Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

175 results about "Cache optimization" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

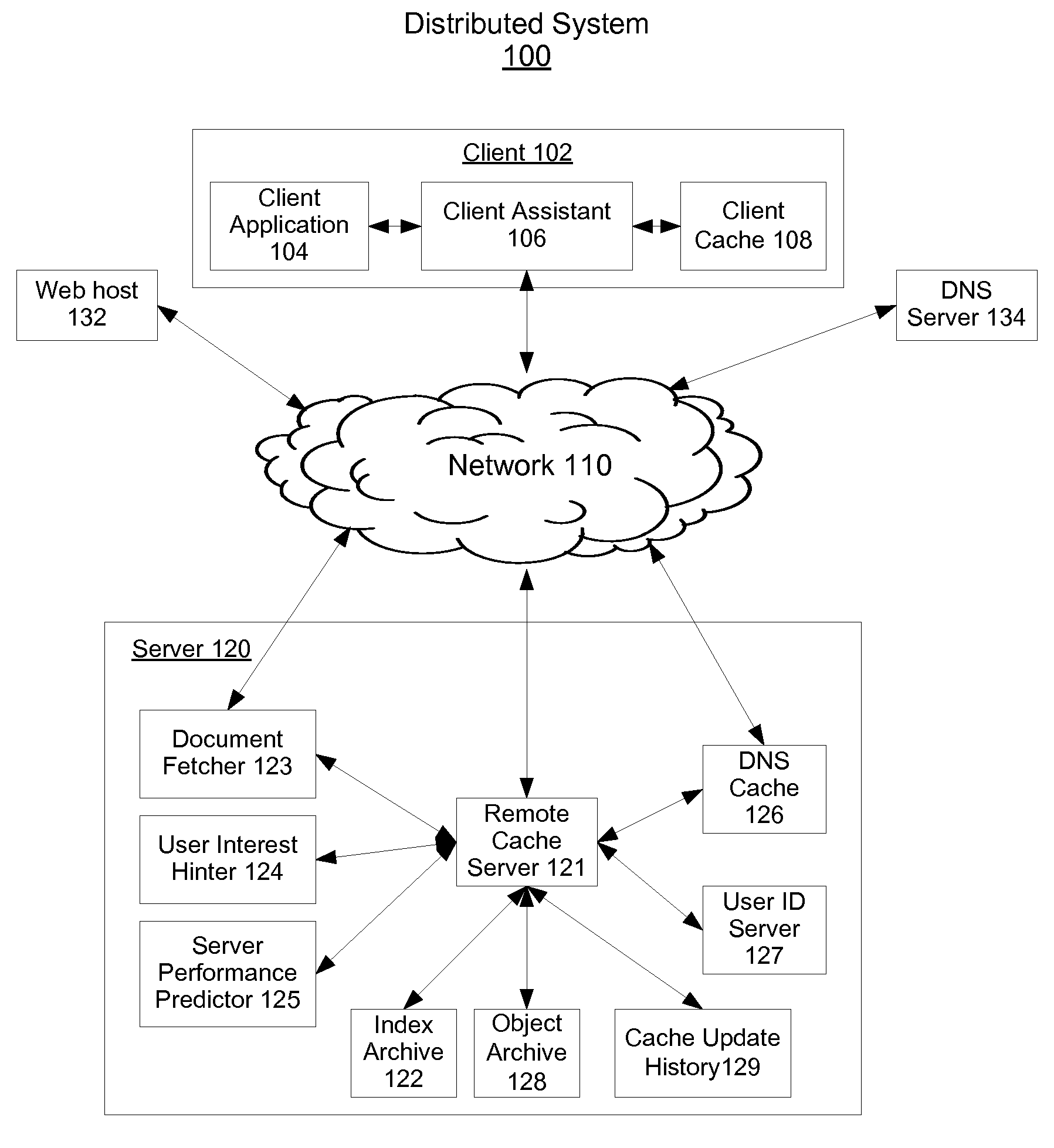

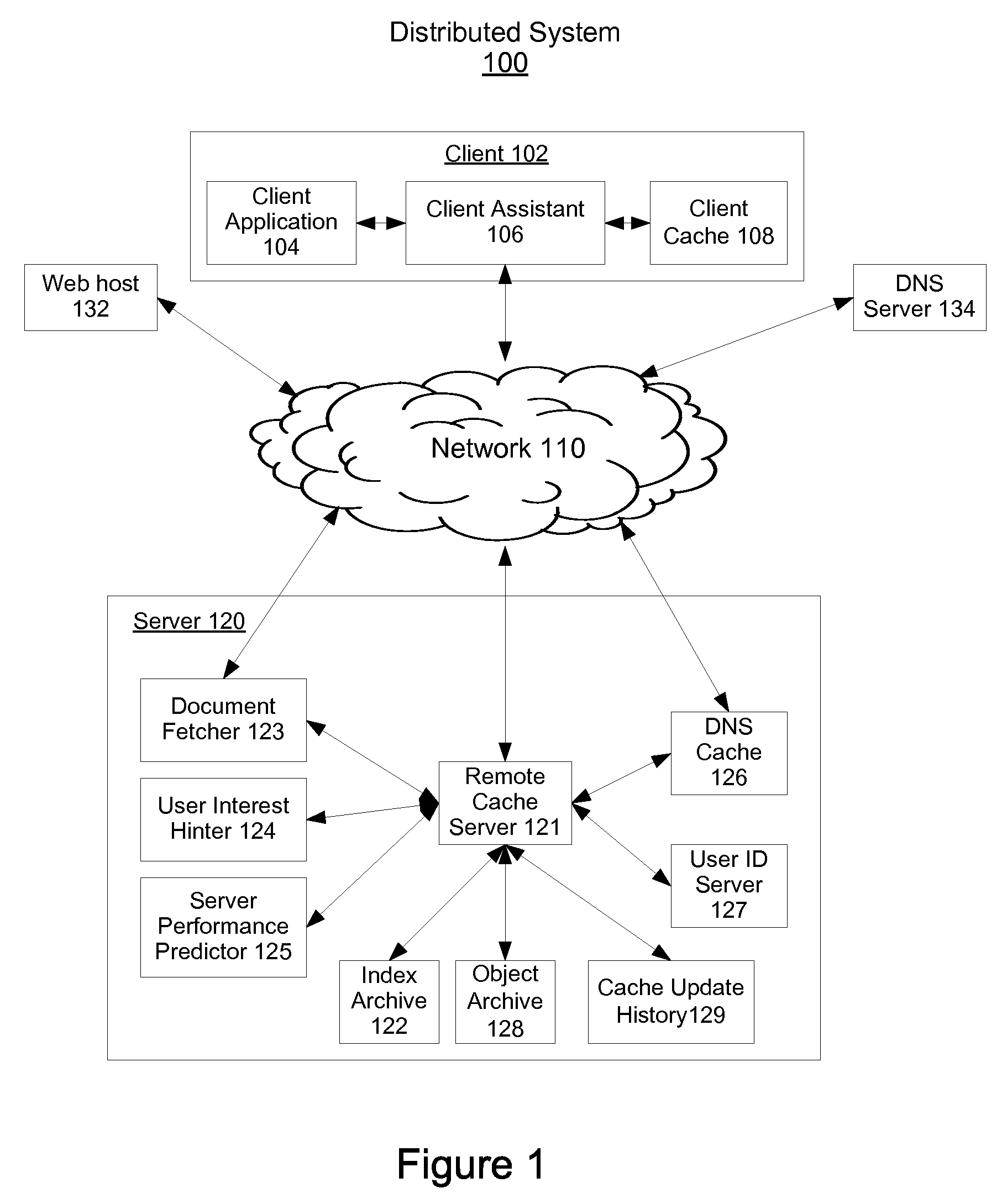

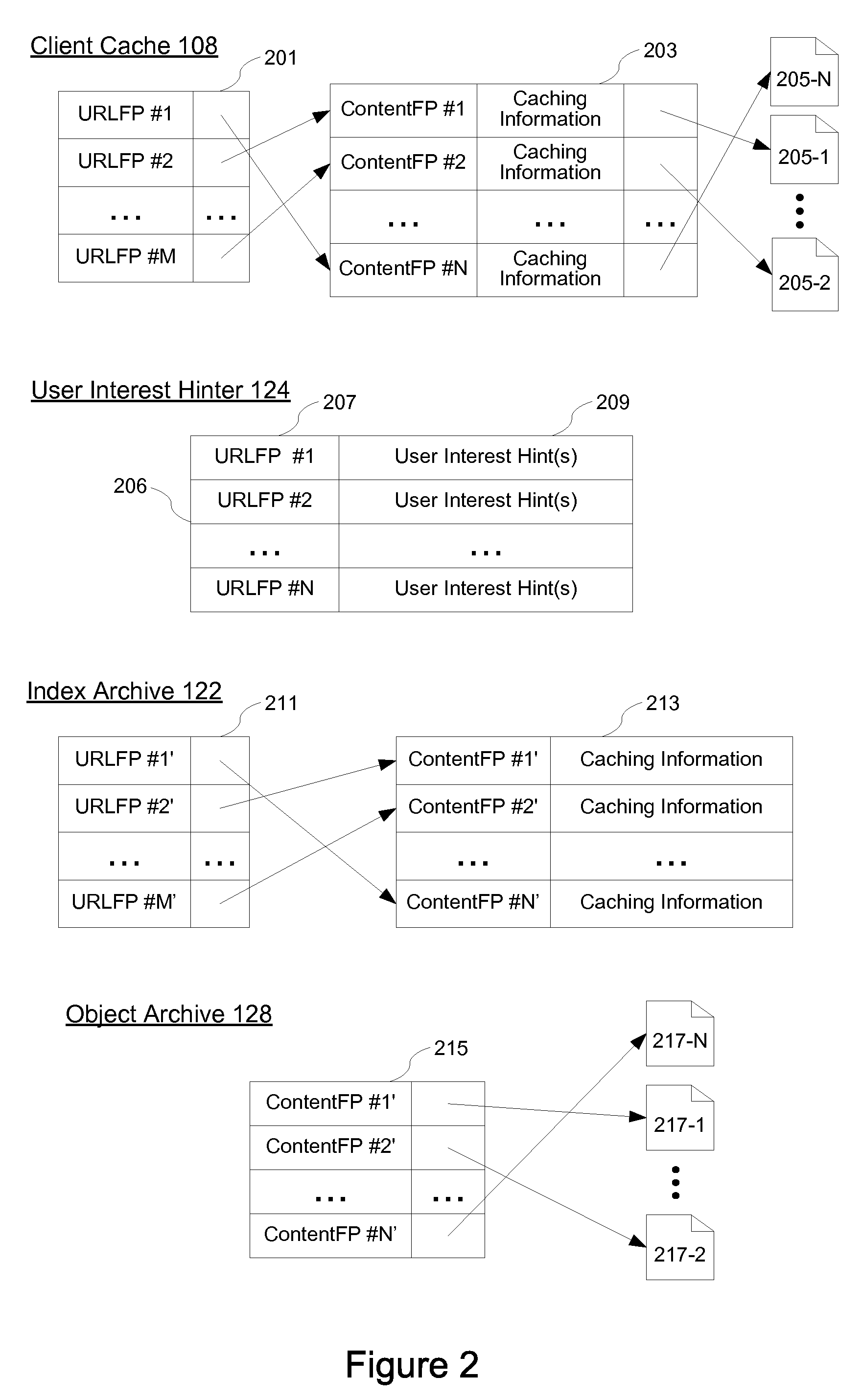

Systems and methods for cache optimization

ActiveUS8065275B2Digital data information retrievalDigital data processing detailsCache optimizationDocumentation

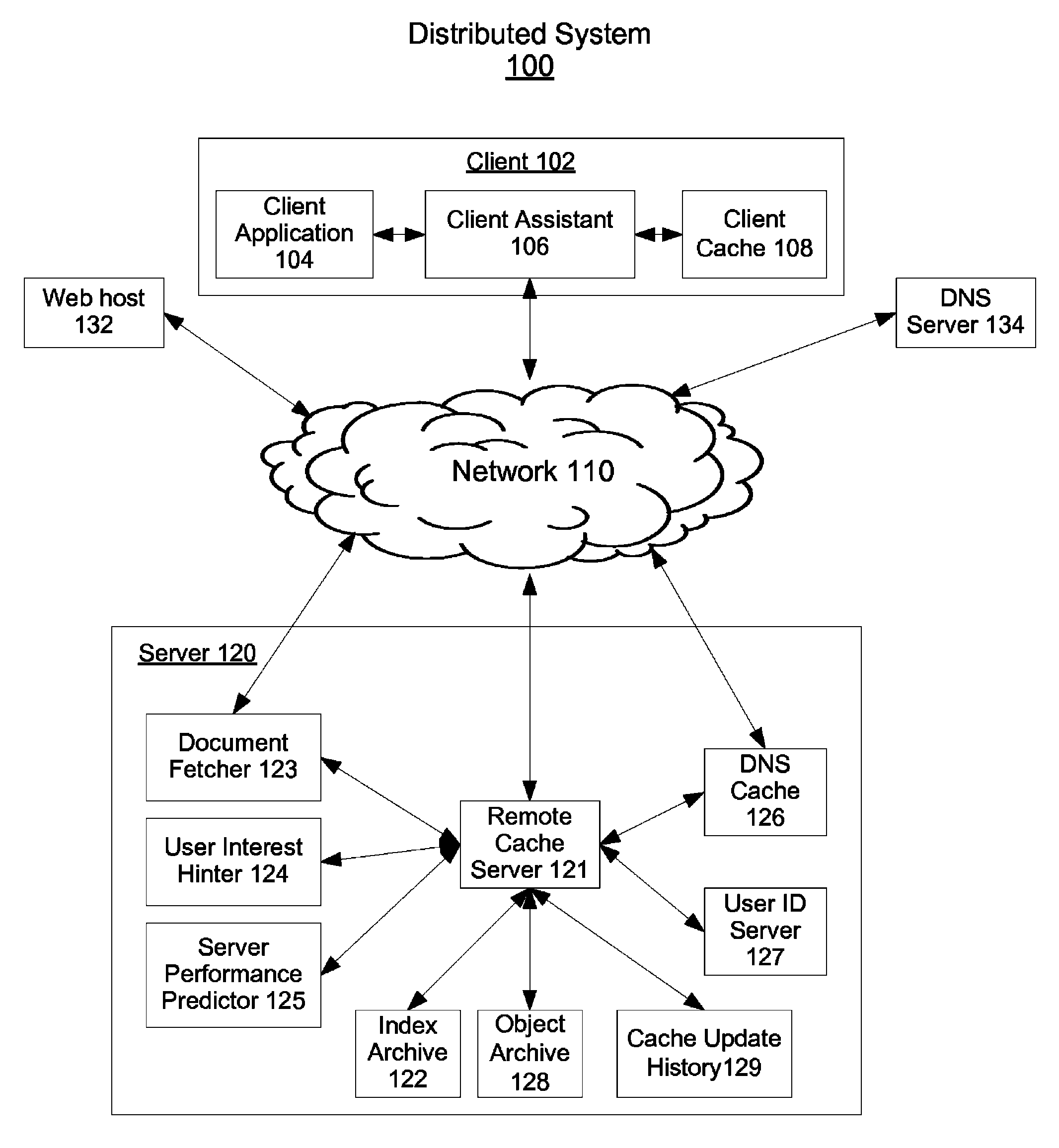

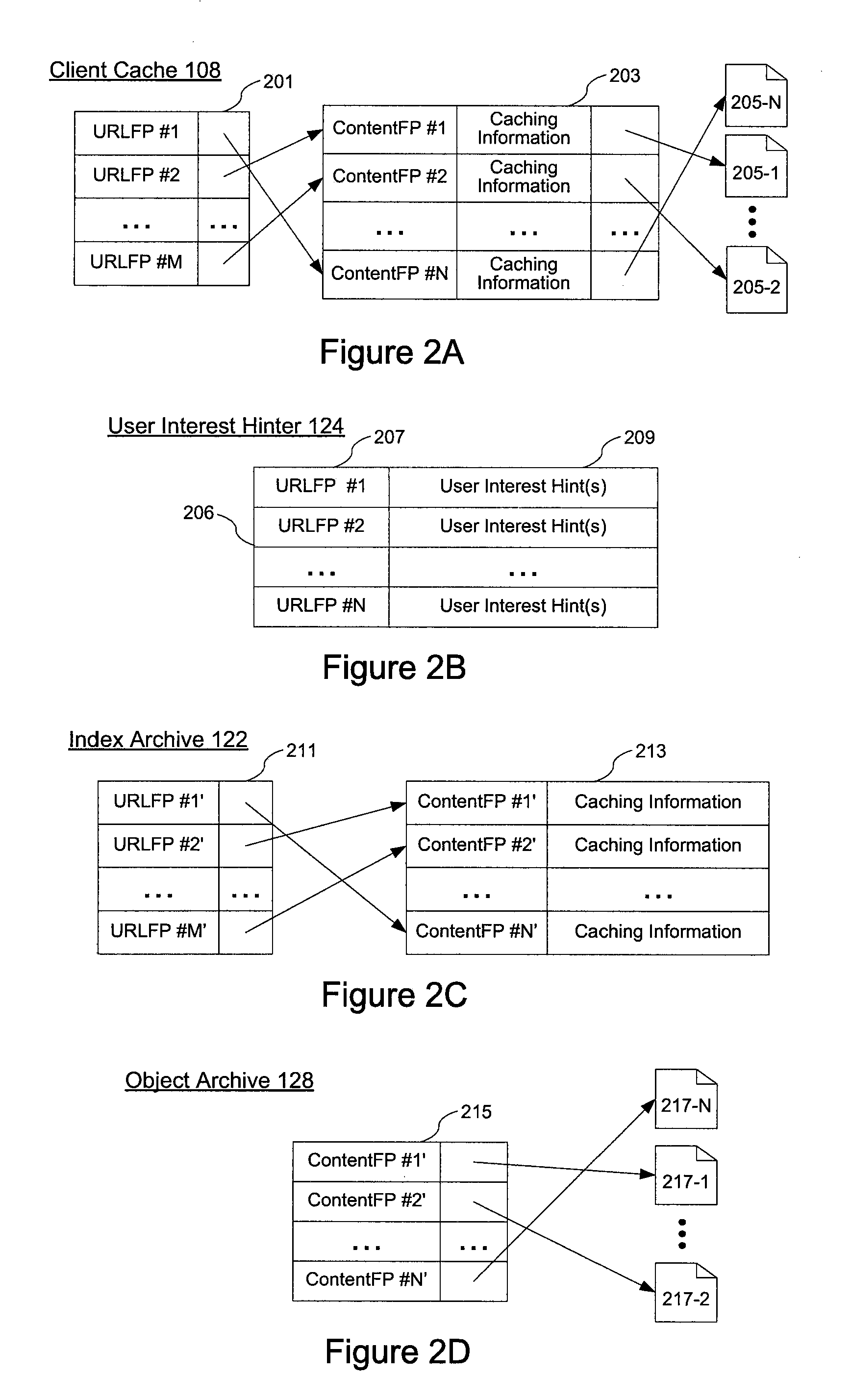

A server computer identifies a cached document and its associated cache update history in response to a request or in anticipation of a request from a client computer. The server computer analyzes the document's cache update history to determine if the cached document is de facto fresh. If the cached document is de facto fresh, the server computer then transmits the cached document to the client computer. Independently, the server computer also fetches an instance of the document from another source like a web host and updates the document's cache update history using the fetched instance of the document.

Owner:GOOGLE LLC

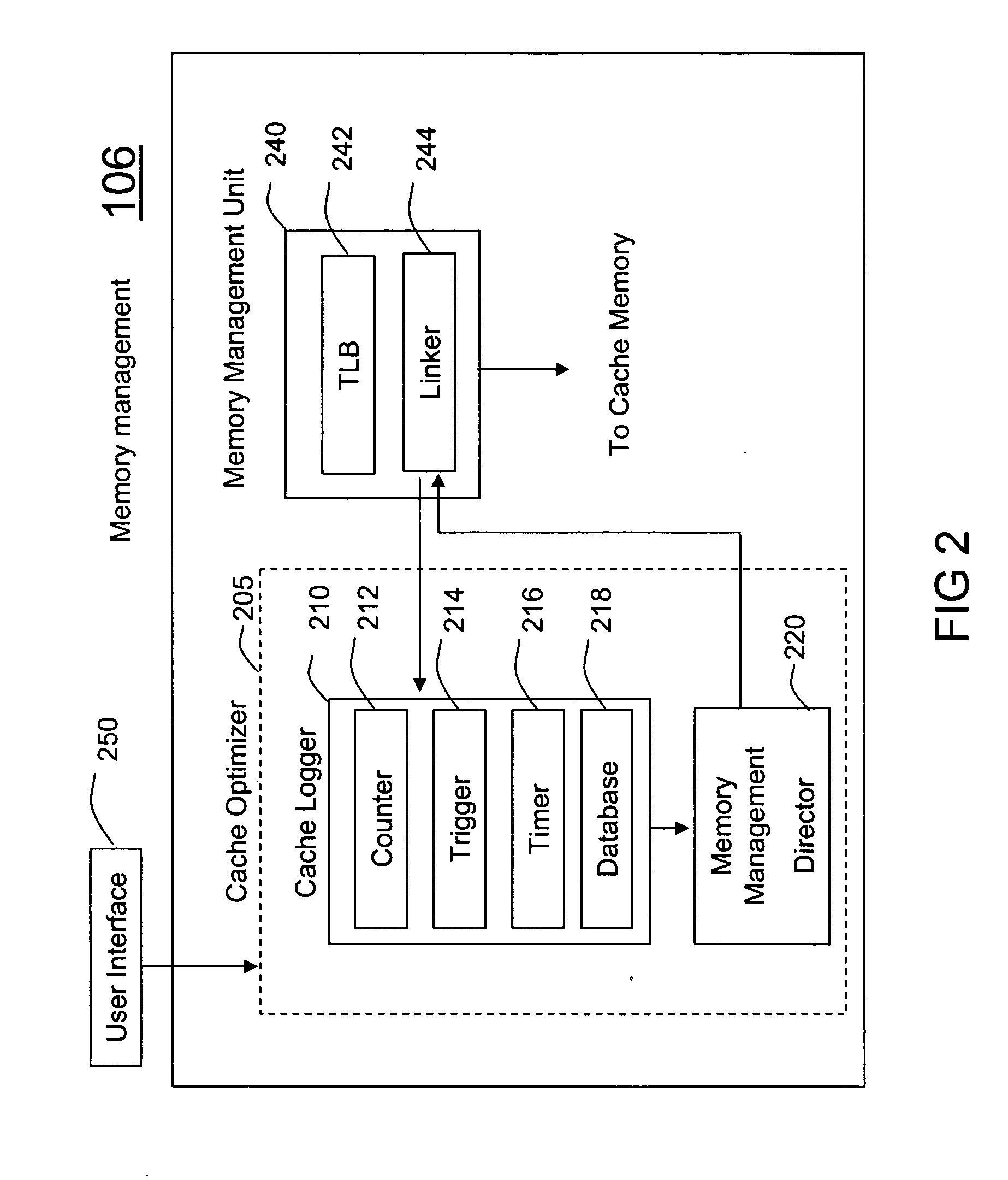

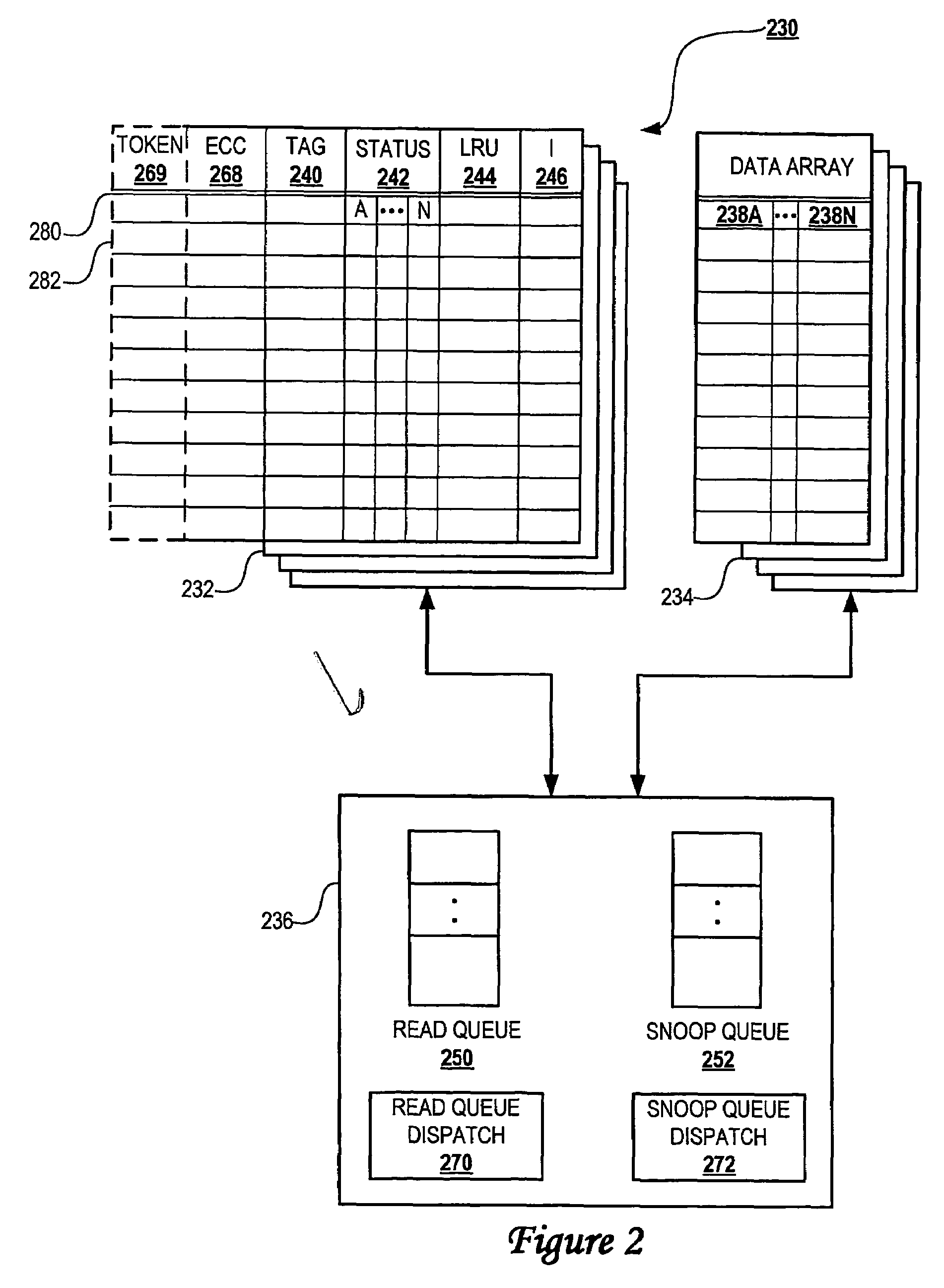

Method and system for run-time cache logging

InactiveUS20070150881A1Increase heightMaximize cache locality compile timeError detection/correctionSpecific program execution arrangementsCache optimizationParallel computing

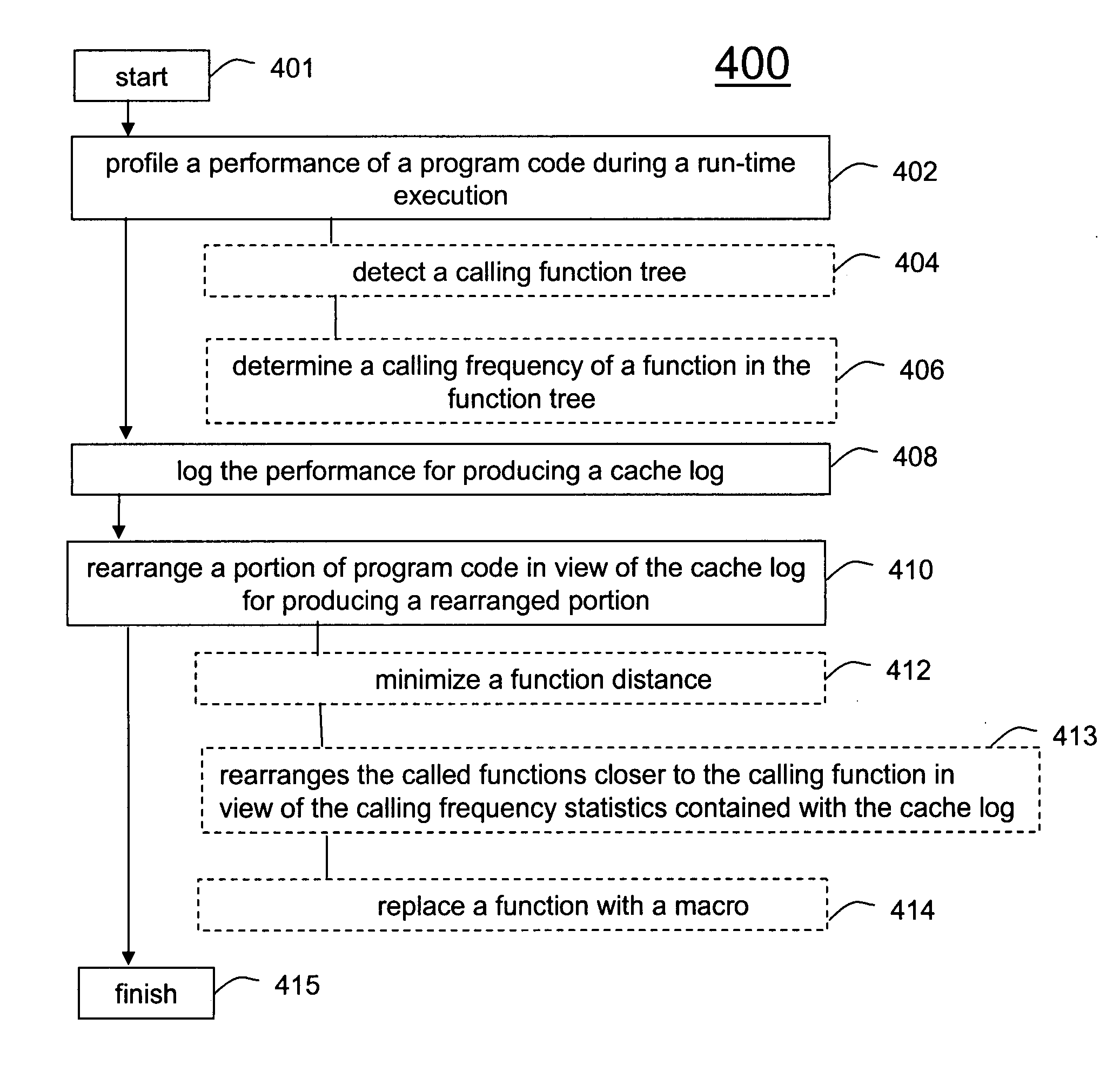

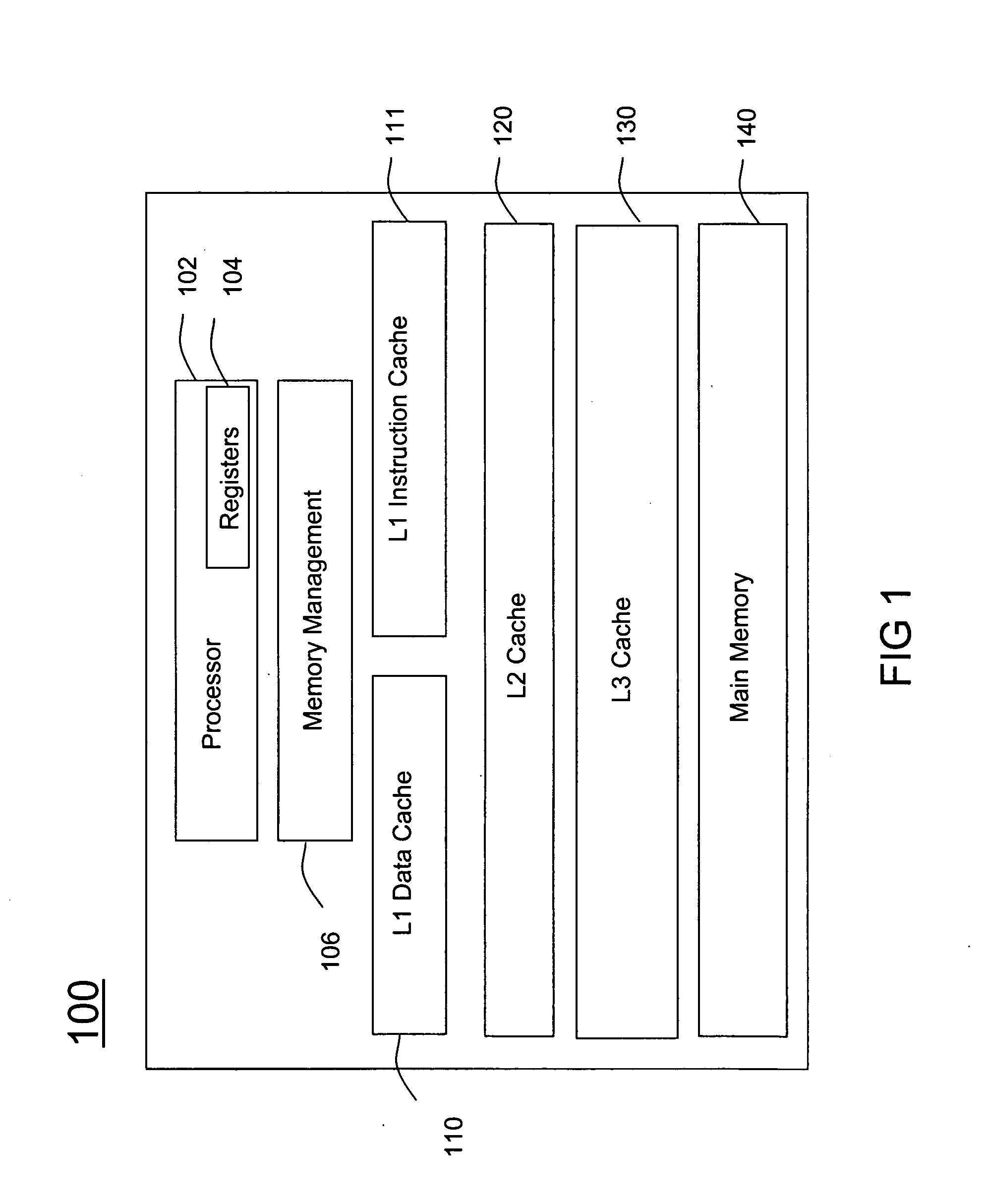

A method (400) and system (106) is provided for run-time cache optimization. The method includes profiling (402) a performance of a program code during a run-time execution, logging (408) the performance for producing a cache log, and rearranging (410) a portion of program code in view of the cache log for producing a rearranged portion. The rearranged portion is supplied to a memory management unit (240) for managing at least one cache memory (110-140). The cache log can be collected during a real-time operation of a communication device and is fed back to a linking process (244) to maximize a cache locality compile-time. The method further includes loading a saved profile corresponding with a run-time operating mode, and reprogramming a new code image associated with the saved profile.

Owner:MOTOROLA INC

Systems and Methods for Cache Optimization

ActiveUS20080201331A1Digital data information retrievalDigital data processing detailsCache optimizationDocument preparation

A server computer identifies a cached document and its associated cache update history in response to a request or in anticipation of a request from a client computer. The server computer analyzes the document's cache update history to determine if the cached document is de facto fresh. If the cached document is de facto fresh, the server computer then transmits the cached document to the client computer. Independently, the server computer also fetches an instance of the document from another source like a web host and updates the document's cache update history using the fetched instance of the document.

Owner:GOOGLE LLC

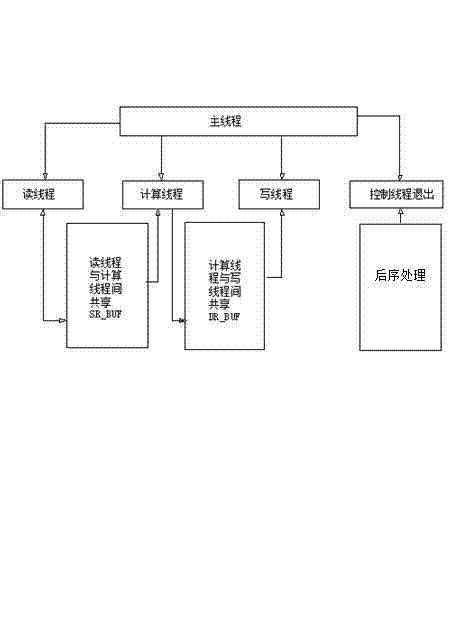

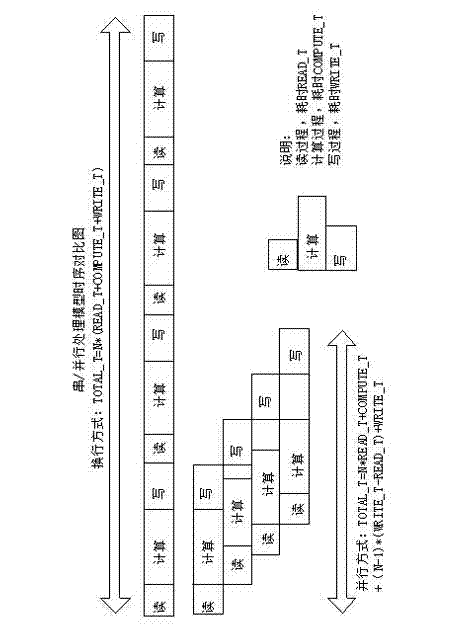

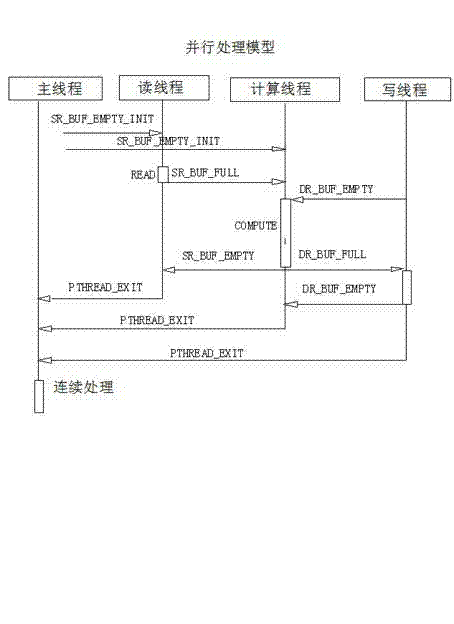

Software performance optimization method based on central processing unit (CPU) multi-core platform

ActiveCN103049245AImprove performanceReduce running timeResource allocationConcurrent instruction executionCache optimizationResource utilization

The invention provides a software performance optimization method based on a CPU multi-core platform. The method comprises software characteristic analysis, parallel optimization scheme formulation and parallel optimization scheme implementation and iteration tuning. Particularly, the method comprises application software characteristic analysis, serial algorithm analysis, CPU multi-in / thread parallel algorithm design, multi-buffer design, design of communication modes among threads, memory access optimization, cache optimization, processor vectorization optimization, mathematical function library optimization and the like. The method is widely applicable to application occasions with multi-thread parallel processing requirements, software developers are guided to perform multi-thread parallel optimization improvement on prior software rapidly and efficiently with short developing periods and low developing costs, the utilization of system resources by software is optimized, data reading and computing and mutual masking of write-back data are achieved, the software running time is shortened furthest, the hardware resource utilization rate is improved apparently, and the software computing efficiency and the software whole performance are enhanced.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

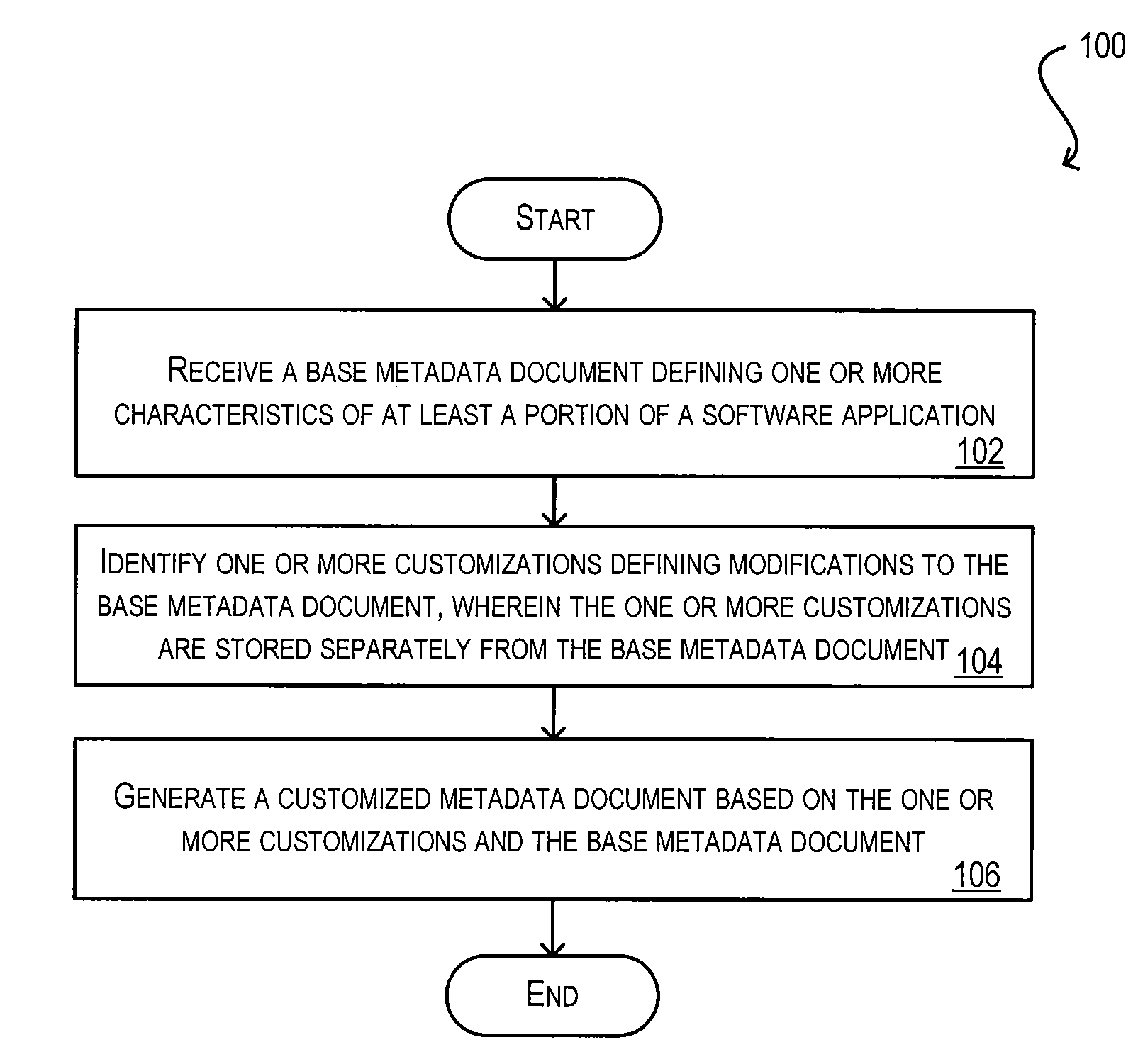

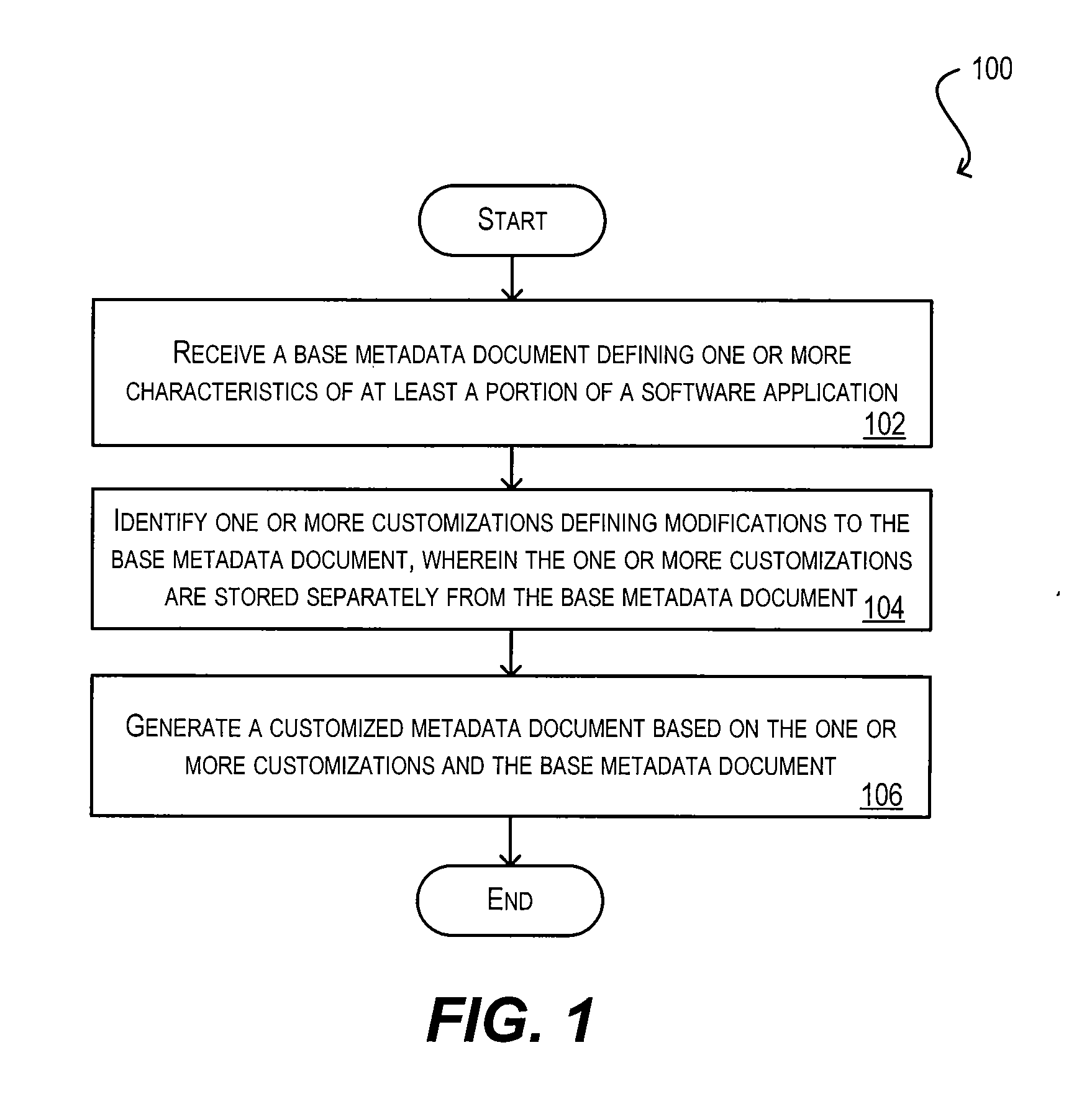

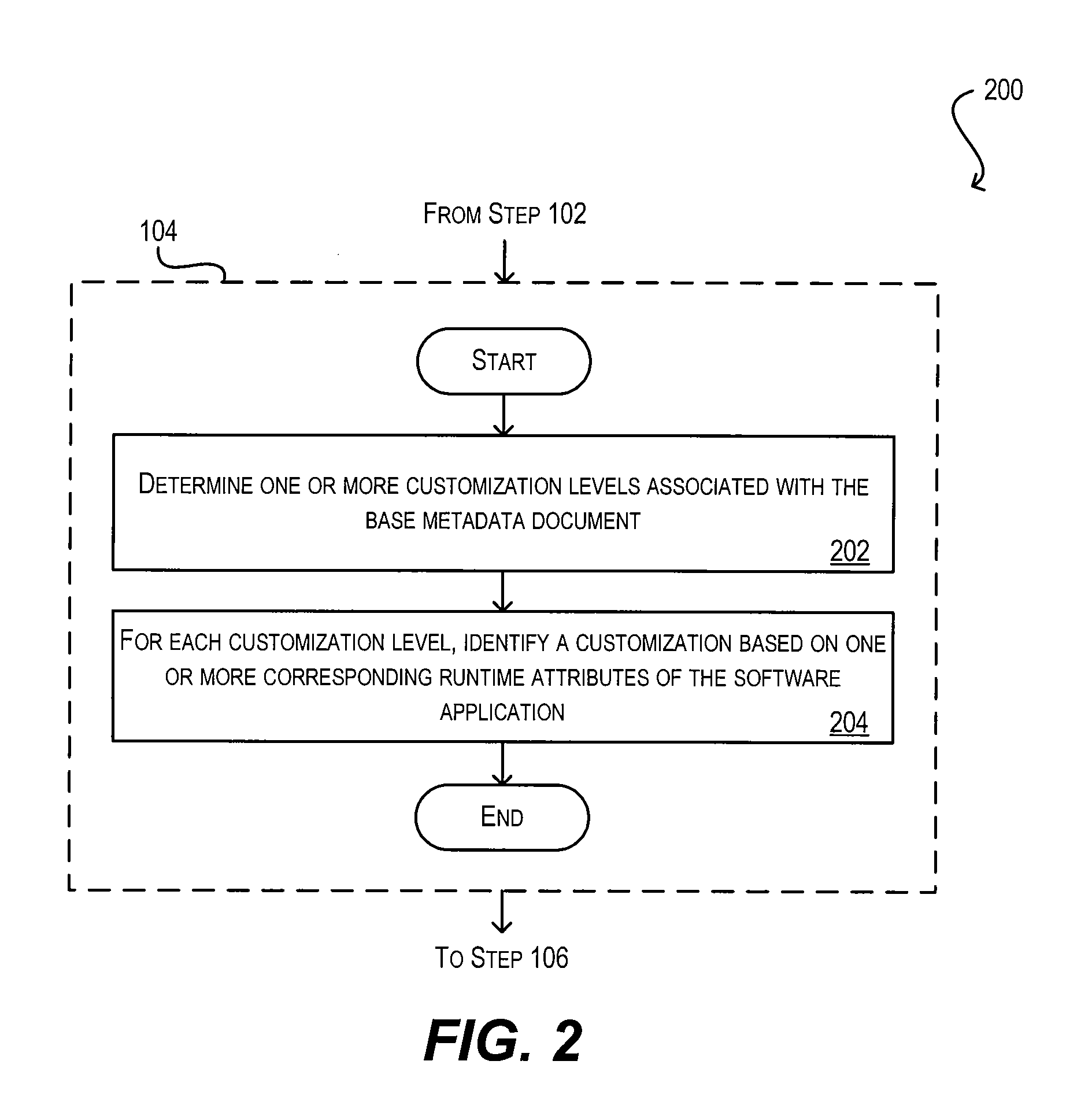

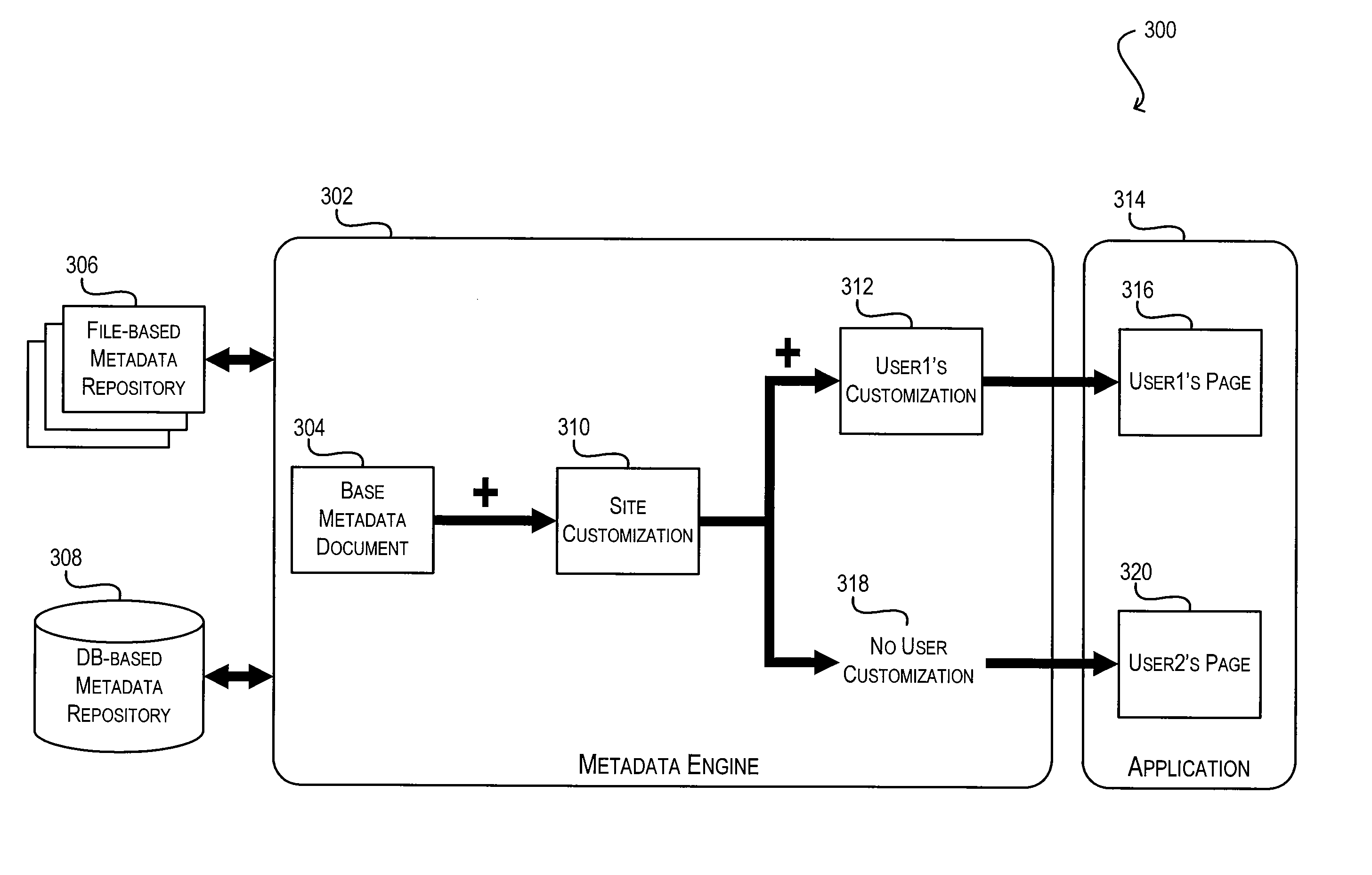

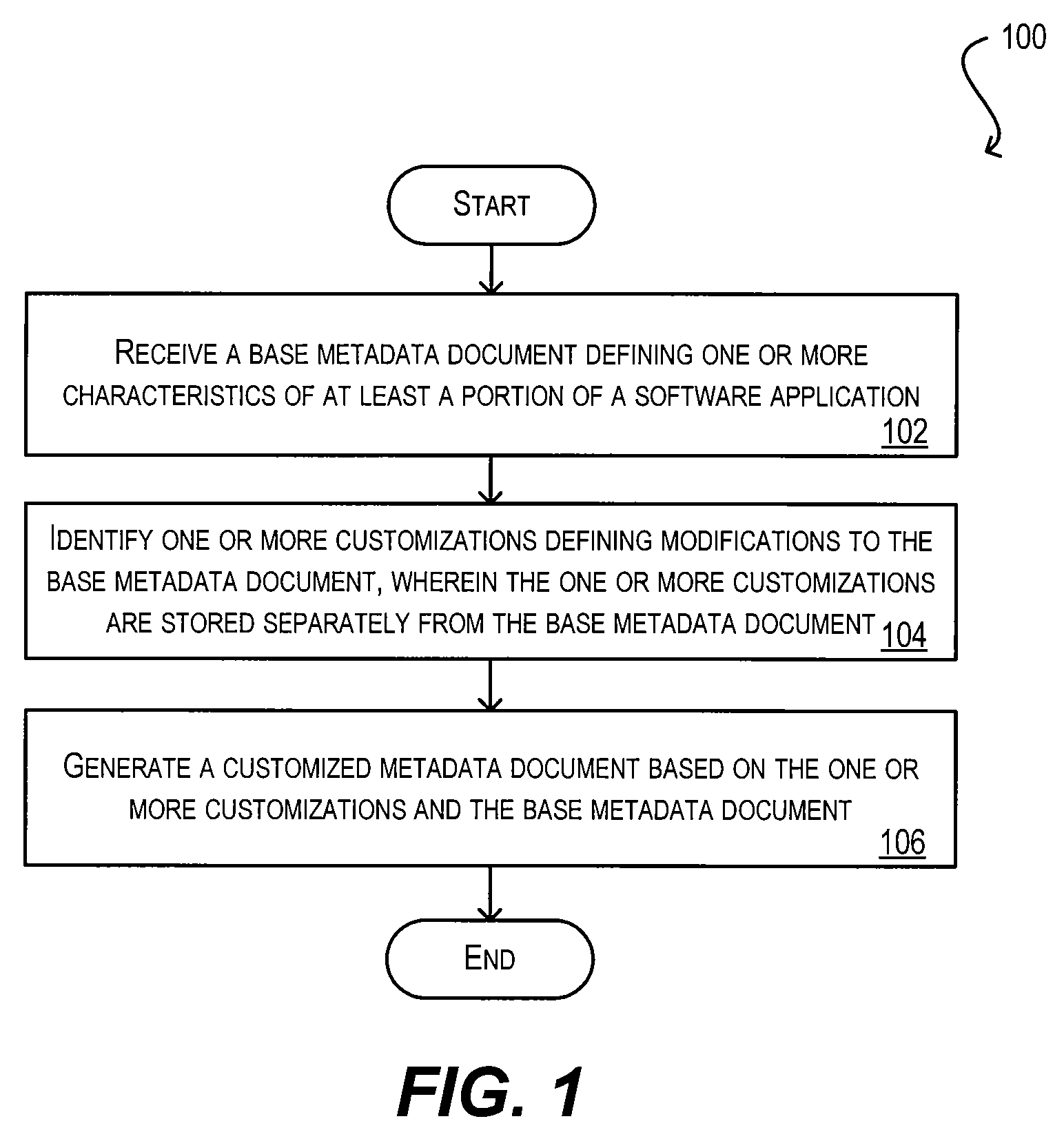

Customization creation and update for multi-layer XML customization

ActiveUS20090204943A1Efficiently provideEfficiently providedVersion controlSpecific program execution arrangementsCache optimizationApplication software

Embodiments of the present invention provide techniques for customizing aspects of a metadata-driven software application. In particular, embodiments of the present invention provide (1) a self-contained metadata engine for generating customized metadata documents from base metadata documents and customizations; (2) a customization syntax for defining customizations; (3) a customization creation / update component for creating and updating customizations; (4) a customization restriction mechanism for restricting the creation of new customizations by specific users or groups of users; and (5) memory and caching optimizations for optimizing the storage and lookup of customized metadata documents.

Owner:ORACLE INT CORP

Caching and memory optimizations for multi-layer XML customization

ActiveUS20090204629A1Efficiently provideEfficiently providedDigital data information retrievalDigital data processing detailsCache optimizationPaper document

Embodiments of the present invention provide techniques for customizing aspects of a metadata-driven software application. In particular, embodiments of the present invention provide (1) a self-contained metadata engine for generating customized metadata documents from base metadata documents and customizations; (2) a customization syntax for defining customizations; (3) a customization creation / update component for creating and updating customizations; (4) a customization restriction mechanism for restricting the creation of new customizations by specific users or groups of users; and (5) memory and caching optimizations for optimizing the storage and lookup of customized metadata documents.

Owner:ORACLE INT CORP

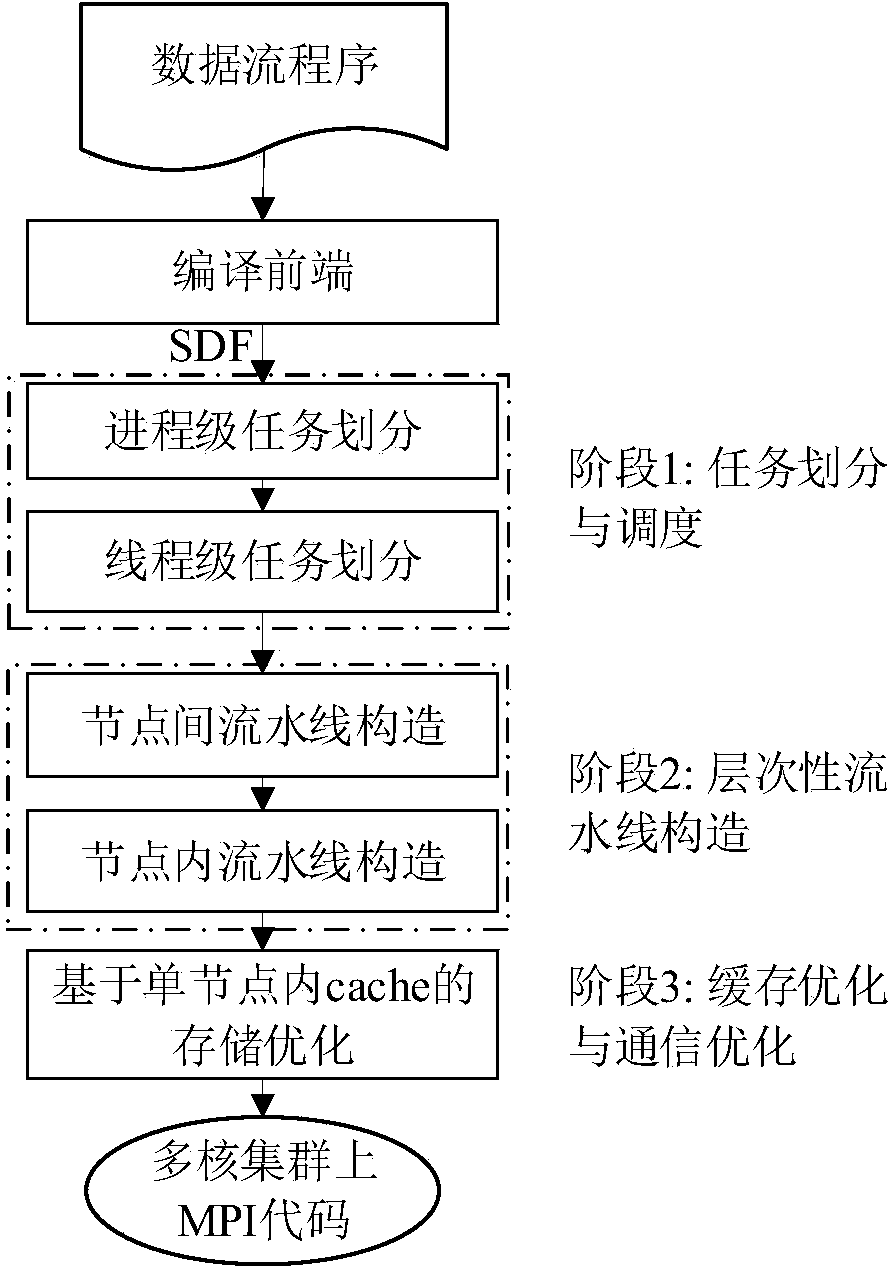

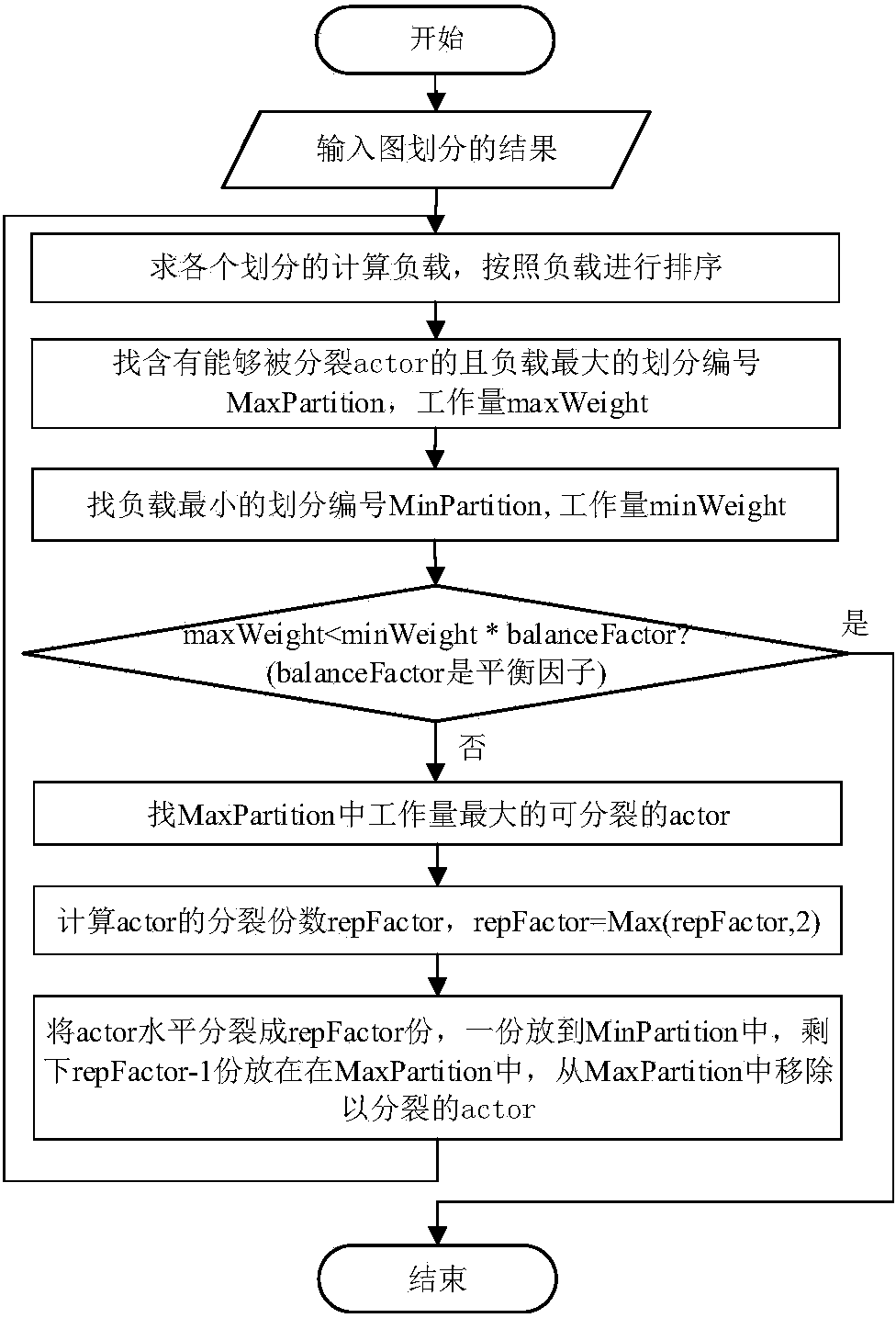

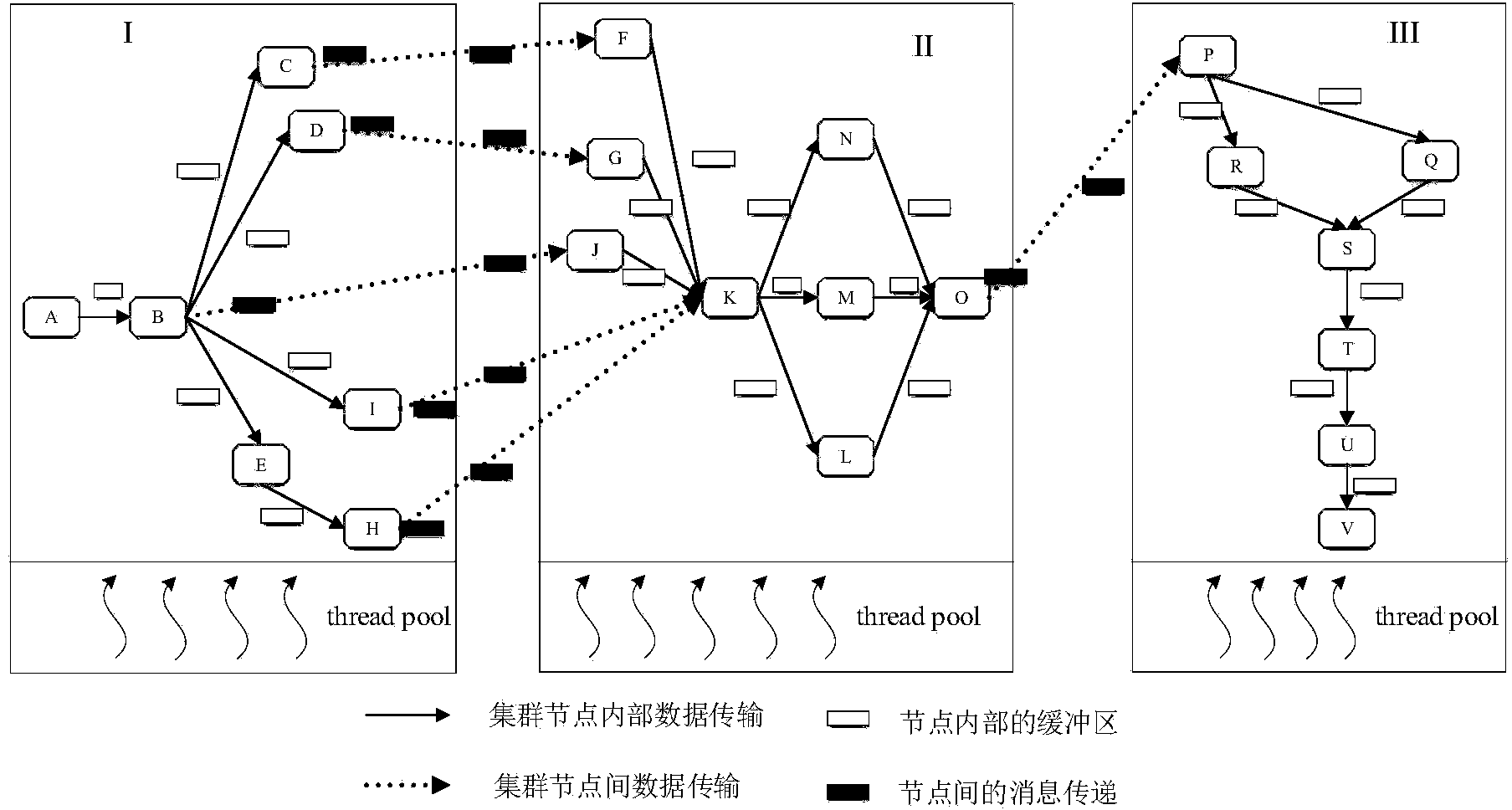

Data flow compilation optimization method oriented to multi-core cluster

ActiveCN103970580AImplementing a three-level optimization processImprove execution performanceResource allocationMemory systemsCache optimizationData stream

The invention discloses a data flow compilation optimization method oriented to a multi-core cluster system. The data flow compilation optimization method comprises the following steps that task partitioning and scheduling of mapping from calculation tasks to processing cores are determined; according to task partitioning and scheduling results, hierarchical pipeline scheduling of pipeline scheduling tables among cluster nodes and among cluster node inner cores is constructed; according to structural characteristics of a multi-core processor, communication situations among the cluster nodes, and execution situations of a data flow program on the multi-core processor, cache optimization based on cache is conducted. According to the method, the data flow program and optimization techniques related to the structure of the system are combined, high-load equilibrium and high parallelism of synchronous and asynchronous mixed pipelining codes on a multi-core cluster are brought into full play, and according to cache and communication modes of the multi-core cluster, cache access and communication transmission of the program are optimized; furthermore, the execution performance of the program is improved, and execution time is shorter.

Owner:HUAZHONG UNIV OF SCI & TECH

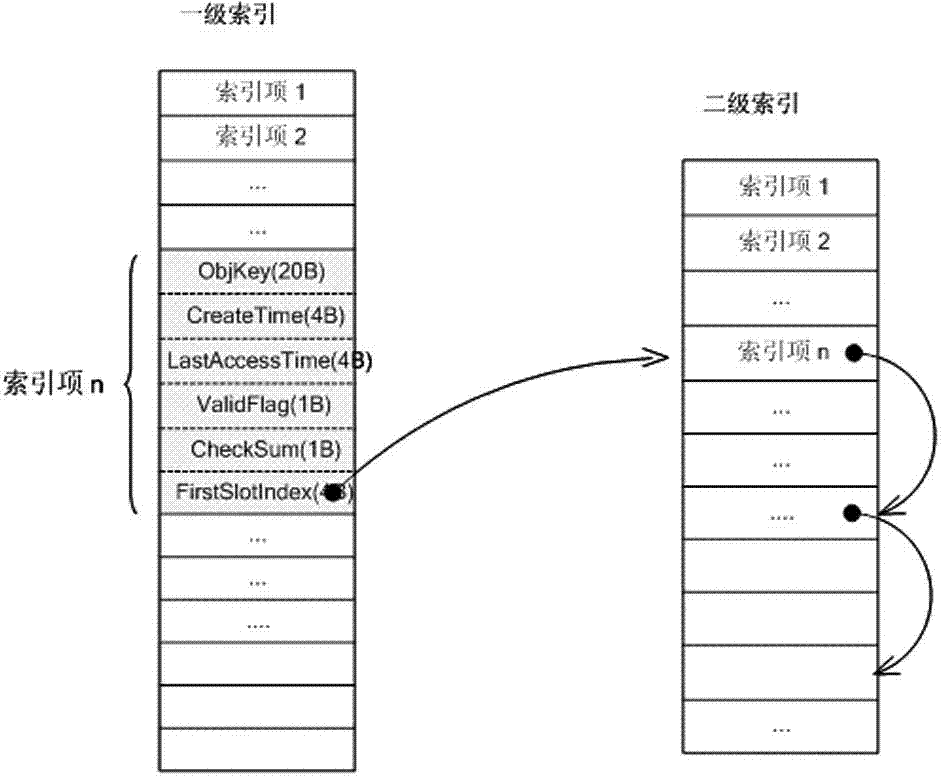

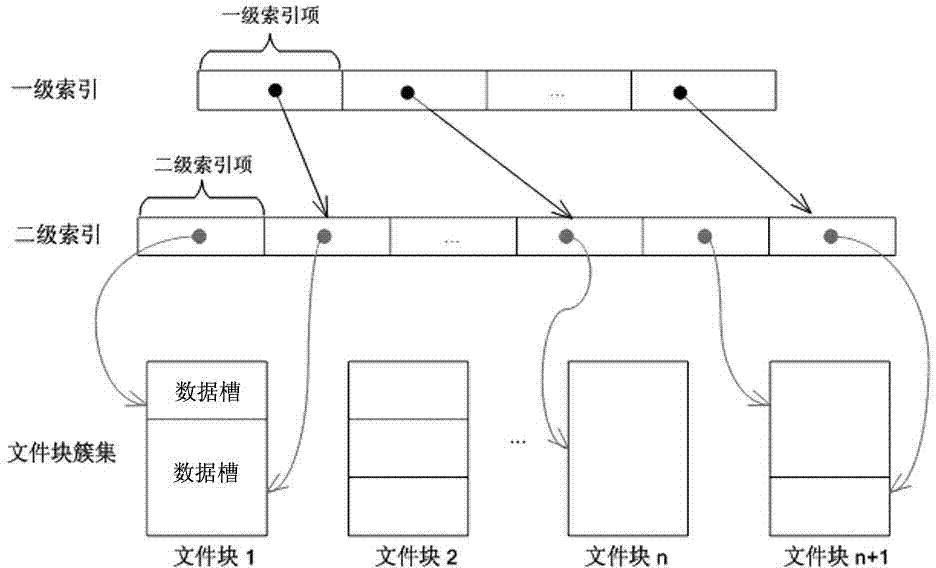

Object caching method based on disk

ActiveCN103678638ATake advantage ofImprove reading and writing efficiencyMemory adressing/allocation/relocationSpecial data processing applicationsCache optimizationFailure mechanism

The invention relates to the technical field of disk caching, in particularly to an object caching method based on a disk. The object caching method includes the steps that file storage space is divided to construct a file storage structure of secondary indexes, and the processes of adding objects to a disk cache and acquiring and deleting cached objects from the disk cache, a cache failure mechanism and the cache optimization arrangement process are achieved according to the file storage structure of the secondary indexes. Data of the objects are stored through chunks with the fixed sizes, the multiple small objects are merged and stored in one chunk, large objects are divided into a plurality of object blocks, the object blocks are stored in the multiple chunks respectively, the objects of any size and any type can be cached, and caching efficiency is higher.

Owner:XIAMEN YAXON NETWORKS CO LTD

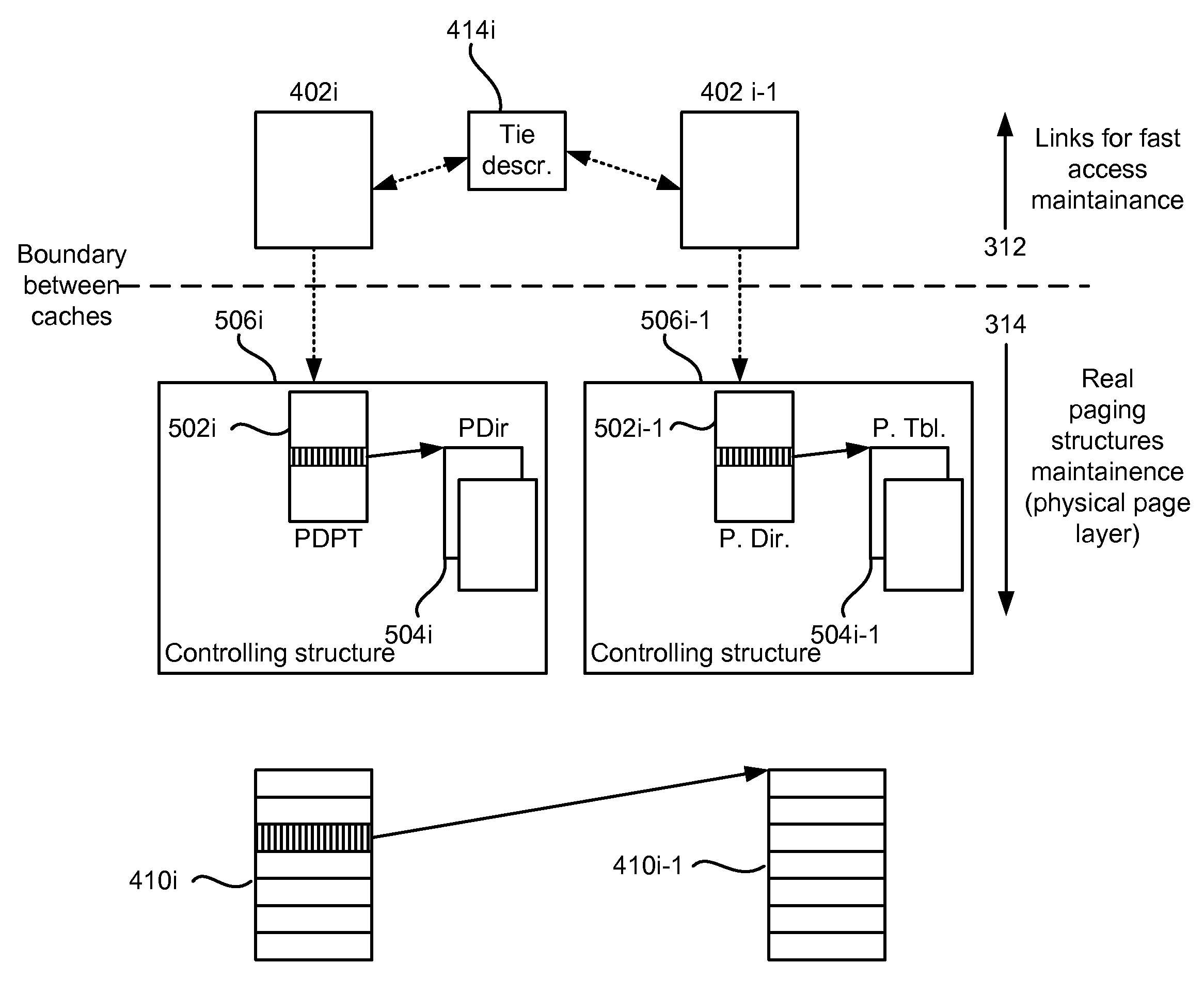

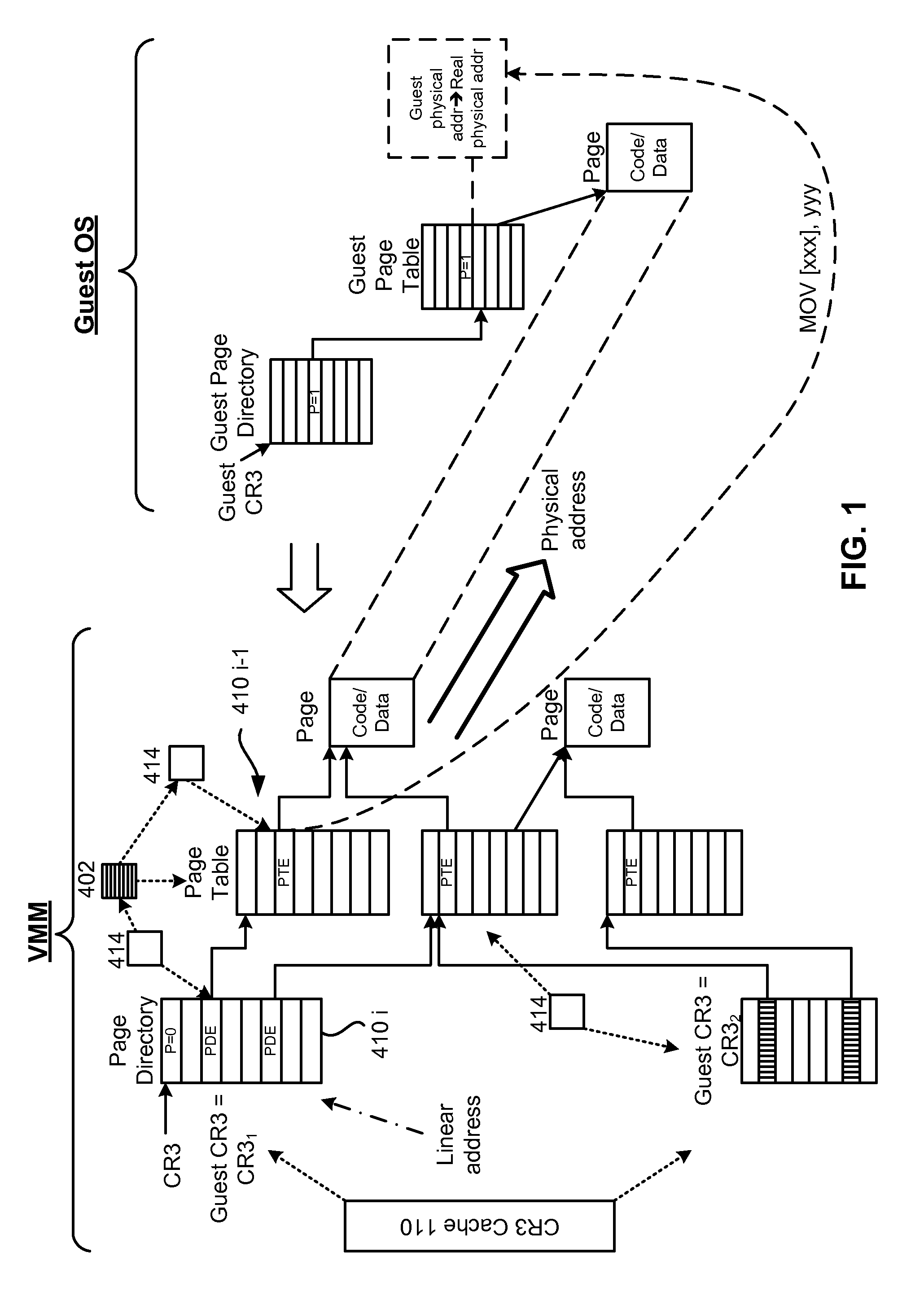

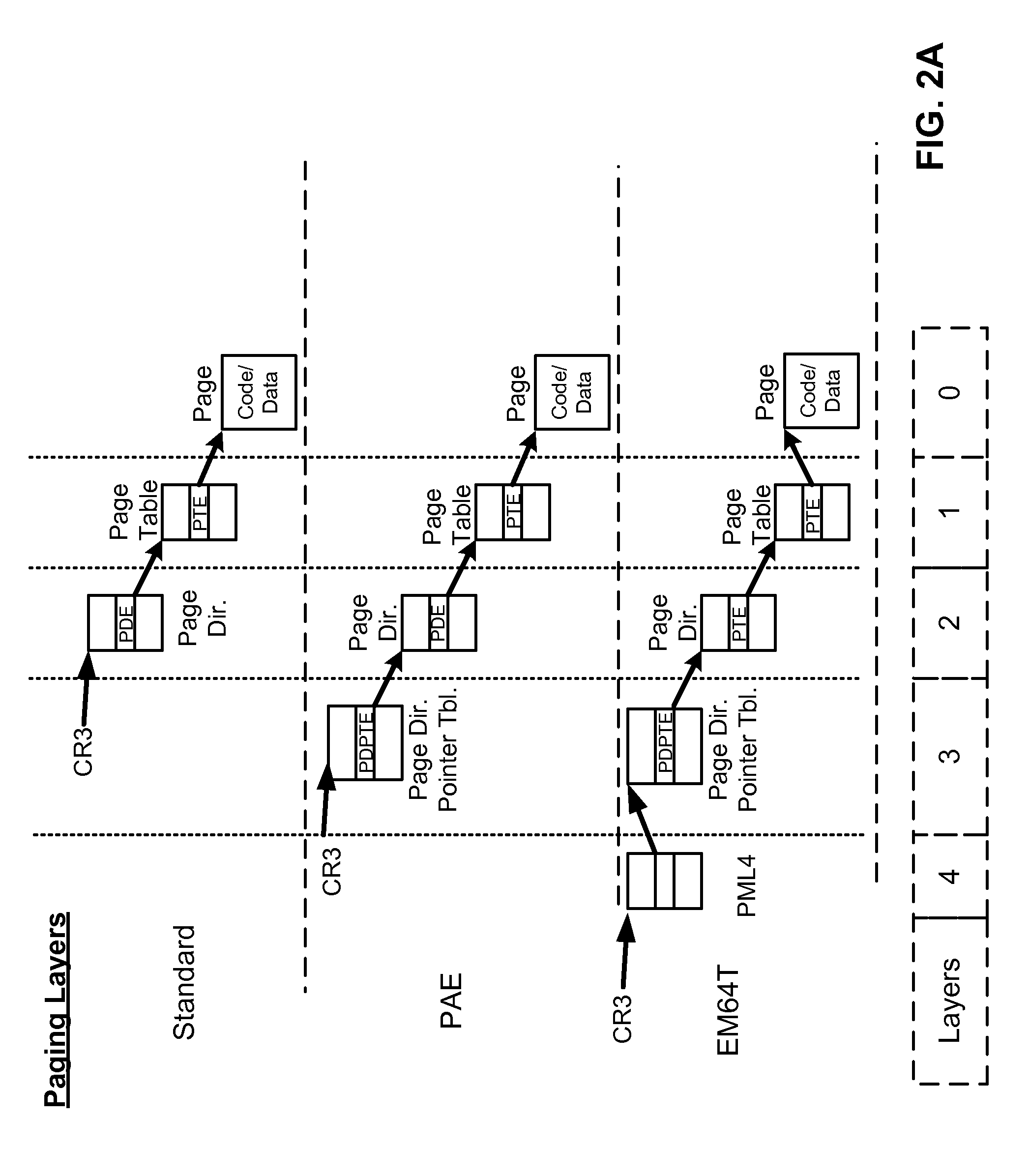

Paging cache optimization for virtual machine

ActiveUS7596677B1Memory adressing/allocation/relocationComputer security arrangementsVirtualizationCache optimization

A system, method and computer program product for virtualizing a processor include a virtualization system running on a computer system and controlling memory paging through hardware support for maintaining real paging structures. A Virtual Machine (VM) is running guest code and has at least one set of guest paging structures that correspond to guest physical pages in guest virtualized linear address space. At least some of the guest paging structures are mapped to the real paging structures. For each guest physical page that is mapped to the real paging structures, paging means for handling a connection structure between the guest physical page and a real physical address of the guest physical page. A cache of connection structures represents cached paths to the real paging structures. Each path is described by guest paging structure descriptors and by tie descriptors. Each path includes a plurality of nodes connected by the tie descriptors. Each guest paging structure descriptor is in a node of at least one path. Each guest paging structure either points to other guest paging structures or to guest physical pages. Each guest paging structure descriptor represents guest paging structure information for mapping guest physical pages to the real paging structures.

Owner:PARALLELS INT GMBH

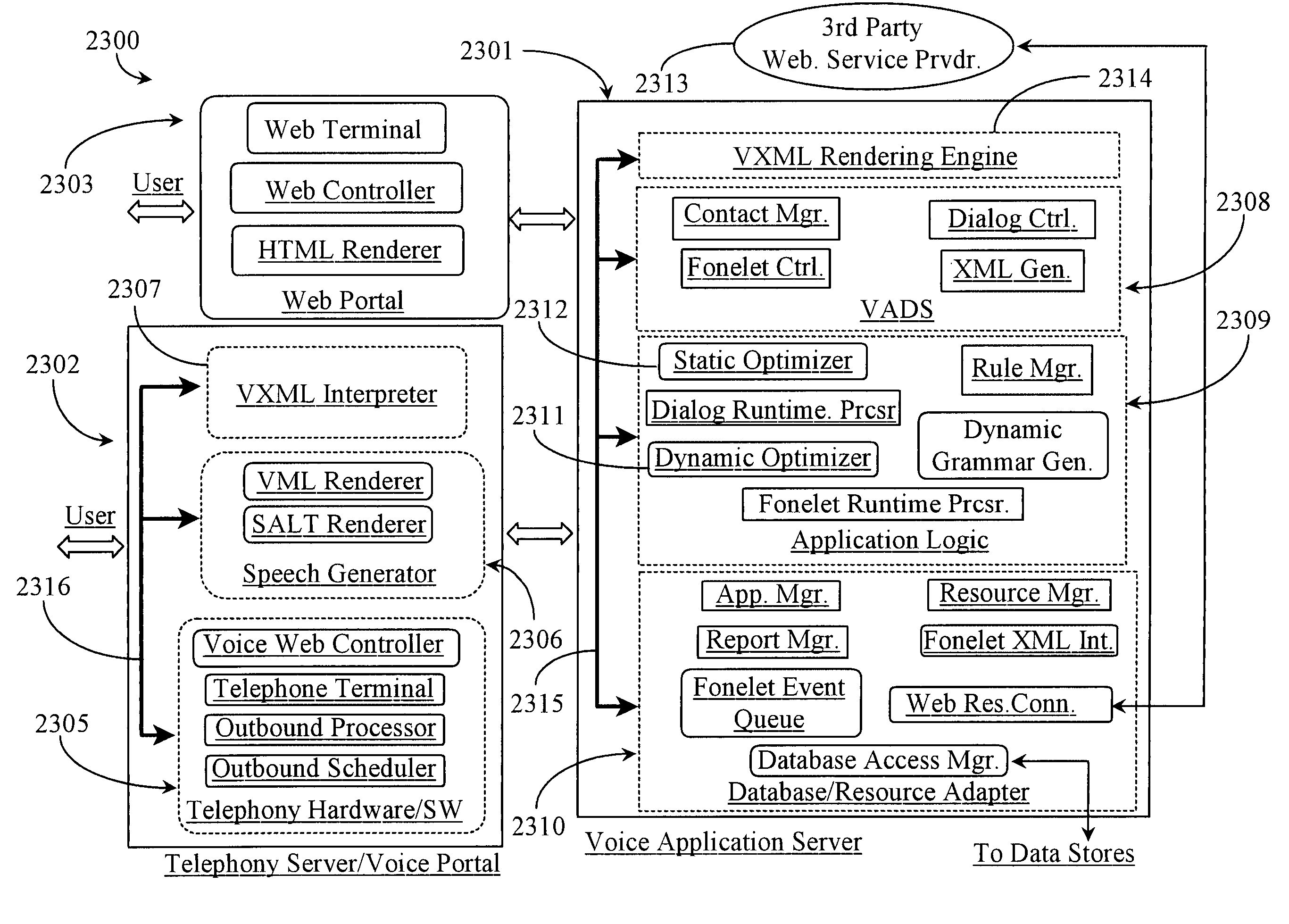

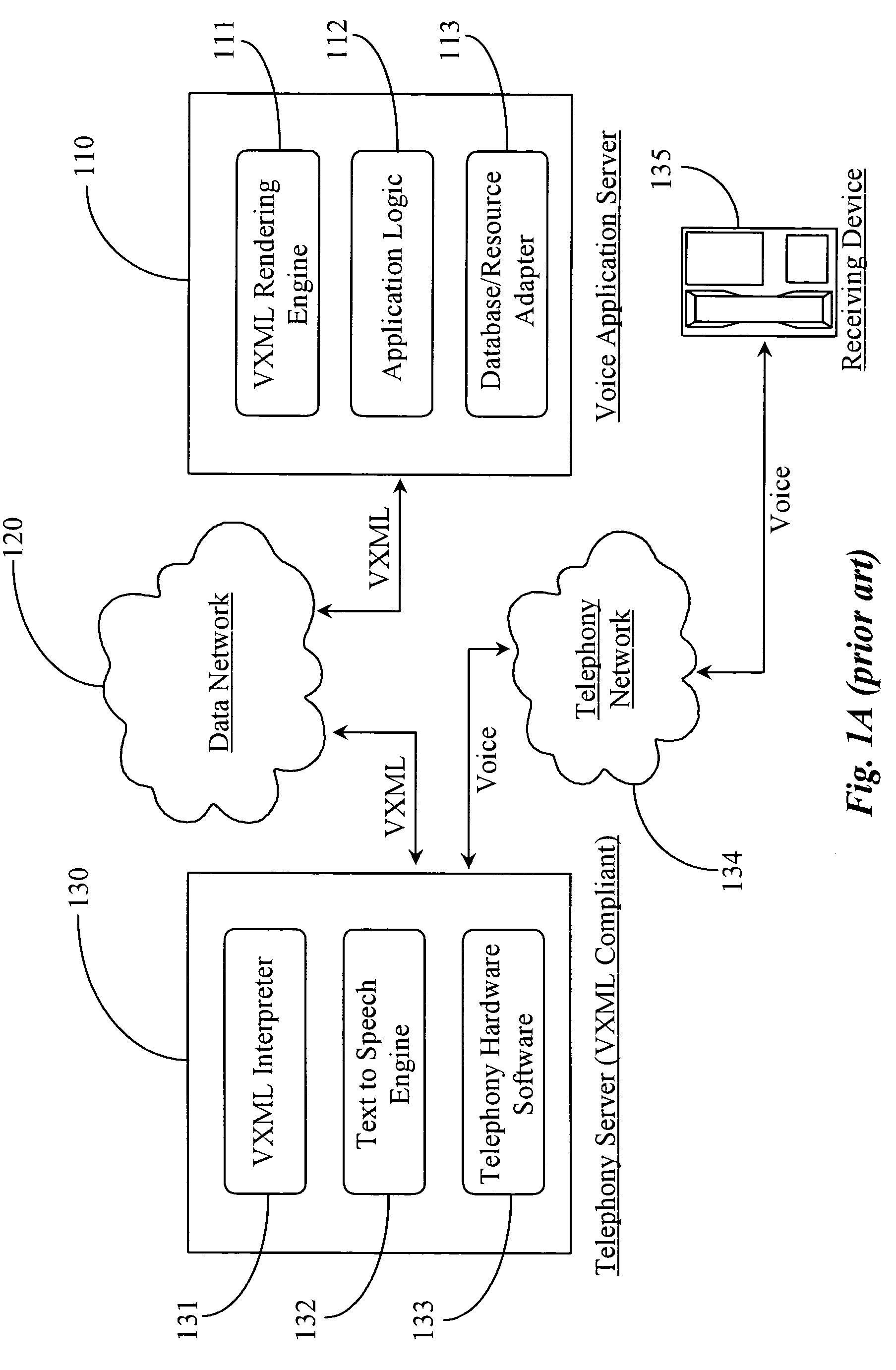

Method and apparatus for reducing data traffic in a voice XML application distribution system through cache optimization

In a voice-extensible markup-language-enabled voice application deployment architecture, an application logic for determining which portions of a voice application for deployment are cached at an application-receiving end system or systems has a processor for processing the voice application according to sequential dialog files of the application, a static content optimizer connected to the processor for identifying files containing static content, and a dynamic content optimizer connected to the processor for identifying files containing dynamic content. The application is characterized in that the optimizers determine which files should be cached at which end-system facilities, tag the files accordingly, and prepare those files for distribution to selected end-system cache facilities for local retrieval during consumer interaction with the deployed application.

Owner:HTC CORP

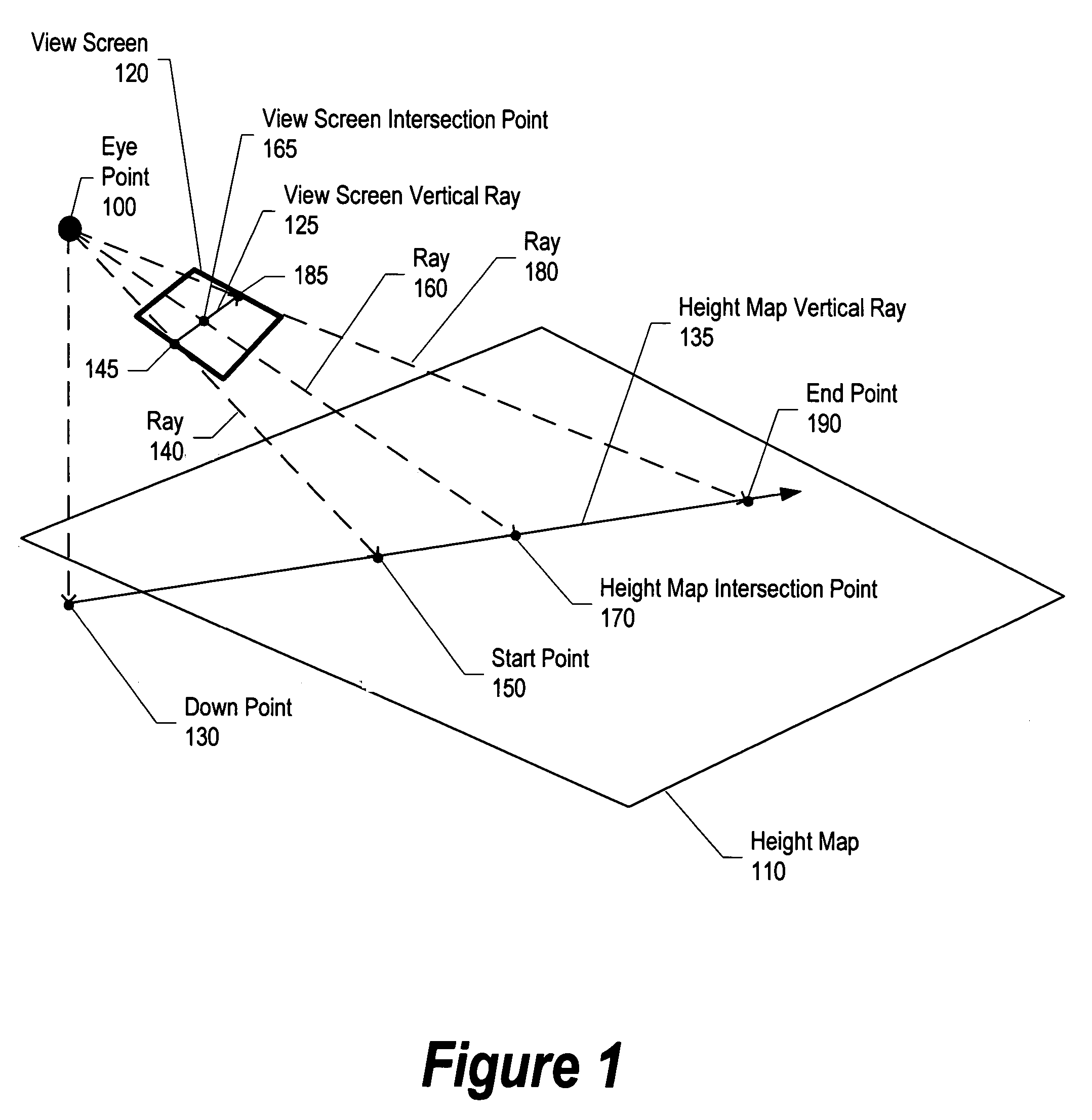

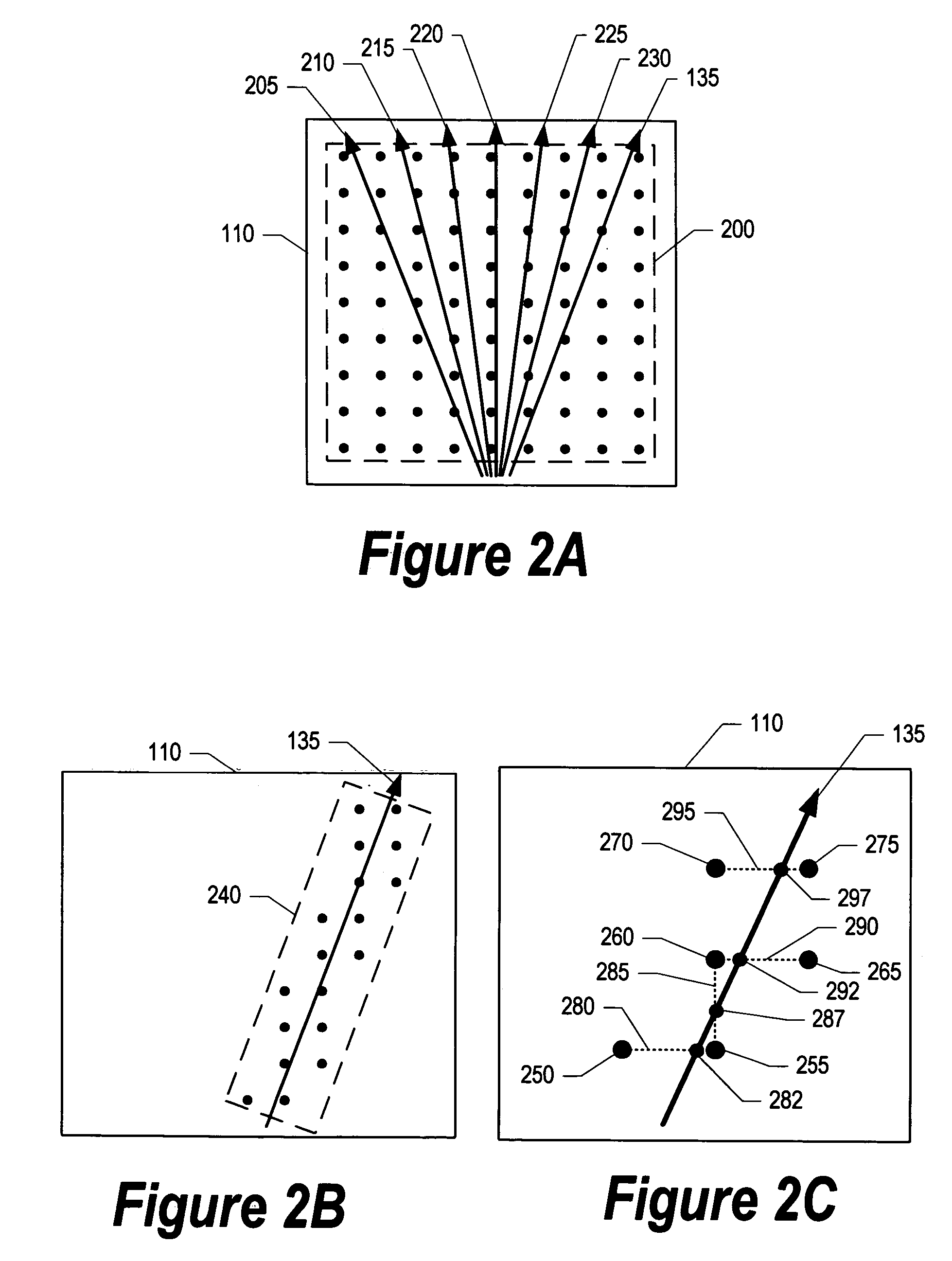

System and method for cache optimized data formatting

InactiveUS7298377B2Digital data processing detailsCathode-ray tube indicatorsPattern recognitionCache optimization

A system and method for cache optimized data formatting is presented. A processor generates images by calculating a plurality of image point values using height data, color data, and normal data. Normal data is computed for a particular image point using pixel data adjacent to the image point. The computed normalized data, along with corresponding height data and color data, are included in a limited space data stream and sent to a processor to generate an image. The normalized data may be computed using adjacent pixel data at any time prior to inserting the normalized data in the limited space data stream.

Owner:IBM CORP

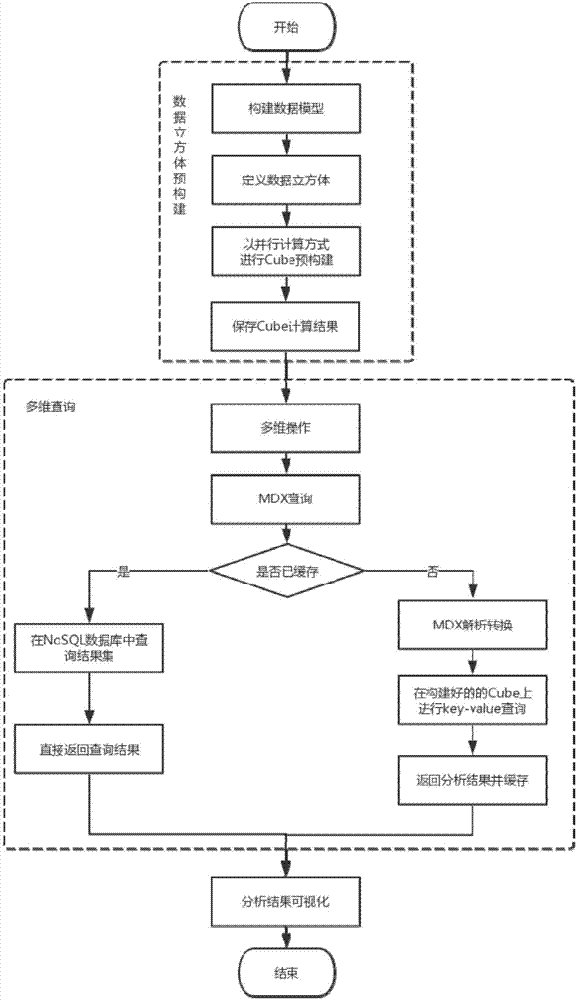

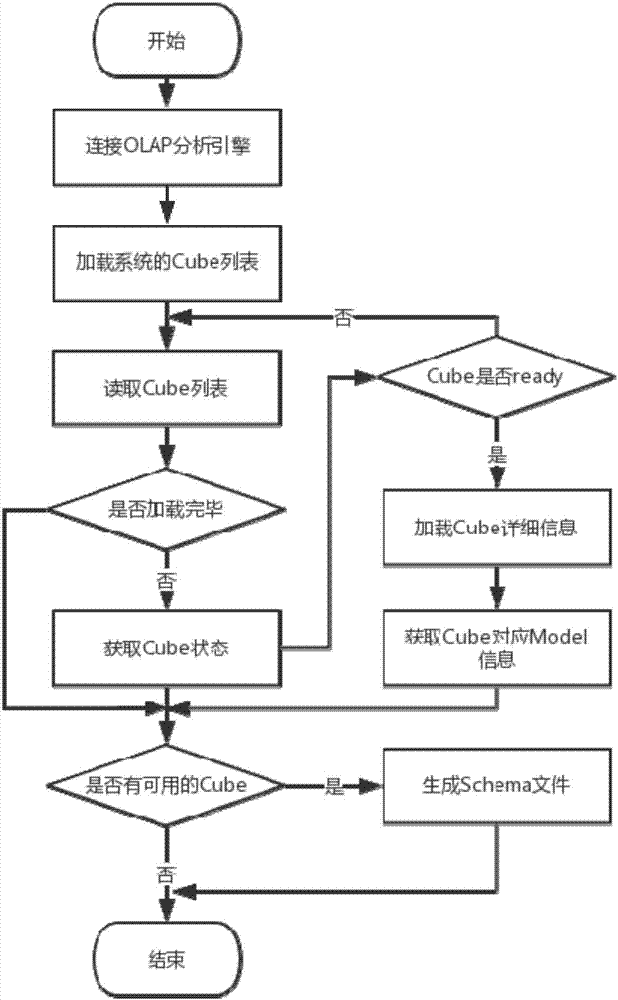

Distributed OLAP analysis method and system based on pre-computation

InactiveCN107301206AMeet storage requirementsTake full advantage of parallel processing performanceMulti-dimensional databasesSpecial data processing applicationsCache optimizationData warehouse

The present invention discloses a distributed OLAP analysis method and system based on pre-computation. The method mainly comprises: constructing a data model based on the distributed data warehouse, and defining data cubes according to the data model; starting pre-computation tasks for the given data cubes so as to pre-build the cubes in a parallel computation manner, and storing results in a distributed key storage system; through a series of steps, converting multi-dimensional analysis operations into key-value query operations on the data cubes, directing obtaining analysis results from the built cubes, and displaying the results in the form of rich and diversified charts; and using the NoSQL to carry out cache optimization on the OLAP query operation. According to the method and system disclosed by the present invention, the powerful processing performance of the Hadoop platform is fully exerted, and data cubes are pre-built, the problem that the query is slow due to that the traditional method needs a large amount of computation from the original data in each query is overcome, so that OLAP analysis efficiency and system performance are improved.

Owner:SOUTH CHINA UNIV OF TECH

Caching and memory optimizations for multi-layer XML customization

ActiveUS8538998B2Efficiently providedDigital data information retrievalDigital data processing detailsCache optimizationApplication software

Embodiments of the present invention provide techniques for customizing aspects of a metadata-driven software application. In particular, embodiments of the present invention provide (1) a self-contained metadata engine for generating customized metadata documents from base metadata documents and customizations; (2) a customization syntax for defining customizations; (3) a customization creation / update component for creating and updating customizations; (4) a customization restriction mechanism for restricting the creation of new customizations by specific users or groups of users; and (5) memory and caching optimizations for optimizing the storage and lookup of customized metadata documents.

Owner:ORACLE INT CORP

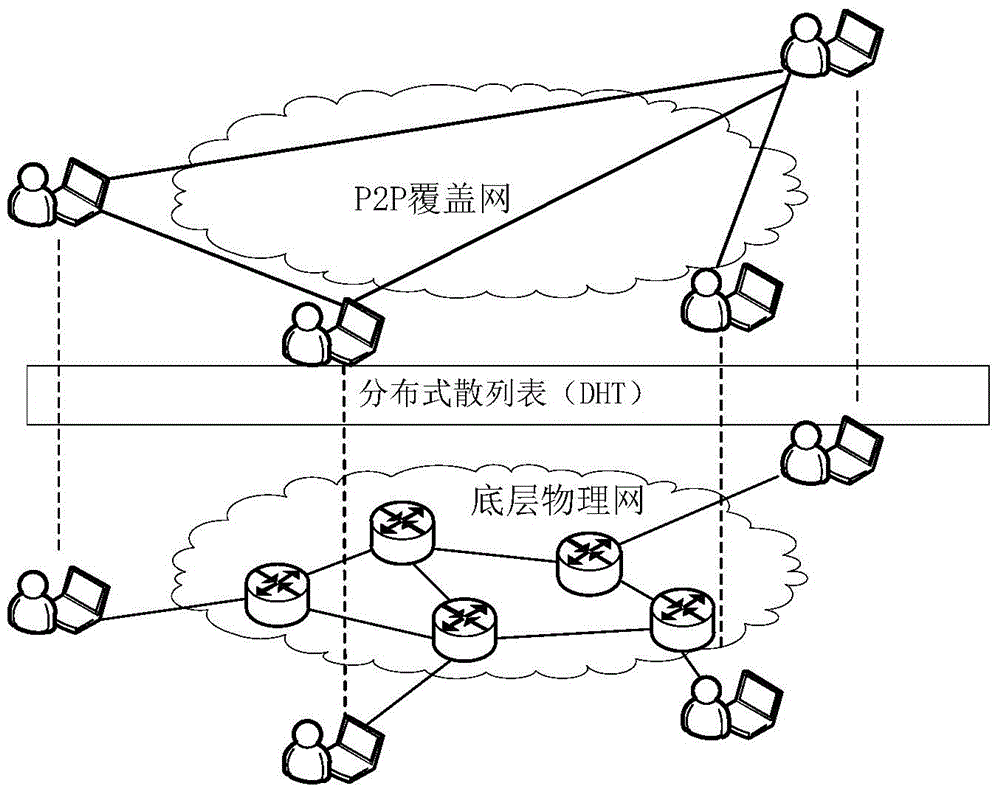

Distributive cache storage method for dynamic self-adaptive video streaming media

ActiveCN105979274AIncrease profitReduce loadDigital video signal modificationSelective content distributionQuality of serviceCache optimization

The invention provides a distributive cache storage method for dynamic self-adaptive video streaming media. In combination with dynamic self-adaptive streaming media encoding technology of a main server, the method encodes videos into versions of different code rate. At the same time, difference of code rate-distortion performance among different video content, buffer capacity limits of edge servers, network connection conditions of different users and video on demand probability distribution are taken into consideration. Subsets of video versions of the edges servers required cache are determined by adopting the distributive cache optimization storage method. Finally, the optimization of integral quality of videos downloaded by the user through the edge servers is realized. The method provided by the invention improves the utilization rate of cache video content of the edge servers, reduces the video streaming media service load of the main server and provides batter video service quality for the user.

Owner:SHANGHAI JIAO TONG UNIV

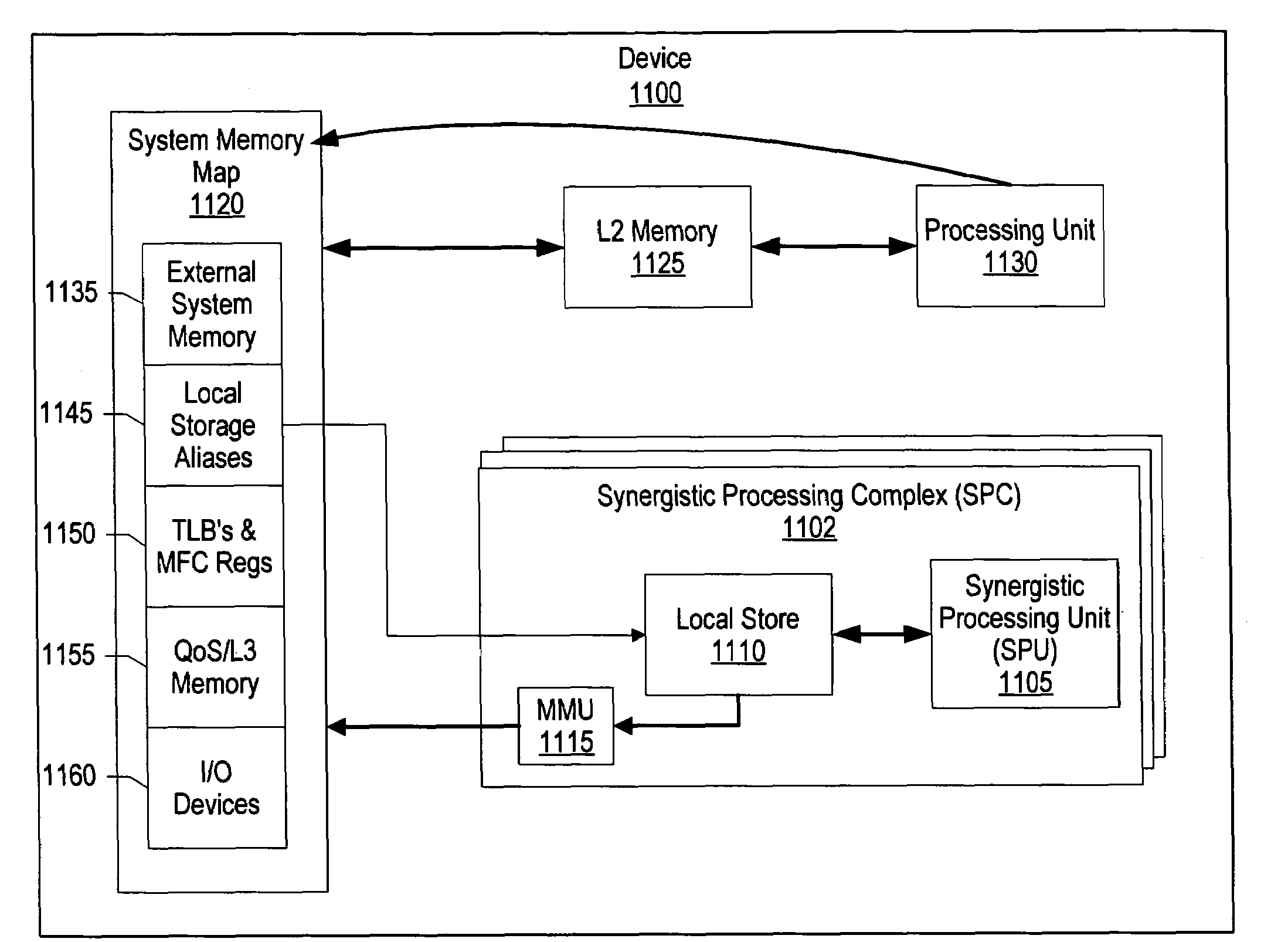

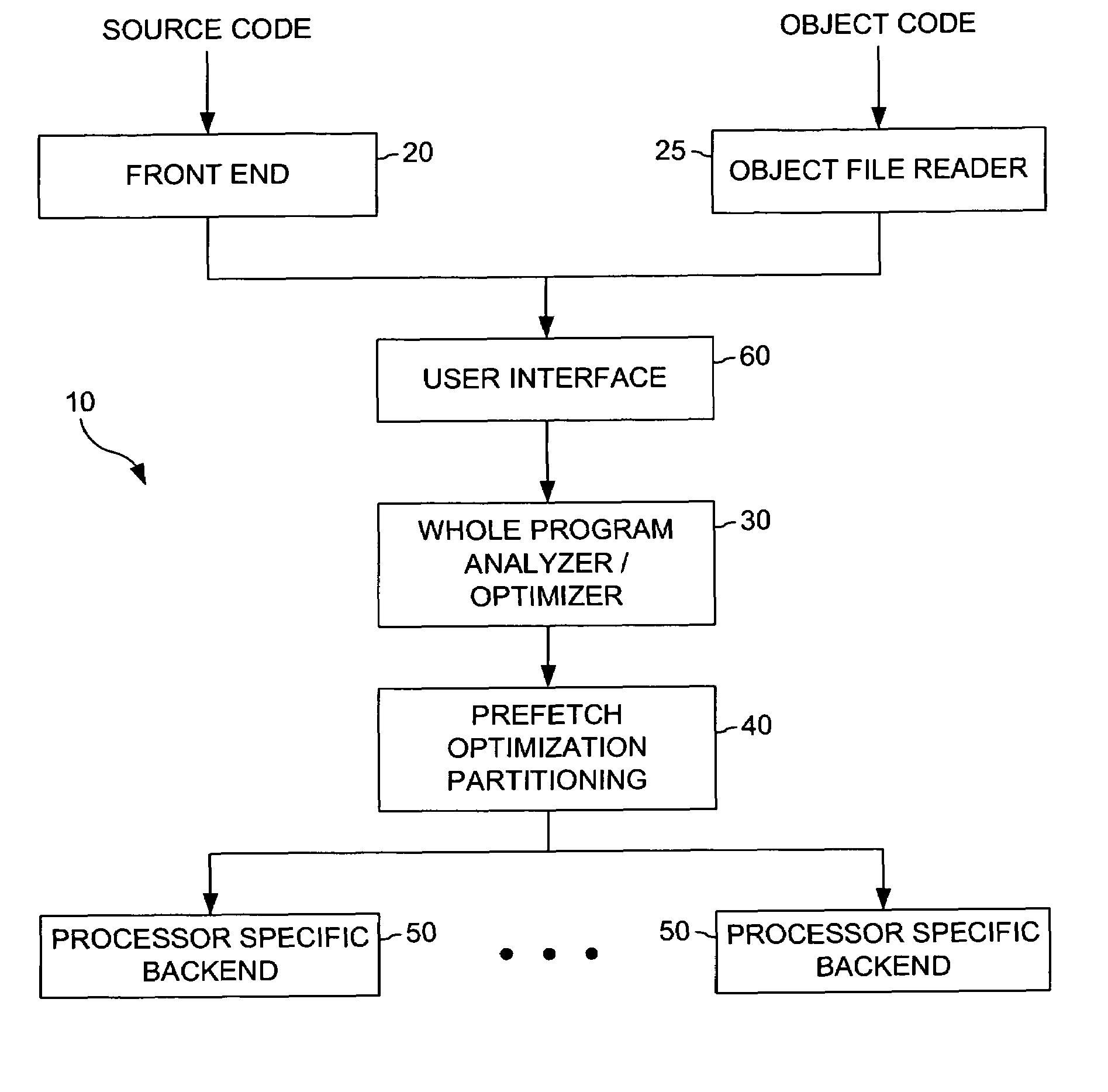

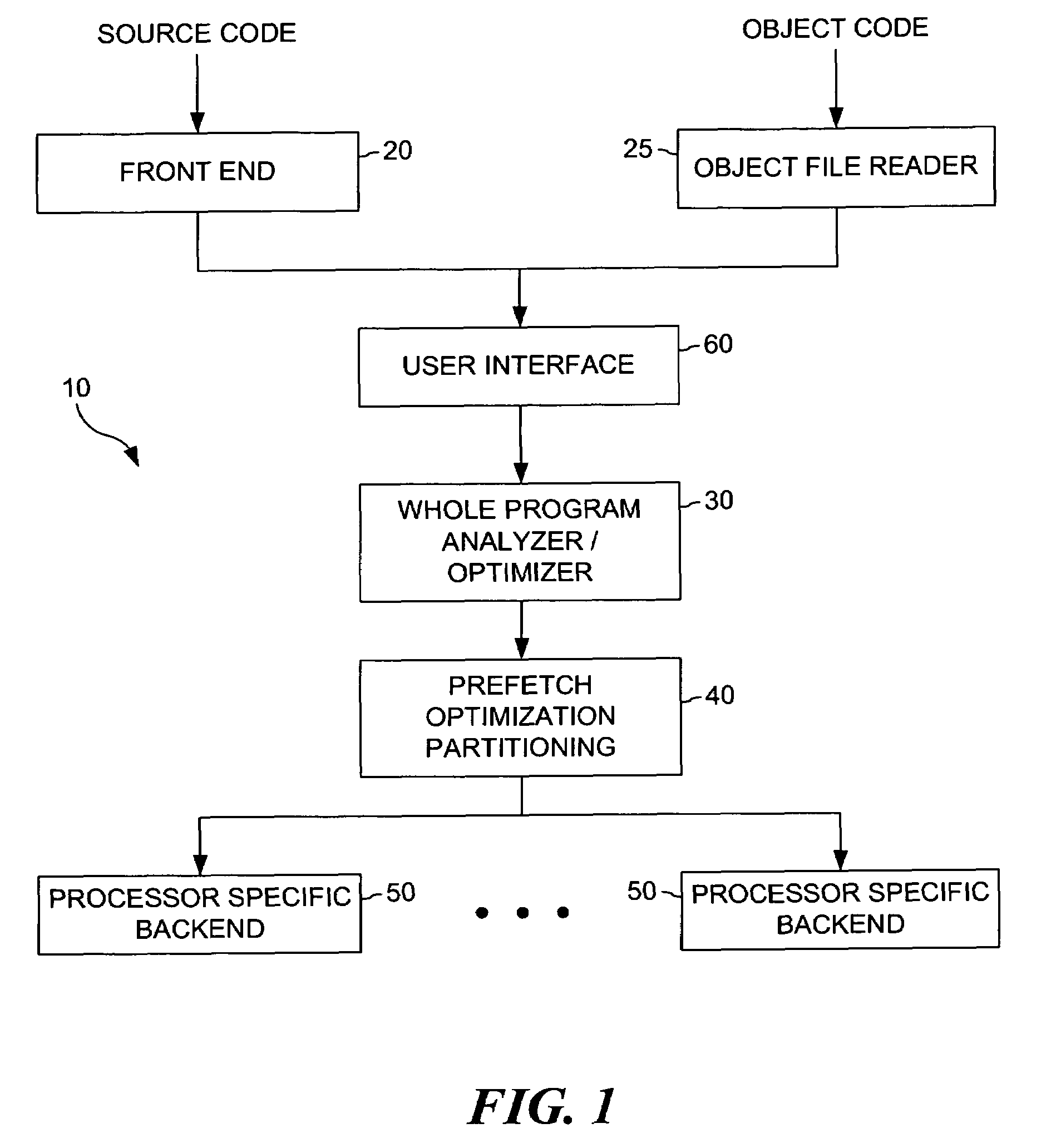

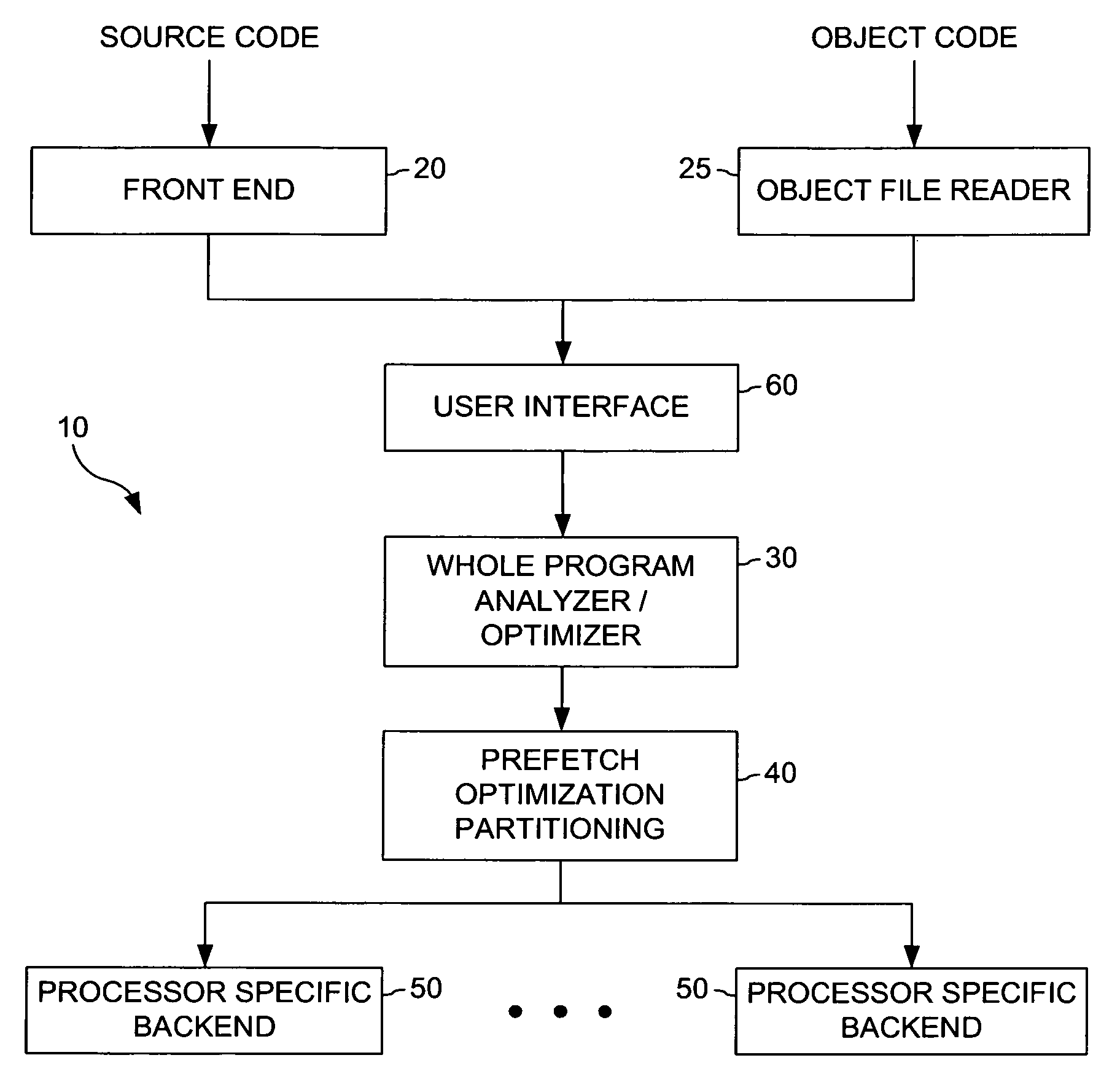

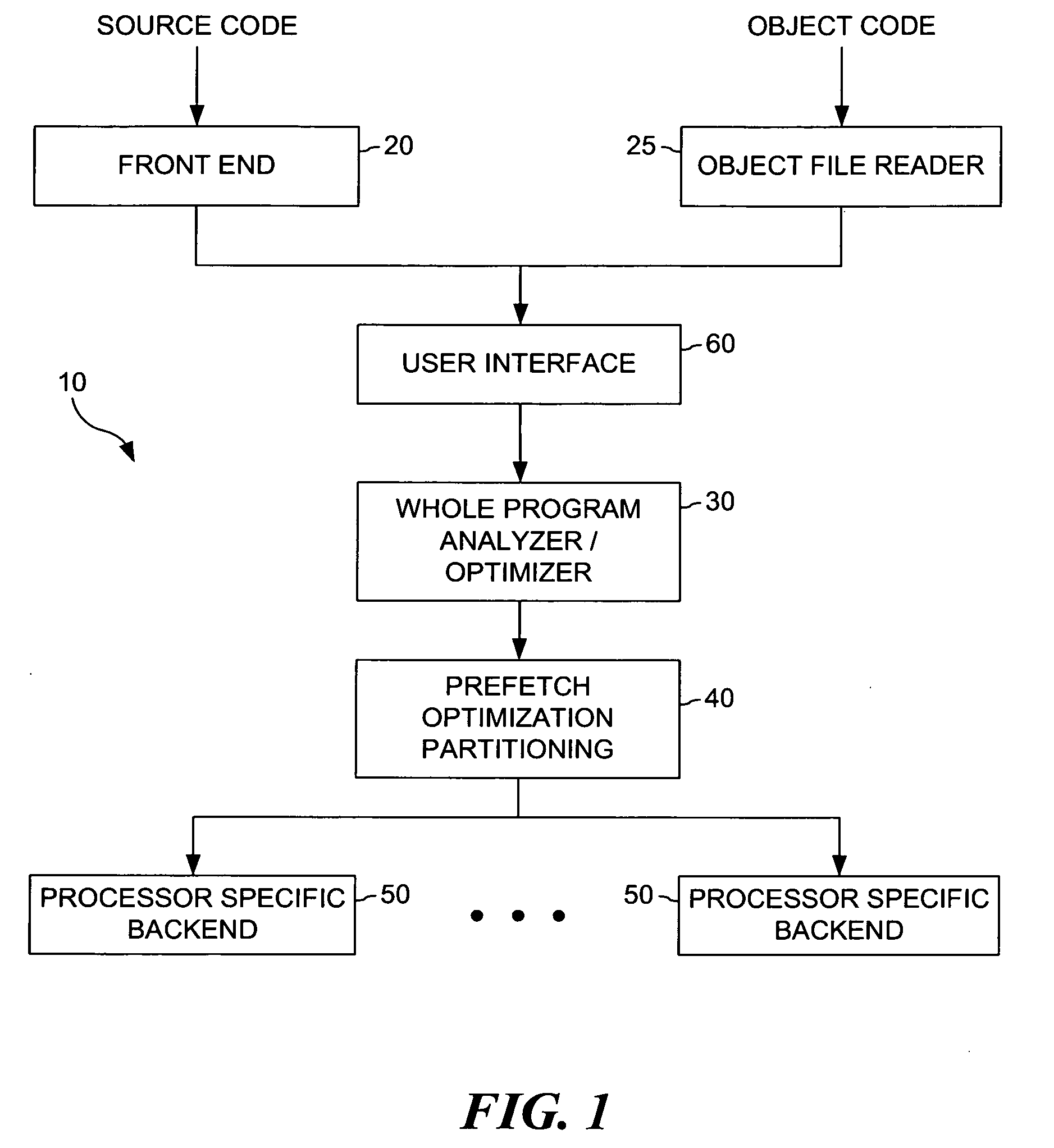

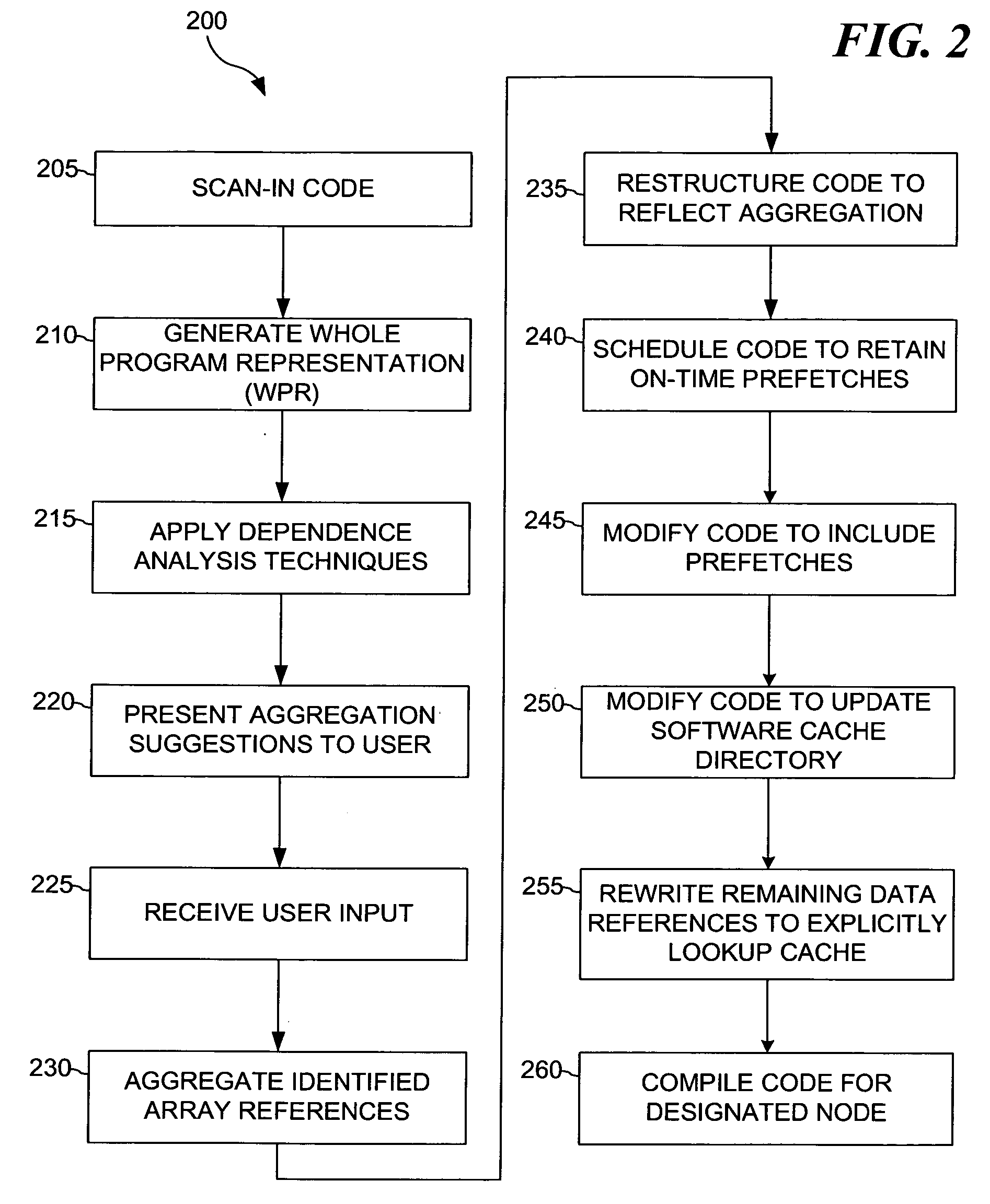

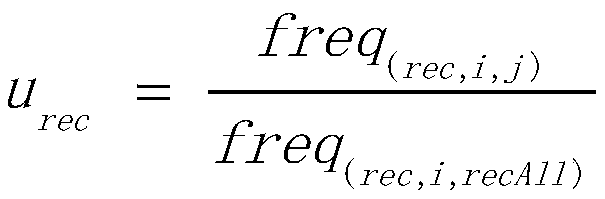

Software managed cache optimization system and method for multi-processing systems

The present invention provides for a method for computer program code optimization for a software managed cache in either a uni-processor or a multi-processor system. A single source file comprising a plurality of array references is received. The plurality of array references is analyzed to identify predictable accesses. The plurality of array references is analyzed to identify secondary predictable accesses. One or more of the plurality of array references is aggregated based on identified predictable accesses and identified secondary predictable accesses to generate aggregated references. The single source file is restructured based on the aggregated references to generate restructured code. Prefetch code is inserted in the restructured code based on the aggregated references. Software cache update code is inserted in the restructured code based on the aggregated references. Explicit cache lookup code is inserted for the remaining unpredictable accesses. Calls to a miss handler for misses in the explicit cache lookup code are inserted. A miss handler is included in the generated code for the program. In the miss handler, a line to evict is chosen based on recent usage and predictability. In the miss handler, appropriate DMA commands are issued for the evicted line and the missing line.

Owner:INT BUSINESS MASCH CORP

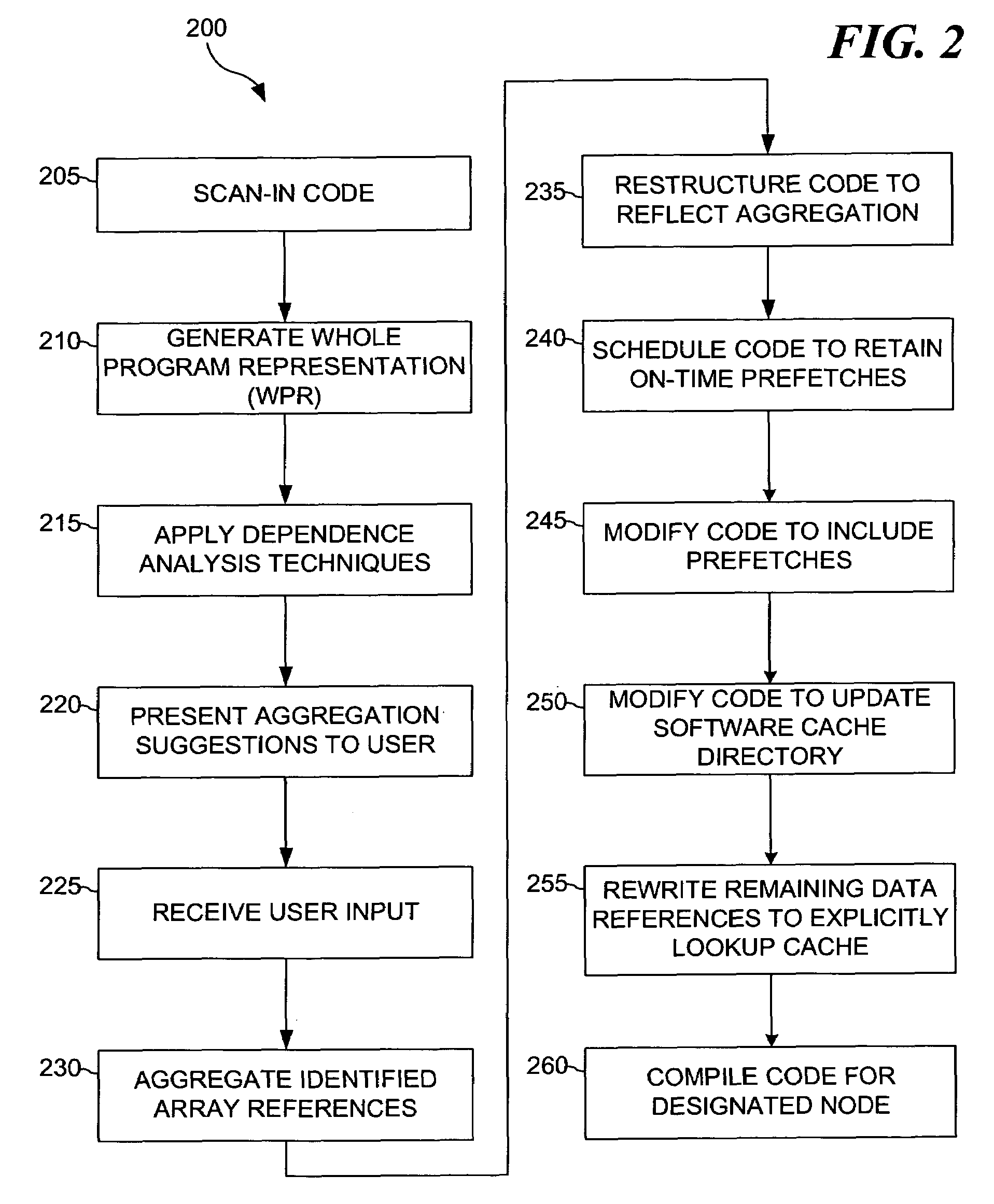

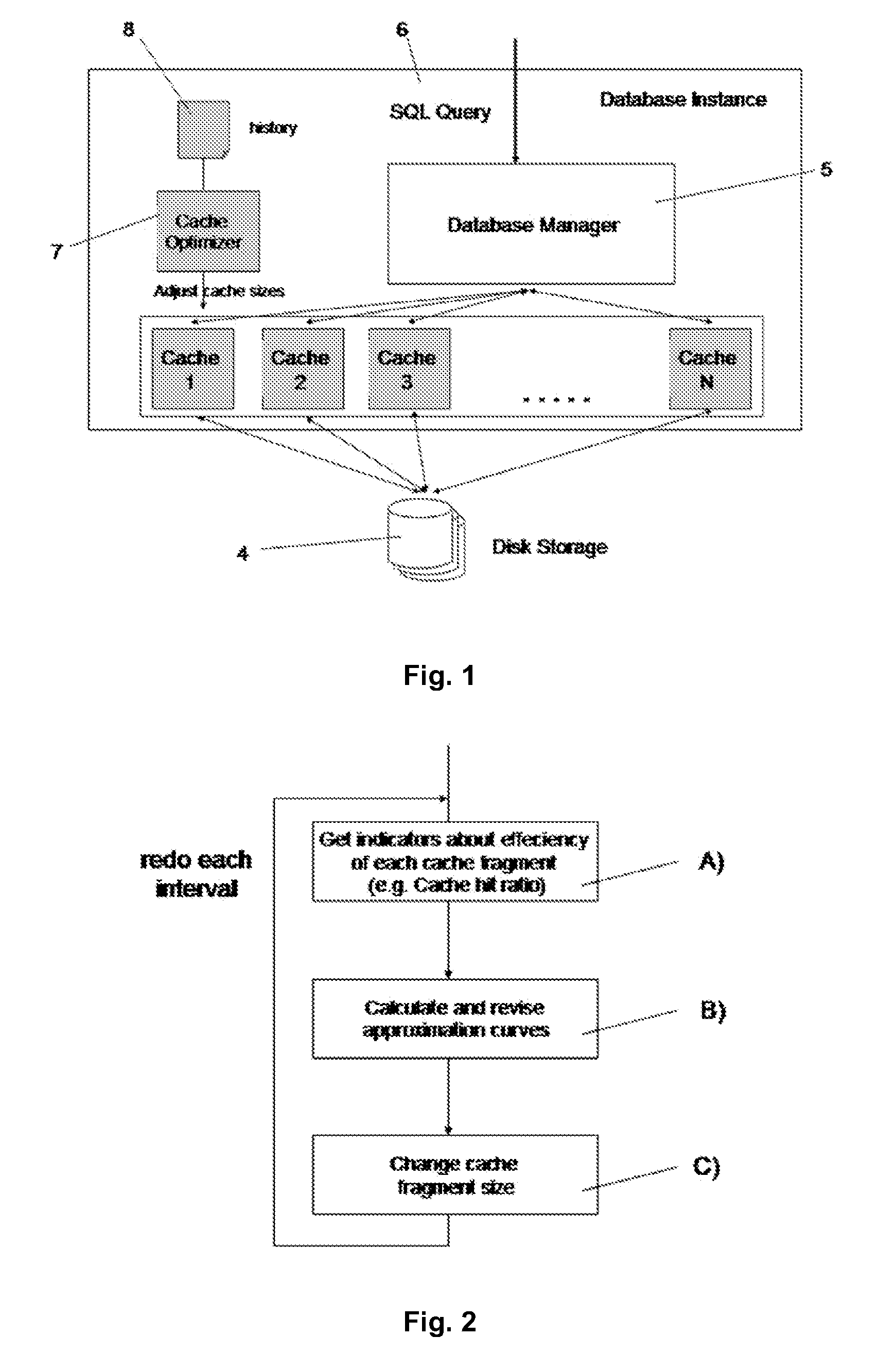

System and method to improve processing time of databases by cache optimization

ActiveUS7512591B2Improve performanceImprove precisionDigital data information retrievalData processing applicationsCache optimizationFragment size

A system and method are disclosed to improve processing time of a database system by continuous automatic background optimization of a cache memory that is fragmented into a plurality of cache fragments. The system and method include collecting indicators about efficiency of individual cache fragments by at least one of measuring a cache hit ratio of each cache fragment, measuring a processing time that a CPU of the database system needs to prepare data in the individual cache fragments, and measuring execution time the CPU needs to process the data in accordance with a SQL query. The system and method include calculating and revising approximation curves for measured values of each cache fragment to find a combination of cache fragment sizes with a highest system throughput. The system and method include changing the sizes of the cache fragments to receive highest system throughput.

Owner:SAP AG

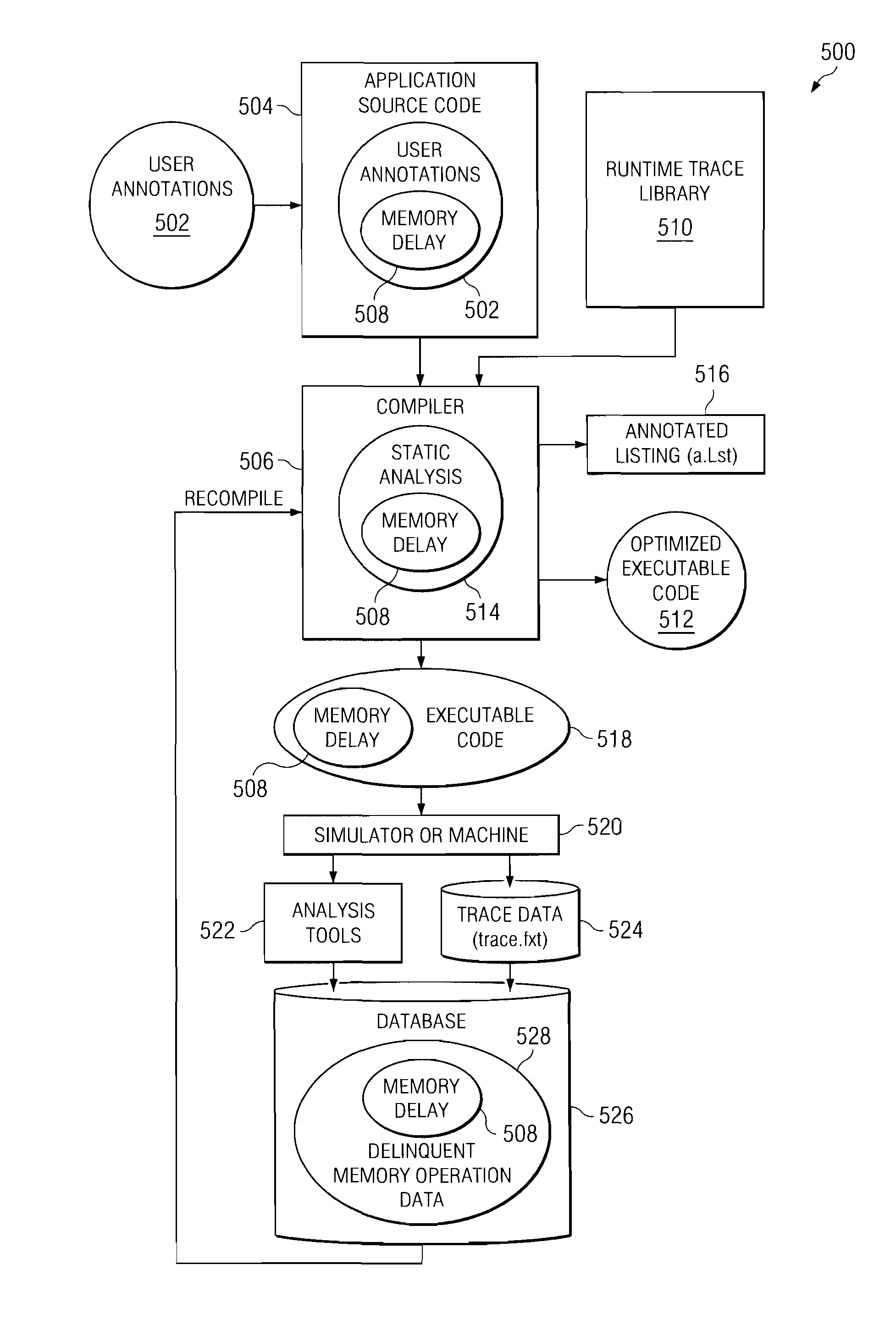

Uniform external and internal interfaces for delinquent memory operations to facilitate cache optimization

ActiveUS20080229028A1Improve application performanceEasy to implementMemory architecture accessing/allocationSoftware engineeringCache optimizationParallel computing

A computer implemented method, software infrastructure and computer usable program code for improving application performance. A delinquent memory operation instruction is identified. A delinquent memory operation instruction is an instruction associated with cache misses that exceeds a threshold number of cache misses. A directive is inserted in a code region associated with the delinquent memory operation to form annotated code. The directive indicates an address of the delinquent memory operation instruction and a number of memory latency cycles expected to be required for the delinquent memory operation instruction to execute. The information included in the annotated code is used to optimize execution of an application associated with the delinquent memory operation instruction.

Owner:IBM CORP

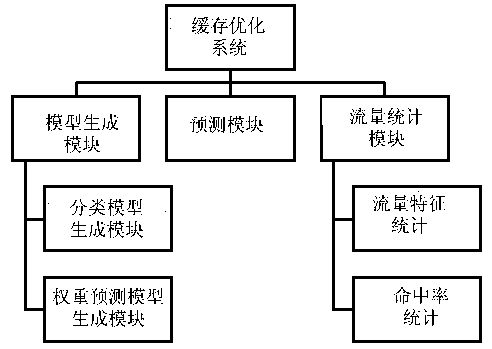

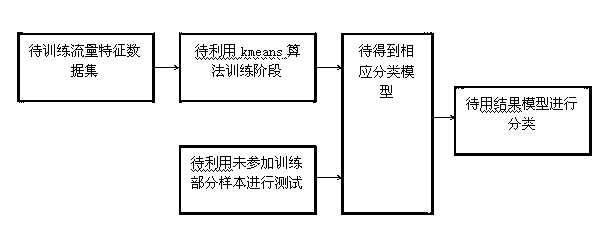

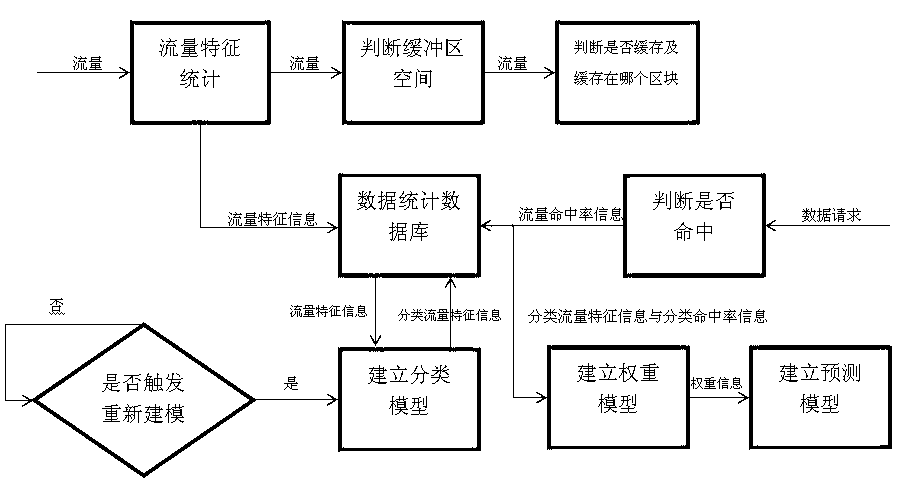

Network intermediate node cache optimization method based on flow characteristic analysis

InactiveCN103023801AMaximize utilizationTraffic conditions are closely relatedData switching networksProtocol designCache optimization

The invention belongs to the field of computer network communication and particularly relates to a network intermediate node cache optimization method based on flow characteristic analysis. The method includes when the flow passes the intermediate node, firstly classifying the flow, and then according to the type of the flow, determining how to process the flow in divided buffer area space of the type according to the cache condition of the area and combining a least recently used (LRU) algorithm. The system can update flow classification models and redistribute buffer space to different new classifications regularly. The method mainly focuses on solving the transparency problem of a network intermediate node cache strategy, namely, algorithm design of the network intermediate node cache is guaranteed to be unrelated to a specific user layer protocol design, and the efficiency of the strategy which satisfies the programming transparency is managed to be optimal.

Owner:FUDAN UNIV

Software managed cache optimization system and method for multi-processing systems

The present invention provides for a method for computer program code optimization for a software managed cache in either a uni-processor or a multi-processor system. A single source file comprising a plurality of array references is received. The plurality of array references is analyzed to identify predictable accesses. The plurality of array references is analyzed to identify secondary predictable accesses. One or more of the plurality of array references is aggregated based on identified predictable accesses and identified secondary predictable accesses to generate aggregated references. The single source file is restructured based on the aggregated references to generate restructured code. Prefetch code is inserted in the restructured code based on the aggregated references. Software cache update code is inserted in the restructured code based on the aggregated references. Explicit cache lookup code is inserted for the remaining unpredictable accesses. Calls to a miss handler for misses in the explicit cache lookup code are inserted. A miss handler is included in the generated code for the program. In the miss handler, a line to evict is chosen based on recent usage and predictability. In the miss handler, appropriate DMA commands are issued for the evicted line and the missing line.

Owner:IBM CORP

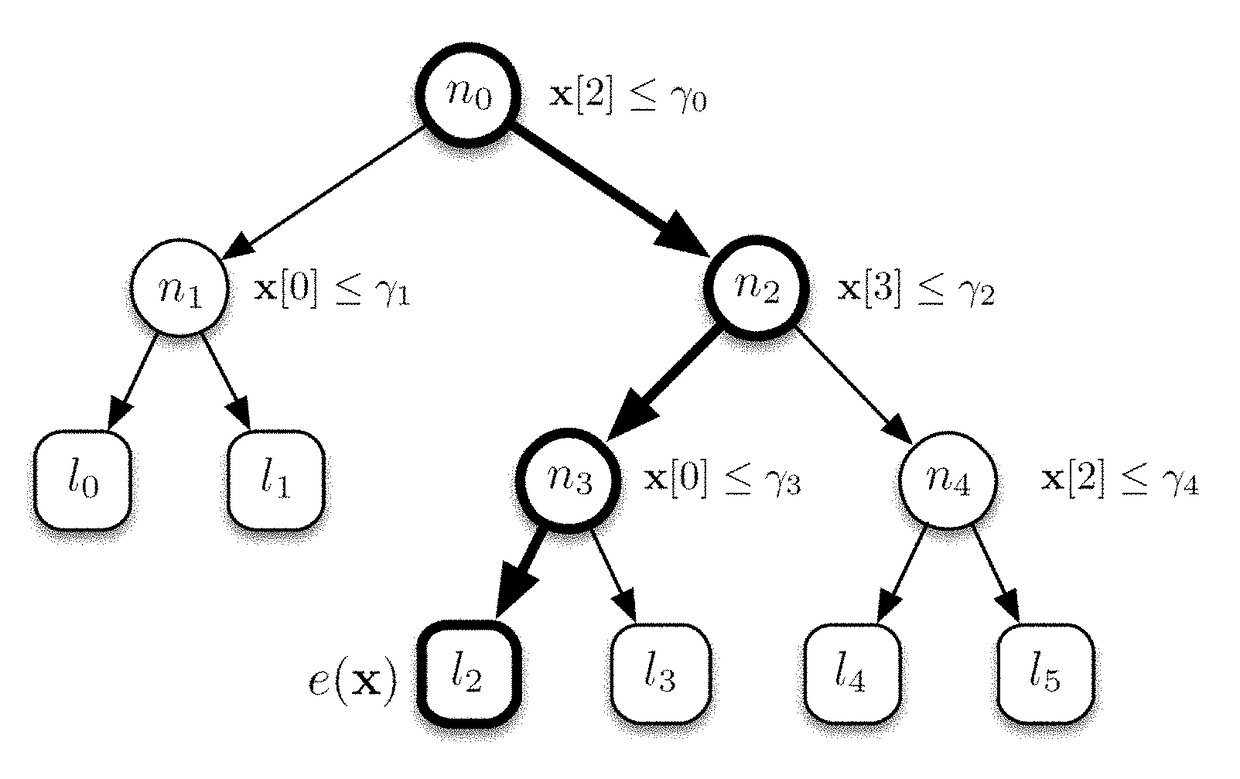

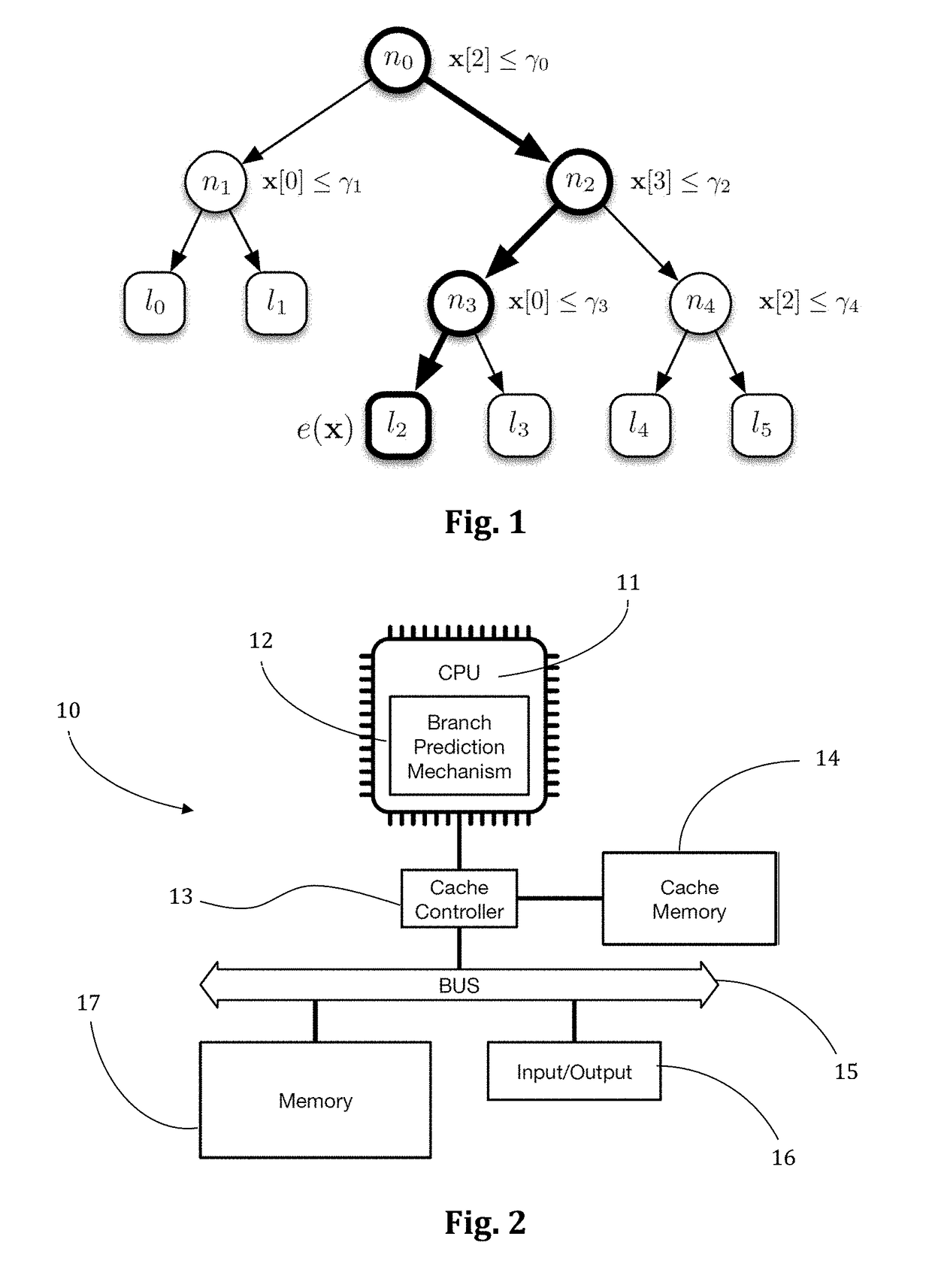

A method to rank documents by a computer, using additive ensembles of regression trees and cache optimisation, and search engine using such a method

ActiveUS20180217991A1Reduce scoring timeImprove recallEnsemble learningInference methodsDocumentation procedureData set

The present invention concerns a novel method to efficiently score documents (texts, images, audios, videos, and any other information file) by using a machine-learned ranking function modeled by an additive ensemble of regression trees. A main contribution is a new representation of the tree ensemble based on bitvectors, where the tree traversal, aimed to detect the leaves that contribute to the final scoring of a document, is performed through efficient logical bitwise operations. In addition, the traversal is not performed one tree after another, as one would expect, but it is interleaved, feature by feature, over the whole tree ensemble. Tests conducted on publicly available LtR datasets confirm unprecedented speedups (up to 6.5×) over the best state-of-the-art methods.

Owner:TISCALI SPA

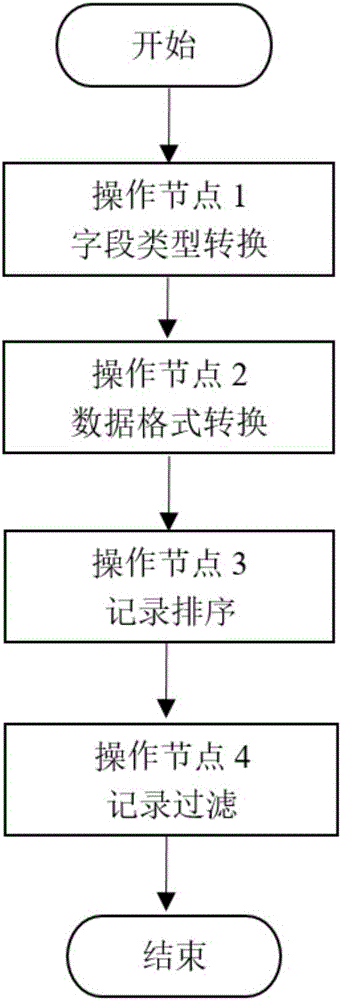

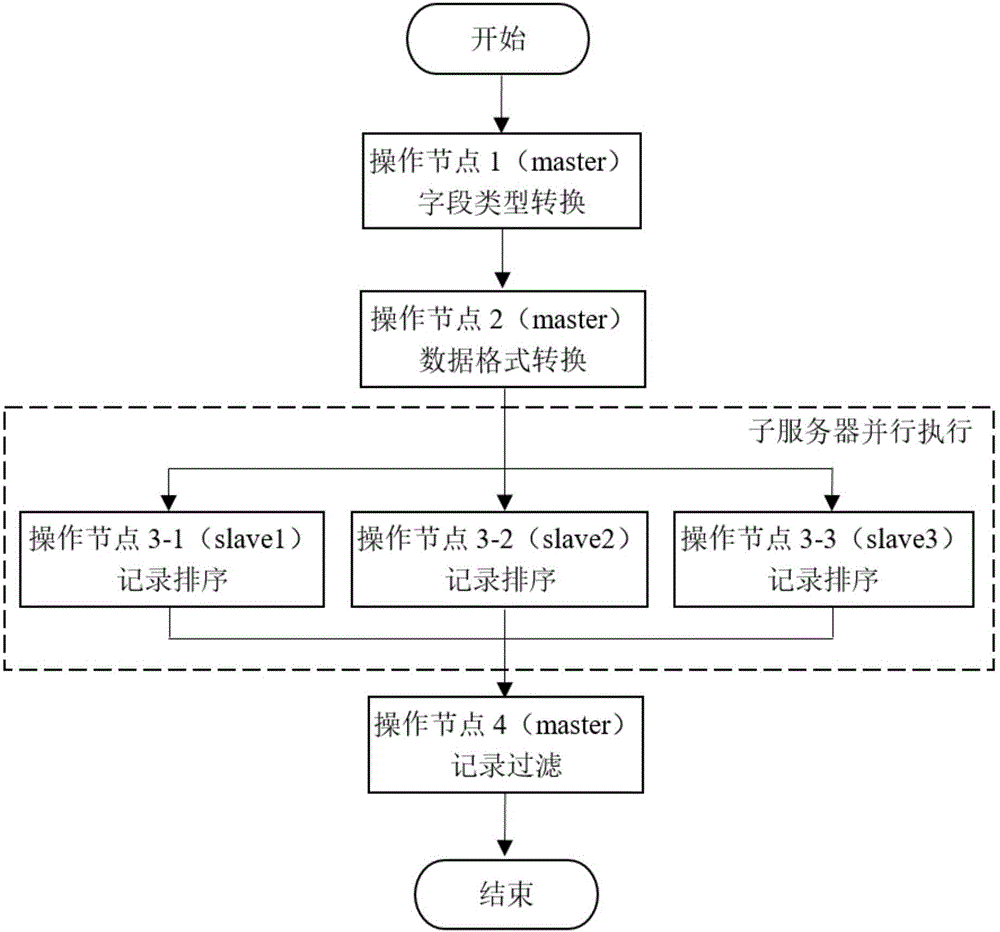

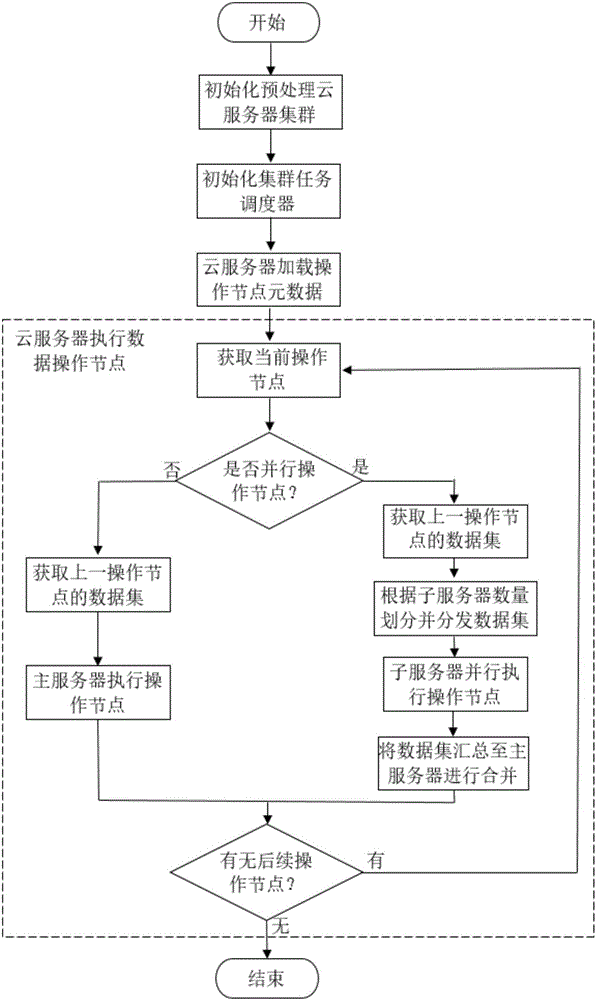

Social insurance big data distributed preprocessing method and system

InactiveCN106126601AGive full play to handlingGive full play to processing performance and provide certain scalabilityDatabase distribution/replicationMulti-dimensional databasesPretreatment methodCache optimization

The invention discloses a social insurance big data distributed preprocessing method and system. According to the main technical scheme, the method comprises the steps of defining a data preprocessing process as a data preprocessing operation that contains a plurality of preprocessing operation nodes, and concurrently executing the preprocessing operation nodes in independent threads; allocating a plurality of executive threads to a data operation node with high complexity, and concurrently executing the data preprocessing operation by a distributed cloud server cluster; and loading and writing data of the distributed preprocessing system in a distributed file system by column, and performing cache optimization on the data writing operation by utilizing NoSQL. According to the method and the system, the processing performance of preprocessing cloud servers is brought into full play, the performance bottleneck of a single server is overcome, redundant data transmission between the servers and data nodes of the HDFS (Hadoop Distributed File System) is avoided, and the efficiency of loading the data in the HDFS is improved, so that the big data preprocessing efficiency is enhanced.

Owner:SOUTH CHINA UNIV OF TECH

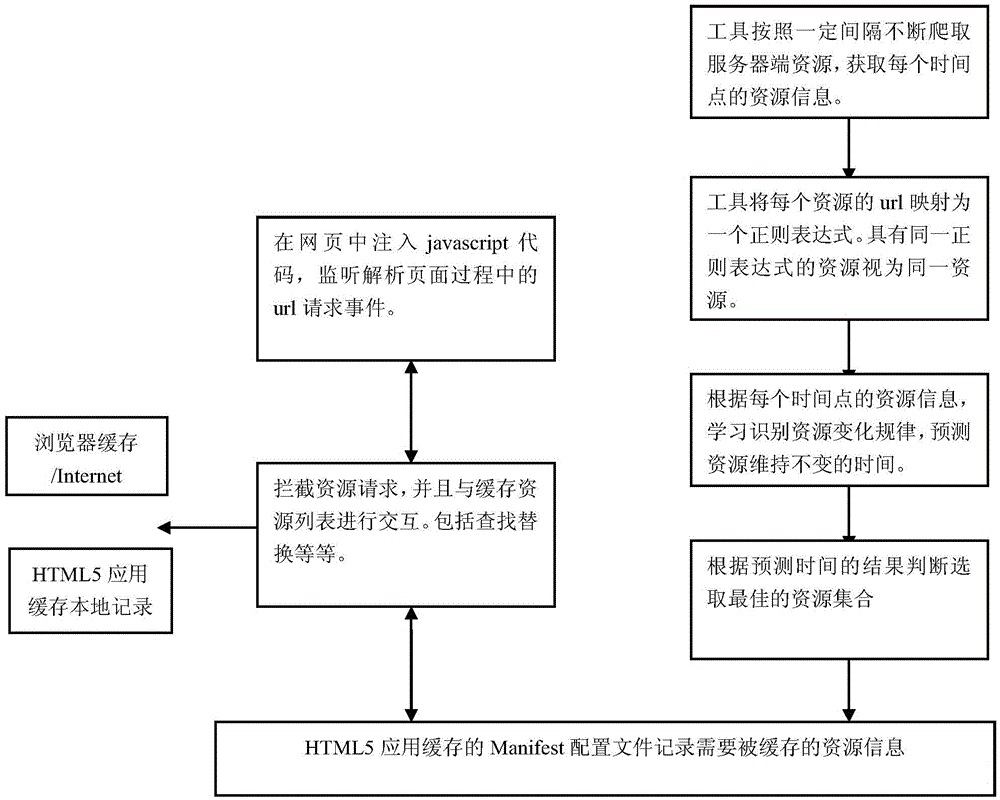

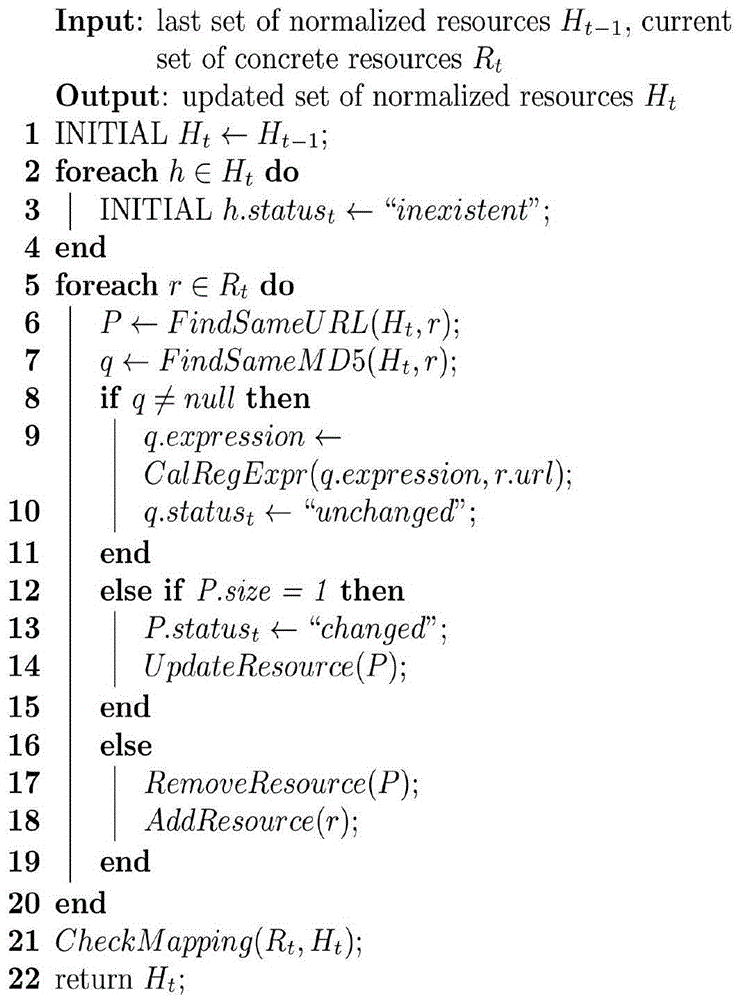

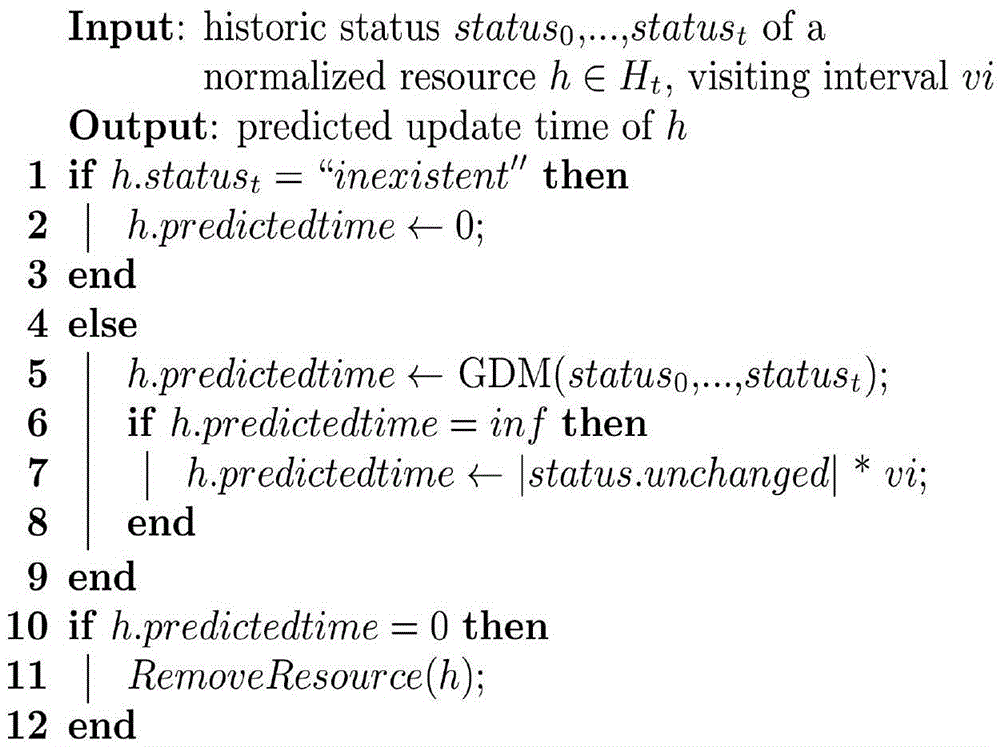

HTML5 application cache based mobile Web cache optimization method

ActiveCN105550338AEasy accessImprove cache hit ratioWeb data indexingInformation formatHTML5Cache optimization

Owner:PEKING UNIV

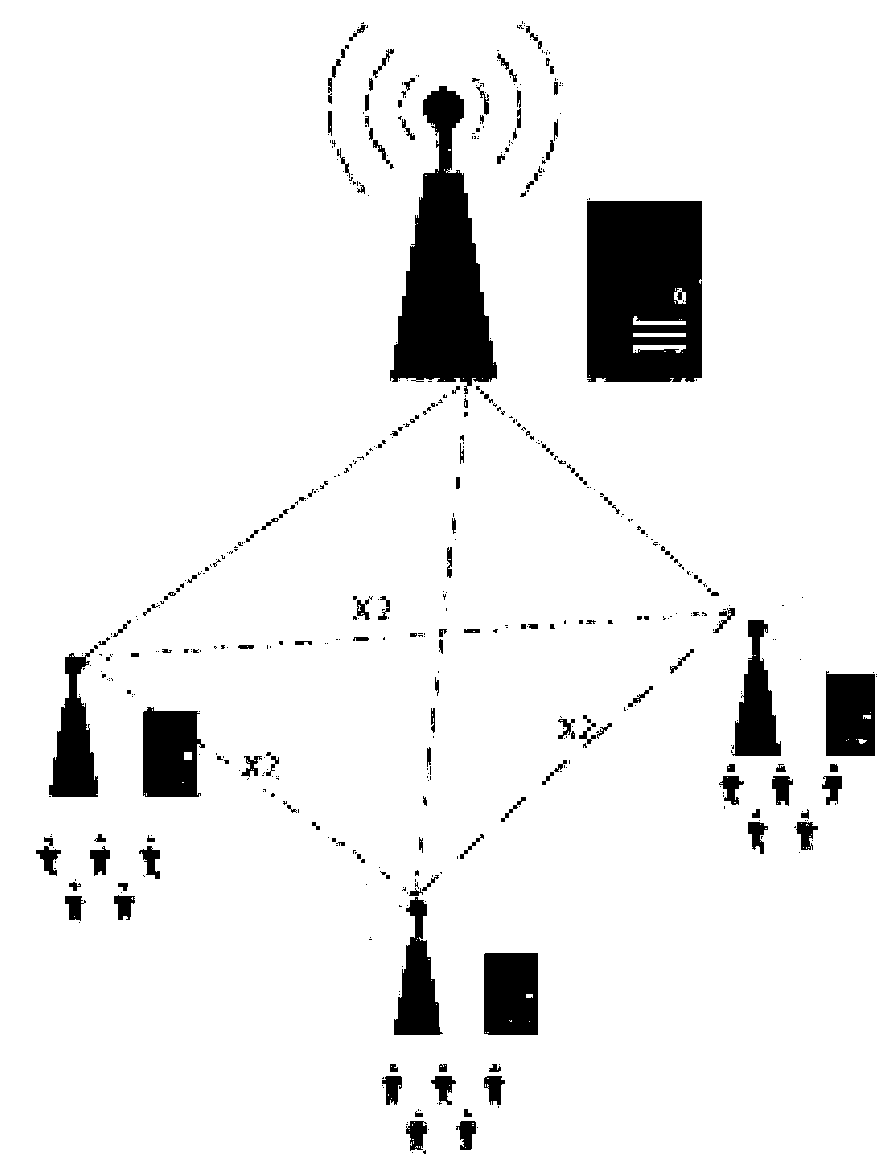

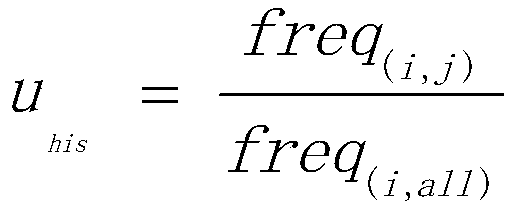

Mobile edge caching method based on region user interest matching

ActiveCN110730471AImprove caching efficiencyShort response timeNetwork traffic/resource managementEffective solutionComputer network

The invention relates to a mobile edge caching method based on regional user interest matching, and belongs to the technical field of caching. The method comprises the following steps: S1, establishing a regional user preference model; S2, establishing a joint cache optimization strategy; S3, establishing a cache system model; S4, designing a cache algorithm. When a cache mechanism of the invention is used, the video caching gain can be maximized under the condition that the diversity of cached videos is met, compared with a current caching mechanism, the video caching benefit and QoE of a user can be effectively improved, and an effective solution is provided for caching and online watching of large-scale ultra-high-definition videos in a future 5G scene.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

System, method and computer program product for application-level cache-mapping awareness and reallocation requests

In view of the foregoing, the shortcomings of the prior art cache optimization techniques, the present invention provides an improved method, system, and computer program product that can optimize cache utilization. In one embodiment, an application requests a kernel cache map from a kernel service and the application receives the kernel. The application designs an optimum cache footprint for a data set from said application. The objects, advantages and features of the present invention will become apparent from the following detailed description. In one embodiment of the present invention, the application transmits a memory reallocation order to a memory manager. In one embodiment of the present invention, the step of the application transmitting a memory reallocation order to the memory manager further comprises the application transmitting a memory reallocation order containing the optimum cache footprint to the memory manager. In one embodiment of the present invention, the step of the application transmitting a memory reallocation order to a memory manager further comprises the application transmitting the memory reallocation order containing to a reallocation services tool within the memory manager.

Owner:GOOGLE LLC

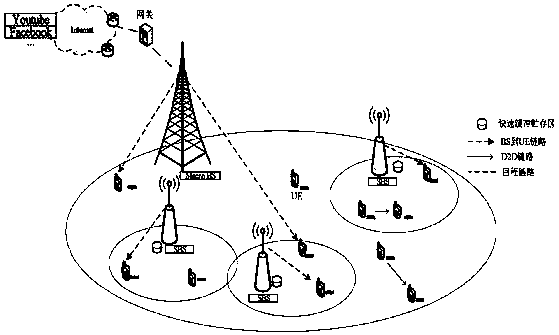

Method for supporting D2D-cellular heterogeneous network joint user association and content cache

ActiveCN108156596AExcellent strategyMinimize the total transmission delayTransmissionMachine-to-machine/machine-type communication serviceCache optimizationSimulation

The invention relates to a method for supporting D2D cellular heterogeneous network joint user association and content cache, and belongs to the technical field of wireless communication. The method comprises the following steps: S1 modeling a user content requirement identifier; S2 modeling a user association variable; S3 modeling a user content cache variable; S4 modeling a user data transmission rate; S5 modeling a user total transmission delay; S6 modeling a user D2D mode transmission delay; S7 modeling a user small cell base station association mode transmission delay; S8 modeling a usermacro cell base station association mode transmission delay; S9 modeling joint user association and content cache restriction conditions; and S10 determining a user association mode and a content cache optimization strategy based on the minimum user total transmission delay. By adoption of the method provided by the invention, the optimal user association network strategy, the optimal content placement and the minimum user content total transmission delay are achieved on the premise of effectively ensuring the minimum data rate requirement of each user.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

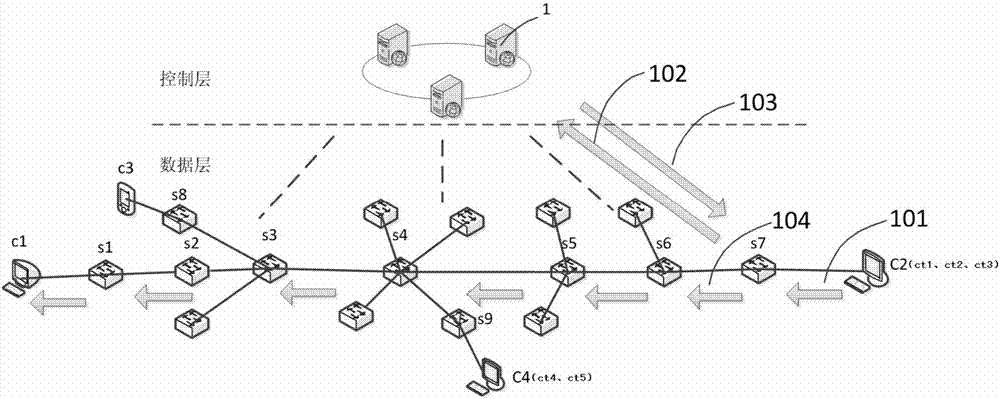

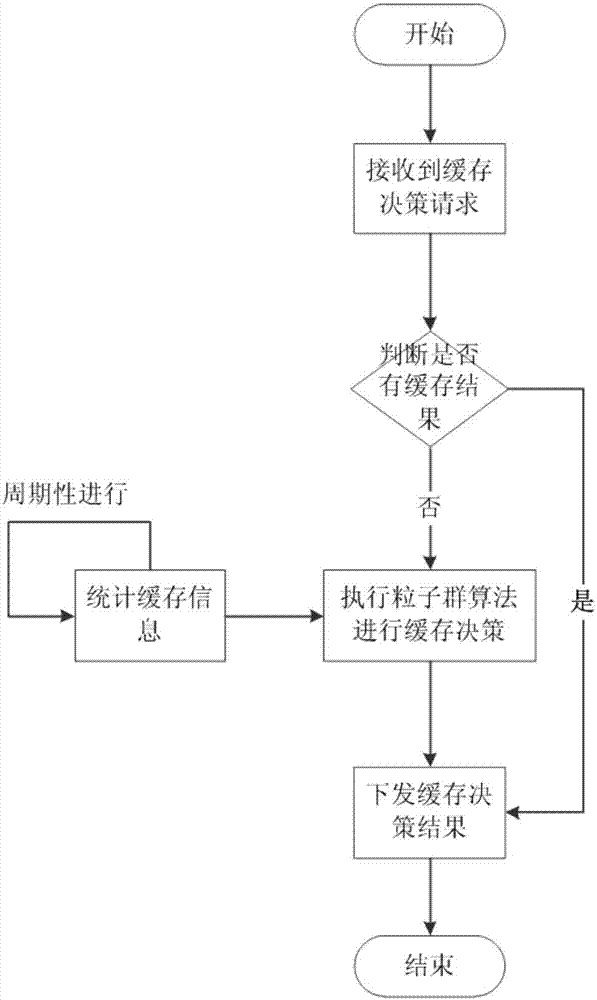

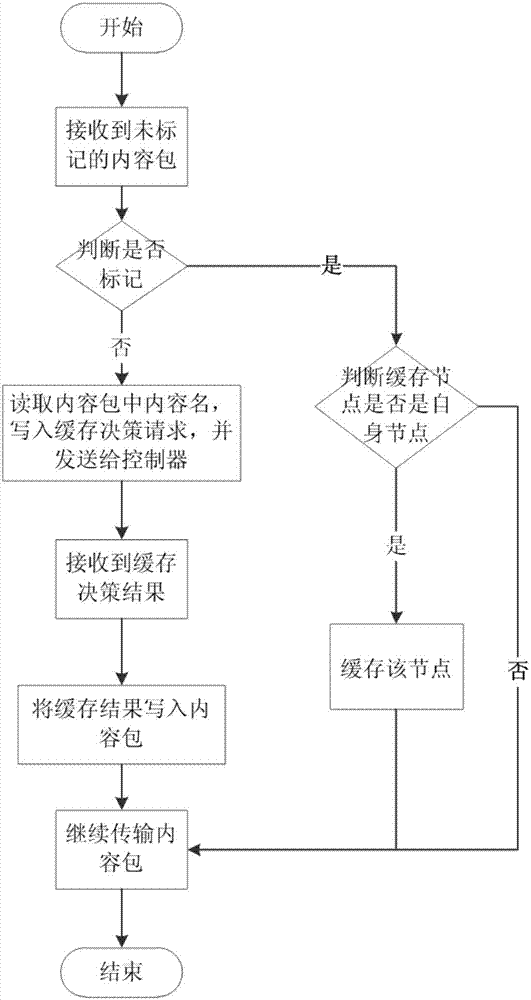

Content center network caching method based on software defined network

ActiveCN107105043ALow elongationImprove cache hit ratioData switching networksCache optimizationMathematical model

The invention provides a content center network caching method based on a software defined network. Under a fused architecture of the software defined network and a content center network, concentrated control and overall cache optimization are performed on caching nodes and content via perception of a controller on overall network topology and cached information. The controller periodically makes statistics on the cached information, and then performs cache optimization according to a data layer cache decision-making request. According to the method provided by the invention, an importance degree and an edge degree of the nodes and the popularity of content are brought into a cache decision-making strategy, mathematical modeling is performed according to the above information, and a particle swarm intelligent algorithm is used for optimizing the mathematical model. The method provided by the invention fully utilizes advantages of the controller on overall control and logic centralization, and thus the cache can perform decision optimization under multi-node coordination.

Owner:XI AN JIAOTONG UNIV

Cache optimization method of real-time vertical search engine objects

InactiveCN101667198AIncrease profitImprove experienceSpecial data processing applicationsCache optimizationDynamic balance

The invention discloses a cache optimization method of real-time vertical search engine objects, comprising the following steps: predicting the popularity trend of different objects and calculating the cache weights of different objects by utilizing the relation between the objects and the object properties; calculating the initial distribution and adjustment method of the grasping quota in each object by utilizing the characteristics the query to the same object by users conforms to a poisson process and the data grasping is used as query driving; and calculating the dynamic balance method ofthe grasping quota in each object by utilizing the characteristic that the true change frequency of data conforms to the poisson process. The invention increases the use ratio of the vertical searchengine on the grasping quota of a data site, increases user experience of real-time vertical search engine and realizes the adaptive configuration of the real-time vertical search engine to differentdata sites.

Owner:ZHEJIANG UNIV

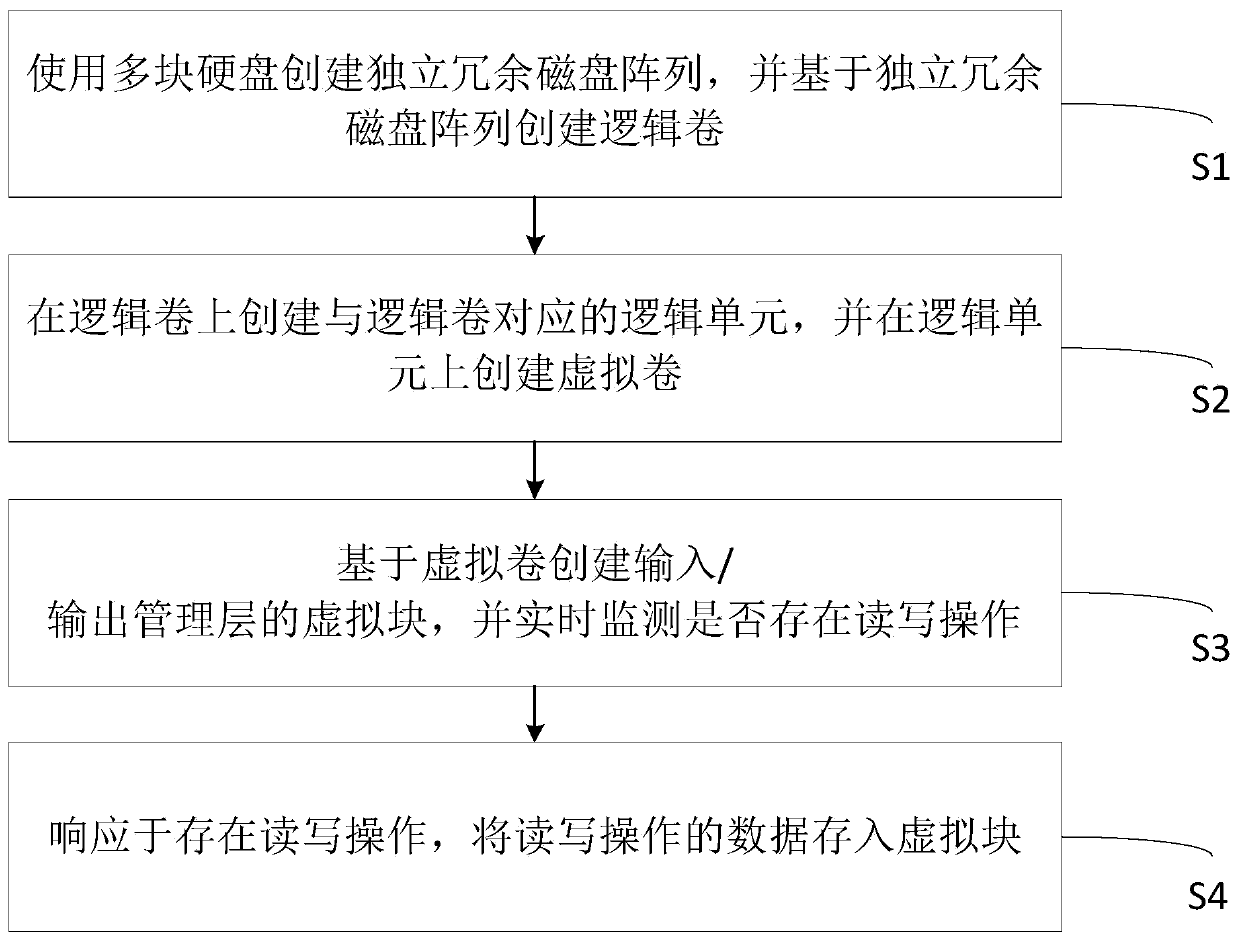

Storage cache optimization method and system, equipment and medium

InactiveCN111240595AImprove read and write speedIncrease storage capacityInput/output to record carriersMemory systemsComputer architectureCache optimization

The invention discloses a storage cache optimization method and system, equipment and a storage medium, and the method comprises the following steps: building an independent redundant disk array through employing a plurality of hard disks, and building a logic volume based on the independent redundant disk array; creating a logic unit corresponding to the logic volume on the logic volume, and creating a virtual volume on the logic unit; creating a virtual block of the input / output management layer based on the virtual volume, and monitoring whether read-write operation exists or not in real time; and in response to the existence of the read-write operation, storing the data of the read-write operation into the virtual block. According to the storage cache optimization method and system, the equipment and the medium provided by the invention, the virtual block is arranged between the independent redundant disk array and the file system, so that the application program reaches the virtual block firstly during reading and writing, and the storage read-write speed and the storage performance are greatly improved.

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

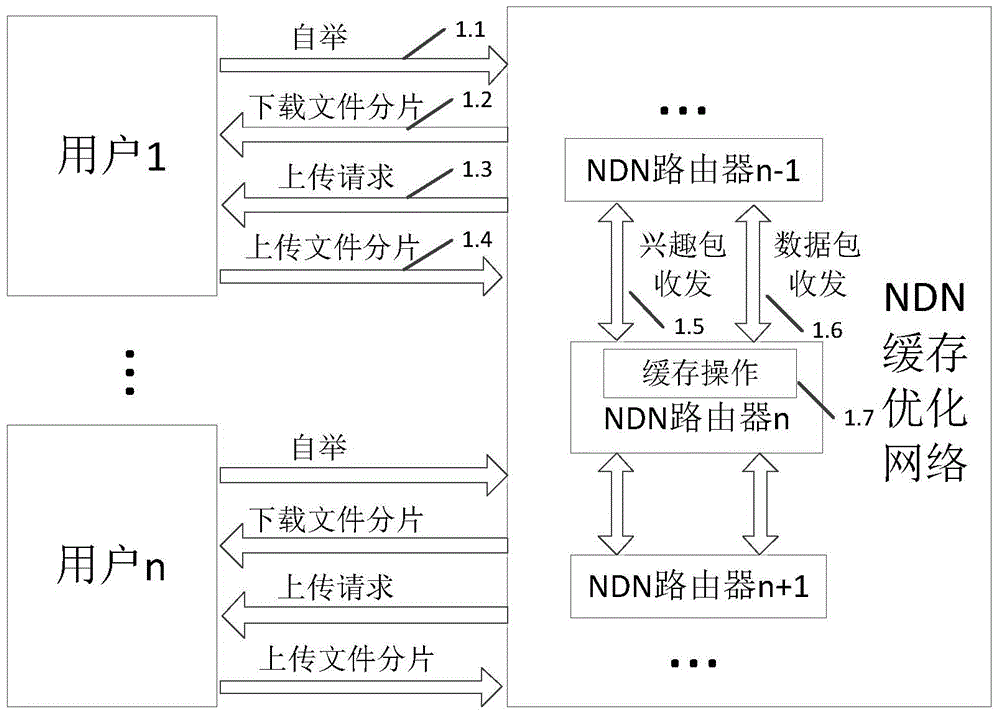

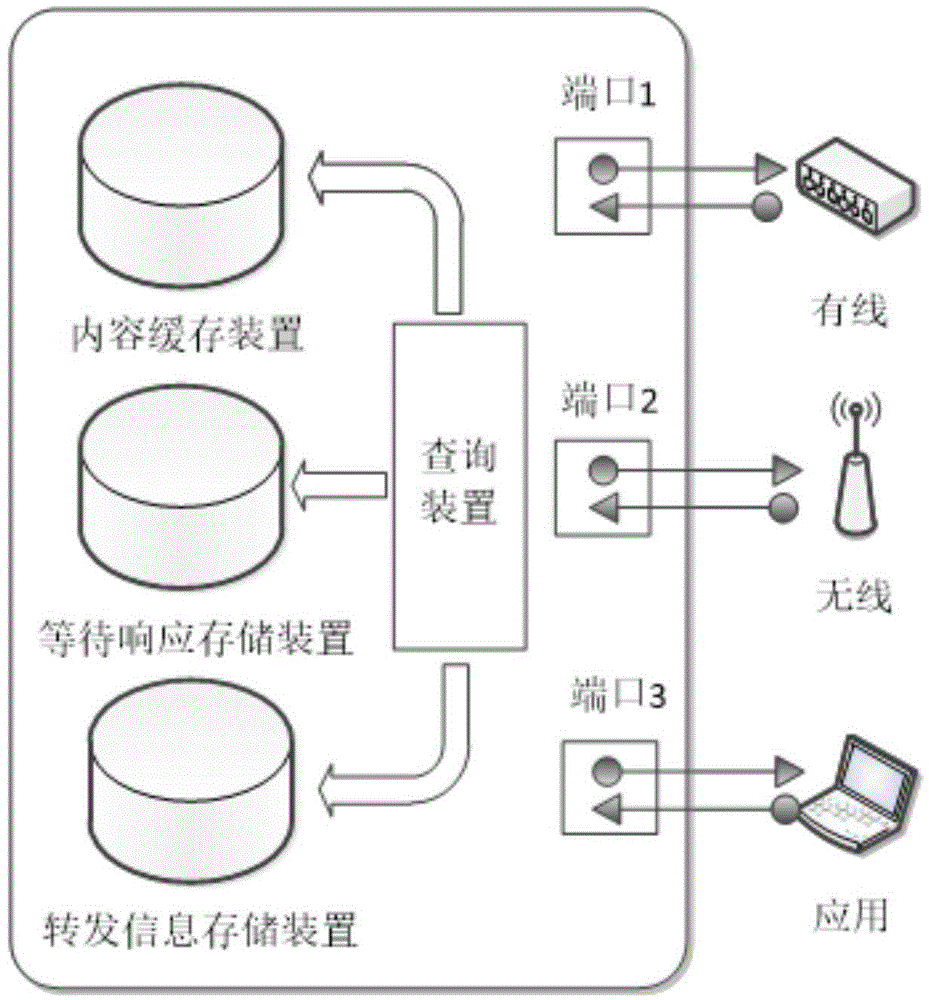

File transmission method and apparatus based on NDN cache optimization

InactiveCN104967677AReduce disturbanceImprove robustnessTransmissionResponse tableCache optimization

The invention relates to a file transmission method and apparatus based on NDN cache optimization. The file transmission method comprises a first step of, when a peer device receives a content segment request and if a content cache device has a corresponding content segment, then transmitting the corresponding content segment out from a request port; a second step of, if the content cache device has no corresponding content segment and if a wait-for-response table contains the name of the content segment, then recording the request port at a table item corresponding to the name; and a third step of, if the wait-for-response table does not contain the name of the content segment, then searching for a forwarding information table, and if the forwarding information table does not contain the name of the content segment or combination of request ports, recording the name of the content segment and the request ports in the wait-for-response table. The method solves the technical problems of short shared file survival time, high network data redundancy rate and large consumed bandwidth in the prior art.

Owner:WUXI LIANGZIYUN DIGITAL NEW MEDIA TECH CO LTD

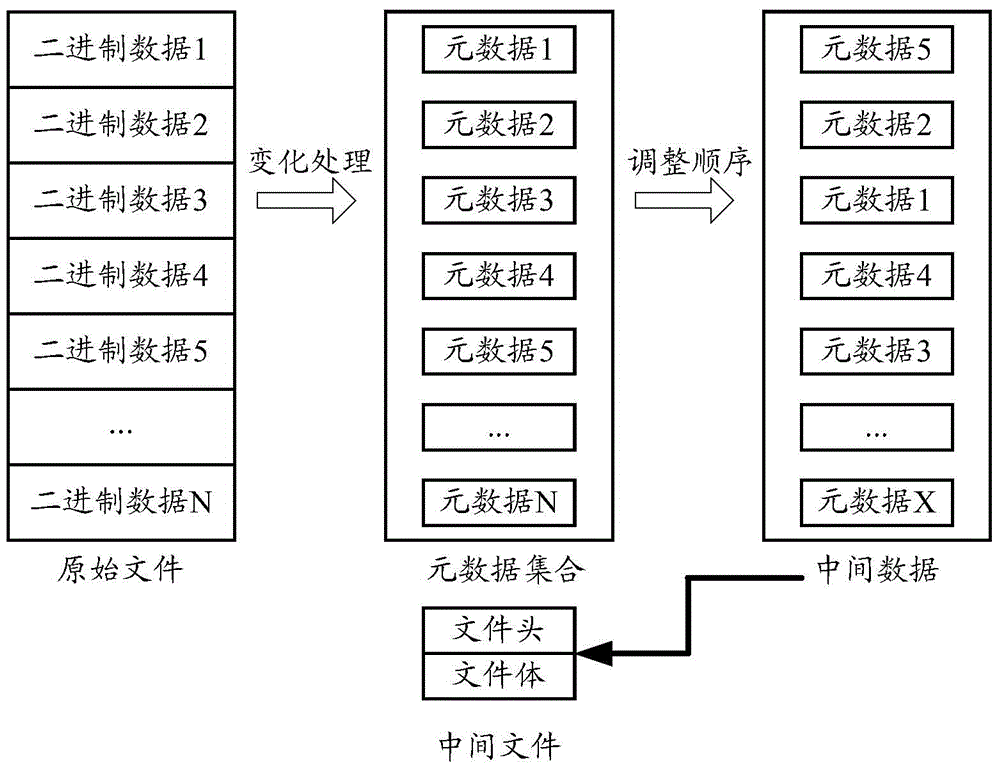

Differential upgrade patch manufacturing method and device

InactiveCN104461593AReduce processingImprove production efficiencySoftware engineeringProgram loading/initiatingCache optimizationData sequences

The invention discloses a differential upgrade patch manufacturing method and device. The method comprises the steps that binary data of a source version are obtained, and mathematical transformation and sorting are carried out on the data to obtain a metadata set, wherein the metadata set comprises set attribute data with the minimum base unit; the data in the metadata set are serialized into an intermediate file; when a differential upgrade patch needs to be manufactured, differentiation analysis is carried out on the source version and a target version according to the intermediate file so as to build a differential upgrade patch. According to the differential upgrade patch manufacturing method and device, the intermediate file is utilized for manufacturing the differential upgrade patch, the differential upgrade patch manufacturing efficiency is improved, and differential upgrade patch manufacturing time is shortened; in addition, due to the fact that a cache optimization mechanism is introduced, the time spent for the processing process in which a differential patch tool is used for manufacturing a differential patch with the longest time is shortened, working efficiency is further improved, the labor cost is saved, and the differential upgrade technology is further applied and popularized.

Owner:ZTE CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com