Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

160results about How to "Improve caching efficiency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

System and method for enabling de-duplication in a storage system architecture

ActiveUS7747584B1Effectively ensureImprove efficiencyDigital data information retrievalDigital data processing detailsData contentData store

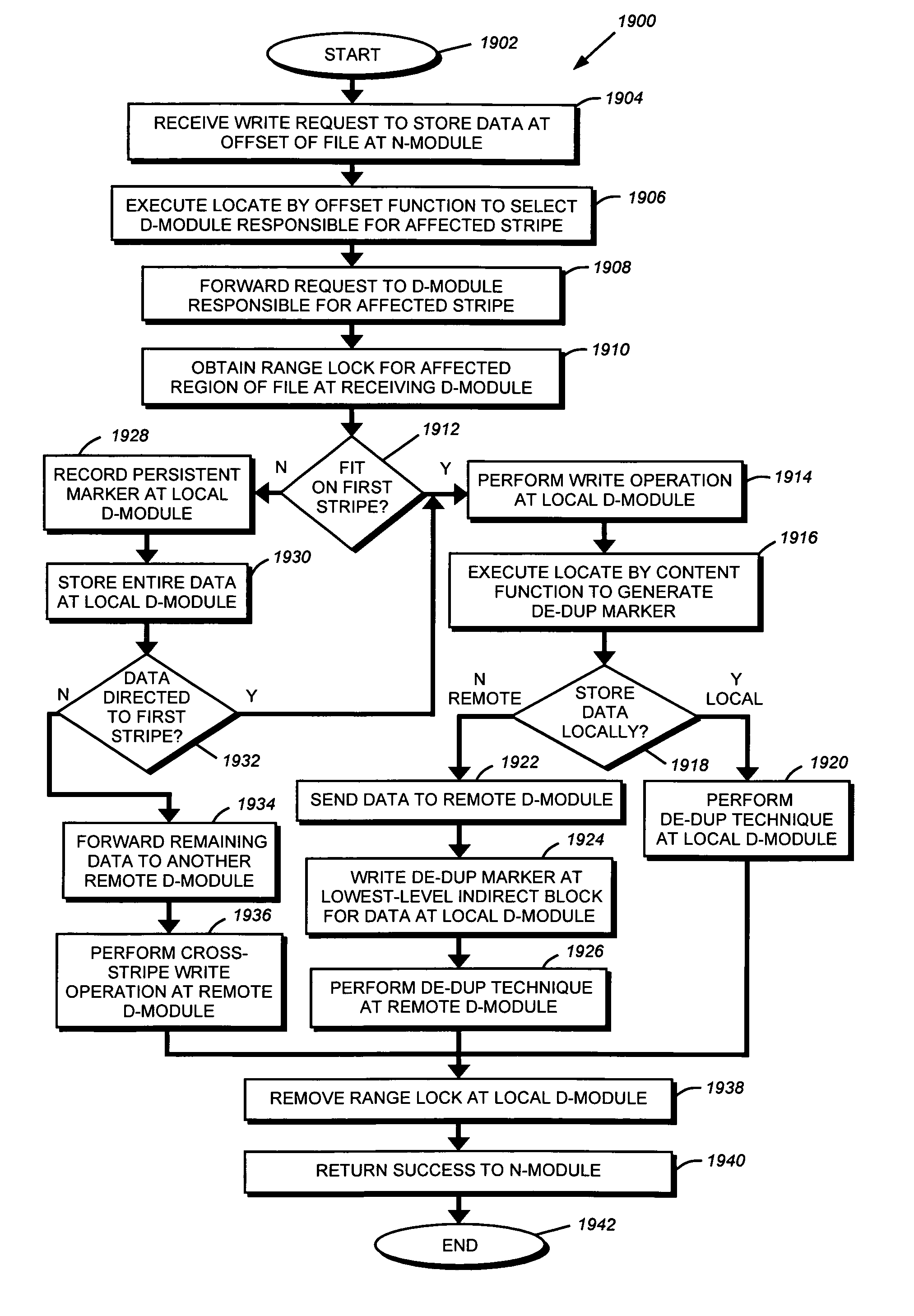

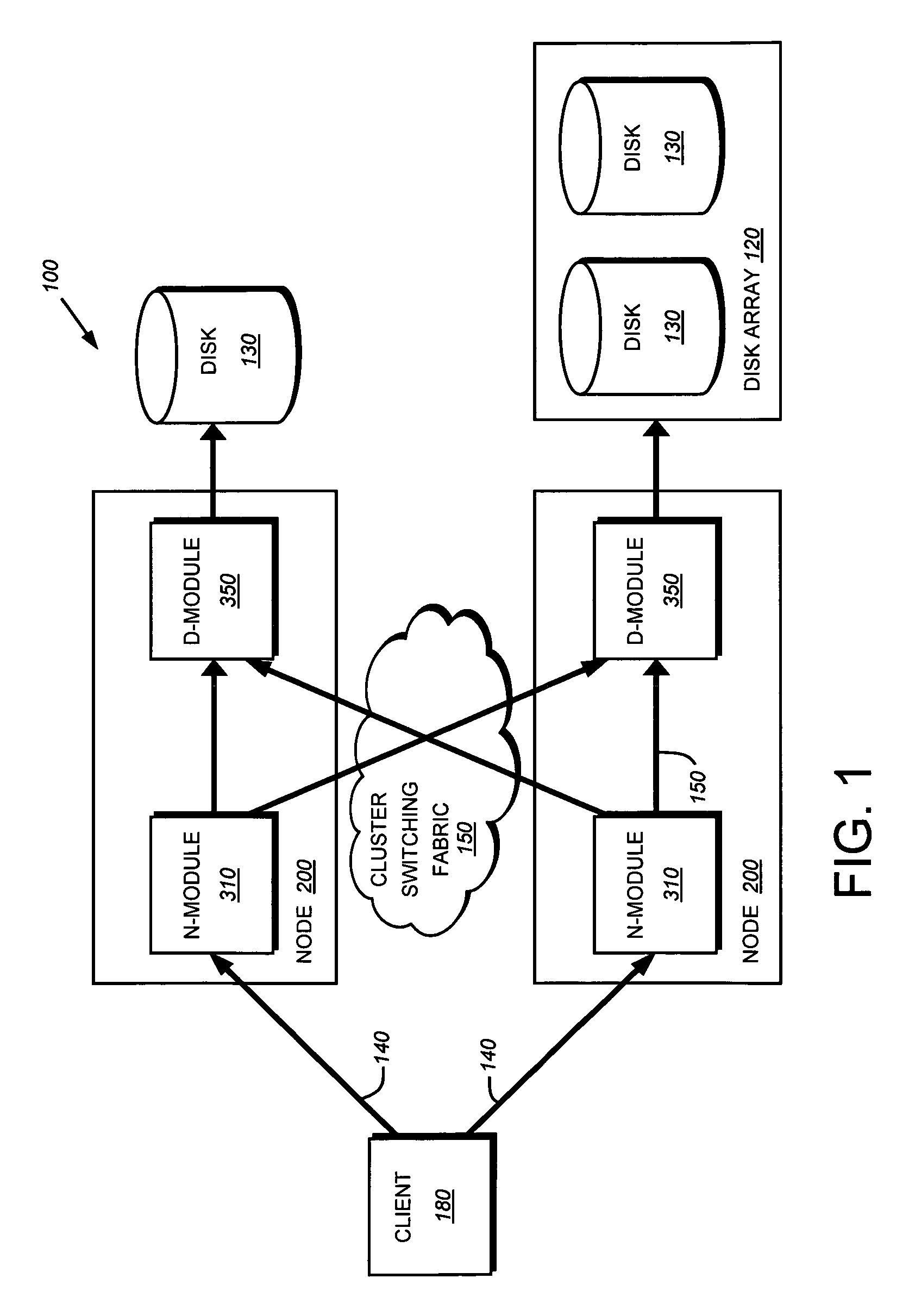

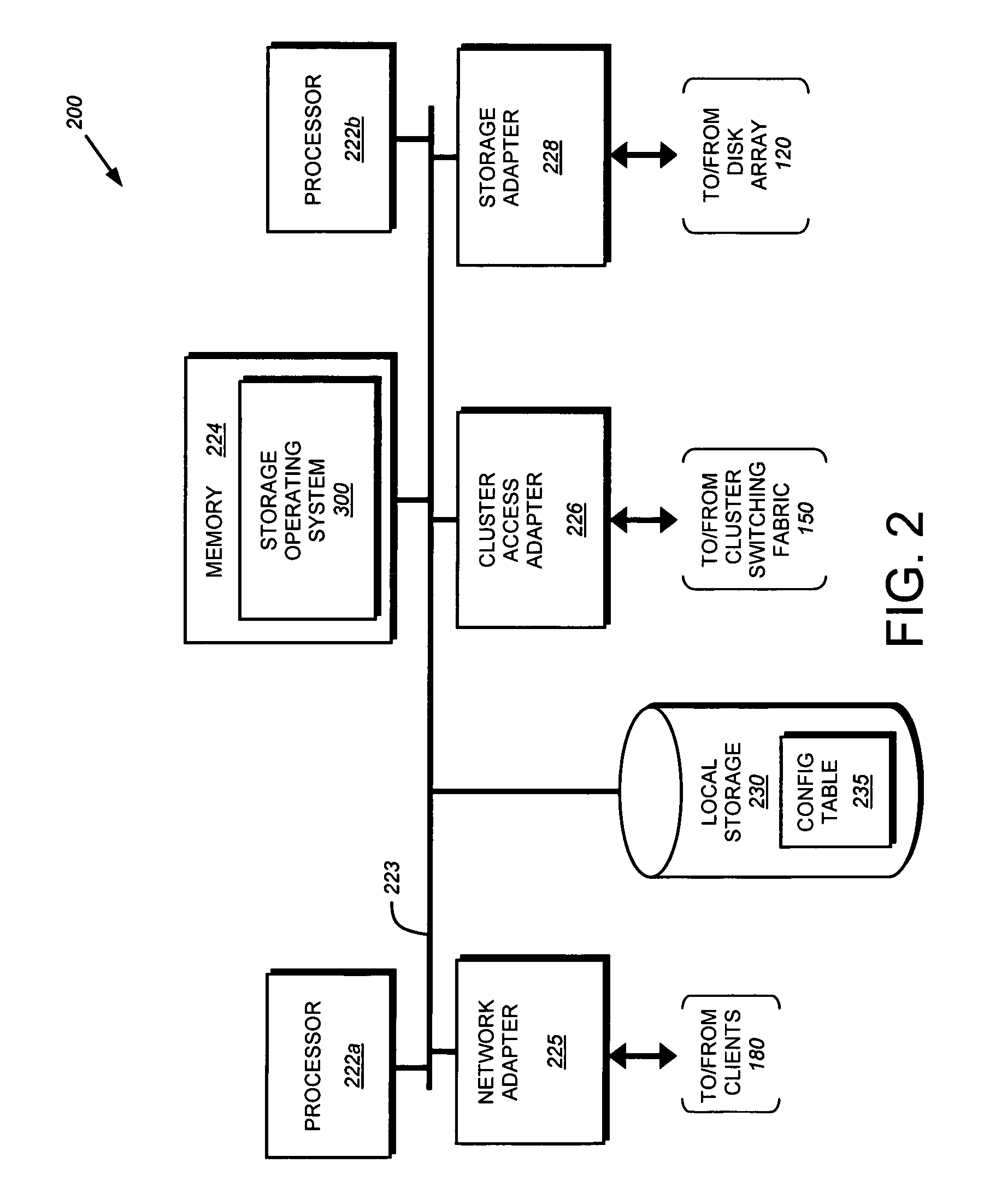

A system and method enables de-duplication in a storage system architecture comprising one or more volumes distributed across a plurality of nodes interconnected as a cluster. De-duplication is enabled through the use of file offset indexing in combination with data content redirection. File offset indexing is illustratively embodied as a Locate by offset function, while data content redirection is embodied as a novel Locate by content function. In response to input of, inter alia, a data container (file) offset, the Locate by offset function returns a data container (file) index that is used to determine a storage server that is responsible for a particular region of the file. The Locate by content function is then invoked to determine the storage server that actually stores the requested data on disk. Notably, the content function ensures that data is stored on a volume of a storage server based on the content of that data rather than based on its offset within a file. This aspect of the invention ensures that all blocks having identical data content are served by the same storage server so that it may implement de-duplication to conserve storage space on disk and increase cache efficiency of memory.

Owner:NETWORK APPLIANCE INC

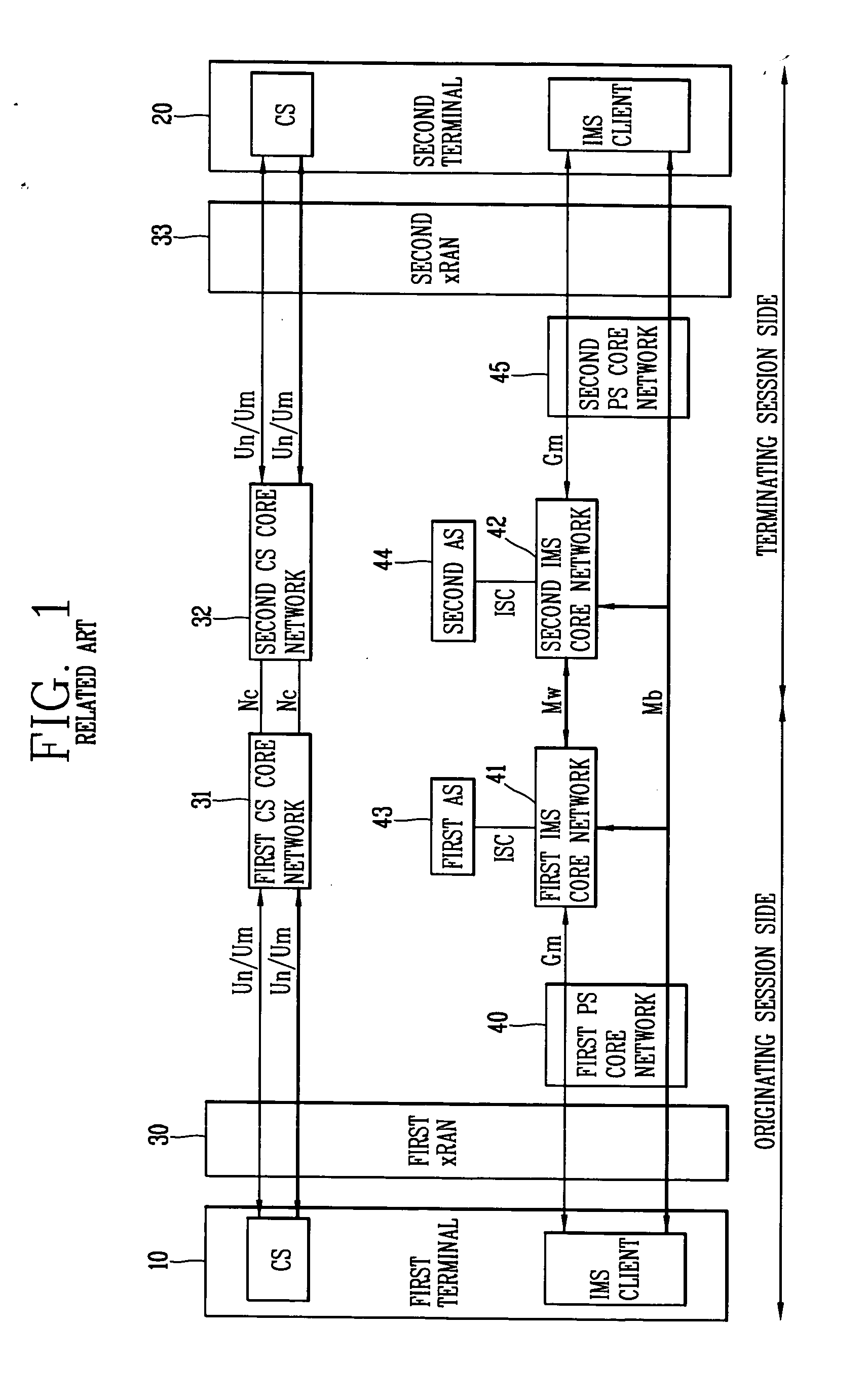

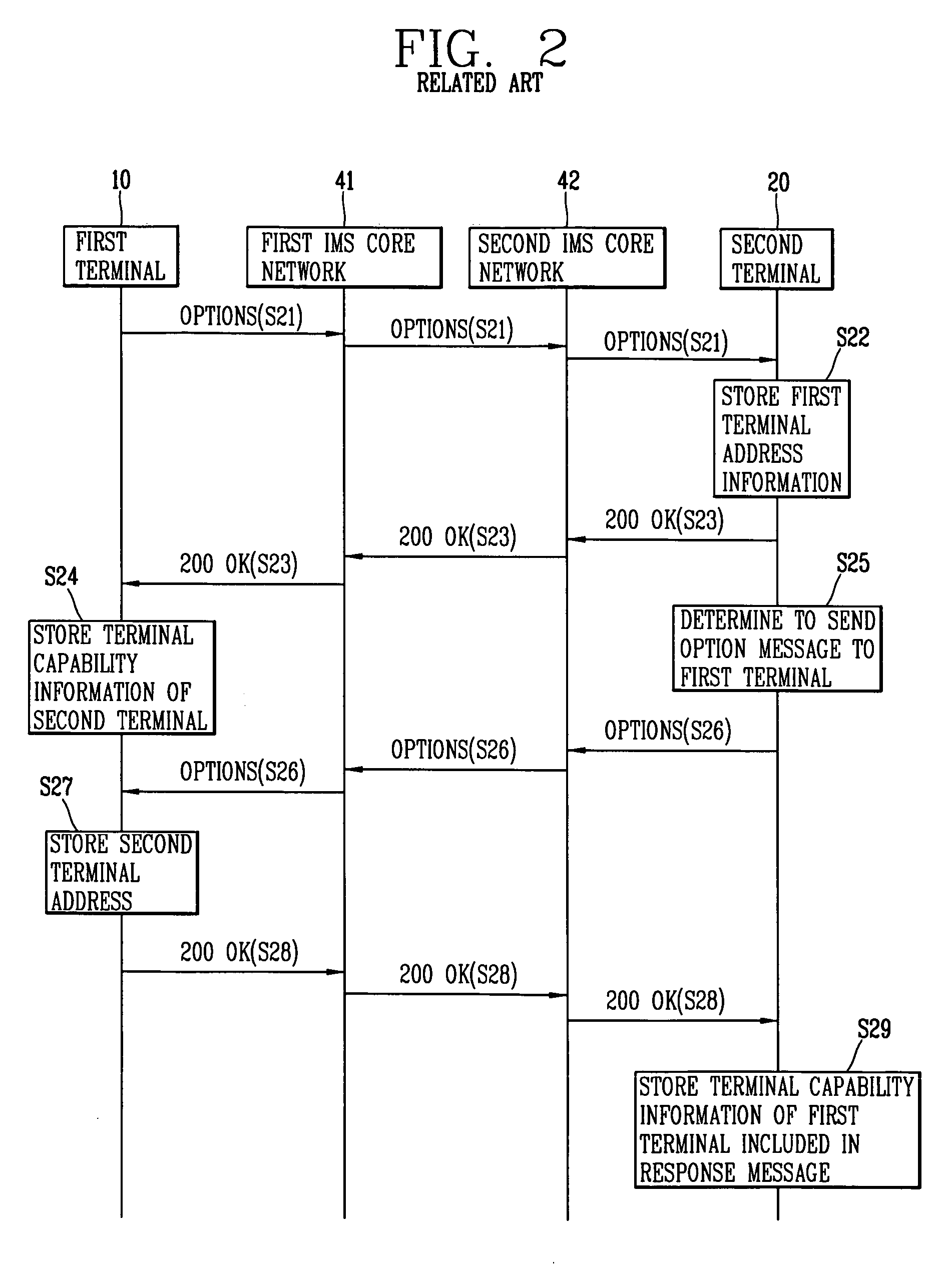

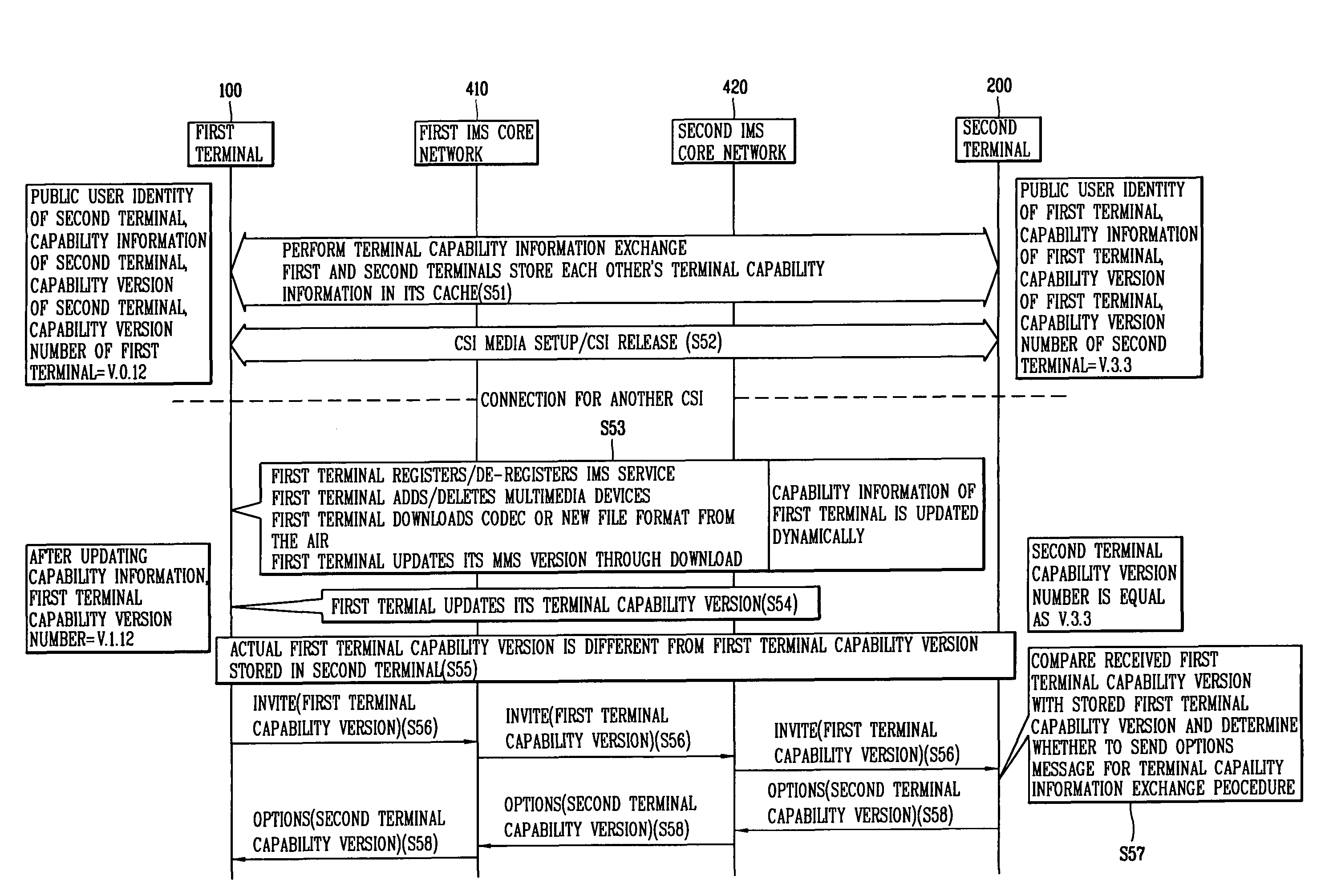

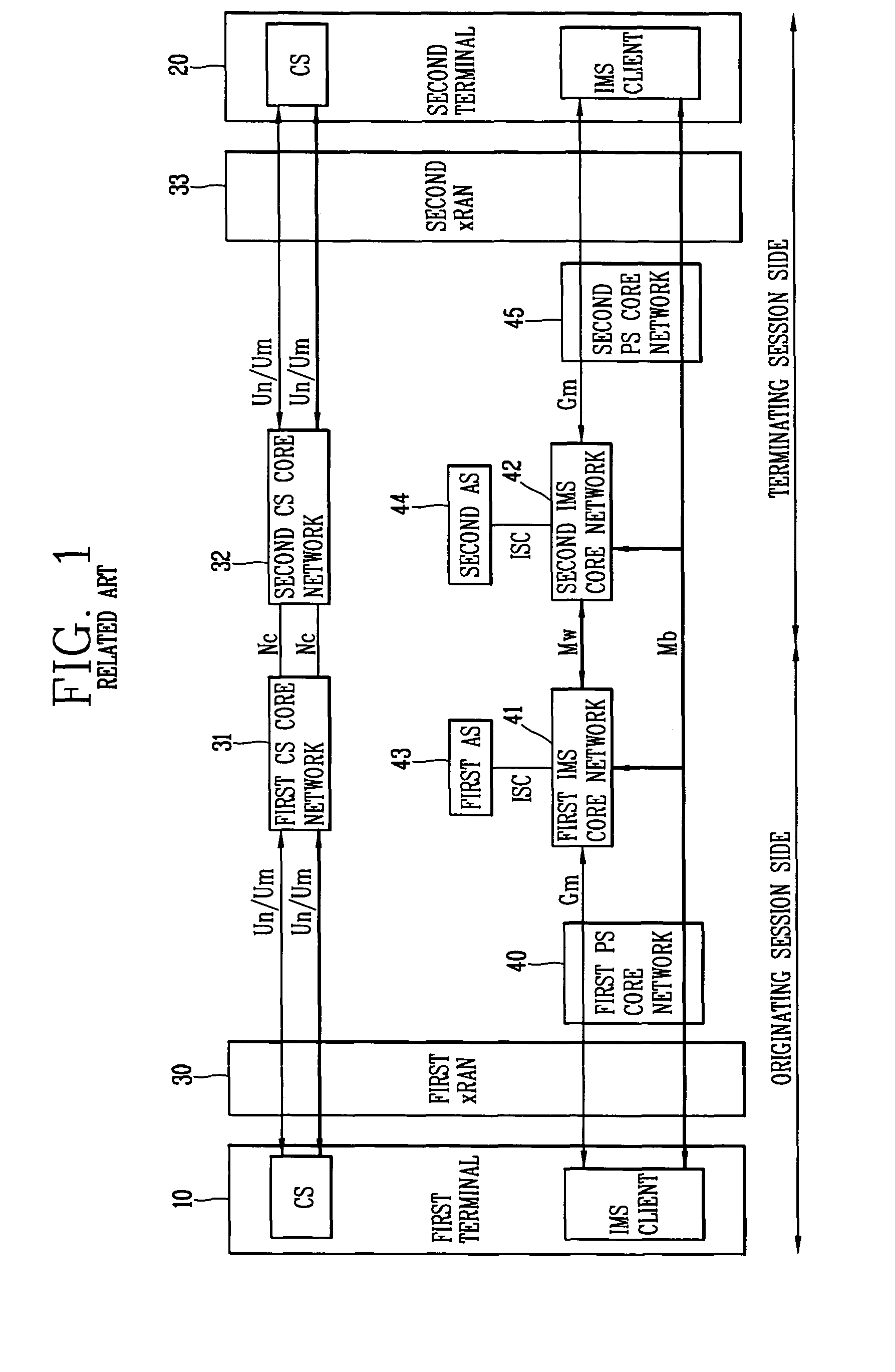

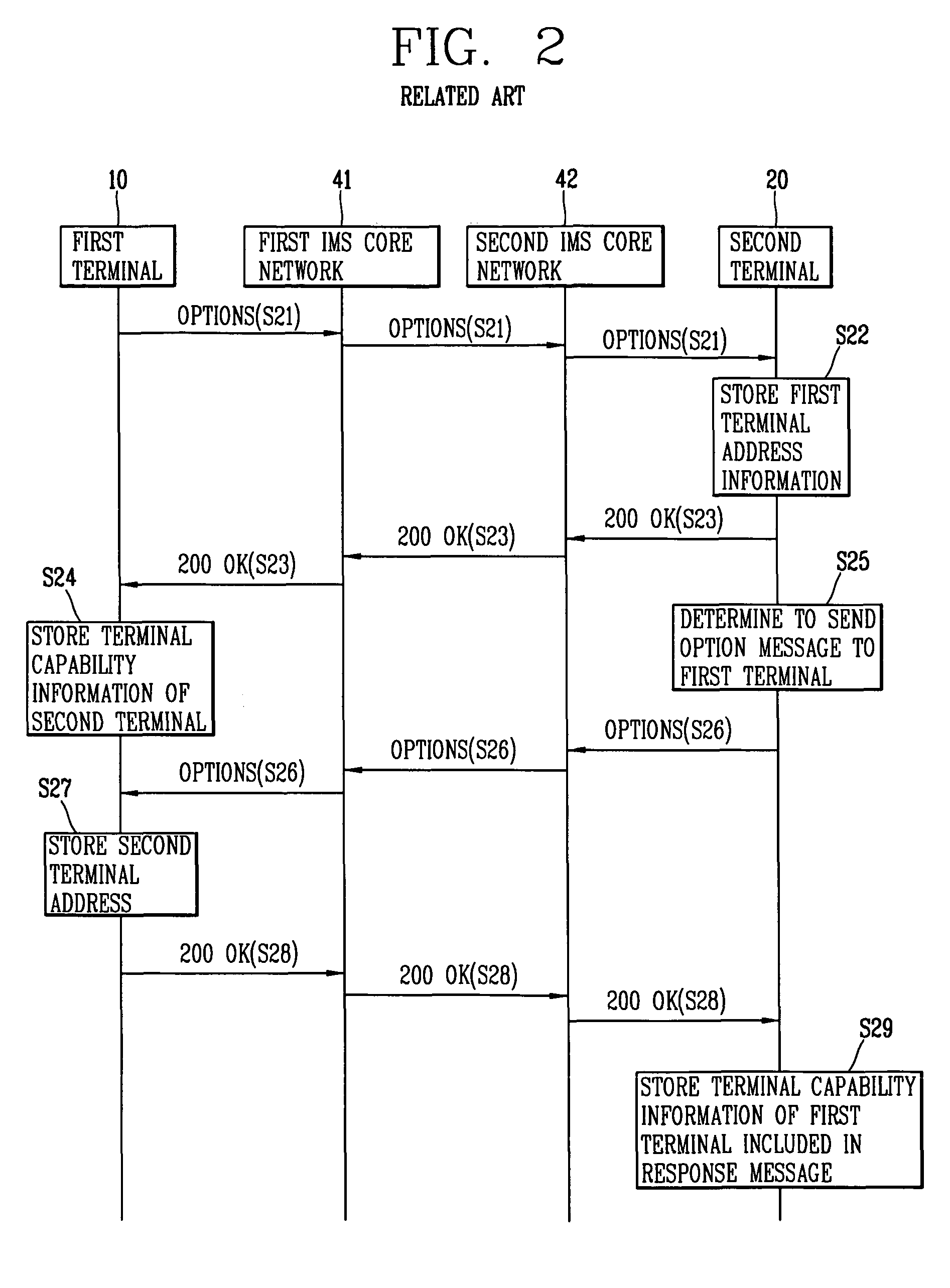

Terminal, method and system for performing combination service using terminal capability version

ActiveUS20070002840A1Simple procedureImprove caching efficiencyConnection managementData switching by path configurationOperating systemService use

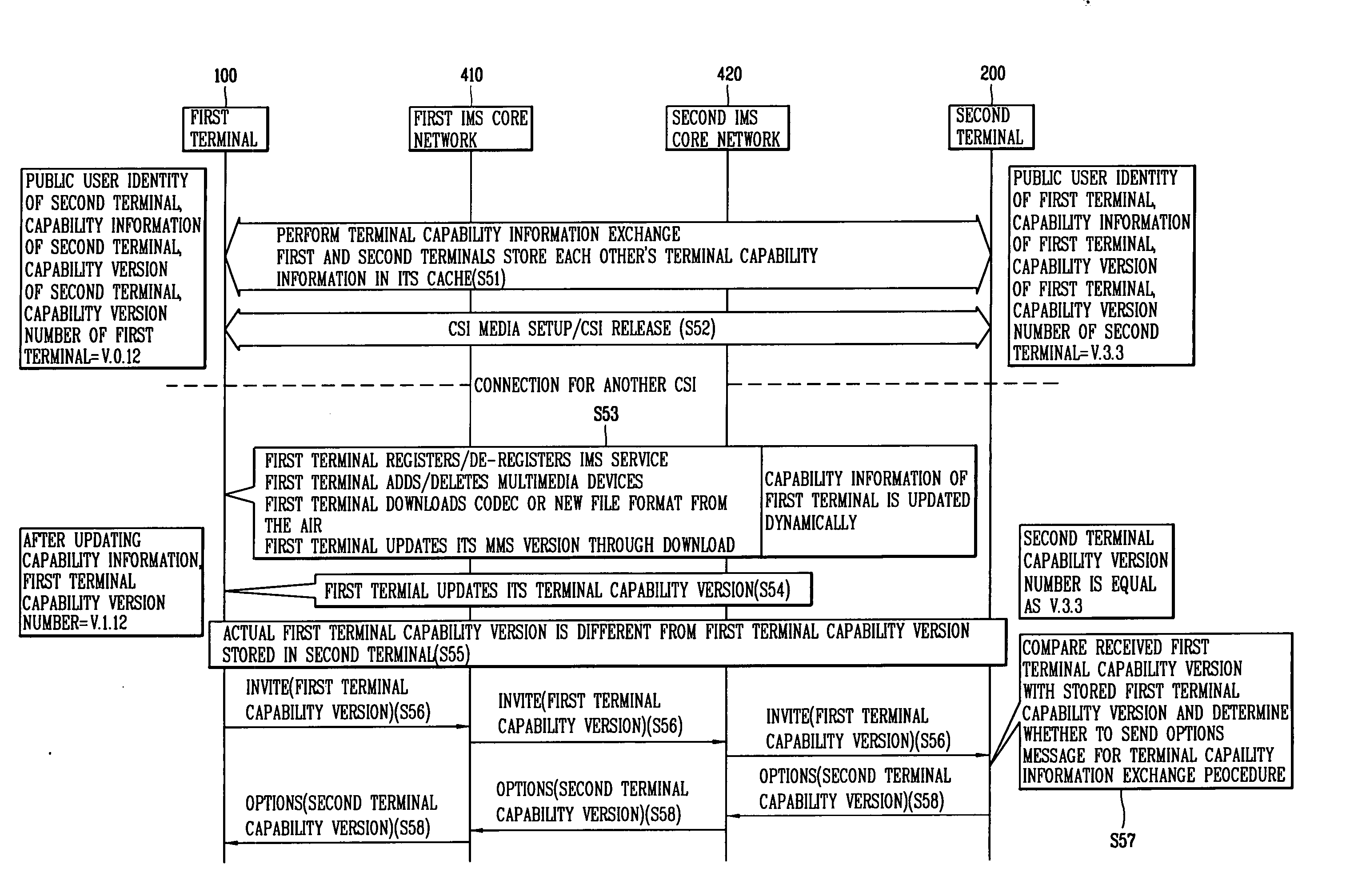

A terminal, method and system for providing a CS service, a SIP-based service, or a CSI service, are provided. According to an embodiment, the terminal includes a controller to receive a terminal capability version of at least one target terminal, to compare the received terminal capability version with a previously stored terminal capability version of the at least one target terminal, and to determine whether to request for terminal capability information of the at least one target terminal based on the comparison result, wherein the terminal capability version identifies a version of capabilities of the at least one target terminal.

Owner:LG ELECTRONICS INC

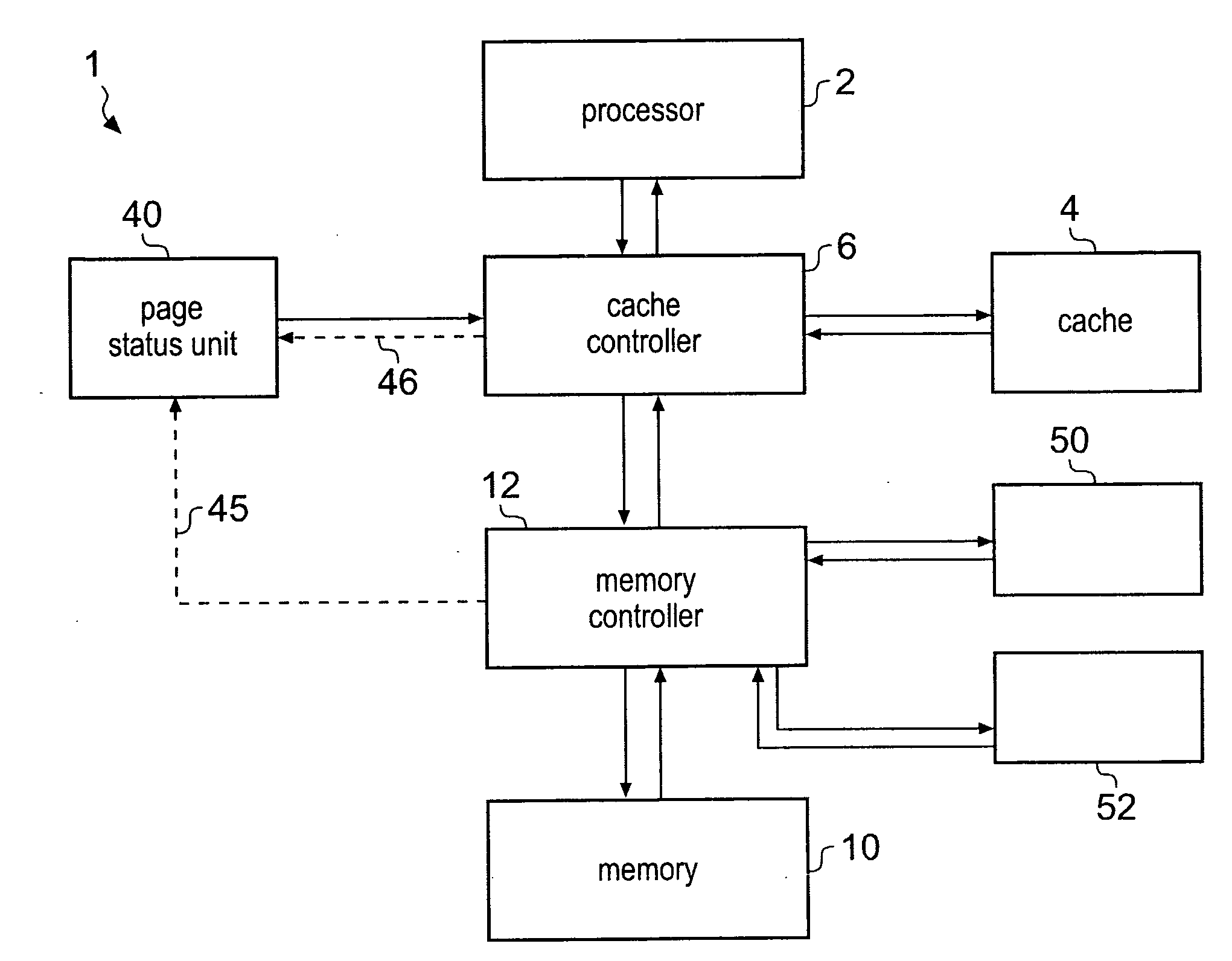

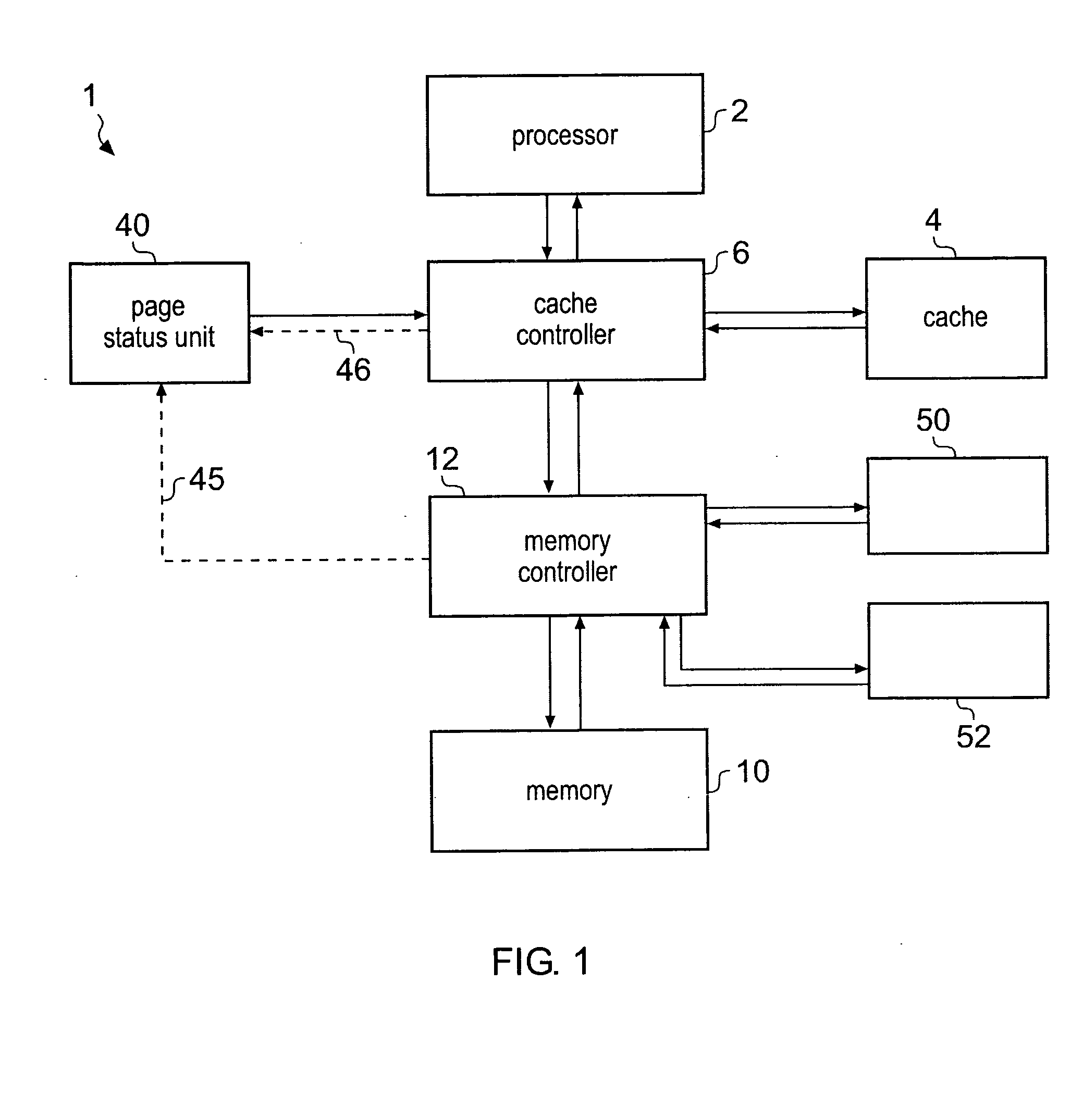

Efficiency of cache memory operations

InactiveUS20090265514A1Improve caching efficiencyEasy accessMemory systemsParallel computingCache management

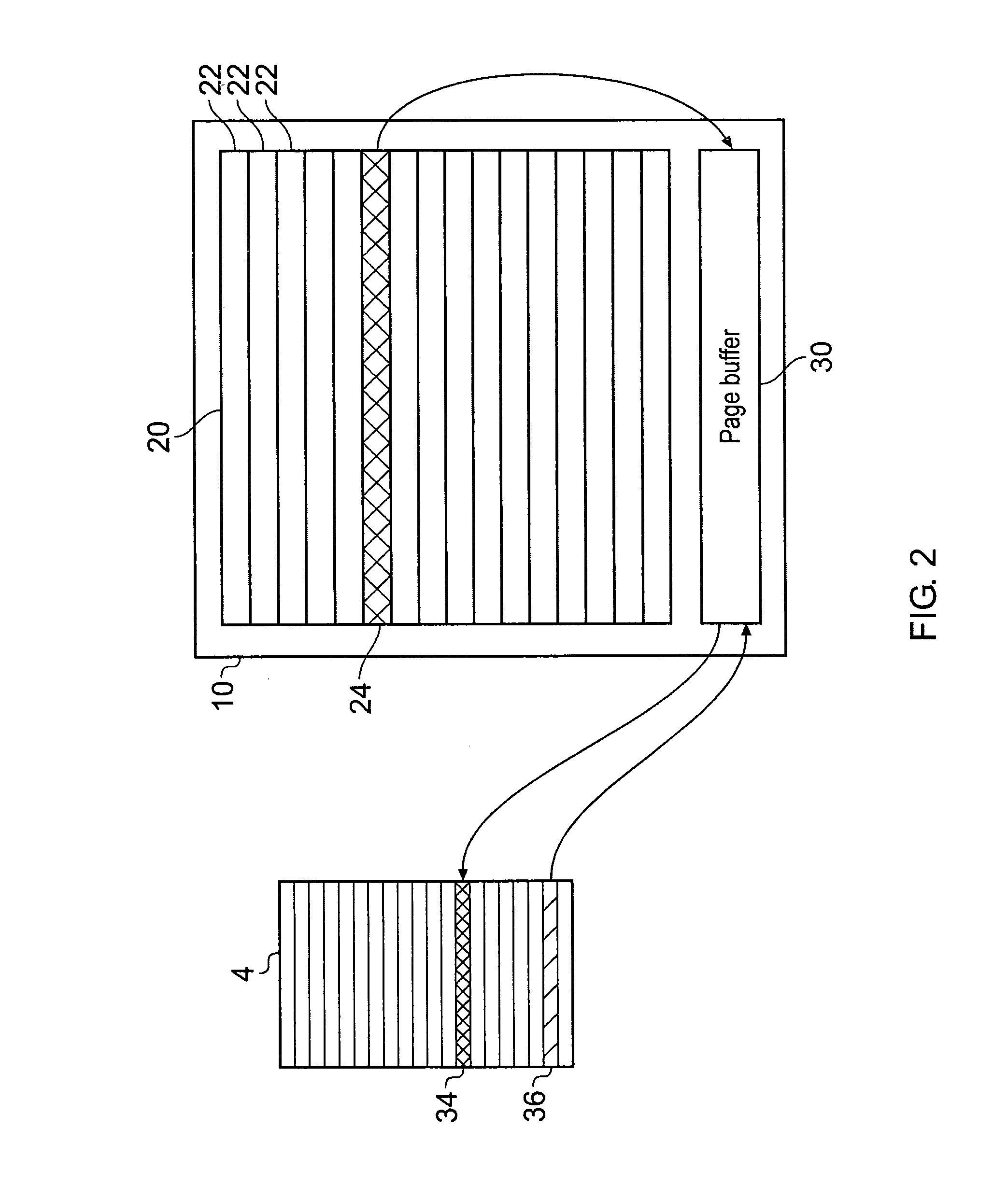

A processing system 1 including a memory 10 and a cache memory 4 is provided with a page status unit 40 for providing a cache controller with a page open indication indicating one or more open pages of data values in memory. At least one of one or more cache management operations performed by the cache controller is responsive to the page open indication so that the efficiency and / or speed of the processing system can be improved.

Owner:ARM LTD

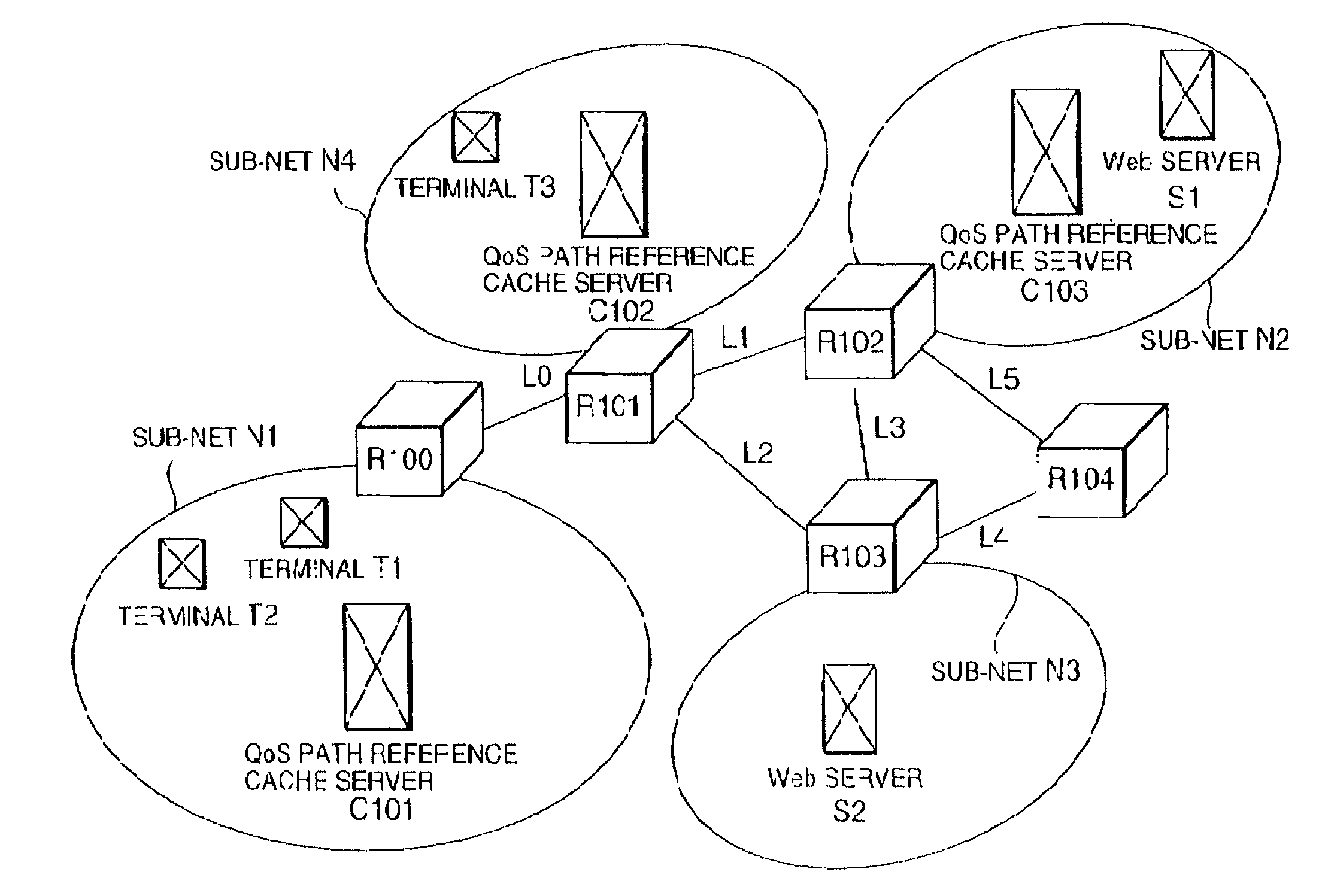

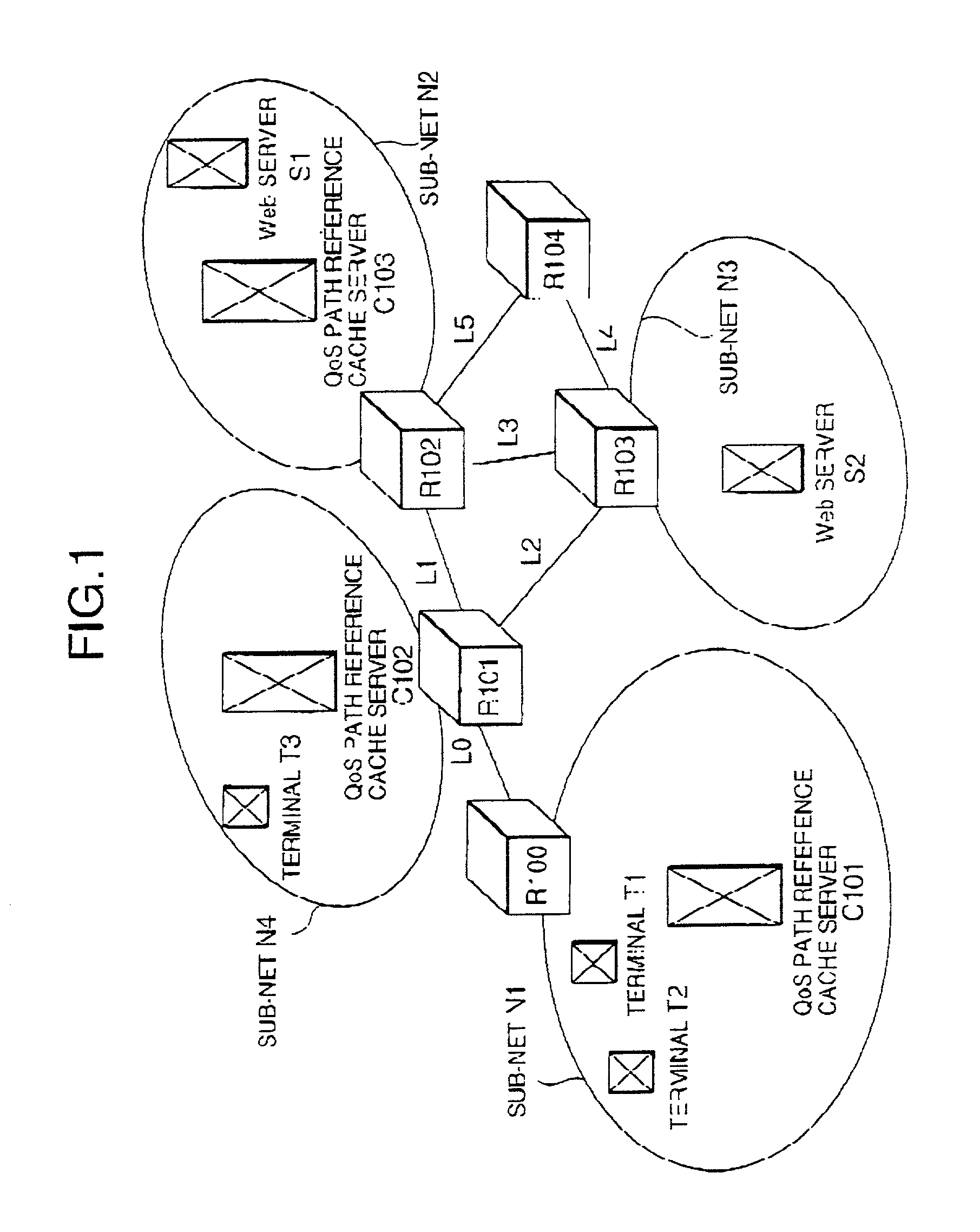

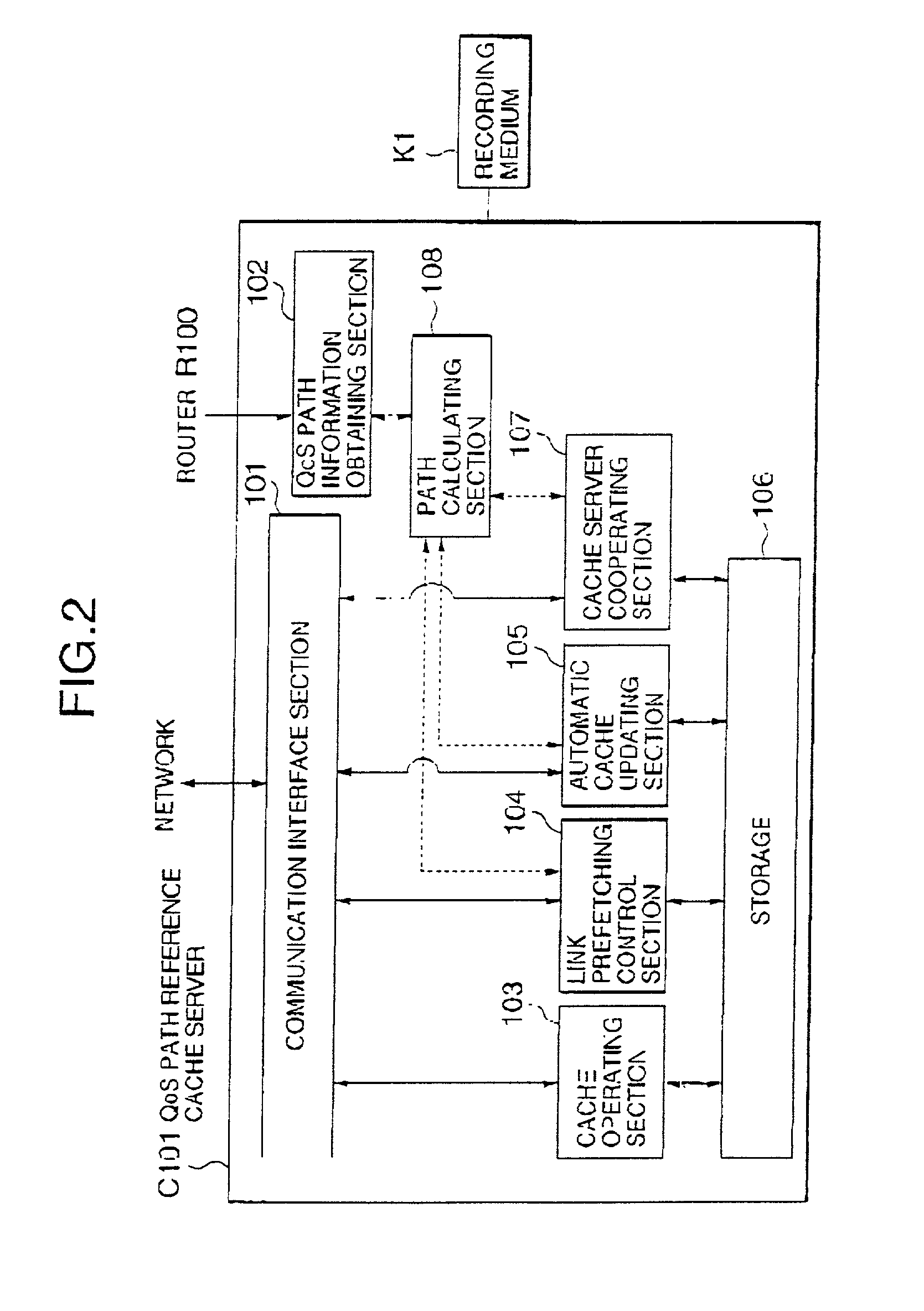

Technique for enhancing effectiveness of cache server

InactiveUS20050246347A1Increase probabilityImprove caching efficiencyDigital data information retrievalDigital data processing detailsCache serverParallel computing

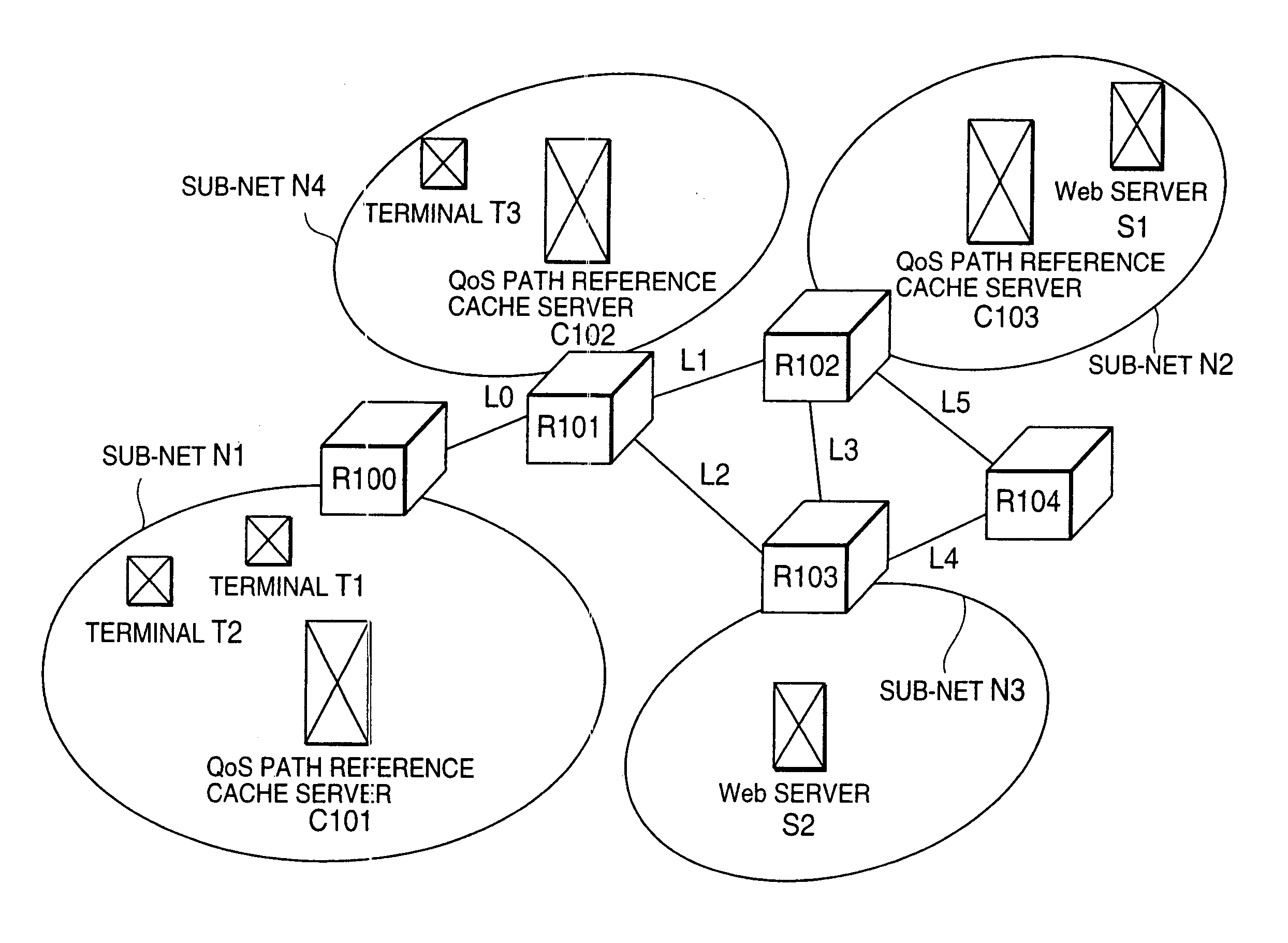

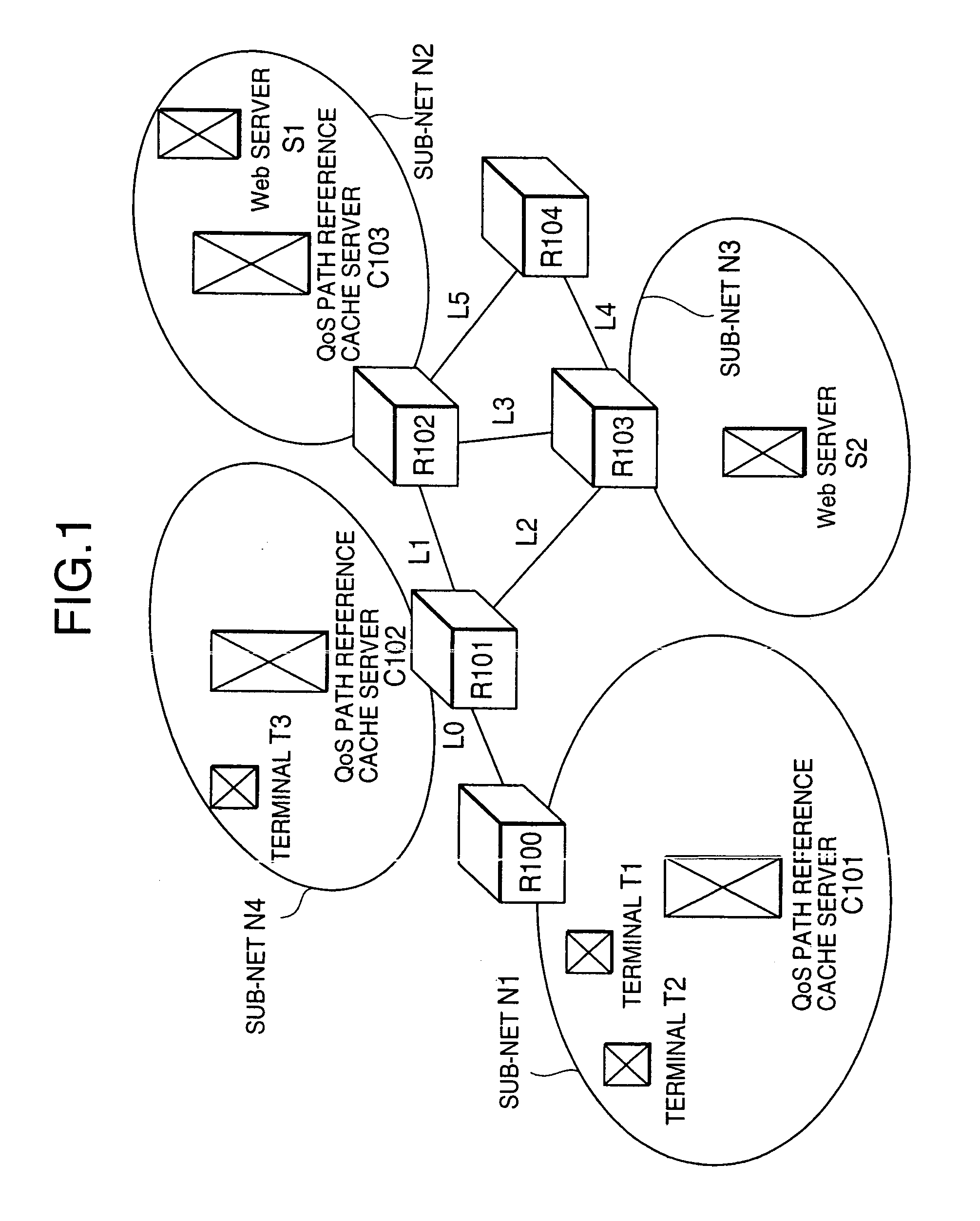

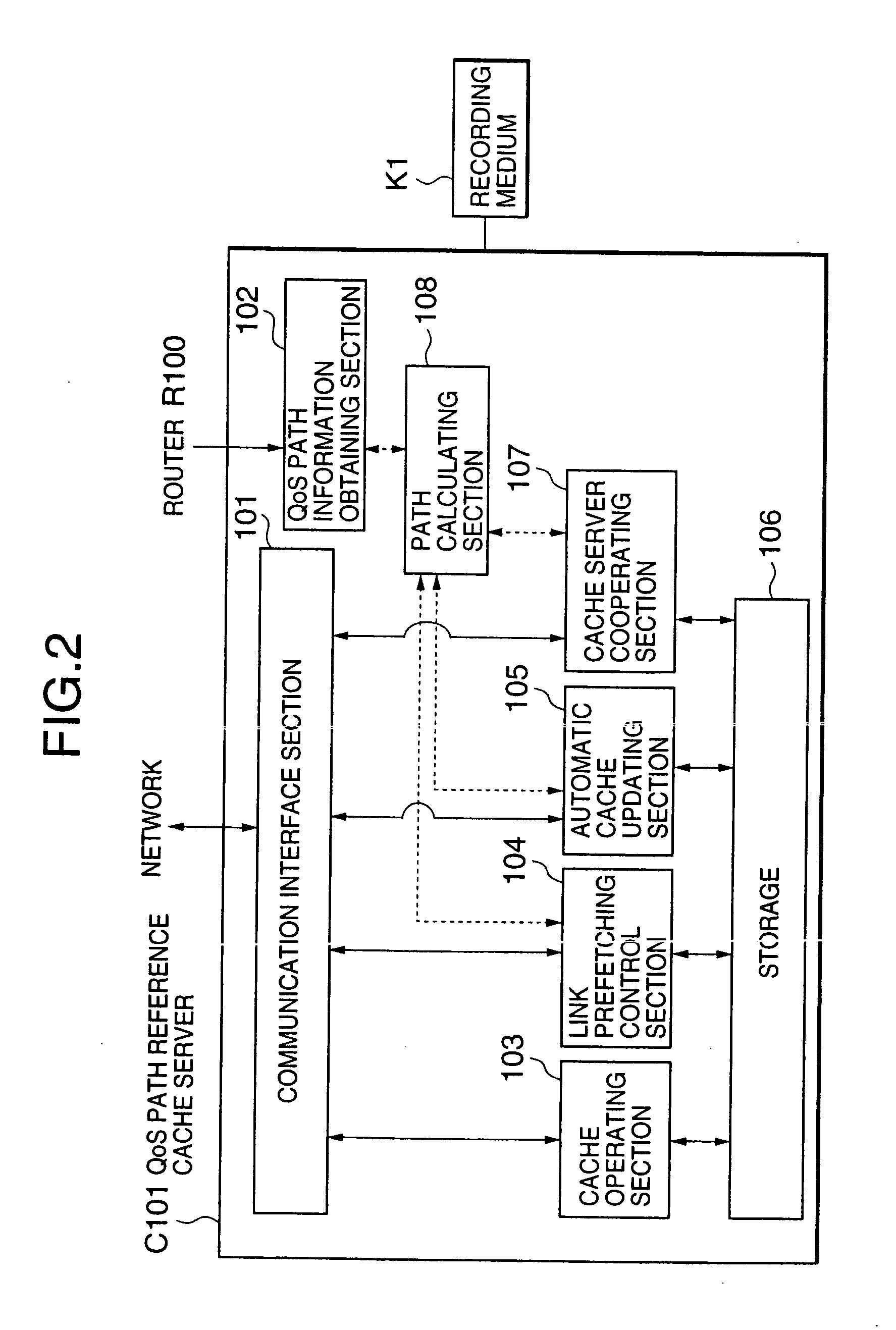

A path calculating section obtains a path suitable for carrying out an automatic cache updating operation, a link prefetching operation, and a cache server cooperating operation, based on QoS path information that includes network path information and path load information obtained by a QoS path information obtaining section. An automatic cache updating section, a link prefetching control section, and a cache server cooperating section carry out respective ones of the automatic cache updating operation, the link prefetching operation, and the cache server cooperating operation, by utilizing the path obtained. For example, the path calculating section obtains a maximum remaining bandwidth path as the path.

Owner:NEC CORP

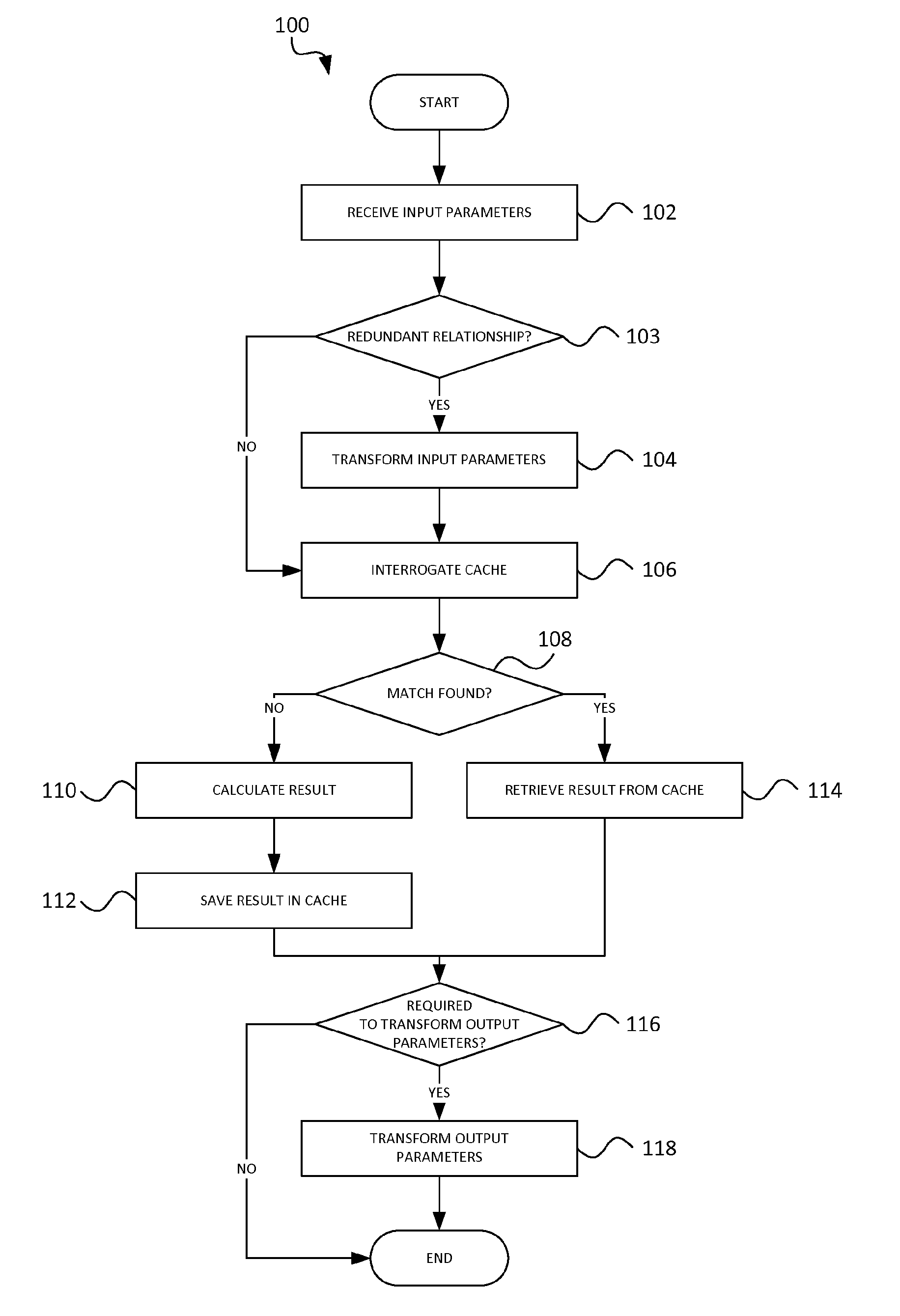

Method of operating a computing device to perform memoization

InactiveUS20110302371A1Improve caching efficiencyReduce in quantityDigital data processing detailsMemory adressing/allocation/relocationParallel computingMemoization

Owner:CSIR

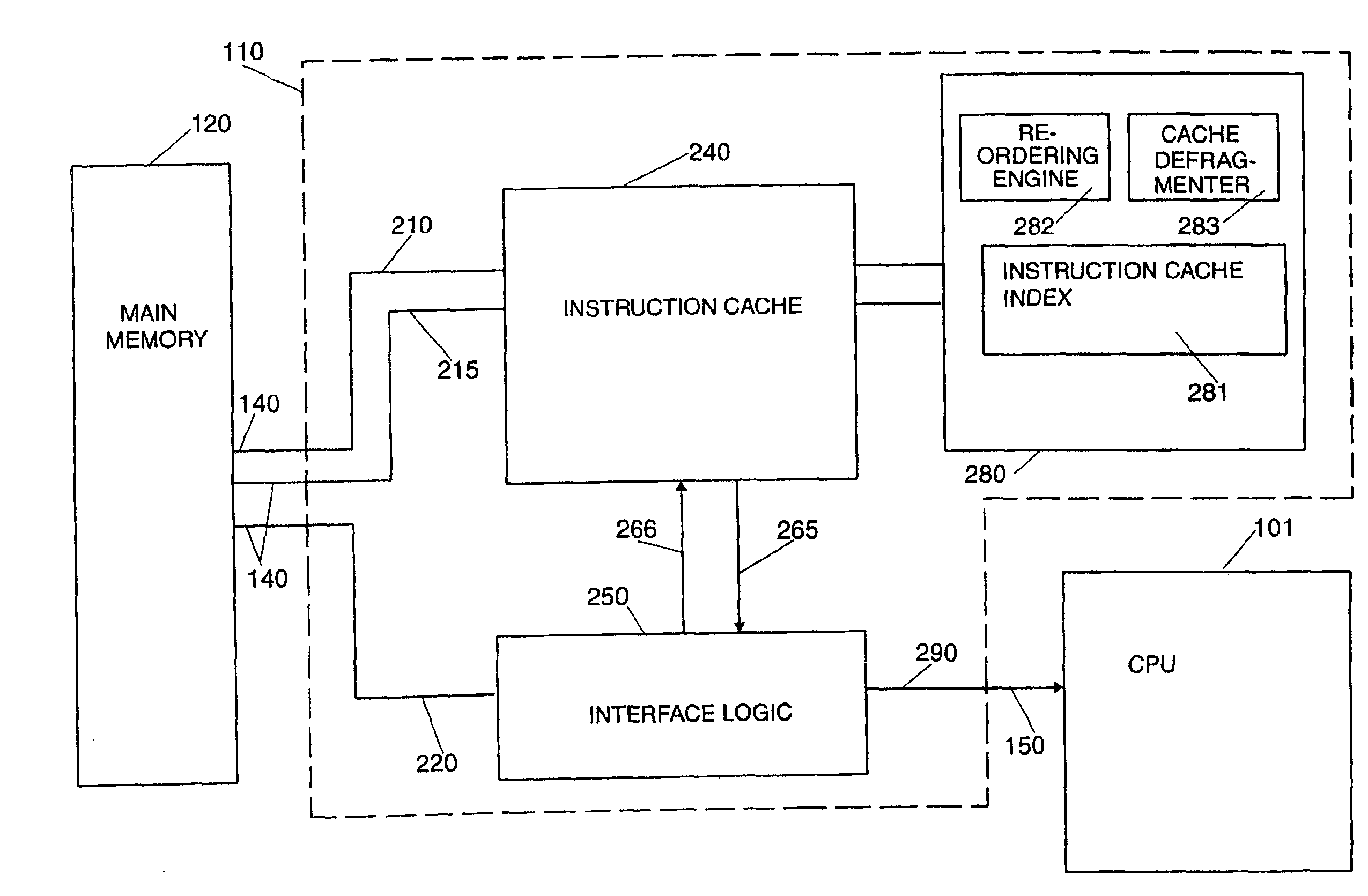

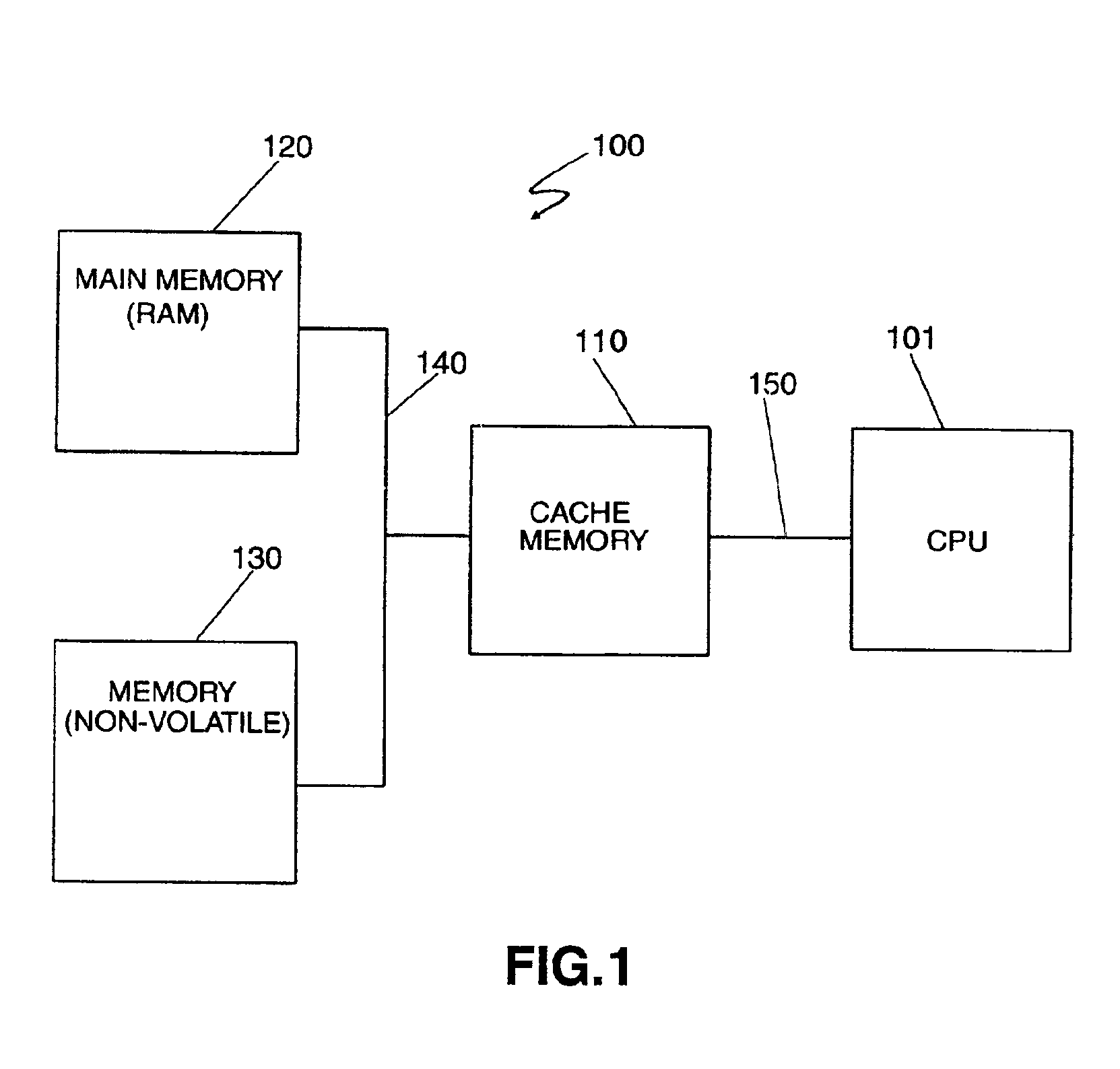

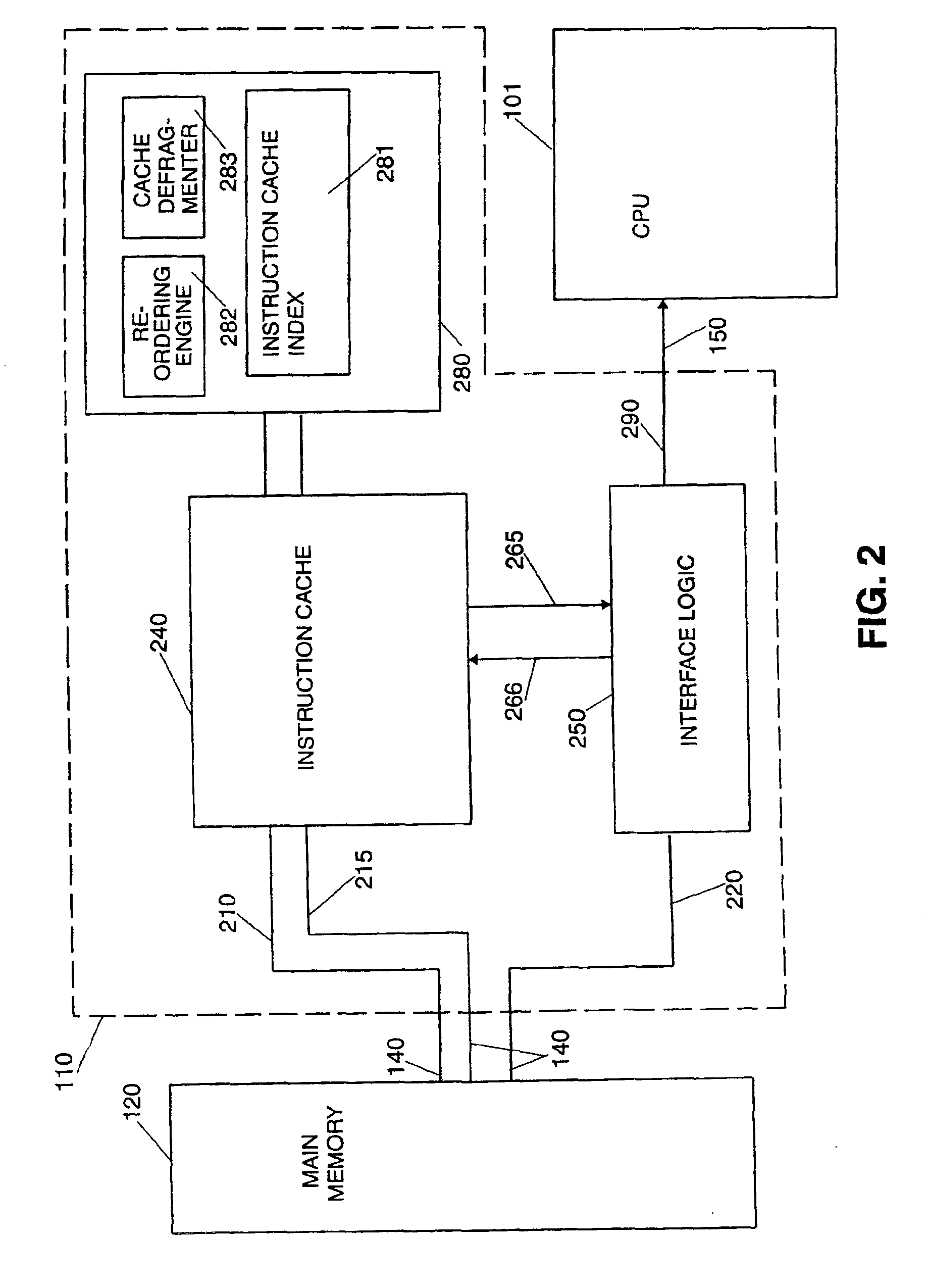

Scheme for reordering instructions via an instruction caching mechanism

InactiveUS6920530B2Reduce complexityImprove caching efficiencyProgram control using stored programsMemory adressing/allocation/relocationBasic blockStore instruction

A method and system for storing instructions retrieved from memory in a memory cache to provide said instructions to a processor. First a new instruction is received from the memory. The system then determines whether the new instruction is a start of a basic block of instructions. If the new instruction is the start of a basic block of instructions, the system determines whether the basic block of instructions is stored in the memory cache responsive. If the basic block of instructions is not stored in the memory cache, the system retrieves the basic block of instructions for the new instruction from the memory. The system then stores the basic block of instructions in a buffer. The system then predicts a next basic block of instructions needed by the processor from the basic block of instructions. The system determines whether the next block of instructions is stored in the cache memory and retrieves the next basic block of instructions from the memory if the next block of instructions is not stored in memory.

Owner:ORACLE INT CORP

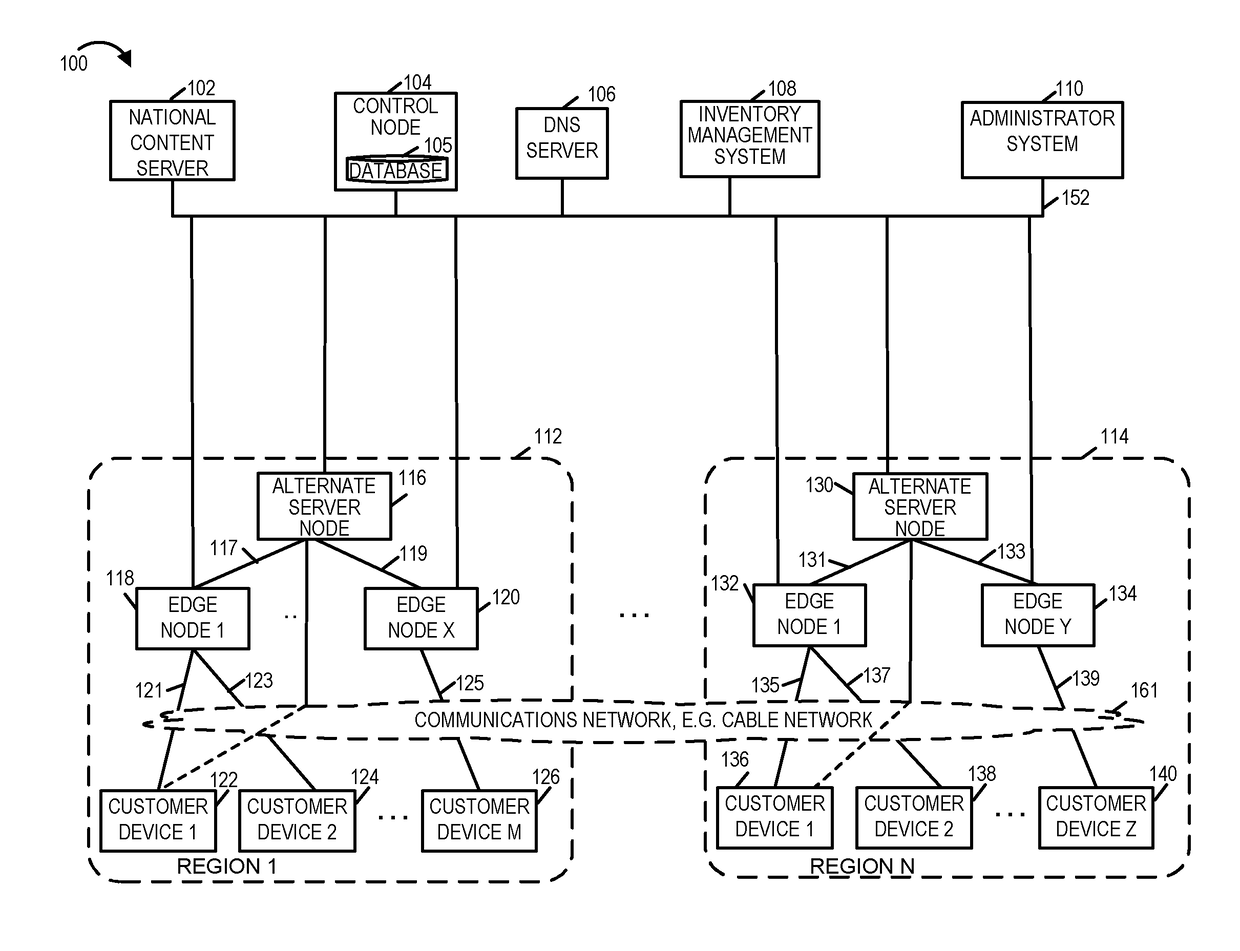

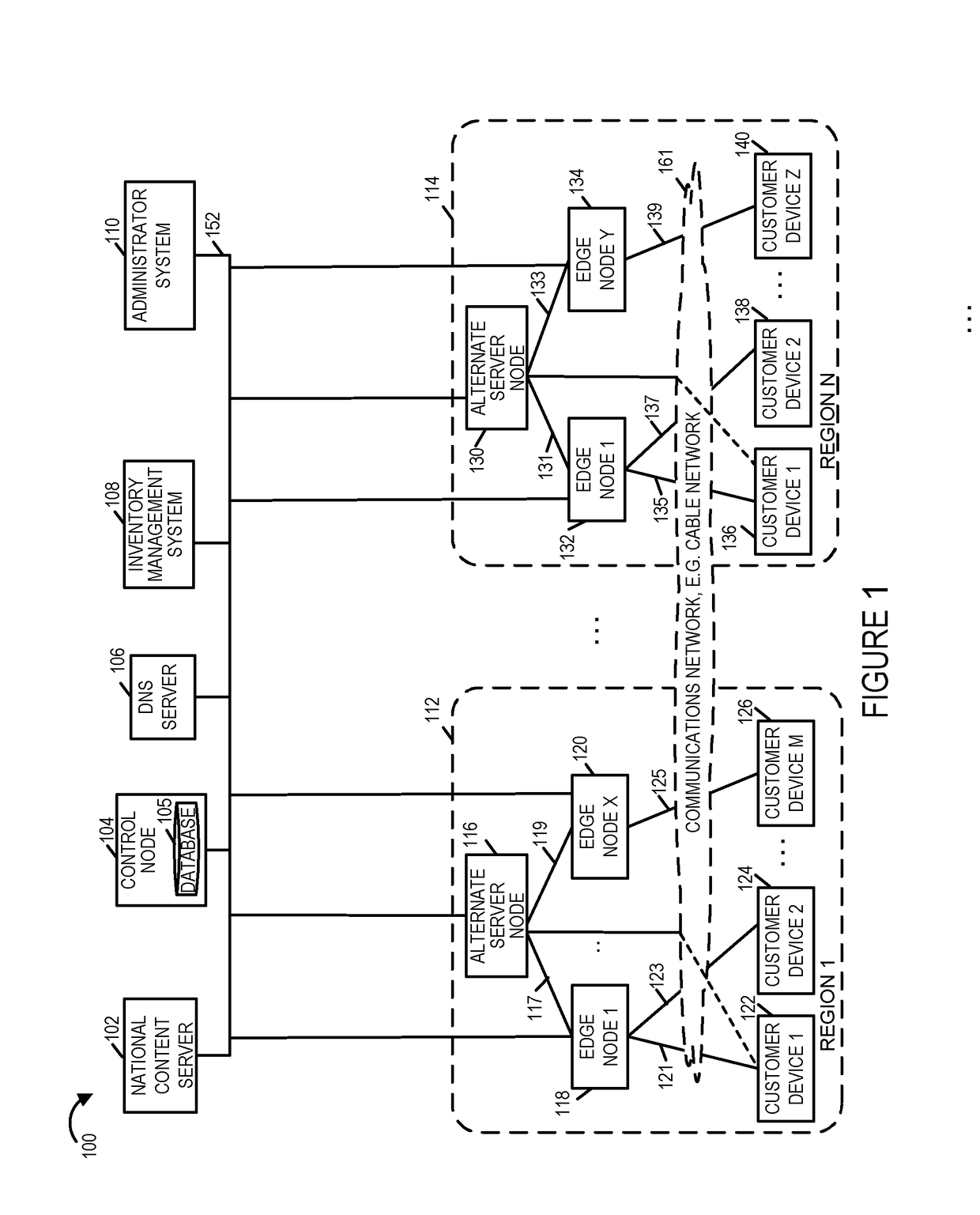

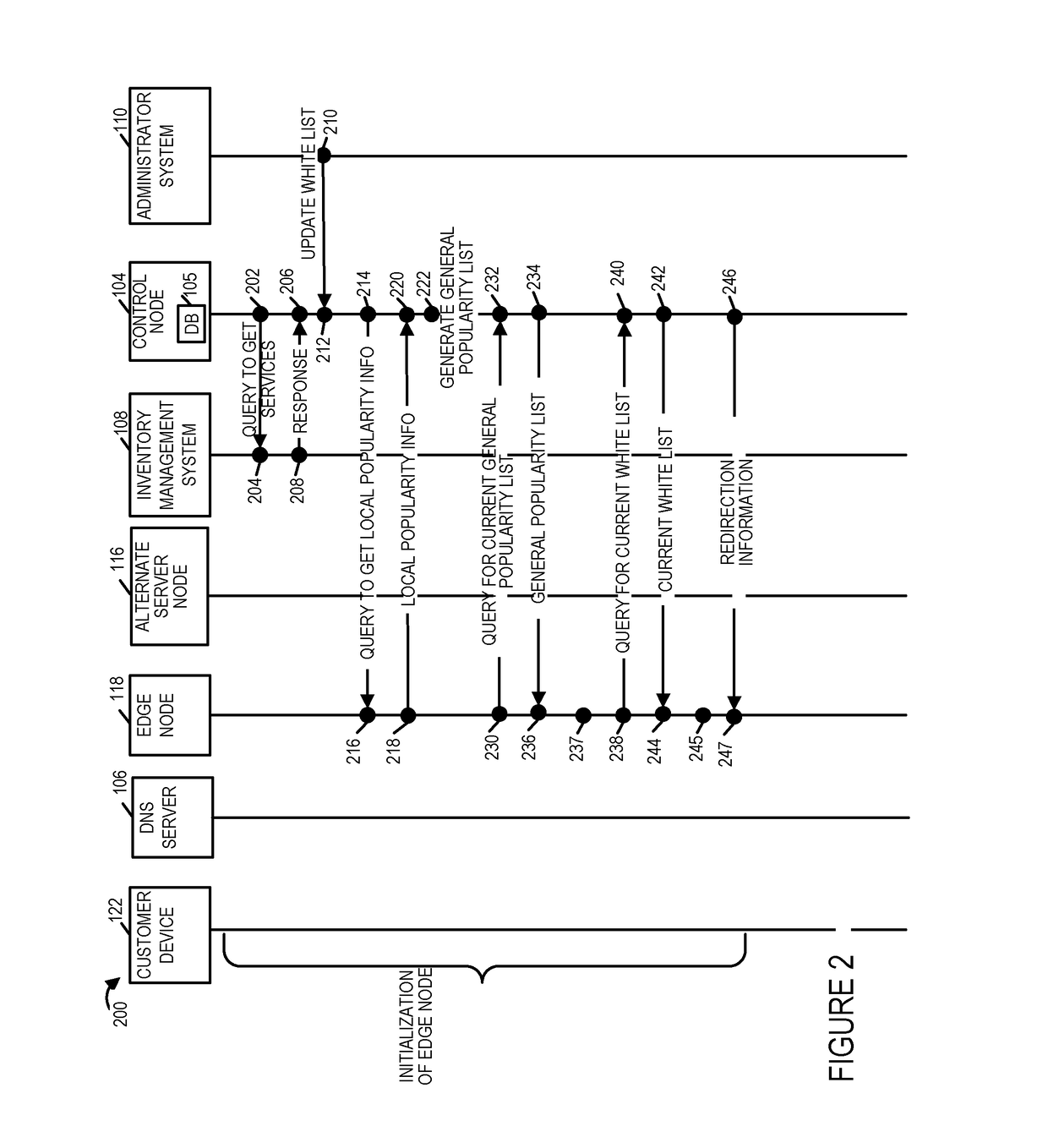

Methods and apparatus for serving content to customer devices based on dynamic content popularity

Methods and apparatus for processing requests for content received from customer devices are described. A decision on how to respond to a request for content is made at an edge node based on a locally maintained popularity list. The local popularity list reflects the local popularity of individual pieces of content at the edge node. A white list of content to be cached and served irrespective of popularity is sometimes used in combination with the local popularity list to make decisions as to how to respond to individual requests for content. In some embodiments the edge node decides on one of the following responses to a content request: i) cache and serve the requested content; ii) serve but don't cache the requested content; or iii) redirect the content request to another node, e.g., an alternate serving node, which can respond to the content request.

Owner:TIME WARNER CABLE ENTERPRISES LLC

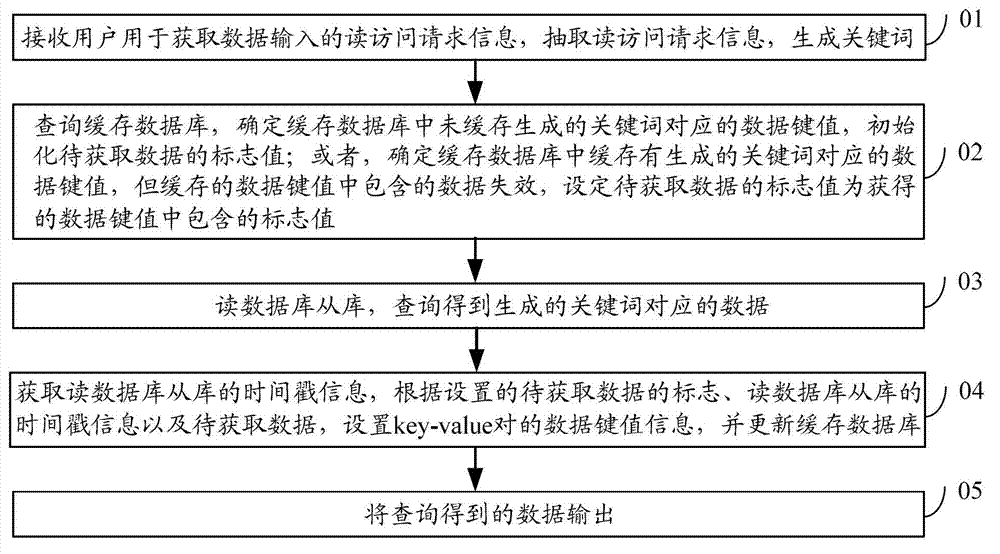

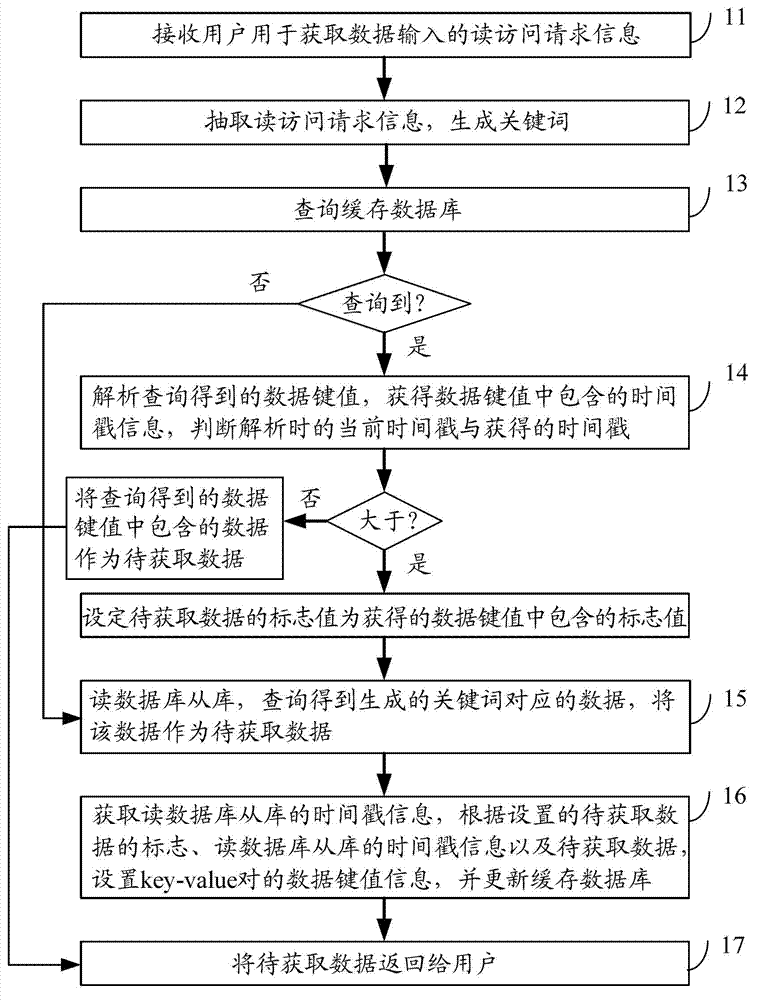

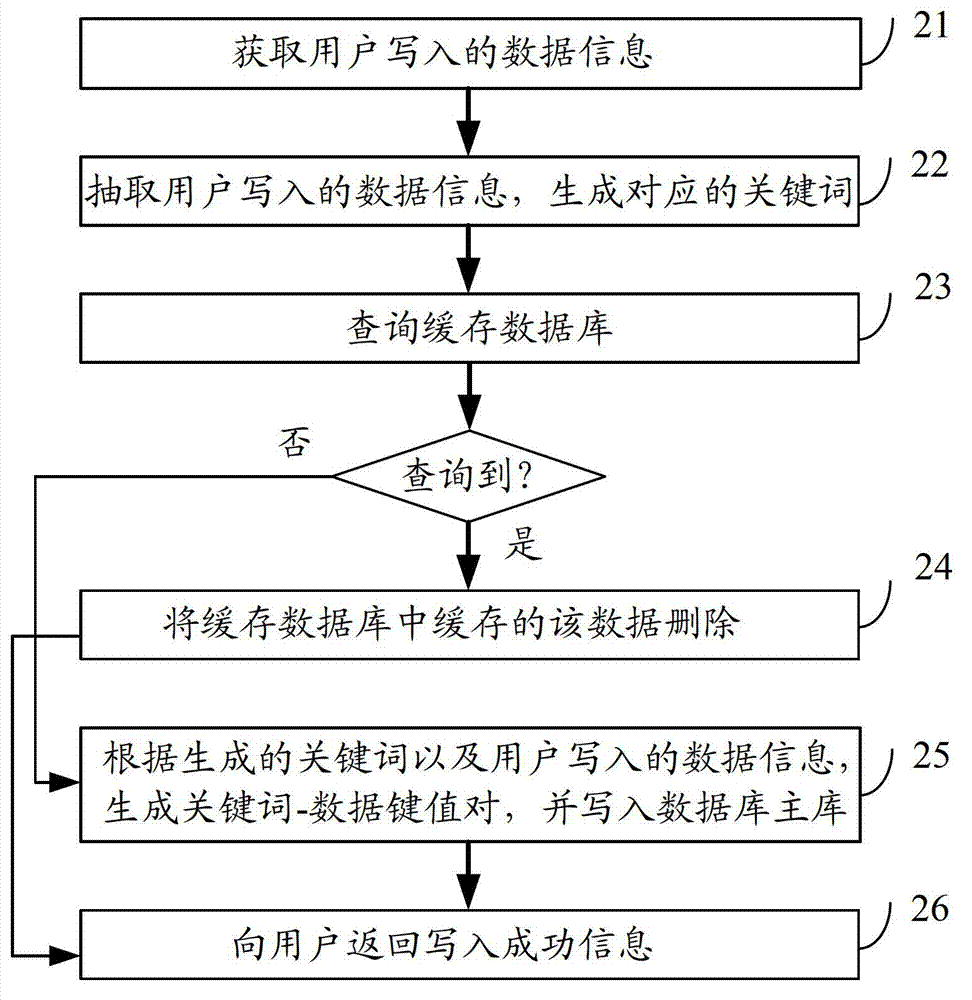

Method and device for reading data based on data cache

ActiveCN102902730AImprove caching efficiencyImprove performanceSpecial data processing applicationsData inputOperating system

The invention discloses a method and a device for reading data based on data cache. The method comprises the steps of: receiving read access request information (of a user) for obtaining data input, and extracting a generated key word; determining a data key value corresponding to a key word which is not subjected to cache generation in a cache databank, and initializing a mark value of data to be obtained; or determining a data key value corresponding to a key word which is subjected to cache generation in the cache databank, wherein the data included in the cached data key value is invalid, and setting the mark value of the data to be obtained as the mark value included in the obtained data key value; reading and checking a sub-bank of the databank so as to obtain data corresponding to the generated key word; obtaining time stamp information of the sub-bank of the databank, setting data key value information of key value pairs according to the set mark of the data to be obtained, the read time stamp information of the sub-bank of the databank and the data to be obtained, and updating the cache databank; and outputting the data obtained through checking. With the adoption of the method and the device, the cache efficiency of the data is improved, and the comprehensive property in data cache is optimized.

Owner:新浪技术(中国)有限公司

System for displaying cached webpages, a server therefor, a terminal therefor, a method therefor and a computer-readable recording medium on which the method is recorded

InactiveUS20120311419A1Increase probabilityImprove caching efficiencyDigital data information retrievalTransmissionCache serverWeb service

The present invention relates to a system, a server, a terminal and method for displaying cached webpages and to a computer-readable recoding medium on which the method is recorded. The system comprises a Web service server which stores at least one webpage; a caching server which collects links to webpages that match preset conditions in the webpage(s) for creating a caching page list that comprises at least one of the links to the collected webpages, and a terminal which refers to the link(s) to webpage(s) in the caching page list to cache the webpage(s), and simultaneously display the input link and the link to the cached webpage in response to a user call up of a specific webpage and wherein the terminal which receives the caching page list, displays the link(s) to webpage(s) in the caching page list so received, and provides a display such that webpages cached in links of the webpages are displayed are separate from non-cached webpages.

Owner:SK PLANET CO LTD

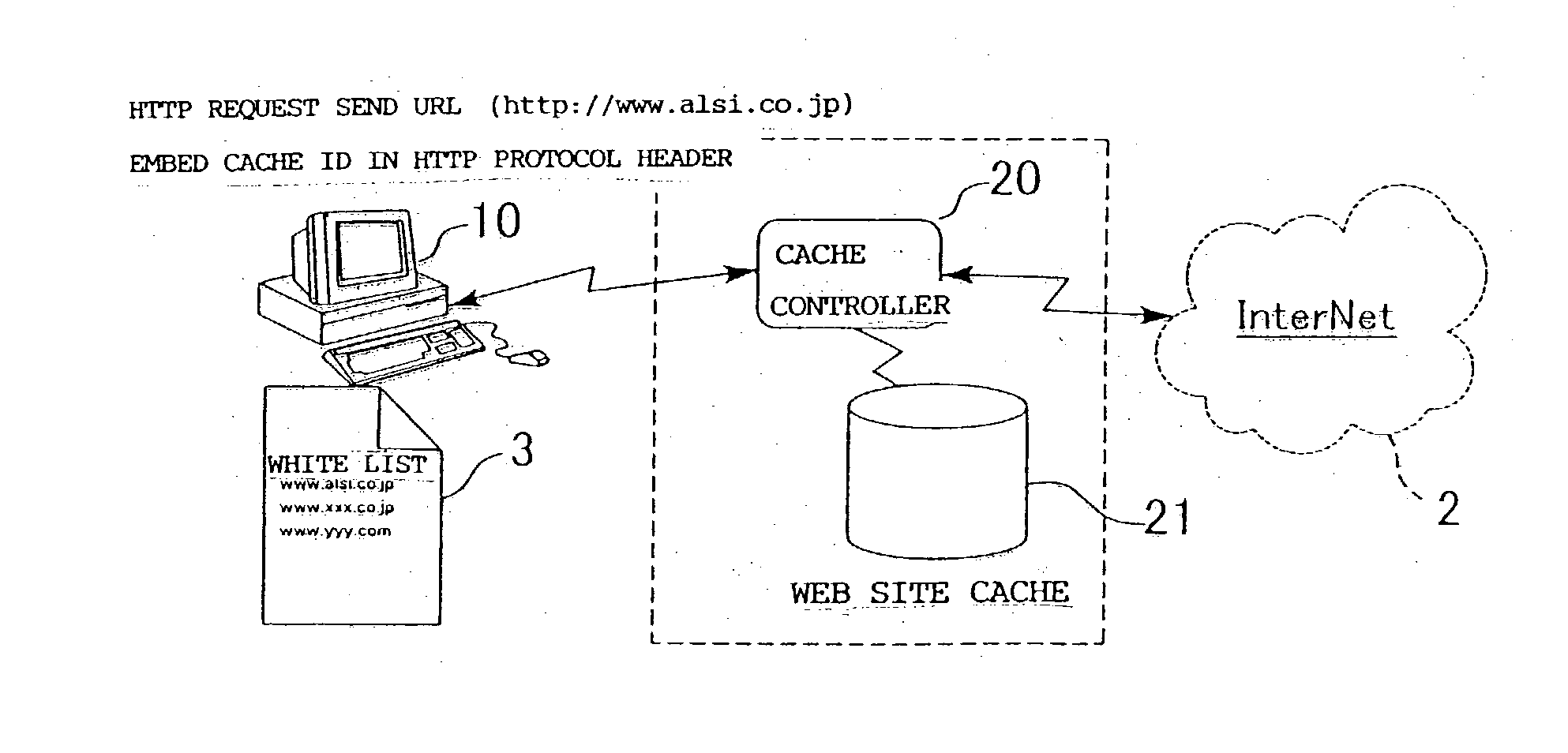

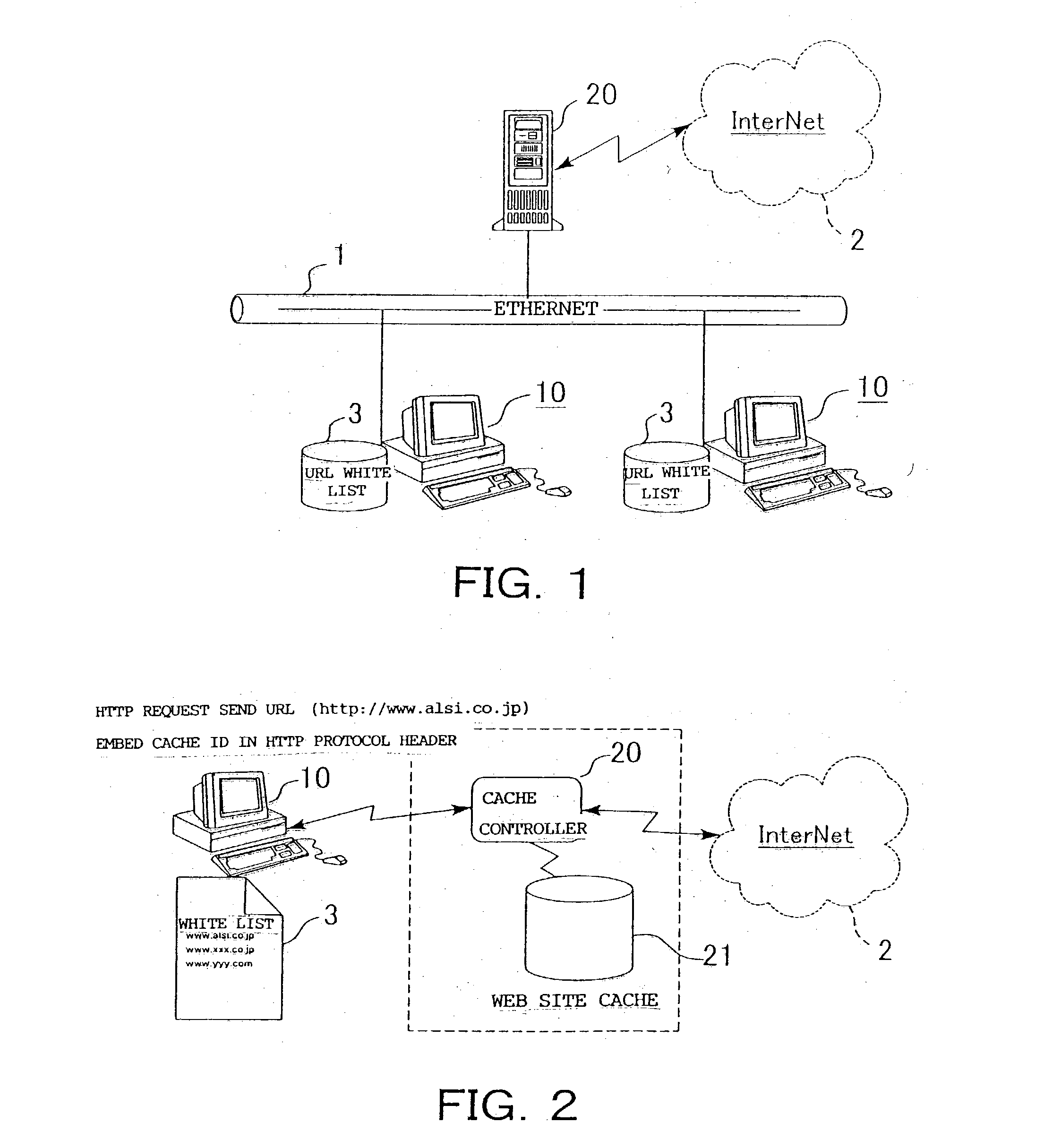

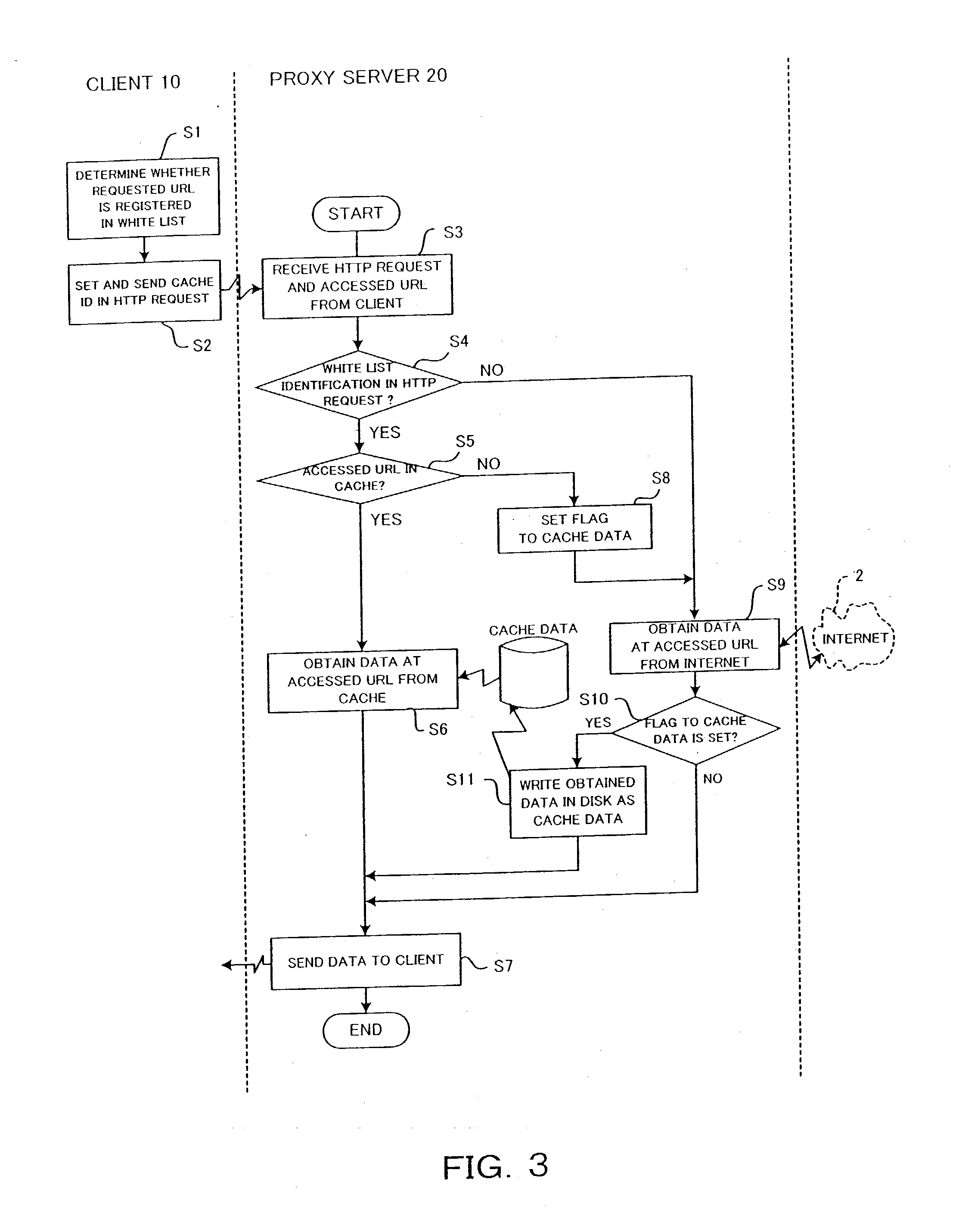

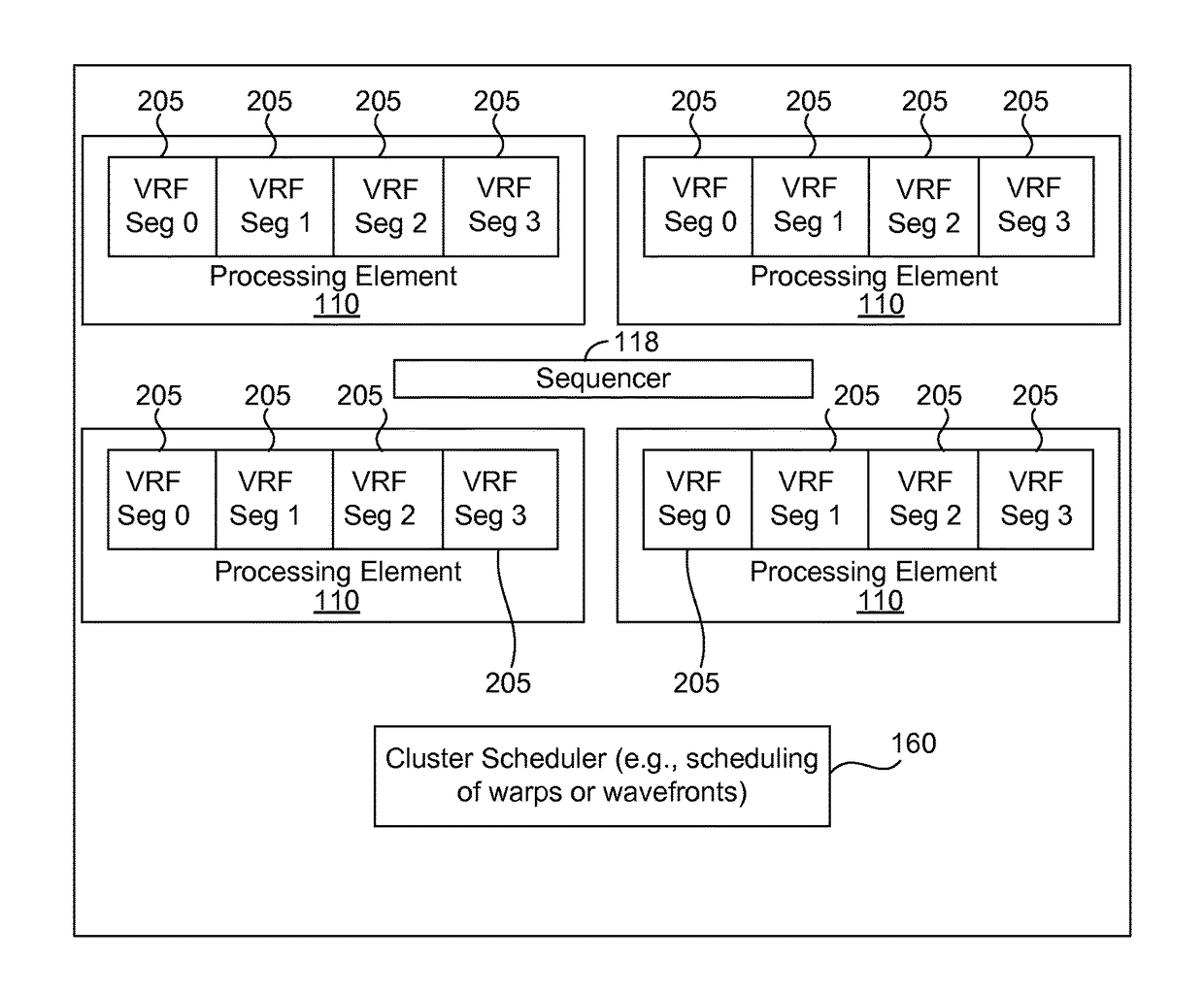

Cache control method of proxy server with white list

InactiveUS20040073604A1Efficient implementationImprove caching efficiencyMultiple digital computer combinationsNon-linear opticsUniform resource locatorClient-side

A cache control method is provided which allows a cache state in a proxy server and a client use state to be synchronized and can improve a cache-efficiency regardless of time zones. A cache control method of a proxy server for relaying data between one or more clients connected to one communication network and a data server connected to the other communication network with a white list, includes the steps of determining whether or not a URL requested by a user of the client is registered in a white list, and processing data at the URL to be cached if the URL is registered in the white list.

Owner:CHIERU

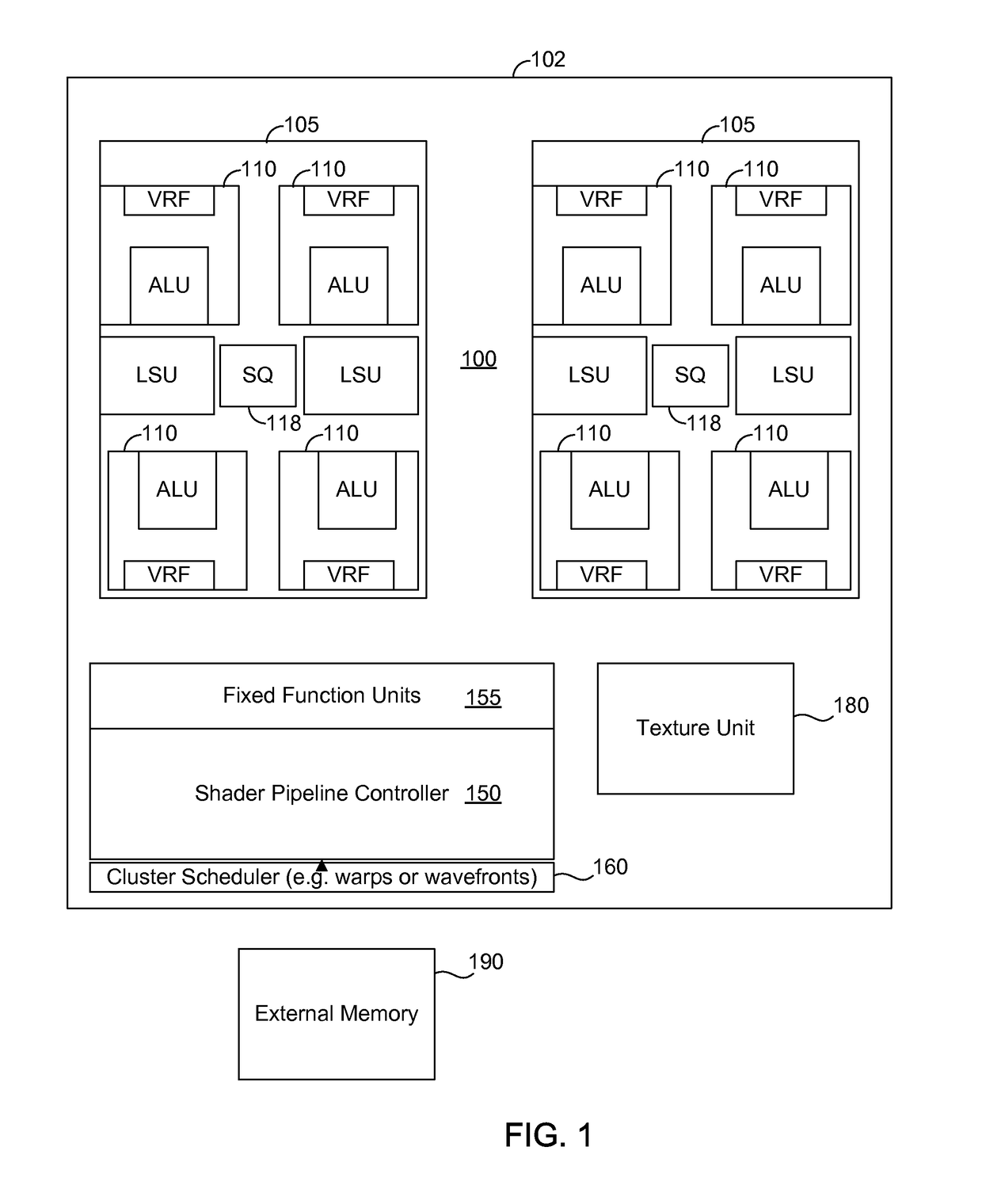

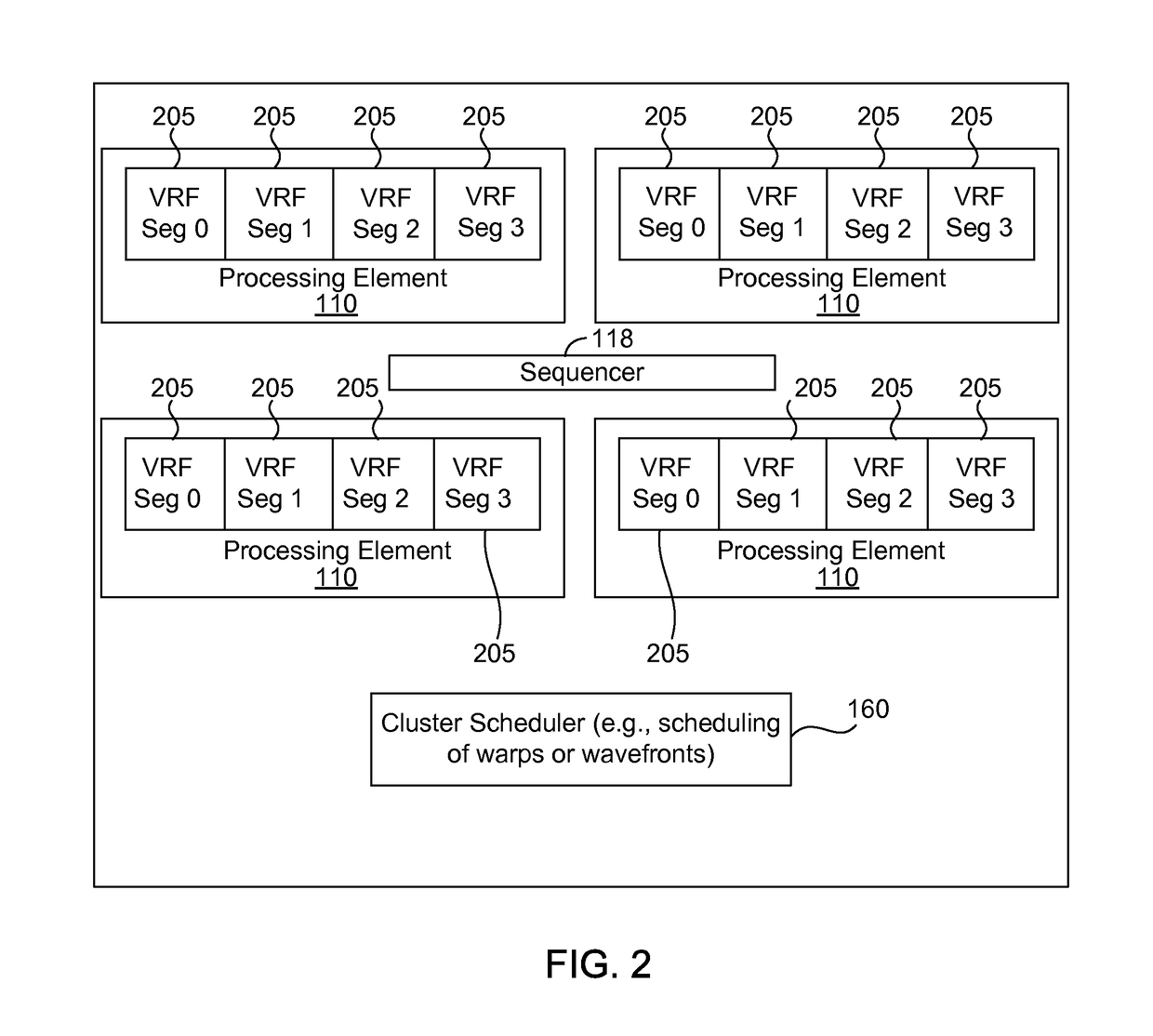

Warp clustering

ActiveUS9804666B2Reduced power data retention modeImprove caching efficiencyVolume/mass flow measurementImage memory managementWavefrontProcessor register

Units of shader work, such as warps or wavefronts, are grouped into clusters. An individual vector register file of a processor is operated as segments, where a segment may be independently operated in an active mode or a reduced power data retention mode. The scheduling of the clusters is selected so that a cluster is allocated a segment of the vector register file. Additional sequencing may be performed for a cluster to reach a synchronization point. Individual segments are placed into the reduced power data retention mode during a latency period when the cluster is waiting for execution of a request, such as a sample request.

Owner:SAMSUNG ELECTRONICS CO LTD

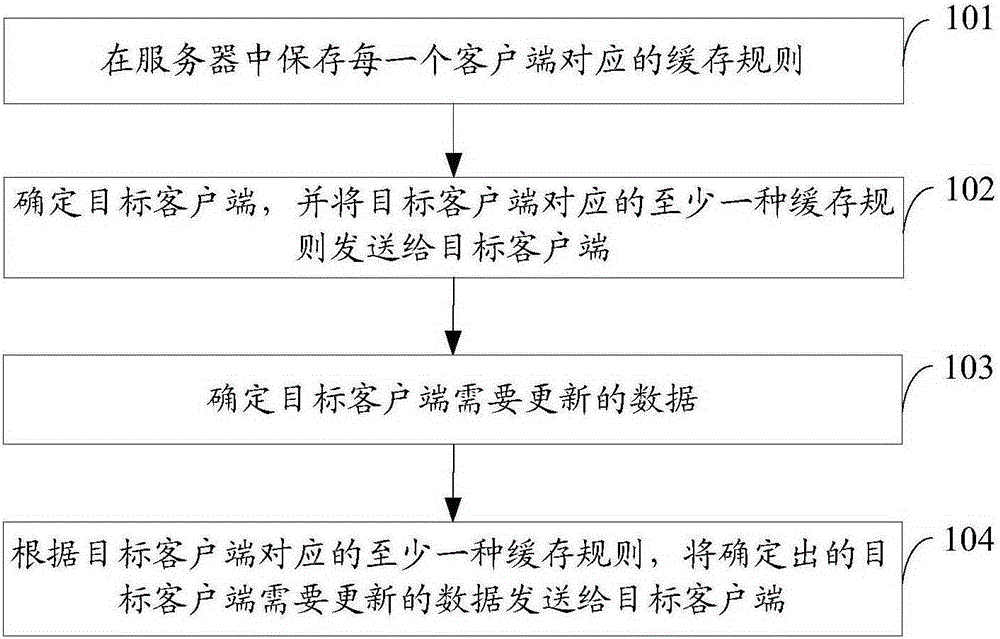

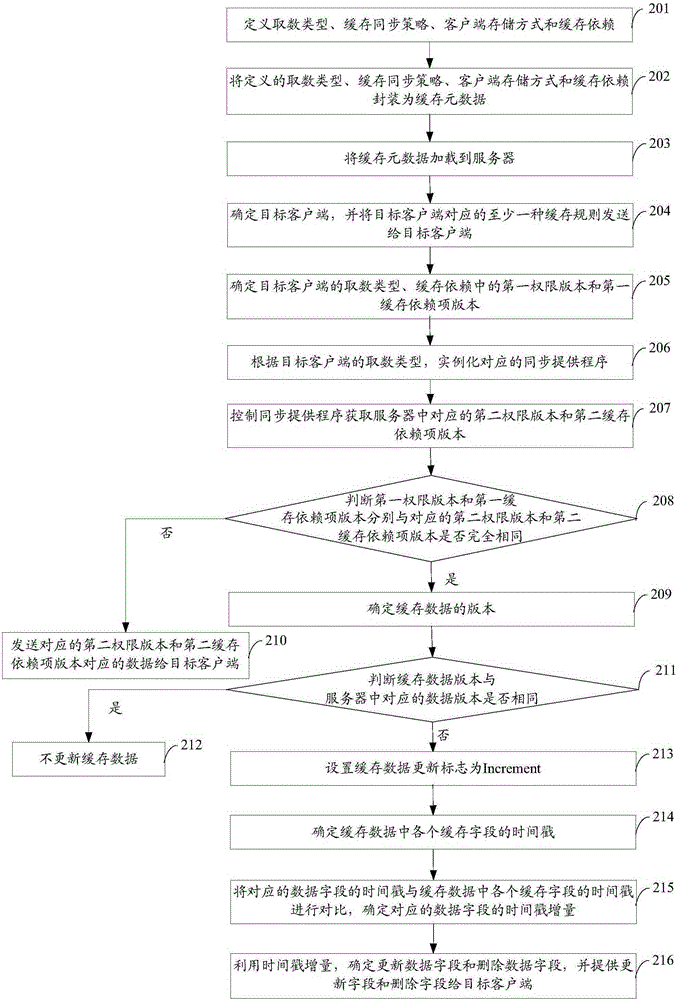

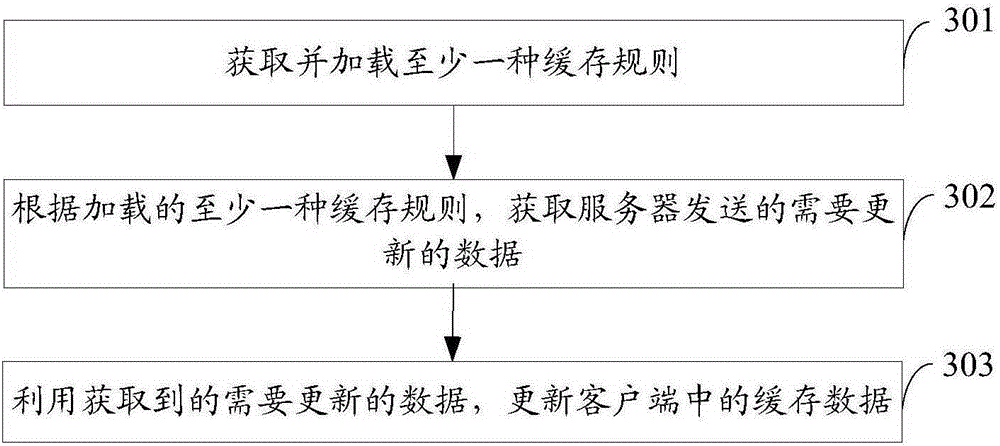

Data caching synchronization method, server and client side

The invention provides a data caching synchronization method, a server and a client side. The data caching synchronization method is applied to the server and comprises the following steps: storing a caching rule corresponding to each client side in the server; determining a target client side, and sending at least one caching rule corresponding to the target client side to the target client side; determining the data, which needs to be updated, of the target client side; and according to the at least one caching rule corresponding to the target client side, sending the determined data, which needs to be updated, of the target client side to the target client side. Therefore, the caching efficiency of the client side is improved.

Owner:INSPUR COMMON SOFTWARE

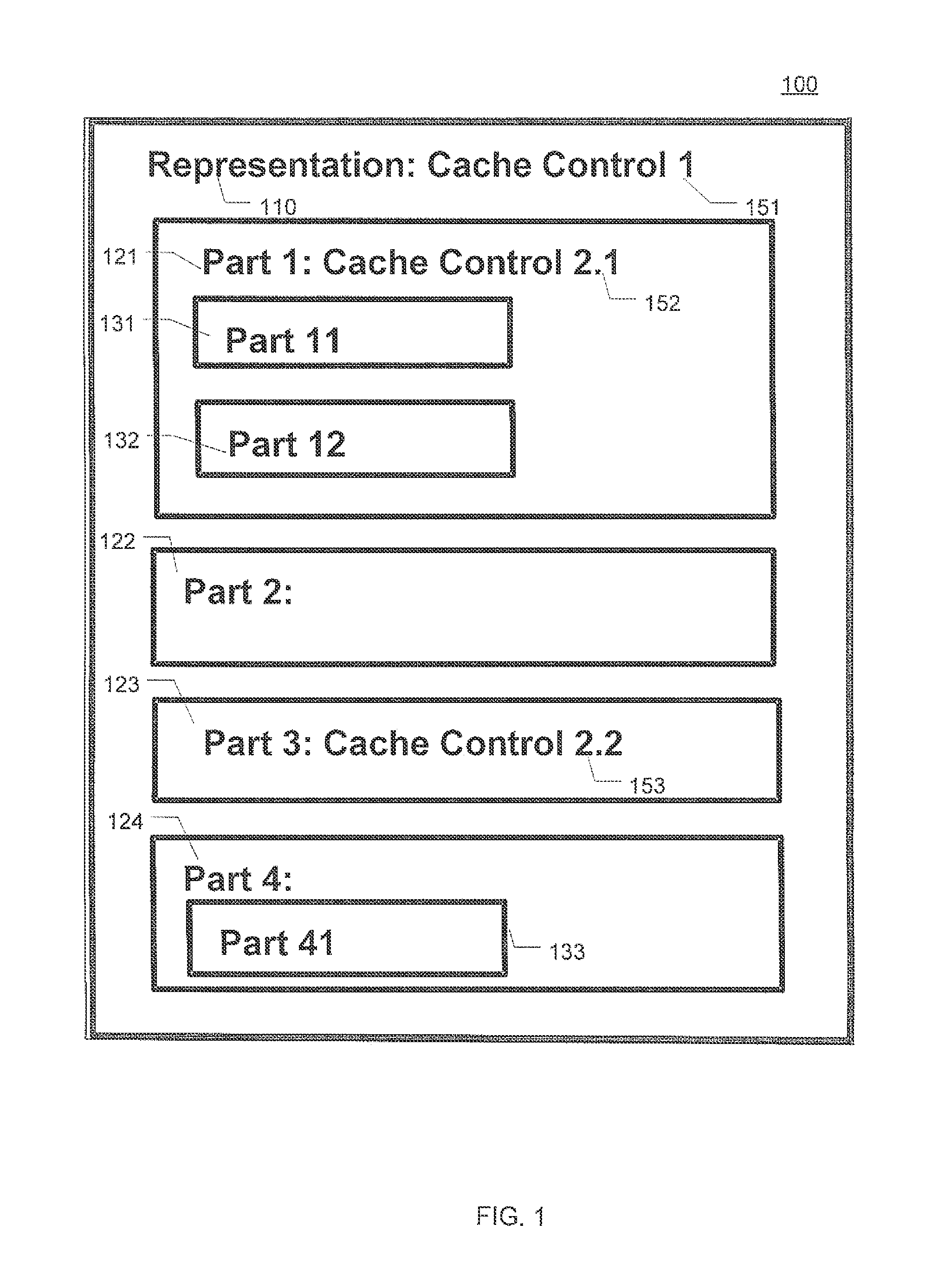

Differential cache for representational state transfer (REST) api

ActiveUS20150334043A1Improve efficiencyImprove effectivenessDigital computer detailsHybrid transportExpiration TimeRepresentational state transfer

System and method of differential cache control. Different parts of a representation are controlled by different cache expiration times. A differential control scheme may adopt a hierarchical control structure in which a subordinate level control policy can override its superordinate level control policies. Different parts of the representation can be updated to a cache separately. Differential cache control can be implemented by programming a cache control directive in HTTP / 1.1. Respective cache expiration time and their control scopes can be specified in a response header and / or response document provided by a server.

Owner:FUTUREWEI TECH INC

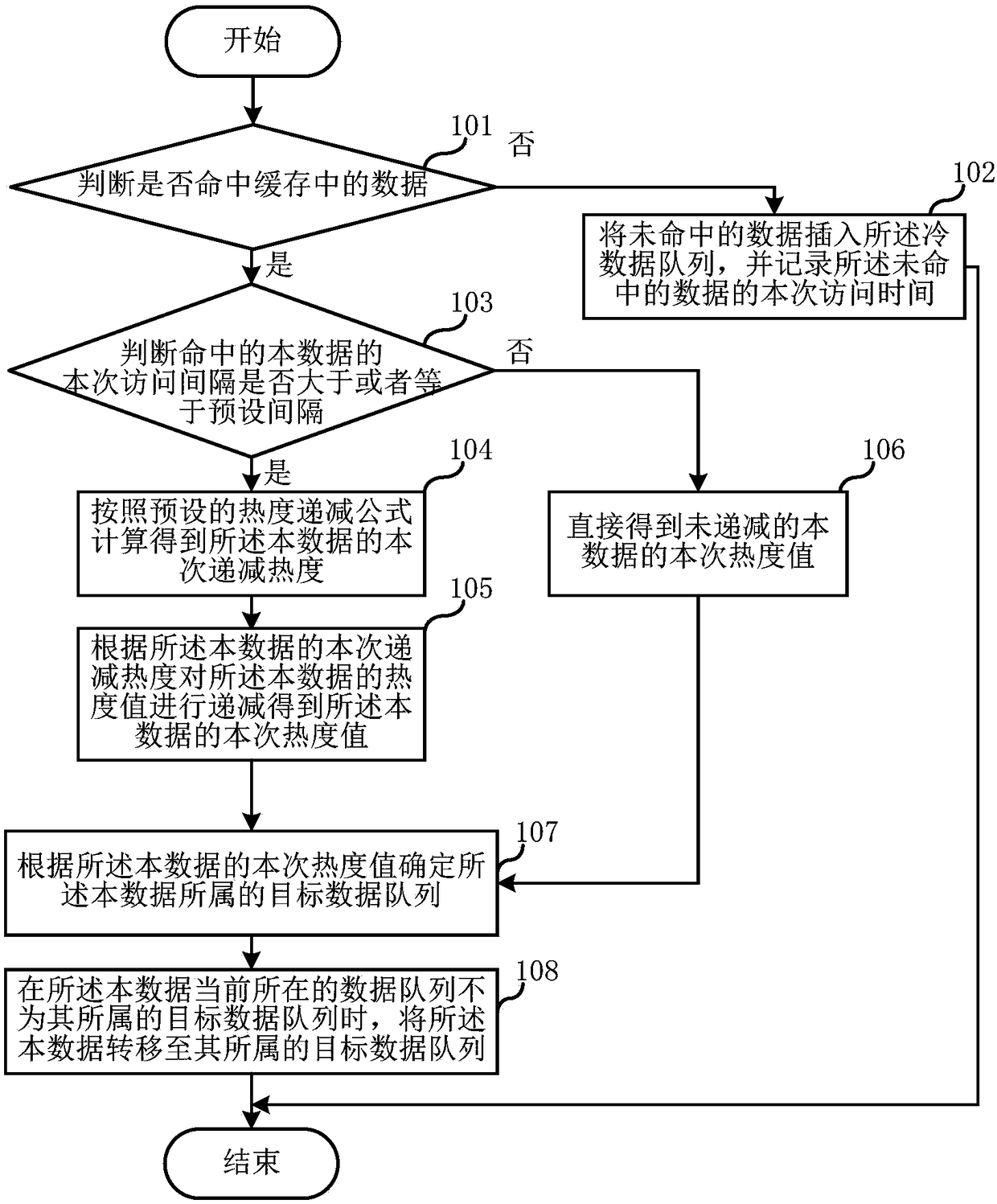

Heat management method based on cached data, server, and storage medium

ActiveCN109471875AAccurate identificationImprove caching efficiencyDatabase updatingSpecial data processing applicationsHeat managementAccess time

The embodiment of the invention relates to the technical field of hot spot data identification, and discloses a heat degree management method based on cached data, a server and a storage medium. The method comprises the following steps: when hitting the data in the cache, judging whether the current access interval of the hitting data is greater than or equal to the preset interval; Wherein, the current access interval is the time interval between the current access time of the data and the previous access time; If the current access interval is greater than or equal to the preset interval, the current decreasing heat degree of the data is calculated according to the preset decreasing heat degree formula; According to the decreasing heat degree of the data, decreasing the heat degree valueof the data to obtain the current heat degree value of the data; Determining a target data queue to which the data belongs according to the current heat value of the data; When the data queue to which the data currently belongs is not the target data queue to which it belongs, the data is transferred to the target data queue to which it belongs. As a result, the hot data can be identified more accurately and the caching efficiency can be improved.

Owner:CHINANETCENT TECH

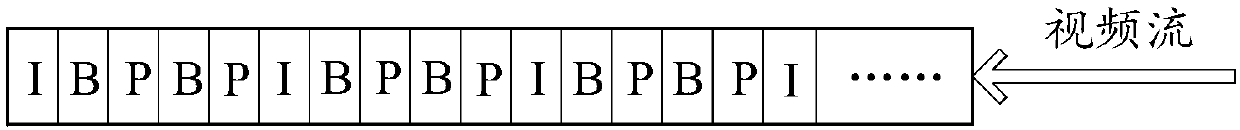

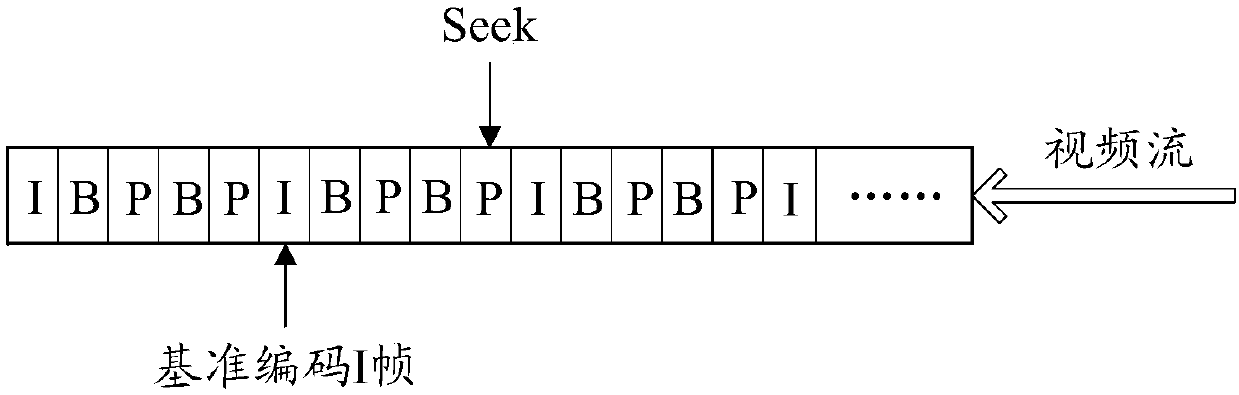

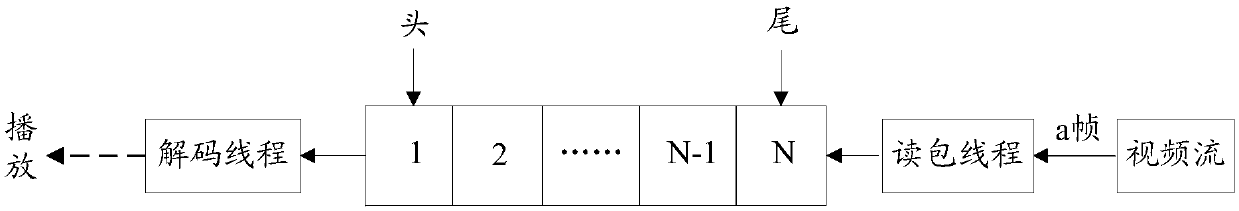

Video caching method and device and readable storage medium

ActiveCN110312156AImprove applicabilityStrong applicabilitySelective content distributionData processingMemory operation

The invention discloses a video caching method and device and a readable storage medium, and belongs to the technical field of data processing. The video caching method comprises the steps of when a to-be-cached target video stream is obtained, obtaining a target idle memory from an idle memory buffer queue to buffer a target video stream according to separation of an I frame and a non-I frame. The memory in the idle memory buffer queue is the idle memory obtained after the cached video data is released, memory multiplexing can be realized by buffering the target video stream through the idlememory in the idle memory buffer queue; therefore, frequent memory operations are reduced, the memory fragmentation degree is reduced, the video caching efficiency is improved, separated storage of the I frame and the B frame and the P frame is realized for the target video stream, subsequent positioning of the data frame is facilitated, and the positioning efficiency of the data frame is improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Ping pong buffer device

InactiveCN1585373ASolve the waste of resourcesSave circuit resourcesMemory adressing/allocation/relocationStore-and-forward switching systemsInput selectionData cache

The device comprises a module for analyzing additional message about data, a selector for inputted additional message, an analyzing module for inputted data, a selector for write cache, a module for processing write jump condition, a ping-pong cache module, a selector for outputted additional message and a selector for read cache. The invention can implement caching for different type data in data flow by only using one ping-pong cache.

Owner:ZTE CORP

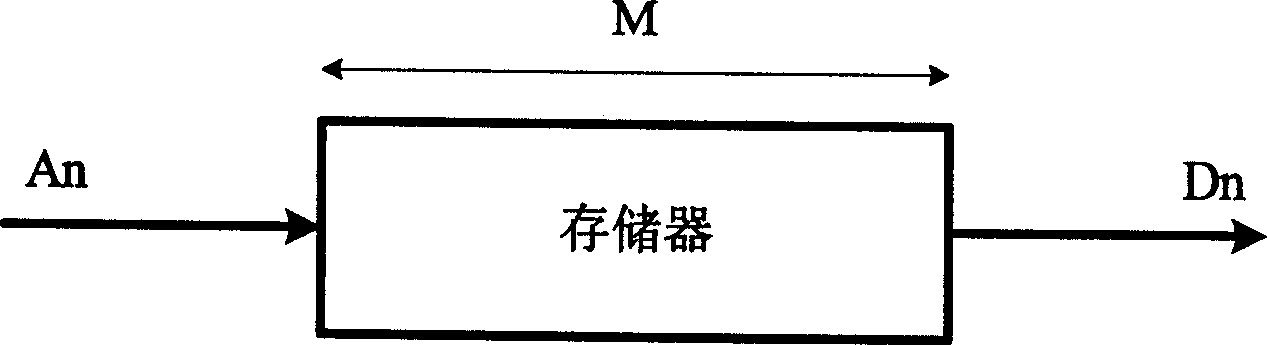

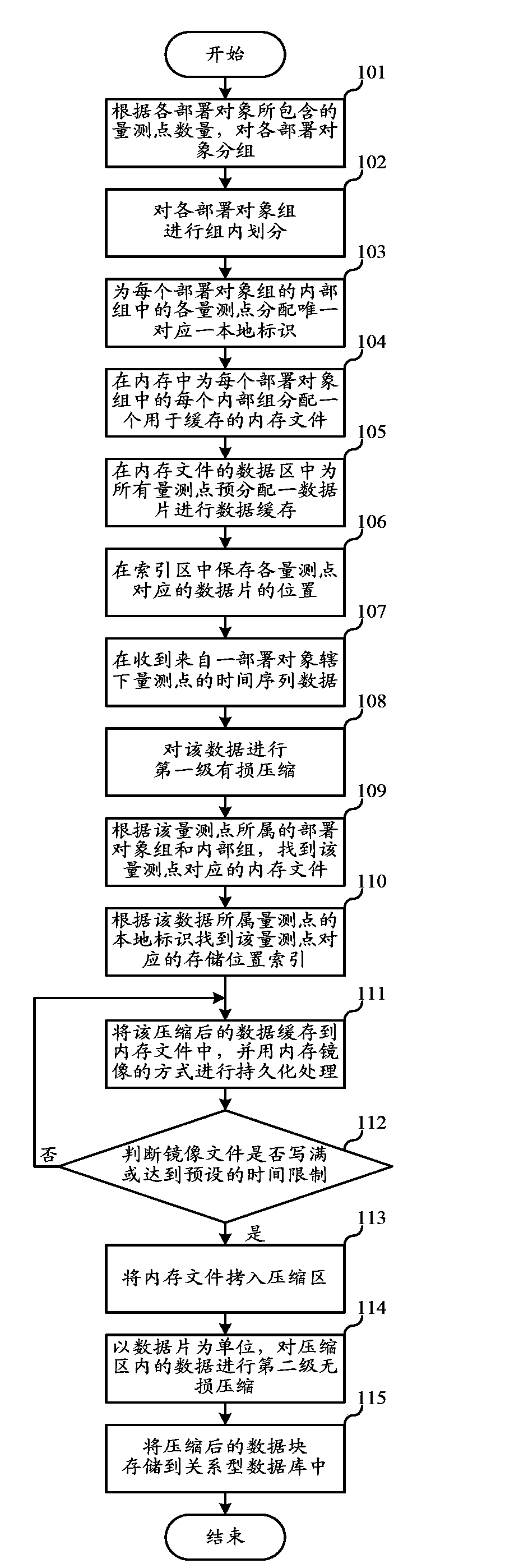

Multisource time series data compression storage method

ActiveCN103914449AImprove efficiencyImprove data query speedRelational databasesSpecial data processing applicationsData compressionLossy compression

The invention discloses a multisource time series data compression storage method. The multisource time series data compression storage method comprises the following steps of grouping deployment objects; dividing internal groups of deployment object groups; distributing a memory file which is used for caching for every internal group in the memory; performing first level lossy compression when time series data of a certain measuring point is received, finding the memory file which is corresponding to the measuring point according to the deployment object group and the internal group to which the measuring point belongs and caching the compressed data into the memory file; mapping the memory files to a hard disk and performing second level lossy compression when the internal files are fully filled or achieve the preset time limits and storing the compressed data blocks into a relational database. The multisource time series data compression storage method has the advantages of enabling the corresponding memory files to be found rapidly when data is cached, rapidly positioning storage positions and improving the caching efficiency; improving the compression efficiency and effectively saving hard drive capacity due to the partitioning compression mode; improving the data reading speed due to the relational database.

Owner:ASAT CHINA TECH

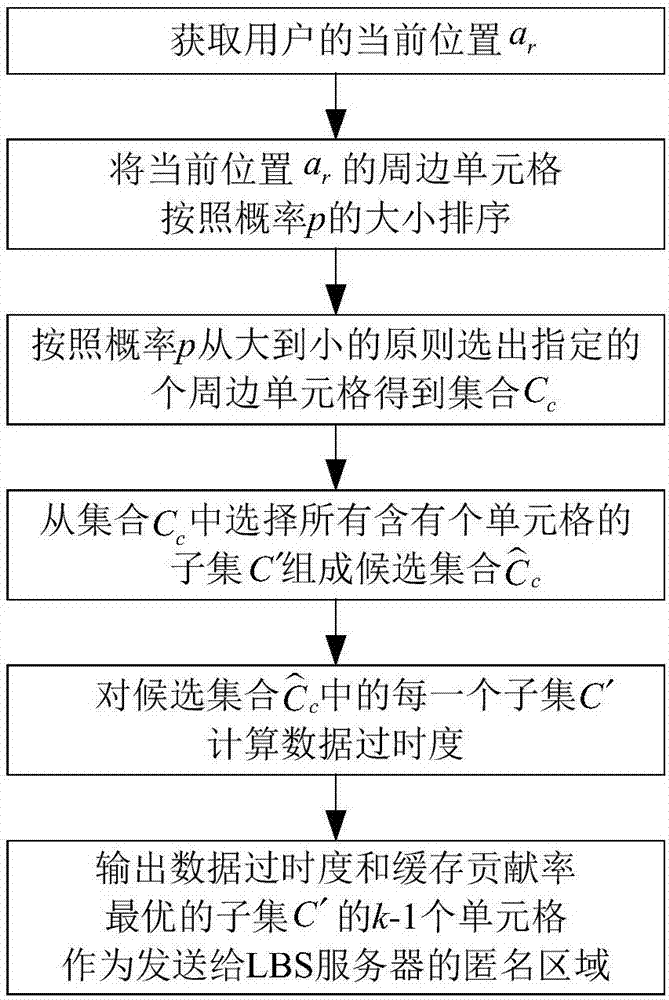

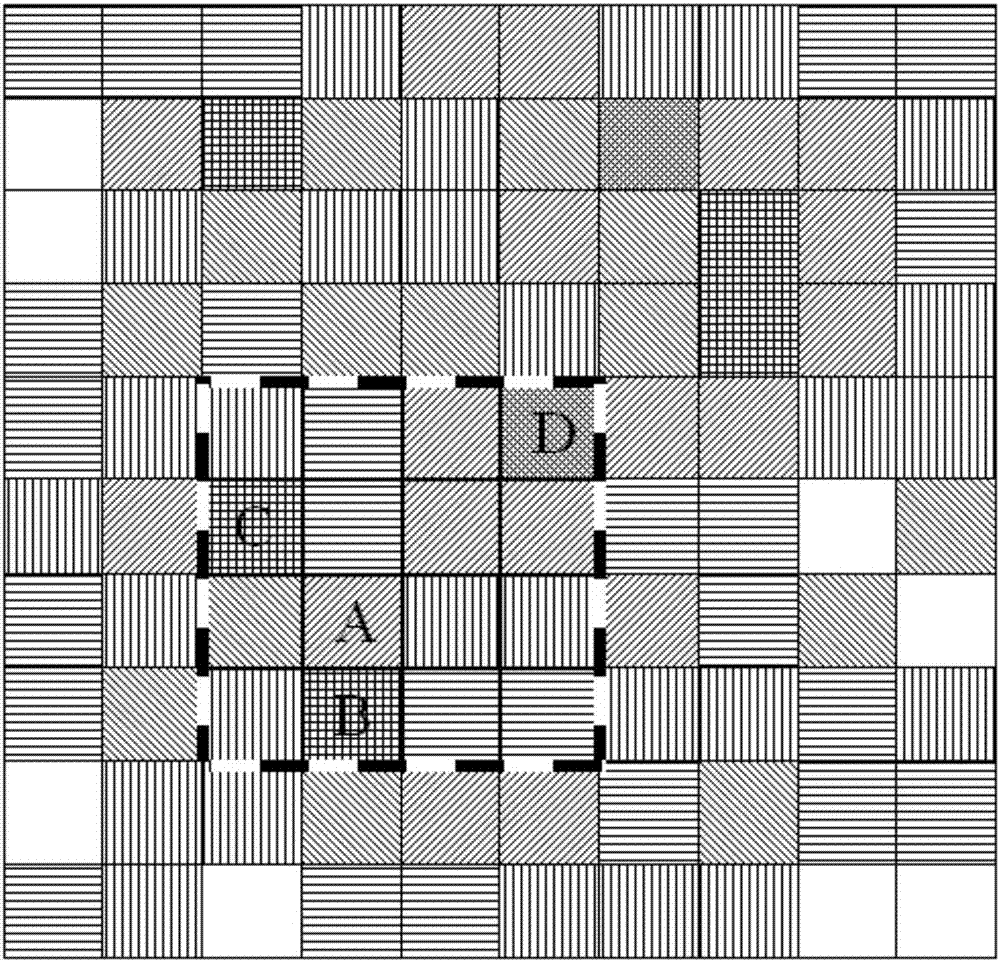

Anonymous area generation method and location privacy protection method

InactiveCN106954182AEfficient use ofReduce overheadTransmissionLocation information based serviceProtection mechanismPrivacy protection

The invention discloses an anonymous area generation method and a location privacy protection method. The anonymous area generation method is used for generating an anonymous area based on a spatial K anonymous method; a multi-level access protection mechanism is adopted in the location privacy protection method; the location privacy protection method performs cache normalization; when a target user sends a LBS request, POI data is obtained according to the priority levels of a target user local, a neighbour user in a network and a LBS server; furthermore, when the POI data is obtained from the LBS server, generation of the anonymous area is carried out by adopting the anonymous area generation method; the LBS request is sent to the LBS server based on the anonymous area as the anonymous location of the user; and furthermore, the POI data returned by the LBS server is output. By means of the anonymous area generation method and the location privacy protection method disclosed by the invention, the location privacy of users is protected in combination with a cache method and the spatial K anonymous method; therefore, inquiry requests sent by users can be reduced to the most extent; furthermore, the privacy protection level of the users can be improved; and thus, the problems that the burden of the server and a channel is too heavy and the information repetition utilization rate is low can be well solved.

Owner:步步高电子商务有限责任公司

Terminal, method and system for performing combination service using terminal capability version

ActiveUS8401004B2Simple procedureImprove caching efficiencySpecial service for subscribersConnection managementComputer terminalService use

Owner:LG ELECTRONICS INC

Technique for enhancing effectiveness of cache server

InactiveUS7146430B2Improve caching efficiencyReduce trafficDigital data information retrievalError preventionCache serverParallel computing

A path calculating section obtains a path suitable for carrying out an automatic cache updating operation, a link prefetching operation, and a cache server cooperating operation, based on QoS path information that includes network path information and path load information obtained by a QoS path information obtaining section. An automatic cache updating section, a link prefetching control section, and a cache server cooperating section carry out respective ones of the automatic cache updating operation, the link prefetching operation, and the cache server cooperating operation, by utilizing the path obtained. For example, the path calculating section obtains a maximum remaining bandwidth path as the path.

Owner:NEC CORP

Method oriented to prediction-based optimal cache placement in content central network

ActiveCN104166630AReduce access latencyReduce redundancyInput/output to record carriersMemory adressing/allocation/relocationArray data structureData diversity

The invention belongs to the technical field of networks, and particularly relates to a method oriented to prediction-based optimal cache placement in a content central network. The method can be used for data cache in the content central network. The method includes the steps that cache placement schemes are encoded into binary symbol strings, 1 stands for cached objects, 0 stands for non-cached objects, and an initial population is generated randomly; the profit value of each cache placement scheme is calculated, and the maximum profit value is found and stored in an array max; selection operation based on individual fitness division is conducted; crossover operation based on individual correlation is conducted; variation operation based on gene blocks is conducted; a new population, namely, a new cache placement scheme is generated; whether the array max tends to be stable or not is judged, and if the array max is stable, maximum profit cache placement is acquired. The method has the advantages that user access delay is effectively reduced, the content duplicate request rate and the network content redundancy are reduced, network data diversity is enhanced, the cache performance of the whole network is remarkably improved, and higher cache efficiency is achieved.

Owner:HARBIN ENG UNIV

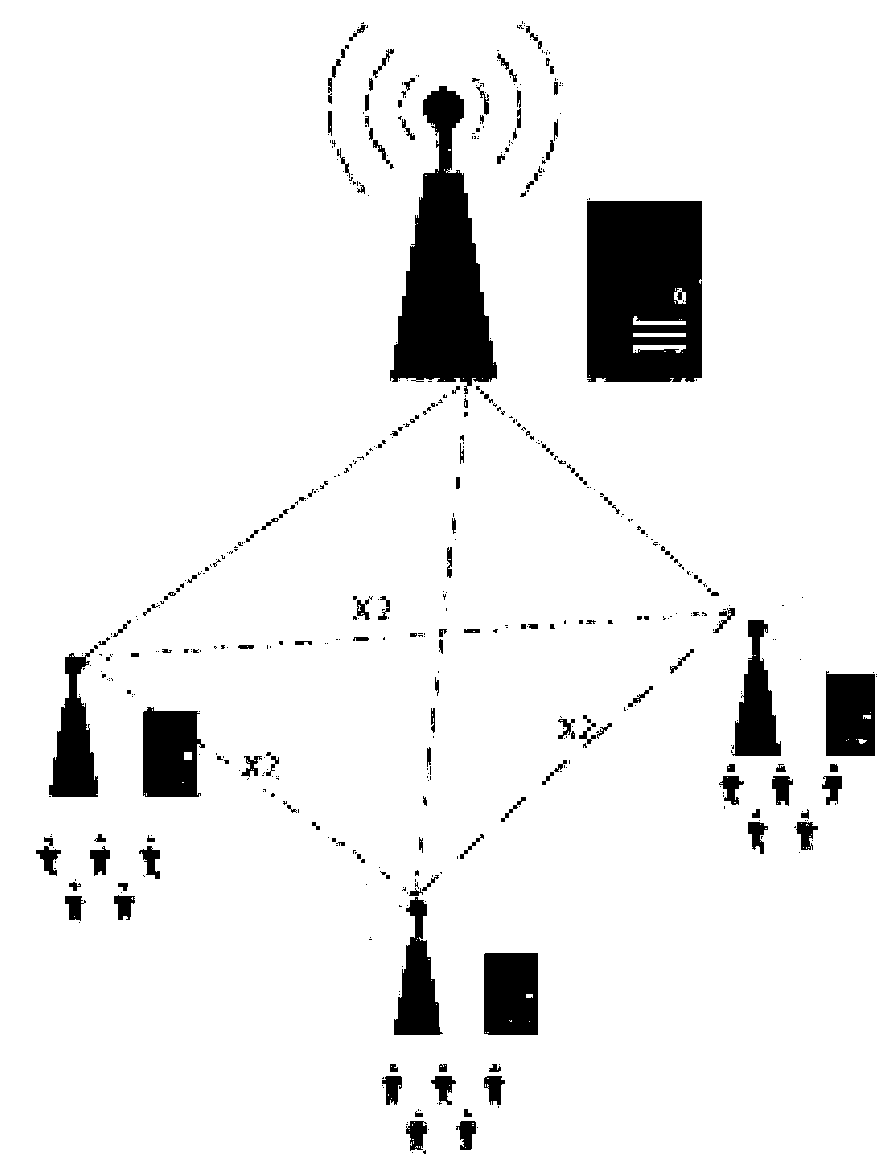

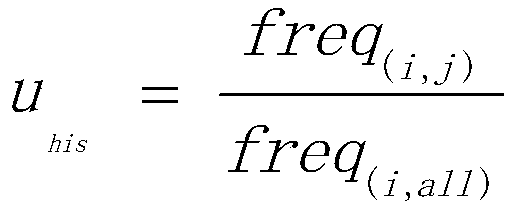

Mobile edge caching method based on region user interest matching

ActiveCN110730471AImprove caching efficiencyShort response timeNetwork traffic/resource managementEffective solutionComputer network

The invention relates to a mobile edge caching method based on regional user interest matching, and belongs to the technical field of caching. The method comprises the following steps: S1, establishing a regional user preference model; S2, establishing a joint cache optimization strategy; S3, establishing a cache system model; S4, designing a cache algorithm. When a cache mechanism of the invention is used, the video caching gain can be maximized under the condition that the diversity of cached videos is met, compared with a current caching mechanism, the video caching benefit and QoE of a user can be effectively improved, and an effective solution is provided for caching and online watching of large-scale ultra-high-definition videos in a future 5G scene.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

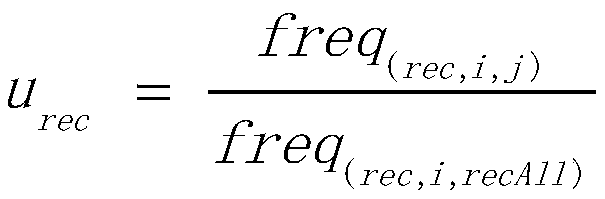

Method and system for providing multimedia information on demand over wide area networks

InactiveUS20070233893A1Lower latencyImprove efficiencyMultiple digital computer combinationsSelective content distributionStream dataData content

Systems and methods for delivering streaming data content to a client device over a data communication network in response to a request for the data content from the client device. The client request is received by a server or a controller device that is typically located on a network switch device. If received by a server, the server sends a request to the controller device to control the transfer of the requested data to the client. The controller device includes the processing capability required for retrieving the streaming data and delivering the streaming data directly to the client device without involving the server system. In some cases, the controller device mirrors the data request to another controller device to handle the data processing and delivery functions. In other cases, the controller device coordinates the delivery of the requested data using one or more other similar controller devices in a pipelined fashion.

Owner:EMC IP HLDG CO LLC

System for displaying cached webpages, a server therefor, a terminal therefor, a method therefor and a computer-readable recording medium on which the method is recorded

InactiveCN102812452ARaise the possibilityImprove caching efficiencyMultiple digital computer combinationsWireless commuication servicesCache serverUser input

Owner:SK PLANET CO LTD

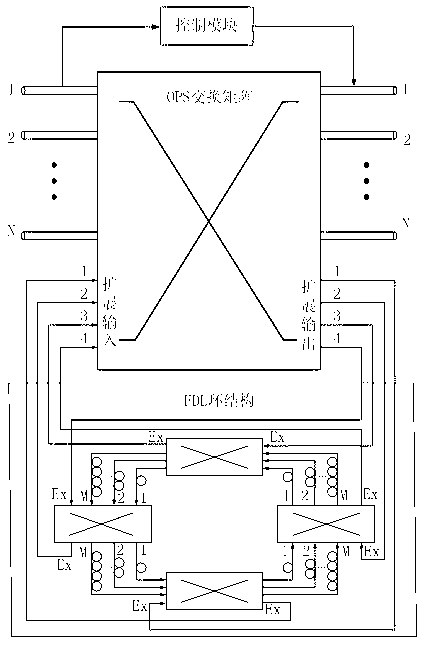

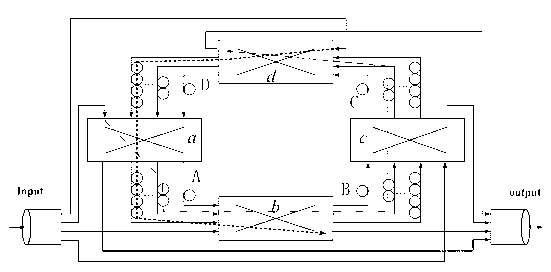

Buffer device and buffer method for feeding back shared light based on FDL (fiber delay line) loop

InactiveCN102843294AImprove the probability of cache successIncrease profitMultiplex system selection arrangementsData switching networksFiberComputer hardware

The invention provides a buffer device and a buffer method for feeding back shared light based on an FDL (fiber delay line) loop, and relates to the technical field of optical fiber communication. The FDL loop comprises four sub switching matrixes and four FDL buffer groups; a conflicting optical packet can go into an extended input port of the FDL loop from any extended output port of an OPS (optical packet switching) node when the optical packet of the optical OPS node encounters an obstruction; an available FDL can be selected in the FDL loop to buffer the optical packet until the output port of the OPS node is free, and the conflicting optical packet departs from the FDL loop through the extended output port of the FDL buffer group, and is switched into the free output port of the OPS through an OPS matrix. The device allows the conflicting optical packet to go into and depart from the FDL loop through the extended input / output port of the FDL loop; the structure of feeding back connection allows the optical packet to pass through the FDL buffer group for a plurality of times; the success probability of buffering the conflicting optical packet is improved, and the utilization rate of the FDL loop is improved. The problems of port contention of the optical packet switching network and utilization rate of finite number of FDLs are well solved.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

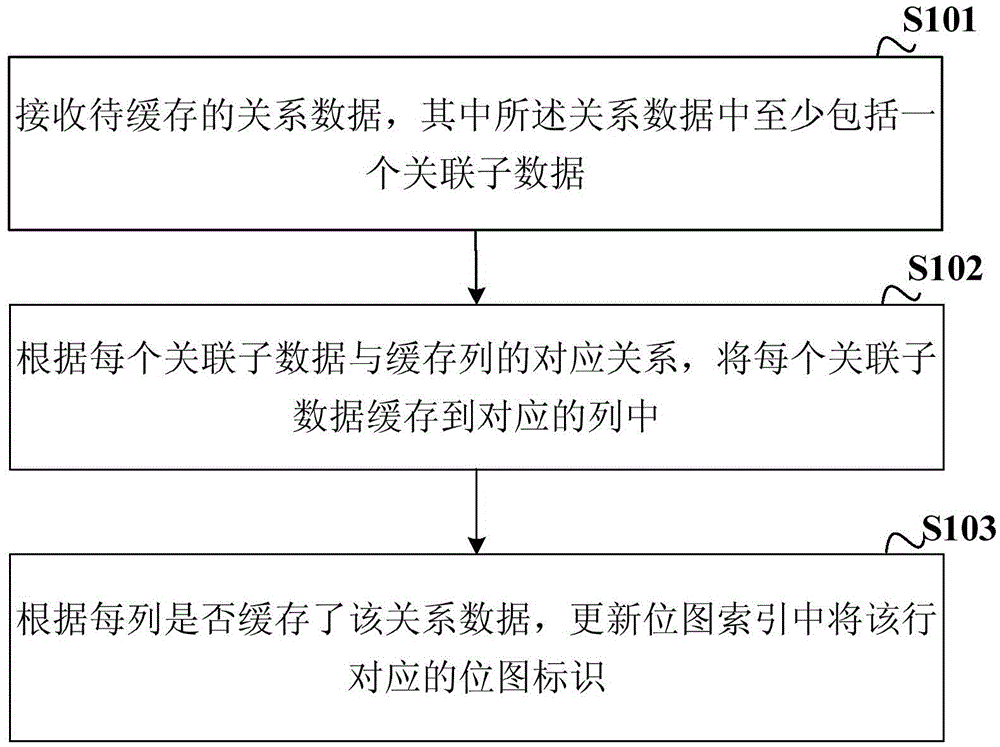

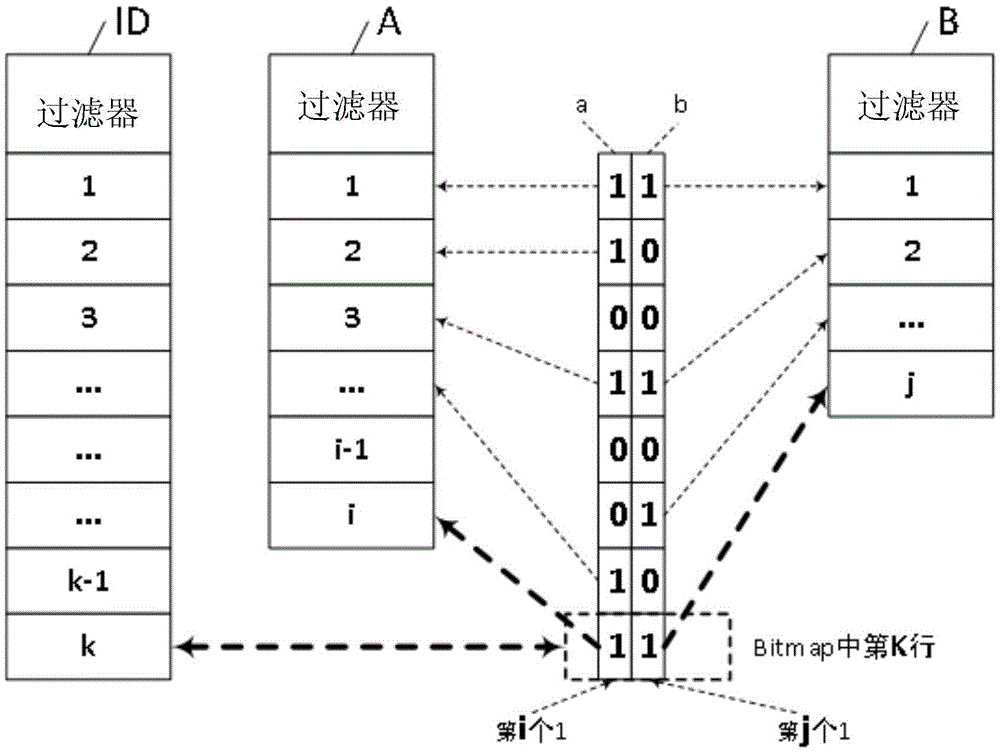

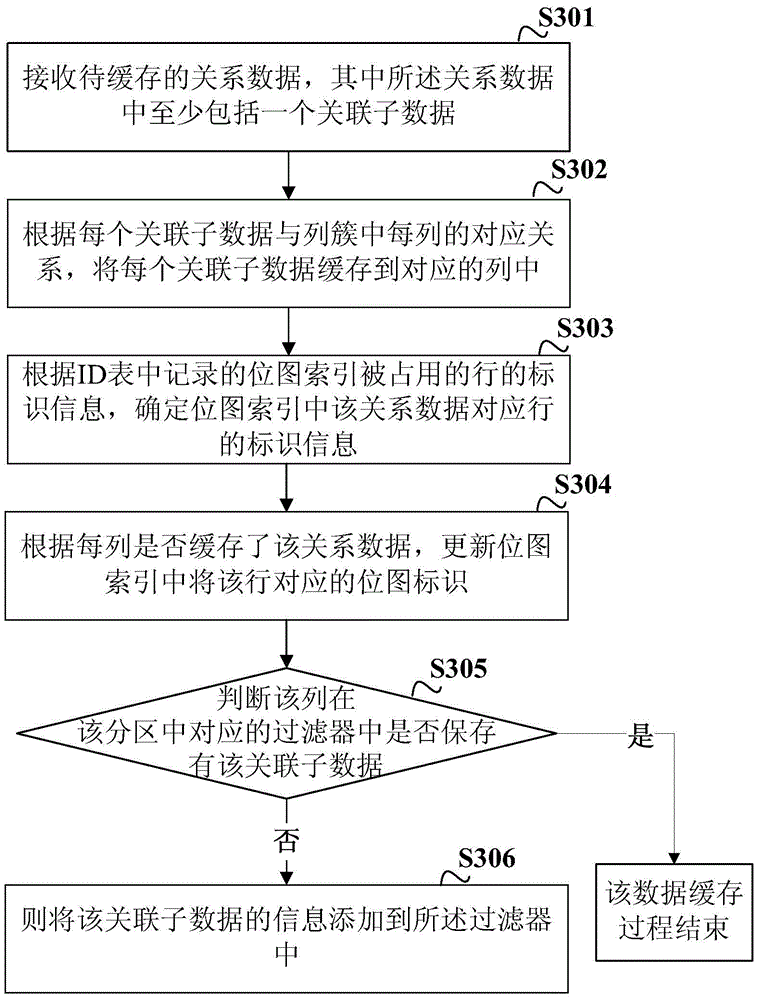

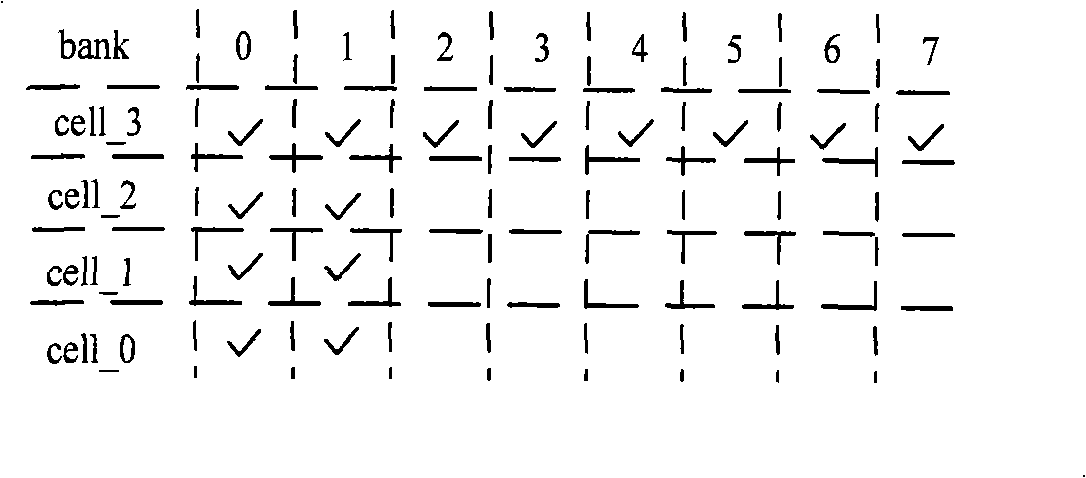

Relational data cache and inquiry method and device

ActiveCN106682042AImprove caching efficiencyIncrease profitSpecial data processing applicationsOperating system

An embodiment of the invention discloses a relational data cache and inquiry method and device. The cache method includes the steps: receiving relational data to be cached and then caching each of associated sub-data into a corresponding column according to the corresponding relation between each of the associated sub-data and each column in a column cluster; updating bitmap identifiers of corresponding lines in a bitmap index according to the condition whether relational data are cached in each column or not. As the relational data are cached based on the corresponding relation table of the bitmap index and each column and divided into a plurality of associated sub-data to be respectively cached, the cache efficiency of the relational data is improved. Moreover, the method is applied to a memory of an electronic device, and the use ratio of the memory is increased.

Owner:HANGZHOU HIKVISION DIGITAL TECH

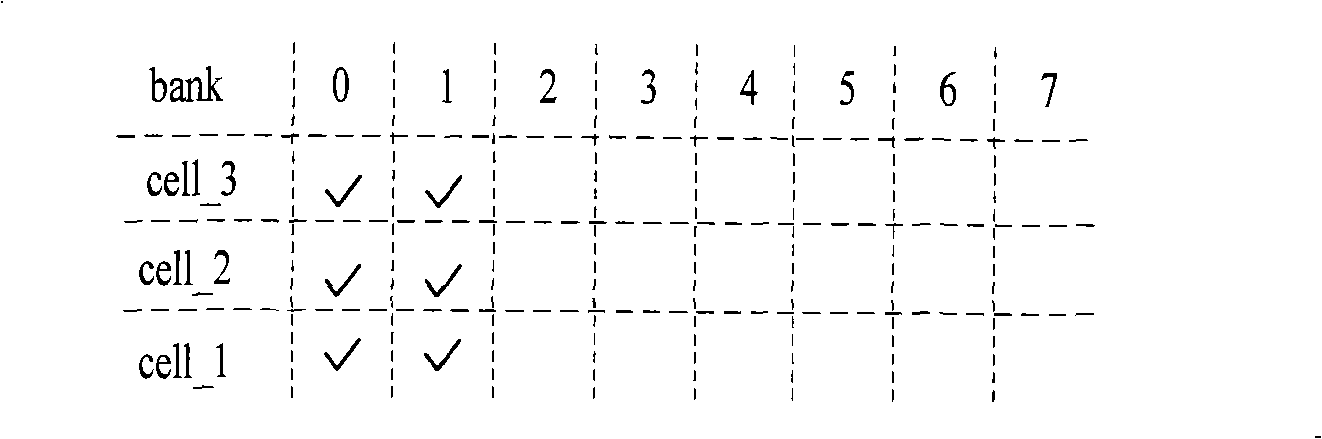

Data reading and writing method and device

InactiveCN101316240AImprove caching efficiencyEasy to operateData switching networksElectric digital data processingDistributed computingWeight value

The embodiment of the invention discloses a method and a device for reading and writing data, which includes the following steps: a request is segmented according to the preset volume of a block data and the segmented request is stored into a block data queue; the weighted values of different block data queues meeting the time-sequential parameters are compared; the block data queue is dispatched according to the comparison result. The application of the method and the device of the embodiment of the invention can optimize the operation sequence on the whole, thus improving the cache efficiency more effectively.

Owner:HUAWEI TECH CO LTD

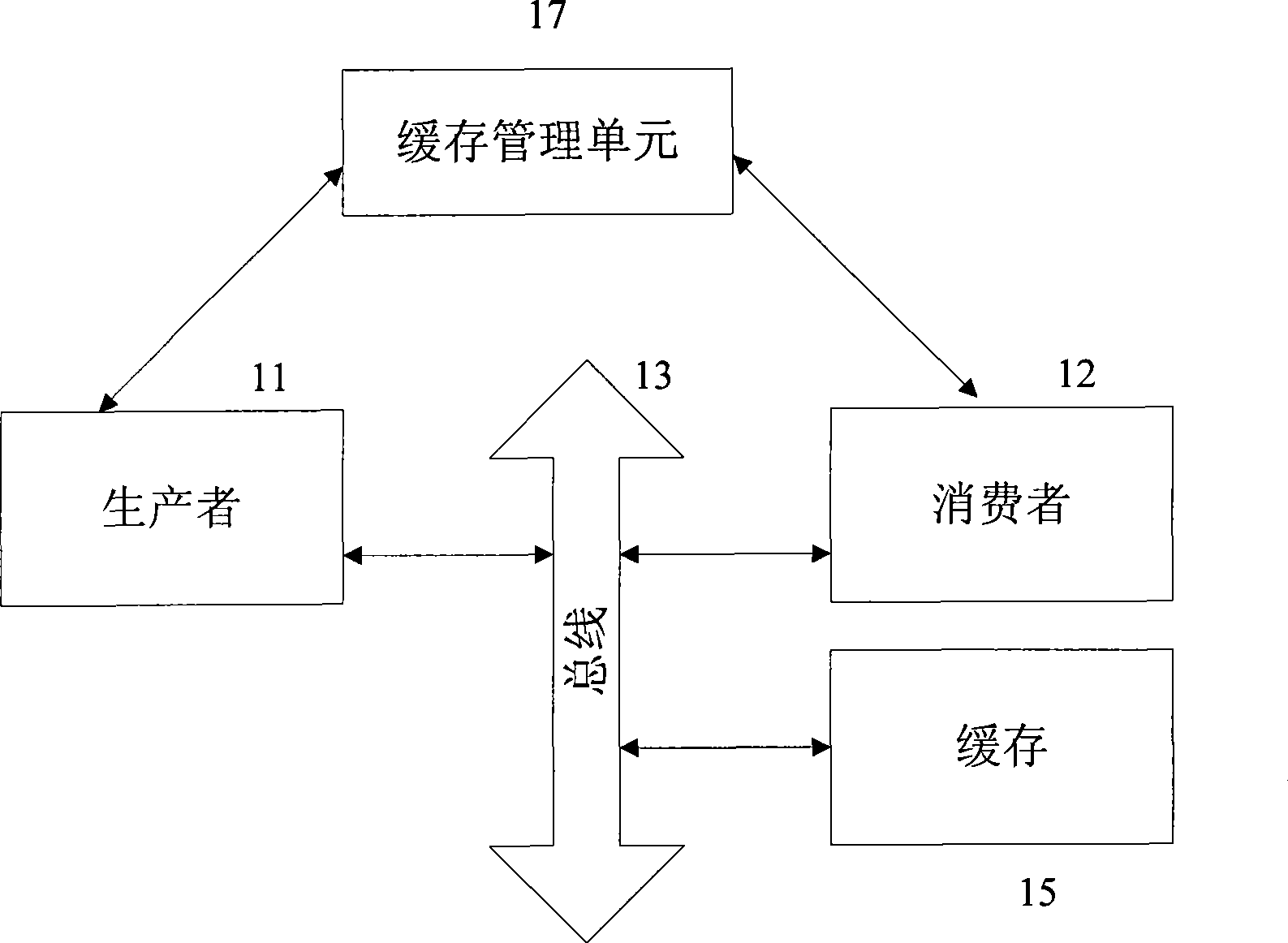

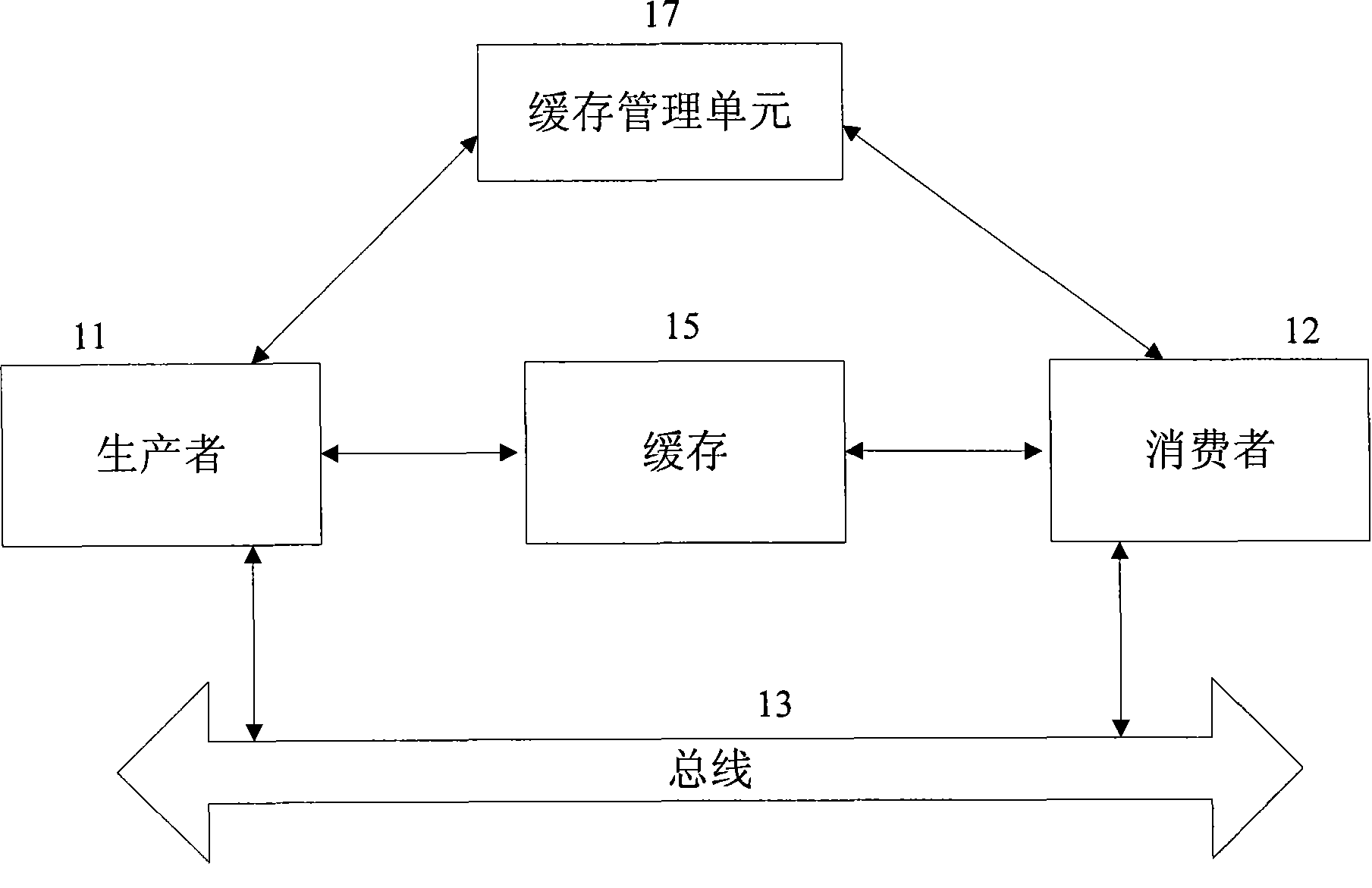

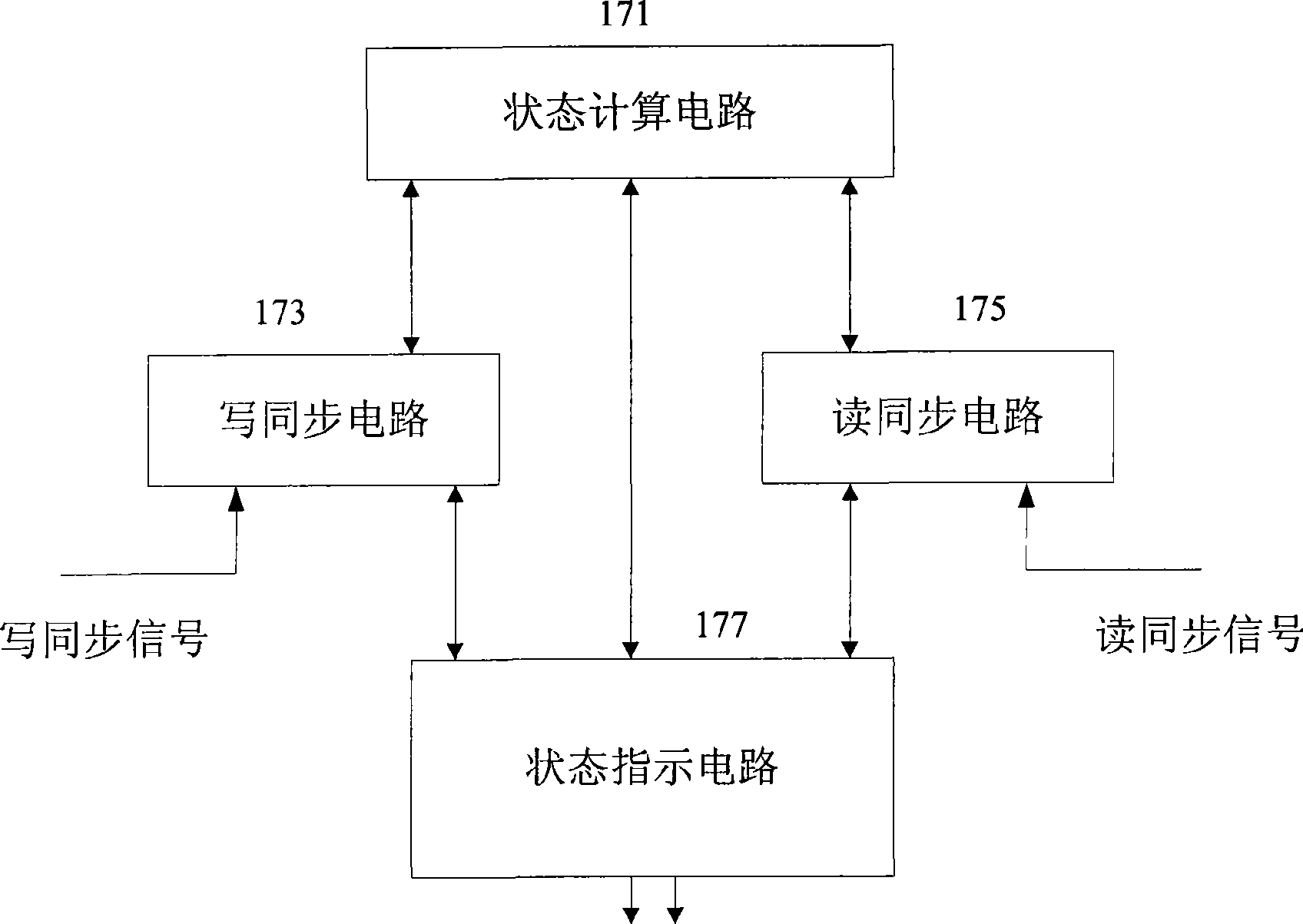

Caching management unit and caching management method

InactiveCN101430663ATake advantage ofImprove caching efficiencyMemory adressing/allocation/relocationManagement unitParallel computing

The invention discloses a cache management unit and a cache management method. The cache management unit comprises a state calculation circuit for calculating effective data in a cache and obtaining a cache state according to calculation of effective data values; a state indication circuit for outputting the cache state to a producer and / or a consumer; a writing synchronization circuit for sending a writing synchronization signal to perform writing synchronization operation on the state calculation circuit when the producer really writings primary data in the cache at each time; a reading synchronization circuit for sending a reading synchronization signal to perform reading synchronization operation on the state calculation circuit when the customer receives the primary data in the cache at each time; a writing presynchronization circuit for sending a writing presynchronization signal to perform writing presynchronization operation on the state calculation circuit when the producer sends out a write command at each time; and a reading presynchronization circuit for sending a reading presynchronization signal to perform reading presynchronization operation on the state calculation circuit when the customer sends out a read command at each time. The cache management unit and the cache management method help enhance the overall cache efficiency.

Owner:SHANGHAI MAGIMA DIGITAL INFORMATION

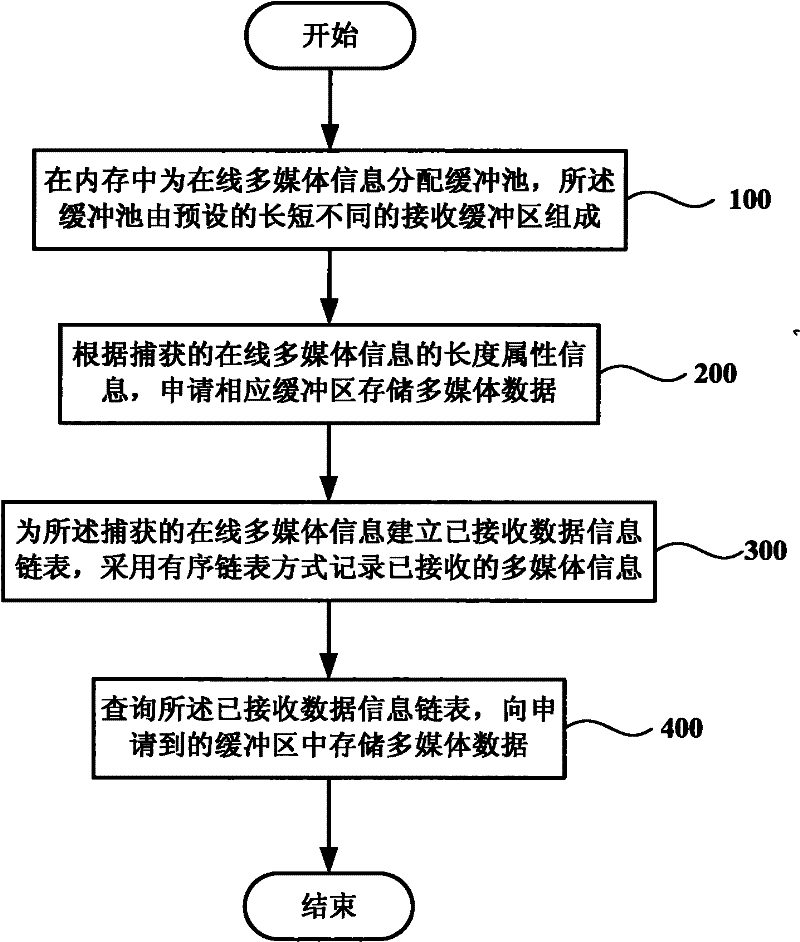

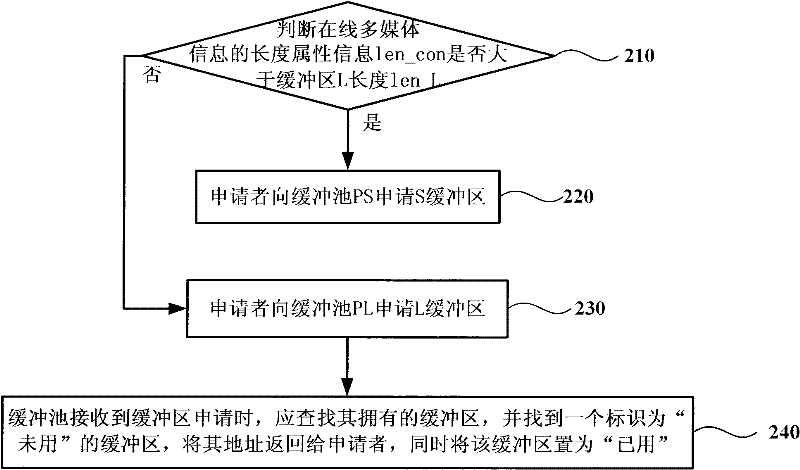

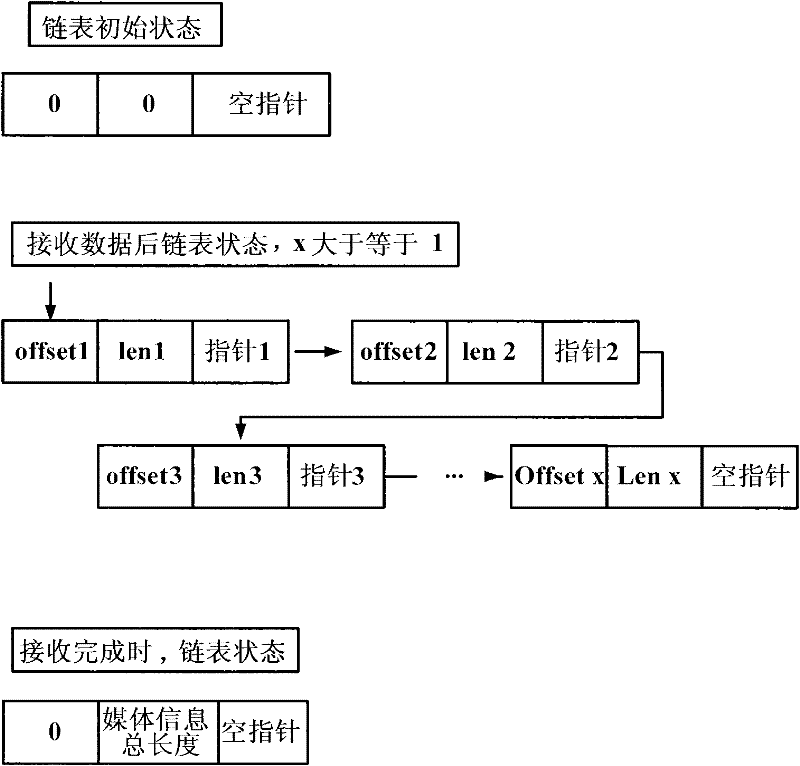

A data caching method and system for online multimedia information mining

ActiveCN102270207AImprove stabilityMeet the requirements of high data volume concurrencySpecial data processing applicationsInternal memoryTerm memory

The invention discloses an online multimedia information mining oriented data caching method and system. The method comprises the following steps of: distributing a cache pool for the online multimedia information in an internal memory, wherein the cache pool is composed of pre-set receiving buffers with different lengths; applying for corresponding buffers to store multimedia data according to length attribute information of the captured online multimedia information; establishing a received data information chain table for the captured online multimedia information, and recording the received multimedia information in a form of orderly chain table; and inquiring the received data information chain table, and storing the multimedia data in the buffers.

Owner:中科海微(北京)科技有限公司

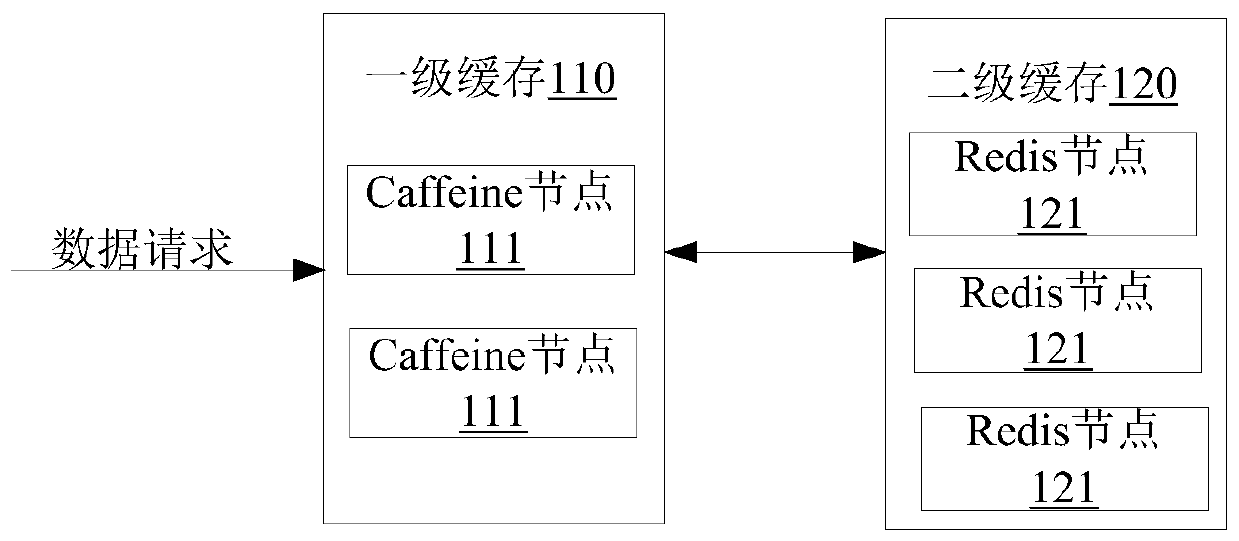

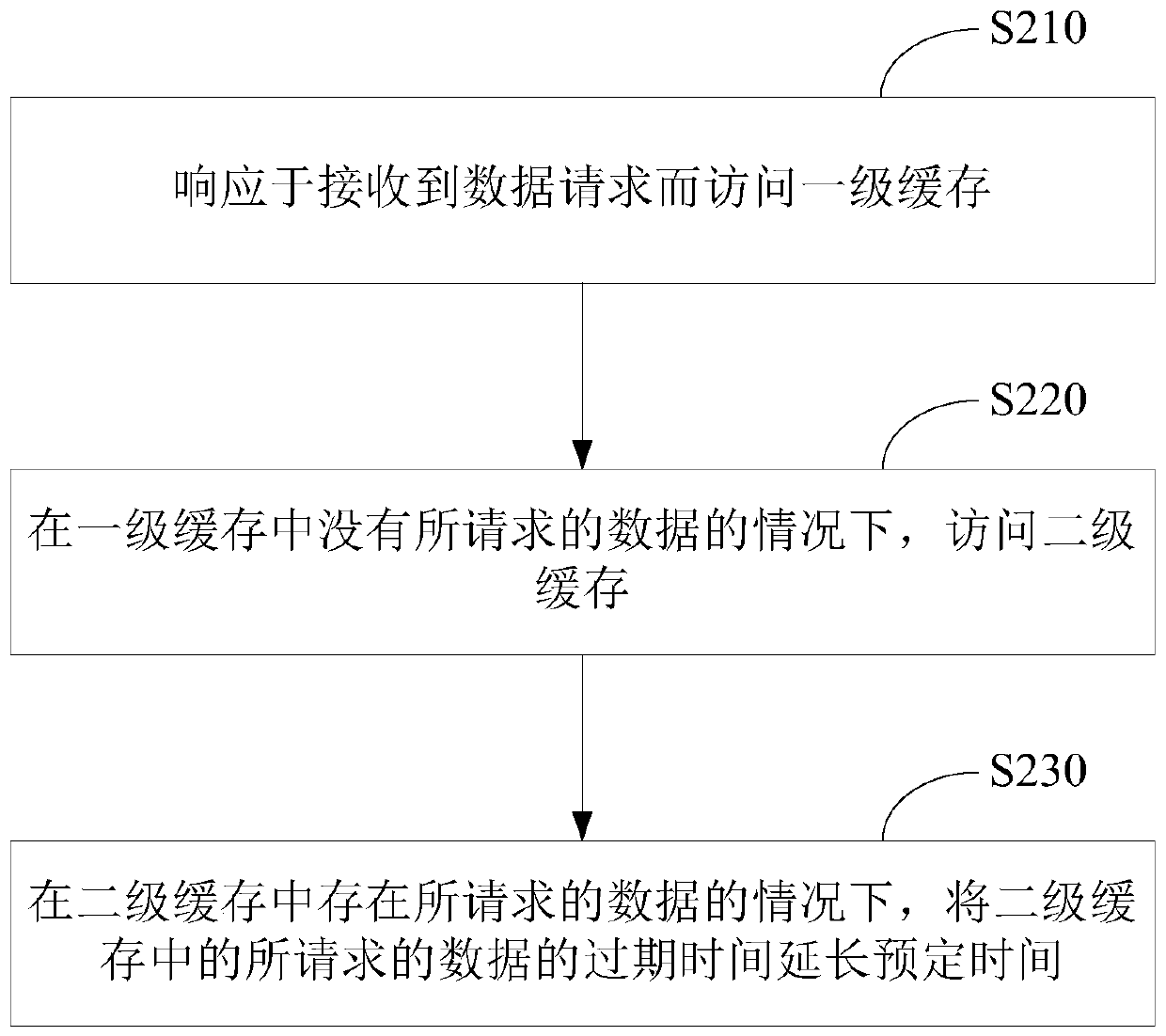

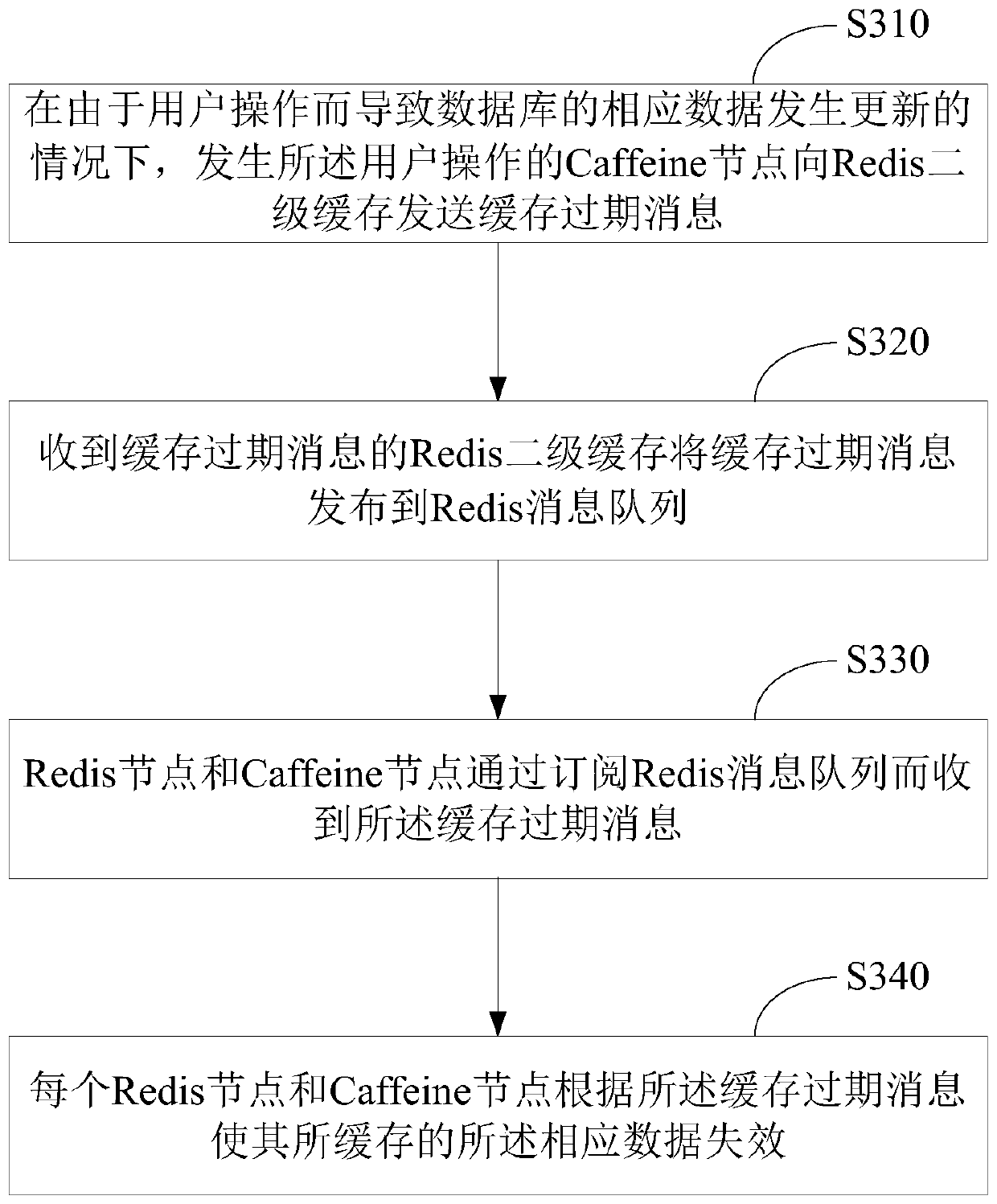

Multilevel cache system, access control method and device thereof and storage medium

PendingCN110069419AImprove caching efficiencyImprove caching capacityTransmissionMemory systemsExpiration TimeAccess control matrix

The invention relates to the technical field of cloud storage, in particular to a multi-level caching system and an access control method and device thereof and a storage medium. The method comprisesthe following steps: accessing a first-level cache in response to receiving a data request; under the condition that the requested data does not exist in the first-level cache, accessing the second-level cache; if there is the requested data in the second level cache, extending the expiration time of the requested data in the second level cache for a predetermined time. Through the embodiments ofthe invention, an optimized distributed storage technology can be provided, the cache efficiency is improved, and the cache capacity expansion cost is reduced.

Owner:CHINA PING AN LIFE INSURANCE CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com