Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

433 results about "Cache access" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

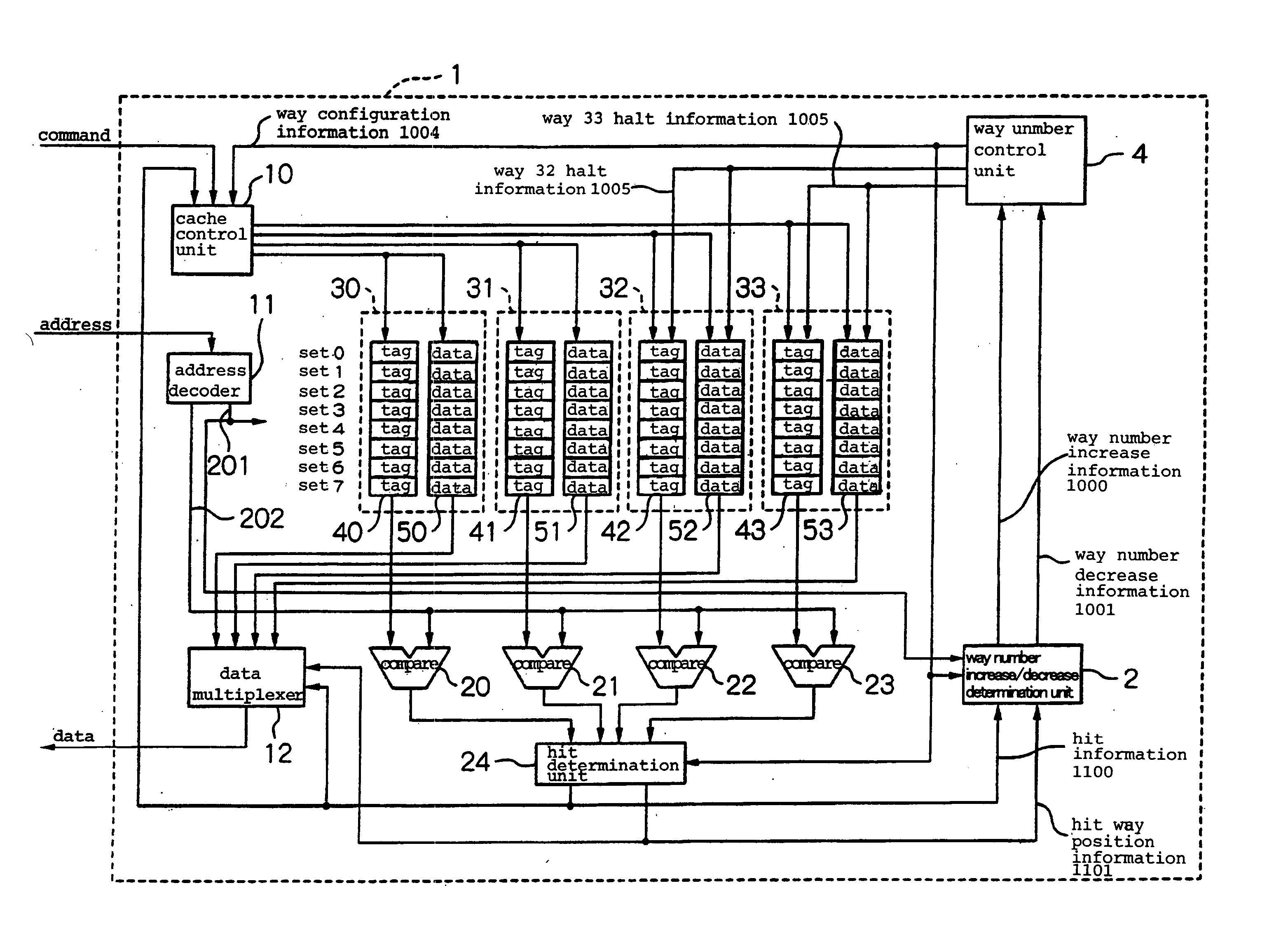

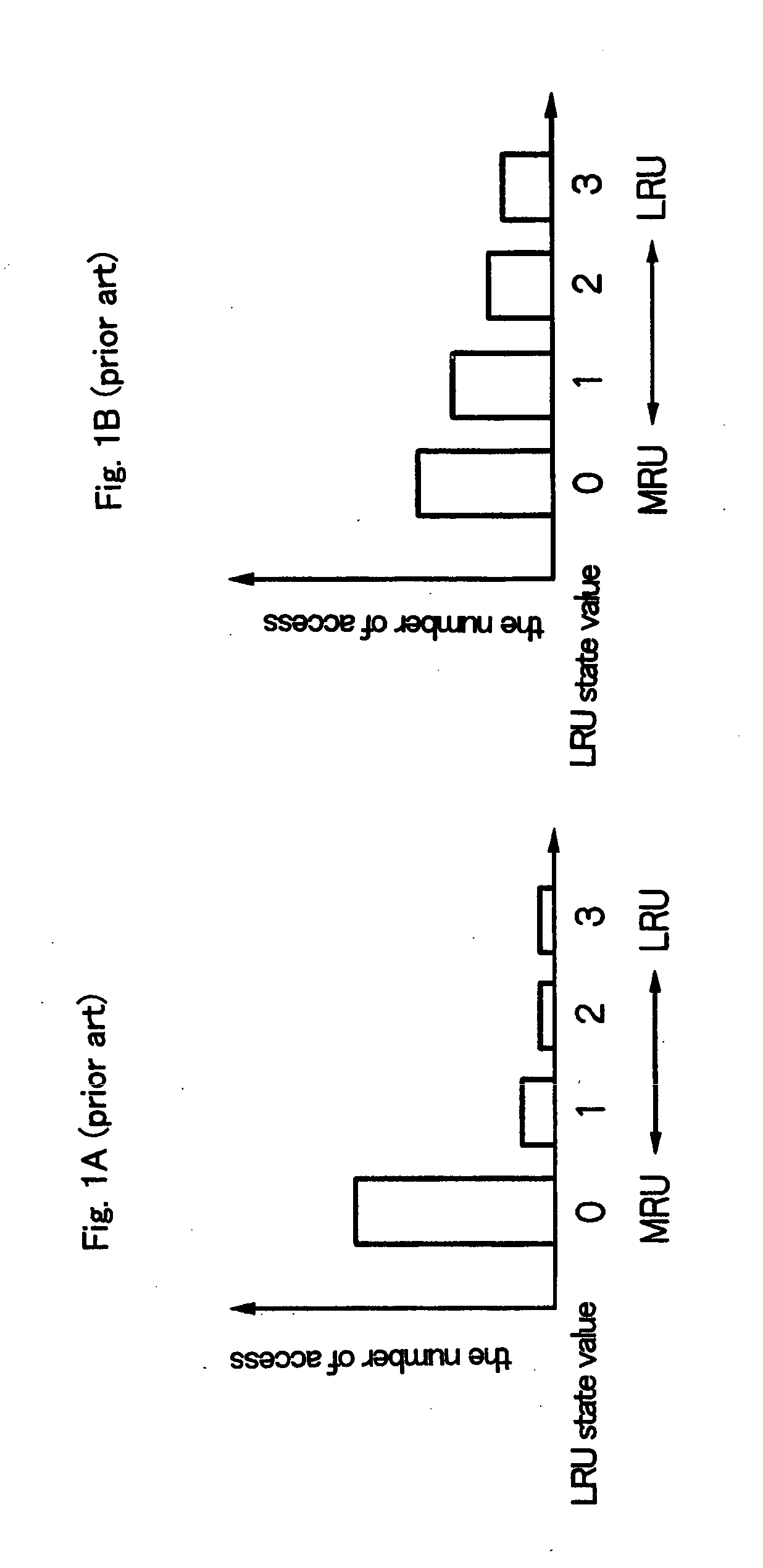

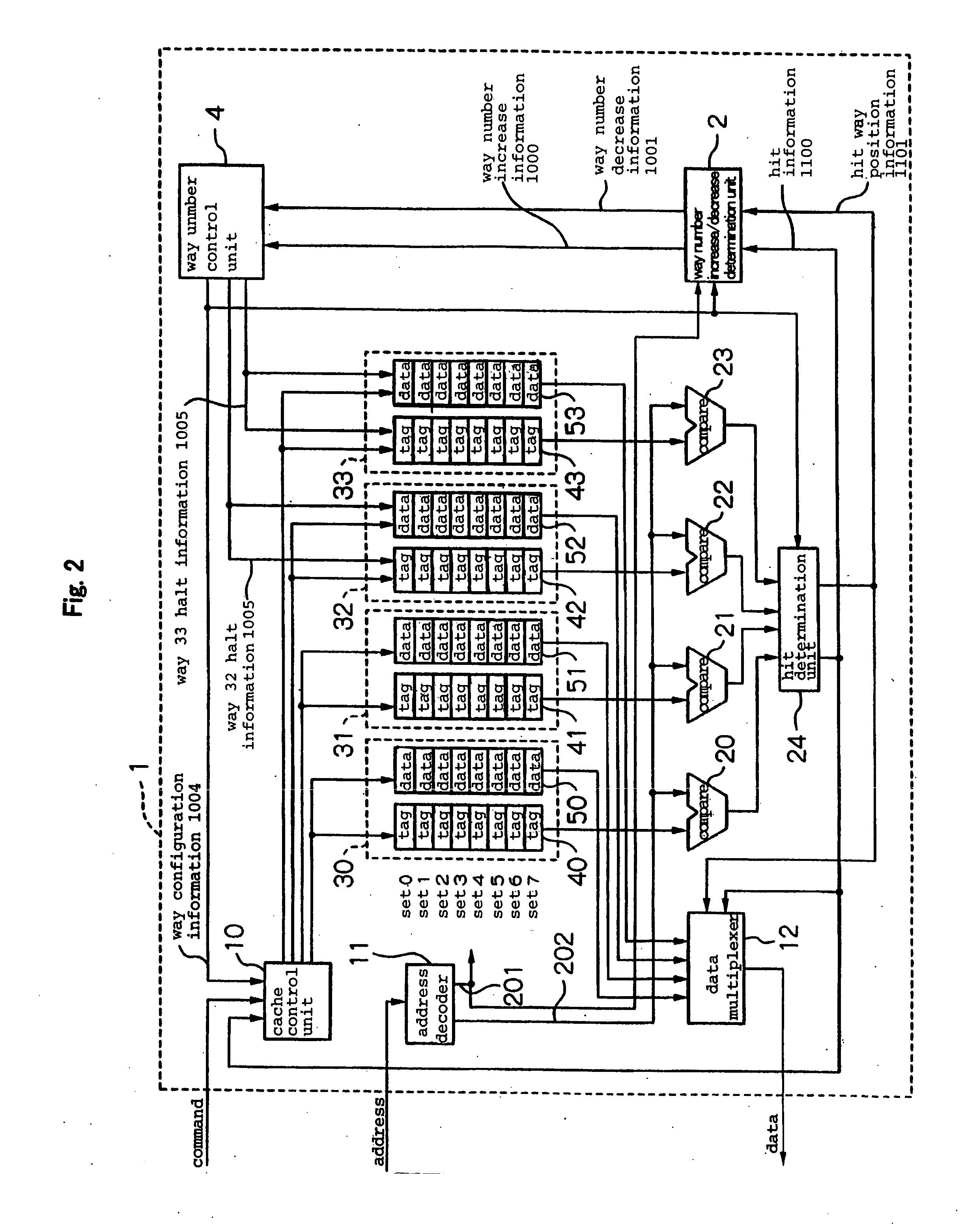

Cache memory with the number of operated ways being changed according to access pattern

InactiveUS20050246499A1Improve performanceReduce power consumptionMemory architecture accessing/allocationEnergy efficient ICTCache accessTime of use

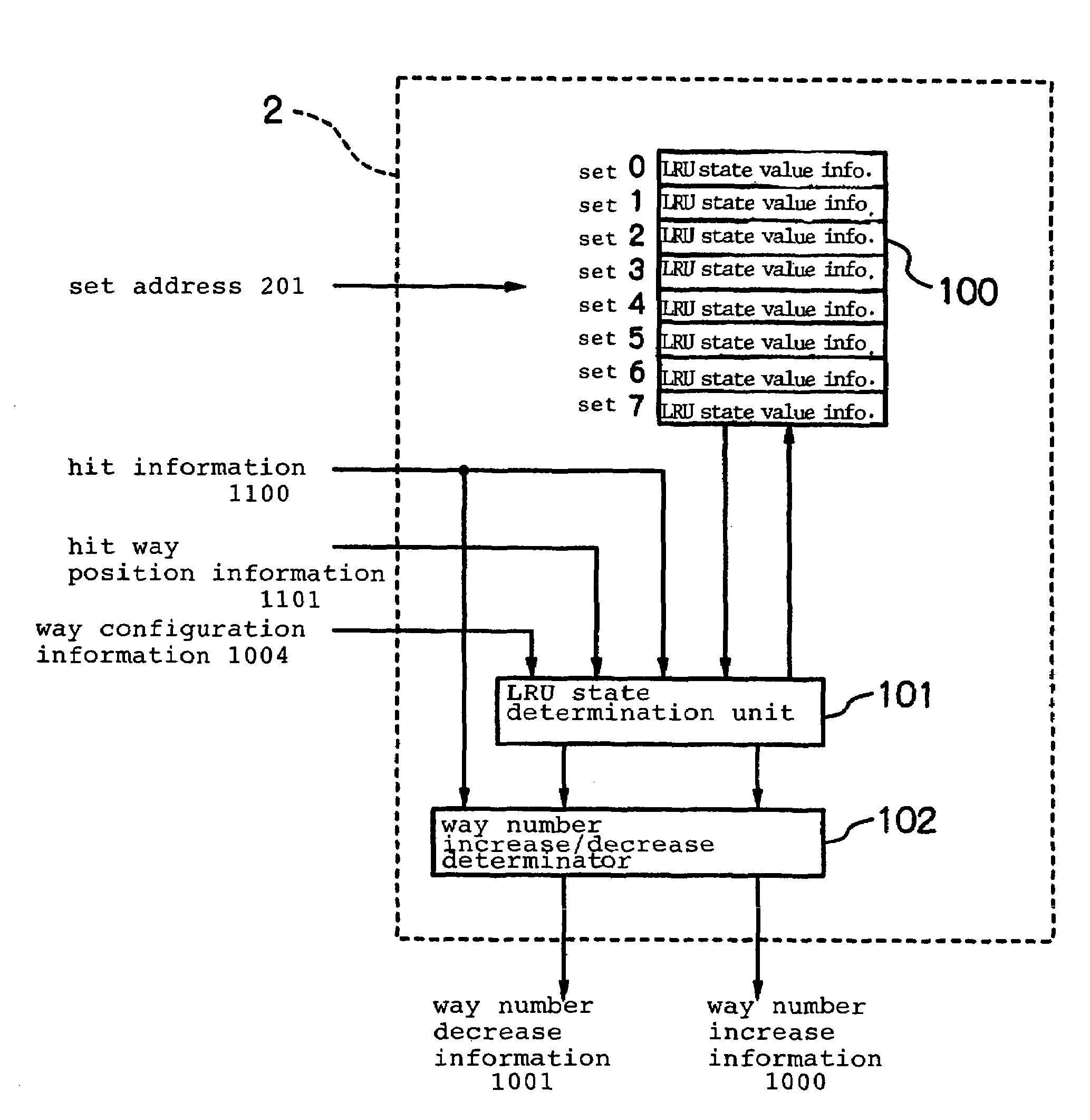

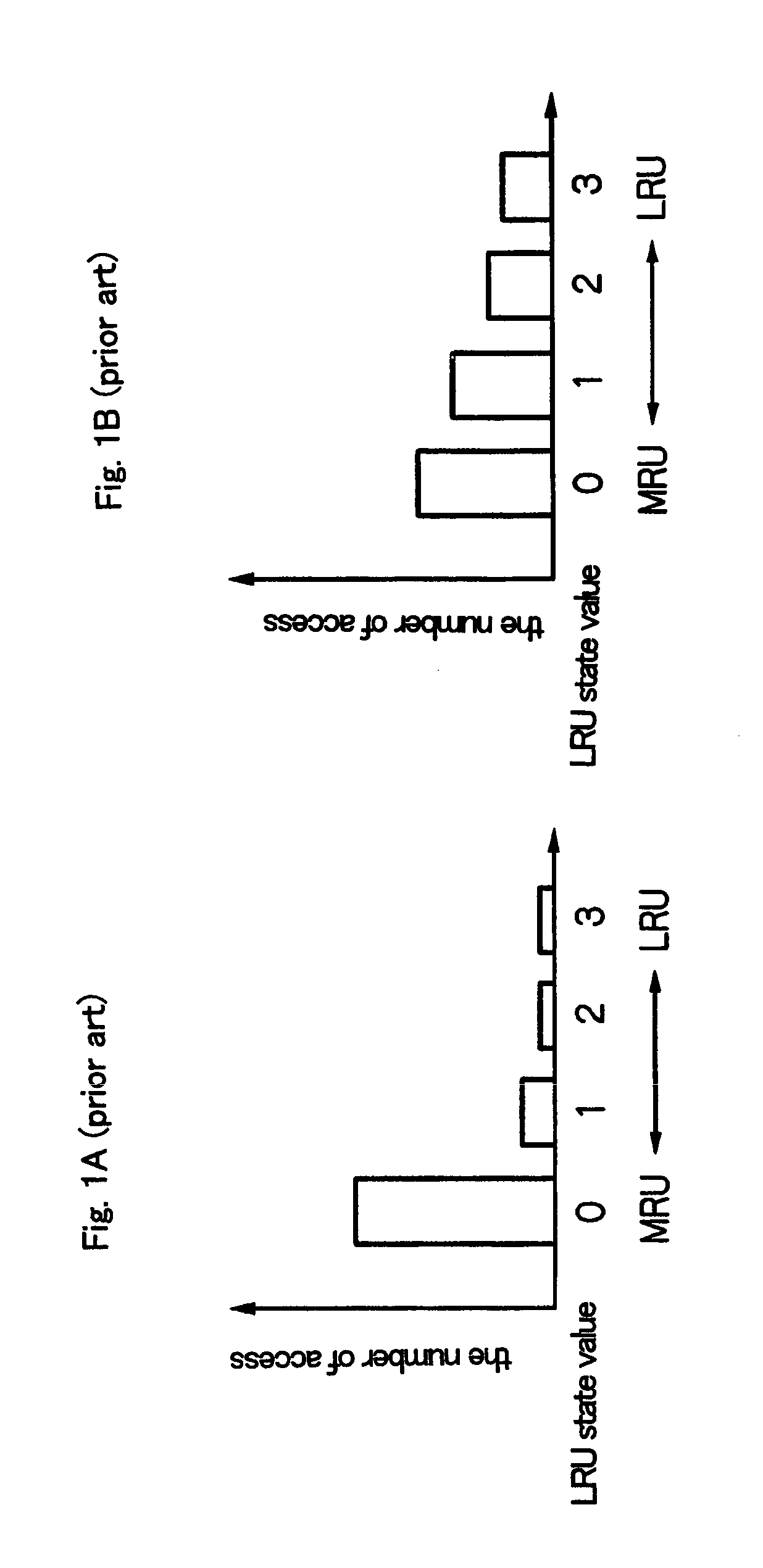

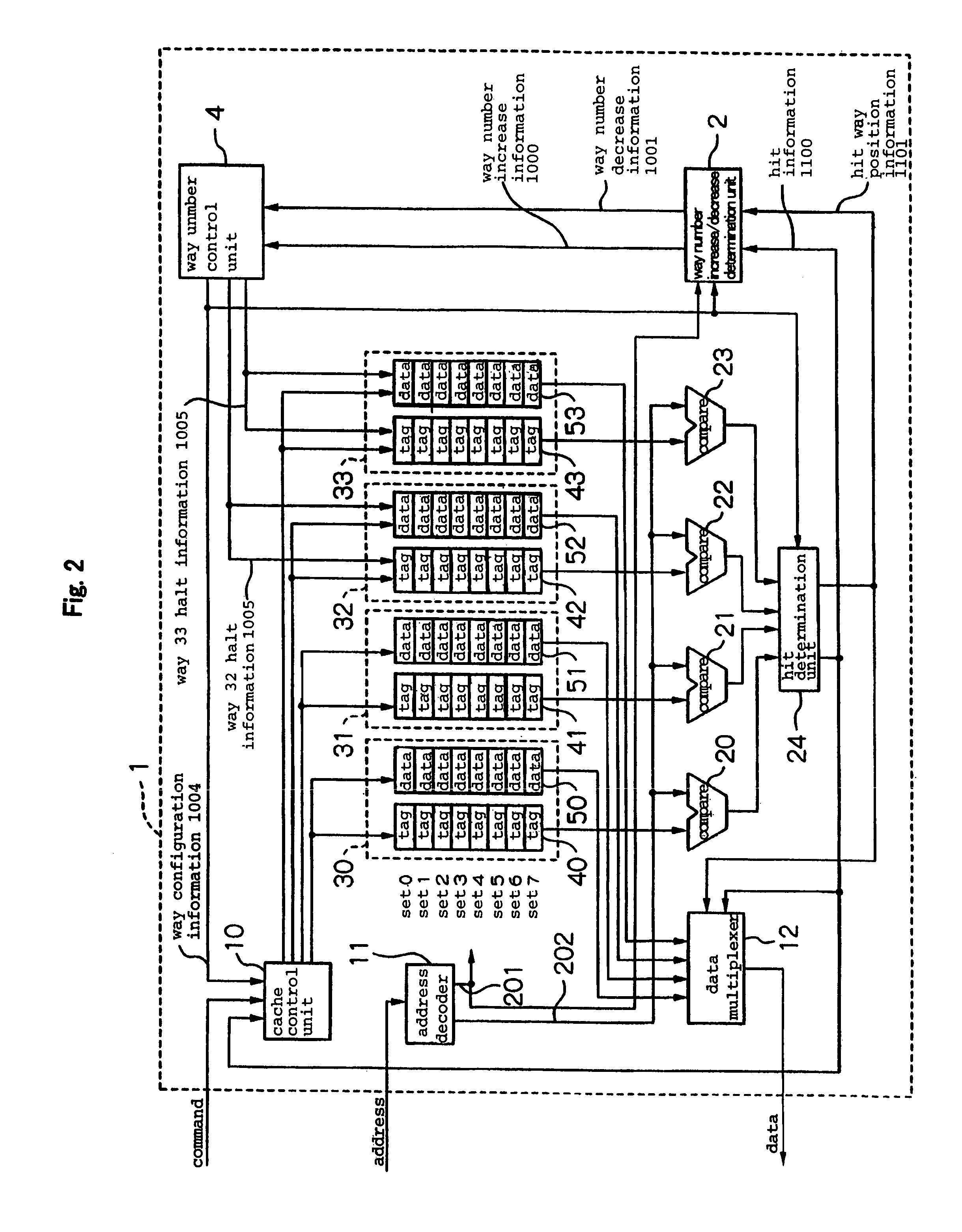

An improvement in performance and a reduction of power consumption in a cache memory can both be effectively realized by increasing or decreasing the number of operated ways in accordance with access patterns. A hit determination unit determines the hit way when a cache access hit occurs. A way number increase / decrease determination unit manages, for each of the ways that are in operation, the order from the way for which the time of use is most recent to the way for which the time of use is oldest. The way number increase / decrease determination unit then finds the rank of the hit ways that have been obtained in the hit determination unit and counts the number of hits for each rank in the order. The way number increase / decrease determination unit further determines increase or decrease of the number of operated ways based on the access pattern that is indicated by the relation of the number of hits to each rank in the order. A way number control unit then selects operation or halt of operation for each way in accordance with the determination to increase or decrease the number of operated ways.

Owner:NEC CORP +1

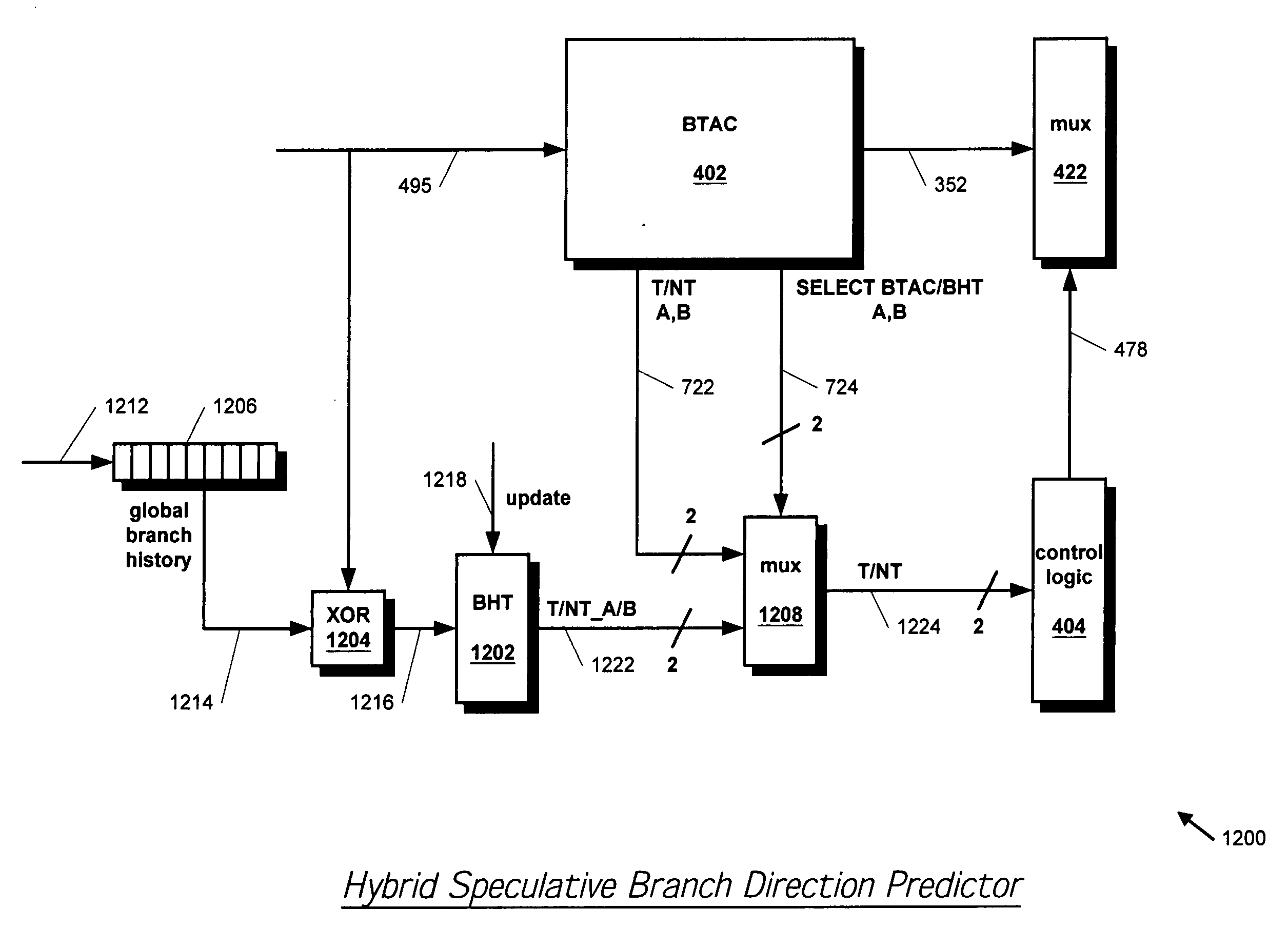

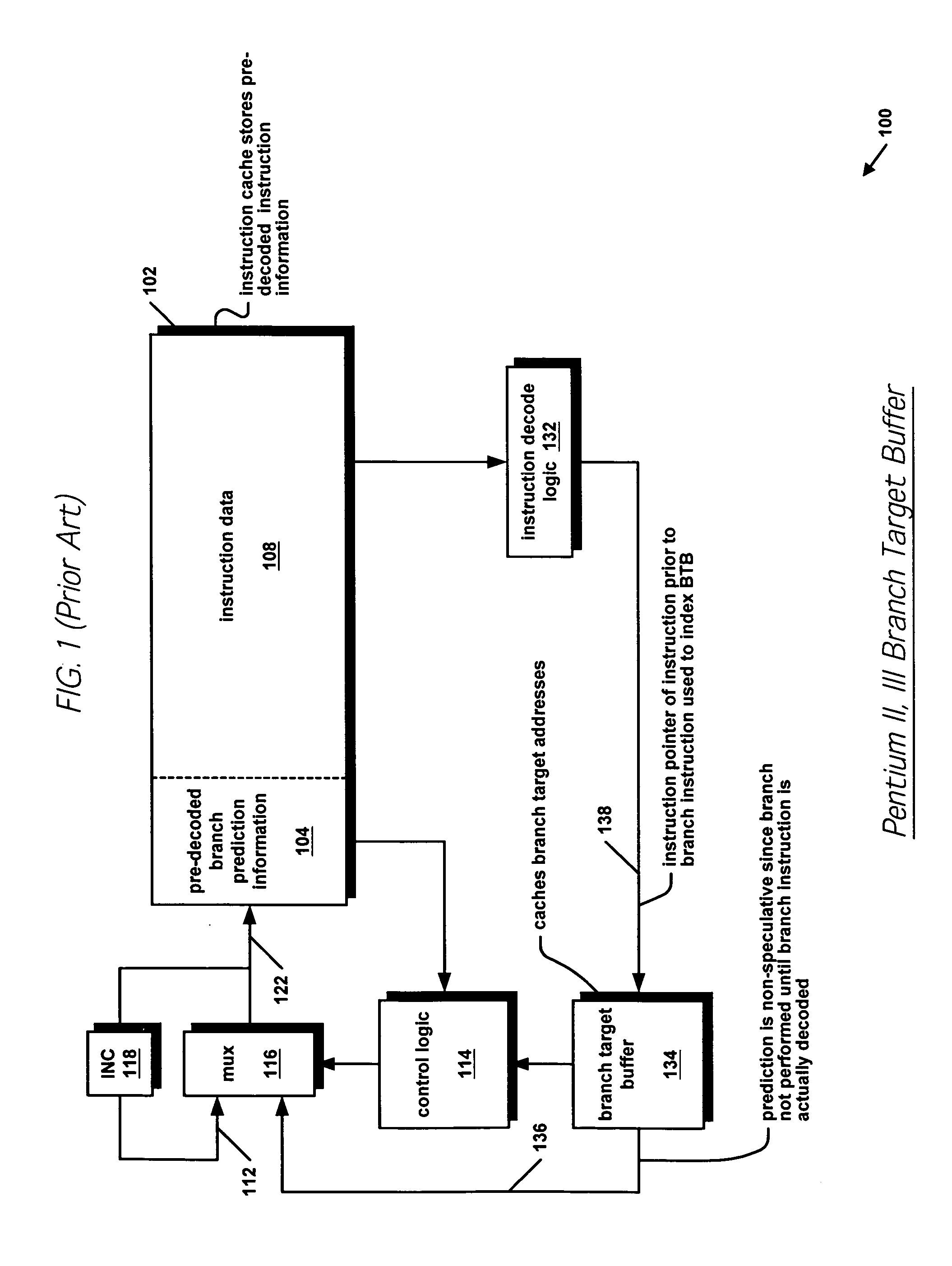

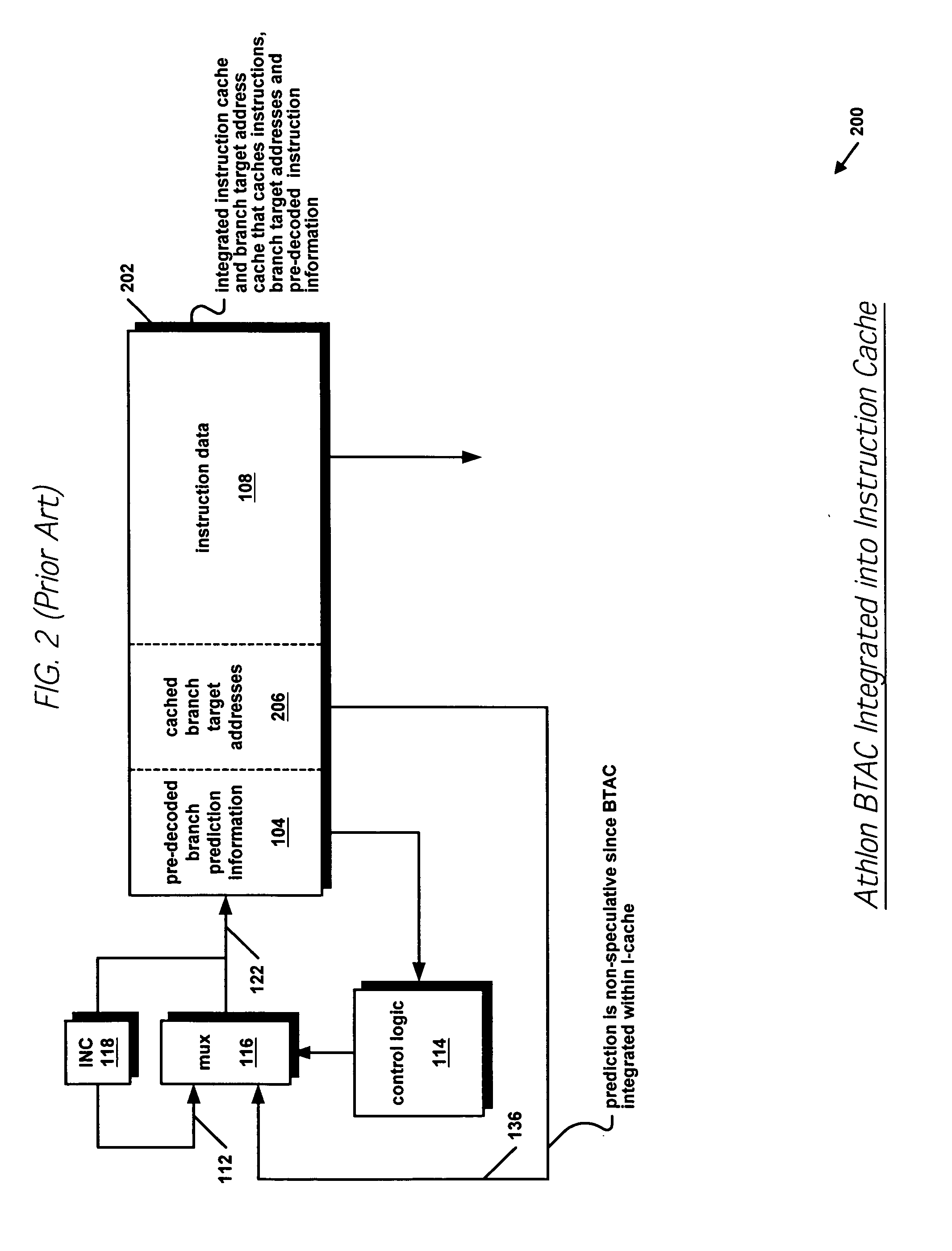

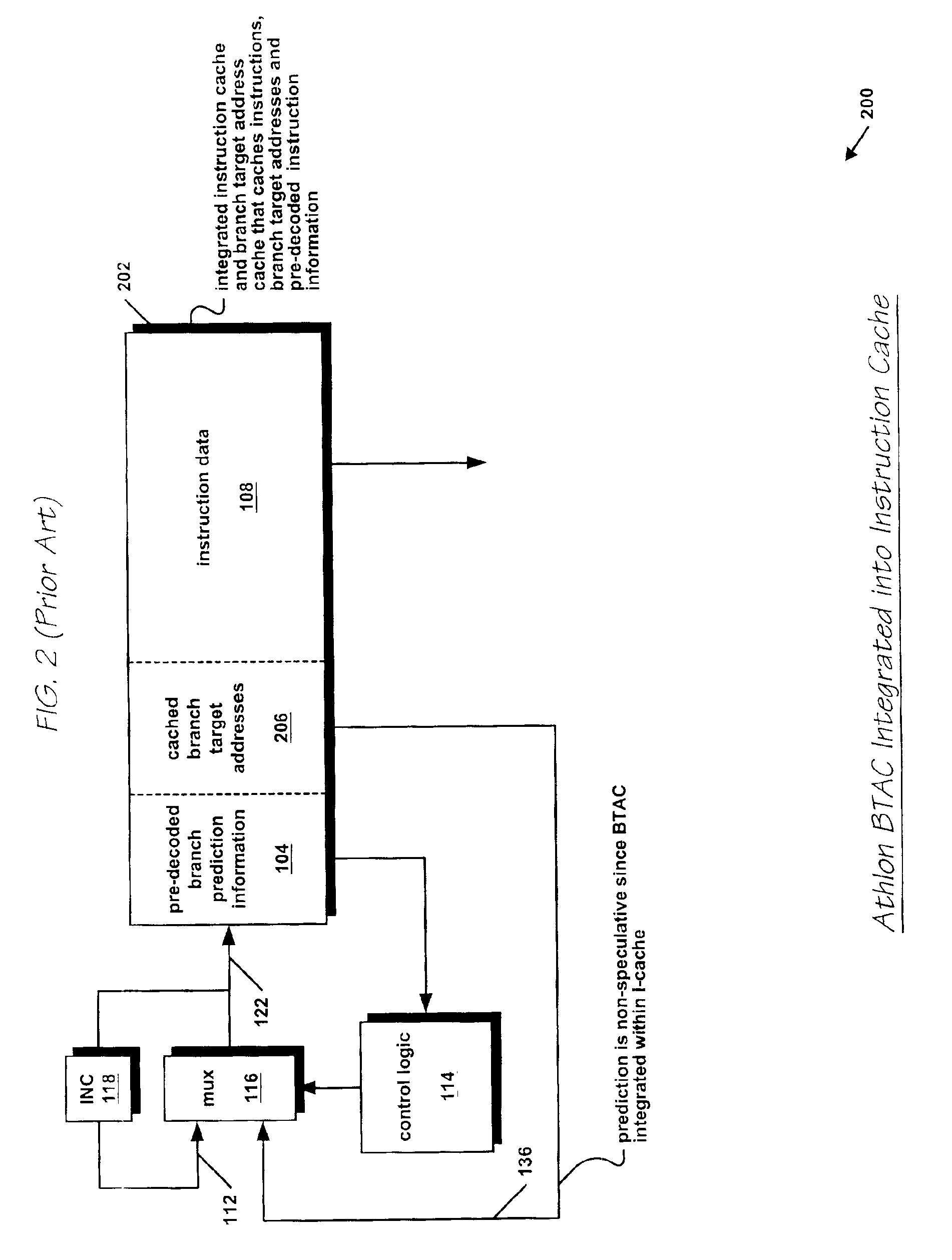

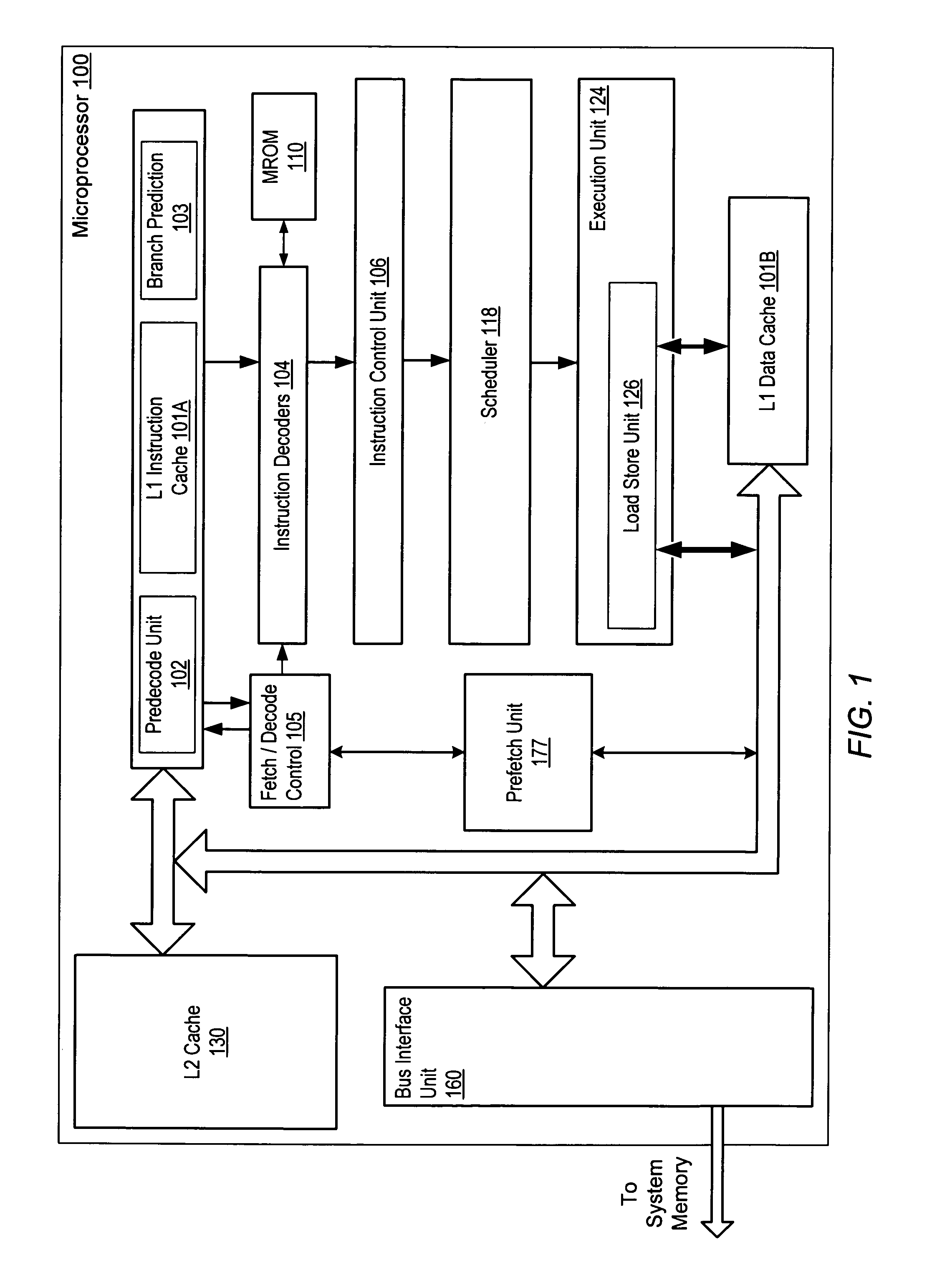

Speculative hybrid branch direction predictor

InactiveUS20050132175A1Improve accuracyReducing overall branch penaltyInstruction analysisDigital computer detailsCache accessParallel computing

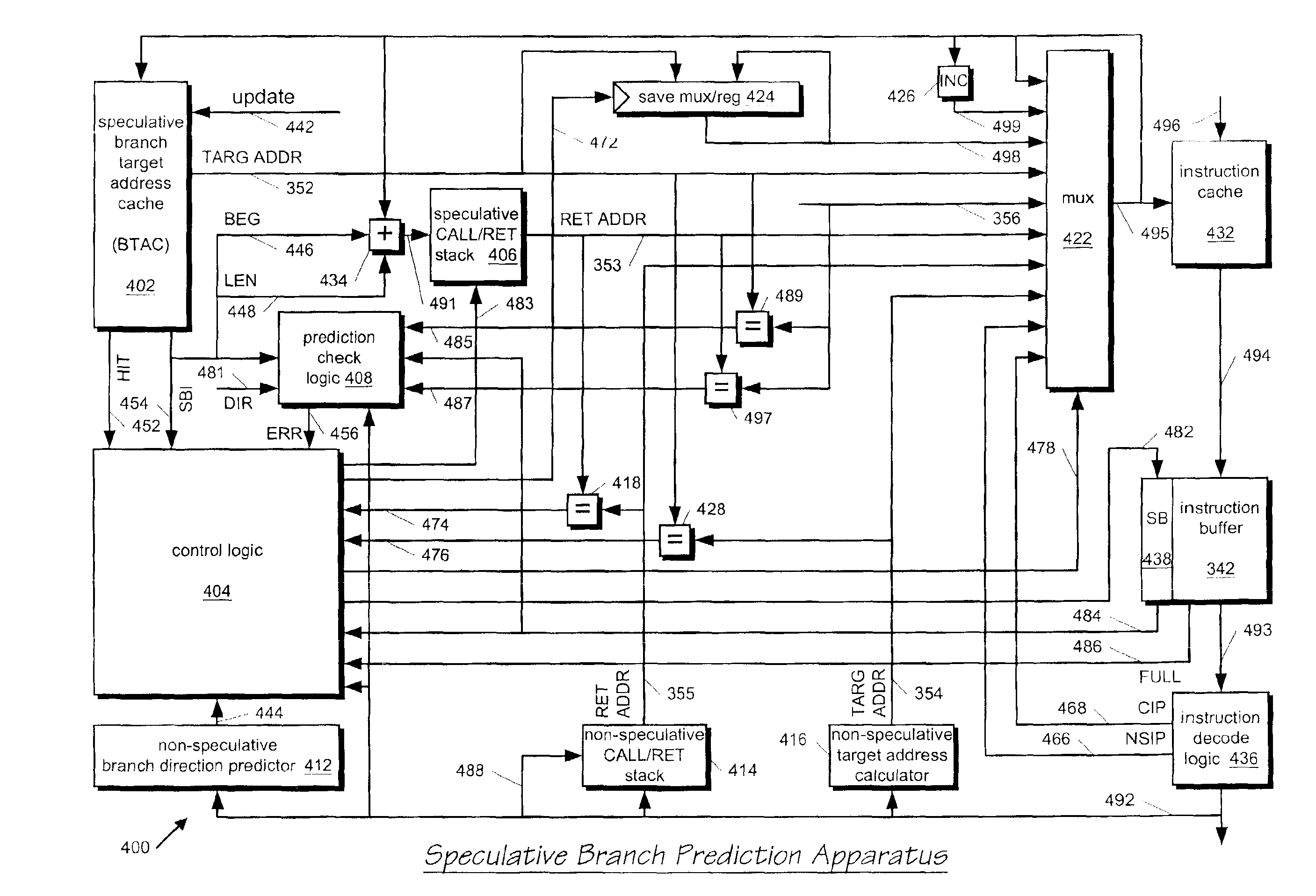

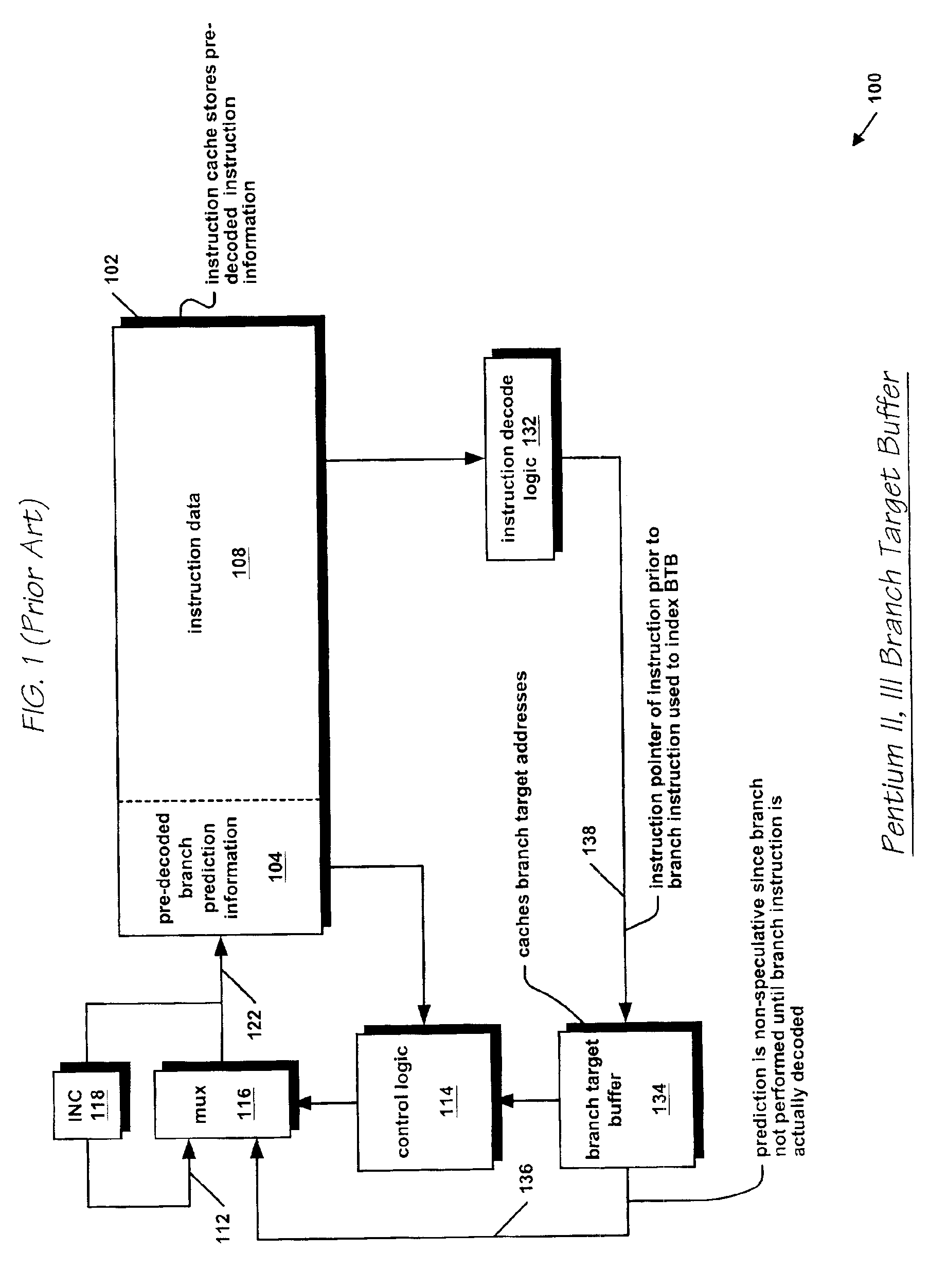

An apparatus for speculatively predicting the direction of a branch instruction in a pipelined microprocessor in a hybrid fashion. A branch target address cache (BTAC) stores a direction prediction about executed branch instructions. The BTAC is indexed by an instruction cache fetch address. The BTAC is accessed in parallel with the instruction cache access, such that the direction prediction is provided before the actual instruction is decoded which is presumed to be a branch instruction corresponding to the direction prediction stored in the BTAC. In parallel with the BTAC access, a branch history table (BHT) is accessed to provide a second speculative direction prediction. The BHT is indexed with a gshare function of the instruction cache fetch address and a global branch history stored in a global branch history register. The BTAC also provides a selector that selects between the two speculative direction predictions.

Owner:IP FIRST

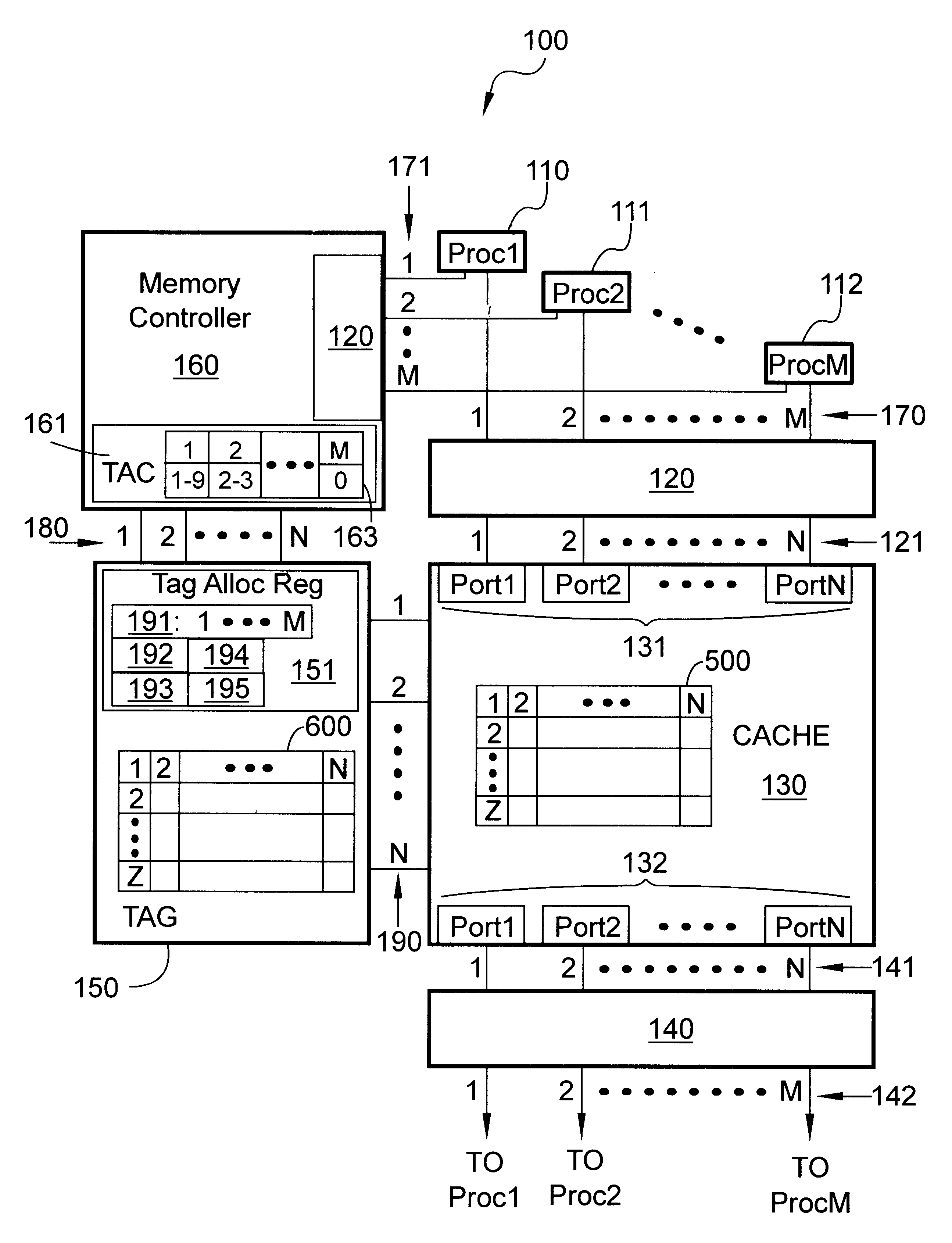

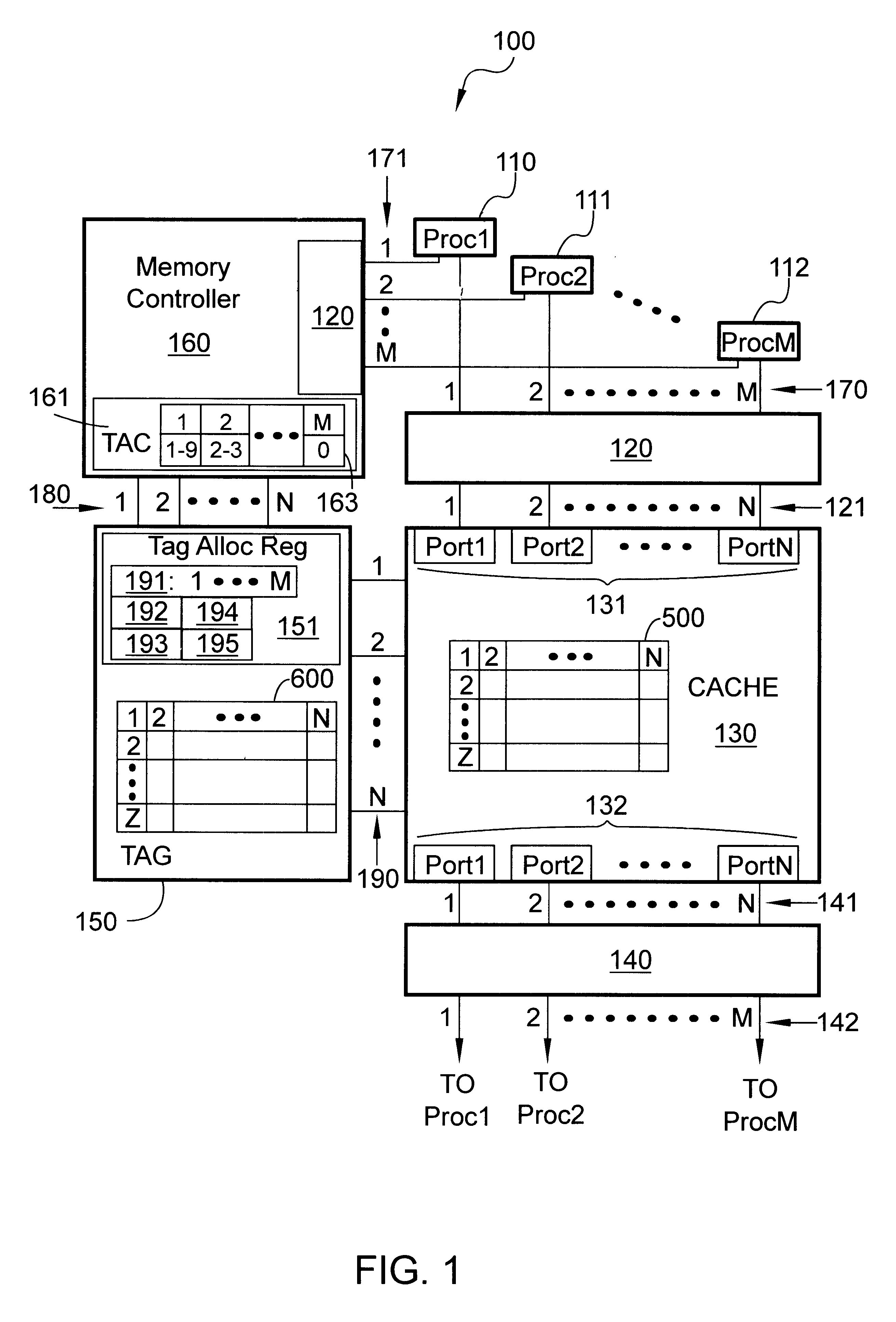

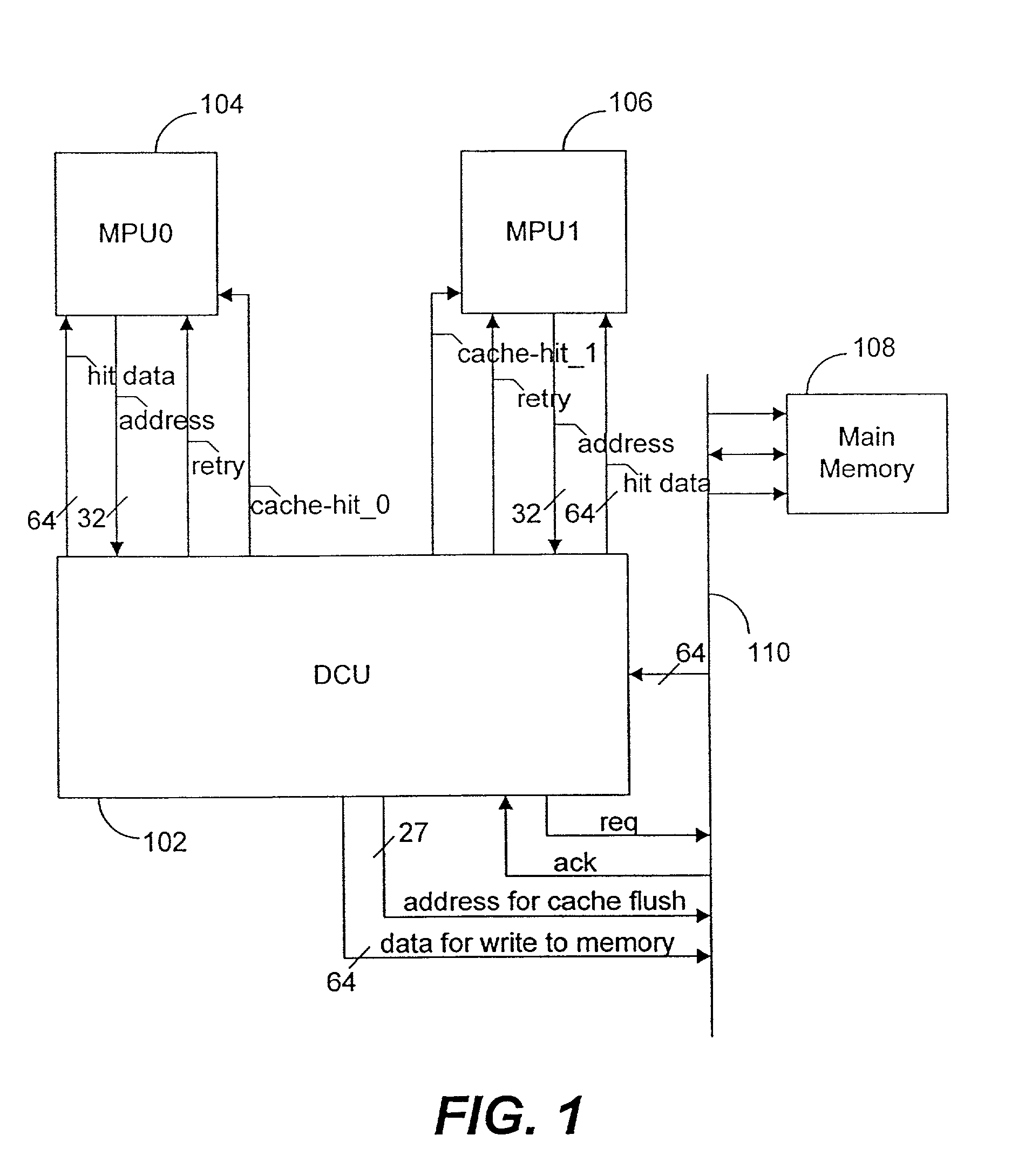

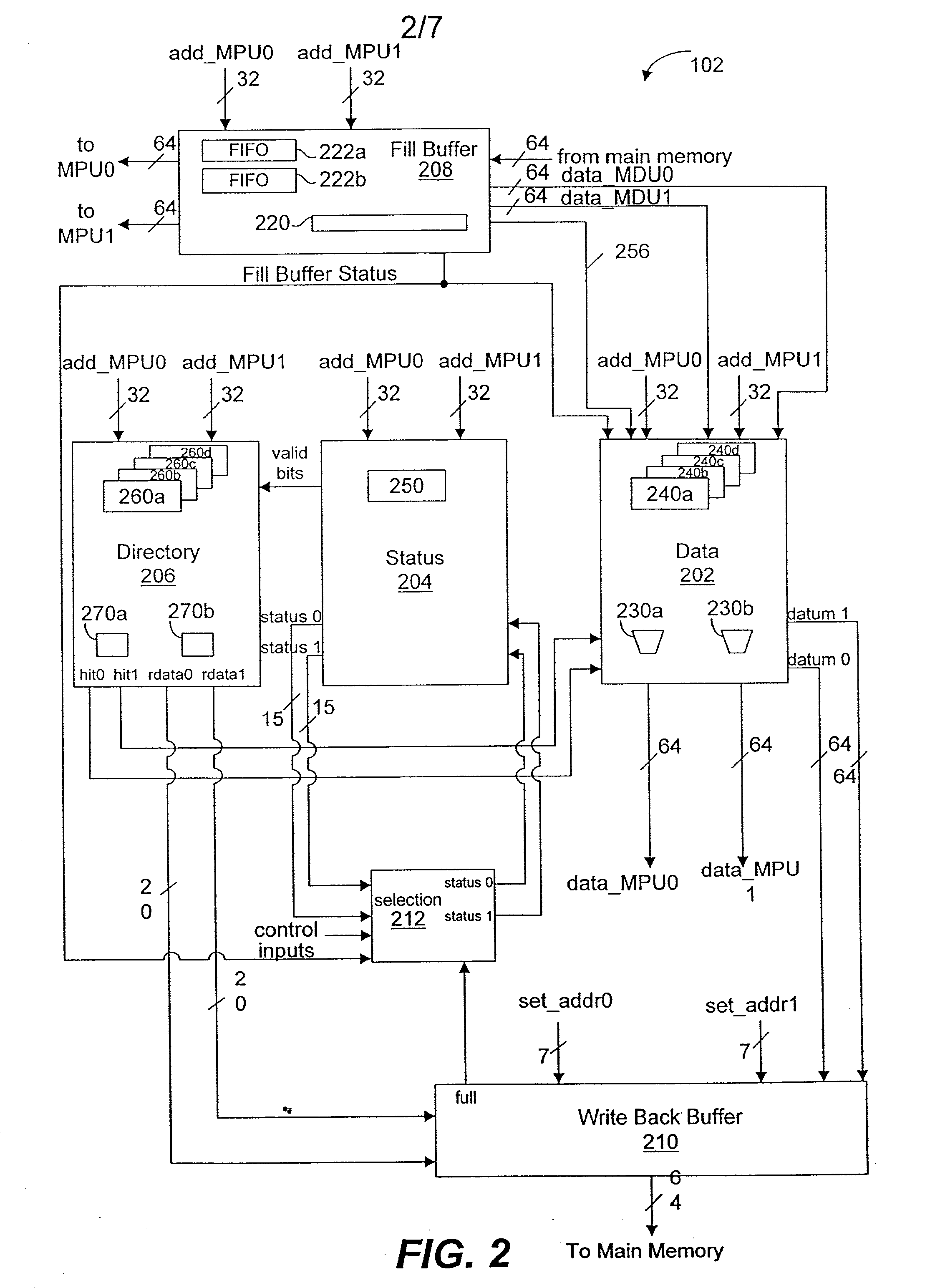

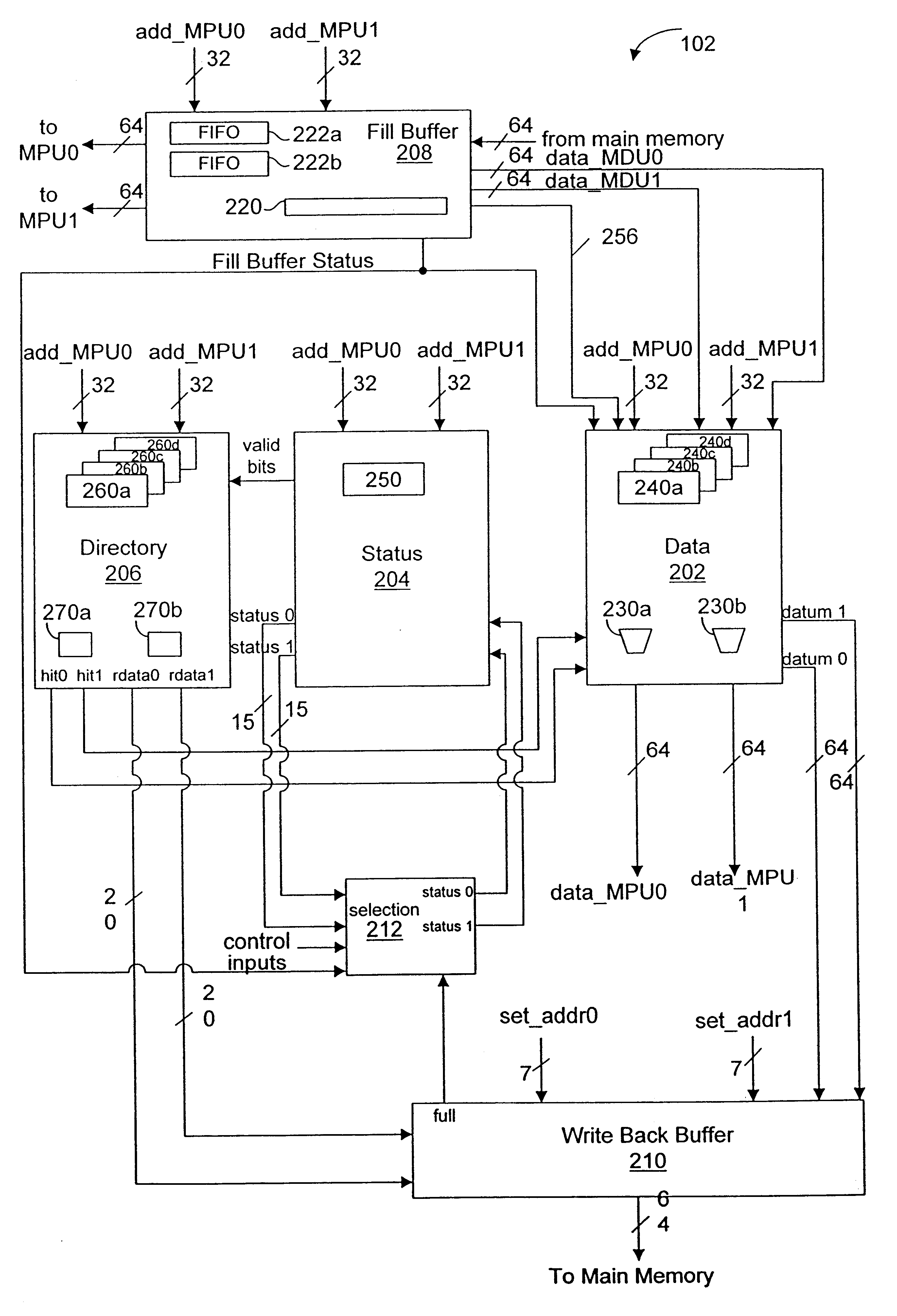

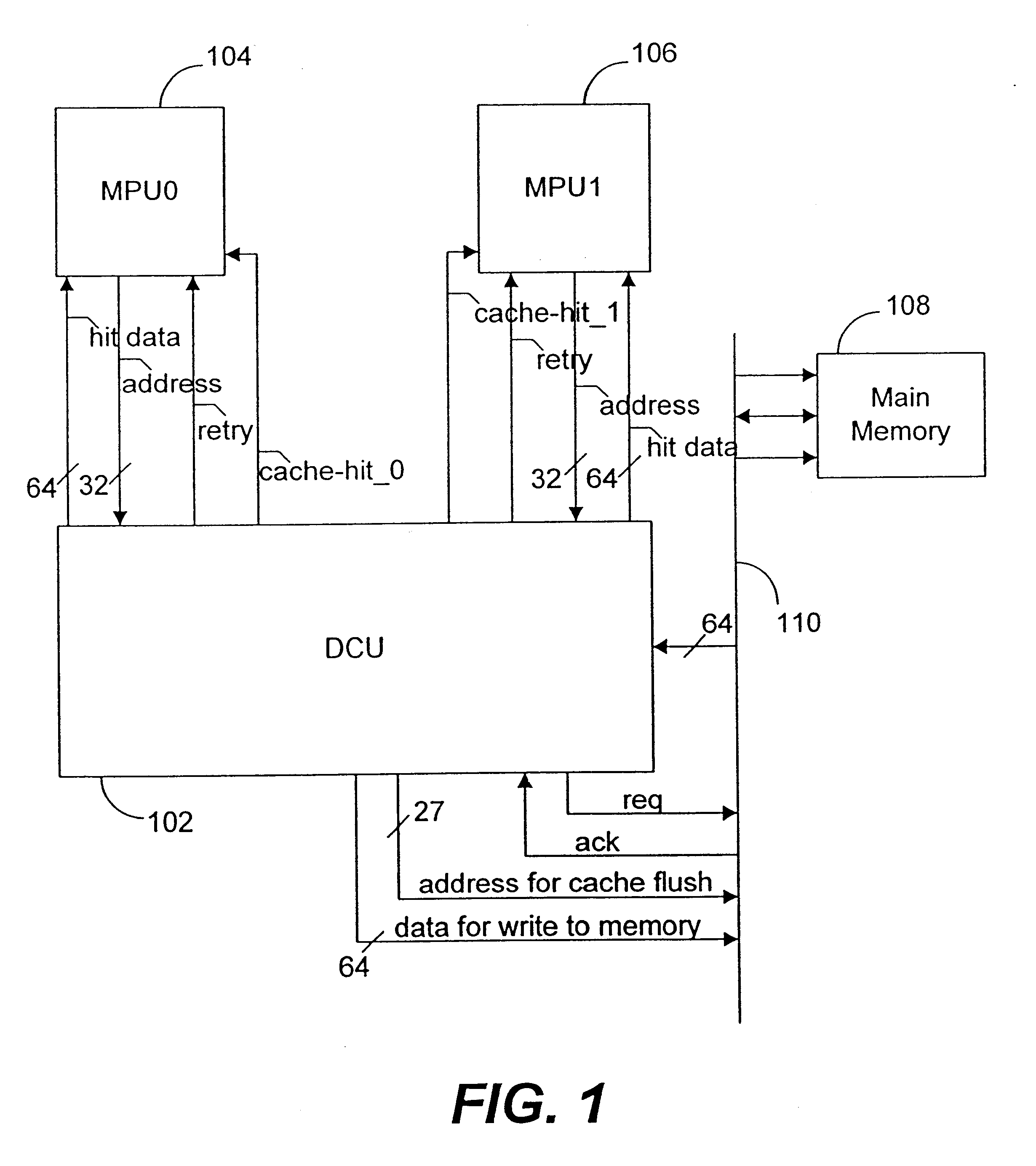

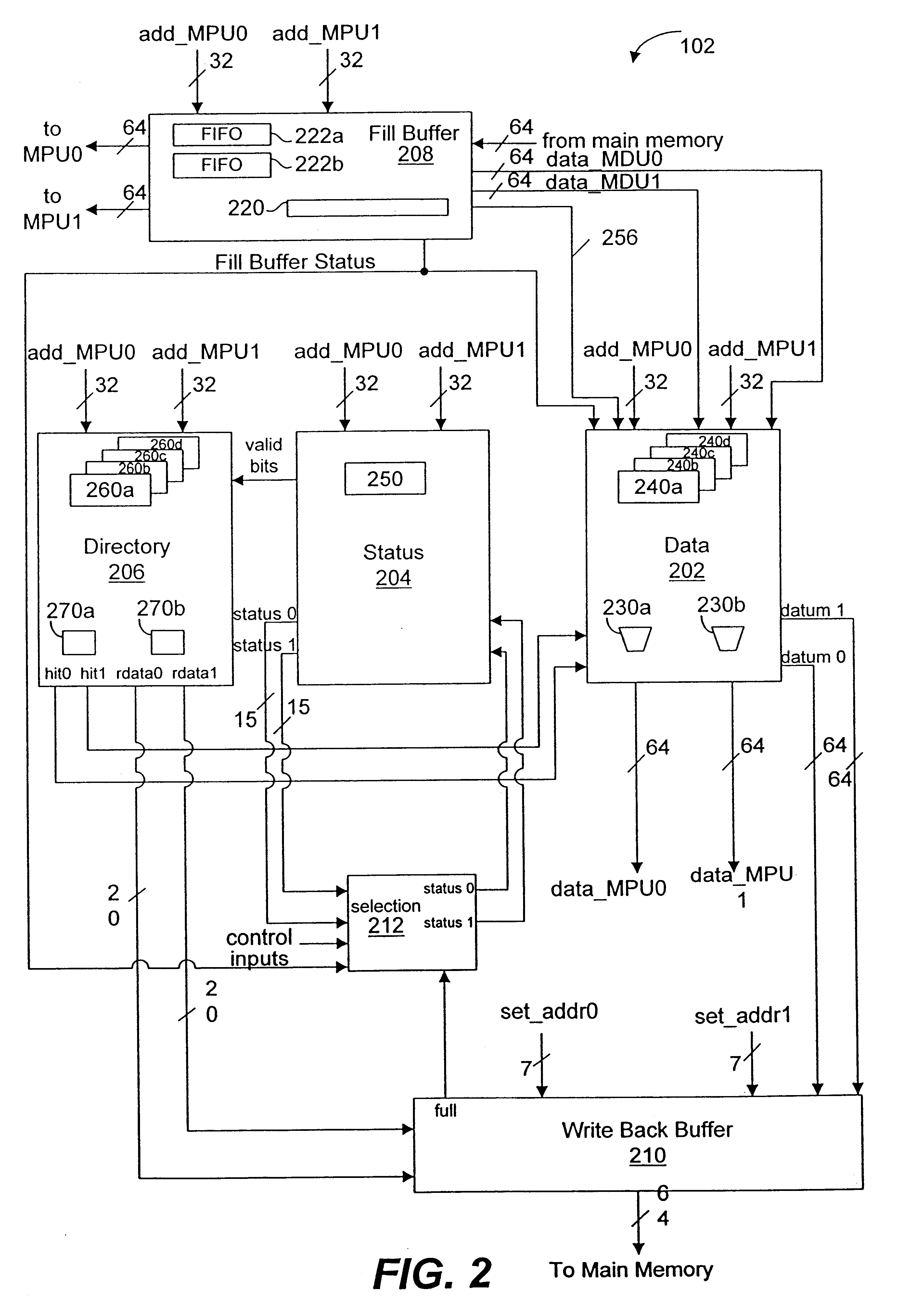

Performance based system and method for dynamic allocation of a unified multiport cache

InactiveUS6604174B1Memory architecture accessing/allocationMemory adressing/allocation/relocationCache accessParallel computing

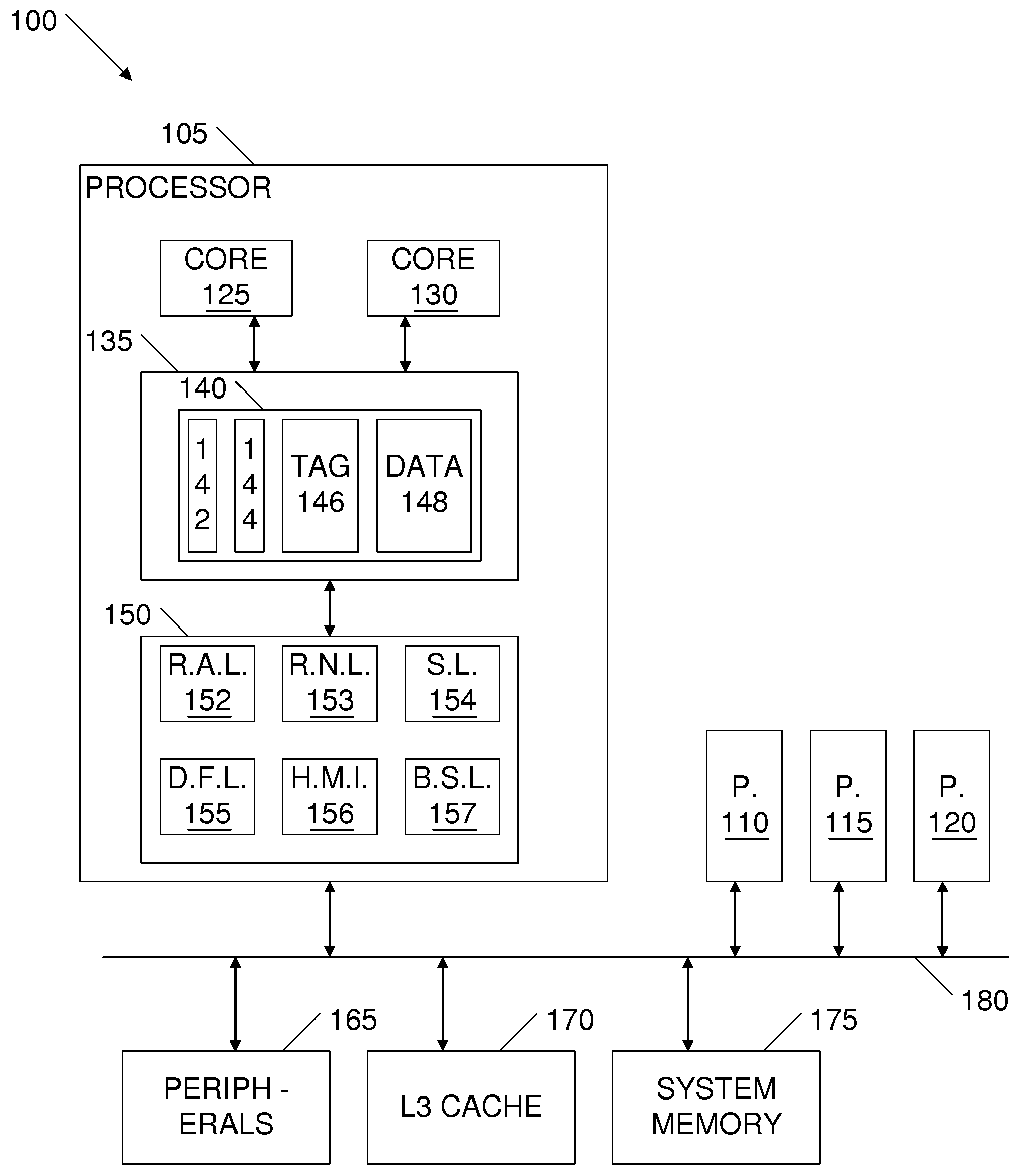

The present invention provides a performance based system and method for dynamic allocation of a unified multiport cache. A multiport cache system is disclosed that allows multiple single-cycle look ups through a multiport tag and multiple single-cycle cache accesses from a multiport cache. Therefore, multiple processes, which could be processors, tasks, or threads can access the cache during any cycle. Moreover, the ways of the cache can be allocated to the different processes and then dynamically reallocated based on performance. Most preferably, a relational cache miss percentage is used to reallocate the ways, but other metrics may also be used.

Owner:GOOGLE LLC

Multiple variable cache replacement policy

A method for selecting a candidate to mark as overwritable in the event of a cache miss while attempting to avoid a write back operation. The method includes associating a set of data with the cache access request, each datum of the set is associated with a way, then choosing an invalid way among the set. Where no invalid ways exist among the set, the next step is determining a way that is not most recently used among the set. Next, the method determines whether a shared resource is crowded . When the shared resource is not crowded, the not most recently used way is chosen as the candidate. Where the shared resource is crowded, the next step is to determine whether the not most recently used way differs from an associated source in the memory and where the not most recently used way is the same as an associated source in the memory, the not most recently used way is chosen as the candidate. Where the not most recently used way differs from an associated source in the memory, the candidate is chosen as the way among the set that does not differ from an associated source in the memory. Where all ways among the set differ from respective sources in the memory, the not most recently used way is chosen as the candidate and the not most recently used way is stored in the shared resource.

Owner:SUN MICROSYSTEMS INC

Enabling and disabling cache bypass using predicted cache line usage

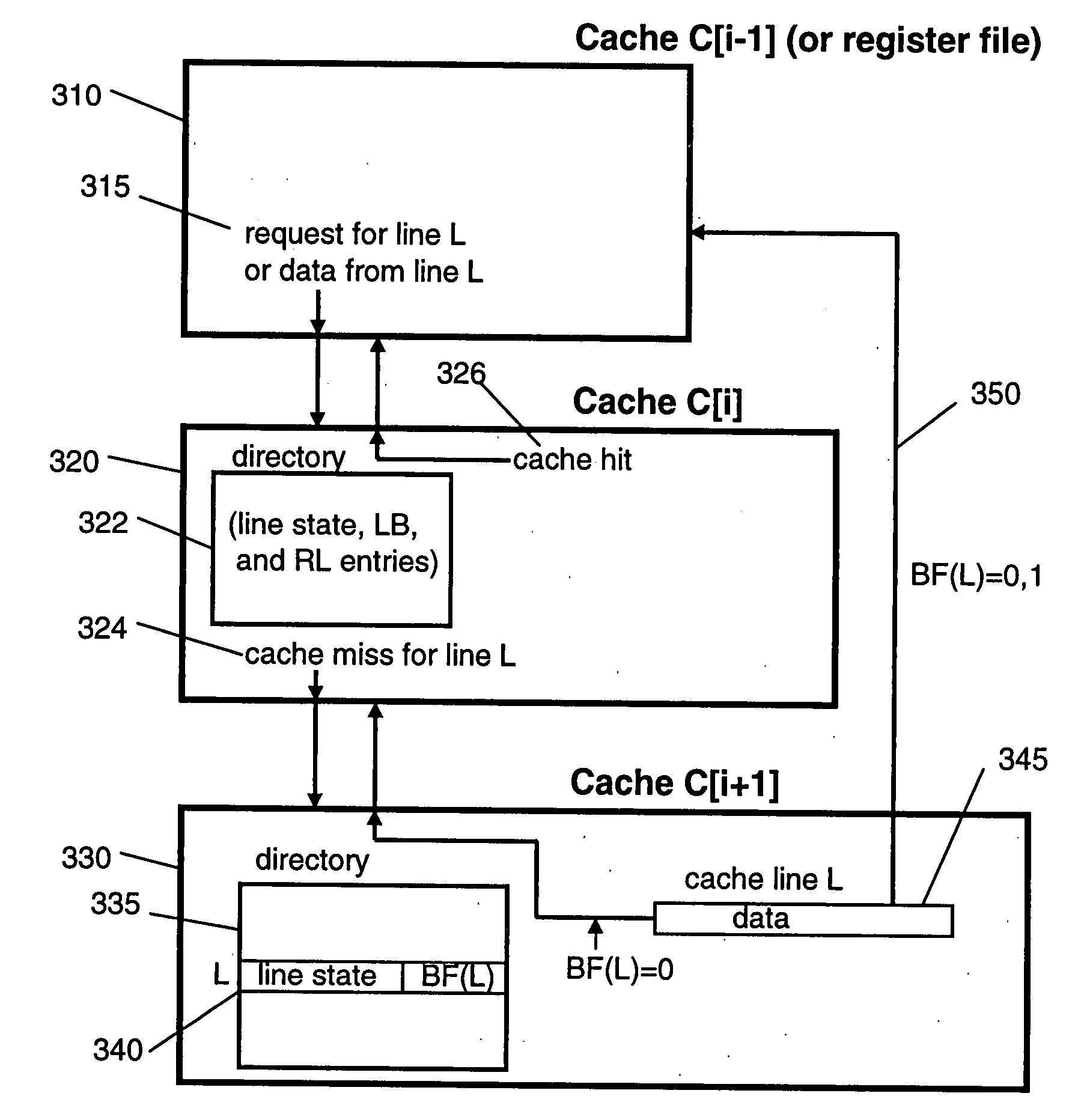

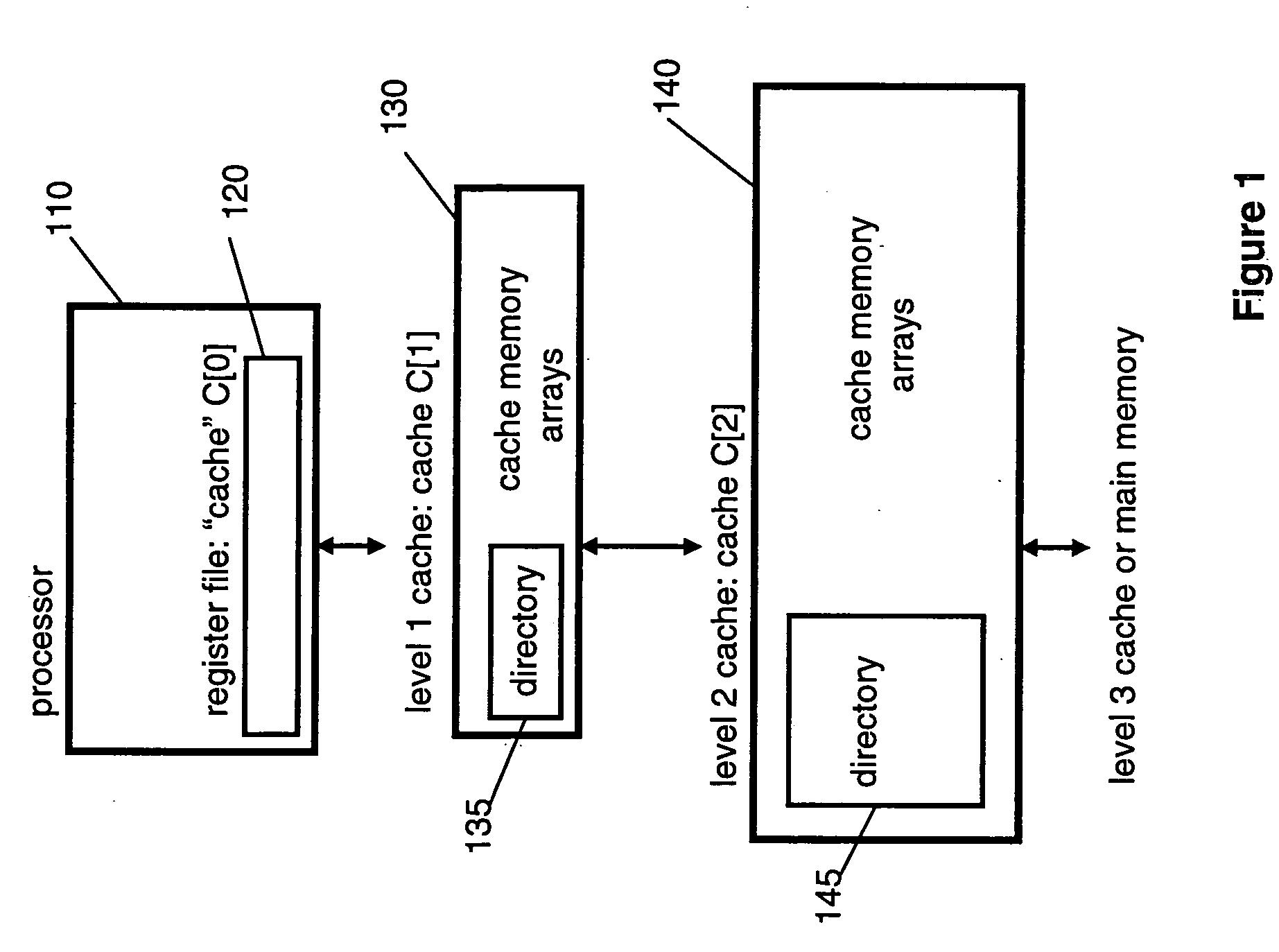

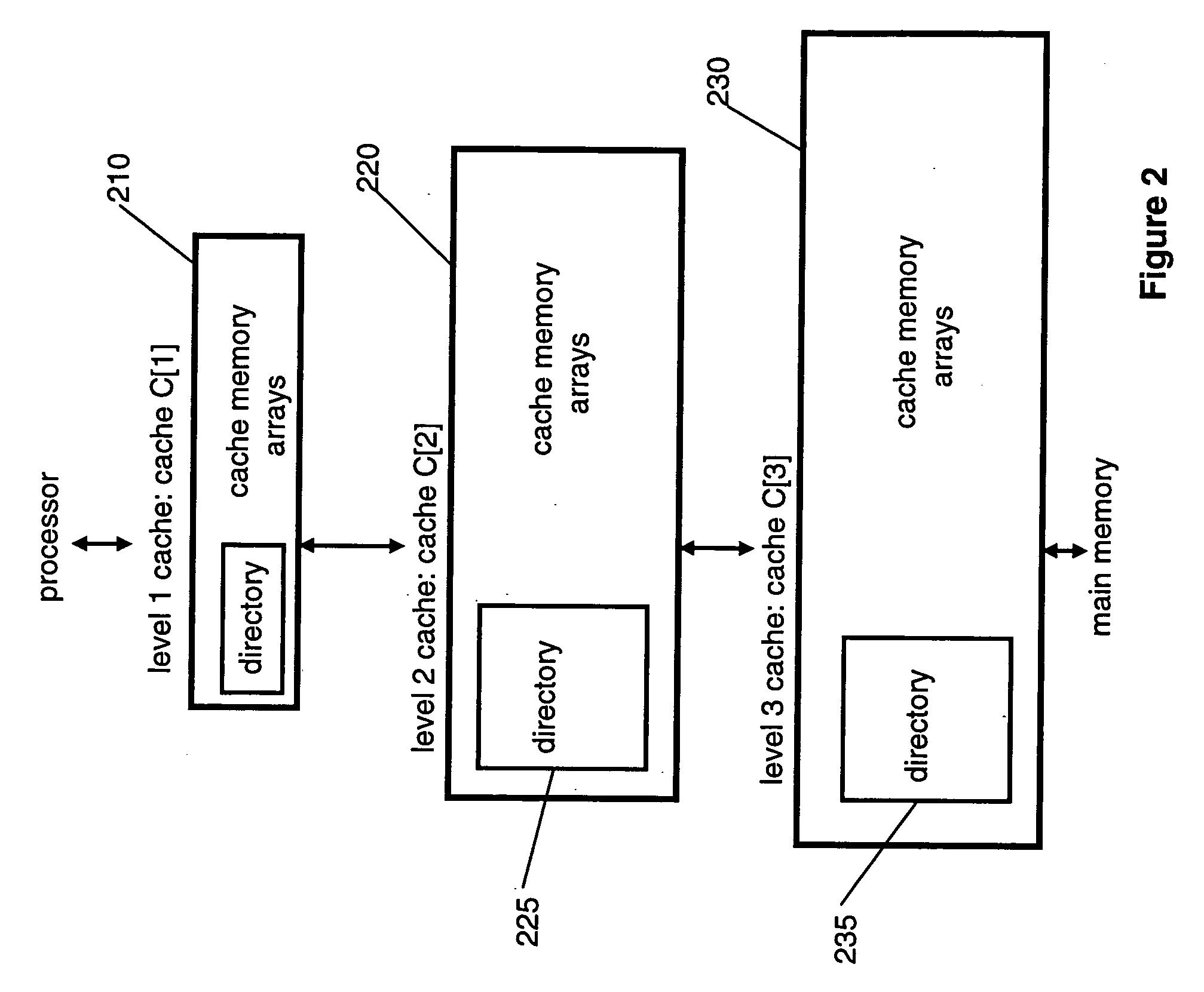

InactiveUS20060112233A1Improve system performanceImprove hit rateMemory systemsCache hierarchyCache access

Arrangements and method for enabling and disabling cache bypass in a computer system with a cache hierarchy. Cache bypass status is identified with respect to at least one cache line. A cache line identified as cache bypass enabled is transferred to one or more higher level caches of the cache hierarchy, whereby a next higher level cache in the cache hierarchy is bypassed, while a cache line identified as cache bypass disabled is transferred to one or more higher level caches of the cache hierarchy, whereby a next higher level cache in the cache hierarchy is not bypassed. Included is an arrangement for selectively enabling or disabling cache bypass with respect to at least one cache line based on historical cache access information.

Owner:IBM CORP

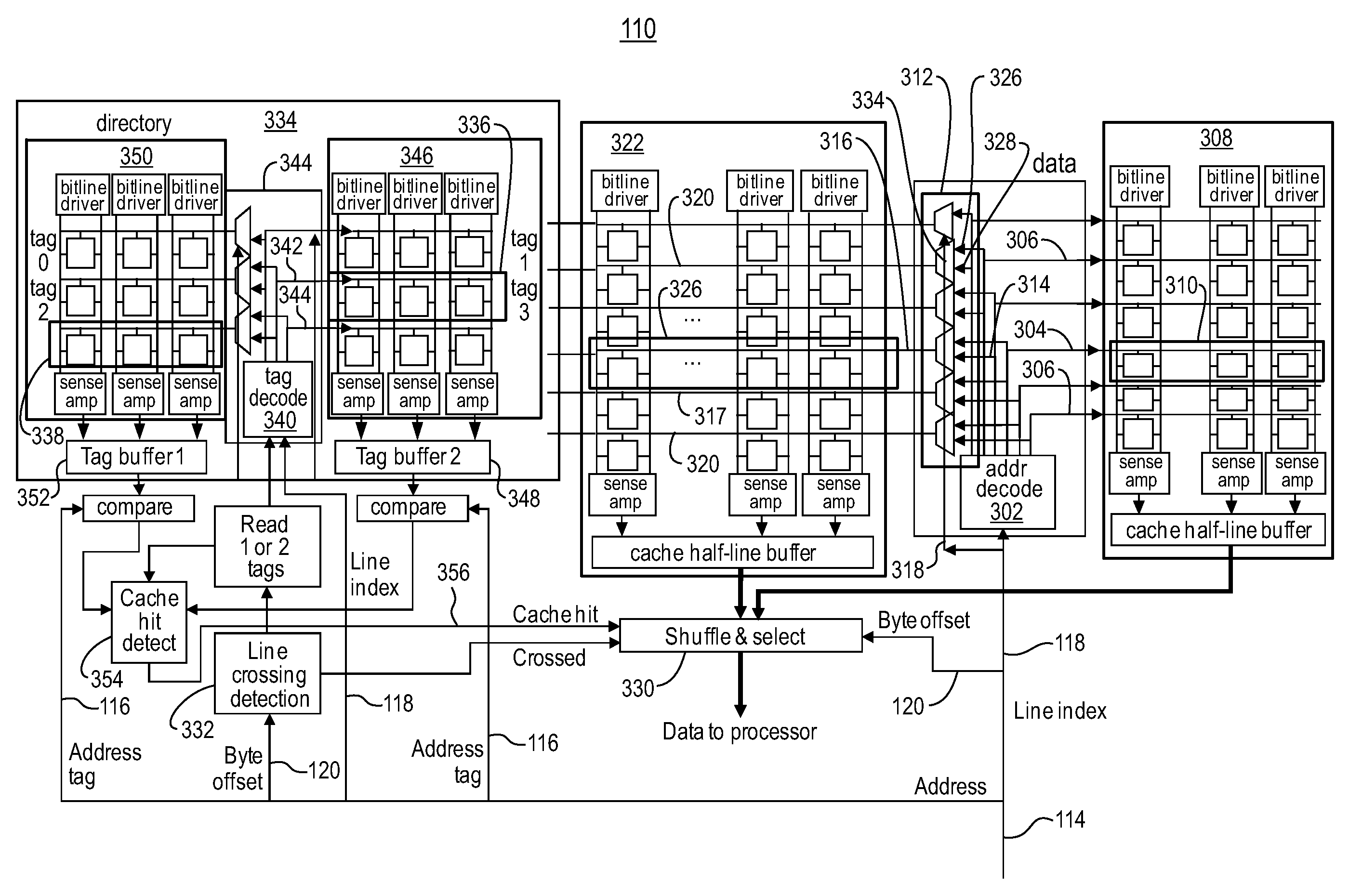

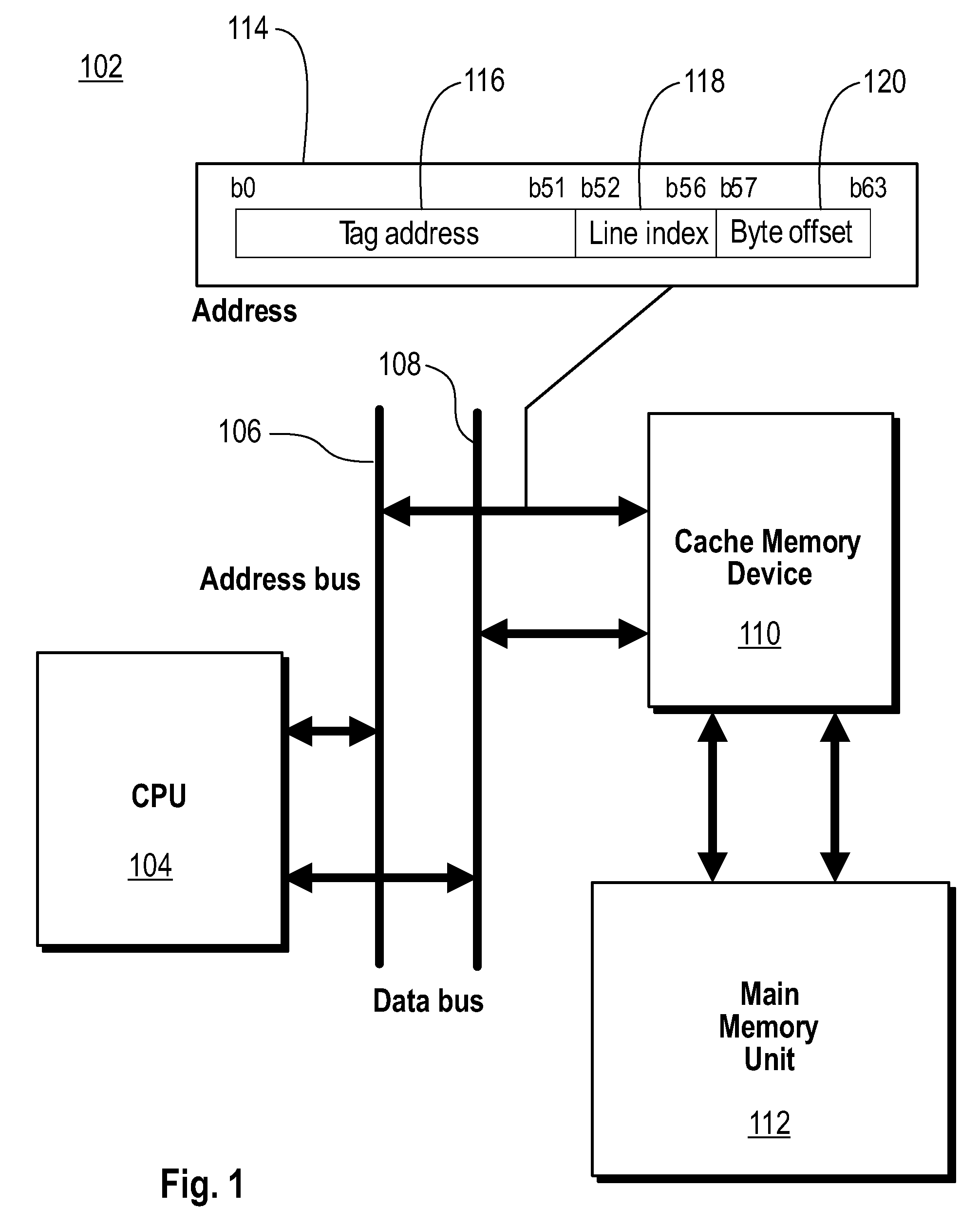

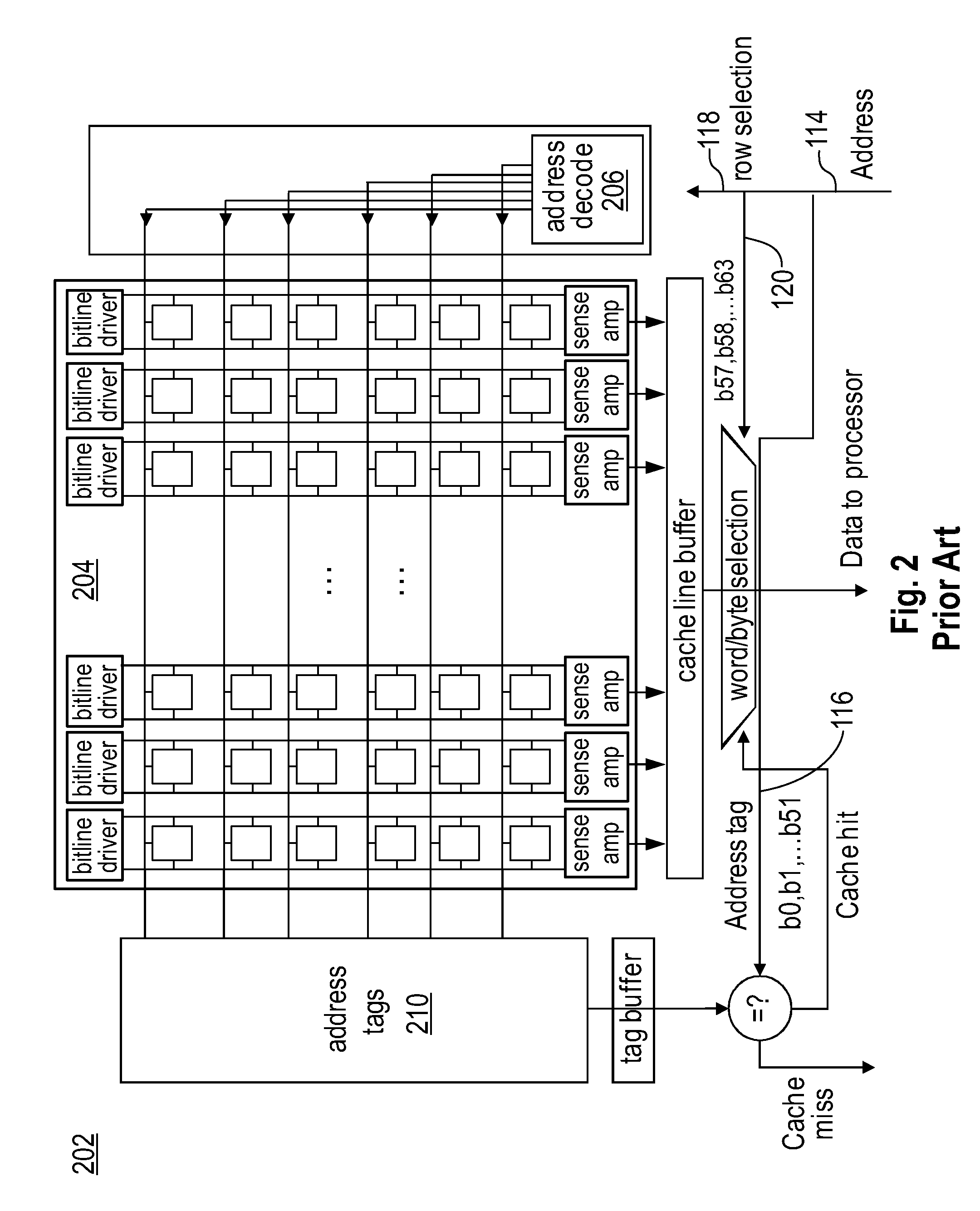

High performance unaligned cache access

A cache memory device and method for operating the same. One embodiment of the cache memory device includes an address decoder decoding a memory address and selecting a target cache line. A first cache array is configured to output a first cache entry associated with the target cache line, and a second cache array coupled to an alignment unit is configured to output a second cache entry associated with the alignment cache line. The alignment unit coupled to the address decoder selects either the target cache line or a neighbor cache line proximate the target cache line as an alignment cache line output. Selection of either the target cache line or a neighbor cache line is based on an alignment bit in the memory address. A tag array cache is split into even and odd cache lines tags, and provides one or two tags for every cache access.

Owner:IBM CORP

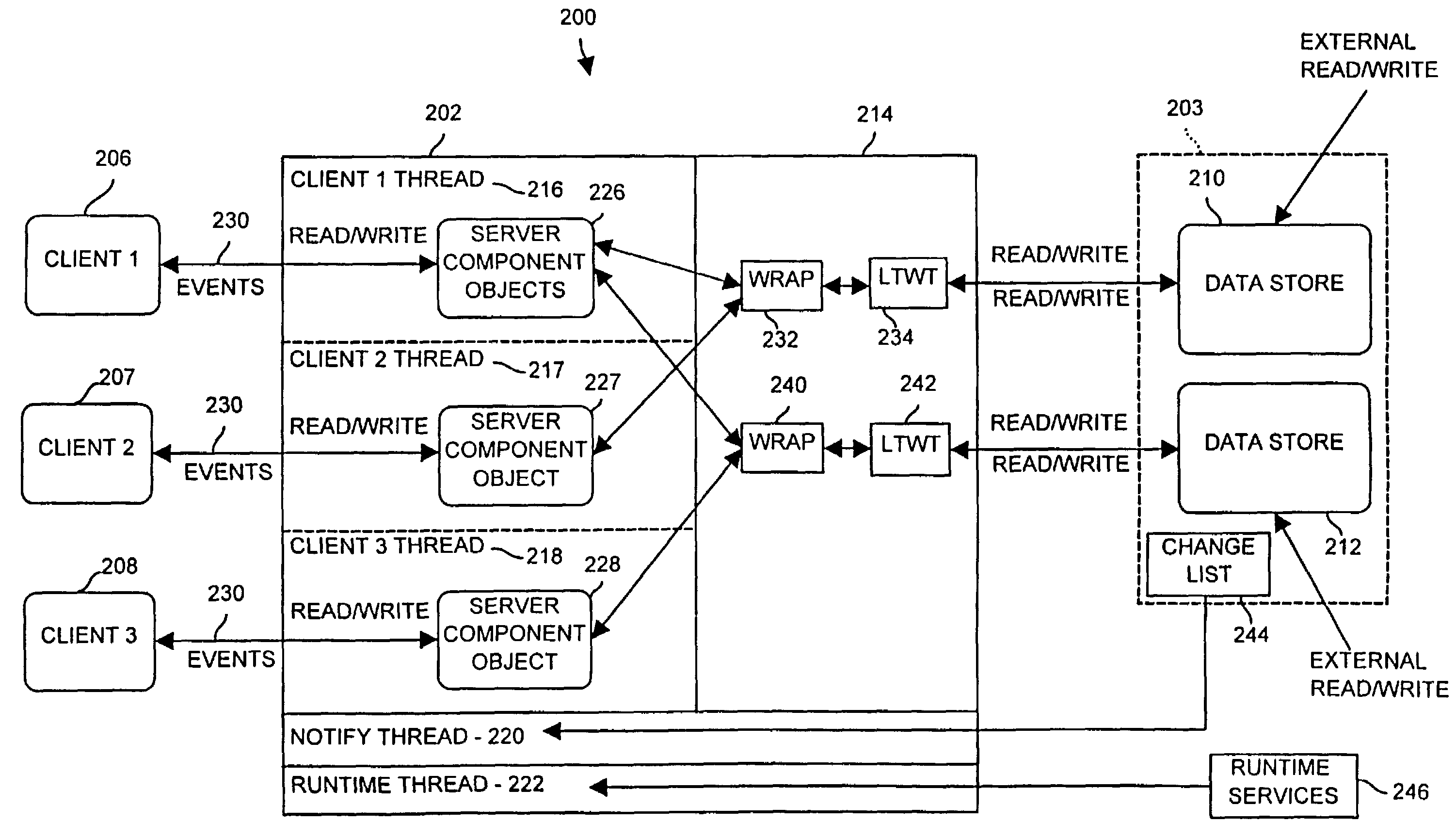

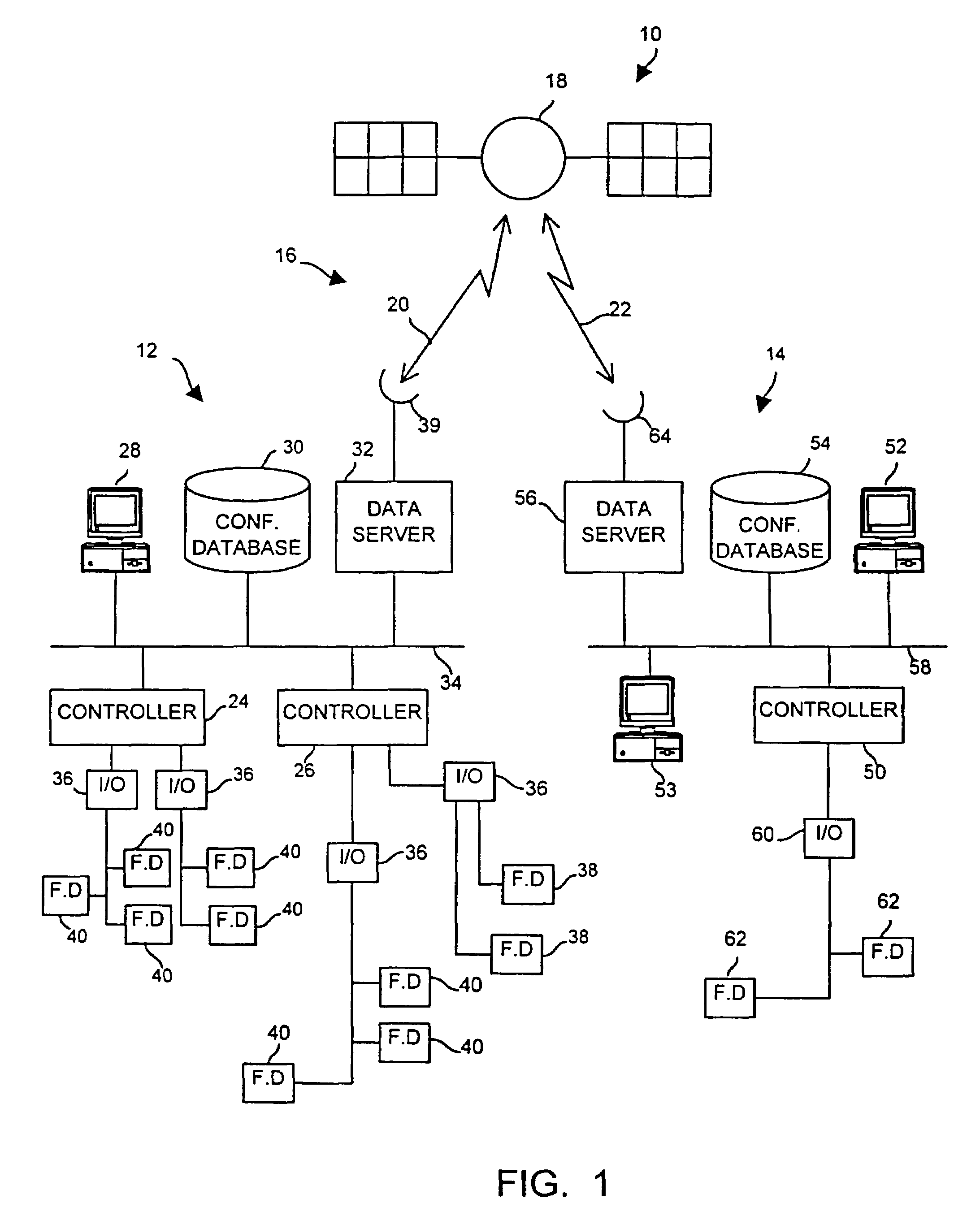

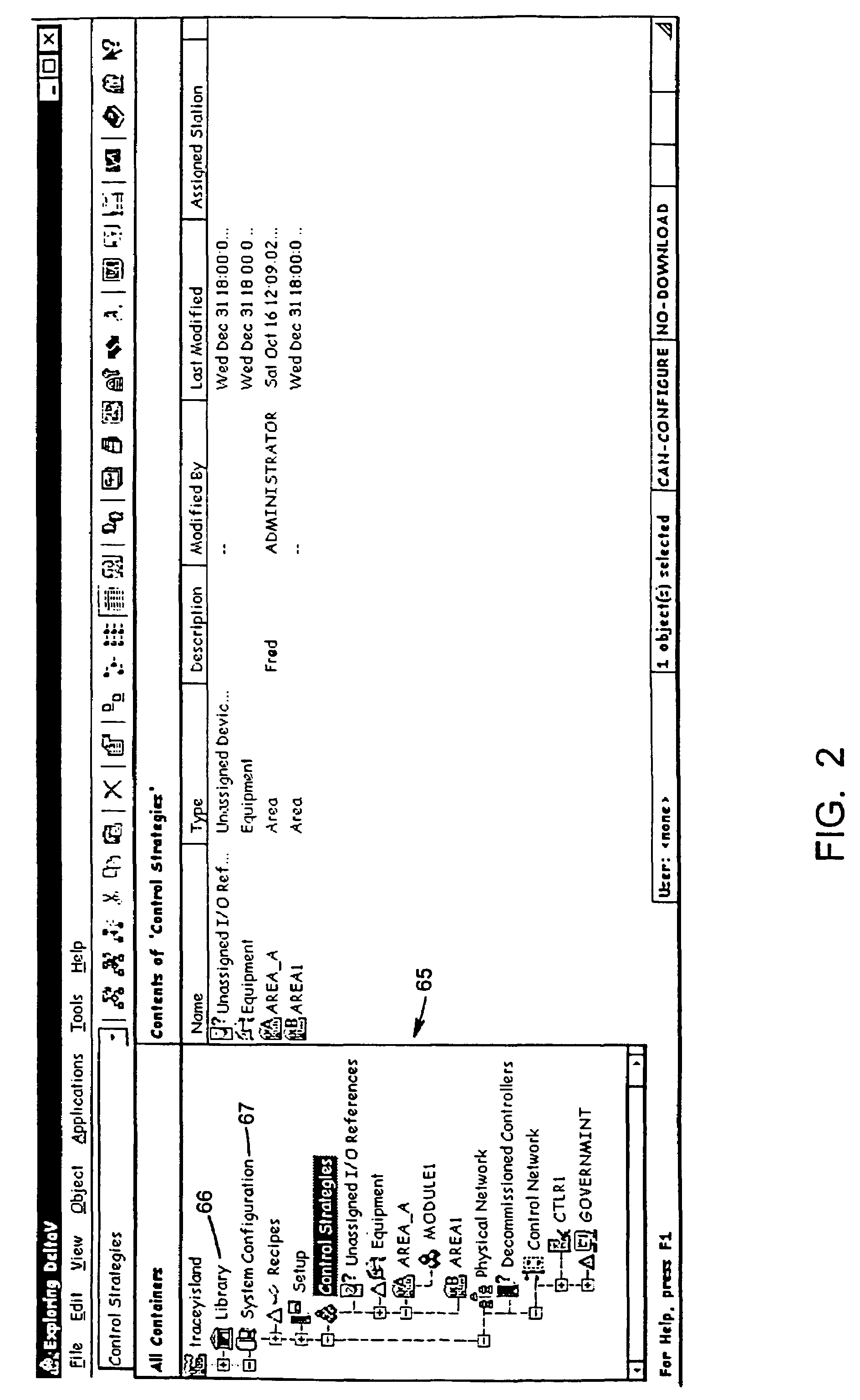

Accessing and updating a configuration database from distributed physical locations within a process control system

InactiveUS7127460B2Reduce the number of readsIncrease speedData processing applicationsProgram loading/initiatingCache accessControl system

A configuration database includes multiple databases distributed at a plurality of physical locations within a process control system. Each of the databases may store a subset of the configuration data and this subset of configuration data may be accessed by users at any of the sites within the process control system. A database server having a shared cache accesses a database in a manner that enables multiple subscribers to read configuration data from the database with only a minimal number of reads to the database. To prevent the configuration data being viewed by subscribers within the process control system from becoming stale, the database server automatically detects changes to an item within the configuration database and sends notifications of changes made to the item to each of the subscribers of that item so that a user always views the state of the configuration as it actually exists within the configuration database.

Owner:FISHER-ROSEMOUNT SYST INC

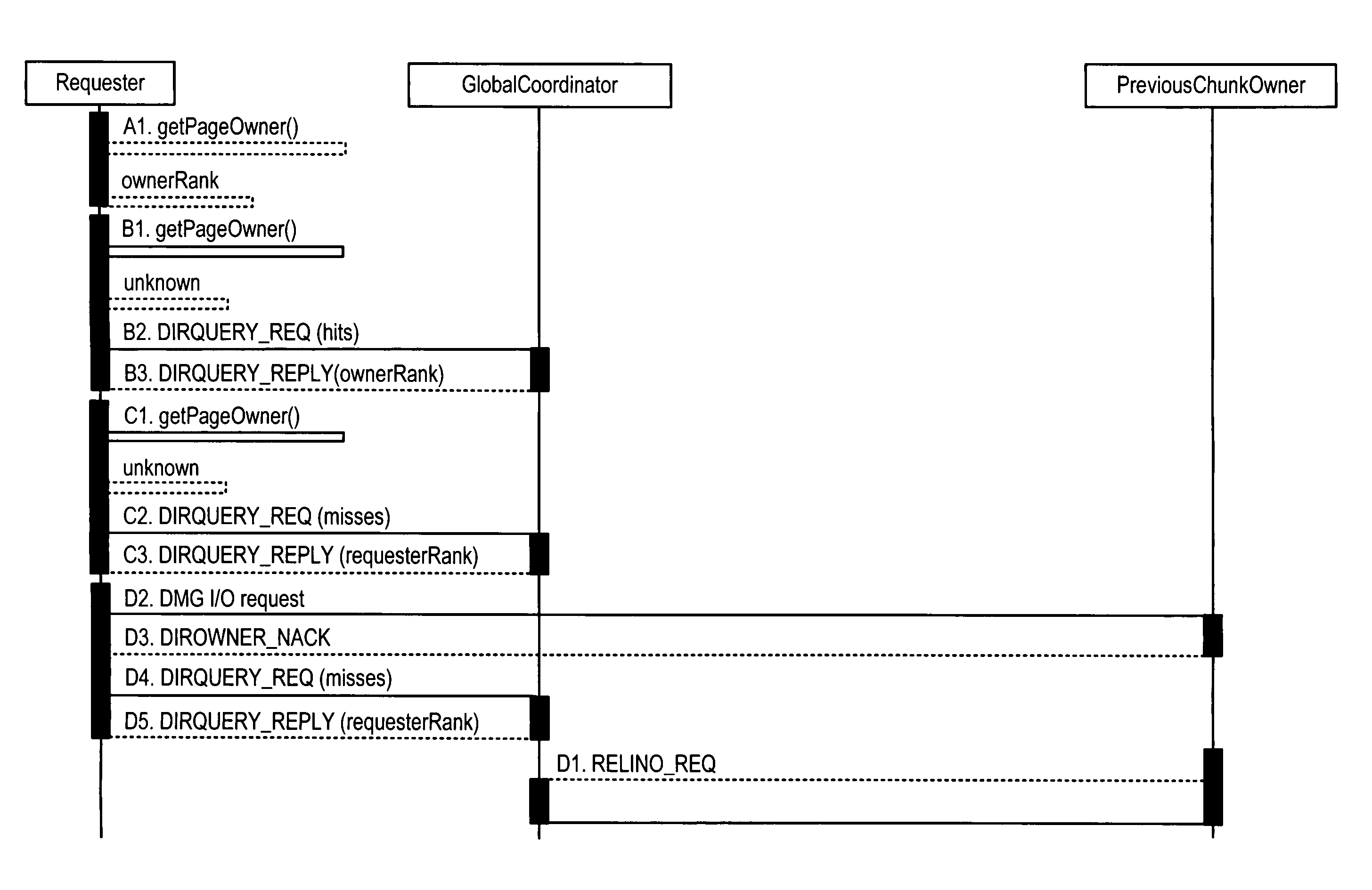

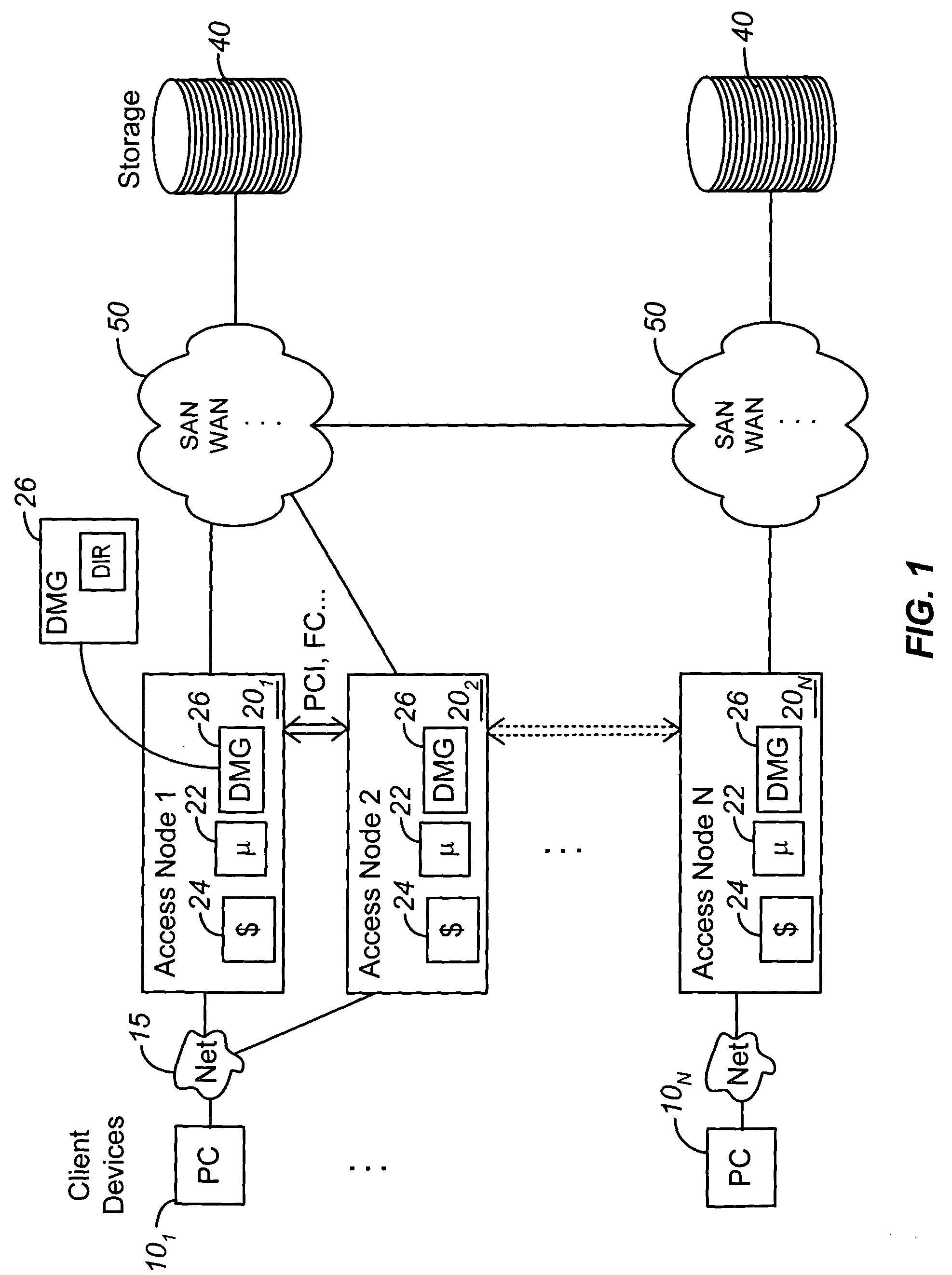

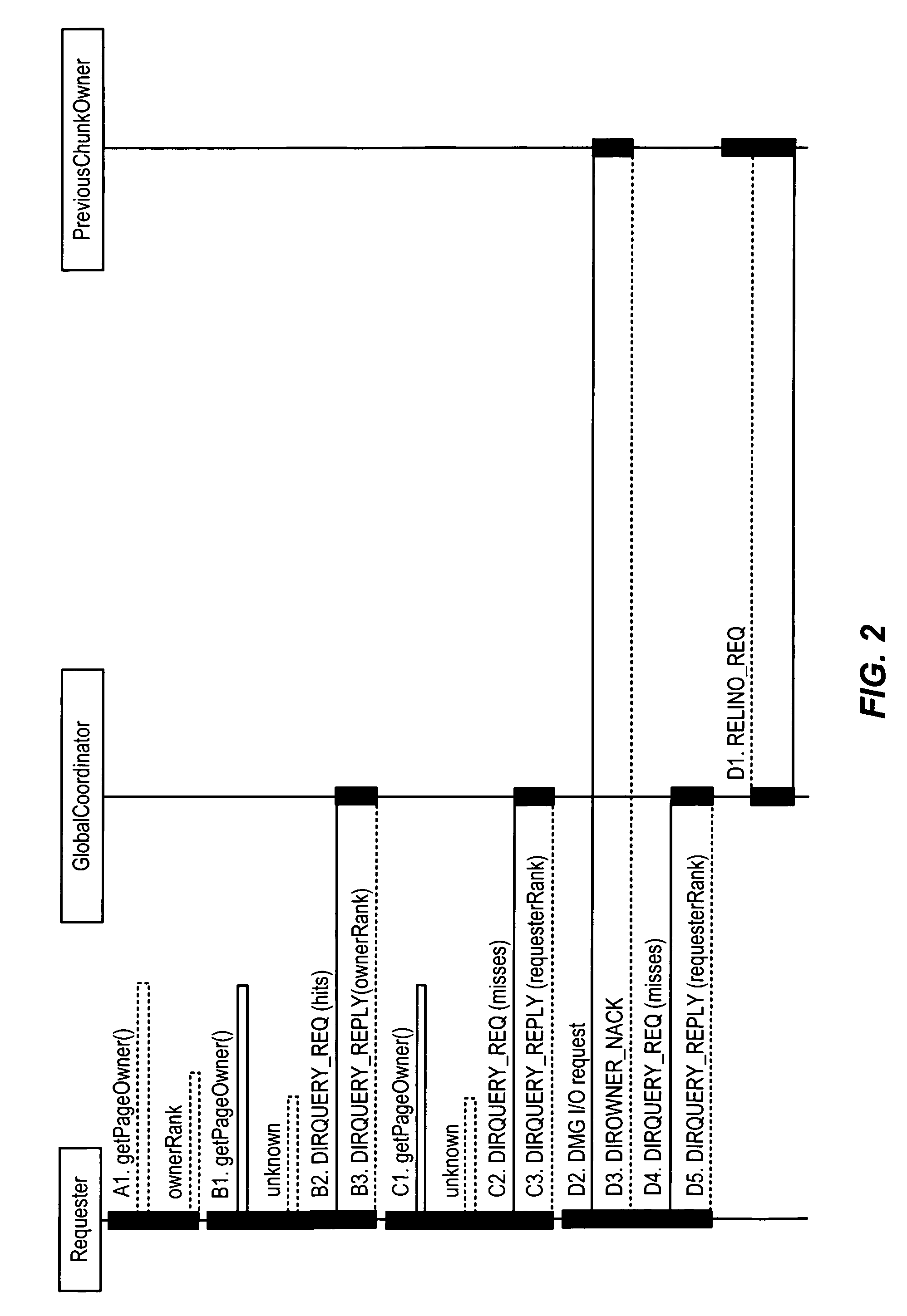

Systems and methods for providing distributed cache coherence

ActiveUS7975018B2Reduce bandwidth requirementsReduce in quantityDigital computer detailsTransmissionComputer networkCache access

A plurality of access nodes sharing access to data on a storage network implement a directory based cache ownership scheme. One node, designated as a global coordinator, maintains a directory (e.g., table or other data structure) storing information about I / O operations by the access nodes. The other nodes send requests to the global coordinator when an I / O operation is to be performed on identified data. Ownership of that data in the directory is given to the first requesting node. Ownership may transfer to another node if the directory entry is unused or quiescent. The distributed directory-based cache coherency allows for reducing bandwidth requirements between geographically separated access nodes by allowing localized (cached) access to remote data.

Owner:EMC IP HLDG CO LLC

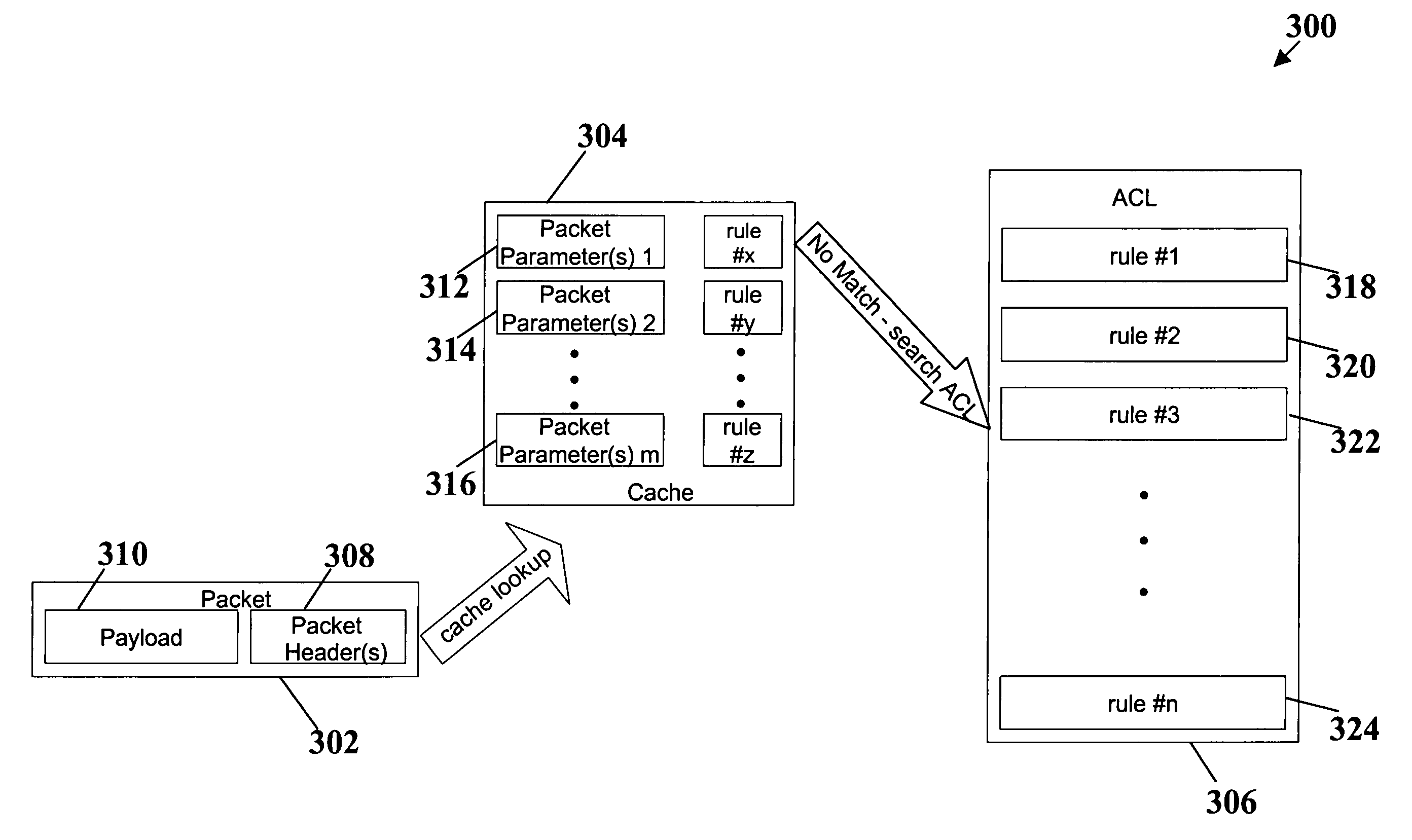

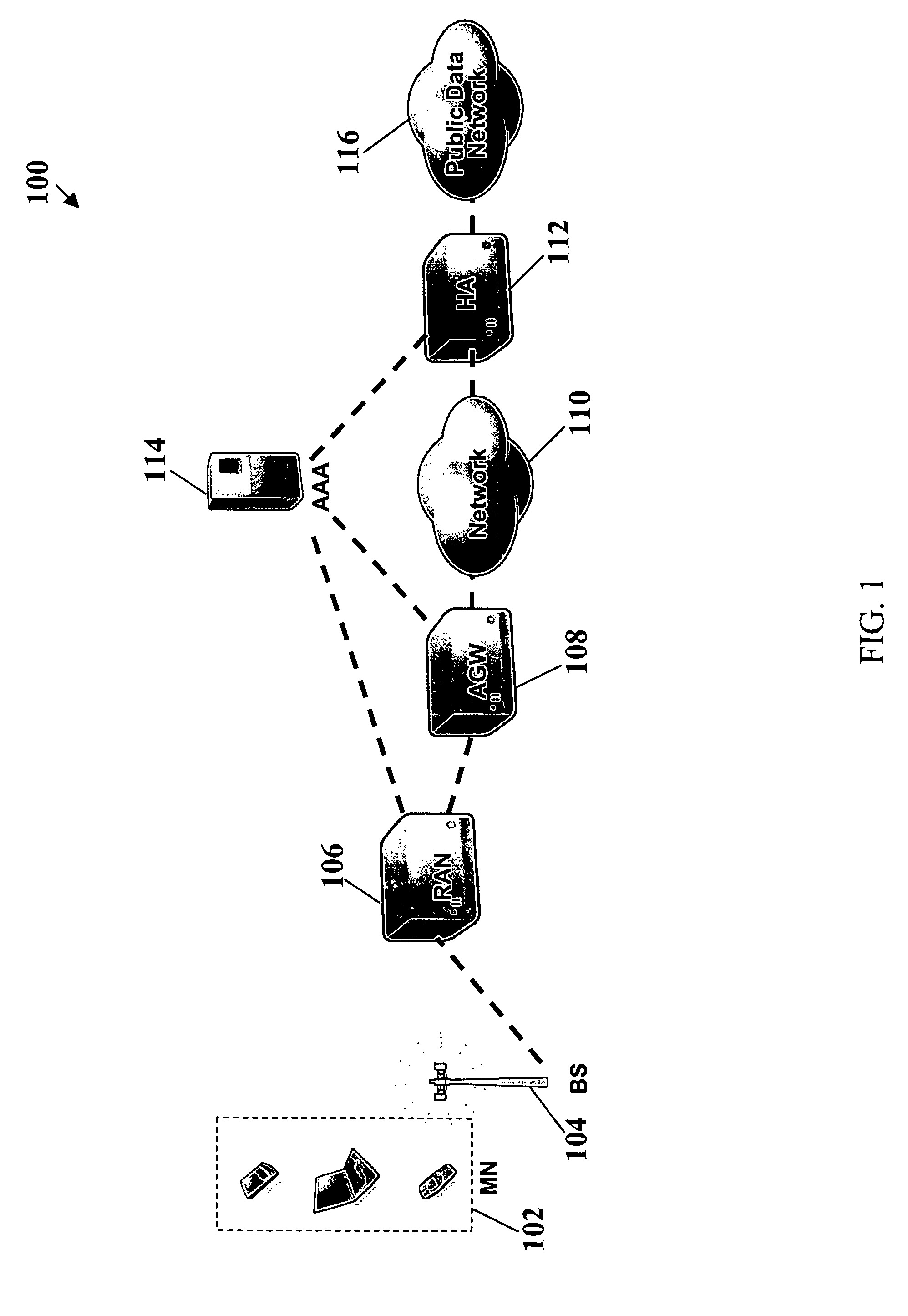

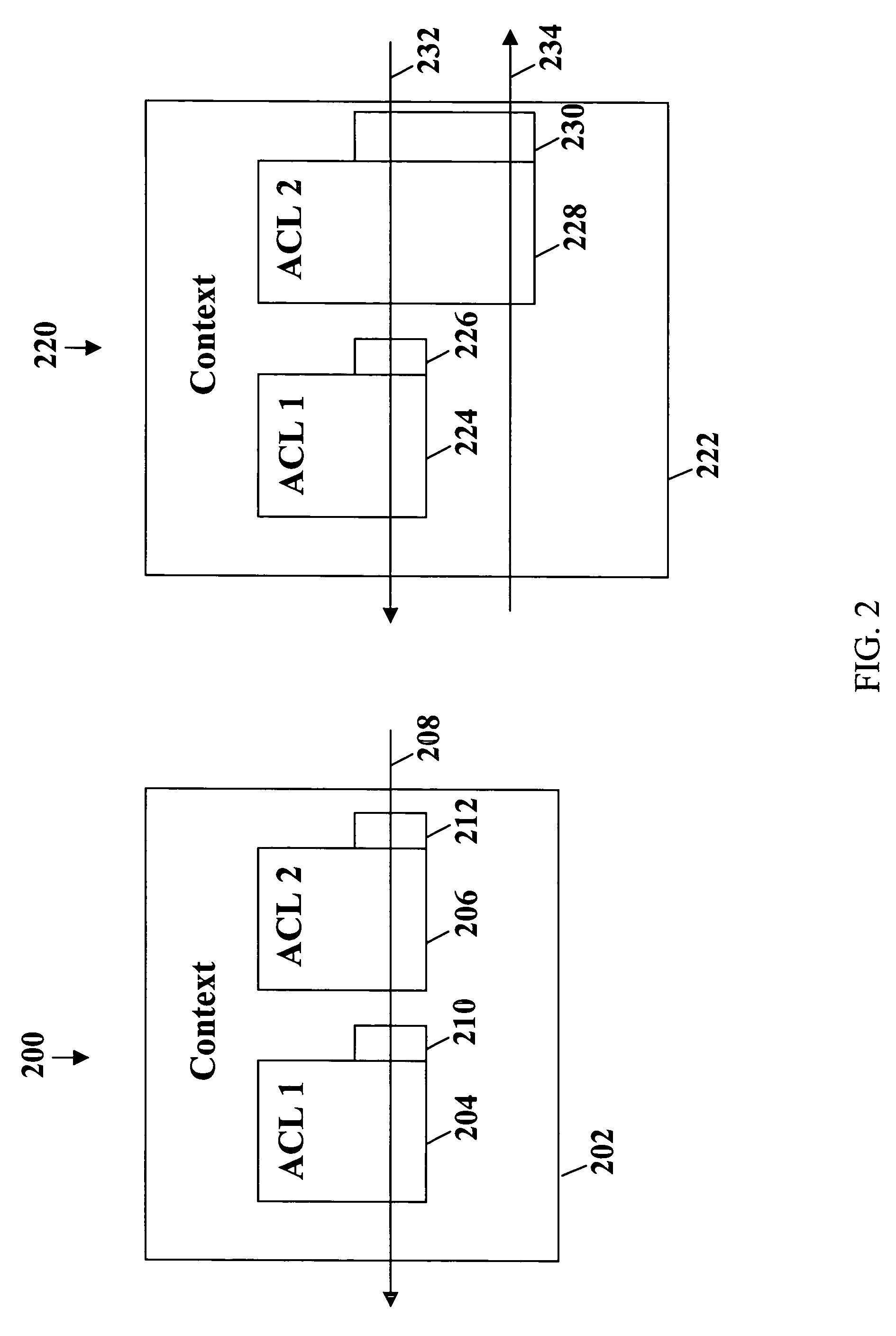

System and method for caching access rights

Owner:CISCO TECH INC

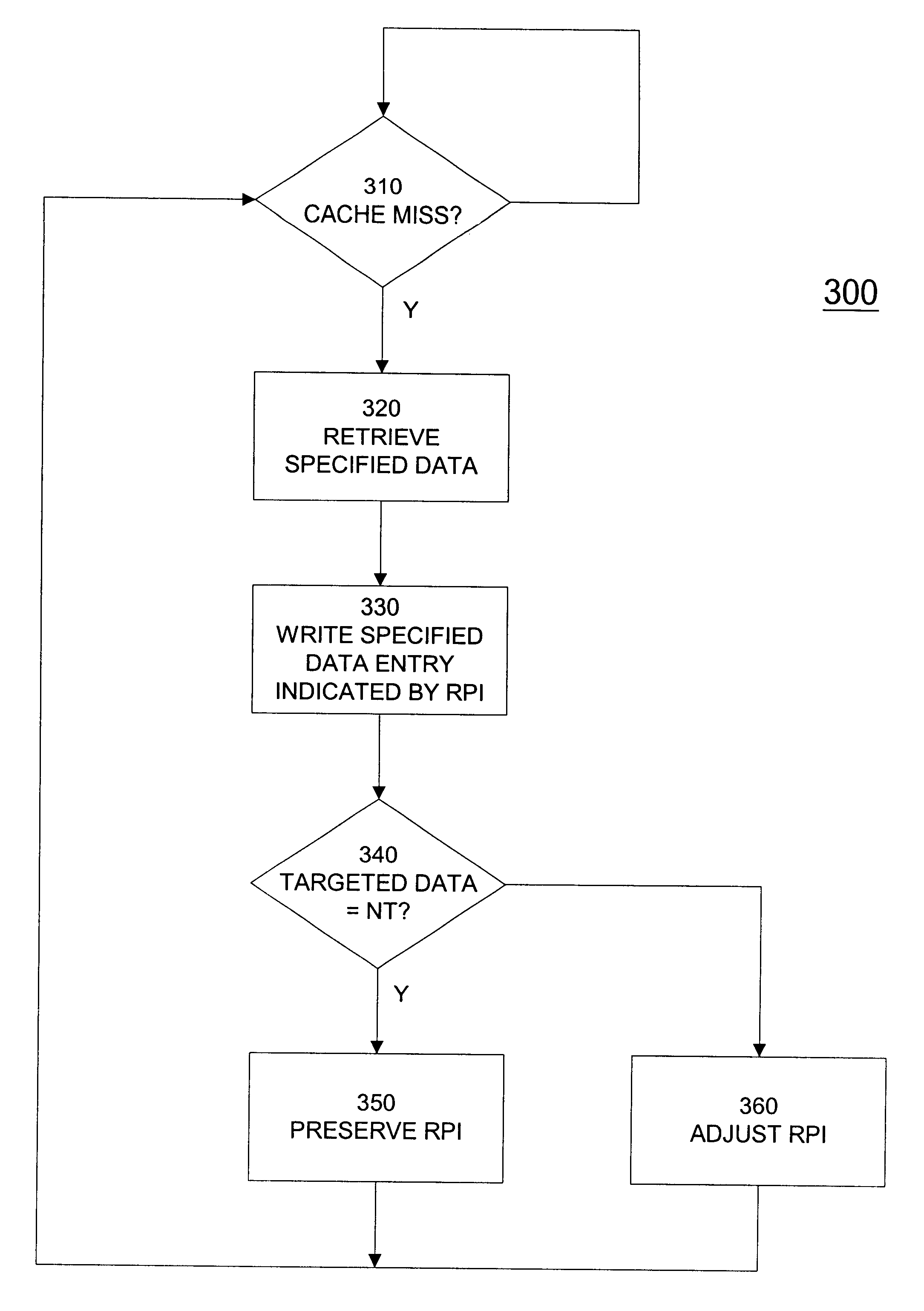

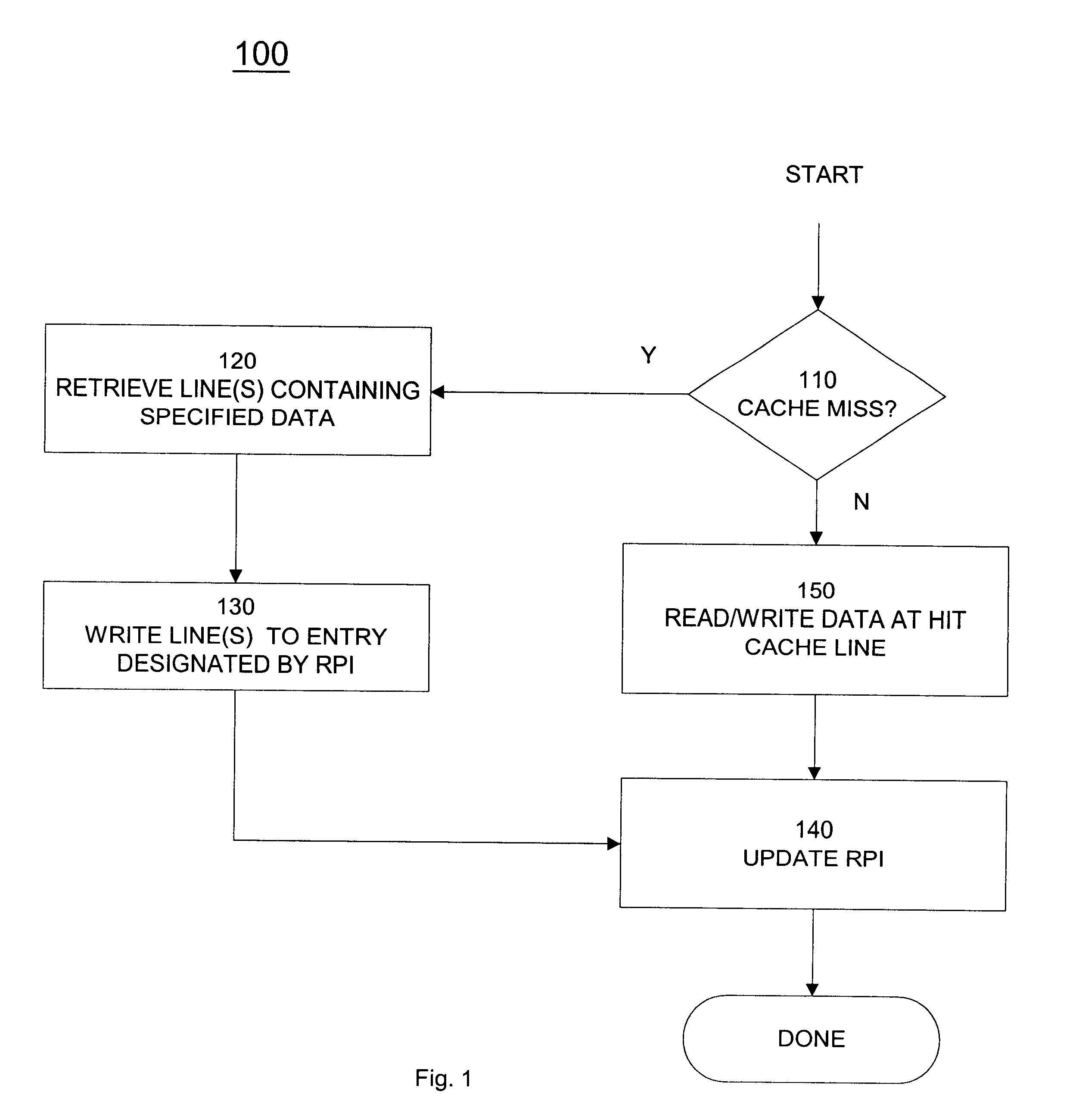

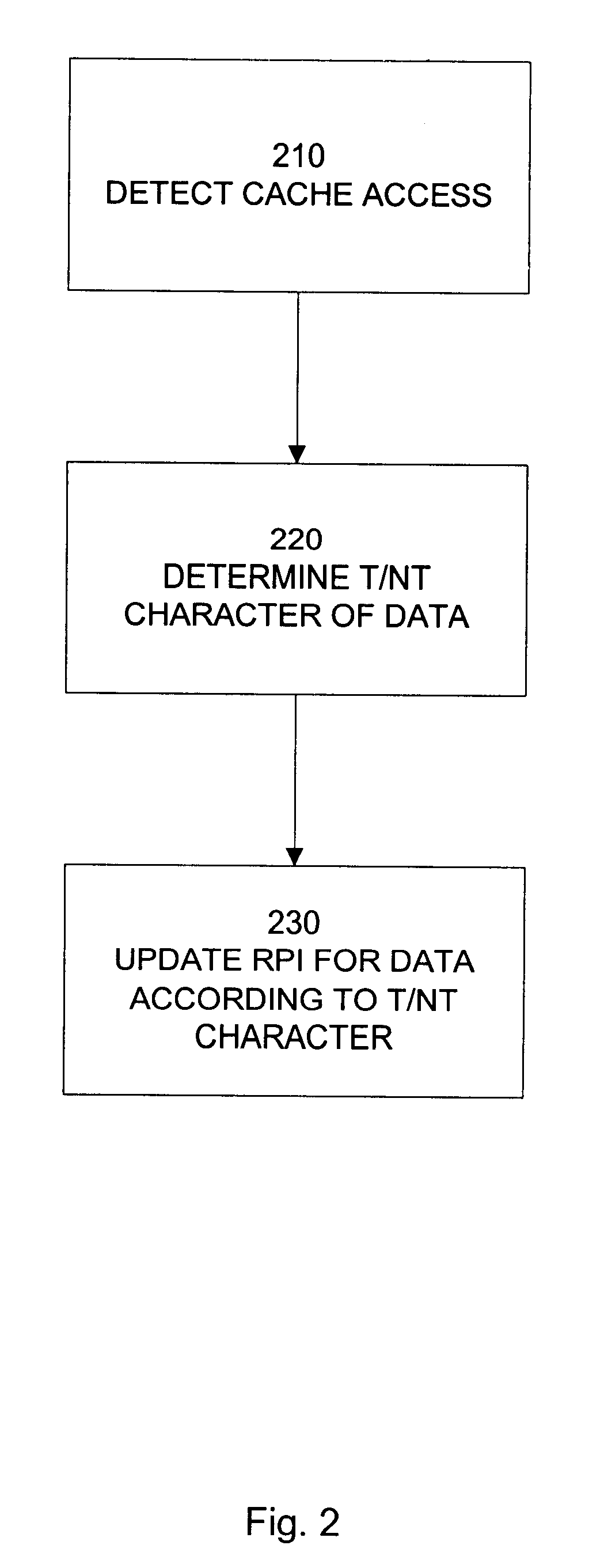

Method and apparatus for managing temporal and non-temporal data in a single cache structure

A method is provided for managing temporal and non-temporal data in the same cache structure. The temporal or non-temporal character of data targeted by a cache access is determined, and a cache entry for the data is identified. When the targeted data is temporal, a replacement priority indicator associated with the identified cache entry is updated to reflect the access. When the targeted data is non temporal, the replacement priority indicator associated with the identified cache entry is preserved. The method may also be implemented by employing a first algorithm to update the replacement priority indicator for temporal data and a second, different algorithm to update the replacement priority indicator for non-temporal data.

Owner:INTEL CORP

Speculative hybrid branch direction predictor

InactiveUS6886093B2Improve accuracyReducing overall branch penaltyInstruction analysisDigital computer detailsCache accessProcessor register

An apparatus for speculatively predicting the direction of a branch instruction in a pipelined microprocessor in a hybrid fashion. A branch target address cache (BTAC) stores a direction prediction about executed branch instructions. The BTAC is indexed by an instruction cache fetch address. The BTAC is accessed in parallel with the instruction cache access, such that the direction prediction is provided before the actual instruction is decoded which is presumed to be a branch instruction corresponding to the direction prediction stored in the BTAC. In parallel with the BTAC access, a branch history table (BHT) is accessed to provide a second speculative direction prediction. The BHT is indexed with a gshare function of the instruction cache fetch address and a global branch history stored in a global branch history register. The BTAC also provides a selector that selects between the two speculative direction predictions.

Owner:IP FIRST

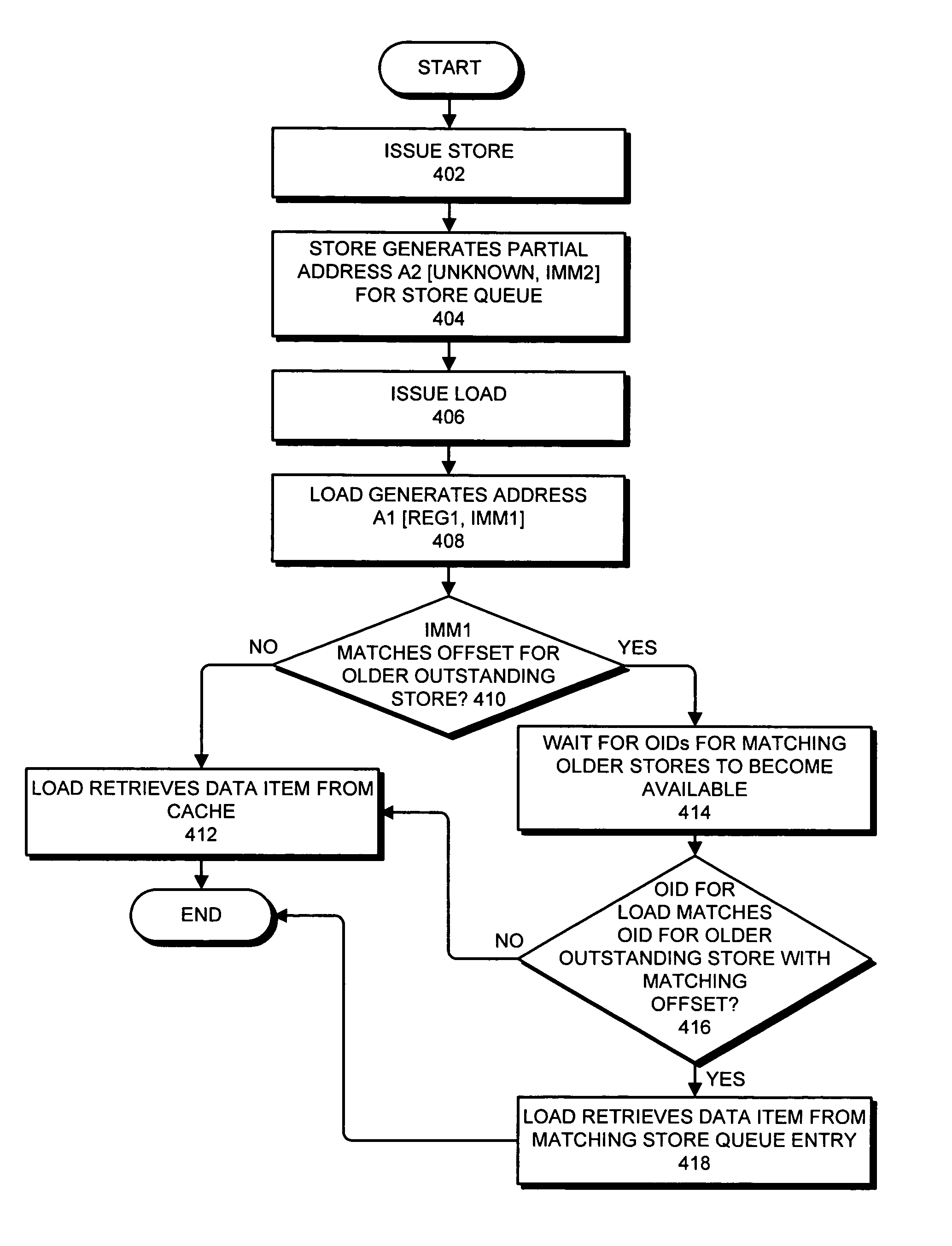

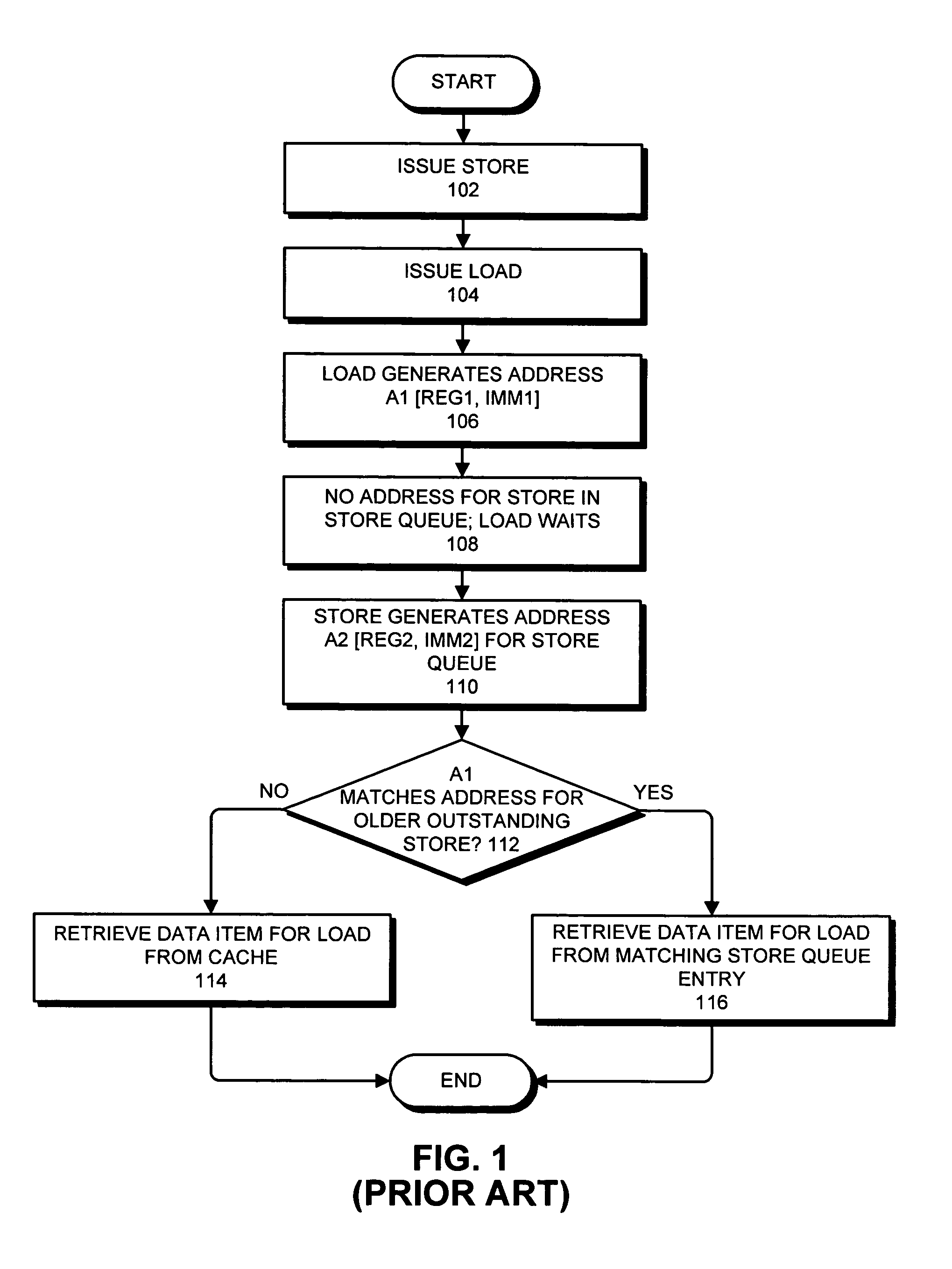

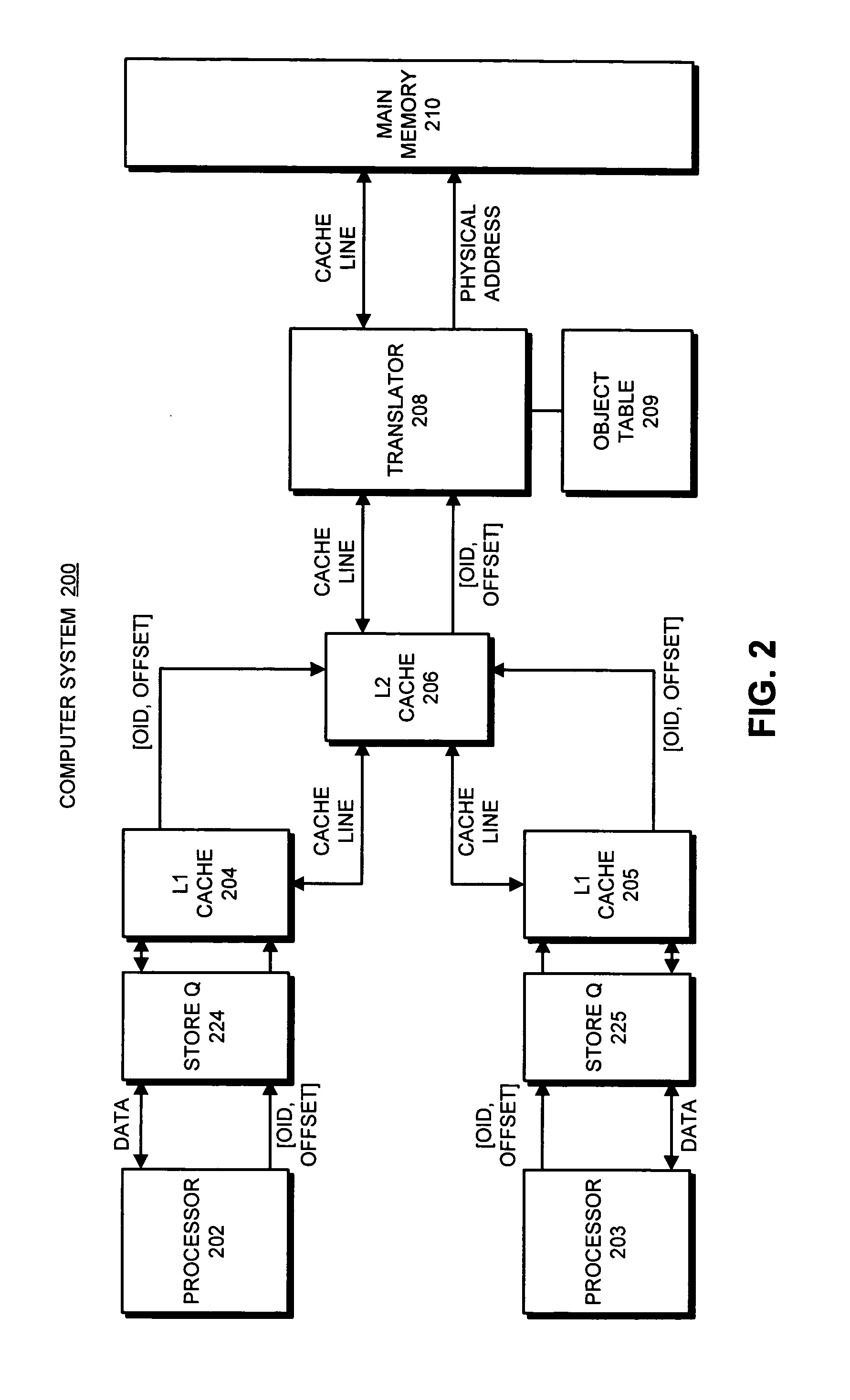

Detecting raw hazards in an object-addressed memory hierarchy by comparing an object identifier and offset for a load instruction to object identifiers and offsets in a store queue

One embodiment of the present invention provides a system that processes memory-access instructions in an object-addressed memory hierarchy. During operation, the system receives a load instruction to be executed, wherein the load instruction loads a data item from an object, and wherein the load instruction specifies an object identifier (OID) for the object and an offset for the data item within the object. Next, the system compares the OID and the offset for the data item against OIDs and offsets for outstanding store instructions in a store queue. If the offset for the data item does not match any of the offsets for the outstanding store instructions in the store queue, and hence no read-after-write (RAW) hazard exists, the system performs a cache access to retrieve the data item for the load instruction.

Owner:ORACLE INT CORP

Cache memory with the number of operated ways being changed according to access pattern

InactiveUS7437513B2Improve performanceReduce power consumptionMemory architecture accessing/allocationEnergy efficient ICTCache accessTime of use

An improvement in performance and a reduction of power consumption in a cache memory can both be effectively realized by increasing or decreasing the number of operated ways in accordance with access patterns. A hit determination unit determines the hit way when a cache access hit occurs. A way number increase / decrease determination unit manages, for each of the ways that are in operation, the order from the way for which the time of use is most recent to the way for which the time of use is oldest. The way number increase / decrease determination unit then finds the rank of the hit ways that have been obtained in the hit determination unit and counts the number of hits for each rank in the order. The way number increase / decrease determination unit further determines increase or decrease of the number of operated ways based on the access pattern that is indicated by the relation of the number of hits to each rank in the order. A way number control unit then selects operation or halt of operation for each way in accordance with the determination to increase or decrease the number of operated ways.

Owner:NEC CORP +1

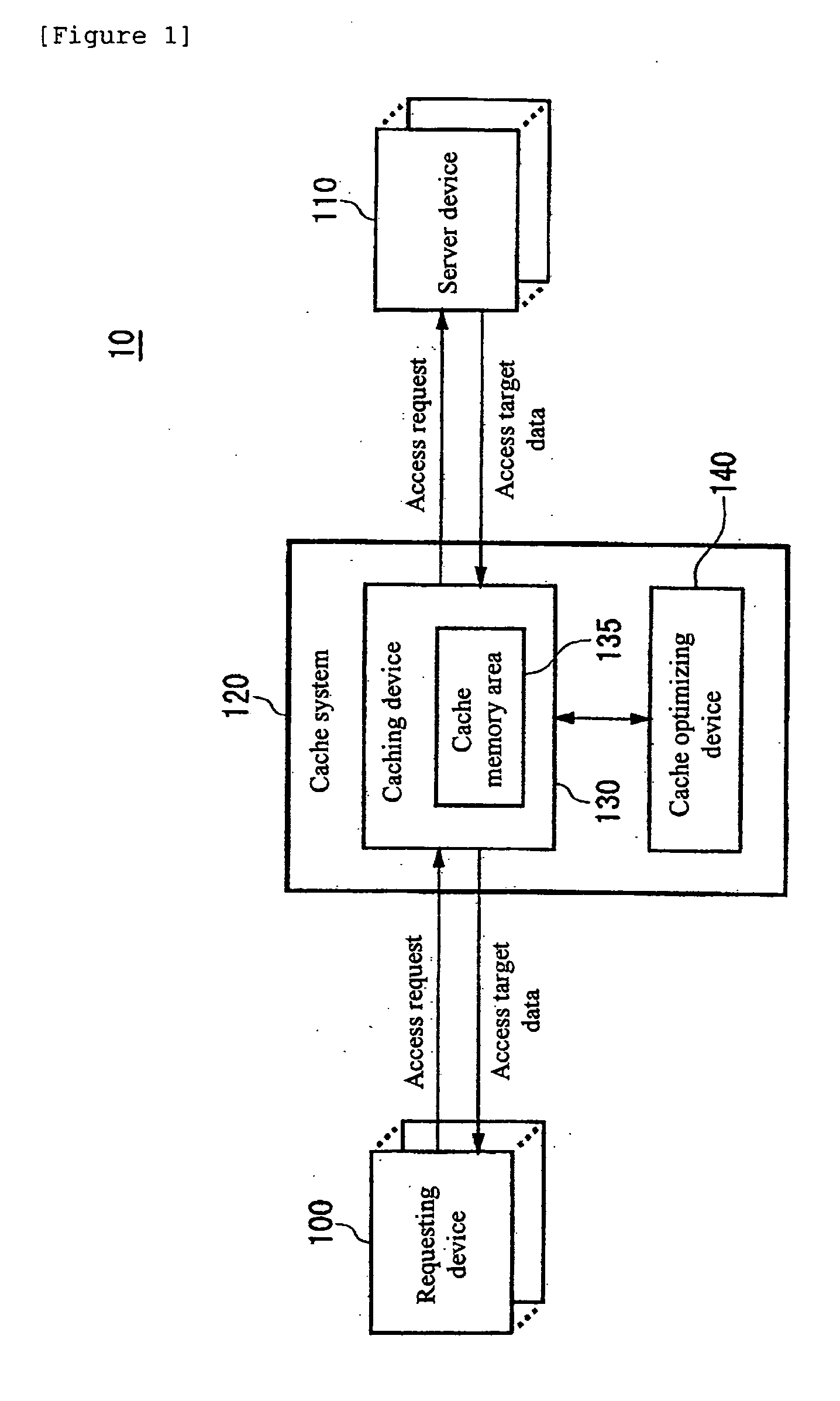

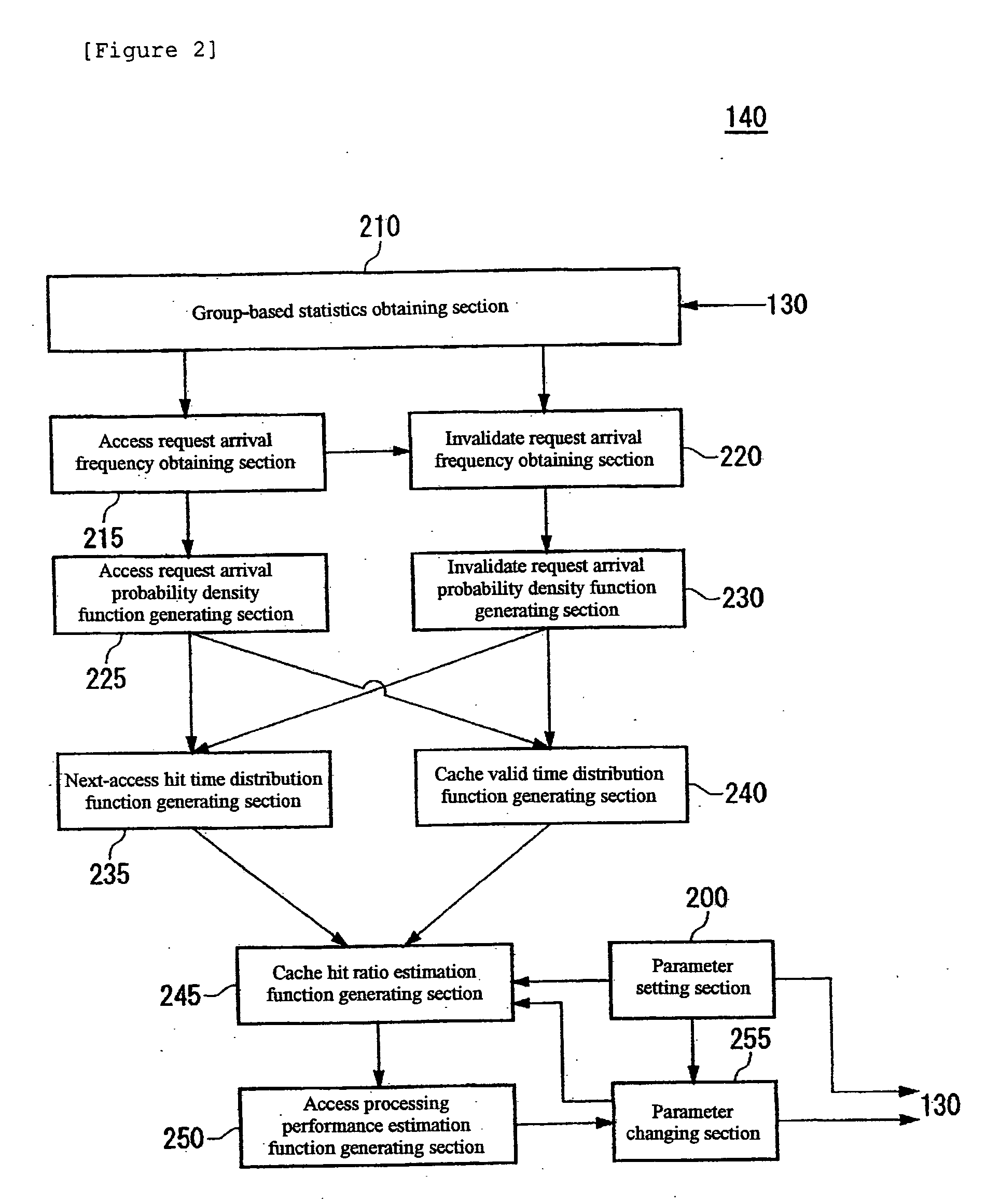

Cache hit ratio estimating apparatus, cache hit ratio estimating method, program, and recording medium

InactiveUS20050268037A1Improve accuracyEasy to processInput/output to record carriersError detection/correctionCache accessNormal density

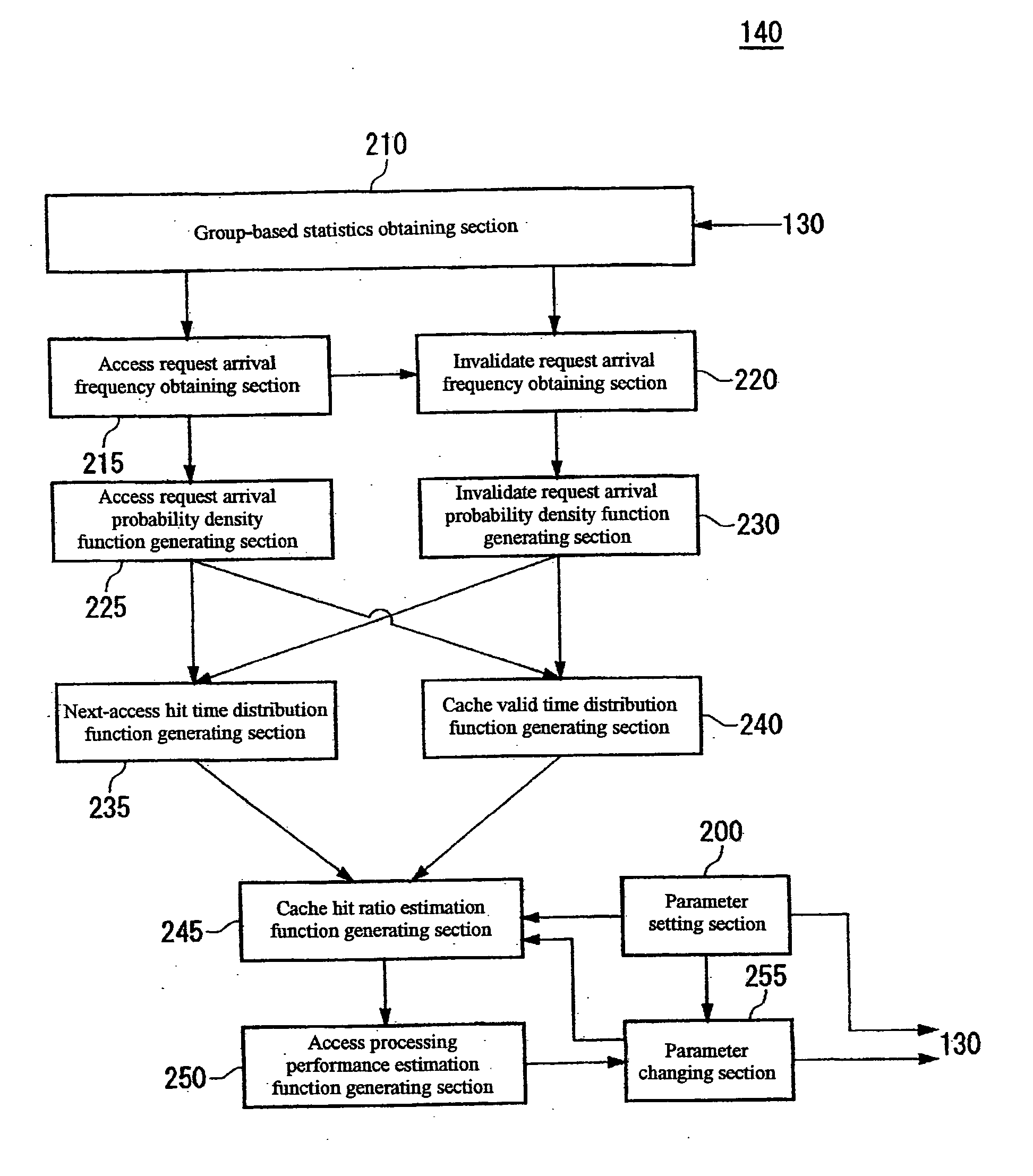

Determining a cache hit ratio of a caching device analytically and precisely. There is provided a cache hit ratio estimating apparatus for estimating the cache hit ratio of a caching device, caching access target data accessed by a requesting device, including: an access request arrival frequency obtaining section for obtaining an average arrival frequency measured for access requests for each of the access target data; an access request arrival probability density function generating section for generating an access request arrival probability density function which is a probability density function of arrival time intervals of access requests for each of the access target data on the basis of the average arrival frequency of access requests for the access target data; and a cache hit ratio estimation function generating section for generating an estimation function for the cache hit ratio for each of the access target data on the basis of the access request arrival probability density function for the plurality of the access target data.

Owner:GOOGLE LLC

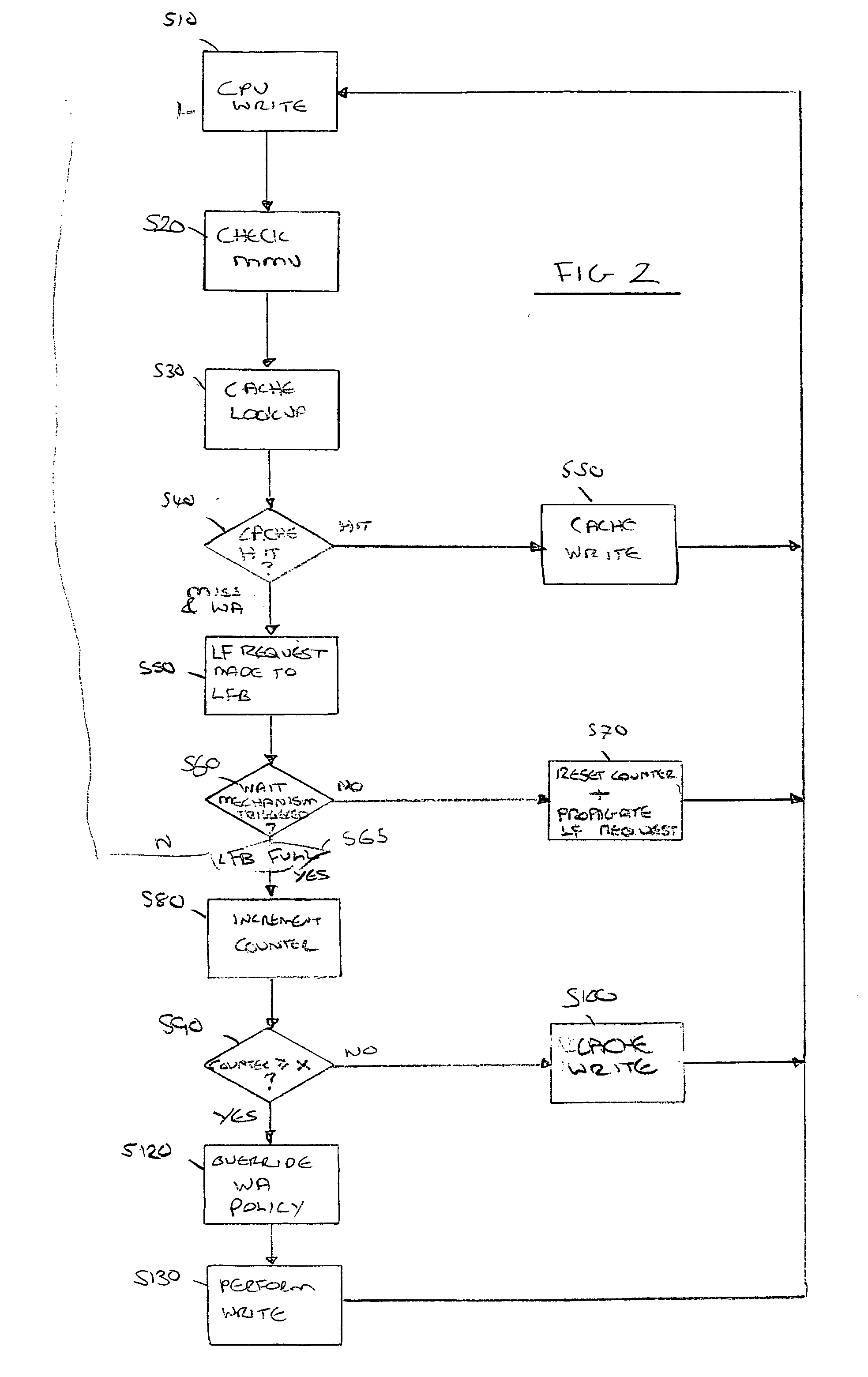

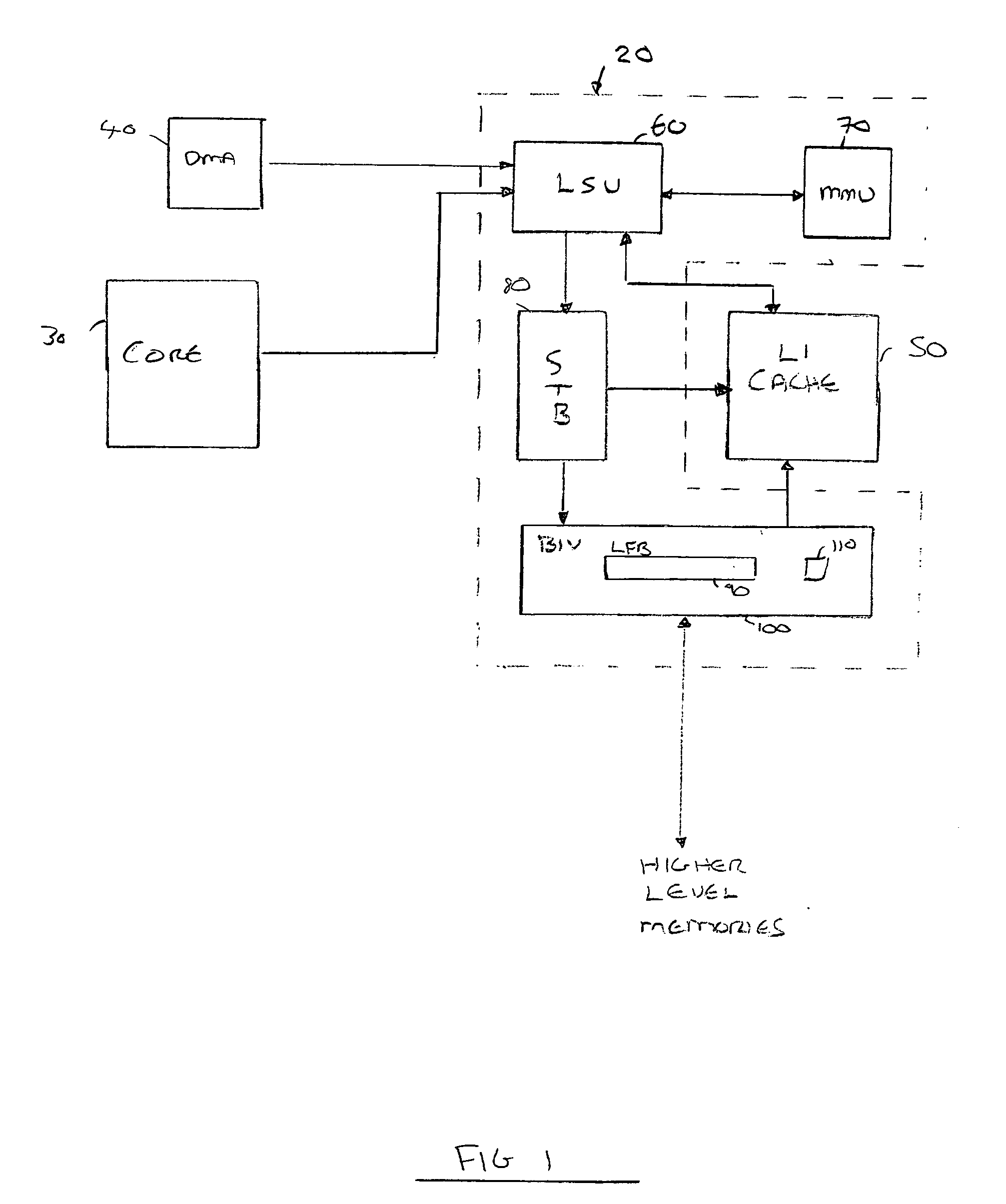

Cache controller

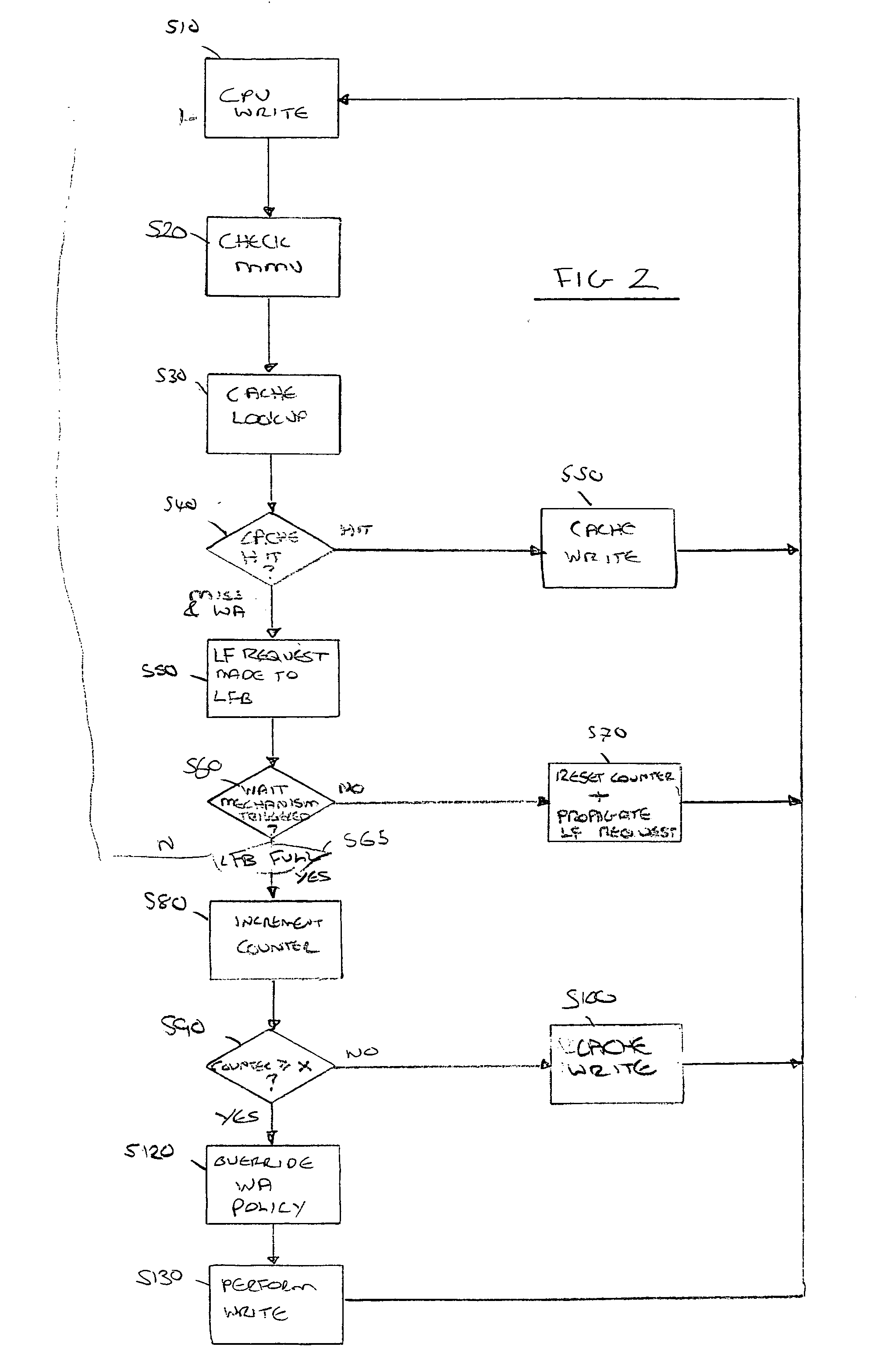

InactiveUS20070079070A1Adequate performance balanceUnlikely to resultMemory architecture accessing/allocationMemory systemsCache accessSequential data

A cache controller and a method is provided. The cache controller comprises: request reception logic operable to receive a write request from a data processing apparatus to write a data item to memory; and cache access logic operable to determine whether a caching policy associated with the write request is write allocate, whether the write request would cause a cache miss to occur, whether the write request is one of a number of write requests which together would cause greater than a predetermined number of sequential data items to be allocated in the cache and, if so, the cache access logic is further operable to override the caching policy associated with the write request to non-write allocate. In this way, in the event that the number of consecutive data items to be allocated within the cache exceeds the predefined number then the cache access logic will consider that it is highly likely that the write requests are associated with a block transfer operation and, accordingly, will override the write allocate caching policy. Accordingly, the write request will proceed but without the write allocate caching policy being applied. Hence, the pollution of the cache with these sequential data items is reduced.

Owner:ARM LTD

Time and power reduction in cache accesses

InactiveUS20070028051A1Reduce the number of stepsReduce widthMemory architecture accessing/allocationEnergy efficient ICTCache accessData buffer

Owner:ARM LTD +1

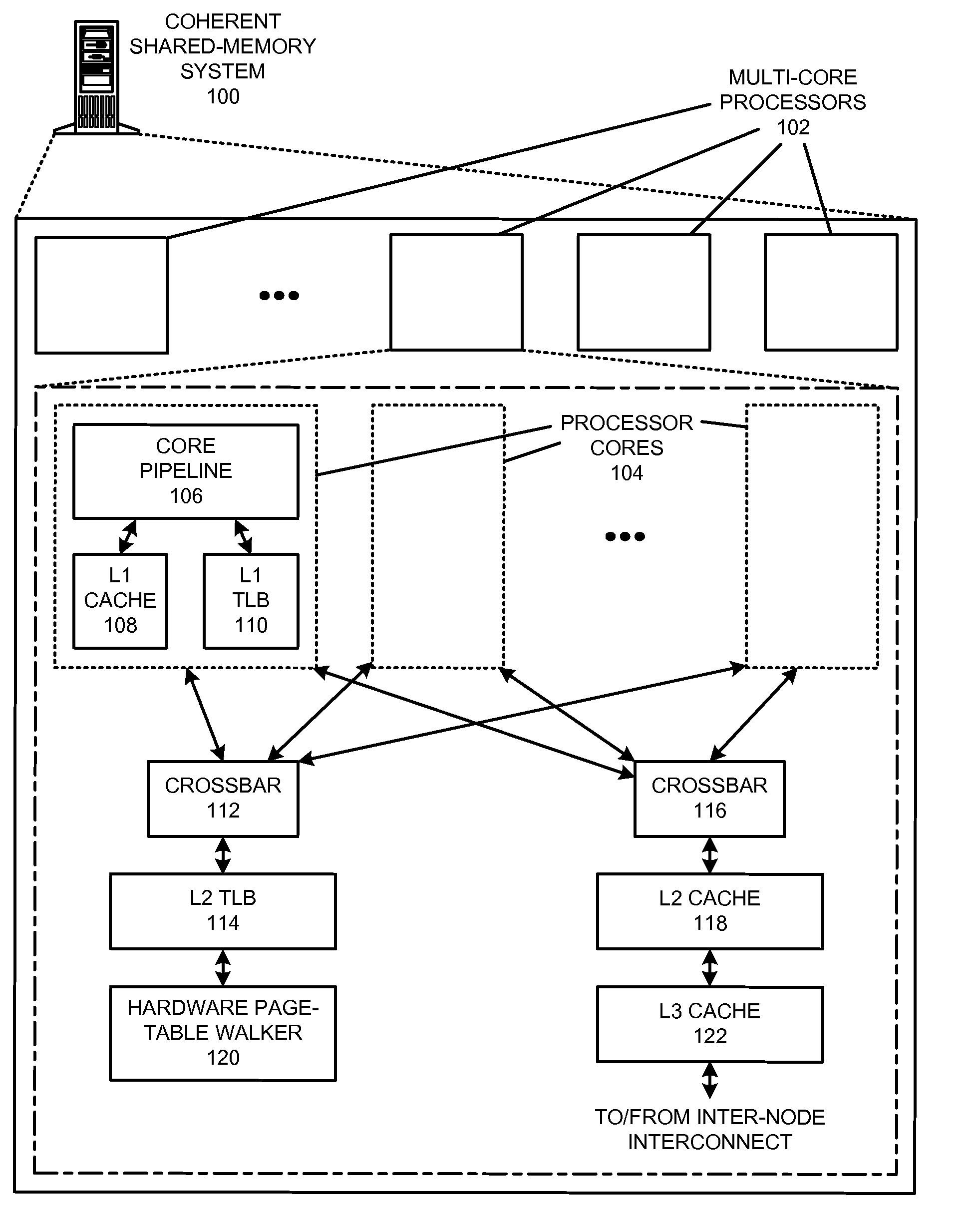

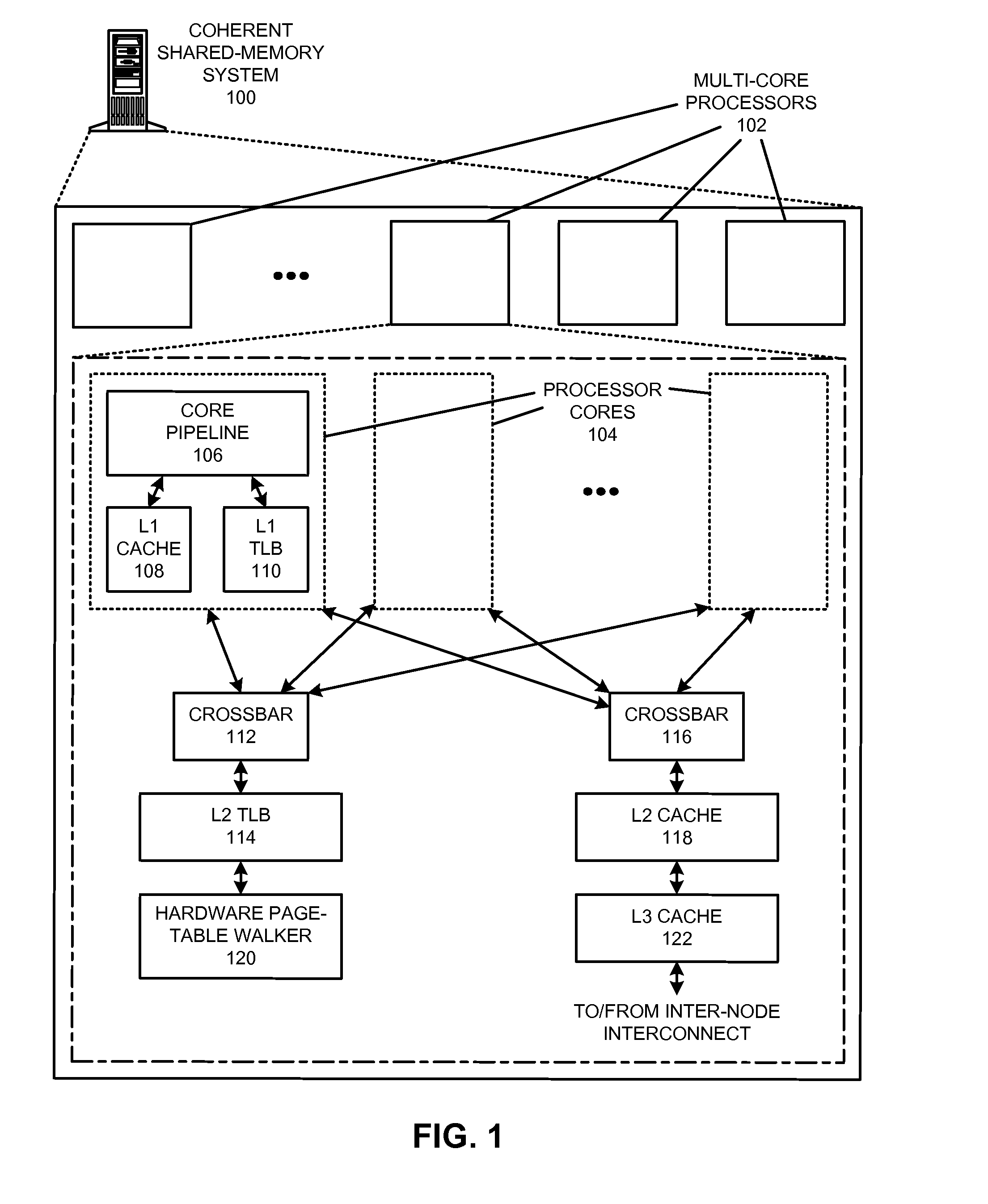

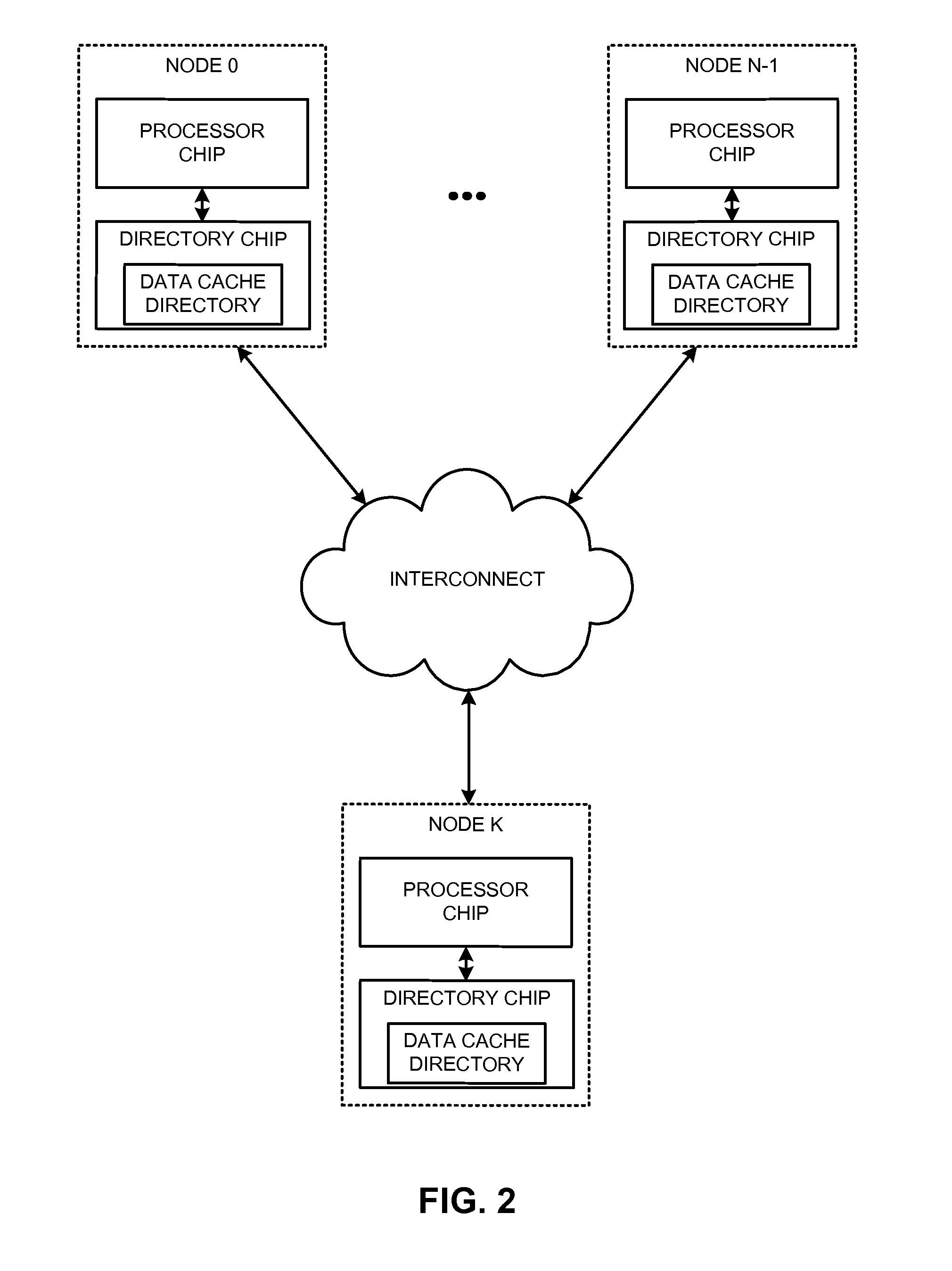

Using a shared last-level tlb to reduce address-translation latency

ActiveUS20140052917A1Increase the number ofEasy to identifyMemory architecture accessing/allocationMemory adressing/allocation/relocationData coherenceCache access

The disclosed embodiments provide techniques for reducing address-translation latency and the serialization latency of combined TLB and data cache misses in a coherent shared-memory system. For instance, the last-level TLB structures of two or more multiprocessor nodes can be configured to act together as either a distributed shared last-level TLB or a directory-based shared last-level TLB. Such TLB-sharing techniques increase the total amount of useful translations that are cached by the system, thereby reducing the number of page-table walks and improving performance. Furthermore, a coherent shared-memory system with a shared last-level TLB can be further configured to fuse TLB and cache misses such that some of the latency of data coherence operations is overlapped with address translation and data cache access latencies, thereby further improving the performance of memory operations.

Owner:ORACLE INT CORP

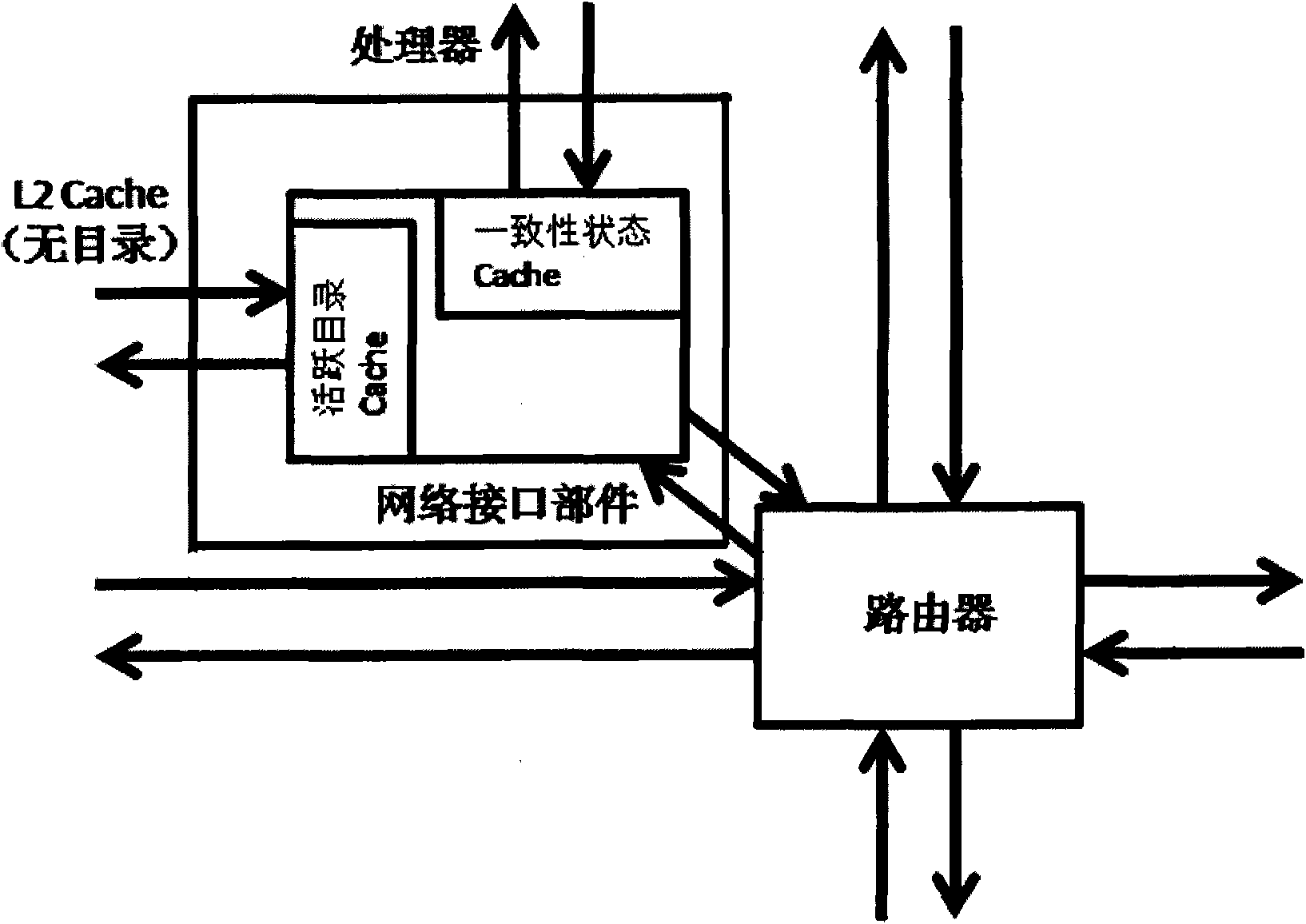

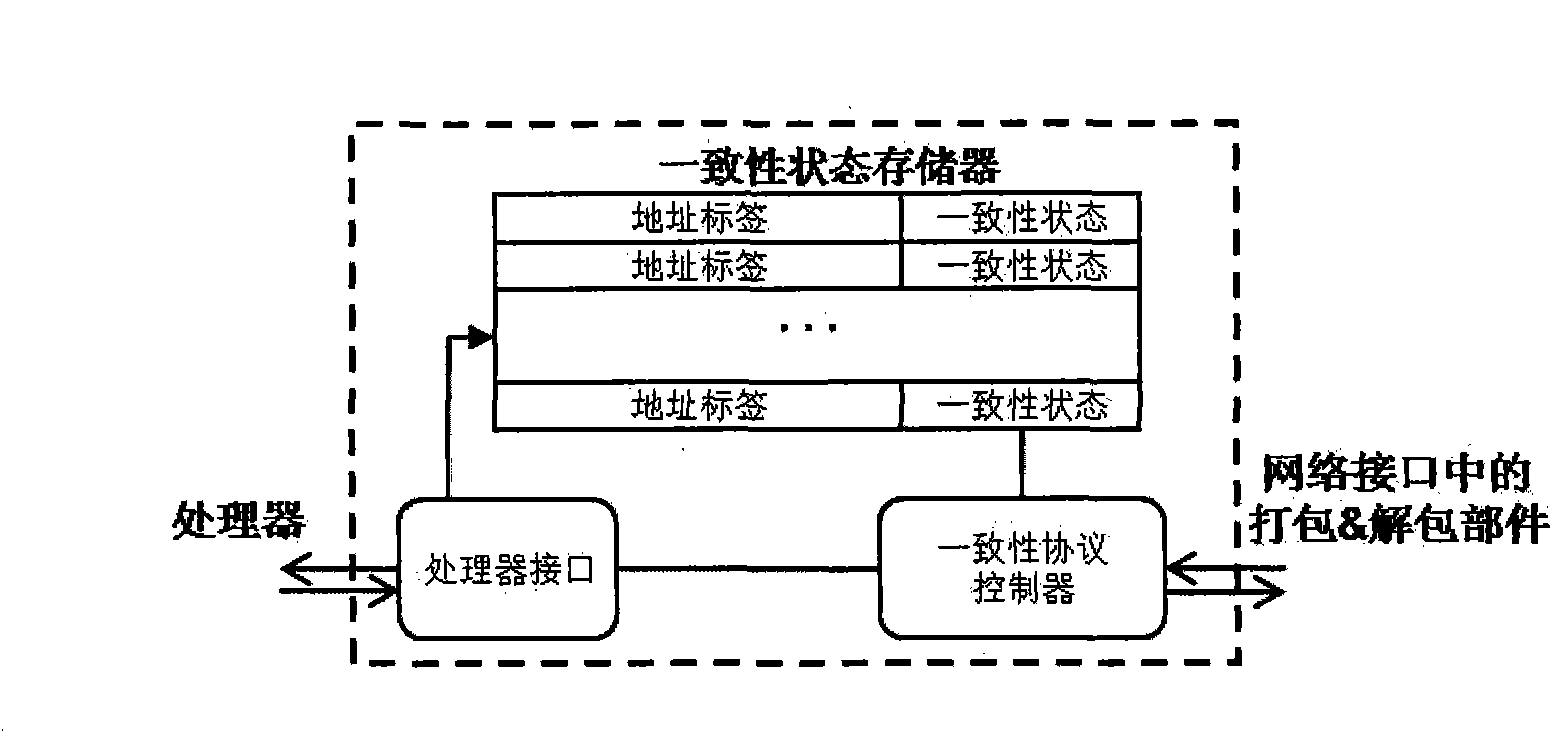

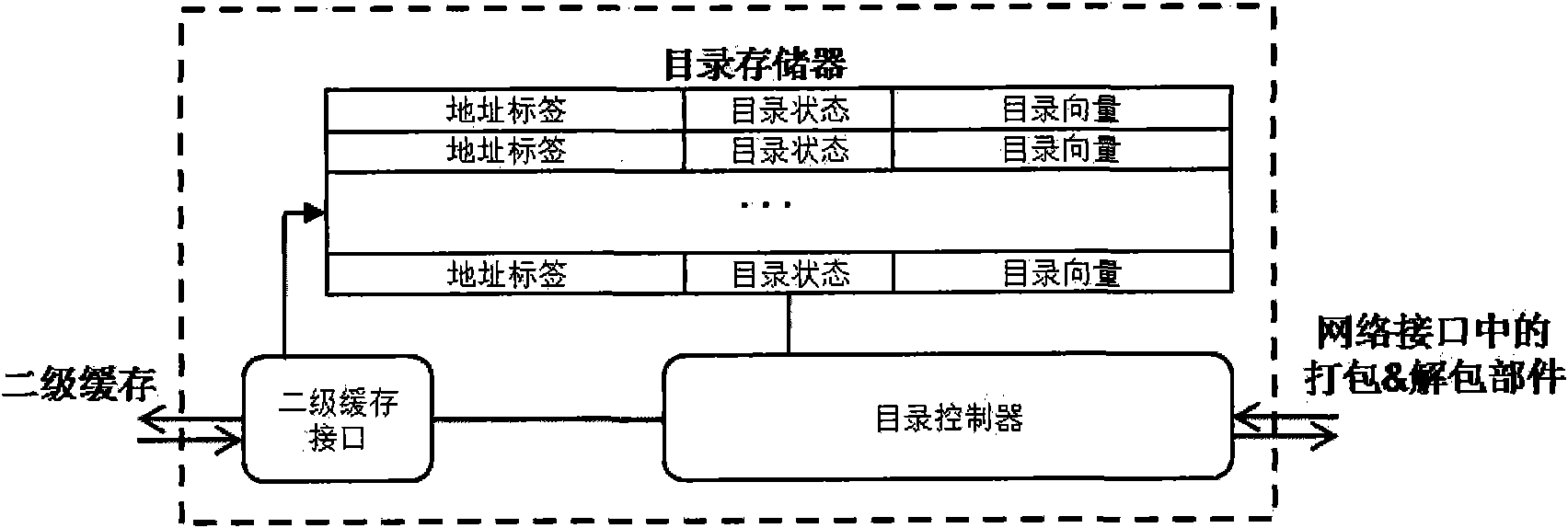

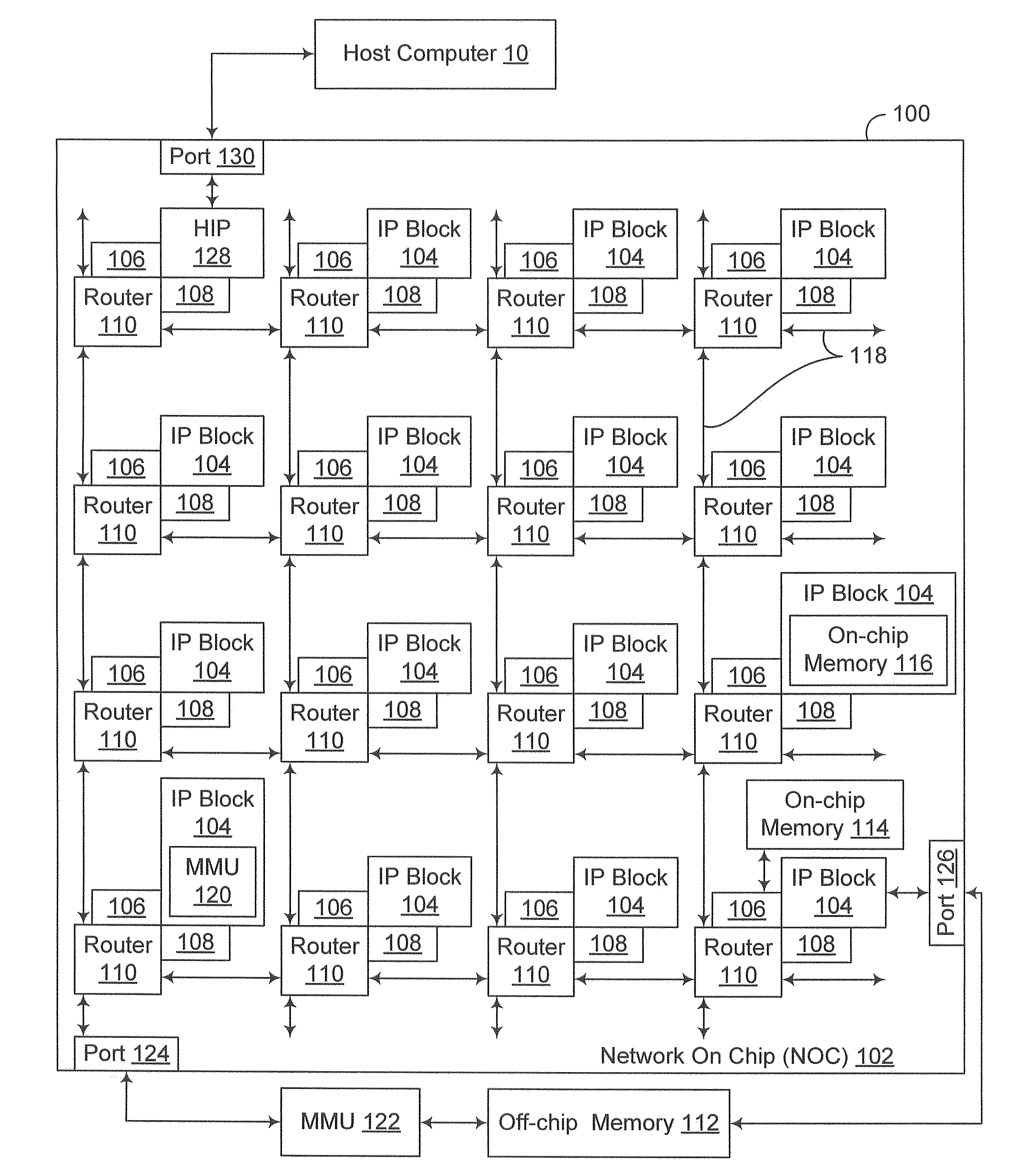

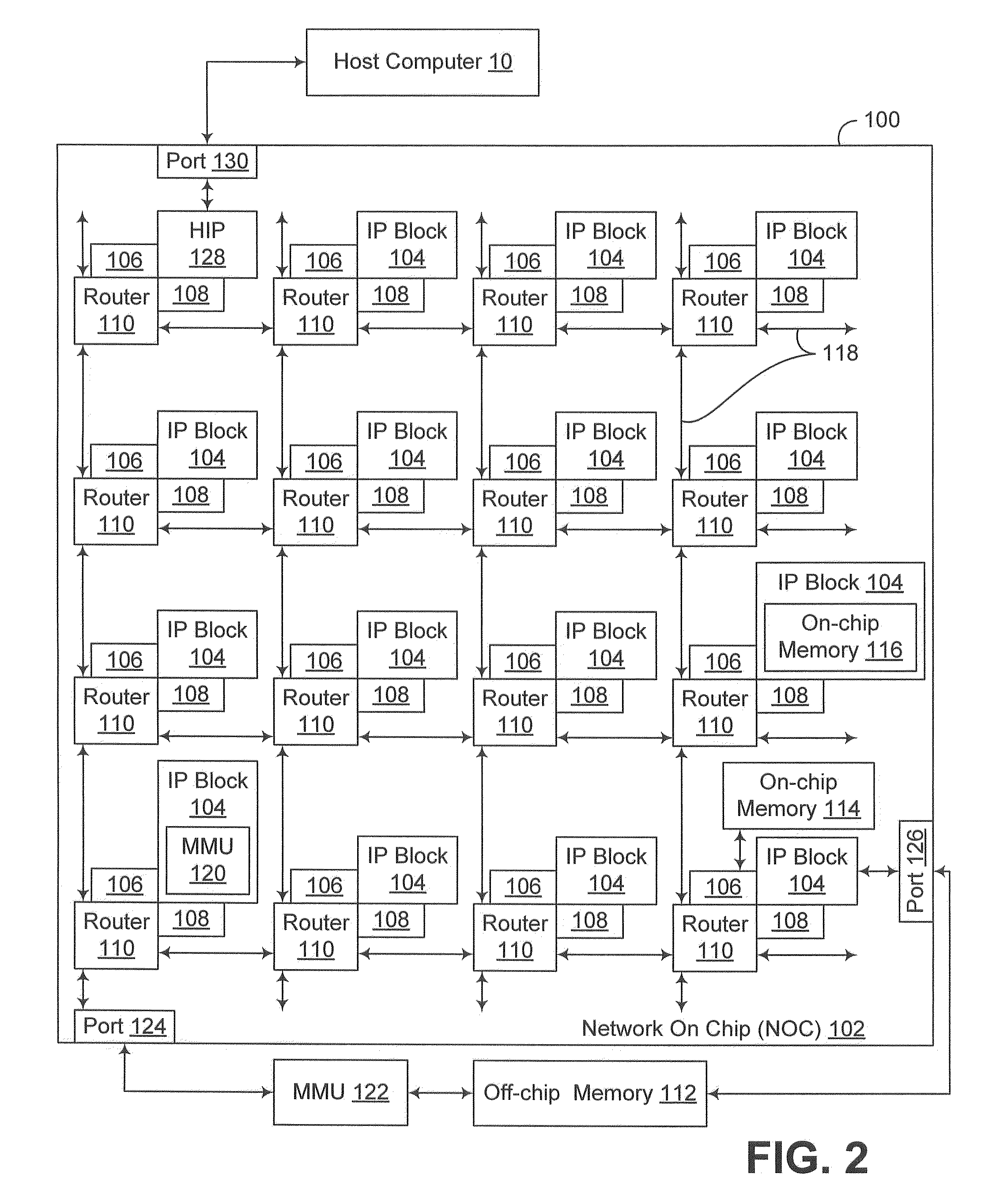

On-chip network system supporting cache coherence and data request method

InactiveCN101958834ASimplify the design processSimplify the verification processDigital computer detailsData switching networksCache accessStructure of Management Information

The invention discloses an on-chip network system supporting cache coherence. The network system comprises a network interface part and a router, wherein the network interface part is connected with the router, a multi-core processor and a second level cache; a consistent state cache connected with the multi-core processor is additionally arranged in the network interface part and is used for storing and maintaining the consistent state of a data block in a first level cache of the multi-core processor; and an active directory cache connected with the second level cache is also additionally arranged in the network interface part and is used for caching and maintaining the directory information of the data block usually accessed by the first level cache. Coherence maintenance work is separated from the work of a processor, directory maintenance work is separated from the work of the second level cache, and the directory structure in the second level cache is eliminated, so that the design and the verification process of the multi-core processor are simplified, the storage cost of a chip is reduced, and the performance of the multi-core processor is improved. The invention also discloses a data request method of the system.

Owner:TSINGHUA UNIV

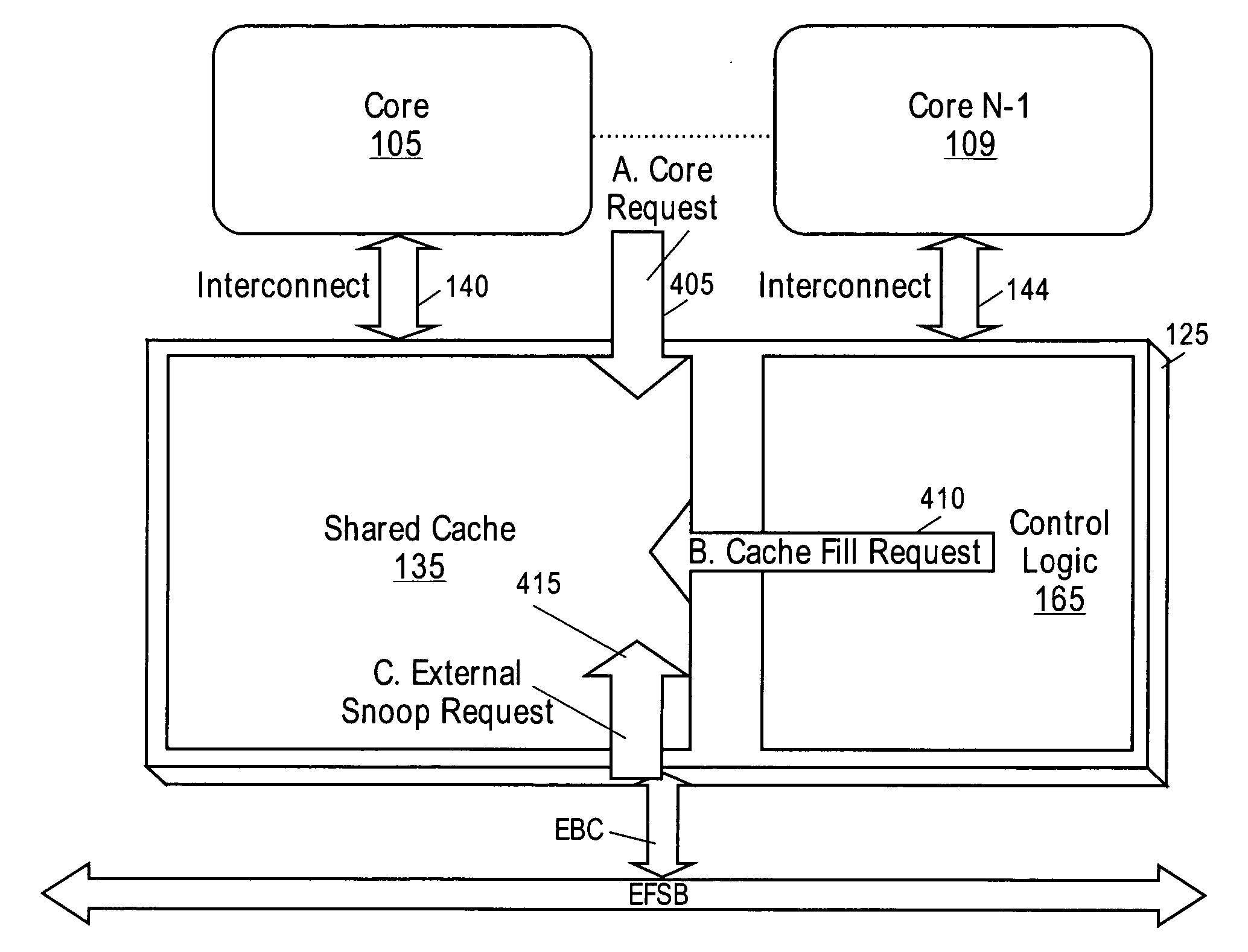

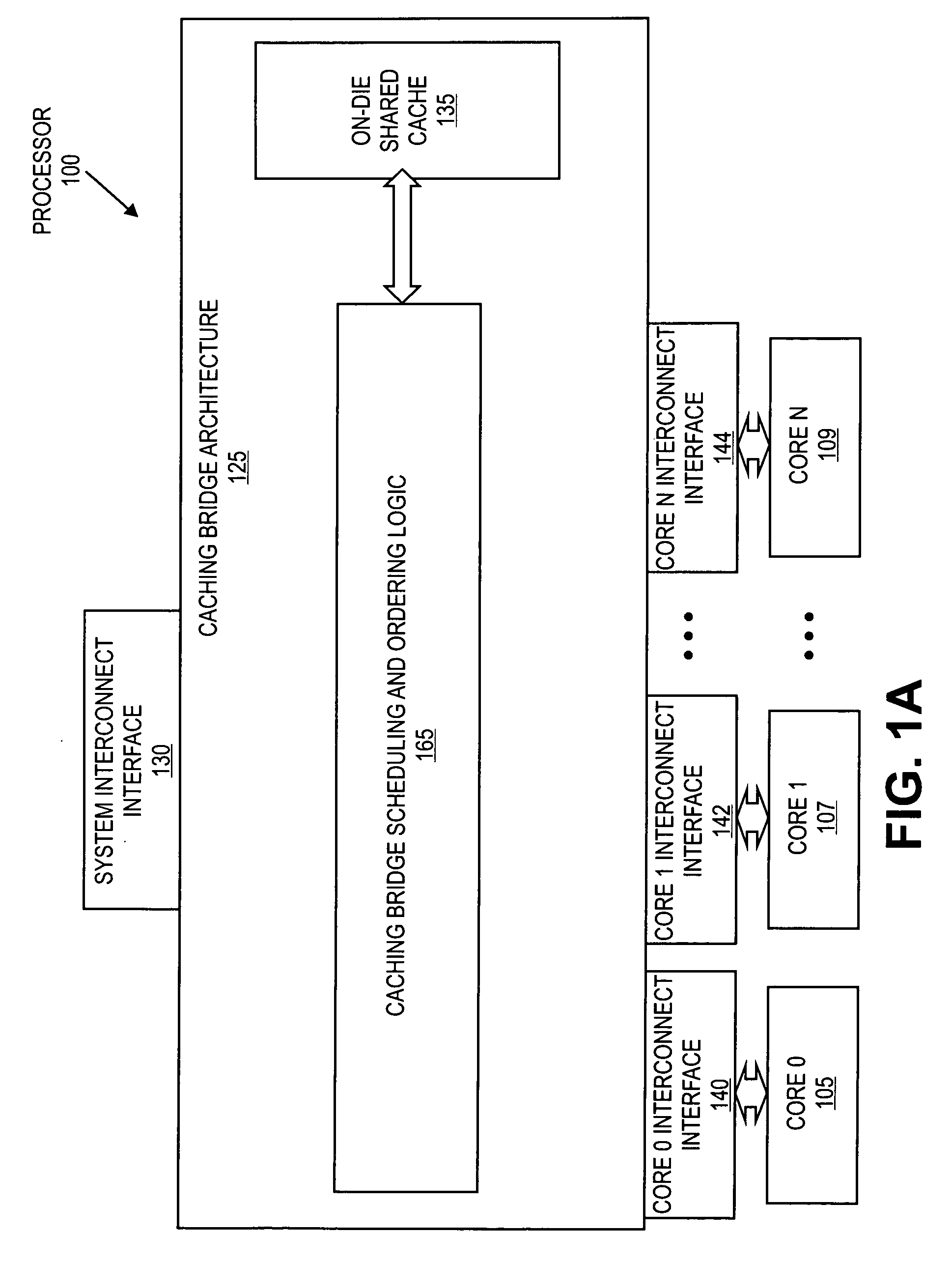

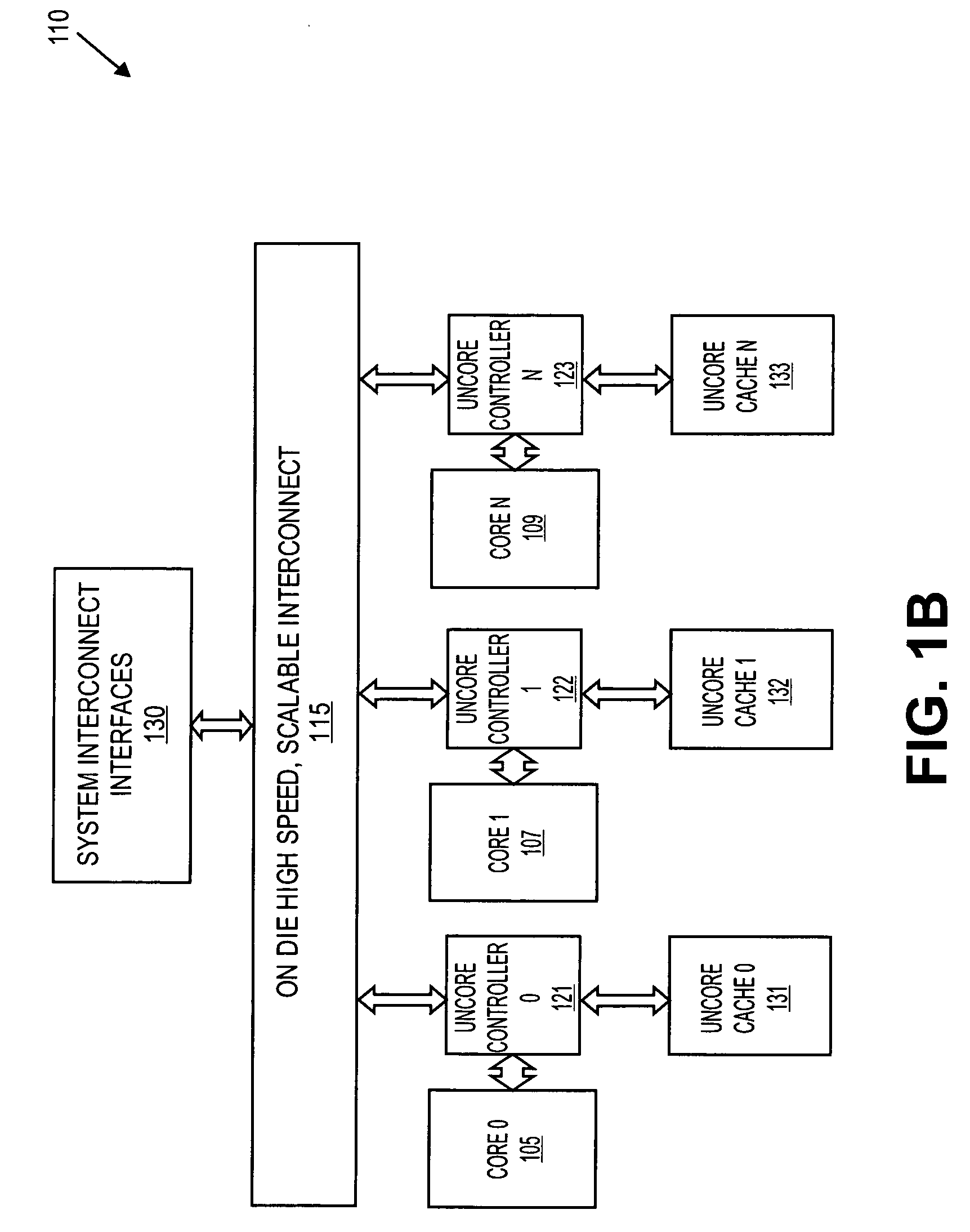

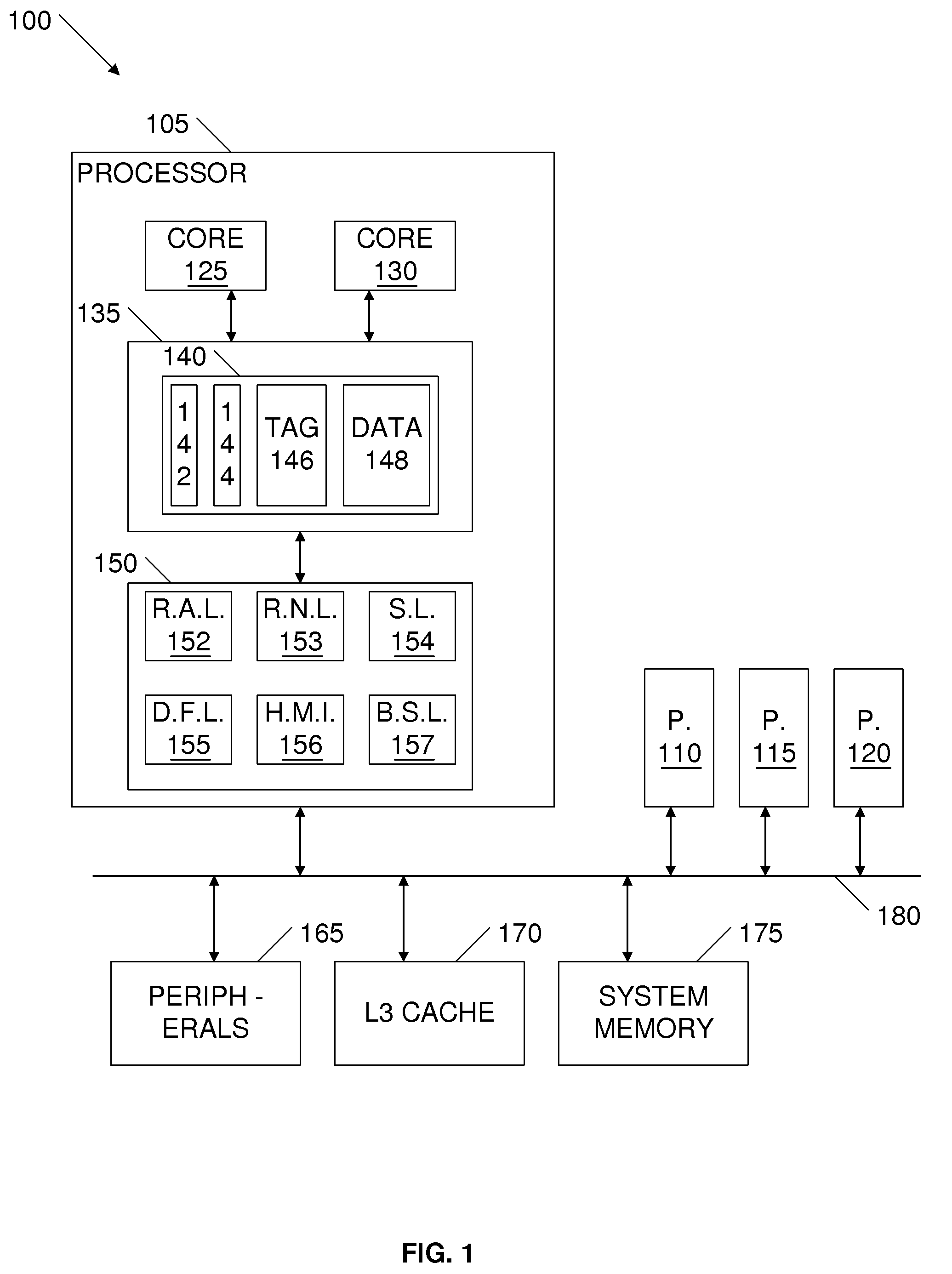

Cache coherency sequencing implementation and adaptive LLC access priority control for CMP

InactiveUS20070005909A1Memory architecture accessing/allocationMemory systemsCache accessParallel computing

A method and apparatus for cache coherency sequencing implementation and an adaptive LLC access priority control is disclosed. One embodiment provides mechanisms to resolve last level cache access priority among multiple internal CMP cores, internal snoops and external snoops. Another embodiment provides mechanisms for implementing cache coherency in multi-core CMP system.

Owner:INTEL CORP

Multiple variable cache replacement policy

A method for selecting a candidate to mark as overwritable in the event of a cache miss while attempting to avoid a write back operation. The method includes associating a set of data with the cache access request, each datum of the set is associated with a way, then choosing an invalid way among the set. Where no invalid ways exist among the set, the next step is determining a way that is not most recently used among the set. Next, the method determines whether a shared resource is crowded. When the shared resource is not crowded, the not most recently used way is chosen as the candidate. Where the shared resource is crowded, the next step is to determine whether the not most recently used way differs from an associated source in the memory and where the not most recently used way is the same as an associated source in the memory, the not most recently used way is chosen as the candidate. Where the not most recently used way differs from an associated source in the memory, the candidate is chosen as the way among the set that does not differ from an associated source in the memory. Where all ways among the set differ from respective sources in the memory, the not most recently used way is chosen as the candidate and the not most recently used way is stored in the shared resource.

Owner:SUN MICROSYSTEMS INC

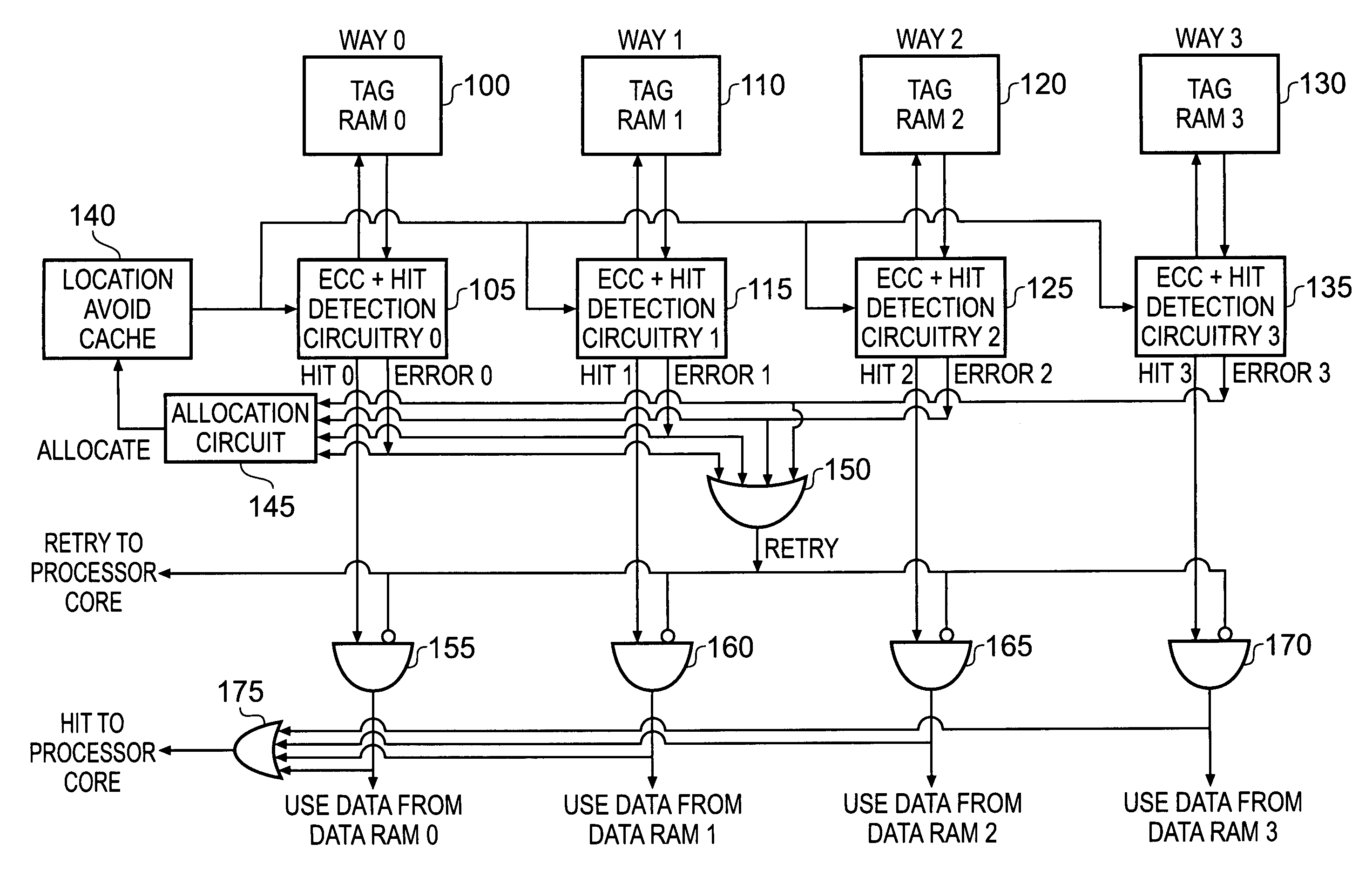

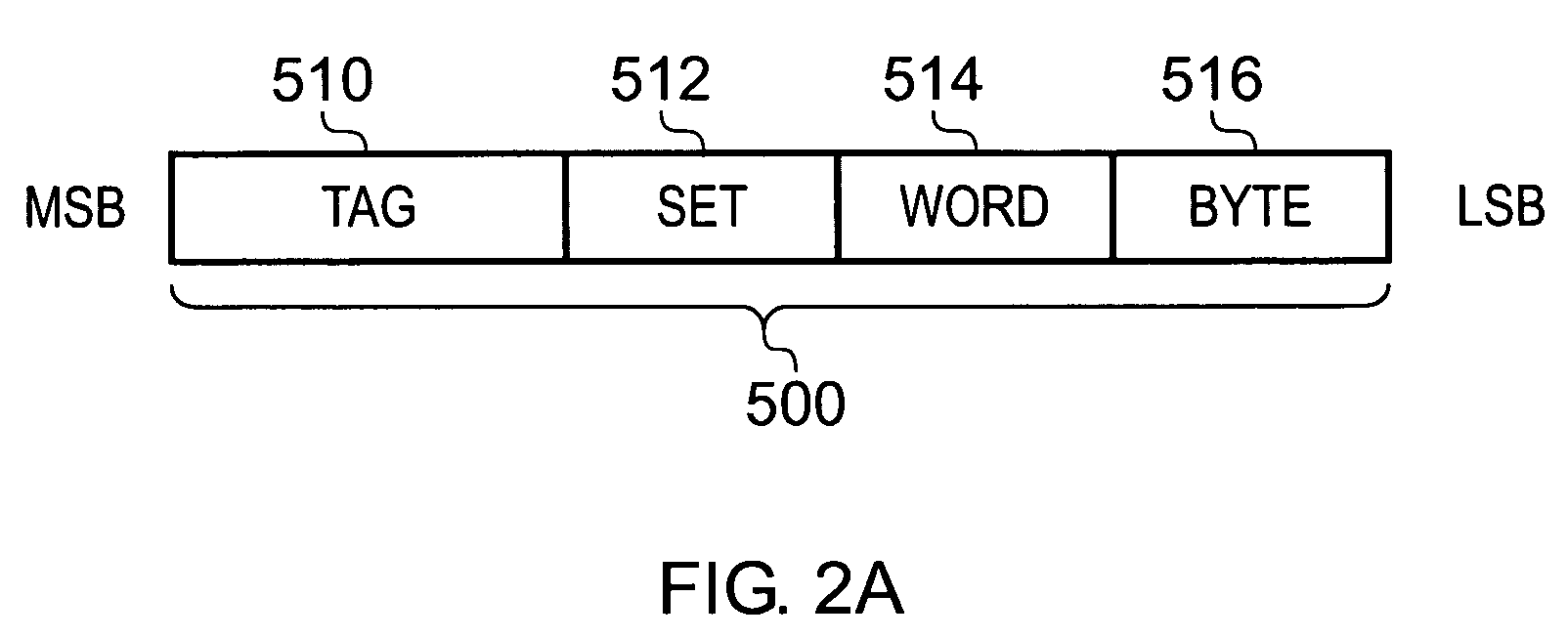

Handling of hard errors in a cache of a data processing apparatus

ActiveUS20090164727A1The process is simple and effectiveOptimization mechanismError detection/correctionDigital storageCache accessParallel computing

A data processing apparatus and method are provided for handling hard errors occurring in a cache of the data processing apparatus. The cache storage comprising data storage having a plurality of cache lines for storing data values, and address storage having a plurality of entries, with each entry identifying for an associated cache line an address indication value, and each entry having associated error data. In response to an access request, a lookup procedure is performed to determine with reference to the address indication value held in at least one entry of the address storage whether a hit condition exists in one of the cache lines. Further, error detection circuitry determines with reference to the error data associated with the at least one entry of the address storage whether an error condition exists for that entry. Additionally, cache location avoid storage is provided having at least one record, with each record being used to store a cache line identifier identifying a specific cache line. On detection of the error condition, one of the records in the cache location avoid storage is allocated to store the cache line identifier for the specific cache line associated with the entry for which the error condition was detected. Further, the error detection circuitry causes a clean and invalidate operation to be performed in respect of the specific cache line, and the access request is then re-performed. The cache access circuitry is arranged to exclude any specific cache line identified in the cache location avoid storage from the lookup procedure. This mechanism provides a very simple and effective mechanism for handling hard errors that manifest themselves within a cache during use, so as to ensure correct operation of the cache in the presence of such hard errors. Further, the technique can be employed not only in association with write through caches but also write back caches, thus providing a very flexible solution.

Owner:ARM LTD

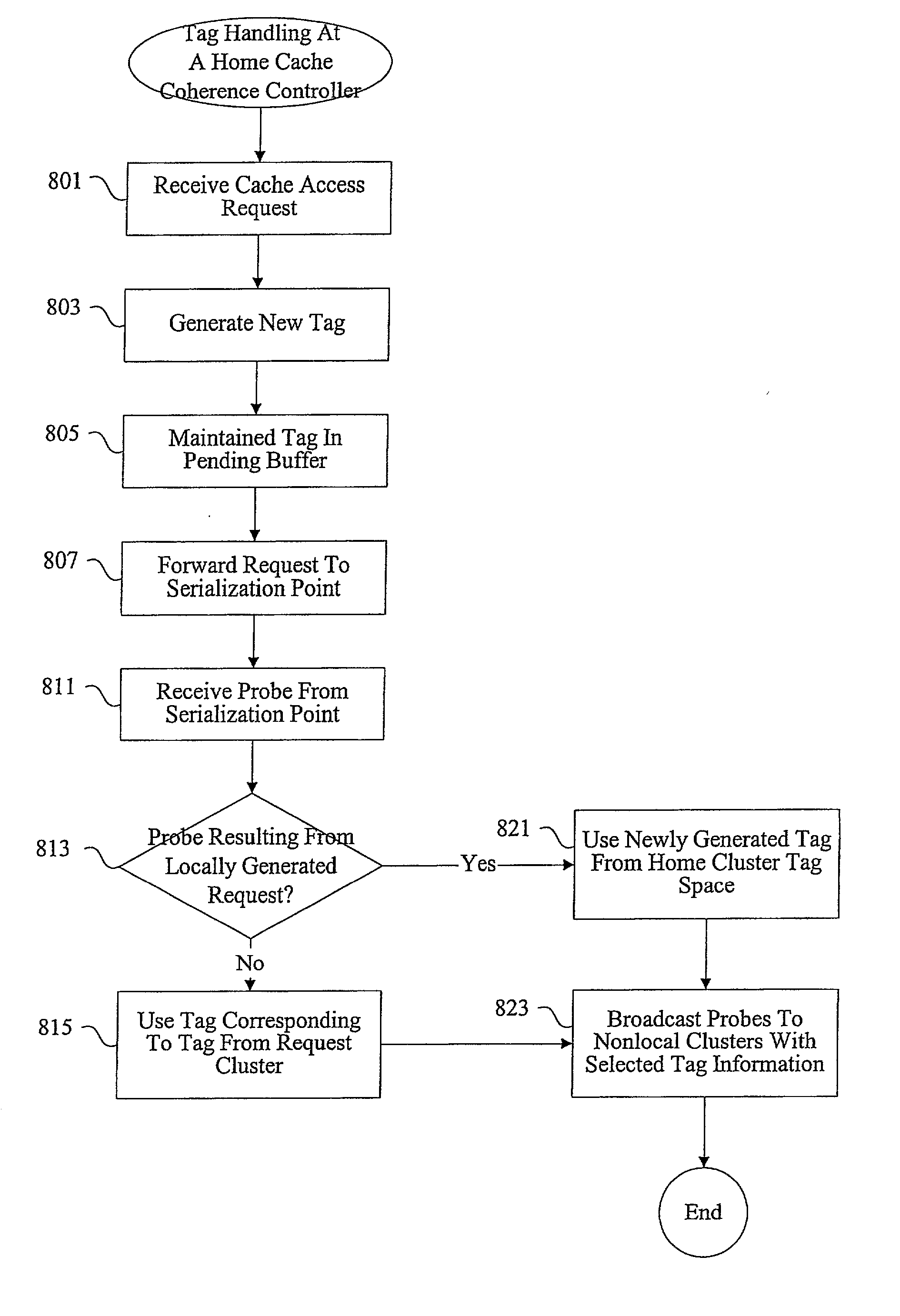

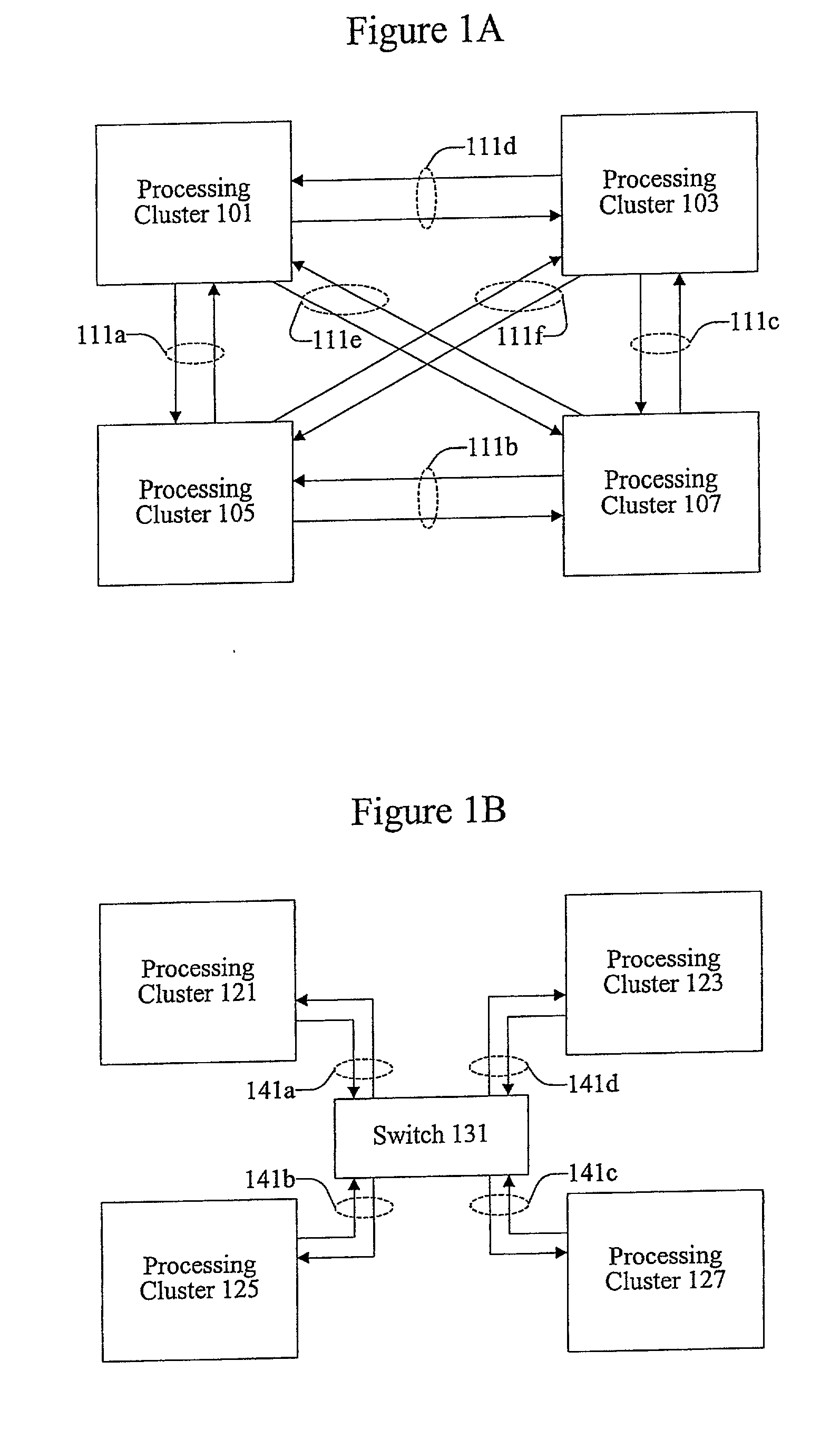

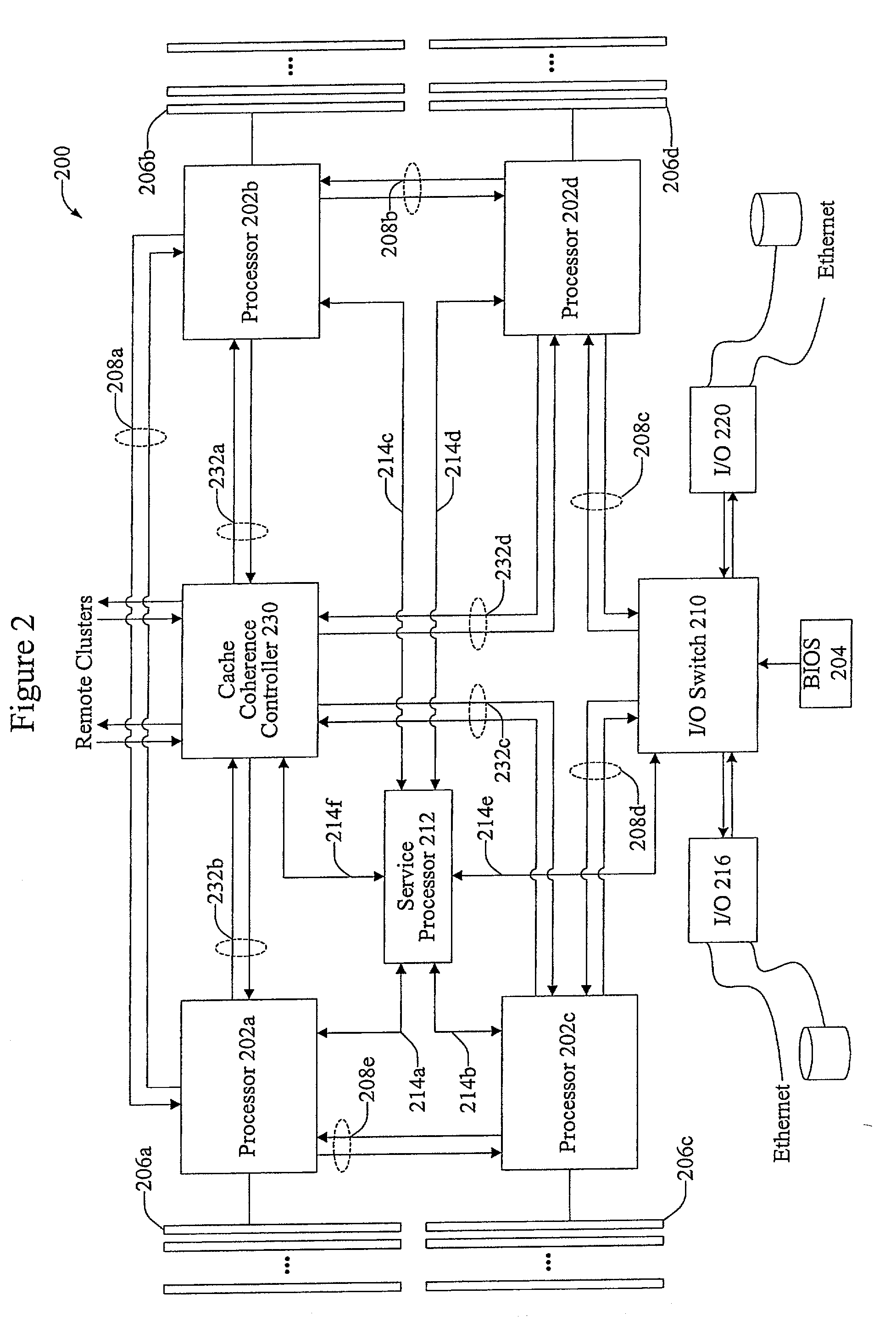

Methods and apparatus for responding to a request cluster

ActiveUS20030212741A1Memory architecture accessing/allocationMemory adressing/allocation/relocationCache accessCluster systems

According to the present invention, methods and apparatus are provided for increasing the efficiency of data access in a multiple processor, multiple cluster system. A home cluster of processors receives a cache access request from a request cluster. The home cluster includes mechanisms for instructing probed remote clusters to respond to the request cluster instead of to the home cluster. The home cluster can also include mechanisms for reducing the number of probes sent to remote clusters. Techniques are also included for providing the requesting cluster with information to determine the number of responses to be transmitted to the requesting cluster as a result of the reduction in the number of probes sent at the home cluster.

Owner:SANMINA-SCI CORPORATION

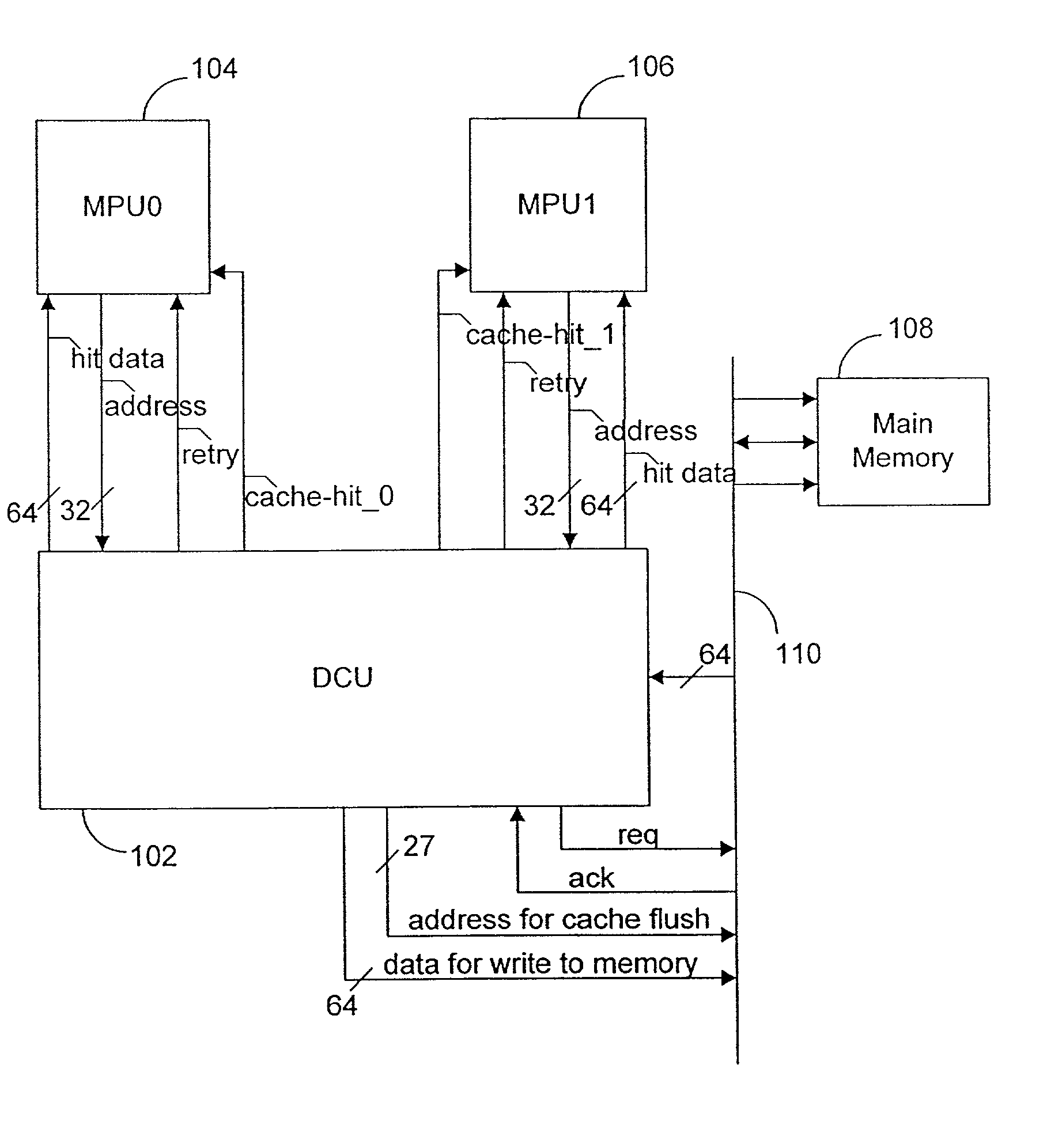

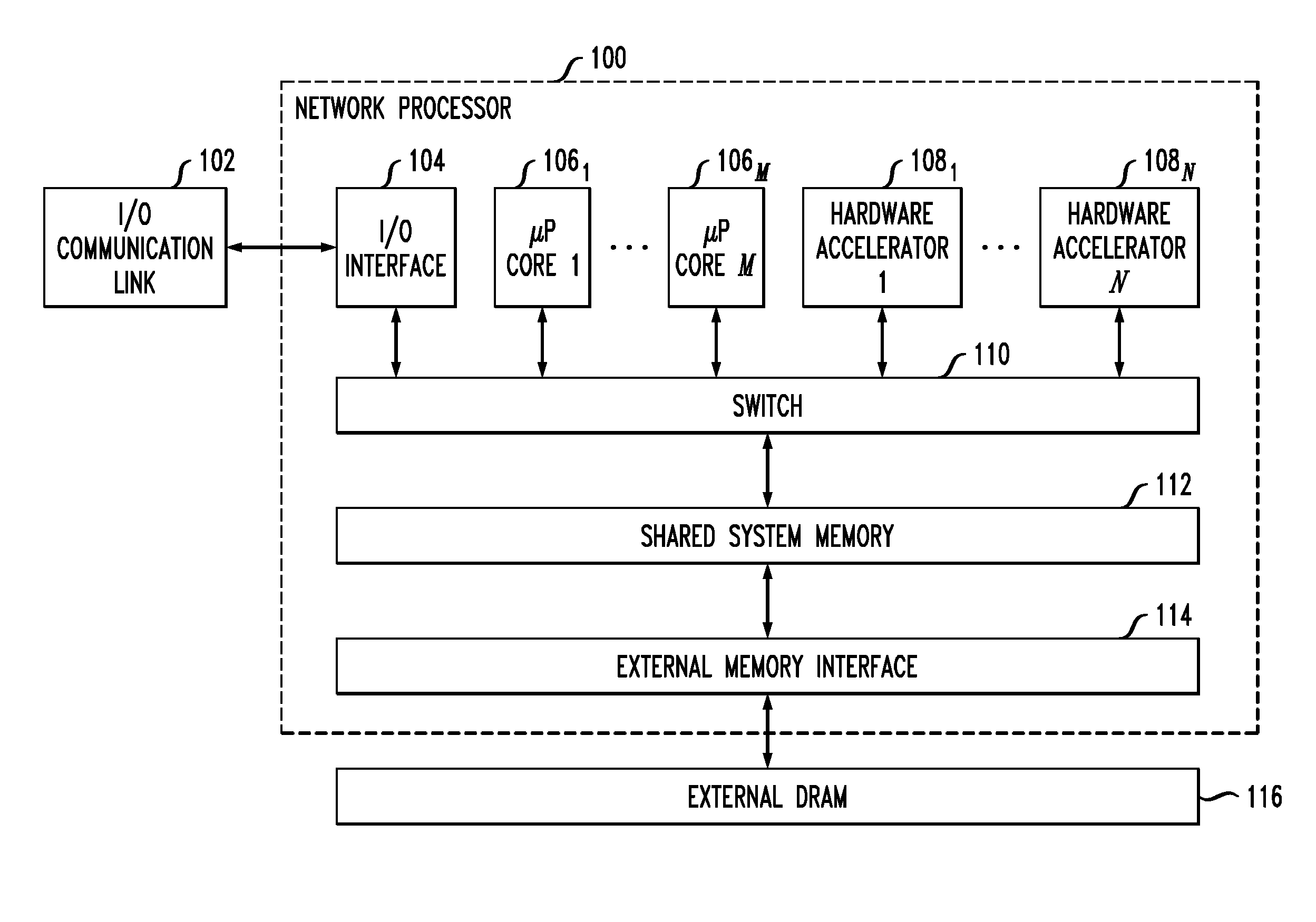

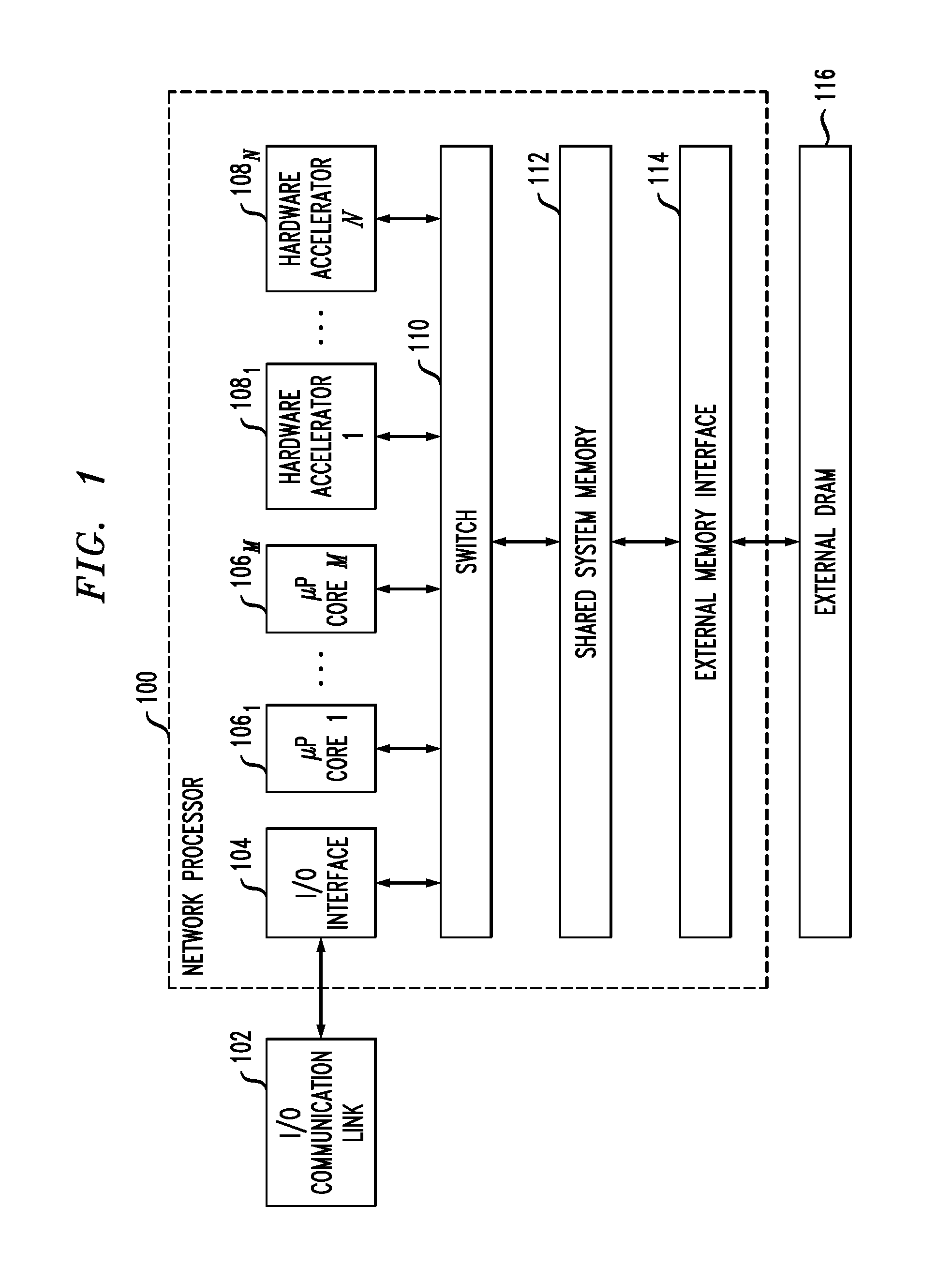

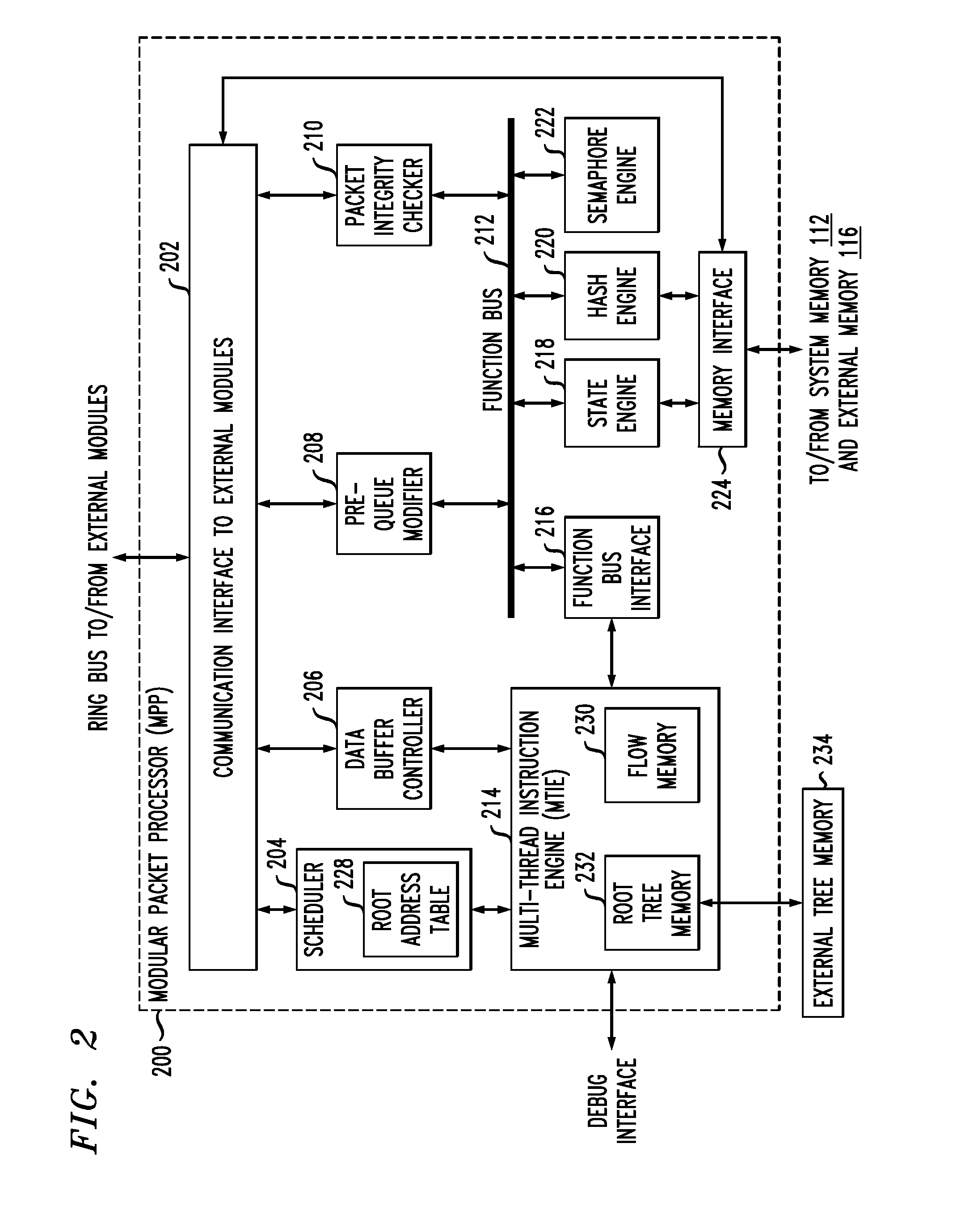

Concurrent, coherent cache access for multiple threads in a multi-core, multi-thread network processor

InactiveUS20110225372A1Well formedMemory adressing/allocation/relocationDigital computer detailsData packComputer architecture

Described embodiments provide a packet classifier of a network processor having a plurality of processing modules. A scheduler generates a thread of contexts for each tasks generated by the network processor corresponding to each received packet. The thread corresponds to an order of instructions applied to the corresponding packet. A multi-thread instruction engine processes the threads of instructions. A state engine operates on instructions received from the multi-thread instruction engine, the instruction including a cache access request to a local cache of the state engine. A cache line entry manager of the state engine translates between a logical index value of data corresponding to the cache access request and a physical address of data stored in the local cache. The cache line entry manager manages data coherency of the local cache and allows one or more concurrent cache access requests to a given cache data line for non-overlapping data units.

Owner:INTEL CORP

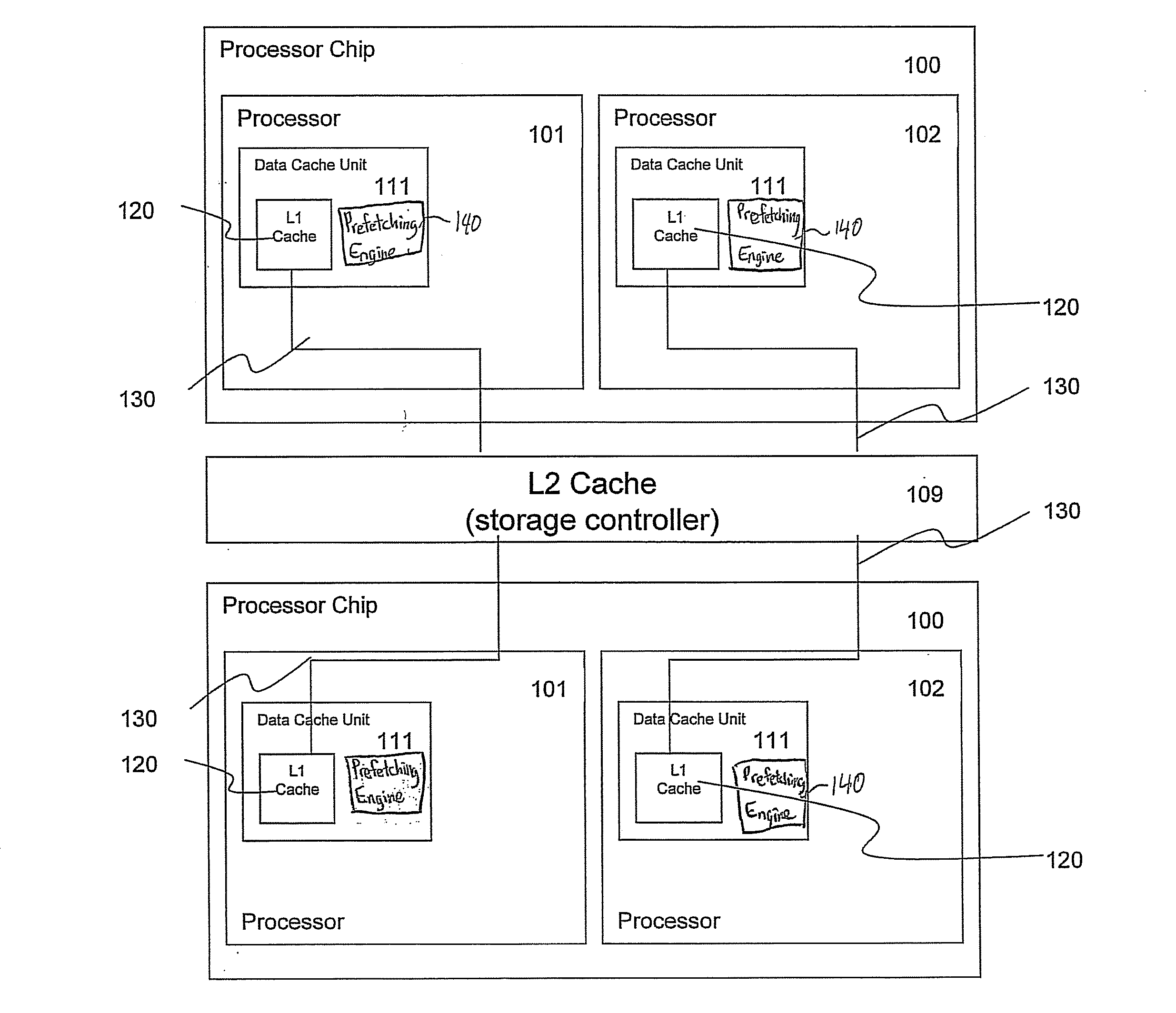

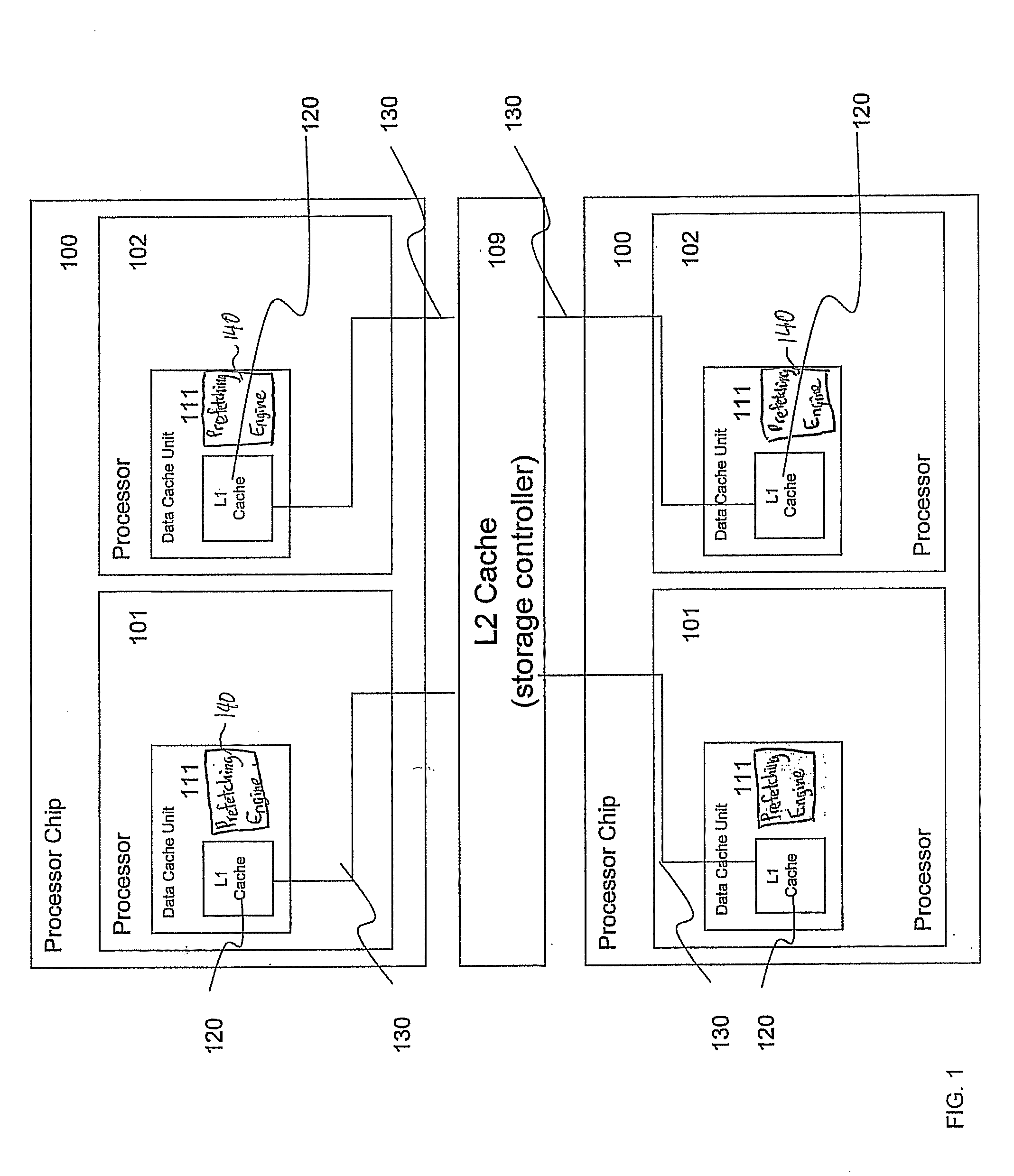

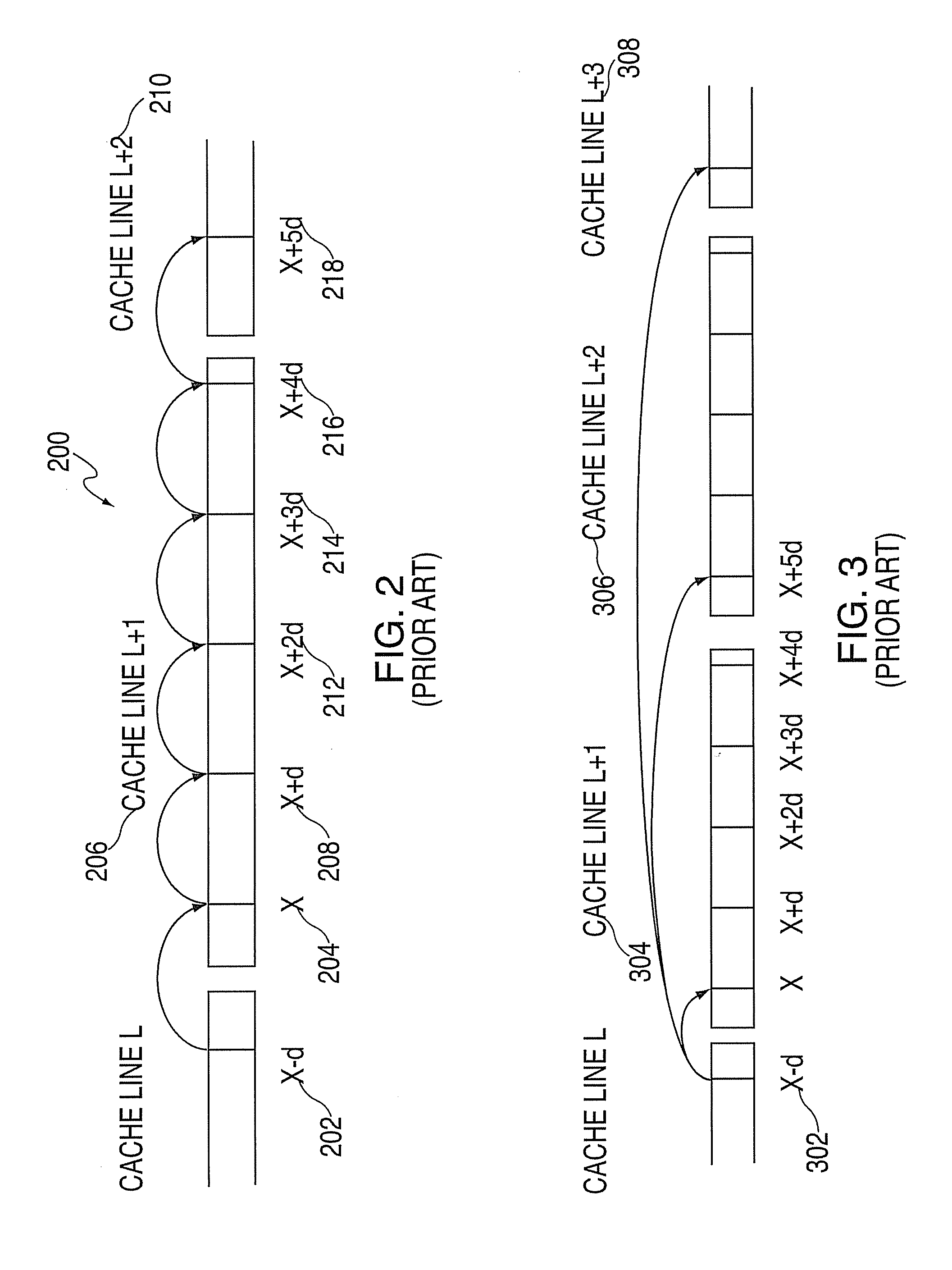

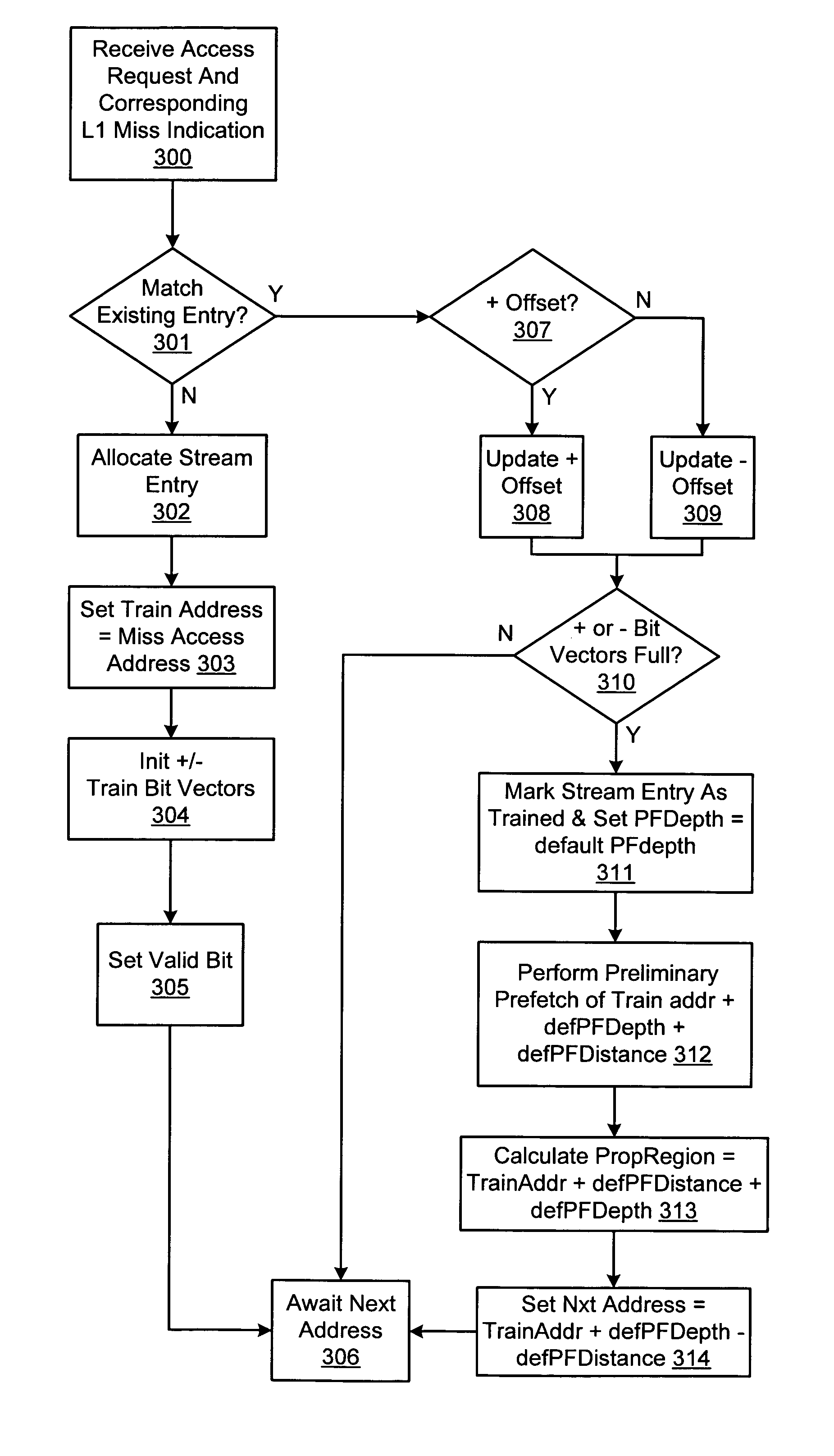

System, method and computer program product for enhancing timeliness of cache prefetching

InactiveUS20090216956A1Improve timelinessMemory architecture accessing/allocationMemory adressing/allocation/relocationCache accessParallel computing

A system, method, and computer program product for enhancing timeliness of cache memory prefetching in a processing system are provided. The system includes a stride pattern detector to detect a stride pattern for a stride size in an amount of bytes as a difference between successive cache accesses. The system also includes a confidence counter. The system further includes eager prefetching control logic for performing a method when the stride size is less than a cache line size. The method includes adjusting the confidence counter in response to the stride pattern detector detecting the stride pattern, comparing the confidence counter to a confidence threshold, and requesting a cache prefetch in response to the confidence counter reaching the confidence threshold. The system may also include selection logic to select between the eager prefetching control logic and standard stride prefetching control logic.

Owner:IBM CORP

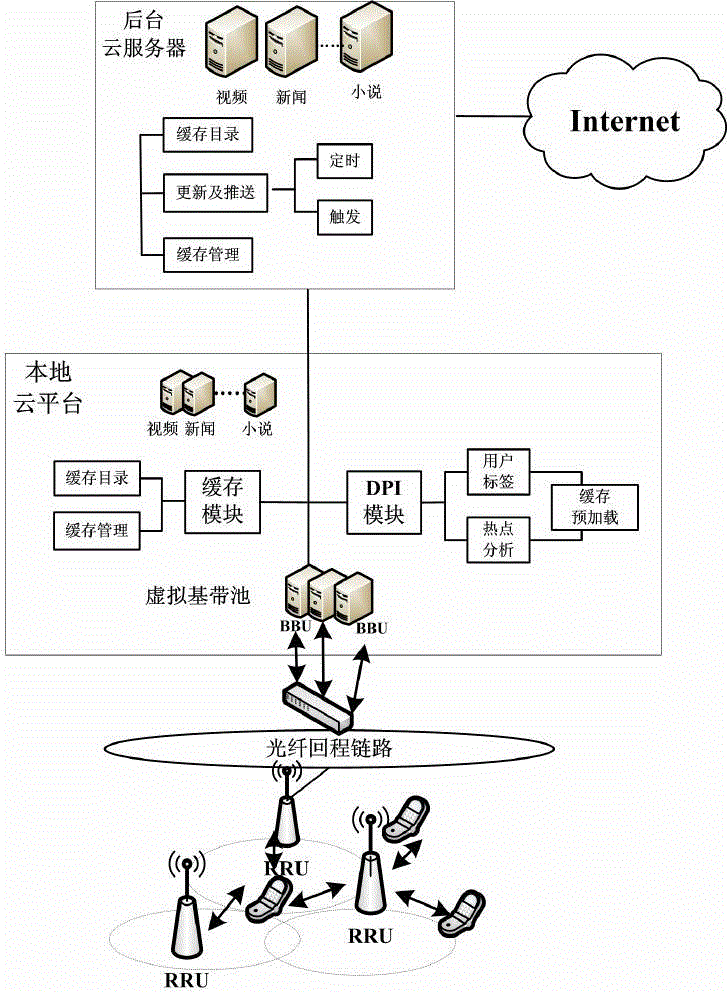

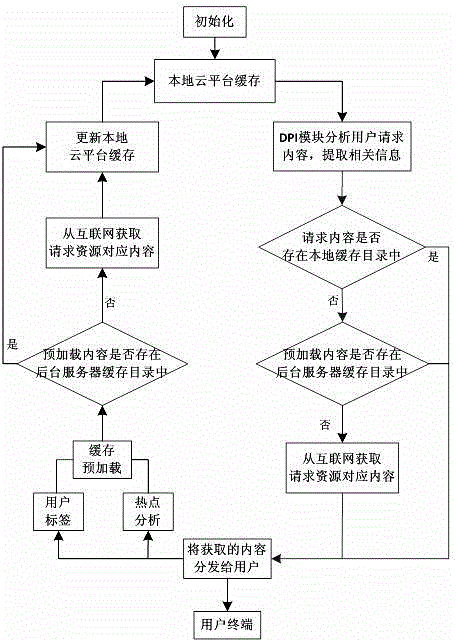

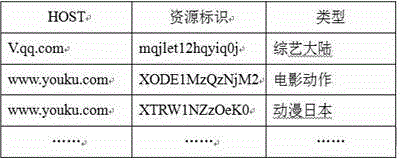

C-RAN based internet content caching and preloading method and system

The invention discloses a C-RAN based internet content caching and preloading technology. The C-RAN based internet content caching and preloading technology comprises the following steps: according to classification of requested resources, querying the corresponding cache directory; if the requested content is saved in the local cache directory, directly returning the corresponding content to a user; if the requested content is not saved in the cache directory, querying the cache directory of a cloud platform server again; if the query fails, acquiring the corresponding resource through internet, and returning the resource to a user; respectively performing hot statistics to the cached and un-cached access contents; according to the access records of the user, obtaining the user preference, and analyzing the user tag composition ratio; when the hot value is up to the upper limit or the user tag composition ratio is higher than the threshold value, triggering a caching and preloading unit, and applying preloading cache to a background server. The C-RAN based internet content caching and preloading technology disclosed by the invention aims to the mobile communication development in the future, realizes the optimal content cache storage in the whole internet, increases the storage utilization rate, effectively saves the internetwork settlement cost, and improves the user experience.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

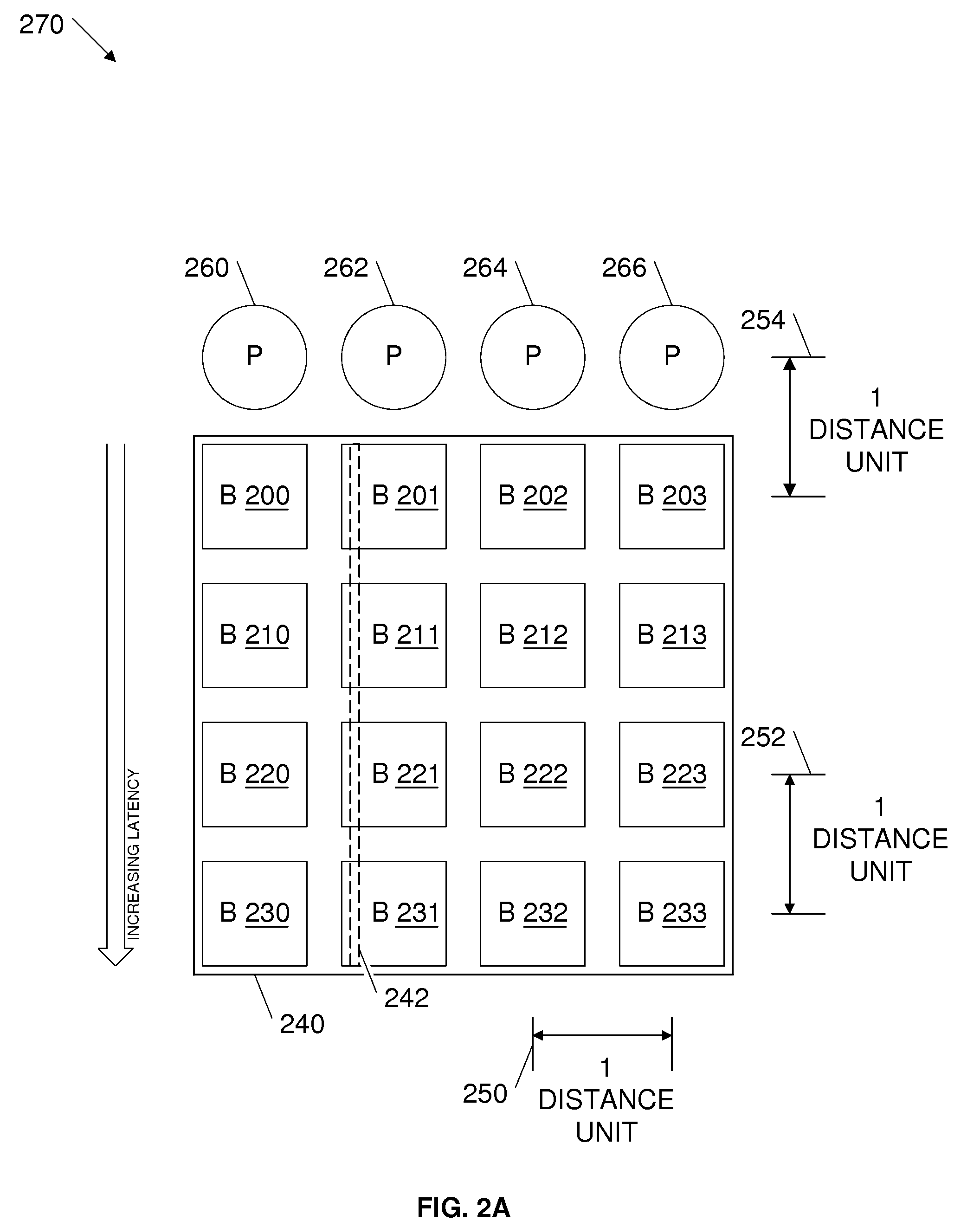

Power conservation in vertically-striped nuca caches

InactiveUS20100275049A1Energy efficient ICTVolume/mass flow measurementCache accessParallel computing

Embodiments that dynamically conserve power in non-uniform cache access (NUCA) caches are contemplated. Various embodiments comprise a computing device, having one or more processors coupled with one or more NUCA cache elements. The NUCA cache elements may comprise one or more banks of cache memory, wherein ways of the cache are vertically distributed across multiple banks. To conserve power, the computing devices generally turn off groups of banks, in a sequential manner according to different power states, based on the access latencies of the banks. The computing devices may first turn off groups having the greatest access latencies. The computing devices may conserve additional power by turning of more groups of banks according to different power states, continuing to turn off groups with larger access latencies before turning off groups with the smaller access latencies.

Owner:IBM CORP

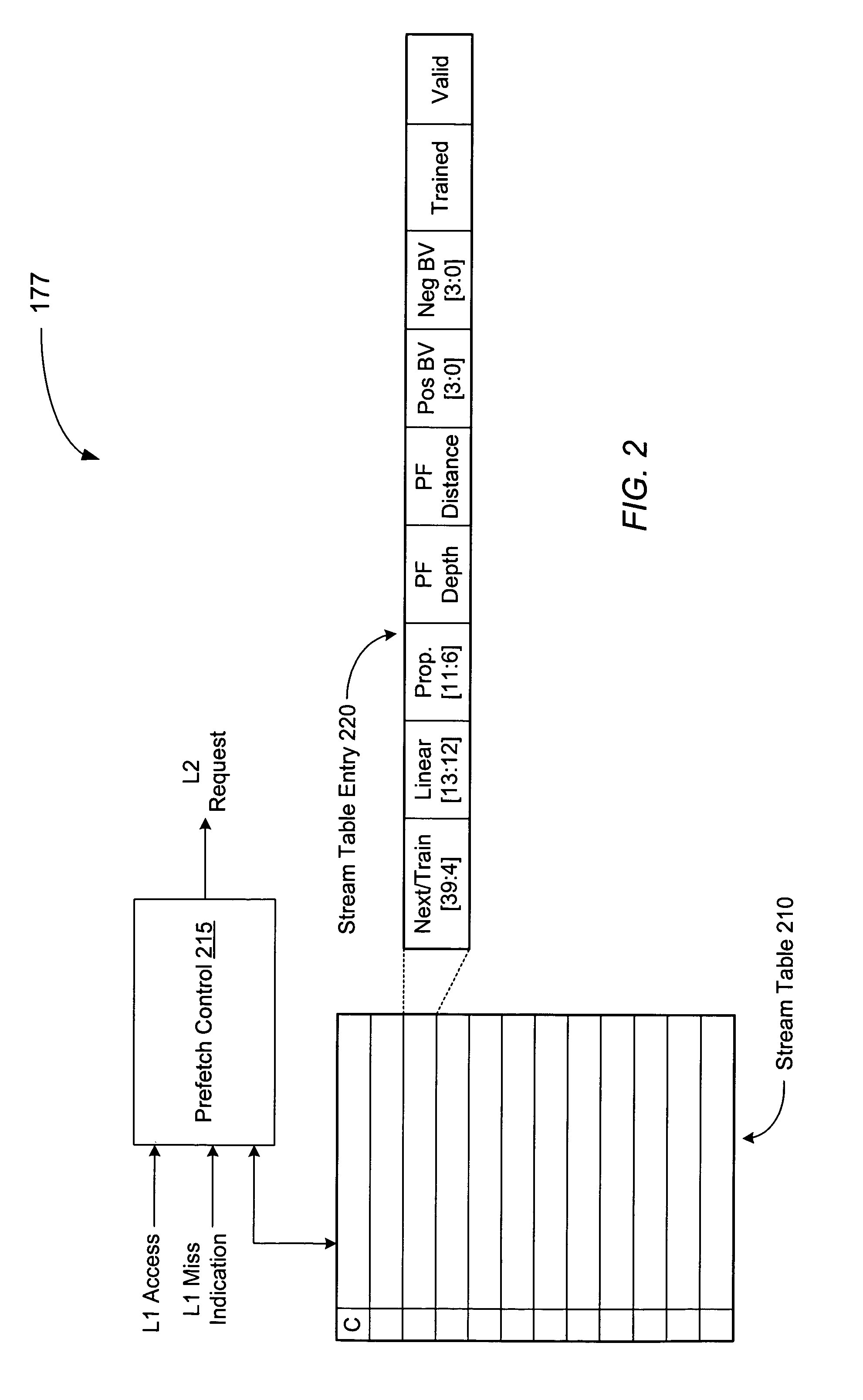

Prefetch unit for use with a cache memory subsystem of a cache memory hierarchy

ActiveUS7836259B1Memory architecture accessing/allocationMemory systemsMemory hierarchyCache hierarchy

A prefetch unit for use with a cache subsystem. The prefetch unit includes a stream storage coupled to a prefetch unit. The stream storage may include a plurality of locations configured to store a plurality of entries each corresponding to a respective range of prefetch addresses. The prefetch control may be configured to prefetch an address in response to receiving a cache access request including an address that is within the respective range of prefetch addresses of any of the plurality of entries.

Owner:ADVANCED MICRO DEVICES INC

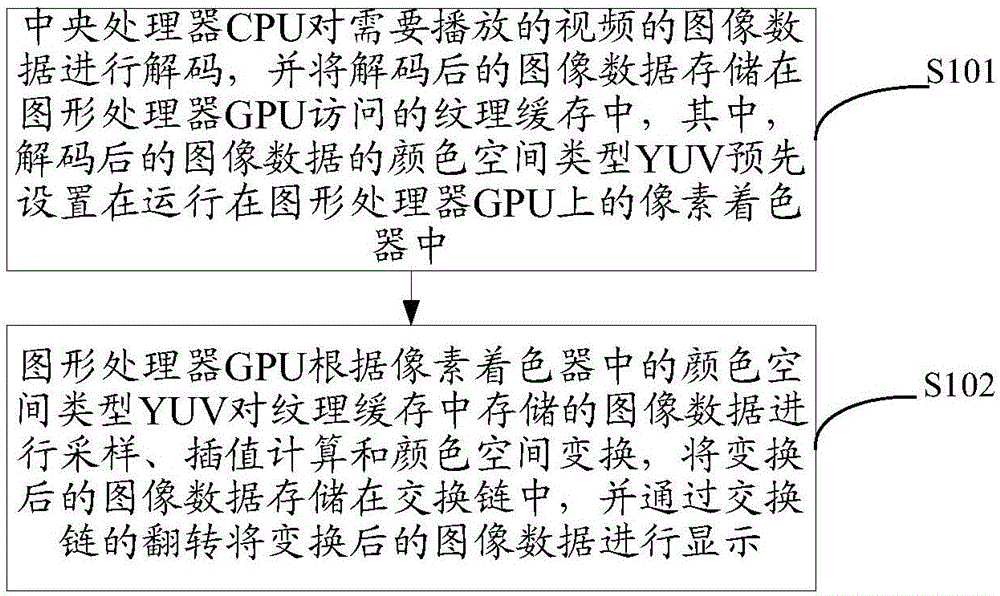

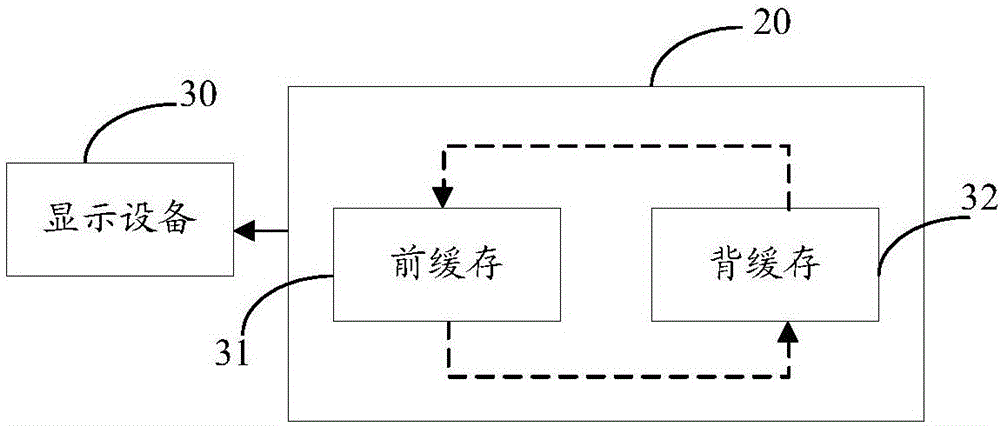

Video rendering method and device

InactiveCN106210883AReduce lossColor signal processing circuitsSelective content distributionCache accessGraphics processing unit

The present invention discloses a video rendering method and device, which aim to lower central processing unit (CPU) performance loss in video rendering. The video rendering method comprises the steps that the CPU decodes image data of a to-be-played video, and stores the decoded image data in a pattern cache accessed by a graphics processing unit (GPU), wherein a color space type YUV of the decoded image data is preset in a pixel shader running on the GPU; and the GPU performs sampling, interpolation calculation and color space conversion on the image data stored in the pattern cache according to the color space type YUV in the pixel shader, and stores the converted image data in an exchange chain, and the converted image data is displayed through overturn of the exchange chain.

Owner:ZHEJIANG DAHUA TECH CO LTD

Vector register file caching of context data structure for maintaining state data in a multithreaded image processing pipeline

ActiveUS20130044117A1Easy accessMinimize memory bus utilizationRegister arrangementsProgram control using wired connectionsGraphicsGeneral purpose

Frequently accessed state data used in a multithreaded graphics processing architecture is cached within a vector register file of a processing unit to optimize accesses to the state data and minimize memory bus utilization associated therewith. A processing unit may include a fixed point execution unit as well as a vector floating point execution unit, and a vector register file utilized by the vector floating point execution unit may be used to cache state data used by the fixed point execution unit and transferred as needed into the general purpose registers accessible by the fixed point execution unit, thereby reducing the need to repeatedly retrieve and write back the state data from and to an L1 or lower level cache accessed by the fixed point execution unit.

Owner:SAMSUNG ELECTRONICS CO LTD

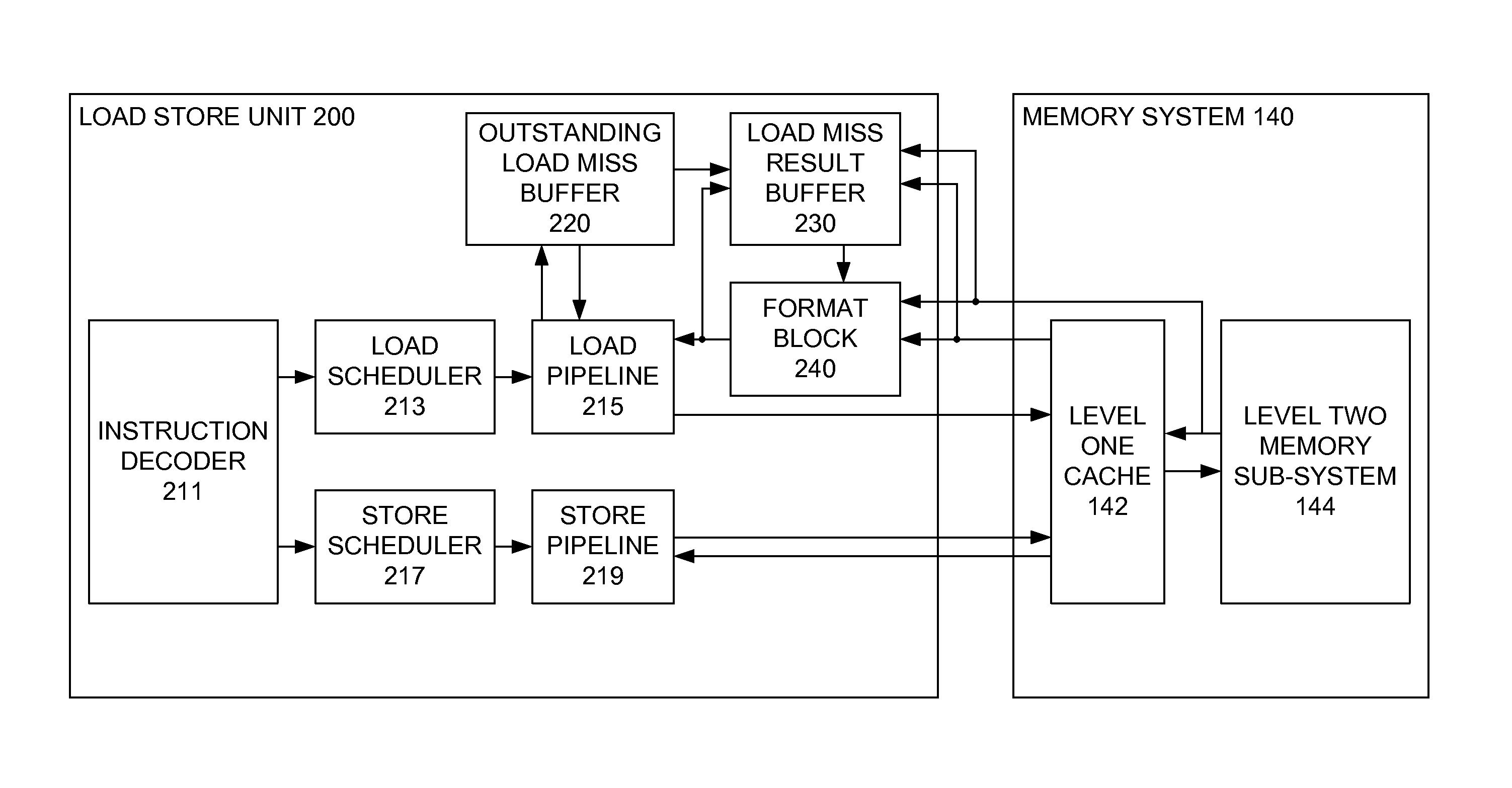

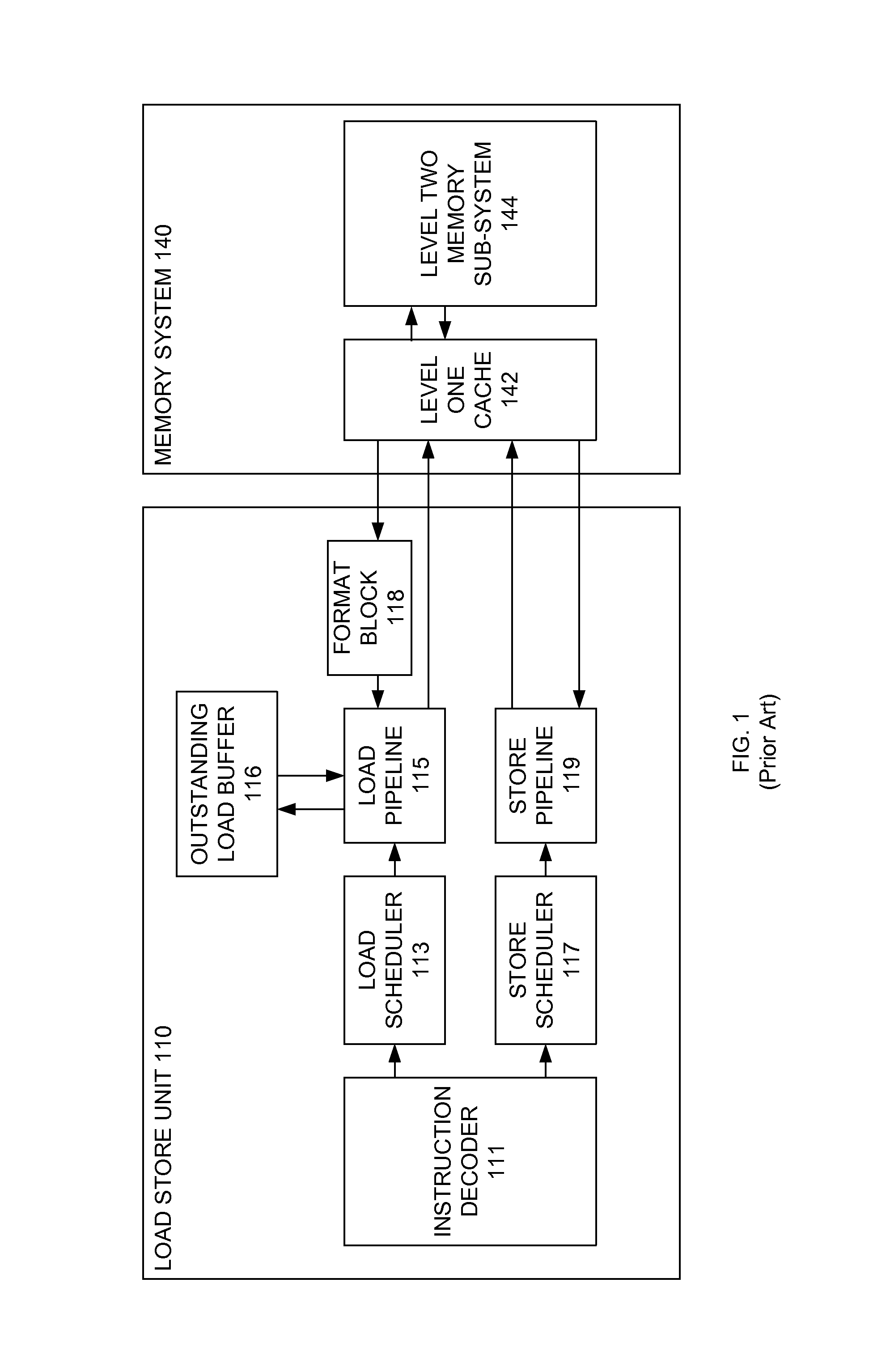

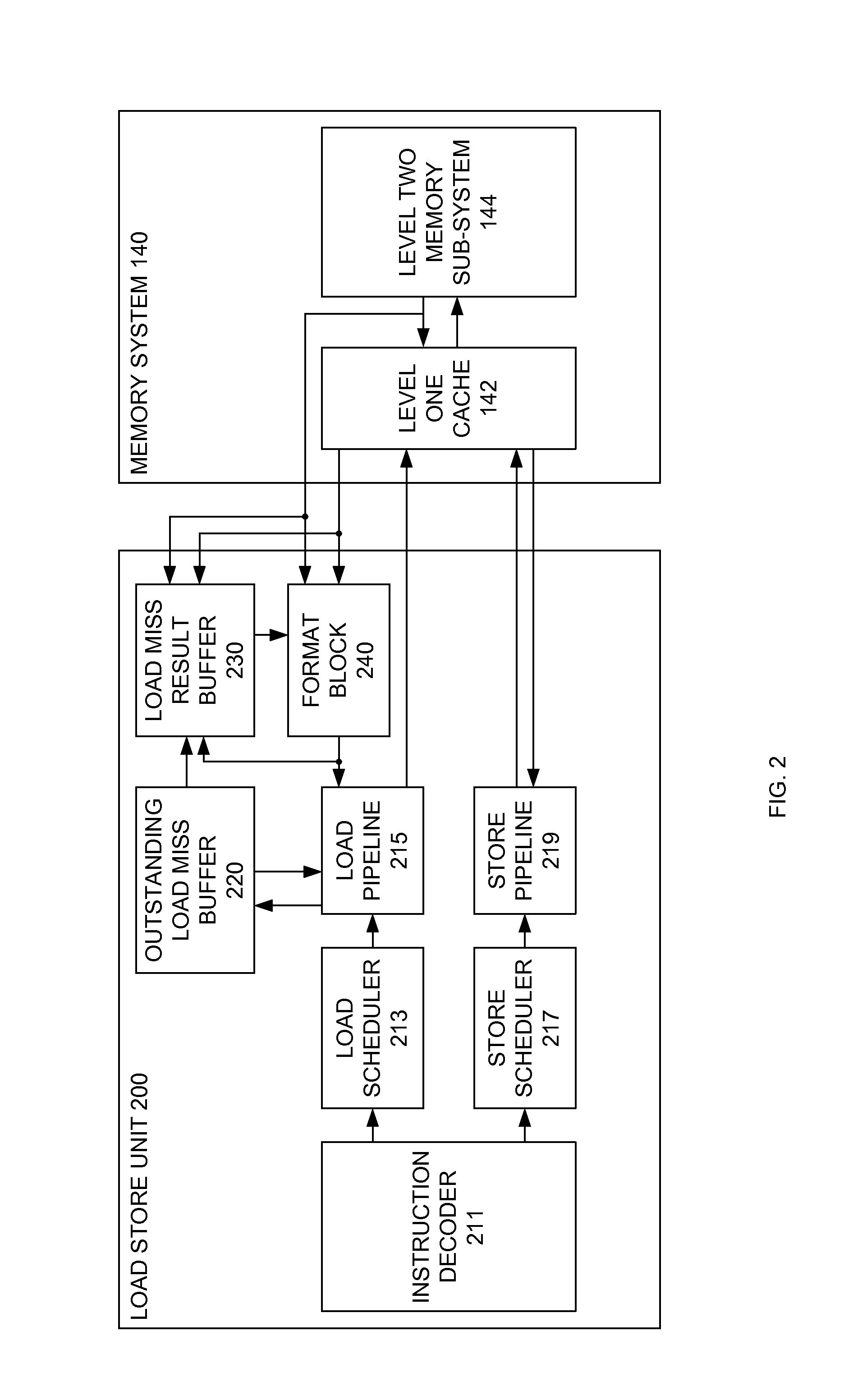

Outstanding load miss buffer with shared entries

ActiveUS8850121B1Input/output to record carriersMemory adressing/allocation/relocationCache accessLoad instruction

A load / store unit with an outstanding load miss buffer and a load miss result buffer is configured to read data from a memory system having a level one cache. Missed load instructions are stored in the outstanding load miss buffer. The load / store unit retrieves data for multiple dependent missed load instructions using a single cache access and stores the data in the load miss result buffer. The outstanding load miss buffer stores a first missed load instruction in a first primary entry. Additional missed load instructions that are dependent on the first missed load instructions are stored in dependent entries of the first primary entry or in shared entries. If a shared entry is used for a missed load instruction the shared entry is associated with the primary entry.

Owner:AMPERE COMPUTING LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com