Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

378 results about "Cache coherence" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

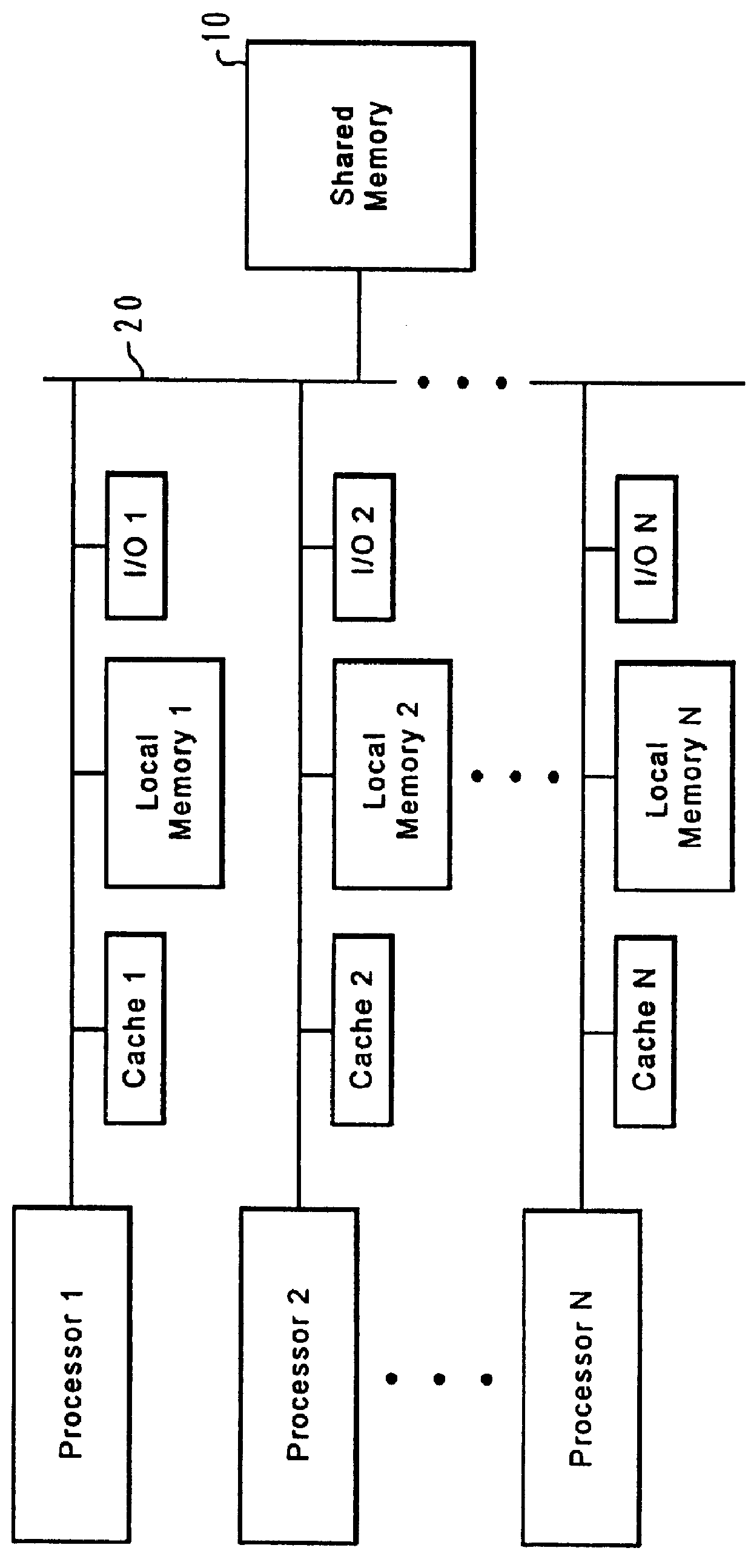

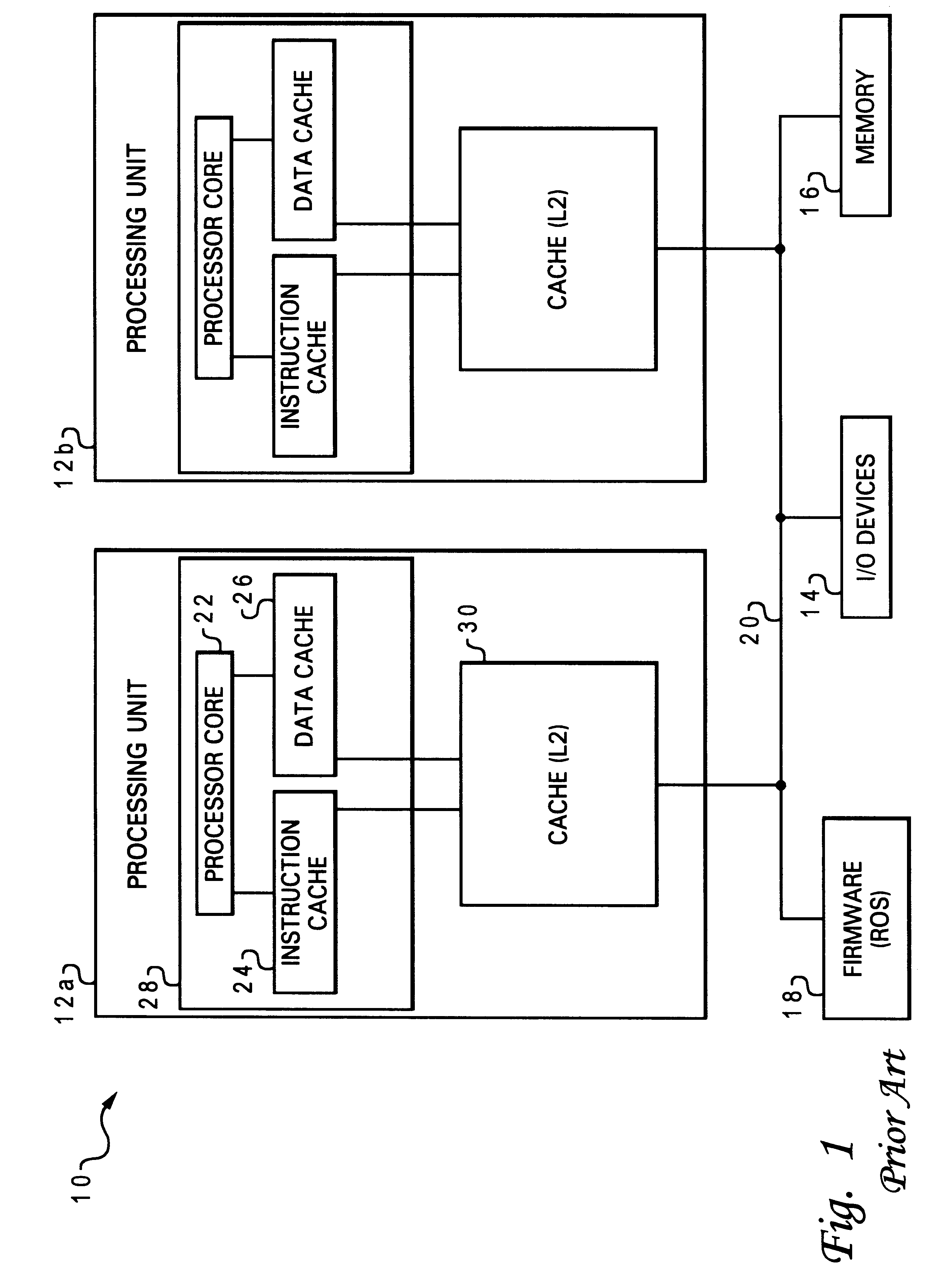

In computer architecture, cache coherence is the uniformity of shared resource data that ends up stored in multiple local caches. When clients in a system maintain caches of a common memory resource, problems may arise with incoherent data, which is particularly the case with CPUs in a multiprocessing system.

System and method for simplifying cache coherence using multiple write policies

ActiveUS20130254488A1Improve power efficiencyReduce hardware costsEnergy efficient ICTMemory adressing/allocation/relocationCache hierarchyCache coherence

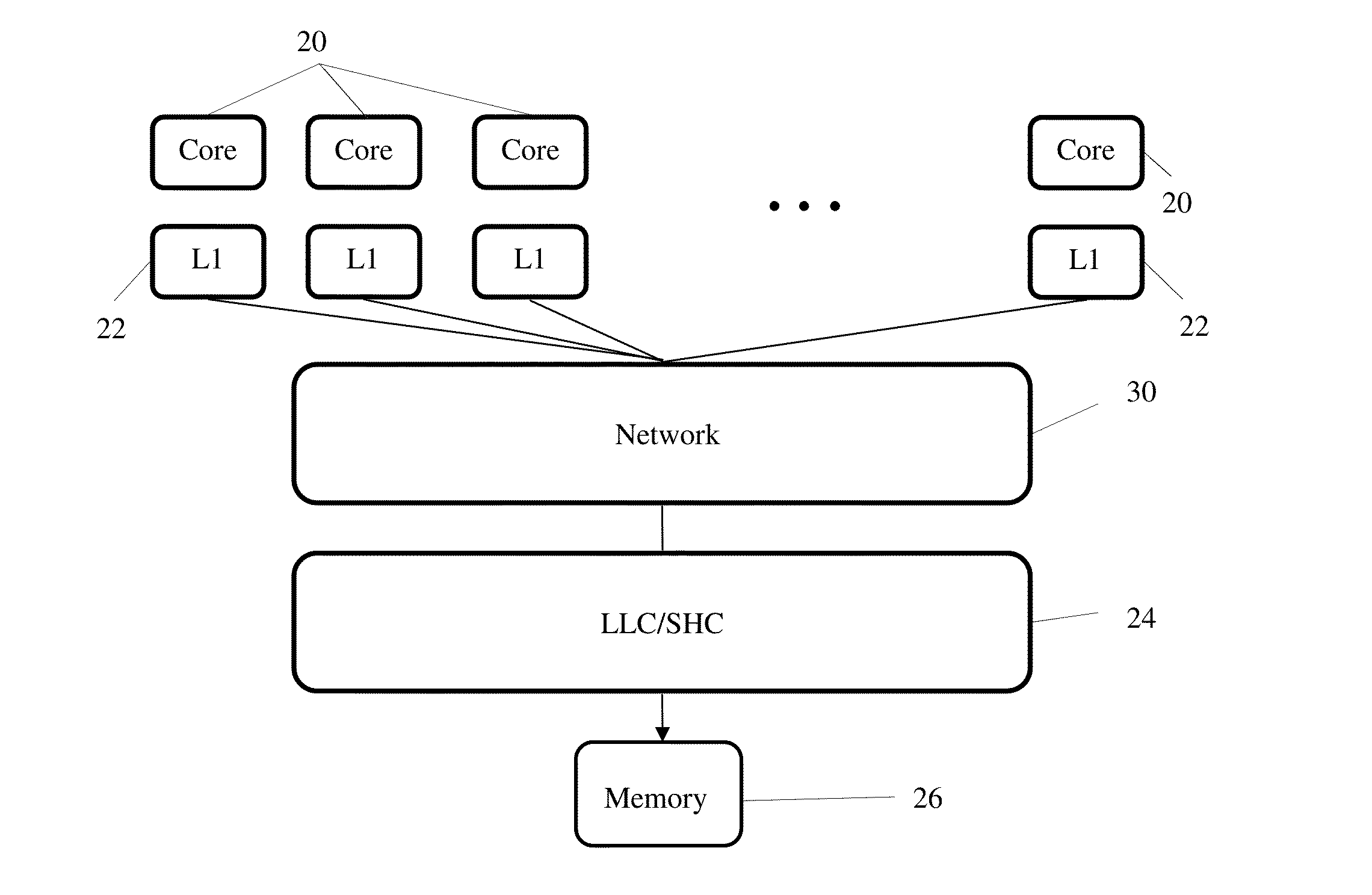

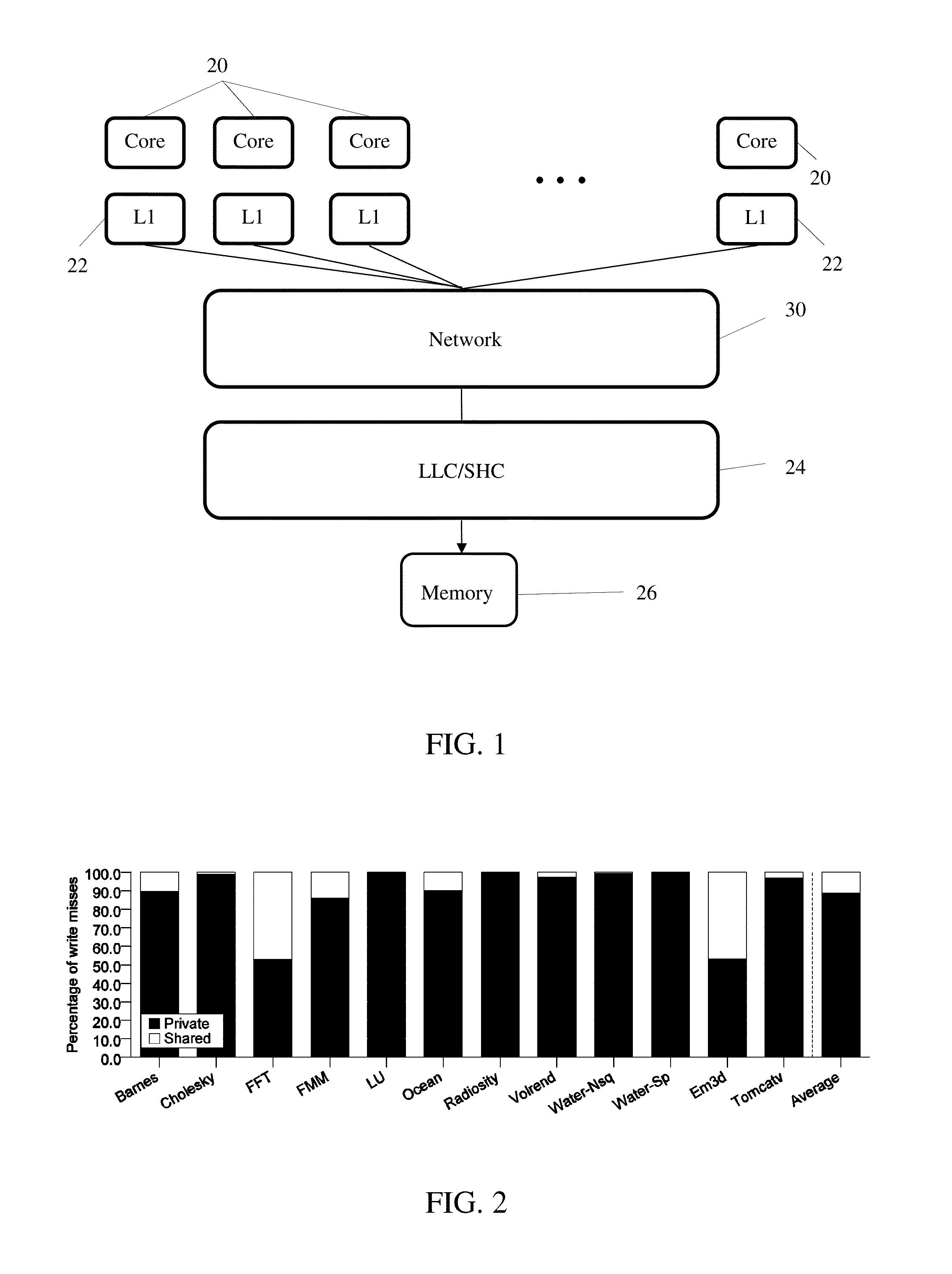

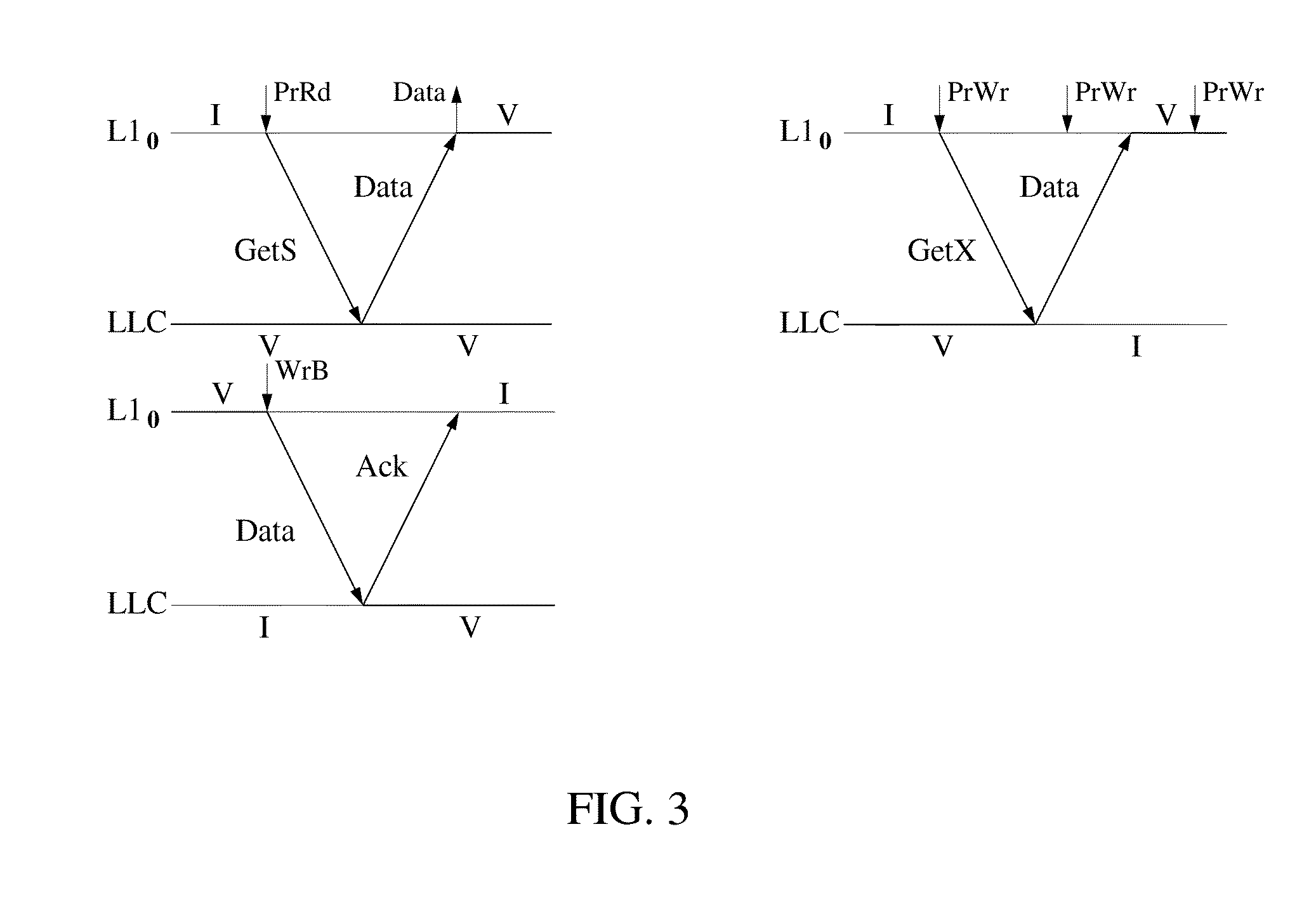

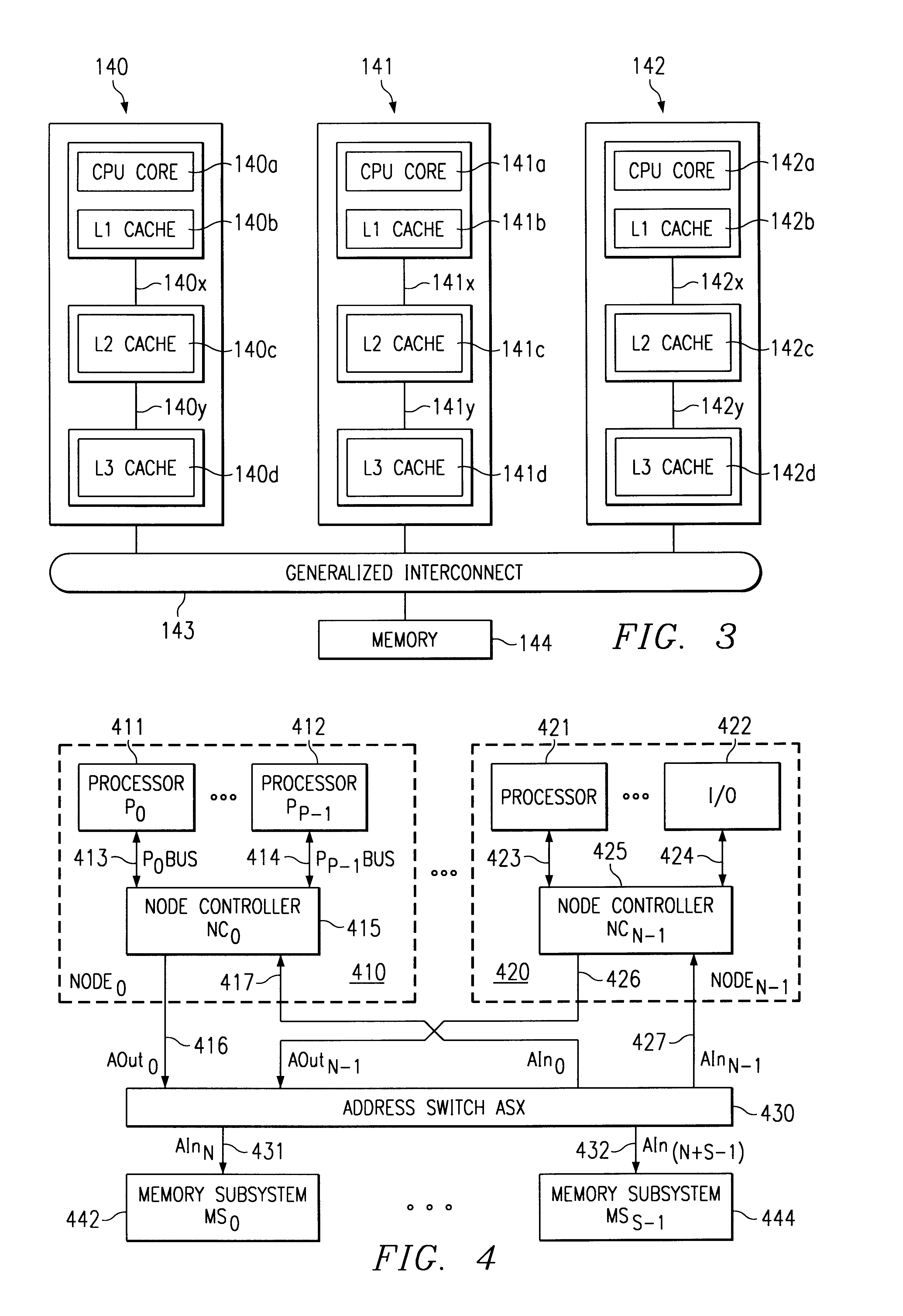

System and methods for cache coherence in a multi-core processing environment having a local / shared cache hierarchy. The system includes multiple processor cores, a main memory, and a local cache memory associated with each core for storing cache lines accessible only by the associated core. Cache lines are classified as either private or shared. A shared cache memory is coupled to the local cache memories and main memory for storing cache lines. The cores follow a write-back to the local memory for private cache lines, and a write-through to the shared memory for shared cache lines. Shared cache lines in local cache memory enter a transient dirty state when written by the core. Shared cache lines transition from a transient dirty to a valid state with a self-initiated write-through to the shared memory. The write-through to shared memory can include only data that was modified in the transient dirty state.

Owner:ETA SCALE AB

Method and apparatus for maintaining cache coherency in a computer system having multiple processor buses

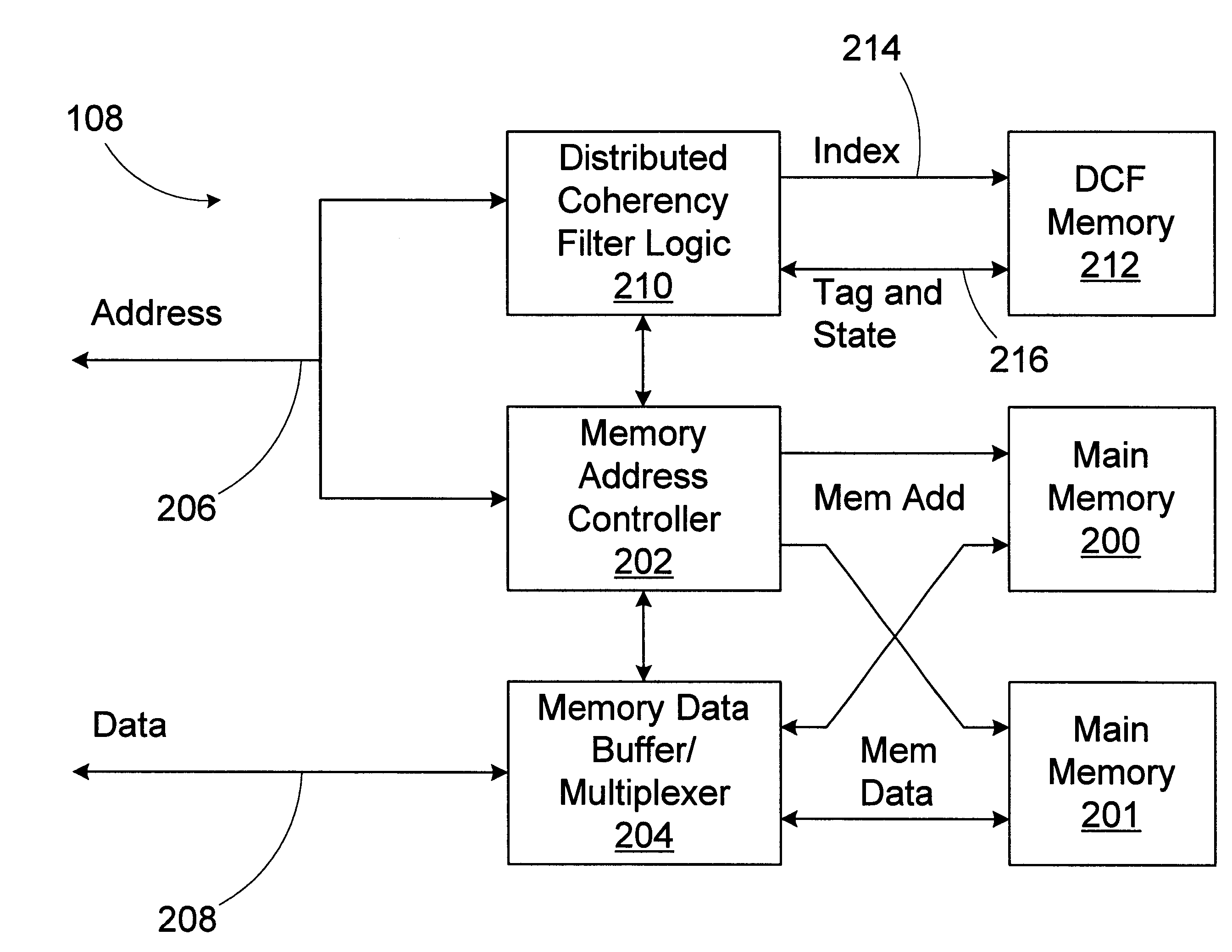

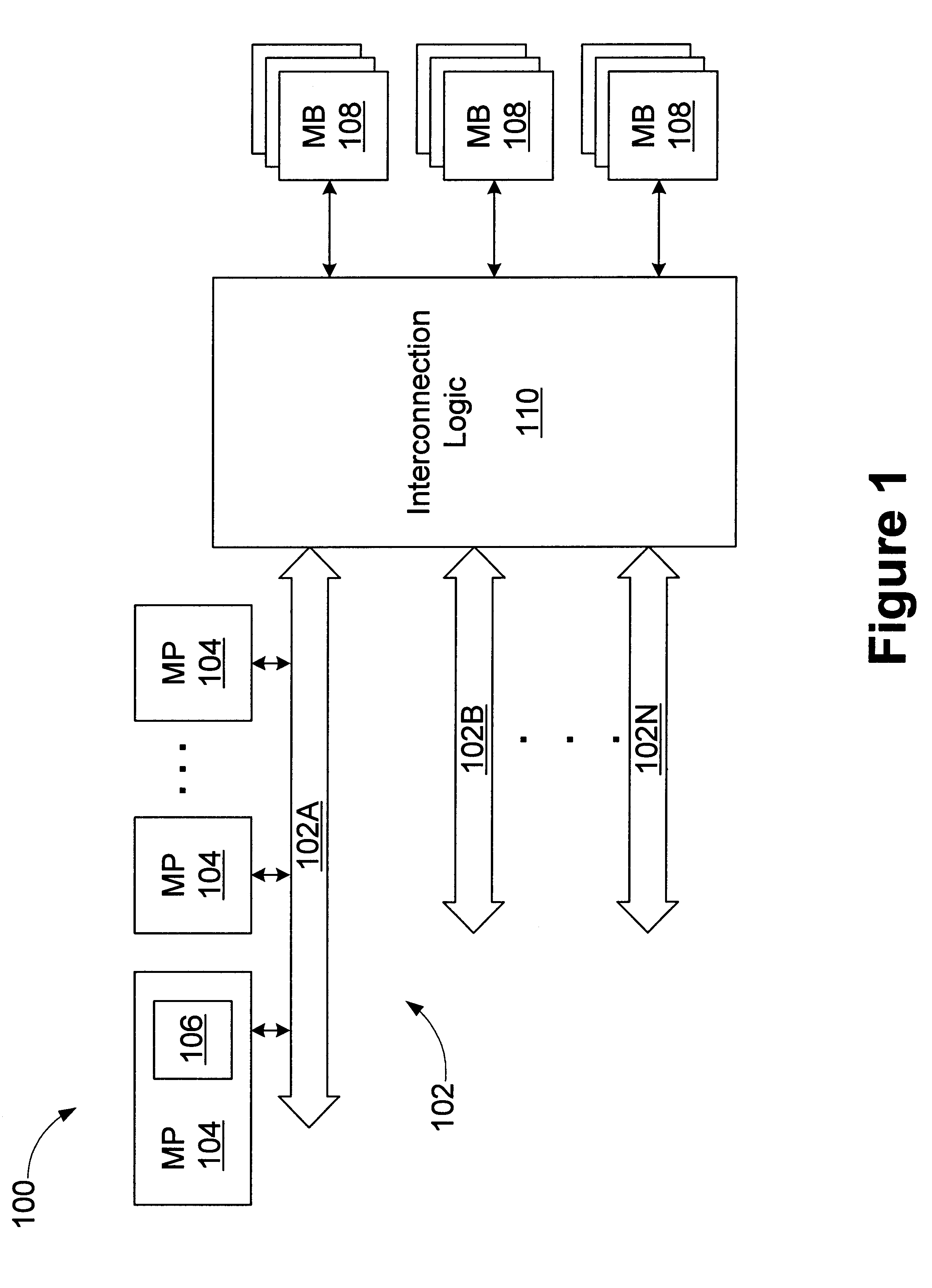

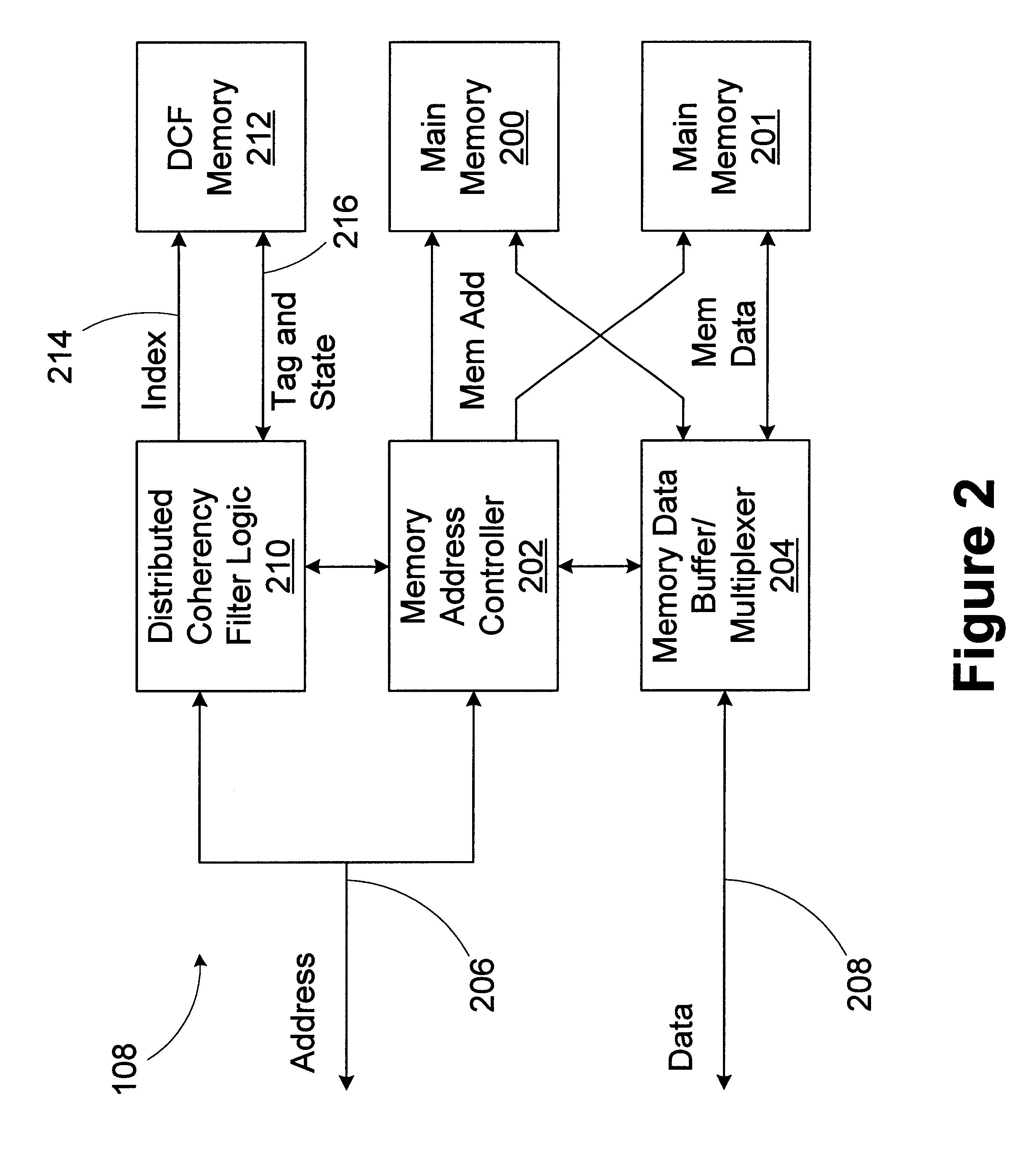

A computer system includes a plurality of processor buses, and a memory bank. The plurality of processors is coupled to the processor buses. At least a portion of the processors have associated cache memories arranged in cache lines. The memory bank is coupled to the processor buses. The memory bank includes a main memory and a distributed coherency filter. The main memory is adapted to store data corresponding to at least a portion of the cache lines. The distributed coherency filter is adapted to store coherency information related to the cache lines associated with each of the processor buses. A method for maintaining cache coherency among processors coupled to a plurality of processor buses is provided. Lines of data are stored in a main memory. A memory request is received for a particular line of data in the main memory from one of the processor buses. Coherency information is stored related to the lines of data associated with each of the processor buses. The coherency information is accessed based on the memory request.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

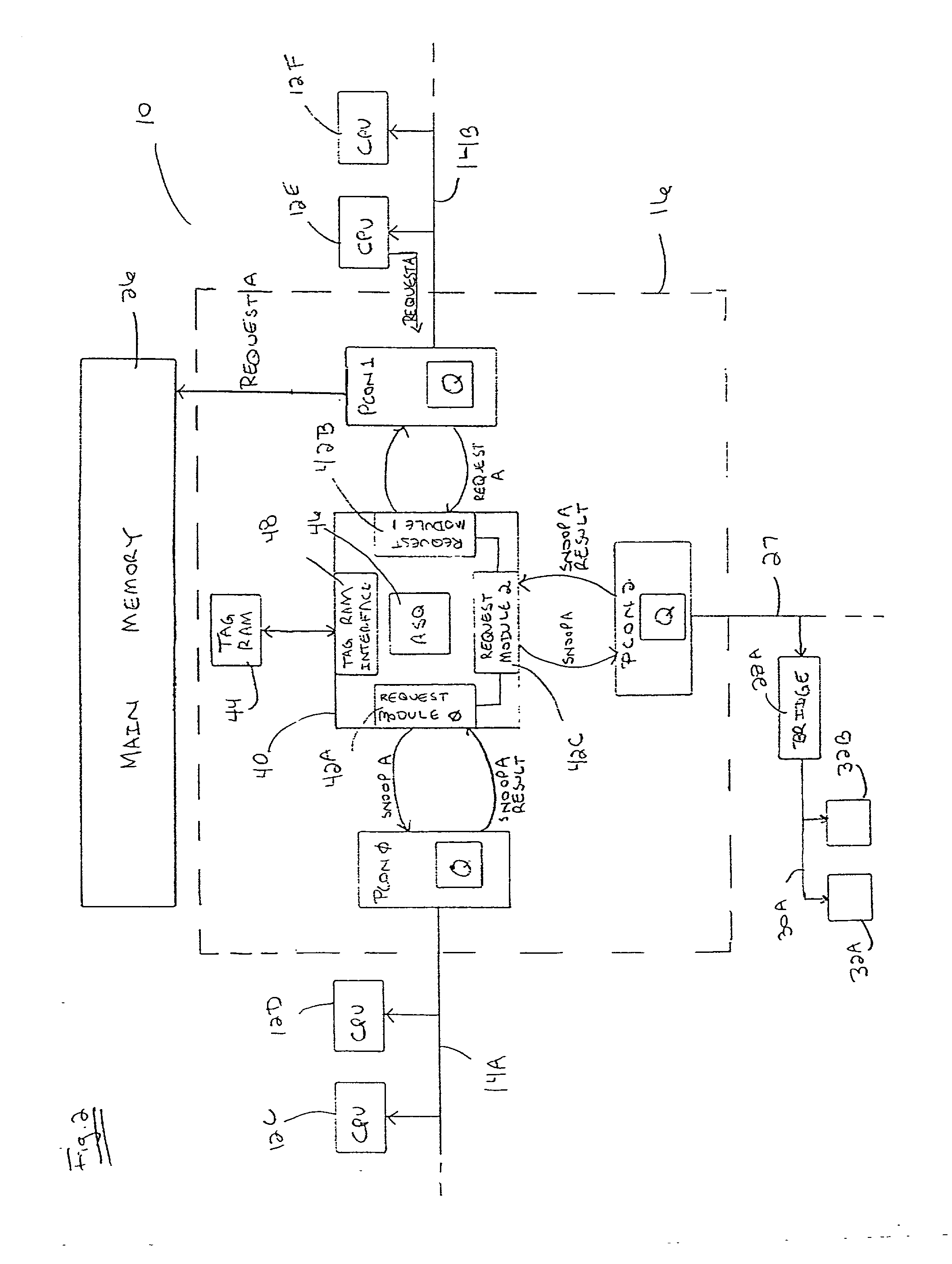

Conservative shadow cache support in a point-to-point connected multiprocessing node

A point-to-point connected multiprocessing node uses a snooping-based cache-coherence filter to selectively direct relays of data request broadcasts. The filter includes shadow cache lines that are maintained to hold copies of the local cache lines of integrated circuits connected to the filter. The shadow cache lines are provided with additional entries so that if newly referenced data is added to a particular local cache line by “silently” removing an entry in the local cache line, the newly referenced data may be added to the shadow cache line without forcing the “blind” removal of an entry in the shadow cache line.

Owner:ORACLE INT CORP

Shared bypass bus structure

InactiveUS6912612B2Error preventionFrequency-division multiplex detailsCrossbar switchLatency (engineering)

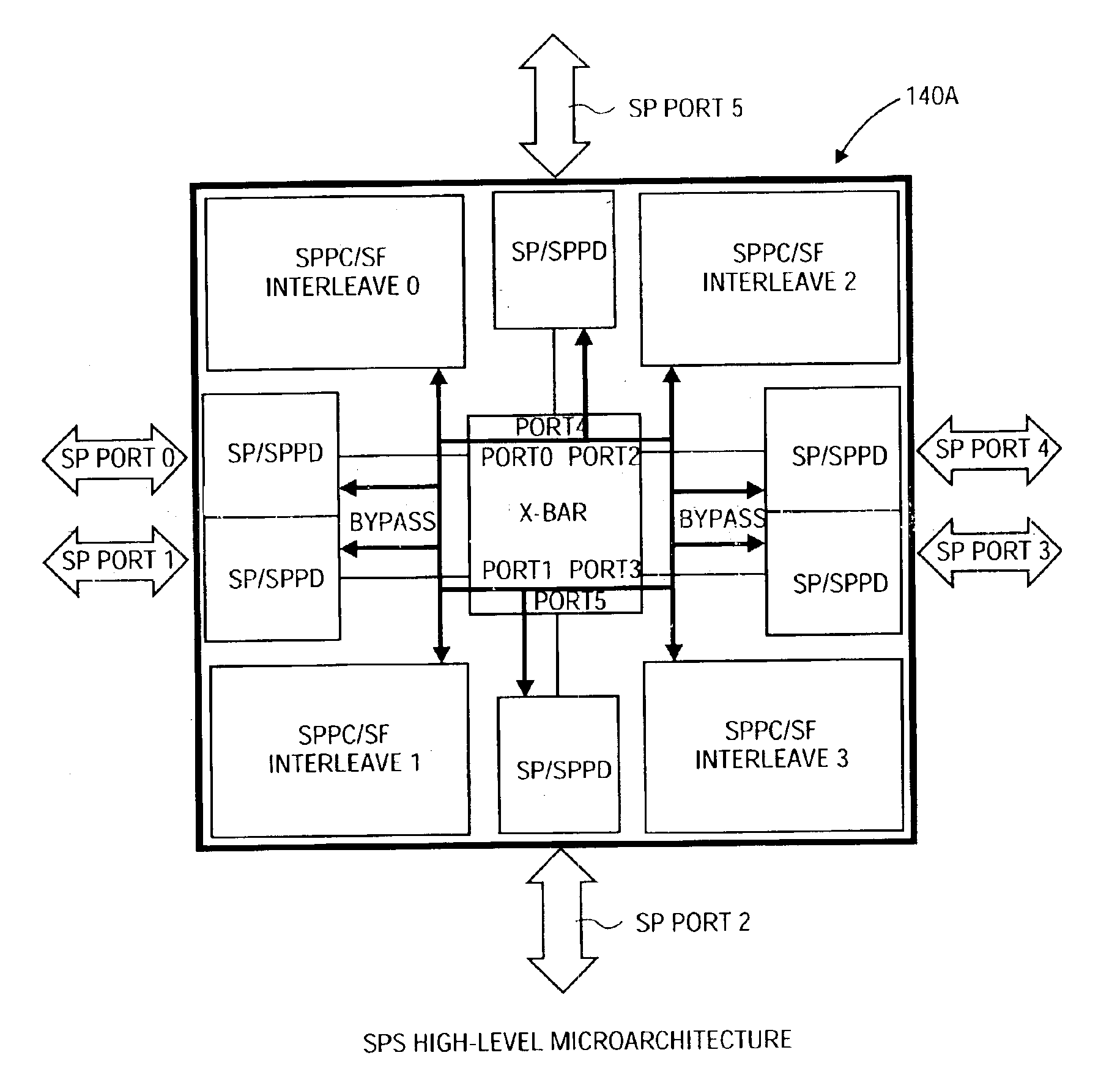

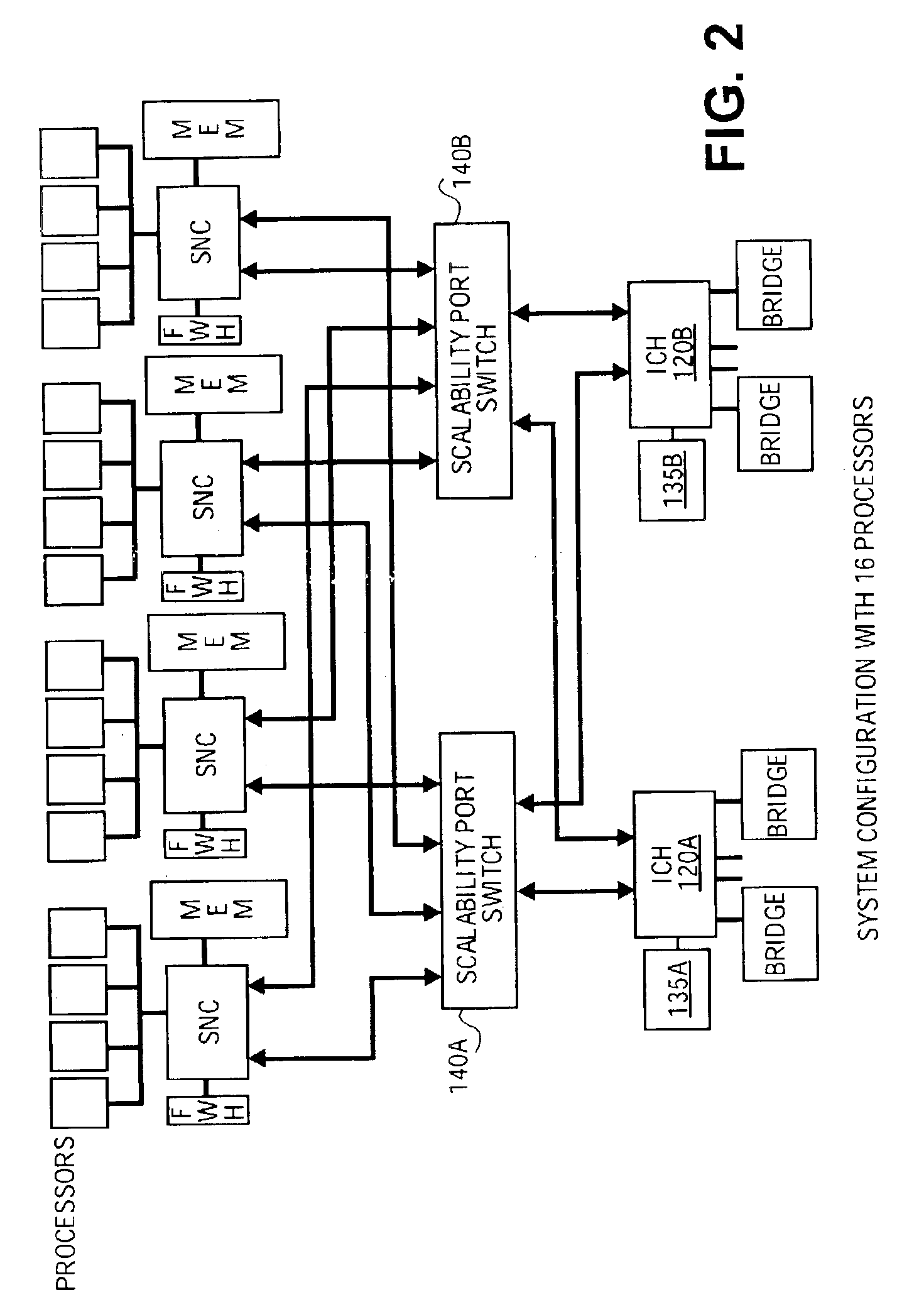

A shared bypass bus structure for low-latency coherency controller access in a coherent scalable switch. In a coherent scalable switch with multiple coherent interconnect ports, distributed coherency control structures, and a crossbar interface between them, a shared bypass bus permits data transfer between the coherent interconnect ports and the coherency control structures while bypassing the crossbar interface. Some embodiments may comprise scalable switches to support one or more sets of processors with substantially independent snoop or cache coherency paths or arrangements.

Owner:INTEL CORP

Method and apparatus to distribute interrupts to multiple interrupt handlers in a distributed symmetric multiprocessor system

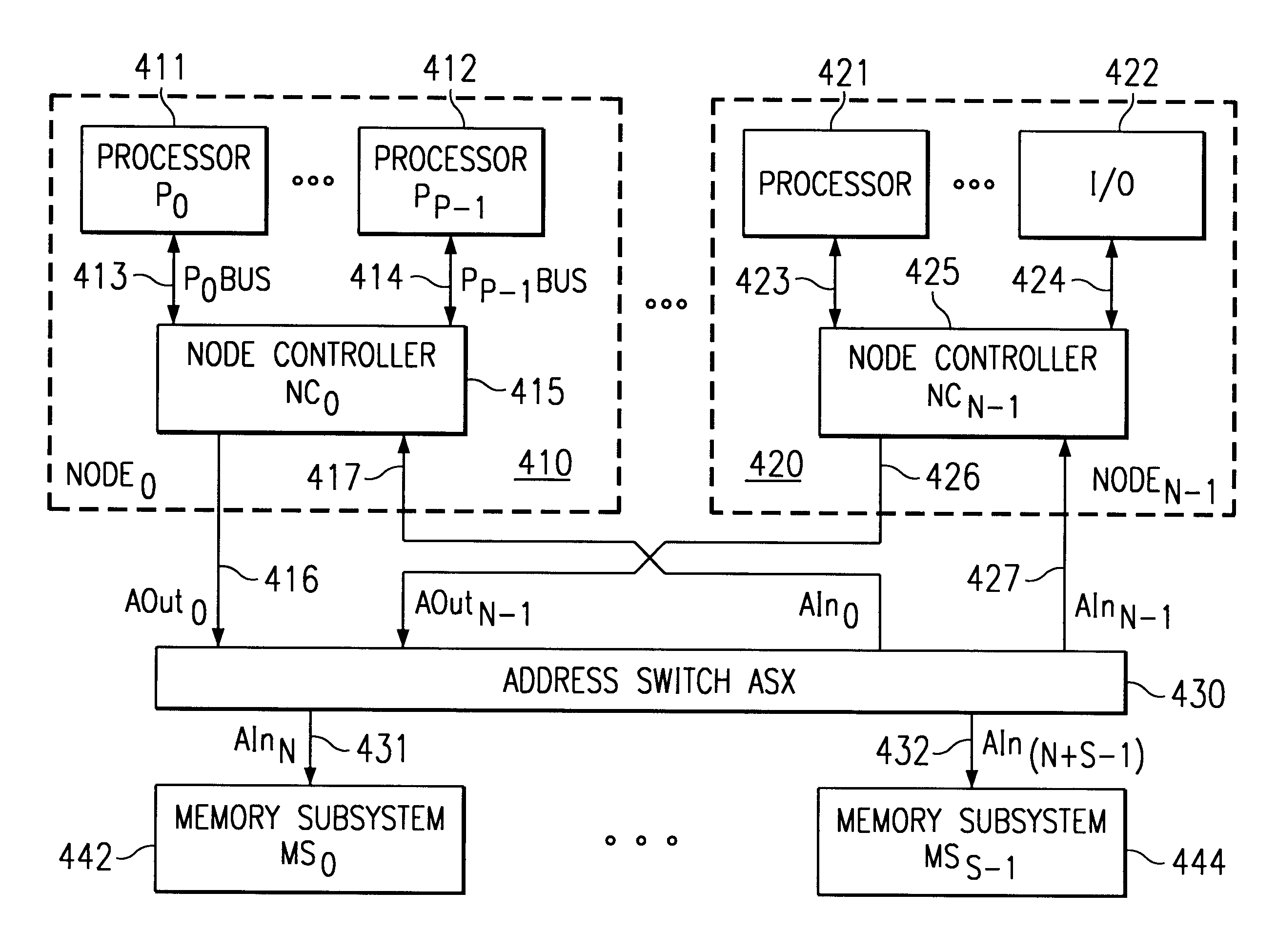

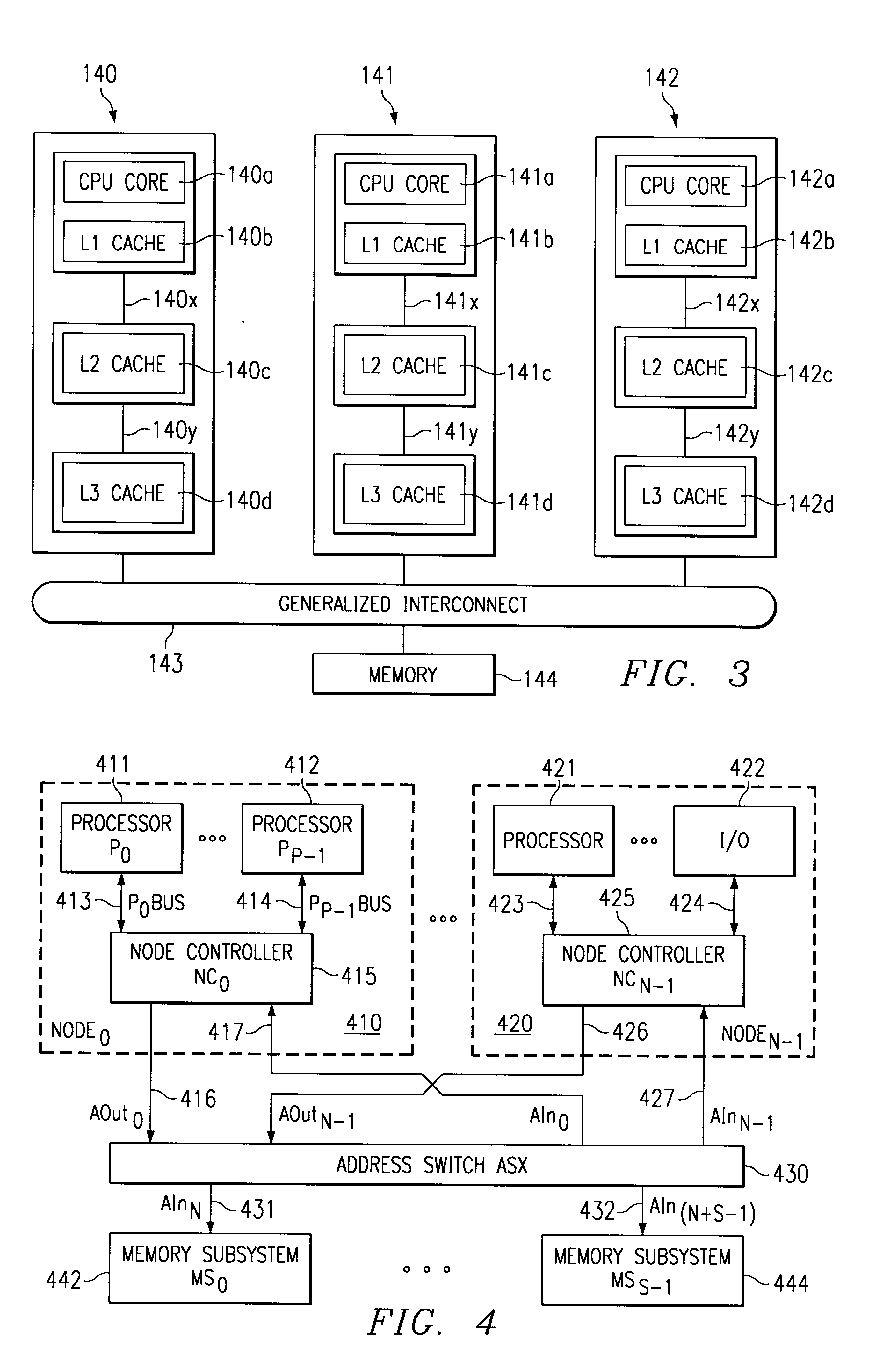

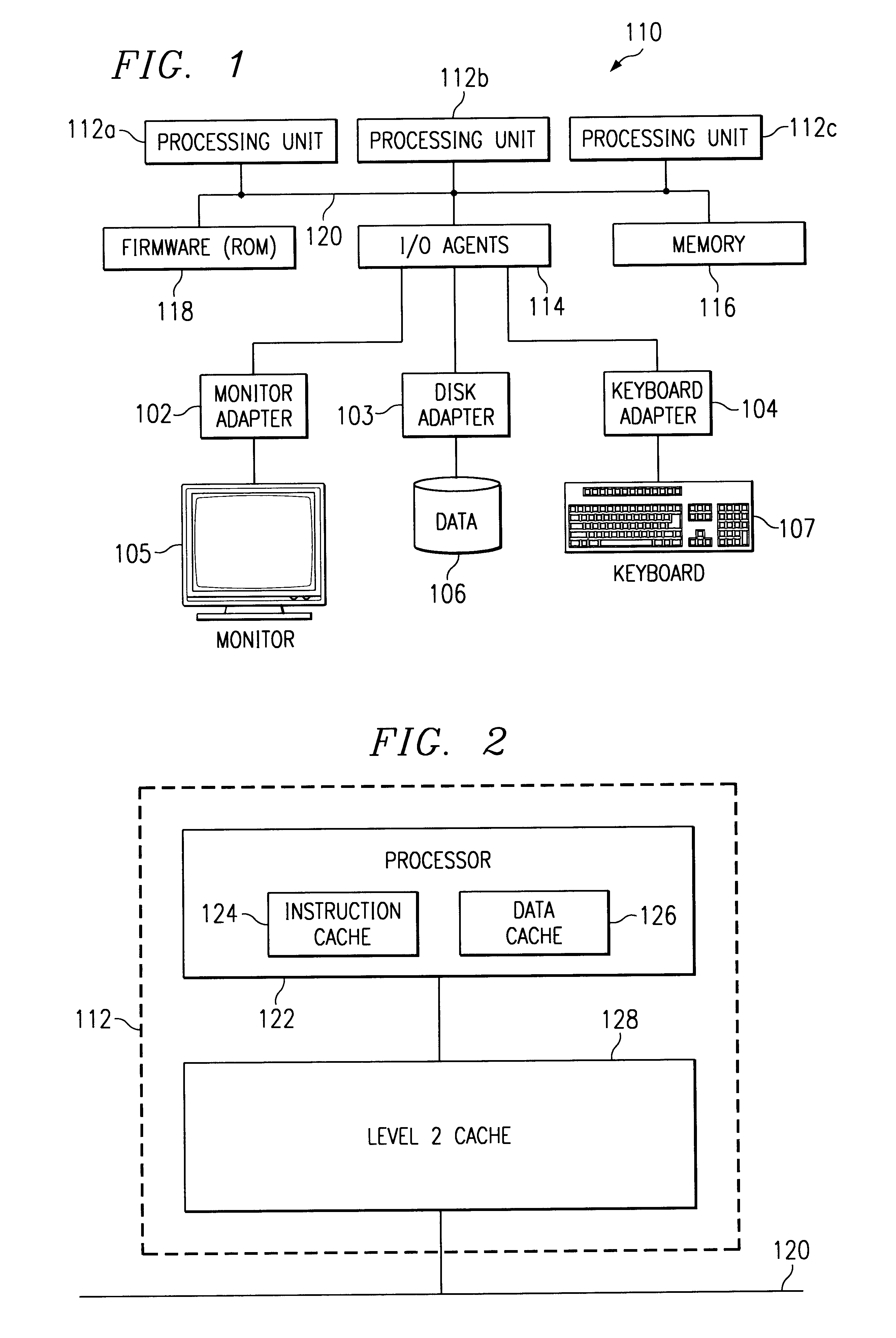

A distributed system structure for a large-way, symmetric multiprocessor system using a bus-based cache-coherence protocol is provided. The distributed system structure contains an address switch, multiple memory subsystems, and multiple master devices, either processors, I / O agents, or coherent memory adapters, organized into a set of nodes supported by a node controller. The node controller receives transactions from a master device, communicates with a master device as another master device or as a slave device, and queues transactions received from a master device. Since the achievement of coherency is distributed in time and space, the node controller helps to maintain cache coherency. The node controller also implements an interrupt arbitration scheme designed to choose among multiple eligible interrupt distribution units without using dedicated sideband signals on the bus.

Owner:GOOGLE LLC

System and method for maintaining cache coherency without external controller intervention

InactiveUS7043610B2Efficiently detectsEfficiently resolveMemory adressing/allocation/relocationSegment descriptorControl store

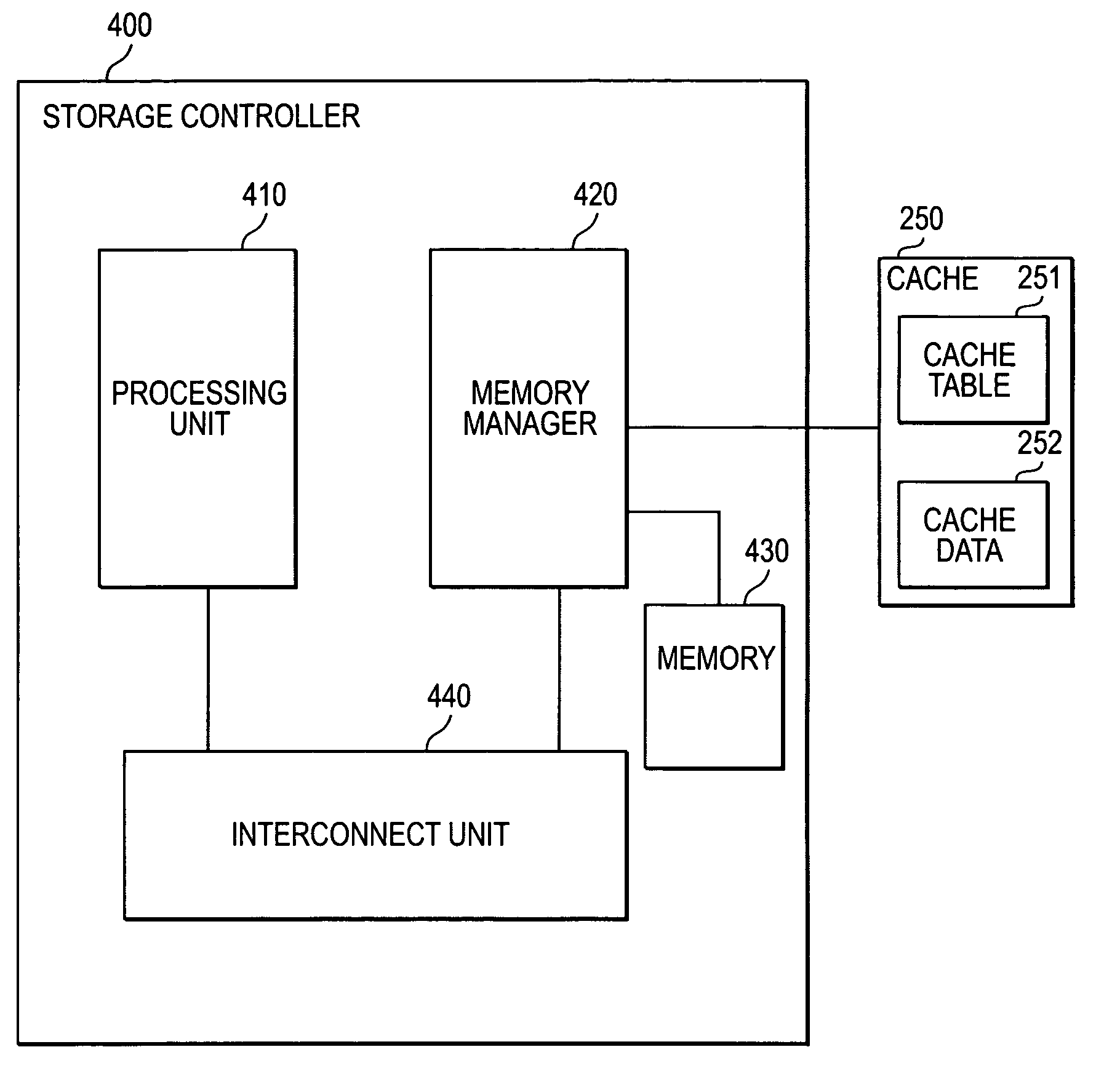

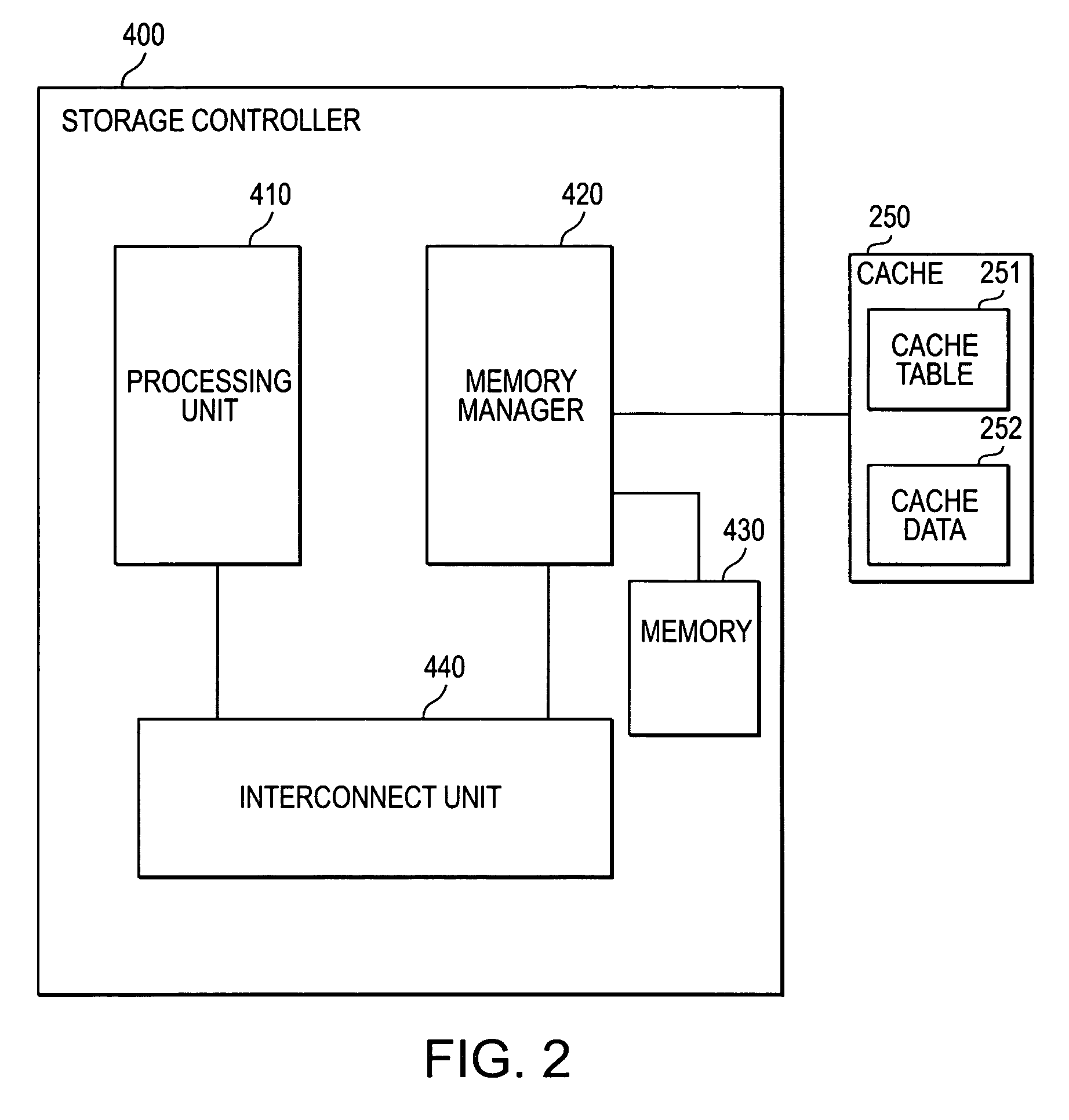

A disk array includes a system and method for cache management and conflict detection. Incoming host commands are processed by a storage controller, which identifies a set of at least one cache segment descriptor (CSD) associated with the requested address range. Command conflict detection can be quickly performed by examining the state information of each CSD associated with the command. The use of CSDs therefore permits the present invention to rapidly and efficiently perform read and write commands and detect conflicts.

Owner:ADAPTEC

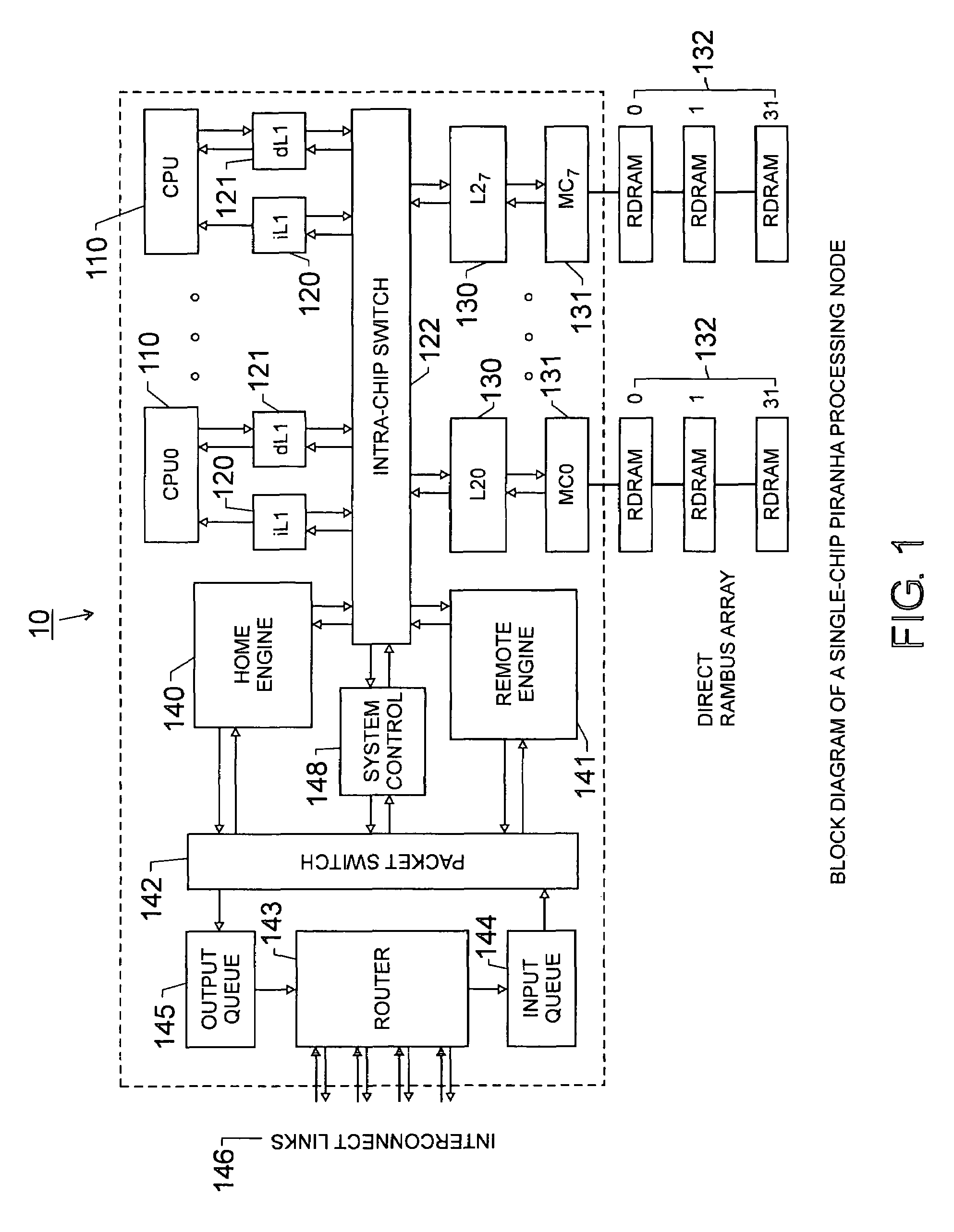

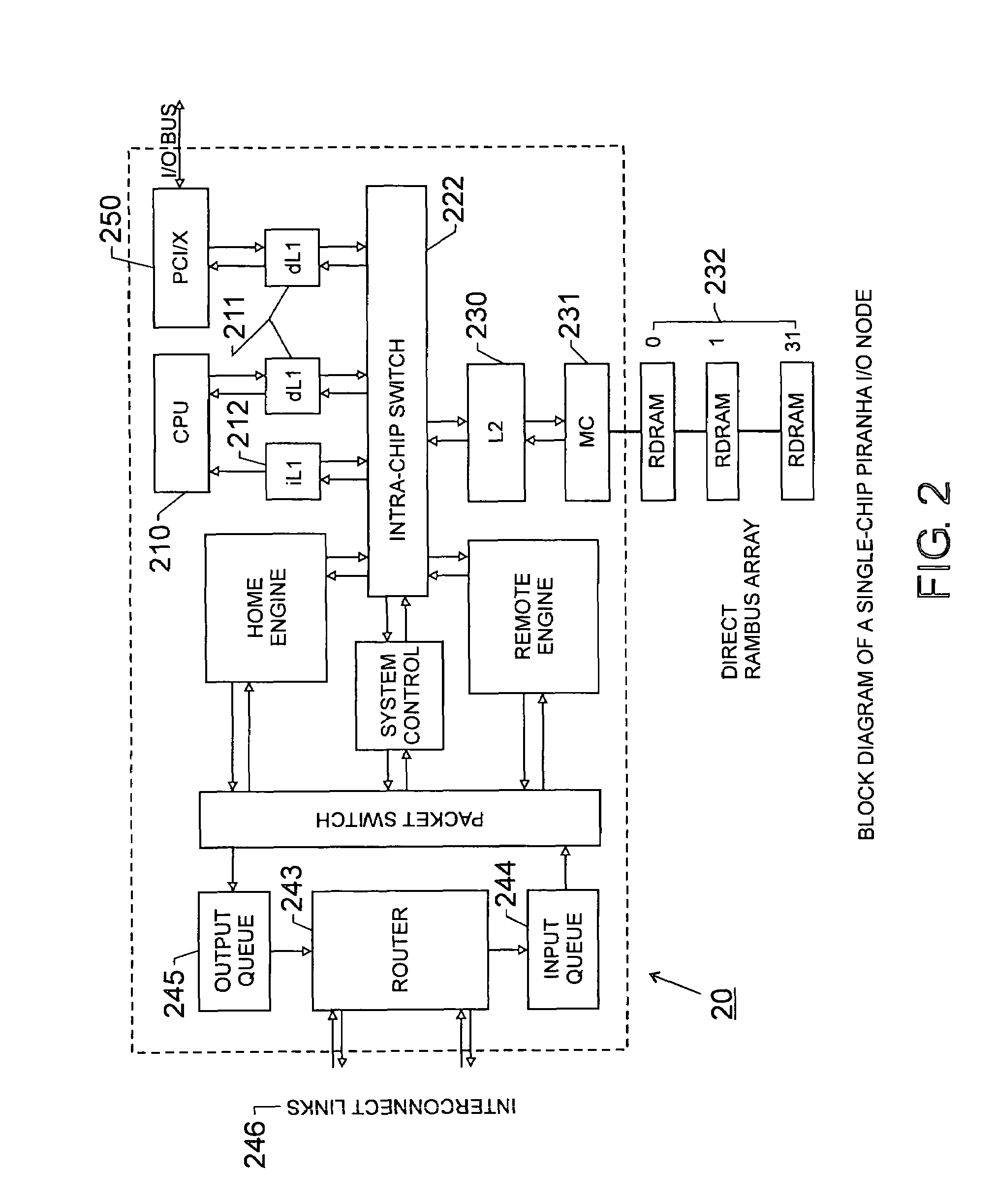

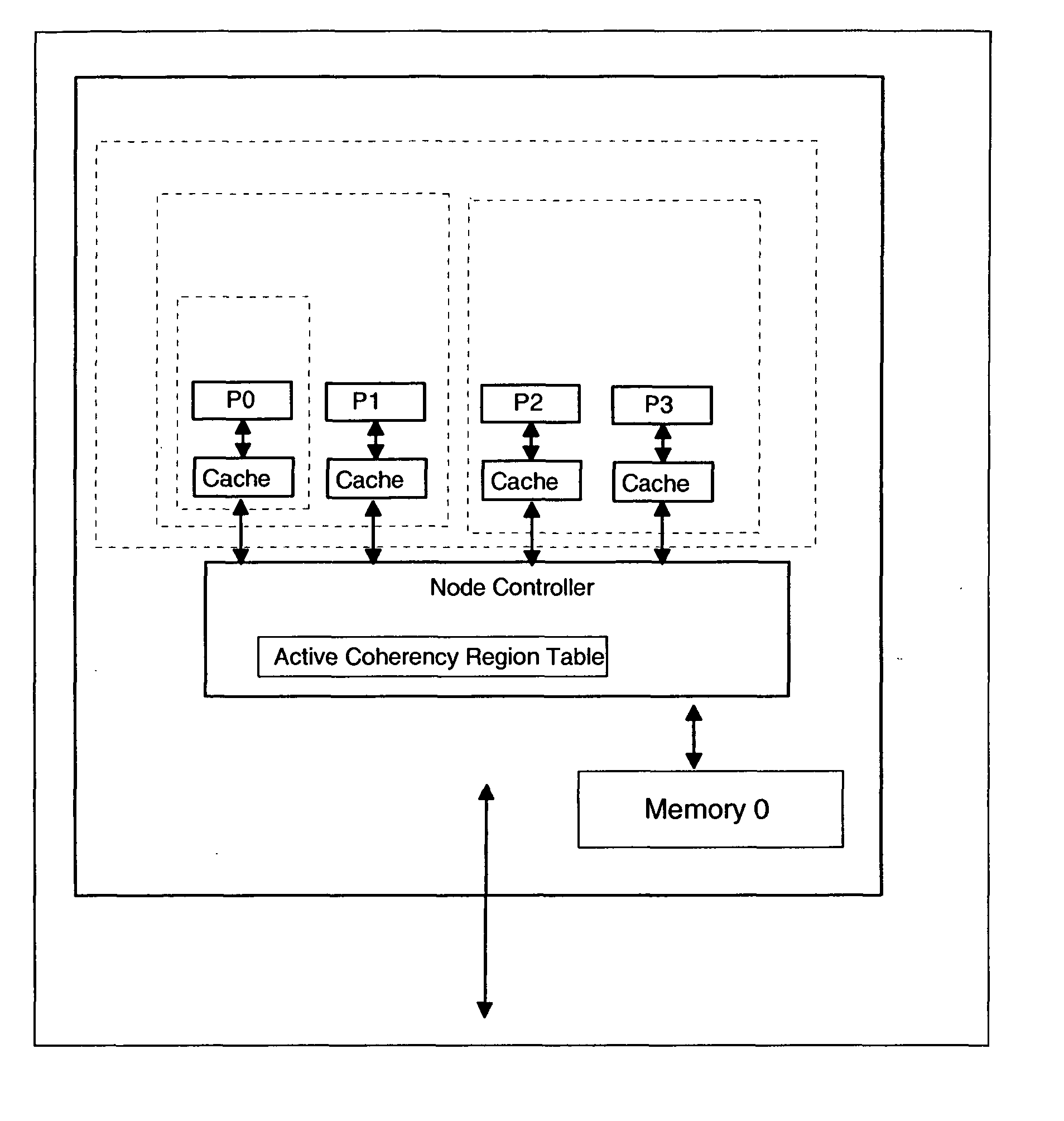

Scalable architecture based on single-chip multiprocessing

InactiveUS6988170B2Short timeSmall investmentMemory architecture accessing/allocationMemory adressing/allocation/relocationCache hierarchyProcessing core

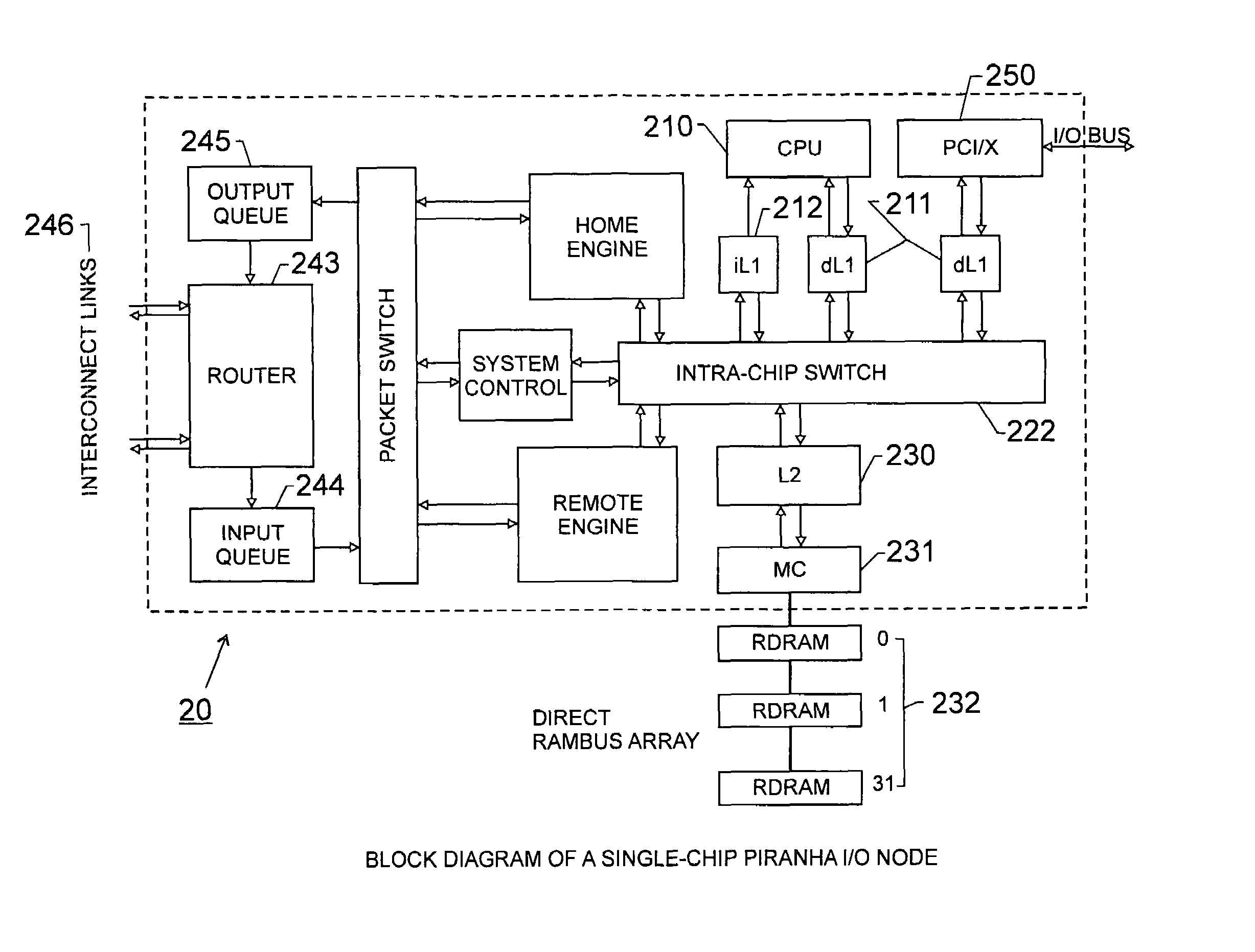

A chip-multiprocessing system with scalable architecture, including on a single chip: a plurality of processor cores; a two-level cache hierarchy; an intra-chip switch; one or more memory controllers; a cache coherence protocol; one or more coherence protocol engines; and an interconnect subsystem. The two-level cache hierarchy includes first level and second level caches. In particular, the first level caches include a pair of instruction and data caches for, and private to, each processor core. The second level cache has a relaxed inclusion property, the second-level cache being logically shared by the plurality of processor cores. Each of the plurality of processor cores is capable of executing an instruction set of the ALPHA™ processing core. The scalable architecture of the chip-multiprocessing system is targeted at parallel commercial workloads. A showcase example of the chip-multiprocessing system, called the PIRAHNA™ system, is a highly integrated processing node with eight simpler ALPHA™ processor cores. A method for scalable chip-multiprocessing is also provided.

Owner:SK HYNIX INC

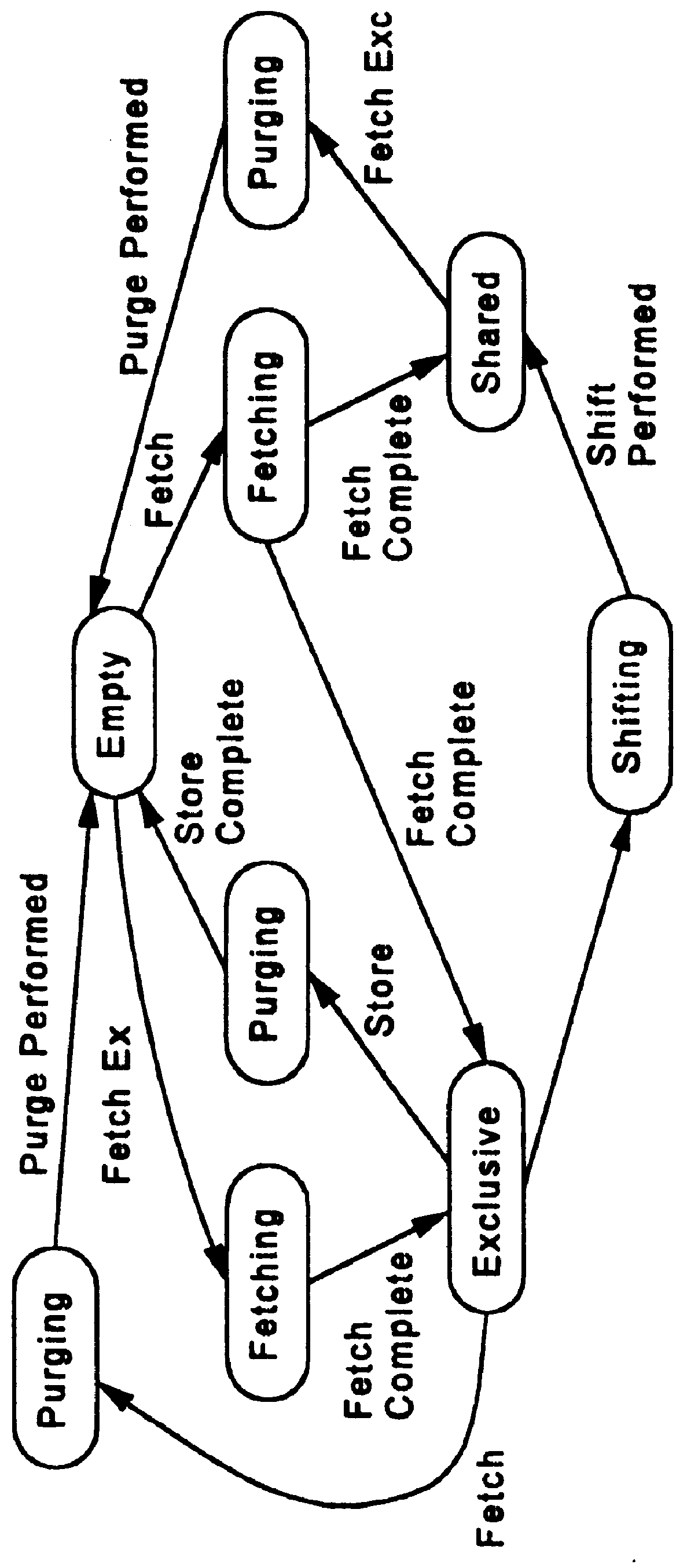

Method and cache-coherence system allowing purging of mid-level cache entries without purging lower-level cache entries

A method and apparatus for purging data from a middle cache level without purging the corresponding data from a lower cache level (i.e., a cache level closer to the processor using the data), and replacing the purged first data with other data of a different memory address than the purged first data, while leaving the data of the first cache line in the lower cache level. In some embodiments, in order to allow such mid-level purging, the first cache line must be in the "shared state" that allows reading of the data, but does not permit modifications to the data (i.e., modifications that would have to be written back to memory). If it is desired to modify the data, a directory facility will issue a purge to all caches of the shared-state data for that cache line, and then the processor that wants to modify the data will request an exclusive-state copy to be fetched to its lower-level cache and to all intervening levels of cache. Later, when the data in the lower cache level is modified, the modified data can be moved back to the original memory from the caches. In some embodiments, a purge of all shared-state copies of the first cache-line data from any and all caches having copies thereof is performed as a prerequisite to doing this exclusive-state fetch.

Owner:RPX CORP +1

High-speed memory storage unit for a multiprocessor system having integrated directory and data storage subsystems

InactiveUS6415364B1Easy to manageImprove system throughputMemory adressing/allocation/relocationInput/output processes for data processingHigh speed memoryImpact system

A high-speed memory system is disclosed for use in supporting a directory-based cache coherency protocol. The memory system includes at least one data system for storing data, and a corresponding directory system for storing the corresponding cache coherency information. Each data storage operation involves a block transfer operation performed to multiple sequential addresses within the data system. Each data storage operation occurs in conjunction with an associated read-modify-write operation performed on cache coherency information stored within the corresponding directory system. Multiple ones of the data storage operations may be occurring within one or more of the data systems in parallel. Likewise, multiple ones of the read-modify-write operations may be performed to one or more of the directory systems in parallel. The transfer of address, control, and data signals for these concurrently performed operations occurs in an interleaved manner. The use of block transfer operations in combination with the interleaved transfer of signals to memory systems prevents the overhead associated with the read-modify-write operations from substantially impacting system performance. This is true even when data and directory systems are implemented using the same memory technology.

Owner:UNISYS CORP

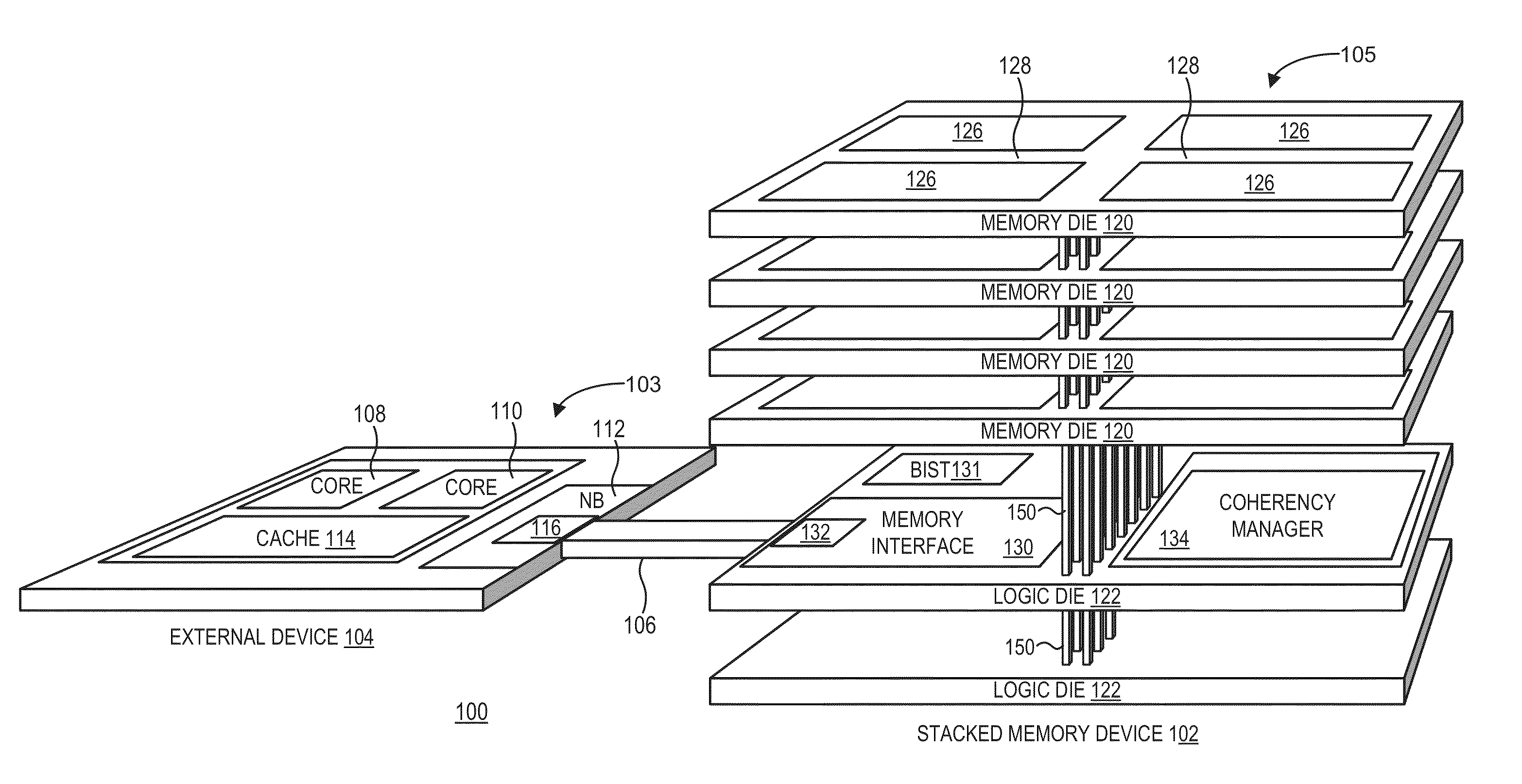

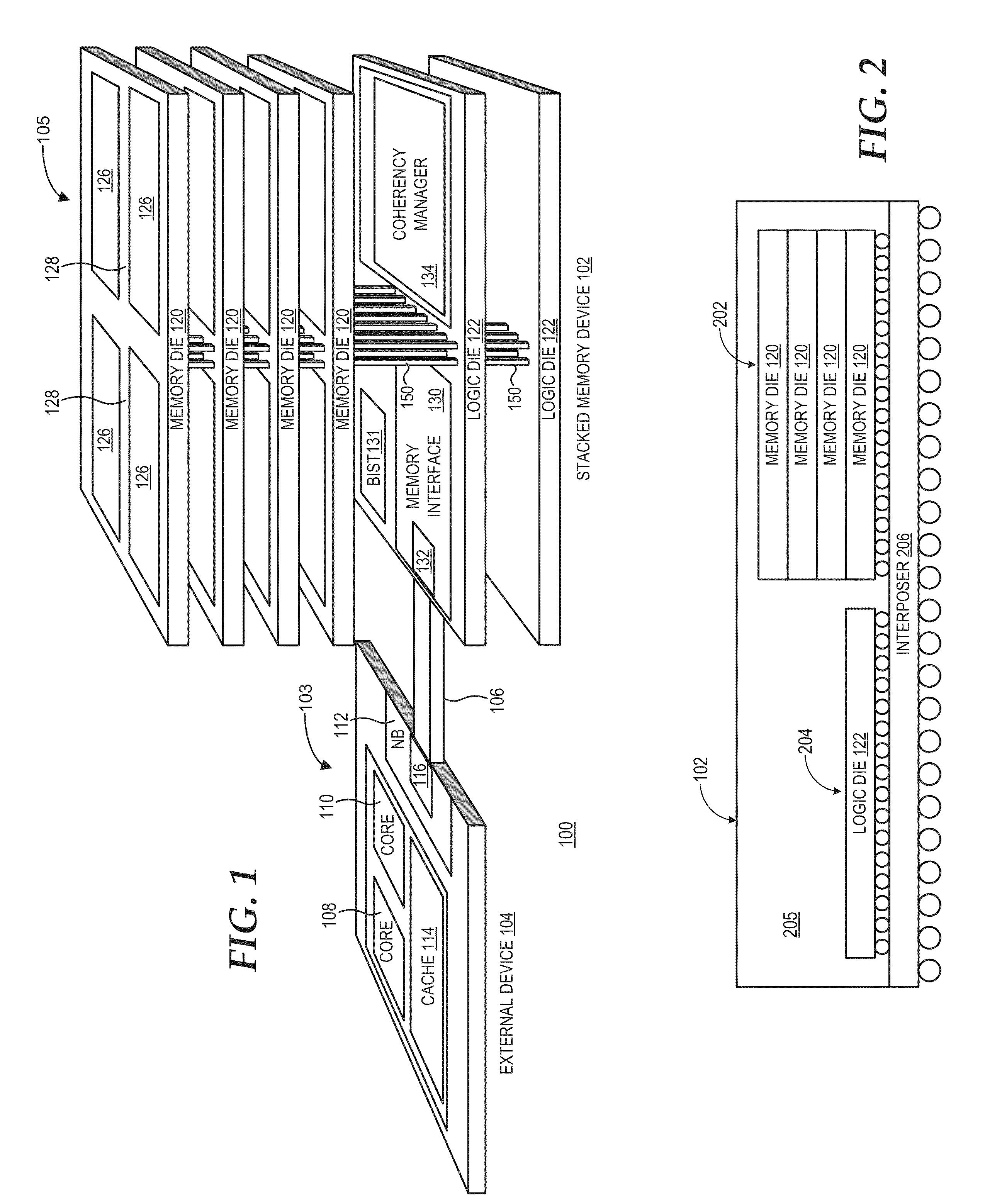

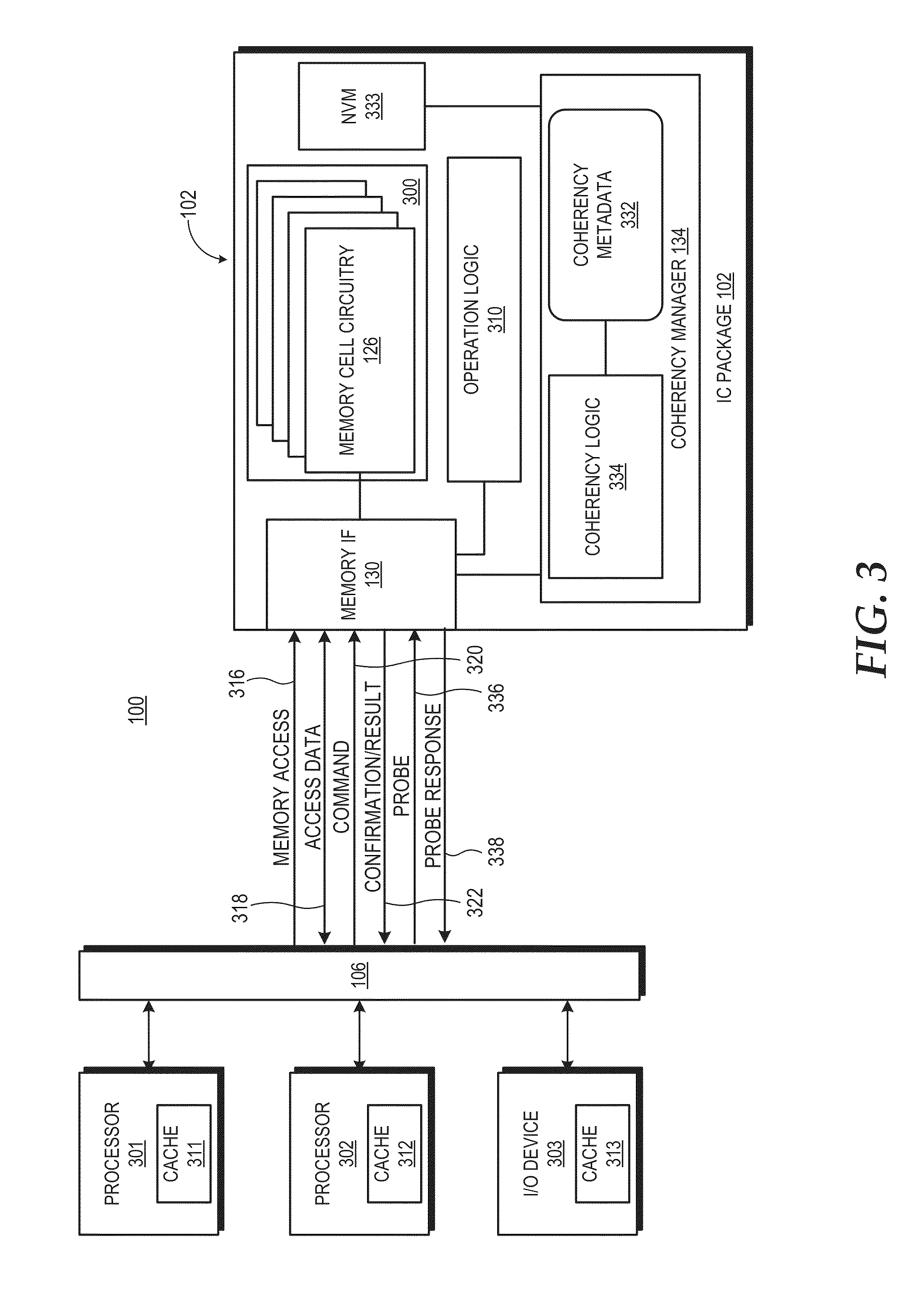

Cache coherency using die-stacked memory device with logic die

ActiveUS20140181417A1Solid-state devicesMemory adressing/allocation/relocationHigh bandwidthMemory interface

A die-stacked memory device implements an integrated coherency manager to offload cache coherency protocol operations for the devices of a processing system. The die-stacked memory device includes a set of one or more stacked memory dies and a set of one or more logic dies. The one or more logic dies implement hardware logic providing a memory interface and the coherency manager. The memory interface operates to perform memory accesses in response to memory access requests from the coherency manager and the one or more external devices. The coherency manager comprises logic to perform coherency operations for shared data stored at the stacked memory dies. Due to the integration of the logic dies and the memory dies, the coherency manager can access shared data stored in the memory dies and perform related coherency operations with higher bandwidth and lower latency and power consumption compared to the external devices.

Owner:ADVANCED MICRO DEVICES INC

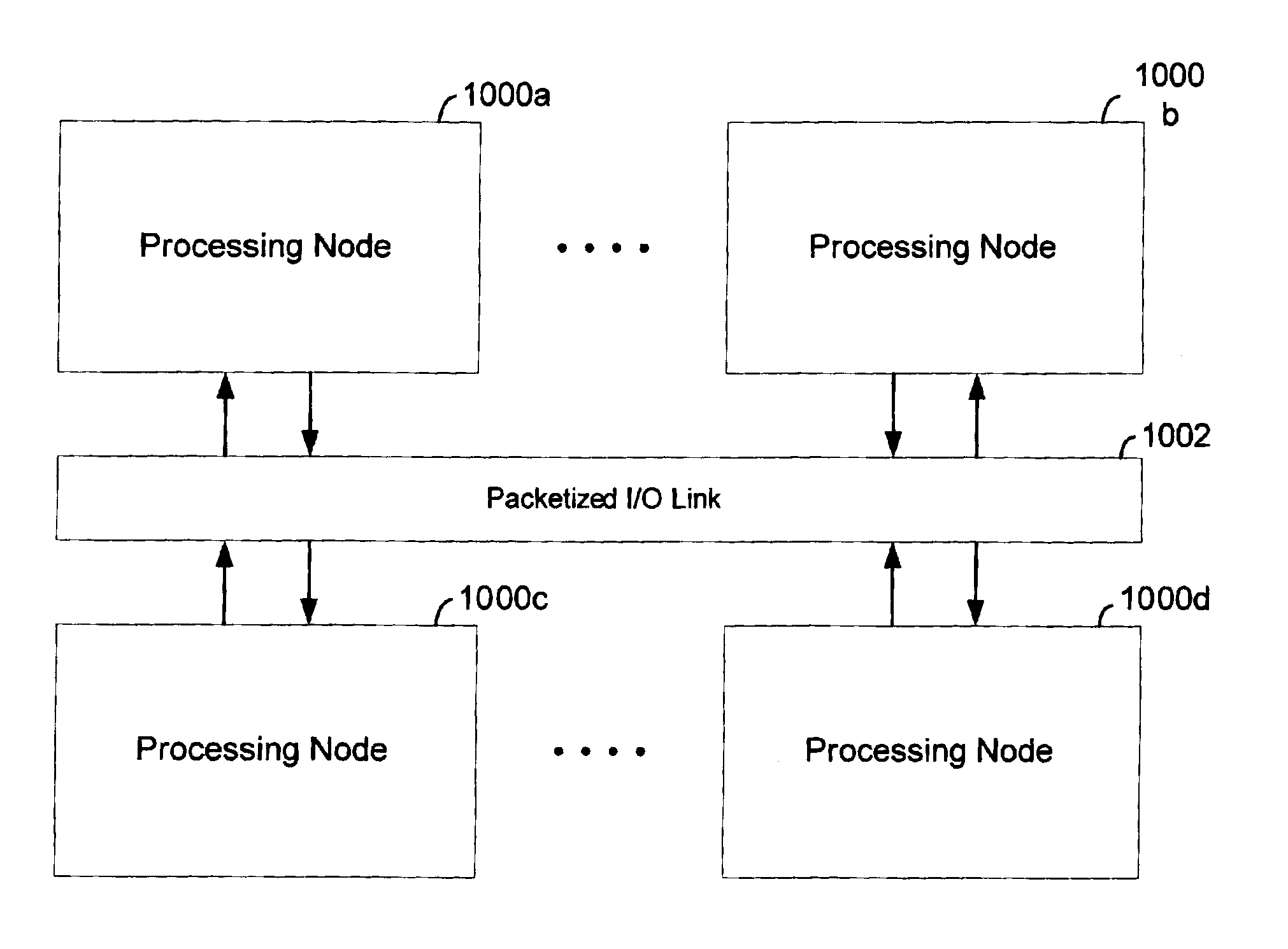

Scalable cache coherent distributed shared memory processing system

A packetized I / O link such as the HyperTransport protocol is adapted to transport memory coherency transactions over the link to support cache coherency in distributed shared memory systems. The I / O link protocol is adapted to include additional virtual channels that can carry command packets for coherency transactions over the link in a format that is acceptable to the I / O protocol. The coherency transactions support cache coherency between processing nodes interconnected by the link. Each processing node may include processing resources that themselves share memory, such as symmetrical multiprocessor configuration. In this case, coherency will have to be maintained both at the intranode level as well as the internode level. A remote line directory is maintained by each processing node so that it can track the state and location of all of the lines from its local memory that have been provided to other remote nodes. A node controller initiates transactions over the link in response to local transactions initiated within itself, and initiates transactions over the link based on local transactions initiated within itself. Flow control is provided for each of the coherency virtual channels either by software through credits or through a buffer free command packet that is sent to a source node by a target node indicating the availability of virtual channel buffering for that channel.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Managing sparse directory evictions in multiprocessor systems via memory locking

ActiveUS20050251626A1Enhanced interactionFacilitate evictionData processing applicationsMemory adressing/allocation/relocationMulti processorParallel computing

Cache coherence directory eviction mechanisms are described for use in computer systems having a plurality of multiprocessor clusters. Interaction among the clusters is facilitated by a cache coherence controller in each cluster. A cache coherence directory is associated with each cache coherence controller identifying memory lines associated with the local cluster that are cached in remote clusters. Techniques are provided for managing eviction of entries in the cache coherence directory by locking memory lines in a home cluster without causing a memory controller to generate probes to processors in the home cluster.

Owner:SANMINA-SCI CORPORATION

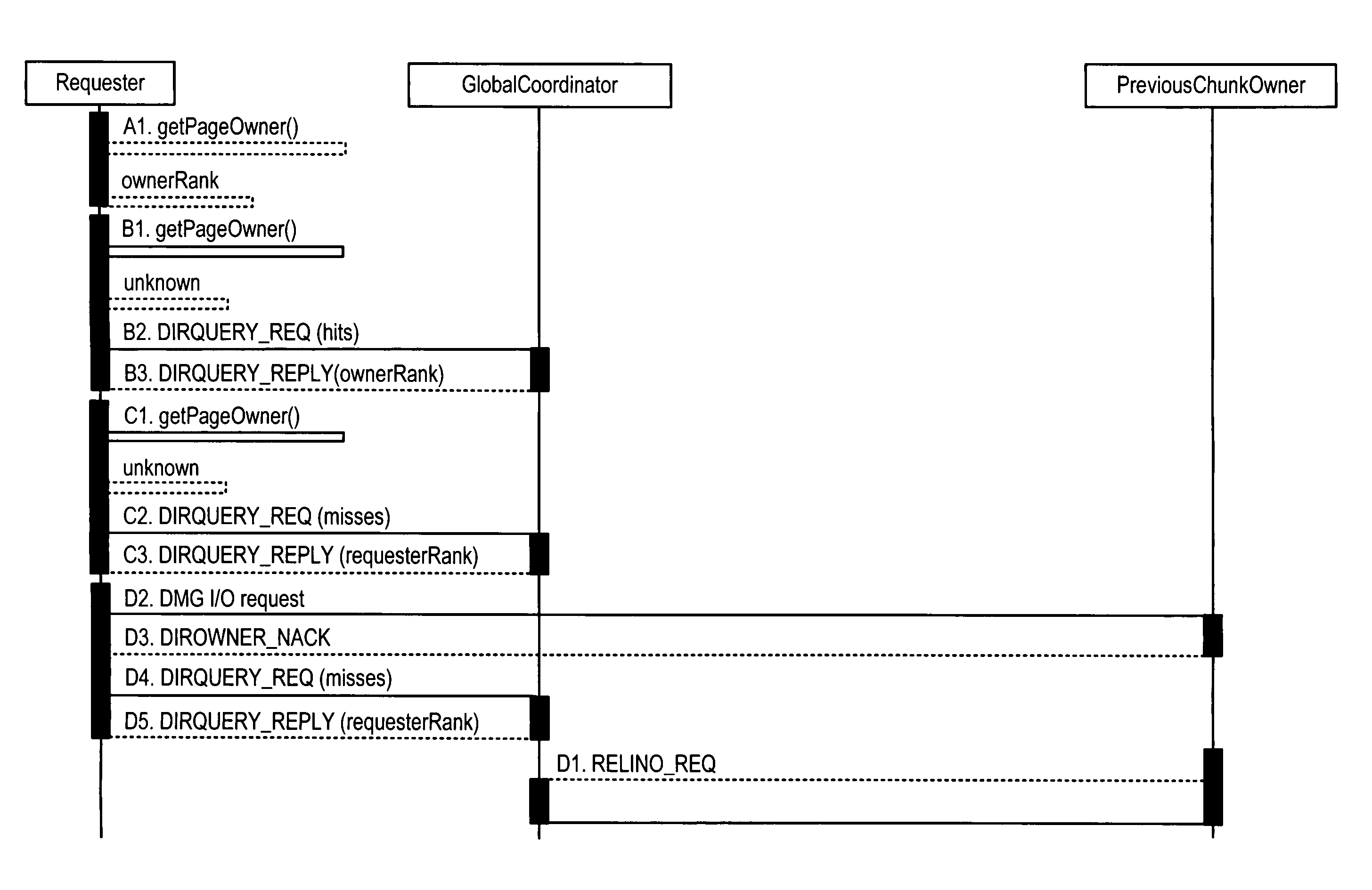

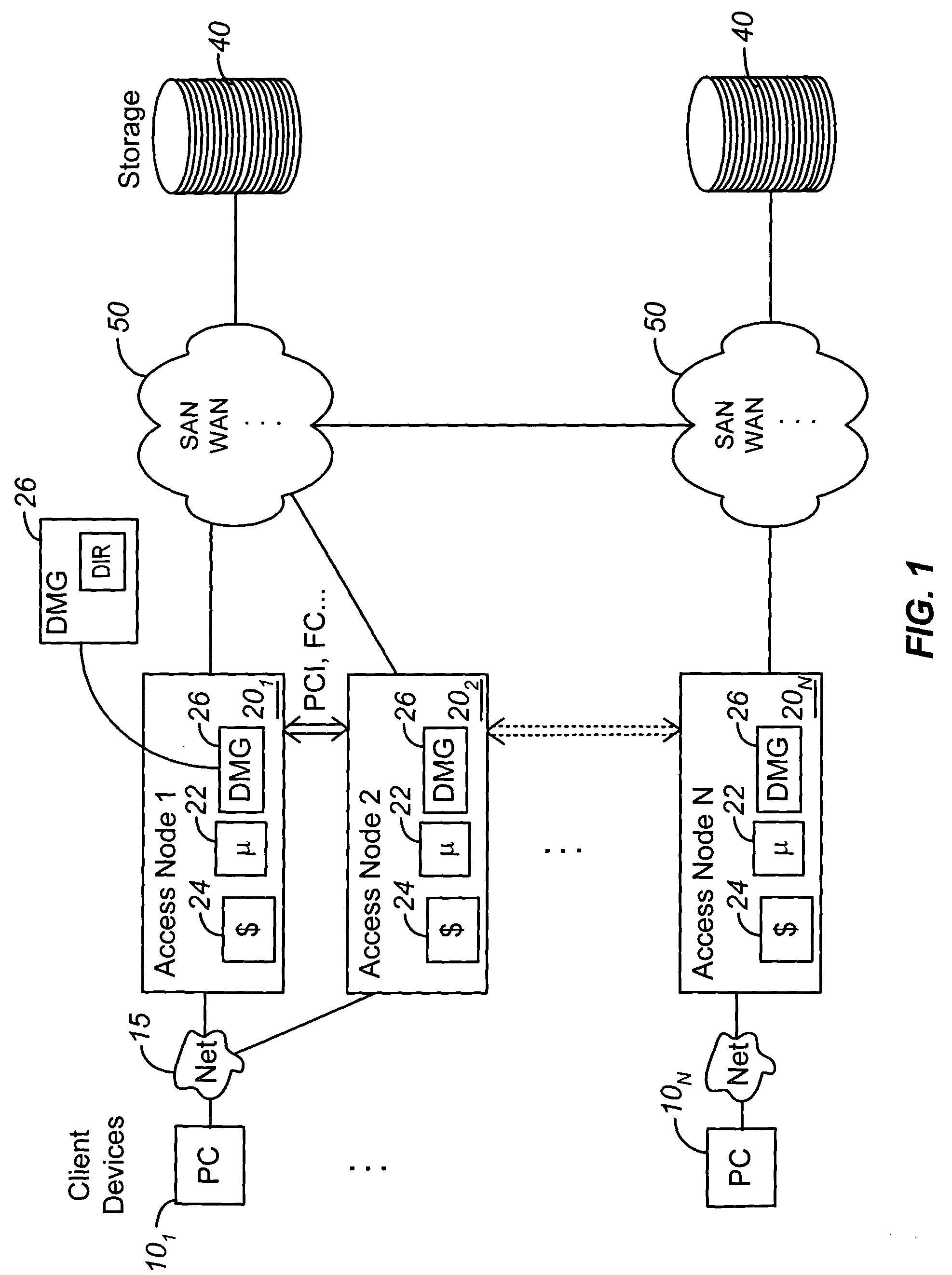

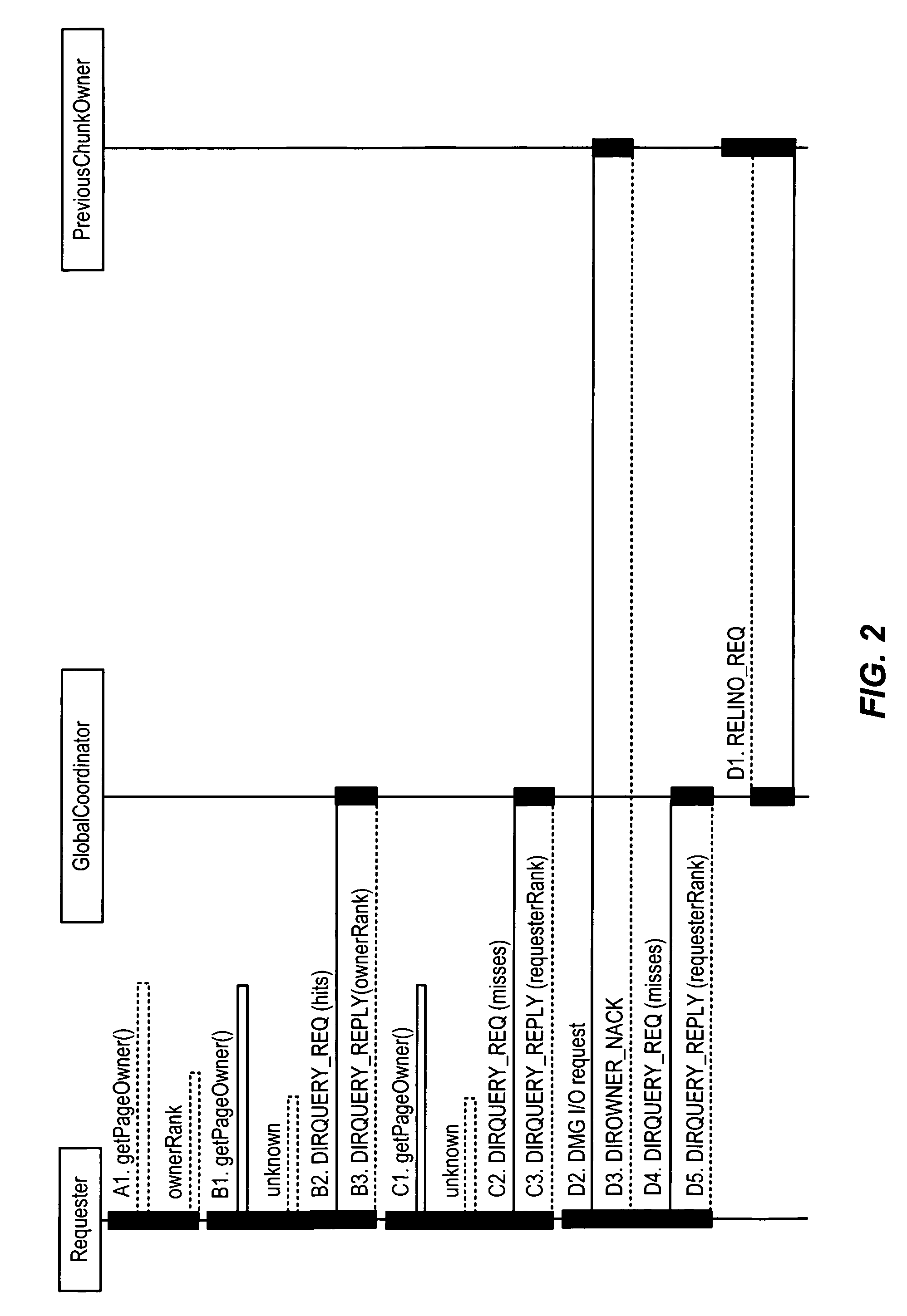

Systems and methods for providing distributed cache coherence

ActiveUS7975018B2Reduce bandwidth requirementsReduce in quantityDigital computer detailsTransmissionComputer networkCache access

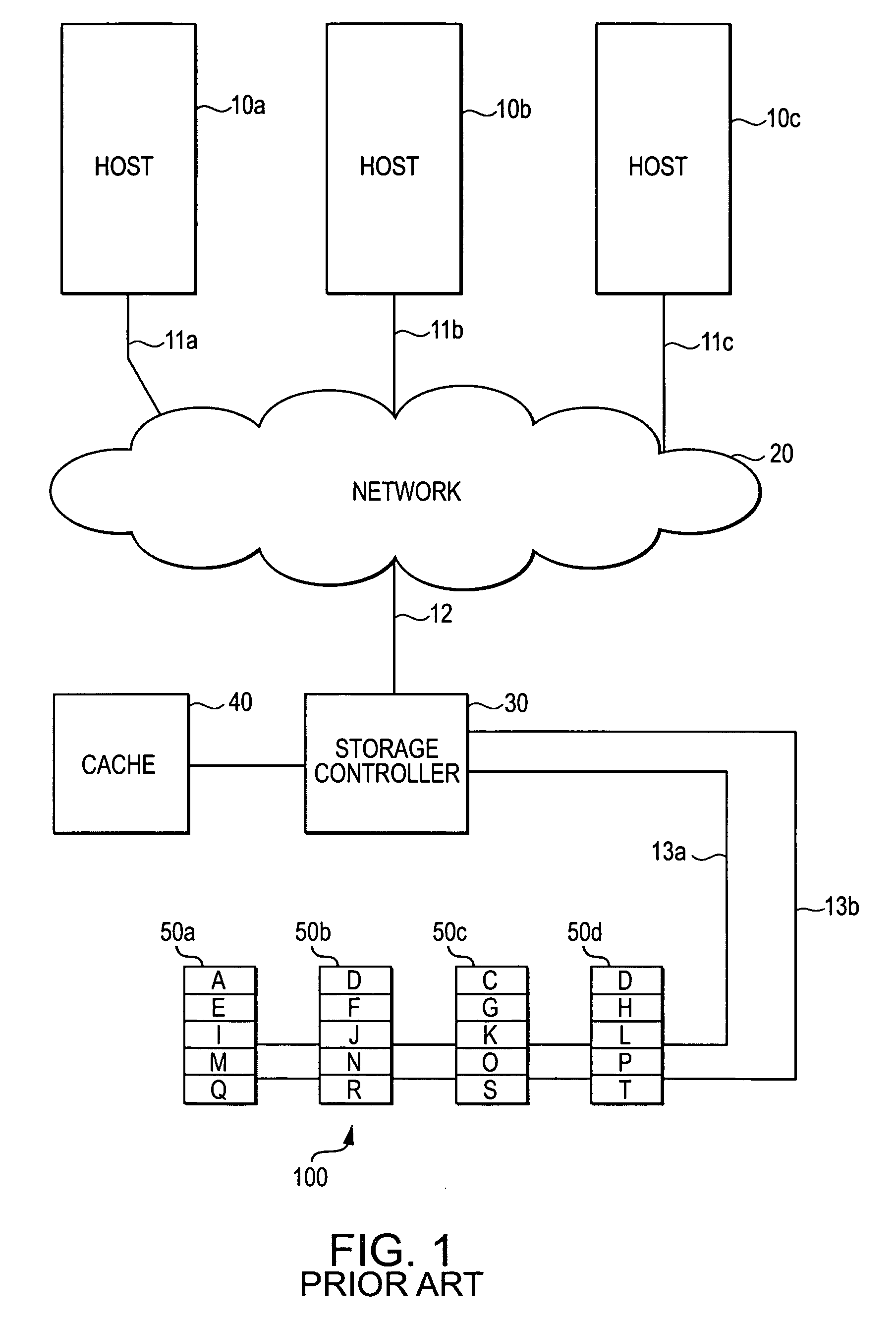

A plurality of access nodes sharing access to data on a storage network implement a directory based cache ownership scheme. One node, designated as a global coordinator, maintains a directory (e.g., table or other data structure) storing information about I / O operations by the access nodes. The other nodes send requests to the global coordinator when an I / O operation is to be performed on identified data. Ownership of that data in the directory is given to the first requesting node. Ownership may transfer to another node if the directory entry is unused or quiescent. The distributed directory-based cache coherency allows for reducing bandwidth requirements between geographically separated access nodes by allowing localized (cached) access to remote data.

Owner:EMC IP HLDG CO LLC

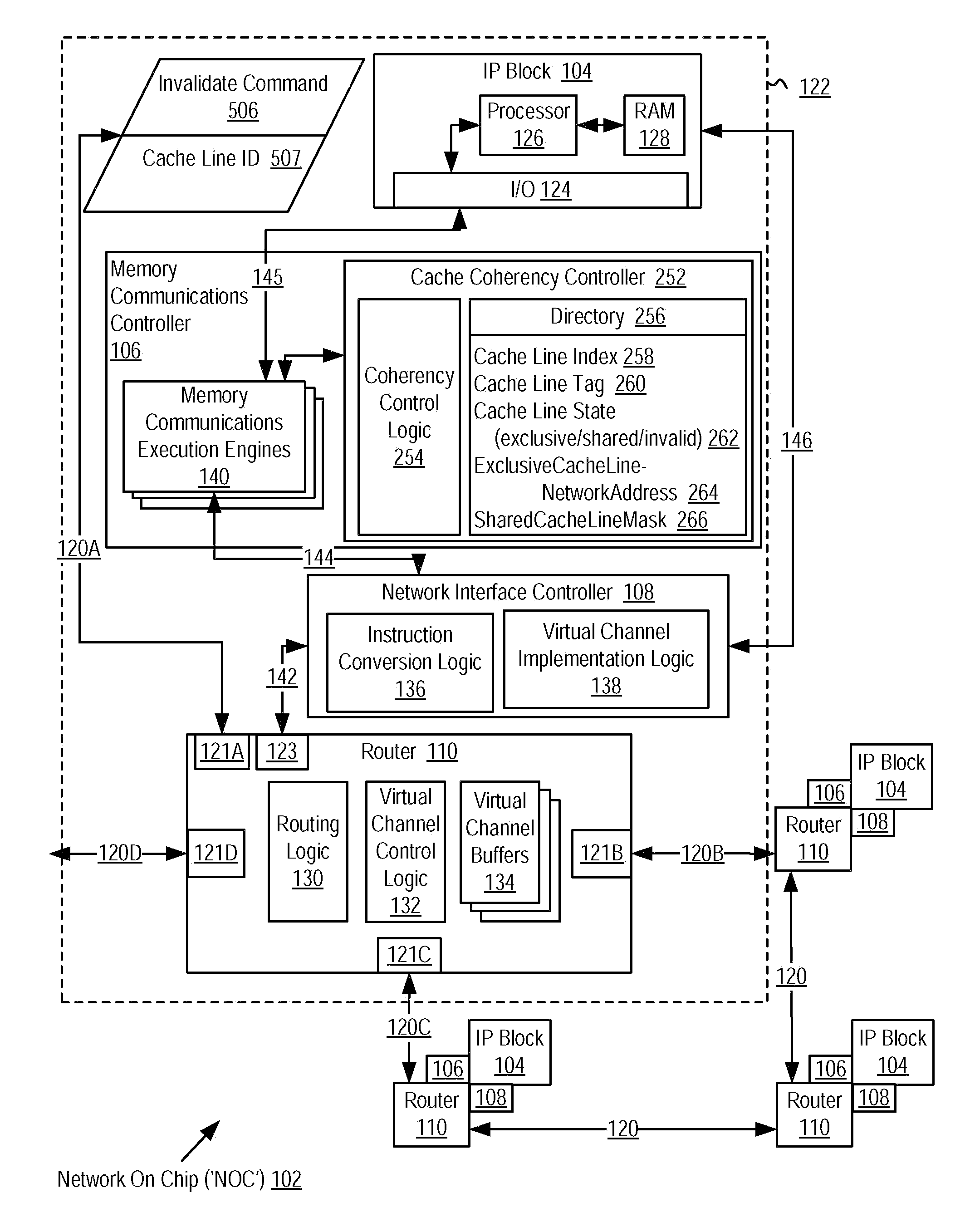

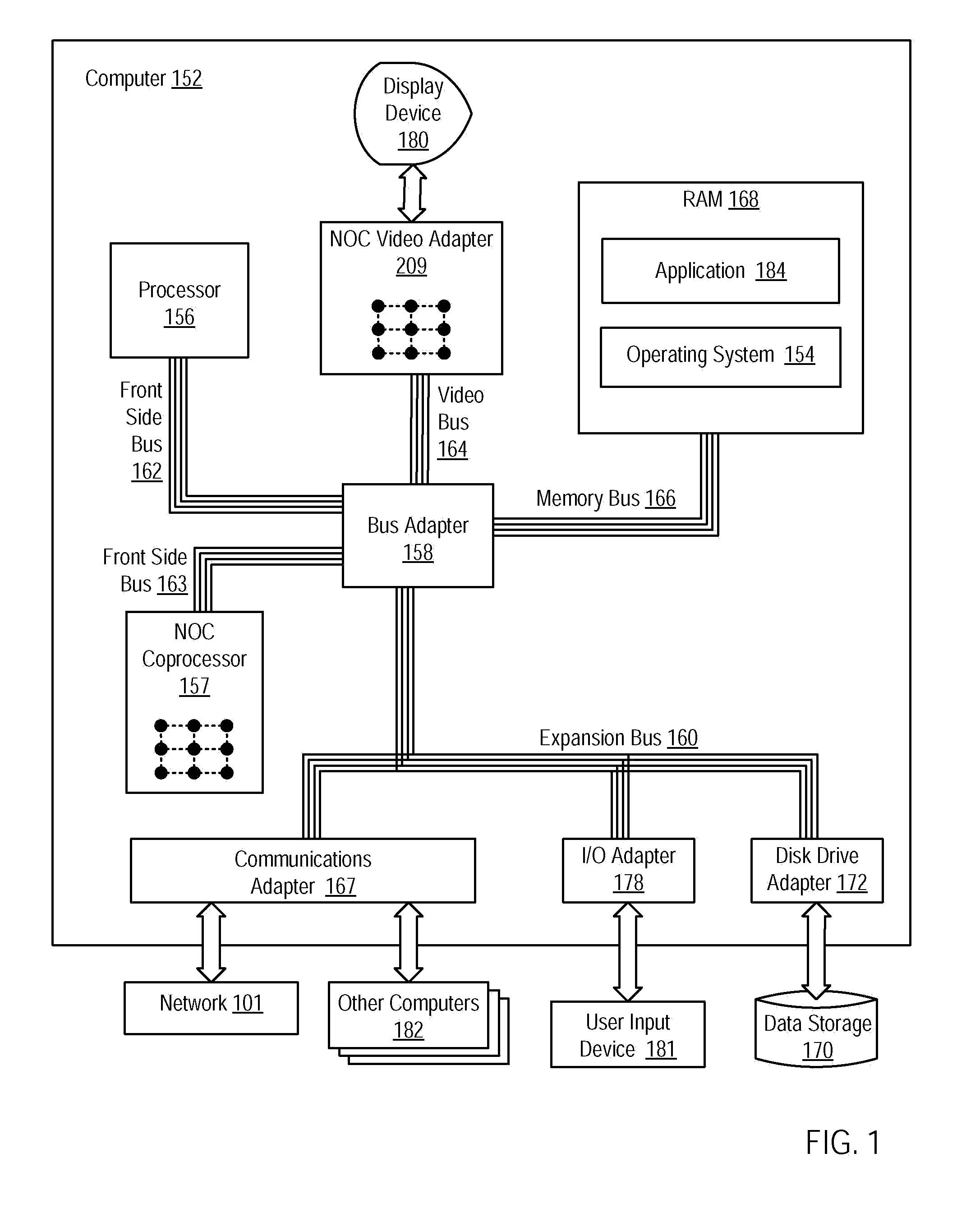

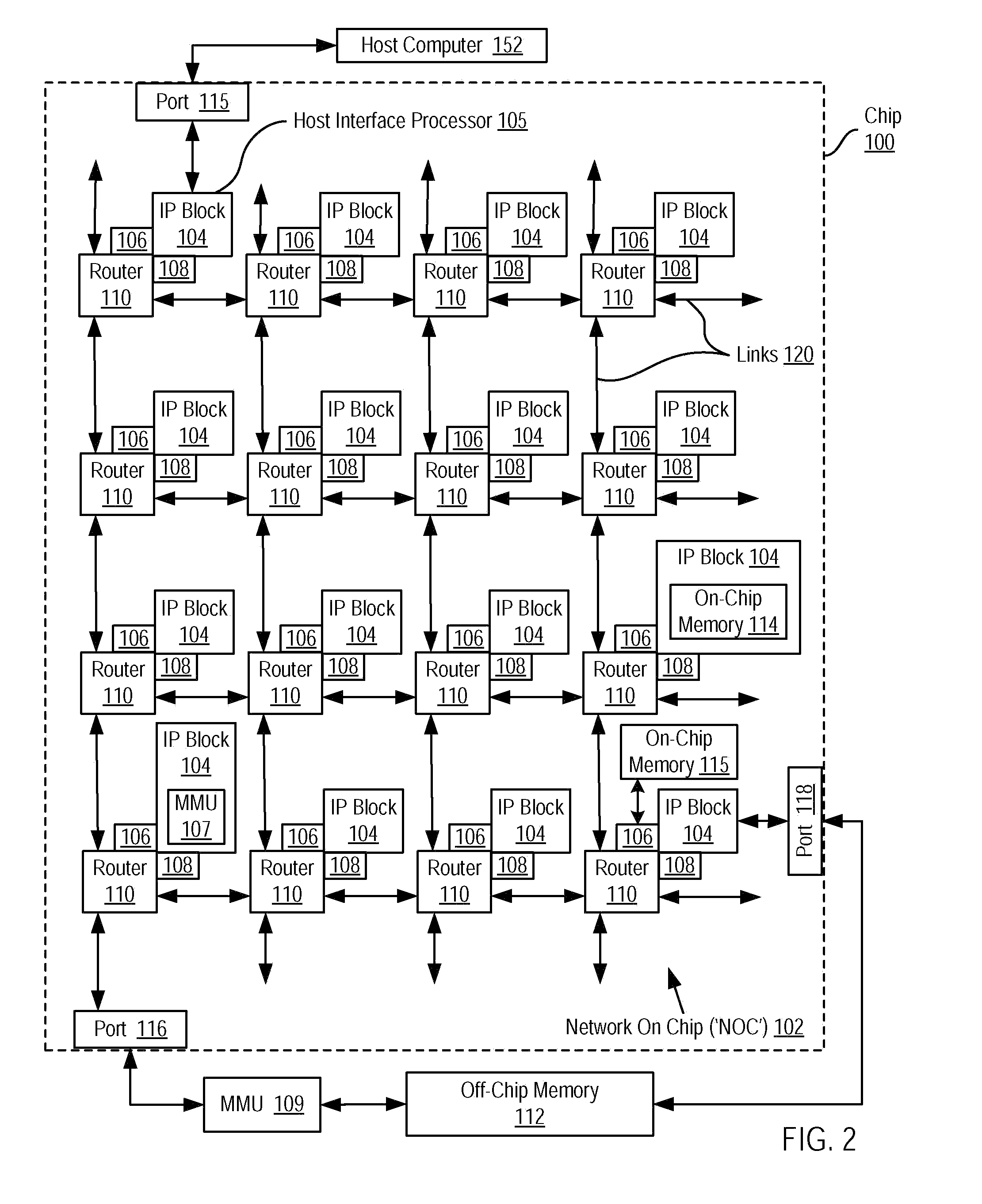

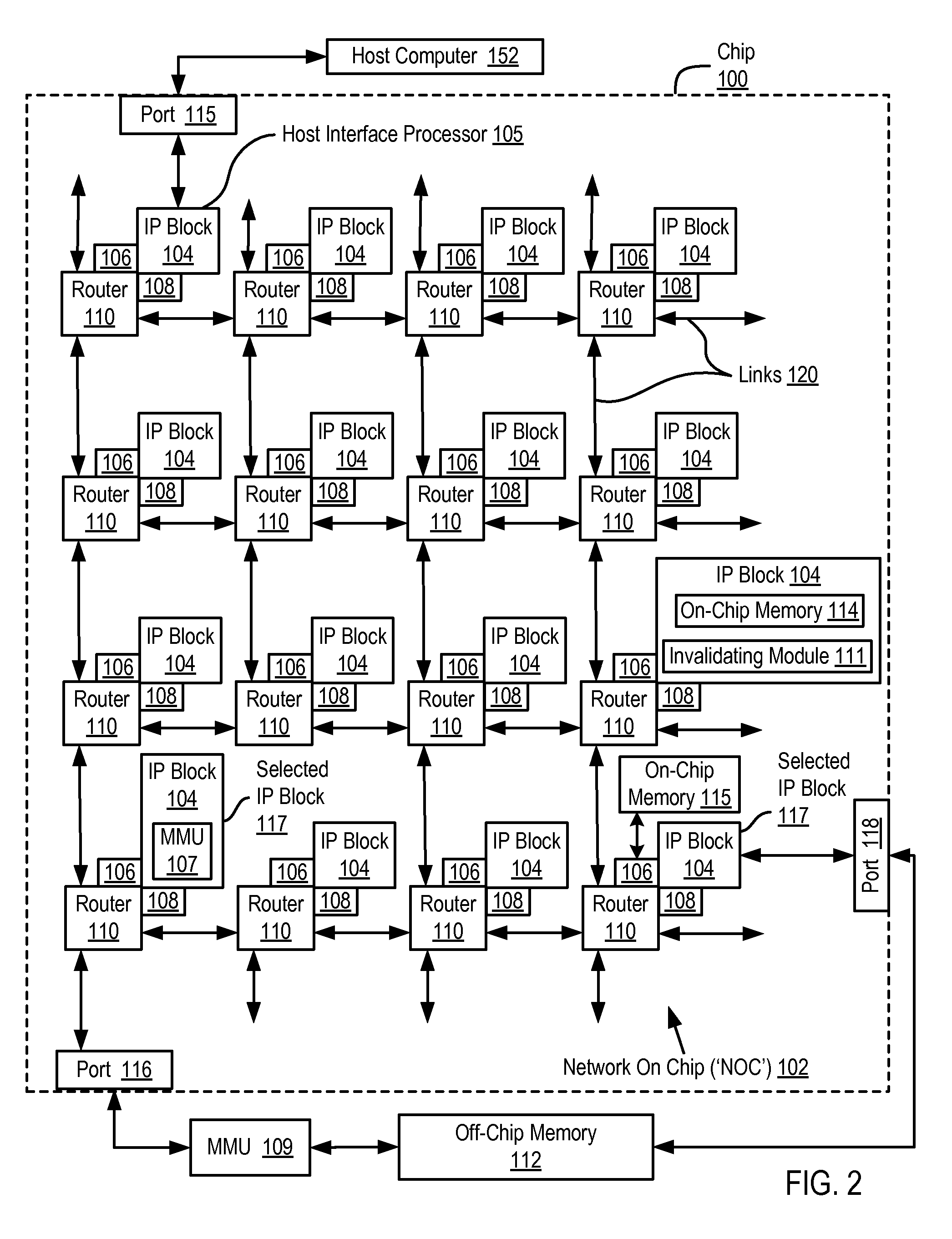

Network On Chip that Maintains Cache Coherency with Invalidate Commands

A network on chip (‘NOC’) that maintains cache coherency, the NOC including integrated processor (‘IP’) blocks, routers, memory communications controllers, and network interface controller, each IP block adapted to a router through a memory communications controller and a network interface controller, at least one memory communications controller further comprising a cache coherency controller each memory communications controller controlling communication between an IP block and memory, and each network interface controller controlling inter-IP block communications through routers, wherein the memory communications controller configured to execute a memory access instruction and configured to determine a state of a cache line addressed by the memory access instruction, the state of the cache line being one of shared, exclusive, or invalid; the memory communications controller configured to broadcast an invalidate command to a plurality of IP blocks of the NOC if the state of the cache line is shared; and the memory communications controller configured to transmit an invalidate command only to an IP block that controls a cache where the cache line is stored if the state of the cache line is exclusive.

Owner:IBM CORP

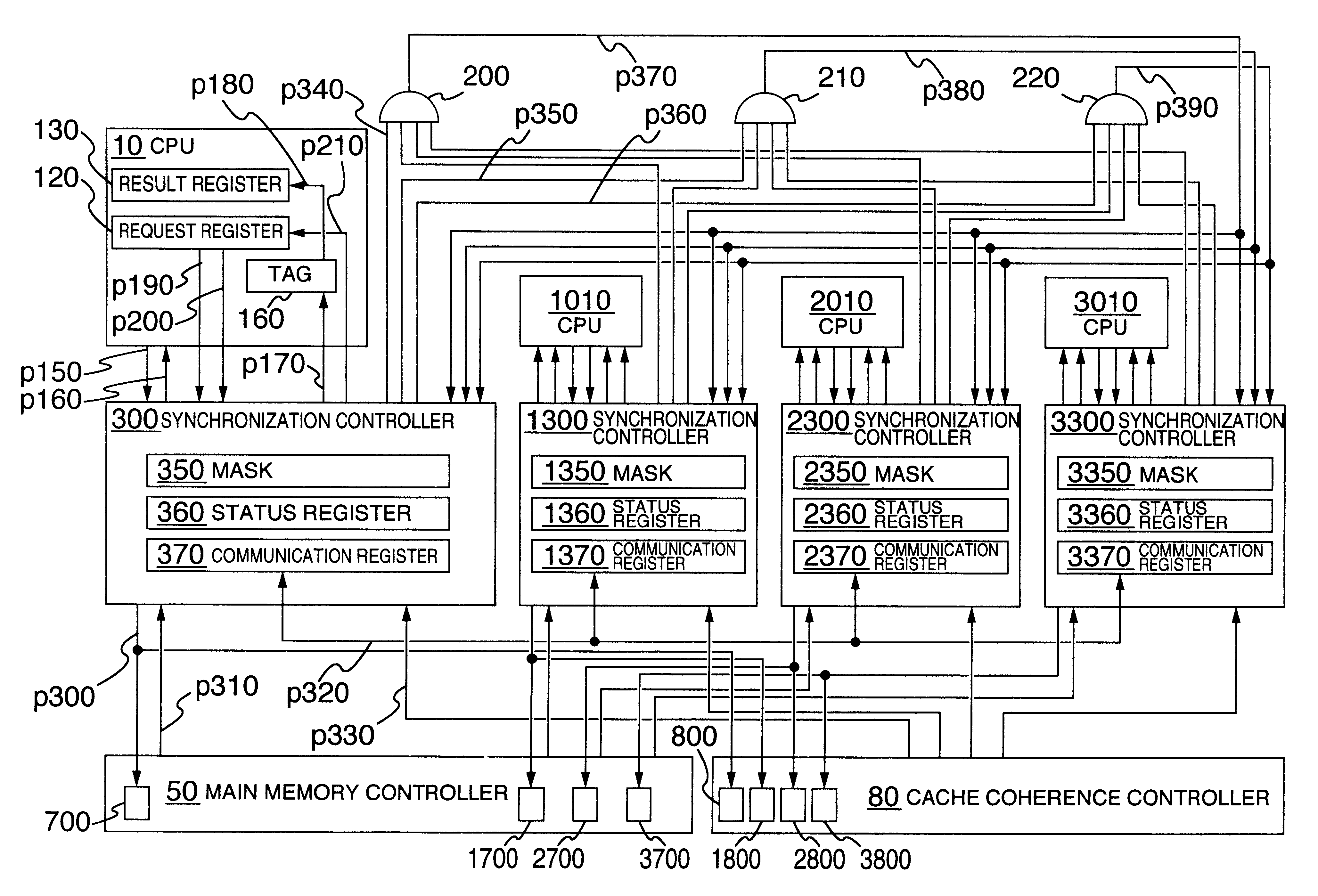

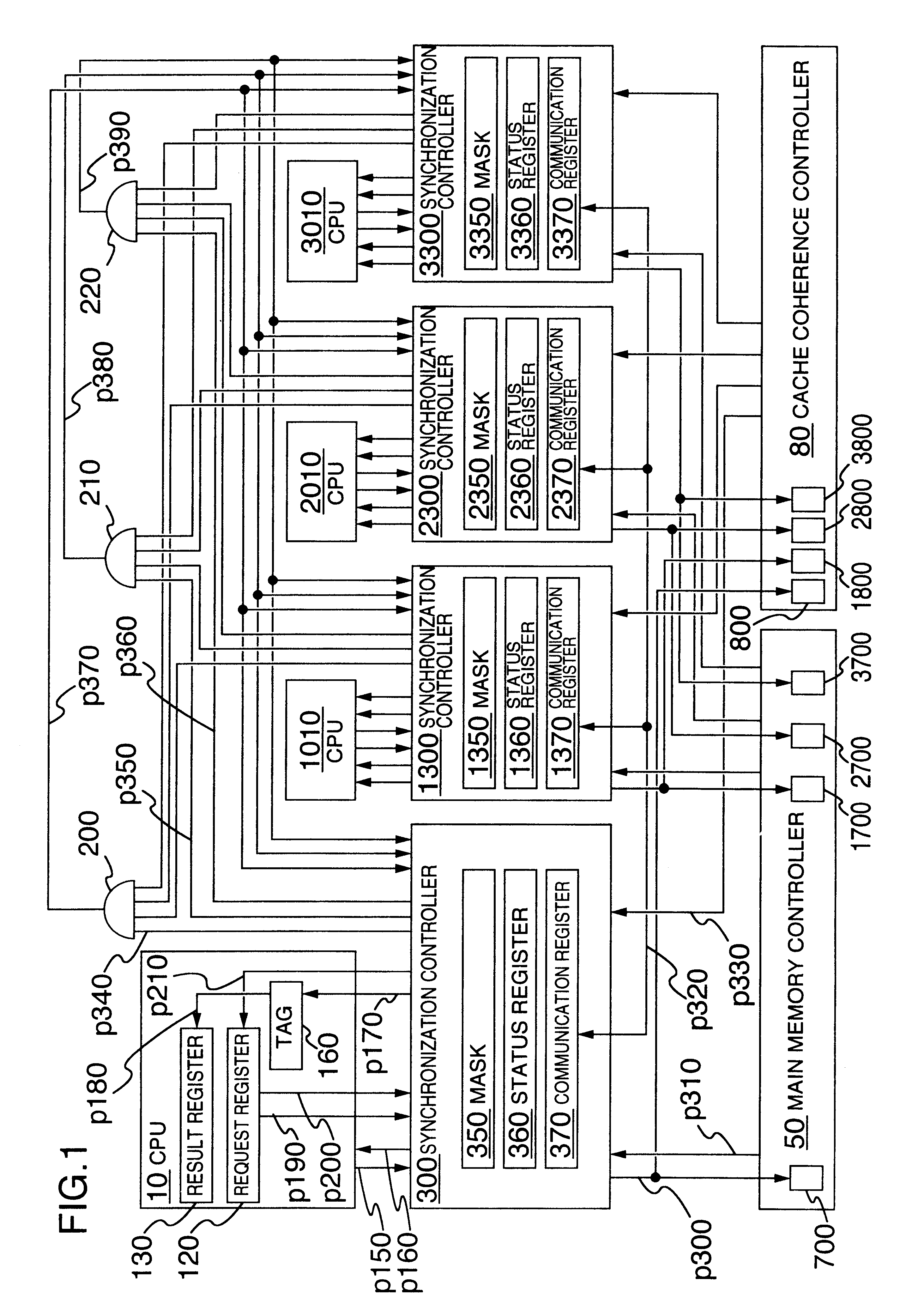

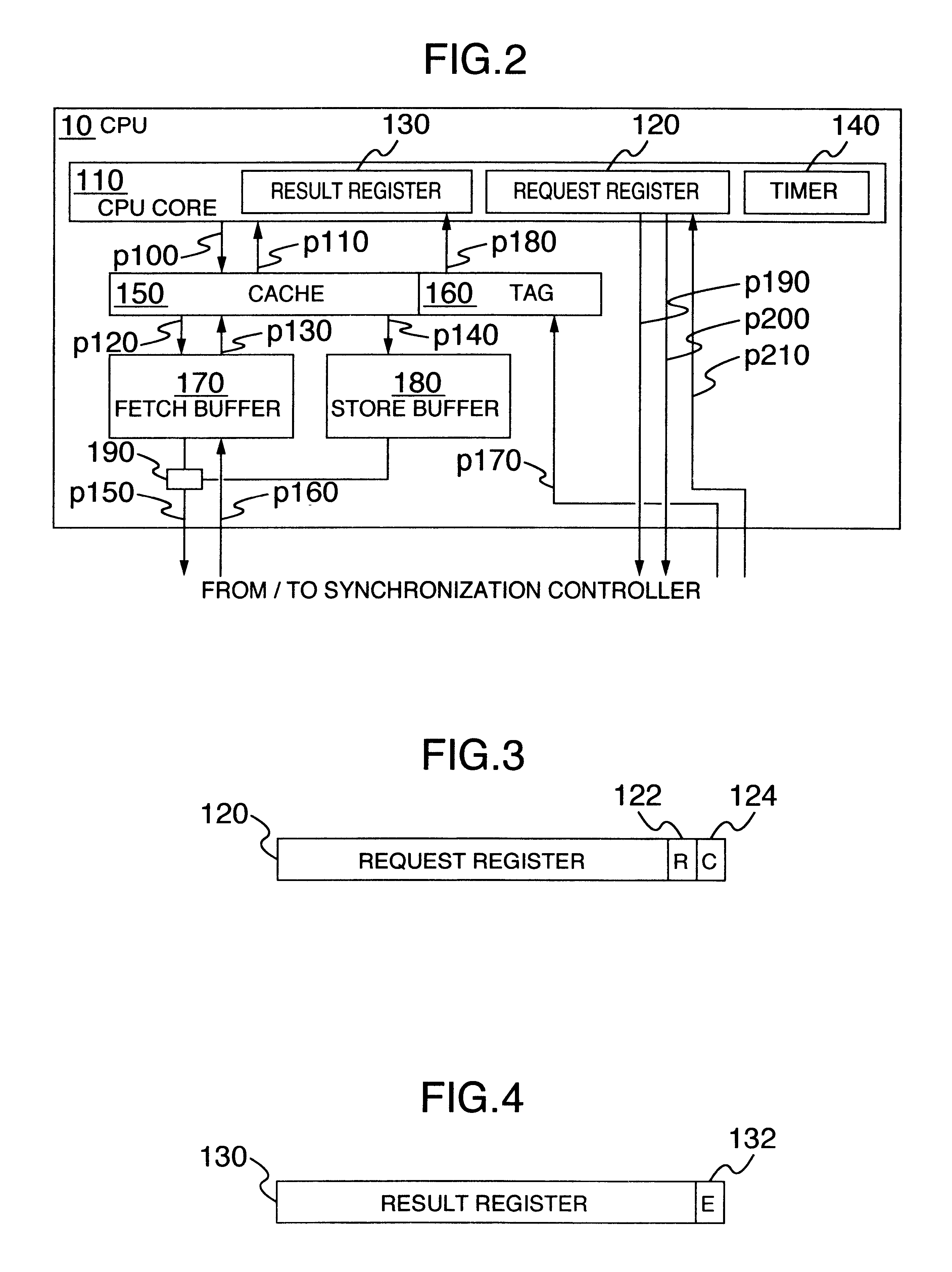

Multiprocessor synchronization and coherency control system

InactiveUS6466988B1Program synchronisationMemory adressing/allocation/relocationMemory typeConnection type

A shared main memory type multiprocessor is arranged to have a switch connection type. The multiprocessor prepares an instruction for outputting a synchronization transaction. When each CPU executes this instruction, after all the transactions of the preceding instructions are output, the synchronization transaction is output to the main memory and the coherence controller. By the synchronization transaction, the main memory serializes the memory accesses and the coherence controller guarantees the completion of the cache coherence control. This makes it possible to serialize the memory accesses and guarantee the completion of the cache coherence control at the same time.

Owner:HITACHI LTD

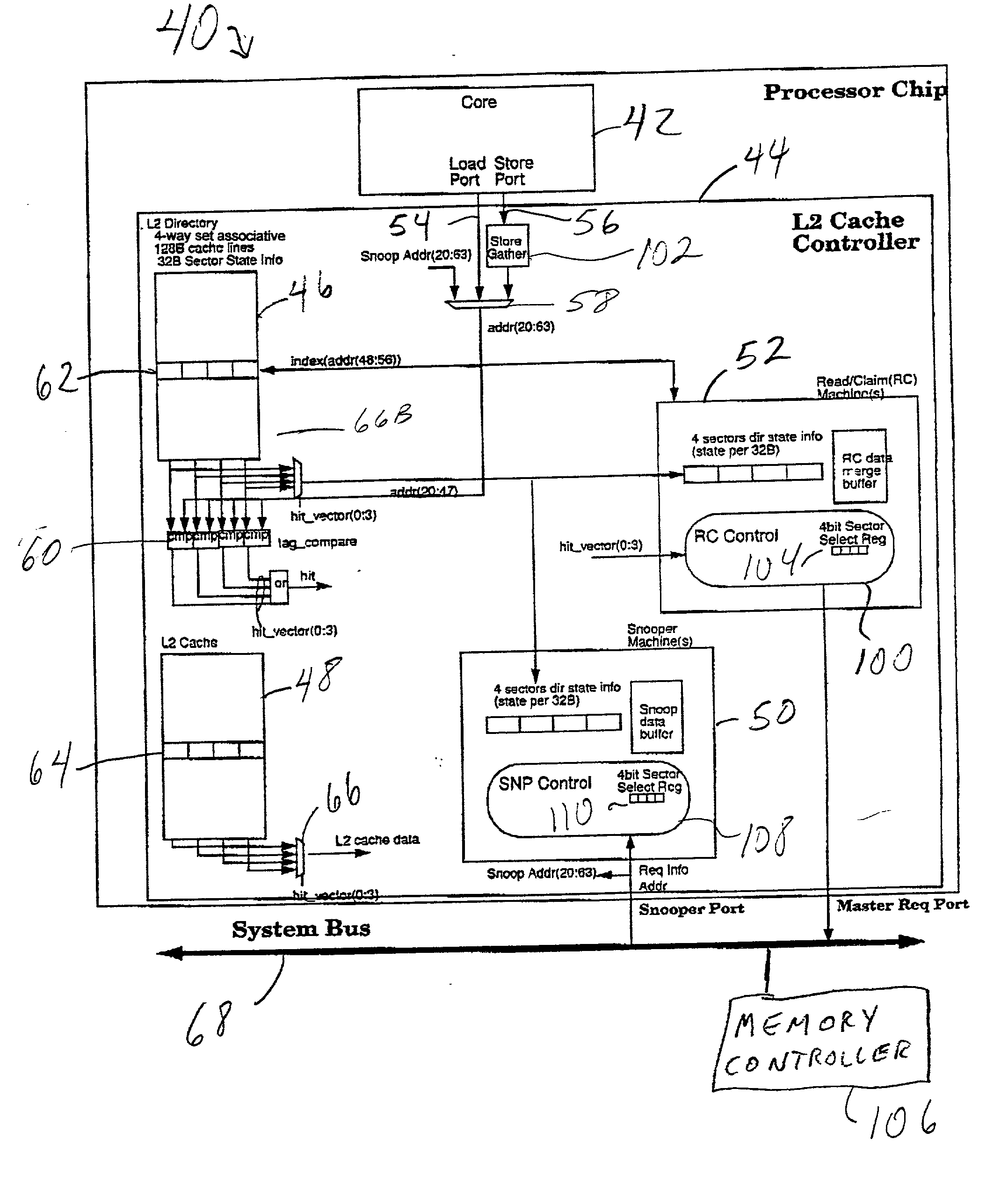

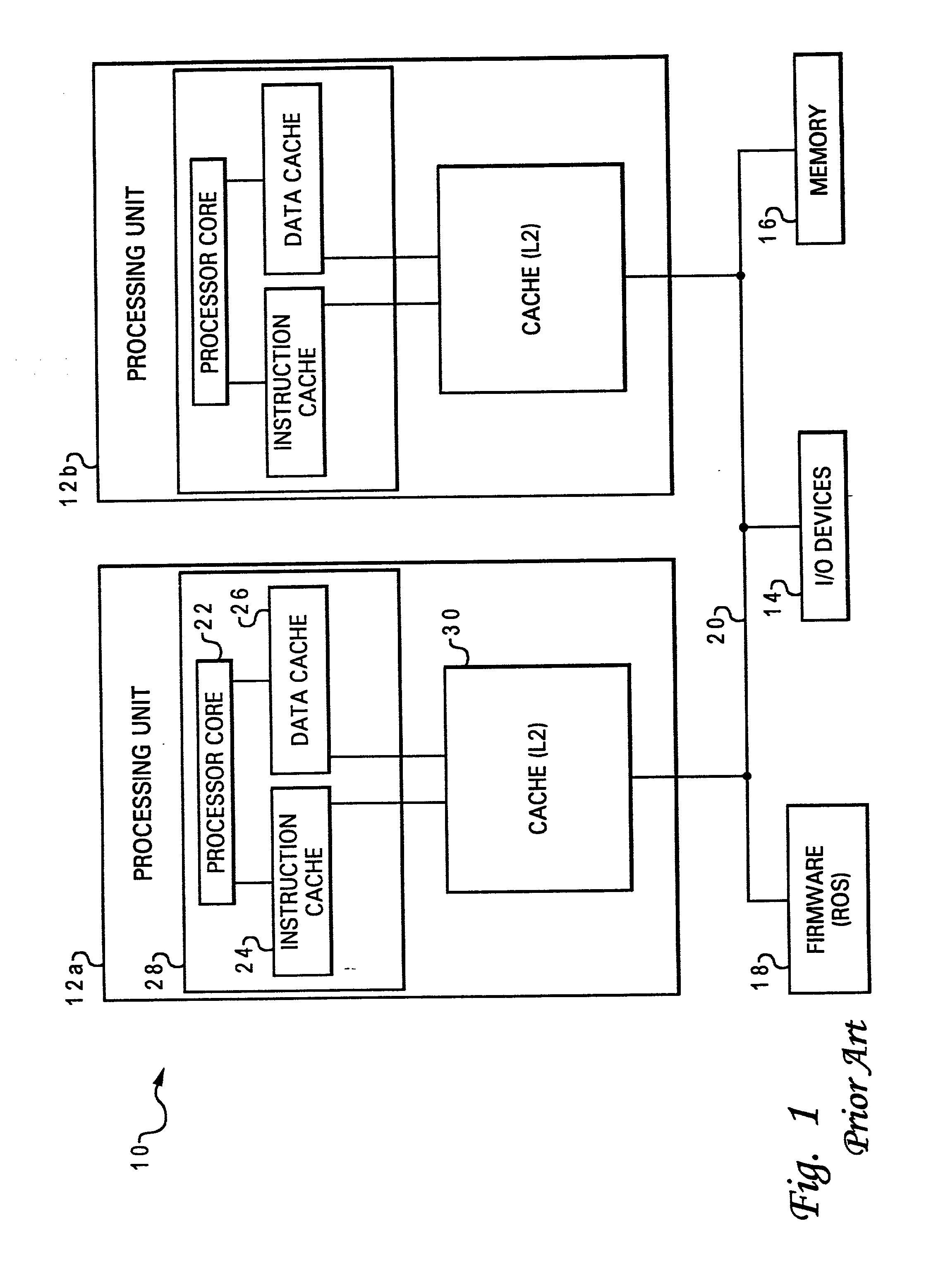

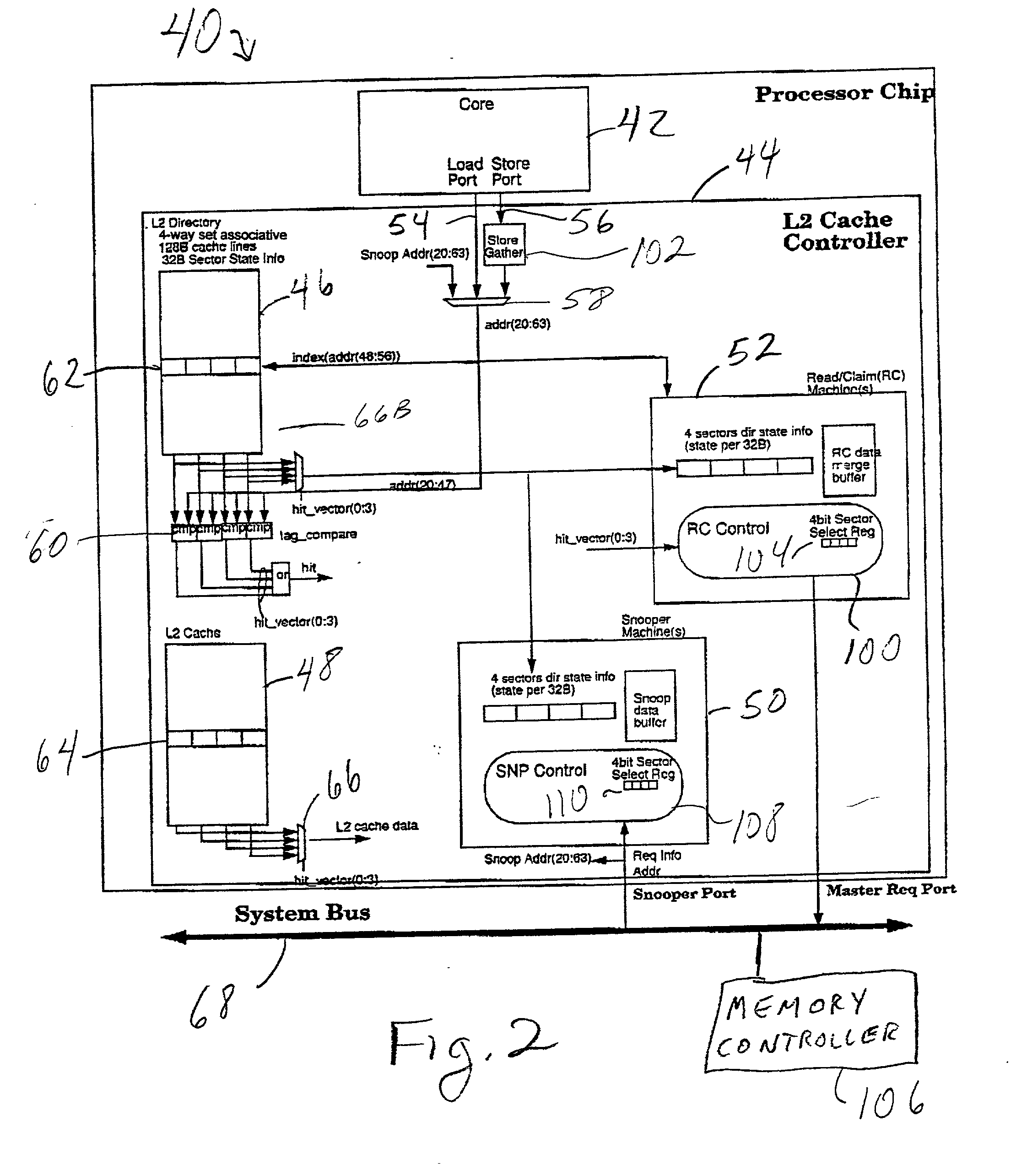

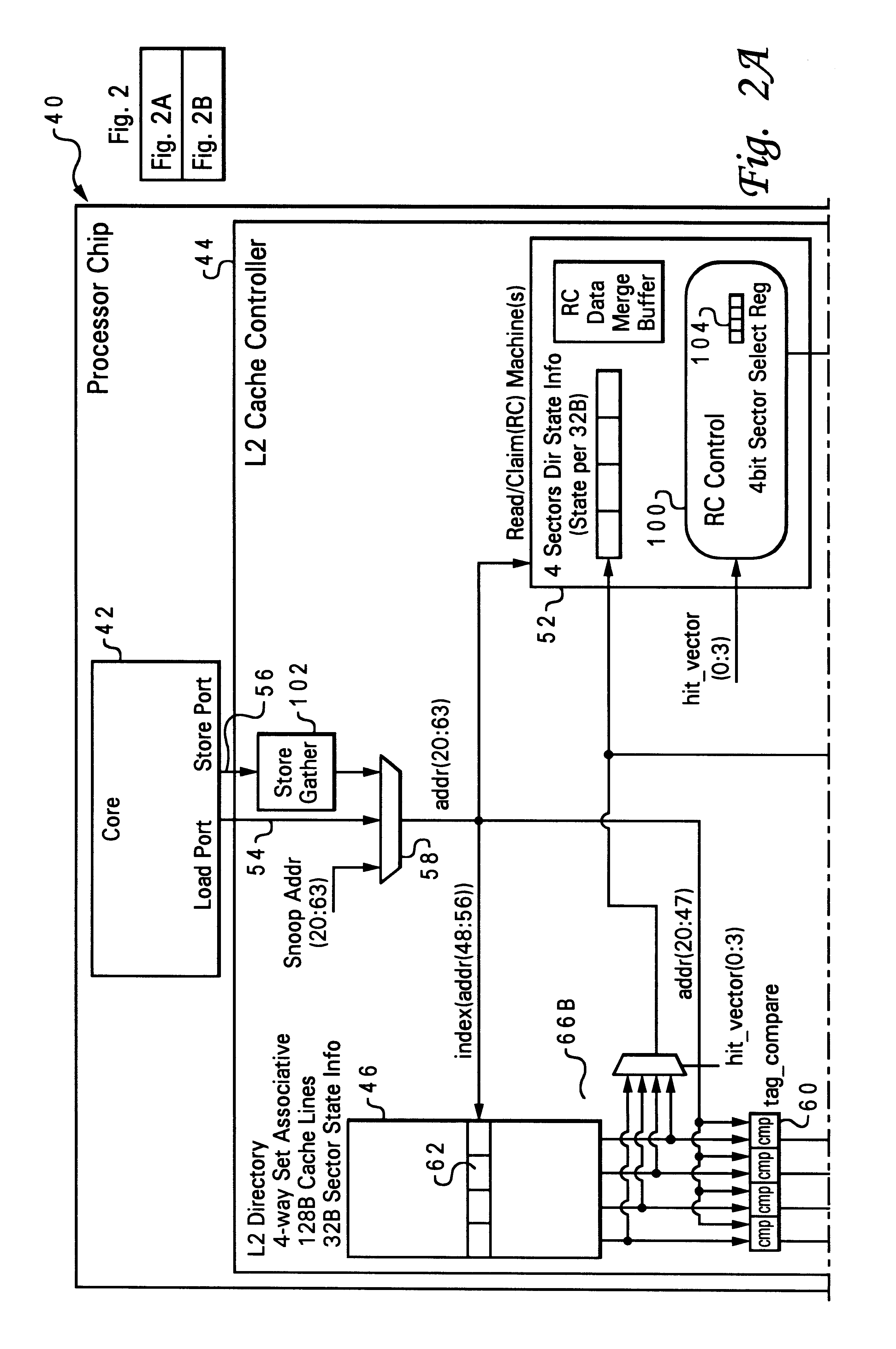

Multiprocessor computer system with sectored cache line mechanism for cache intervention

A method of maintaining coherency in a multiprocessor computer system wherein each processing unit's cache has sectored cache lines. A first cache coherency state is assigned to one of the sectors of a particular cache line, and a second cache coherency state, different from the first cache coherency state, is assigned to the overall cache line while maintaining the first cache coherency state for the first sector. The first cache coherency state may provide an indication that the first sector contains a valid value which is not shared with any other cache (i.e., an exclusive or modified state), and the second cache coherency state may provide an indication that at least one of the sectors in the cache line contains a valid value which is shared with at least one other cache (a shared, recently-read, or tagged state). Other coherency states may be applied to other sectors in the same cache line. Partial intervention may be achieved by issuing a request to retrieve an entire cache line, and sourcing only a first sector of the cache line in response to the request. A second sector of the same cache line may be sourced from a third cache. Other sectors may also be sourced from a system memory device of the computer system as well. Appropriate system bus codes are utilized to transmit cache operations to the system bus and indicate which sectors of the cache line are targets of the cache operation.

Owner:GOOGLE LLC

Cache coherence protocol for a multiple bus multiprocessor system

InactiveUS6868481B1Improve performanceMemory adressing/allocation/relocationMultiple digital computer combinationsMulti processorComputerized system

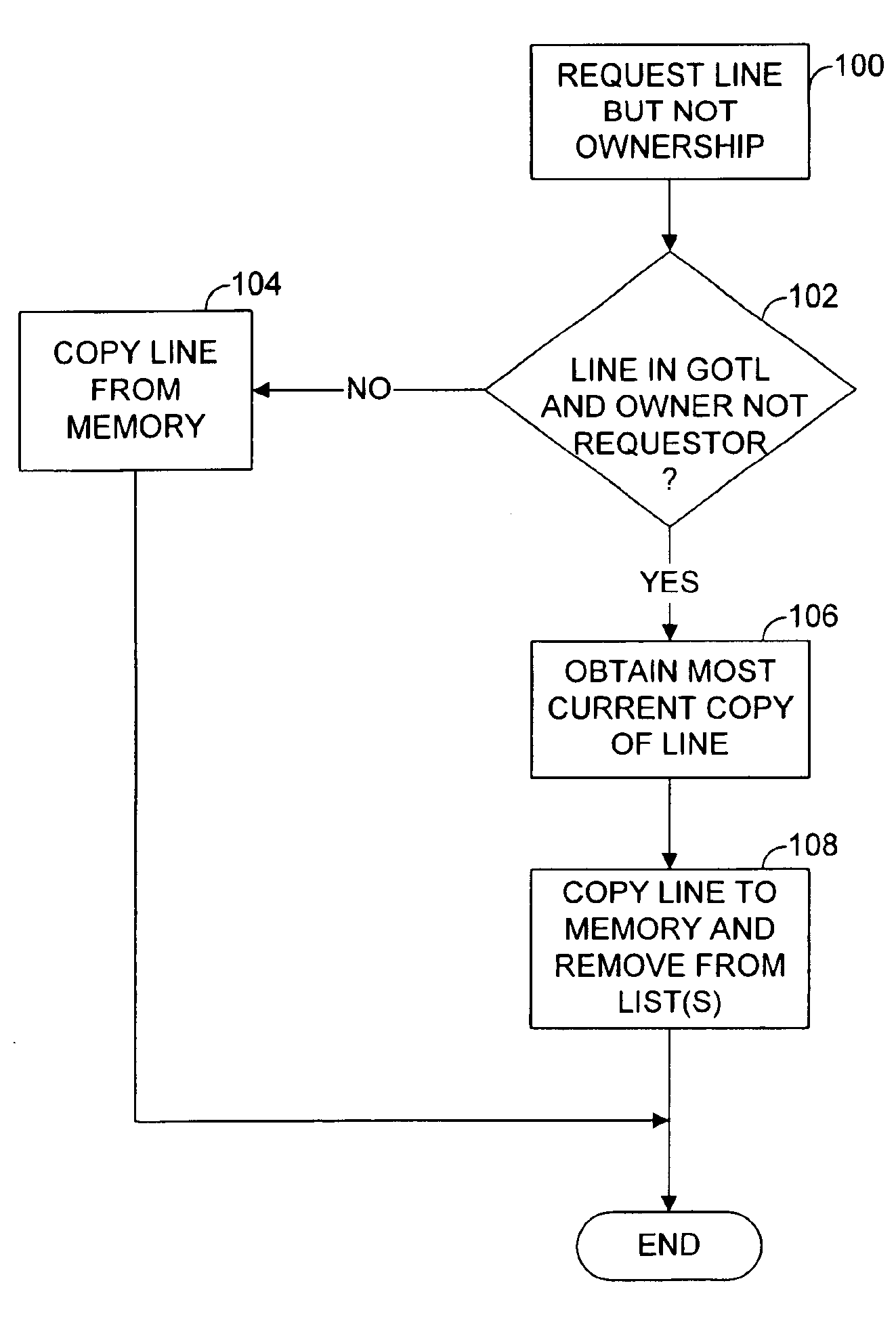

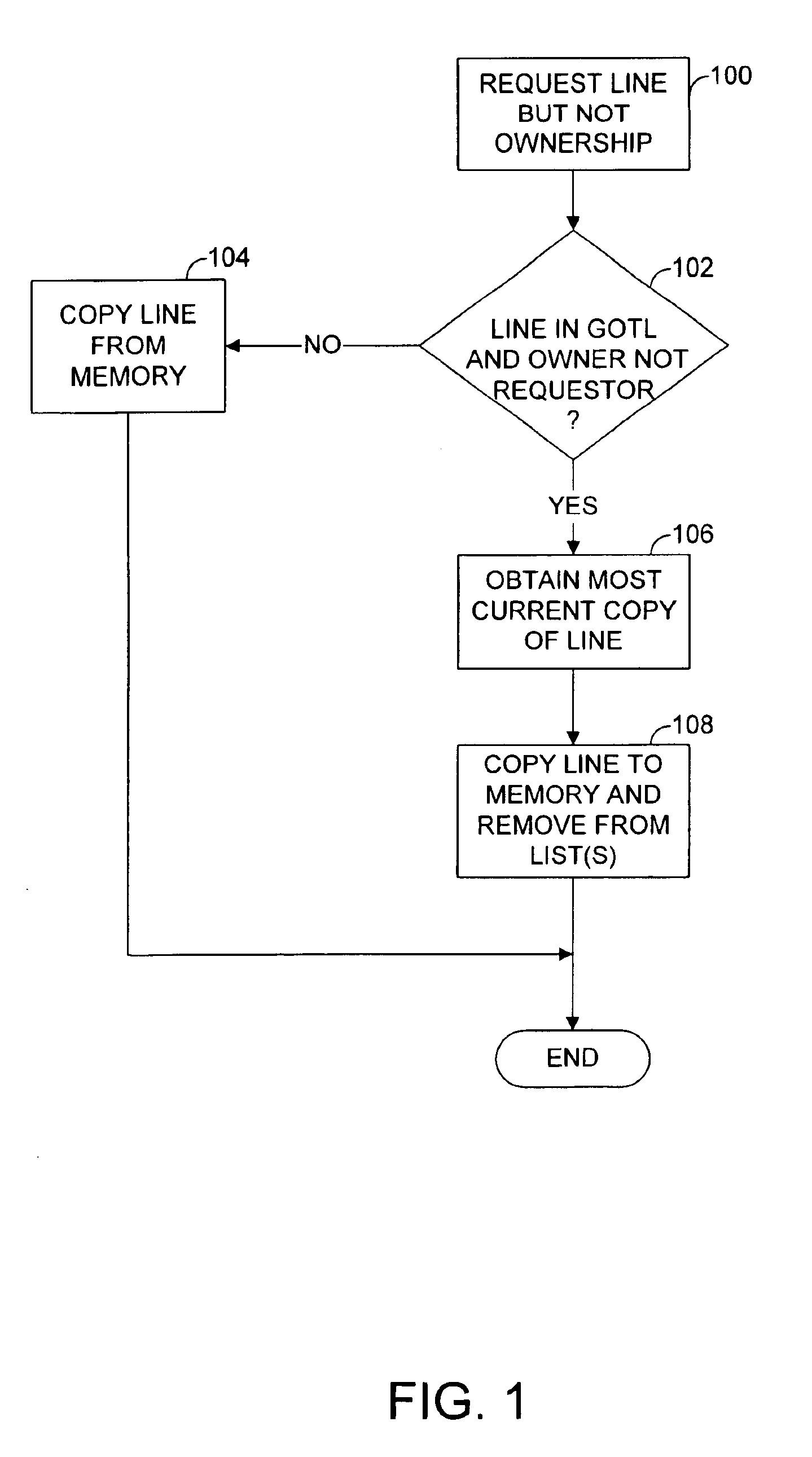

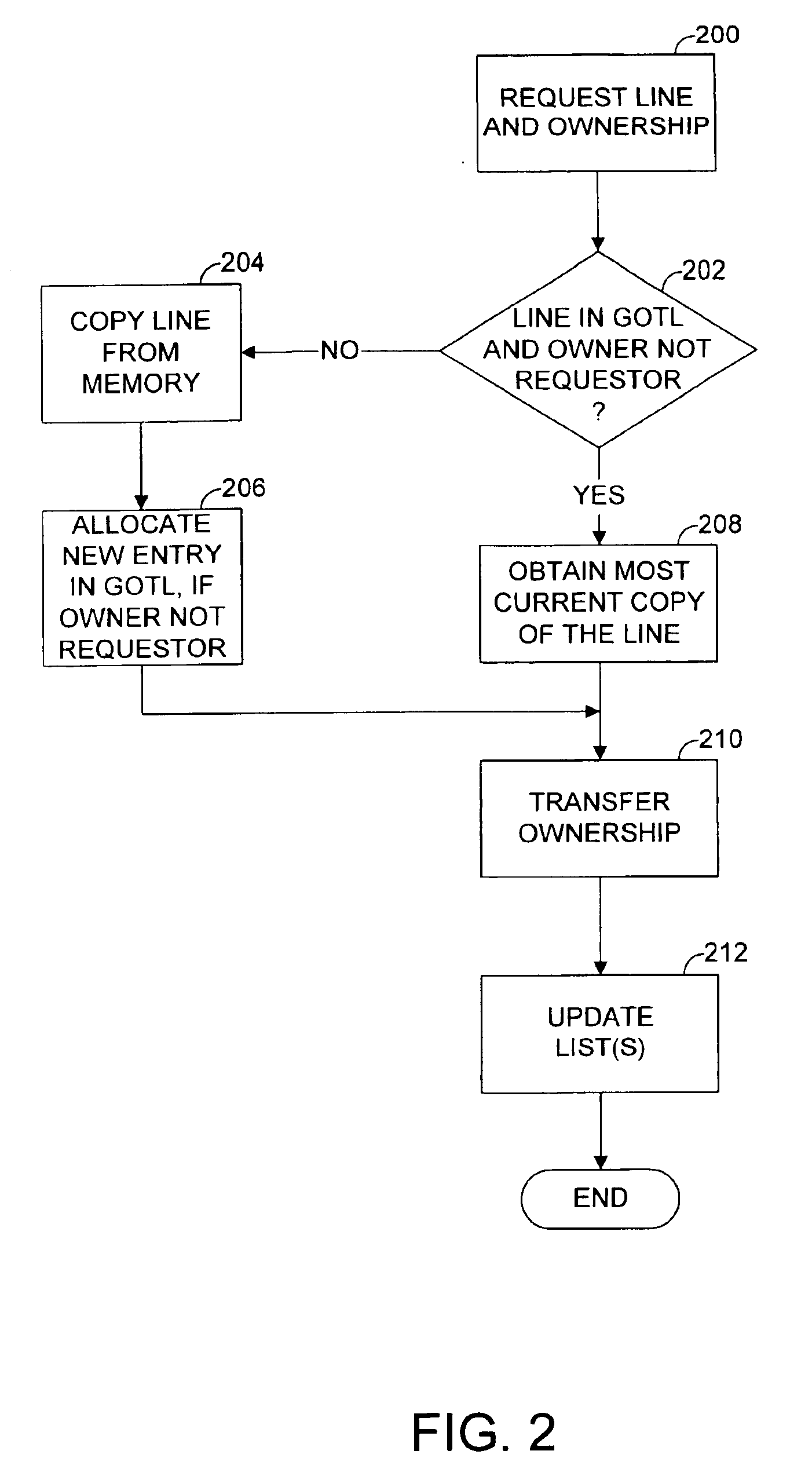

A computer system maintains a list of tags (called a Global Ownership Tag List (GOTL)) for all the cache lines in the system that are owned by a cache. The GOTL is used for cache coherence. There may be one central GOTL. Alternatively, the GOTL may be distributed, so that every device that can request a copy of memory data maintains a local copy of the GOTL. The GOTL can be limited to a relatively small size. For a limited size list, a tag may need to be evicted to make room for a new tag. A line associated with an evicted tag must be written back to memory.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP +1

Method and apparatus for avoiding data bus grant starvation in a non-fair, prioritized arbiter for a split bus system with independent address and data bus grants

InactiveUS6535941B1Reduce delaysSpeeding up data bus grant processMemory systemsMulti processorAddress bus

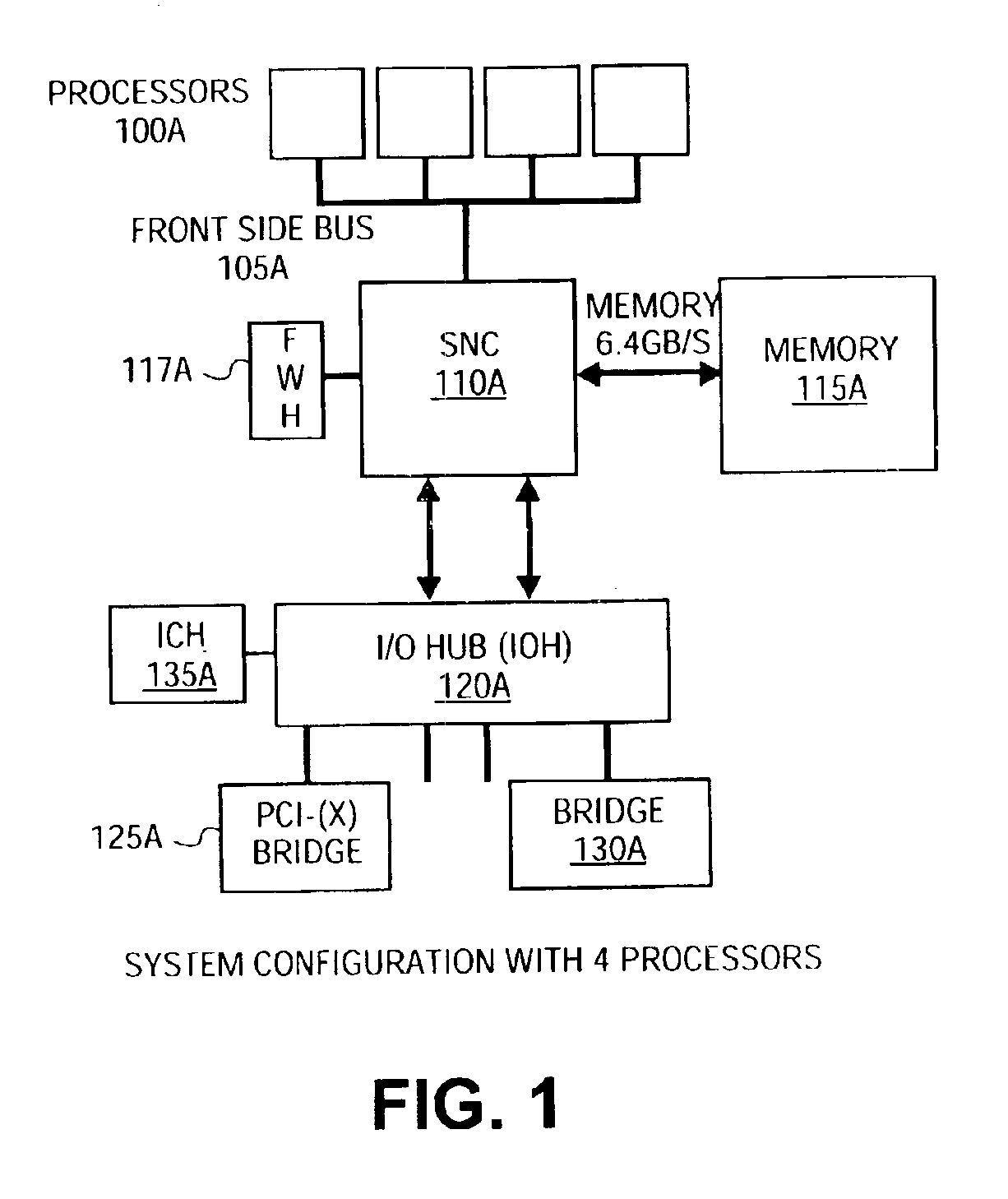

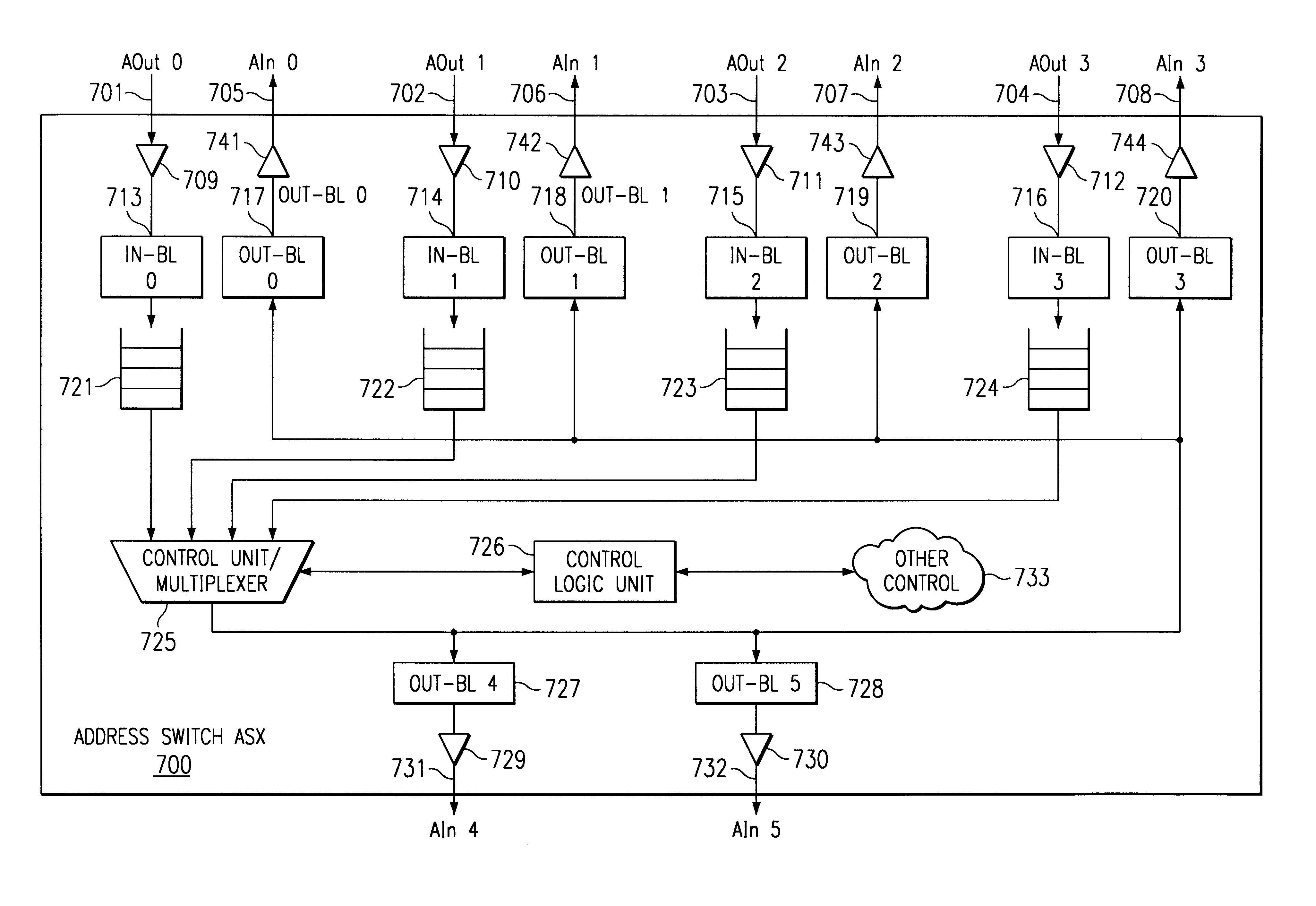

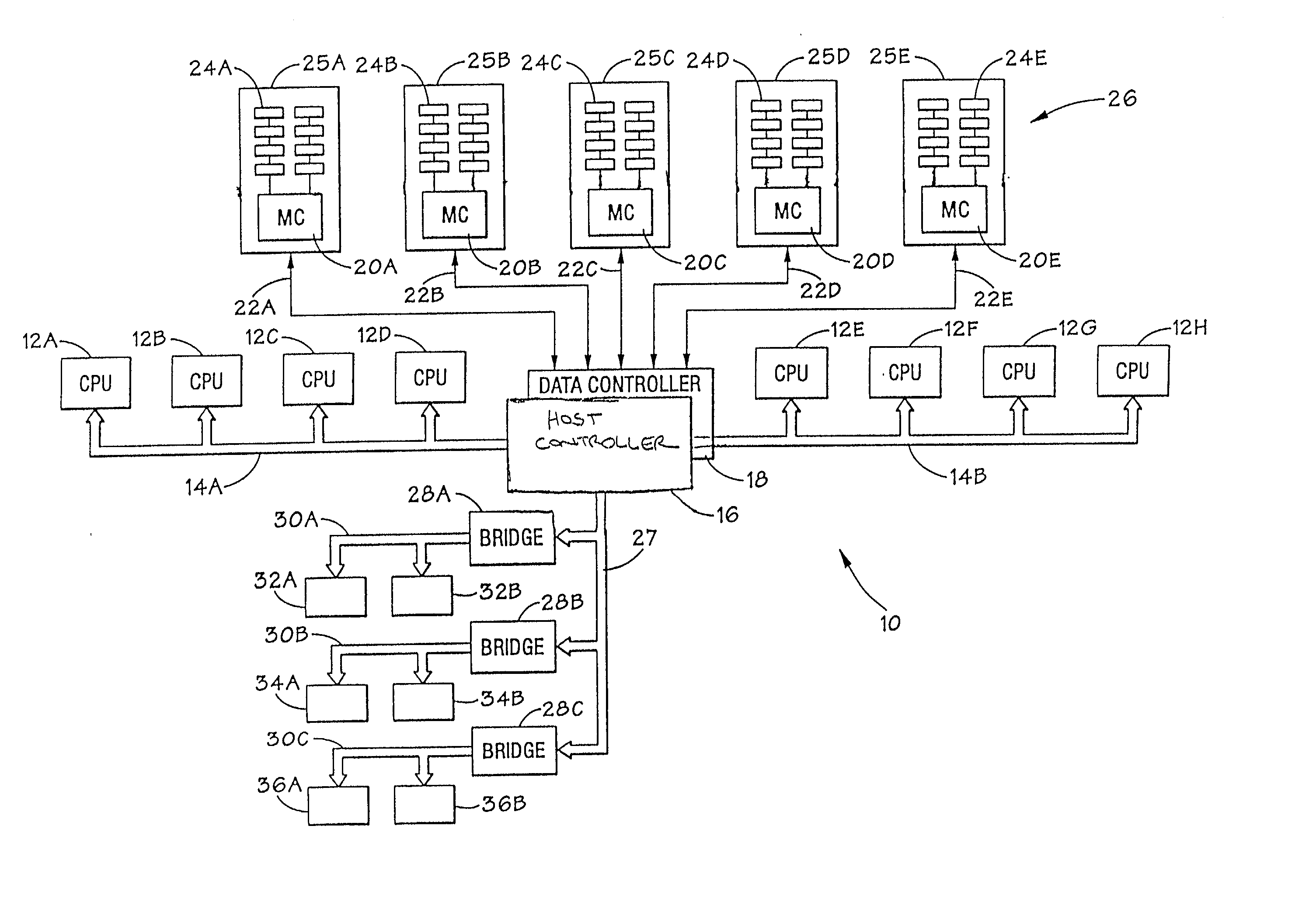

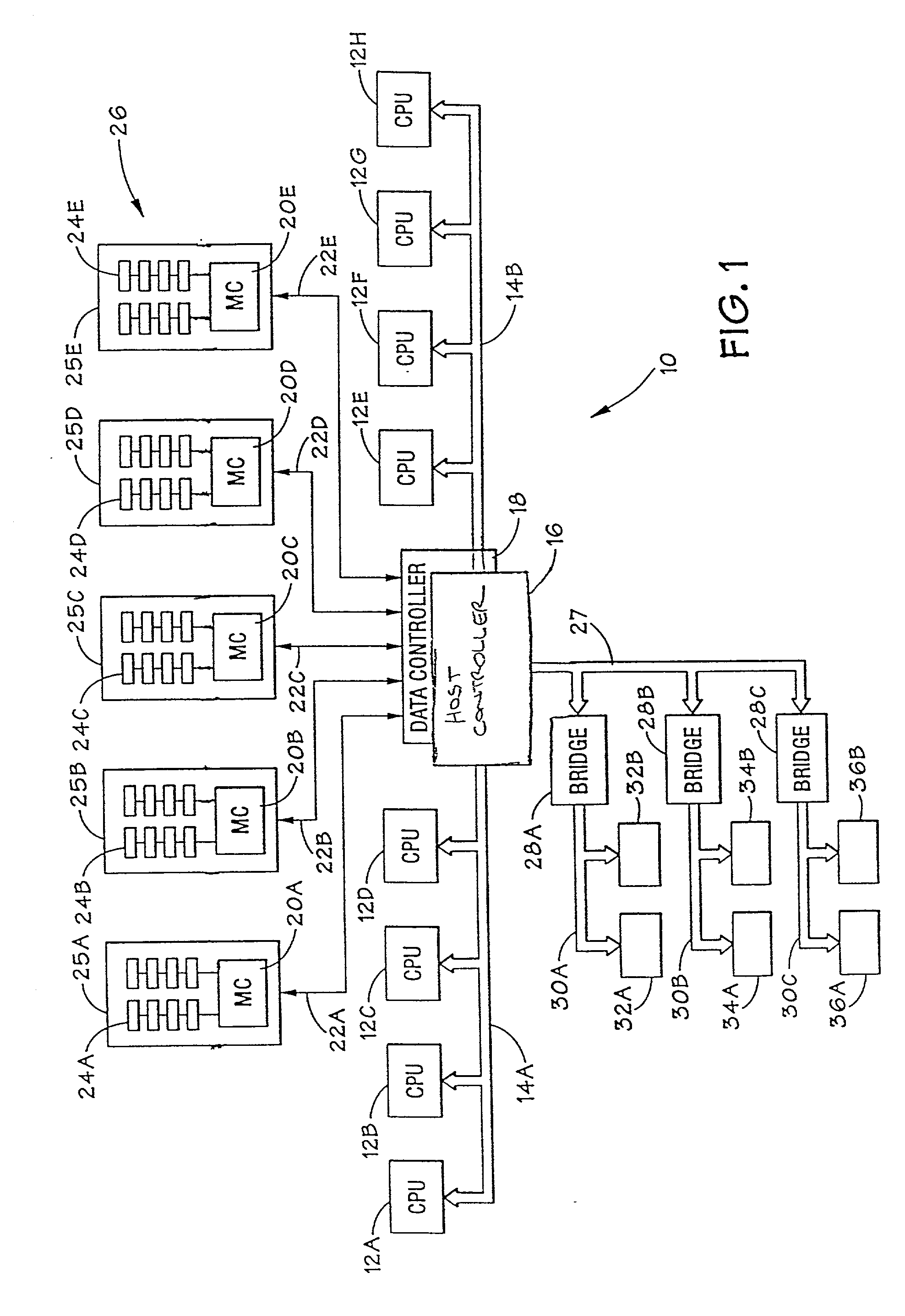

A distributed system structure for a large-way, symmetric multiprocessor system using a bus-based cache-coherence protocol is provided. The distributed system structure contains an address switch, multiple memory subsystems, and multiple master devices, either processors, I / O agents, or coherent memory adapters, organized into a set of nodes supported by a node controller. The node controller receives transactions from a master device, communicates with a master device as another master device or as a slave device, and queues transactions received from a master device. Since the achievement of coherency is distributed in time and space, the node controller helps to maintain cache coherency. In order to reduce the delays in giving address bus grants, a bus arbiter for a bus connected to a processor and a particular port of the node controller parks the address bus towards the processor. A history of address bus grants is kept to determine whether any of the previous address bus grants could be used to satisfy an address bus request associated with a data bus request. If one of them qualifies, the data bus grant is given immediately, speeding up the data bus grant process by anywhere from one to many cycles depending on the requests for the address bus from the higher priority node controller.

Owner:IBM CORP

Location-aware cache-to-cache transfers

InactiveUS20050240735A1Low cache intervention costLow costEnergy efficient ICTMemory adressing/allocation/relocationParallel computingCache coherence

In shared-memory multiprocessor systems, cache interventions from different sourcing caches can result in different cache intervention costs. With location-aware cache coherence, when a cache receives a data request, the cache can determine whether sourcing the data from the cache will result in less cache intervention cost than sourcing the data from another cache. The decision can be made based on appropriate information maintained in the cache or collected from snoop responses from other caches. If the requested data is found in more than one cache, the cache that has or likely has the lowest cache intervention cost is generally responsible for supplying the data. The intervention cost can be measured by performance metrics that include, but are not limited to, communication latency, bandwidth consumption, load balance, and power consumption.

Owner:IBM CORP

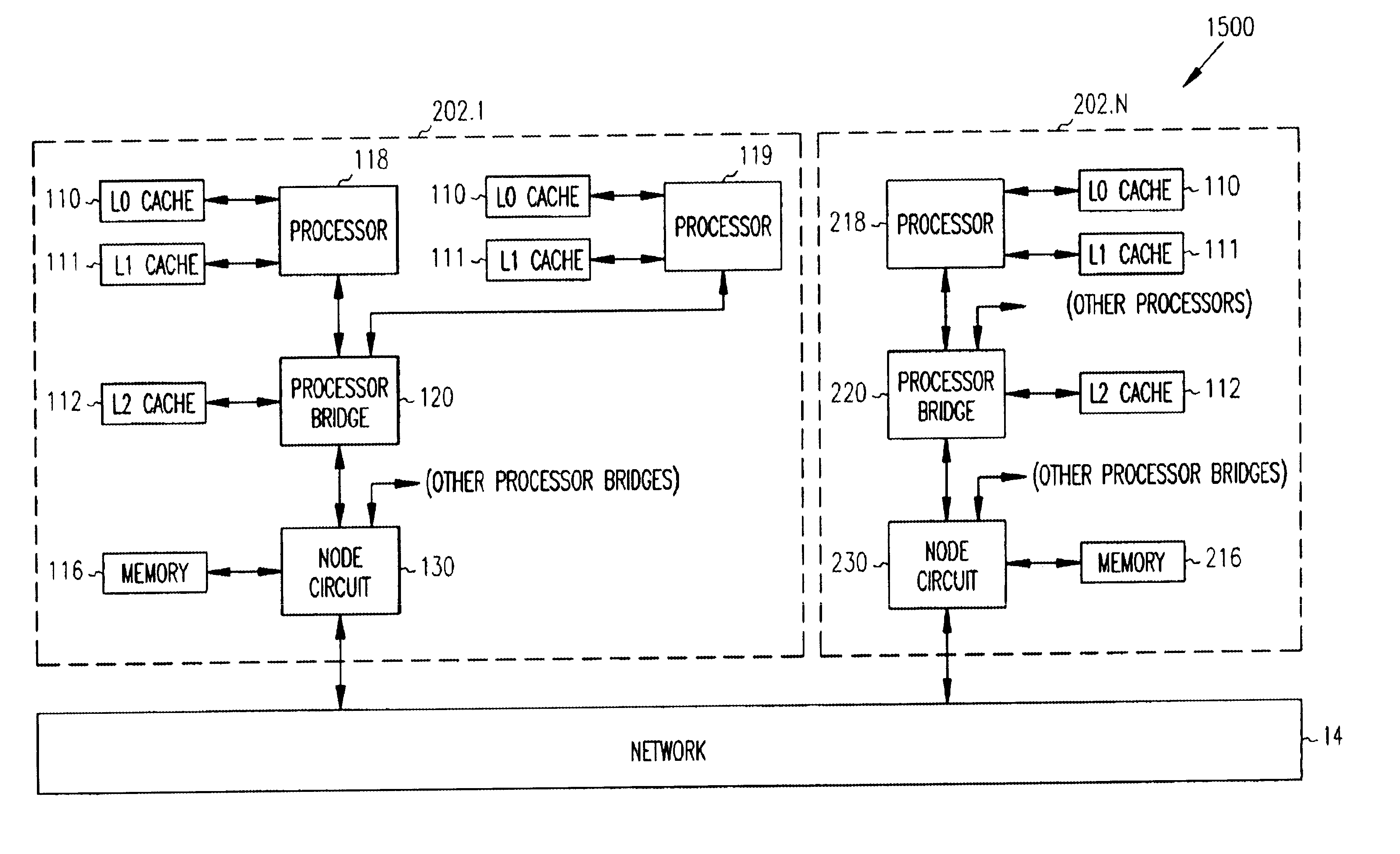

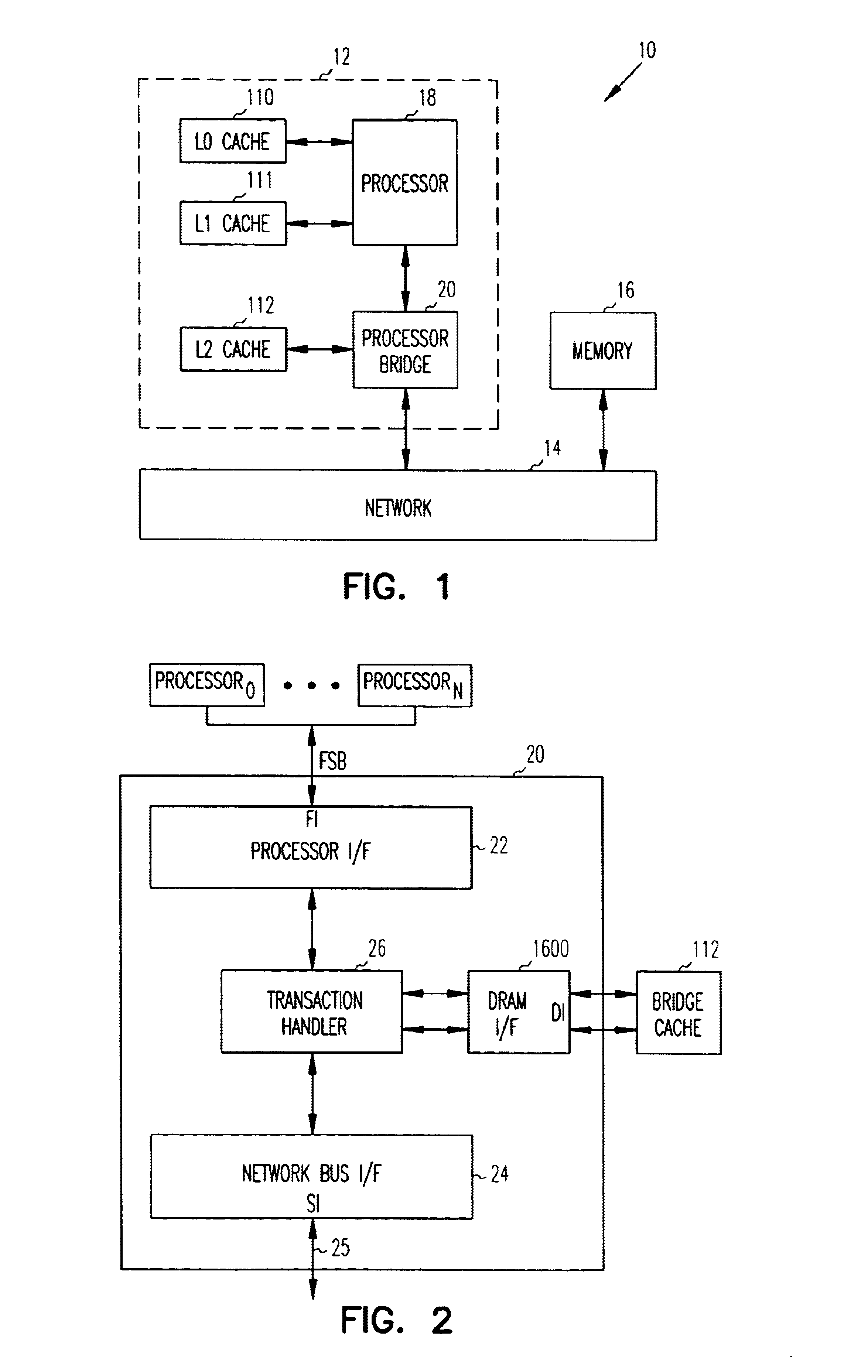

Multiprocessor computer system having multiple coherency regions and software process migration between coherency regions without cache purges

InactiveUS20050021913A1Eliminate needMemory architecture accessing/allocationMemory adressing/allocation/relocationMulti processorPhysics processing unit

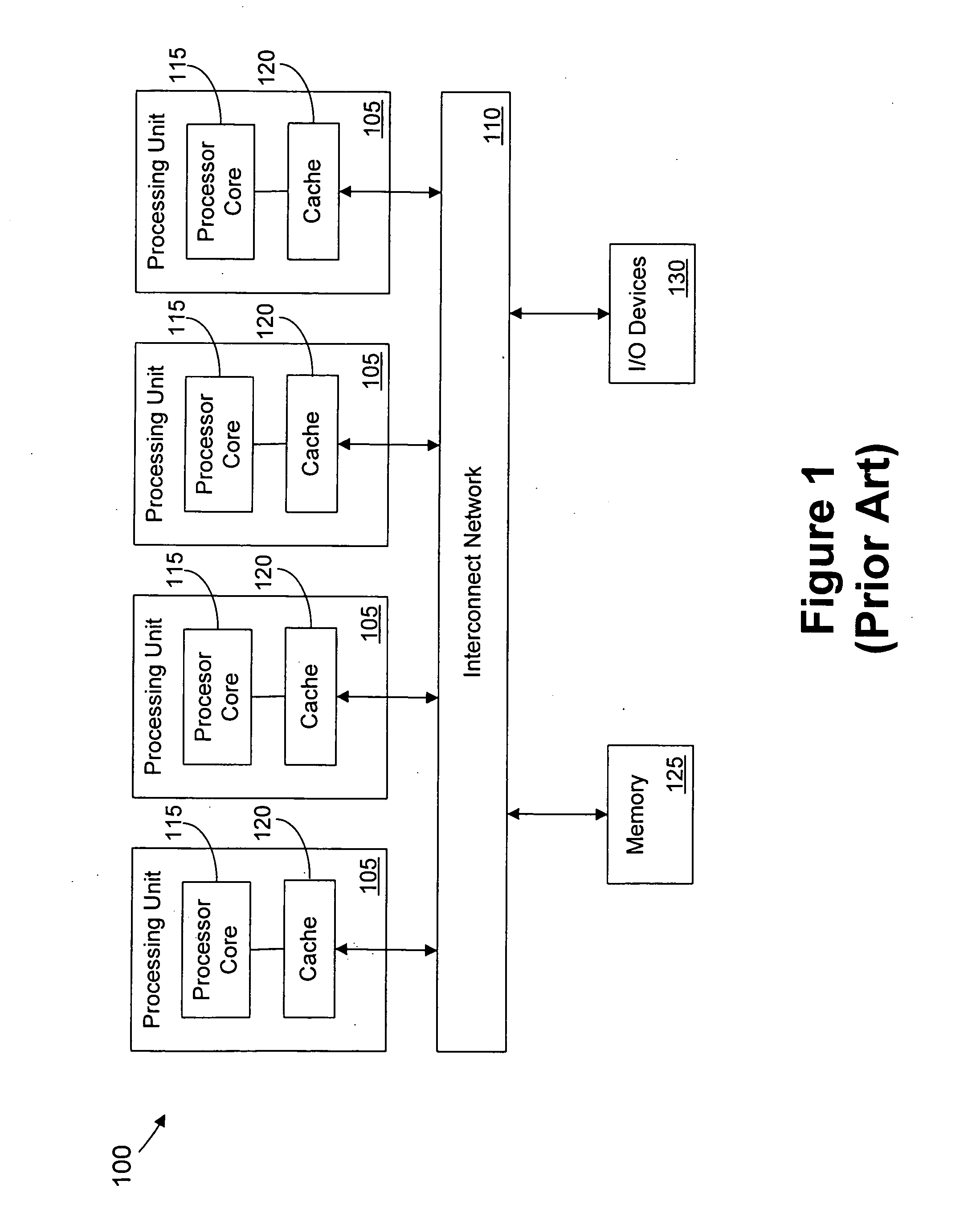

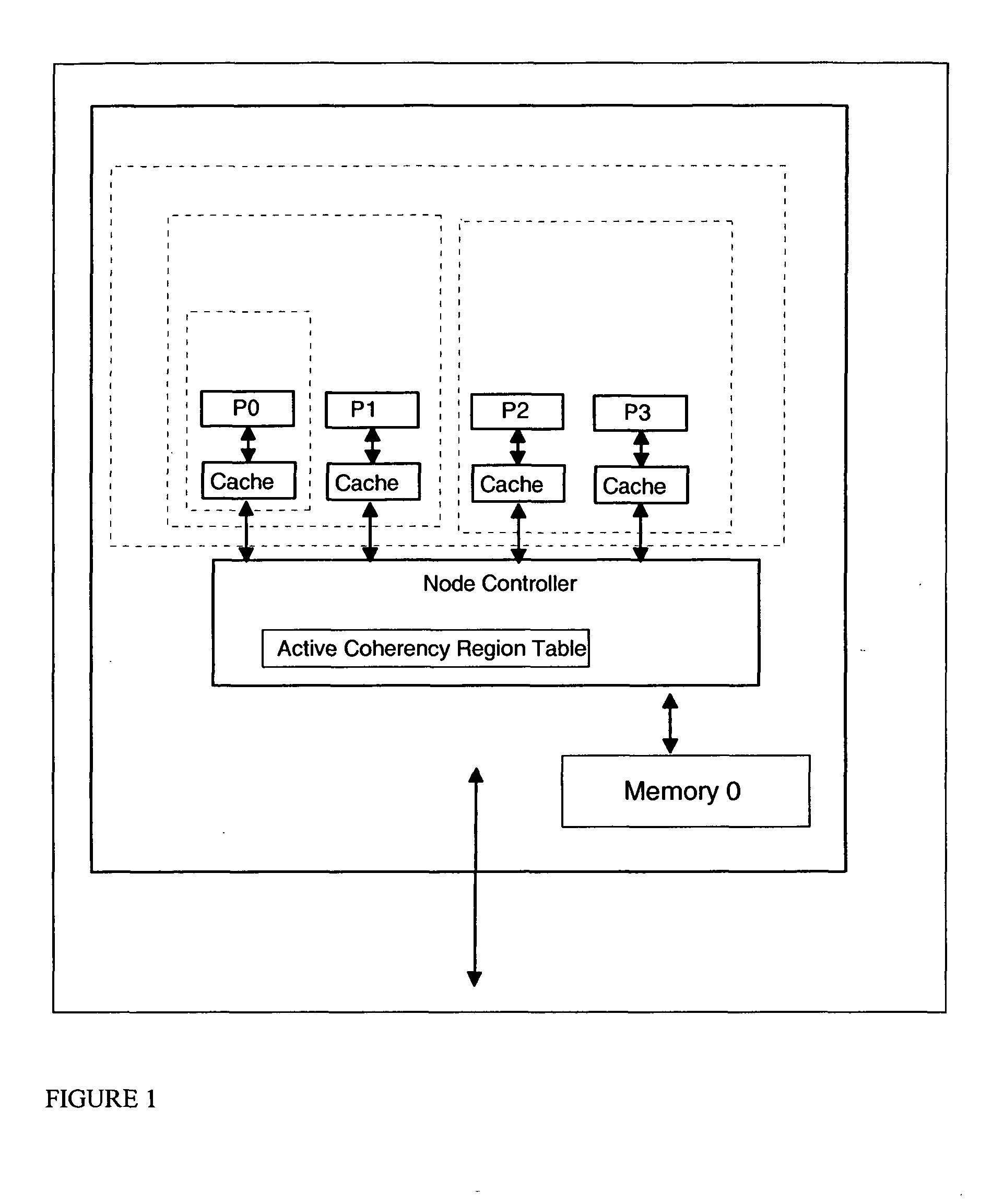

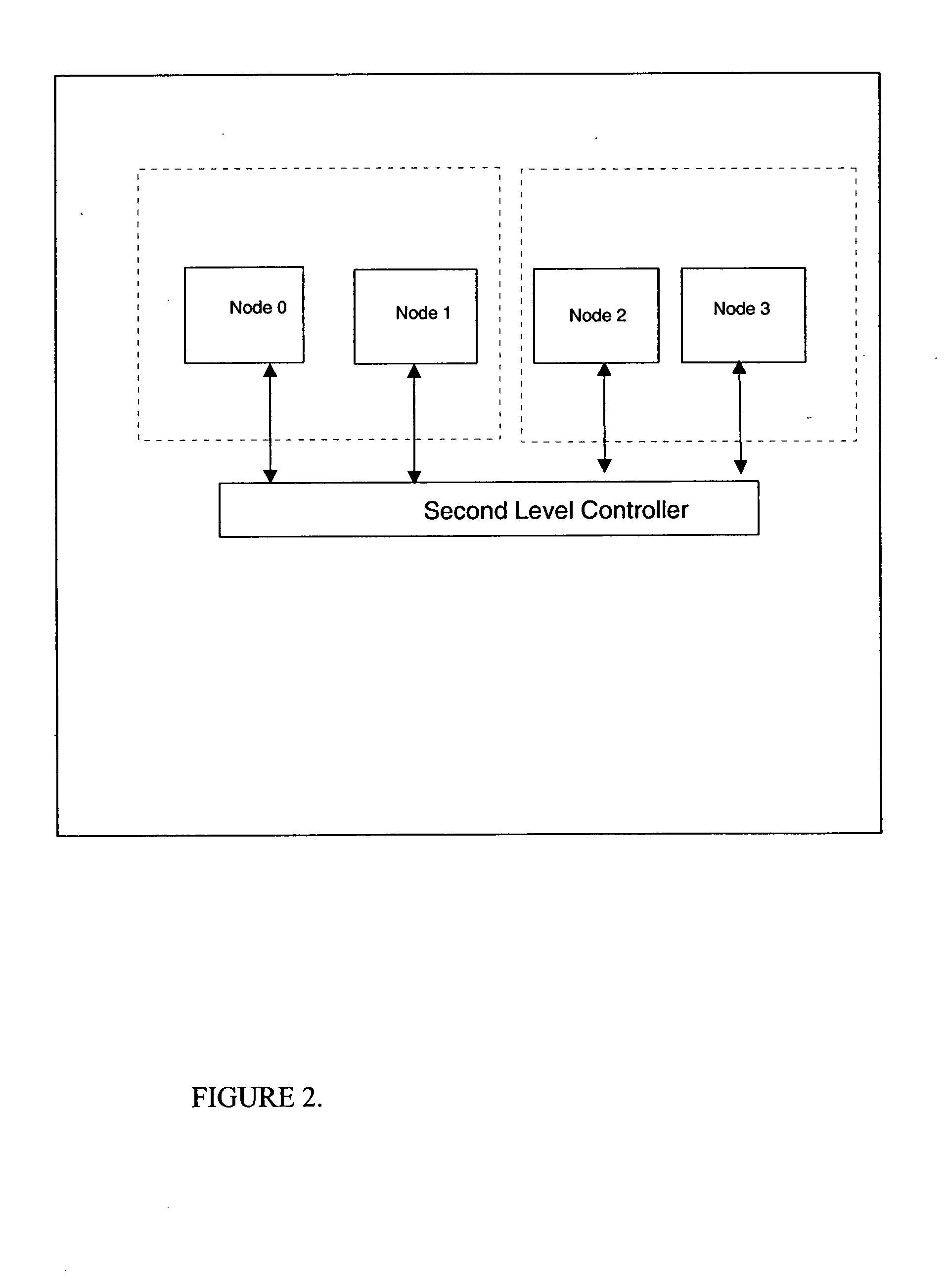

A multiprocessor computer system has a plurality of processing nodes which use processor state information to determine which coherent caches in the system are required to examine a coherency transaction produced by a single originating processor's storage request. A node of the computer has dynamic coherency boundaries such that the hardware uses only a subset of the total processors in a large system for a single workload at any specific point in time and can optimize the cache coherency as the supervisor software or firmware expands and contracts the number of processors which are being used to run any single workload. Multiple instances of a node can be connected with a second level controller to create a large multiprocessor system. The node controller uses the mode bits to determine which processors must receive any given transaction that is received by the node controller. The second level controller uses the mode bits to determine which nodes must receive any given transaction that is received by the second level controller. Logical partitions are mapped to allowable physical processors. Cache coherence regions which encompass subsets of the total number of processors and caches in the system are chosen for their physical proximity. A distinct cache coherency region can be defined for each partition using a hypervisor.

Owner:IBM CORP

Next snoop predictor in a host controller

A technique for optimizing cycle time in maintaining cache coherency. Specifically, a method and apparatus are provided to optimize the processing of requests in a multi-processor-bus system which implements a snoop-based coherency scheme. The acts of snooping a bus for a first address and searching a posting queue for the next address to be snooped are performed simultaneously to minimize the request cycle time.

Owner:VALTRUS INNOVATIONS LTD +1

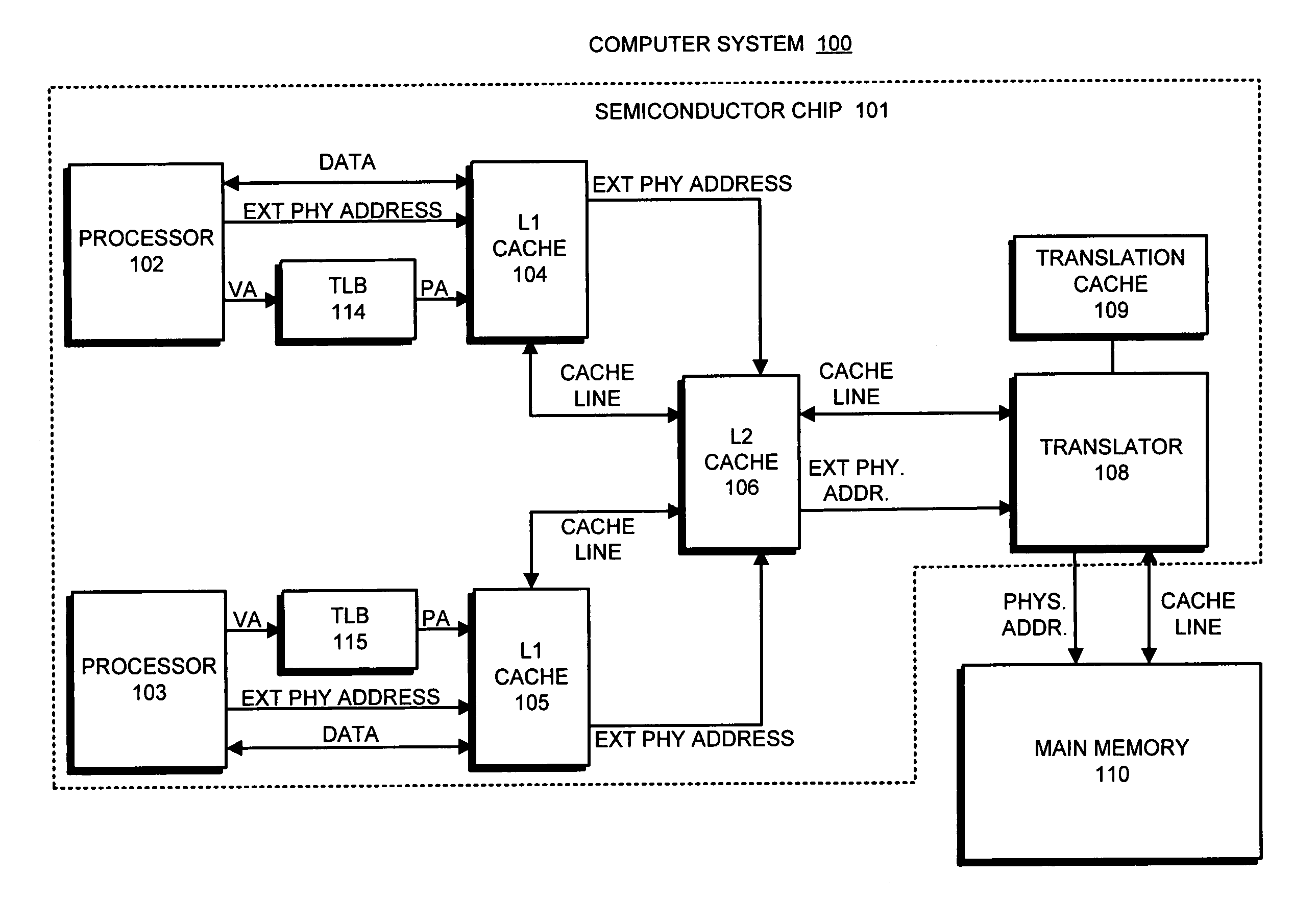

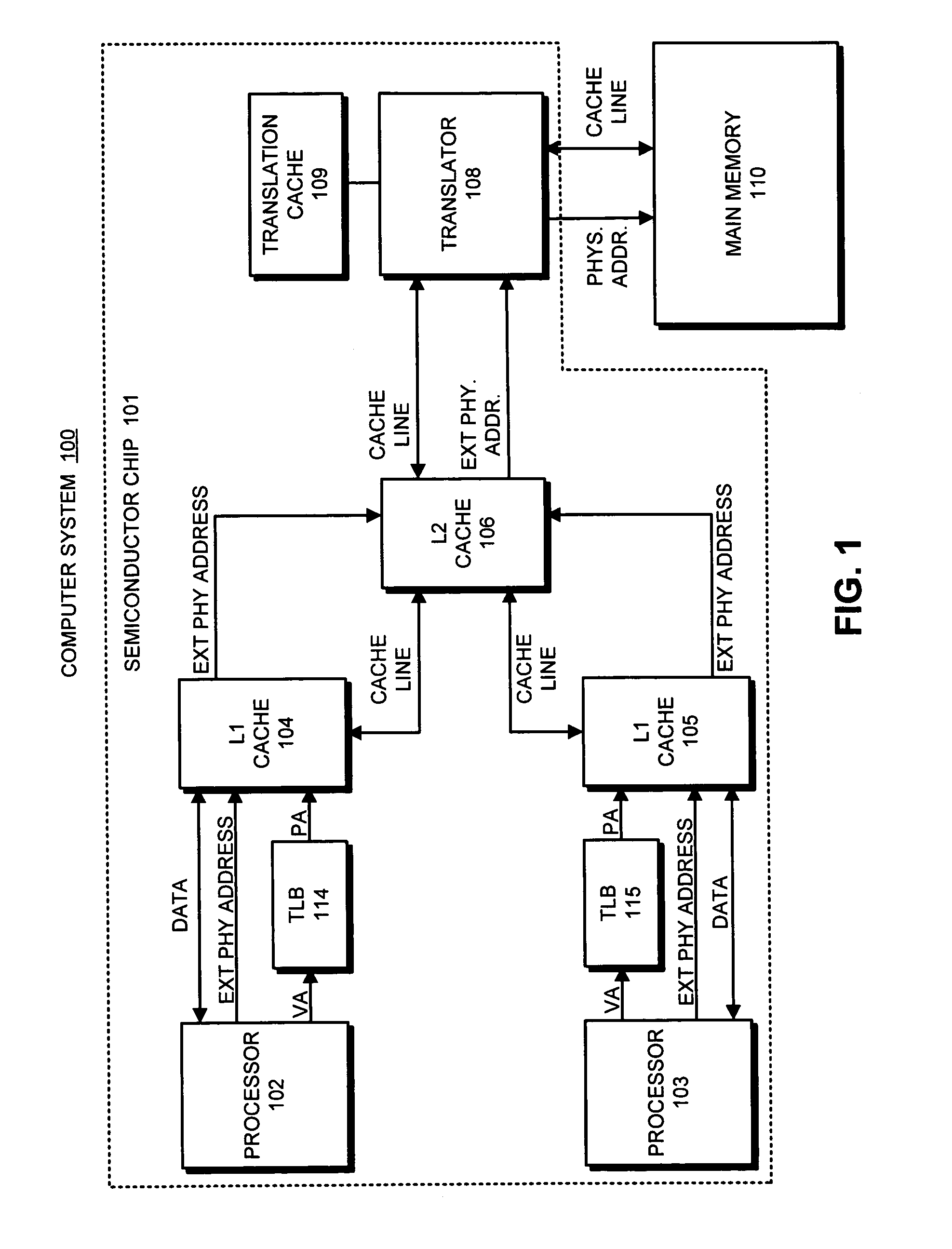

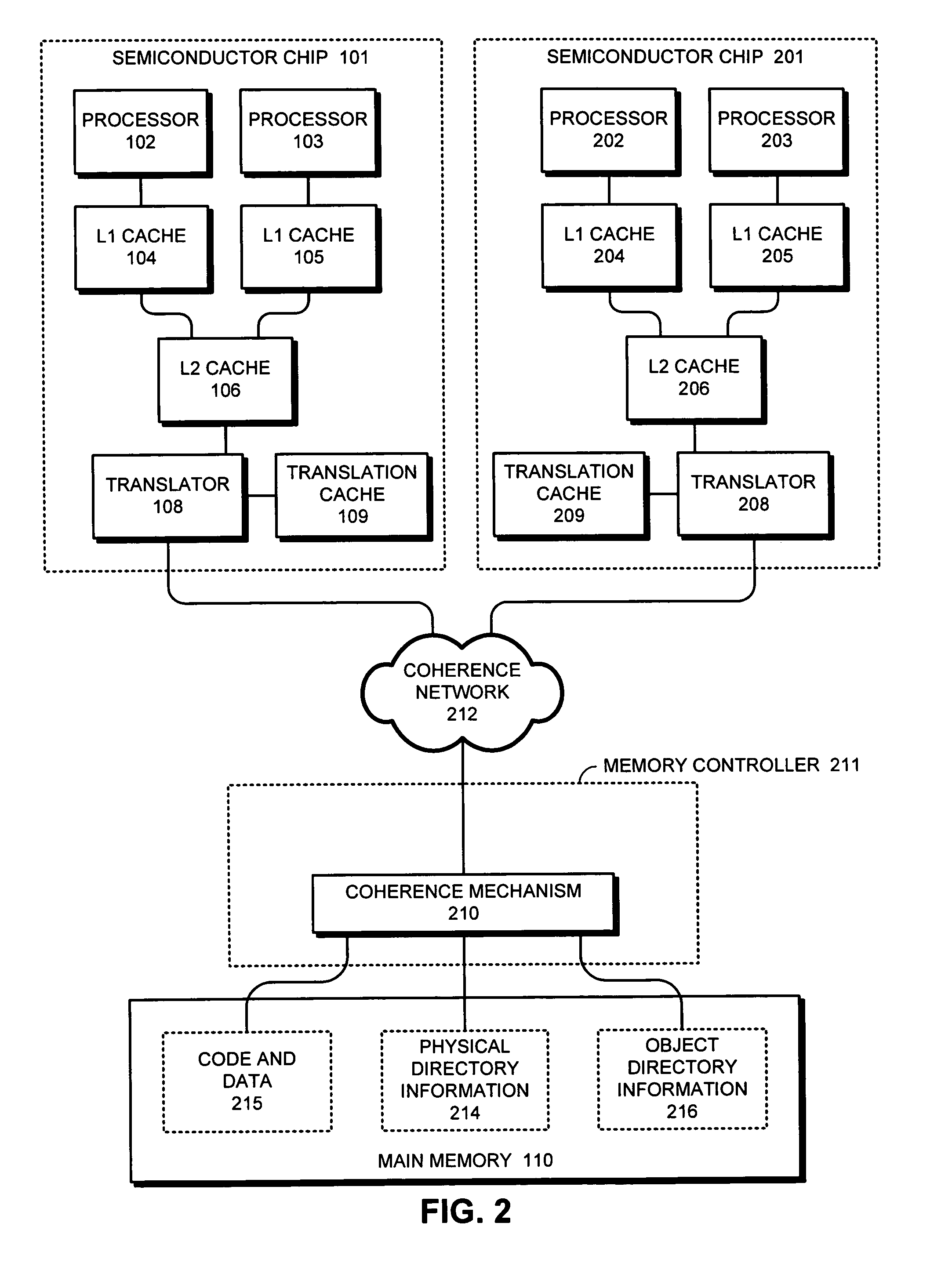

Avoiding inconsistencies between multiple translators in an object-addressed memory hierarchy

ActiveUS7167956B1Avoid inconsistenciesMemory adressing/allocation/relocationMicro-instruction address formationMemory hierarchyObject based

One embodiment of the present invention provides a system that avoids inconsistencies between multiple translators in an object-addressed memory hierarchy. This object-addressed memory hierarchy includes an object cache, which supports references to object cache lines based on object identifiers instead of physical addresses. During operation, the system receives a read-to-share (RTS) signal for an object cache line, wherein the RTS signal is received from a requesting processor as part of a cache-coherence operation. If no processor owns the object cache line, the system causes the requesting processor to become the owner of the object cache line instead of merely holding a copy the object cache line in the shared state. The system also generates a translation for the object cache line in a translator associated with the requesting processor, wherein the translation maps an object identifier and a corresponding offset to a physical address for the object cache line and reconstructs the contents of the object cache line by reading from memory at that physical address. In this way, if the requesting processor owns the object cache line, a subsequent processor that requests the same object cache line will receive the object cache line from the requesting processor, and will not generate an additional translation for the object cache line. This ensures that multiple translators will not generate inconsistent translations for the same object cache line.

Owner:ORACLE INT CORP

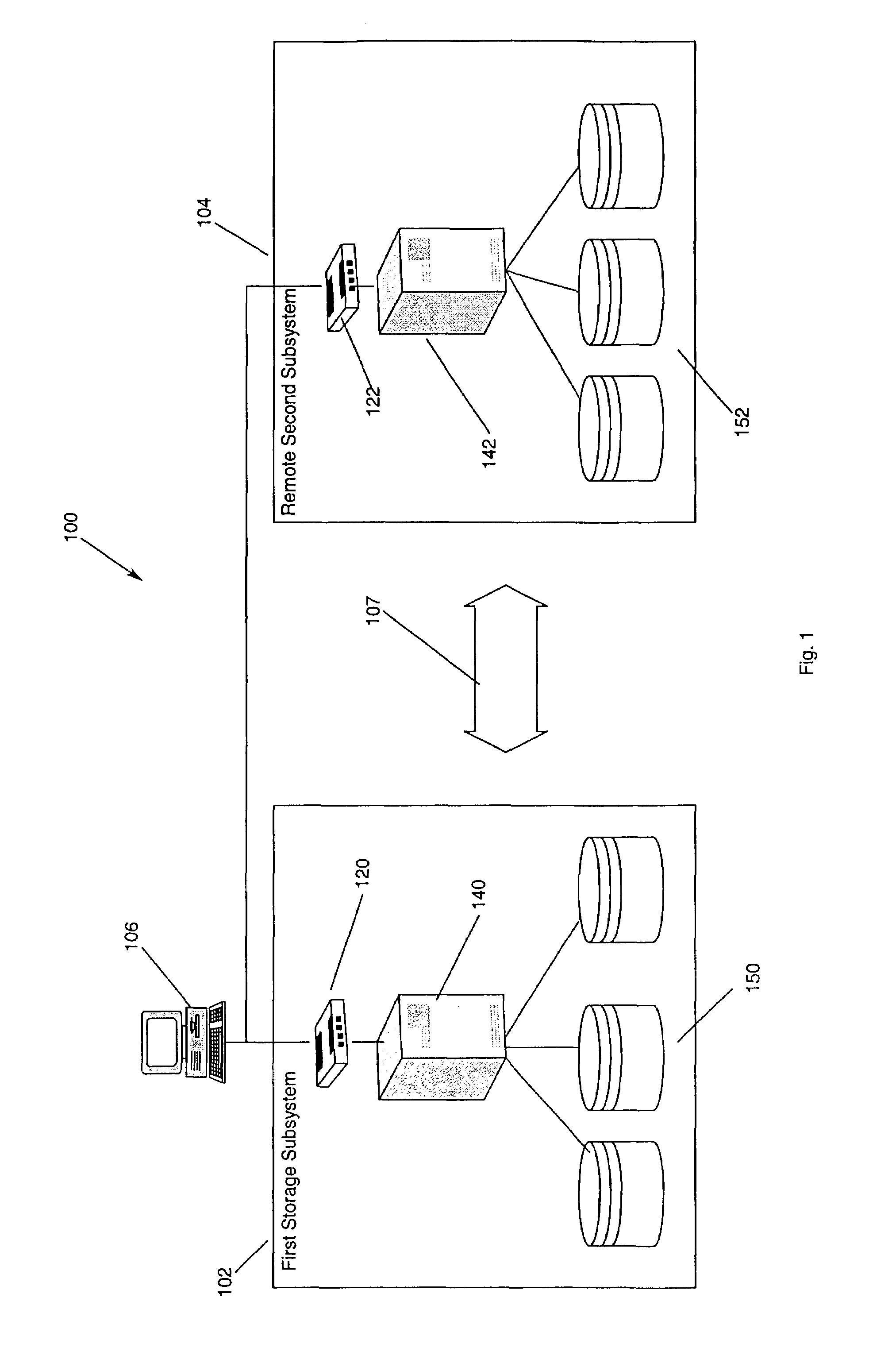

Method, apparatus and program storage device for maintaining data consistency and cache coherency during communications failures between nodes in a remote mirror pair

InactiveUS7120824B2Maintain data consistencyData processing applicationsError detection/correctionTimestampComputer science

A method, apparatus and program storage device for maintaining data consistency and cache coherency during communications failures between nodes in a remote mirror pair. A link between a mirror pair of storage systems is monitored. During a link failure between a first storage system and a second storage systems, reads and writes on the first and second storage systems are independently performed and write data and associated timestamps are maintained for the write data for each write in a queue on the first and second storage system. After link reestablishment, volume sets on the first and second storage systems are resynchronized using write data and associated timestamps.

Owner:IBM CORP

Method and system for maintaining cache coherence in a multiprocessor-multicache environment having unordered communication

A method and system for providing cache coherence despite unordered interconnect transport. In a computer system of multiple memory devices or memory units having shared memory and an interconnect characterized by unordered transport, the method comprises sending a request packet over the interconnect from a first memory device to a second memory device requiring that an action be carried out on shared memory held by the second memory device. If the second memory device determines that the shared memory is in a transient state, the second memory device returns the request packet to the first memory device; otherwise, the request is carried out by the second memory device. The first memory device will continue to resend the request packet each time that the request packet is returned.

Owner:IBM CORP

Cache coherence protocol

InactiveUS20050240734A1Memory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingDistributed cache

A cache coherence protocol facilitates a distributed cache coherency conflict resolution in a multi-node system to resolve conflicts at a home node.

Owner:INTEL CORP

Multiprocessor computer system with sectored cache line mechanism for cache intervention

A method of maintaining coherency in a multiprocessor computer system wherein each processing unit's cache has sectored cache lines. A first cache coherency state is assigned to one of the sectors of a particular cache line, and a second cache coherency state, different from the first cache coherency state, is assigned to the overall cache line while maintaining the first cache coherency state for the first sector. The first cache coherency state may provide an indication that the first sector contains a valid value which is not shared with any other cache (i.e., an exclusive or modified state), and the second cache coherency state may provide an indication that at least one of the sectors in the cache line contains a valid value which is shared with at least one other cache (a shared, recently-read, or tagged state). Other coherency states may be applied to other sectors in the same cache line. Partial intervention may be achieved by issuing a request to retrieve an entire cache line, and sourcing only a first sector of the cache line in response to the request. A second sector of the same cache line may be sourced from a third cache. Other sectors may also be sourced from a system memory device of the computer system as well. Appropriate system bus codes-are utilized to transmit cache operations to the system bus and indicate which sectors of the cache line are targets of the cache operation.

Owner:GOOGLE LLC

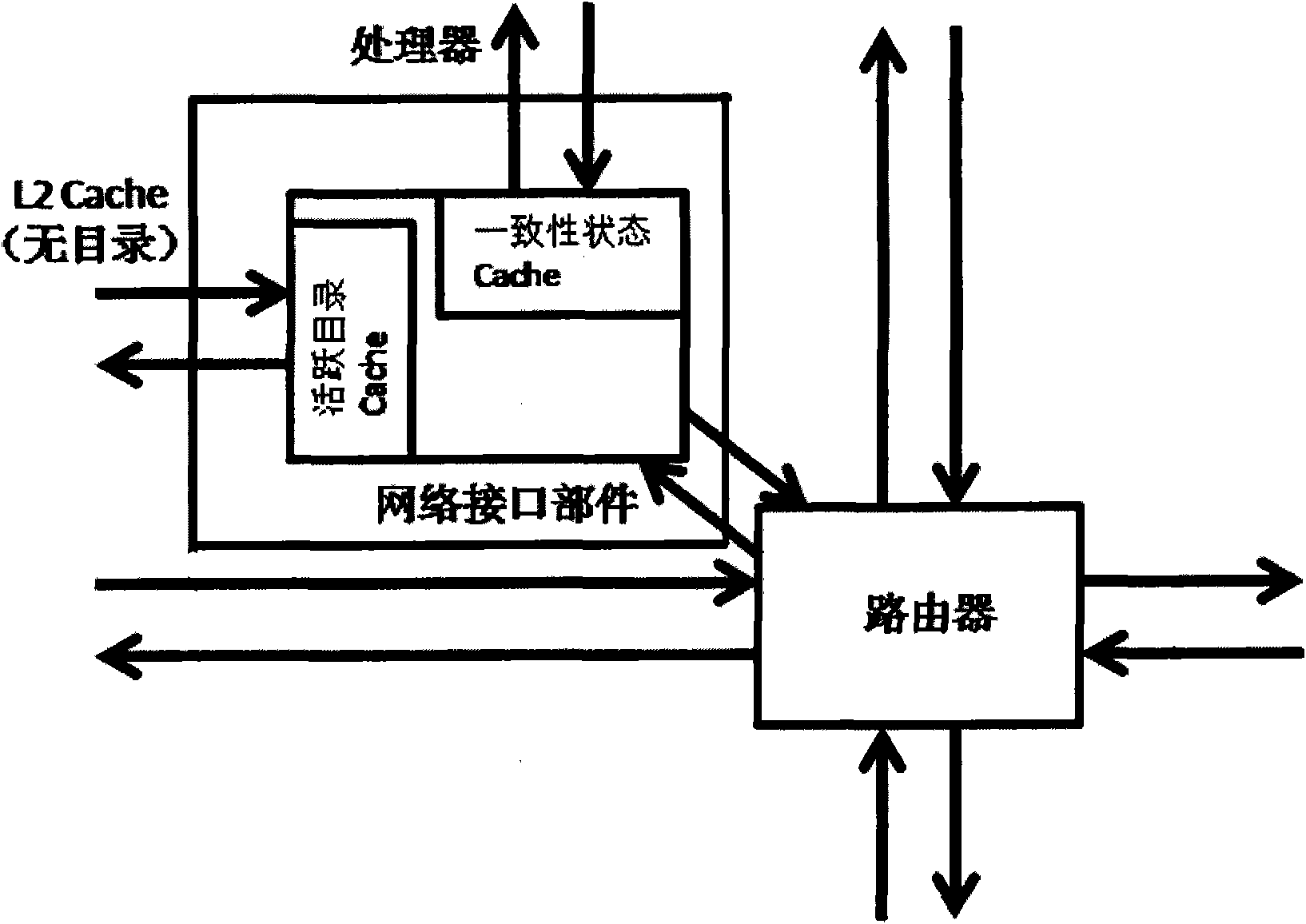

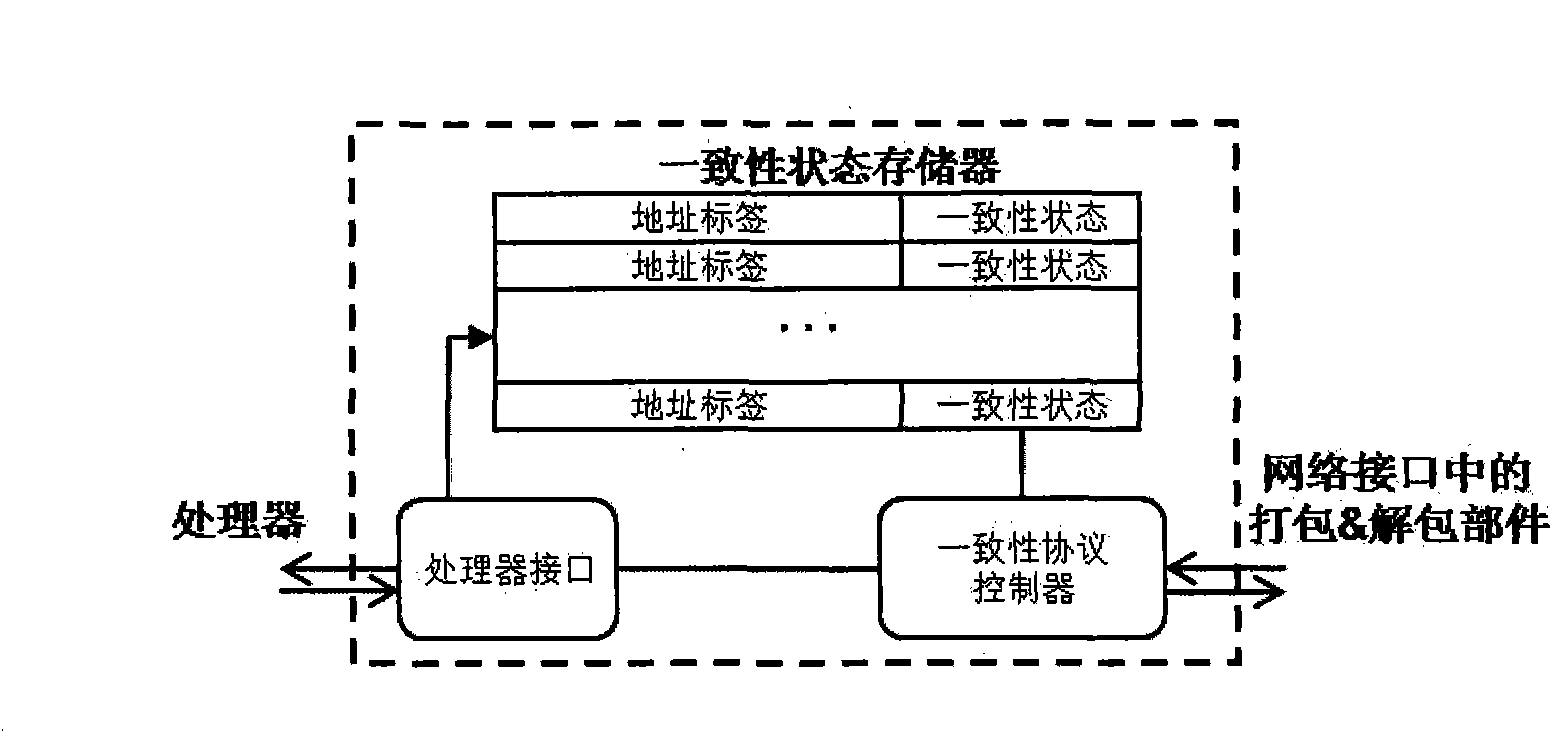

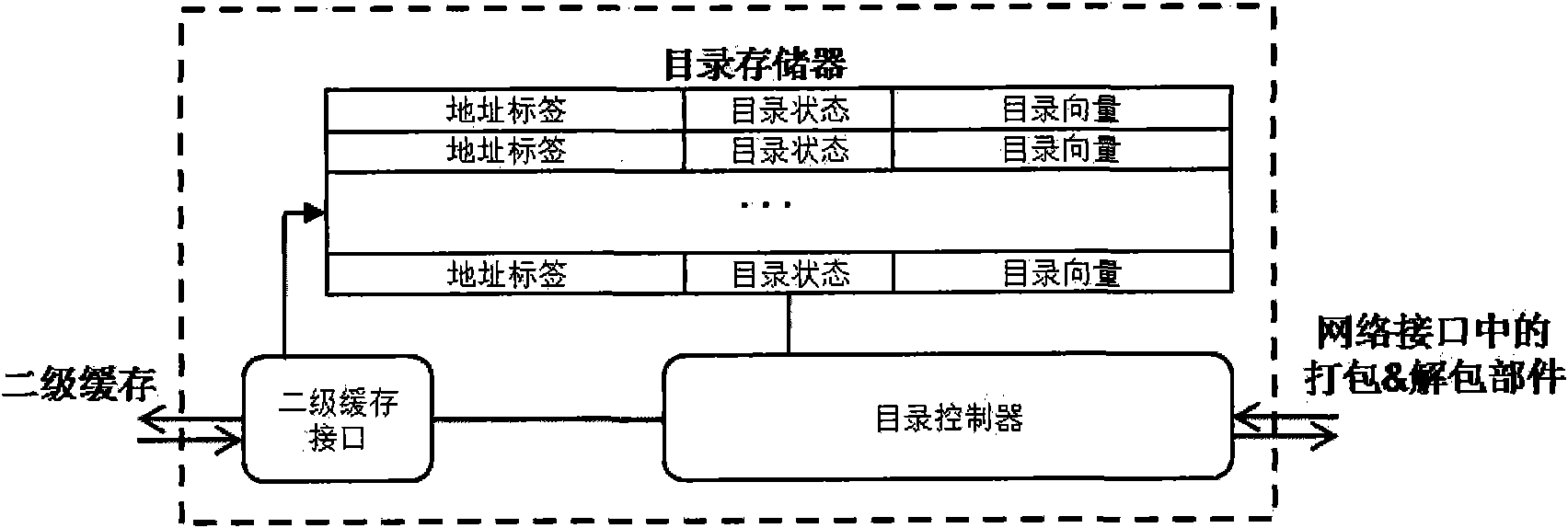

On-chip network system supporting cache coherence and data request method

InactiveCN101958834ASimplify the design processSimplify the verification processDigital computer detailsData switching networksCache accessStructure of Management Information

The invention discloses an on-chip network system supporting cache coherence. The network system comprises a network interface part and a router, wherein the network interface part is connected with the router, a multi-core processor and a second level cache; a consistent state cache connected with the multi-core processor is additionally arranged in the network interface part and is used for storing and maintaining the consistent state of a data block in a first level cache of the multi-core processor; and an active directory cache connected with the second level cache is also additionally arranged in the network interface part and is used for caching and maintaining the directory information of the data block usually accessed by the first level cache. Coherence maintenance work is separated from the work of a processor, directory maintenance work is separated from the work of the second level cache, and the directory structure in the second level cache is eliminated, so that the design and the verification process of the multi-core processor are simplified, the storage cost of a chip is reduced, and the performance of the multi-core processor is improved. The invention also discloses a data request method of the system.

Owner:TSINGHUA UNIV

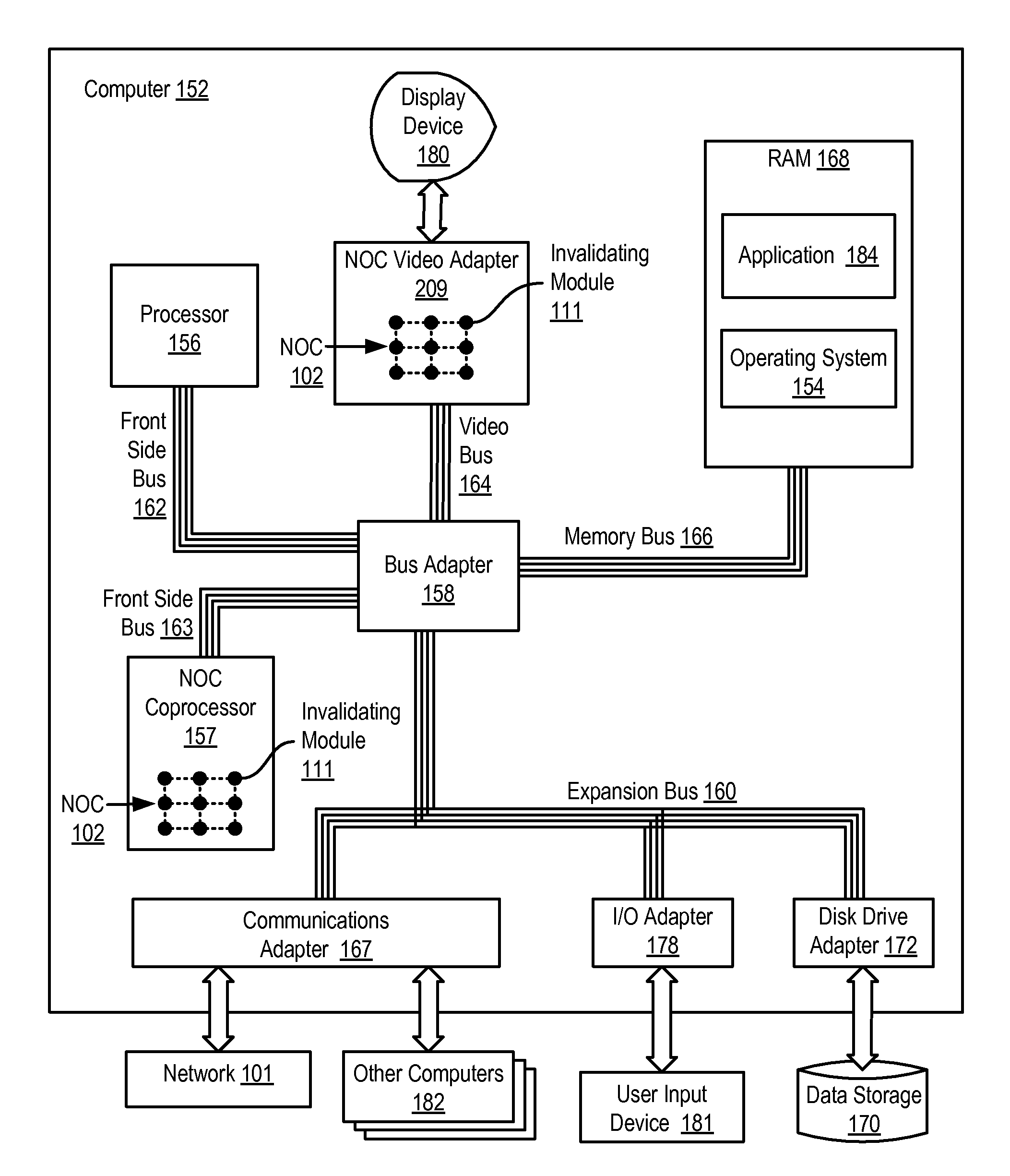

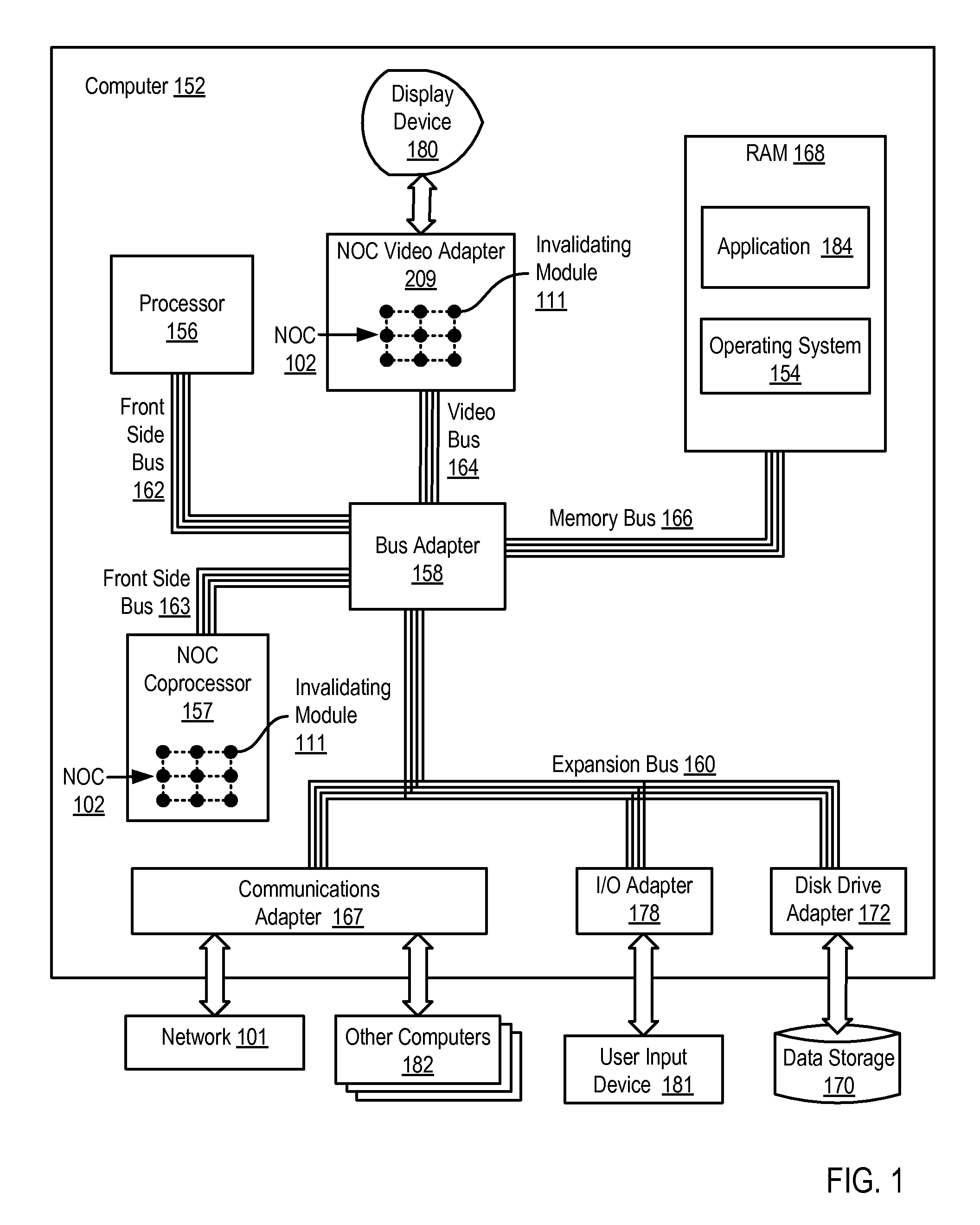

Network on Chip That Maintains Cache Coherency with Invalidation Messages

A network on chip (‘NOC’), and methods of operation of a NOC, that maintains cache coherency with invalidation messages, the NOC comprising integrated processor (‘IP’) blocks, routers, memory communications controllers, and network interface controller, each IP block adapted to a router through a memory communications controller and a network interface controller, each memory communications controller controlling communication between an IP block and memory, and each network interface controller controlling inter-IP block communications through routers, the NOC also including an invalidating module configured to send, to selected IP blocks, an invalidation message, the invalidation message representing an instruction to invalidate cached memory and the selected IP blocks, each selected IP block configured to invalidate the contents of the cached memory responsive to receiving the invalidation message.

Owner:IBM CORP

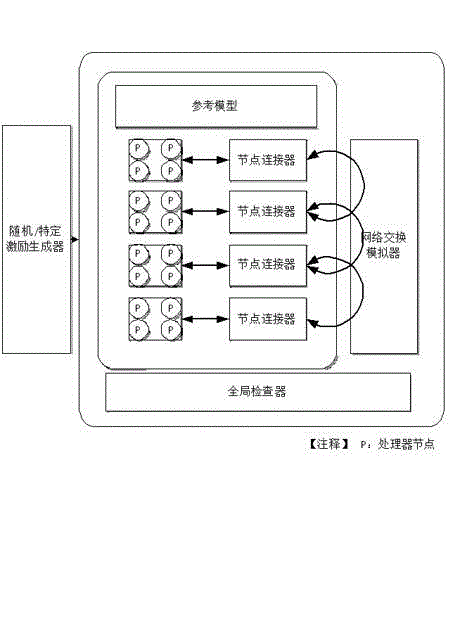

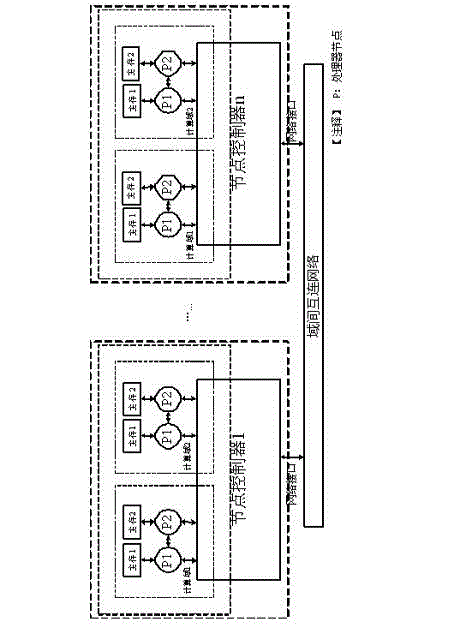

Extension Cache Coherence protocol-based multi-level consistency simulation domain verification and test method

ActiveCN103150264AImprove performanceReduce Topological ComplexityMemory architecture accessing/allocationMemory adressing/allocation/relocationUniform memory accessMulti processor

The invention discloses an extension Cache Coherence protocol-based multi-level consistency domain simulation verification and test method. An extension Cache Coherence protocol-based multi-level consistency domain CC-NUMA (Cache Coherent Non-Uniform Memory Access) system protocol simulation model is constructed. A protocol table inquiring and state converting executing mechanism in a key node of a system ensures that a Cache Coherence protocol is maintained in a single computing domain and is simultaneous maintained among a plurality of computing domains, and accuracy and stability are ensured by intra-domain and inter-domain transmission; and a credible protocol inlet conversion coverage rate evaluating drive verification method is provided, transactions are processed by loading an optimized transaction generator promoting model, a coverage rate index is obtained after the operation is ended, and the verification efficiency is increased in comparison with a random transaction promoting mechanism. Through constructing one multi-processor multi-consistency domain verification system model and developing relevant simulation verification, the applicability and the effectiveness of the method are further confirmed.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

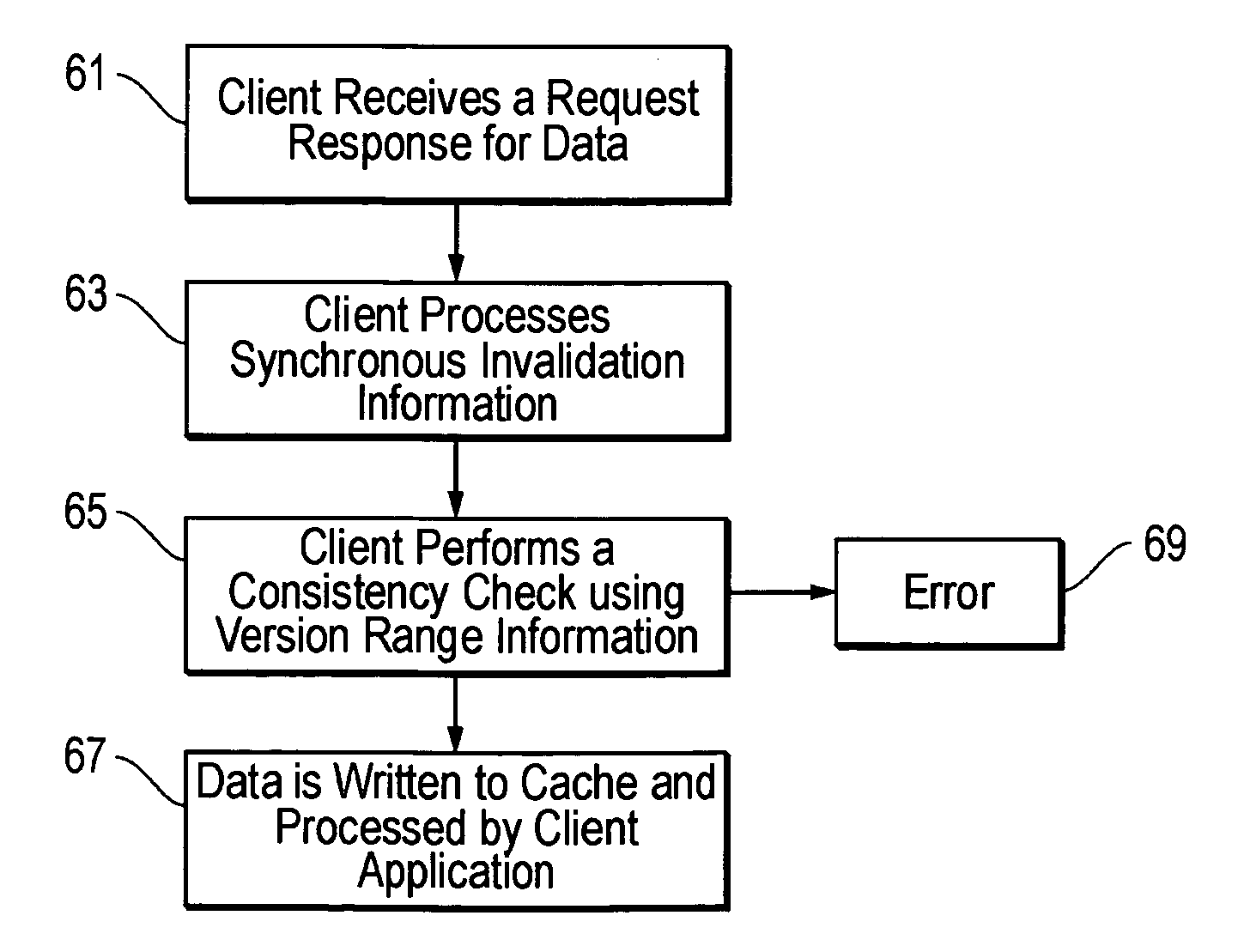

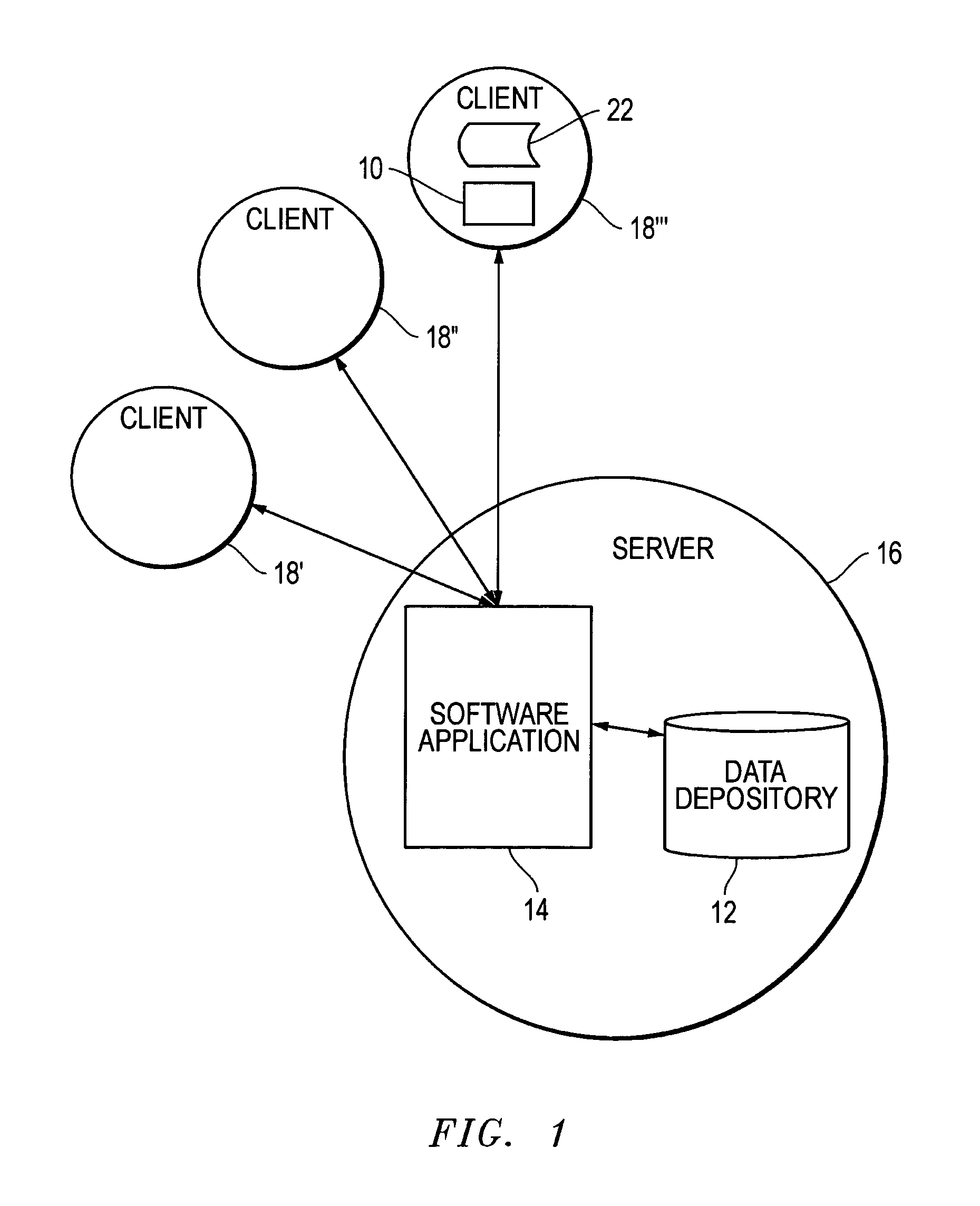

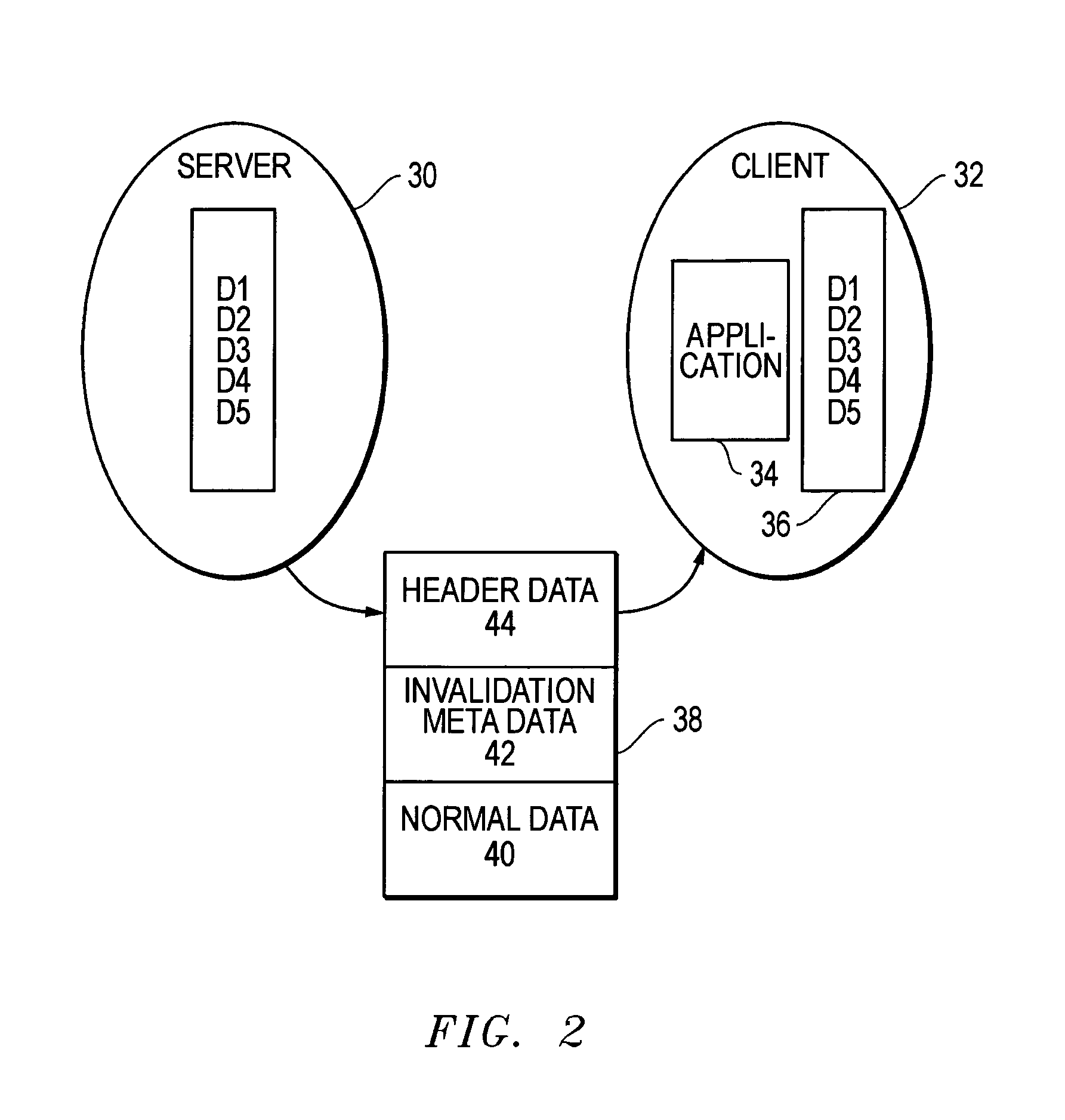

System and method of maintaining functional client side data cache coherence

InactiveUS6996584B2Maximum performanceImprove performanceMemory architecture accessing/allocationDigital data information retrievalDatabase serverClient-side

The present invention provides functional client side data cache coherence distributed across database servers and clients. This system includes an application resident on a client operable to request access to data, and wherein the client is coupled to a local memory cache operable to store requested date. The client is coupled to a remote memory storage system, such as disk storage or network resources by a communication pathway. This remote memory storage system is operable to store data, process requests for specified data, retrieve the specified data from within the remote memory storage system, and transmit the requested data to the client with annotated version information. The data received by the client is verified as being coherent with any downstream linked information stored in the client's local memory cache. Otherwise, updated coherent data is requested, received and verified prior to being used by the client and its resident applications.

Owner:ACTIAN CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com