Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

291 results about "Remote memory" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Remote memory is the ability to remember things and events from many years earlier. This is a function of long-term memory which the brain stores differently than recent or short-term memories. Short-term memories are stored in different areas of the brain than long-term memories.

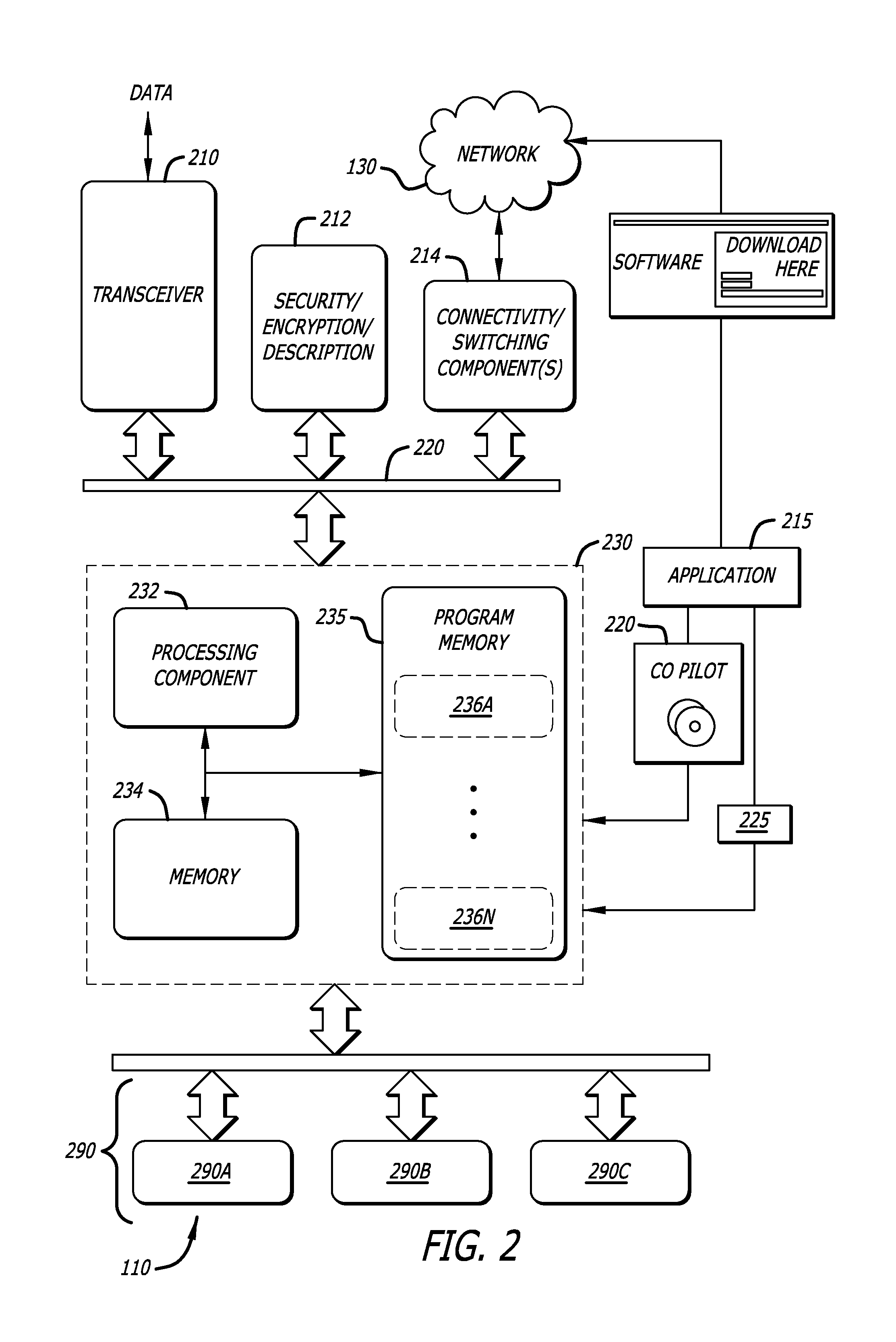

Data security system and method for portable device

ActiveUS7313825B2Ease overhead performanceHigh overhead performancePeptide/protein ingredientsDigital data processing detailsInformation processingEvent trigger

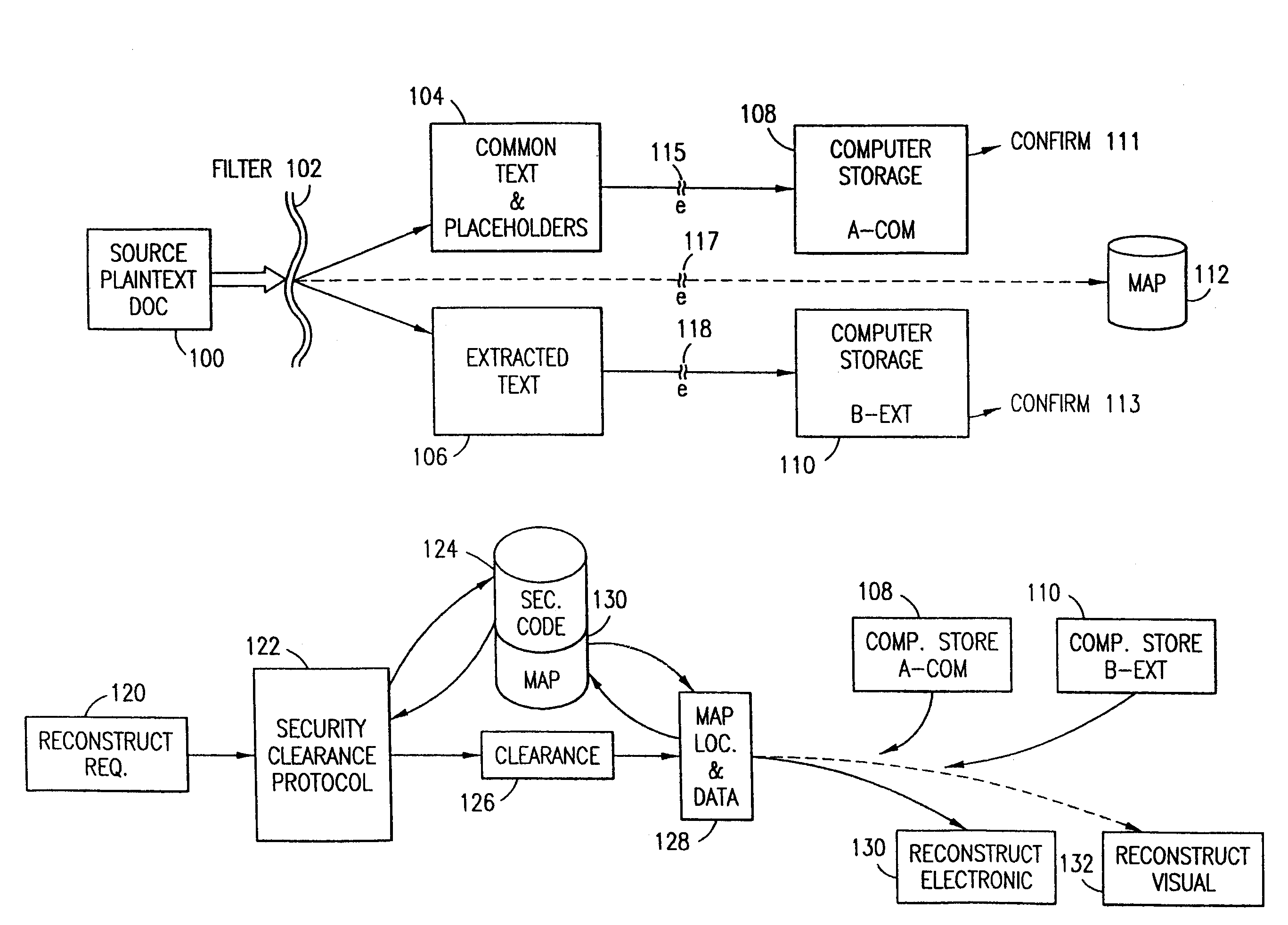

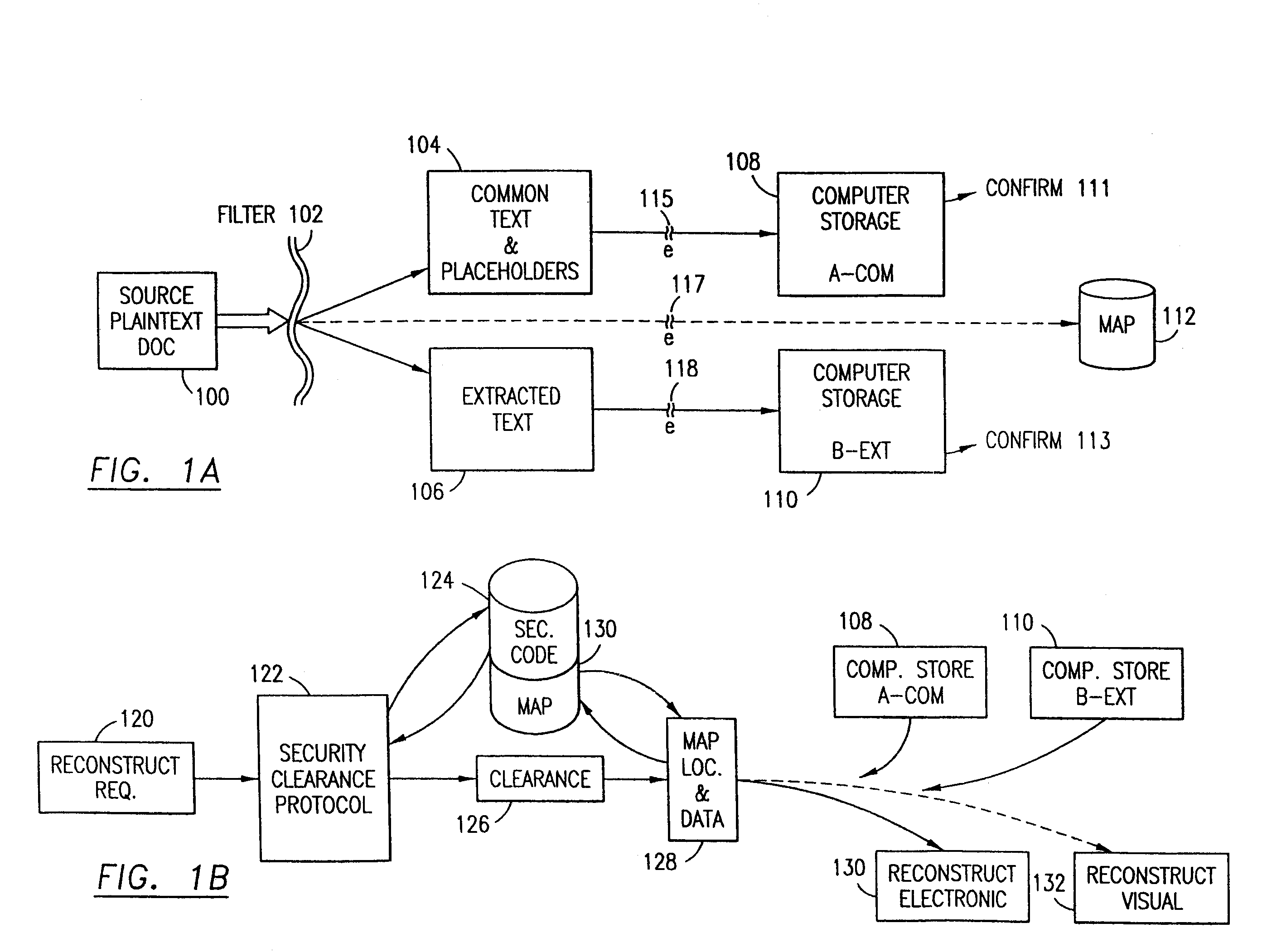

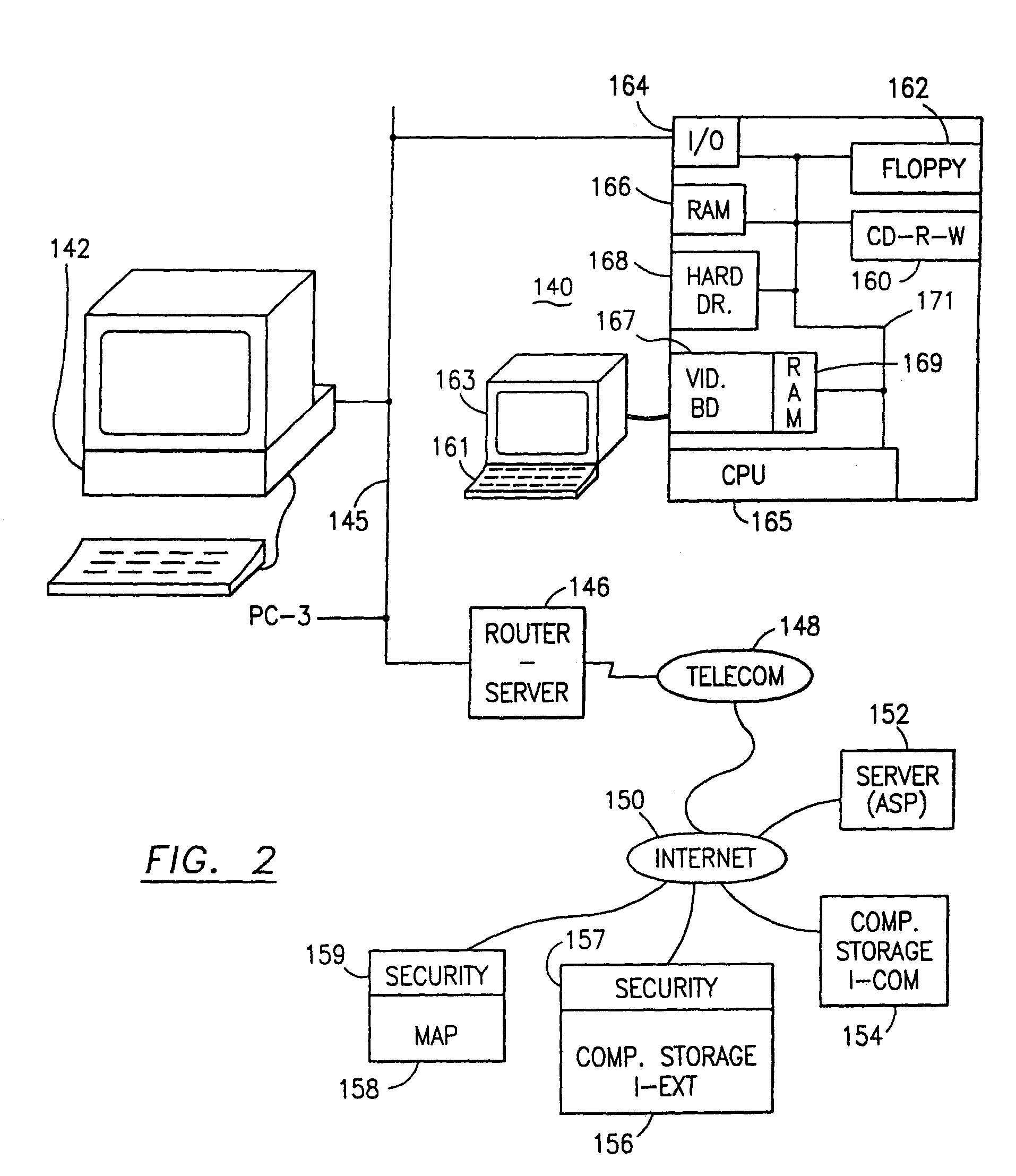

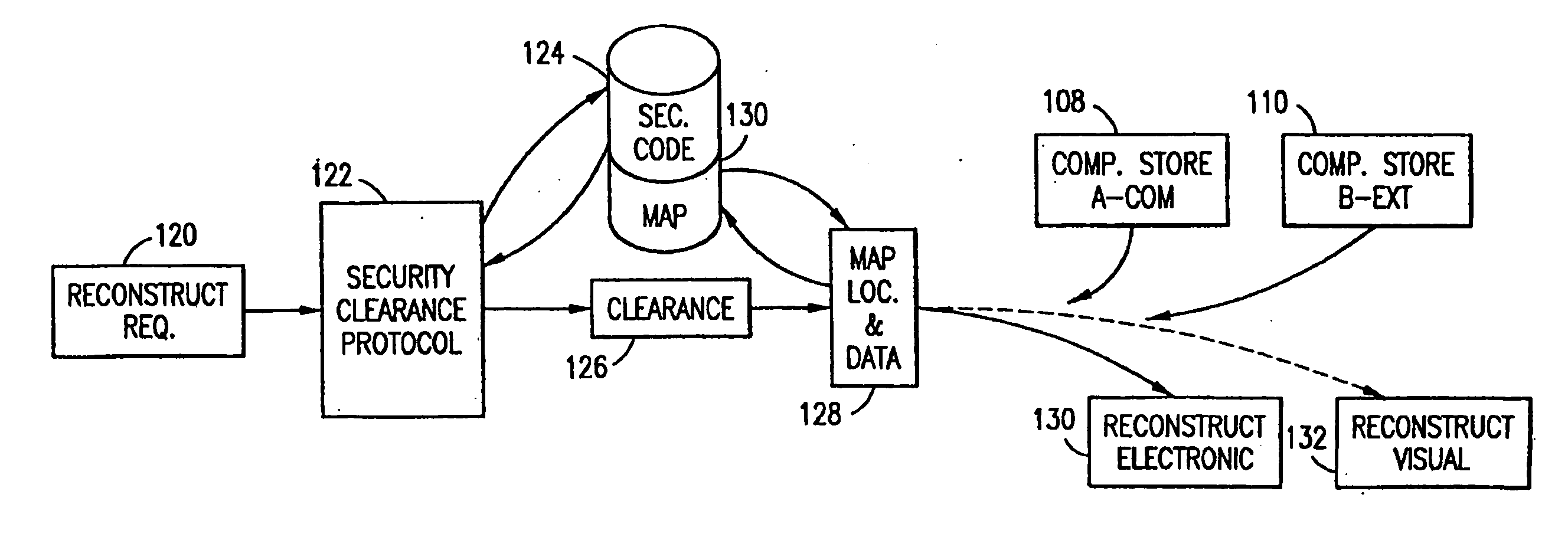

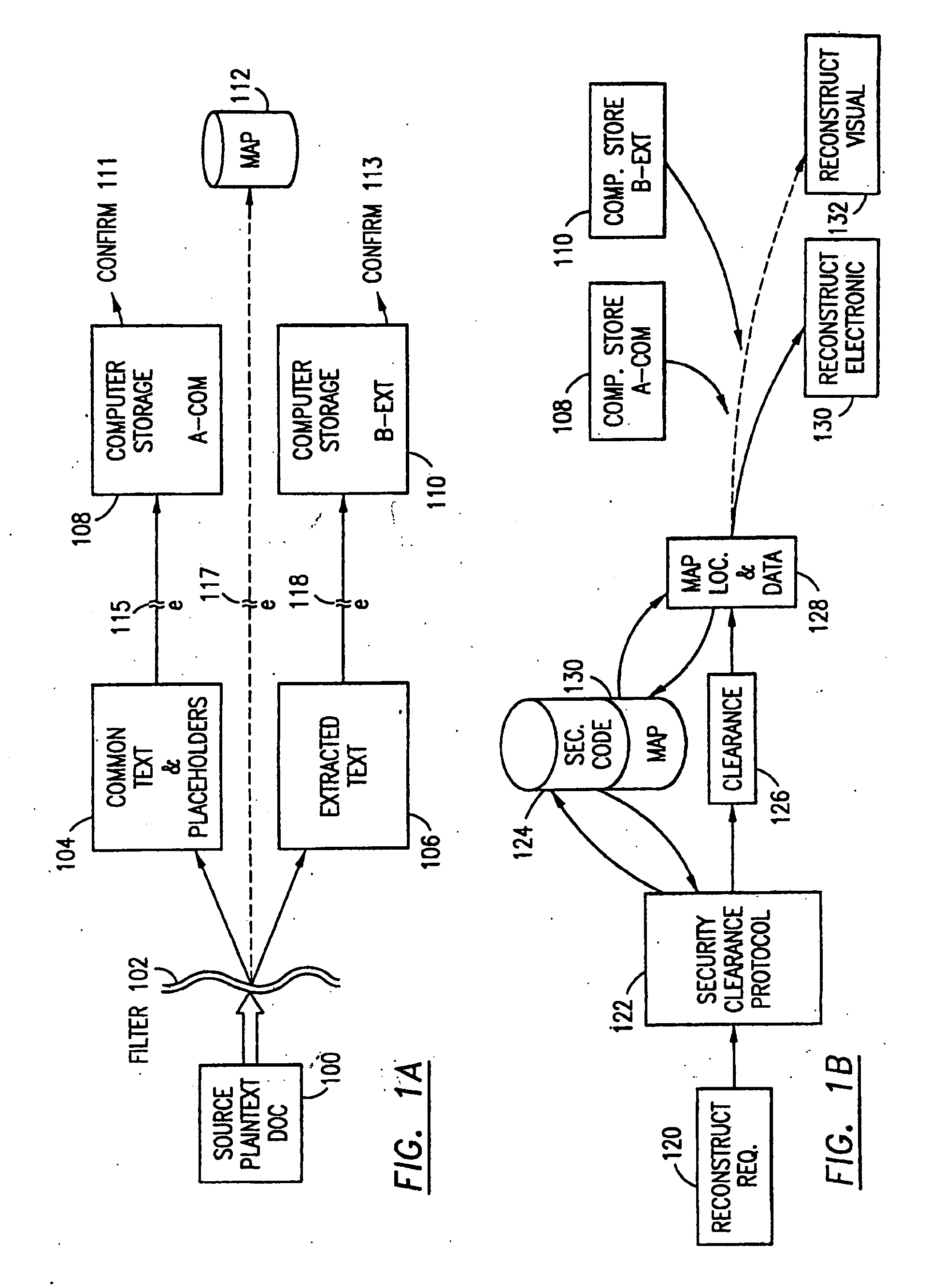

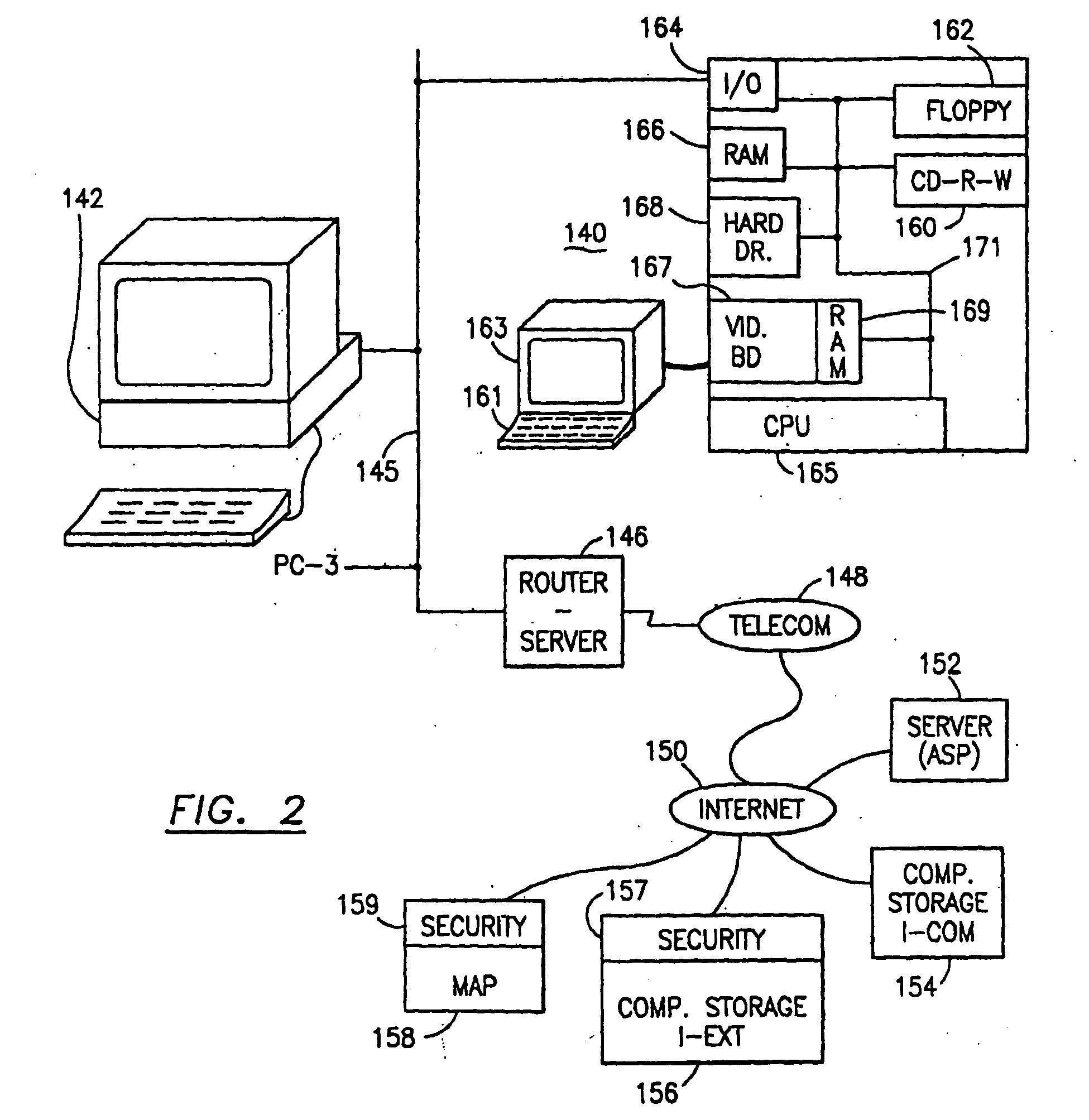

The method, used with a portable computing device, secures security sensitive words, icons, etc. by determining device location within or without a predetermined region and then extracting the security data from the file, text, data object or whatever. The extracted data is separated from the remainder data and stored either on media in a local drive or remotely, typically via wireless network, to a remote store. Encryption is used to further enhance security levels. Extraction may be automatic, when the portable device is beyond a predetermined territory, or triggered by an event, such a “save document” or a time-out routine. Reconstruction of the data is permitted only in the presence of a predetermined security clearance and within certain geographic territories. A computer readable medium containing programming instructions carrying out the methodology for securing data is also described herein. An information processing system for securing data is also described.

Owner:DIGITAL DOORS

Data Security System and with territorial, geographic and triggering event protocol

ActiveUS20090178144A1Ease overhead performanceHigh overhead performanceDigital data processing detailsAnalogue secracy/subscription systemsInformation processingEvent trigger

The method, program and information processing system secures data, and particularly security sensitive words, characters or data objects in the data, in a computer system with territorial, geographic and triggering event protocols. The method and system determines device location within or without a predetermined region and then extracts security data from the file, text, data object or whatever. The extracted data is separated from the remainder data and stored either on media in a local drive or remotely, typically via wireless network, to a remote store. Encryption is used to further enhance security levels. Extraction may be automatic, when the portable device is beyond a predetermined territory, or triggered by an event, such a “save document” or a time-out routine. Reconstruction of the data is permitted only with security clearance and within certain geographic territories. An information processing system for securing data is also described.

Owner:DIGITAL DOORS

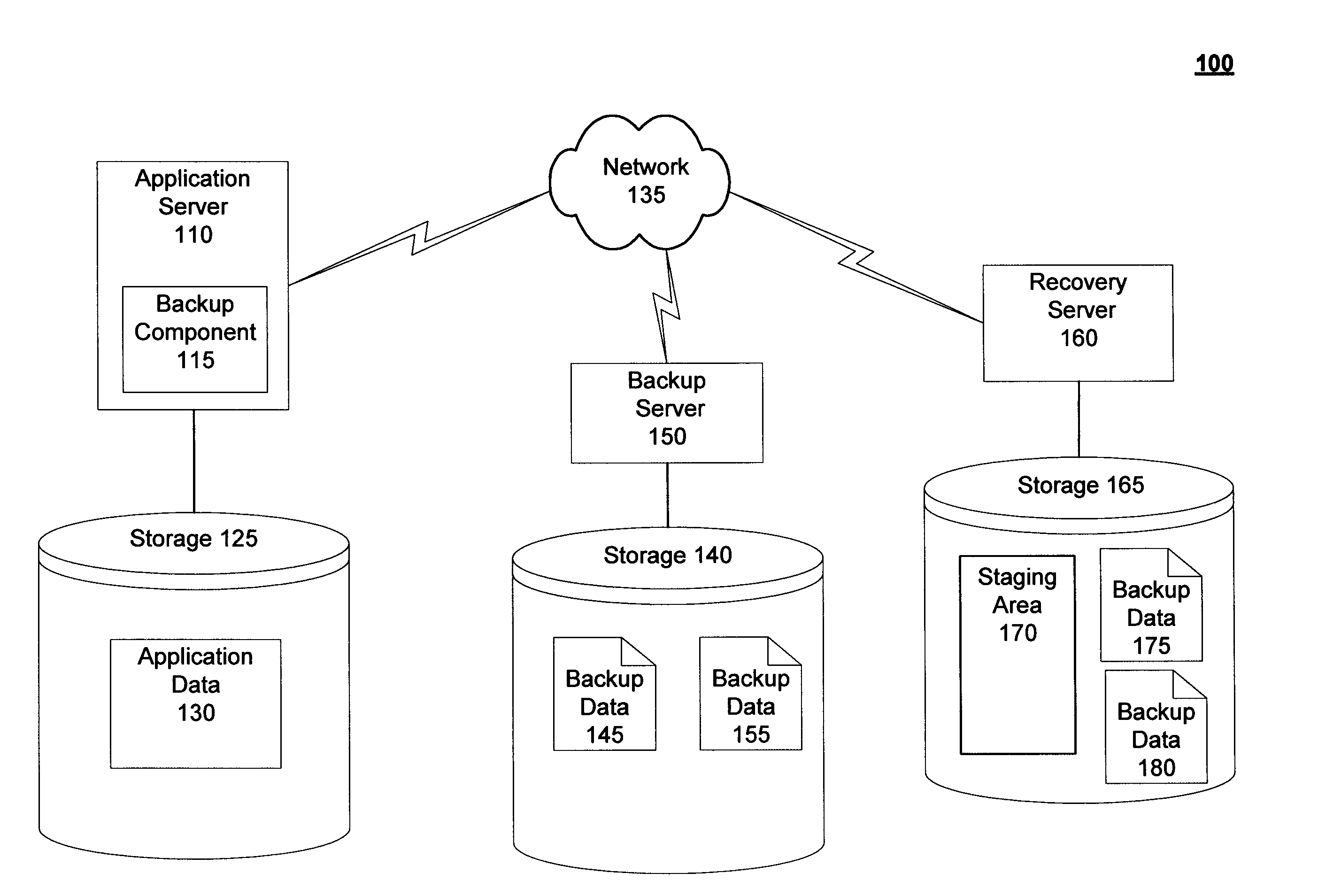

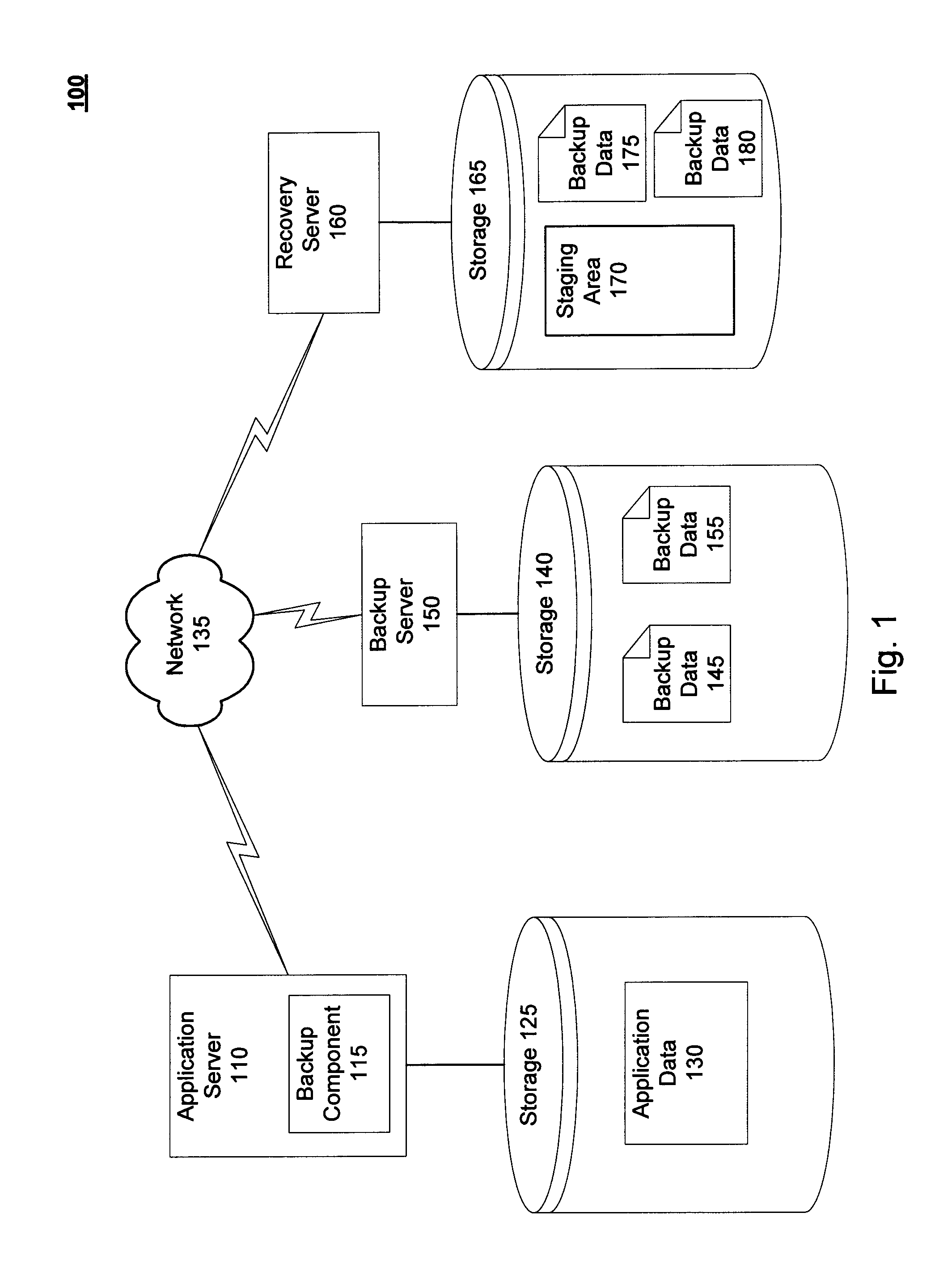

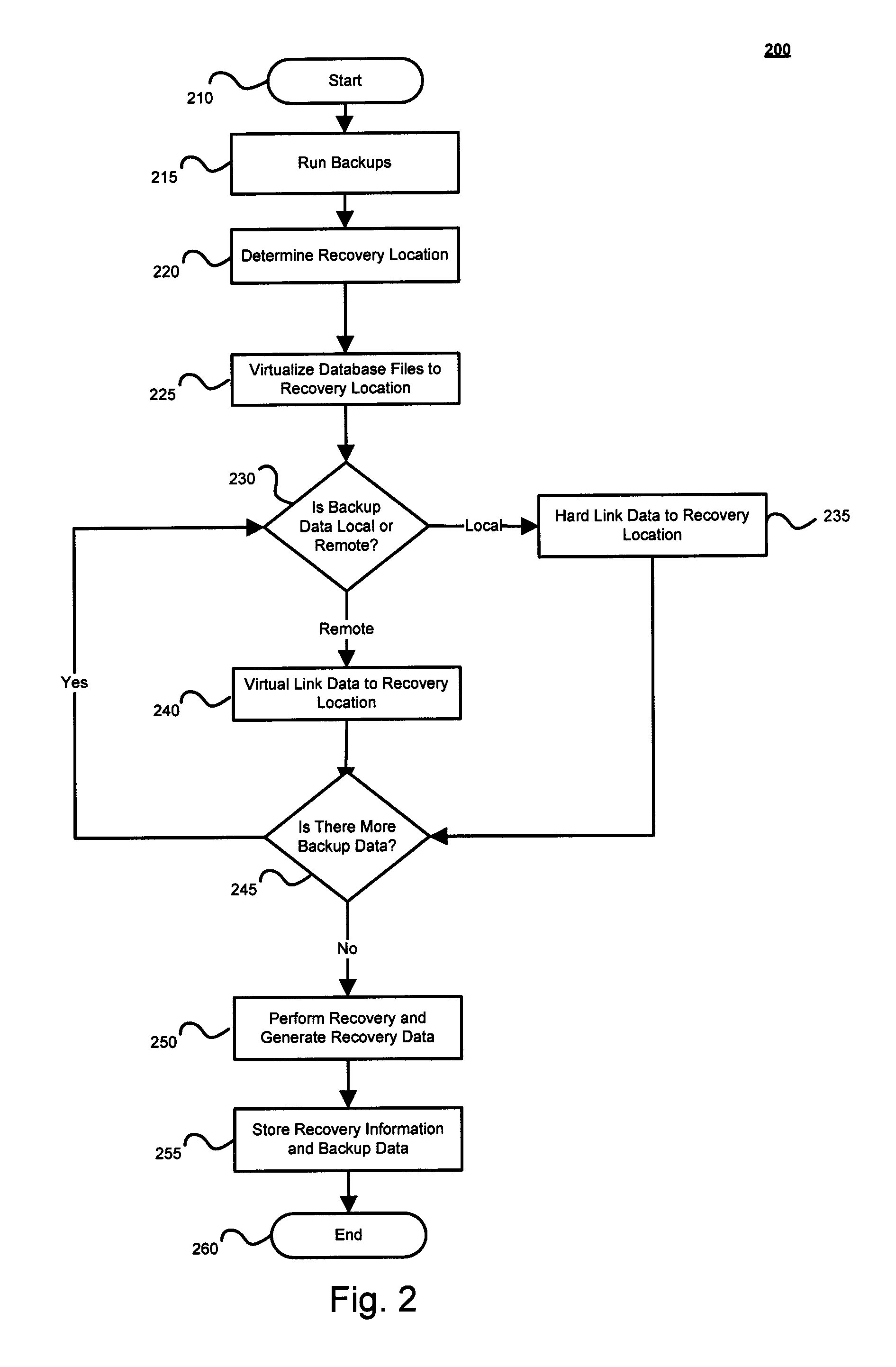

Techniques for granular recovery of data from local and remote storage

Techniques for granular recovery of data from local and remote storage are disclosed. In one particular exemplary embodiment, the techniques may be realized as a method for recovery of data from local and remote storage comprising determining a recovery location, determining a location of backup data, hard linking one or more portions of the backup data to the recovery location in the event that the one or more portions of the backup data to be hard linked are determined to be on a volume of the recovery location, virtually linking one or more portions of the backup data to the recovery location in the event that the one or more portions of the backup data to be virtually linked are determined to be on a volume different from the volume of the recovery location, and performing recovery utilizing one or more portions of recovery data.

Owner:VERITAS TECH

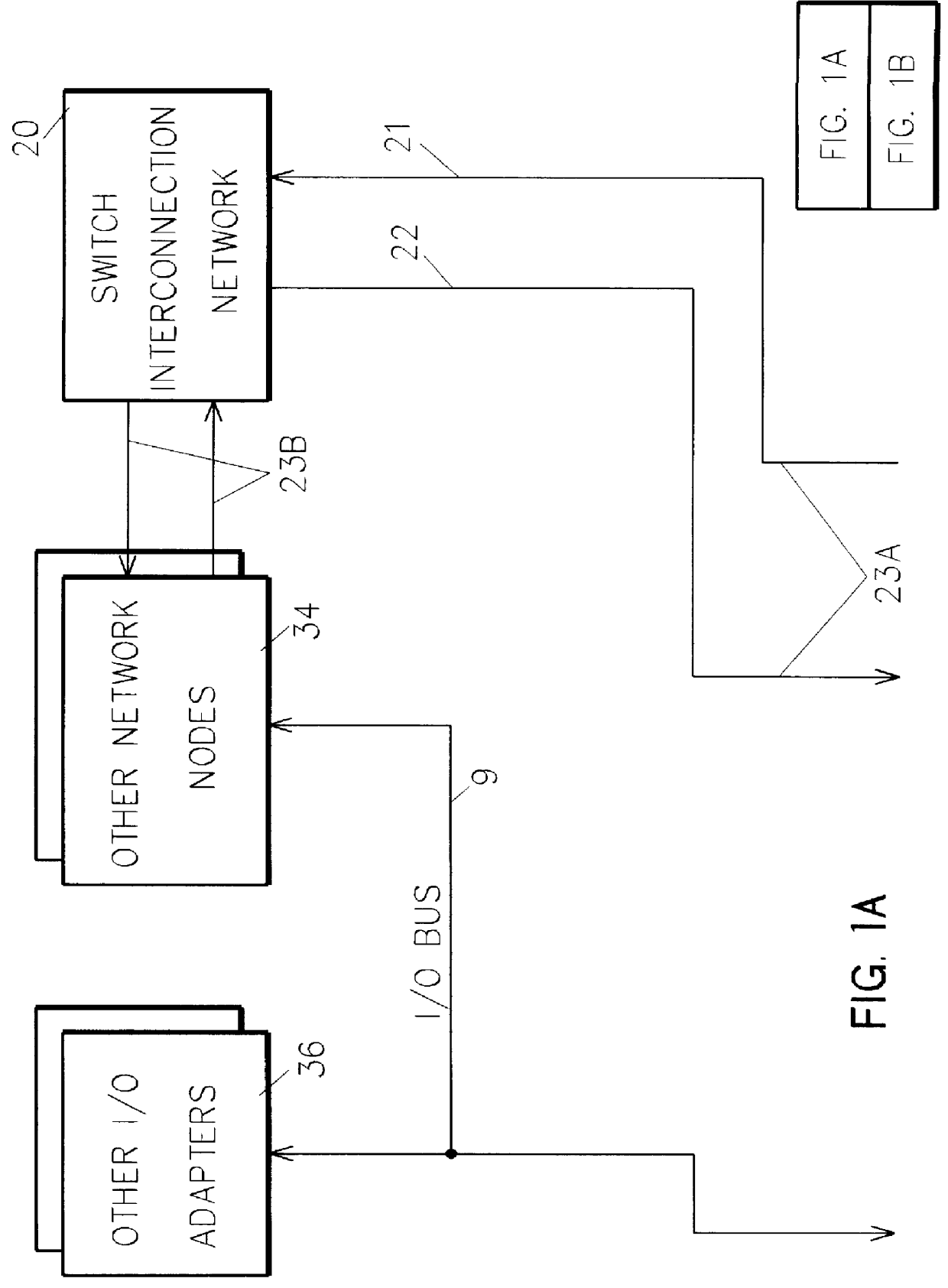

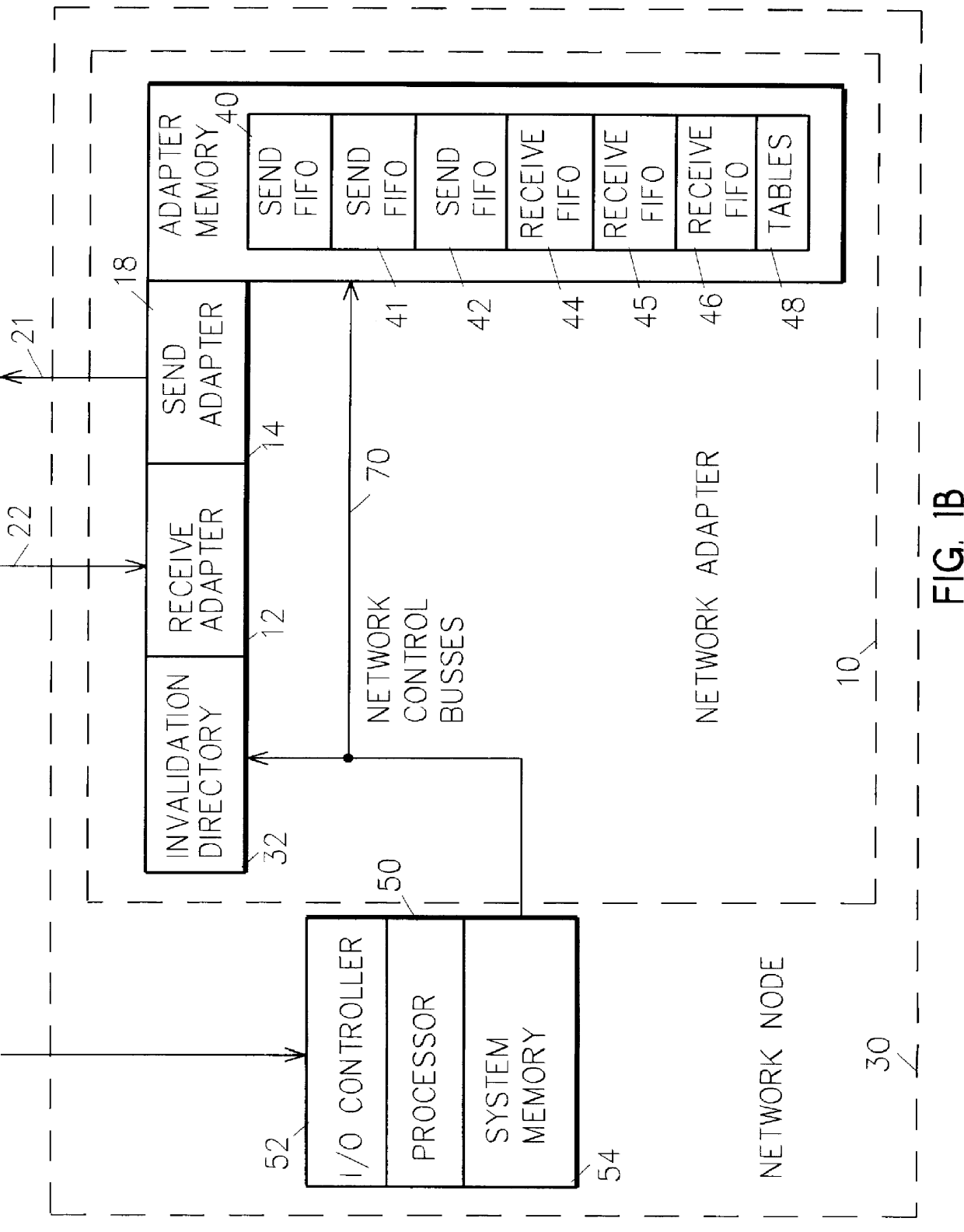

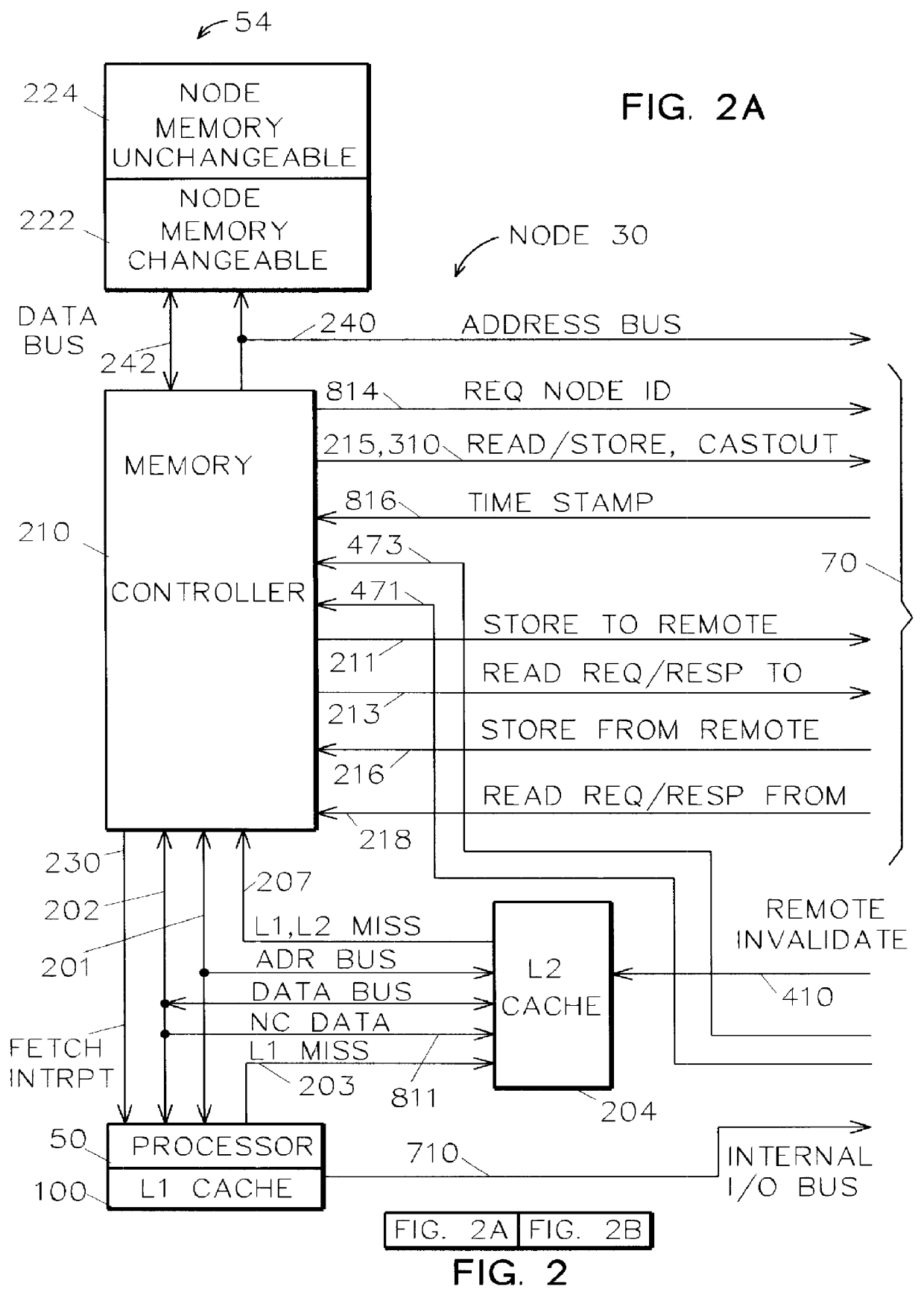

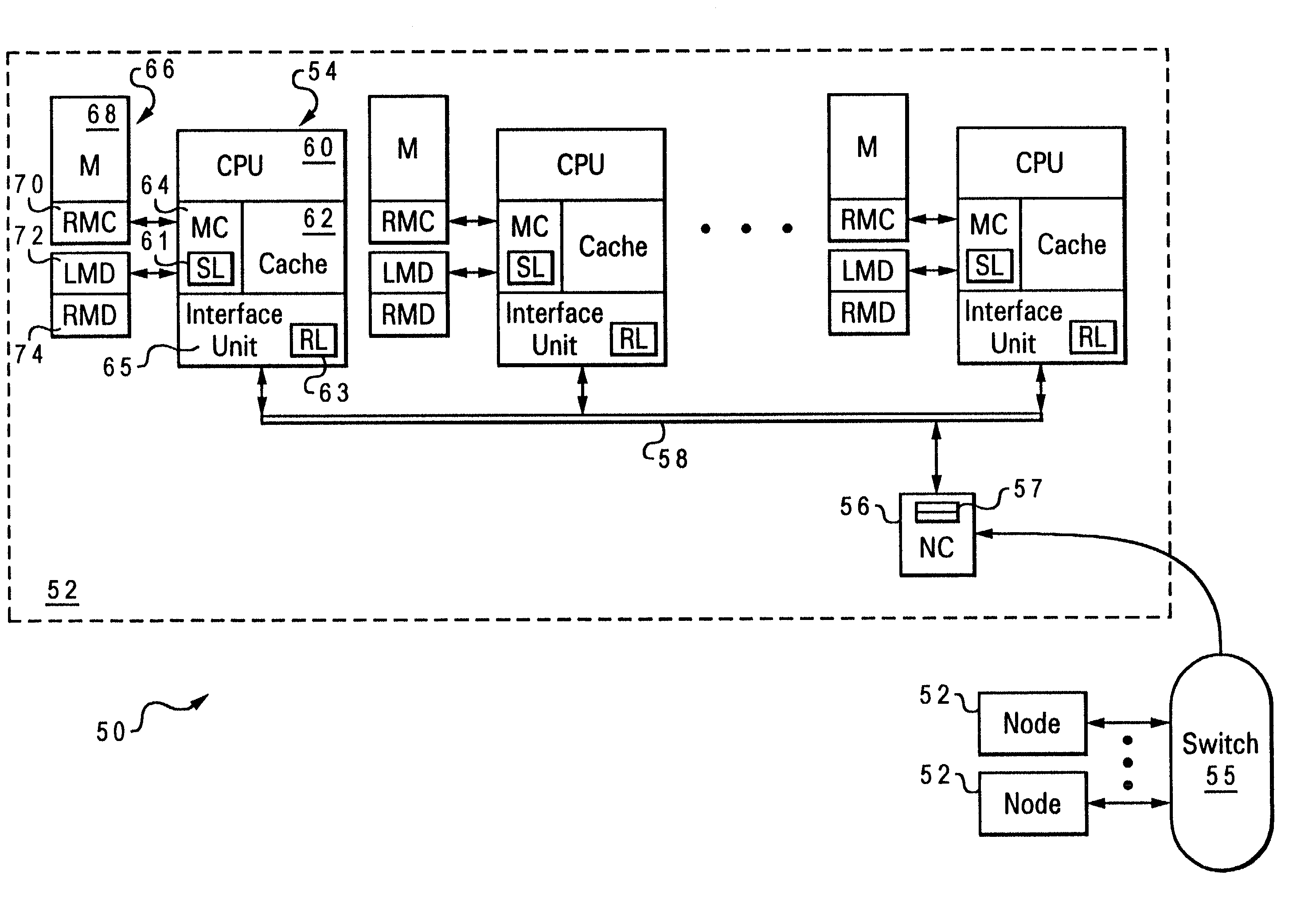

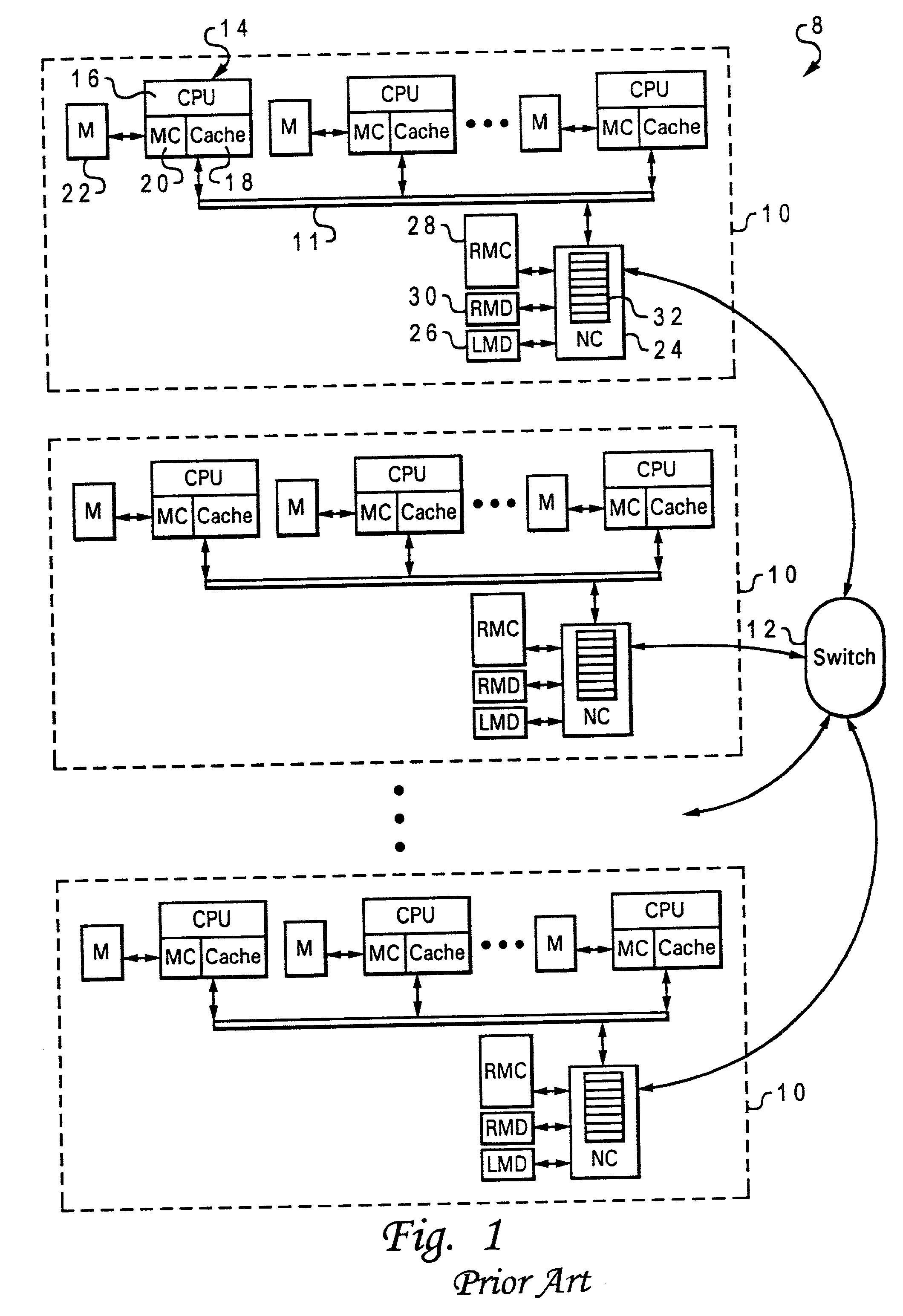

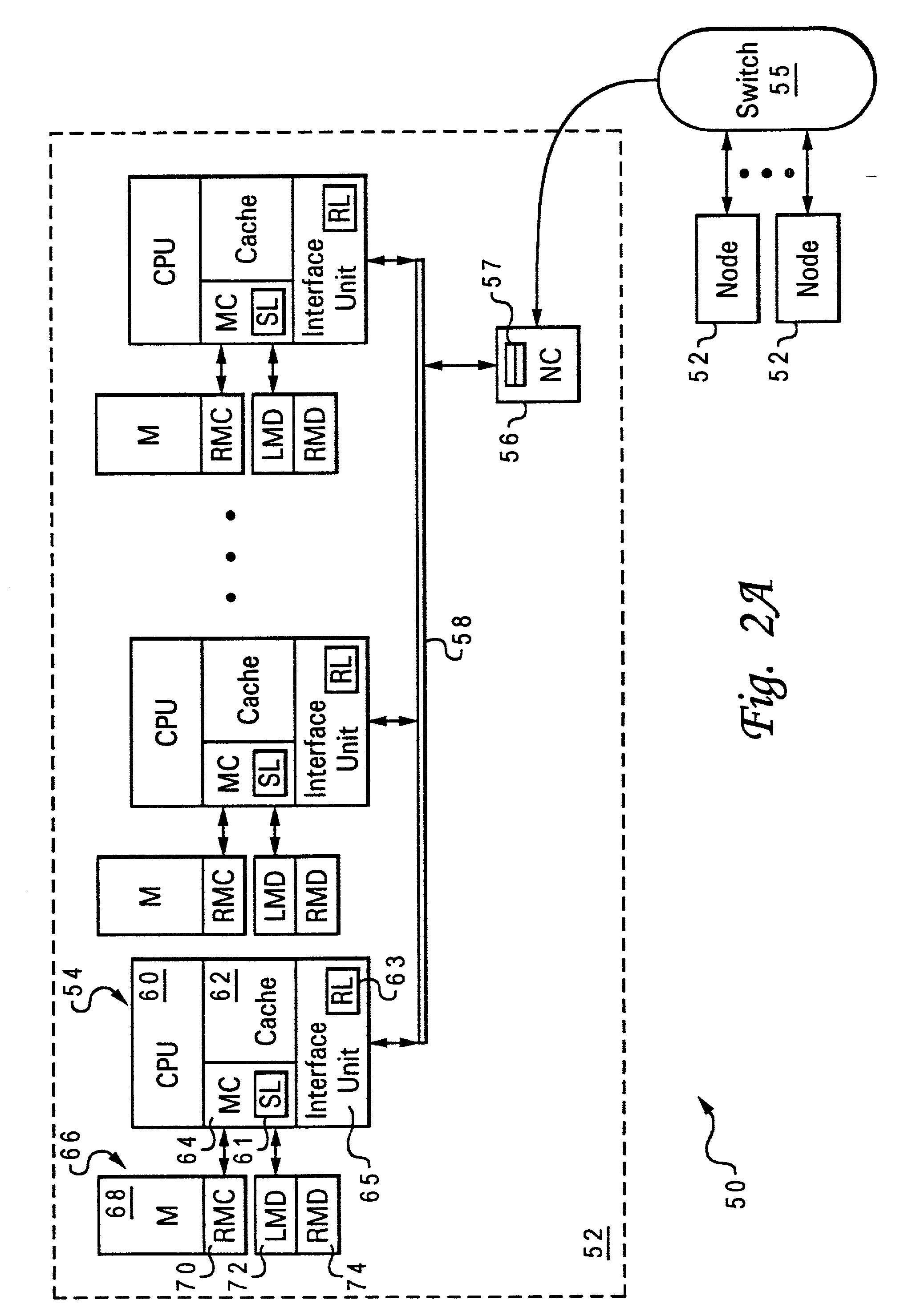

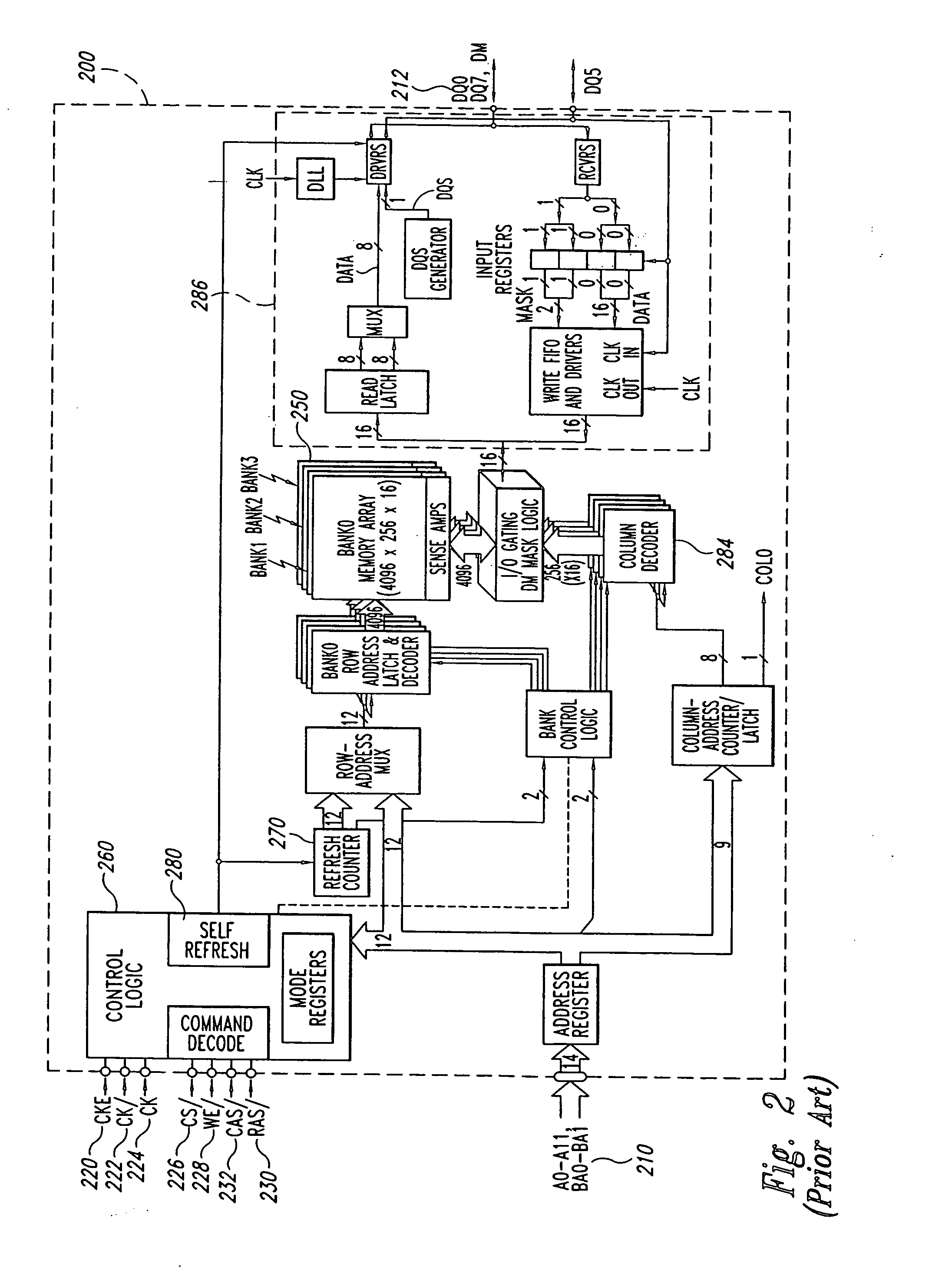

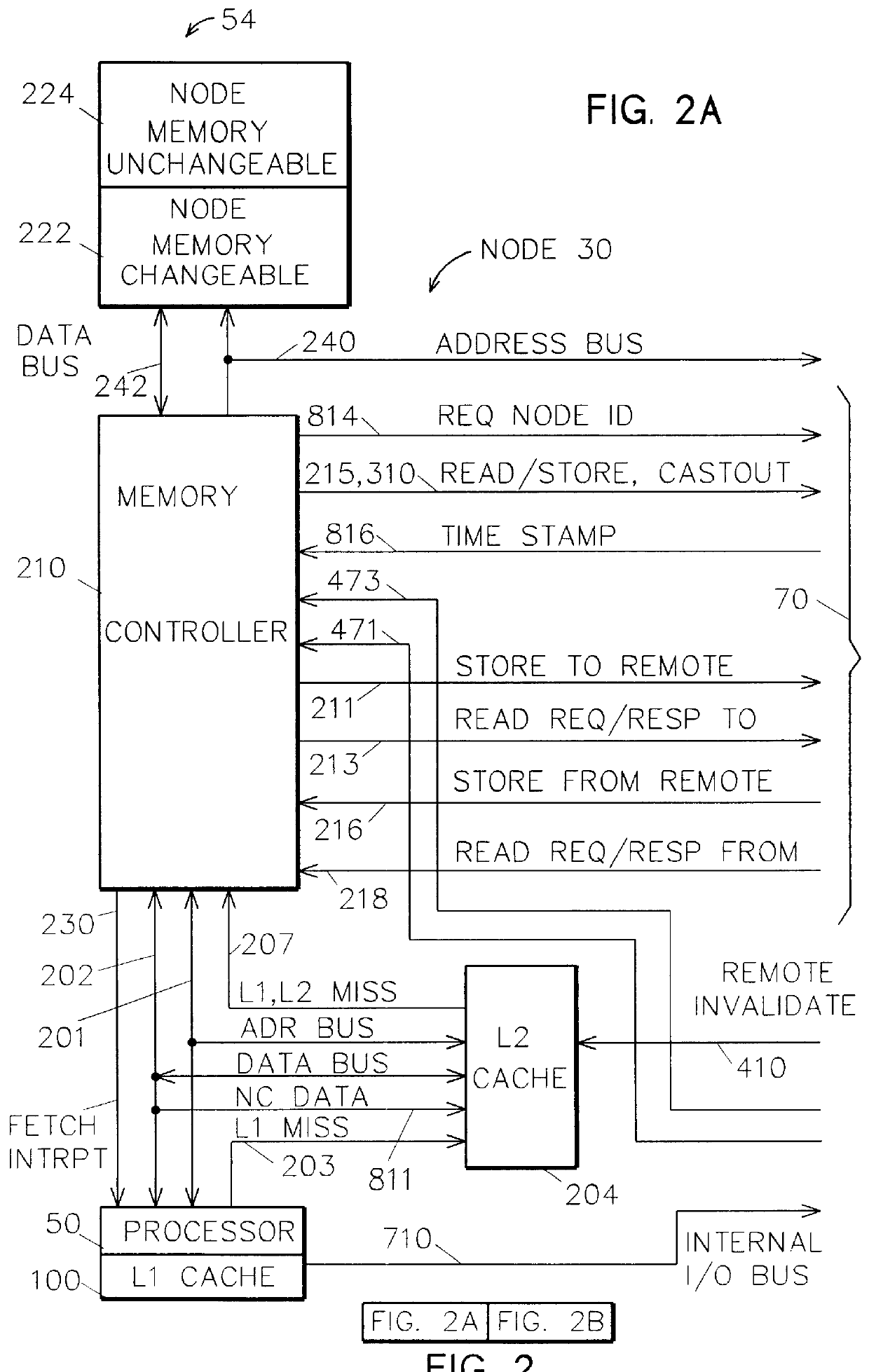

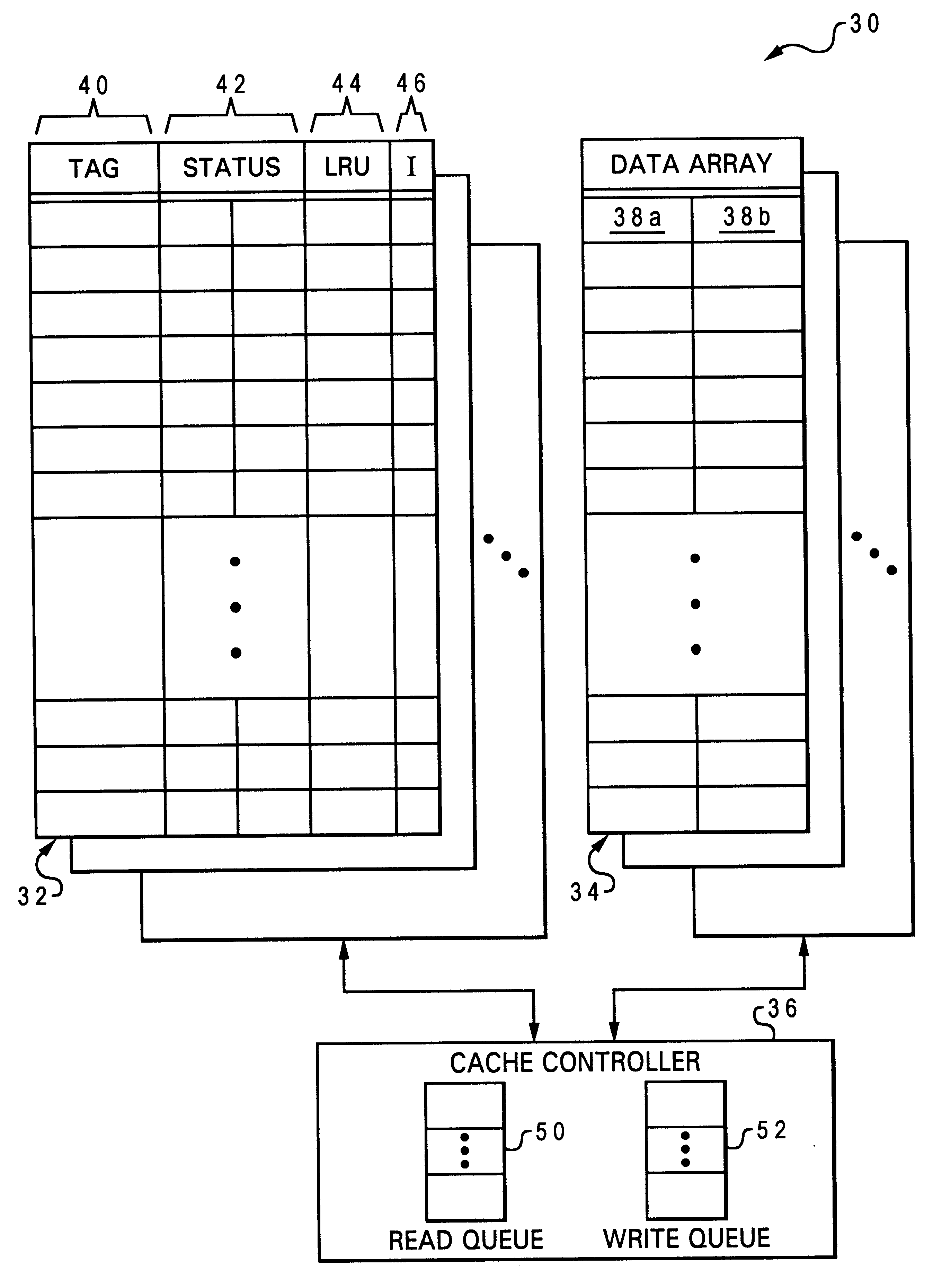

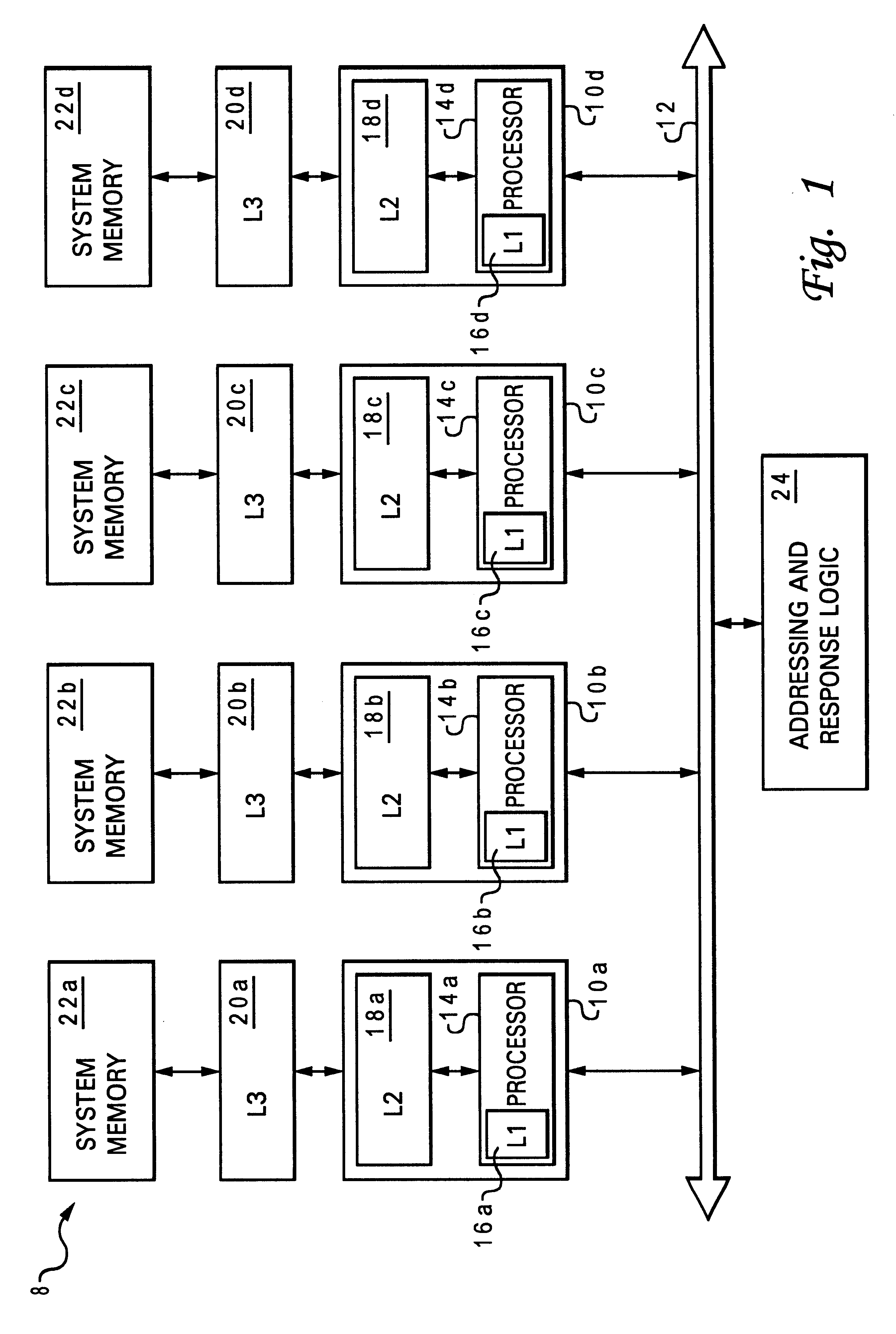

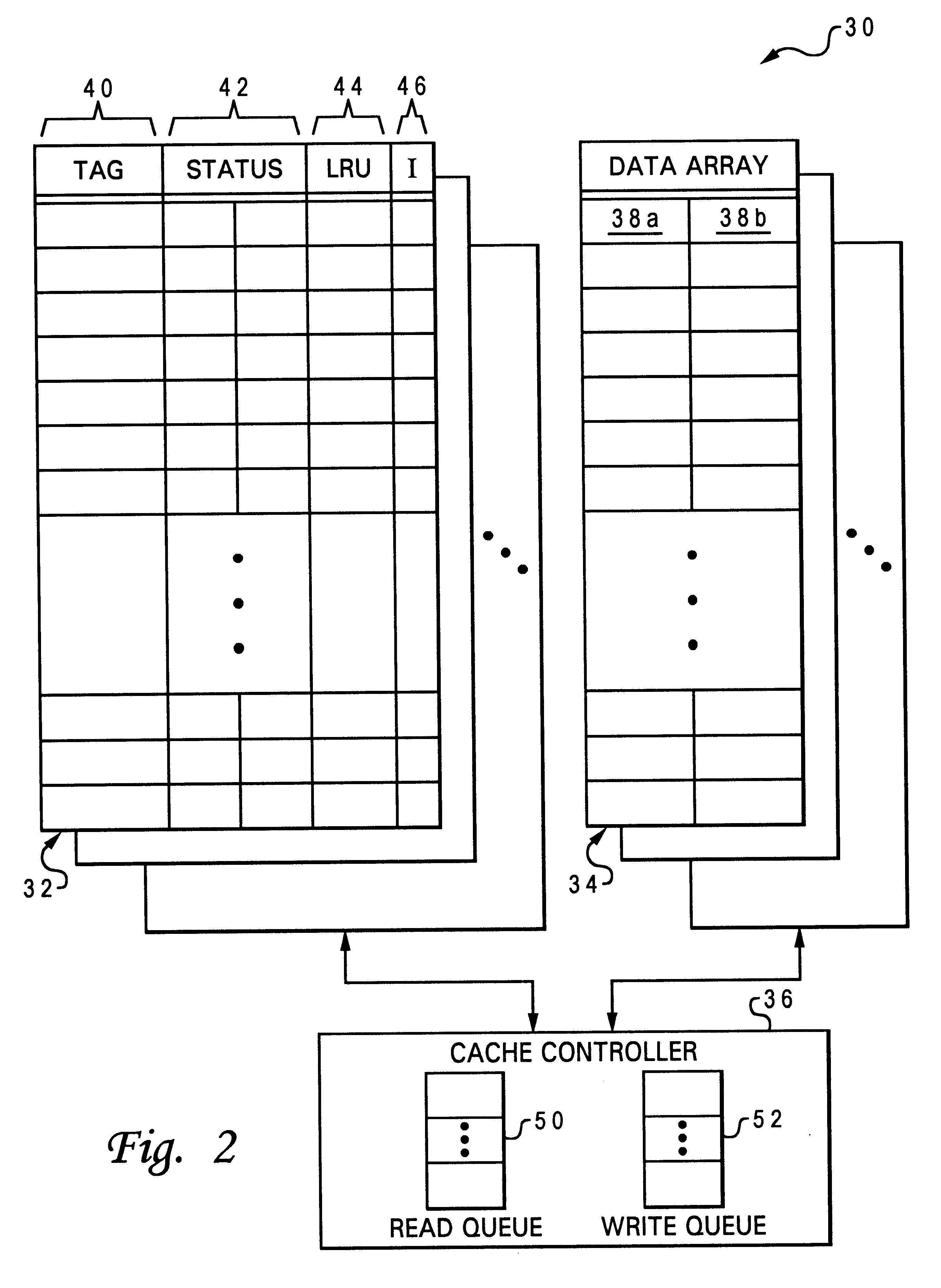

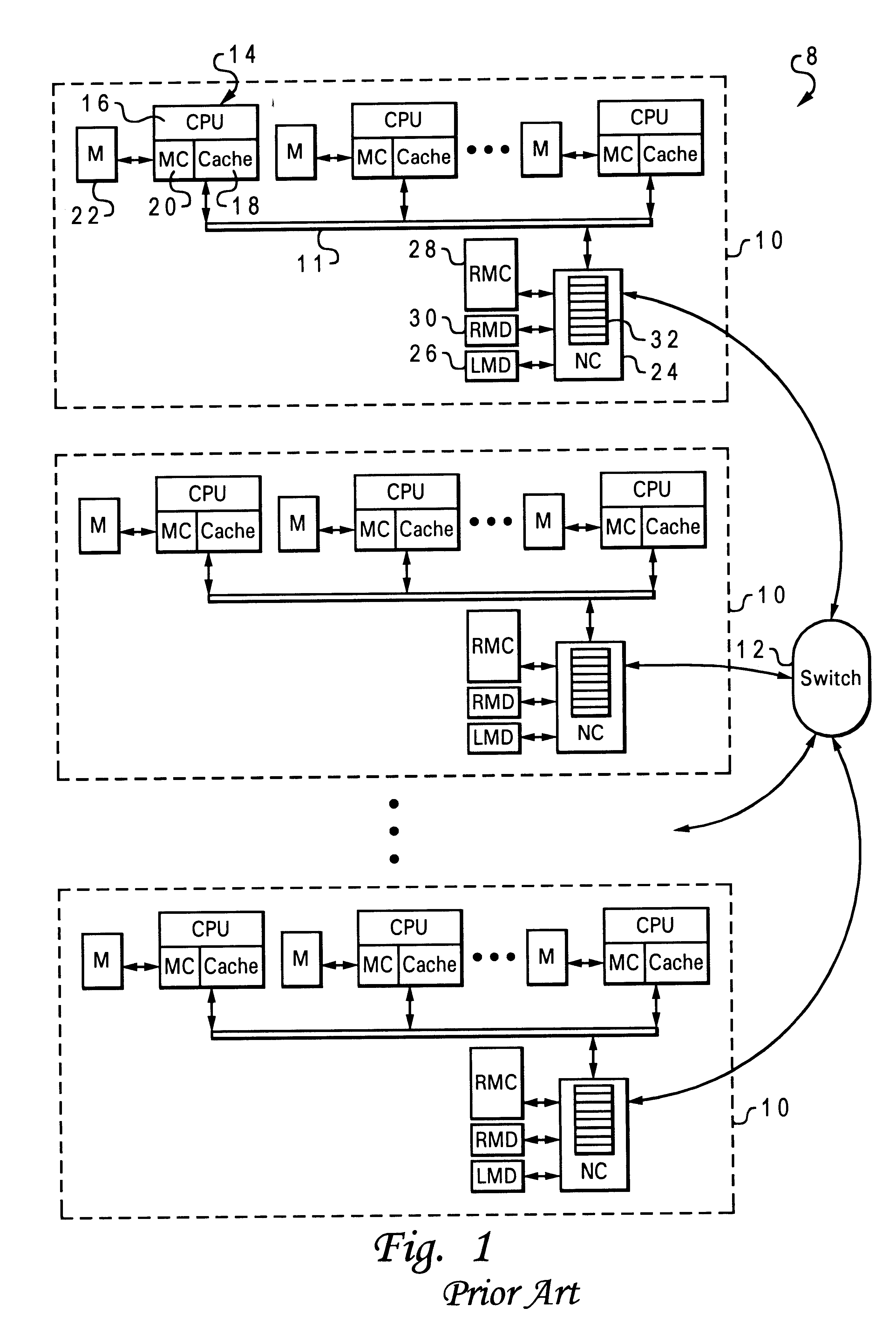

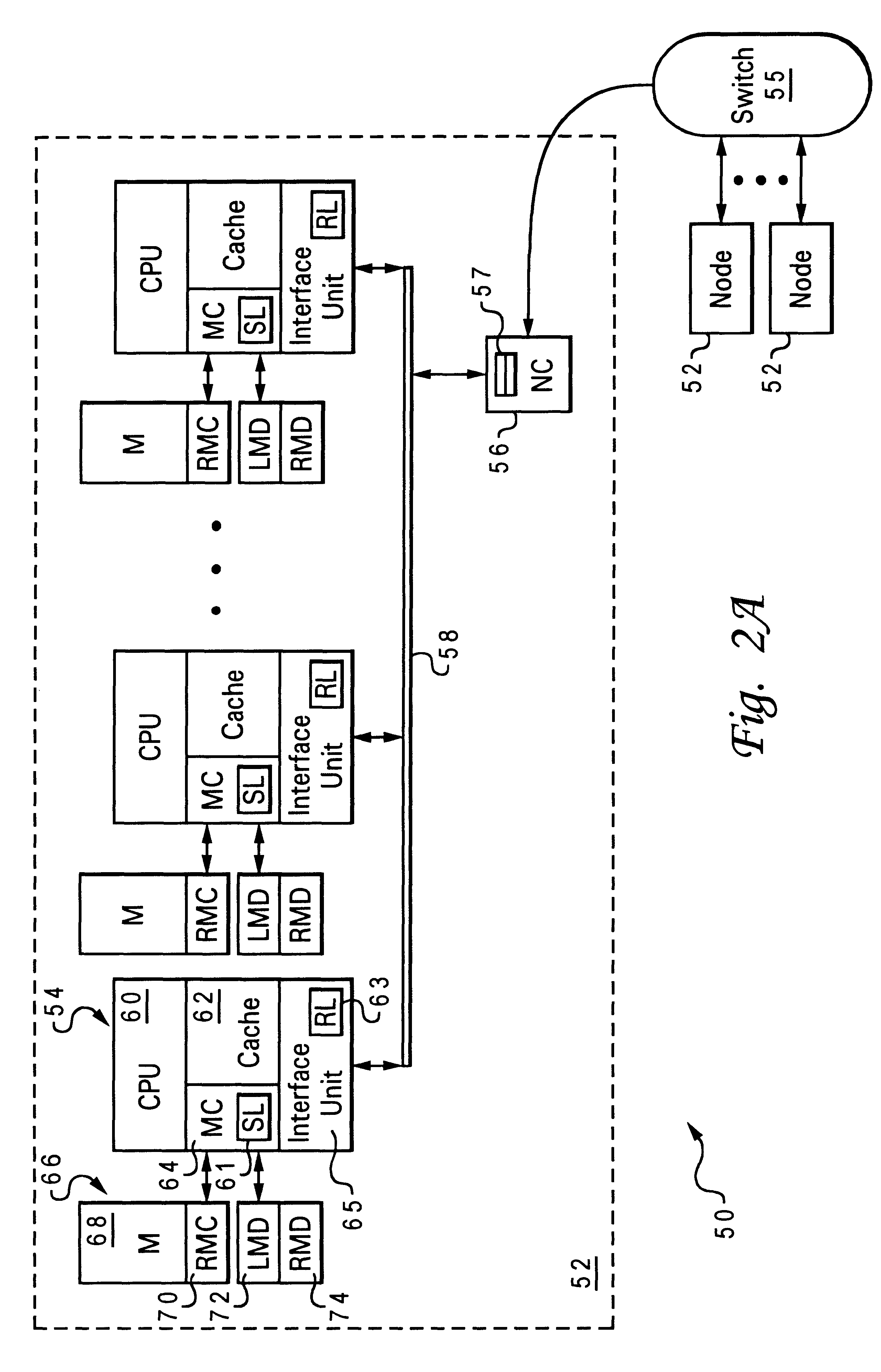

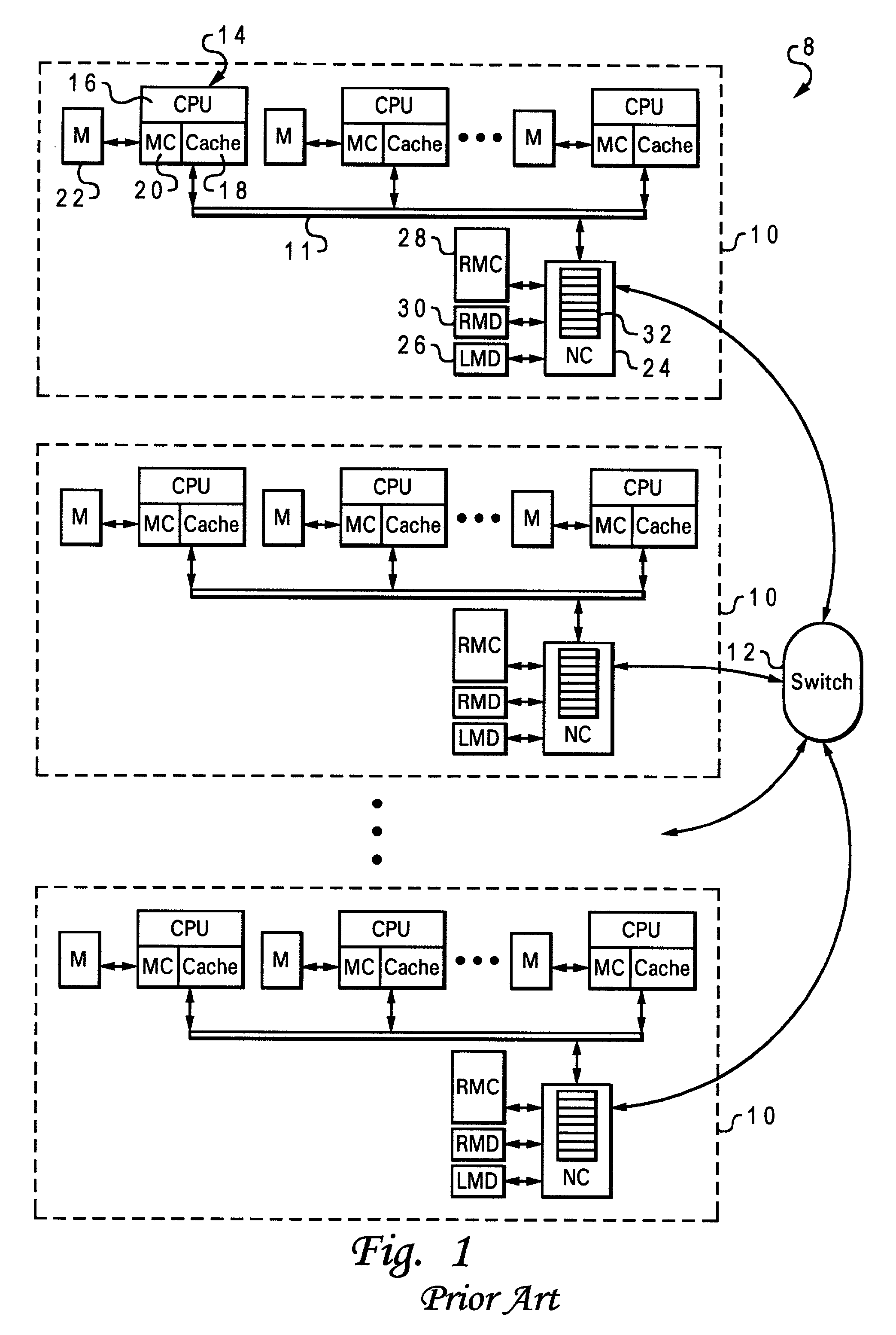

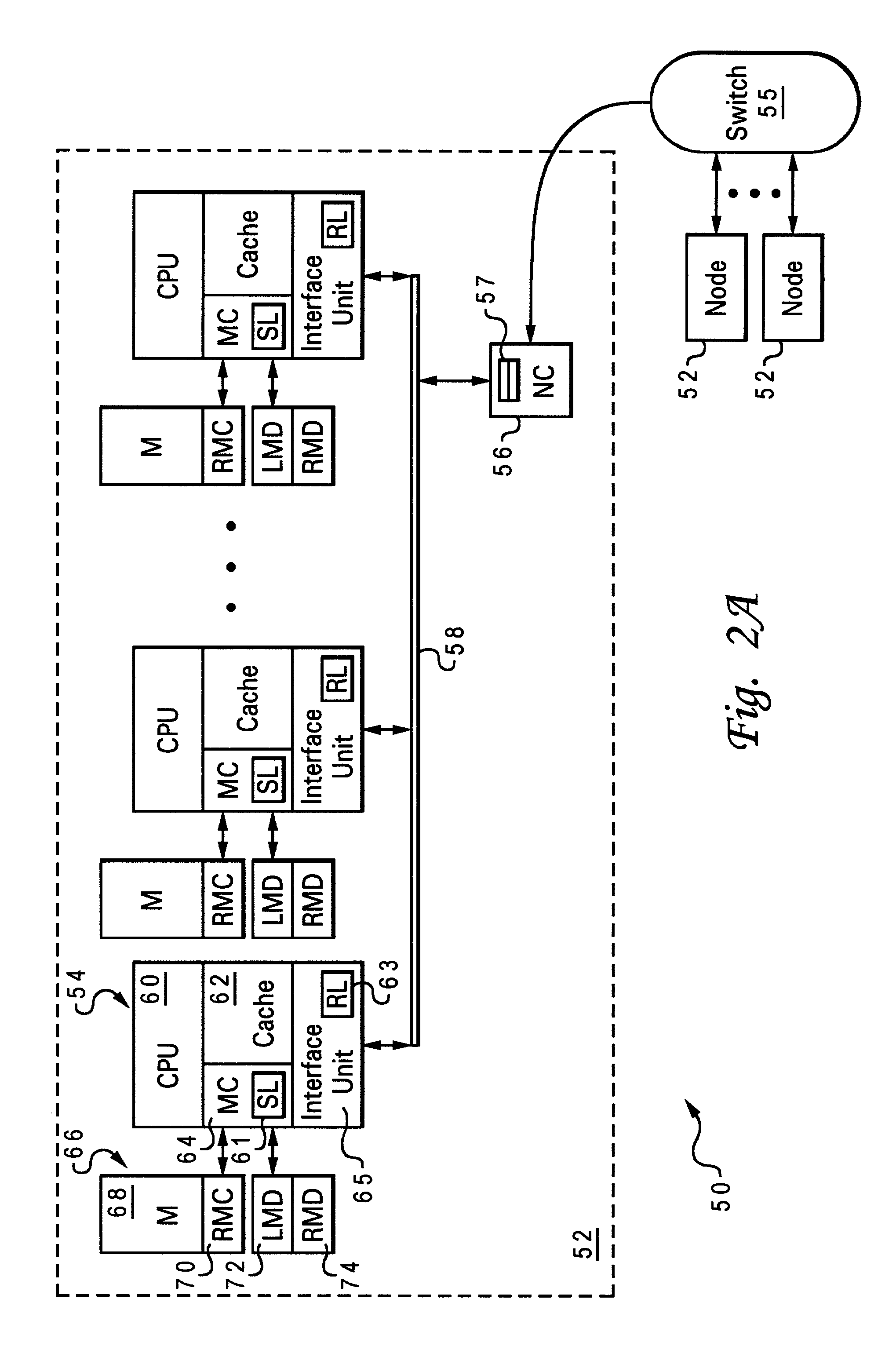

Memory controller for controlling memory accesses across networks in distributed shared memory processing systems

InactiveUS6044438AMore efficient cache coherent systemData processing applicationsMemory adressing/allocation/relocationRemote memory accessRemote direct memory access

A shared memory parallel processing system interconnected by a multi-stage network combines new system configuration techniques with special-purpose hardware to provide remote memory accesses across the network, while controlling cache coherency efficiently across the network. The system configuration techniques include a systematic method for partitioning and controlling the memory in relation to local verses remote accesses and changeable verses unchangeable data. Most of the special-purpose hardware is implemented in the memory controller and network adapter, which implements three send FIFOs and three receive FIFOs at each node to segregate and handle efficiently invalidate functions, remote stores, and remote accesses requiring cache coherency. The segregation of these three functions into different send and receive FIFOs greatly facilitates the cache coherency function over the network. In addition, the network itself is tailored to provide the best efficiency for remote accesses.

Owner:IBM CORP

Methods and apparatus for providing a prepaid, remote memory customer account for the visually impaired

InactiveUS6345766B1Complete banking machinesTicket-issuing apparatusPrepaid telephone callTelephone card

The present invention includes a prepaid telephone calling card for use by the visually impaired. A braille printer is configured to print a wallet-sized, plastic prepaid telephone calling card having one or more information fields printed in braille. A first information field printed in braille corresponds to an access number through which an individual can access a host computer for completing prepaid telephone calls. A second field printed in braille corresponds to a unique account code associated with the prepaid card. Various other information fields may also be printed in braille on the card, as desired. The information fields may also be printed in very large type face to facilitate reading by individuals who are not blind, yet substantially visually impaired.

Owner:LIBERTY PEAK VENTURES LLC

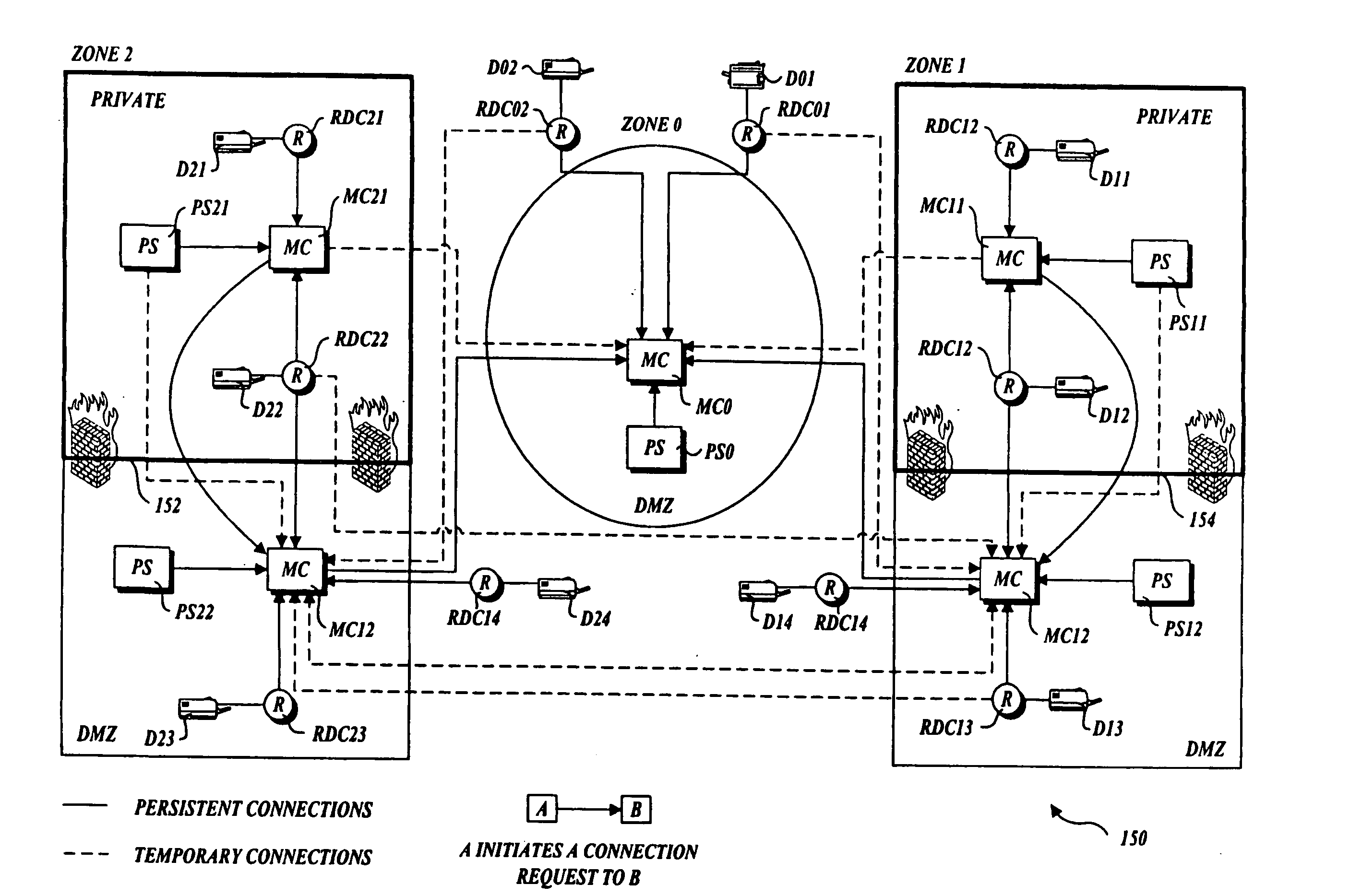

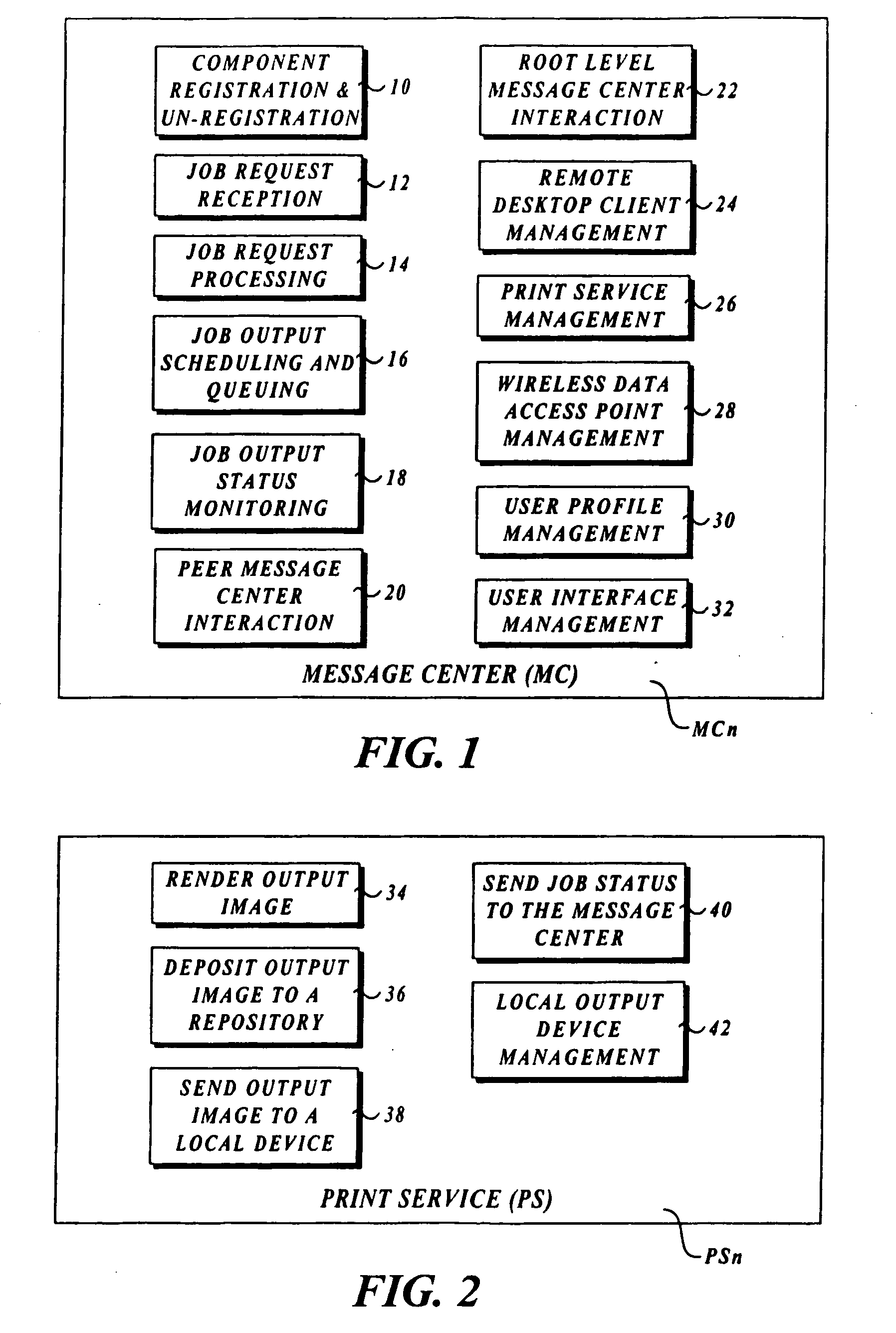

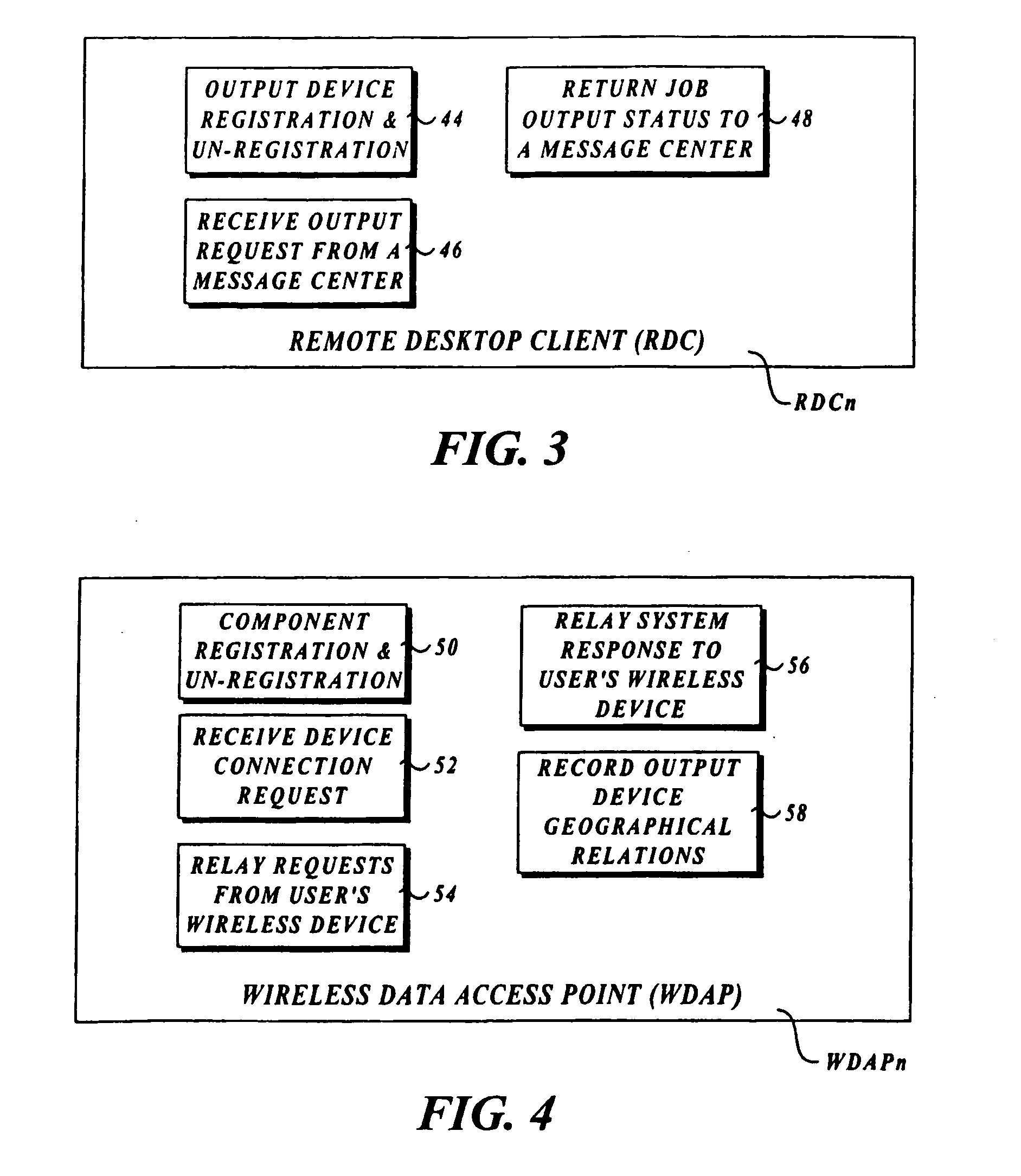

Output management system and method for enabling printing via wireless devices

InactiveUS20070022180A1Data switching by path configurationMultiple digital computer combinationsStore and forwardOutput device

A system and method for managing output such as printing, faxing, and e-mail over various types of computer networks. In one aspect, the method provides for printing via a wireless device. The system provides renderable data to the wireless device by which a user-interface (UI) may be rendered. The UI enables users to select source data and an output device on which the source data are to be printed. The source data are then retrieved from a local or remote store and forwarded to a print service, which renders output image data corresponding to the source data and the output device that was selected. The output image data are then submitted to the output device to be physically rendered. The user-interfaces enable wired and wireless devices to access the system. The system enables documents to be printed by reference, and enables access to resources behind firewalls.

Owner:KYOCERA DOCUMENT SOLUTIONS INC

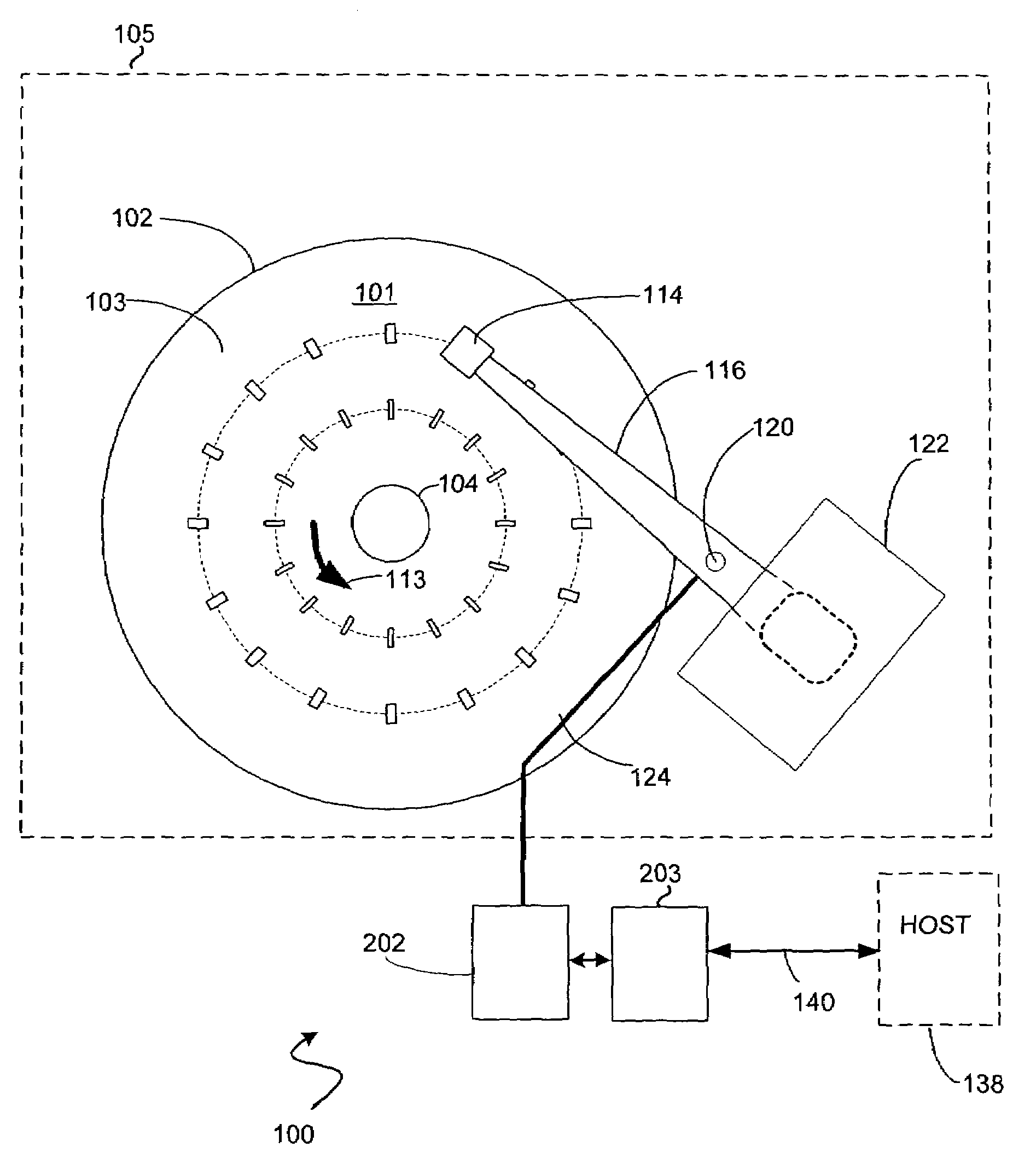

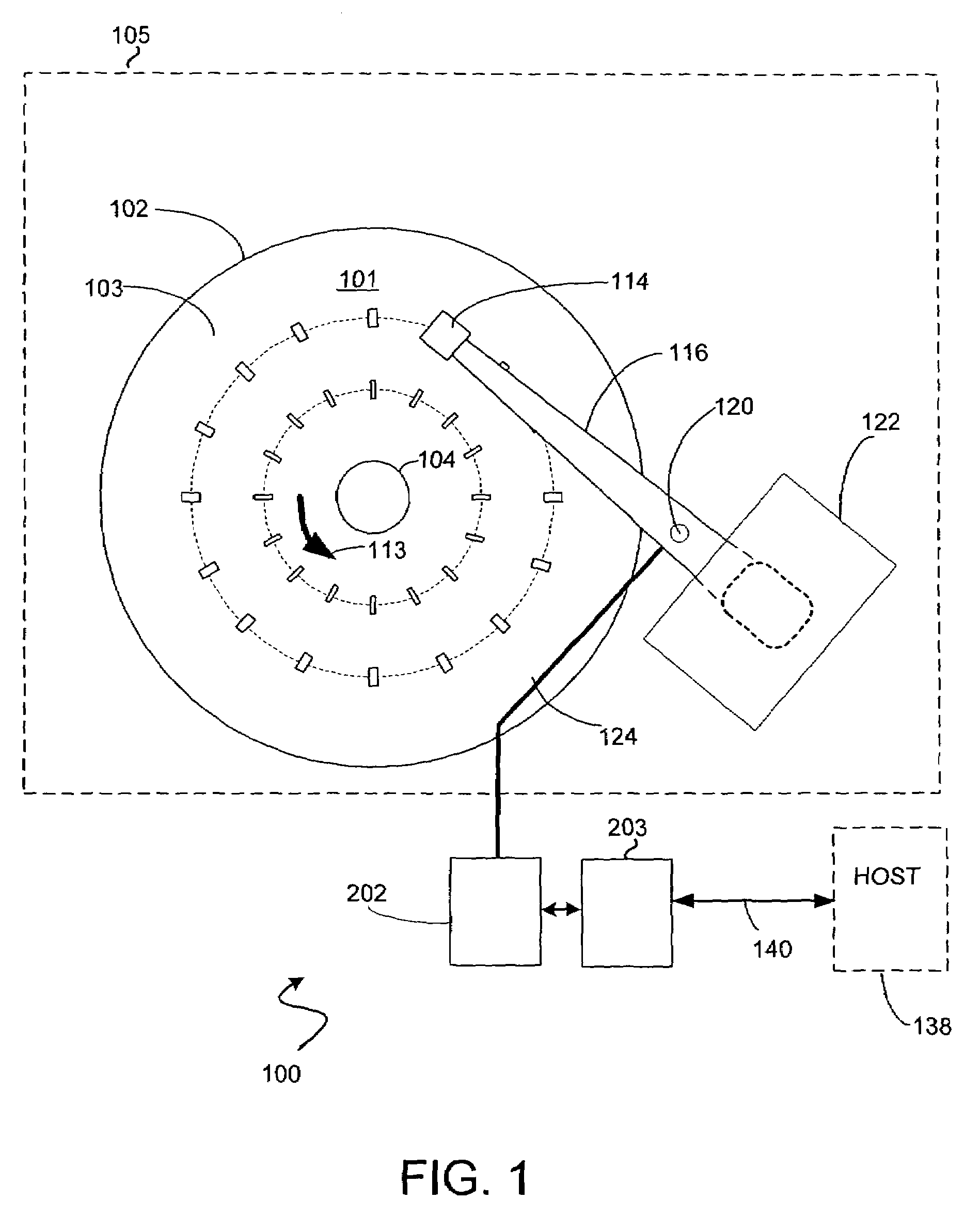

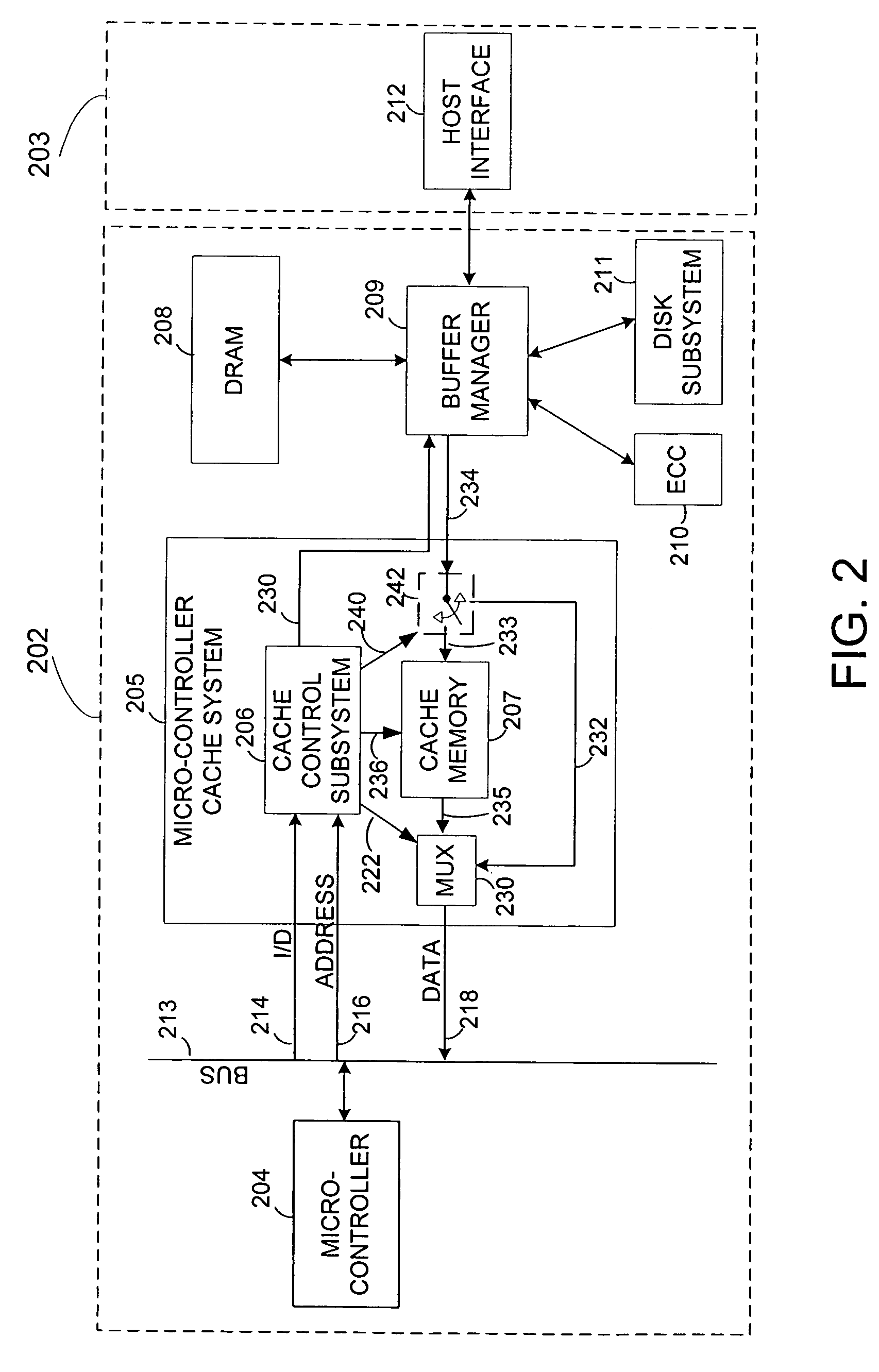

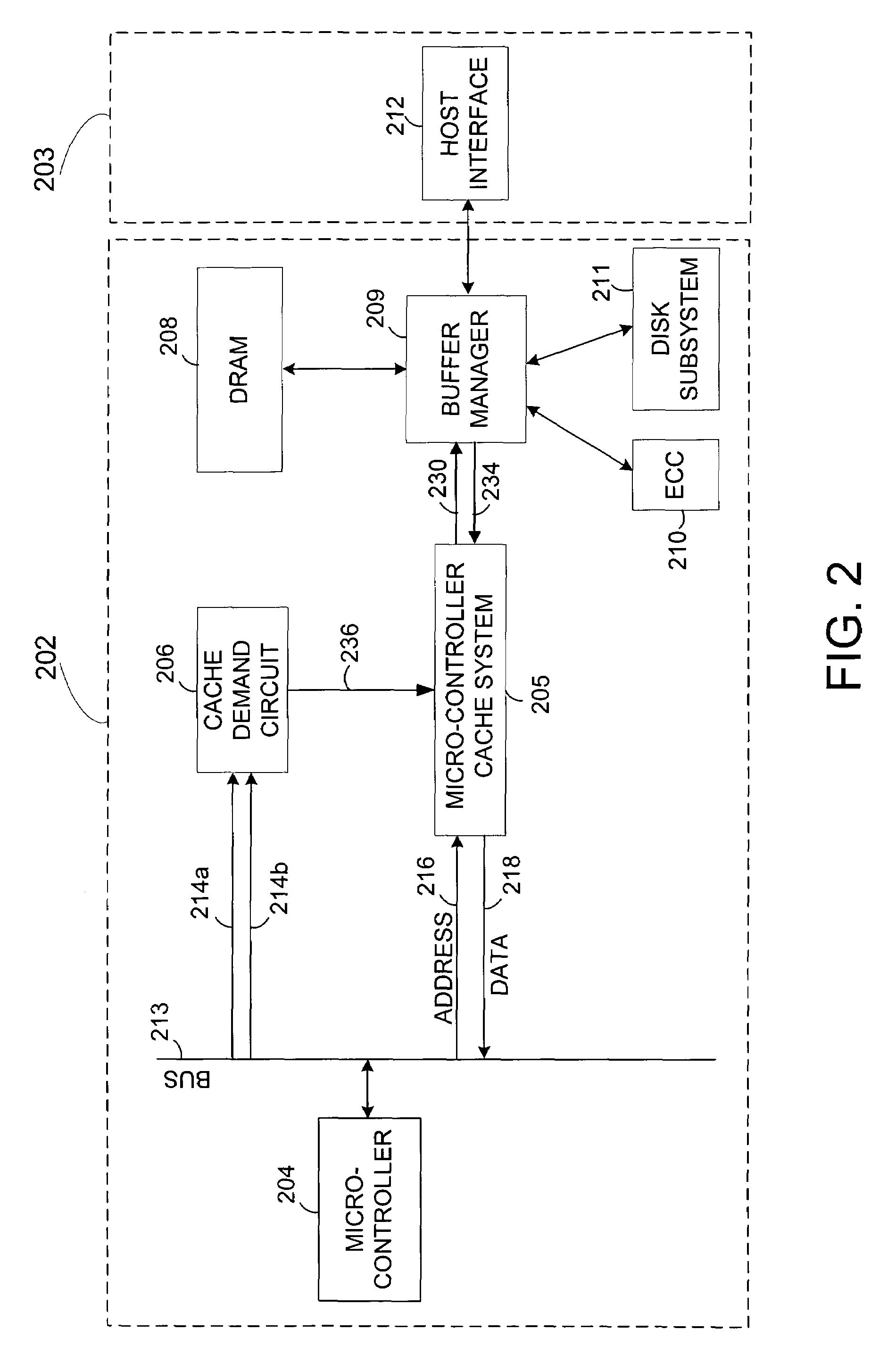

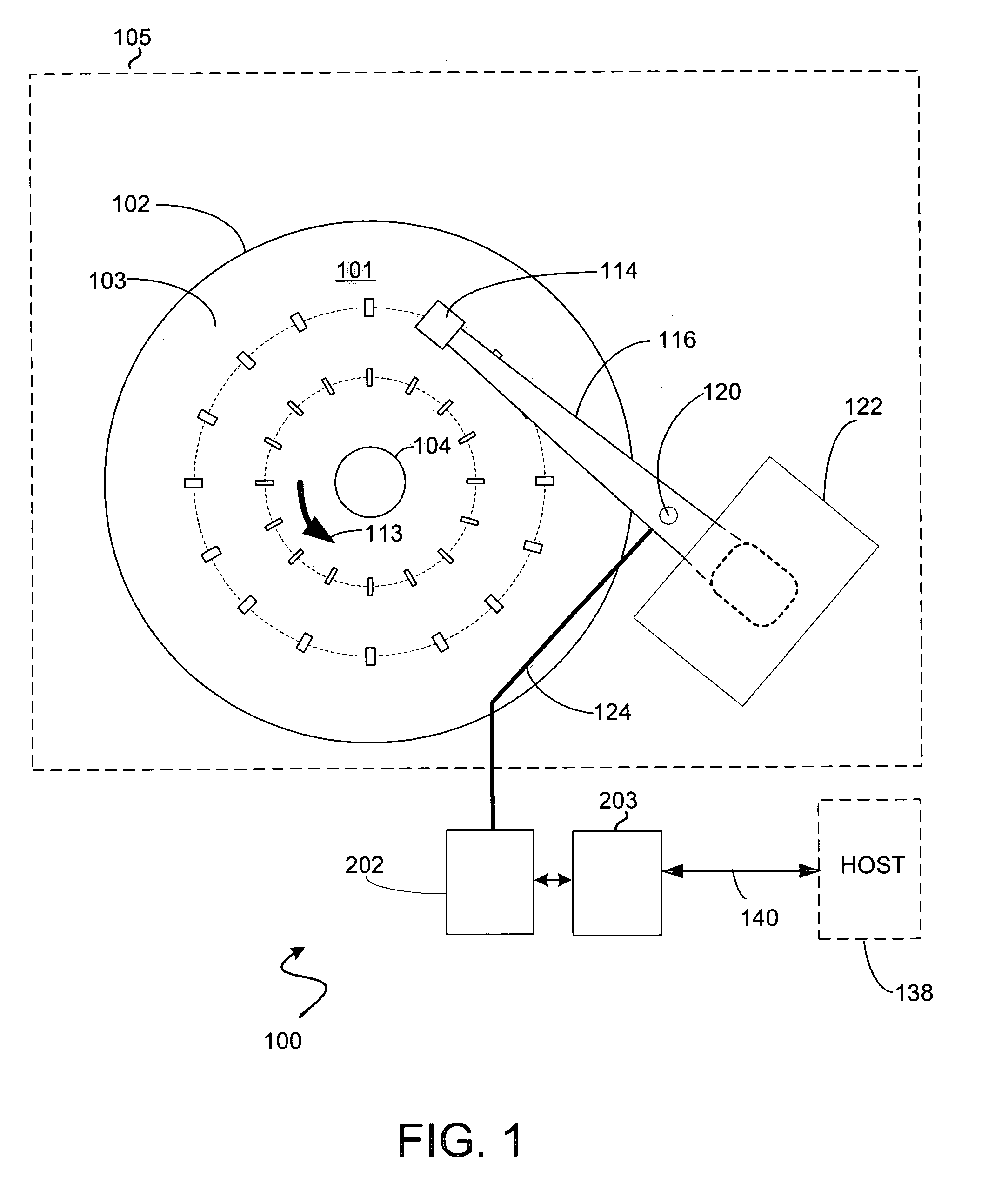

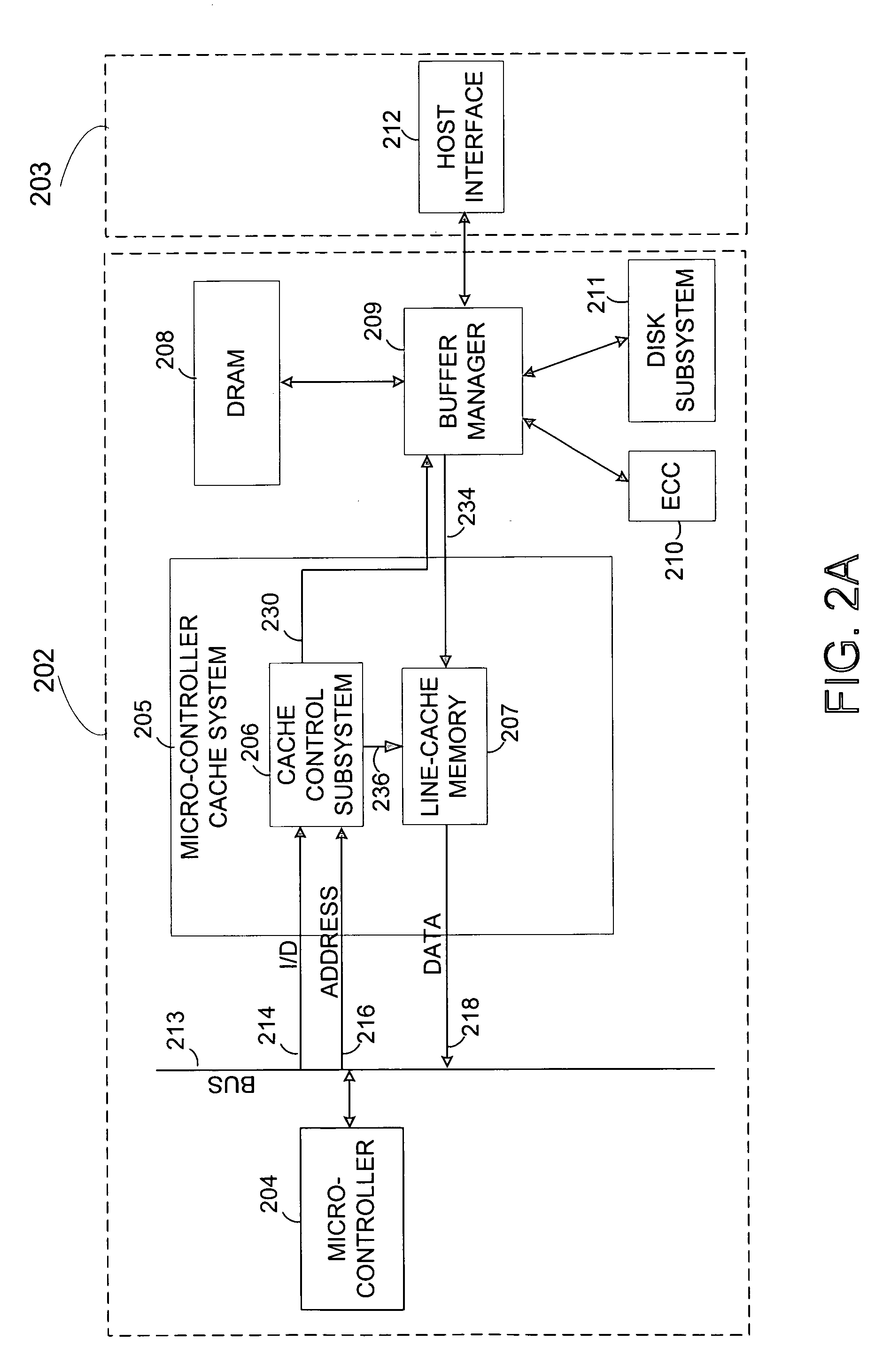

Fetch operations in a disk drive control system

InactiveUS7194576B1Easy to operateMemory architecture accessing/allocationMemory adressing/allocation/relocationControl systemParallel computing

A method and system for improving fetch operations between a micro-controller and a remote memory via a buffer manager in a disk drive control system comprising a micro-controller, a micro-controller cache system having a cache memory and a cache-control subsystem, and a buffer manager communicating with micro-controller cache system and remote memory. The invention includes receiving a data-request from micro-controller in cache control subsystem wherein the data-request comprises a request for at least one of instruction code and non-instruction data. The invention further includes providing the requested data to micro-controller if the requested data reside in cache memory, determining if the received data-request is for non-instruction data if requested data does not reside in cache memory, fetching the non-instruction data from remote memory by micro-controller cache system via buffer manager, and bypassing cache memory to preserve the contents of cache memory and provide the fetched non-instruction data to micro-controller.

Owner:WESTERN DIGITAL TECH INC

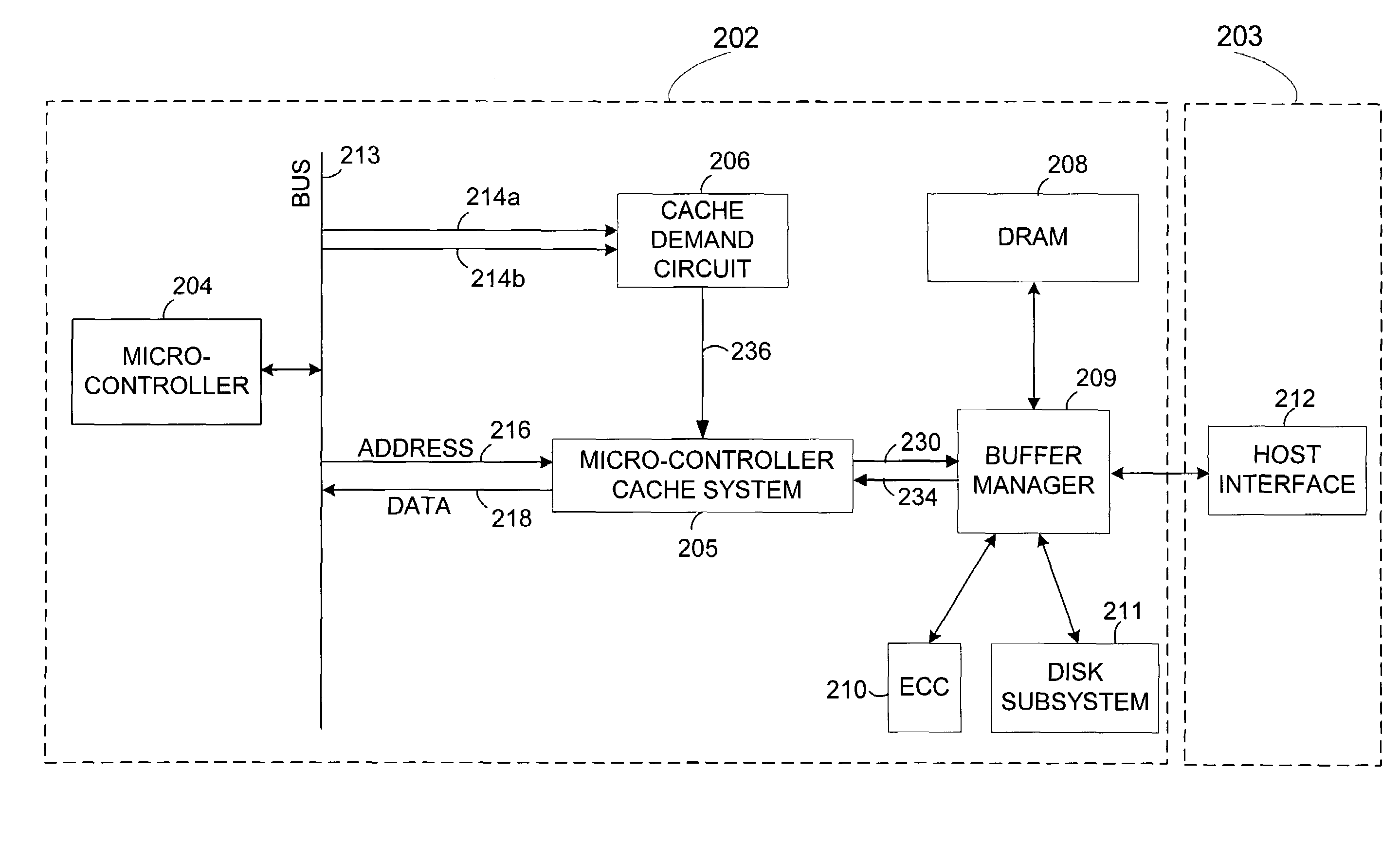

Instruction prefetch caching for remote memory

ActiveUS7240161B1Digital computer detailsConcurrent instruction executionMemory addressControl system

A disk drive control system comprising a micro-controller, a micro-controller cache system adapted to store micro-controller data for access by the micro-controller, a buffer manager adapted to provide the micro-controller cache system with micro-controller requested data stored in a remote memory, and a cache demand circuit adapted to: a) receive a memory address and a memory access signal, and b) cause the micro-controller cache system to fetch data from the remote memory via the buffer manager based on the received memory address and memory access signal prior to a micro-controller request.

Owner:WESTERN DIGITAL TECH INC

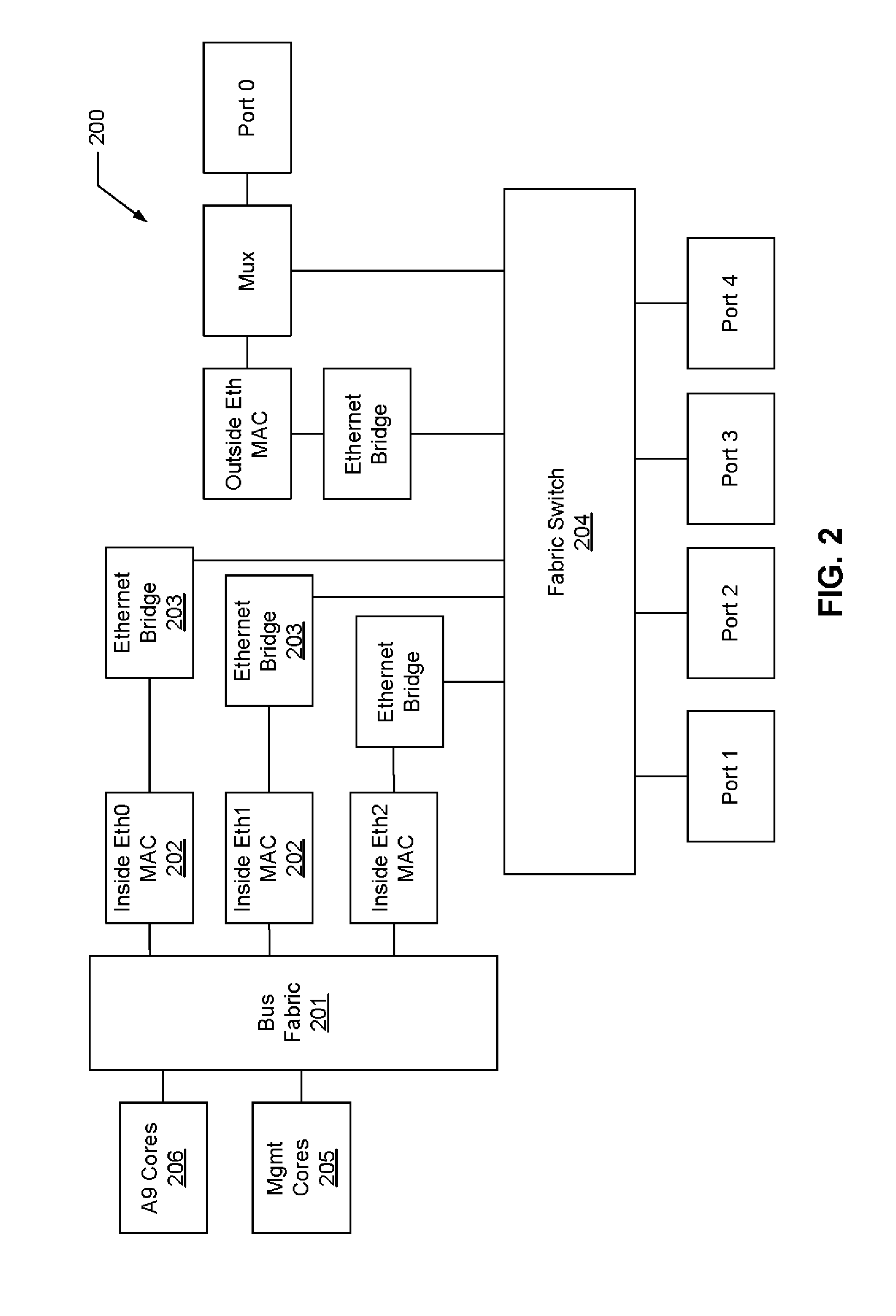

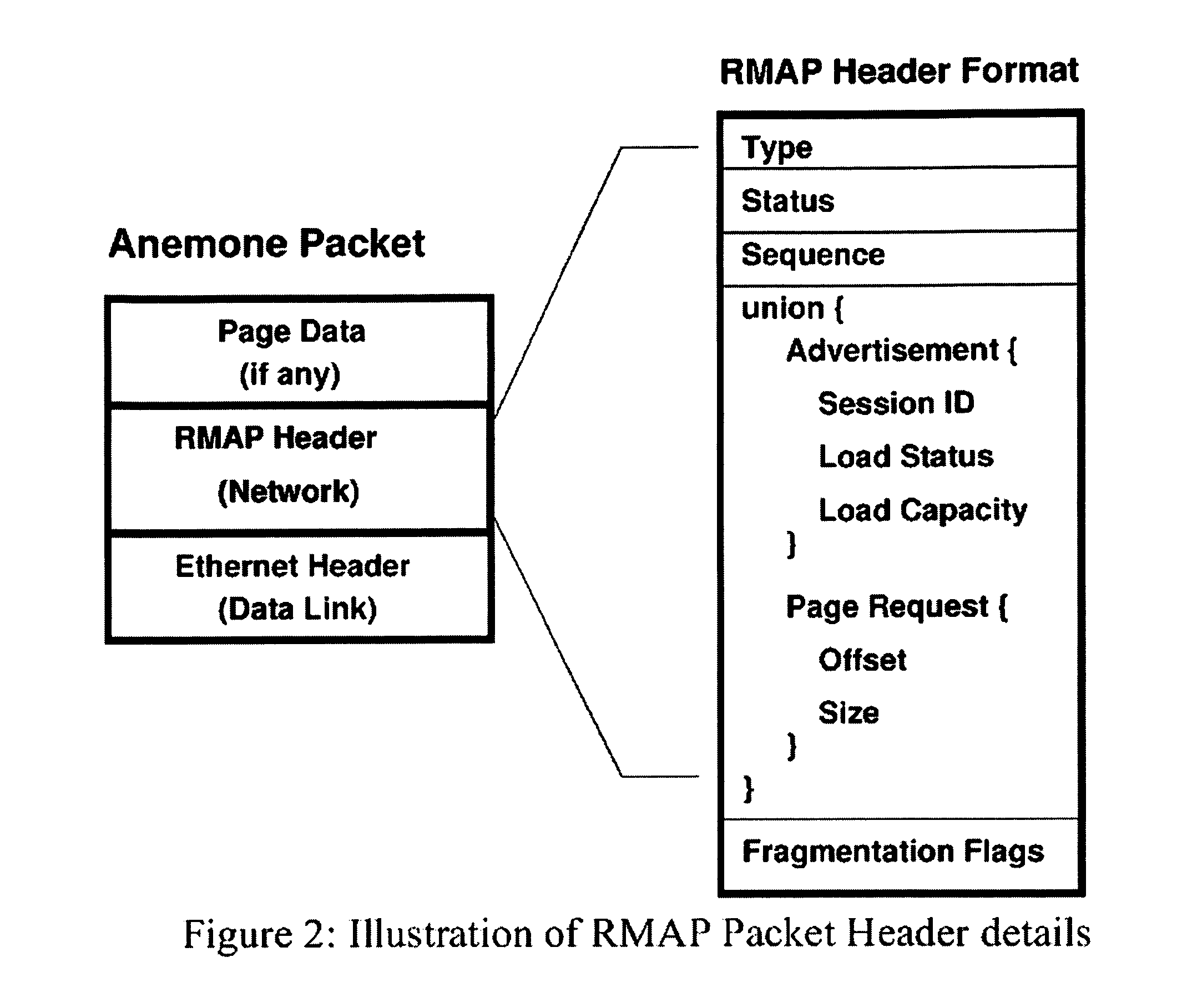

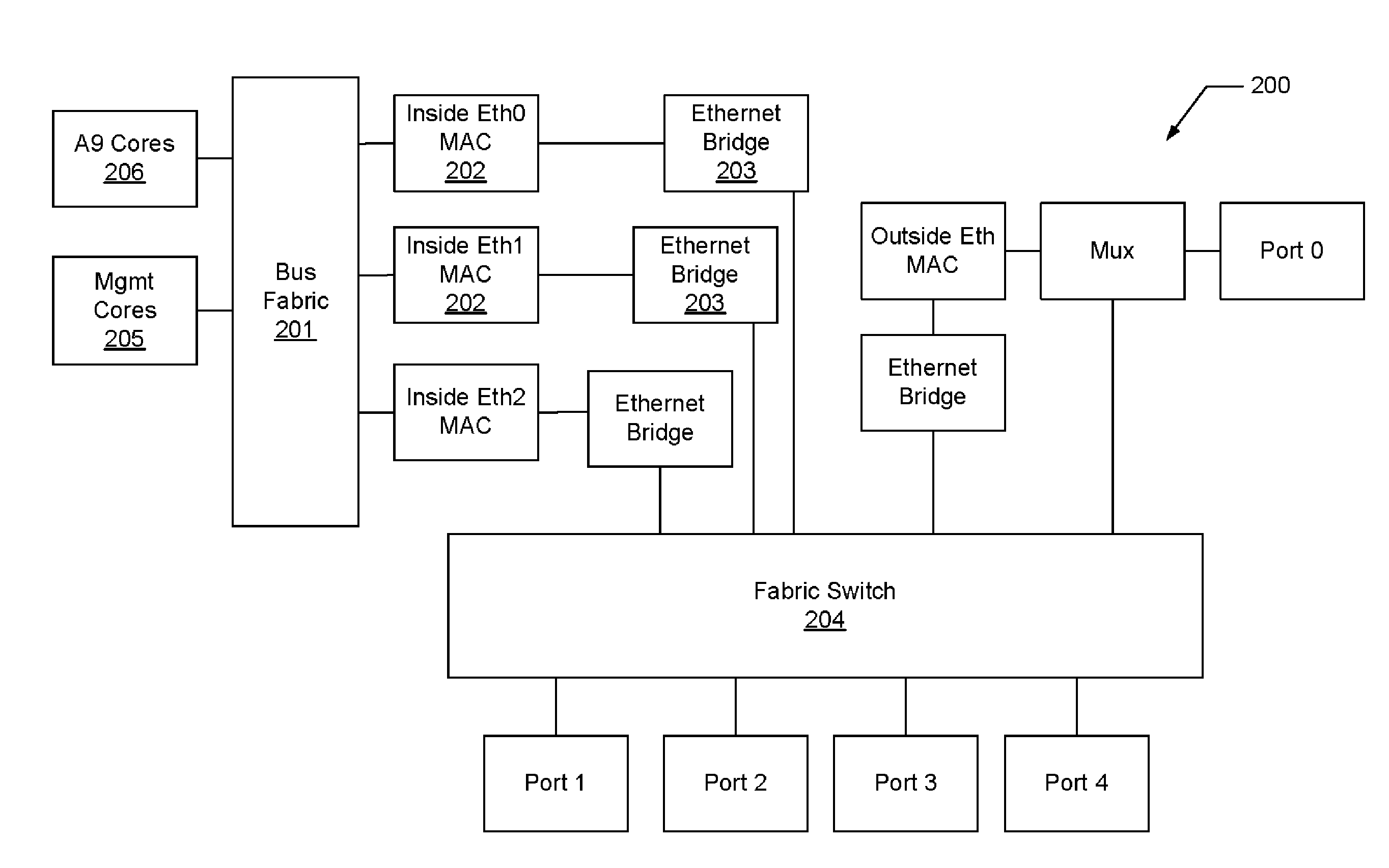

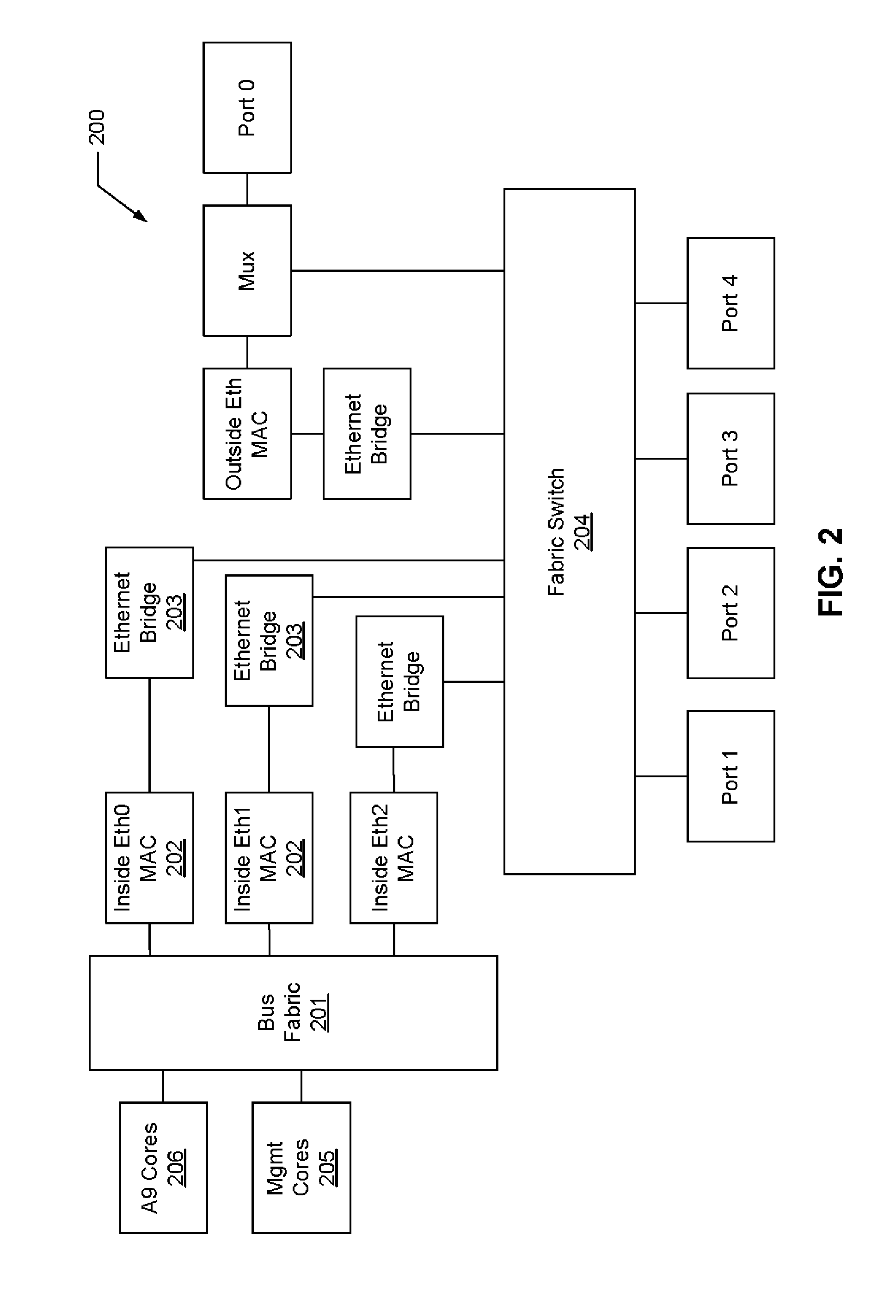

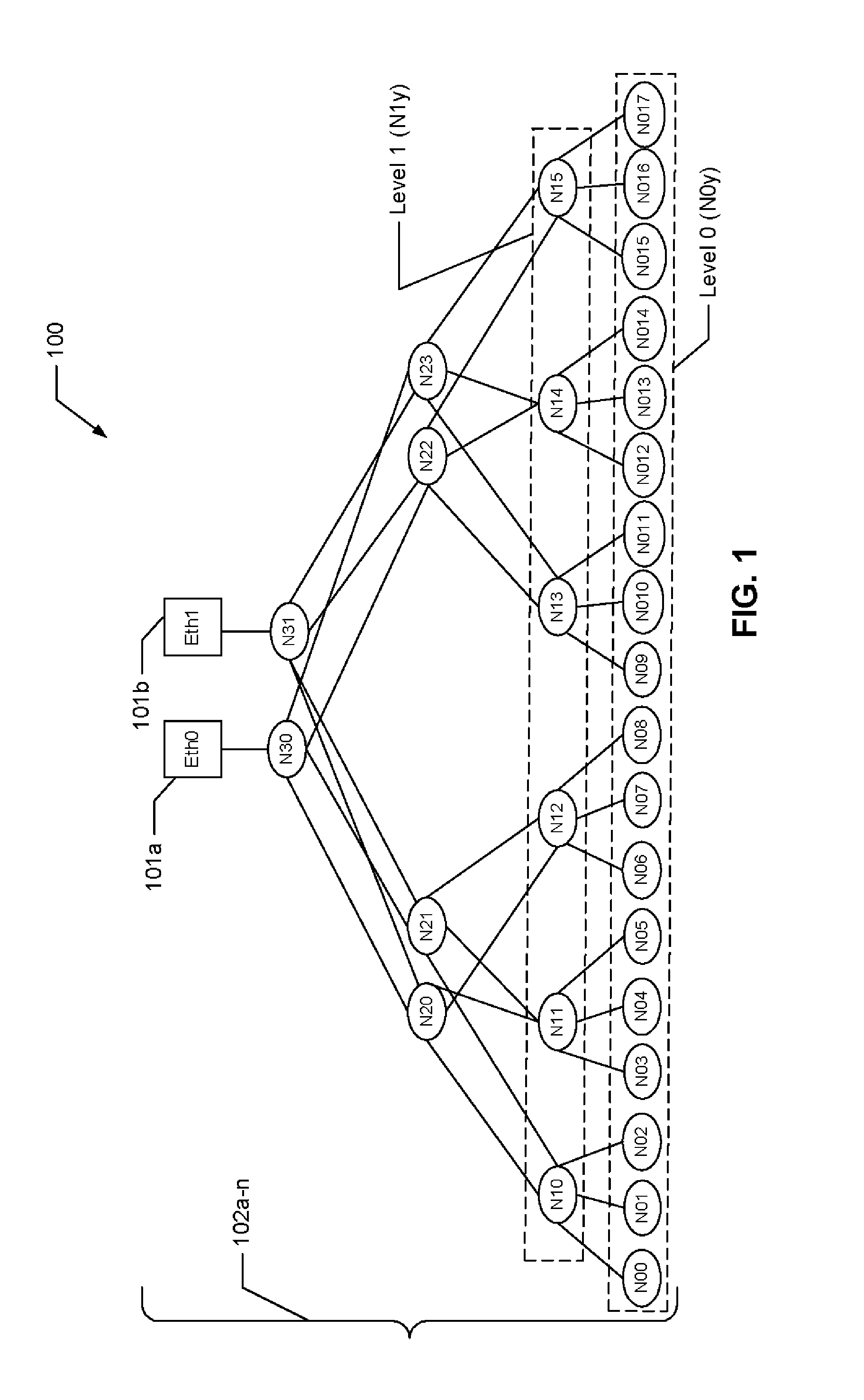

System and Method for Using a Multi-Protocol Fabric Module Across a Distributed Server Interconnect Fabric

ActiveUS20120207165A1Improve functionalityImprove optimizationDigital computer detailsData switching by path configurationPersonalizationStructure of Management Information

A multi-protocol personality module enabling load / store from remote memory, remote DMA transactions, and remote interrupts, which permits enhanced performance, power utilization and functionality. In one form, the module is used as a node in a network fabric and adds a routing header to packets entering the fabric, maintains the routing header for efficient node-to-node transport, and strips the header when the packet leaves the fabric. In particular, a remote bus personality component is described. Several use cases of the Remote Bus Fabric Personality Module are disclosed: 1) memory sharing across a fabric connected set of servers; 2) the ability to access physically remote I / O devices across this fabric of connected servers; and 3) the sharing of such physically remote I / O devices, such as storage peripherals, by multiple fabric connected servers.

Owner:III HLDG 2

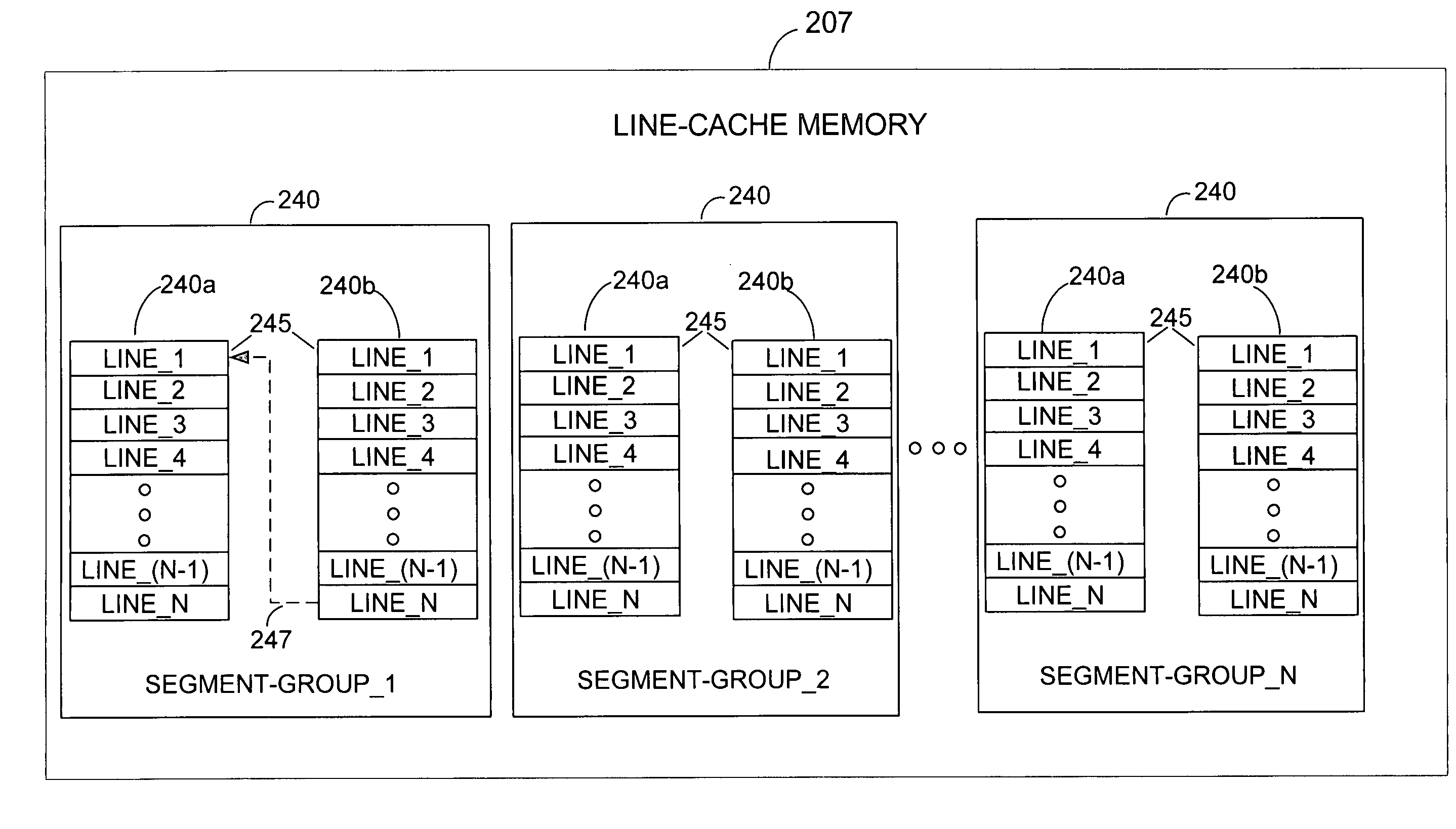

Reducing micro-controller access time to data stored in a remote memory in a disk drive control system

InactiveUS7111116B1Shorten access timeReducing micro-controller access timeMemory adressing/allocation/relocationControl systemAccess time

A method and system for reducing micro-controller access time to information stored in the remote memory via the buffer manager in a disk drive control system comprising a micro-controller, a micro-controller cache system having a plurality of line-cache segments grouped into at least one line-cache segment-group, and a buffer manager communicating with the micro-controller cache system and a remote memory. The method and system includes receiving in the micro-controller cache system a current data-request from the micro-controller, providing the current requested data to the micro-controller if the current requested data resides in a first line-cache segment of a first segment-group, and automatically filling a second line-cache segment of the first segment-group with data retrieved from the remote memory wherein the retrieved data is sequential in the remote memory to the provided current requested data.

Owner:WESTERN DIGITAL TECH INC

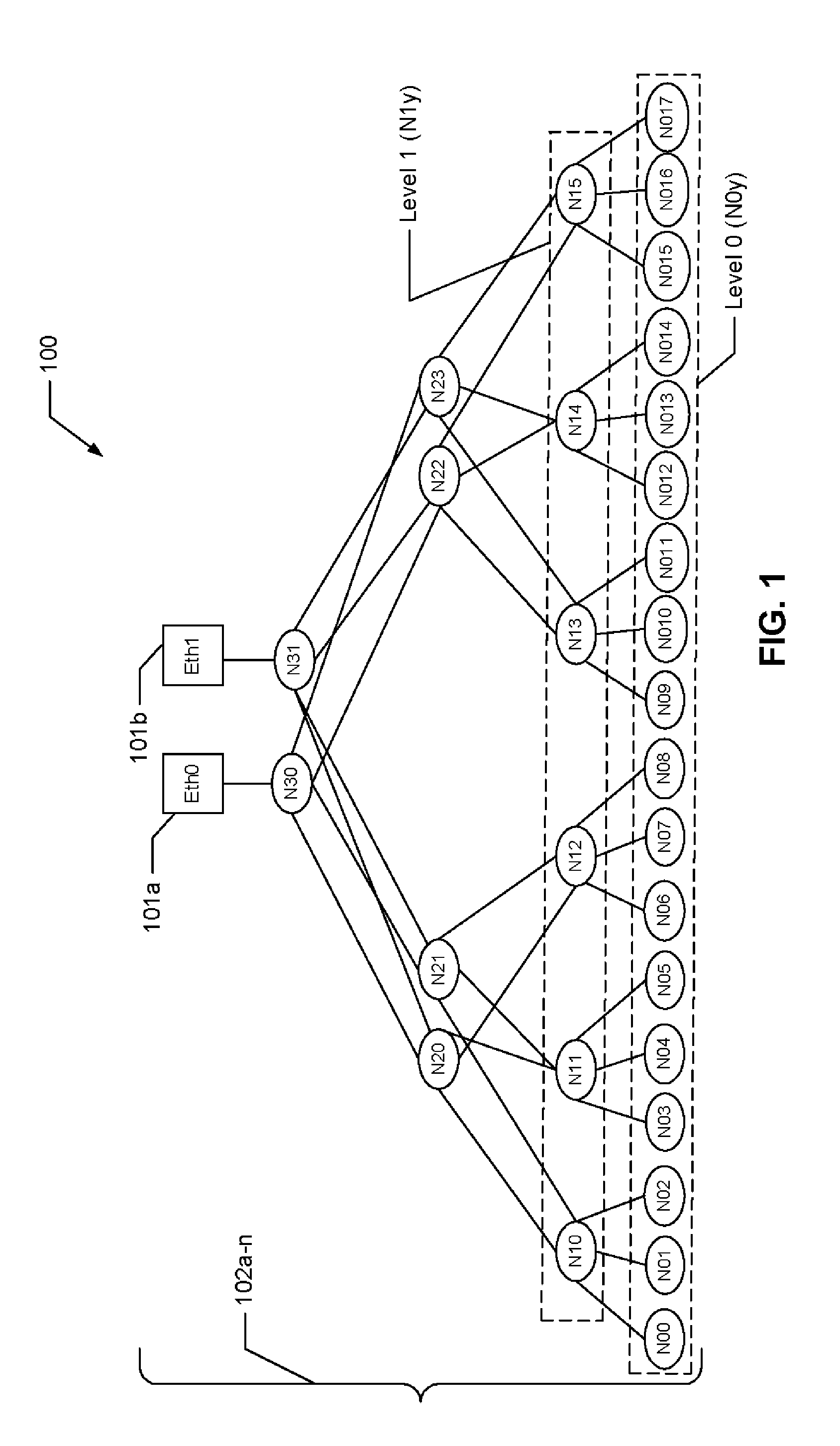

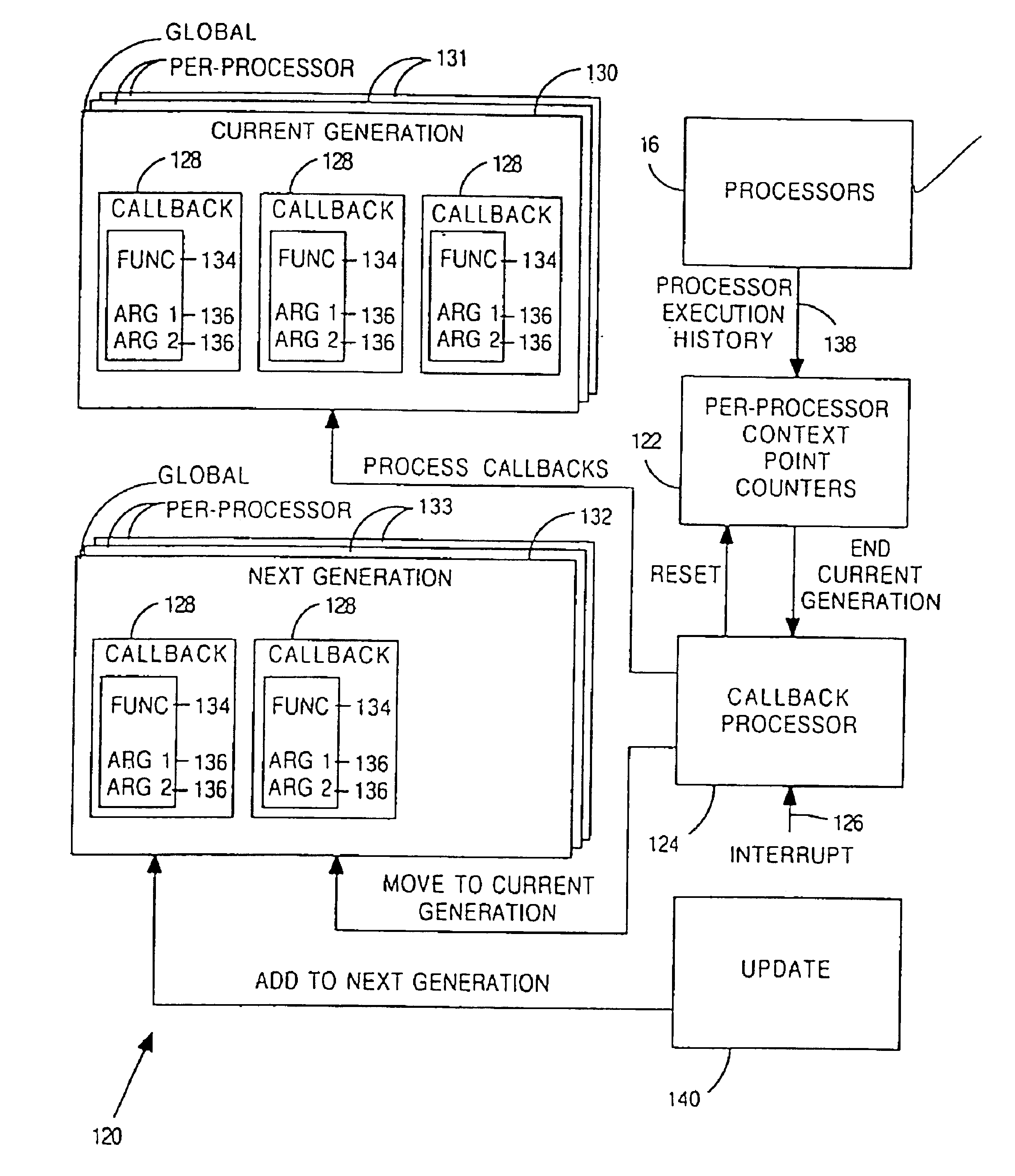

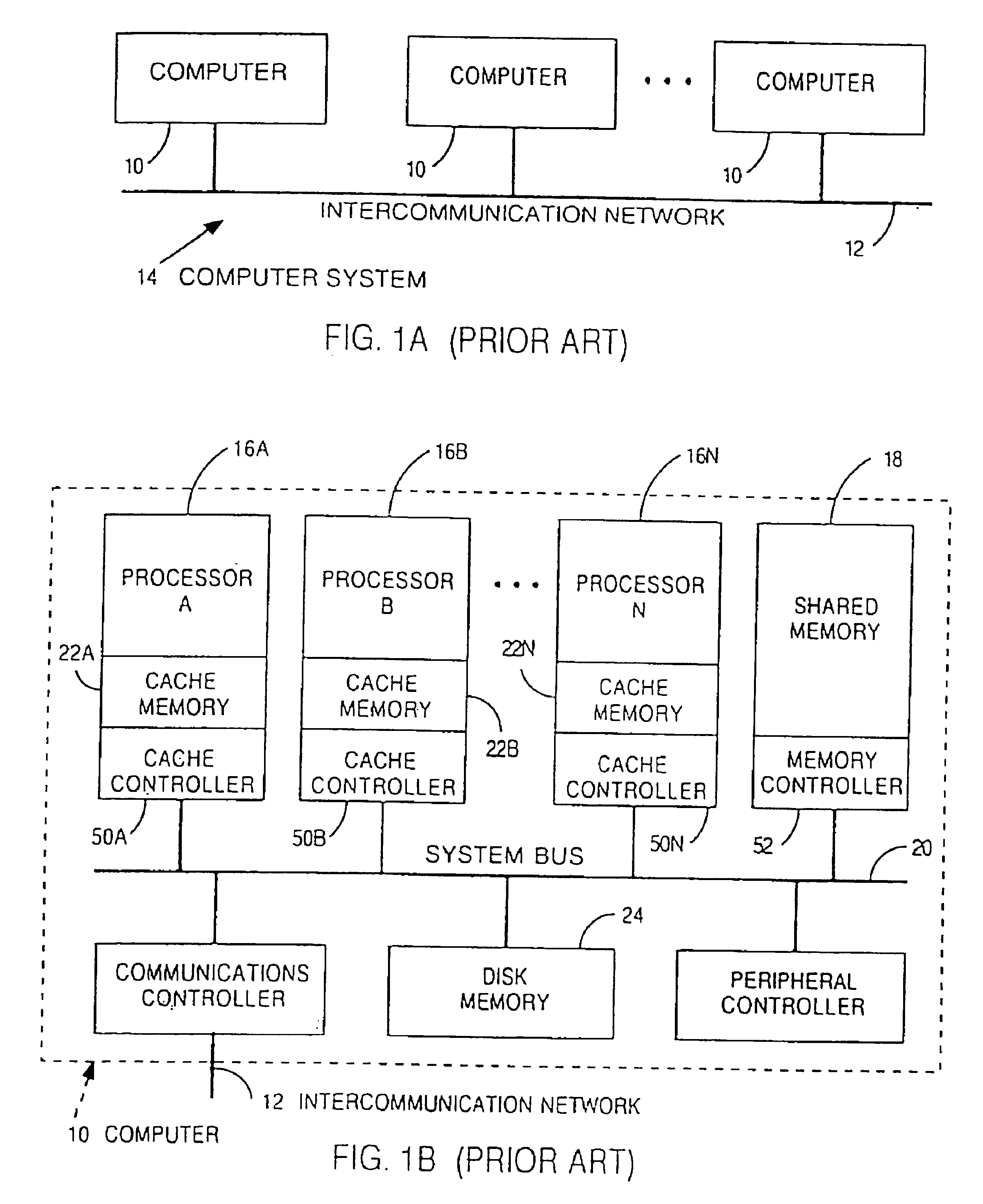

High speed methods for maintaining a summary of thread activity for multiprocessor computer systems

InactiveUS6886162B1Reduce in quantityMultiprogramming arrangementsMemory systemsQuiescent stateProcessor node

A high-speed method for maintaining a summary of thread activity reduces the number of remote-memory operations for an n processor, multiple node computer system from n2 to (2n−1) operations. The method uses a hierarchical summary of-thread-activity data structure that includes structures such as first and second level bit masks. The first level bit mask is accessible to all nodes and contains a bit per node, the bit indicating whether the corresponding node contains a processor that has not yet passed through a quiescent state. The second level bit mask is local to each node and contains a bit per processor per node, the bit indicating whether the corresponding processor has not yet passed through a quiescent state. The method includes determining from a data structure on the processor's node (such as a second level bitmask) if the processor has passed through a quiescent state. If so, it is then determined from the data structure if all other processors on its node have passed through a quiescent state. If so, it is then indicated in a data structure accessible to all nodes (such as the first level bitmask) that all processors on the processor's node have passed through a quiescent state. The local generation number can also be stored in the data structure accessible to all nodes. If a processor determines from this data structure that the processor is the last processor to pass through a quiescent state, the processor updates the data structure for storing a number of the current generation stored in the memory of each node.

Owner:SEQUENT COMPUTER SYSTEMS

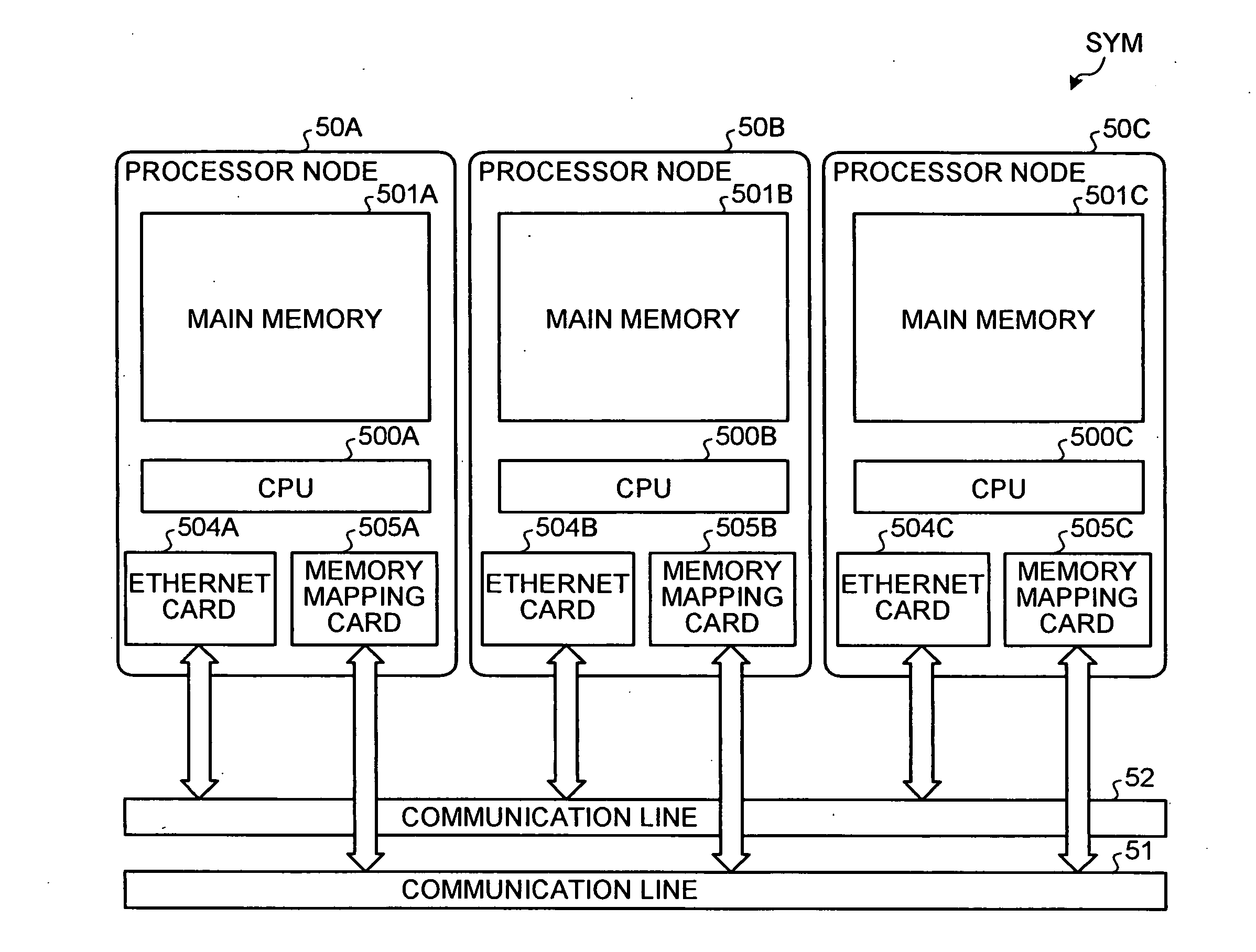

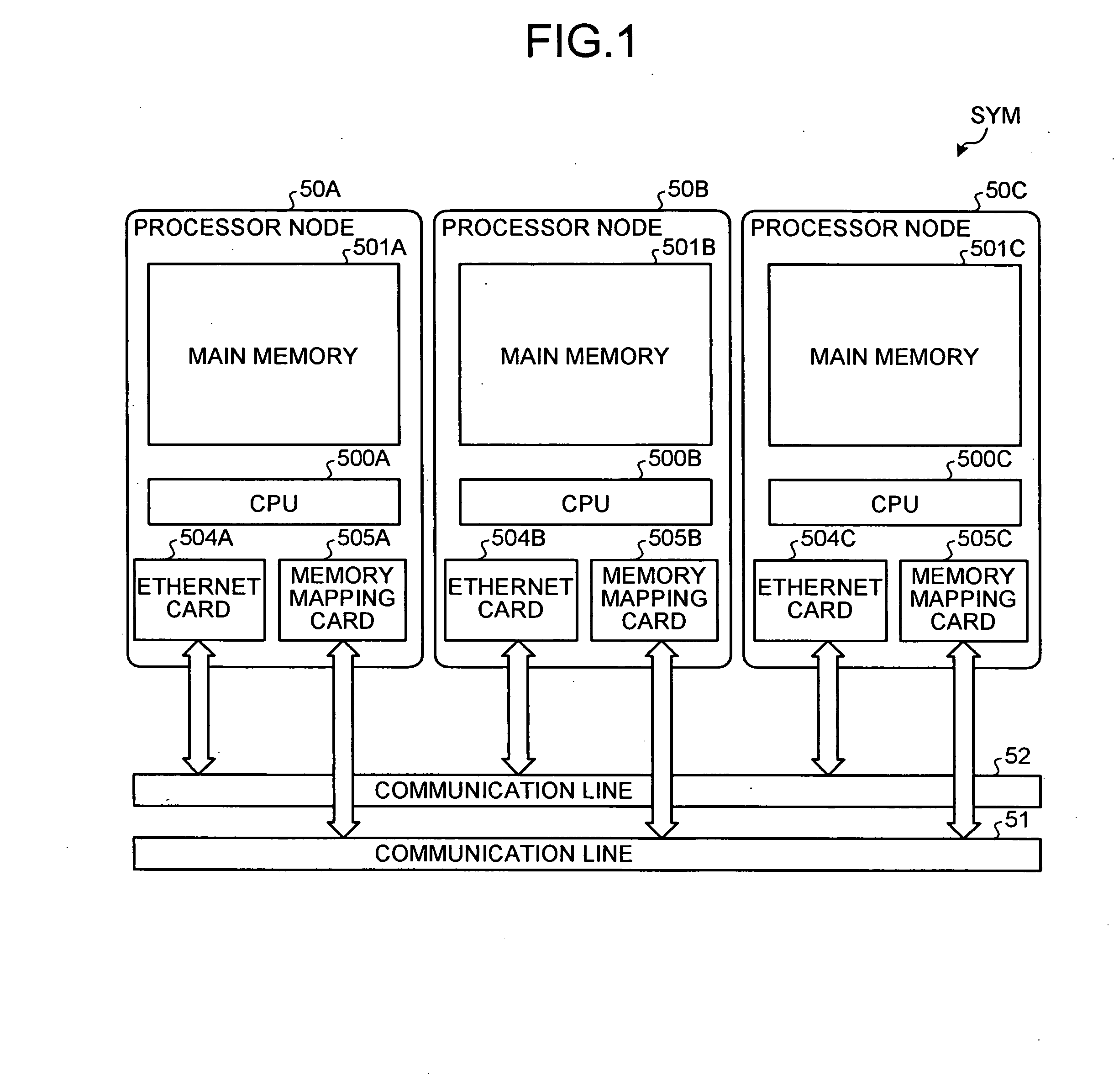

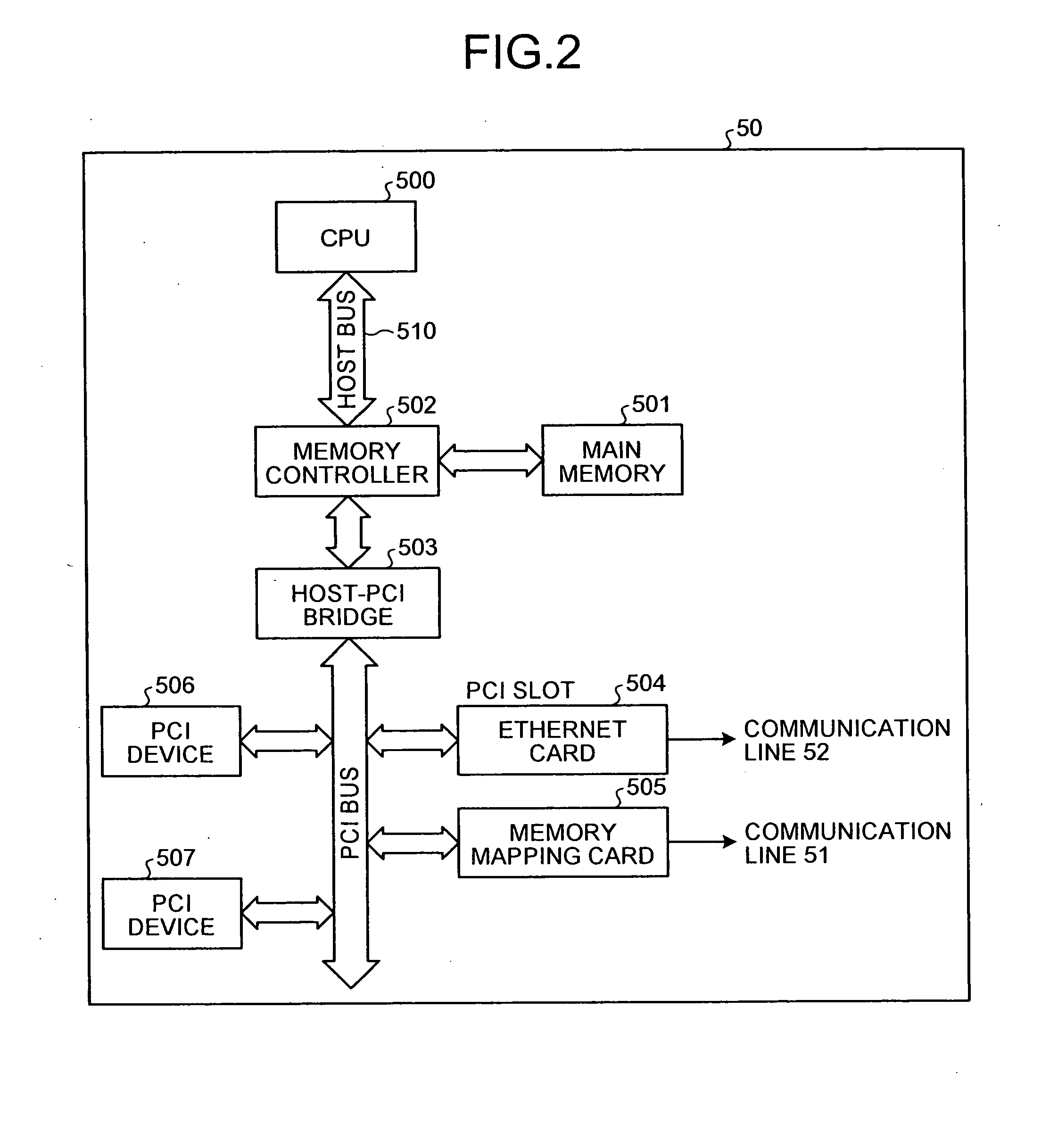

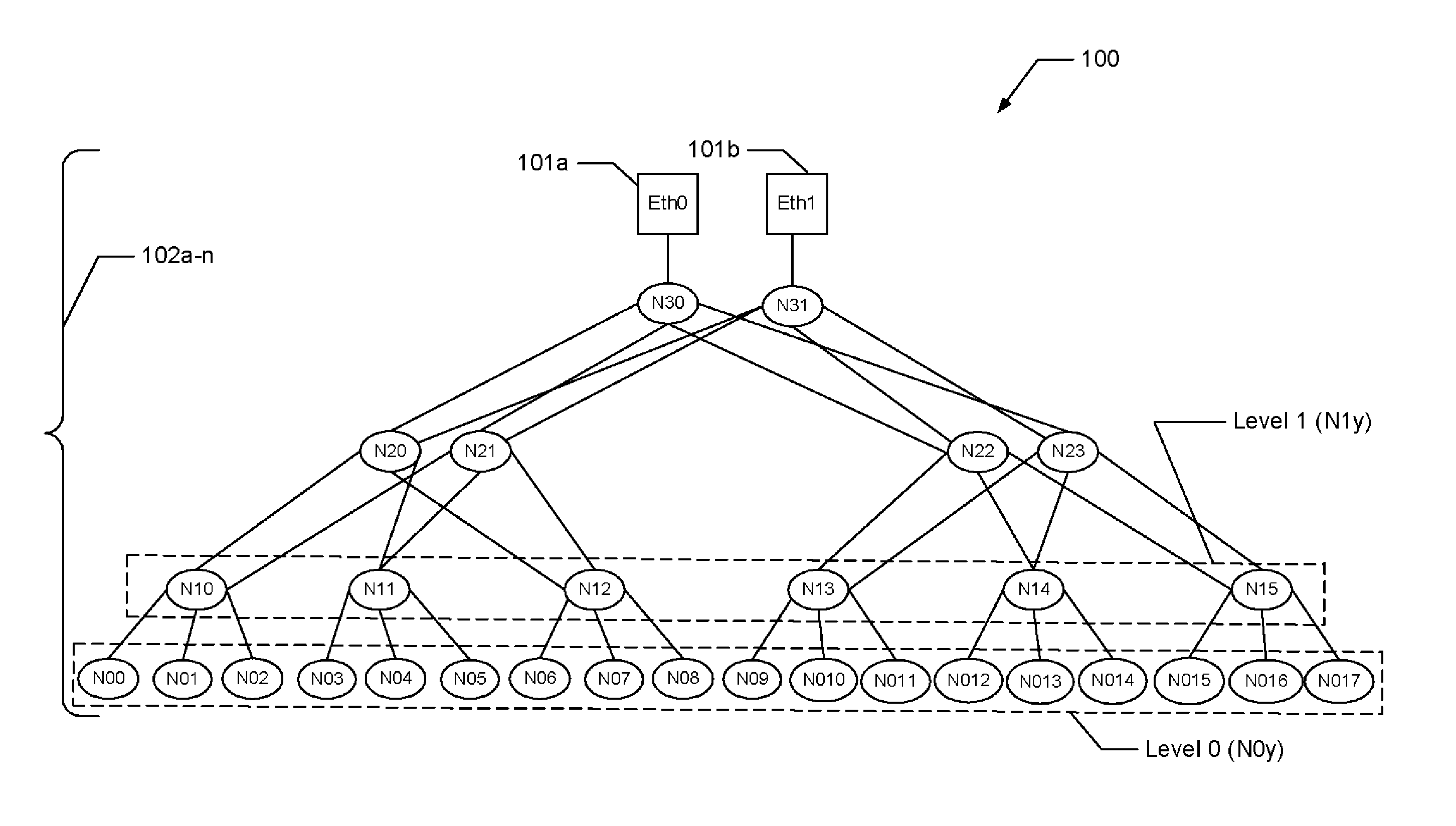

Multiprocessor system

InactiveUS20090077326A1Solve problemsMemory adressing/allocation/relocationGeneral purpose stored program computerCommunication unitManagement unit

A memory mapping unit requests allocation of a remote memory to memory mapping units of other processor nodes via a second communication unit, and requests creation of a mapping connection to a memory-mapping managing unit of a first processor node via the second communication unit. The memory-mapping managing unit creates the mapping connection between a processor node and other processor nodes according to a connection creation request from the memory mapping unit, and then transmits a memory mapping instruction for instructing execution of a memory mapping to the memory mapping unit via a first communication unit of the first processor node.

Owner:RICOH KK

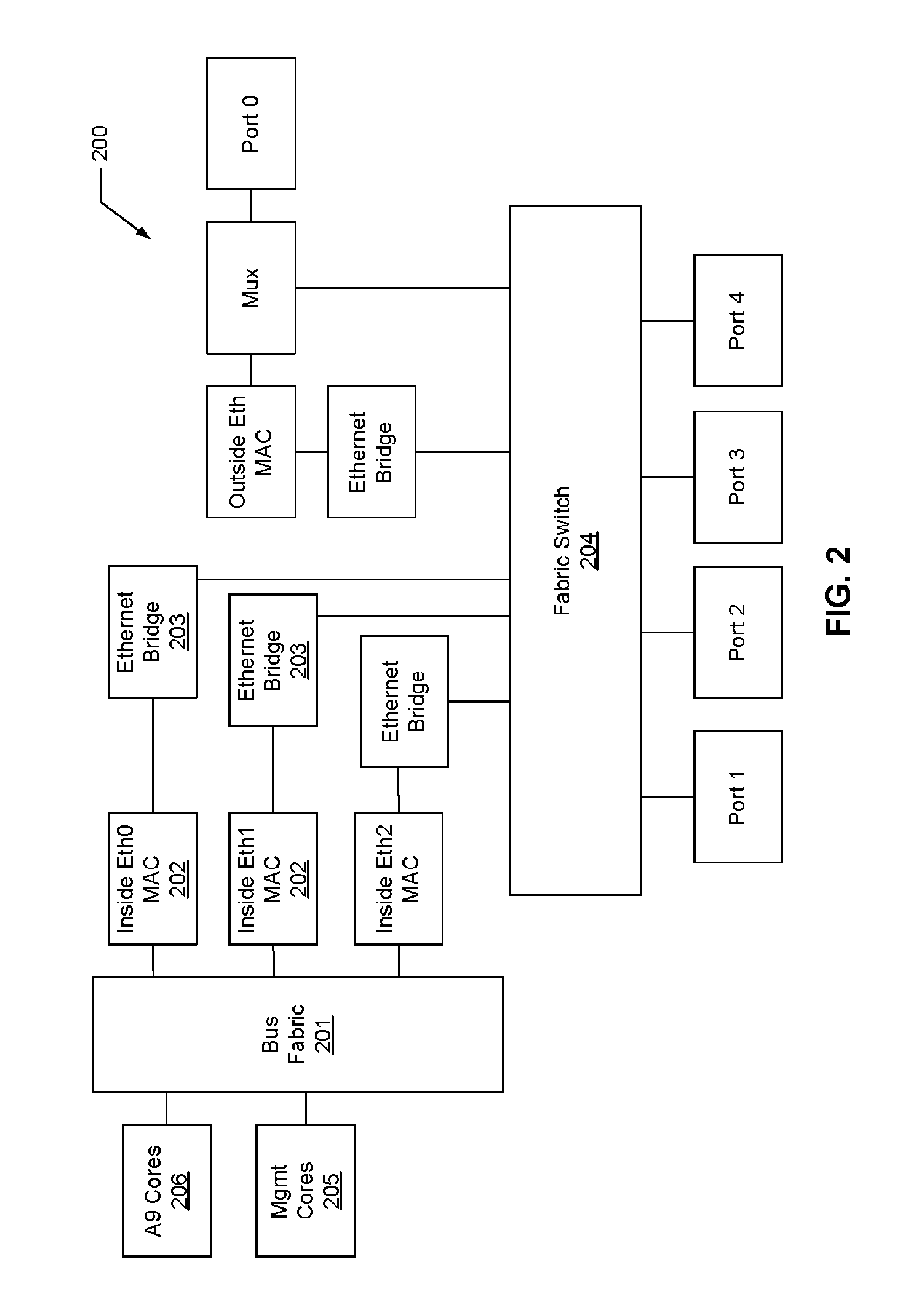

Two-stage request protocol for accessing remote memory data in a NUMA data processing system

InactiveUS20030009643A1Memory architecture accessing/allocationMemory adressing/allocation/relocationRemote memoryReceipt

A non-uniform memory access (NUMA) computer system includes a remote node coupled by a node interconnect to a home node having a home system memory. The remote node includes a local interconnect, a processing unit and at least one cache coupled to the local interconnect, and a node controller coupled between the local interconnect and the node interconnect. The processing unit first issues, on the local interconnect, a read-type request targeting data resident in the home system memory with a flag in the read-type request set to a first state to indicate only local servicing of the read-type request. In response to inability to service the read-type request locally in the remote node, the processing unit reissues the read-type request with the flag set to a second state to instruct the node controller to transmit the read-type request to the home node. The node controller, which includes a plurality of queues, preferably does not queue the read-type request until receipt of the reissued read-type request with the flag set to the second state.

Owner:IBM CORP

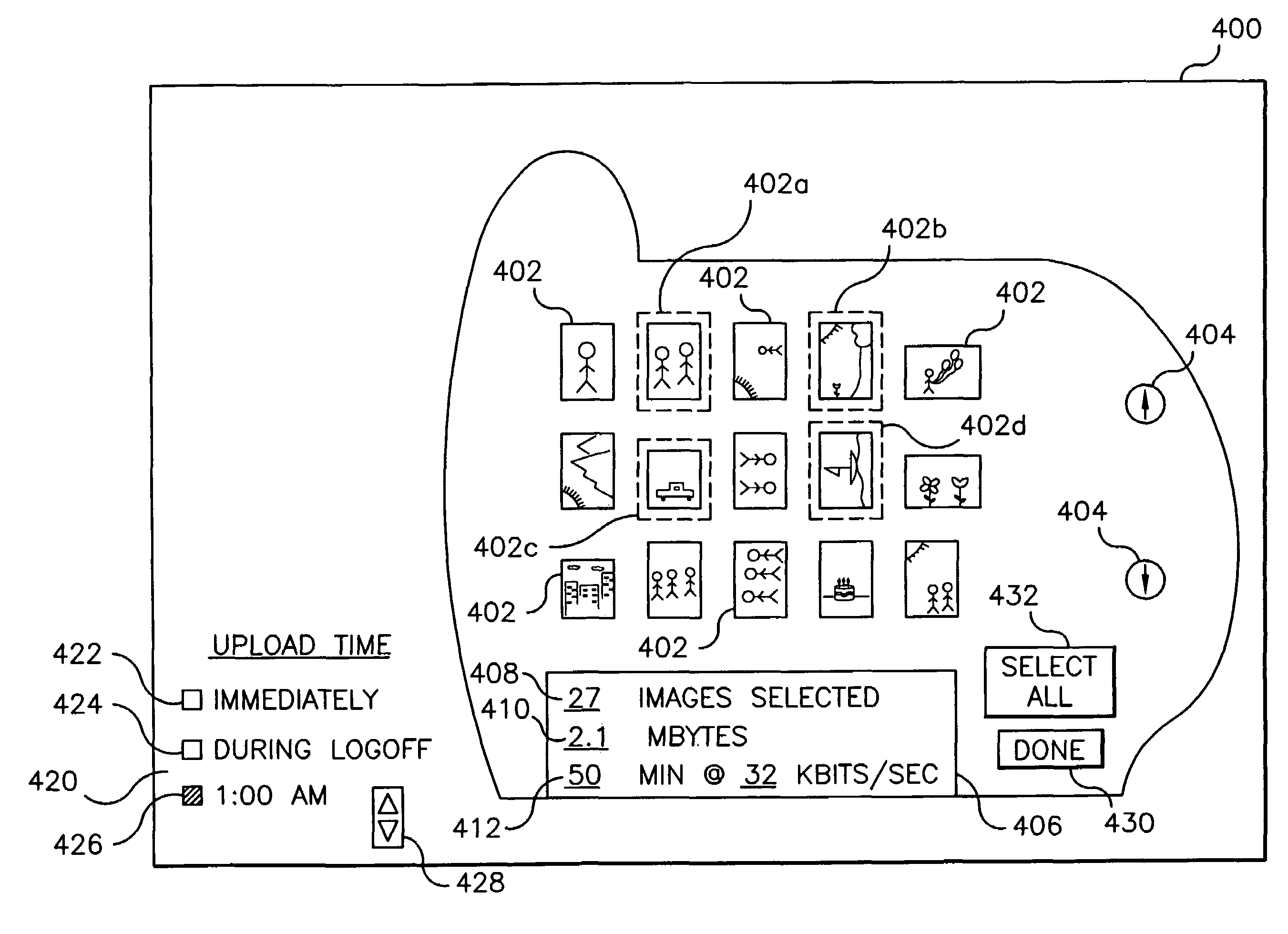

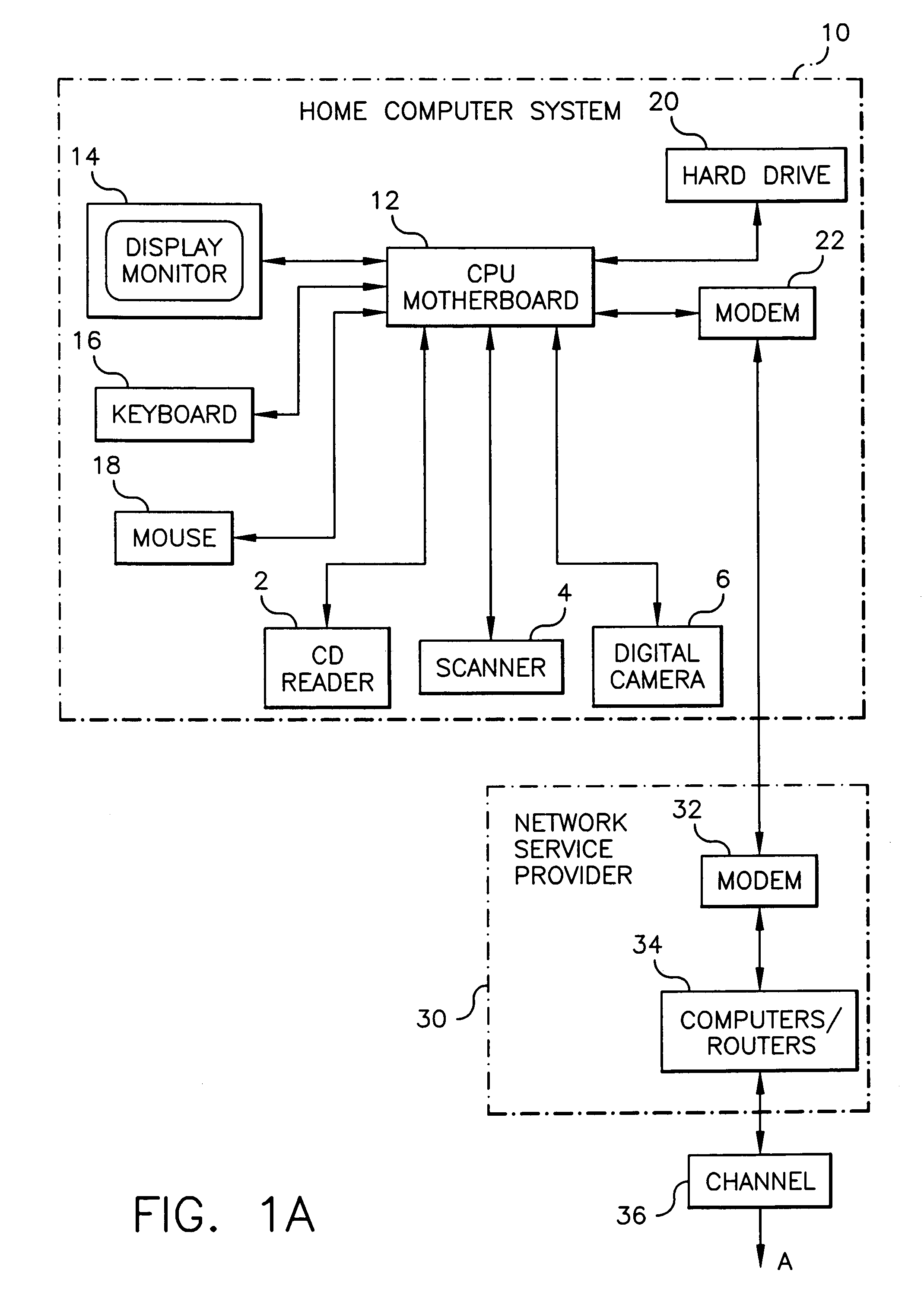

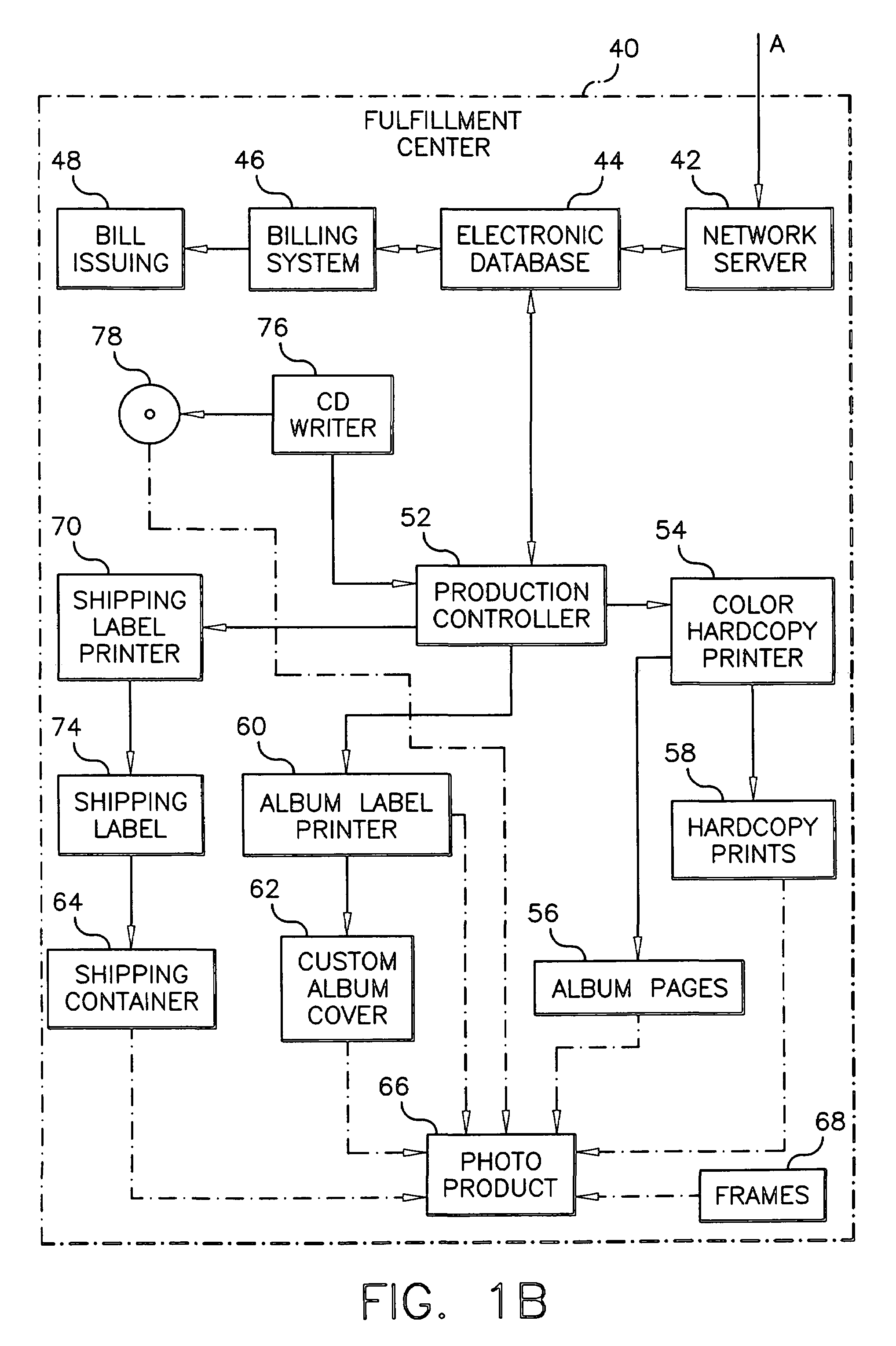

Effective transfer of images from a user to a service provider

InactiveUS6950198B1Easy to getTelevision system detailsVisual representatino by photographic printingService provisionThumbnail Image

A method of selecting images from a plurality of images previously stored in a local digital memory, ordering services to be provided utilizing the images, and transferring such images over a channel to a remote digital memory where the services are to be provided includes storing a plurality of images in the local memory location along with corresponding thumbnail images and displaying at least a subset of the thumbnail images for viewing by a user. The user selects those images to be transferred after viewing the displayed thumbnail images and provides image identifiers for each selected image to be uploaded to the remote location and a service order which specifies the services to be provided utilizing such selected images. The remote location confirms the receipt of the service order. The images are transferred over the channel to the remote memory location at a suitable time for effective data transfer whereby the ordered services can be subsequently provided.

Owner:MONUMENT PEAK VENTURES LLC

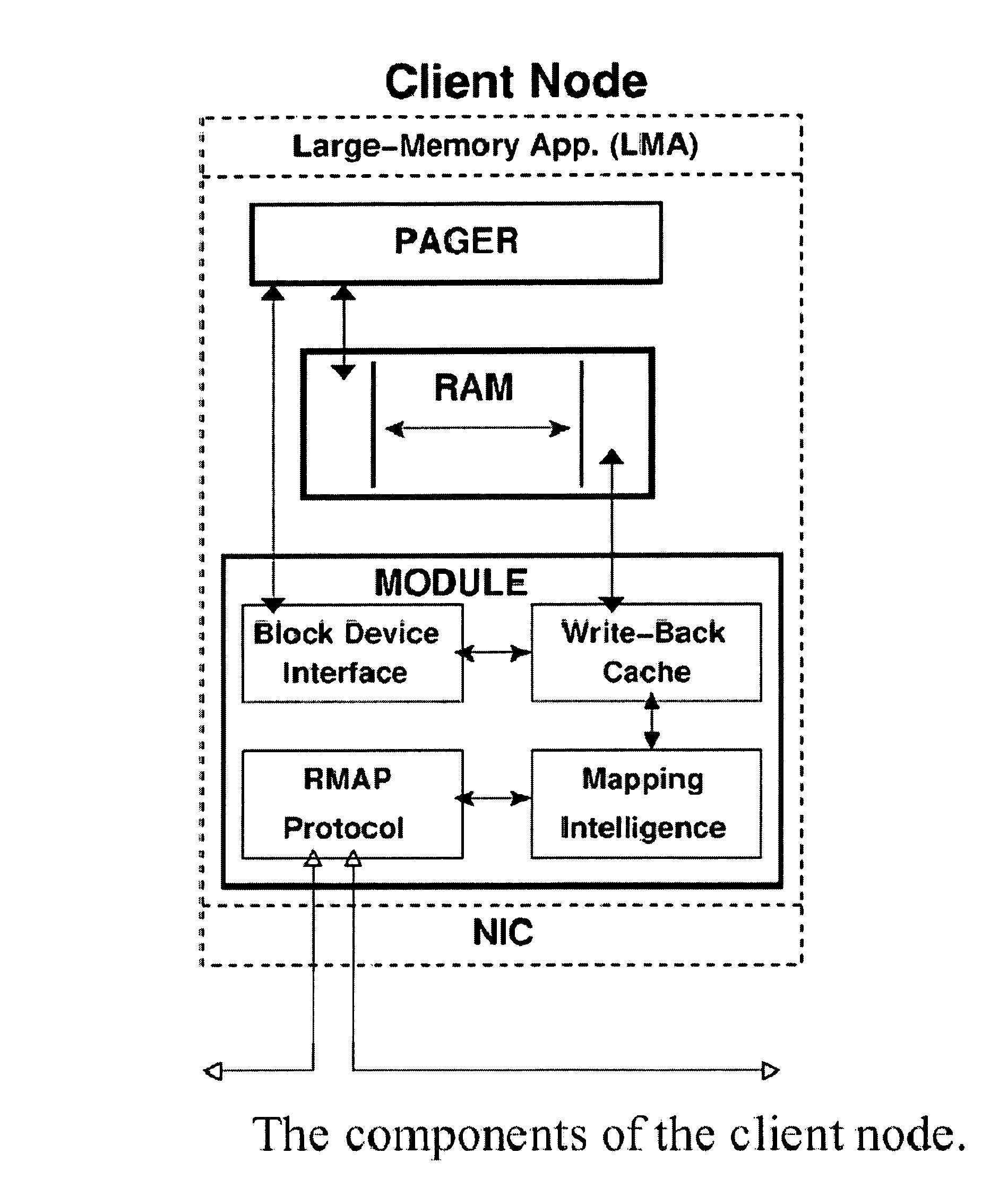

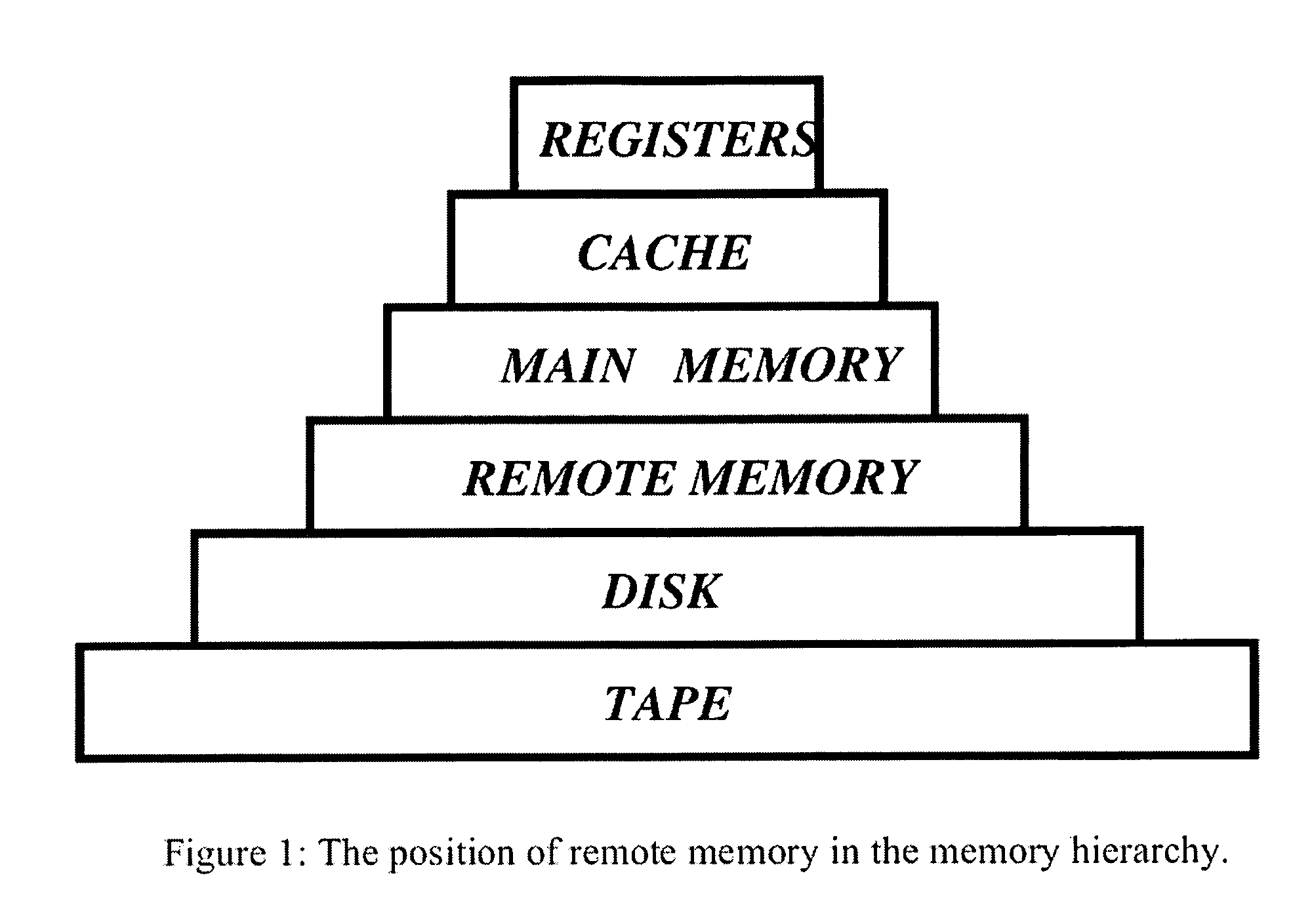

Distributed adaptive network memory engine

ActiveUS7917599B1Processing speedLower latencyMultiple digital computer combinationsTransmissionMass storageOperational system

Memory demands of large-memory applications continue to remain one step ahead of the improvements in DRAM capacities of commodity systems. Performance of such applications degrades rapidly once the system hits the physical memory limit and starts paging to the local disk. A distributed network-based virtual memory scheme is provided which treats remote memory as another level in the memory hierarchy between very fast local memory and very slow local disks. Performance over gigabit Ethernet shows significant performance gains over local disk. Large memory applications may access potentially unlimited network memory resources without requiring any application or operating system code modifications, relinkling or recompilation. A preferred embodiment employs kernel-level driver software.

Owner:THE RES FOUND OF STATE UNIV OF NEW YORK

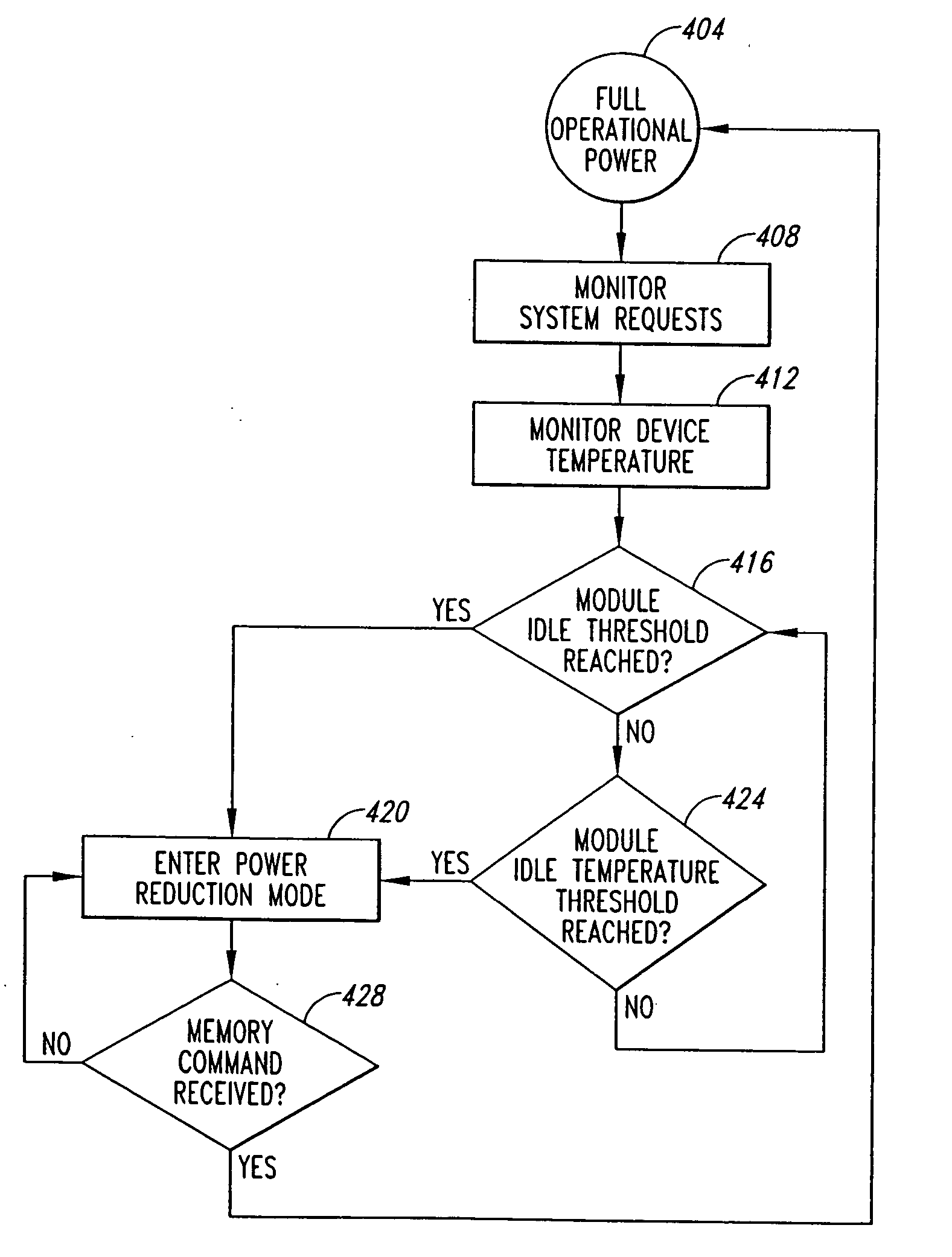

System and method for selective memory module power management

InactiveUS20060206738A1Reduced responsivenessReduce usageEnergy efficient ICTVolume/mass flow measurementActivity levelSystem usage

A memory module includes a memory hub that monitors utilization of the memory module and directs devices of the memory module to a reduced power state when the module is not being used at a desired level. System utilization of the memory module is monitored by tracking system usage, manifested by read and write commands issued to the memory module, or by measuring temperature changes indicating a level of device activity beyond normal refresh activity. Alternatively, measured activity levels can be transmitted over a system bus to a centralized power management controller which, responsive to the activity level packets transmitted by remote memory modules, direct devices of those remote memory modules to a reduced power state. The centralized power management controller could be disposed on a master memory module or in a memory or system controller.

Owner:ROUND ROCK RES LLC

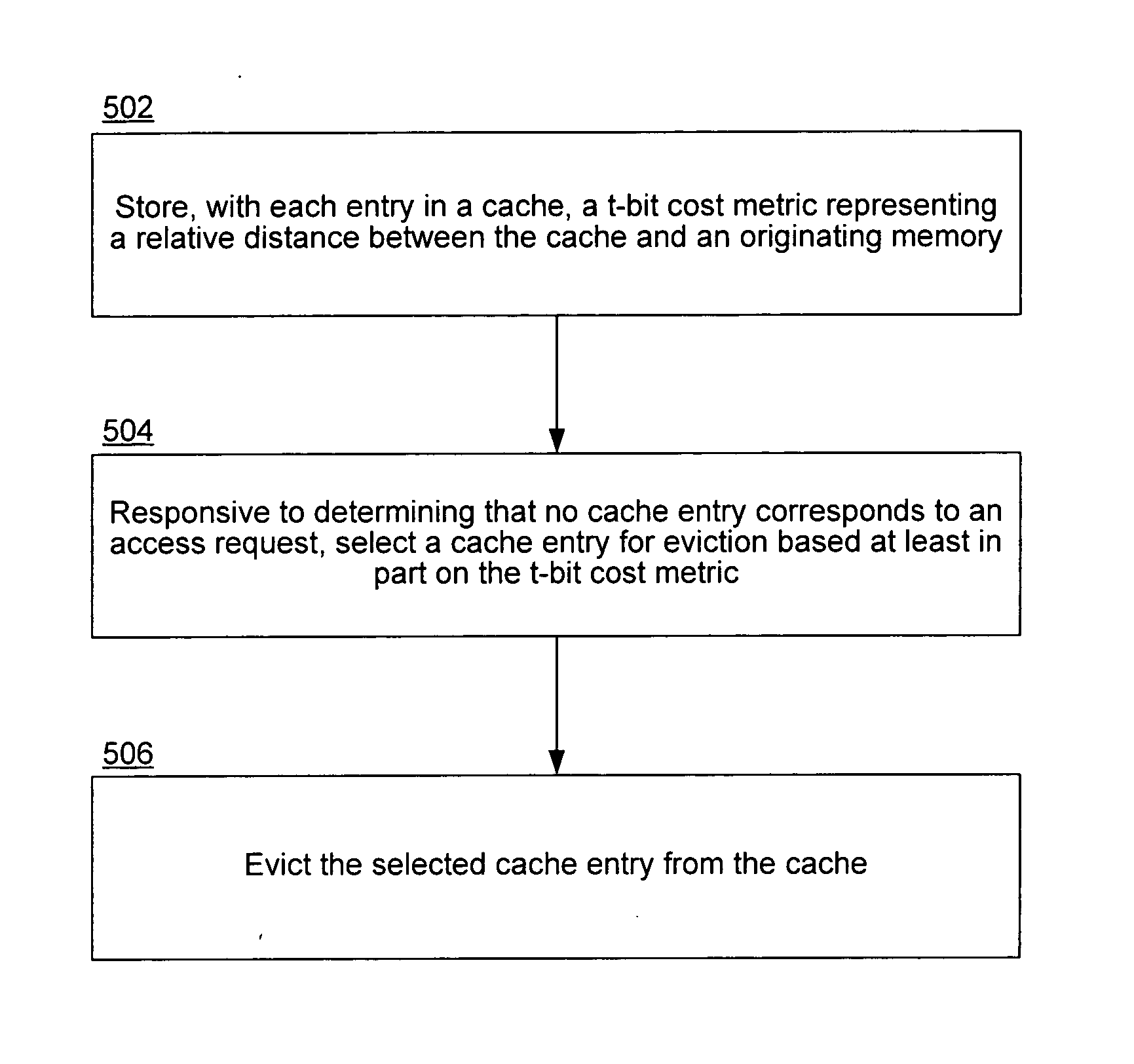

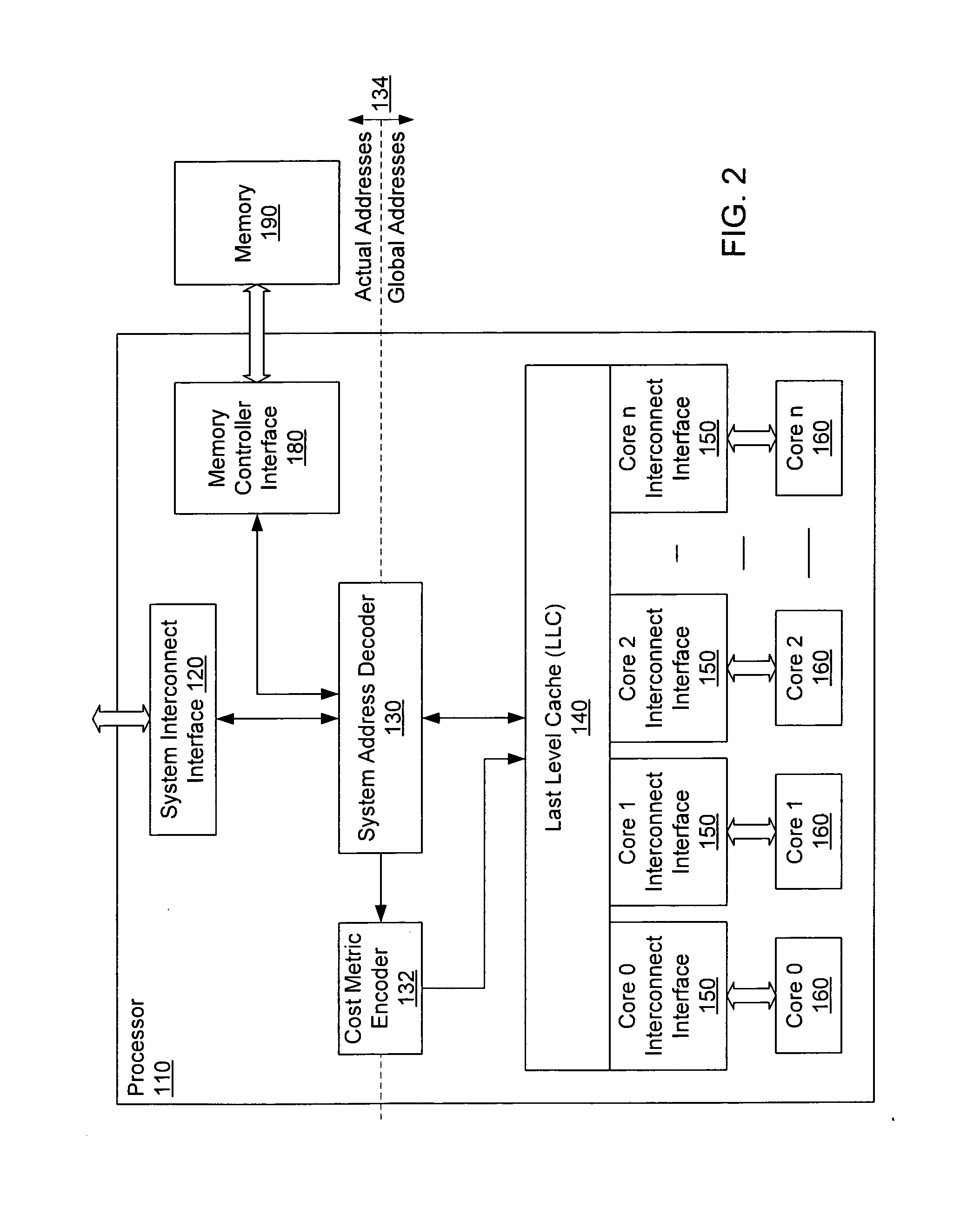

Home node aware replacement policy for caches in a multiprocessor system

InactiveUS20070156964A1Memory architecture accessing/allocationMemory systemsCost metricMulti processor

A home node aware replacement policy for a cache chooses to evict lines which belong to local memory over lines which belong to remote memory, reducing the average transaction cost of incorrect cache line replacements. With each entry, the cache stores a t-bit cost metric (t≧1) representing a relative distance between said cache and an originating memory for the respective cache entry. Responsive to determining that no cache entry corresponds to an access request, the replacement policy selects a cache entry for eviction from the cache based at least in part on the t-bit cost metric. The selected cache entry is then evicted from the cache.

Owner:INTEL CORP

System and method for using a multi-protocol fabric module across a distributed server interconnect fabric

ActiveUS8599863B2Extend performance and power optimization and functionalityDigital computer detailsData switching by path configurationPersonalizationStructure of Management Information

A multi-protocol personality module enabling load / store from remote memory, remote Direct memory Access (DMA) transactions, and remote interrupts, which permits enhanced performance, power utilization and functionality. In one form, the module is used as a node in a network fabric and adds a routing header to packets entering the fabric, maintains the routing header for efficient node-to-node transport, and strips the header when the packet leaves the fabric. In particular, a remote bus personality component is described. Several use cases of the Remote Bus Fabric Personality Module are disclosed: 1) memory sharing across a fabric connected set of servers; 2) the ability to access physically remote Input Output (I / O) devices across this fabric of connected servers; and 3) the sharing of such physically remote I / O devices, such as storage peripherals, by multiple fabric connected servers.

Owner:III HLDG 2

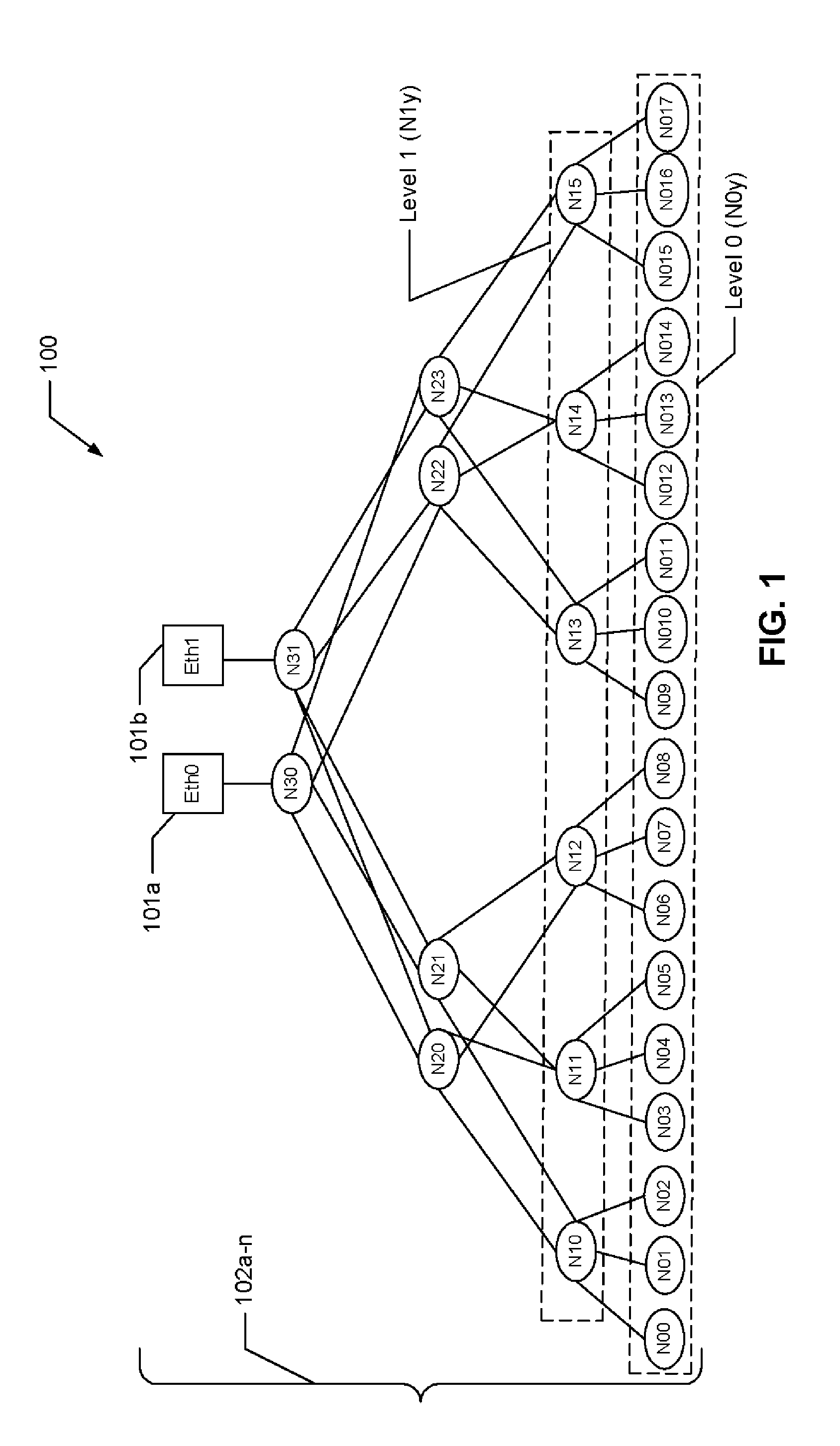

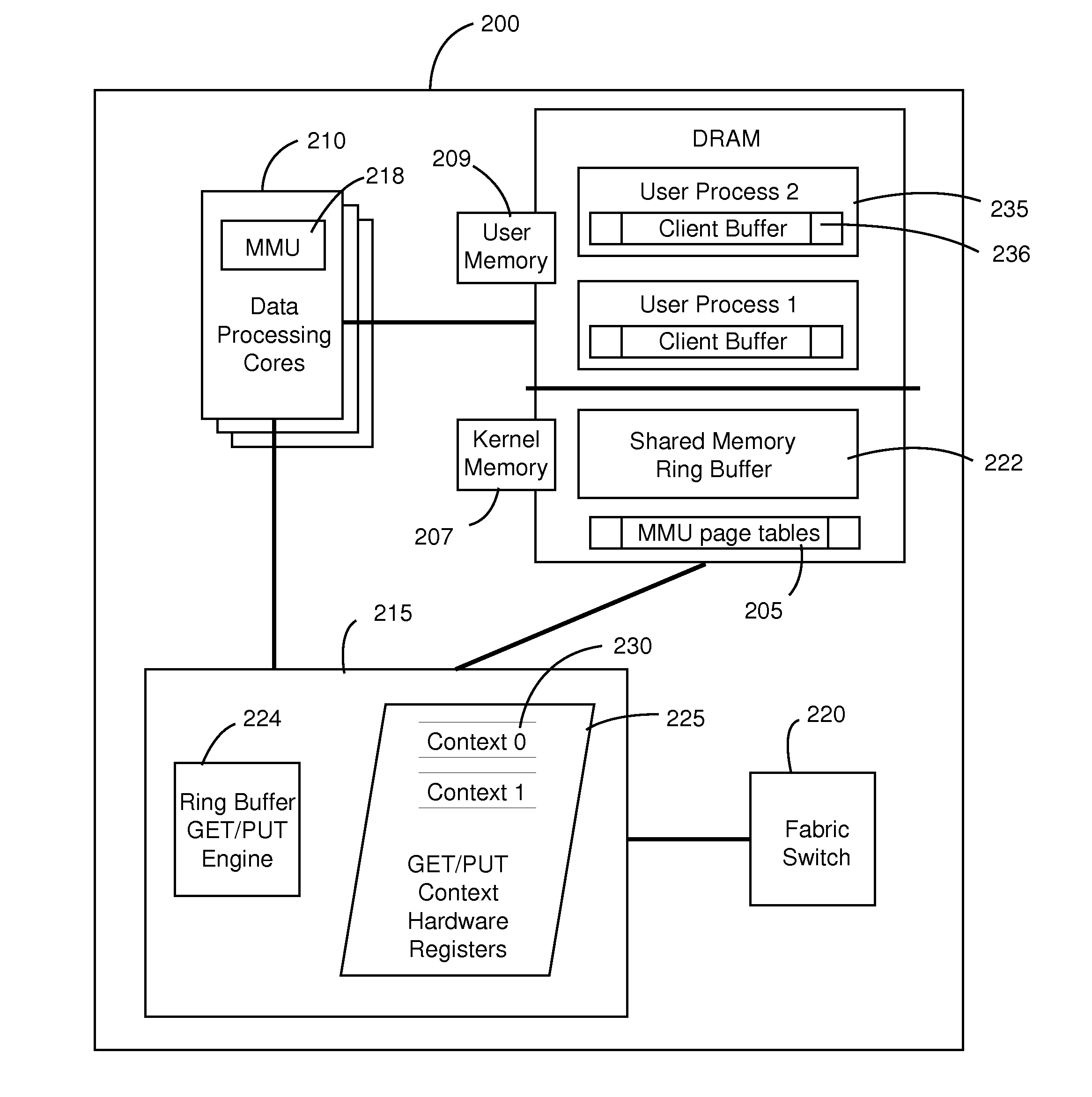

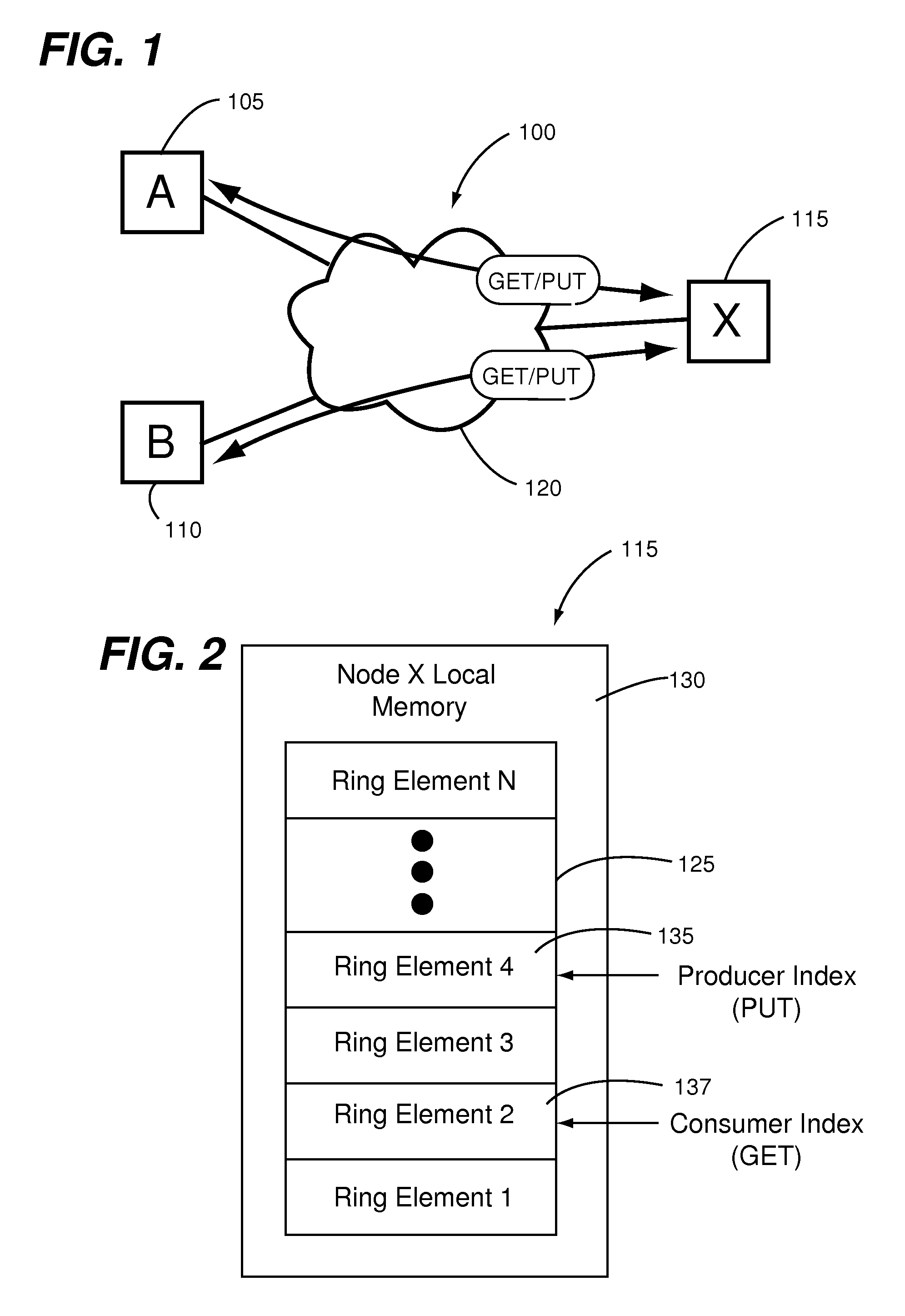

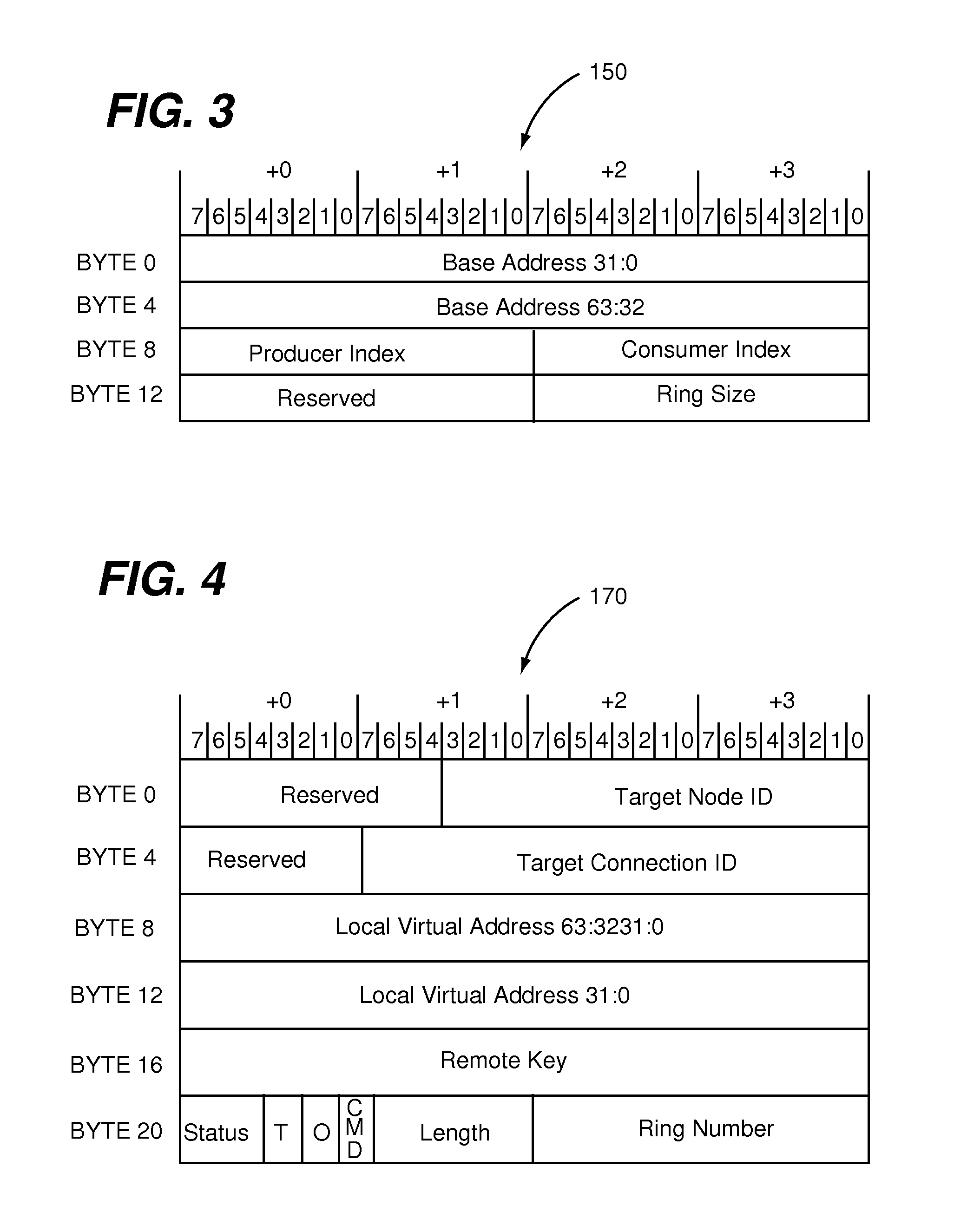

Remote memory ring buffers in a cluster of data processing nodes

ActiveUS20150039840A1Interprogram communicationDigital computer detailsProcessor registerStructure of Management Information

A data processing node has an inter-node messaging module including a plurality of sets of registers each defining an instance of a GET / PUT context and a plurality of data processing cores each coupled to the inter-node messaging module. Each one of the data processing cores includes a mapping function for mapping each one of a plurality of user level processes to a different one of the sets of registers and thereby to a respective GET / PUT context instance. Mapping each one of the user level processes to the different one of the sets of registers enables a particular one of the user level processes to utilize the respective GET / PUT context instance thereof for performing a GET / PUT action to a ring buffer of a different data processing node coupled to the data processing node through a fabric without involvement of an operating system of any one of the data processing cores.

Owner:III HLDG 2

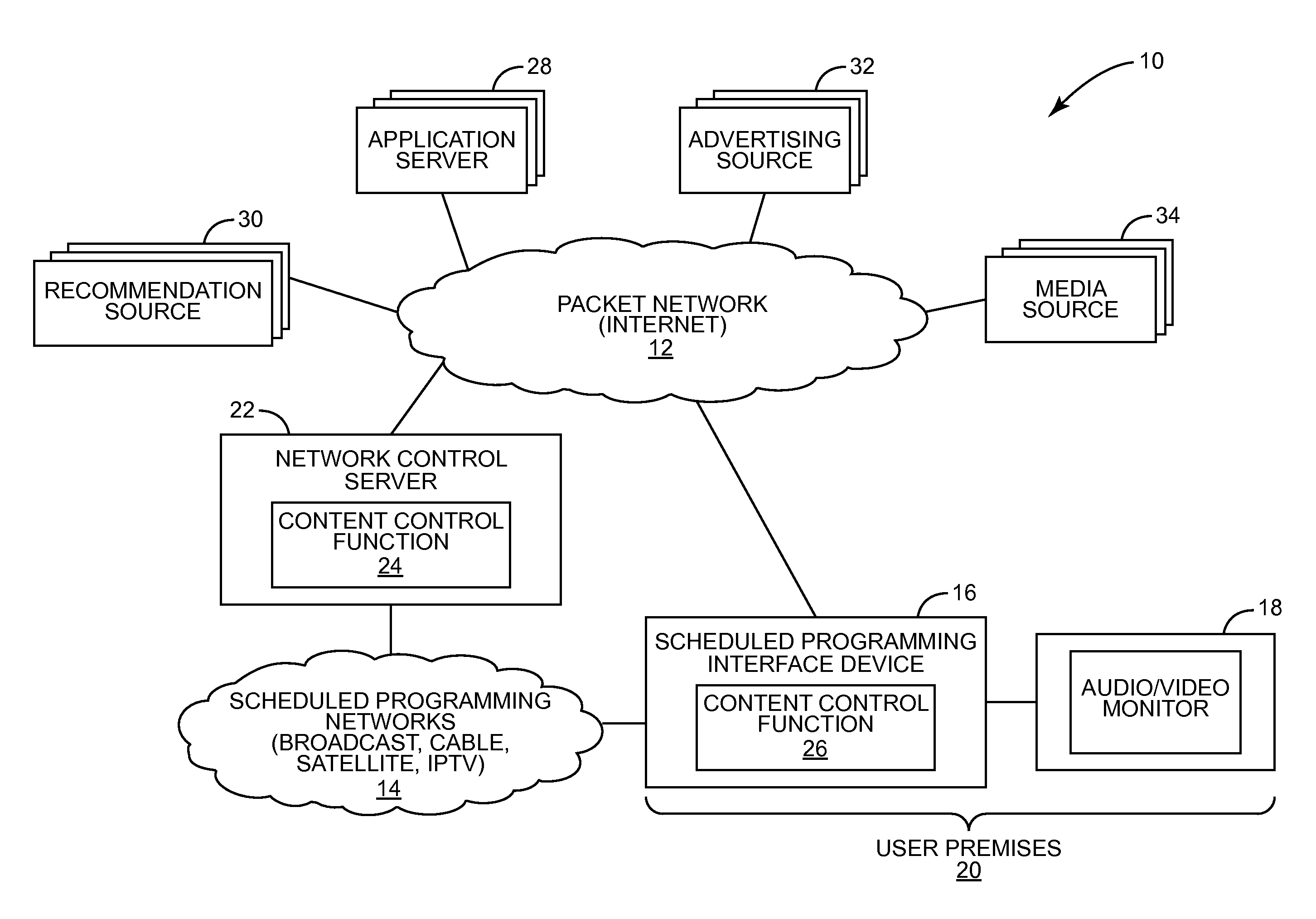

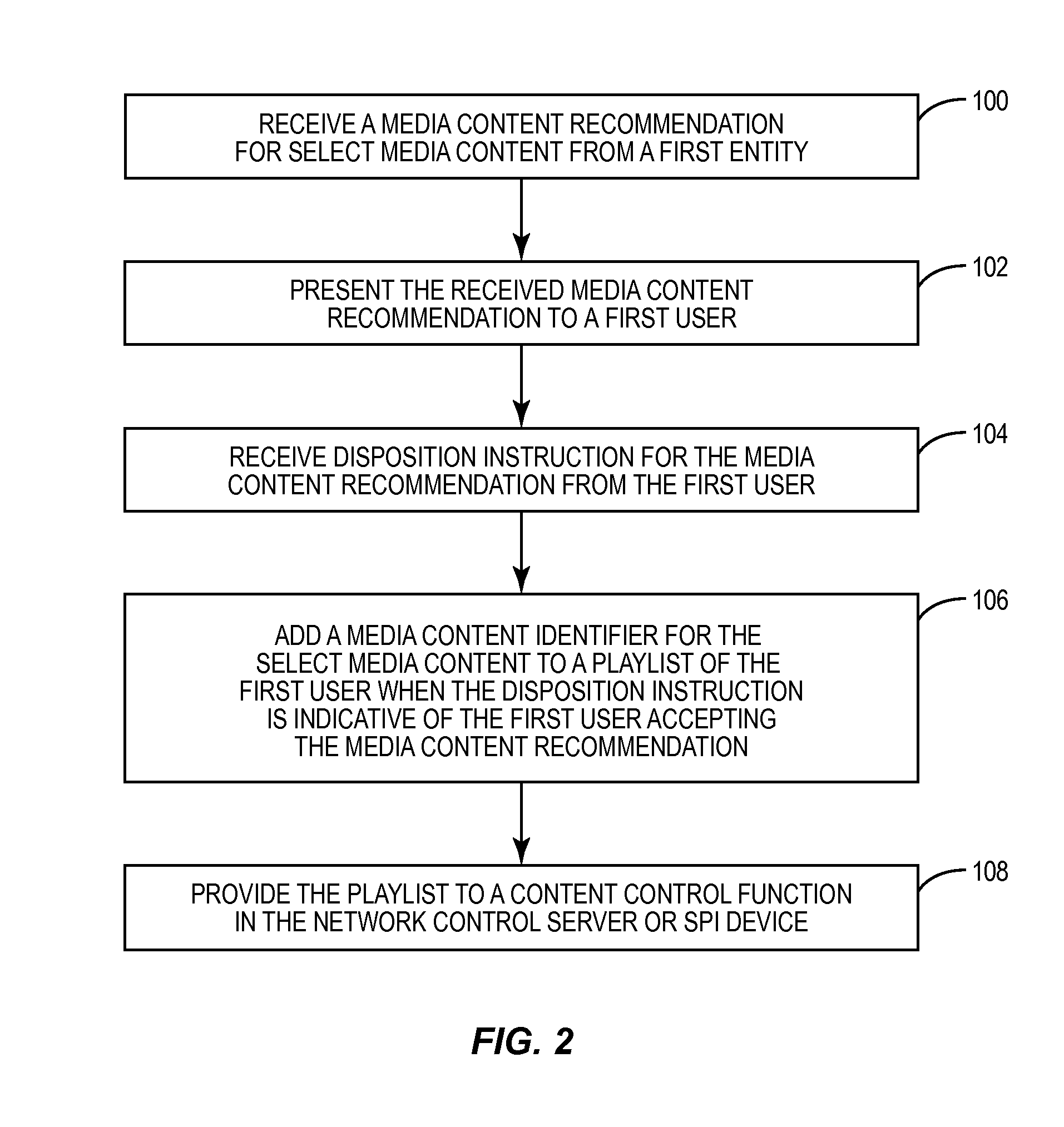

Playlist execution in a scheduled programming environment

InactiveUS20090292376A1Television system detailsSelective content distributionRecording mediaControl function

The present invention relates to generating and executing a playlist in a scheduled programming environment. In one embodiment, a media content recommendation for select media content is received from a first entity. The select media content for the media content recommendation is then added to a playlist for a first user. The playlist will identify numerous media content items to be consumed at particular times, in a particular order, or a combination thereof. The playlist is provided to a content control function, which controls, either directly or remotely, a scheduled programming interface (SPI) device to execute the playlist. The SPI device effectively consumes the media content items of the playlist in an automated fashion. Consumption of the media content items may include presenting the media content to the first user via an audio or video monitor, recording the media content in local or remote storage, or a combination thereof.

Owner:RPX CLEARINGHOUSE

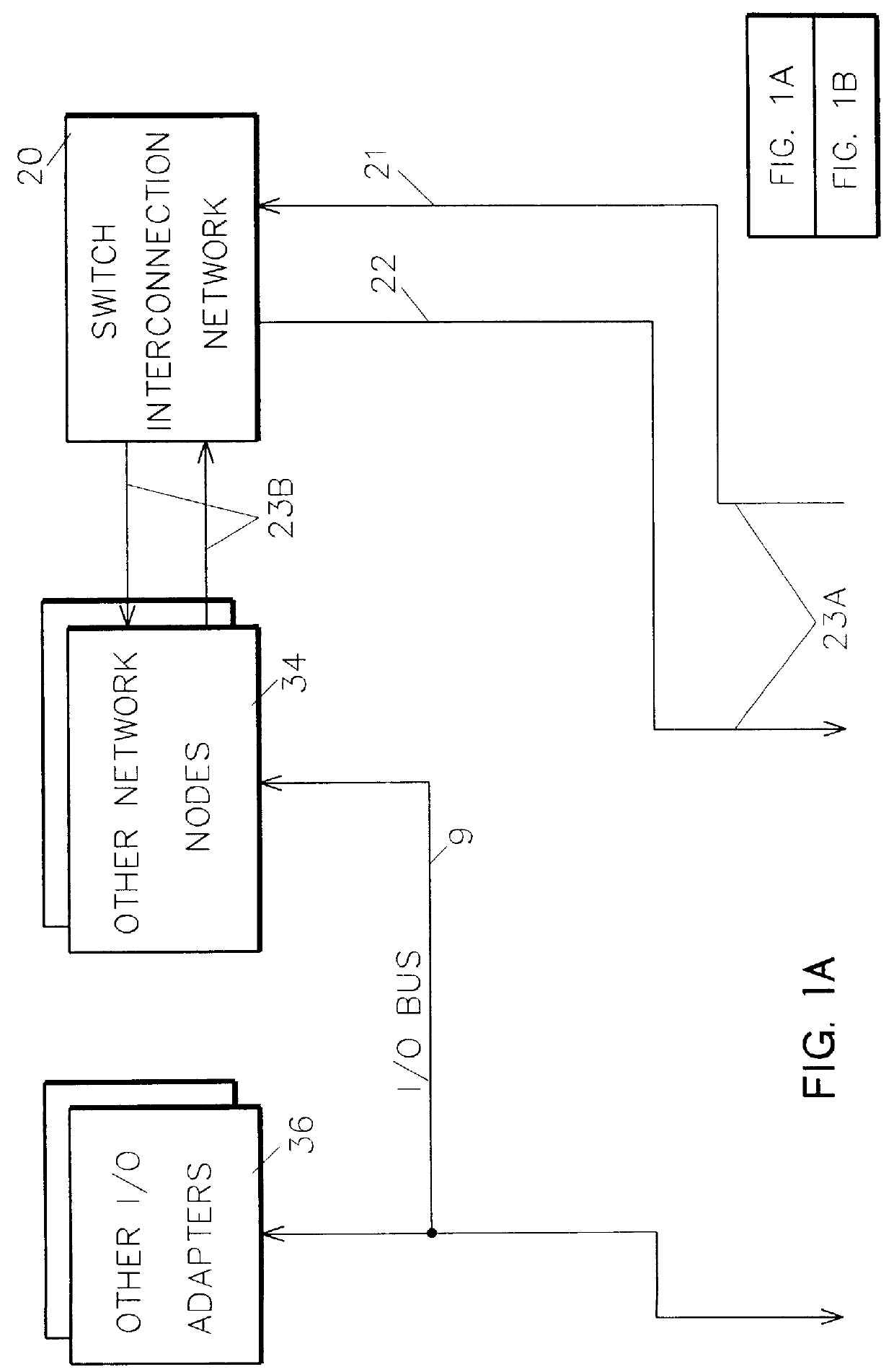

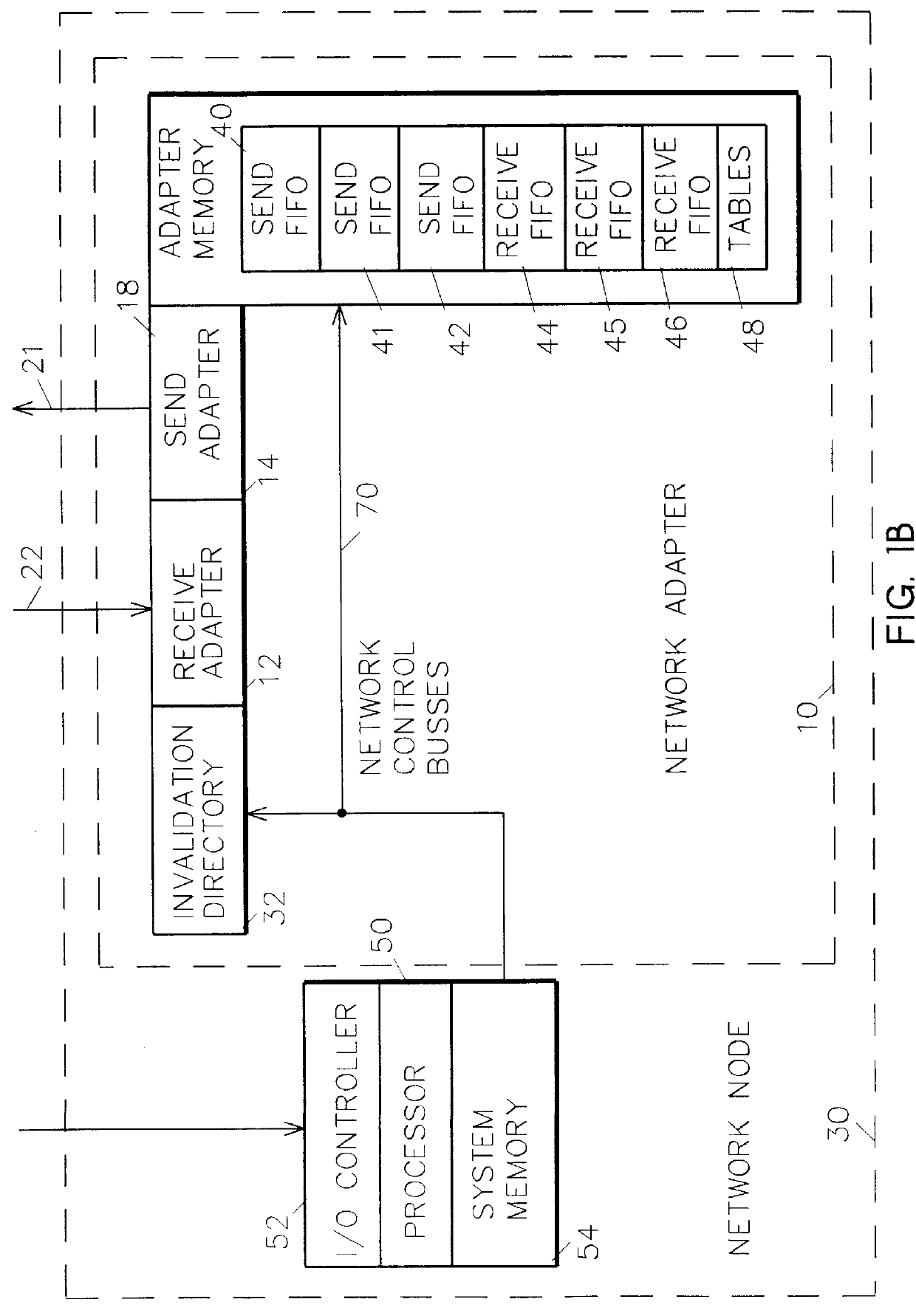

Bi-directional network adapter for interfacing local node of shared memory parallel processing system to multi-stage switching network for communications with remote node

InactiveUS6122674AOptimize networkData processing applicationsMemory adressing/allocation/relocationRemote memory accessExchange network

A shared memory parallel processing system interconnected by a multi-stage network combines new system configuration techniques with special-purpose hardware to provide remote memory accesses across the network, while controlling cache coherency efficiently across the network. The system configuration techniques include a systematic method for partitioning and controlling the memory in relation to local verses remote accesses and changeable verses unchangeable data. Most of the special-purpose hardware is implemented in the memory controller and network adapter, which implements three send FIFOs and three receive FIFOs at each node to segregate and handle efficiently invalidate functions, remote stores, and remote accesses requiring cache coherency. The segregation of these three functions into different send and receive FIFOs greatly facilitates the cache coherency function over the network. In addition, the network itself is tailored to provide the best efficiency for remote accesses.

Owner:IBM CORP

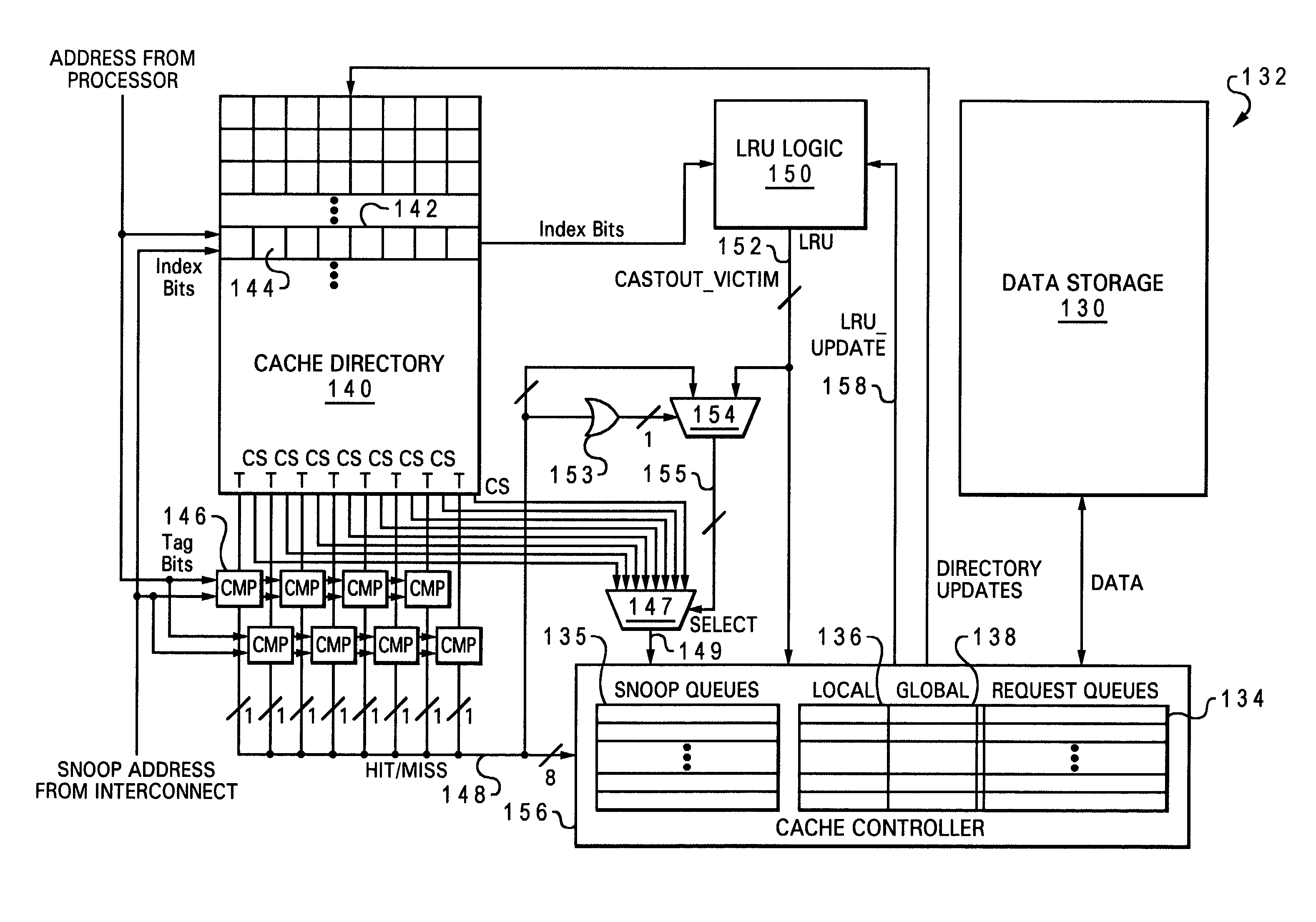

Data processing system, cache, and method that select a castout victim in response to the latencies of memory copies of cached data

A data processing system includes a processing unit, a distributed memory including a local memory and a remote memory having differing access latencies, and a cache coupled to the processing unit and to the distributed memory. The cache includes a congruence class containing a plurality of cache lines and a plurality of latency indicators that each indicate an access latency to the distributed memory for a respective one of the cache lines. The cache further includes a cache controller that selects a cache line in the congruence class as a castout victim in response to the access latencies indicated by the plurality of latency indicators. In one preferred embodiment, the cache controller preferentially selects as castout victims cache lines having relatively short access latencies.

Owner:IBM CORP

System and method for using a multi-protocol fabric module across a distributed server interconnect fabric

ActiveUS20150103826A1Extend performance and power optimization and functionalityData switching by path configurationPersonalizationStructure of Management Information

A multi-protocol personality module enabling load / store from remote memory, remote Direct Memory Access (DMA) transactions, and remote interrupts, which permits enhanced performance, power utilization and functionality. In one form, the module is used as a node in a network fabric and adds a routing header to packets entering the fabric, maintains the routing header for efficient node-to-node transport, and strips the header when the packet leaves the fabric. In particular, a remote bus personality component is described. Several use cases of the Remote Bus Fabric Personality Module are disclosed: 1) memory sharing across a fabric connected set of servers; 2) the ability to access physically remote Input Output (I / O) devices across this fabric of connected servers; and 3) the sharing of such physically remote I / O devices, such as storage peripherals, by multiple fabric connected servers.

Owner:III HLDG 2

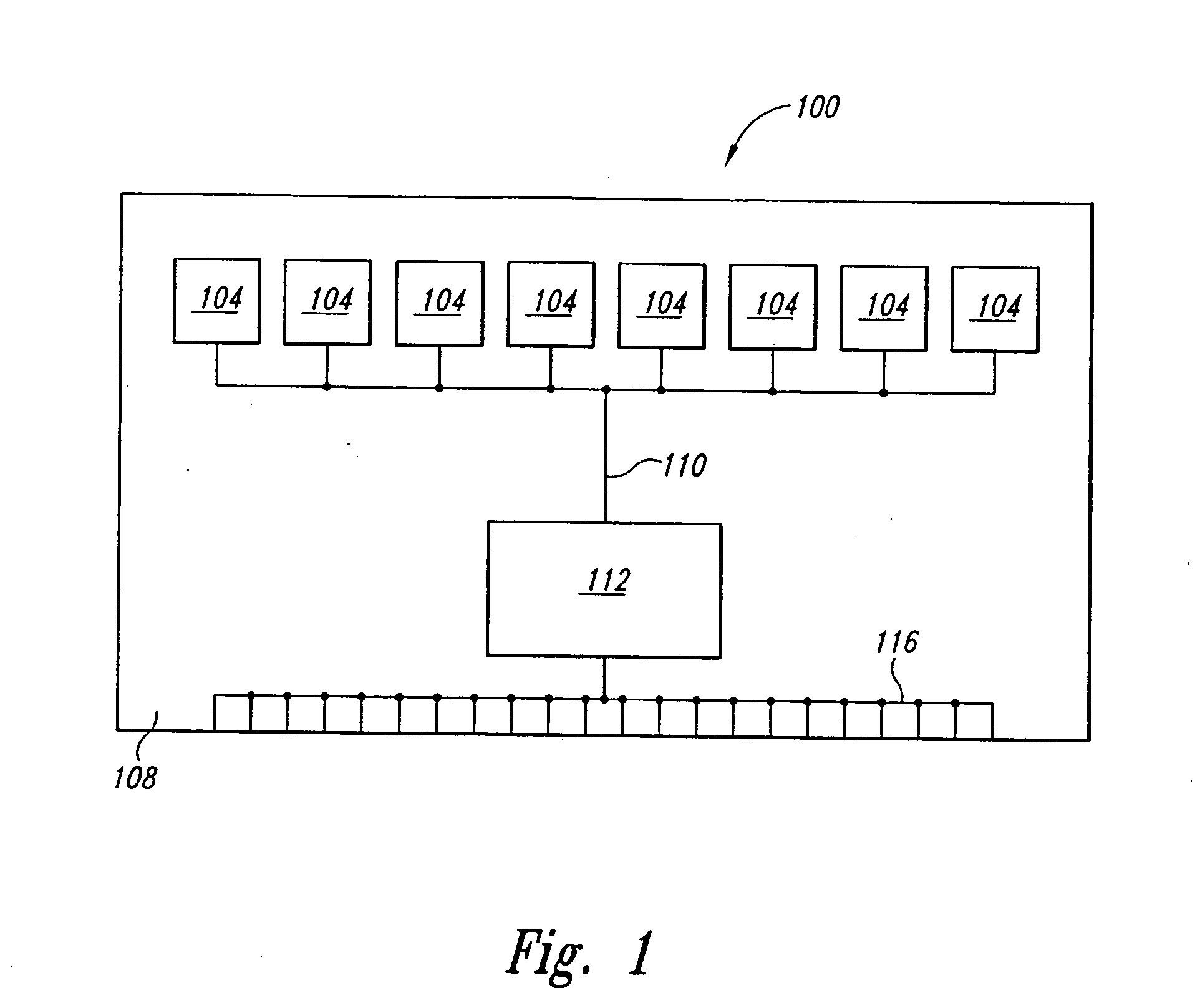

Arbitration system having a packet memory and method for memory responses in a hub-based memory system

A memory hub module includes a decoder that receives memory requests determines a memory request identifier associated with each memory request. A packet memory receives memory request identifiers and stores the memory request identifiers. A packet tracker receives remote memory responses and associates each remote memory response with a memory request identifier and removes the memory request identifier from the packet memory. A multiplexor receives remote memory responses and local memory responses. The multiplexor selects an output responsive to a control signal. Arbitration control logic is coupled to the multiplexor and the packet memory and develops the control signal to select a memory response for output.

Owner:MICRON TECH INC

Two-stage request protocol for accessing remote memory data in a NUMA data processing system

InactiveUS6615322B2Memory architecture accessing/allocationMemory adressing/allocation/relocationData processing systemComputerized system

A non-uniform memory access (NUMA) computer system includes a remote node coupled by a node interconnect to a home node having a home system memory. The remote node includes a local interconnect, a processing unit and at least one cache coupled to the local interconnect, and a node controller coupled between the local interconnect and the node interconnect. The processing unit first issues, on the local interconnect, a read-type request targeting data resident in the home system memory with a flag in the read-type request set to a first state to indicate only local servicing of the read-type request. In response to inability to service the read-type request locally in the remote node, the processing unit reissues the read-type request with the flag set to a second state to instruct the node controller to transmit the read-type request to the home node. The node controller, which includes a plurality of queues, preferably does not queue the read-type request until receipt of the reissued read-type request with the flag set to the second state.

Owner:INT BUSINESS MASCH CORP

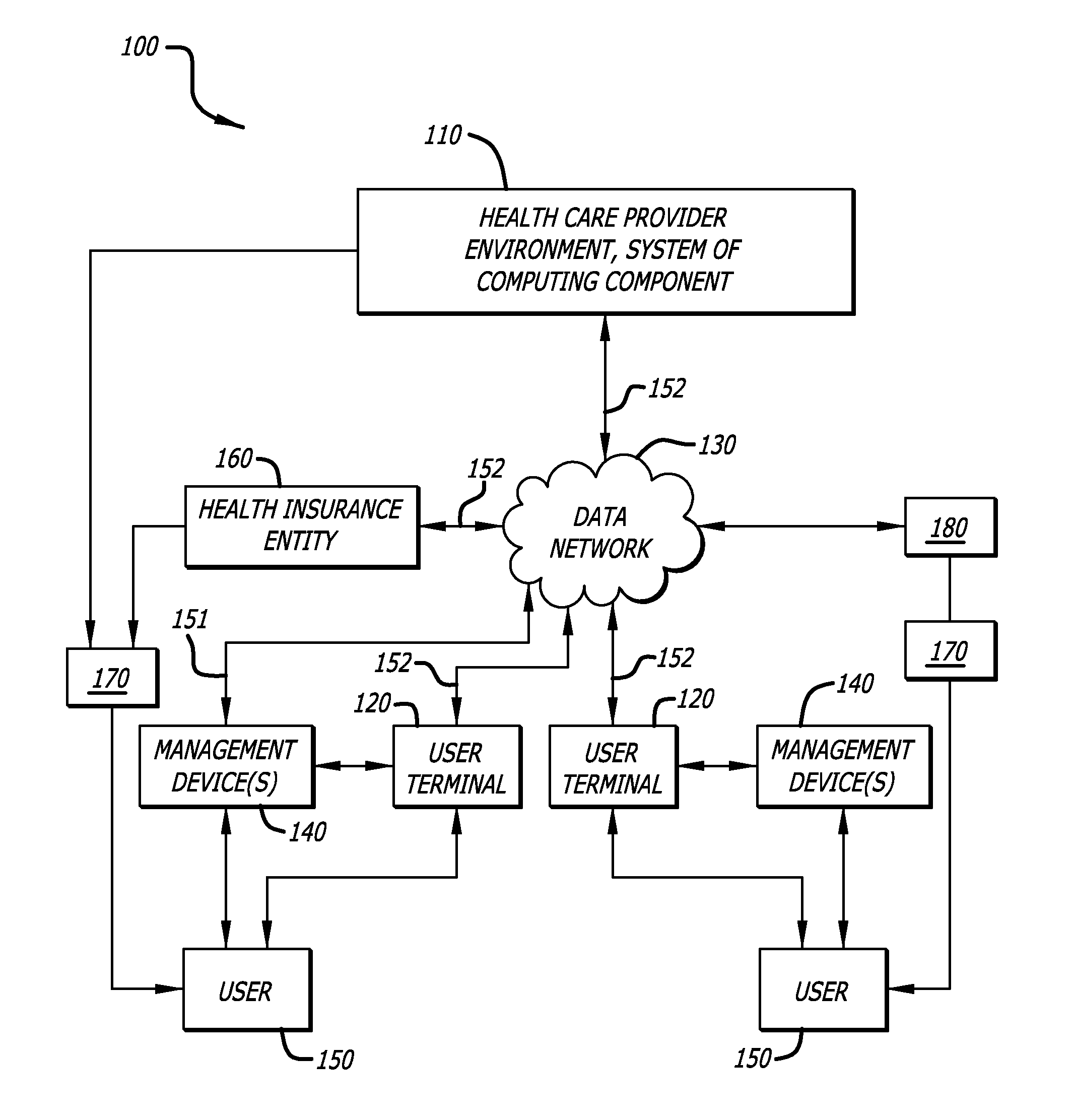

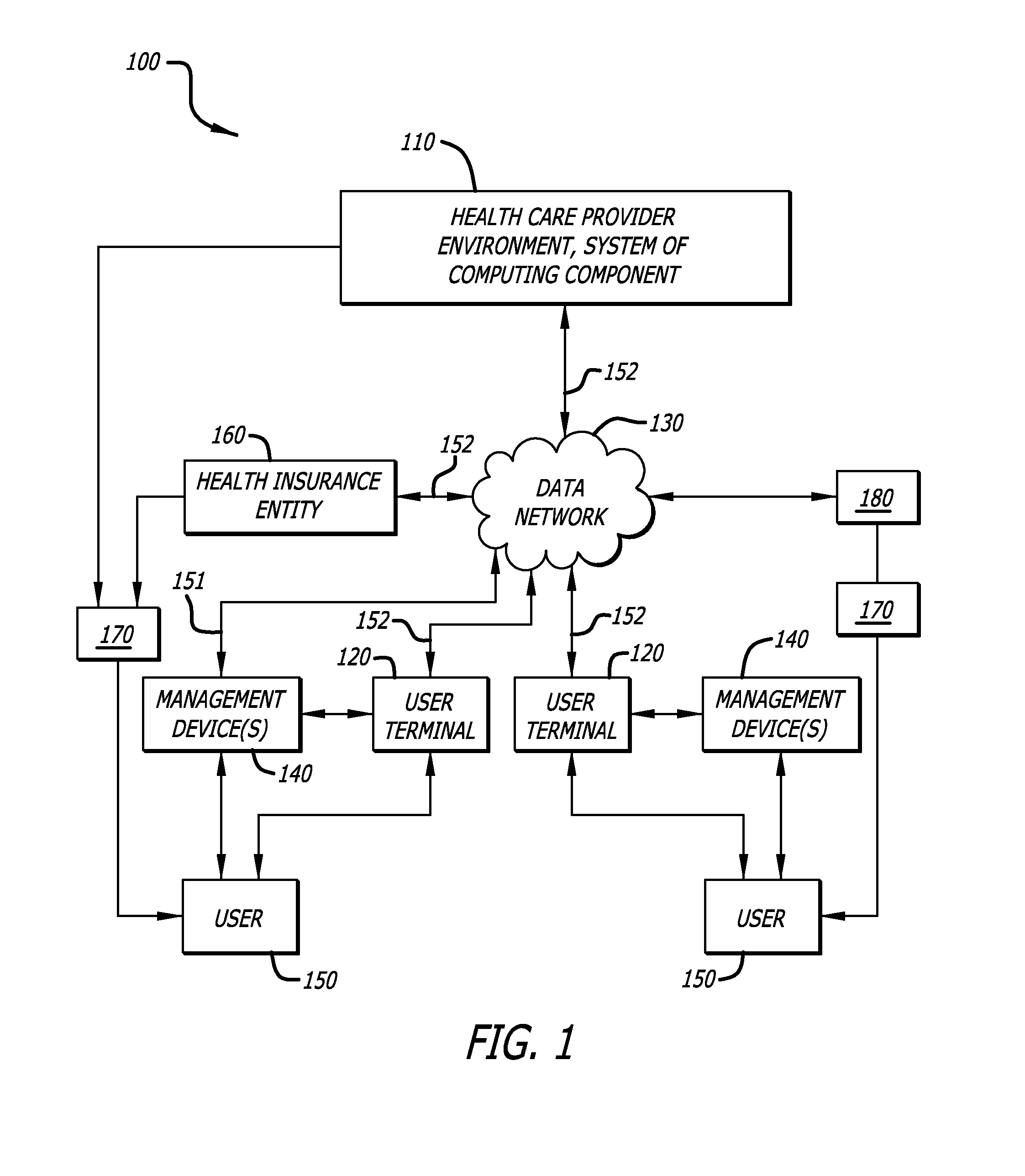

System and method for analysis of medical data to encourage health care management

System and method analyze medical data of a patient having a disease afflicted health condition and action is taken to encourage the patient to perform wellness-enhancing activities and to take and report medical data more frequently. Consideration in the form of reduced insurance costs, medical supply costs, and medical equipment costs are given to patients who comply. Health care providers are advised that analysis of the patient's medical data is reimbursable and are also encouraged to perform such analyses through rewards. Patient data may be stored in a remote memory site, accessed by HCPs for analysis, and proprietary software may be used to communicate directly to insurance companies or other medical benefit entities.

Owner:ABBOTT DIABETES CARE INC

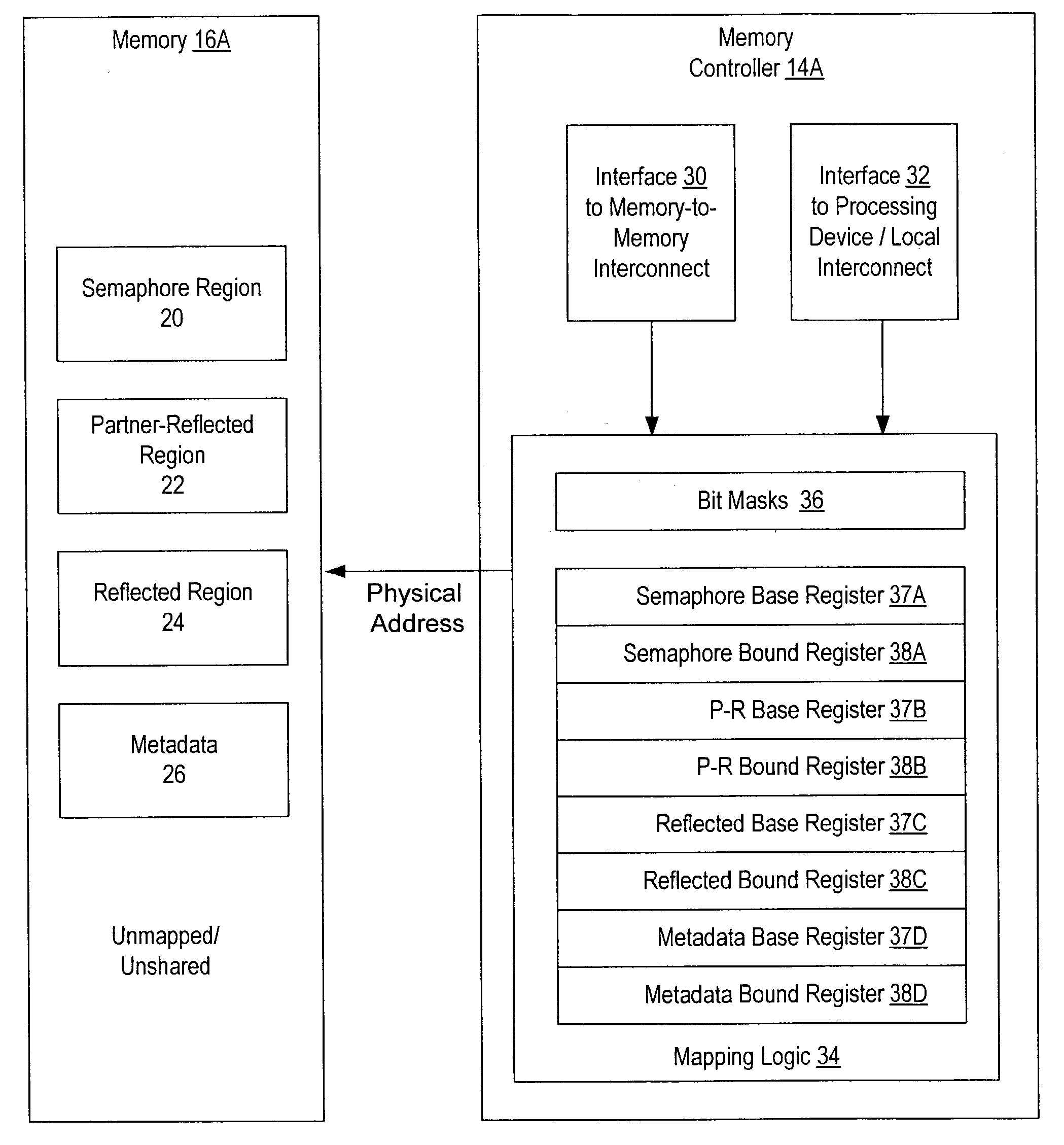

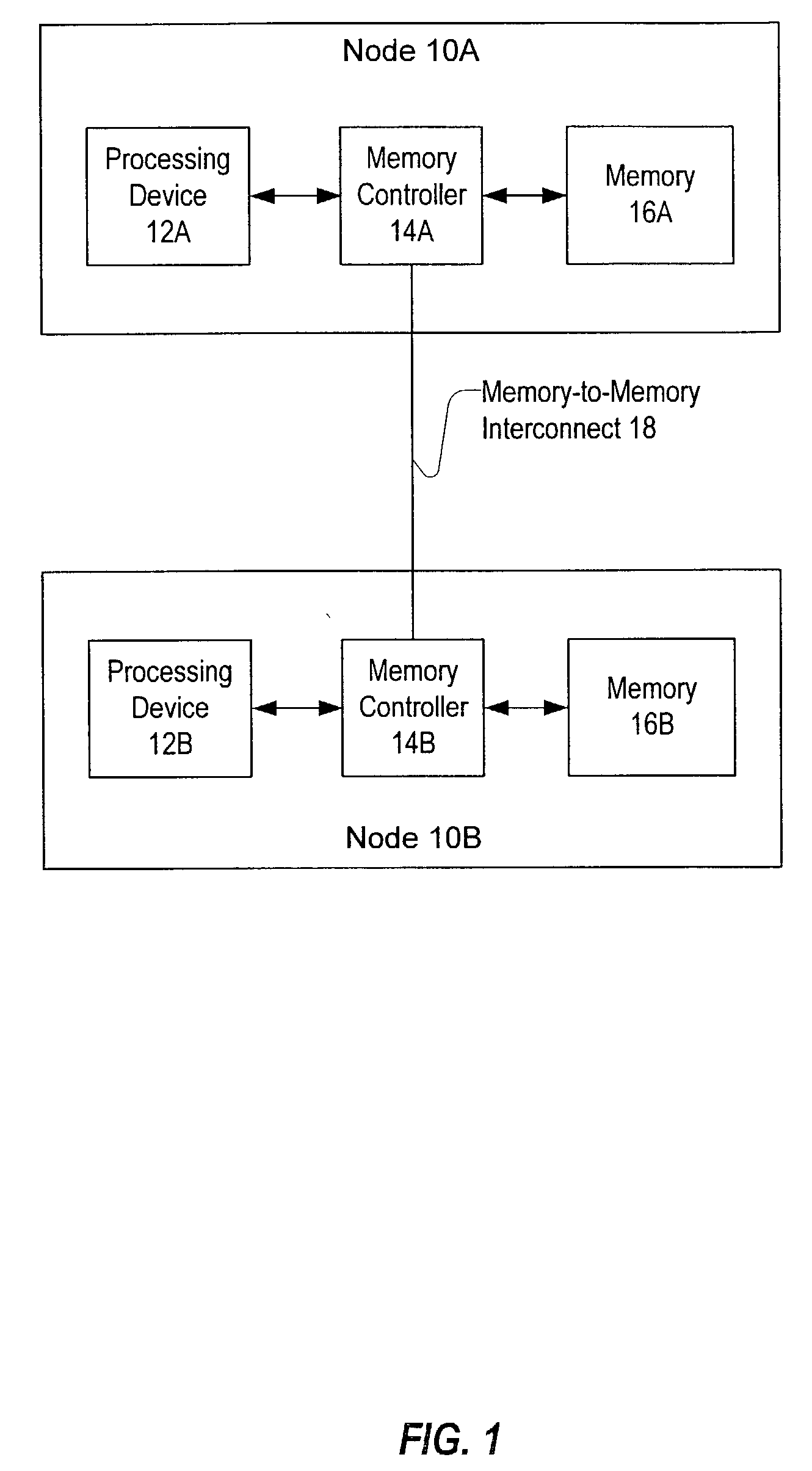

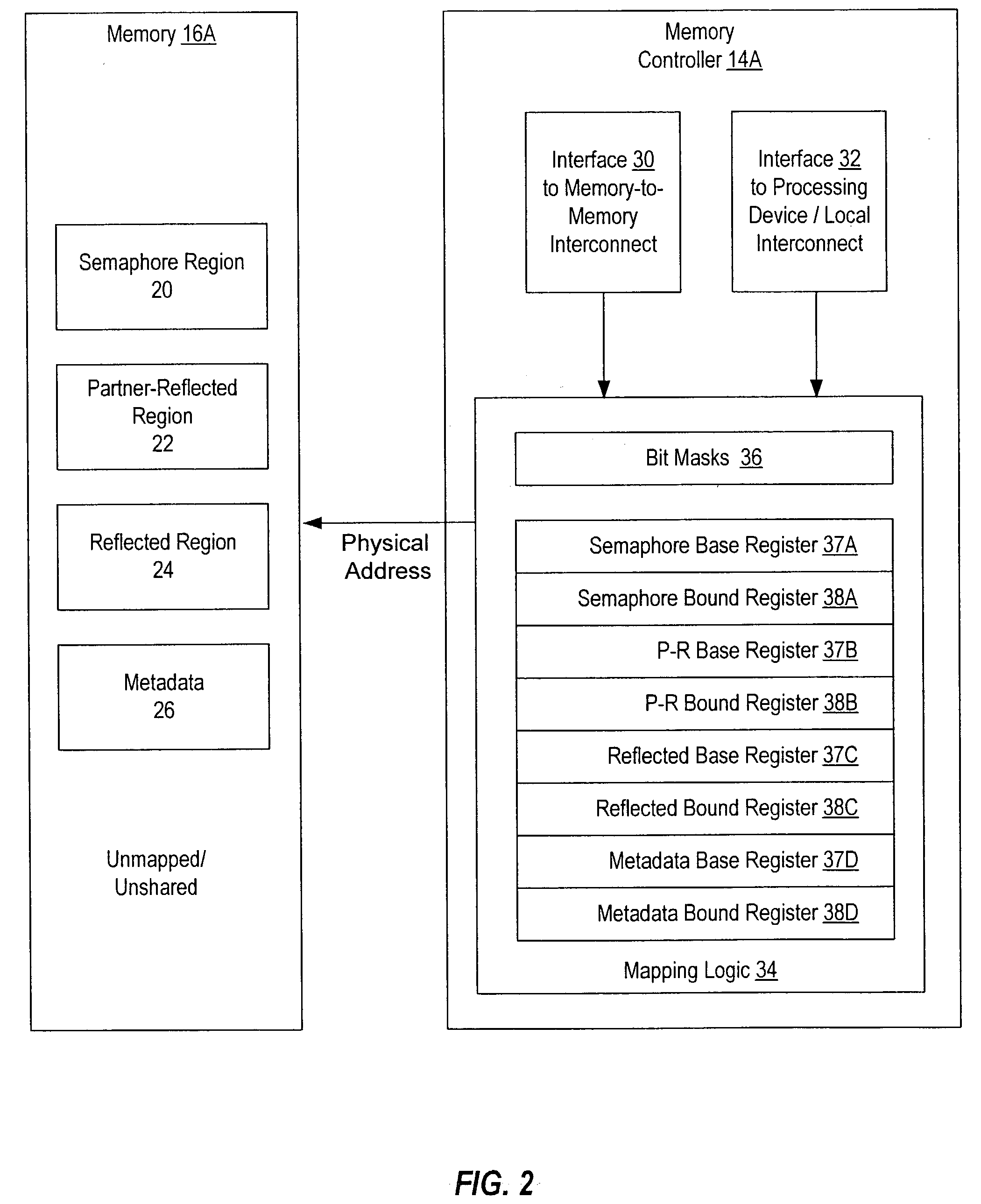

System and method for sharing memory among multiple storage device controllers

InactiveUS20040117562A1Memory adressing/allocation/relocationDigital computer detailsRemote memory accessNetwork Communication Protocols

Each node's memory controller may be configured to send and receive messages on a dedicated memory-to-memory interconnect according to the communication protocol and to responsively perform memory accesses in a local memory. The type of message sent on the interconnect may depend on which memory region is targeted by a memory access request local to the sending node. If certain regions are targeted locally, a memory controller may delay performance of a local memory access until the memory access has been performed remotely. Remote nodes may confirm performance of the remote memory accesses via the memory-to-memory interconnect.

Owner:ORACLE INT CORP

Non-uniform memory access (NUMA) data processing system having remote memory cache incorporated within system memory

A non-uniform memory access (NUMA) computer system and associated method of operation are disclosed. The NUMA computer system includes at least a remote node and a home node coupled to an interconnect. The remote node contains at least one processing unit coupled to a remote system memory, and the home node contains at least a home system memory. To reduce access latency for data from other nodes, a portion of the remote system memory is allocated as a remote memory cache containing data corresponding to data resident in the home system memory. In one embodiment, access bandwidth to the remote memory cache is increased by distributing the remote memory cache across multiple system memories in the remote node.

Owner:IBM CORP

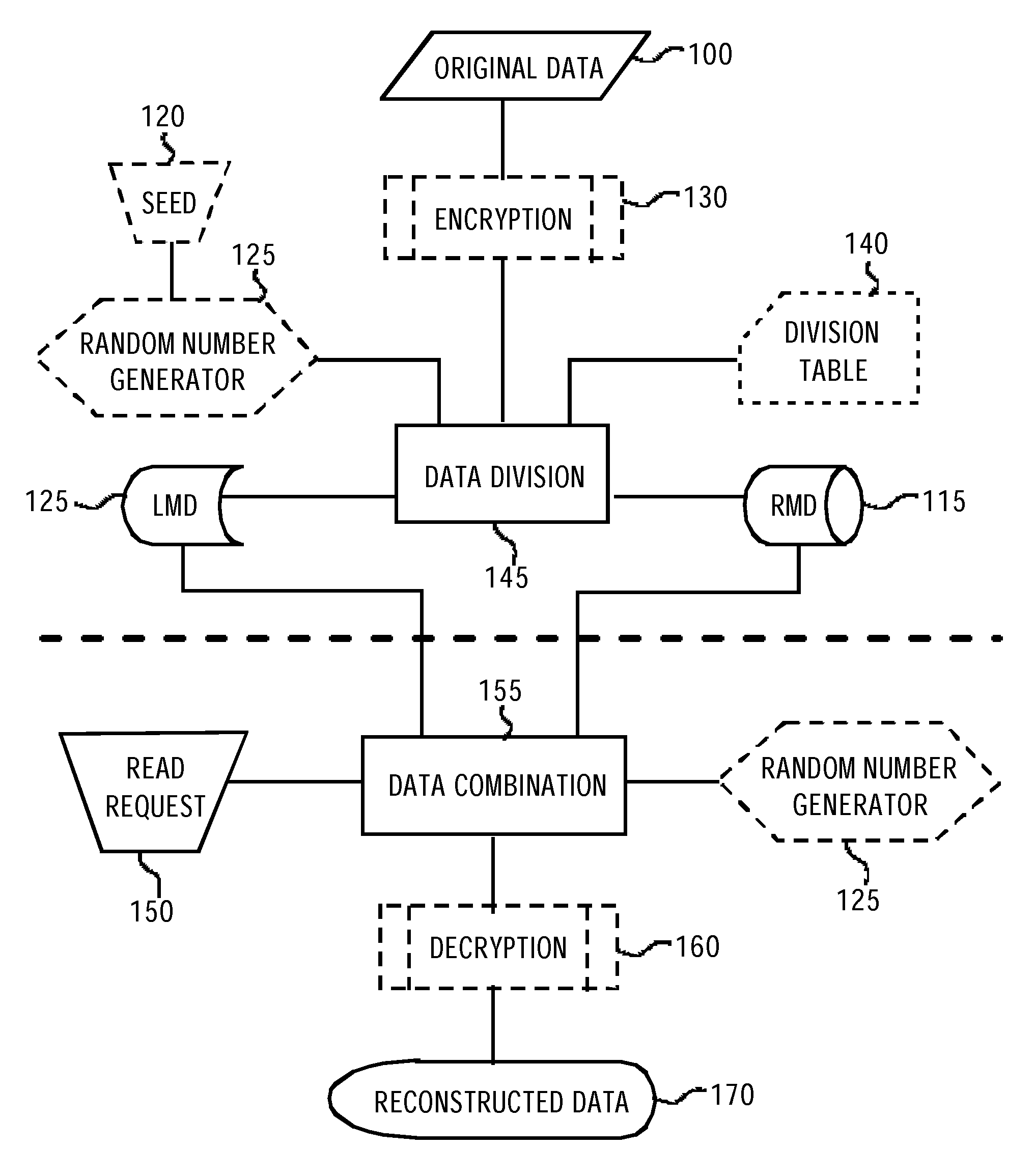

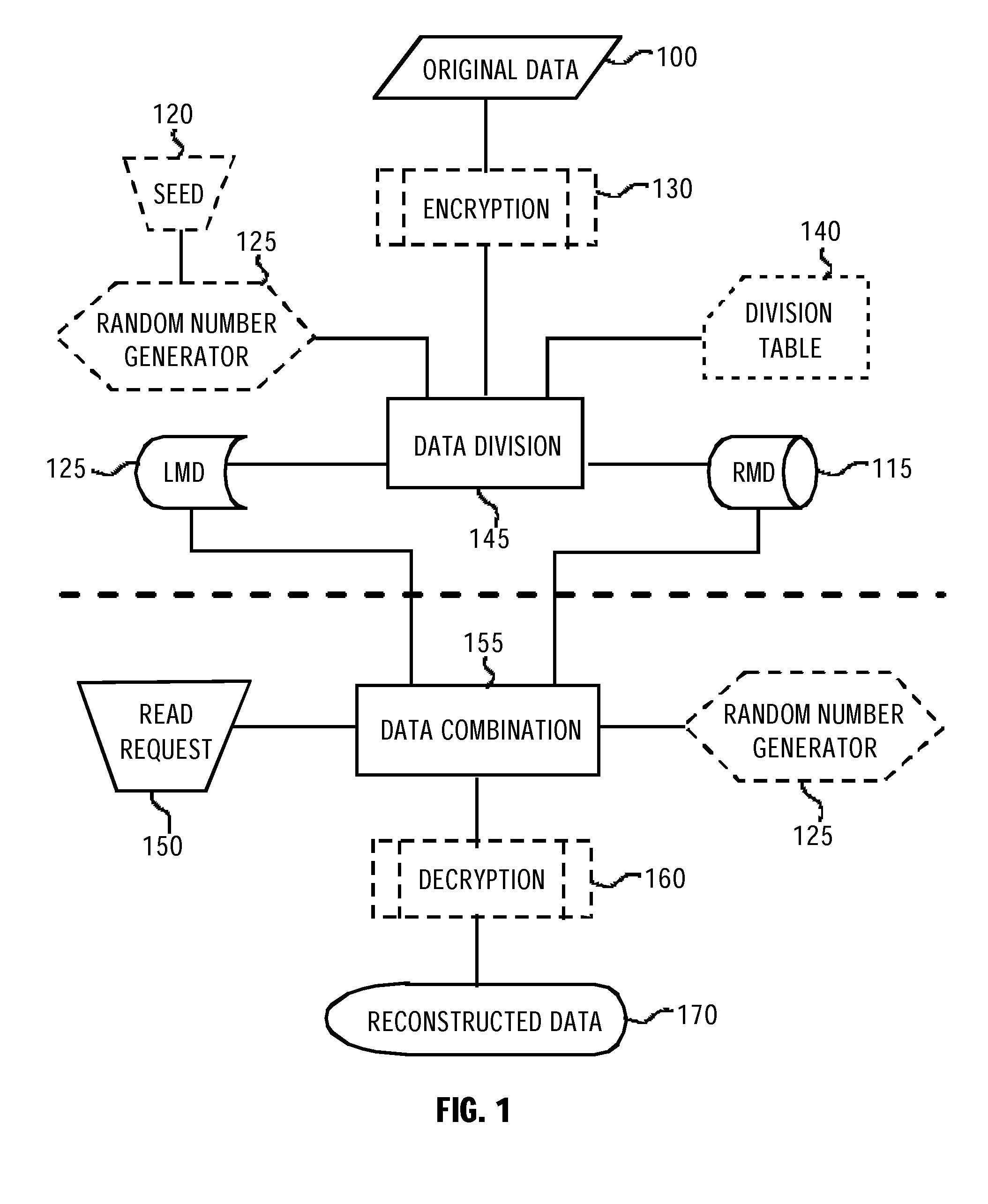

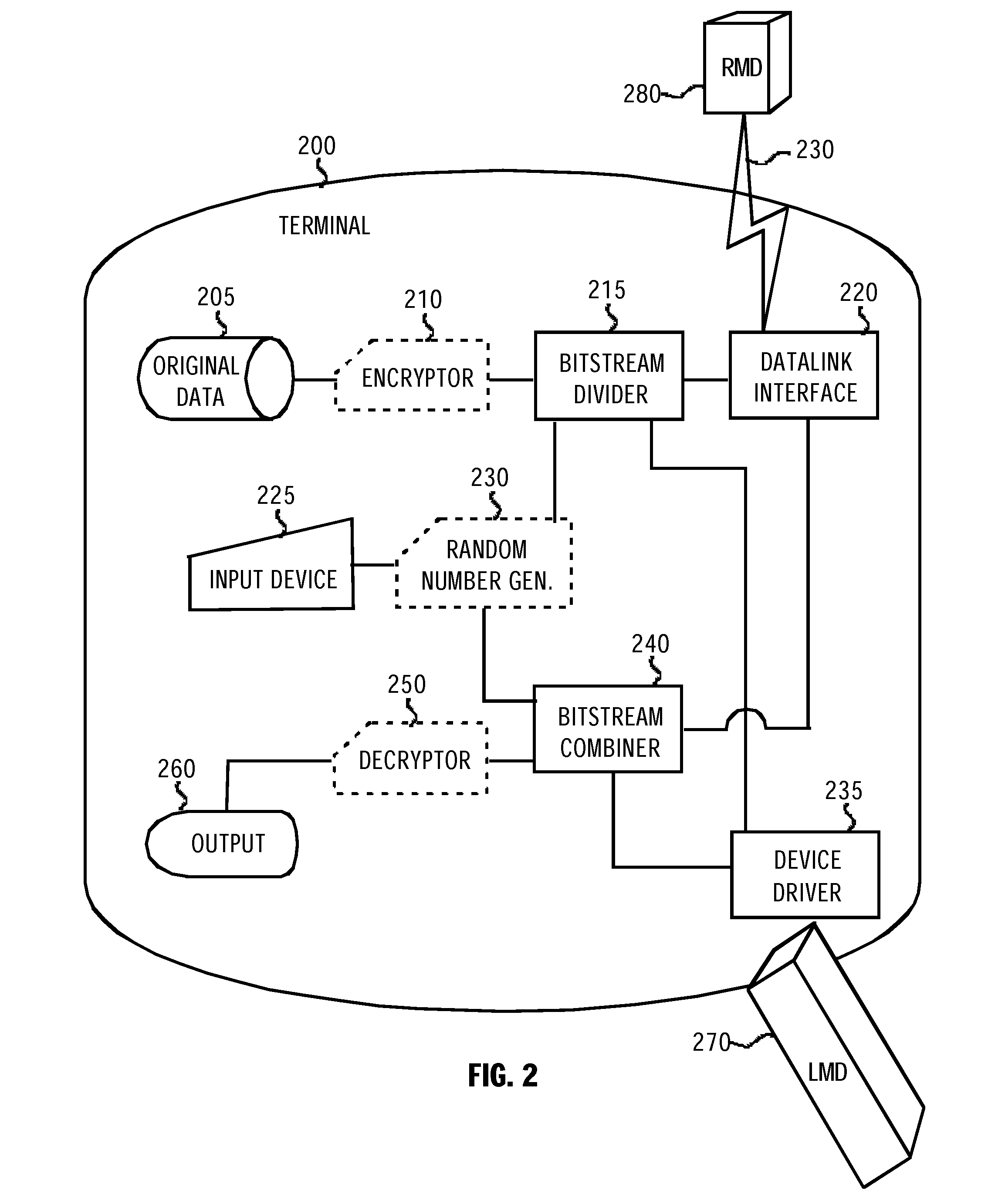

Method and Apparatus for Securing Data in a Memory Device

InactiveUS20120300931A1Unauthorized memory use protectionHardware monitoringOriginal dataComputer terminal

A Method and a terminal intended for securing information in a local memory device which is couplable to a terminal having a data link interface. At the terminal, the method divides original data resulting in a first portion and a second portion. The method stores the first portion in the local memory device and sends the second portion for storage in a remote memory device. Upon obtaining an authorized read request targeted to the original data the method retrieves the second portion and combines the two portions. The method provides high data security if the data, is encrypted prior to the step of dividing. Another aspect of the invention comprises a terminal capable of at least combing the first and second data portions to reconstruct the original data, and preferably to perform the step required for dividing the data. The data may or may not be encrypted.

Owner:SPLITSTREEM

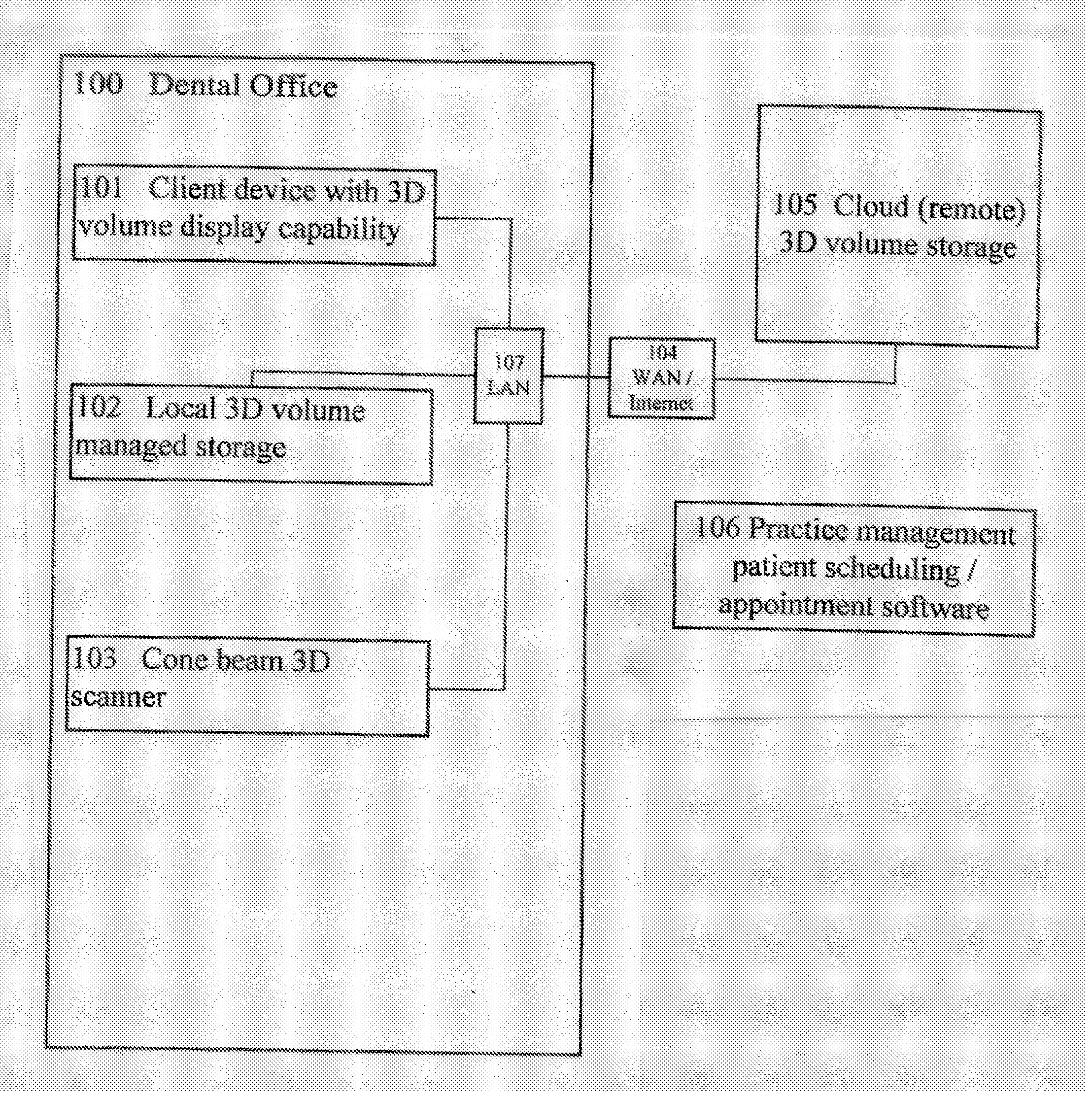

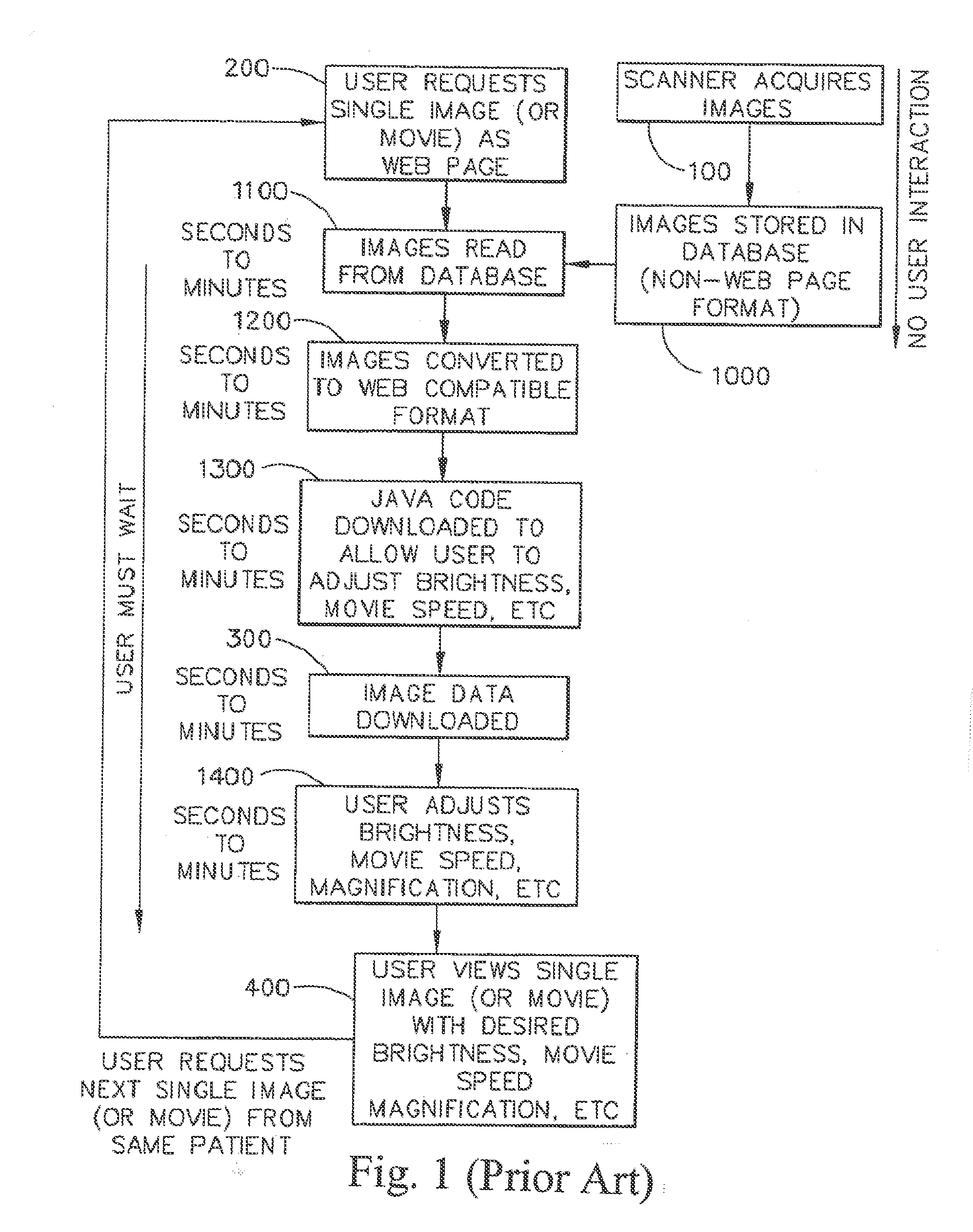

3D cone beam dental imaging system

A 3D dental imaging system which is used for producing and transferring 3D volumes of a patient from a remote cloud storage device and which includes a 3D volume imaging software for displaying cone beam images. The software retrieves volumes from storage located in the dental office. The 3D volumes are acquired via a cone beam scanner. There is storage located in the dentist office for storage of 3D volumes that were acquired via a cone beam scanner. There is also storage located remotely of the dentist office for storage of 3D volumes that were acquired via a cone beam scanner located in the dentist office. The 3D volumes are transferred between the local storage and the remote storage. The patient appointment information is retrieved for patient's future appointments from a patient scheduling software. The 3D volumes automatically are downloaded from remote storage to local storage based upon patient's future appointment information.

Owner:GOLAY DOUGLAS A

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com