Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

331 results about "Memory sharing" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

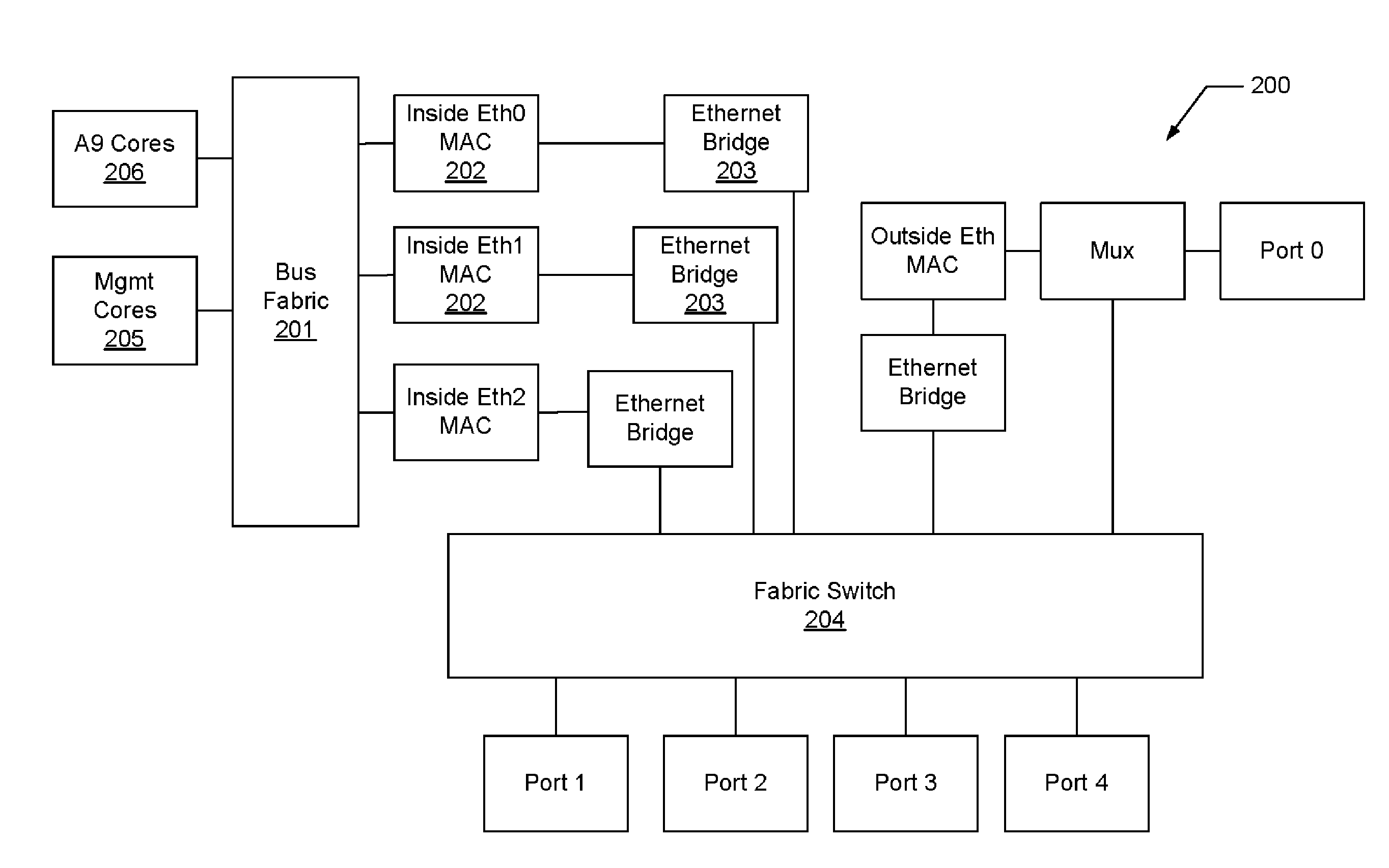

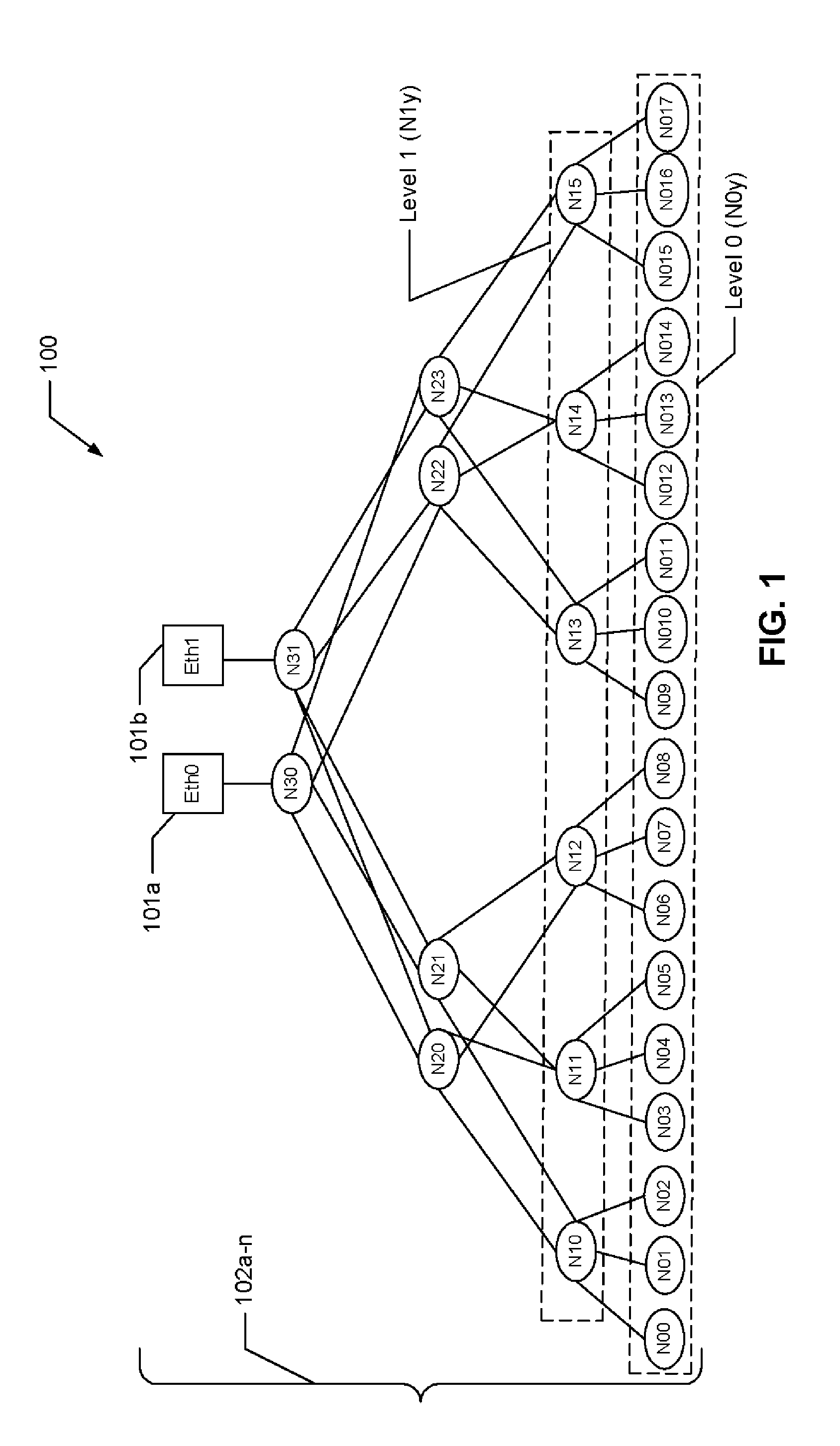

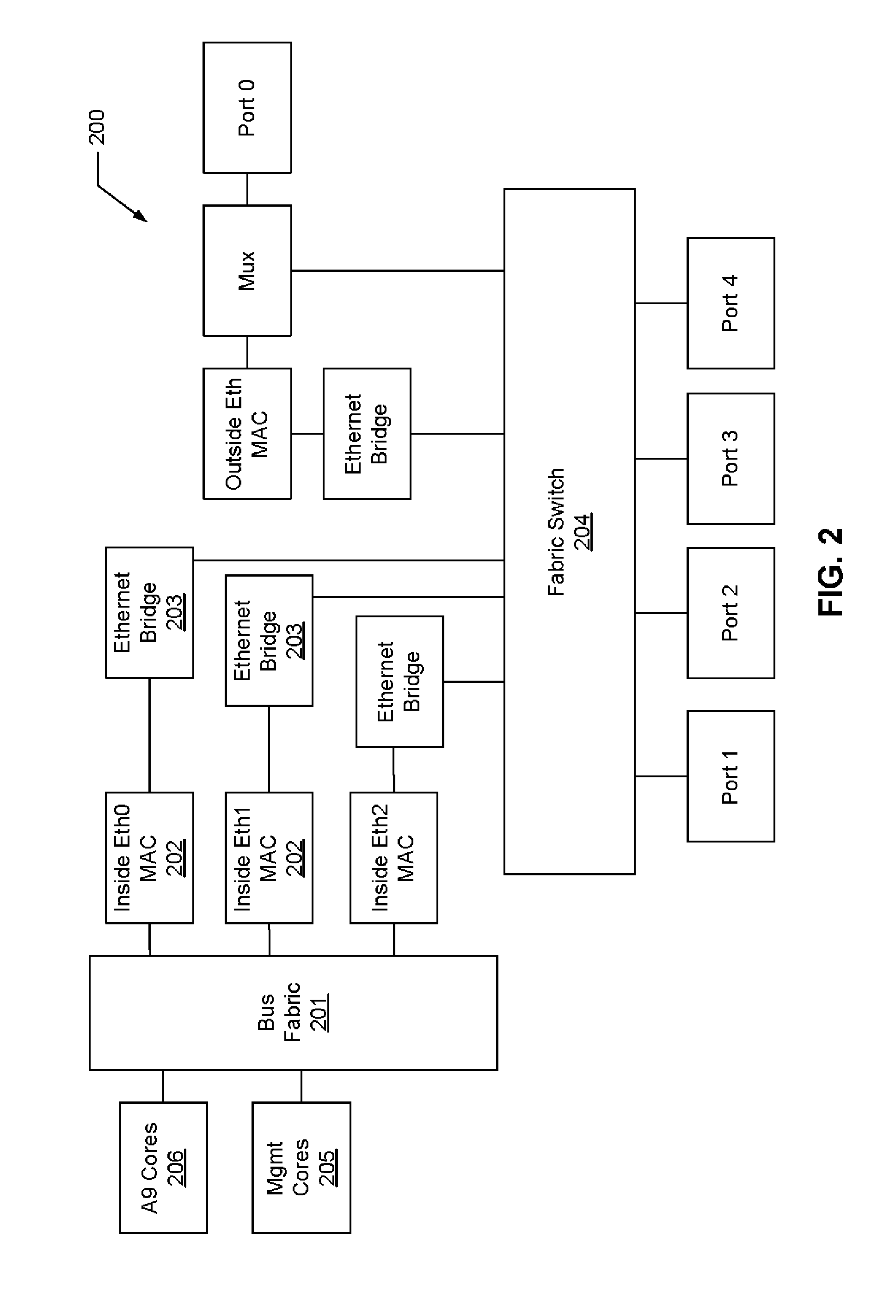

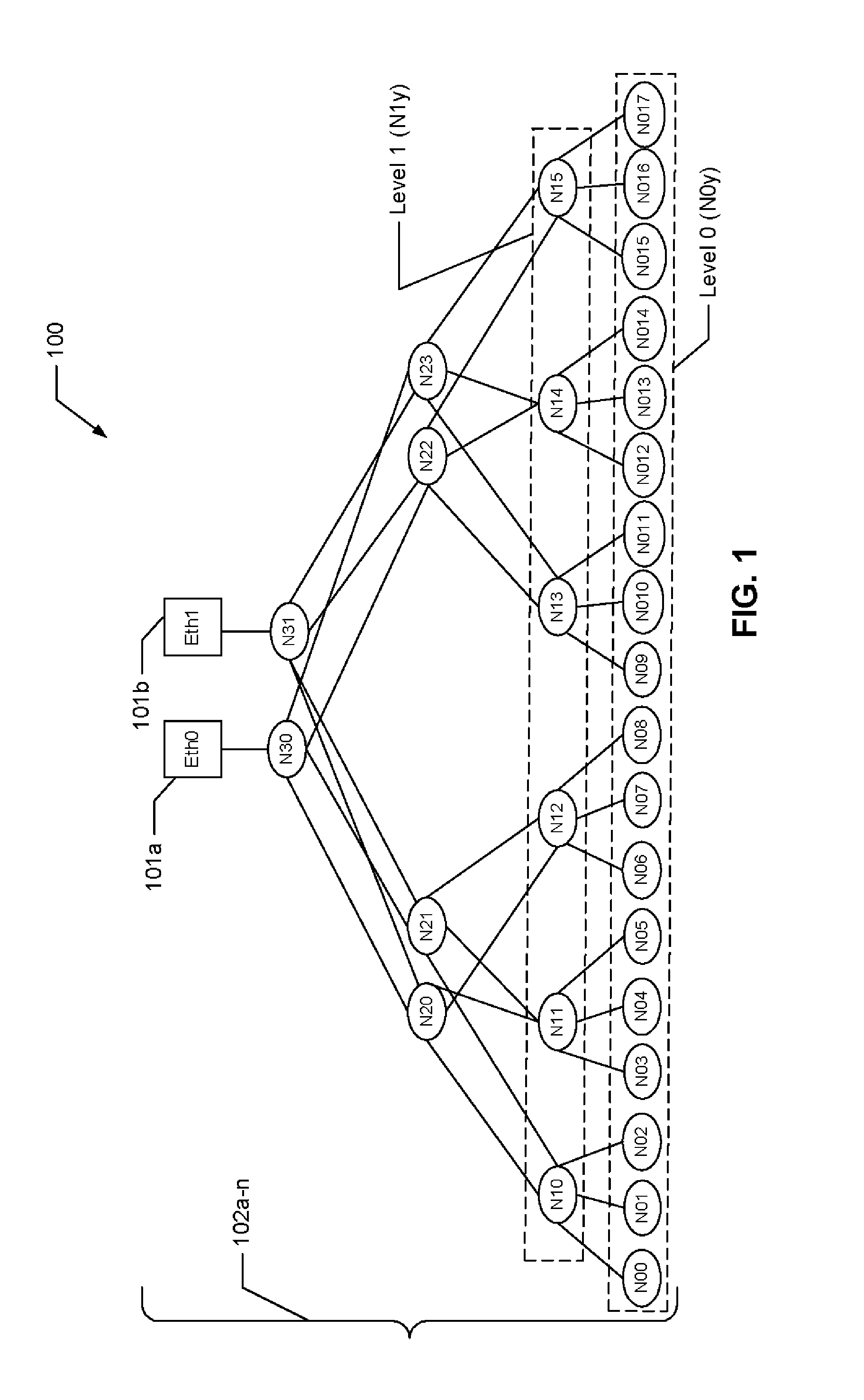

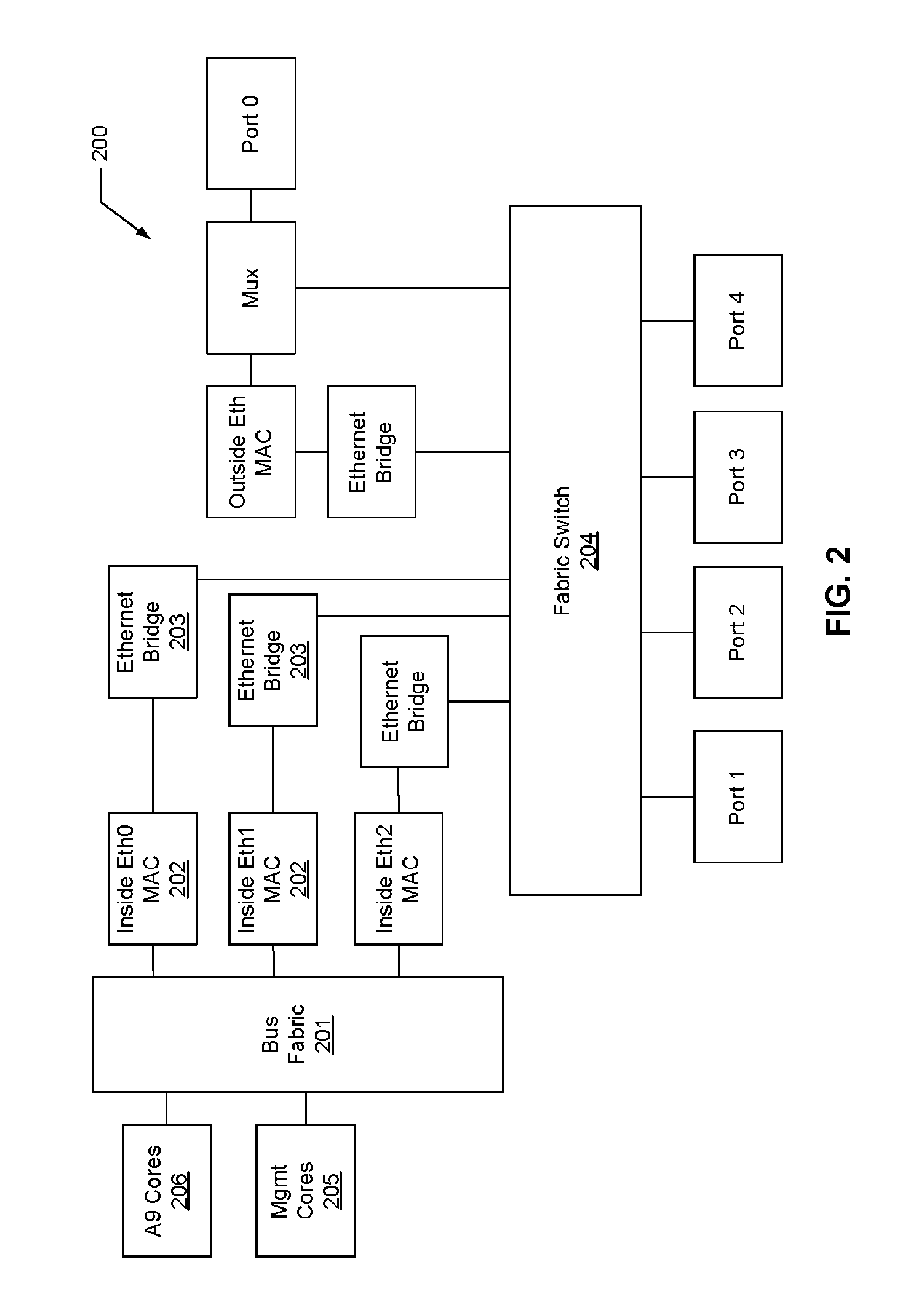

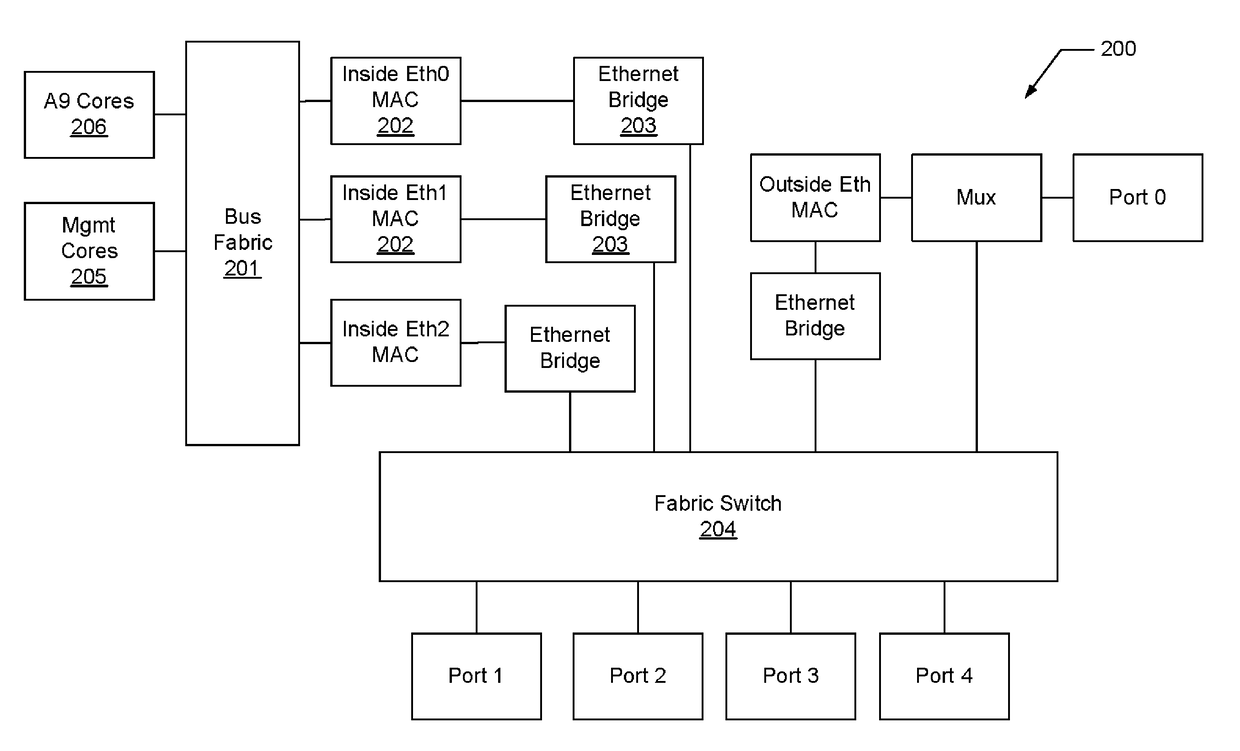

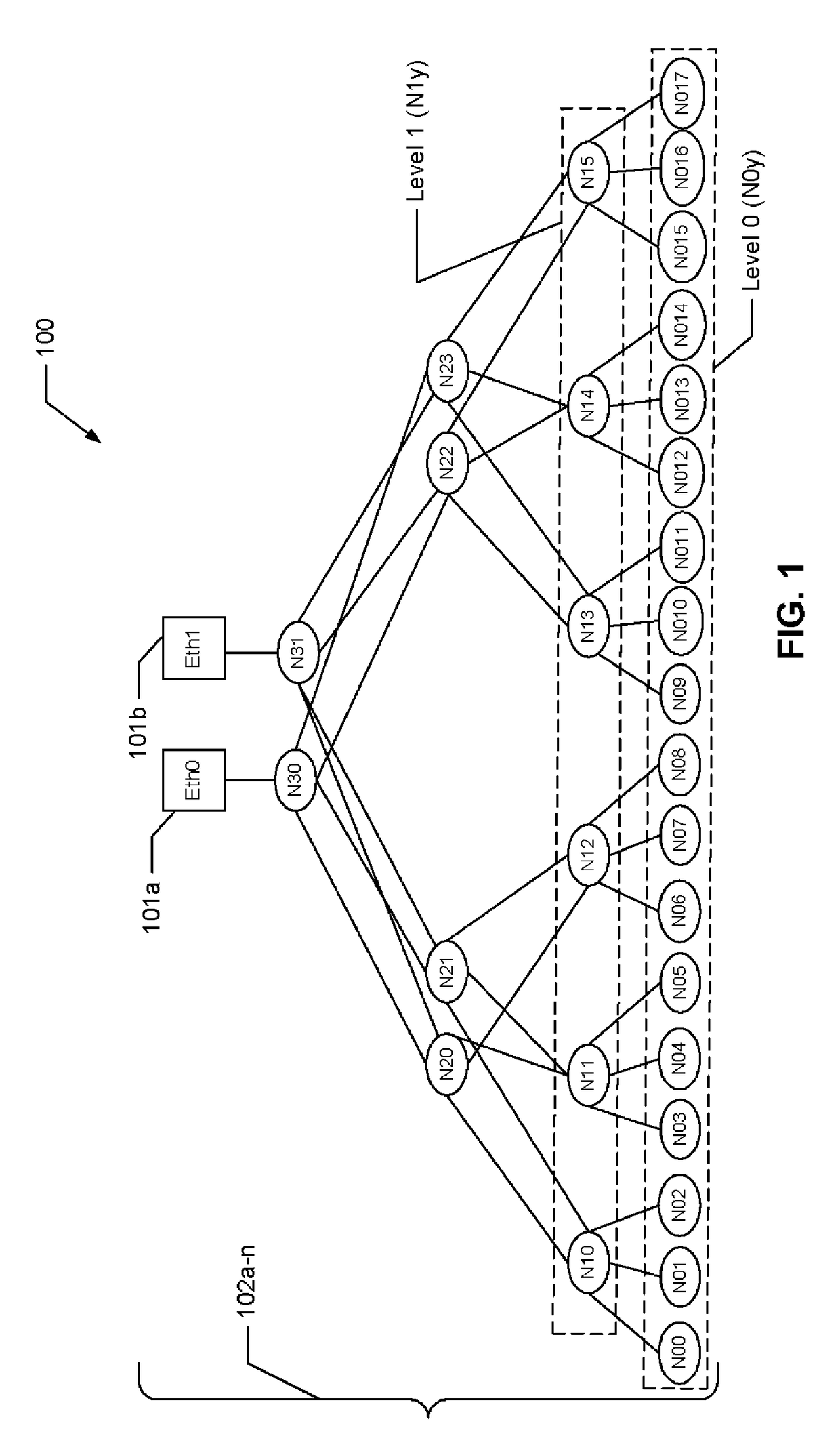

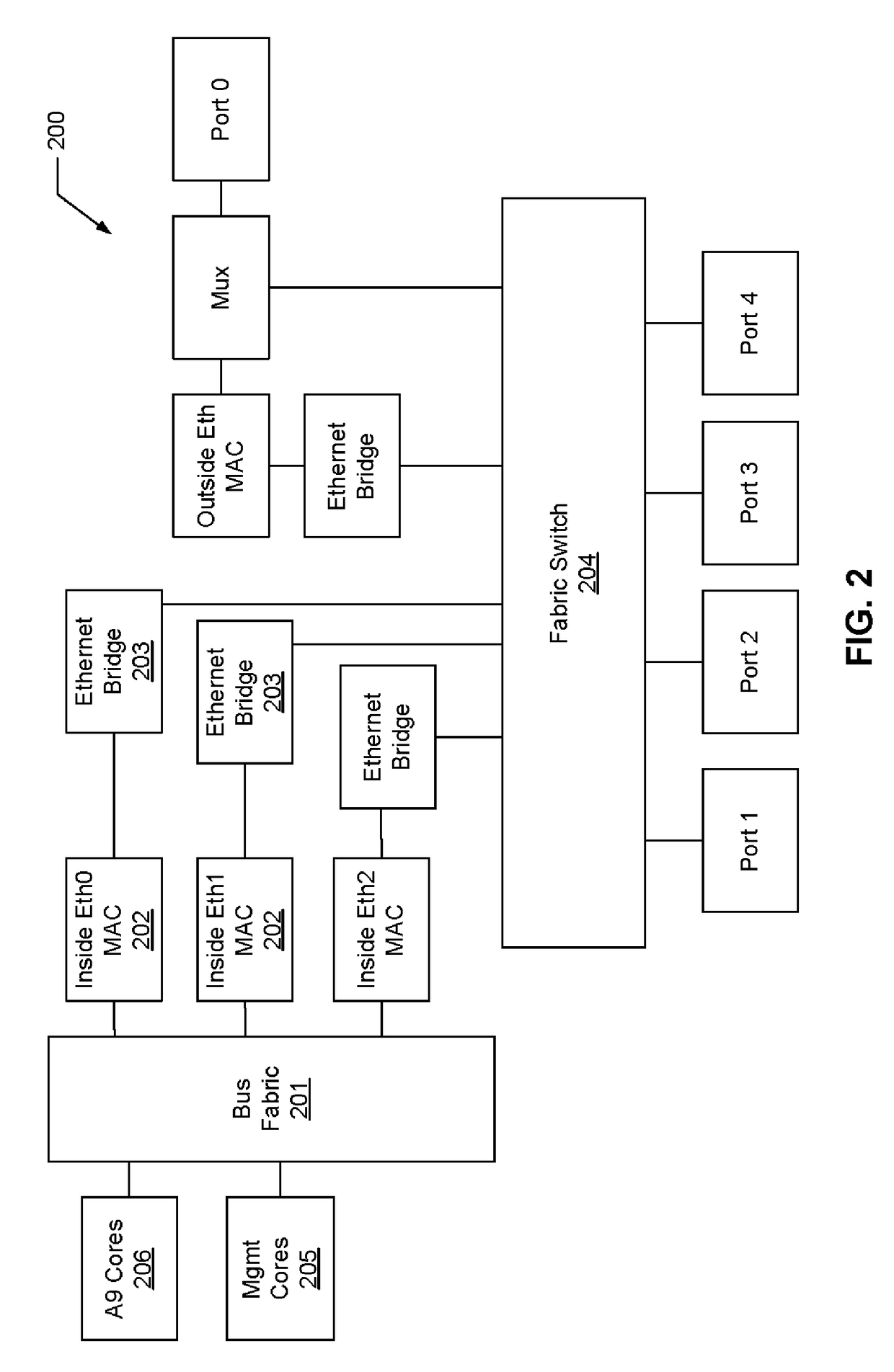

System and method for using a multi-protocol fabric module across a distributed server interconnect fabric

ActiveUS8599863B2Extend performance and power optimization and functionalityDigital computer detailsData switching by path configurationPersonalizationStructure of Management Information

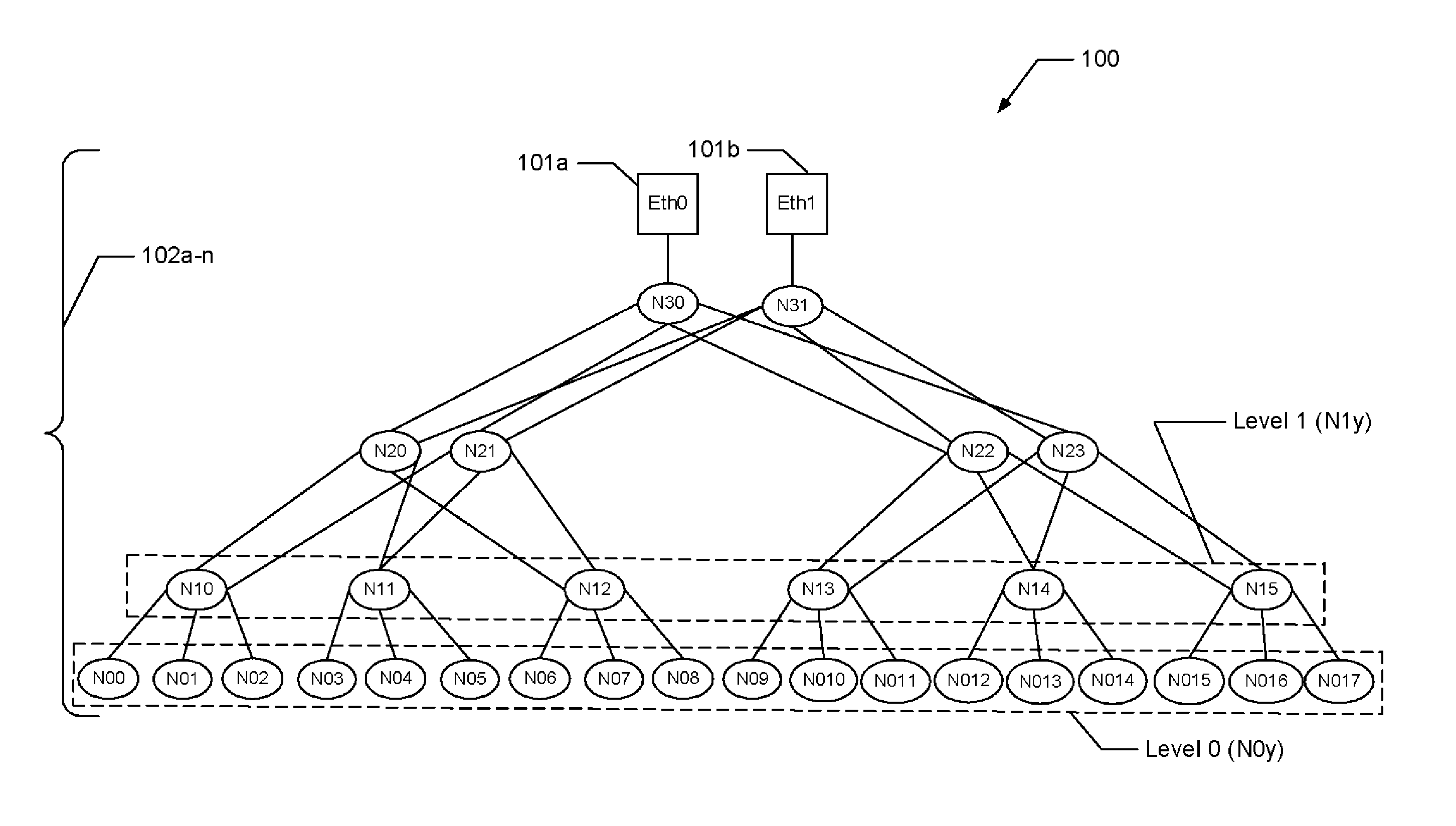

A multi-protocol personality module enabling load / store from remote memory, remote Direct memory Access (DMA) transactions, and remote interrupts, which permits enhanced performance, power utilization and functionality. In one form, the module is used as a node in a network fabric and adds a routing header to packets entering the fabric, maintains the routing header for efficient node-to-node transport, and strips the header when the packet leaves the fabric. In particular, a remote bus personality component is described. Several use cases of the Remote Bus Fabric Personality Module are disclosed: 1) memory sharing across a fabric connected set of servers; 2) the ability to access physically remote Input Output (I / O) devices across this fabric of connected servers; and 3) the sharing of such physically remote I / O devices, such as storage peripherals, by multiple fabric connected servers.

Owner:III HLDG 2

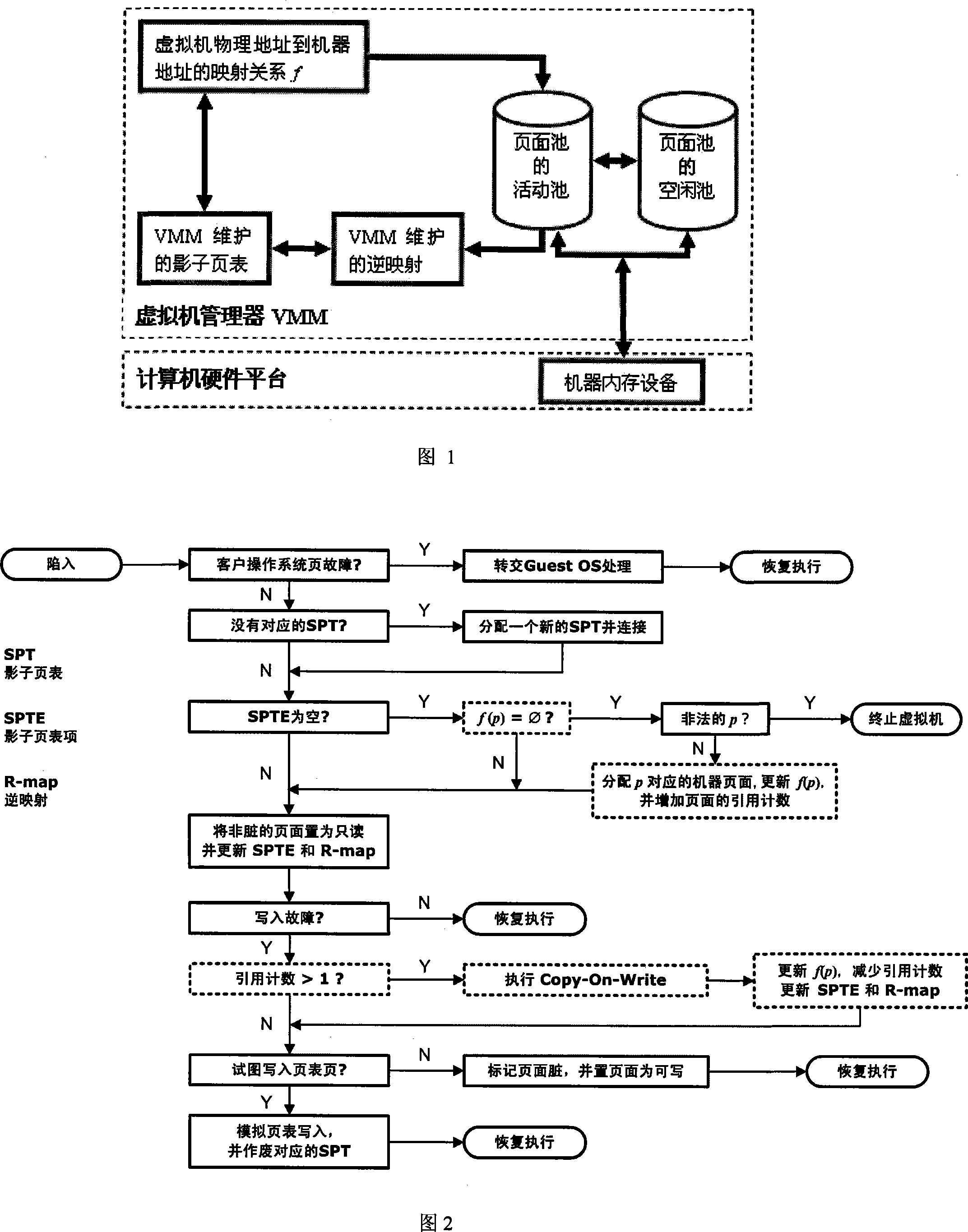

Dynamic EMS memory mappings method of virtual machine manager

InactiveCN101158924ARealize page fetching on demandImplement memory sharingMemory adressing/allocation/relocationSoftware simulation/interpretation/emulationDynamic managementMemory sharing

The invention discloses a dynamic memory mapping method of a virtual machine manager, the steps are that: separately establish a virtual machine page pool and a virtual machine manager page pool; when the virtual machine access is violated, the virtual machine manager dynamically establishes and updates the mapping relations f(p) form a physical memory collection P to a machine memory collection M in the virtual machine manager page pool; the inventive method can simultaneously support obtaining pages according to the requirements at upper layer, virtual storage, as well as memory shared functions, so as to make the virtual machine manager realize dynamic management and allocation of the virtual machine memory on the premise that the virtual machine manager sufficiently guarantees the virtual machine access memory performance.

Owner:PEKING UNIV

System and method for grouping processors

InactiveUS20050081201A1Guaranteed LatencyAffect performanceResource allocationMemory systemsGroup propertyTerm memory

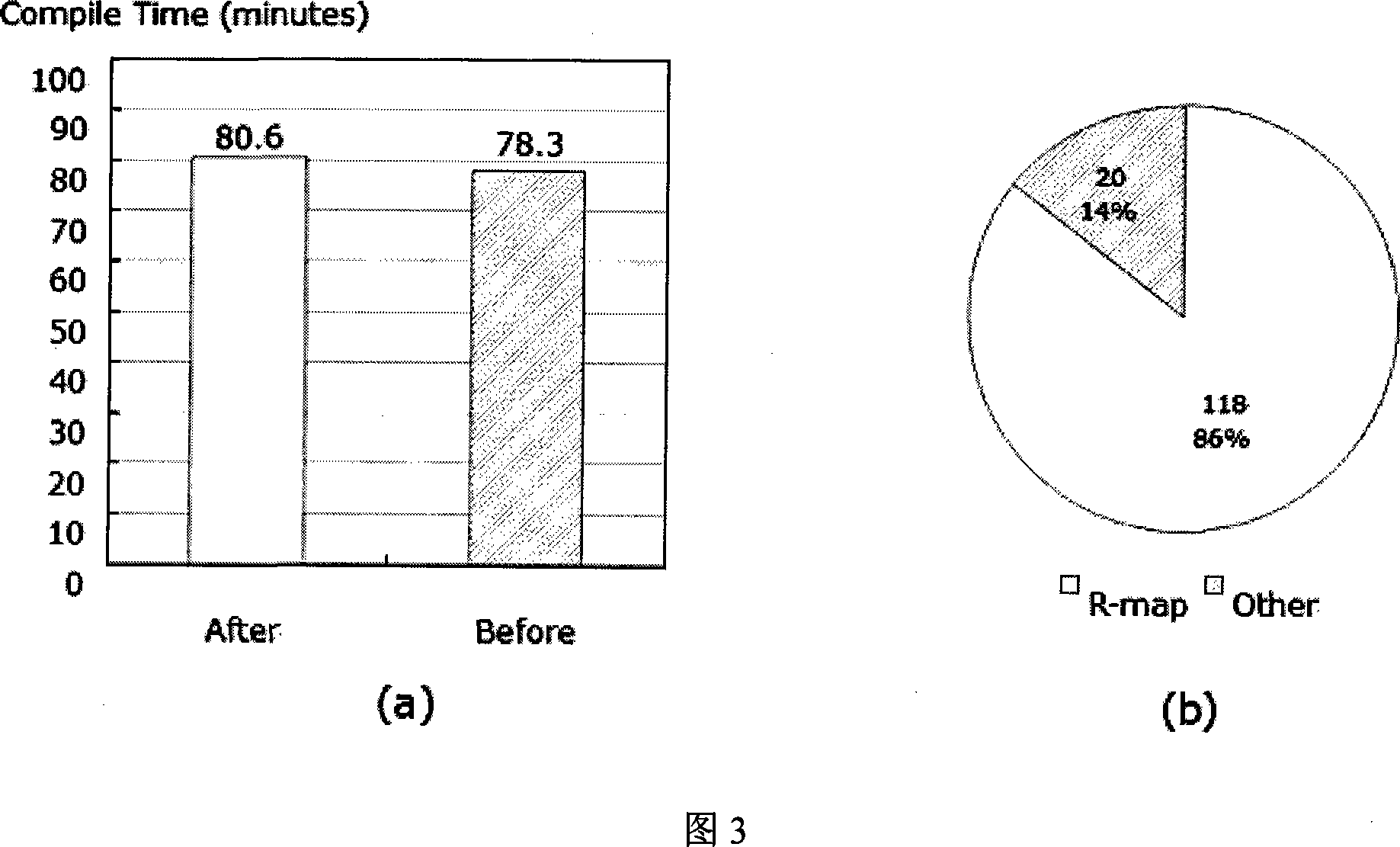

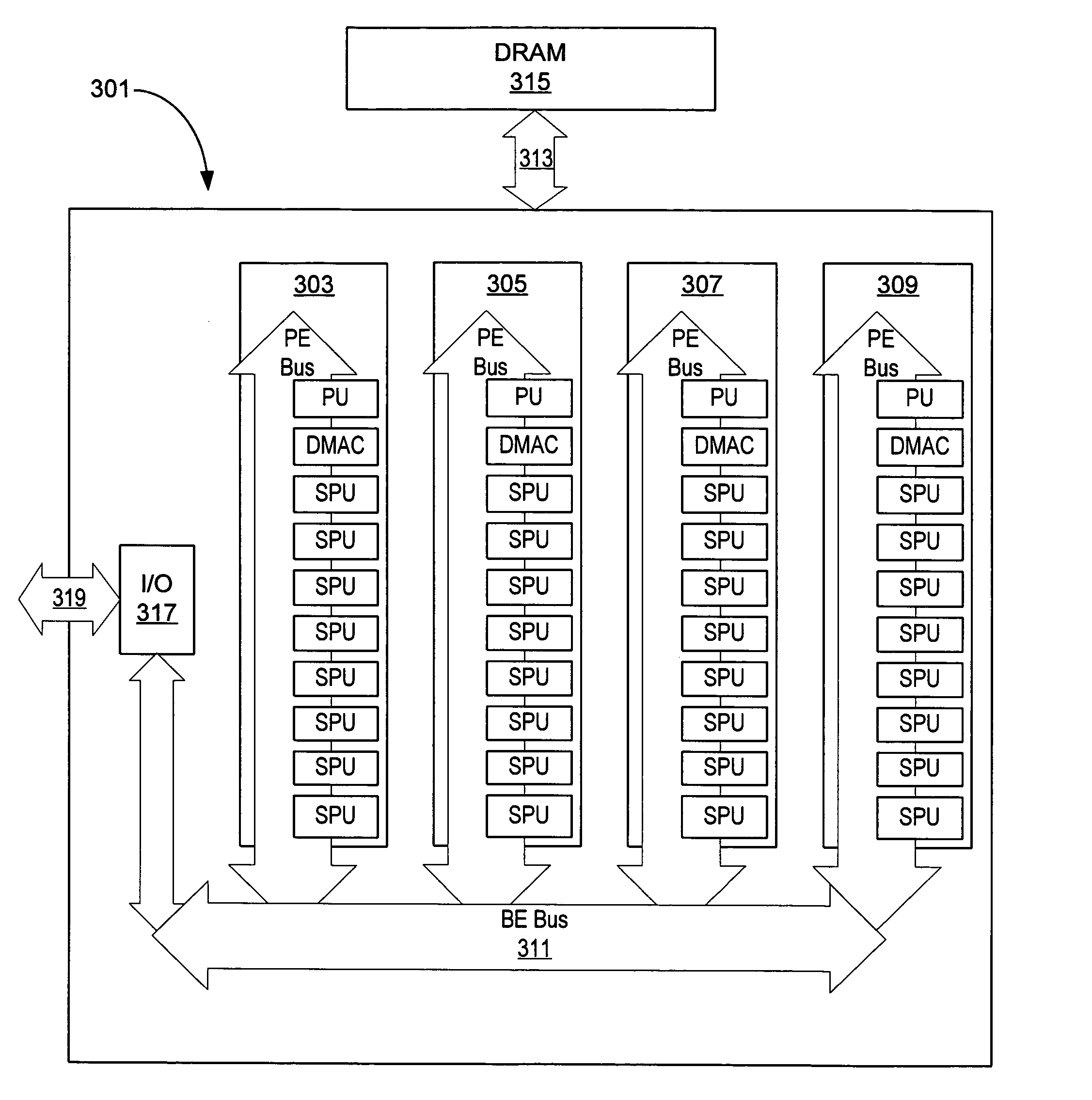

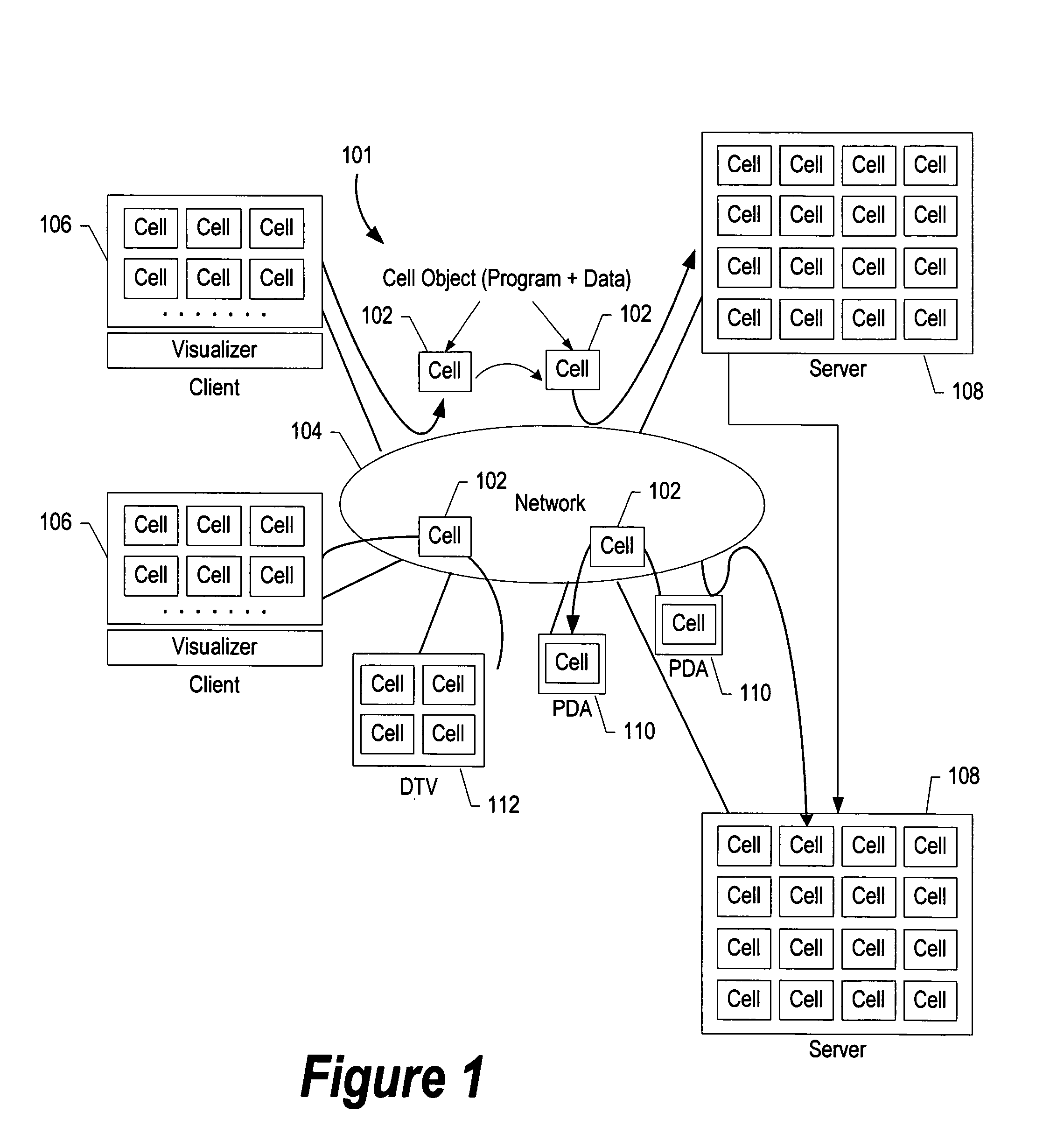

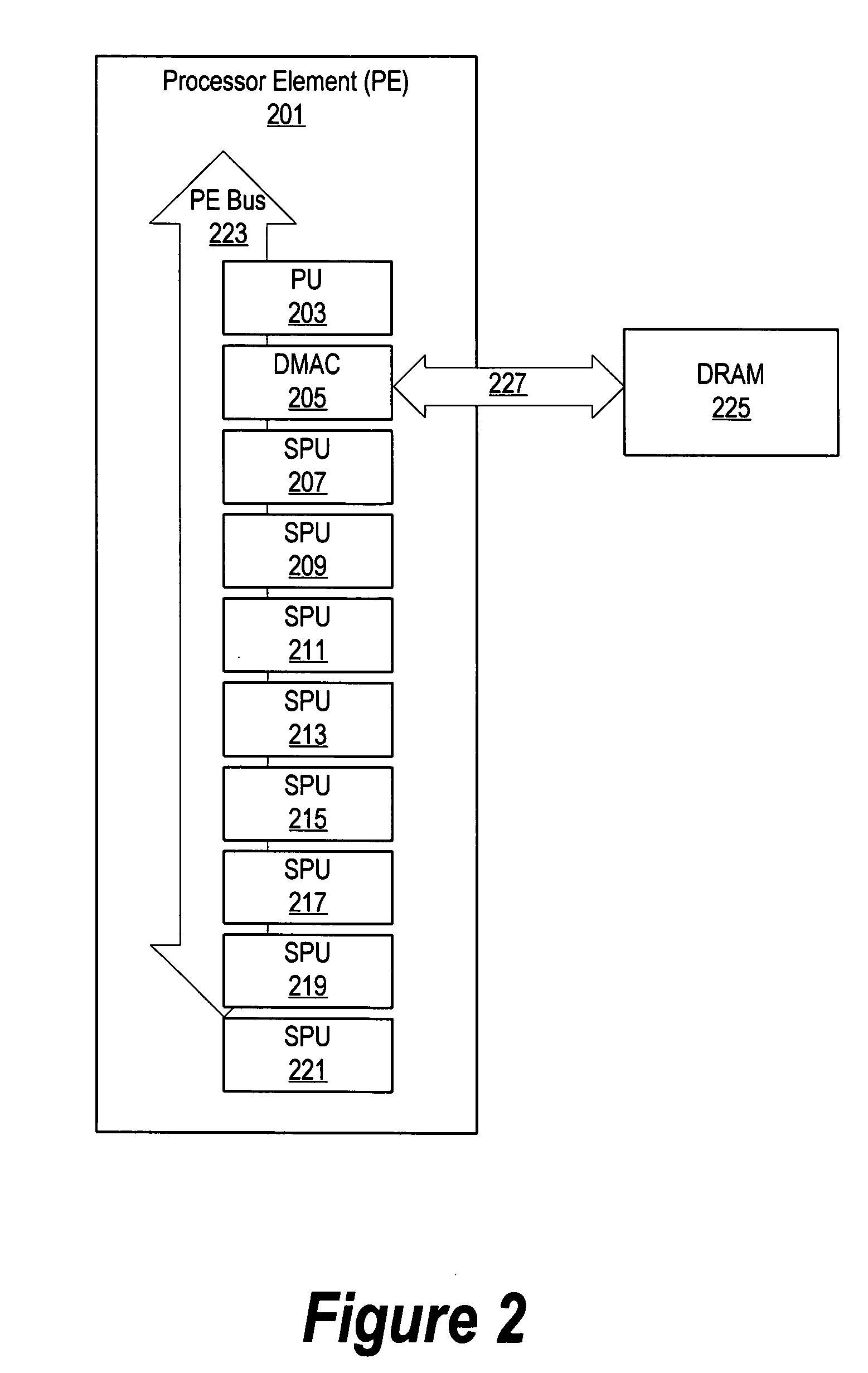

A system and method for grouping processors is presented. A processing unit (PU) initiates an application and identifies the application's requirements. The PU assigns one or more synergistic processing units (SPUs) and a memory space to the application in the form of a group. The application specifies whether the task requires shared memory or private memory. Shared memory is a memory space that is accessible by the SPUs and the PU. Private memory, however, is a memory space that is only accessible by the SPUs that are included in the group. When the application executes, the resources within the group are allocated to the application's execution thread. Each group has its own group properties, such as address space, policies (i.e. real-time, FIFO, run-to-completion, etc.) and priority (i.e. low or high). These group properties are used during thread execution to determine which groups take precedence over other tasks.

Owner:TAHOE RES LTD

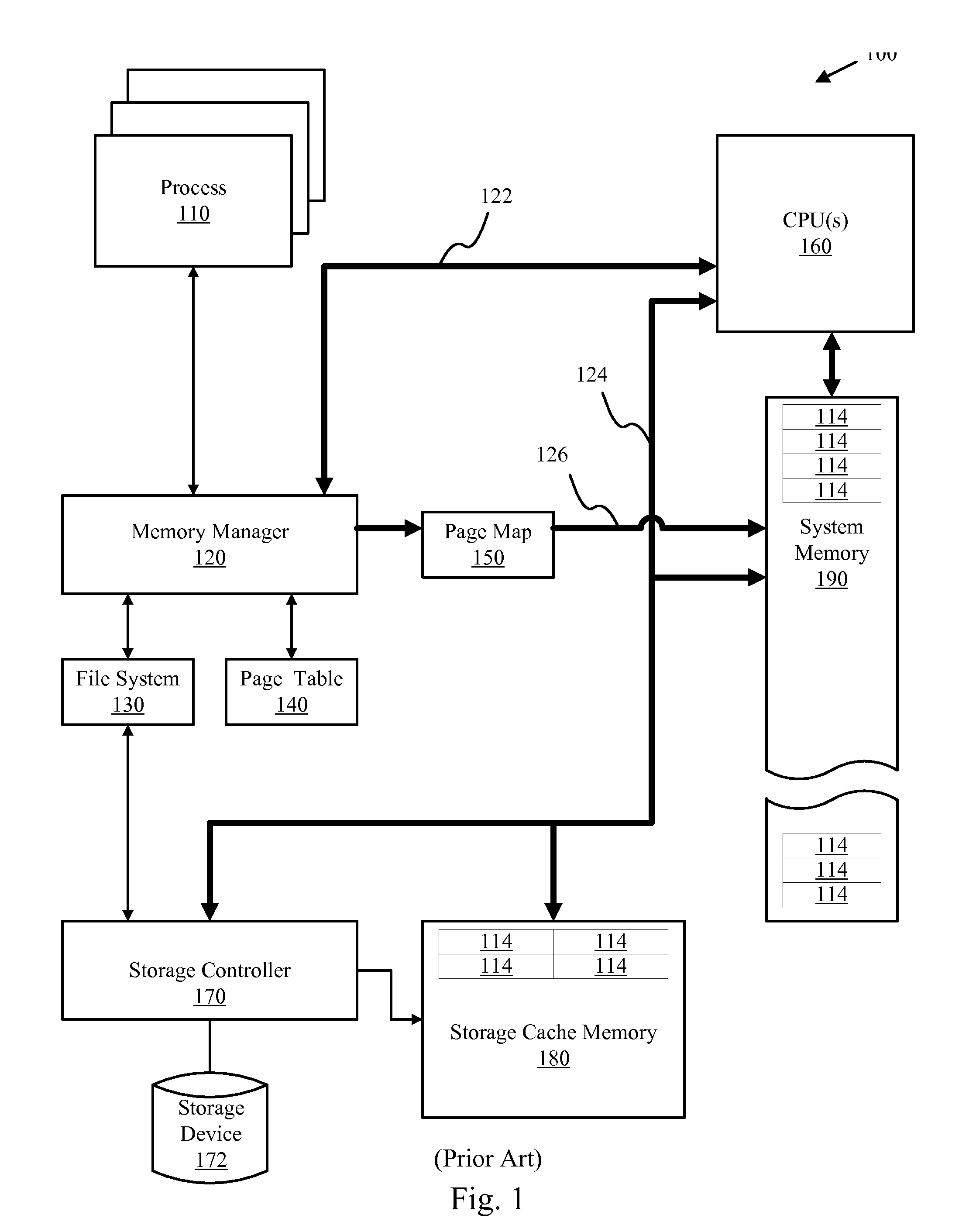

Apparatus method and system for fault tolerant virtual memory management

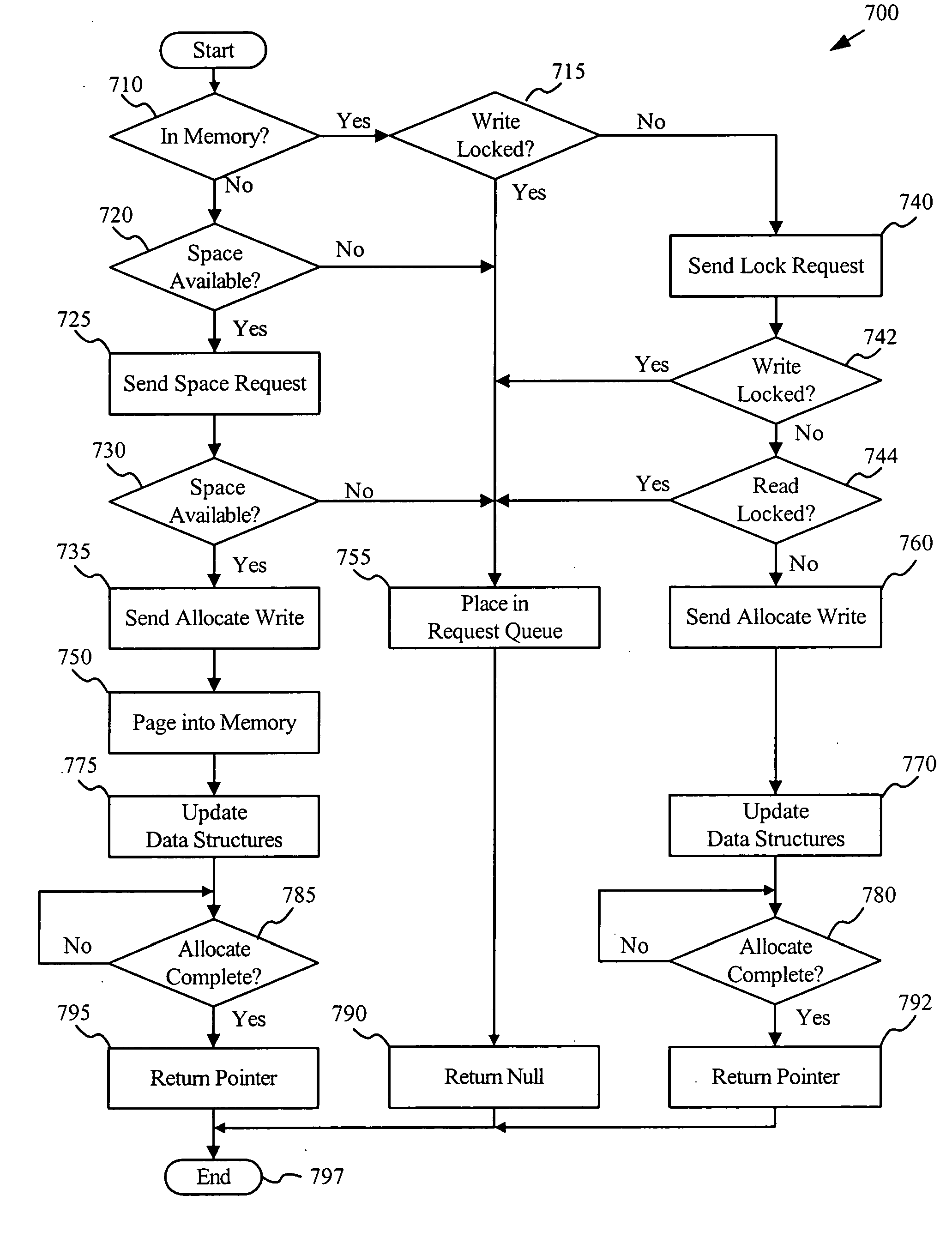

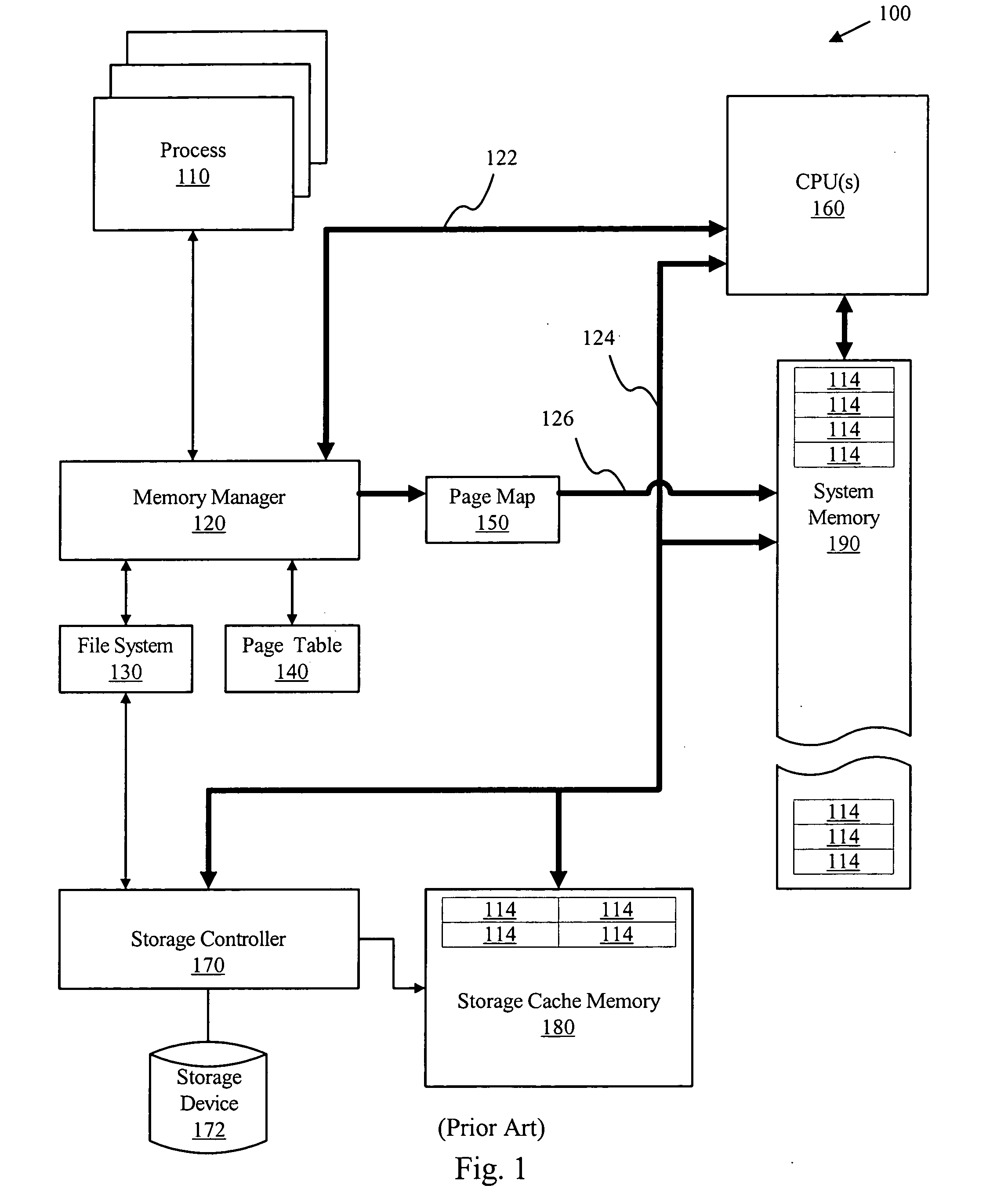

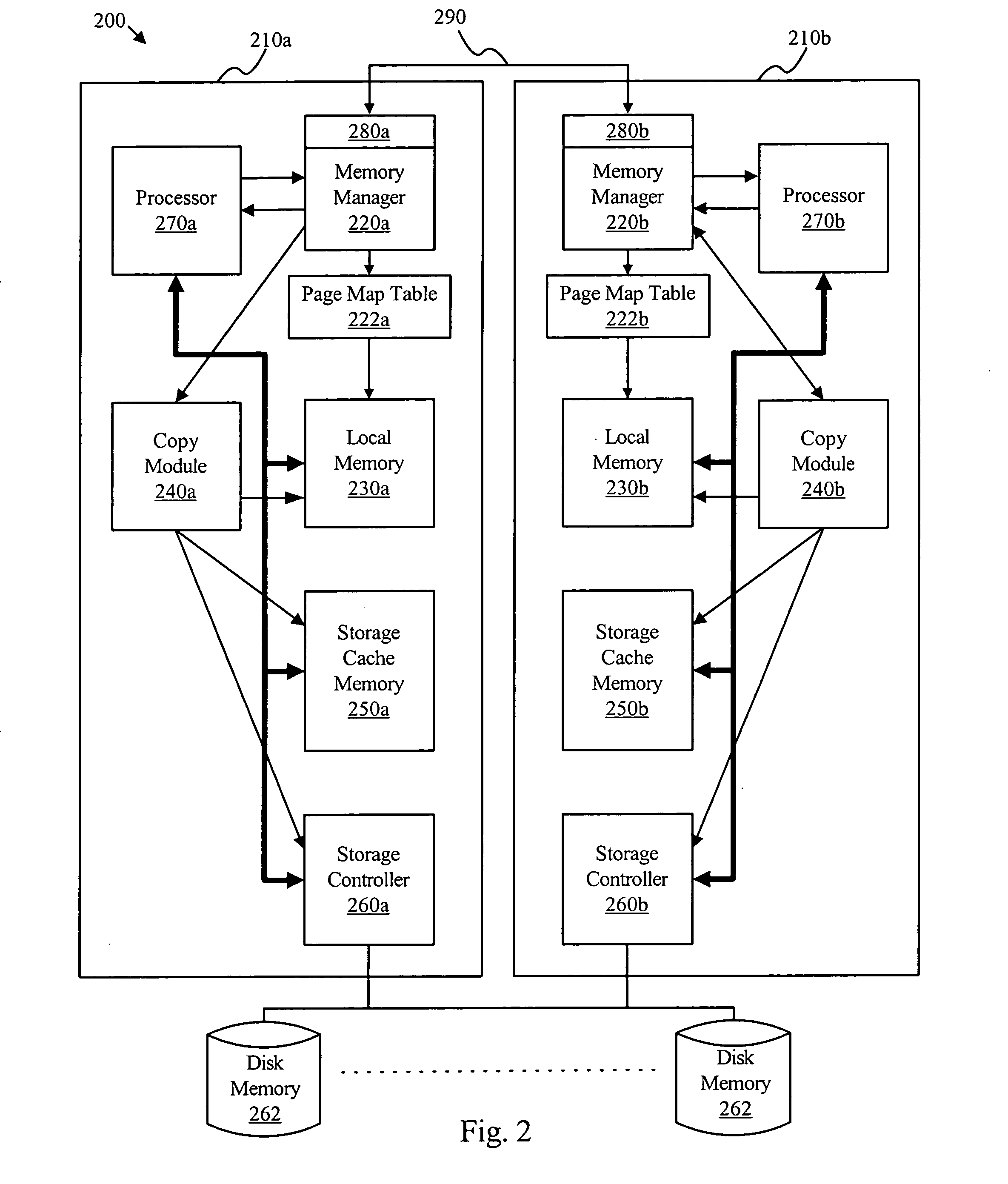

InactiveUS20050132249A1Facilitating fault toleranceThe process is fast and efficientMemory loss protectionError detection/correctionMemory mapWriting messages

A fault tolerant synchronized virtual memory manager for use in a load sharing environment manages memory allocation, memory mapping, and memory sharing in a first processor, while maintaining synchronization of the memory space of the first processor with the memory space of at least one partner processor. In one embodiment, synchronization is maintained via paging synchronization messages such as a space request message, an allocate memory message, a release memory message, a lock request message, a read header message, a write page message, a sense request message, an allocate read message, an allocate write message, and / or a release pointer message. Paging synchronization facilitates recovery operations without the cost and overhead of prior art fault tolerant systems.

Owner:IBM CORP

System and method for using a multi-protocol fabric module across a distributed server interconnect fabric

ActiveUS20150103826A1Extend performance and power optimization and functionalityData switching by path configurationPersonalizationStructure of Management Information

A multi-protocol personality module enabling load / store from remote memory, remote Direct Memory Access (DMA) transactions, and remote interrupts, which permits enhanced performance, power utilization and functionality. In one form, the module is used as a node in a network fabric and adds a routing header to packets entering the fabric, maintains the routing header for efficient node-to-node transport, and strips the header when the packet leaves the fabric. In particular, a remote bus personality component is described. Several use cases of the Remote Bus Fabric Personality Module are disclosed: 1) memory sharing across a fabric connected set of servers; 2) the ability to access physically remote Input Output (I / O) devices across this fabric of connected servers; and 3) the sharing of such physically remote I / O devices, such as storage peripherals, by multiple fabric connected servers.

Owner:III HLDG 2

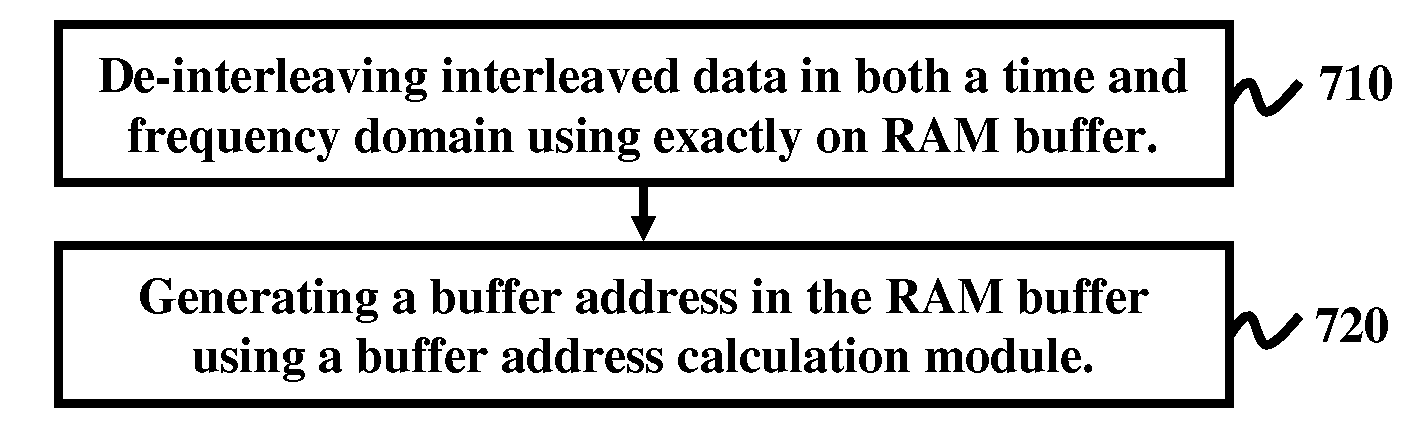

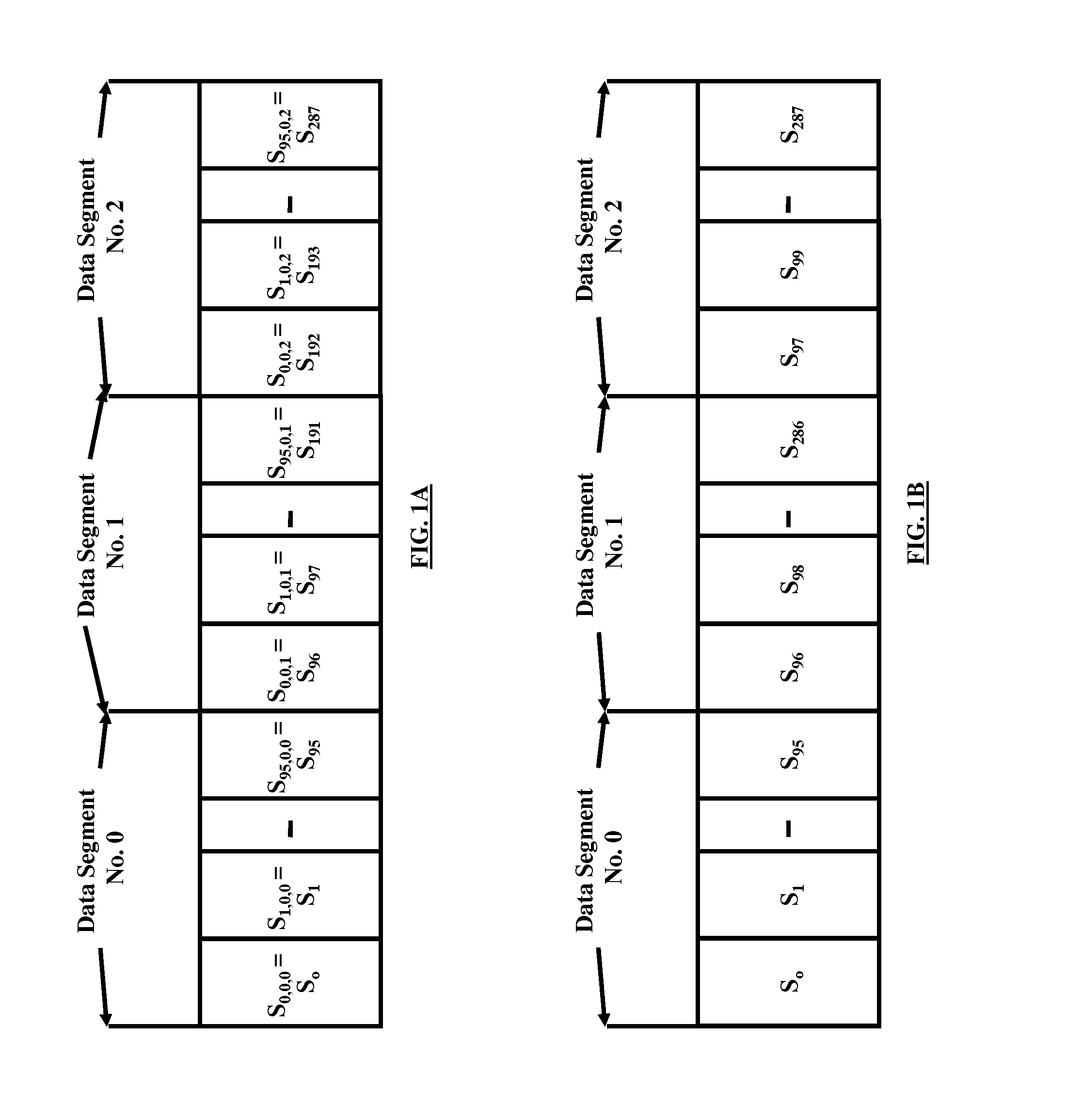

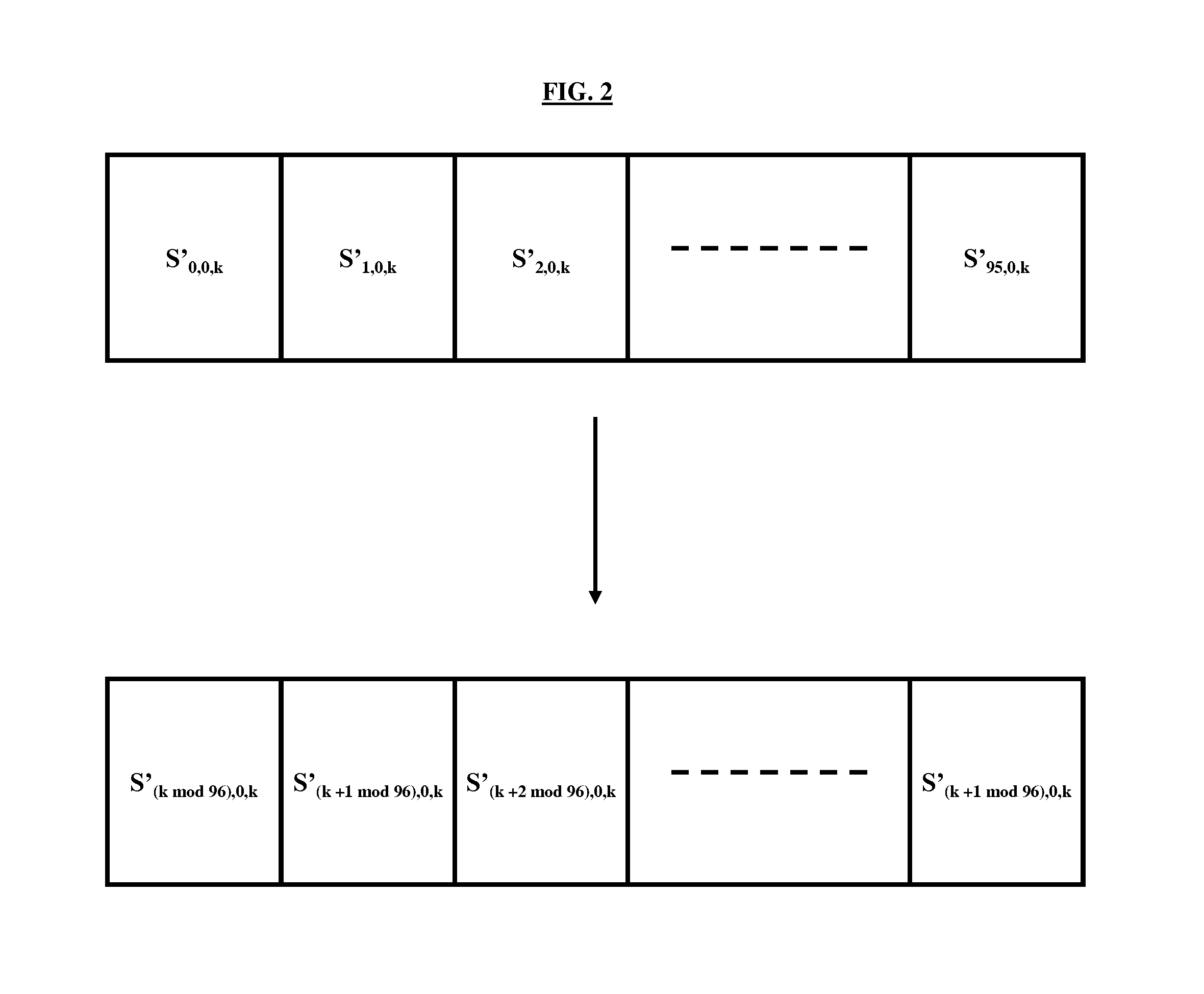

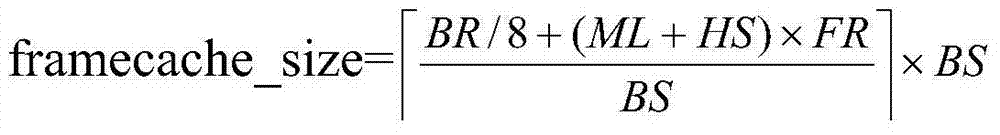

Memory sharing of time and frequency de-interleaver for isdb-t receivers

InactiveUS20090300300A1Reduce areaIncrease in sizeModulated-carrier systemsForward error control useMemory sharingReal-time computing

Time and frequency de-interleaving of interleaved data in an Integrated Services Digital Broadcasting Terrestrial (ISDB-T) receiver includes exactly one random access memory (RAM) buffer in the ISDB-T receiver that performs both time and frequency de-interleaving of the interleaved data and a buffer address calculation module for generating buffer address in the buffer. The system performs memory sharing of the time and frequency de-interleaver for ISDB-T receivers and reduces the memory size required for performing de-interleaving in an ISDB-T receiver and combines the frequency and time de-interleaver buffers into one RAM thereby reducing the memory size.

Owner:ATMEL CORP

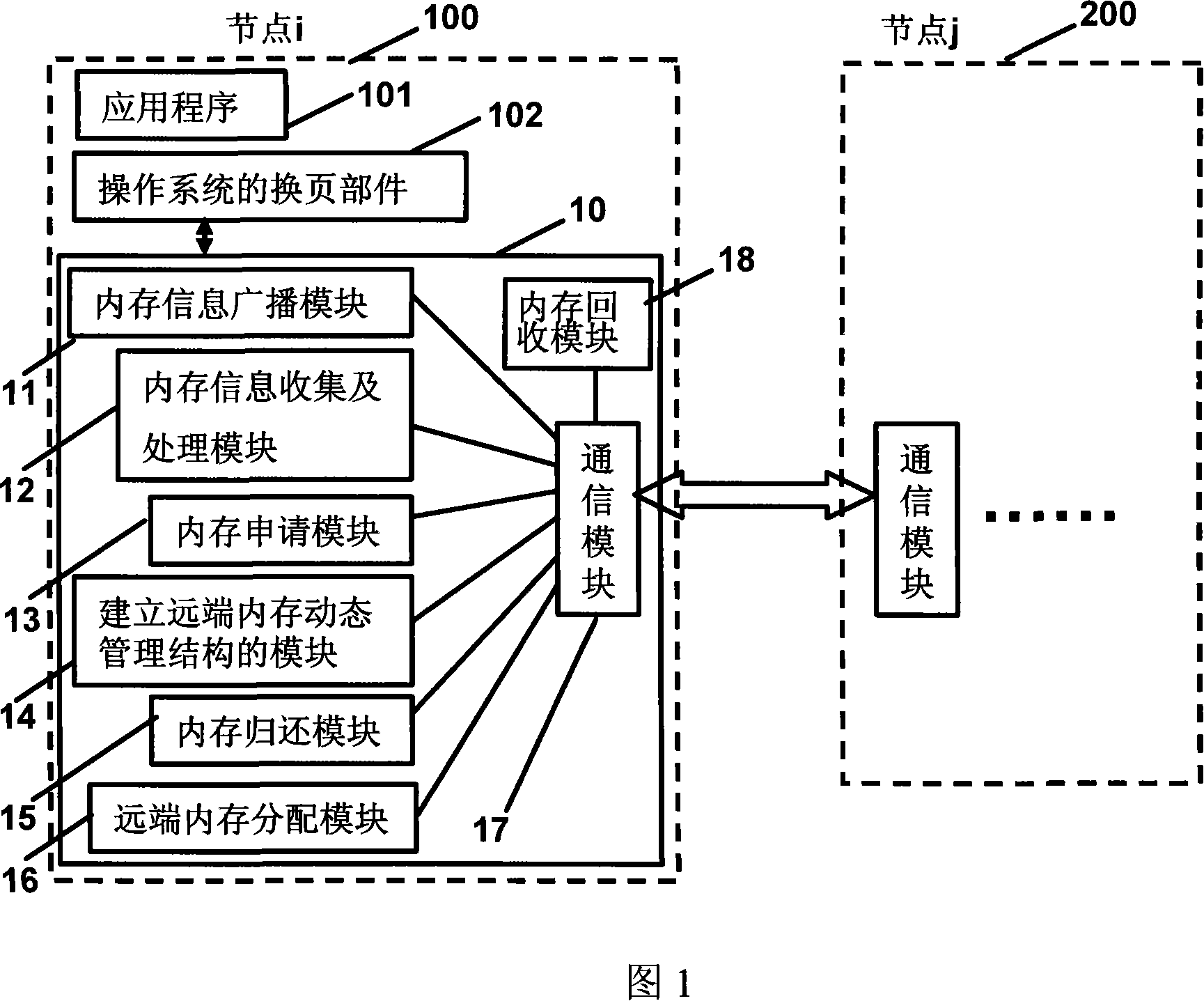

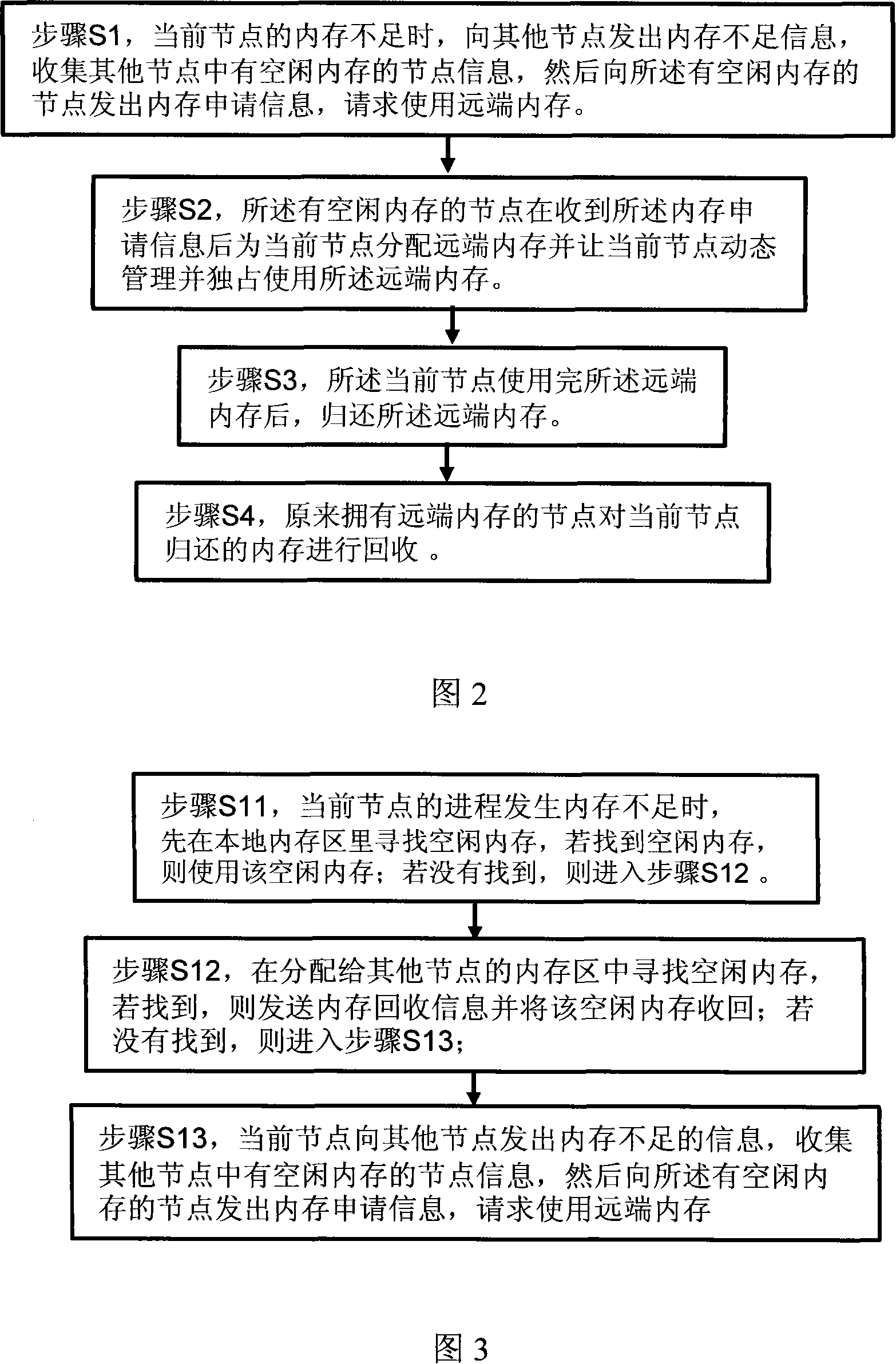

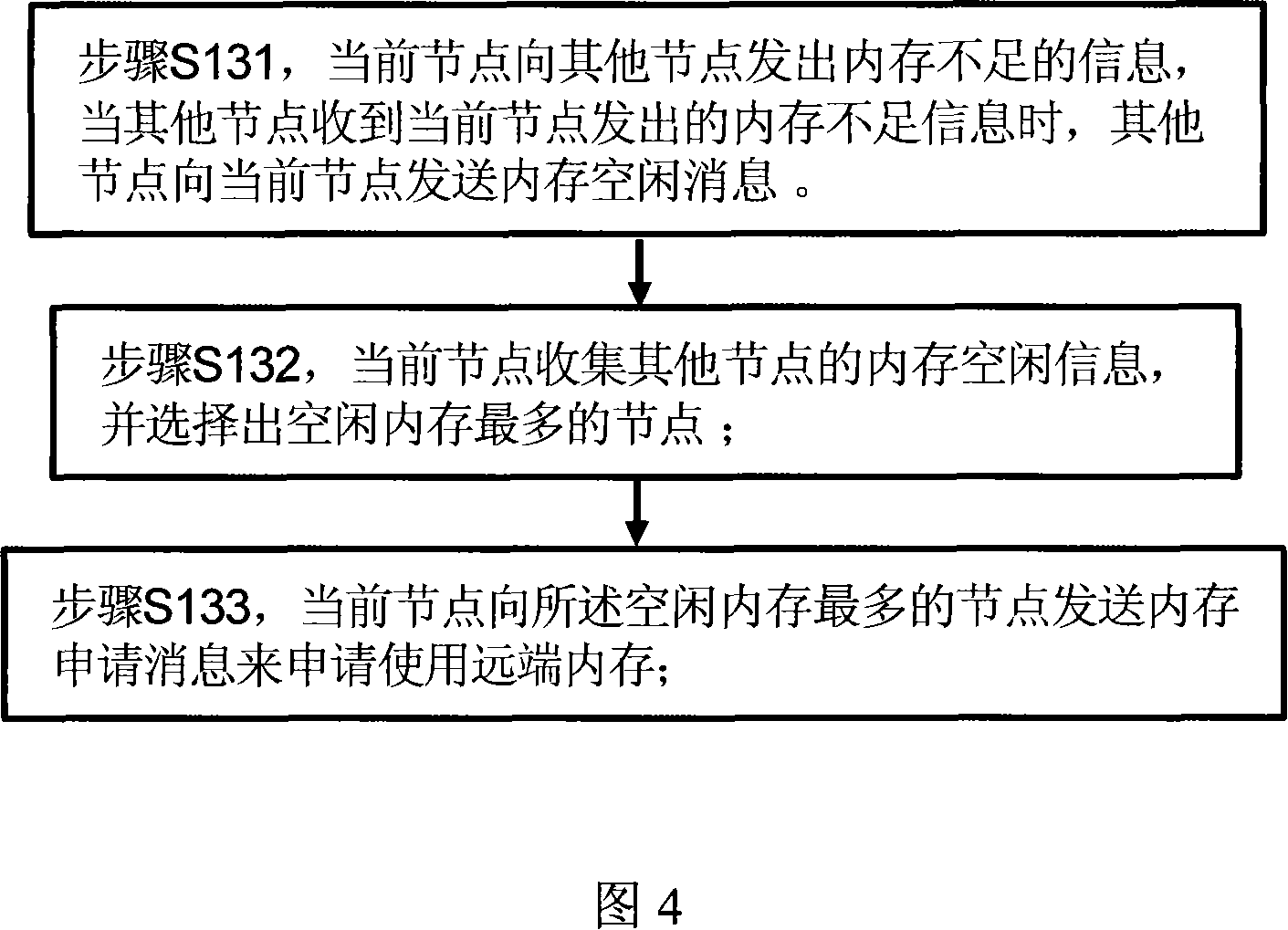

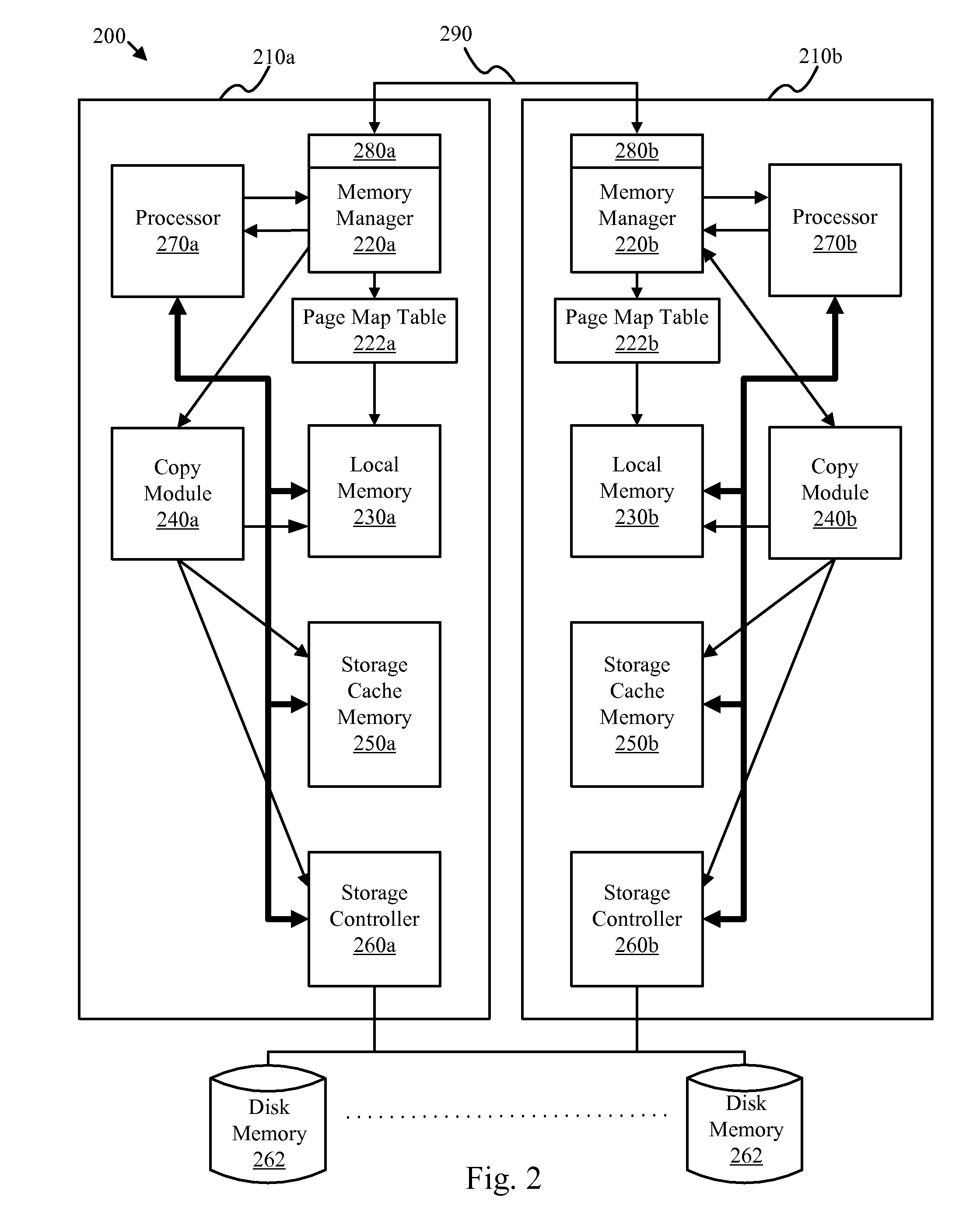

EMS memory sharing system, device and method

ActiveCN101158927AIncrease profitImprove reliabilityMemory adressing/allocation/relocationOperational systemOut of memory

The invention discloses a memory shared system and a device, as well as a method in a multi-core NUMA system. The system comprises a plurality of nodes; the operating system of each node comprises a memory shared device, the memory shared device comprises: a memory information collection and processing module, a memory application module, as well as a module establishing a distant end memory dynamic management structure and connected with a communication module. The method comprises the following steps: step S1, when the memory of a front node is deficient, the memory deficiency information is sent to other nodes, the node information of vacant memory in other nodes is collected, then the memory application information is sent to the nodes with vacant memory requesting the use of distant end memory; step S2, after receiving the memory application information, the nodes with vacant memory distributes the distant end memory with the current node, and let the current node dynamically manages and exclusively uses the distant end memory. The invention borrows the distant end vacant memory to accomplish the load leveling of the entire system.

Owner:HUAWEI TECH CO LTD

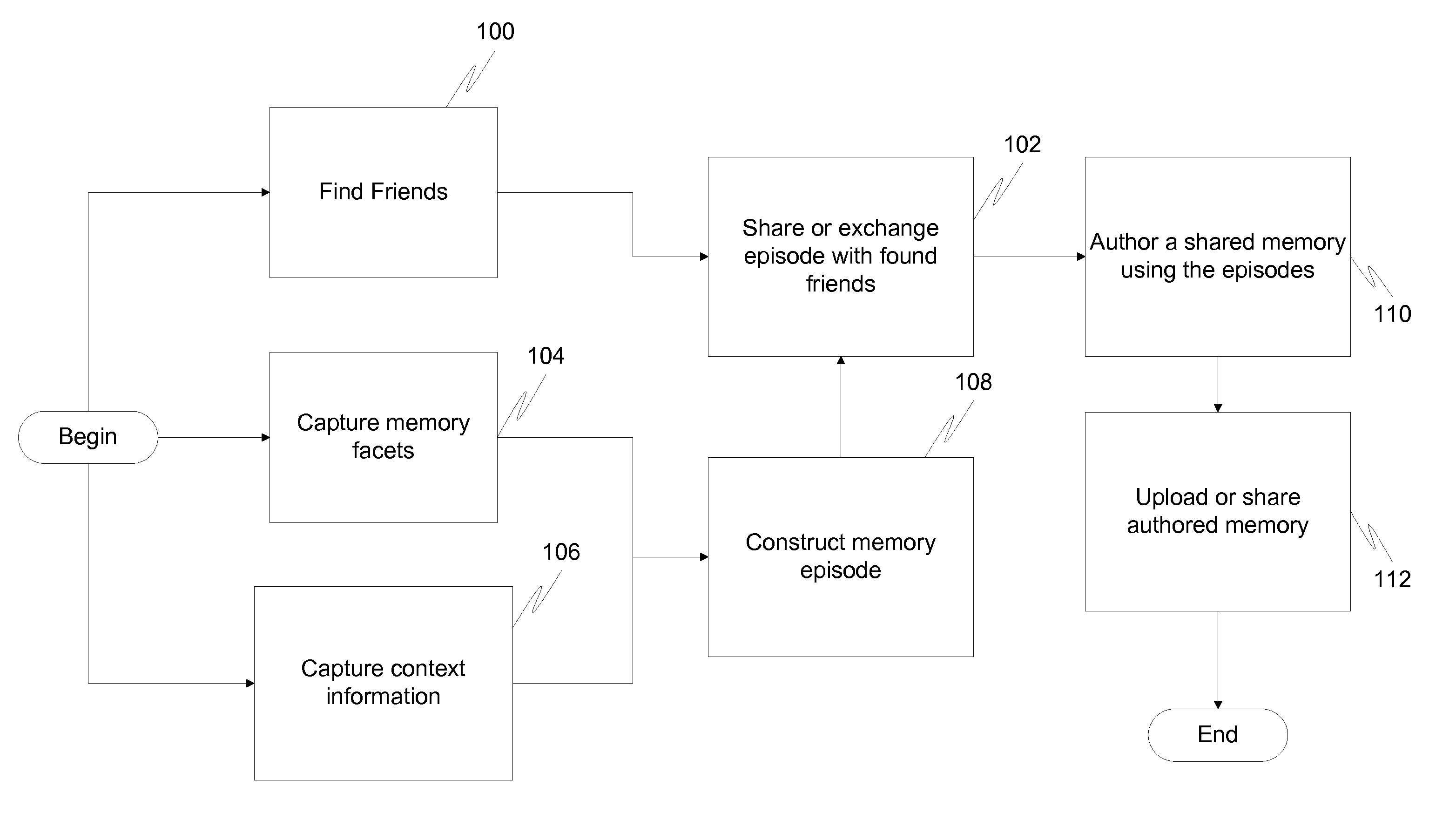

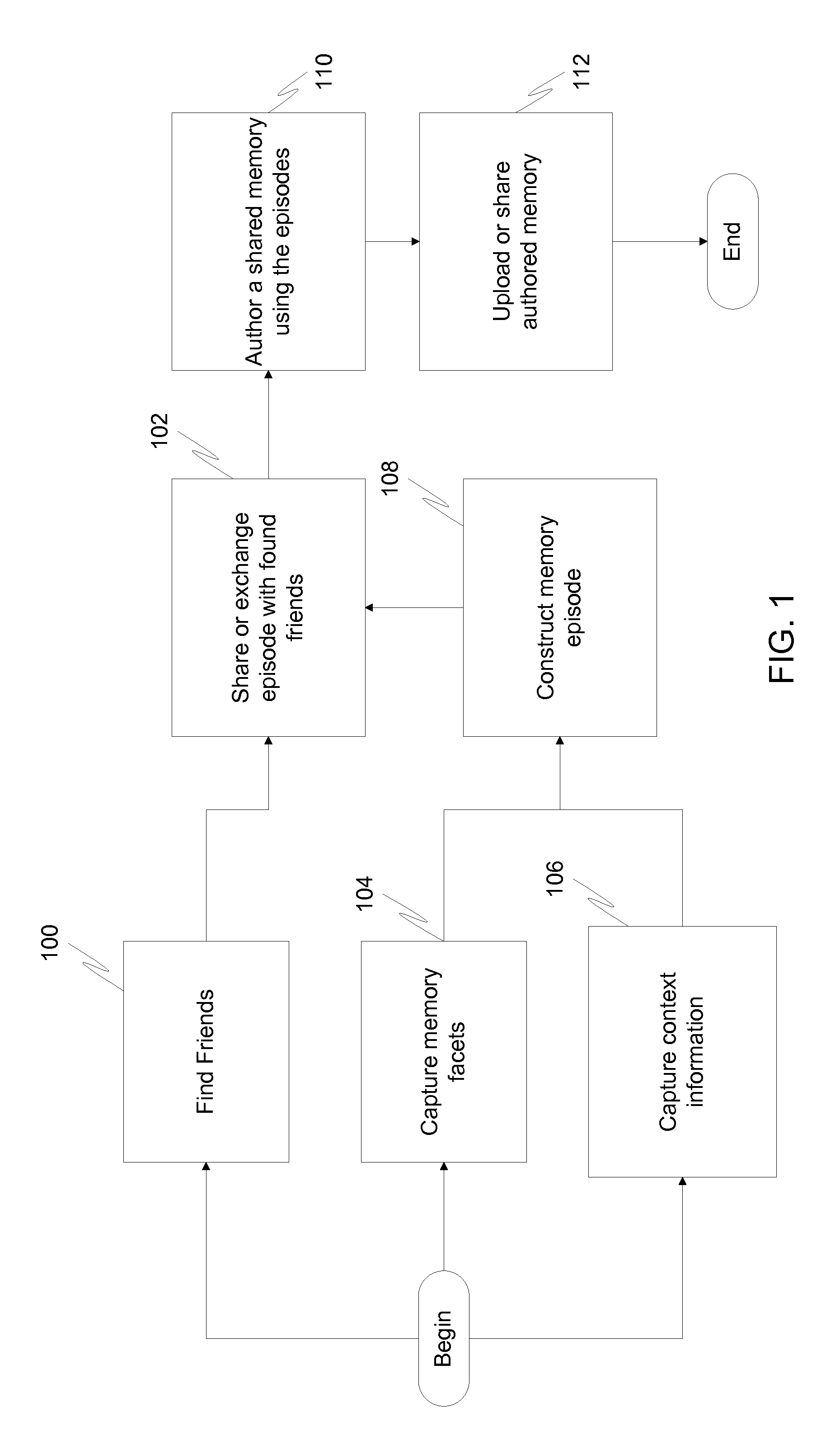

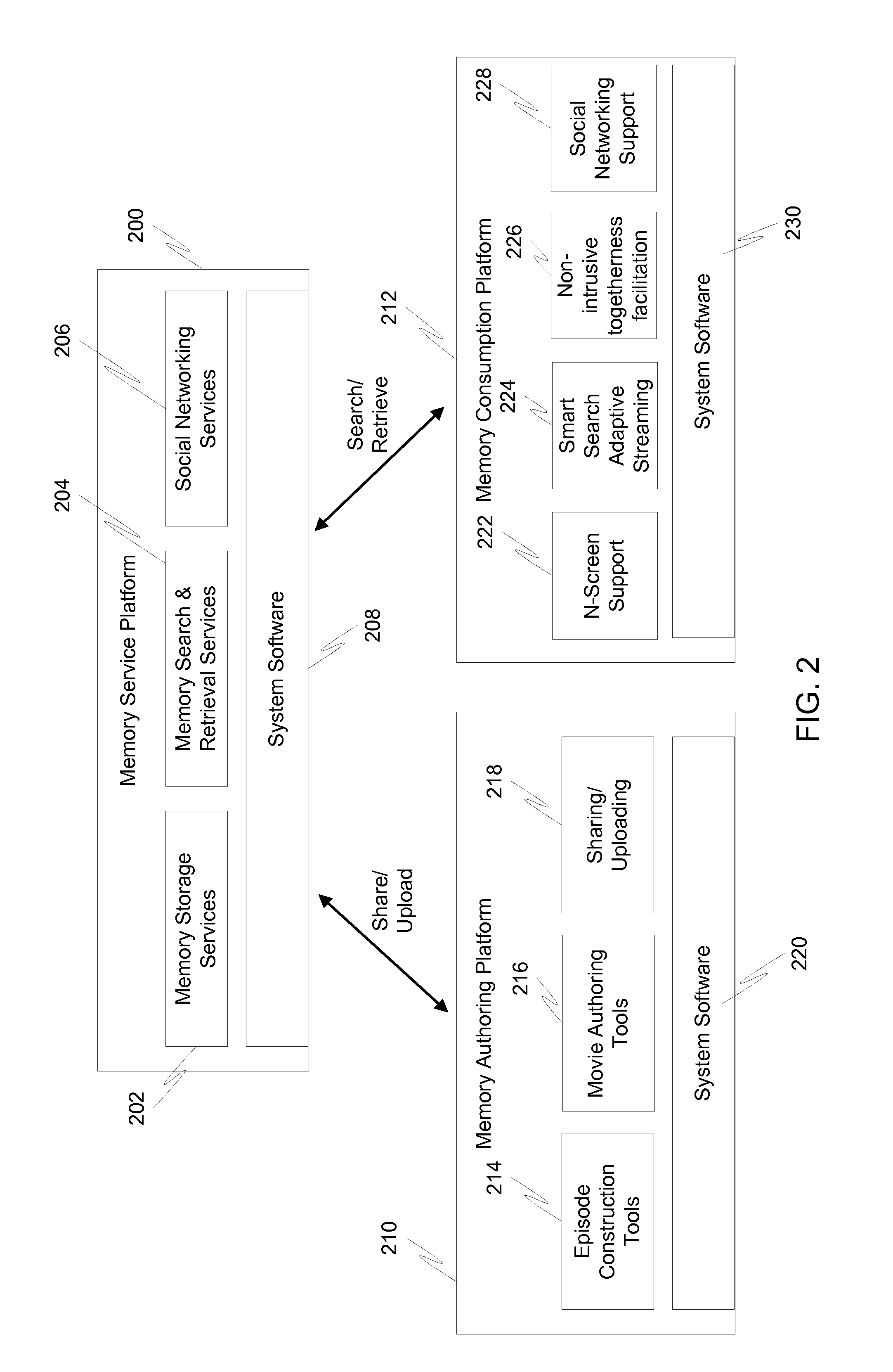

Life-logging and memory sharing

InactiveUS20130038756A1Television system detailsInput/output to record carriersMemory objectMemory sharing

In a first embodiment of the present invention, a method for creating a memory object on an electronic device is provided, comprising: capturing a facet using the electronic device; recording sensor information relating to an emotional state of a user of the electronic device at the time the facet was captured; determining an emotional state of the user based on the recorded sensor information; and storing the facet along with the determined emotional state as a memory object.

Owner:SAMSUNG ELECTRONICS CO LTD

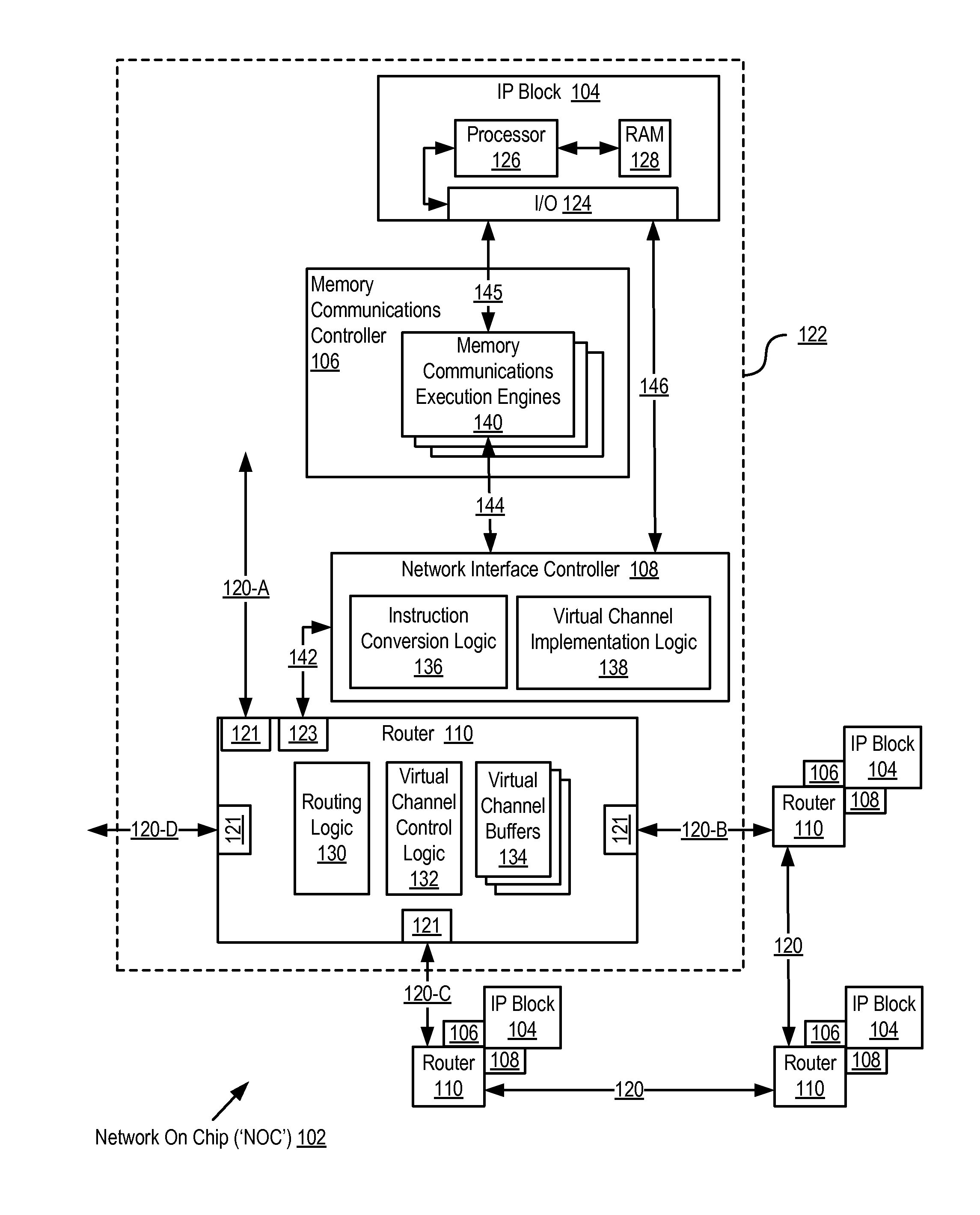

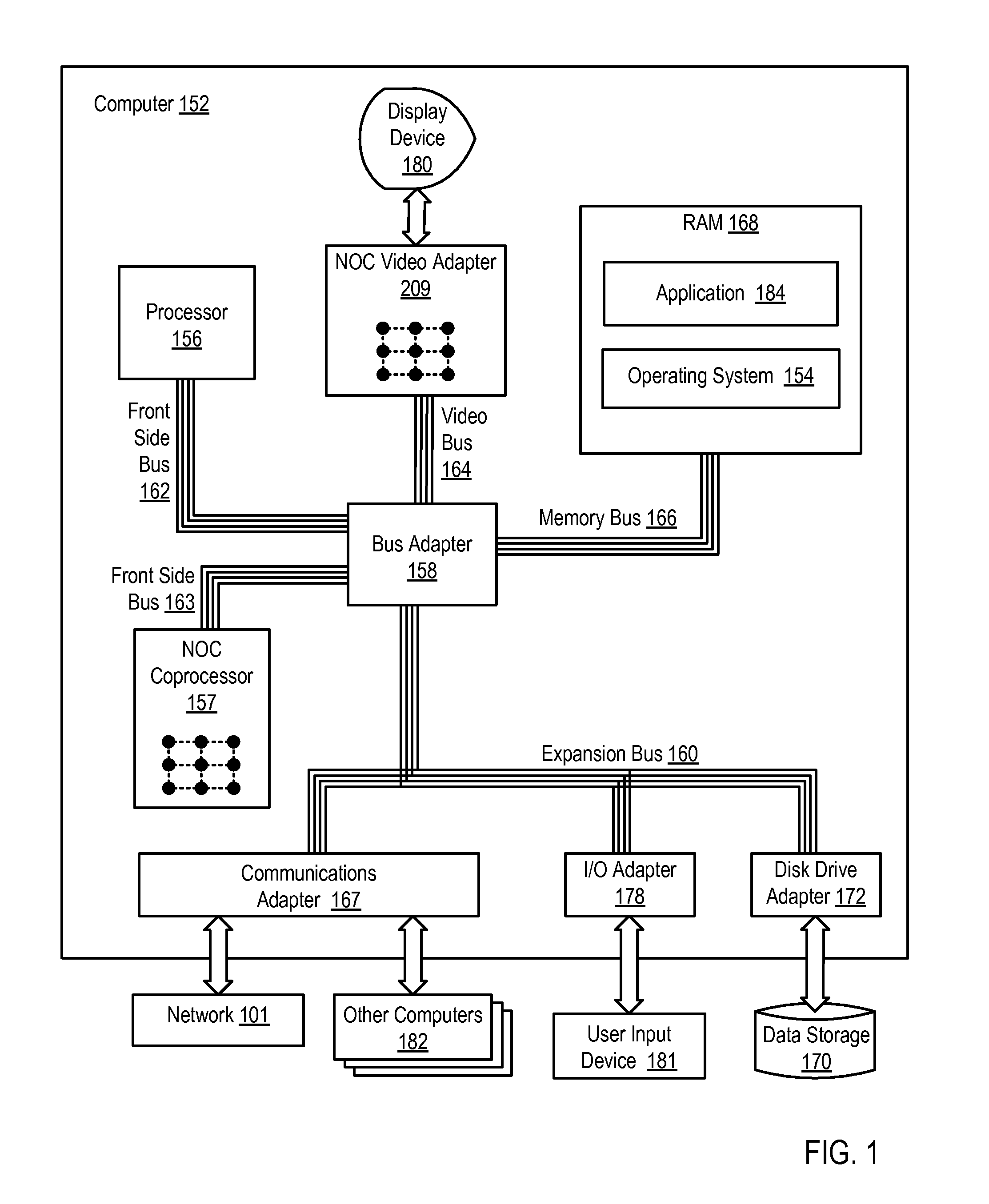

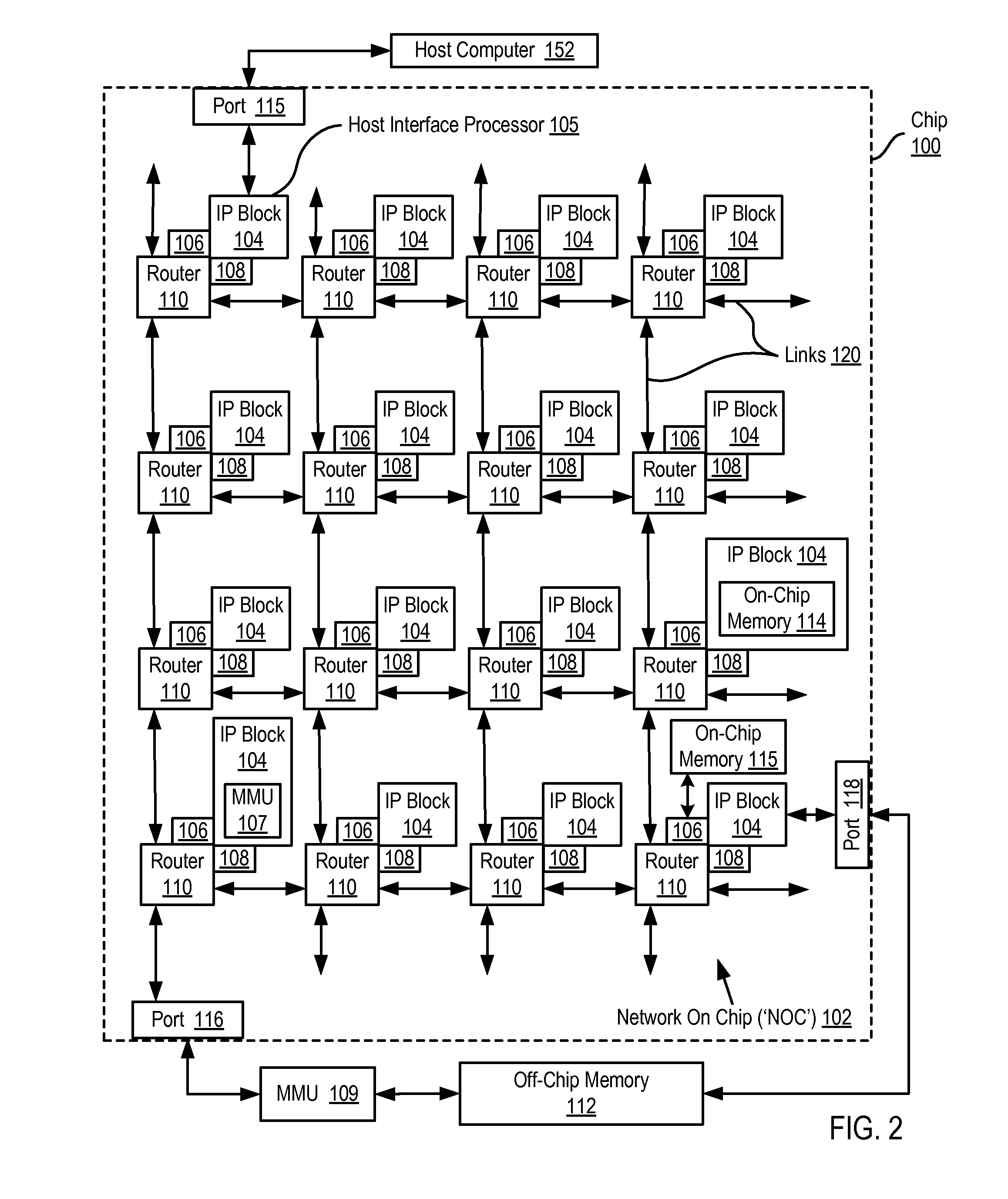

Software Pipelining On A Network On Chip

Memory sharing in a software pipeline on a network on chip (‘NOC’), the NOC including integrated processor (‘IP’) blocks, routers, memory communications controllers, and network interface controllers, with each IP block adapted to a router through a memory communications controller and a network interface controller, where each memory communications controller controlling communications between an IP block and memory, and each network interface controller controlling inter-IP block communications through routers, including segmenting a computer software application into stages of a software pipeline, the software pipeline comprising one or more paths of execution; allocating memory to be shared among at least two stages including creating a smart pointer, the smart pointer including data elements for determining when the shared memory can be deallocated; determining, in dependence upon the data elements for determining when the shared memory can be deallocated, that the shared memory can be deallocated; and deallocating the shared memory.

Owner:INT BUSINESS MASCH CORP

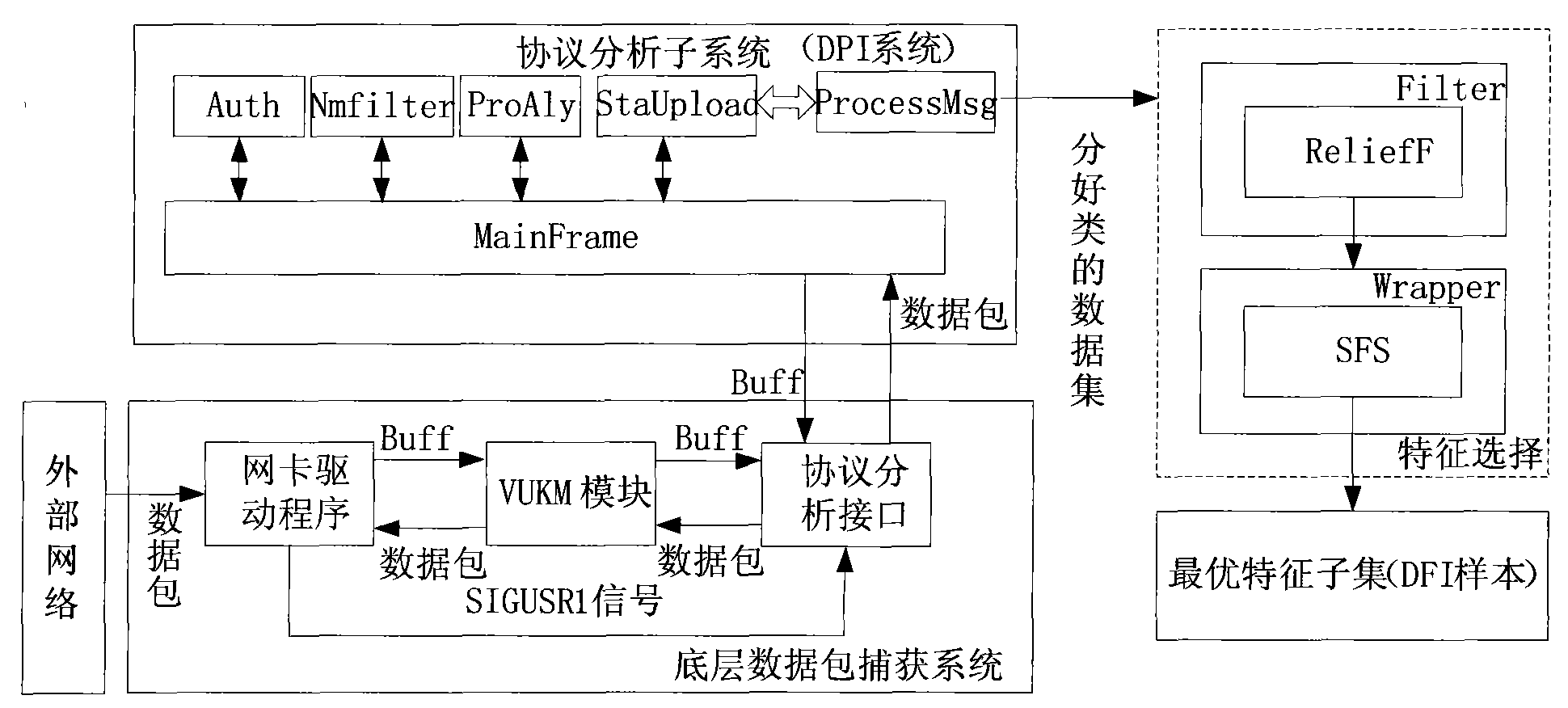

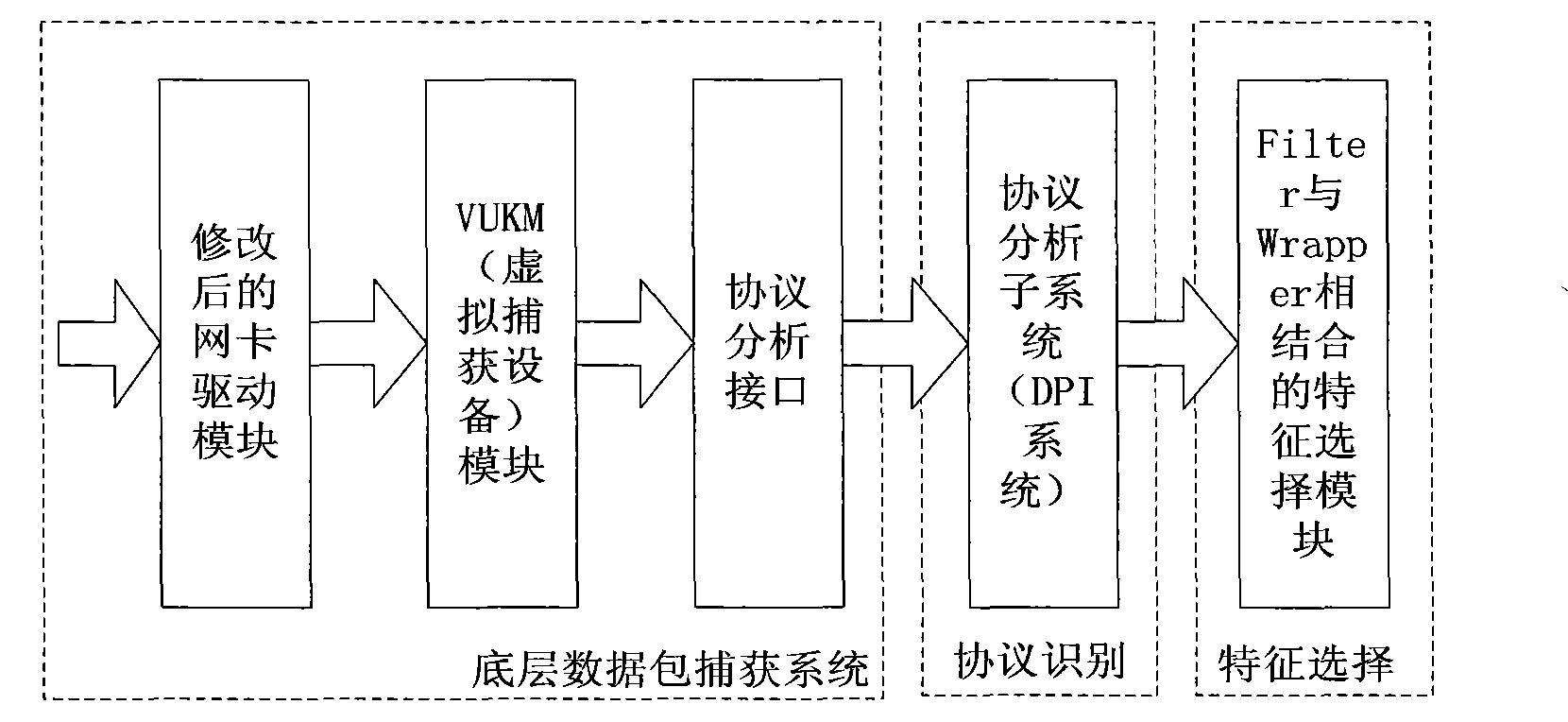

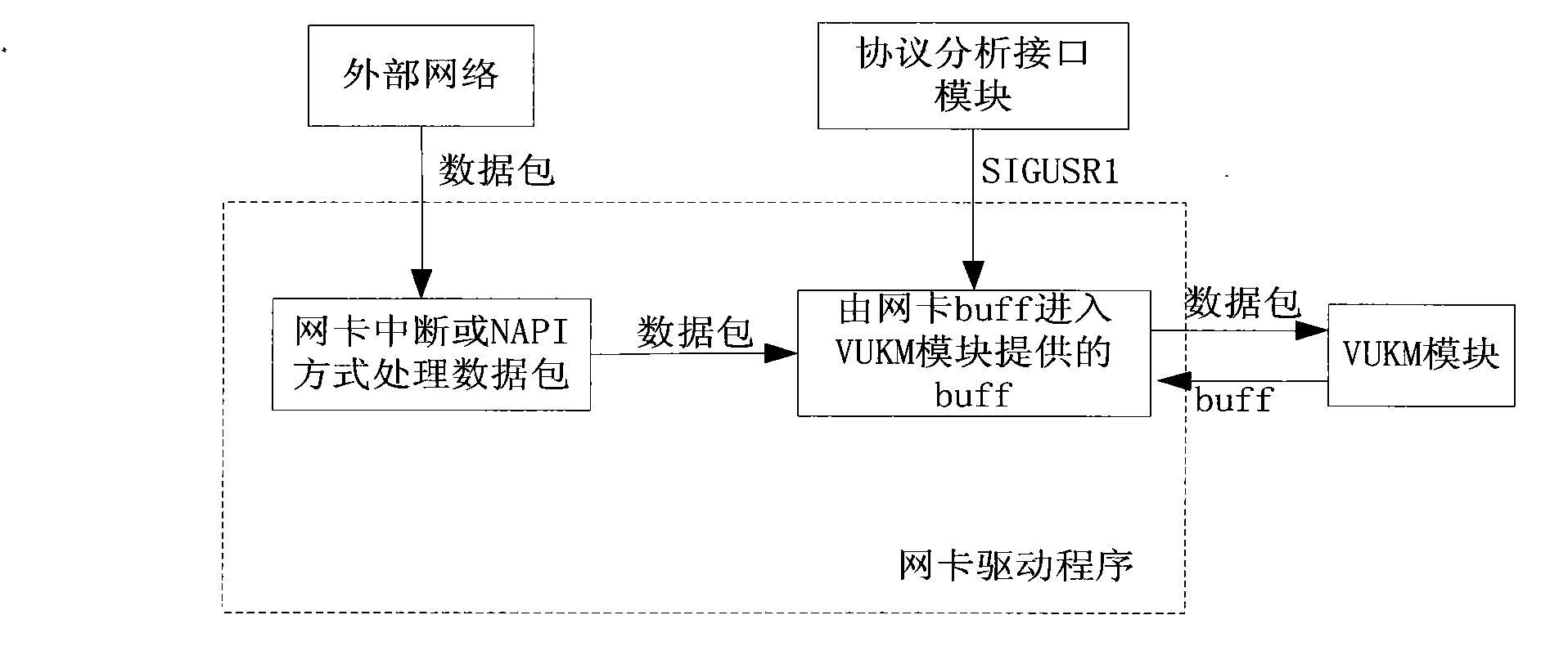

High-speed capturing method of bottom-layer data packet based on Linux

InactiveCN101841470AOvercome disadvantagesImprove acquisition efficiencyMemory adressing/allocation/relocationData switching networksOriginal dataGNU/Linux

The invention provides a high-speed capturing method of a bottom-layer data packet based on Linux. By setting a virtual capturing equipment module (VUKM module) to modify a network card driver, the high-speed capturing method leads the data packet reaching to a network card to be capable of bypassing a kernel protocol to be directly passed to a subsequence module for processing so as to realize memory sharing of a user space and a kernel space; and the kernel space transmits the data packet to an upper-layer analysis processing interface module at a high speed, and provides a mechanism for leading an upper-layer application program and the network card to access the VUKM module in a conflict-free manner so as to make further processing to the captured data packet. The high-speed capturing method can acquire the original data packet by the network card at a high speed under the gigabit network environment, and can overcome the defect of the traditional data packet capturing technology, thus improving the acquisition efficiency.

Owner:SOUTHEAST UNIV

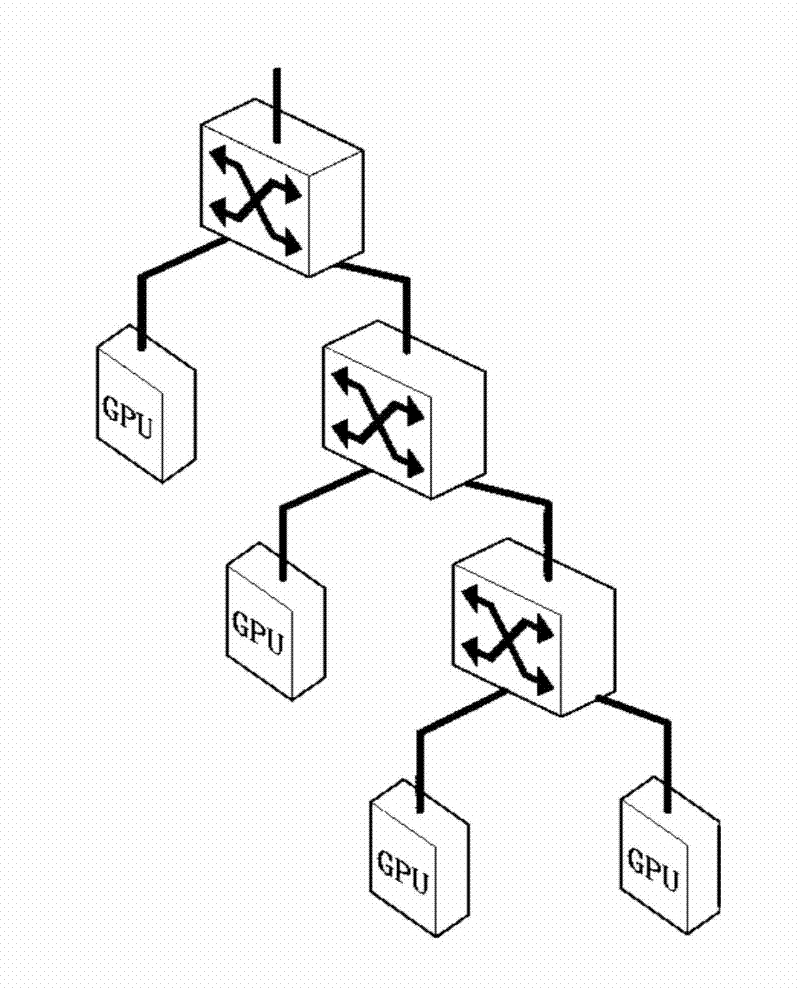

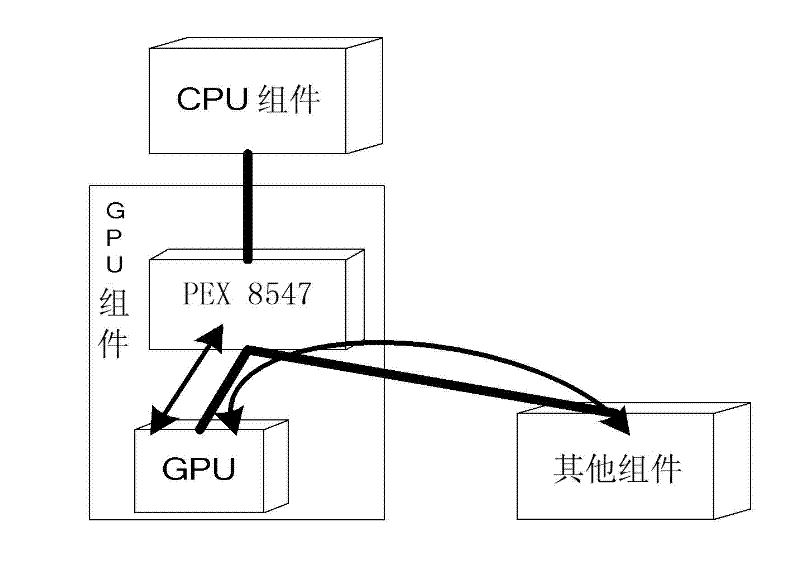

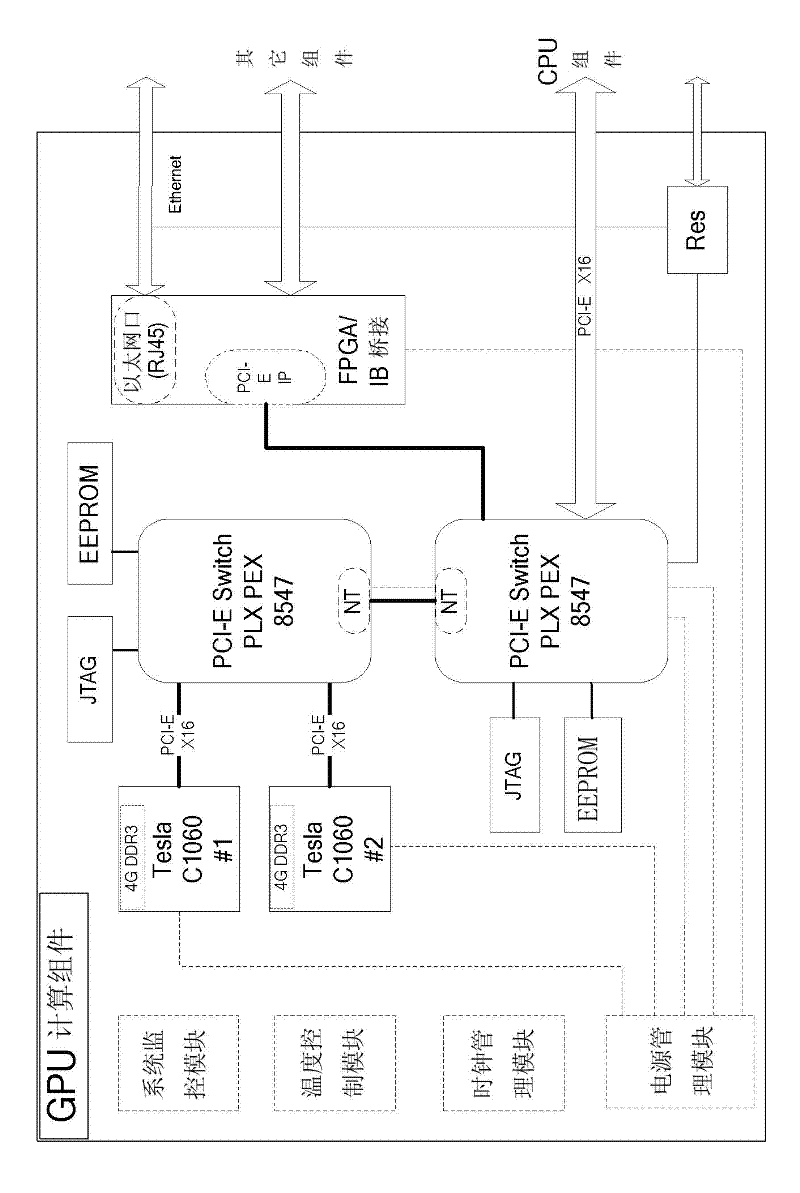

Multi-GPU (graphic processing unit) interconnection system structure in heterogeneous system

ActiveCN102541804ASolving High-Speed Interconnect ProblemsFlexibleDigital computer detailsImage data processing detailsComputer architectureMulti gpu

The invention relates to a GPU (graphic processing unit) hardware configuration management problem in a heterogeneous system in the technical field of computer communication, and in particular relates to a multi-GPU interconnection system structure in the heterogeneous system. In the multi-GPU interconnection system structure in the heterogeneous system, based on a mixed, heterogeneous and high-performance computer system which is combined by multi-core accelerators and a multi-core universal processor, multiple multi-core accelerators, as well as between multiple multi-core accelerators and the multi-core universal processor are subjected to multistage interconnection by adopting multi-port exchange chips based on PCI-E (peripheral component interconnect-express) buses, and a multistage exchange structure which is reconfigurable to an external interface is formed. The multi-GPU interconnection system structure in the heterogeneous system provided by the invention solves the high-speed interconnection problem between the multiple GPUs and between the multiple GPUs and the CPU in the traditional heterogeneous system, is a hardware system structure with flexibility and expandability, supports the expandability and interface flexibility and realizes transparent transmission and memory sharing.

Owner:THE PLA INFORMATION ENG UNIV +1

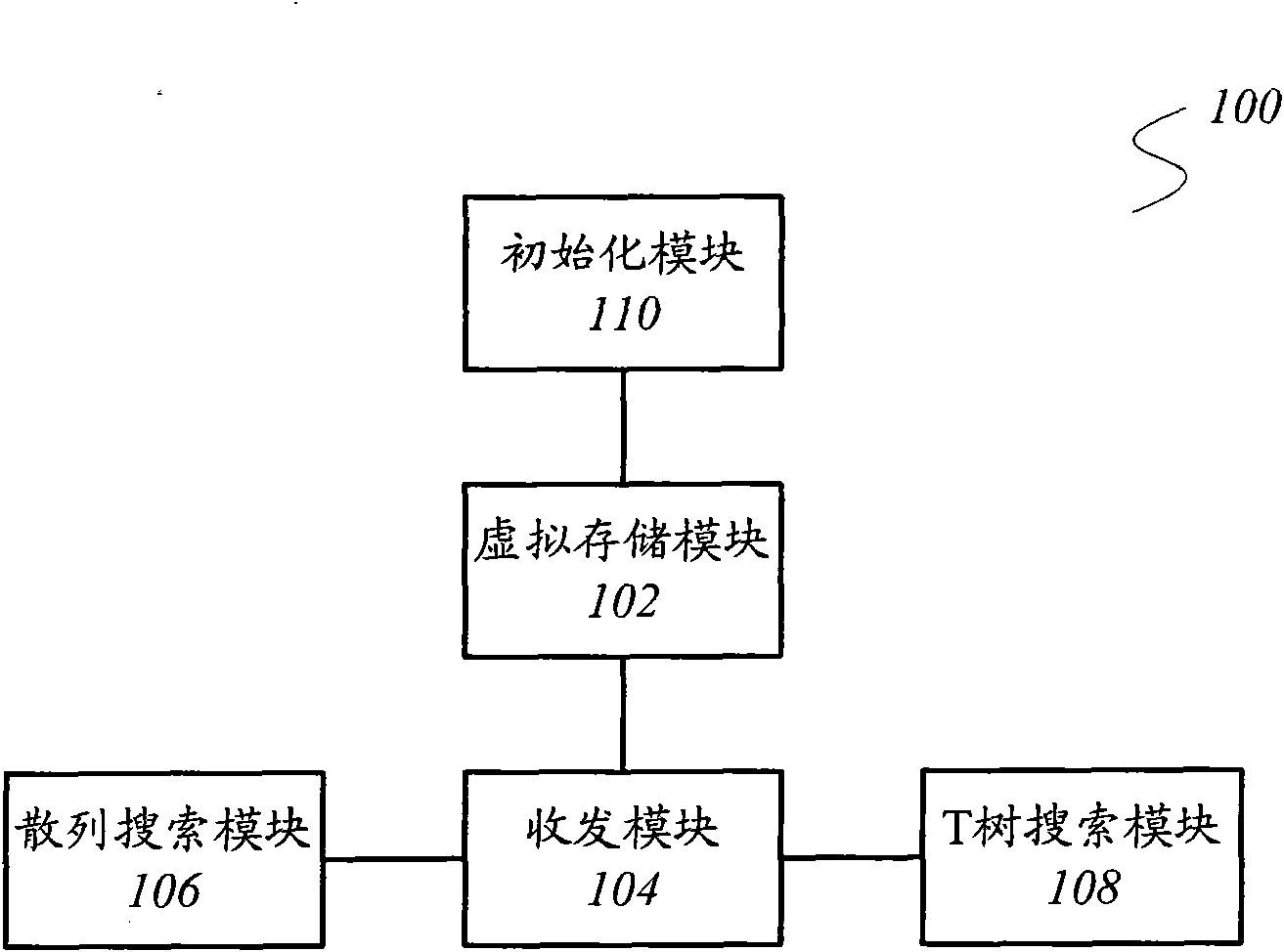

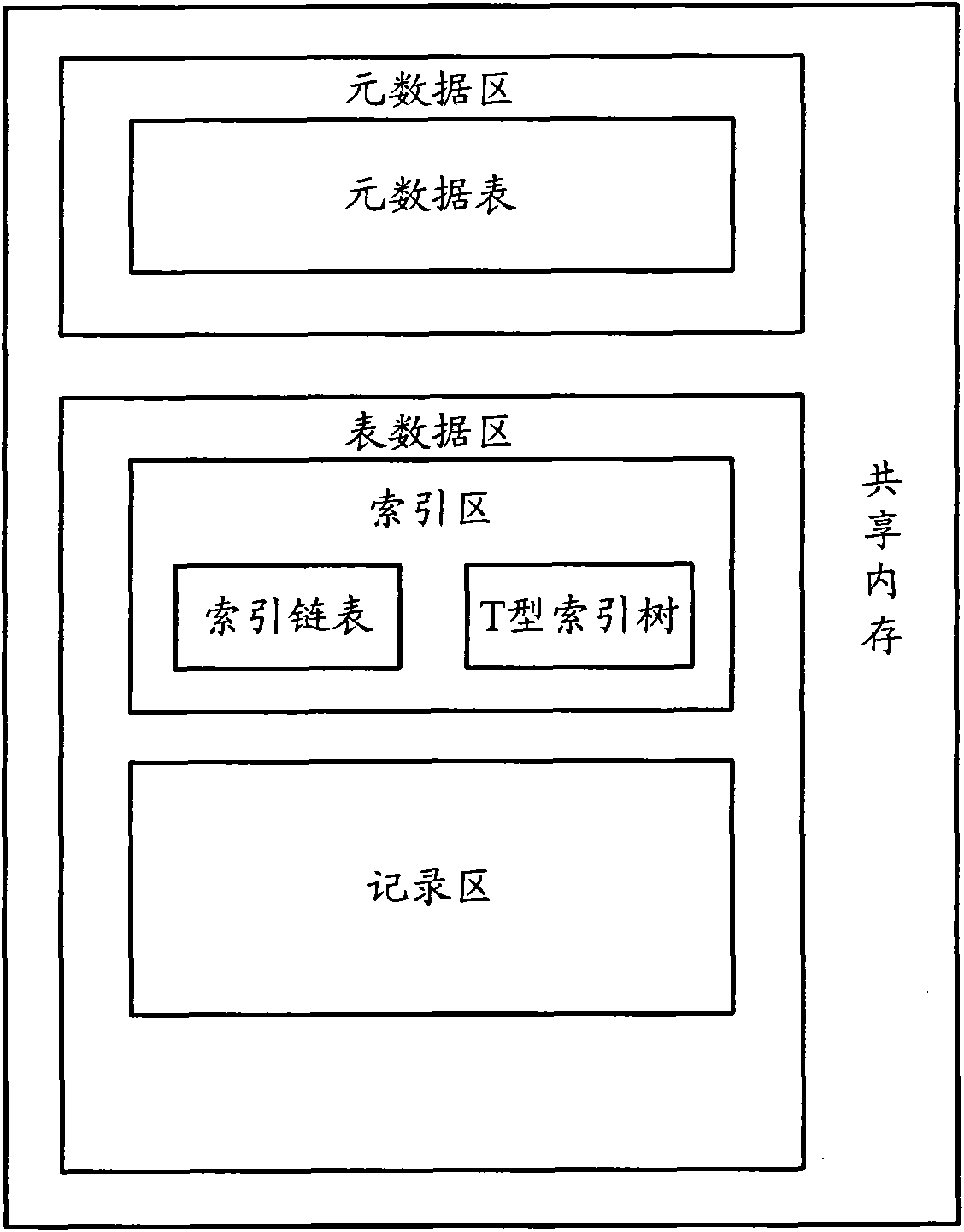

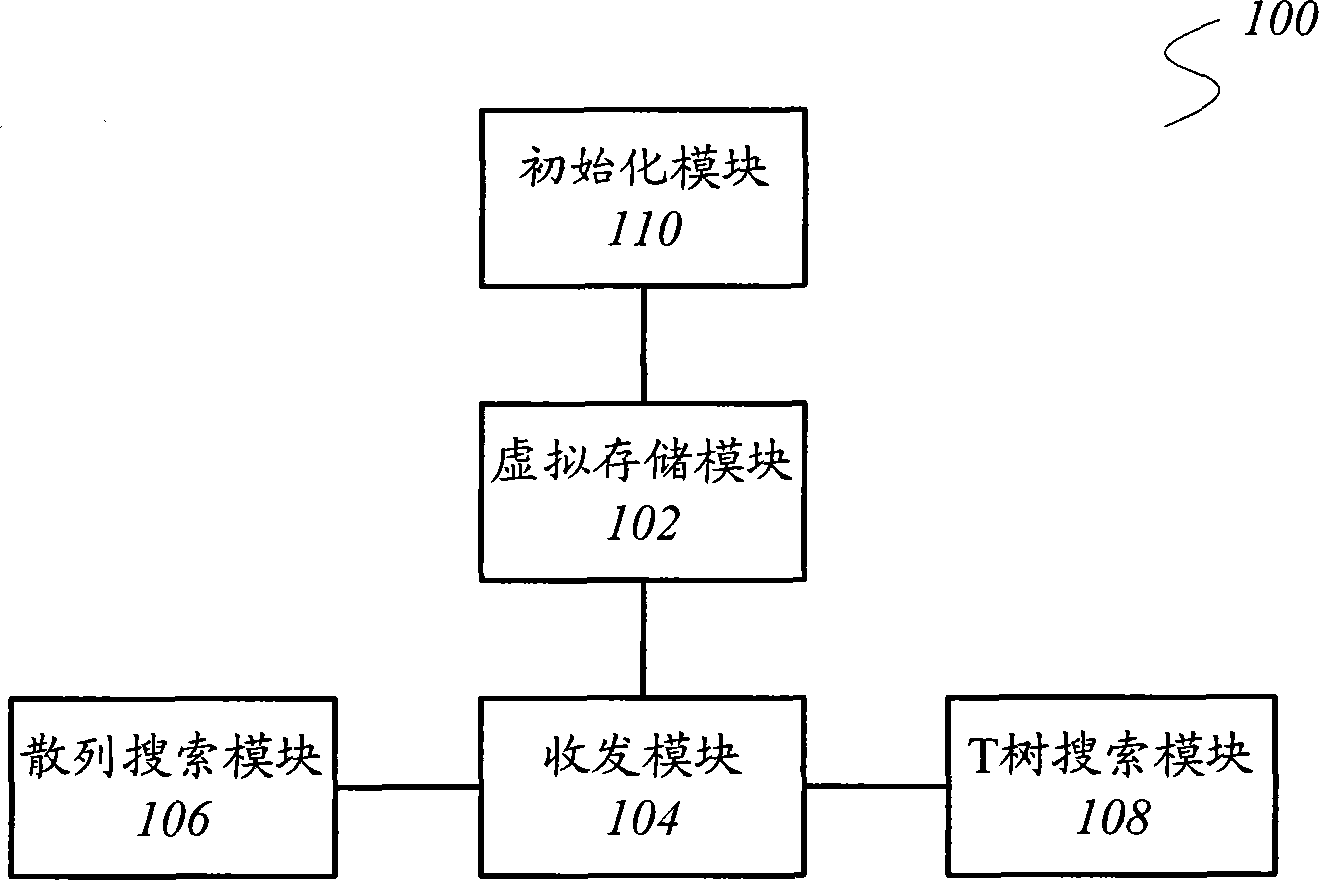

Data cache system and data inquiry method

InactiveCN102122285AEasy to manageEasy to useSpecial data processing applicationsMemory sharingComputer science

The invention relates to a cache technology, and provides a data cache system and a data inquiry method aiming to the defects that the traditional Memcached system does not support multiple indexes and the like. The data cache system comprises a virtual storage module, a forwarding module and a hash searching module, wherein the virtual storage module is arranged in a shared memory; the forwarding module is used for receiving a search request and judging the type to which the forwarding module belongs, and forwarding the search request; and the hash searching module is used for receiving the search request of a single-value search type and extracting a keyword, searching corresponding index information from a metadata table according to the type of the keyword, searching a corresponding hash index chain table from an index domain according to the index information, searching a matched index from a sub chain table according to a hash calculation result and searching a matched data record according to the index. The invention also provides a data inquiry method. The technical scheme provided by the invention supports multiple indexes, the data record is stored in a memory sharing mode, and the defect of the traditional Memcached system is overcome.

Owner:卓望数码技术(深圳)有限公司

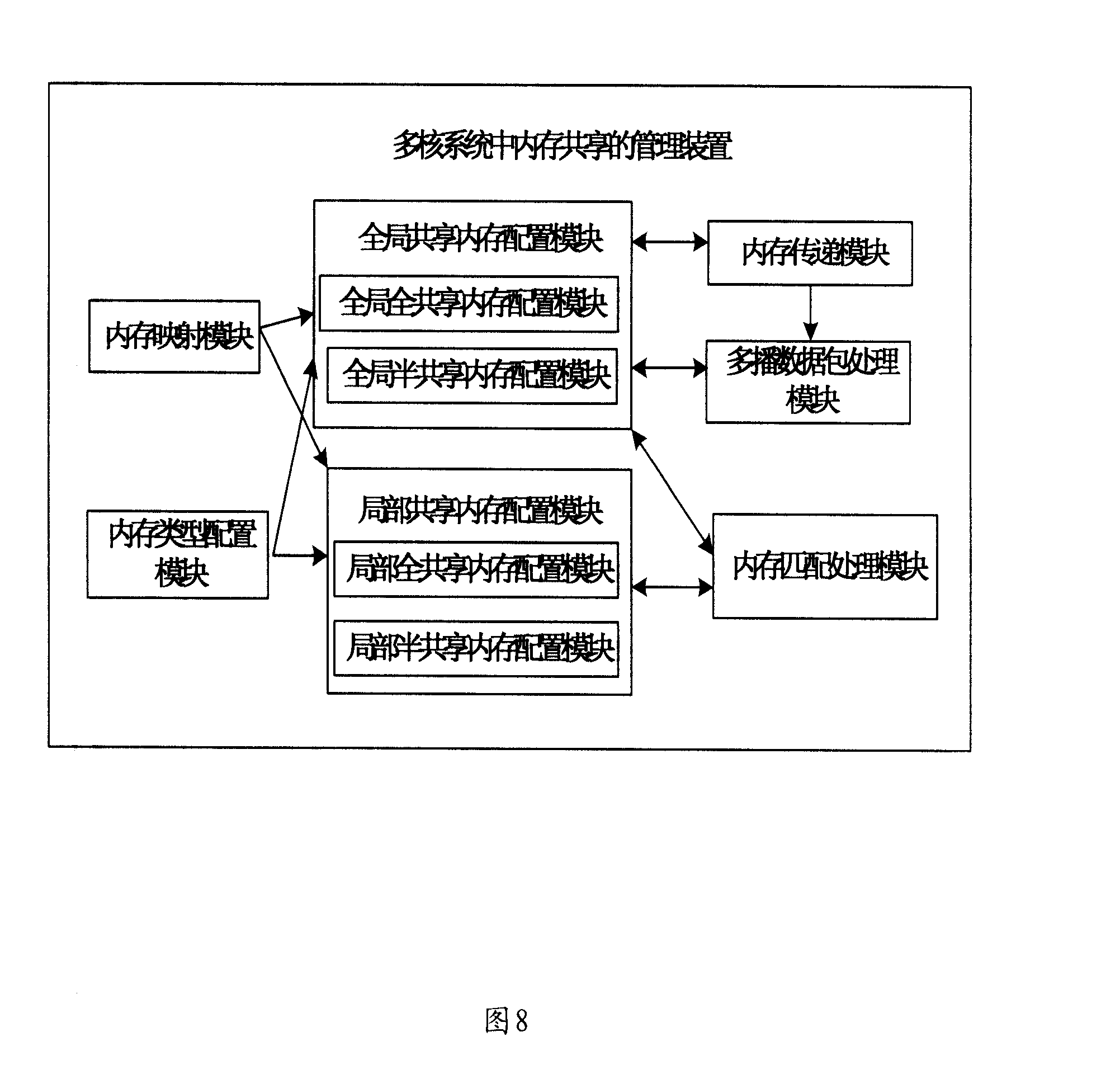

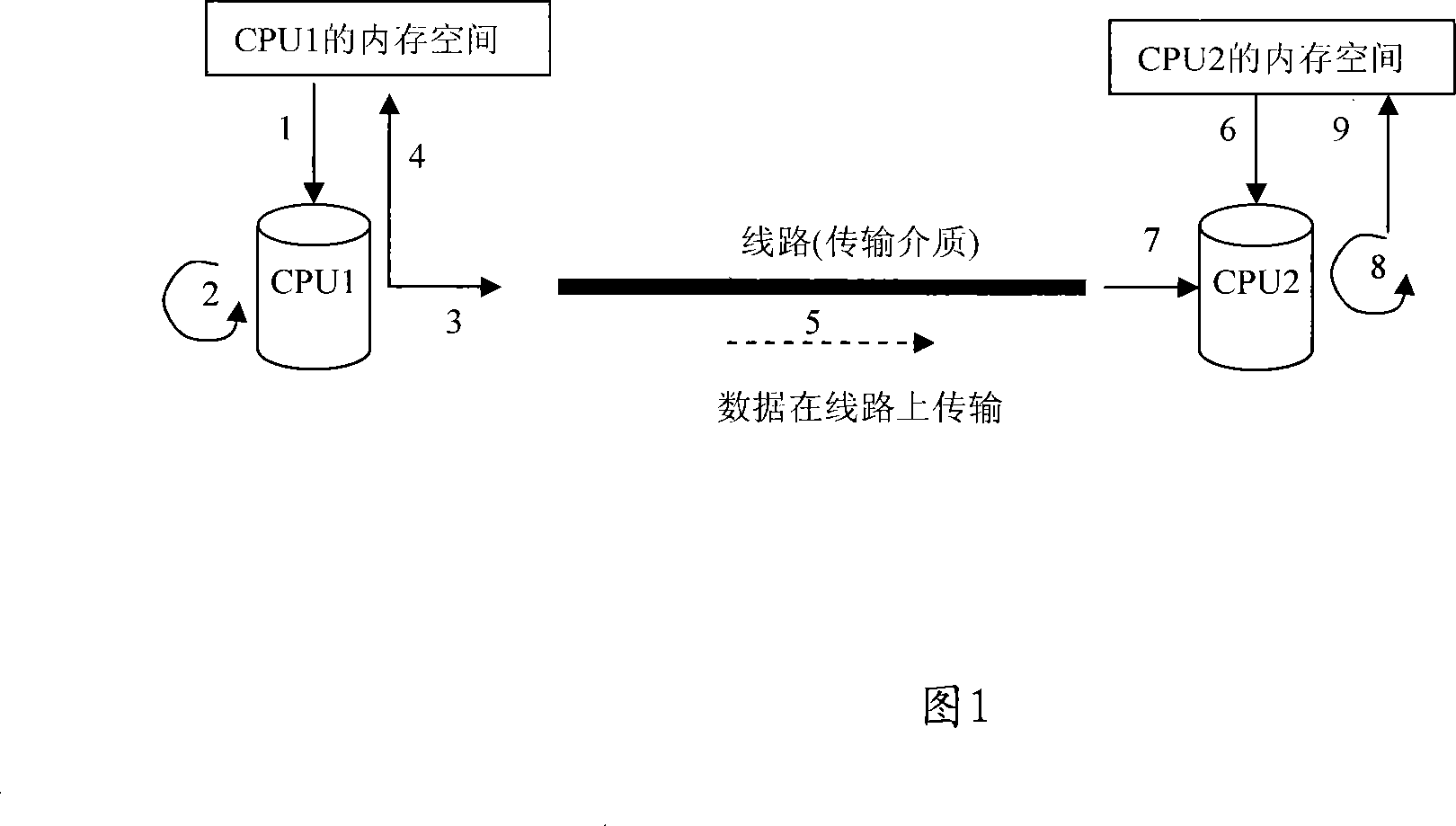

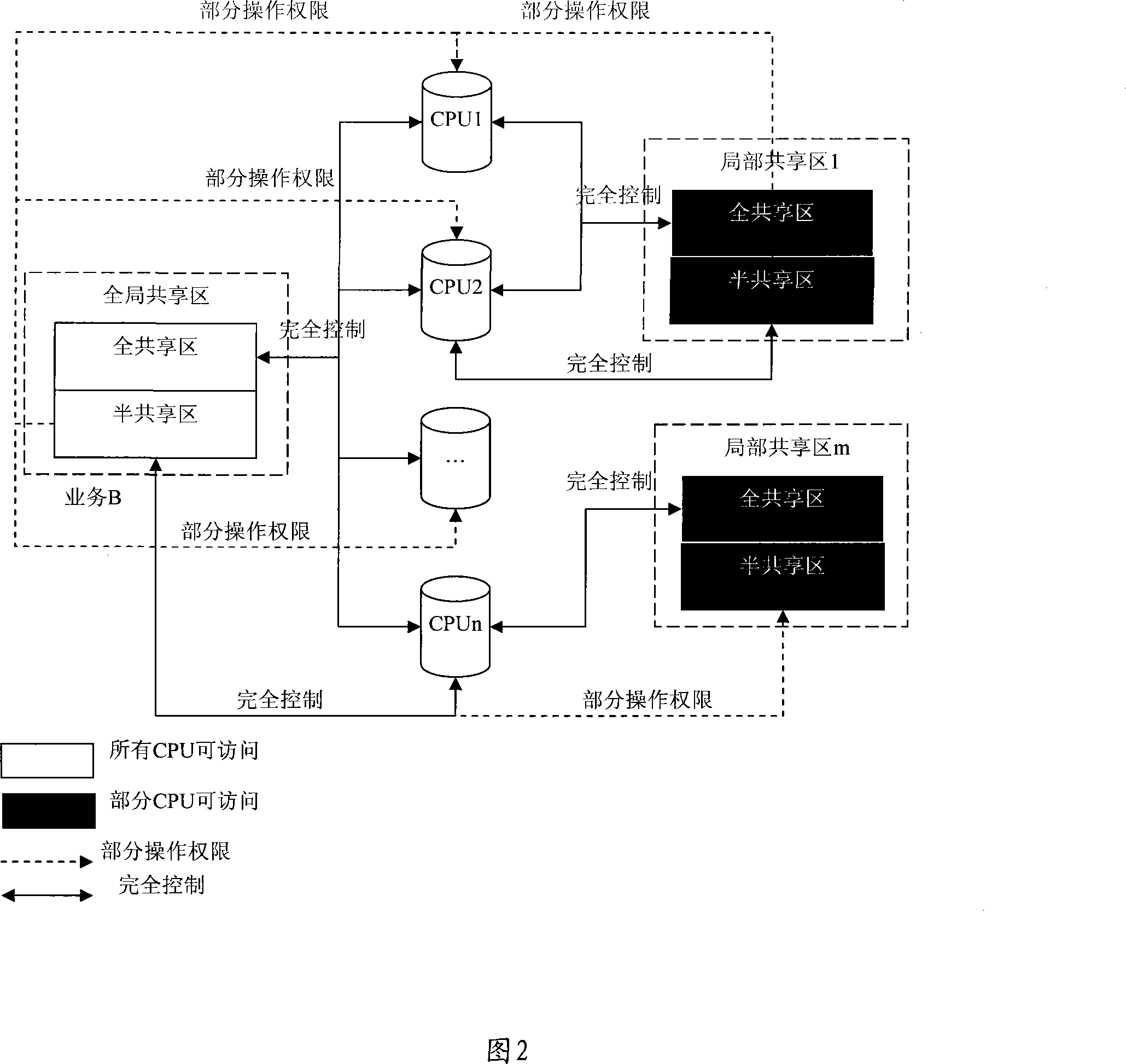

Management method and device for sharing internal memory in multi-core system

InactiveCN101246466AImprove performanceReduce the chance of shared memory access conflictsMemory adressing/allocation/relocationDigital computer detailsMemory sharingCore system

The invention provides a management method and device for shared memory in a multi-core system. The method mainly comprises: configuring global shared memory and local shared memory in the multi-core system; all central processor units (CPU) in the multi-core system are capable of accessing the global shared memory and part of CPUs in the multi-core system are capable of accessing the local shared memory. The device mainly comprises: the global shared memory configuration module and the local shared memory configuration module. Free transfer of shared memory between CPUs can be accomplished by the invention and agile configuration of the shared memory on each CPU is supported, which accomplishes co-existence of various memory-sharing ways so as to simplify process of communication among multi-cores, greatly reduce probability of shared memory accessing conflict and is beneficial to improve performance of the multi-core system.

Owner:HUAWEI TECH CO LTD

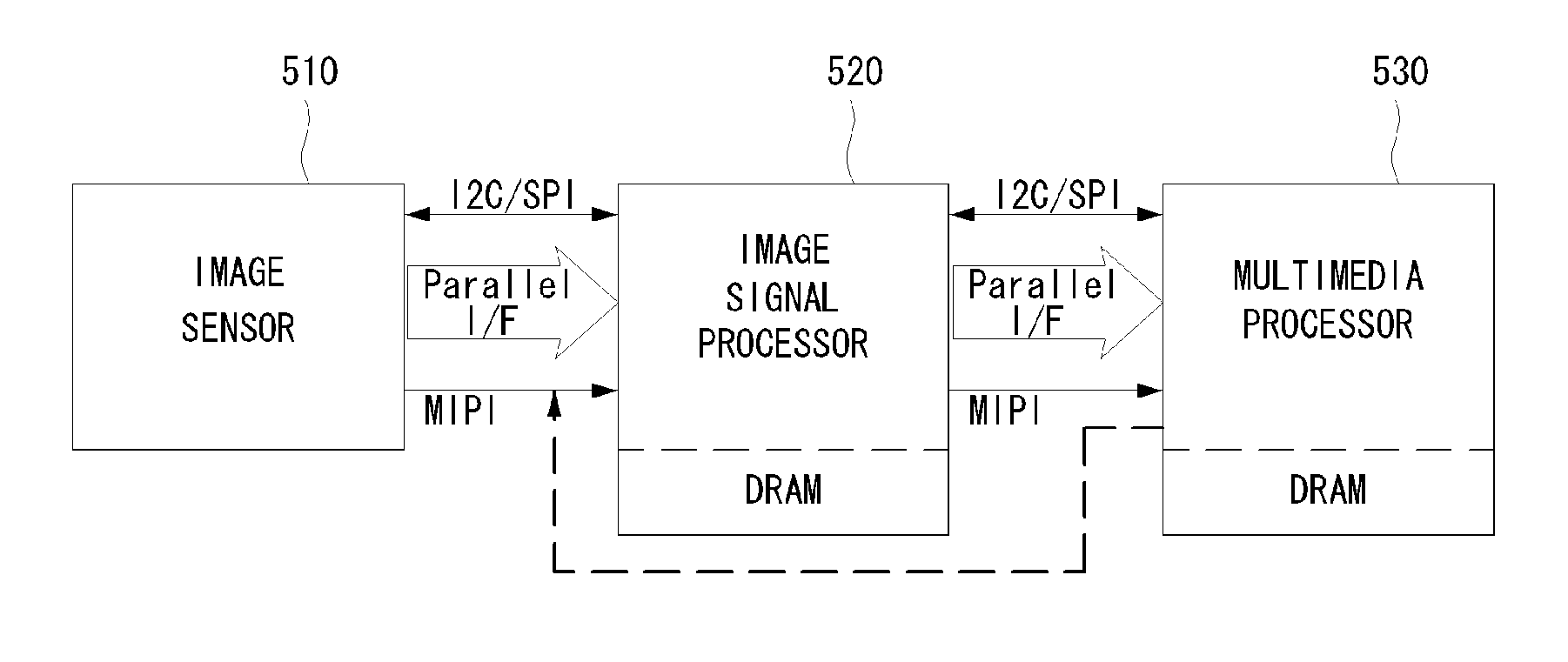

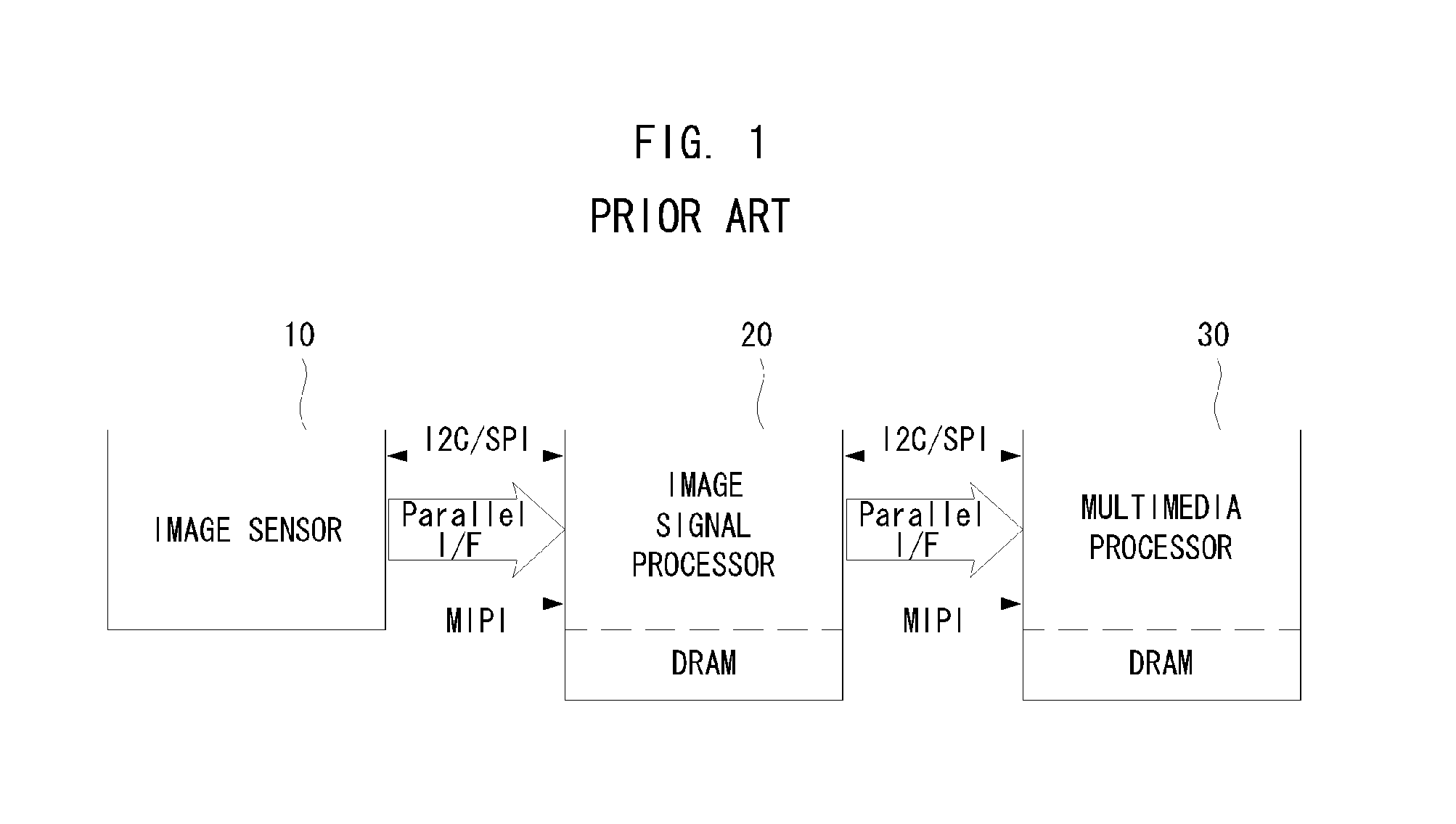

Imaging device and method for sharing memory among chips

InactiveUS20110149116A1Efficient use ofEfficiently share a memoryTelevision system detailsColor television detailsMemory sharingComputer terminal

An imaging device and a memory sharing method are provided. The imaging device includes: an image sensor; an image signal processor chip that includes a frame memory and that receives and processes a video signal from the image sensor; and a control processor chip that receives video information from the image signal processor chip via a first serial interface and that accesses the frame memory via a second serial interface used for the image signal processor chip to receive the video image signal from the image sensor. Accordingly, it is possible to efficiently utilize resources in a movable communication terminal having a camera function.

Owner:MTEKVISION CO LTD

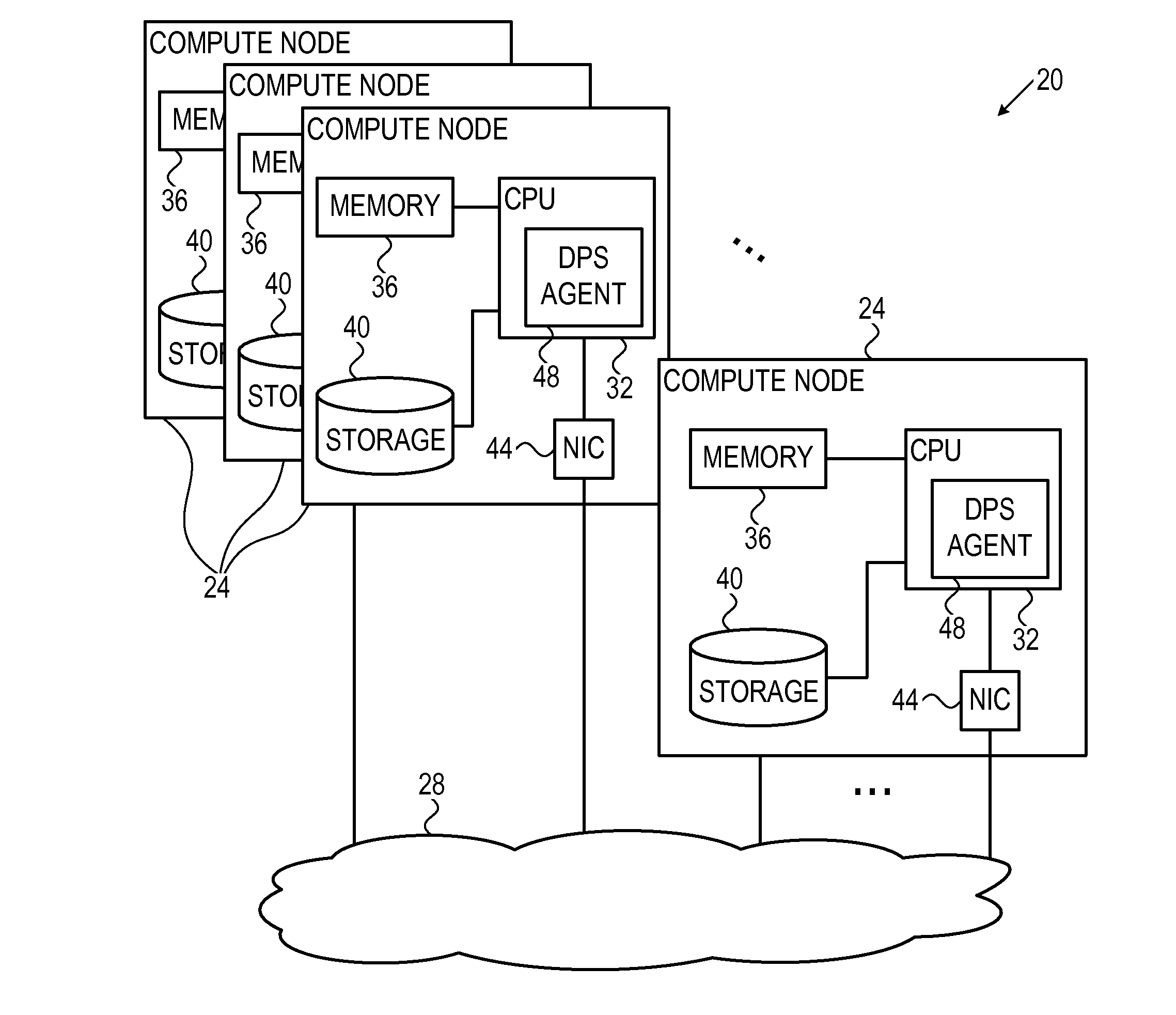

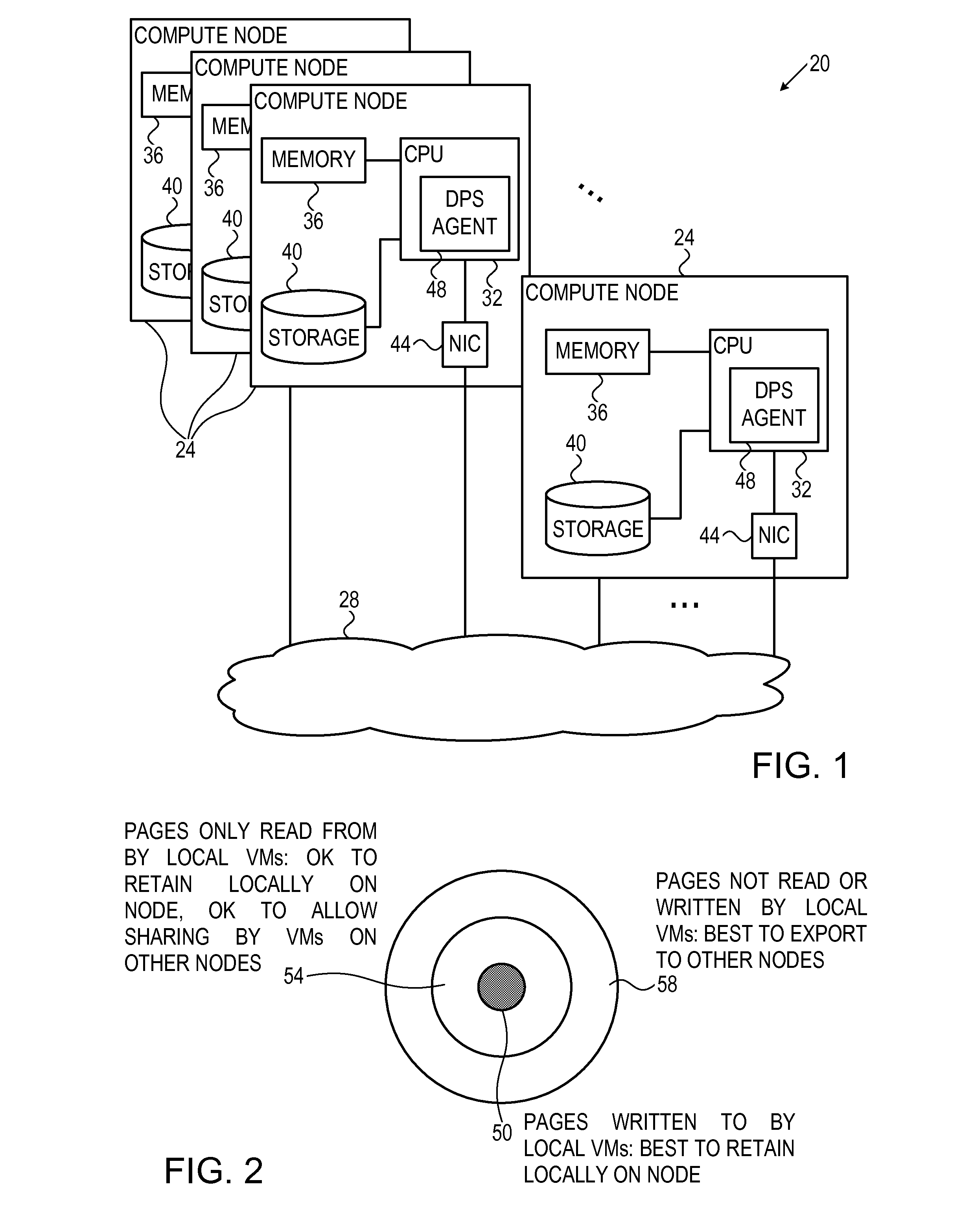

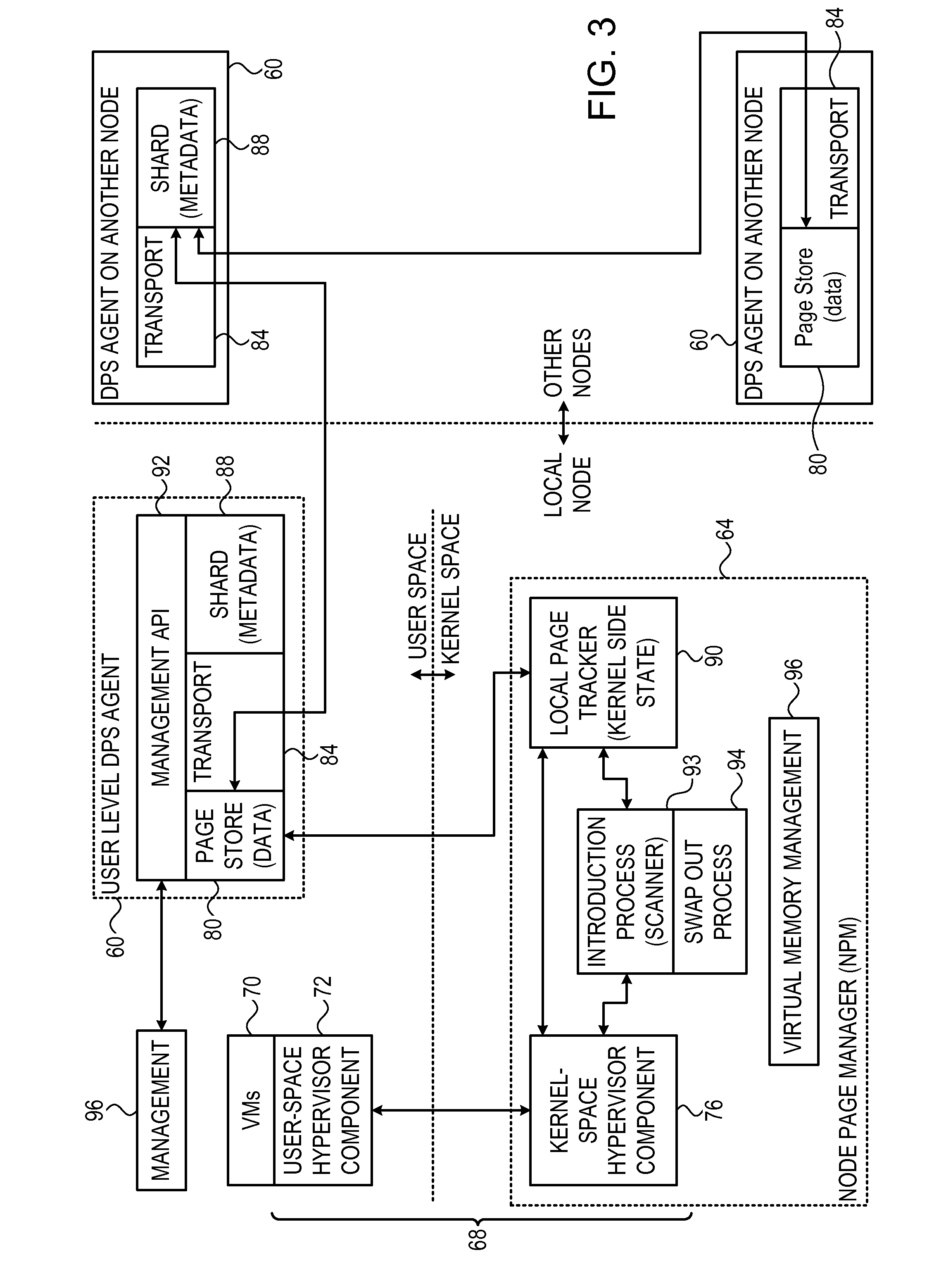

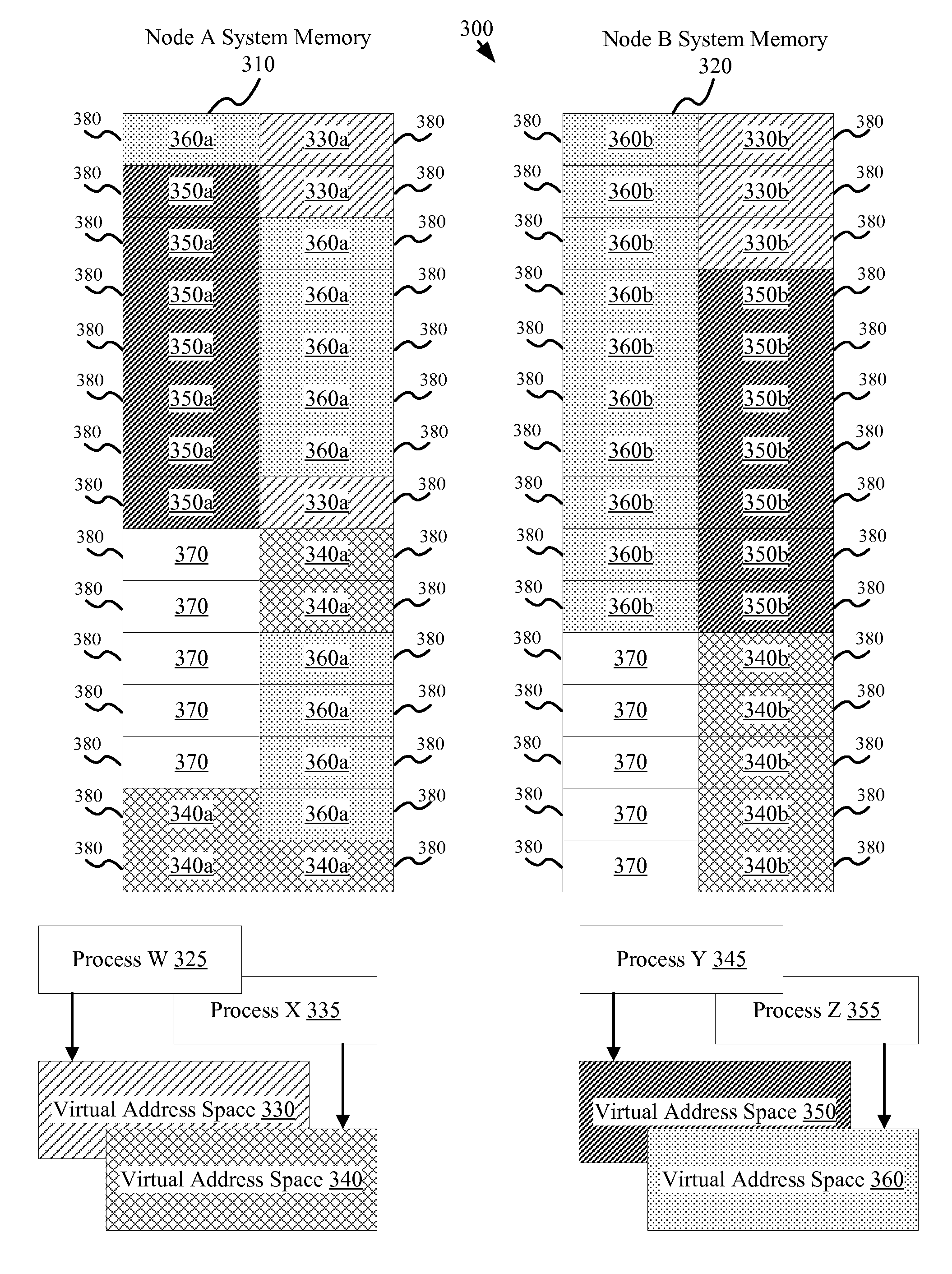

Memory resource sharing among multiple compute nodes

InactiveUS20150234669A1Input/output to record carriersTransmissionMemory sharingDistributed computing

A method includes running on multiple compute nodes respective memory sharing agents that communicate with one another over a communication network. One or more local Virtual Machines (VMs), which access memory pages, run on a given compute node. Using the memory sharing agents, the memory pages that are accessed by the local VMs are stored on at least two of the compute nodes, and the stored memory pages are served to the local VMs.

Owner:MELLANOX TECHNOLOGIES LTD

Apparatus method and system for fault tolerant virtual memory management

InactiveUS7107411B2Facilitating fault toleranceSmall sizeMemory loss protectionError detection/correctionWriting messagesMemory sharing

A fault tolerant synchronized virtual memory manager for use in a load sharing environment manages memory allocation, memory mapping, and memory sharing in a first processor, while maintaining synchronization of the memory space of the first processor with the memory space of at least one partner processor. In one embodiment, synchronization is maintained via paging synchronization messages such as a space request message, an allocate memory message, a release memory message, a lock request message, a read header message, a write page message, a sense request message, an allocate read message, an allocate write message, and / or a release pointer message. Paging synchronization facilitates recovery operations without the cost and overhead of prior art fault tolerant systems.

Owner:INT BUSINESS MASCH CORP

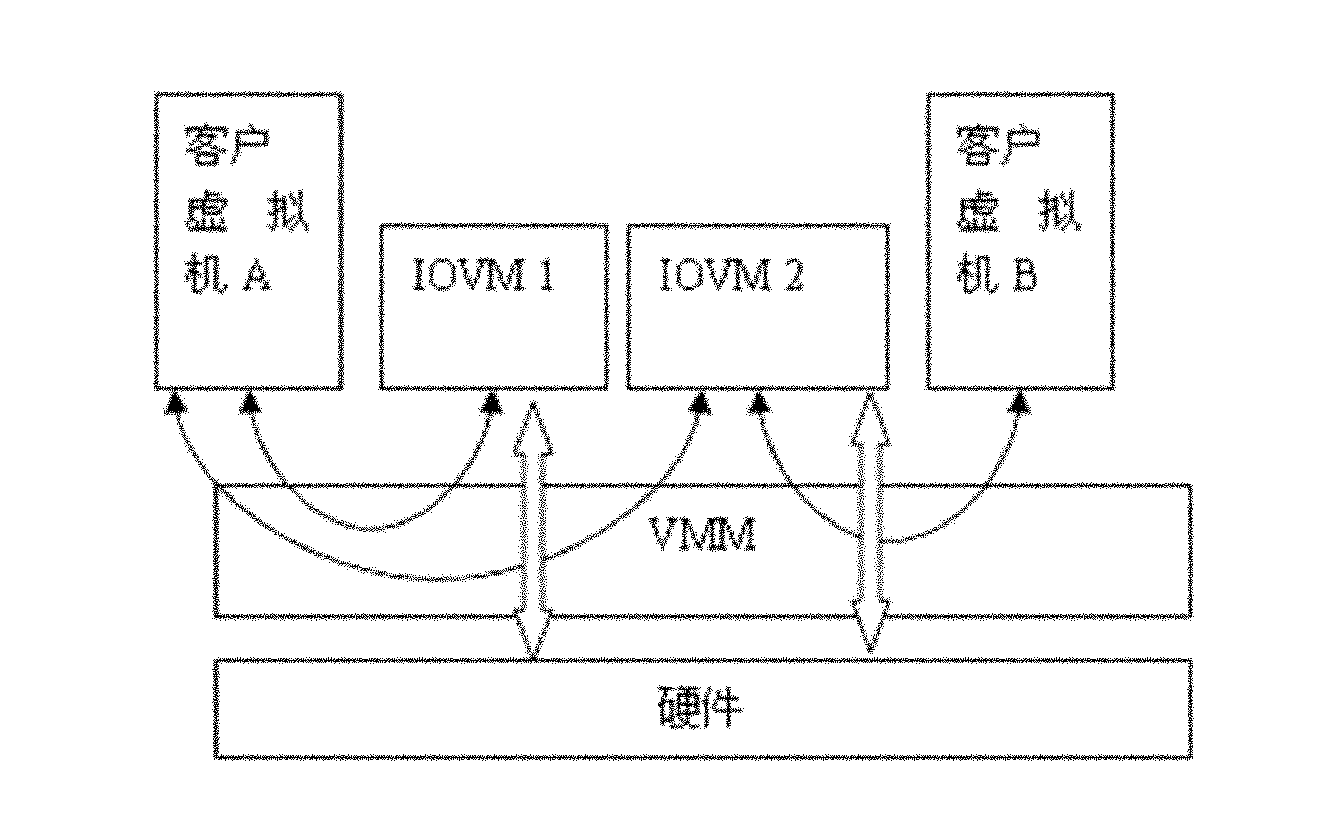

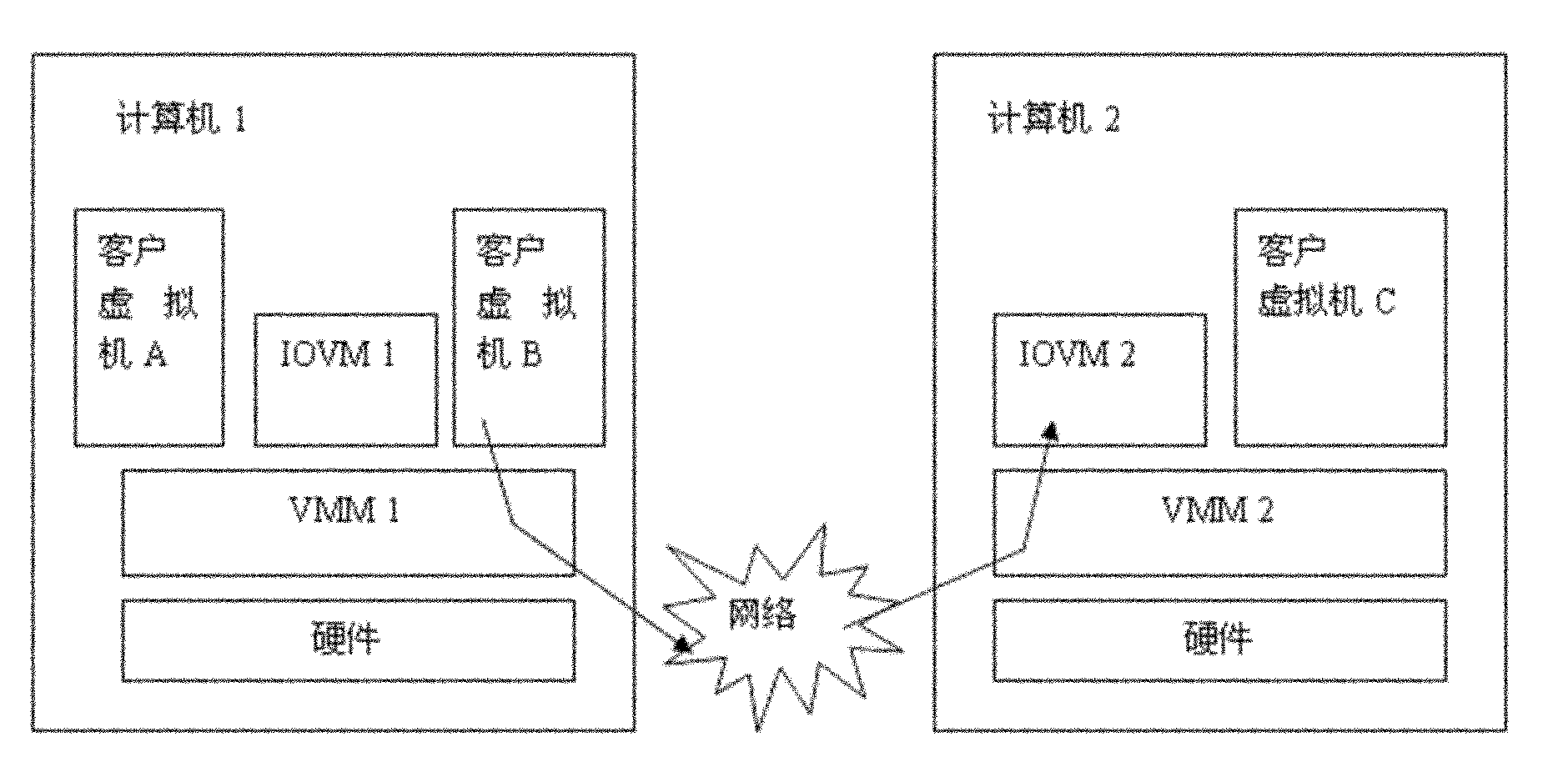

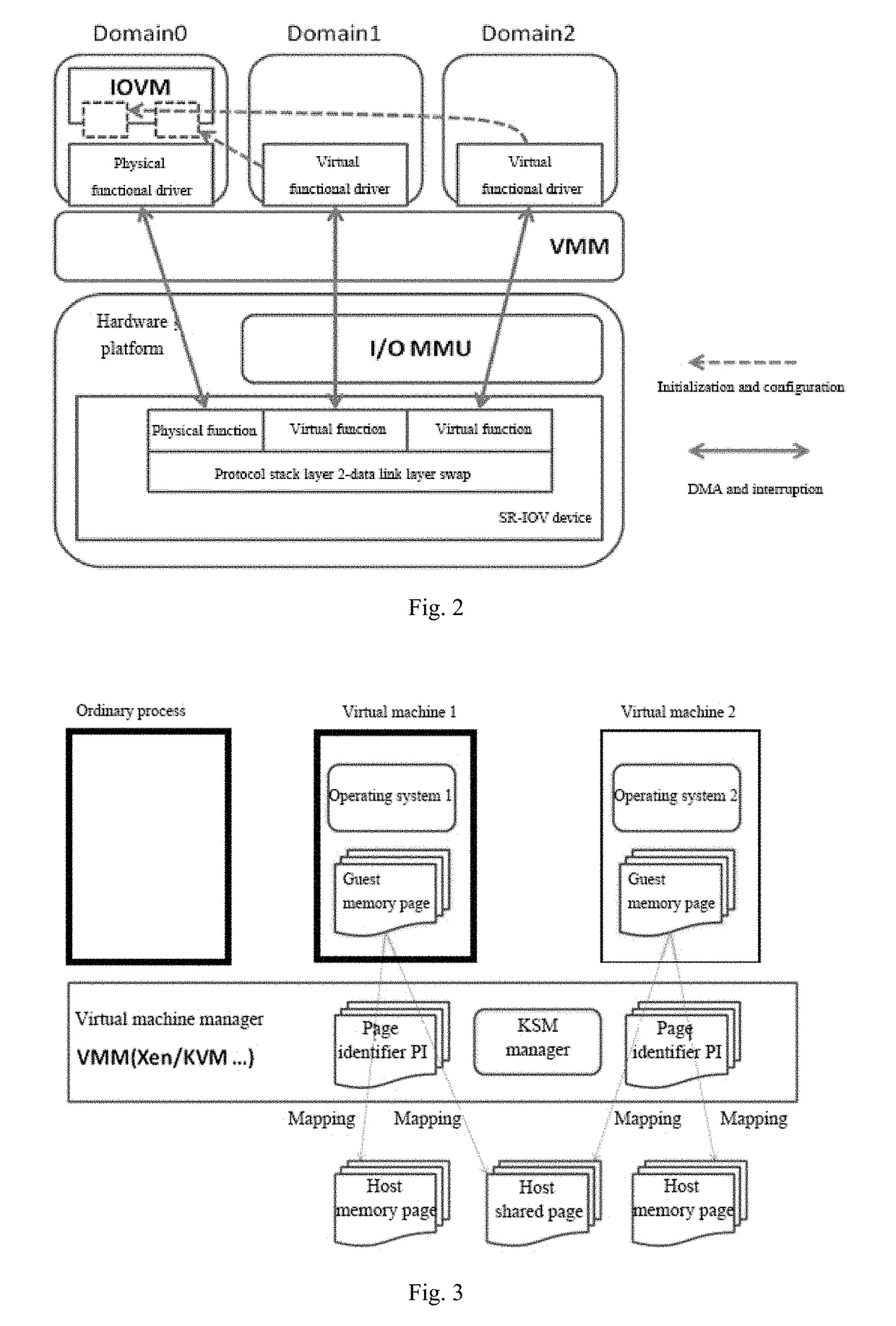

Virtual machine system for input/output equipment virtualization outside virtual machine monitor

ActiveCN101976200AReduce the burden onImprove isolationTransmissionSoftware simulation/interpretation/emulationVirtualizationMemory sharing

The invention provides a virtual machine system for input / output equipment virtualization outside a virtual machine monitor. The virtual machine system comprises the virtual machine monitor; the virtual machine monitor is provided with a plurality of input / output virtual machines, and all I / O operations of clients and equipment sharing are processed by the input / output virtual machines; the plurality of input / output virtual machines are managed and dispatched by the virtual machine monitor; and an inter-domain communication mechanism and a memory sharing mechanism are formed between the input / output virtual machines and the virtual machine monitor. Functions of processing and sharing input and output are extracted from the virtual machine monitor based on a processor platform of hardware-aid virtualization technology, and processing, sharing and dispatching of the input and the output are performed in the prerogative client virtual machines running above the virtual machine monitor tofinish the I / O virtualization function so that better isolation and safety can be acquired and the burden of the virtual machine monitor can be reduced at the same time.

Owner:ZHEJIANG UNIV

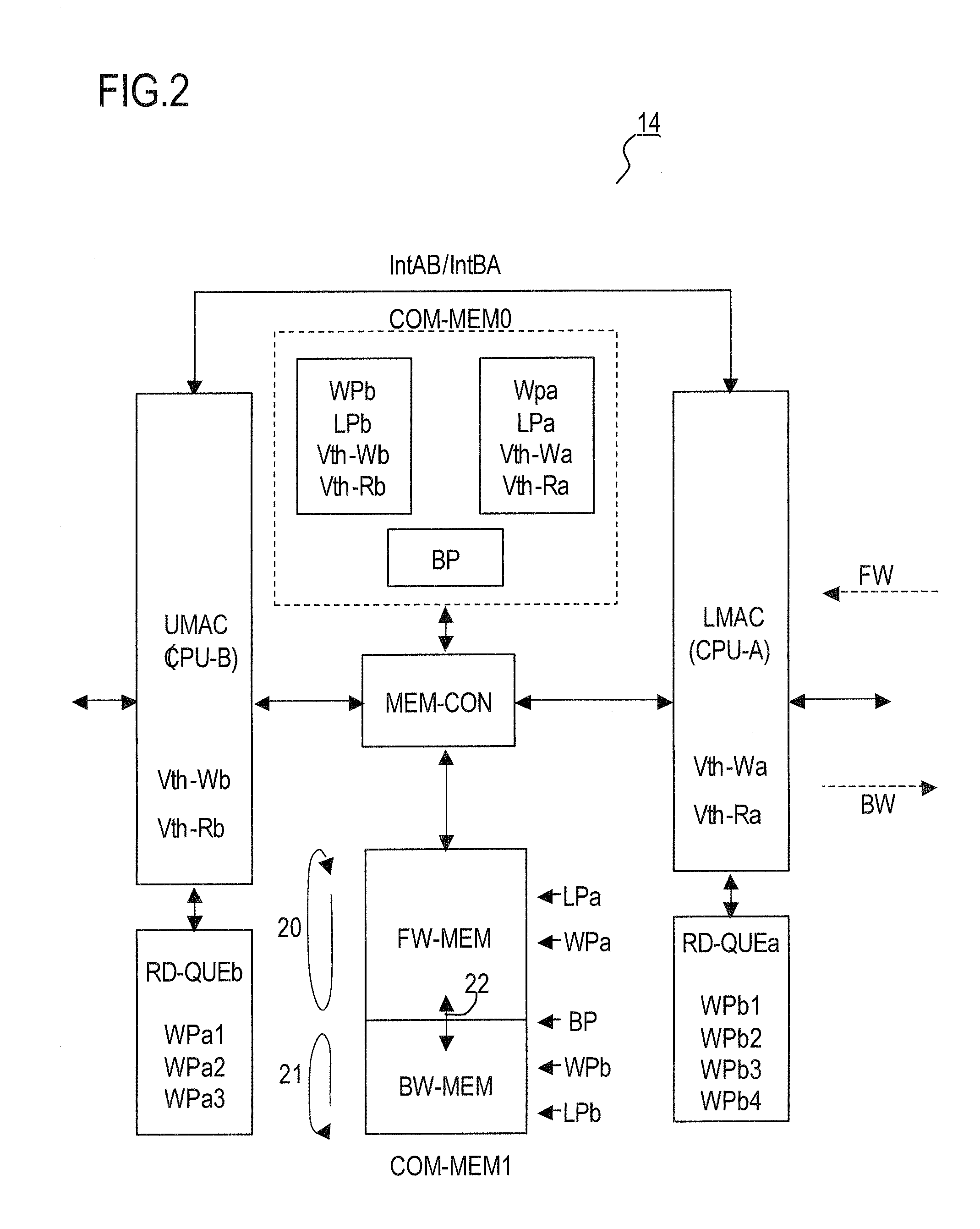

Memory-sharing system device

InactiveUS20080320243A1Memory adressing/allocation/relocationDigital computer detailsMemory sharingData transmission

A memory-sharing system device has a shared memory, divided into forward-direction and backward-direction memory areas; a first processor inputting transfer data in the forward direction, writing the data to the forward-direction memory area, reading transfer data in the backward direction from the backward-direction memory area and outputting the data; and a second processor for transferring data in the back-ward direction. The first or second processor sets memory release criteria for the forward-direction and backward-direction memory areas respectively, and, when the used memory area reaches the memory release criterion, performs memory release processing. The first or second processor monitors the forward-direction and the backward-direction data transfer speed, changes the memory release criterion depending on the data transfer speed.

Owner:FUJITSU SEMICON LTD

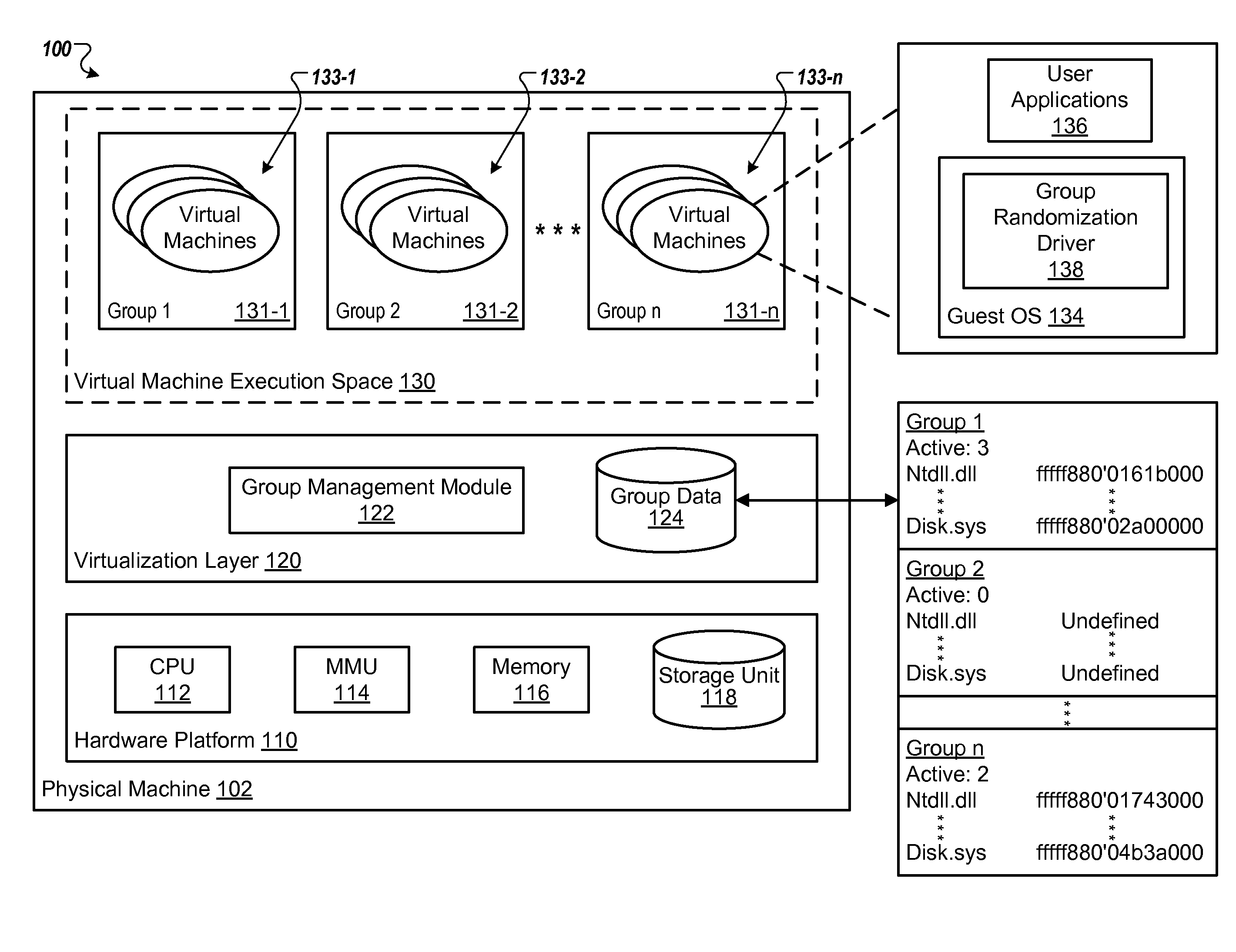

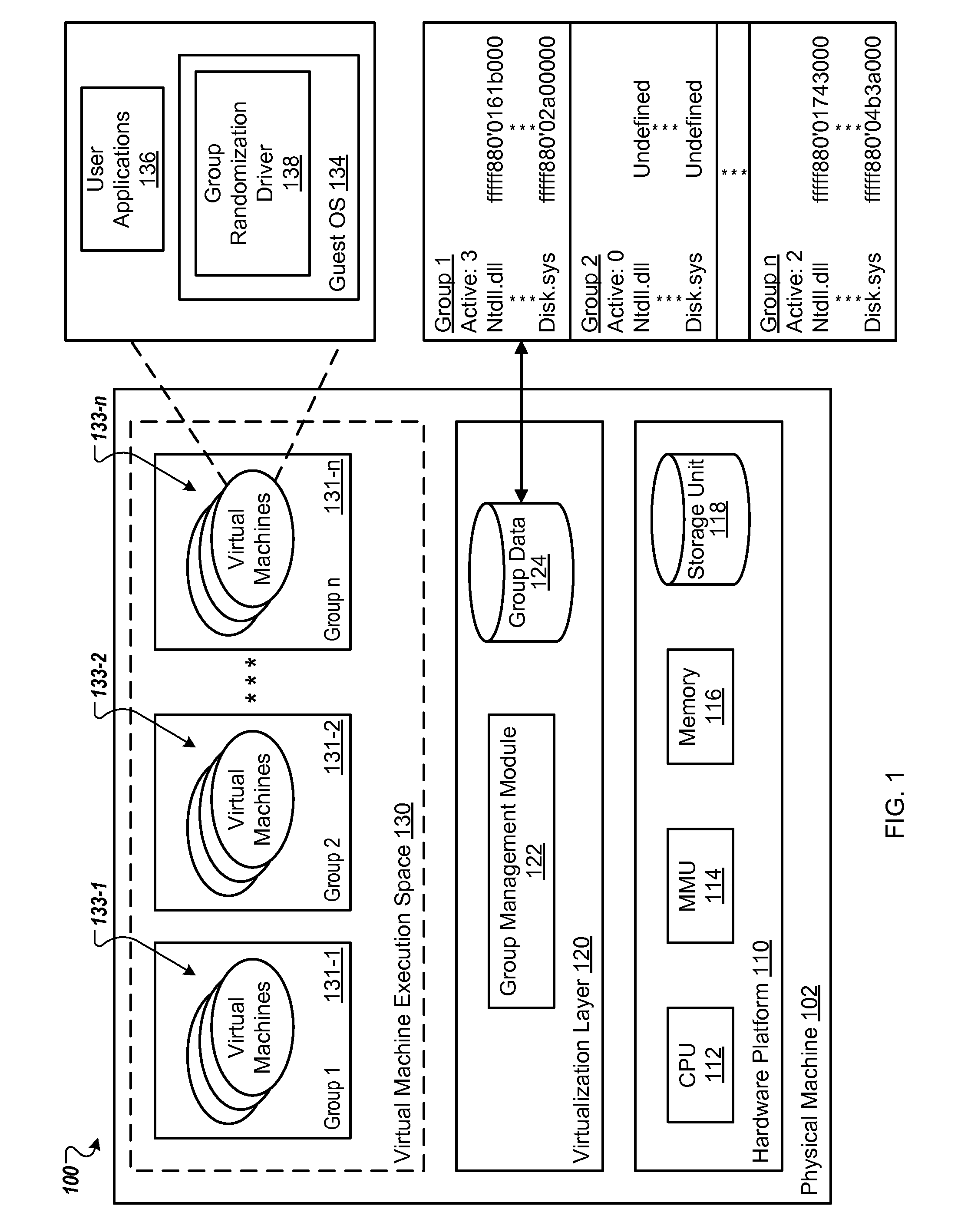

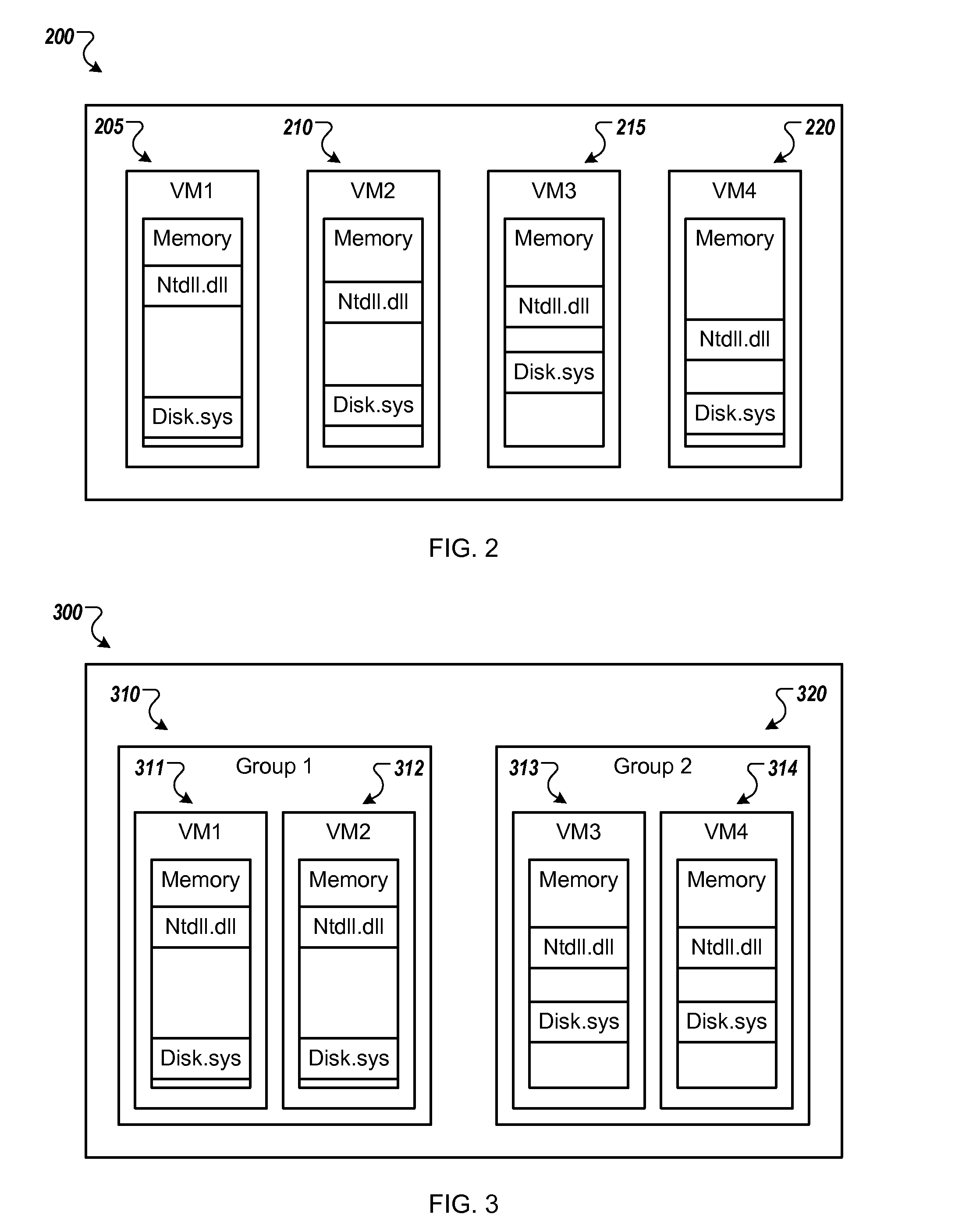

Optimizing memory sharing in a virtualized computer system with address space layout randomization enabled in guest operating systems

ActiveUS20150261576A1Improving memory sharingMemory architecture accessing/allocationResource allocationVirtualizationOperational system

Systems and techniques are described for optimizing memory sharing. A described technique includes grouping virtual machines (VMs) into groups including a first group; initializing a first VM in the first group, wherein initializing the first VM includes identifying, for each of one or more first memory pages for the first VM, a respective base address for storing the first memory page using an address randomization technique, and storing, for each of the one or more first memory pages, data associating the first memory page with the respective base address for the first group, and initializing a second VM while the first VM is active, wherein initializing the second VM includes determining that the second VM is a member of the first group, and in response, storing one or more second memory pages for the second VM using the respective base addresses stored for the first group.

Owner:VMWARE INC

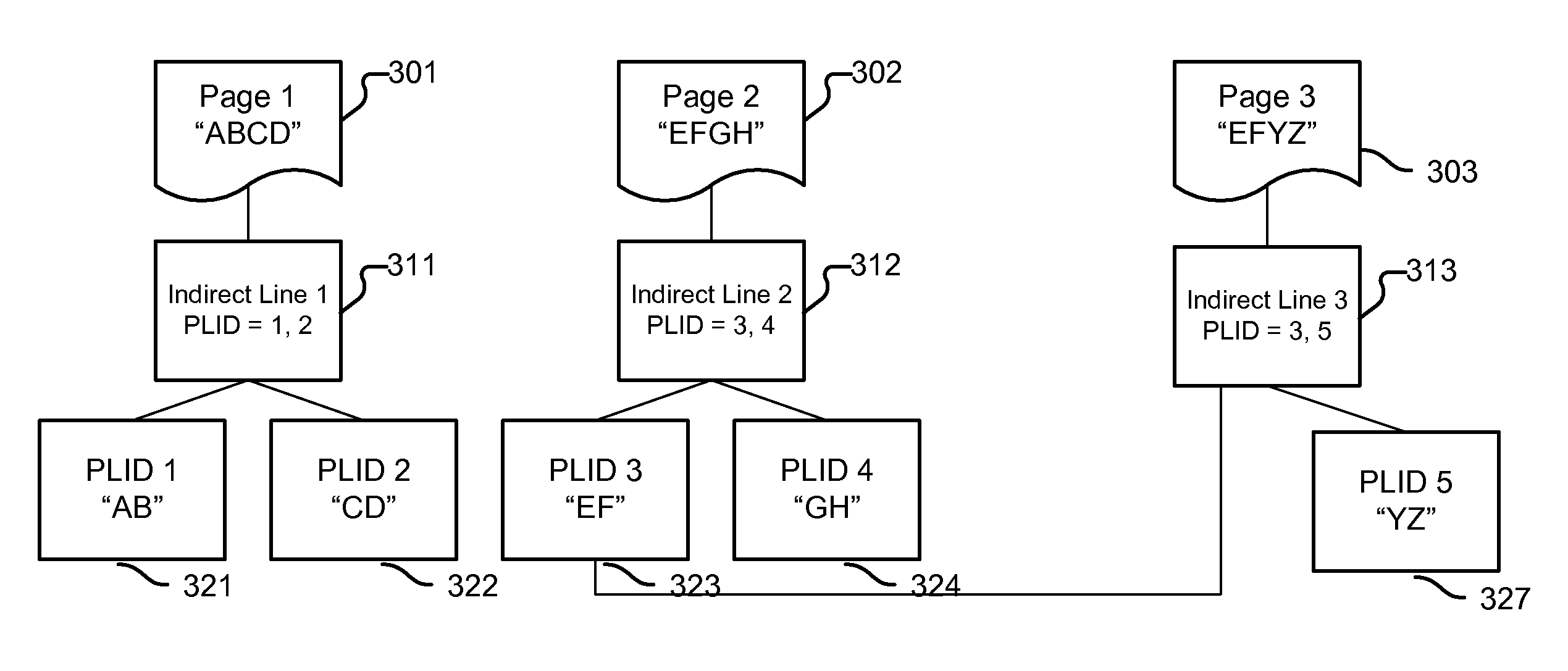

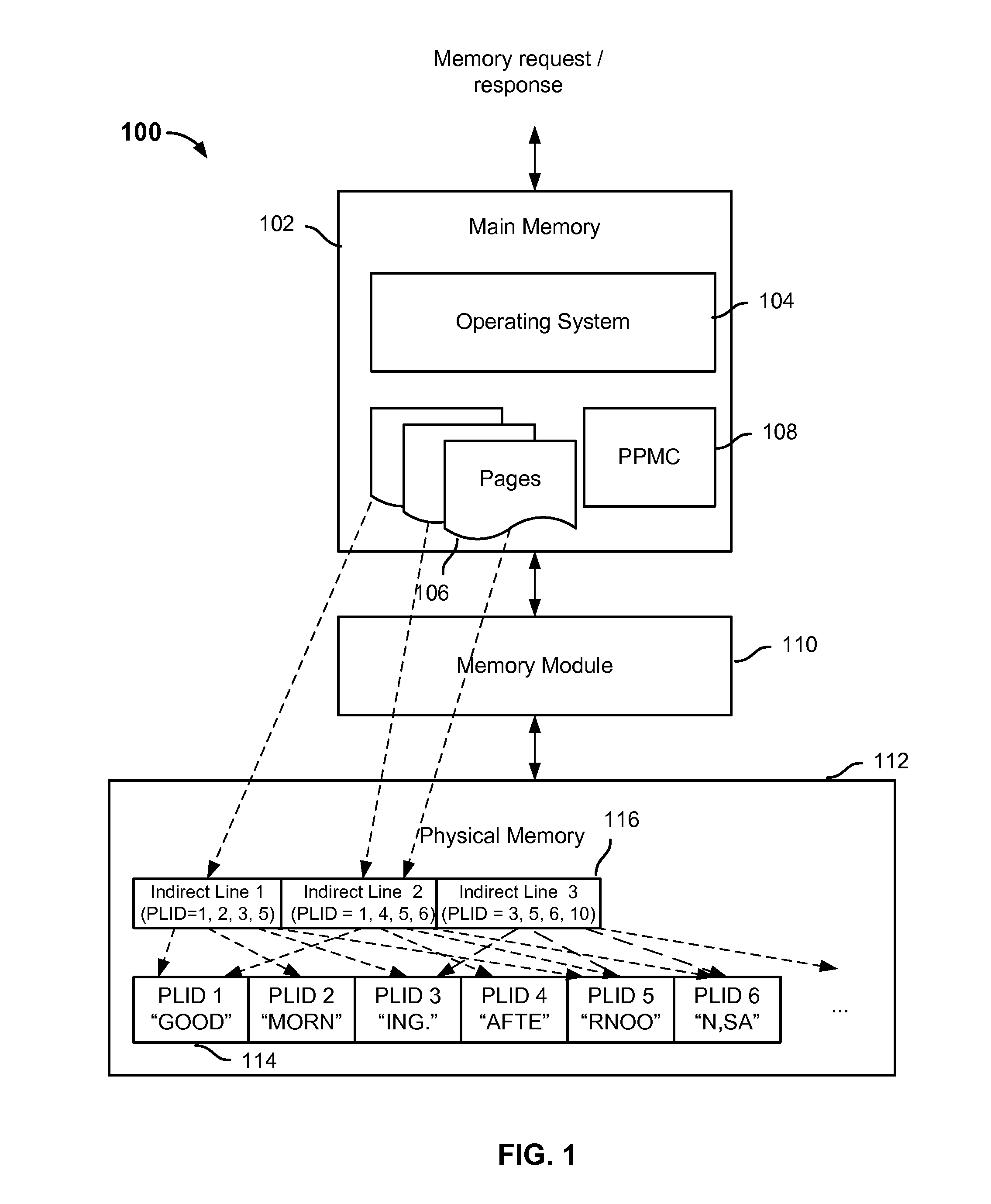

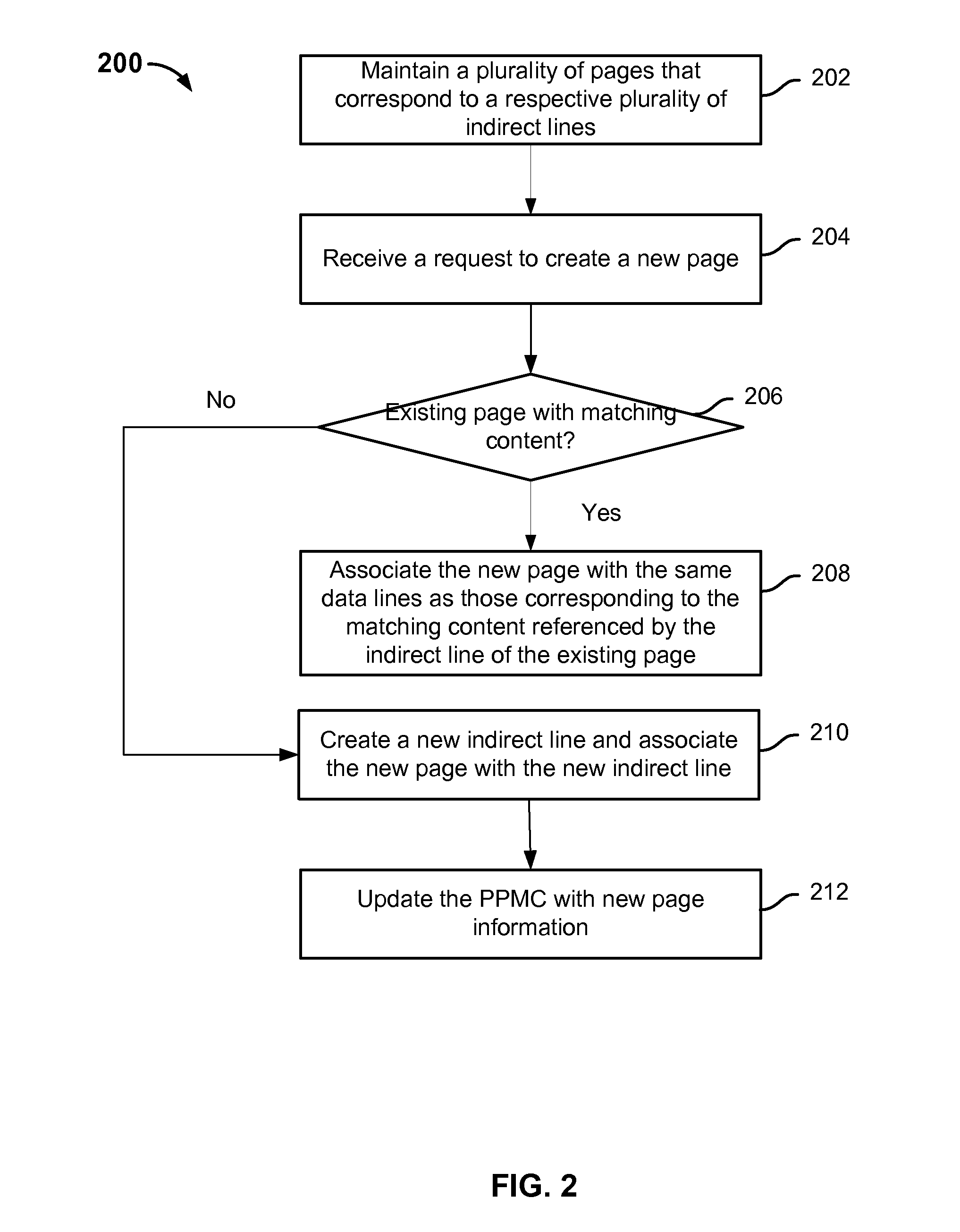

Memory sharing and page deduplication using indirect lines

ActiveUS9501421B1Input/output to record carriersMemory adressing/allocation/relocationMemory sharingMemory management unit

Memory management includes maintaining a plurality of physical pages corresponding to a respective plurality of indirect lines, where each of the plurality of indirect lines corresponds to a set of one or more data lines. Memory management further includes receiving a request to create a new physical page; determining whether there is an existing physical page that has matching content to the new physical page; and in the event that there is an existing physical page that has matching content as the new physical page, associating the new physical page with the same data lines as those corresponding to the matching content referenced by the indirect line associated with the existing physical page.

Owner:INTEL CORP

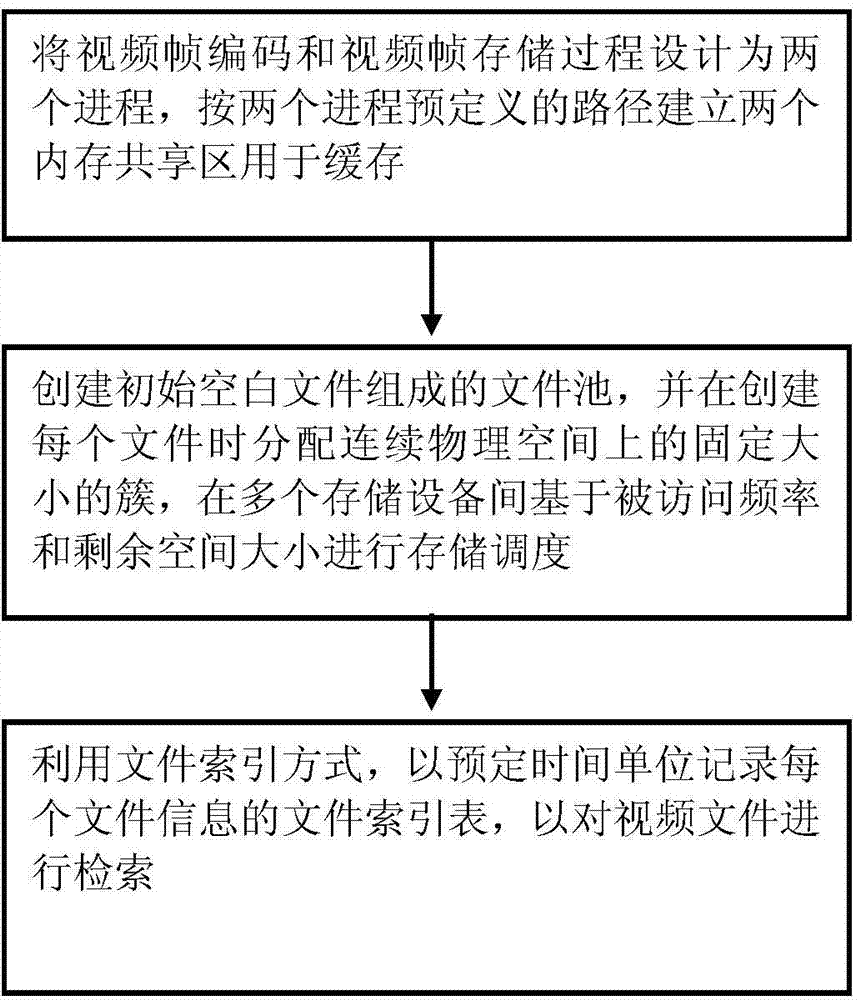

Network-based data storage method

InactiveCN104750858AIncrease the amount of dataStable storageMemory adressing/allocation/relocationSpecial data processing applicationsPhysical spaceAccess frequency

The invention provides a network-based data storage method which includes the steps: designing a video frame coding and video frame storage process into two processes, and building two memory sharing areas for caching according to paths predefined by the two processes; creating a file pool comprising initial null files with specified sizes, distributing a group of clusters with fixed sizes in a continuous physical space, and scheduling storage among a plurality of storage devices based on accessed frequency and the residual space of the storage devices; recording a file index table of each file information by a preset time unit, and retrieving a video file. The network-based data storage method realizes stable storage and rapid accurate retrieval under high data volume, and has practicability and reliability.

Owner:CHENGDU YINGTAI SCI & TECH

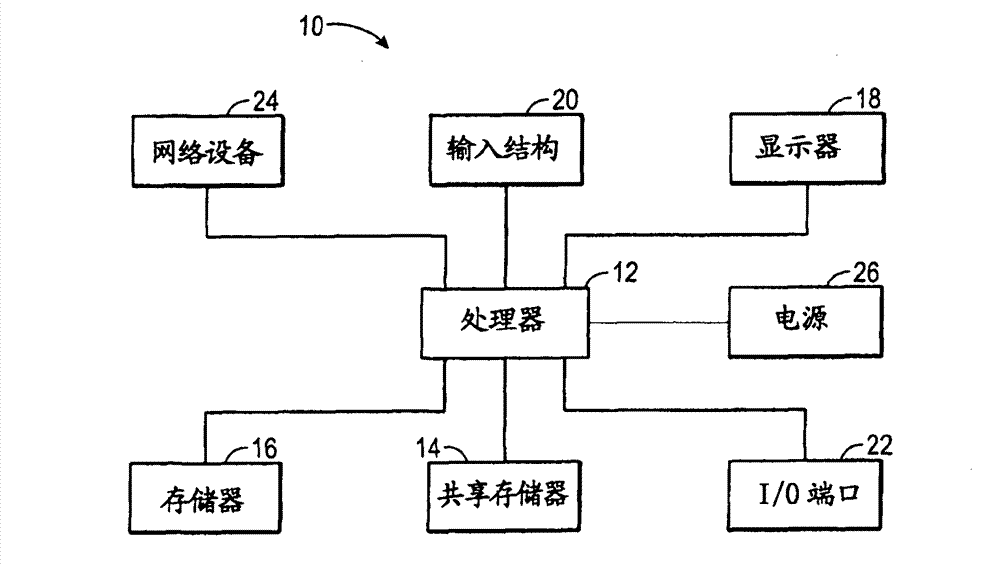

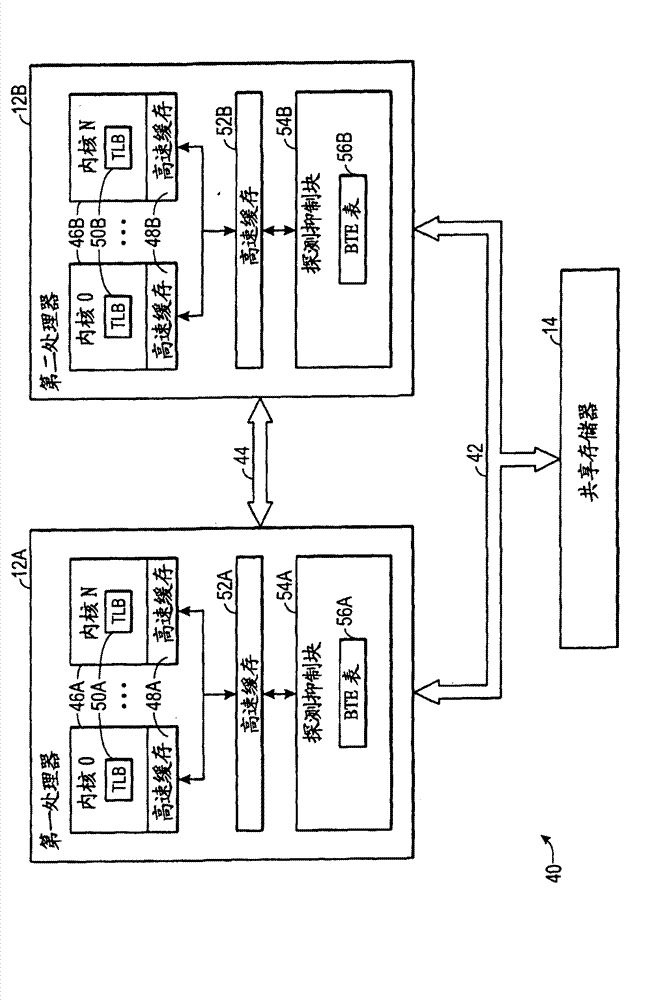

Systems, methods, and devices for cache block coherence

ActiveCN102880558AMemory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingMemory sharing

Systems, methods, and devices for efficient cache coherence between memory-sharing devices 12A and 12B are provided. In particular, snoop traffic may be suppressed based at least partly on a table of block tracking entries (BTEs). Each BTE may indicate whether groups of one or more cache lines of a block of memory could potentially be in use by another memory-sharing device 12A and 12B. By way of example, a memory-sharing device 12 may employ a table of BTEs 56 that each has several cache status entries. When a cache status entry indicates that none of a group of one or more cache lines could possibly be in use by another memory-sharing device 12, a snoop request for any cache lines of that group may be suppressed without jeopardizing cache coherence.

Owner:APPLE INC

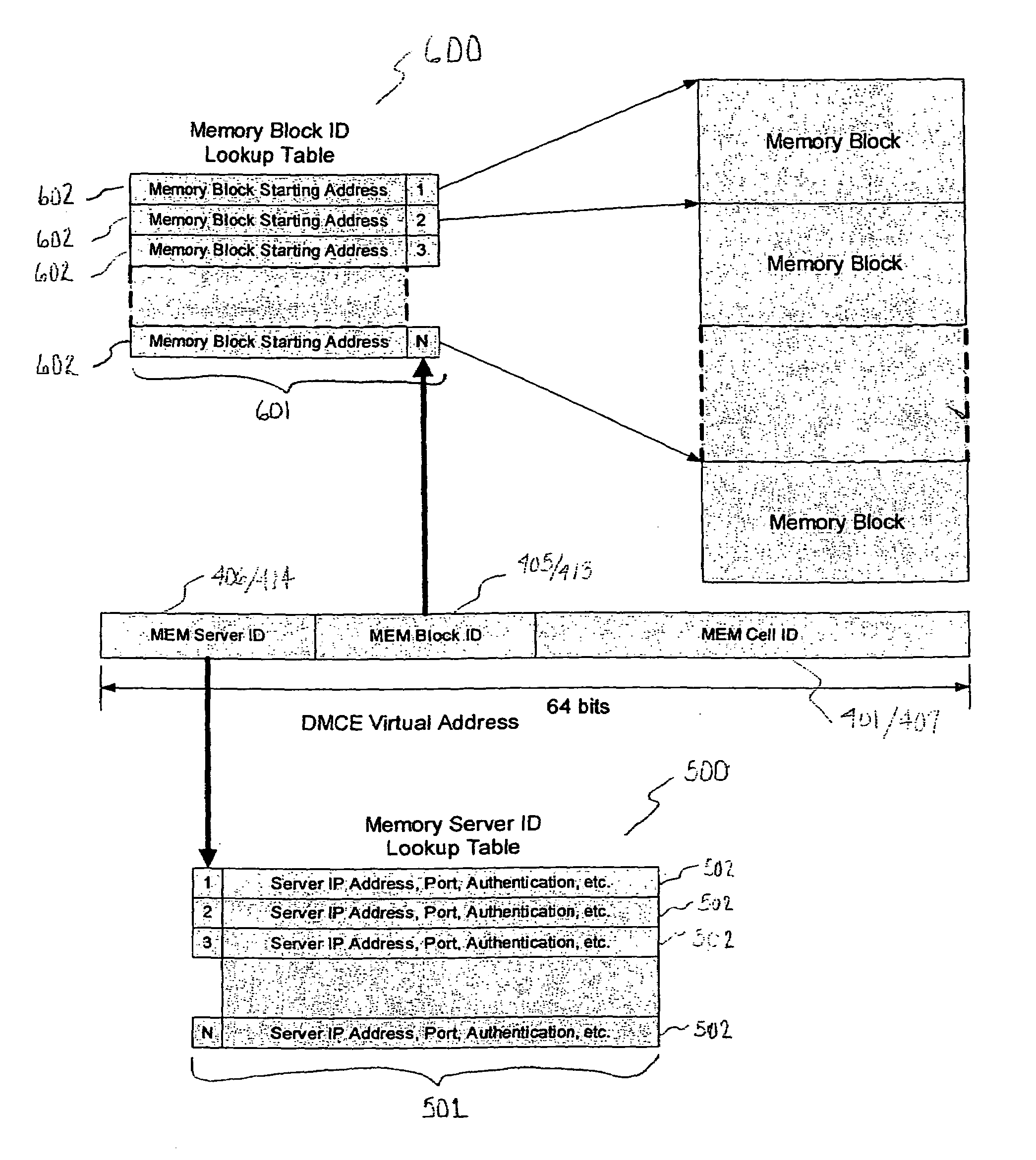

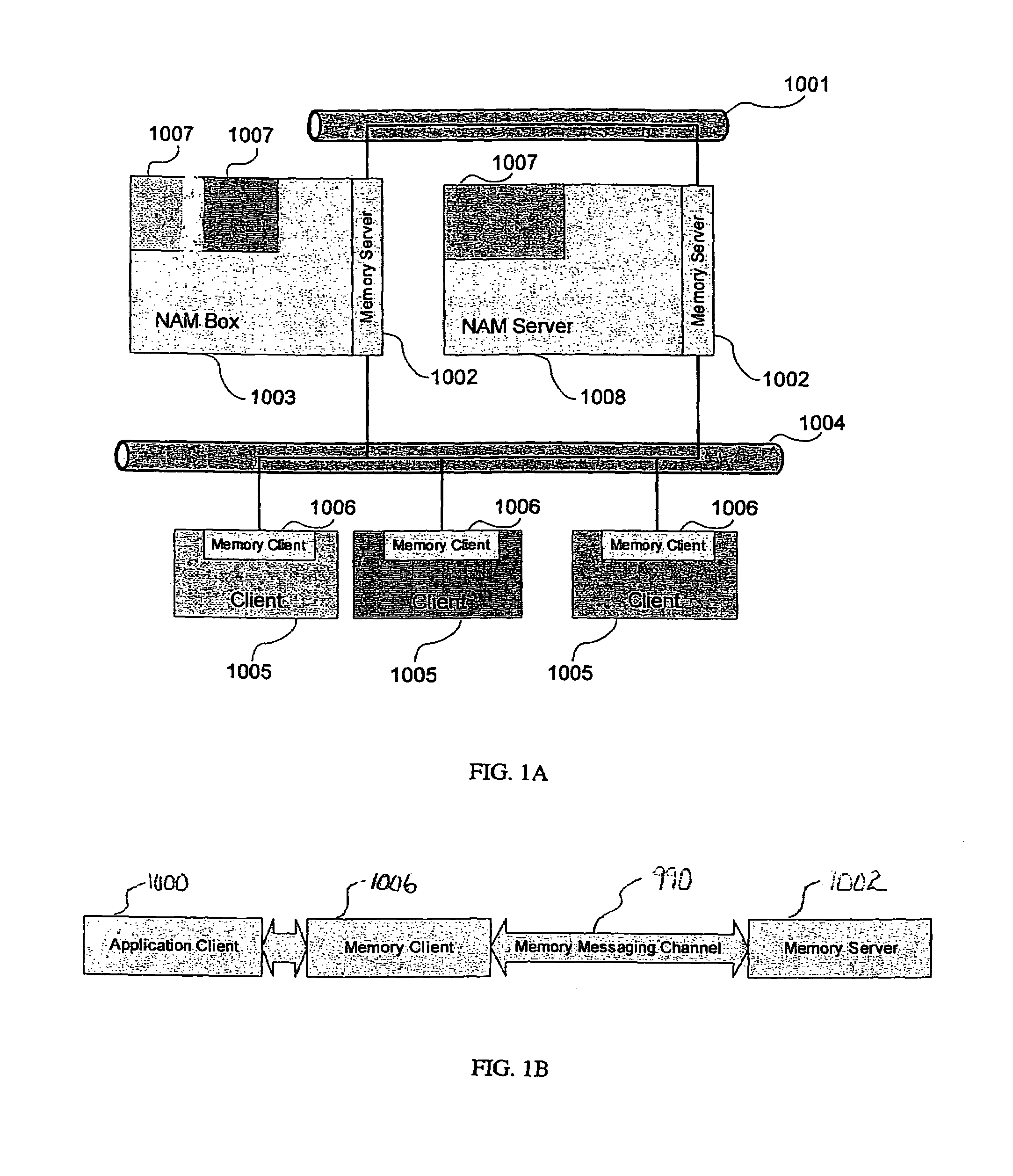

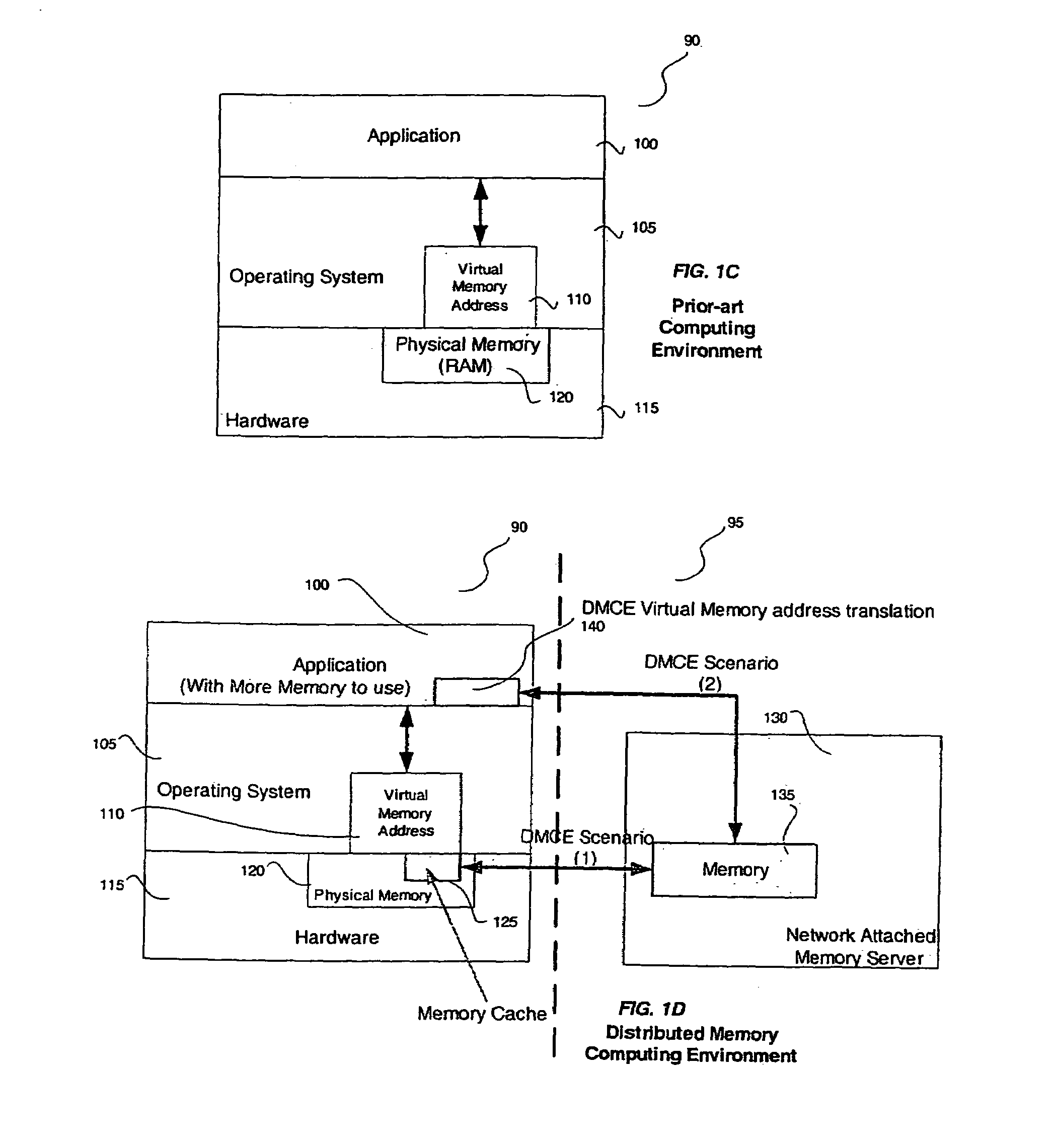

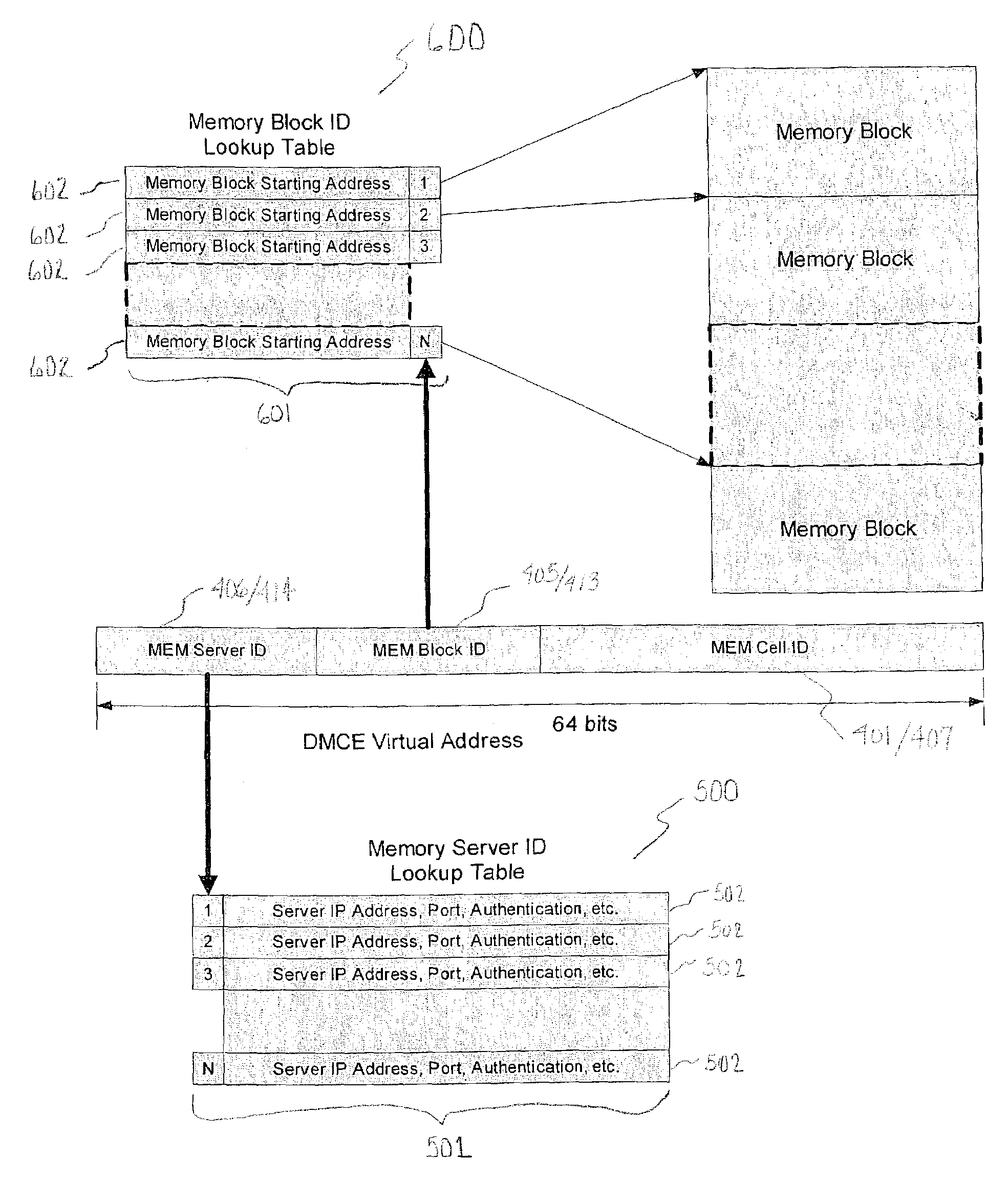

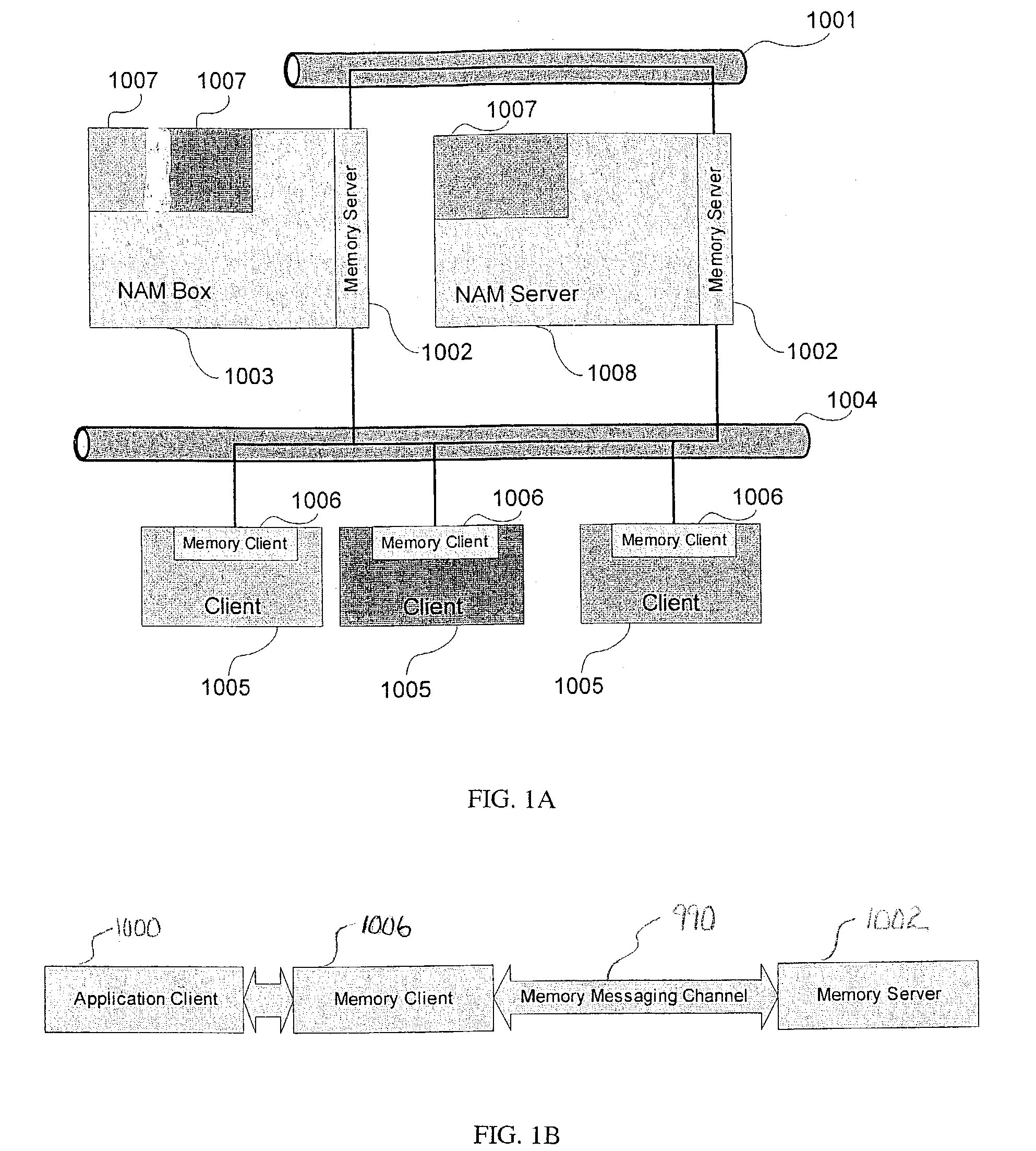

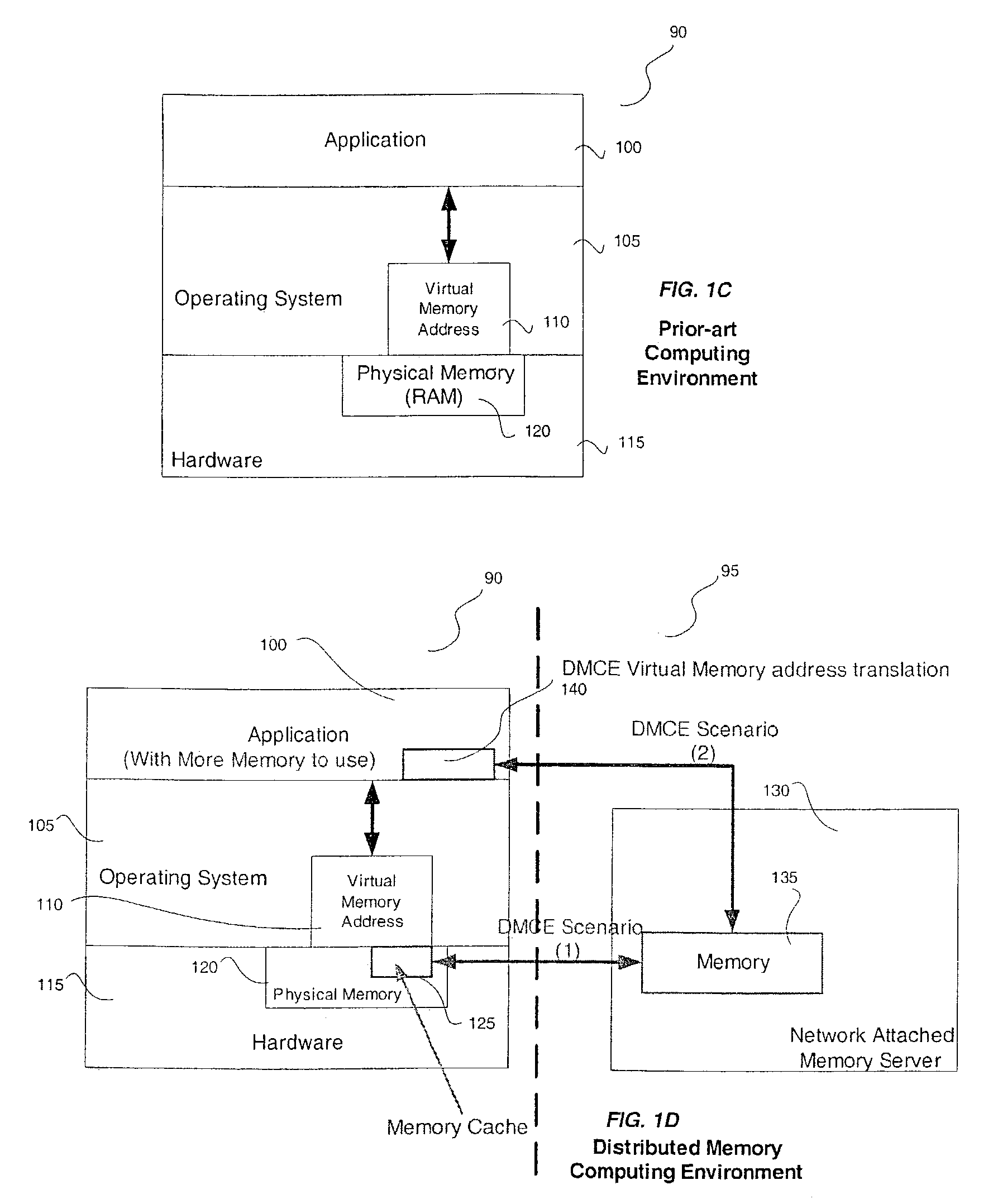

Network attached memory and implementation thereof

InactiveUS7149855B2Improve fault toleranceMore sharingMemory adressing/allocation/relocationDigital storageDistributed memoryArea network

A Distributed Memory Computing Environment (herein called “DMCE”) architecture and implementation is disclosed in which any computer equipped with a memory agent can borrow memory from other computer(s) equipped with a memory server on a distributed network. A memory backup and recovery as an optional subsystem of the Distributed Memory Computing system is also disclosed. A Network Attached Memory (herein called “NAM” or “NAM Box” or “NAM Server”) appliance is disclosed as a dedicated memory-sharing device attached to a network. A Memory Area Network (herein called “MAN”) is further disclosed, such a network is a network of memory device(s) or memory server(s) which provide memory sharing service to memory-demanding computer(s) or the like, when one memory device or memory server fails, its service will seamlessly transfer to other memory device(s) or memory server(s).

Owner:INTELITRAC

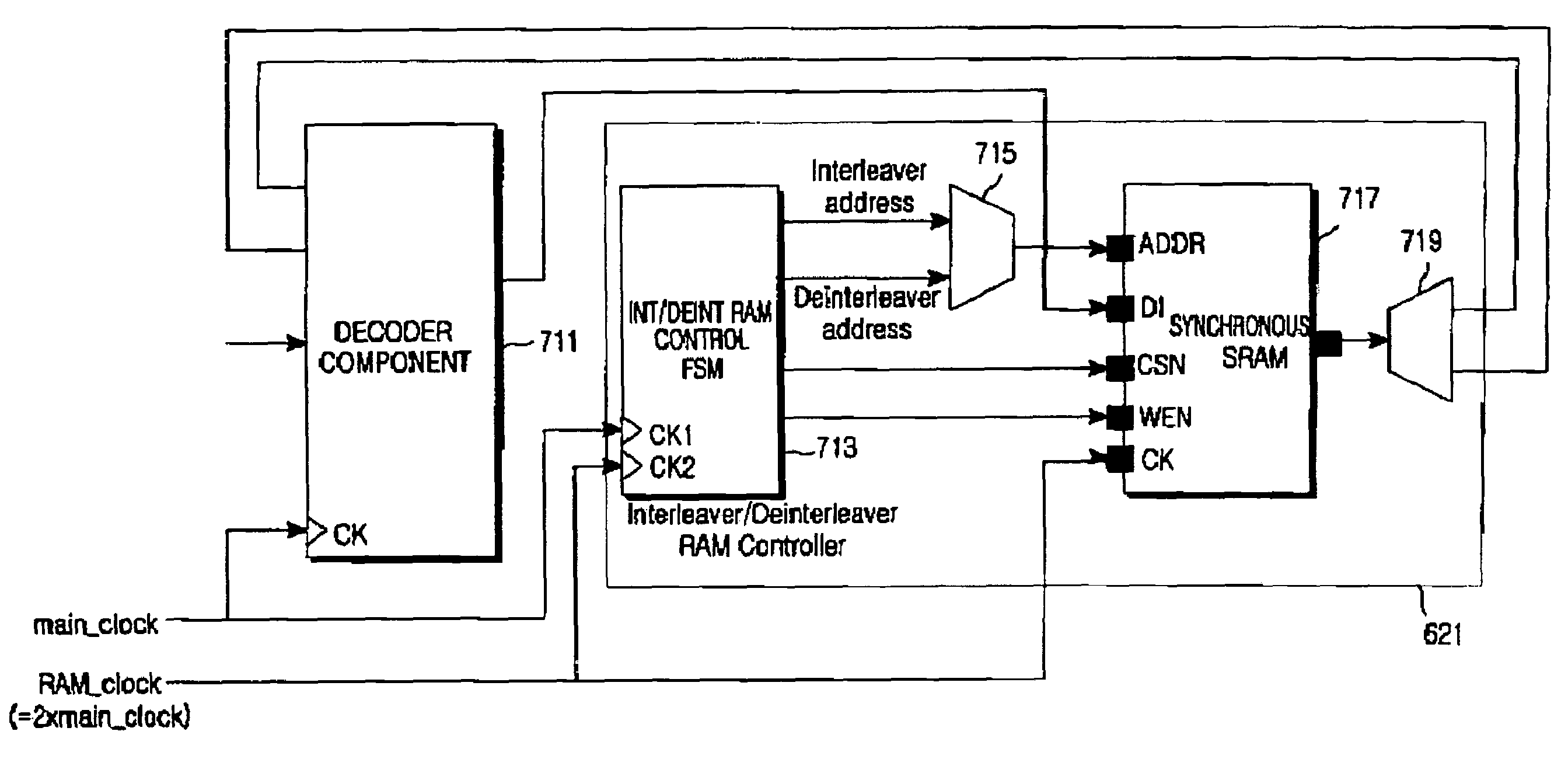

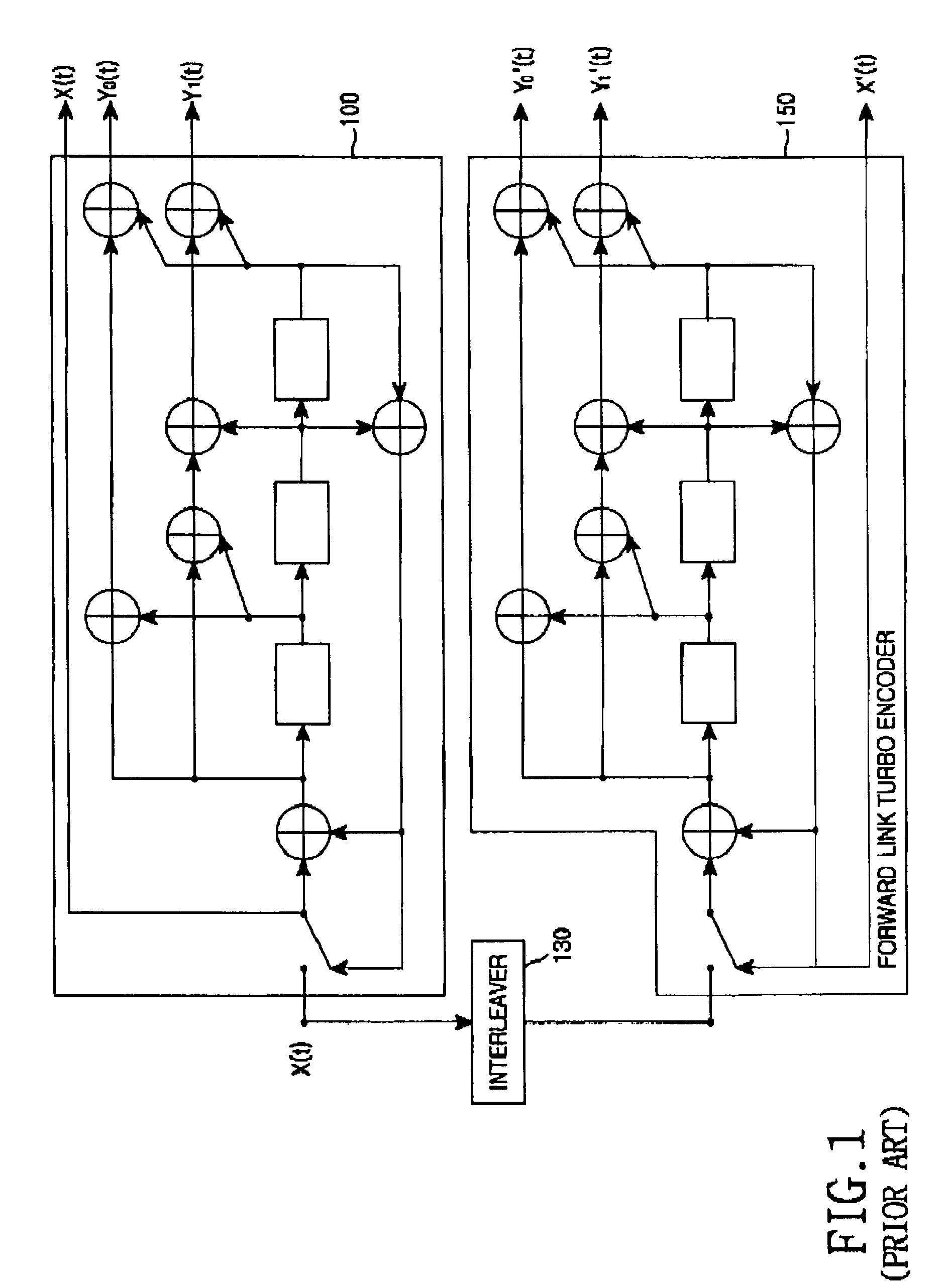

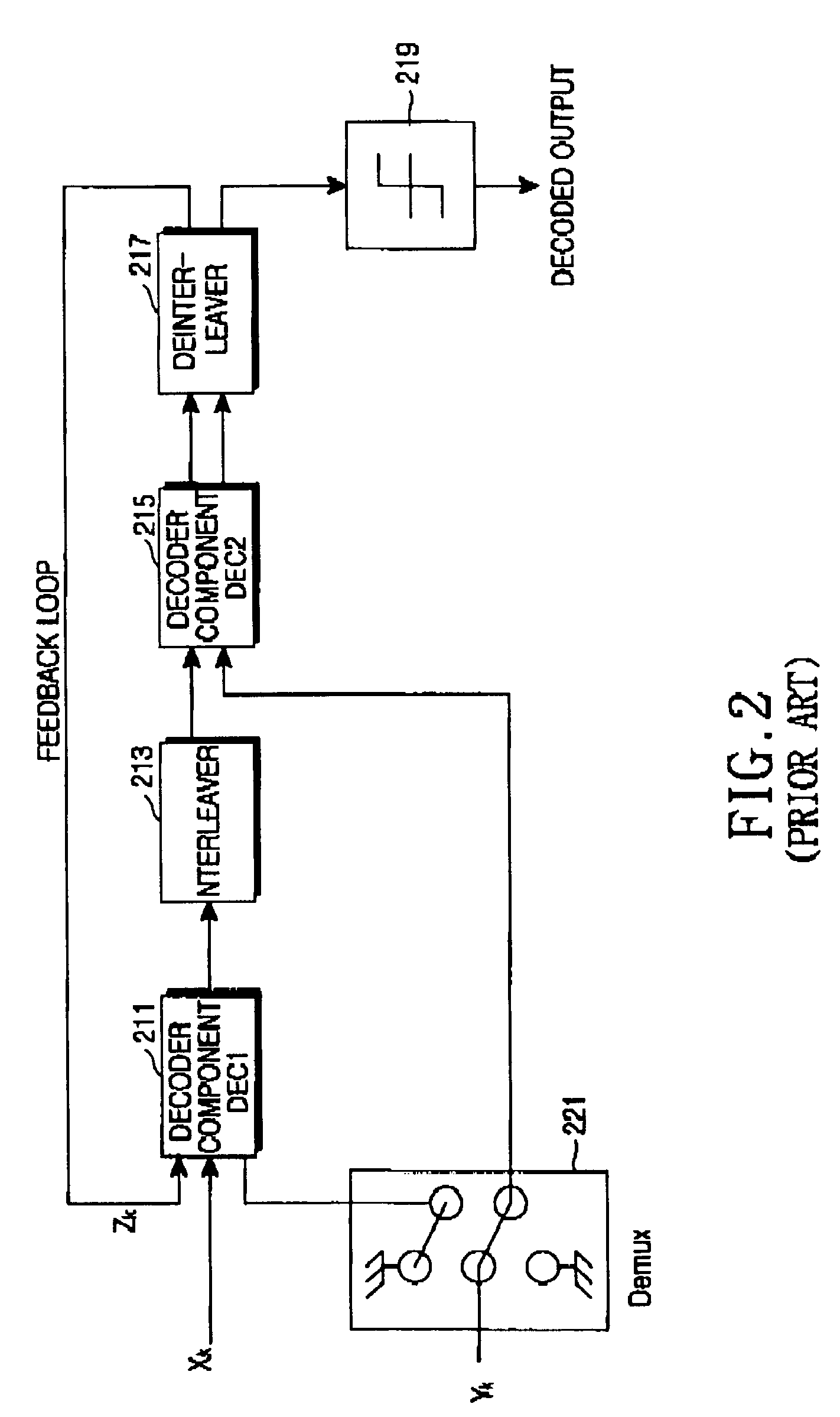

Apparatus and method for memory sharing between interleaver and deinterleaver in a turbo decoder

ActiveUS6988234B2Data representation error detection/correctionCode conversionControl signalDelayed time

An apparatus for memory sharing between an interleaver and a deinterleaver in a turbo decoder is disclosed. A memory writes therein data obtained by interleaving data decoded by a decoder and writes therein data obtained by deinterleaving the data decoded by the decoder, in response to a control signal. A controller reads the stored interleaved data for a delay time of the decoder, generates a first control signal for writing the interleaved signal in the memory after a lapse of the delay time, writes the deinterleaved data in the memory for the delay time, and generates a second control signal for reading the stored deinterleaved data after a lapse of the delay time.

Owner:SAMSUNG ELECTRONICS CO LTD

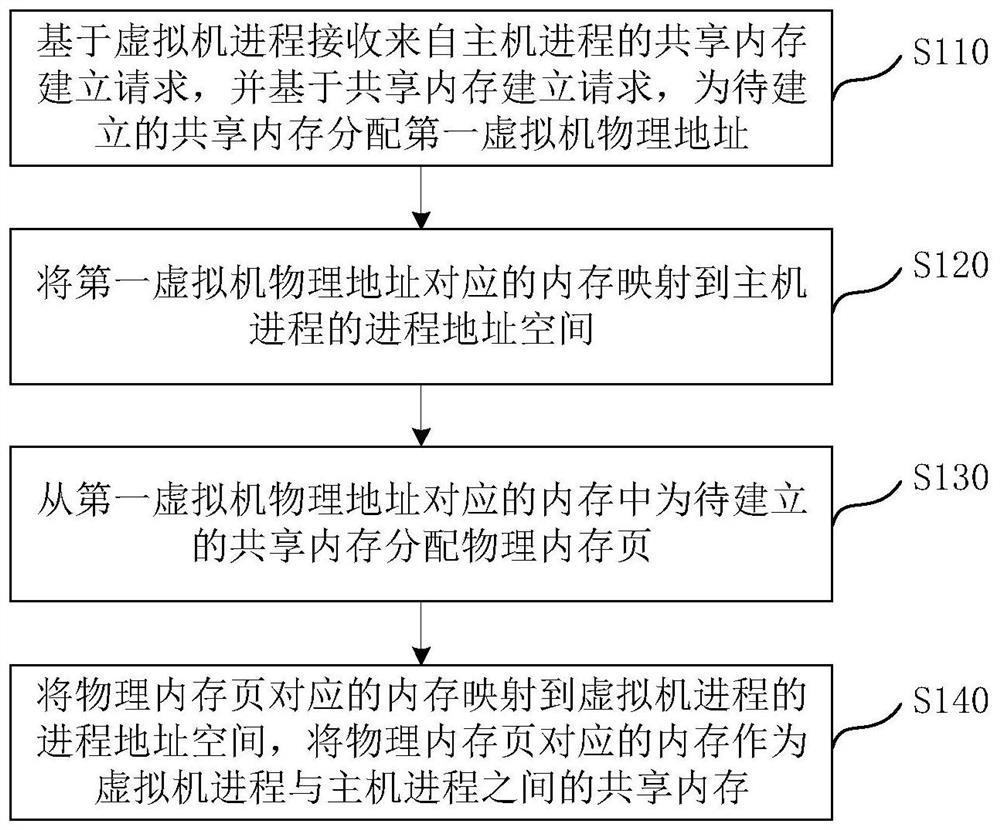

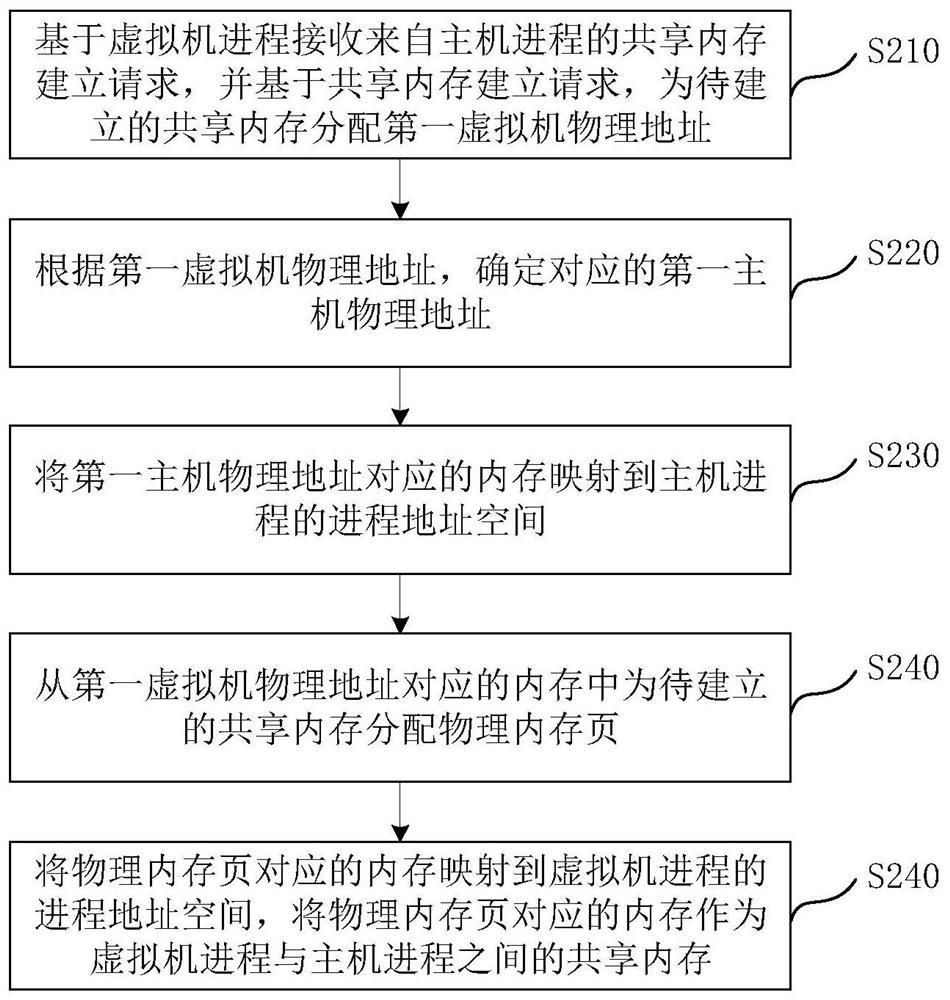

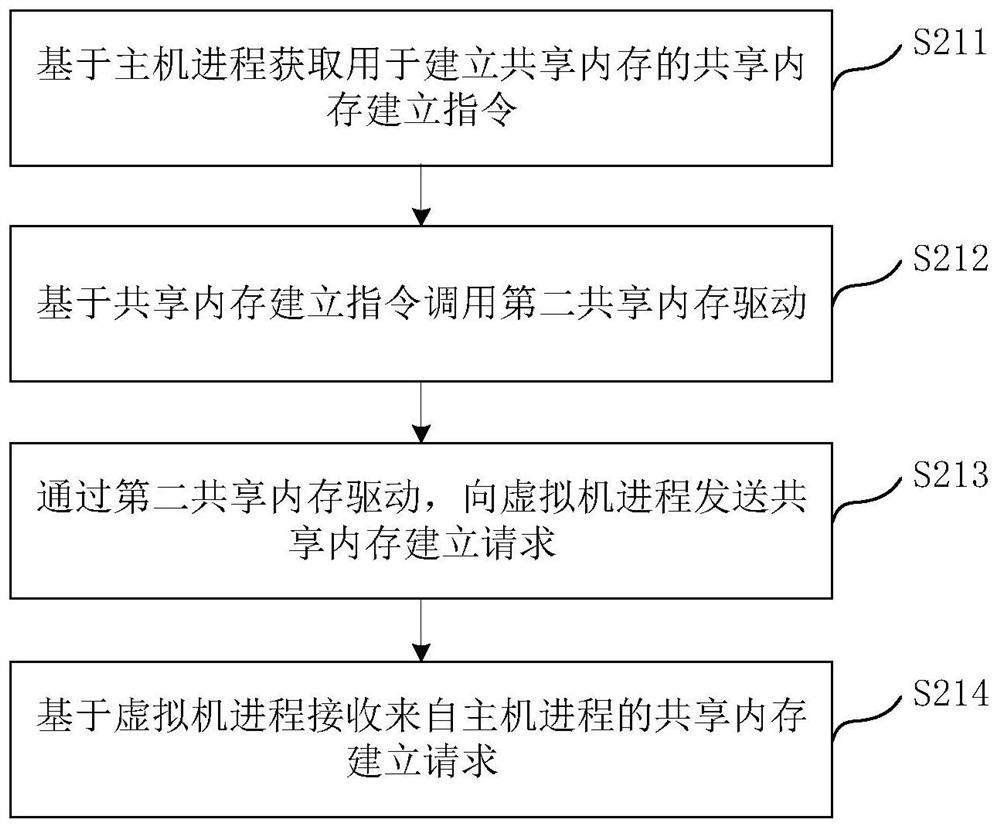

Memory sharing method and device, electronic equipment and storage medium

PendingCN111813584AReduce sharing granularityReduce the risk of security incidentsResource allocationInterprogram communicationPhysical addressMemory sharing

The invention discloses a memory sharing method and device, electronic equipment and a storage medium, relates to the technical field of computers, and is applied to a data sharing system. The devicecomprises a host and a virtual machine running in the host, wherein at least one host process runs in the host, at least one virtual machine process runs in the virtual machine, and the method comprises the following steps: receiving a shared memory establishment request from the host process based on the virtual machine process, and allocating a first virtual machine physical address to a to-be-established shared memory based on the shared memory establishment request; mapping a memory corresponding to the physical address of the first virtual machine to a process address space of a host process; allocating a physical memory page to the shared memory to be established from the memory corresponding to the physical address of the first virtual machine; and mapping the memory corresponding to the physical memory page to the process address space of the virtual machine process, and taking the memory corresponding to the physical memory page as a shared memory between the virtual machine process and the host process. According to the invention, safe memory sharing between the virtual machine and the host process is realized.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

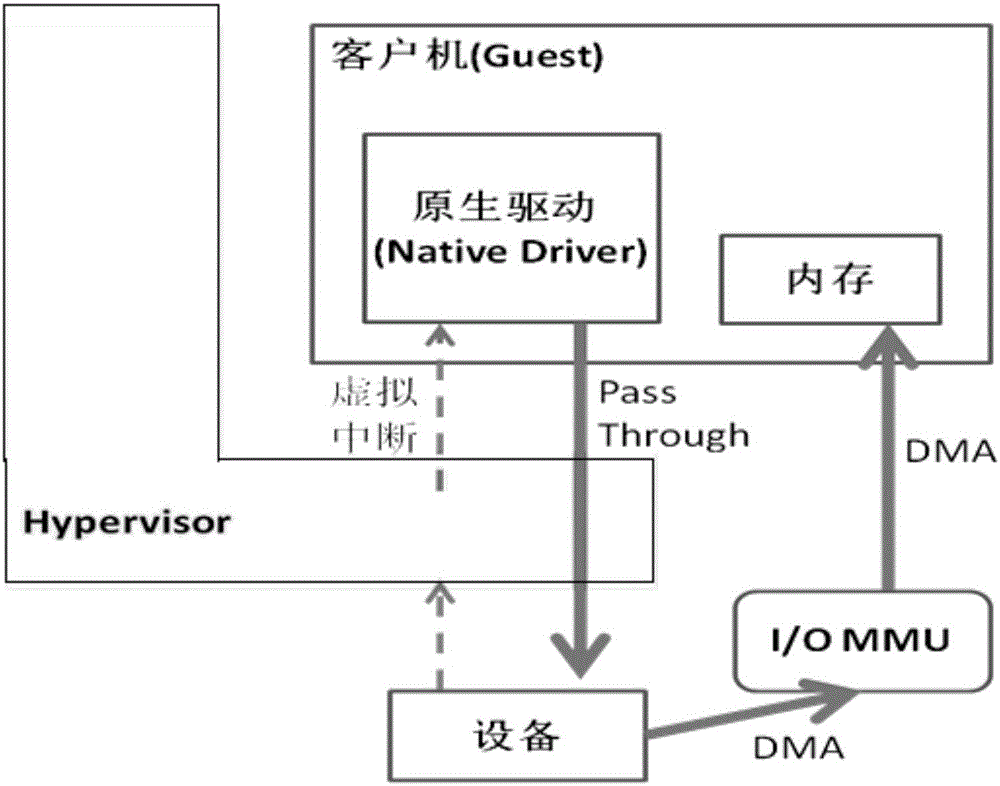

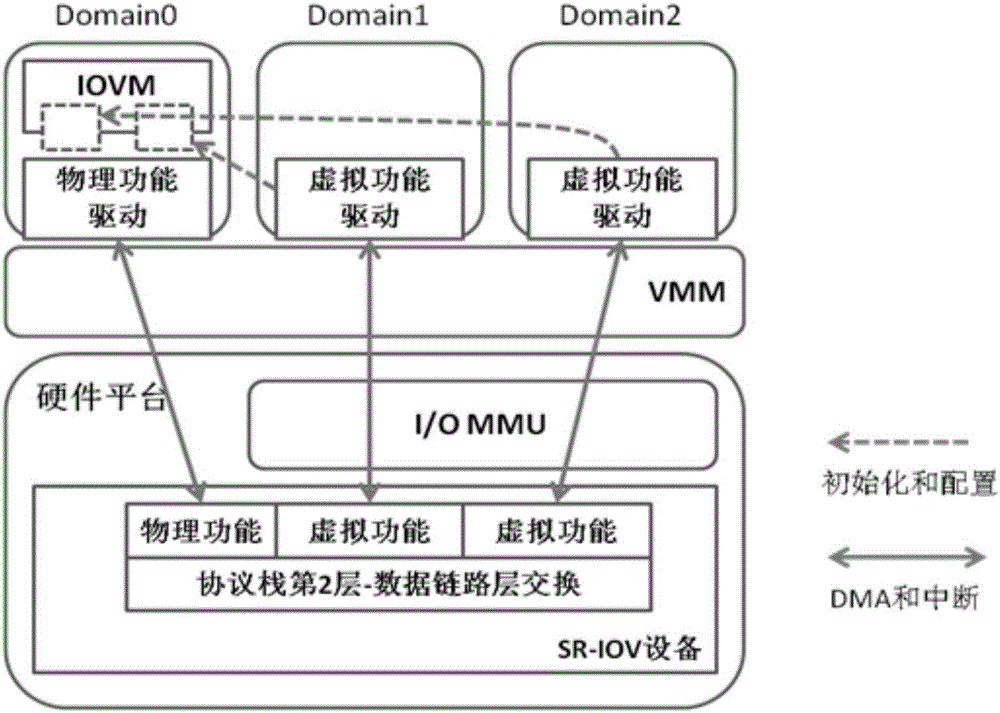

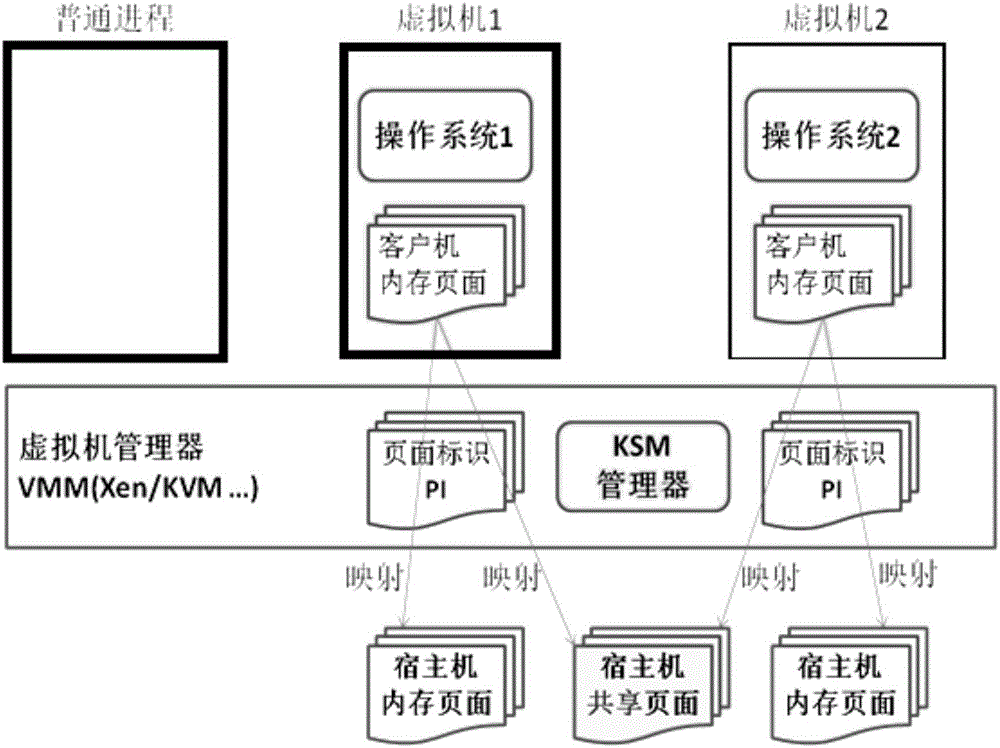

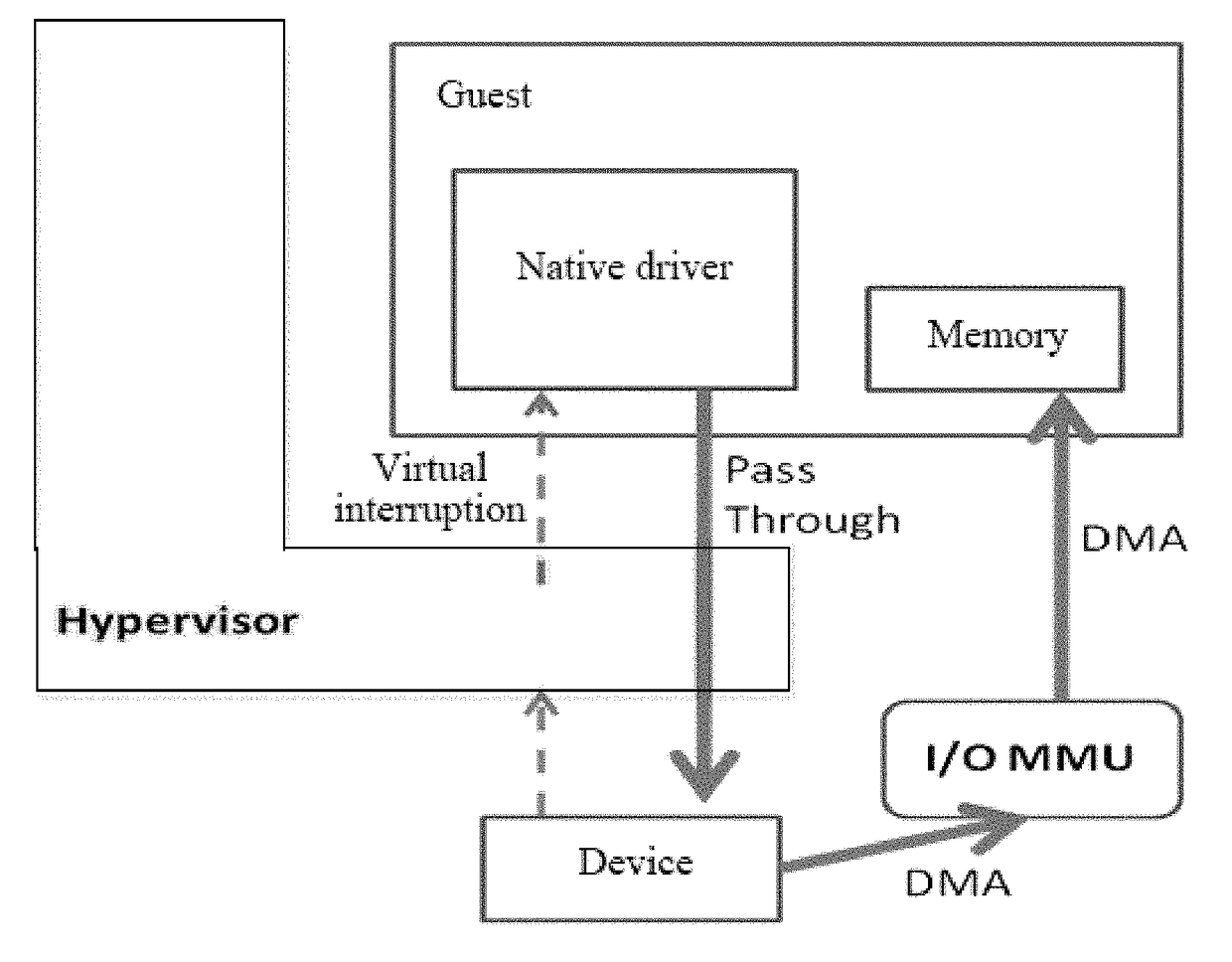

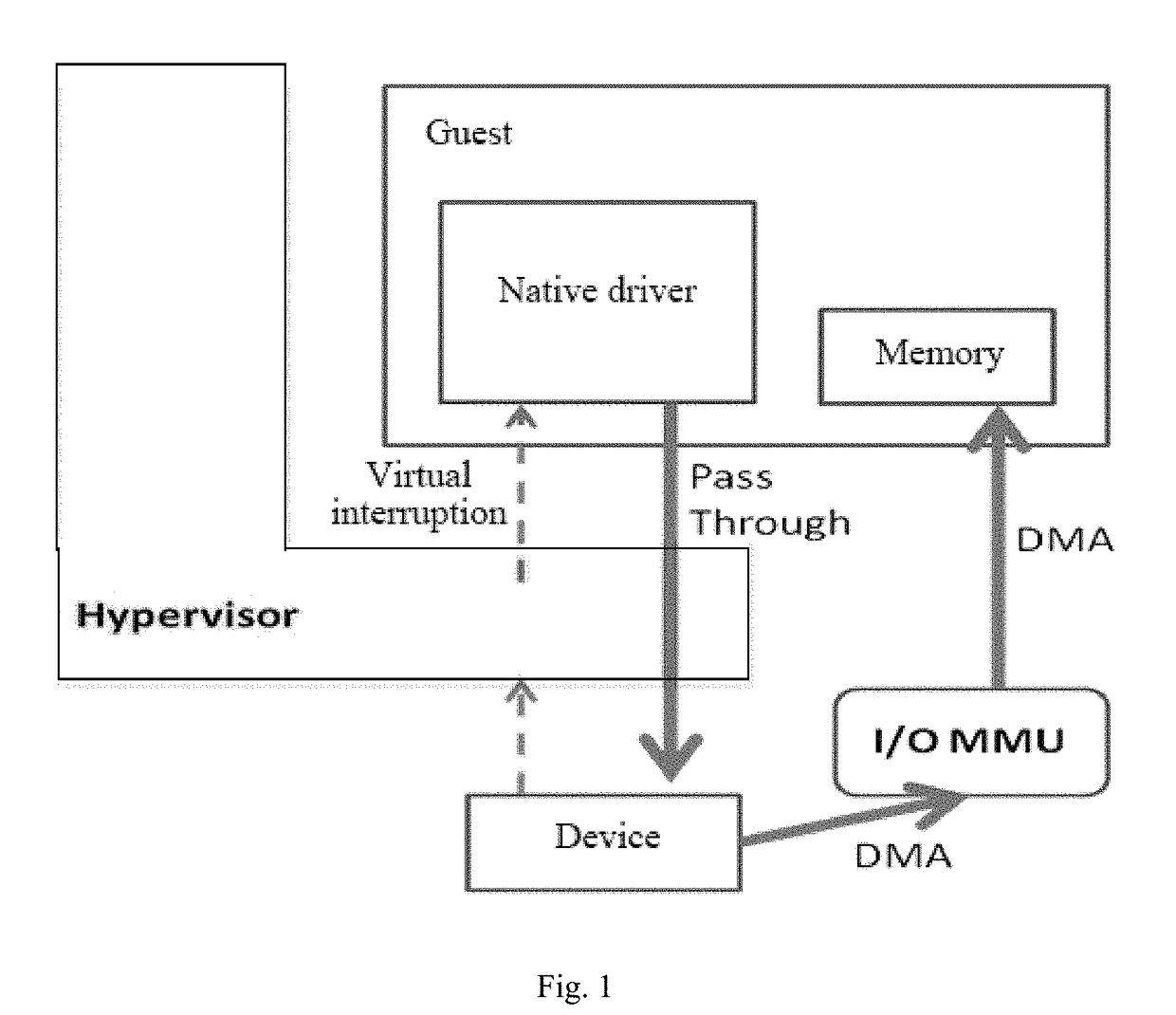

Virtual machine memory sharing method based on combination of KSM and Pass-through

ActiveCN106155933ATroubleshooting Shared Merge IssuesEasy to operateMemory architecture accessing/allocationSoftware simulation/interpretation/emulationWrite protectionDirect memory access

The invention discloses a virtual machine memory sharing method based on the combination of KSM and Pass-through. The virtual machine memory sharing method specifically comprises the following steps: judging whether an operating system of each client uses an IOMMU (Input / Output Memory Management Unit) or not by a virtual machine manager; if not, not participating in sharing mapping of a KSM technology; if so, judging a memory page of each client and determining whether the memory page is a mapping page or not; if so, mapping the mapping page of each client into a host; if not, under the condition of keeping Pass-through properties, utilizing the KSM technology for all non-mapping pages and combining the memory pages with the same content in a plurality of sets of virtual machines; and meanwhile, carrying out writing protection processing. The memory pages of the clients are distinguished into special use for DMA (Direct Memory Access) and non-DMA uses, so that the KSM technology is only selectively applied to the non-DMA pages; and under the condition of keeping the Pass-through properties, the aim of saving memory resources is realized.

Owner:MASSCLOUDS INNOVATION RES INST (BEIJING) OF INFORMATION TECH

Distributed memory computing environment and implementation thereof

ActiveUS7043623B2Improve fault toleranceMore sharingMemory adressing/allocation/relocationDigital storageDistributed memoryArea network

A Distributed Memory Computing Environment (herein called “DMCE”) architecture and implementation is disclosed in which any computer equipped with a memory agent can borrow memory from other computer(s) equipped with a memory server on a distributed network. A memory backup and recovery as an optional subsystem of the Distributed Memory Computing system is also disclosed. A Network Attached Memory (herein called “NAM” or “NAM Box” or “NAM Server”) appliance is disclosed as a dedicated memory-sharing device attached to a network. A Memory Area Network (herein called “MAN”) is further disclosed, such a network is a network of memory device(s) or memory server(s) which provide memory sharing service to memory-demanding computer(s) or the like, when one memory device or memory server fails, its service will seamlessly transfer to other memory device(s) or memory server(s).

Owner:INTELITRAC

System and method for using a multi-protocol fabric module across a distributed server interconnect fabric

ActiveUS9680770B2Extend performance and power optimization and functionalityData switching networksPersonalizationStructure of Management Information

Owner:III HLDG 2

Memory sharing method of virtual machines based on combination of ksm and pass-through

InactiveUS20180011797A1Improve balanceEfficient solutionMemory architecture accessing/allocationSoftware simulation/interpretation/emulationWrite protectionSoftware engineering

A memory sharing method of virtual machines through the combination of KSM and pass-through, including: a virtual machine manager judging whether operating systems of guests use IOMMU, if not, not participating in shared mapping of a KSM technology; if yes, judging memory pages of each guest to confirm whether the pages are mapping pages, if yes, remain the mapping pages into a host; and if not, on the premise of keeping the properties of Pass-through, using the KSM technology for all non-mapping pages to merge the memory pages with same contents among various virtual machines and perform write protection processing simultaneously. The guest memory pages are divided into those special for DMA and those for non-DMA purpose, then the KSM technology is only selectively applied to the non-DMA pages, and on the premise of keeping the properties of Pass-through, the object of saving memory resources is achieved simultaneously.

Owner:MASSCLOUDS INNOVATION RES INST (BEIJING) OF INFORMATION TECH

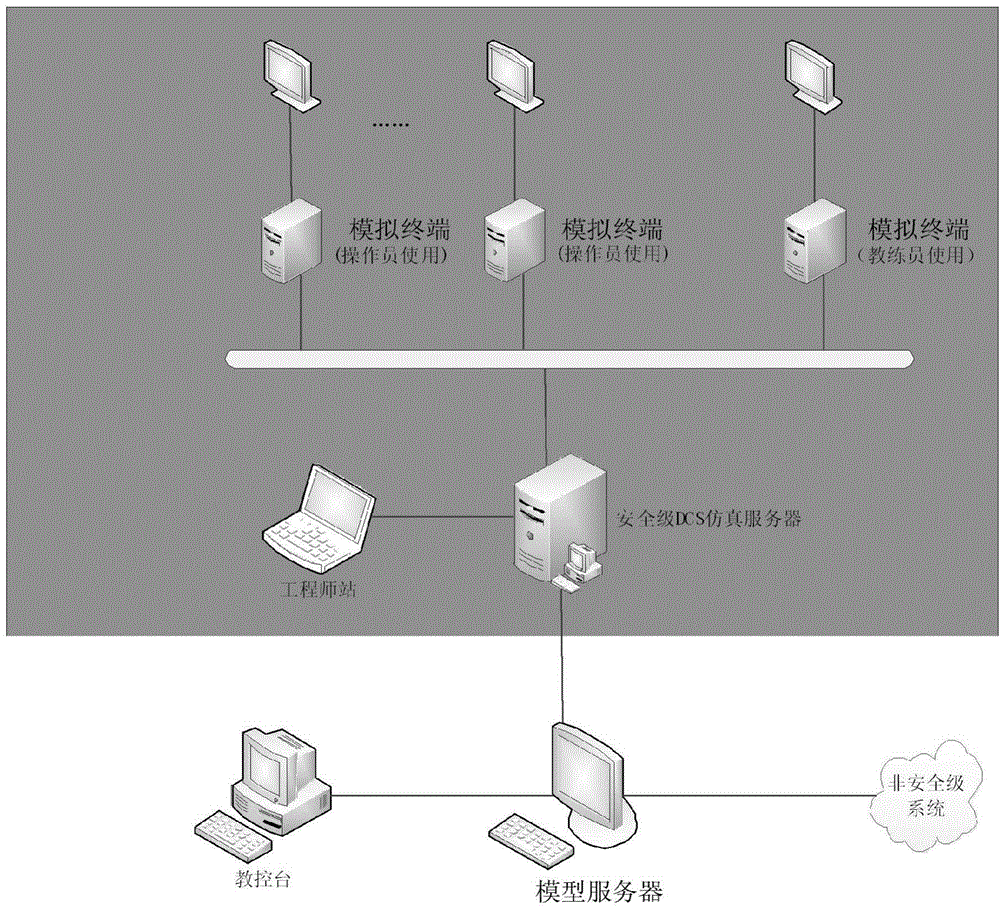

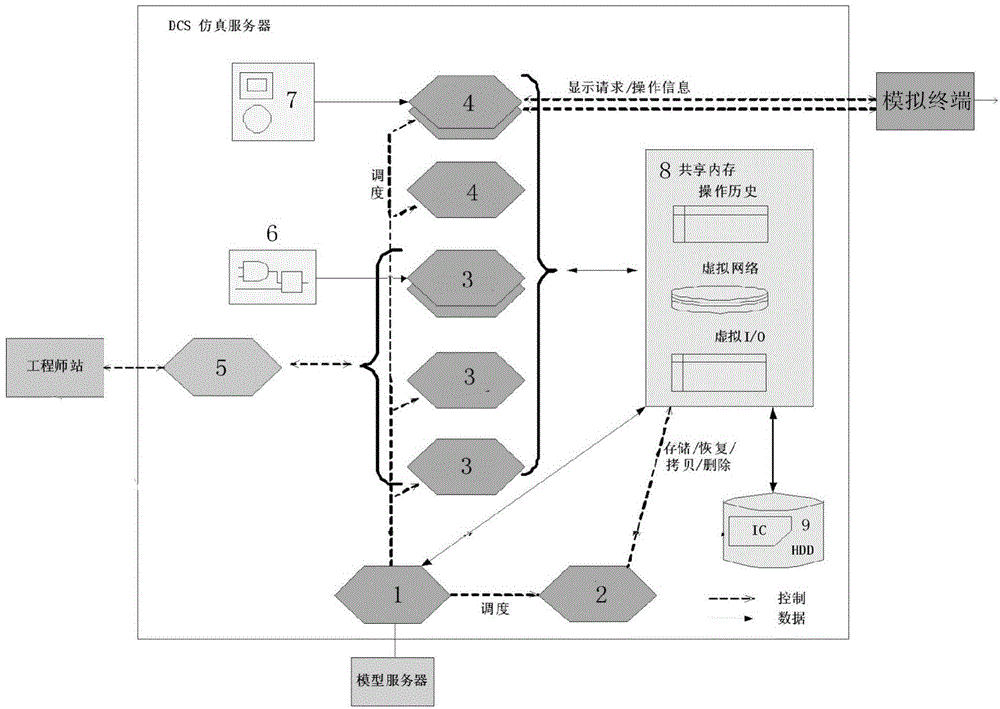

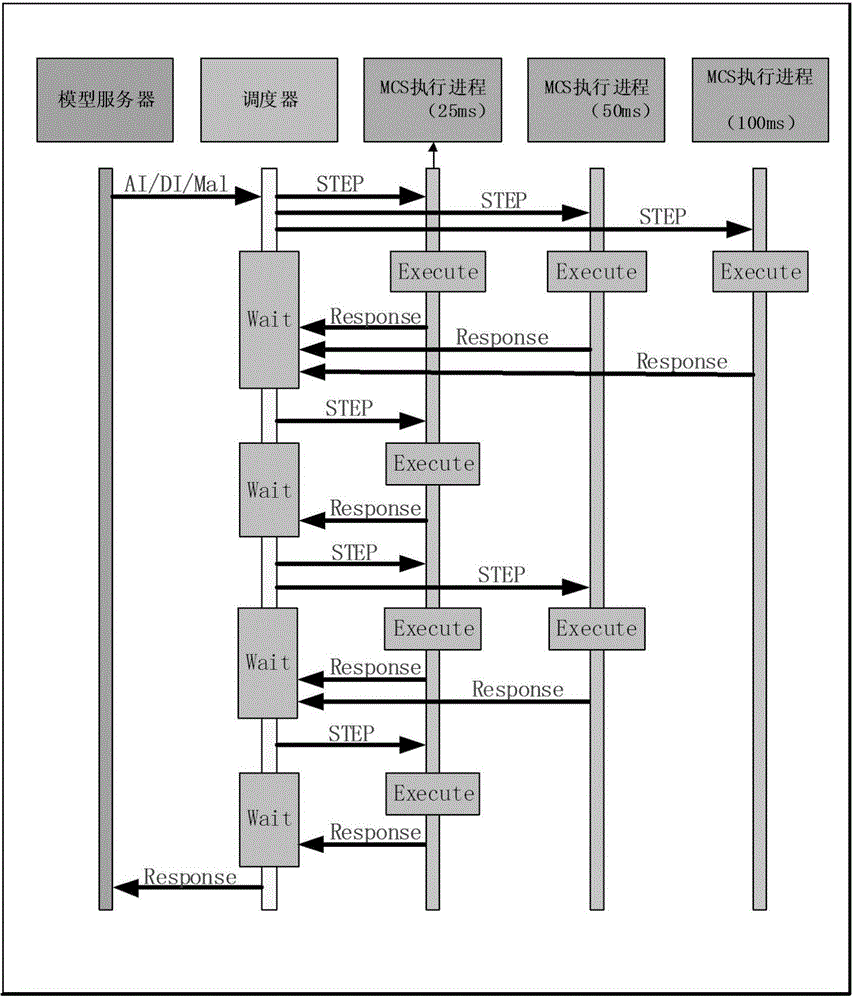

Digitized security level control system simulation device of nuclear power plants

ActiveCN104809932ACosmonautic condition simulationsEducational modelsNuclear power plantMemory sharing

The invention discloses a digitized security level control system simulation device of nuclear power plants. The digitized security level control system simulation device is connected with a model server through a network and comprises a security level DCS simulation server and n simulation terminals. The security level DCS simulation server is used for simulating the security level DCS part of the digitized security level control system simulation device. The simulation terminals are used for simulating true display and operation, and n is larger than or equal to 1. The security level DCS simulation server comprises a scheduler (1), an MCS execution process unit (3), an SCID execution process unit (4), an application logic unit (6), a display DB unit (7), a memory sharing unit (8) and a data manager (2). The normal running and fault work conditions of multiple on-site DCSs are simulated through one server, configuration files of the actual DCSs can be analyzed, system monitoring is conducted through practical engineer stations, and the a simulated system can approach a true system to the maximum extent.

Owner:CHINA TECHENERGY +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com