Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

97 results about "Multi gpu" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

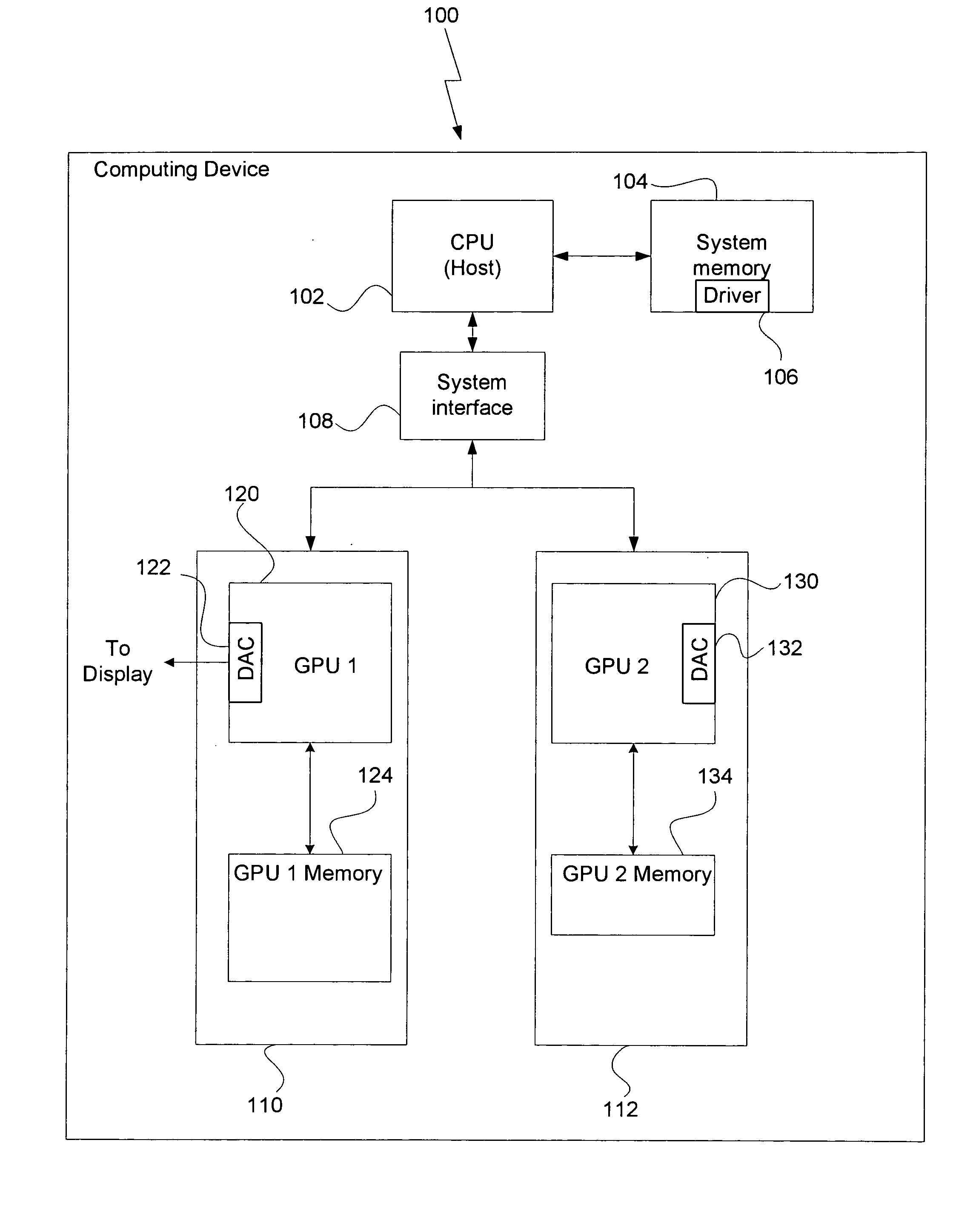

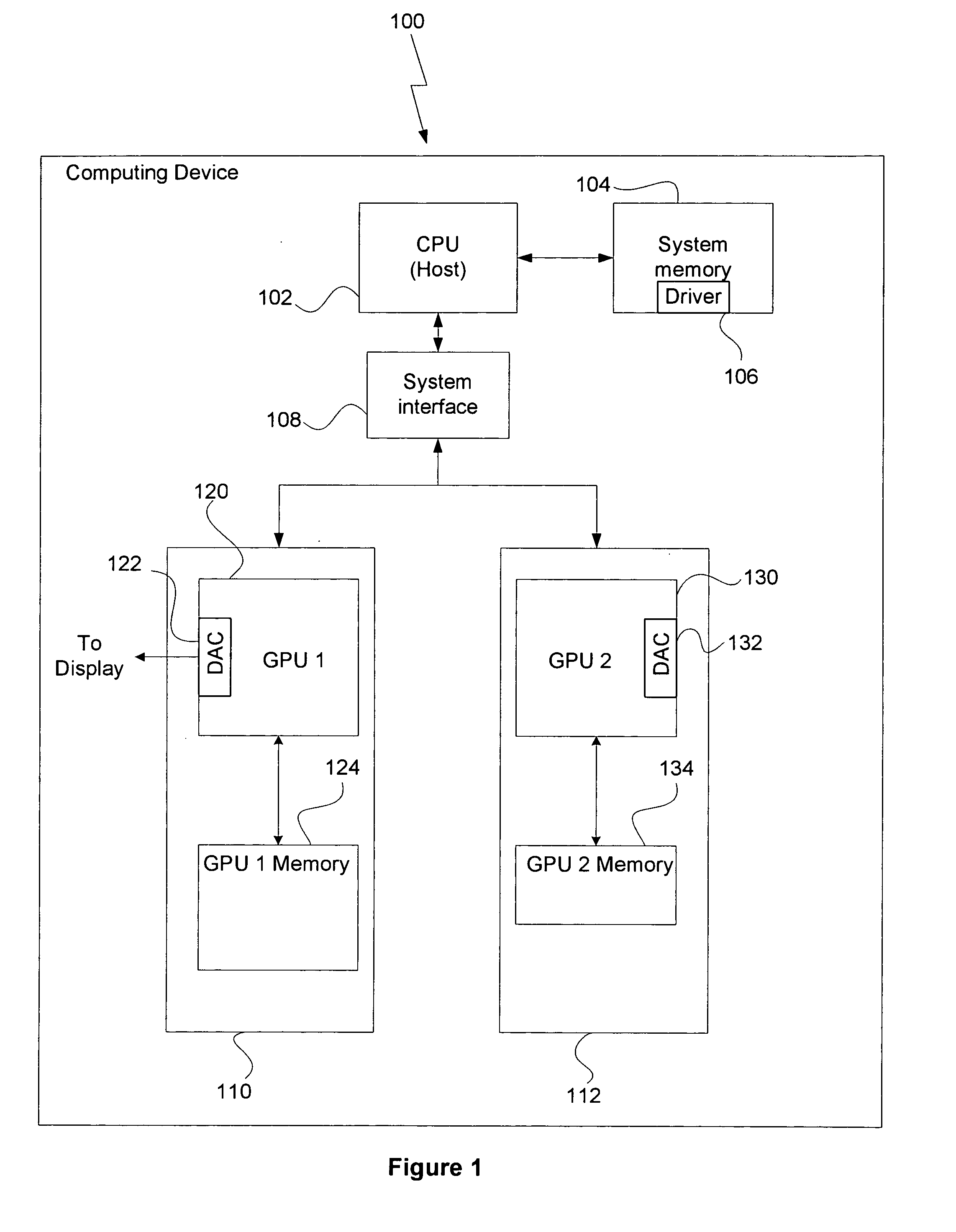

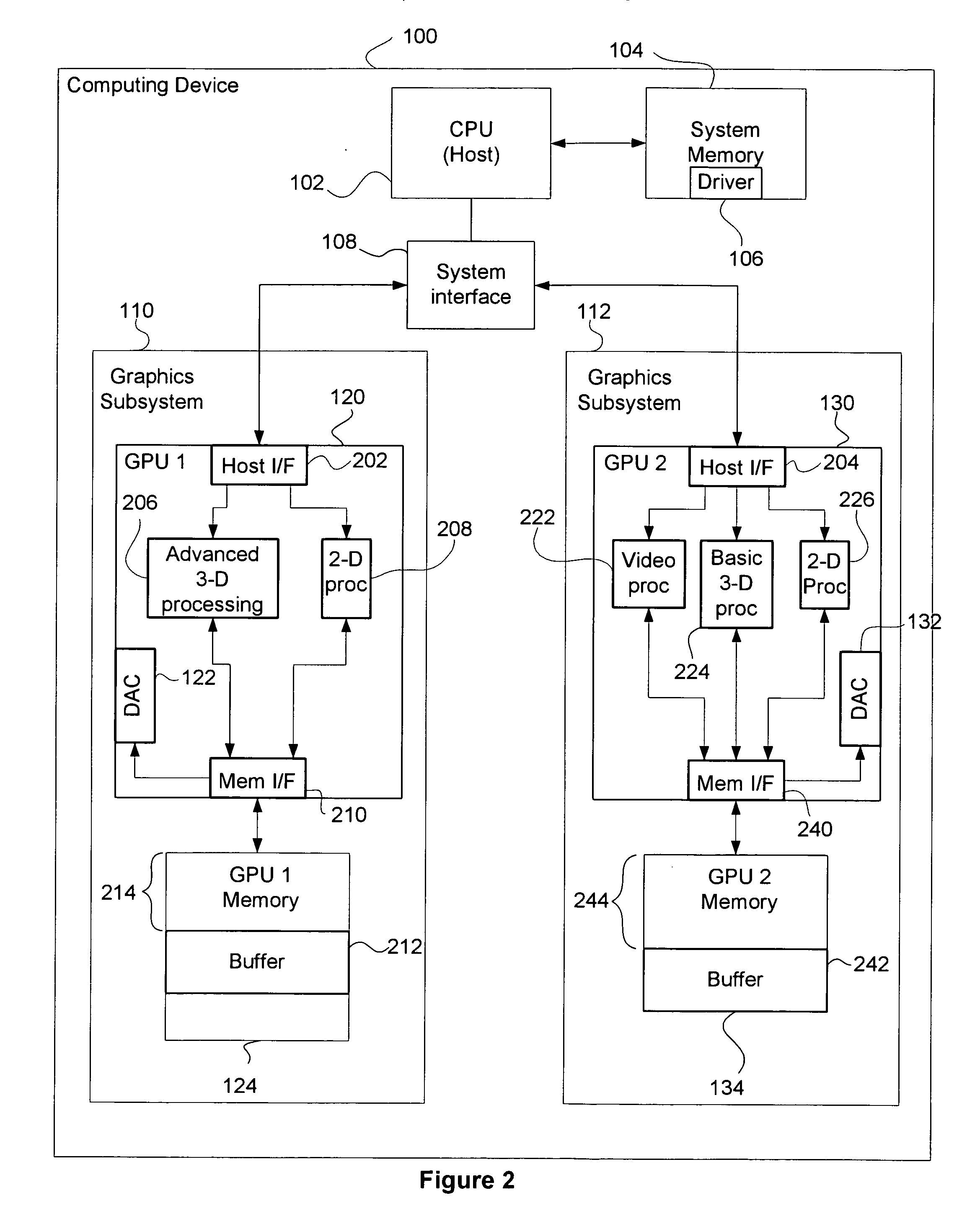

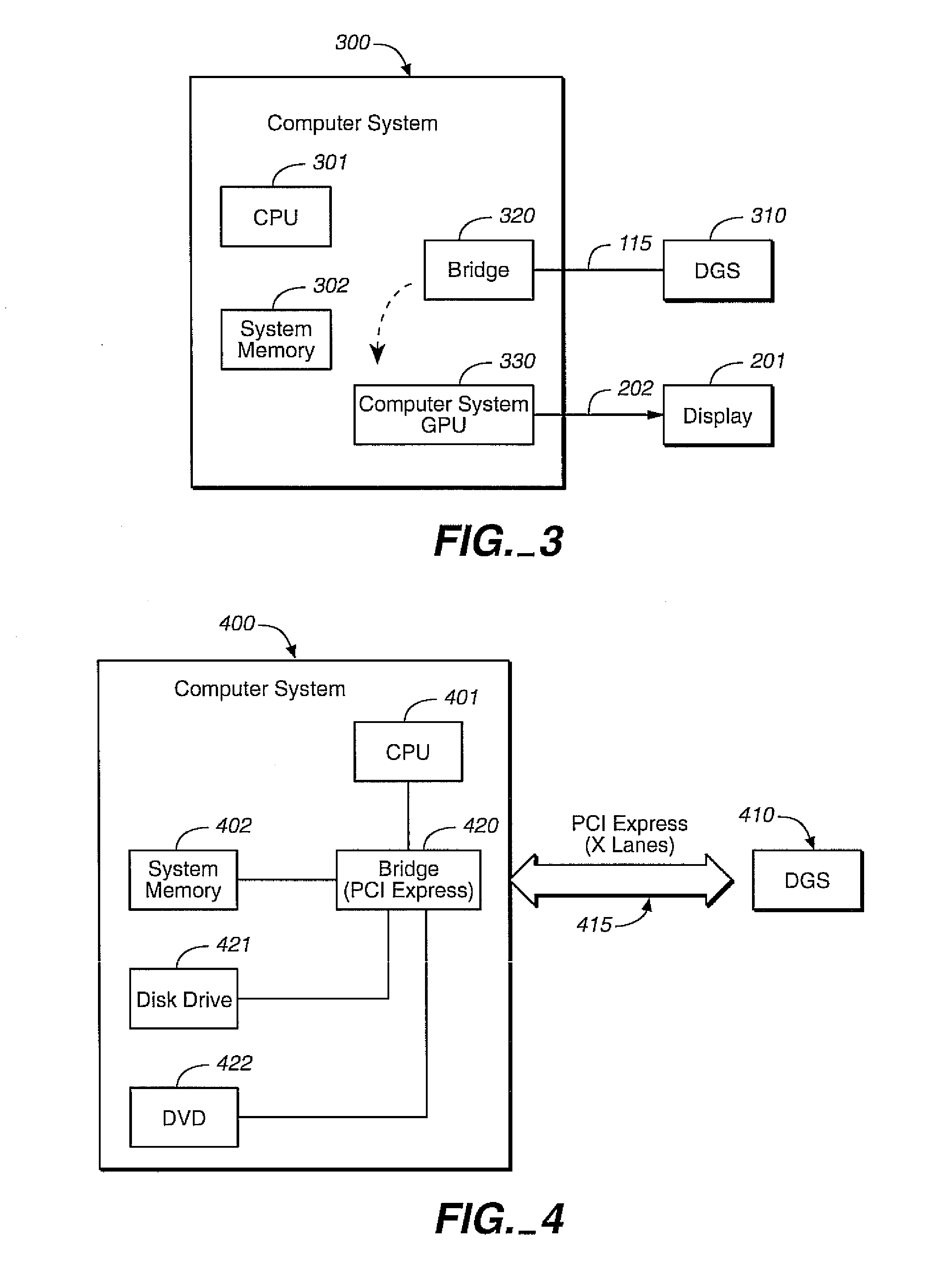

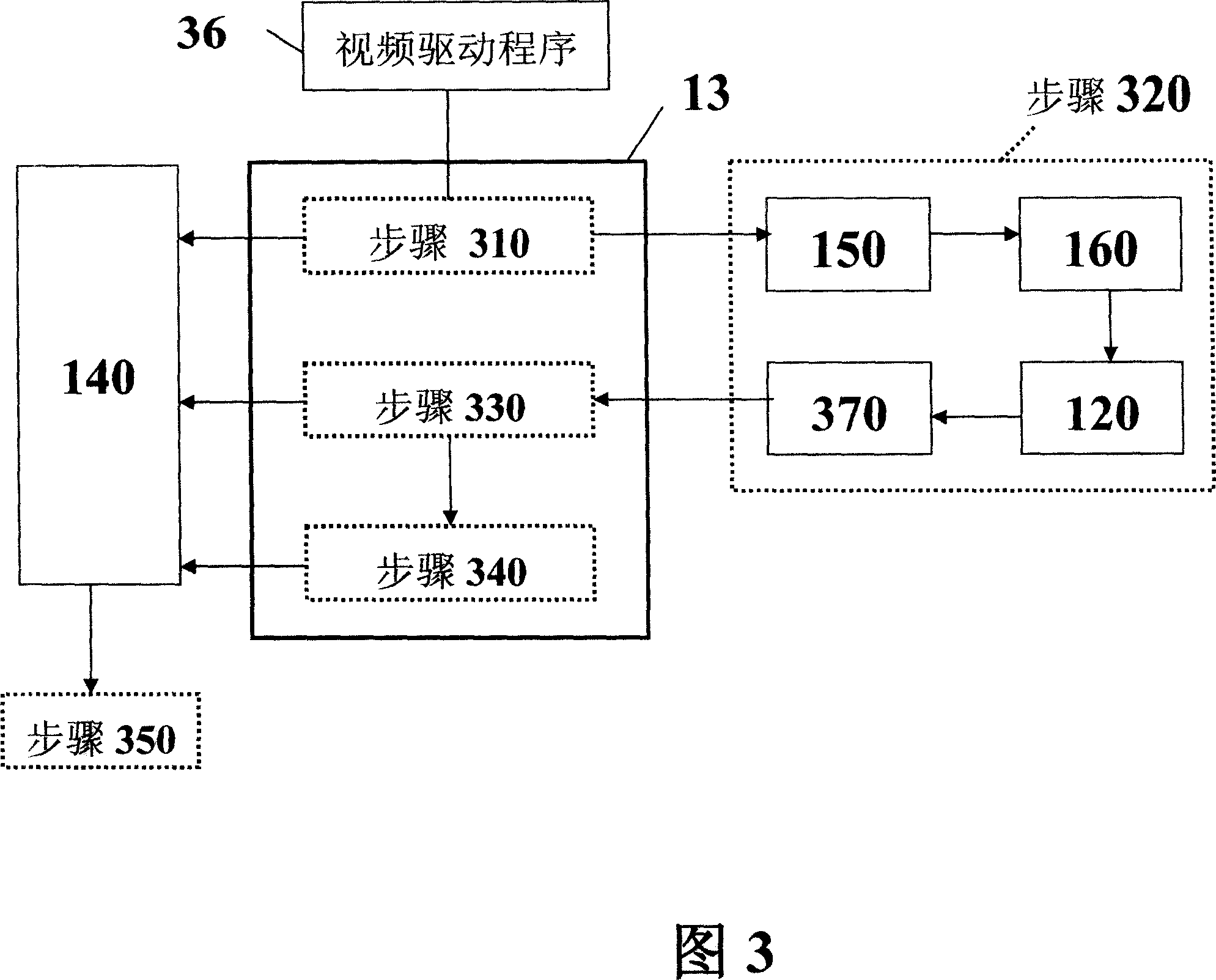

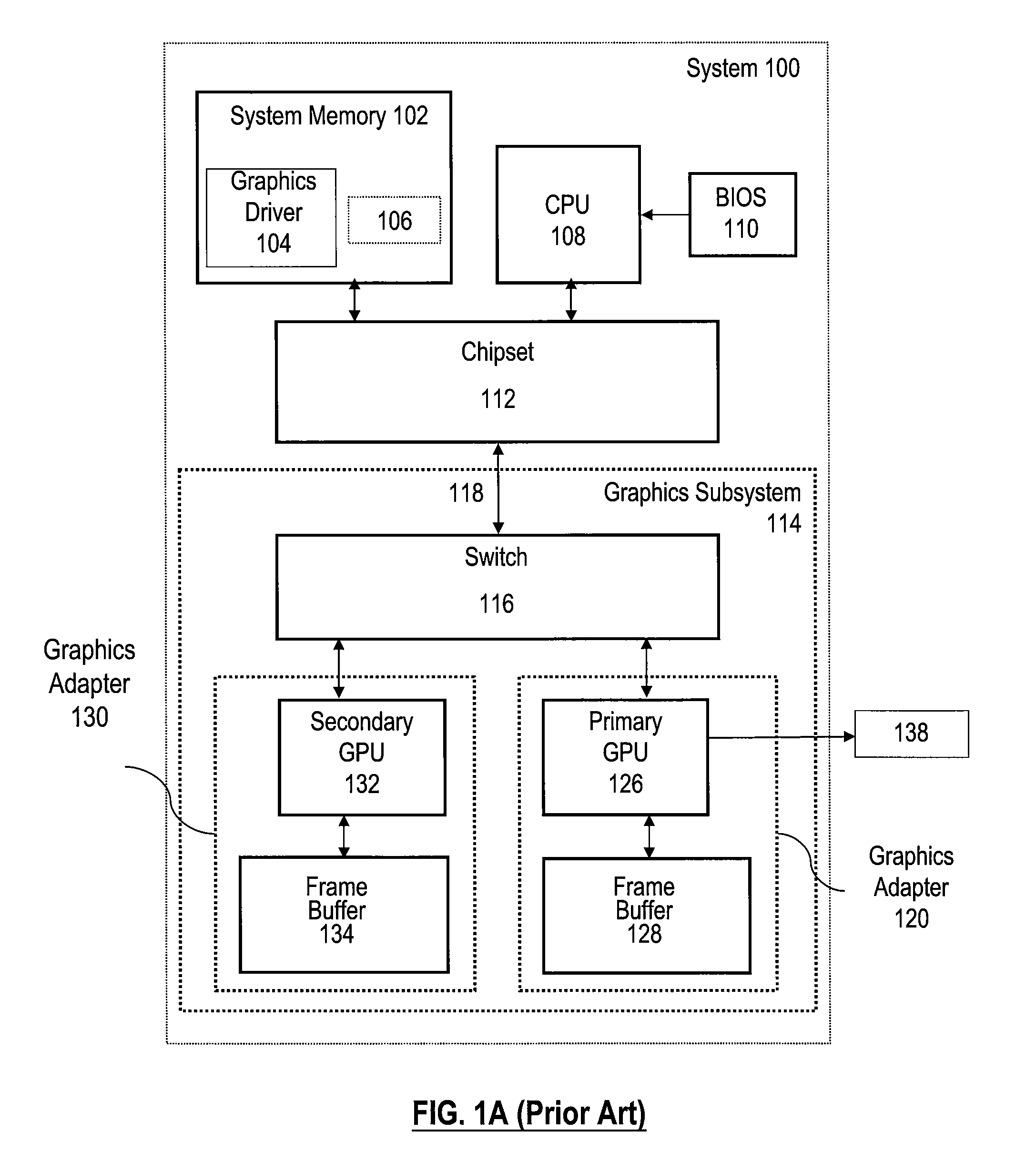

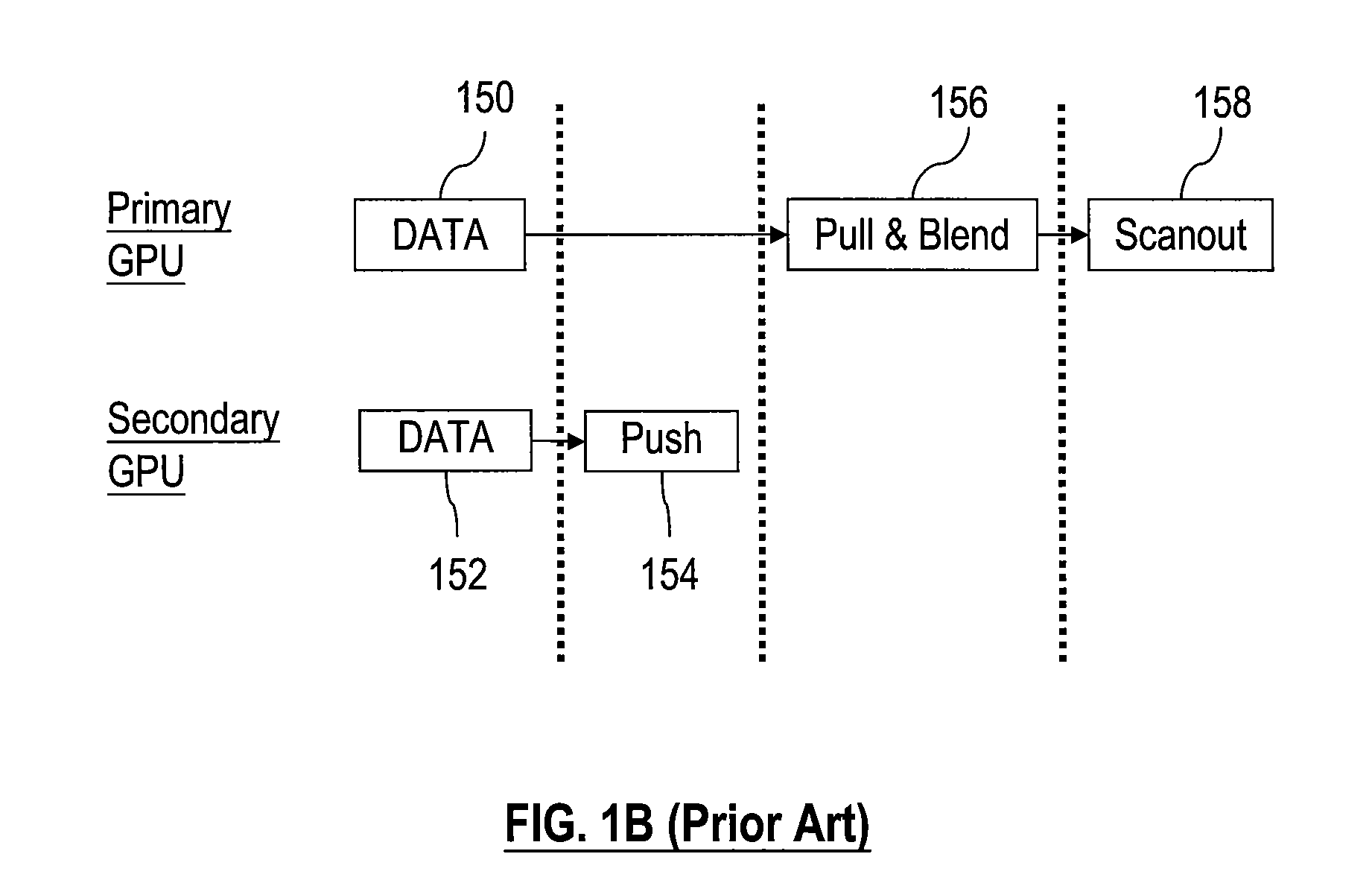

Asymmetric multi-GPU processing

ActiveUS20070195099A1Improve processing efficiencyTelevision system detailsPicture reproducers using cathode ray tubesMulti gpuParallel computing

A system for processing video data includes a host processor, a first media processing device coupled to a first buffer, the first media processing device configured to perform a first processing task on a frame of video data, and a second media processing device coupled to a second buffer, the second media processing device configured to perform a second processing task on the processed frame of video data. The architecture allows the two devices to have asymmetric video processing capabilities. Thus, the first device may advantageously perform a first task, such as decoding, while the second device performs a second task, such as post processing, according to the respective capabilities of each device, thereby increasing processing efficiency relative to prior art systems. Further, one driver may be used for both devices, enabling applications to take advantage of the system's accelerated processing capabilities without requiring code changes.

Owner:NVIDIA CORP

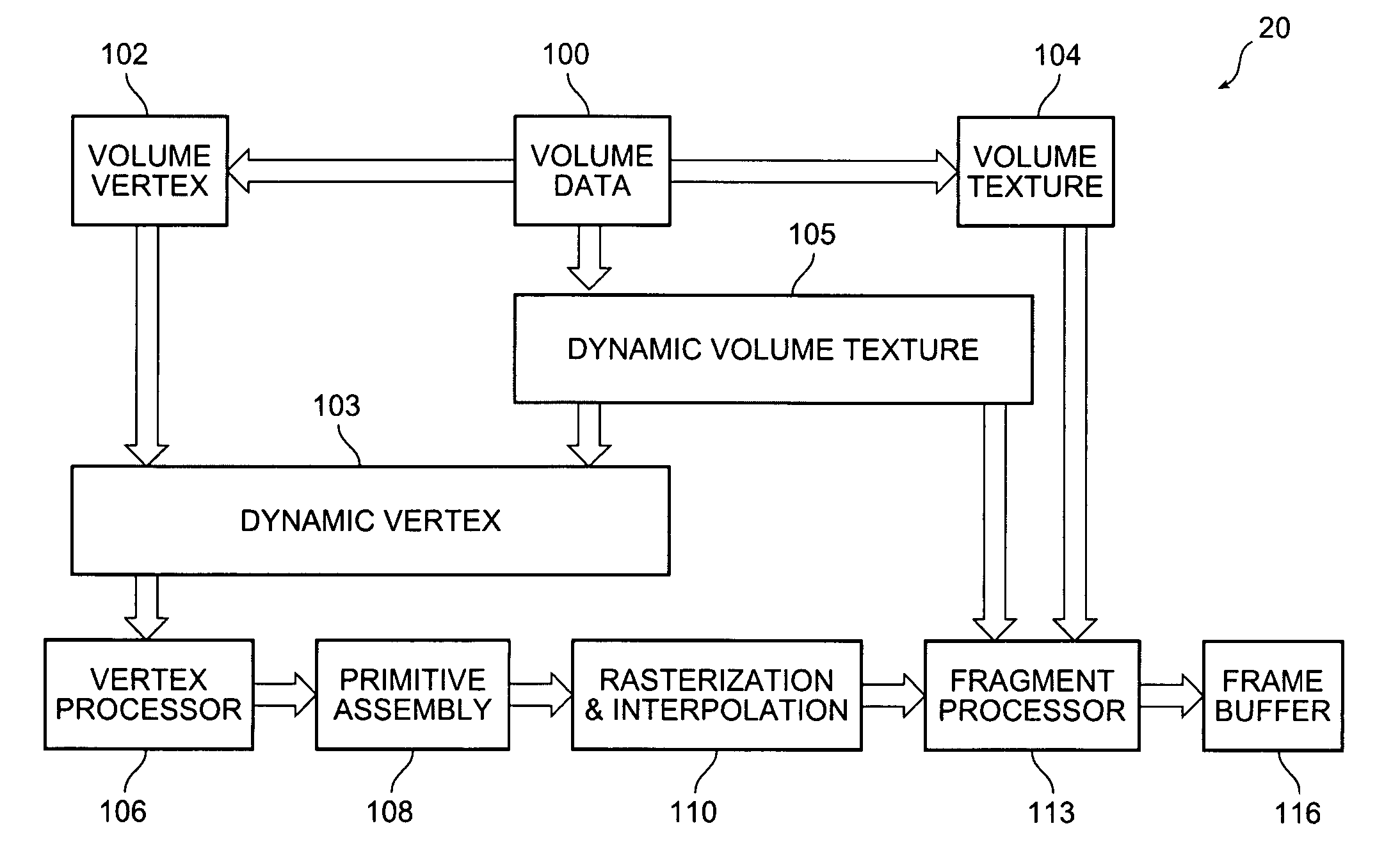

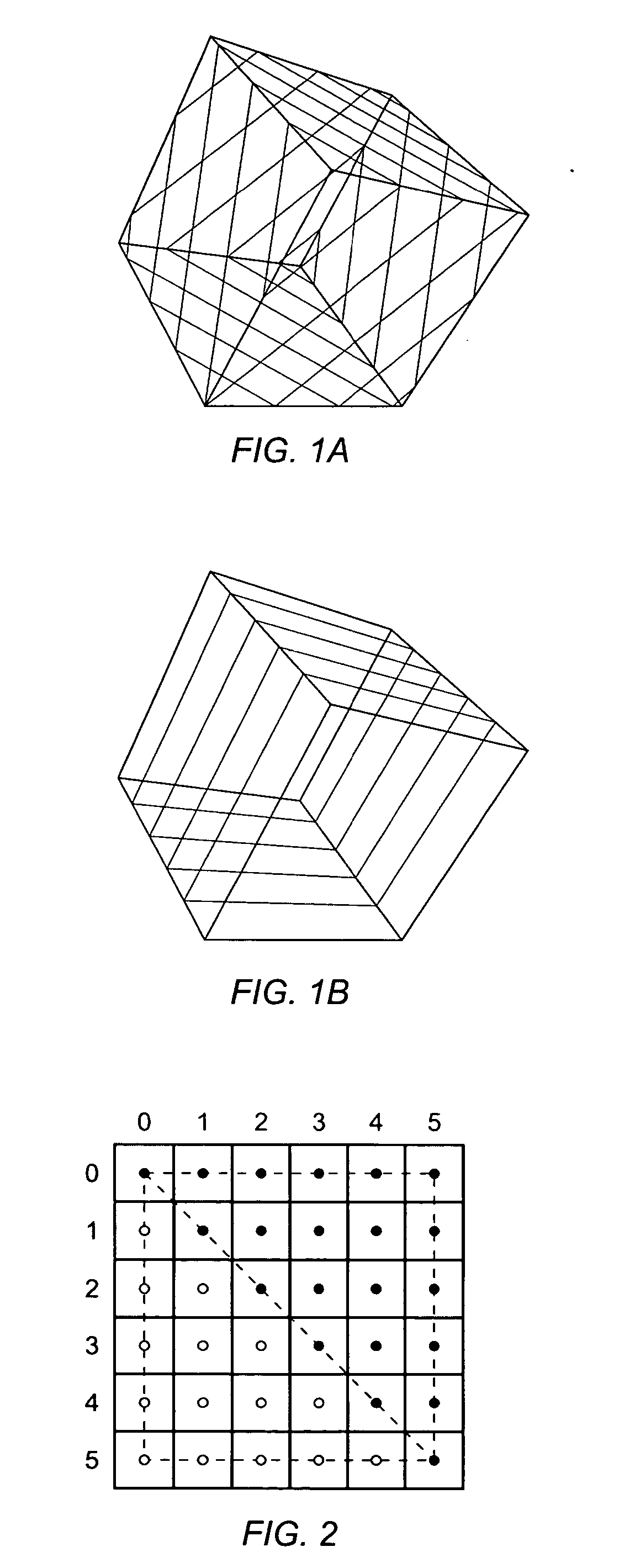

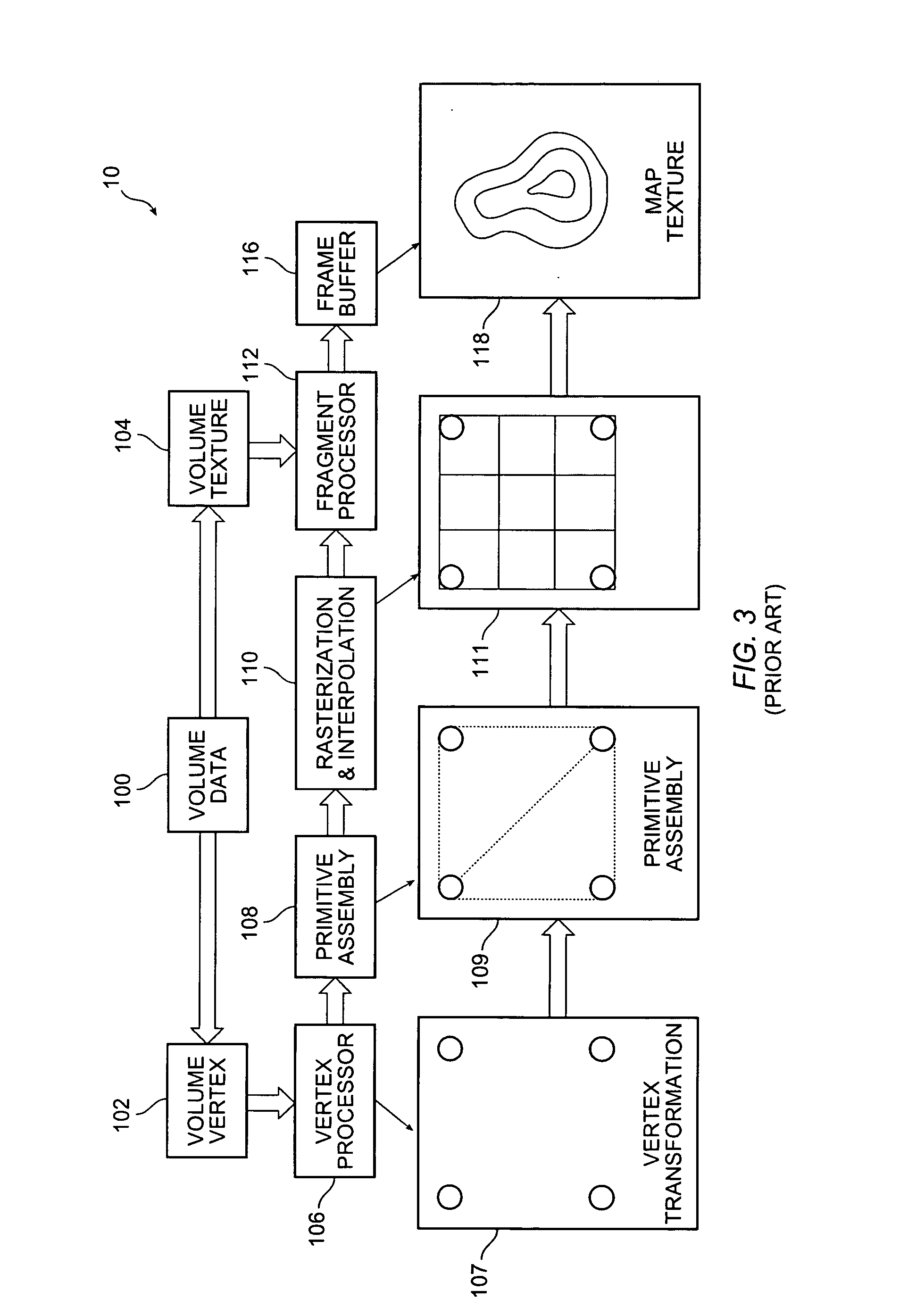

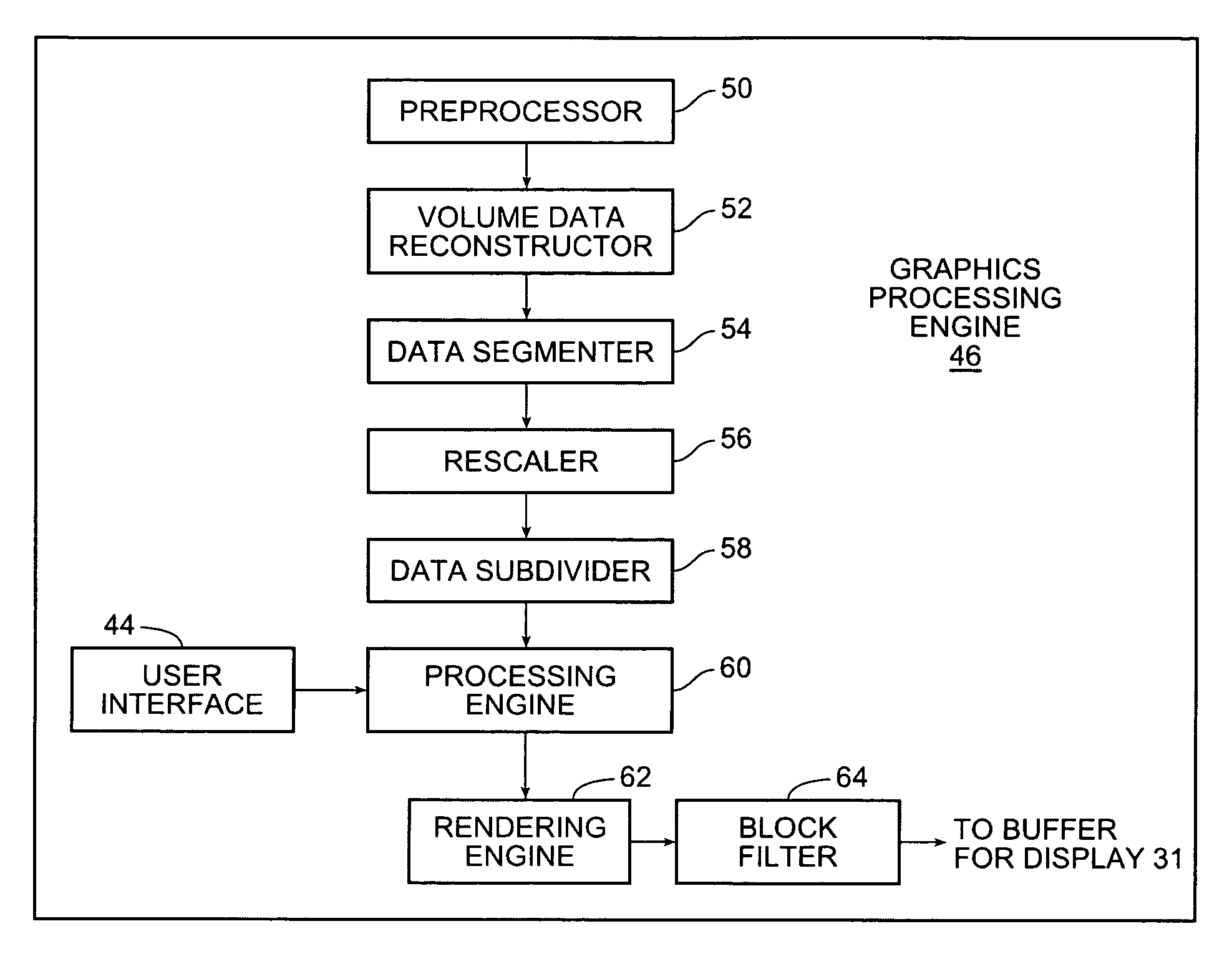

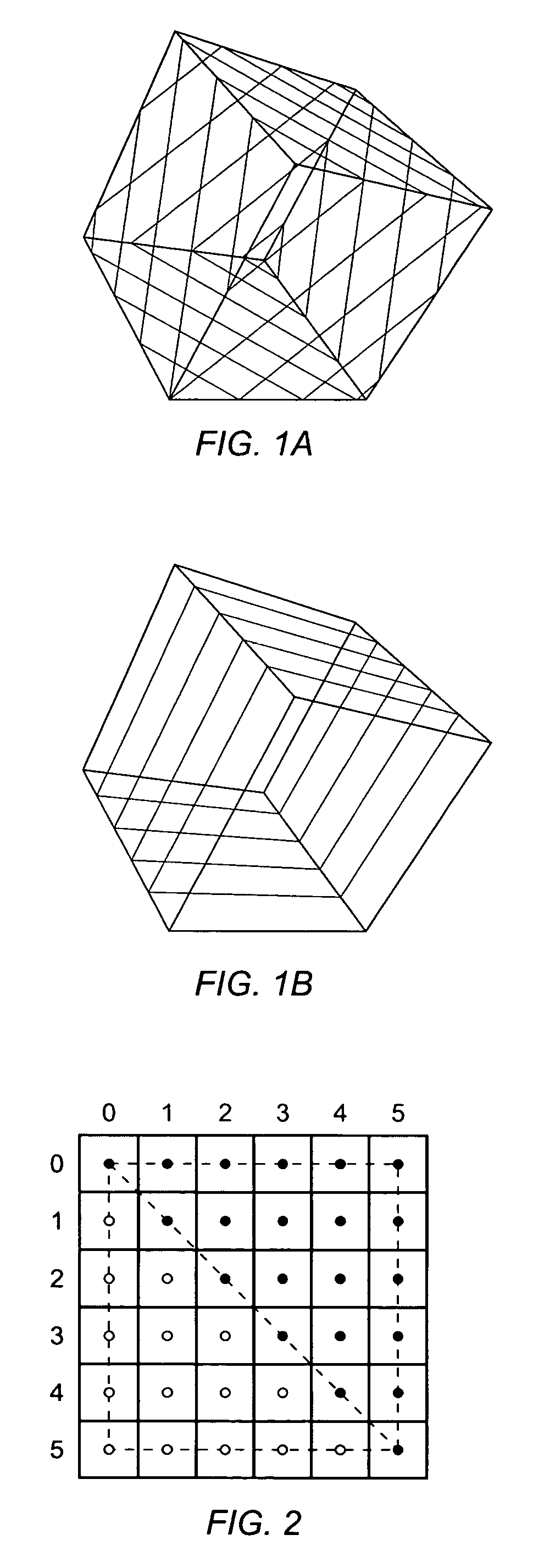

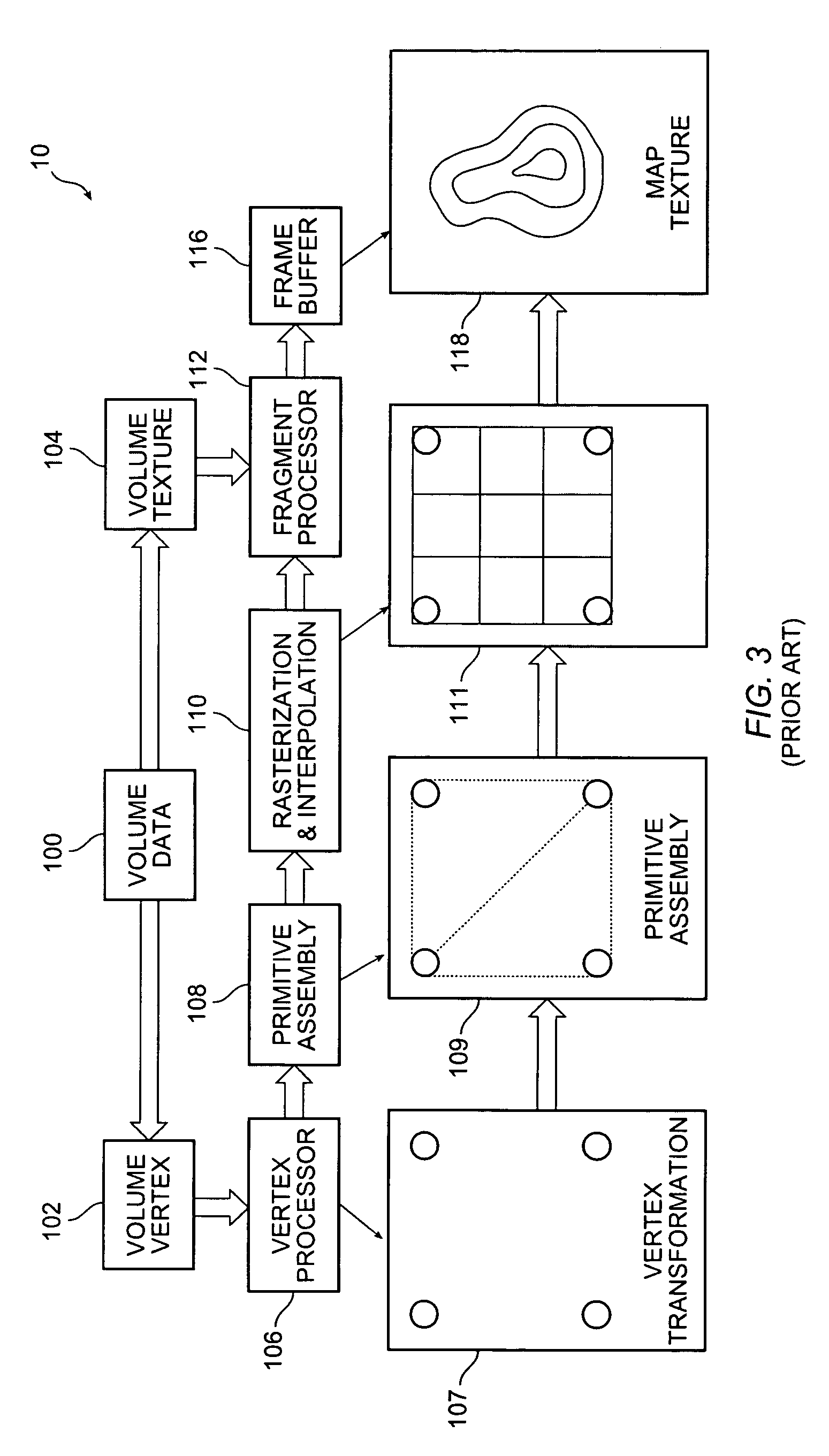

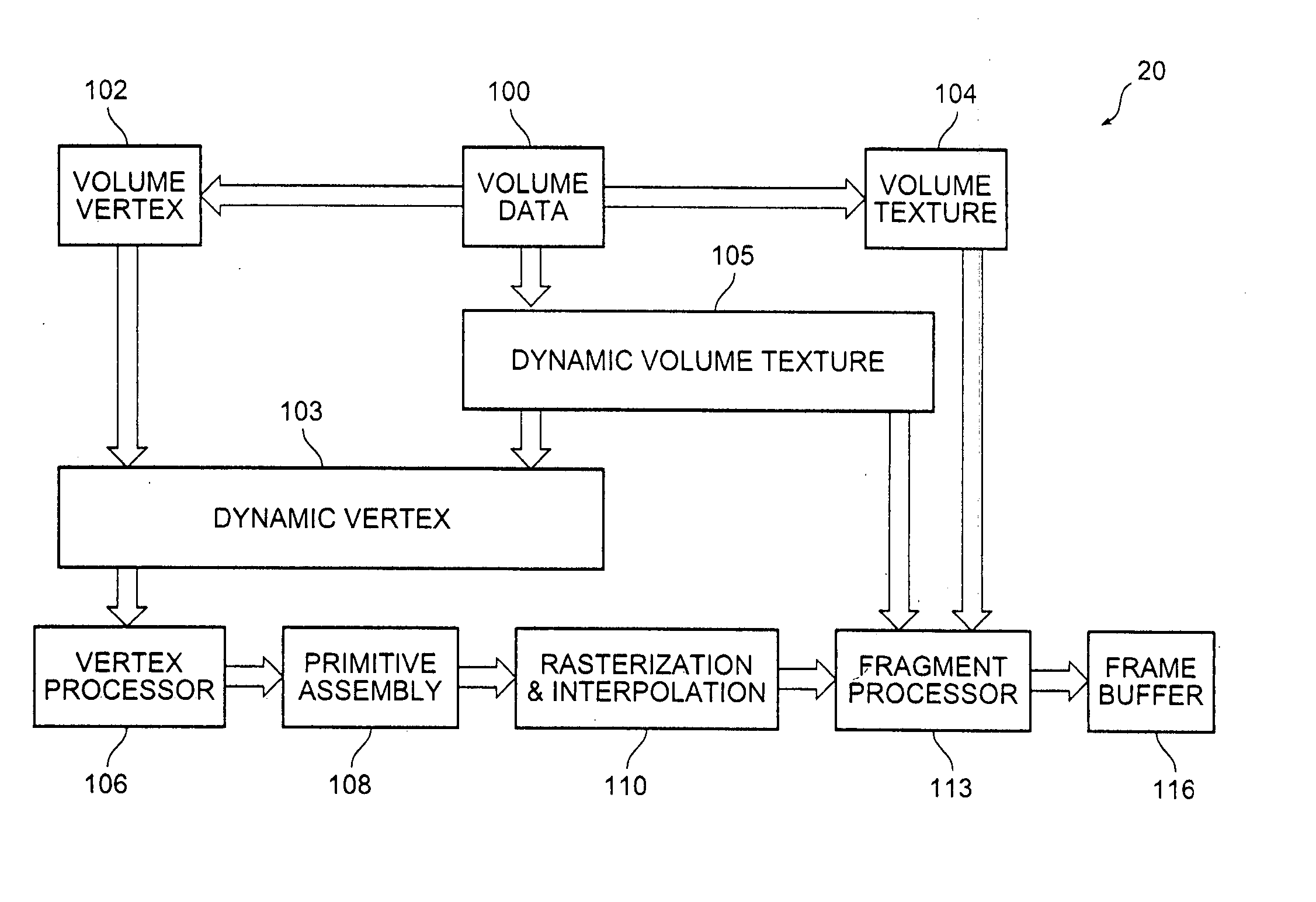

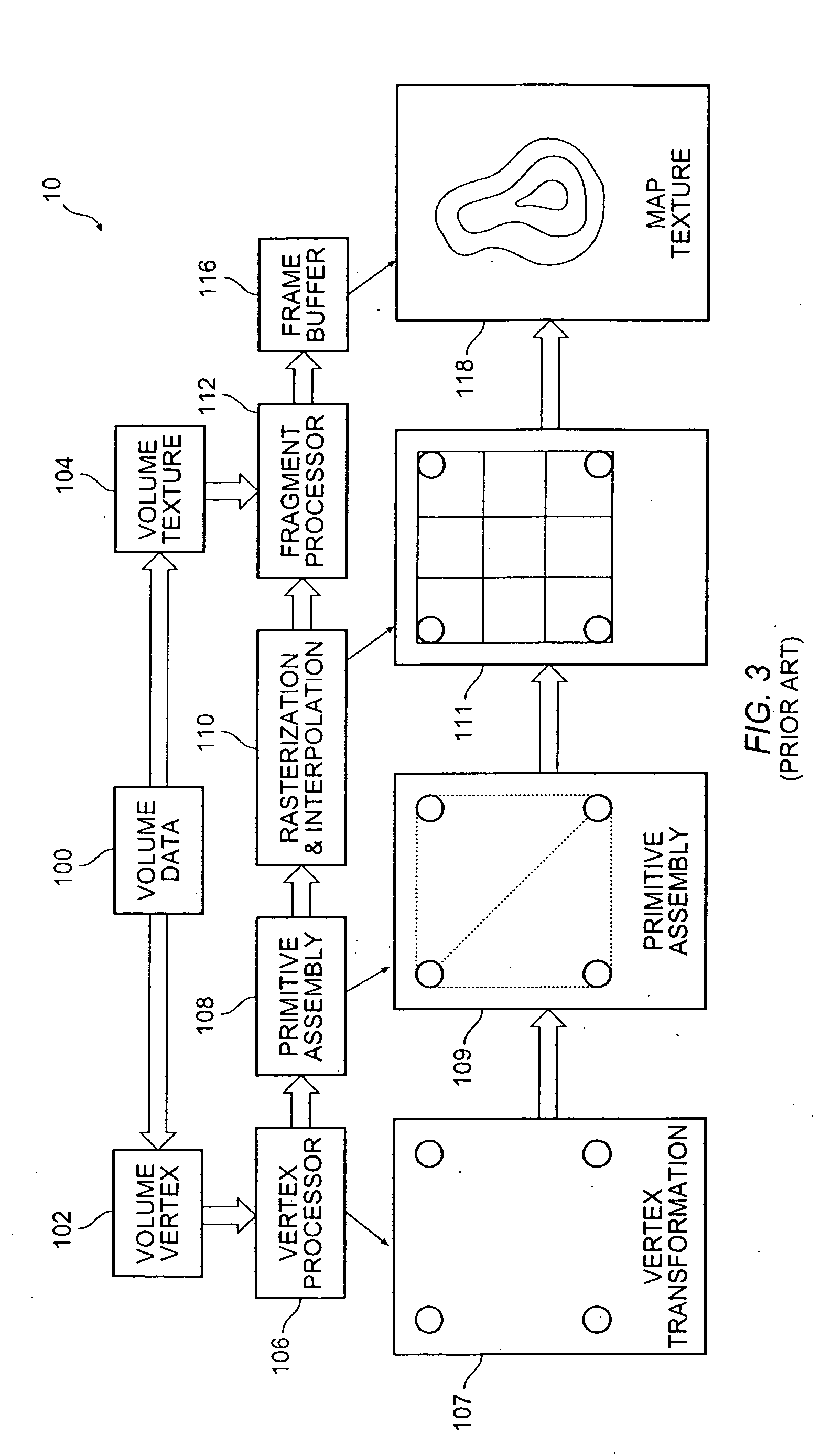

Block-based fragment filtration with feasible multi-GPU acceleration for real-time volume rendering on conventional personal computer

ActiveUS20050231503A1Reduce the burden onDetails involving 3D image dataCathode-ray tube indicatorsVoxelFiltration

A computer-based method and system for interactive volume rendering of a large volume data on a conventional personal computer using hardware-accelerated block filtration optimizing uses 3D-textured axis-aligned slices and block filtration. Fragment processing in a rendering pipeline is lessened by passing fragments to various processors selectively in blocks of voxels based on a filtering process operative on slices. The process involves generating a corresponding image texture and performing two-pass rendering, namely a virtual rendering pass and a main rendering pass. Block filtration is divided into static block filtration and dynamic block filtration. The static block filtration locates any view-independent unused signal being passed to a rasterization pipeline. The dynamic block filtration determines any view-dependent unused block generated due to occlusion. Block filtration processes utilize the vertex shader and pixel shader of a GPU in conventional personal computer graphics hardware. The method is for multi-thread, multi-GPU operation.

Owner:THE CHINESE UNIVERSITY OF HONG KONG

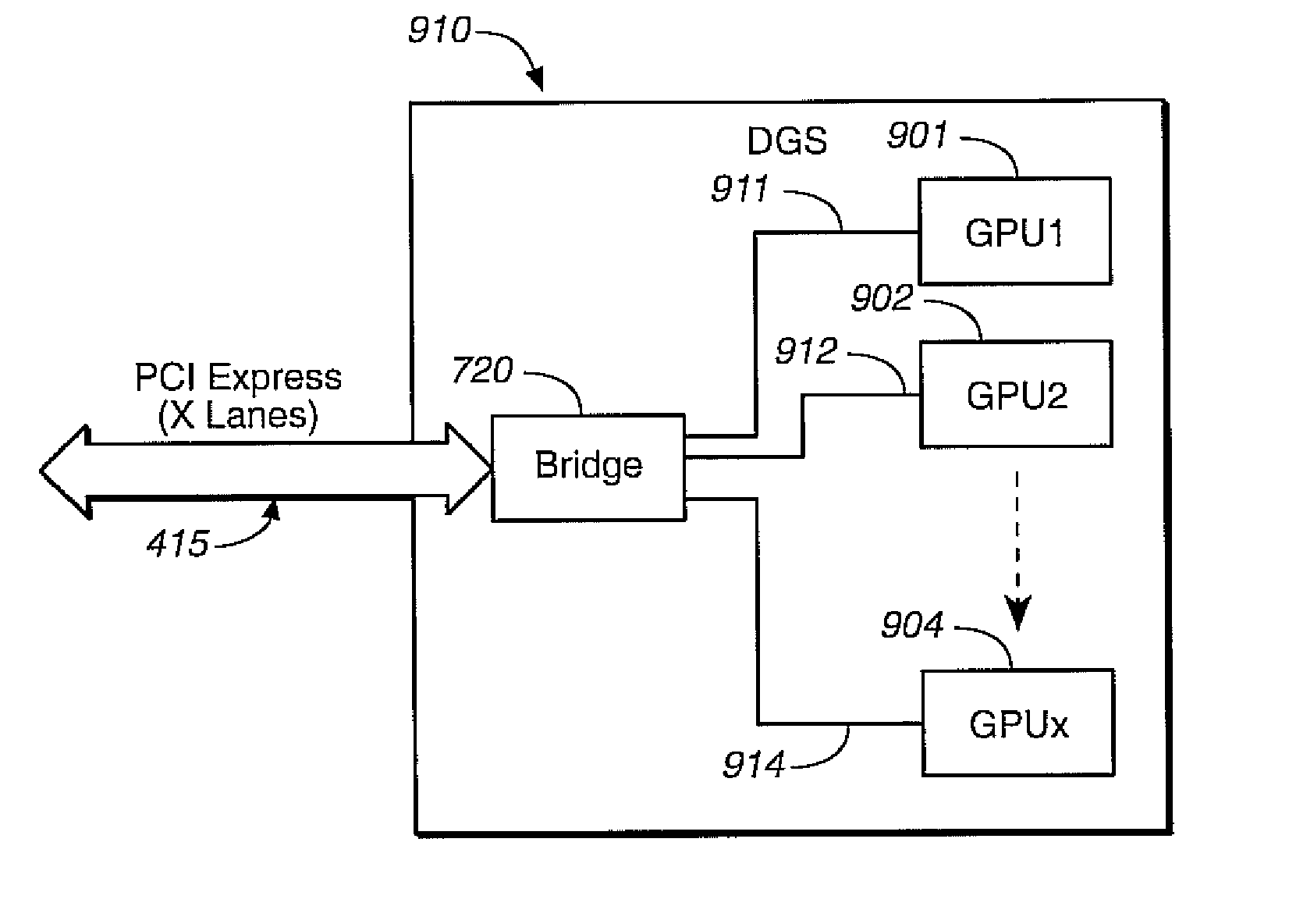

Multiple GPU graphics system for implementing cooperative graphics instruction execution

ActiveUS7663633B1Limit scalabilityCathode-ray tube indicatorsMultiple digital computer combinationsGraphicsMultiplexer

A multiple GPU (graphics processor unit) graphics system is disclosed. The multiple GPU graphics system includes a plurality of GPUs configured to execute graphics instructions from a computer system. A GPU output multiplexer and a controller unit are coupled to the GPUs. The controller unit is configured to control the GPUs and the output multiplexer such that the GPUs cooperatively execute the graphics instructions from the computer system.

Owner:NVIDIA CORP

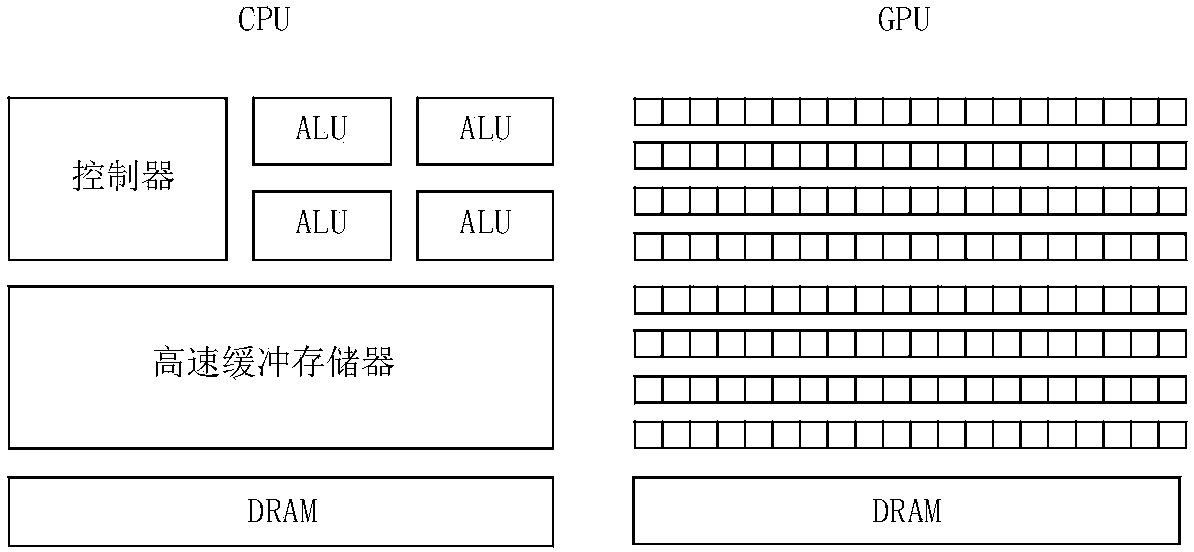

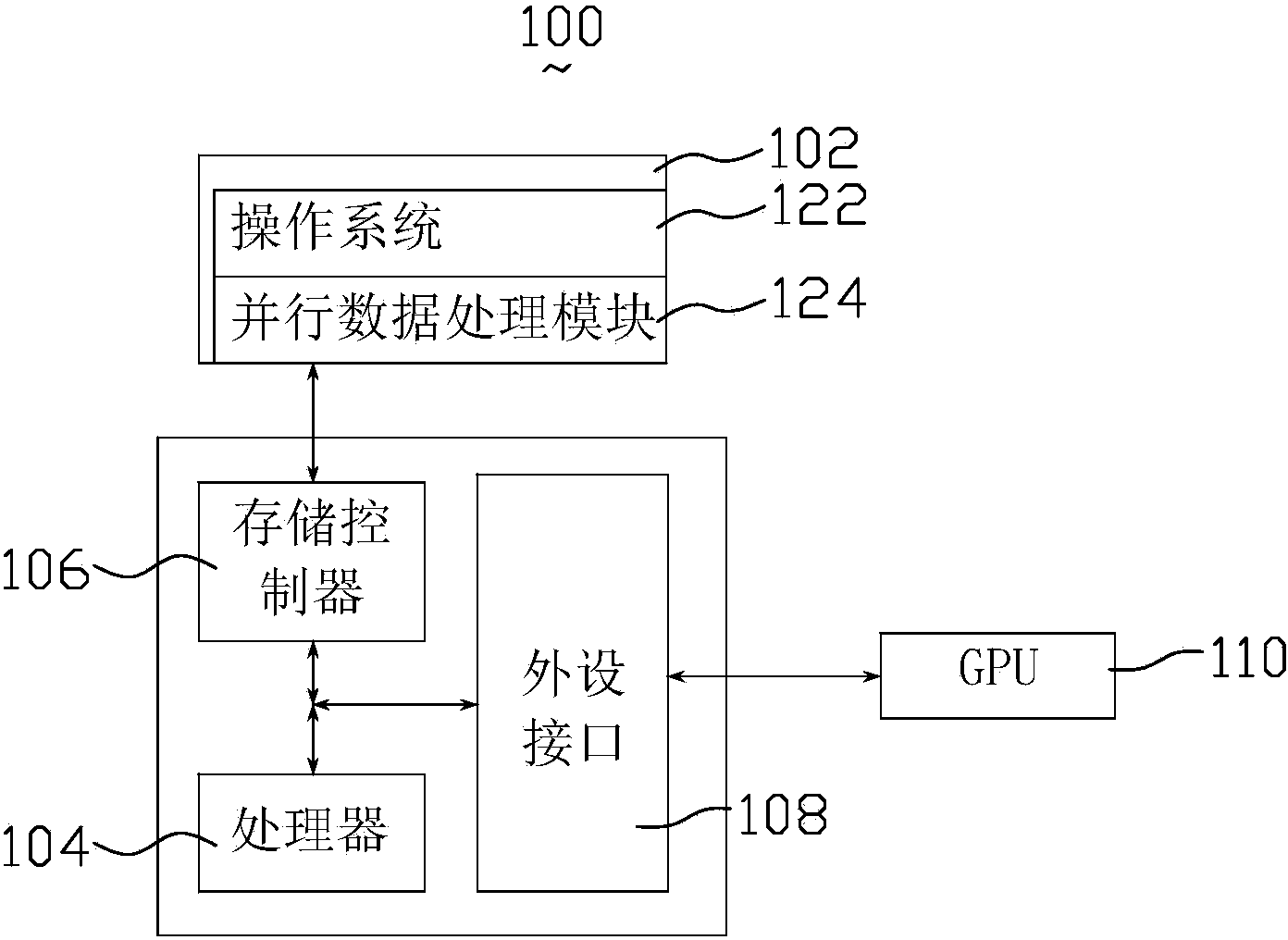

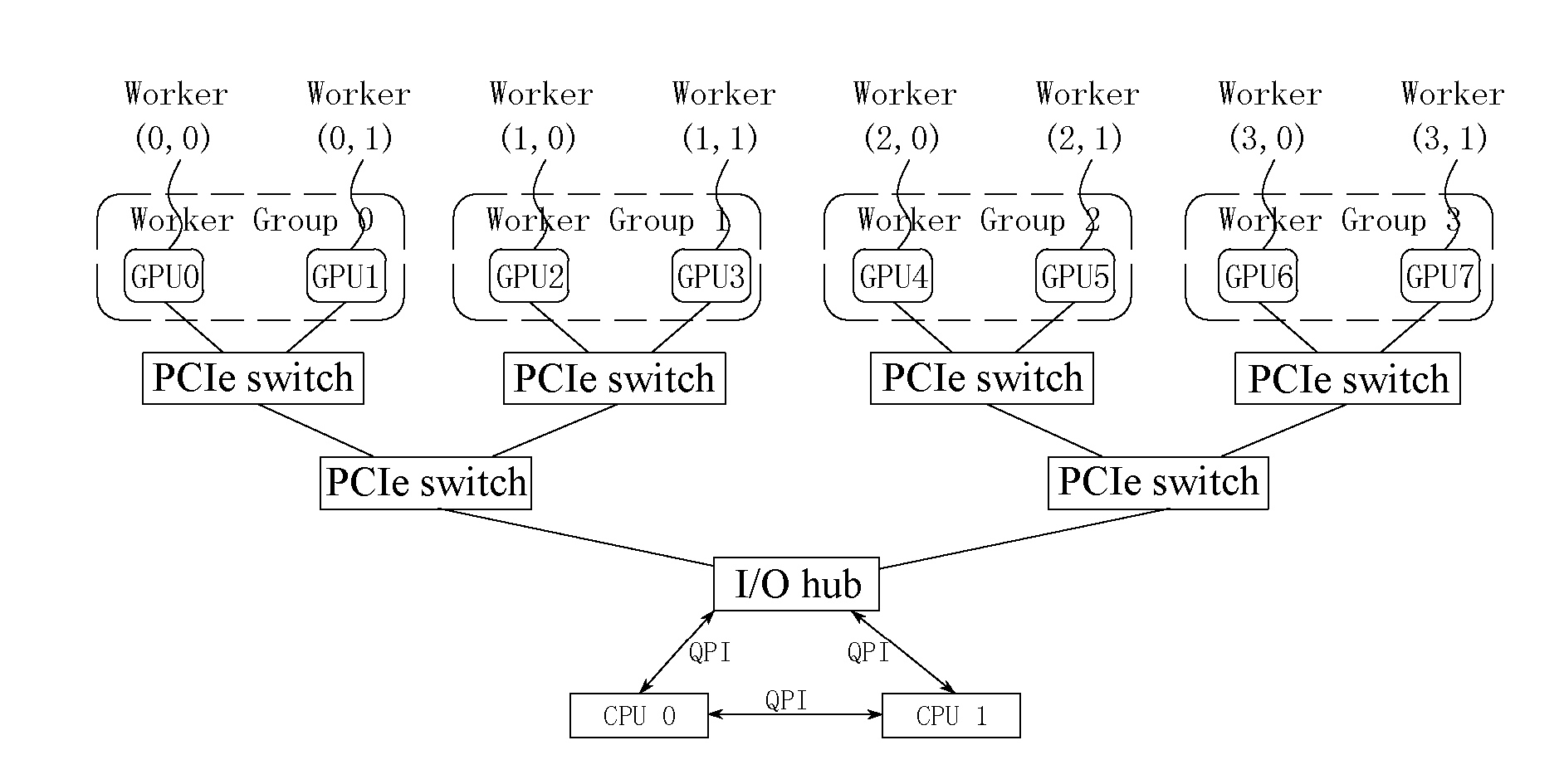

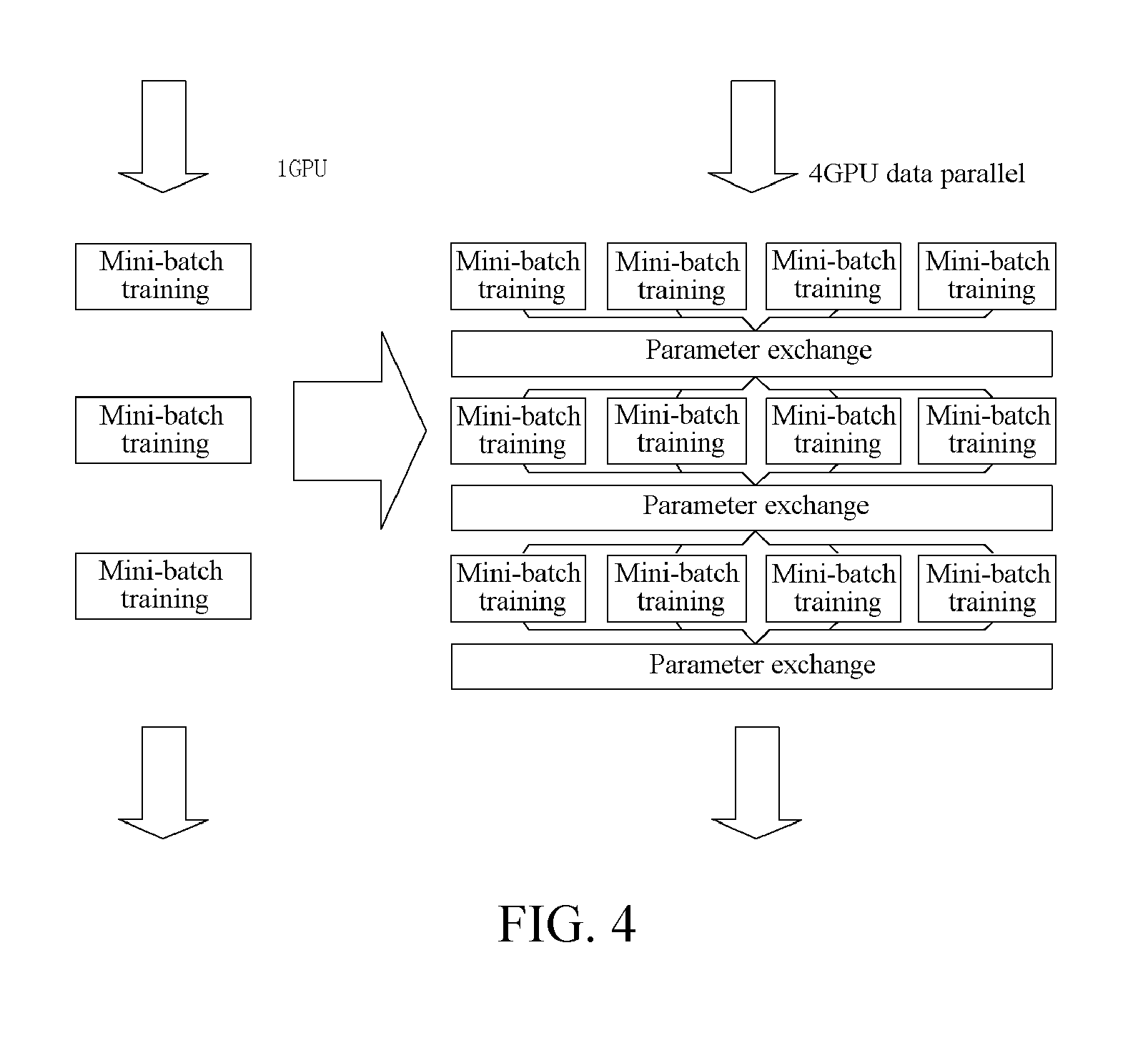

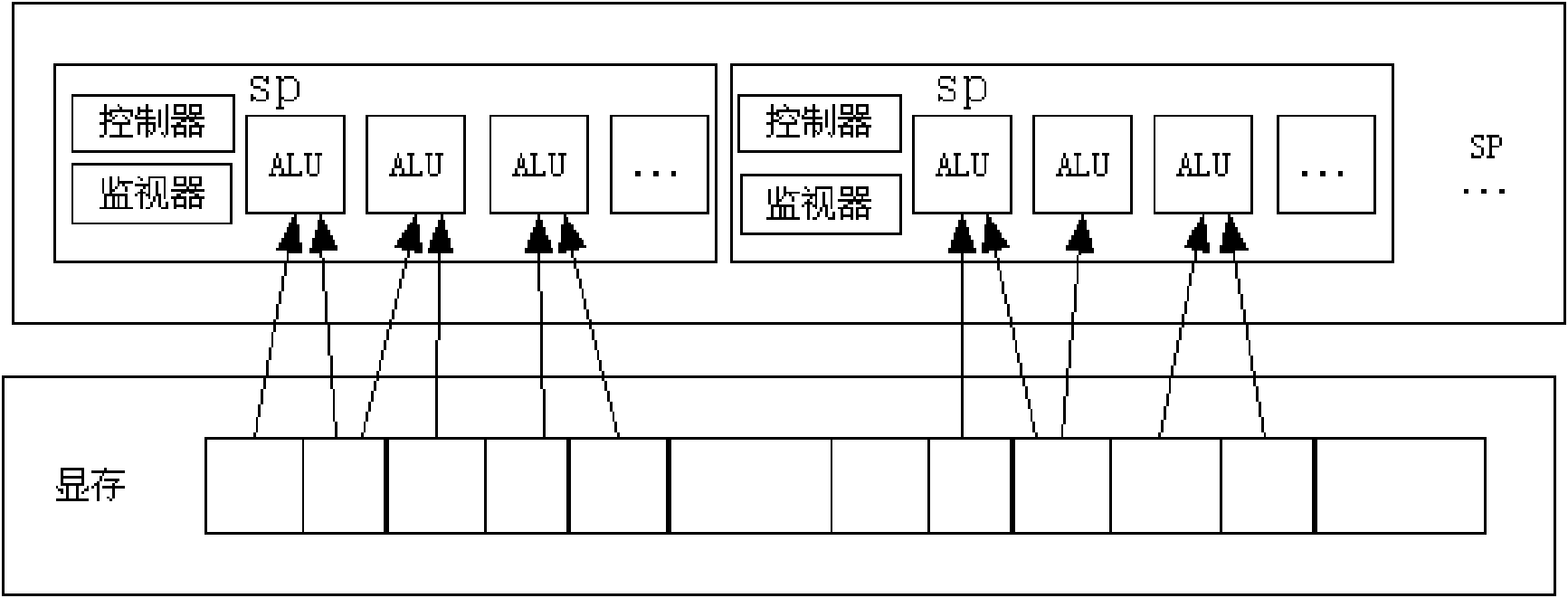

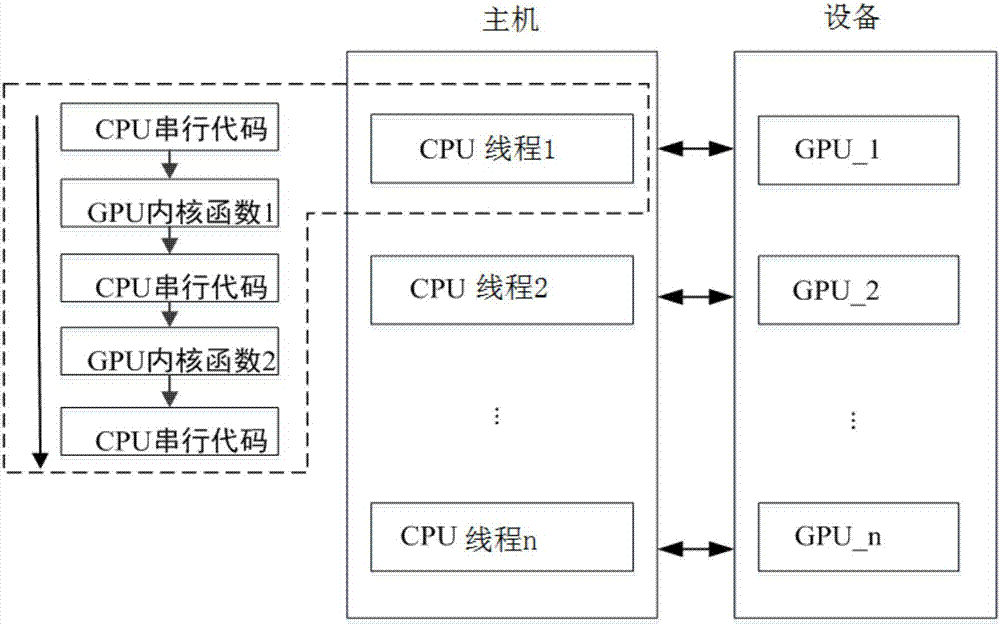

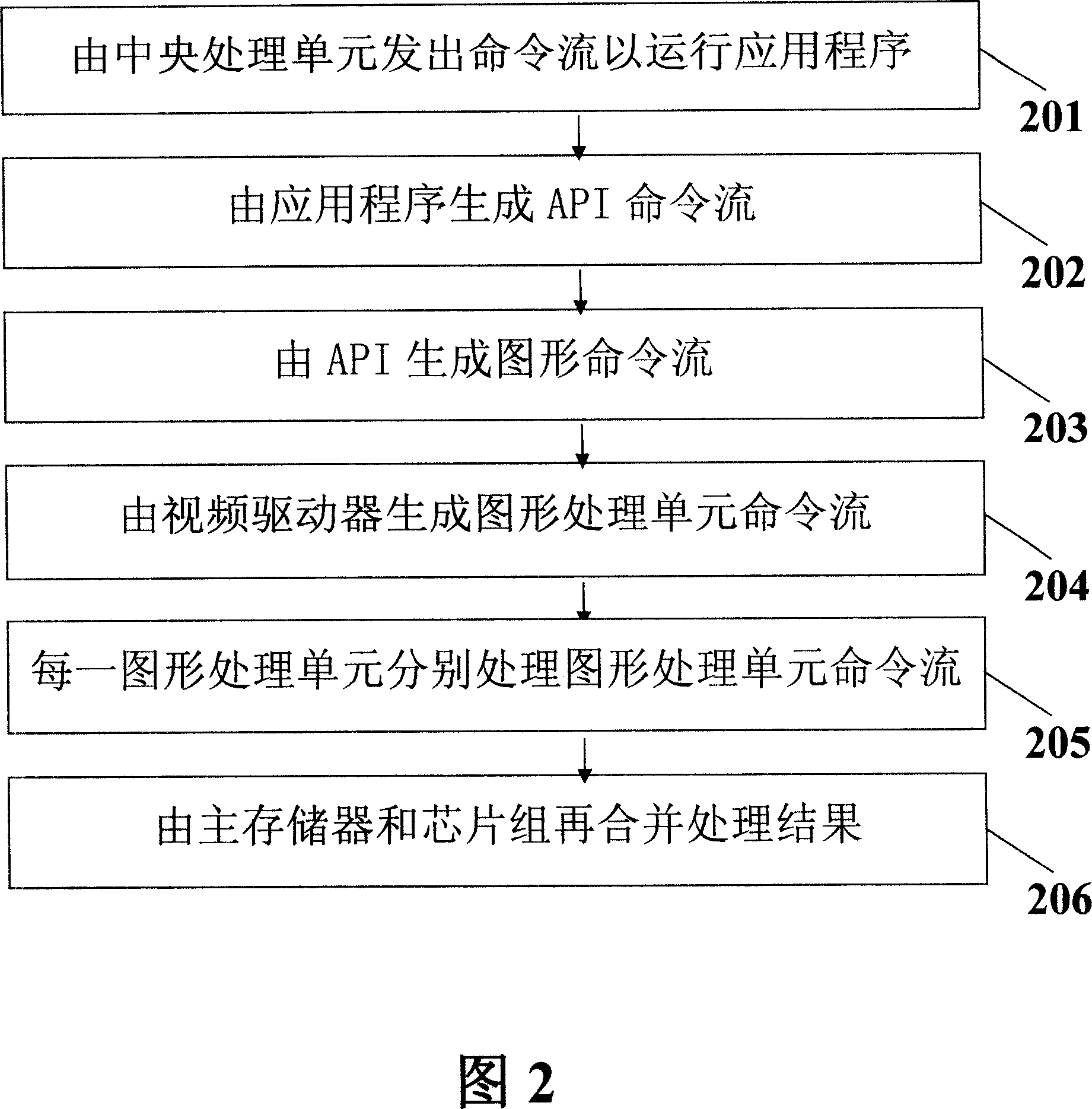

Parallel model processing method and device based on multiple graphics processing units

ActiveCN104036451AImprove processing efficiencyImprove the efficiency of storage accessProcessor architectures/configurationProgram controlGraphicsVideo memory

The invention relates to a parallel model processing method based on multiple graphics processing units (GPUs). The method includes the steps: creating multiple Workers used for respectively controlling multiple Worker Groups in a central processing unit (CPU), wherein each Worker Group comprises the GPUs; binding each Worker with one corresponding GPU; loading one Batch of training data from a nonvolatile memory into a GPU video memory corresponding to one Worker Group; transmitting data, needed by the GPUs for data processing, among the GPUs corresponding to one Worker Group in a Peer to Peer manner; controlling the GPUs to perform data processing in parallel through the Workers. By the method, efficiency of parallel data processing of the GPUs can be improved. Besides, the invention further provides a parallel data processing device.

Owner:SHENZHEN TENCENT COMP SYST CO LTD

Efficient multi-chip GPU

ActiveUS7616206B1Raise the ratioMore scalableCathode-ray tube indicatorsMultiple digital computer combinationsData compressionExtensibility

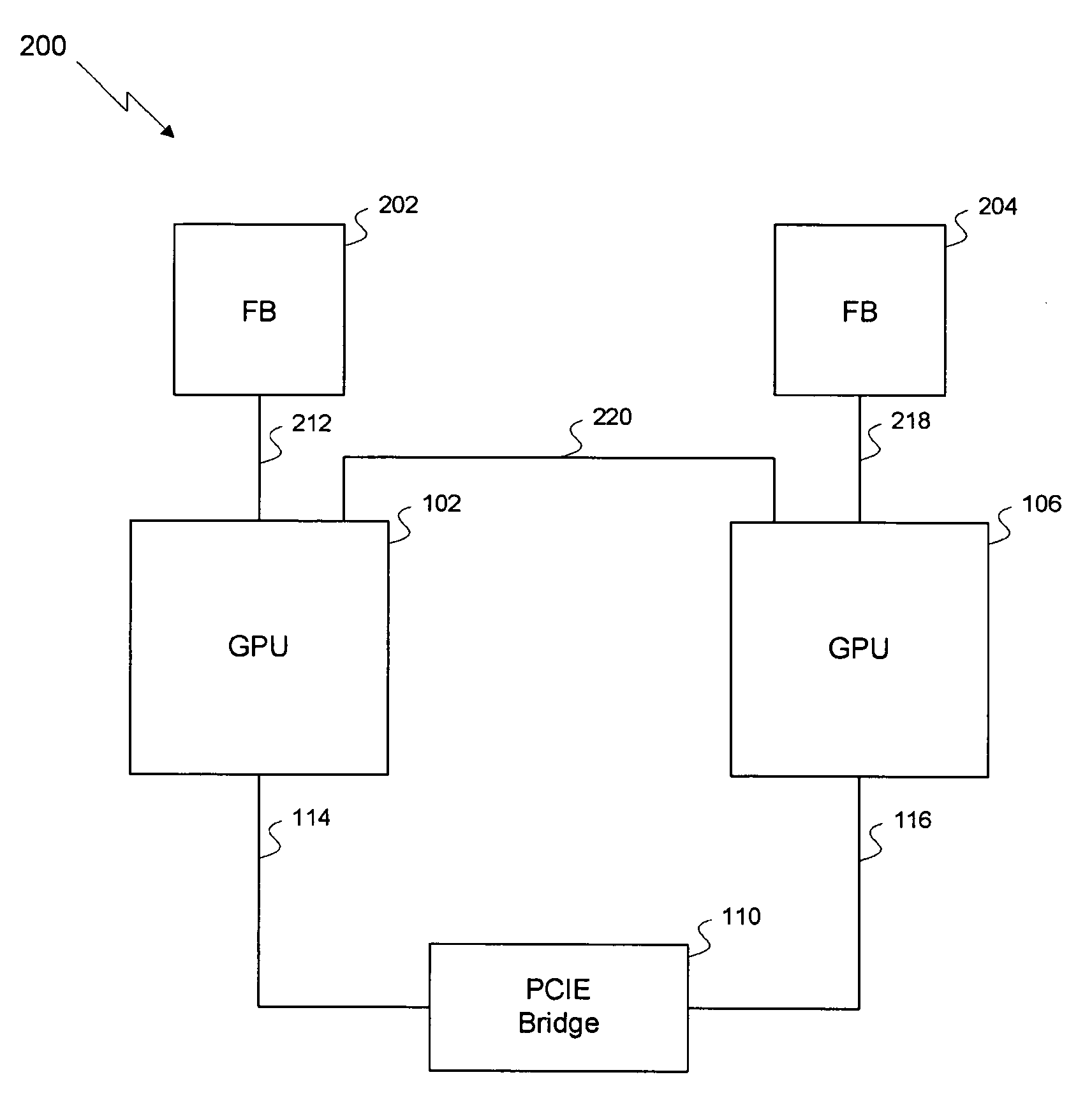

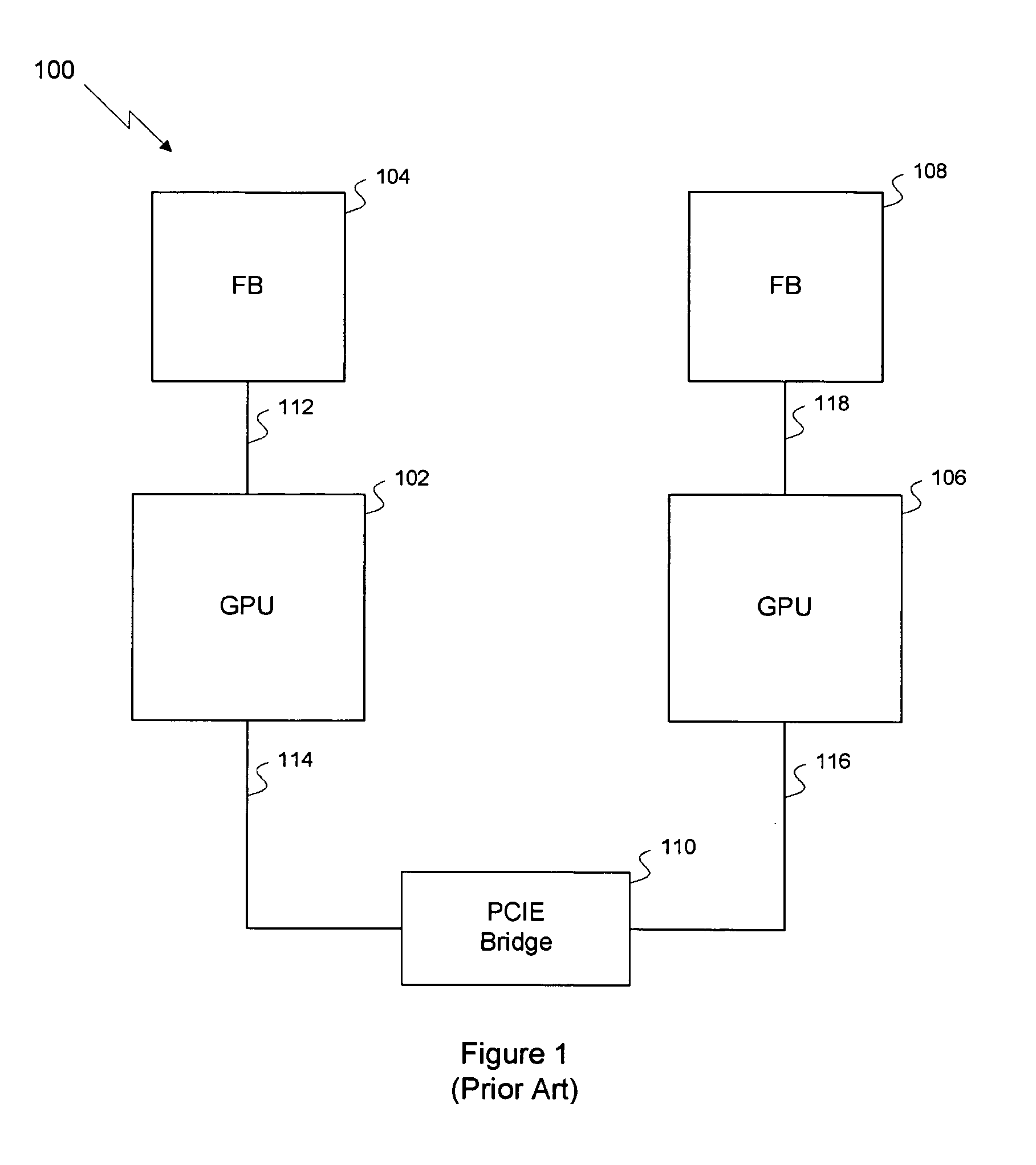

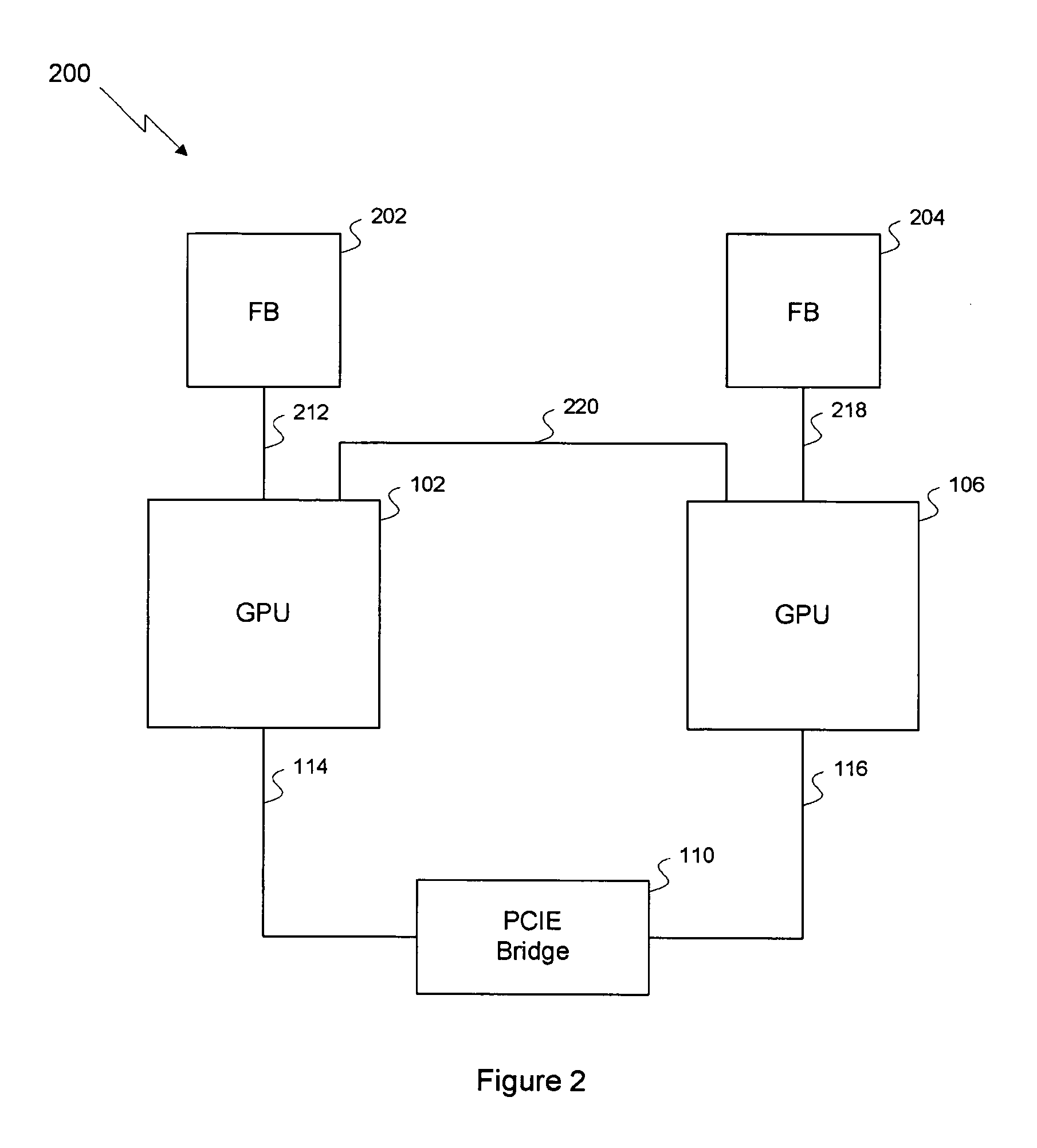

One embodiment of the invention sets forth a technique for efficiently combining two graphics processing units (“GPUs”) to enable an improved price-performance tradeoff and better scalability relative to prior art multi-GPU designs. Each GPU's memory interface is split into a first part coupling the GPU to its respective frame buffer and a second part coupling the GPU directly to the other GPU, creating an inter-GPU private bus. The private bus enables higher bandwidth communications between the GPUs compared to conventional communications through a PCI Express™ bus. Performance and scalability are further improved through render target interleaving; render-to-texture data duplication; data compression; using variable-length packets in GPU-to-GPU transmissions; using the non-data pins of the frame buffer interfaces to transmit data signals; duplicating vertex data, geometry data and push buffer commands across both GPUs; and performing all geometry processing on each GPU.

Owner:NVIDIA CORP

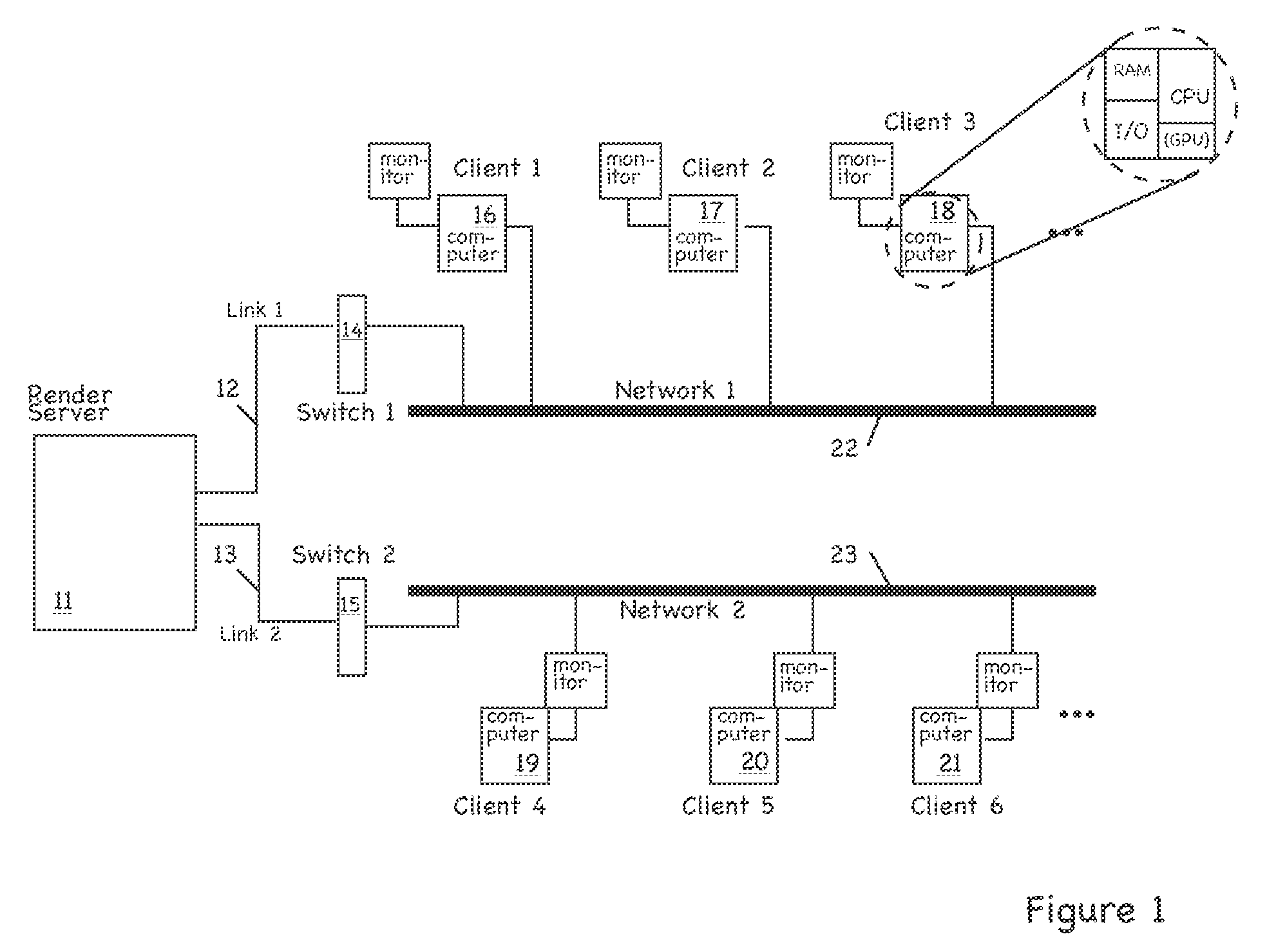

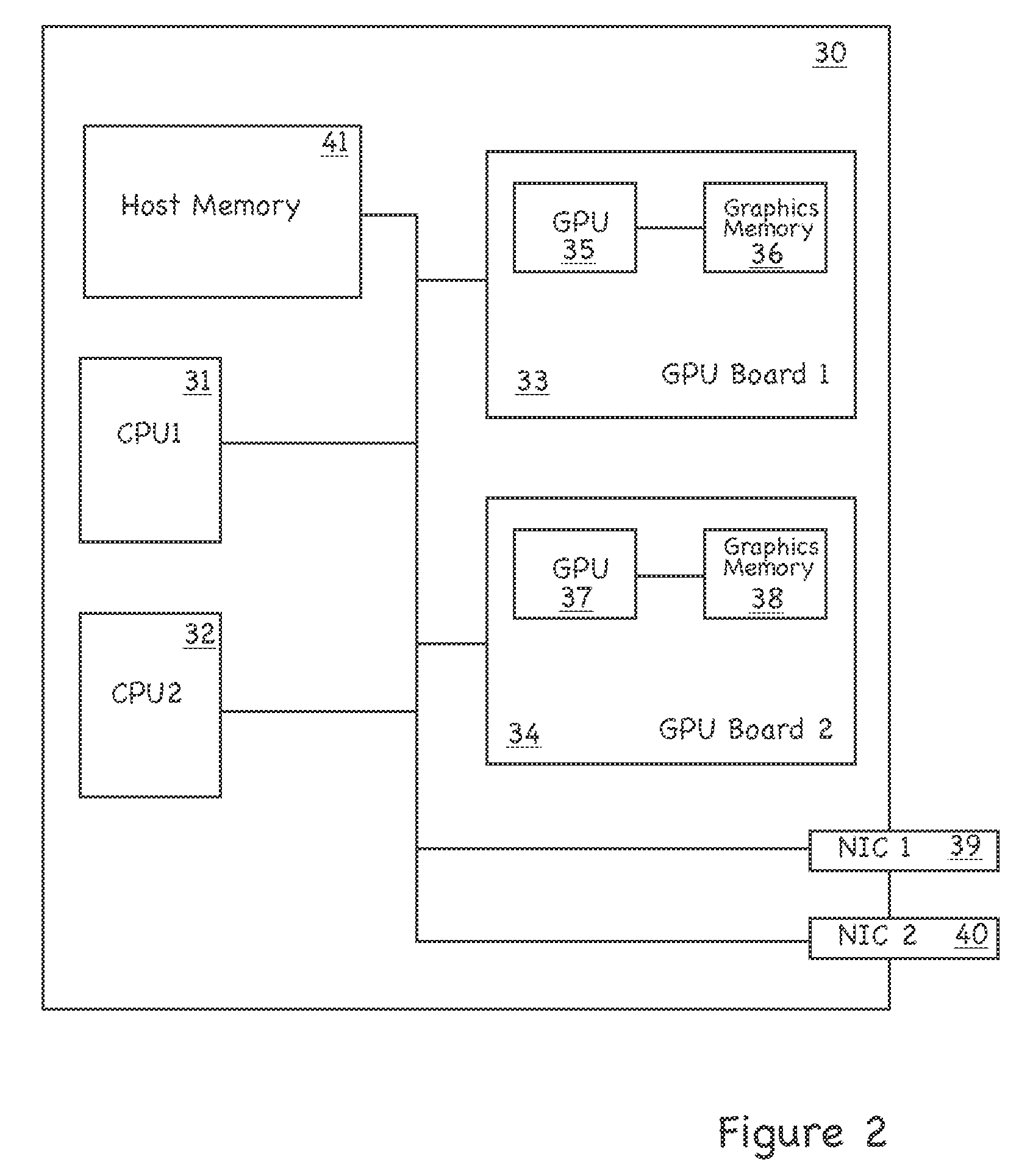

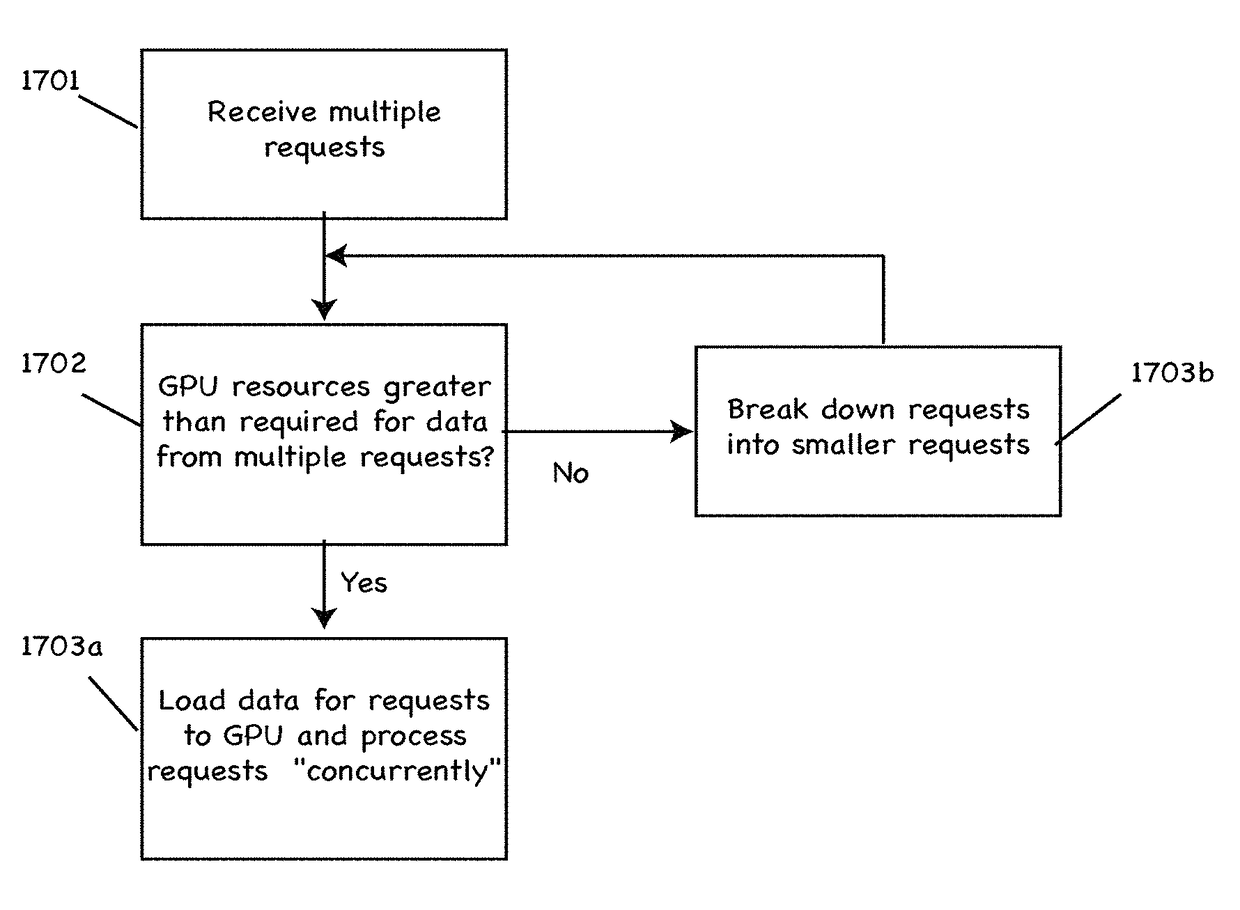

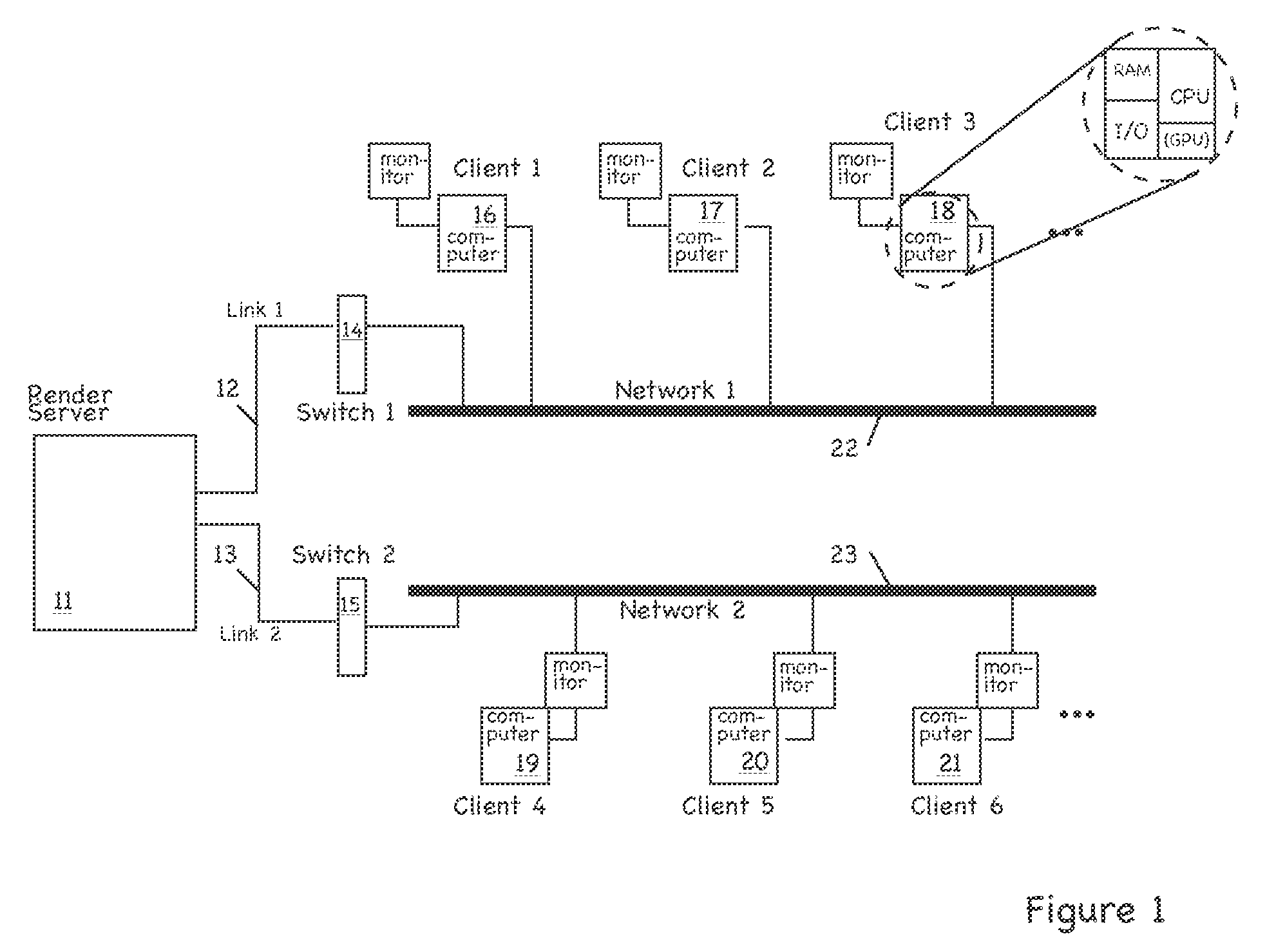

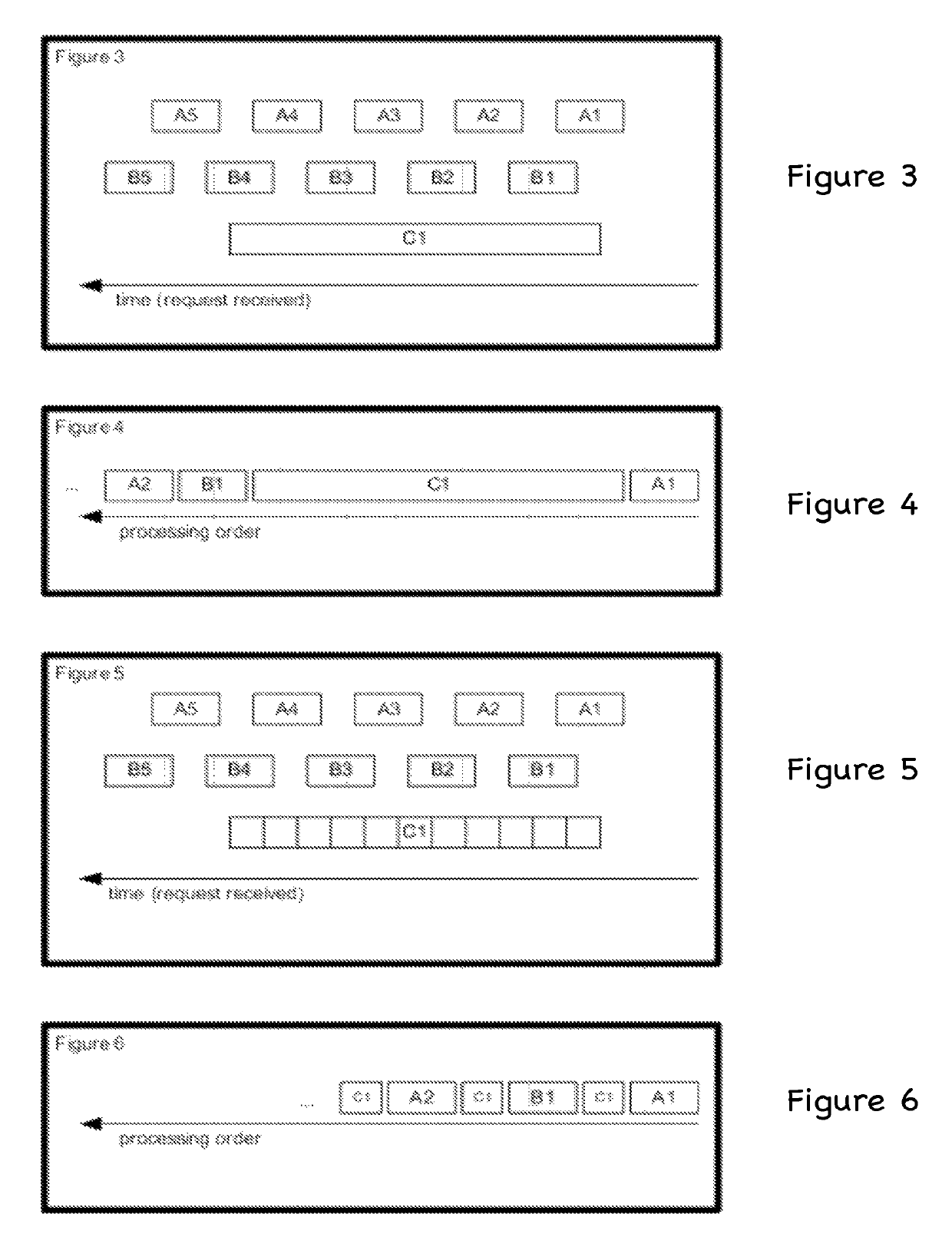

Multi-user multi-GPU render server apparatus and methods

ActiveUS8319781B2Minimize timeLess computation timeMultiprogramming arrangementsCathode-ray tube indicatorsGraphicsDigital data

The invention provides, in some aspects, a system for rendering images, the system having one or more client digital data processors and a server digital data processor in communications coupling with the one or more client digital data processors, the server digital data processor having one or more graphics processing units. The system additionally comprises a render server module executing on the server digital data processor and in communications coupling with the graphics processing units, where the render server module issues a command in response to a request from a first client digital data processor. The graphics processing units on the server digital data processor simultaneously process image data in response to interleaved commands from (i) the render server module on behalf of the first client digital data processor, and (ii) one or more requests from (a) the render server module on behalf of any of the other client digital data processors, and (b) other functionality on the server digital data processor.

Owner:PME IP

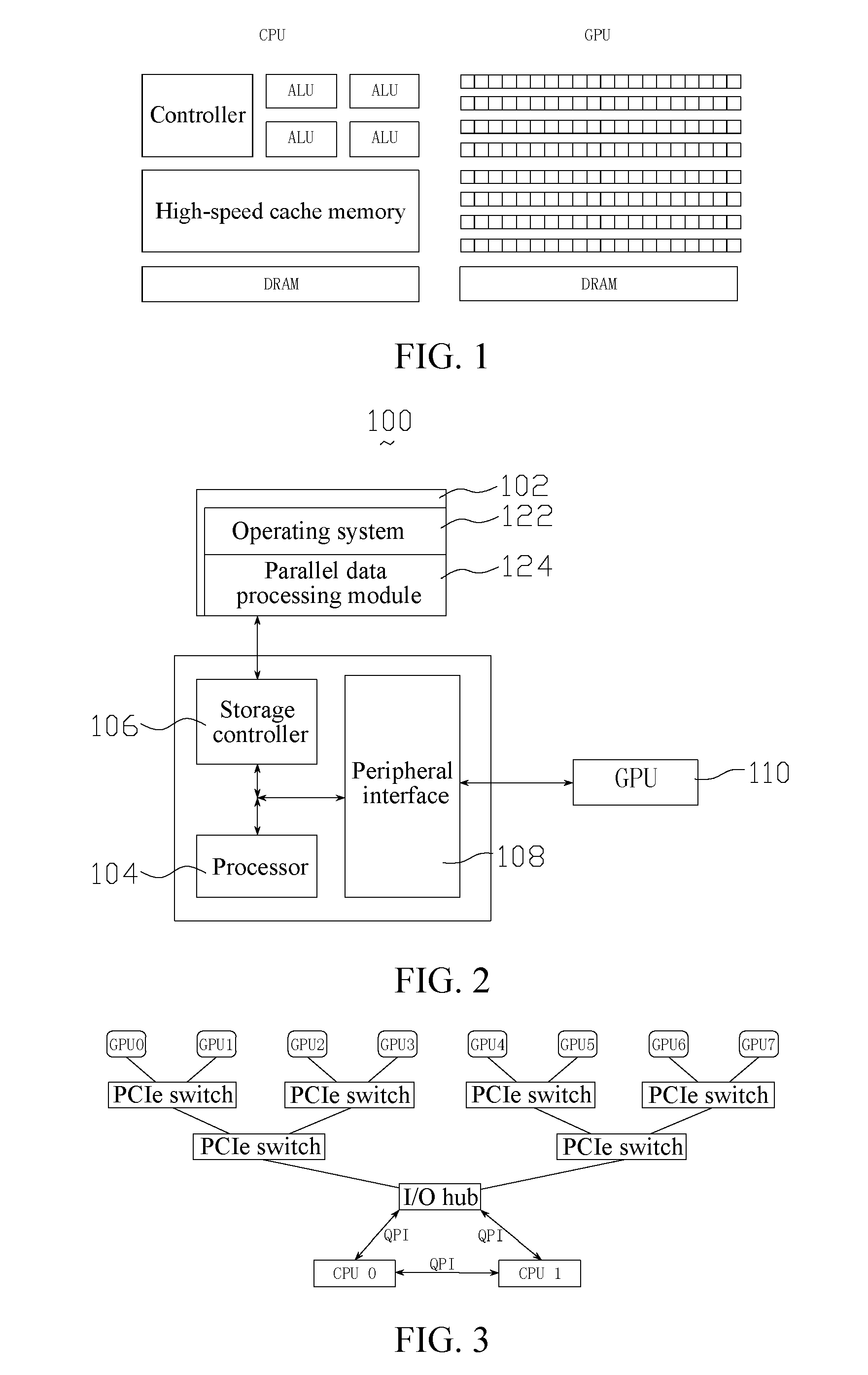

Data parallel processing method and apparatus based on multiple graphic processing units

ActiveUS20160321777A1Enhance data parallel processing efficiencyImprove Parallel Processing EfficiencyResource allocationProgram synchronisationGraphicsVideo memory

A parallel data processing method based on multiple graphic processing units (GPUs) is provided, including: creating, in a central processing unit (CPU), a plurality of worker threads for controlling a plurality of worker groups respectively, the worker groups including one or more GPUs; binding each worker thread to a corresponding GPU; loading a plurality of batches of training data from a nonvolatile memory to GPU video memories in the plurality of worker groups; and controlling the plurality of GPUs to perform data processing in parallel through the worker threads. The method can enhance efficiency of multi-GPU parallel data processing. In addition, a parallel data processing apparatus is further provided.

Owner:TENCENT TECH (SHENZHEN) CO LTD

Block-based fragment filtration with feasible multi-GPU acceleration for real-time volume rendering on conventional personal computer

ActiveUS7154500B2Reduce the burden onDetails involving 3D image dataCathode-ray tube indicatorsVoxelFiltration

A computer-based method and system for interactive volume rendering of a large volume data on a conventional personal computer using hardware-accelerated block filtration optimizing uses 3D-textured axis-aligned slices and block filtration. Fragment processing in a rendering pipeline is lessened by passing fragments to various processors selectively in blocks of voxels based on a filtering process operative on slices. The process involves generating a corresponding image texture and performing two-pass rendering, namely a virtual rendering pass and a main rendering pass. Block filtration is divided into static block filtration and dynamic block filtration. The static block filtration locates any view-independent unused signal being passed to a rasterization pipeline. The dynamic block filtration determines any view-dependent unused block generated due to occlusion. Block filtration processes utilize the vertex shader and pixel shader of a GPU in conventional personal computer graphics hardware. The method is for multi-thread, multi-GPU operation.

Owner:THE CHINESE UNIVERSITY OF HONG KONG

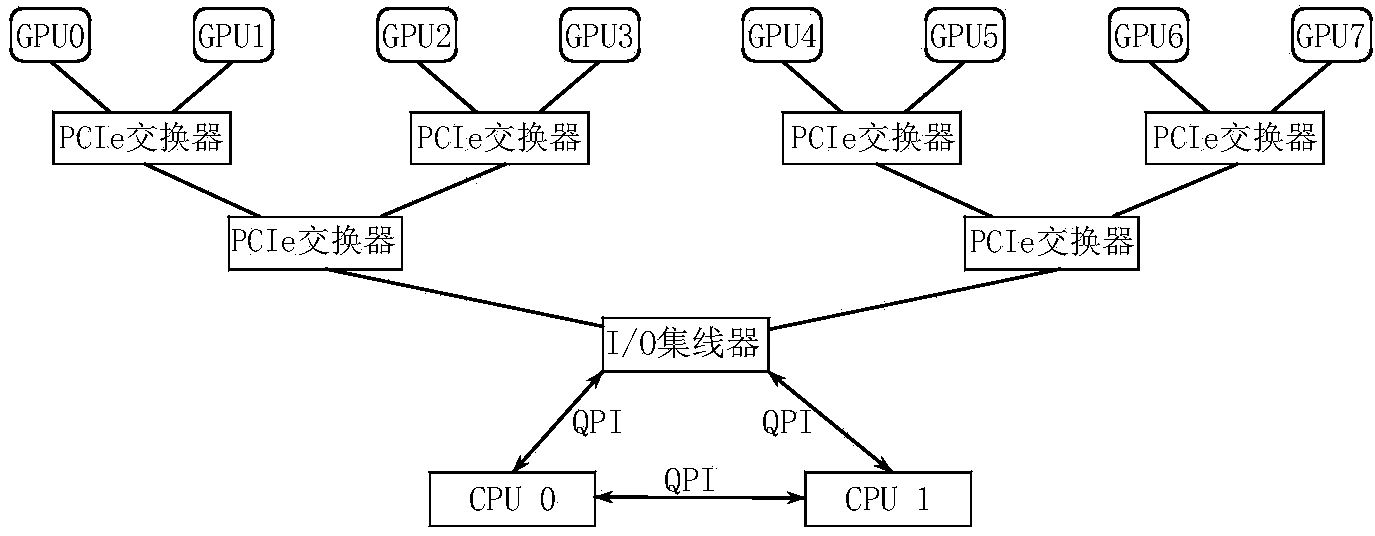

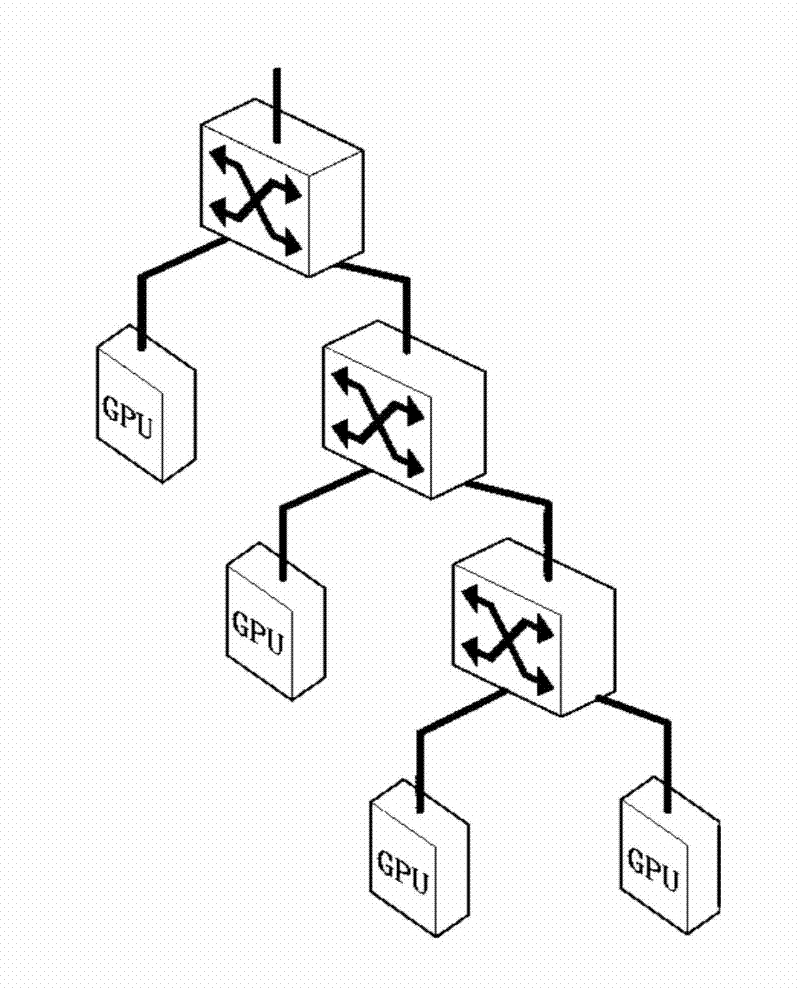

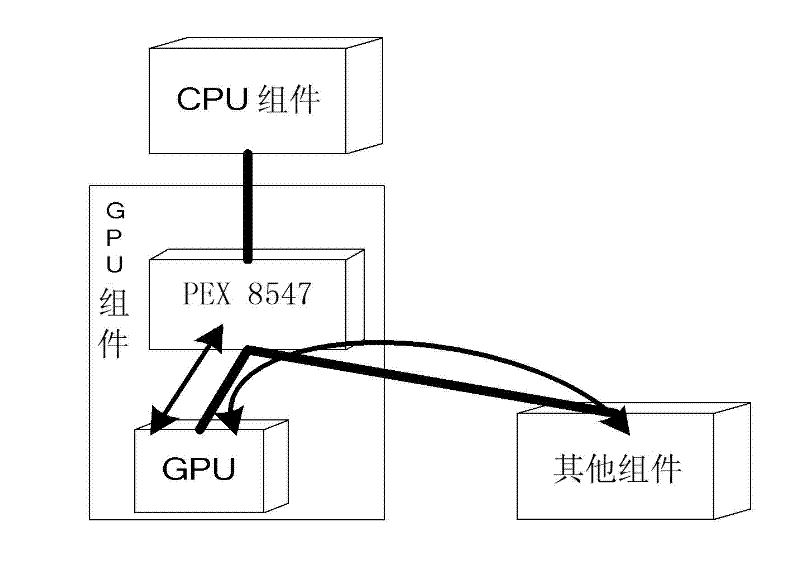

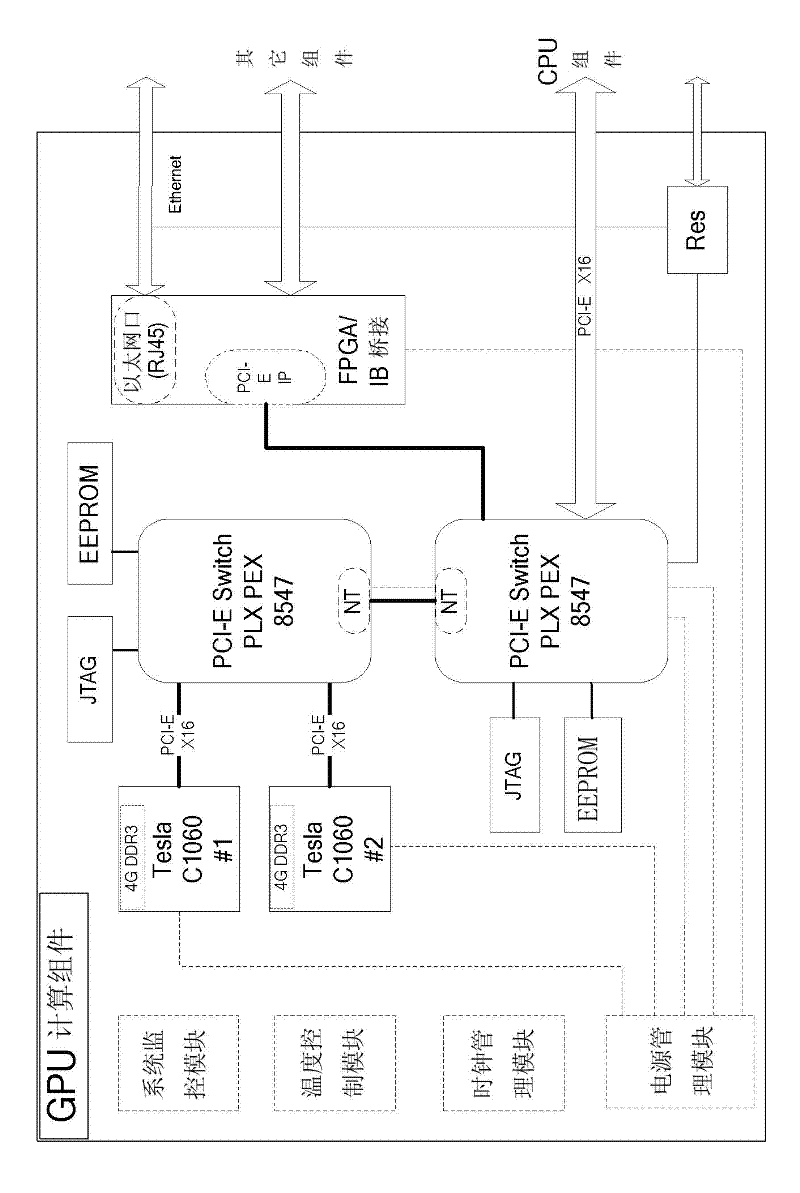

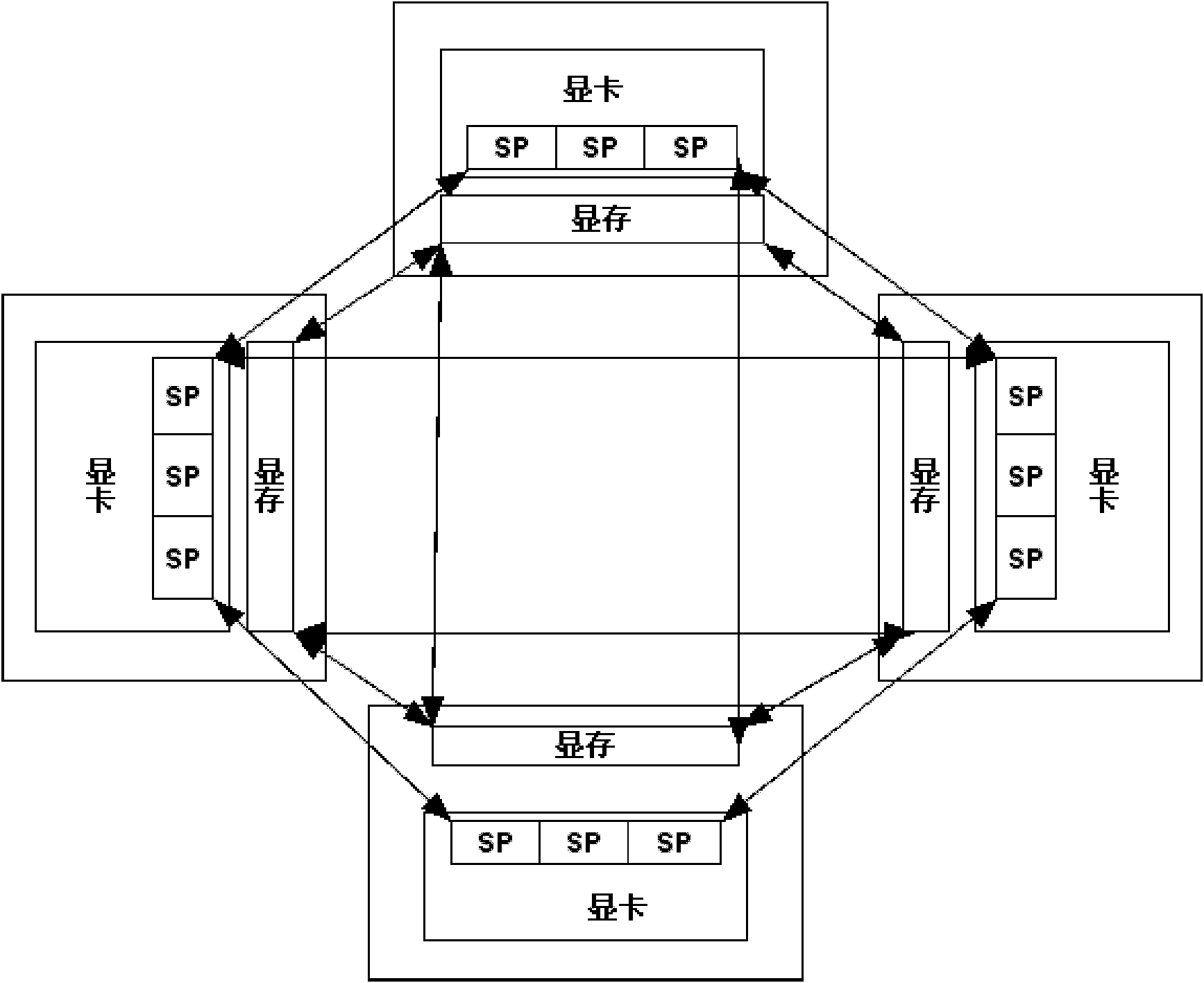

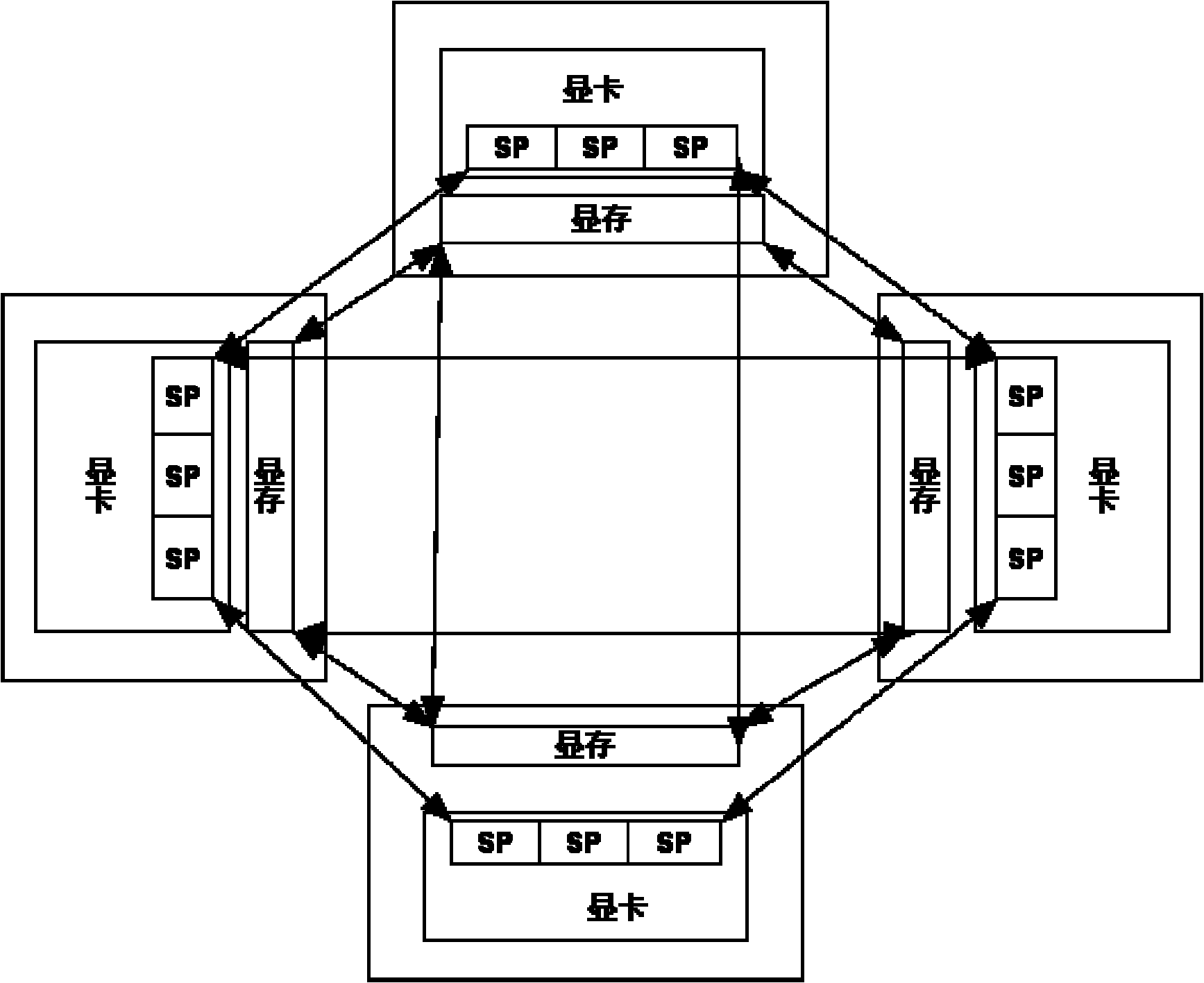

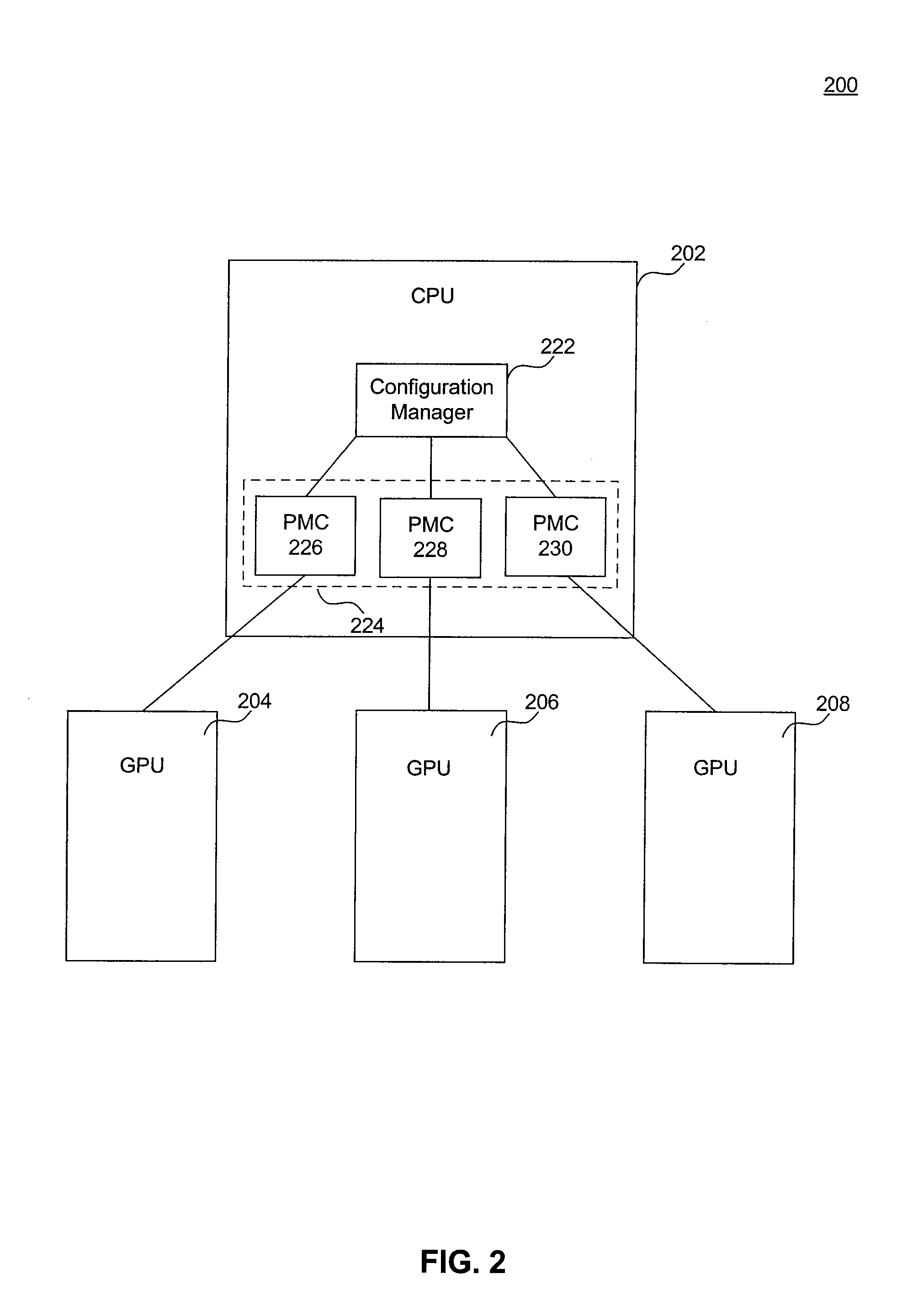

Multi-GPU (graphic processing unit) interconnection system structure in heterogeneous system

ActiveCN102541804ASolving High-Speed Interconnect ProblemsFlexibleDigital computer detailsImage data processing detailsComputer architectureMulti gpu

The invention relates to a GPU (graphic processing unit) hardware configuration management problem in a heterogeneous system in the technical field of computer communication, and in particular relates to a multi-GPU interconnection system structure in the heterogeneous system. In the multi-GPU interconnection system structure in the heterogeneous system, based on a mixed, heterogeneous and high-performance computer system which is combined by multi-core accelerators and a multi-core universal processor, multiple multi-core accelerators, as well as between multiple multi-core accelerators and the multi-core universal processor are subjected to multistage interconnection by adopting multi-port exchange chips based on PCI-E (peripheral component interconnect-express) buses, and a multistage exchange structure which is reconfigurable to an external interface is formed. The multi-GPU interconnection system structure in the heterogeneous system provided by the invention solves the high-speed interconnection problem between the multiple GPUs and between the multiple GPUs and the CPU in the traditional heterogeneous system, is a hardware system structure with flexibility and expandability, supports the expandability and interface flexibility and realizes transparent transmission and memory sharing.

Owner:THE PLA INFORMATION ENG UNIV +1

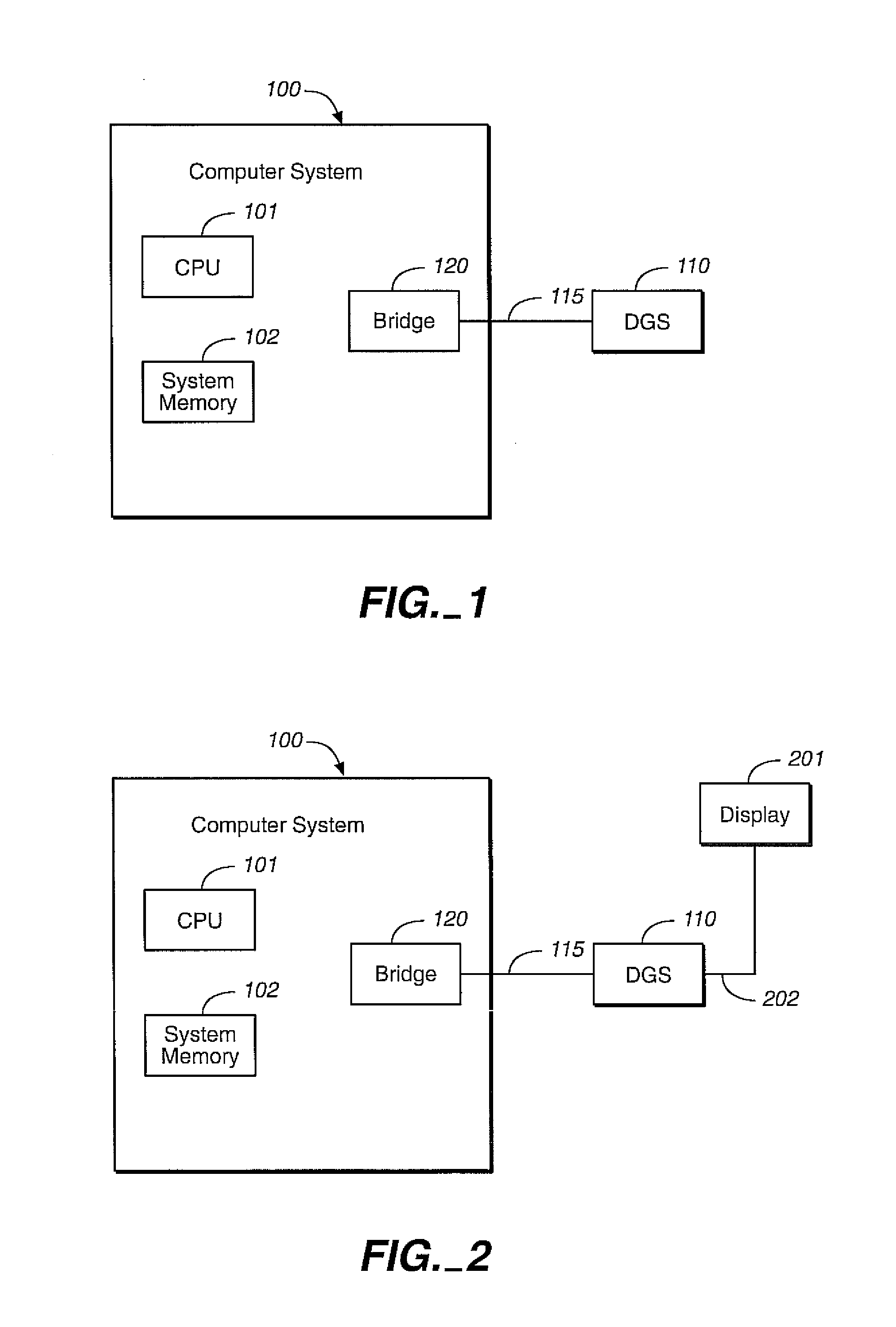

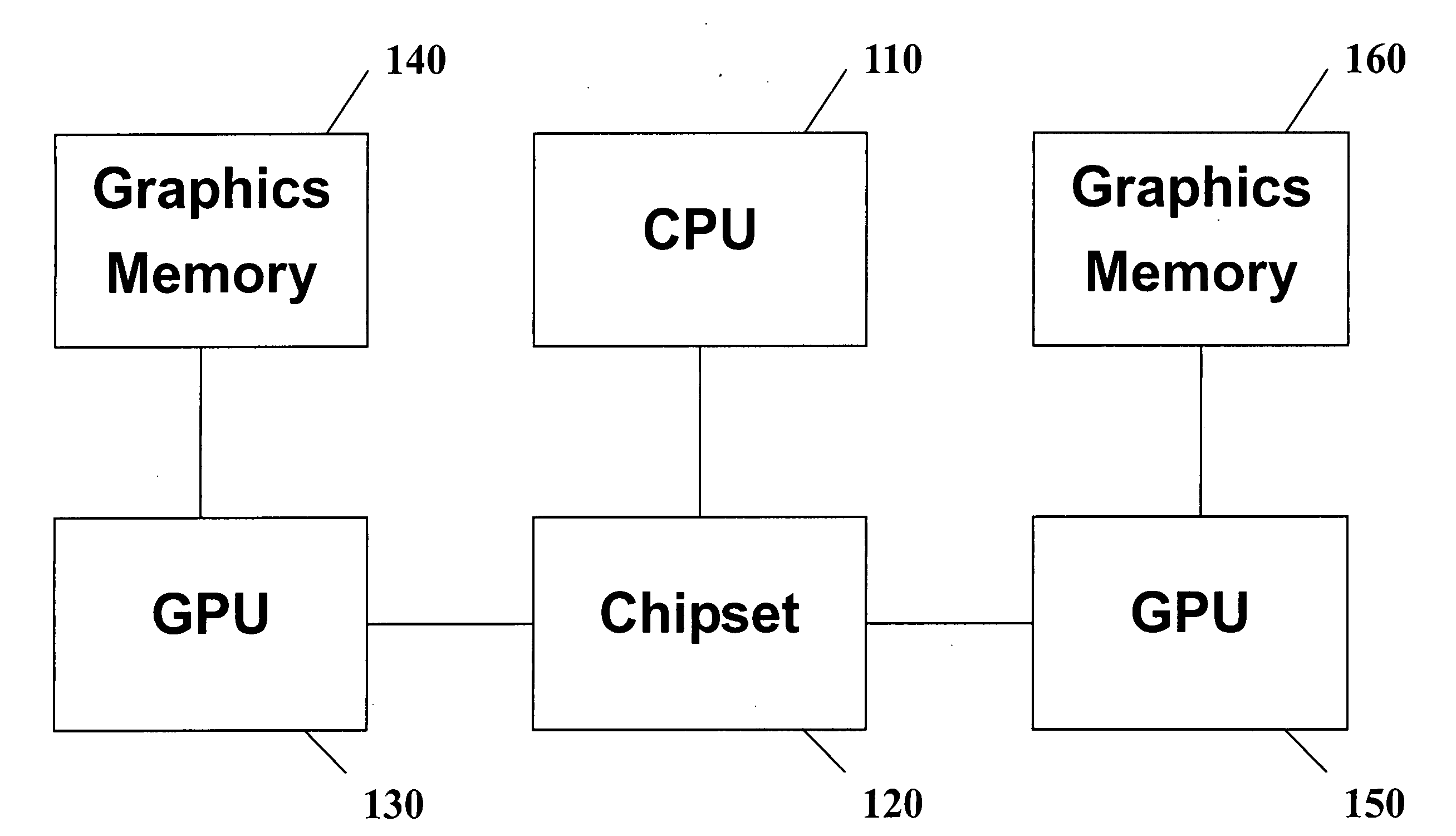

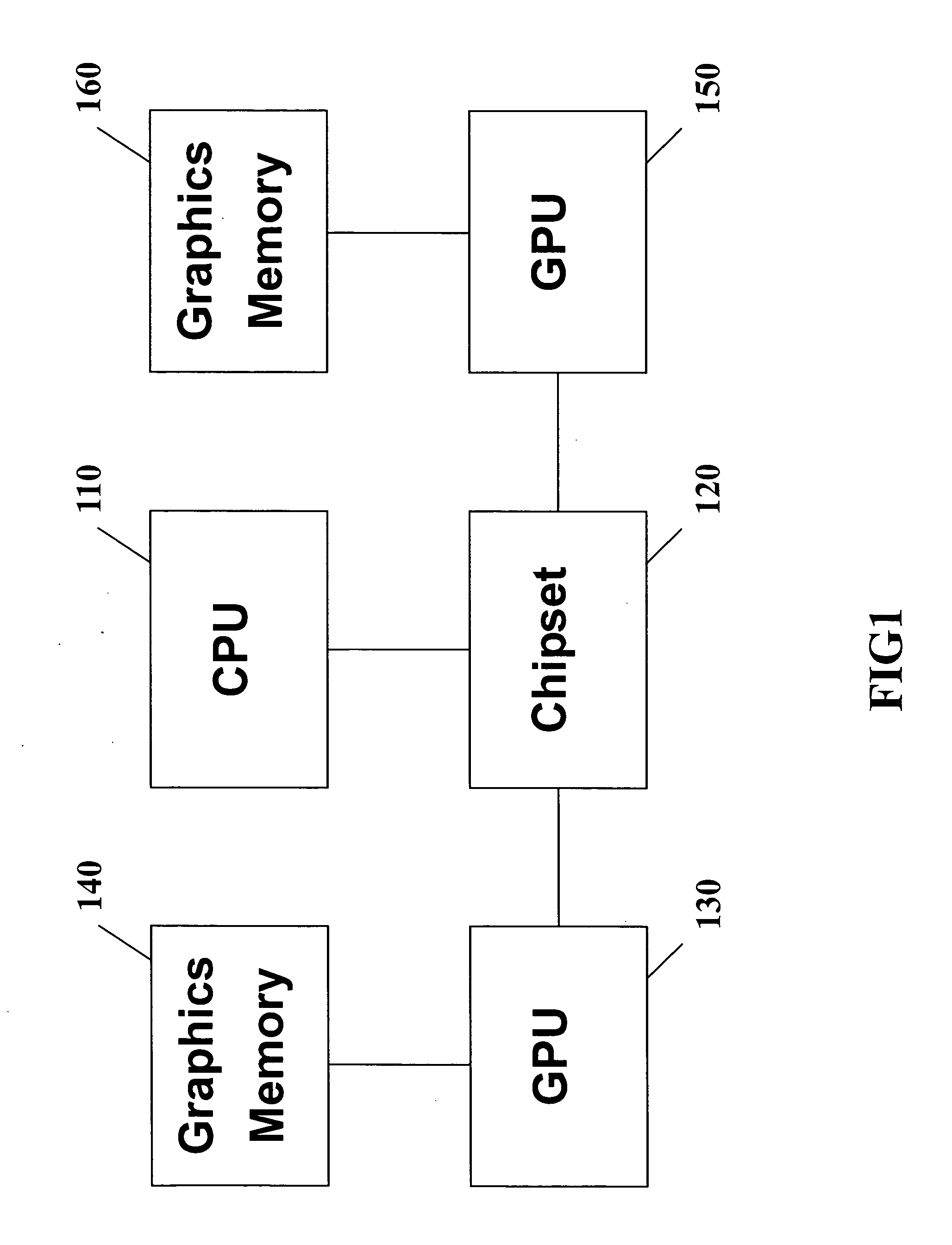

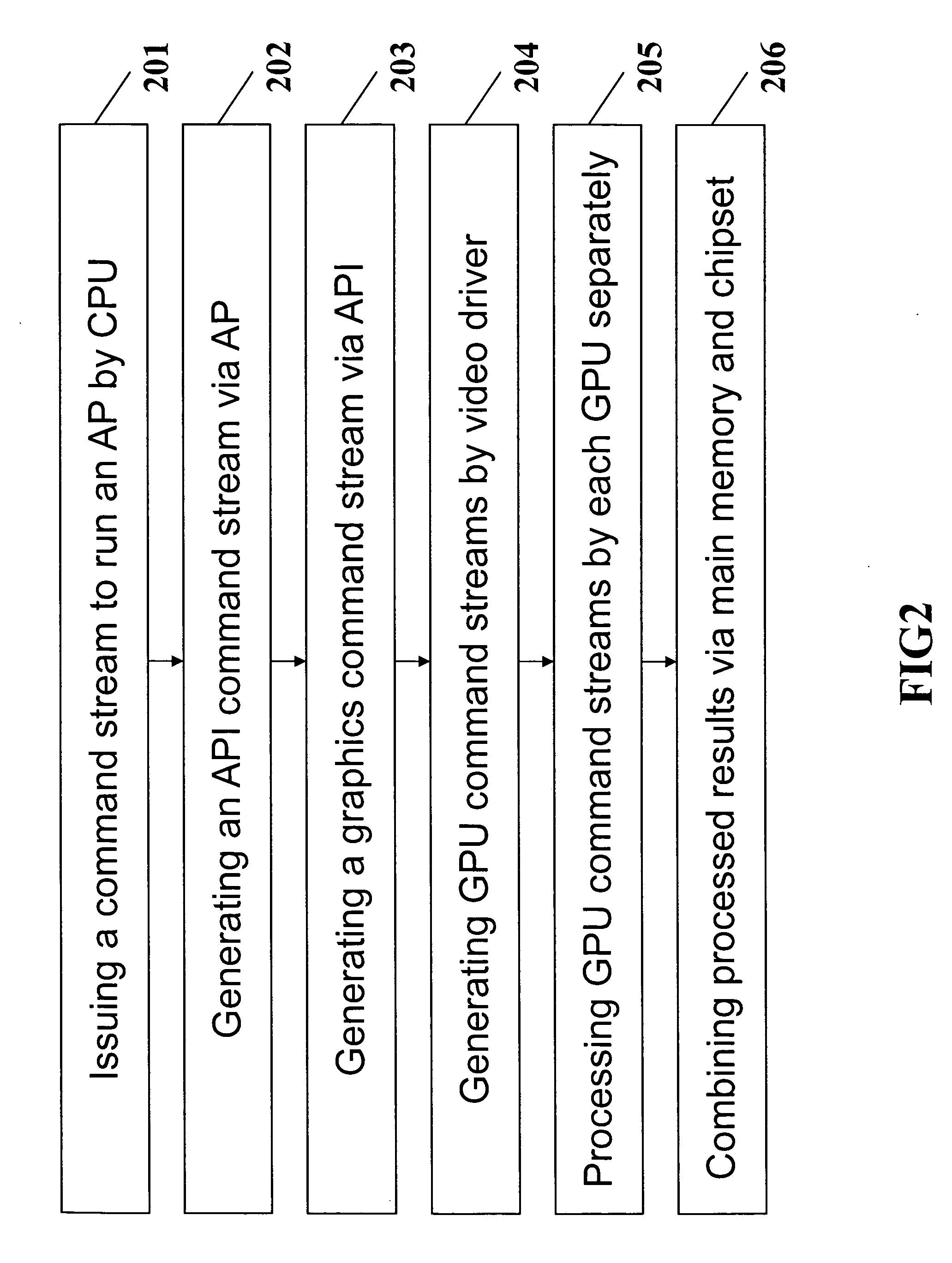

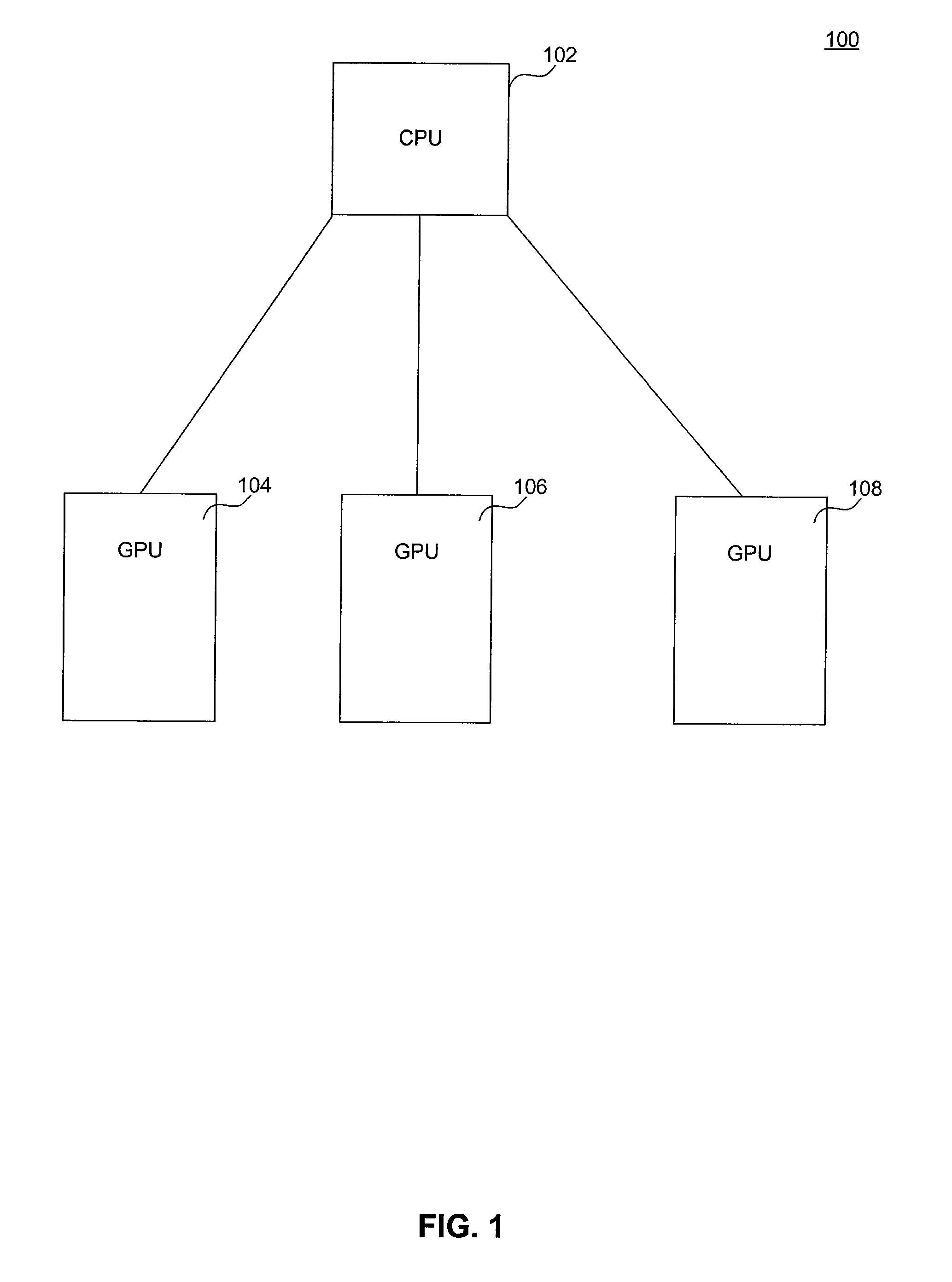

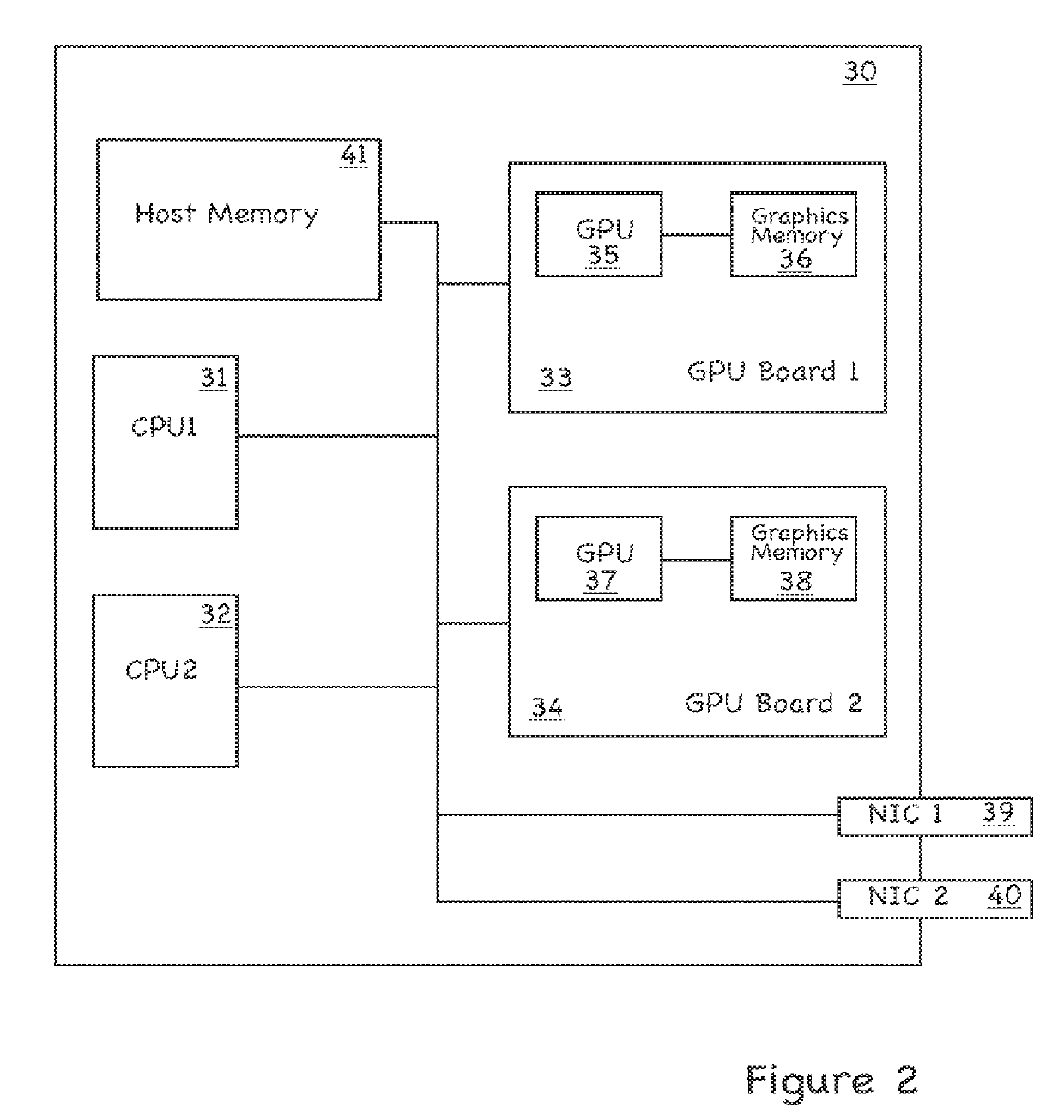

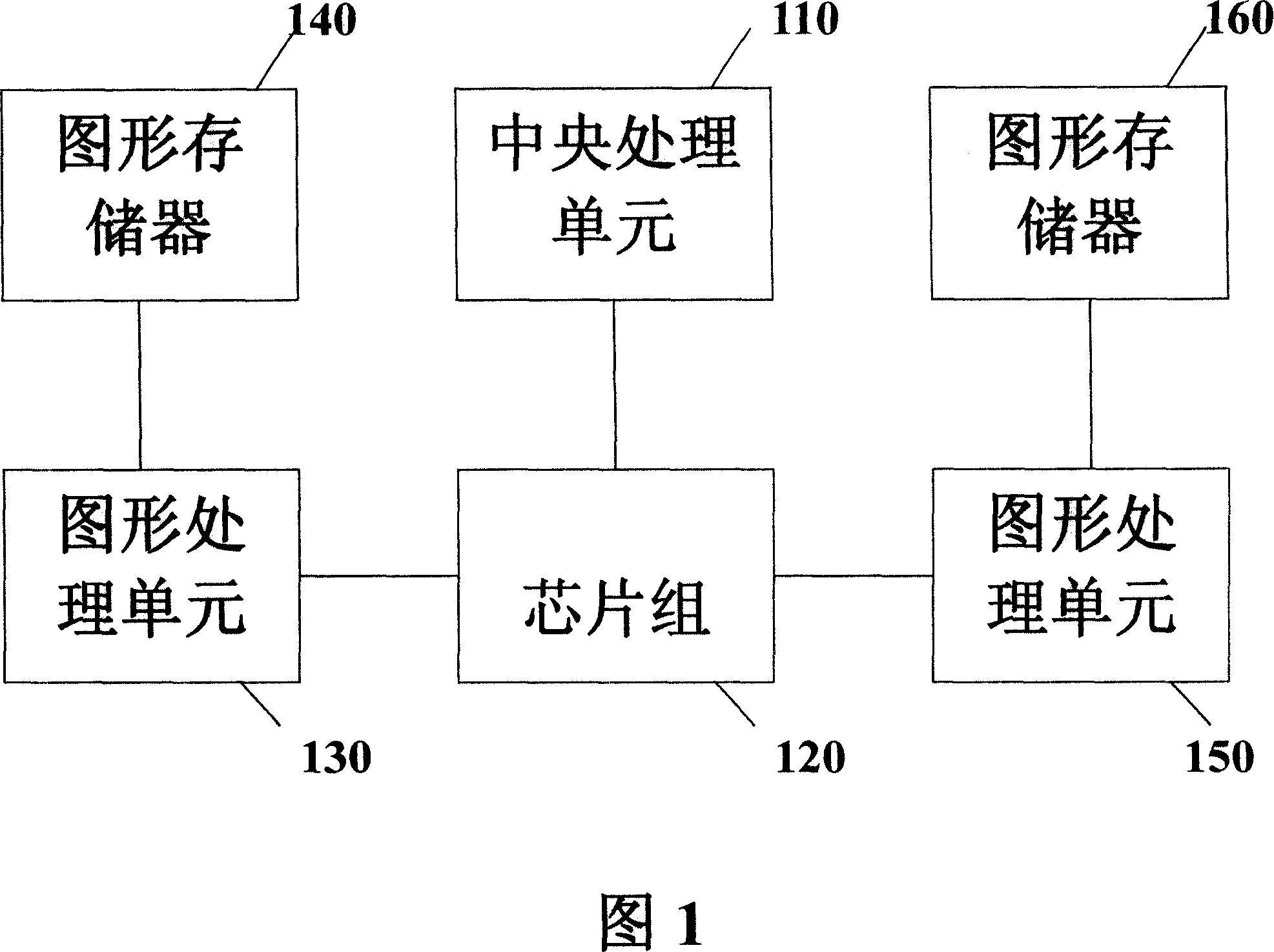

Multi-GPU rendering system

InactiveUS20080030510A1Improve system performanceImprove performanceProcessor architectures/configurationElectric digital data processingGraphicsMulti gpu

A multi-GPU rendering system according to a preferred embodiment of the present invention includes a CPU, a chipset, the first GPU (graphics processing unit), the first graphics memory for the first GPU, a second GPU, and the second graphics memory for the second GPU. The chipset is electrically connected to the CPU, the first GPU and the second GPU. Graphics content is divided into two parts for the two GPUs to process separately. The two parts of the graphics content may be the same or different in sizes. Two processed graphics results are combined in one of these two graphics memories to form complete image stream and then it is outputted to a display by the GPU.

Owner:XGI TECHNOLOGY

Method for reducing power consumption based on dynamic task migrating technology in multi-GPU (Graphic Processing Unit) system

InactiveCN101901042AEffective startEffective closeEnergy efficient ICTResource allocationResource utilizationMulti gpu

The invention relates to a method for reducing power consumption based on a dynamic task migrating technology in a multi-GPU (Graphic Processing Unit) system in the technical field of computers, which comprises the following steps of: respectively mounting a GPU utilization ratio monitor on each GPU to obtain an average utilization ratio of each GPU in T time; when the utilization ratio of the i GPU is R1, migrating all tasks on the i GPU on the GPU the utilization ratio of which is R2 and shutting down the GPU; when the utilization ratio of the j GPU is 100 percent, migrating part of tasks on the j GPU on the GPU the utilization ratio of which is R3; when the utilization ratio of all running GPUs exceeds a threshold value R4, and a system is provided with the shutdown GPU, automatically starting a shutdown GPU by the system and allocating a new calculating task to the just started GPU; and continuously repeating the steps till all the GPUs are used for running a program. The invention has the function of monitoring a real-time source utilization ratio and can effectively reduce the power consumption of the GPUs and optimize the communication among the GPUs.

Owner:SHANGHAI JIAO TONG UNIV

Multi-user multi-GPU render server apparatus and methods

ActiveUS9904969B1Fast timeStrong interactionCathode-ray tube indicatorsMultiple digital computer combinationsGraphicsDigital data

The invention provides, in some aspects, a system for rendering images, the system having one or more client digital data processors and a server digital data processor in communications coupling with the one or more client digital data processors, the server digital data processor having one or more graphics processing units. The system additionally comprises a render server module executing on the server digital data processor and in communications coupling with the graphics processing units, where the render server module issues a command in response to a request from a first client digital data processor. The graphics processing units on the server digital data processor simultaneously process image data in response to interleaved commands from (i) the render server module on behalf of the first client digital data processor, and (ii) one or more requests from (a) the render server module on behalf of any of the other client digital data processors, and (b) other functionality on the server digital data processor.

Owner:PME IP

Multi-user multi-gpu render server apparatus and methods

ActiveUS20090201303A1Fast timeStrong interactionCathode-ray tube indicatorsProcessor architectures/configurationDigital dataGraphics

The invention provides, in some aspects, a system for rendering images, the system having one or more client digital data processors and a server digital data processor in communications coupling with the one or more client digital data processors, the server digital data processor having one or more graphics processing units. The system additionally comprises a render server module executing on the server digital data processor and in communications coupling with the graphics processing units, where the render server module issues a command in response to a request from a first client digital data processor. The graphics processing units on the server digital data processor simultaneously process image data in response to interleaved commands from (i) the render server module on behalf of the first client digital data processor, and (ii) one or more requests from (a) the render server module on behalf of any of the other client digital data processors, and (b) other functionality on the server digital data processor.

Owner:PME IP

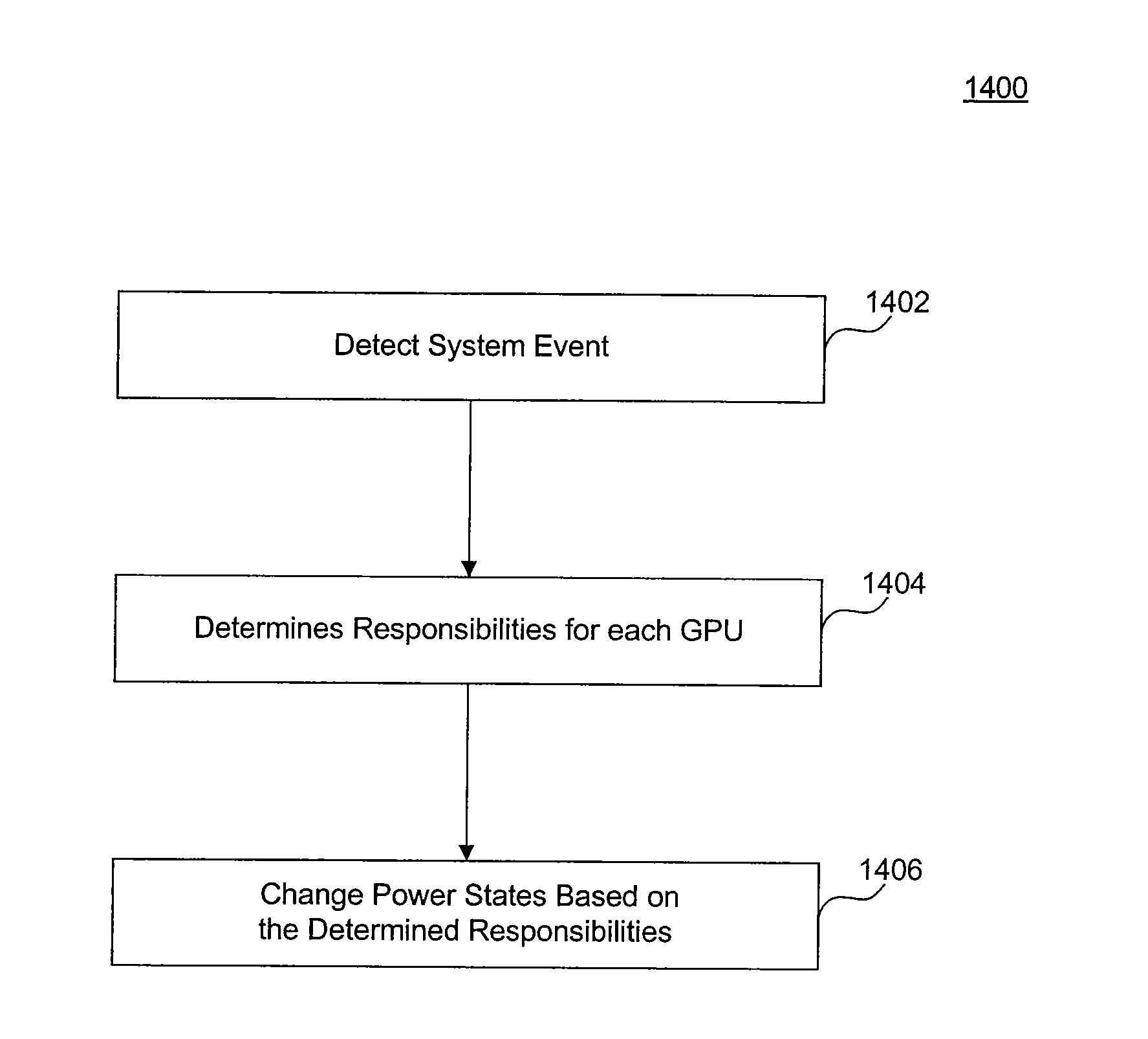

Power Management in Multi-GPU Systems

A method of power management is provided. The method includes detecting an event, assign a first responsibility to a first graphics processing unit (GPU) and a second responsibility to second GPU, and changing a power state of the first and second GPUs based on the first and second responsibilities, respectively. The first responsibility is different from the second responsibility.

Owner:ATI TECH INC

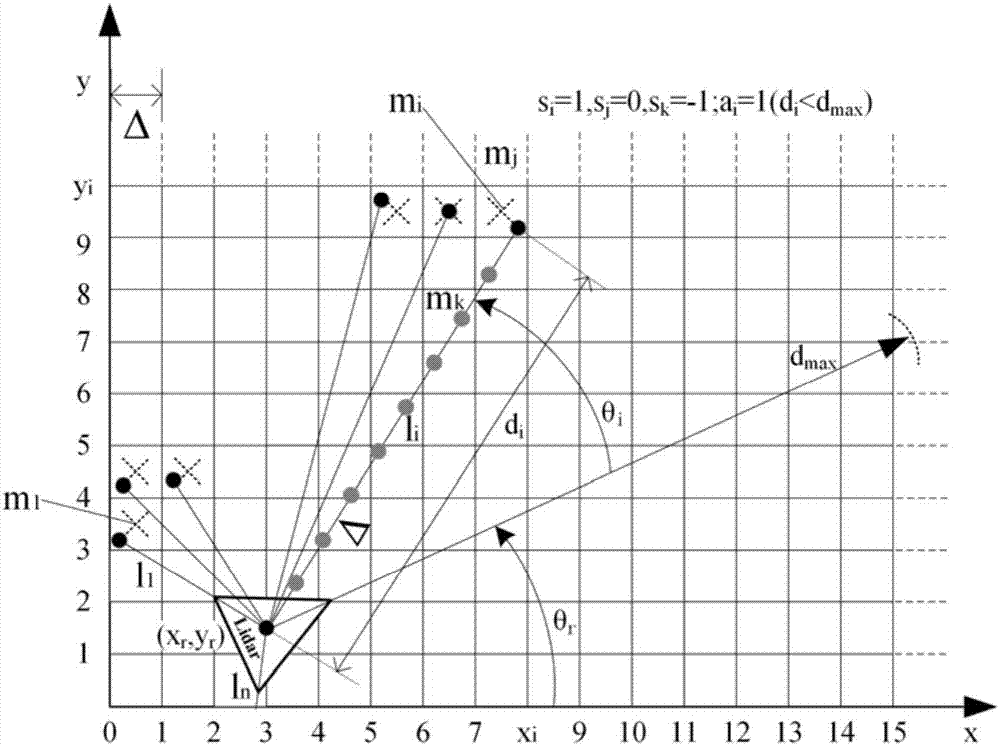

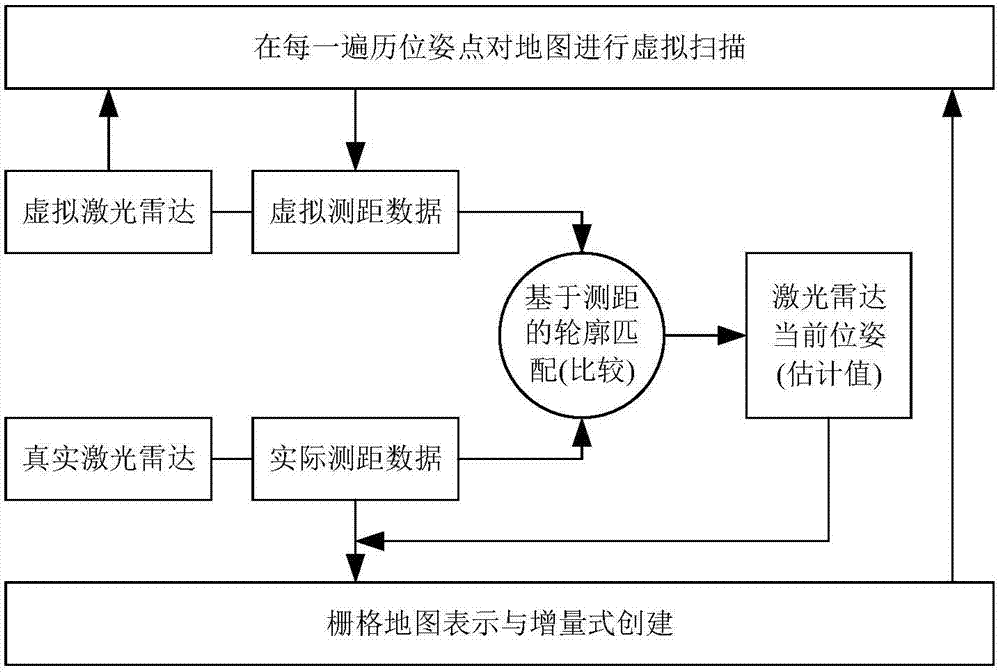

Virtual scanning and ranging matching-based AGV laser SLAM method

InactiveCN107239076AImprove real-time performanceIncrease flexibilityPosition/course control in two dimensionsTriangulationArtificial intelligence

The invention discloses a virtual scanning and ranging matching-based AGV laser SLAM method, and relates to mobile robot navigation and positioning. The method comprises a grid map representation and establishment method, a virtual scanning and matching positioning method and an algorithm instantaneity improving method. The method using a contour traversal matching principle comprises the specific steps of scanning a map by adopting a virtual laser radar at each traversal position, directly comparing virtually scanned data with data of a current laser radar, finding out optimal position information of an AGV robot and then incrementally building the map. For the problems that most of existing laser SLAM algorithms are aimed at a low-precision sensor, and the filtering, estimating and optimizing stability and the positioning accuracy cannot be absolutely ensured and the application requirements of the industrial AGV robot cannot be easily met, the problem of preliminary construction and calibration in navigation by adopting a reflector board and a triangulation principle can be solved by adopting multi-GPU parallel processing and changing an initial propulsion position of virtual ranging, and the flexibility, the reliability and the precision are improved.

Owner:仲训昱

Method and apparatus for efficient use of graphics processing resources in a virtualized execution environment

ActiveUS20180089881A1Program initiation/switchingSoftware simulation/interpretation/emulationVirtualizationGraphics

An apparatus and method are described for an efficient multi-GPU virtualization environment. For example, one embodiment of an apparatus comprises: a plurality of graphics processing units (GPUs) to be shared by a plurality of virtual machines (VMs) within a virtualized execution environment; a shared memory to be shared between the plurality of VMs and GPUs executed within the virtualized graphics execution environment; the GPUs to collect performance data related to execution of commands within command buffers submitted by the VMs, the GPUs to store the performance data within the shared memory; and a GPU scheduler and / or driver to schedule subsequent command buffers to the GPUs based on the performance data

Owner:INTEL CORP

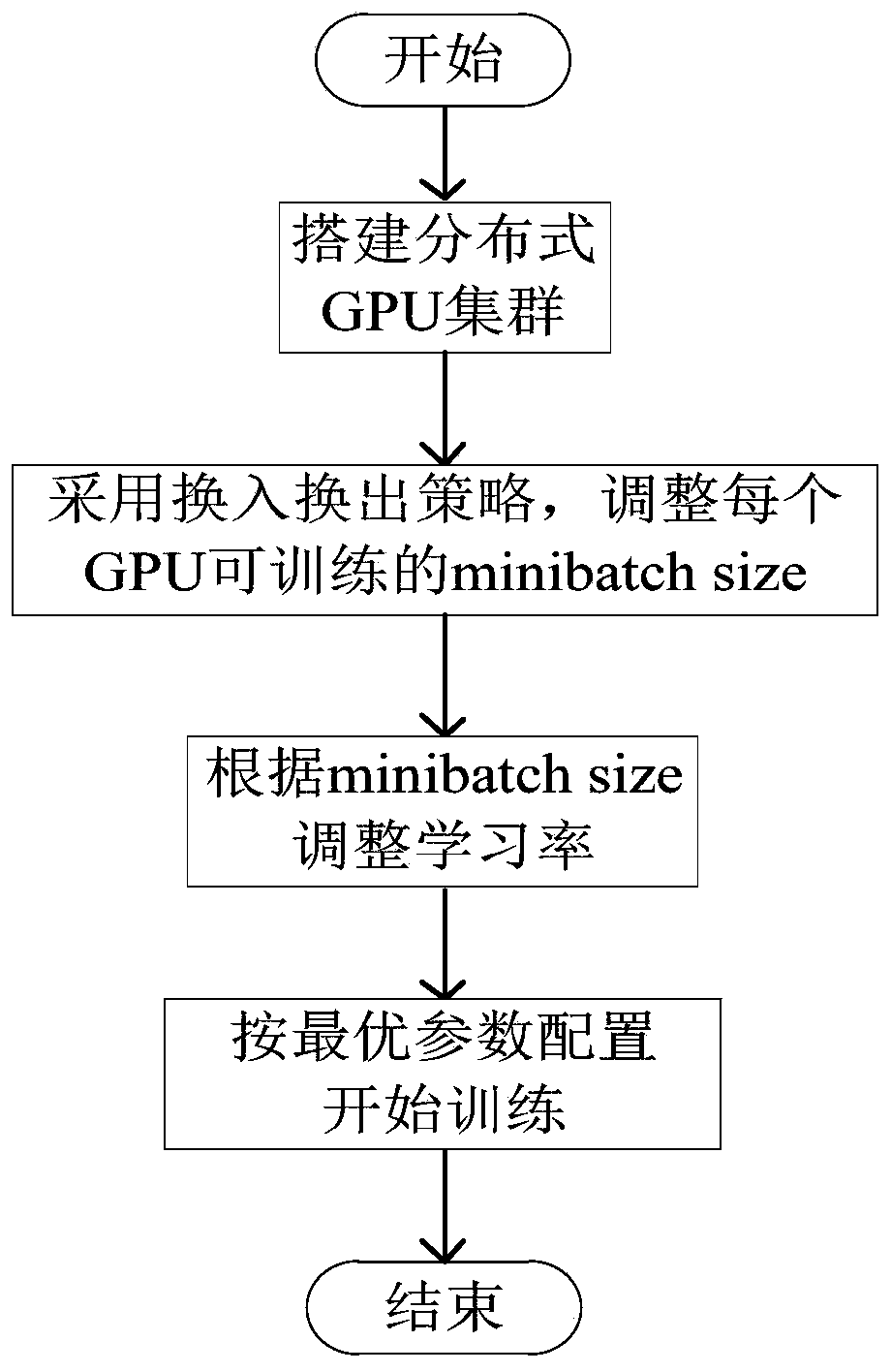

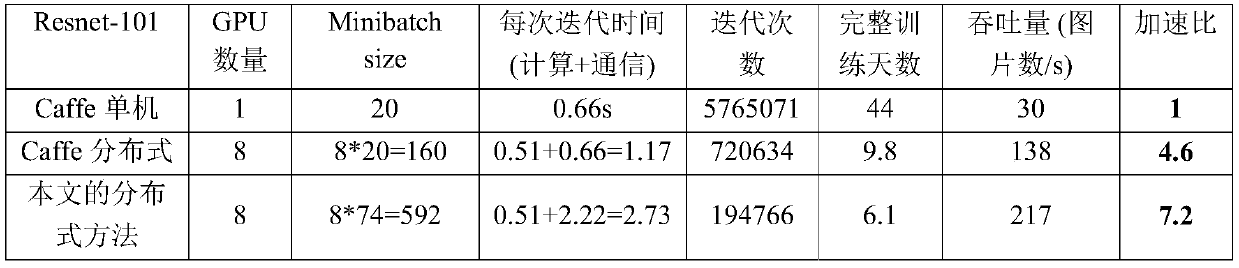

A distributed acceleration method and system for a deep learning training task

ActiveCN109902818AAccelerated trainingDoes not affect training accuracyProcessor architectures/configurationPhysical realisationMulti gpuHyper parameters

The invention relates to a distributed acceleration method and system for a deep learning training task. The method comprises the following steps: (1) building a distributed GPU training cluster; (2)adopting a swap-in swap-out strategy, and adjusting a minibatch size on a single GPU working node in the distributed GPU training cluster is adjusted; (3) adjusting the learning rate according to theminibatch size determined in the step (2); And (4) carrying out deep learning training by adopting the hyper-parameters minibatch size determined in the steps (2) and (3) and the learning rate. On thepremise that the training accuracy is not affected, the communication time is greatly compressed simply and efficiently by reducing the number of times of parameter updating communication between clusters, and compared with a single GPU mode, the cluster expansion efficiency can be fully improved in a multi-GPU mode, and acceleration of the training process of the ultra-deep neural network modelis achieved.

Owner:INST OF INFORMATION ENG CAS

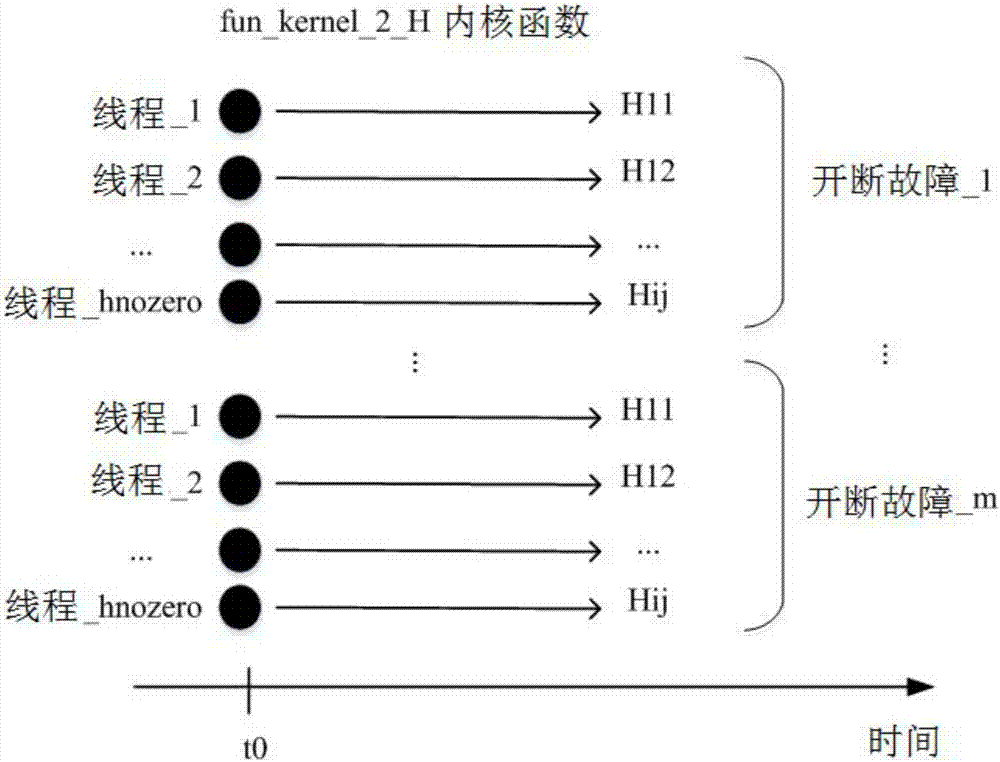

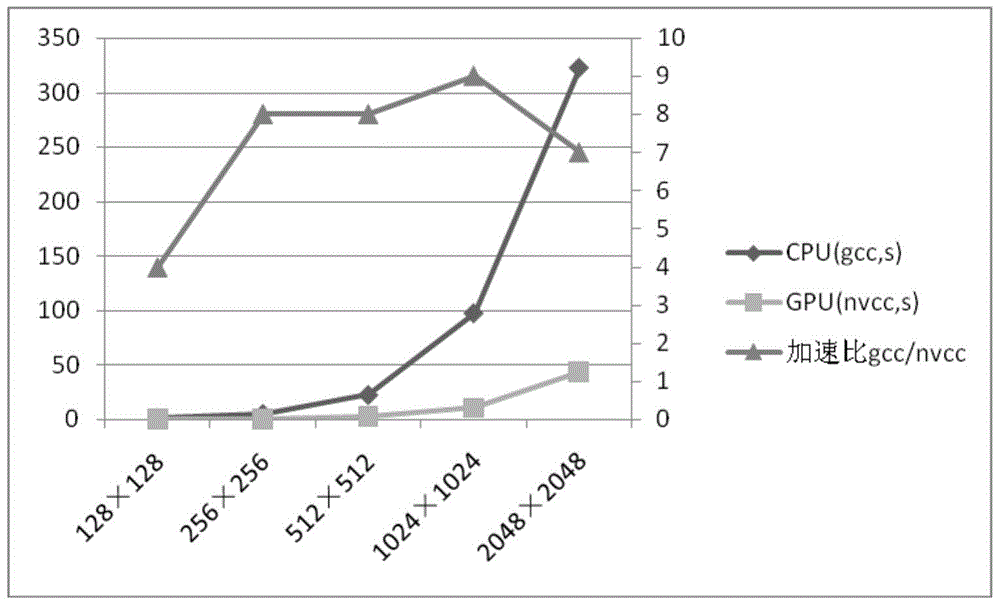

CPU+multi-GPU heterogeneous mode static safety analysis calculation method

InactiveCN106874113AImprove static security analysis scanning calculation efficiencyData processing applicationsResource allocationHybrid programmingMulti gpu

The invention discloses a CPU+multi-GPU heterogeneous mode static safety analysis calculation method. For the requirement for fast scanning for static safety analysis of large power grid in a practical engineering application, an OpenMp multithreading technique is adopted for allocating corresponding thread count according to a system GPU configuration condition and a calculation requirement on a CUDA unified calculation frame platform; each thread is uniquely corresponding to a single GPU; on the basis of CPU and GPU hybrid programming development, a CPU+multi-GPU heterogeneous calculation mode is constructed for collaboratively completing the preconceived fault parallel calculation; on the basis of single preconceived fault load flow calculation, the highly synchronous parallel of a plurality of cut-off load flow iterative processes is realized; the parallel processing capability of preconceived fault scanning for the static safety analysis is greatly promoted through element-grade fine grit parallel; a powerful technical support is supplied for the online safety analysis early-warning scanning of an integrated dispatching system of an interconnected large power grid.

Owner:NARI TECH CO LTD +2

Block-based fragment filtration with feasible multi-GPU acceleration for real-time volume rendering on conventional personal computer

A computer-based method and system for interactive volume rendering of a large volume data on a conventional personal computer using hardware-accelerated block filtration optimizing uses 3D-textured axis-aligned slices and block filtration. Fragment processing in a rendering pipeline is lessened by passing fragments to various processors selectively in blocks of voxels based on a filtering process operative on slices. The process involves generating a corresponding image texture and performing two-pass rendering, namely a virtual rendering pass and a main rendering pass. Block filtration is divided into static block filtration and dynamic block filtration. The static block filtration locates any view-independent unused signal being passed to a rasterization pipeline. The dynamic block filtration determines any view-dependent unused block generated due to occlusion. Block filtration processes utilize the vertex shader and pixel shader of a GPU in conventional personal computer graphics hardware. The method is for multi-thread, multi-GPU operation.

Owner:THE CHINESE UNIVERSITY OF HONG KONG

Multi-GPU molecular dynamics simulation method for structural material radiation damage

ActiveCN105787227AReduce energy costsReduce maintenance costsDesign optimisation/simulationSpecial data processing applicationsNODALTime evolution

The invention discloses a multi-GPU molecular dynamics simulation method for structural material radiation damage. The method comprises the following steps: initializing; dynamically dividing grids for each node; performing inter-node communication; establishing a sorting cellular list on GPU; updating time step; finding the corresponding relationship between particle and grid number according to the coordinate of the particle; forecasting the displacement, speed and accelerated speed of the particle; calculating the stress of each particle; utilizing the stress to rectify the displacement, speed and accelerated speed of the particle; ensuring the constant temperature of the system according to the ensemble correcting speed; utilizing a periodic boundary condition to correct the position of the particle; storing a current calculation result; and iteratively executing the above steps till reaching preset step number. The method can be utilized to high-efficiently and conveniently simulate the material radiation damage process on larger spatial and temporal scale and to explain the long-time evolution law of the radiation damage at micro-scale.

Owner:INST OF MODERN PHYSICS CHINESE ACADEMY OF SCI

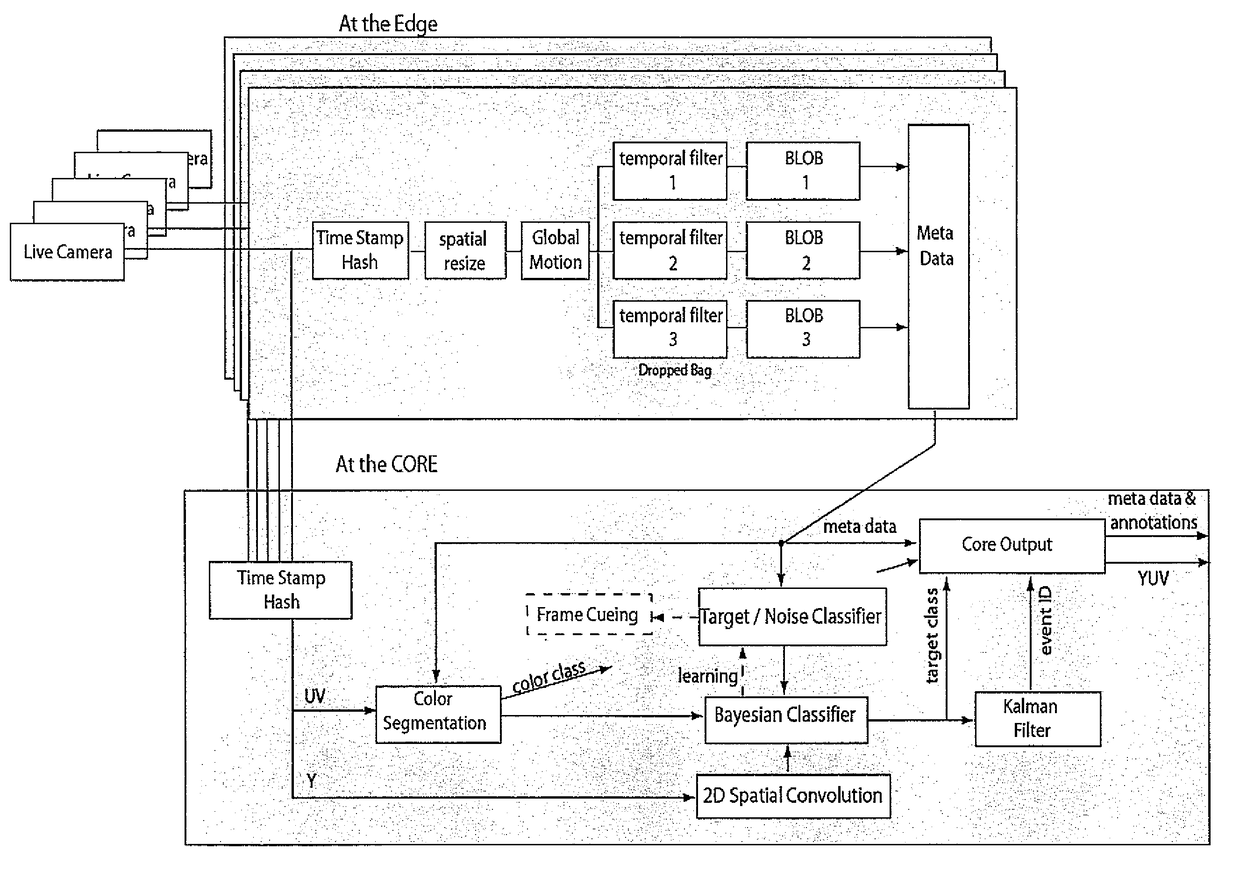

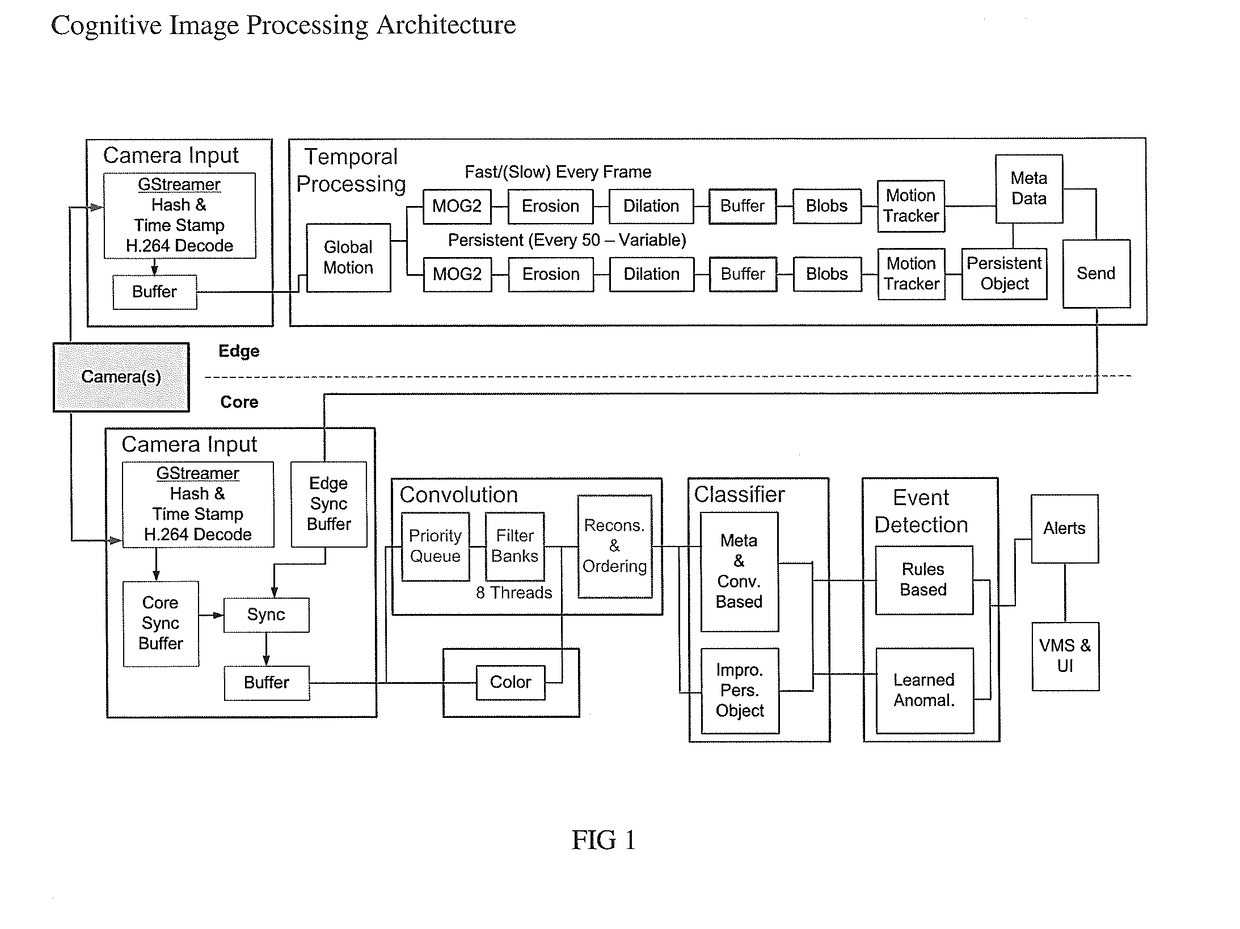

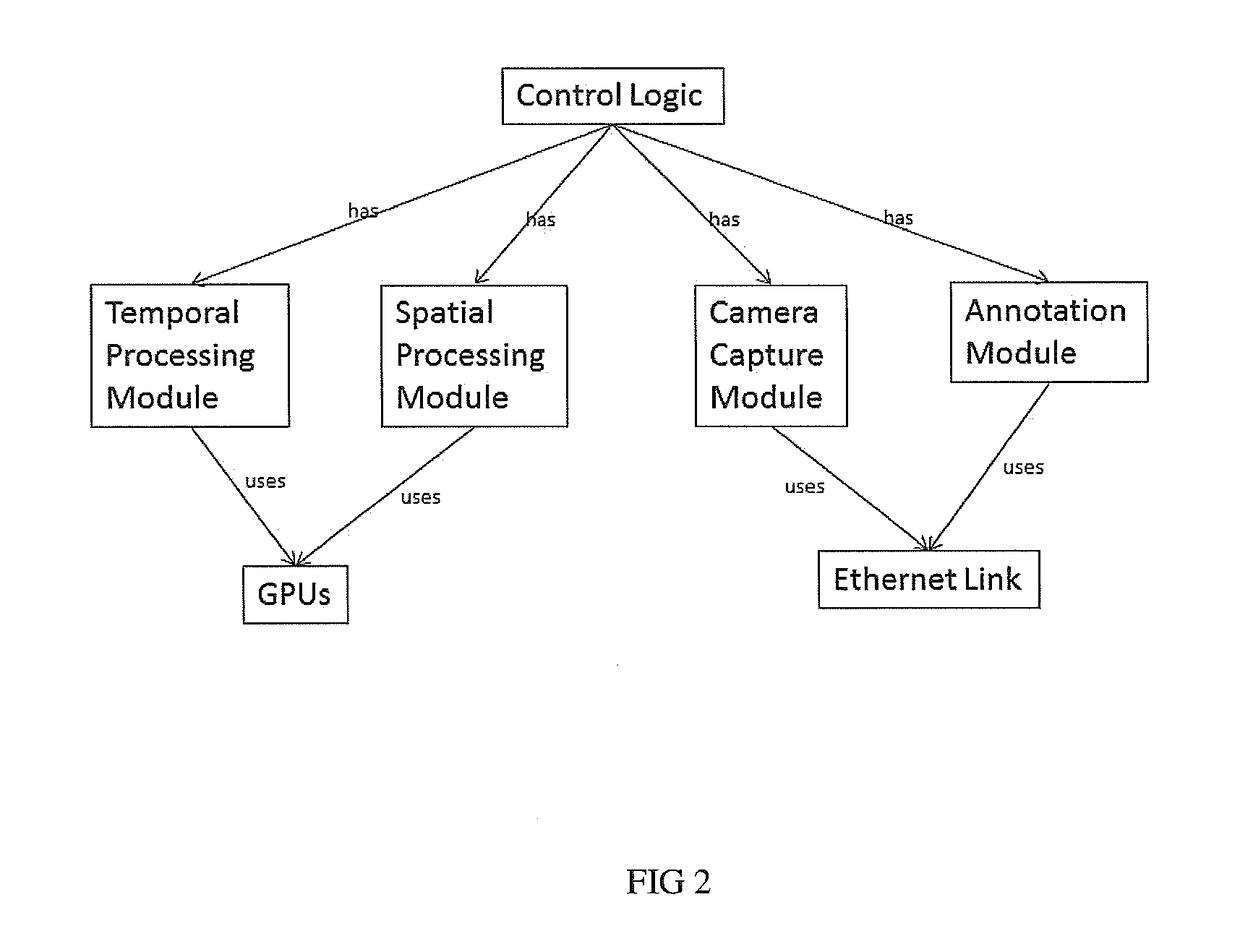

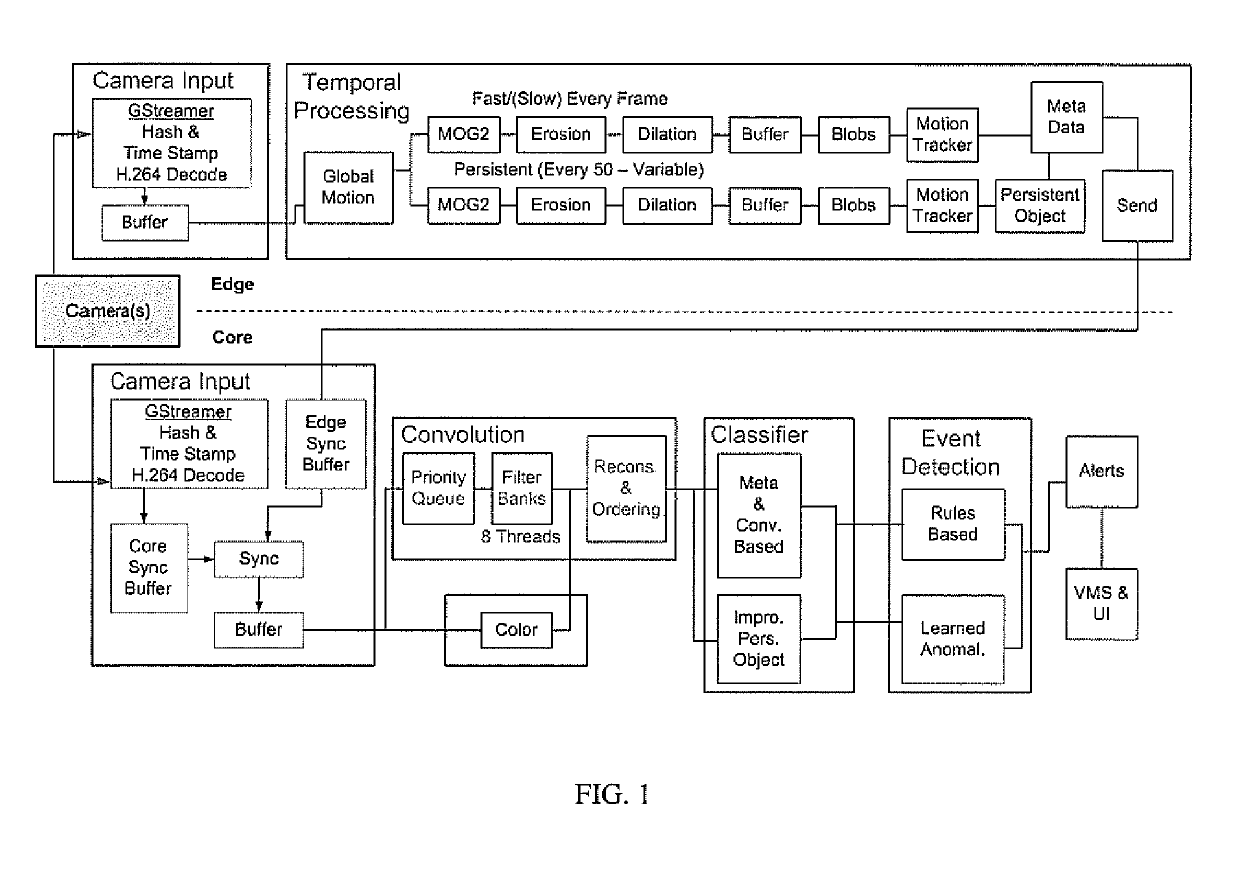

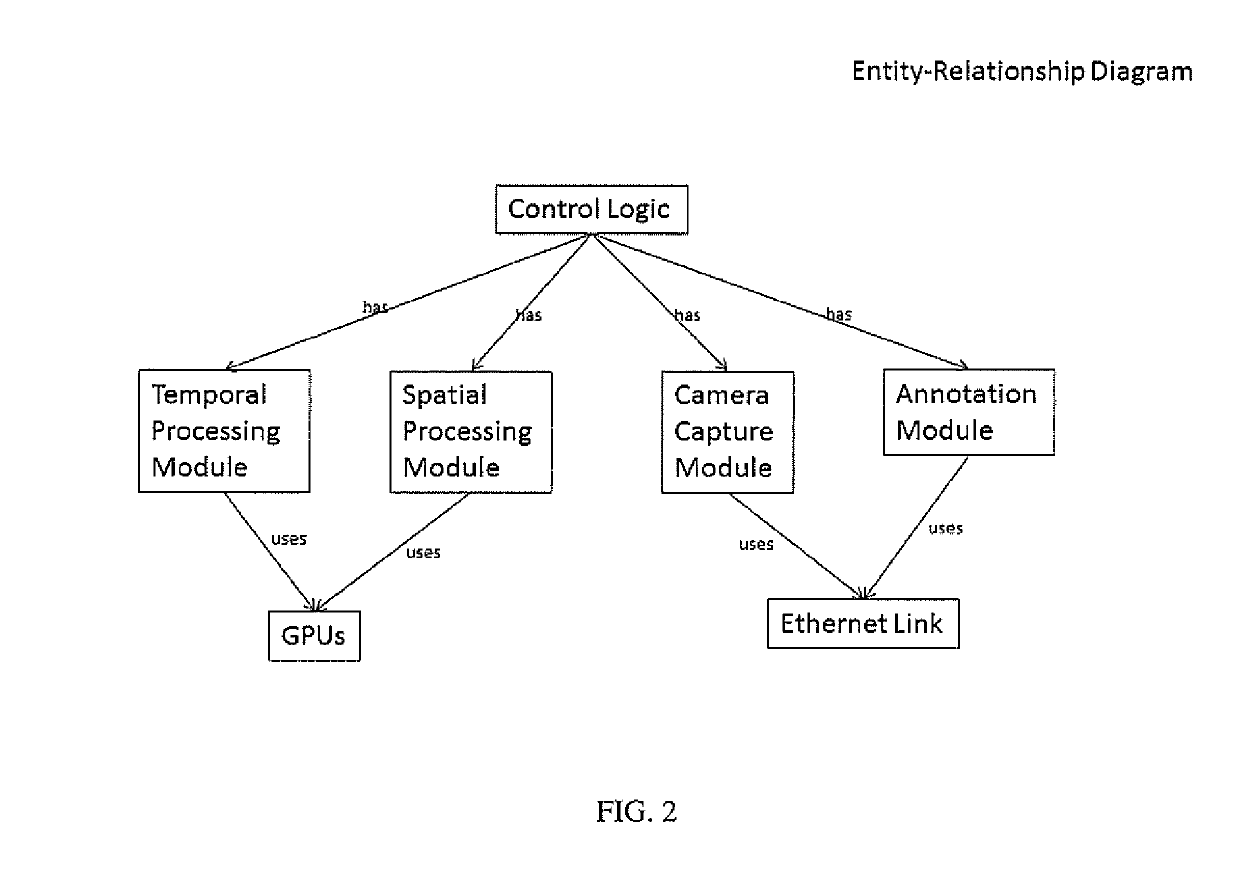

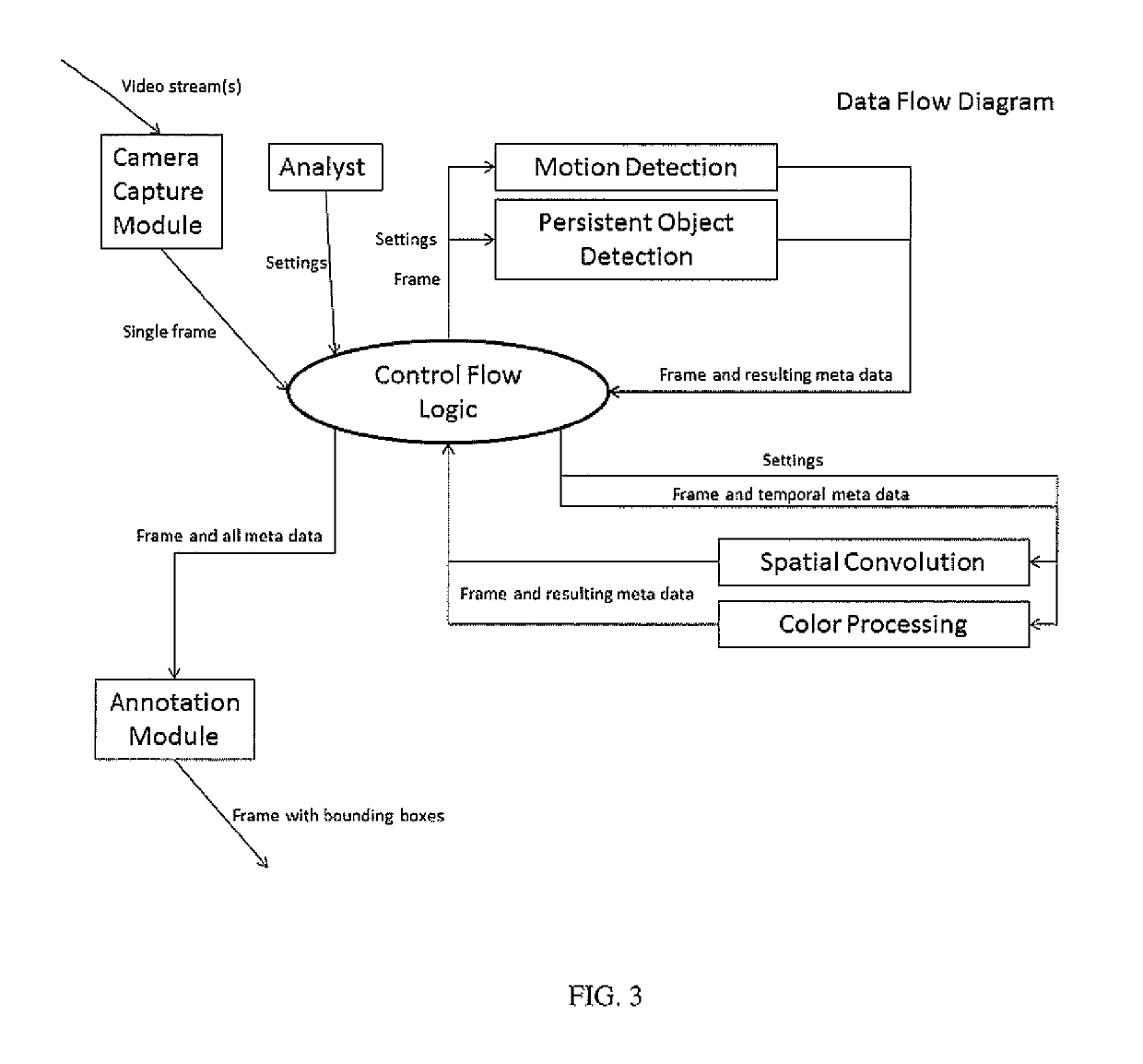

Methods and Devices for Cognitive-based Image Data Analytics in Real Time

An invention is disclosed for a real time video analytic processor that embodies algorithms and processing architectures that process a wide variety of sensor images in a fashion that emulates how the human visual path processes and interprets image content. spatial, temporal, and color content of images are analyzed and the salient features of the images determined. These salient features are then compared to the salient features of objects of user interest in order to detect, track, classify, and characterize the activities of the objects. Objects or activities of interest are annotated in the image streams and alerts of critical events are provided to users. Instantiation of the cognitive processing can be accomplished on multi-FPGA and multi-GPU processing hardwares.

Owner:IRVINE SENSORS

Multi-user multi-GPU render server apparatus and methods

ActiveUS10311541B2Minimize timeLess computation timeImage memory managementCathode-ray tube indicatorsGraphicsDigital data

The invention provides, in some aspects, a system for rendering images, the system having one or more client digital data processors and a server digital data processor in communications coupling with the one or more client digital data processors, the server digital data processor having one or more graphics processing units. The system additionally comprises a render server module executing on the server digital data processor and in communications coupling with the graphics processing units, where the render server module issues a command in response to a request from a first client digital data processor. The graphics processing units on the server digital data processor simultaneously process image data in response to interleaved commands from (i) the render server module on behalf of the first client digital data processor, and (ii) one or more requests from (a) the render server module on behalf of any of the other client digital data processors, and (b) other functionality on the server digital data processor.

Owner:PME IP

Multi-gpu rendering system

InactiveCN101118645AImprove performanceDigital computer detailsImage memory managementGraphicsMulti gpu

A multi-GPU rendering system according to a preferred embodiment of the present invention includes a CPU, a chipset, the first GPU (graphics processing unit), the first graphics memory for the first GPU, a second GPU, and the second graphics memory for the second GPU. The chipset is electrically connected to the CPU, the first GPU and the second GPU. Graphics content is divided into two parts for the two GPUs to process separately. The two parts of the graphics content may be the same or different in sizes. Two processed graphics results are combined in one of these two graphics memories to form complete image stream and then it is outputted to a display by the GPU.

Owner:XGI TECHNOLOGY

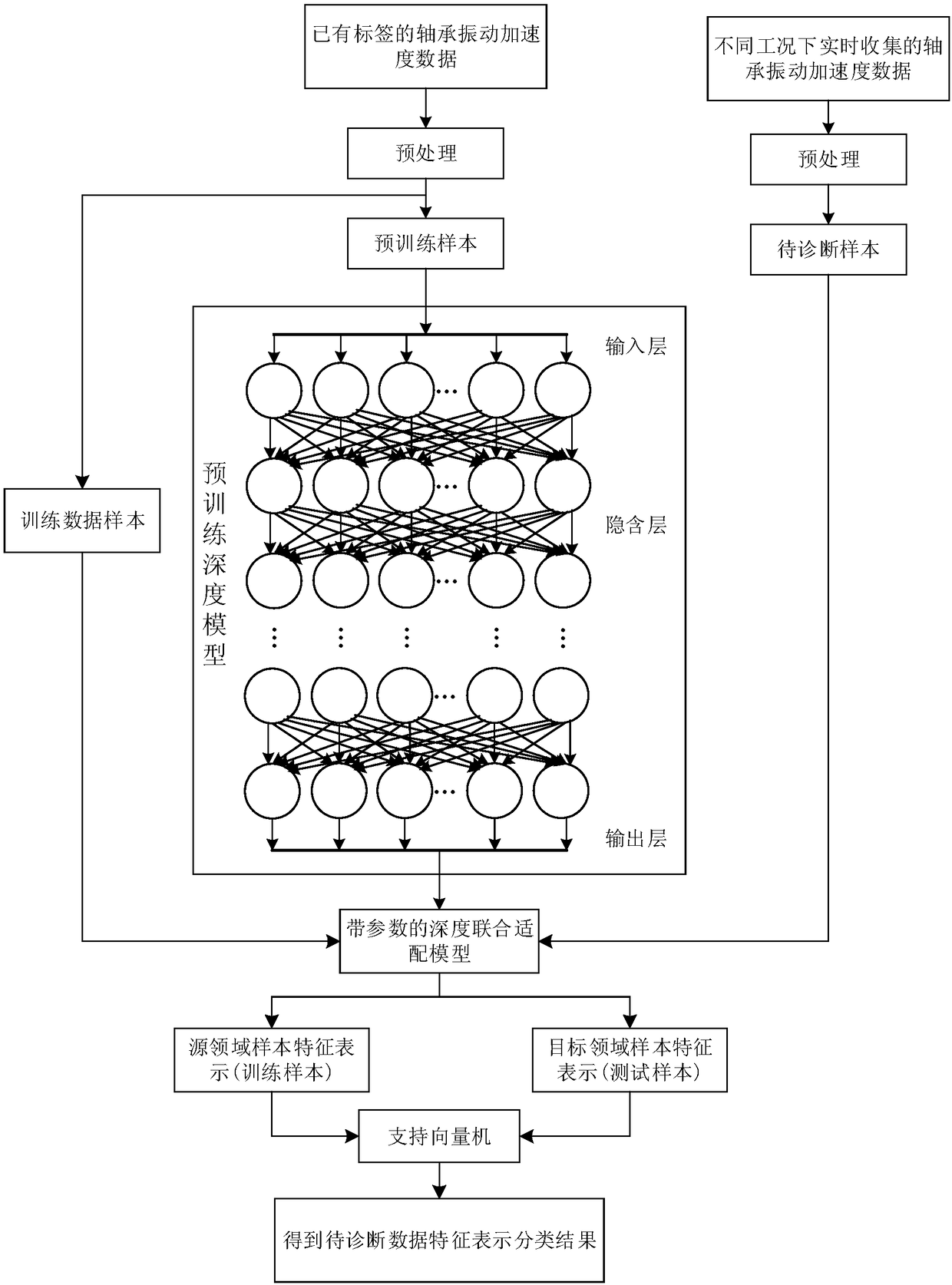

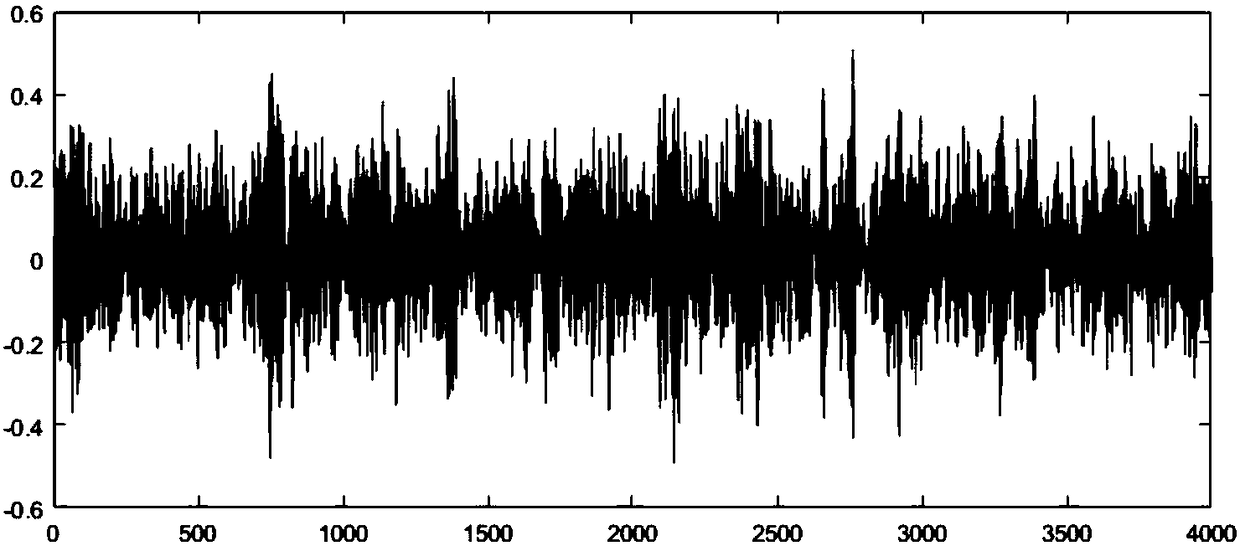

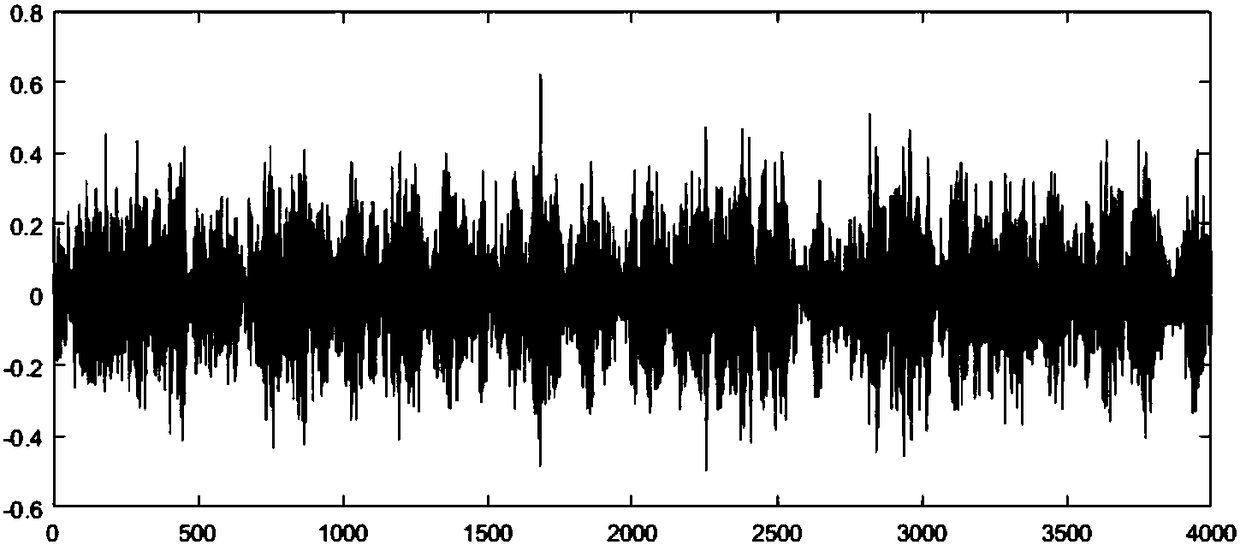

Wind turbine generator bearing fault diagnosing method based on deep joint adaptation network

ActiveCN108344574AReduce operational complexityHigh similarityMachine bearings testingElectricityFusion mechanism

The invention discloses a wind turbine generator bearing fault diagnosing method based on a deep joint adaptation network. The wind turbine generator bearing fault diagnosing method based on the deepjoint adaptation network comprises the following steps: 1), establishing a multi-element fusion database; 2), establishing a deep joint adaptation model; 3), establishing a wind turbine generator bearing fault diagnosing model of the deep joint adaptation network; 4), establishing a multi-GPU cluster computing system. With the wind turbine generator bearing fault diagnosing method, according to distribution difference characteristics between training data and target data during monitoring under different actual working conditions, an inter-domain invariant characteristic representing and probability distribution difference correcting mechanism is explored, a fault target recognition strategy based on an inter-domain joint distribution adaptation and common characteristic deep learning fusion mechanism is provided, advantages of a deep self-encoding network can be utilized, the characteristics are not required to be artificially selected, better, abstract and advanced characteristics can be automatically extracted, and the computational complexity of a classification algorithm is reduced; the wind turbine generator bearing fault diagnosing method is especially suitable for a multi-noise, large-data, complex-structure and dynamic system.

Owner:安赛尔(长沙)机电科技有限公司

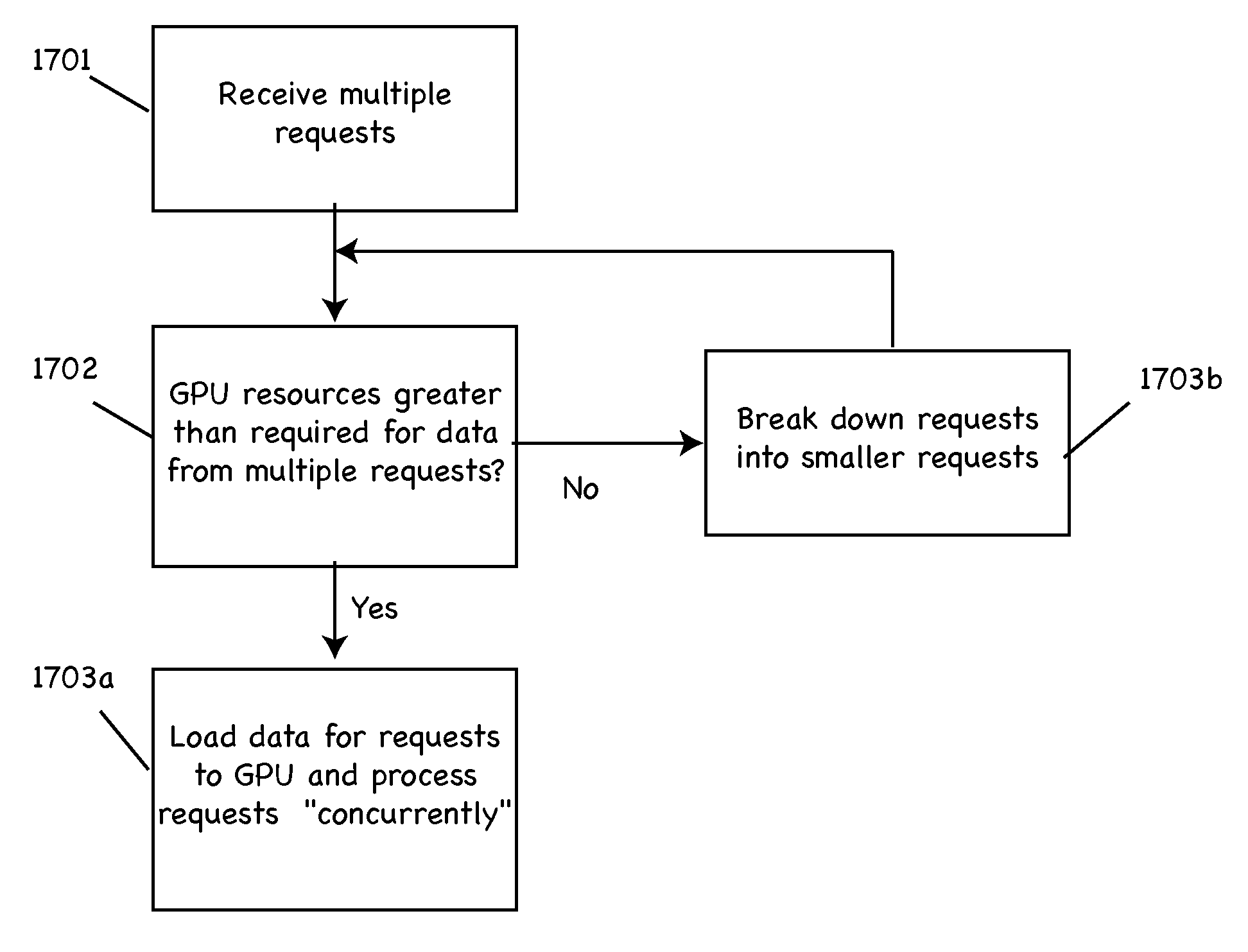

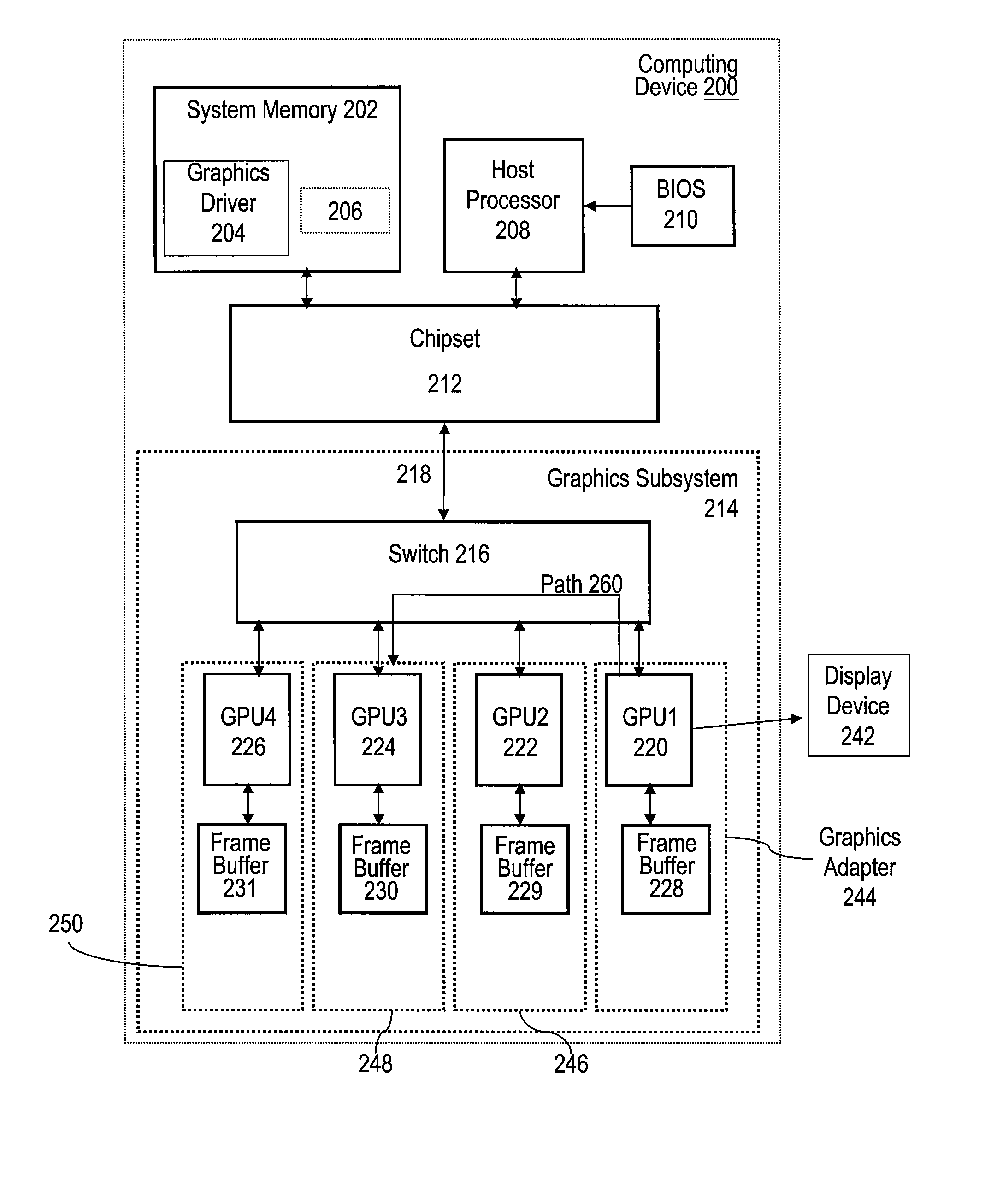

Method and system for using a GPU frame buffer in a multi-GPU system as cache memory

ActiveUS8345052B1Memory architecture accessing/allocationImage memory managementMulti gpuData buffer

A method and system for using a graphics processing unit (“GPU”) frame buffer in a multi-GPU computing device as cache memory are disclosed. Specifically, one embodiment of the present invention sets forth a method, which includes the steps of designating a first GPU subsystem in the multi-GPU computing device as a rendering engine, designating a second GPU subsystem in the multi-GPU computing device as a cache accelerator, and directing an upstream memory access request associated with an address from the first GPU subsystem to a port associated with a first address range, wherein the address falls within the first address range. The first and the second GPU subsystems include a first GPU and a first frame buffer and a second GPU and a second frame buffer, respectively.

Owner:NVIDIA CORP

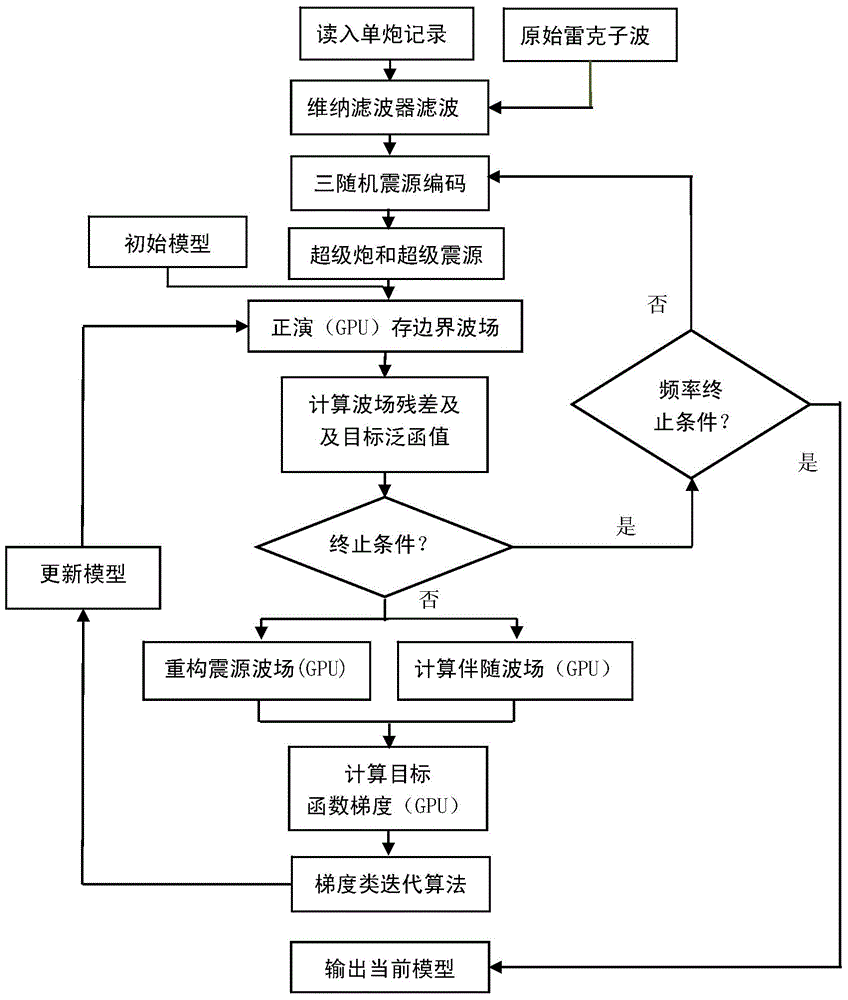

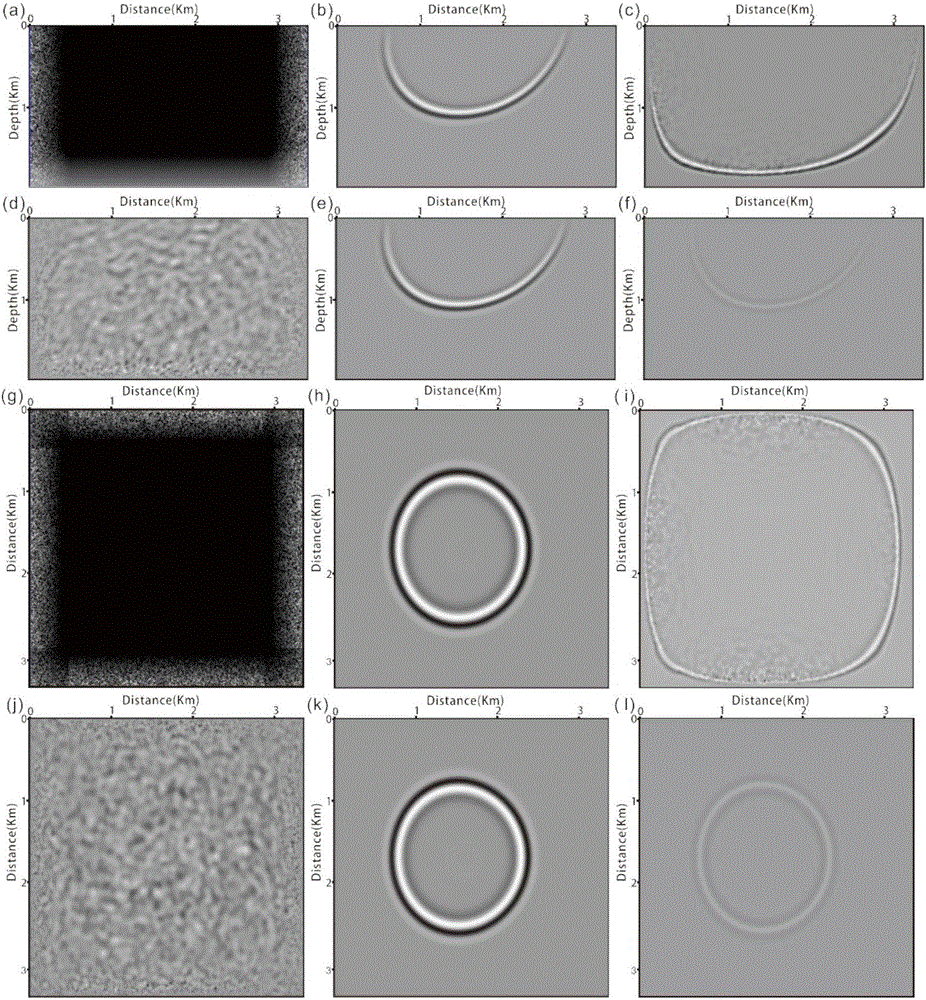

Efficient time domain full waveform inversion method

ActiveCN105319581AReduce the number of simulationsReduce input and outputSeismic signal processingWave equationWave field

The present invention provides an efficient time domain full waveform inversion method, belonging to the field of efficient, low storage, high precision and high speed modeling of petroleum seismography data. The efficient time domain full waveform inversion method comprises the following steps: (1) employing original seismic observation records to randomly form supper-shot gathers with different frequency bands through adoption of a source polarity randomizing scheme, a source position randomizing scheme and a source number randomizing scheme; (2) accelerating the forward modeling of high order staggered mesh finite differences of a sound wave equation system and an elastic wave system of the one-form basis of the time domain through adoption of a multi-GPU parallel computing technique on the basis of CUDA, and obtaining a supper-shot gather with the ifreq frequency band simulated by the GPU; and (3) simulating propagations with different seismic sources through multi-GPU nucleus and through adoption of the collaborative technology of the CPU and the GPU at the forward modeling, and storing boundary data of a seismic source wave field and all the wave fields at the last moment to a computer memory.

Owner:CHINA PETROLEUM & CHEM CORP +1

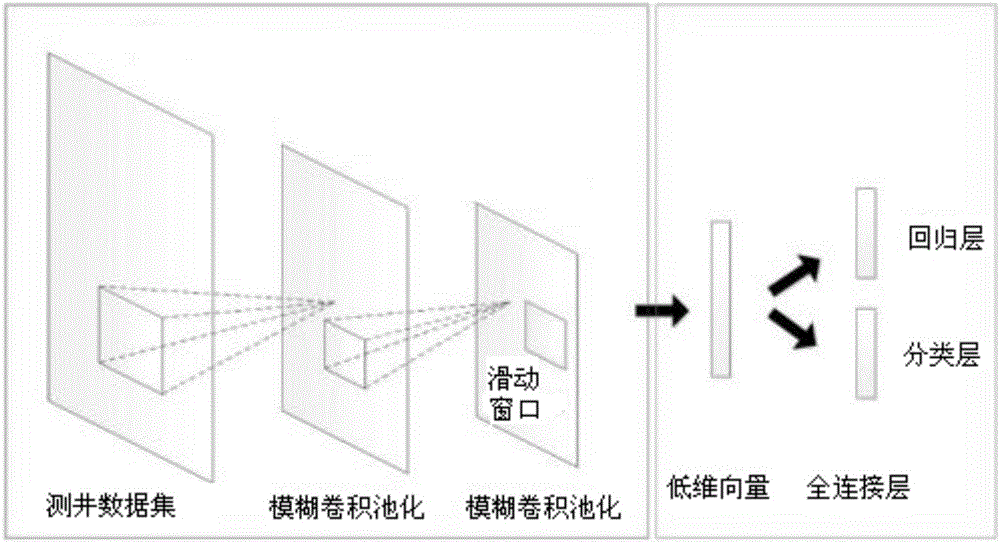

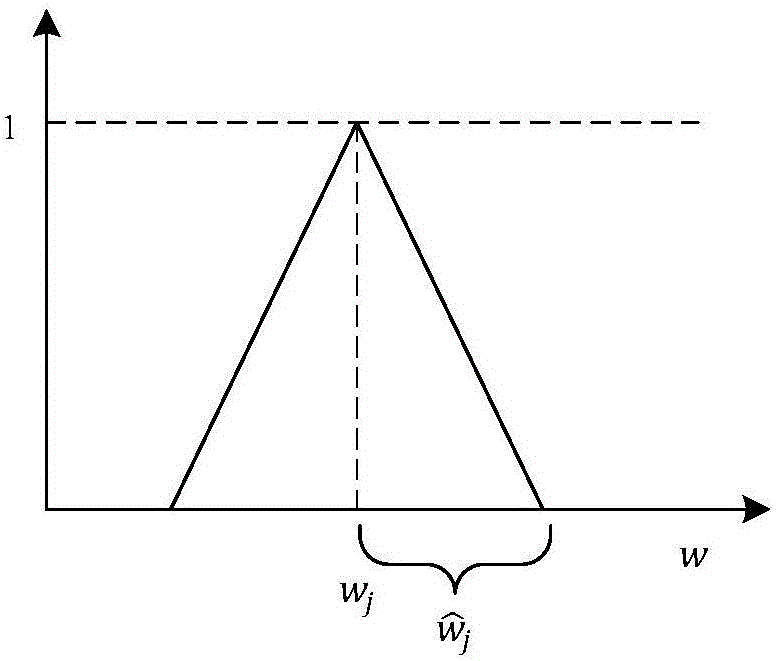

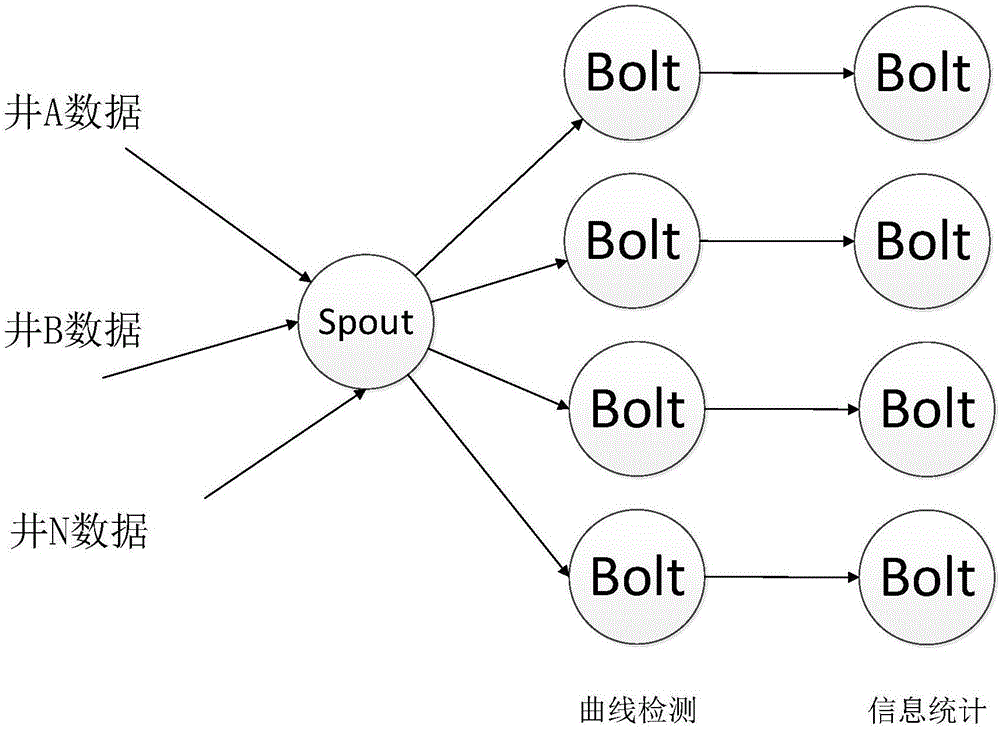

Parallelization method of convolutional neural networks in fuzzy region under big-data environment

ActiveCN106372402ASimple structureOptimization parametersNeural architecturesInformaticsObfuscationNerve network

The invention discloses a parallelization method of convolutional neural networks in a fuzzy region under a big-data environment. The parallelization method comprises the following steps: firstly, constructing the convolutional neural networks in the fuzzy region, putting a given target assumption region and object identification into the same network, carrying out convolutional calculation, and updating the weight of the whole network in a training process; and secondly, dividing an input log data set into a plurality of small data sets, introducing multiple workflows to pass through the convolutional neural networks in the fuzzy region in parallel for convolution and pooling, and independently training each small data set by virtue of gradient descent. By virtue of the parallelization method, a network structure and parameters are optimized, and relatively good analysis performance and precision are realized; furthermore, the number of FR-CNN obfuscation layers is adjusted aiming at different log data sets, so that the extracted features can well reflect the characters of oil-gas reservoirs, and the fuzzification problem of the log data can be solved; and the parallel training and execution of FR-CNN are carried out by virtue of multiple GPUs, so that the efficiency of the FR-CNN is improved.

Owner:CHINA UNIV OF PETROLEUM (EAST CHINA)

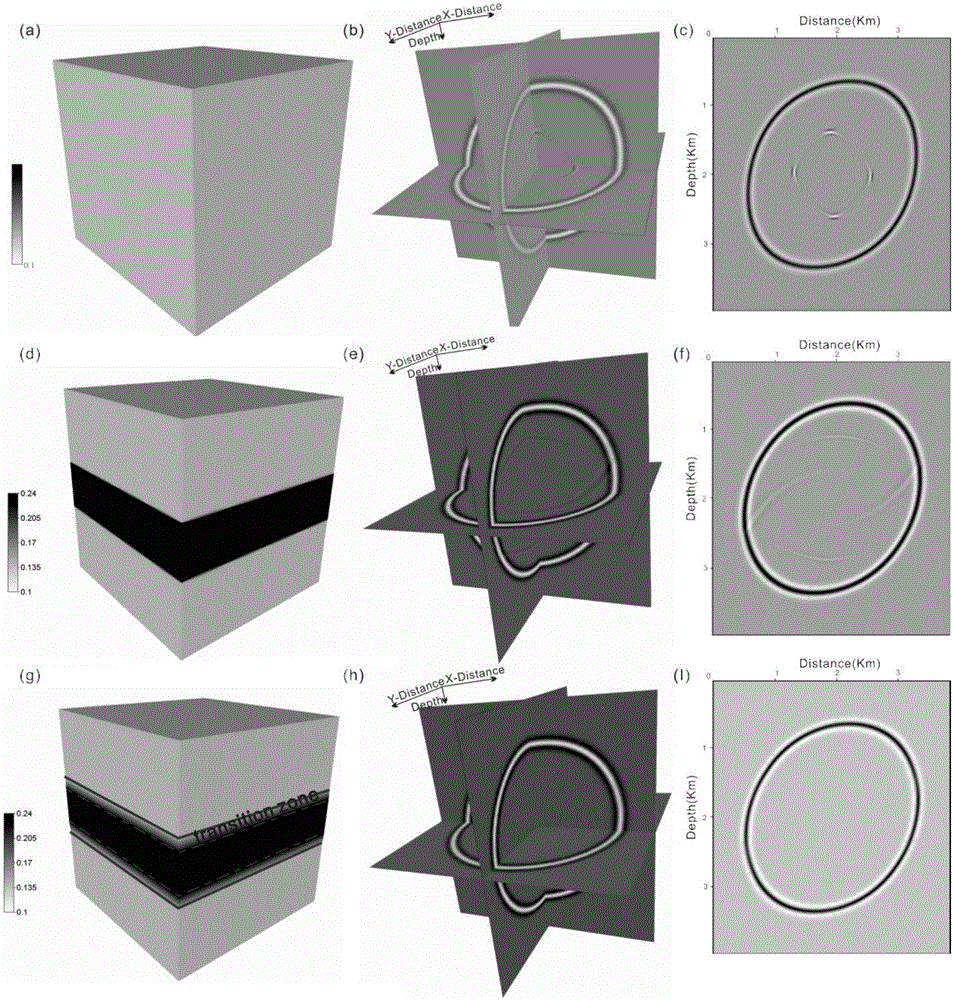

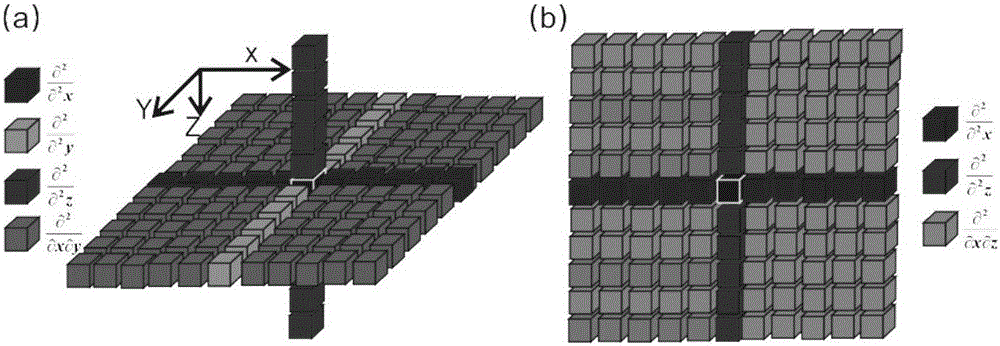

Multi GPU calculation based reverse time migration imaging method of 3D TTI medium

ActiveCN105717539AIncrease occupancyGood energy focusSeismic signal processingReverse timeWave field

The invention discloses a multi GPU calculation based reverse time migration imaging method of a 3D TTI medium. According to the method, a stable second-order coupling equation set serves as a calculation model, finite SV wave components are given to enhance the wave field spreading stability, an anisotropy parameter matching method is used to suppress interference of pseudo-transverse waves, uniform anisotropy boundaries corresponding to a random speed boundary and other parameters are introduced, storage of large amount of wave field data and frequency disk reading and writing are avoided, and a GPU-based CUDA platform is used to realize high-performance parallel calculation. According to the invention, a second-order coupling quasi-acoustic equipment in the TTI medium is solved in a difference method on the basis of multi-GPU parallel calculation technology, wave field spreading is stable, pseudo-transverse waves are suppressed effectively, application of the random boundary reduces wave field storage and disk reading and writing, a multi-asynchronous-flow high parallel strategy of multi GPU calculation is used, the calculation efficiency is improved obviously, and the imaging method meets requirements for industrialization of anisotropy reverse time migration.

Owner:CHINA UNIV OF GEOSCIENCES (BEIJING)

Methods and Devices for Cognitive-based Image Data Analytics in Real Time Comprising Convolutional Neural Network

InactiveUS20190138830A1Television system detailsCharacter and pattern recognitionMulti gpuData profiling

A real time video analytic processor that uses a trained convolutional neural network that embodies algorithms and processing architectures that process a wide variety of sensor images in a fashion that emulates how the human visual path processes and interprets image content. Spatial, temporal, and color content of images are analyzed and the salient features of the images determined. These salient features are compared to the salient features of objects of user interest in order to detect, track, classify, and characterize the activities of the objects. Objects or activities of interest are annotated in the image streams and alerts of critical events are provided to the user. Instantiation of the cognitive processing can be accomplished on multi-FPGA and multi-GPU processing hardware.

Owner:IRVINE SENSORS

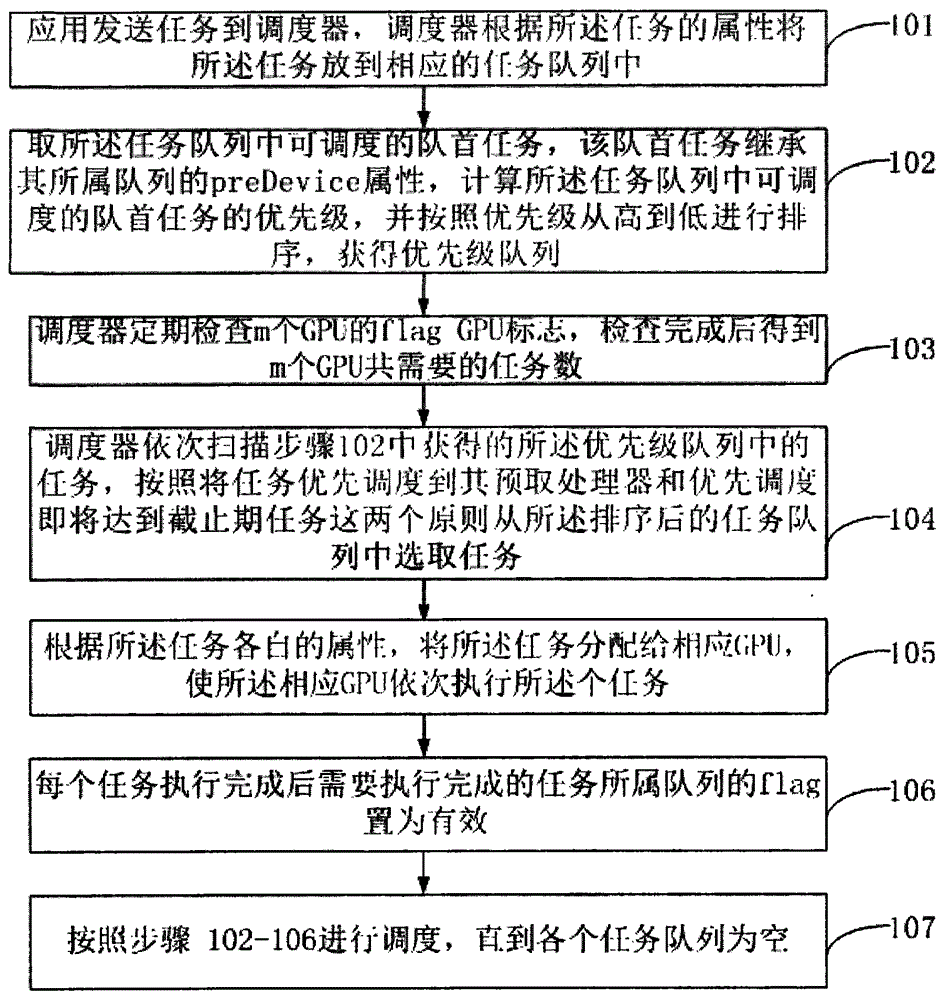

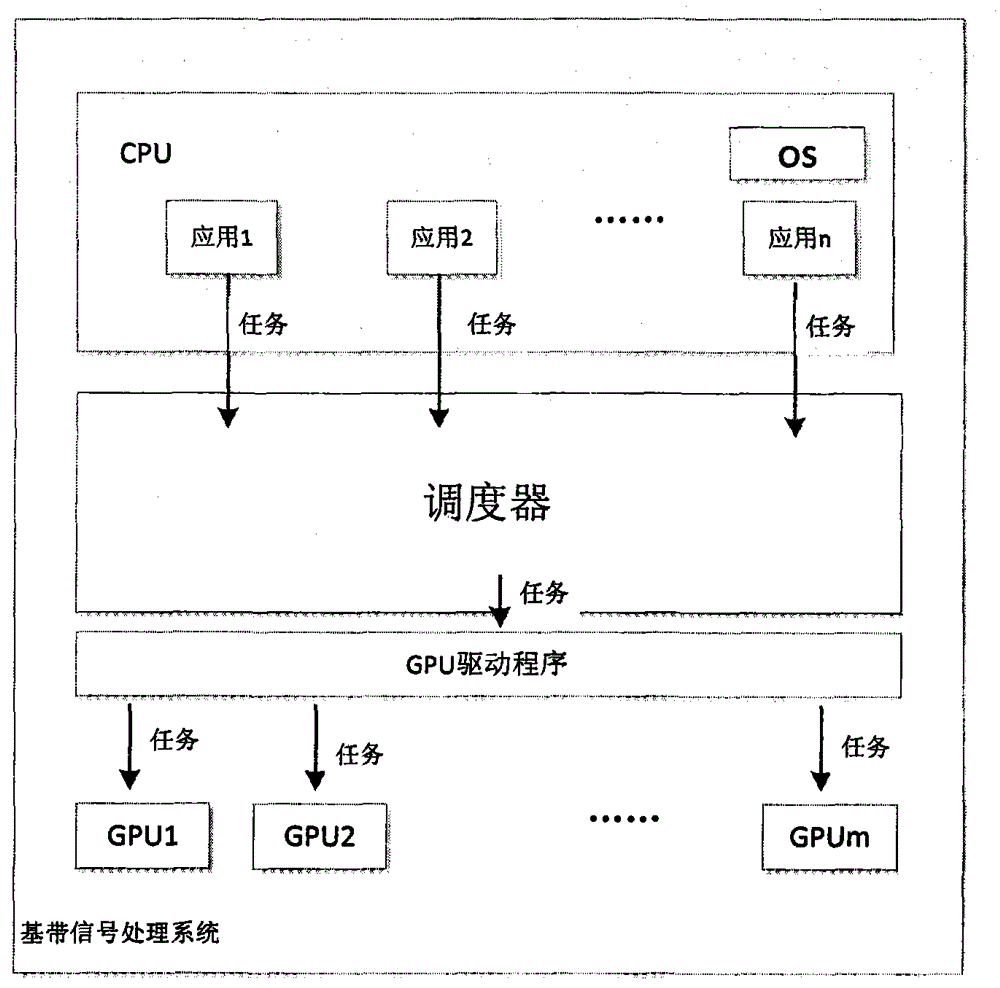

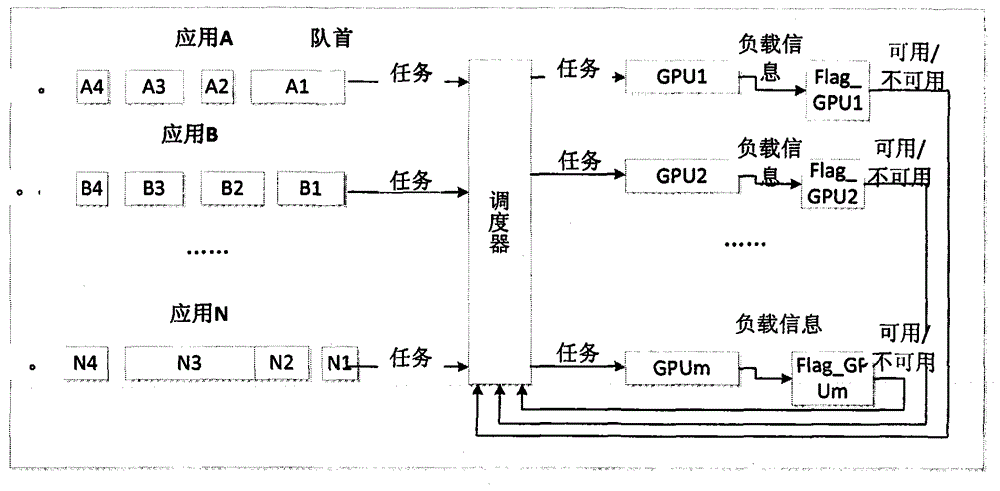

Baseband signal processing task parallelism real-time scheduling method based on multiple GPUs

InactiveCN104156264AReduce wasteImprove real-time performanceProgram initiation/switchingResource allocationMulti gpuSignal processing

The embodiment of the invention provides a baseband signal processing task parallelism real-time scheduling method based on multiple GPUs, and belongs to the technical field of computers. The method can improve instantaneity and throughout capacity of baseband signal processing. The method includes the steps that an application sends tasks to a scheduler, and the scheduler places the tasks to corresponding task queues according to attributes of the tasks; the scheduler checks flag_GPU marks of the m GPUs periodically, and the number m' of the tasks needed by the m GPUs is obtained after checking; sequenced m' tasks are selected for the GPUs, and the m' tasks inherit preDevice attributes of the task queues which the m' tasks belong to; according to the preDevice attribute of each of the m' tasks, the m' tasks are distributed to a prefetched processor, and the GPUs execute the m' tasks; after the tasks are executed, the flags of the queues which the executed tasks belong to are set to be valid until all the task queues are empty.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com