Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

469 results about "CUDA" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

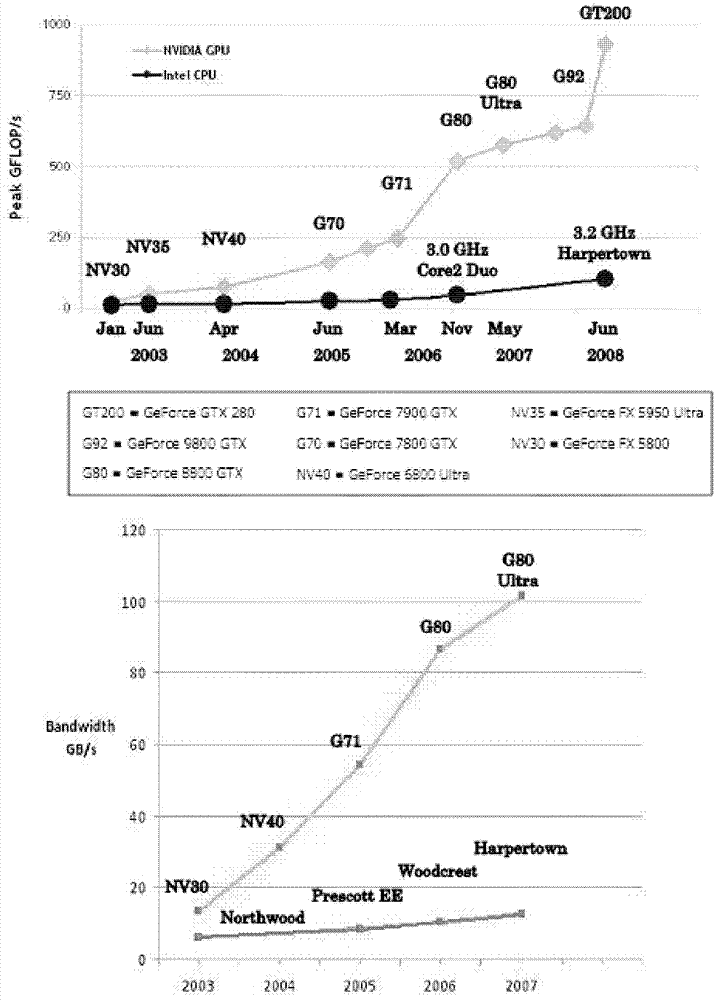

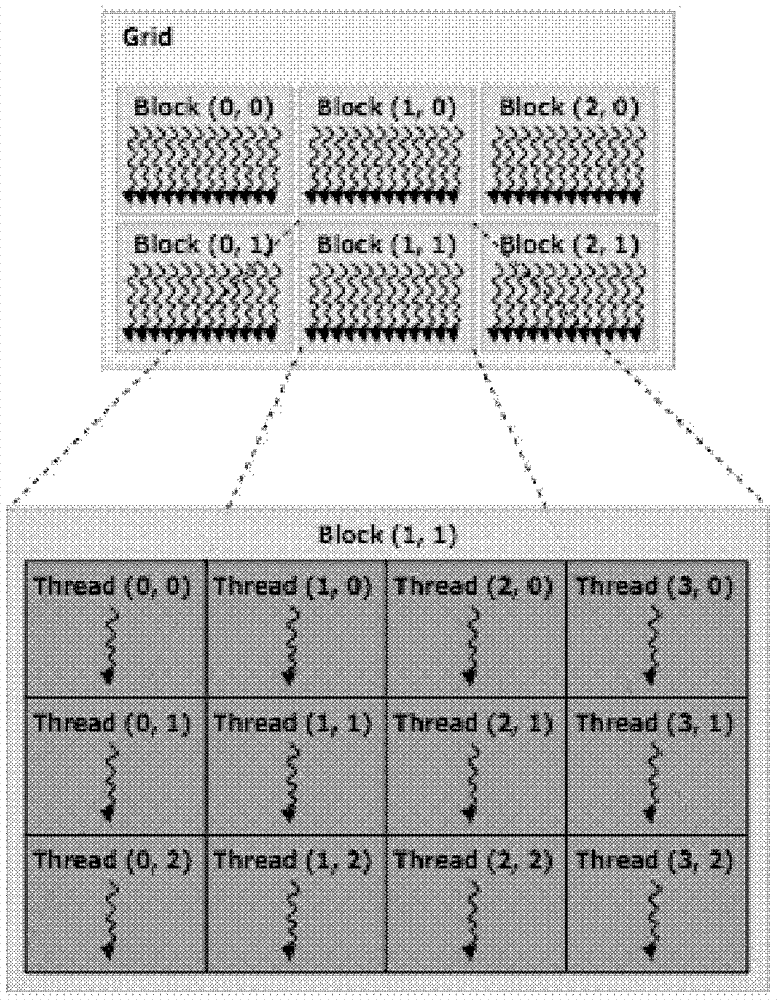

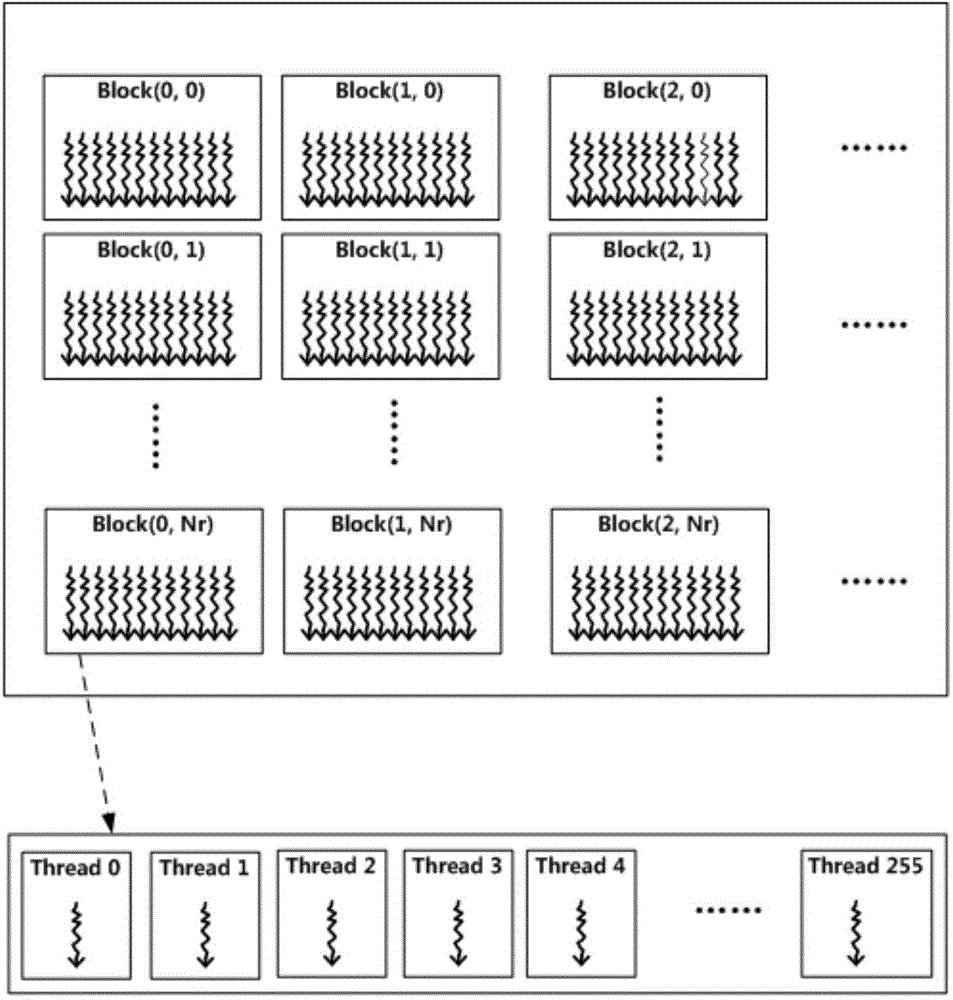

CUDA (Compute Unified Device Architecture) is a parallel computing platform and application programming interface (API) model created by Nvidia. It allows software developers and software engineers to use a CUDA-enabled graphics processing unit (GPU) for general purpose processing — an approach termed GPGPU (General-Purpose computing on Graphics Processing Units). The CUDA platform is a software layer that gives direct access to the GPU's virtual instruction set and parallel computational elements, for the execution of compute kernels.

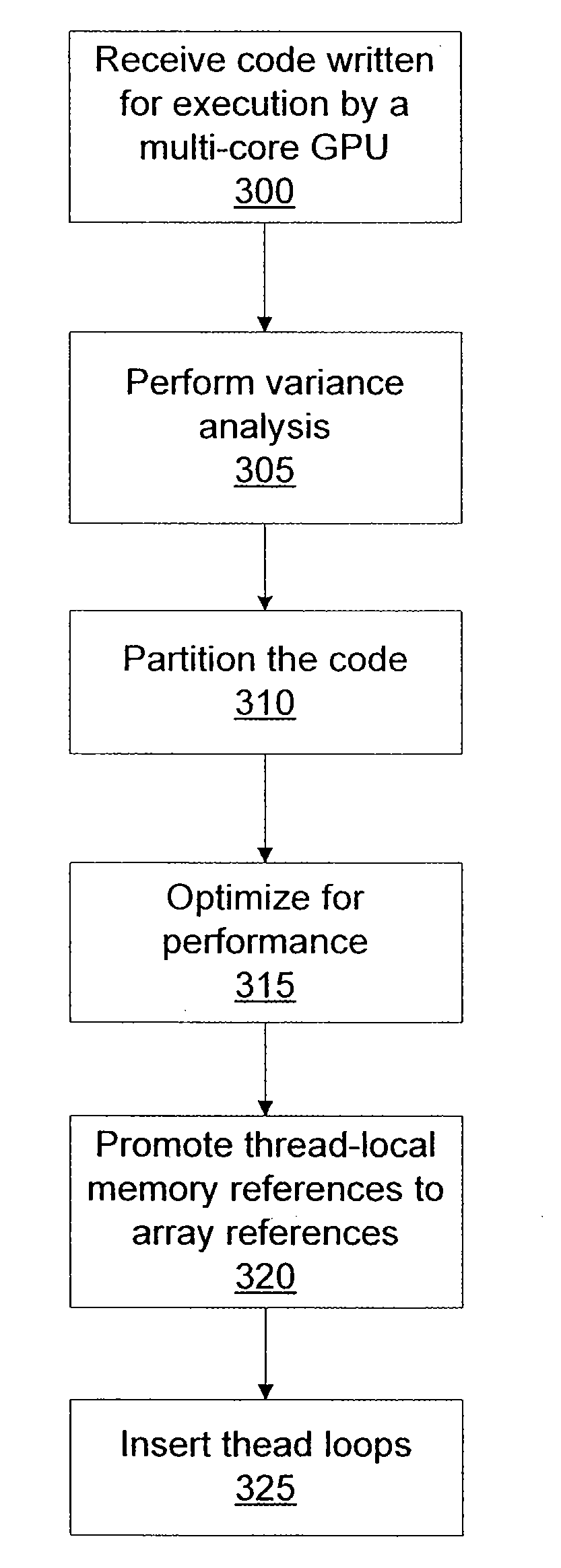

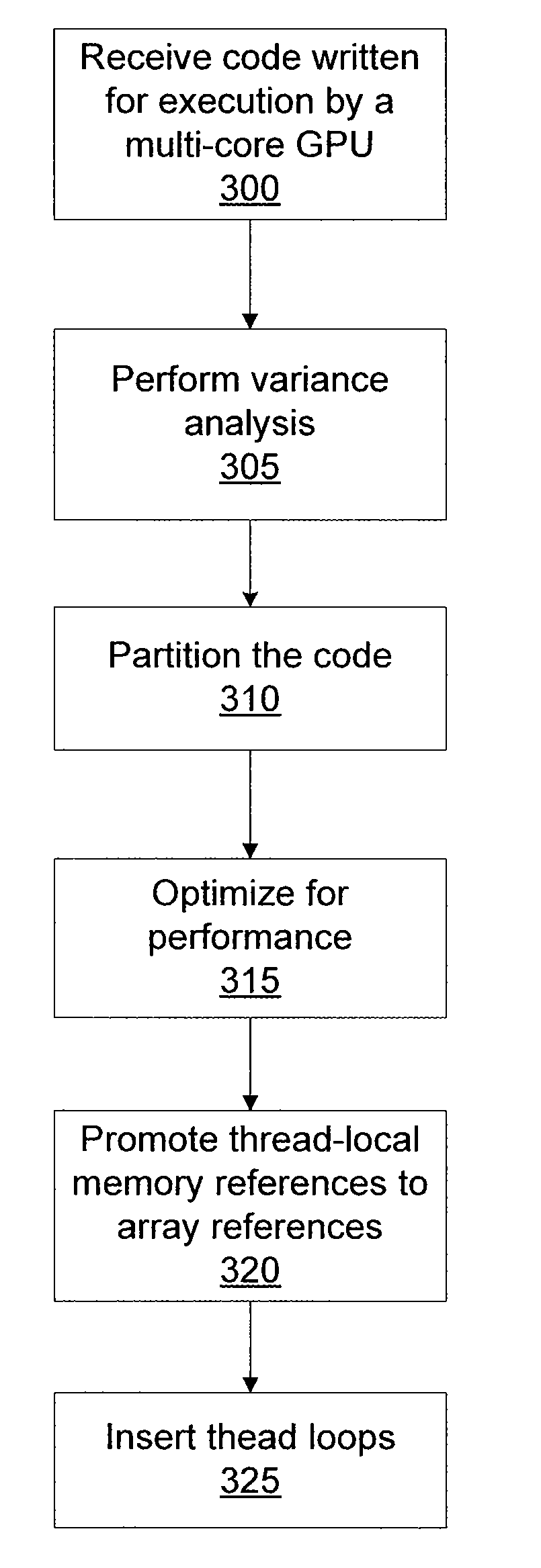

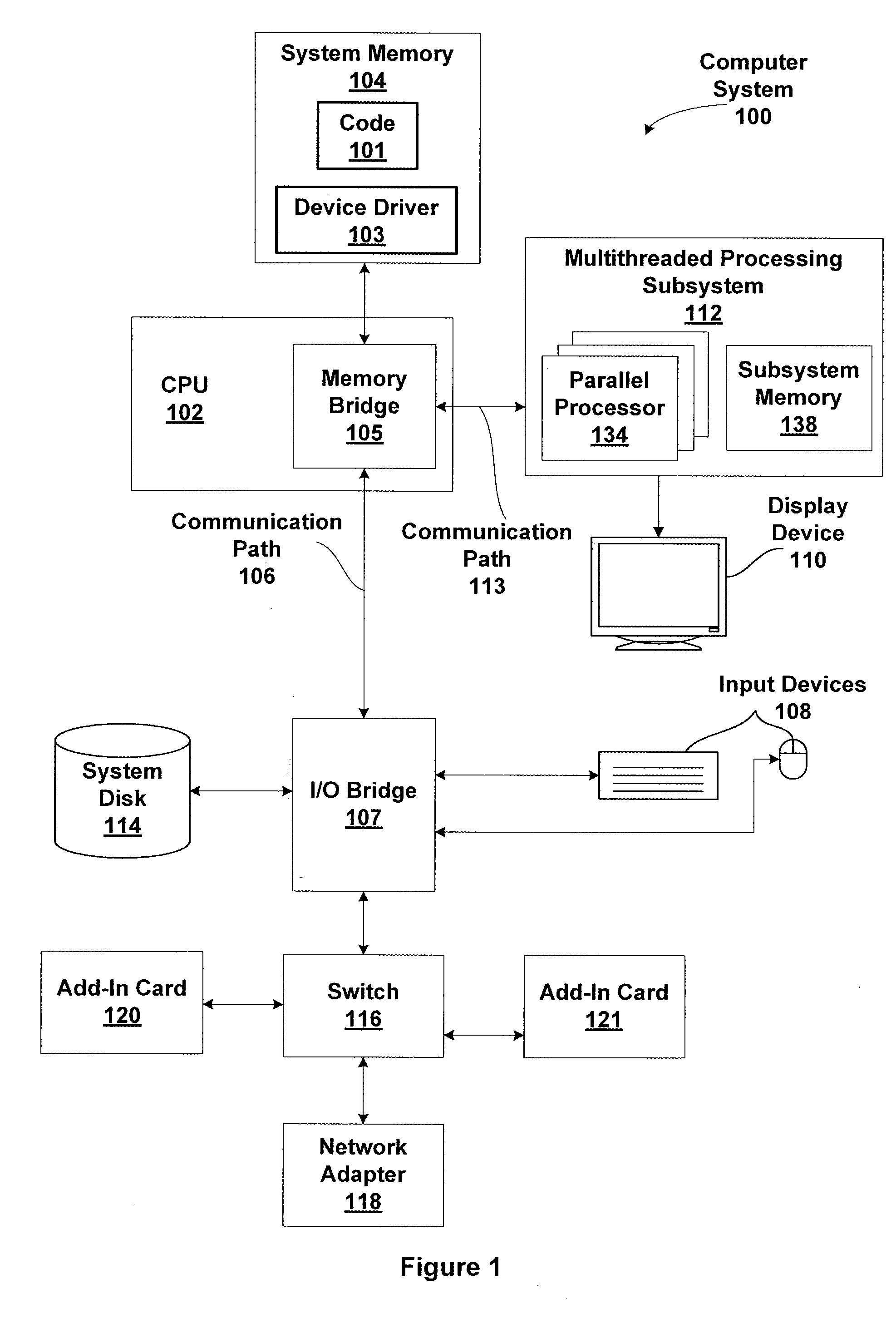

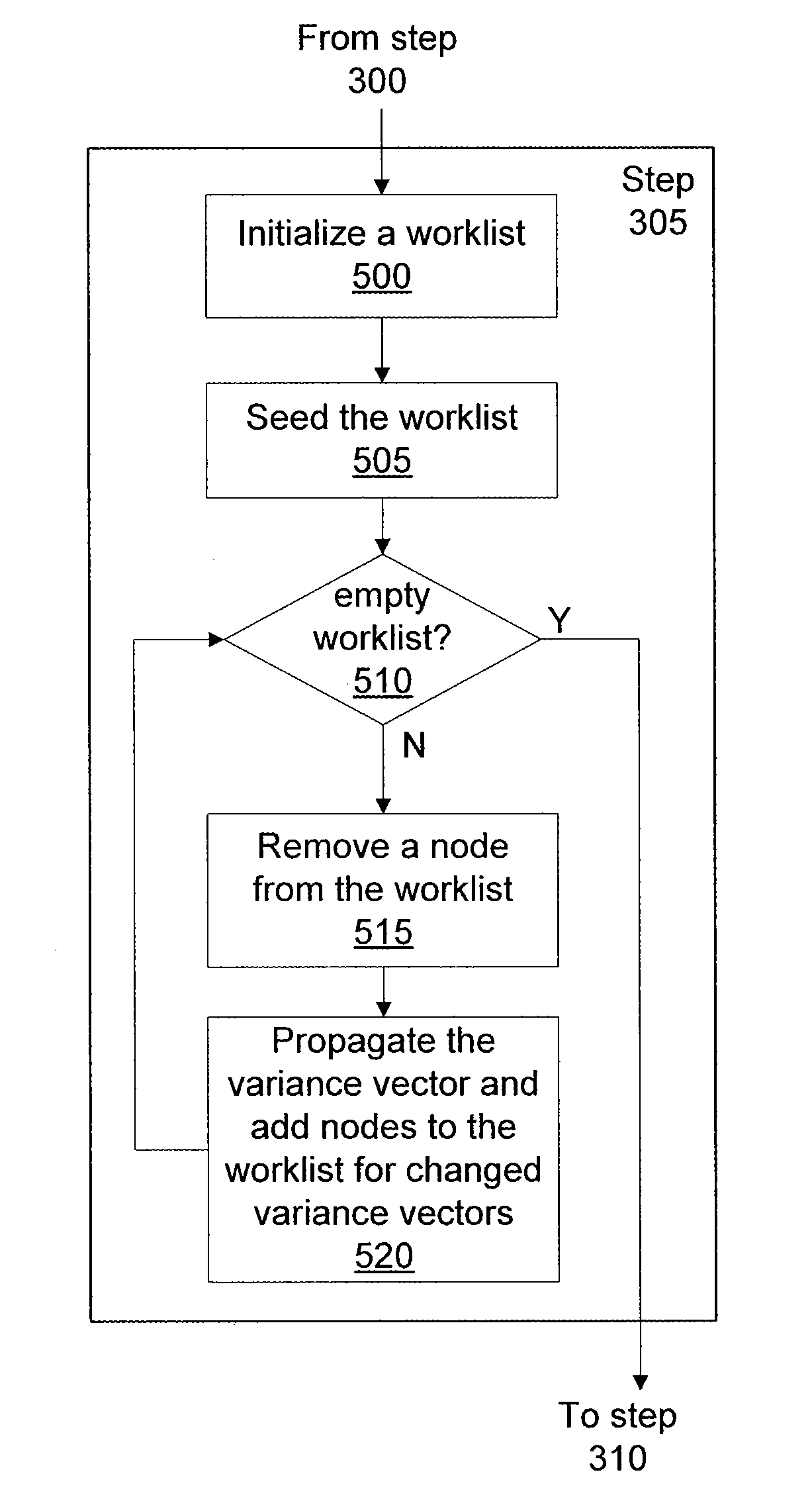

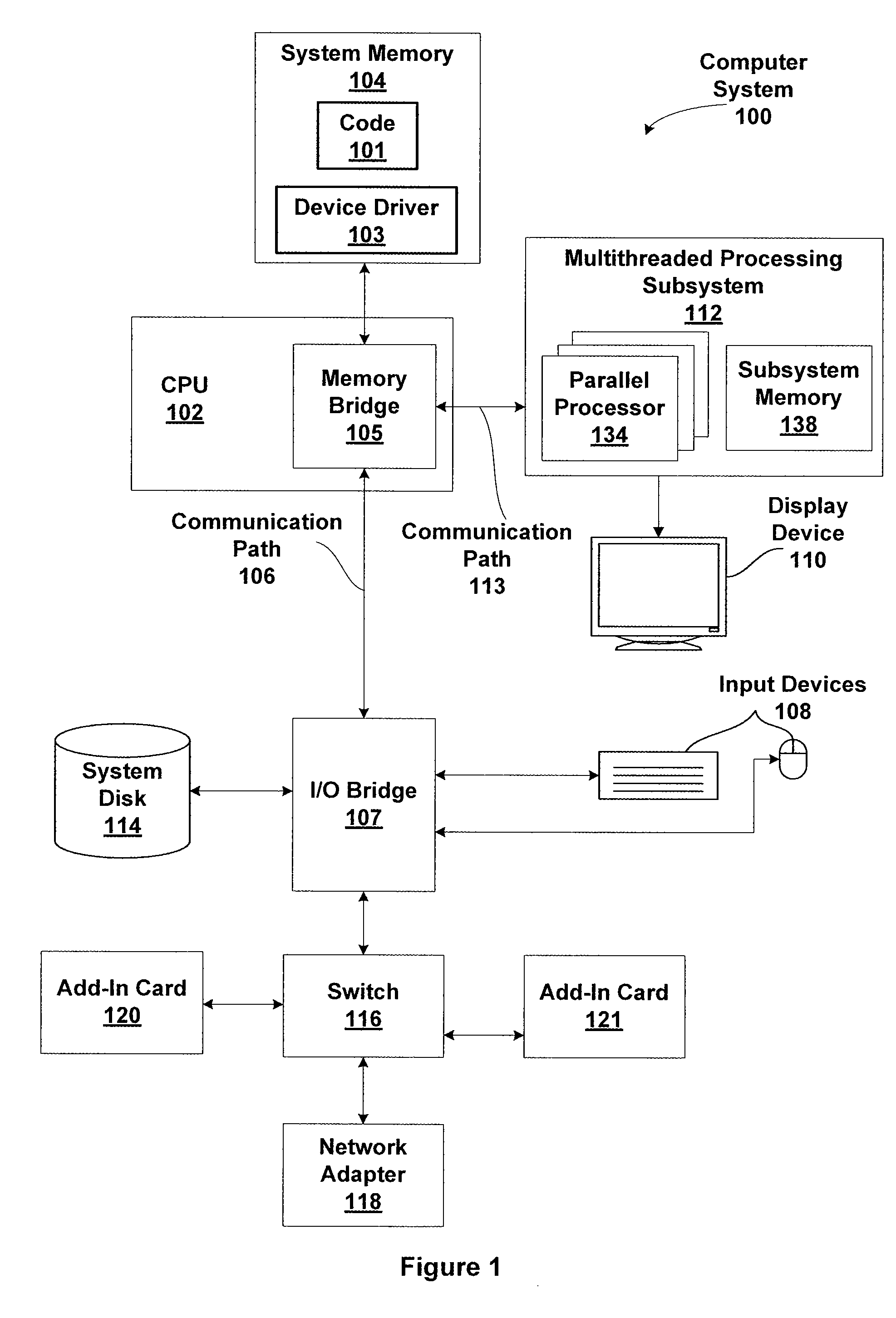

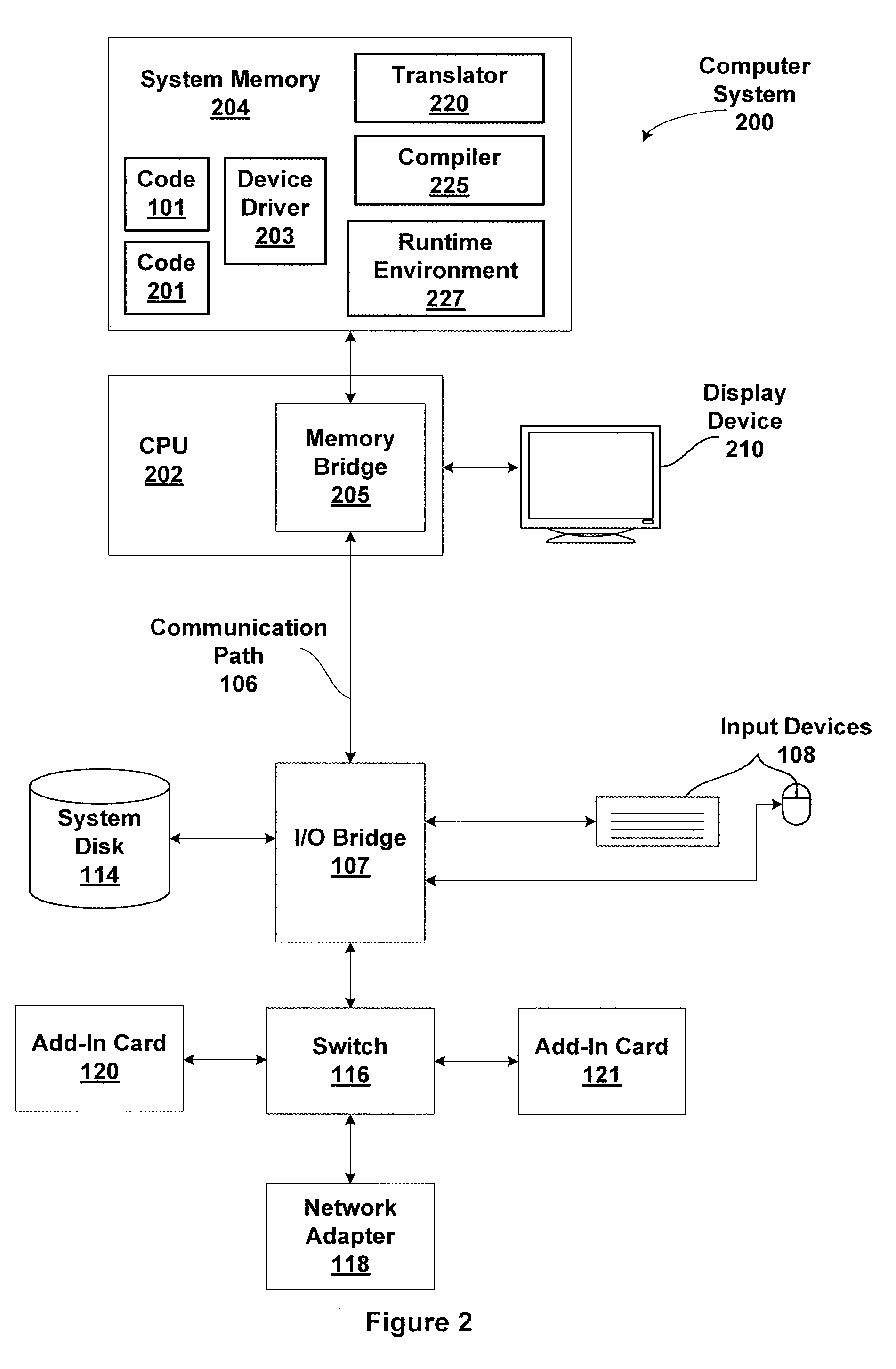

Variance analysis for translating cuda code for execution by a general purpose processor

ActiveUS20090259997A1Error detection/correctionSpecific program execution arrangementsGeneral purposeGraphics

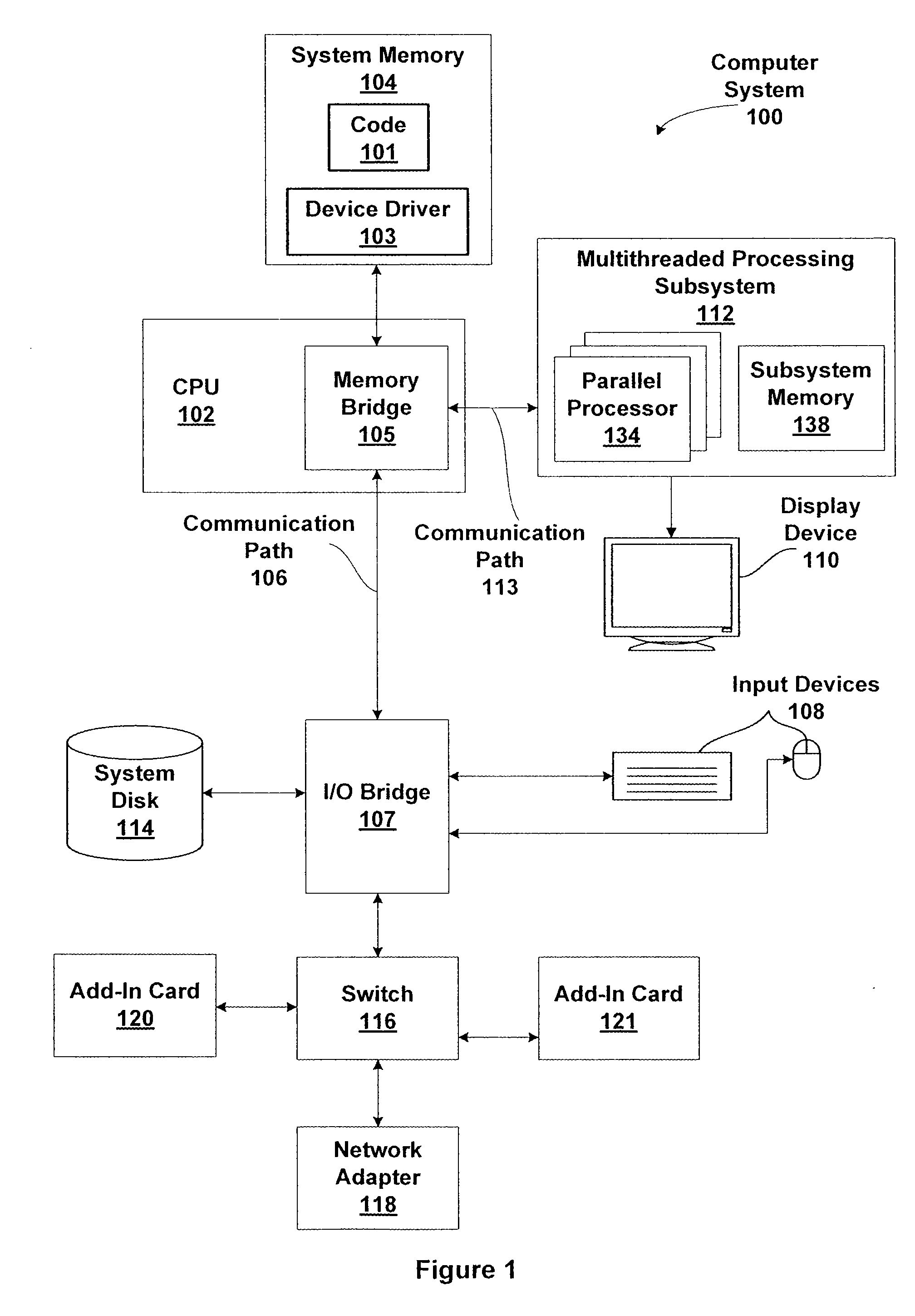

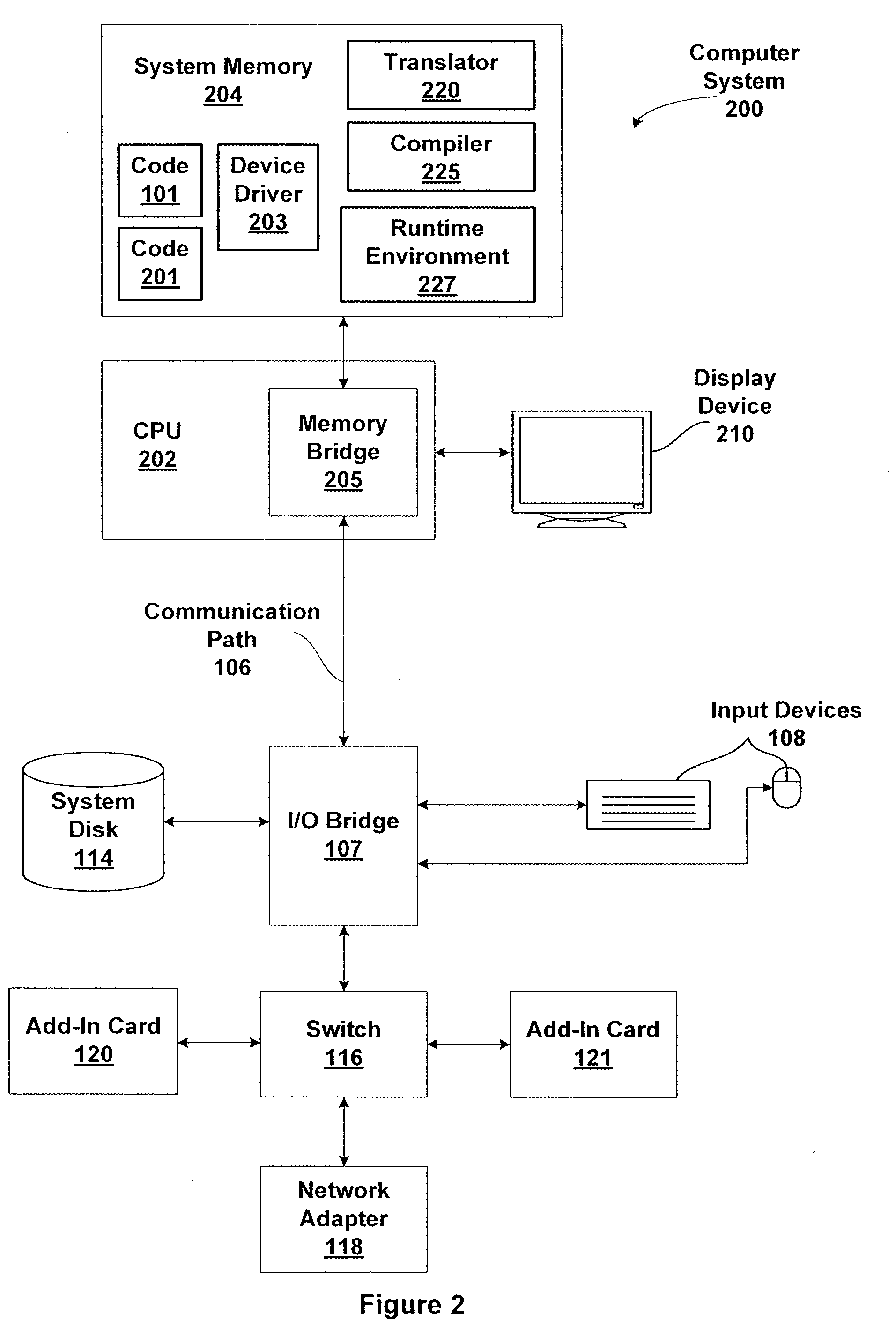

One embodiment of the present invention sets forth a technique for translating application programs written using a parallel programming model for execution on multi-core graphics processing unit (GPU) for execution by general purpose central processing unit (CPU). Portions of the application program that rely on specific features of the multi-core GPU are converted by a translator for execution by a general purpose CPU. The application program is partitioned into regions of synchronization independent instructions. The instructions are classified as convergent or divergent and divergent memory references that are shared between regions are replicated. Thread loops are inserted to ensure correct sharing of memory between various threads during execution by the general purpose CPU.

Owner:NVIDIA CORP

Tattoo image classification method based on deep learning

ActiveCN103996056AIncrease workloadImprove reliabilityCharacter and pattern recognitionLearning machineImaging quality

The invention discloses a tattoo image classification method based on deep learning. The tattoo image classification method based on the deep learning comprises the following steps: 1) transforming samples: 1.1) affine transformation, 1.2) elastic transformation, 1.3) sheltering simulation and 1.4) whitening; 2) carrying out self-encoding pre-training: training a great quantity of colorful tattoo images by a self-encoding learning machine which is optimized by CUDA (compute unified device architecture) to obtain certain common edge information of the tattoo images, and meanwhile, picking up and applying the images to the first layer of a convolutional network; 3) carrying out convolutional network training to a transformed sample by a result obtained by self encoding. The image classification method based on the deep learning, which is disclosed by the invention, is effectively free from the influence by irradiation direction, skin color, hair, ray, image quality and the like, and has the advantages of good reliability and high realization efficiency.

Owner:ZHEJIANG UNIV OF TECH

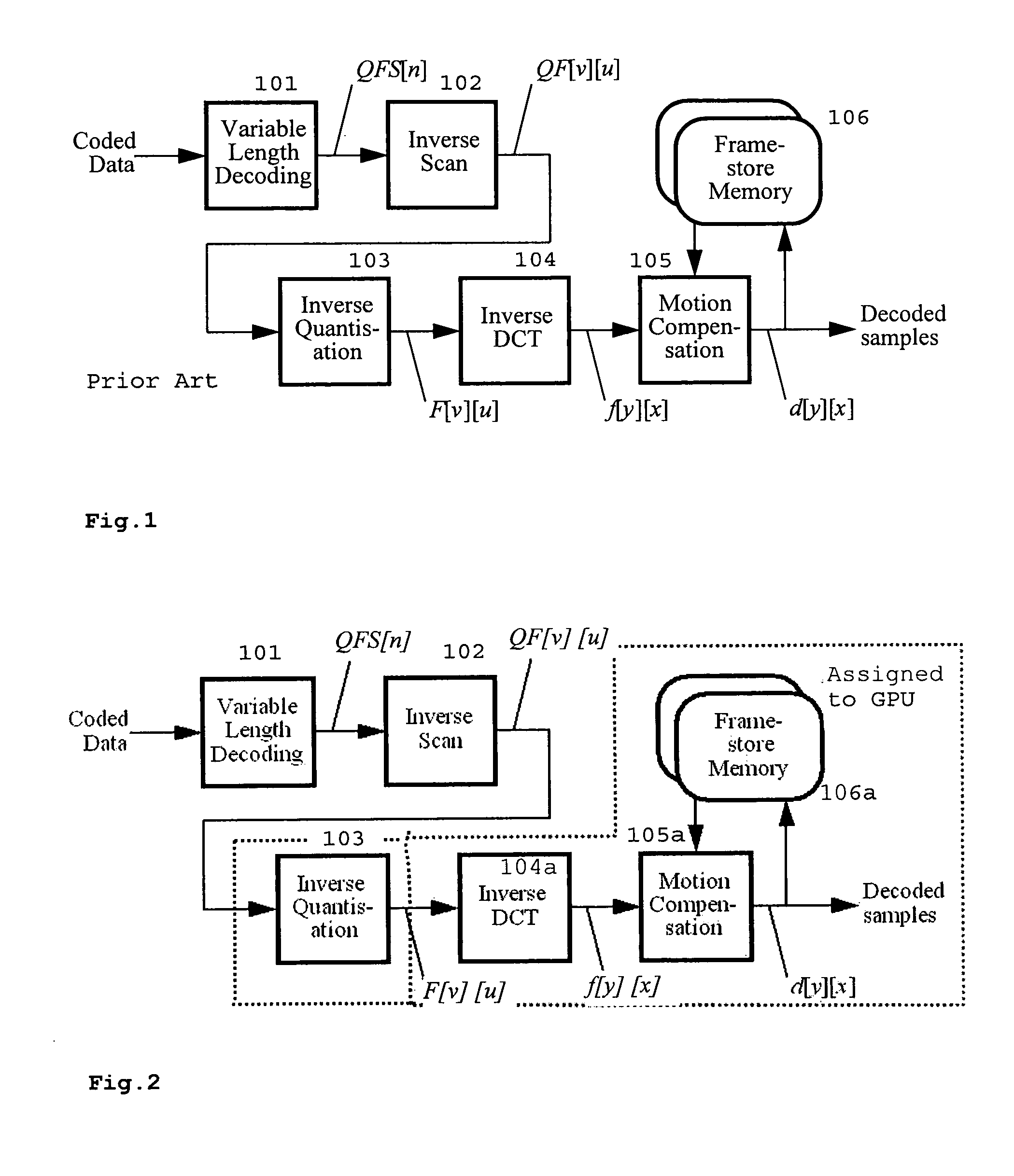

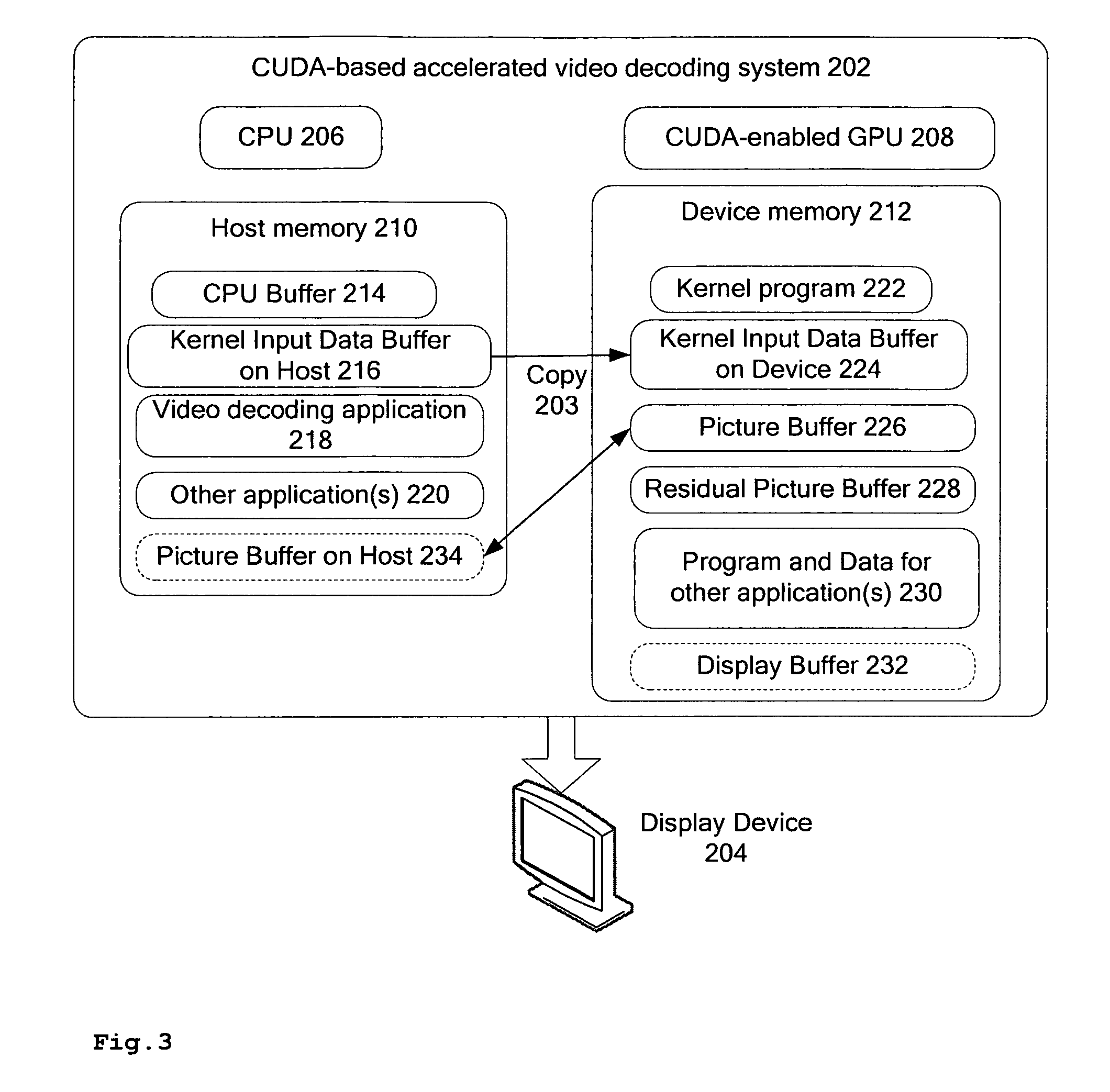

Method for video decoding supported by graphics processing unit

InactiveUS20100135418A1Picture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningGraphicsData transport

A method for utilizing a CUDA based GPU to accelerate a complex, sequential task such as video decoding, comprises decoding on a CPU headers and macroblocks of encoded video, performing inverse quantization (on CPU or GPU), transferring the picture data to GPU, where it is stored in a global buffer, and then on the GPU performing inverse waveform transforming of the inverse quantized data, performing motion compensation, buffering the reconstructed picture data in a GPU global buffer, determining if the decoded picture data are used as reference for decoding a further picture, and if so, copying the decoded picture data from the GPU global buffer to a GPU texture buffer. Advantages are that the data communication between CPU and GPU is minimized, the workload of CPU and GPU is balanced and the modules off-loaded to GPU can be efficiently realized since they are data-parallel and compute-intensive.

Owner:INTERDIGITAL VC HLDG INC

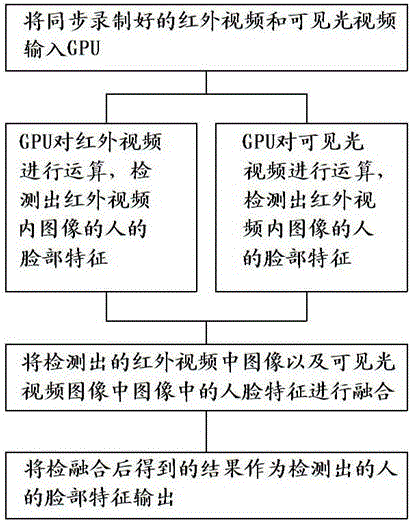

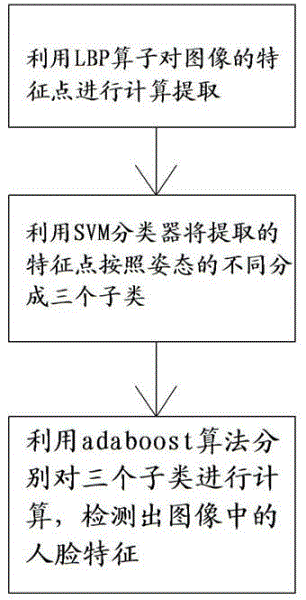

Multispectral face detection method based on graphics processing unit (GPU)

InactiveCN102622589ABreak the limitation of only being able to detect positive facesCharacter and pattern recognitionFace detectionSvm classifier

The invention discloses a multispectral face detection method based on a graphics processing unit (GPU). The GPU based on compute unified device architecture (CUDA) is used for calculating an infrared light video and a visible light video which are recorded synchronously, so that features of a face in an infrared light image and a visible light image are detected respectively; and an infrared light detection result and a visible light detection result are combined synchronously, and a combined result is used as a face feature of a human and output. According to the multispectral face detection method, the face detection results based on the infrared light image and the visible light image are combined. The detection method is not influenced by light, and a detected face image is an accurate visible light image; and a face in an image can be detected under a severe environment. During detection, a support vector machine (SVM) classifier for classifying attitudes of faces is constructed; and due to the classification of the attitude classifier, face detection based on an adaboos detection algorithm is performed on sub types of infrared images. According to the technology, faces with various attitudes in the infrared images can be detected; and the limitation that only front faces in the infrared images can be detected is broken through.

Owner:陈遇春

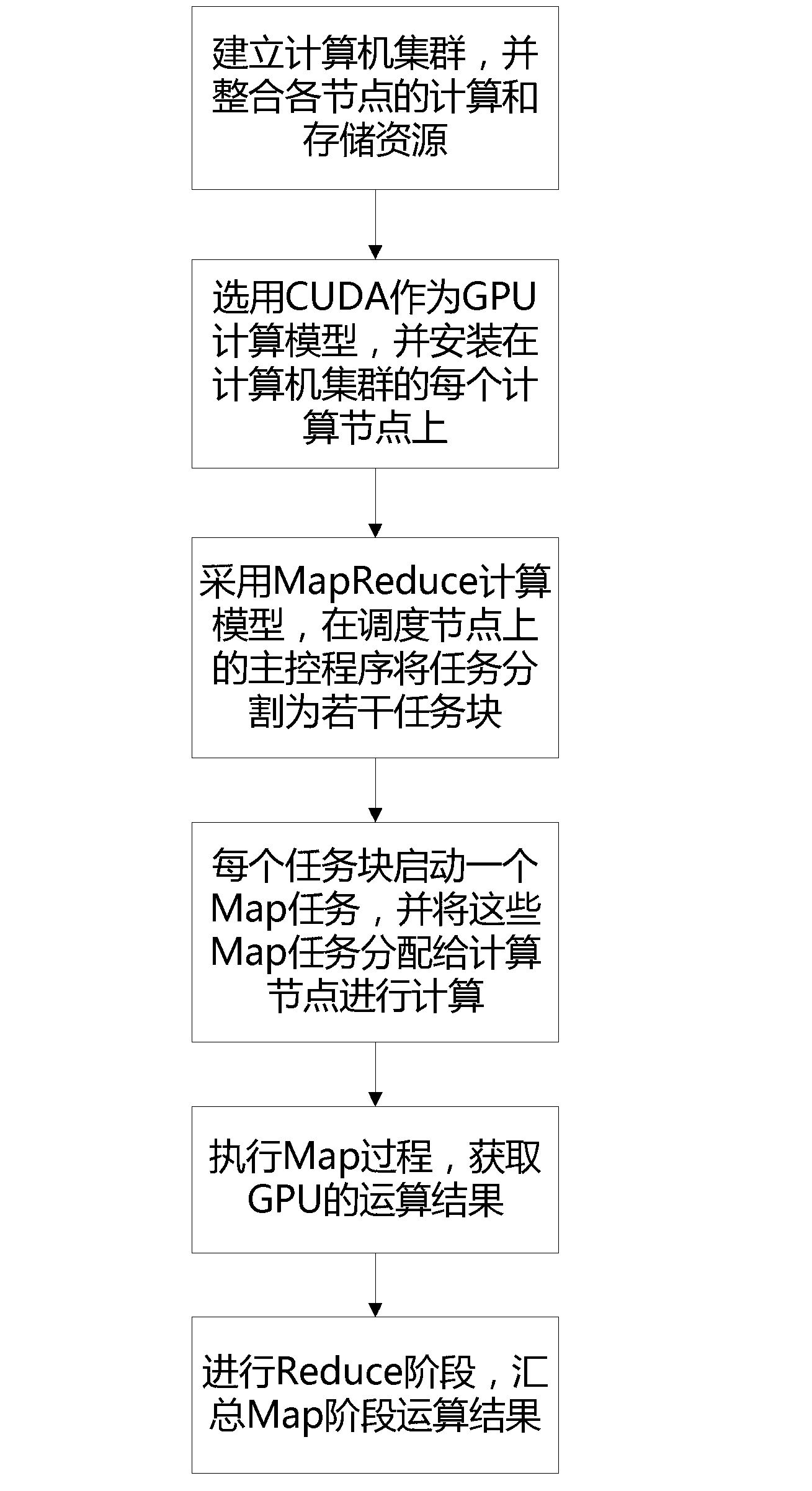

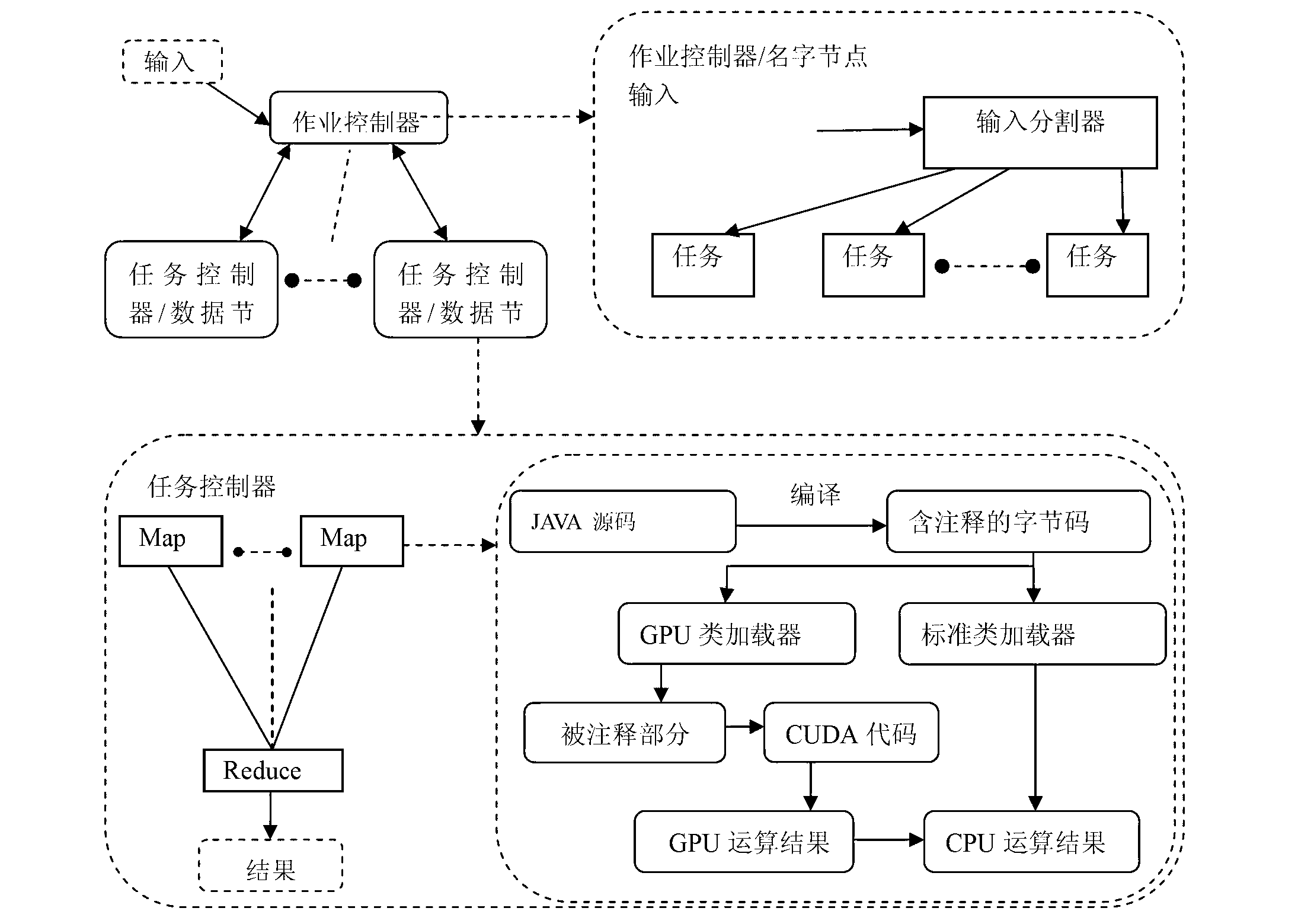

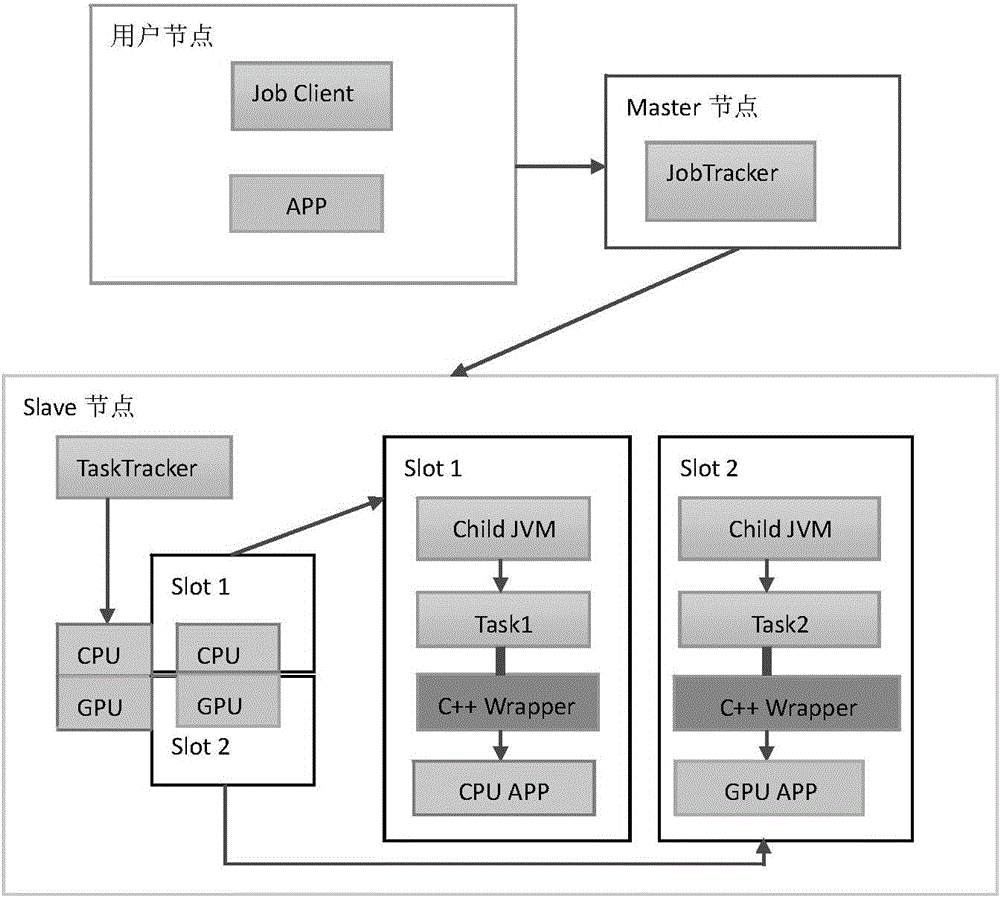

CPU/GPU (Central Processing Unit/ Graphic Processing Unit) cooperative processing method oriented to mass data high-performance computation

InactiveCN102708088ARealize unified schedulingRealize comprehensive utilizationConcurrent instruction executionMultiple digital computer combinationsComputer clusterSource code

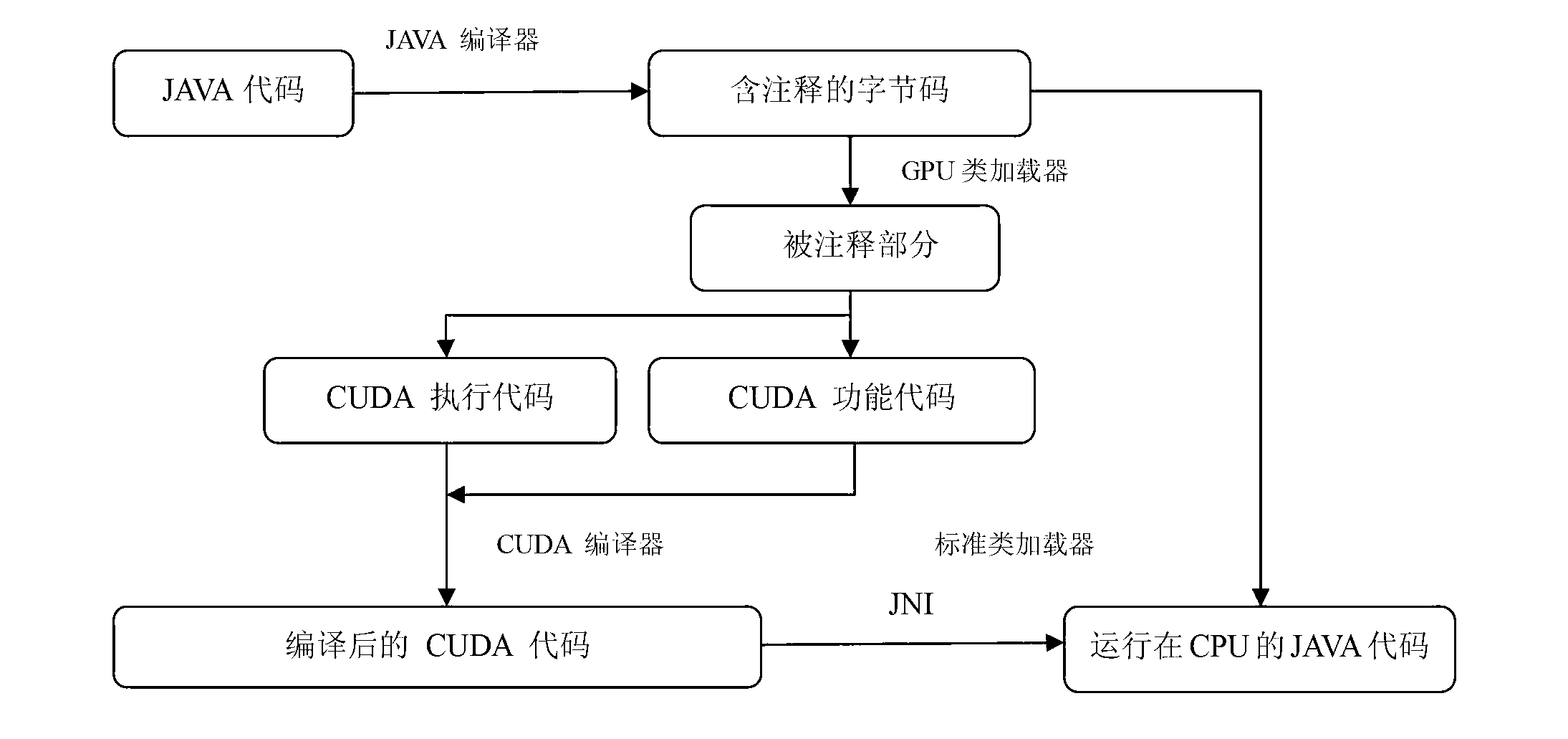

The invention provides a CPU / GPU (Central Processing Unit / Graphic Processing Unit) cooperative processing method oriented to mass data high-performance computation, which is used for solving the problem of lower operating efficiency of mass data computation. By designing a set of JAVA comment code criterion and building a computer cluster composed of multiple computers, an improved Hadoop platform is arranged in the cluster, and the designed JAVA comment code criterion and a GPU Class loader are added in the improved platform; a CUDA (Compute Unified Device Architecture) of a certain edition is mounted on each computing node, so that the user can conveniently use the CPU computation resource in a Map function of a MapReduce through the commend code when programming. The method realizes unified dispatch and utilization of CPU and GPU computing power on the computer cluster, so that the application having both data-intensive property and computation-intensive property can be realized efficiently, and the programmed source code is transplantable, and convenient for the programmer to develop.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

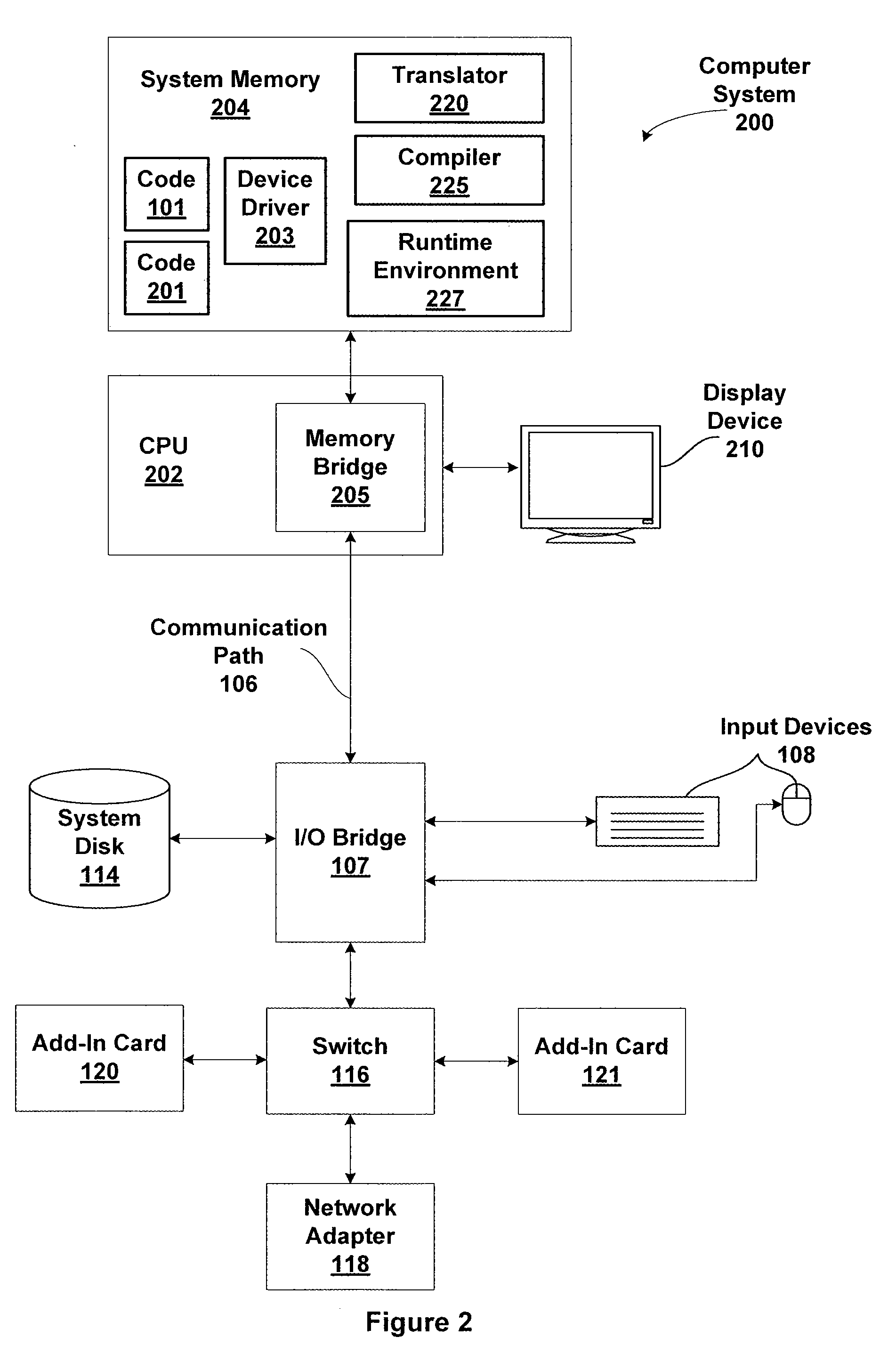

Partitioning cuda code for execution by a general purpose processor

ActiveUS20090259996A1Software engineeringSpecific program execution arrangementsGraphicsGeneral purpose

One embodiment of the present invention sets forth a technique for translating application programs written using a parallel programming model for execution on multi-core graphics processing unit (GPU) for execution by general purpose central processing unit (CPU). Portions of the application program that rely on specific features of the multi-core GPU are converted by a translator for execution by a general purpose CPU. The application program is partitioned into regions of synchronization independent instructions. The instructions are classified as convergent or divergent and divergent memory references that are shared between regions are replicated. Thread loops are inserted to ensure correct sharing of memory between various threads during execution by the general purpose CPU.

Owner:NVIDIA CORP

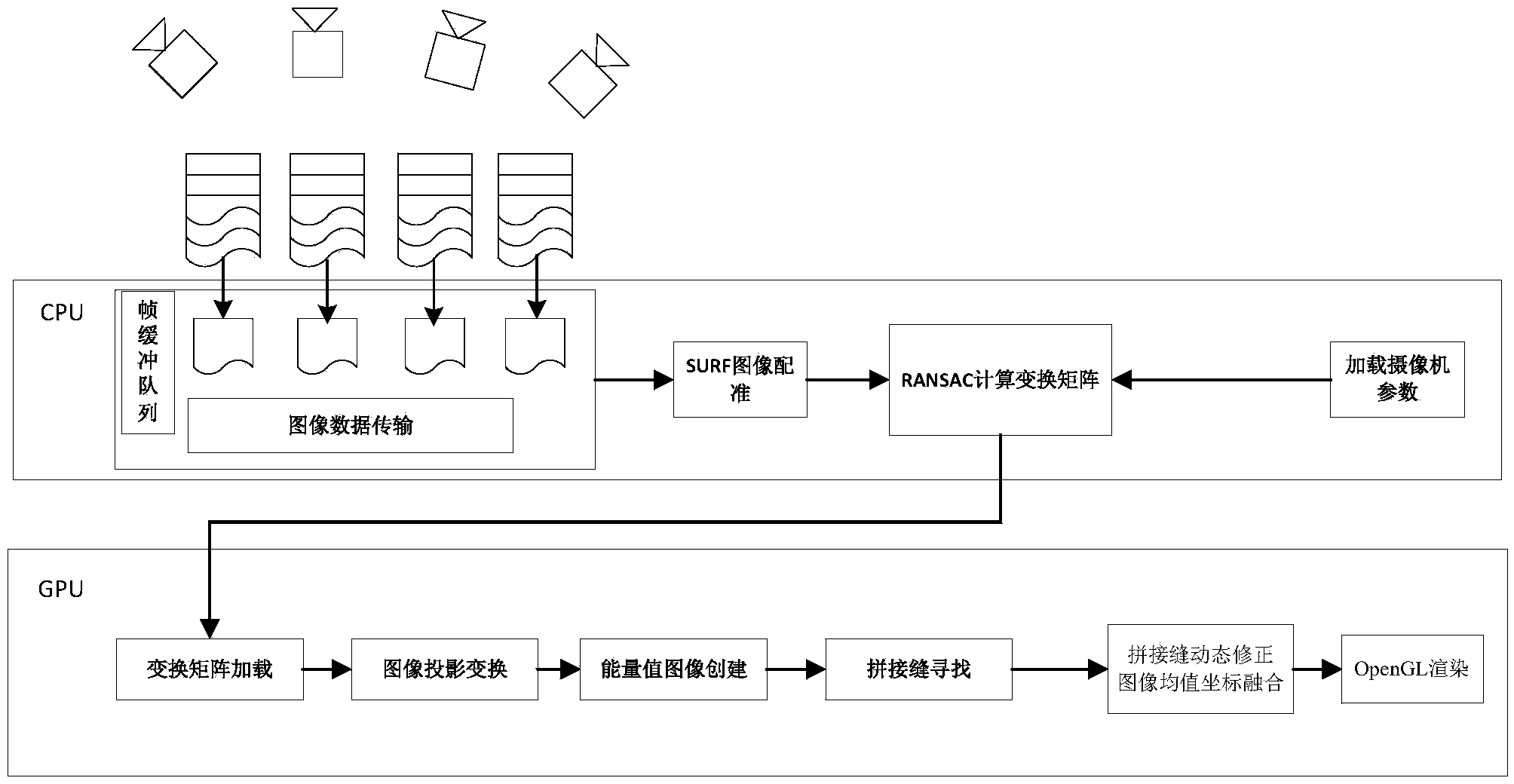

Multi-video real-time panoramic fusion splicing method based on CUDA

InactiveCN103997609AImprove monitoring effectImage enhancementTelevision system detailsUltrahigh resolutionImage resolution

The invention discloses a multi-video real-time panoramic fusion splicing method based on a CUDA. The splicing method includes the step of system initialization and the step of real-time video frame fusion, wherein the step of system initialization is executed at the CPU end of the CUDA, and the step of real-time video frame fusion is executed at the GPU end; according to CUDA-based stream processing modes S21, S22, S23 and S24, four concurrent processed execution streams are set up at the GPU end, and S21, S22, S23 and S24 are deployed to corresponding stream processing sequences. Compared with the prior art, the multi-video and real-time panoramic fusion splicing method has the following advantages that multi-video real-time panoramic videos without ghost images or color brightness difference are achieved, the ultrahigh-resolution overall monitoring effect on large-scale scenes such as an airport, a garden and a square is remarkable, and the application prospect is wide.

Owner:WISESOFT CO LTD +1

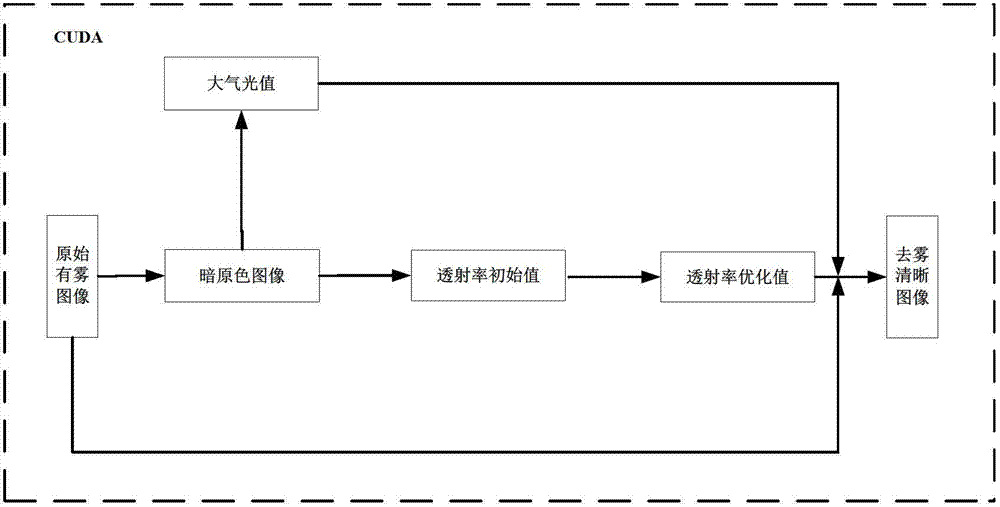

Real-time image defogging method based on CUDA (Compute Unified Device Architecture)

InactiveCN103049890AGood and fast real-time fog image restoration effectImage enhancementWorking environmentFilter algorithm

The invention relates to the fields of computer application technology and computer vision, and particularly relates to a real-time image defogging method based on CUDA (Compute Unified Device Architecture). The real-time image defogging method comprises the following steps of: creating a collaborative work environment of a CPU (Central Processing Unit) and a GPU (Graphics Processing Unit) by utilizing the CUDA; inputting an original foggy image and obtaining a dark primary-color image of the original foggy image as well as an atmospheric light value of the dark primary-color image; obtaining an initial transmissivity value of the original foggy image according to dark channel priority, and obtaining the optimized transmittivity by utilizing a guide filtering algorithm; and determining a defogged restored image according to the original foggy image, the transmissivity distribution and the atmospheric light value in an atmospheric scattering model. According to the real-time image defogging method disclosed by the invention, a programming model in which the CPU and the GPU work cooperatively is established by sufficiently combining the advantages of the CPU and the GPU; the atmospheric light value and the transmittivity distribution are estimated by utilizing the dark channel priority knowledge and the atmospheric scattering model, so that a good and quick restoring effect of a real-time fog-degraded image is finally realized.

Owner:WISESOFT CO LTD +1

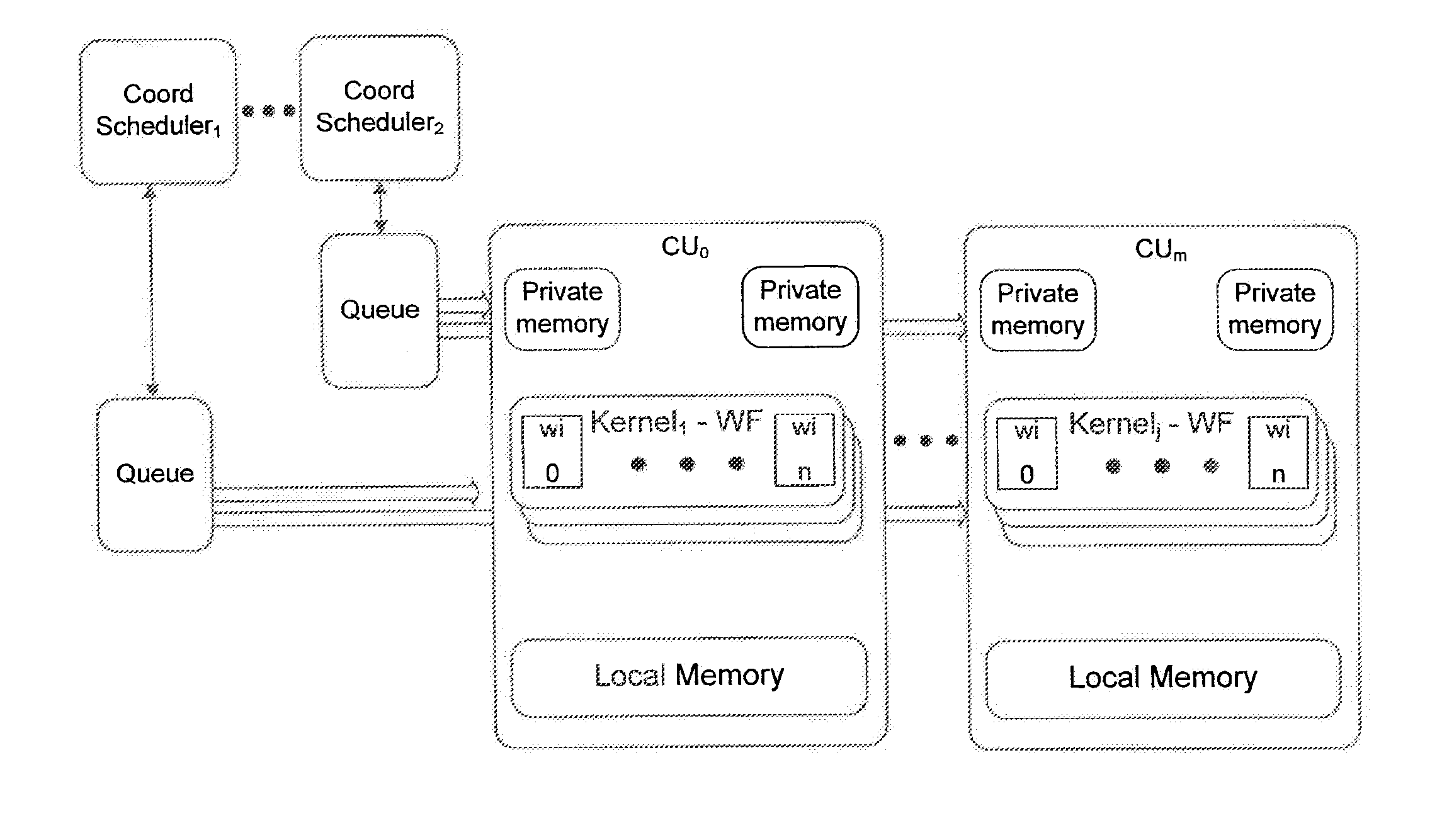

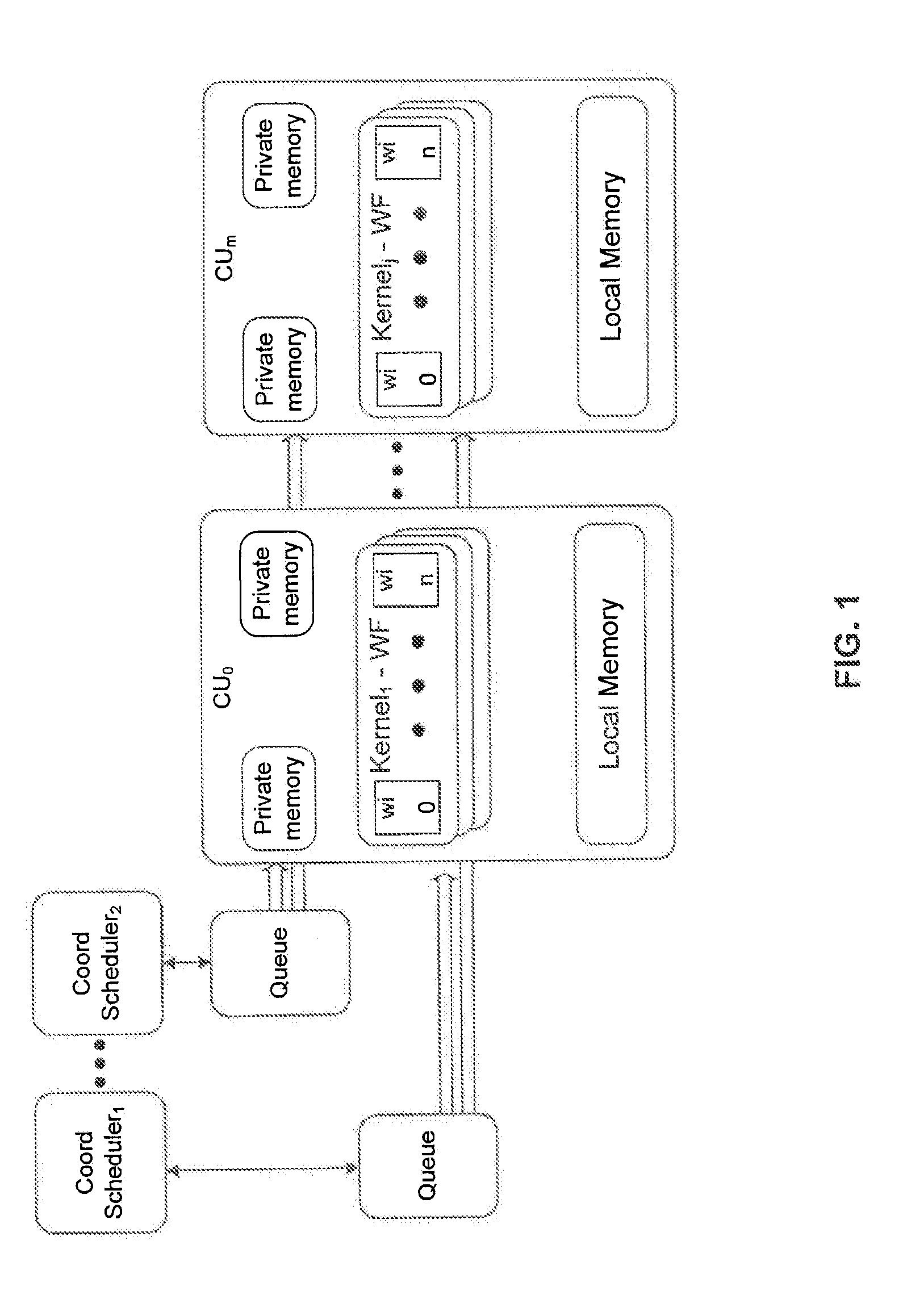

Heterogeneous Parallel Primitives Programming Model

InactiveUS20130332937A1Resource allocationMemory systemsSymmetric multiprocessor systemStandardization

With the success of programming models such as OpenCL and CUDA, heterogeneous computing platforms are becoming mainstream. However, these heterogeneous systems are low-level, not composable, and their behavior is often implementation defined even for standardized programming models. In contrast, the method and system embodiments for the heterogeneous parallel primitives (HPP) programming model disclosed herein provide a flexible and composable programming platform that guarantees behavior even in the case of developing high-performance code.

Owner:ADVANCED MICRO DEVICES INC

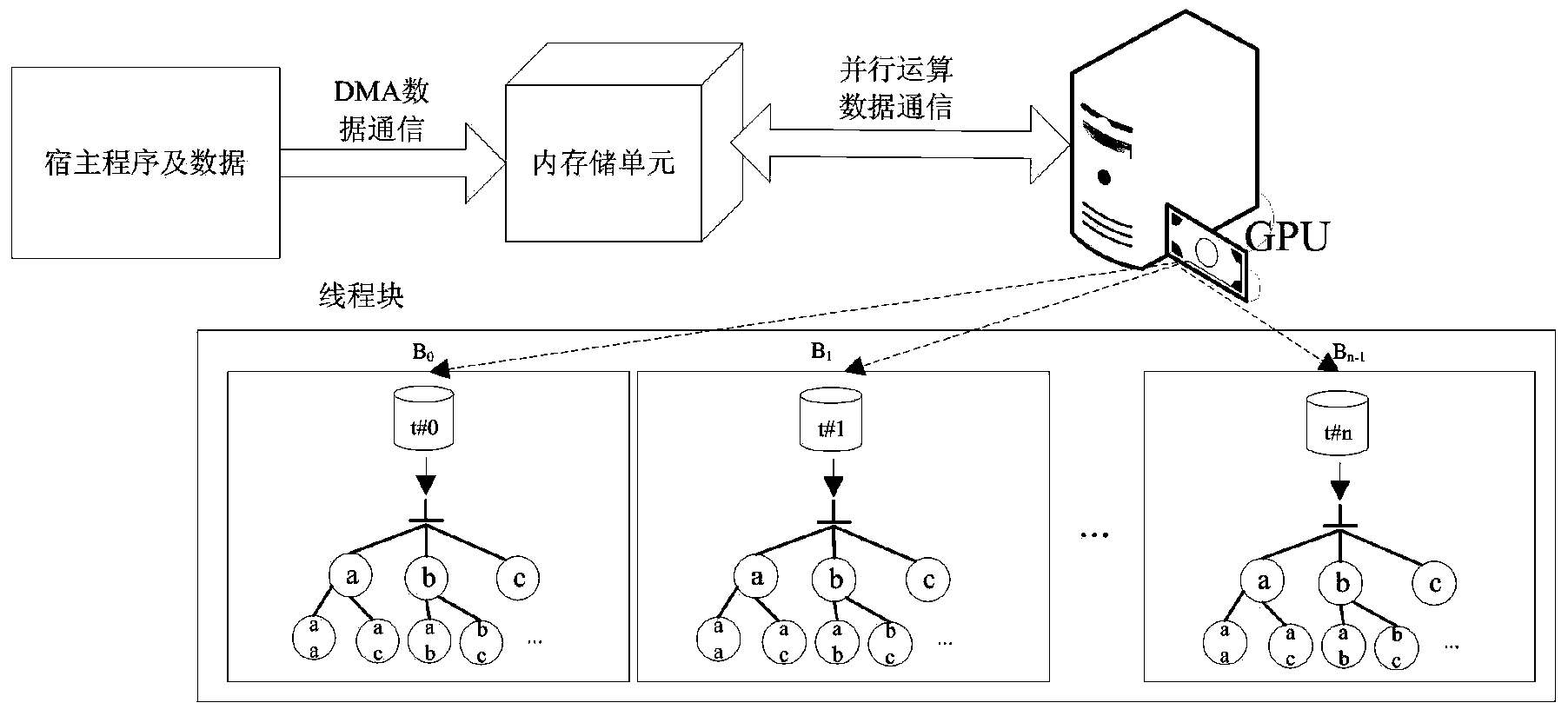

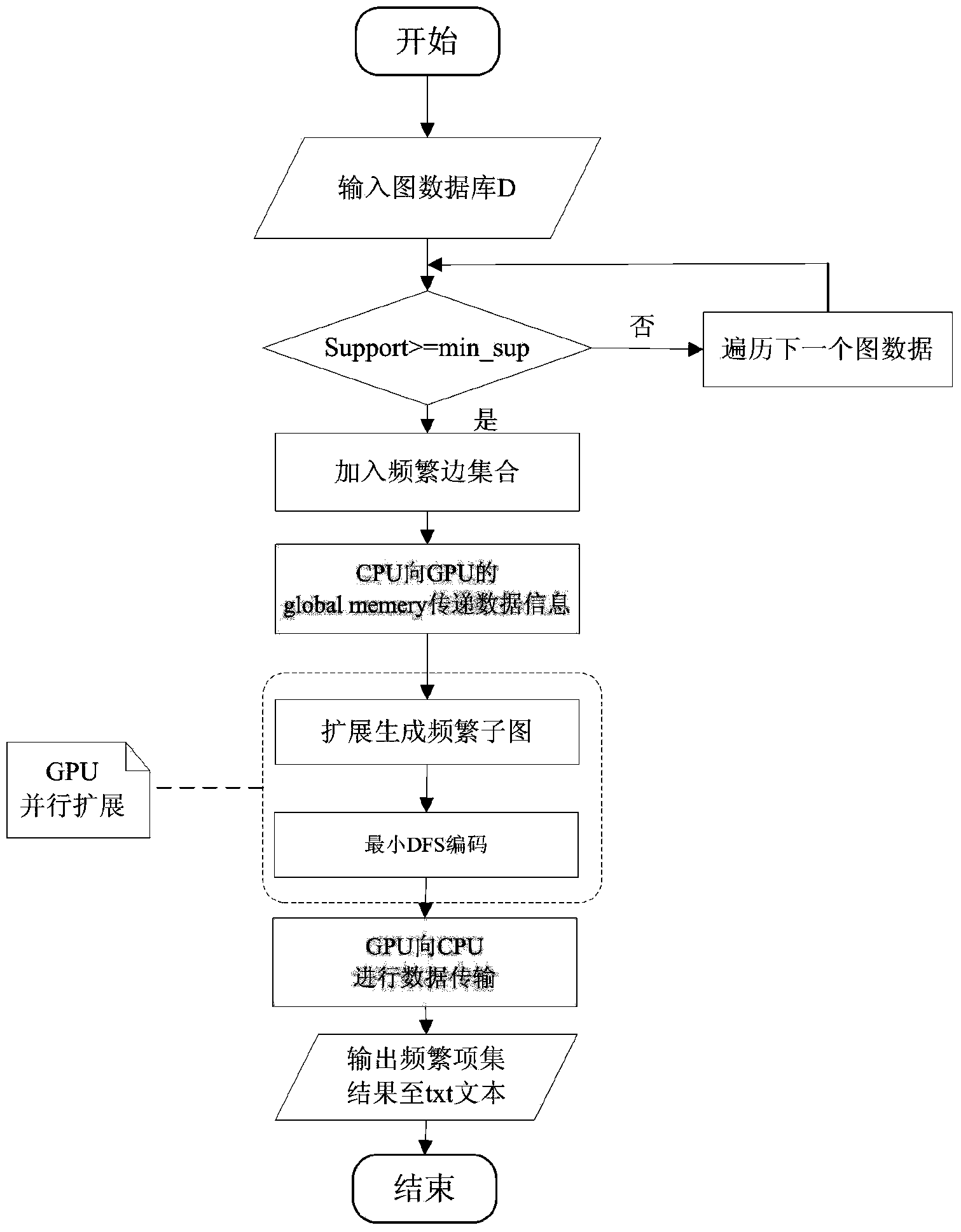

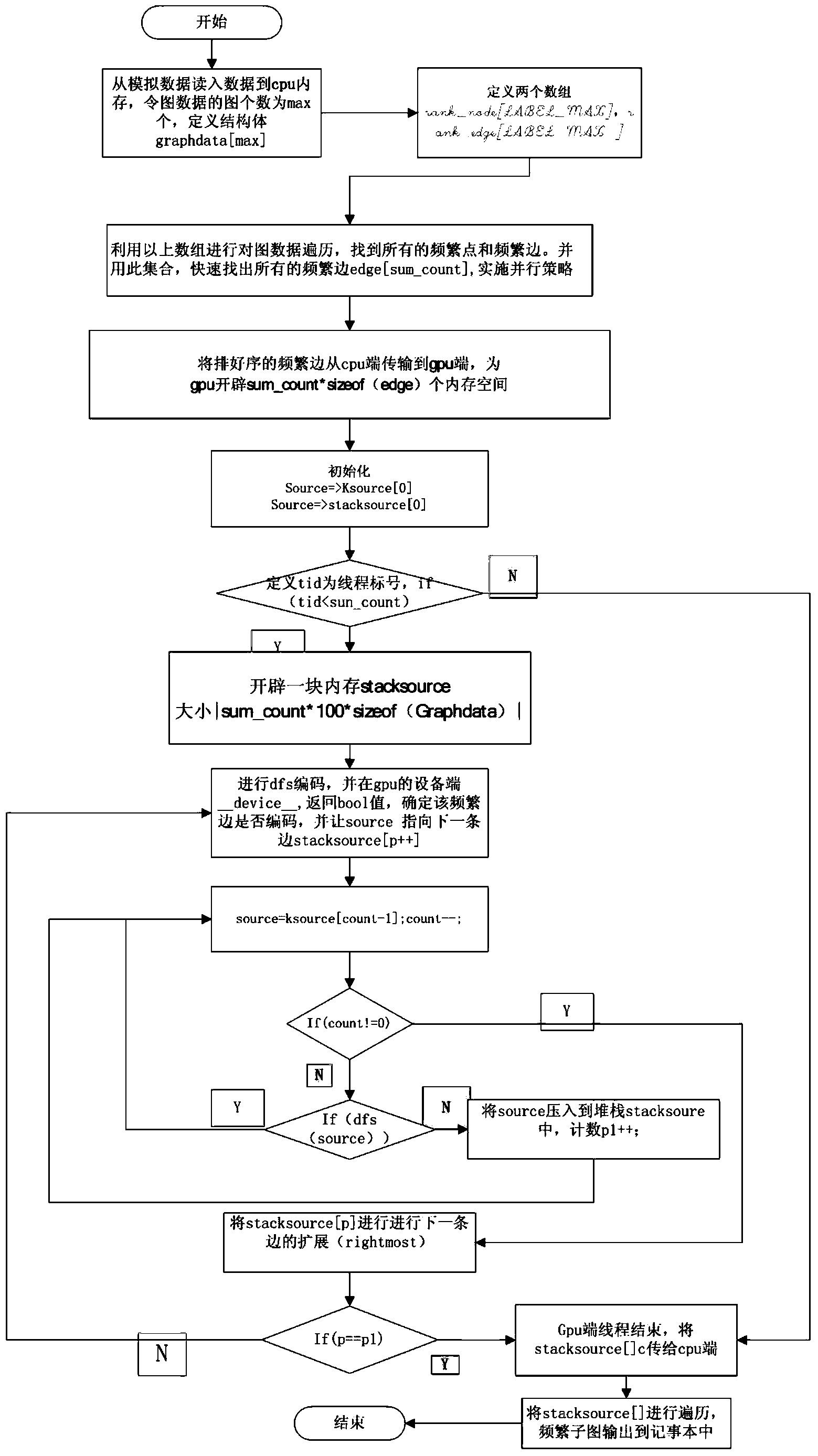

Frequent subgraph excavating method based on graphic processor parallel computing

ActiveCN103559016ACollaborative Computing RealizationImprove data processing capabilitiesResource allocationConcurrent instruction executionGraphicsData set

The invention discloses a frequent subgraph excavating method based on graphic processor parallel computing. The method includes marking out a plurality of thread blocks through a graphic processing unit (GPU), evenly distributing frequent sides to different threads to conduct parallel processing, obtaining different extension subgraphs through right most, returning the graph excavating data set obtained by each thread to each thread block, finally utilizing the GPU to conduct data communication with a memory and returning a result to a central processing unit (CPU) to process the result. The graph excavating method is feasible and effective, graph excavating performance is optimized under intensive large data environment, graph excavating efficiency is improved, data information is provided for scientific research analysis, market research and the like fast and reliably, and a parallel excavating method on a compute unified device architecture (CUDA) is achieved.

Owner:JIANGXI UNIV OF SCI & TECH

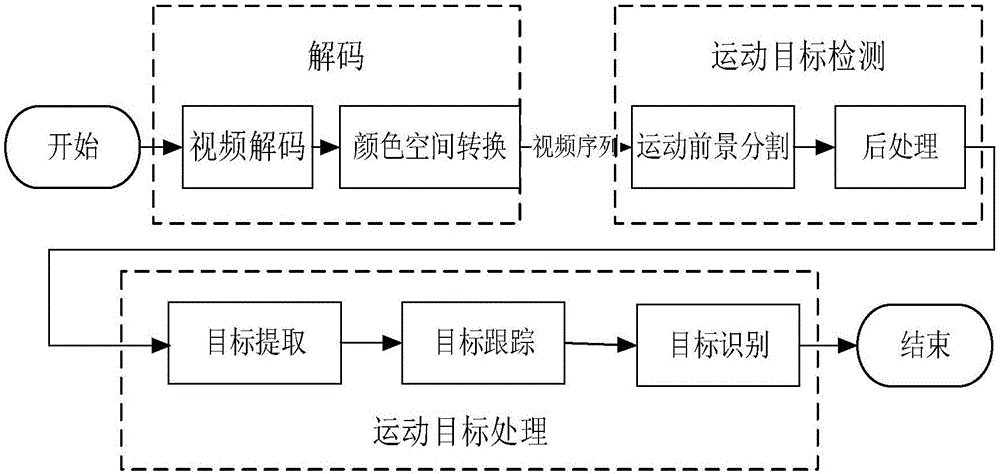

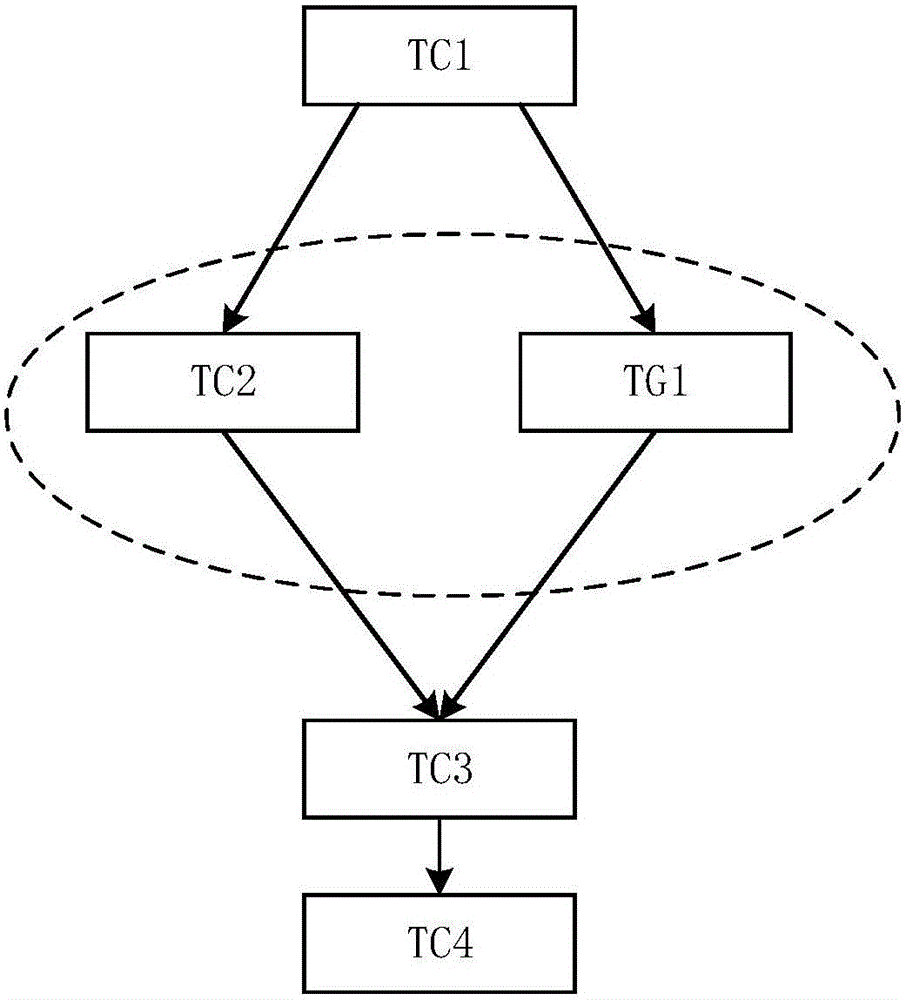

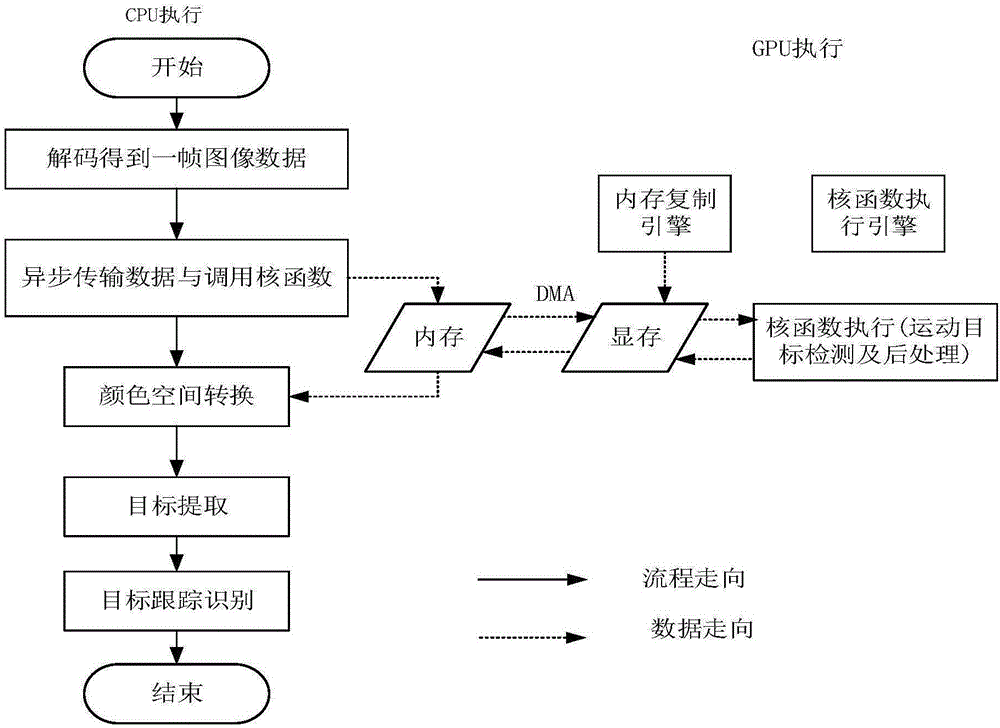

Video analysis and accelerating method based on thread level flow line

ActiveCN106358003AFull use of computing powerReduce communication overheadTelevision system detailsColor television detailsAdjacent levelComputational resource

The invention discloses a video analysis and accelerating method. The method comprises the following steps: dividing a video frame processing task into four levels of subtasks according a sequential order, and allocating the subtasks to GPU and CPU to process; realizing each level of subtask through a thread, transmitting data to a thread for the next subtask after processing, and ensuring that all the threads perform concurrent execution; pausing and waiting when new tasks does not exist or a thread for the next level of subtask does not accomplish processing; adopting a first-in first-out (FIFO) buffer queue to transmit data between threads for two adjacent levels of subtasks; realizing asynchronous cooperation concurrency of CPU and GPU subtasks through CUDA function asynchronous invocation for two subtask not in a dependency relationship. According to the method, various computing resources in a heterogeneous system are effectively utilized, a reasonable task scheduling mechanism is established, and communication overhead between different processors is reduced, so that the computing power of each computing resource is given into full play, and the system efficiency is improved.

Owner:HUAZHONG UNIV OF SCI & TECH

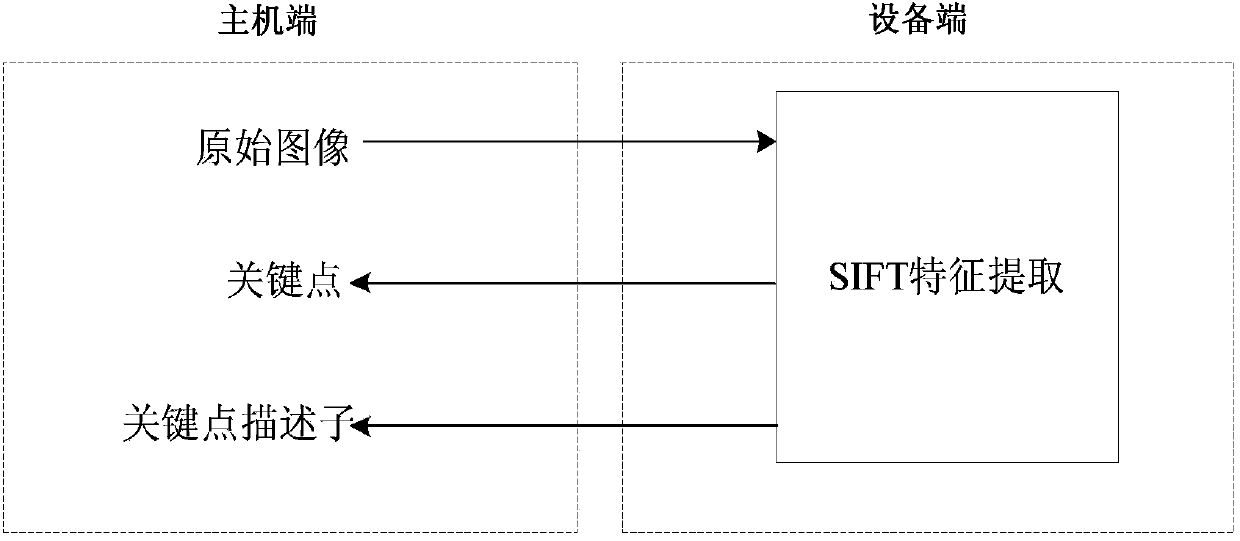

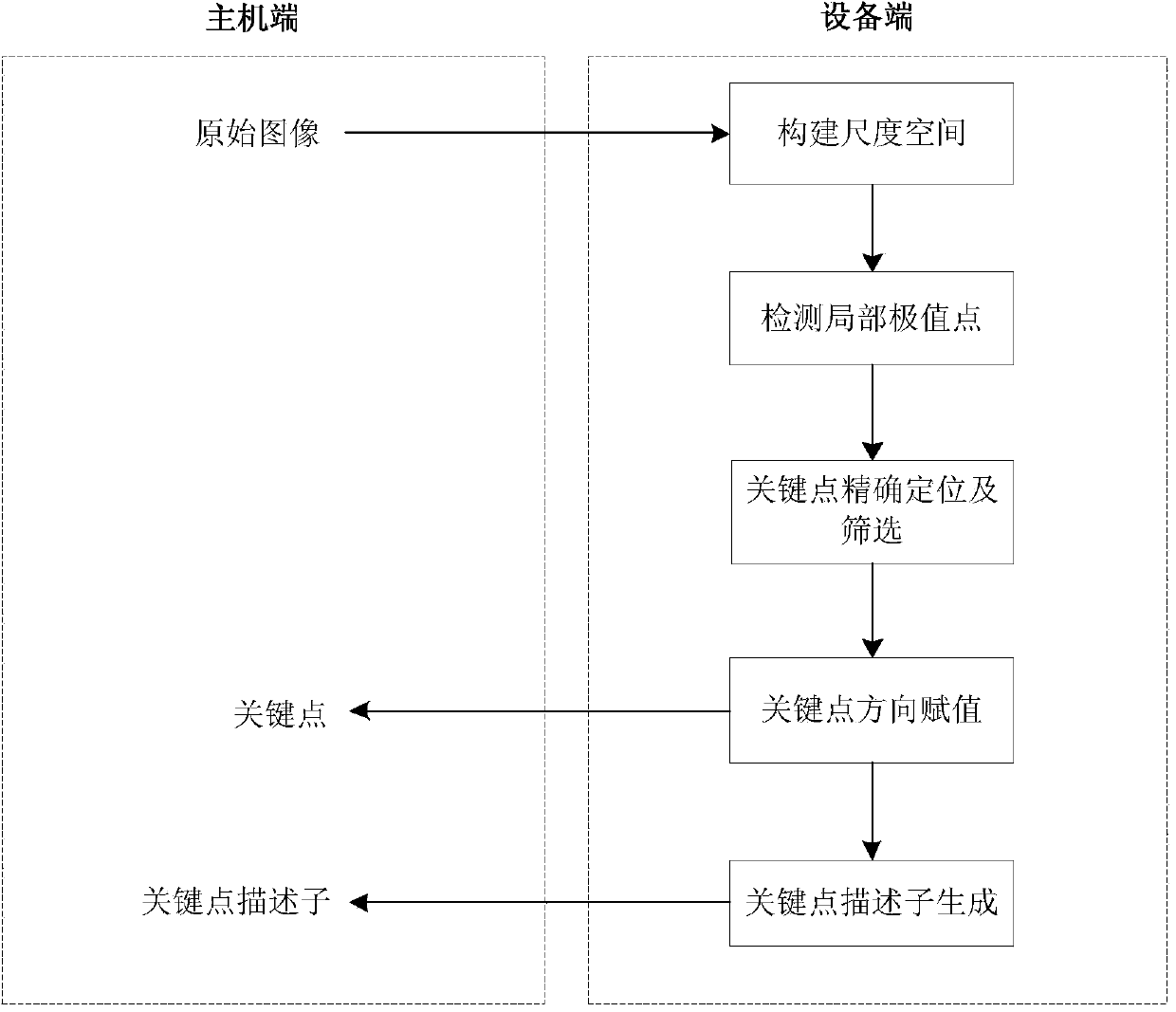

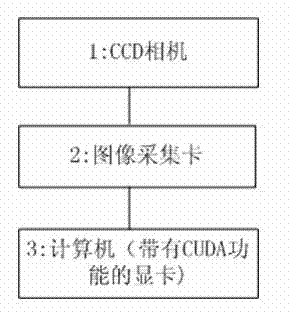

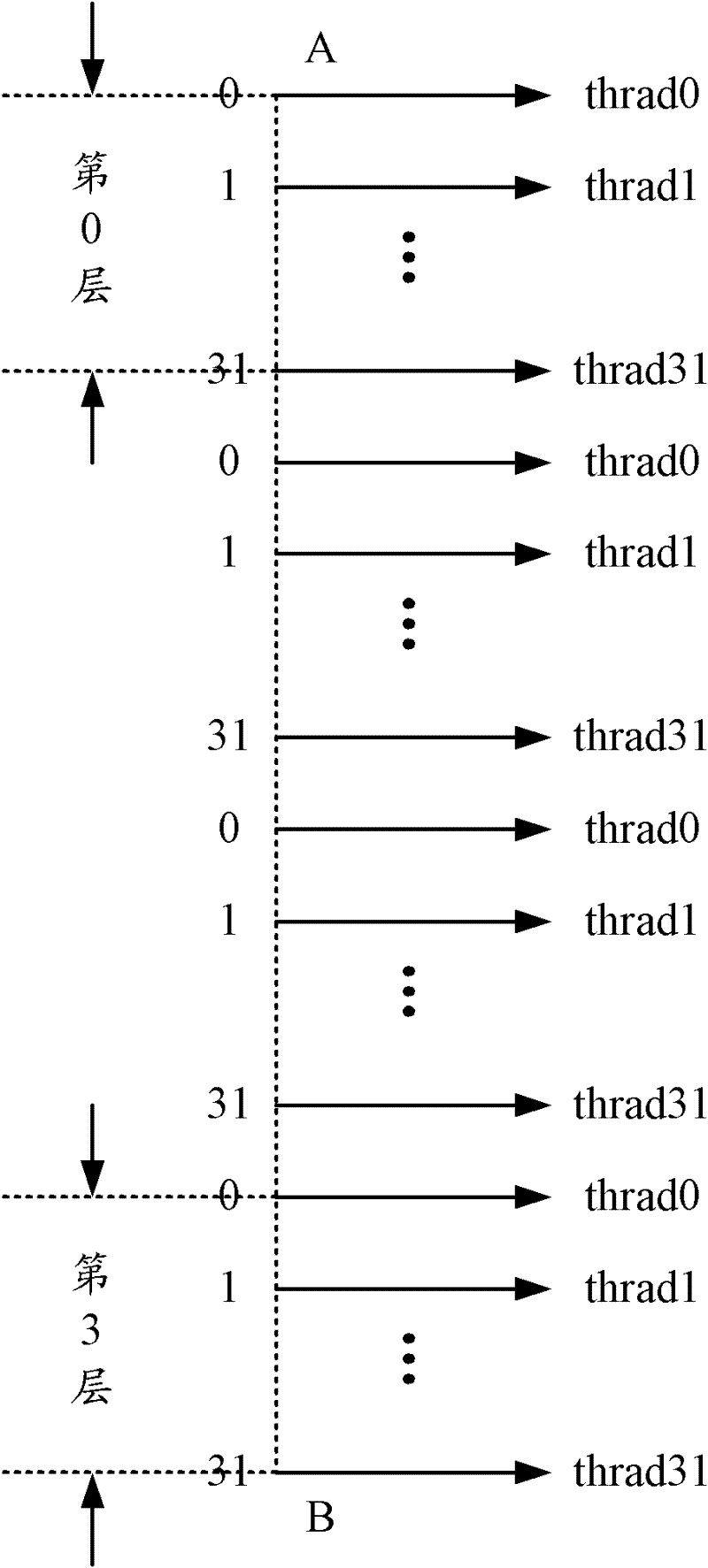

SIFT parallelization system and method based on recursion Gaussian filtering on CUDA platform

InactiveCN103593850ACalculation speedMeet real-time requirementsImage enhancementImage analysisFeature extractionImage transfer

Provided are an STFT parallelization system and method based on recursion Gaussian filtering on a CUDA platform. The method comprises the steps that first, original images are transmitted to a GPU end for conducting a series of Gaussian filtering and downsampling to establish a Gaussian pyramid, Gaussian filtering is conducted through a recursion Gaussian filter, and then substraction is conducted on the adjacent images to obtain a Gaussian difference pyramid; second, a thread block is used as a unit to load in an image, each thread is used for processing one pixel, and the pixel is compared with the adjacent 26 pixels to obtain local extreme points; third, each thread is used for processing one local extreme point, and positioning and selecting of key points are conducted; fourth, one thread block is used for calculating the direction of one key point, one thread is used for calculating the direction and the amplitude value of one pixel in the neighbourhood of the key point, the direction and the amplitude valve are accumulated to a gradient histogram through an atomic addition provided by a CUDA, and the information such as the coordinates and the directions of the key points are transmitted to a host end according to the directions of the key points obtained by the gradient histogram; fifth, one thread block is used for calculating one key point descriptor, then a calculating result is transmitted to the host end, and SIFT feature extraction is achieved. The STFT parallelization system and method based on the recursion Gaussian filtering on the CUDA platform improve the calculating speed of an SIFT algorithm.

Owner:北京航空航天大学深圳研究院

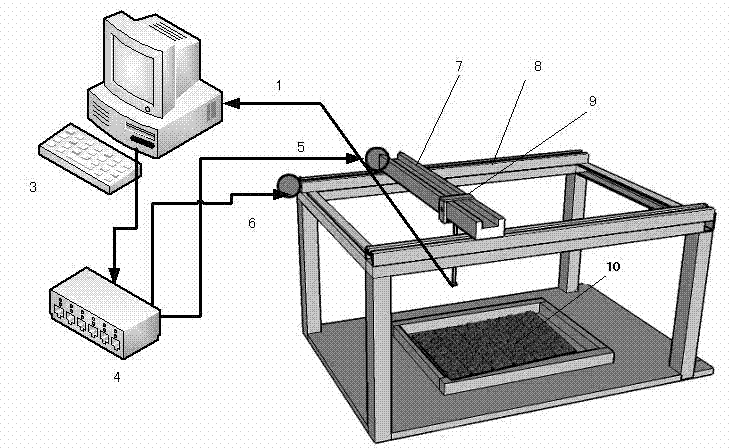

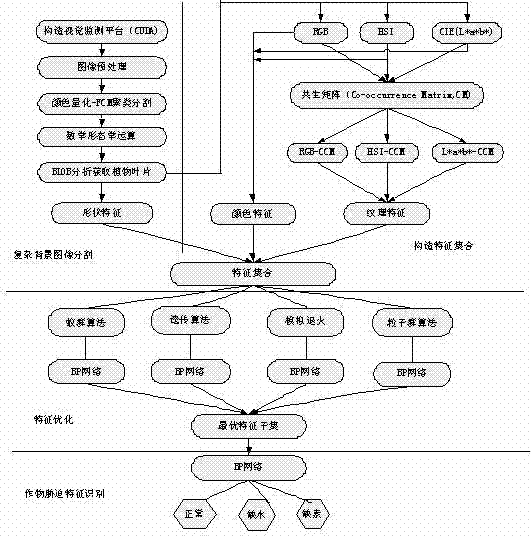

Identification method for stressed state of water fertilizer of greenhouse crop on basis of computer vision technology

InactiveCN102789579AMeet real-time requirementsCharacter and pattern recognitionNeural learning methodsResearch ObjectGreenhouse crops

The invention relates to an identification method for a stressed state of a water fertilizer of a greenhouse crop on basis of a computer vision technology. According to the identification method provided by the invention, a crop under a greenhouse environment is taken as a research object; a computer vision monitoring platform is constructed; a plant image cutting method which adapts to the change in natural illumination and a complex scene is researched; an obtained plant blade image is extracted at the aspects of morphology, color, grain and the like, and sufficient characteristic sets are constructed; a heuristic search algorithm, such as a genetic algorithm, a simulated annealing algorithm, an ant colony algorithm, a particle swarm optimization, or the like, is combined with a neural network technique for searching for the optimal characteristic subset; and a BP (Back Propagation) neural network is utilized to identify a stressed characteristic of the crop. A camera is moved by using a horizontal positioning system, so that the plant image is all-dimensionally obtained; the algorithm operation is realized by using a CUDA (Compute Unified Device Architecture) hardware platform, so as to meet the real-time demand on monitoring; and the invention provides a technical method for measuring destructiveness under the stressed state of the water fertilizer of the greenhouse crop and the application prospect is wide.

Owner:TONGJI UNIV

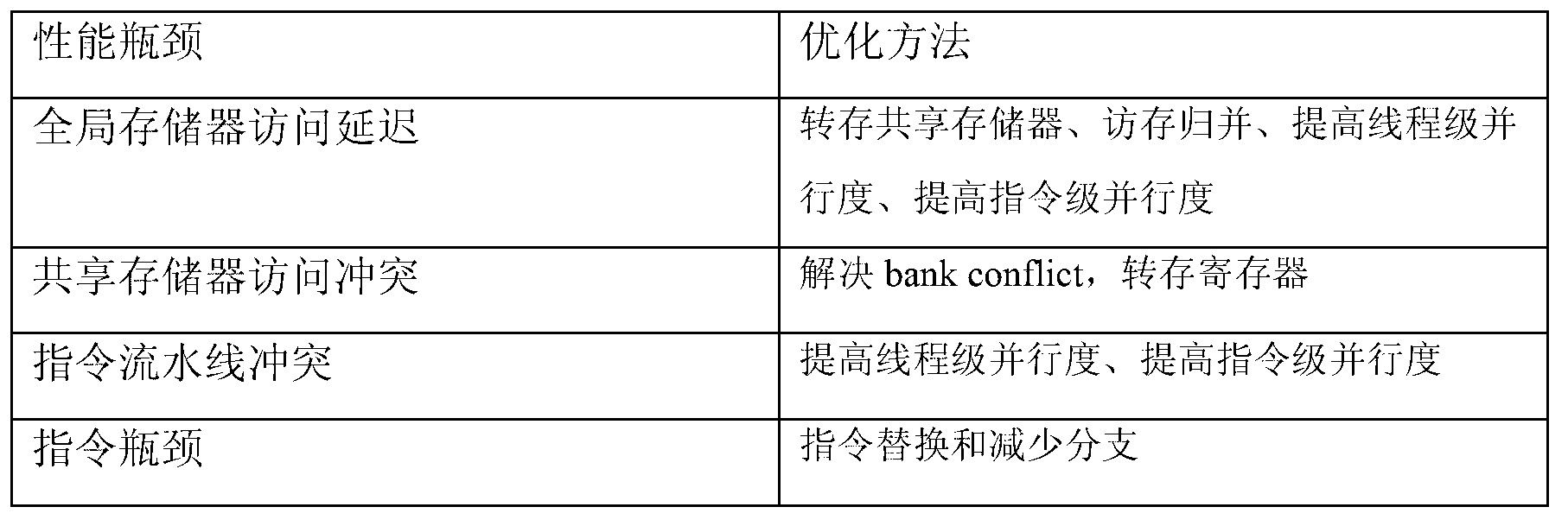

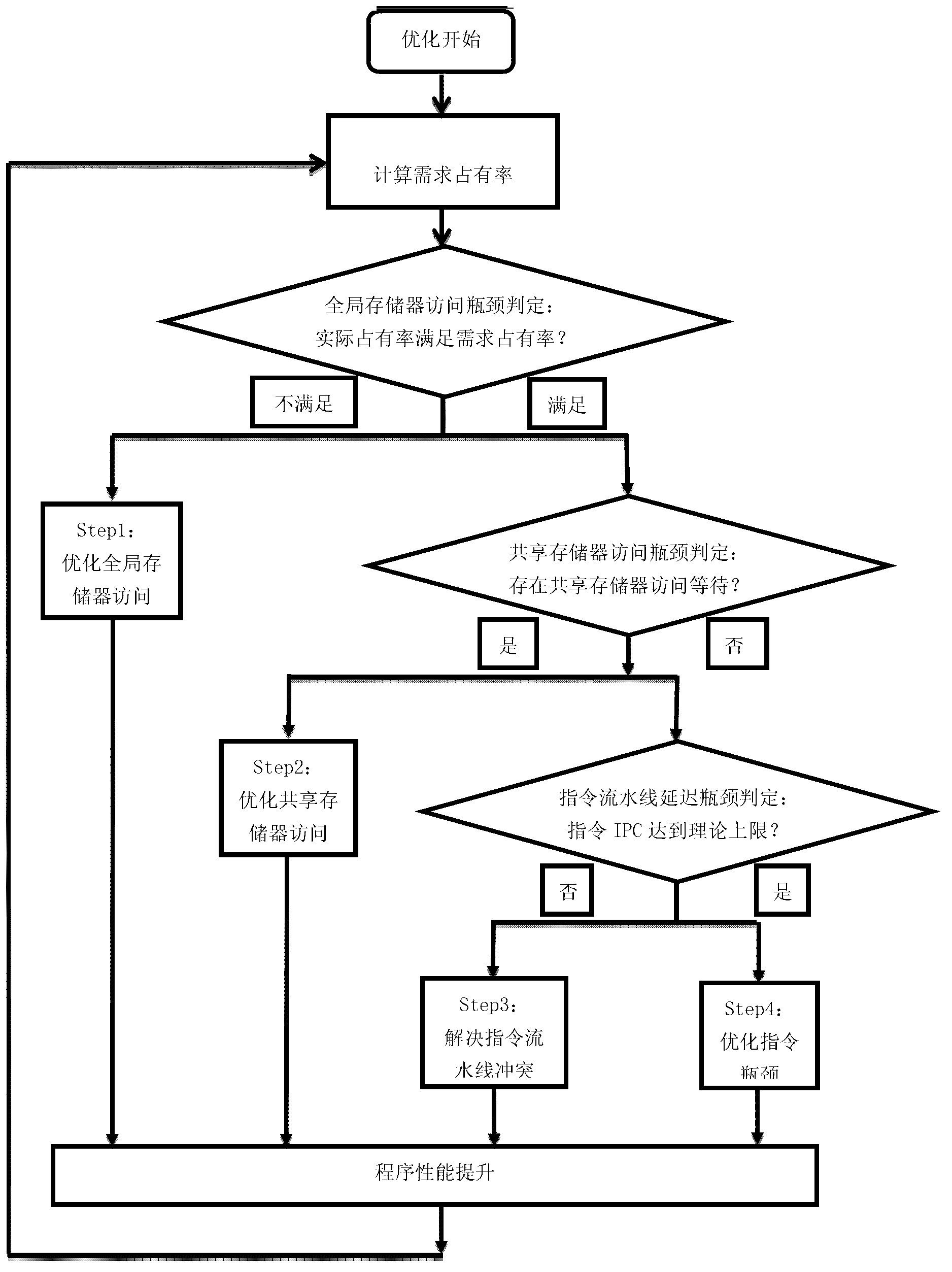

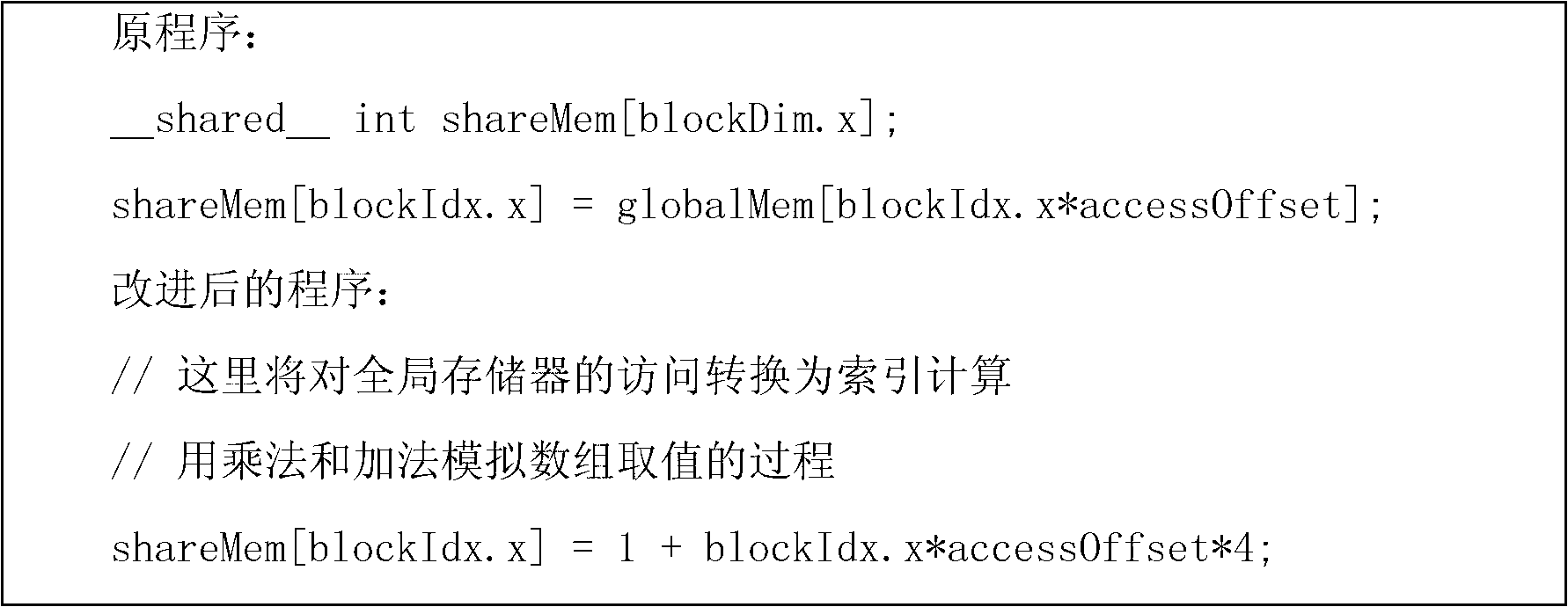

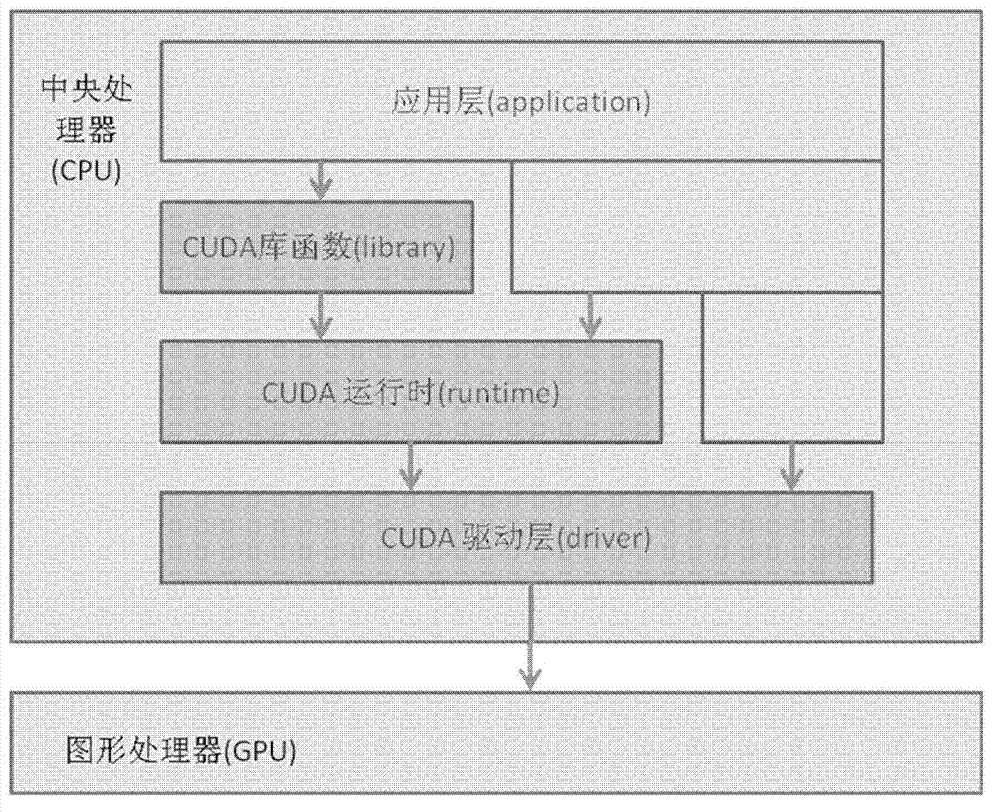

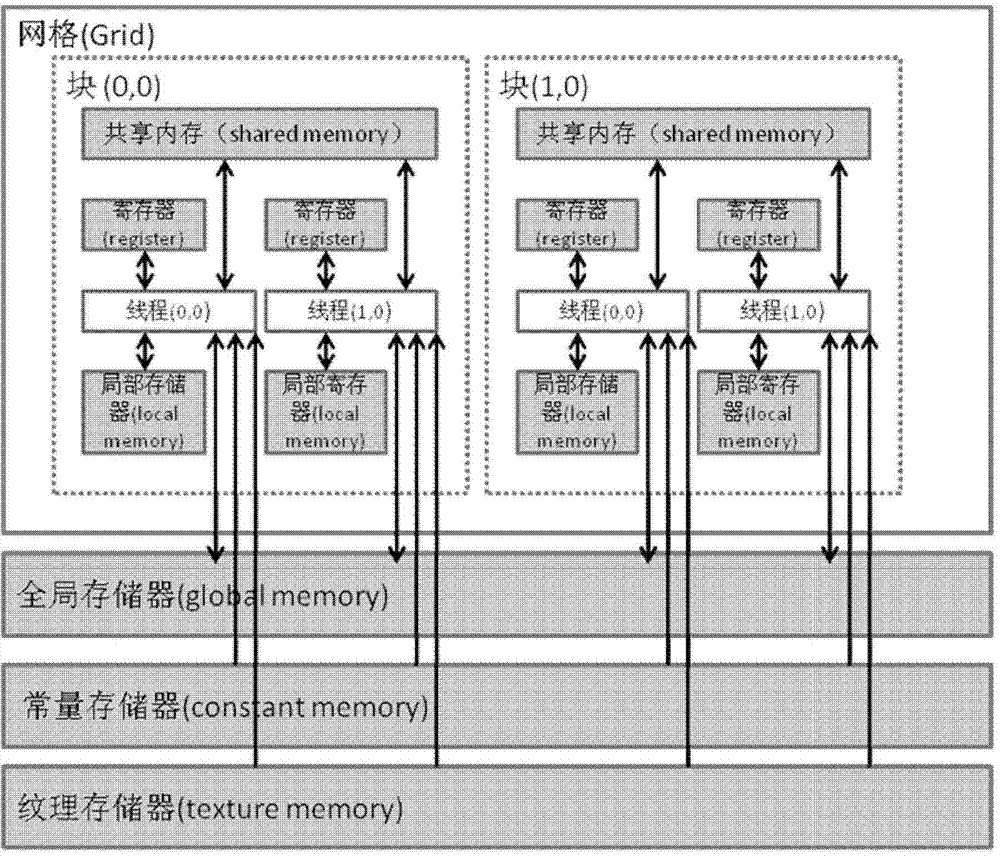

Graphics processing unit (GPU) program optimization method based on compute unified device architecture (CUDA) parallel environment

The invention relates to a graphics processing unit (GPU) program optimization method based on compute unified device architecture (CUDA) parallel environment. The GPU program optimization method defines performance bottleneck of a GPU program core and comprises global storage access delay, shared storage access conflict, instruction pipelining conflict and instruction bottleneck according to grades. An actual operational judgment criterion and a bottleneck optimization solving method of each performance bottleneck are provided. A global storage access delay optimization method includes transferring a shared storage, access merging, improving thread level parallelism and improving instruction level parallelism. A shared storage access conflict and instruction pipelining conflict optimization method includes solving bank conflict, transferring a register, improving thread level parallelism, and improving instruction level parallelism. The instruction bottle neck includes instruction replacing and branch reducing. The GPU program optimization method provides a basis for CUDA programming and optimization, helps a programmer conveniently find the performance bottleneck in a CUDA program, conducts high-efficiency and targeted optimization for the performance bottleneck, and enables the CUDA program to develop computing ability of GPU equipment to the great extent.

Owner:北京微视威信息科技有限公司

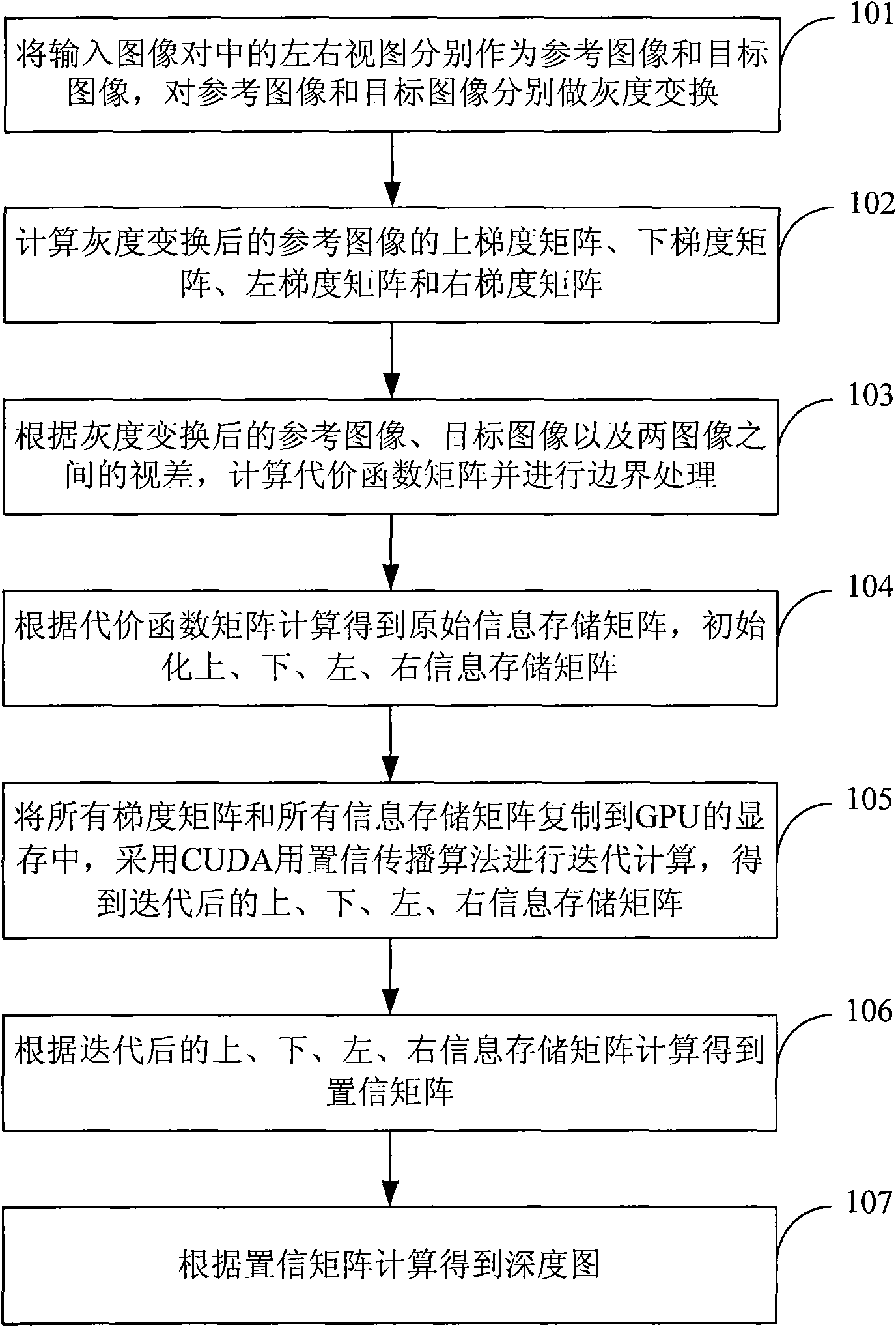

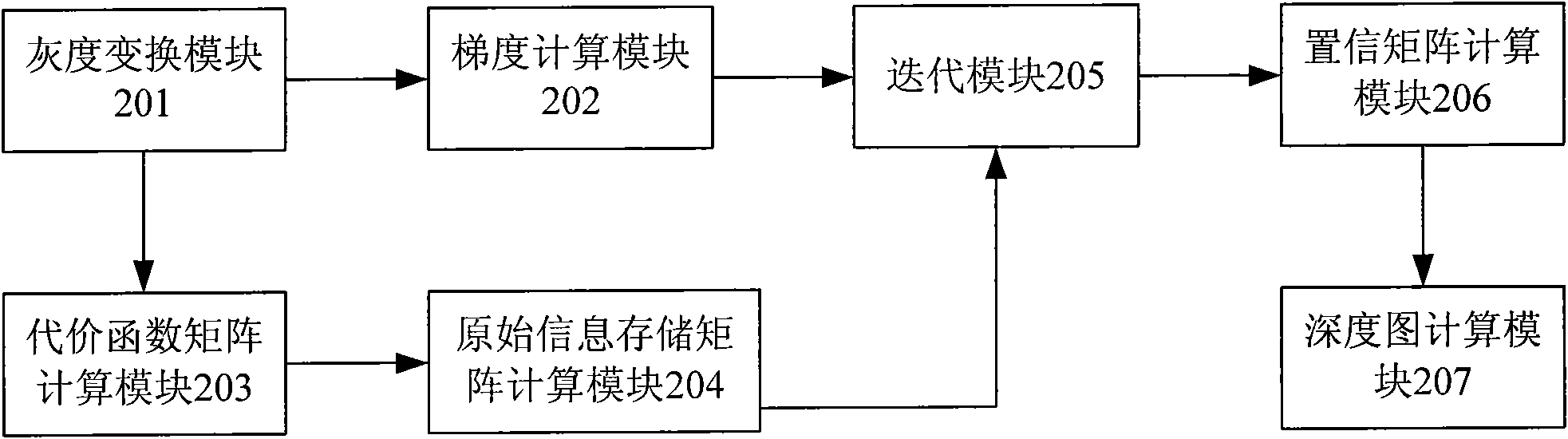

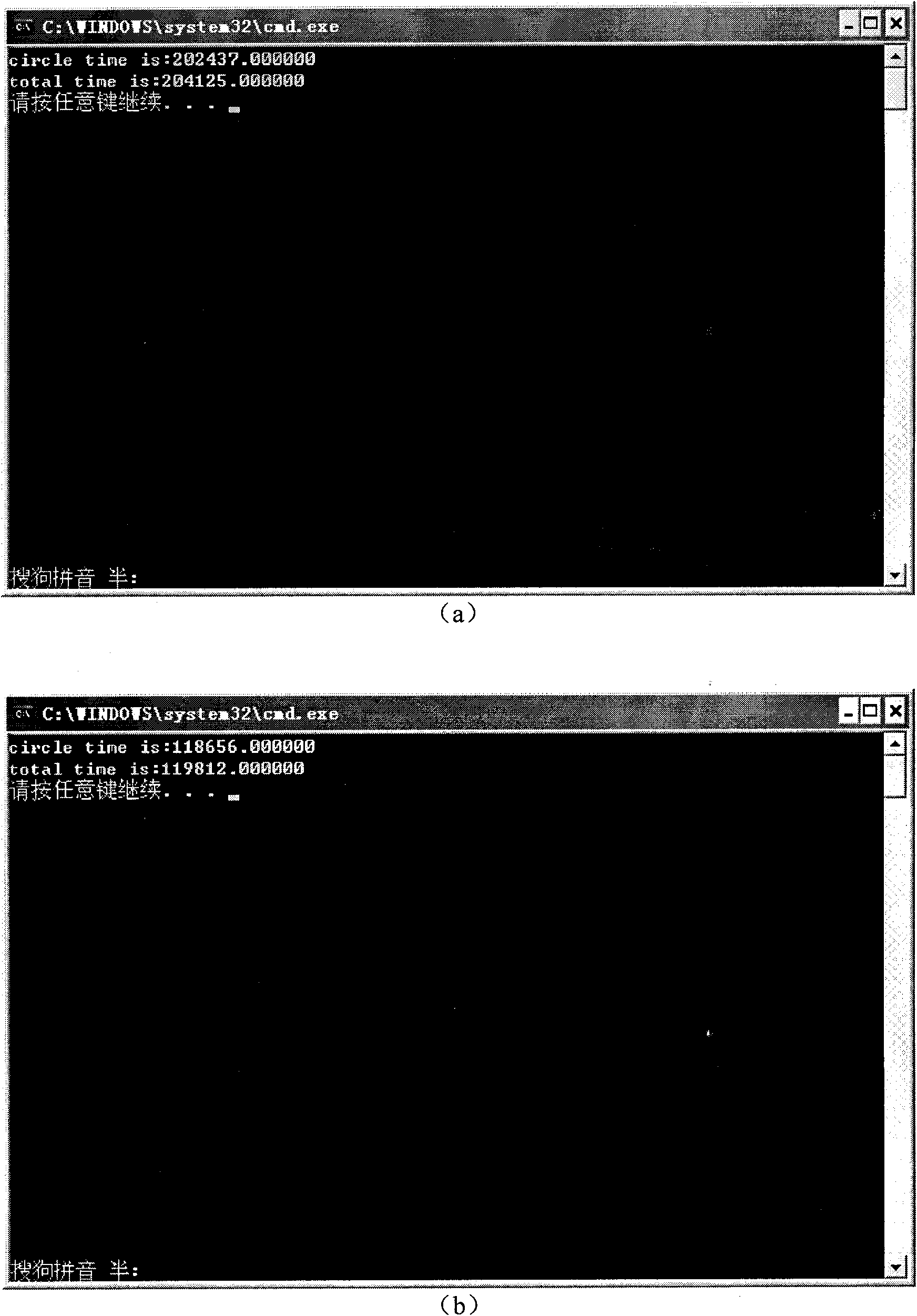

Method and device for generating depth map

ActiveCN101605270AResolution is slowQuality improvementImage analysisSteroscopic systemsParallaxReference image

The invention discloses a method and a device for generating a depth map, which belongs to the technical field of computer vision. The method comprises the following steps: performing gray-scale transformation by using a left view and a right view of an input image pair as a reference image and a target image respectively; computing upper, lower, left and right gradient matrixes of the reference image after the gray-scale transformation; computing a cost function matrix according to parallax, and processing borders; computing an original information storage matrix, and initializing upper, lower, left and right information storage matrixes; performing iterative calculation in a GPU memory by using a CUDA belief propagation algorithm to acquire upper, lower, left and right information storage matrixes after iteration, and computing a belief matrix according to the result of the iteration; and computing the depth map according to the belief matrix. The device comprises a gray-scale transformation module, a gradient computation module, a cost function matrix computation module, an original information storage matrix computation module, an iteration module, a belief matrix computation module and a depth map computation module. The method and the device improve computing efficiency of the depth map and realize high-quality and fast generation of the depth map.

Owner:TSINGHUA UNIV

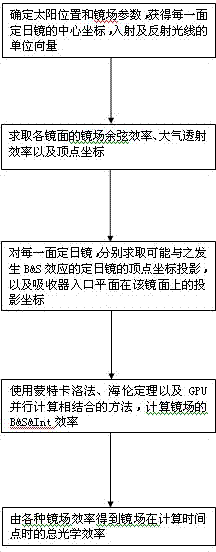

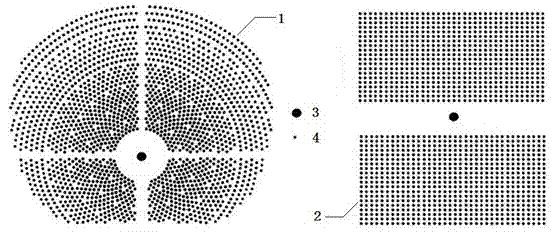

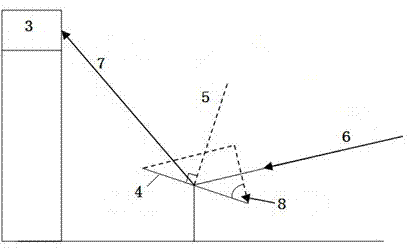

Calculating method for mirror field optical efficiency on basis of graphics processing unit (GPU) tower type solar energy thermoelectric system

The invention discloses a calculating method for mirror field optical efficiency on the basis of a graphics processing unit (GPU) tower type solar energy thermoelectric system, which comprises requesting mirror plane central coordinate matrix of a mirror field, determining the position of the sun, requesting mirror field cosine and atmosphere transmission efficiency; determining heliostats possibly having blocking and shading (B and S) effects with each mirror, translating the top points of a row of mirrors to the plane where the calculated mirror is arranged, and recording the coordinate after transformation; projecting the coordinate at the top point of an inlet of an absorber to the plane of each heliostat and recording the coordinate data; and utilizing the Monte-Carlo method and Helen theory to calculate B and S and intercept (B and S and Int) efficiency of the heliostats according to the recorded coordinate data, utilizing a compute unified device architecture (CUDA) calculatingplatform and a GPU double-layer parallel structure to accelerate calculation, compositing various efficiency to obtain the total optical efficiency of the mirror field. The method can improve simulation calculating speed of the mirror field optical efficiency of the tower type solar energy power station while ensuring accuracy so as to save optimization cost.

Owner:ZHEJIANG UNIV

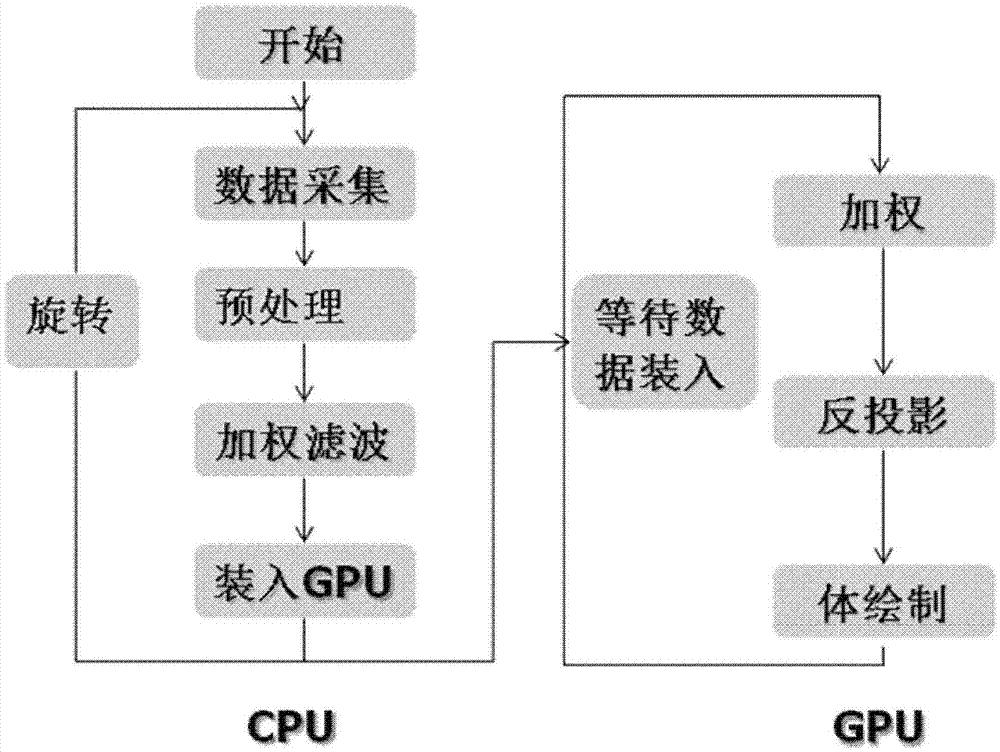

CT (computerized tomography) on-line reconstruction and real-time visualization method based on CUDA (compute unified device architecture)

The invention provides a CT (computerized tomography) on-line reconstruction and visualization method based on a CUDA (compute unified device architecture). The method comprises the following steps of obtaining projection data and pre-treating the obtained projection data; realizing FDK (Feldkamp, Davis and Kress) weighted filter process by a CPU (central processing unit); accelerating CUDA to realize FDK weighted back protection; and accelerating CUDA to realize volume rendering. The method provided by the invention can be used for implementing on-line reconstruction for cone beam CT data, so that a cone beam CT system can reconstruct a CT three-dimensional image while sampling the projection data, and an integrated CT image can be obtained finally along with the completion of the projection data, thereby realizing real-time on-line feedback.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

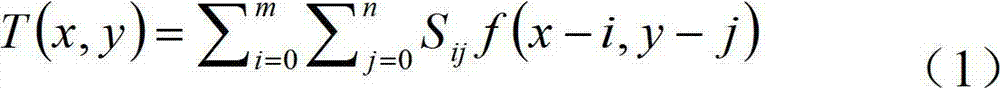

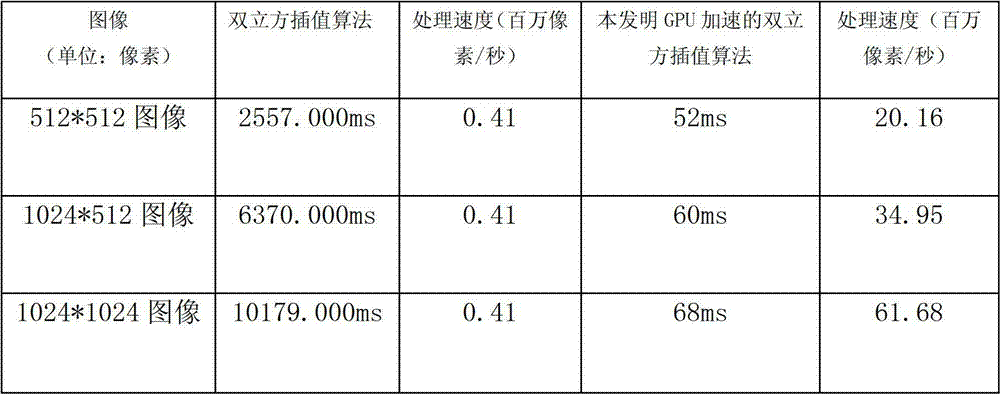

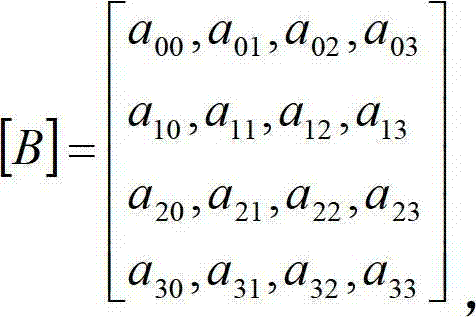

Method for fast zooming two-dimensional seismic image based on GPU (graphic processing unit)

InactiveCN102831577ABoost zoomImprove interactivityGeometric image transformationImage data processing detailsGraphicsColor image

The invention discloses a method for fast zooming a two-dimensional seismic image based on a GPU (graphic processing unit). The method comprises the following steps of: introducing a GPU-accelerated bicubic interpolation algorithm into an seismic color image zooming application so as to improve the zooming and interaction effects; introducing a CUDA (Compute Unified Device Architecture) and openGL (Open Graphics Library) interoperation technology into the color image zooming application so as to accelerate the display of the zoomed color image; and applying a data segmentation technology into the seismic profile color image display so as to solve the display and zooming problems of mass seismic profile data. The method disclosed by the invention has the characteristics of optimal bicubic interpolation effect, freeness from mosaics and smoothness for edge transition, fast zooming speed and capability of ensuring the real-time zooming of mass data. According to the method disclosed by the invention, the memory restriction of the adopted algorithm is much less than that of normal bicubic interpolation, so that the method is suitable for processing seismic profile images with mass data.

Owner:UNIV OF ELECTRONIC SCI & TECH OF CHINA

Big data appliance realizing method based on CPU-GPU heterogeneous cluster

ActiveCN104536937ARealize unified schedulingSolve the problem of low computing efficiencyMultiple digital computer combinationsComputer clusterHeterogeneous cluster

The invention belongs to the technical field of cloud computing, and provides a big data appliance realizing method based on a CPU-GPU heterogeneous cluster. The method includes the steps that the computer cluster is set up and comprises a Master node provided with a CPU and other Slave nodes provided with CPUs and GPUs; a CUDA is installed on each Slave node; a MapReduce model provided by a Hadoop is selected, a Map task is started for each task block, and the Map tasks are transmitted to the Slave nodes to be calculated; the Slave nodes divide the received Map tasks into corresponding proportions, dispatch the proportioned Map tasks to the CPUs or the GPUs to execute Map and Reduce operation and transmit operation results to the Master node; the Master node receives the operation results fed back by all the Slave nodes and finishes all task processing.

Owner:SHENZHEN INST OF ADVANCED TECH

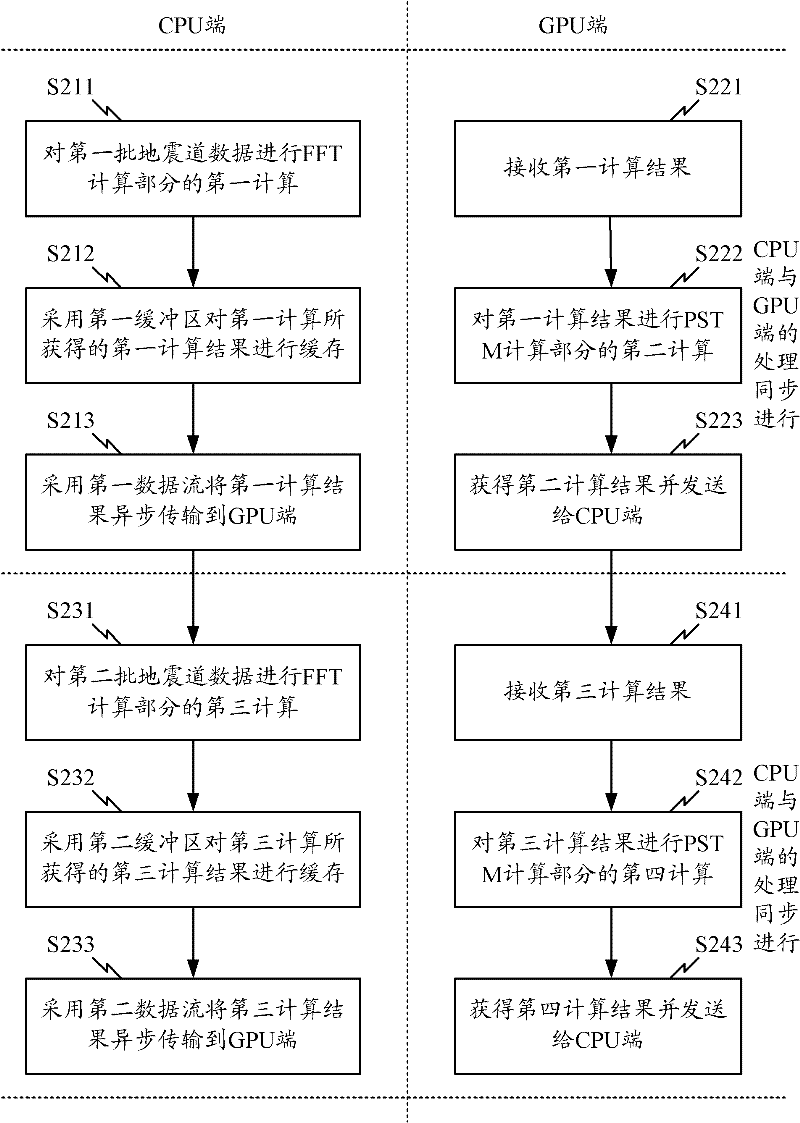

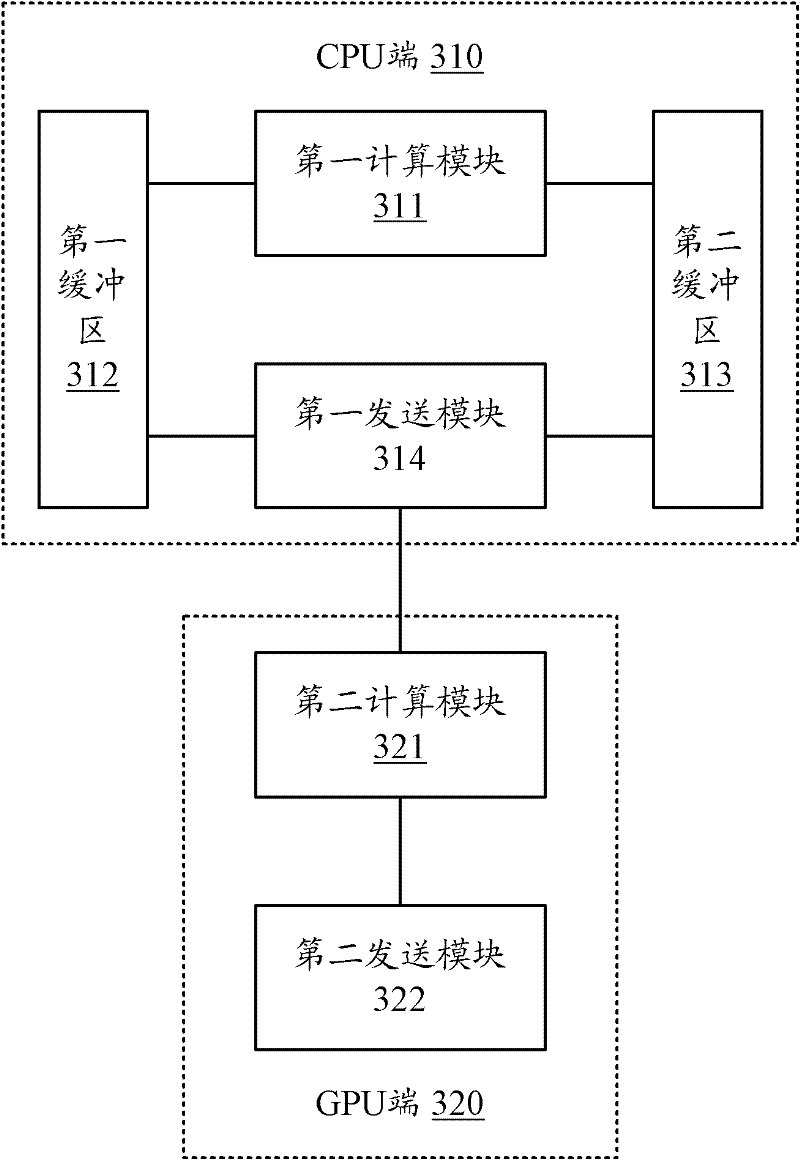

Method and system for processing seismic pre-stack time migration

ActiveCN102243321AReduce processing timeOvercoming the time-consuming defect of pre-stack time migration processingSeismic signal processingChannel dataFast Fourier transform

The invention discloses method and system for processing a seismic pre-stack time migration, aiming at overcoming the defect of time waste of PSTM (PreStack Time Migration) processing in the prior art. The method comprises the following steps of: dividing a PSTM program code to an FFT (Fast Fourier Transform) calculation part and a PSTM calculation part, connecting all seismic channel data to a CPU (Central Processing Unit) end by two batches to perform timesharing calculation, and asynchronously transmitting the calculated result to a GPU (Ground Power Unit) end; and while carrying out calculation of the PSTM calculation part on one batch of data by the GPU, carrying out calculation of the FFT calculation part on the other batch of seismic data by the CPU. In the technical scheme of the invention, the CPU and the GPU realize the asynchronous transmission of data by the stream technology of CUDA (Compute Unified Device Architecture), which overcomes the defect of time waste of PSTM processing in the prior art and greatly decreases the realization difficulty and cost.

Owner:SUZHOU LANGCHAO INTELLIGENT TECH CO LTD

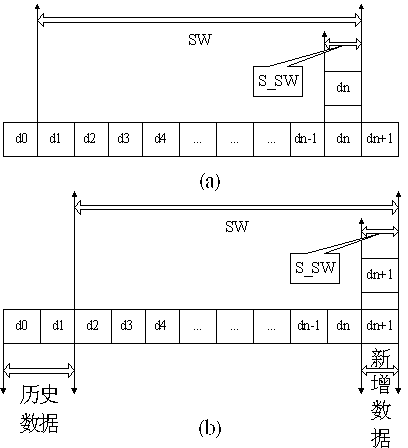

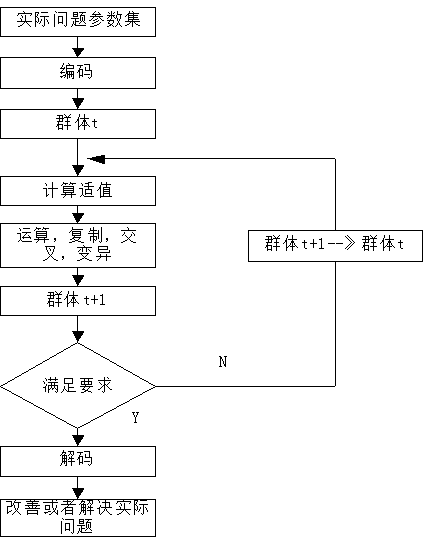

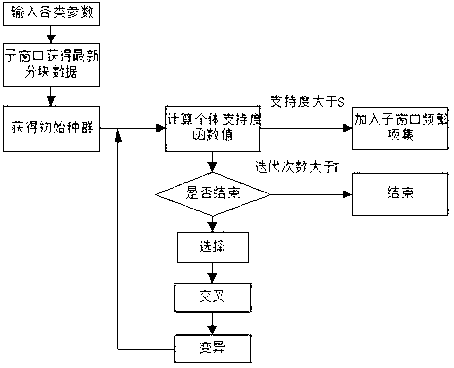

Data flow parallel processing method based on GPU-CUDA platform and genetic algorithm

InactiveCN103279332AEfficiently obtainedImprove operating experienceGenetic modelsConcurrent instruction executionStreaming dataData stream

The invention provides a data flow parallel processing method based on a GPU-CUDA platform and a genetic algorithm. The data flow parallel processing method comprises the following steps: dynamically mining frequent item sets of newest data, and starting the searching process from a group of initial populations, wherein each individual in the populations can be a possible frequent pattern; adopting a sliding window mode according to the characteristics of a data flow to perform streaming data mining, and adopting a nested child window model based on a sliding window in terms of features of frequent item set mining; performing frequent item set mining by adopting a GPU-CUDA parallel processing technology according to the characteristics that the data flow is large in data amount and requires real-time processing; and finally obtaining the frequent item sets of data in the current sliding window by comprehensively processing the frequent item sets of nested child windows in the sliding window. Compared with the prior art, by means of the data flow parallel processing method, the frequent item sets of the flow data are processed through the strong floating-point calculation capability of a GPU and a CUDA accelerating technology for programming on the GPU, modeling can be performed by adopting a parallel mode of the genetic algorithm, and user operation experience is improved.

Owner:LANGCHAO ELECTRONIC INFORMATION IND CO LTD

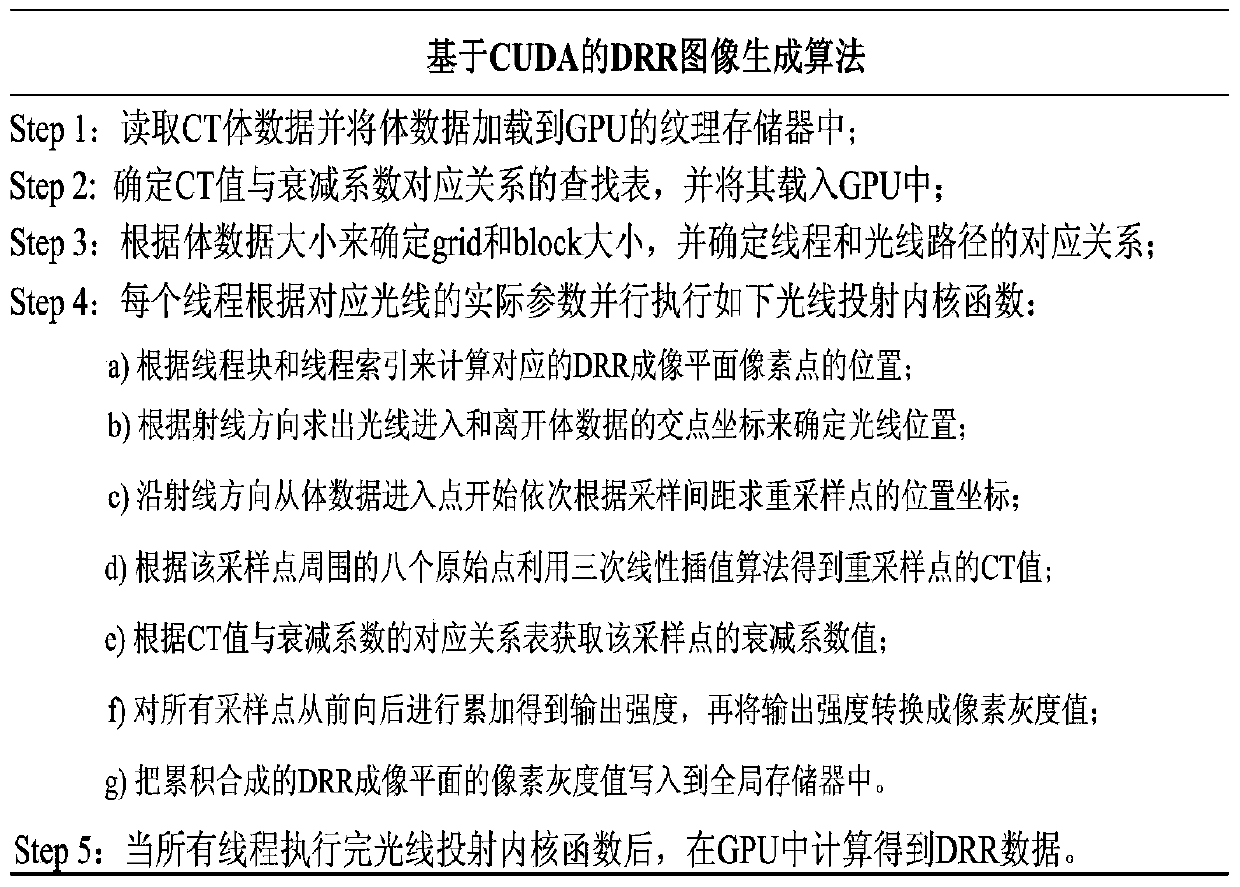

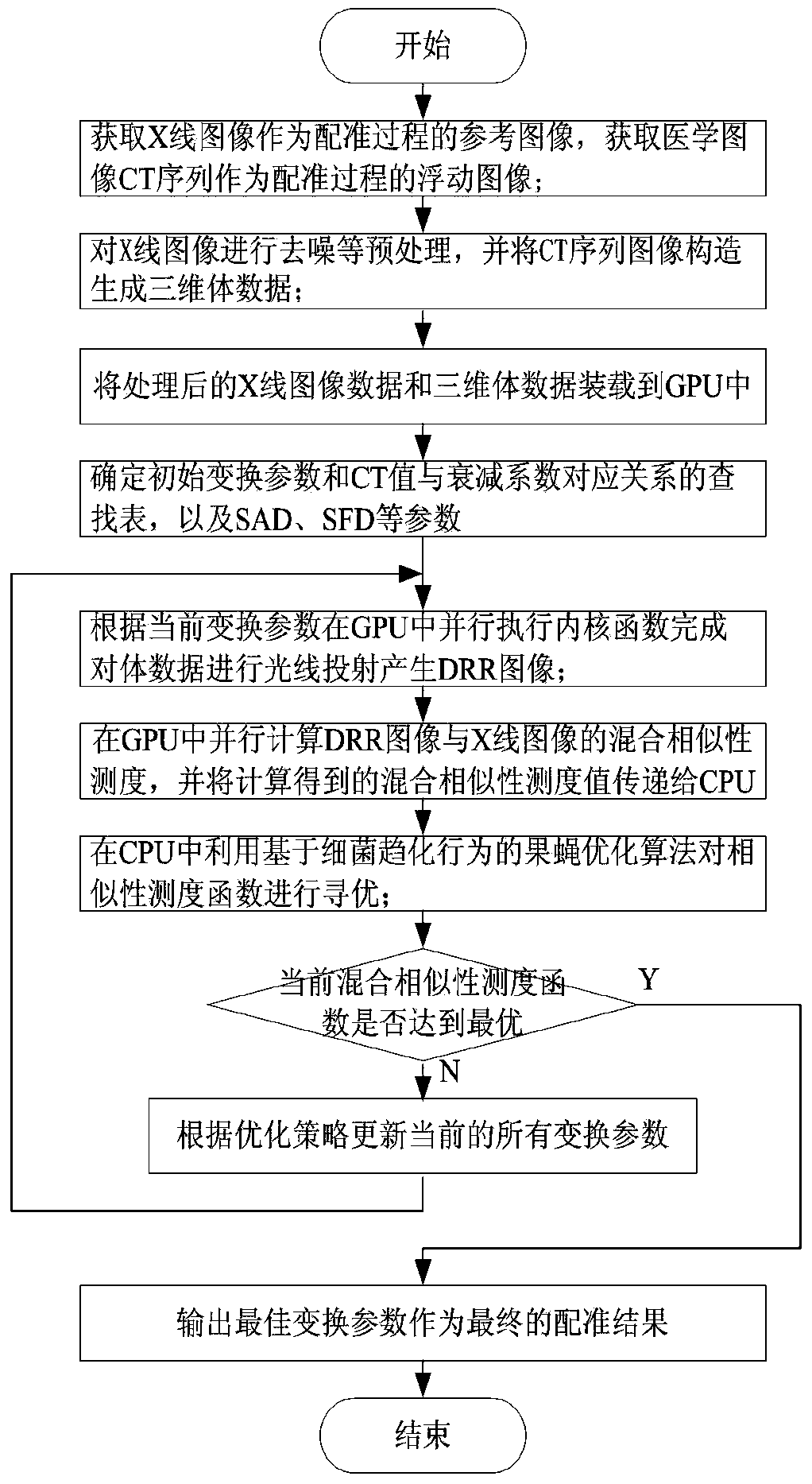

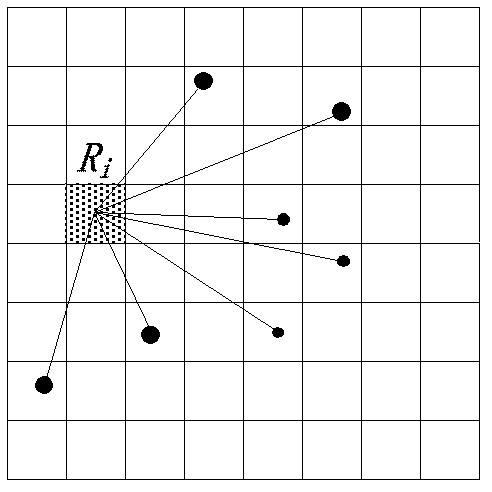

2D-3D medical image parallel registration method based on combination similarity measure

InactiveCN104134210ASimple calculationHigh precisionImage analysisGeneration processParallel programming model

The invention discloses a 2D-3D medical image parallel registration method based on combination similarity measure. The method comprises the following steps: firstly, using a CUDA (Compute Unified Device Architecture) parallel computing model to finish a quick generation process of a DRR (Digitally Reconstructed Radiograph) image; combining a SAD (Sum of Absolute Difference) with PI (pattern intensity) as new similarity measure to carry out parallel computation on GPU (Graphics Processing Unit); and finally, transferring a combination similarity measure value to CPU (Central Processing Unit), and adopting a fruit fly optimization algorithm based on bacterial chemotaxis behaviors to optimize for looking for an optimal registration parameter. An experiment verifies the performance of the method to show that the execution speed of the method is effectively improved since DRR high-speed generation and the mixed similarity measure are realized in the GPU. Meanwhile, compared with the single similarity measure, the invention adopts the mixed similarity measure to improve the accuracy of a registration result.

Owner:LANZHOU JIAOTONG UNIV

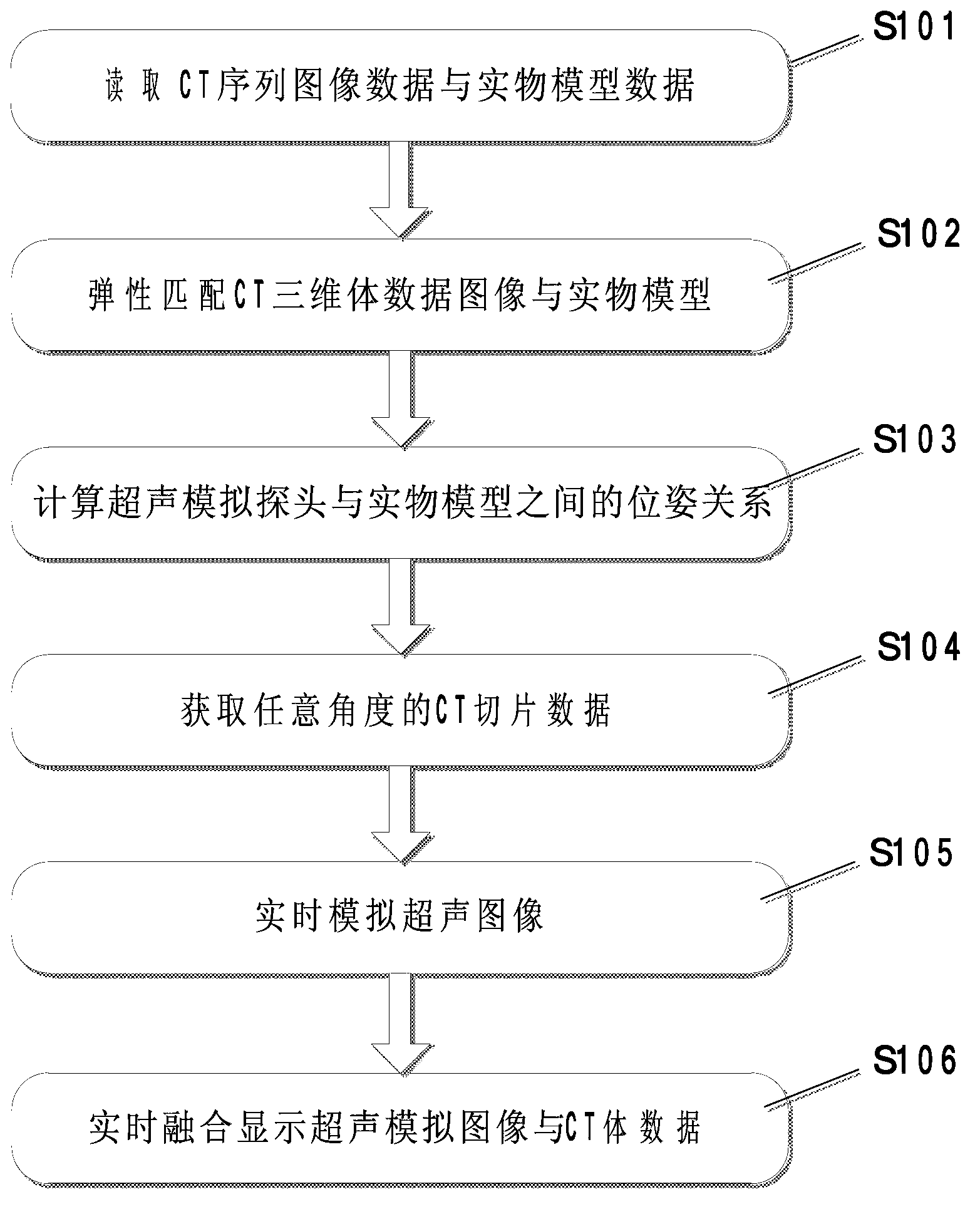

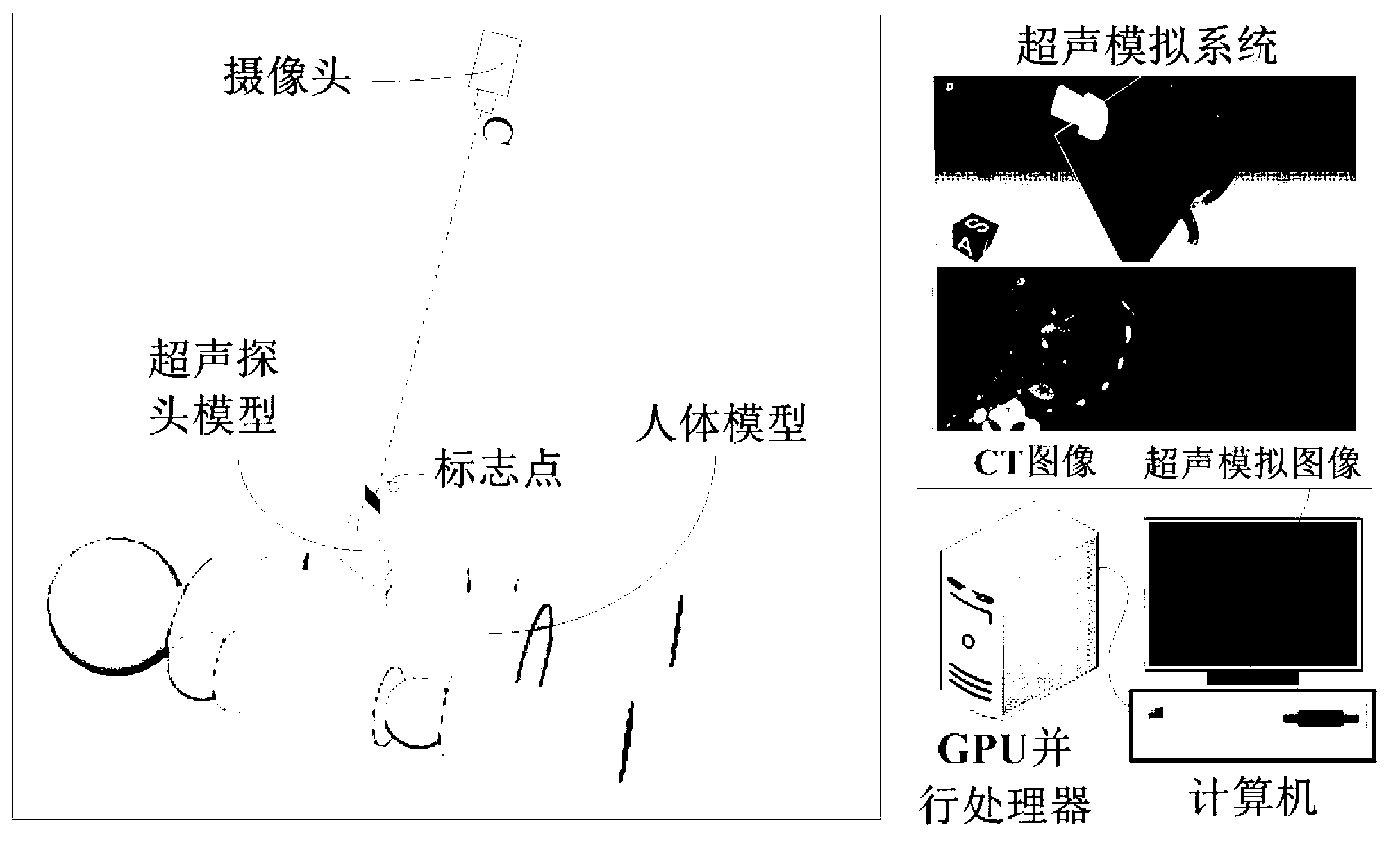

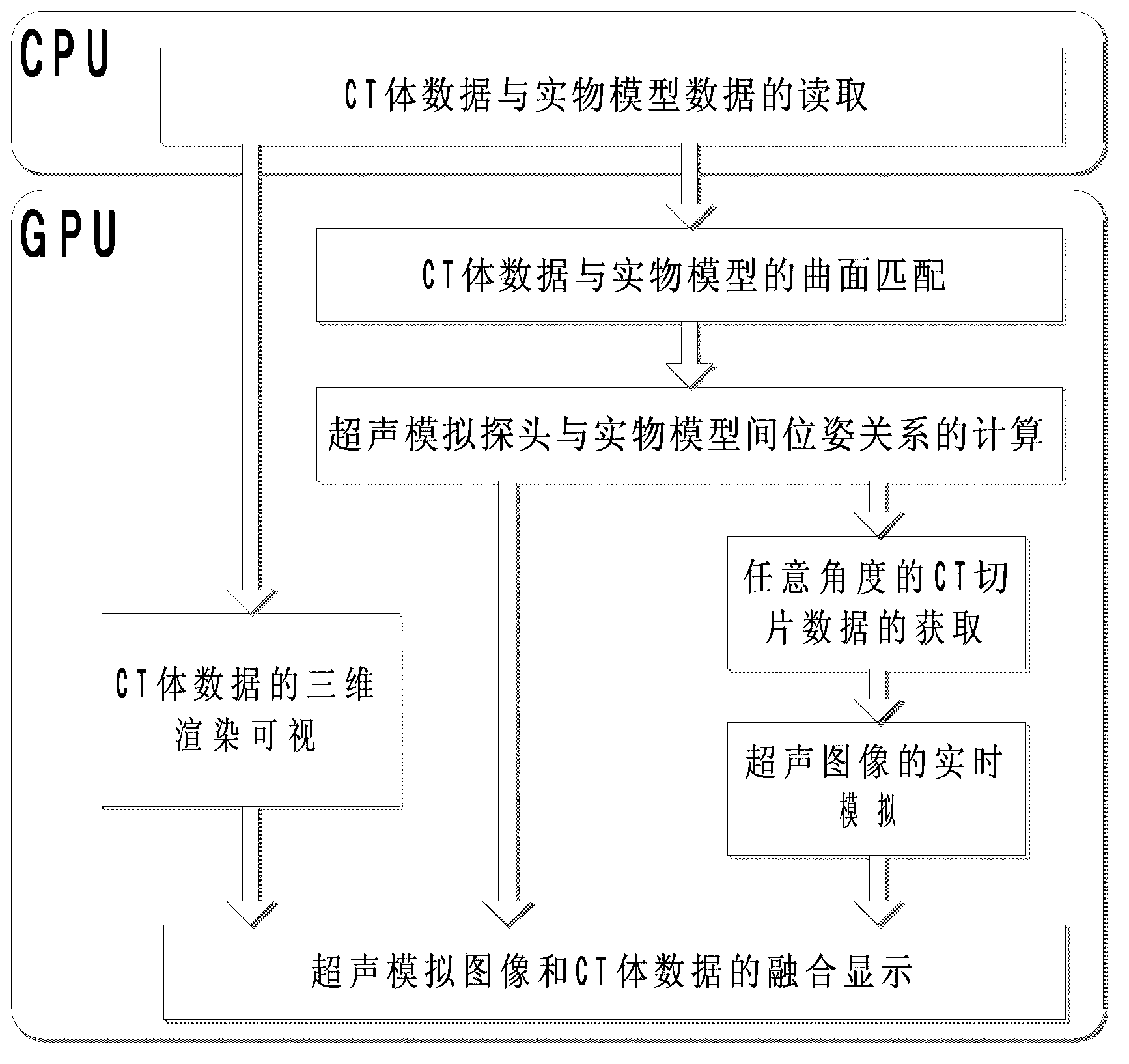

Ultrasonic training system based on CT (Computed Tomography) image simulation and positioning

ActiveCN103295455AReduce computational complexityThe pose matrix is accurateEducational modelsHuman bodyThree dimensional ct

The invention provides an ultrasonic training system based on CT (Computed Tomography) image simulation and positioning. Ultrasound image simulation and CT volumetric data rendering are accelerated to be achieved through a GPU (Graphics Processing Unit) and the real-time performance of the system is improved. A curved surface matching module is used for performing surface matching on read human body CT volumetric data and physical model data with a physical model as the standard and achieving elastic transformation of a curved surface based on an interpolation method of thin plate splines; an ultrasonic simulation probe position tracking module is used for performing real-time calculation on ultrasonic simulation probe positions relative to the physical model by a marking point tracking method and obtaining arbitrary angle CT slice images according to a position matrix; an image enhancement and ultrasonic image simulation generation module is used for improving the vessel contrast ratio in CT images by a multi-scale enhancement method and achieving the ultrasound image simulation based on the CT volumetric data; and an integration display module is used for accelerating to achieve rendering display of the CT volumetric data based on CUDA (Compute Unified Device Architecture) and integrating and displaying ultrasound simulation images and three-dimensional CT images according to the obtained position matrix.

Owner:ARIEMEDI MEDICAL SCI BEIJING CO LTD

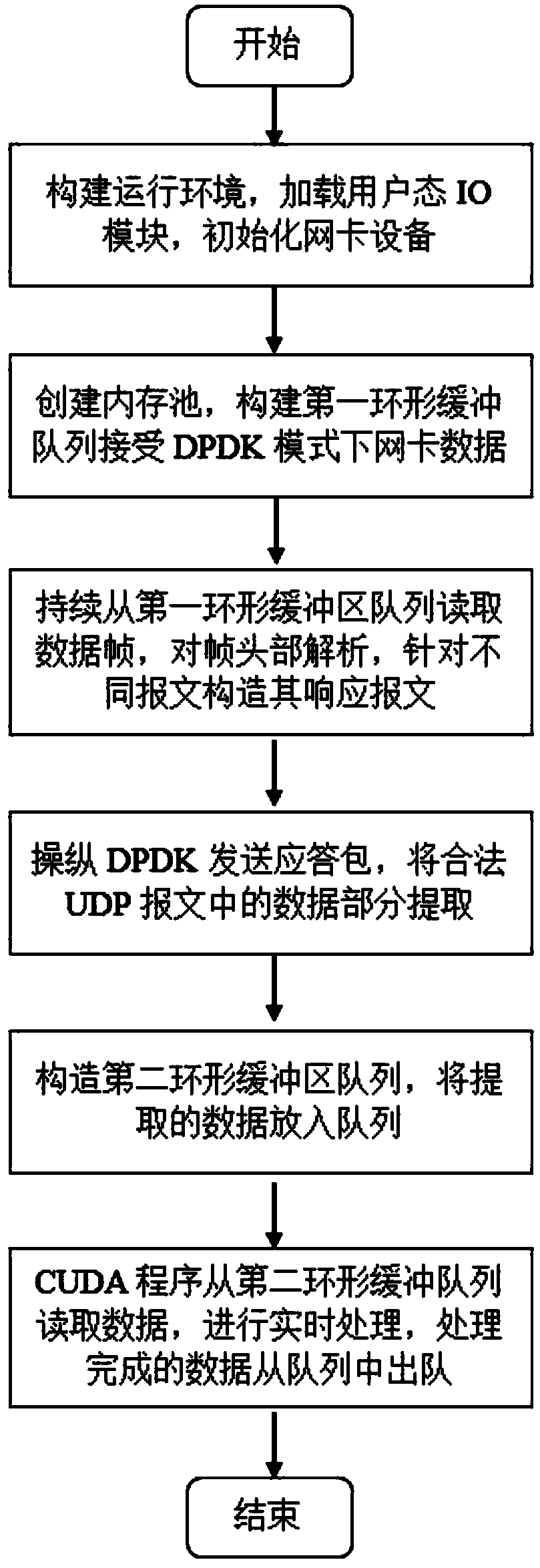

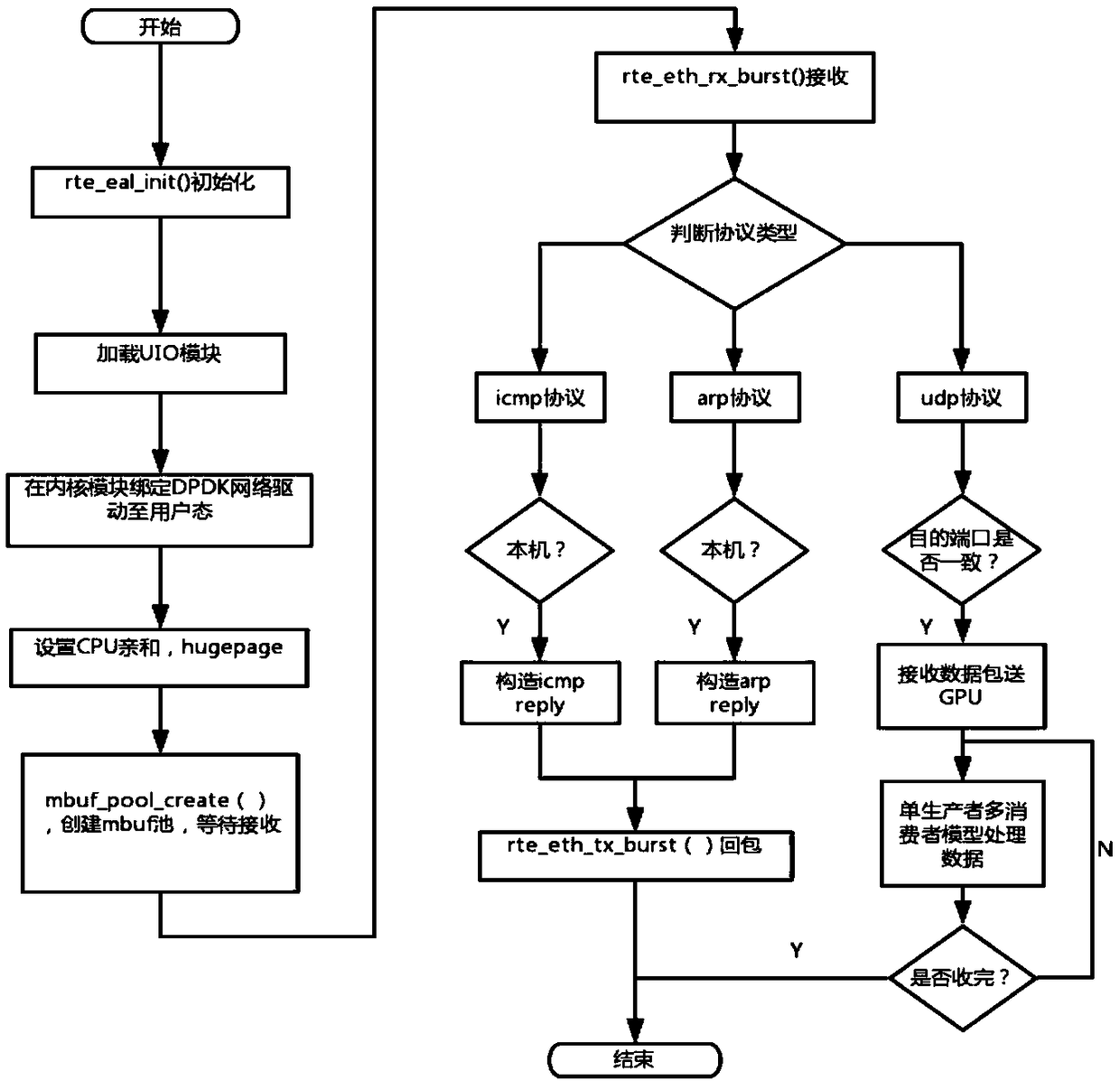

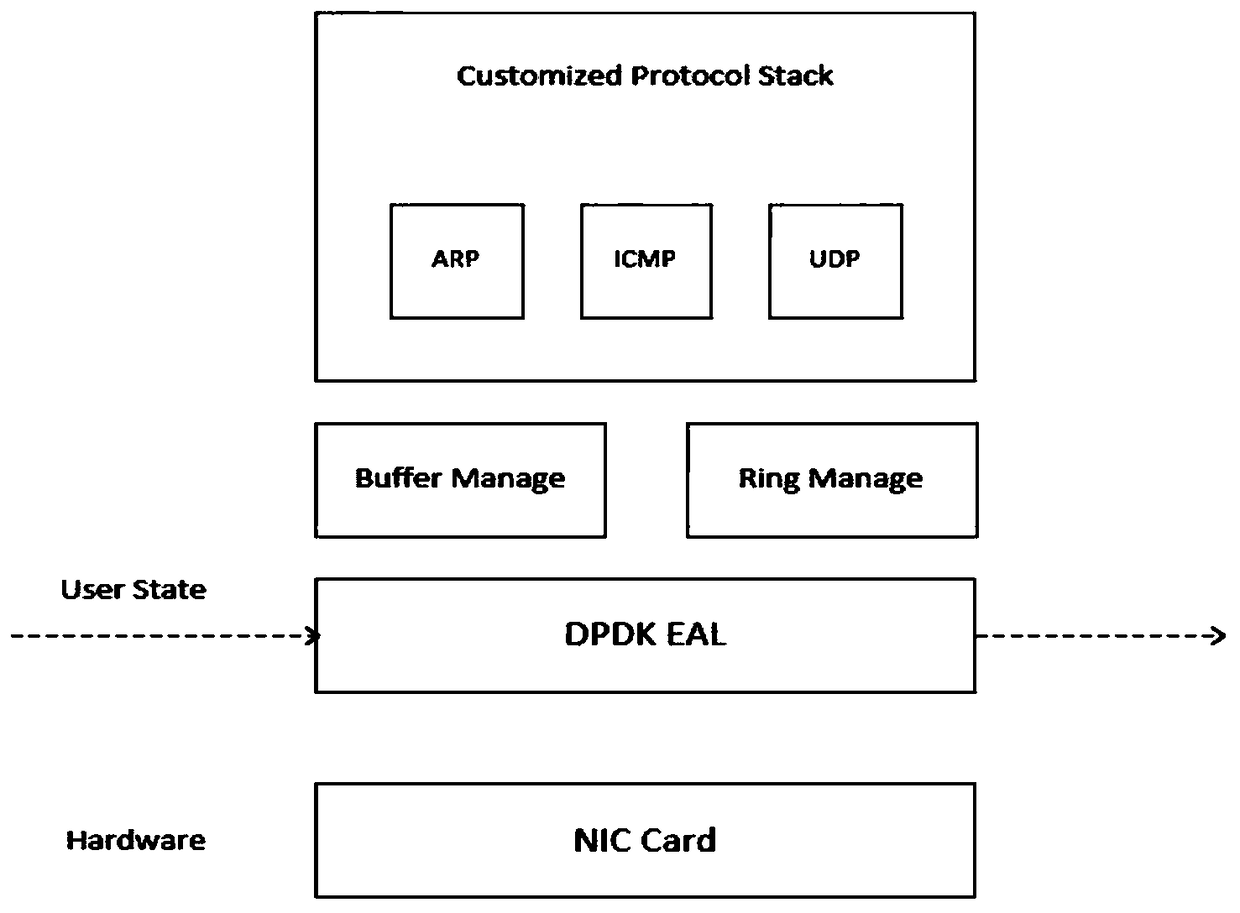

DPDK-based astronomy data acquisition and real-time processing method

The invention relates to a DPDK-based astronomy data acquisition and real-time processing method and belongs to the field of network data pack processing. The method comprises step of constructing a DPDK operation environment; creating a memory pool; reading data frames from an annular cache, analyzing frame heads and constructing response reports; carrying out legal verification on UDP data packsand extracting data parts in the reports; placing extracted data into a second annular buffering region; and reading data and carrying out real-time processing from the annular buffering region through a CUDA program. According to the invention, by fully using performance advantages of a DPDK in case of processing of high speed IO compared with traditional performance advantages based on a kernelTCP / IP protocol stack, through a lock-free annular buffering array, parts of the TCP / IP protocol stack are achieved under the user state, so lossless receiving of data in a 10 gigabit network environment is achieved; and compared with the data pack receiving based on the traditional protocol stack, the performance is greatly improved.

Owner:KUNMING UNIV OF SCI & TECH

Method for sharing GPU (graphics processing unit) by multiple tasks based on CUDA (compute unified device architecture)

InactiveCN102708009ASimplify programming workImprove performanceResource allocationConstraint relationCUDA

The invention discloses a method for sharing a GPU (graphics processing unit) by multiple tasks based on a CUDA (compute unified device architecture). The method includes creating a mapping table in a Global Memory, determining each task number and task block numbers which are executed by a corresponding Block in a corresponding combined Kernel; starting N blocks by one Kernel every time; and meeting constraint relations among the original tasks by a marking and blockage waiting method; and performing sharing by the multiple tasks for a Shared Memory in a pre-application and static distribution mode. The N is equal to the sum of the task block numbers of all the tasks. By the aid of the method, sharing by the multiple tasks can be realized on the existing hardware architecture of the GPU simply and conveniently, programming work in actual application can be simplified, and a good performance is obtained under certain conditions.

Owner:HUAWEI TECH CO LTD +1

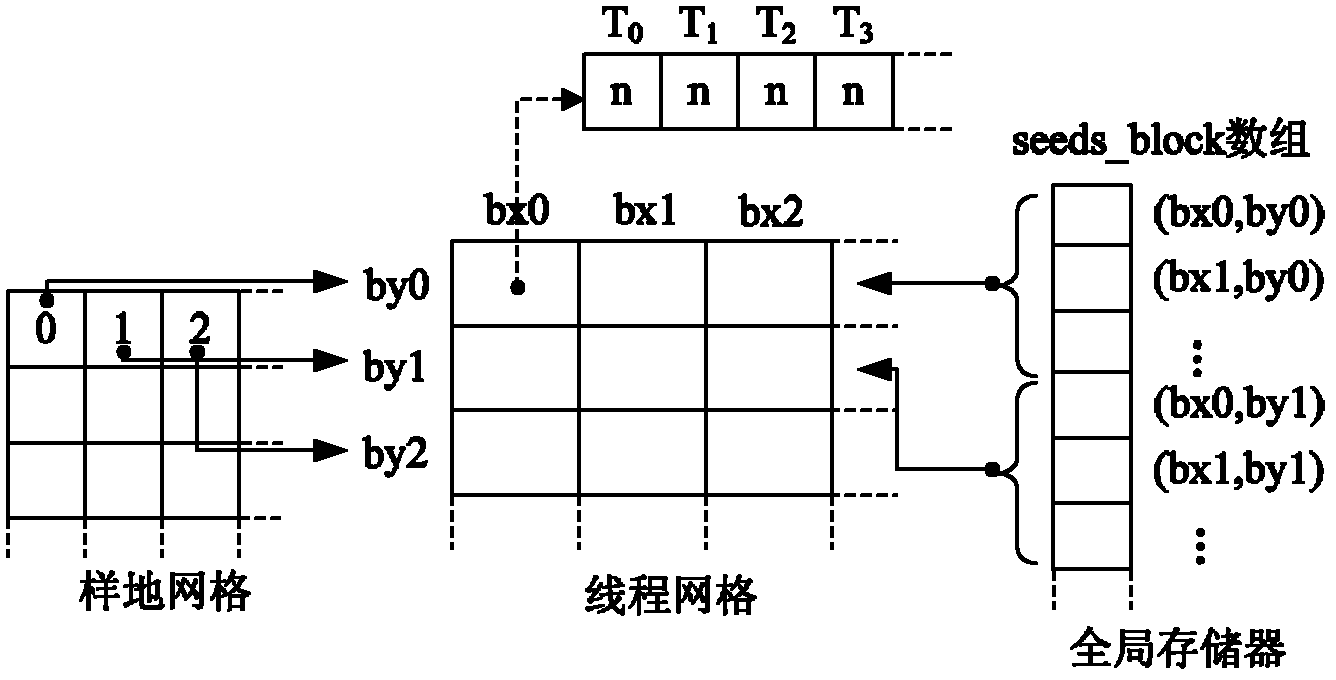

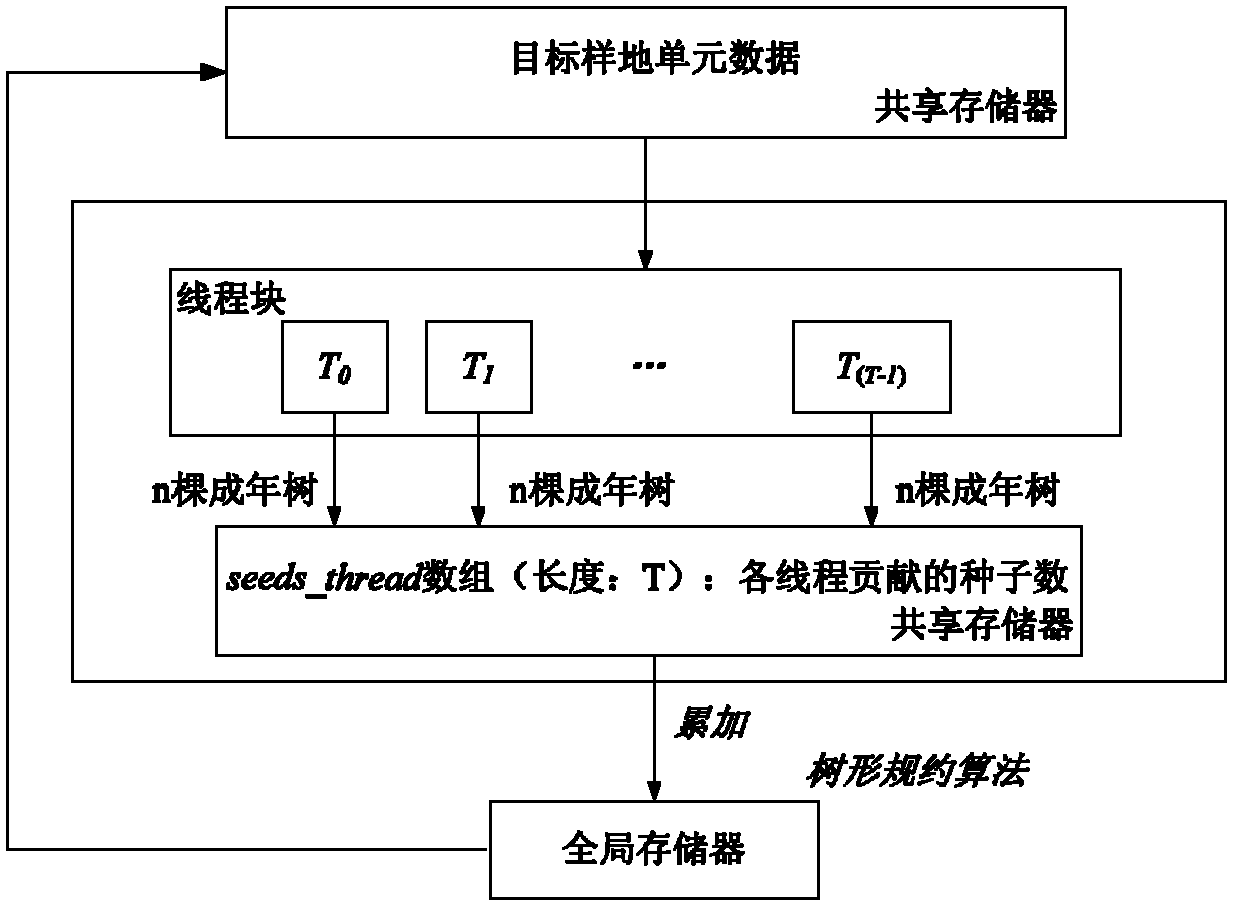

Parallel acquisition method for seed distribution data based on CUDA

InactiveCN102662641AInnovative ideasGreat innovationConcurrent instruction executionSpecial data processing applicationsCore functionVideo memory

The invention discloses a parallel acquisition method for seed distribution data based on CUDA (Compute Unified Device Architecture). The implementation of the method relates to a host terminal executed on a CPU (Central Processing Unit) and an equipment terminal executed on a GPU (Graphics Processing Unit) under the CUDA. The parallel acquisition method for the seed distribution data comprises the following steps: firstly, allocating and initializing an input data memory space; secondly, allocating an input data video memory space; thirdly, transmitting input data from the memory to the video memory; fourthly, allocating the input data video memory space; fifthly, allocating the input data memory space; and sixthly, setting core execution configuration of the equipment terminal and calling a core function of the equipment terminal for calculating the seed distribution. The invention provides the parallel acquisition method for the seed distribution data based on the CUDA; and according to the parallel acquisition method, better calculation performance is obtained while the precision is ensured and the evolution simulation time is shortened.

Owner:ZHEJIANG UNIV OF TECH

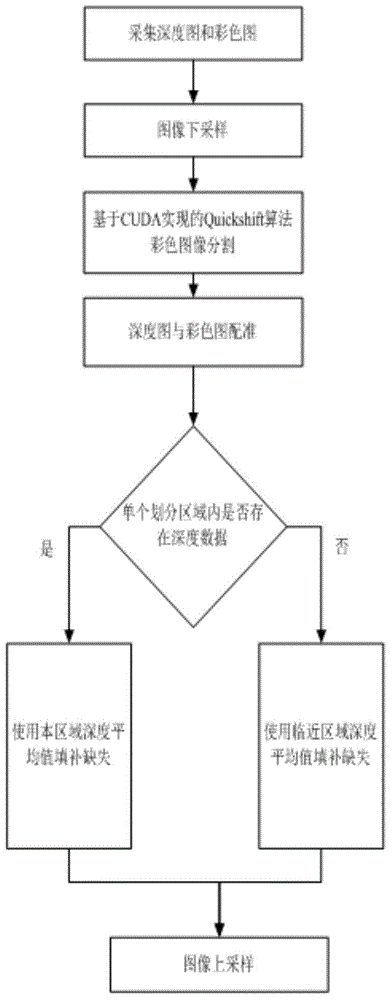

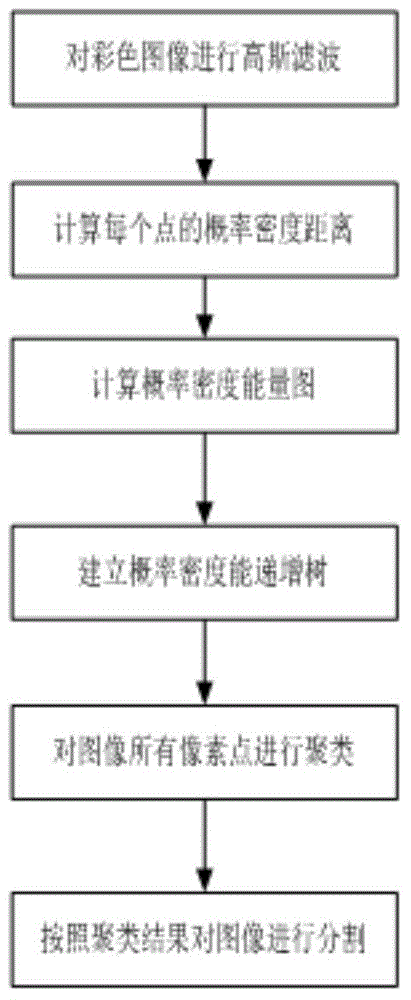

Technology for restoring depth image and combining virtual and real scenes based on GPU (Graphic Processing Unit)

InactiveCN105096311AAchieve correct registrationQuality improvementImage analysisComputer visionGraphics processing unit

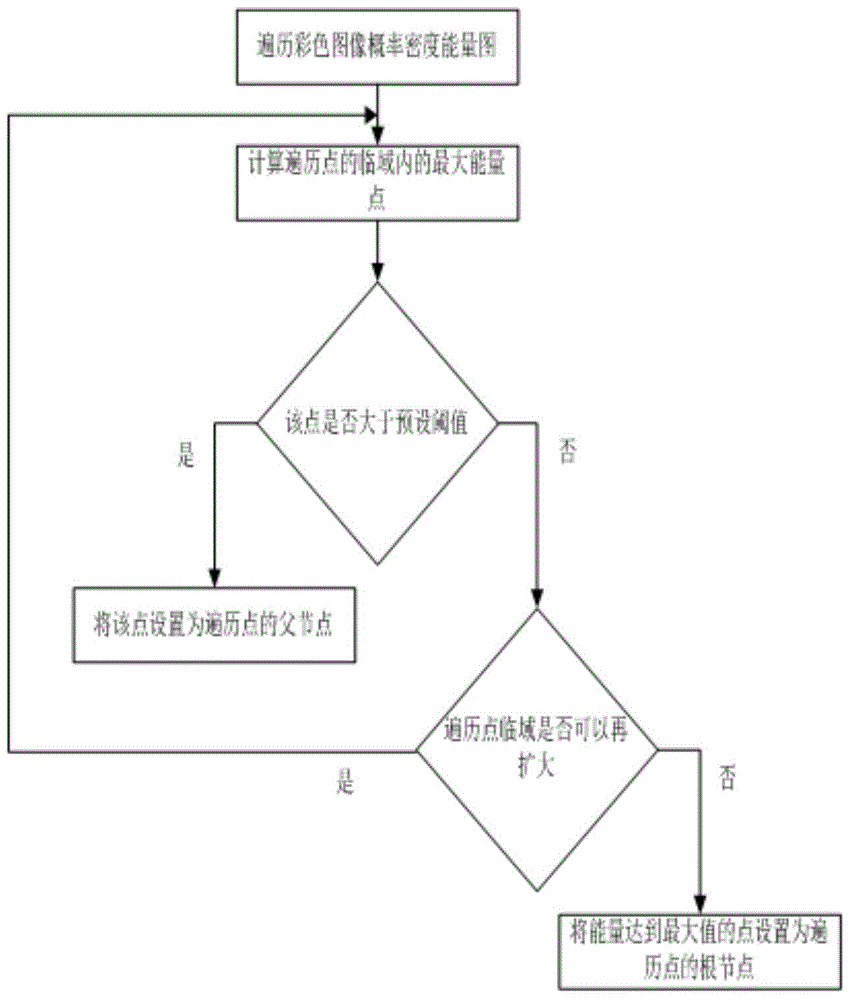

The invention discloses a technology for restoring a depth image and combining virtual and real scenes based on a GPU (Graphic Processing Unit). The technology mainly comprises the following steps: (1), collecting the depth image and a colourized image; (2), performing down-sampling of the images so as to ensure real-time restoring speed; (3), segmenting the colourized image by using a QuickShift algorithm, wherein the specific algorithm is realized by using a CUDA (Compute Unified Device Architecture) based on GPU operation; (4), processing a segmented block lacking of depth data by utilizing the segmentation result of the colourized image; registering the Kinect depth image and colourized image at first; filling a deleted region by using an average depth value of the region if the depth data exists in the region; and filling by using the average depth value of a neighbourhood region if all depth information in the region is deleted; and (5), performing up-sampling of the images. According to the invention, the bug restoring problem of the Kinect depth images is solved in combination with an image sampling technology and a CUDA technology based on the QuickShift algorithm and the GPU operation; on this basis, virtual objects and real objects are superposed, so that shading between virtual objects and the real objects is realized; and thus, realistic interaction is enhanced.

Owner:中国科学院科学传播研究中心

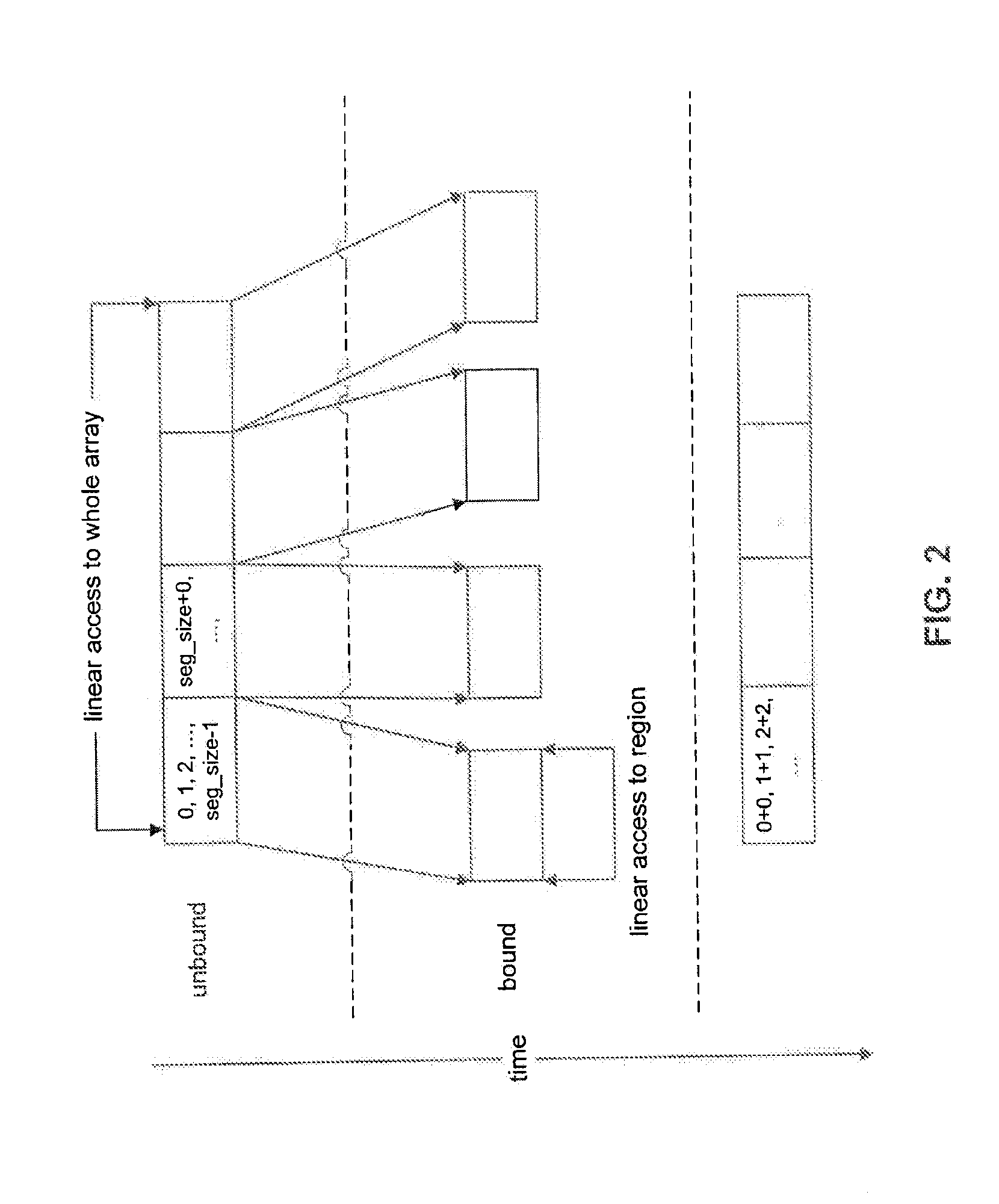

Partitioning CUDA code for execution by a general purpose processor

One embodiment of the present invention sets forth a technique for translating application programs written using a parallel programming model for execution on multi-core graphics processing unit (GPU) for execution by general purpose central processing unit (CPU). Portions of the application program that rely on specific features of the multi-core GPU are converted by a translator for execution by a general purpose CPU. The application program is partitioned into regions of synchronization independent instructions. The instructions are classified as convergent or divergent and divergent memory references that are shared between regions are replicated. Thread loops are inserted to ensure correct sharing of memory between various threads during execution by the general purpose CPU.

Owner:NVIDIA CORP

Implement method of parallel fluid calculation based on entropy lattice Boltzmann model

ActiveCN103425833AReduced simulation timeShorten the timeSpecial data processing applicationsPerformance indexIterative method

The invention discloses an implement method of parallel fluid calculation based on an entropy lattice Boltzmann model, and provides a parallel implement mode of the entropy lattice Boltzmann model based on the GPU of a mainstream video card nVIDIA in current general calculation territory. The fact that ELBM simulated time is shortened by one third than the simulated time on a CPU is achieved by measuring the speed-up ratio of fluid parallel calculation and comparing the performance index of lattice number updated per second, and applying the CUDA on the GPU of the nVIDIA video card. A method of directly approximately solving a parameter alpha is more effective than an iterative method, namely, and time can be reduced by 31.7% averagely. The implement method of the parallel fluid calculation based on the entropy lattice Boltzmann model can use hardware resources of the system fully, and verifies the parallel calculation mode of the entropy lattice Boltzmann model from the actual operation level, and therefore efficiency of the whole parallel fluid calculation is improved obviously.

Owner:HUNAN UNIV

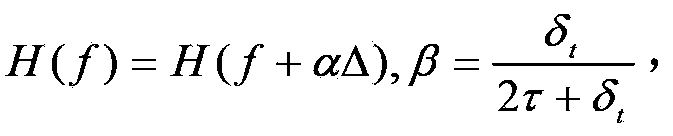

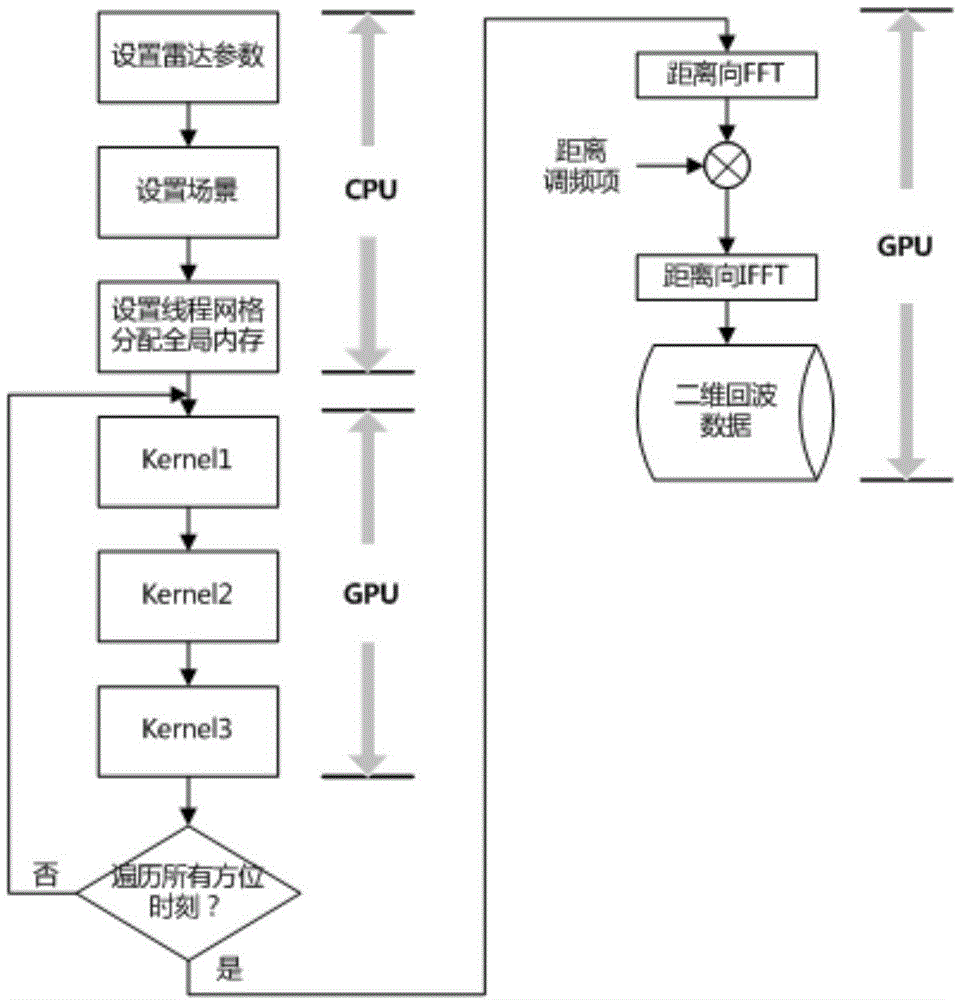

Missile-borne SAR echo simulation and imaging method based on LabVIEW

InactiveCN104459666ARealize echo simulationAchieving processing powerWave based measurement systemsSynthetic aperture radarRadar signal processing

The invention belongs to the technical field of radar signal processing, and particularly relates to a SAR face echo simulation and missile-borne SAR imaging method based on LabVIEW. The method includes the specific steps that work parameters of a synthetic aperture radar are input in a LabVIEW interface, the missile-borne SAR echo simulation process is encapsulated into a DLL function through a CUDA framework, the work parameters of the synthetic aperture radar are output to a library function node through the LabVIEW, and the library function node calls the DLL function to obtain echo data of all azimuth time SAR observation scenes.

Owner:XIDIAN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com