Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

85 results about "Parallel programming model" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computing, a parallel programming model is an abstraction of parallel computer architecture, with which it is convenient to express algorithms and their composition in programs. The value of a programming model can be judged on its generality: how well a range of different problems can be expressed for a variety of different architectures, and its performance: how efficiently the compiled programs can execute. The implementation of a parallel programming model can take the form of a library invoked from a sequential language, as an extension to an existing language, or as an entirely new language.

On-chip shared memory based device architecture

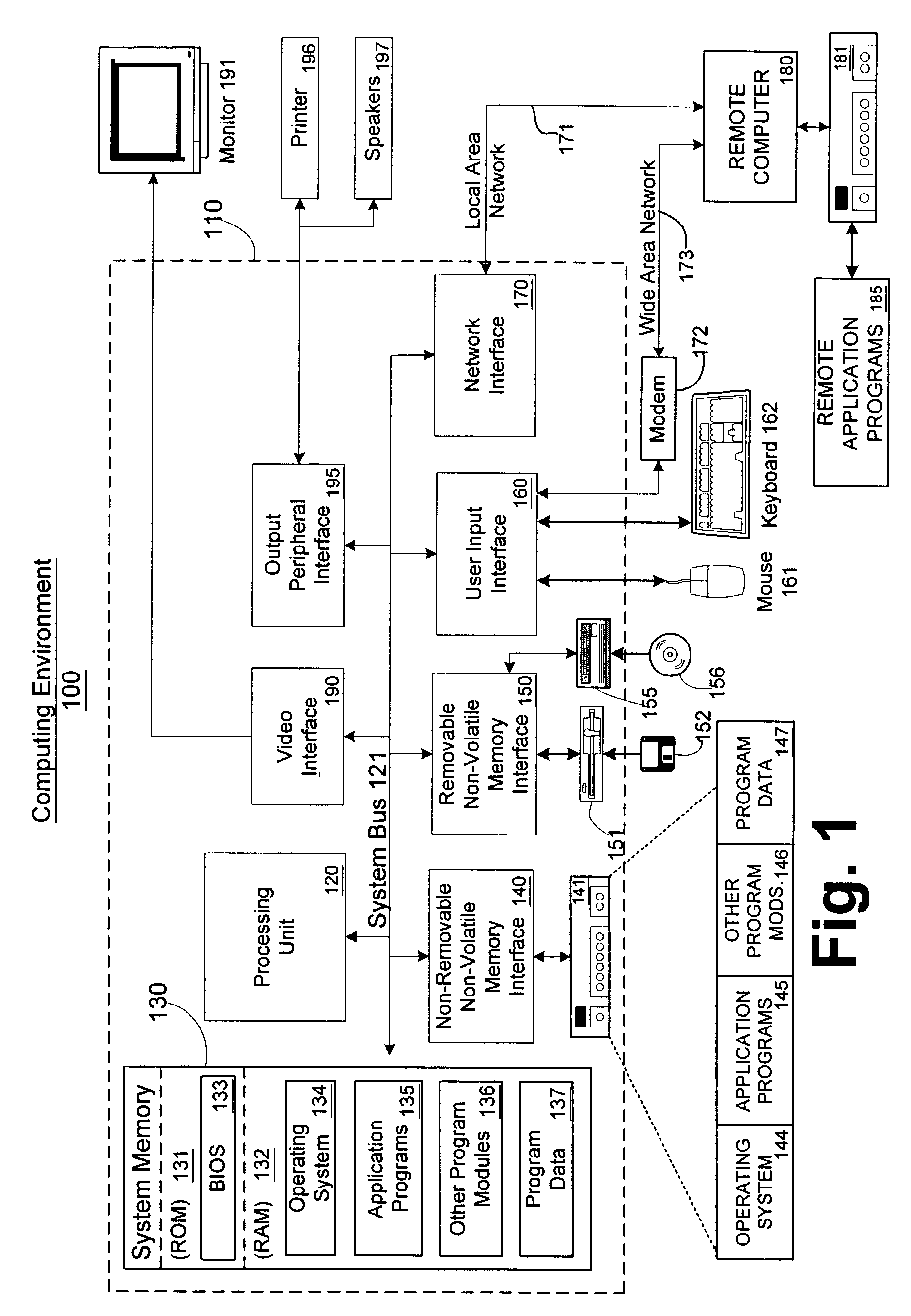

ActiveUS7743191B1Reduce disadvantagesLow costRedundant array of inexpensive disk systemsRecord information storageExtensibilityRAID

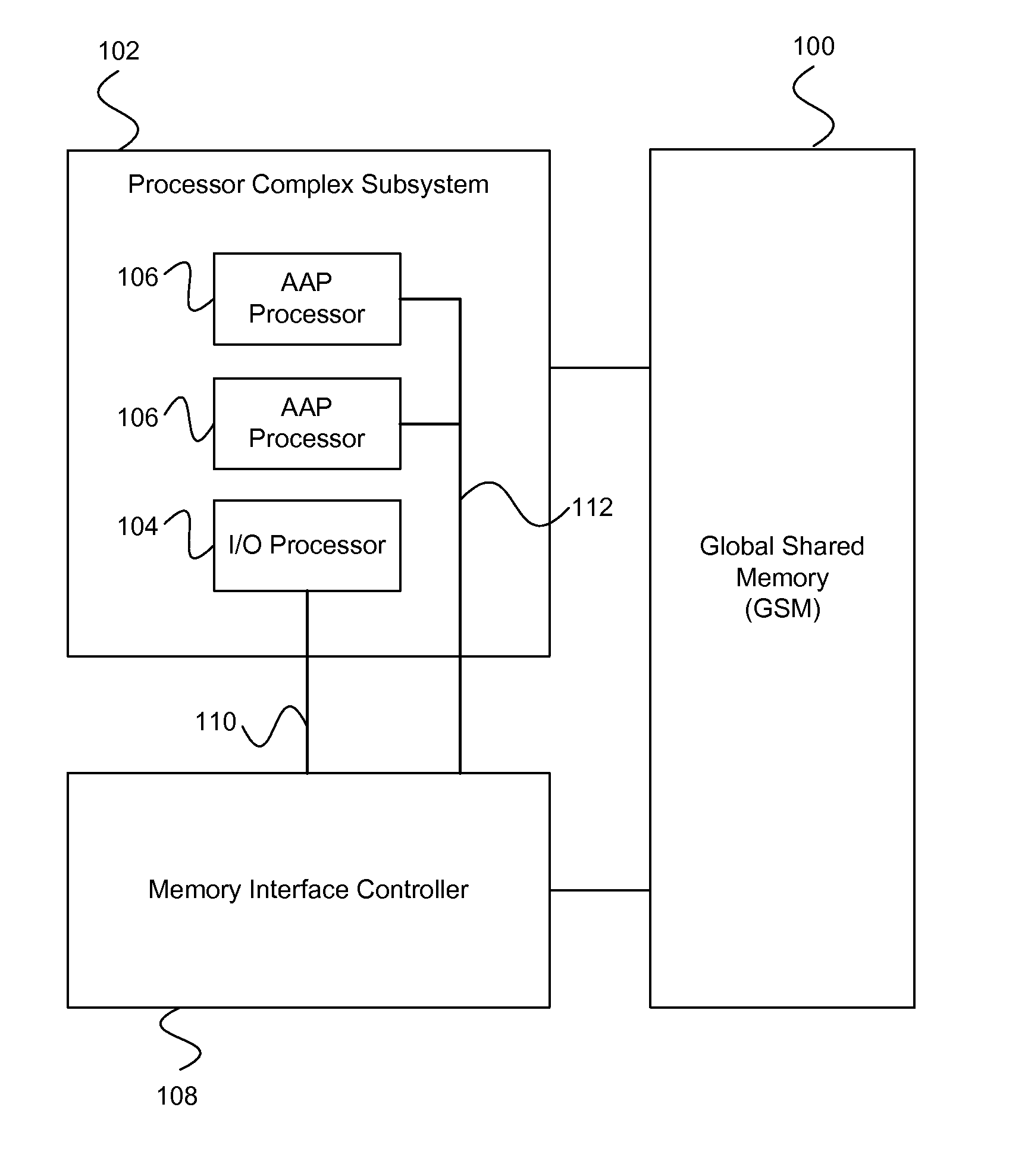

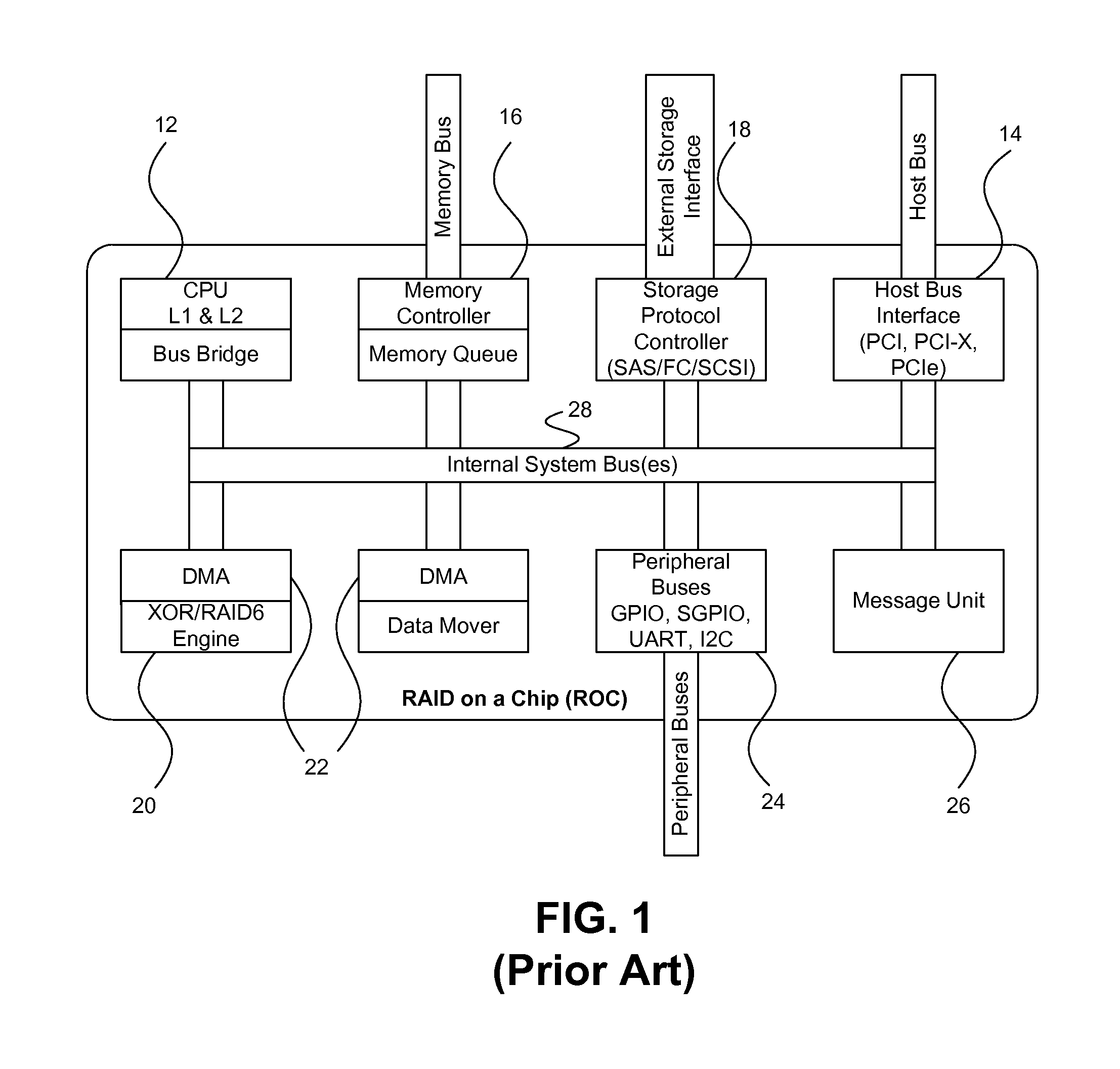

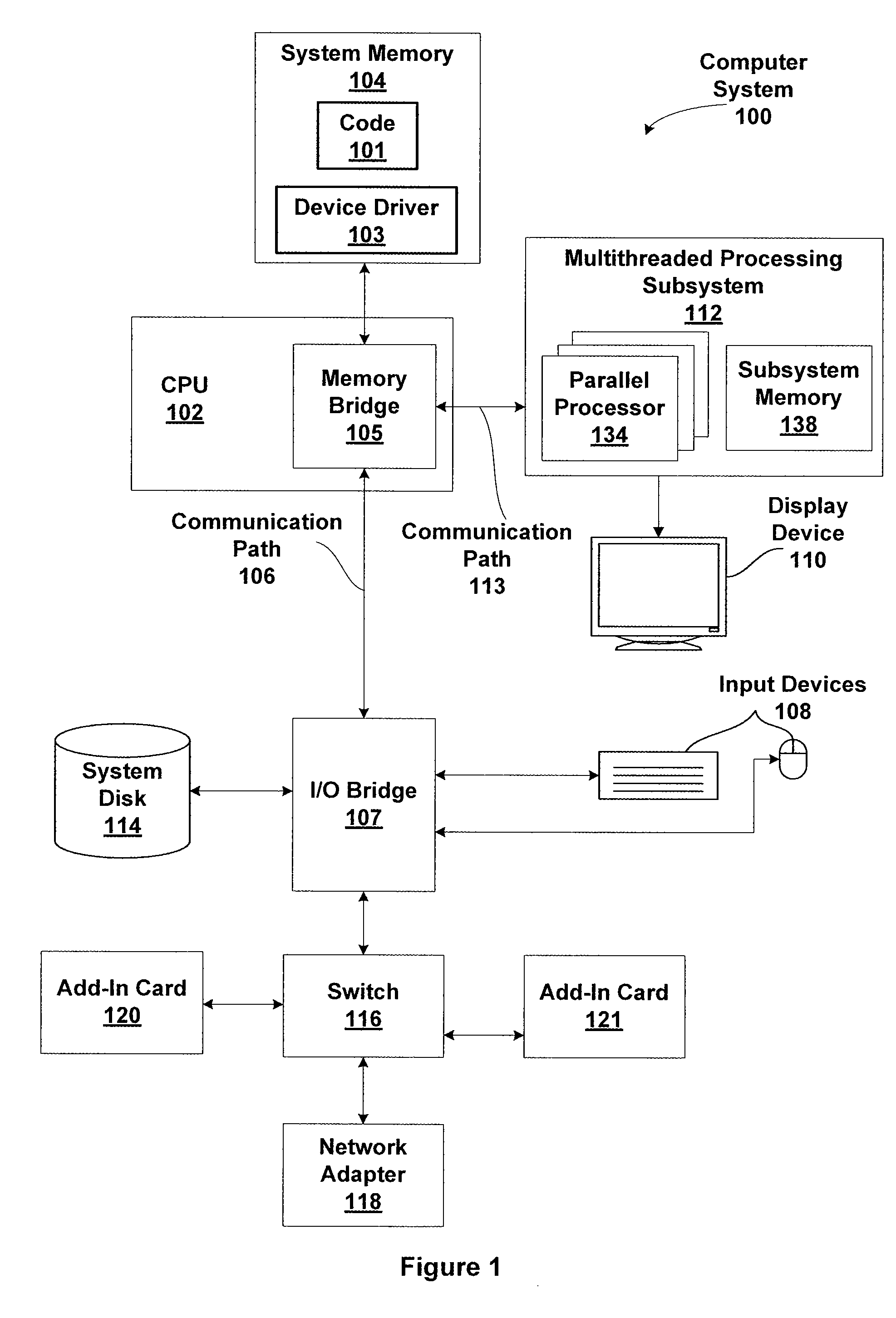

A method and architecture are provided for SOC (System on a Chip) devices for RAID processing, which is commonly referred as RAID-on-a-Chip (ROC). The architecture utilizes a shared memory structure as interconnect mechanism among hardware components, CPUs and software entities. The shared memory structure provides a common scratchpad buffer space for holding data that is processed by the various entities, provides interconnection for process / engine communications, and provides a queue for message passing using a common communication method that is agnostic to whether the engines are implemented in hardware or software. A plurality of hardware engines are supported as masters of the shared memory. The architectures provide superior throughput performance, flexibility in software / hardware co-design, scalability of both functionality and performance, and support a very simple abstracted parallel programming model for parallel processing.

Owner:MICROSEMI STORAGE SOLUTIONS

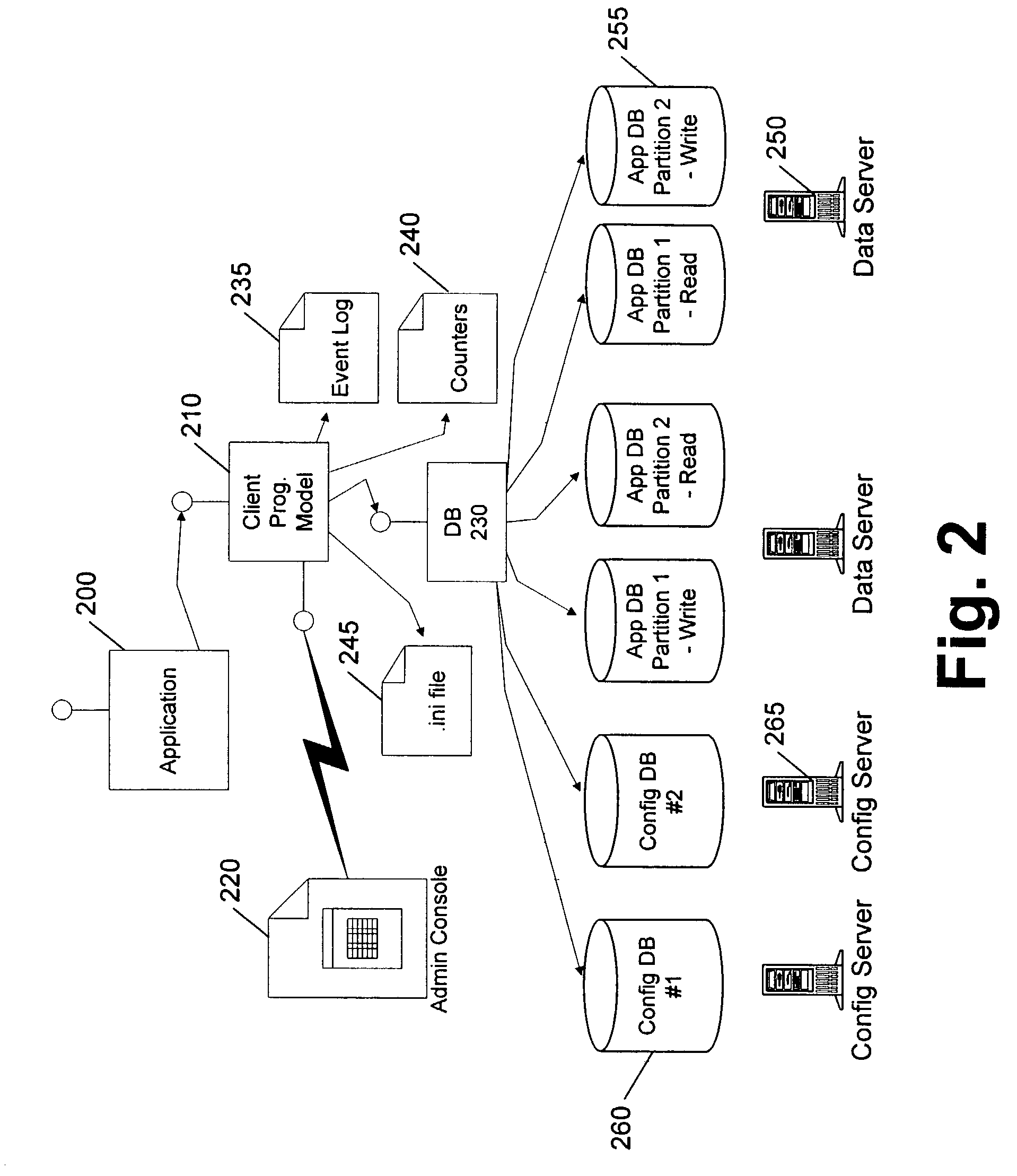

Client programming model with abstraction

InactiveUS7058958B1Digital data information retrievalData processing applicationsFailoverParallel programming model

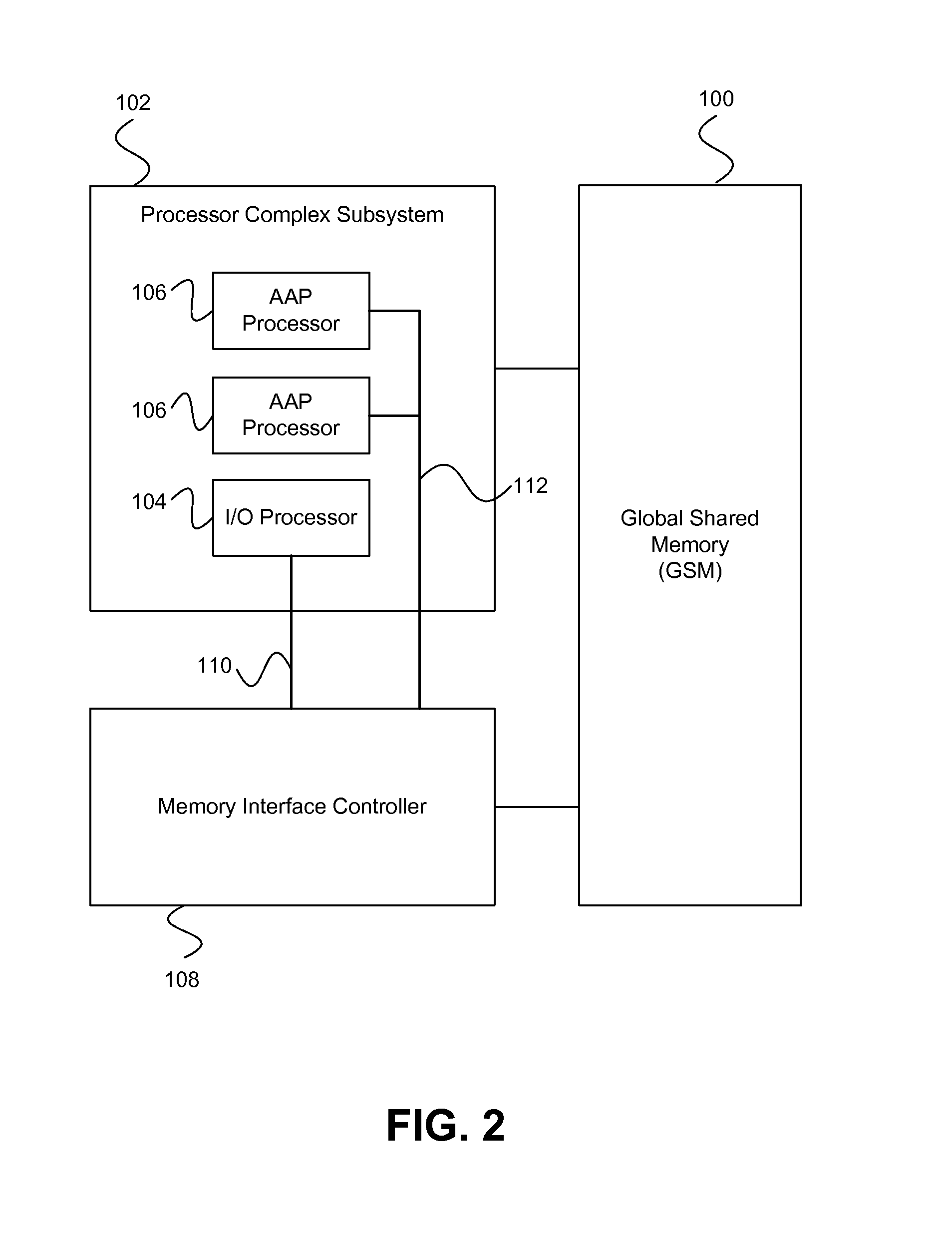

A client programming model with abstraction hides from an underlying client application or application program interface (API) the details of where each element of data is located, and which copy of the data is resident on an available server and associated databases. The model wraps a database, such as a virtual database, and provides data-dependent and application-dependent routing, failover, and operational administration.

Owner:MICROSOFT TECH LICENSING LLC

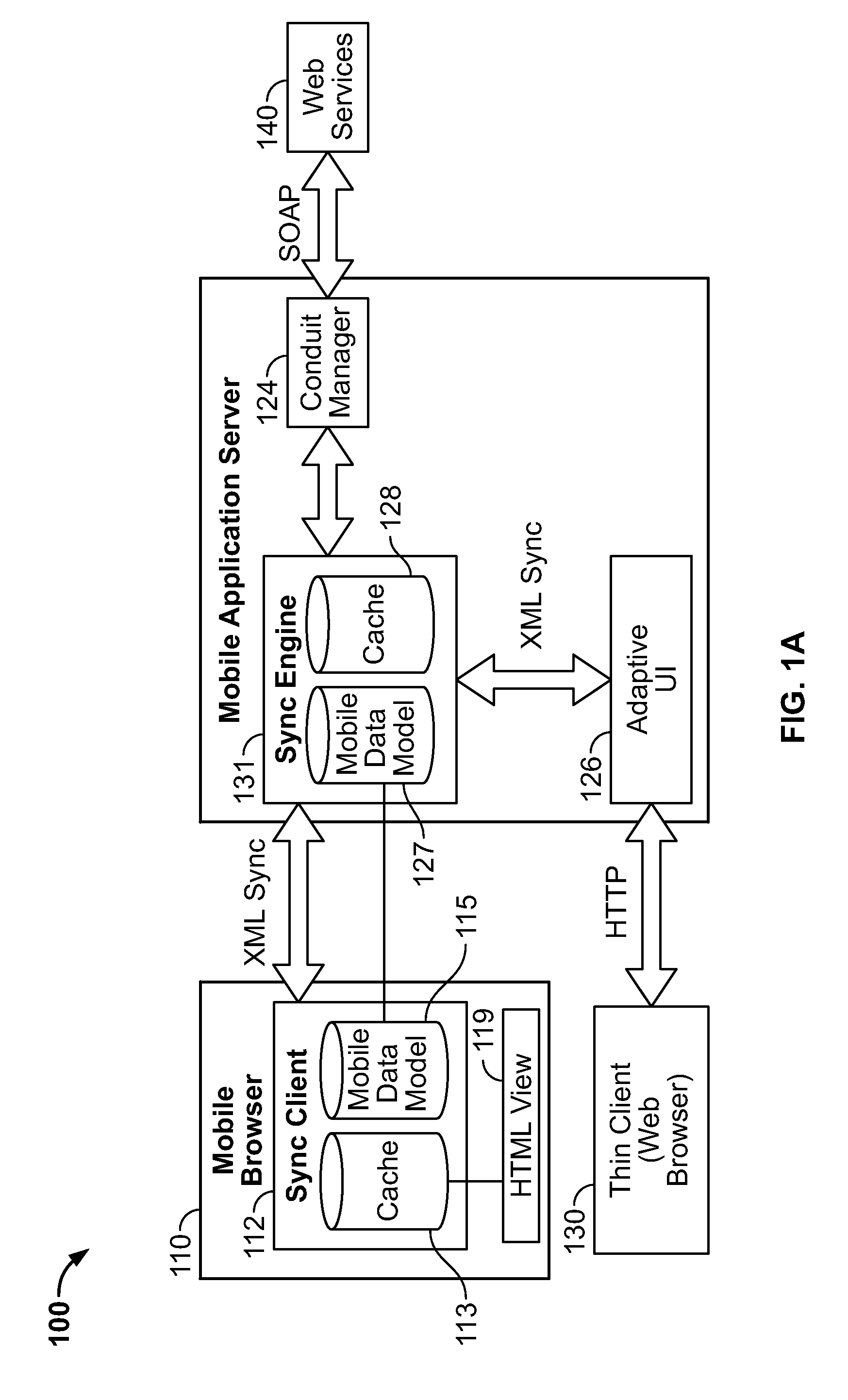

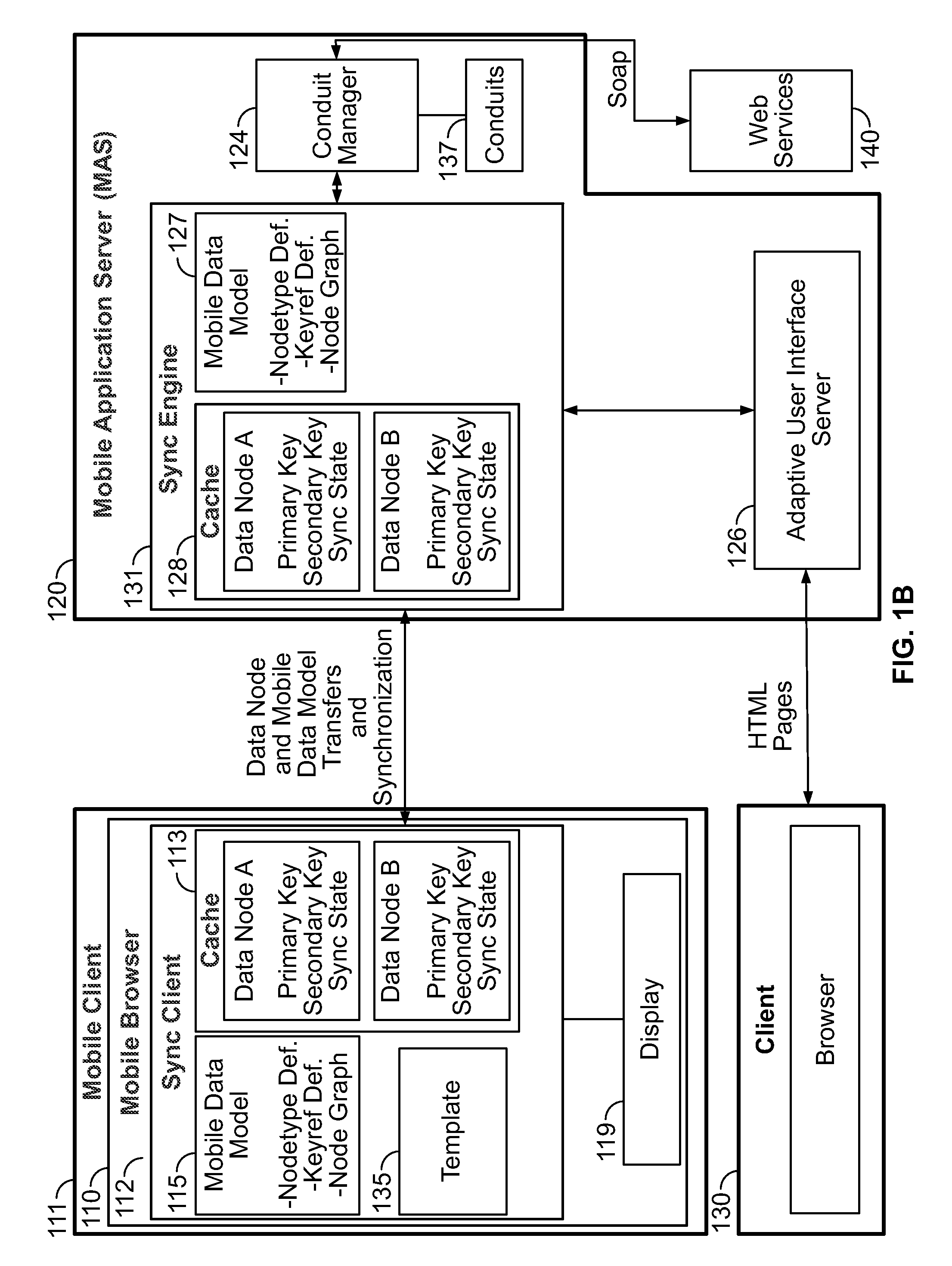

Occasionally-connected application server

ActiveUS7650432B2Efficient traversal and synchronization of dataDatabase distribution/replicationMultiple digital computer combinationsApplication serverParallel programming model

Providing a framework for developing, deploying and managing sophisticated mobile solutions, with a simple Web-like programming model that integrates with existing enterprise components. Mobile applications may consist of a data model definition, user interface templates, a client side controller, which includes scripts that define actions, and, on the server side, a collection of conduits, which describe how to mediate between the data model and the enterprise. In one embodiment, the occasionally-connected application server assumes that data used by mobile applications is persistently stored and managed by external systems. The occasionally-connected data model can be a metadata description of the mobile application's anticipated usage of this data, and be optimized to enable the efficient traversal and synchronization of this data between occasionally connected devices and external systems.

Owner:ORACLE INT CORP

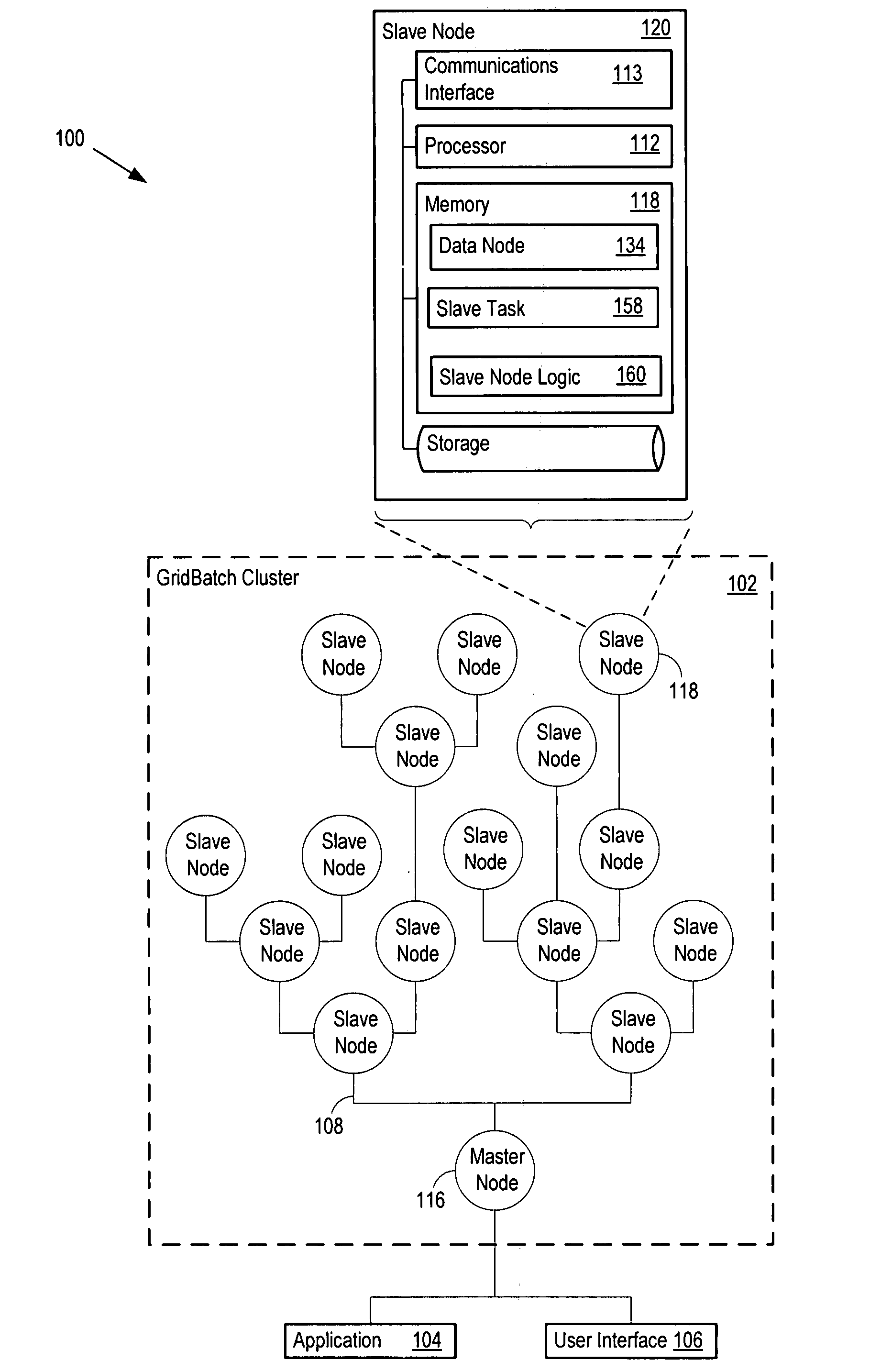

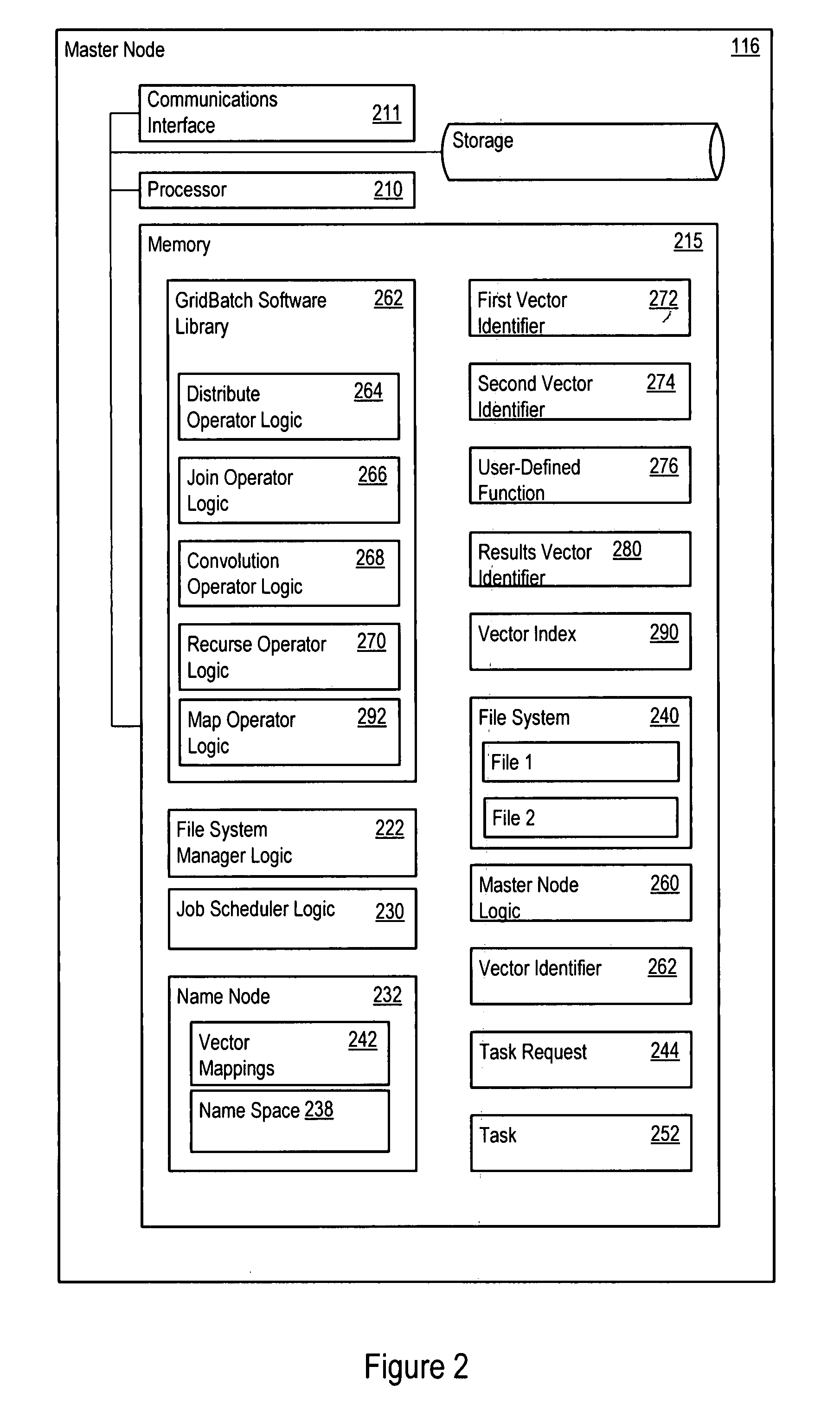

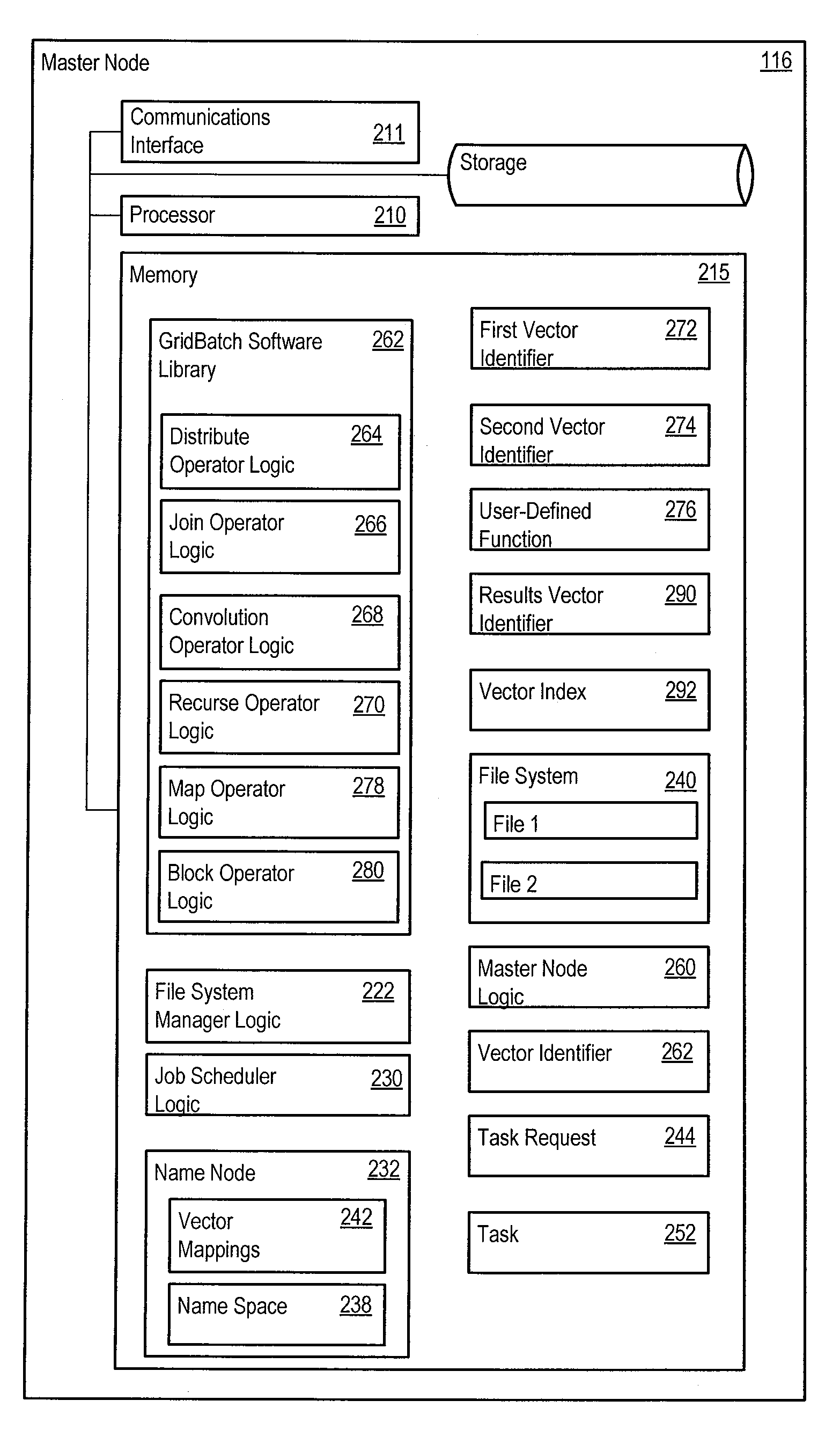

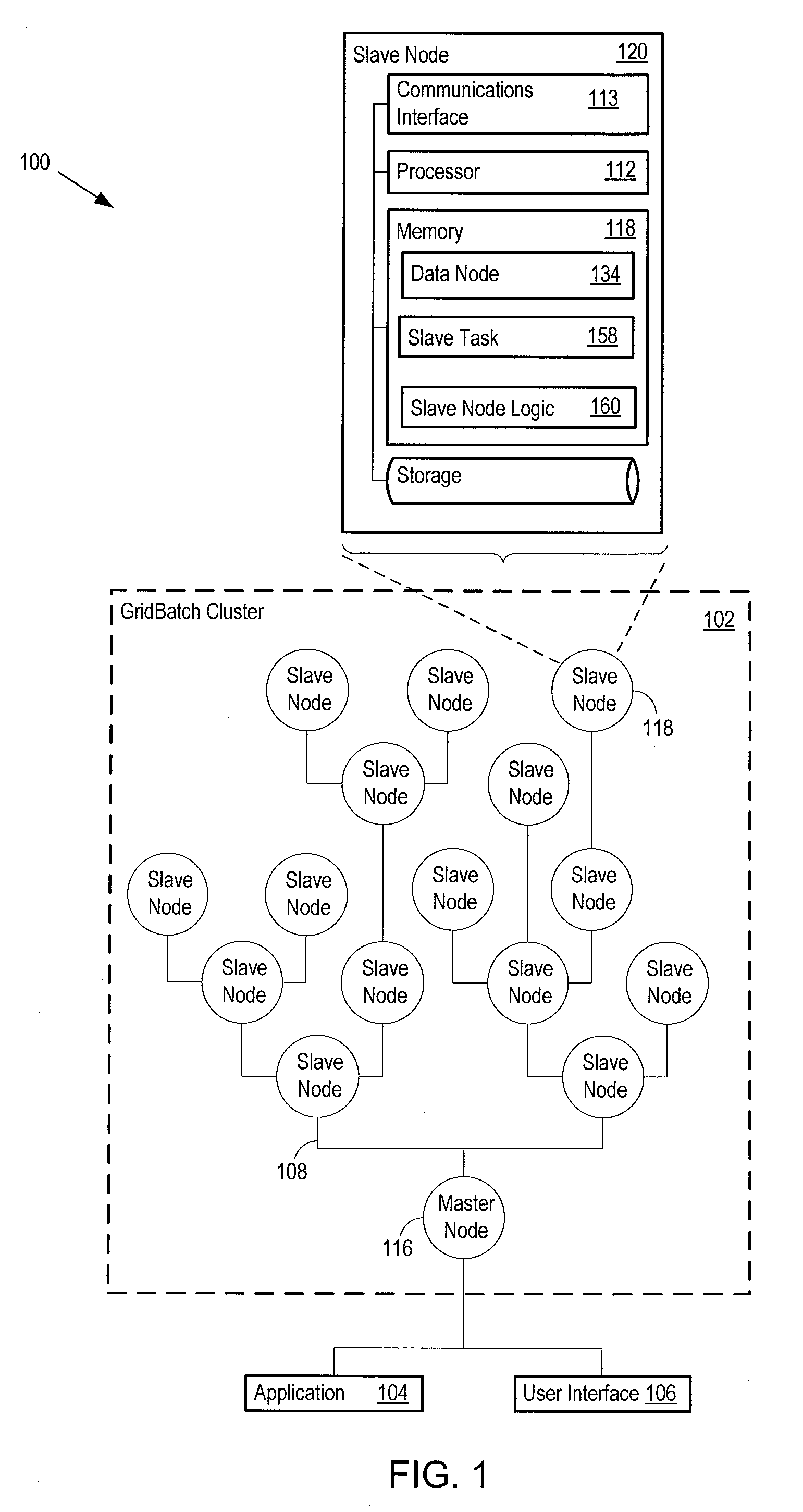

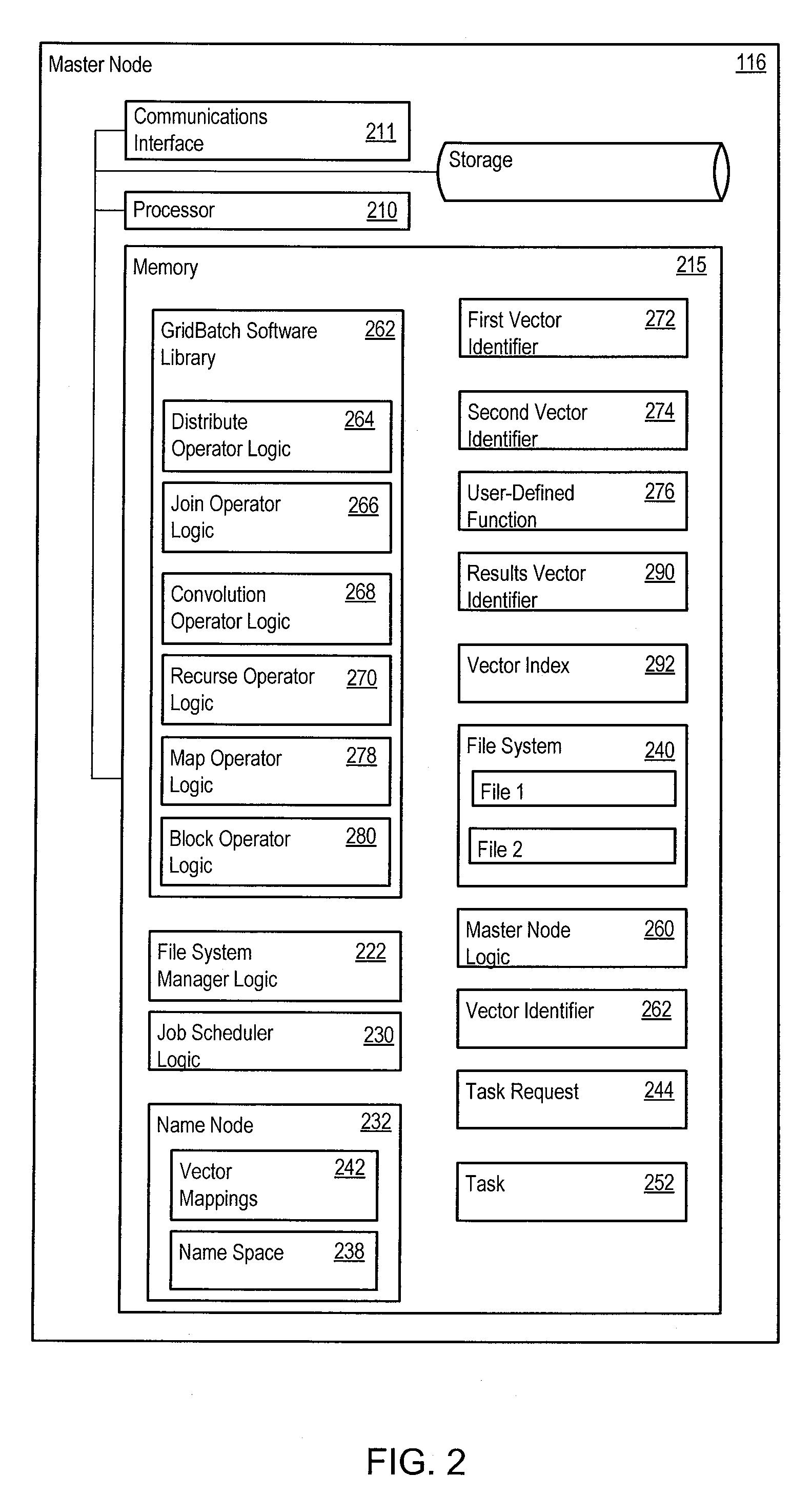

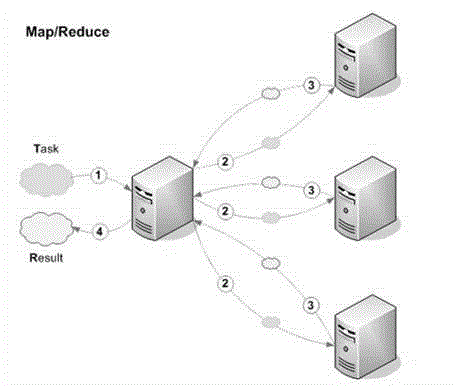

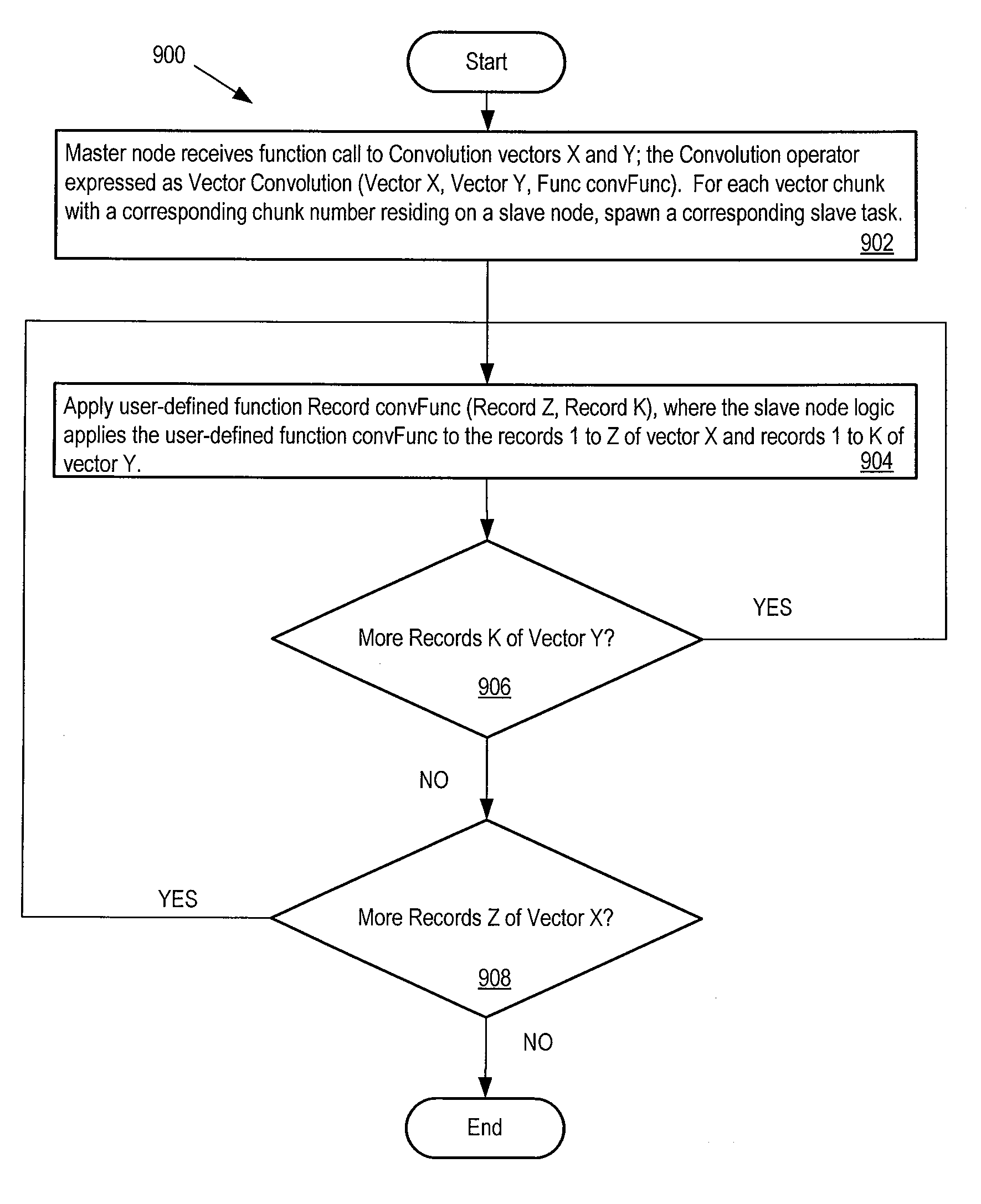

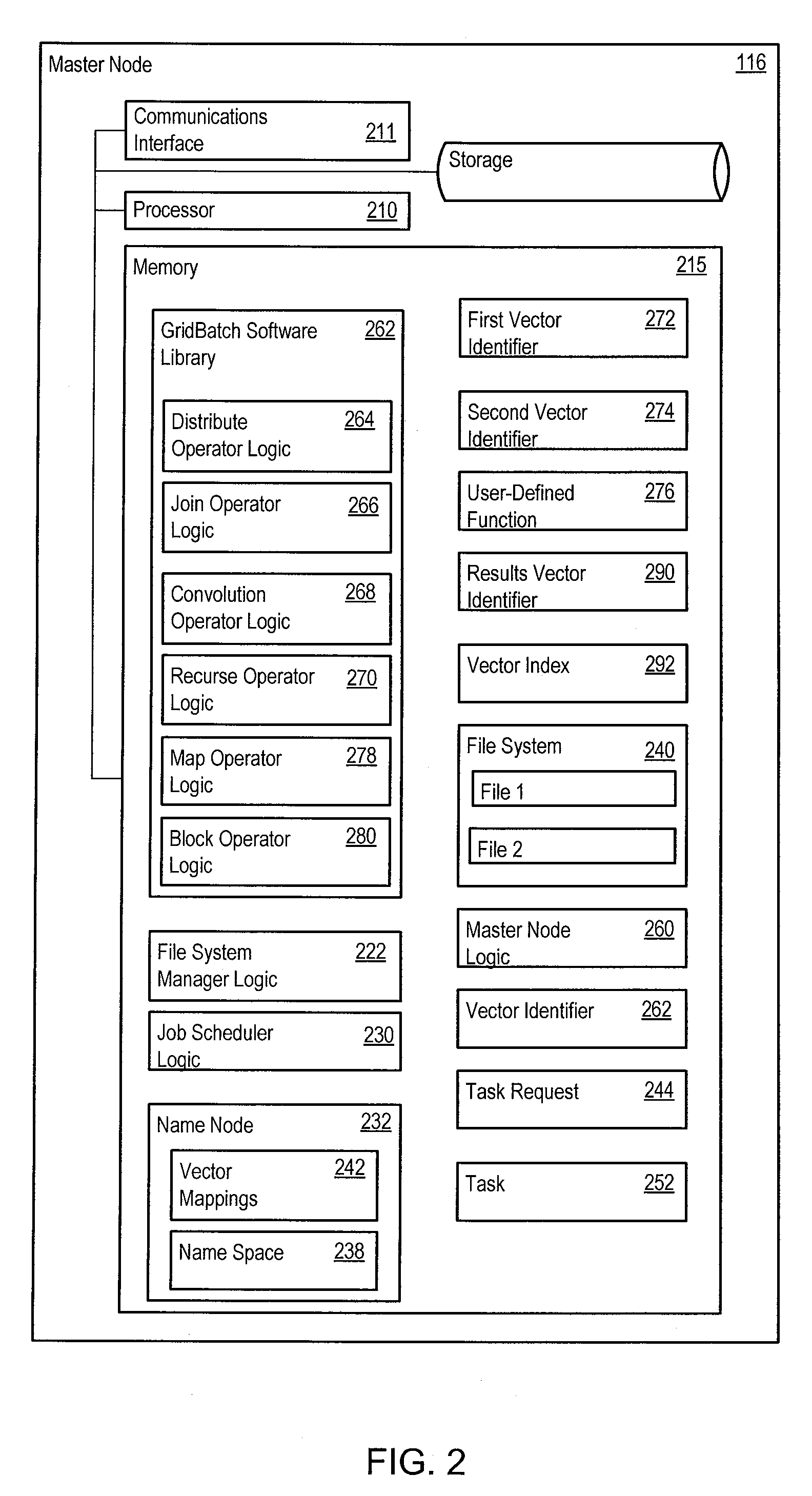

Infrastructure for parallel programming of clusters of machines

ActiveUS20090089544A1Easy to convertImprove performanceDigital data information retrievalGeneral purpose stored program computerParallel programming modelMulti processor

GridBatch provides an infrastructure framework that hides the complexities and burdens of developing logic and programming application that implement detail parallelized computations from programmers. A programmer may use GridBatch to implement parallelized computational operations that minimize network bandwidth requirements, and efficiently partition and coordinate computational processing in a multiprocessor configuration. GridBatch provides an effective and lightweight approach to rapidly build parallelized applications using economically viable multiprocessor configurations that achieve the highest performance results.

Owner:ACCENTURE GLOBAL SERVICES LTD

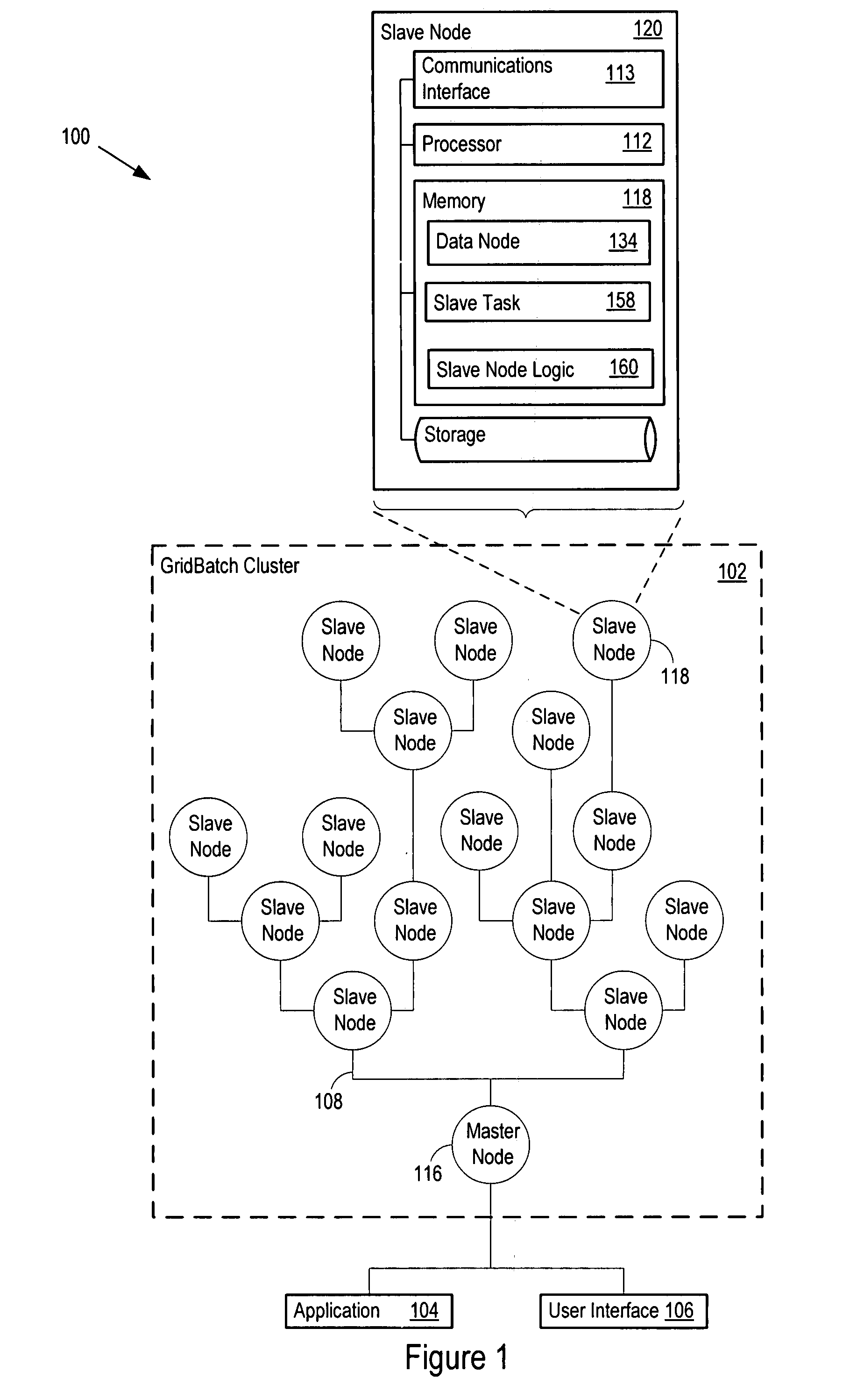

Infrastructure for parallel programming of clusters of machines

InactiveUS20090089560A1Easy to convertImprove performanceAnalogue secracy/subscription systemsMultiple digital computer combinationsMulti processorParallel programming model

GridBatch provides an infrastructure framework that hides the complexities and burdens of developing logic and programming application that implement detail parallelized computations from programmers. A programmer may use GridBatch to implement parallelized computational operations that minimize network bandwidth requirements, and efficiently partition and coordinate computational processing in a multiprocessor configuration. GridBatch provides an effective and lightweight approach to rapidly build parallelized applications using economically viable multiprocessor configurations that achieve the highest performance results.

Owner:ACCENTURE GLOBAL SERVICES LTD

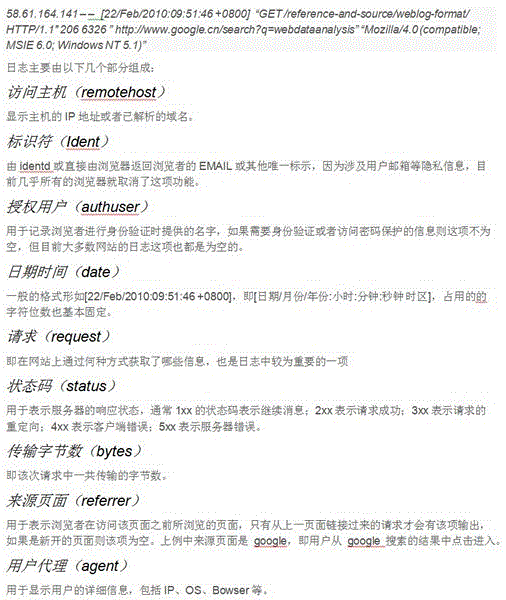

Website operation state monitoring and abnormal detection based on MapReduce

The invention discloses website operation state monitoring and abnormal detection based on MapReduce. By utilizing a MapReduce parallel programming model, the state monitoring and abnormal detection can be finished, an optimal information point is captured from massive journal files, and abnormal behaviours in the access process are effectively and accurately captured by adopting an effective strategy. A website journal is analyzed by adopting four parallel strategies, namely state monitoring, characteristic abnormal detection, flow peak value detection and decision tree learning access rules, so that the monitoring and abnormal access detection of the website operation state can be achieved.

Owner:常熟市支塘镇新盛技术咨询服务有限公司

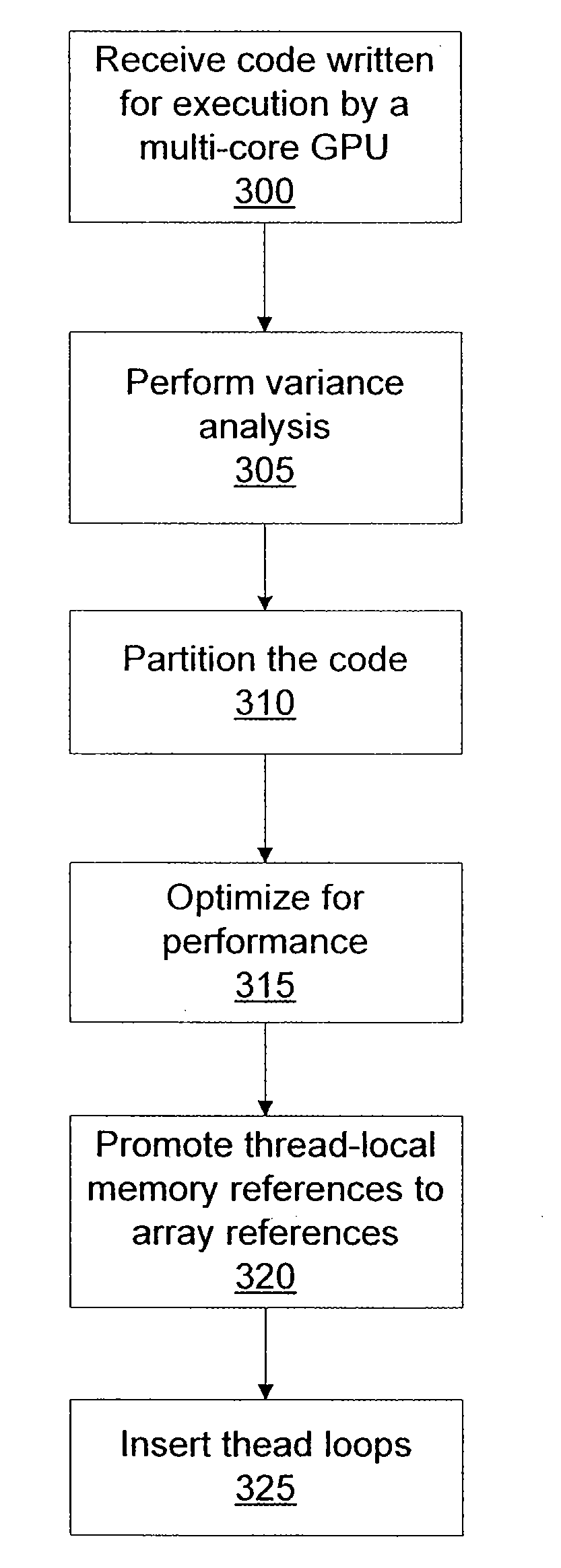

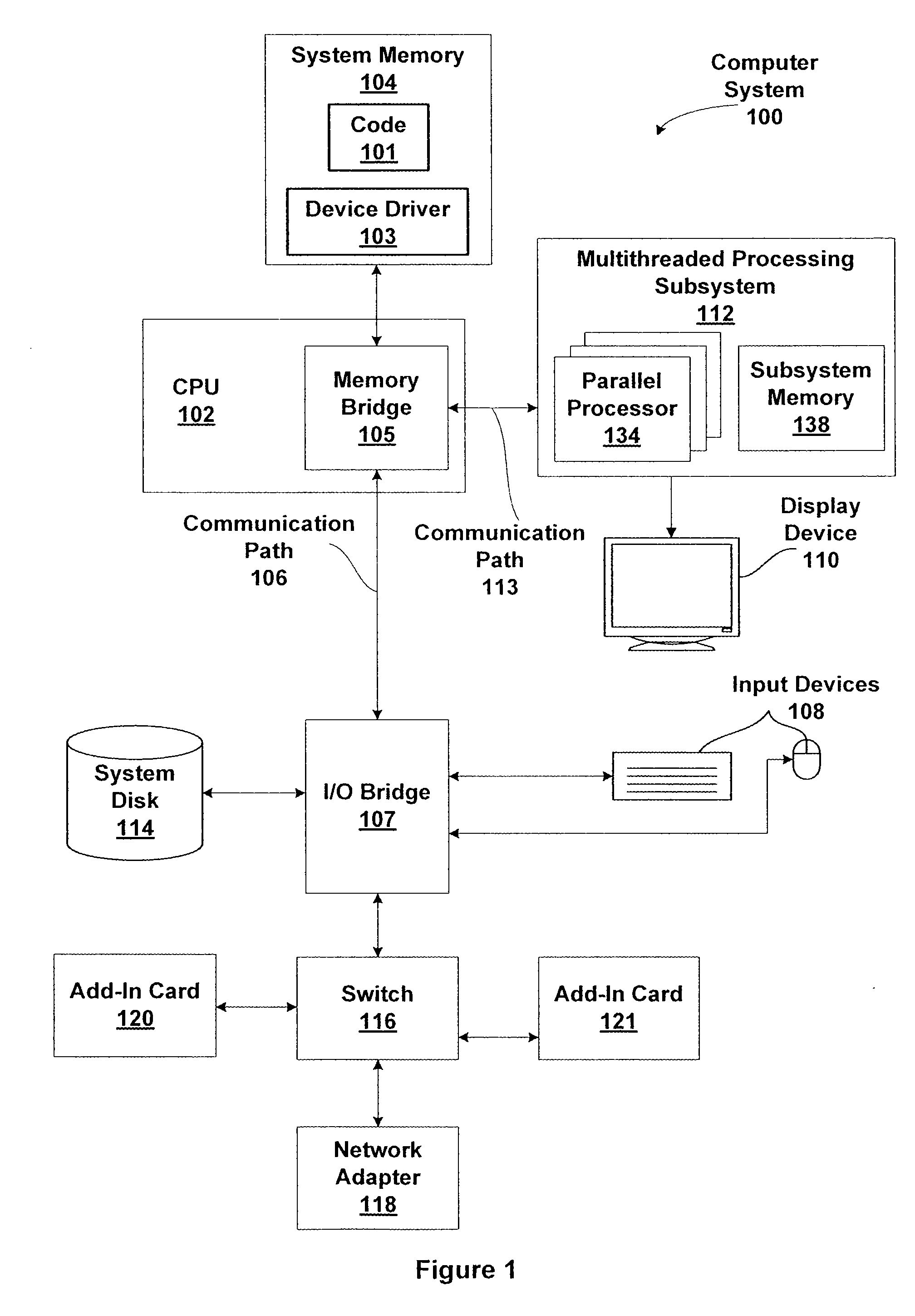

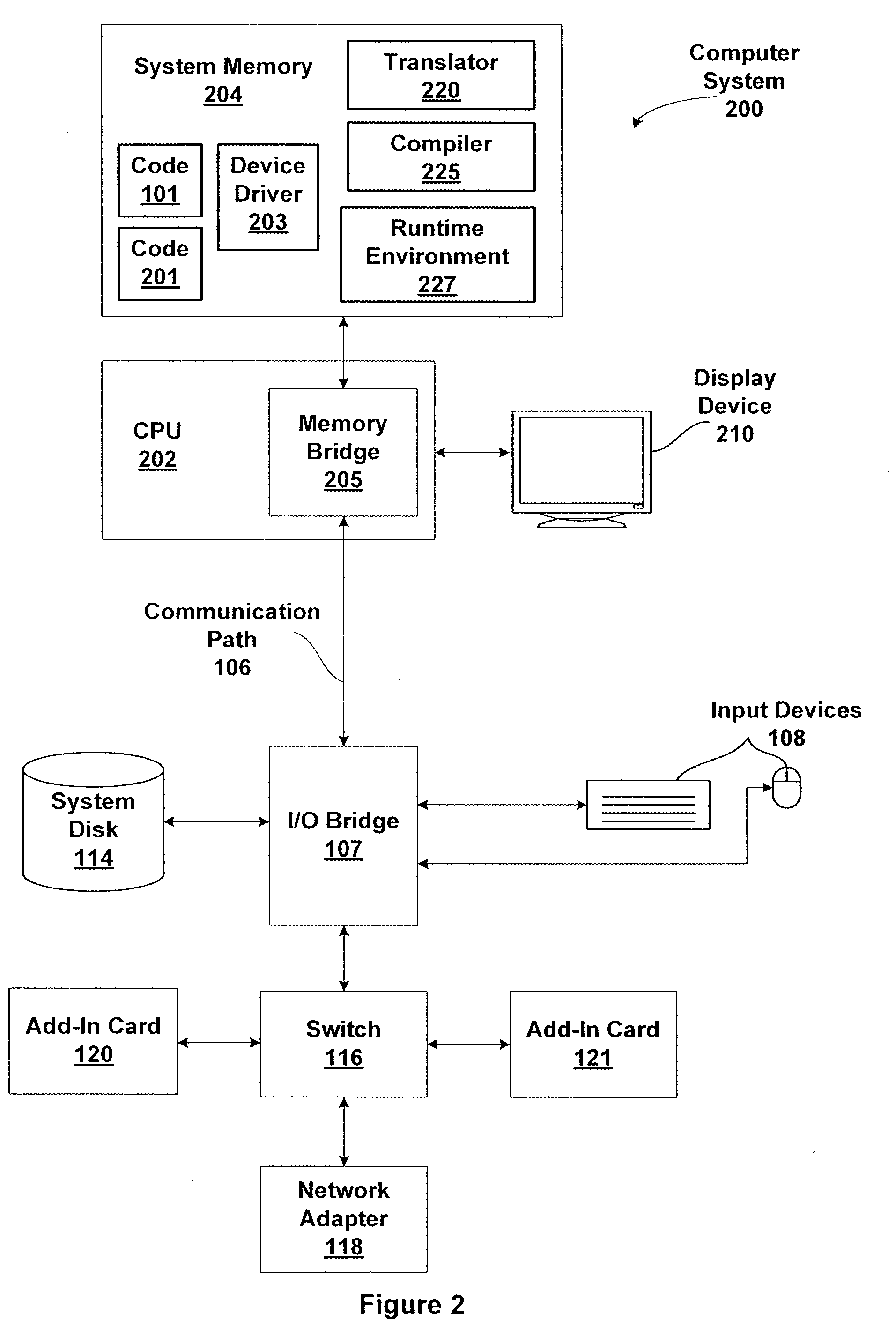

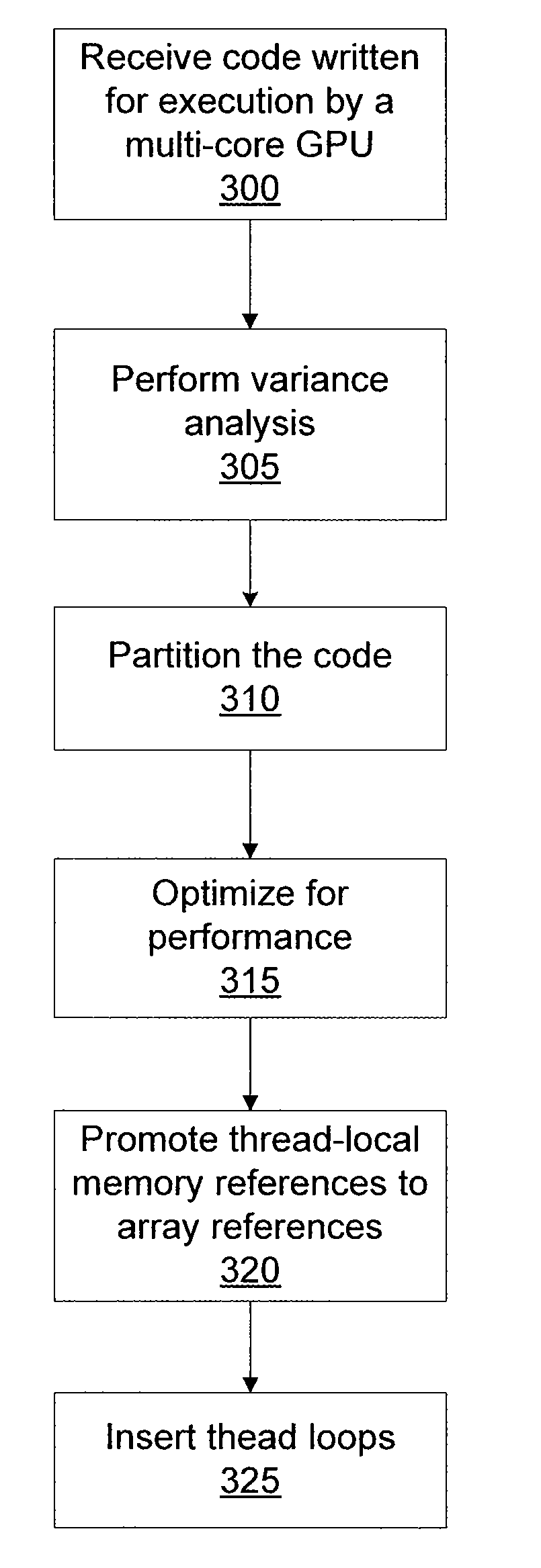

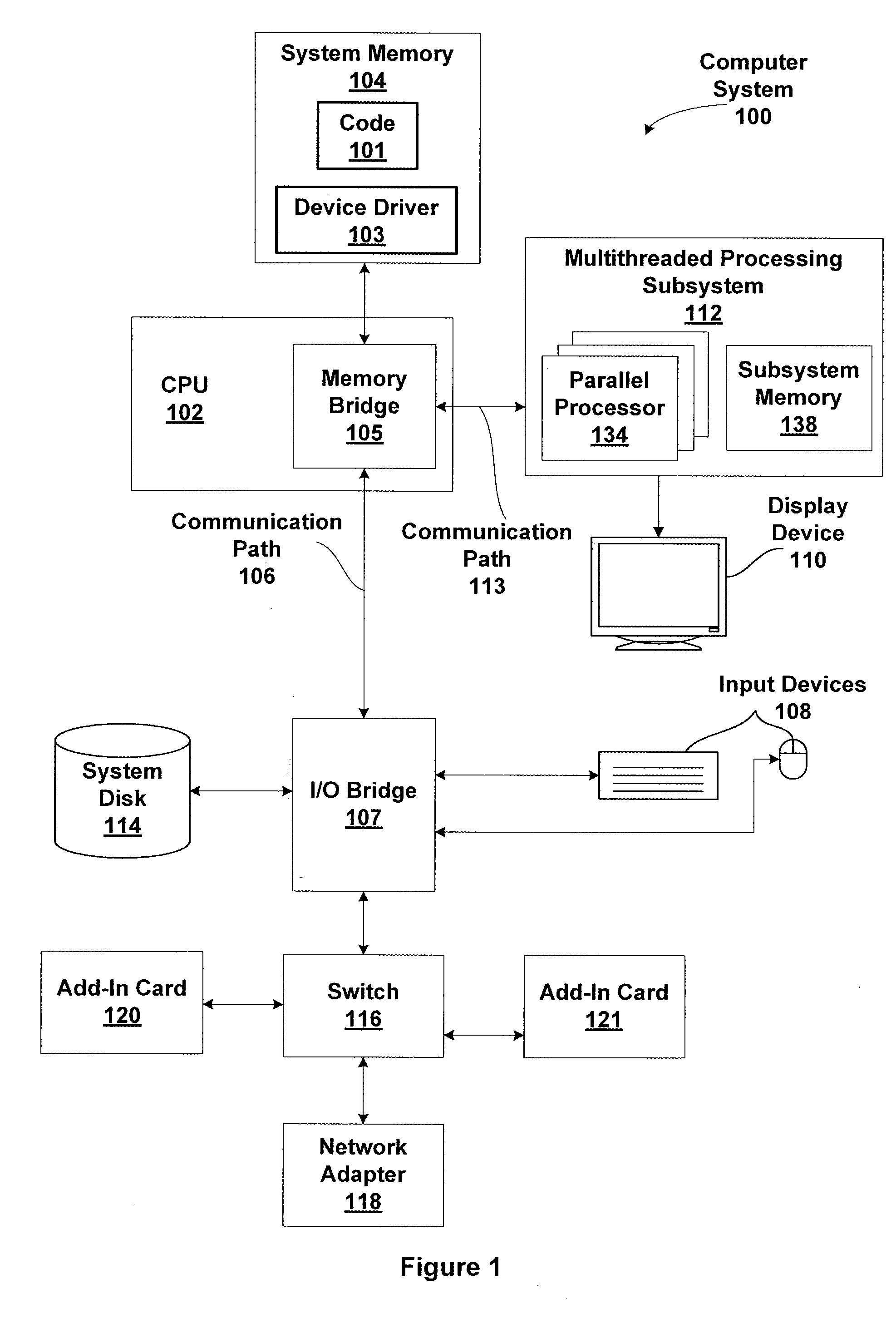

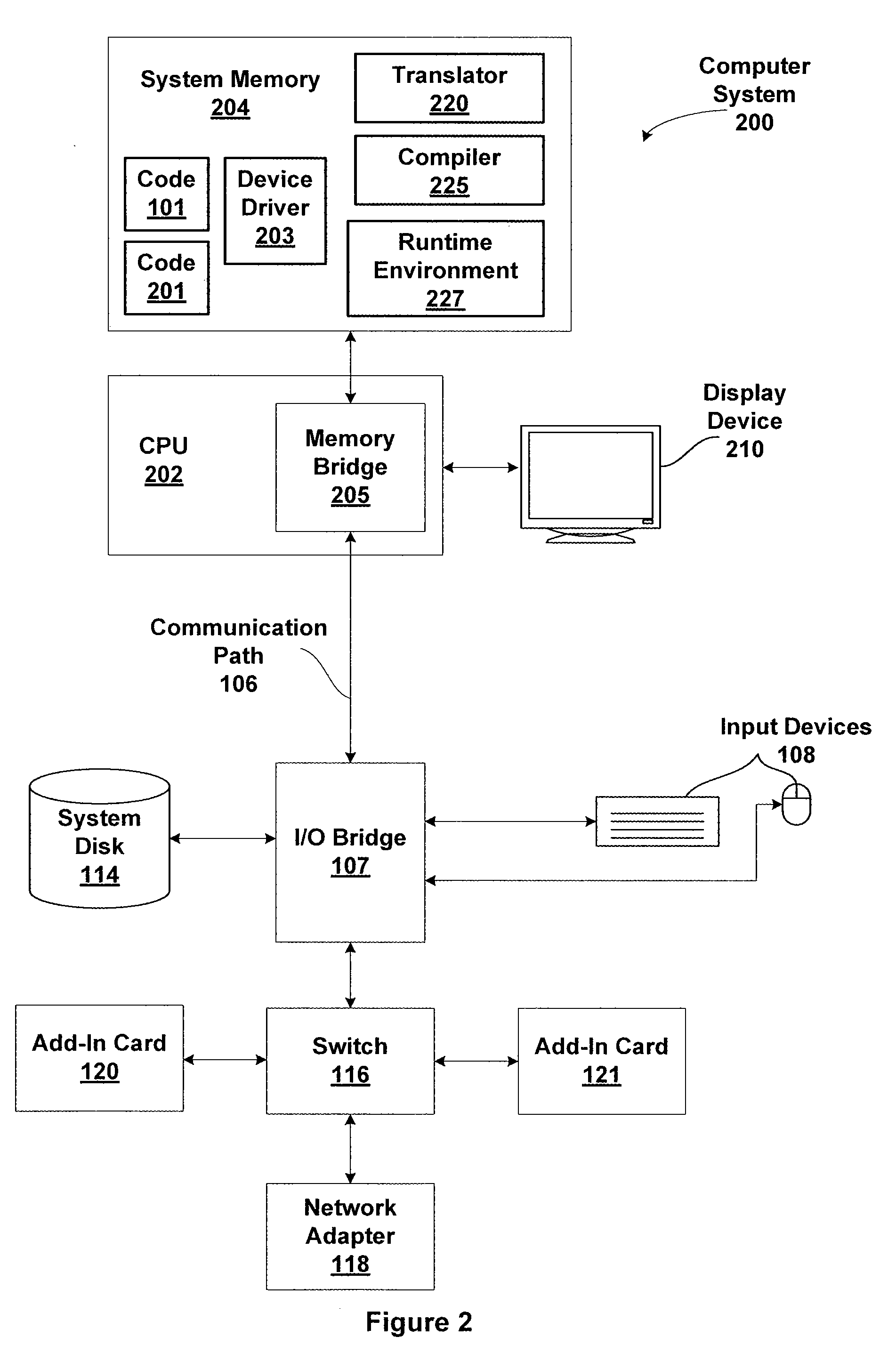

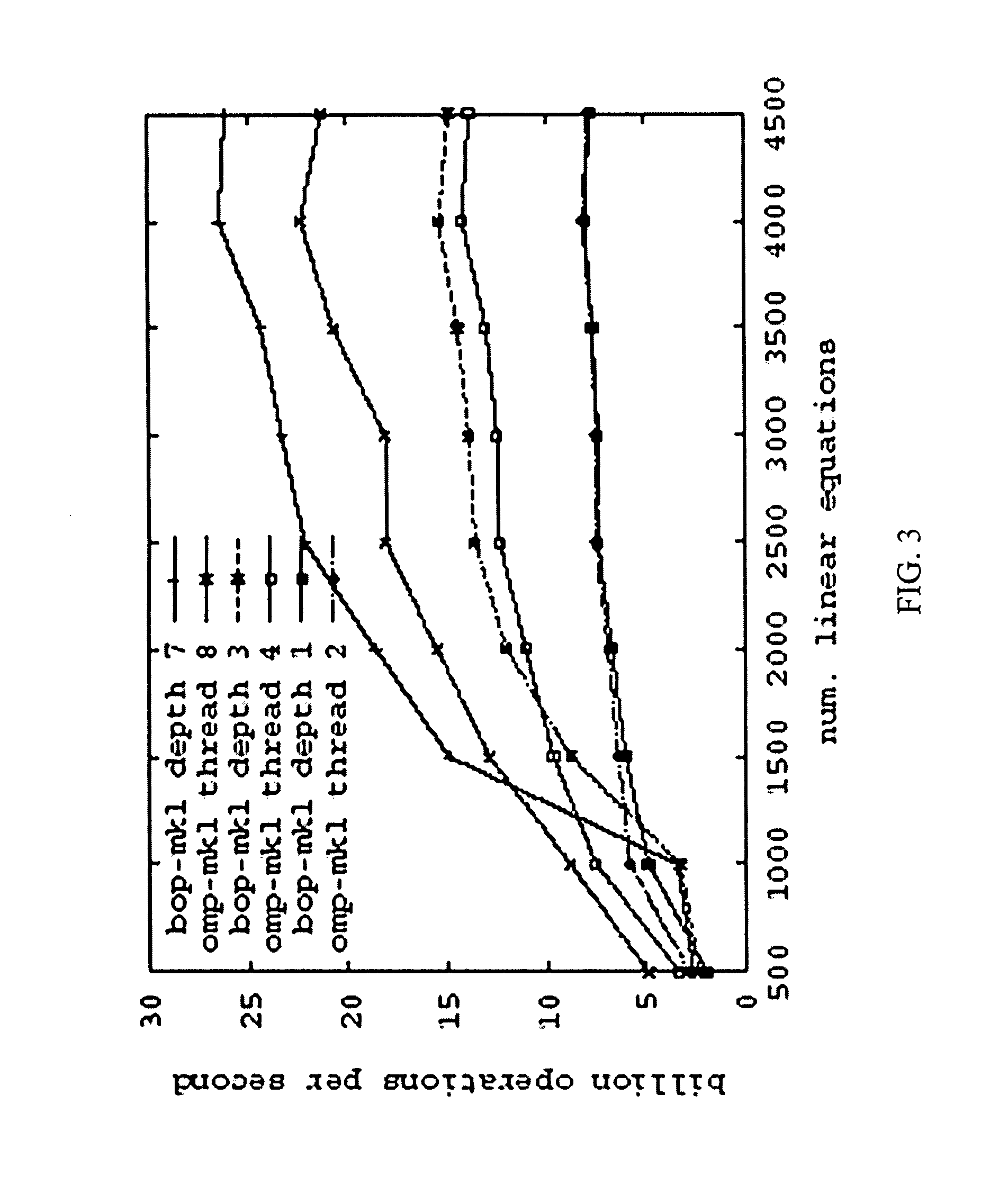

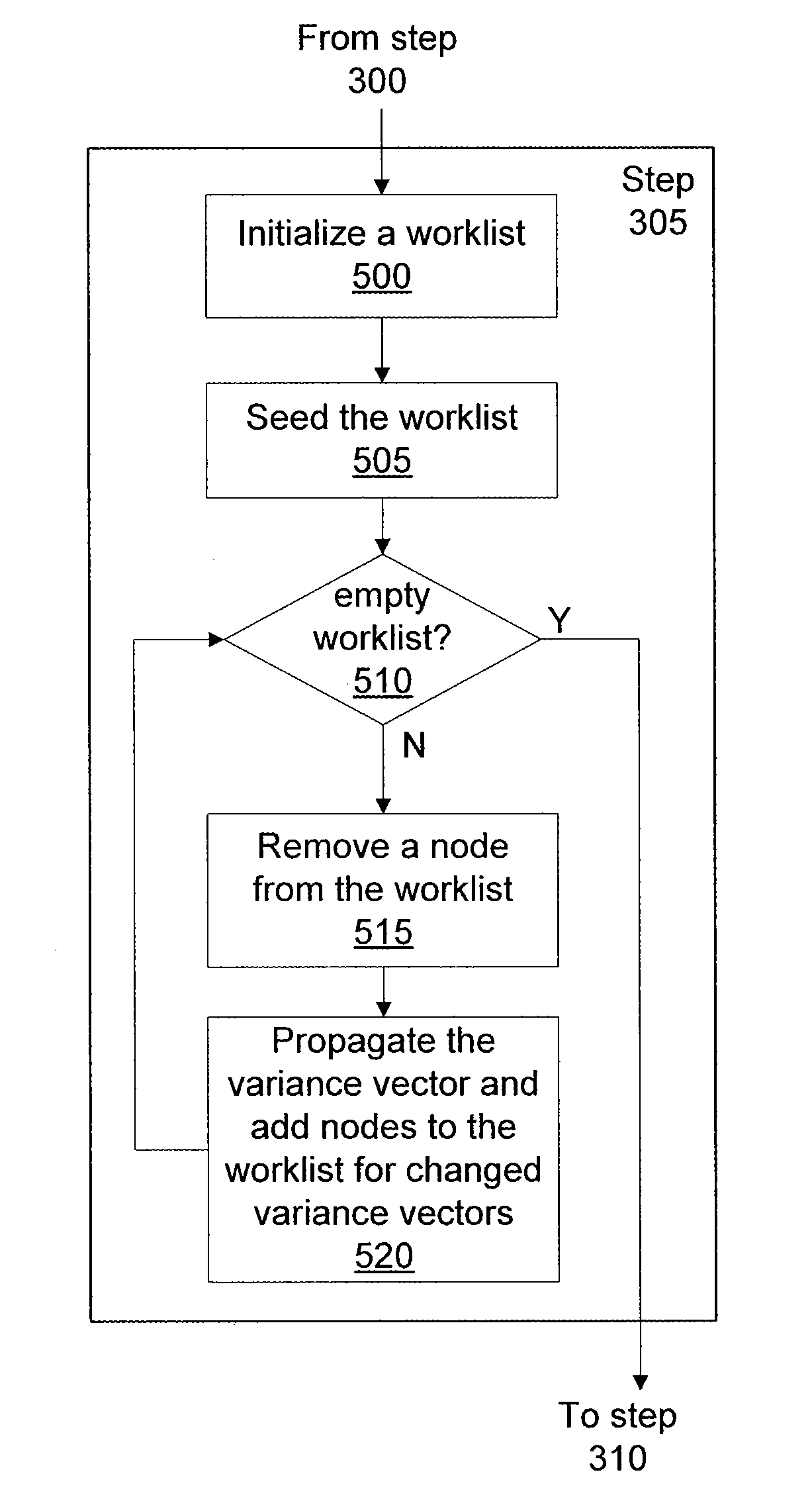

Variance analysis for translating cuda code for execution by a general purpose processor

ActiveUS20090259997A1Error detection/correctionSpecific program execution arrangementsGeneral purposeGraphics

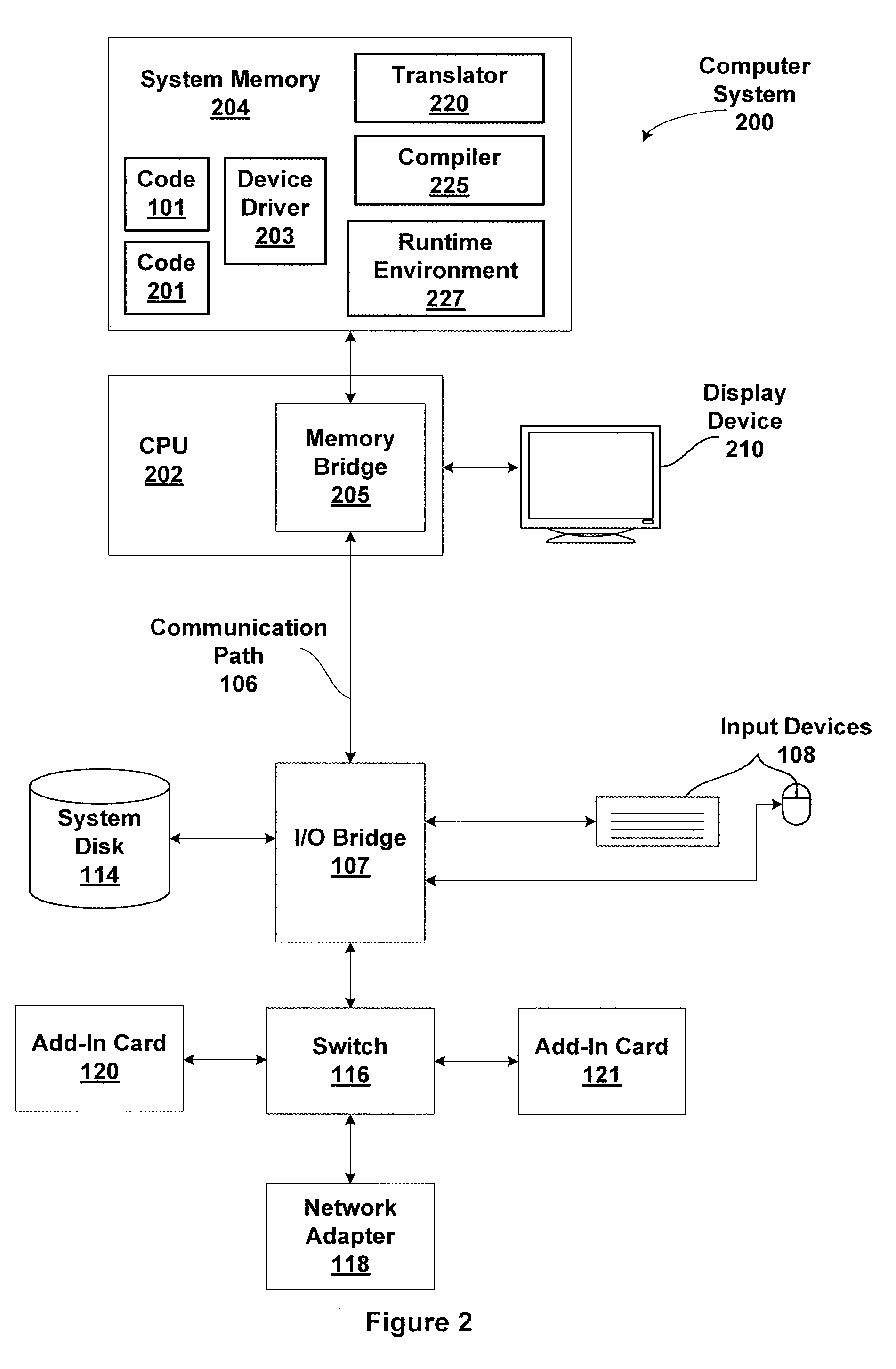

One embodiment of the present invention sets forth a technique for translating application programs written using a parallel programming model for execution on multi-core graphics processing unit (GPU) for execution by general purpose central processing unit (CPU). Portions of the application program that rely on specific features of the multi-core GPU are converted by a translator for execution by a general purpose CPU. The application program is partitioned into regions of synchronization independent instructions. The instructions are classified as convergent or divergent and divergent memory references that are shared between regions are replicated. Thread loops are inserted to ensure correct sharing of memory between various threads during execution by the general purpose CPU.

Owner:NVIDIA CORP

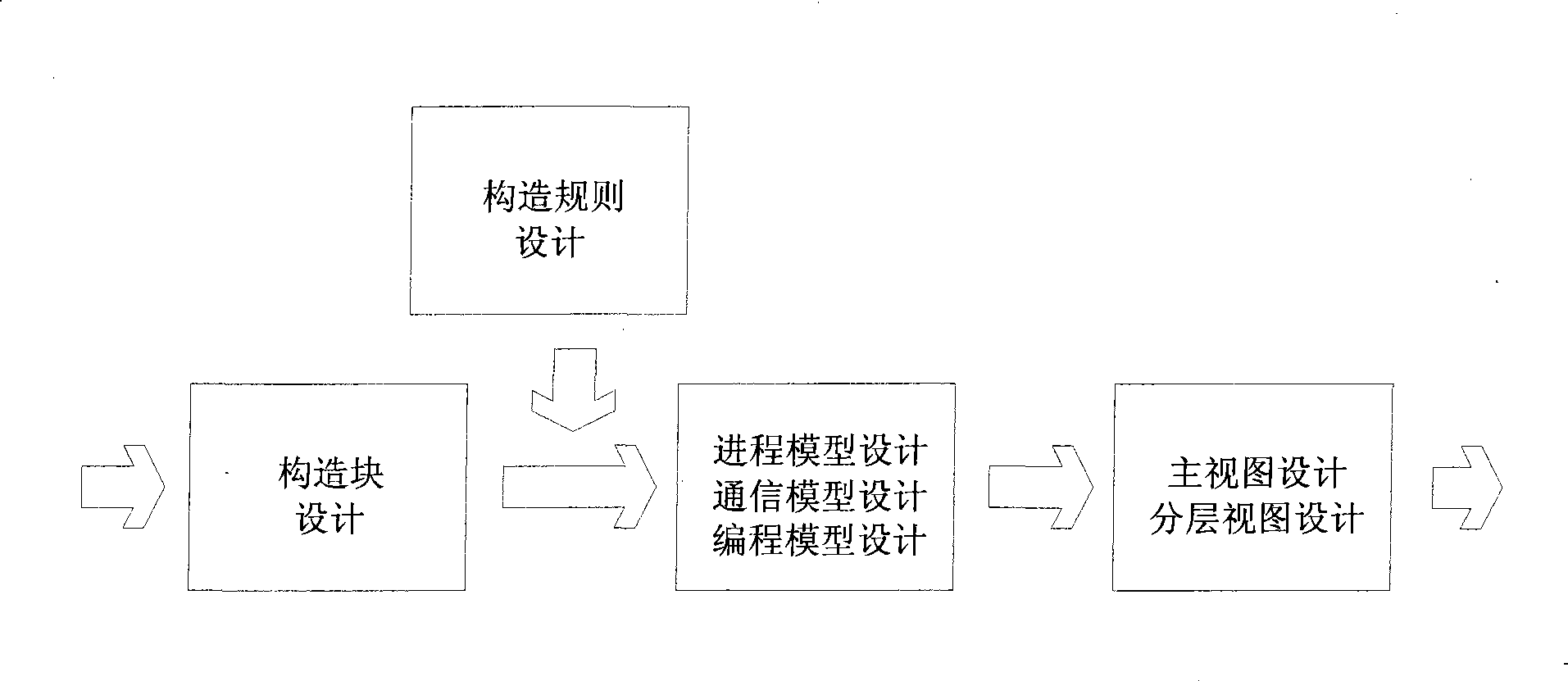

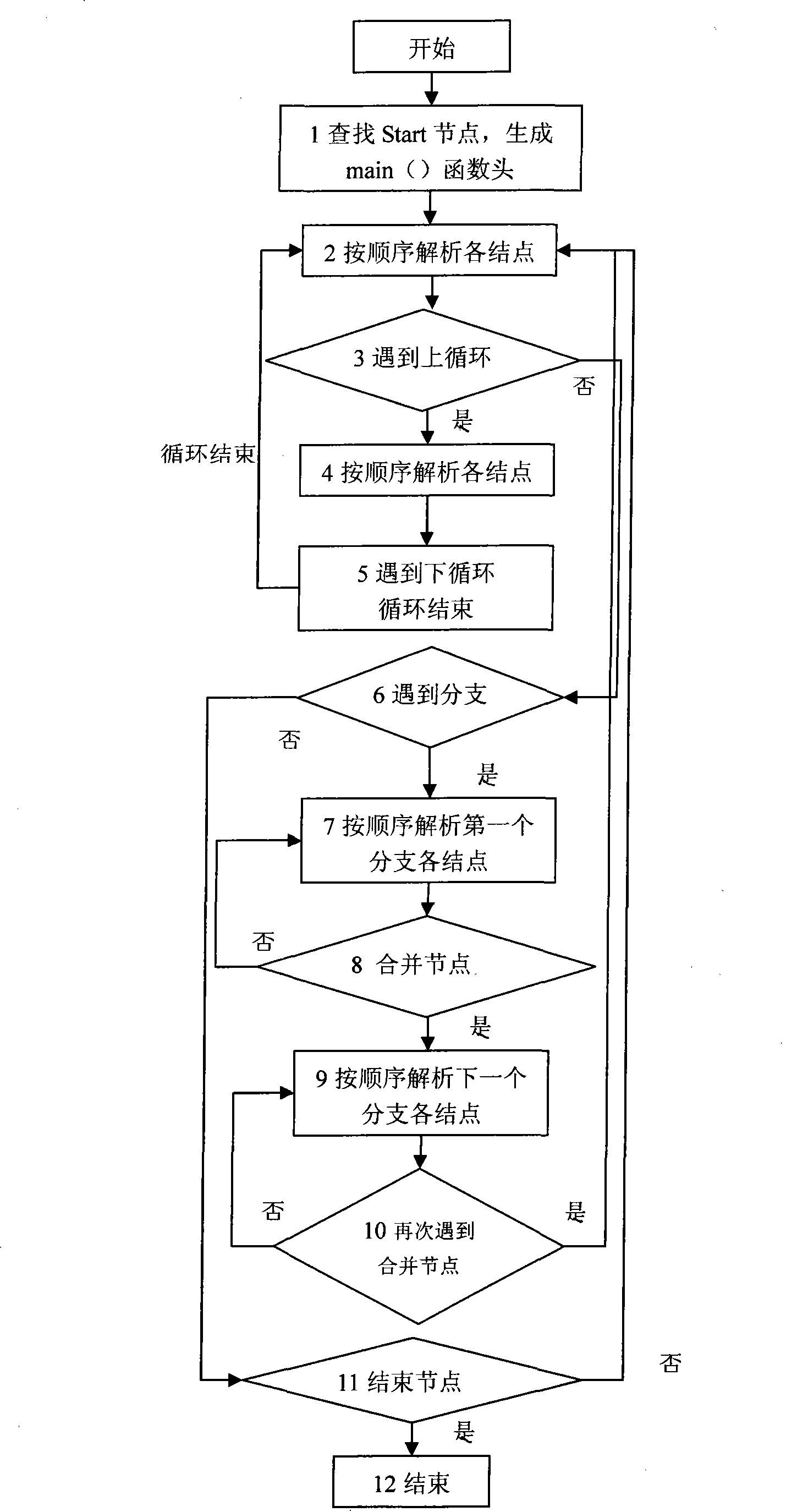

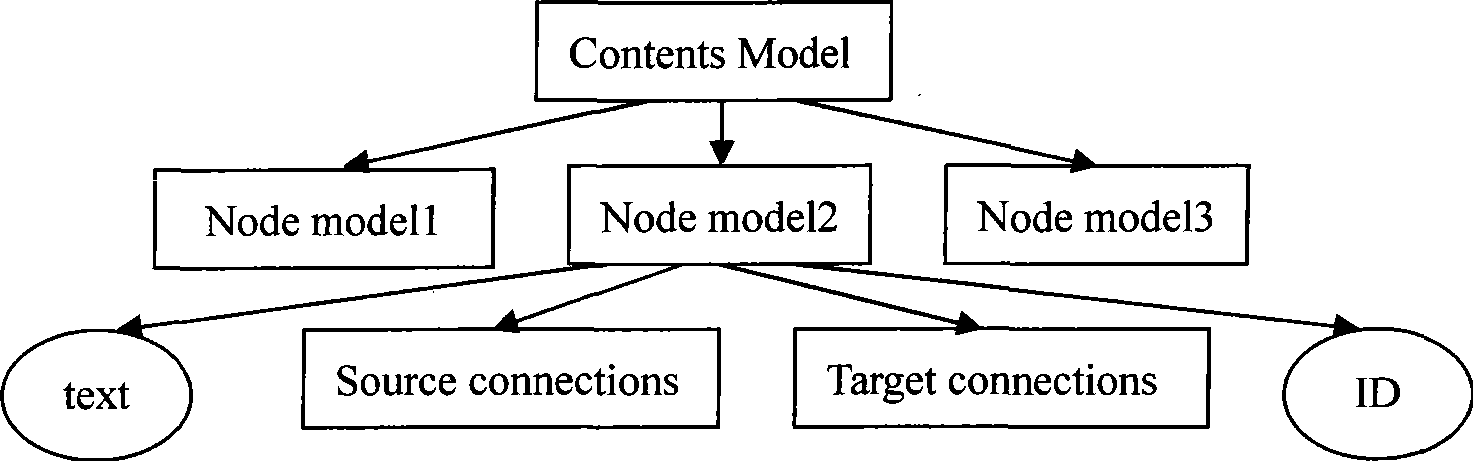

MPI parallel programming system based on visual modeling and automatic skeleton code generation method

InactiveCN101464799AImprove versatilityEasy to operateMultiprogramming arrangementsSpecific program execution arrangementsGraphicsCode editor

The invention discloses an MPI parallel programming system and an automatic generation method for framework code, which are based on visual modeling. A model is provided by a user interface layer based on a visual operating interface; a code generating layer is used for analyzing the model, wherein, the user interface layer consists of a graphic editor used for graphical modeling and a code editor used as the modifying environment for generating a code framework; the code generating layer comprises a arithmetic framework module, a model verifying module and a code generating module, wherein, the arithmetic framework module is used for describing features and actions of a mode, encapsulating the design mode once again and providing a program framework; the model verifying module is used for verifying the logical correction of the model before the code is generated; and the code generating module is used for invoking different parallel standard lib according to users' different demands. Compared with the prior art, the system and the method have good universality, so that even the professional staff without professional knowledge can have the code framework generated automatically via the method of visual modeling.

Owner:TIANJIN UNIV

Partitioning cuda code for execution by a general purpose processor

ActiveUS20090259996A1Software engineeringSpecific program execution arrangementsGraphicsGeneral purpose

One embodiment of the present invention sets forth a technique for translating application programs written using a parallel programming model for execution on multi-core graphics processing unit (GPU) for execution by general purpose central processing unit (CPU). Portions of the application program that rely on specific features of the multi-core GPU are converted by a translator for execution by a general purpose CPU. The application program is partitioned into regions of synchronization independent instructions. The instructions are classified as convergent or divergent and divergent memory references that are shared between regions are replicated. Thread loops are inserted to ensure correct sharing of memory between various threads during execution by the general purpose CPU.

Owner:NVIDIA CORP

Multi-level parallel programming method

ActiveCN101887367AIncrease profitReduce complexityMultiprogramming arrangementsSpecific program execution arrangementsFault toleranceHardware architecture

The invention discloses a multi-level parallel programming method and relates to the field of parallel programming model, mode and method. The method fully combines the characteristics of a mixed hardware architecture, has obvious effect of improving the utilization rate of a group hardware environment, helps developers simplify the parallel programming and multiplex the existing parallel codes, reduces the programming complexity and the error rate through inter-process and intra-process processing and further reduces the error rate by taking fault tolerance into full consideration.

Owner:TIANJIN UNIV

Mobile applications

ActiveUS8645973B2Multiprogramming arrangementsTransmissionApplication serverParallel programming model

Providing a framework for developing, deploying and managing sophisticated mobile solutions, with a simple Web-like programming model that integrates with existing enterprise components. Mobile applications may consist of a data model definition, user interface templates, a client side controller, which includes scripts that define actions, and, on the server side, a collection of conduits, which describe how to mediate between the data model and the enterprise. In one embodiment, the occasionally-connected application server assumes that data used by mobile applications is persistently stored and managed by external systems. The occasionally-connected data model can be a METAdata description of the mobile application's anticipated usage of this data, and be optimized to enable the efficient traversal and synchronization of this data between occasionally connected devices and external systems.

Owner:ORACLE INT CORP

Infrastructure for parallel programming of clusters of machines

InactiveUS7970872B2Easy to convertImprove performanceAnalogue secracy/subscription systemsMultiple digital computer combinationsParallel programming modelApplication software

GridBatch provides an infrastructure framework that hides the complexities and burdens of developing logic and programming application that implement detail parallelized computations from programmers. A programmer may use GridBatch to implement parallelized computational operations that minimize network bandwidth requirements, and efficiently partition and coordinate computational processing in a multiprocessor configuration. GridBatch provides an effective and lightweight approach to rapidly build parallelized applications using economically viable multiprocessor configurations that achieve the highest performance results.

Owner:ACCENTURE GLOBAL SERVICES LTD

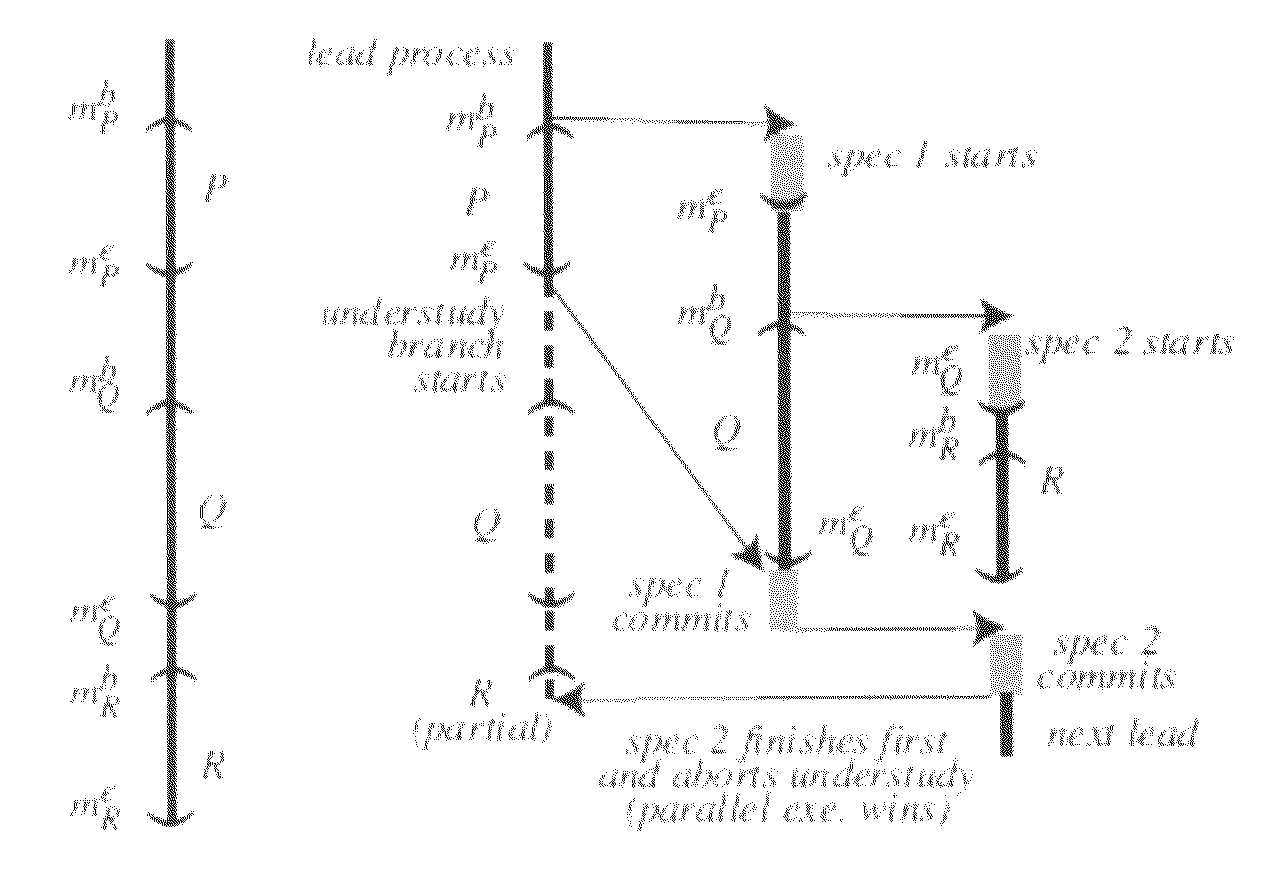

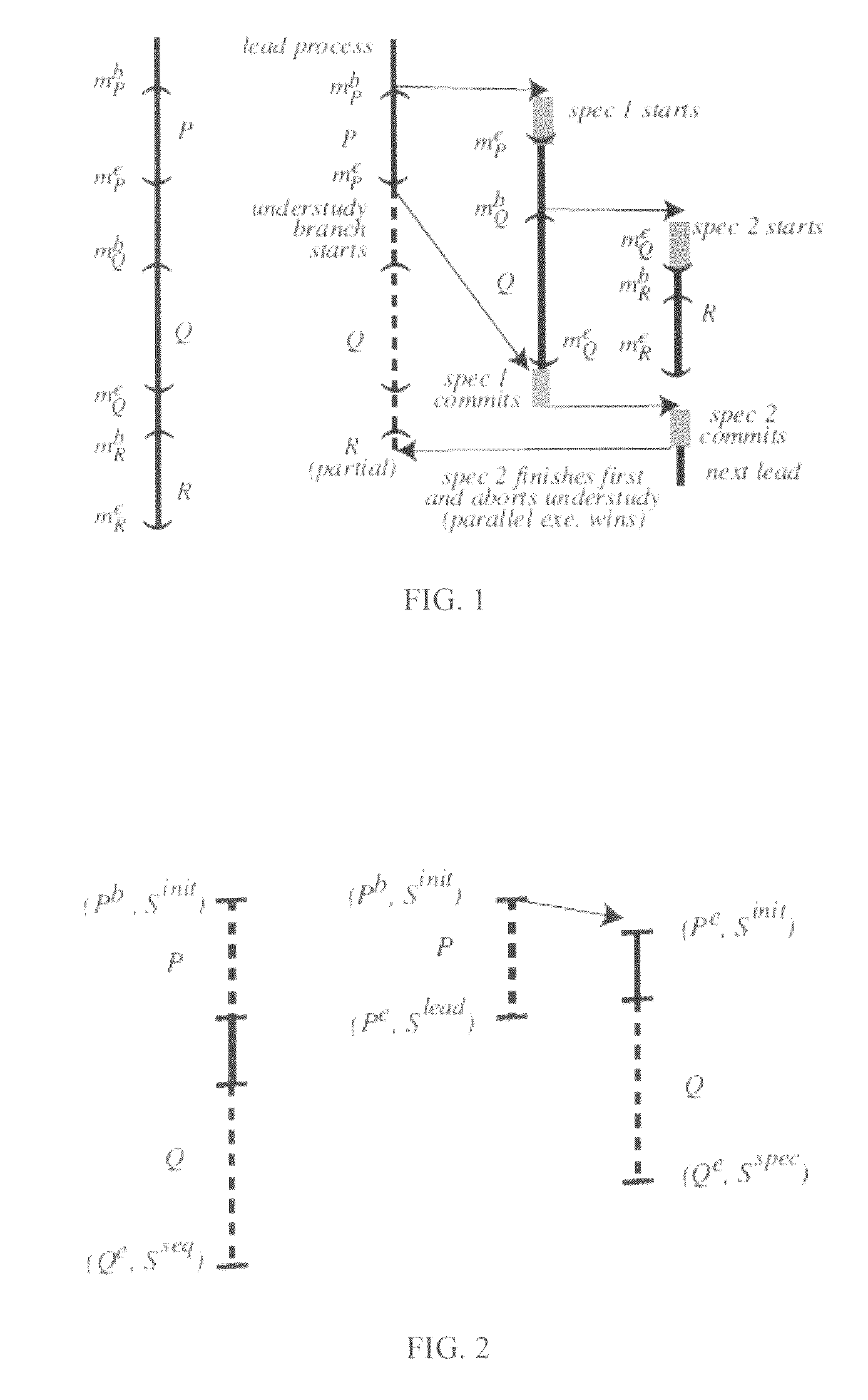

Parallel programming using possible parallel regions and its language profiling compiler, run-time system and debugging support

InactiveUS8549499B1Ensure performanceImprove programming performanceSoftware engineeringDigital computer detailsParallel programming modelRunning time

A method of dynamic parallelization for programs in systems having at least two processors includes examining computer code of a program to be performed by the system, determining a largest possible parallel region in the computer code, classifying data to be used by the program based on a usage pattern and initiating multiple, concurrent processes to perform the program. The multiple, concurrent processes ensure a baseline performance that is at least as efficient as a sequential performance of the computer code.

Owner:UNIVERSITY OF ROCHESTER

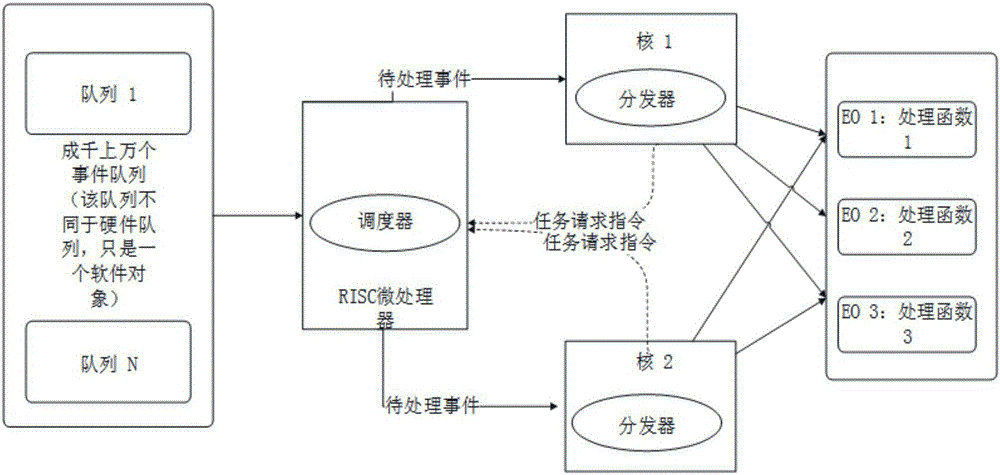

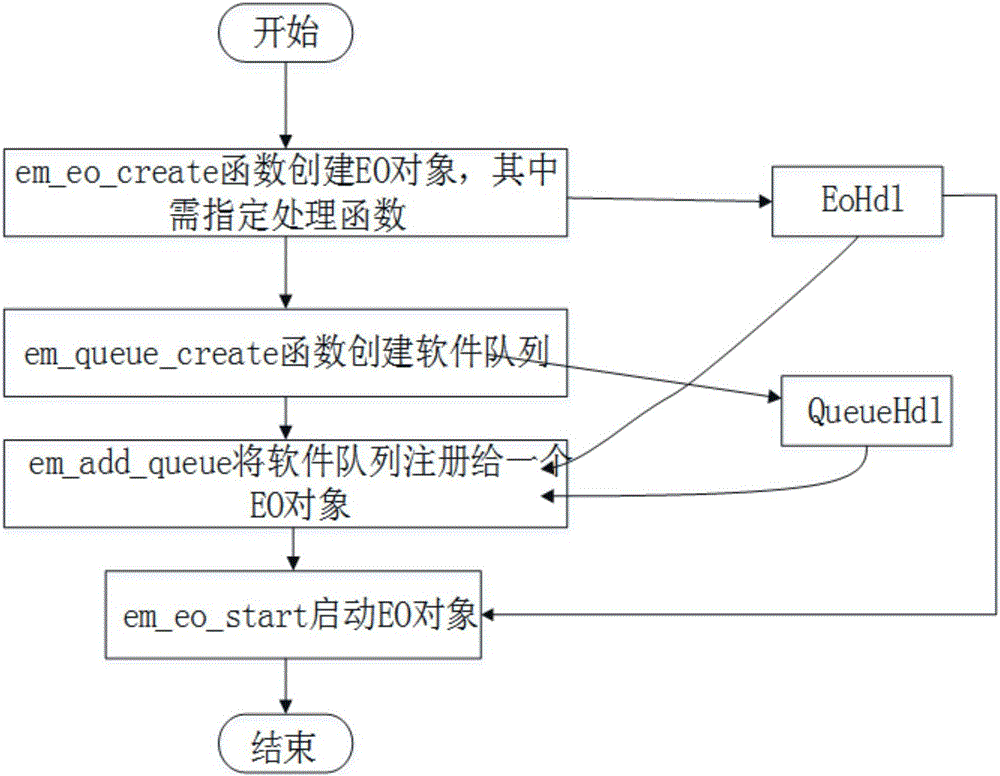

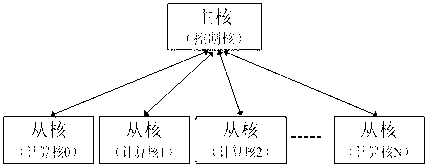

Method for realizing dynamic dispatching distribution of task by multi-core embedded DSP (Data Structure Processor)

ActiveCN105045658AImprove scalabilityMeet the application requirements of dynamic load balancingProgram initiation/switchingResource allocationComputer architectureOperational system

The invention discloses a method for realizing the dynamic dispatching distribution of a task by a multicore embedded DSP (Data Structure Processor). A KeyStone platform brought out by TI (Texas Instruments) provides a multicore runtime system library OpenEM (Event Machine) capable of realizing the dynamic dispatching distribution of the task on the basis of Multicore Navigator, the dynamic dispatching distribution of the task can be realized through the multicore runtime system library OpenEM, and multicore load balance is realized, wherein the multicore runtime system library OpenEM is independent of an operating system. A DSP core of a multicore embedded processor based on a KeyStone architecture is divided into a main core and slave cores, wherein the main core finishes the global initialization of a programming model, and all cores finish local initialization. The programming model consists of a main core generation event, an event driver, an OpenEM dispatching distribution event and a slave core processing event. The invention provides a uniform parallel programming model of the multicore embedded DSP on the basis of the OpenEM for embedded software developers. The implementation method is high in expansibility, can be suitable for the majority of multicore or many-core embedded processors based on a KeyStone architecture and can meet the application requirement of the dispatching distribution of the task under a multicore environment and realize the dynamic load balance.

Owner:杭州普锐视科技有限公司

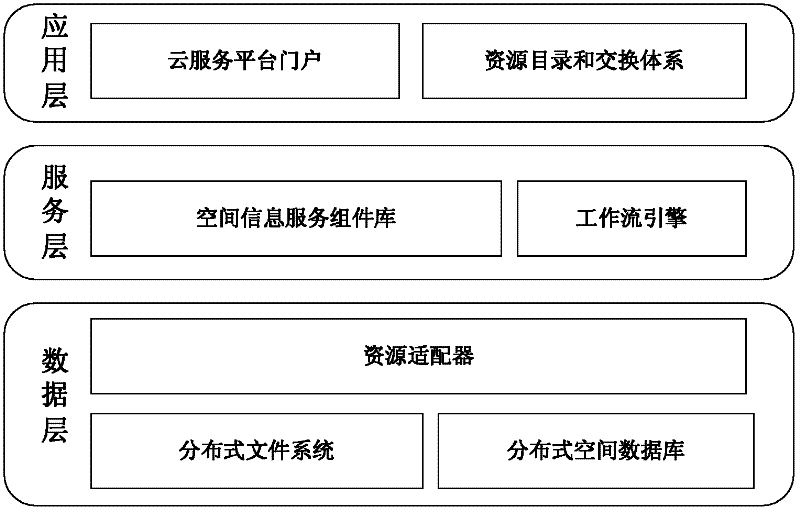

Cloud computing-based spatial information service system

InactiveCN102377824AMeet various needsHigh feasibilityTransmissionParallel programming modelData link layer

The embodiment of the invention discloses a cloud computing-based spatial information service system. The cloud computing-based spatial information service system comprises a data layer, a service layer and an application layer, wherein the data layer is used for storing and managing data resources; the service layer is used for encapsulating spatial information service components and performing service process modeling by using a workflow engine; the application layer is used for providing external service; the data layer consists of a distributed file system, a distributed spatial database and a resource adapter; the service layer consists of a spatial information service component library and the workflow engine; and the application layer consists of a cloud service platform portal, a resource directory and an exchange system. The cloud computing-based spatial information service system adopts the technologies of the distributed file system, the distributed database, parallel programming models and workflows and the like, supports mass data storage, the resource directory, the exchange system and parallel program processing, can provide integrated service from data to processing functions, has high feasibility and can meet various requirements of spatial information service.

Owner:江西省南城县网信电子有限公司

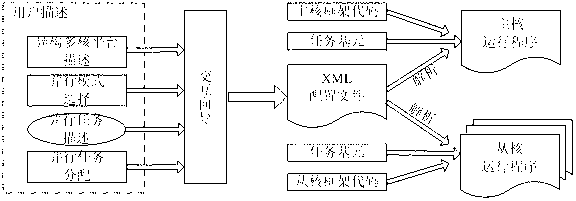

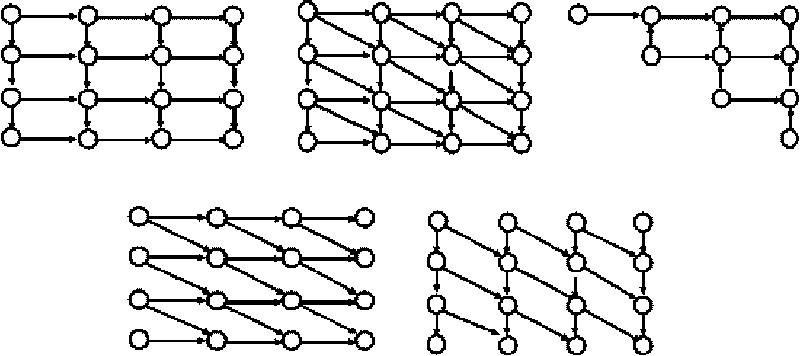

User description based programming design method on embedded heterogeneous multi-core processor

InactiveCN102707952AOutstanding FeaturesHighlight significant progressSpecific program execution arrangementsPerformance computingParallel programming model

The invention relates to a user description based programming design method on an embedded heterogeneous multi-core processor. The method includes the steps that a user configures a guide through an image interface to perform description of a heterogeneous multi-core processor platform and a task, a parallel mode is set, an element task is established and registered, a task relation graph (directed acyclic graph (DAG)) is generated, the element task is subjected to a static assignment on the heterogeneous multi-core processor, and processor platform characteristics, parallel demands and task assignment are expressed in a configuration file mode (extensible markup language (XML)). Then the element task after a configuration file is subjected to a parallel analysis is embedded into a position of a heterogeneous multi-core framework code task label, a corresponding serial source program is constructed, a serial compiler is invoked, and finally an executable code on the heterogeneous multi-core processor can be generated. By means of the user description based programming design method on the embedded heterogeneous multi-core processor, parallel programming practices such as developing a parallel compiler on a general personal computer (PC) or a high-performance computing platform, establishing a parallel programming language and porting a parallel library are effectively avoided, the difficulty of developing a parallel program on the heterogeneous multi-core processor platform in the embedded field is greatly reduced, the purpose of parallel programming based on the user description and parallelization interactive guide is achieved.

Owner:SHANGHAI UNIV

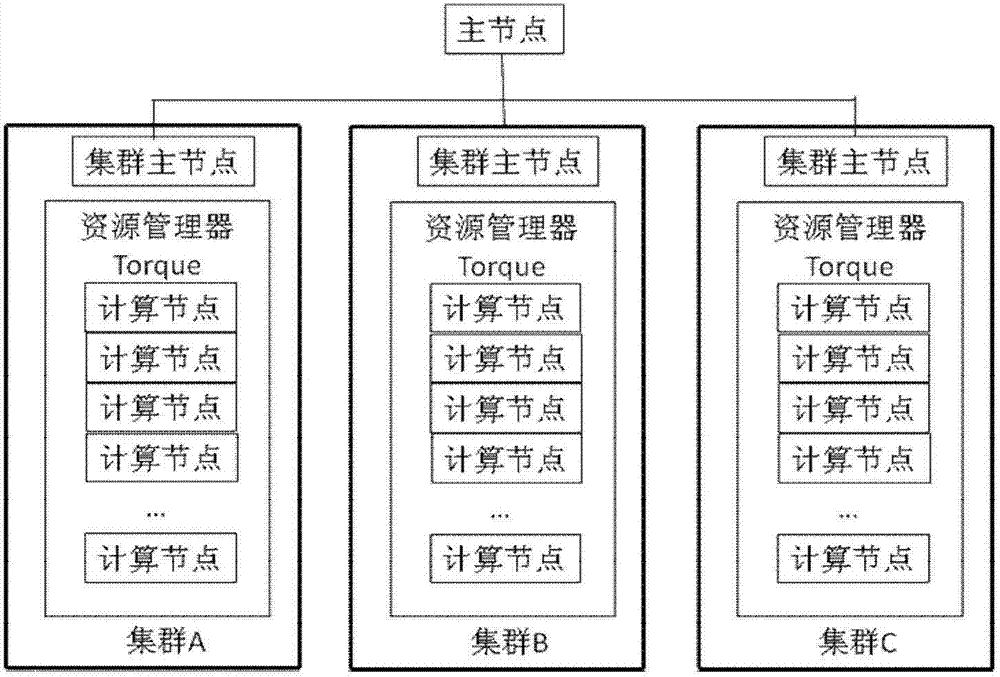

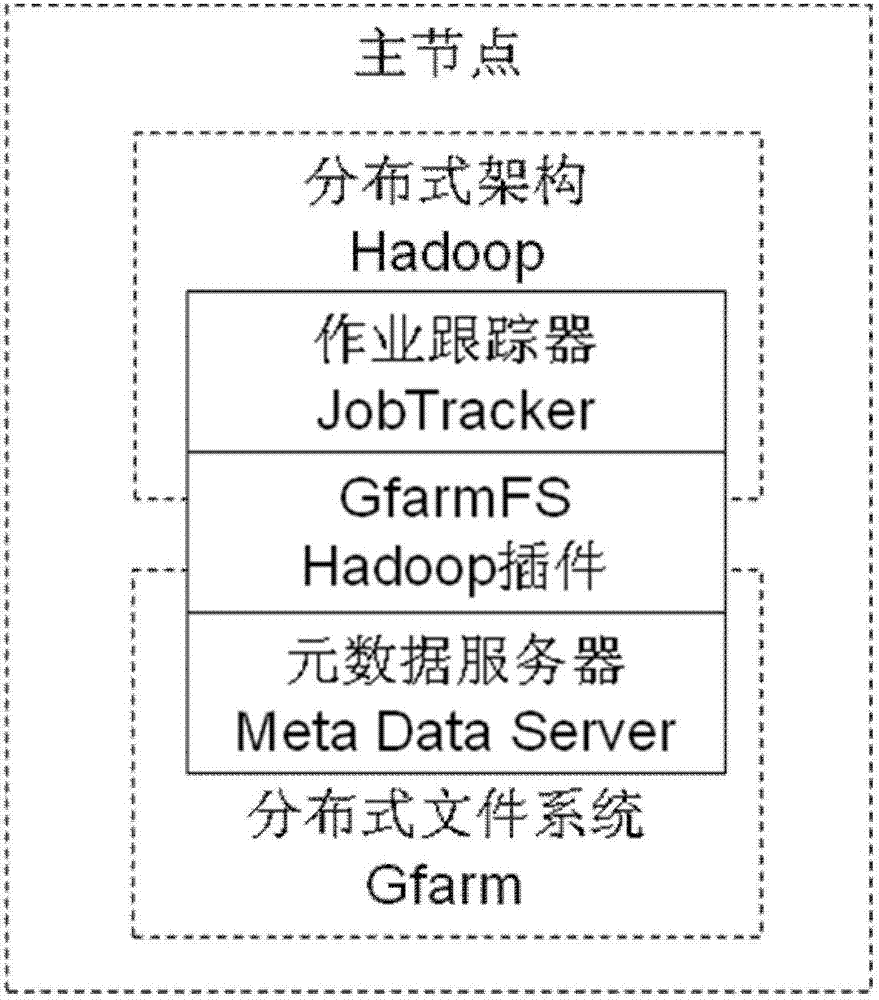

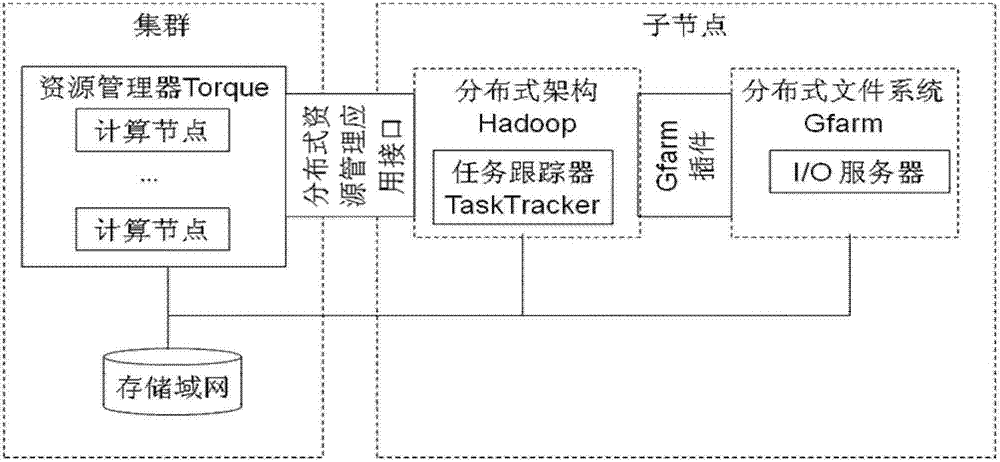

Parallel programming method oriented to data intensive application based on multiple data architecture centers

InactiveCN102880510AEasy to handleImprove parallel efficiencyResource allocationCollocationCluster systems

The invention relates to a parallel programming method oriented to data intensive application based on multiple data architecture centers. The method comprises the following steps of: constructing a main node of system architecture, constructing a sub node of the system architecture, performing loading, performing execution and the like. The parallel programming method has the advantages that a technicist in the field of a large scale of data intensive scientific data does not need to know well a parallel calculation mode based on multiple data centers and does not need to have a MapReduce and multi-point interface (MPI) parallel programming technology relevant to high-performance calculation; a plurality of distributed clusters are simply configured, and a MapReduce calculation task is loaded to the distributed clusters; the hardware and software collocation of the existing cluster system is not required to be changed, and the architecture can quickly parallel the data intensive application based on the MapReduce programming model on multiple data centers; and therefore, relatively high parallelization efficiency is achieved, and the processing capacity of the large-scale distributed data intensive scientific data can be greatly improved.

Owner:CENT FOR EARTH OBSERVATION & DIGITAL EARTH CHINESE ACADEMY OF SCI

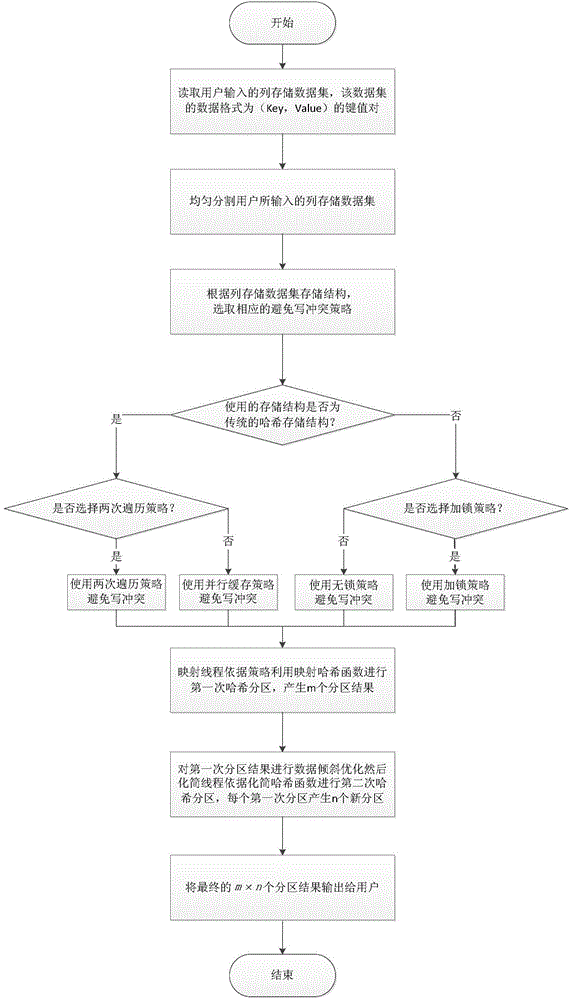

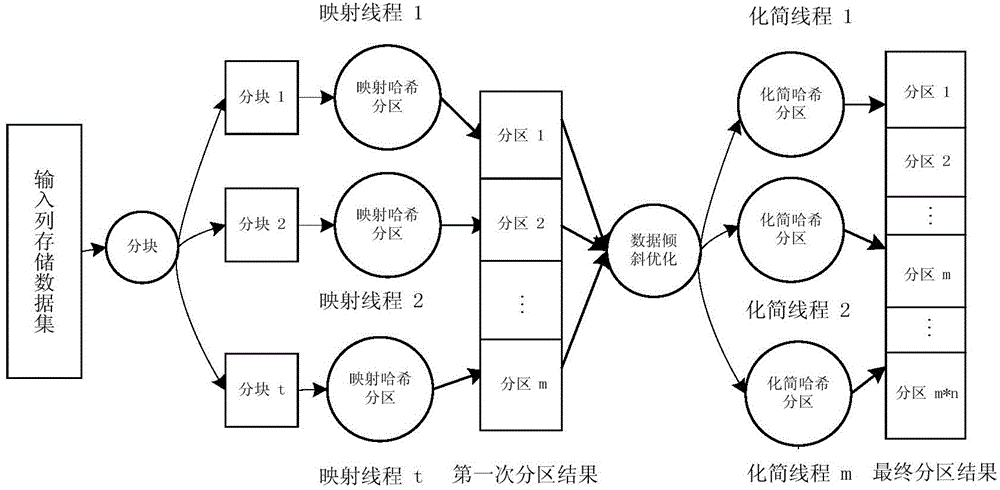

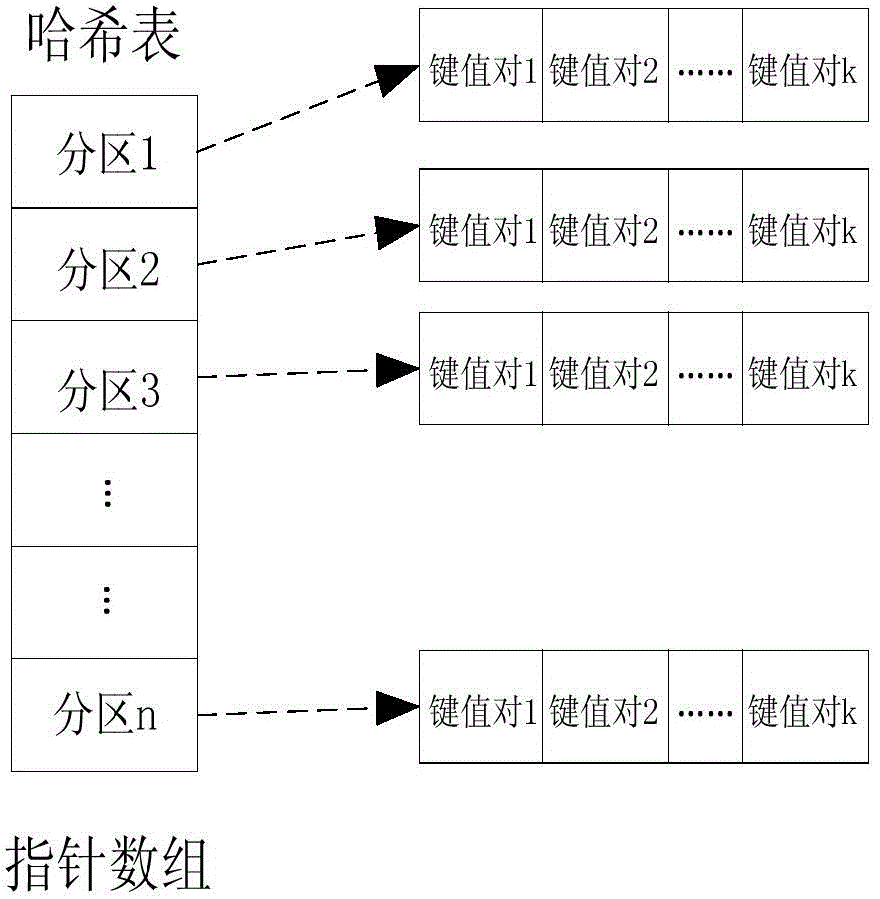

Multi-core parallel hash partitioning optimizing method based on column storage

ActiveCN104133661AImprove performanceImprove cache efficiencyConcurrent instruction executionSpecial data processing applicationsOn columnData set

The invention discloses a multi-core parallel hash partitioning optimizing method based on column storage. The method mainly solves the problem that an existing parallel hash partitioning algorithm can not efficiently use resources of a multi-core processor. According to the technical scheme, data partitioning tasks are dynamically distributed to multiple cores for execution by means of a mapping and simplification parallel programming model, and corresponding strategies for avoiding write conflicts are selected according to different storage structures of column storage data sets; primary hash partitioning is carried out through a mapping thread, and an obtained primary hash partitioning result is sent to a simplification thread for secondary hash partitioning after data tilt optimization; a final hash partitioning result is fed back. According to the method, the characteristic that tasks can be executed in parallel on the multi-core processor is well used, the method can be suitable for input data in various distribution modes, high-speed caching efficiency and overall performance of the multi-core processor are improved, and the method can be used for multi-core parallel multi-step hash partitioning of the column storage data sets.

Owner:XIDIAN UNIV

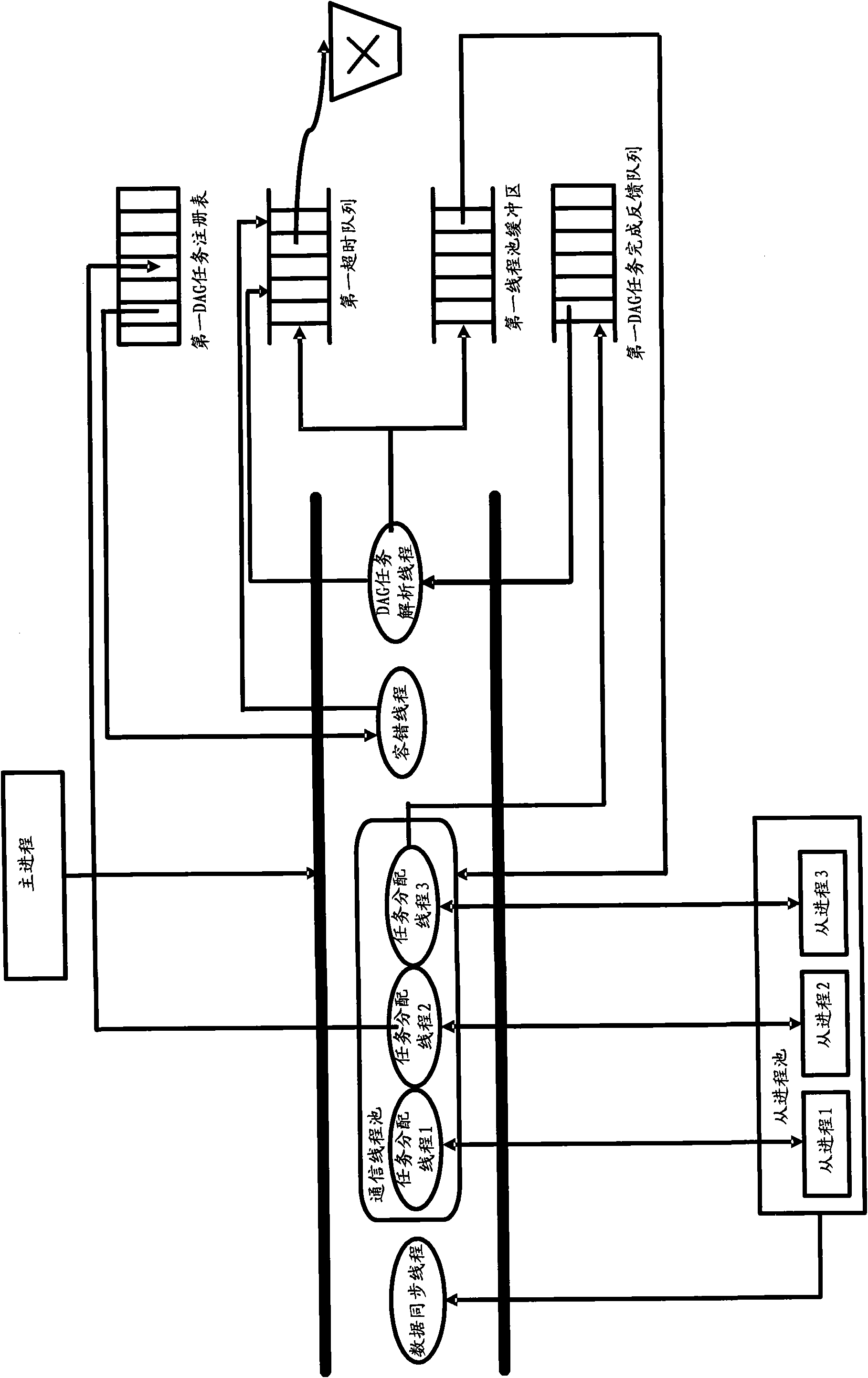

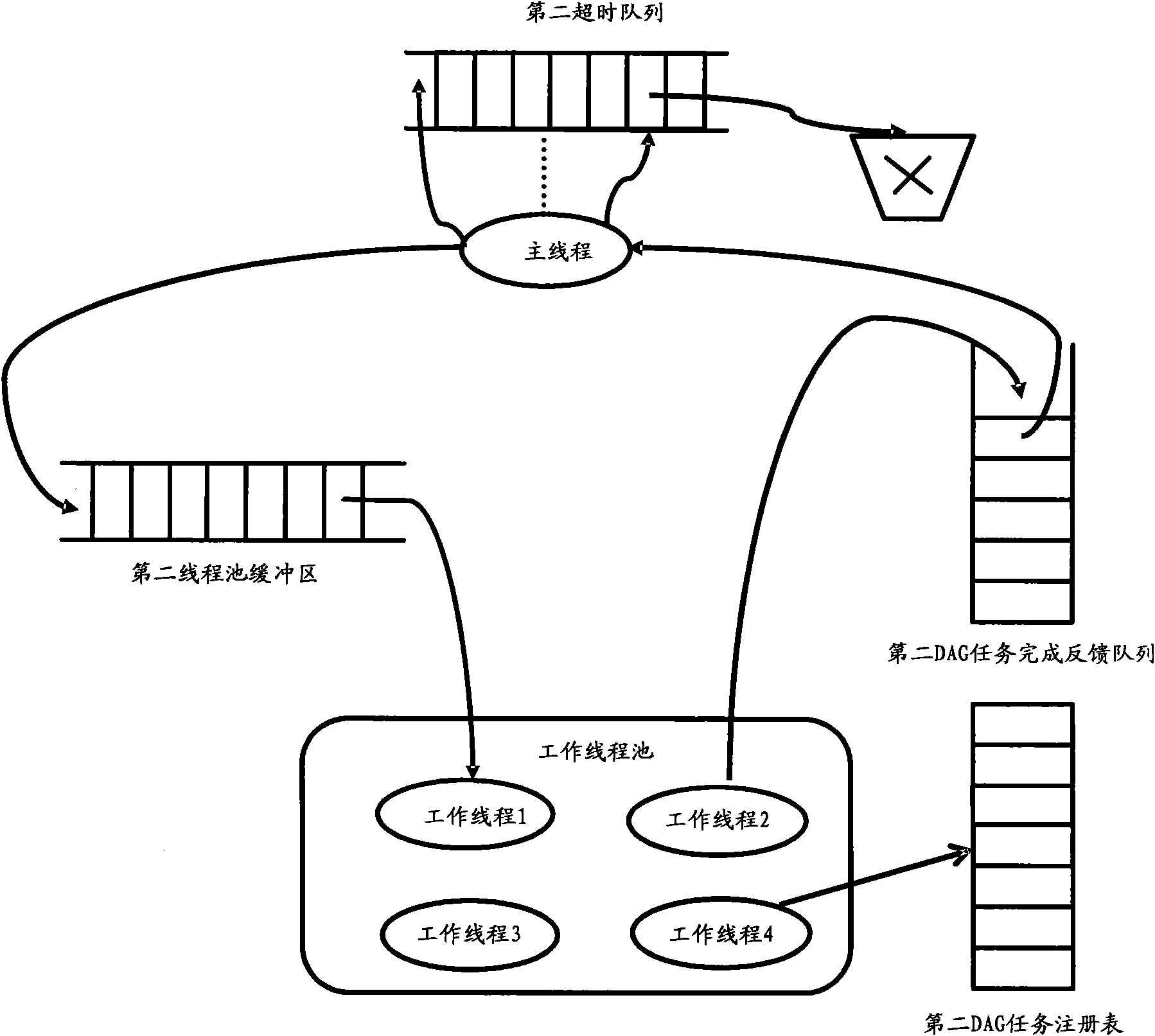

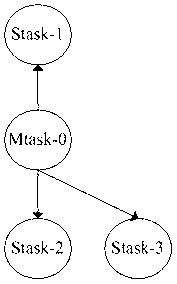

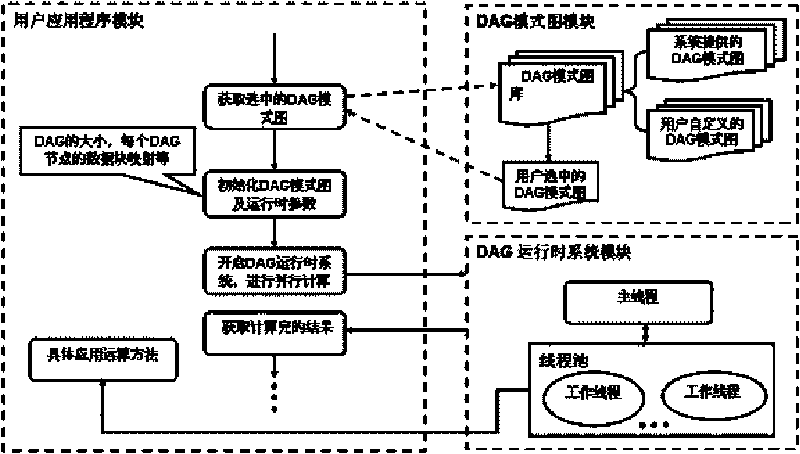

Parallel programming model system of DAG oriented data driving type application and realization method

InactiveCN101710286AReasonable structureImprove performanceConcurrent instruction executionSpecific program execution arrangementsFault toleranceParallel programming model

The invention discloses a parallel programming model system of DAG oriented data driving type application and a realization method. The parallel programming model system of DAG oriented data driving type application comprises a DAG mode chart module, a user application program module and a DAG runtime system module, wherein the DAG mode chart module comprises a DAG mode chart bank; the user application program module is used for user initialization setup and confirmation of specific parallelization algorithm; and the DAG runtime system module comprises a main thread and a thread pool. The main thread is used for analyzing and updating the DAG mode chart, issuing and dispatching data block, and controlling fault-tolerance; the thread pool comprises a thread pool queue buffer zone and a work thread, wherein the thread pool queue buffer zone is a data interface for the main thread and the work thread, the work thread is used for constantly acquiring computing tasks from the queue buffer zone and carrying out computing. Compared with the prior art, the invention decreases difficulty in designing and developing a parallel computing application program for non-computer professionals, shortens development period of parallel software, and enables the completed parallel computing application program to have more reasonable structure and more optimized performance.

Owner:TIANJIN UNIV

2D-3D medical image parallel registration method based on combination similarity measure

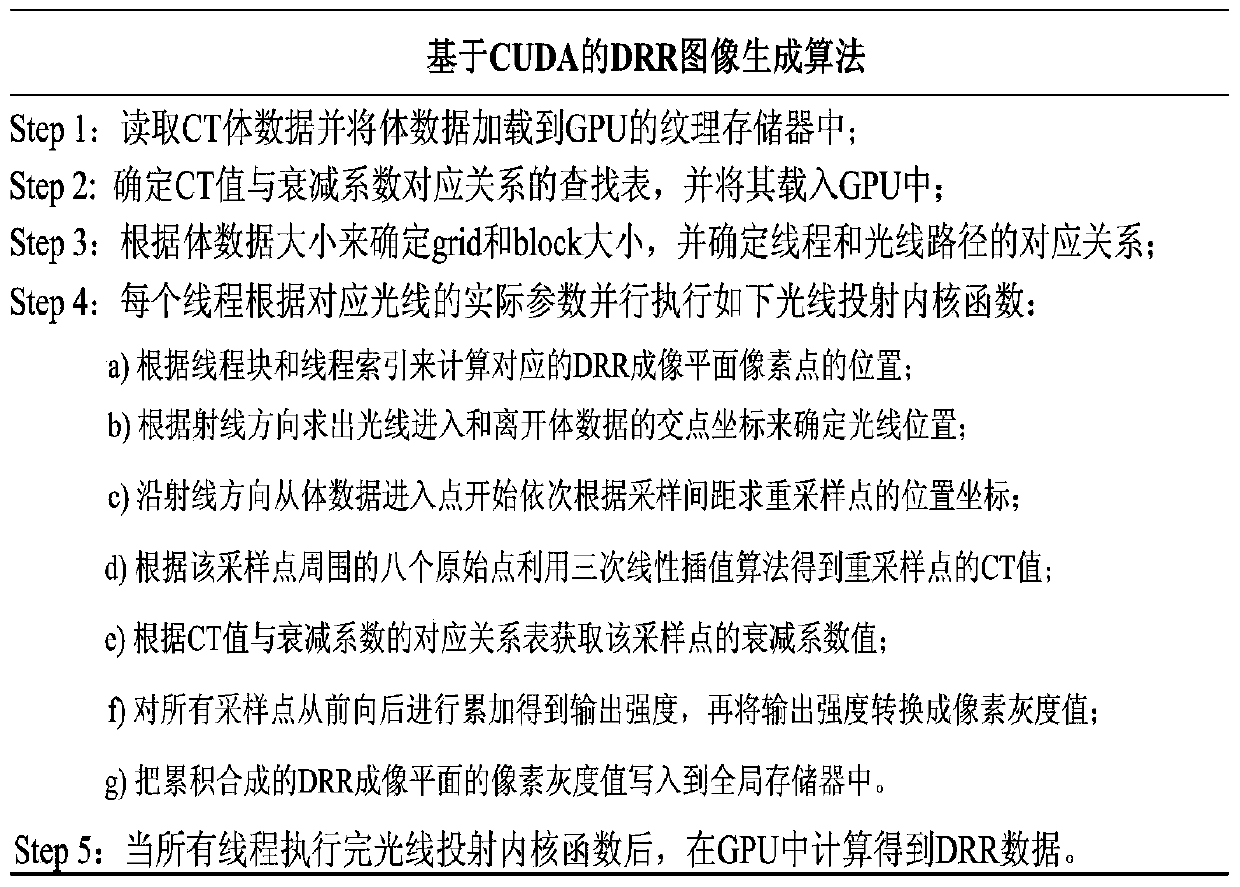

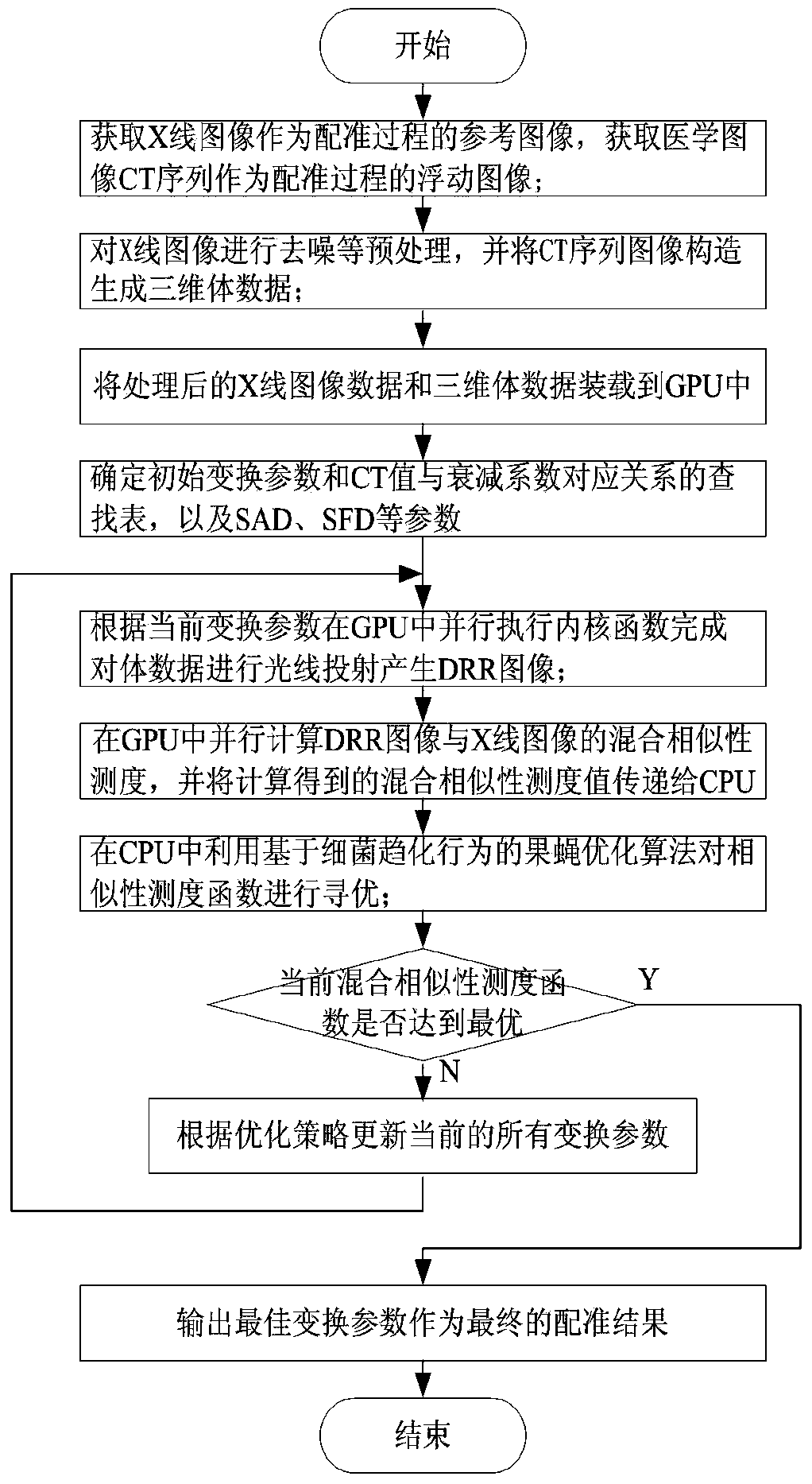

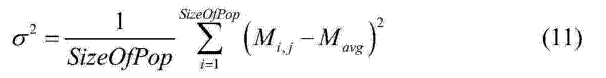

InactiveCN104134210ASimple calculationHigh precisionImage analysisGeneration processParallel programming model

The invention discloses a 2D-3D medical image parallel registration method based on combination similarity measure. The method comprises the following steps: firstly, using a CUDA (Compute Unified Device Architecture) parallel computing model to finish a quick generation process of a DRR (Digitally Reconstructed Radiograph) image; combining a SAD (Sum of Absolute Difference) with PI (pattern intensity) as new similarity measure to carry out parallel computation on GPU (Graphics Processing Unit); and finally, transferring a combination similarity measure value to CPU (Central Processing Unit), and adopting a fruit fly optimization algorithm based on bacterial chemotaxis behaviors to optimize for looking for an optimal registration parameter. An experiment verifies the performance of the method to show that the execution speed of the method is effectively improved since DRR high-speed generation and the mixed similarity measure are realized in the GPU. Meanwhile, compared with the single similarity measure, the invention adopts the mixed similarity measure to improve the accuracy of a registration result.

Owner:LANZHOU JIAOTONG UNIV

Search method based on big data

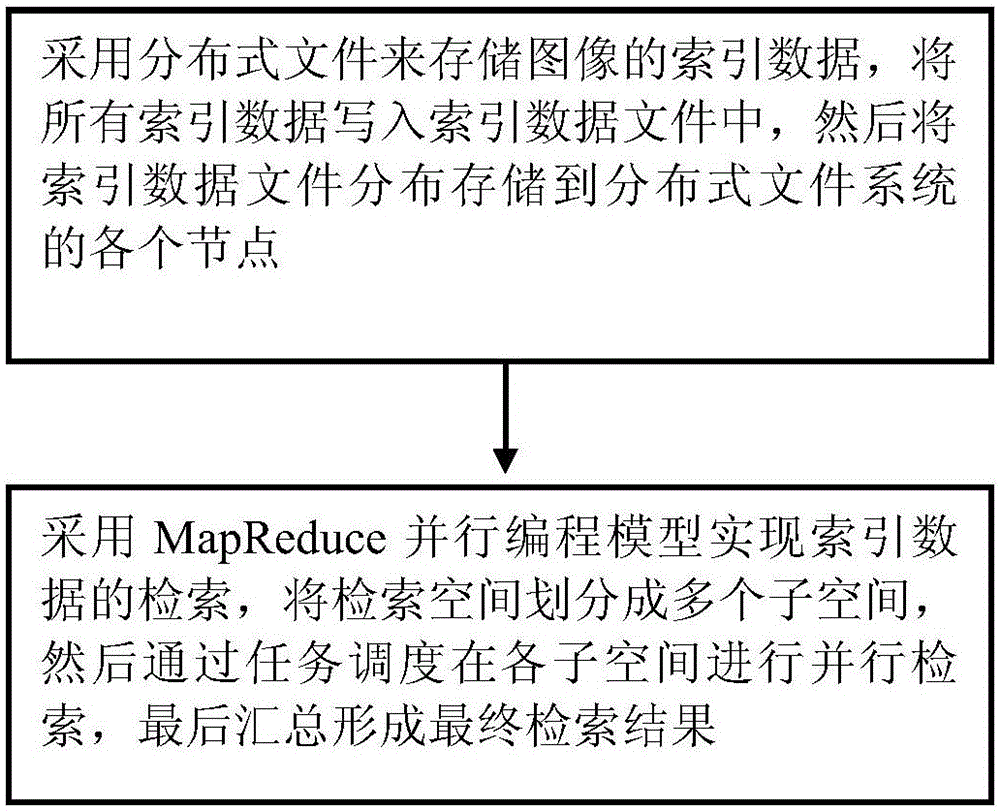

InactiveCN105117502AImprove retrieval efficiencyAvoid performance bottlenecksSpecial data processing applicationsDistributed File SystemParallel programming model

The invention provides a search method based on big data. The method comprises steps as follows: index data of images are stored by the aid of distributed files, all the index data are written into index data files, and then the index data files are distributed and stored in various nodes of a distributed file system; the index data are searched through a MapReduce parallel programming model, a search space is divided into multiple subspaces, then parallel searches are performed on the subspaces through task scheduling, and finally, a final search result is formed through summarizing. With the adoption of the search method based on the big data, processes for storing and searching the data of the images by the aid of the distributed system are optimized, the search efficiency is improved, and the performance bottleneck is overcome.

Owner:SICHUAN ZHONGKE TENGXIN TECH

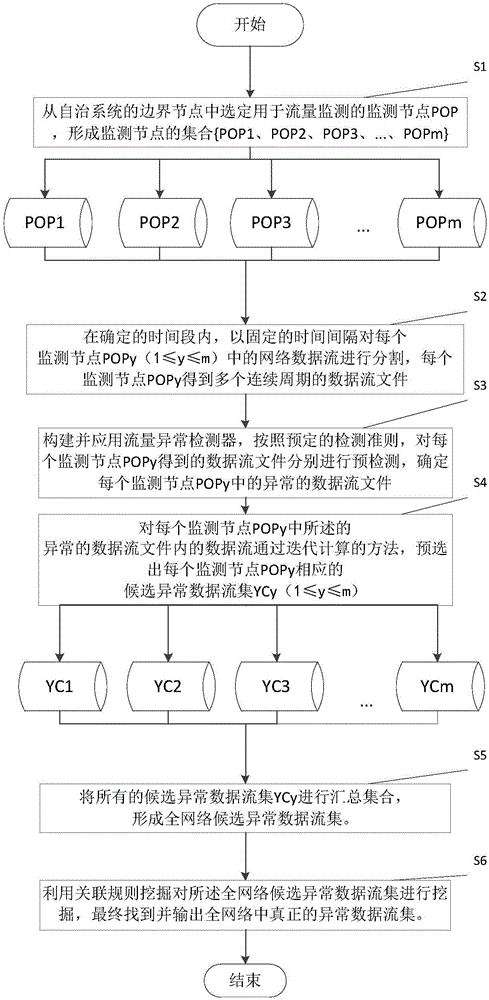

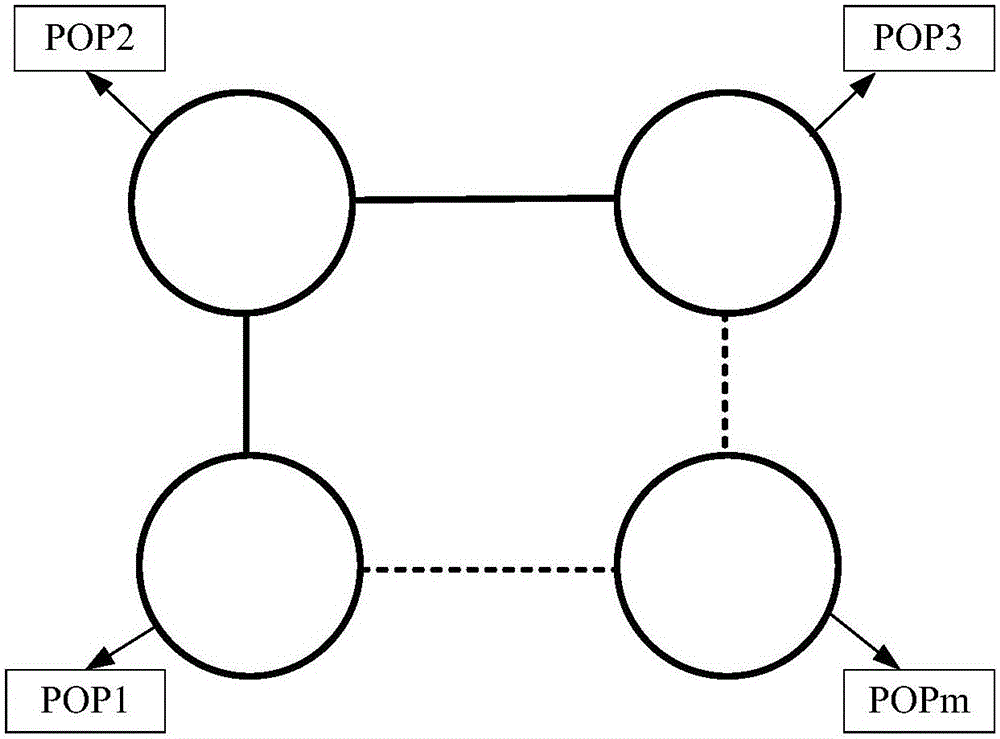

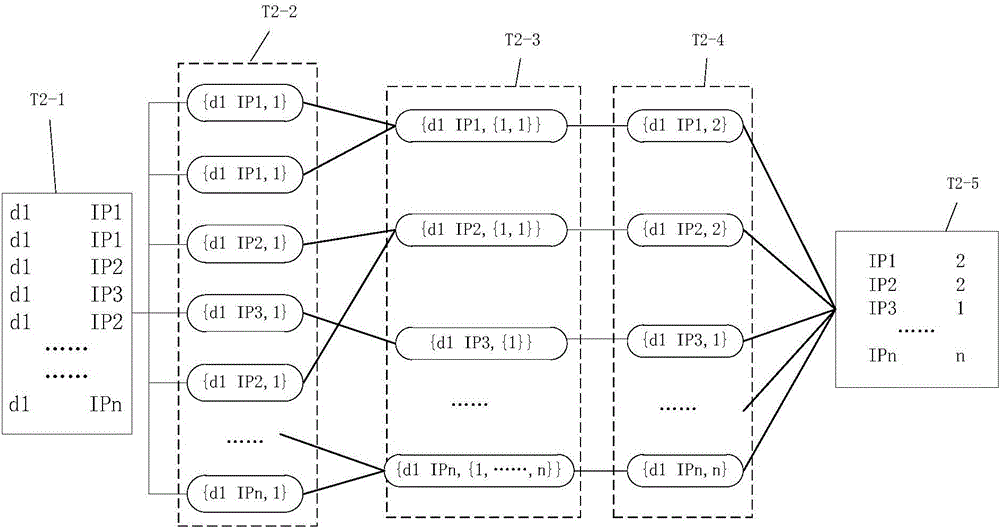

Full-network traffic abnormality extraction method

The invention discloses a full-network traffic abnormality extraction method. The method comprises the following steps: step one, selecting monitoring nodes of an autonomous system; step two, segmenting network data stream of each monitoring node with the fixed time interval within a determined time slot, wherein each monitoring node obtain data stream files of multiple continuous periods; step three, constructing and applying a traffic abnormality detector to pre-detect the data stream files of each monitoring node, and determining the abnormal data stream file; step four, performing iterative calculation on the data stream in the abnormal data stream file of each monitoring node to pre-select candidate abnormal data stream sets; step five, summarizing all candidate abnormal data stream sets to form a full-network candidate abnormal data stream set; step six, excavating the full-network candidate abnormal data stream sets using association rules so as to find the real abnormal data stream set. Through the adoption of the method disclosed by the invention, the abnormal data stream can be efficiently and accurately captured based on the MapReduce parallel programming model.

Owner:中国人民解放军防空兵学院

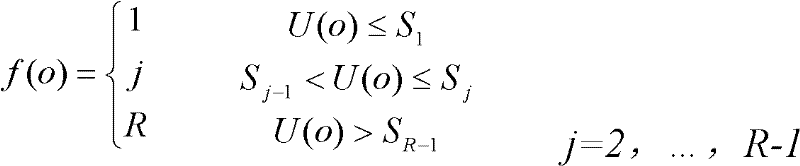

Dependent-chance two-layer programming model-based transmission network programming method

The invention relates to a dependent-chance two-layer programming model-based transmission network programming method. A dependent-chance two-layer nonlinear programming model for the programming of a transmission network is established. The upper-layer programming of the model aims to maximize a probability that return on investment of the transmission network is greater than a certain ideal value, and a constraint is about the erection number of candidate lines. The lower-layer programming of the model comprises two sub-problems, wherein one is the social benefit maximization of a system under a normal running condition, the other is maximization of a probability that the total load shedding amount of the system under a failure running condition is lower than a certain specified value, and a constraint is a failure running constraint. A hybrid algorithm combining a Monte-Carlo method, a genetic algorithm and an interior point algorithm is disclosed for solving the dependent-chance two-layer programming model. The problem of risks in the programming of the transmission network is rationally solved, a dependent-chance two-layer programming modeling-based concept is applied to the programming of the transmission network, and the aim of higher probability of realizing a rate of return on investment in an uncertain environment is fulfilled.

Owner:SHANGHAI UNIVERSITY OF ELECTRIC POWER

Partitioning CUDA code for execution by a general purpose processor

One embodiment of the present invention sets forth a technique for translating application programs written using a parallel programming model for execution on multi-core graphics processing unit (GPU) for execution by general purpose central processing unit (CPU). Portions of the application program that rely on specific features of the multi-core GPU are converted by a translator for execution by a general purpose CPU. The application program is partitioned into regions of synchronization independent instructions. The instructions are classified as convergent or divergent and divergent memory references that are shared between regions are replicated. Thread loops are inserted to ensure correct sharing of memory between various threads during execution by the general purpose CPU.

Owner:NVIDIA CORP

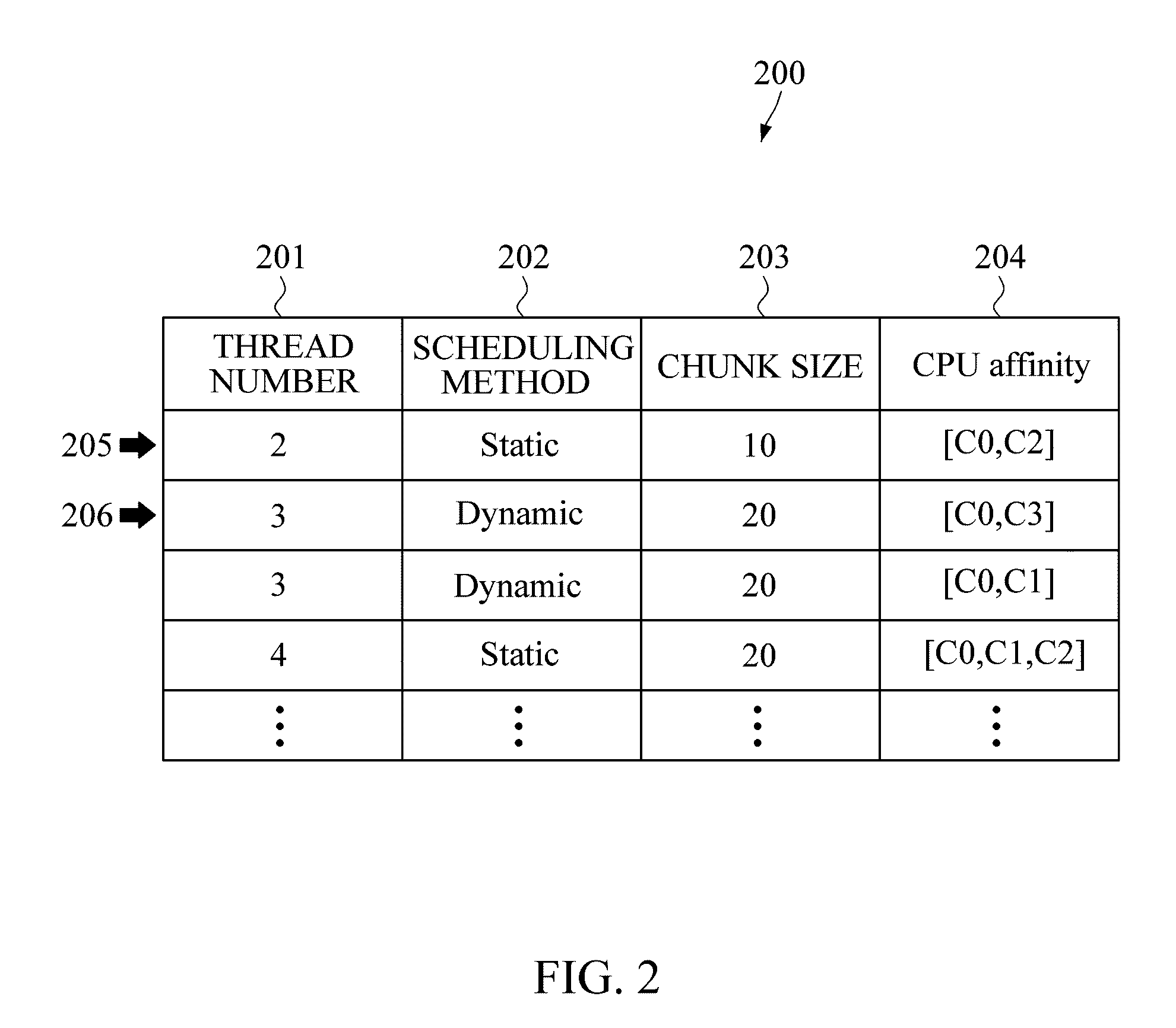

Apparatus and method for controlling parallel programming

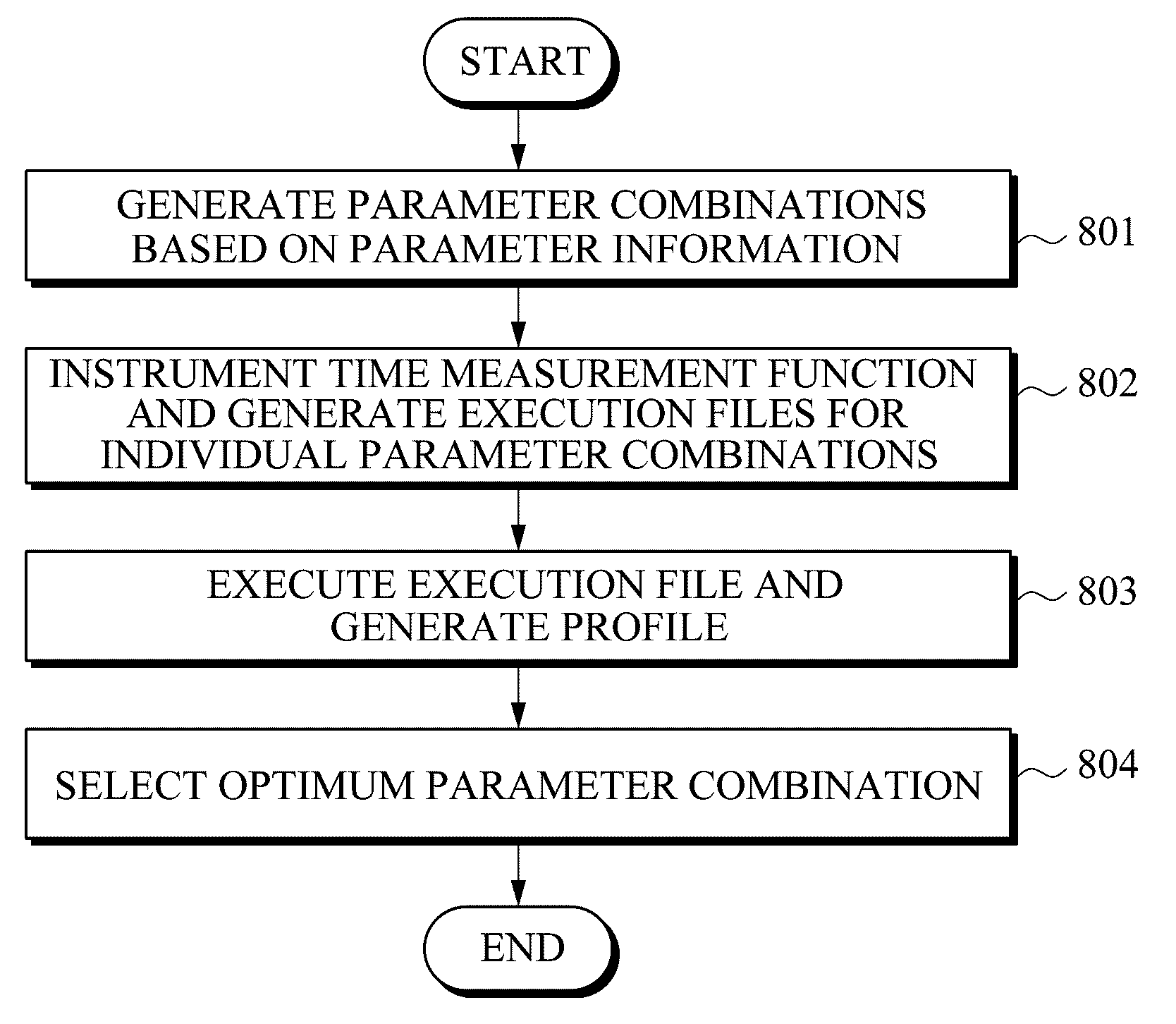

A parallel programming adjusting apparatus and method are provided. Parameter sets are made by grouping parameters of a parallel programming model influencing the system performance, the parameter sets are combined among the groups, generating parameter combinations. Execution files are executed for the individual parameter combinations and a runtime of a parallel region for respective parameter combination is measured. An optimum parameter combination is selected based on the measured runtime.

Owner:SAMSUNG ELECTRONICS CO LTD

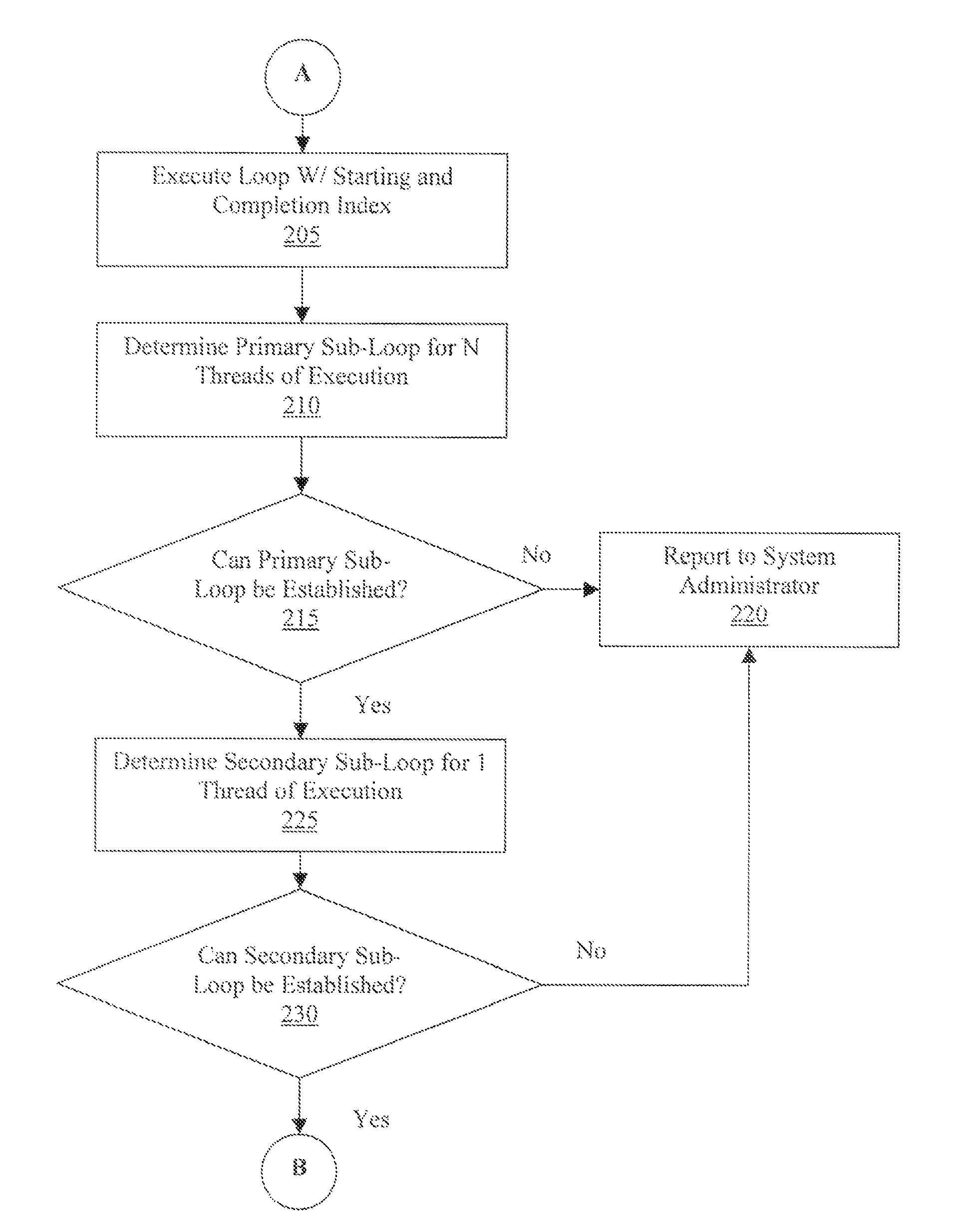

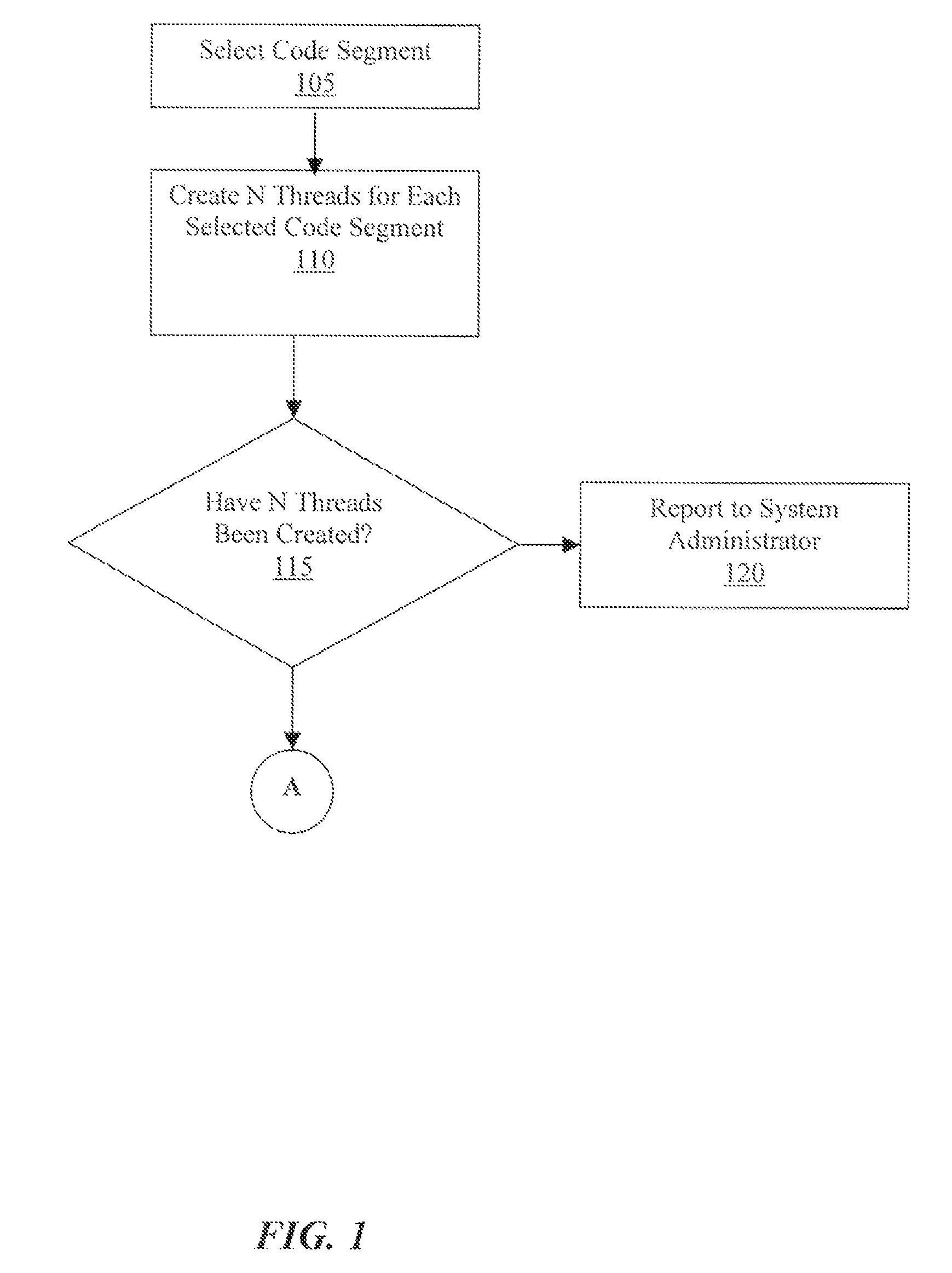

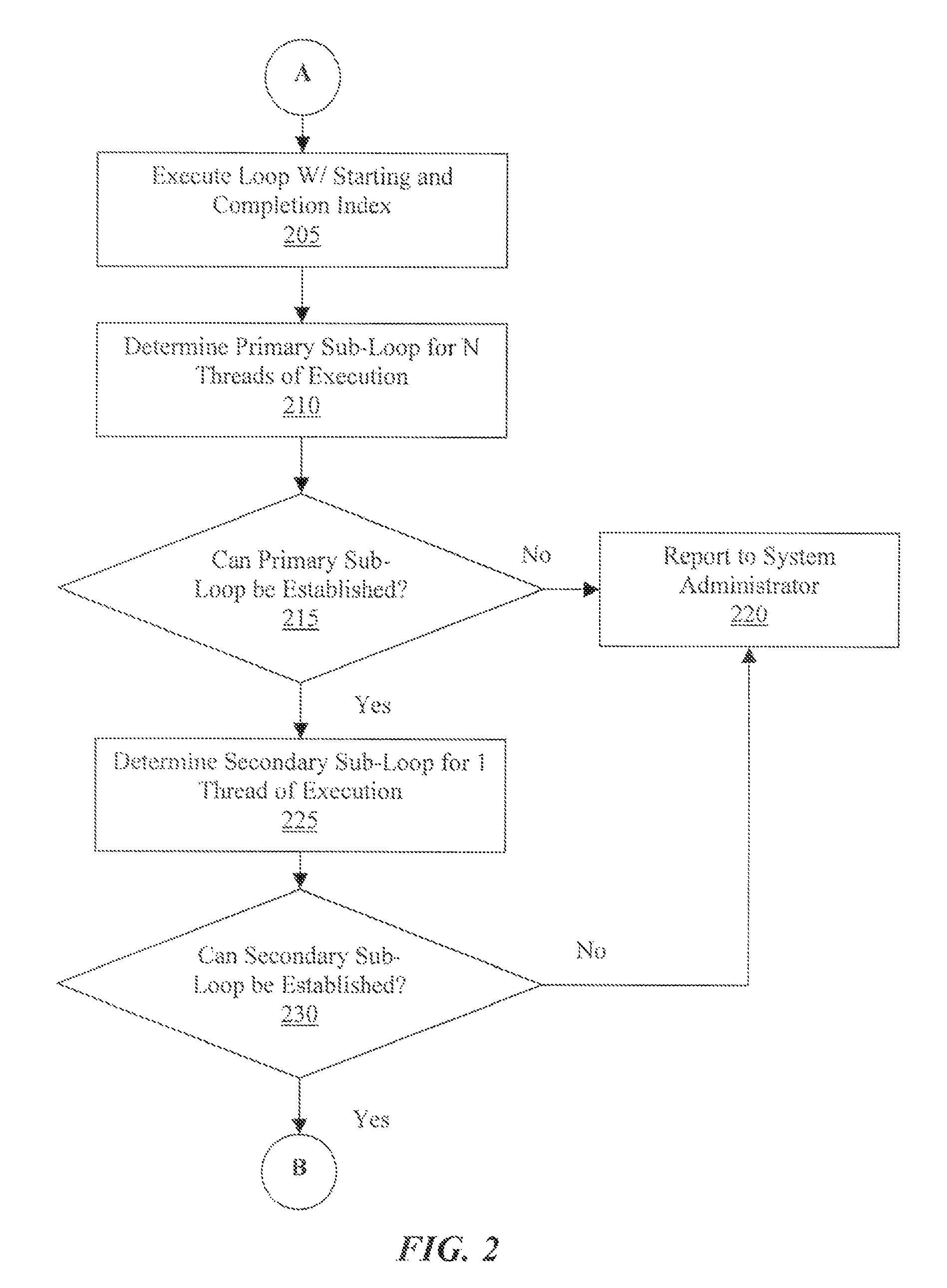

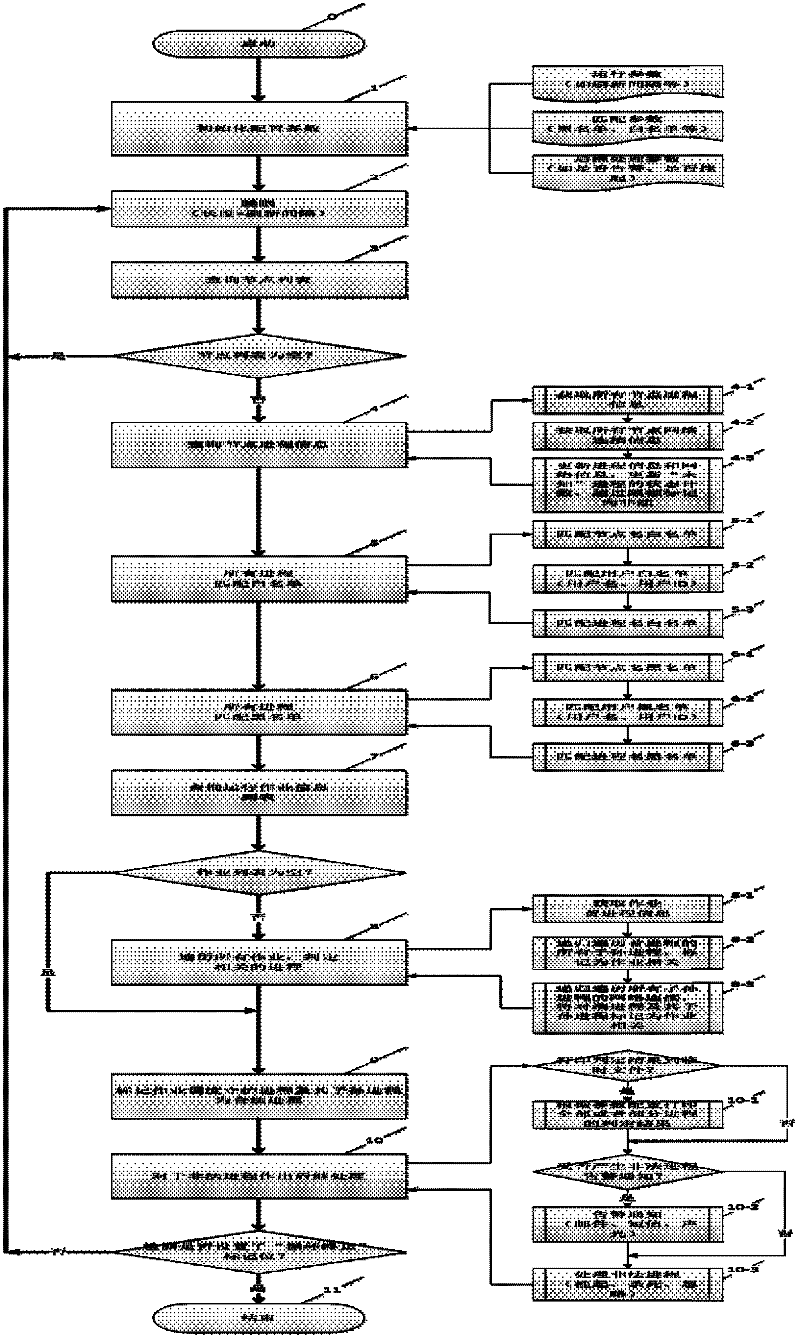

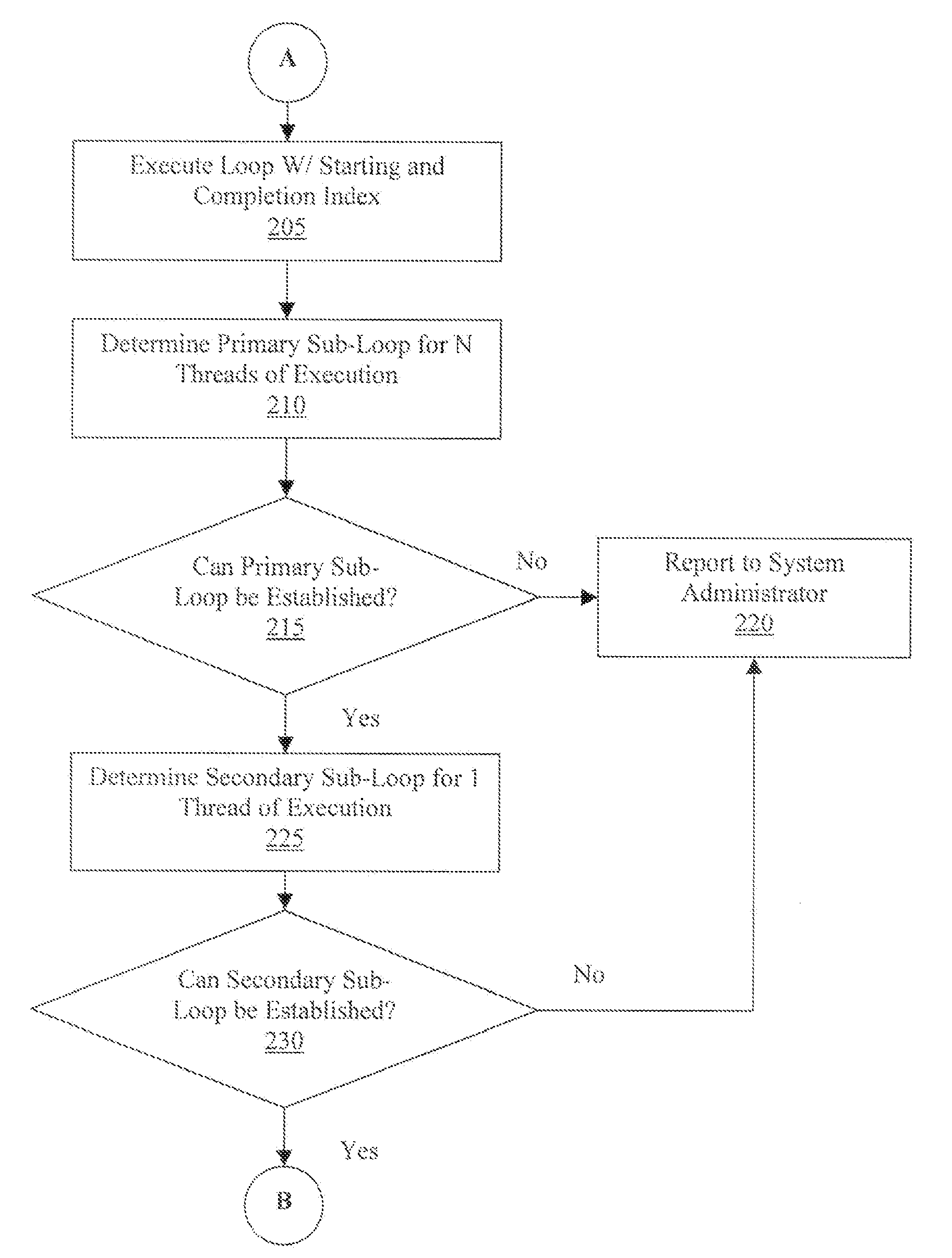

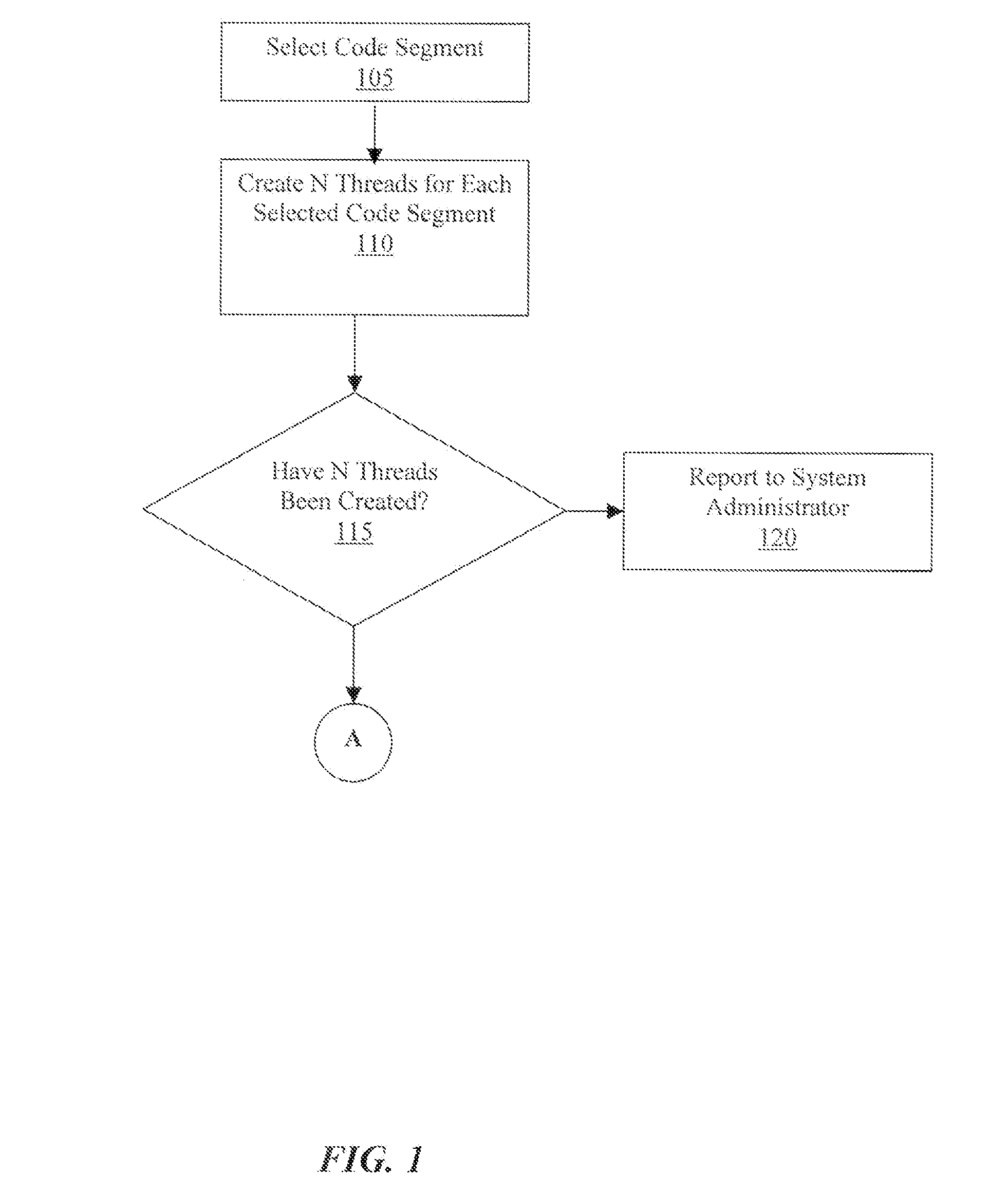

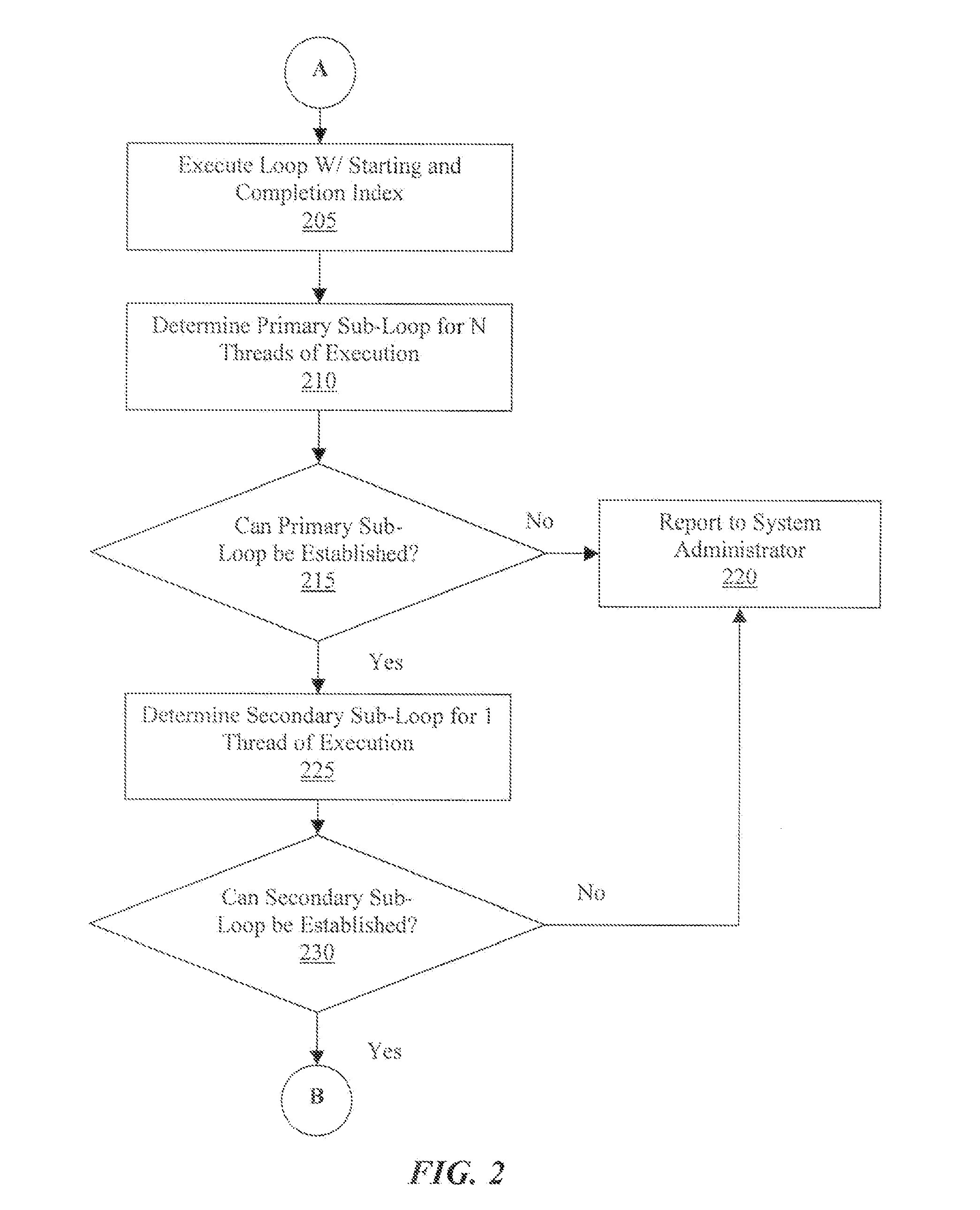

Method to examine the execution and performance of parallel threads in parallel programming

InactiveUS8046745B2Software engineeringSpecific program execution arrangementsParallel programming modelApplication software

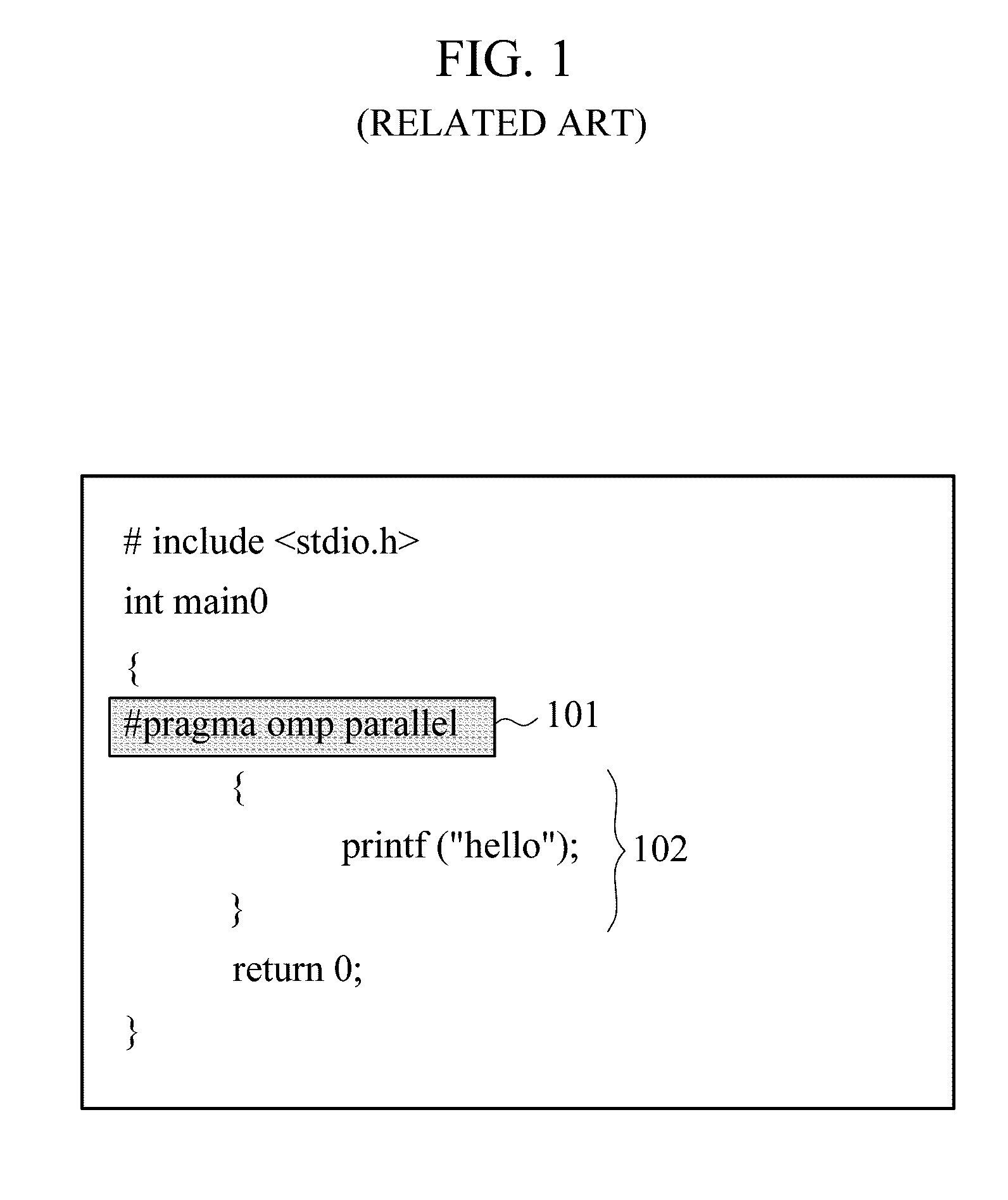

The present invention relates to compiler generated code for parallelized code segments, wherein the generated code is used to determine if an expected number of parallel processing threads is created for a parallel processing application, in addition to determining the performance impact of using parallel threads of execution. In the event the expected number of parallel threads is not generated, notices and alerts are generated to report the thread creation problem. Further, a method is disclosed for the collection of performance metrics for N threads of execution and one thread of execution, and thereafter performing a comparison operation upon the execution threads. Notices and alerts are generated to report the resultant performance metrics for the N threads of execution versus the one thread of execution.

Owner:INT BUSINESS MASCH CORP

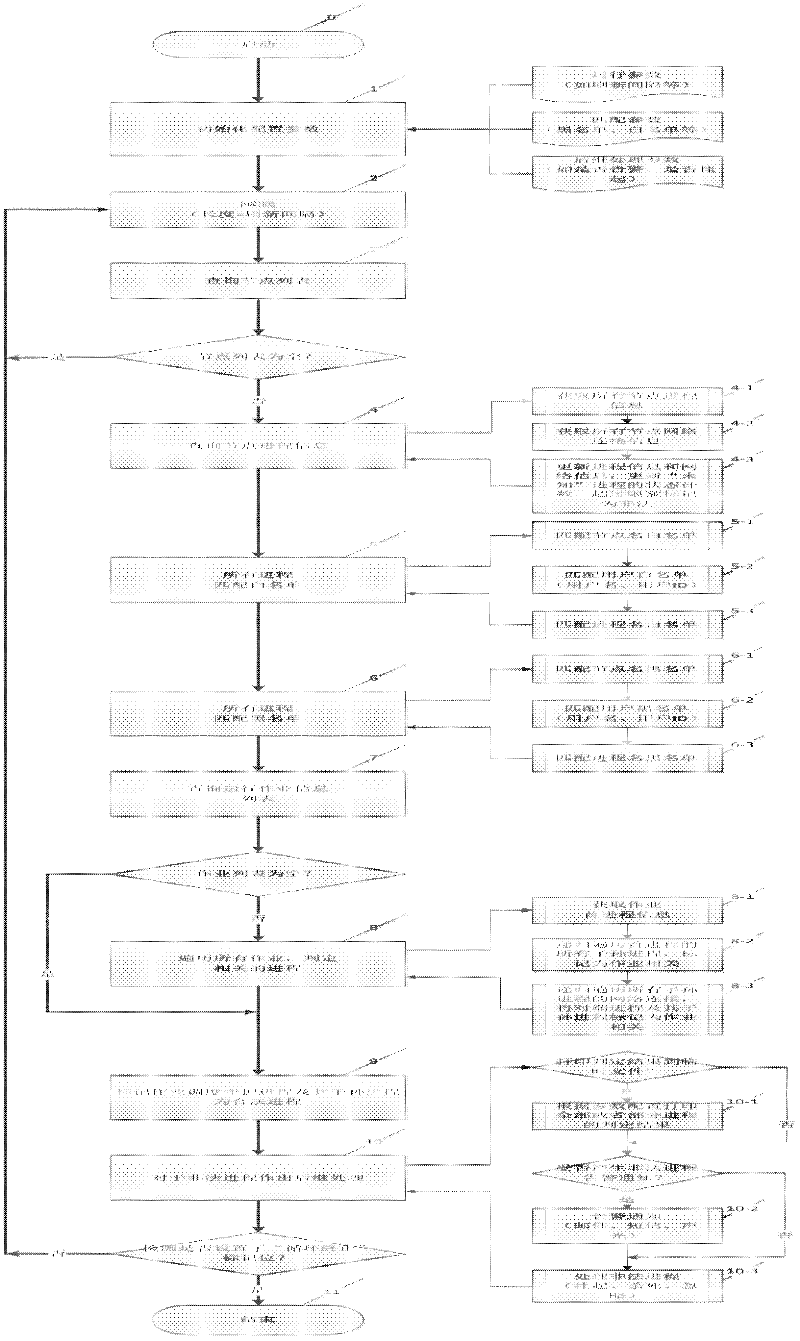

Illegal job monitor method based on process scanning

ActiveCN102521101ASimplify workFlexible configurationHardware monitoringMultiprogramming arrangementsOperational systemParallel programming model

The invention provides an illegal job monitor method based on process scanning. The method comprises the following steps: firstly, initializing a process black list and white list; secondly, reading job detailed information of job scheduling; thirdly, acquiring process information and network connection information of all computation nodes; then, determining the correlation between the process and the black list, the white list and the job according to a certain strategy according to information acquired by the above steps, and further determining legality of the process; finally, processing illegal process by subsequent treatment according to the determination results and a preset processing strategy, and updating state counting of unknown processes of the process. The illegal job monitor method provided by the invention has the advantage that the method can determine the correlation between the process and the job according to the job information and the operation system information (process and network) without considering a parallel programming model used by a user; and the operation of managers is simplified greatly by flexibly configuring the plurality of kinds of white lists, black lists and subsequent processing strategies.

Owner:DAWNING INFORMATION IND BEIJING +1

Method to examine the execution and performance of parallel threads in parallel programming

InactiveUS20080134150A1Software engineeringSpecific program execution arrangementsParallel programming modelApplication software

The present invention relates to compiler generated code for parallelized code segments, wherein the generated code is used to determine if an expected number of parallel processing threads is created for a parallel processing application, in addition to determining the performance impact of using parallel threads of execution. In the event the expected number of parallel threads is not generated, notices and alerts are generated to report the thread creation problem. Further, a method is disclosed for the collection of performance metrics for N threads of execution and one thread of execution, and thereafter performing a comparison operation upon the execution threads. Notices and alerts are generated to report the resultant performance metrics for the N threads of execution versus the one thread of execution.

Owner:IBM CORP

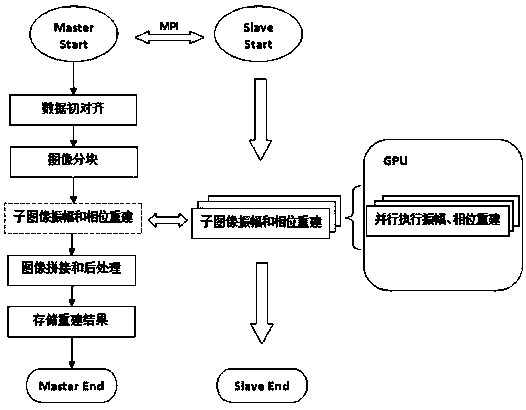

Parallel realization method for reconstructing spot diagram in astronomic image by K-T algorithm

InactiveCN107451955ATroubleshooting High-Resolution ReconstructionsImprove acceleration performanceImage enhancementImage analysisImaging processingParallel programming model

The invention relates to a parallel realization method for high-resolution reconstruction of a spot diagram in an astronomic image by a K-T algorithm, and belongs to the field of astronomic technical image processing and computing. The method comprises the steps of firstly reading image and parameter files through a main process of a CPU and calculating related physical parameters for performing image preprocessing and data alignment; secondly segmenting a whole view field image of all frames into numerous sub-blocks according to the size of an isoplanatic region to perform image block segmentation; thirdly distributing sub-block frames to independent sub-processes, performing parallel computing processing by adopting a CUDA parallel programming model, and performing Fourier amplitude and phase reconstruction; and finally performing inverse Fourier transform on each sub-block in combination with Fourier amplitude and phase by each sub-process to obtain a reconstructed image, and performing splicing processing on all the sub-blocks by the main process to finish reconstruction. According to the method, a CPU+GPU mixed heterogeneous parallel mode and a GPU-aware MPI mechanism are applied to the problem in the high-resolution reconstruction of the spot diagram in the astronomic image, so that the problem in the high-resolution reconstruction of the spot diagram is solved; and the method has relatively high expansibility.

Owner:KUNMING UNIV OF SCI & TECH

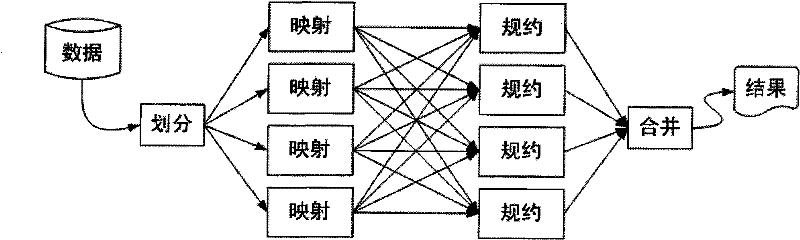

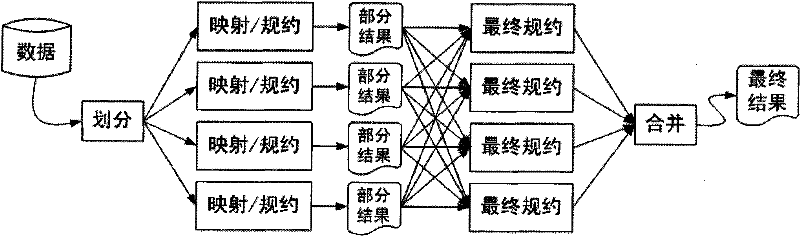

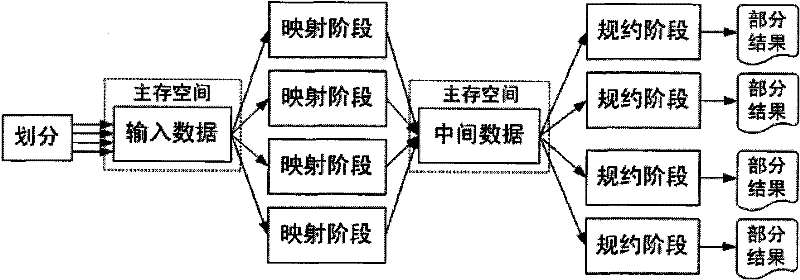

Many-core environment-oriented division mapping/reduction parallel programming model

InactiveCN102193830AAvoid idlingIncrease profitResource allocationConcurrent instruction executionParallel programming modelResource utilization

The invention belongs to the field of computer software application and particularly relates to a many-core environment-oriented division mapping / reduction parallel programming model. The programming model comprises a division mapping / reduction parallel programming model and a main storage multiplexing, many-core scheduling and assembly line execution technique, wherein the division mapping / reduction parallel programming model is used for performing partition treatment on mass data; and the main storage multiplexing, many-core scheduling and assembly line execution technique is used for optimizing the resource utilization of a many-core environment. By adopting the programming model, the mass data processing capacity can be effectively improved in the many-core environment; and by using the structural characteristic of a many-core system, the using amount of a multiplexing main storage is reduced the cache access is optimized, the hit rate is increased, idling of a processing unit is prevented, and the executing efficiency is increased. The programming model is simple for an application programmer, and a program source code does not need to be modified. Input and output of the programming model are fully consistent with those of a mapping / reduction model. The programming model can be applied to a many-core computing system for processing large-scale data.

Owner:FUDAN UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com