Many-core environment-oriented division mapping/reduction parallel programming model

A programming model and environment technology, applied in the direction of concurrent instruction execution, resource allocation, multi-program device, etc., can solve the problems of limiting the comprehensive platform capabilities and not giving full play to the characteristics of many-core platforms, so as to improve the hit rate and increase the mass data Processing capacity, the effect of improving execution efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

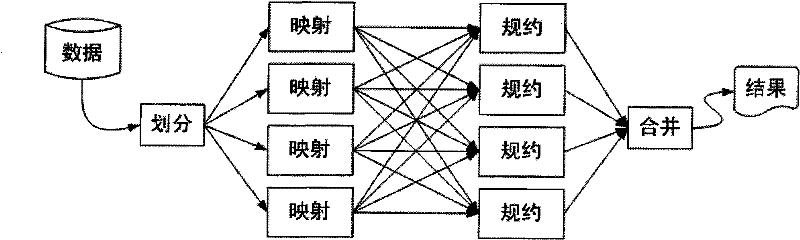

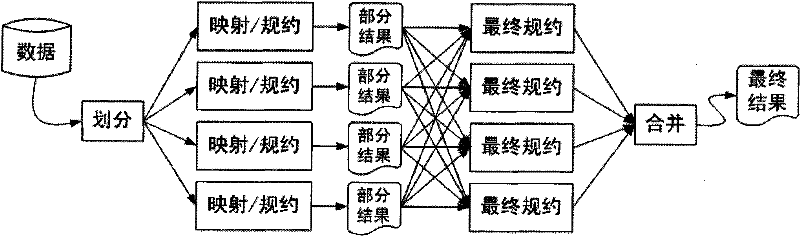

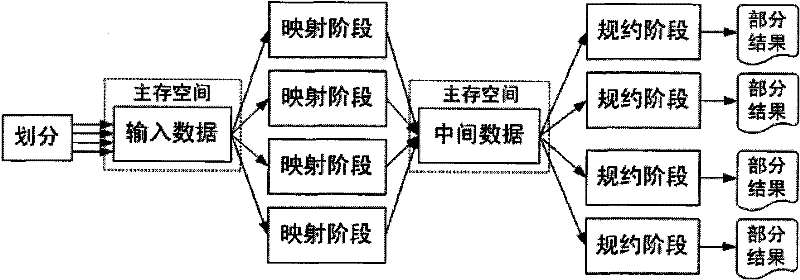

[0023] The execution flow of an exemplary divide-and-conquer map / reduce model is as follows figure 2 As shown, compared with the mapping / reduction model, the divide-and-conquer model uses a cycle to execute the "mapping / reduction" phase, and the operation of each mapping / reduction phase is equivalent to a complete operation under the original mapping / reduction model, the difference is only The input is only a part of the whole massive data set. Therefore, the runtime system of the model first divides the massive data set at a coarse-grained level according to the current system resource status as the input for the cyclic execution of "mapping / reduction", and then fine-grained part of the input data at each "mapping / reduction" stage. is distributed to each execution unit of the "map" stage. The "partial results" produced by a map / reduce operation are held in main memory for further processing. When the entire massive dataset completes the mapping / reduction operation, the "fi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com