Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

7816results about How to "Calculation speed" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

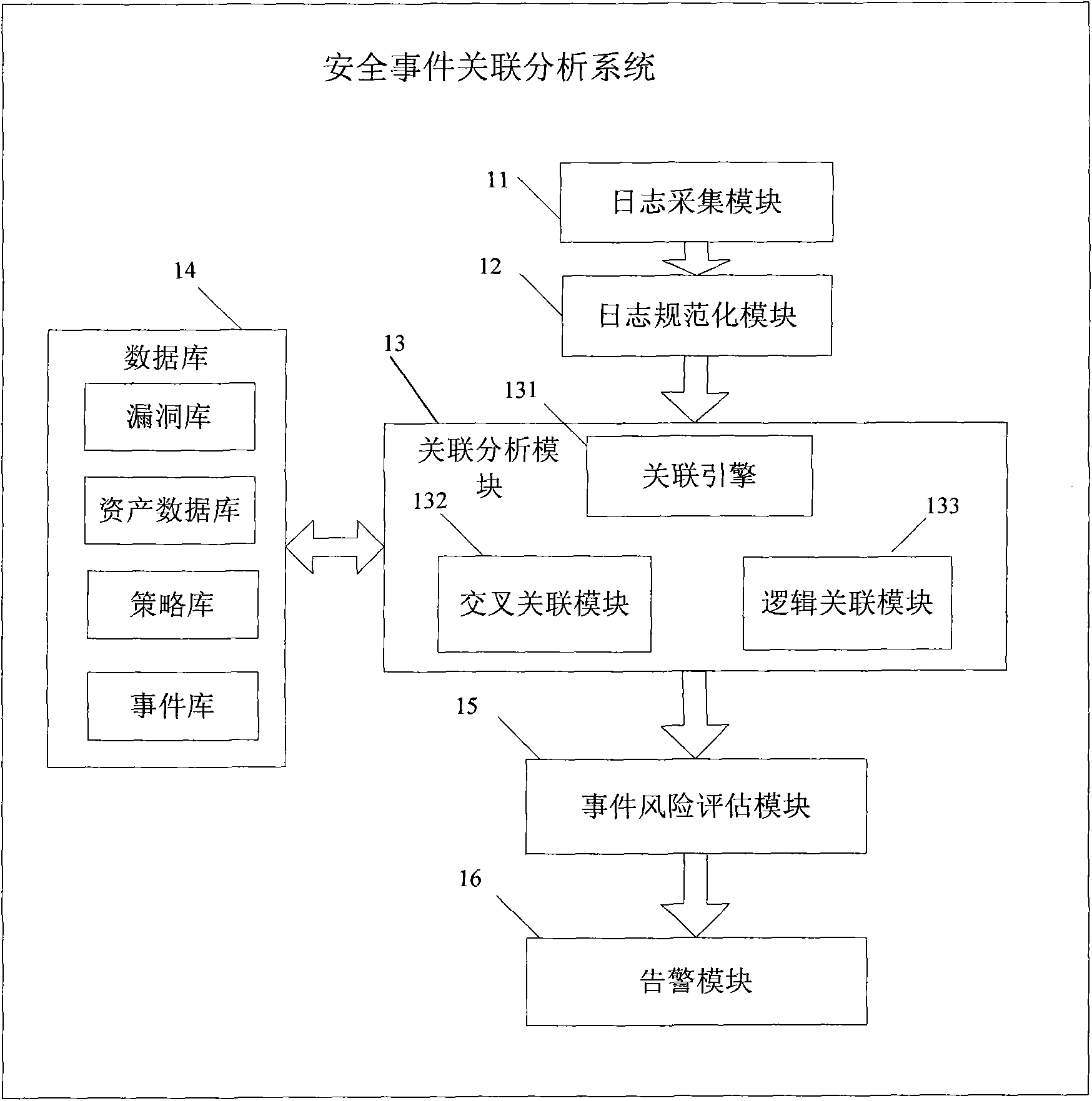

Log correlation analysis system and method

ActiveCN101610174ACalculation speedReduce false alarmsData switching networksEvent correlationCross correlation analysis

The invention provides a log event correlation analysis system and a method, which comprises the steps of: collecting log data, extracting characteristic data of the log data through a preset regular expression, constructing log events with uniform format according to the extracted characteristic data, querying treatment strategies of the log events, implementing cross correlation analysis and event flow logic correlation analysis to the events according to the instruction of strategies, as well as implementing risk evaluation on the log events and automatically responding. The method effectively reduces false-alarm, improves the objectivity of risk evaluation and the warning thereof has higher actual direction on users. The invention also provides a log event correlation analysis system corresponding to the method.

Owner:SHENZHEN Y& D ELECTRONICS CO LTD

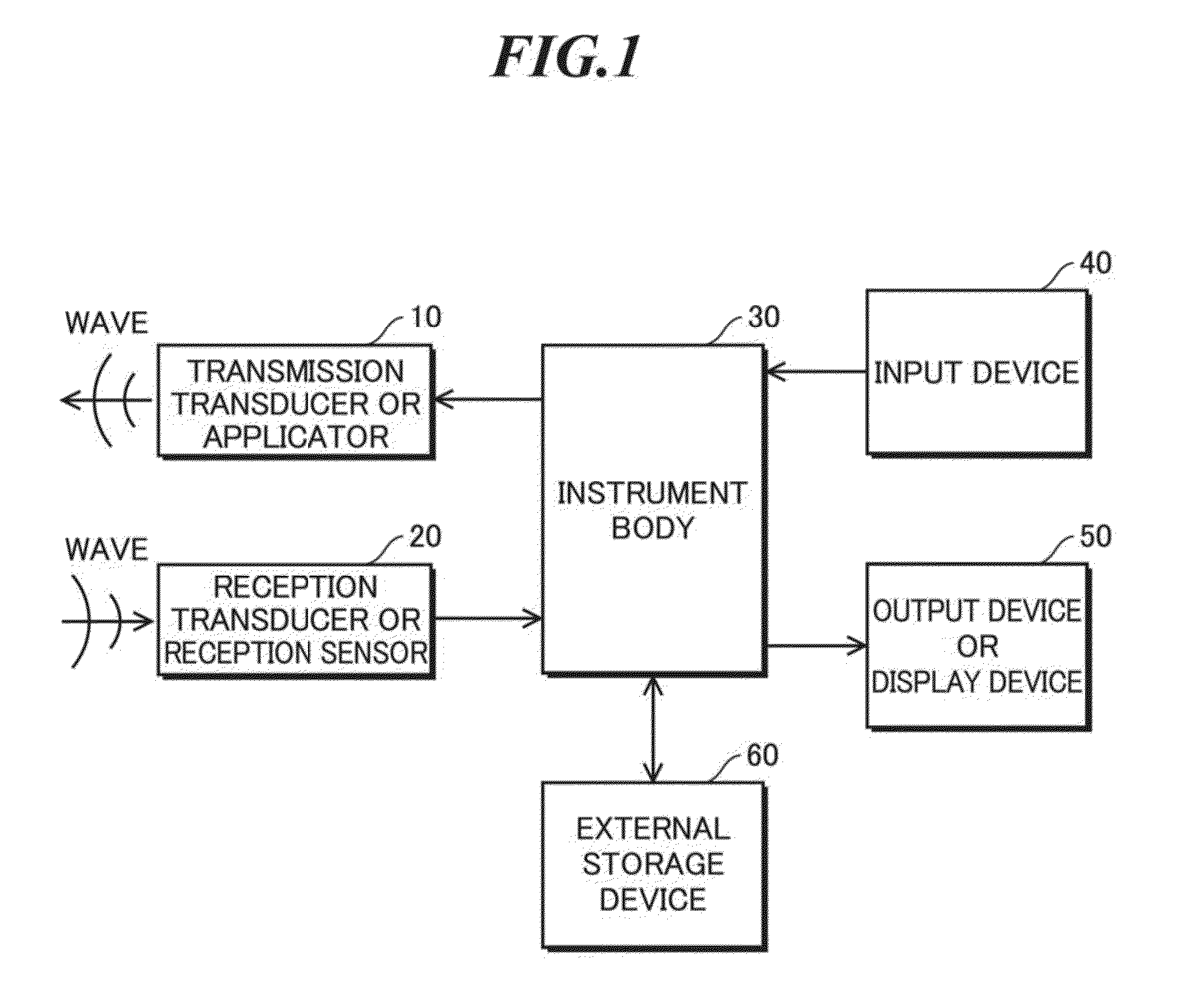

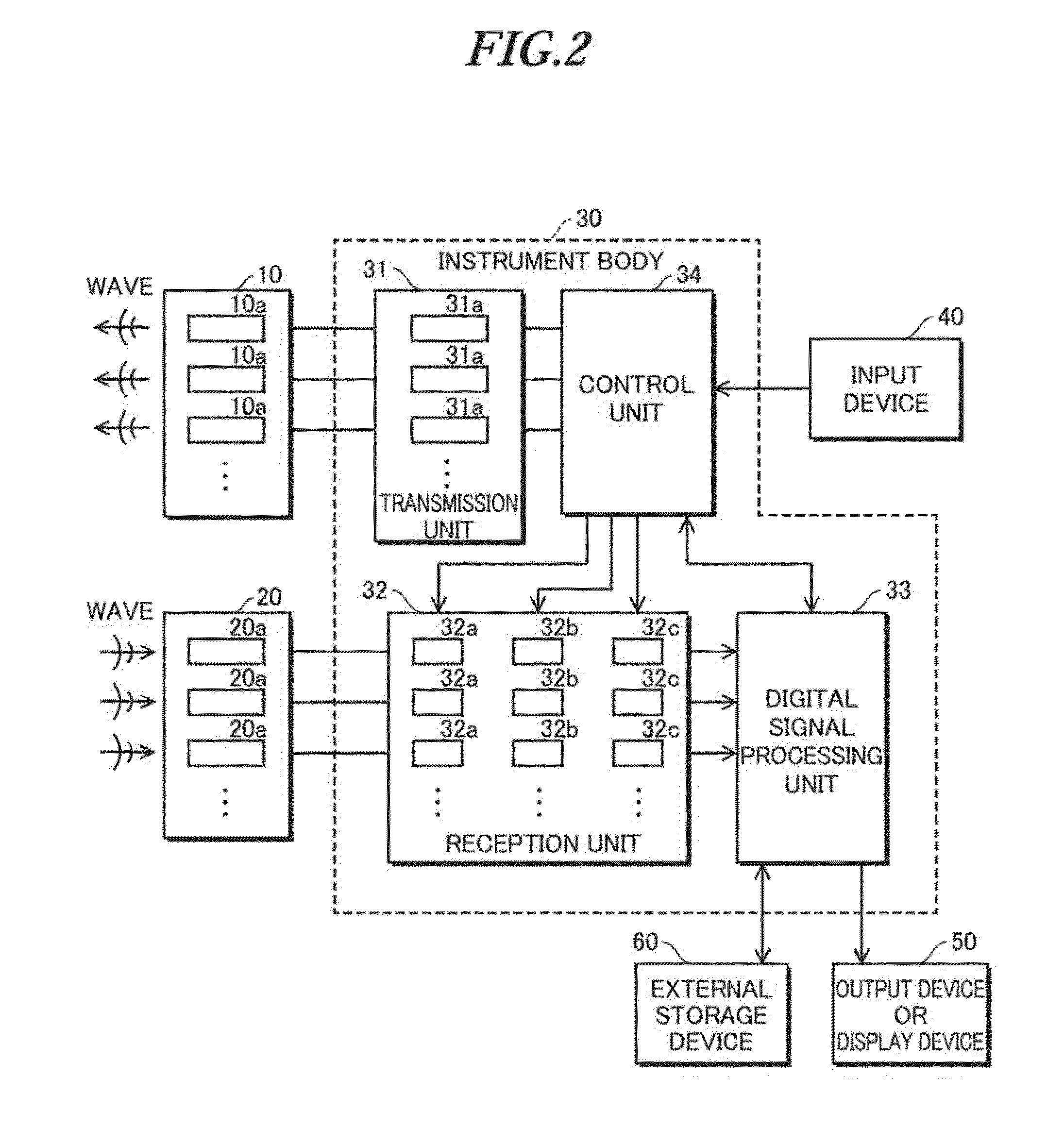

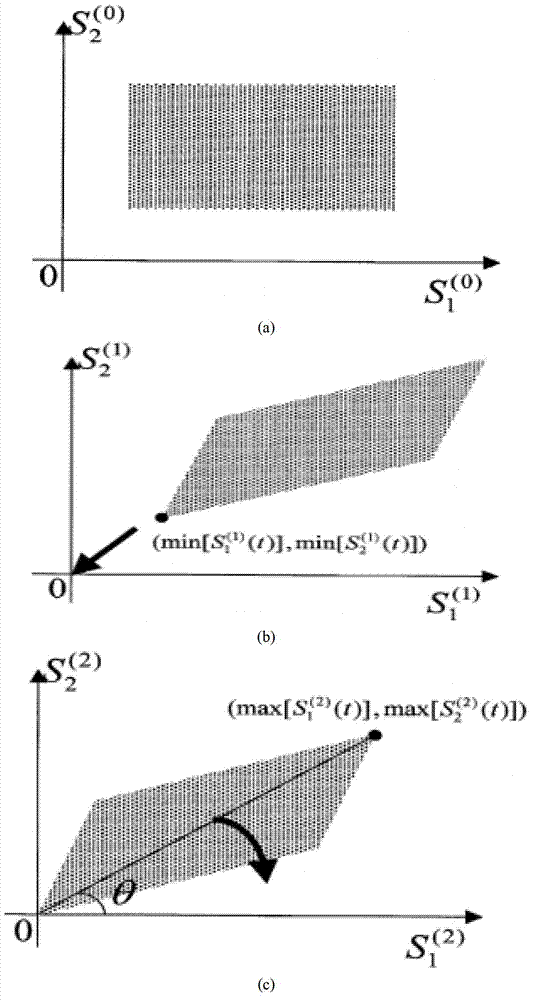

Beamforming method, measurement and imaging instruments, and communication instruments

ActiveUS20160157828A1Increase speedImprove accuracyProcessing detected response signalCatheterEngineeringWavenumber

Beamforming method that allows a high speed and high accuracy beamforming with no approximate interpolations. This beamforming method includes step (a) that generates reception signals by receiving waves arrival from a measurement object; and step (b) that performs a beamforming with respect to the reception signals generated by step (a); and step (b) including without performing wavenumber matching including approximate interpolation processings with respect to the reception signals, and the reception signals are Fourier's transformed in the axial direction and the calculated Fourier's transform is multiplied to a complex exponential function expressed using a wavenumber of the wave and a carrier frequency to perform wavenumber matching in the lateral direction and further, the product is Fourier's transformed in the lateral direction and the calculated result is multiplied to a complex exponential function, from which an effect of the lateral wavenumber matching is removed, to perform wavenumber matching in the axial direction, by which an image signal is generated.

Owner:CHIKAYOSHI SUMI

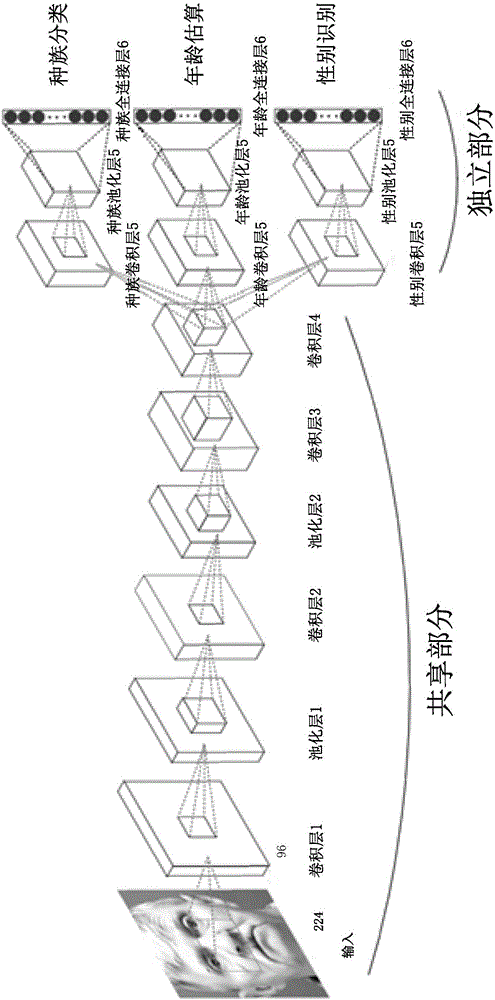

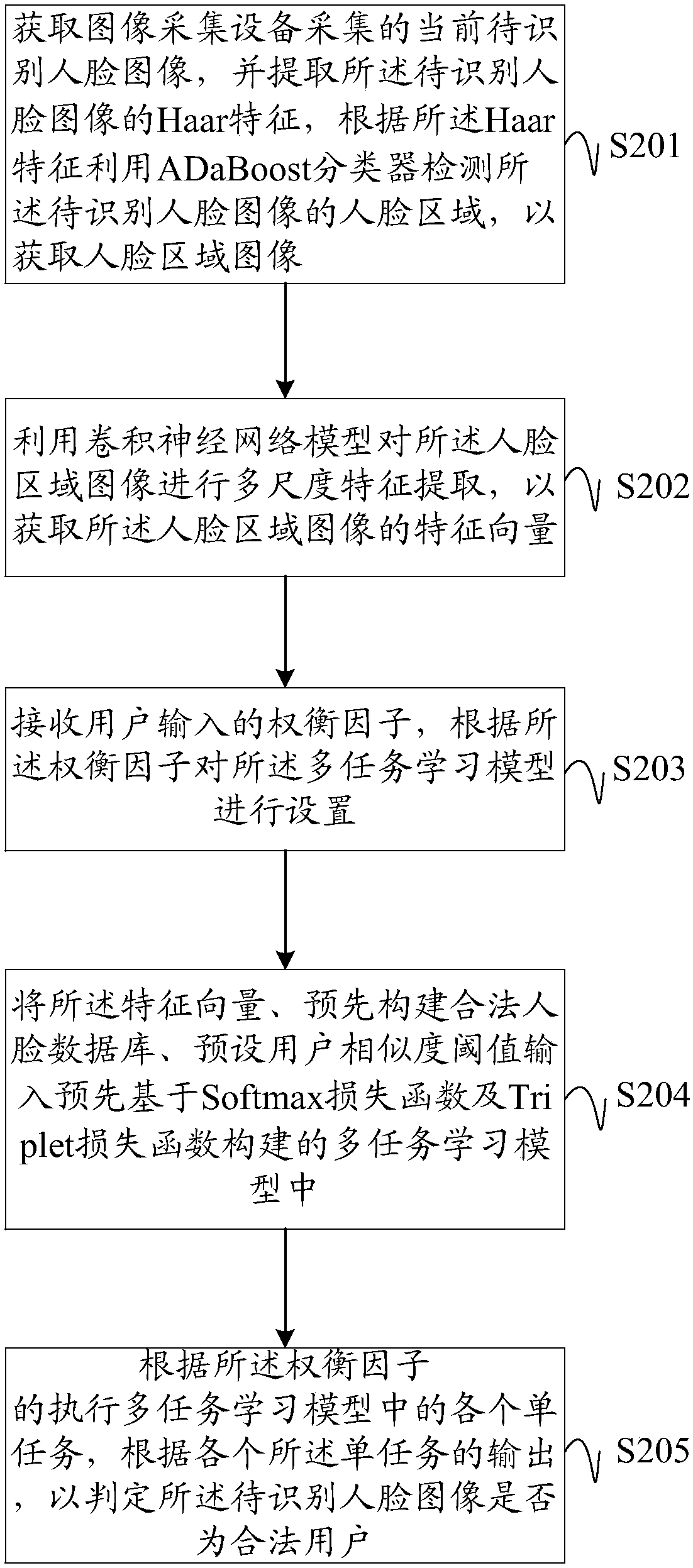

Multi-task learning convolutional neural network-based face attribute analysis method

ActiveCN106529402AImprove generalization abilityCalculation speedCharacter and pattern recognitionTask networkMulti attribute analysis

The present invention discloses a multi-task learning convolutional neural network (CNN)-based face attribute analysis method. According to the method, based on a convolutional neural network, a multi-task learning method is adopted to carry out age estimation, gender identification and race classification on a face image simultaneously. In a traditional processing method, when face multi-attribute analysis is carried out, a plurality of times of calculation are required, and as a result, time can be wasted, and the generalization ability of a model is decreased. According to the method of the invention, three single-task networks are trained separately; the weight of a network with the lowest convergence speed is adopted to initialize the shared part of a multi-task network, and the independent parts of the multi-task network are initialized randomly; and the multi-task network is trained, so that a multi-task convolutional neural network (CNN) model can be obtained; and the trained multi-task convolutional neural network (CNN) model is adopted to carry out age, gender and race analysis on an inputted face image simultaneously, and therefore, time can be saved, and accuracy is high.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

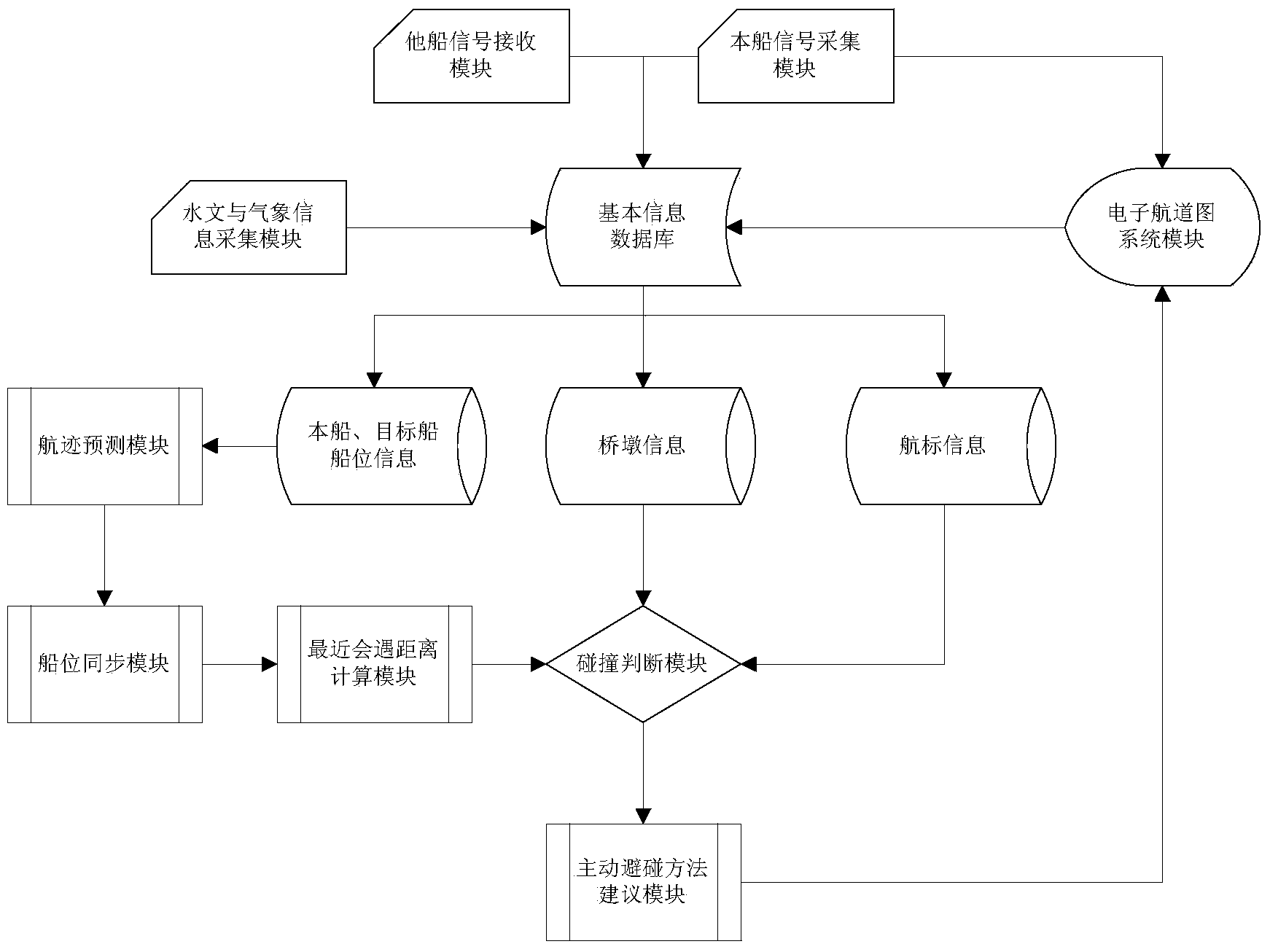

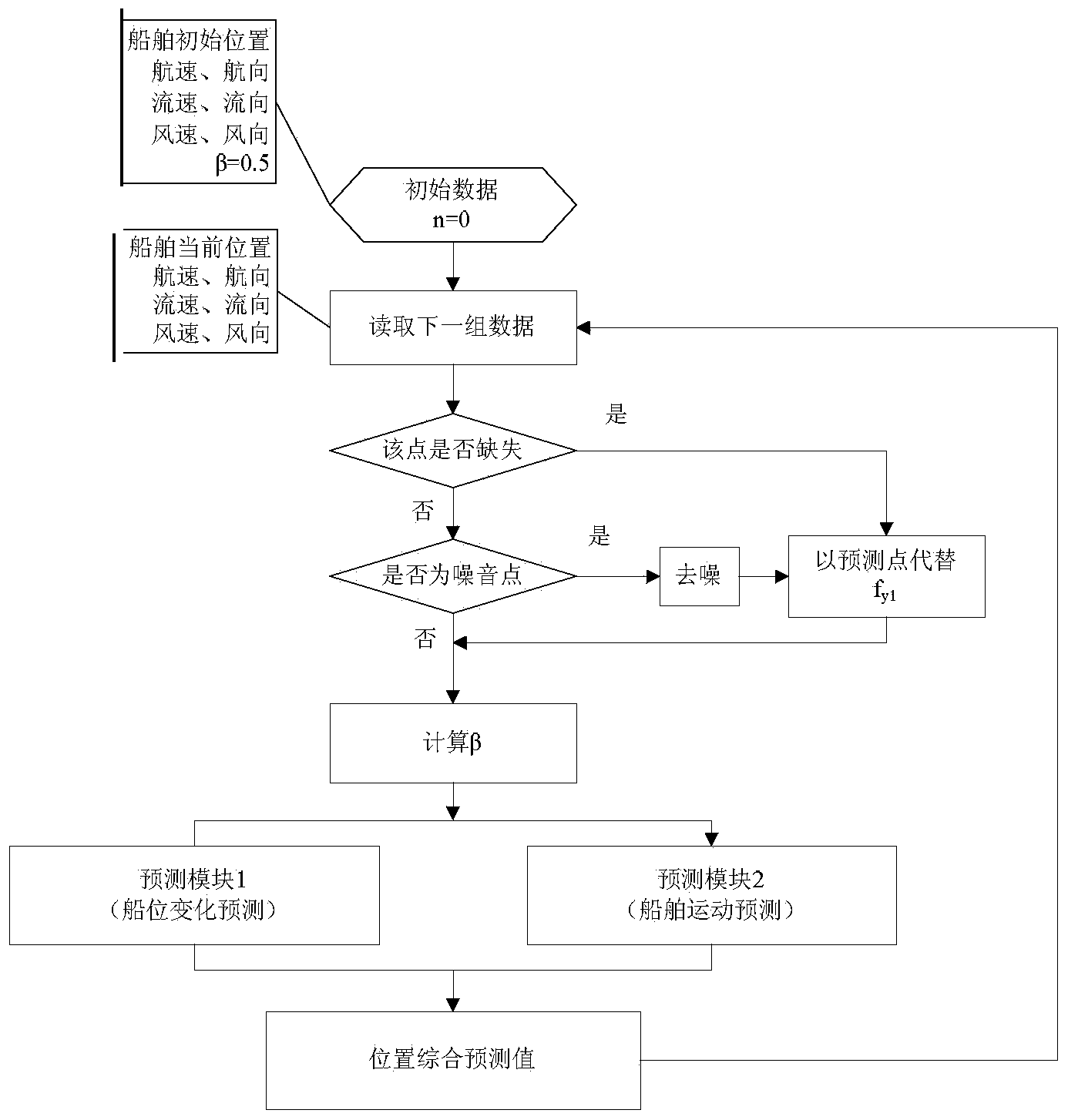

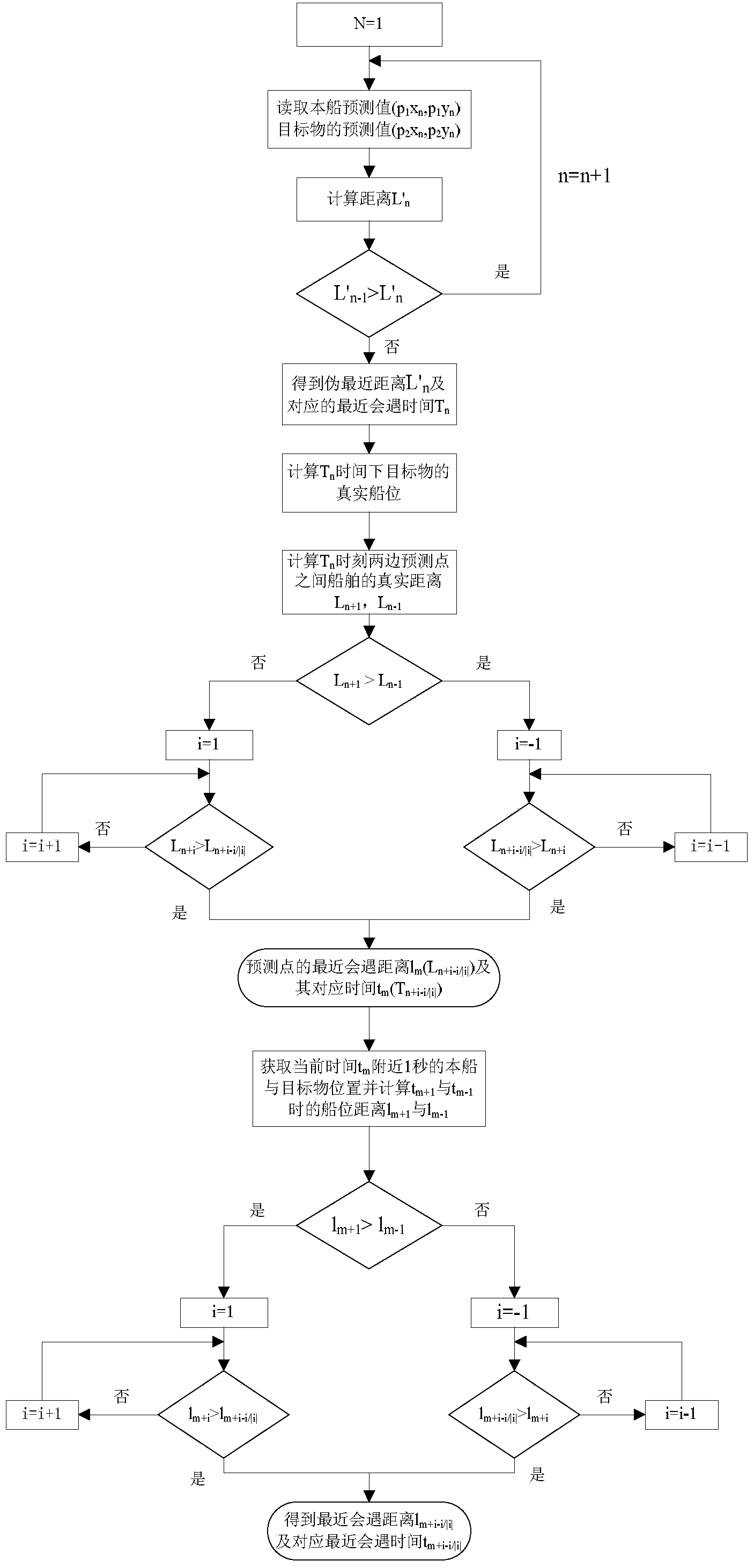

System and method for inland river bridge zone shipborne sailing active collision prevention

ActiveCN103730031AGuarantee the safety of navigationAccurate ship trackMarine craft traffic controlMarine navigationCollision prevention

The invention provides a method for inland river bridge zone shipborne sailing active collision prevention. The method comprises the steps of collecting hydrological information, meteorological information and ship information of nearby ships and the ship, automatically updating river map information on line, and performing data storage; forecasting tracks of the ship and other ships according to obtained track information of the ship and other ships by combination with the real-time hydrological information and the meteorological information, and obtaining track information of every ship; calculating the nearest meeting distance and the nearest meeting time of the ship and a target object according to the obtained track information; and giving collision accident pre-warning and active collision prevention suggestions according to the nearest meeting distance and the nearest meeting time of the ship and the target object. According to the method, by predicating ship sailing situations, the shortcoming that an existing ship assistance navigation device cannot accurately obtain the position of the target object, and the nearest meeting distance and the reaching of the nearest meeting distance cannot be pre-judged more accurately is overcome.

Owner:湖南湘船重工股份有限公司

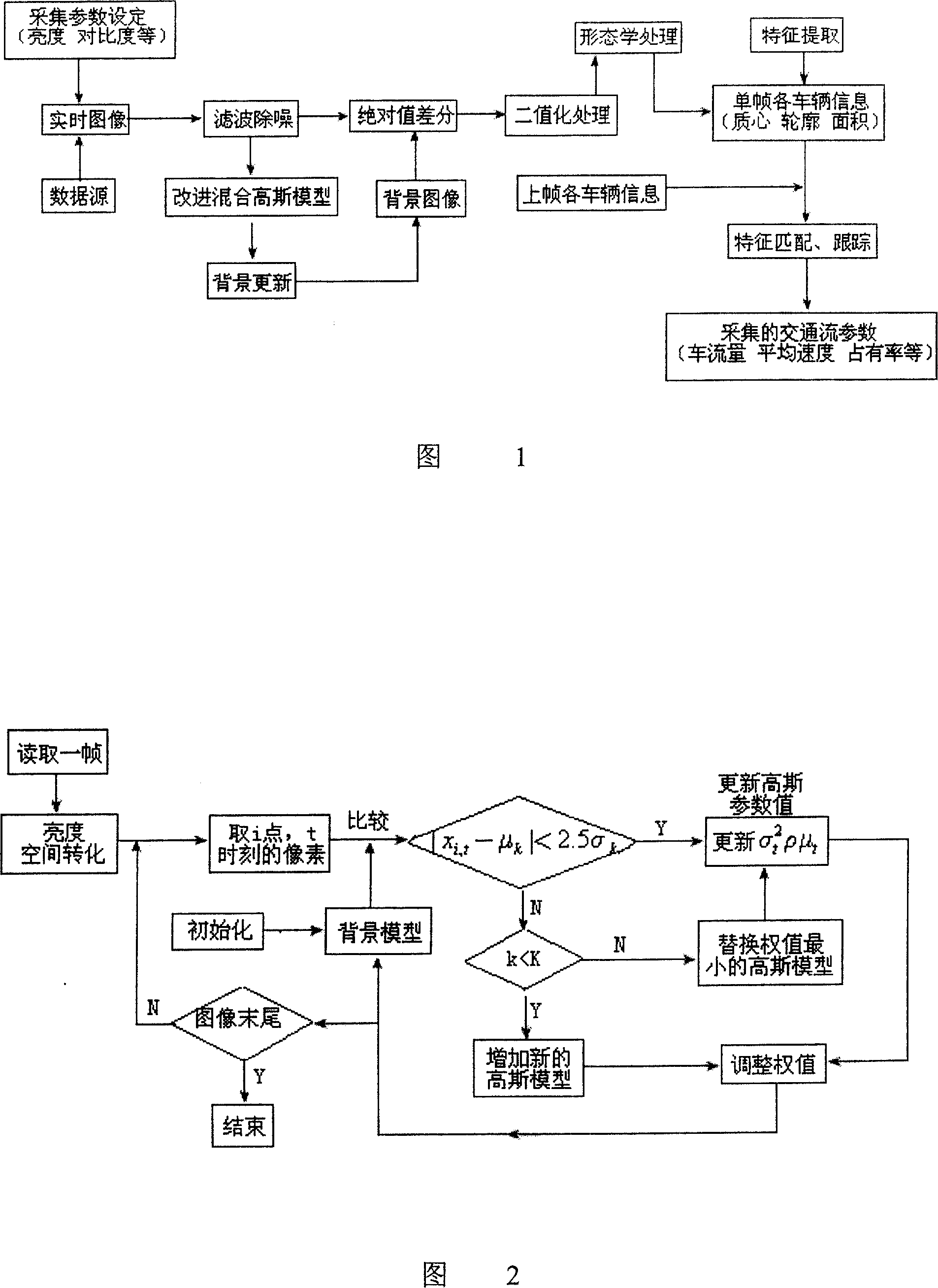

Method for collecting characteristics in telecommunication flow information video detection

ActiveCN1984236AImprove real-time performanceCalculation speedTelevision system detailsImage analysisTraffic flowVideo image

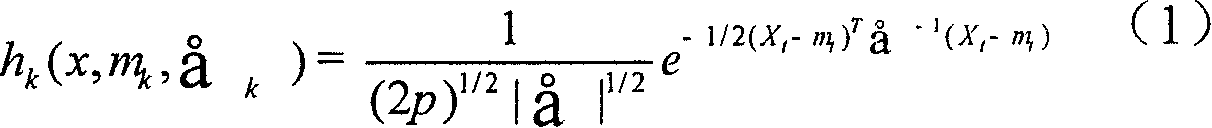

In the invention, the detecting system comprises a video camera and a signal processor. It uses an improved Gaussian mixture distribution model to characterize each pixel in image frame; the characterized feature only uses a brightness feature; if there isn't a moving object (vehicle), the video image is relatively static; each pixel obeys a statistic model along with time variation; when getting a new image frame, the Gaussian mixture distribution model is renewed, if the pixel in current image match the Gaussian mixture distribution model, then determining the pixel is a background point; otherwise, determining the pixel is a foreground point.

Owner:ZHEJIANG UNIV OF TECH

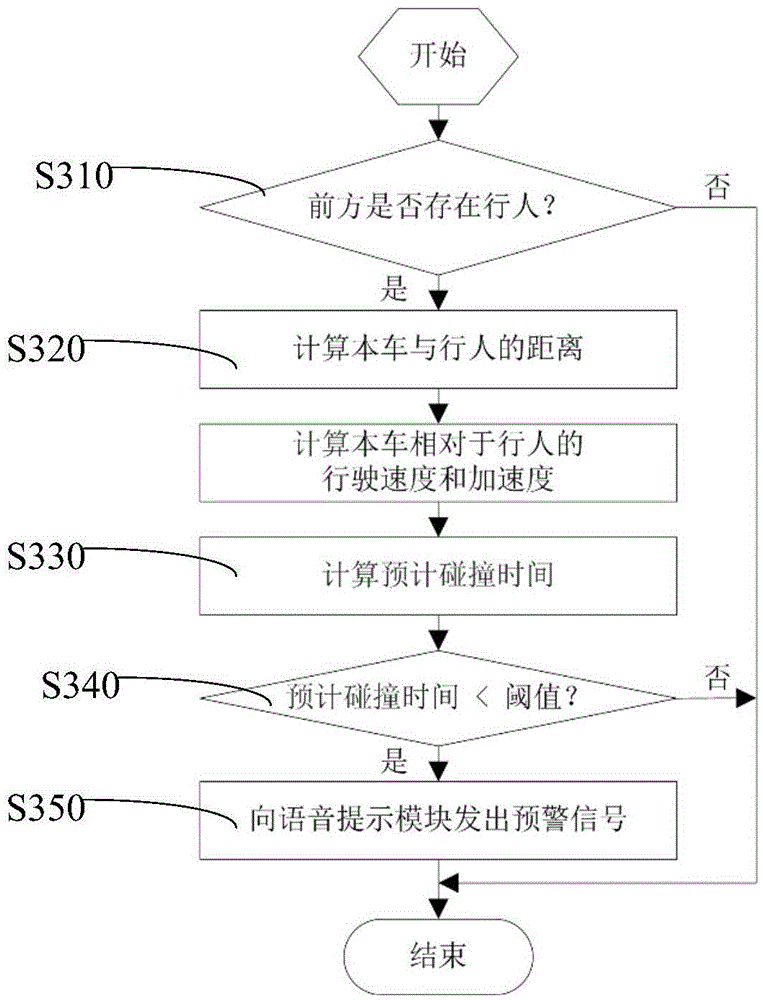

Driving assistance system and real-time warning and prompting method for vehicle

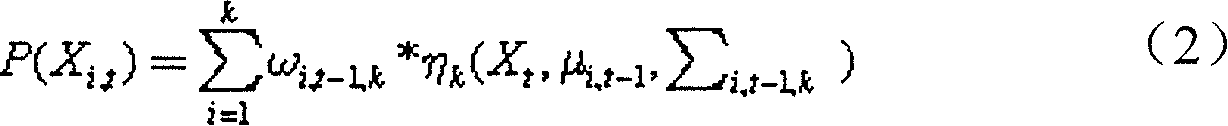

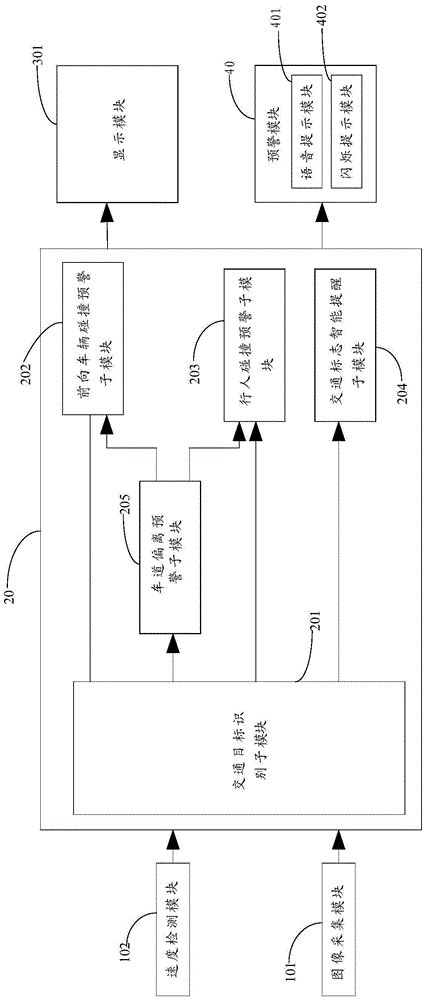

ActiveCN105620489AReduce collectionSmall amount of calculationExternal condition input parametersComputer moduleMarking out

The invention relates to a driving assistance system and a real-time warning and prompting method for a vehicle. Image data of collected targets in front of a current vehicle is collected through an image collection module, then, a lane target in the image data is recognized by a traffic target recognition submodule, a region of interest is marked out according to the recognized lane target, and the region of interest is served as a key recognized target; and the image collection module is controlled by a data processing module to take a lane corresponding to the region of interest as an image collection target. Therefore, after the region of interest is mainly detected, the image collection module is capable of collecting image data required by a user more in time without collecting all forward data, therefore not only is collection capacity of the image collection module lowered but also data processing capacity of the data processing module is reduced, so that calculation amount of the whole system is reduced, calculation speed is accelerated, and warning response time is shortened.

Owner:SHENZHEN MINIEYE INNOVATION TECH CO LTD

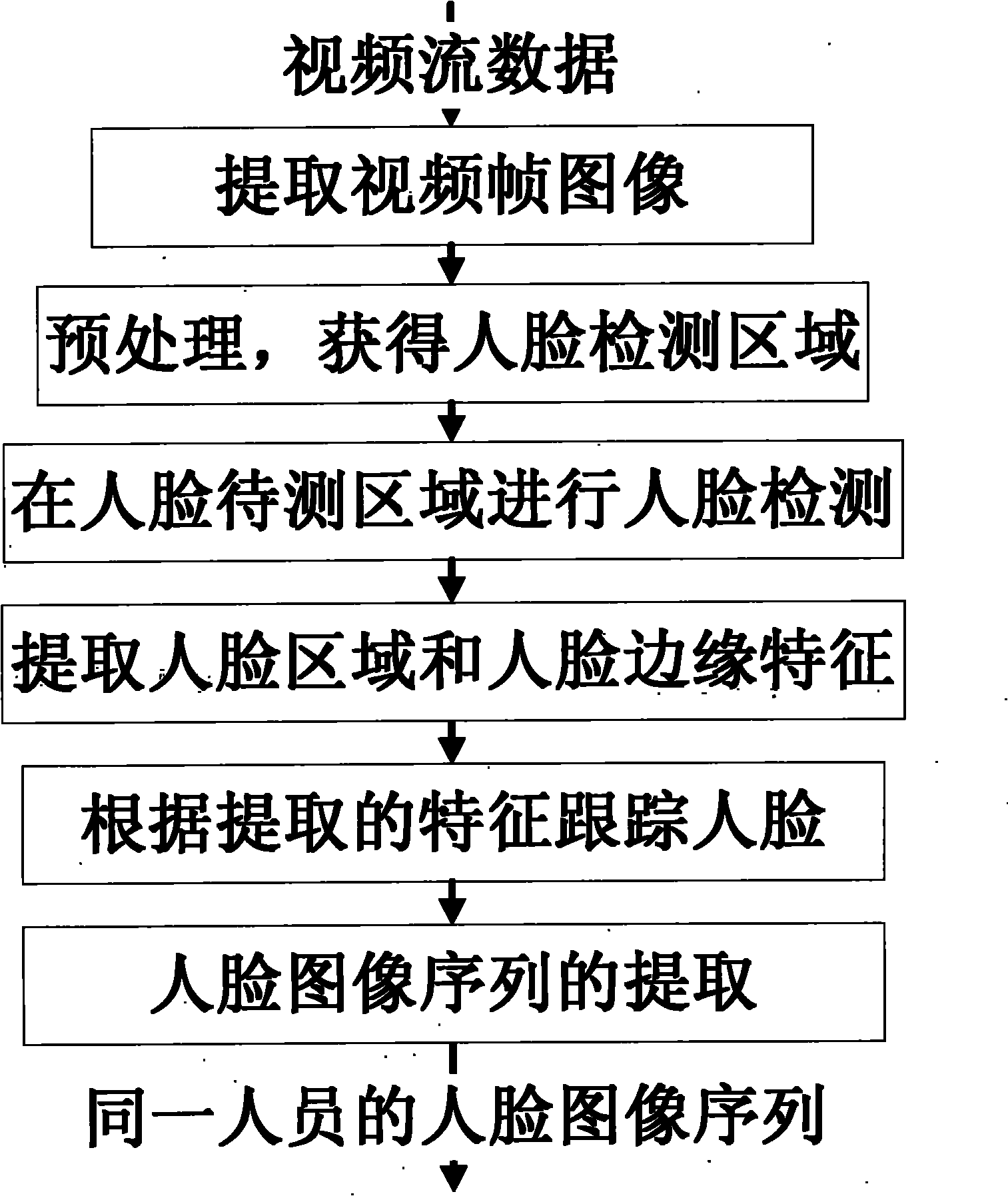

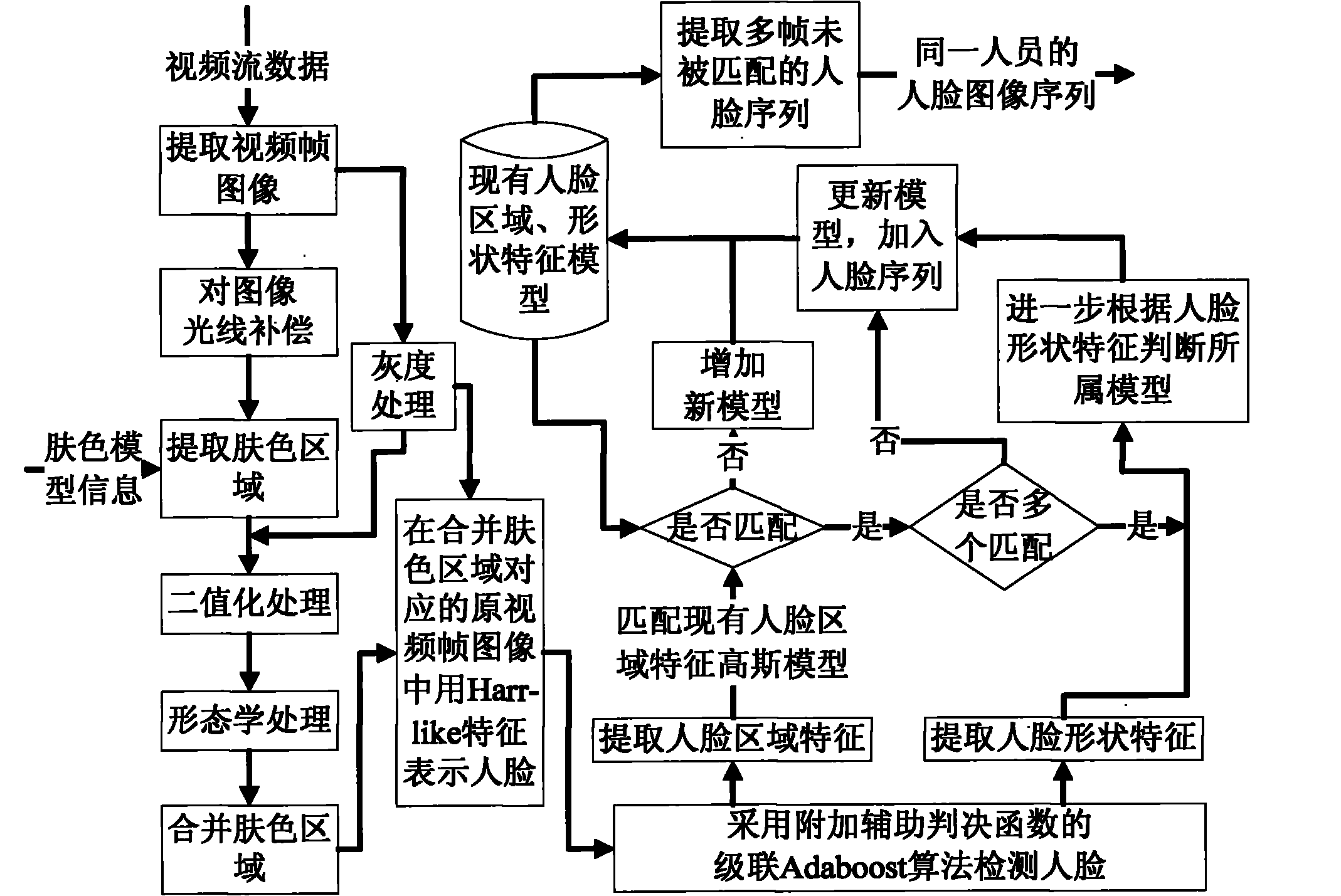

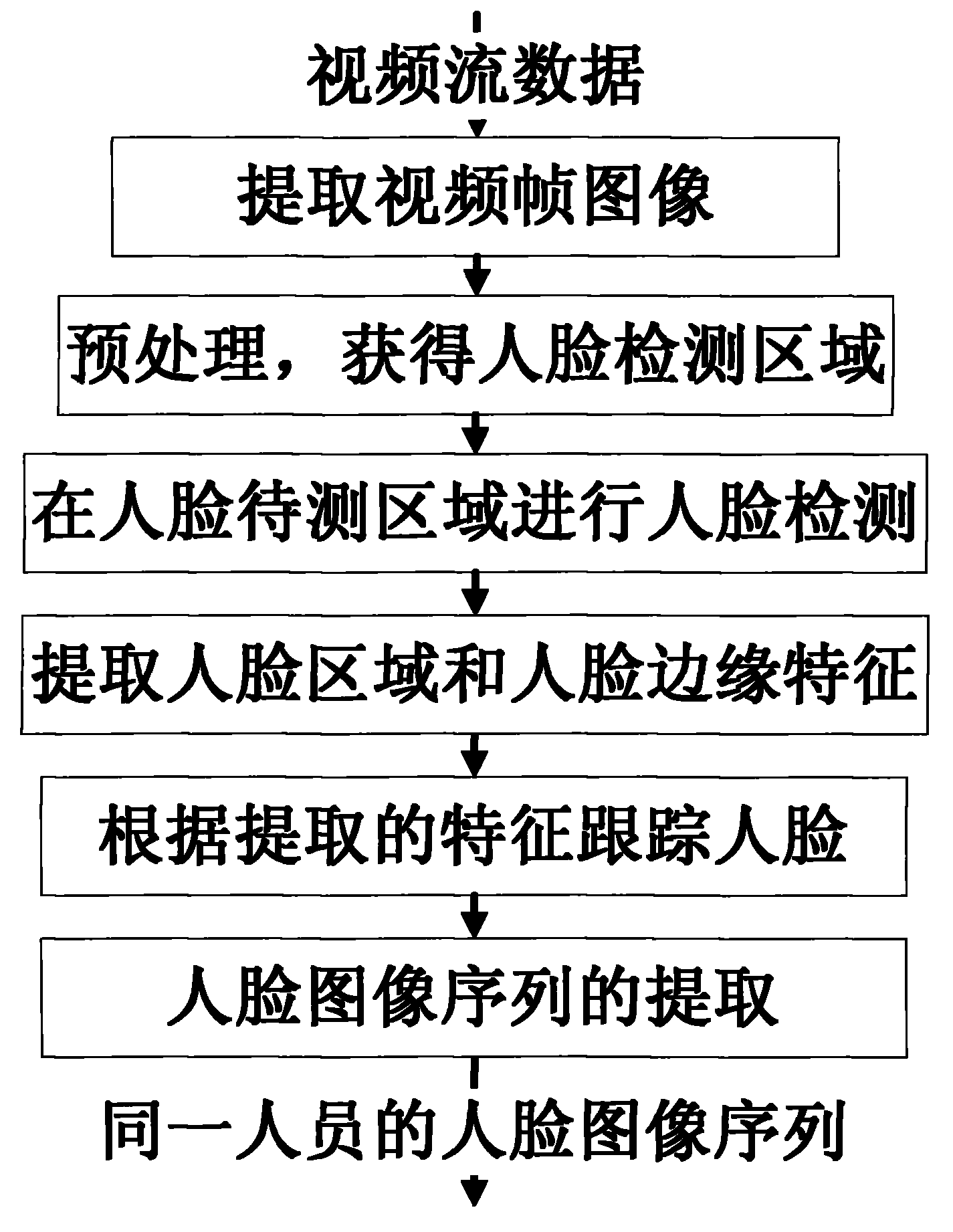

Method for quickly and accurately detecting and tracking human face based on video sequence

InactiveCN102214291AQuick calculationDetection speedCharacter and pattern recognitionPattern recognitionFace detection

The invention discloses a method for quickly and accurately detecting and tracking a human face based on a video sequence, which relates to the technical field of mode identification. The method comprises the following steps of: 1, extracting a video frame image from a video stream; 2, preprocessing the video frame image, namely compensating light rays, extracting skin color areas, performing morphological processing and combining the areas; 3, detecting the human face, namely representing the human face by using Harr-like characteristics and detecting the human face by using a cascaded Adaboost algorithm with an assistant decision function; 4, establishing the characteristics of the human face, namely detecting the area characteristics of the detected human face and the shape characteristics of the edge profile of the human face; 5, tracking the human face, particularly tracking the human face by using a human face area characteristic model when an intersection does not occur in a human face area, and further matching when the intersection occurs according to the shape characteristics of the edge profile of the human face; and 6, extracting the sequence of a human face image. By the technical method, the human face can be detected and tracked quickly and accurately on the basis of the video sequence.

Owner:云南清眸科技有限公司

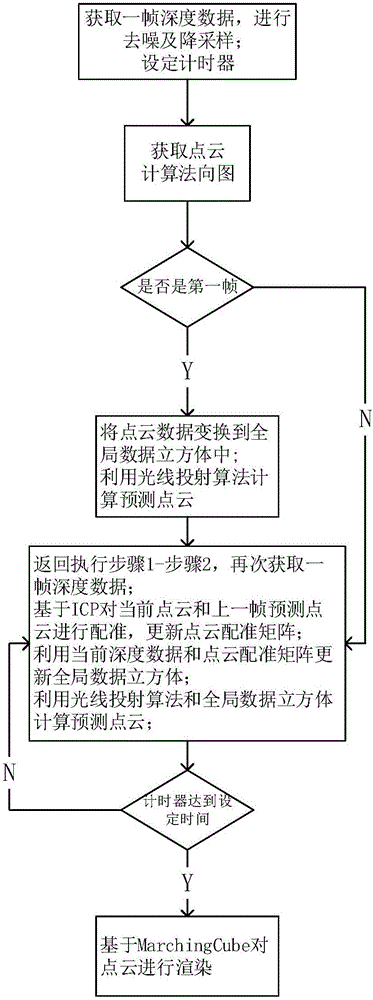

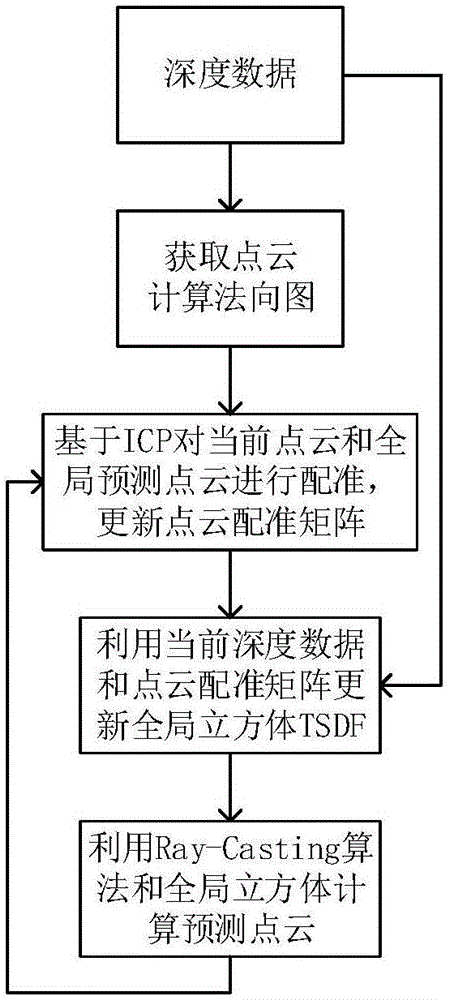

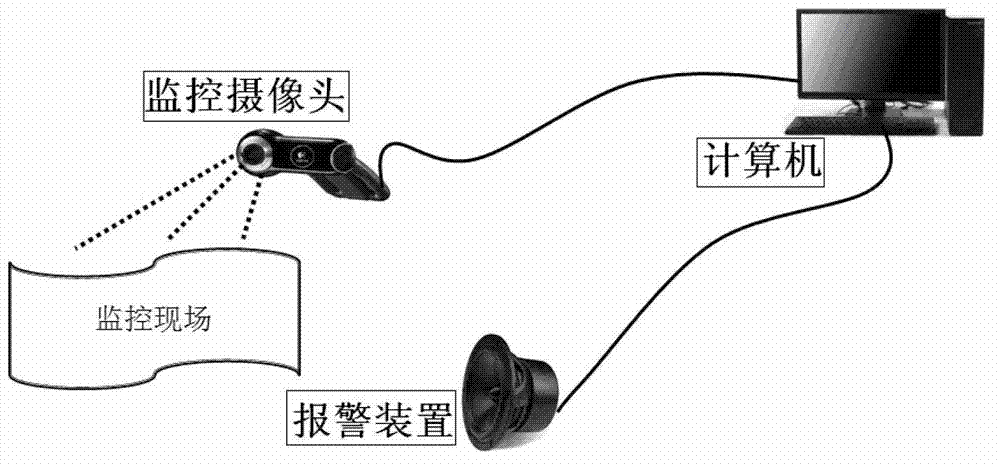

Indoor scene 3D reconstruction method based on Kinect

ActiveCN106803267ALess redundancyImprove real-time performanceDetails involving processing stepsImage enhancementPoint cloudRay casting

The invention discloses an indoor scene 3D reconstruction method based on Kinect and solves the technical problem of the real-time reconstruction of an indoor scene 3D model and avoidance of excessive redundant points. The method comprises steps of: obtaining the depth data of an object by using Kinect and de-nosing and down-sampling the depth data; obtaining the point cloud data of a current frame and calculating the vector normal of each point in the frame; using a TSDF algorithm to establish a global data cube, and using a ray casting algorithm to calculate predicted point cloud data; calculating a point cloud registration matrix by using an ICP algorithm and the predicted point cloud data, fusing the obtained point cloud data of each frame into the global data cube, and fusing the point cloud data frame by frame until a good fusion effect is obtained; rendering the point cloud data with an isosurface extraction algorithm and constructing the 3D model of the object. The method improves the registration speed and the registration precision, is fast in fusion speed and few in redundancy points, and can be used for real-time reconstruction of the indoor scene.

Owner:XIDIAN UNIV

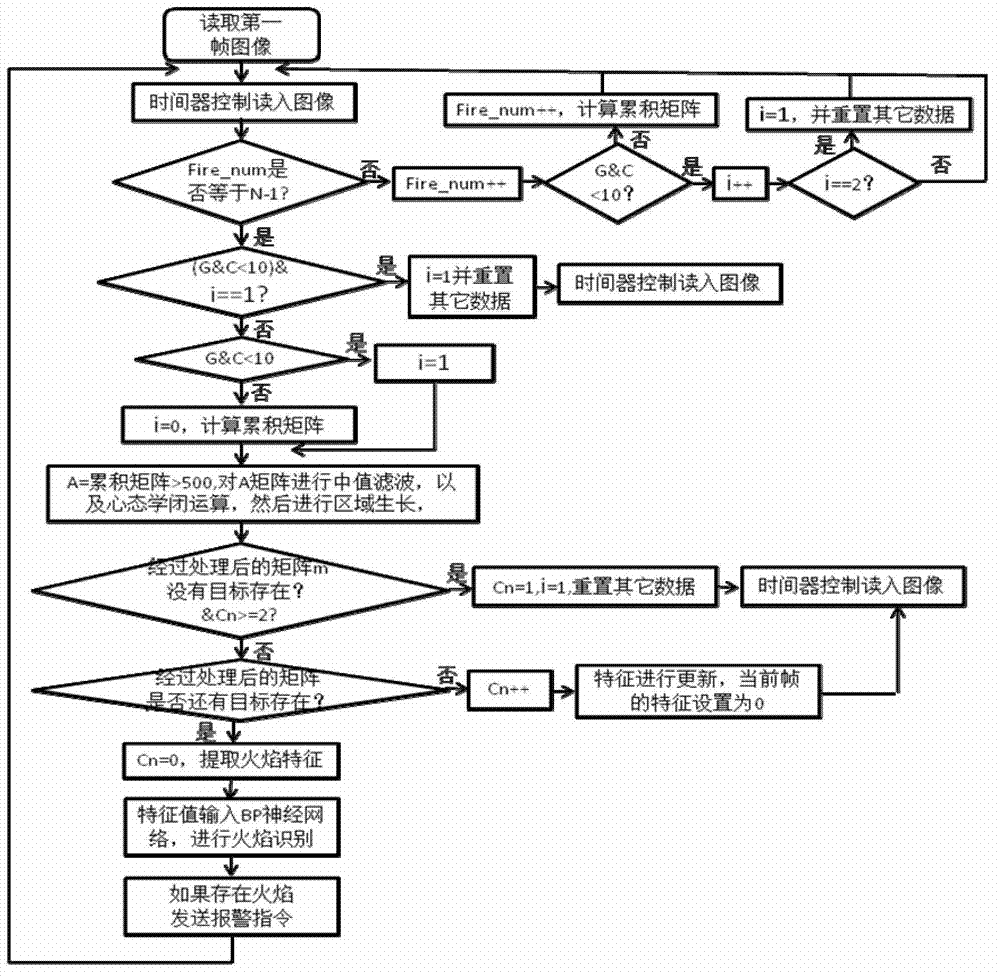

Video flame detecting method based on multi-feature fusion technology

InactiveCN103116746AExcellent capture qualityChoose from a wide range of applicationsCharacter and pattern recognitionDecision modelMulti target tracking

The invention provides a video flame detecting method based on a multi-feature fusion technology. The video flame detecting method includes firstly using a cumulative geometrical independent component analysis (C-GICA) method to capture a moving target in combination with a flame color decision model, tracking moving targets in current and historical frames in combination with a multi-target tracking technology based on moving target areas, extracting color features, edge features, circularity degrees and textural features of the targets, inputting the features into a back propagation (BP) neural network, and further detecting flames after the decision of the BP neural network. According to the video flame detecting method, spatial-temporal features of the moving features, color features, textural features and the like are comprehensively applied, the defects of algorithms of existing video flame detecting technologies are overcome, and reliability and applicability of the video flame detecting method are effectively improved.

Owner:UNIV OF SCI & TECH OF CHINA

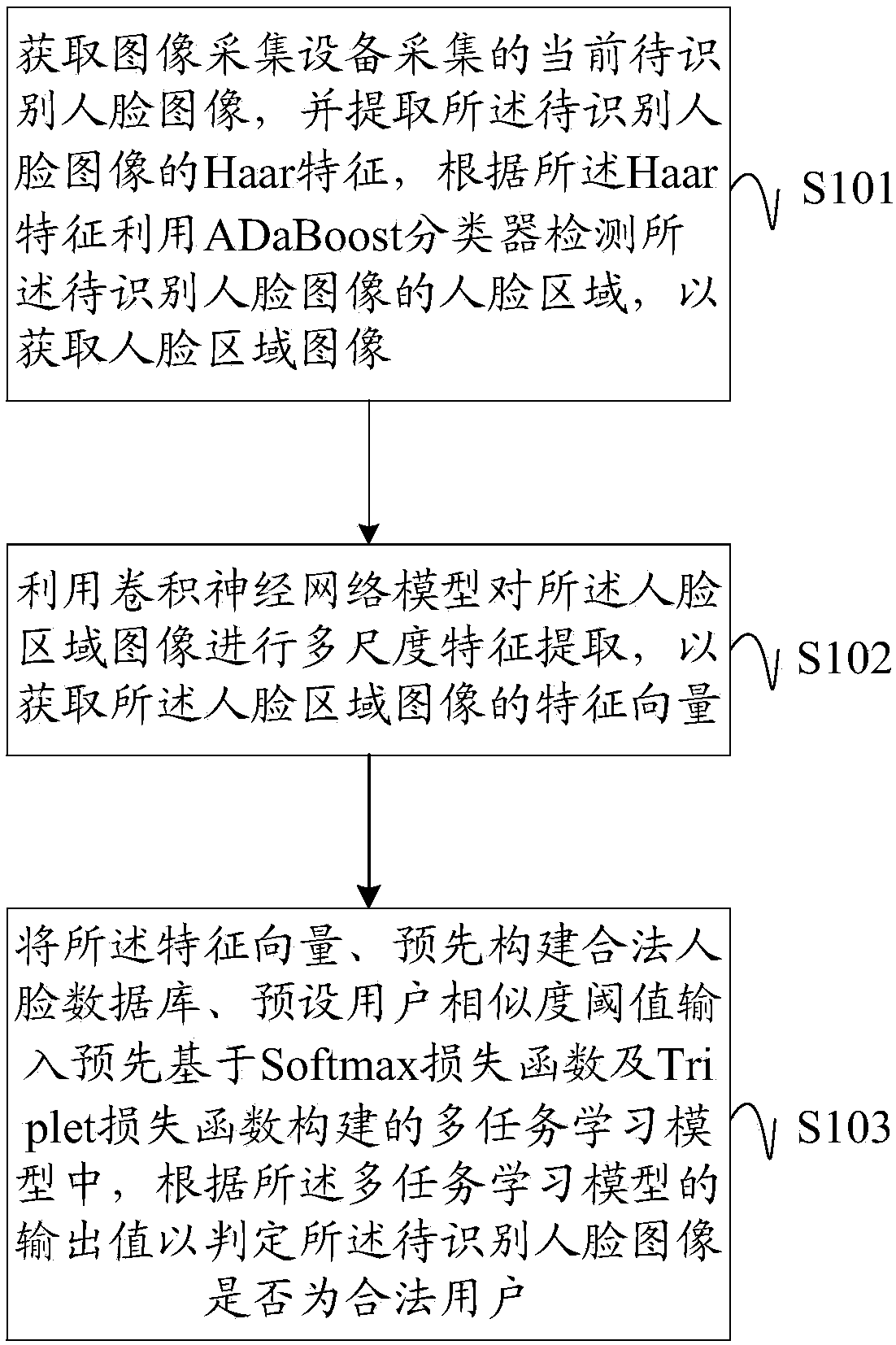

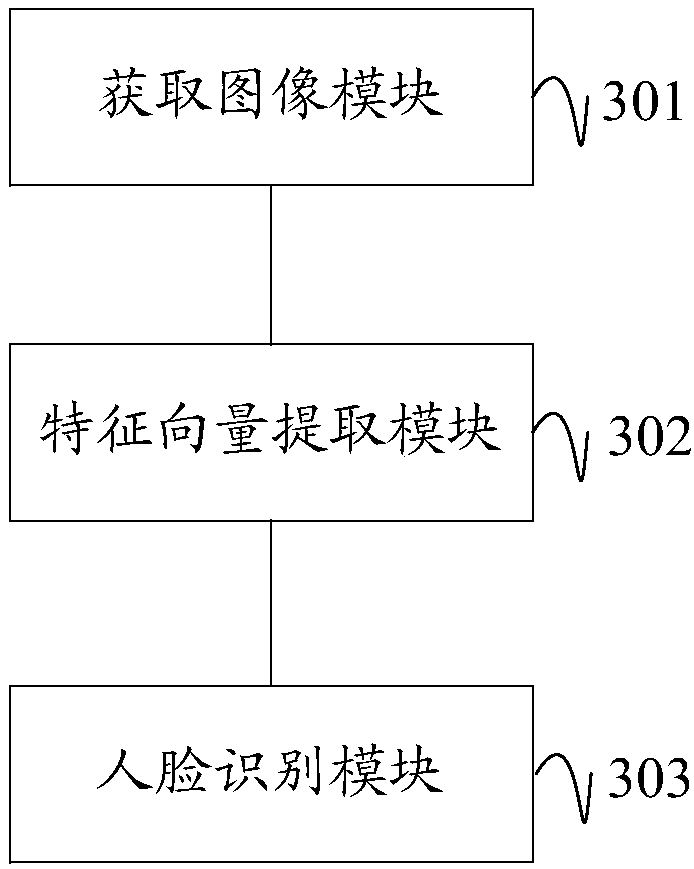

Face recognition method and device

ActiveCN107423690AImprove accuracyCalculation speedCharacter and pattern recognitionFeature vectorFeature extraction

The embodiment of the invention discloses a face recognition method and a device. The method comprises the steps of extracting the Haar feature of a current to-be-recognized face image, and detecting the human face area of the to-be-recognized face image by adopting an ADaBoost classifier so as to obtain a face region image; performing the multi-scale feature extraction on the face region image by utilizing a convolution neural network model, and obtaining a feature vector of the face region image; inputting the feature vector, a pre-built legal face database and a preset user similarity threshold value into a multi-task learning model pre-constructed according to a Softmax loss function and a Triplet loss function, and judging whether the to-be-recognized face image is a legal user or not according to the output value of the multi-task learning model. The extracted feature is good in robustness and good in generalization ability. Therefore, not only the face recognition rate improved, but also the accuracy of face recognition is improved. The safety of identity authentication is improved.

Owner:GUANGDONG UNIV OF TECH

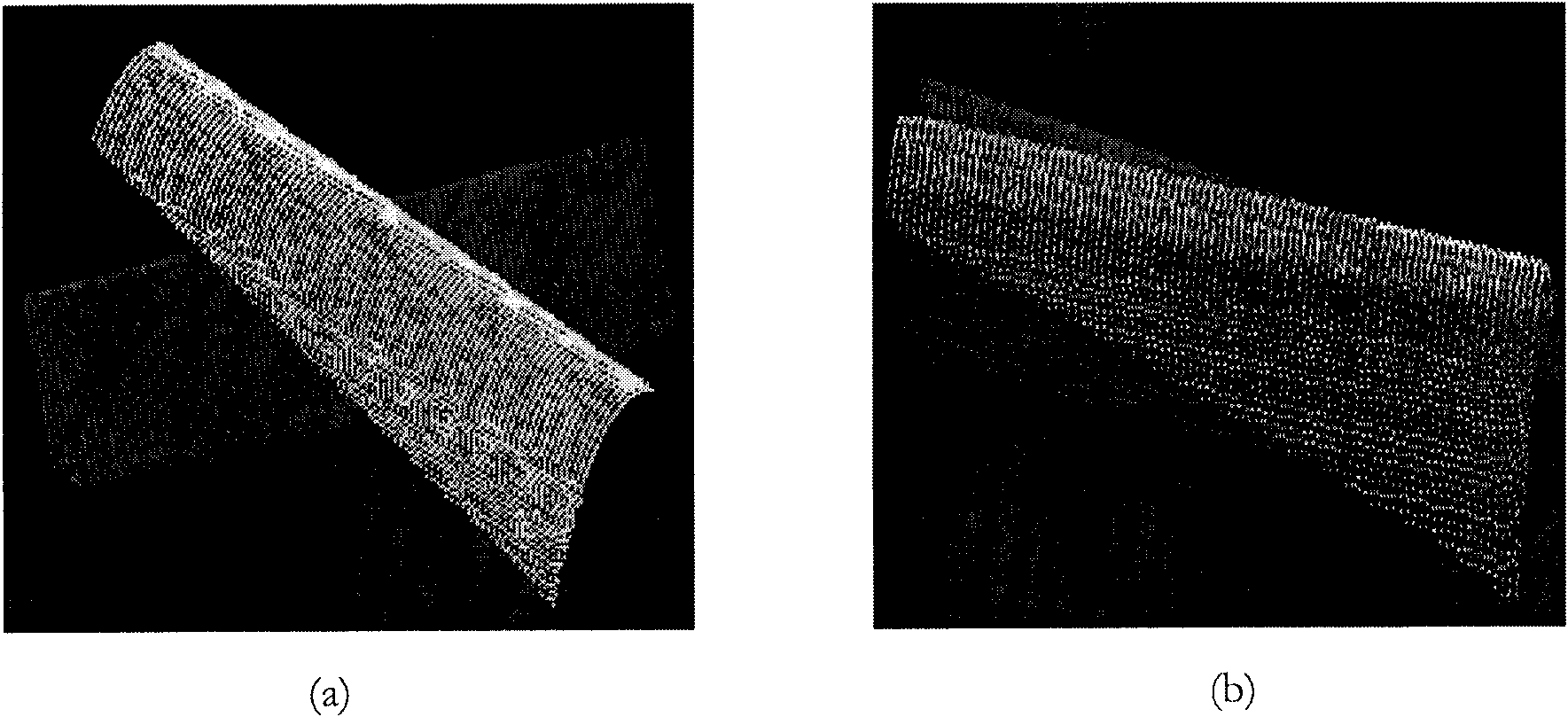

Precise registration method of multilook point cloud

InactiveCN101645170ACalculation speedImprove registration accuracyImage analysisMatch algorithmsIterative closest point

The invention provides a precise registration method of multilook point cloud, comprising the following steps: respectively selecting one piece of point cloud overlapping approximately from two piecesof global point cloud to be registered to serve as the target point cloud and the reference point cloud; utilizing a principle direction bonding method to realize the preregistration of the target point cloud and the reference point cloud; utilizing the principle direction test method to judge and realize the consistency of the preregistration principle directions of the target point cloud and the reference point cloud; respectively calculating the curvature of each point in the target point cloud and the reference point cloud; respectively obtaining characteristic matching point symetries P0and Q0 according to curvature similarity; using the iterative closest point matching algorithm to realize the precise registration of the target point cloud and the reference point cloud by utilizingthe characteristic matching point symetries P0 and Q0; and completing the registration of the two pieces of global point cloud. The method is characterized by high computation speed and high registration precision, thus being capable of realizing good registration effect.

Owner:BEIJING INFORMATION SCI & TECH UNIV

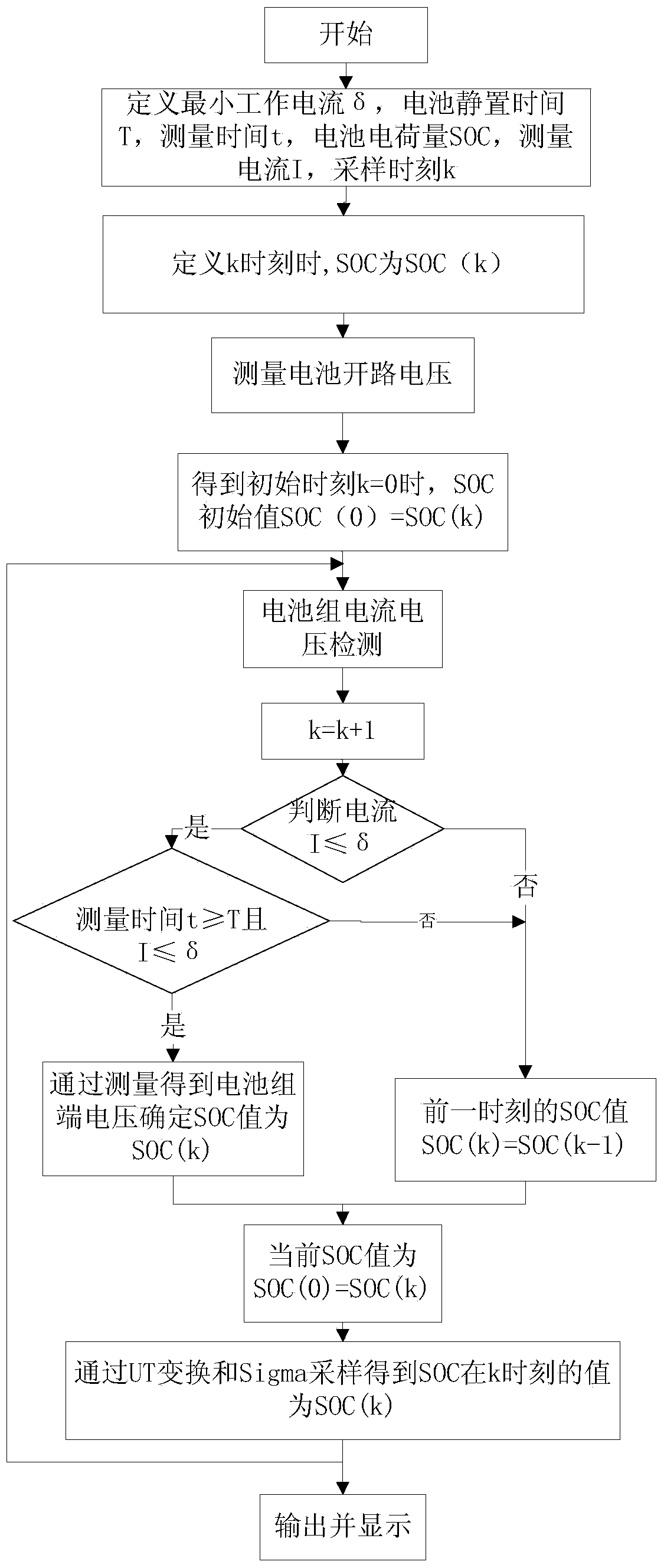

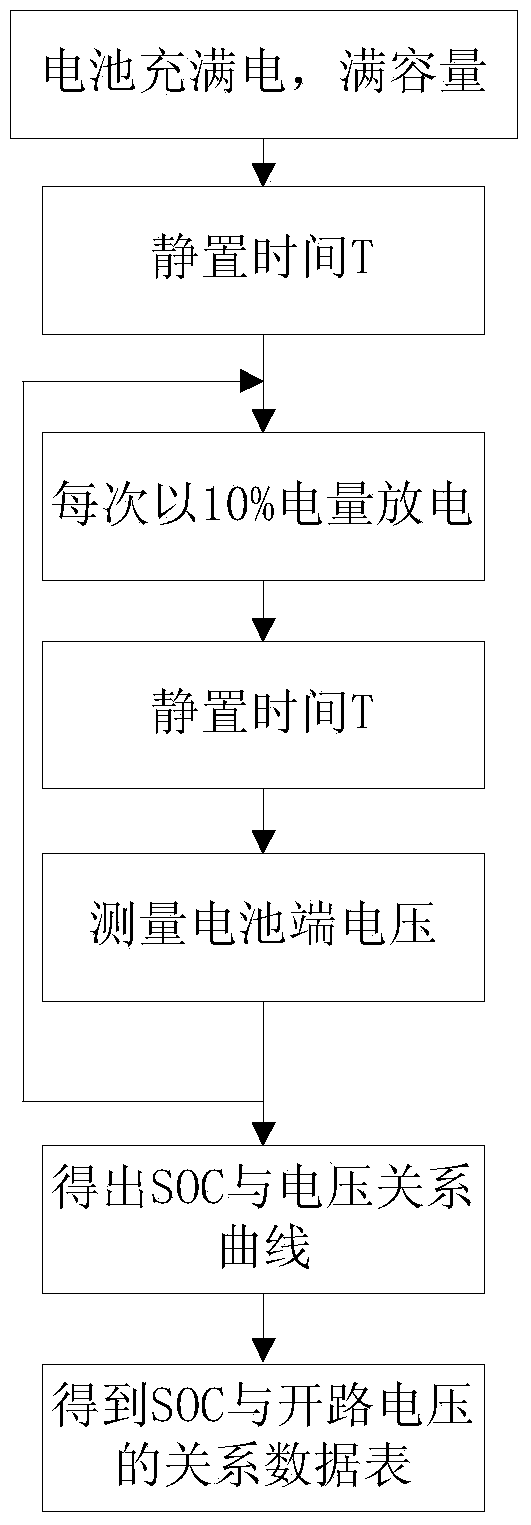

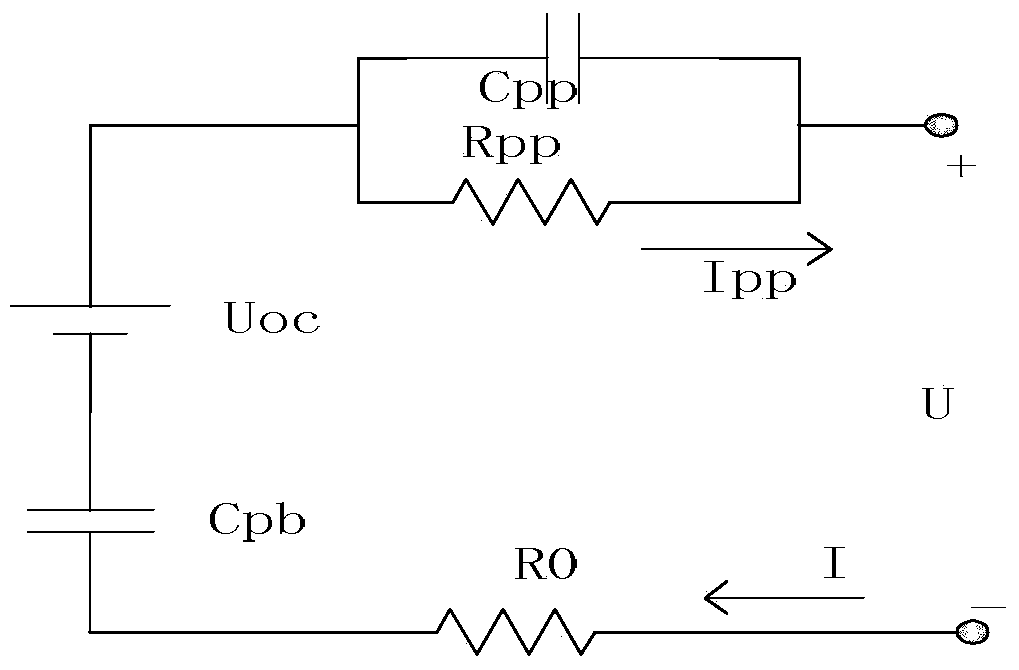

Power battery electric charge quantity estimation method

ActiveCN103675706AHigh precisionImprove calculation accuracyElectrical testingSigma pointLinearization

The invention provides a power battery electric charge quantity estimation method. The method includes the following steps of firstly, obtaining the function relationship between the SOC and an open-circuit voltage through an open-circuit voltage method; secondly, measuring the initial value of the SOC; thirdly, sampling and obtaining the SOC estimated initial value of an unscented Kalman filter; fourthly, conducting Sigma point sampling of UT conversion of the unscented Kalman filter according to a battery state equation and an observation equation, obtaining an estimated value of the observation quantity, and estimating an SOC estimated value and a covariance of a power battery at the next moment. According to the method, the open-circuit voltage method and the unscented Kalman filter are used in cooperation for conducting SOC estimation, the estimation accuracy is high, the SOC initial value is obtained through the open-circuit voltage method, SOC estimation modification is conducted, the estimation accuracy is improved, and in the unscented Kalman filter non-linearization approximation process, errors are reduced, the calculation speed is high, and the SOC estimation efficiency is improved.

Owner:GUILIN UNIV OF ELECTRONIC TECH

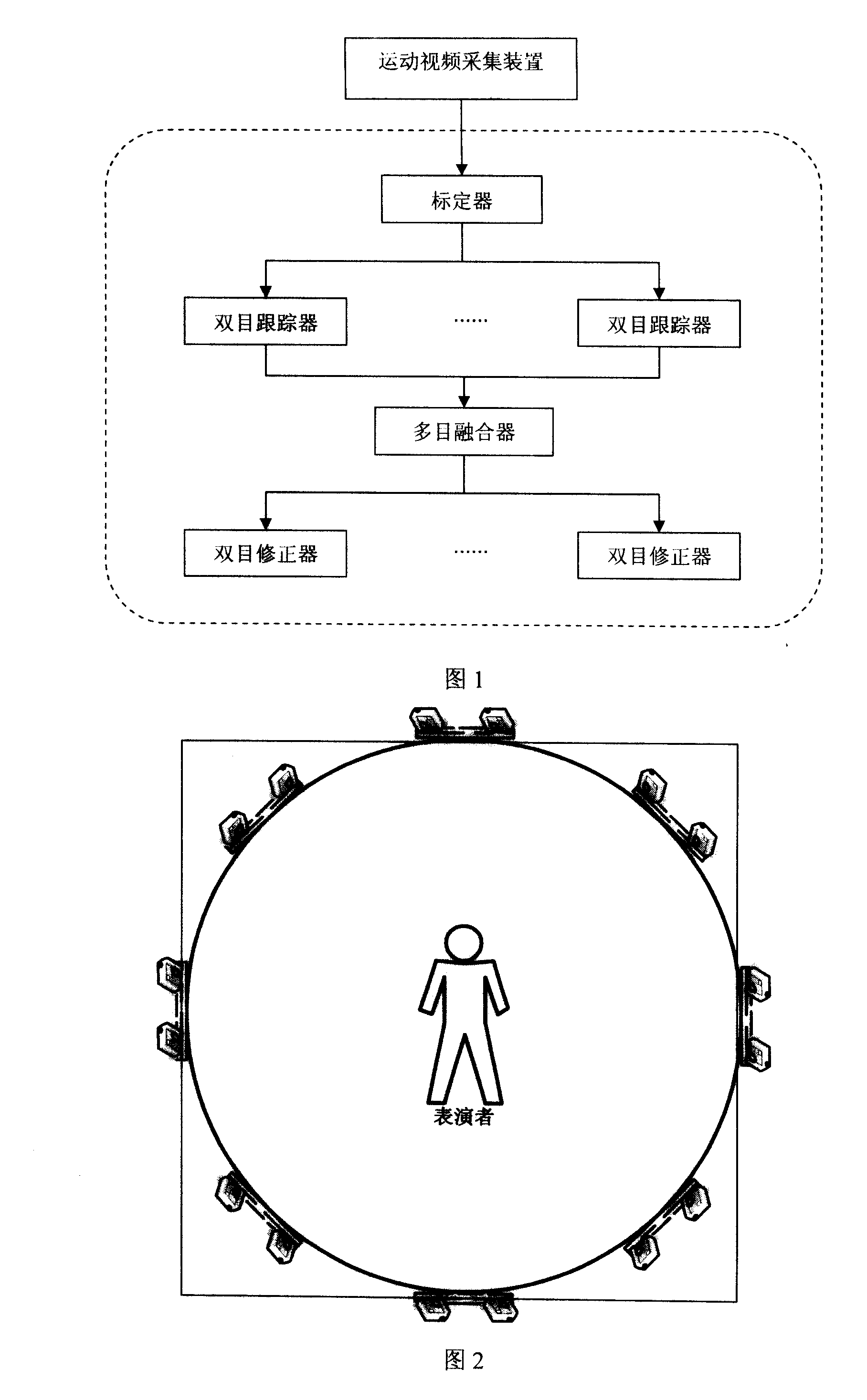

Method for capturing movement based on multiple binocular stereovision

The invention discloses a movement capturing method based on multiple binocular stereo vision. A movement video collecting device is constructed, and human movement video sequences from different orientations are collected by the movement video collecting device. Multiocular movement video sequences shot by a plurality of cameras are calibrated. Marked points matching and tracking of each binocular tracker is finished. Data fusion of three-dimensional tracking result of multiple binocular trackers is completed. The three-dimensional movement information of the marked points acquired by a multiocular fusion device is fed back to the binocular tracker to consummate binocular tracking. On the basis of binocular three-dimensional tracking realized by binocular vision, the invention fuses multiple groups of binocular three-dimensional movement data, resolves parameter acquiring problem of three-dimensional position, tracking, track fusion and the like for a plurality of marked points, increases number of traceable market points and enables the tracking effect to be comparable with three-dimensional movement acquiring device employing multi-infrared cameras for collecting.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

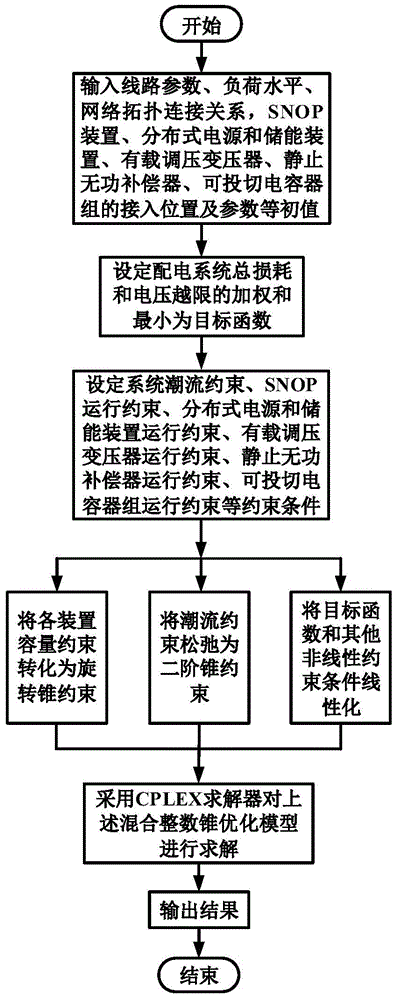

Mixed integer cone programming based intelligent distribution system synthetic voltage reactive power optimization method

ActiveCN105740973AReduce the difficulty of solvingEasy to solveForecastingInteger non linear programmingDistribution power system

The present invention provides a mixed integer cone programming based intelligent distribution system synthetic voltage reactive power optimization method. The method comprises: inputting a distribution system structure and a parameter of a distribution system; establishing a time sequence optimization model of a distribution system synthetic voltage reactive power control problem considering various types of adjustment means; converting the established model into a mixed integer second-order cone model; solving the obtained mixed integer second-order cone model by using a mathematical solver capable of solving mixed integer second-order cone programming; and outputting a solution result. The method provided by the present invention greatly reduces solving difficulty and facilitates performing solution by using a solving tool, so that a complex mixed integer non-linear programming problem can be solved, cumbersome iteration and a large number of tests are avoided, and the calculation speed is greatly improved.

Owner:天津白泽清源科技有限公司

Face detection method and device

InactiveCN107871134ACalculation speedThe classification prediction is accurateCharacter and pattern recognitionFalse detectionNetwork model

The embodiment of the invention provides a face detection method and device, and the method comprises the steps: employing a pre-trained first convolution neural network model for the classification of a to-be-detected image, screening out at least one candidate region from a to-be-detected image input region, employing a pre-trained second convolution neural network model for the classification of the candidate regions, screening out at least one selected region from the candidate regions, carrying out the removing and clustering of detection frames according to at least one selected region,so as to obtain a human face detection region. Because the input region of the first convolution neural network is very small, the calculation speed of human face detection is improved. In addition, because the two convolution neural network models at different depths are employed, the secondary classification of the obtained candidate regions is carried out, and the classification prediction is enabled to be more accurate. Meanwhile, a large number of false detection samples are filtered out, and the detection performances are improved.

Owner:BEIJING EYECOOL TECH CO LTD

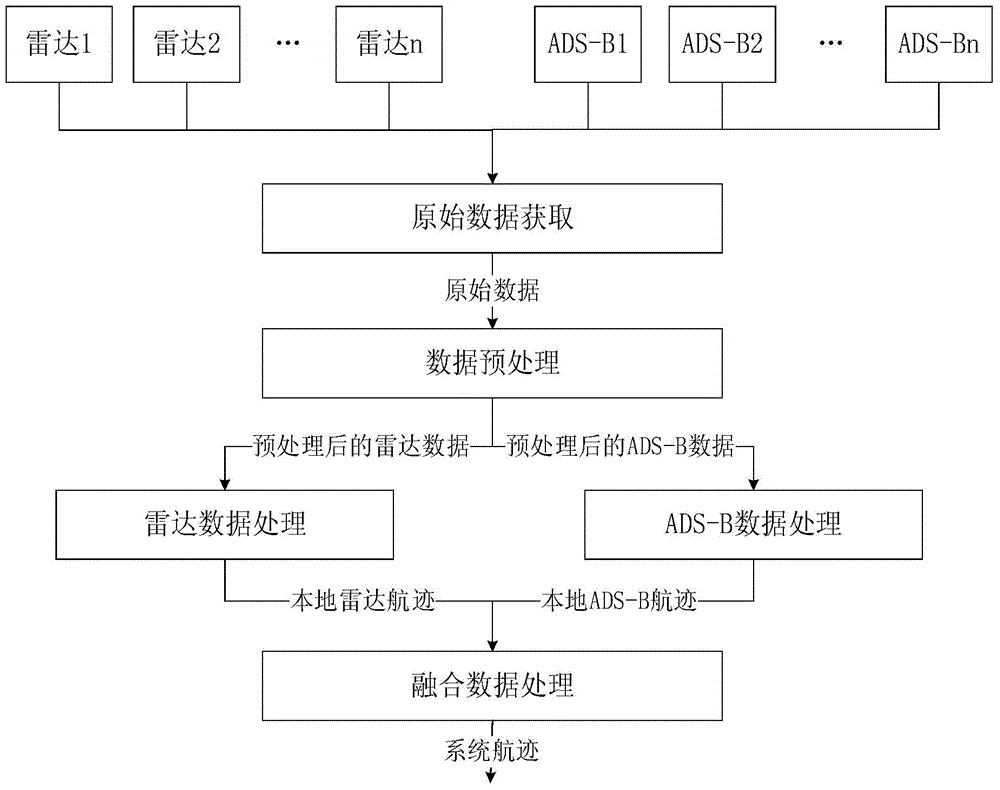

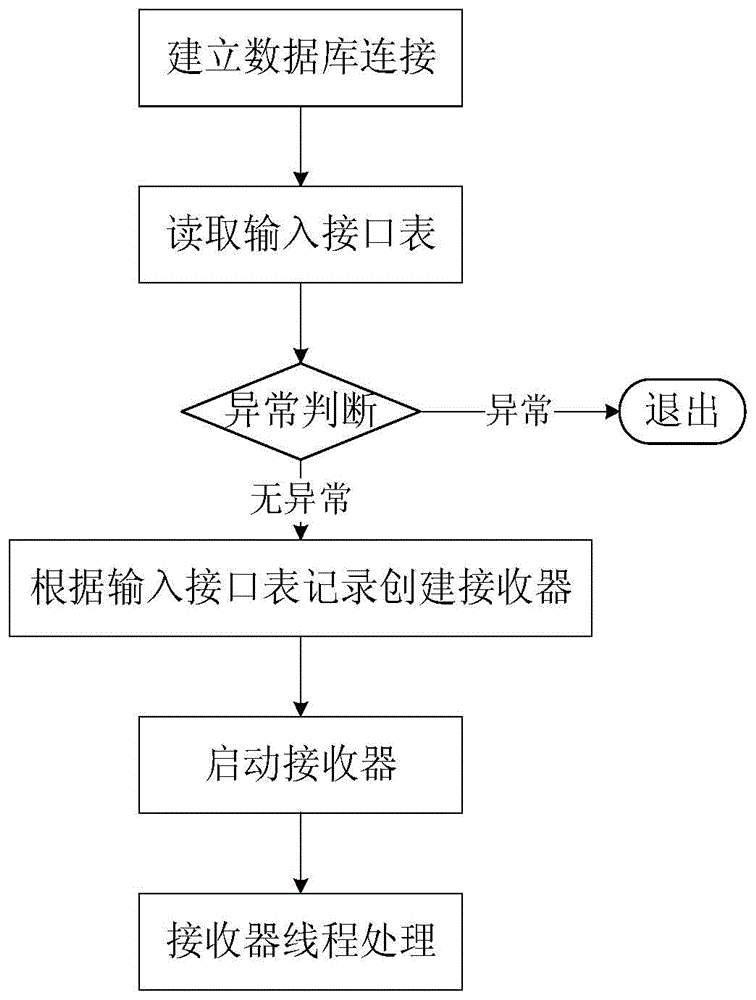

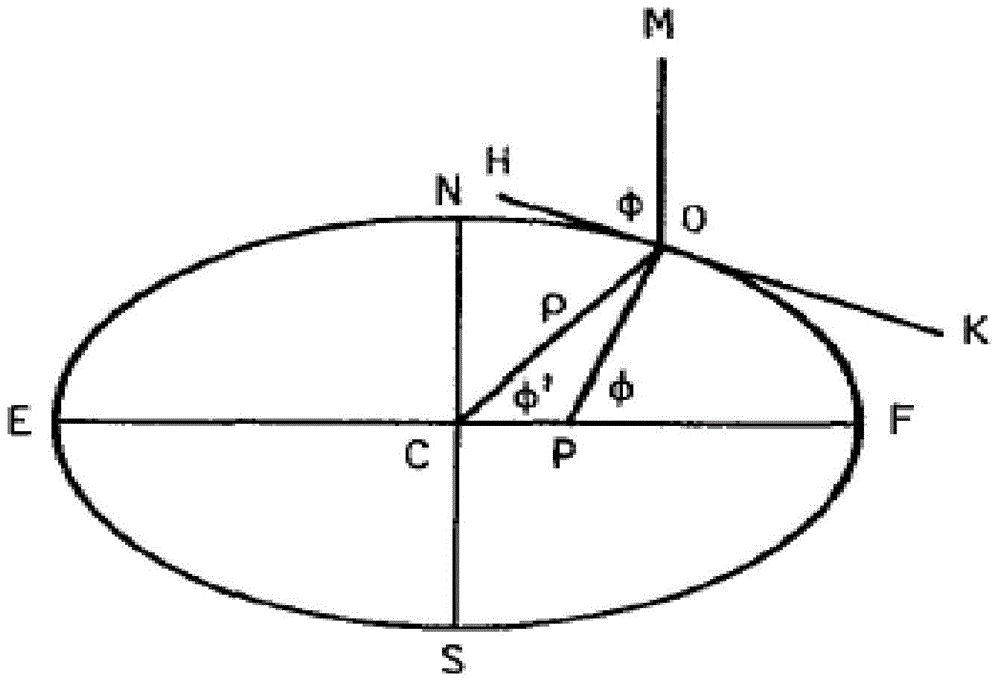

Multi-surveillance-source flying target parallel track processing method

ActiveCN104808197AMeet the requirements of real-time processingControl quantityRadio wave reradiation/reflectionData processing systemHigh availability

The invention discloses a multi-surveillance-source flying target parallel track processing method. The method includes the steps: multi-surveillance-source data receiving; multi-surveillance-source data analysis; radar data processing; ADS-B (automatic dependent surveillance-broadcast) data processing; multi-surveillance-source data fusion. Surveillance of quality of data accessing to radar is realized by monitoring and analyzing quality of radar signals. In addition, real-time receive processing of the radar data is realized by means of multithreading, high safety, high reliability and high usability of a data processing system can be further guaranteed, and accuracy and quickness in track processing of flying targets in different data types from different surveillance sources can be realized.

Owner:四川九洲空管科技有限责任公司

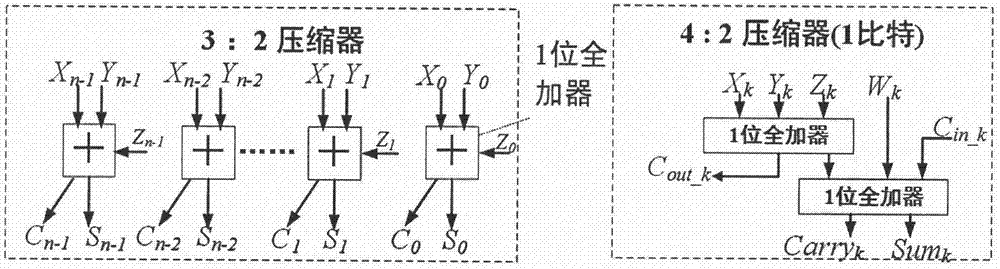

Approximate-computation-based binary weight convolution neural network hardware accelerator calculating module

ActiveCN106909970ACalculation speedReduce power consumptionPhysical realisationApproximate computingHardware acceleration

The invention discloses an approximate-computation-based binary weight convolution neural network hardware accelerator calculating module. The hardware accelerator calculating module is able to receive the input neural element data and binary convolution kernel data and conducts rapid convolution data multiplying, accumulating and calculating. The calculation module utilizes the complement data representation, and includes mainly an optimized approximation binary multiplier, a compressor tree, an innovative approximation adder, and a temporary register for the sum of the serially adding part. In addition, targeted to the optimized approximation binary multiplier, two error compensation schemes are proposed, which reduces or completely eliminates the errors brought about from the optimized approximation binary multiplier under the condition of only slightly increasing the hardware resource overhead expense. Through the optimized calculating units, the key paths for the binary weight convolution neural network hardware accelerator using the computation module are shortened considerably, and the size loss and power loss are also reduced, making the module suitable for a low power consuming embedded type system in need of using the convolution neural network.

Owner:南京风兴科技有限公司

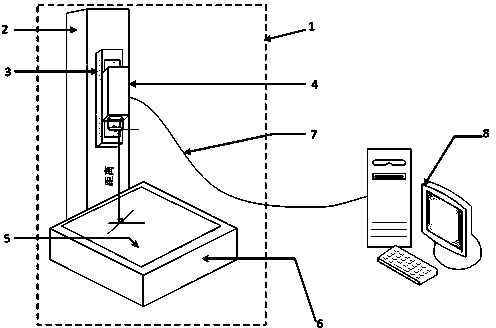

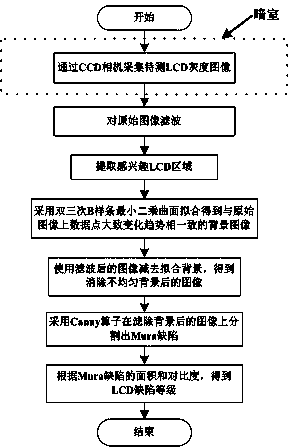

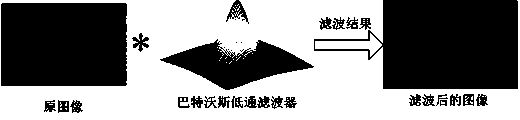

TFT-LCD Mura defect machine vision detecting method based on B spline surface fitting

InactiveCN103792699ASimplify the build processEasy to operateOptically investigating flaws/contaminationNon-linear opticsMachine visionCcd camera

The invention discloses a TFT-LCD Mura defect machine vision detecting method based on B spline surface fitting and belongs to the field of LCD display defect detecting. The method includes: using a CCD camera to collect the grayscale image of a lighted to-be-detected LCD; filtering the original image; extracting an interested area; using double three-time B spline surface fitting to fit an image background; using the original image to subtract the background image so as to obtain the image with the background, with uneven brightness, being removed; using a Canny operator to detects Mura defects; determining defect level. The method has the advantages that the method is reliable, high in accuracy and time saving in calculation.

Owner:SICHUAN ENTRY EXIT INSPECTION & QUARANTINE BUREAU OF THE PEOPLES REPUBLIC OF CHINA

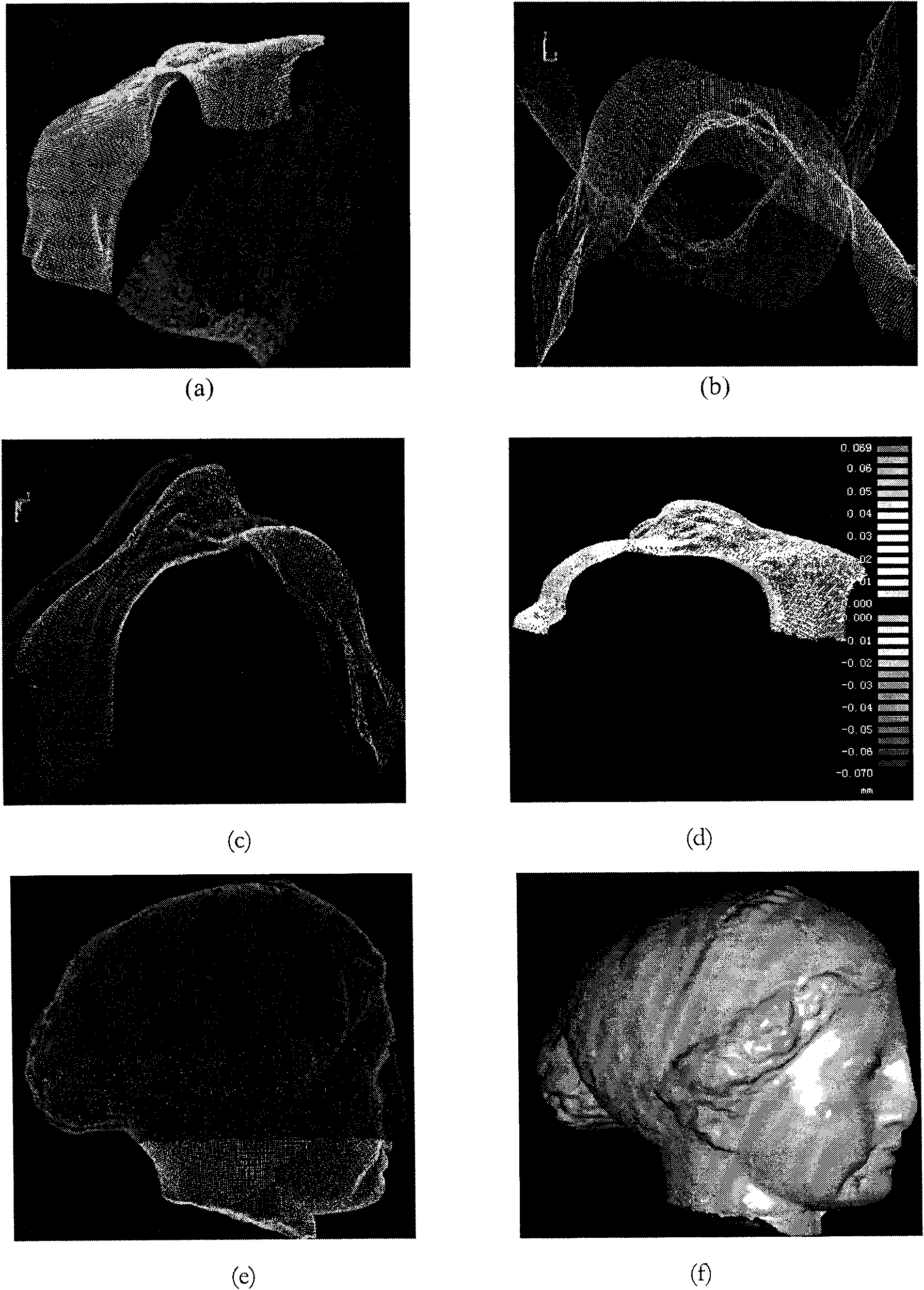

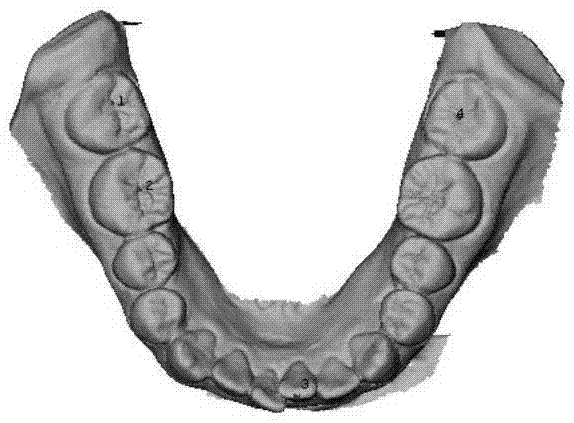

Dentition model generation method based on oral cavity scanning data and CBCT (Cone Beam Computed Tomography) data

ActiveCN105447908AMeet clinical requirementsCalculation speedImage enhancementImage analysisCone beam computed tomographyDentition

The present invention discloses a dentition model generation method based on oral cavity scanning data and CBCT (Cone Beam Computed Tomography) data. The method comprises: reading a three-dimensional multi-source data model of an oral cavity; setting and registering a fixed model and a floating model, wherein the fixed model is a triangular mesh model created by means of CBCT, and the floating model is a triangular mesh model of intraoral scanning; manually picking up feature point pairs of the models on the fixed model and the floating model, correcting the feature point pairs according to point cloud curvature of the models, moving feature points to a point with the largest curvature in a surrounding neighborhood, and performing preliminary registration on the feature point pairs by using a point-to-point ICP (Iterative Closest Point) algorithm; calculating a point cloud error of the models after preliminary registration, and by combination with medical parameter requirements, setting a parameter of precise registration; performing precise registration by using an optimized point-to-surface ICP algorithm; and fusing the CBCT data and intraoral scanning data after registration, and outputting tooth root and crown models after registration and fusion. Rapid and precise registration and fusion of data of different devices or data of different postures of patients are implemented.

Owner:山东山大华天软件有限公司

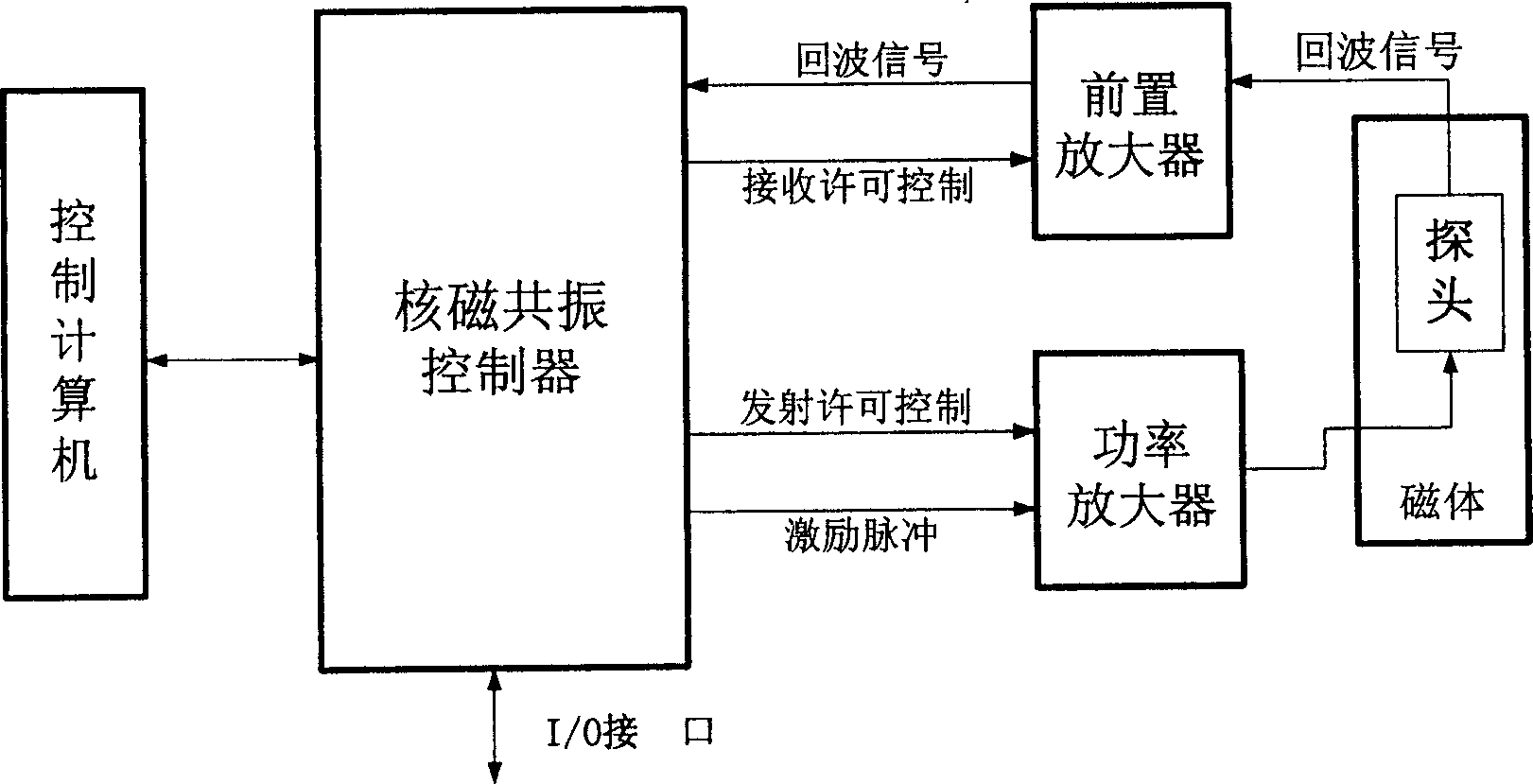

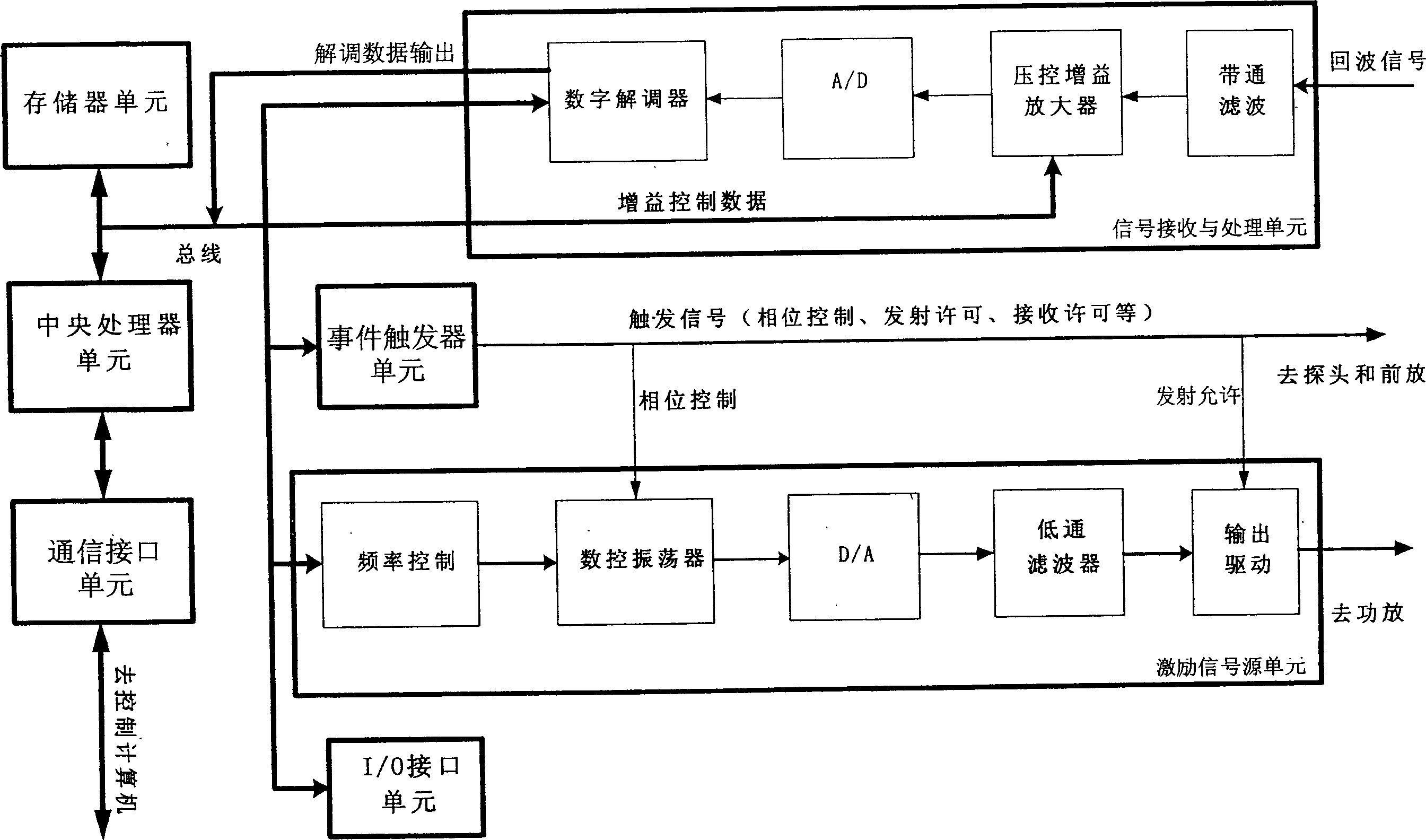

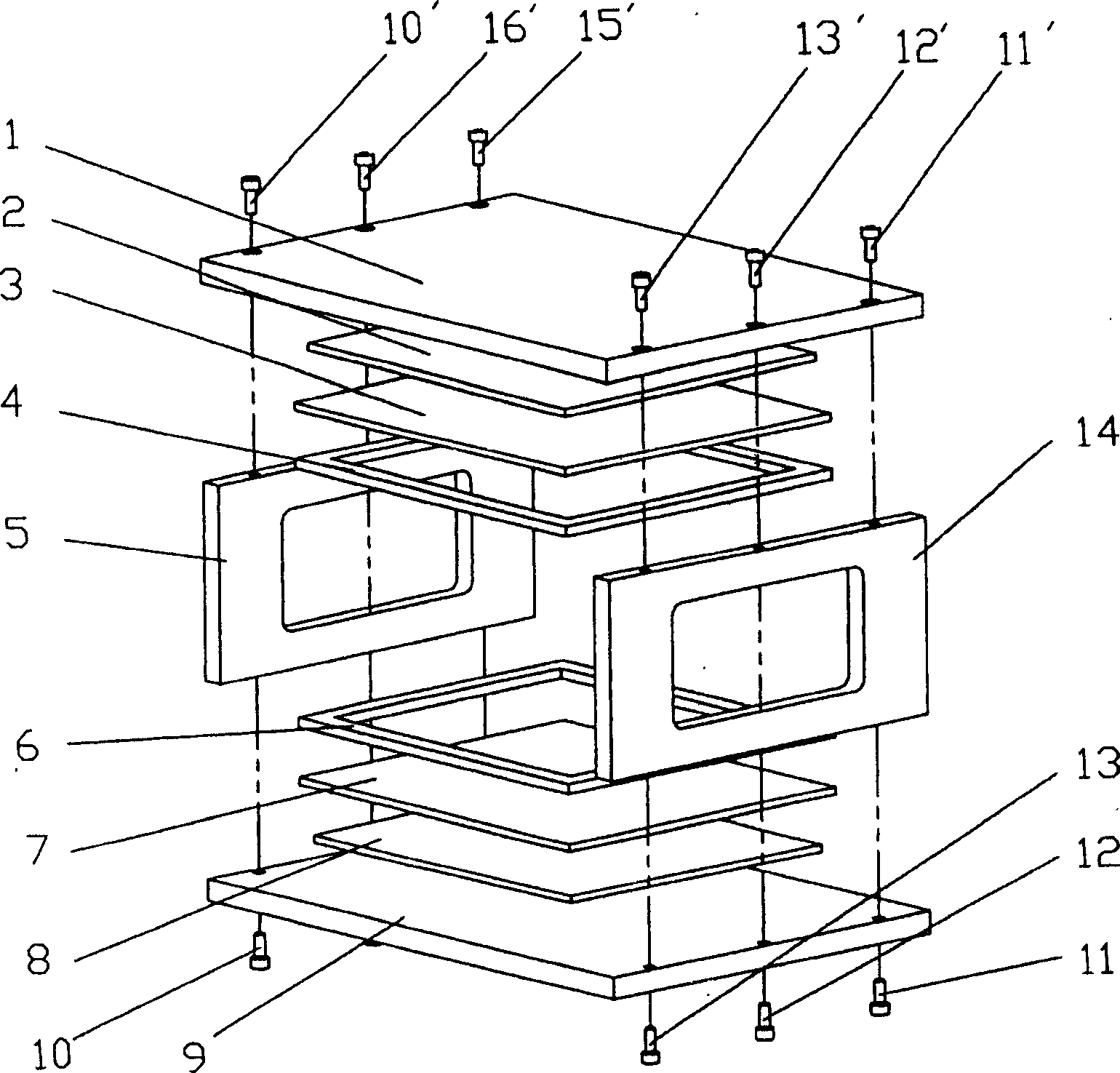

Apparatus and method for measuring stratum rock physical property by rock NMR relaxation signal

InactiveCN1763563ACalculation speedHigh precisionAnalysis using nuclear magnetic resonanceDetection using electron/nuclear magnetic resonanceFluid saturationNMR - Nuclear magnetic resonance

The invention discloses a rock nuclear magnetic resonance relaxation signal measuring device of formation rock matter property, which comprises the following parts: magnet, probe, preposition amplifier, power amplifier, nuclear magnetic resonance controller and control computer, wherein the nuclear magnetic resonance controller generates specific frequency and waveshape radio frequency actuation impulse, which is sent to the nuclear magnetic resonance probe in the magnet after magnified; the rock sample is set in the exciting probe, which generates nuclear magnetic resonance backward wave signal; the nuclear magnetic resonance probe receives the backward wave signal and sends to the nuclear magnetic resonance controller after magnified, which is sent to the computer finally. The invention can generate the parameter for usage directly, which can be applied in the oil field nuclear magnetic resonance well.

Owner:PEKING UNIV

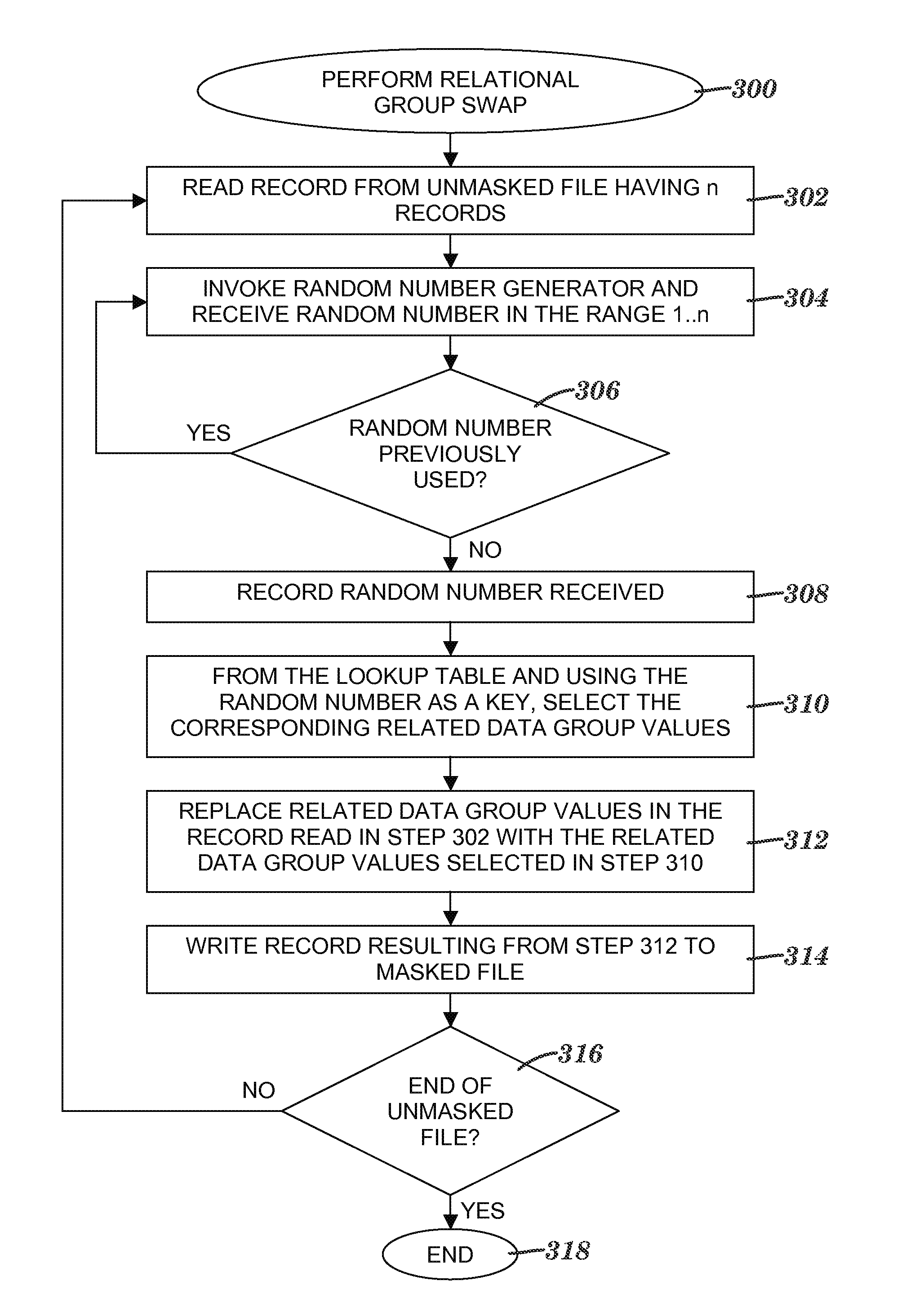

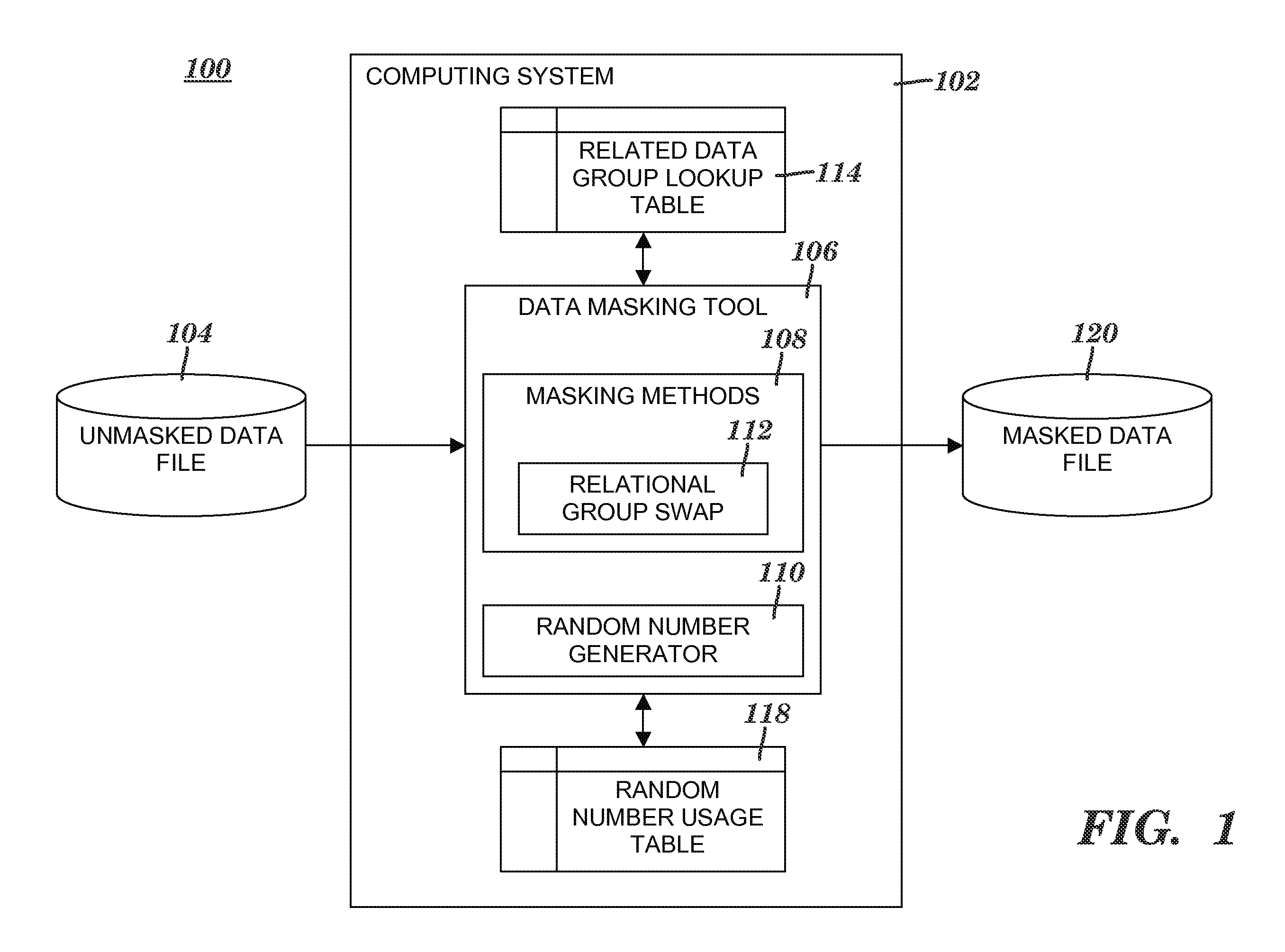

Masking related sensitive data in groups

InactiveUS7877398B2Precise processingCalculation speedDigital data processing detailsComputer security arrangementsData fileLookup table

A method and system of masking a group of related data values. A record in an unmasked data file of n records is read. The record includes a first set of data values of data elements included in a related data group (RDG) and one or more data values of one or more data elements external to the RDG. A random number k is received. A second set of data values is retrieved from a lookup table that associates n key values with n sets of data values. Retrieving the second set of data values includes identifying that the second set of data values is associated with a key value of k. The n sets of data values are included in the umnasked data file's n records. The record is masked by replacing the first set of data values with the retrieved second set of data values.

Owner:INT BUSINESS MASCH CORP

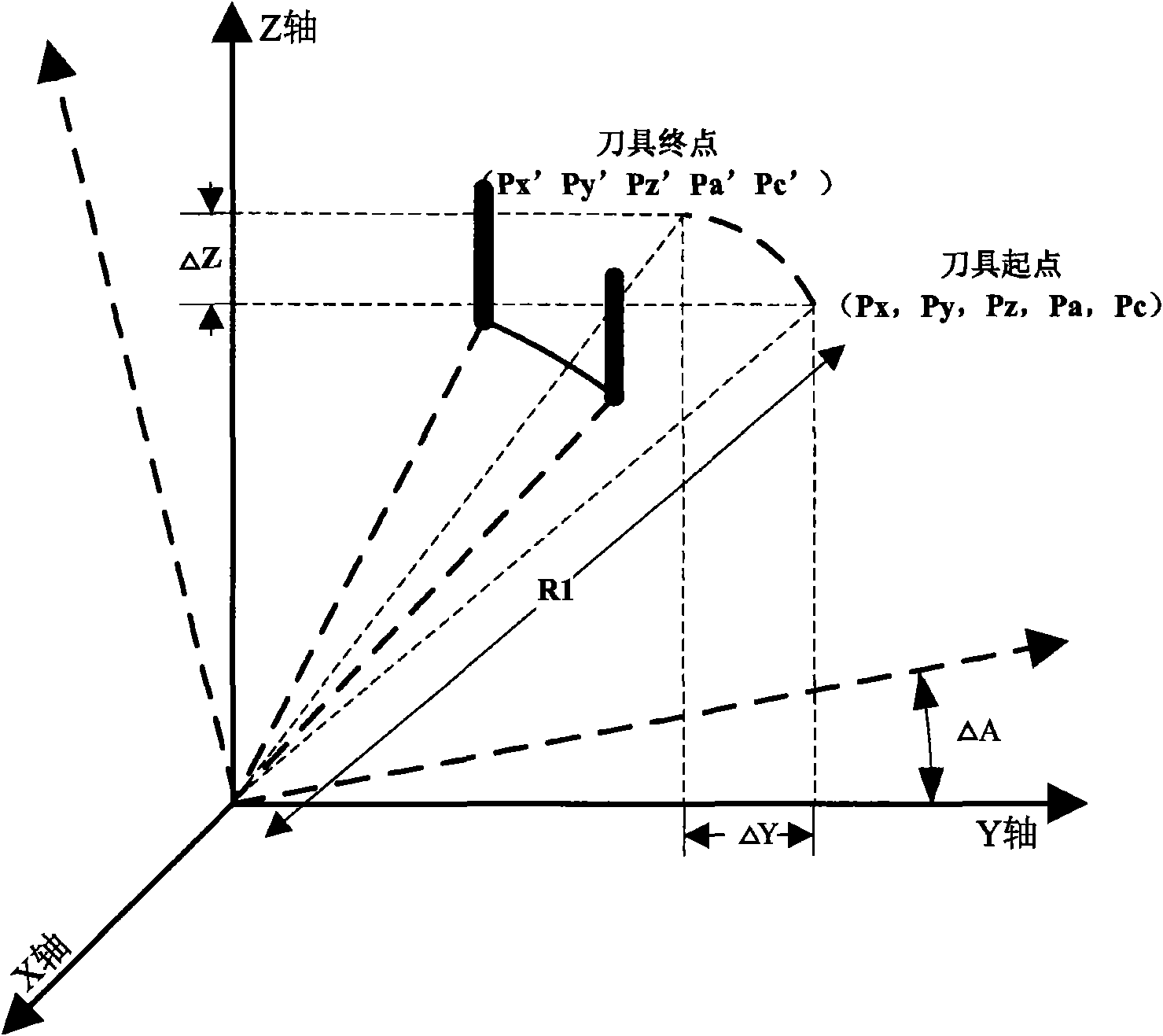

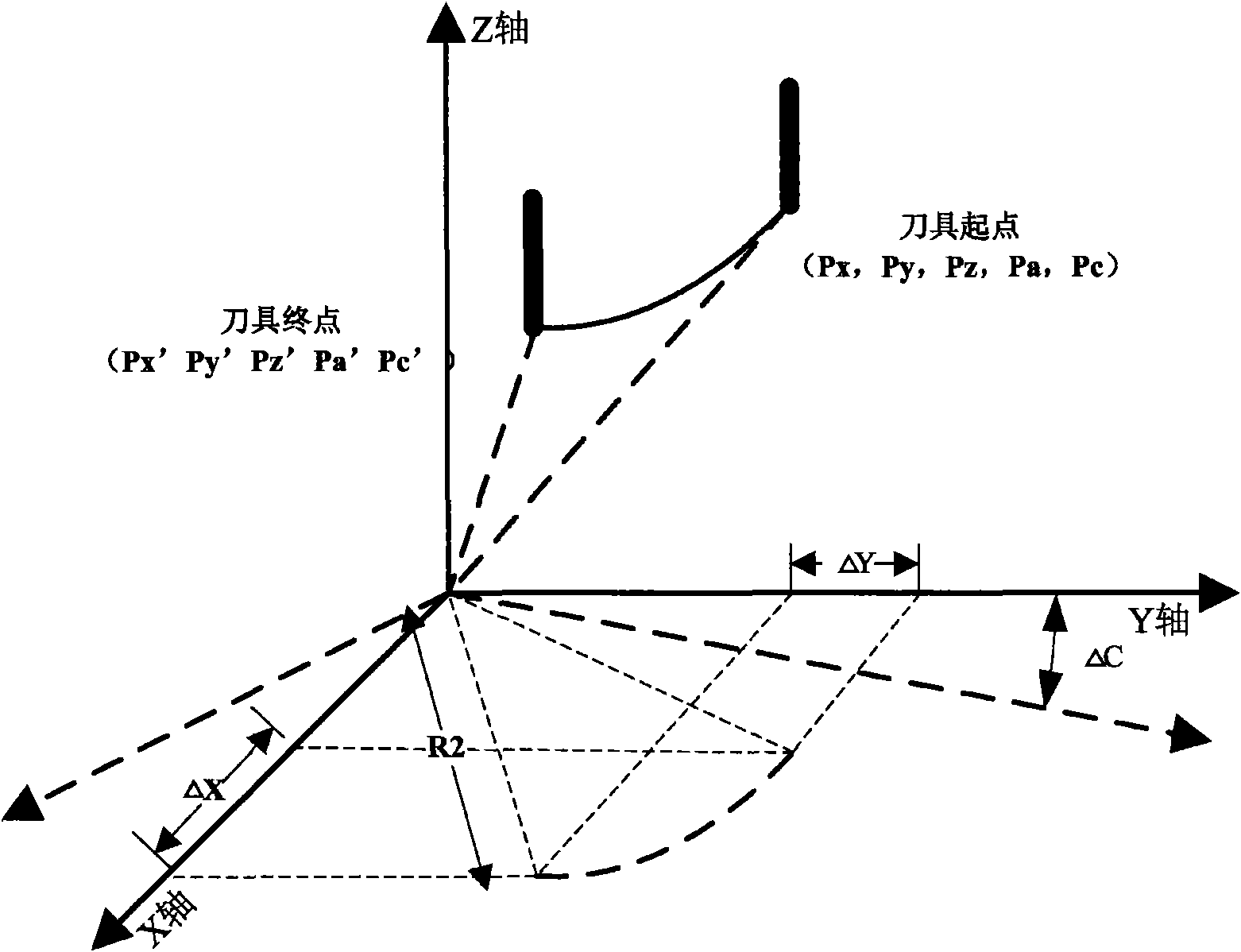

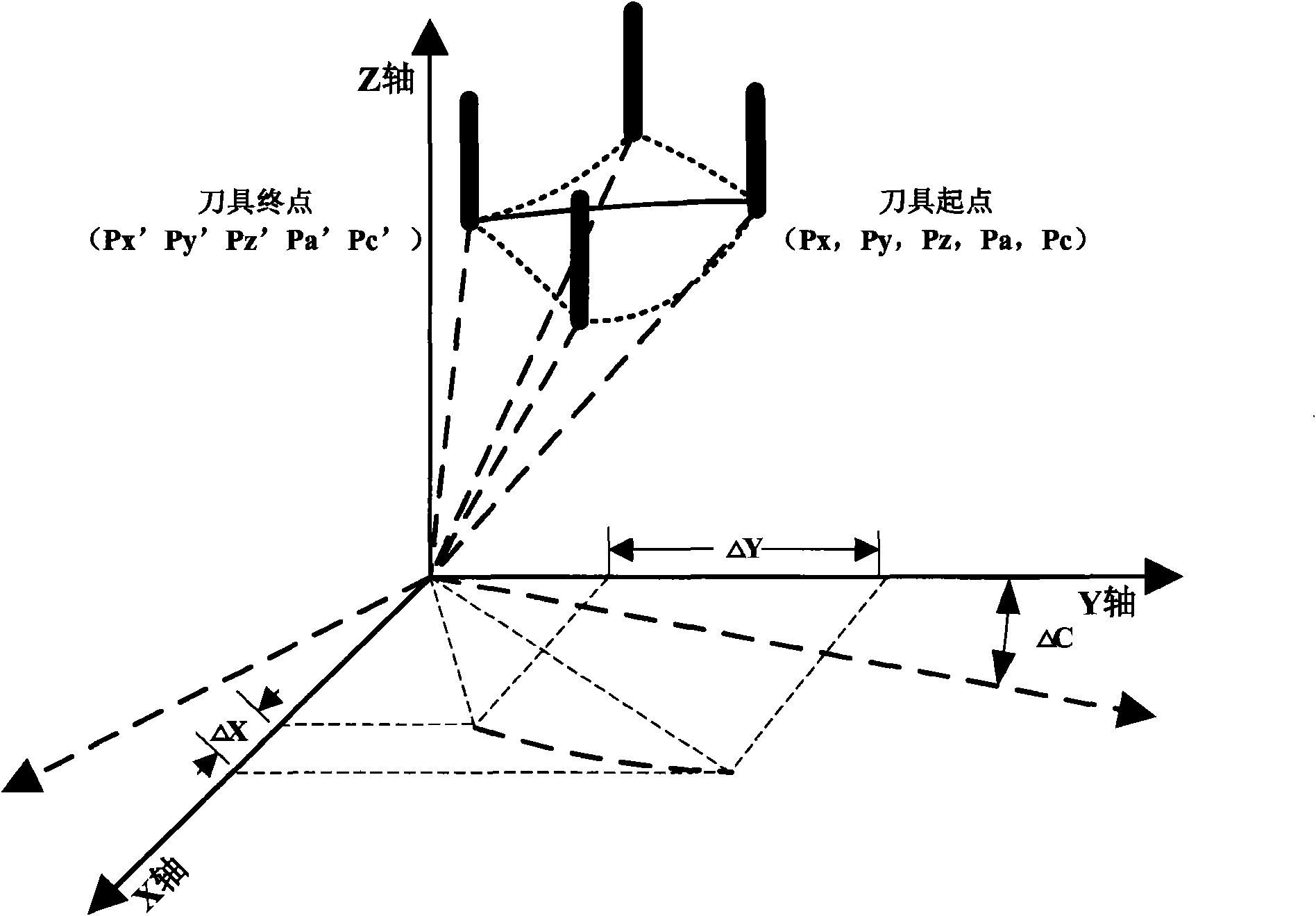

Method for compensating central point of double-turntable five-axis linked numerical control machining rotary tool

InactiveCN101980091ASolve the problem of nonlinear motion errorImprove performanceProgramme controlComputer controlLinear motionNonlinear motion

The invention discloses a method for compensating a central point of a double-turntable five-axis linked numerical control machining rotary tool. The method is characterized by comprising the following steps of: when a tool trace of a double-turntable five-axis linked numerical control system which machines a spatial complex curved surface is a straight line, discretely approximating the movement trace of the central point of the tool through line interpolation, starting a real time control protocol (RTCP) module, obtaining a normal plane compensation vector and performing projection to obtain a central point compensation vector and output displacement by using the RTCP module, and inputting a number axis center distance parameter to realize the compensation of a nonlinear movement error during the machining of the double-turntable five-axis linked numerical control system. The method can well inhibit the nonlinear movement error, so that the running efficiency of the numerical control machining equipment is improved, the numerical control equipment can perform high-precision and high-efficiency machining, the machining quality of parts can be remarkably improved, and the method has excellent application prospect in the field of mechanical engineering.

Owner:RES INST OF XIAN JIAOTONG UNIV & SUZHOU

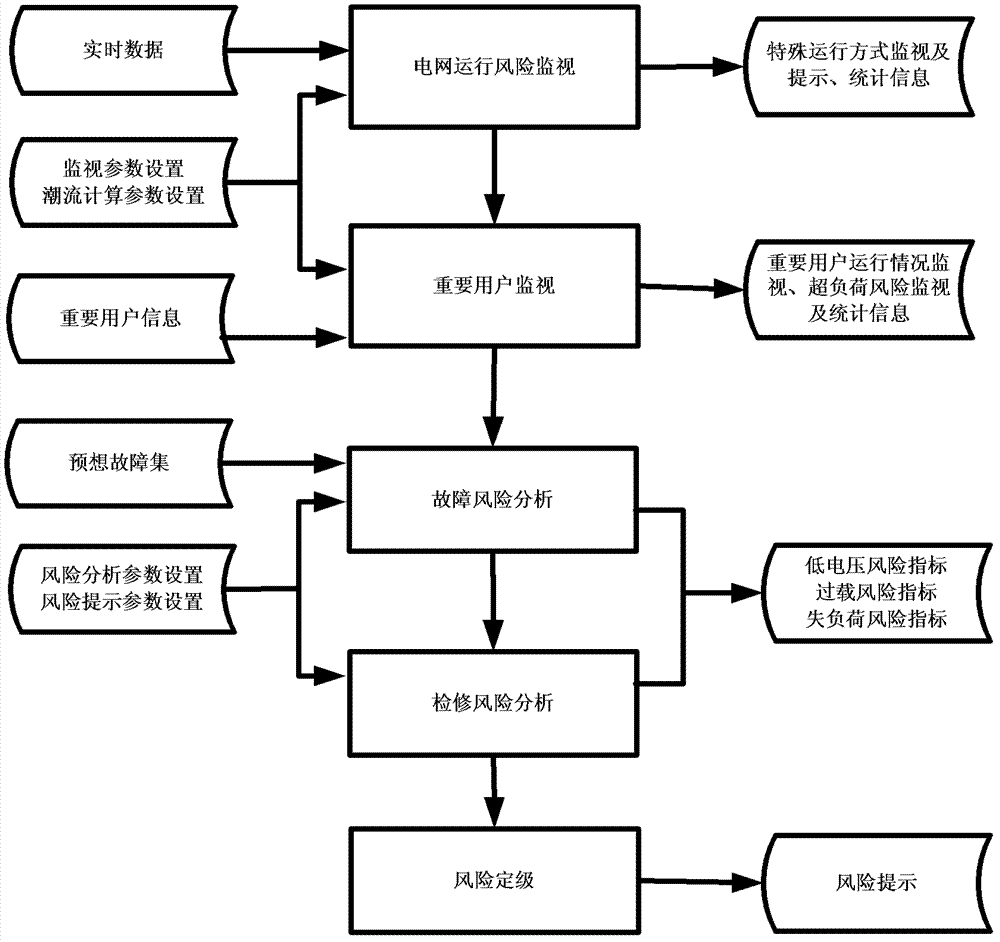

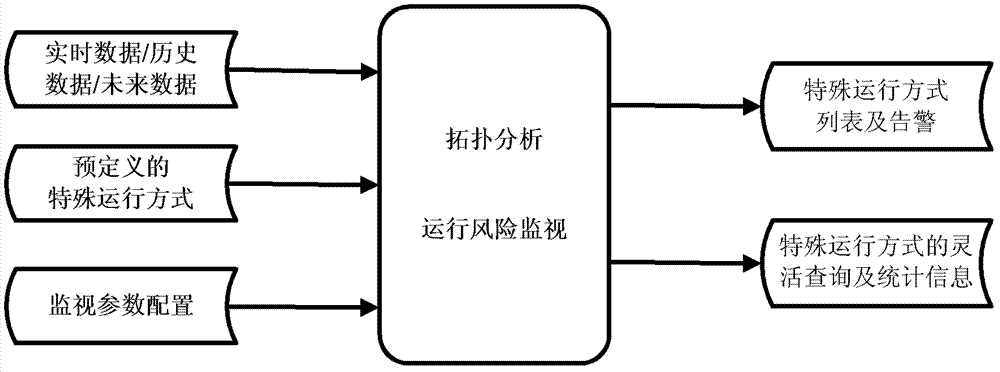

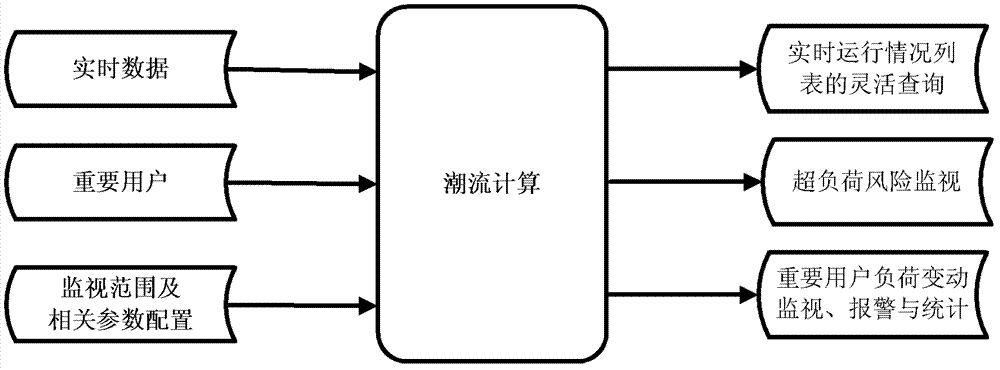

Online risk analysis system and method for regional power grid

The invention provides online risk analysis system and an online risk analysis method for a regional power grid. The system comprises a display unit, a power grid running risk monitoring unit, an important user monitoring unit, an analysis unit and a risk grading unit; the method comprises the following steps: (1). acquiring the power grid running data and system state; (2). conducting expect fault set selection and fault analysis; (3). judging the result caused by the power grid according to the expect fault, if power supply risk exists, conducting the step (4), otherwise returning the step 2; and (4). calculating risk indicator, and conducting risk grade prompt according to the indicator. The online risk analysis system and the online risk analysis method for the regional power grid comprehensively consider the current state probability, fault possibility and fault seriousness of the system, and help a dispatcher analyze and find hidden risk of the system.

Owner:CHINA ELECTRIC POWER RES INST +1

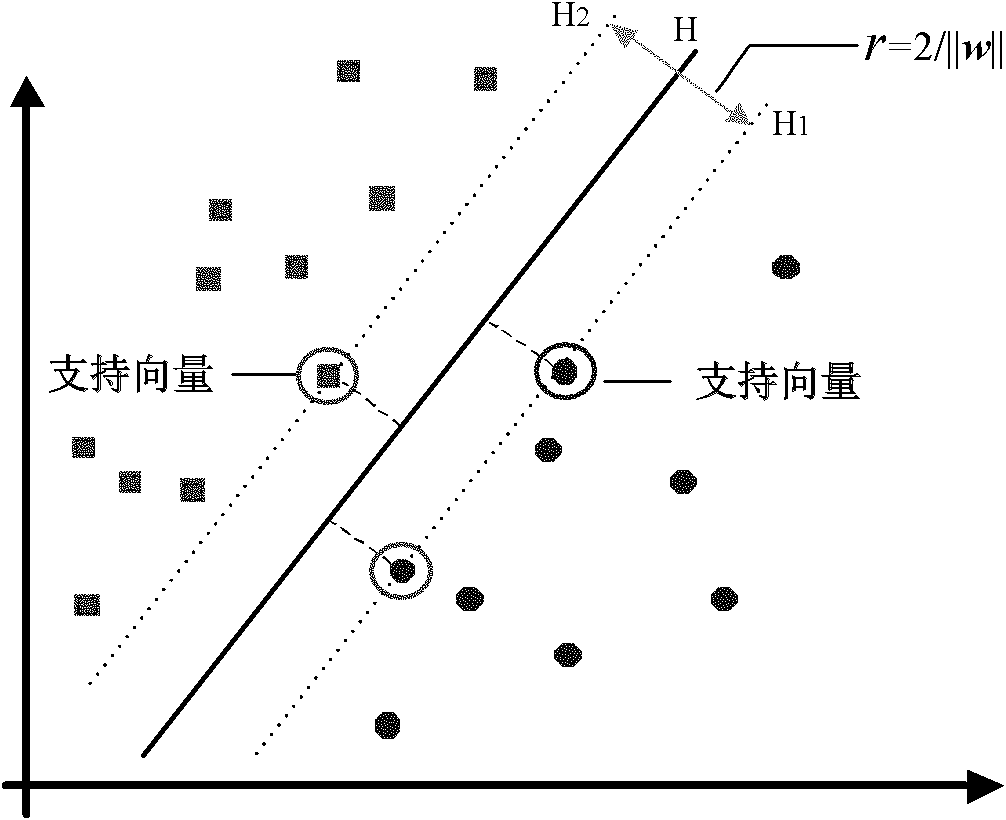

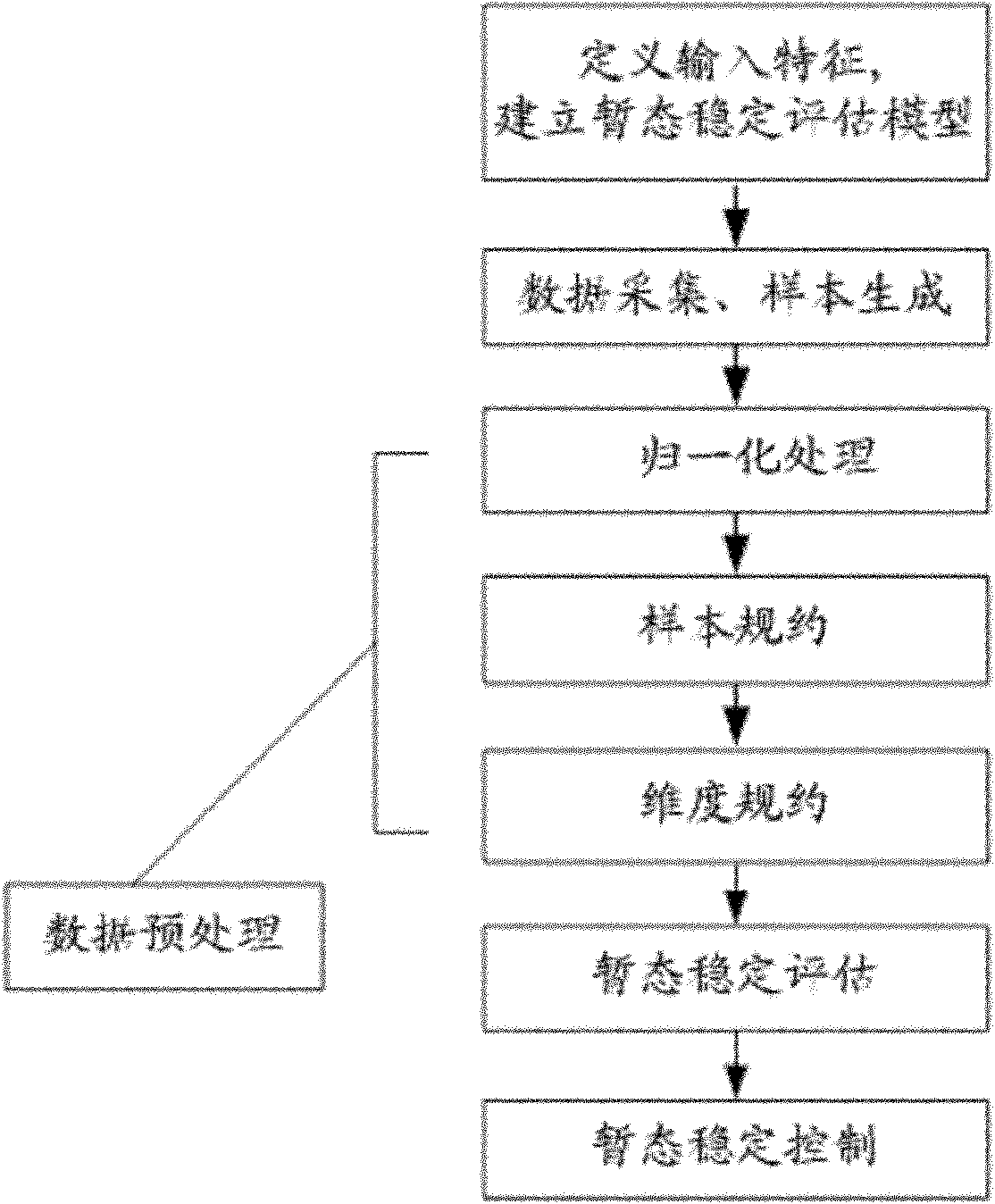

Method based on knowledge discovery technology for stability assessment and control of electric system

ActiveCN102074955AGuaranteed uptimeImprove data qualityAc network circuit arrangementsTransient stateSimulation

The invention provides a method for transient stability assessment and real-time control of an electric system. According to the method, technologies concerning knowledge discovery are introduced to realize the stability assessment and the control of the electric system; and on the basis of establishing a transient stability assessment model, the corresponding relation between the system running condition and the overall stability of the electric system is found out, the inherent running discipline of the electric system are grasped, and the fast assessment of the stable running state of the electric system is realized, therefore, the actual running management of the electric system can be guided. The method has the advantages that timely and effective stability control scheme can be established for the running state which loses stability after great disturbance, and the safe and the stable running of the system can be ensured at lowest price.

Owner:CHINA ELECTRIC POWER RES INST +1

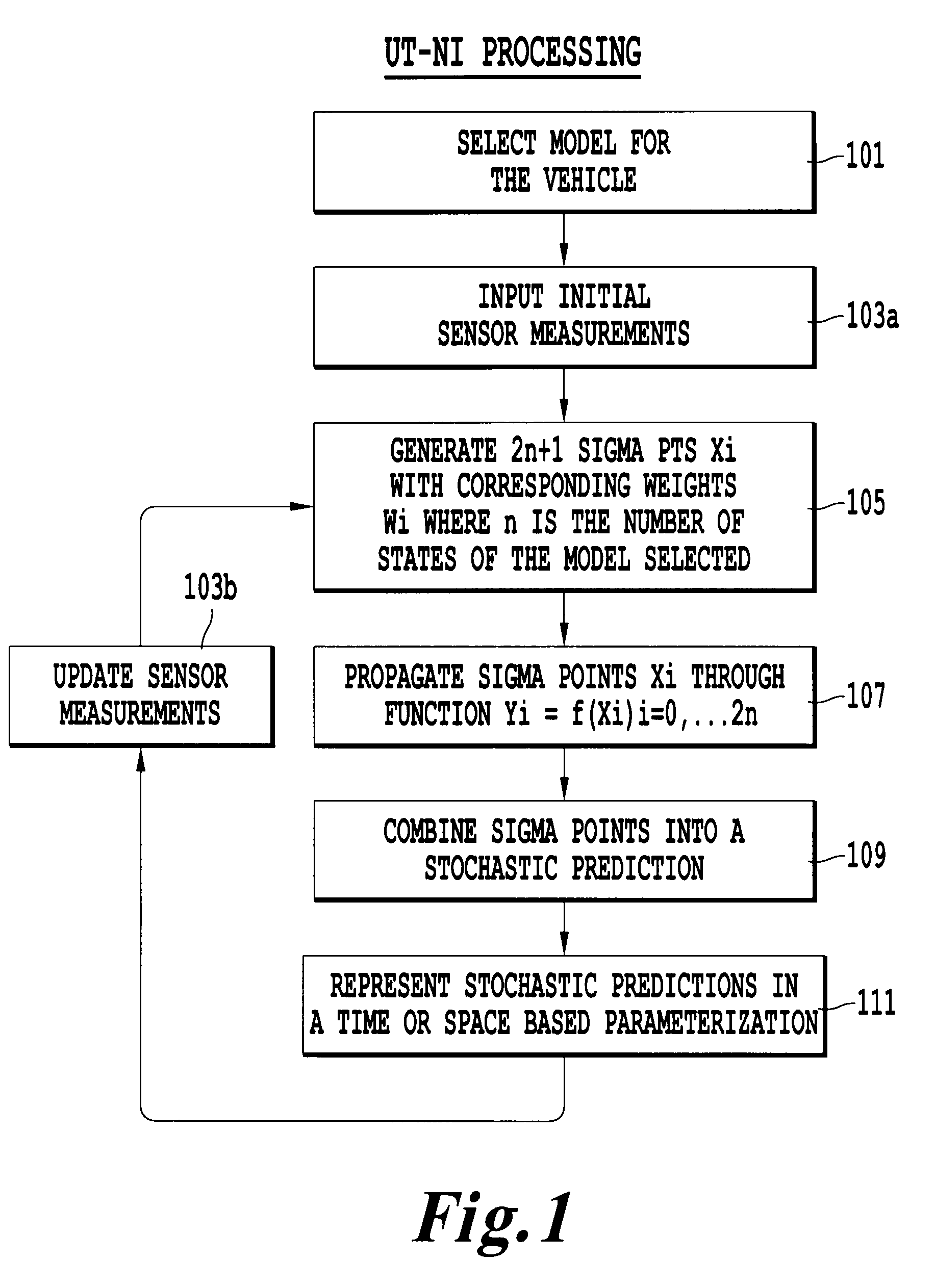

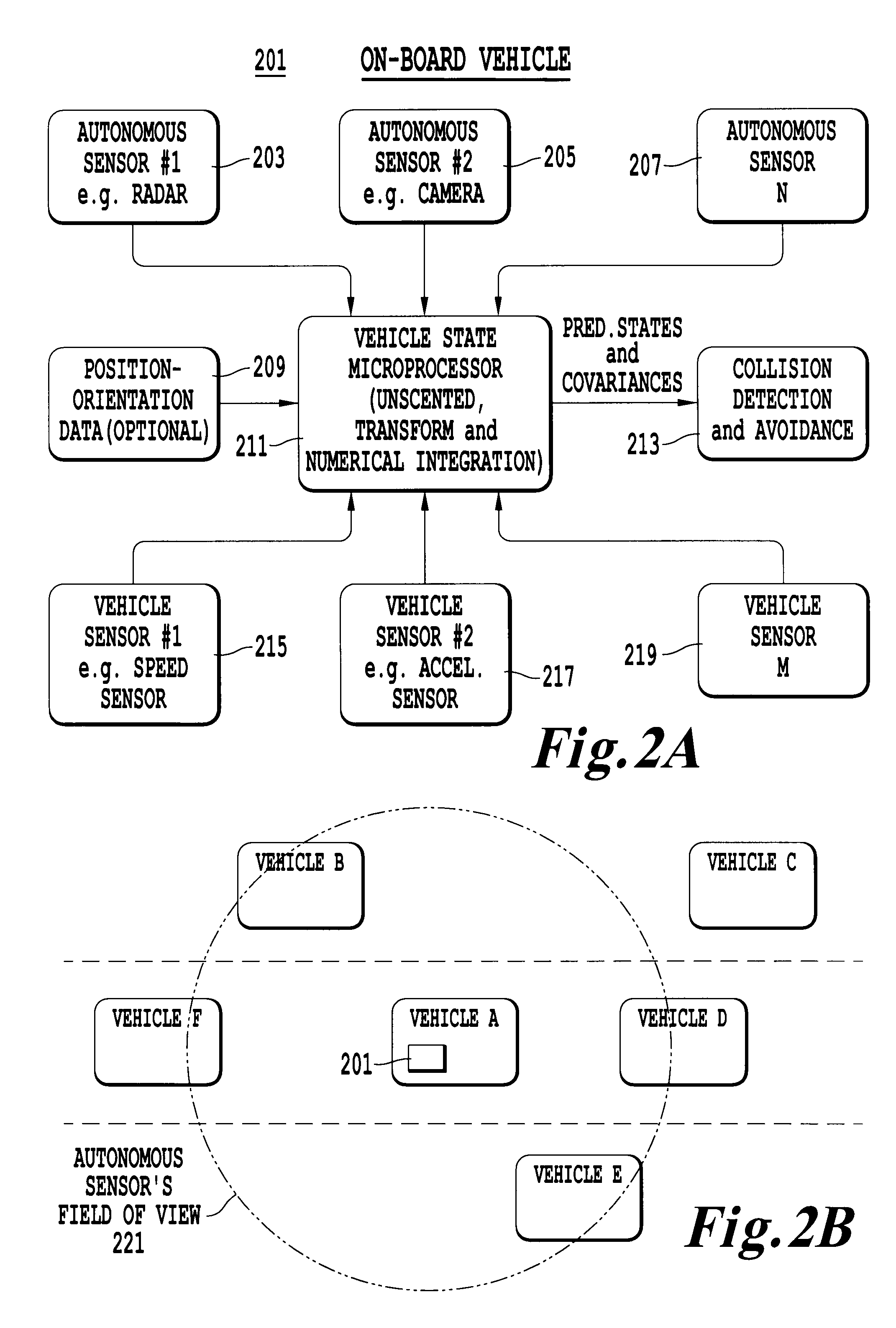

System and method for stochastically predicting the future states of a vehicle

InactiveUS20100057361A1Accurate predictionCalculation speedAnalogue computers for trafficAnti-collision systemsSigma pointEngineering

Owner:TOYOTA MOTOR CO LTD

Automobile violation video monitoring method

InactiveCN102332209APowerfulHigh degree of intelligenceDetection of traffic movementHigh definitionVideo camera

The invention discloses an automobile violation video monitoring method, which is achieved by capturing videos through a camera. The method is characterized in that the video monitored image is processed in the following steps: finding the number plate of a vehicle on the image, and locating the video image according to the number plate; setting a violation monitoring area on the image; tracking the motion track of the number plate of the vehicle in the monitored video through the number plate location technique; and detecting whether the location of the number plate of the vehicle passes through the set violation monitoring area to determine whether the violation occurs. The monitoring method can achieve intelligent monitoring and forensics snapshot of different violation behaviors by using only one high definition camera in combination with different video monitoring function modules, thereby obviating the connection and installation of other detection sensors. The automobile violation video monitoring method has the advantages of powerful function, high intelligent degree, simple equipment, high integrity and so on, and is flexible in setting.

Owner:王志清

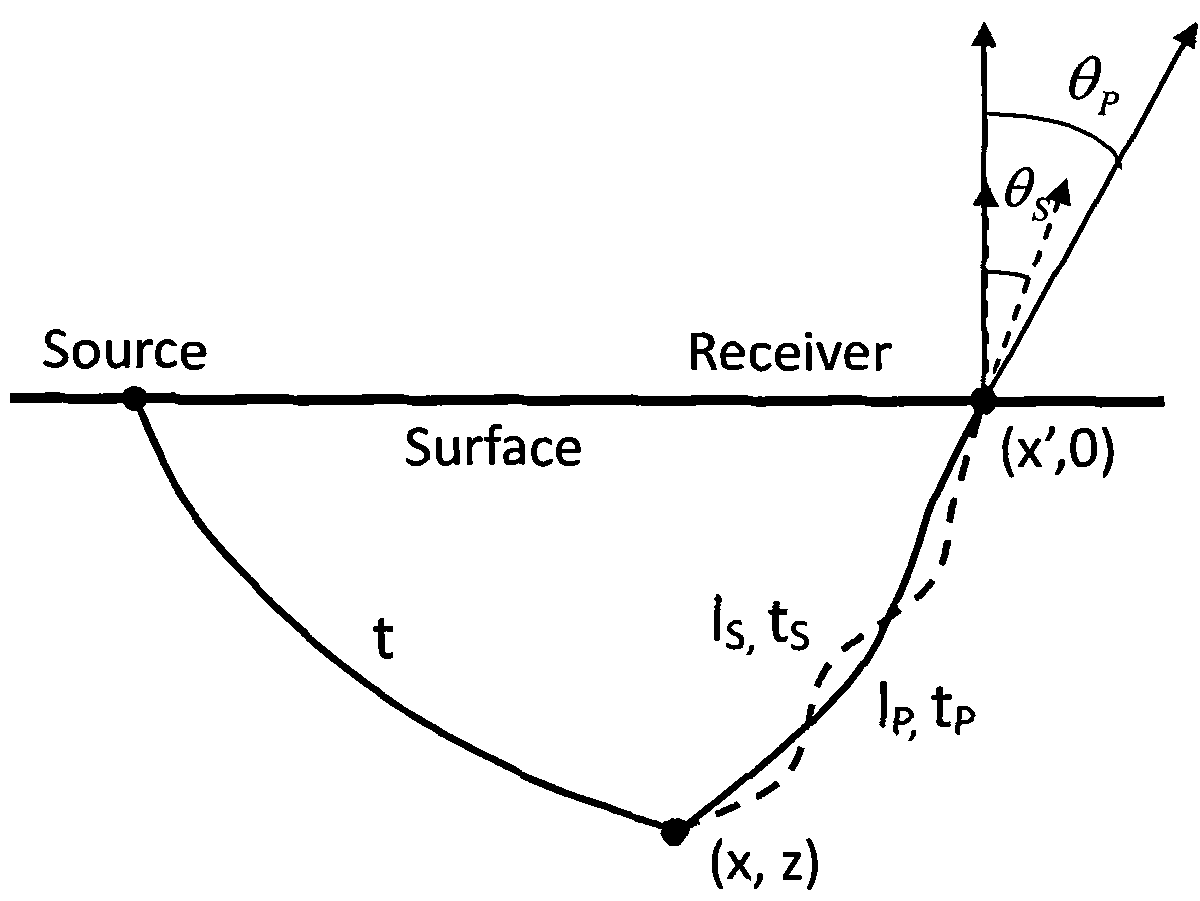

Pre-stack depth migration method

ActiveCN101937100AAccurate homingImplement multi-component migrationSeismic signal processingImage resolutionWave field

The invention relates to the field of seismic prospecting, in particular to a pre-stack depth migration method. The method comprises the following steps of: performing migration velocity analysis on seismic data; calculating travel time, arc length, emergence angle and incidence angle by ray tracing; performing migration aperture calculation; and performing pre-stack depth migration by using a Kirchhoff integration vector migration formula. The method integrates migration velocity analysis, migration aperture selection, ray tracing and Kirchhoff integration formula, does not need wave field separation, and realizes multi-component simultaneous migration and accurate homing of converted wave. Based on the practical exploration data, the pre-stack depth migration method has good surface imaging effect and high imaging resolution, the continuity of the deep reflecting in-phase axis on a converted wave imaging section is improved, and the construction is clearer.

Owner:INST OF GEOLOGY & GEOPHYSICS CHINESE ACAD OF SCI +1

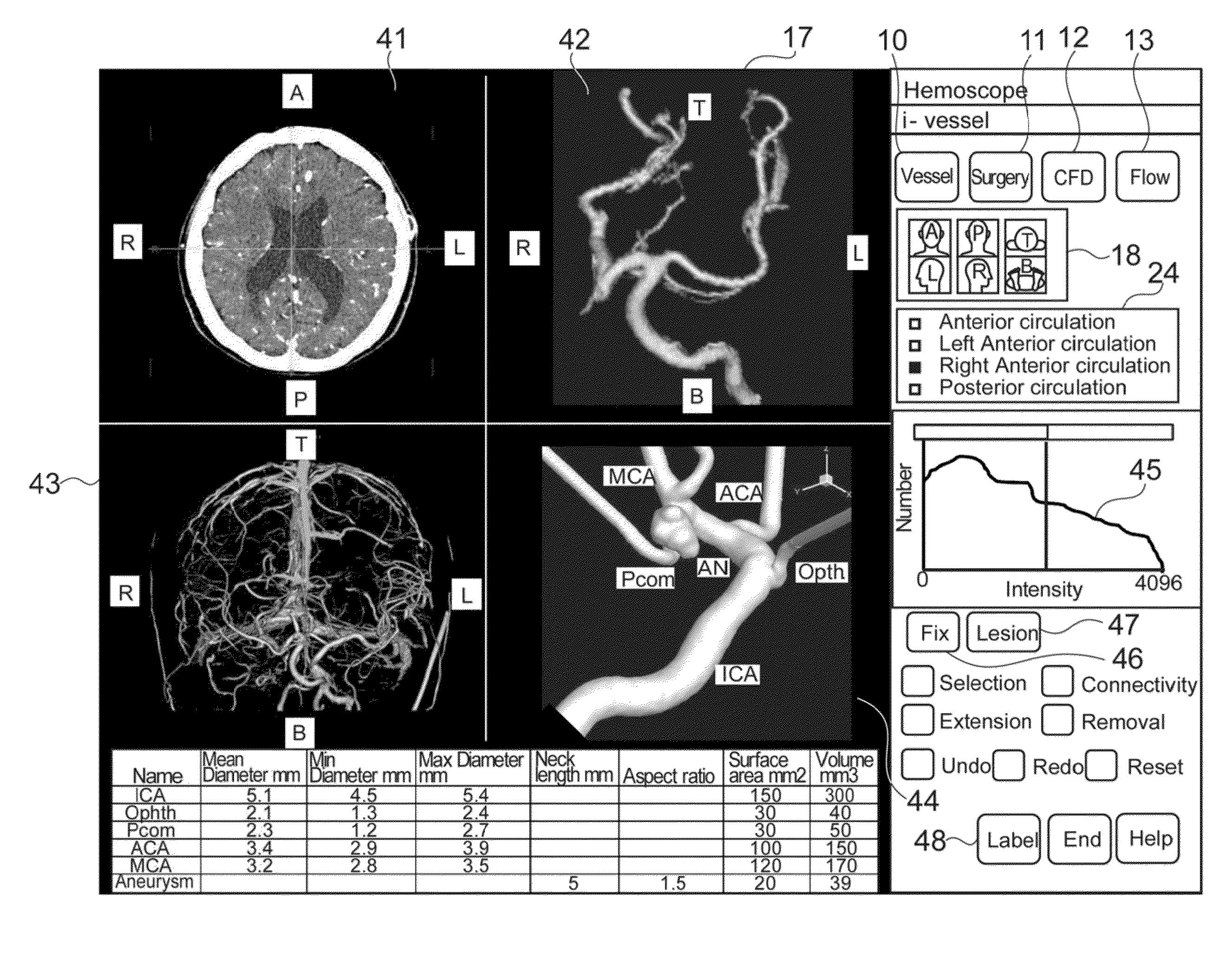

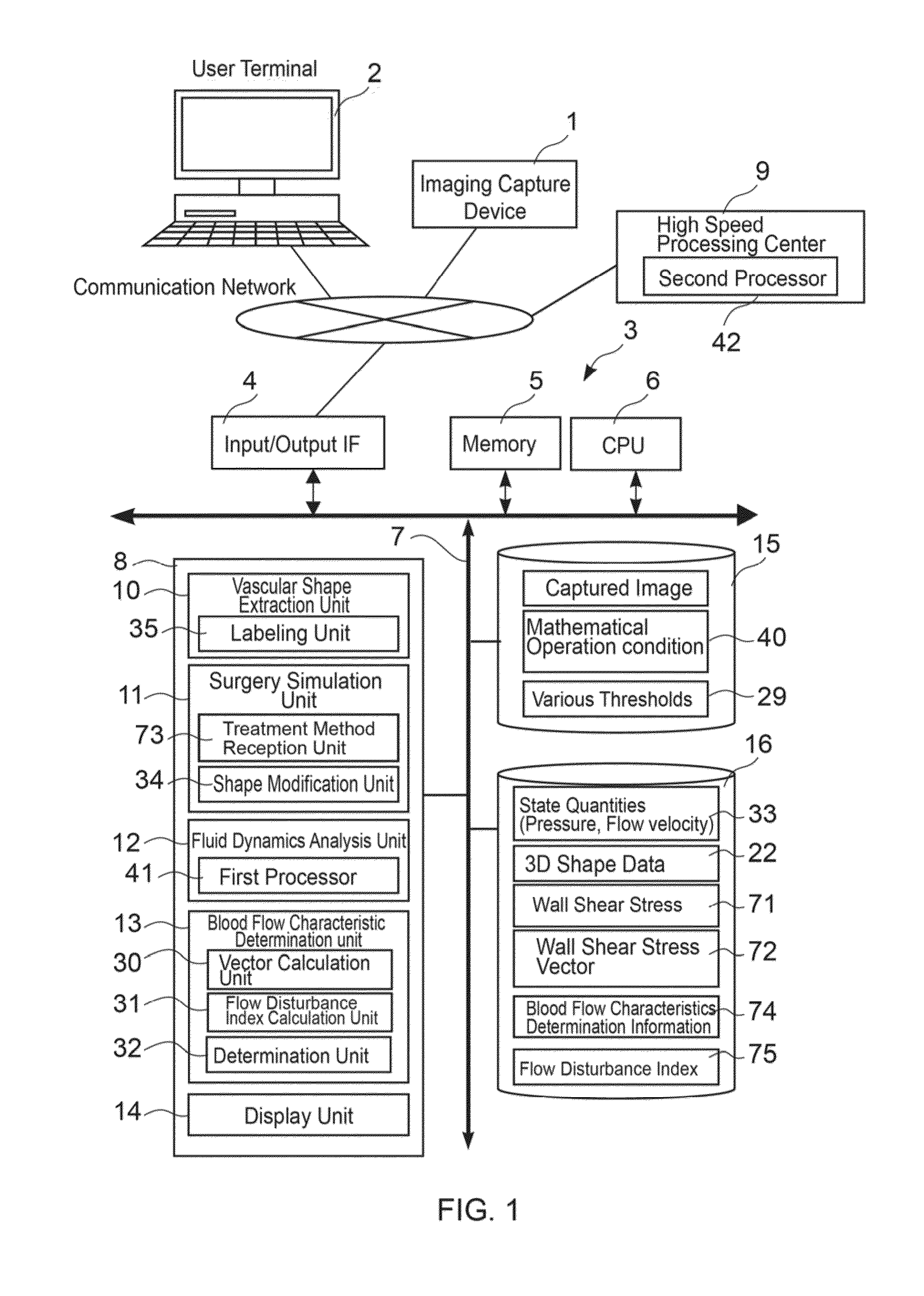

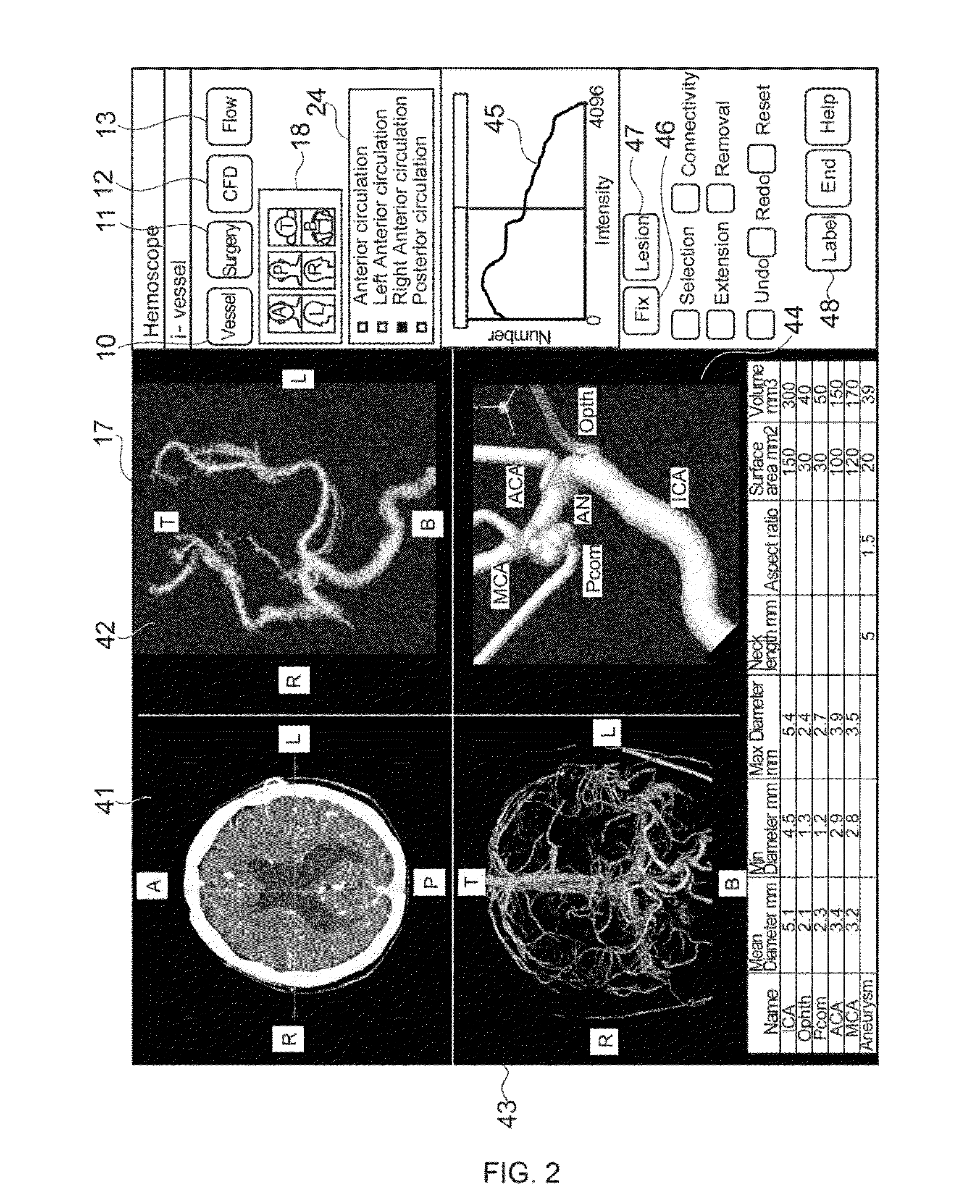

System for diagnosing bloodflow characteristics, method thereof, and computer software program

ActiveUS20140316758A1Calculation speedImprove calculation accuracyMedical imagingMechanical/radiation/invasive therapiesGraphicsWall shear

This system is a computer-based system for analyzing a blood flow at a target vascular site of a subject by means of a computer simulation, having a three-dimensional shape extraction unit, by a computer, for reading a captured image at the target vascular site and generating three-dimensional data representing a shape of a lumen of the target vascular site; a fluid dynamics analysis unit, by a computer, for determining state quantities (pressure and flow velocity) of blood flow at each position of the lumen of the target vascular site by means of computation by imposing boundary conditions relating to blood flow to the three-dimensional shape data; a blood flow characteristic determination unit for determining, from the state quantities of the blood flow determined by the fluid dynamics analysis unit, a wall shear stress vector at each position of the lumen wall surface of the target vascular site, determining relative relationship between a direction of the wall shear stress vector at a specific wall surface position and directions of wall shear stress vectors at wall surface positions surrounding the specific wall surface position, and from the morphology thereof, determining characteristics of the blood flow at the specific wall surface position and outputting the same as a determined result; and a display unit, by a computer, for displaying the determined result of the blood flow characteristic which is graphically superposed onto a three-dimensional shape model.

Owner:EBM

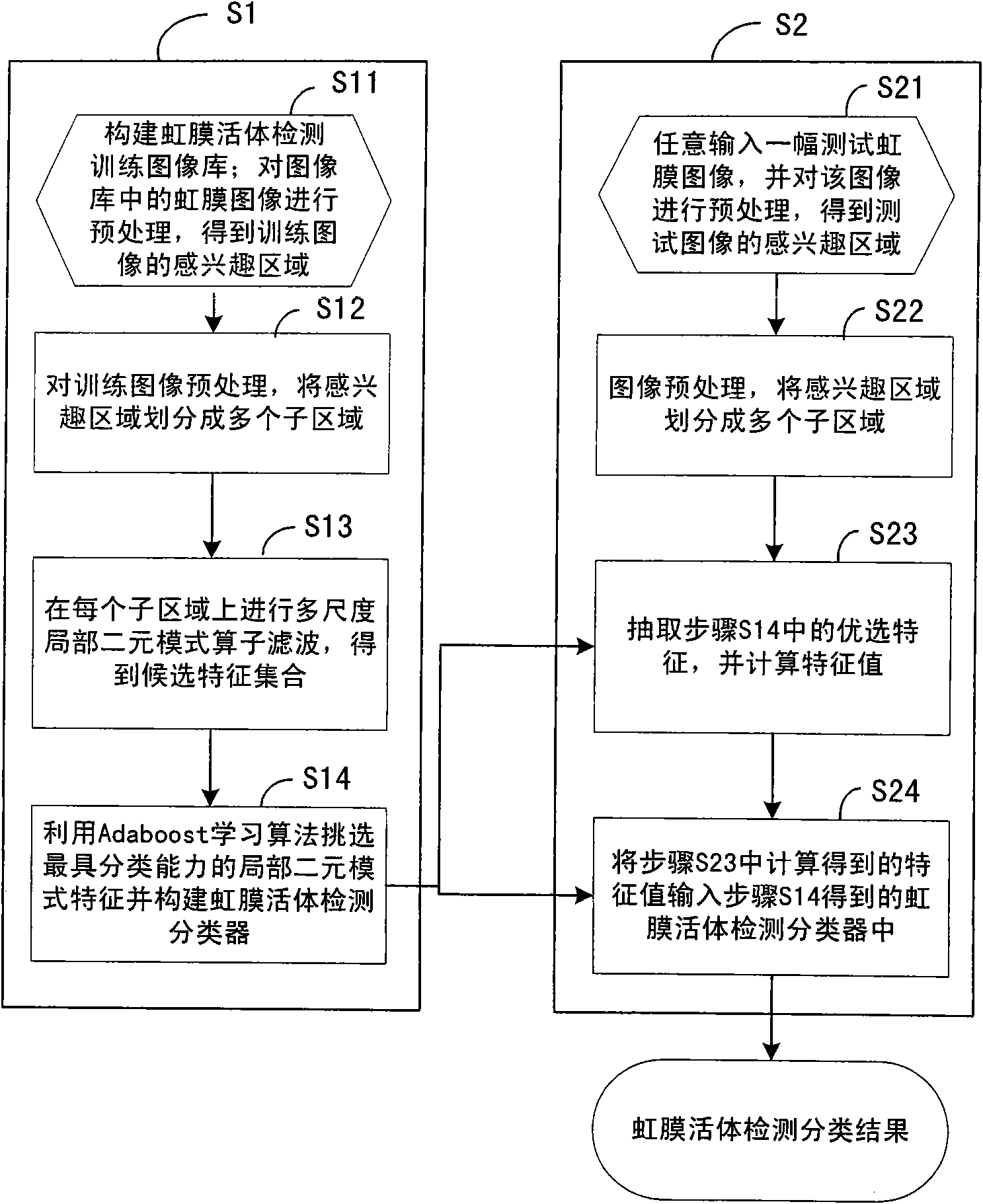

Living iris detection method

ActiveCN101833646AHigh precisionImprove securityCharacter and pattern recognitionPattern recognitionMedicine

The invention relates to a living iris detection method, which comprises the following steps of: S1, pre-treating a living iris image and an artificial iris image in a training image library; performing multi-scale characteristic extraction of local binary mode in an obtained interested area, and selecting the preferable one from the obtained candidate characteristics by using an adaptive reinforcement learning algorithm, and establishing a classifier for living iris detection; and S2, pre-treating the randomly input test iris image, and calculating the preferable local binary-mode characteristic in the obtained interested area; inputting the calculated characteristic value into the classifier for living iris detection obtained in step S1, and judging whether the test image is from the living iris according to the output result of the classifier. The invention can perform effective anti-forgery detection and alarm for the iris image and reduce error rate in iris recognition. The invention is widely applicable to various application systems for identification and safety precaution by using iris recognition.

Owner:BEIJING IRISKING

Masking related sensitive data in groups

InactiveUS20090132575A1Precise processingCalculation speedDigital data processing detailsComputer security arrangementsData fileData element

A method and system of masking a group of related data values. A record in an unmasked data file of n records is read. The record includes a first set of data values of data elements included in a related data group (RDG) and one or more data values of one or more data elements external to the RDG. A random number k is received. A second set of data values is retrieved from a lookup table that associates n key values with n sets of data values. Retrieving the second set of data values includes identifying that the second set of data values is associated with a key value of k. The n sets of data values are included in the umnasked data file's n records. The record is masked by replacing the first set of data values with the retrieved second set of data values.

Owner:IBM CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com