Method for sharing GPU (graphics processing unit) by multiple tasks based on CUDA (compute unified device architecture)

An implementation method and multi-task technology, applied in the direction of multi-programming devices, resource allocation, etc., can solve problems such as multi-task sharing that have not been found, and achieve the effects of simple multi-task sharing, simplified programming work, and good performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0059] The following uses a specific example to further illustrate the present invention. However, it should be noted that the purpose of publishing the embodiments is to help further understand the present invention, but those skilled in the art can understand that various substitutions and modifications are possible without departing from the spirit and scope of the present invention and the appended claims. It is possible. Therefore, the present invention should not be limited to the content disclosed in the embodiments, and the scope of protection claimed by the present invention is subject to the scope defined by the claims.

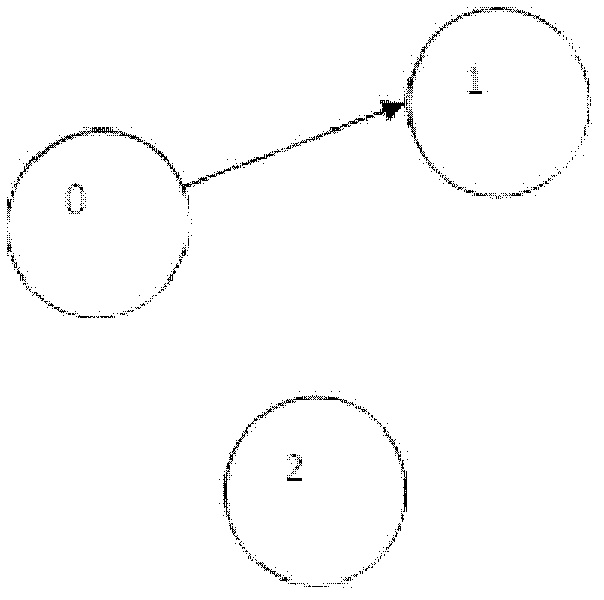

[0060] A specific example is: 3 calculation tasks (the content of the specific tasks has no effect here).

[0061] Tasks have the following constraints: Task 1 must be completed after task 0 is completed, because task 1 needs to use the result of task 0, and task 2 has no constraint relationship with task 0 and task 1. (Attachment 3(a), the circle repr...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com