Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

3935 results about "Memory address" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

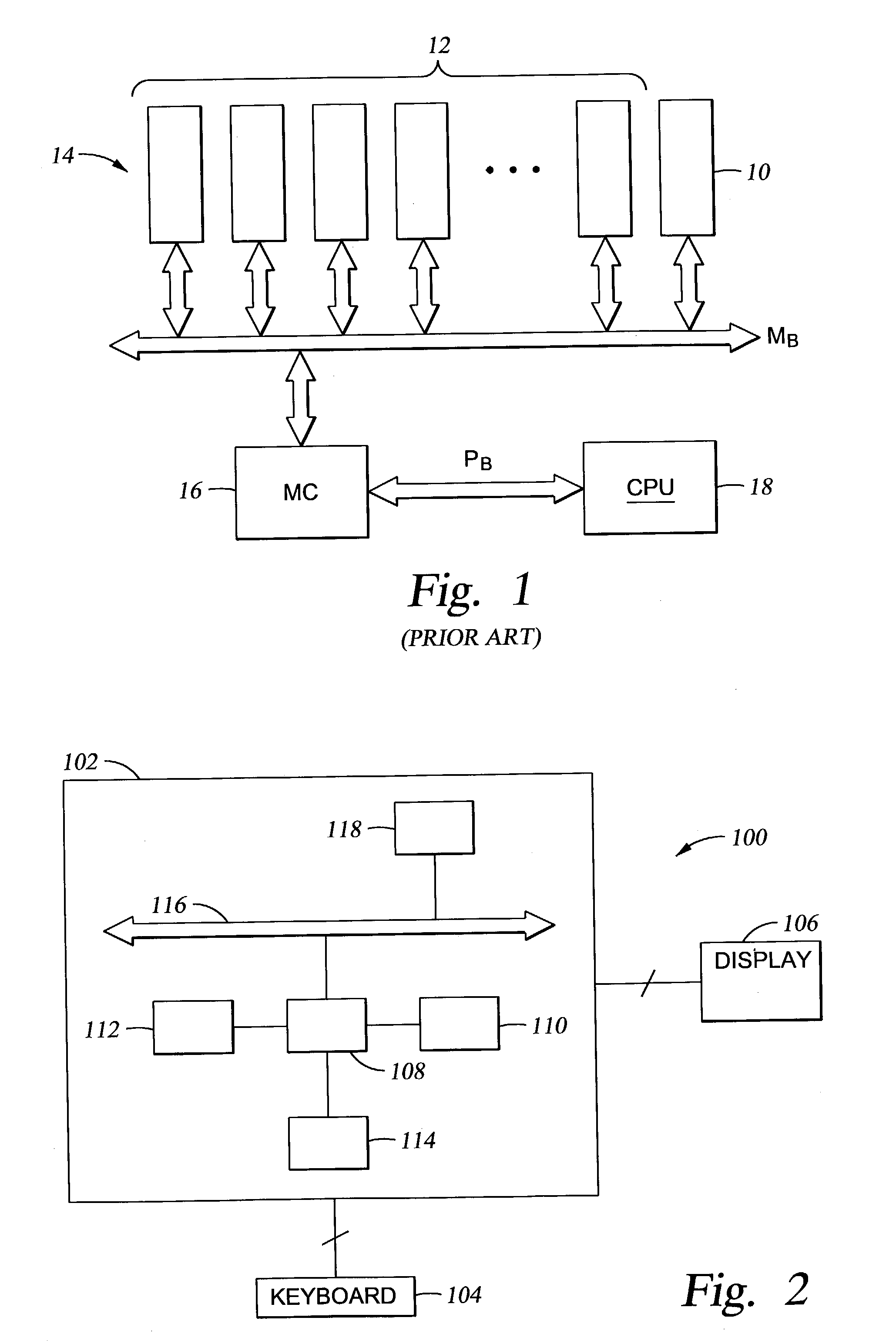

In computing, a memory address is a reference to a specific memory location used at various levels by software and hardware. Memory addresses are fixed-length sequences of digits conventionally displayed and manipulated as unsigned integers. Such numerical semantic bases itself upon features of CPU (such as the instruction pointer and incremental address registers), as well upon use of the memory like an array endorsed by various programming languages.

Memory addressing controlled by PTE fields

ActiveUS7805587B1Reduce accessMemory adressing/allocation/relocationComputer security arrangementsMemory addressDram memory

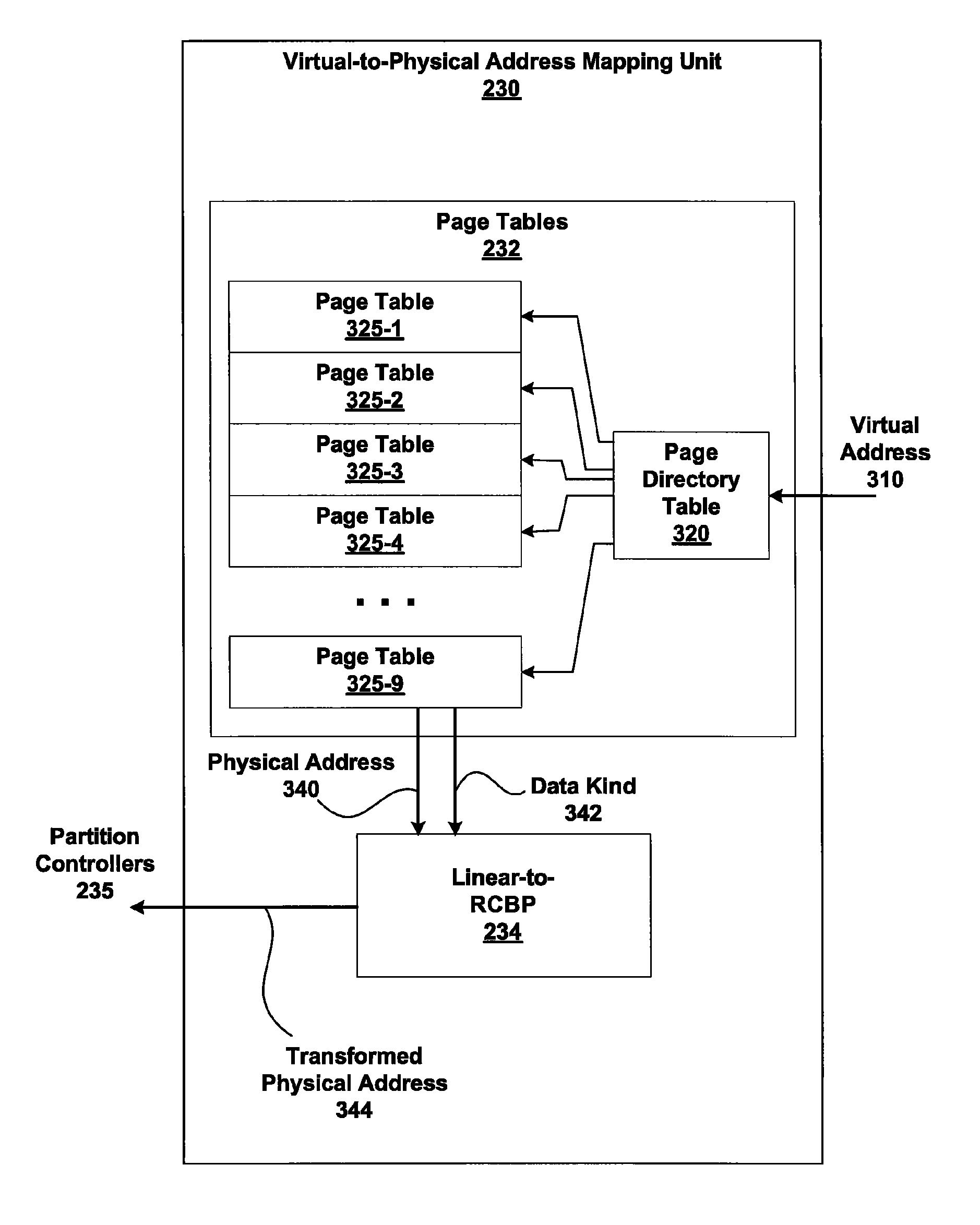

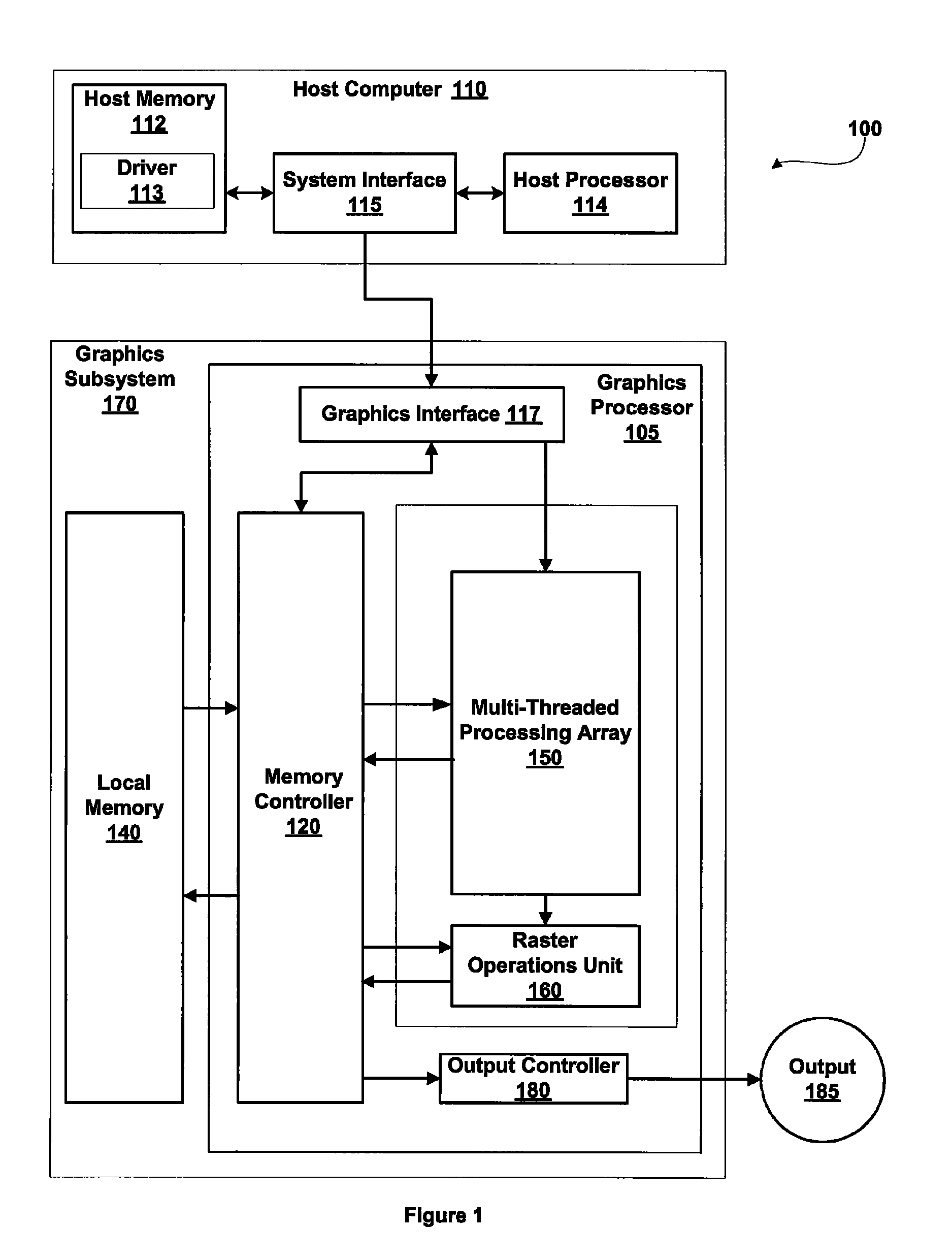

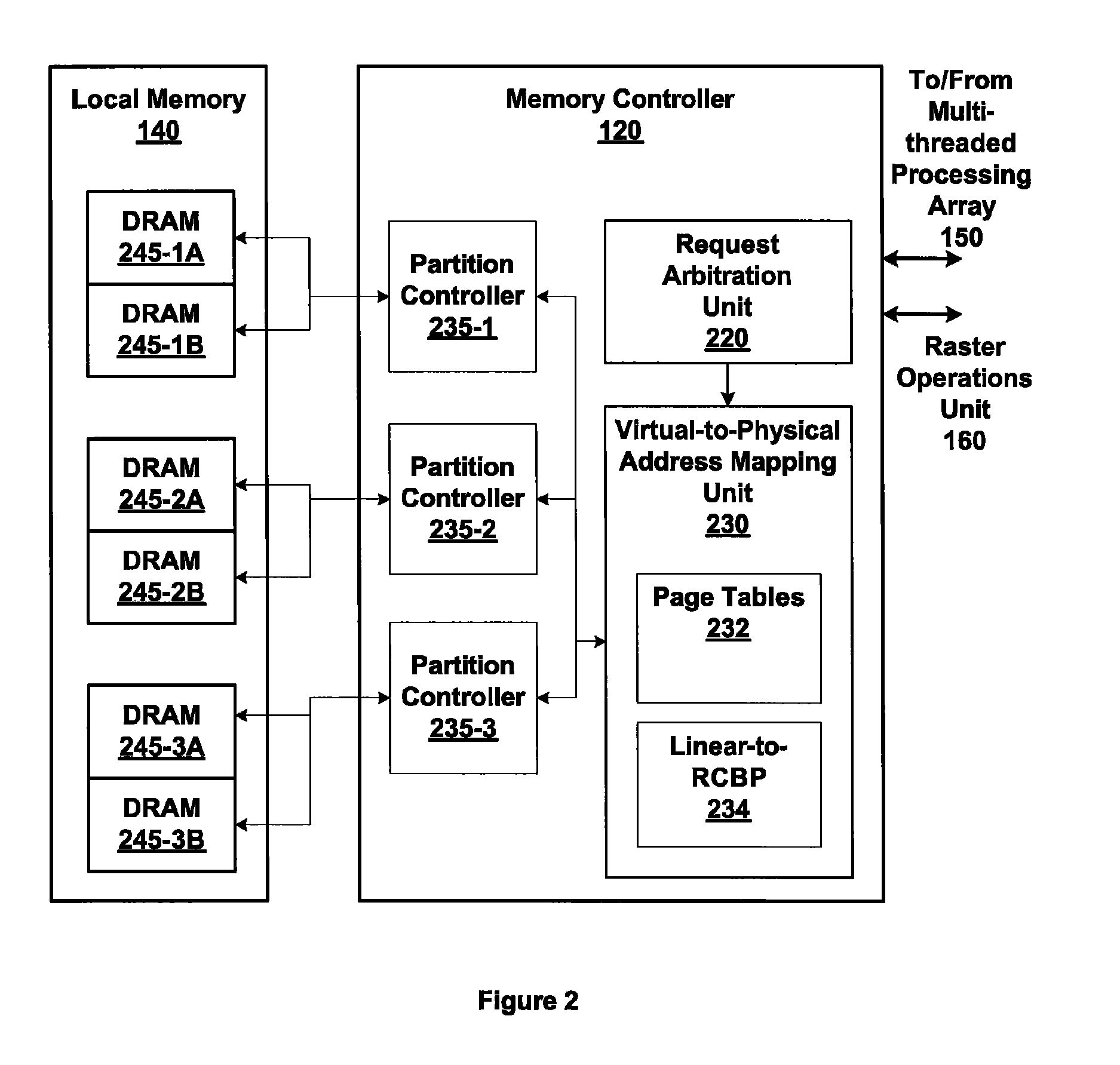

Embodiments of the present invention enable virtual-to-physical memory address translation using optimized bank and partition interleave patterns to improve memory bandwidth by distributing data accesses over multiple banks and multiple partitions. Each virtual page has a corresponding page table entry that specifies the physical address of the virtual page in linear physical address space. The page table entry also includes a data kind field that is used to guide and optimize the mapping process from the linear physical address space to the DRAM physical address space, which is used to directly access one or more DRAM. The DRAM physical address space includes a row, bank and column address. The data kind field is also used to optimize the starting partition number and partition interleave pattern that defines the organization of the selected physical page of memory within the DRAM memory system.

Owner:NVIDIA CORP

Processor with Kernel Mode Access to User Space Virtual Addresses

ActiveUS20130132702A1Enhanced kernel mode memory capacityIncrease memory capacityMemory adressing/allocation/relocationUnauthorized memory use protectionMemory addressParallel computing

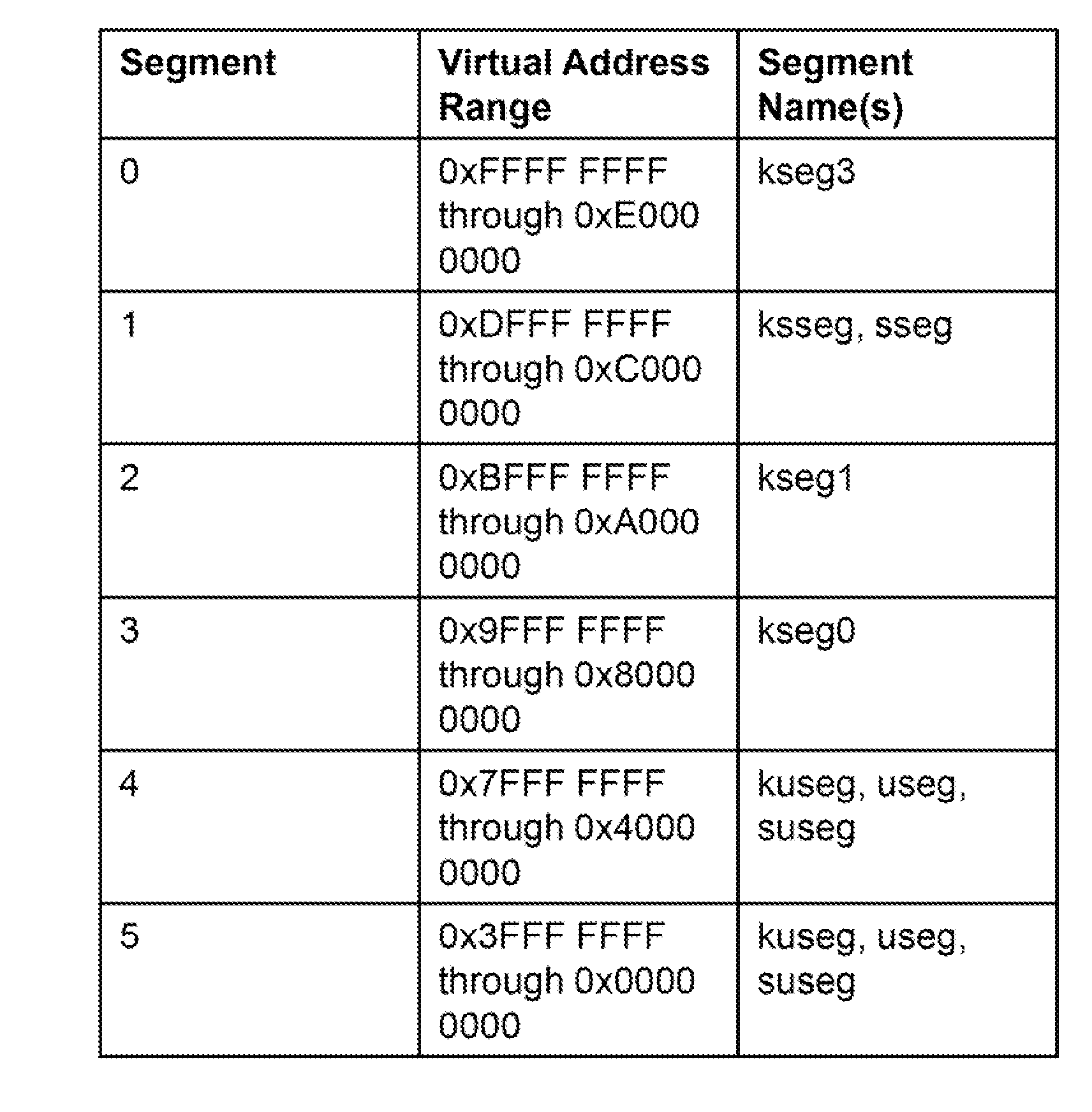

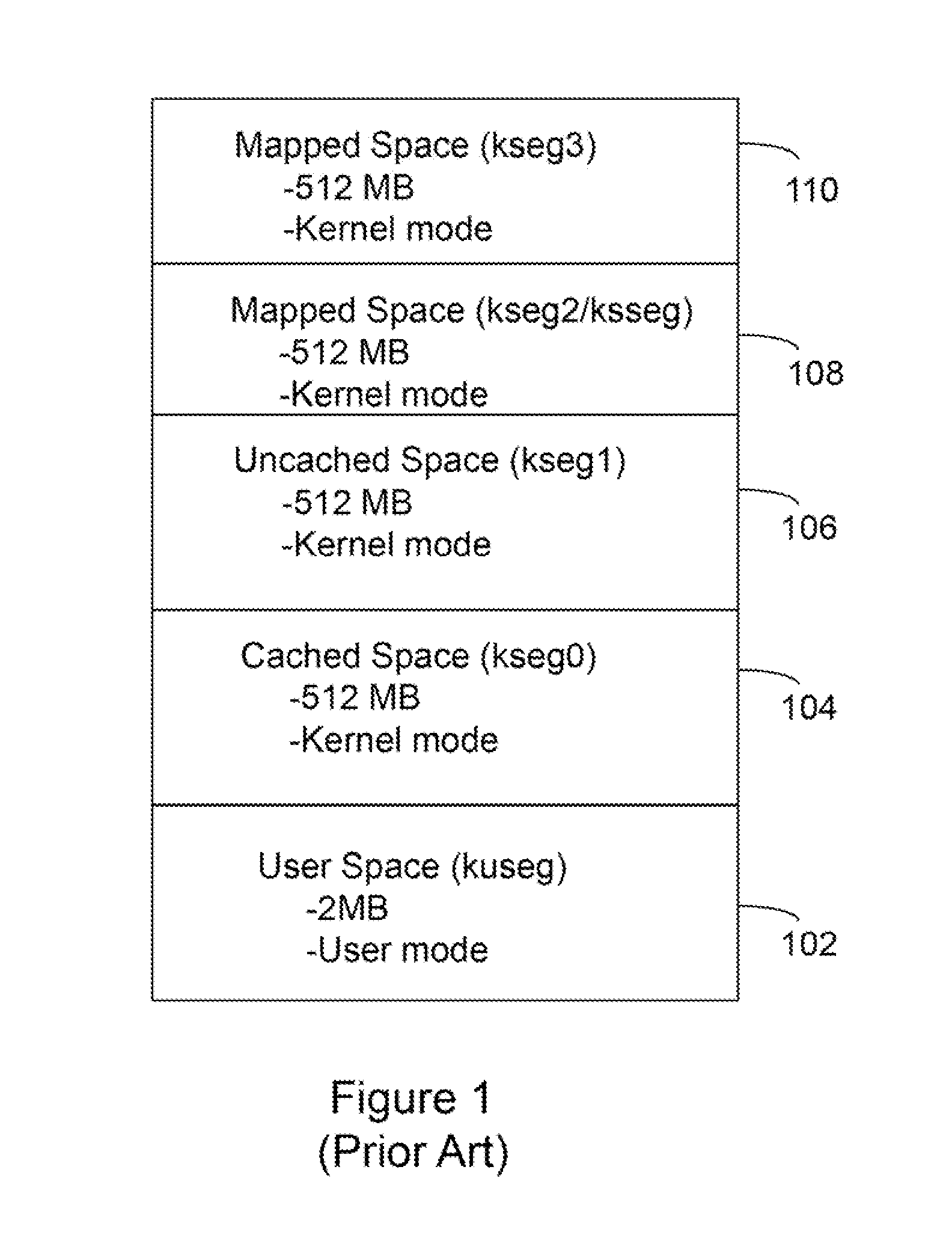

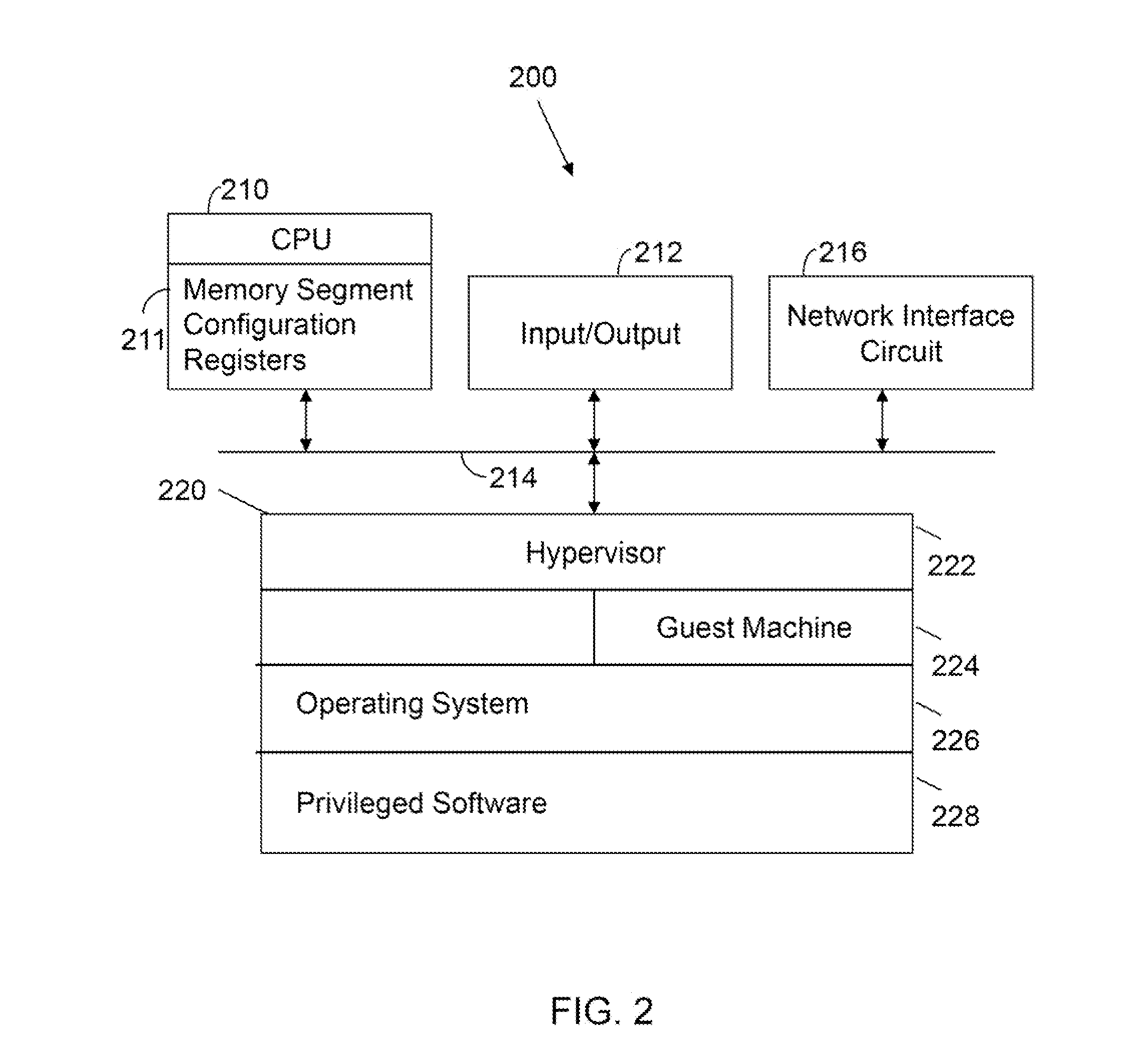

A computer includes a memory and a processor connected to the memory. The processor includes memory segment configuration registers to store defined memory address segments and defined memory address segment attributes such that the processor operates in accordance with the defined memory address segments and defined memory address segment attributes to allow kernel mode access to user space virtual addresses for enhanced kernel mode memory capacity.

Owner:MIPS TECH INC

Technique for memory imprint reliability improvement

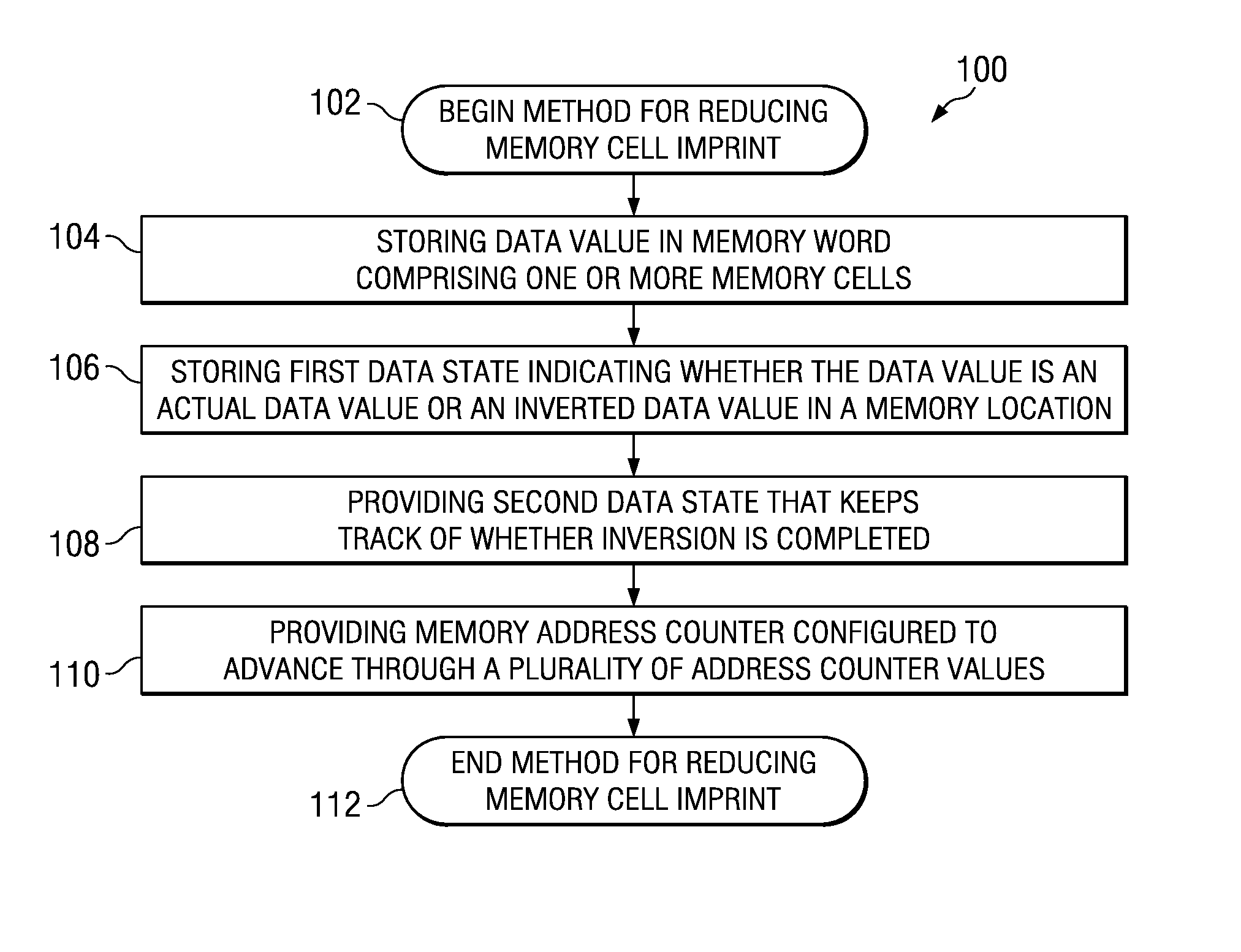

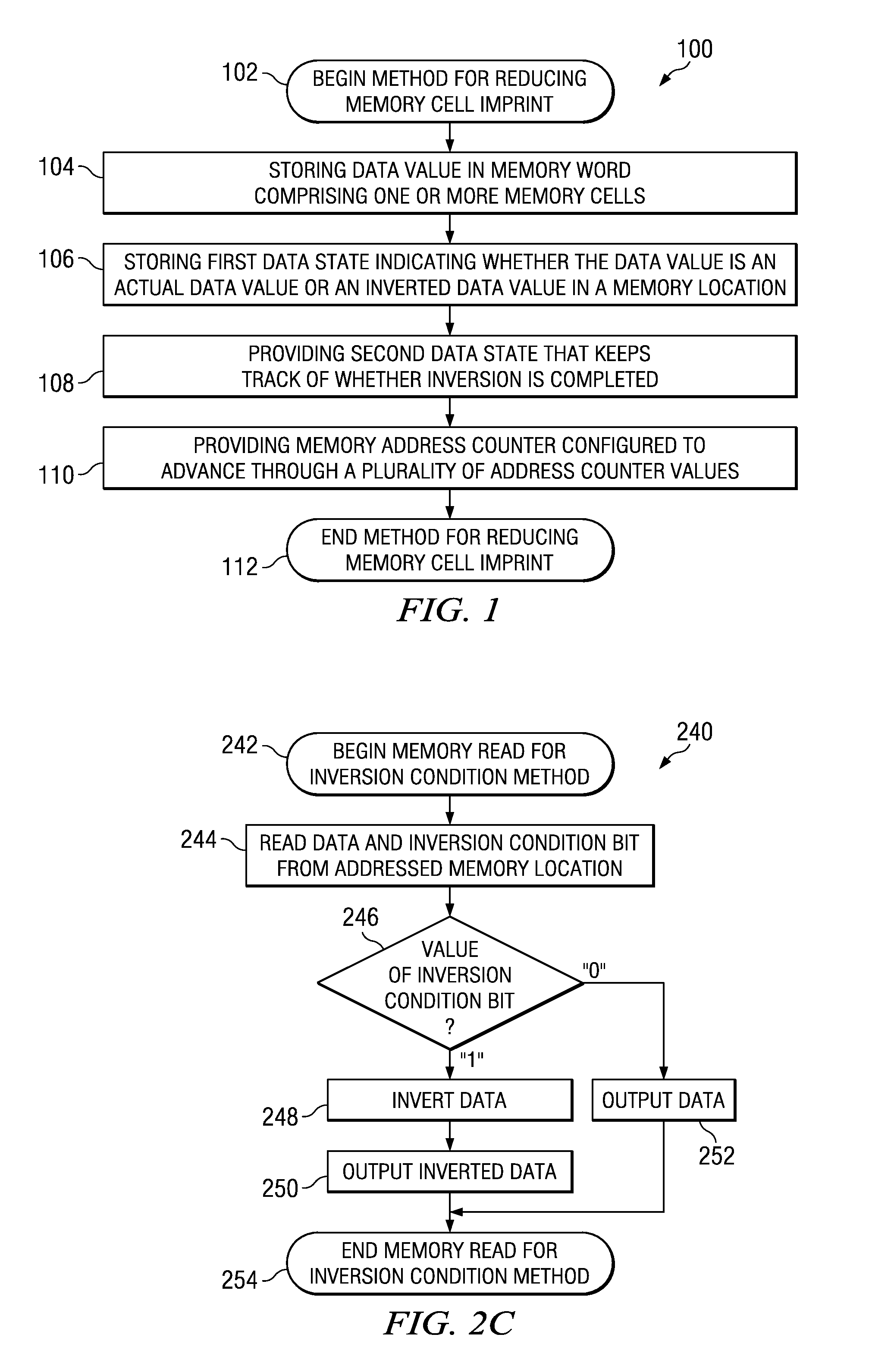

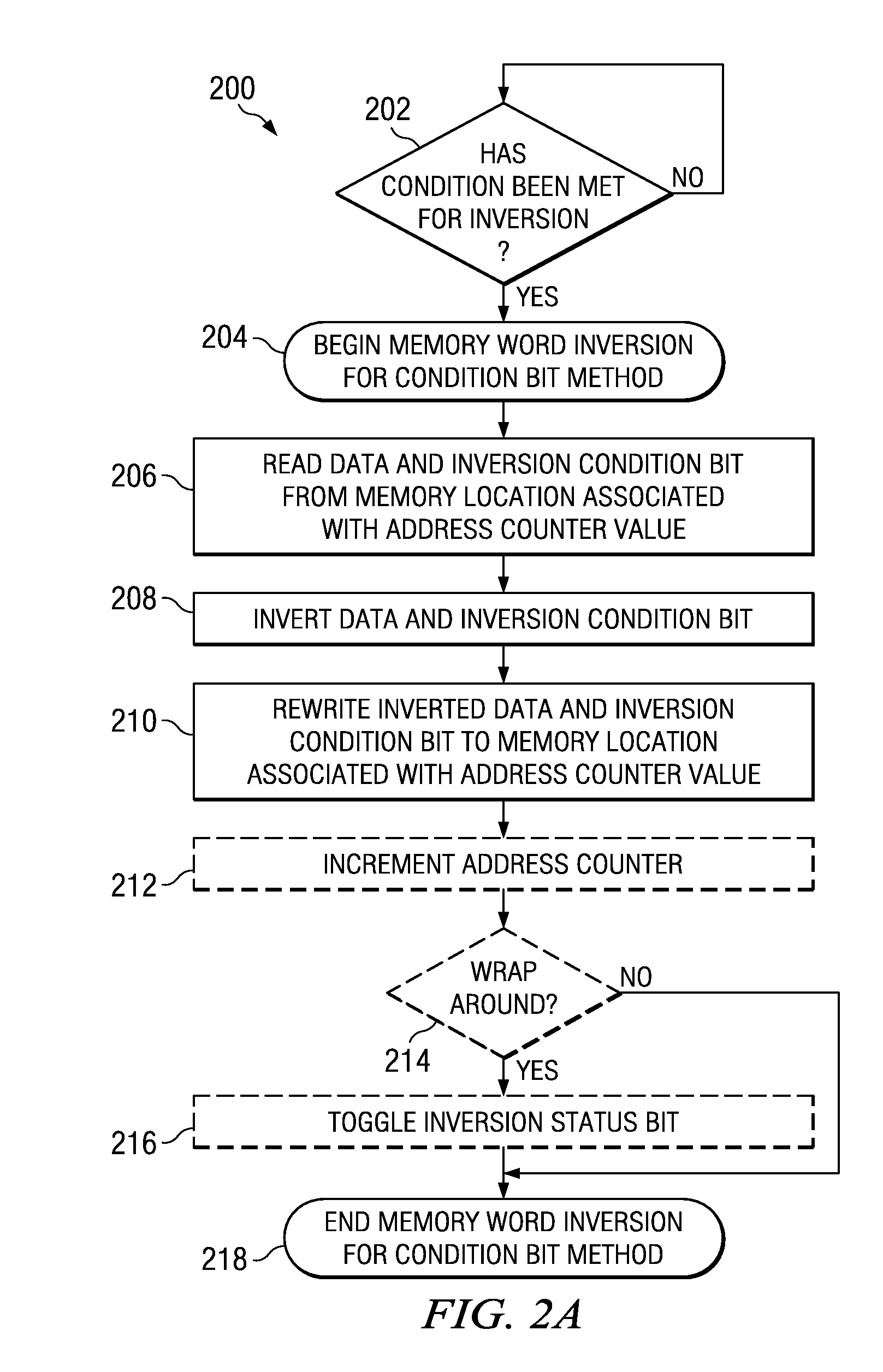

One embodiment of the present invention relates to a method of reducing imprint of a memory cell. The method comprises adding an inversion condition bit operably associated with one or more memory cells storing a memory word. The inversion condition bit indicates whether the memory word represents an actual payload or an inversion of the actual payload. The inversion condition bit and memory word are selectively toggled by a control circuitry. Inversion is performed by reading the inversion condition bit and memory word and rewriting the memory word back to the one or more memory cells in an inverted or non-inverted state, depending on an inversion condition bit. The inversion condition bit is then written to the inversion status bit value. The memory address is incremented, and the inversion status data state is toggled once the address counter addresses the entire memory array. Other methods and circuits are also disclosed.

Owner:TEXAS INSTR INC

SIMD processor and addressing method

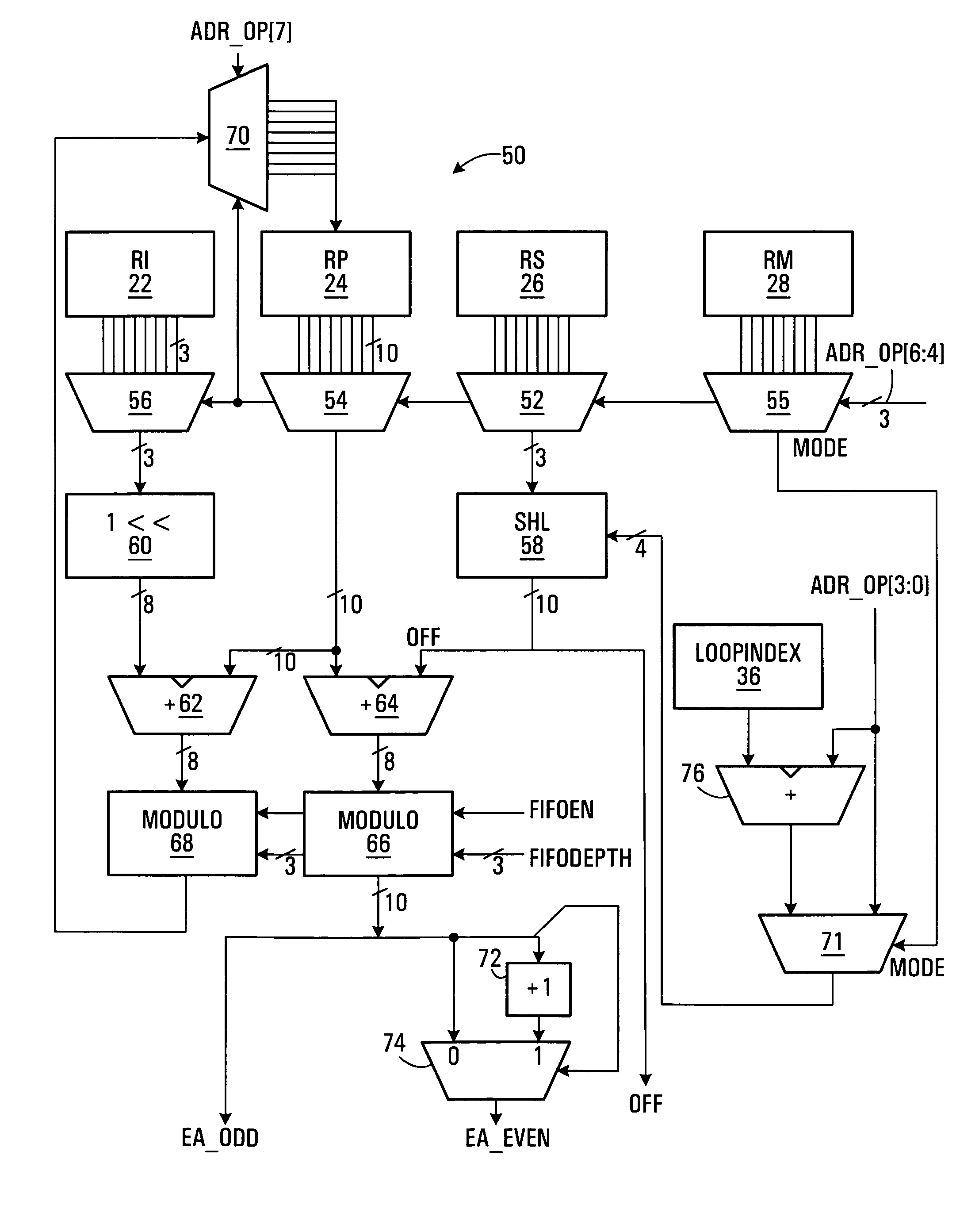

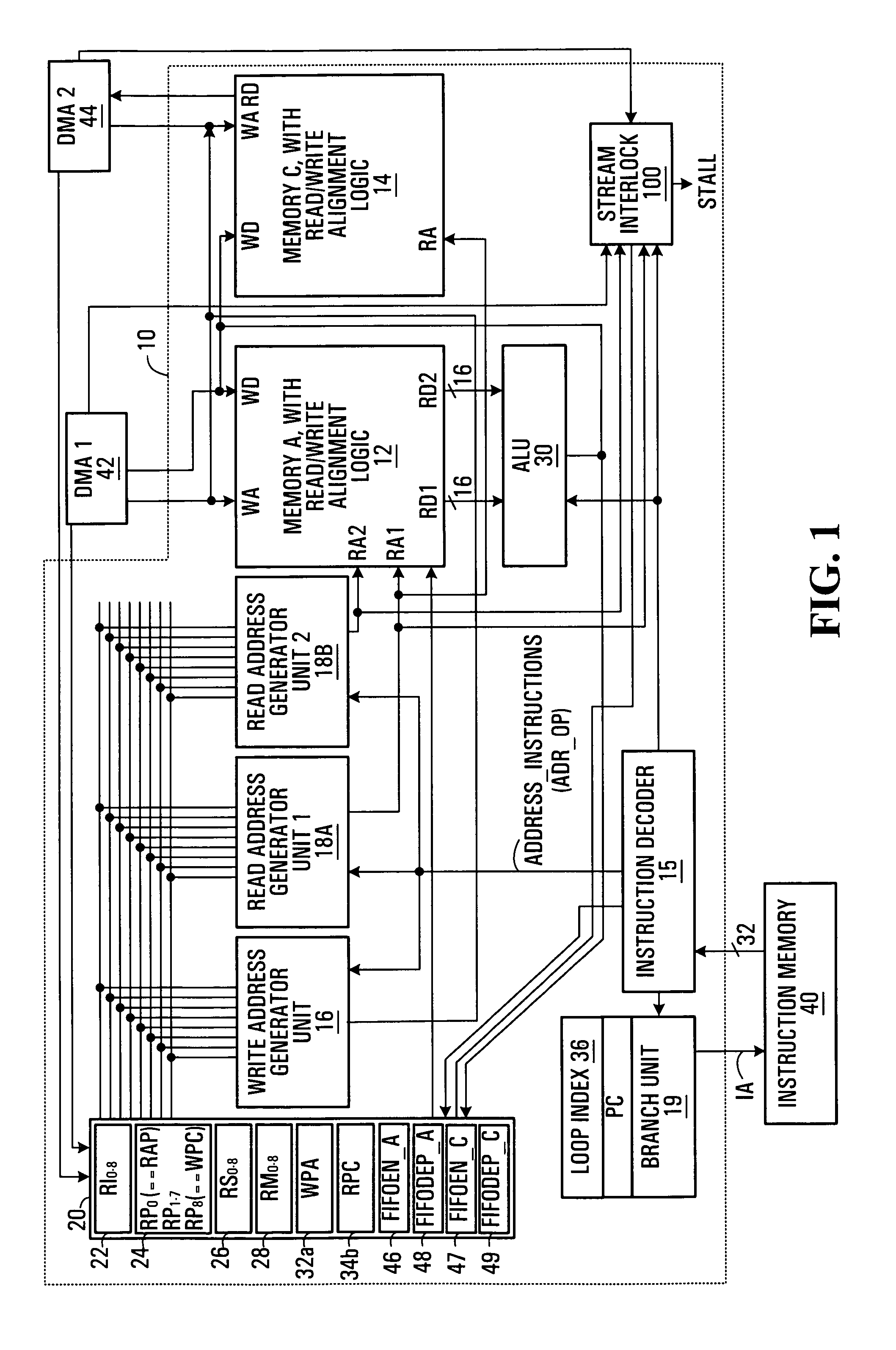

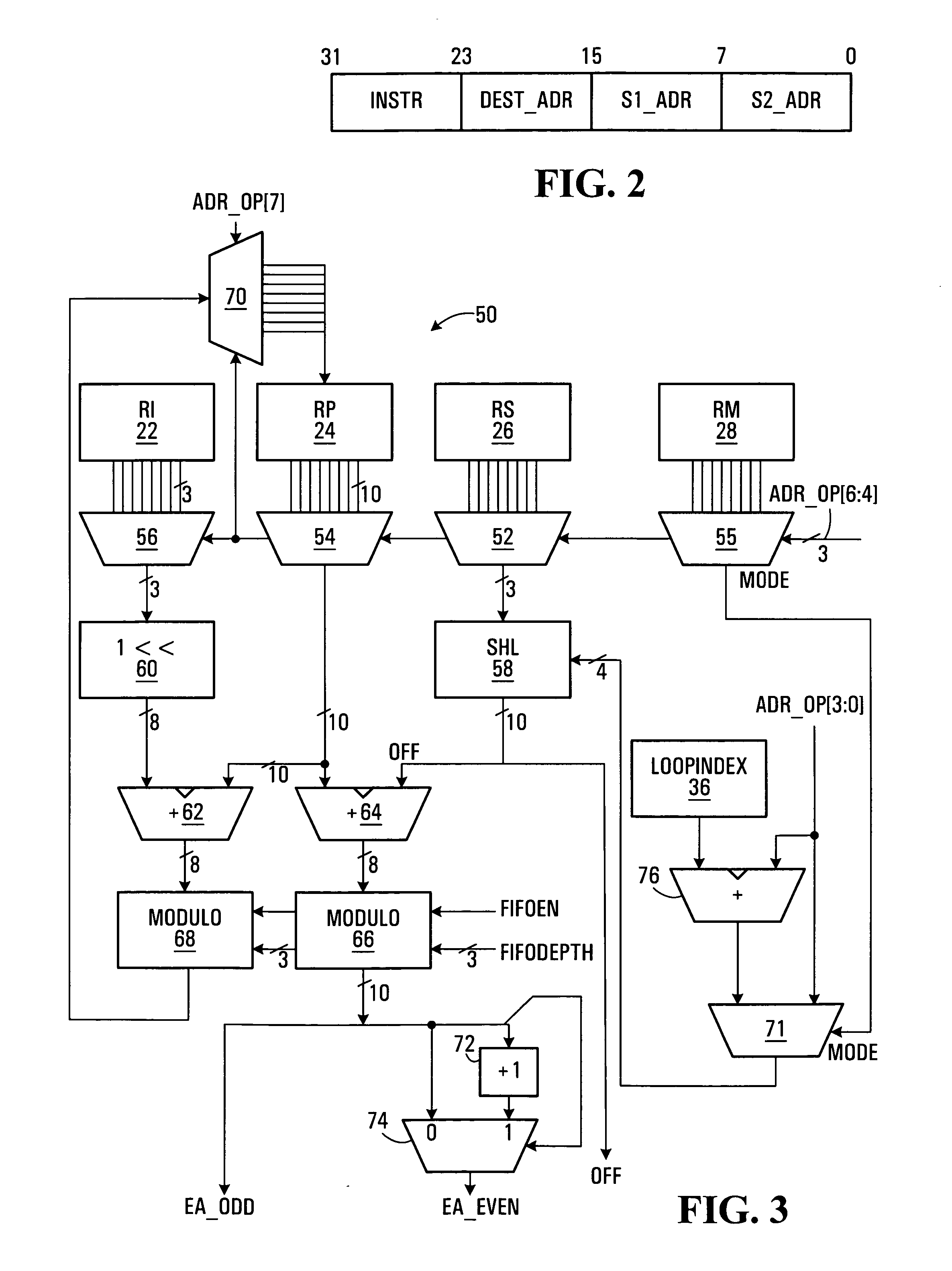

InactiveUS20060047937A1Without unduly consuming processor resourcesMemory adressing/allocation/relocationMicro-instruction address formationMemory addressProcessor register

A single instruction, multiple data (SIMD) processor including a plurality of addressing register sets, used to flexibly calculate effective operand source and destination memory addresses is disclosed. Two or more address generators calculate effective addresses using the register sets. Each register set includes a pointer register, and a scale register. An address generator forms effective addresses from a selected register set's pointer register and scale register; and an offset. For example, the effective memory address may be formed by multiplying the scale value by an offset value and summing the pointer and the scale value multiplied by the offset value.

Owner:AVAGO TECH INT SALES PTE LTD

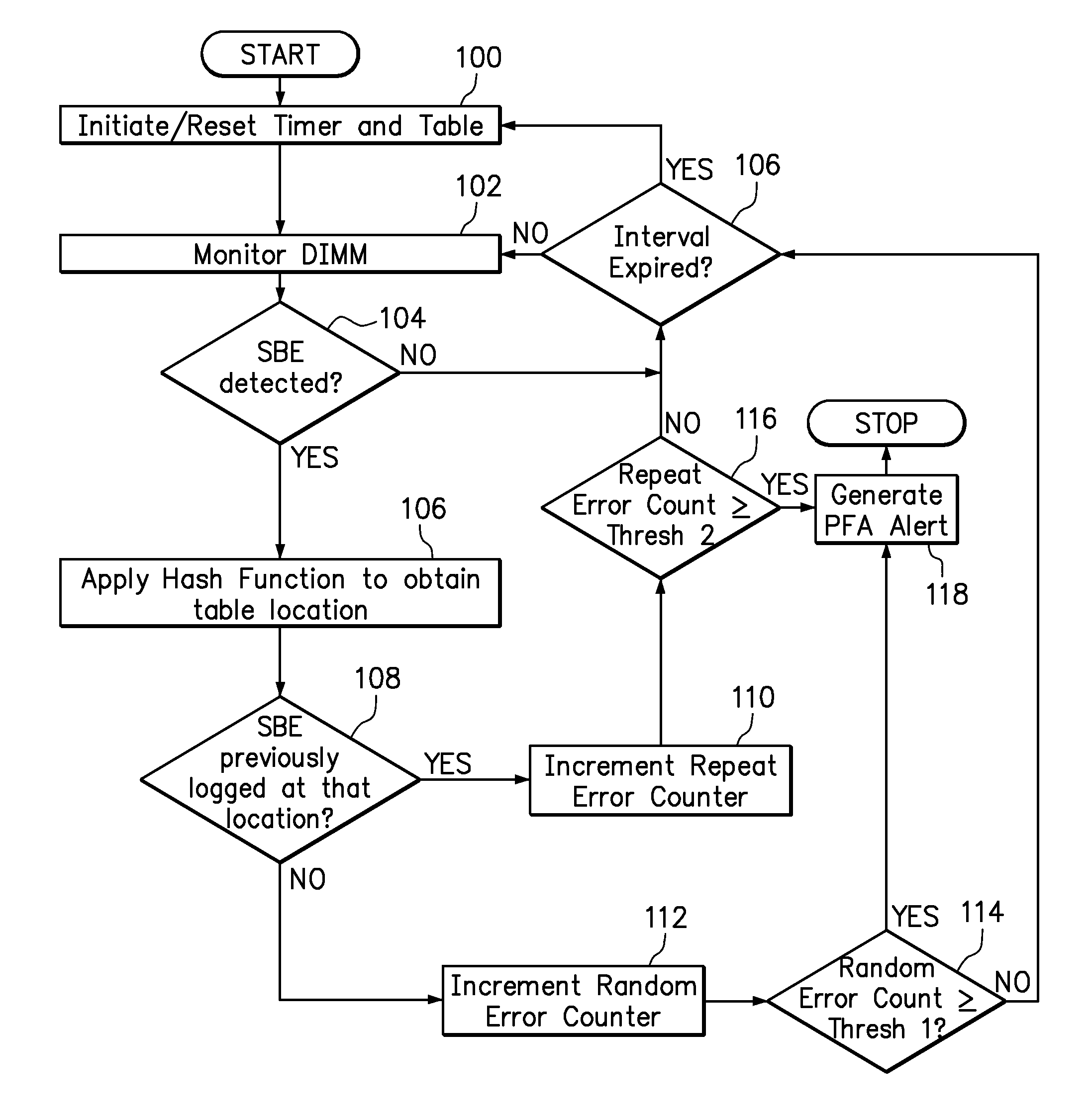

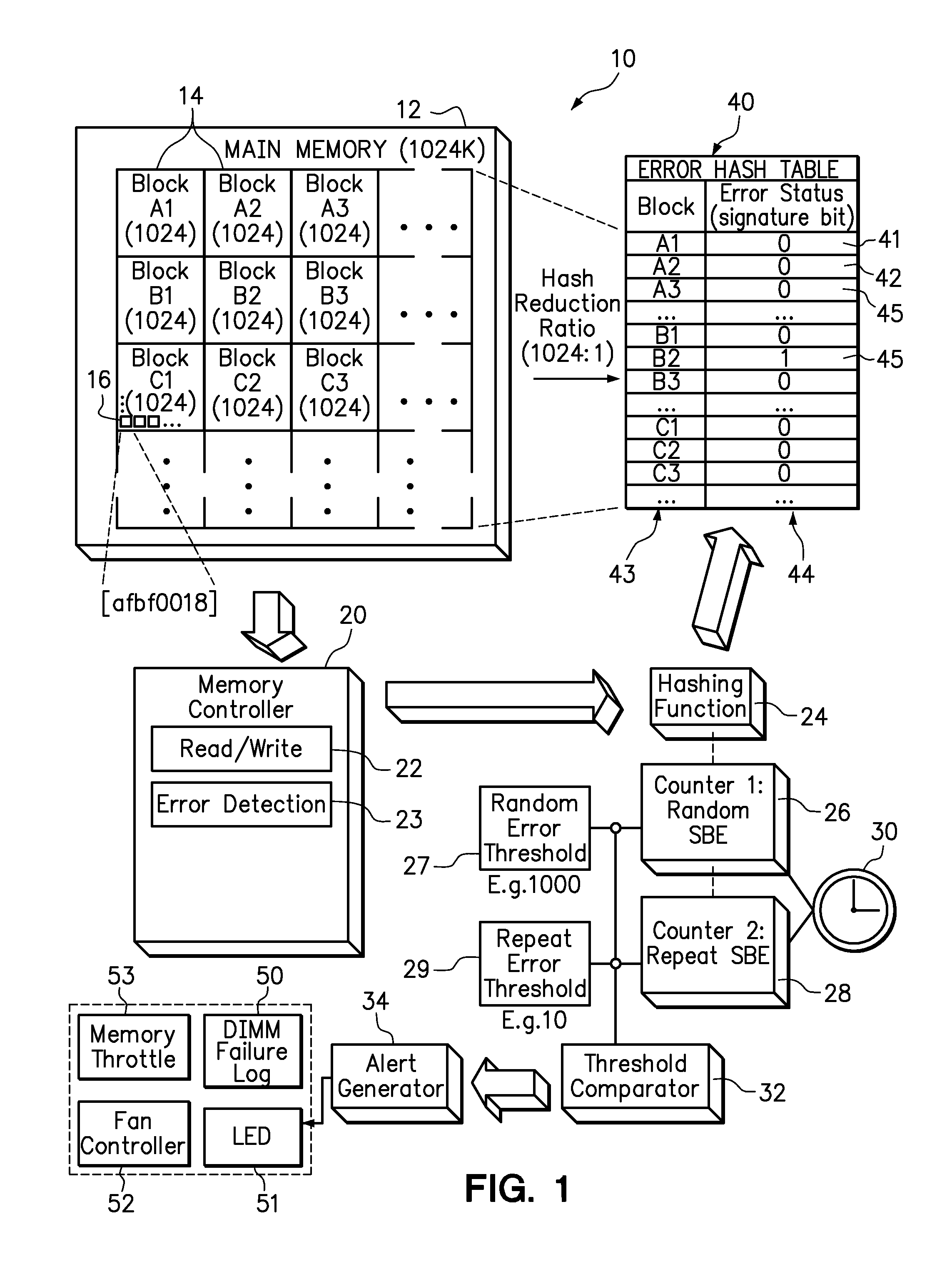

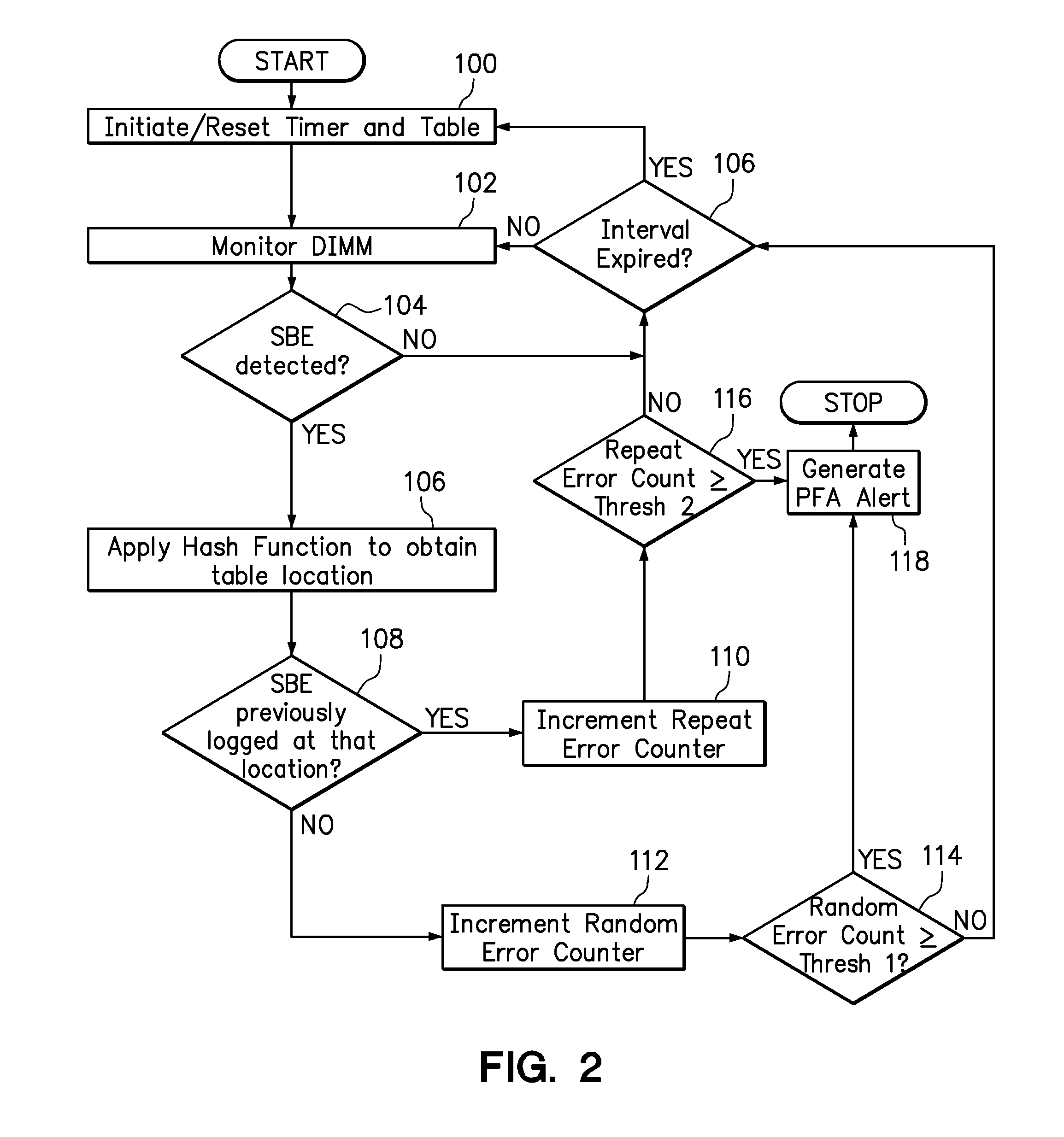

Use of hashing function to distinguish random and repeat errors in a memory system

One embodiment provides an error detection method wherein single-bit errors in a memory module are detected and identified as being a random error or a repeat error. Each identified random error and each identified repeat error occurring in a time interval is counted. An alert is generated in response to a number of identified random errors reaching a random-error threshold or a number of identified repeat errors reaching a repeat-error threshold during the predefined interval. The repeat-error threshold is set lower than the random-error threshold. A hashing process may be applied to the memory address of each detected error to map the location of the error in the memory system to a corresponding location in an electronic table.

Owner:LENOVO GLOBAL TECH INT LTD

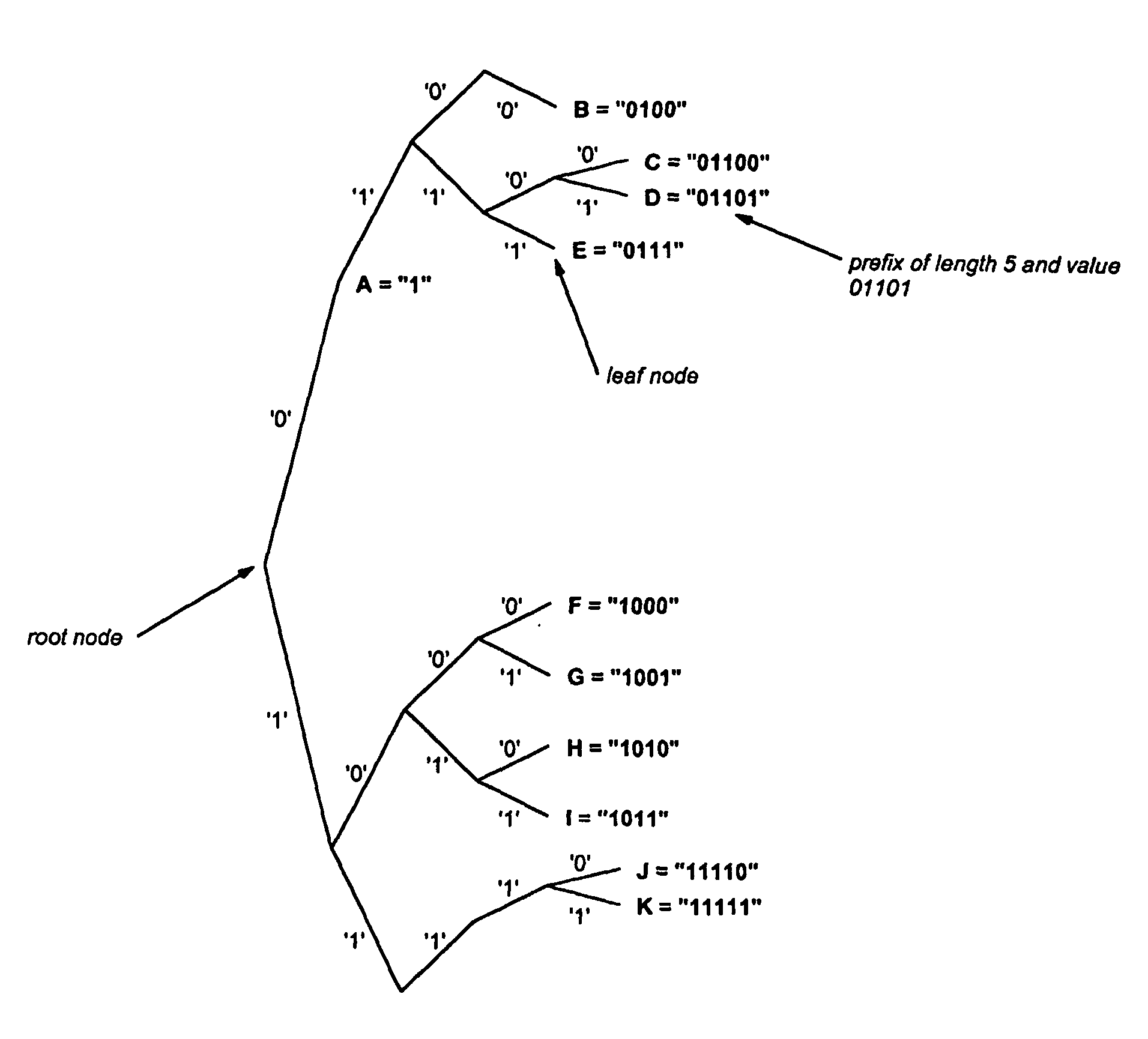

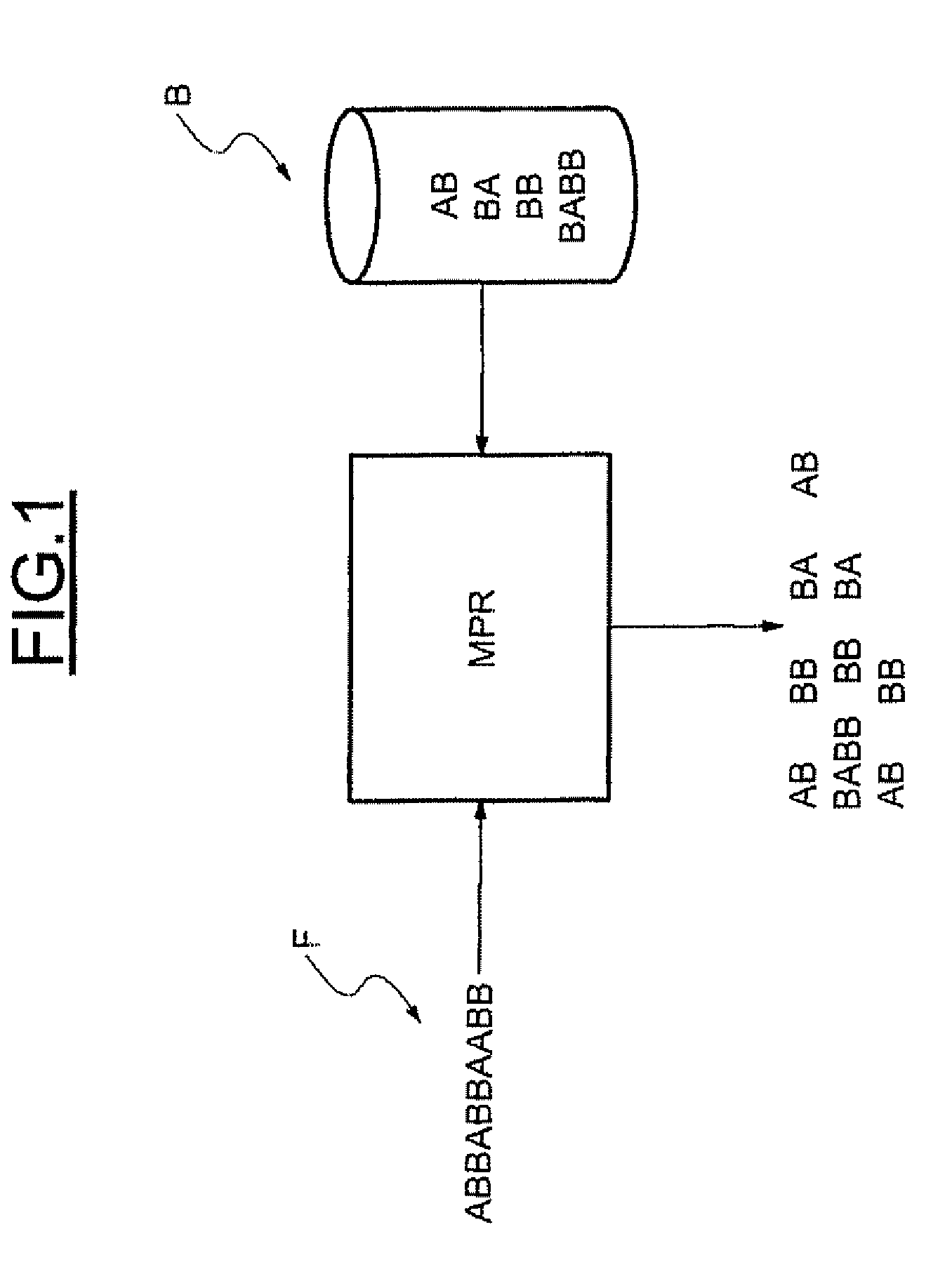

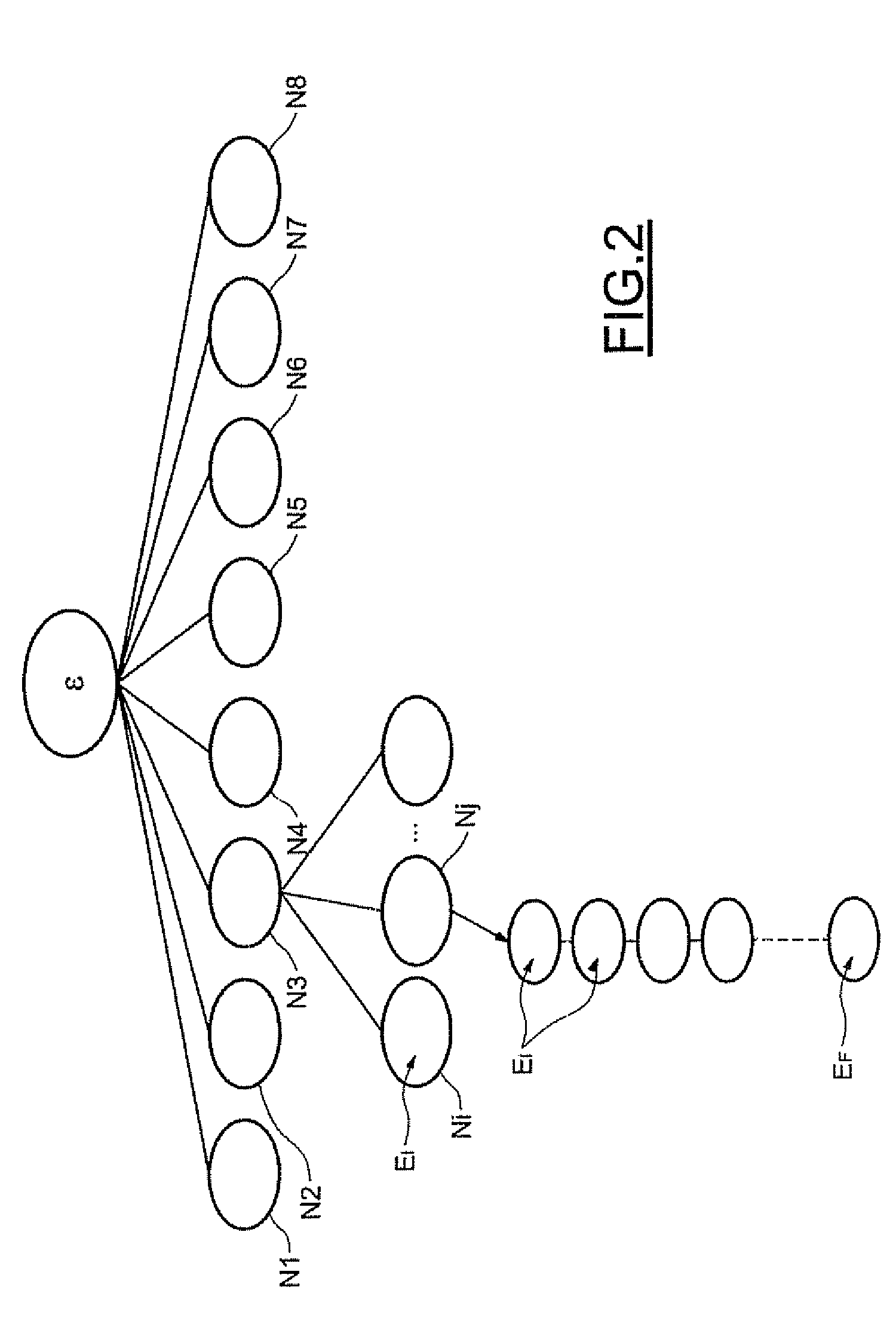

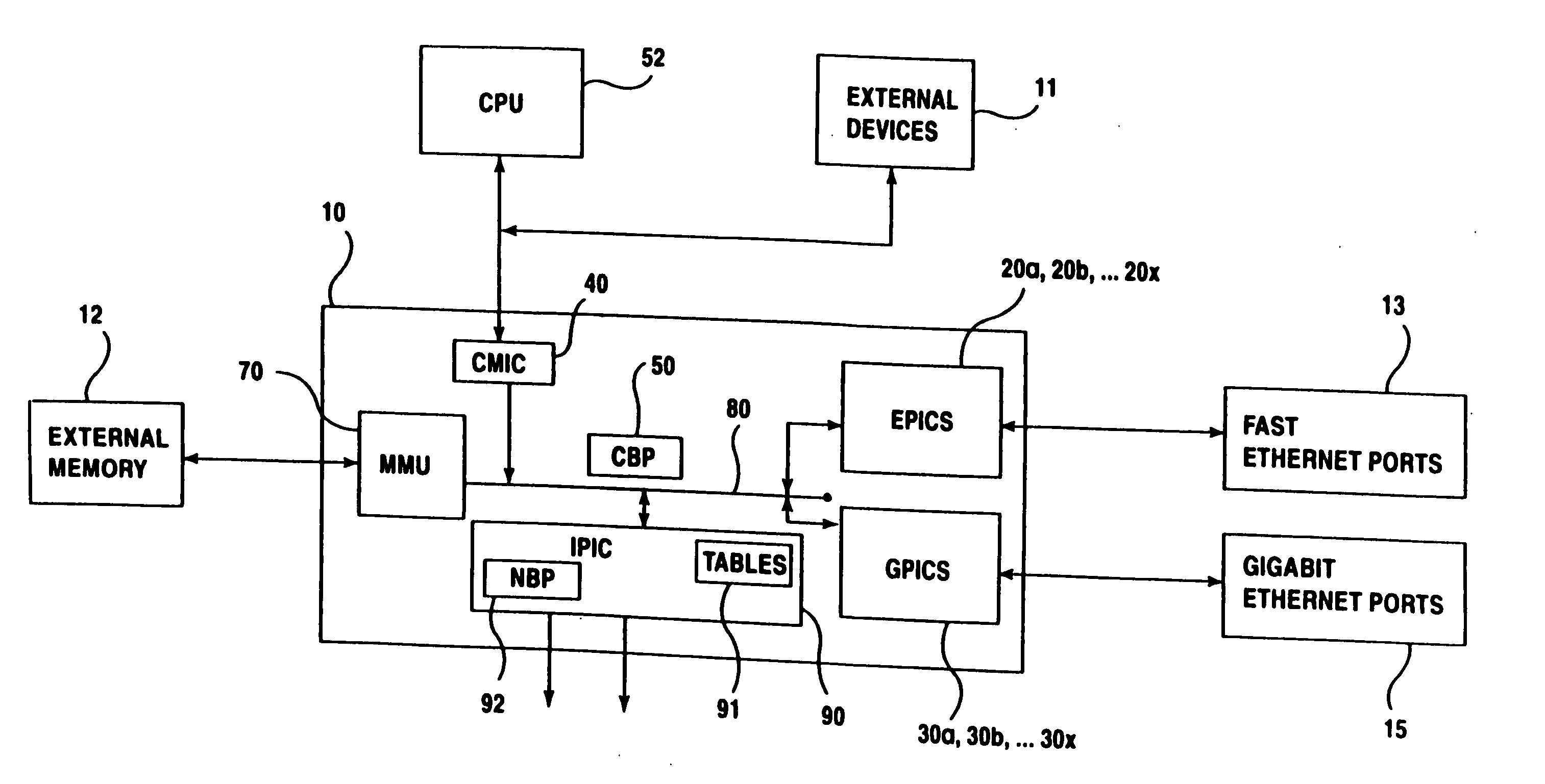

Efficient ipv4/ipv6 best matching prefix method and apparatus

InactiveUS20050171959A1Efficient searchData processing applicationsDigital data processing detailsMemory addressTheoretical computer science

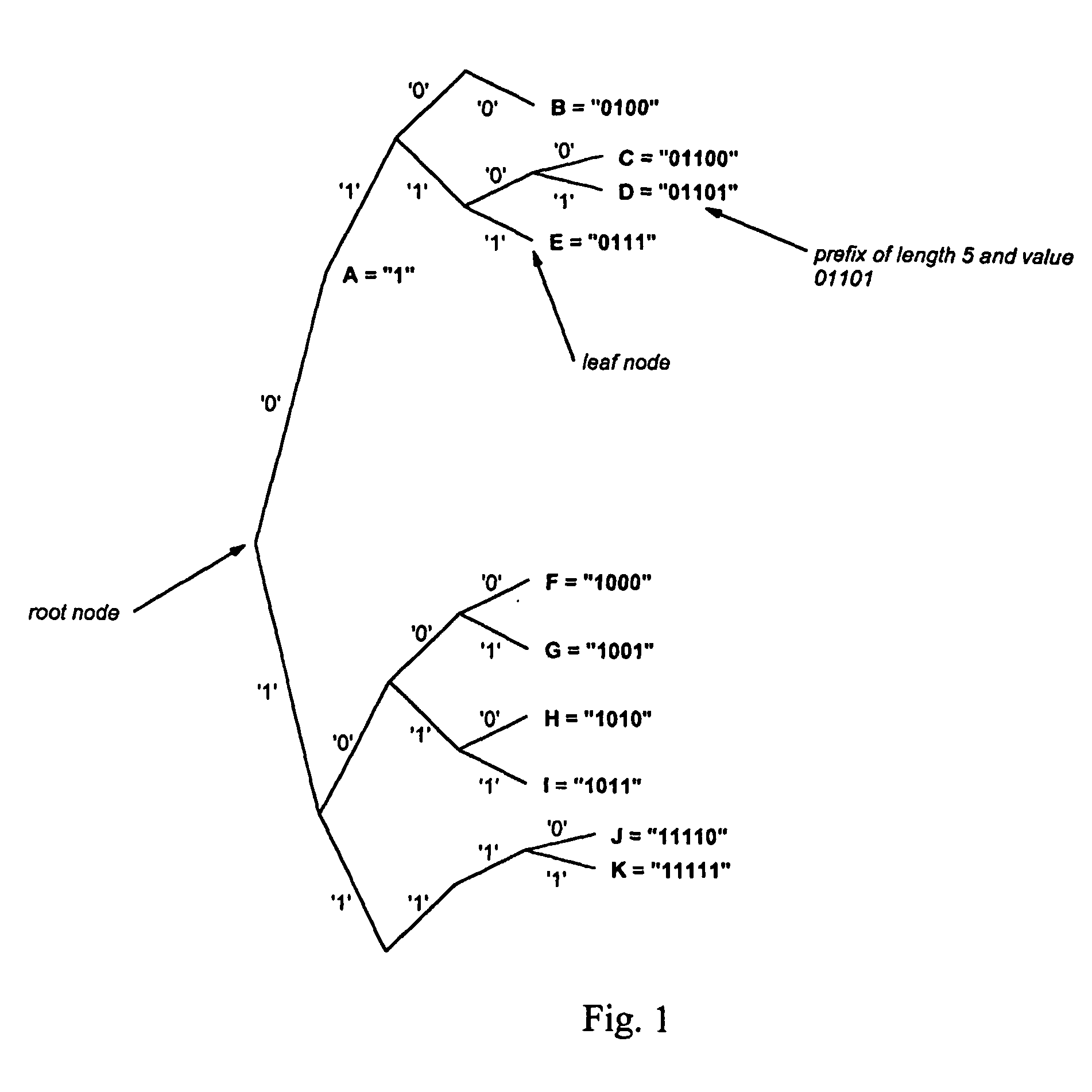

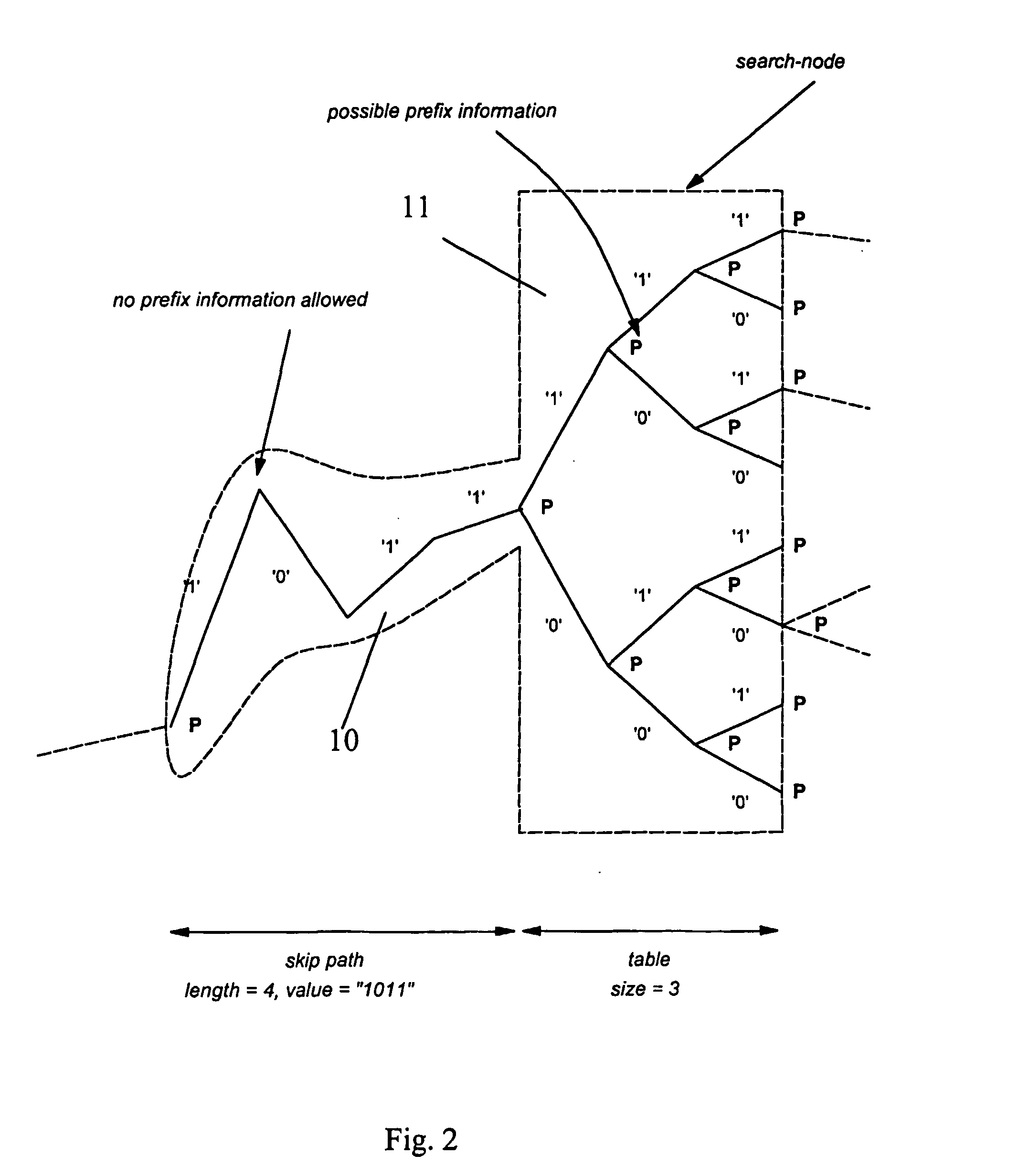

The present invention provides a data-structure to store a search database and provides techniques to build this datastructure given a list of prefixes (P) and to search this database efficiently for a best matching prefix for an address D. The data-structure can be stored in standard memory (14), where values are stored associated with memory address locations. The data structure includes representations of addressable linked tables (FIG. 3b). The representations are related to a binary search trie (FIG. 1) and each linked table (T) has at least one entry. Entries in a table span more than one level of the binary search trie. The spanning feature relates to compression of a binary search trie into a finite number of levels (and hence tables). The finite number is less than the number of levels in the binary search trie. Hence the search algorithm is restricted to a finite, and predetermined number of search accesses to the tables to obtain a best-match result.

Owner:TRANSWITCH

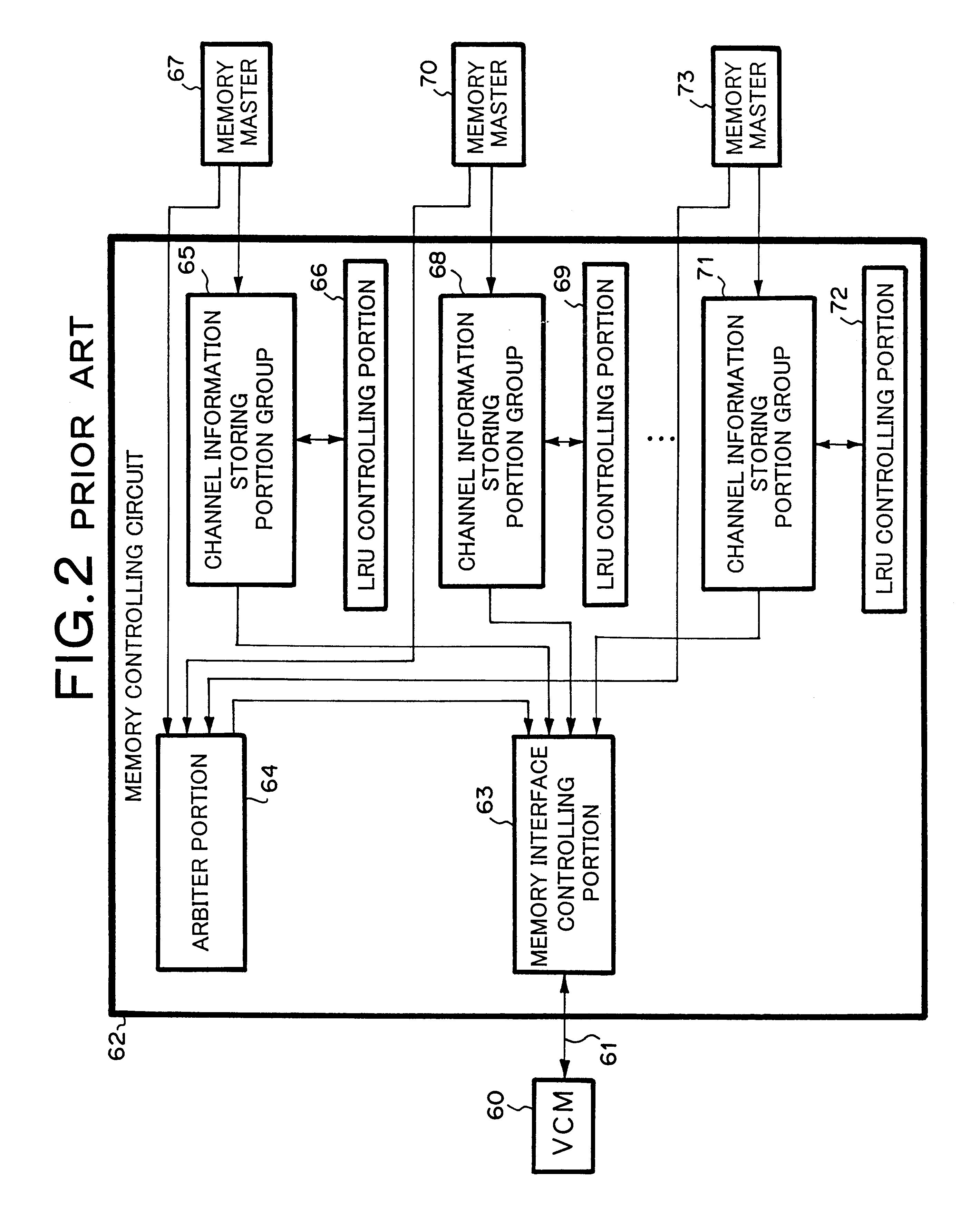

Virtual channel memory access controlling circuit

InactiveUS6505287B2Memory adressing/allocation/relocationDigital storageMemory addressParallel computing

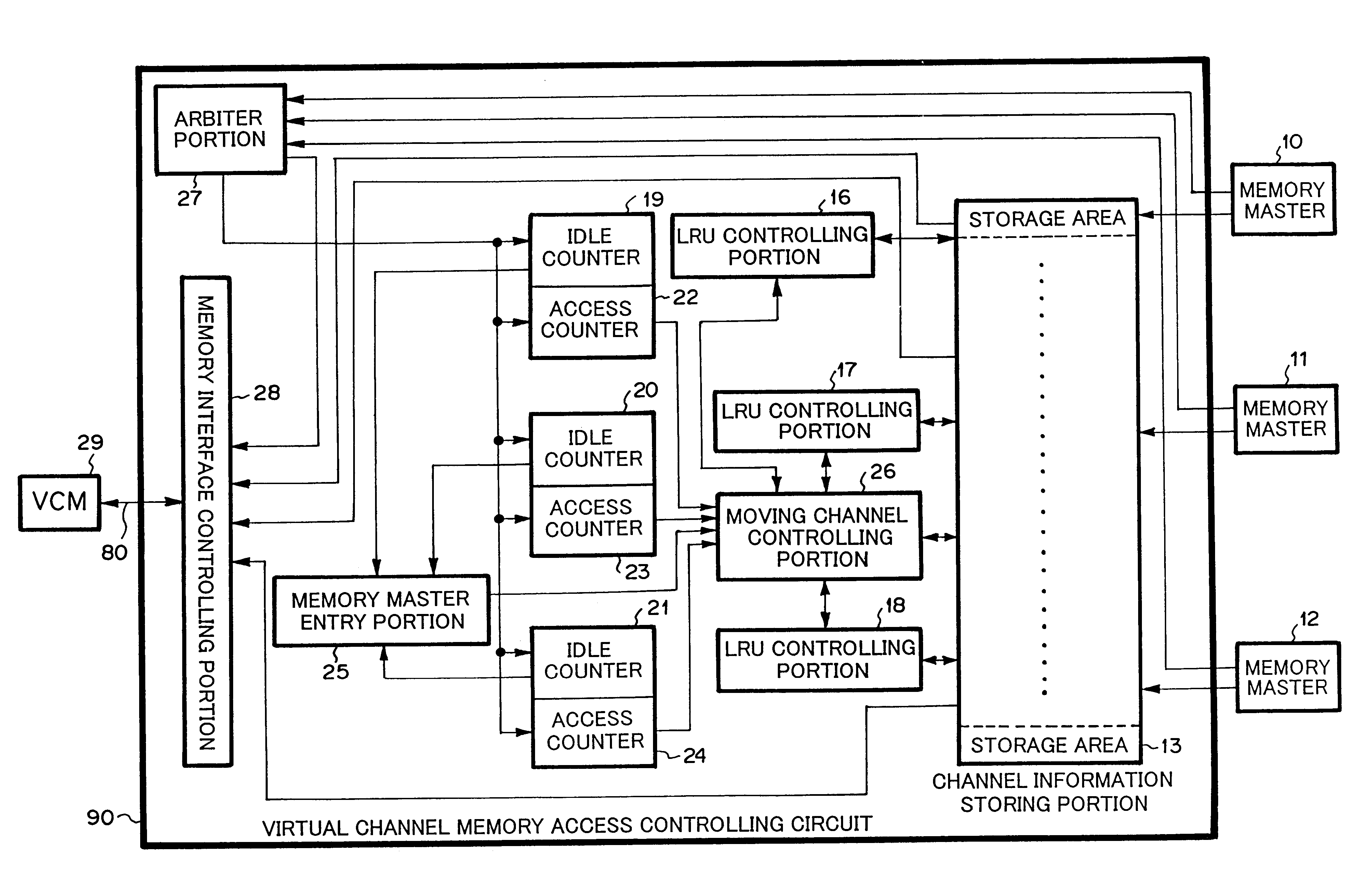

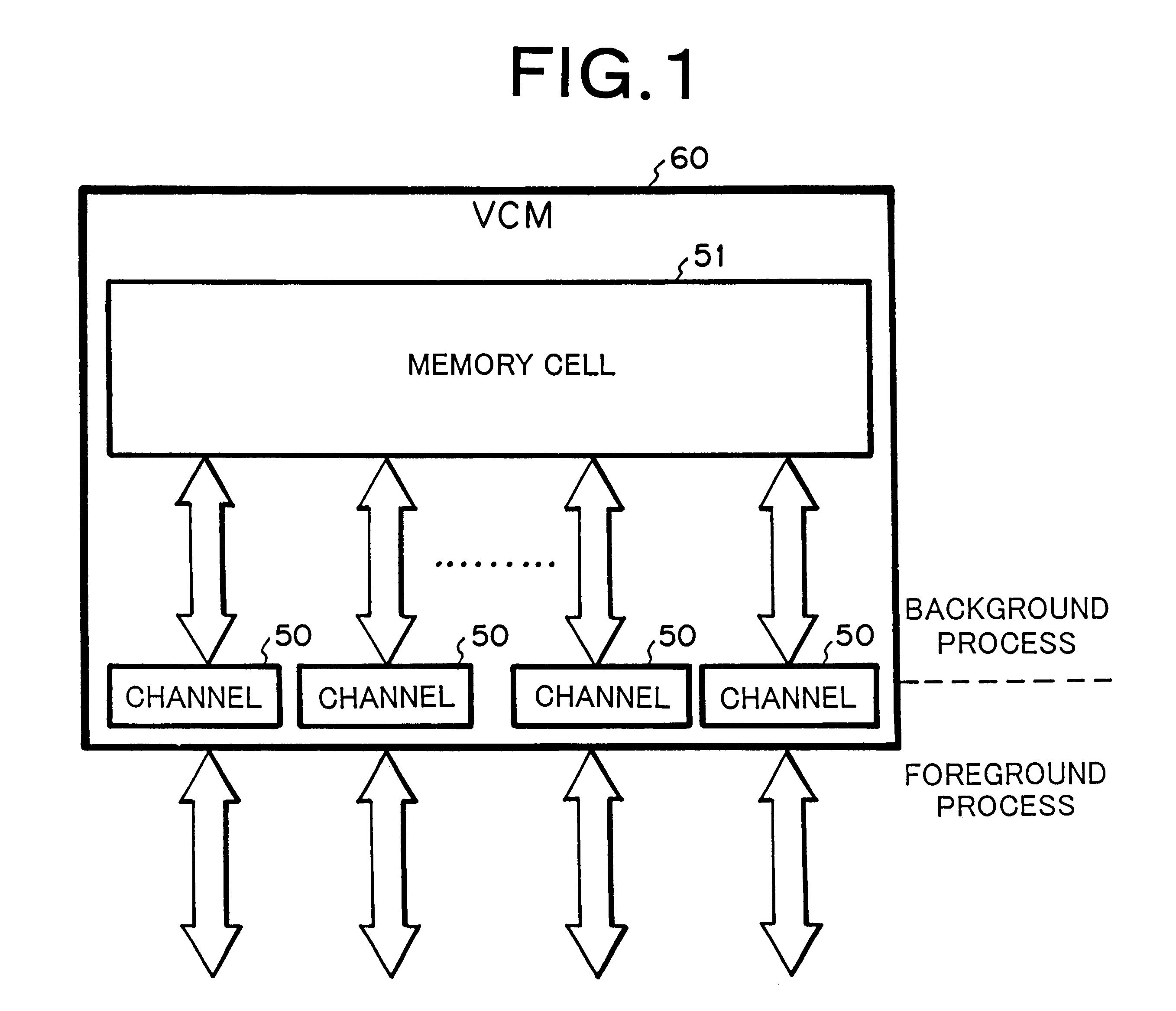

Disclosed is a virtual channel memory access controlling circuit for controlling accesses from a plurality of memory masters to a virtual channel memory having a plurality of channels, comprising: a channel information storing portion having a plurality of storage areas, each of the storage areas being assigned to any of the memory masters, each of the storage areas corresponding to each of the channels, each of the storage areas having a channel number and a memory address, the channel number identifying a channel, and the memory address being sent to the virtual channel memory; detector for detecting necessity of a change of assignment of storage area between memory masters; and changer for dynamically changing the assignment of the storage area between memory masters.

Owner:HTC CORP

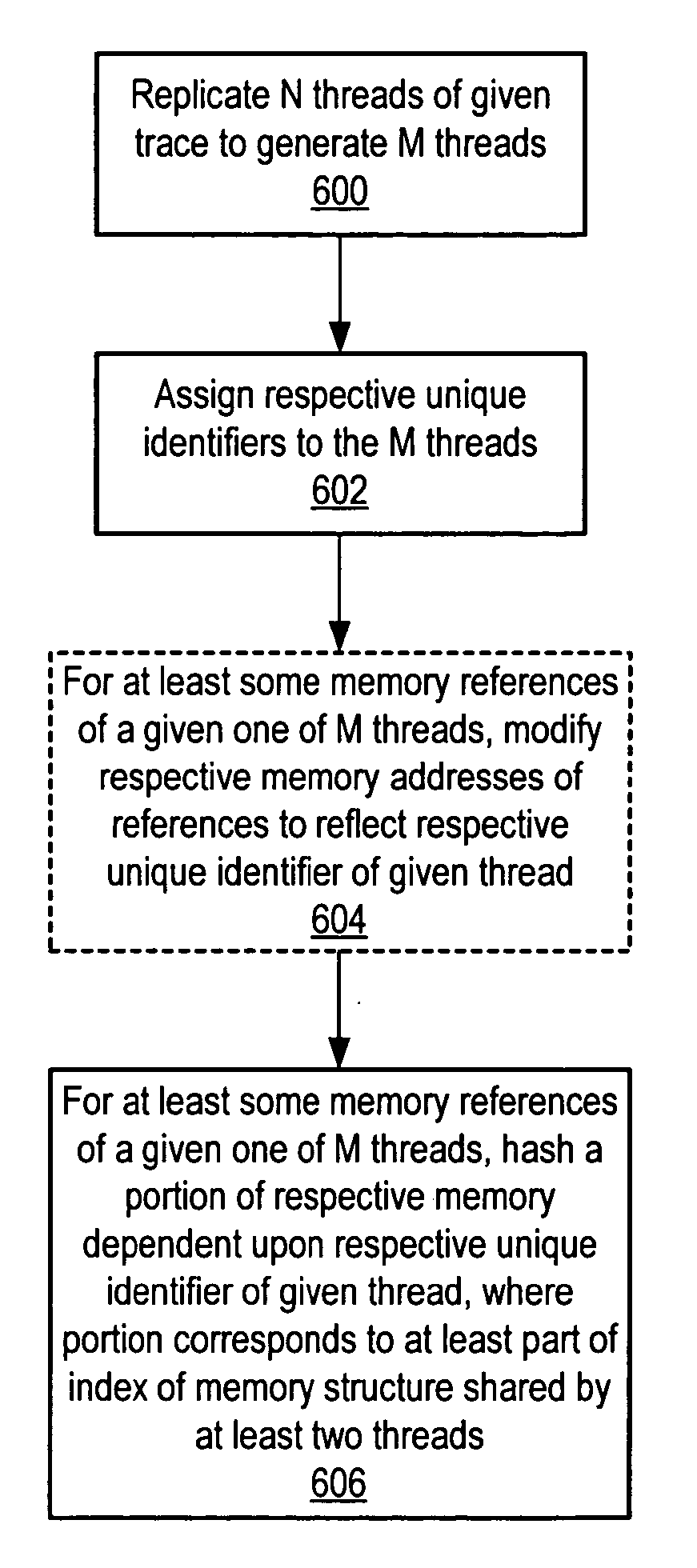

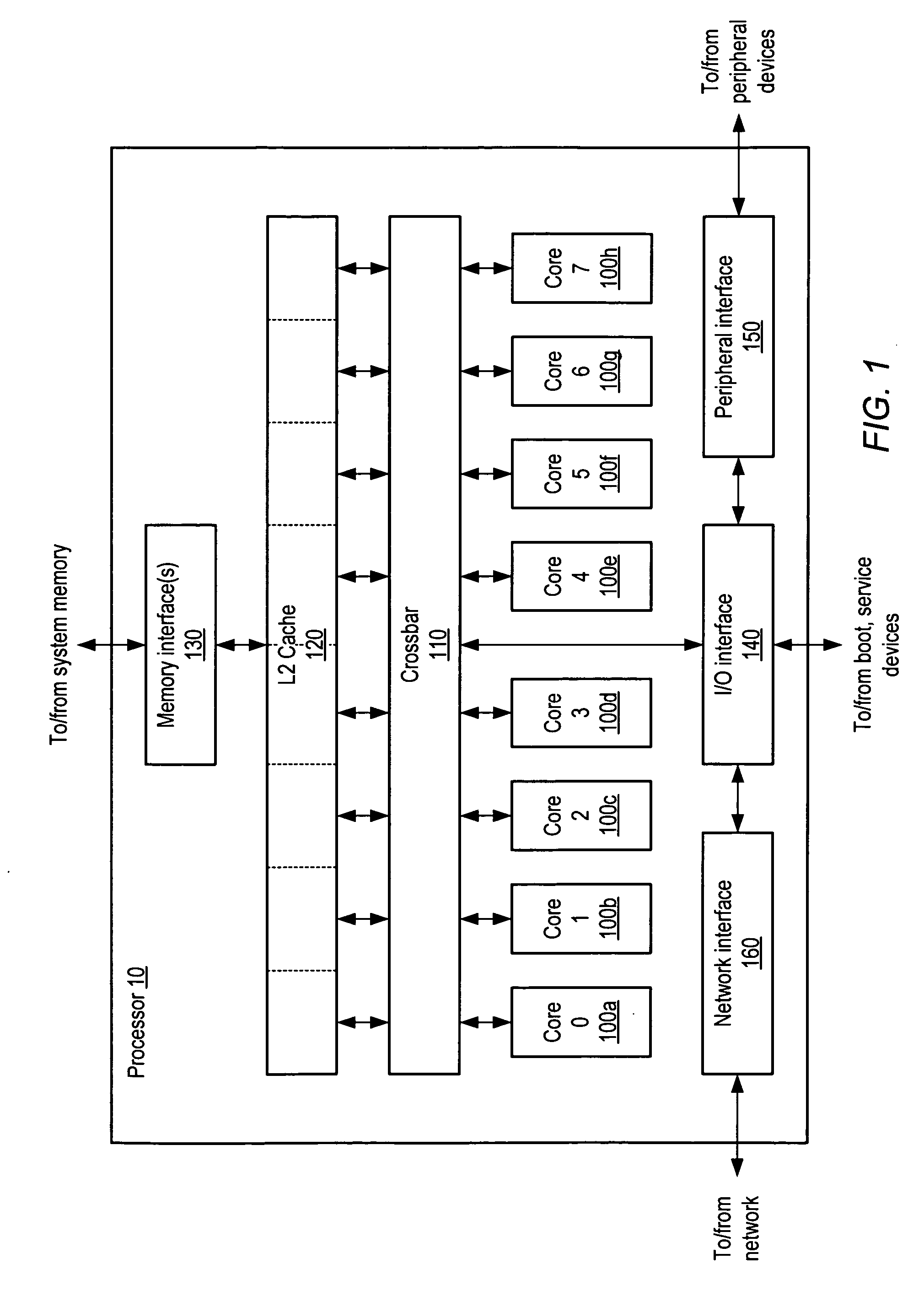

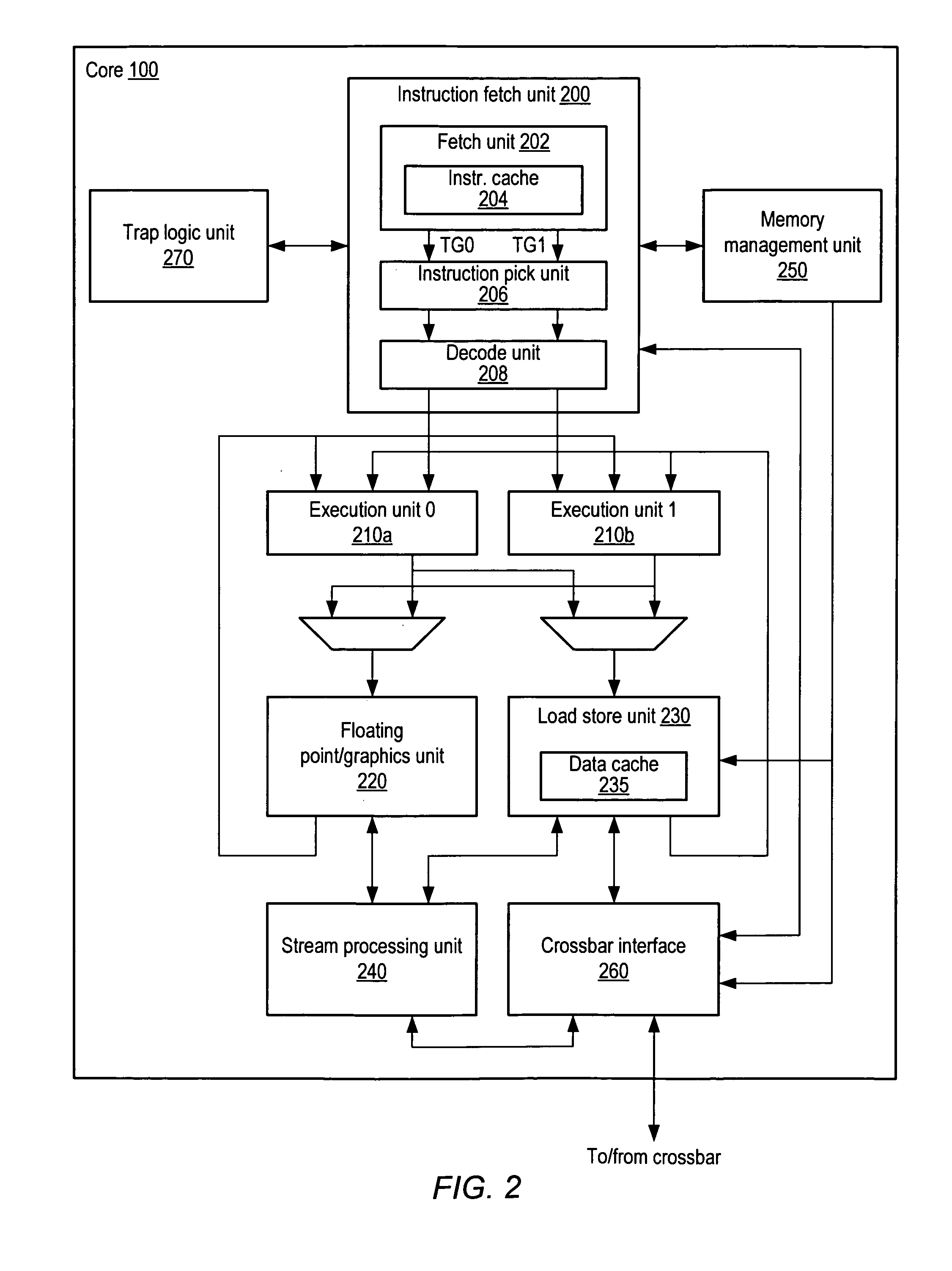

Method and system for trace generation using memory index hashing

ActiveUS20070234016A1Digital computer detailsSpecific program execution arrangementsMemory addressParallel computing

A method and system for trace generation using memory index hashing. A method may include generating an extended trace representative of M threads of instruction execution from a trace representative of N threads of instruction execution, where N and M are integers, N≧1 and M>N, and where each of the N threads of the trace includes memory references to respective memory addresses. Generating the extended trace from the trace may include replicating the N threads to generate the M threads, assigning a respective identifier to each of the M threads, and for a given one of the M threads, hashing a first portion of each of the respective addresses dependent upon the respective identifier of the given thread, where the first portion of each of the respective addresses corresponds to at least part of an index of a memory structure shared by at least two of the M threads.

Owner:ORACLE INT CORP

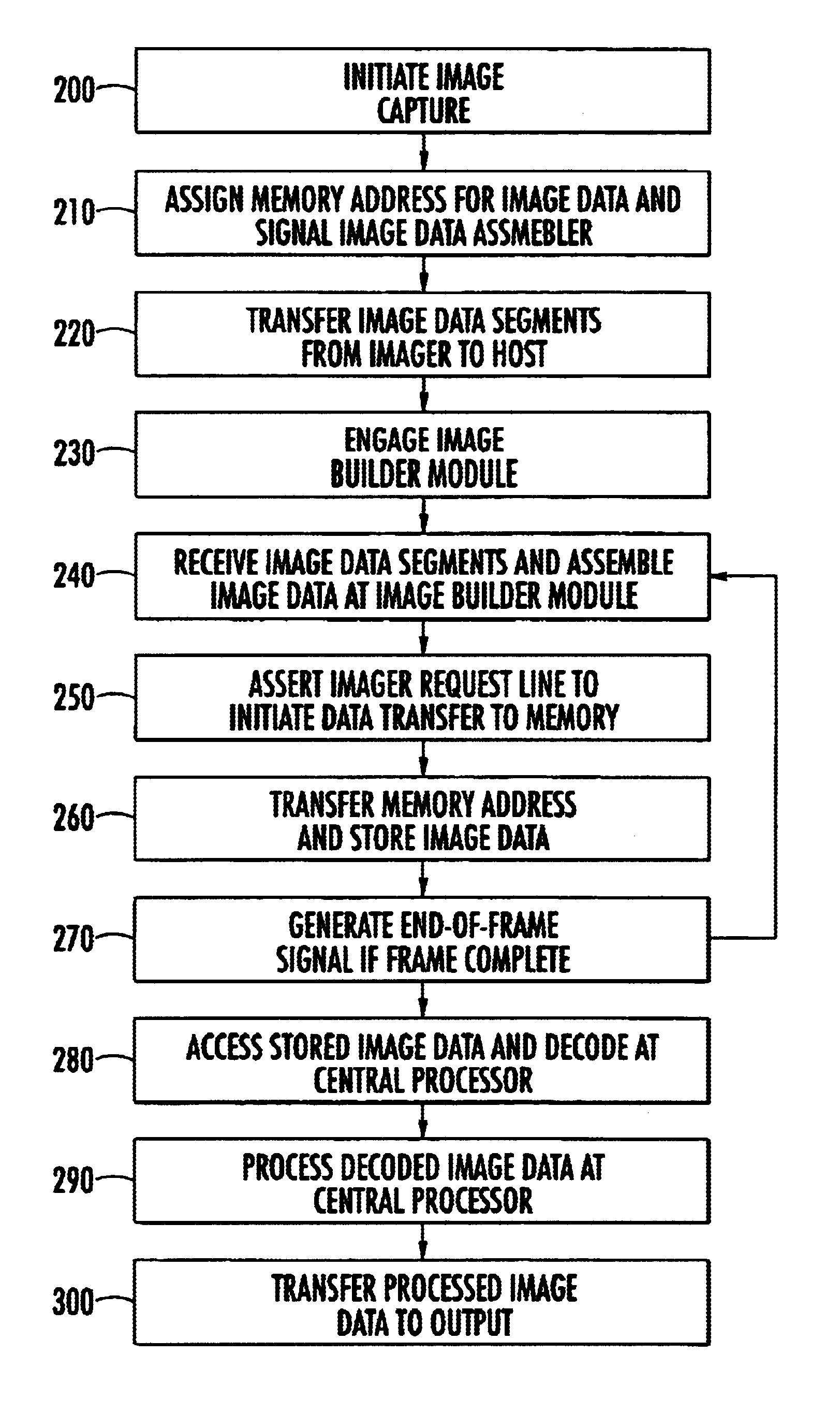

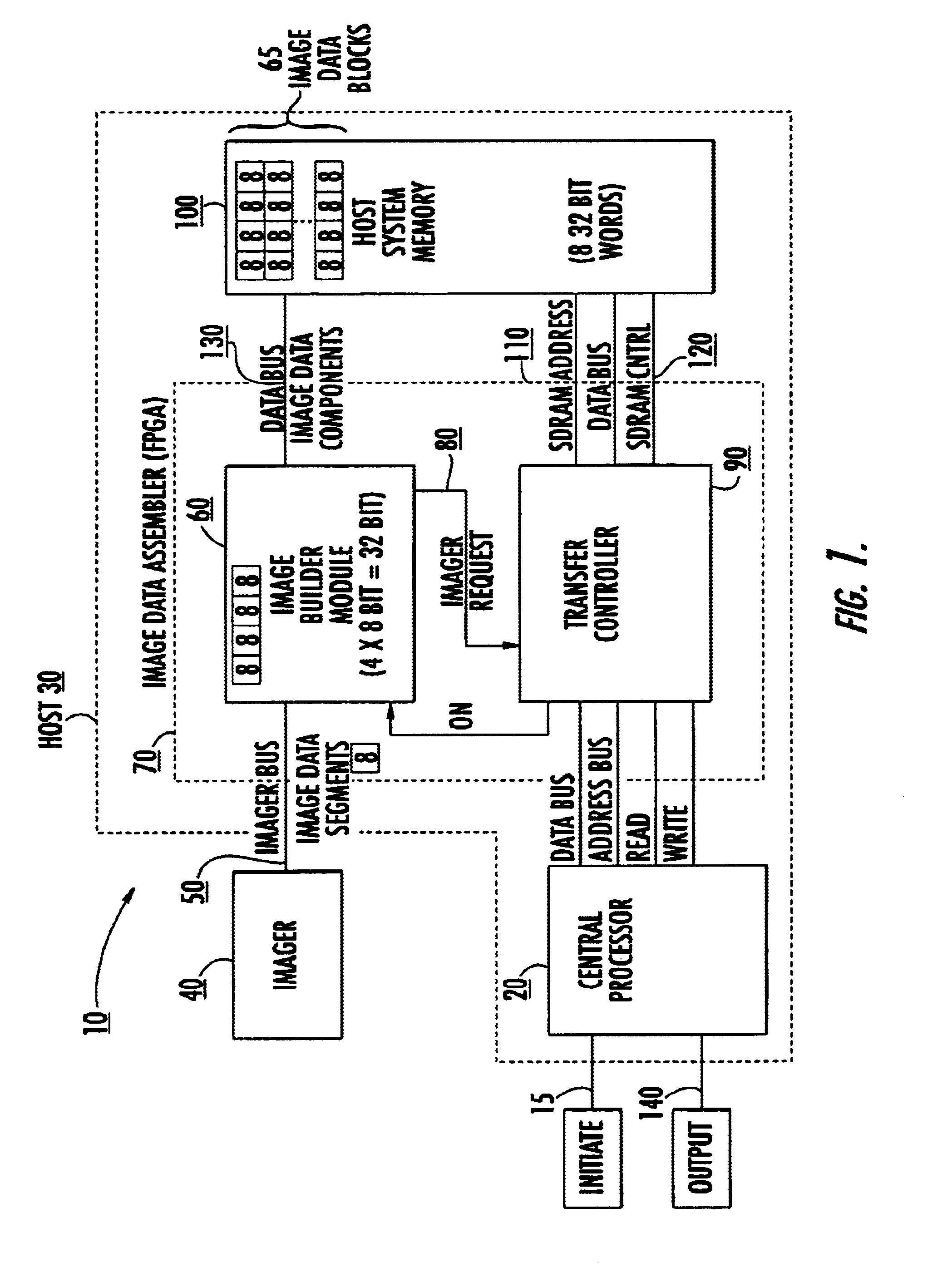

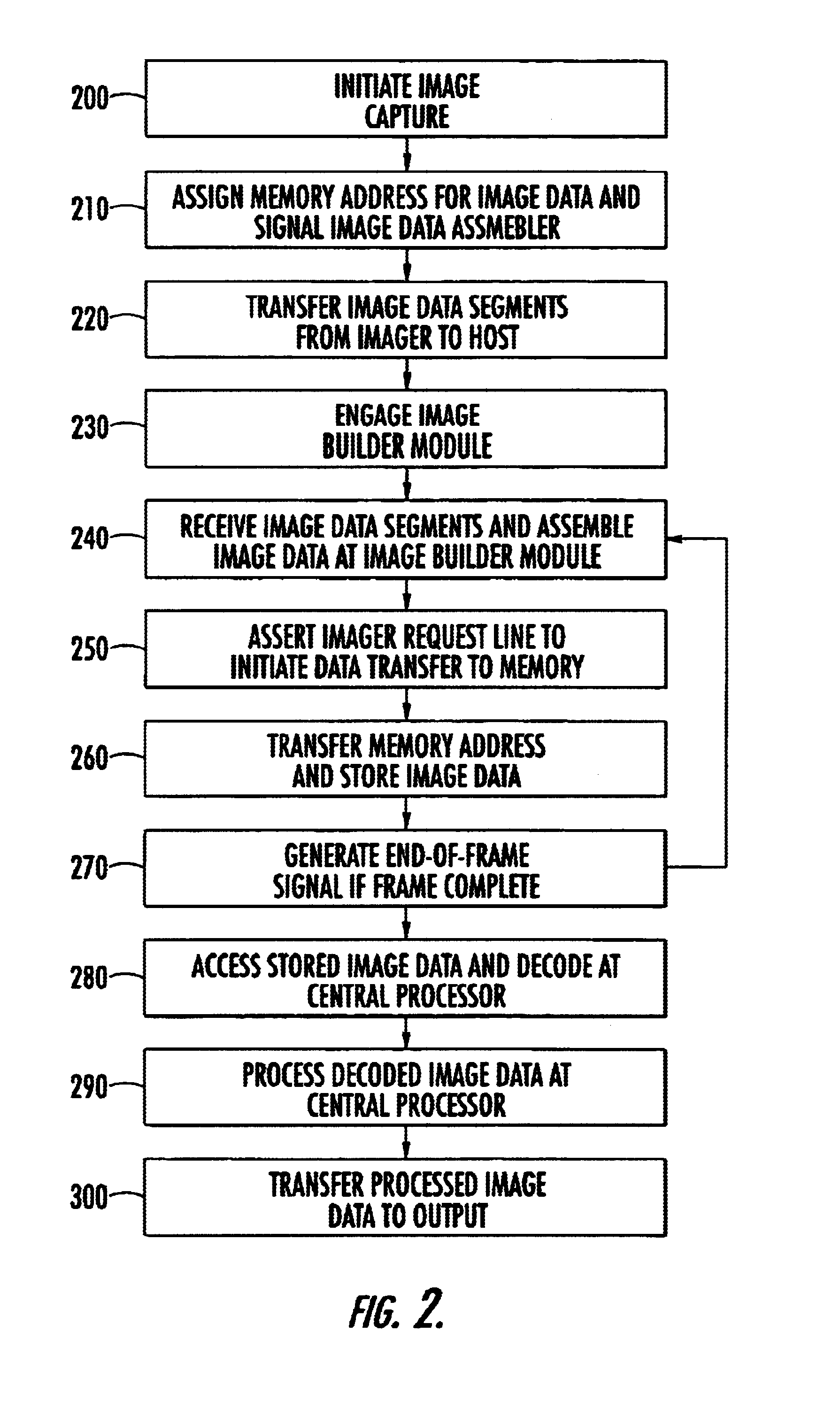

Methods and apparatus for image capture and decoding in a centralized processing unit

InactiveUS6947612B2Timely and efficientEliminate needCharacter and pattern recognitionSensing record carriersMemory addressDigital signal processing

An improved method and device for capturing image data benefits from having a single central processor execute the operating system, and the image capture, decode and processing programs. A method for capturing of image data comprises transmitting image data from an imager, assembling the image data, assigning a memory address to the assembled image data and transferring the assembled image data into system memory. This method is capable of central processing whereby the capturing of image data is executed via the main processor without having to invoke a dedicated processor or incorporate external components, such as additional PCBs, external digital signal processing or external data storage. Additionally, an imaging device comprises an image builder module that receives image data from the imager bus and assembles the data, and a transfer controller that initiates the image builder module and controls the transfer of image data into and out of memory.

Owner:HAND HELD PRODS

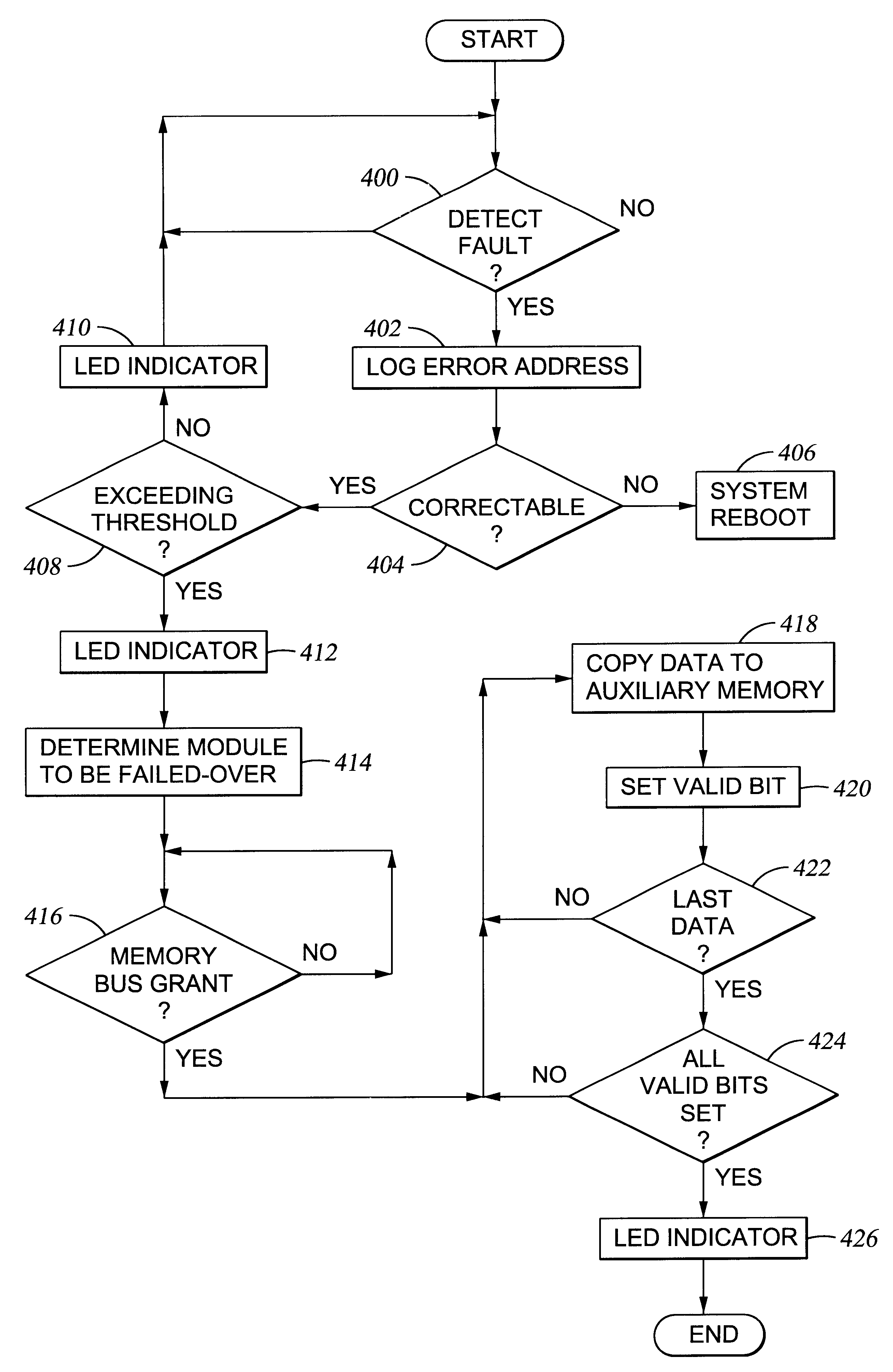

Fail-over of multiple memory blocks in multiple memory modules in computer system

InactiveUS20040073829A1Error detection/correctionEmergency protective arrangements for automatic disconnectionFailoverMemory address

A computer system has a memory controller for controlling accesses to multiple memory modules, each having multiple memory blocks, and a fail-over circuit for failing-over individual memory blocks from multiple memory modules. The digital information stored in an individual memory block that has experienced memory errors in excess of a permissible threshold is copied to an auxiliary memory location. The memory accesses directed to the failed-over memory block are intercepted and redirected to the auxiliary memory location. Tags are stored to identify failed-over memory modules and corresponding auxiliary memory modules, so a tag look-up for an accessed memory address can generate a hit signal when the memory access is to a failed-over memory module and cause the auxiliary memory module to respond to the memory access.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

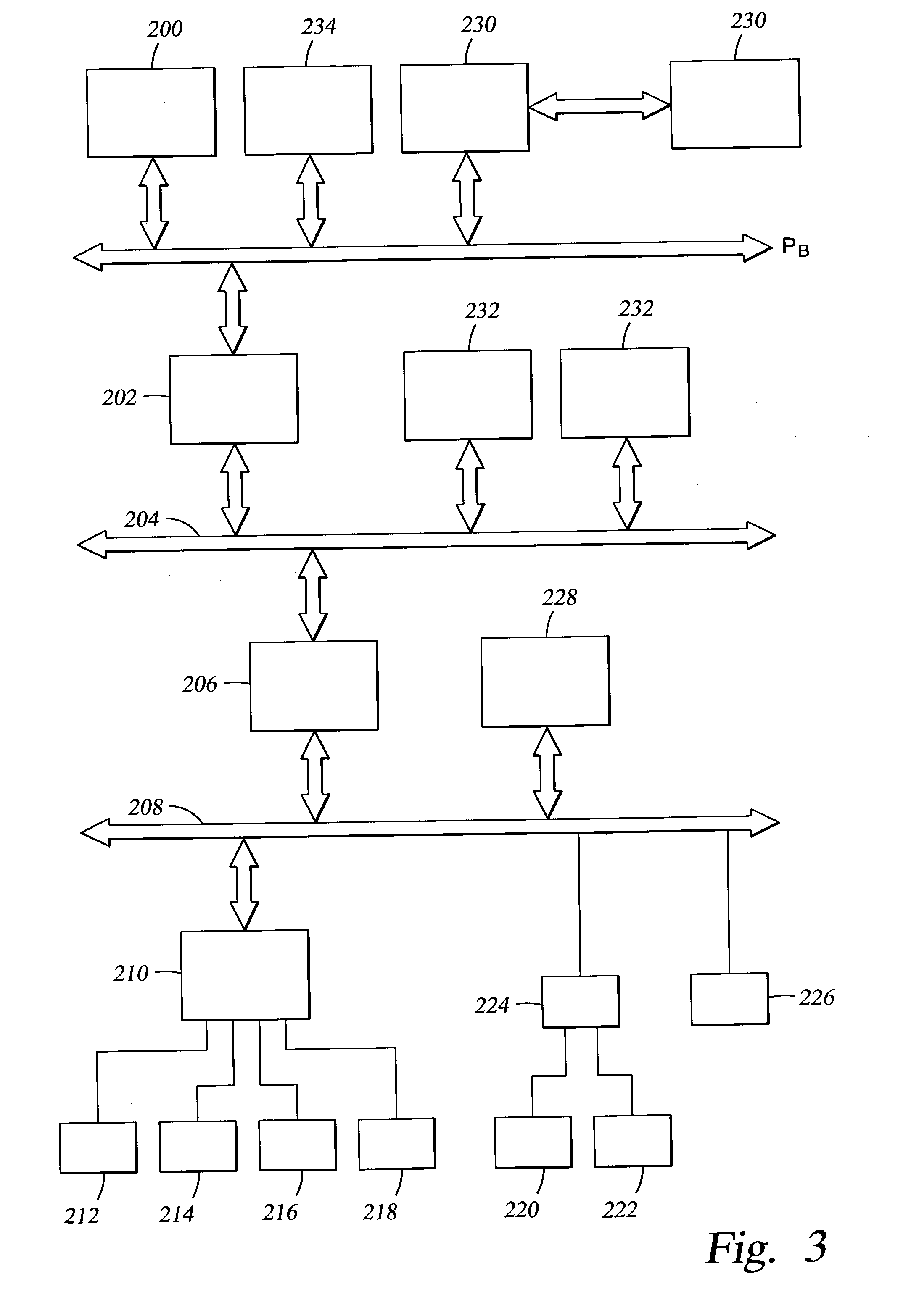

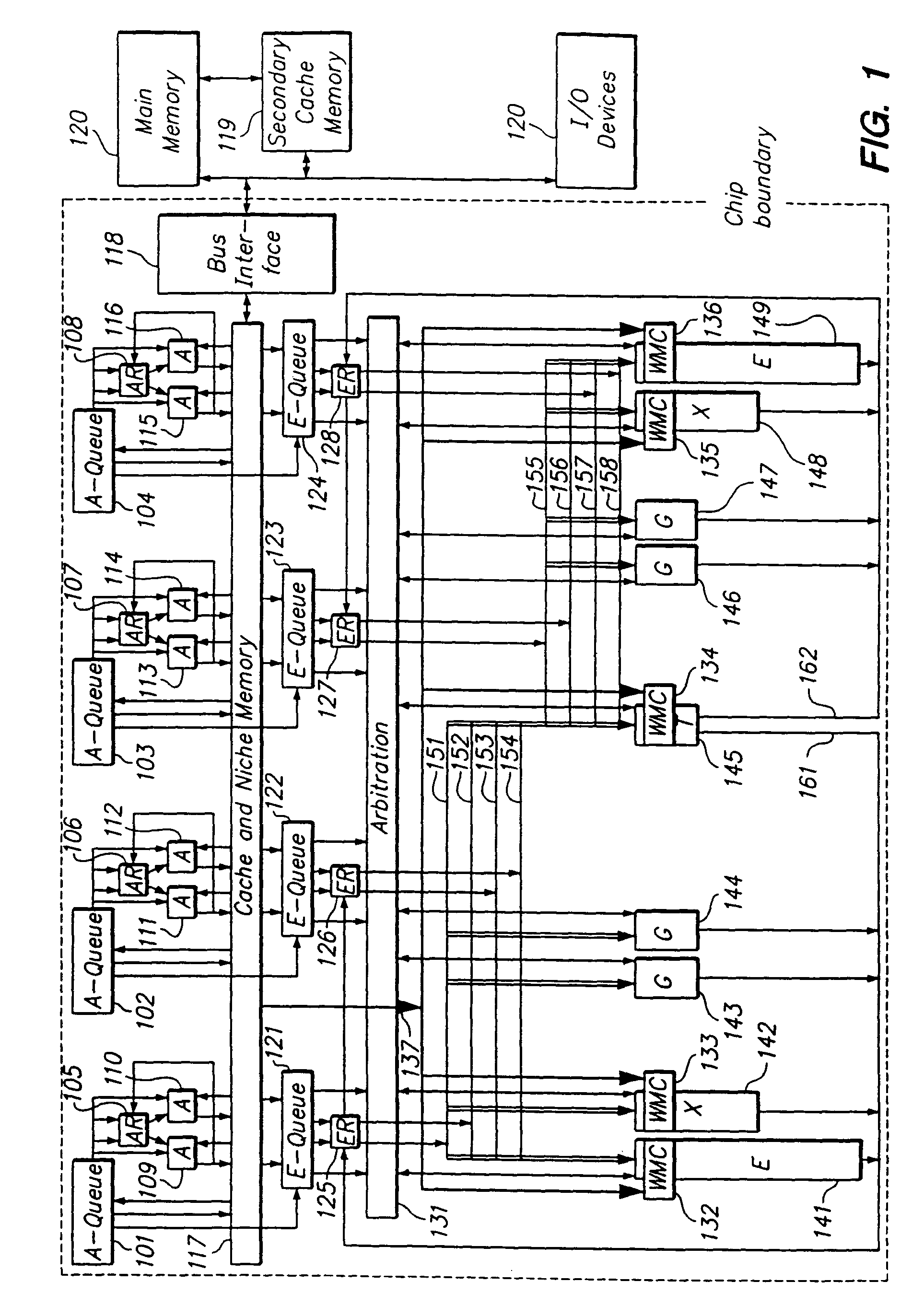

Programmable processor and method with wide operations

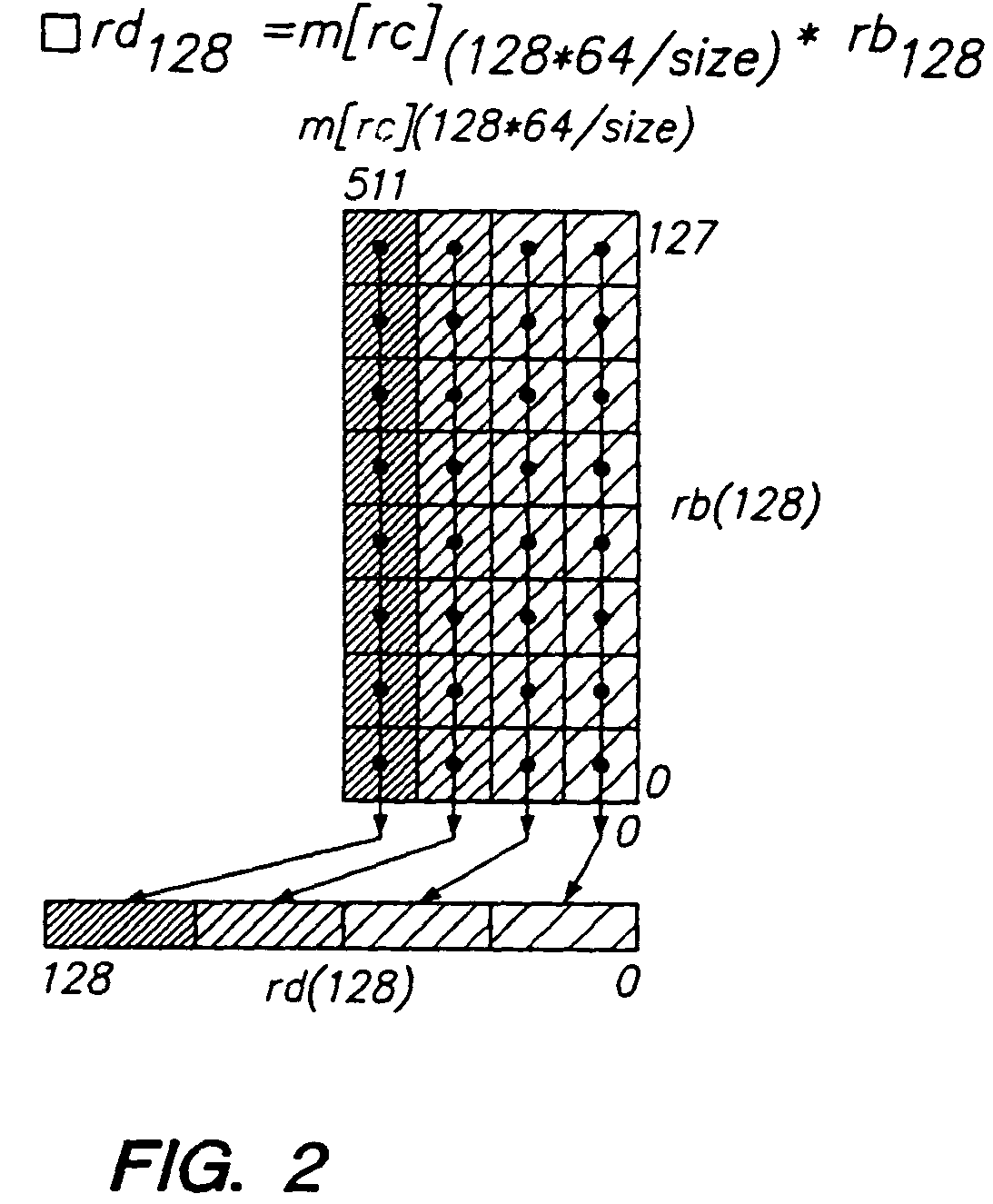

InactiveUS7301541B2Improve performanceEnhancing processor flexibilityEnergy efficient ICTCode conversionMemory addressPath width

A programmable processor and method for improving the performance of processors by expanding at least two source operands, or a source and a result operand, to a width greater than the width of either the general purpose register or the data path width. The present invention provides operands which are substantially larger than the data path width of the processor by using the contents of a general purpose register to specify a memory address at which a plurality of data path widths of data can be read or written, as well as the size and shape of the operand. In addition, several instructions and apparatus for implementing these instructions are described which obtain performance advantages if the operands are not limited to the width and accessible number of general purpose registers.

Owner:MICROUNITY

Fail-over of multiple memory blocks in multiple memory modules in computer system

InactiveUS6505305B1Error detection/correctionEmergency protective arrangements for automatic disconnectionFailoverMemory address

A computer system has a memory controller for controlling accesses to multiple memory modules, each having multiple memory blocks, and a fail-over circuit for failing-over individual memory blocks from multiple memory modules. The digital information stored in an individual memory block that has experienced memory errors in excess of a permissible threshold is copied to an auxiliary memory location. The memory accesses directed to the failed-over memory block are intercepted and redirected to the auxiliary memory location. Tags are stored to identify failed-over memory modules and corresponding auxiliary memory modules, so a tag look-up for an accessed memory address can generate a hit signal when the memory access is to a failed-over memory module and cause the auxiliary memory module to respond to the memory access.

Owner:HEWLETT PACKARD DEV CO LP

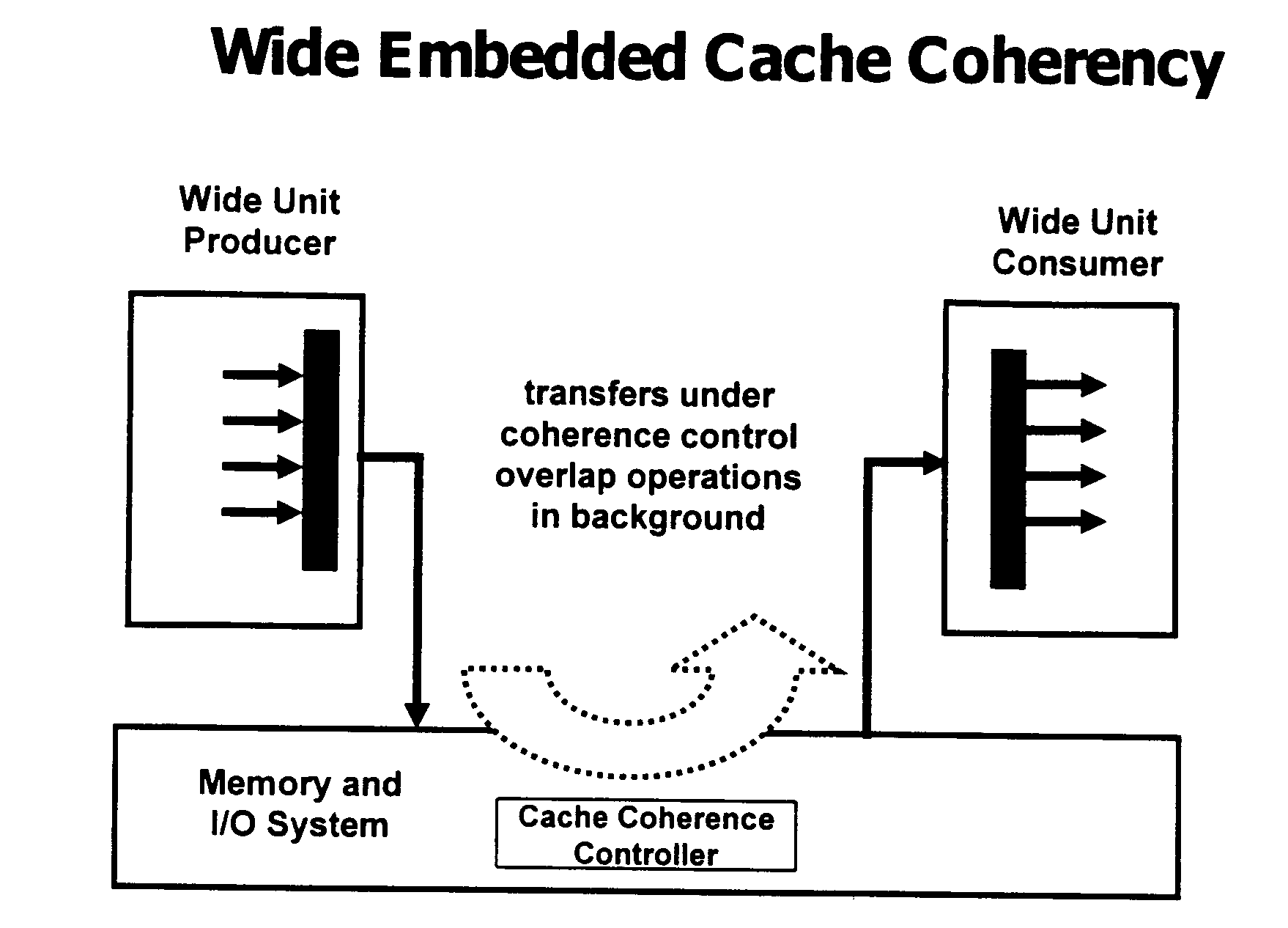

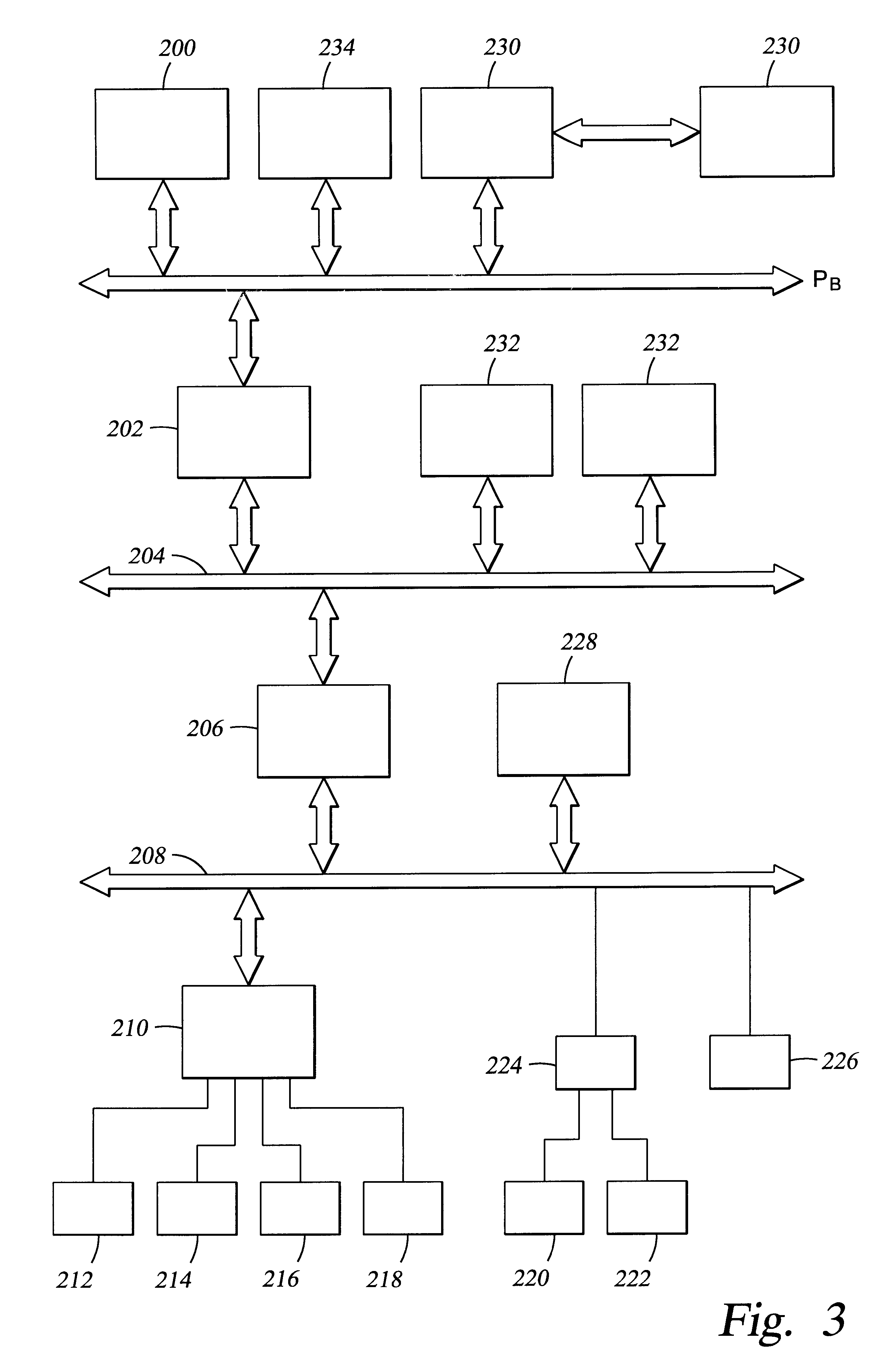

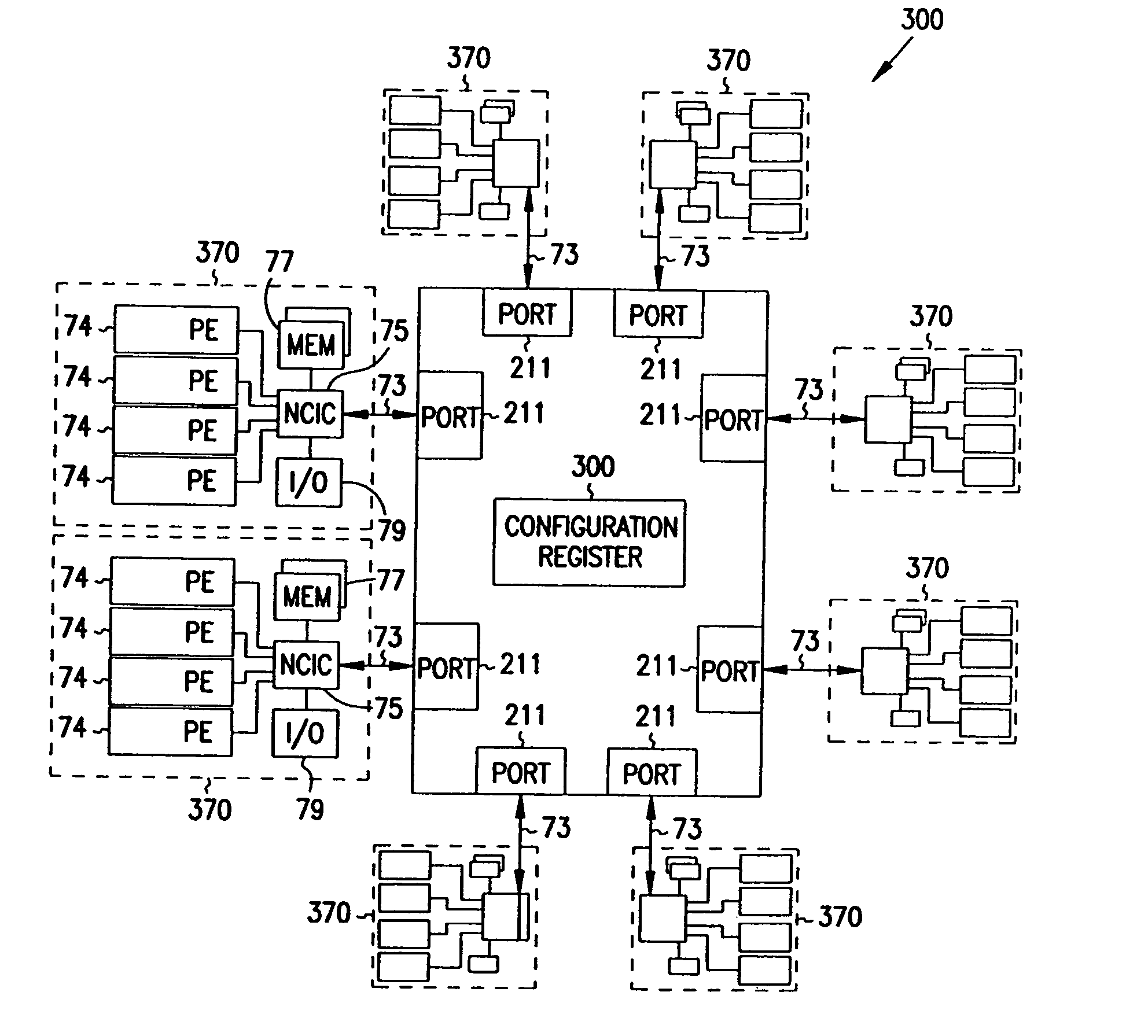

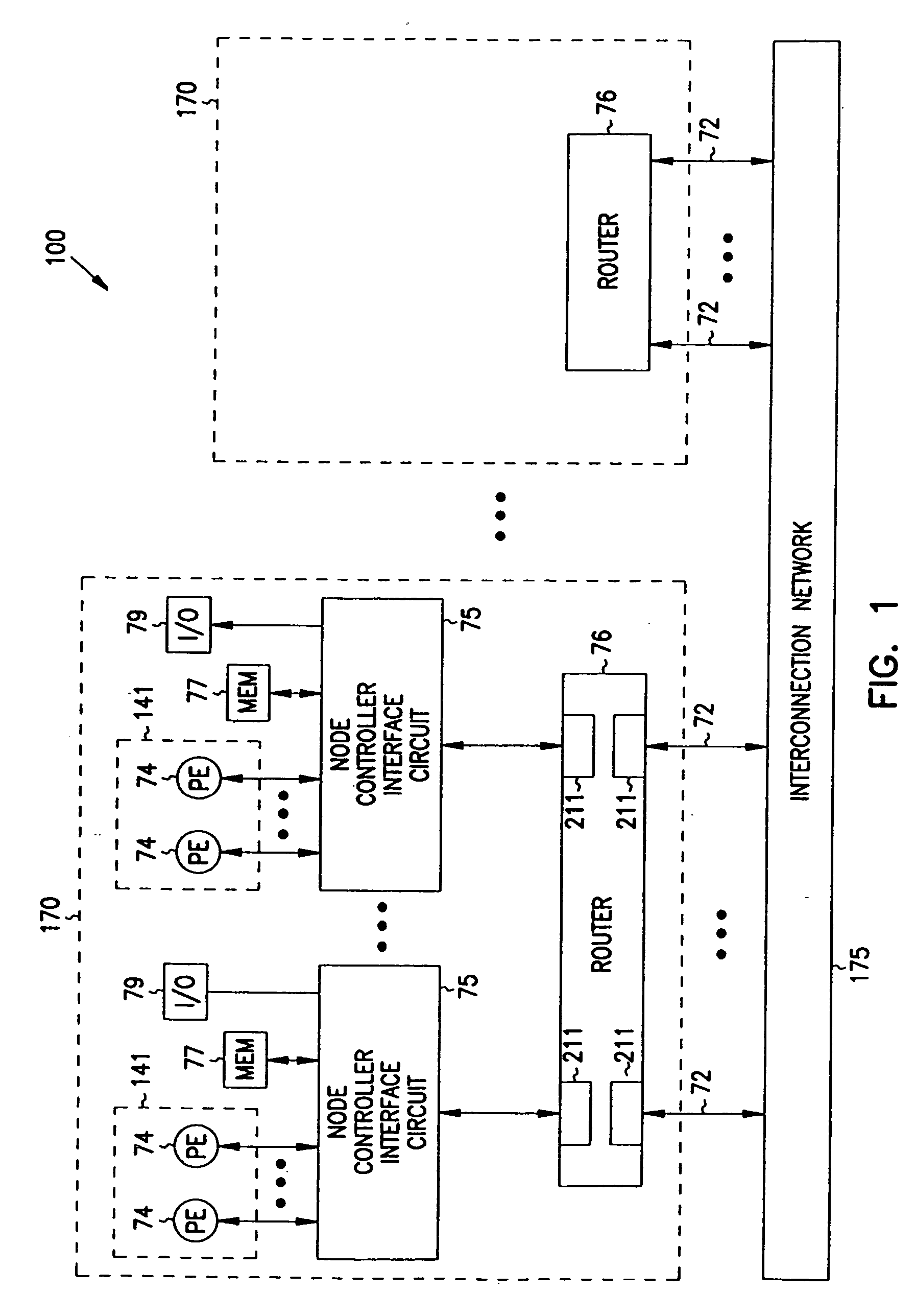

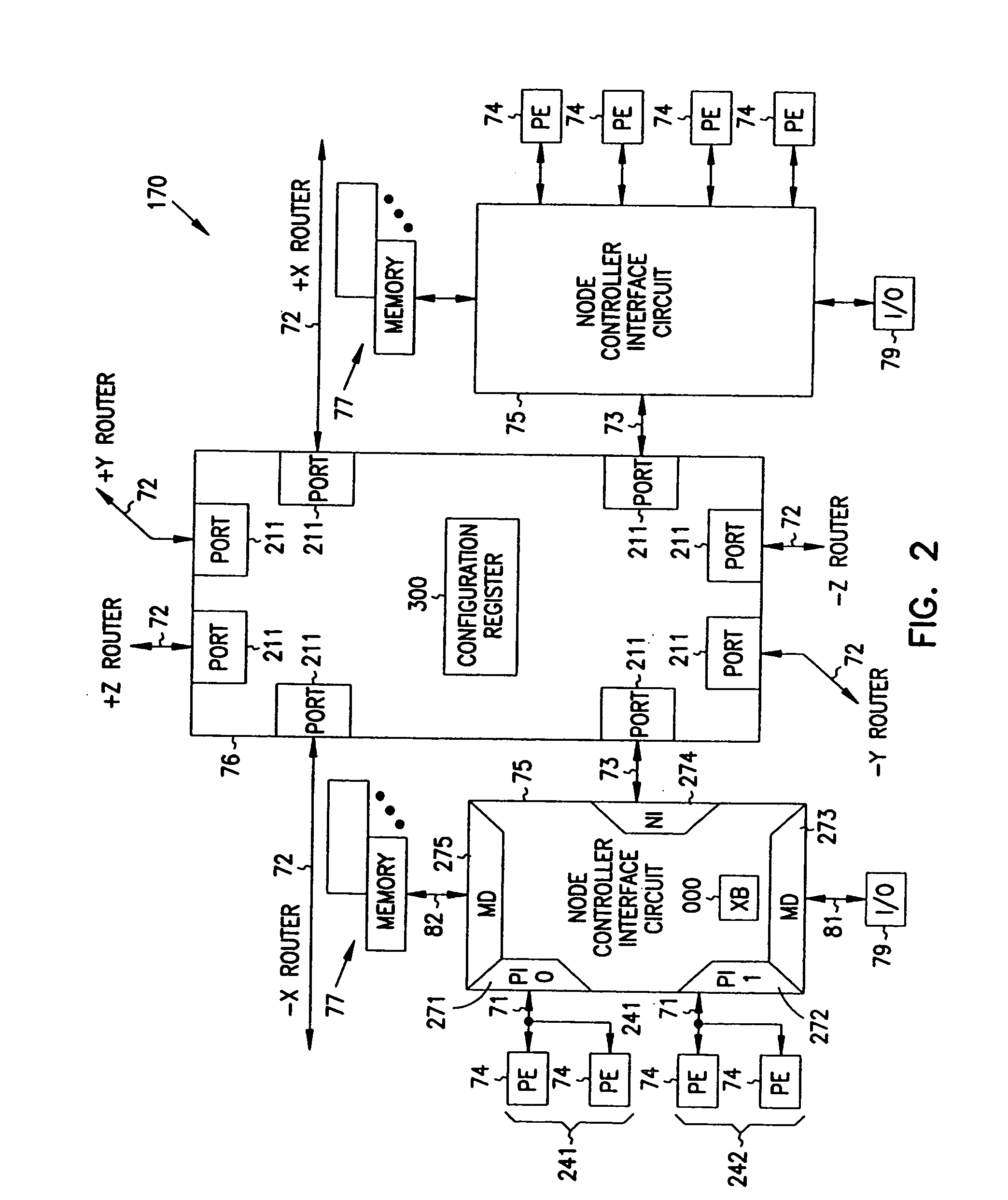

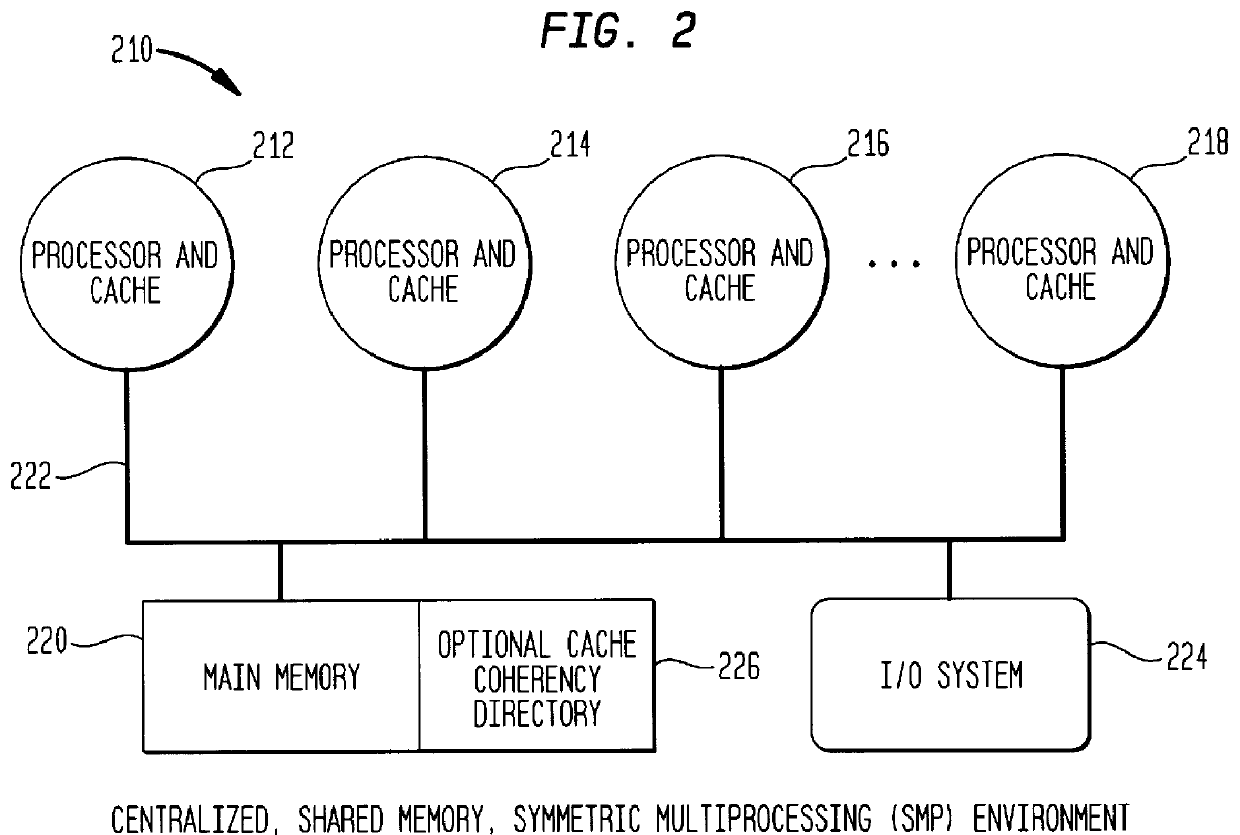

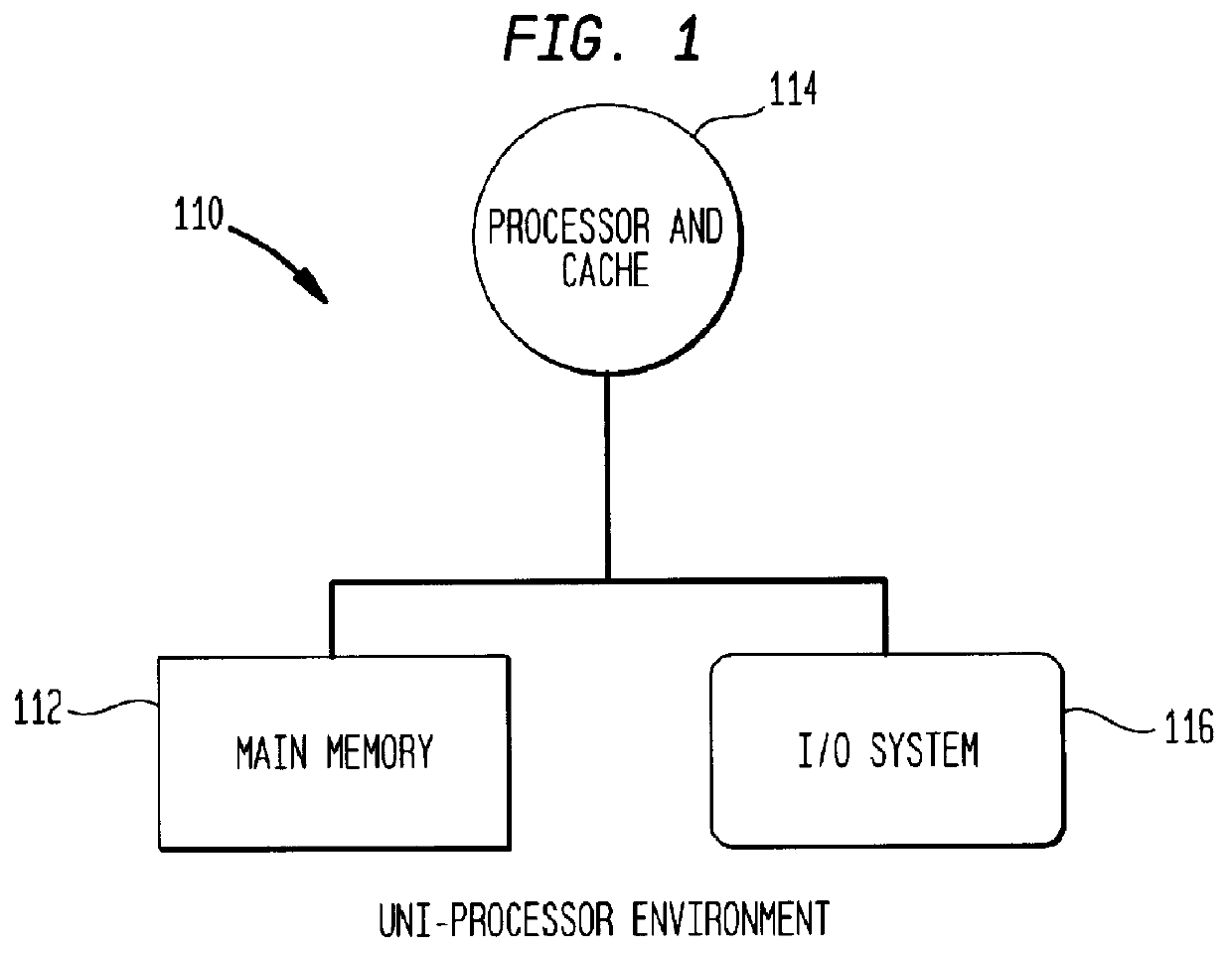

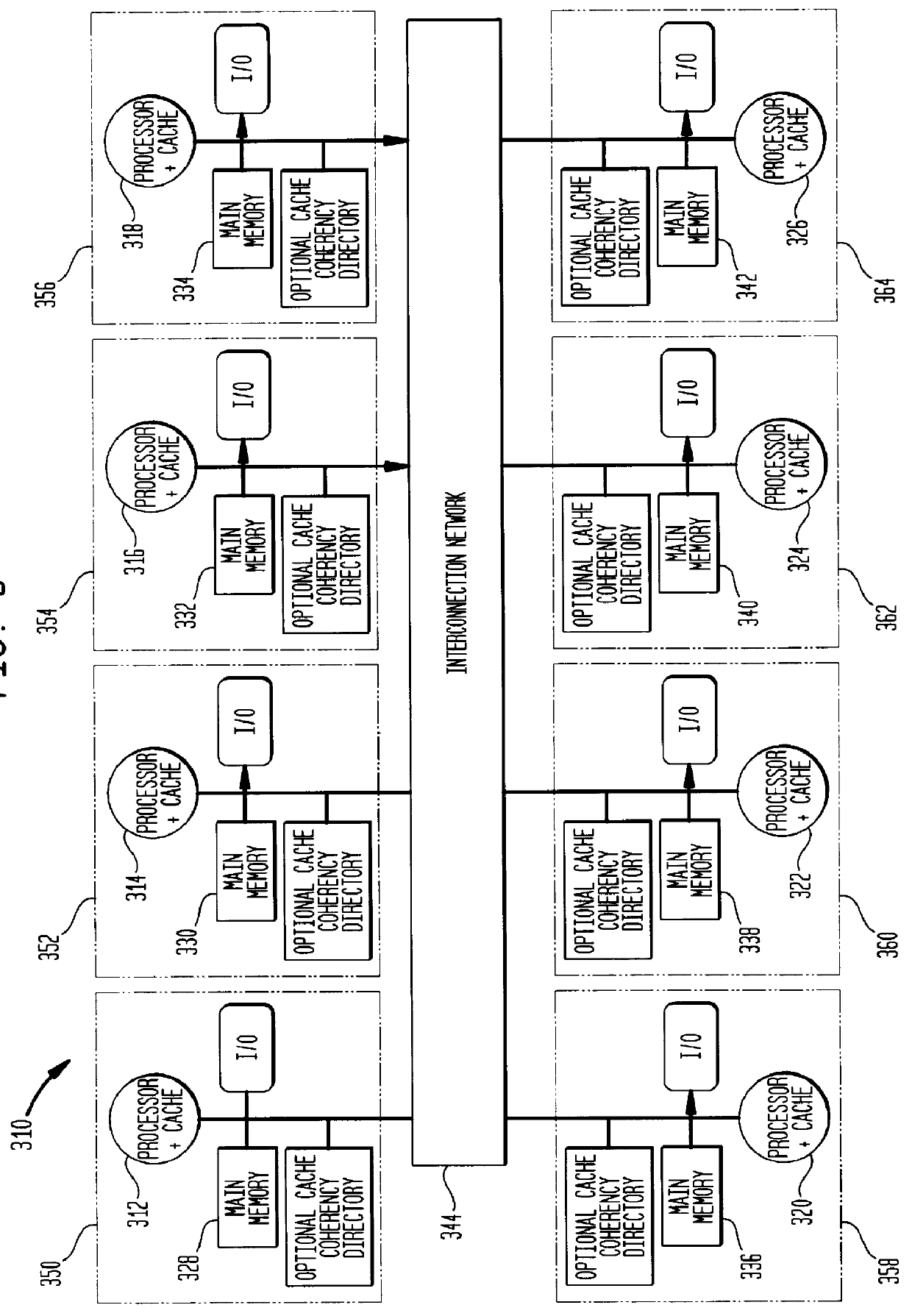

Multiprocessor node controller circuit and method

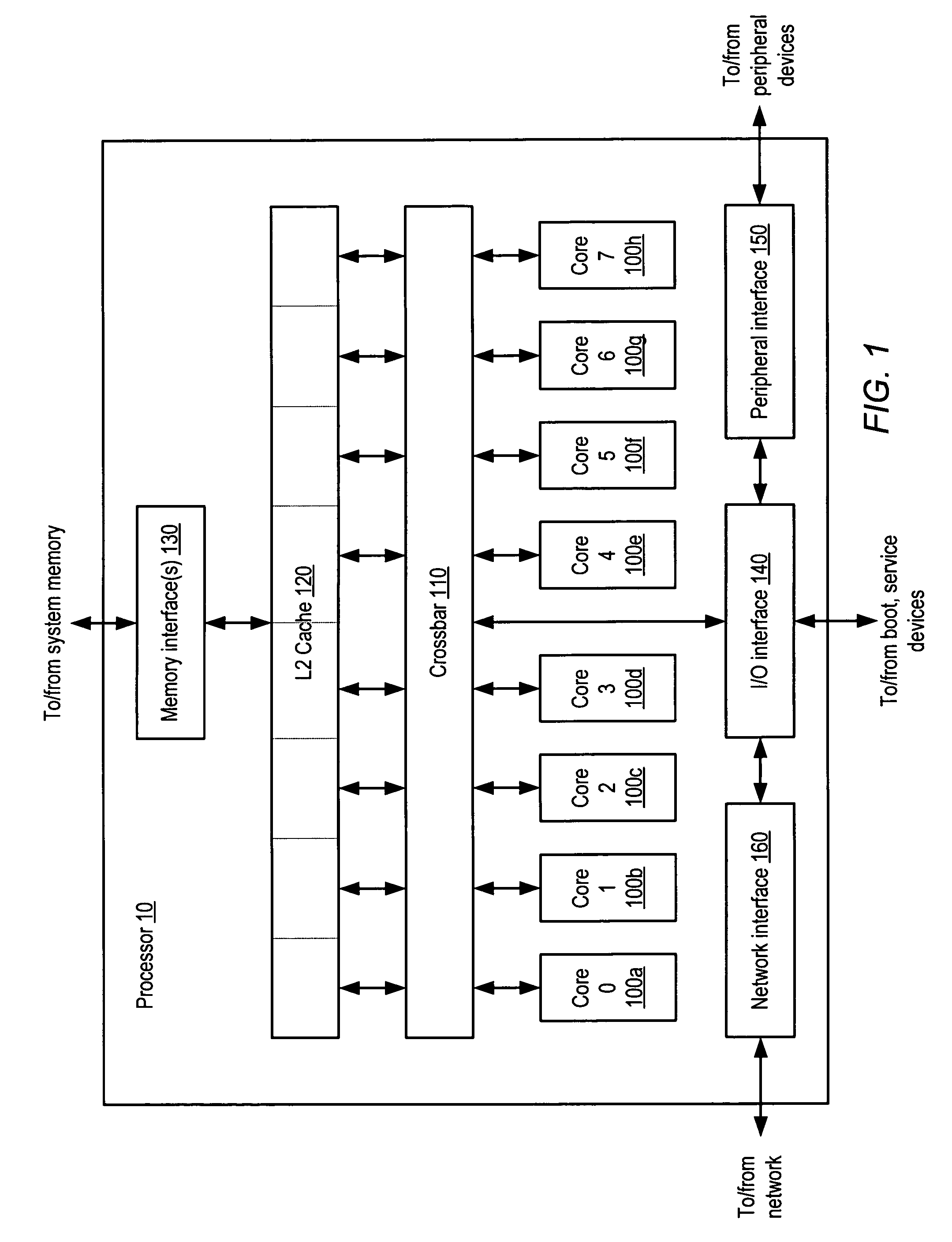

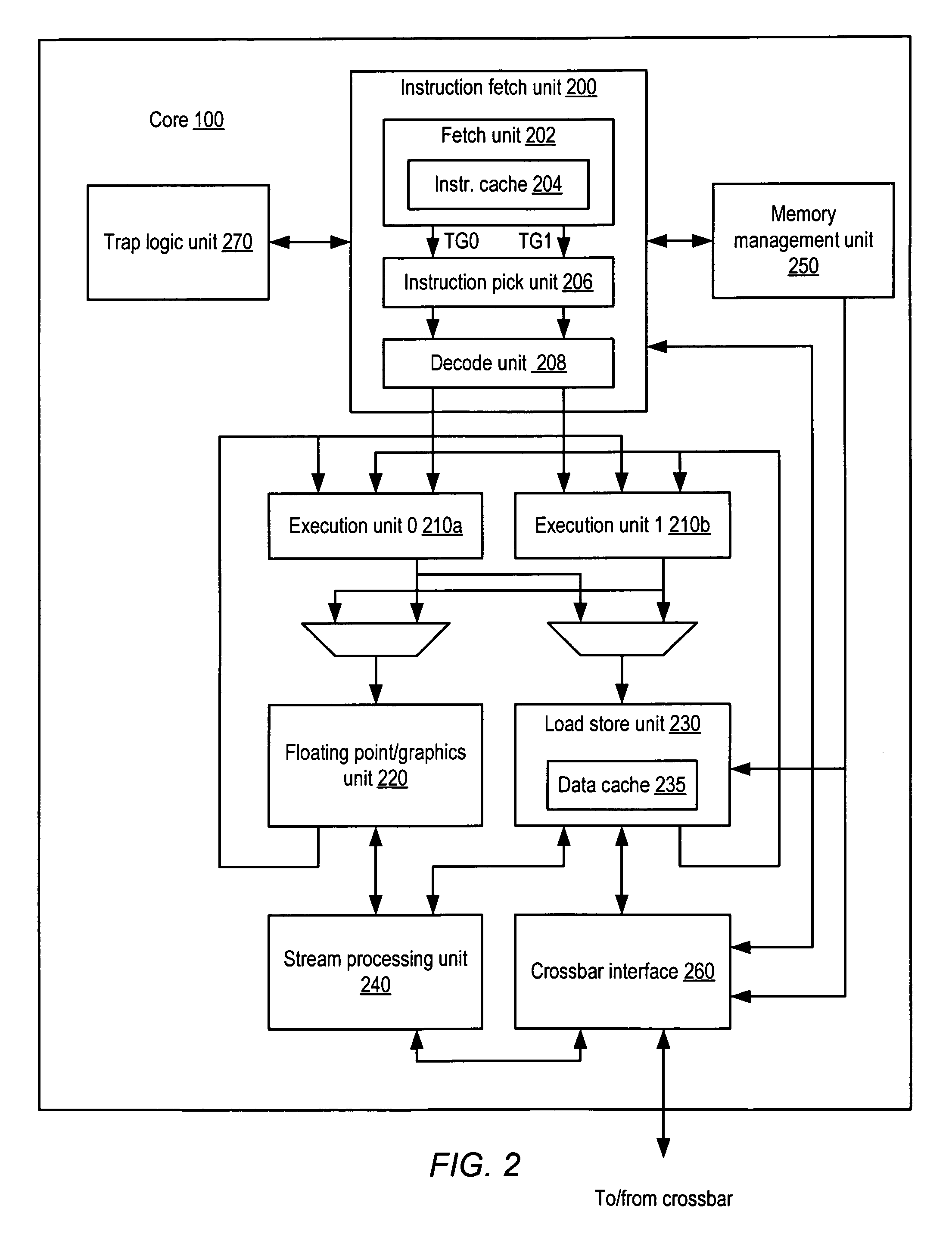

InactiveUS20050053057A1Ease of parallel processingImprove welfareMultiplex system selection arrangementsMemory adressing/allocation/relocationMemory addressCrossbar switch

Improved method and apparatus for parallel processing. One embodiment provides a multiprocessor computer system that includes a first and second node controller, a number of processors being connected to each node controller, a memory connected to each controller, a first input / output system connected to the first node controller, and a communications network connected between the node controllers. The first node controller includes: a crossbar unit to which are connected a memory port, an input / output port, a network port, and a plurality of independent processor ports. A first and a second processor port connected between the crossbar unit and a first subset and a second subset, respectively, of the processors. In some embodiments of the system, the first node controller is fabricated onto a single integrated-circuit chip. Optionally, the memory is packaged on plugable memory / directory cards wherein each card includes a plurality of memory chips including a first subset dedicated to holding memory data and a second subset dedicated to holding directory data. Further, the memory port includes a memory data port including a memory data bus and a memory address bus coupled to the first subset of memory chips, and a directory data port including a directory data bus and a directory address bus coupled to the second subset of memory chips. In some such embodiments, the ratio of (memory data space) to (directory data space) on each card is set to a value that is based on a size of the multiprocessor computer system.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP +1

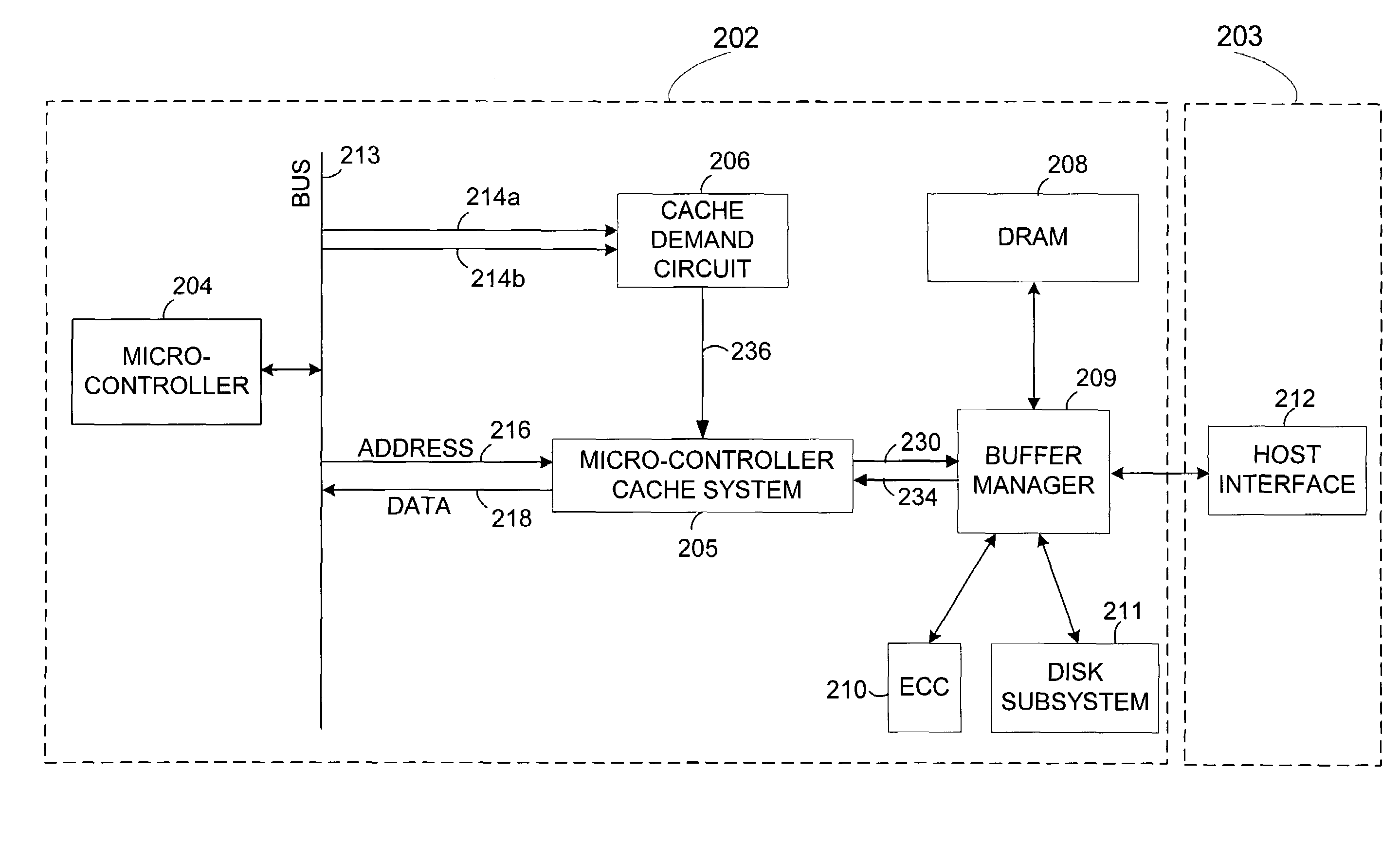

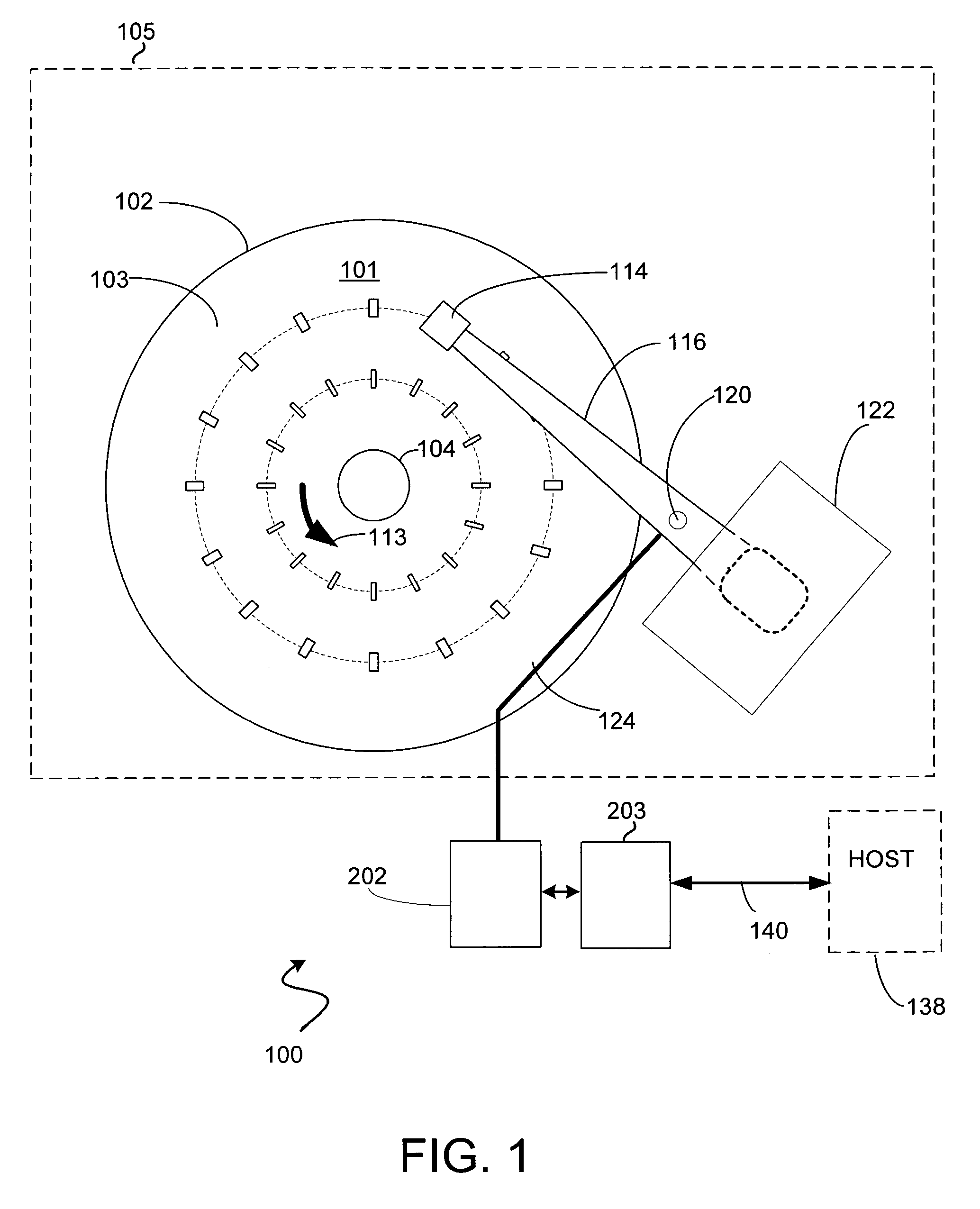

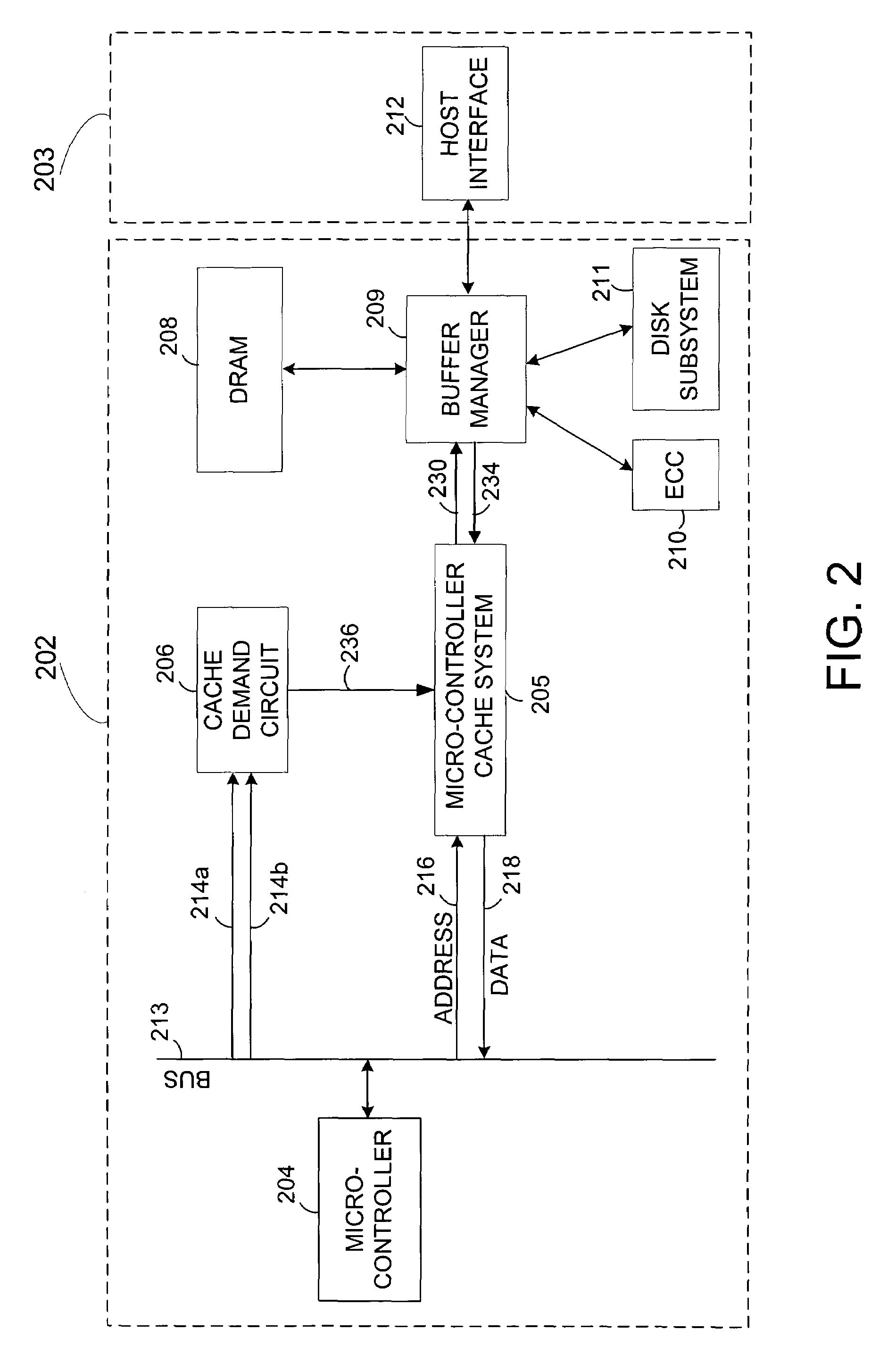

Instruction prefetch caching for remote memory

ActiveUS7240161B1Digital computer detailsConcurrent instruction executionMemory addressControl system

A disk drive control system comprising a micro-controller, a micro-controller cache system adapted to store micro-controller data for access by the micro-controller, a buffer manager adapted to provide the micro-controller cache system with micro-controller requested data stored in a remote memory, and a cache demand circuit adapted to: a) receive a memory address and a memory access signal, and b) cause the micro-controller cache system to fetch data from the remote memory via the buffer manager based on the received memory address and memory access signal prior to a micro-controller request.

Owner:WESTERN DIGITAL TECH INC

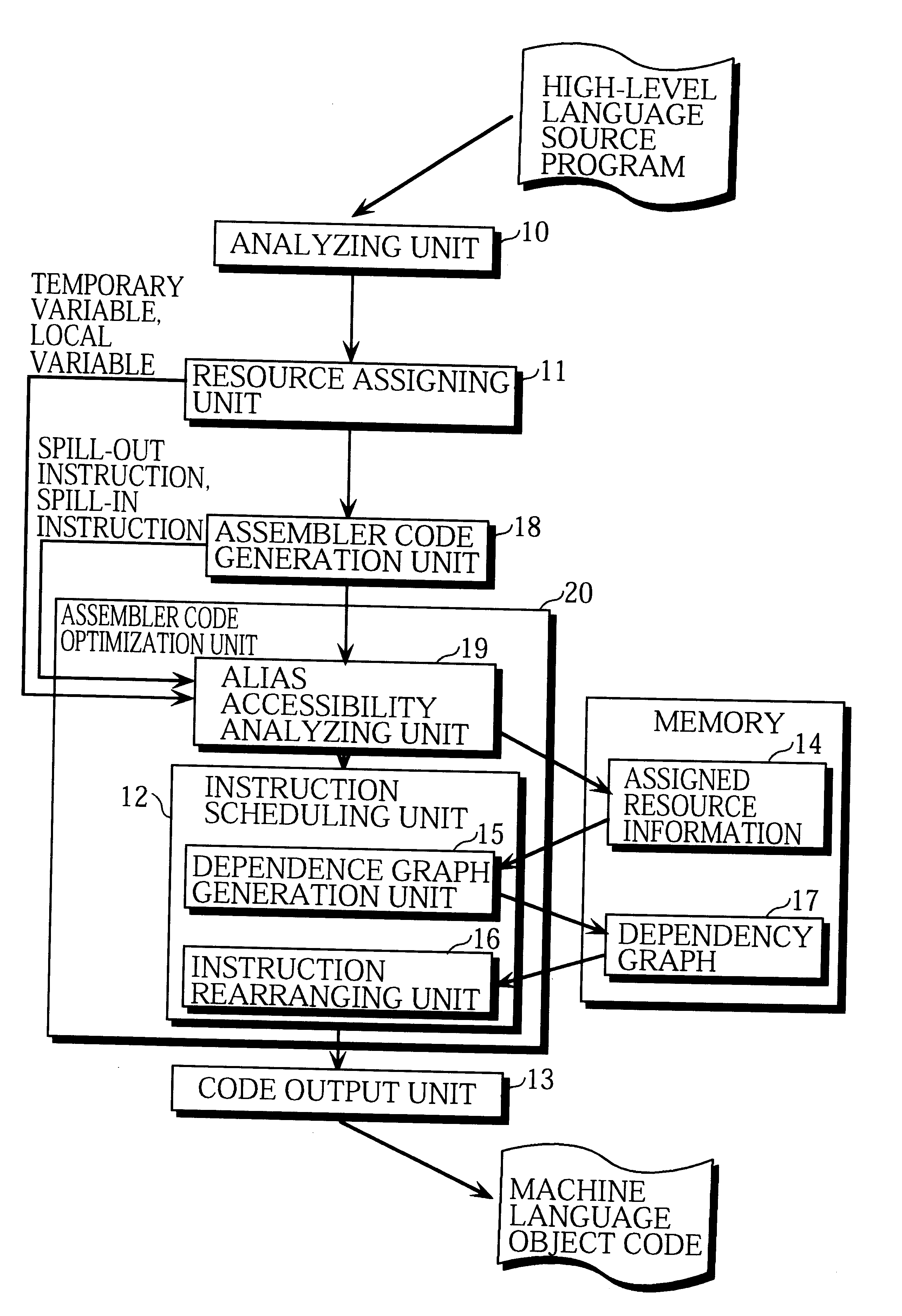

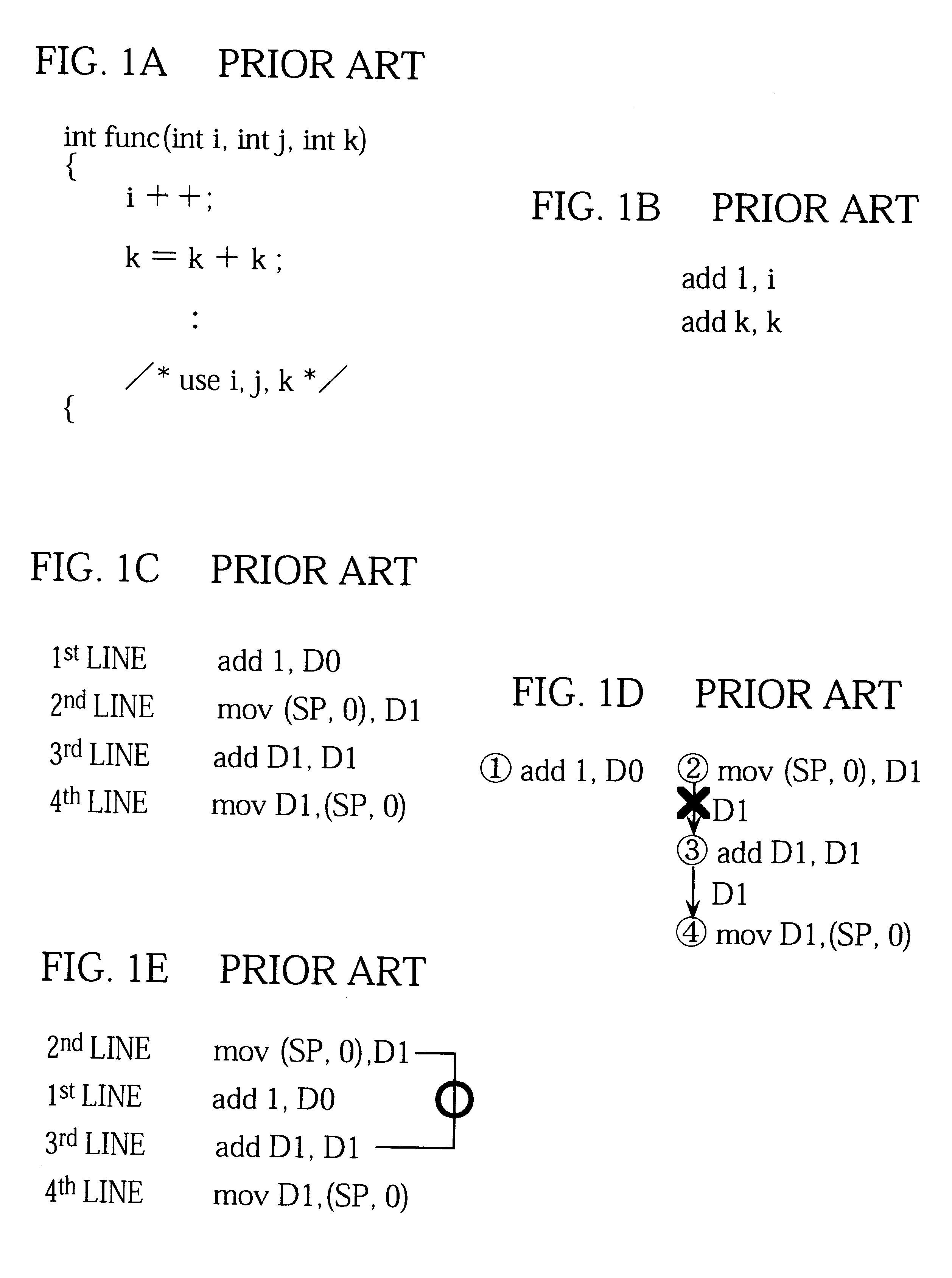

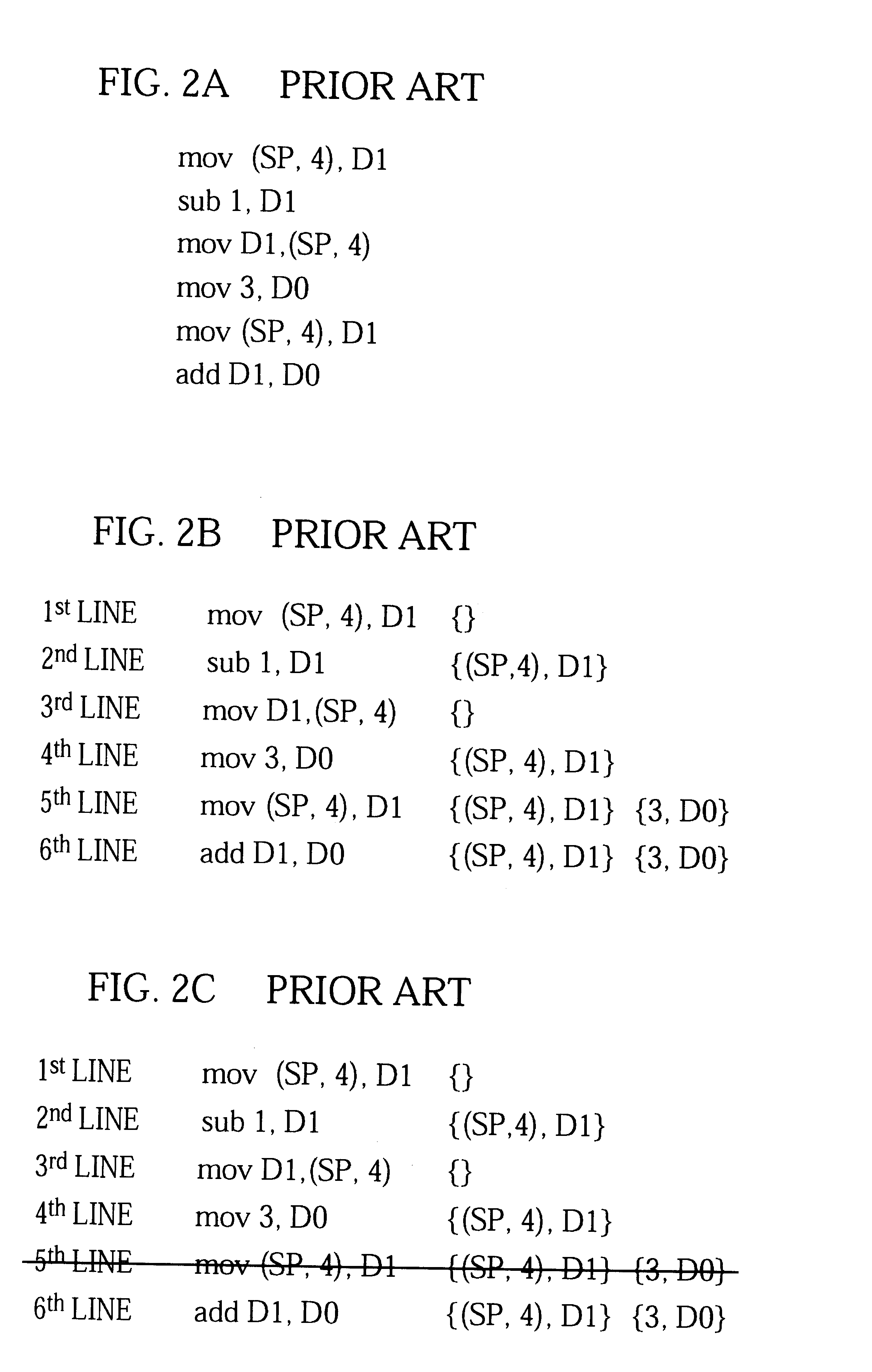

Compiler for optimizing memory instruction sequences by marking instructions not having multiple memory address paths

Internal variables generated by a compiler are assigned to machine resources such as registers and memory by the resource assigning unit 11, and when the assembler code generation unit 18 has outputted an instruction sequence, the alias accessibility analyzing unit 19 registers memory access instructions in the instruction sequence in the assigned resource information 14 according to whether the instructions have a possibility of access by alias. The assembler code optimization unit 20 refers to the assigned resource information 14 and performs optimization at assembler level, thereby reducing the program size and execution time of the instruction sequence.

Owner:SOCIONEXT INC

System and method for maintaining translation look-aside buffer (TLB) consistency

InactiveUS6105113AMemory architecture accessing/allocationMemory adressing/allocation/relocationVirtual memoryMemory address

A system and method for maintaining consistency between translational look-aside buffers (TLB) and page tables. A TLB has a TLB table for storing a list of virtual memory address-to-physical memory address translations, or page table entries (PTES) and a hardware-based controller for invalidating a translation that is stored in the TLB table when a corresponding page table entry changes. The TLB table includes a virtual memory (VM) page tag and a page table entry address tag for indexing the list of translations The VM page tag can be searched for VM pages that are referenced by a process. If a referenced VM page is found, an associated physical address is retrieved for use by the processor. The TLB controller includes a snooping controller for snooping a cache-memory interconnect for activity that affects PTEs. The page table entry address tag can be searched by a search engine in the TLB controller for snooped page table entry addresses. The TLB controller includes an updating module for invalidating or updating translations associated with snooped page table entry addresses. Translations in TLBs are thus updated or invalidated through hardware when an operating system changes a PTE, without intervention by an operating system or other software.

Owner:RPX CORP +1

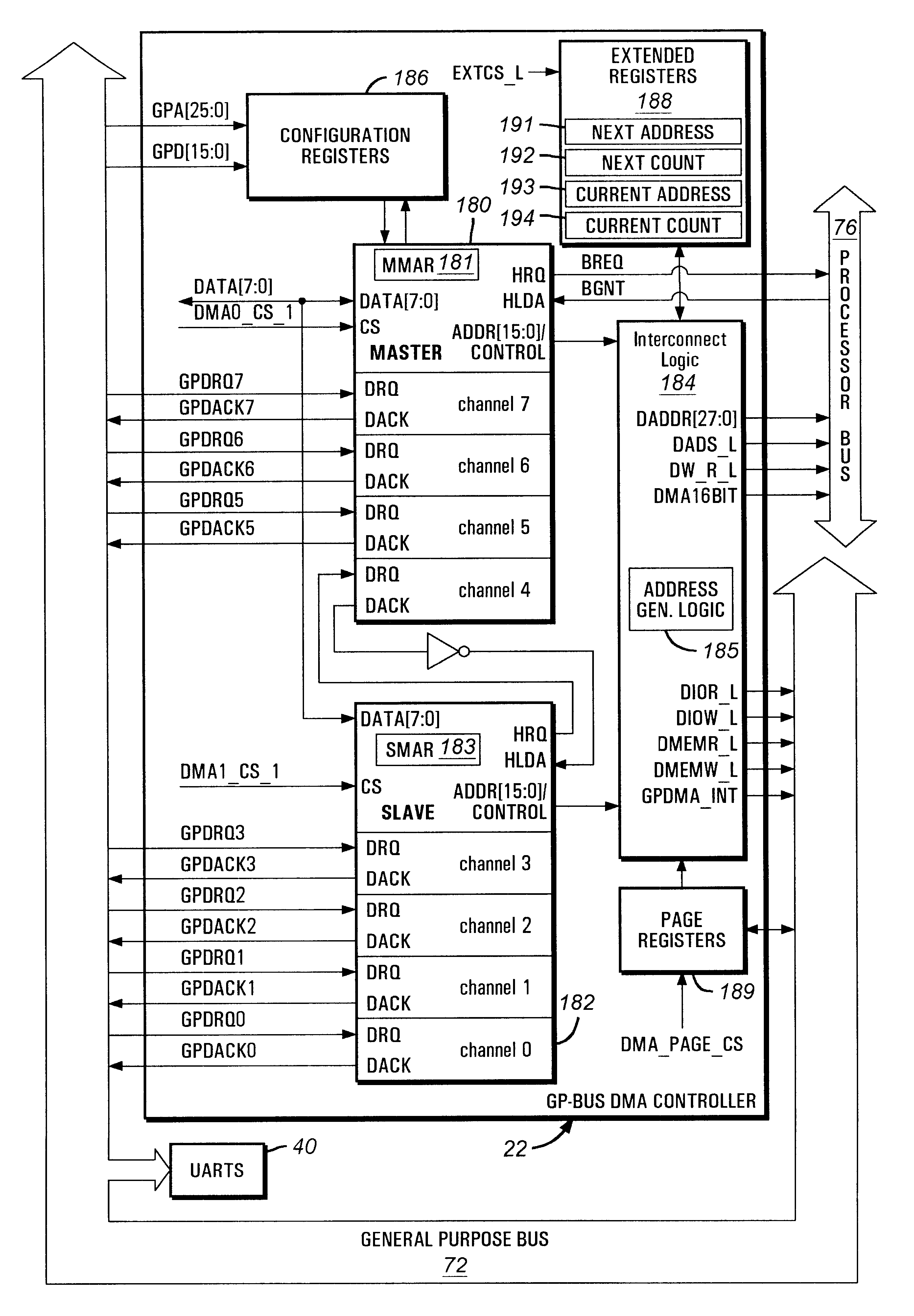

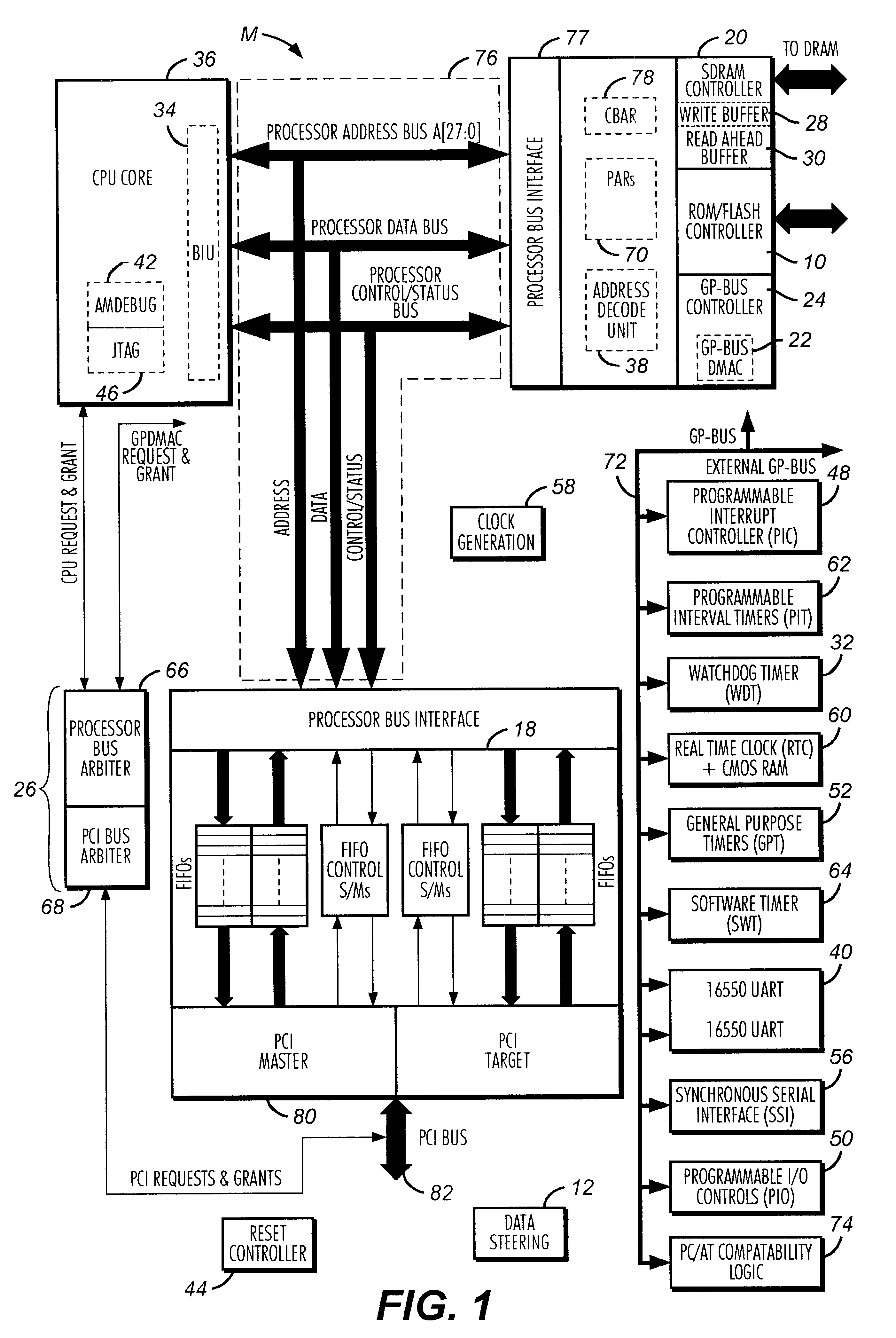

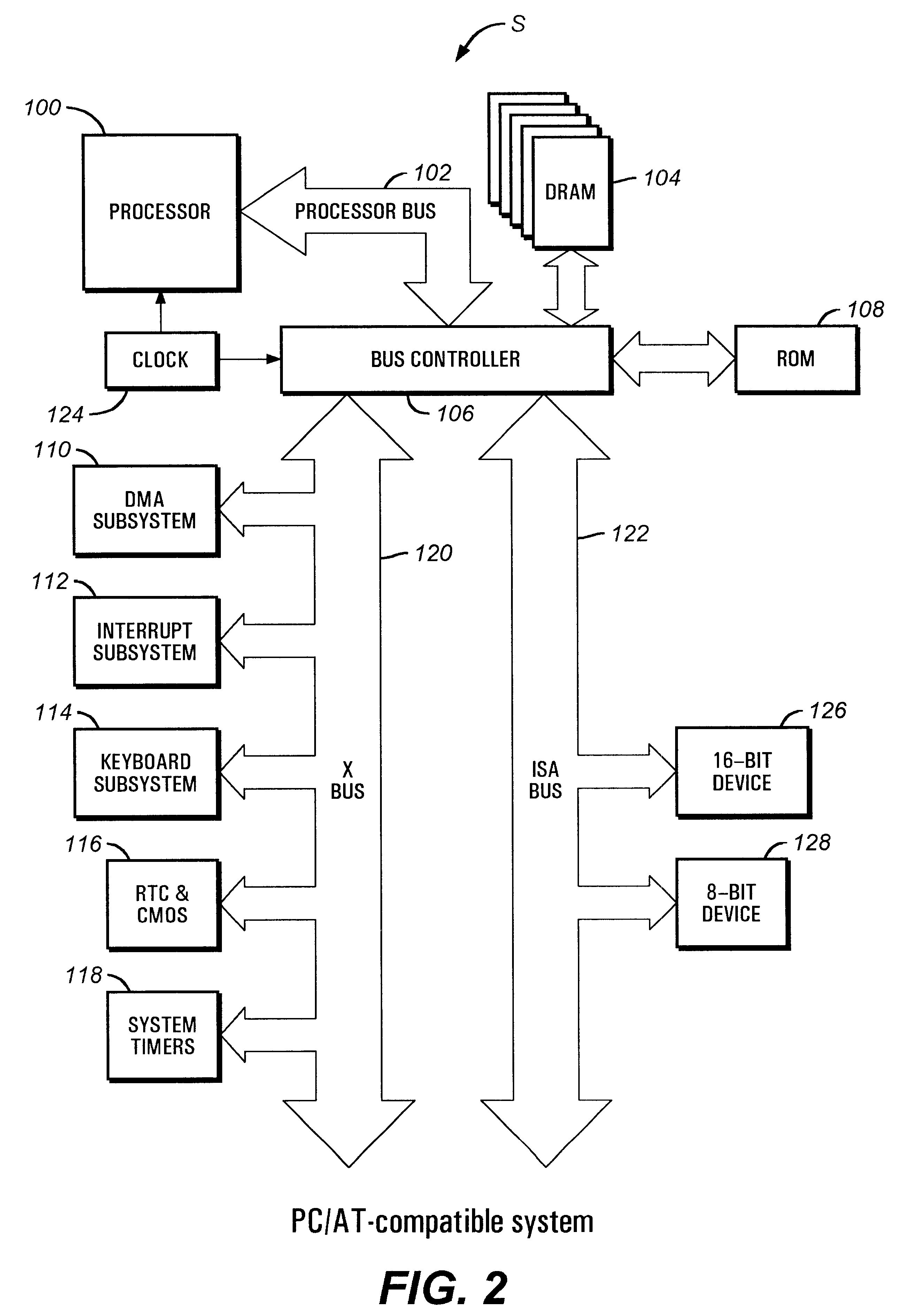

Direct memory access controller with channel width configurability support

A direct memory access (DMA) controller provides seven DMA channels configurable for a PC / AT compatible mode or an enhanced mode. In an enhanced mode of the DMA controller, three DMA master channels on a master DMA controller and a DMA channel on a slave DMA controller are individually configurable to be either 8-bit or 16-bit DMA channels. In addition, in the enhanced mode, a memory address can increment or decrement across a memory page boundary. The DMA controller includes a transfer count register selectively configured for 16-bit operation or 24-bit operation. The DMA controller also includes address generation logic selectively configured for 24-bit operation or 28-bit operation. In the PC / AT compatible mode, the DMA controller supports three 16-bit channels and four 8-bit channels. The DMA controller thus provides DMA channel width configurability.

Owner:GLOBALFOUNDRIES INC

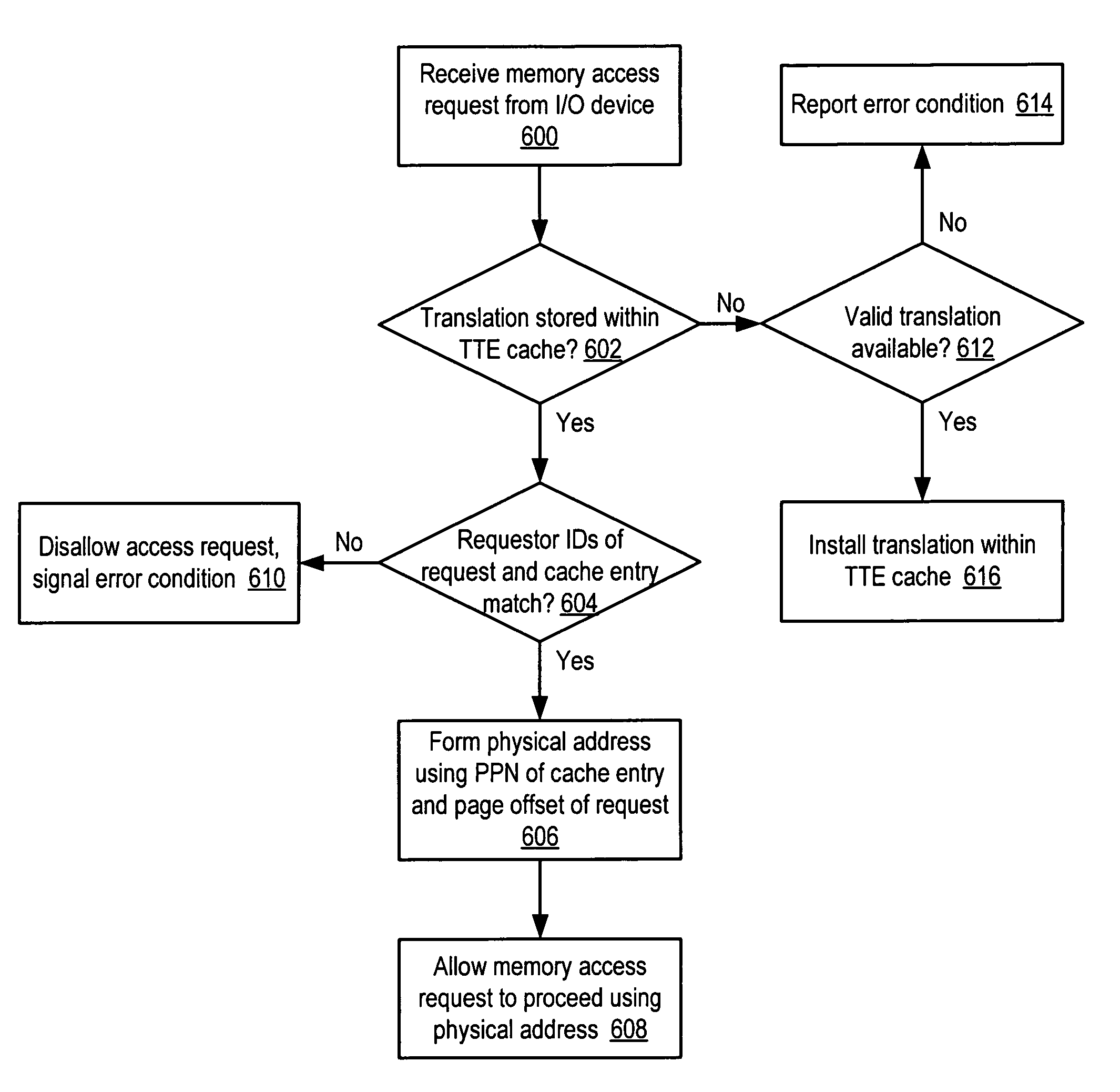

Processor and method for device-specific memory address translation

ActiveUS7487327B1Memory systemsInput/output processes for data processingMemory addressManagement unit

A processor employing device-specific memory address translation. In one embodiment, a processor may include a device interface configured to receive a memory access request from an input / output (I / O) device, where the request specifies a virtual memory address and a first requestor identifier (ID) that identifies the I / O device. The processor may also include an I / O memory management unit coupled to the device interface and configured to determine whether a virtual-to-physical memory address translation corresponding to the virtual memory address is stored within an I / O memory translation buffer. The I / O memory management unit may be further configured to determine whether a second requestor ID stored within the I / O memory translation buffer and corresponding to the memory address translation matches the first requestor ID. If the first and second requestor IDs do not match, the I / O memory management unit may disallow the memory access request and to signal an error condition.

Owner:ORACLE INT CORP

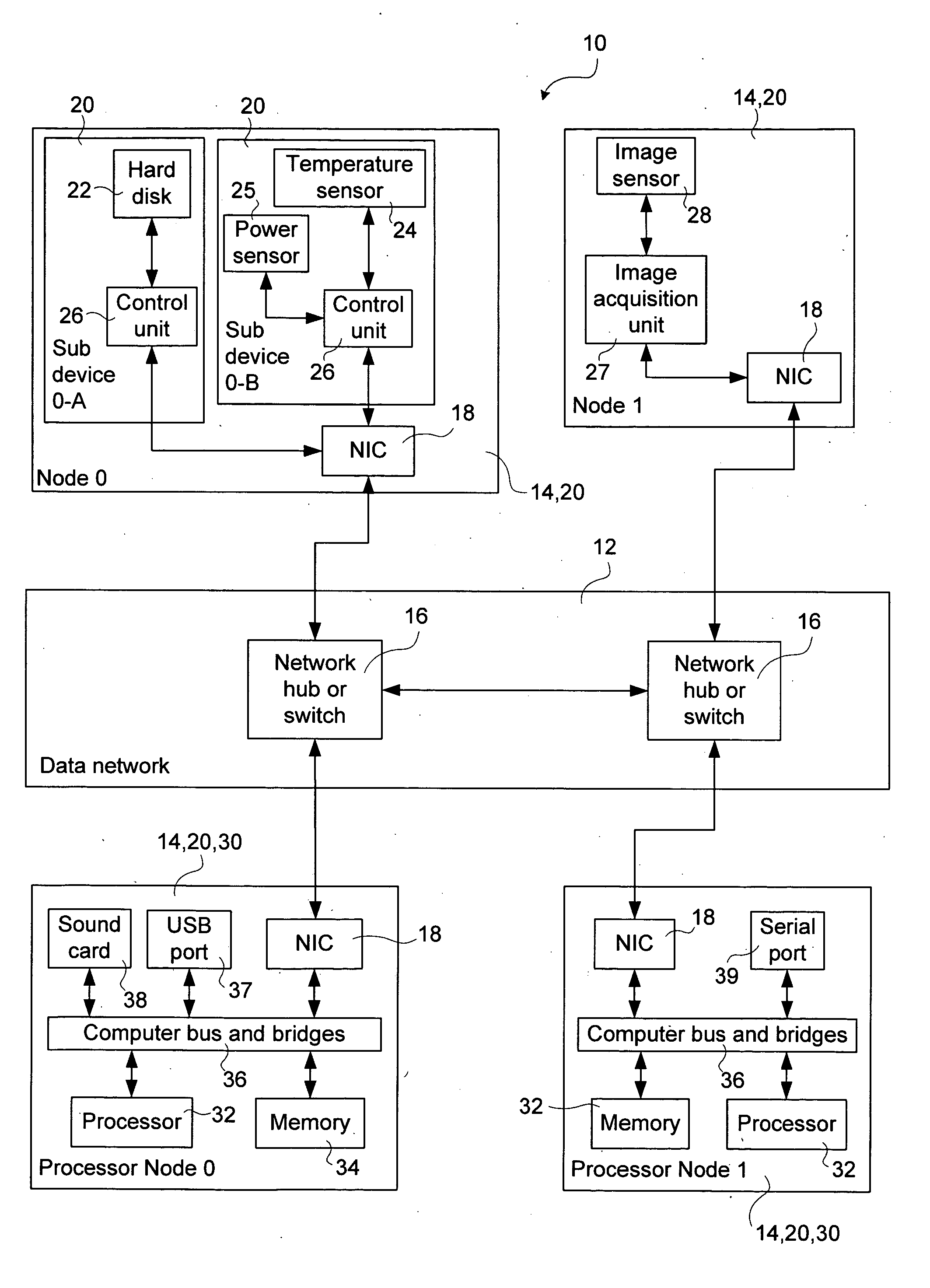

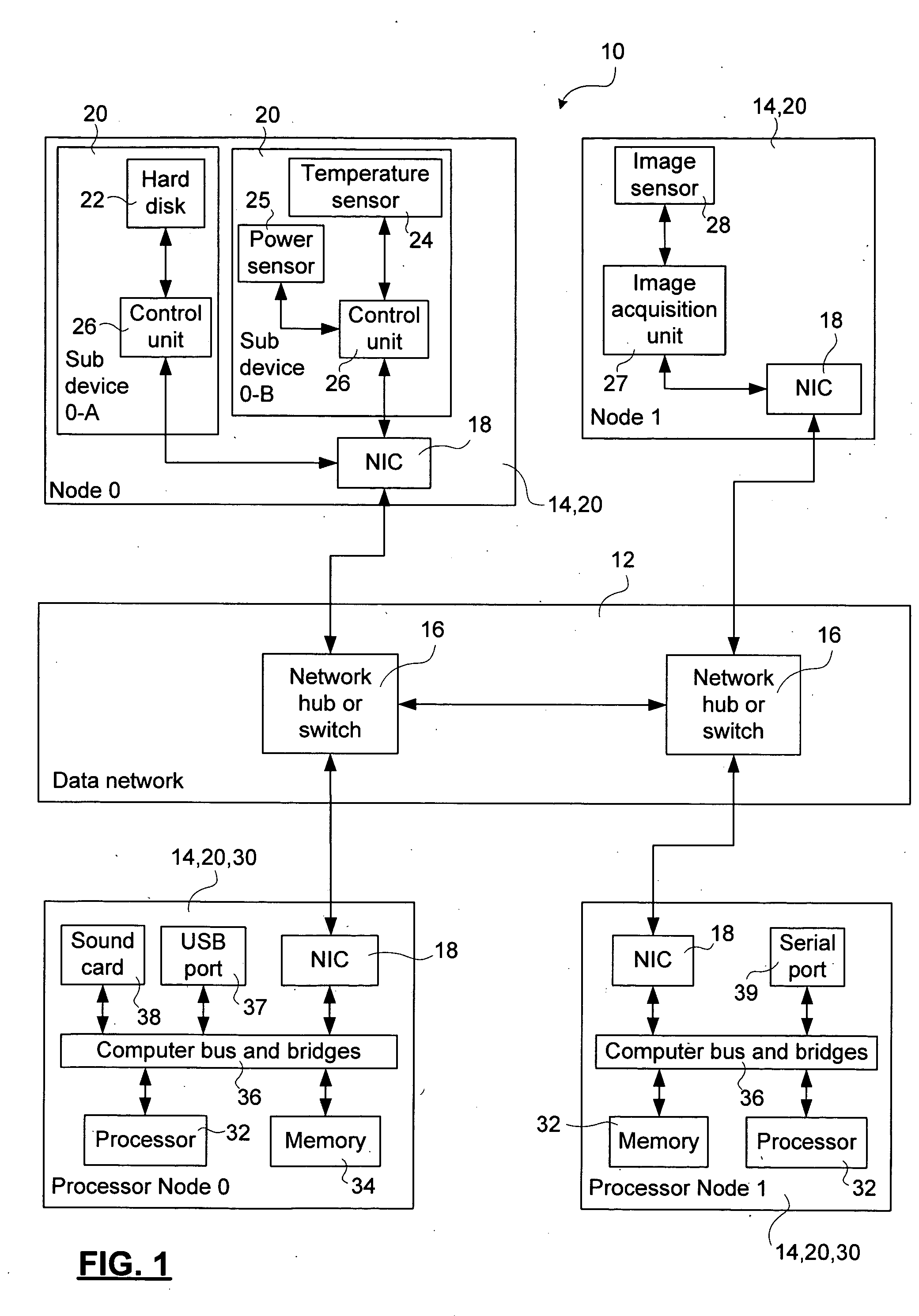

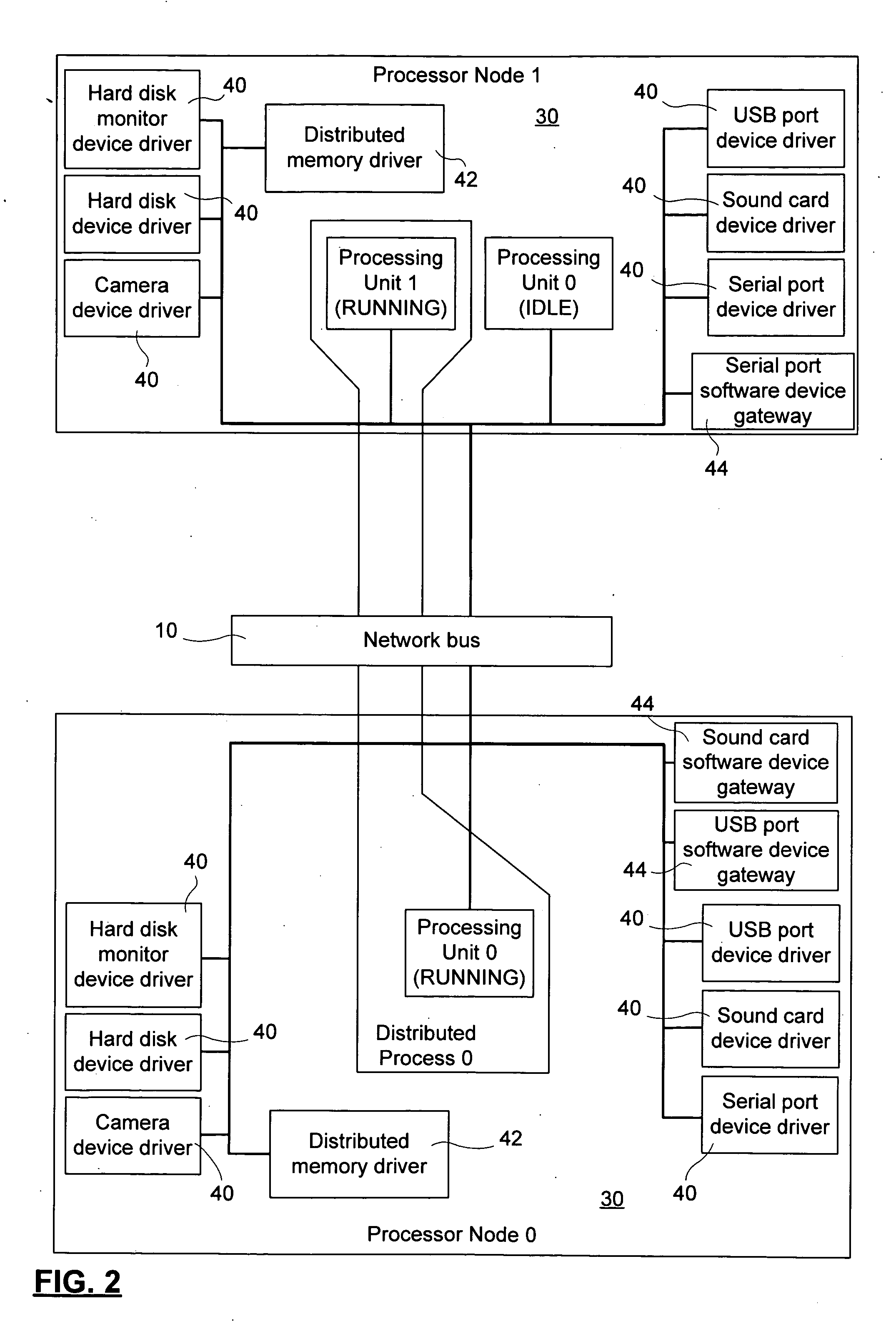

Methods and apparatus for enabling bus connectivity over a data network

ActiveUS20060059287A1Facilitate communicationSelectively leverage operating system capabilitiesProgram synchronisationMemory addressAbstraction layer

A method and system for interconnecting peripherals, processor nodes, and hardware devices to a data network to produce a network bus providing OS functionality for managing hardware devices connected to the network bus involves defining a network bus driver at each of the processor nodes that couples hardware device drivers to a network hardware abstraction layer of the processor node. The network bus can be constructed to account for the hot-swappable nature of the hardware devices using a device monitoring function, and plug and play functionality for adding and, removing device driver instances. The network bus can be used to provide a distributed processing system by defining a shared memory space at each processor node. Distributed memory pages are provided with bus-network-wide unique memory addresses, and a distributed memory manager is added to ensure consistency of the distributed memory pages, and to provide a library of functions for user mode applications.

Owner:PLEORA TECH

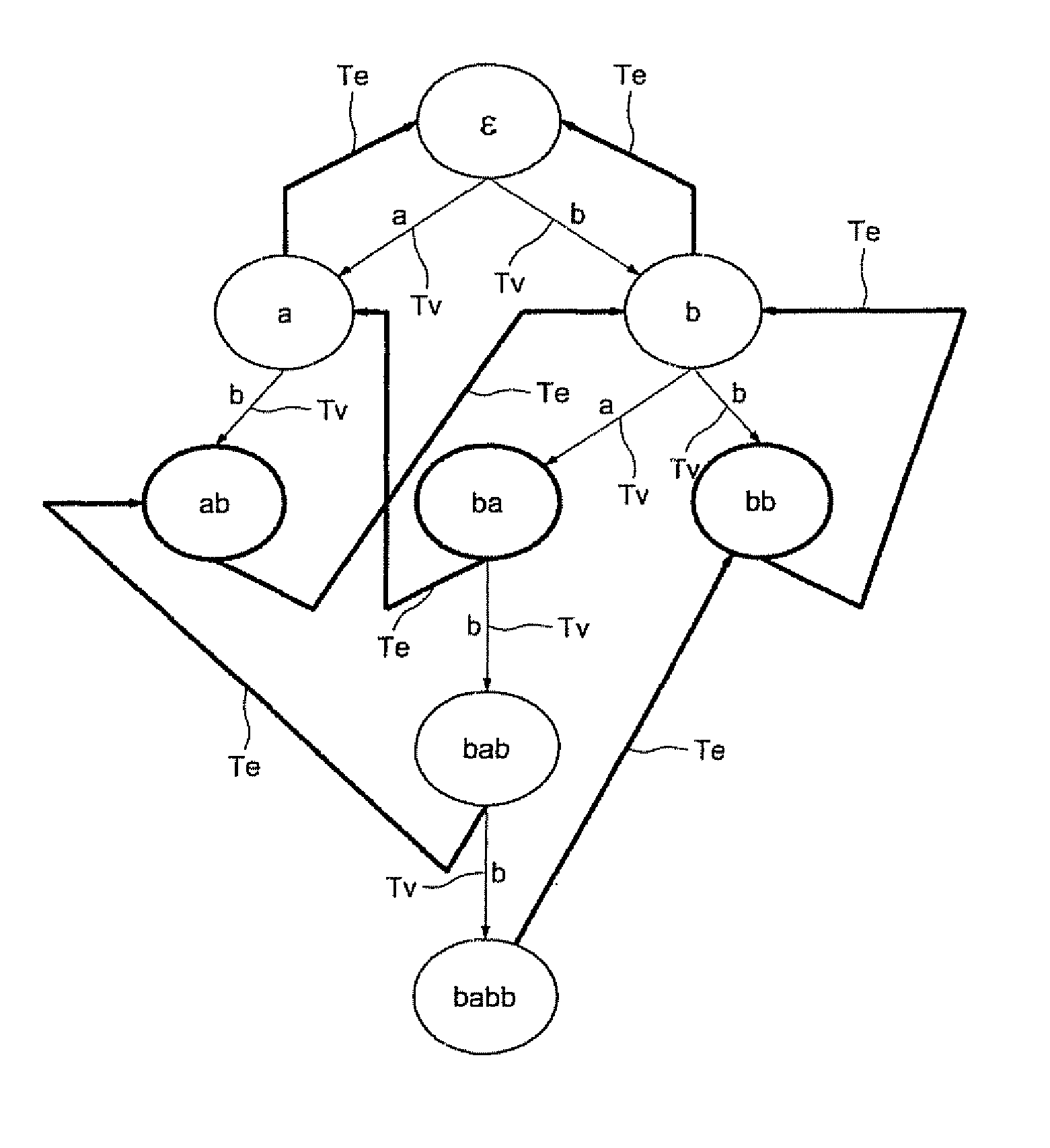

Memory circuit for aho-corasick type character recognition automaton and method of storing data in such a circuit

ActiveUS20070075878A1Number of bitDigital data information retrievalCode conversionMemory addressData stream

A memory circuit for an Aho-Corasick type character recognition automaton uses a node tree for recognizing predetermined strings of characters in an incoming data stream. The recognization is based upon successive transitions in the node tree stored in memory in which each node corresponds to a recognized sequence of a character string. At least part of the nodes are related to a consecutive node by a valid transition, from an initial state to terminal states, with each one corresponding to a recognized character string This memory circuit includes first sets of consecutive memory addresses defining respectively strings of consecutive nodes accessible sequentially during successive transitions to a terminal state, and second sets of memory addresses defining multiple nodes each pointing to several states.

Owner:STMICROELECTRONICS SRL

Vector register addressing

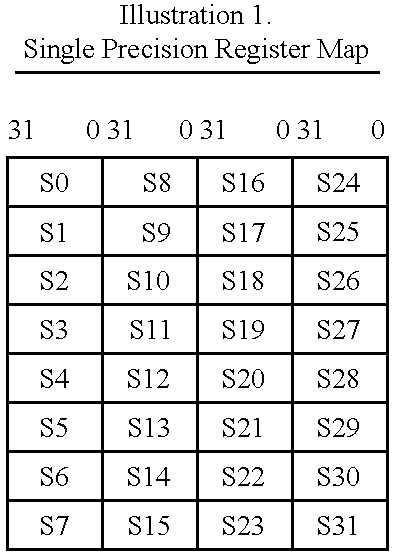

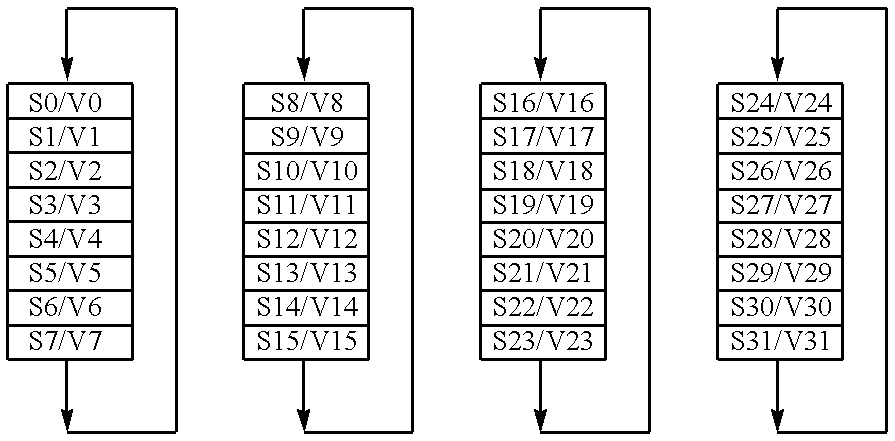

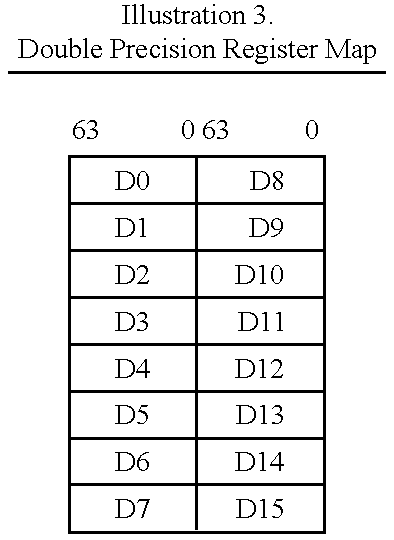

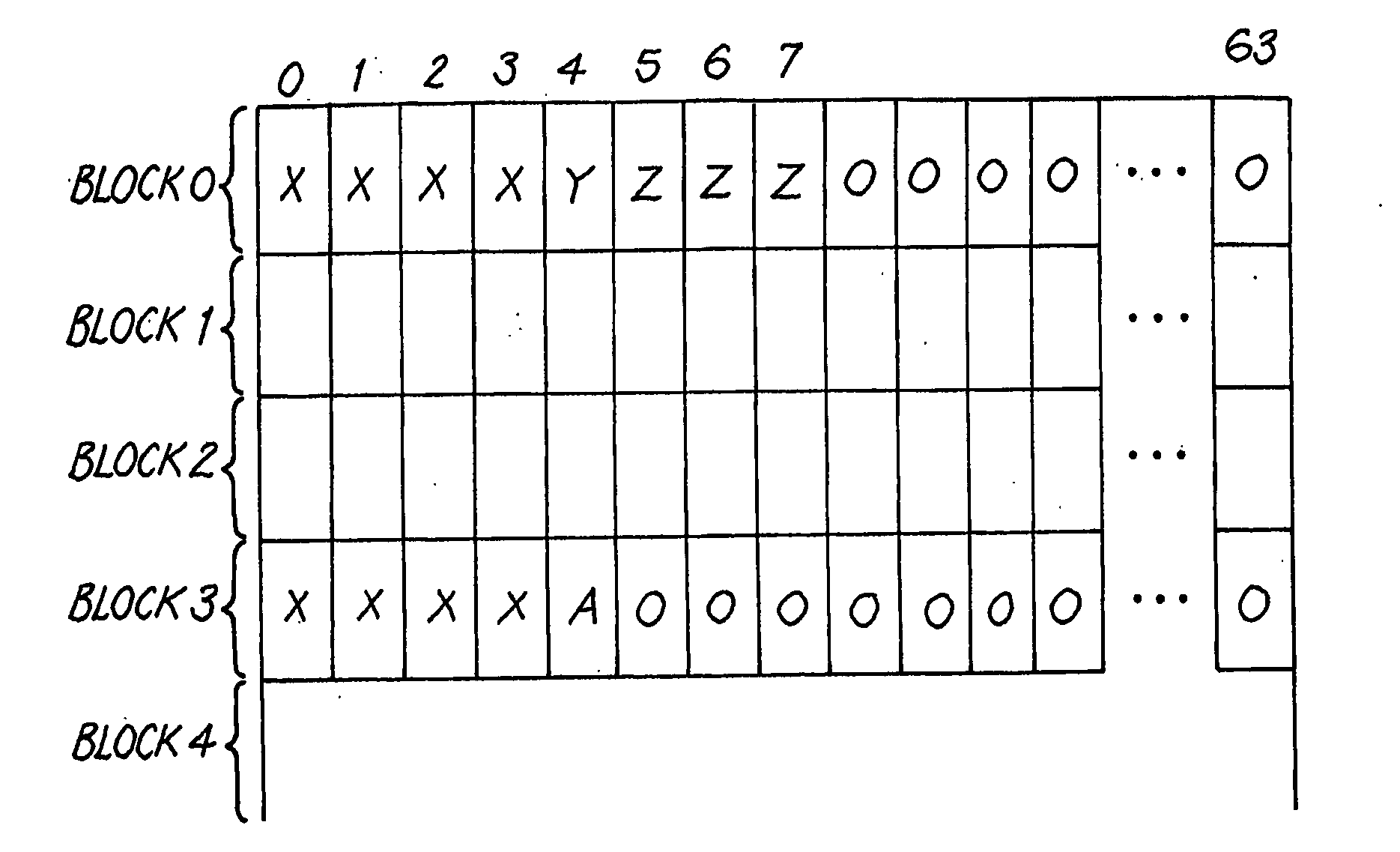

InactiveUS6332186B1Without complexityWithout costRegister arrangementsInstruction analysisMemory addressProcessing Instruction

A floating point unit 26 is provided with a register bank 38 comprising 32 registers that may be used as either vector registers V or scalar registers S. Data values are transferred between memory 30 and the registers within the register bank 38 using contiguous block memory access instructions. Vector processing instructions specify a sequence of processing operations to be performed upon data values within a sequence of registers. The register address is incremented between each operation by an amount controlled by a stride value. Accordingly, the register address can be incremented by values such as 0, 1, 2 or 4 between each iteration. This provides a mechanism for retaining block memory access instructions to contiguous memory addresses whilst supporting vector matrix and / or complex operations in which the data values needed for each iteration are not adjacent to one another in the memory 30.

Owner:ARM LTD

Portable Data Storage Device Using a Memory Address Mapping Table

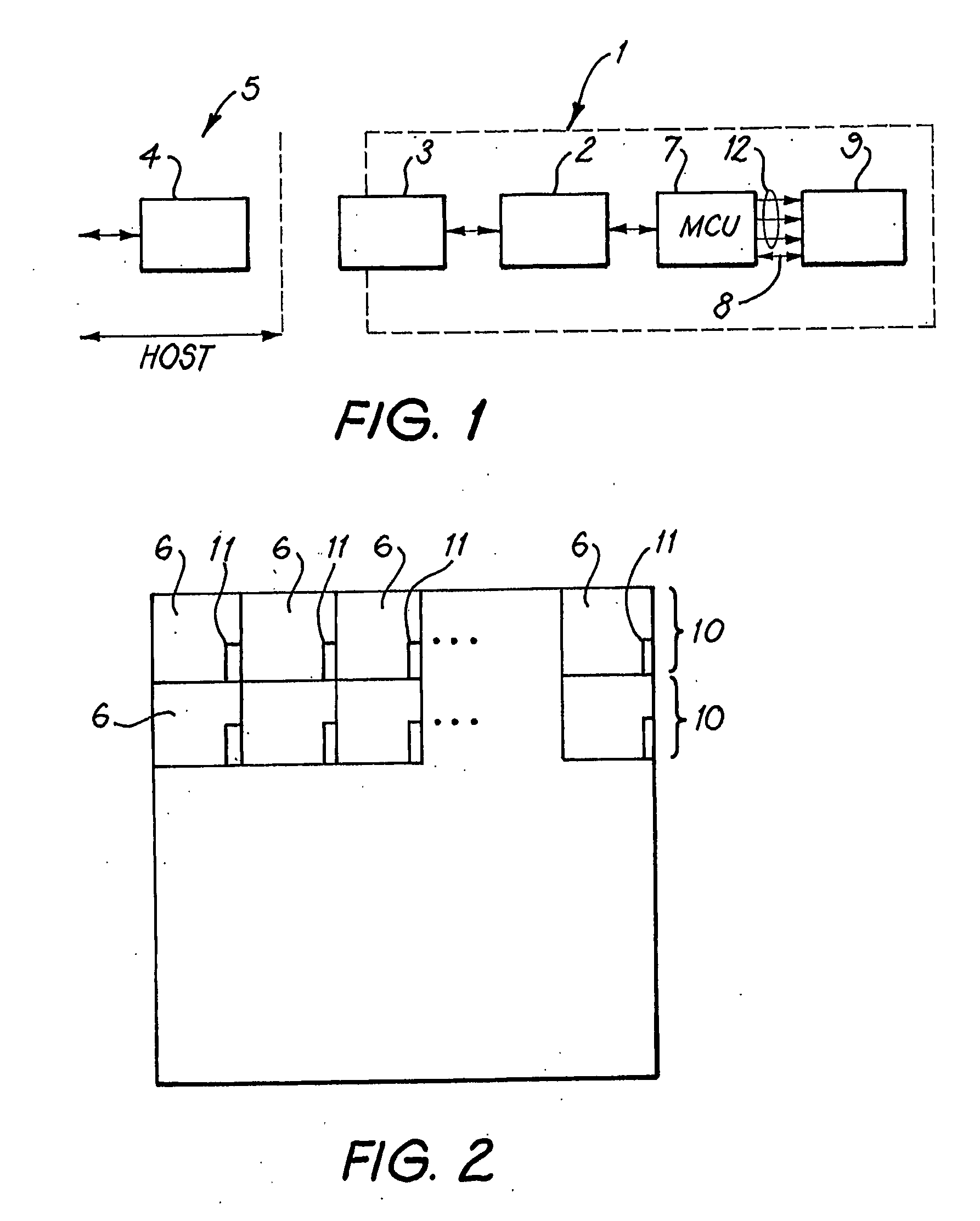

InactiveUS20080228995A1Reduce in quantityFast operationMemory architecture accessing/allocationCombination recordingMemory addressUSB

A portable data storage device includes a USB controller, a master control unit and a NAND flash memory device. The master control unit receives data to be written to logical addresses, and instructions to read data from logical addresses. It uses a memory address mapping table to associate the logical addresses with the physical addresses in the memory device, and writes data to or reads data from the physical address corresponding to the logical address. The mapping is changed at intervals, so that different ones of the physical address regions are associated at different times with the logical addresses. This increases the speed of the device, and also means that no physical addresses are rapidly worn out by being permanently associated with logical addresses to which data is written relatively often.

Owner:TREK TECH SINGAPORE PTE

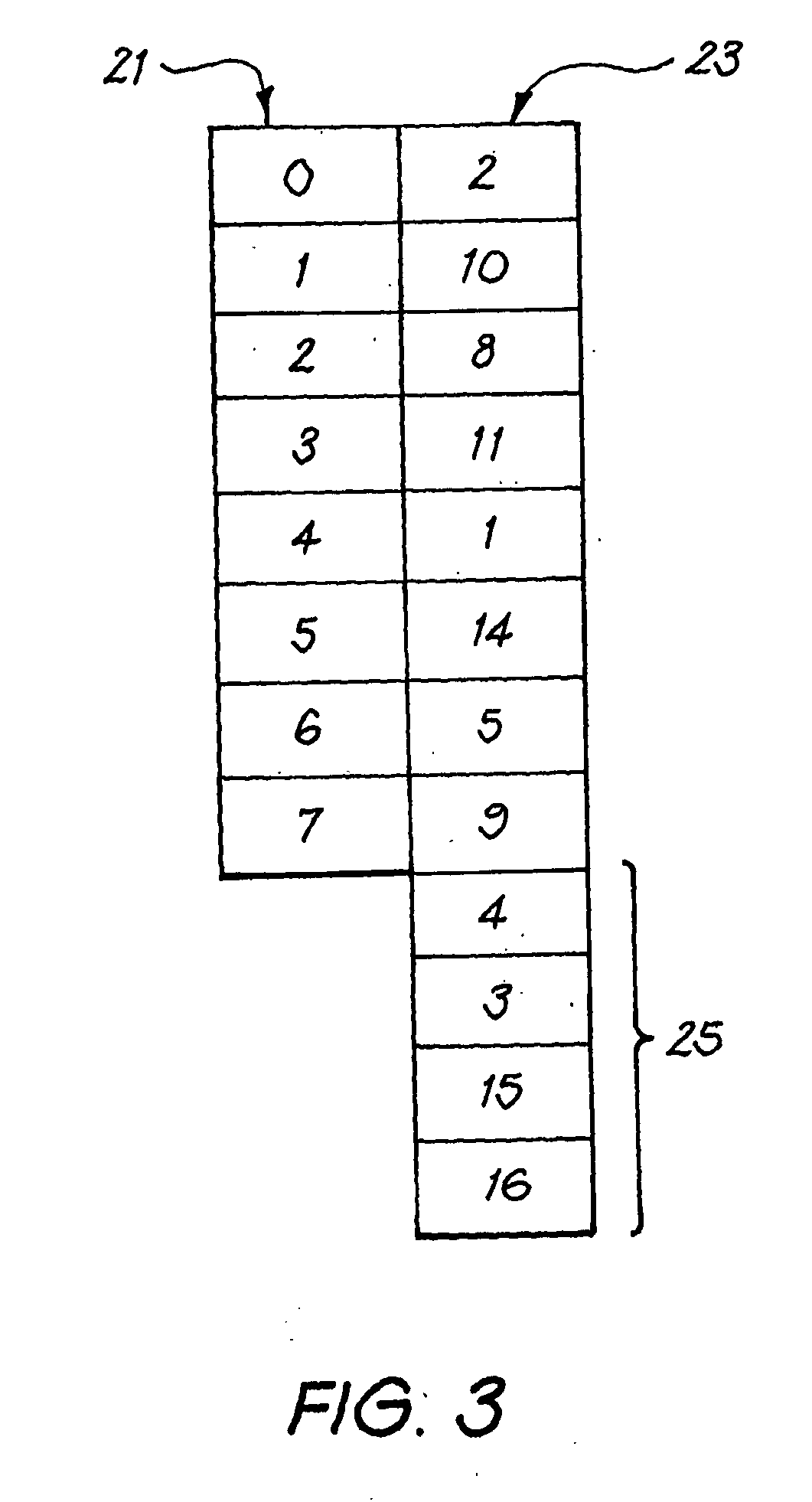

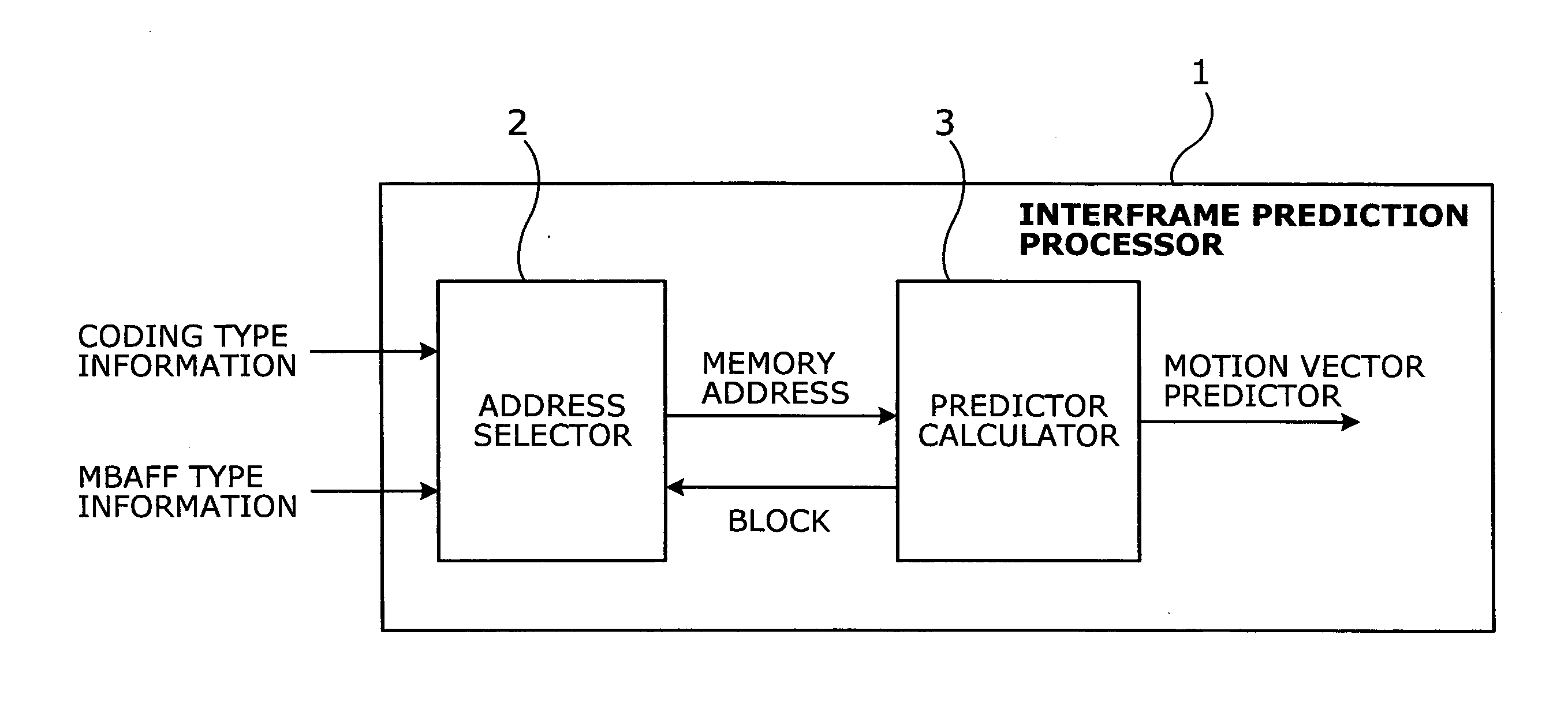

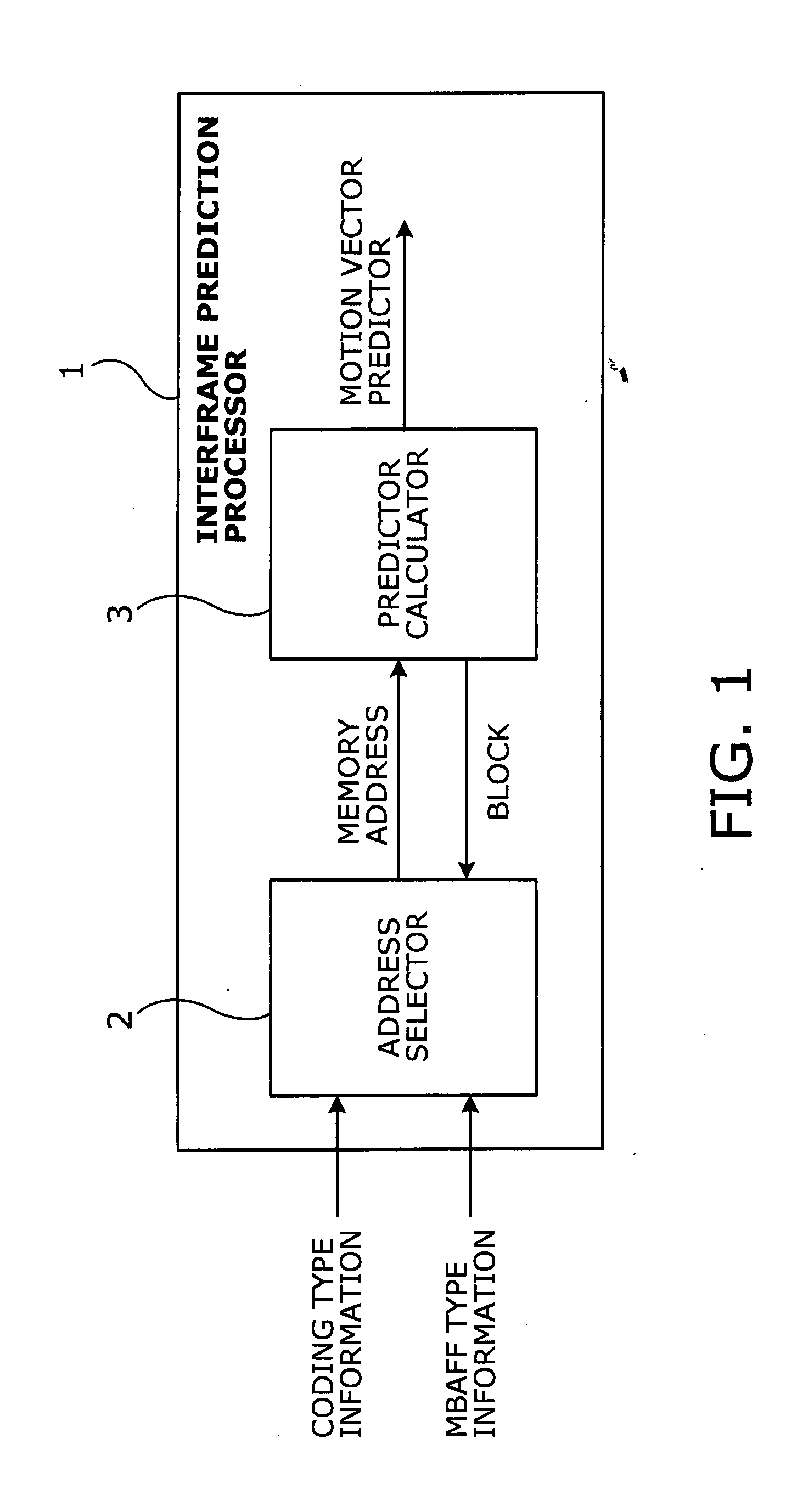

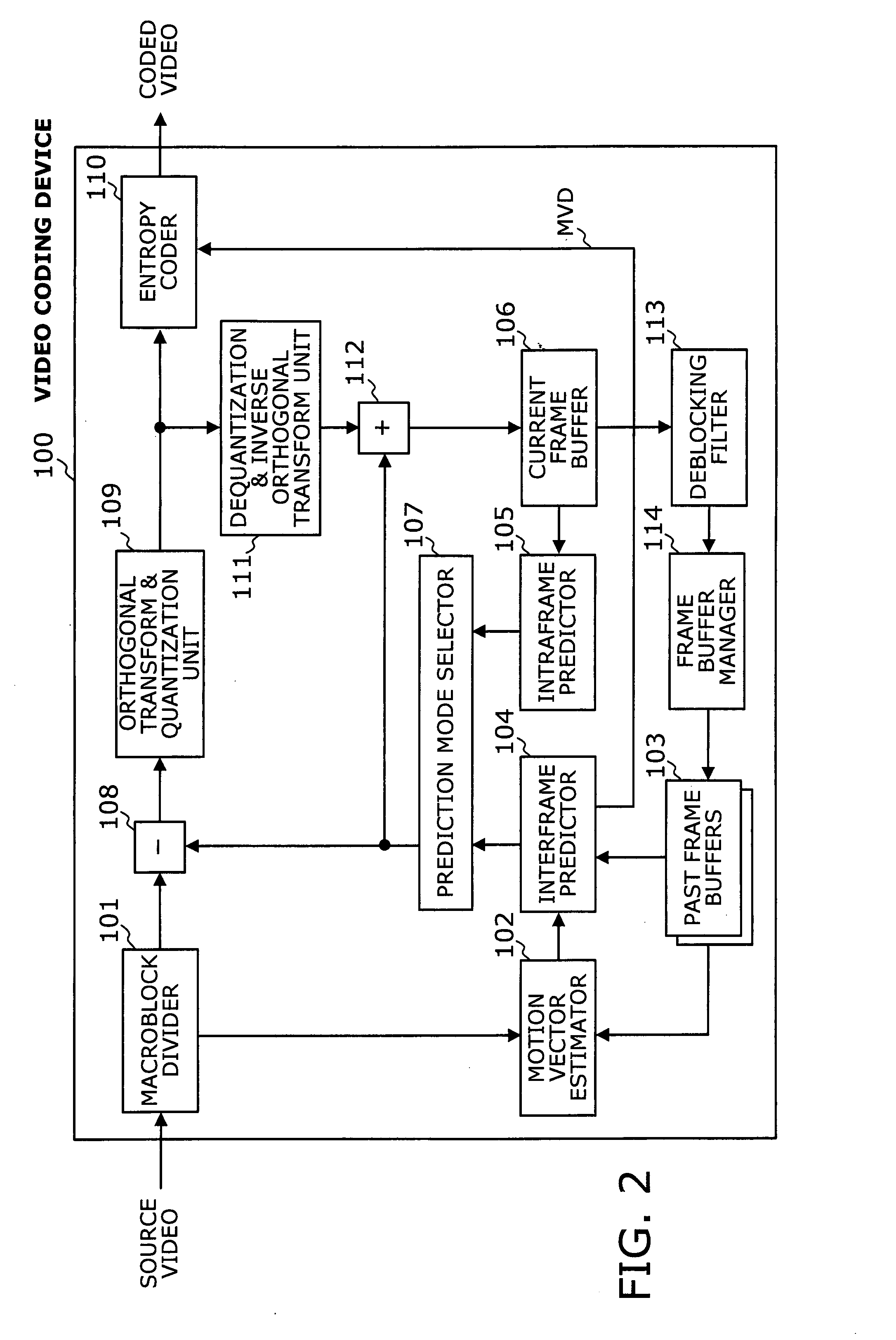

Interframe prediction processor with mechanism for providing locations of reference motion vectors used in macroblock adaptive field/frame mode

InactiveUS20080043843A1Increase speedColor television with pulse code modulationColor television with bandwidth reductionMemory addressMotion vector

An interframe prediction processor designed for high-speed video coding and decoding. The interframe prediction processor has an address selector and a predictor calculator. The address selector receives information about MBAFF type and coding type. When performing motion compensation for a given MBAFF picture with reference to blocks surrounding a current block, the address selector provides memory addresses of reference motion vectors according to the coding type of the current block pair, as well as to the coding type of the reference blocks. The predictor calculator that determines a motion vector predictor for each constituent block of the current block pair based on reference motion vectors read out of the provided memory addresses.

Owner:SOCIONEXT INC

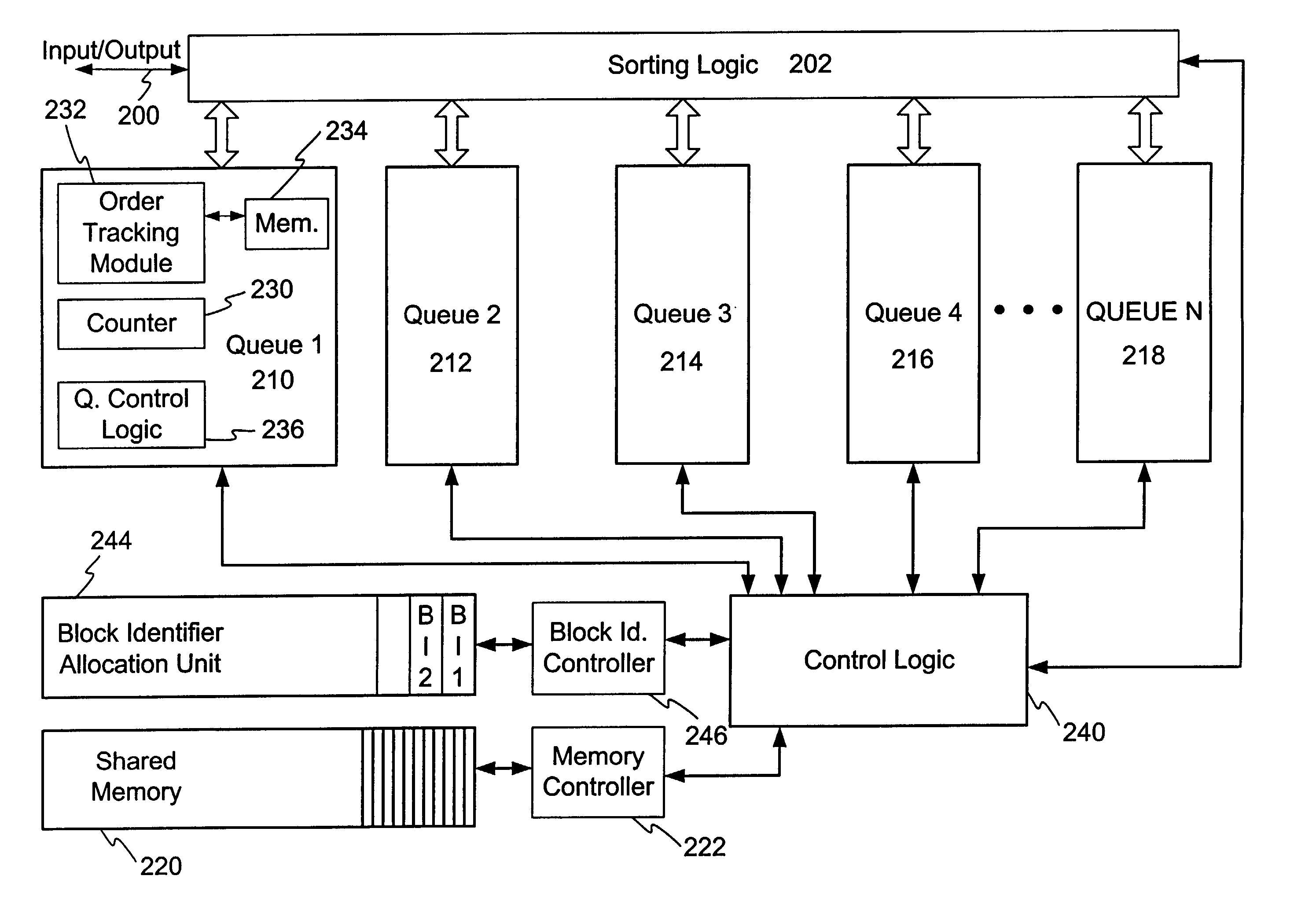

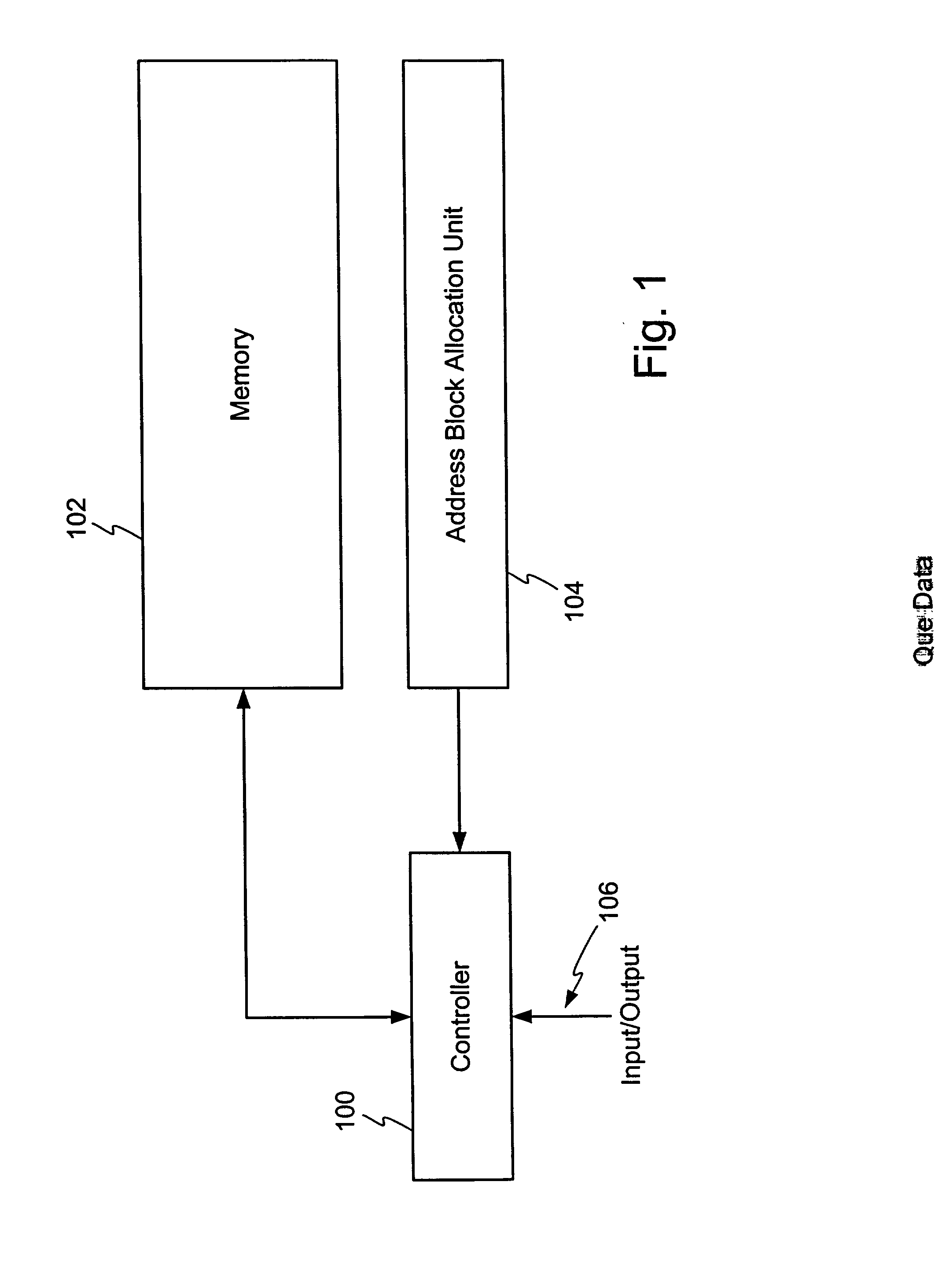

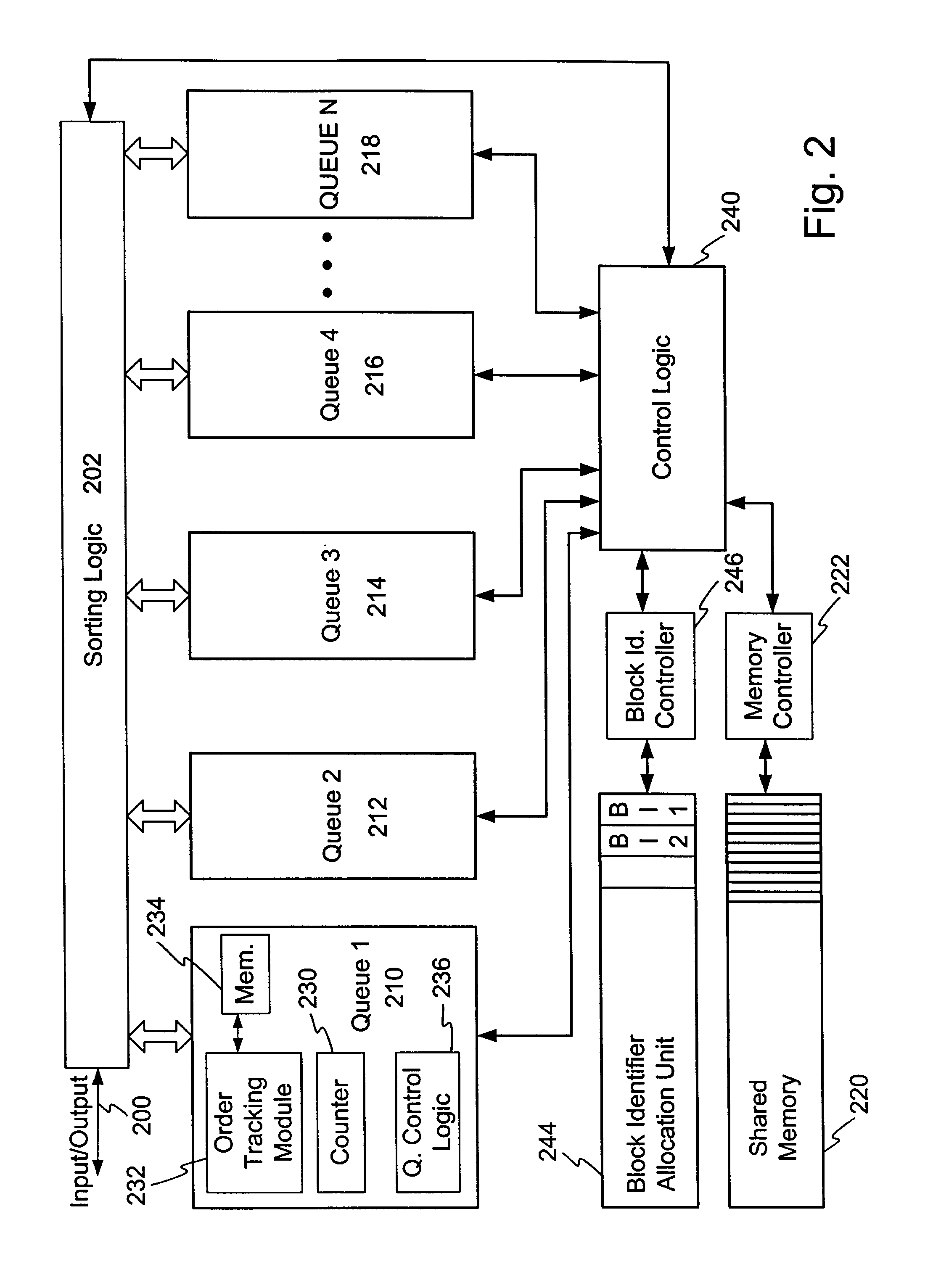

Shared memory

InactiveUS20020126673A1Increase speedSpeed advantageData switching by path configurationMemory addressDistributed computing

A system and method for tracking data using a sharing memory is disclosed. In one embodiment the system comprises a plurality of queues, each configured to track the order of receipt of data items. The plurality of queues utilize a shared memory instead of associating memory with each queue. Memory addresses are dynamically allocated and de-allocated based on the needs of each queue. As a queue utilizes all its originally assigned addresses, additional memory addresses may be allocated to the queue. Likewise, as a queue outputs its contents, unused memory addresses are de-allocated so the addresses may be used by other queues. In one embodiment, the addresses are allocated in blocks by a block identifier comprising a single memory address. One or more counters in each queue increments and decrements the block identifier to access different memory locations. In one embodiment each queue includes an order tracking module to track the order of receipt of each data item based on the address at which the data item is stored.

Owner:ENTRIDIA

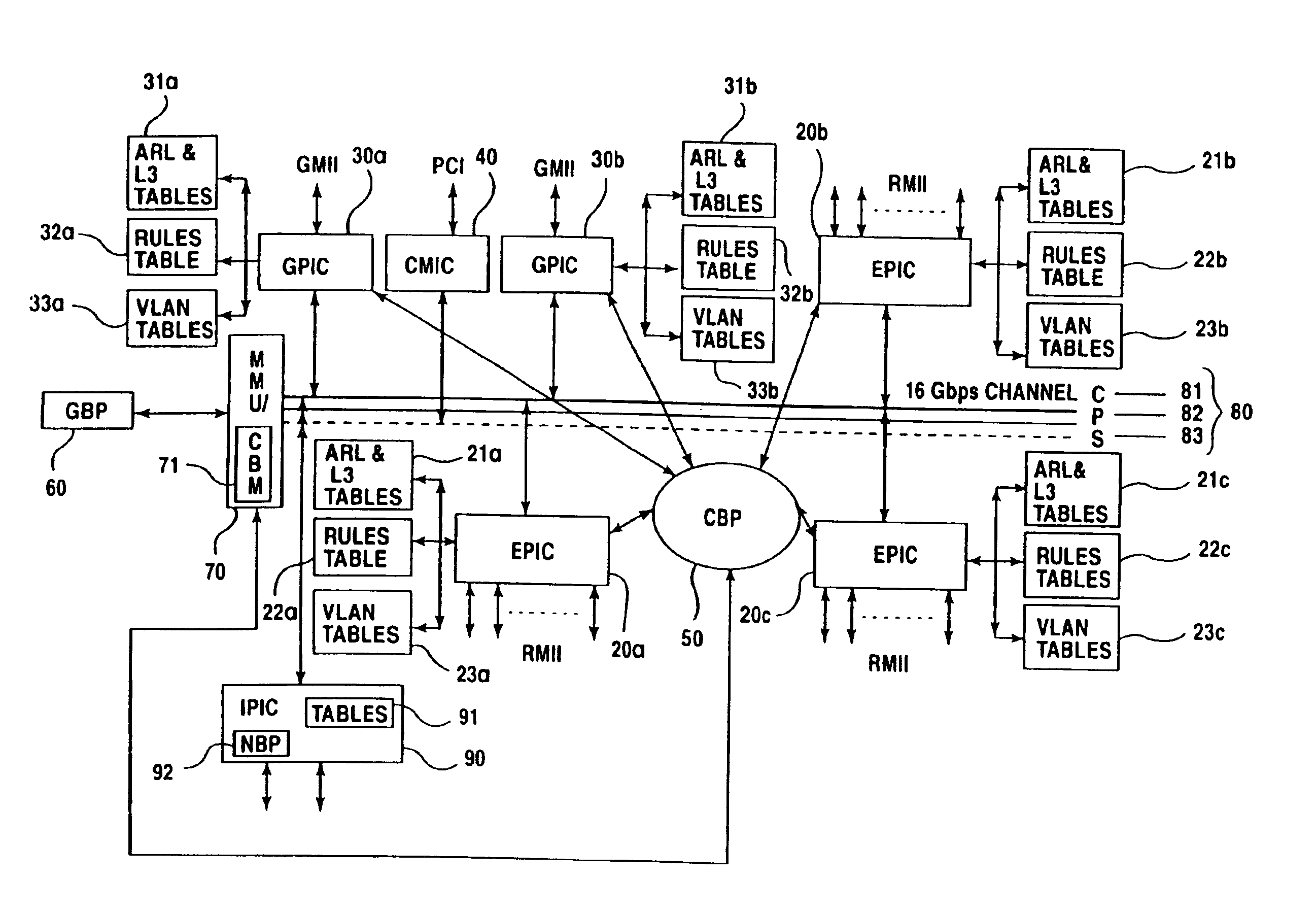

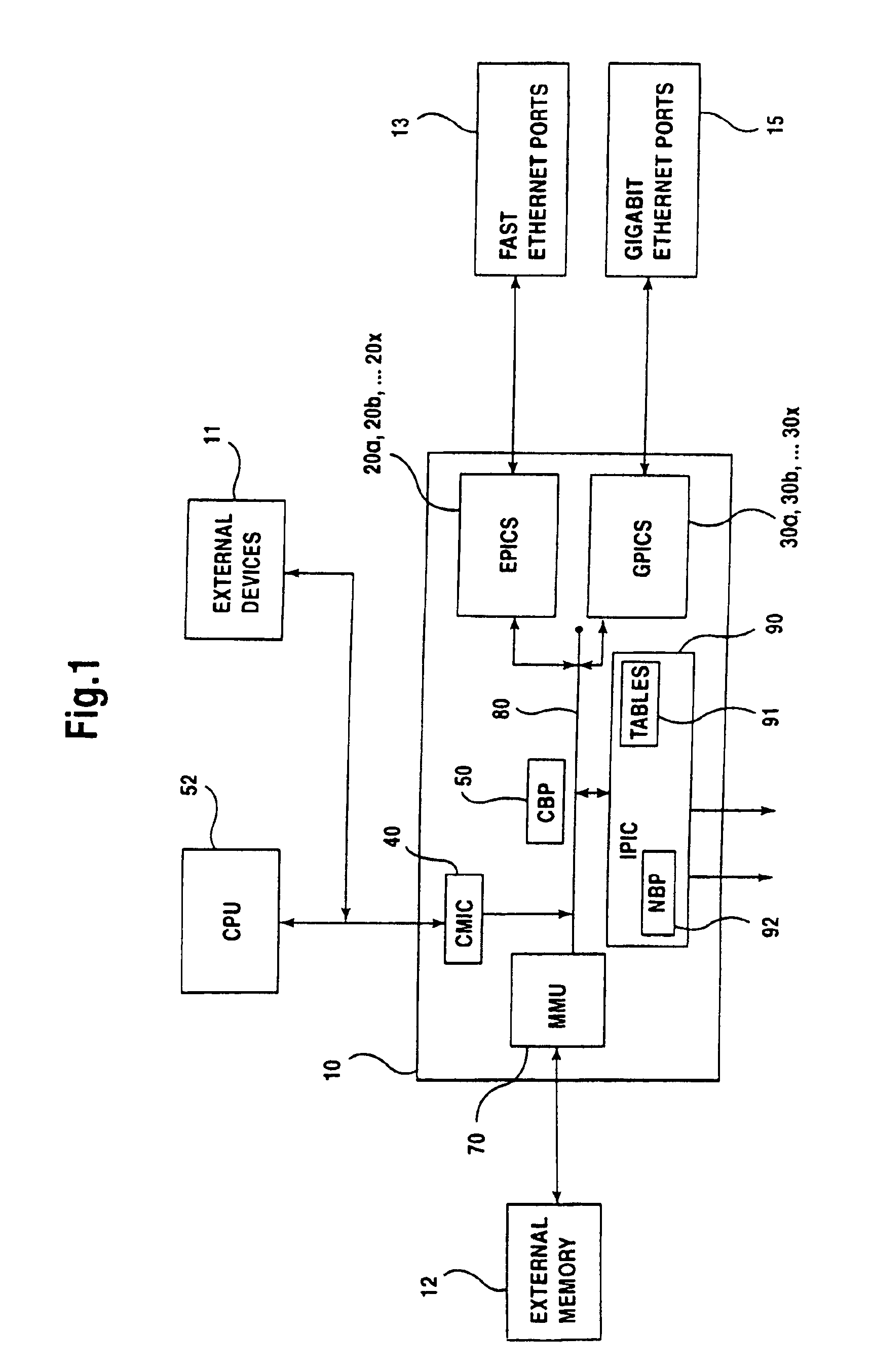

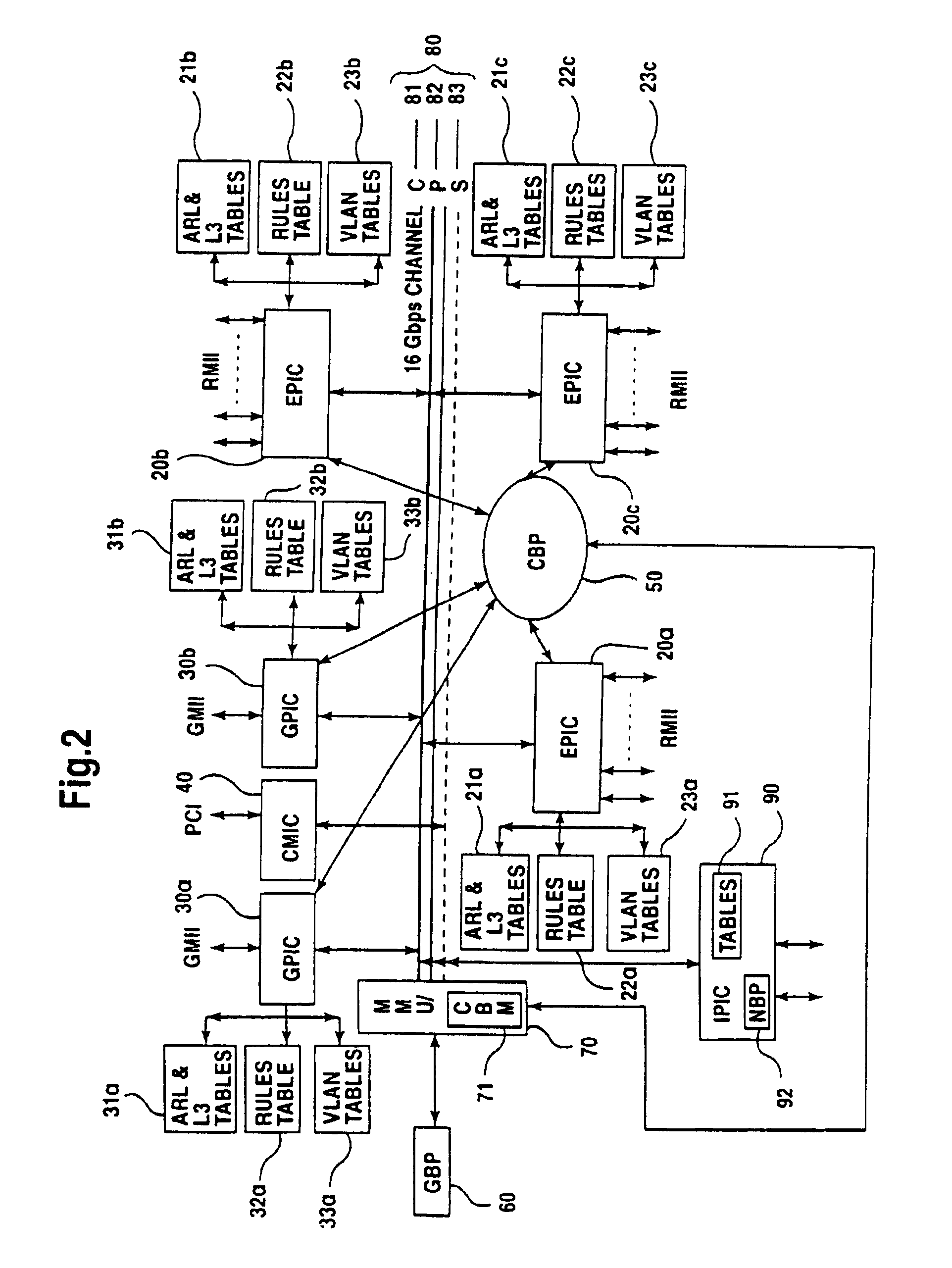

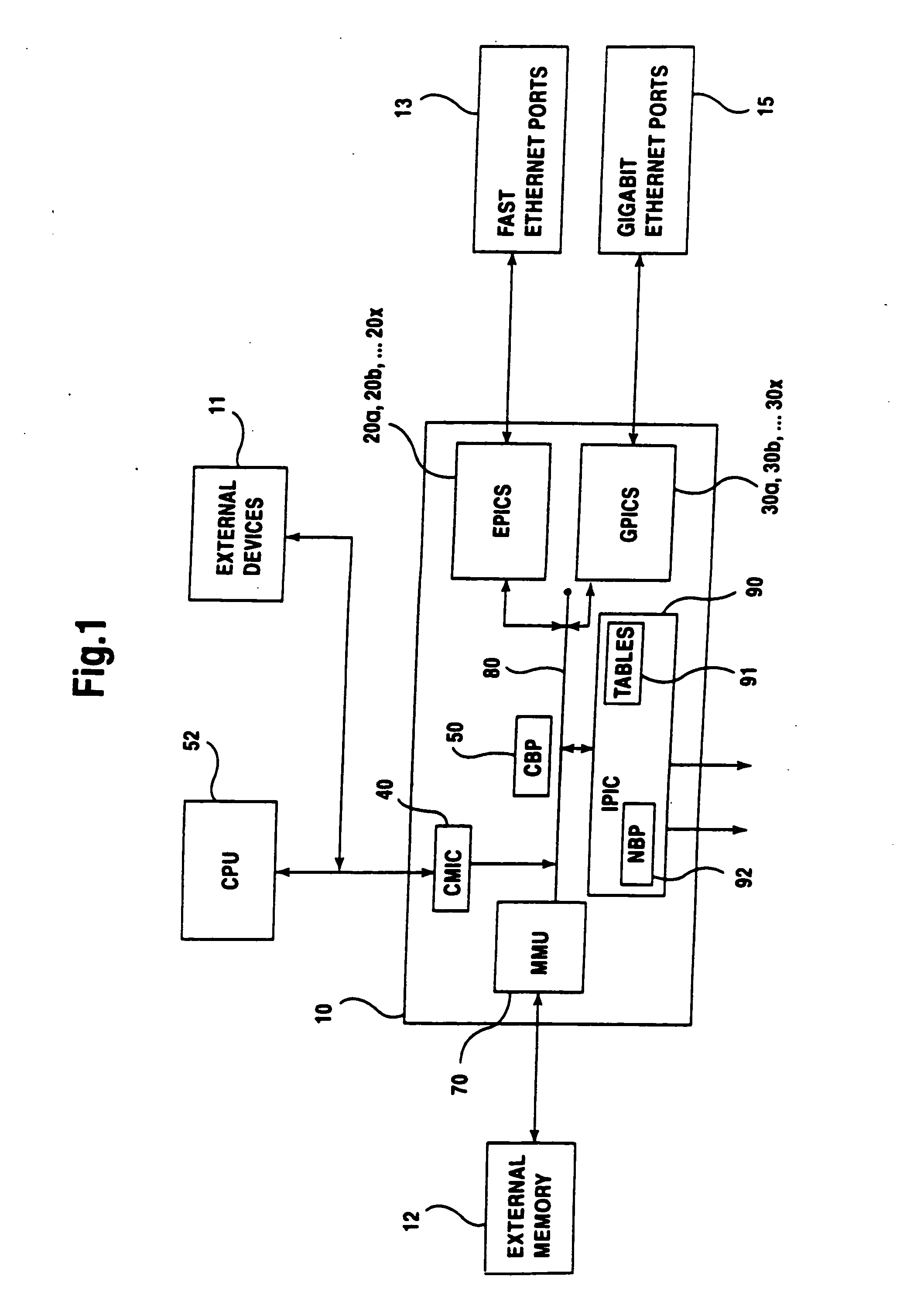

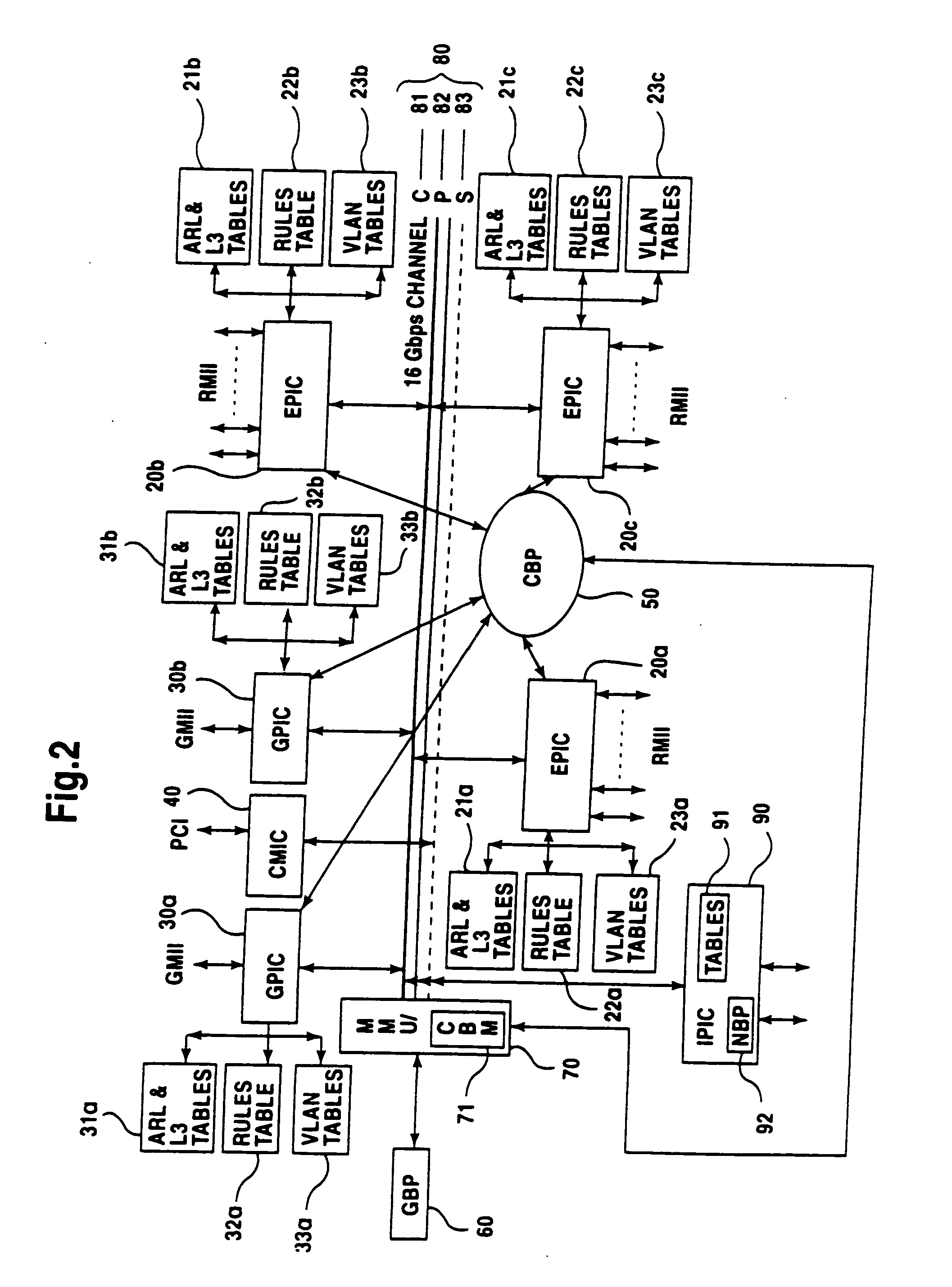

Apparatus and method for managing memory in a network switch

InactiveUS6912637B1Special service provision for substationInput/output to record carriersMemory addressParallel computing

The present invention is related to a method and apparatus for managing memory in a network switch, wherein the memory includes the steps of providing a memory, wherein the memory includes a plurality of memory locations configured to store data therein and providing a memory address pool having a plurality of available memory addresses arranged therein, wherein each of the plurality of memory addresses corresponds to a specific memory location. The method further includes the steps of providing a memory address pointer, wherein the memory address pointer indicates a next available memory address in the memory address pool, and reading available memory addresses from the memory address pool using a last in first out operation. The method also includes writing released memory addresses into the memory address pool, adjusting a position of the memory address pointer upon a read or a write operation from the memory address pool.

Owner:AVAGO TECH INT SALES PTE LTD

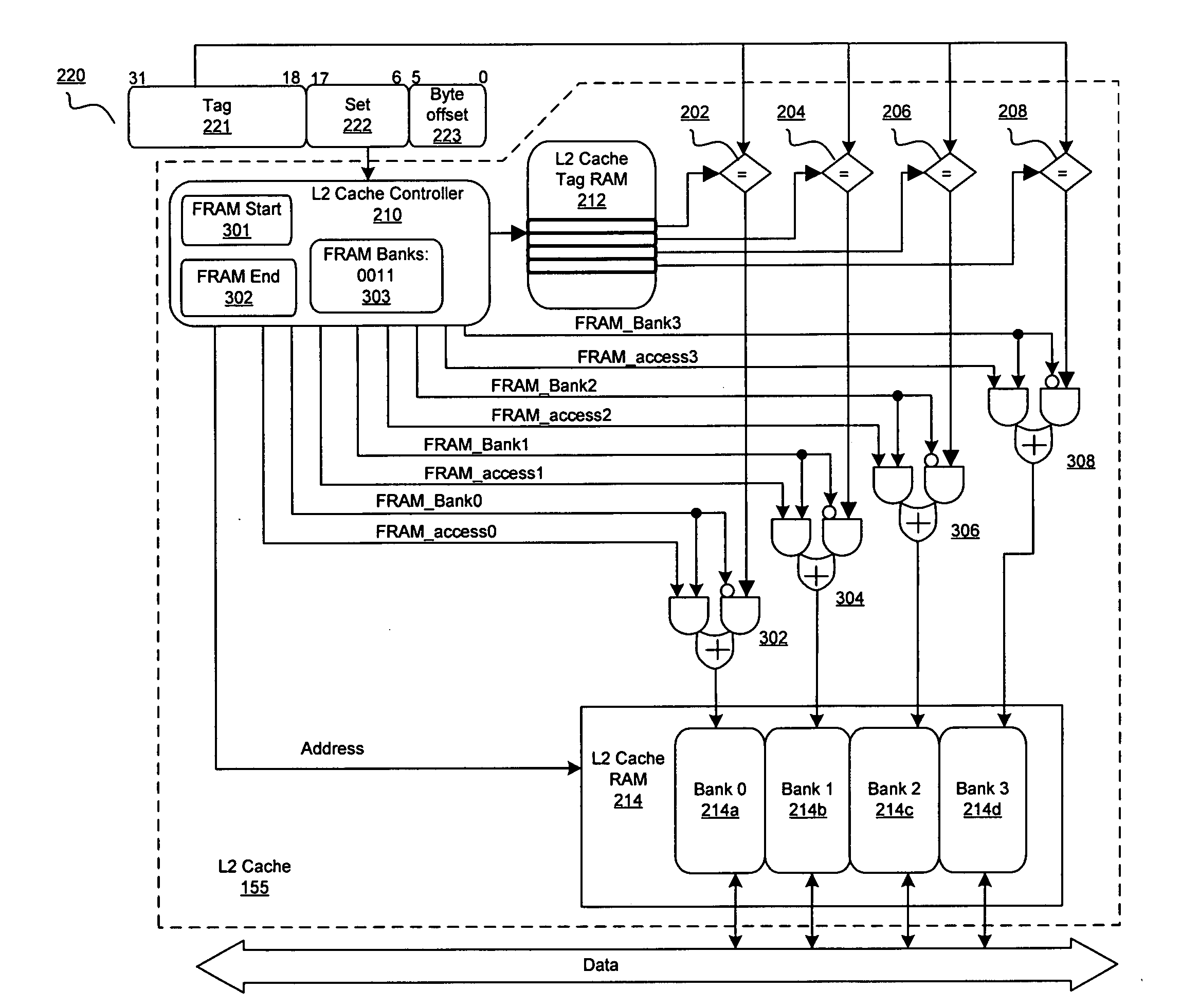

Method and system for on-chip configurable data ram for fast memory and pseudo associative caches

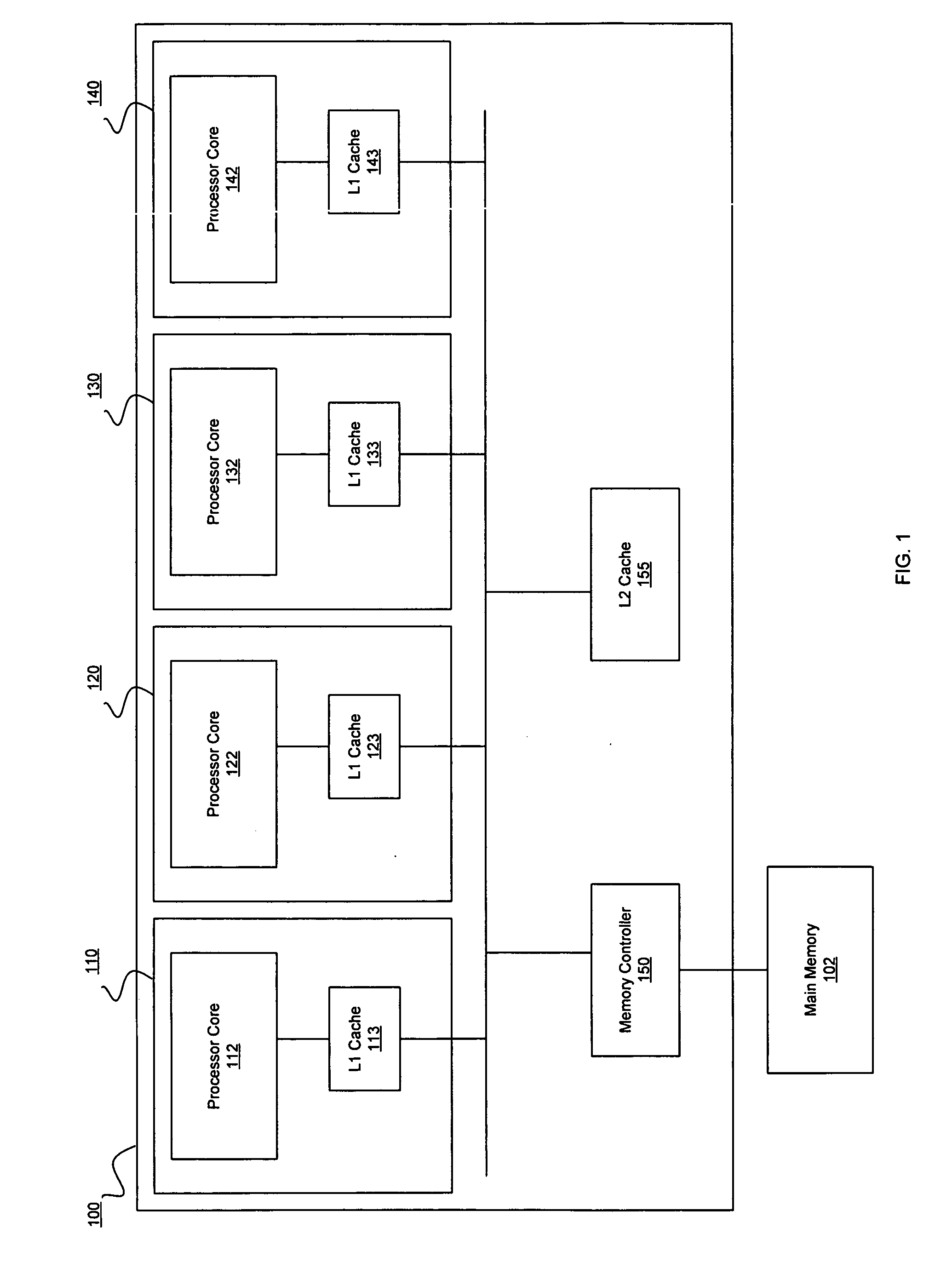

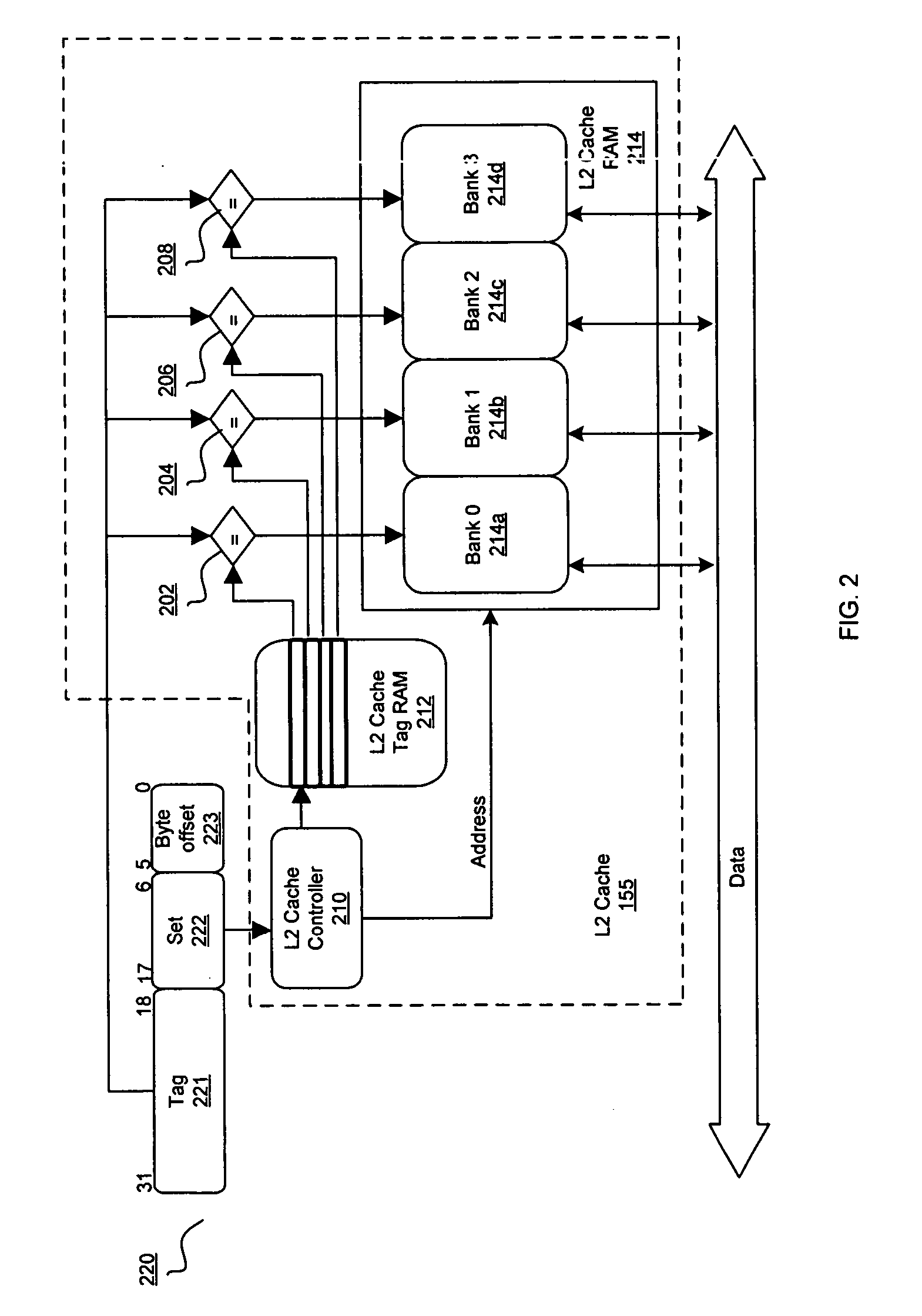

InactiveUS20060277365A1Memory architecture accessing/allocationEnergy efficient ICTMemory addressMemory bank

Aspects of a method and system for an on-chip configurable data RAM for fast memory and pseudo associative caches are provided. Memory banks of configurable data RAM integrated within a chip may be configured to operate as fast on-chip memory or on-chip level 2 cache memory. A set associativity of the on-chip level 2 cache memory may be same after configuring the memory banks as prior to the configuring. The configuring may occur during initialization of the memory banks, and may adjusted the amount of the on-chip level 2 cache. The memory banks configured to operate as on-chip level 2 cache memory or as fast on-chip memory may be dynamically enabled by a memory address.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

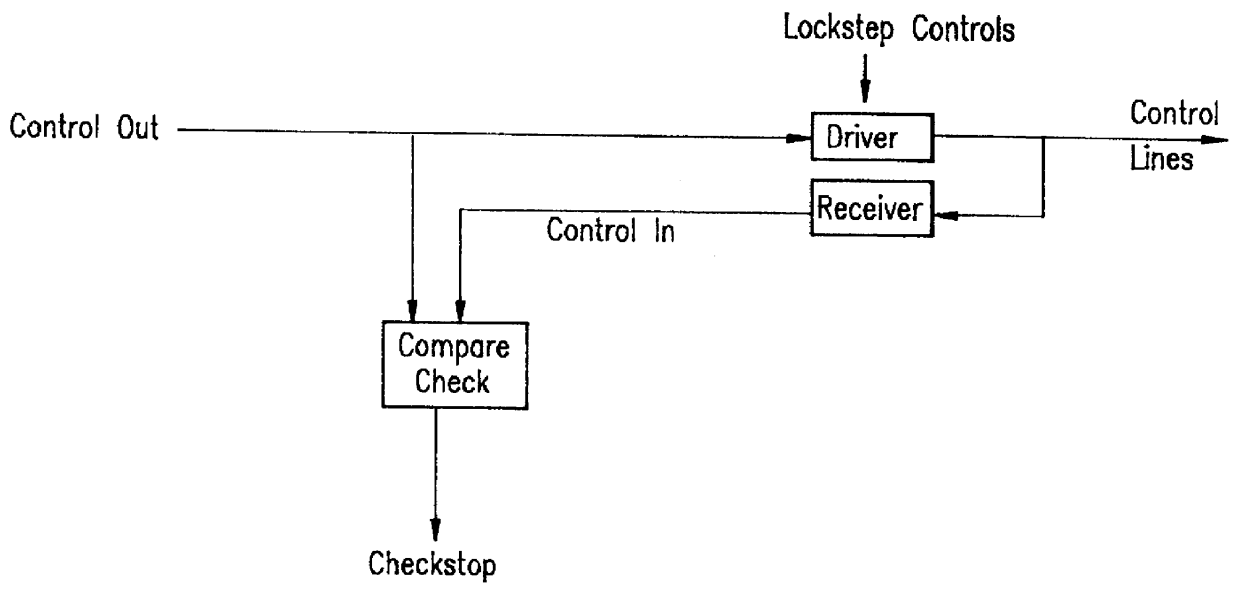

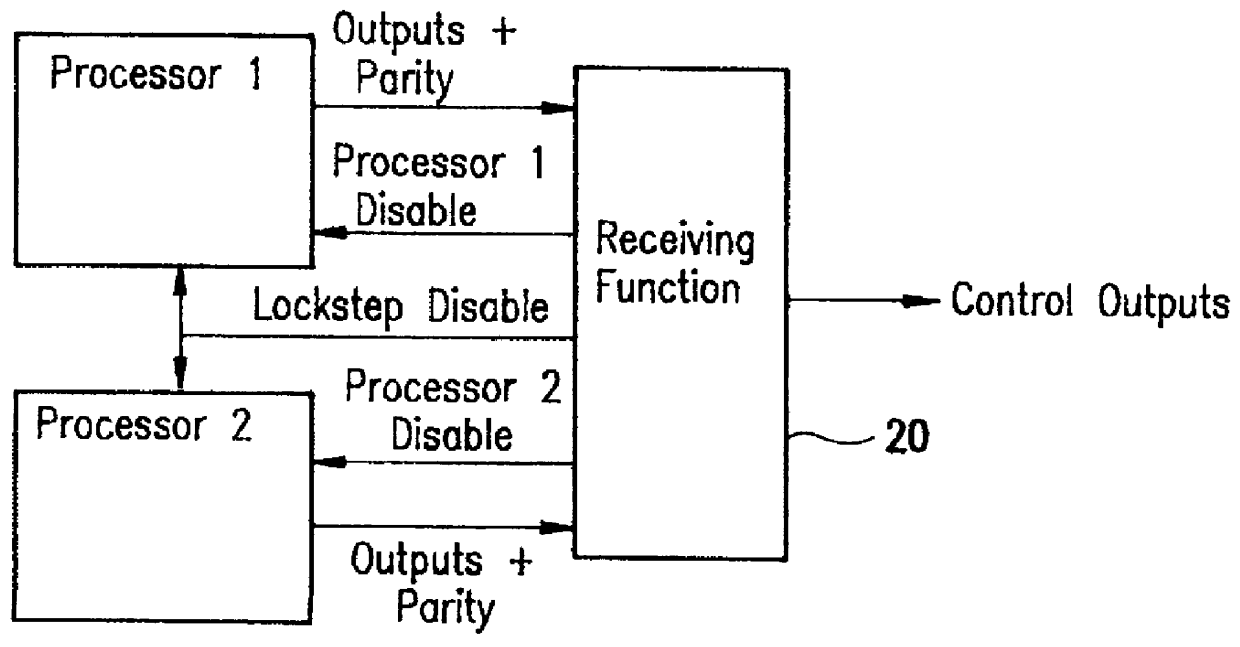

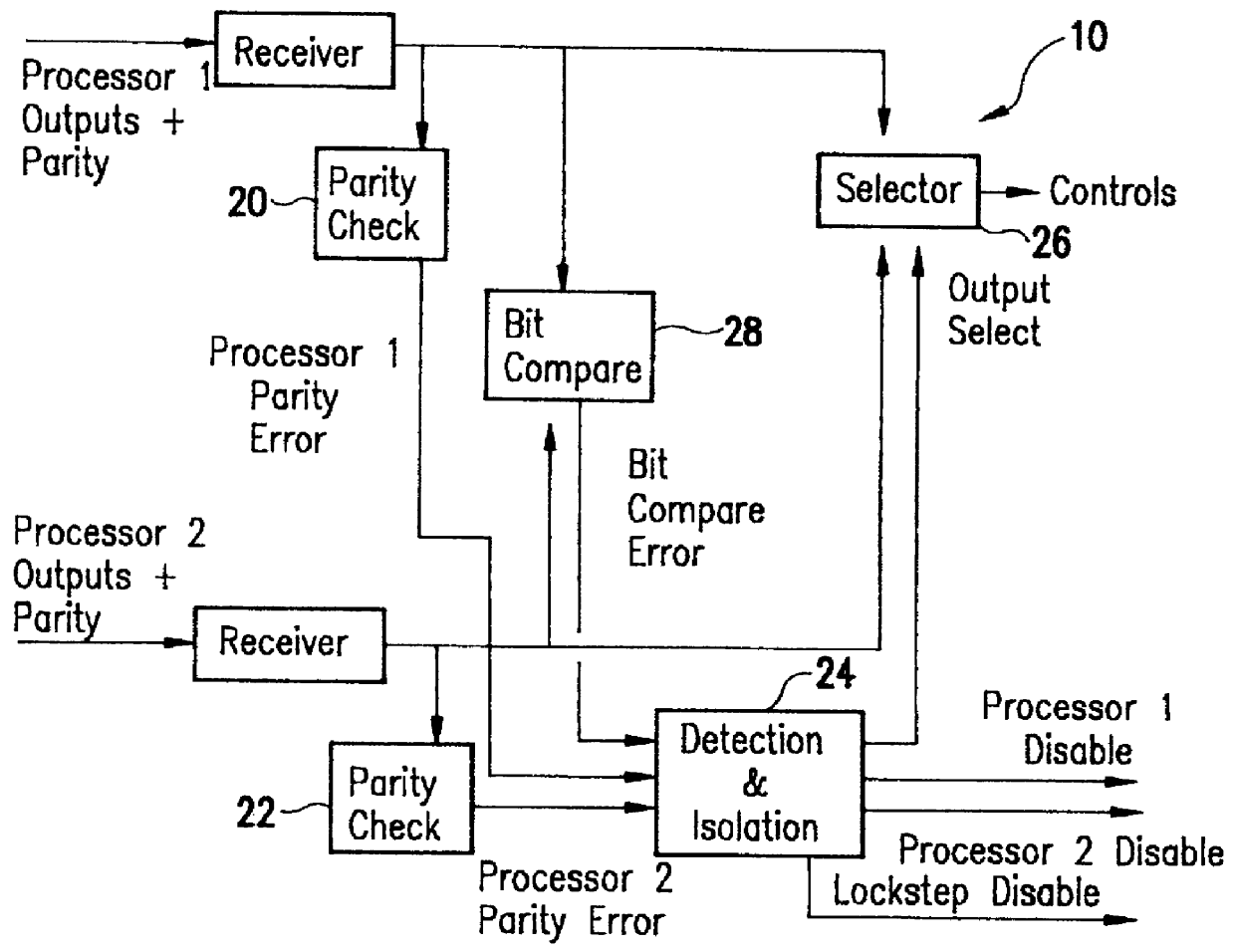

Error detection and fault isolation for lockstep processor systems

InactiveUS6065135ARedundant data error correctionRedundant hardware error correctionMemory addressAddress bus

Owner:BAE SYST INFORMATION & ELECTRONICS SYST INTERGRATION INC

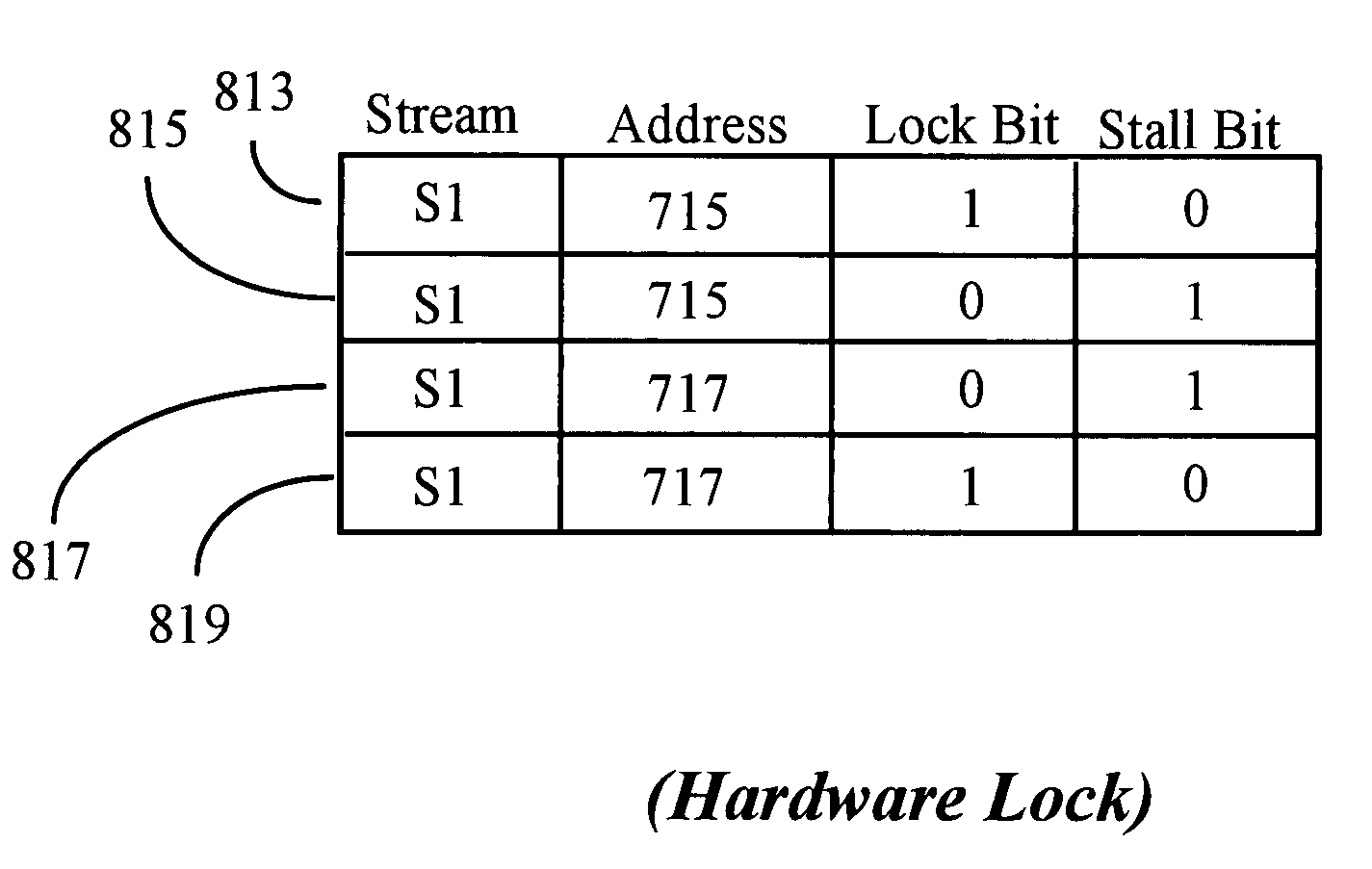

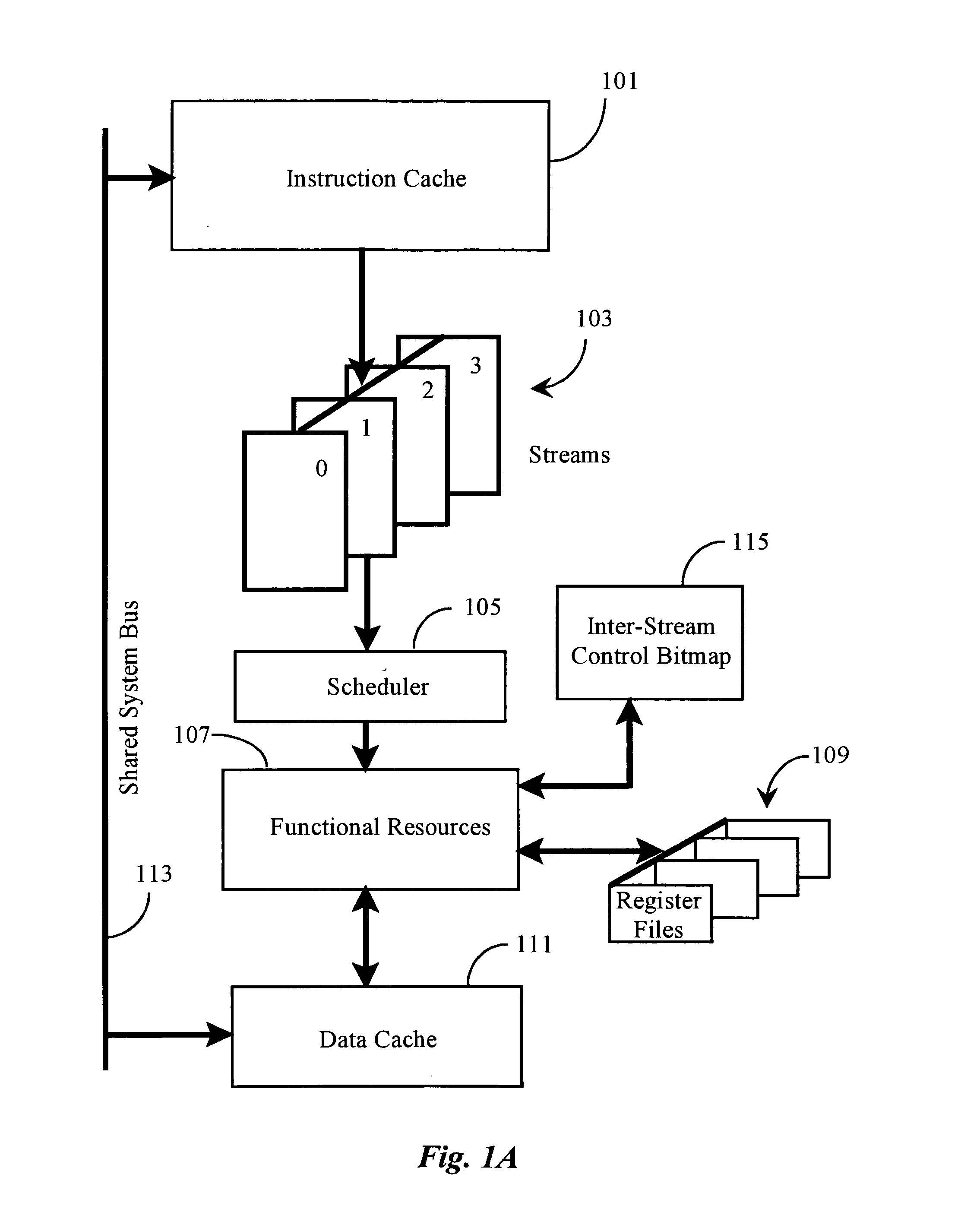

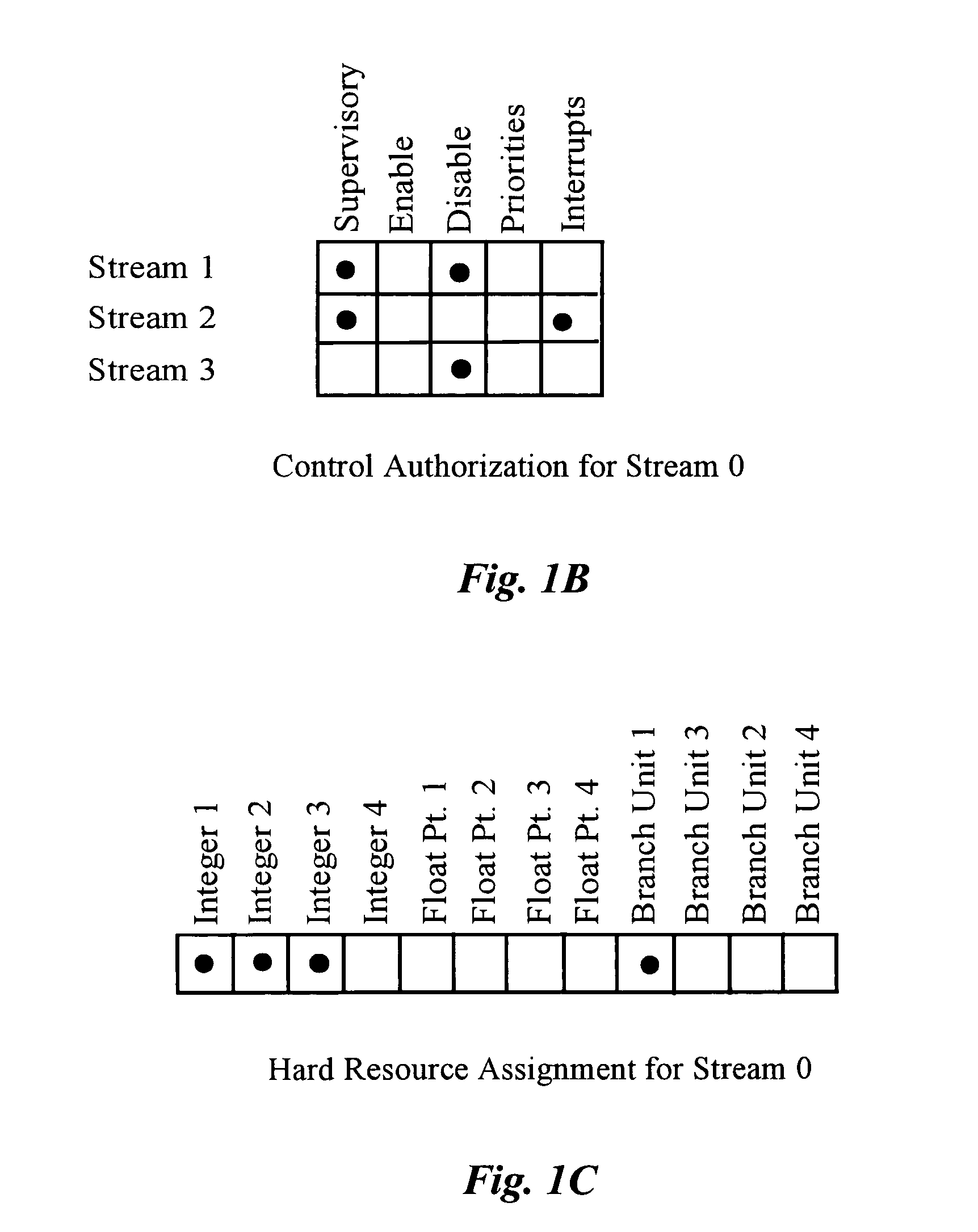

Method and apparatus for implementing atomicity of memory operations in dynamic multi-streaming processors

InactiveUS7257814B1Digital computer detailsUnauthorized memory use protectionMemory addressLocking mechanism

A multi-streaming processor has a plurality of streams for streaming one or more instruction threads, a set of functional resources for processing instructions from streams, and a lock mechanism for locking selected memory locations shared by streams of the processor, the hardware-lock mechanism operating to set a lock when an atomic memory sequence is started and to clear a lock when an atomic memory sequence is completed. In preferred embodiments the lock mechanism comprises one or more storage locations associated with each stream of the processor, each storage location enabled to store a memory address a lock bit, and a stall bit. Methods for practicing the invention using the apparatus are also taught.

Owner:ARM FINANCE OVERSEAS LTD

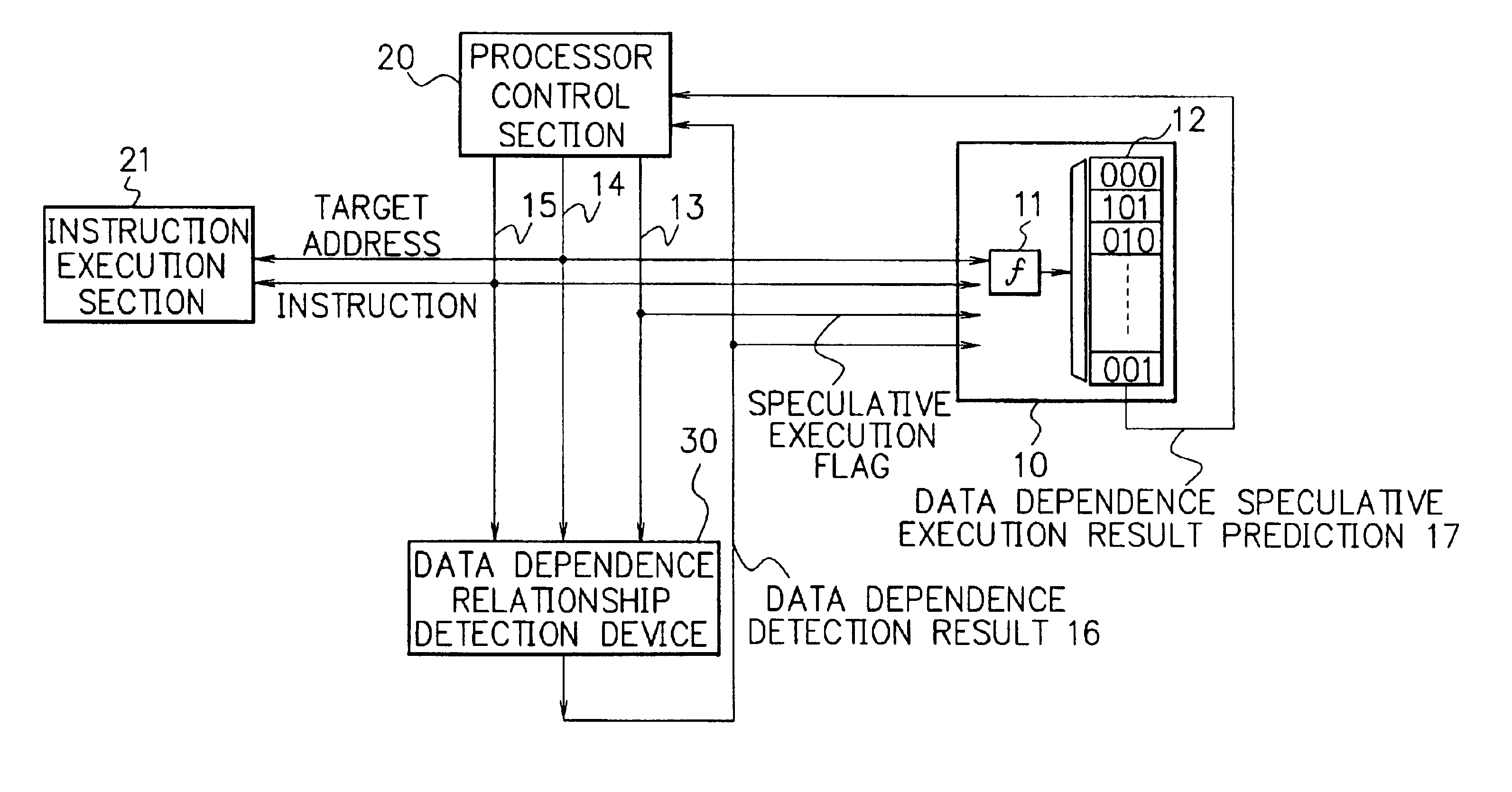

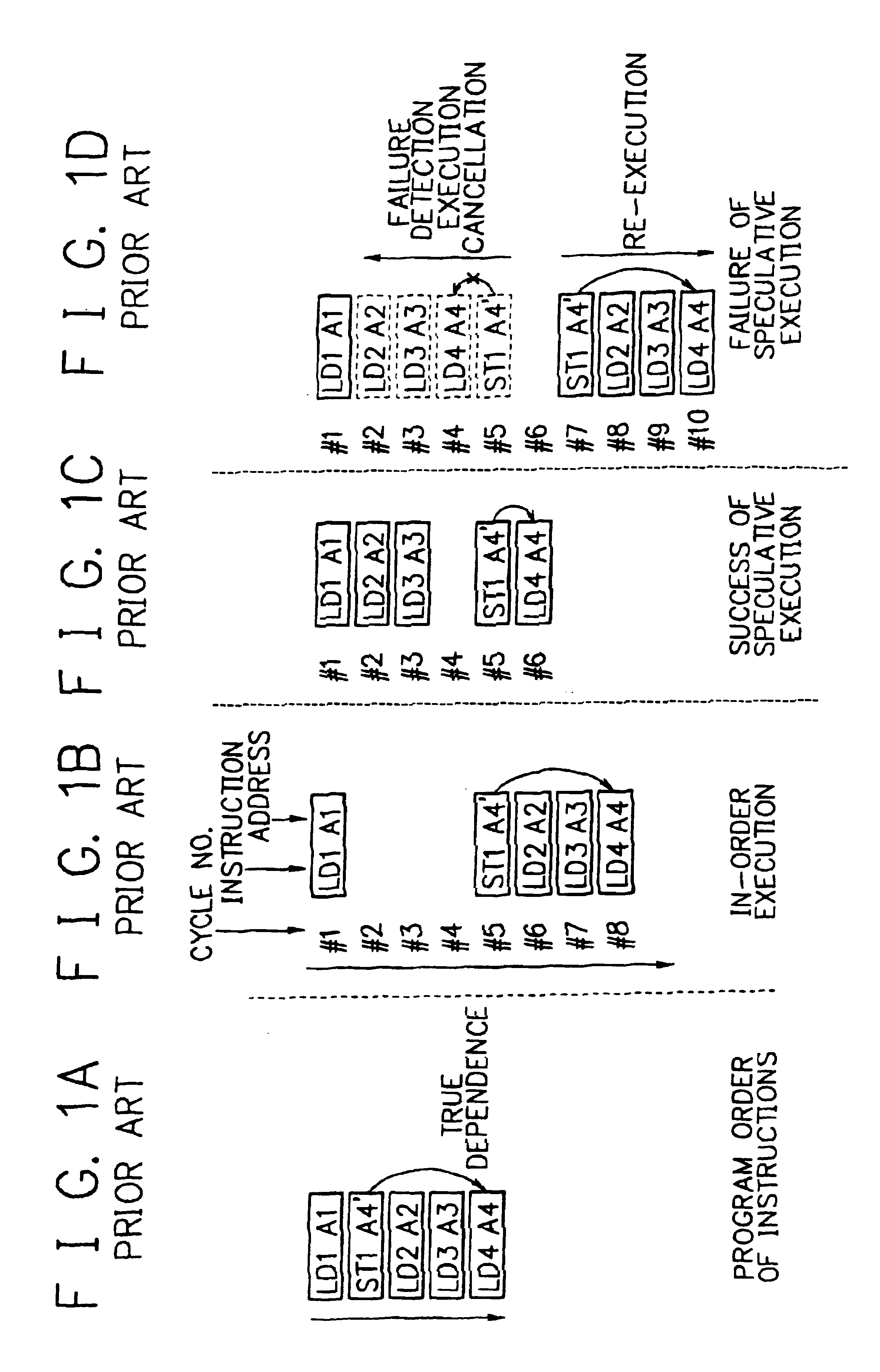

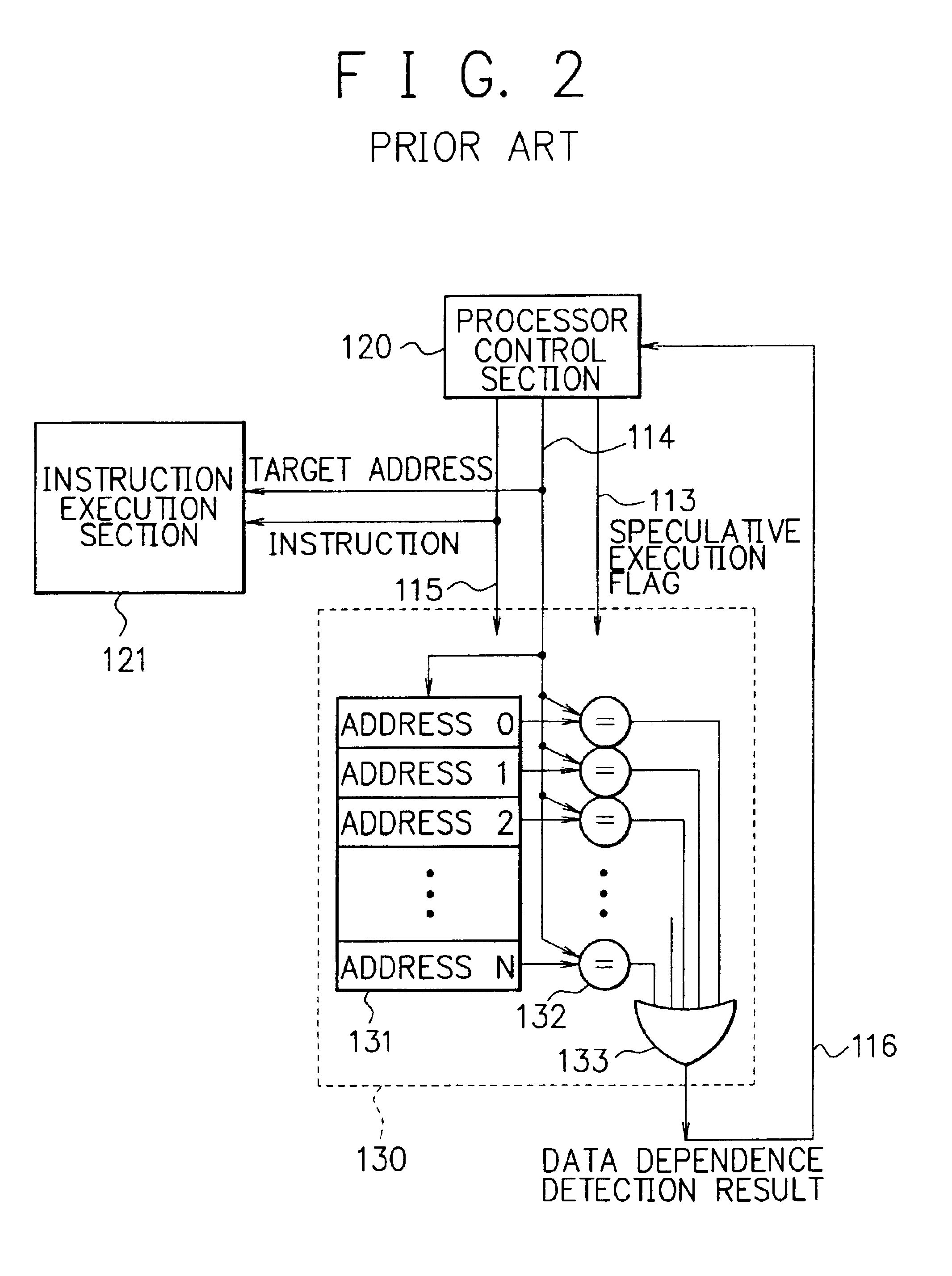

Processor, multiprocessor system and method for speculatively executing memory operations using memory target addresses of the memory operations to index into a speculative execution result history storage means to predict the outcome of the memory operation

InactiveUS6970997B2Low failure rateImprove execution performanceDigital computer detailsConcurrent instruction executionMemory addressSpeculative execution

When a processor executes a memory operation instruction by means of data dependence speculative execution, a speculative execution result history table which stores history information concerning success / failure results of the speculative execution of memory operation instructions of the past is referred to and thereby whether the speculative execution will succeed or fail is predicted. In the prediction, the target address of the memory operation instruction is converted by a hash function circuit into an entry number of the speculative execution result history table (allowing the existence of aliases), and an entry of the table designated by the entry number is referred to. If the prediction is “success”, the memory operation instruction is executed in out-of-order execution speculatively (with regard to data dependence relationship between the instructions). If the prediction is “failure”, the speculative execution is canceled and the memory operation instruction is executed later in the program order non-speculatively. Whether the speculative execution of the memory operation instructions has succeeded or failed is judged by detecting the data dependence relationship between the memory operation instructions, and the speculative execution result history table is updated taking the judgment into account.

Owner:NEC CORP

Apparatus and method for managing memory in a network switch

InactiveUS20050207423A1Special service provision for substationStore-and-forward switching systemsMemory addressParallel computing

The present invention is related to a method and apparatus for managingmemory in a network switch, wherein the memory includes the steps of providing a memory, wherein the memory includes a plurality of memory locations configured to store data therein and providing a memory address pool having a plurality of available memory addresses arranged therein, wherein each of the plurality of memory addresses corresponds to a specific memory location. The method further includes the steps of providing a memory address pointer, wherein the memory address pointer indicates a next available memory address in the memory address pool, and reading available memory addresses from the memory address pool using a last in first out operation. The method also includes writing released memory addresses into the memory address pool, adjusting a position of the memory address pointer upon a read or a write operation from the memory address pool.

Owner:AVAGO TECH INT SALES PTE LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com