Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

118 results about "Distributed shared memory" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

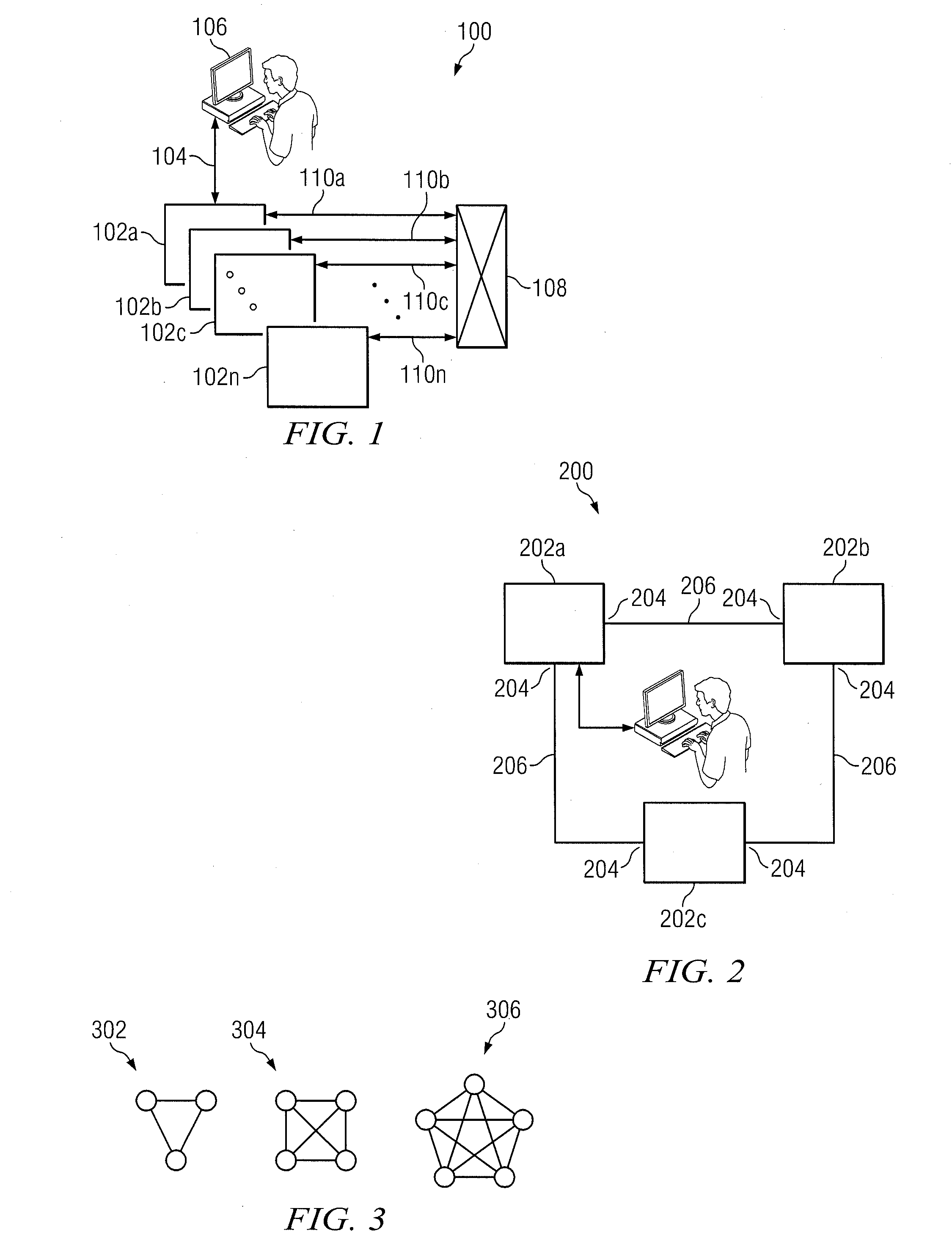

Inventor

In computer science, distributed shared memory (DSM) is a form of memory architecture where physically separated memories can be addressed as one logically shared address space. Here, the term "shared" does not mean that there is a single centralized memory, but that the address space is "shared" (same physical address on two processors refers to the same location in memory). Distributed global address space (DGAS), is a similar term for a wide class of software and hardware implementations, in which each node of a cluster has access to shared memory in addition to each node's non-shared private memory.

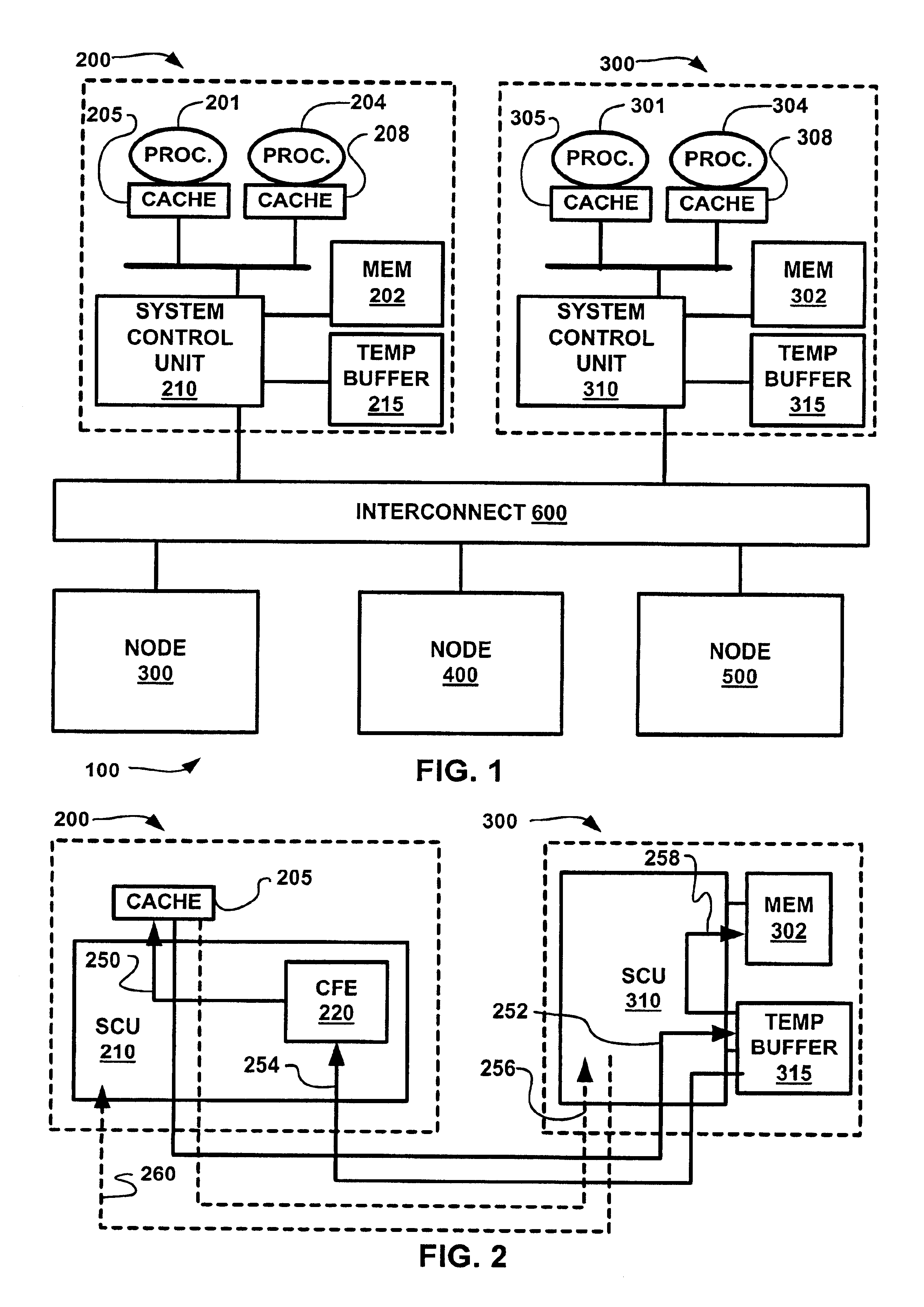

Memory controller for controlling memory accesses across networks in distributed shared memory processing systems

InactiveUS6044438AMore efficient cache coherent systemData processing applicationsMemory adressing/allocation/relocationRemote memory accessRemote direct memory access

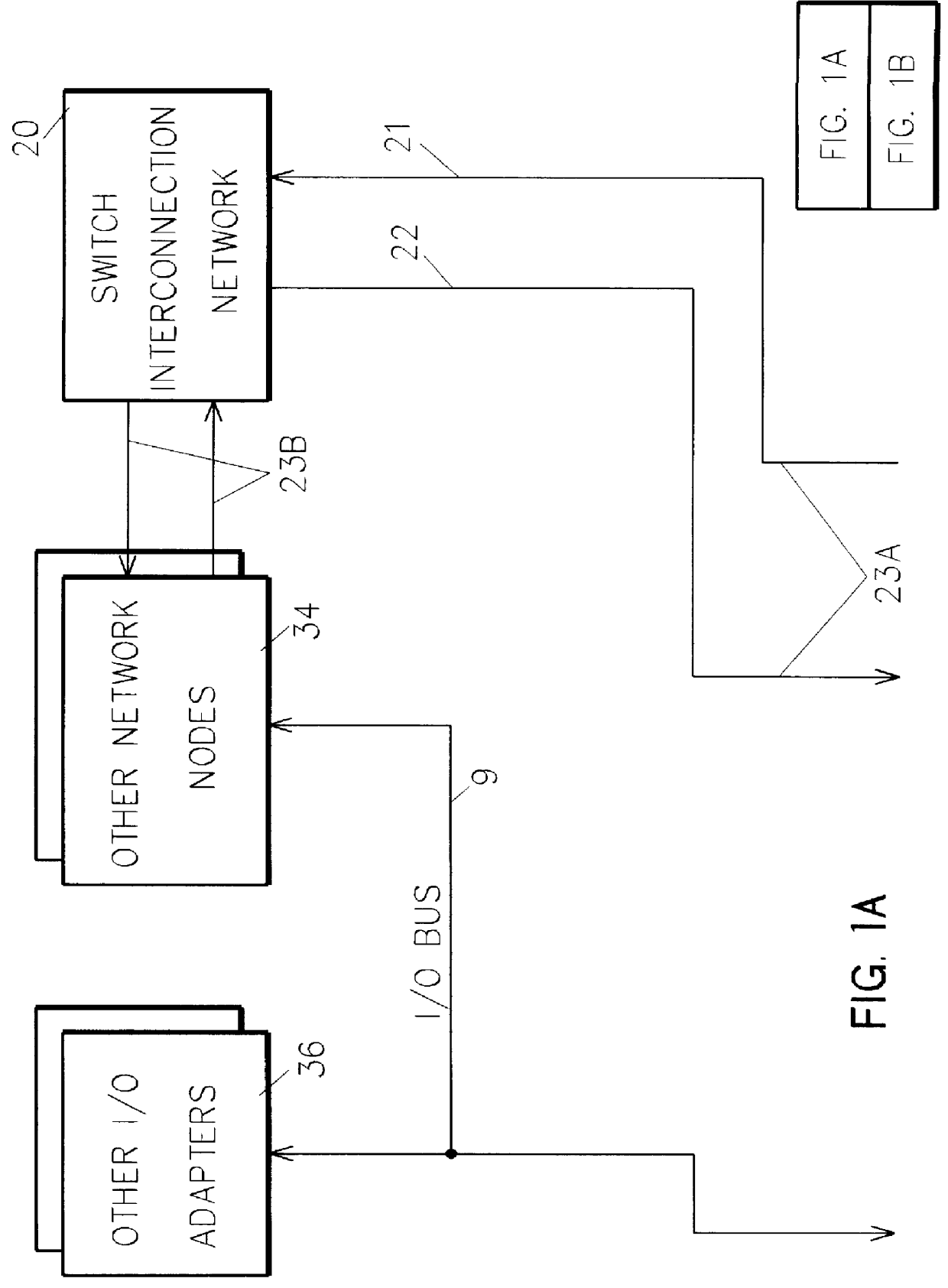

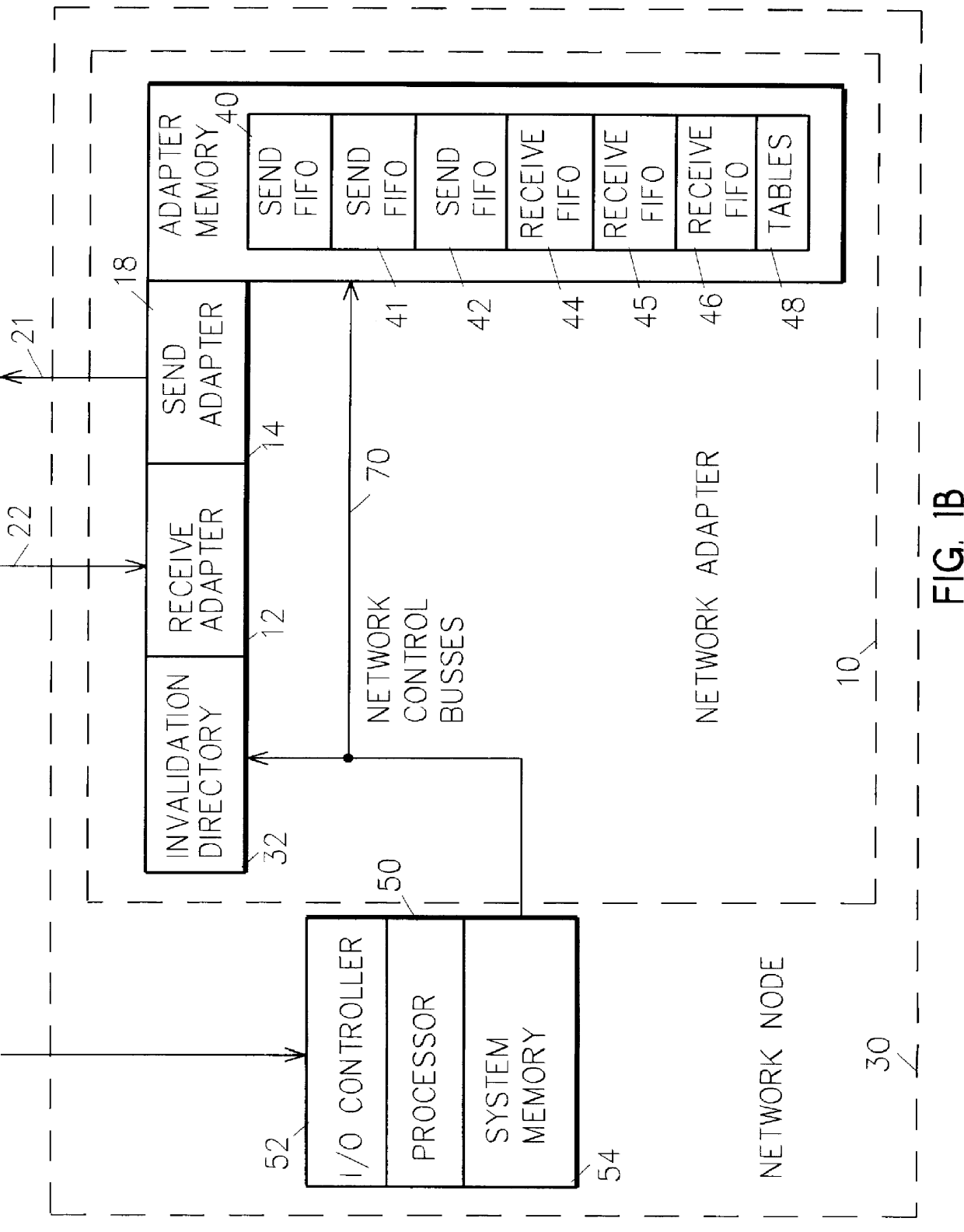

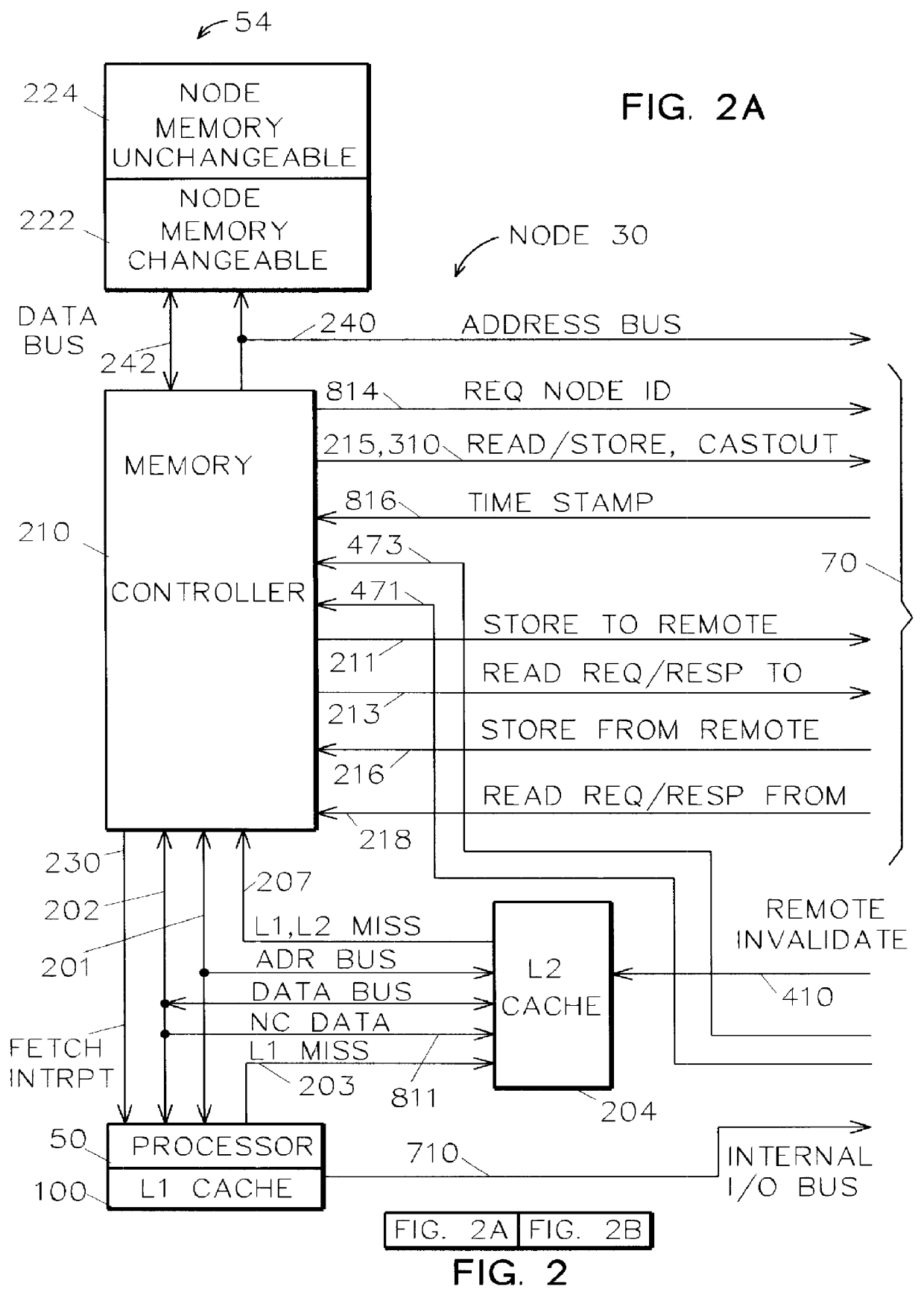

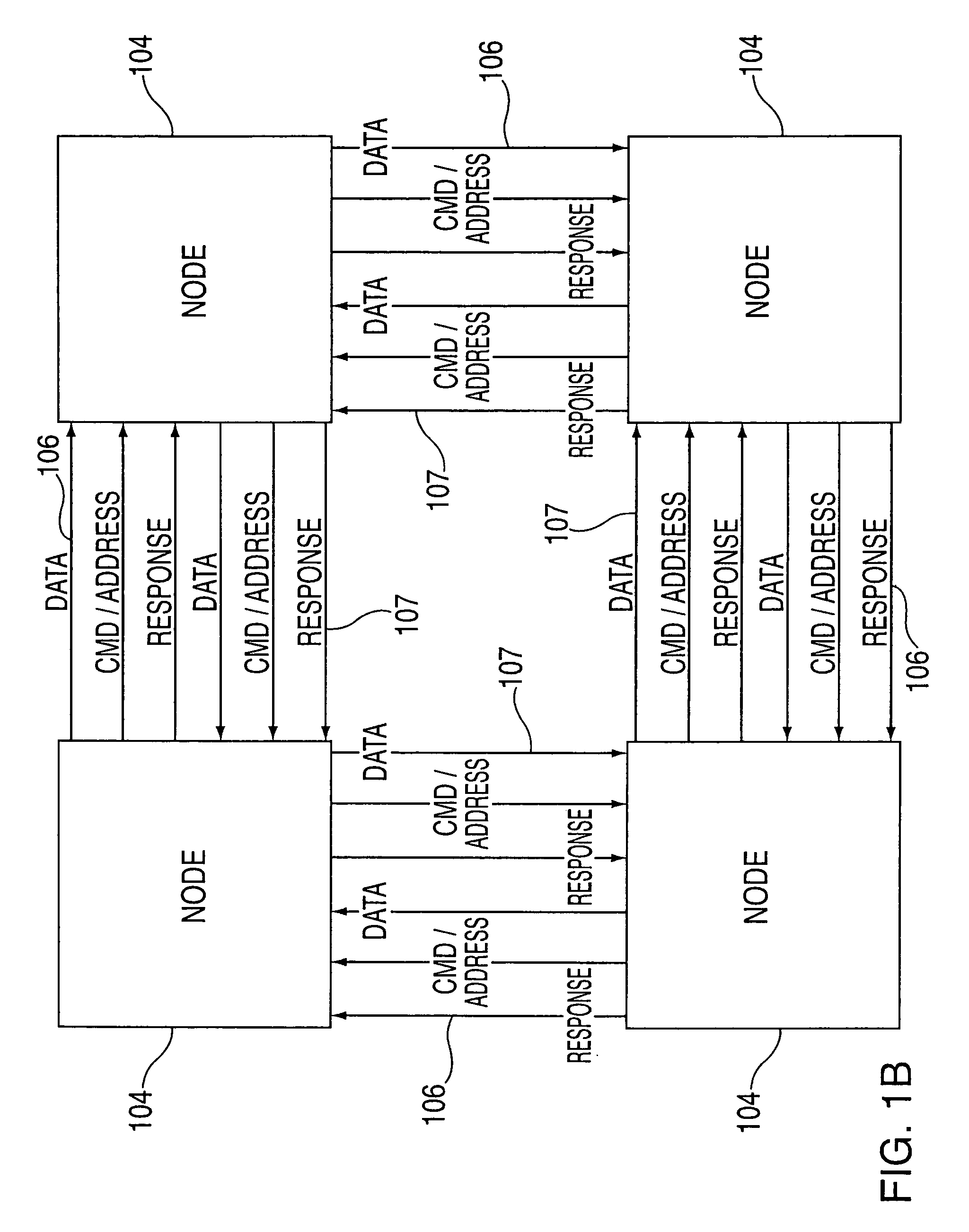

A shared memory parallel processing system interconnected by a multi-stage network combines new system configuration techniques with special-purpose hardware to provide remote memory accesses across the network, while controlling cache coherency efficiently across the network. The system configuration techniques include a systematic method for partitioning and controlling the memory in relation to local verses remote accesses and changeable verses unchangeable data. Most of the special-purpose hardware is implemented in the memory controller and network adapter, which implements three send FIFOs and three receive FIFOs at each node to segregate and handle efficiently invalidate functions, remote stores, and remote accesses requiring cache coherency. The segregation of these three functions into different send and receive FIFOs greatly facilitates the cache coherency function over the network. In addition, the network itself is tailored to provide the best efficiency for remote accesses.

Owner:IBM CORP

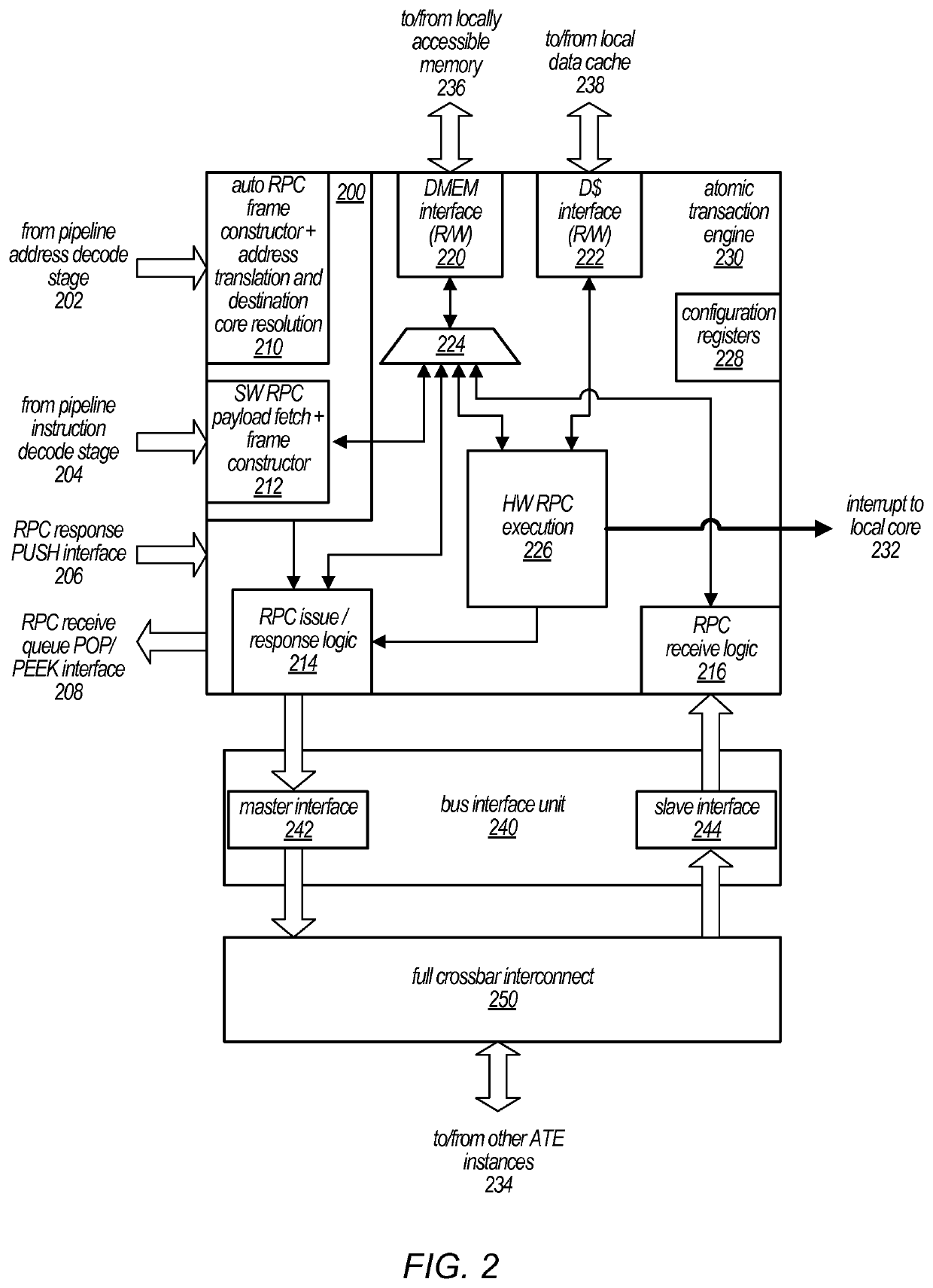

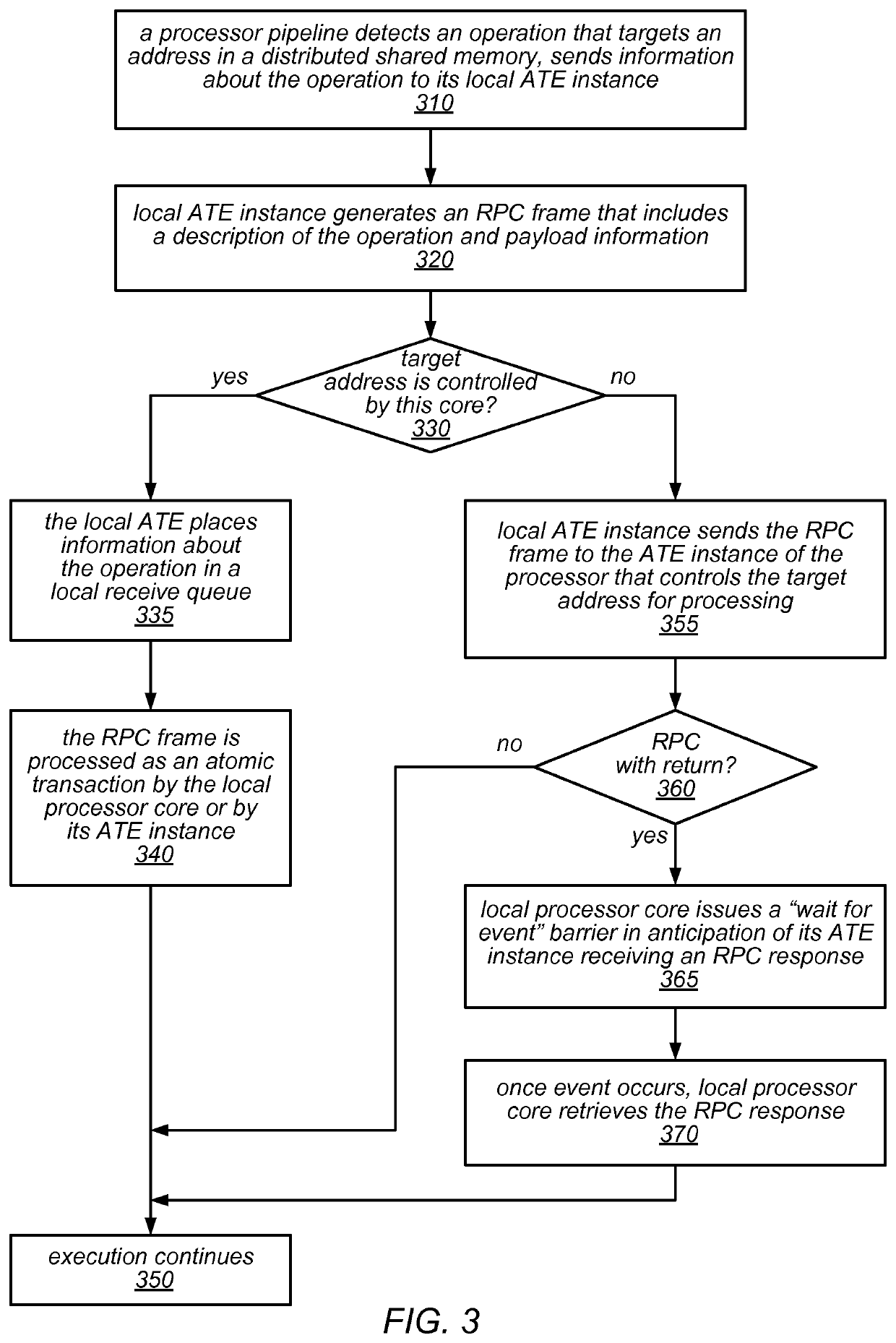

Distributed shared memory using interconnected atomic transaction engines at respective memory interfaces

ActiveUS10732865B2Light weightLess complexInput/output to record carriersProgram synchronisationComputer architectureMemory interface

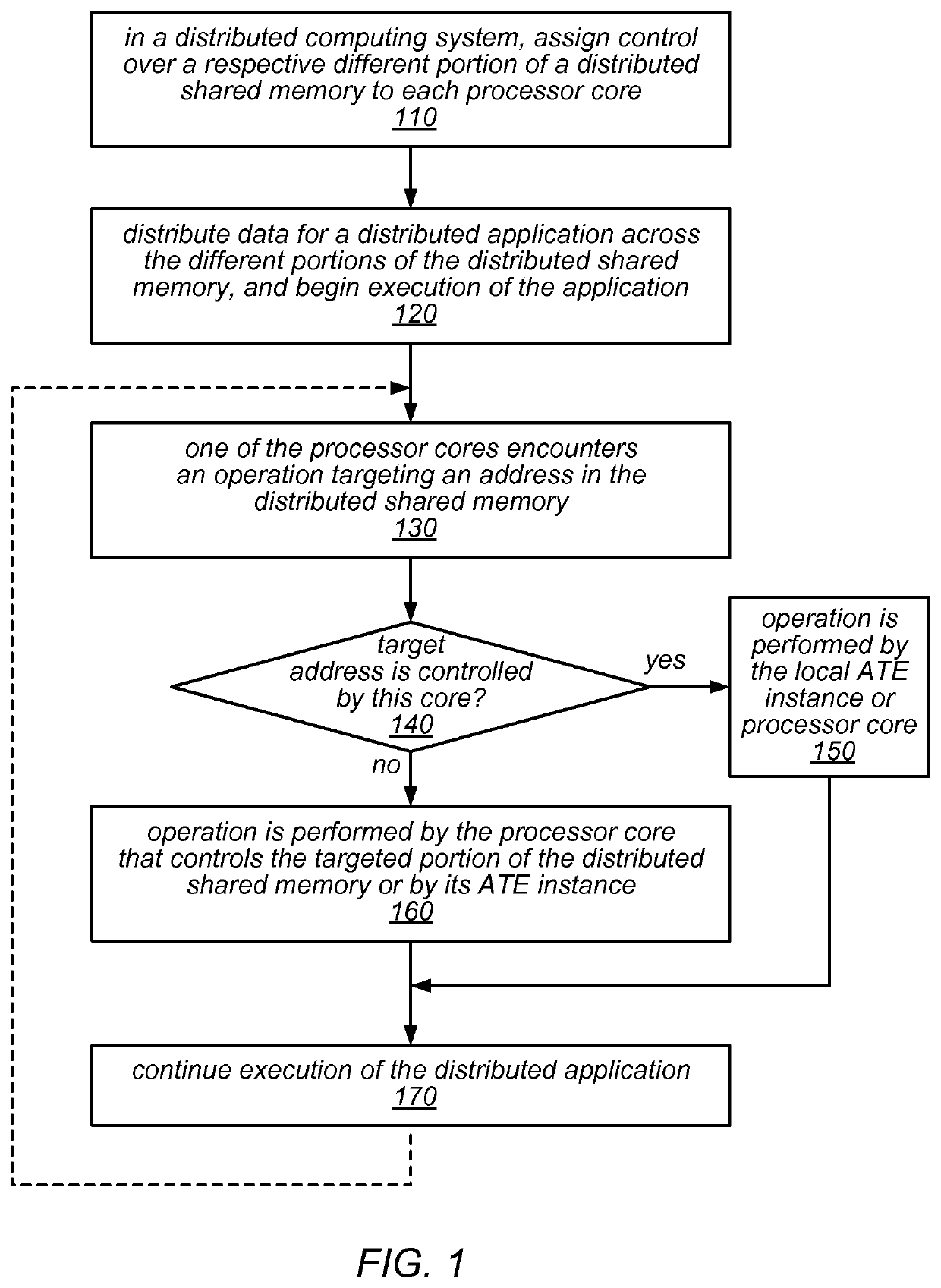

A hardware-assisted Distributed Memory System may include software configurable shared memory regions in the local memory of each of multiple processor cores. Accesses to these shared memory regions may be made through a network of on-chip atomic transaction engine (ATE) instances, one per core, over a private interconnect matrix that connects them together. For example, each ATE instance may issue Remote Procedure Calls (RPCs), with or without responses, to an ATE instance associated with a remote processor core in order to perform operations that target memory locations controlled by the remote processor core. Each ATE instance may process RPCs (atomically) that are received from other ATE instances or that are generated locally. For some operation types, an ATE instance may execute the operations identified in the RPCs itself using dedicated hardware. For other operation types, the ATE instance may interrupt its local processor core to perform the operations.

Owner:ORACLE INT CORP

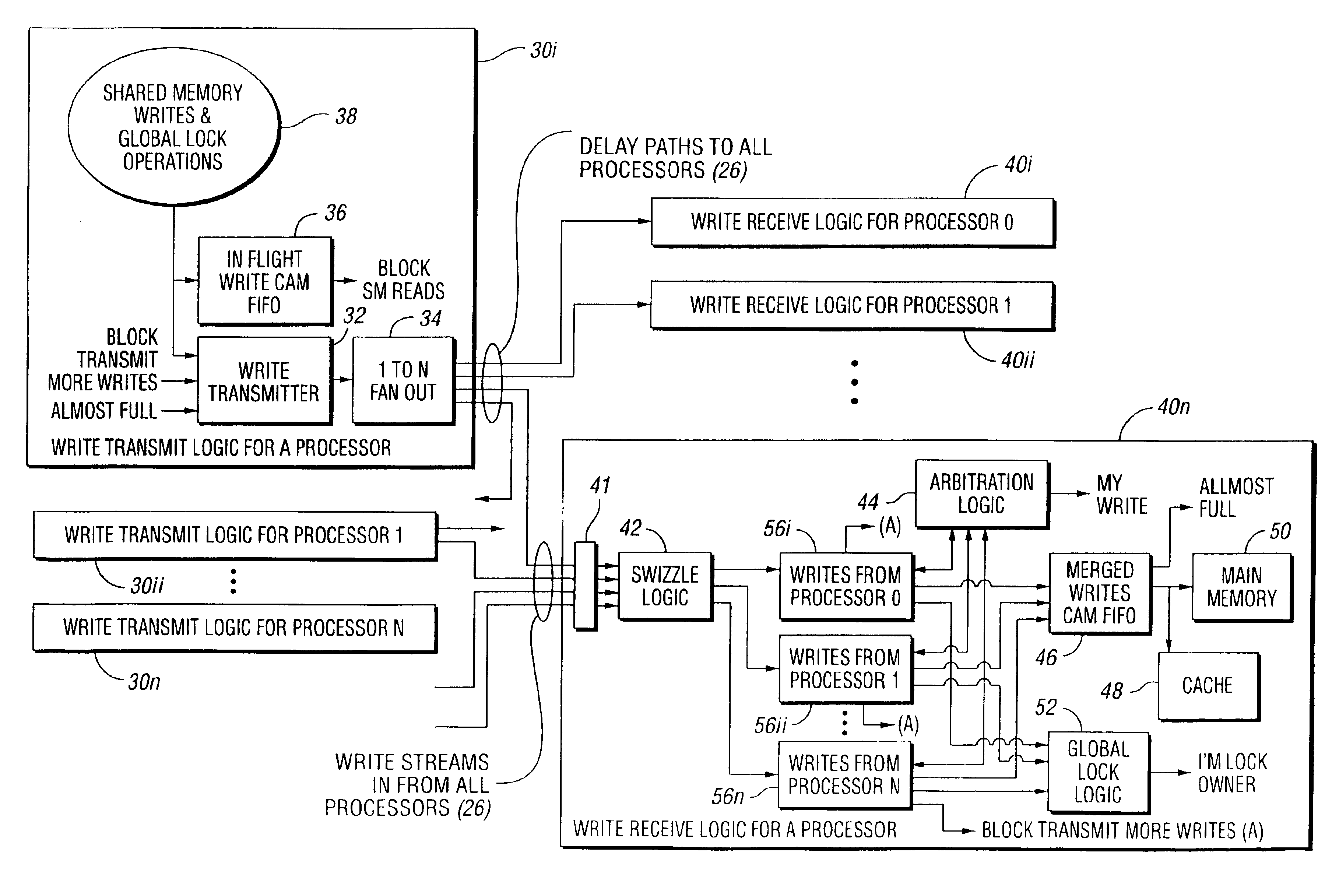

System and method for a distributed shared memory

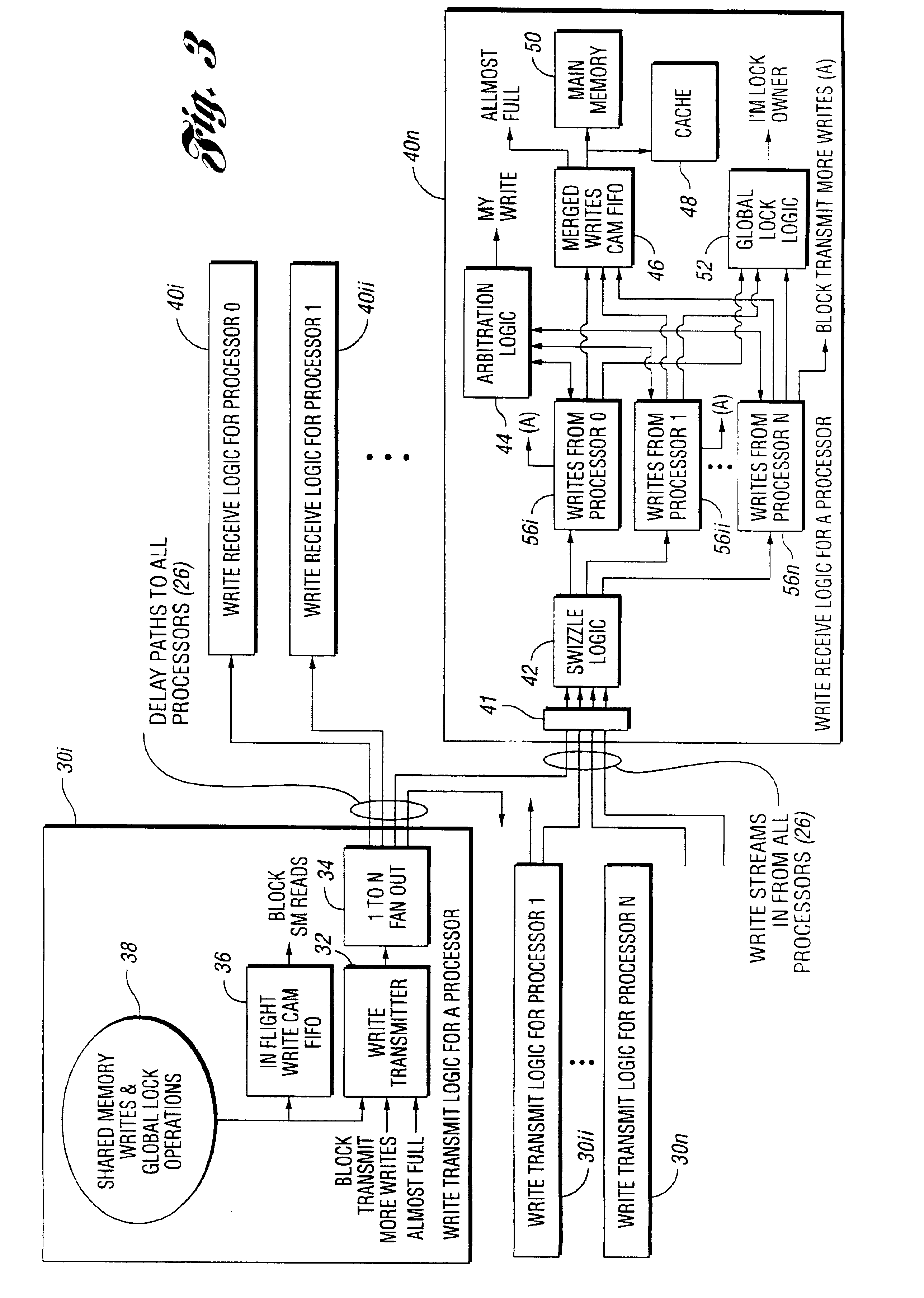

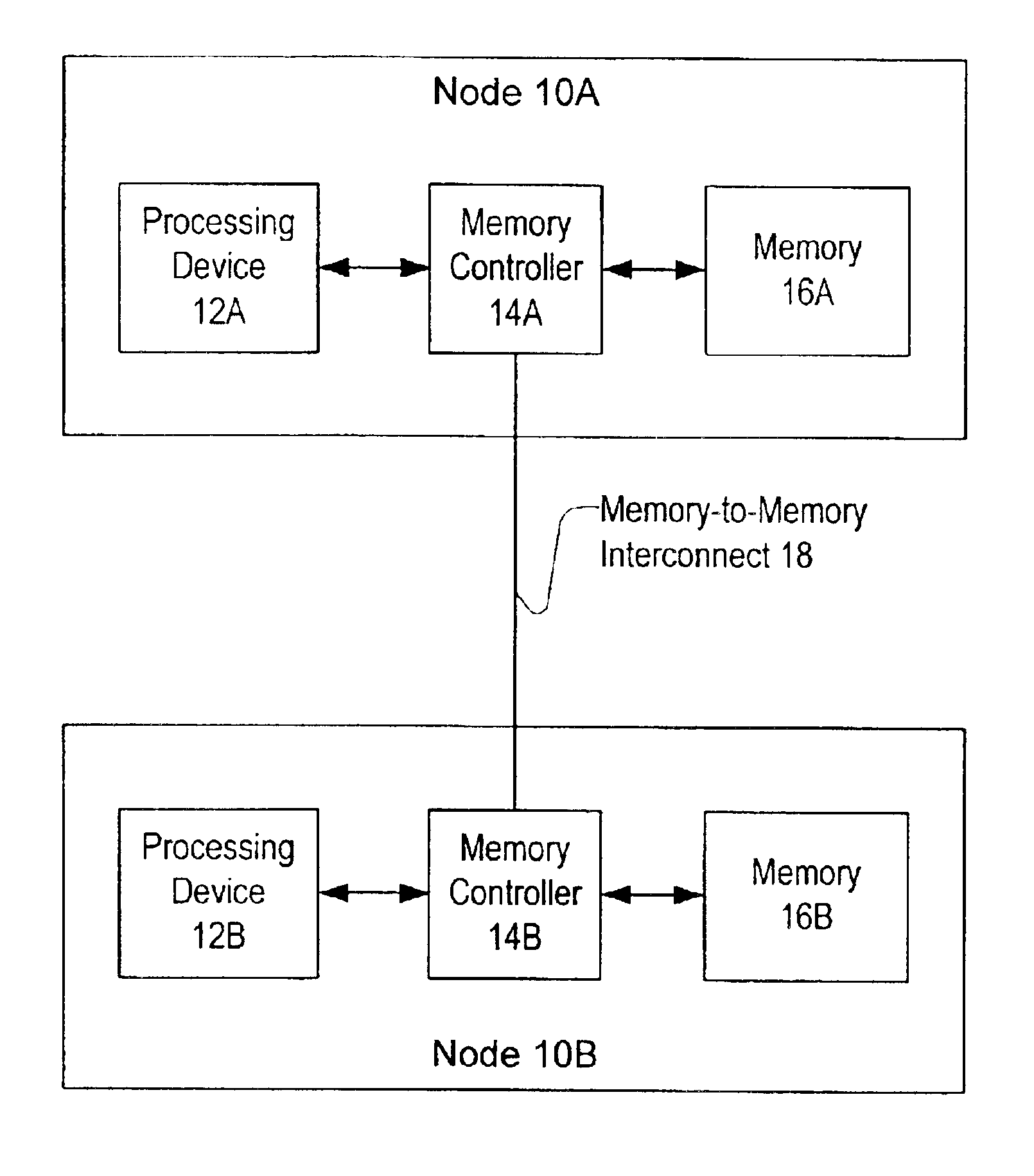

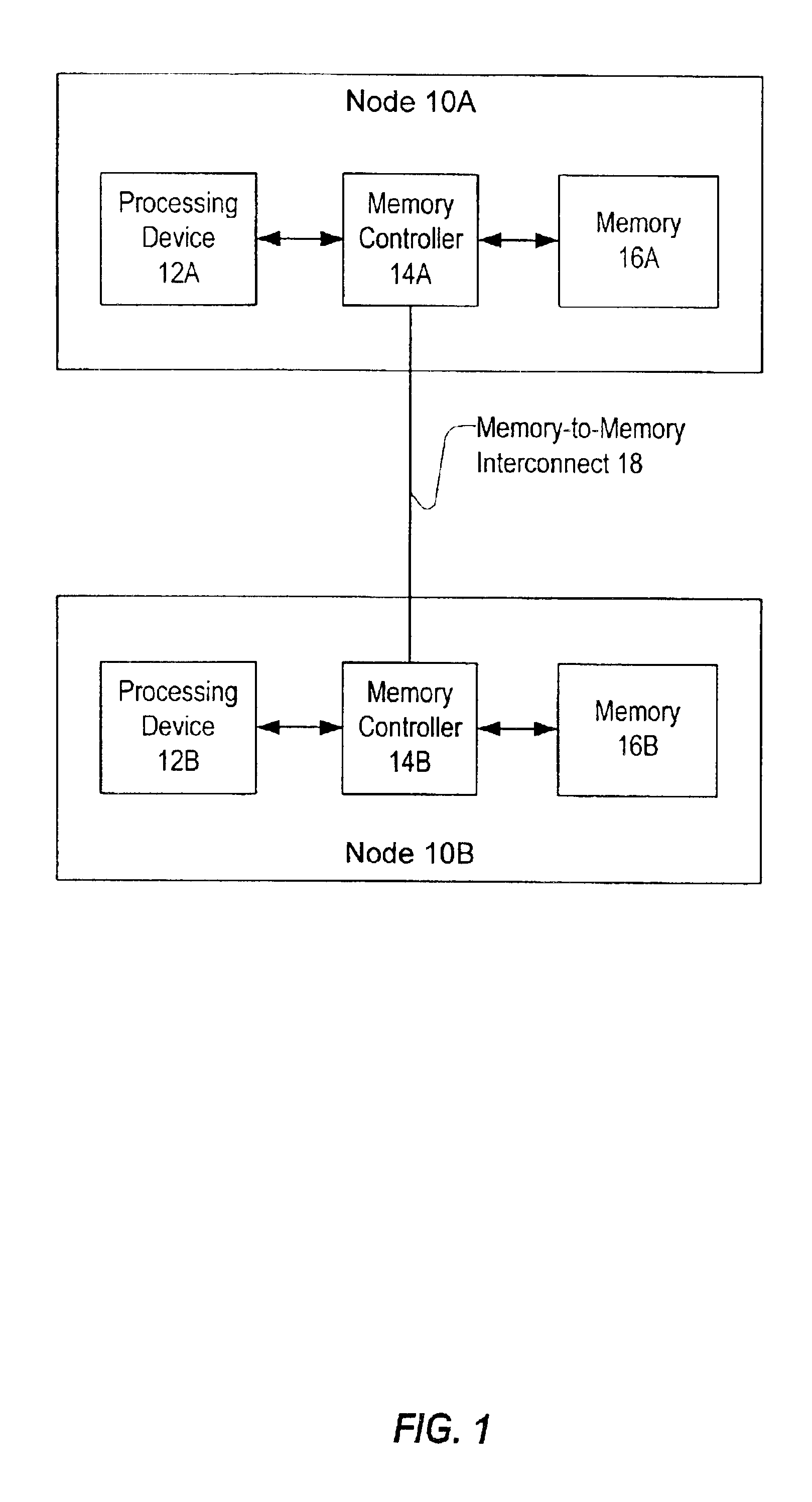

A system and method for a distributed shared memory. The system includes multiple processors, each processor transmitting write commands issued therefrom concerning a shared memory to each of the processors, such that each processor receives each shared memory write command transmitted. The system also includes multiple local memories, each local memory associated with one of the processors and having a copy of the shared memory, wherein each processor completes each received shared memory write command at its associated local memory such that the copies of the shared memory remain consistent at all times. The method includes transmitting write commands concerning the shared memory to each of the processors, such that each processor receives each shared memory write command transmitted, and completing each received shared memory write command at the associated local memory such that the copies of the shared memory remain consistent at all times.

Owner:ORACLE INT CORP

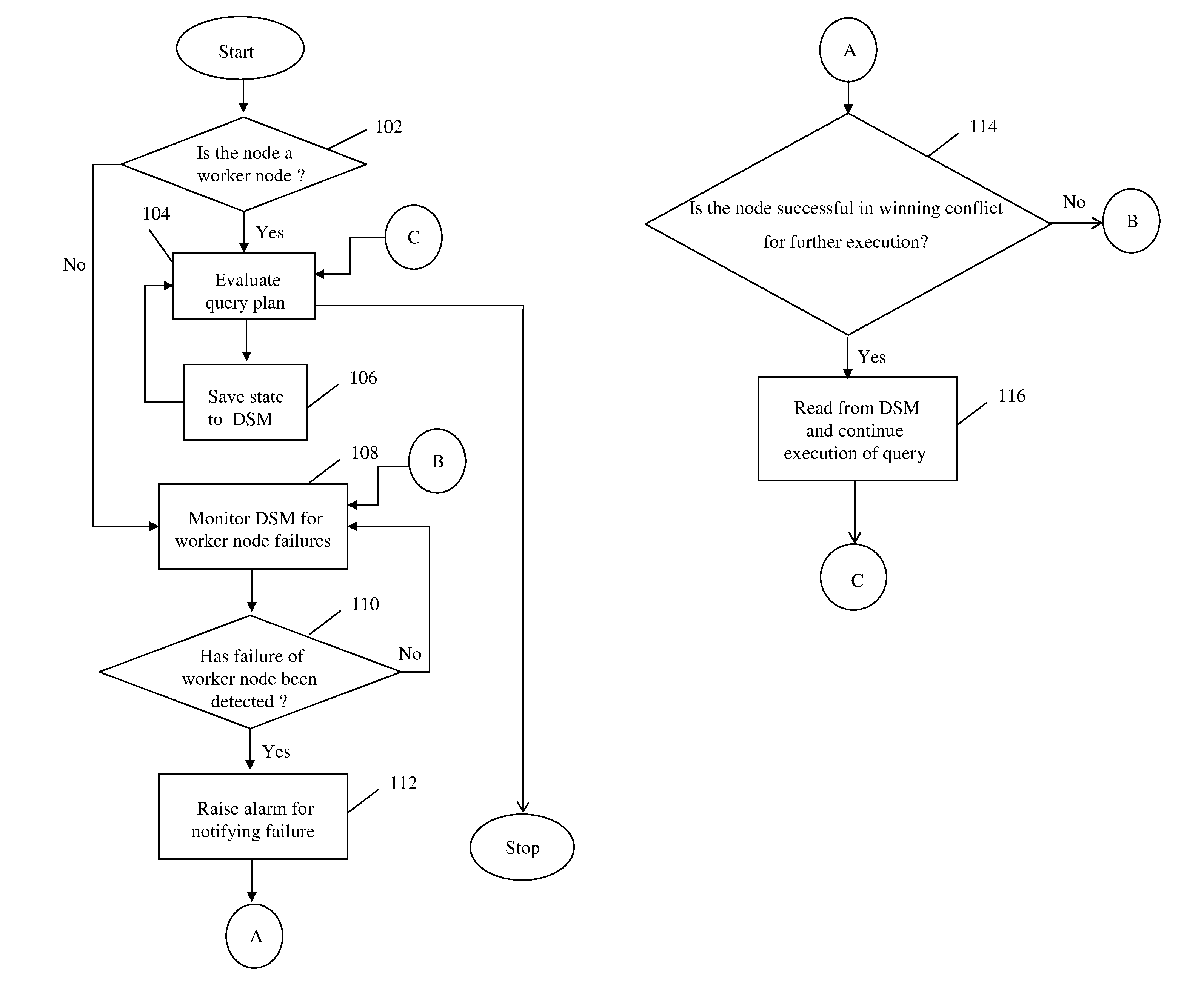

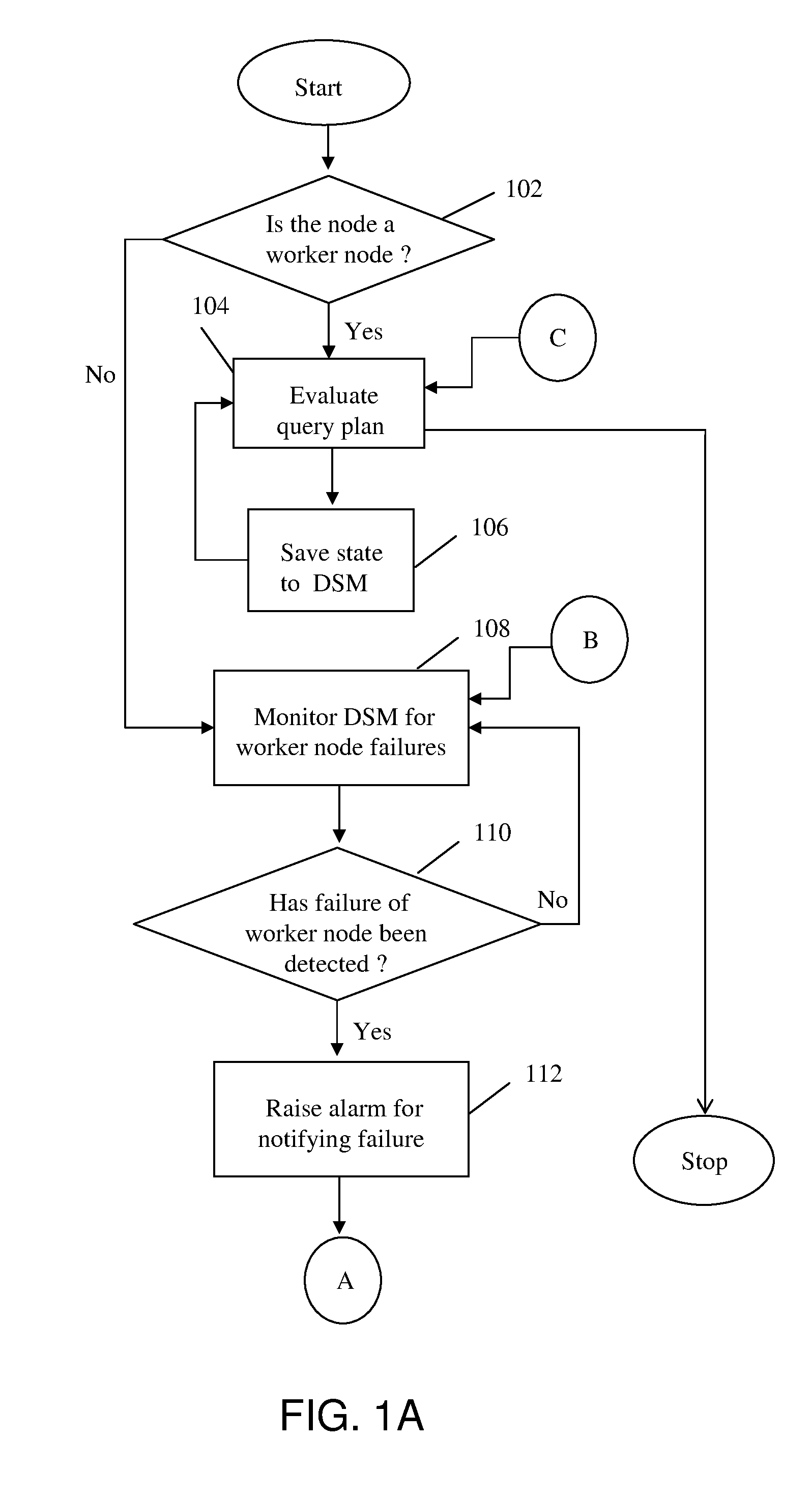

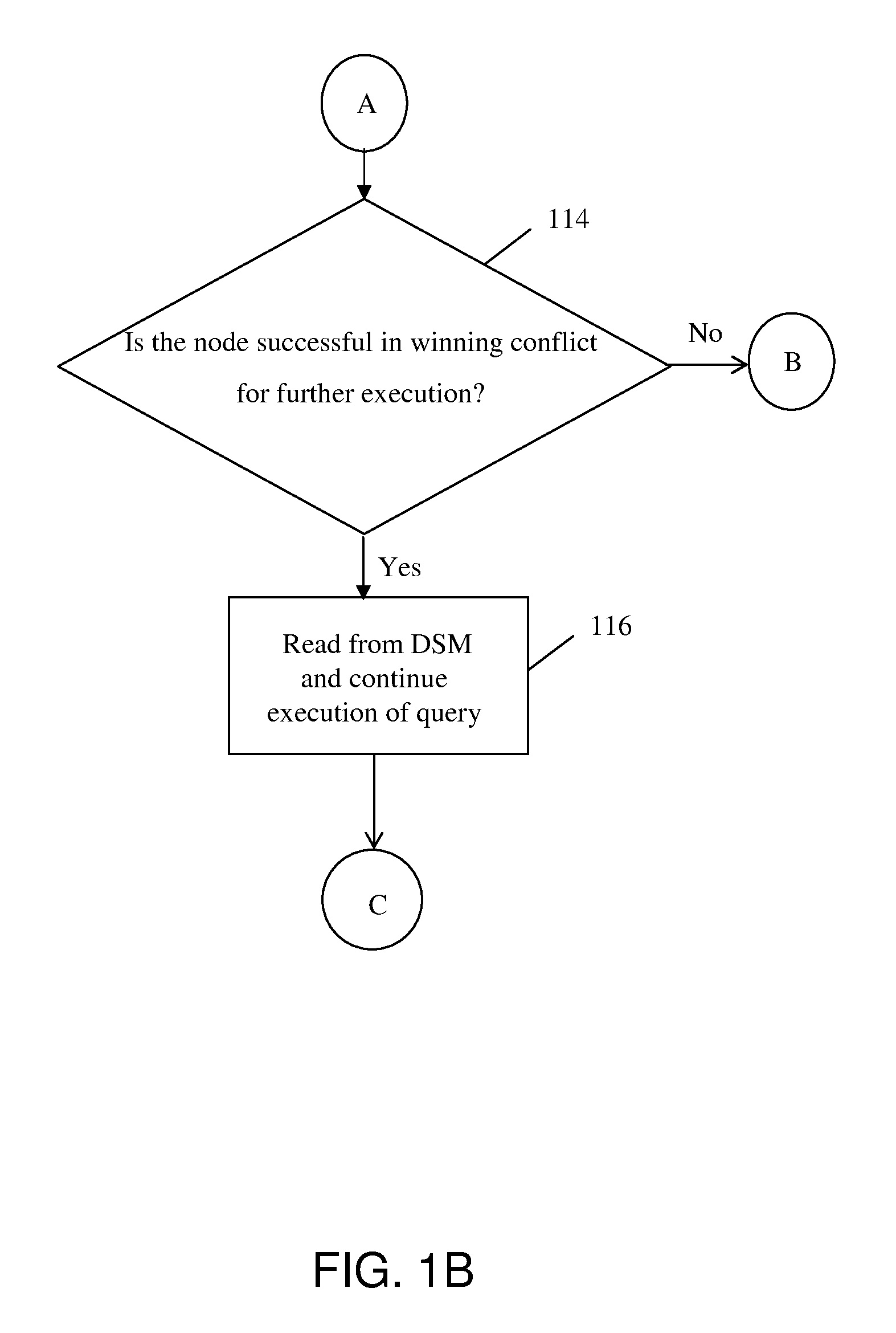

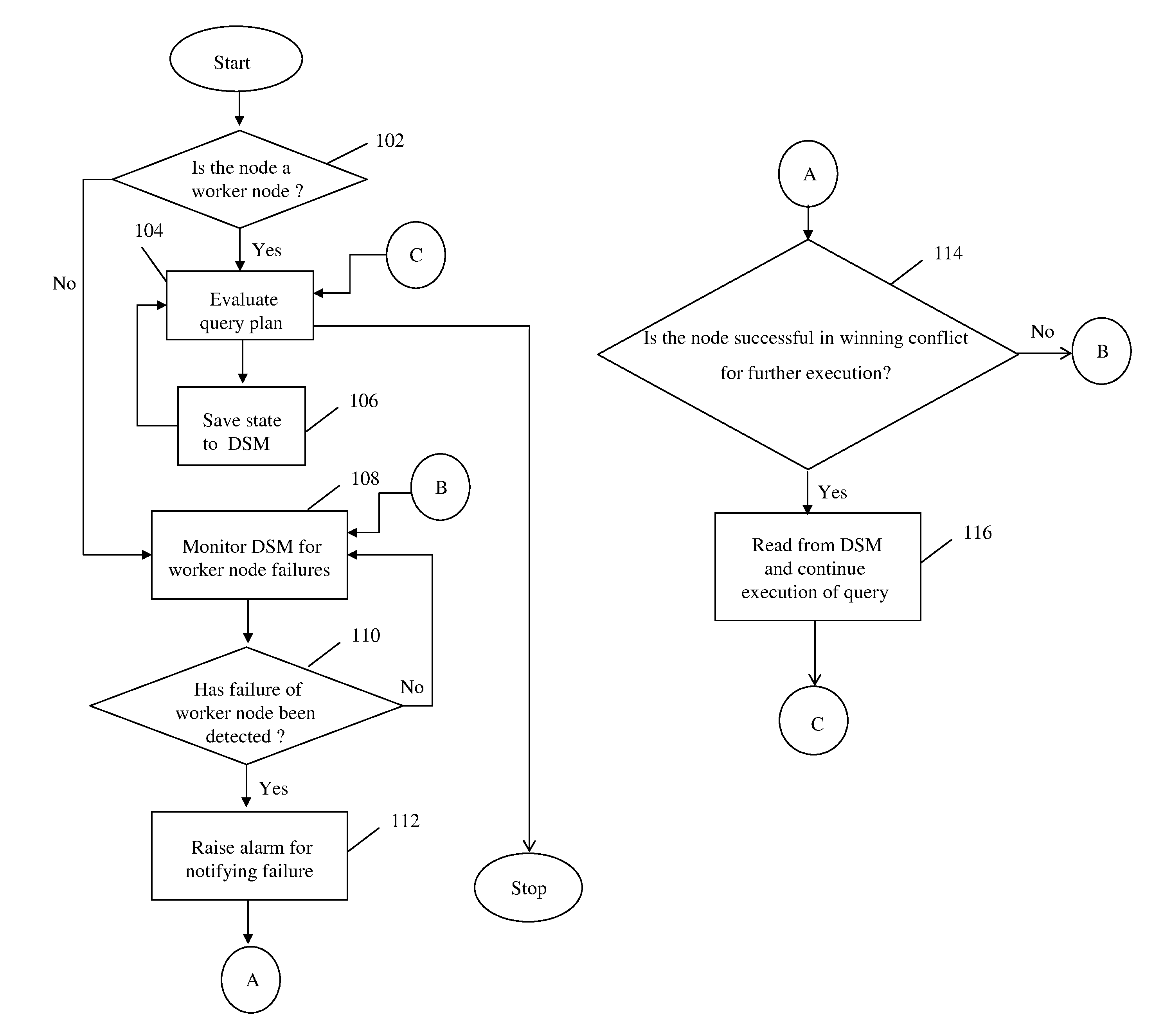

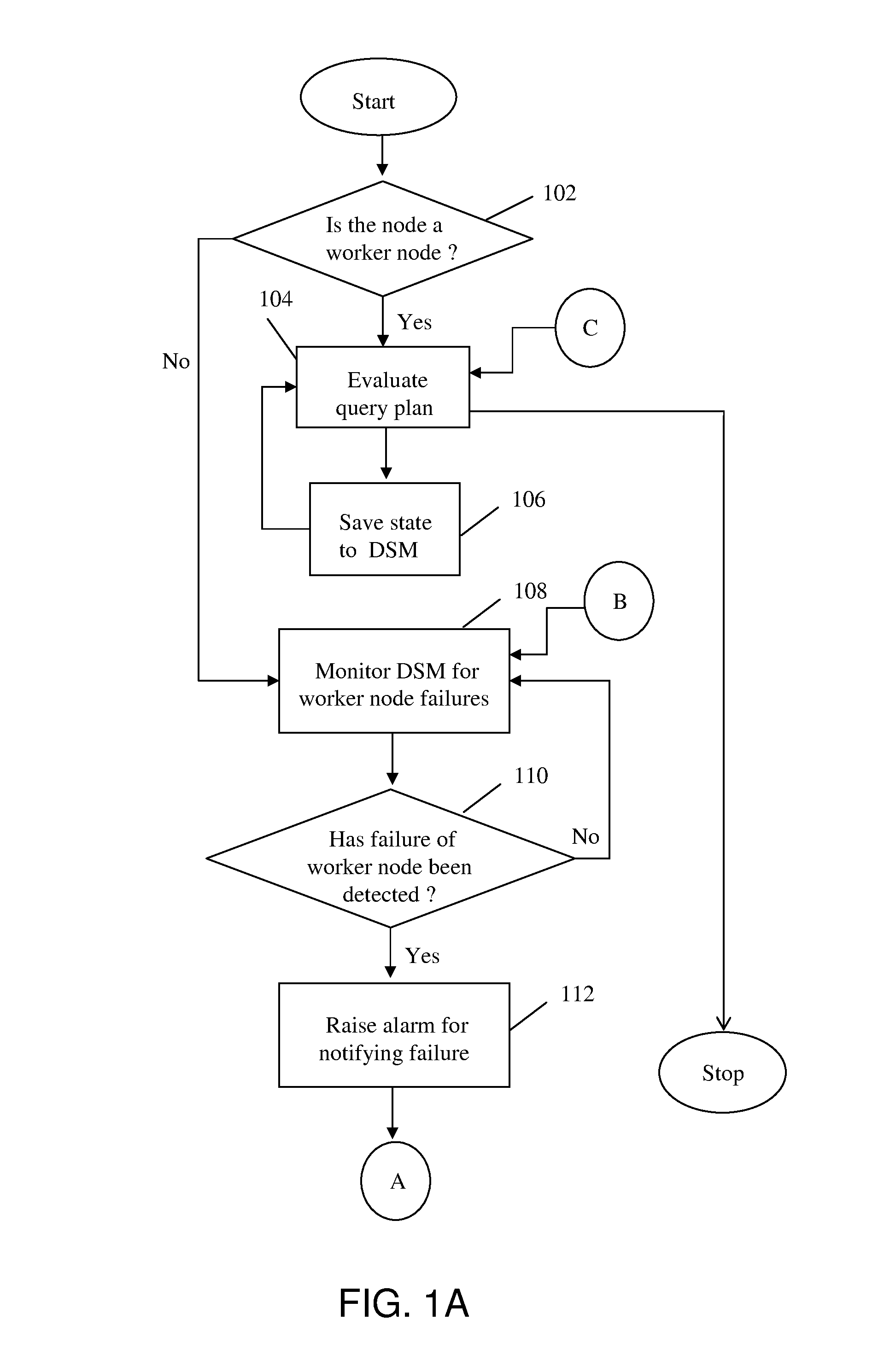

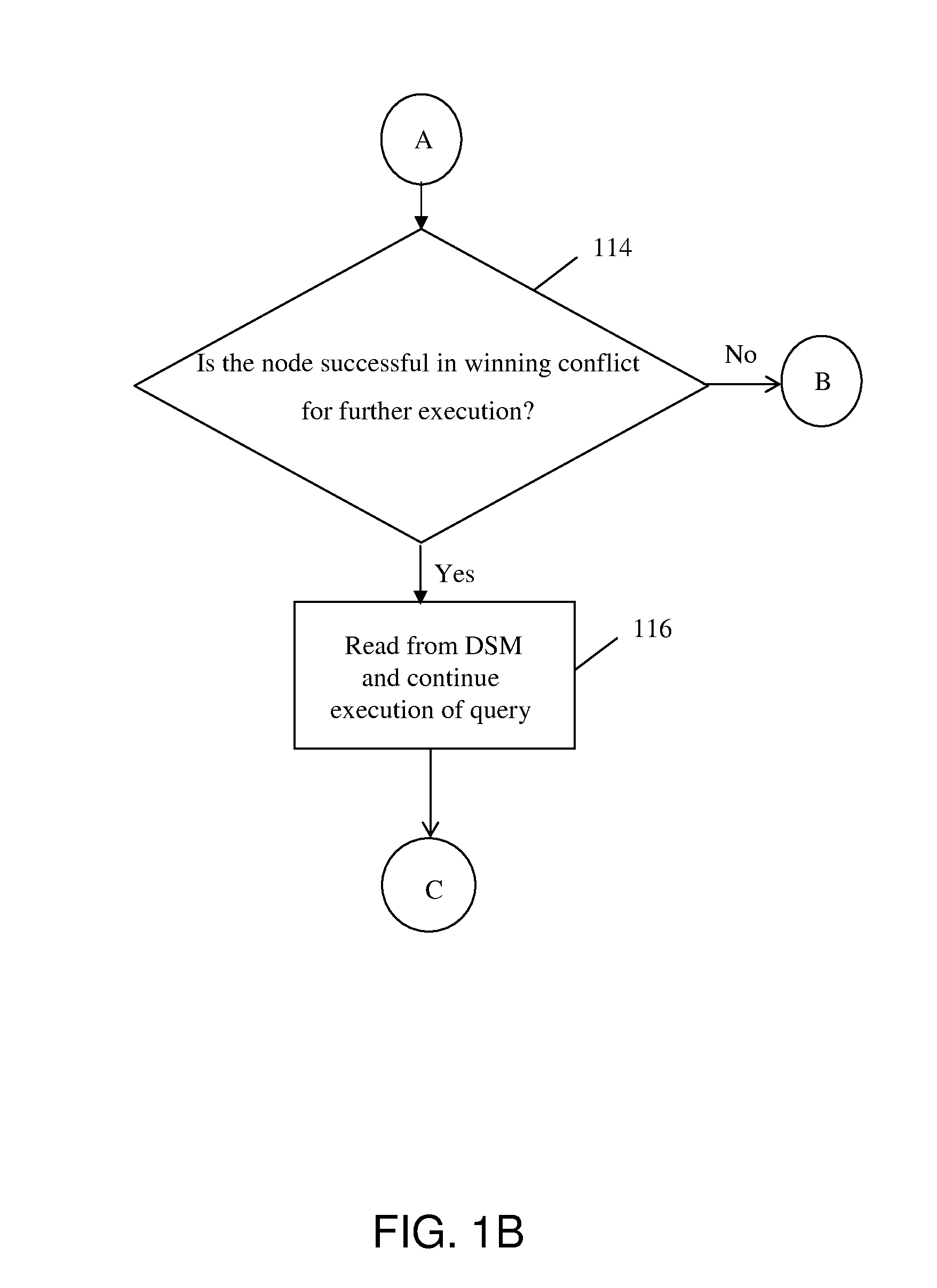

Method and system for automatic failover of distributed query processing using distributed shared memory

A method and system for implementing automatic recovery from failure of resources in a grid-based distributed database is provided. The method includes determining the category of each node in the subgroup of nodes, where the determination identifies each node as at least one of a worker node and an idle node. The method further includes saving state of each worker node engaged in execution of a task in a shared memory at pre-determined time intervals. Each worker node is monitored by one or more idle nodes in each sub-group. Upon detection of no change in state of worker node for a pre-determined period of time, a failure notification is raised by one or more idle nodes that have detected failure of the worker node.

Owner:INFOSYS LTD

Network with distributed shared memory

InactiveUS20090144388A1Memory adressing/allocation/relocationDigital computer detailsControl dataTerm memory

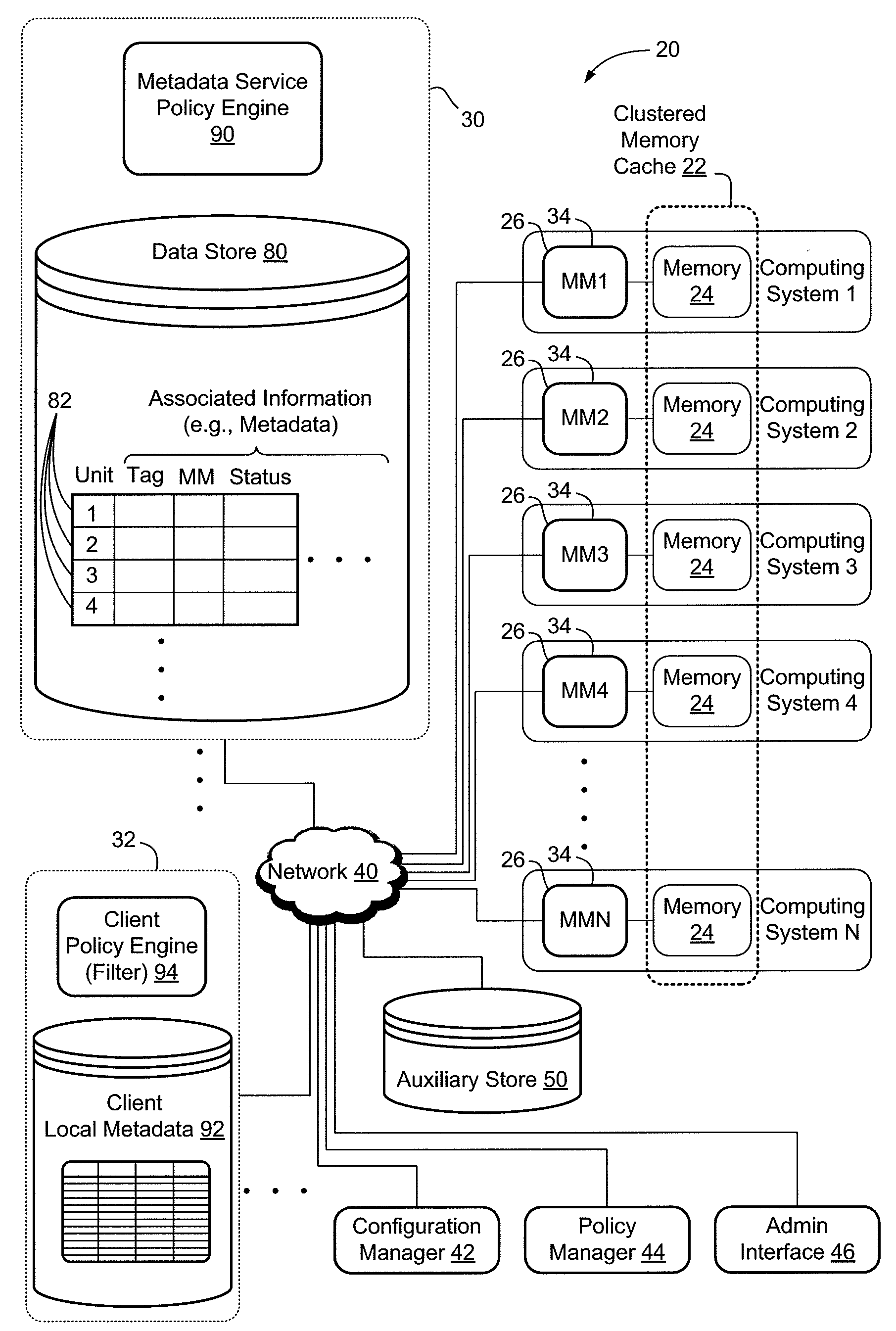

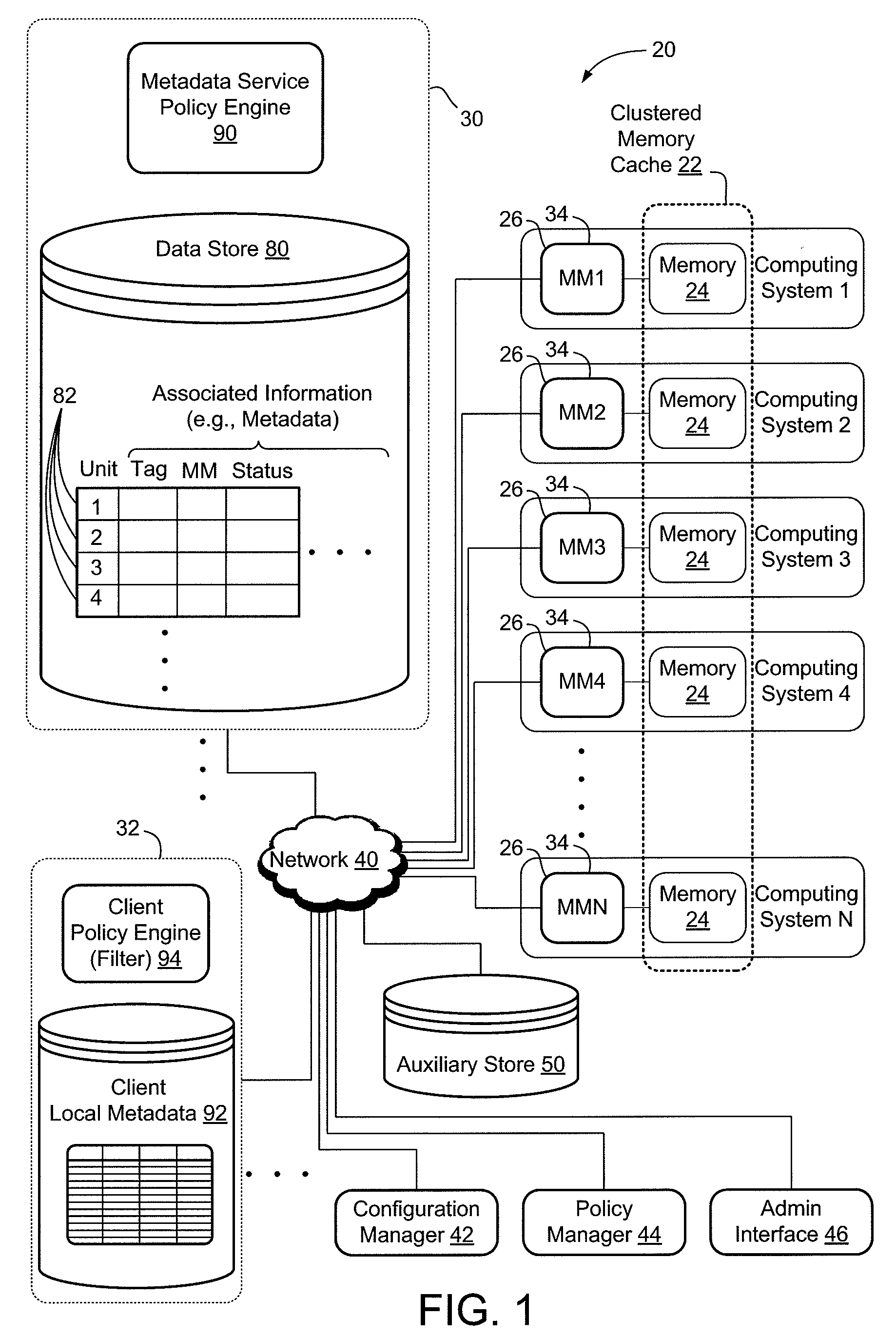

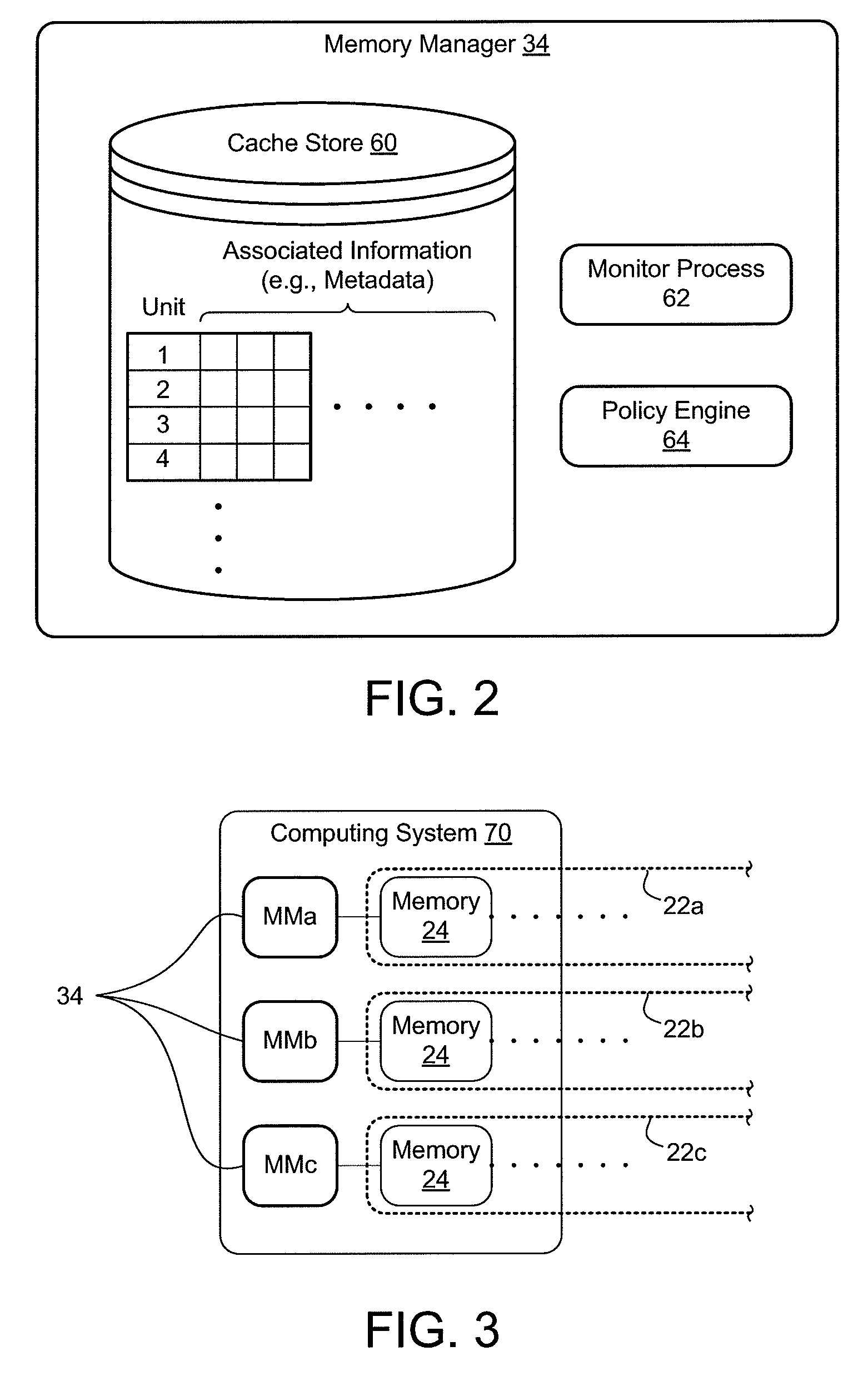

A computer network with distributed shared memory, including a clustered memory cache aggregated from and comprised of physical memory locations on a plurality of physically distinct computing systems. The clustered memory cache is accessible by a plurality of clients on the computer network and is configured to perform page caching of data items accessed by the clients. The network also includes a policy engine operatively coupled with the clustered memory cache, where the policy engine is configured to control where data items are cached in the clustered memory cache.

Owner:DELL PROD LP

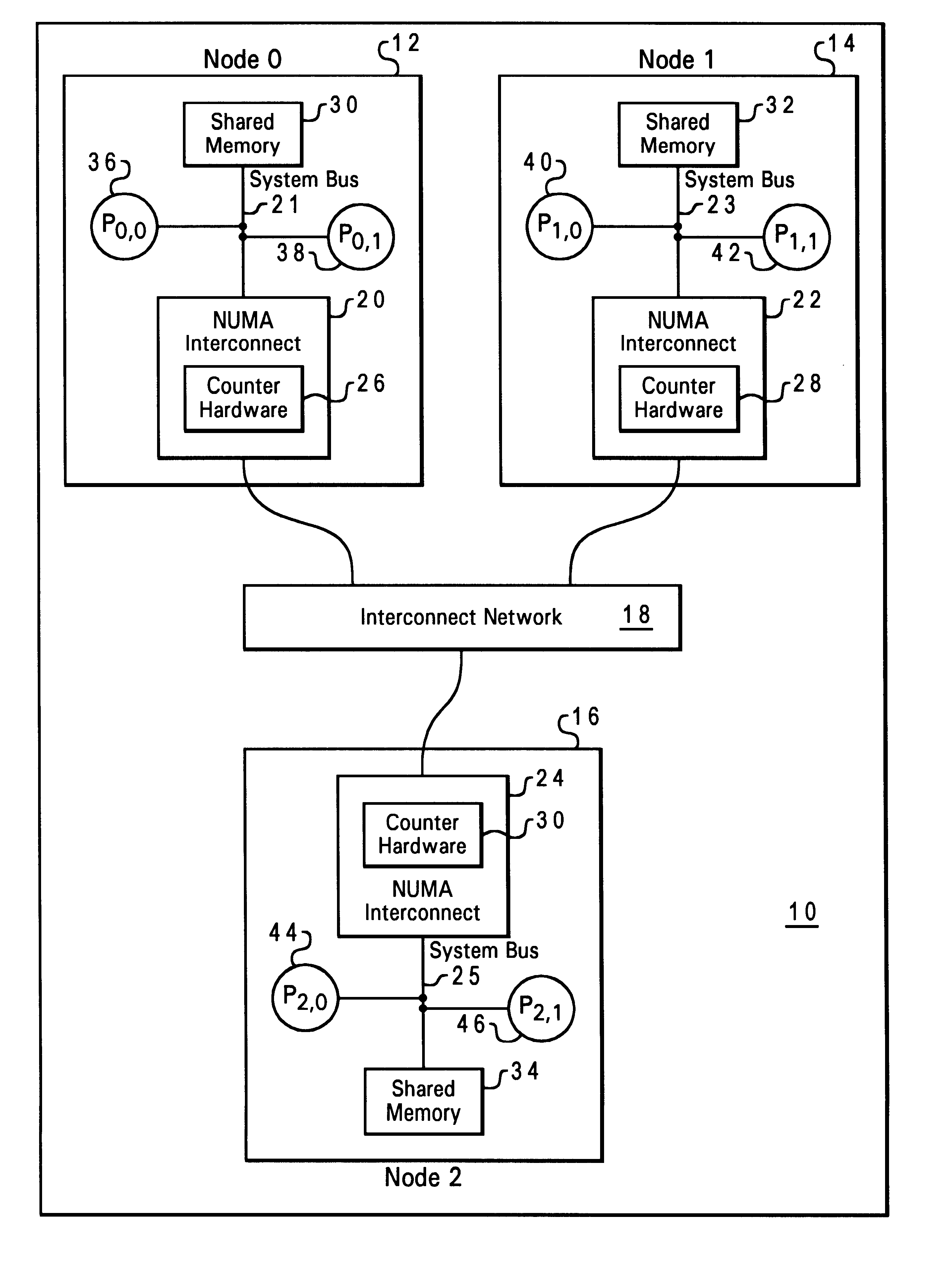

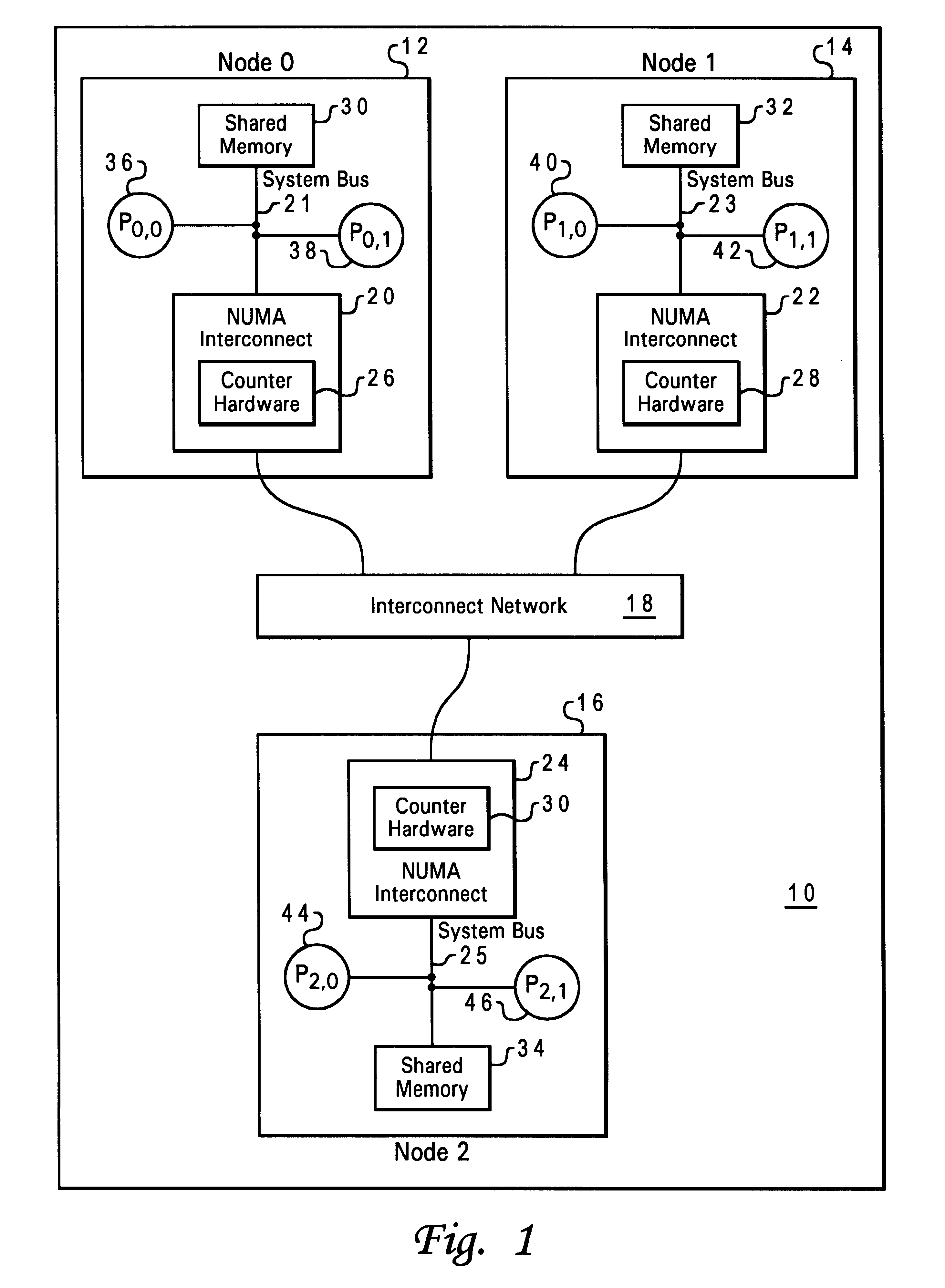

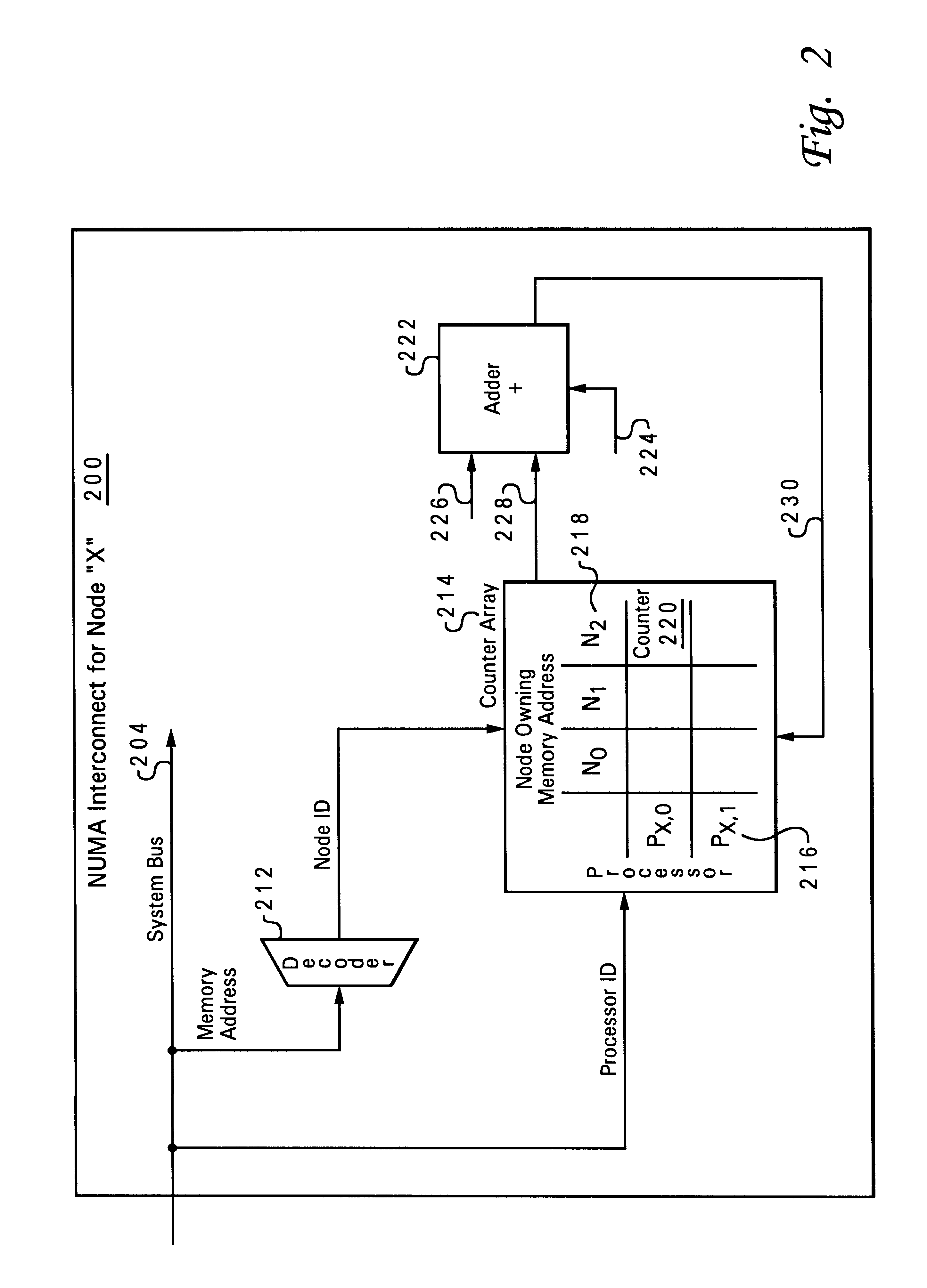

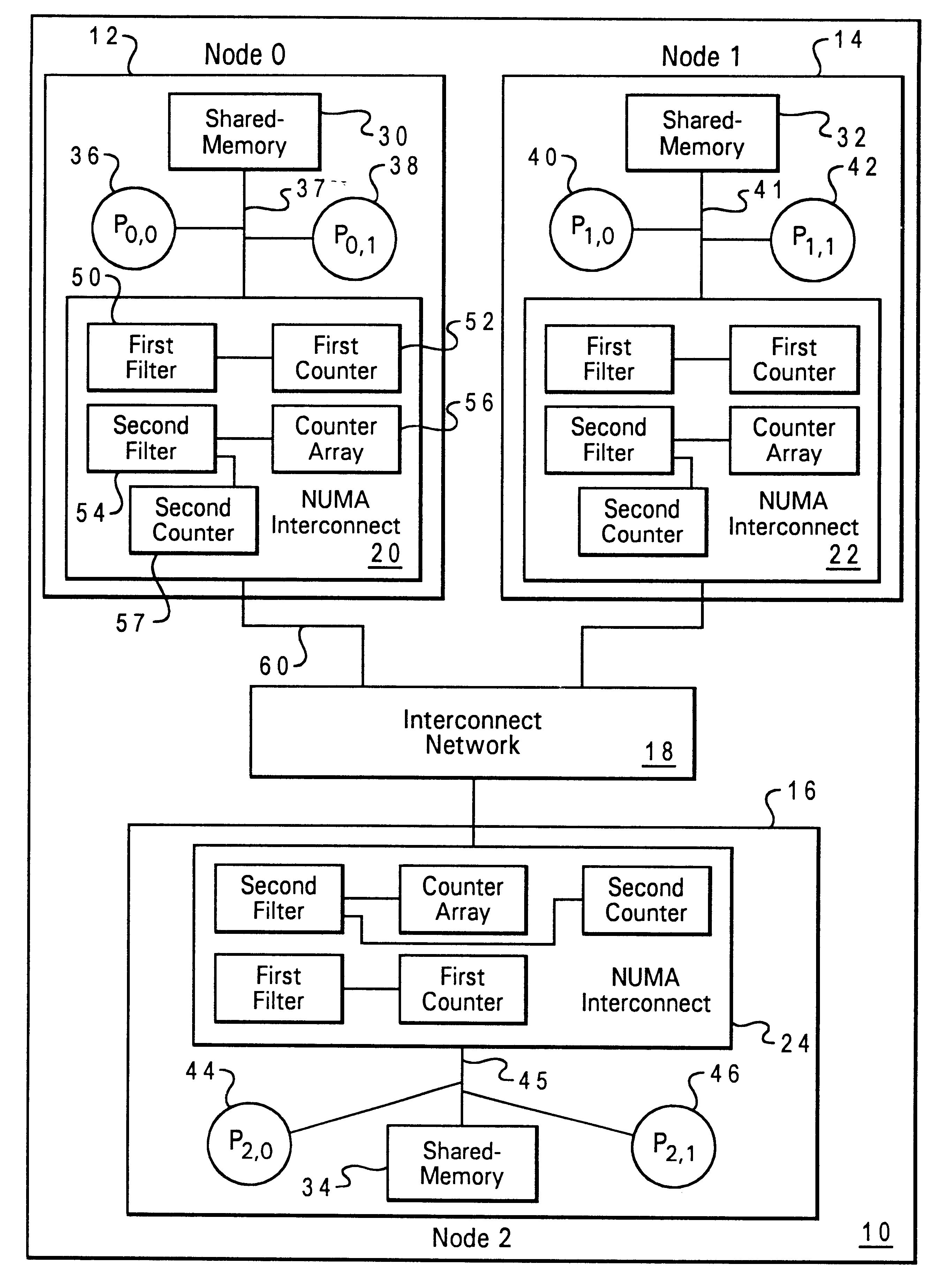

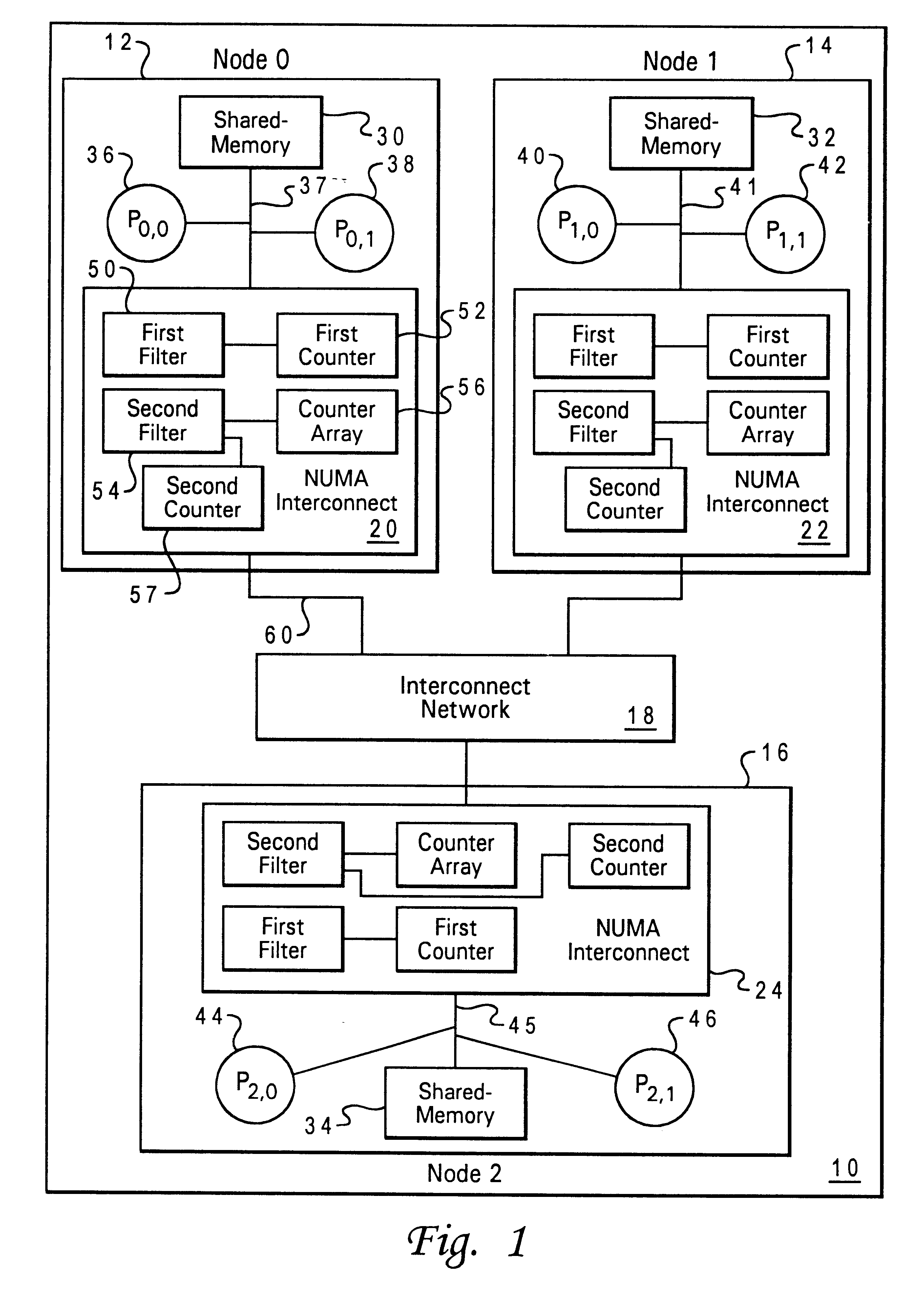

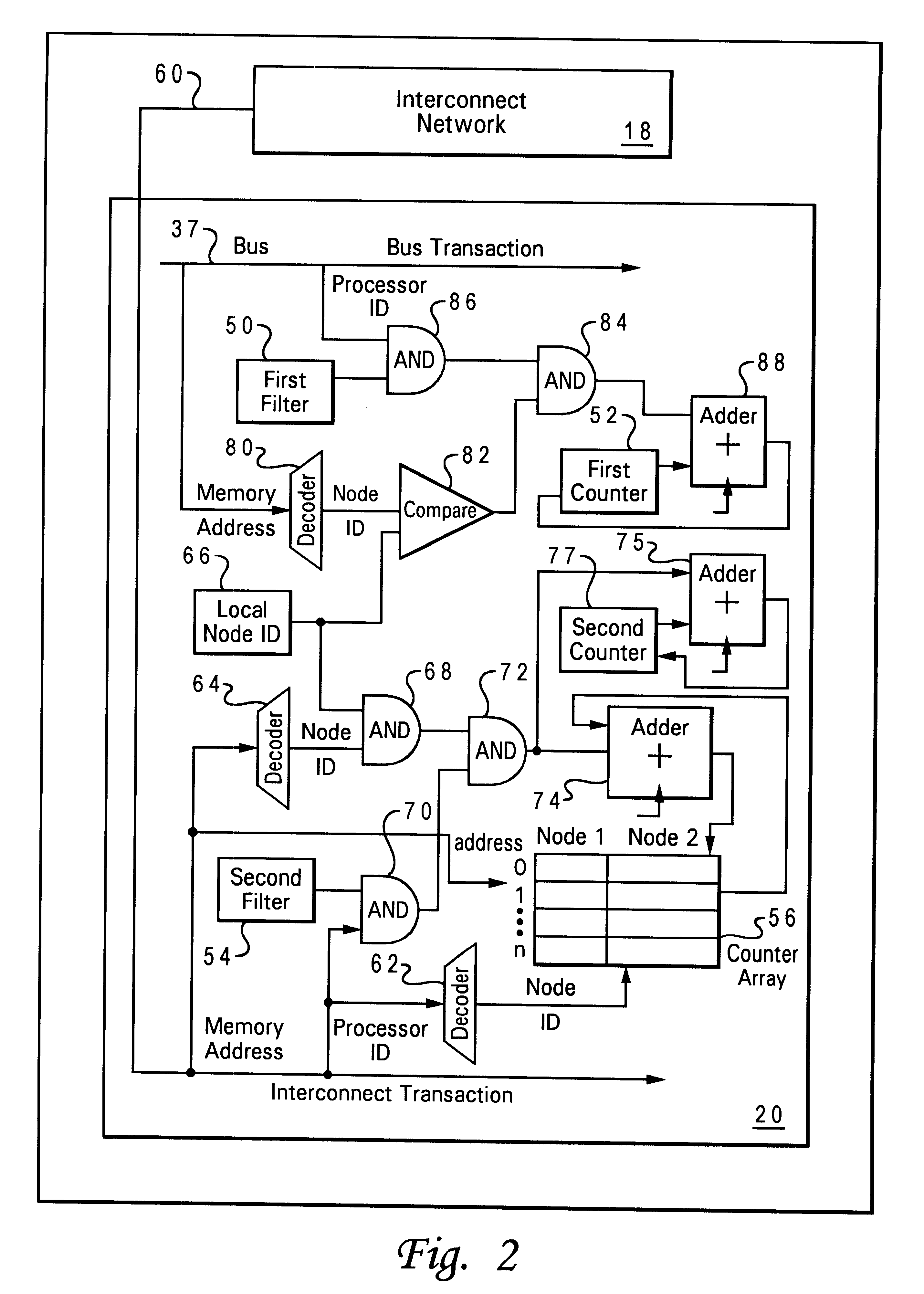

Method and system in a distributed shared-memory data processing system for determining utilization of nodes by each executed thread

InactiveUS6266745B1Digital computer detailsHardware monitoringData processing systemOperational system

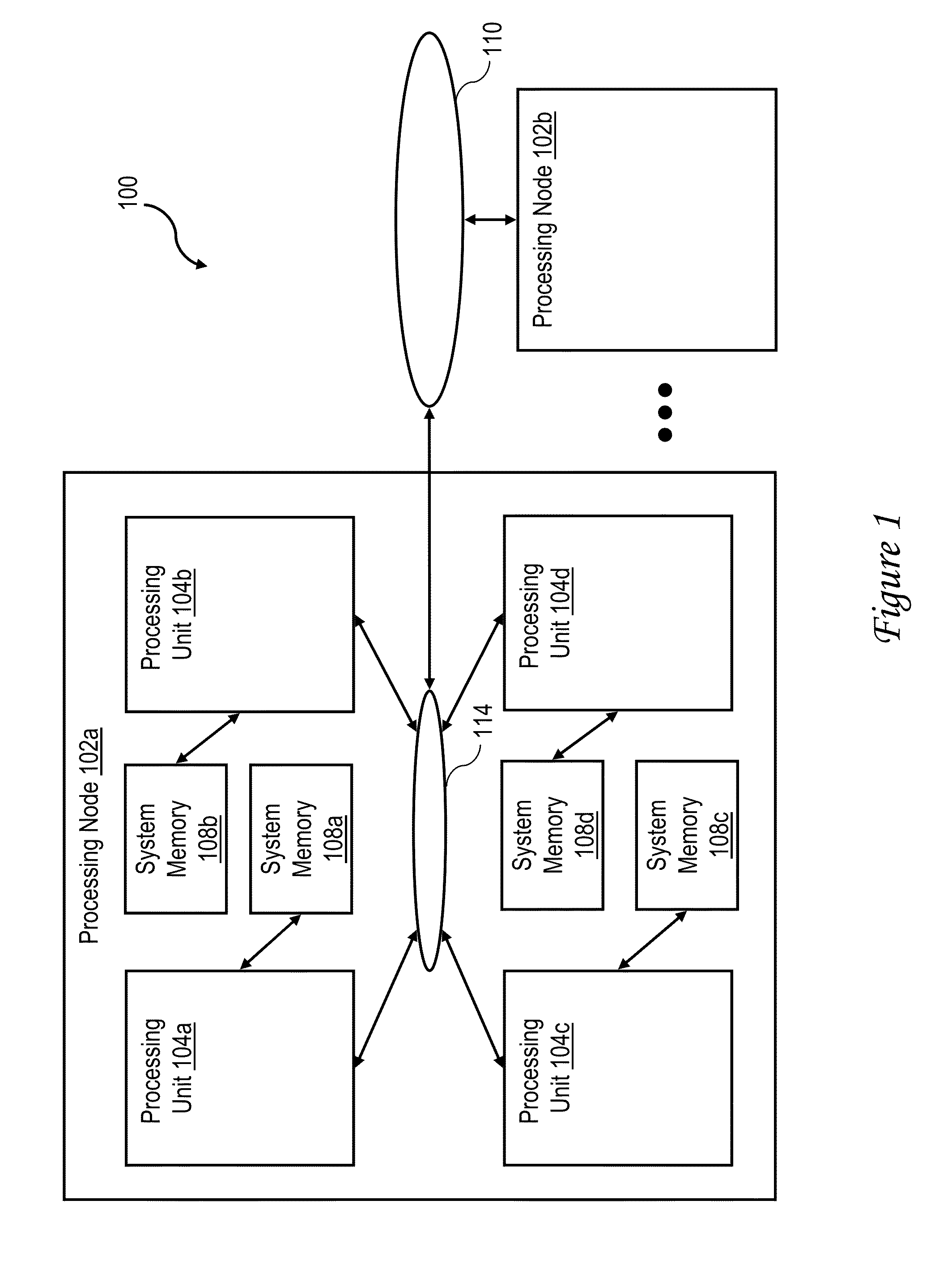

A method and system in a distributed shared-memory data processing system are disclosed for determining a utilization of each of a plurality of coupled processing nodes by one of a plurality of executed threads. The system includes a single operating system being executing simultaneously by a plurality of processors included within each of the processing nodes. The operating system processes one of the plurality of threads utilizing one of the plurality of nodes. During the processing, for each of the nodes, a quantity of times the one of the plurality of threads accesses a shared-memory included with each of the plurality of nodes is determined.

Owner:IBM CORP

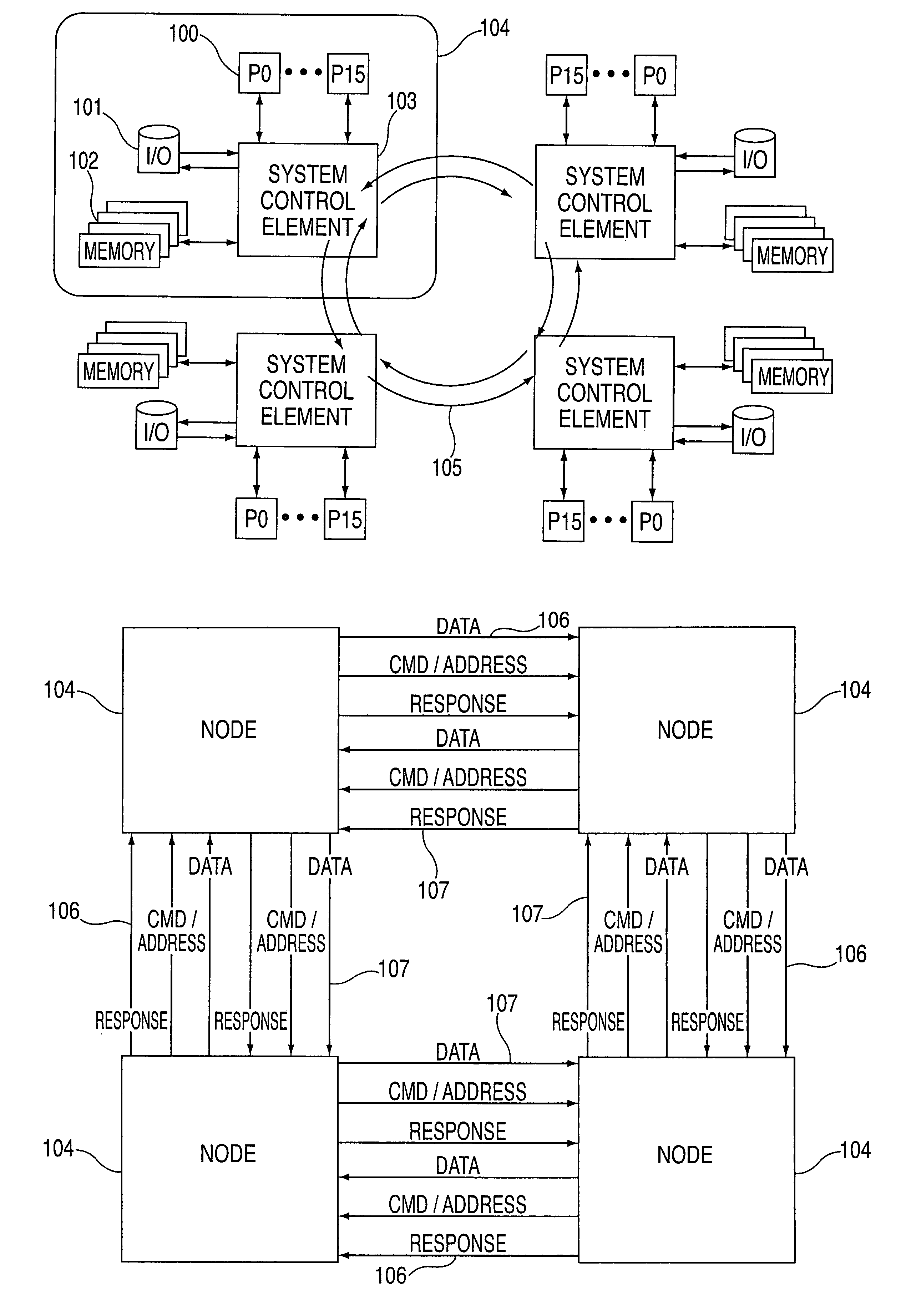

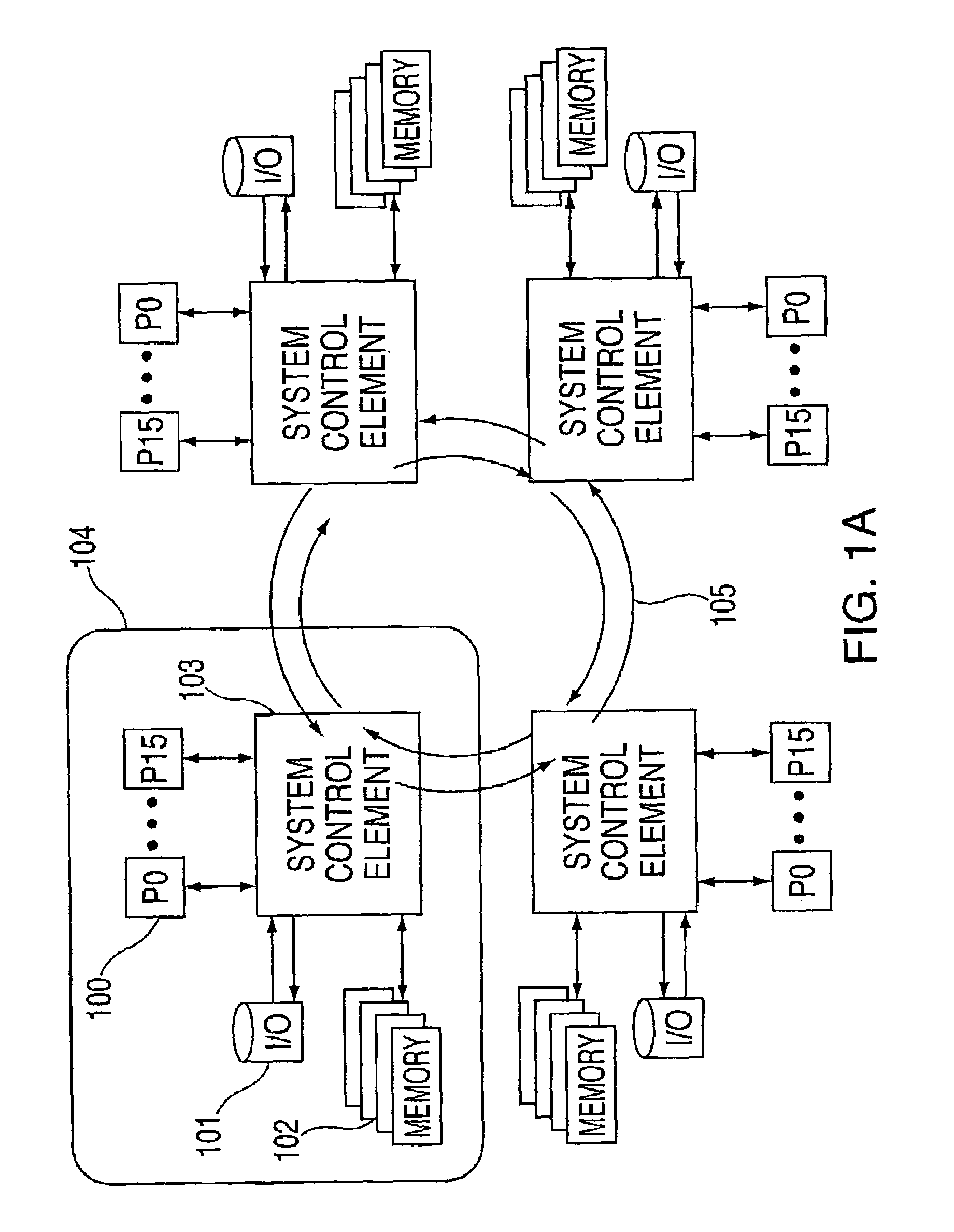

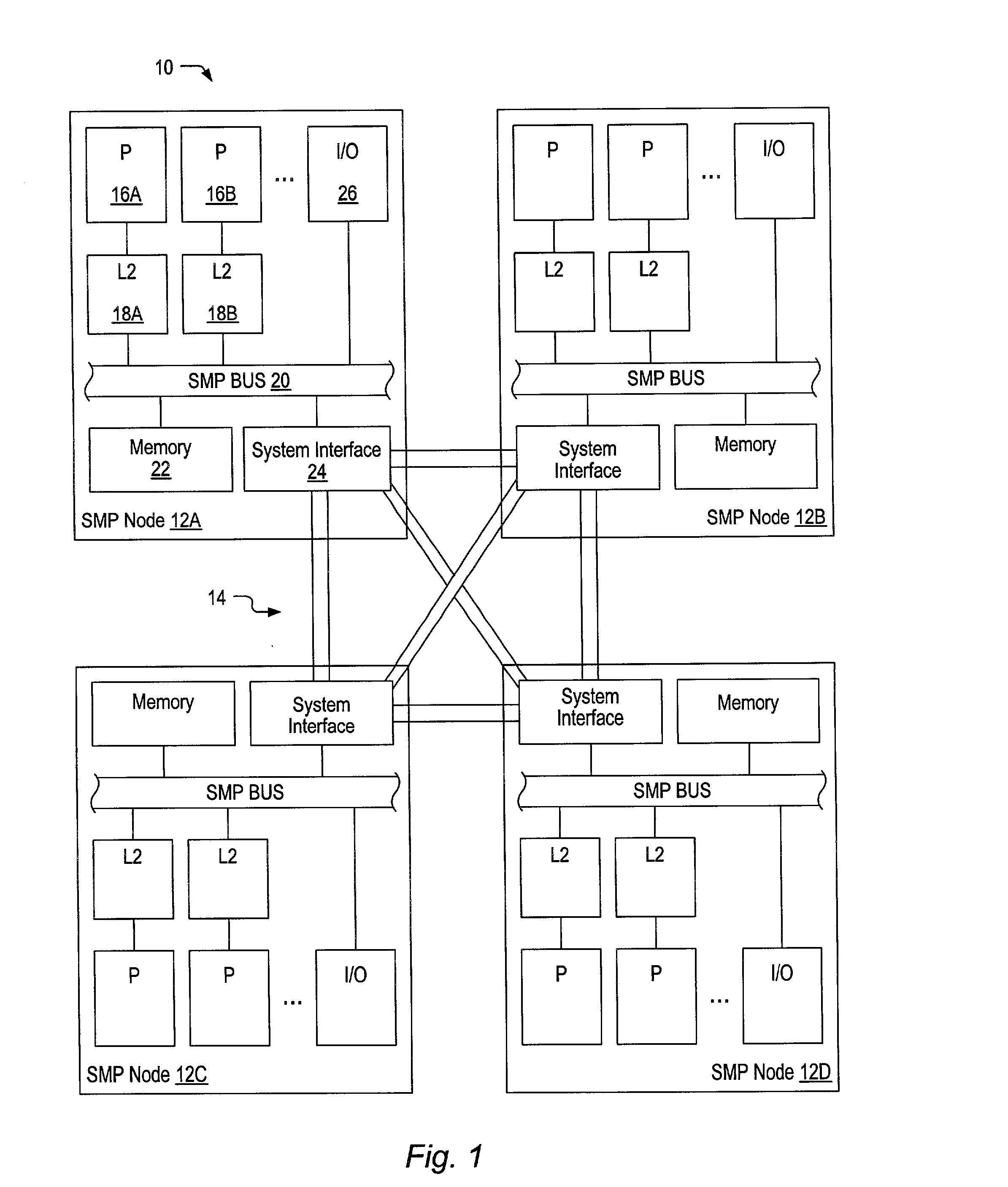

Coherency management for a "switchless" distributed shared memory computer system

InactiveUS7111130B2Reduce waiting timeEfficient packagingMemory adressing/allocation/relocationComputerized systemConsistency management

A shared memory symmetrical processing system including a plurality of nodes each having a system control element for routing internodal communications. A first ring and a second ring interconnect the plurality of nodes, wherein data in said first ring flows in opposite directions with respect to said second ring. A receiver receives a plurality of incoming messages via the first or second ring and merges a plurality of incoming message responses with a local outgoing message response to provide a merged response. Each of the plurality of nodes includes any combination of the following: at least one processor, cache memory, a plurality of I / O adapters, and main memory. The system control element includes a plurality of controllers for maintaining coherency in the system.

Owner:IBM CORP

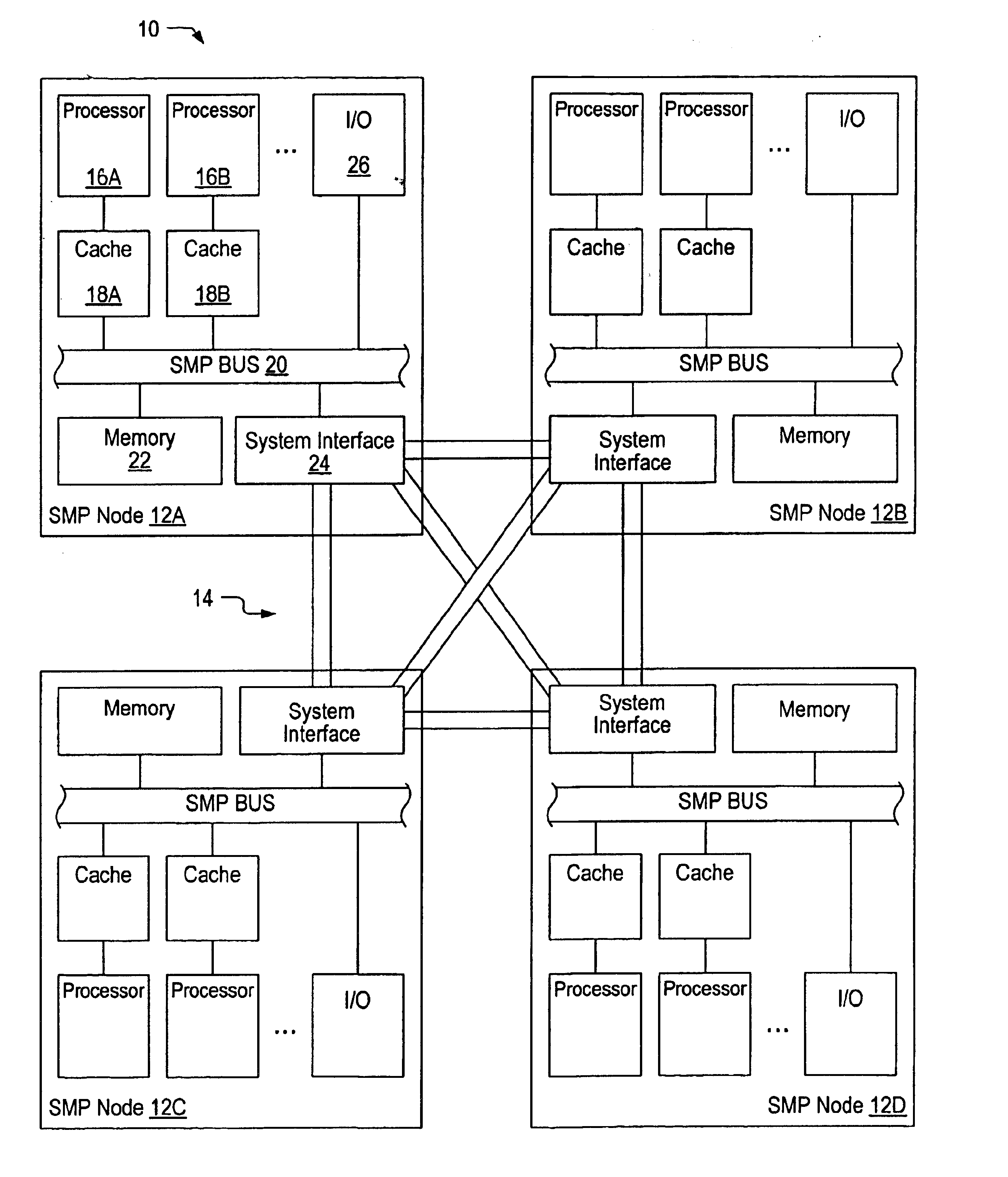

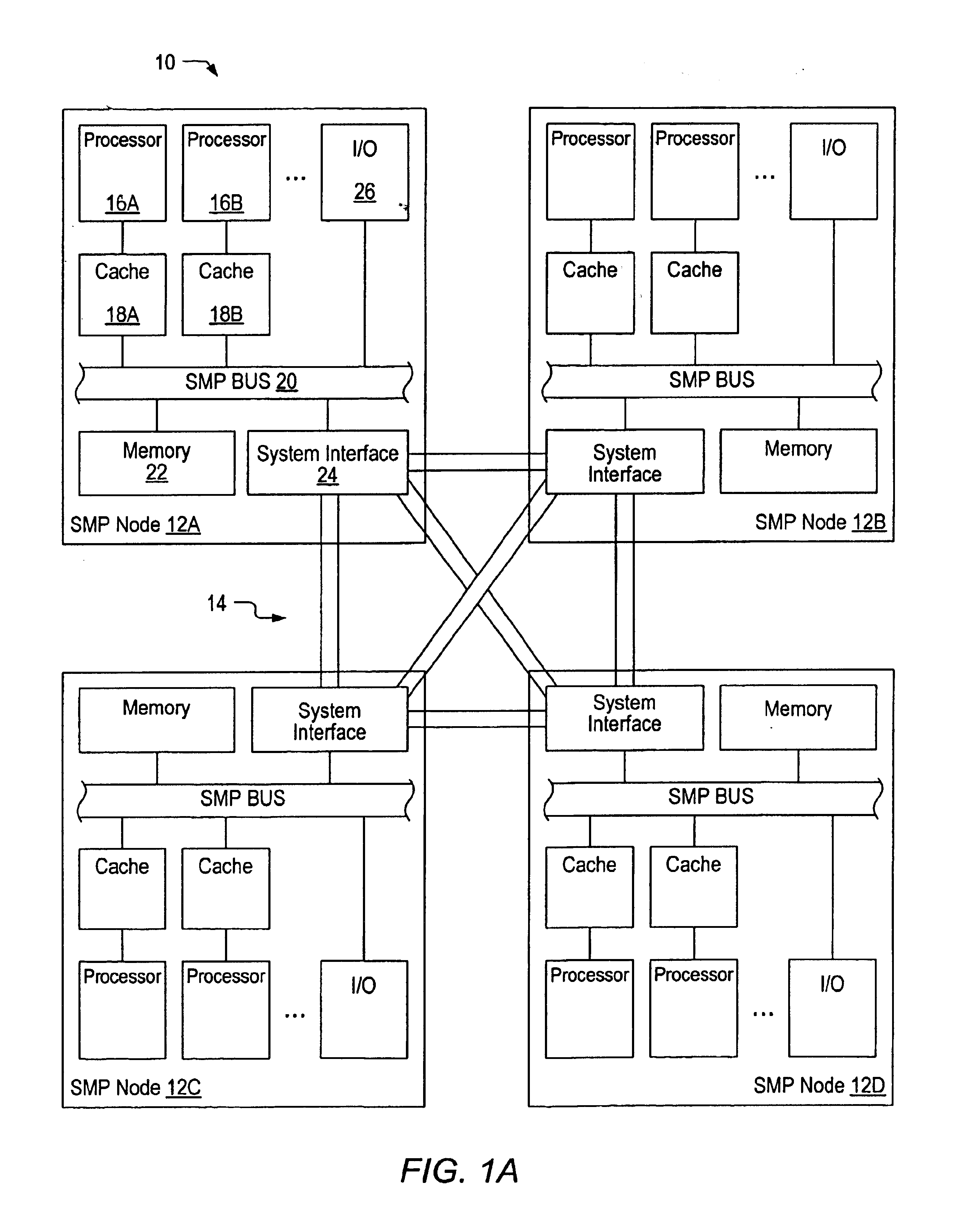

Distributed symmetric multiprocessing computing architecture

ActiveUS20110125974A1Shorten the progressMemory adressing/allocation/relocationDigital computer detailsSupercomputerGlobal address space

Example embodiments of the present invention includes systems and methods for implementing a scalable symmetric multiprocessing (shared memory) computer architecture using a network of homogeneous multi-core servers. The level of processor and memory performance achieved is suitable for running applications that currently require cache coherent shared memory mainframes and supercomputers. The architecture combines new operating system extensions with a high-speed network that supports remote direct memory access to achieve an effective global distributed shared memory. A distributed thread model allows a process running in a head node to fork threads in other (worker) nodes that run in the same global address space. Thread synchronization is supported by a distributed mutex implementation. A transactional memory model allows a multi-threaded program to maintain global memory page consistency across the distributed architecture. A distributed file access implementation supports non-contentious file I / O for threads. These and other functions provide a symmetric multiprocessing programming model consistent with standards such as Portable Operating System Interface for Unix (POSIX).

Owner:ANDERSON RICHARD S

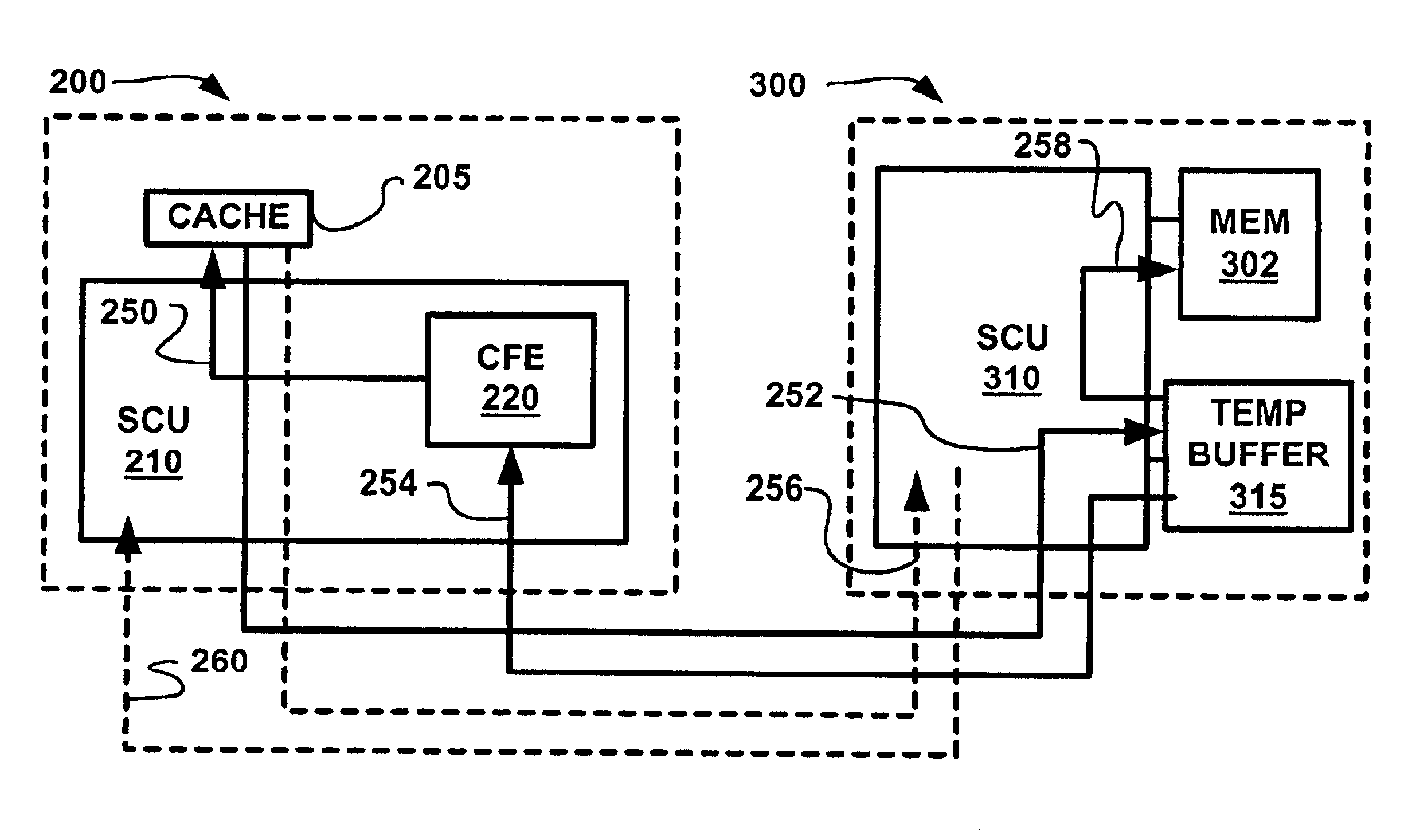

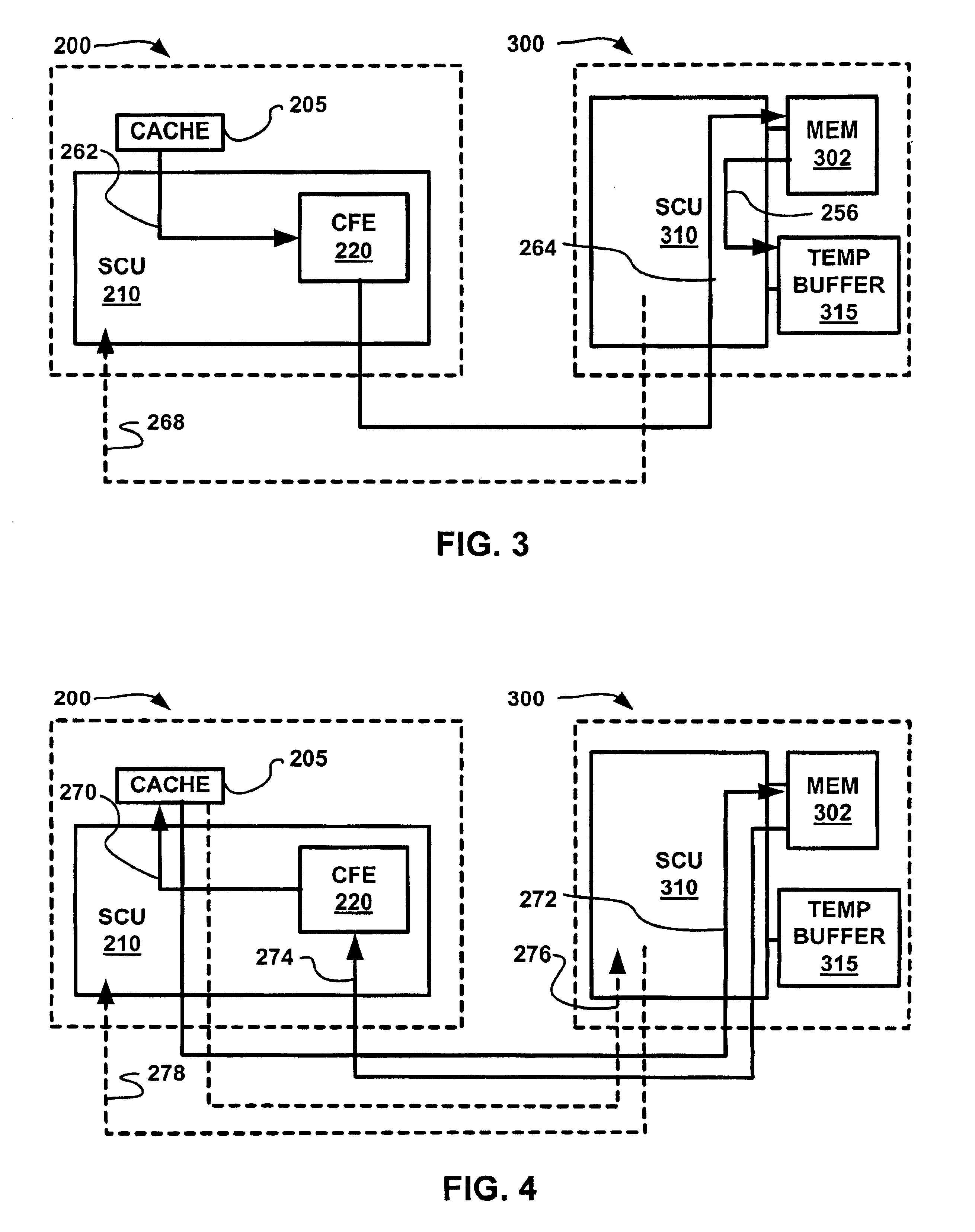

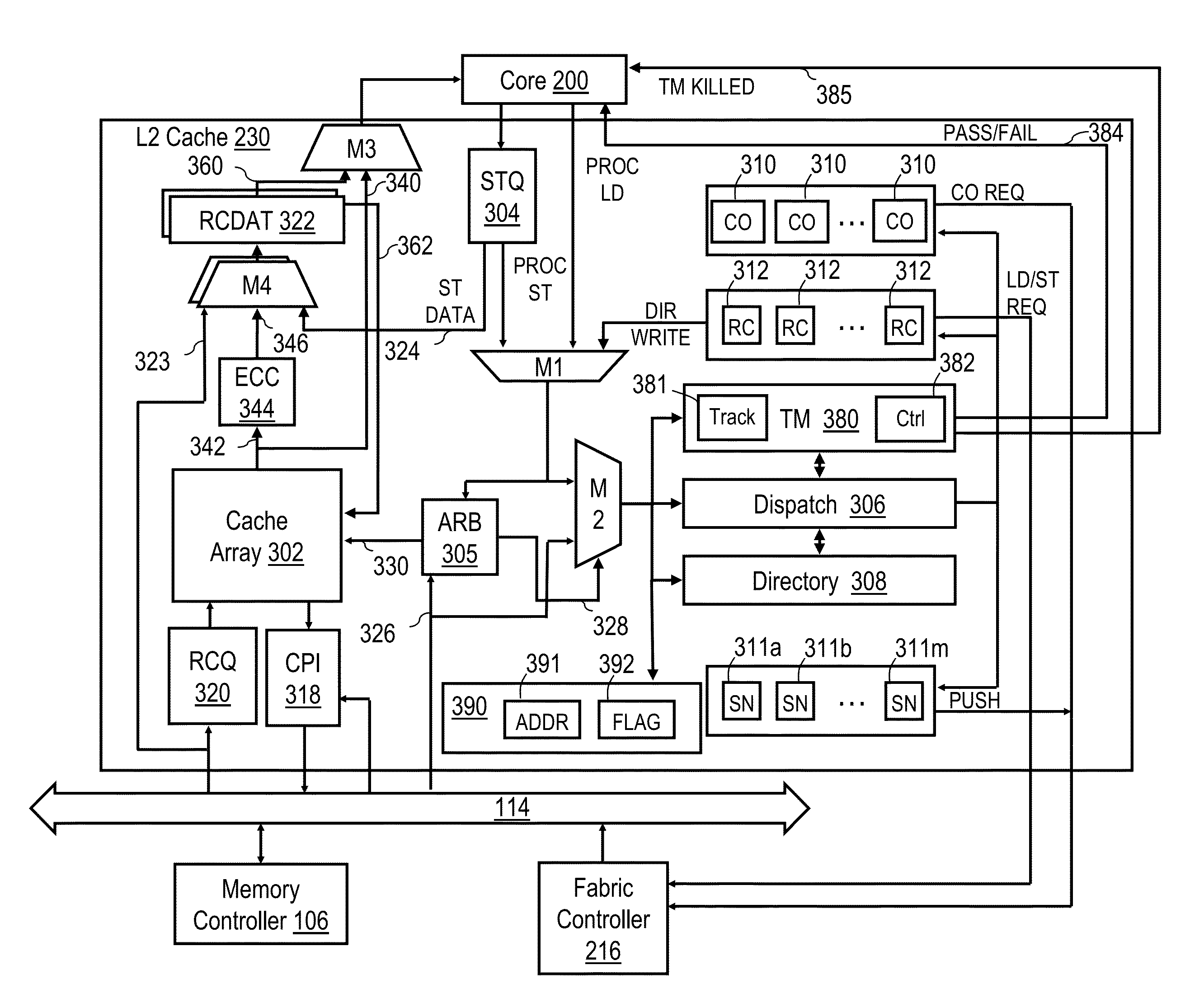

Multi-processor computer system with transactional memory

InactiveUS6880045B2Data processing applicationsMemory adressing/allocation/relocationMulti processorSemantics

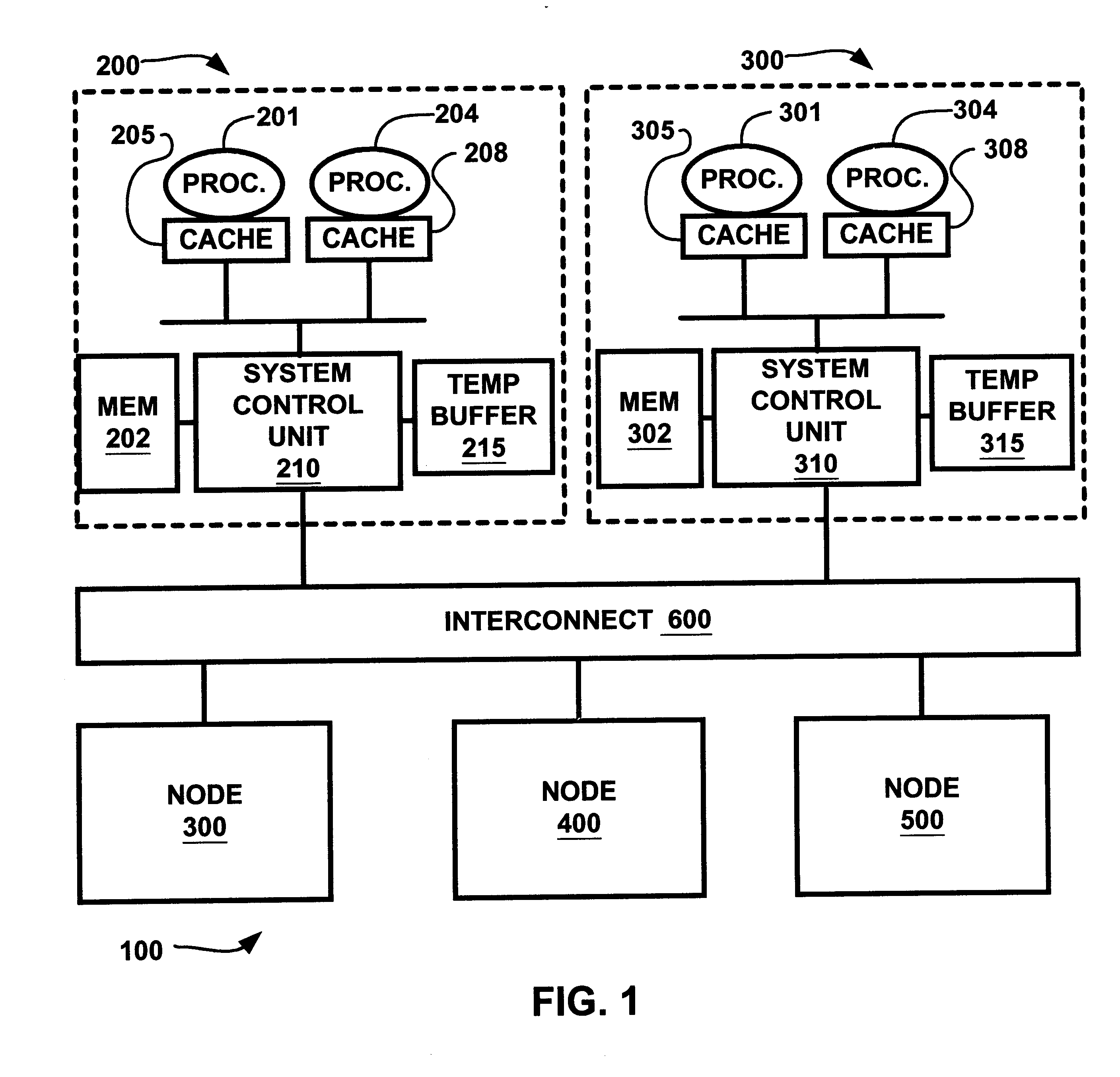

A cache coherent distributed shared memory multi-processor computer system is provided which supports transactional memory semantics. A cache flushing engine and temporary buffer allow selective forced write-backs of dirty cache lines to the home memory. A flush can be performed from the updated cache to the temporary buffer and then to the home memory after confirmation of receipt or from the updated cache to the home memory directly with the temporary buffer holding the old data until confirmation that the home memory contains the update.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

Transactional Processing for Clustered File Systems

InactiveUS20100049718A1Reduce file system accessMemory architecture accessing/allocationDigital data processing detailsData segmentGranularity

Systems and methods for transactional processing within a clustered file system wherein user defined transactions operate on data segments of the file system data. The users are provided within an interface for using a transactional mechanism, namely services for opening, writing and rolling-back transactions. A distributed shared memory technology is utilized to facilitate efficient and coherent cache management within the clustered file system based on the granularity of data segments (rather than files).

Owner:IBM CORP

Method and system in a distributed shared-memory data processing system for determining utilization of shared-memory included within nodes by a designated application

InactiveUS6336170B1Resource allocationMemory adressing/allocation/relocationData processing systemOperational system

A method and system in a distributed shared-memory data processing system are disclosed having a single operating system being executed simultaneously by a plurality of processors included within a plurality of coupled processing nodes for determining a utilization of each memory location included within a shared-memory included within each of the plurality of nodes by each of the plurality of nodes. The operating system processes a designated application utilizing the plurality of nodes. During the processing, for each of the plurality of nodes, a determination is made of a quantity of times each memory location included within a shared-memory included within each of the plurality of nodes is accessed by each of the plurality of nodes.

Owner:IBM CORP

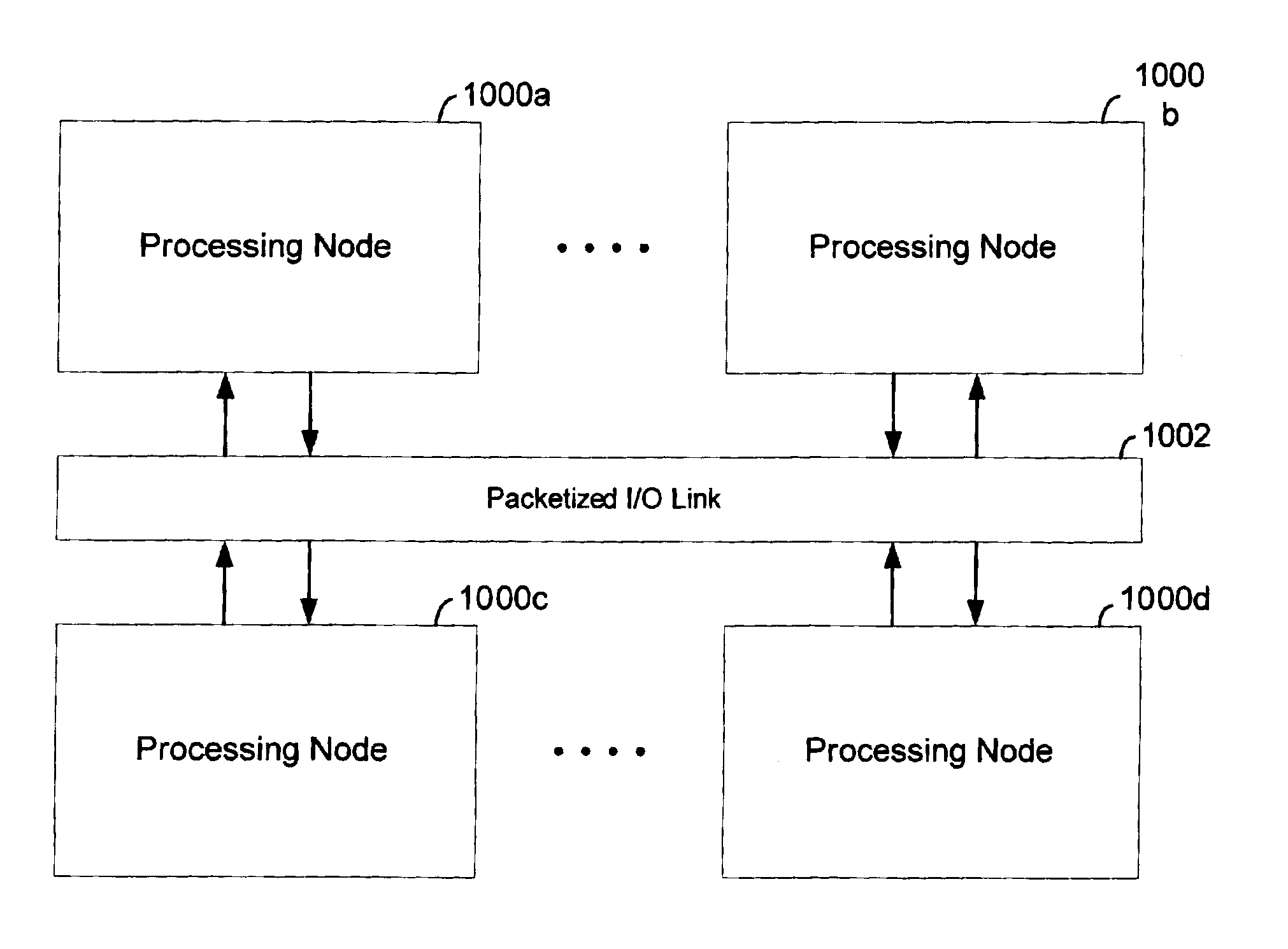

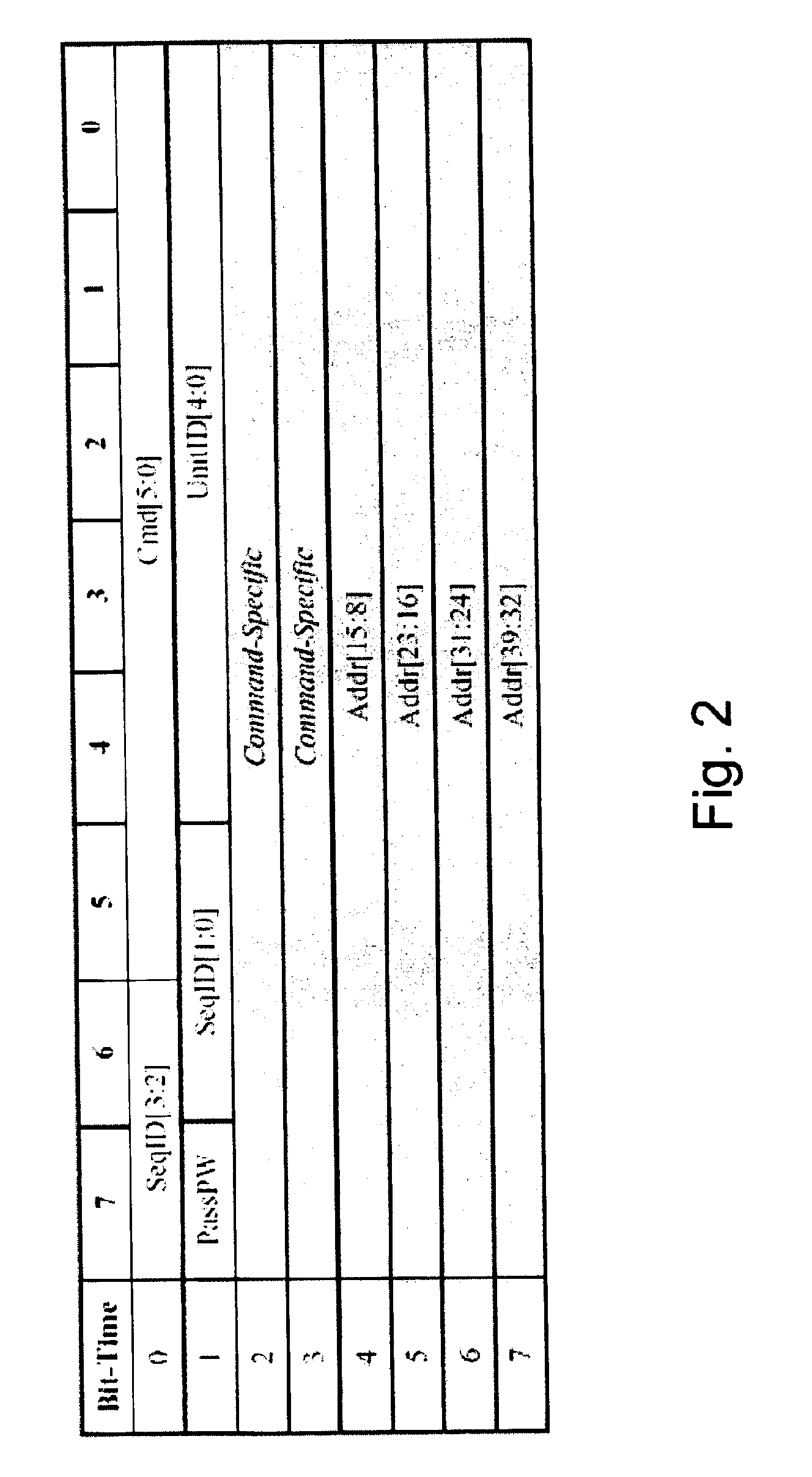

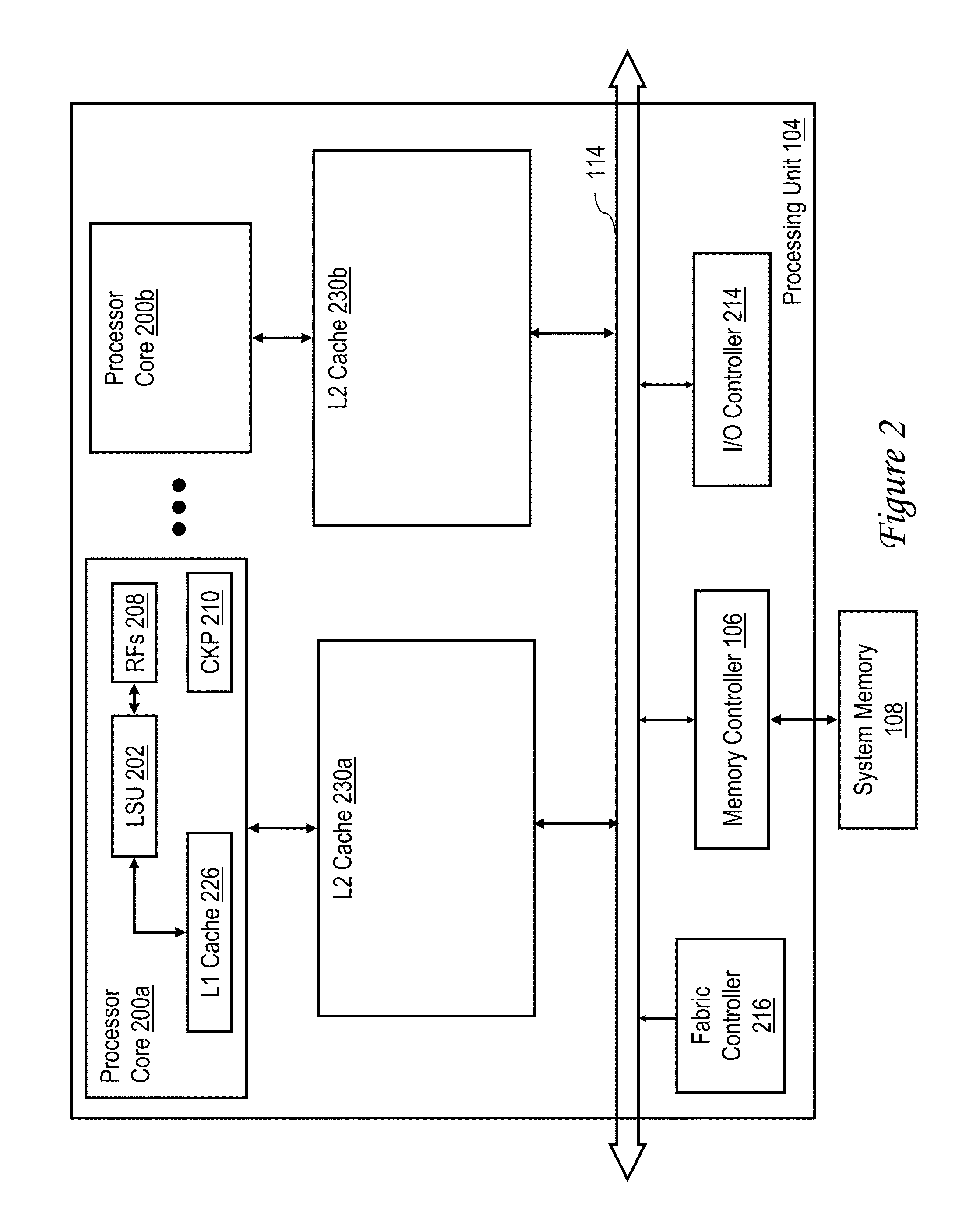

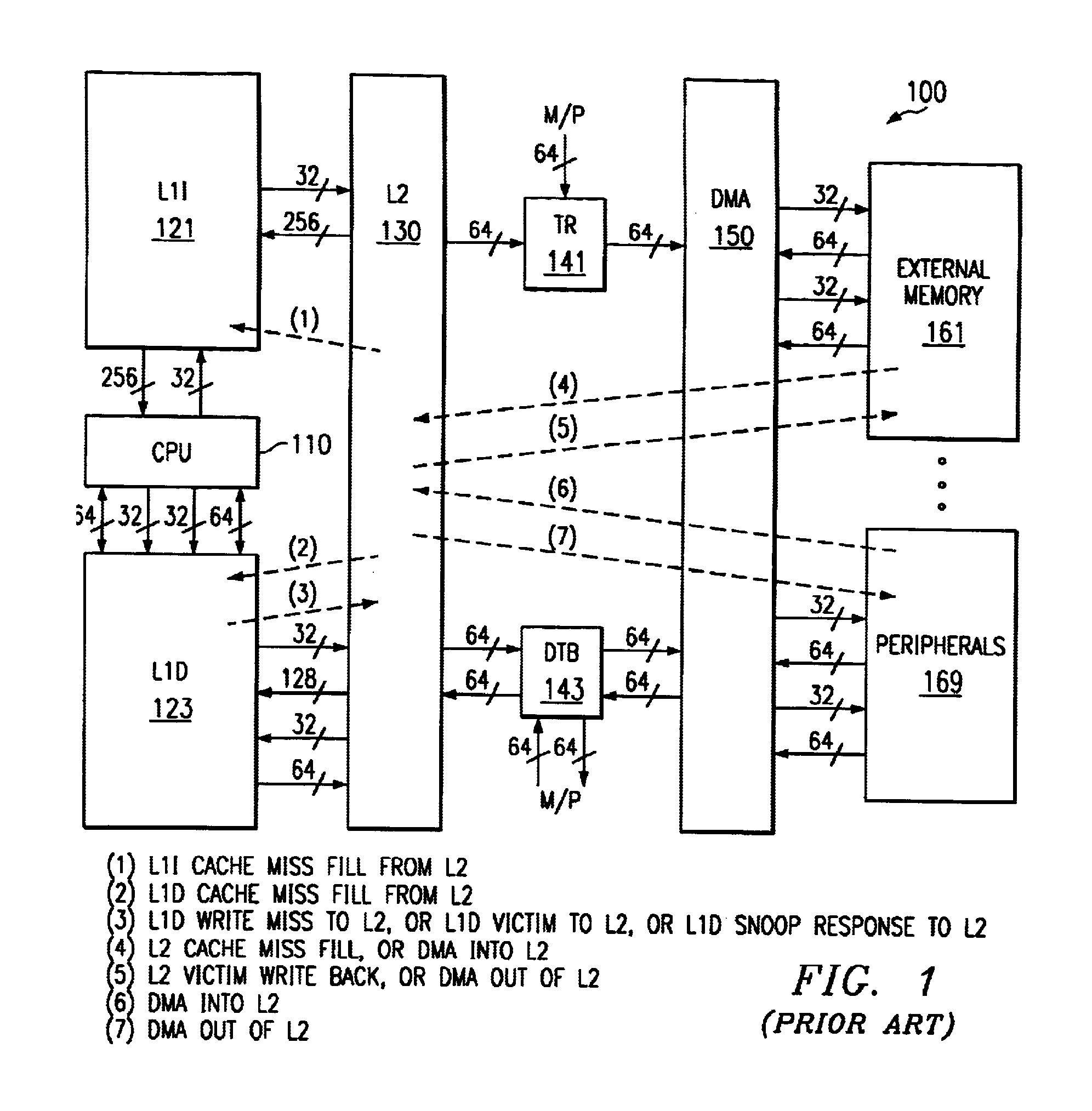

Scalable cache coherent distributed shared memory processing system

A packetized I / O link such as the HyperTransport protocol is adapted to transport memory coherency transactions over the link to support cache coherency in distributed shared memory systems. The I / O link protocol is adapted to include additional virtual channels that can carry command packets for coherency transactions over the link in a format that is acceptable to the I / O protocol. The coherency transactions support cache coherency between processing nodes interconnected by the link. Each processing node may include processing resources that themselves share memory, such as symmetrical multiprocessor configuration. In this case, coherency will have to be maintained both at the intranode level as well as the internode level. A remote line directory is maintained by each processing node so that it can track the state and location of all of the lines from its local memory that have been provided to other remote nodes. A node controller initiates transactions over the link in response to local transactions initiated within itself, and initiates transactions over the link based on local transactions initiated within itself. Flow control is provided for each of the coherency virtual channels either by software through credits or through a buffer free command packet that is sent to a source node by a target node indicating the availability of virtual channel buffering for that channel.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

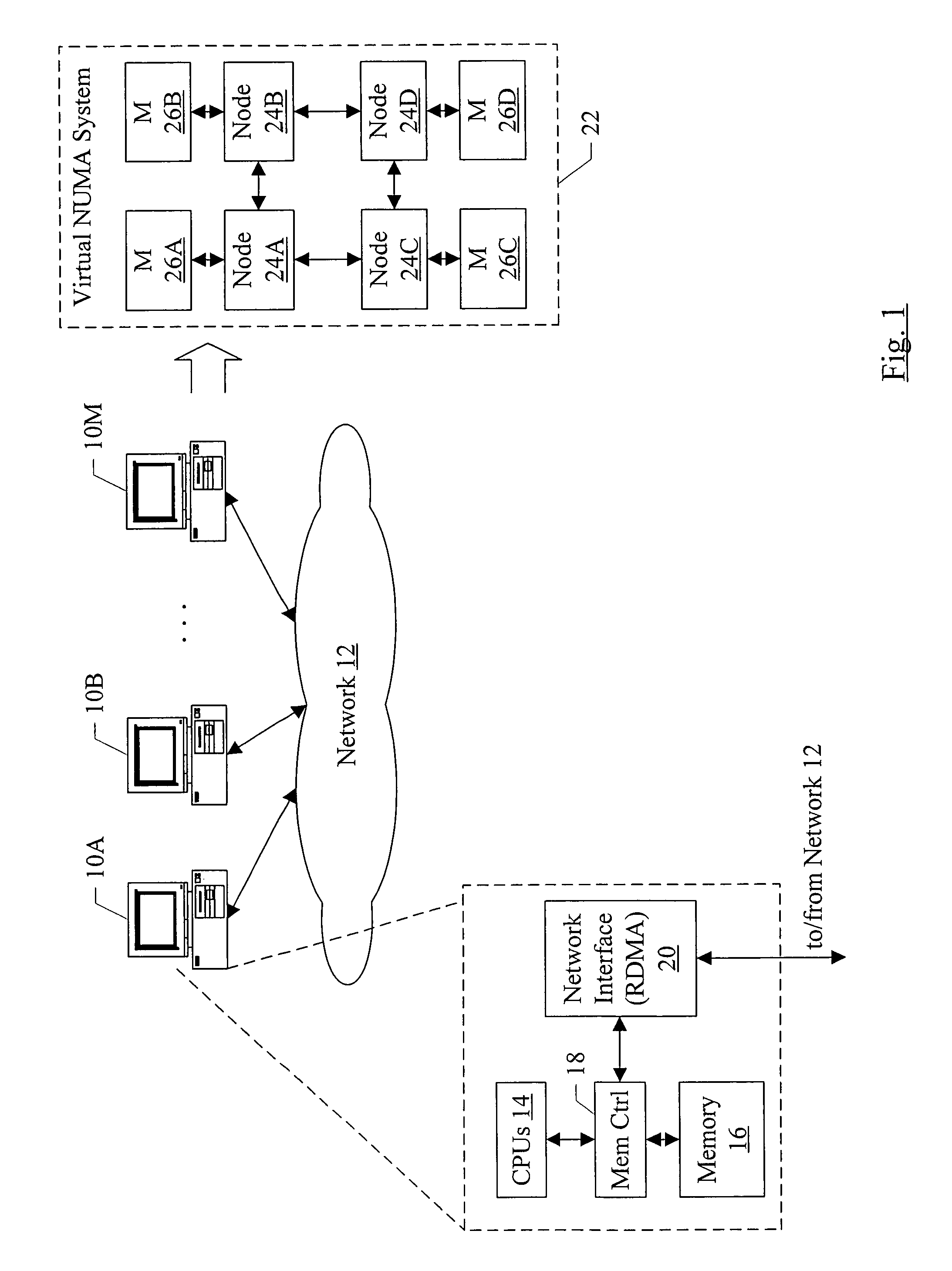

Supporting a weak ordering memory model for a virtual physical address space that spans multiple nodes

InactiveUS7702743B1Memory architecture accessing/allocationDigital computer detailsComputerized systemEthernet

In one embodiment, a virtual NUMA system may be formed from multiple computer systems coupled to a network such as InfiniBand, Ethernet, etc. Each computer includes one or more software modules which present the resources of the computers as a virtual NUMA machine. The virtual machine is a non-uniform memory access (NUMA) machine comprising a plurality of nodes, each node having memory that is part of a distributed shared memory. Additionally, the virtual machine is coherent with a weakly ordered memory model. When executed in a current owner node of a first block in response to an ownership transfer request from a requesting node of the plurality of nodes for the first block, the software modules perform a synchronization operation if the first block has been modified in the current owner node.

Owner:SYMANTEC OPERATING CORP

Method and system for automatic failover of distributed query processing using distributed shared memory

A method and system for implementing automatic recovery from failure of resources in a grid-based distributed database is provided. The method includes determining the category of each node in the subgroup of nodes, where the determination identifies each node as at least one of a worker node and an idle node. The method further includes saving state of each worker node engaged in execution of a task in a shared memory at pre-determined time intervals. Each worker node is monitored by one or more idle nodes in each sub-group. Upon detection of no change in state of worker node for a pre-determined period of time, a failure notification is raised by one or more idle nodes that have detected failure of the worker node.

Owner:INFOSYS LTD

Rewind only transactions in a data processing system supporting transactional storage accesses

ActiveUS20140040551A1Memory architecture accessing/allocationMemory adressing/allocation/relocationData processing systemParallel computing

In a multiprocessor data processing system having a distributed shared memory system, a memory transaction that is a rewind-only transaction (ROT) and that includes one or more transactional memory access instructions and a transactional abort instruction is executed. In response to execution of the one or more transactional memory access instructions, one or more memory accesses to the distributed shared memory system indicated by the one or more transactional memory access instructions are performed. In response to execution of the transactional abort instruction, execution results of the one or more transaction memory access instructions are discarded and control is passed to a fail handler.

Owner:IBM CORP

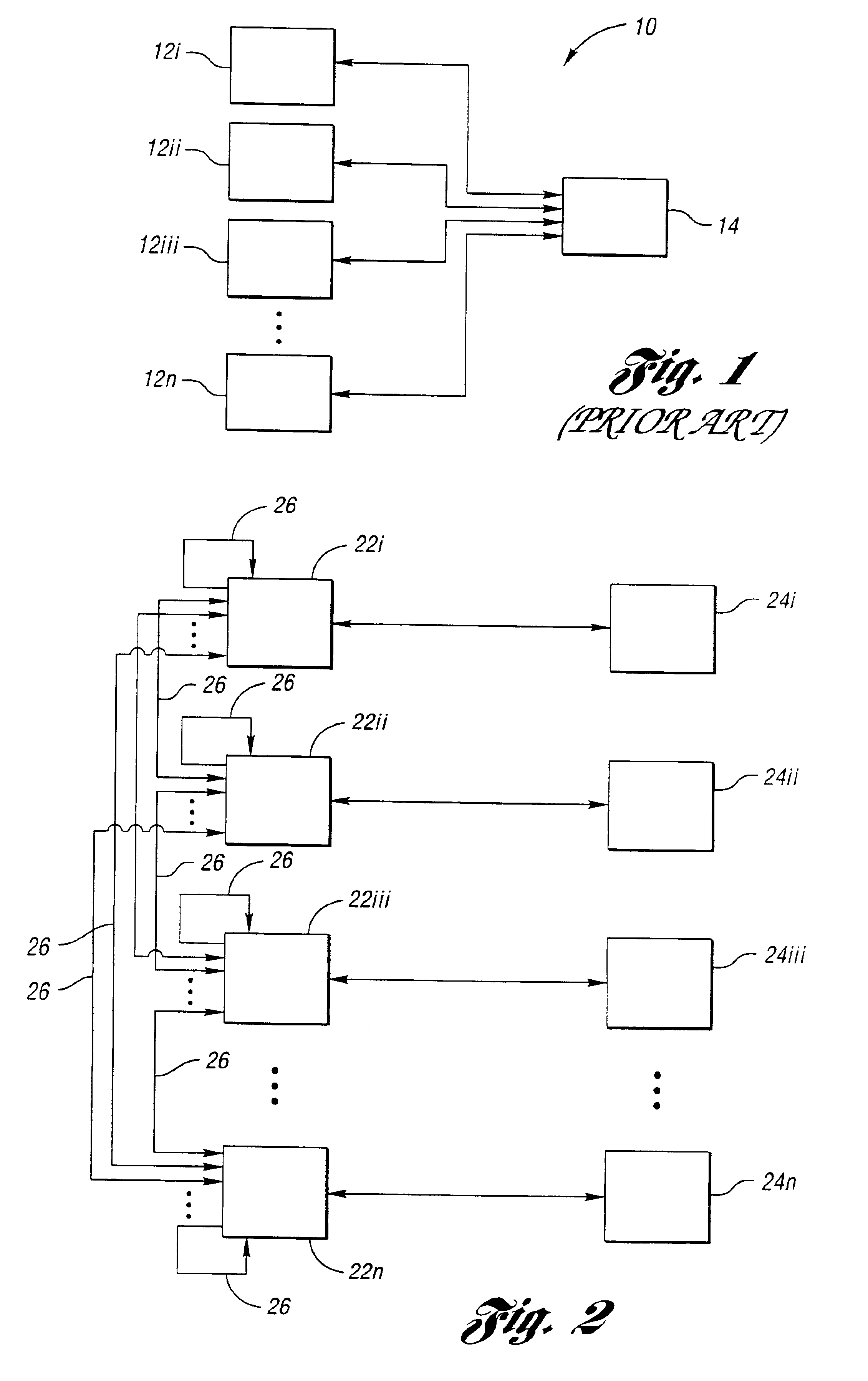

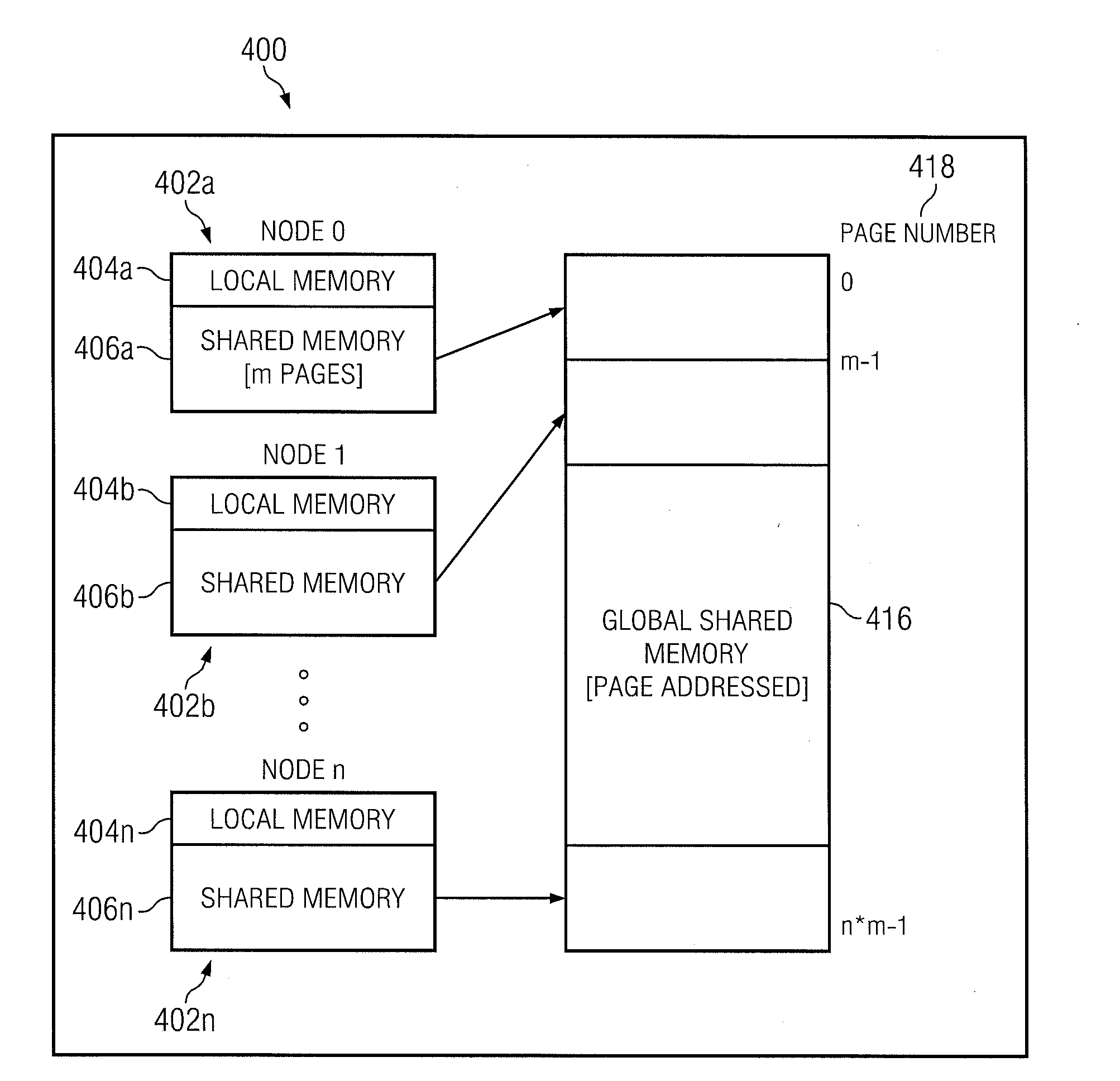

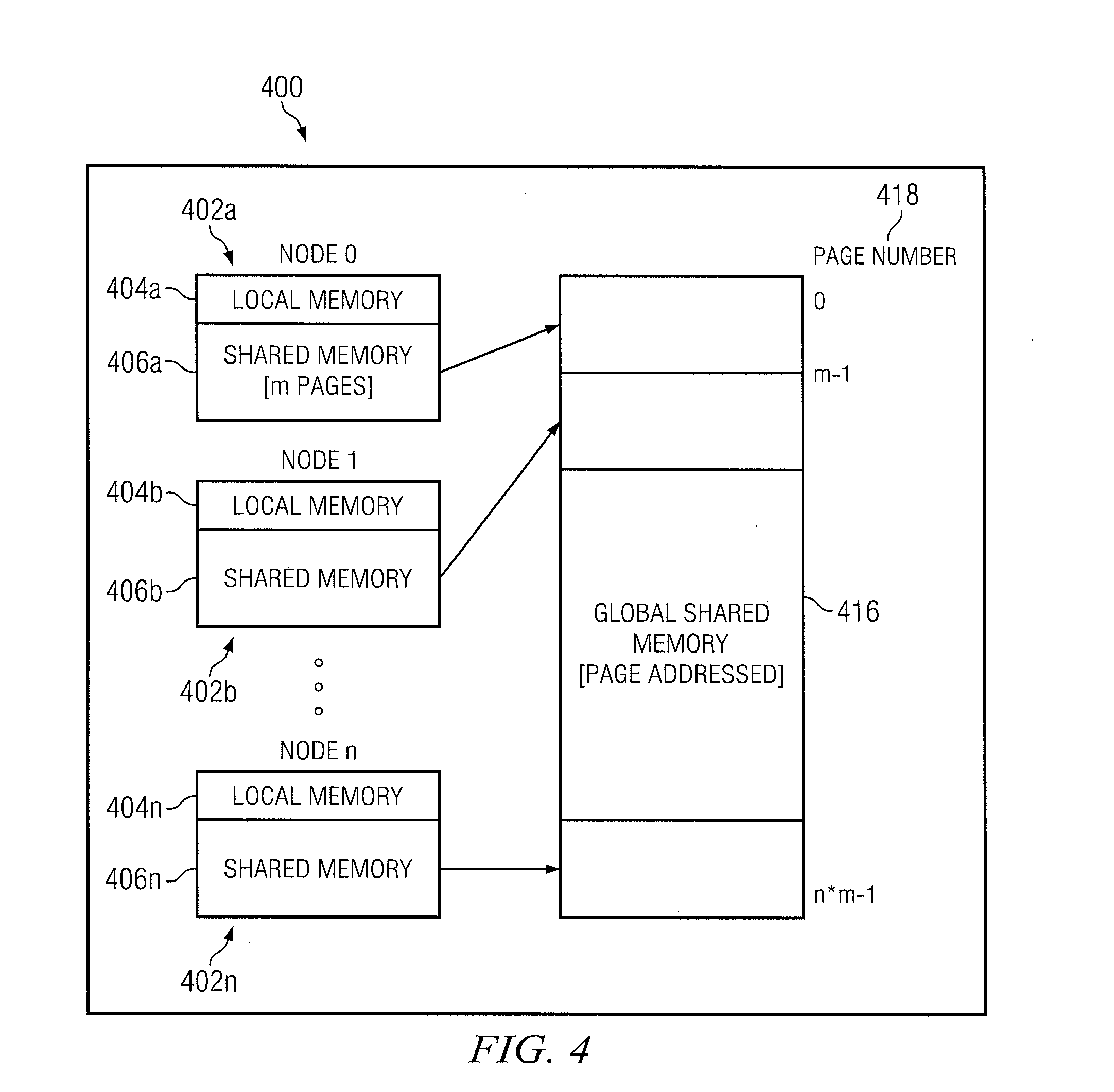

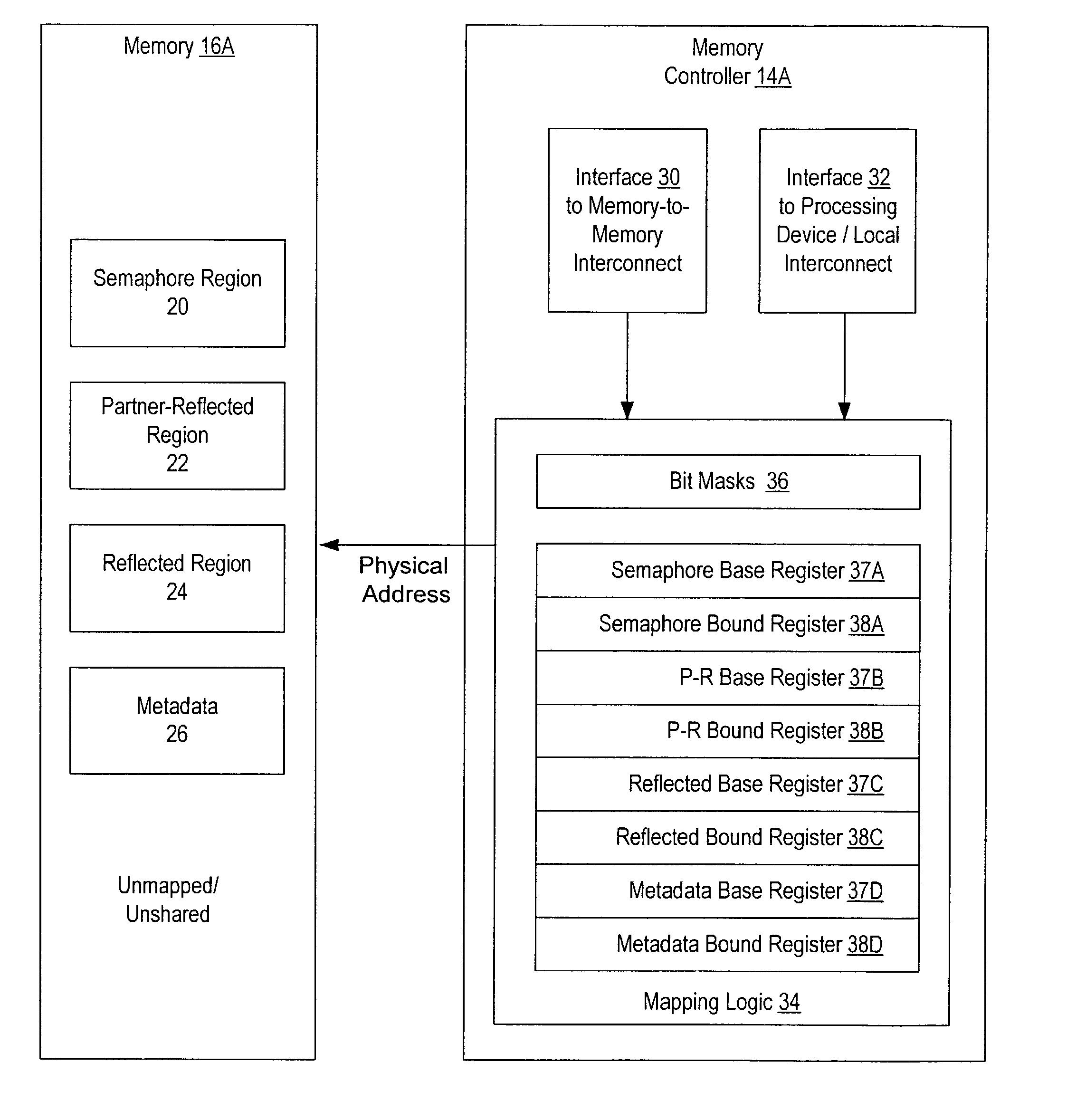

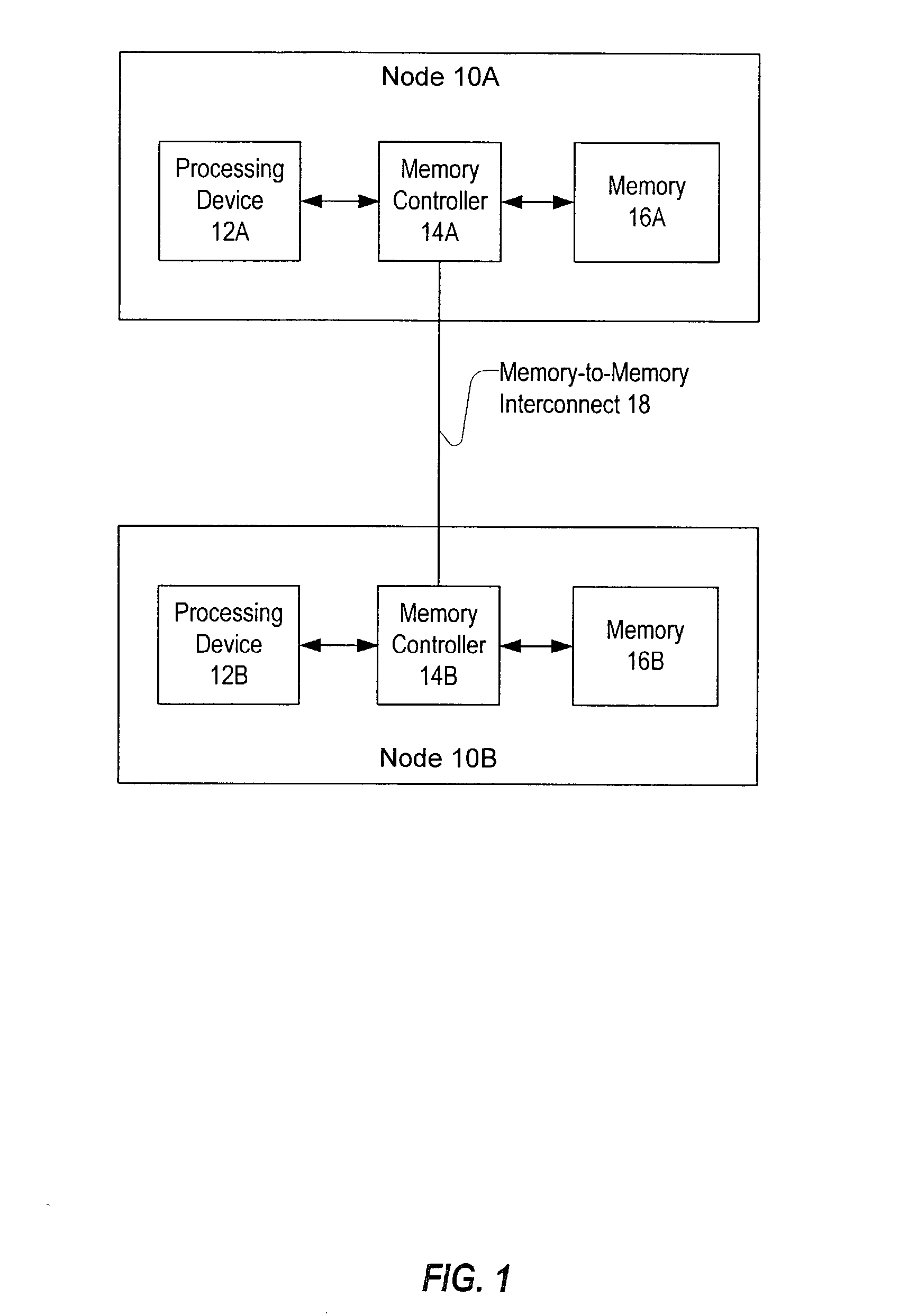

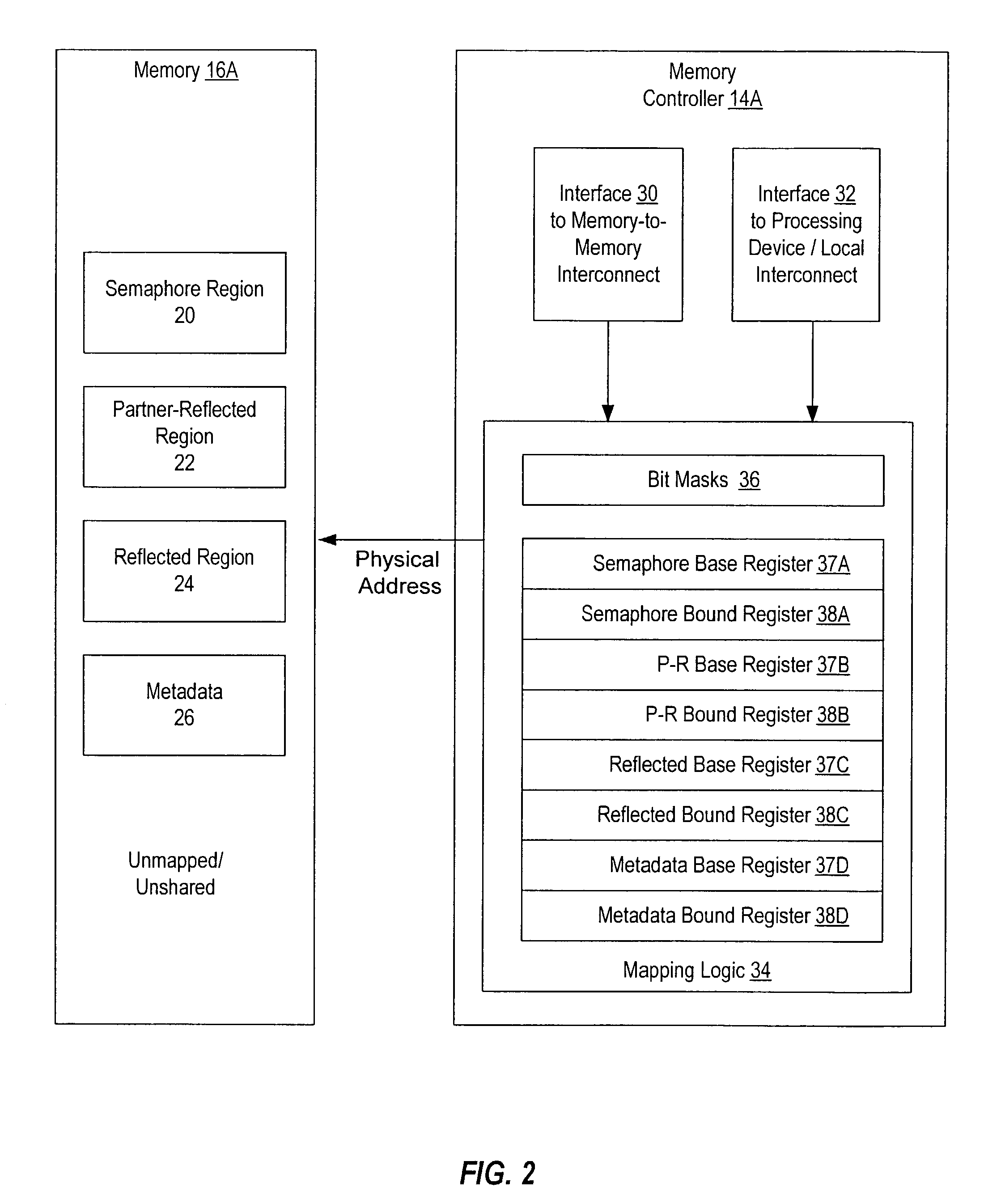

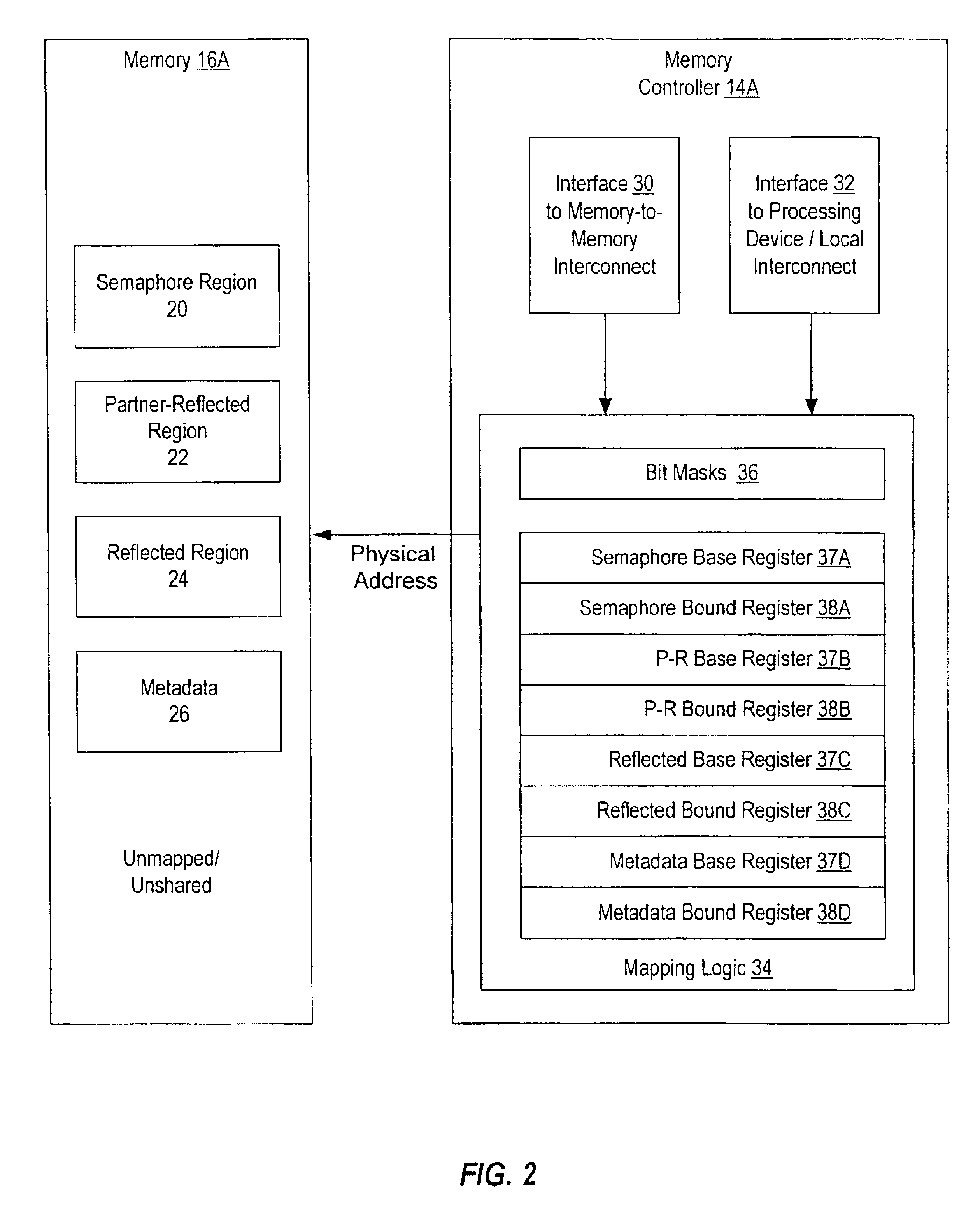

System and method for implementing shared memory regions in distributed shared memory systems

ActiveUS20040117579A1Memory adressing/allocation/relocationDigital computer detailsShared memoryDistributed shared memory

Various embodiments of systems and methods for implementing shared memory regions in a distributed shared memory system may involve implementing several different shared memory regions in each distributed shared memory node. Each node may reflect write access requests targeting those shared memory regions to one or more other nodes, depending on which shared region is targeted (e.g., requests targeting one region may be reflected to a single other node while requests targeting other regions may be reflected to more than one other node). A node's completion of the requested write access locally may be dependent on the completion of the write access in the other nodes, depending on which shared memory region is targeted.

Owner:ORACLE INT CORP

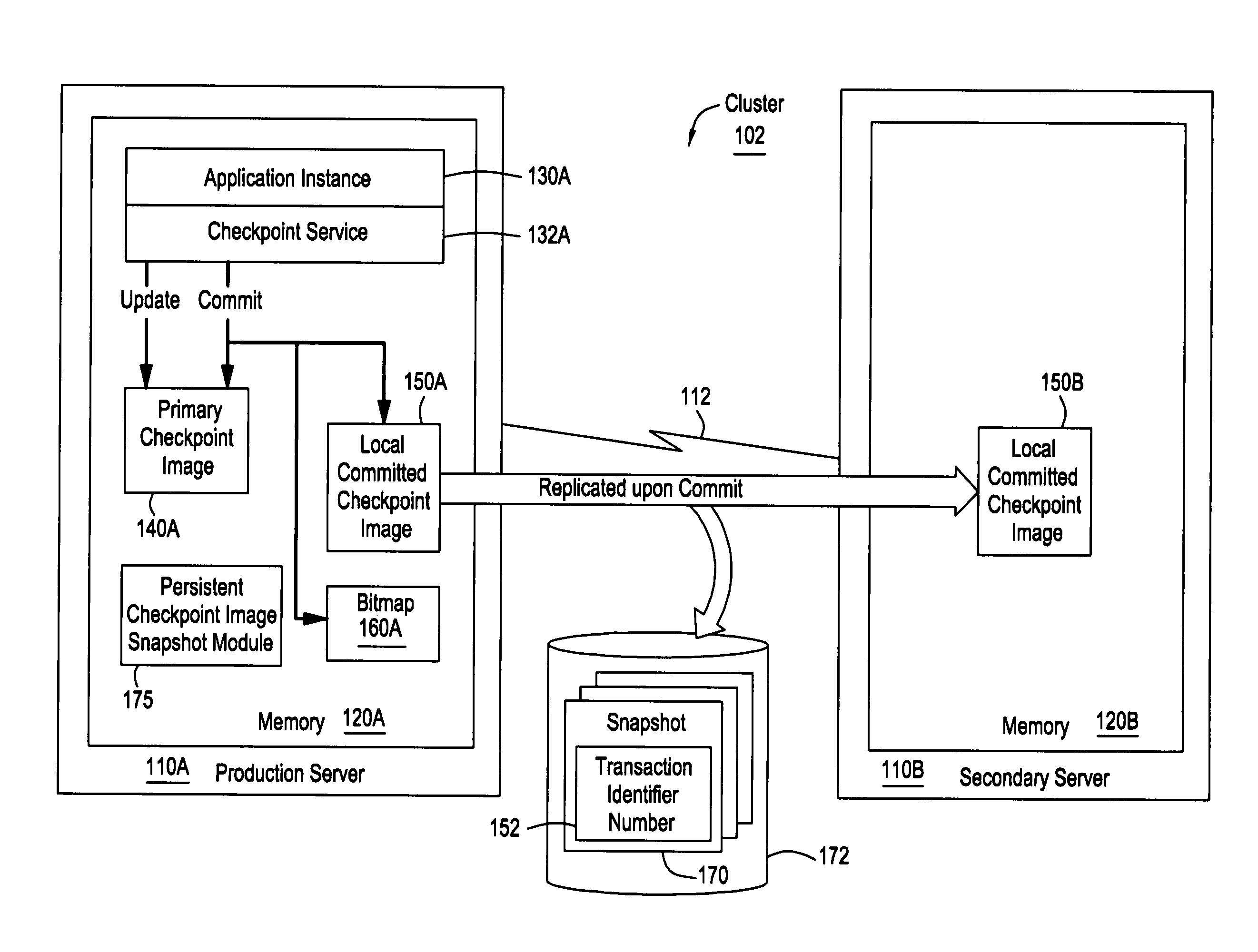

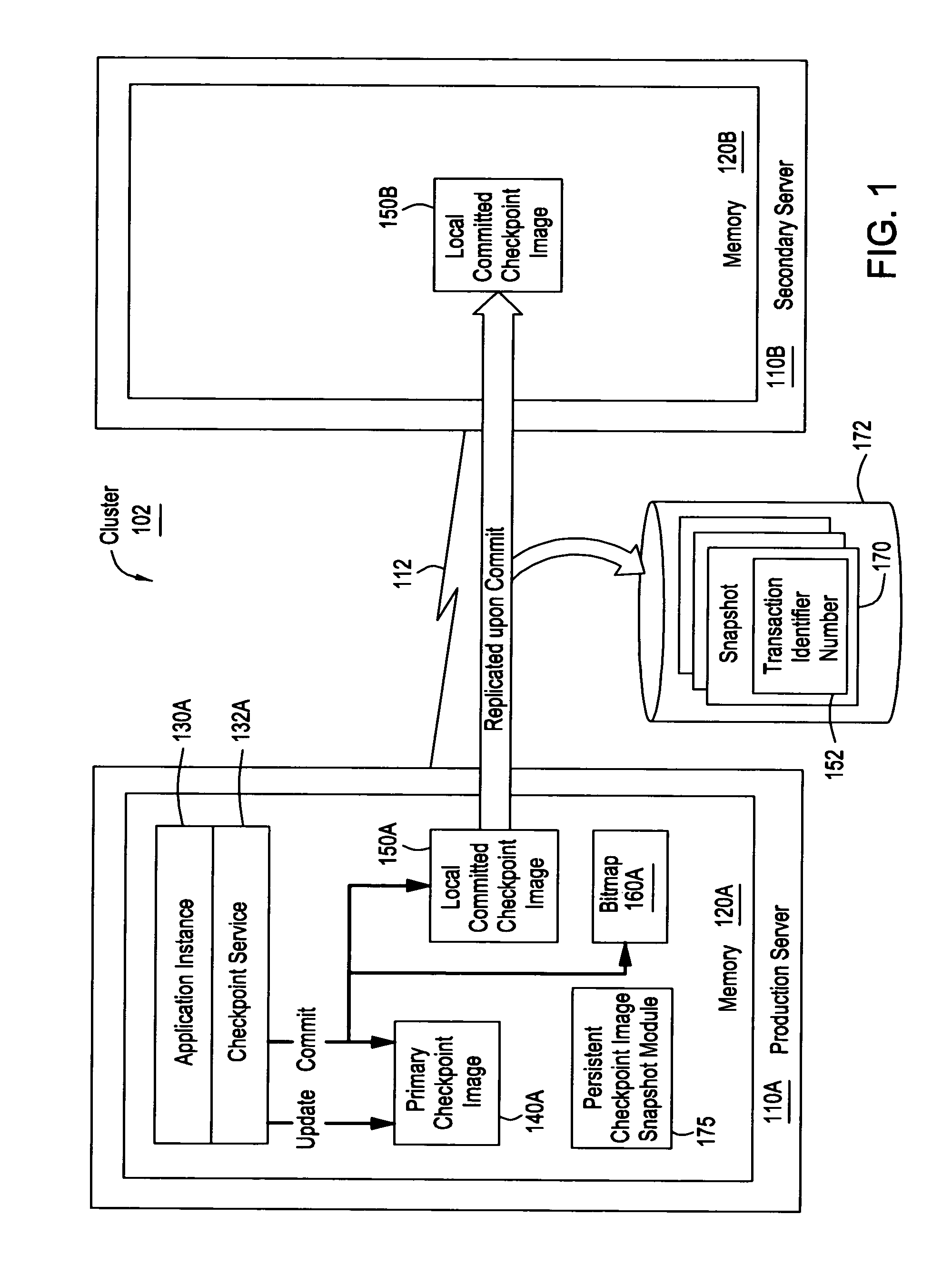

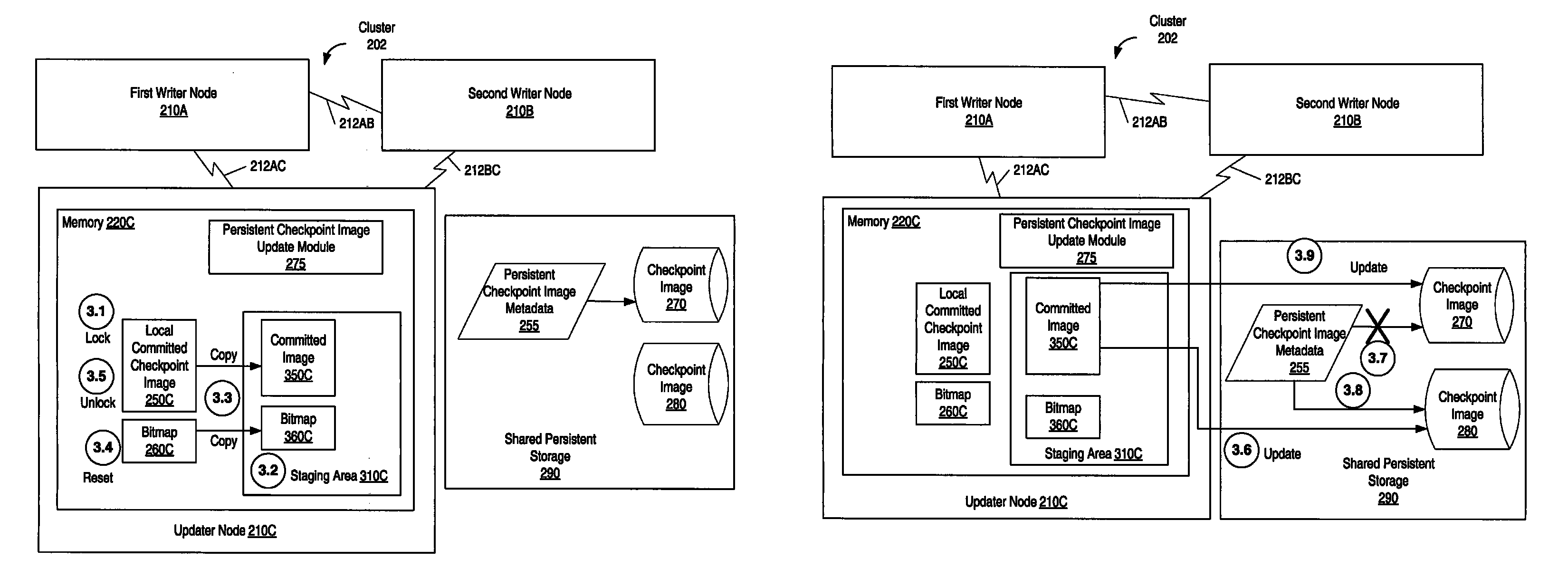

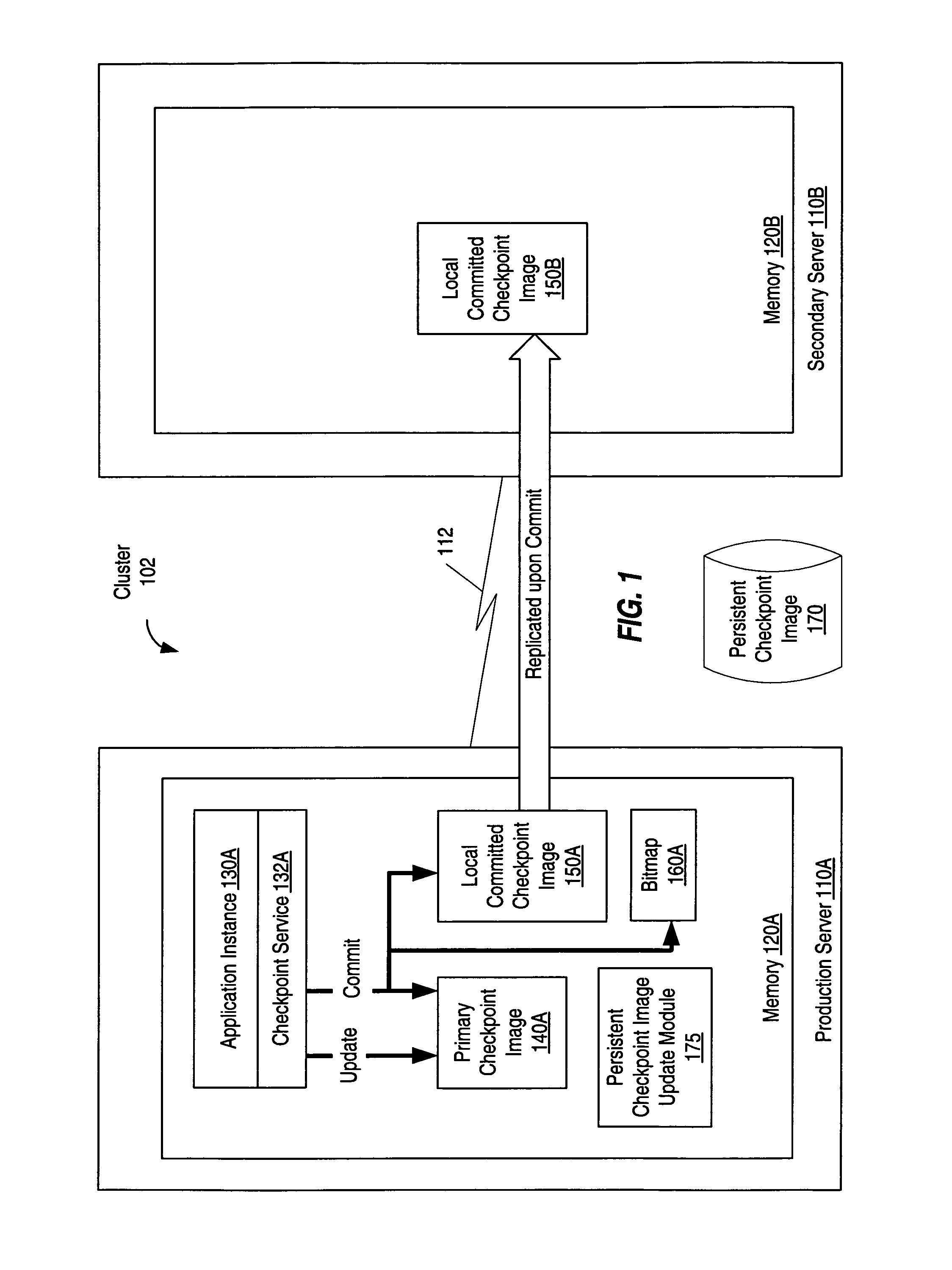

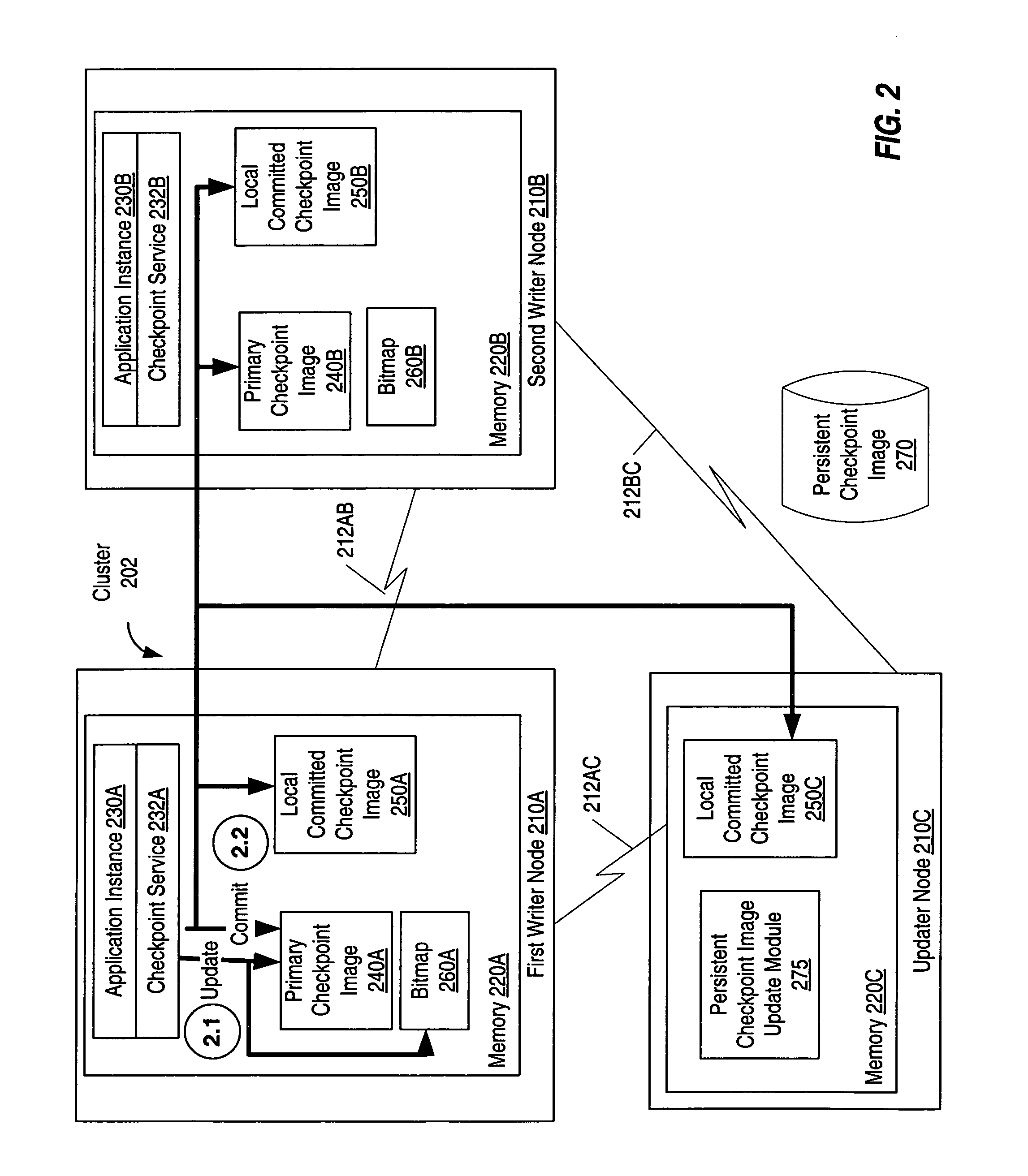

Method and apparatus for creating and using persistent images of distributed shared memory segments and in-memory checkpoints

InactiveUS7779295B1Promote recoveryEffect application performanceError detection/correctionTelecommunications linkDistributed Computing Environment

A method and apparatus that enable quick recovery from failure or restoration of an application state of one or more nodes, applications, and / or communication links in a distributed computing environment, such as a cluster. Recovery or restoration is facilitated by regularly saving persistent images of the in-memory checkpoint data and / or of distributed shared memory segments using snapshots of the committed checkpoint data. When one or more nodes fail, the snapshots can be read and used to restart the application in the most recently-saved state prior to the failure or rollback the application to an earlier state.

Owner:SYMANTEC OPERATING CORP

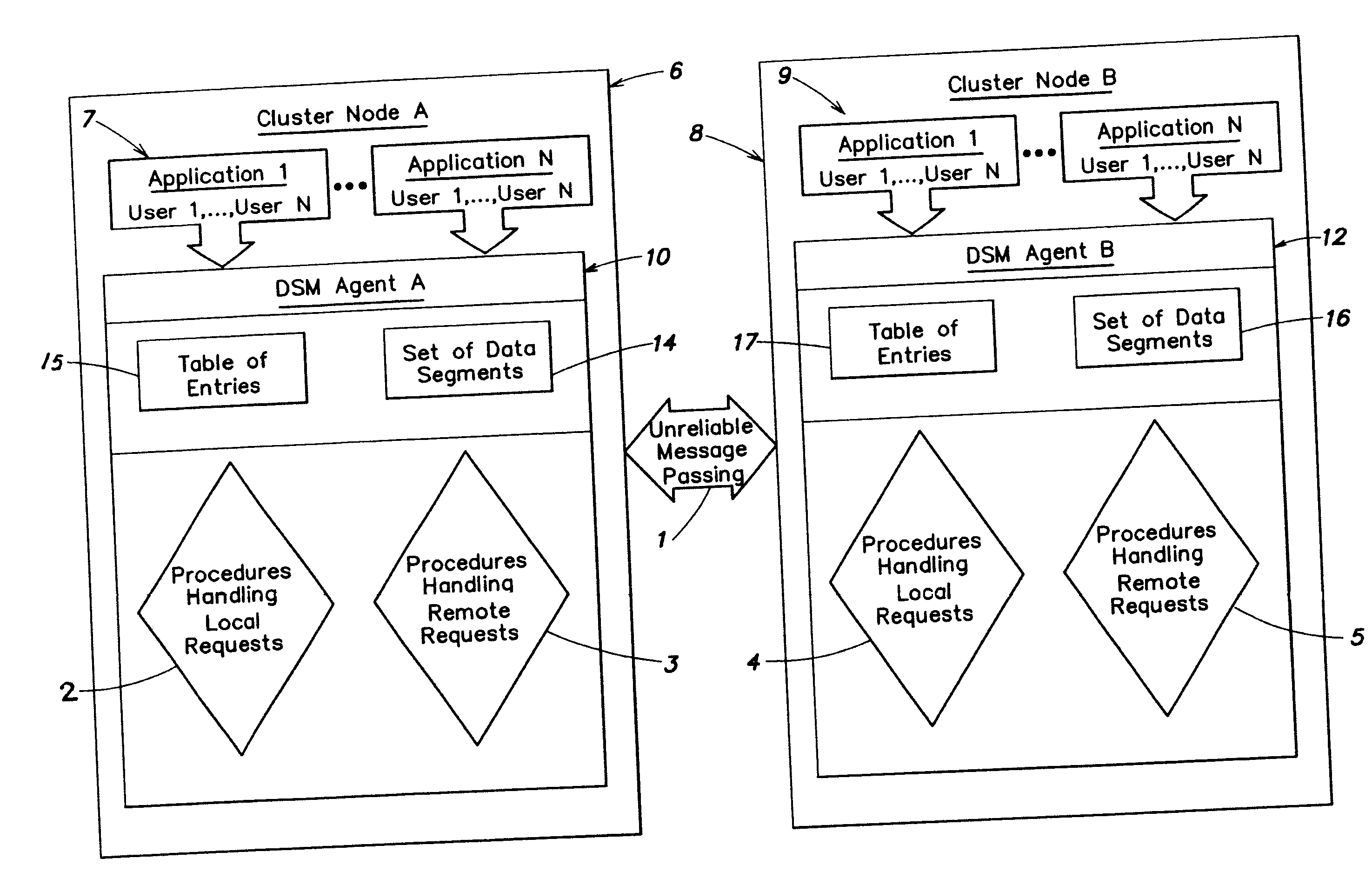

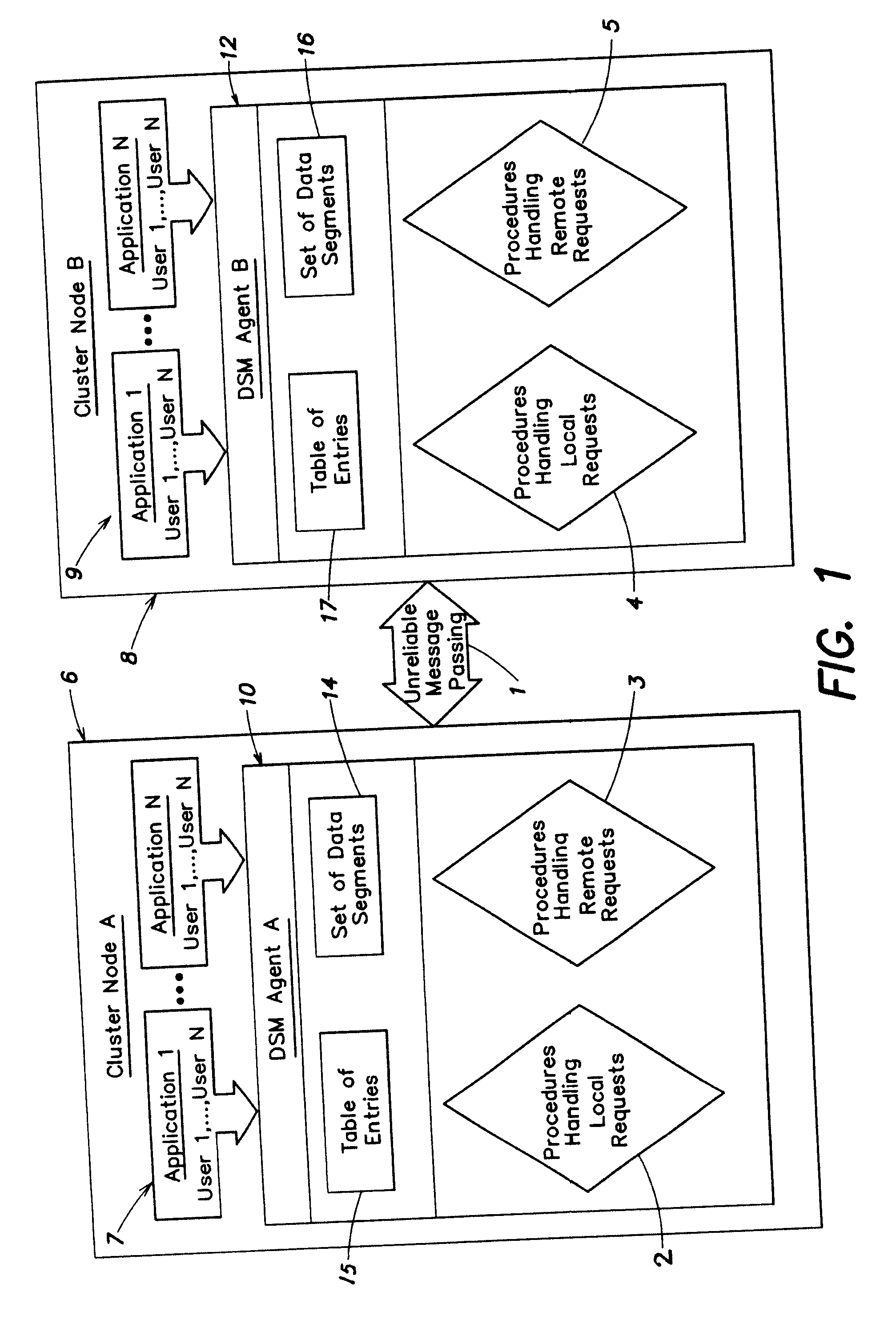

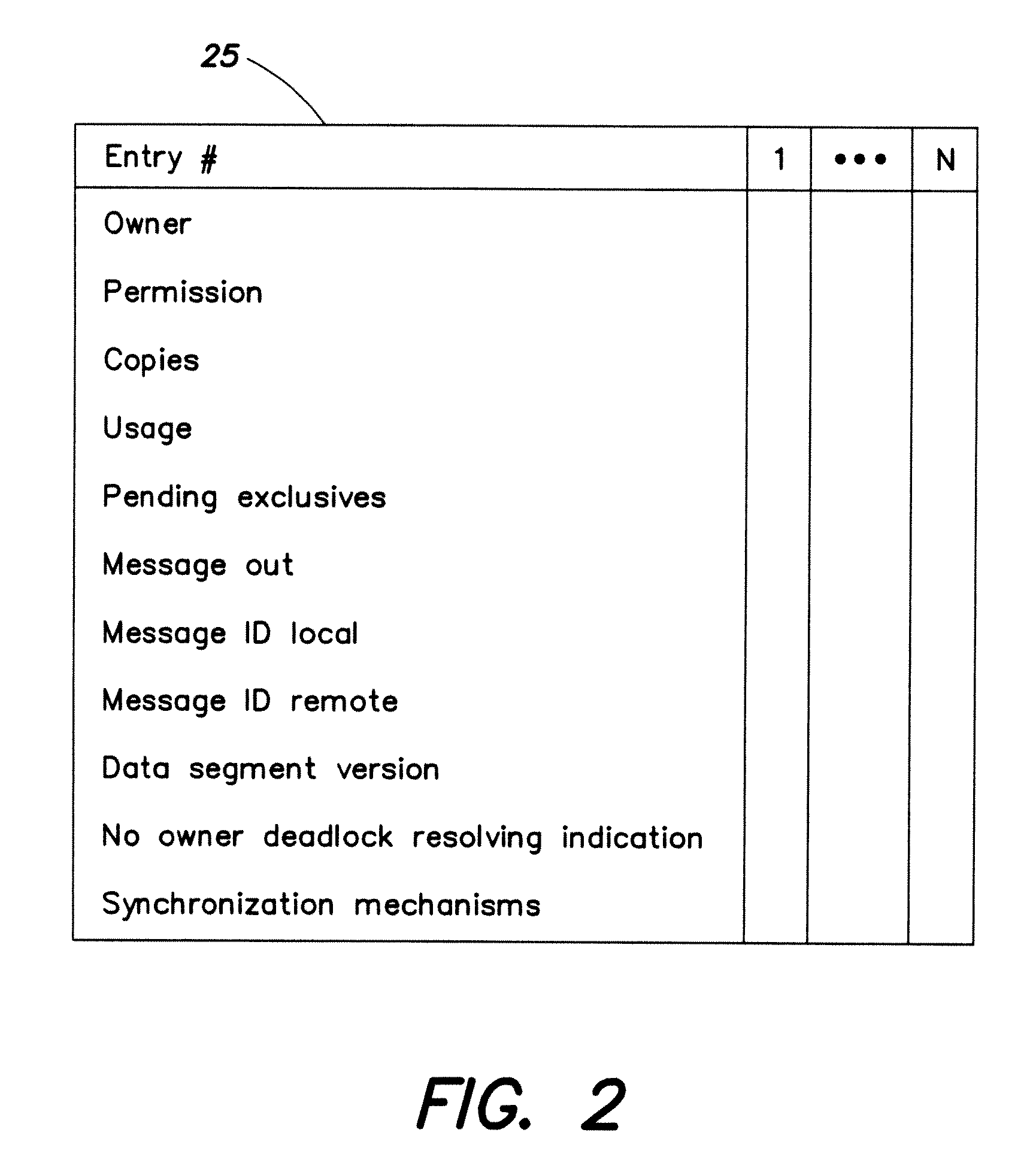

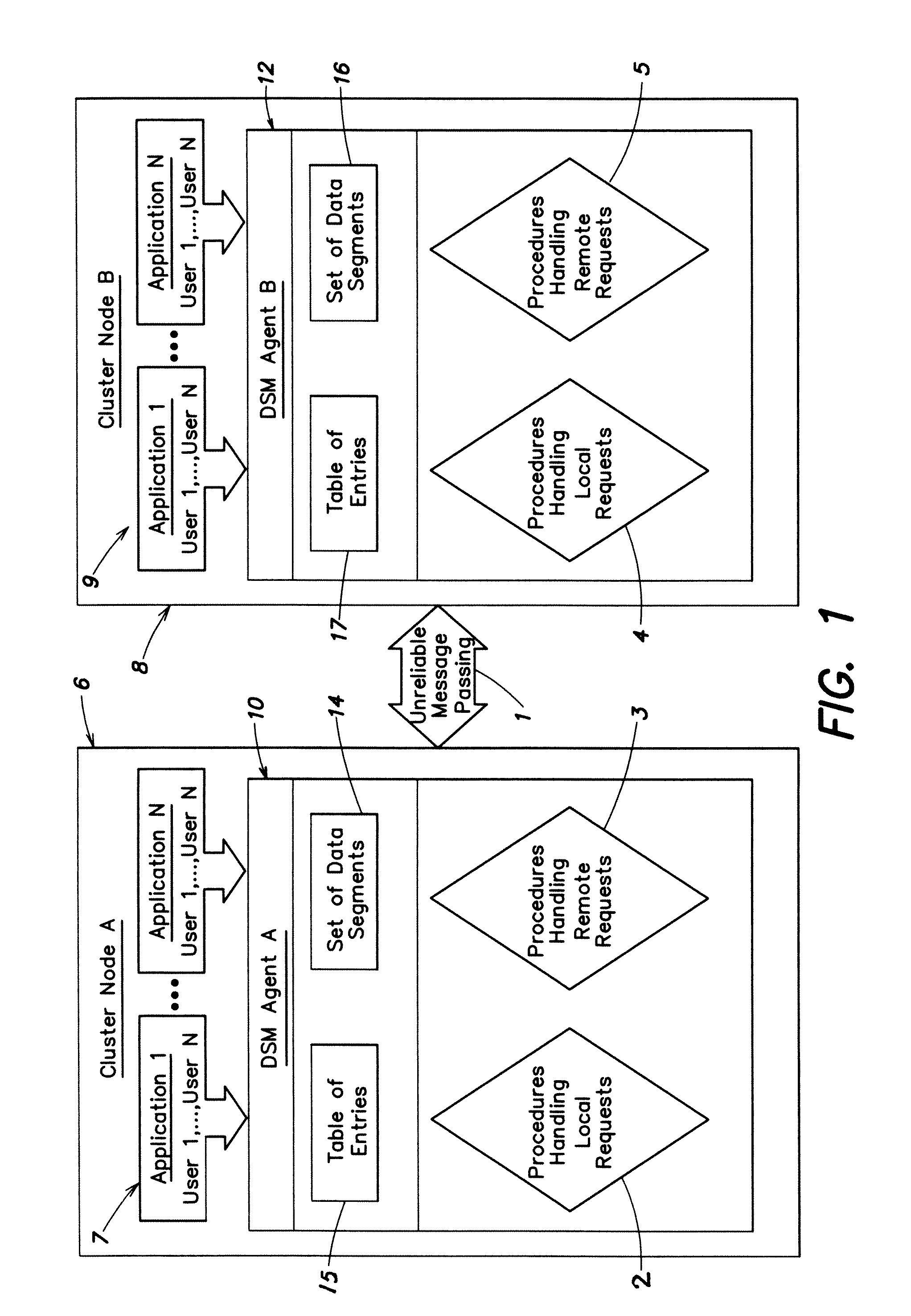

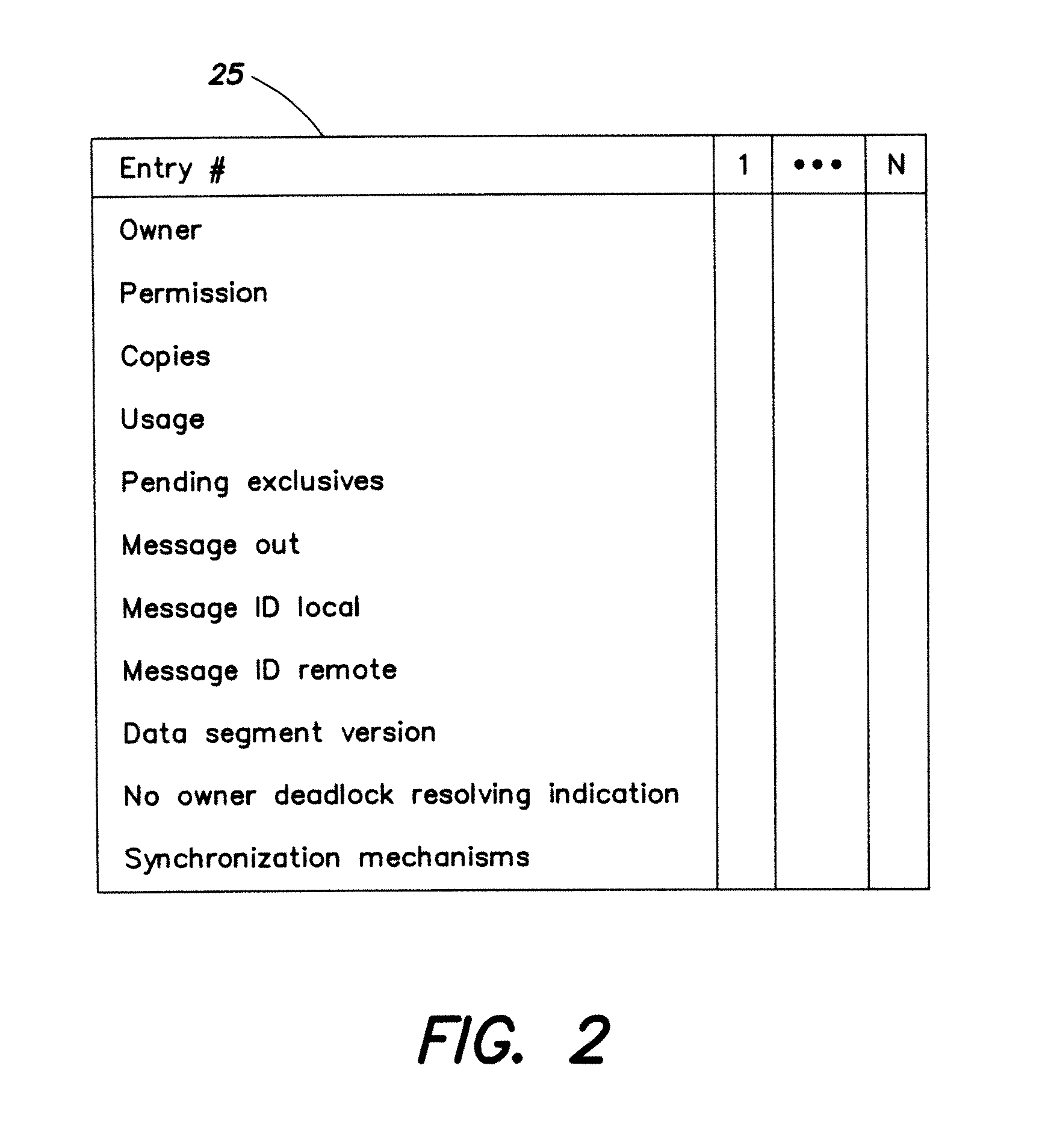

Distributed shared memory

InactiveUS20120272012A1Memory architecture accessing/allocationMemory adressing/allocation/relocationData segmentComputer cluster

Systems and methods for implementing a distributed shared memory (DSM) in a computer cluster in which an unreliable underlying message passing technology is used, such that the DSM efficiently maintains coherency and reliability. DSM agents residing on different nodes of the cluster process access permission requests of local and remote users on specified data segments via handling procedures, which provide for recovering of lost ownership of a data segment while ensuring exclusive ownership of a data segment among the DSM agents detecting and resolving a no-owner messaging deadlock, pruning of obsolete messages, and recovery of the latest contents of a data segment whose ownership has been lost.

Owner:INT BUSINESS MASCH CORP

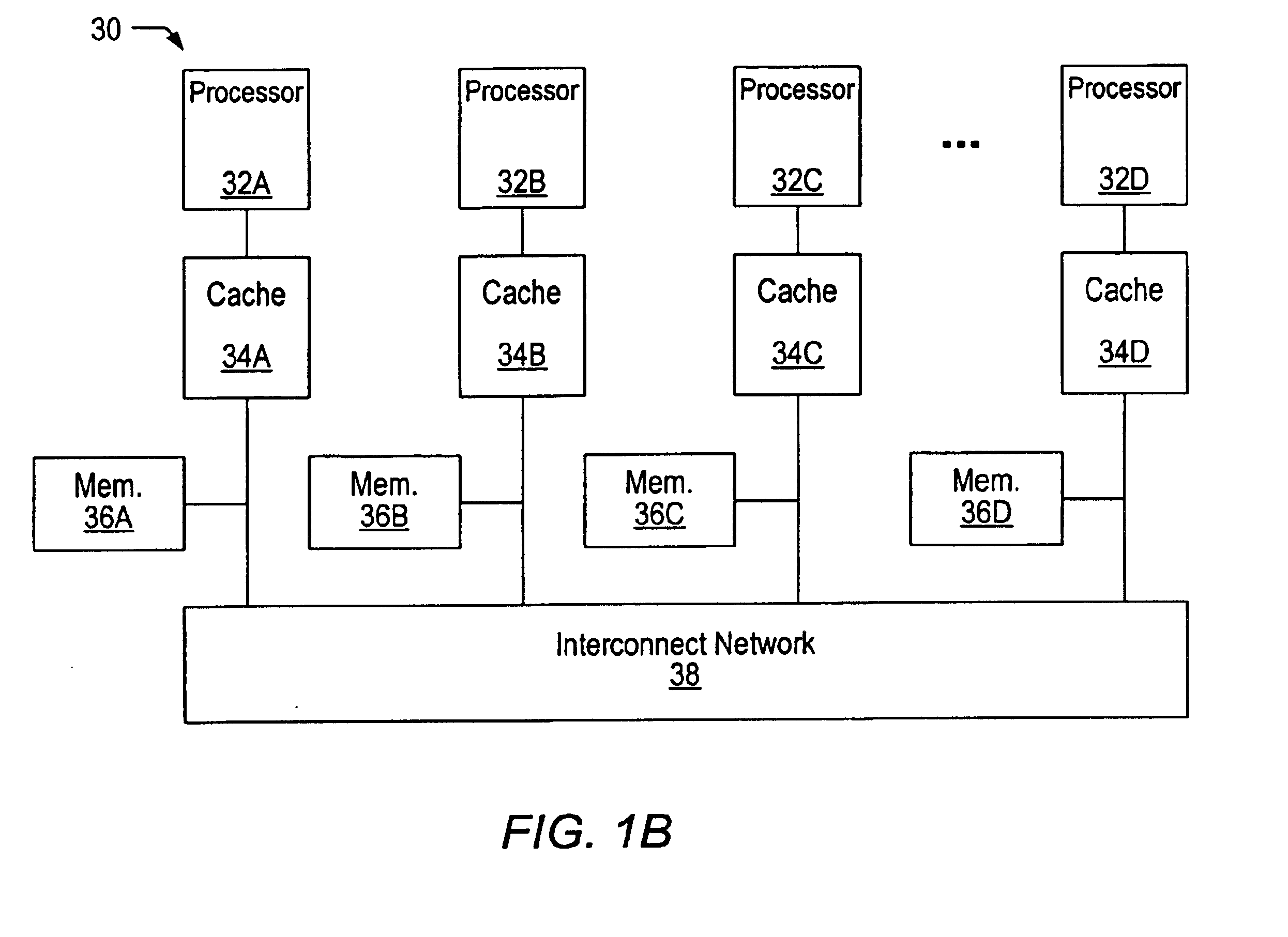

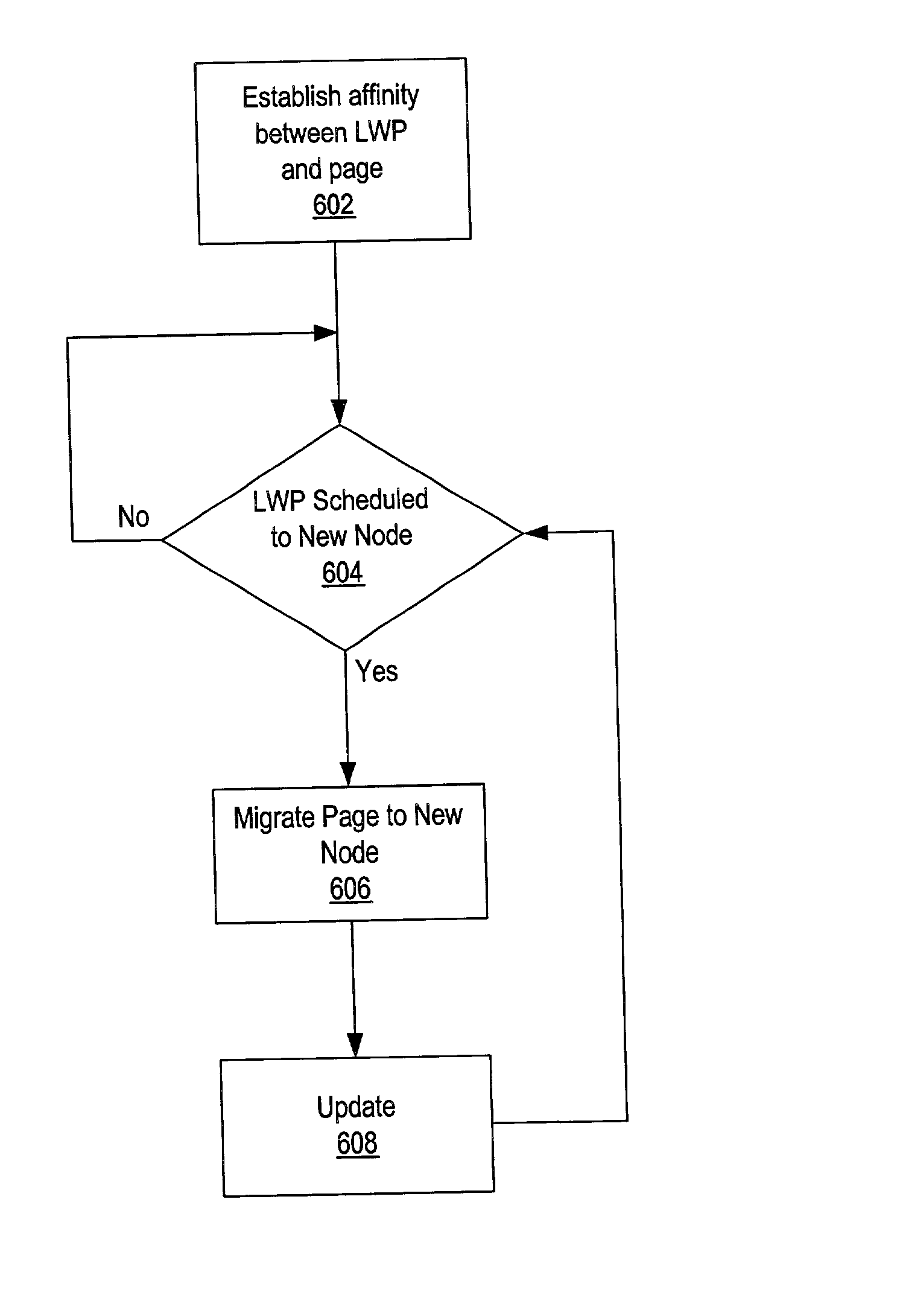

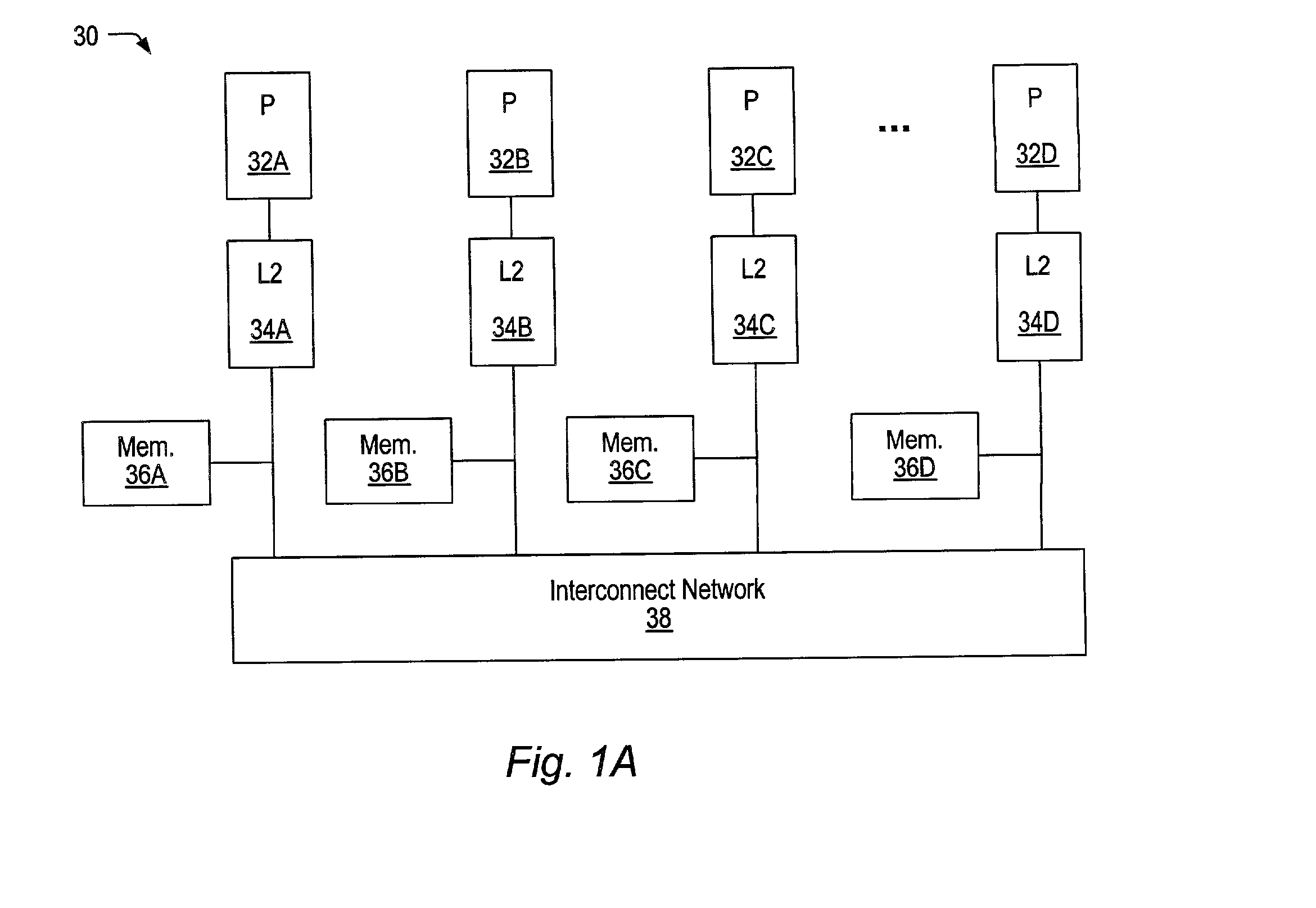

Dynamic memory placement policies for NUMA architecture

InactiveUS6871219B2Memory adressing/allocation/relocationMultiple digital computer combinationsLight-weight processMulti processor

A distributed shared memory multiprocessor computer system utilizes page placement policies to reduce data access latencies. Pages of memory are allocated to nodes in a distributed shared memory multiprocessor computer system. A placement policy database is maintained which indicates a placement policy for each page in the system. Upon an access to a page, the placement policy corresponding to the accessed page is determined and the indicated policy is acted upon. A Migrate on Next Touch policy provides that the next access to a page with this policy will cause the page to migrate to the node of the accessing CPU. A Memory Follows Lightweight Process (LWP) policy ensures that pages within a given address range are always local to a specified LWP. A Migrate on Every Touch policy provides that pages within a given address range are migrated to an accessing CPU on every touch. A Replicate on Remote Touch policy provides for replication of a page in the memory of each accessing CPU's domain. Finally, a Replicate on All policy provides that upon an access to a given page, that page is replicated on all nodes in the system.

Owner:ORACLE INT CORP

Dynamic memory placement policies for NUMA architecture

InactiveUS20020129115A1Flexible placementMemory adressing/allocation/relocationMultiple digital computer combinationsLight-weight processMulti processor

A distributed shared memory multiprocessor computer system utilizes page placement policies to reduce data access latencies. Pages of memory are allocated to nodes in a distributed shared memory multiprocessor computer system. A placement policy database is maintained which indicates a placement policy for each page in the system. Upon an access to a page, the placement policy corresponding to the accessed page is determined and the indicated policy is acted upon. A Migrate on Next Touch policy provides that the next access to a page with this policy will cause the page to migrate to the node of the accessing CPU. A Memory Follows Lightweight Process (LWP) policy ensures that pages within a given address range are always local to a specified LWP. A Migrate on Every Touch policy provides that pages within a given address range are migrated to an accessing CPU on every touch. A Replicate on Remote Touch policy provides for replication of a page in the memory of each accessing CPU's domain. Finally, a Replicate on All policy provides that upon an access to a given page, that page is replicated on all nodes in the system.

Owner:ORACLE INT CORP

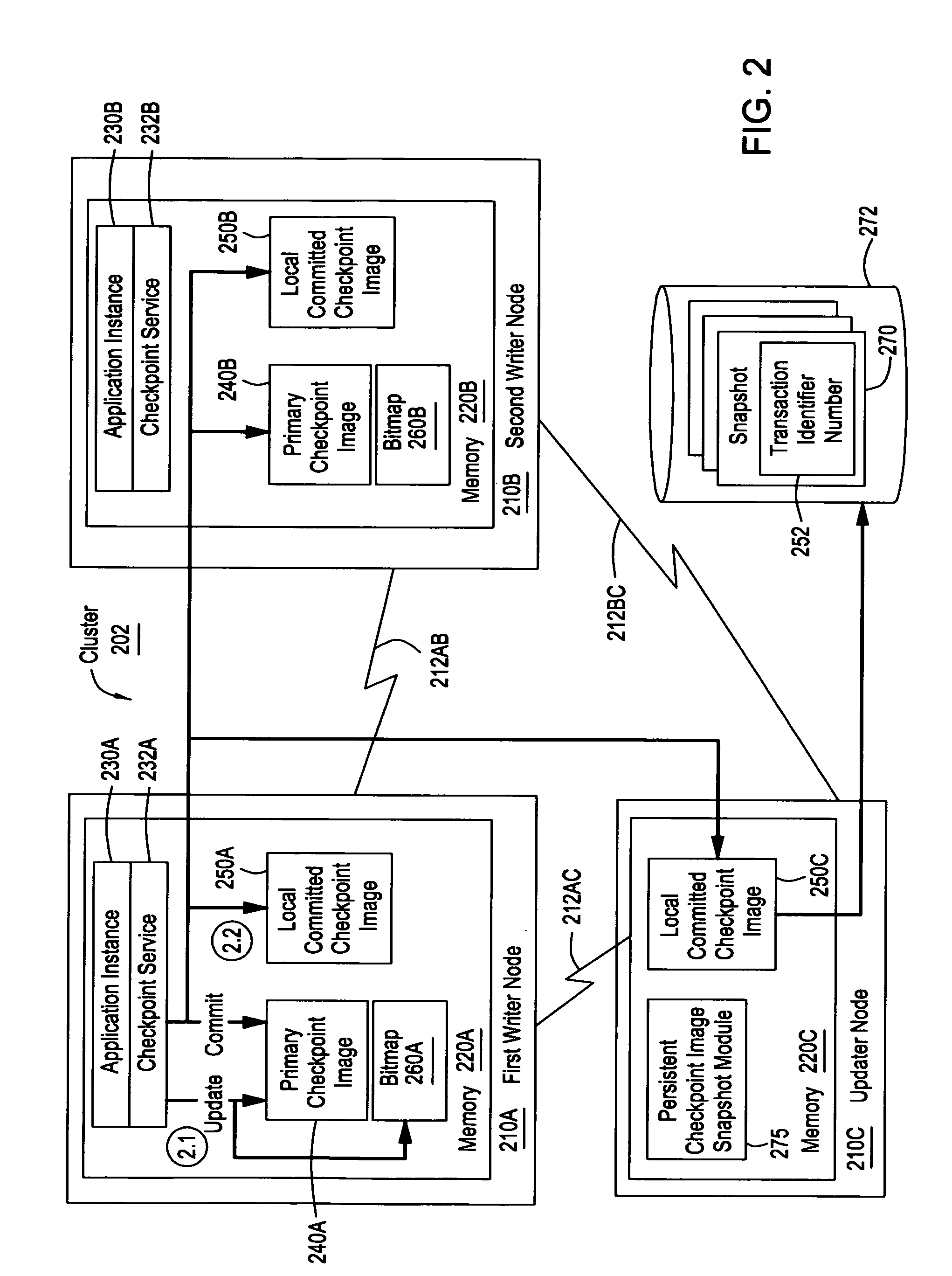

Persistent images of distributed shared memory segments and in-memory checkpoints

ActiveUS8099627B1Promote recoveryEffect application performanceError detection/correctionTelecommunications linkDistributed Computing Environment

A method, system, computer system, and computer-readable medium that enable quick recovery from failure of one or more nodes, applications, and / or communication links in a distributed computing environment, such as a cluster. Recovery is facilitated by regularly saving persistent images of the in-memory checkpoint data and / or of distributed shared memory segments. The persistent checkpoint images are written asynchronously so that applications can continue to write data even during creation and / or updating the persistent image and with minimal effect on application performance. Furthermore, multiple updater nodes can simultaneously update the persistent checkpoint image using normal synchronization operations. When one or more nodes fail, the persistent checkpoint image can be read and used to restart the application in the most recently-saved state prior to the failure. The persistent checkpoint image can also be used to initialize the state of the application in a new node joining the distributed computing environment.

Owner:SYMANTEC OPERATING CORP

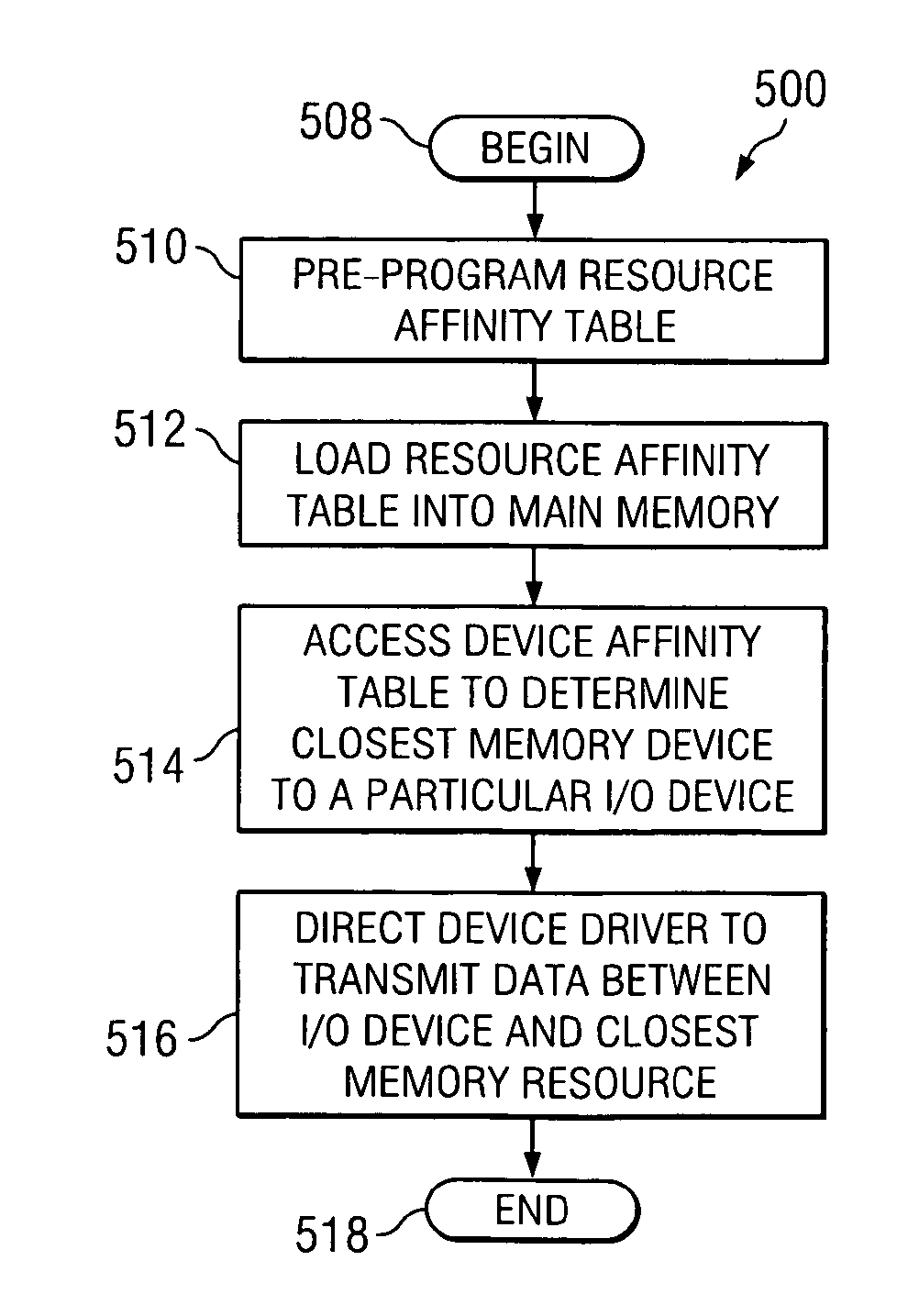

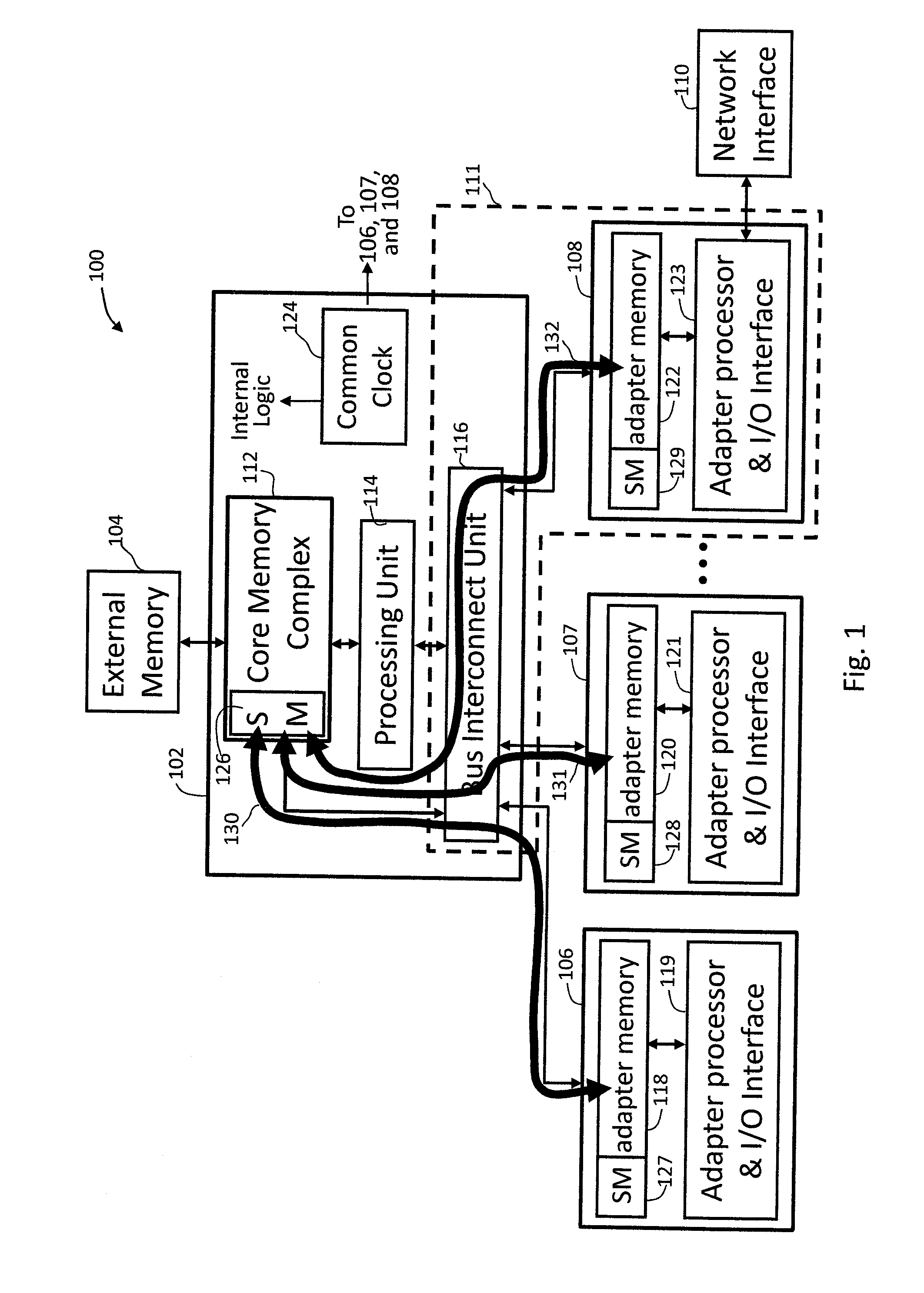

Optimized memory allocator for a multiprocessor computer system

ActiveUS20070233967A1Maximize total data transmittedImproving I/O performance measureMemory architecture accessing/allocationMemory systemsAccess timeMulti processor

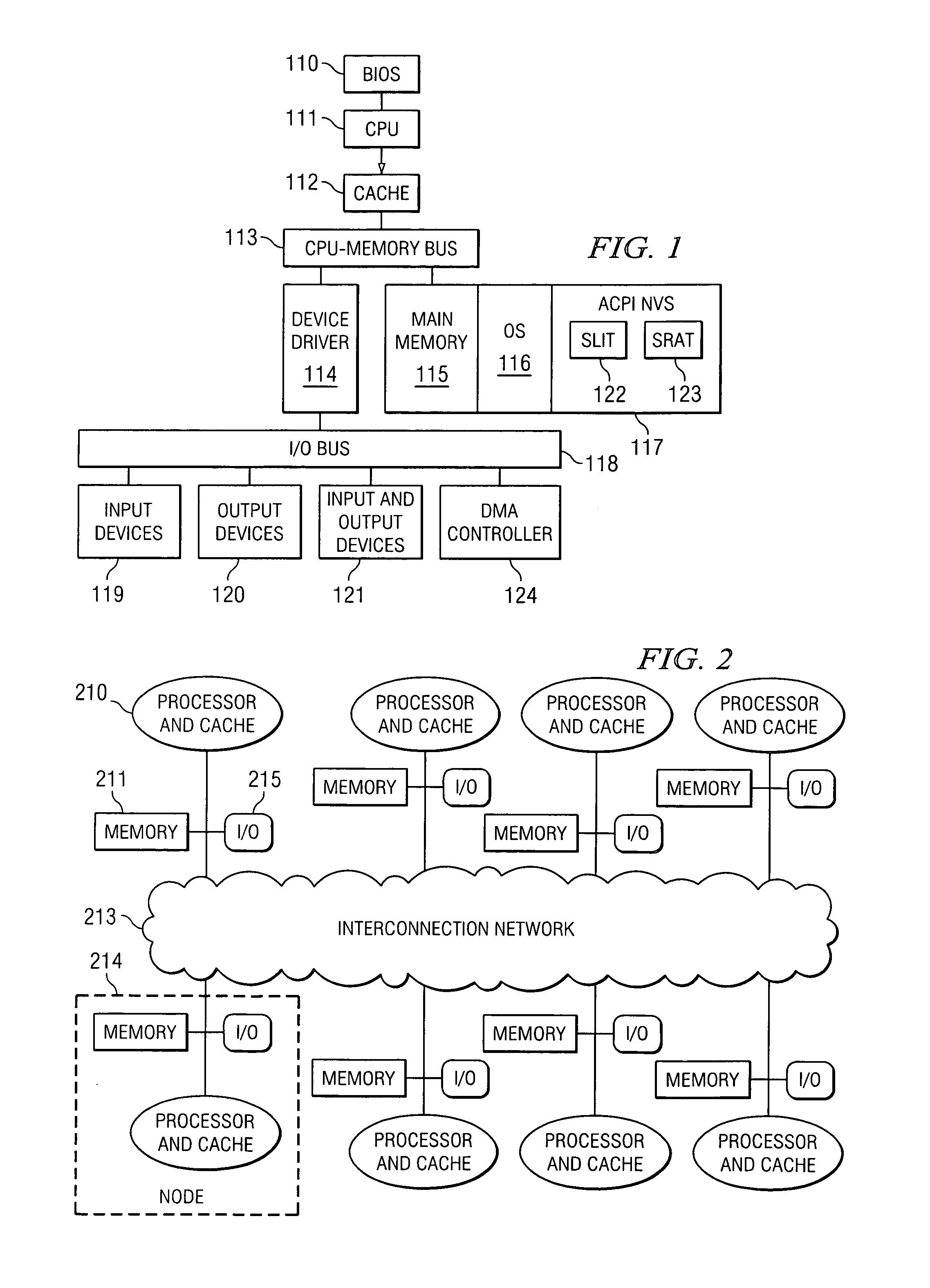

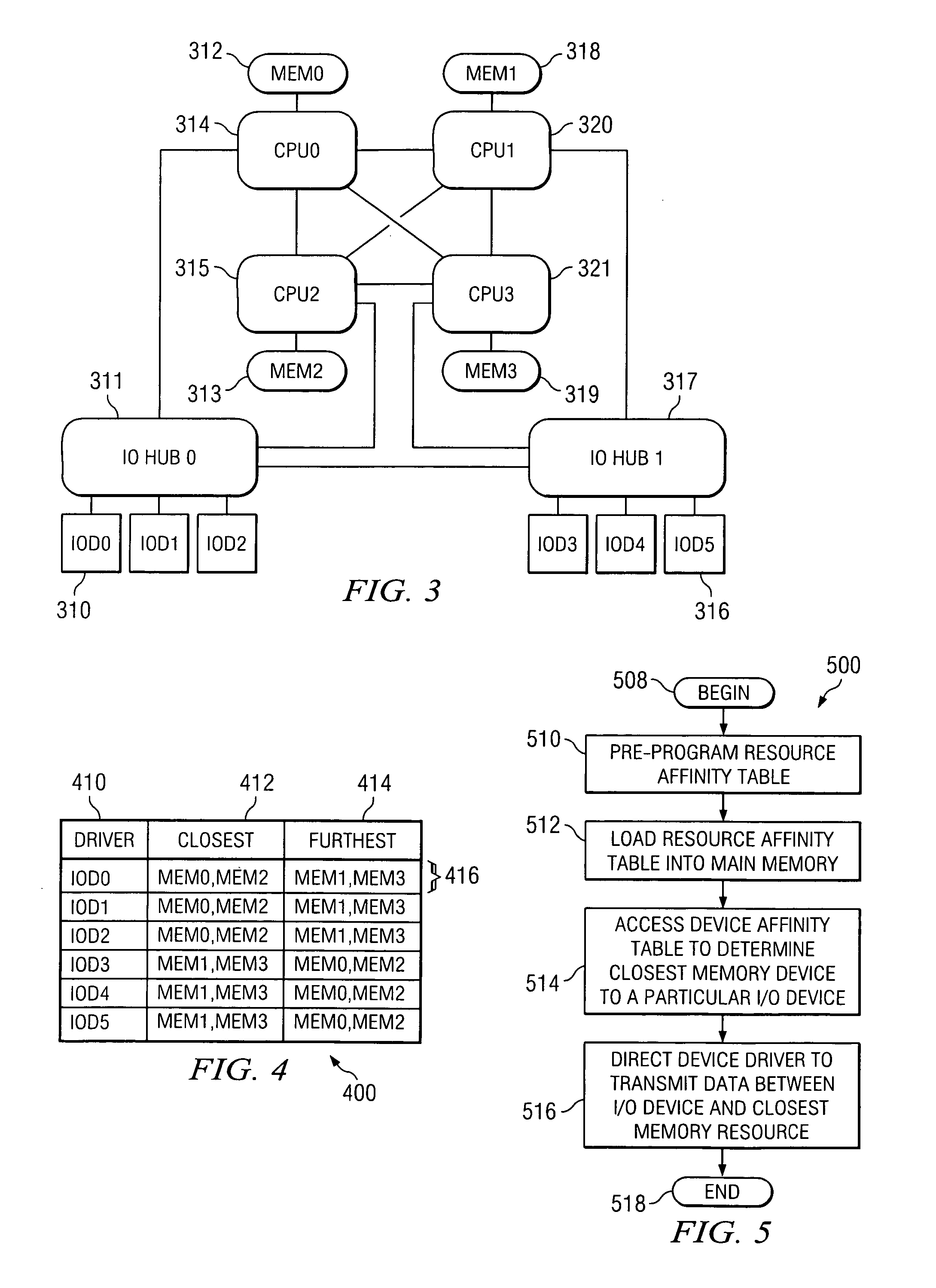

The present disclosure describes systems and methods for allocating memory in a multiprocessor computer system such as a non-uniform memory access (NUMA) machine having distribute shared memory. The systems and methods include allocating memory to input-output devices (I / O devices) based at least in part on which memory resource is physically closest to a particular I / O device. Through these systems and methods memory is allocated more efficiently in a NUMA machine. For example, allocating memory to an I / O device that i80s on the same node as a memory resource, reduces memory access time thereby maximizing data transmission. The present disclosure further describes a system and method for improving performance in a multiprocessor computer system by utilizing a pre-programmed device affinity table. The system and method includes listing the memory resources physically closest to each I / O device and accessing the device table to determine the closest memory resource to a particular I / O device. The system and method further includes directing a device driver to transmit data between the I / O device and the closest memory resource.

Owner:DELL PROD LP

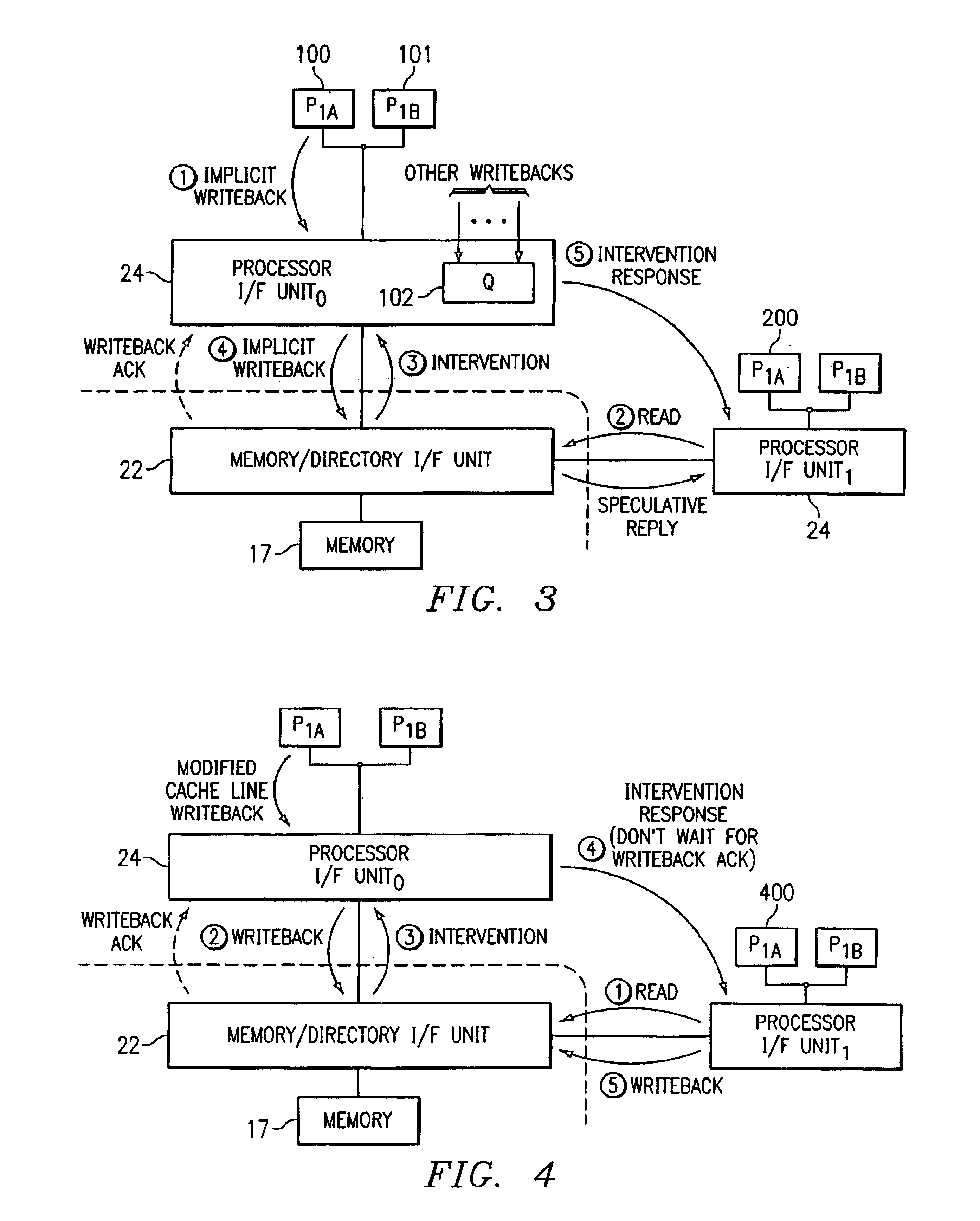

System and method for handling updates to memory in a distributed shared memory system

InactiveUS6915387B1Readily apparentEliminate and reduce disadvantageMemory adressing/allocation/relocationParallel computingComputerized system

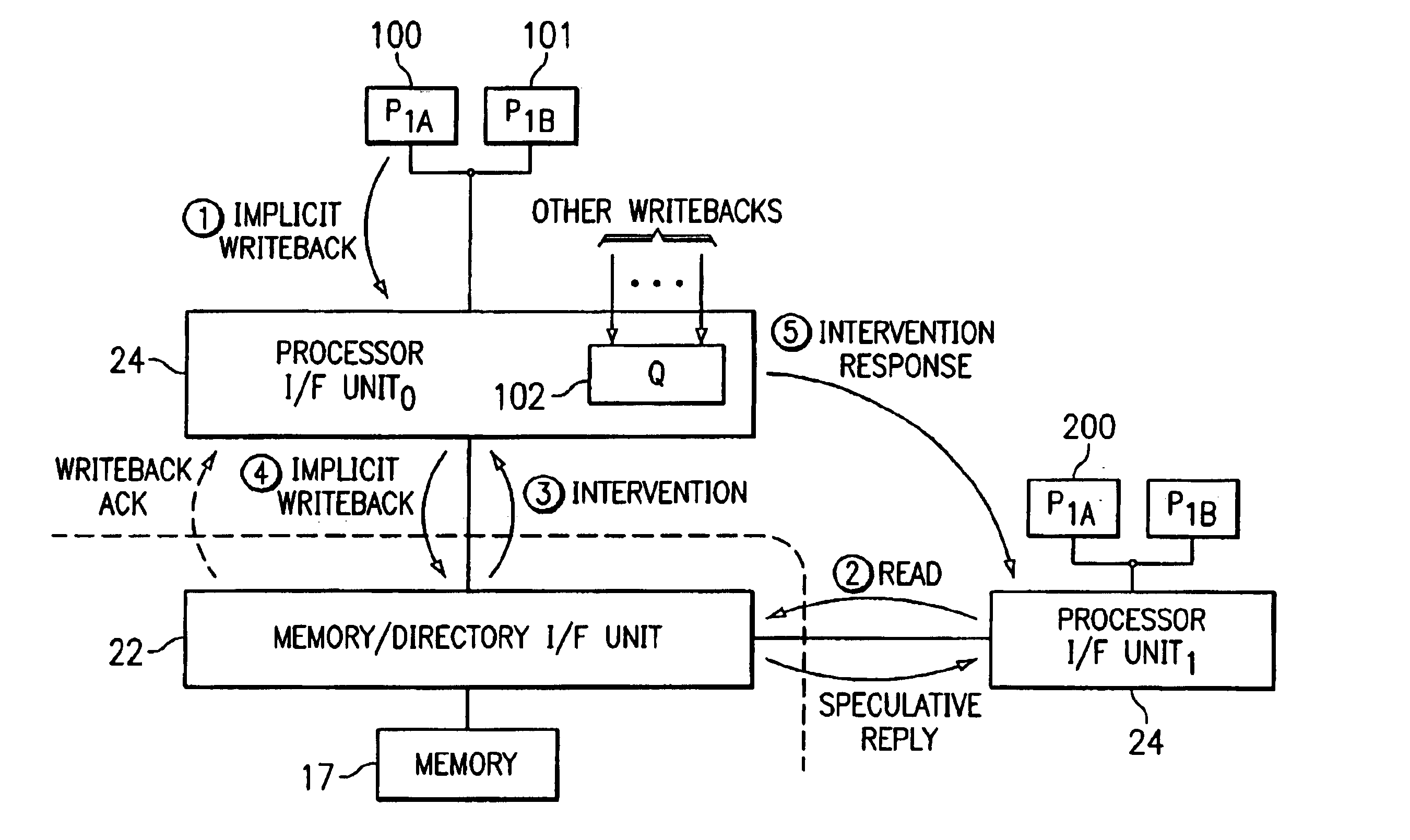

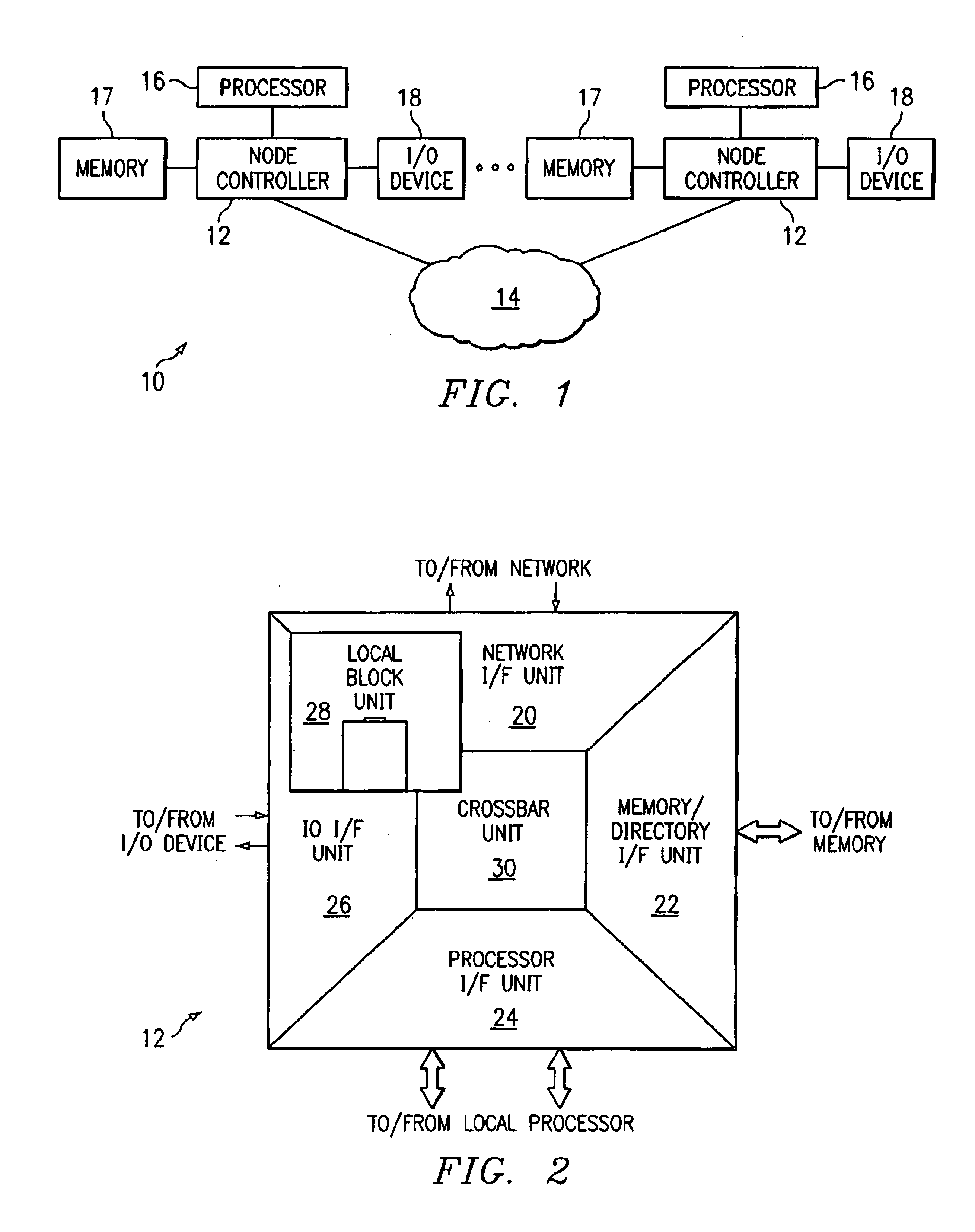

A processor (100) in a distributed shared memory computer system (10) receives ownership of data and initiates an initial update to memory request. A front side bus processor interface (24) forwards the initial update to memory request to a memory directory interface unit (22). The front side processor interface (24) may receive subsequent update to memory requests for the data from processors co-located on the same local bus. Front side bus processor interface (24) maintains a most recent subsequent update to memory in a queue (102). Once the data has been updated in its home memory (17), the memory directory interface unit (22) sends a writeback acknowledge to the front side bus processor interface (24). The most recent subsequent update to memory request in the queue (102) is then forwarded by the front side bus processor interface (24) to the memory directory interface unit (24) for processing.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP +1

Cache-flushing engine for distributed shared memory multi-processor computer systems

InactiveUS6874065B1Memory architecture accessing/allocationMemory adressing/allocation/relocationMulti processorParallel computing

A cache coherent distributed shared memory multi-processor computer system is provided with a cache-flushing engine which allows selective forced write-backs of dirty cache lines to the home memory. After a request is posted in the cache-flushing engine, a “flush” command is issued which forces the owner cache to write-back the dirty cache line to be flushed. Subsequently, a “flush request” is issued to the home memory of the memory block. The home node will acknowledge when the home memory is successfully updated. The cache-flushing engine operation will be interrupted when all flush requests are complete.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

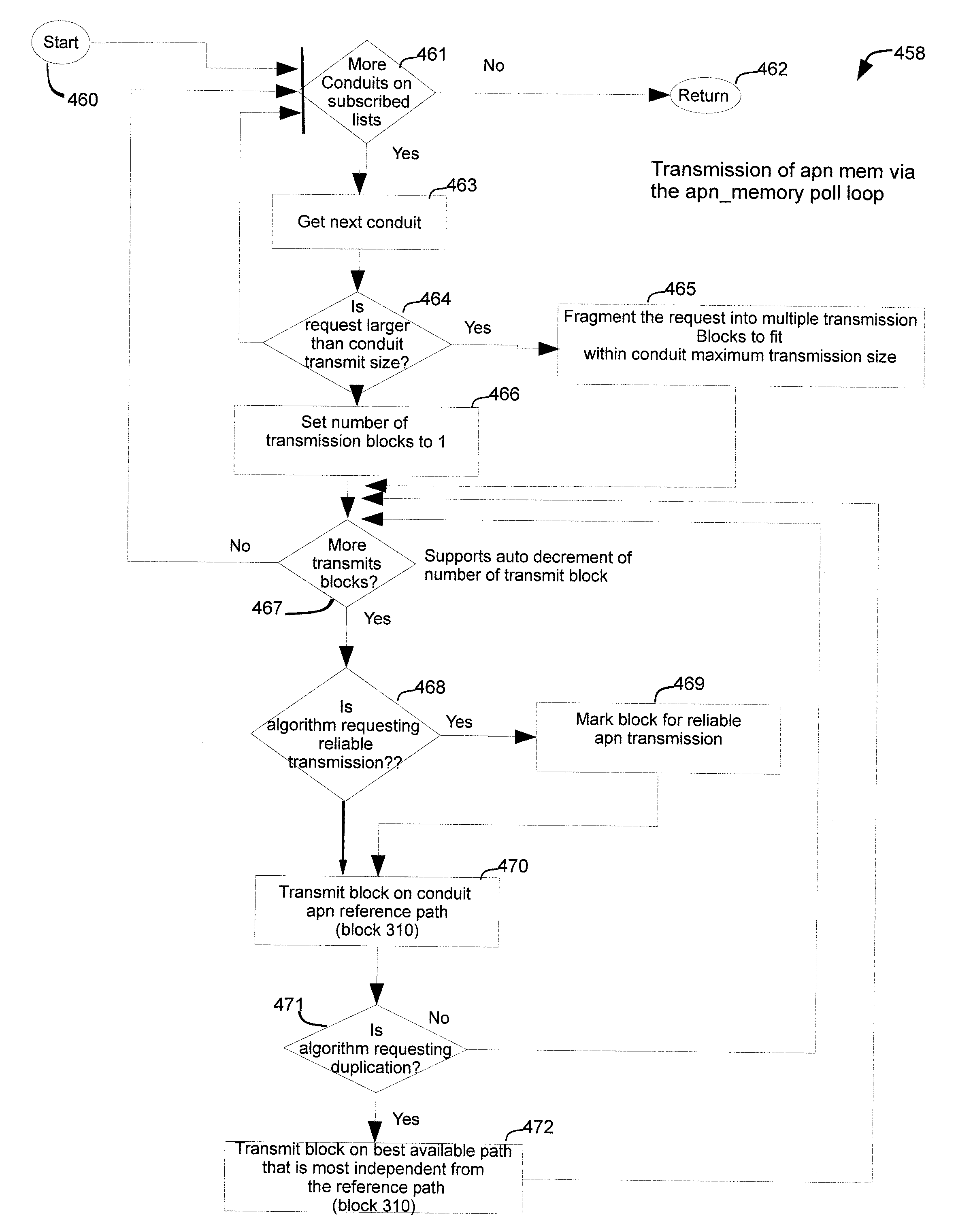

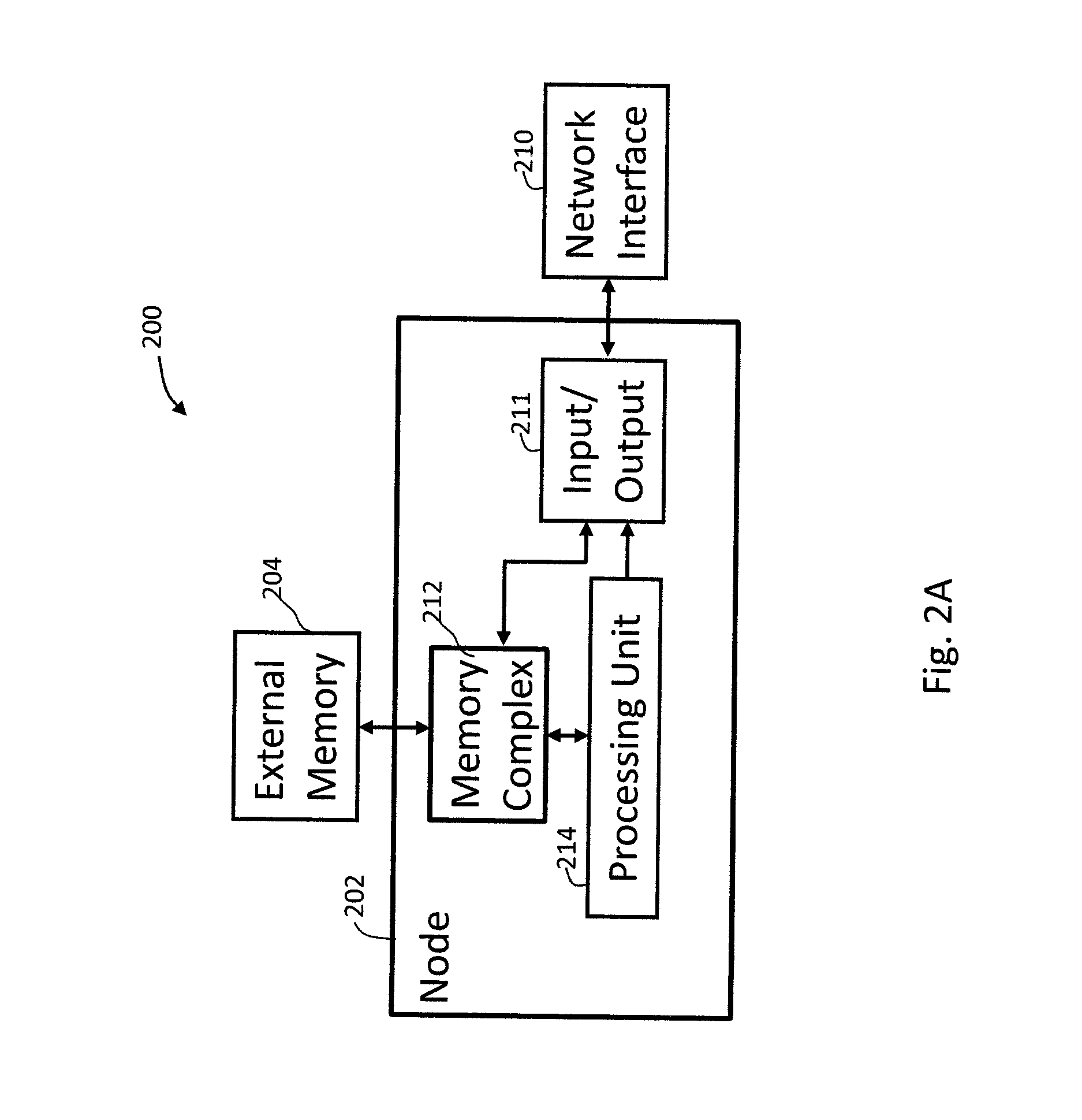

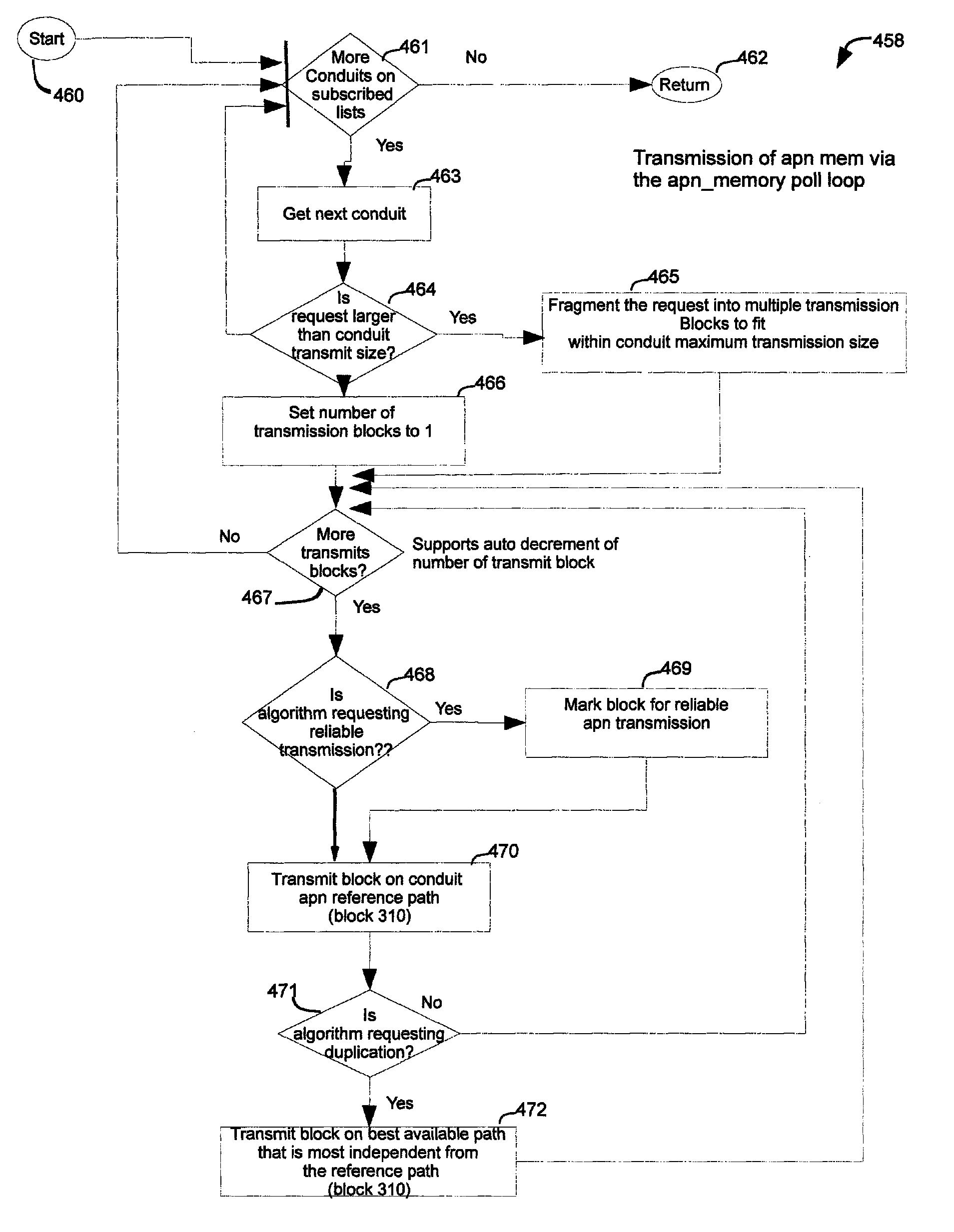

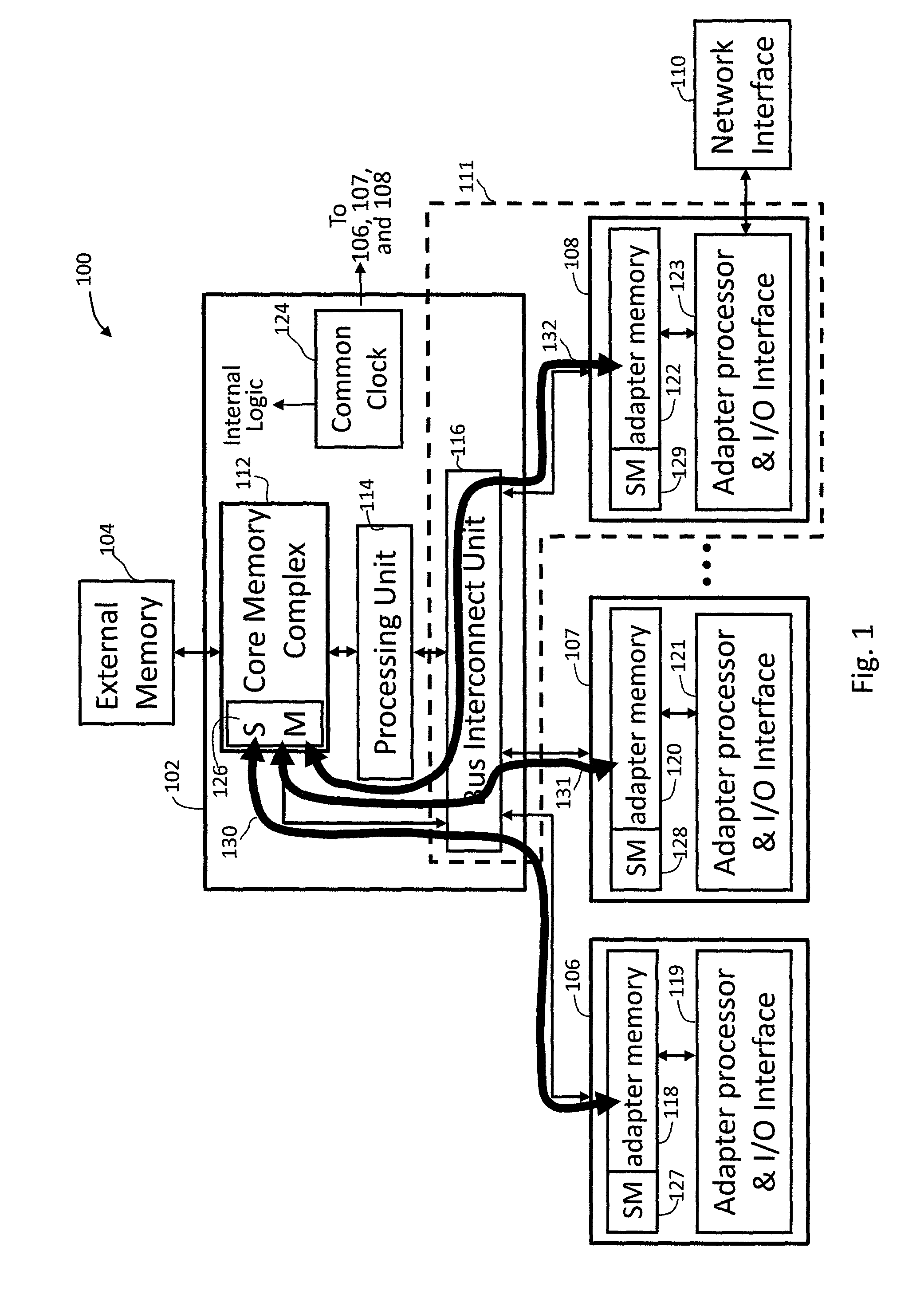

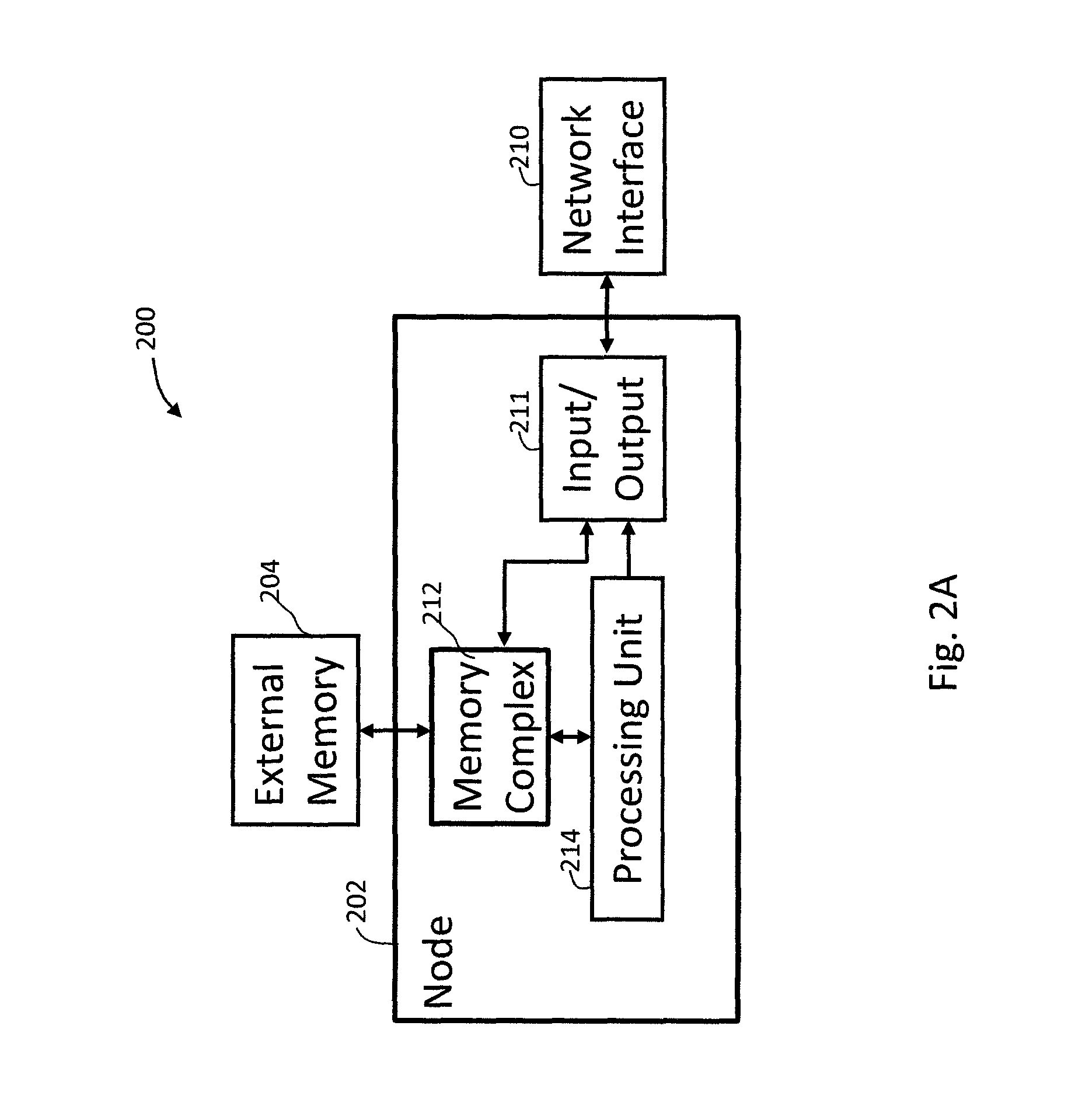

Adaptive Private Network Asynchronous Distributed Shared Memory Services

ActiveUS20120042032A1Improve performanceImprove reliabilitySynchronisation information channelsDigital computer detailsPacket lossDistributed memory

A highly predicable quality shared distributed memory process is achieved using less than predicable public and private internet protocol networks as the means for communications within the processing interconnect. An adaptive private network (APN) service provides the ability for the distributed memory process to communicate data via an APN conduit service, to use high throughput paths by bandwidth allocation to higher quality paths avoiding lower quality paths, to deliver reliability via fast retransmissions on single packet loss detection, to deliver reliability and timely communication through redundancy transmissions via duplicate transmissions on high a best path and on a most independent path from the best path, to lower latency via high resolution clock synchronized path monitoring and high latency path avoidance, to monitor packet loss and provide loss prone path avoidance, and to avoid congestion by use of high resolution clock synchronized enabled congestion monitoring and avoidance.

Owner:TALARI NETWORKS

Adaptive private network asynchronous distributed shared memory services

ActiveUS8452846B2Improve performance and reliability and predictabilitySynchronisation information channelsMultiple digital computer combinationsPacket lossDistributed memory

A highly predicable quality shared distributed memory process is achieved using less than predicable public and private internet protocol networks as the means for communications within the processing interconnect. An adaptive private network (APN) service provides the ability for the distributed memory process to communicate data via an APN conduit service, to use high throughput paths by bandwidth allocation to higher quality paths avoiding lower quality paths, to deliver reliability via fast retransmissions on single packet loss detection, to deliver reliability and timely communication through redundancy transmissions via duplicate transmissions on high a best path and on a most independent path from the best path, to lower latency via high resolution clock synchronized path monitoring and high latency path avoidance, to monitor packet loss and provide loss prone path avoidance, and to avoid congestion by use of high resolution clock synchronized enabled congestion monitoring and avoidance.

Owner:TALARI NETWORKS

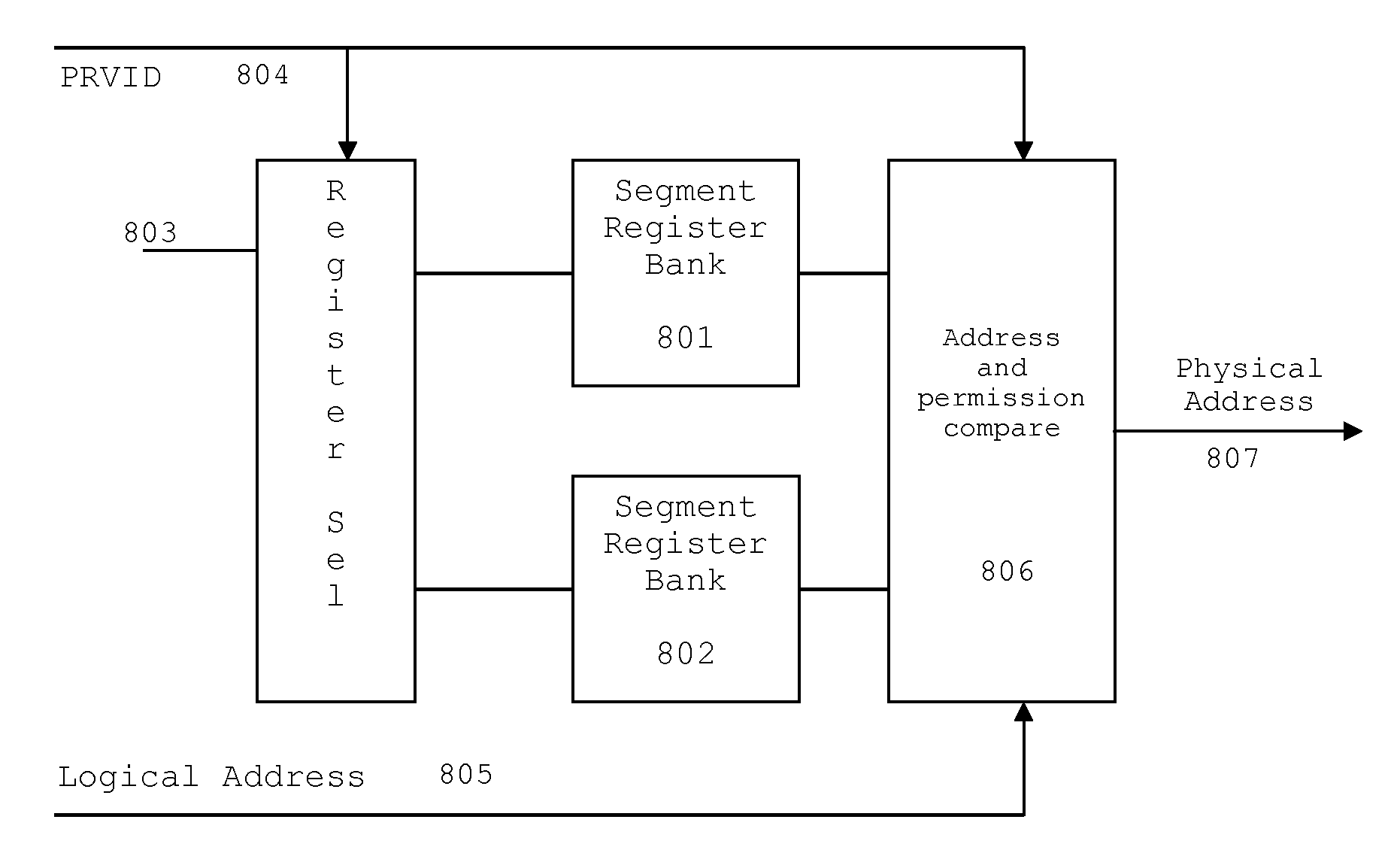

Device Security Features Supporting a Distributed Shared Memory System

ActiveUS20120191933A1Memory architecture accessing/allocationEnergy efficient ICTChain of trustProtection system

A memory management and protection system that incorporates device security features that support a distributed, shared memory system. The concept of secure regions of memory and secure code execution is supported, and a mechanism is provided to extend a chain of trust from a known, fixed secure boot ROM to the actual secure code execution. Furthermore, the system keeps a secure address threshold that is only programmable by a secure supervisor, and will only allow secure access requests that are above this threshold.

Owner:TEXAS INSTR INC

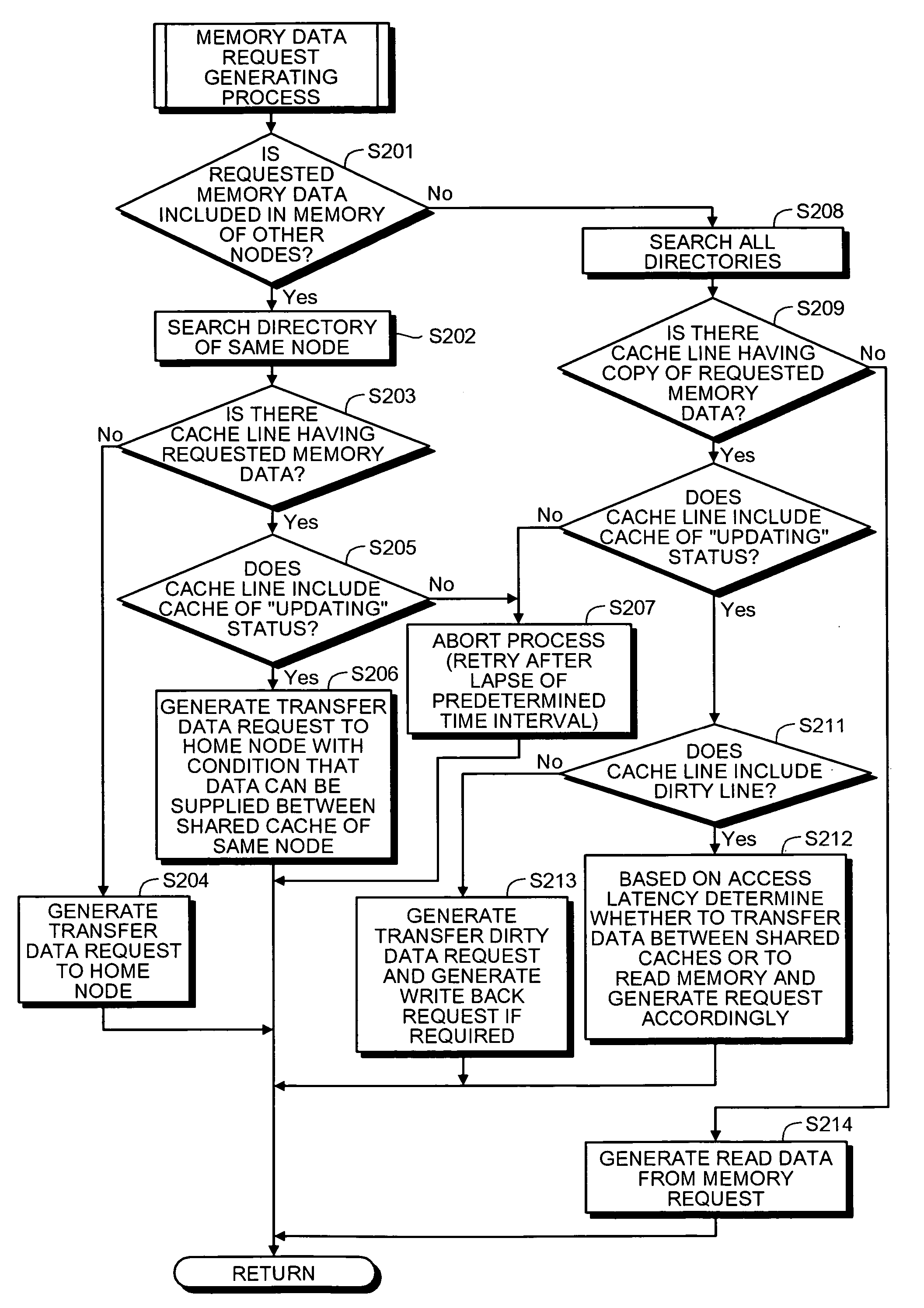

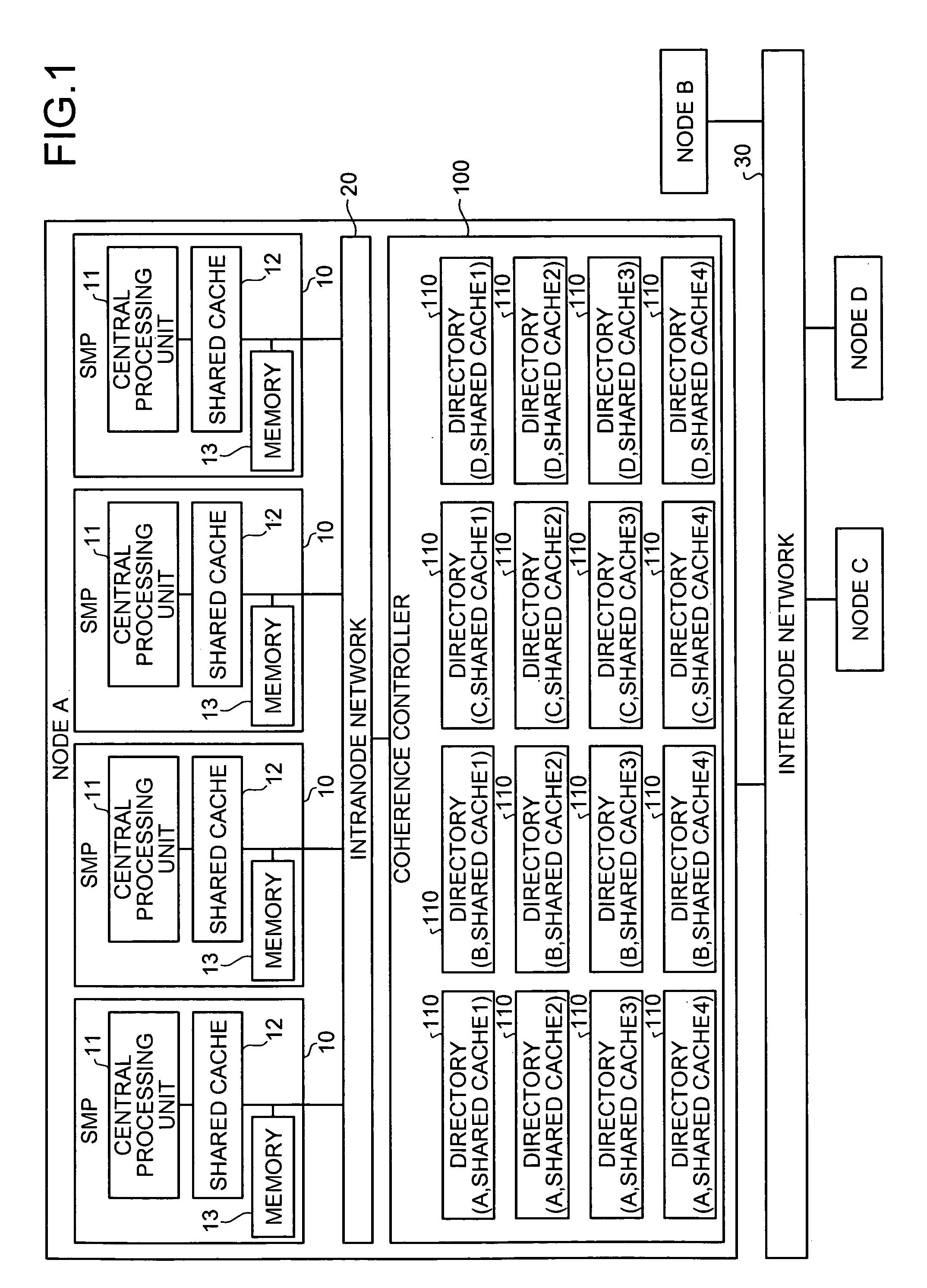

Method and system for maintaining cache coherence of distributed shared memory system

A distributed shared memory system includes a plurality of nodes. Each of the nodes includes a plurality of shared multiprocessors. Each of the shared multiprocessors includes a processor, a shared cache, and a memory. Each of the nodes includes a coherence maintaining unit that maintains cache coherence based on a plurality of directories each of which corresponding to each of the shared caches included in the distributed shared memory system.

Owner:FUJITSU LTD

Split sparse directory for a distributed shared memory multiprocessor system

InactiveUS6560681B1Reduce the amount requiredSmall sizeMemory adressing/allocation/relocationMultiple digital computer combinationsMulti processorParallel computing

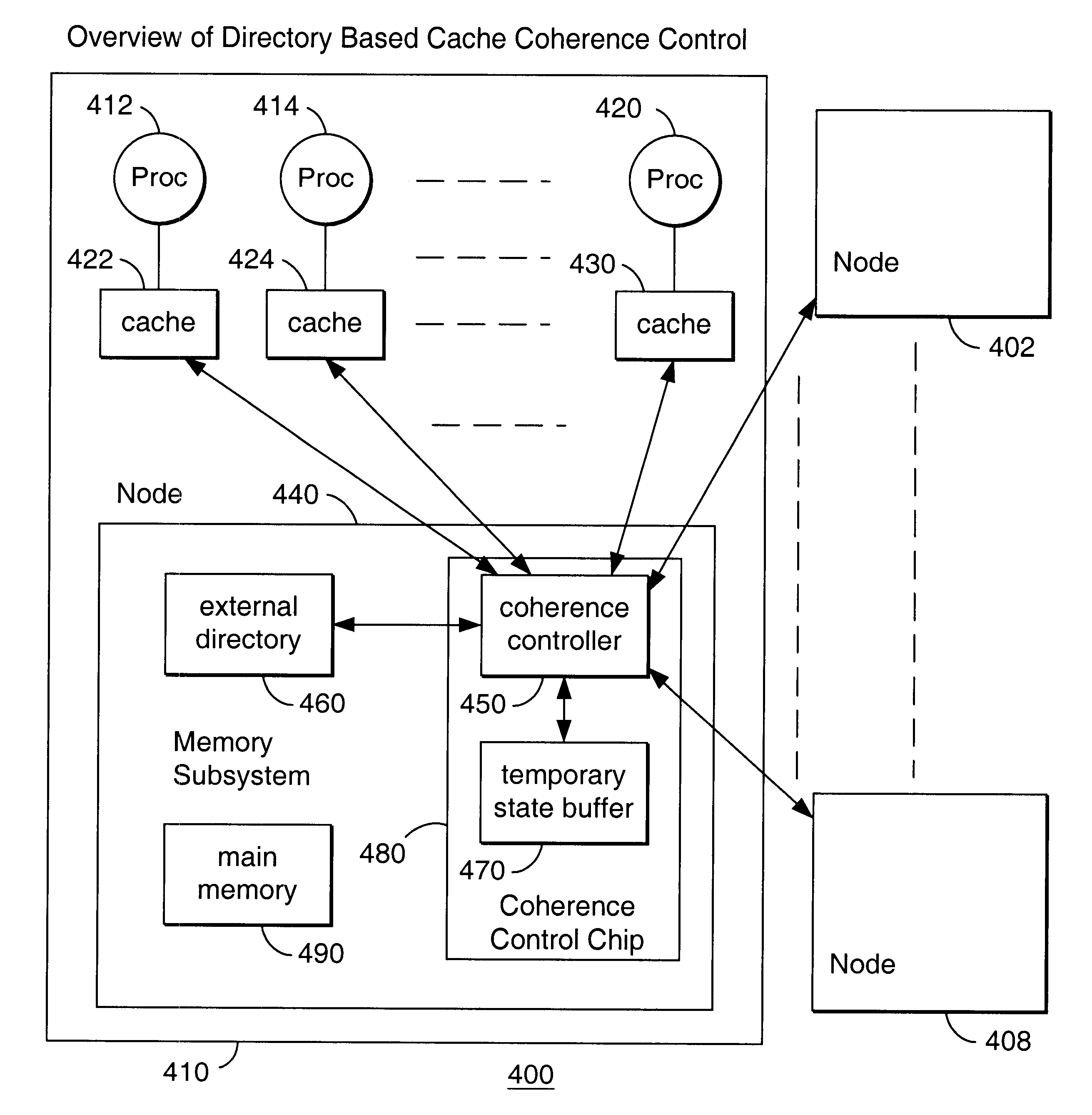

A split sparse directory for a distributed shared memory multiprocessor system with multiple nodes, each node including a plurality of processors, each processor having an associated cache. The split sparse directory is in a memory subsystem which includes a coherence controller, a temporary state buffer and an external directory. The split sparse directory stores information concerning the cache lines in the node, with the temporary state buffer holding state information about transient cache lines and the external directory holding state information about non-transient cache lines.

Owner:FUJITSU LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com