Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

174 results about "Data coherency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Data Coherency. Data that is coherent is data that is the same across the network. In other words, if data is coherent, data on the server and all the clients is synchronized.

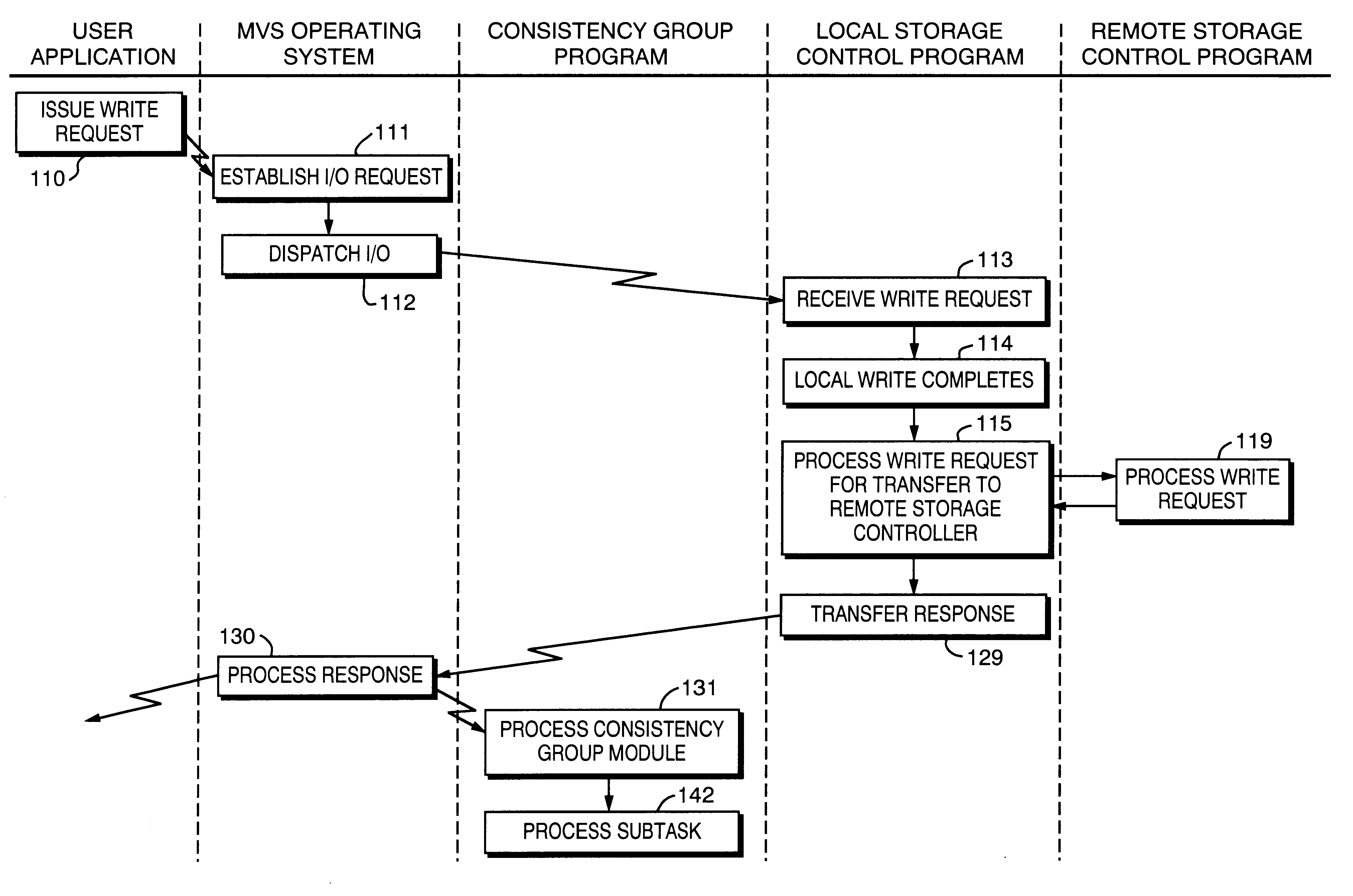

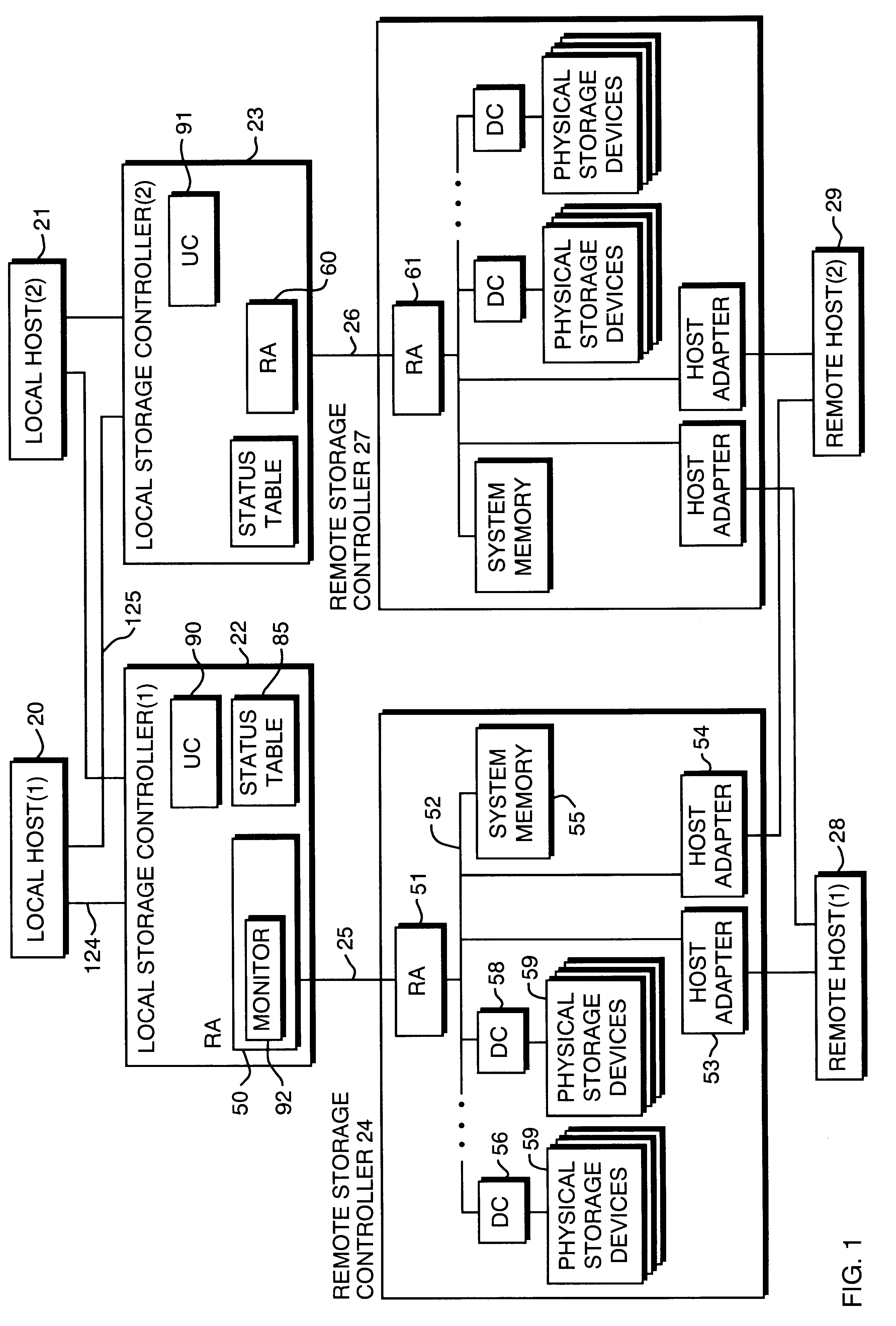

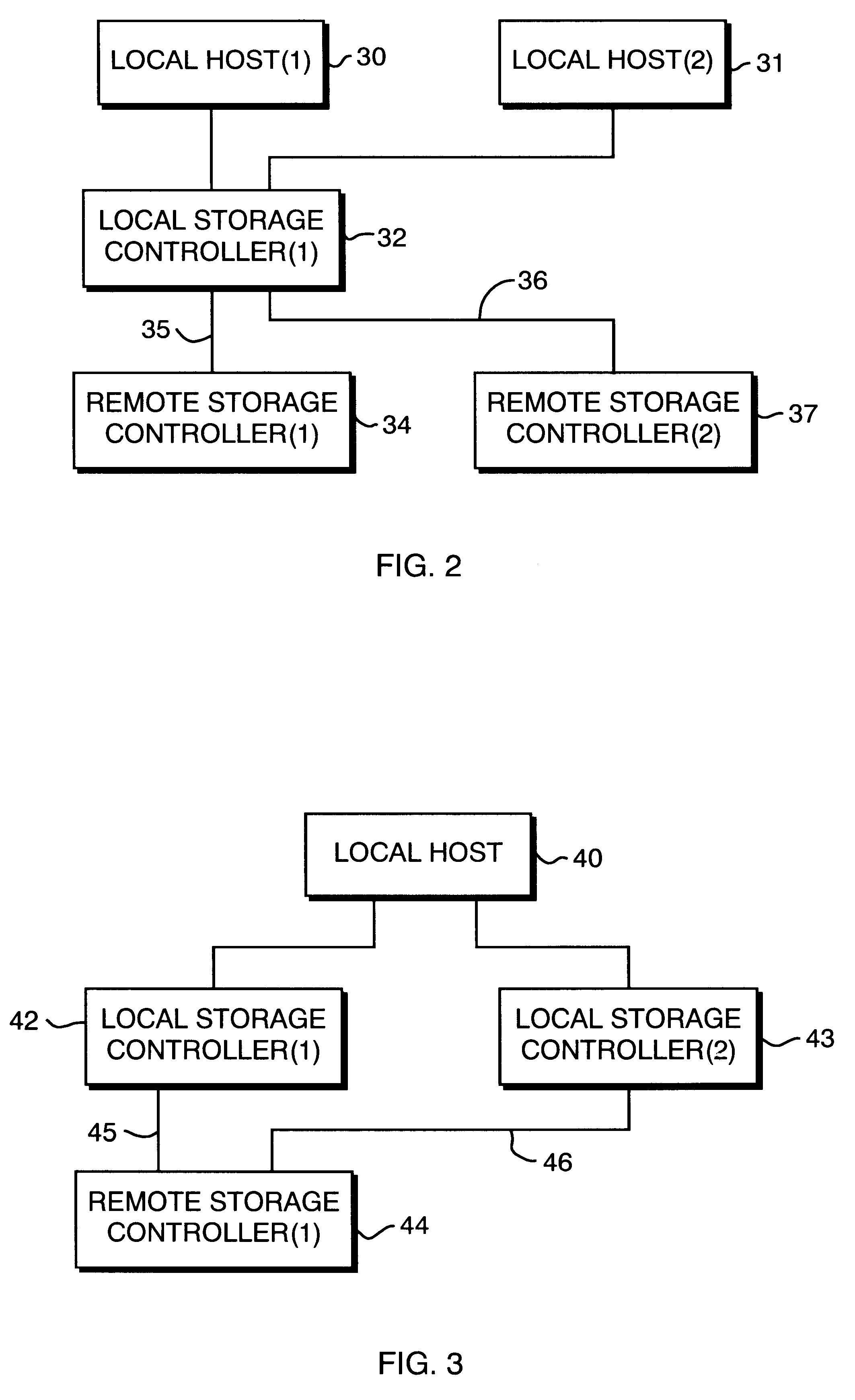

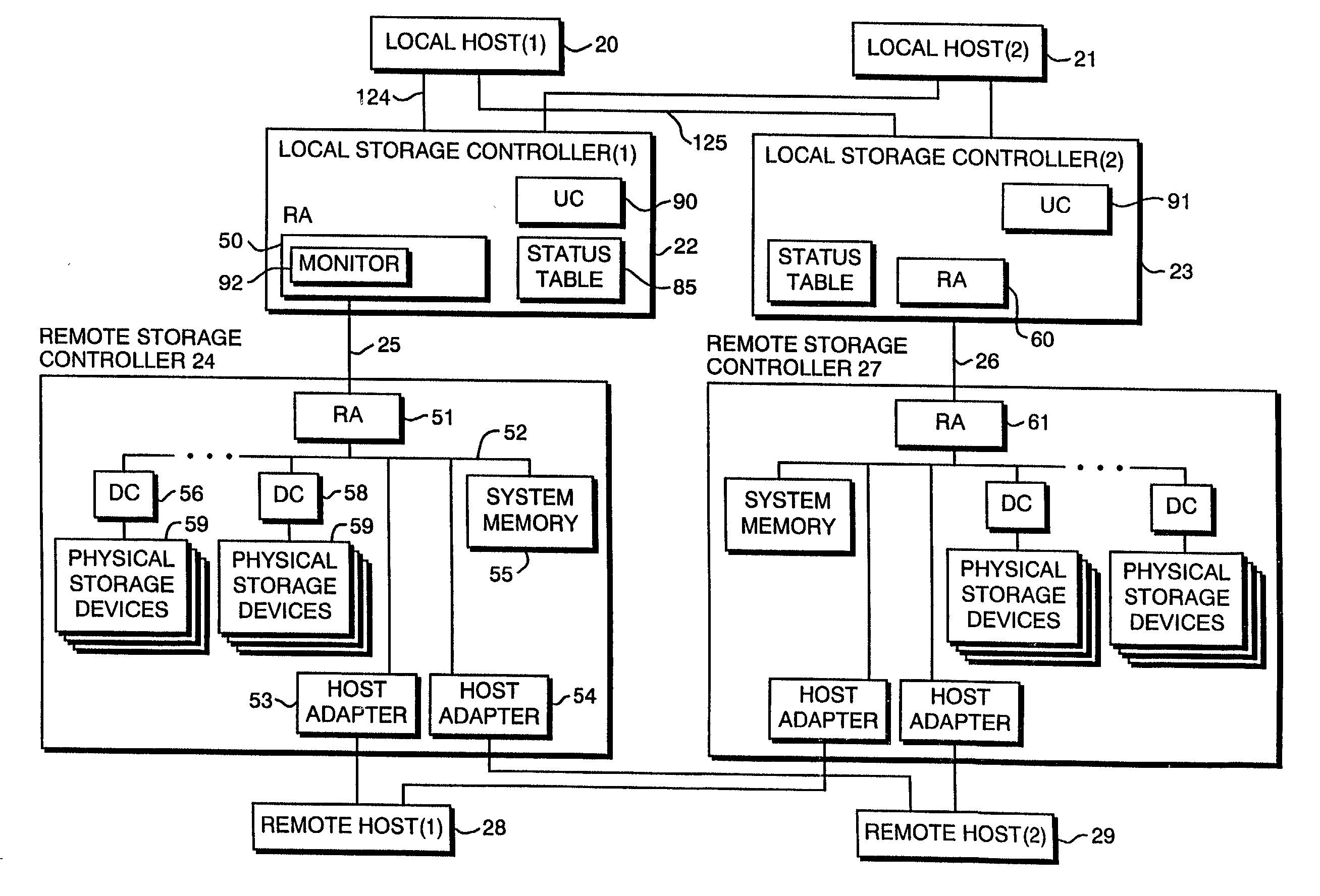

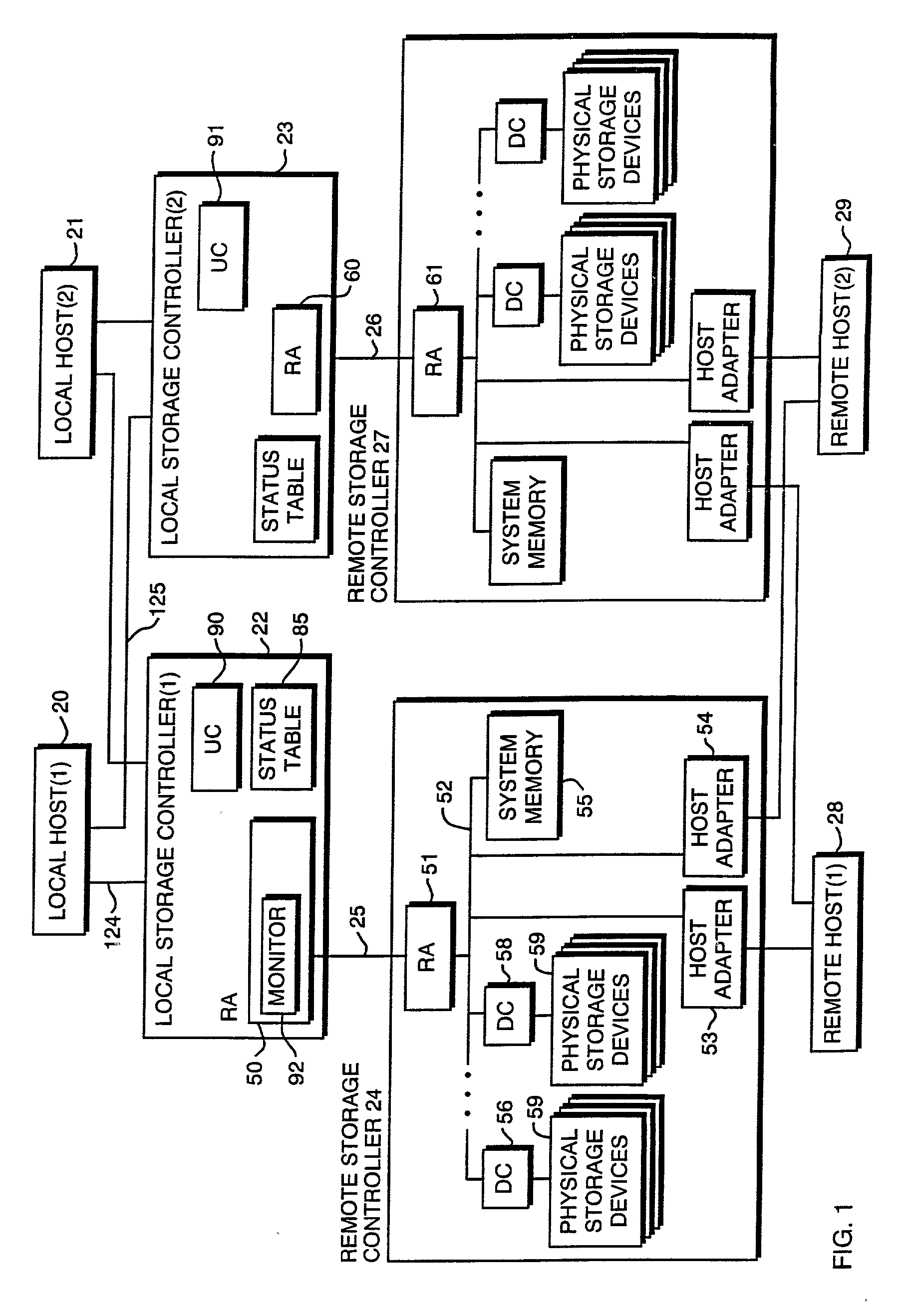

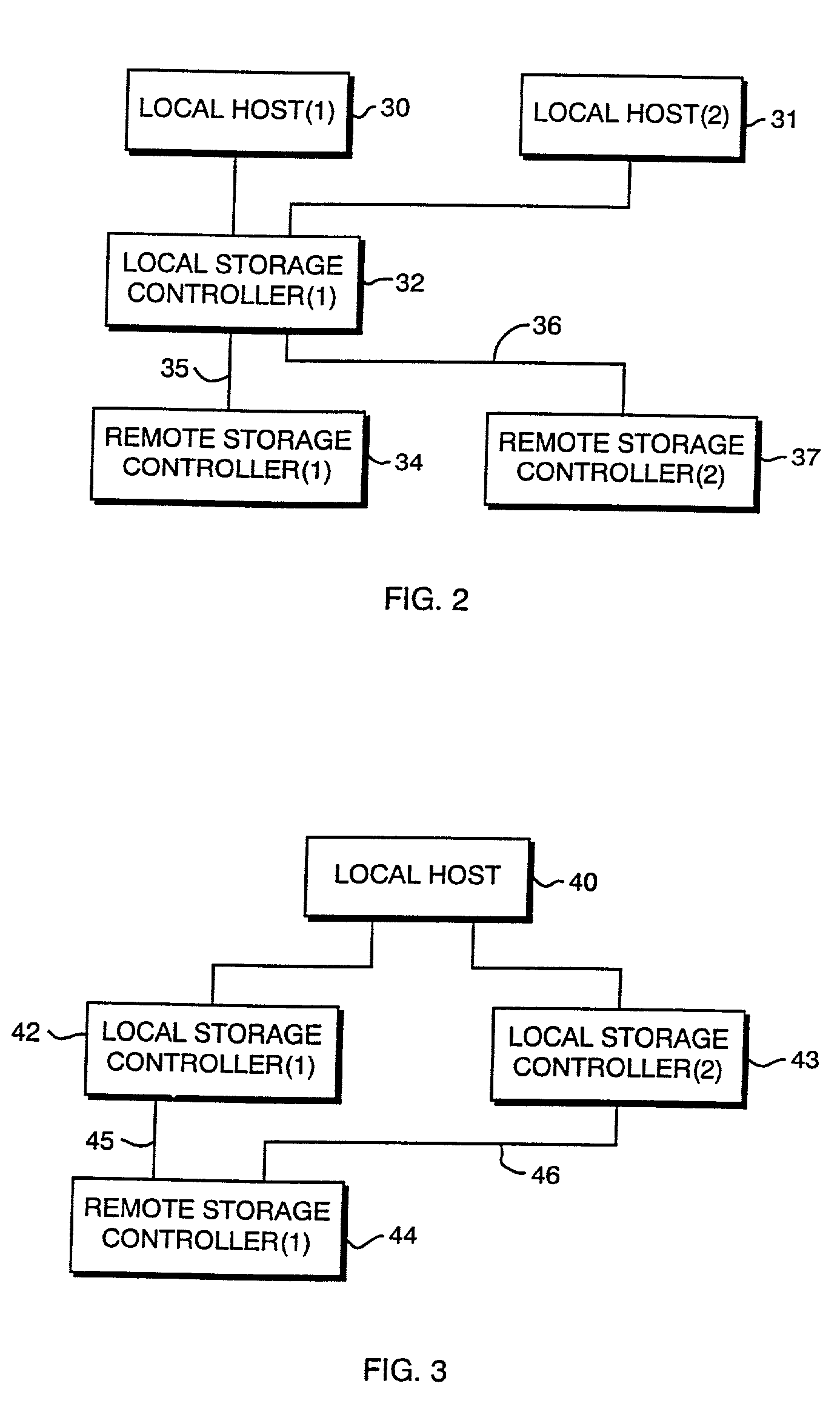

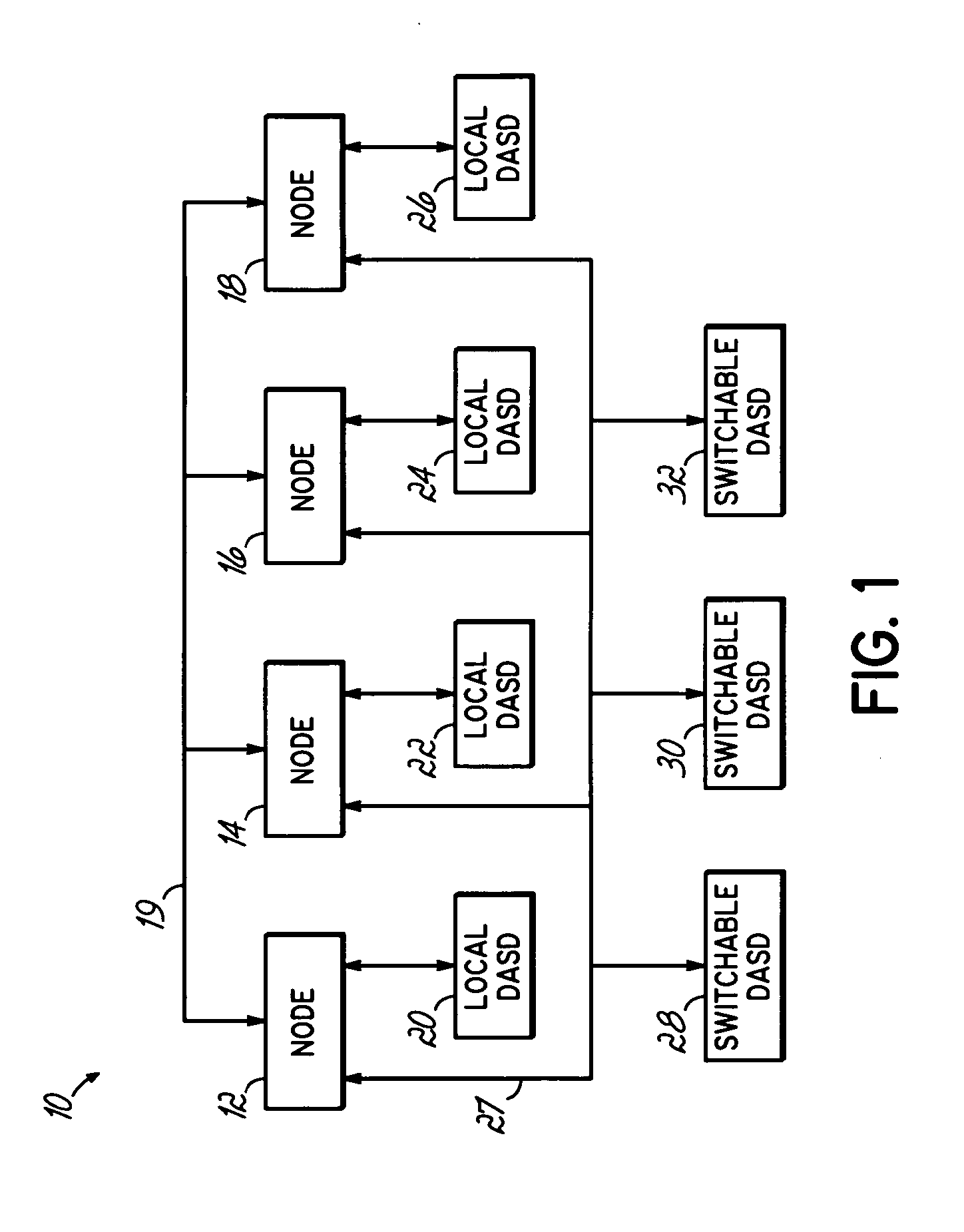

Method and apparatus for maintaining data coherency

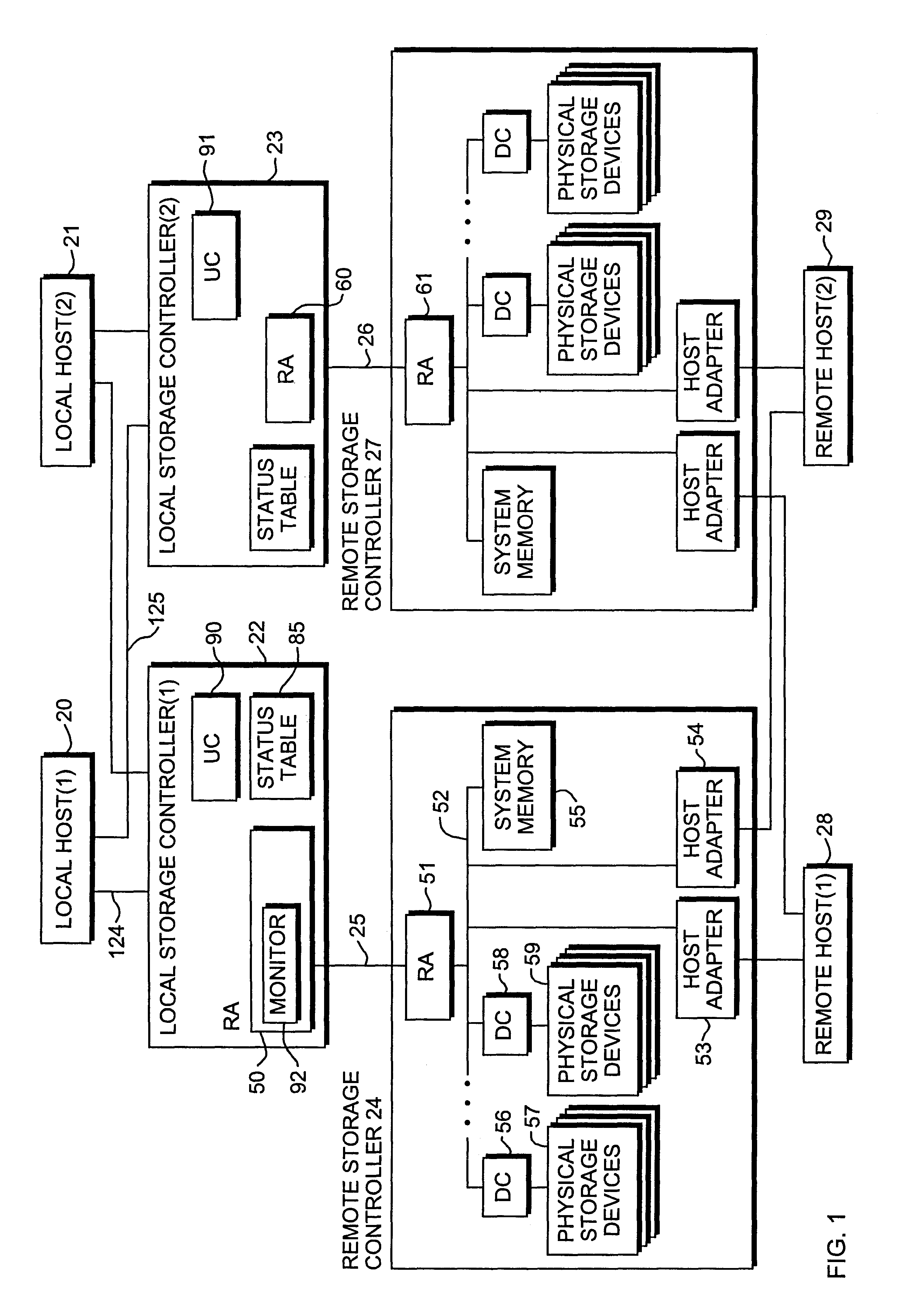

A method and apparatus for assuring data consistency in a data processing network including local and remote data storage controllers interconnected by independent communication paths. The remote storage controller or controllers normally act as a mirror for the local storage controller or controllers. If, for any reason, transfers over one of the independent communication paths is interrupted, transfers over all the independent communication paths to predefined devices in a group are suspended thereby assuring the consistency of the data at the remote storage controller or controllers. When the cause of the interruption has been corrected, the local storage controllers are able to transfer data modified since the suspension occurred to their corresponding remote storage controllers thereby to reestablish synchronism and consistency for the entire dataset.

Owner:EMC IP HLDG CO LLC

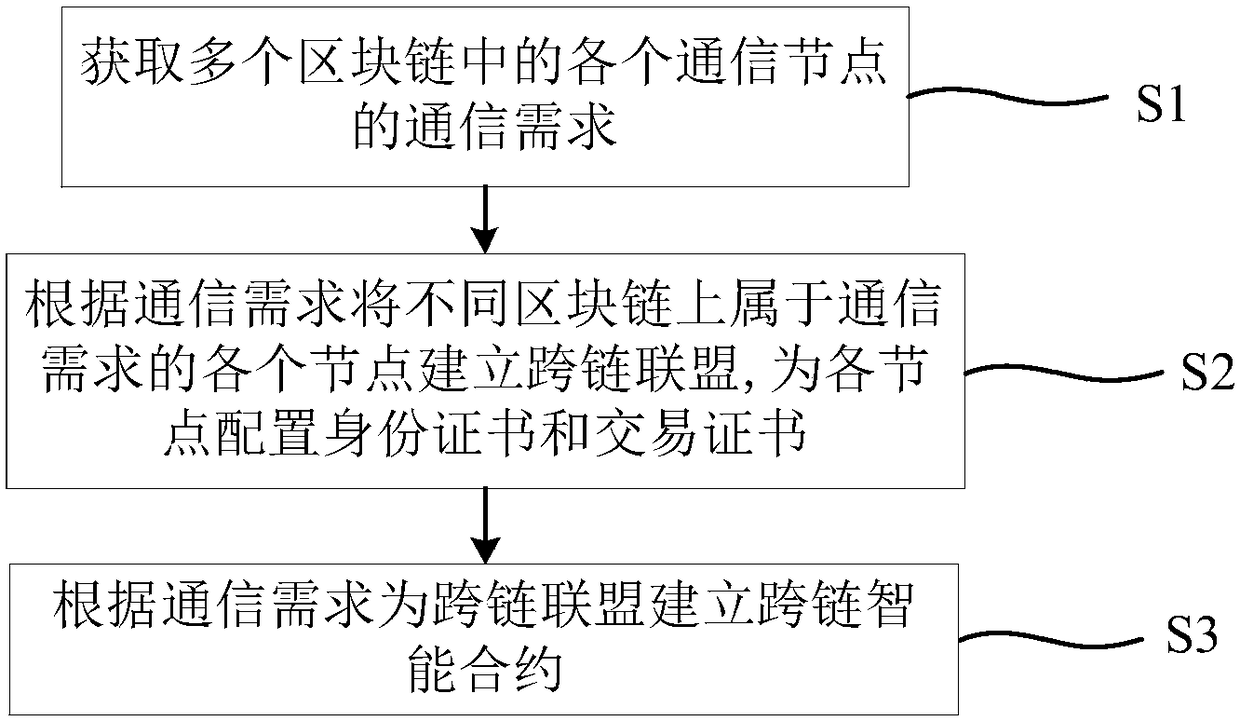

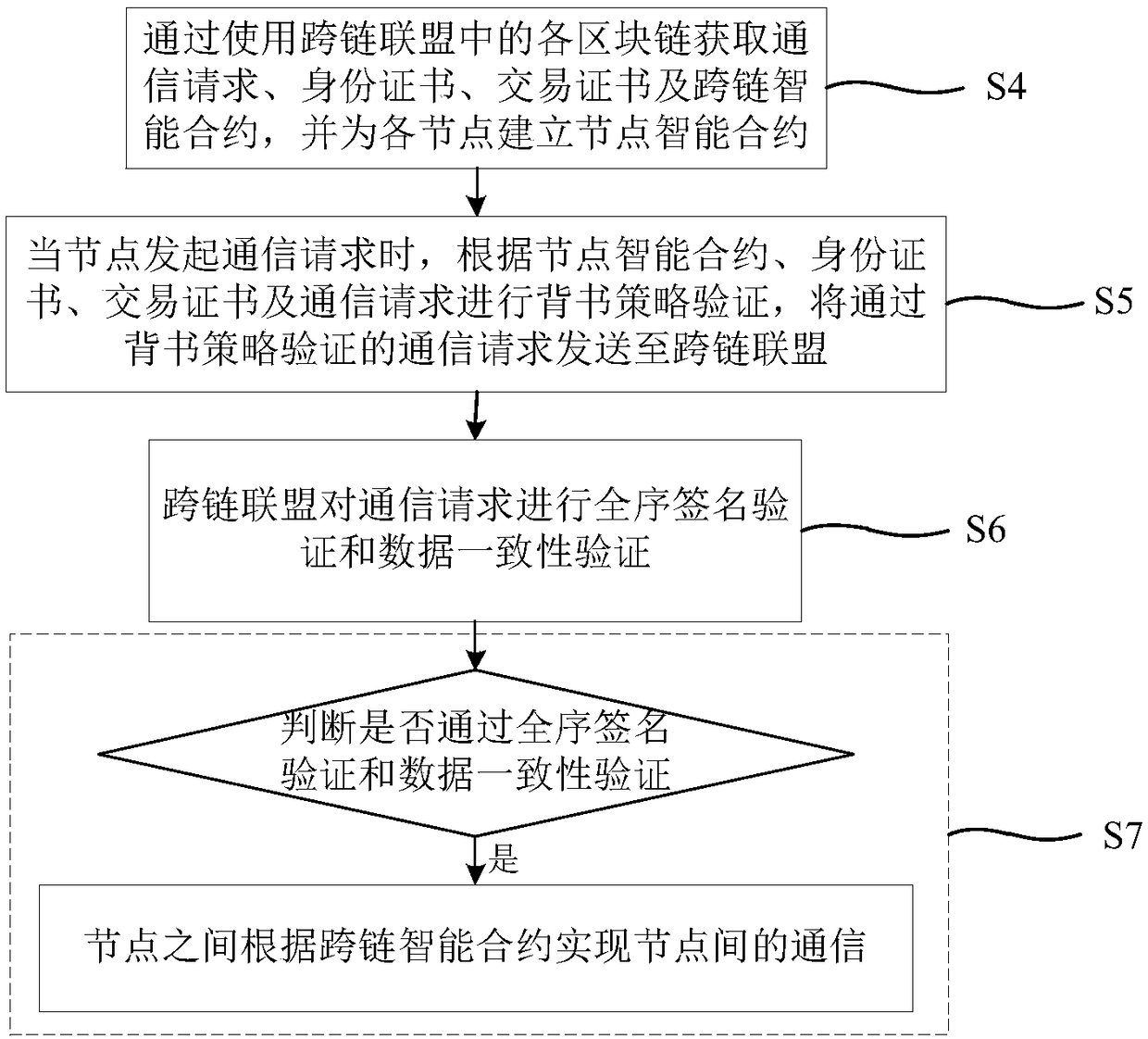

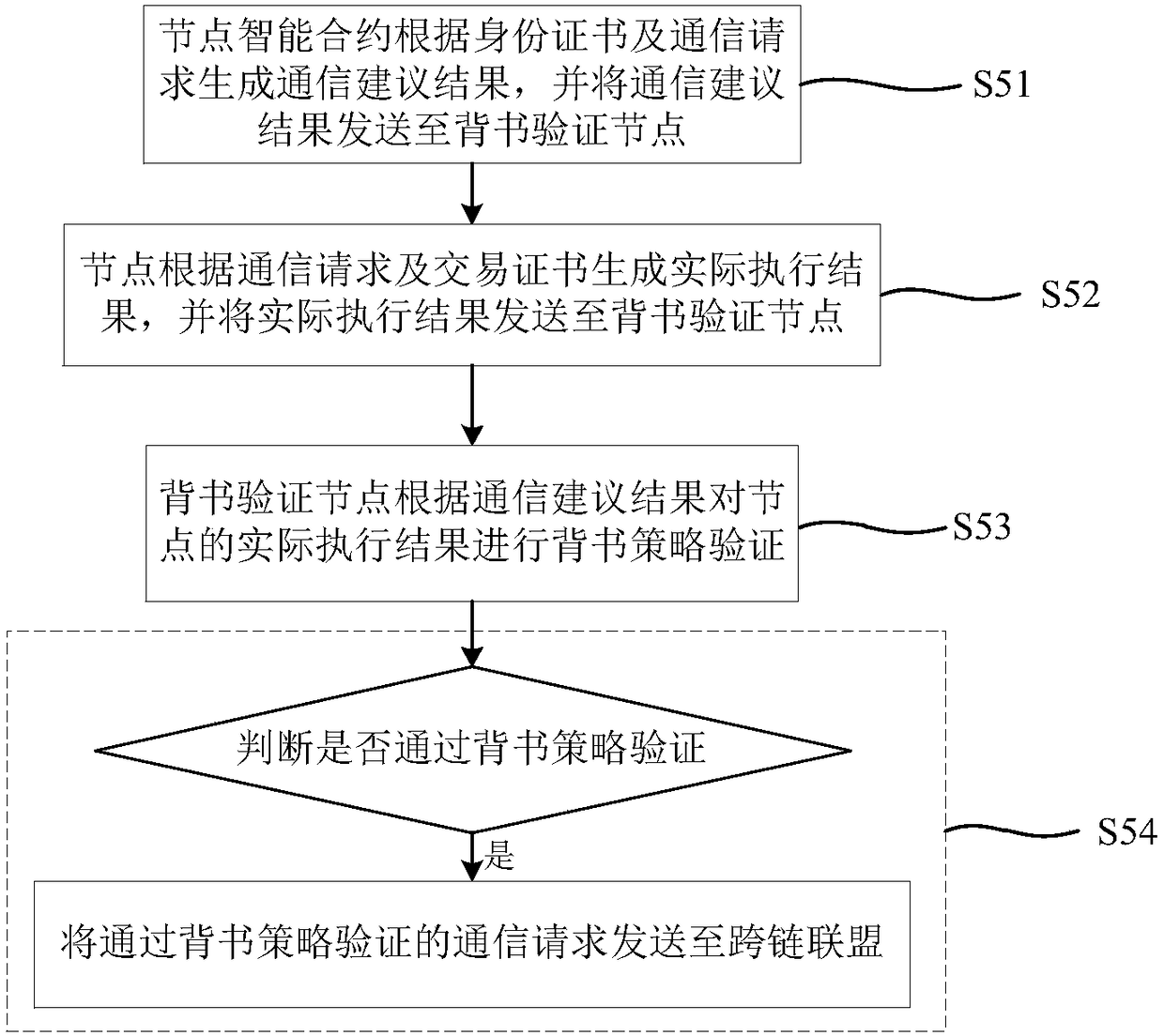

Method for building cross-chain alliance among block chains and block chain cross-chain communication method and system

ActiveCN108256864ARealize the establishmentAchieve sharingTransmissionProtocol authorisationResource utilizationSmart contract

The invention provides a method for building a cross-chain alliance among block chains and a block chain cross-chain communication method and system. The method for building the cross-chain alliance includes: acquiring the communication requirements of various communication nodes in multiple block chains; using the nodes which belong to the communication requirements to build the cross-chain alliance, and configuring identity certificate and transaction certificate; building a cross-chain smart contract. The block chain cross-chain communication method includes: using the various block chainsin the cross-chain alliance to acquire a communication request, and building a node smart contract for each node; if the communication request is initiated, performing endorsement strategy verification on the communication request, performing total order signature verification and data consistency verification, and using the cross-chain smart contract to achieve node communication when the communication request passes the verification. By using the method to build the cross-chain alliance, cross-chain communication among different block chains is achieved, data resources on different block chains can be shared, and resource utilization efficiency is increased.

Owner:无锡三聚阳光知识产权服务有限公司

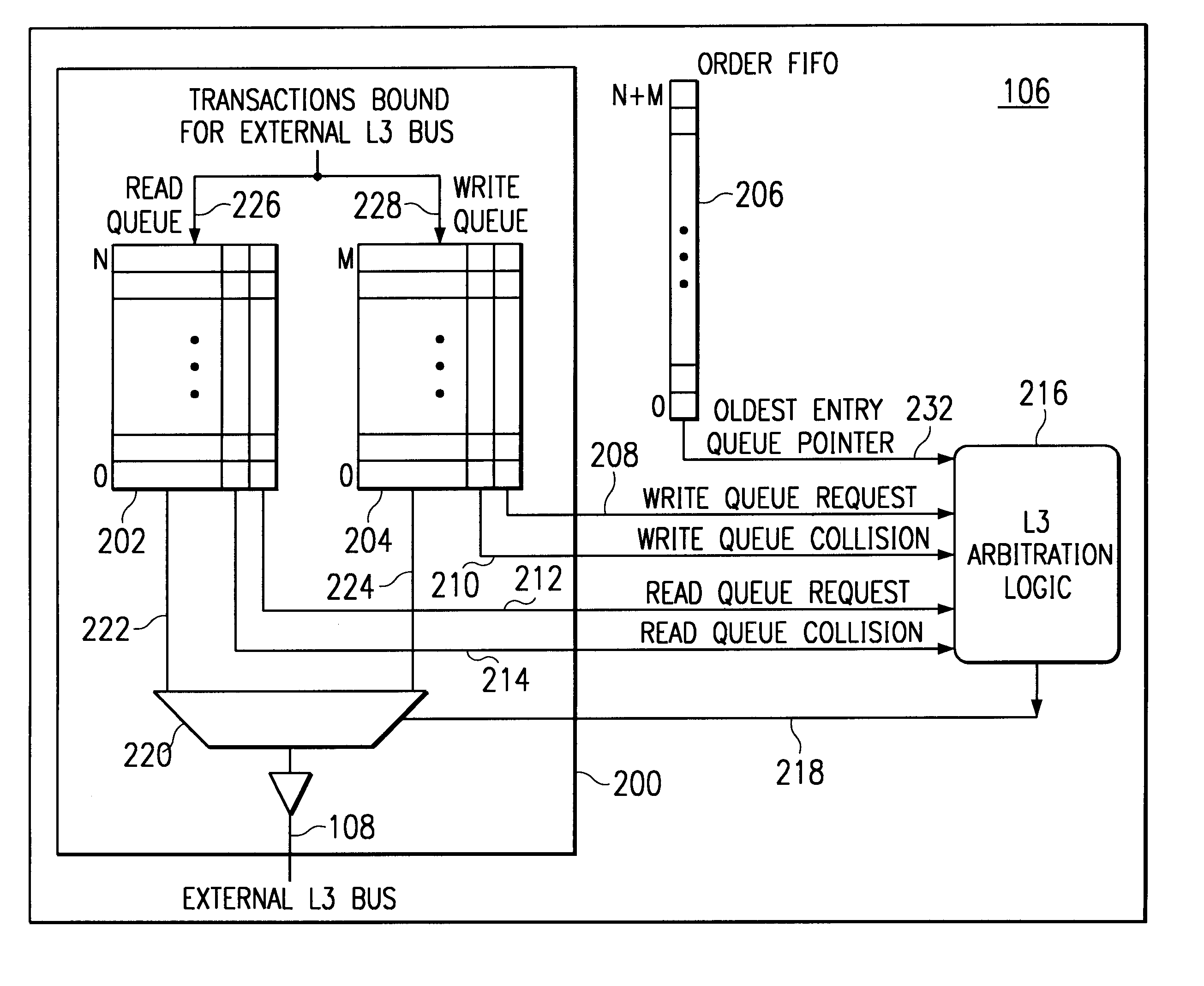

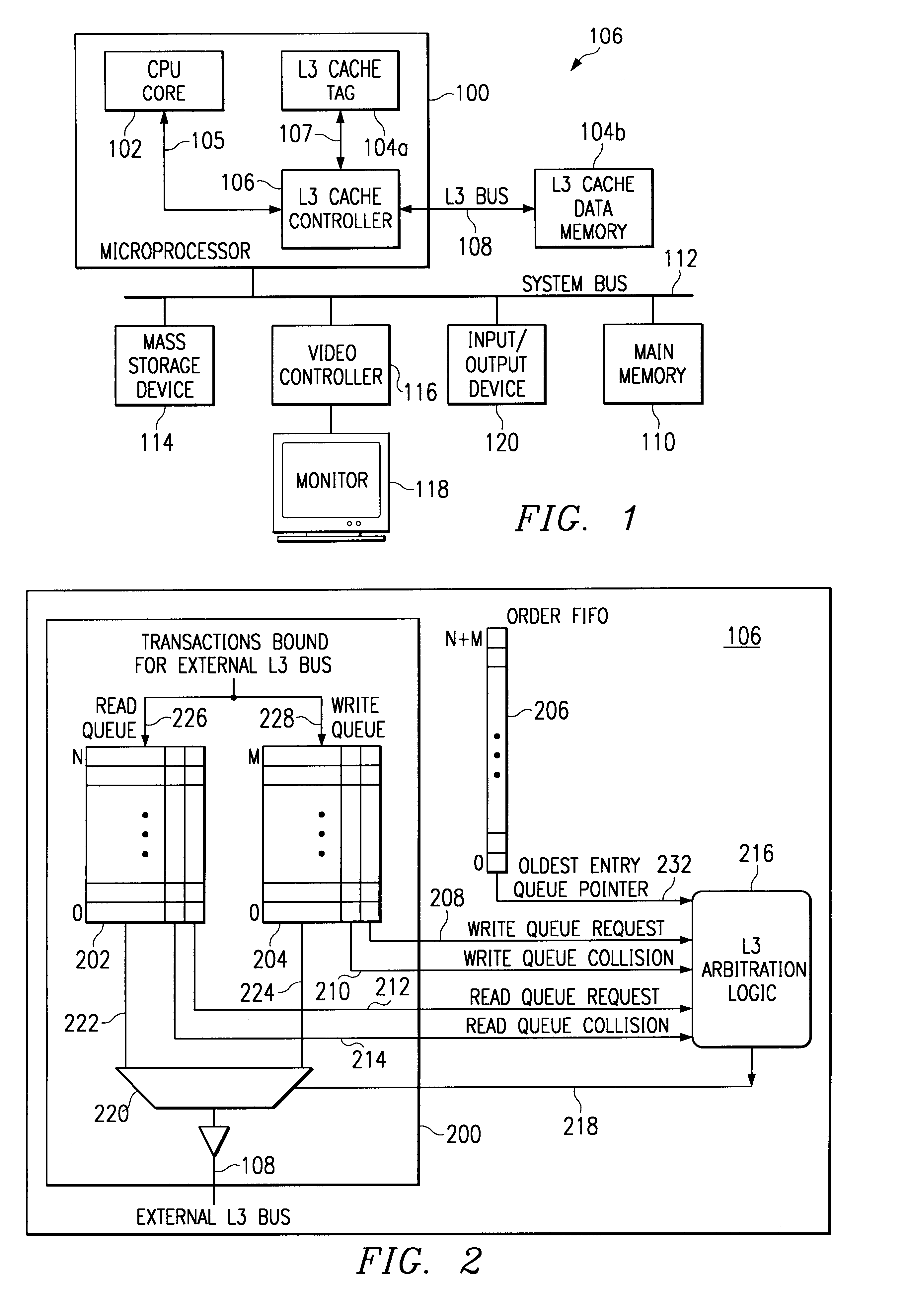

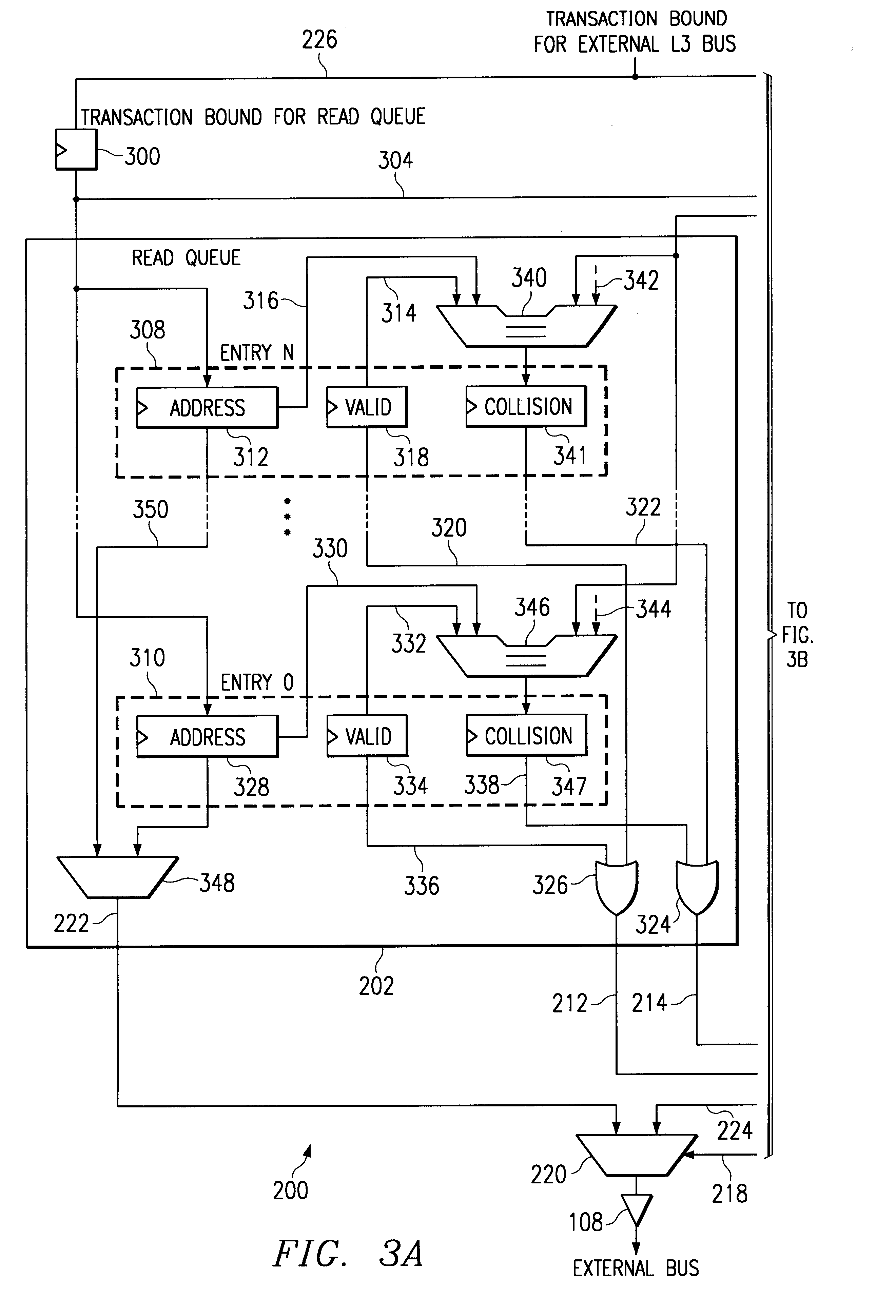

Bus optimization with read/write coherence including ordering responsive to collisions

InactiveUS6256713B1Optimizing bus utilizationMaintaining readFuel lightersMemory adressing/allocation/relocationOperating systemData coherency

Owner:IBM CORP +1

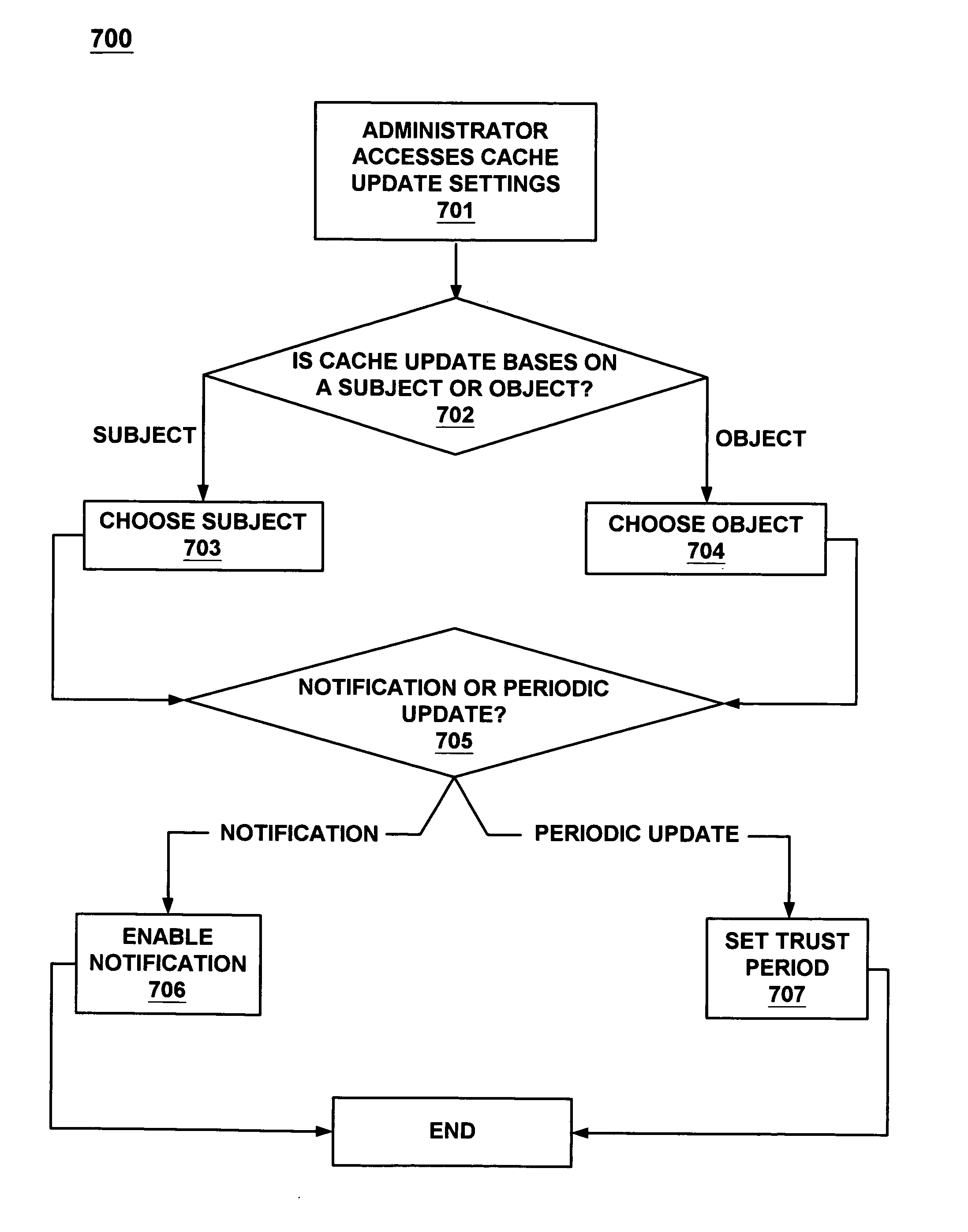

Hybrid system and method for updating remote cache memory with user defined cache update policies

InactiveUS7020750B2Digital data information retrievalMemory adressing/allocation/relocationGraphicsGraphical user interface

A hybrid system for updating cache including a first computer system coupled to a database accessible by a second computer system, said second computer system including a cache, a cache update controller for concurrently implementing a user defined cache update policy, including both notification based cache updates and periodic based cache updates, wherein said cache updates enforce data coherency between said database and said cache, and a graphical user interface for selecting between said notification based cache updates and said periodic based cache updates.

Owner:ORACLE INT CORP

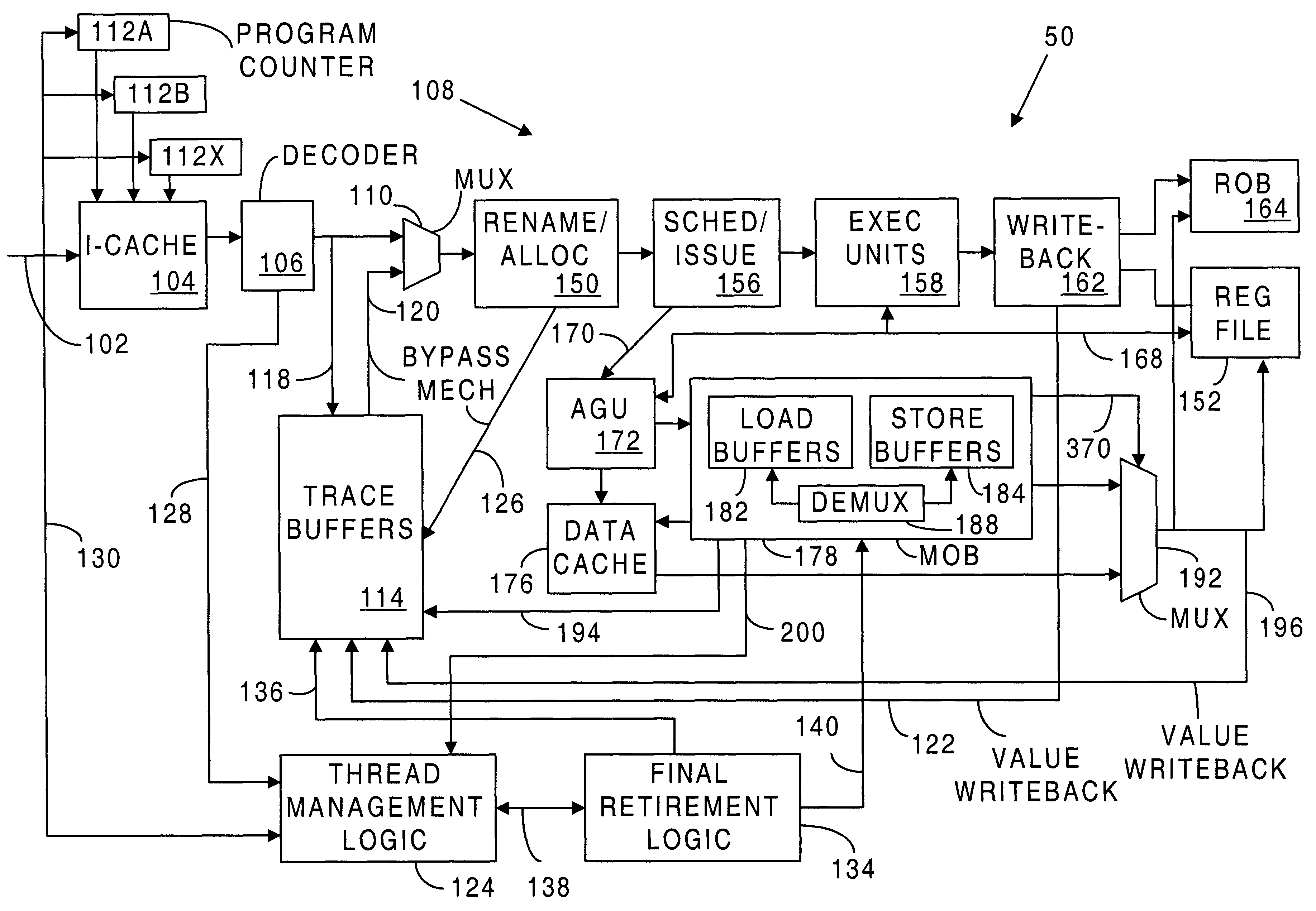

Memory system for ordering load and store instructions in a processor that performs multithread execution

InactiveUS6463522B1Memory adressing/allocation/relocationDigital computer detailsLoad instructionParallel computing

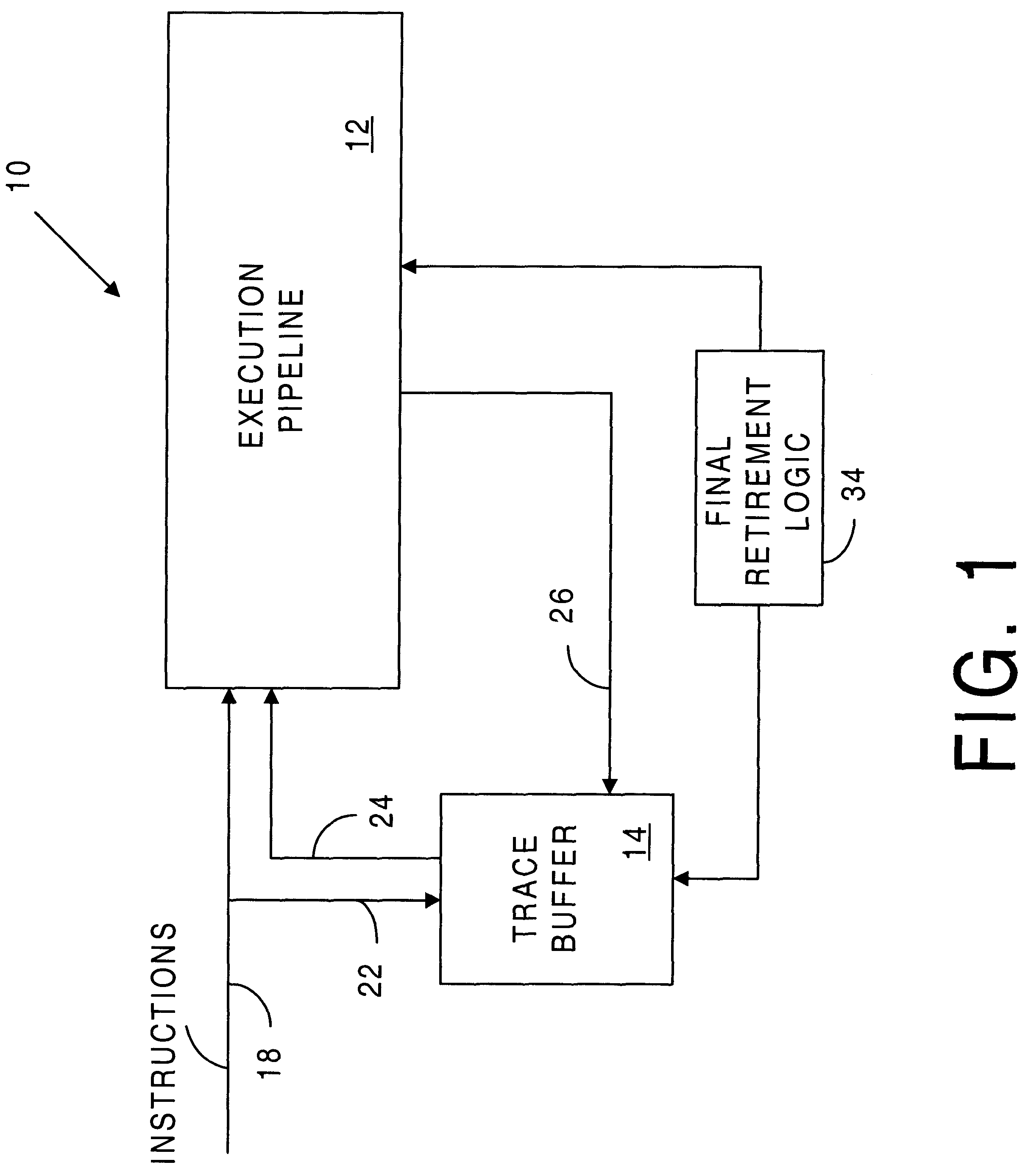

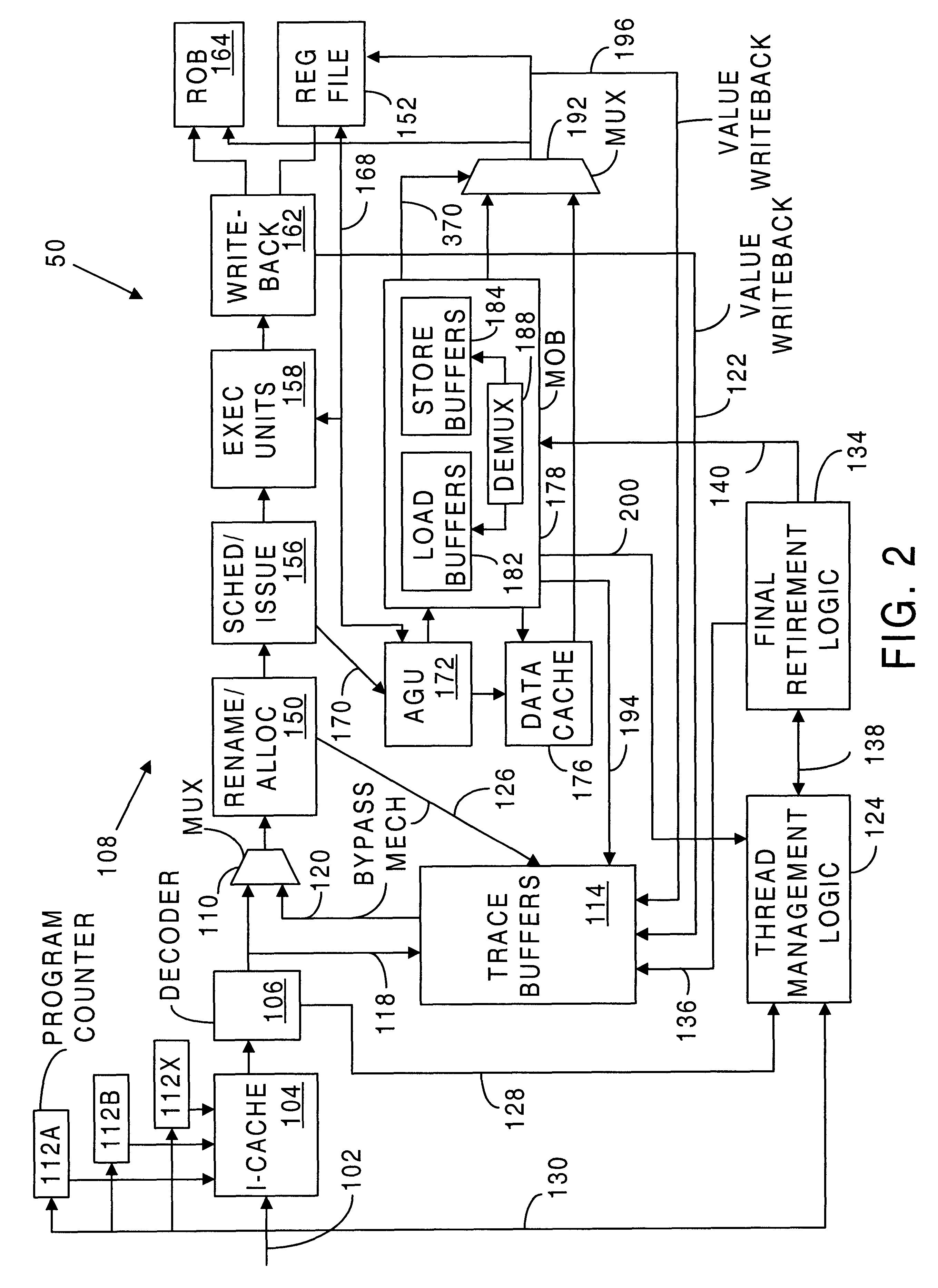

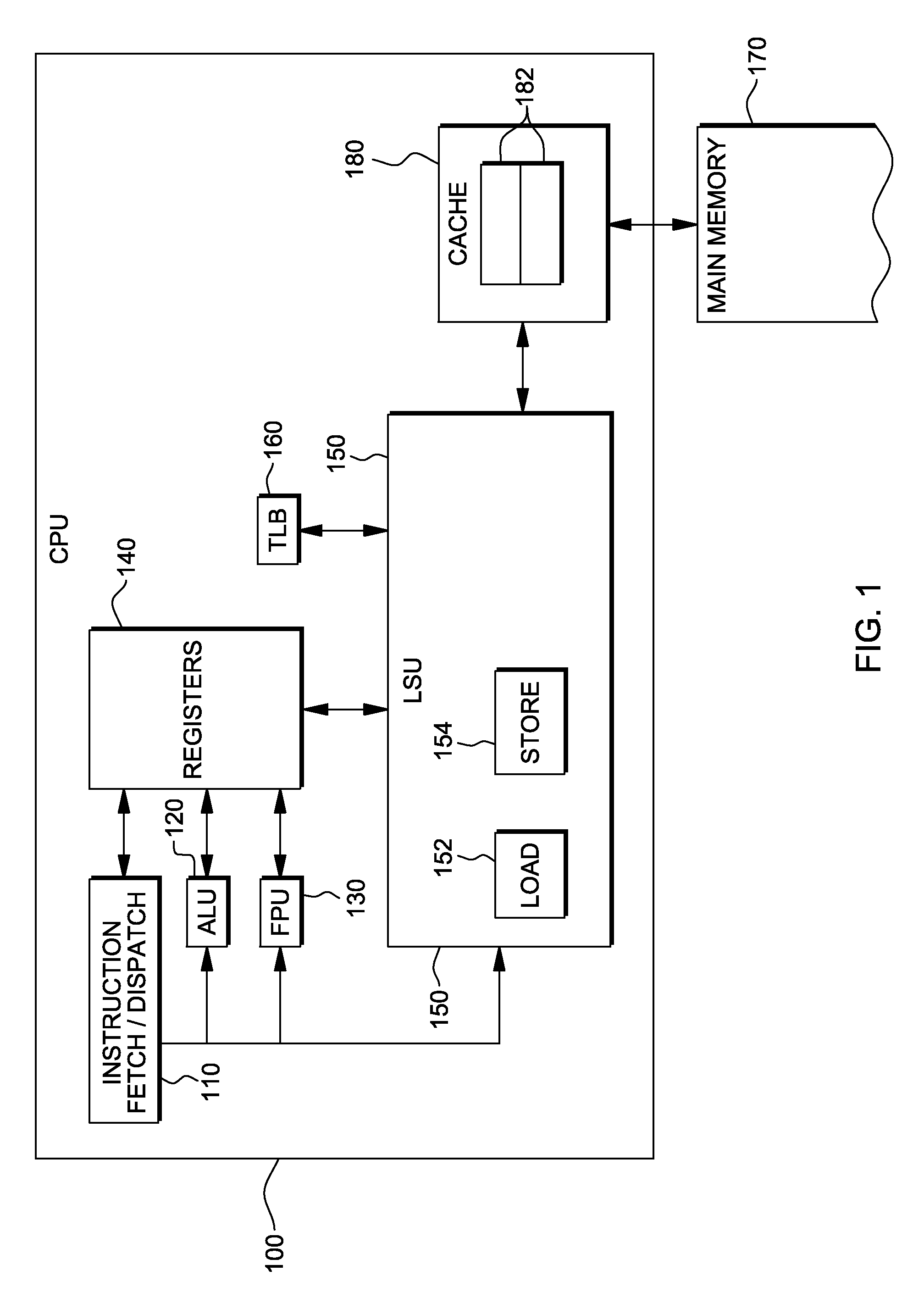

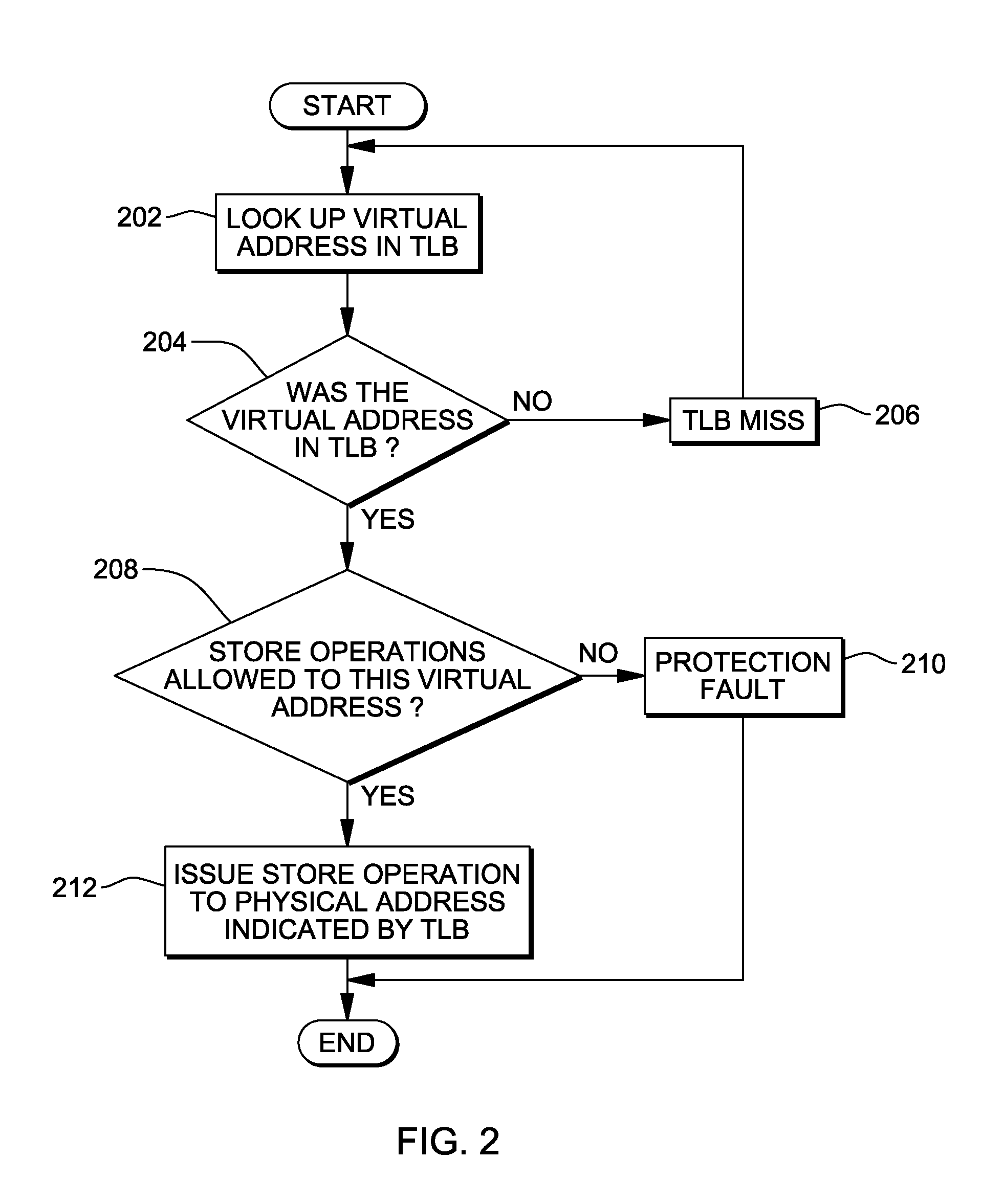

In one embodiment of the invention, a processor includes a memory order buffer (MOB) including load buffers and store buffers, wherein the MOB orders load and store instructions so as to maintain data coherency between load and store instructions in different threads, wherein at least one of the threads is dependent on at least another one of the threads. In another embodiment of the invention, a processor includes an execution pipeline to concurrently execute at least portions of threads, wherein at least one of the threads is dependent on at least another one of the threads, the execution pipeline including a memory order buffer that orders load and store instructions. The processor also includes detection circuitry to detect speculation errors associated with load instructions in a load buffer.

Owner:INTEL CORP

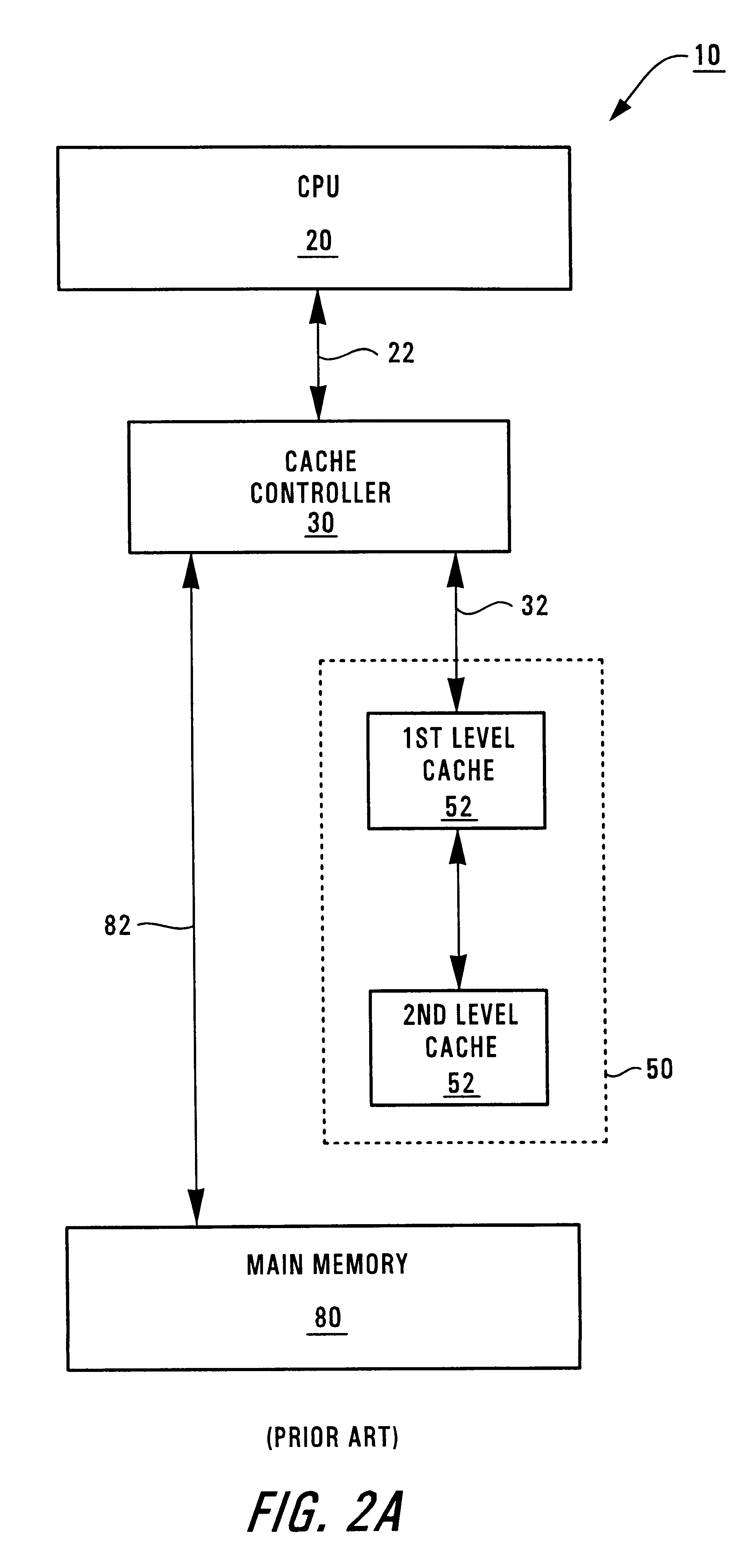

Method and architecture for data coherency in set-associative caches including heterogeneous cache sets having different characteristics

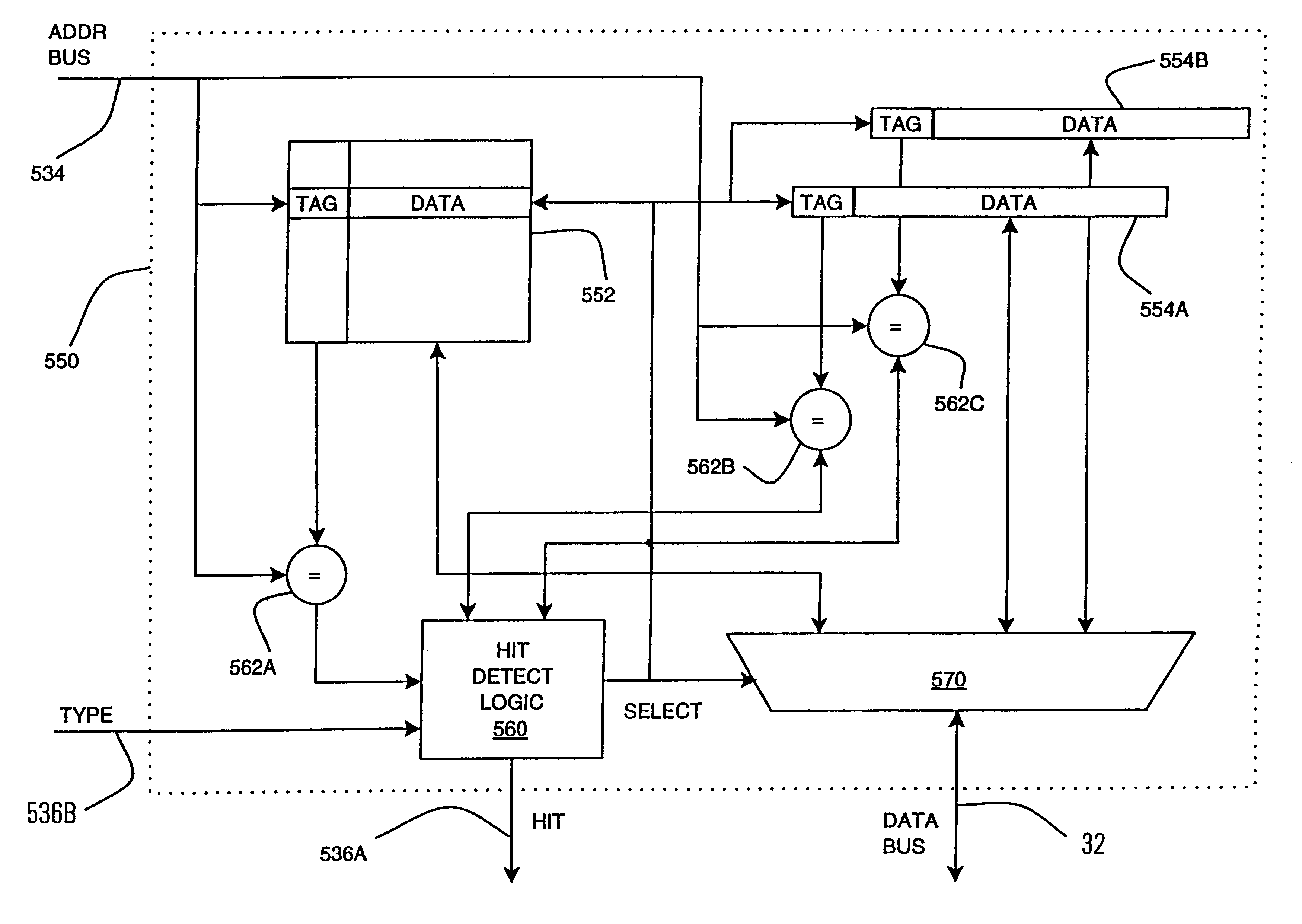

A processor architecture and method are shown which involve a cache having heterogeneous cache sets. An address value of a data access request from a CPU is compared to all cache sets within the cache regardless of the type of data and the type of data access indicated by the CPU to create a unitary interface to the memory hierarchy of the architecture. Data is returned to the CPU from the cache set having the shortest line length of the cache sets containing the data corresponding to the address value of the data request. Modified data replaced in a cache set having a line length that is shorter than other cache sets is checked for matching data resident in the cache sets having longer lines and the matching data is replaced with the modified data. All the cache sets at the cache level of the memory hierarchy are accessed in parallel resulting in data being retrieved from the fastest memory source available, thereby improving memory performance. The unitary interface to a memory hierarchy having multiple cache sets maintains data coherency, simplifies code design and increases resilience to coding errors.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

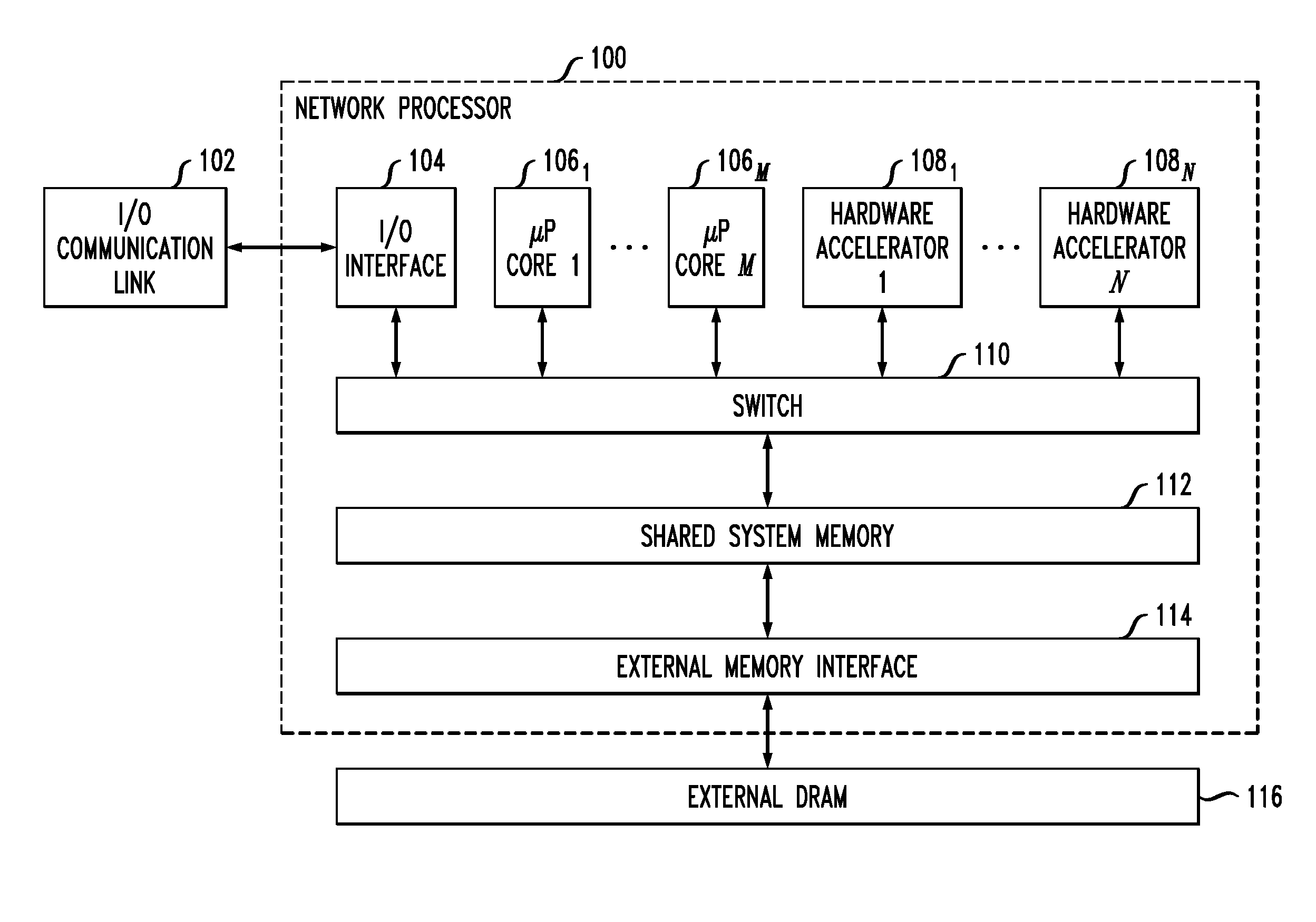

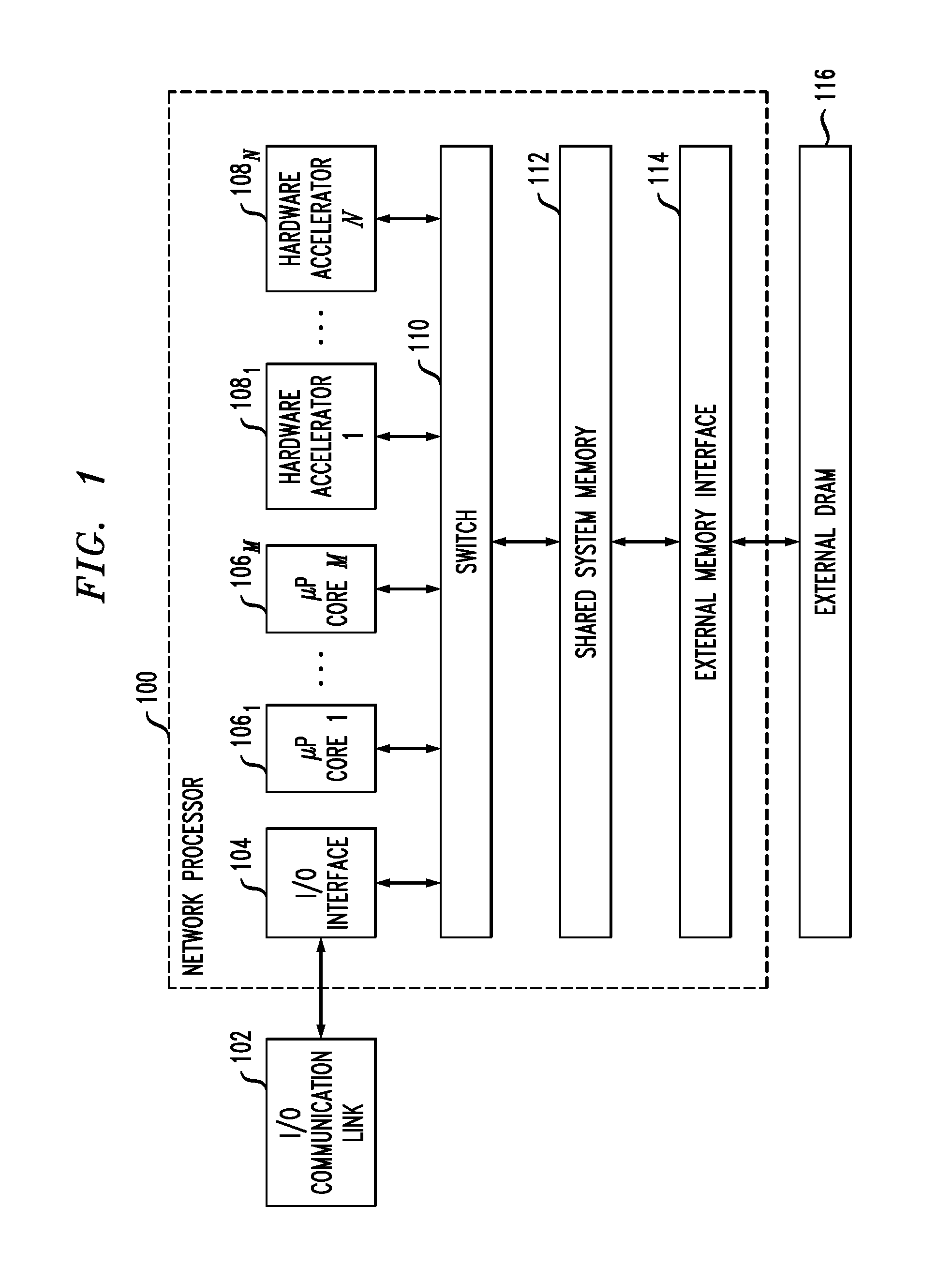

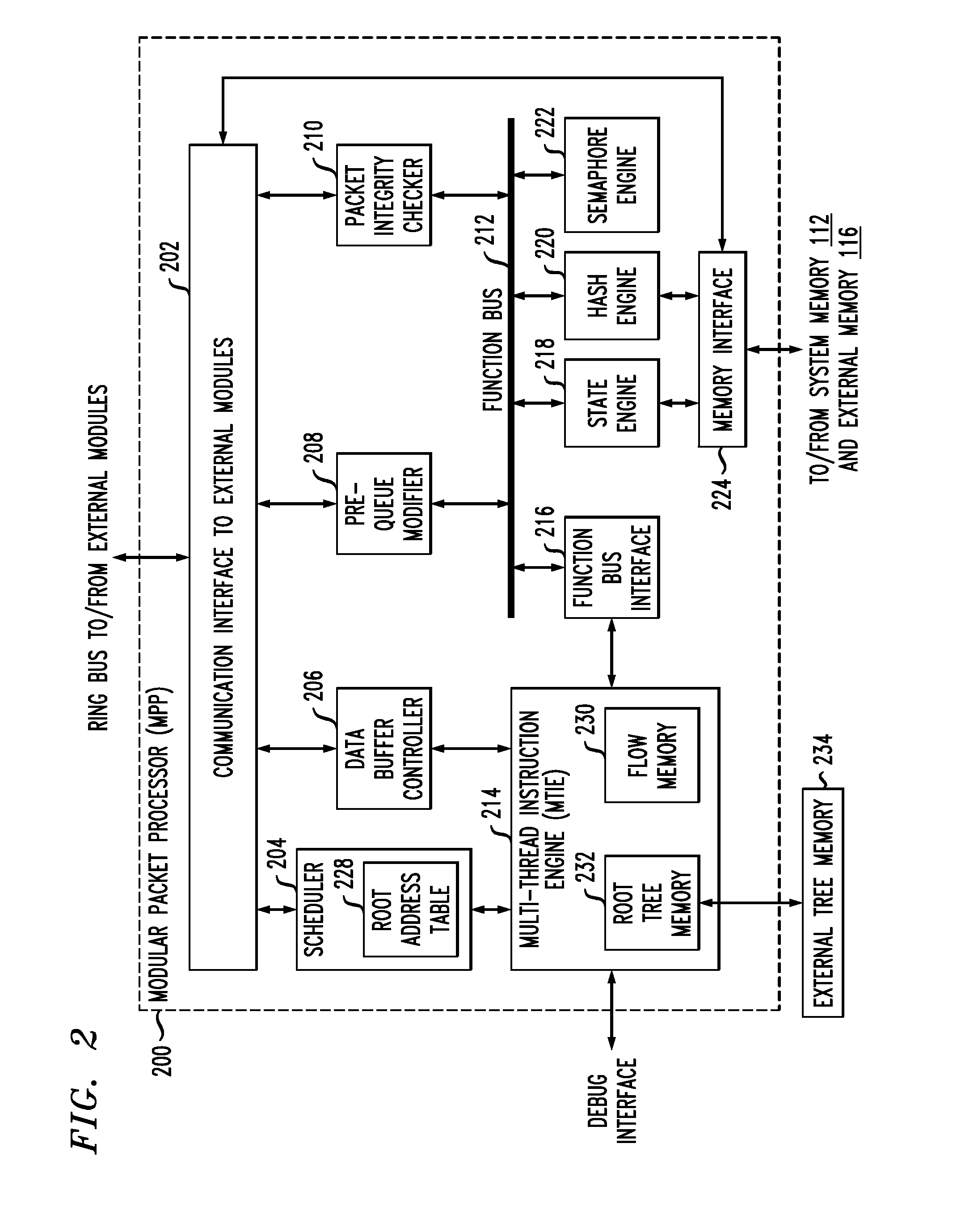

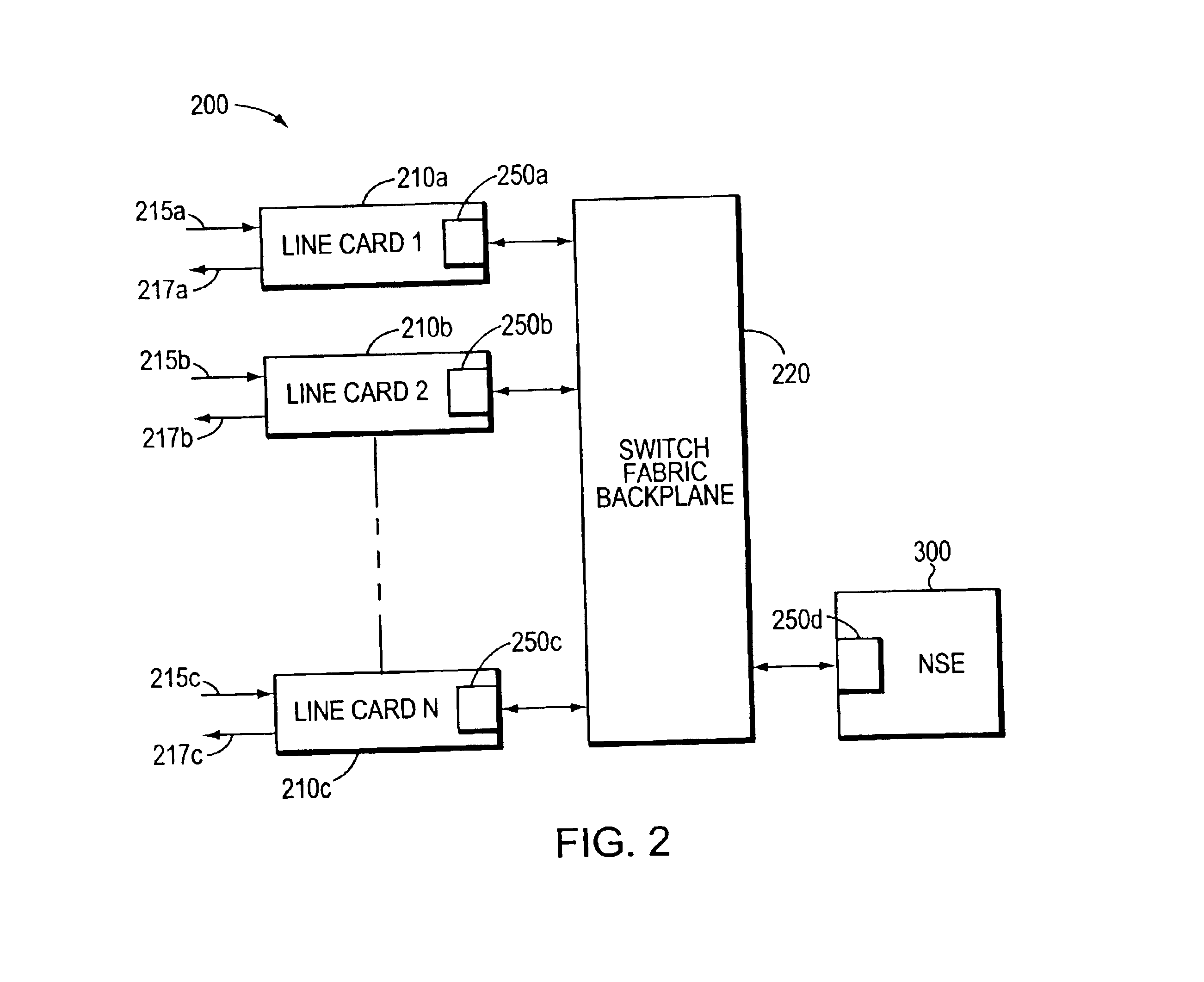

Concurrent, coherent cache access for multiple threads in a multi-core, multi-thread network processor

InactiveUS20110225372A1Well formedMemory adressing/allocation/relocationDigital computer detailsData packComputer architecture

Described embodiments provide a packet classifier of a network processor having a plurality of processing modules. A scheduler generates a thread of contexts for each tasks generated by the network processor corresponding to each received packet. The thread corresponds to an order of instructions applied to the corresponding packet. A multi-thread instruction engine processes the threads of instructions. A state engine operates on instructions received from the multi-thread instruction engine, the instruction including a cache access request to a local cache of the state engine. A cache line entry manager of the state engine translates between a logical index value of data corresponding to the cache access request and a physical address of data stored in the local cache. The cache line entry manager manages data coherency of the local cache and allows one or more concurrent cache access requests to a given cache data line for non-overlapping data units.

Owner:INTEL CORP

Method and apparatus for maintaining data coherency

A method and apparatus for assuring data consistency in a data processing network including local and remote data storage controllers interconnected by independent communication paths. The remote storage controller or controllers normally act as a mirror for the local storage controller or controllers. If, for any reason, transfers over one of the independent communication paths is interrupted, transfers over all the independent communication paths to predefined devices in a group are suspended thereby assuring the consistency of the data at the remote storage controller or controllers. When the cause of the interruption has been corrected, the local storage controllers are able to transfer data modified since the suspension occurred to their corresponding remote storage controllers thereby to reestablish synchronism and consistency for the entire dataset.

Owner:DELL EMC

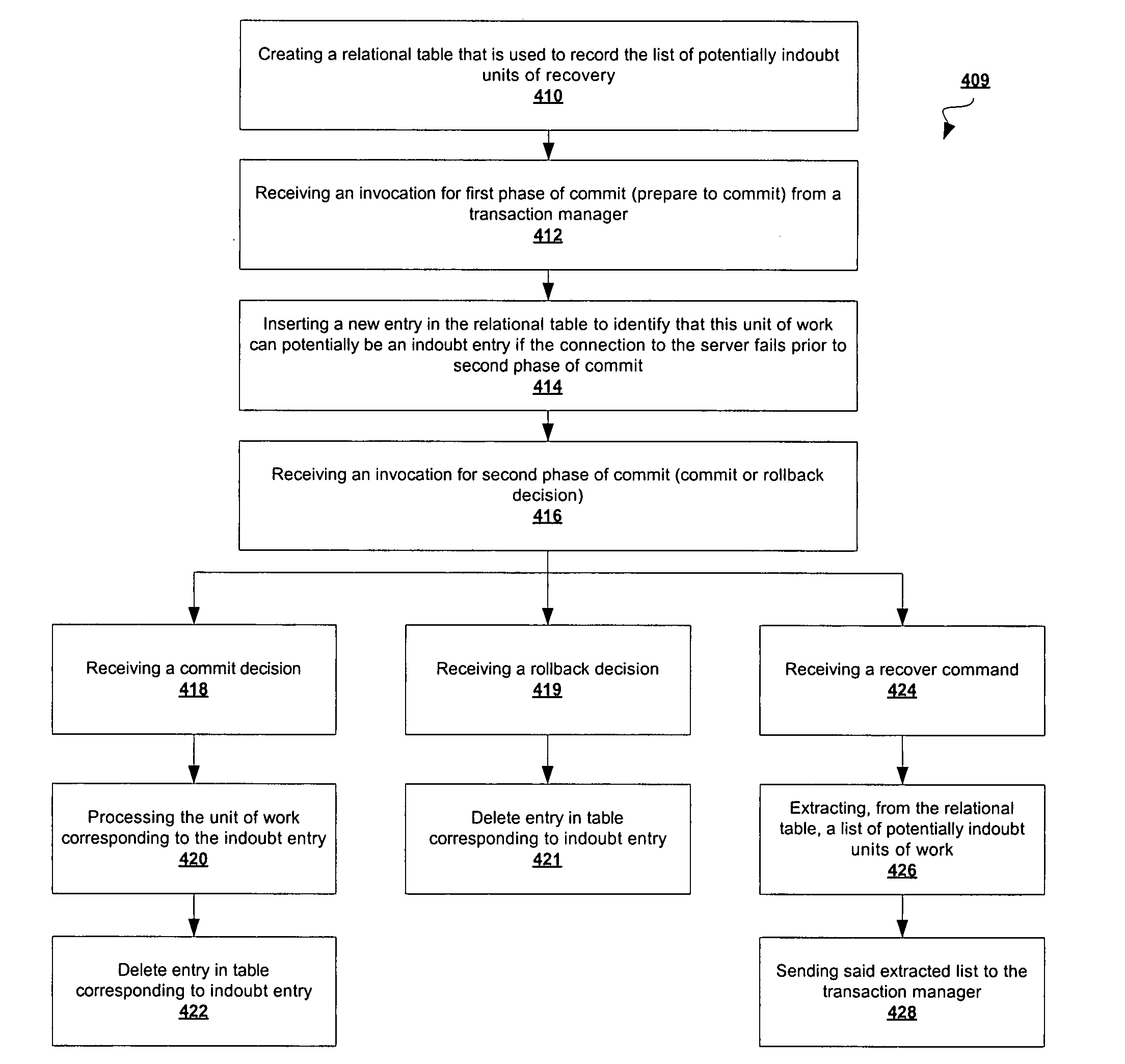

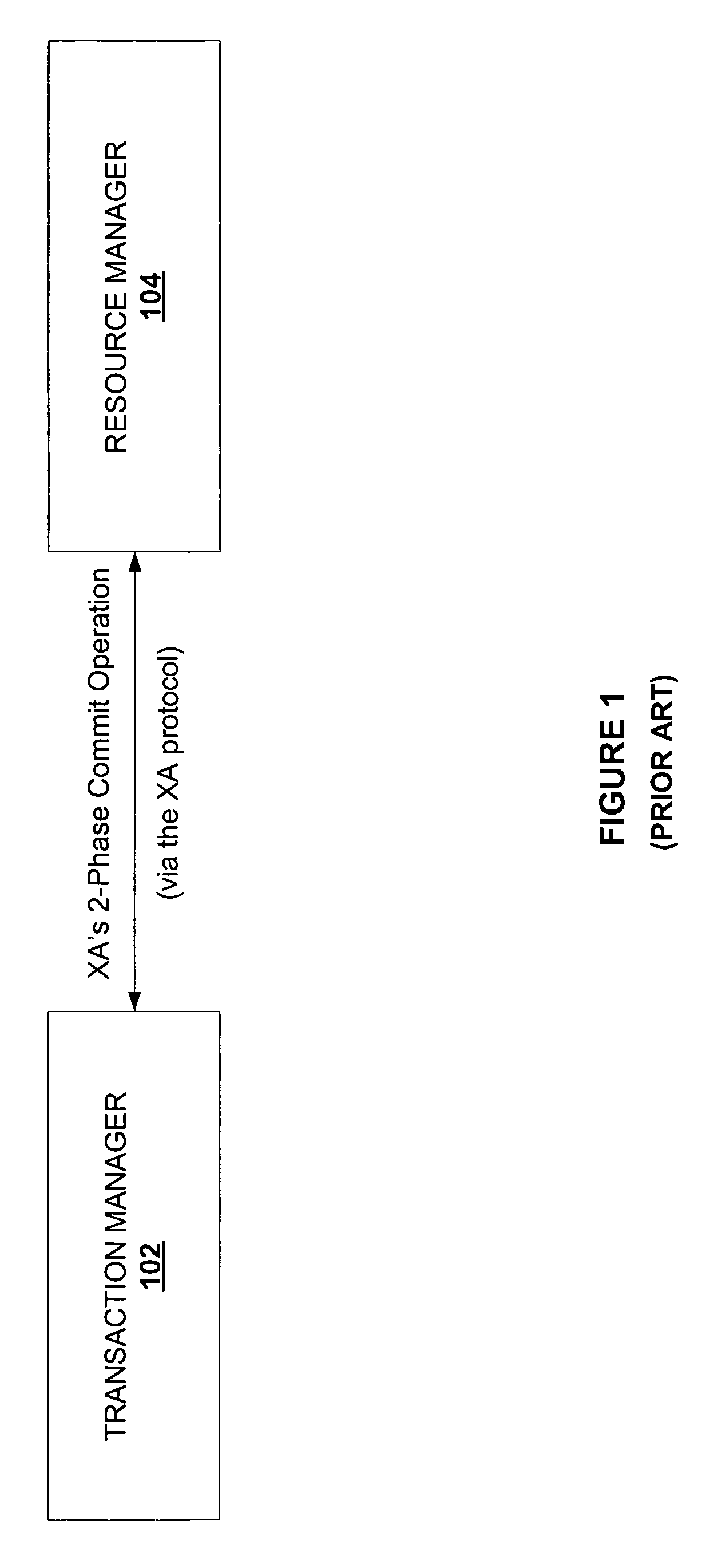

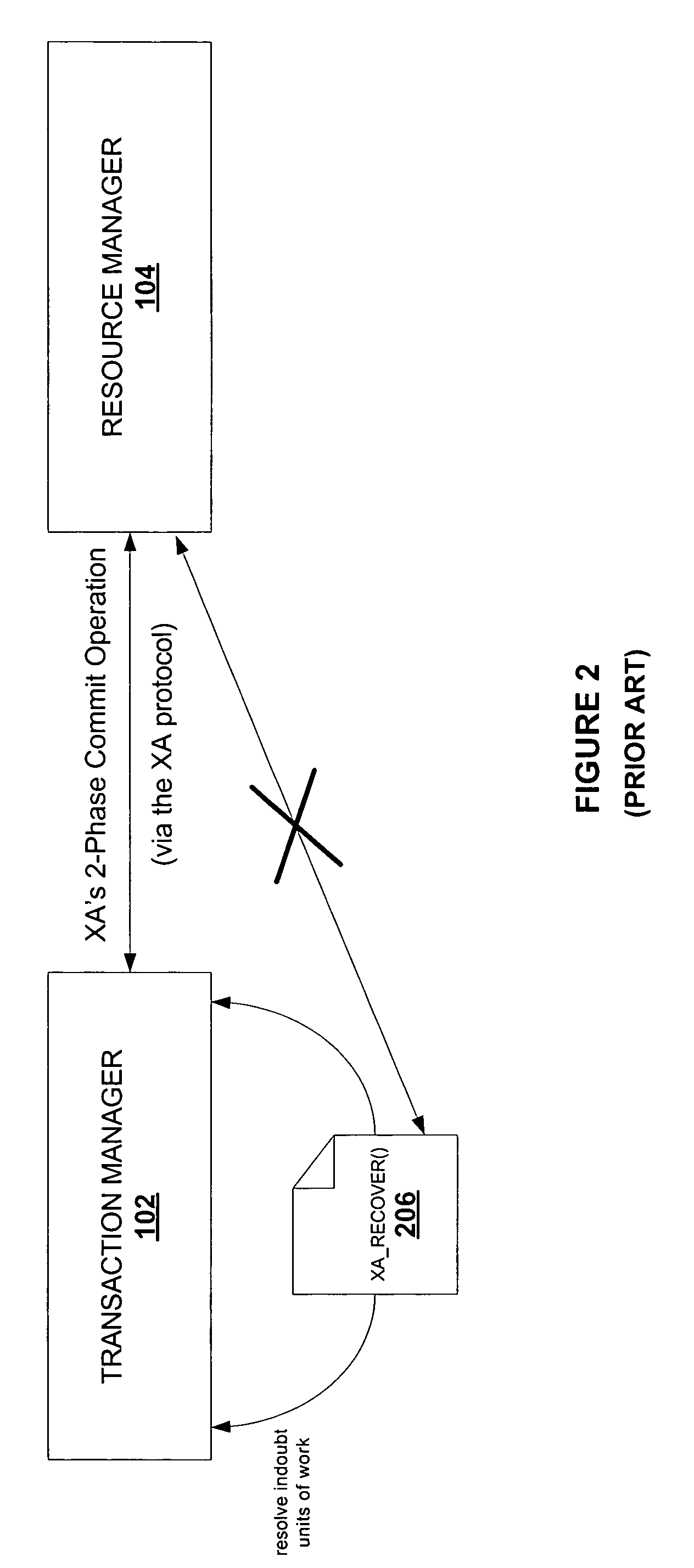

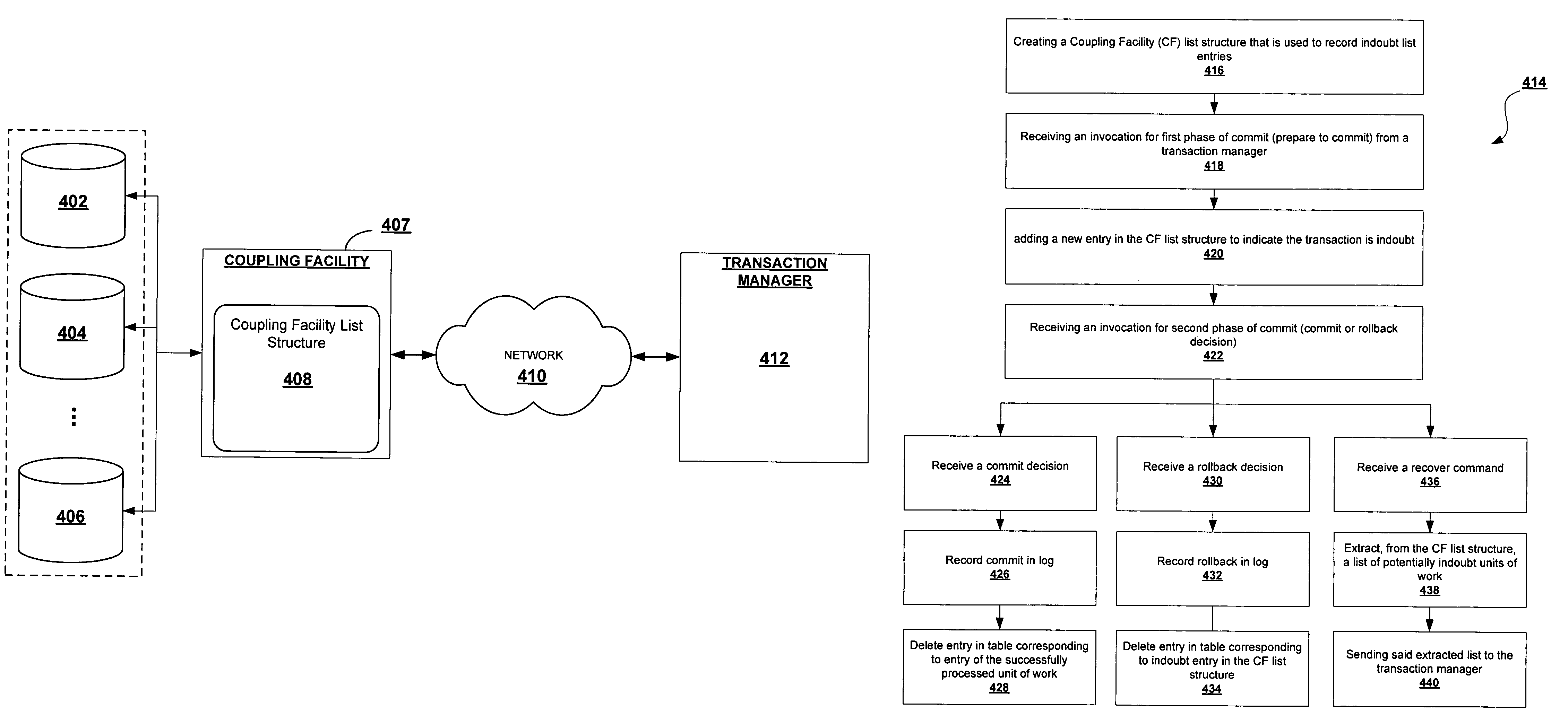

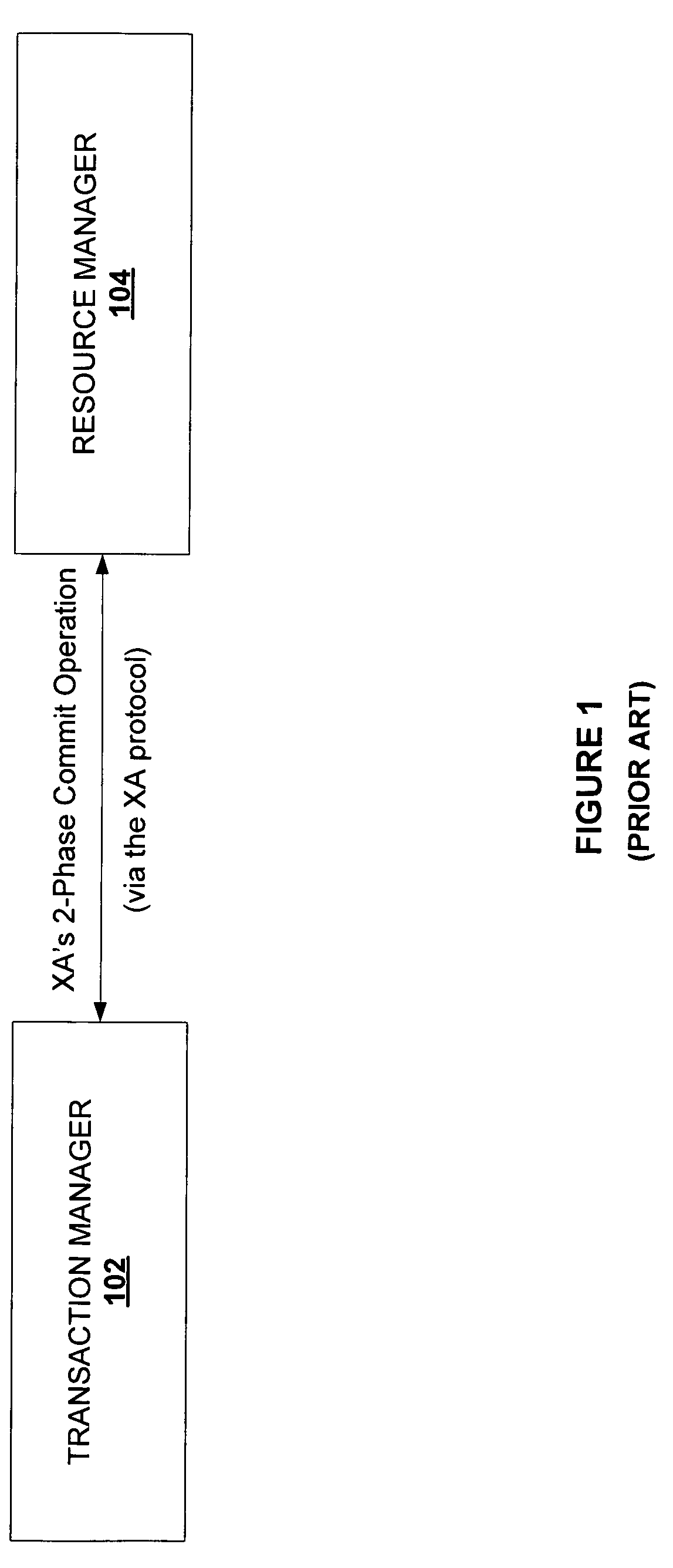

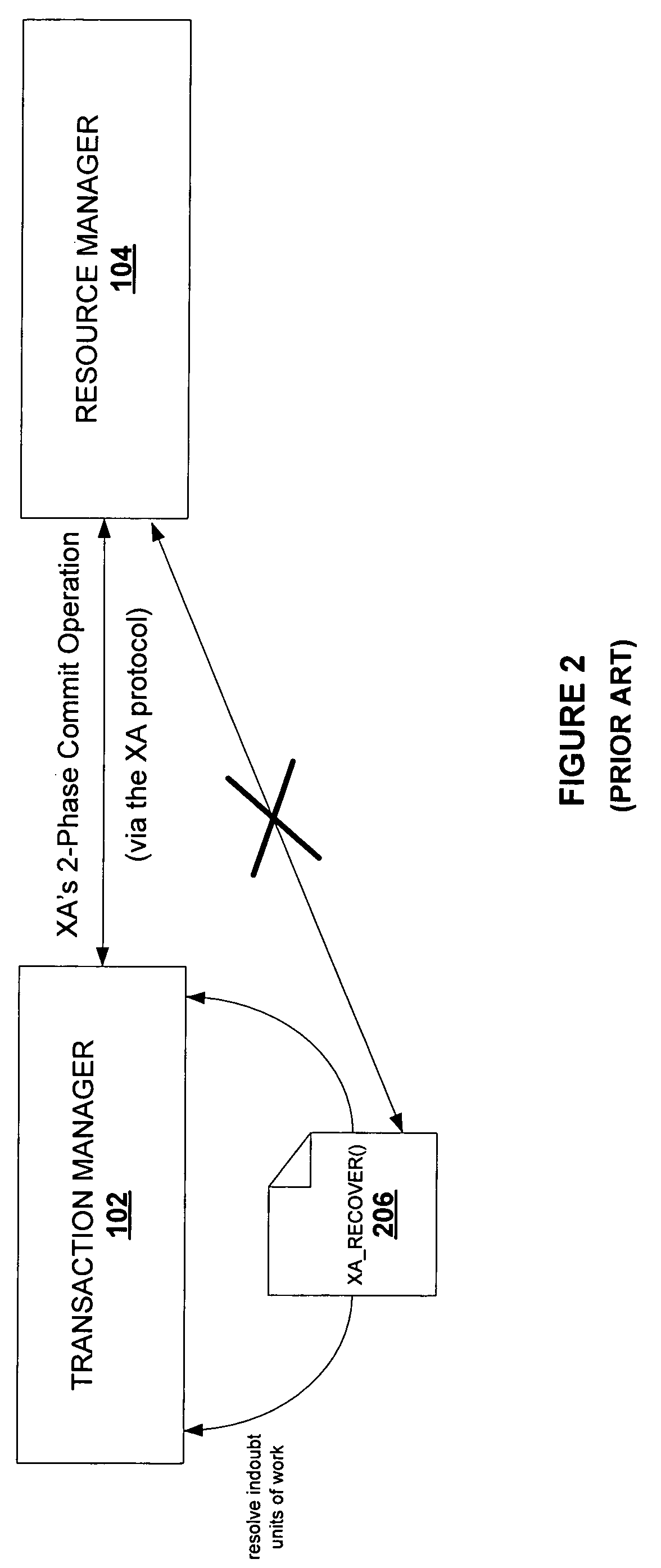

System and method for supporting XA 2-phase commit protocols with a loosely coupled clustered database server

InactiveUS20050144299A1Eliminate needRelational databasesMultiple digital computer combinationsDatabase serverRunning time

Indoubt transaction entries are recorded for each member of a database cluster, thereby avoiding the CPU cost and elapsed-time impact of persisting this information to a disk (either via a log write or a relational table I / O). This implementation allows for full read / write access and data coherency for concurrent access by all the members in the database cluster. At any given point in time, a full list of indoubt transactions is maintained for the entire database cluster in a relational table (e.g., an SQL table).

Owner:IBM CORP

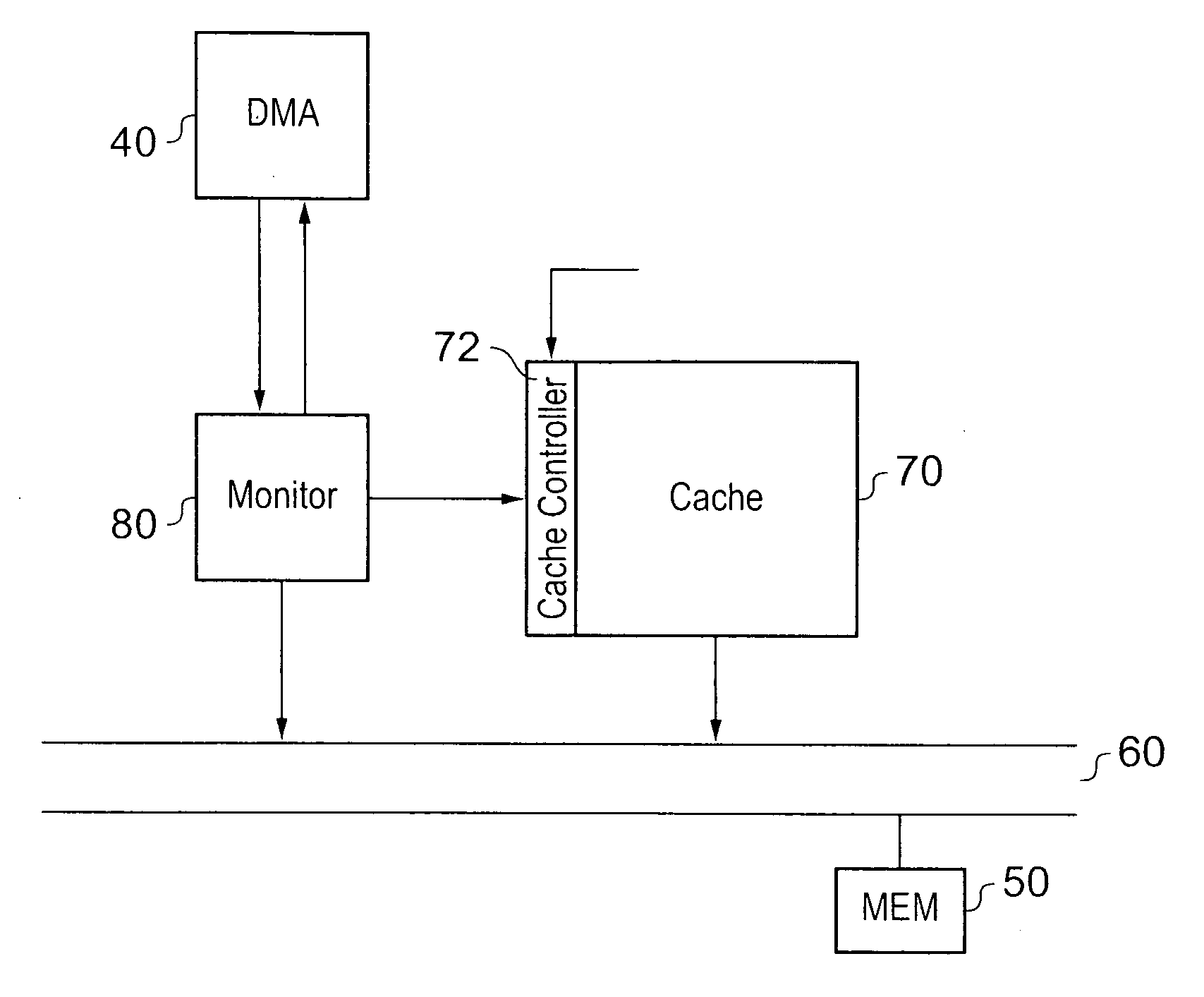

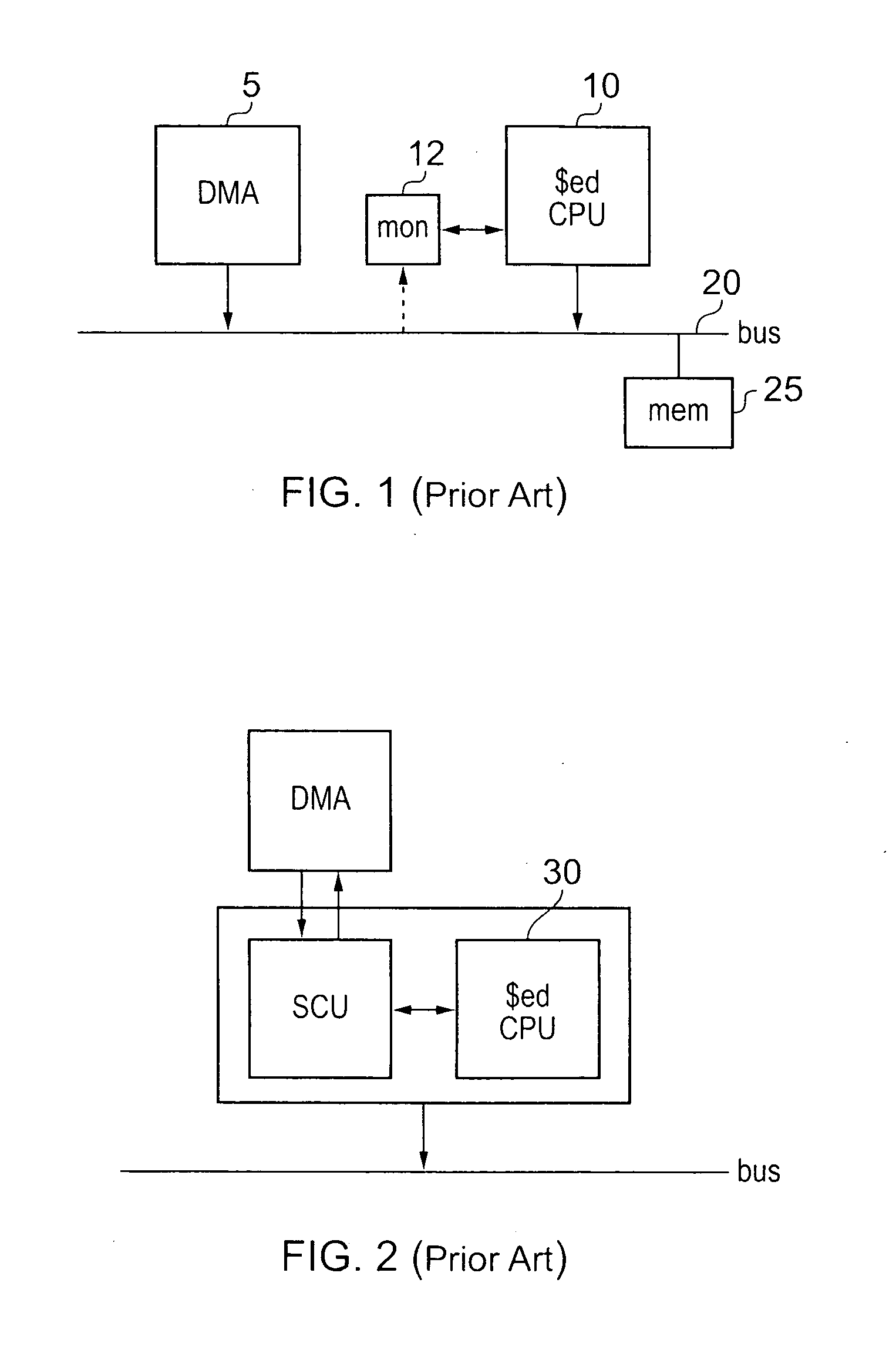

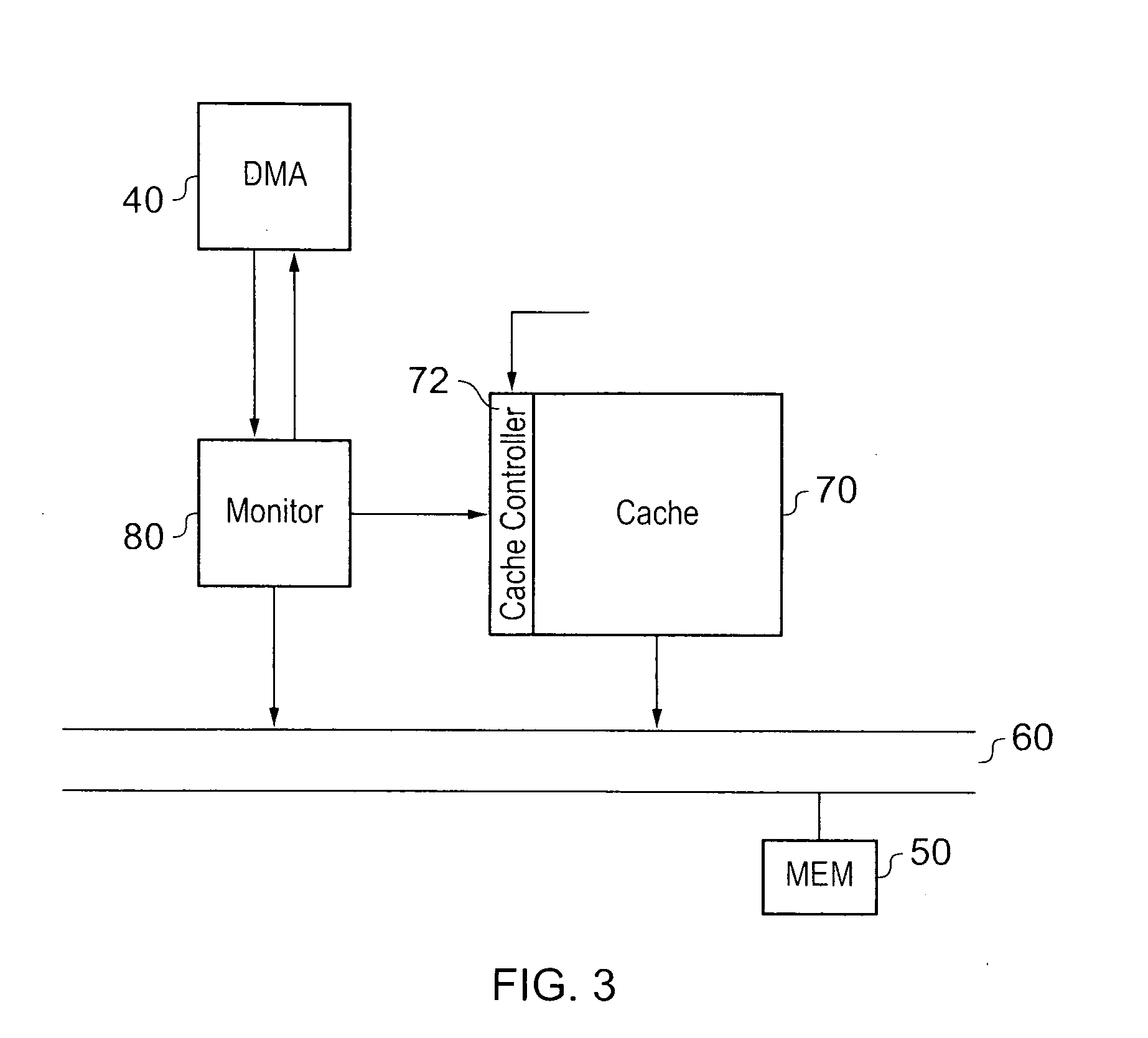

Area and power efficient data coherency maintenance

ActiveUS20110191543A1Easy to monitorFacilitate transmissionMemory architecture accessing/allocationEnergy efficient ICTPower efficientOperating system

An apparatus for storing data that is being processed is disclosed. The apparatus comprises: a cache associated with a processor and for storing a local copy of data items stored in a memory for use by the processor, monitoring circuitry associated with the cache for monitoring write transaction requests to the memory initiated by a further device, the further device being configured not to store data in the cache. The monitoring circuitry is responsive to detecting a write transaction request to write a data item, a local copy of which is stored in the cache, to block a write acknowledge signal transmitted from the memory to the further device indicating the write has completed and to invalidate the stored local copy in the cache and on completion of the invalidation to send the write acknowledge signal to the further device.

Owner:ARM LTD

Facilitating data coherency using in-memory tag bits and tag test instructions

InactiveUS20120296877A1Reduce the number of timesGood data consistencyMemory architecture accessing/allocationDigital data processing detailsOriginal dataData mining

Fine-grained detection of data modification of original data is provided by associating separate guard bits with granules of memory storing original data from which translated data has been obtained. The guard bits indicating whether the original data stored in the associated granule is protected for data coherency. The guard bits are set and cleared by special-purpose instructions. Responsive to attempting access to translated data obtained from the original data, the guard bit(s) associated with the original data is checked to determine whether the guard bit(s) fail to indicate coherency of the original data, and if so, discarding of the translated data is initiated to facilitate maintaining data coherency between the original data and the translated data.

Owner:IBM CORP

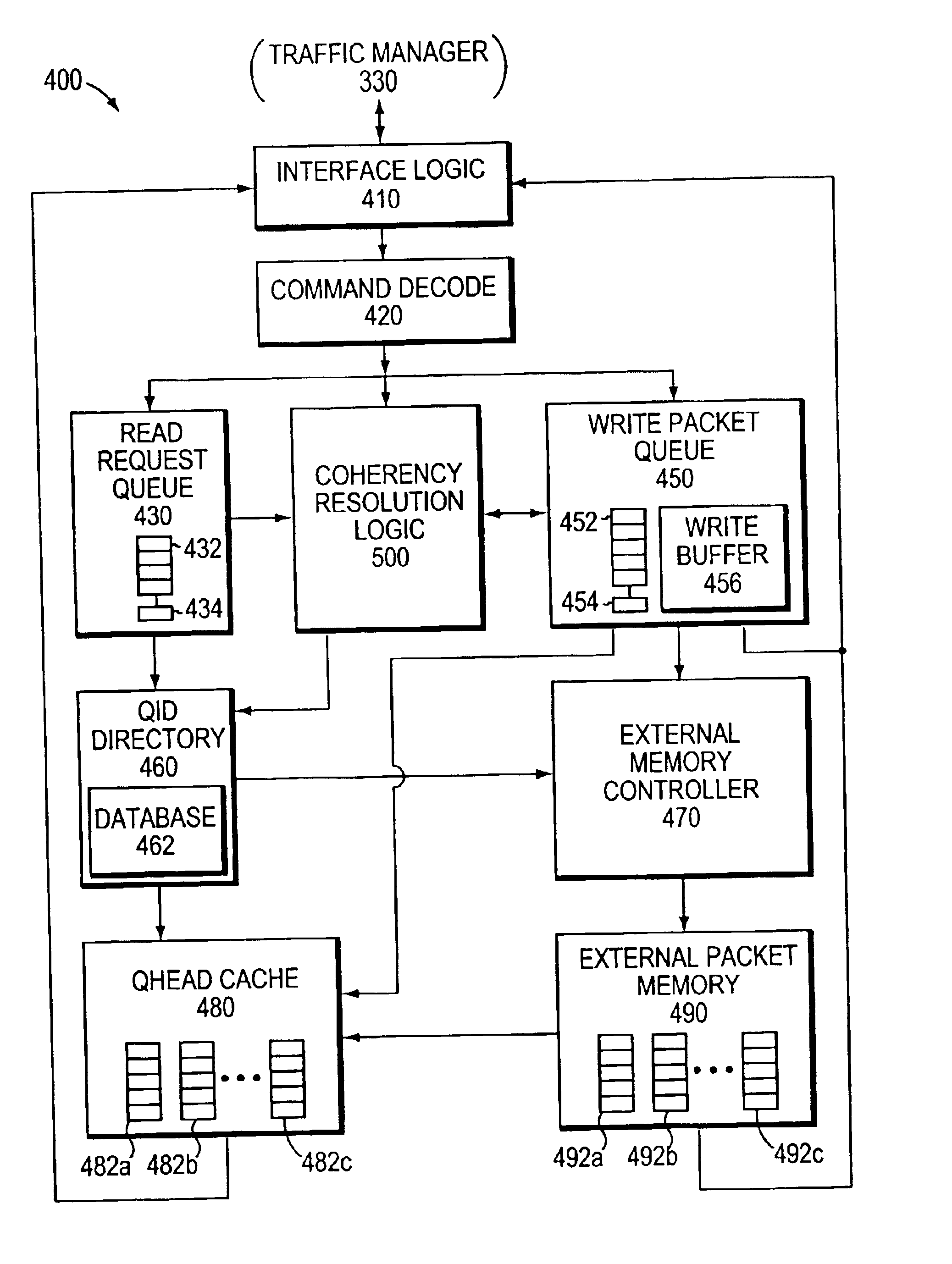

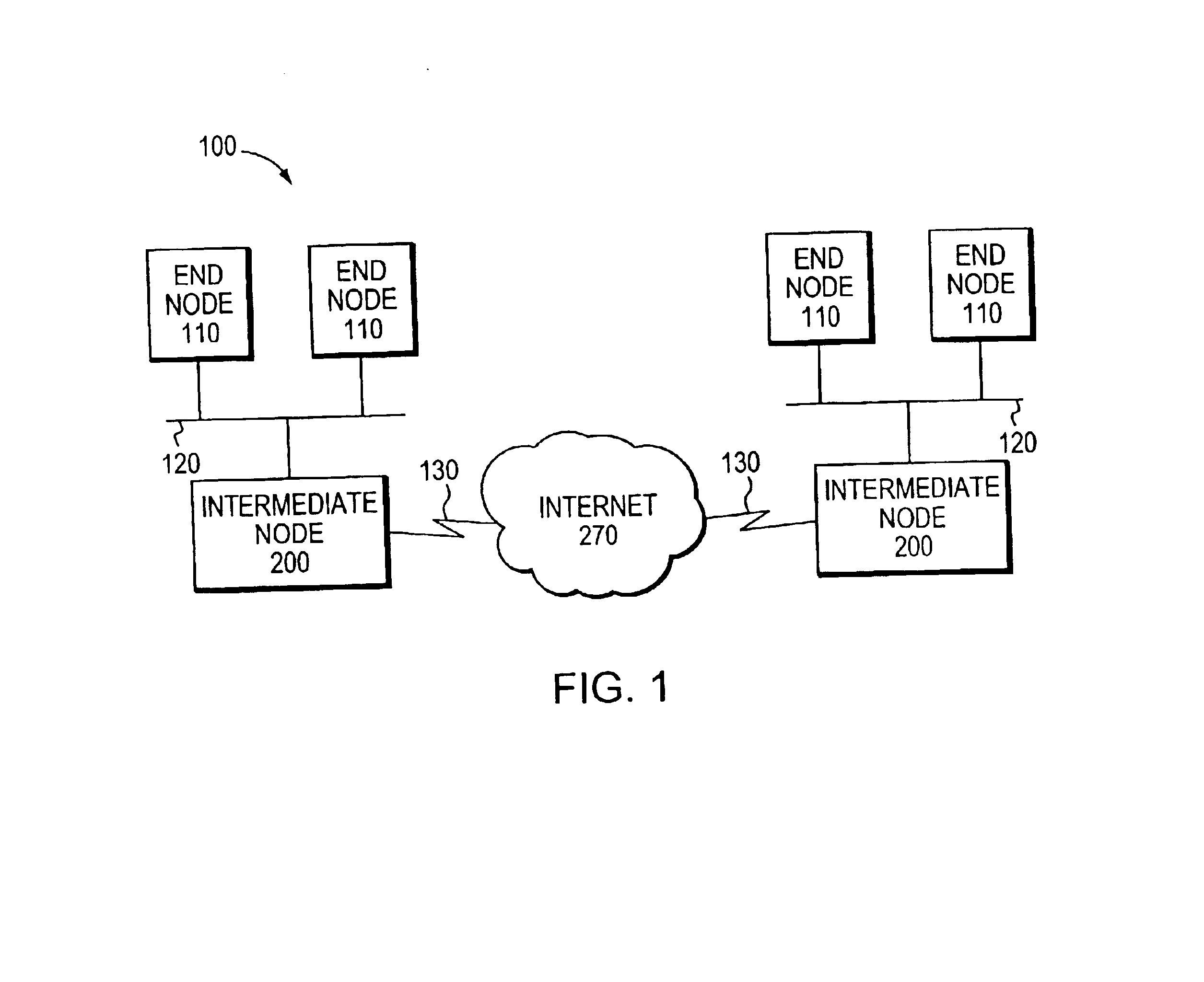

Coherency coverage of data across multiple packets varying in sizes

ActiveUS6850999B1Simplifies data coherencyReduce the impactMemory adressing/allocation/relocationDigital computer detailsWrite bufferNetwork packet

A coherency resolution technique enables efficient resolution of data coherency for packet data associated with a service queue of an intermediate network node. The packet data is enqueued on a write buffer prior to being stored on an external packet memory of a packet memory system. The packet data may be interspersed among other packets of data from different service queues, wherein the packets are of differing sizes. In response to a read request for the packet data, a coherency operation is performed by coherency resolution logic on the data in the write buffer to determine if any of its enqueued data can be used to service the request.

Owner:CISCO TECH INC

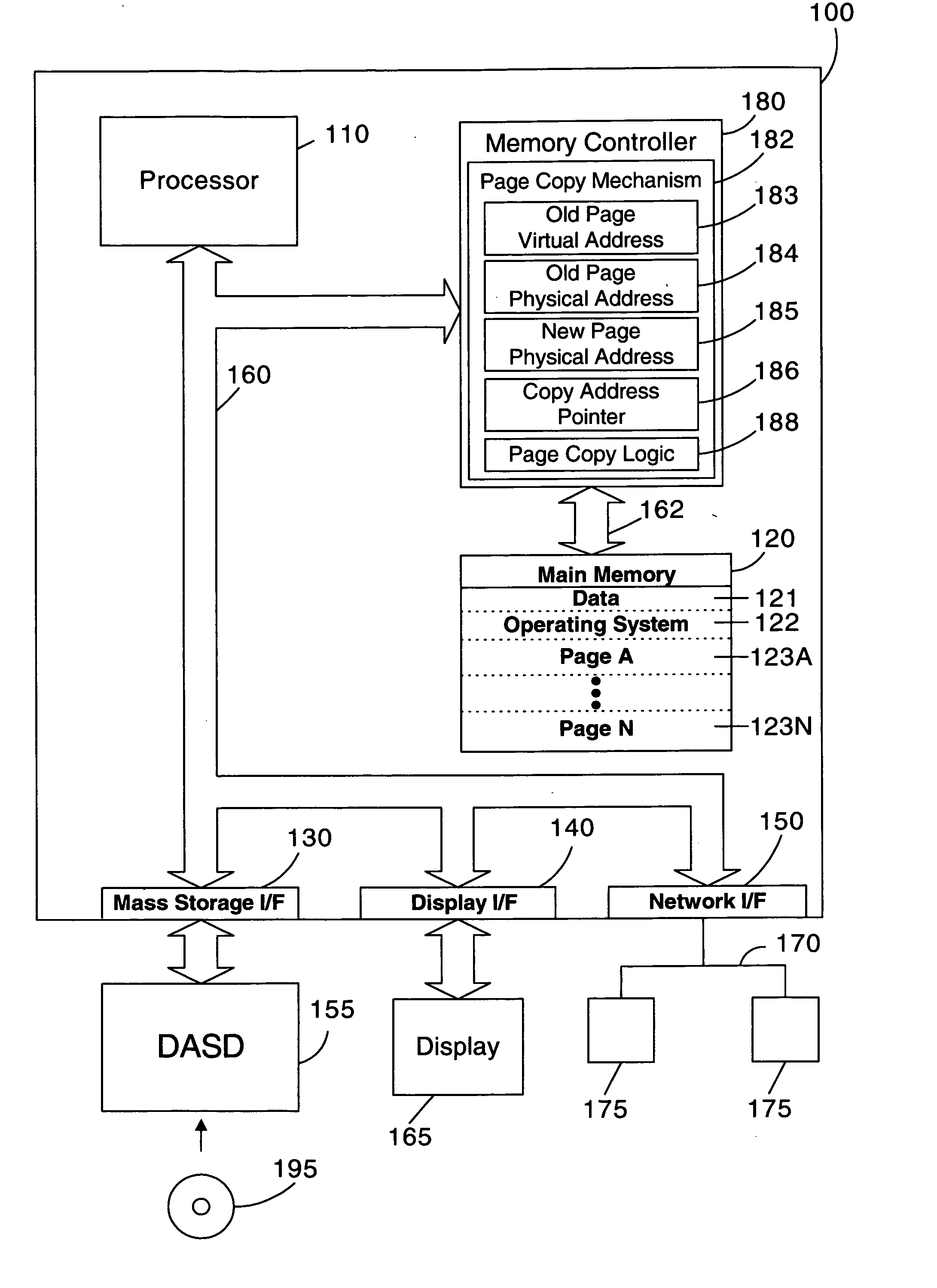

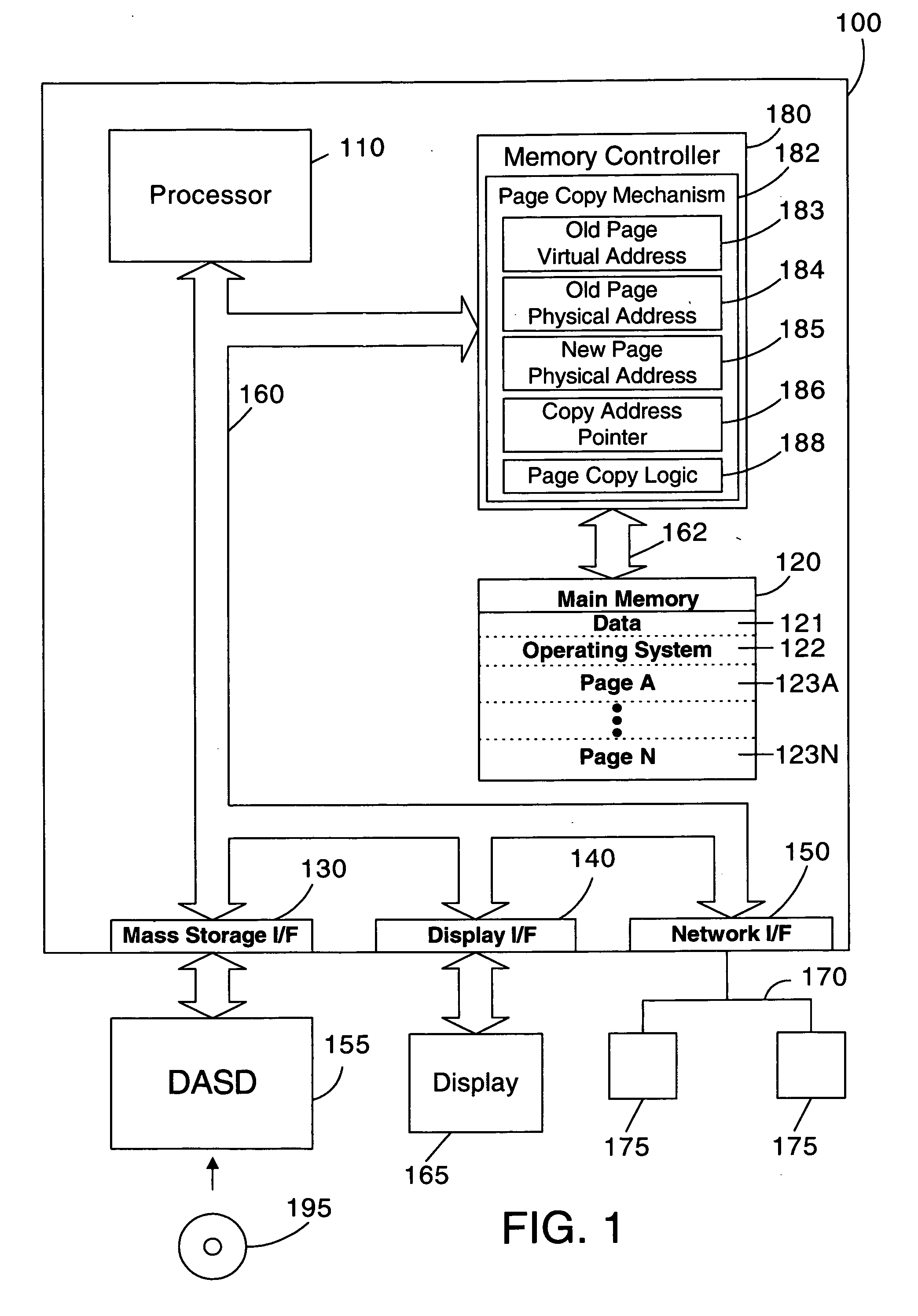

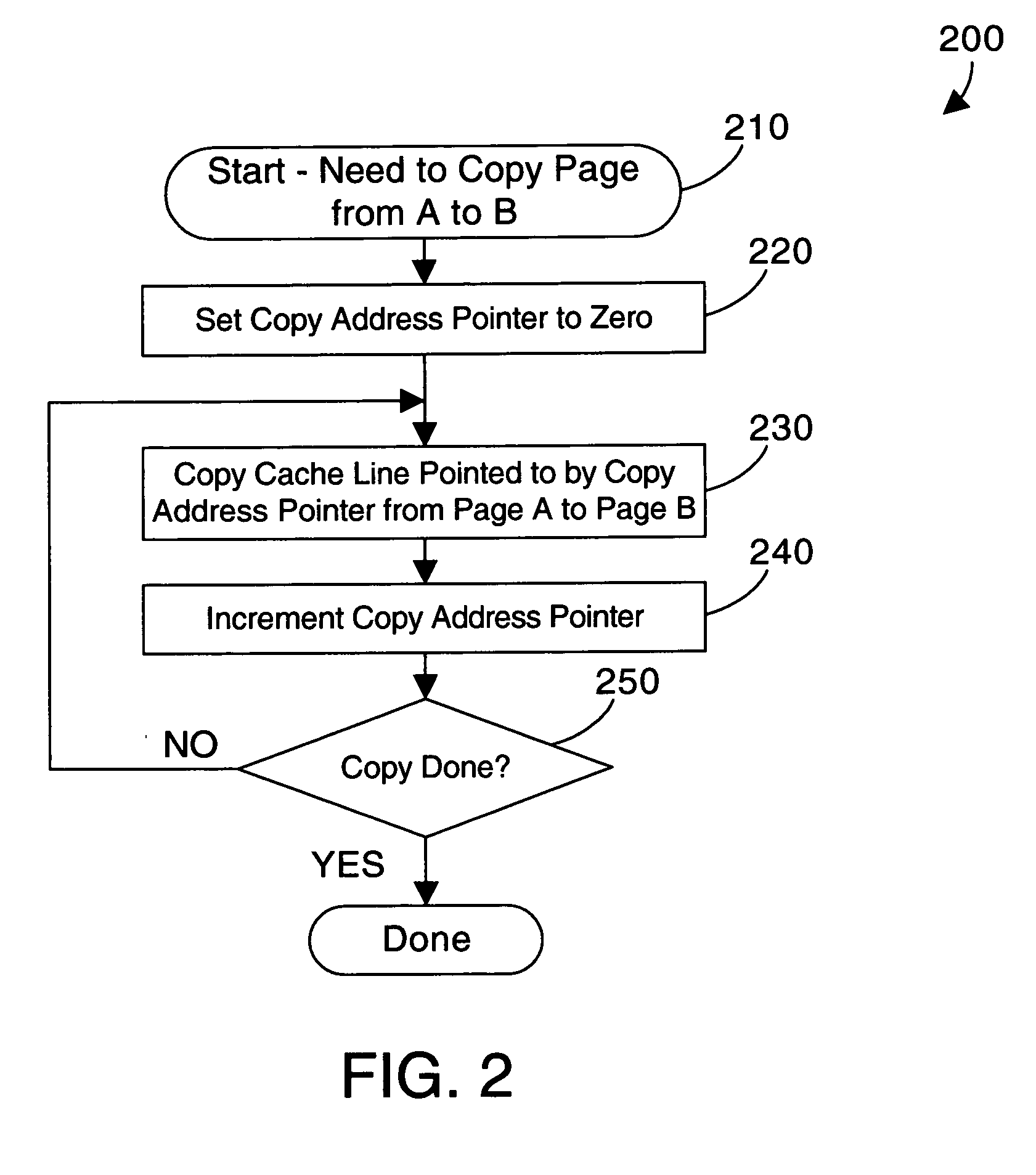

Memory controller and method for handling DMA operations during a page copy

A memory controller provides page copy logic that assures data coherency when a DMA operation to a page occurs during the copying of the page by the memory controller. The page copy logic compares the page index of the DMA operation to a copy address pointer that indicates the location currently being copied. If the page index of the DMA operation is less than the copy address pointer, the portion of the page that would be written to by the DMA operation has already been copied, so the DMA operation is performed to the physical address of the new page. If the page index of the DMA operation is greater than the copy address pointer, the portion of the page that would be written to by the DMA operation has not yet been copied, so the DMA operation is performed to the physical address of the old page.

Owner:LENOVO GLOBAL TECH INT LTD

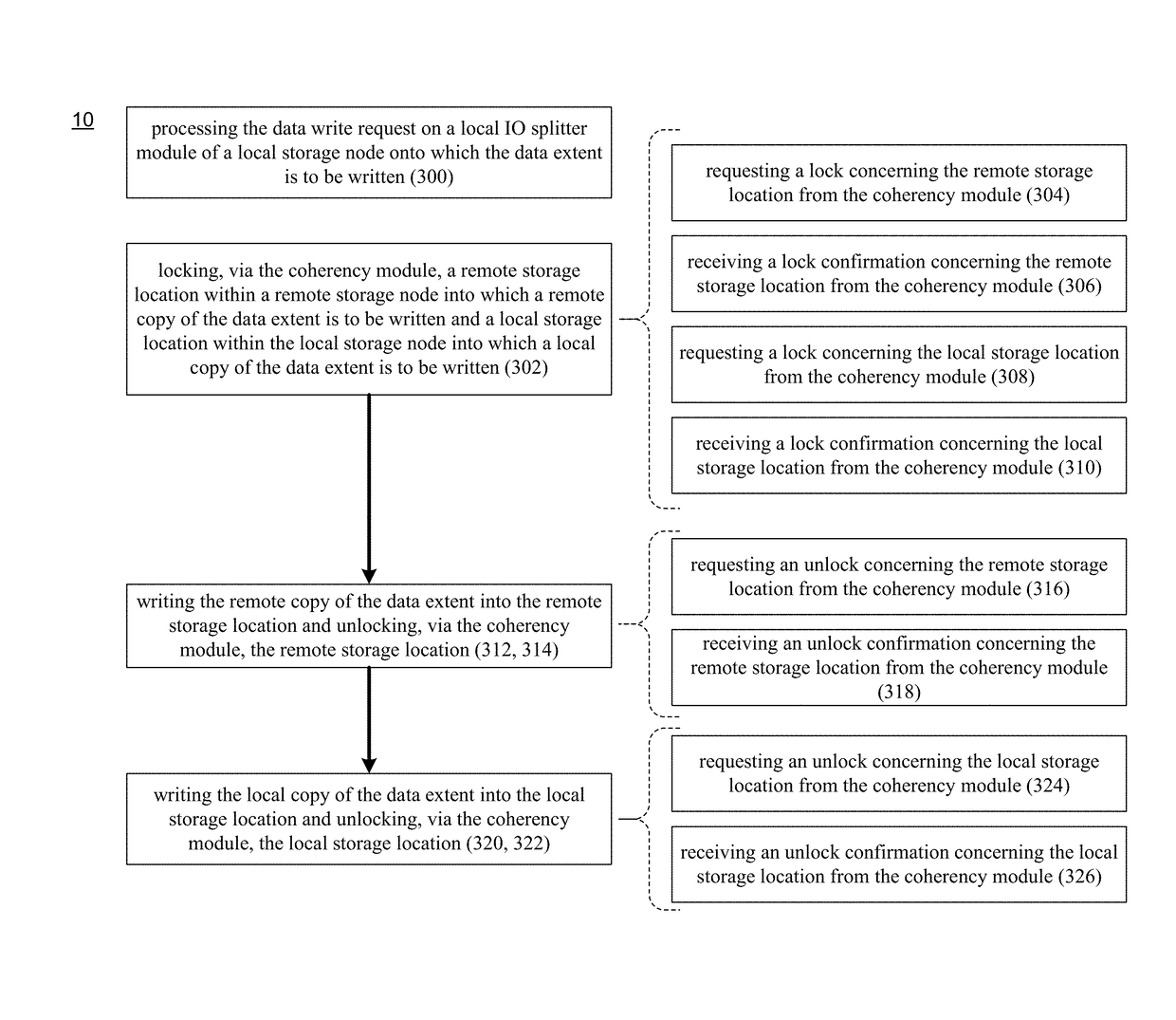

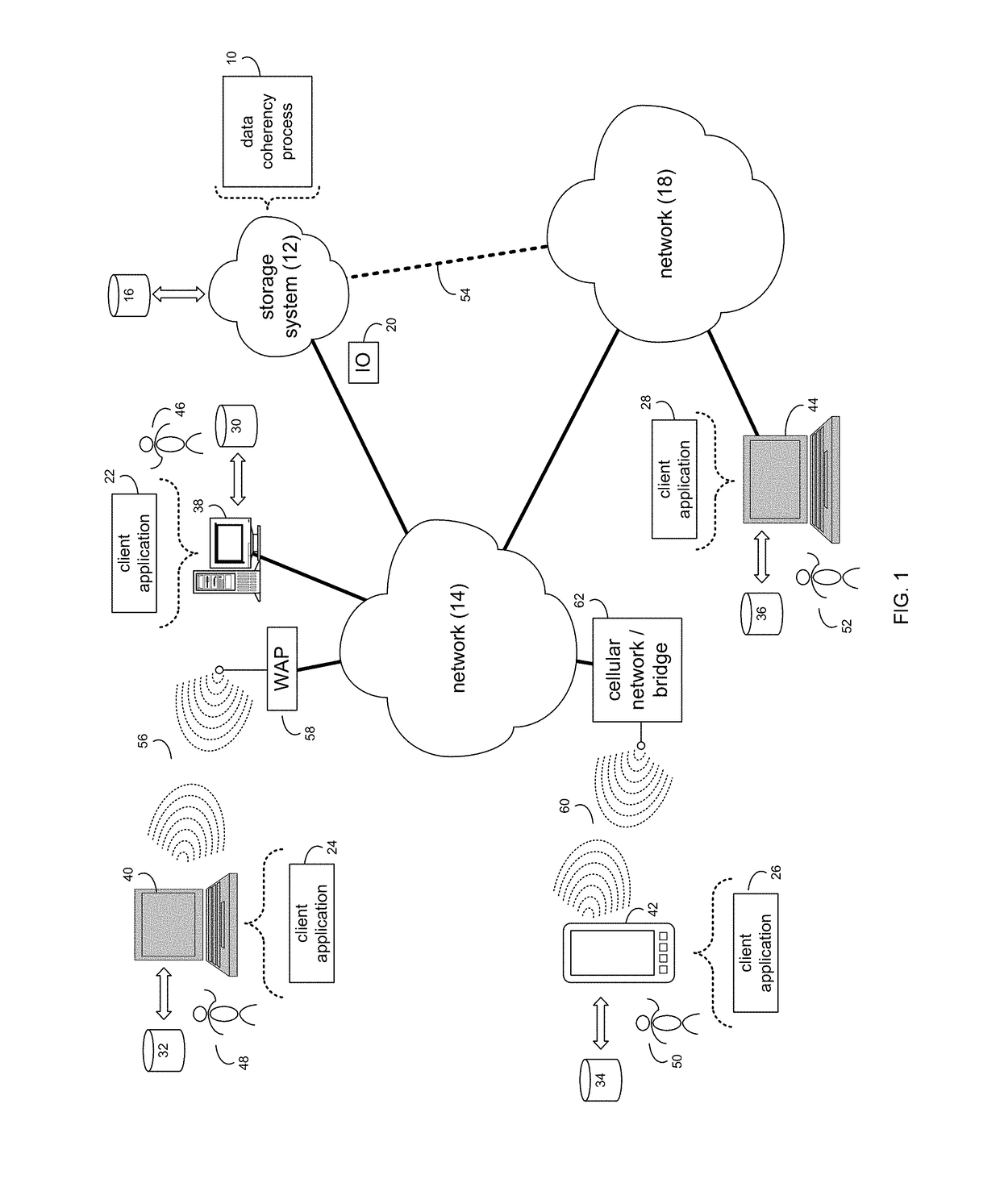

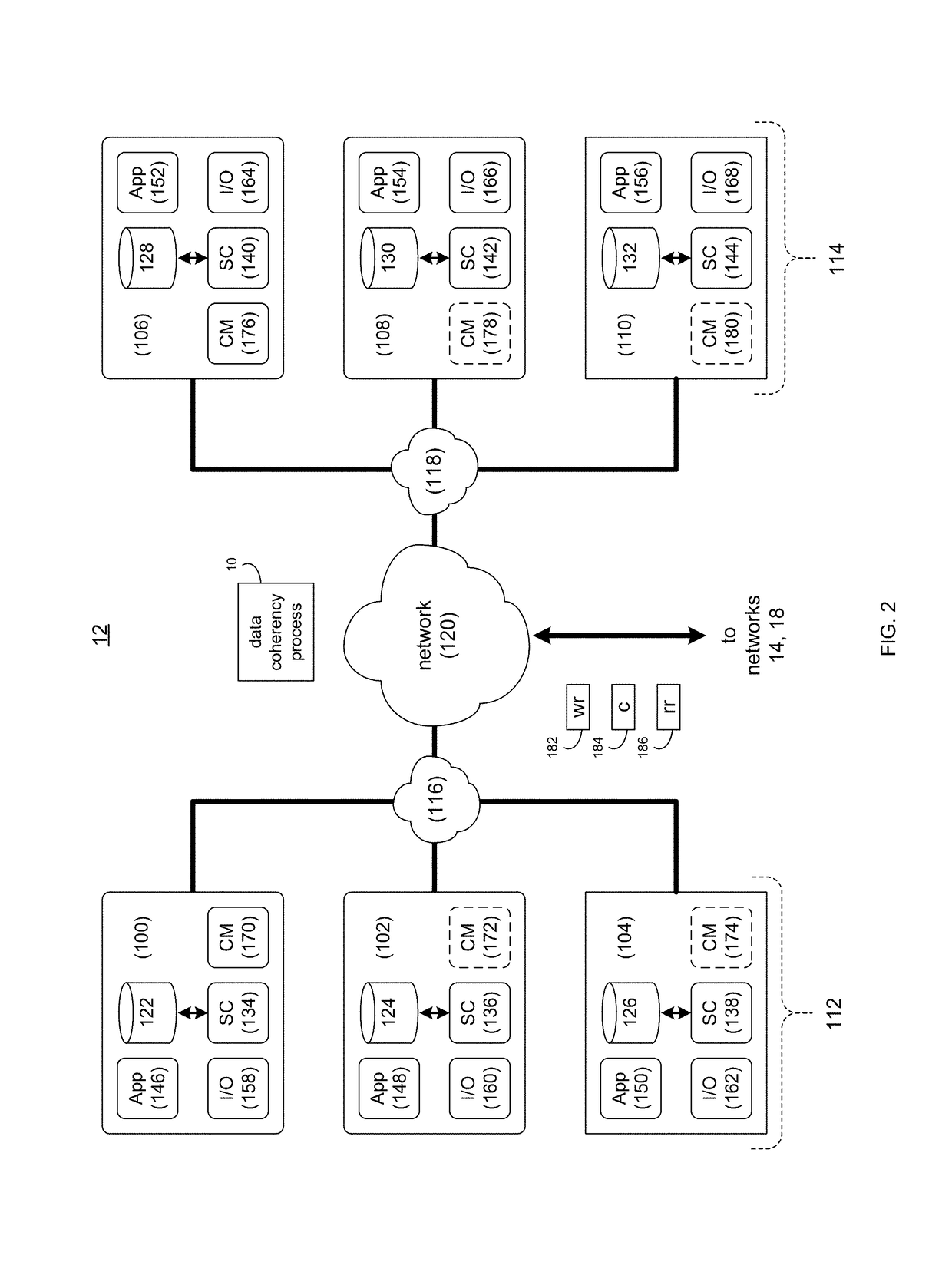

Data coherency system and method

ActiveUS9946649B1Memory architecture accessing/allocationInput/output to record carriersComputer scienceData coherency

A method, computer program product, and computing system for defining an IO splitter module within each of a plurality of nodes included within a hyper-converged storage environment. A coherency module is defined on at least one of the plurality of nodes. A data request is received.

Owner:EMC IP HLDG CO LLC

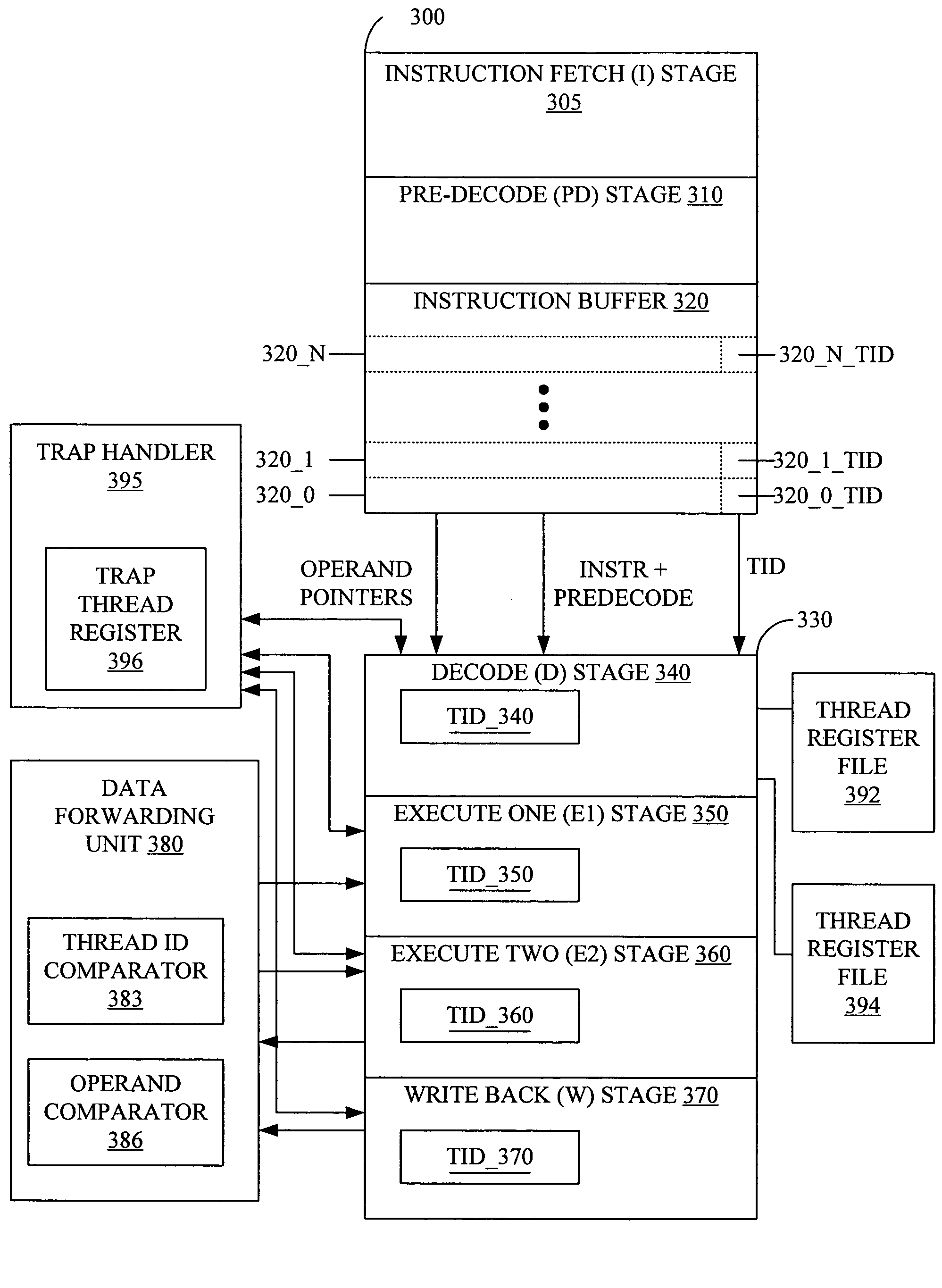

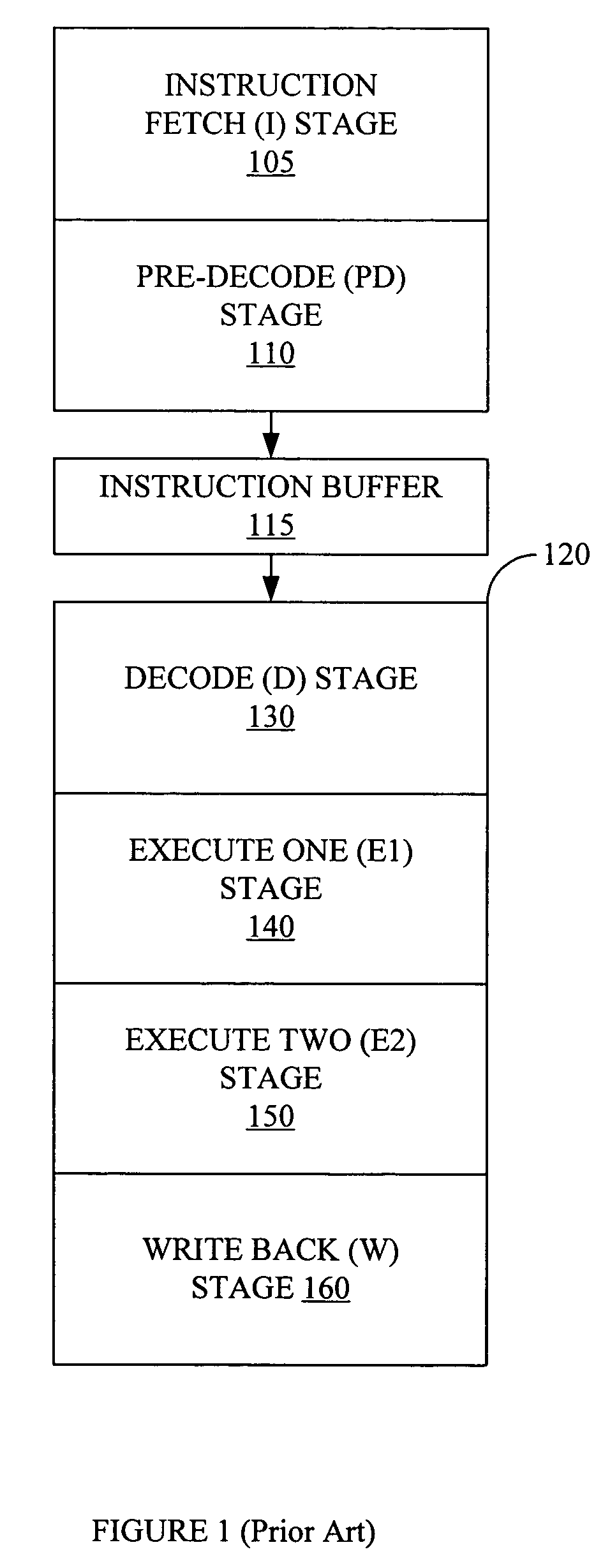

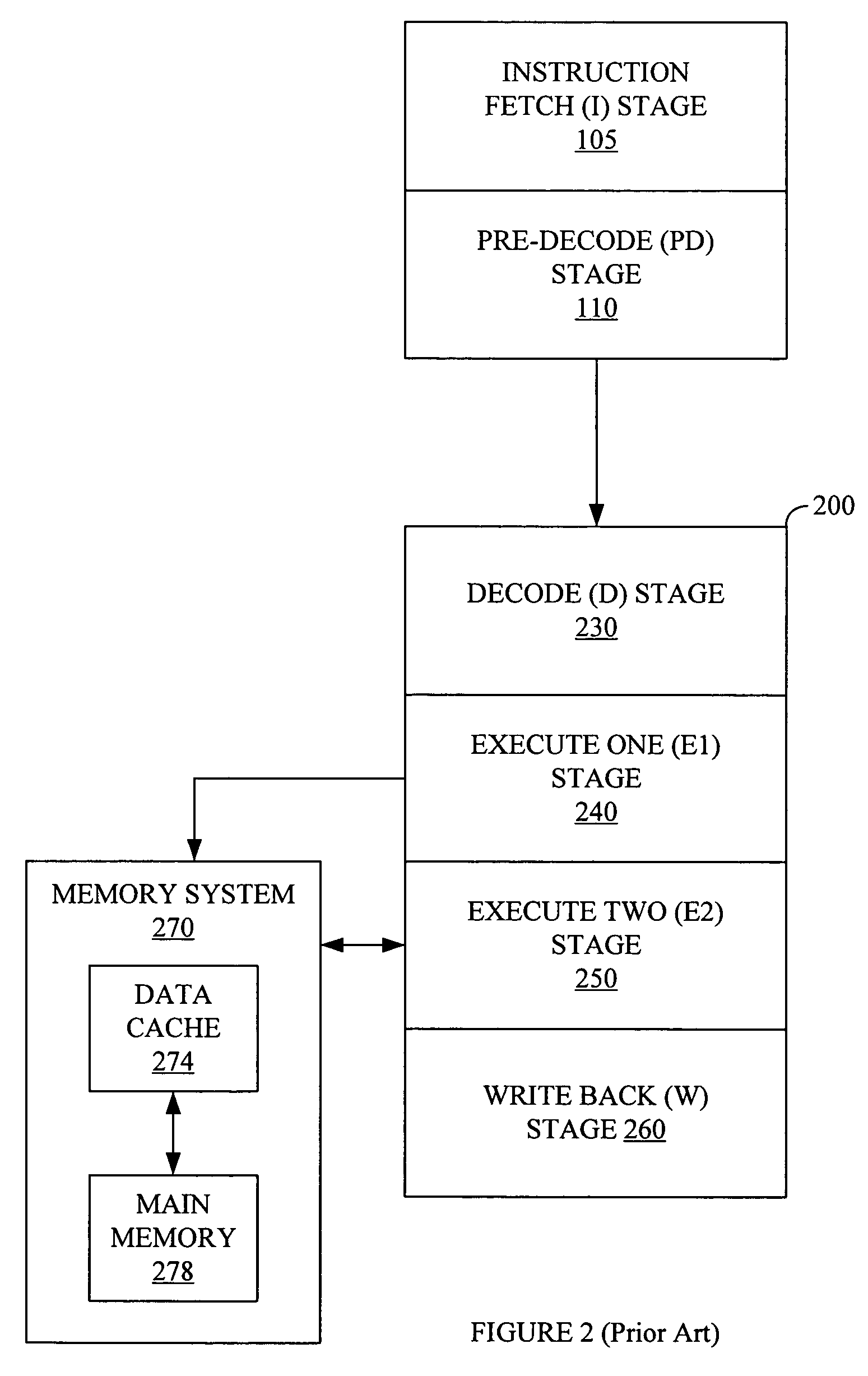

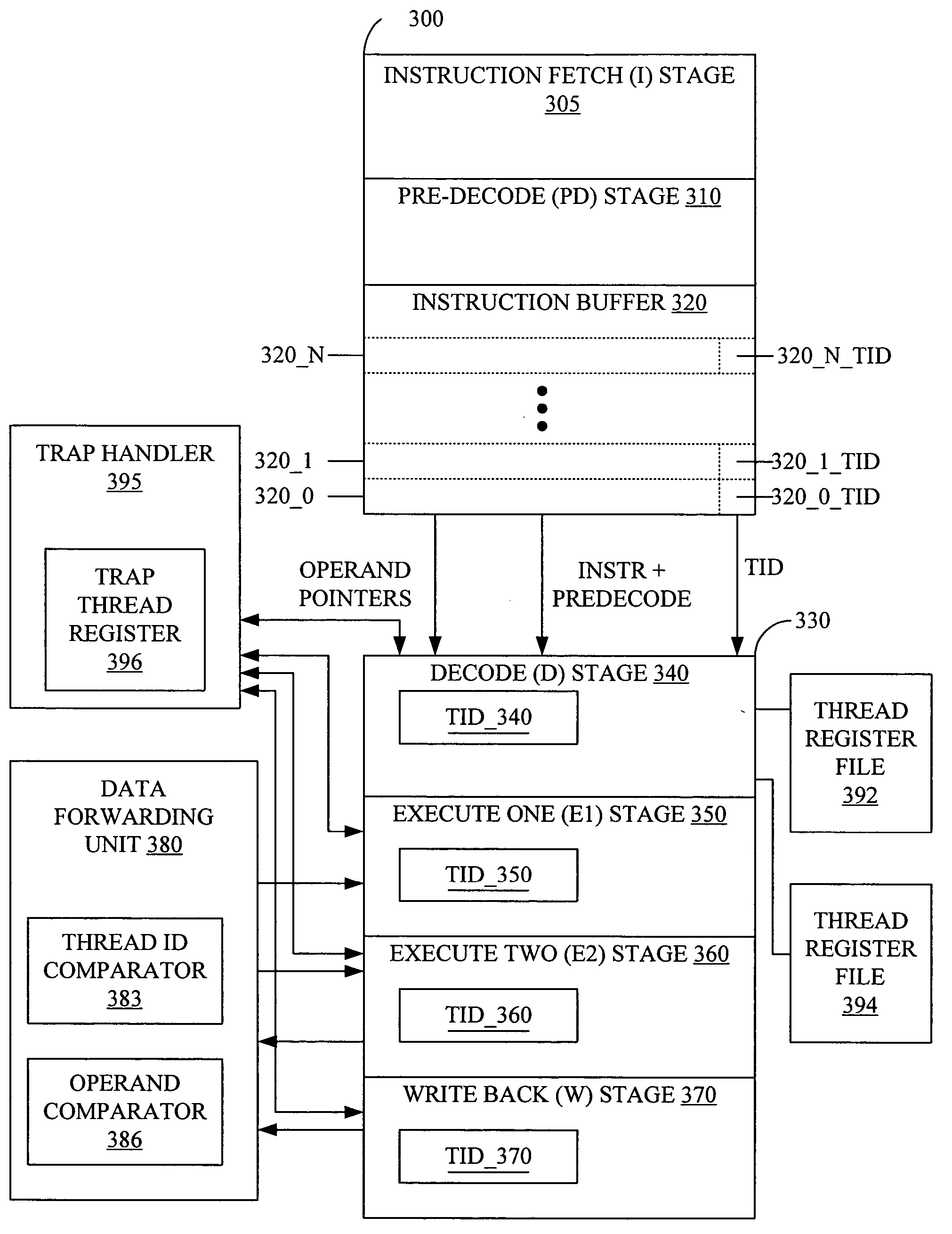

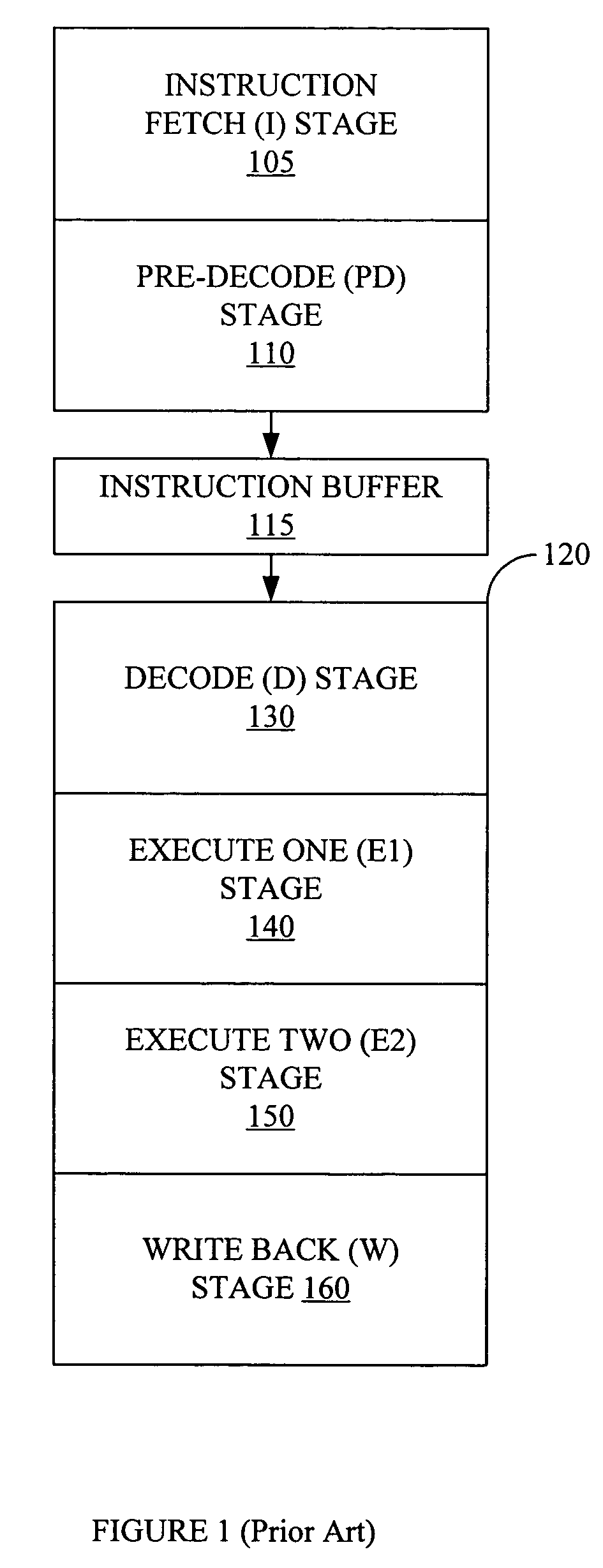

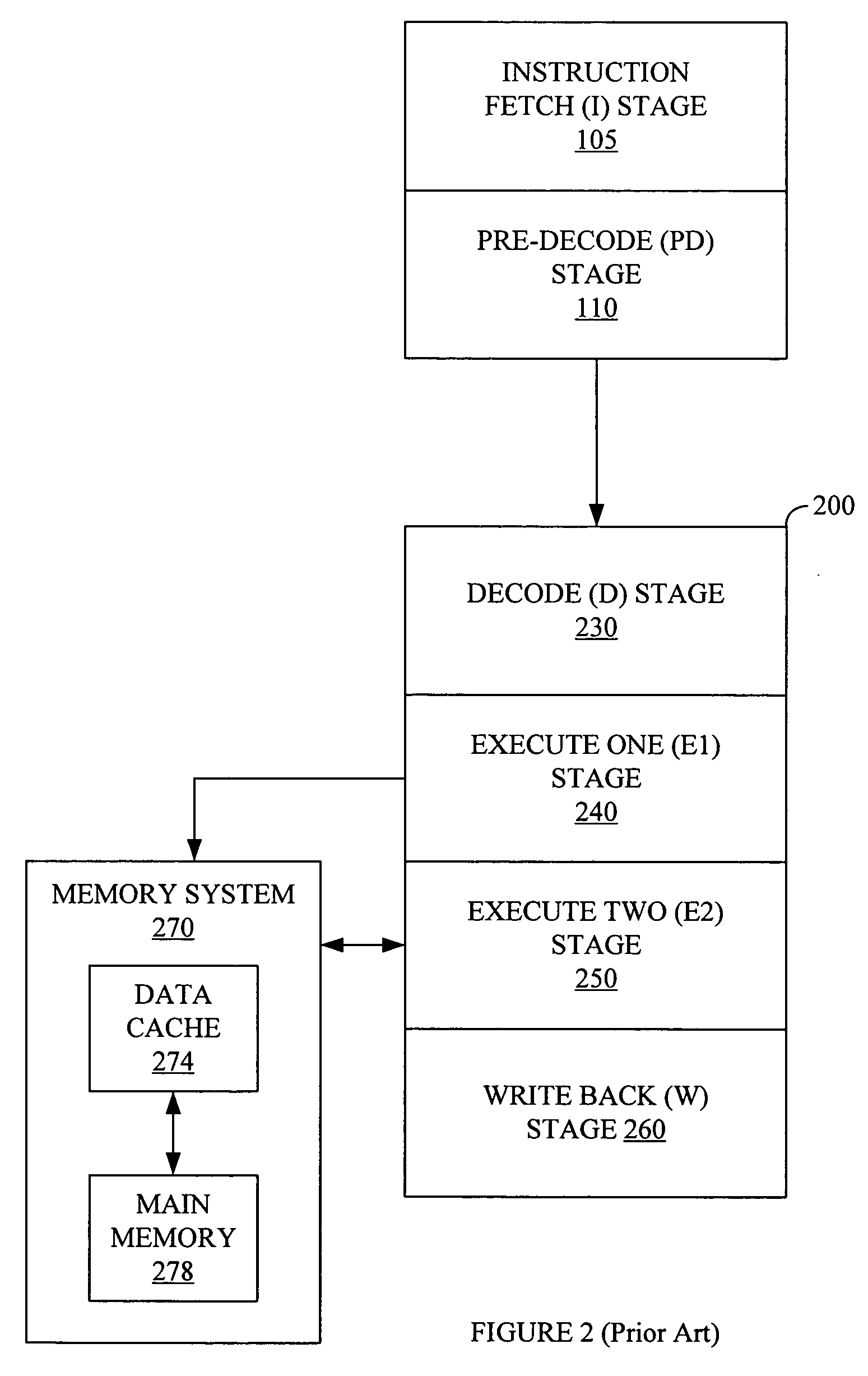

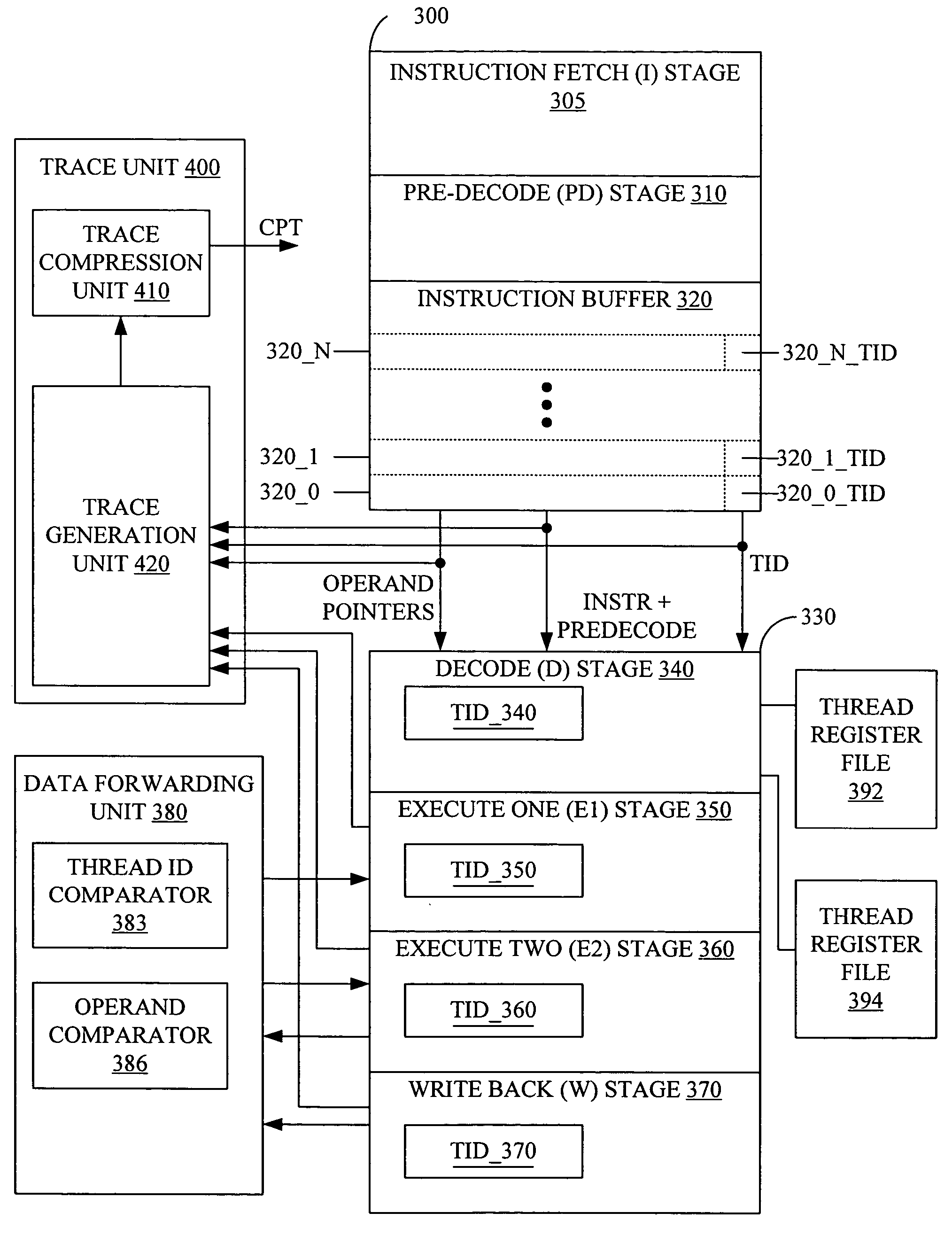

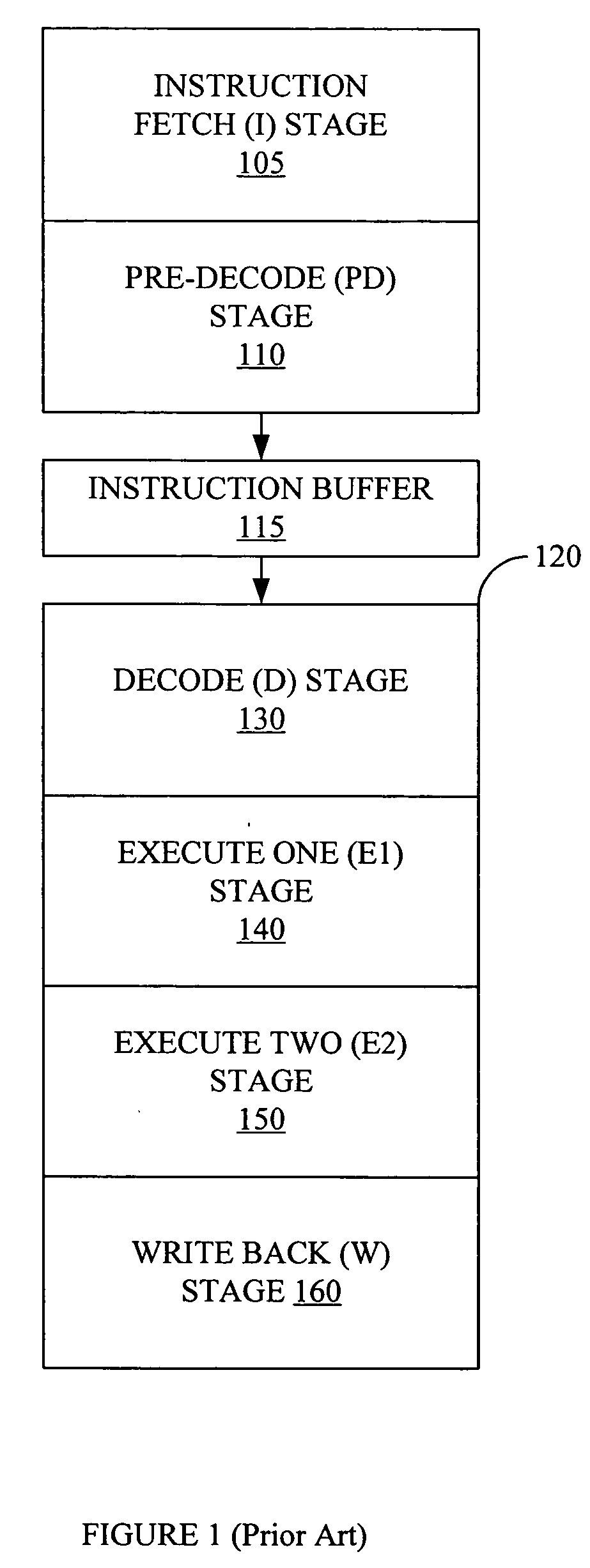

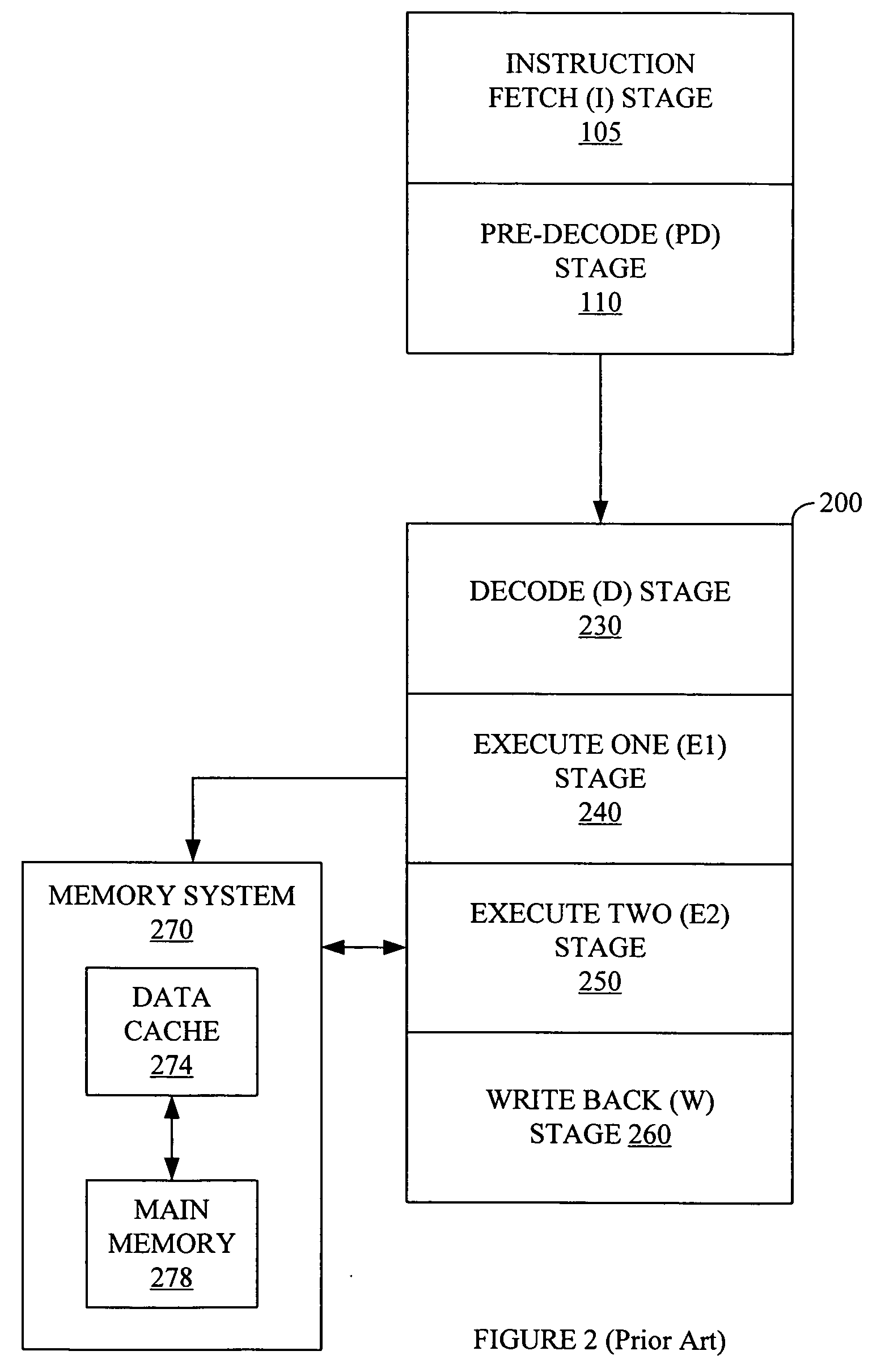

Thread ID in a multithreaded processor

ActiveUS7263599B2Easy maintenanceHinder resolutionProgram initiation/switchingDigital computer detailsOperandProgram trace

A multithreaded processor includes a thread ID for each set of fetched bits in an instruction fetch and issue unit. The thread ID attaches to the instructions and operands of the set of fetched bits. Pipeline stages in the multithreaded processor stores the thread ID associated with each operand or instruction in the pipeline stage. The thread ID are used to maintain data coherency and to generate program traces that include thread information for the instructions executed by the multithreaded processor.

Owner:INFINEON TECH AG

High performance support for XA protocols in a clustered shared database

ActiveUS7260589B2Low costAvoid impactDigital data information retrievalData processing applicationsCouplingRelational table

A shared memory device called the Coupling Facility (CF) is used to record the indoubt transaction entries for each member of the database cluster, avoiding the CPU cost and elapsed time impact of persisting this information to disk (either via a log write or a relational table I / O). The CF provides full read / write access and data coherency for concurrent access by all the members in the database cluster. At any given point in time, the CF will contain the full list of indoubt transactions for the entire database cluster. CF duplexing is used to guarantee the integrity of the CF structure used for the indoubt list. In the event of complete loss of both CF structures (which will not happen except in major disaster situations), data sharing group restart processing can reconstruct the CF structures from the individual member logs.

Owner:INT BUSINESS MASCH CORP

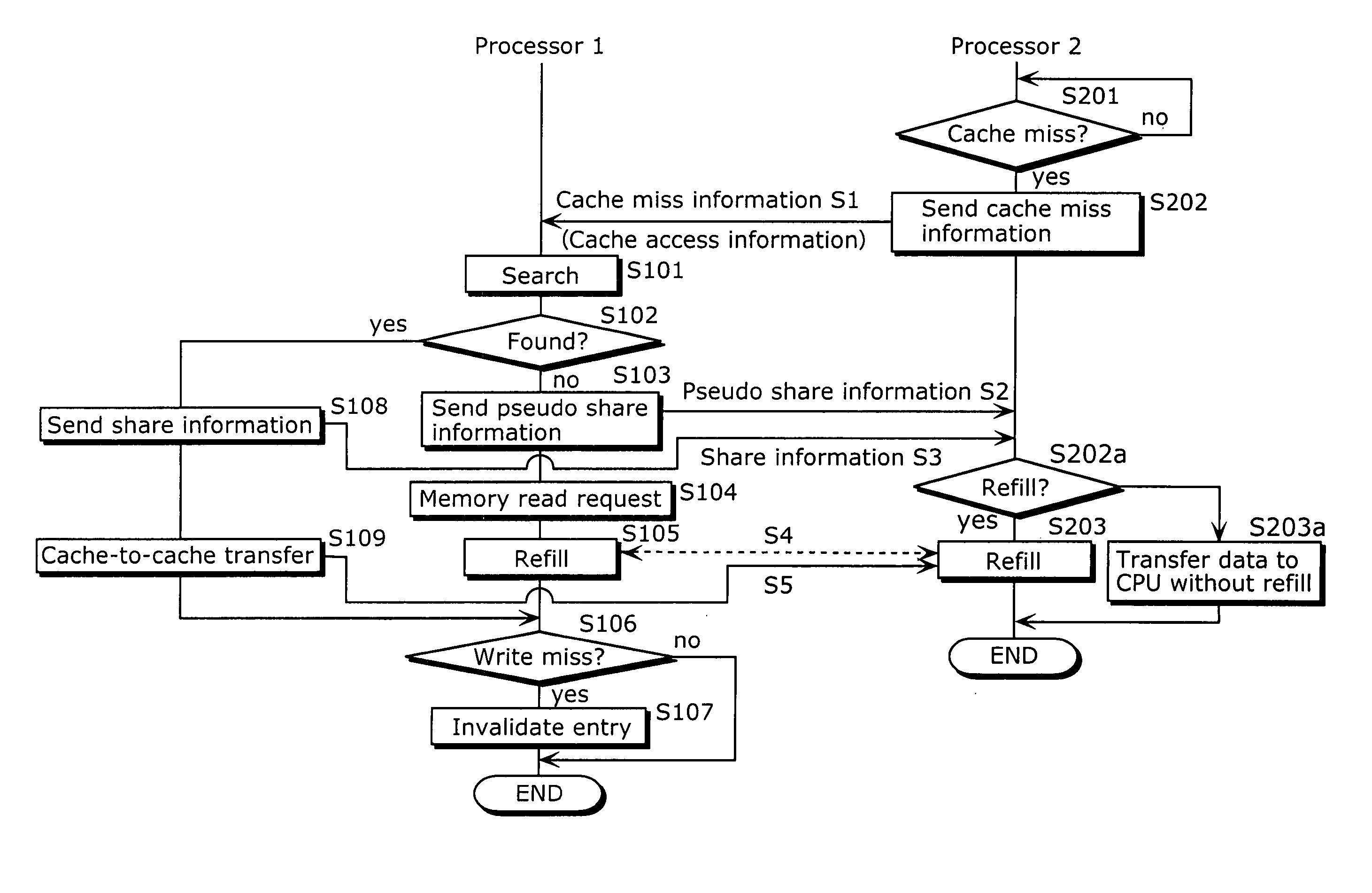

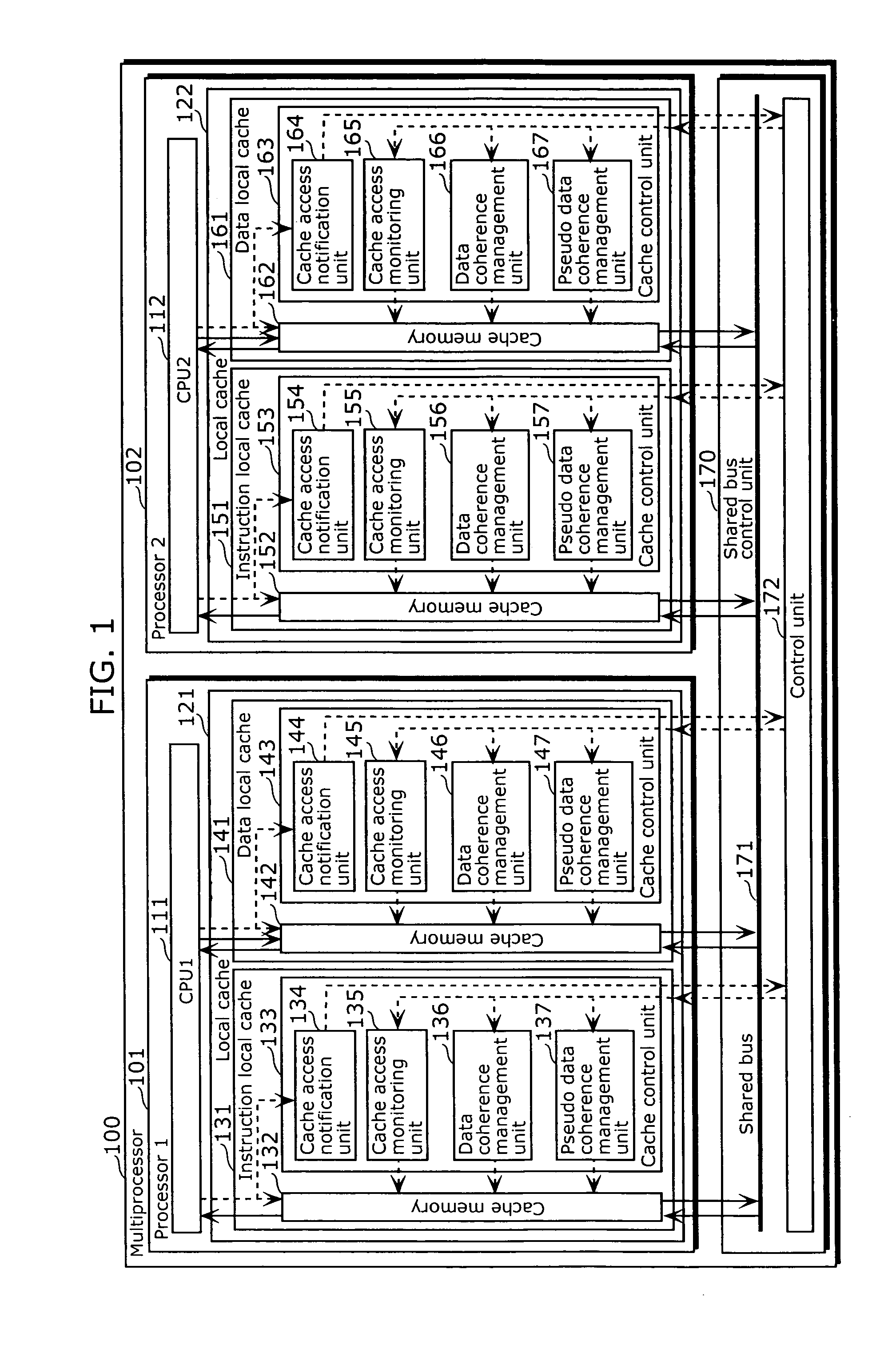

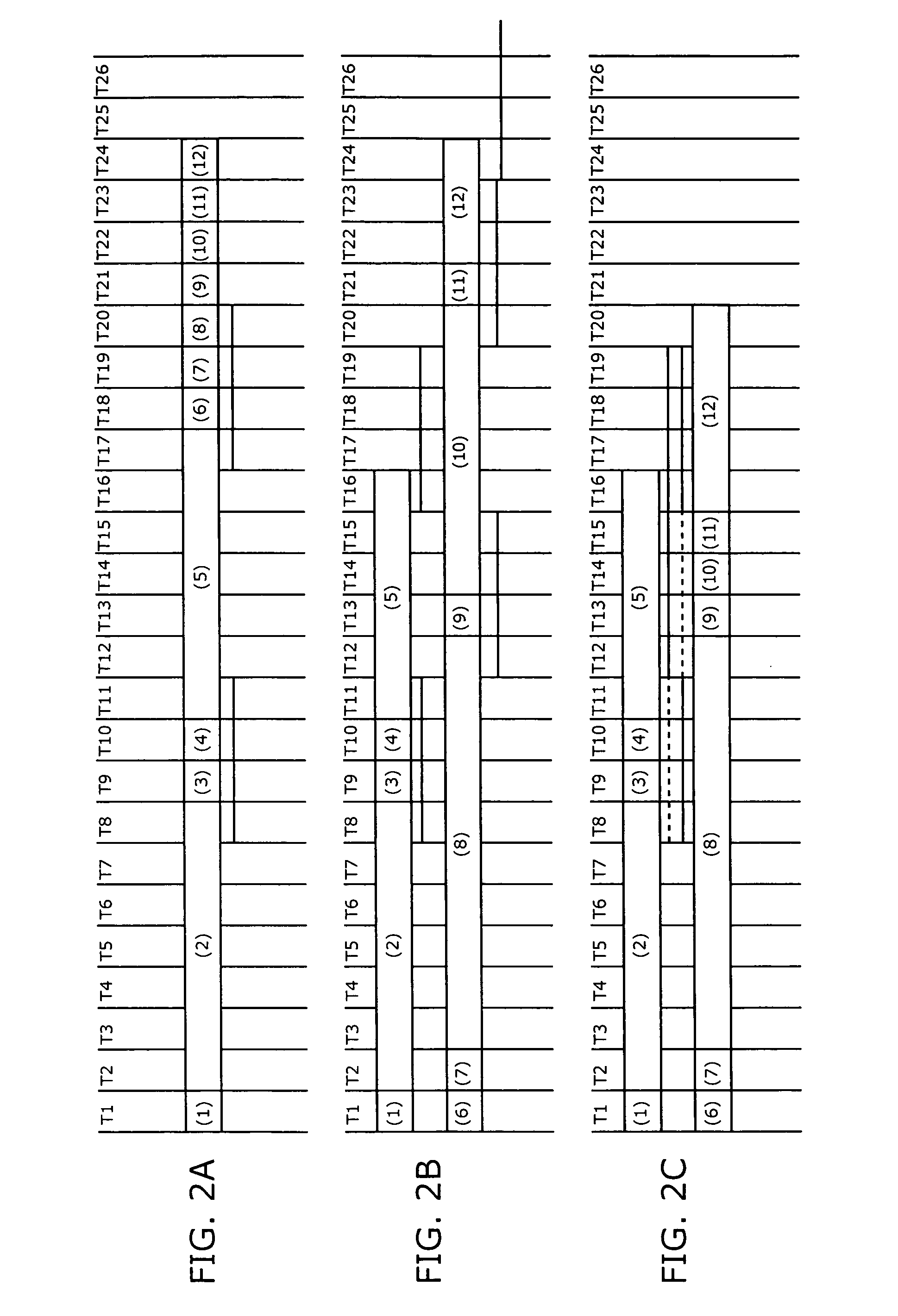

Multiprocessing apparatus

InactiveUS20060059317A1Miss occurrence ratioReducing bus contentionMemory systemsComputer architectureControl cell

The multiprocessing apparatus of the present invention is a multiprocessing apparatus including a plurality of processors, a shared bus, and a shared bus controller, wherein each of the processors includes a central processing unit (CPU) and a local cache, each of the local caches includes a cache memory, and a cache control unit that controls the cache memory, each of the cache control units includes a data coherence management unit that manages data coherence between the local caches by controlling data transfer carried out, via the shared bus, between the local caches, wherein at least one of the cache control units (a) monitors a local cache access signal, outputted from another one of the processors, for notifying an occurrence of a cache miss, and (b) notifies pseudo information to the another one of the processors via the shared bus controller, the pseudo information indicating that data corresponding to the local cache access signal is stored in the cache memory of the local cache that includes the at least one of the cache control units, even in the case where the data corresponding to the local cache access signal is not actually stored.

Owner:PANASONIC CORP

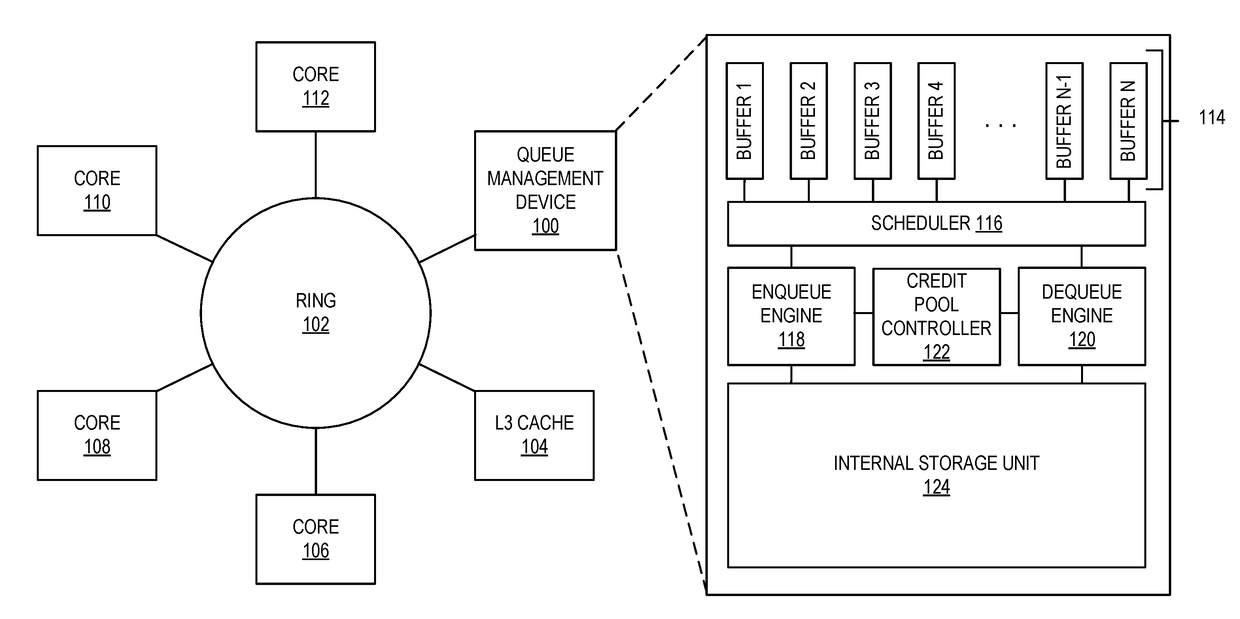

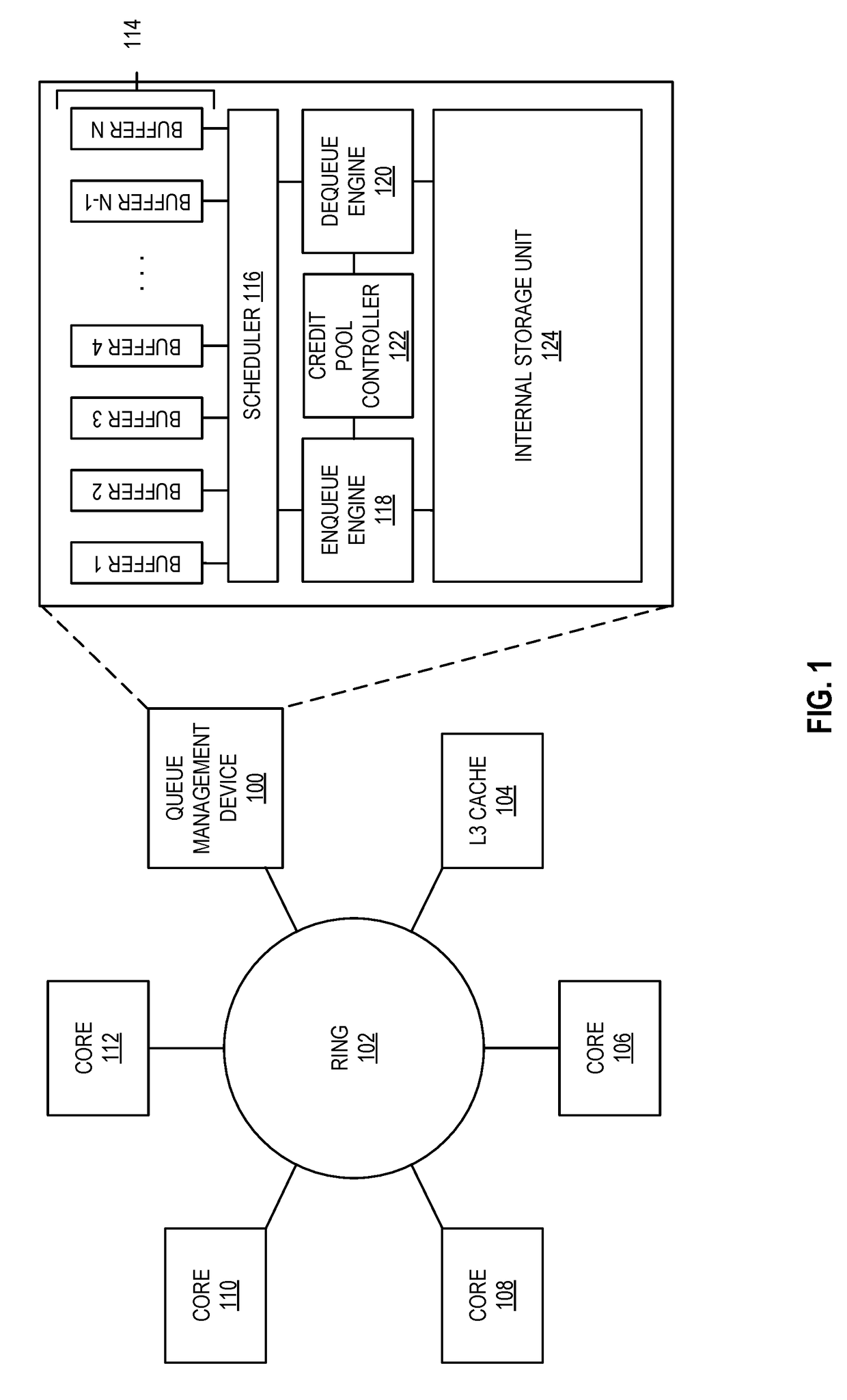

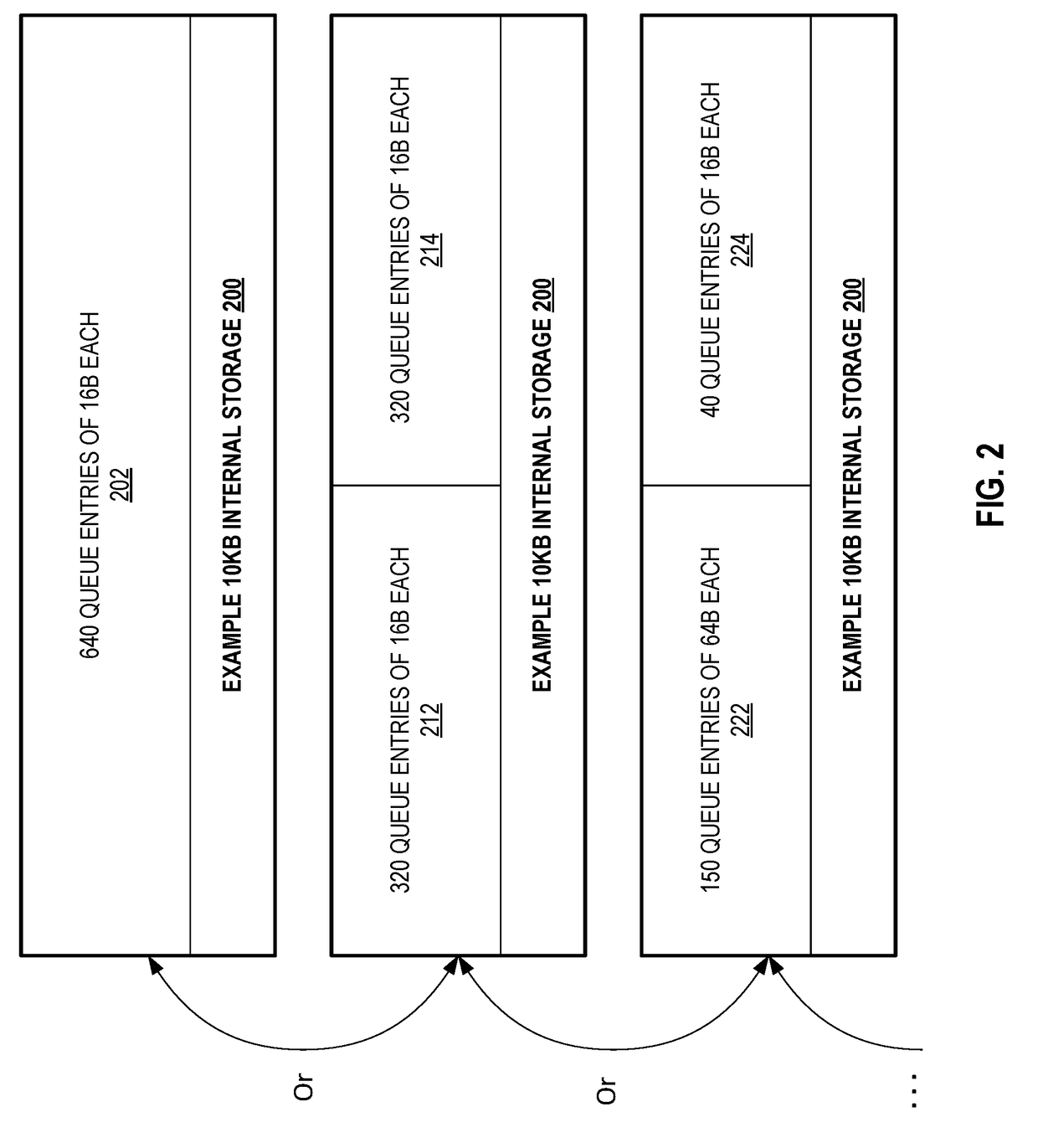

Multi-core communication acceleration using hardware queue device

ActiveUS20170192921A1Memory architecture accessing/allocationMemory adressing/allocation/relocationResource Management SystemData transmission

Apparatus and methods implementing a hardware queue management device for reducing inter-core data transfer overhead by offloading request management and data coherency tasks from the CPU cores. The apparatus include multi-core processors, a shared L3 or last-level cache (“LLC”), and a hardware queue management device to receive, store, and process inter-core data transfer requests. The hardware queue management device further comprises a resource management system to control the rate in which the cores may submit requests to reduce core stalls and dropped requests. Additionally, software instructions are introduced to optimize communication between the cores and the queue management device.

Owner:INTEL CORP

Method and apparatus for maintaining data coherency

A method and apparatus for assuring data consistency in a data processing network including local and remote data storage controllers interconnected by independent communication paths. The remote storage controller or controllers normally act as a mirror for the local storage controller or controllers. If, for any reason, transfers over one of the independent communication paths is interrupted, transfers over all the independent communication paths to predefined devices in a group are suspended thereby assuring the consistency of the data at the remote storage controller or controllers. When the cause of the interruption has been corrected, the local storage controllers are able to transfer data modified since the suspension occurred to their corresponding remote storage controllers thereby to reestablish synchronism and consistency for the entire dataset.

Owner:EMC CORP

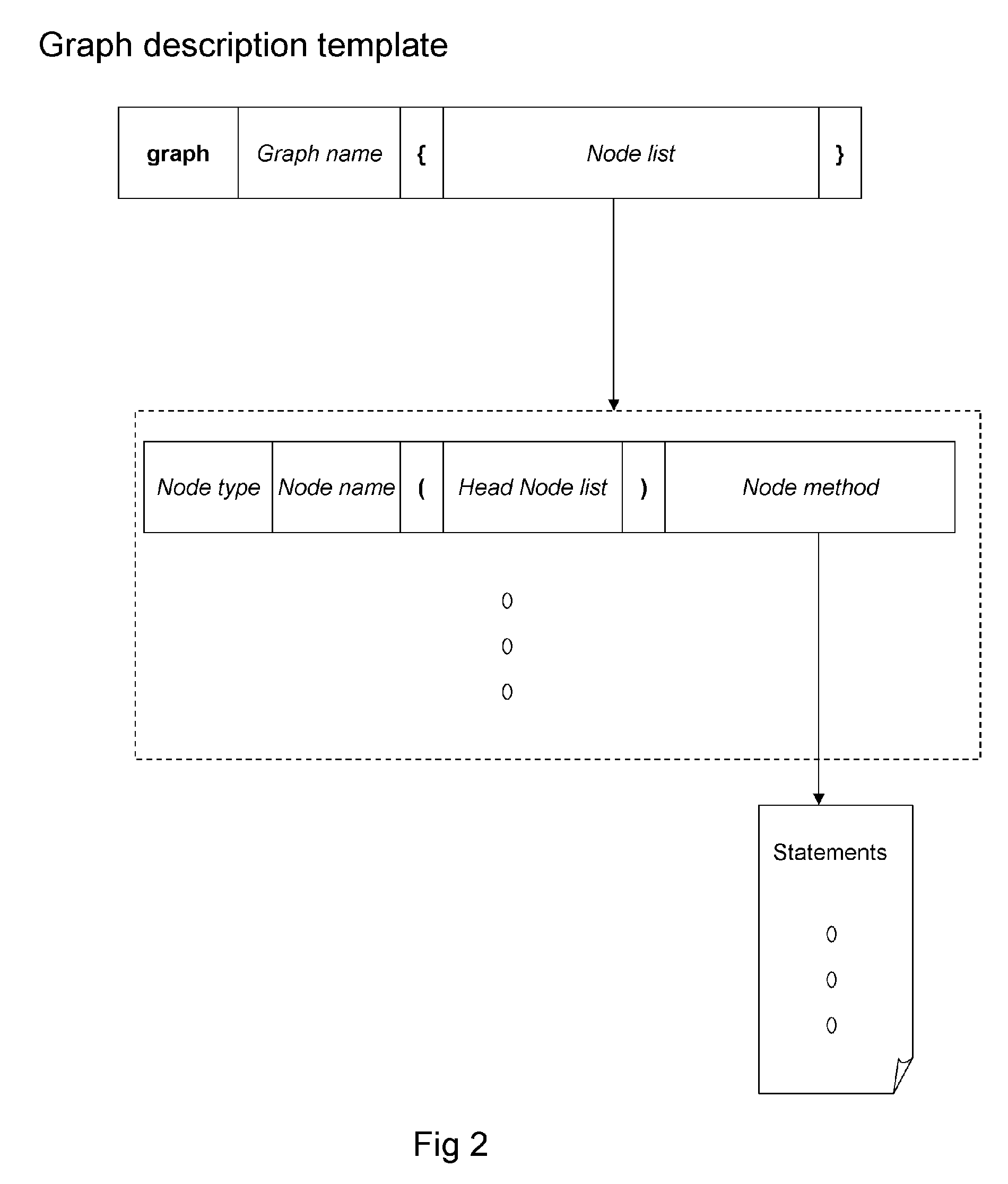

A method of event driven computation using data coherency of graph

InactiveUS20060294499A1Expressed easily and appliedInhibition formationRequirement analysisSpecific program execution arrangementsDirected graphTheoretical computer science

Continuous streams of multiple concurrent events are handled by a system using computation dependency graph. The computation dependency graph is derived from the mathematical concept of directed graph. The computation dependency graph has plurality of nodes representing typed or non-typed variables and arcs connecting from tail node to head node representing dependency of computation. The instance of a graph has plurality of node instances, and any node instance may be associated to external stream of event where a single event from the event stream changes the value of the node instance. The computation of a node is preformed when one or more of its tail node instance values are changed. The end result of the computation from tail to head makes the nodes connected by arcs to be coherent. The computation dependency graph has plurality of instance of the graph whose topology representing computation path may be reconfigured during the traversal of the node instances. A grammar to produce easy to read description of the computation dependency graph is understood by the system. The system produces data structure and instruction sets for the node computation according to descriptions conforming to the grammar by parsing the descriptions. The system has a computation engine to correctly traverse the graph instances described by the graph descriptions to processes events in most optimal manner. This invention includes various techniques and tools which can be used in combination or independently.

Owner:SHIM JOHN

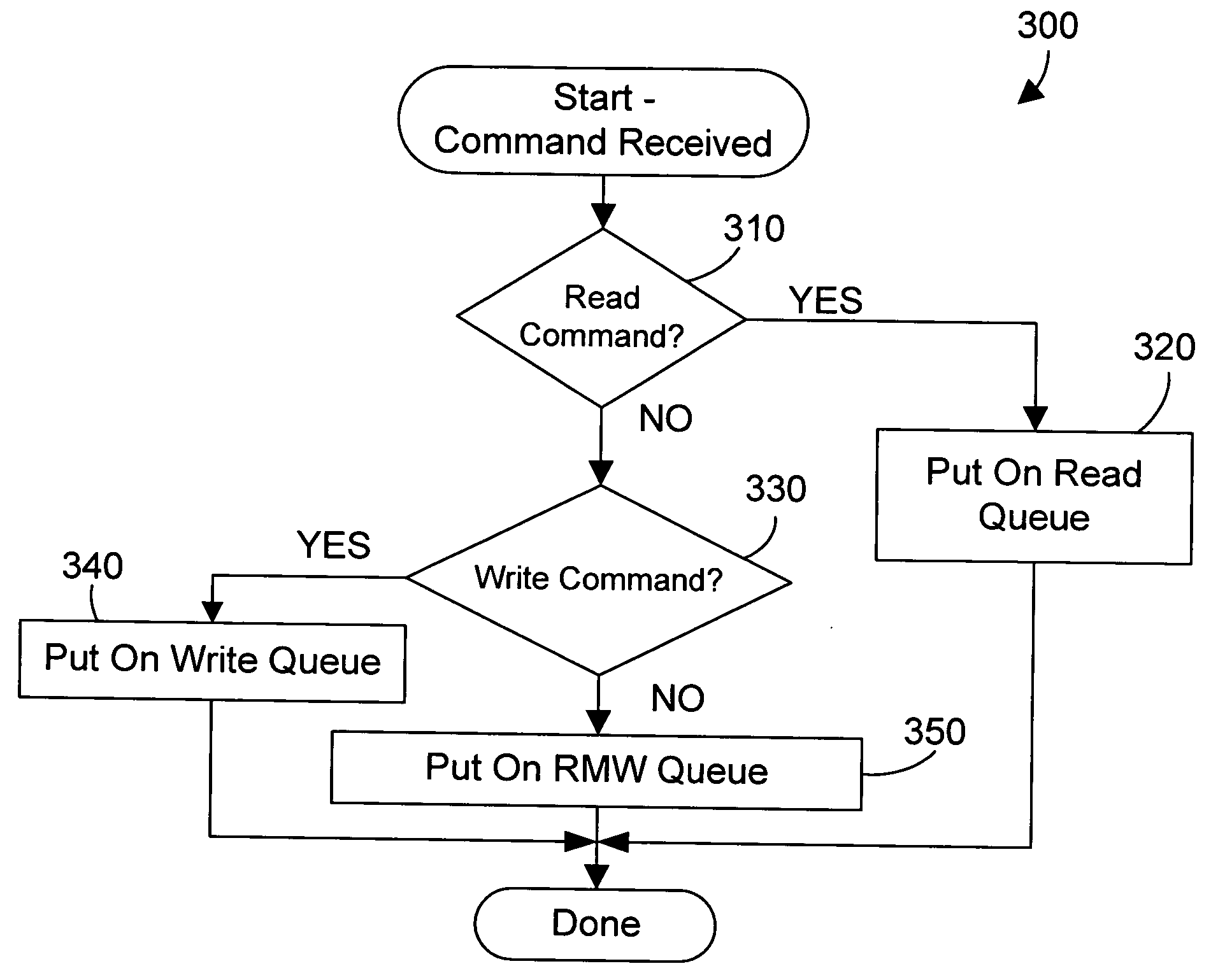

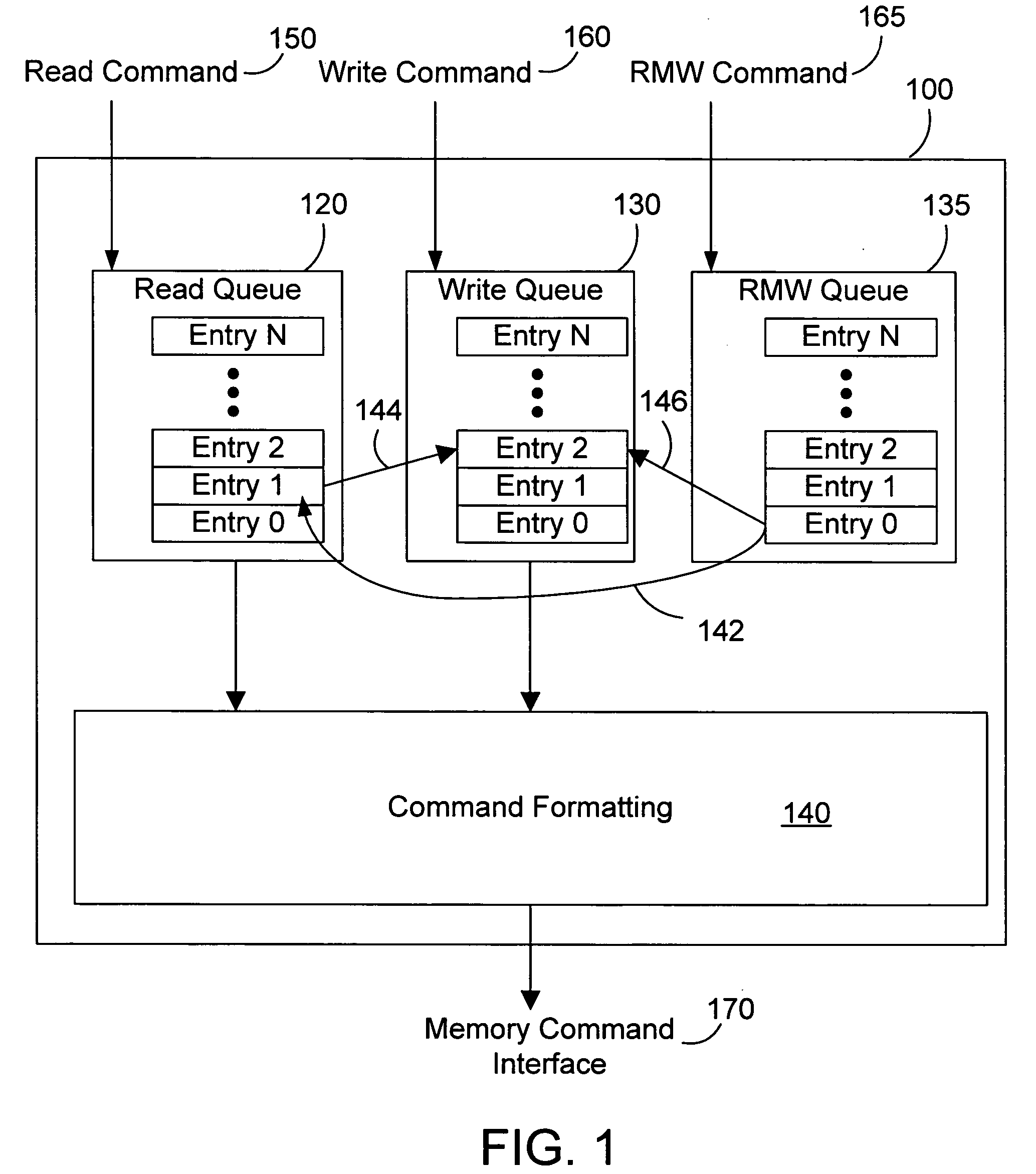

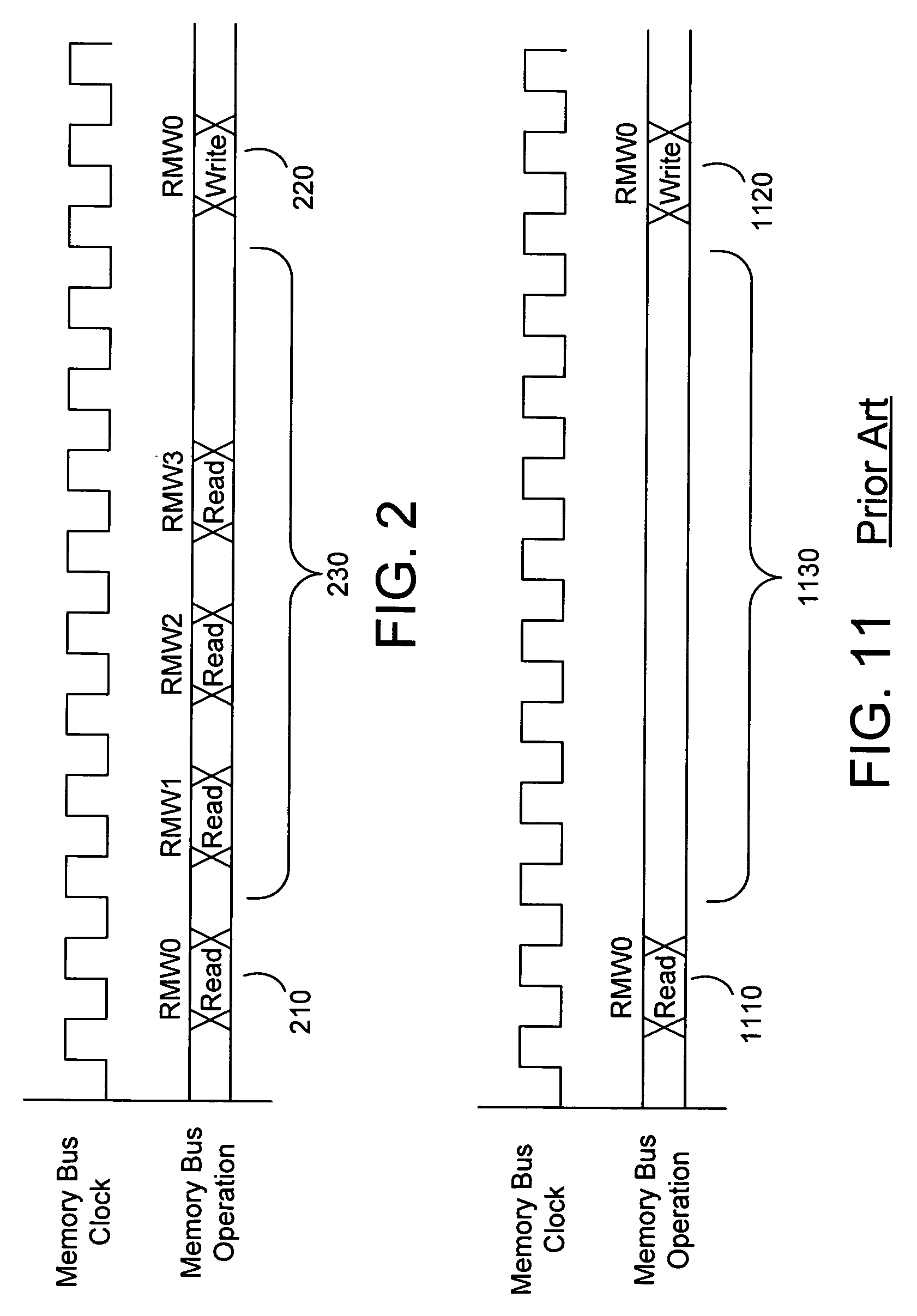

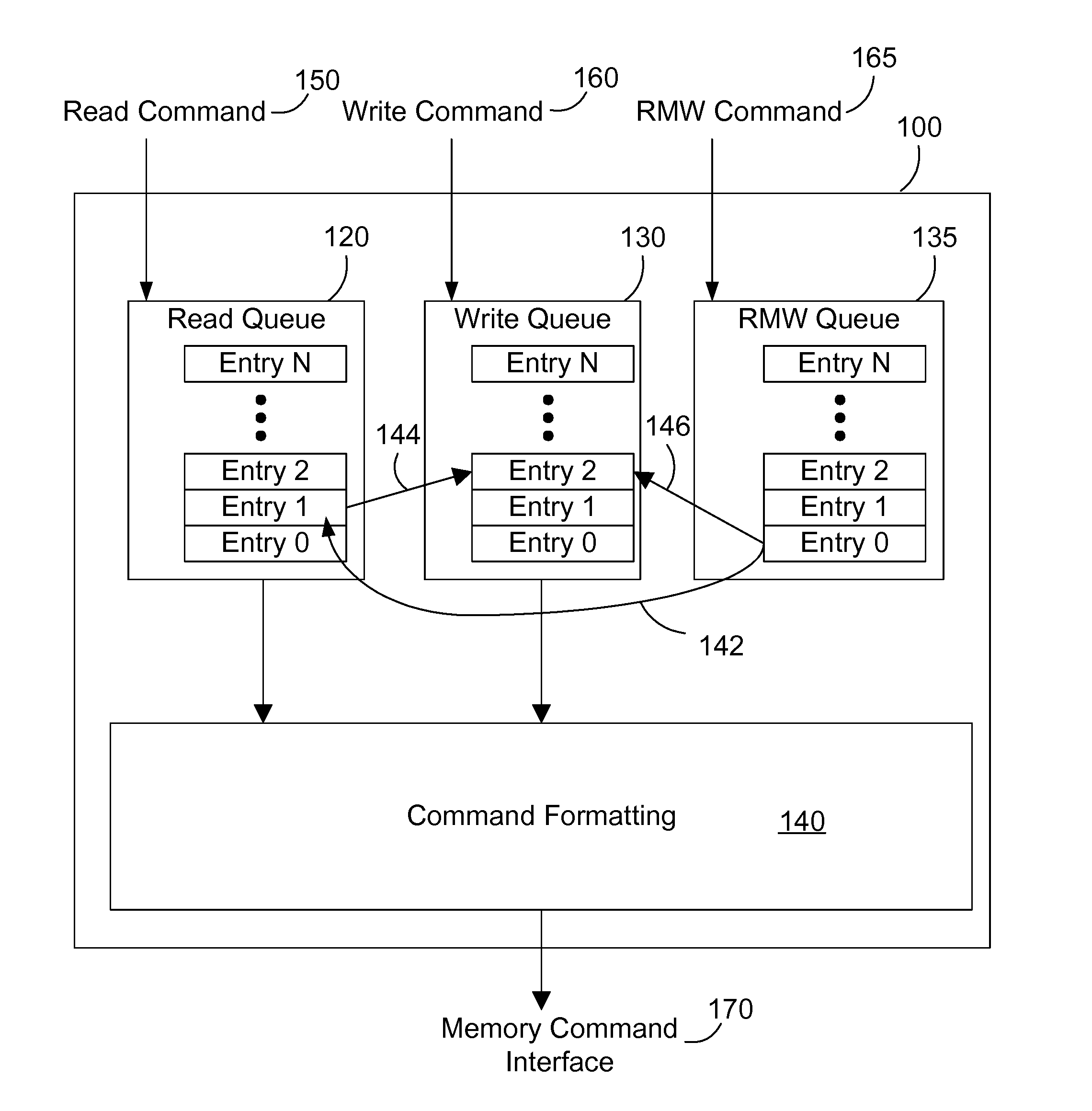

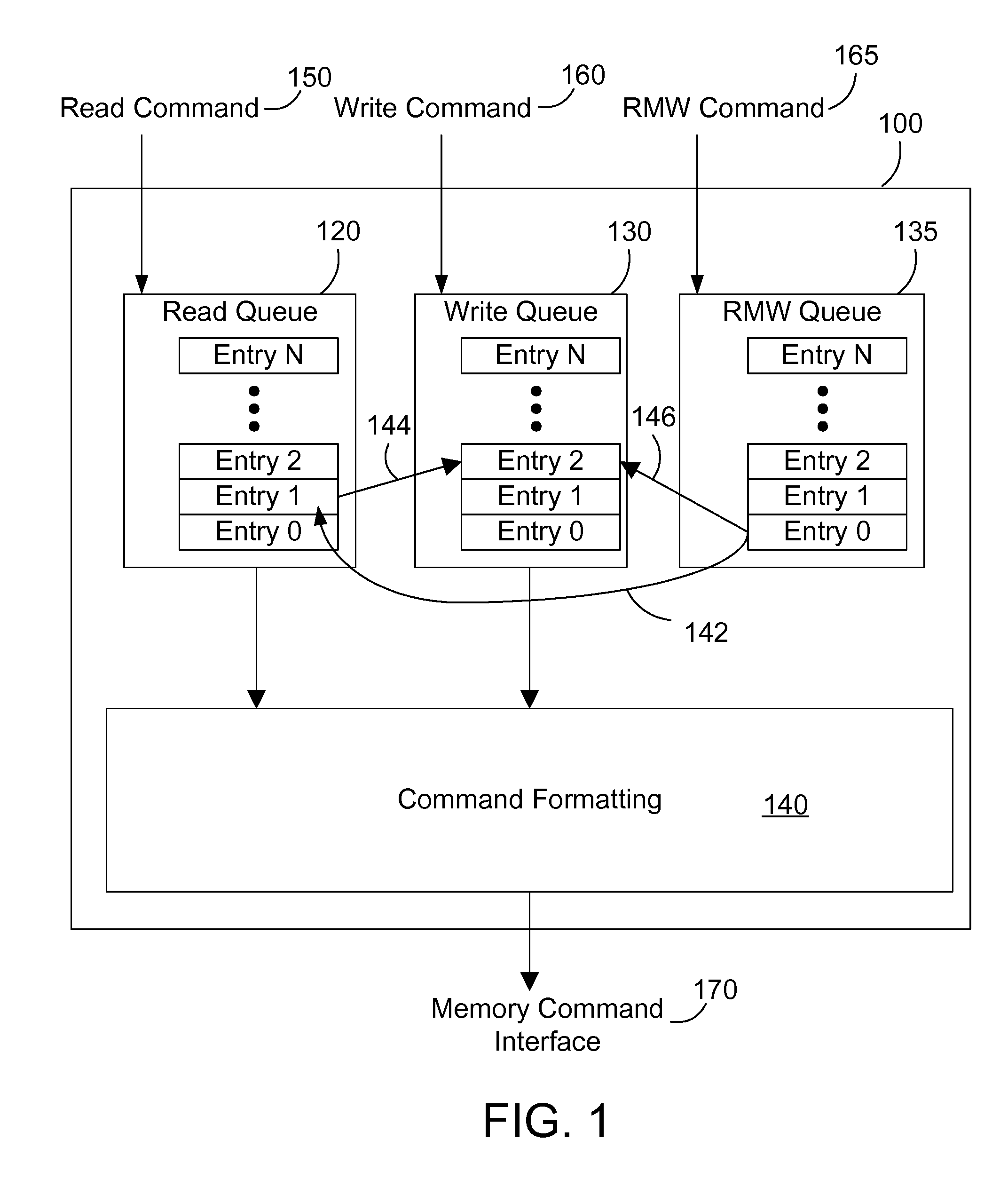

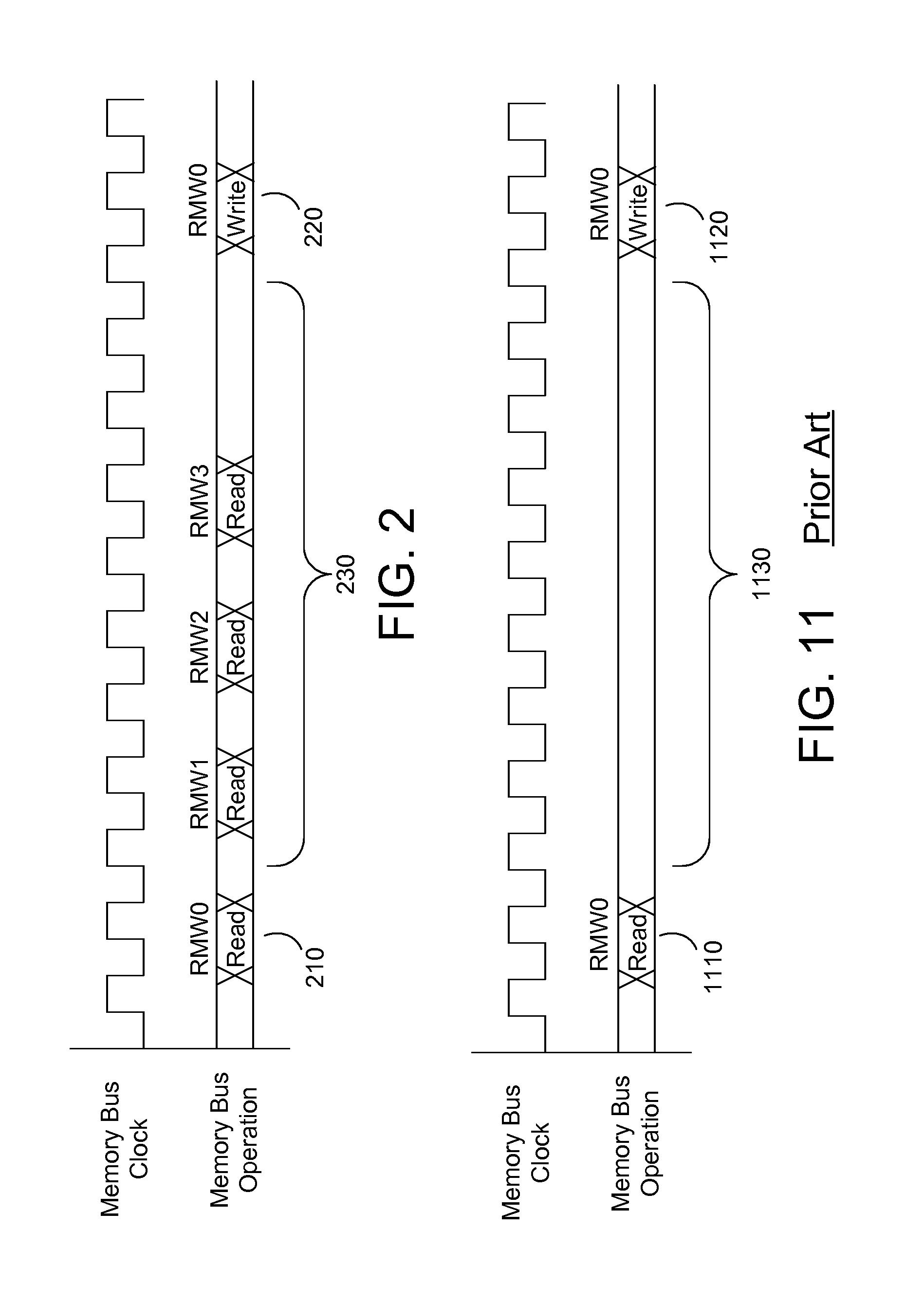

Memory controller and method for optimized read/modify/write performance

InactiveUS20060090044A1Easy to implementProgram controlStatic storageMemory controllerOperating system

A memory controller optimizes execution of a read / modify / write command by breaking the RMW command into separate and unique read and write commands that do not need to be executed together, but just need to be executed in the proper sequence. The most preferred embodiments use a separate RMW queue in the controller in conjunction with the read queue and write queue. In other embodiments, the controller places the read and write portions of the RMW into the read and write queue, but where the write queue has a dependency indicator associated with the RMW write command in the write queue to insure the controller maintains the proper execution sequence. The embodiments allow the memory controller to translate RMW commands into read and write commands with the proper sequence of execution to preserve data coherency.

Owner:IBM CORP

Thread ID in a multithreaded processor

ActiveUS20050177703A1Easy maintenanceHinder resolutionProgram initiation/switchingDigital computer detailsOperandProgram trace

A multithreaded processor includes a thread ID for each set of fetched bits in an instruction fetch and issue unit. The thread ID attaches to the instructions and operands of the set of fetched bits. Pipeline stages in the multithreaded processor stores the thread ID associated with each operand or instruction in the pipeline stage. The thread ID are used to maintain data coherency and to generate program traces that include thread information for the instructions executed by the multithreaded processor.

Owner:INFINEON TECH AG

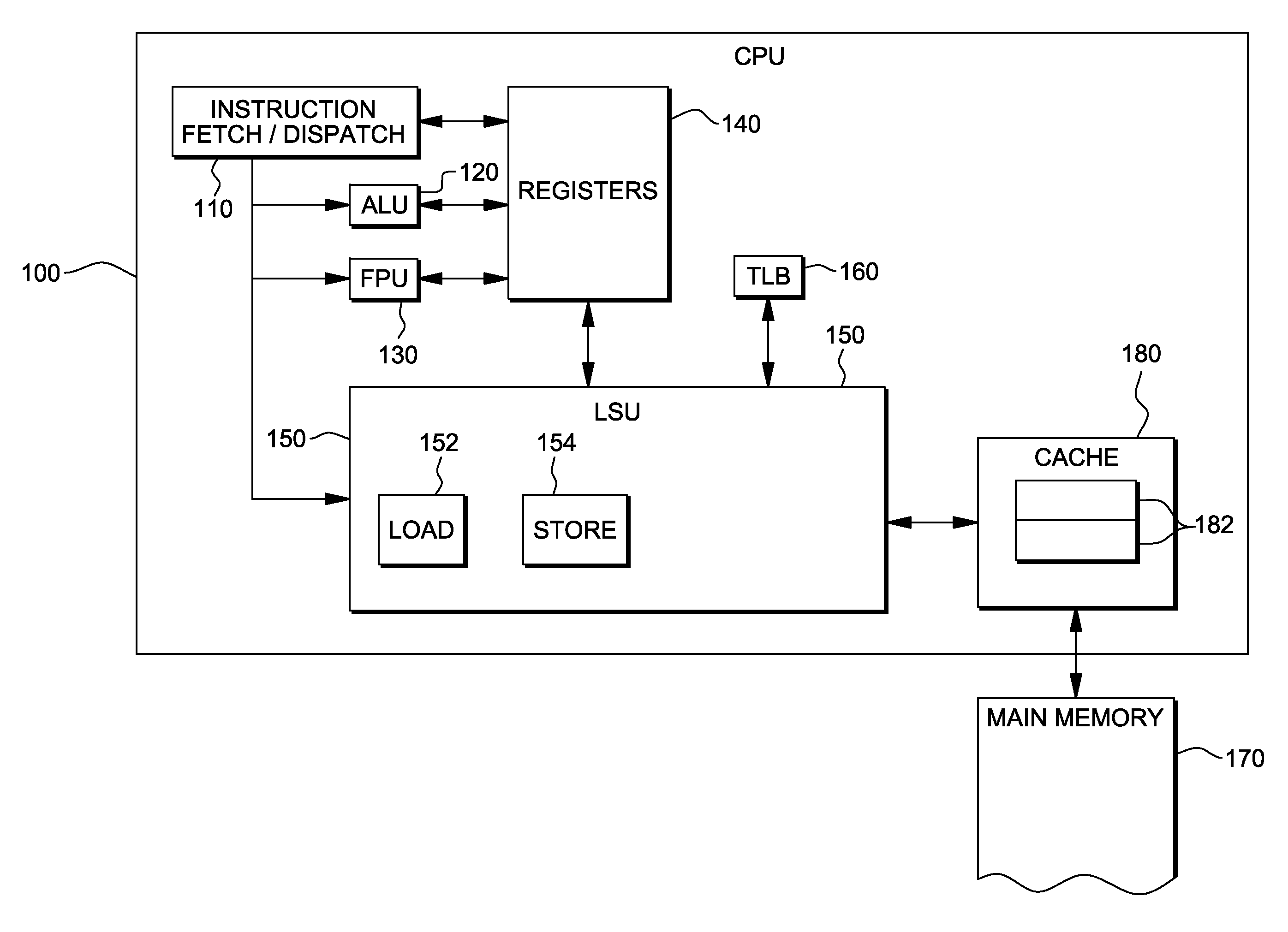

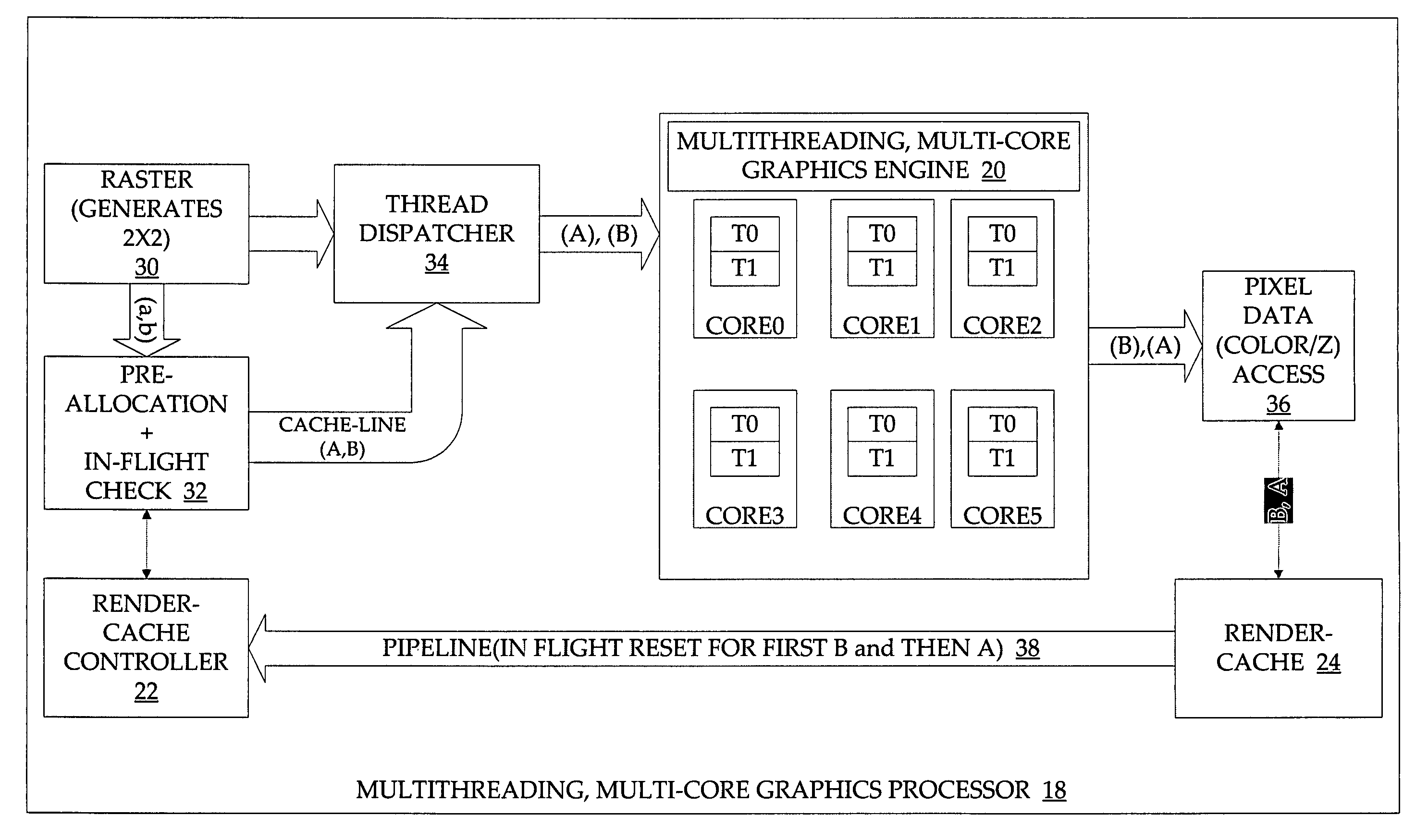

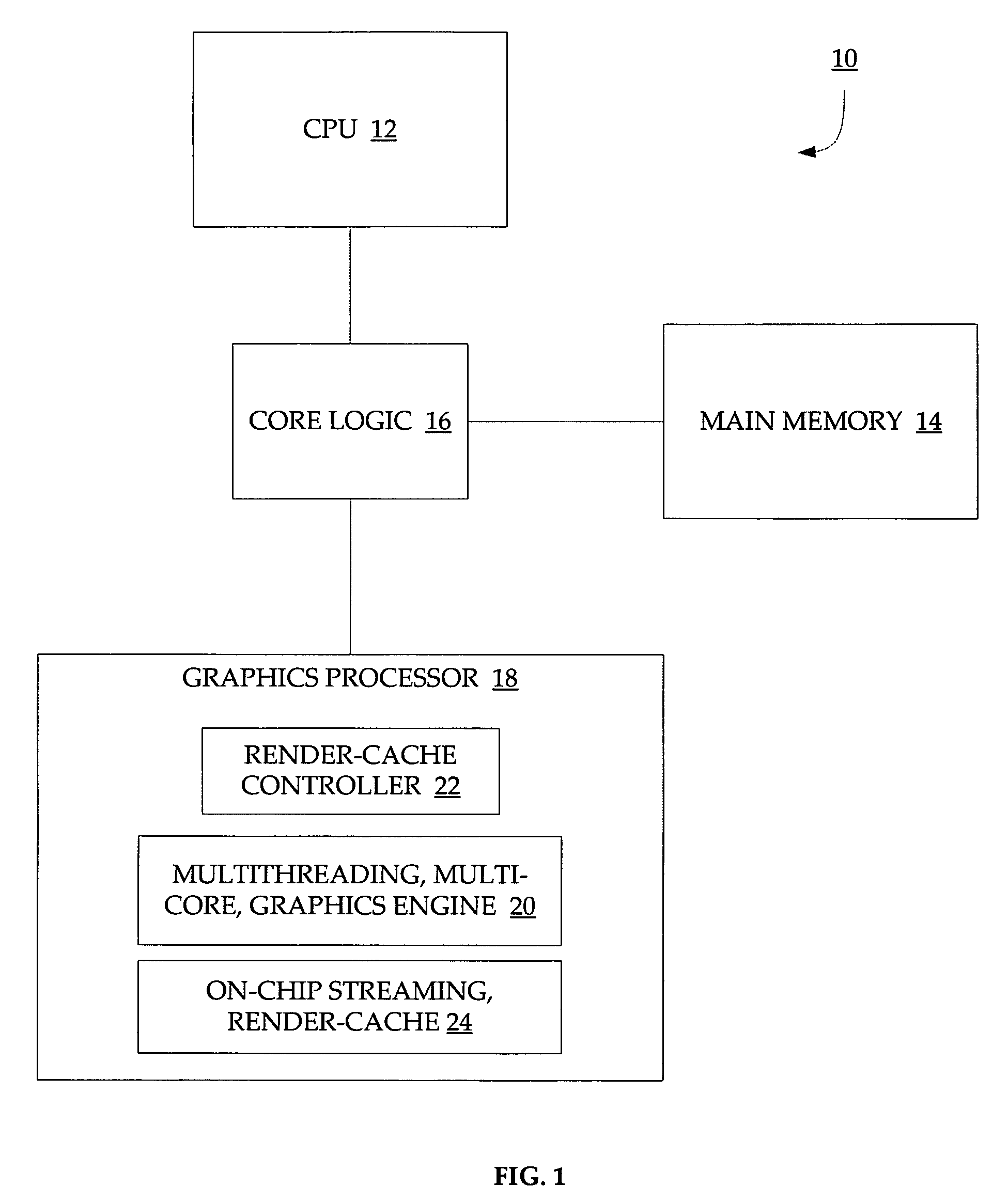

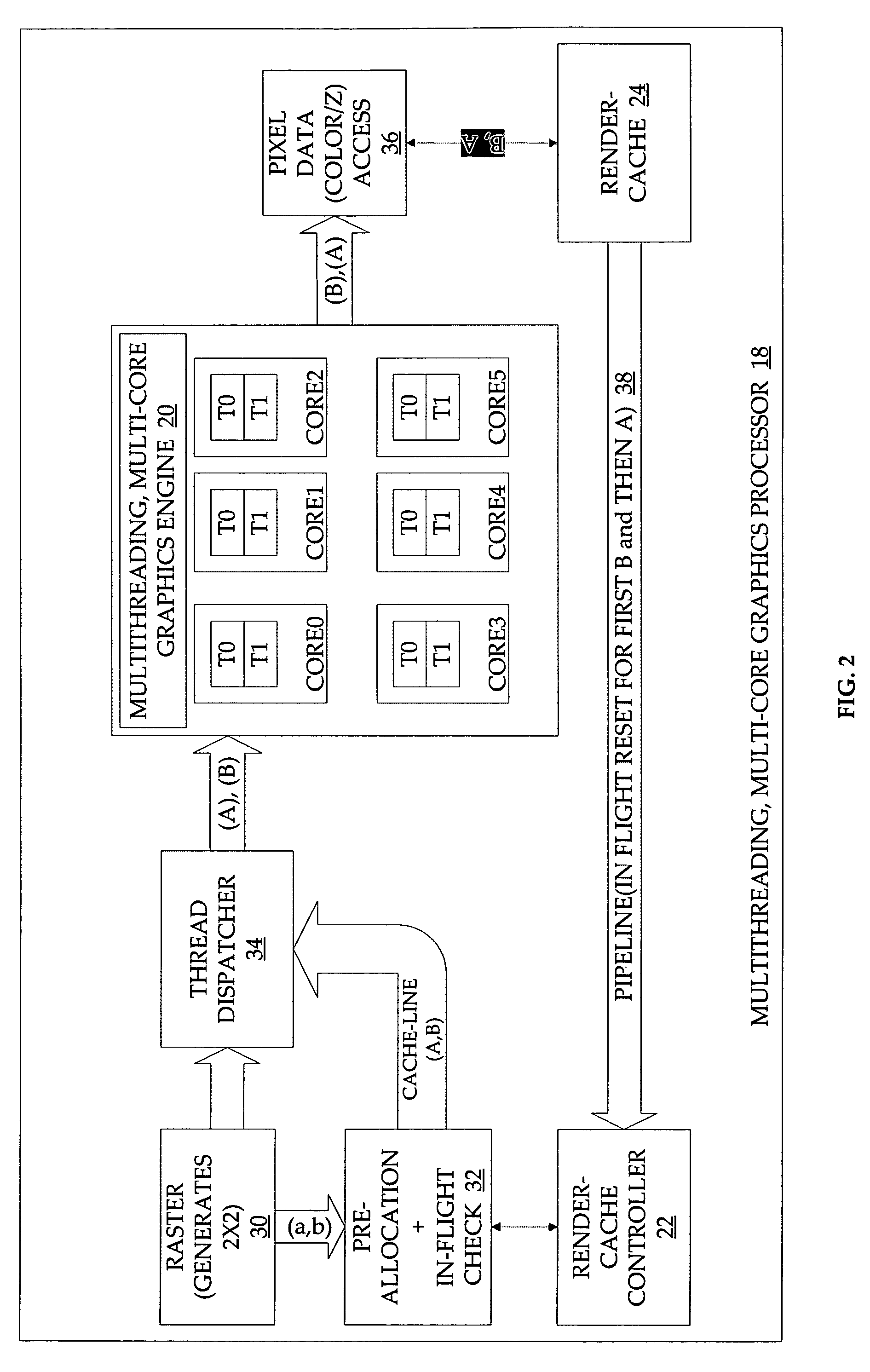

Render-cache controller for multithreading, multi-core graphics processor

ActiveUS7719540B2Memory adressing/allocation/relocationDigital computer detailsComputational scienceThree dimensional graphics

A method and apparatus for rendering three-dimensional graphics using a streaming render-cache with a multi-threading, multi-core graphics processor are disclosed. The graphics processor includes a streaming render-cache and render-cache controller to maintain the order in which threads are dispatched to the graphics engine, and to maintain data coherency between the render-cache and the main memory. The render-cache controller blocks threads from being dispatched to the graphics engine out of order by only allowing one sub-span to be in-flight at any given time.

Owner:TAHOE RES LTD

Memory controller and method for optimized read/modify/write performance

InactiveUS20080016294A1Easy to implementProgram controlStatic storageMemory controllerOperating system

A memory controller optimizes execution of a read / modify / write command by breaking the RMW command into separate and unique read and write commands that do not need to be executed together, but just need to be executed in the proper sequence. The most preferred embodiments use a separate RMW queue in the controller in conjunction with the read queue and write queue. In other embodiments, the controller places the read and write portions of the RMW into the read and write queue, but where the write queue has a dependency indicator associated with the RMW write command in the write queue to insure the controller maintains the proper execution sequence. The embodiments allow the memory controller to translate RMW commands into read and write commands with the proper sequence of execution to preserve data coherency.

Owner:IBM CORP

Program tracing in a multithreaded processor

ActiveUS20050177819A1Easy maintenanceHinder resolutionProgram initiation/switchingConcurrent instruction executionOperandProgram trace

A multithreaded processor includes a thread ID for each set of fetched bits in an instruction fetch and issue unit. The thread ID attaches to the instructions and operands of the set of fetched bits. Pipeline stages in the multithreaded processor stores the thread ID associated with each operand or instruction in the pipeline stage. The thread ID are used to maintain data coherency and to generate program traces that include thread information for the instructions executed by the multithreaded processor.

Owner:INFINEON TECH AG

Facilitating data coherency using in-memory tag bits and tag test instructions

InactiveUS20120297146A1Reduce the number of timesGood data consistencyMemory architecture accessing/allocationMemory adressing/allocation/relocationOriginal dataData mining

A method is provided for fine-grained detection of data modification of original data by associating separate guard bits with granules of memory storing original data from which translated data has been obtained. The guard bits indicating whether the original data stored in the associated granule is protected for data coherency. The guard bits are set and cleared by special-purpose instructions. Responsive to attempting access to translated data obtained from the original data, the guard bit(s) associated with the original data is checked to determine whether the guard bit(s) fail to indicate coherency of the original data, and if so, discarding of the translated data is initiated to facilitate maintaining data coherency between the original data and the translated data.

Owner:IBM CORP

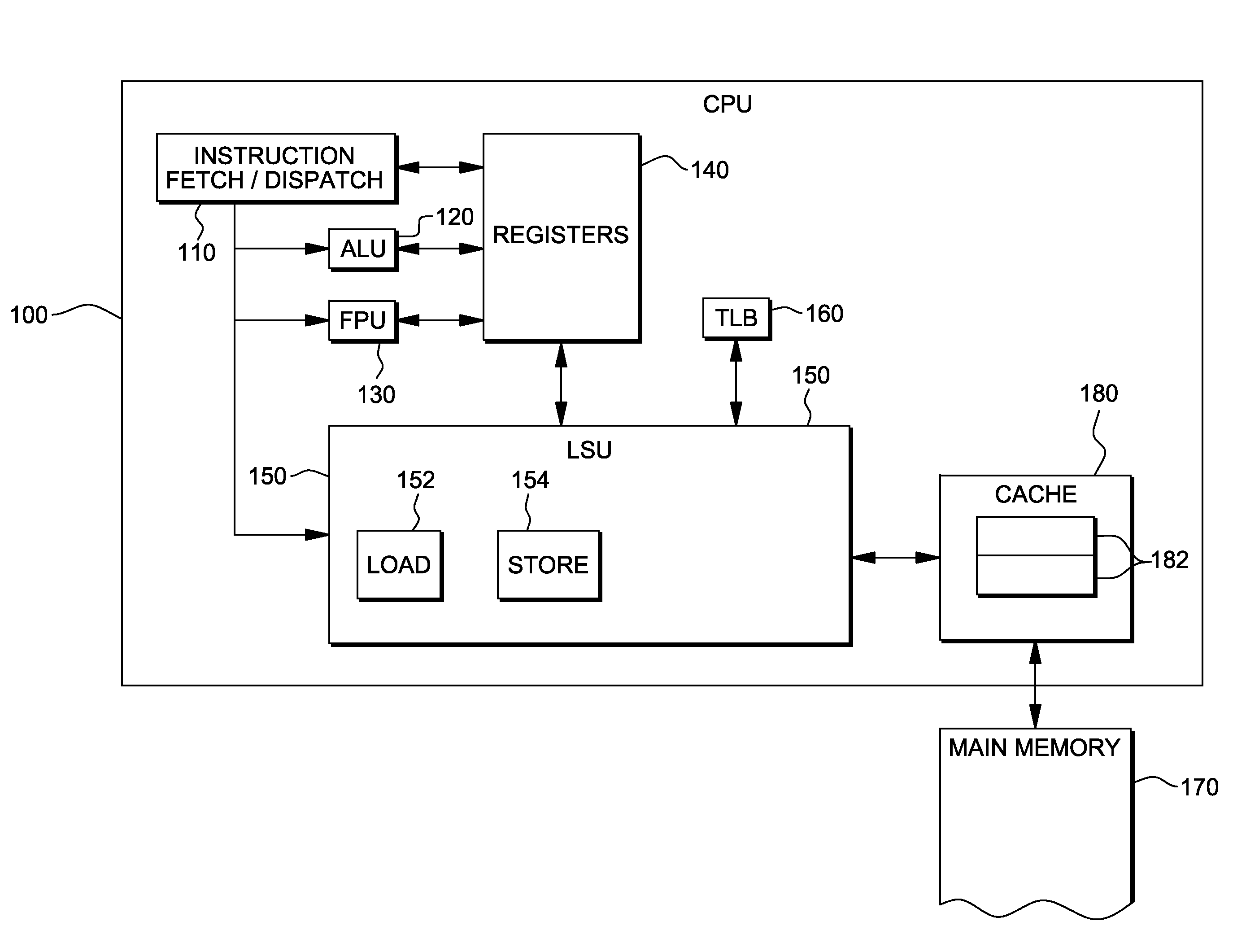

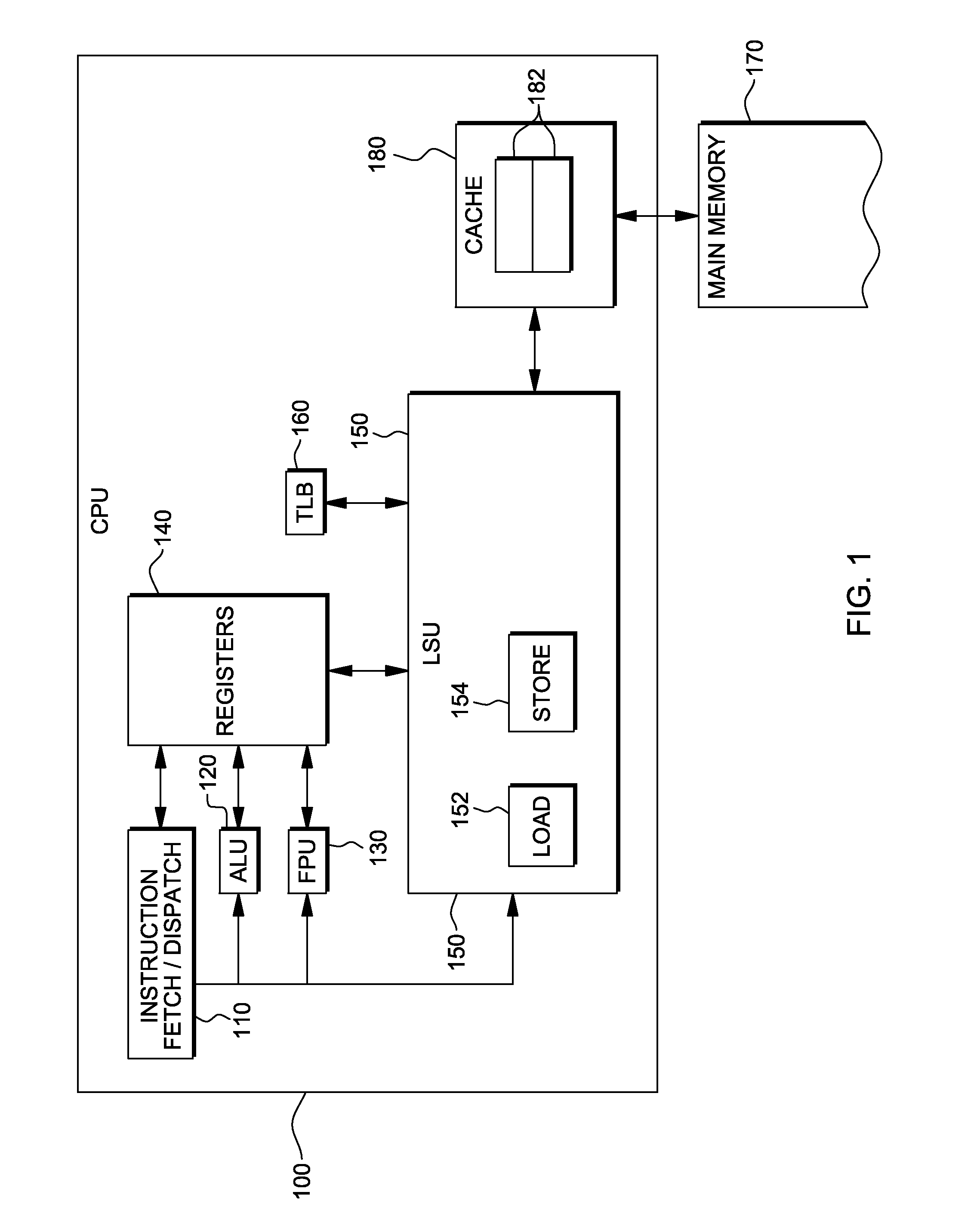

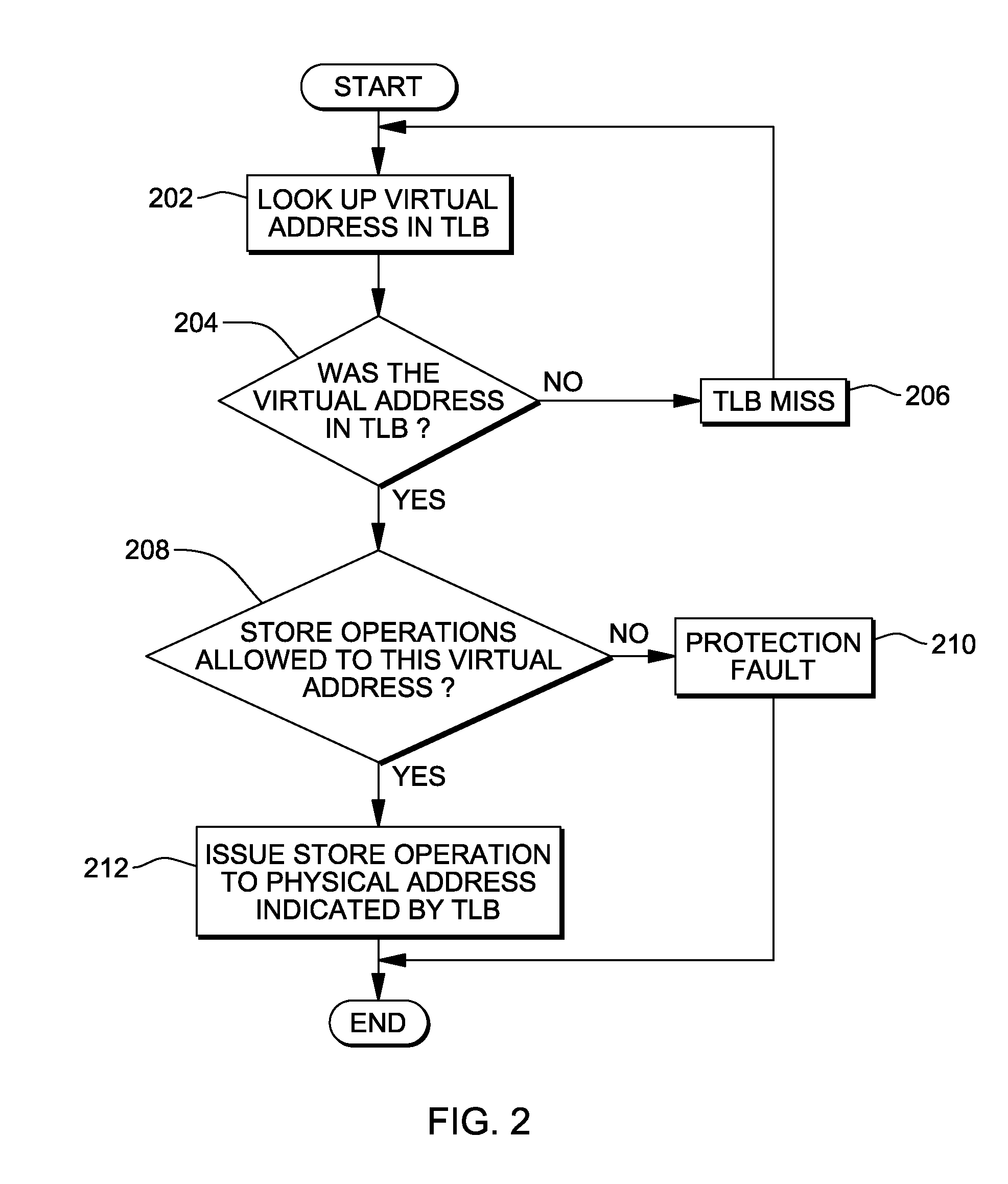

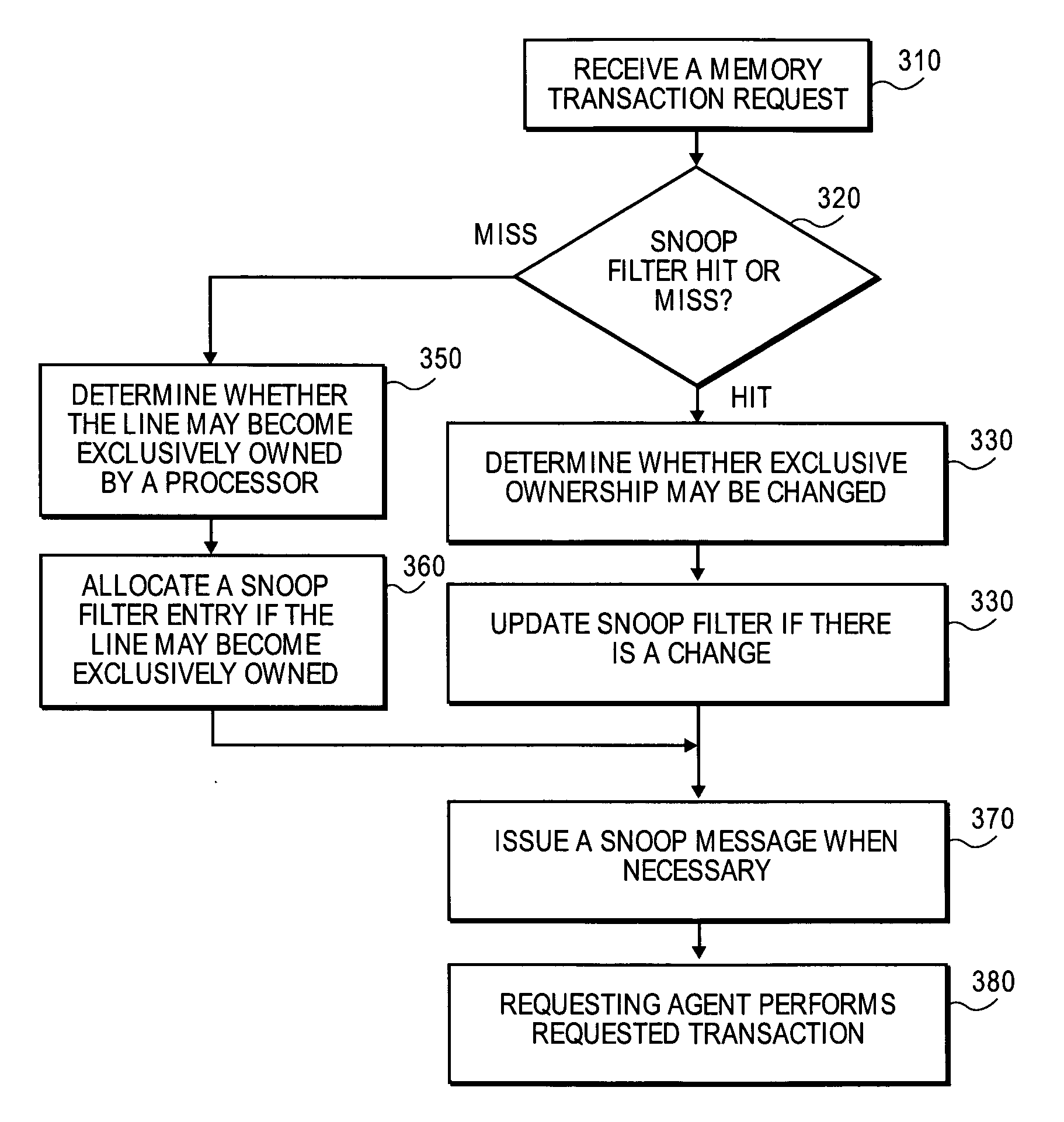

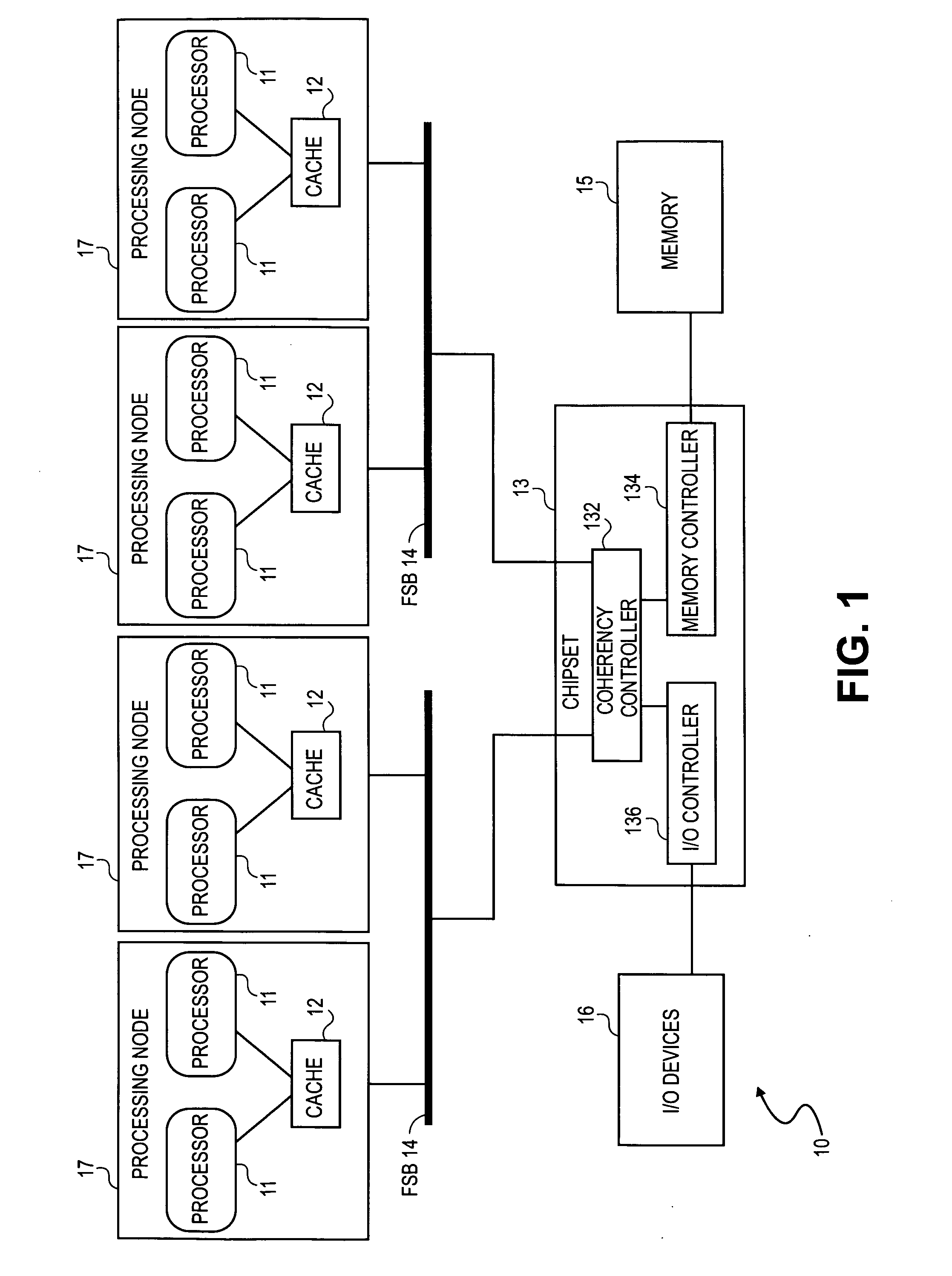

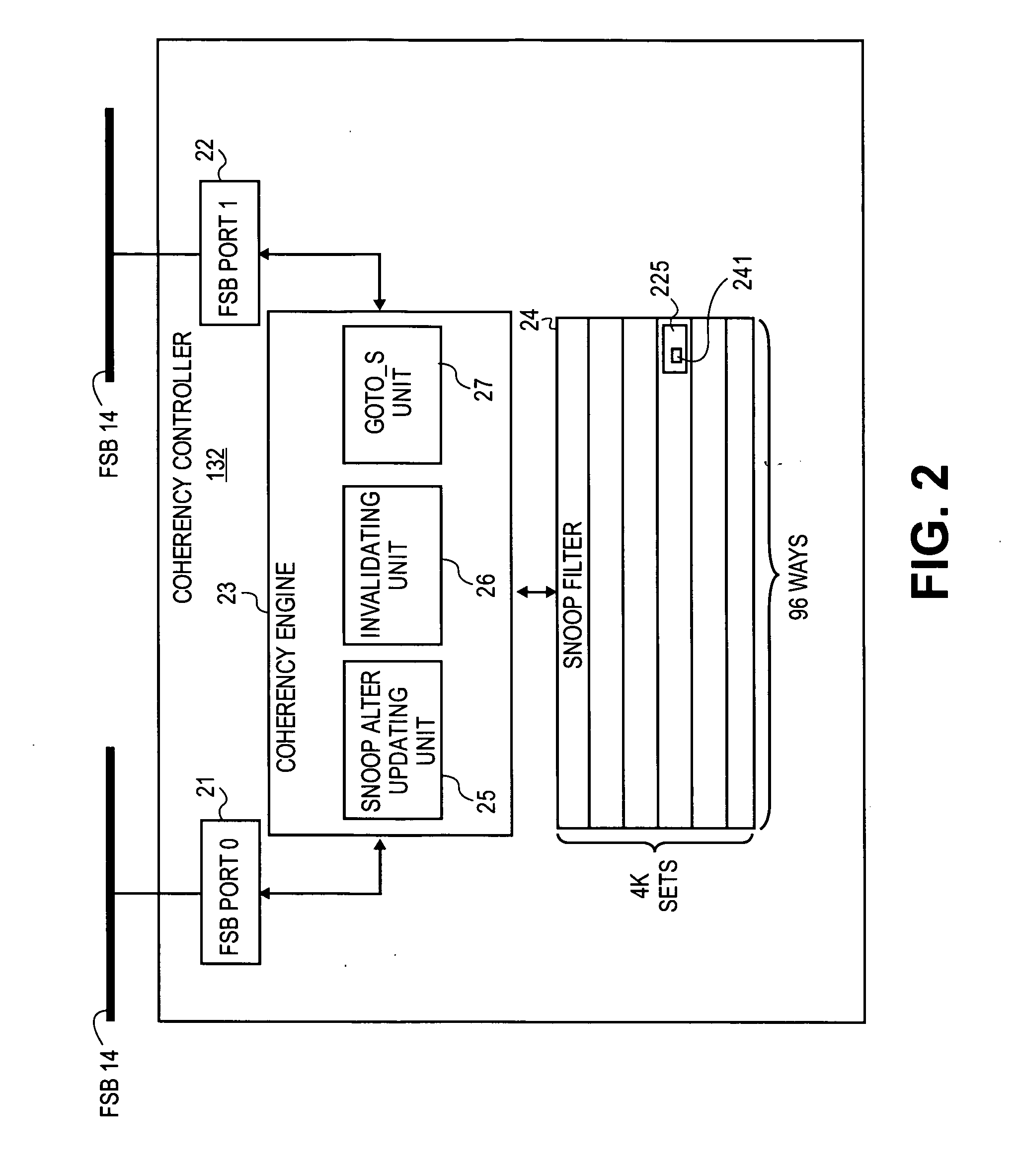

Exclusive ownership snoop filter

A snoop filter maintains data coherency information for multiple caches in a multi-processor system. The Exclusive Ownership Snoop Filter only stores entries that are exclusively owned by a processor. A coherency engine updates the entries in the snoop filter such that an entry is removed from the snoop filter if the entry exits the exclusive state. To ensure data coherency, the coherency engine implements a sequencing rule that decouples a read request from a write request.

Owner:INTEL CORP

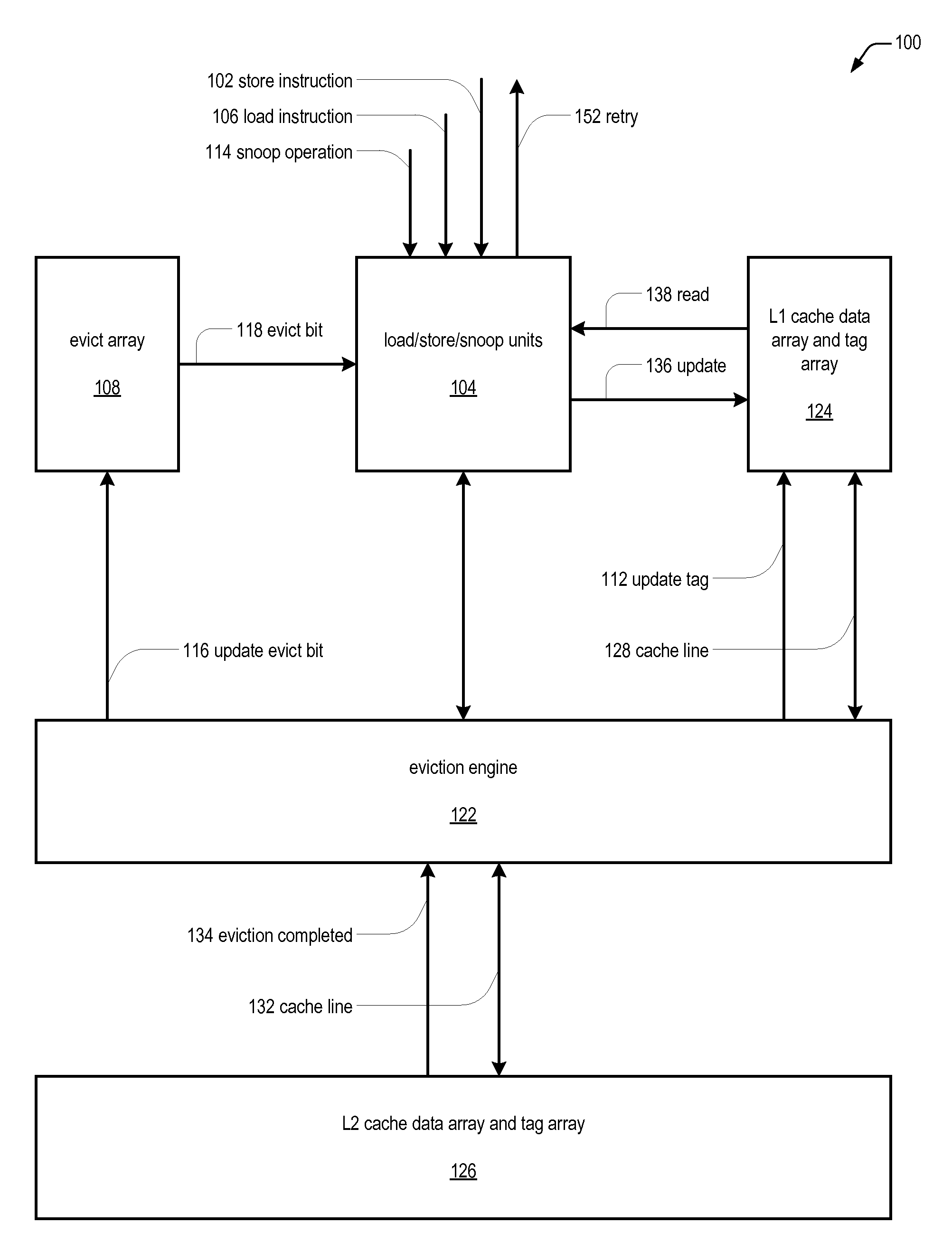

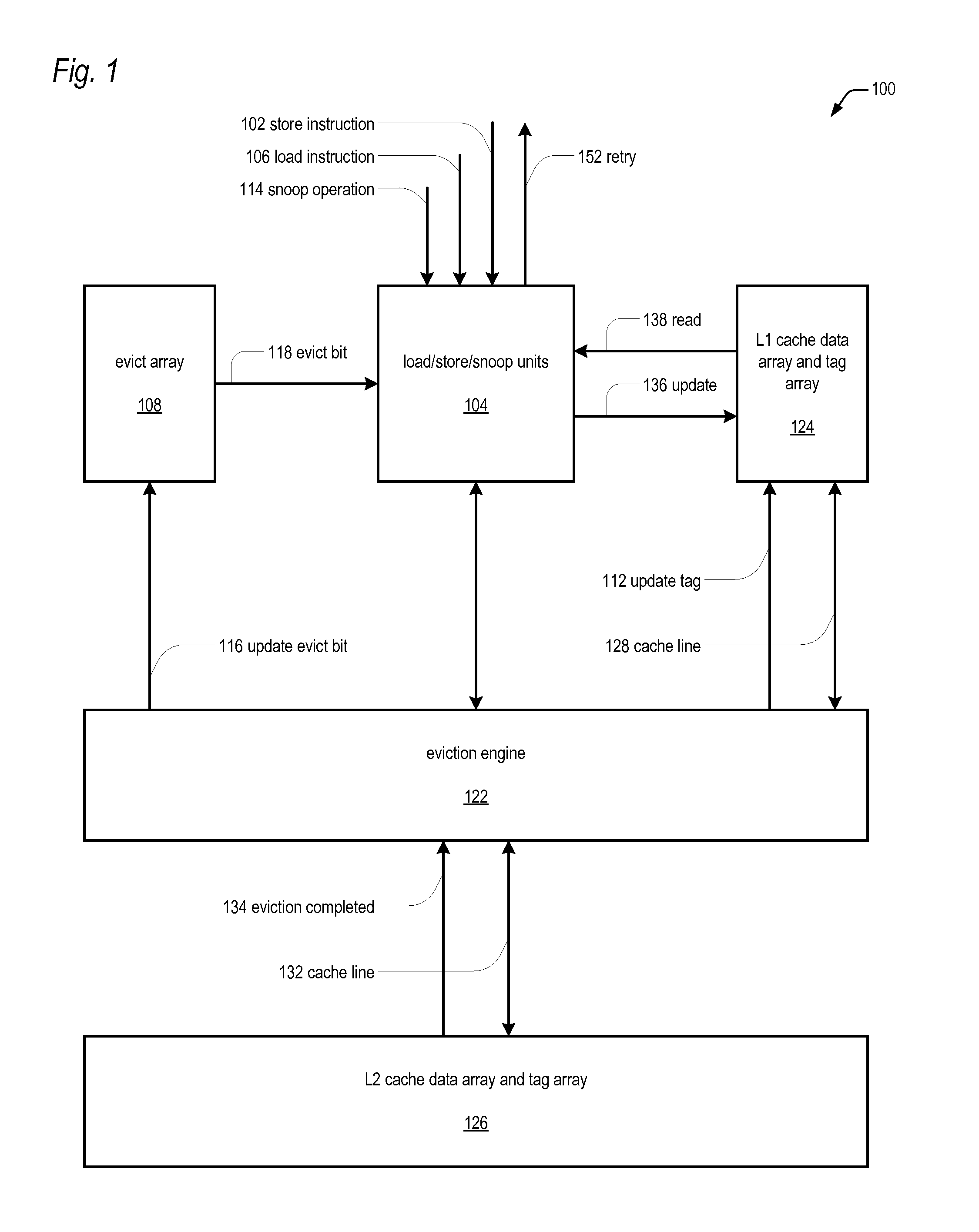

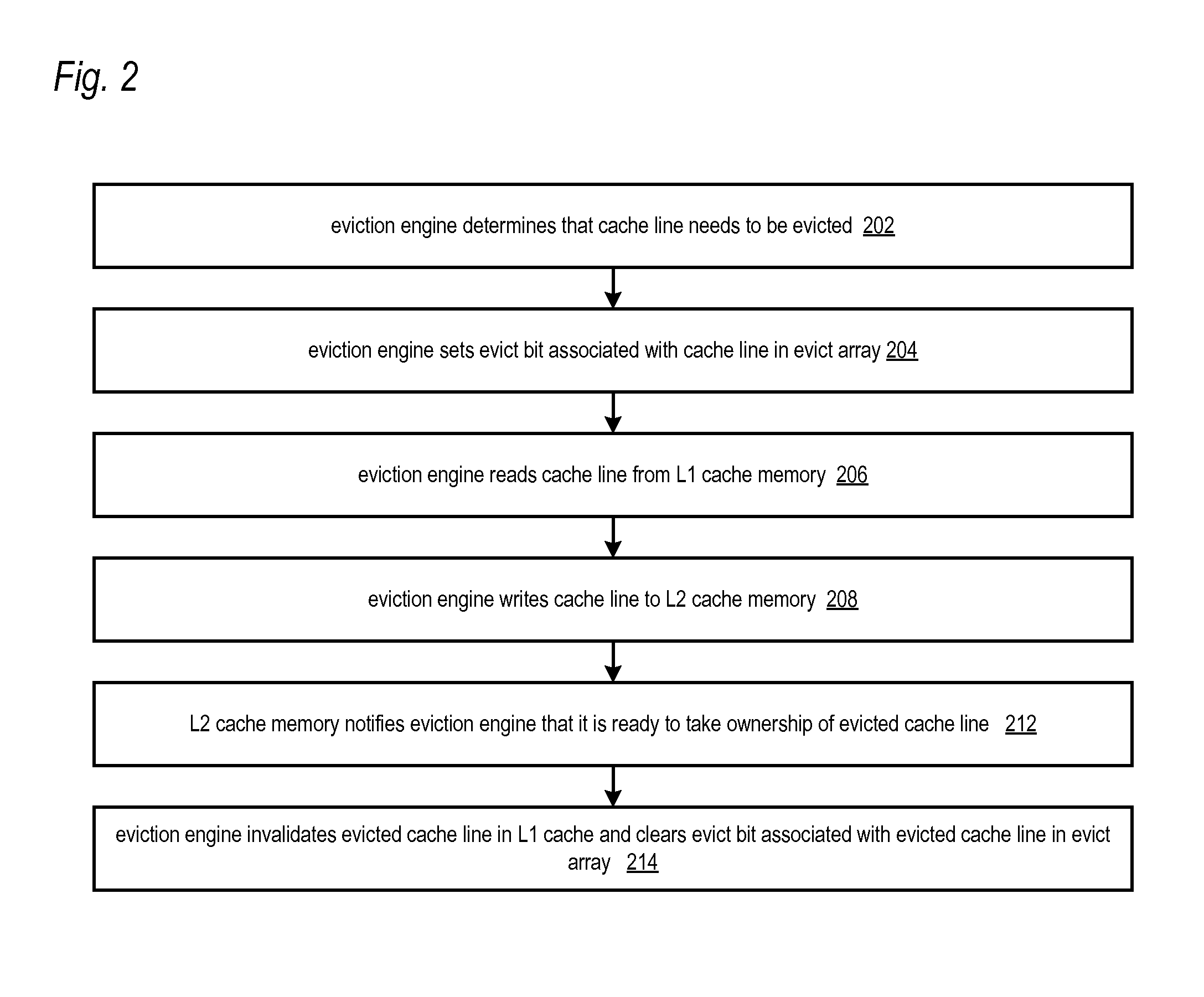

Microprocessor cache line evict array

ActiveUS20100064107A1Lower requirementReduce time pressureMemory architecture accessing/allocationMemory adressing/allocation/relocationMemory hierarchyParallel computing

An apparatus for ensuring data coherency within a cache memory hierarchy of a microprocessor during an eviction of a cache line from a lower-level memory to a higher-level memory in the hierarchy includes an eviction engine and an array of storage elements. The eviction engine is configured to move the cache line from the lower-level memory to the higher-level memory. The array of storage elements are coupled to the eviction engine. Each storage element is configured to store an indication for a corresponding cache line stored in the lower-level memory. The indication indicates whether or not the eviction engine is currently moving the cache line from the lower-level memory to the higher-level memory.

Owner:VIA TECH INC

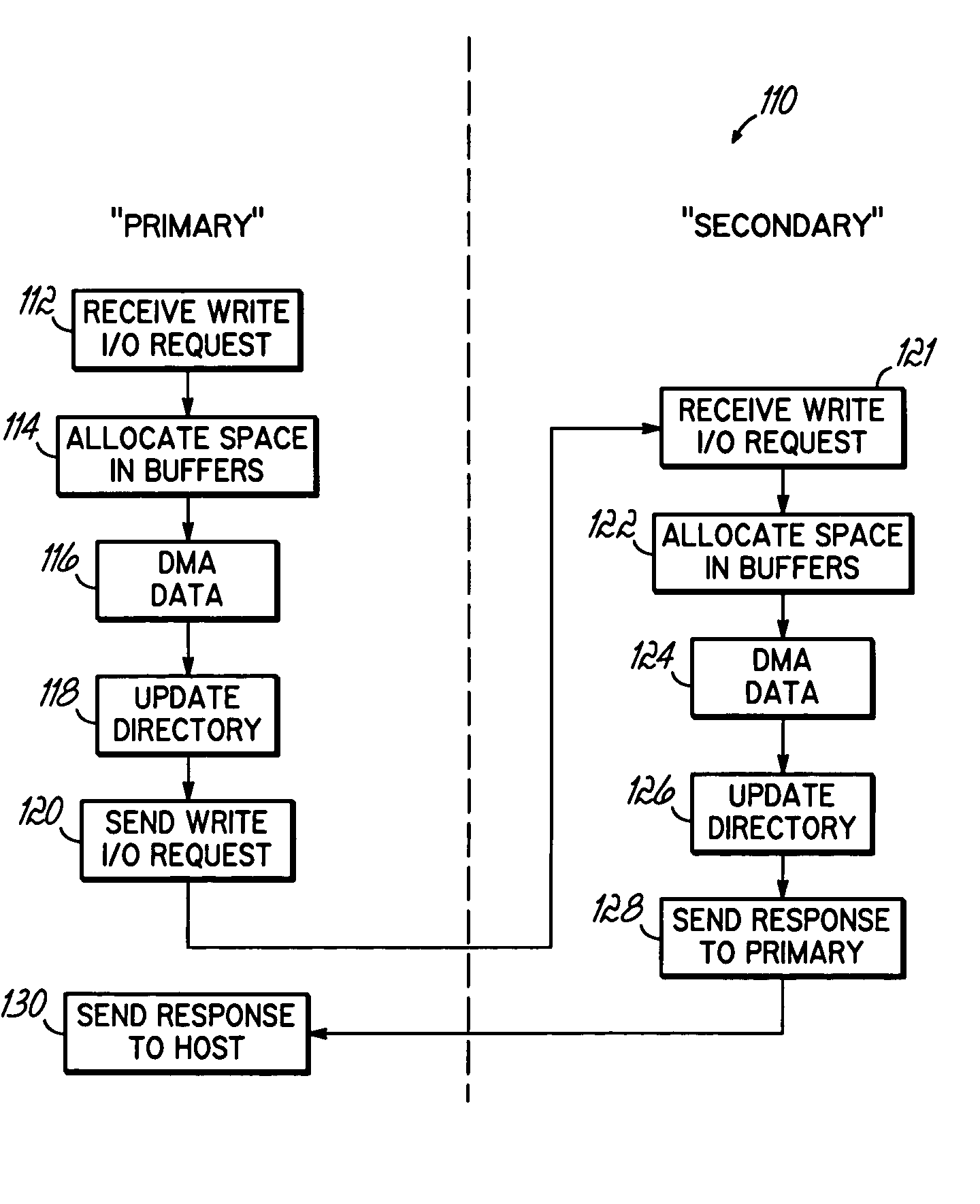

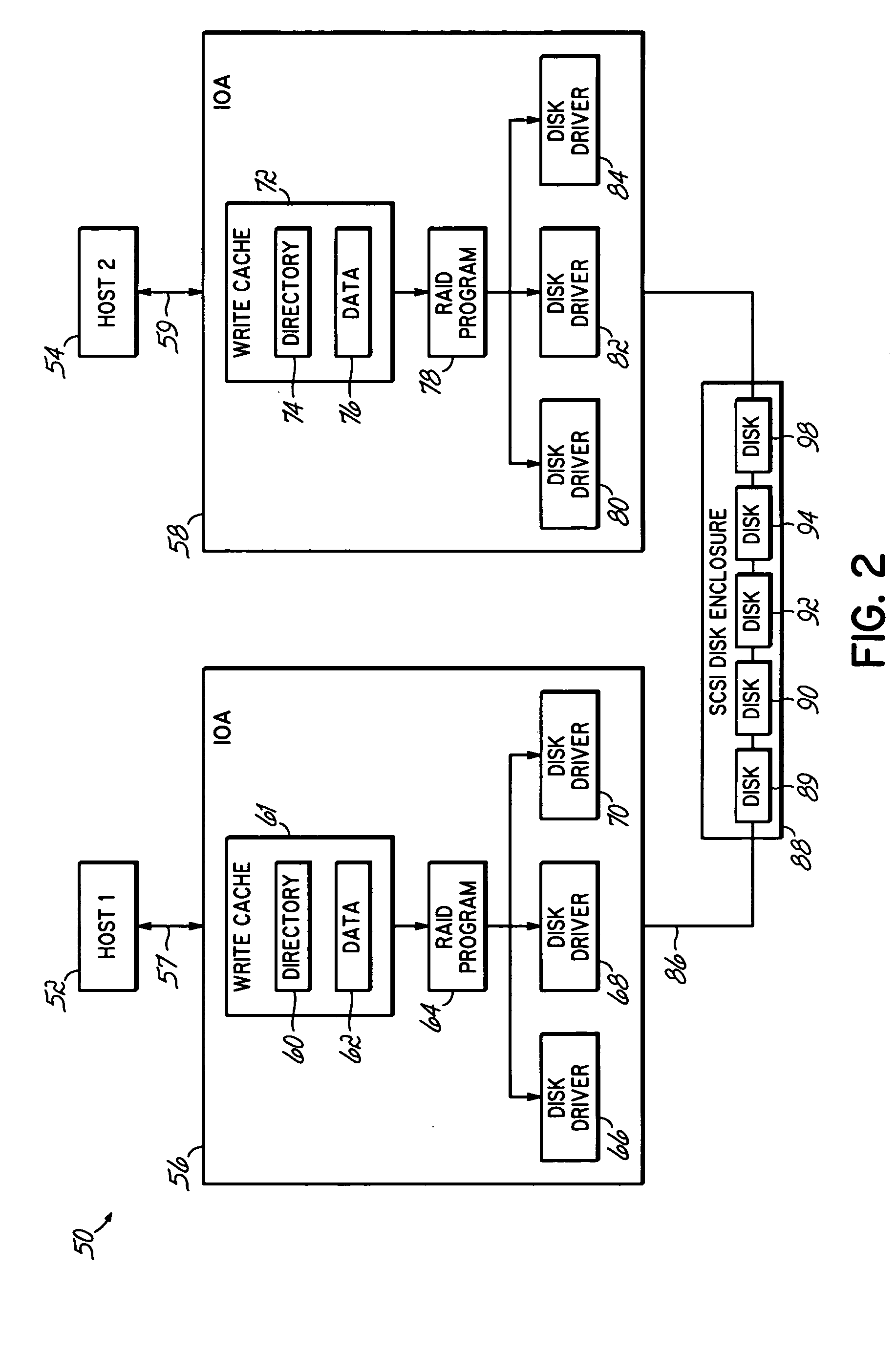

Commingled write cache in dual input/output adapter

InactiveUS20050198411A1Efficiently and reliably mirroringMemory architecture accessing/allocationMemory systemsData storeData coherency

An apparatus, program product and method maintain data coherency between paired I / O adapters by commingling primary and backup data within the respective write caches of the I / O adapters. Such commingling allows the data to be dynamically allocated in a common pool without regard to dedicated primary and backup regions. As such, primary and backup data may be maintained within the cache of a secondary adapter at a different relative location(s) than is the corresponding data stored in the cache of the primary adapter. In any case, however, the same data is updated in both respective write caches such that data coherence is maintained.

Owner:IBM CORP

Data coherency management

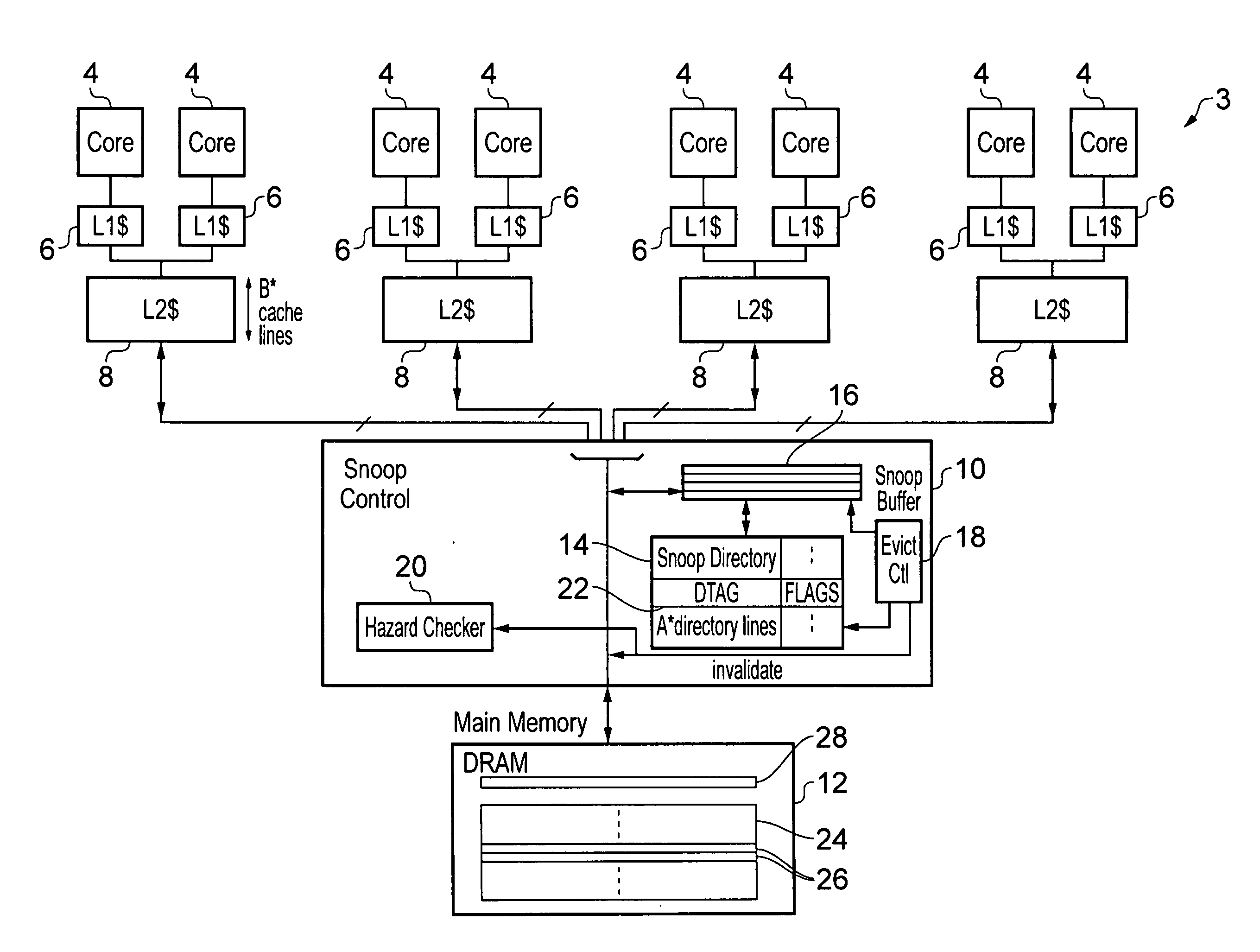

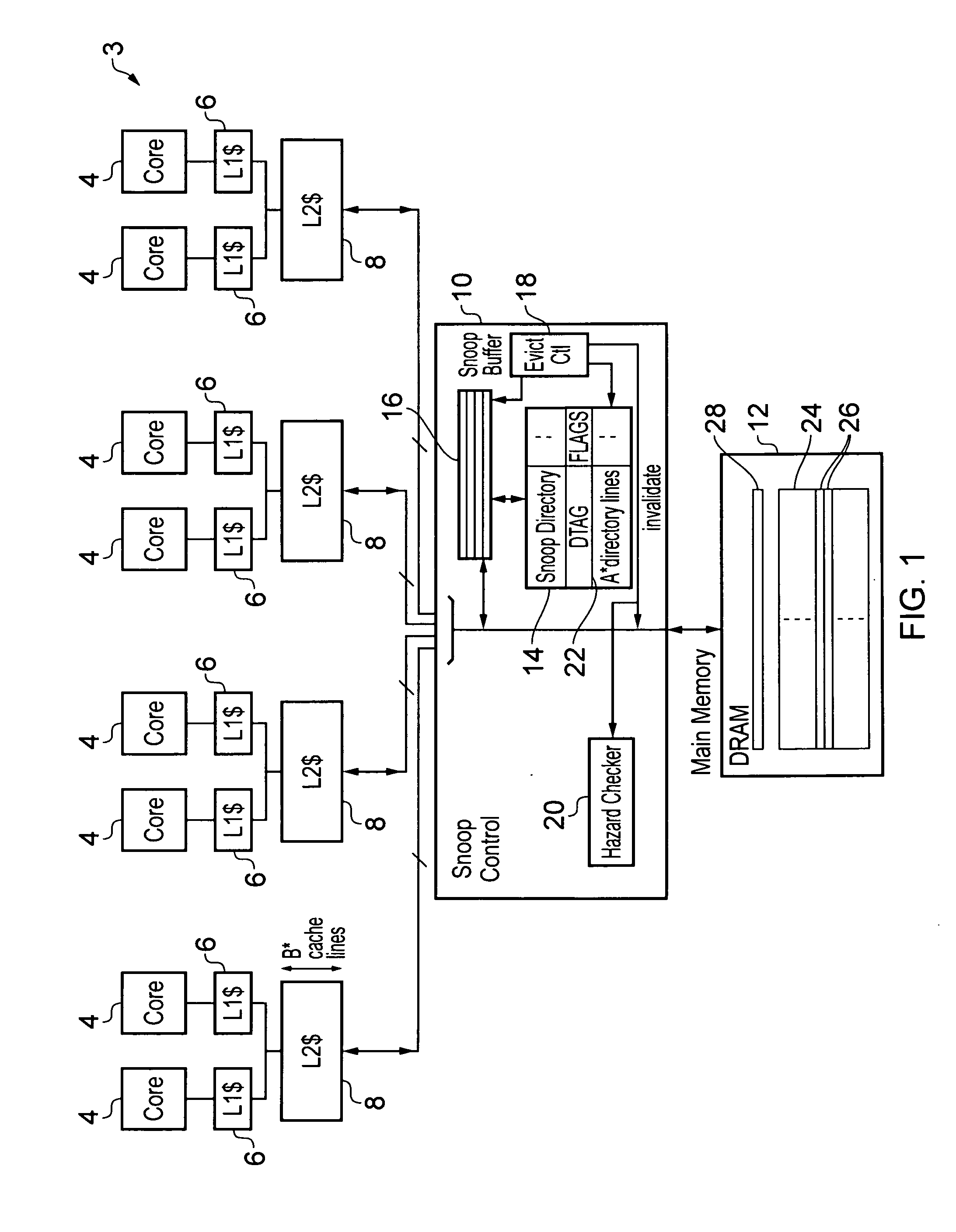

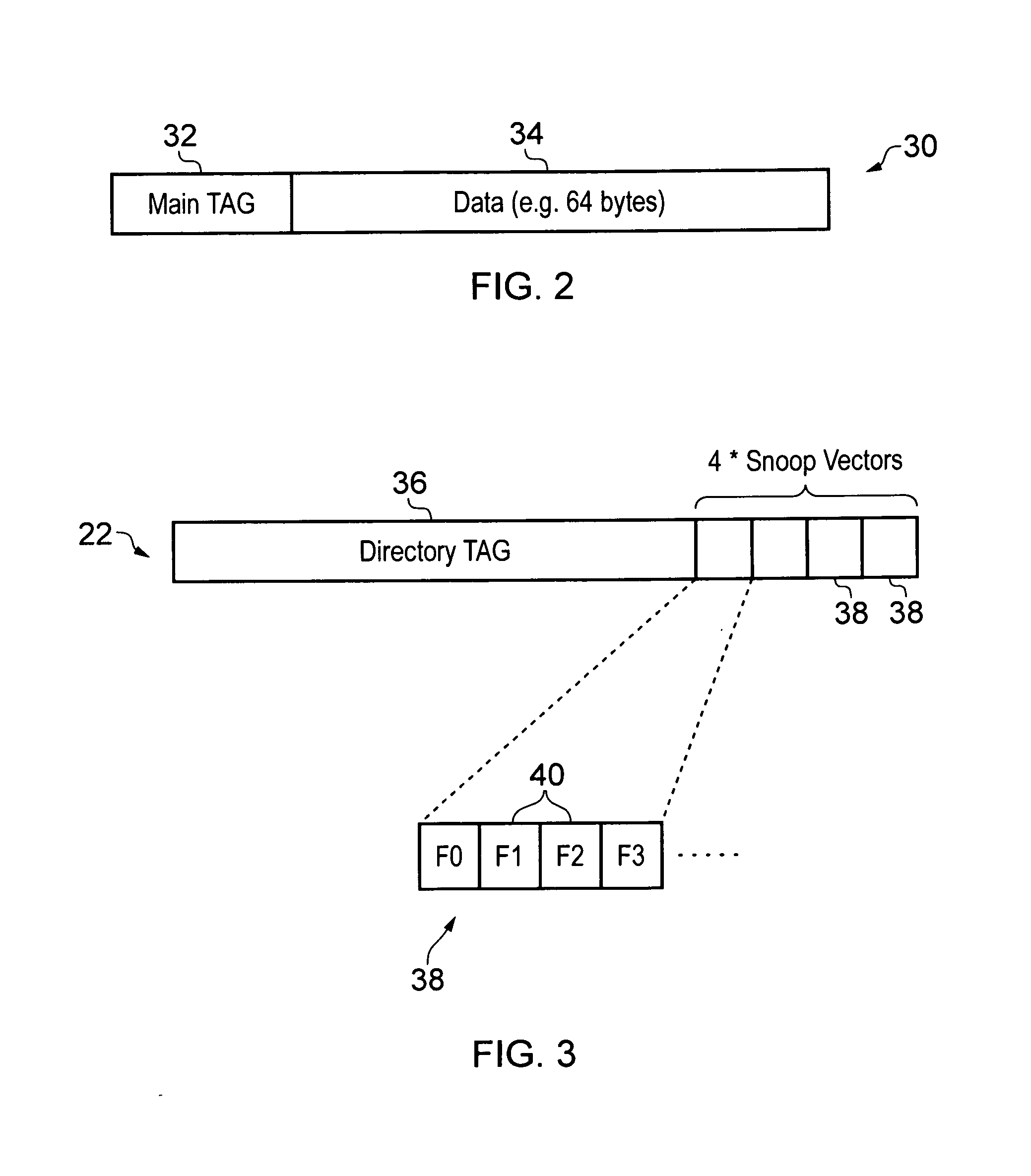

ActiveUS20140281180A1Raise the possibilityReduce areaMemory adressing/allocation/relocationMemory addressData processing system

A data processing system 3 employing a coherent memory system comprises multiple main cache memories 8. An inclusive snoop directory memory 14 stores directory lines 22. Each directory line includes a directory tag and multiple snoop vectors. Each snoop vector relates to a span of memory addresses corresponding to the cache line size within the main cache memories 8.

Owner:ARM LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com