Adaptive cache prefetching based on competing dedicated prefetch policies in dedicated cache sets to reduce cache pollution

A caching, adaptive technique used to reduce cache misses. domain, which can solve problems such as increasing hardware overhead

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0024] Referring now to the figures, several exemplary aspects of the invention are described. The word "exemplary" is used herein to mean "serving as an example, instance, or illustration." Any aspect described herein as "exemplary" is not necessarily to be construed as preferred or advantageous over other aspects.

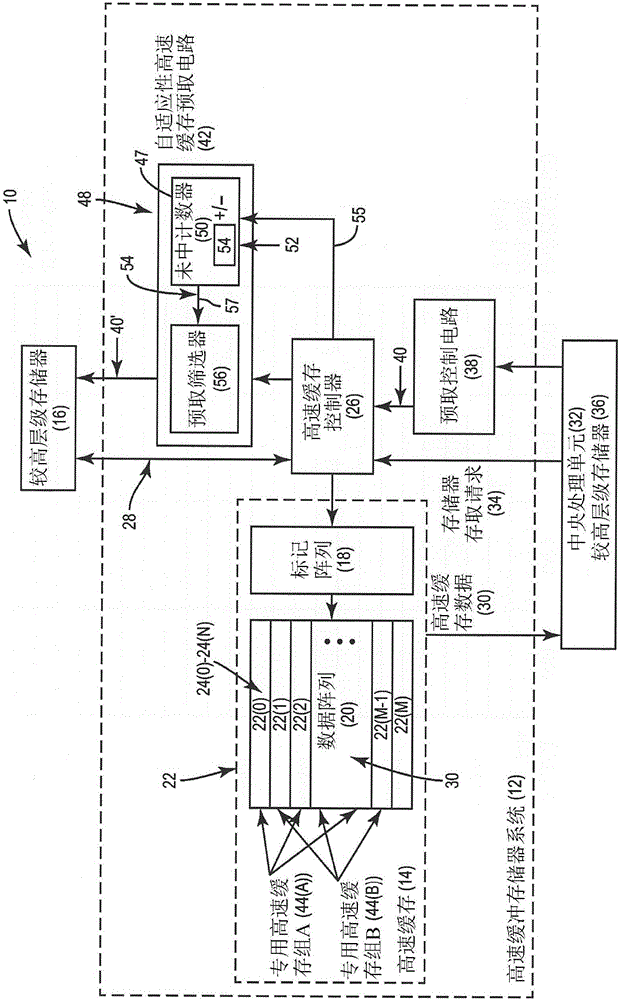

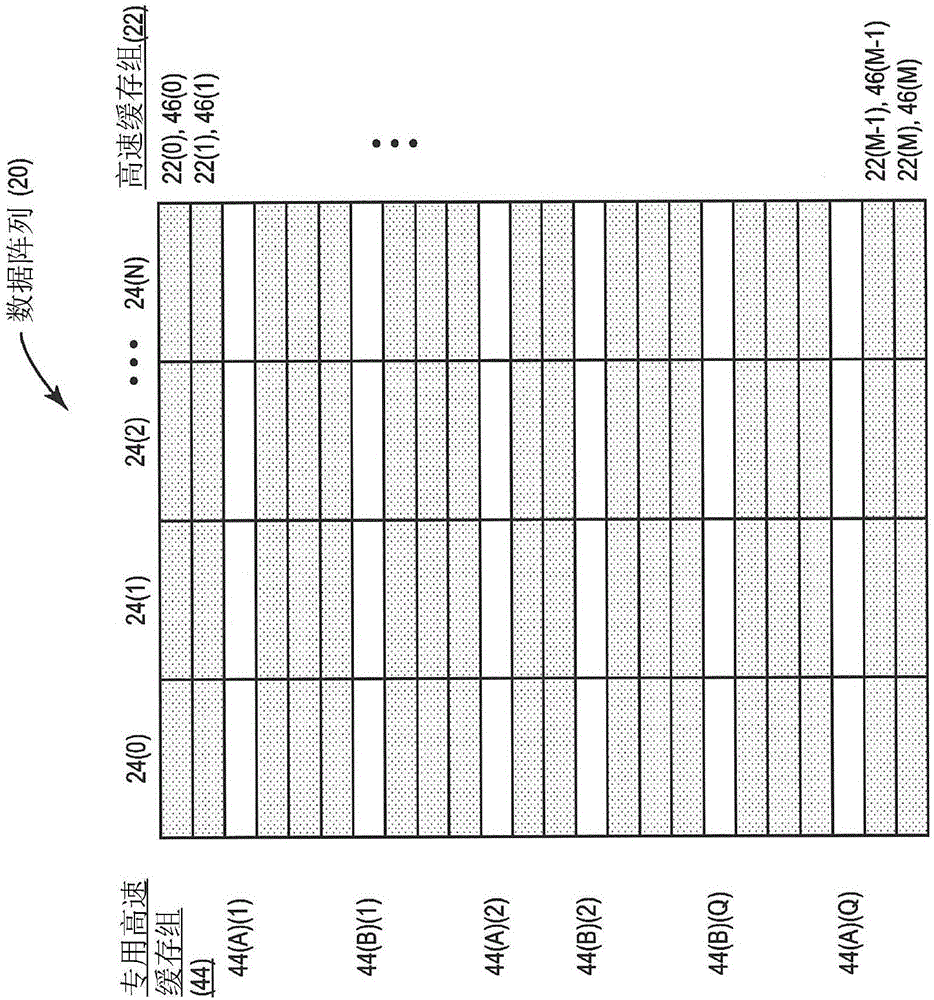

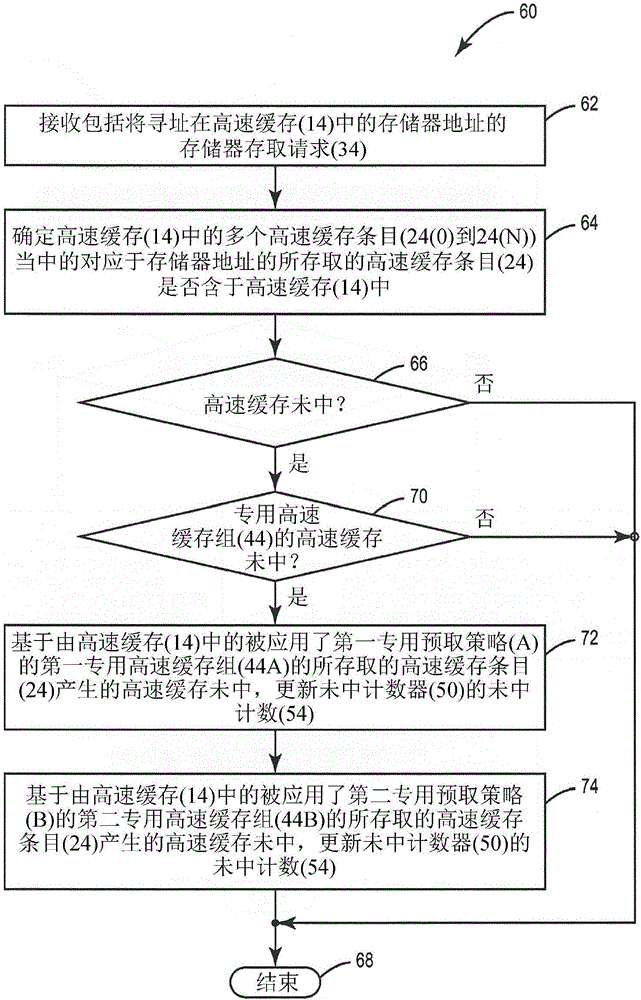

[0025] Aspects disclosed in the detailed description include adaptive cache prefetching based on competitive private prefetch policies in private cache groups to reduce cache pollution. In one aspect, adaptive cache prefetch circuitry for prefetching data into a cache is provided. Instead of attempting to determine the best replacement strategy for the cache, the adaptive cache prefetch circuit is configured to determine a prefetch strategy based on the results of competing dedicated prefetch strategies applied to dedicated cache groups in the cache. In this regard, a subset of the cache sets in the cache are assigned as "private" cache sets. Other non-dedicat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com