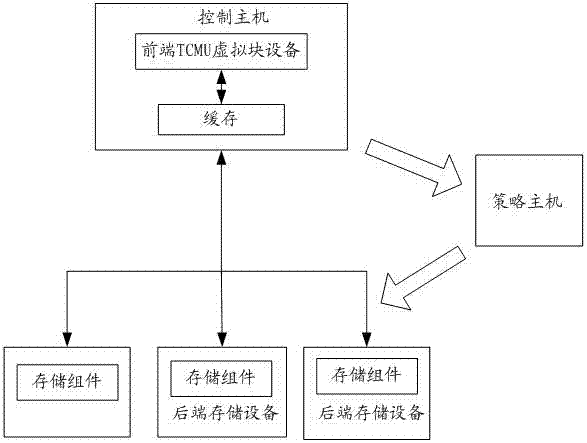

Cache data access method and system based on TCMU virtual block device

A virtual block device and cache data technology, applied in memory systems, electrical digital data processing, instruments, etc., can solve the problem of low read and write performance of TCMU, improve read and write performance, and prevent cache pollution

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

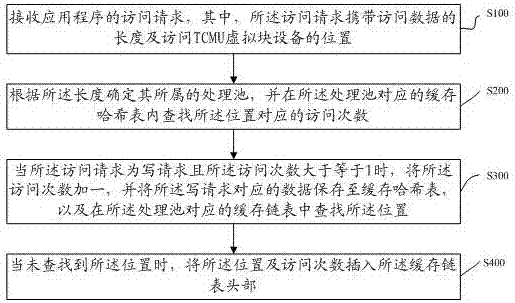

[0086] This embodiment provides a cache data access method based on the TCMU virtual block device, the access is a write access, such as Figure 4 with Figure 5 , the method includes:

[0087] S10. When receiving a write request carrying (offset, length, data), distribute the write request to its corresponding processing pool according to the length, where the processing pool is the same as 4K, 8K, 16K, 32K, 64K and One correspondence in 128K;

[0088] S20. Search for the offset in the cache hash table corresponding to the processing pool, if not found, execute step S30, and if found, execute step S40;

[0089] S30. Use offset as the key to hash into the hash table, and record its count as 1 and execute step 80, wherein the cache hash table does not cache the data;

[0090] S40. Add 1 to the access times count corresponding to the offset, and cache the data in the cache hash table;

[0091] S50. Send offset and count to the cache linked list corresponding to the processin...

Embodiment 2

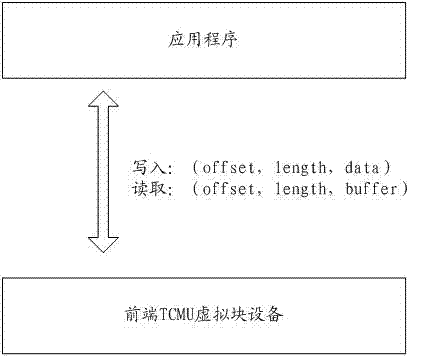

[0096] This embodiment provides a cache data access method based on a TCMU virtual block device, the access is a read access, such as Figure 4 with Image 6 , the method includes:

[0097] M10. When receiving a write request carrying (offset, length, buffer), distribute the write request to its corresponding processing pool according to the length, where the processing pool is compatible with 4K, 8K, 16K, 32K, 64K and One correspondence in 128K;

[0098] M20. Search for the offset in the cache hash table corresponding to the processing pool, if not found, execute step S30, and if found, execute step S40;

[0099] M30. Read the data from the back-end storage device through the network, hash it into the hash table with offset as the key, and record the count of the number of visits to the location as 1, wherein the cache hash table does not cache said data;

[0100] M40. Comparing the number of visits count with 1, if it is equal to 1, the execution is not M50, if it is gre...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com