Memory system cache mechanism based on flash memory

A flash memory and cache technology, applied in the field of memory system cache mechanism, can solve the problems of not being able to identify the cacheline, not being able to identify the cacheline, reducing the overall system performance and service life, etc., so as to reduce the number of write requests, reduce time overhead, and reduce overhead small effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

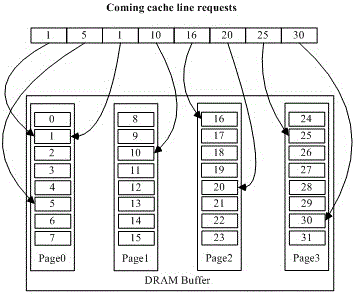

[0024] figure 1 It is a schematic diagram of unbalanced access to each cache line in each page. In this example, the size of each page is 512B, including 8 cache lines.

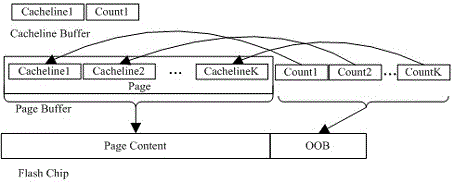

[0025] figure 2 It is an architecture diagram and a schematic diagram of a work flow of a memory system cache mechanism based on flash memory adopted in the present invention, and the DRAM cache includes a page buffer area and a cache line buffer area.

[0026] image 3 It is a schematic diagram of the history-aware hot spot identification mechanism. The access information records of the cache line can be stored in the out-of-band area of the flash memory when the page is written back to the flash memory.

[0027] Figure 4 It is a schematic diagram of the principle of the delayed refresh mechanism, and the dirty flag is set for each data page and cache line. The specific execution process is:

[0028] In the first step, the DRAM cache space is divided into a page cache area and a cache line cache are...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com