Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

953 results about "Majorization minimization" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

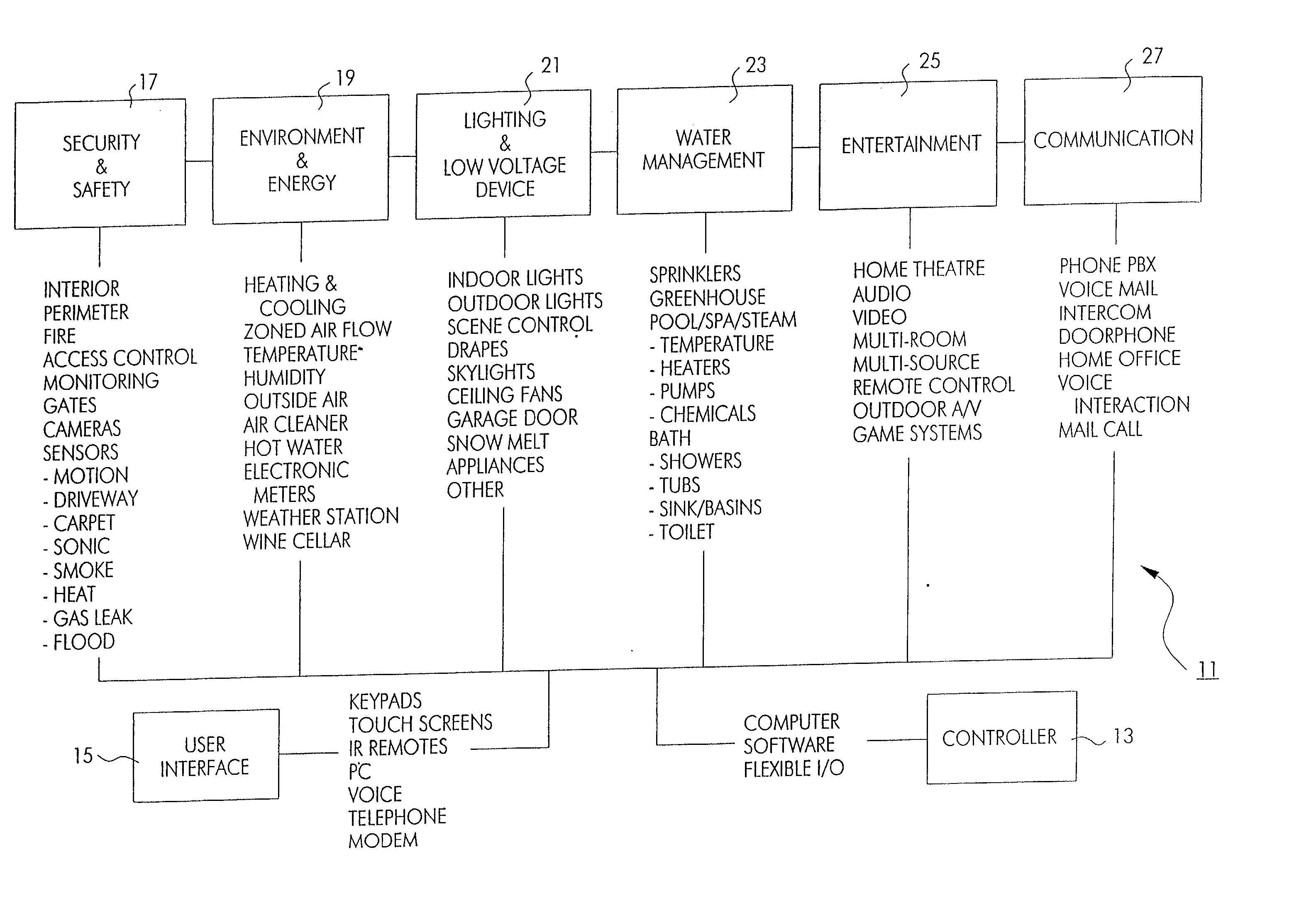

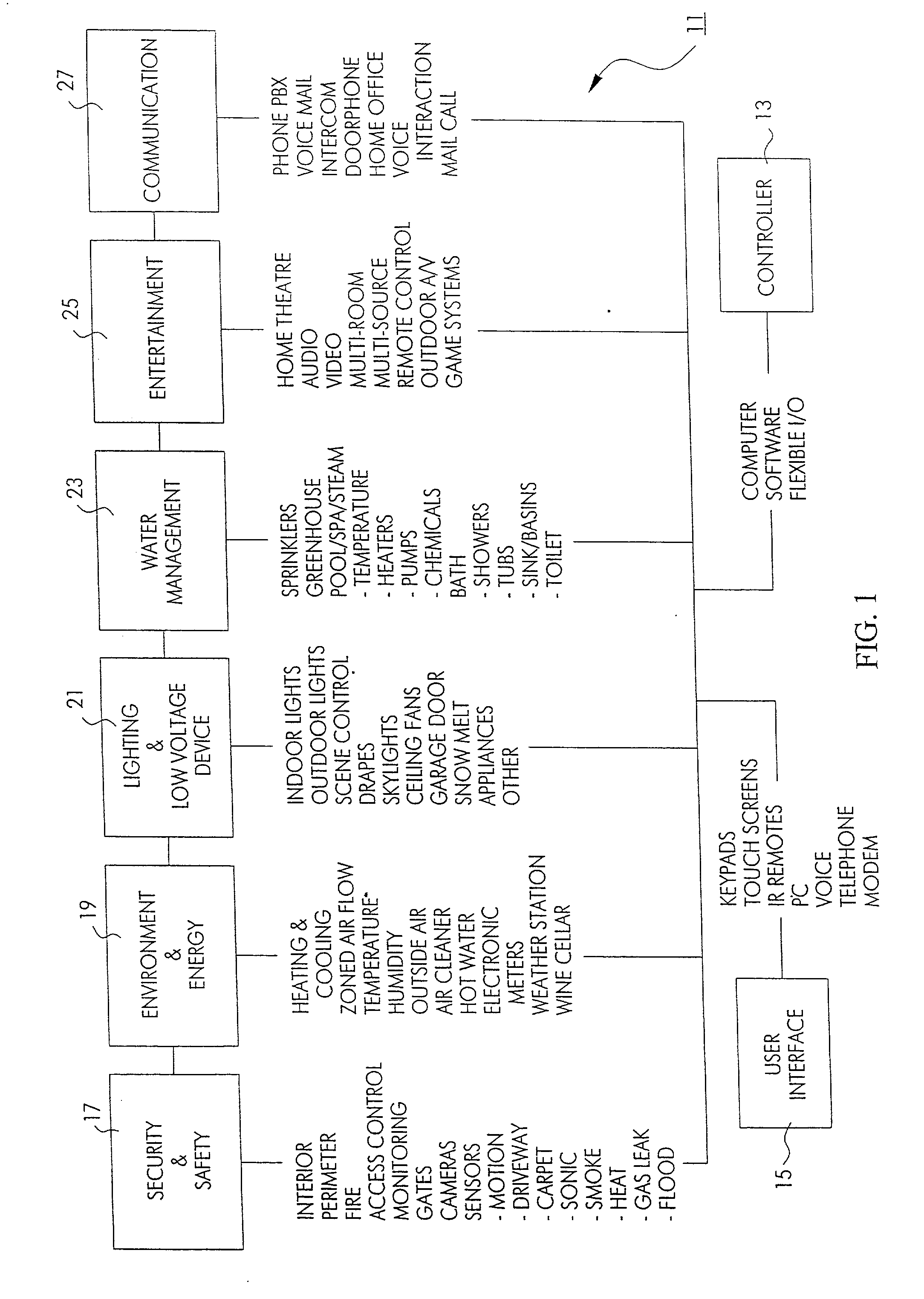

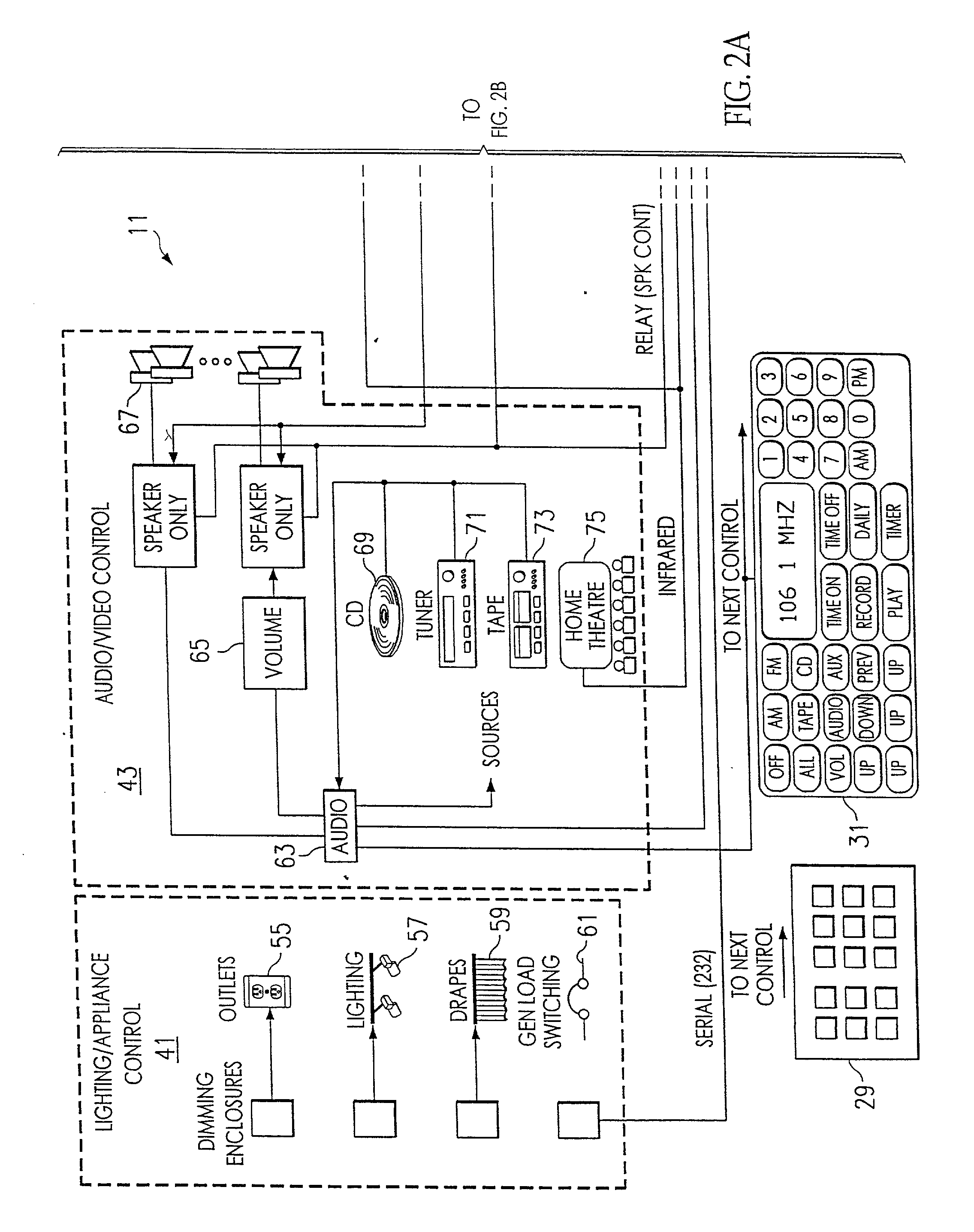

Method and apparatus for improved building automation

InactiveUS20020016639A1Large modularityCommunicationSampled-variable control systemsComputer controlModularityEngineering

The improved building automation system of the present invention is modular in the extreme. This diminishes the amount of custom programming required in order to affect control of a particular building. It allows for a relatively open architecture which can accommodate a variety of unique control applications which are scripted for a particular building. By modularizing many of the common processes utilized in the automation system, the custom programming required to control any particular building is minimized. This modularity in design allows for uniform and coordinated control over a plurality of automation subsystems which may be incompatible with one another at the device or machine level, but which can be controlled utilizing a relatively small and uniform set of "interprocess control commands" which define an interprocess control protocol which is utilized in relatively high level scripts and control applications which may be written for a particular building.

Owner:UNIDEN AMERICA

Method and system for minimizing the connection set up time in high speed packet switching networks

InactiveUS6934249B1Minimize delayMinimize in in to selectError preventionFrequency-division multiplex detailsTraffic capacityPacket switched

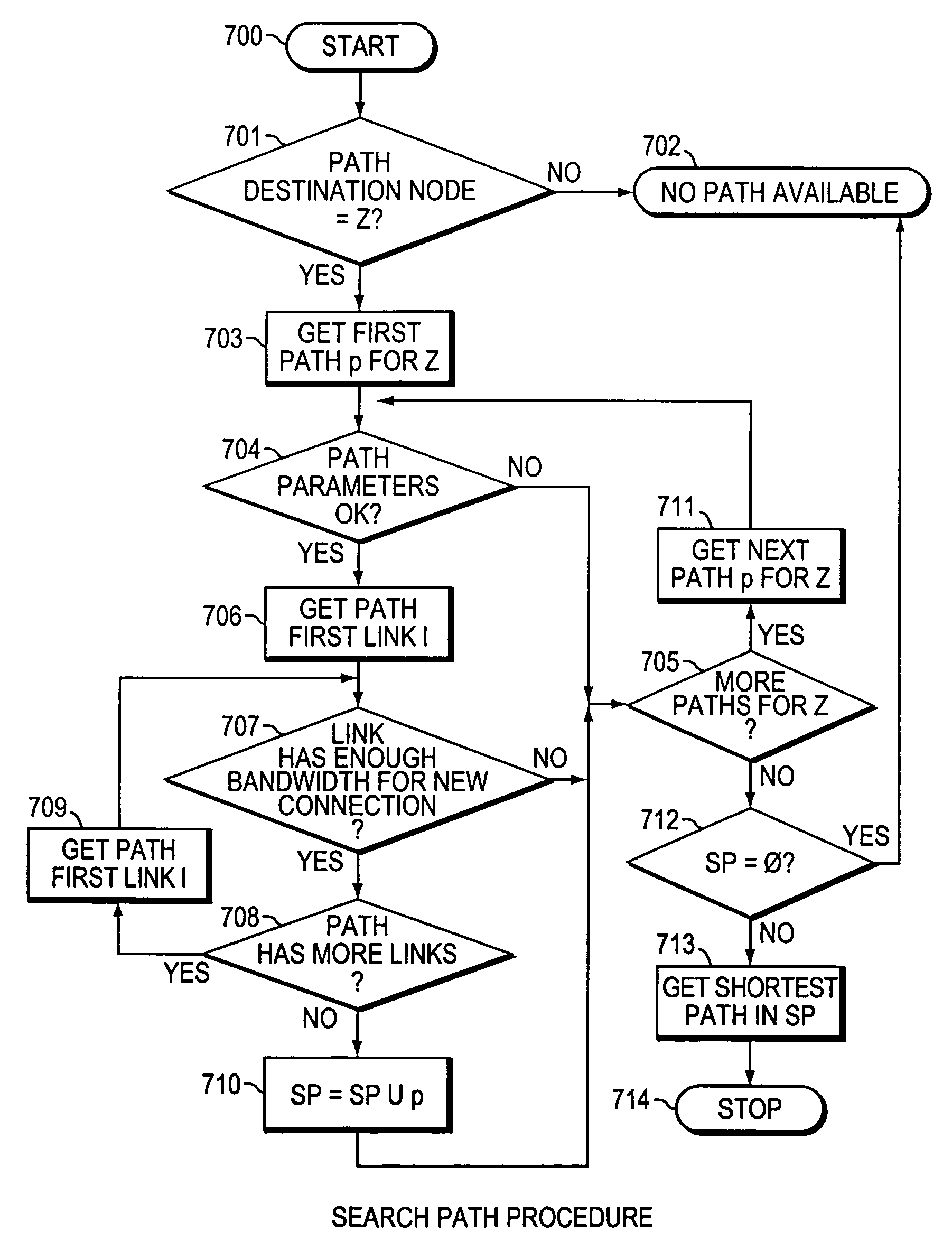

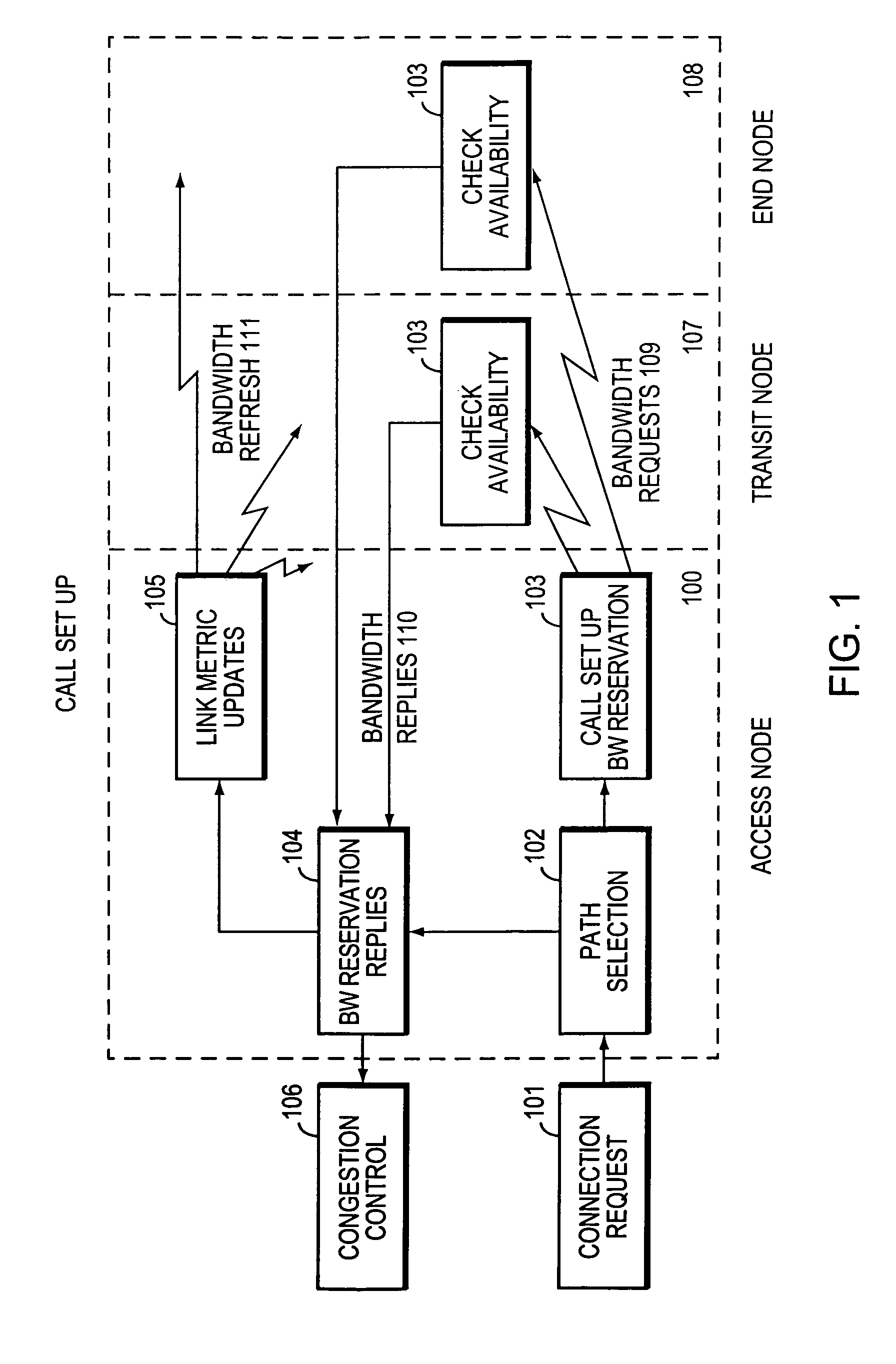

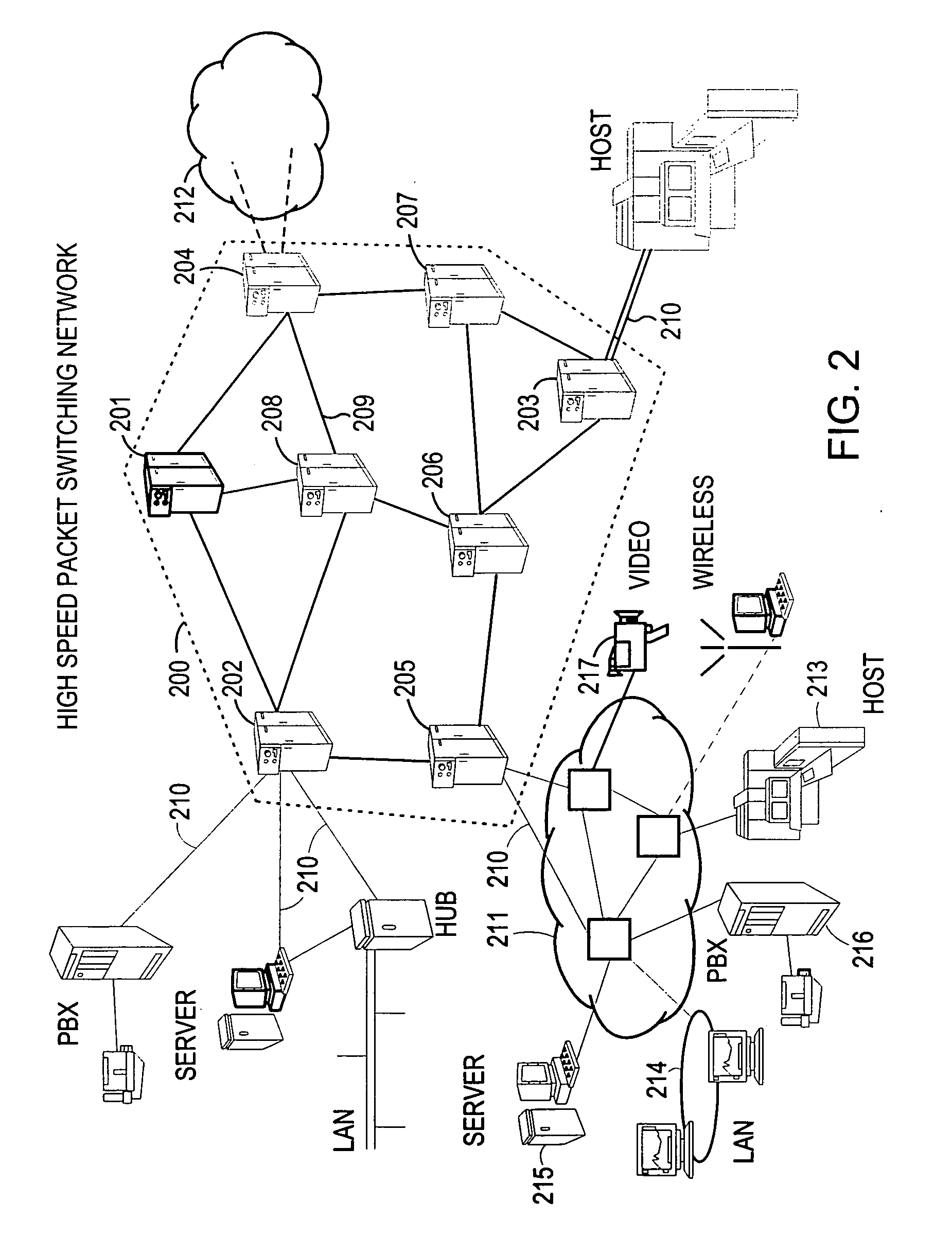

The present invention is directed to a high speed packet switching network and, in particular to a method and system for minimizing the time to establish a connection between an origin and a destination node. Due to high dynamicity of the traffic on transmission links, it is important to select a routing path according to a fully up-to-date information on all network resources. The simpler approach is to calculate a new path for each new connection request. This solution may be very time consuming because there are as many path selection operations as connection set up operations. On another hand, the calculation of paths based on an exhaustive exploration of the network topology, is a complex operation which may also take an inordinate amount of resources in large networks. Many of connections originated from a network node flow to the same destination network node. It is therefore possible to take a serious benefit in reusing the same already calculated paths for several connections towards the same node. The path calculated at the time the connection is requested is recorded in a Routing Database and updated each time a modification occurs in the network. Furthermore, alternate paths for supporting non-disruptive path switch on failure or preemption, and new paths towards potential destination nodes can be calculated and stored when the connection set up process is idle. These last operations are executed in background with a low processing priority and in absence of connection request.

Owner:CISCO TECH INC

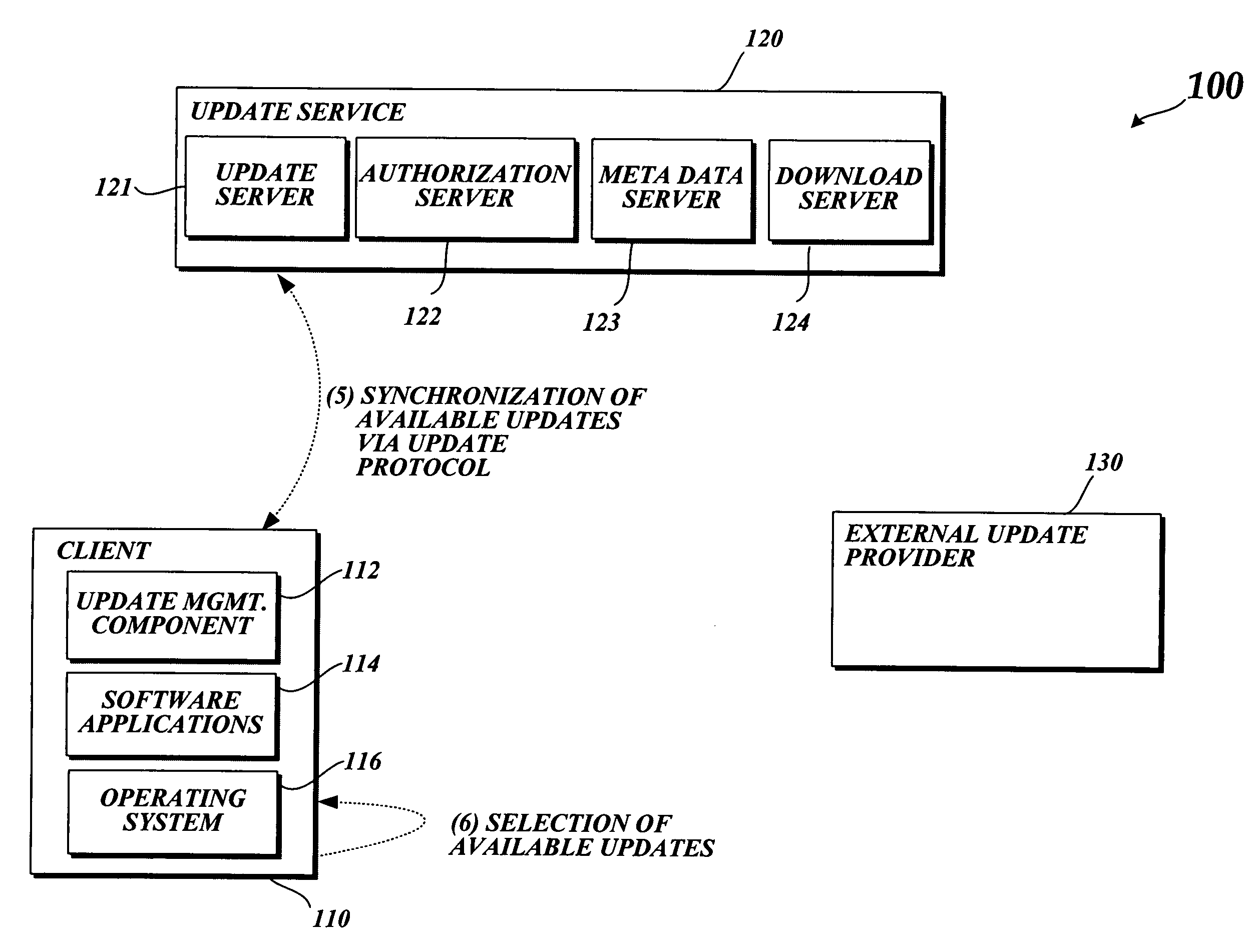

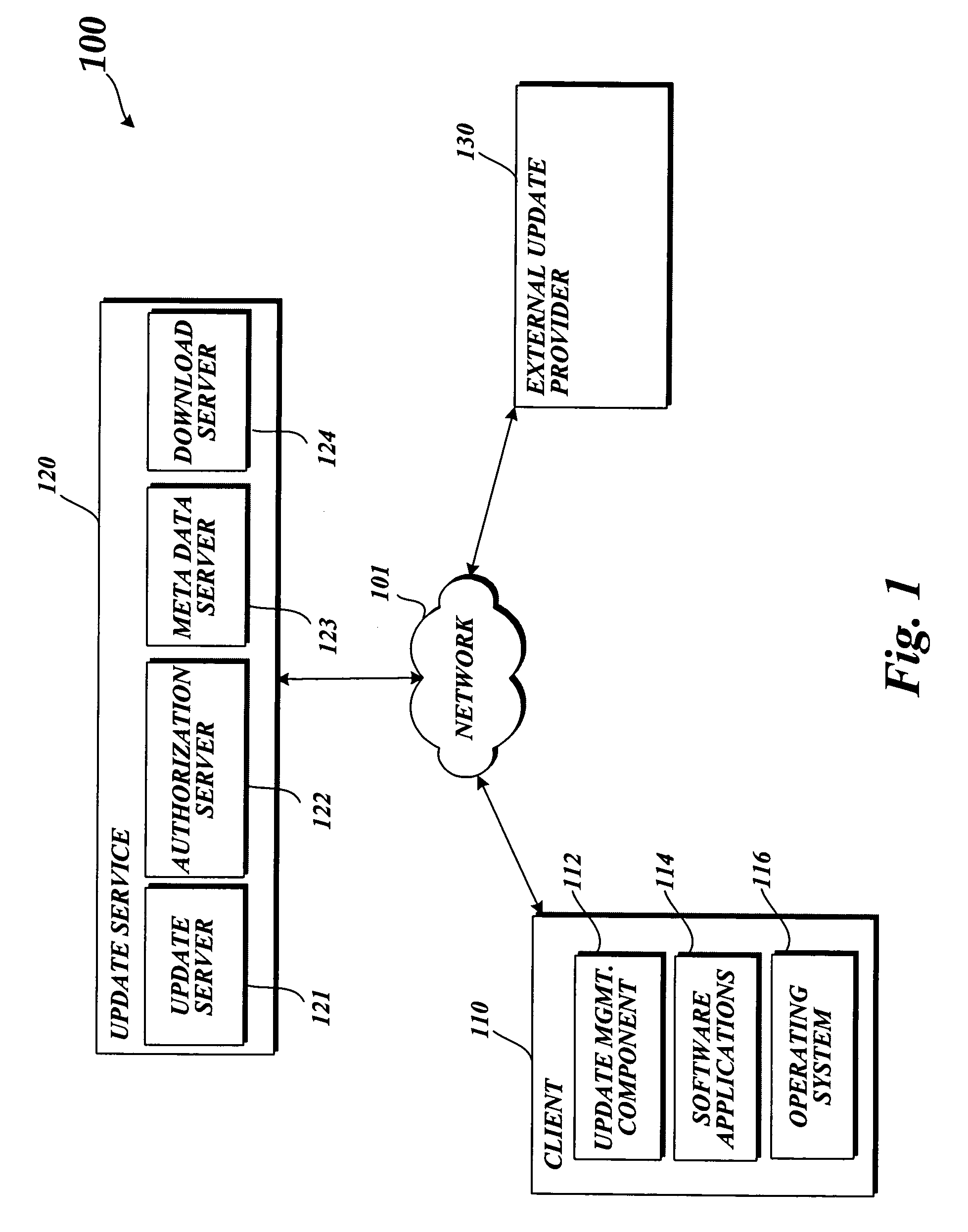

Method and system for downloading updates

ActiveUS7716660B2Minimize timeReduce the amount requiredSoftware maintainance/managementMultiple digital computer combinationsAnti virusSoftware update

Embodiments of the present invention provide the ability for a software provider to distribute software updates to several different recipients utilizing a peer-to-peer environment. The invention described herein may be used to update any type of software, including, but not limited to, operating software, programming software, anti-virus software, database software, etc. The use of a peer-to-peer environment with added security provides the ability to minimize download time for each peer and also reduce the amount of egress bandwidth that must be provided by the software provider to enable recipients (peers) to obtain the update.

Owner:MICROSOFT TECH LICENSING LLC

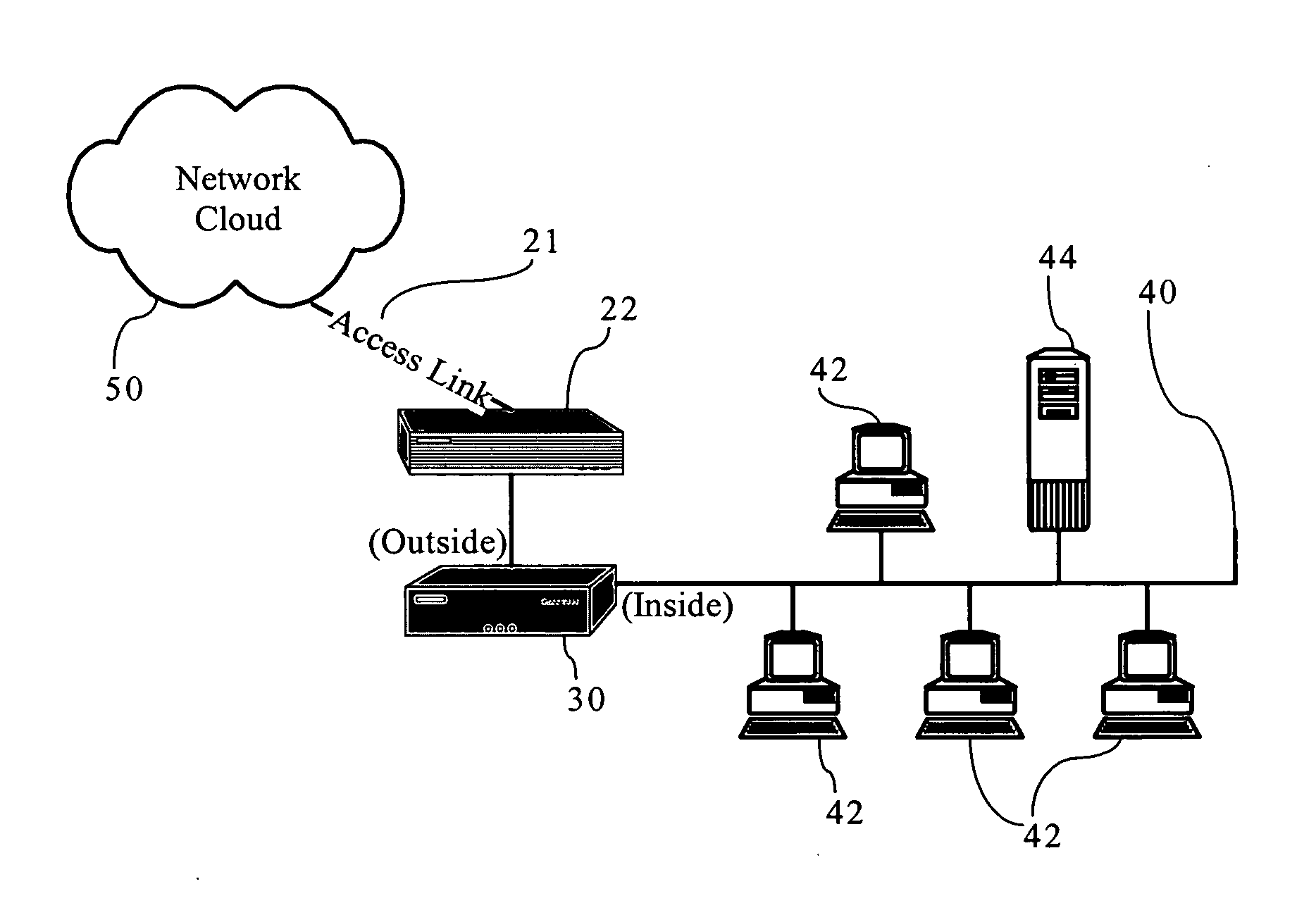

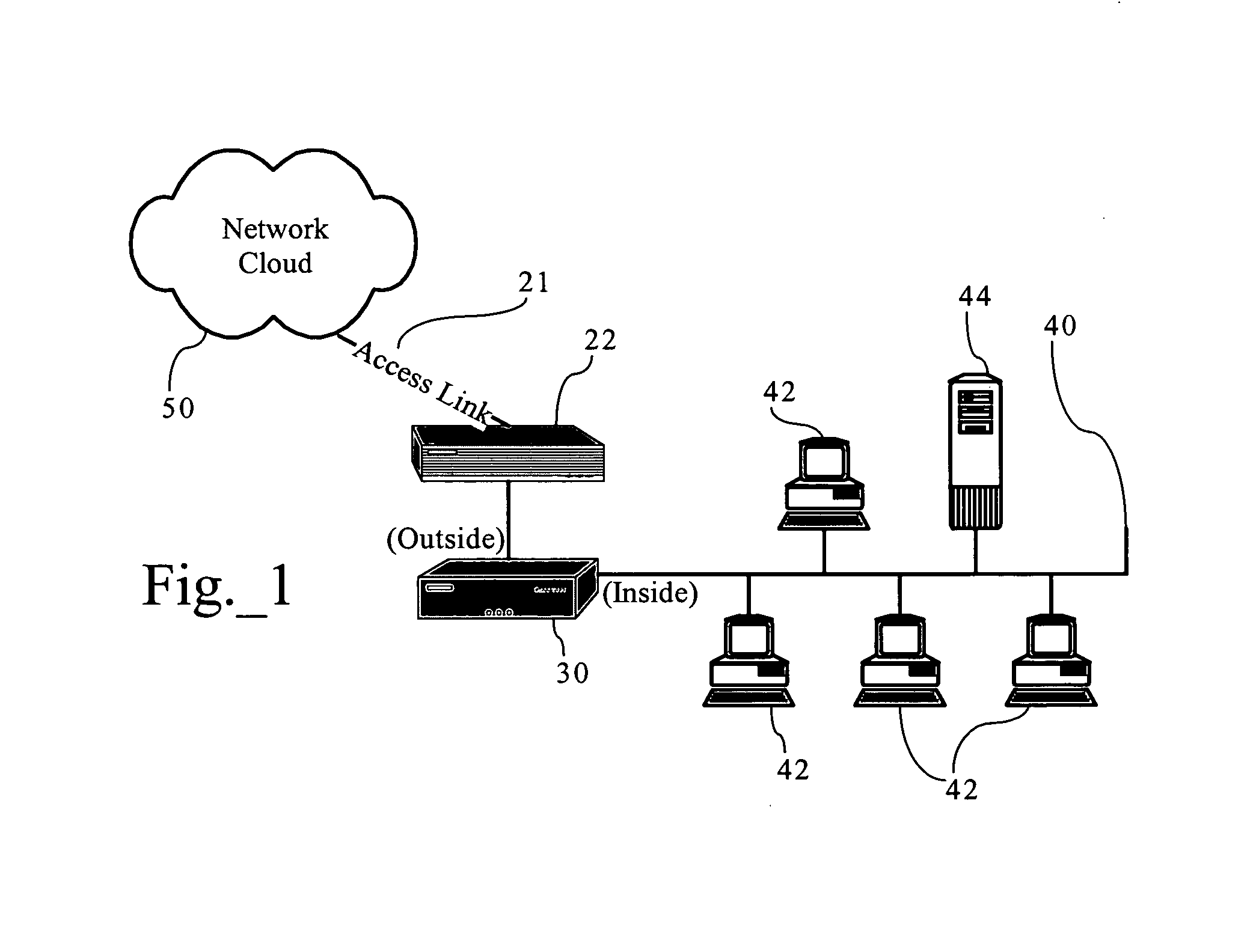

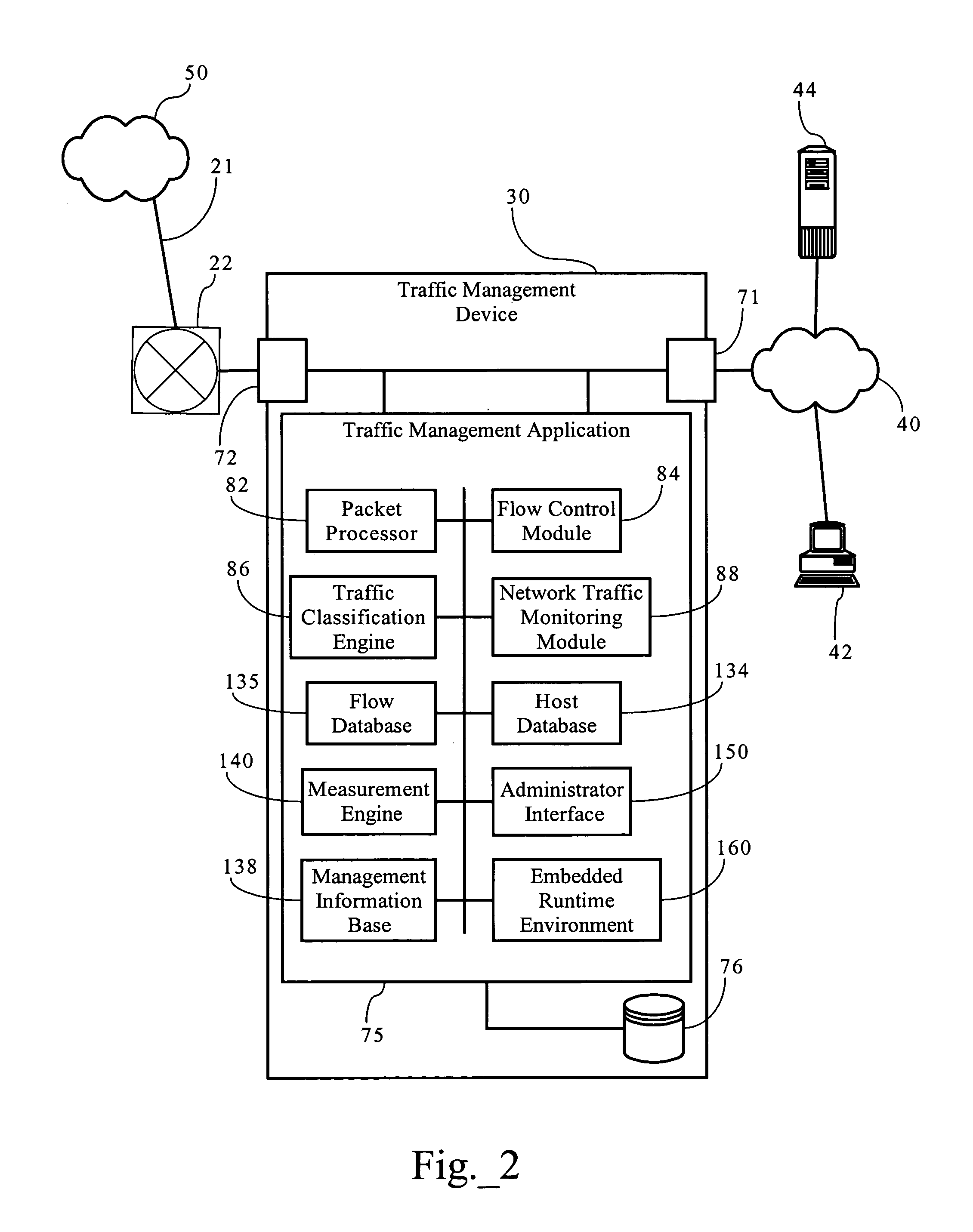

Cost-aware, bandwidth management systems adaptive to network conditions

ActiveUS8489720B1Improve load conditionsLow costMultiple digital computer combinationsData switching networksNetwork conditionsApplication software

Methods, apparatuses, and systems directed to cost-aware bandwidth management schemes that are adaptive to monitored network or application performance attributes. In one embodiment, the present invention supports bandwidth management systems that adapt to network conditions, while managing tradeoffs between bandwidth costs and application performance. One implementation of the present invention tracks bandwidth usage over an applicable billing period and applies a statistical model to allow for bursting to address increased network loading conditions that degrade network or application performance. One implementation allows for bursting at selected time periods based on computations minimizing cost relative to an applicable billing model. One implementation of the present invention is also application-aware, monitoring network application performance and increasing bandwidth allocations in response to degradations in the performance of selected applications.

Owner:CA TECH INC

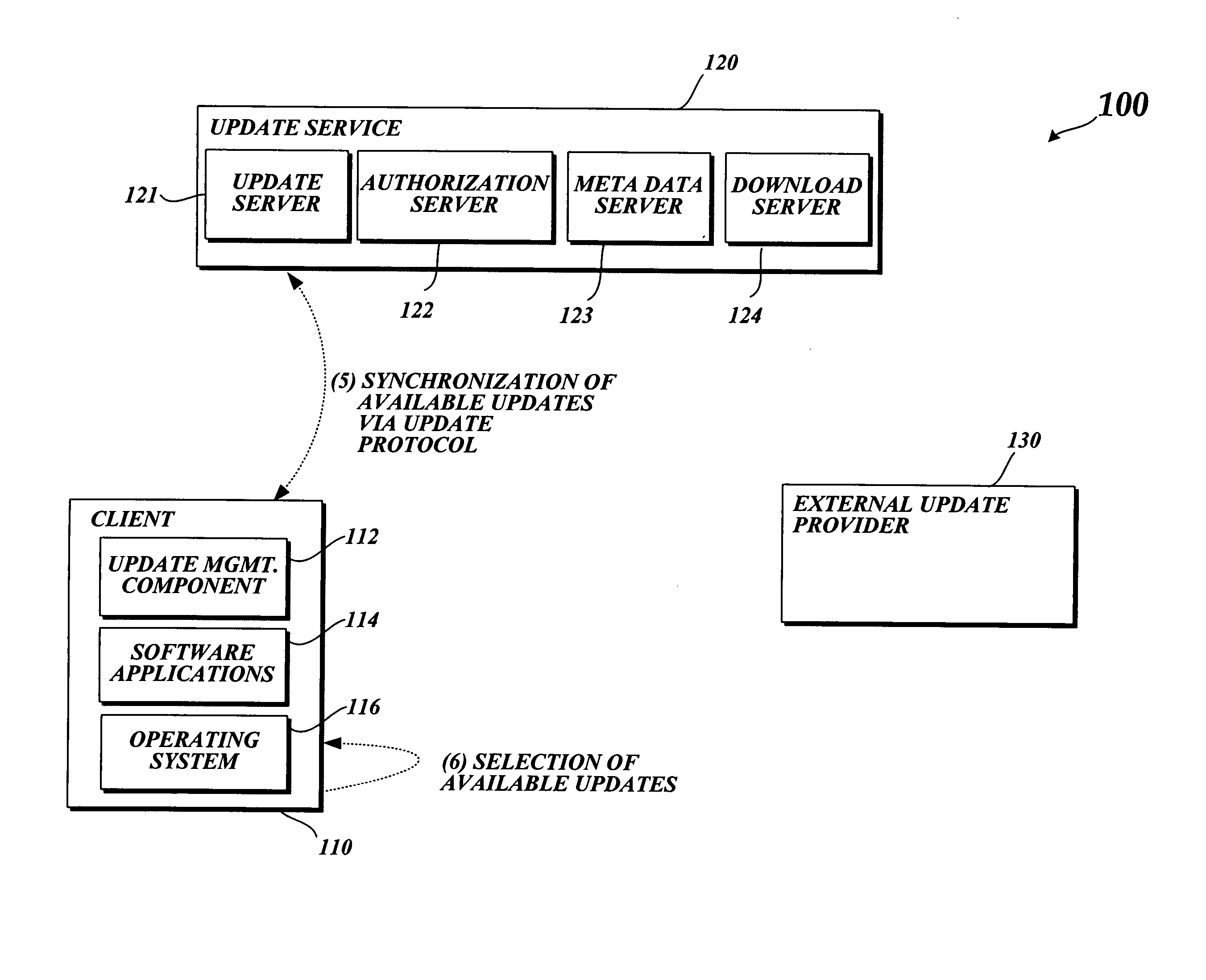

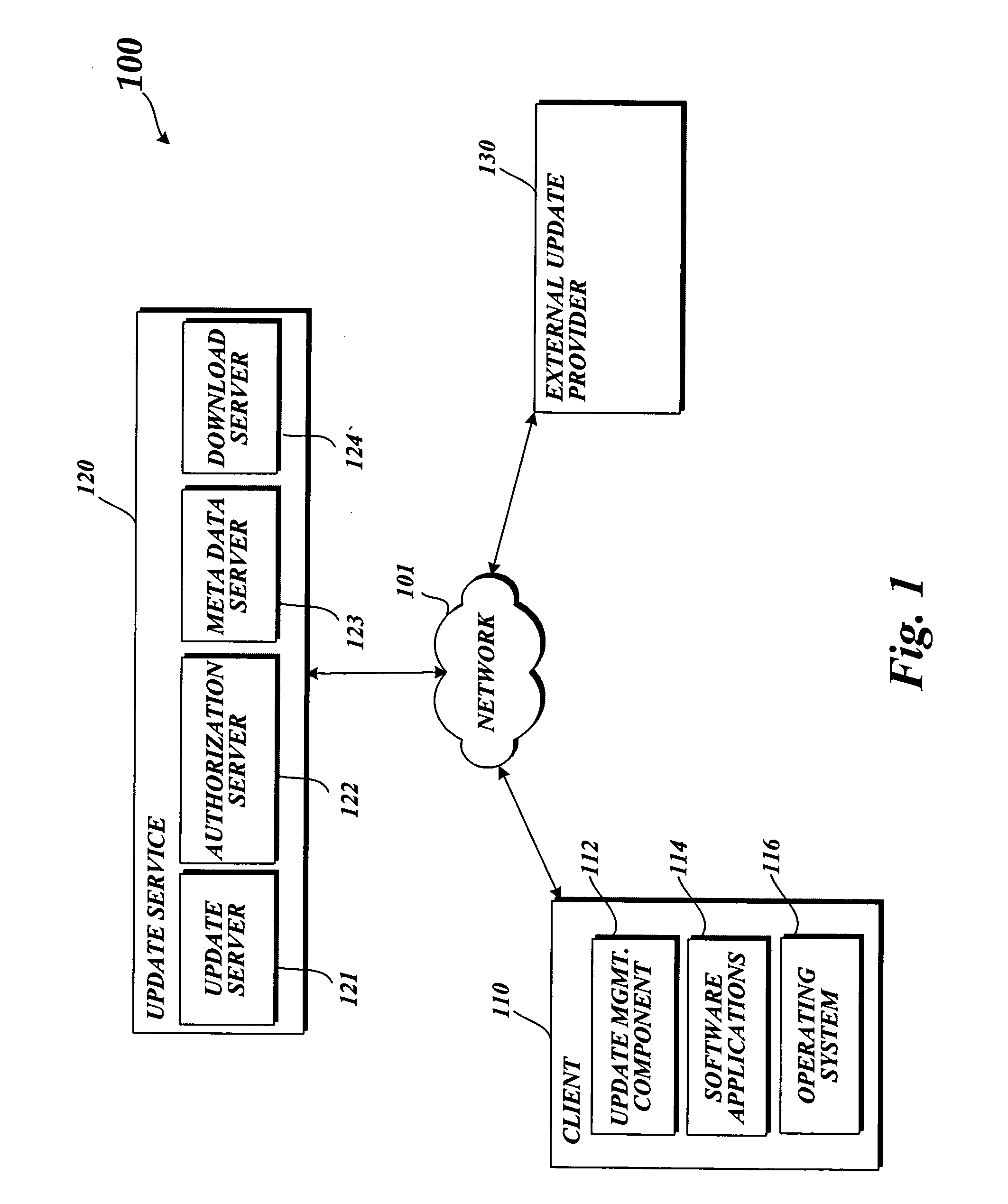

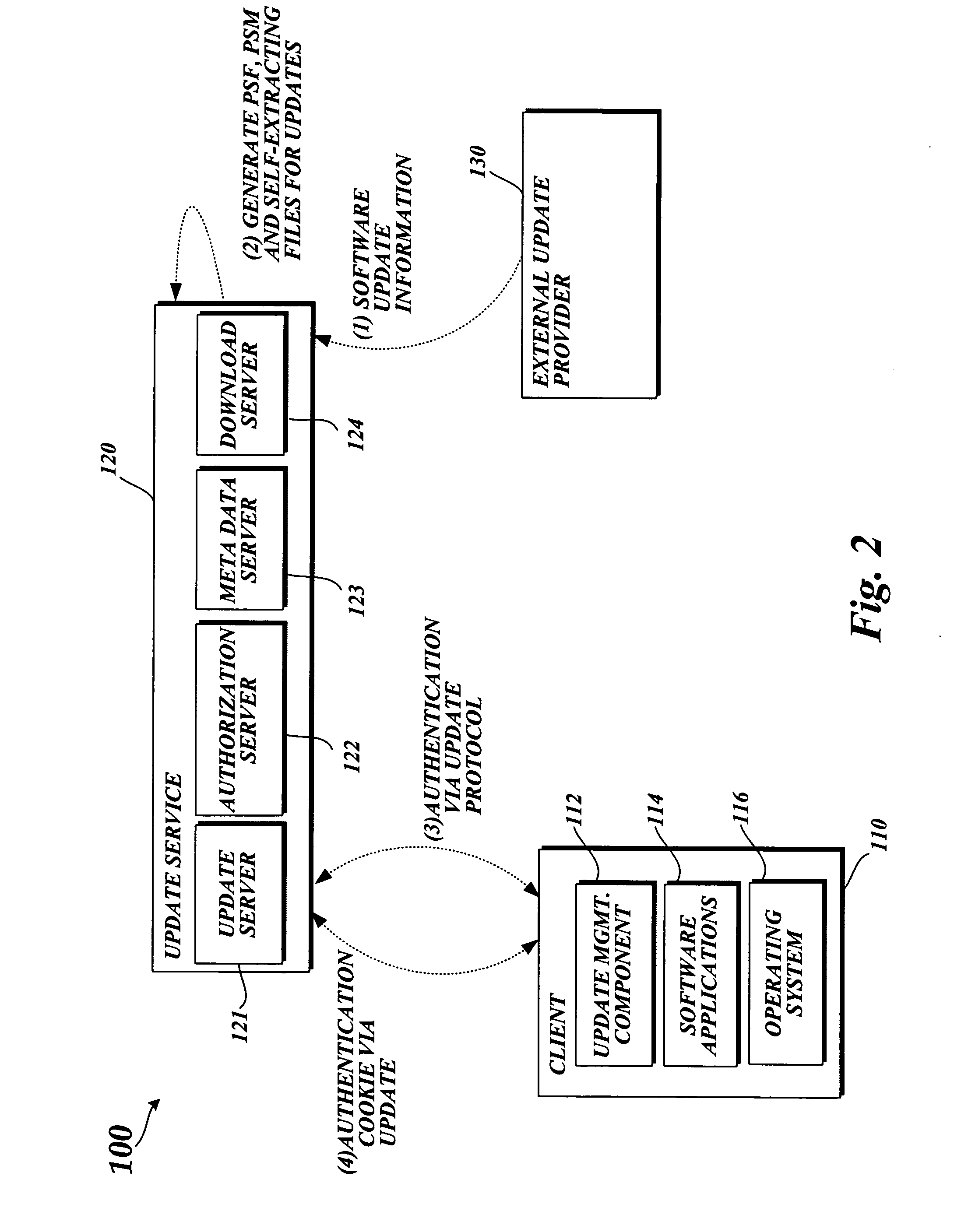

System and method for a software distribution service

ActiveUS20050132349A1Facilitating selection and implementationMinimizing bandwidthLink editingMultiple digital computer combinationsSoftware distributionService control

The present invention is directed to a system and method for managing software updates. More specifically, the present invention is directed to a system and method for facilitating the selection and implementation of software updates while minimizing the bandwidth and processing resources required to select and implement the software updates. In accordance with an aspect of the present invention, a software update service controls access to software updates stored on servers. In accordance with another aspect, the software update service synchronizes with client machines to identify applicable updates.

Owner:MICROSOFT TECH LICENSING LLC

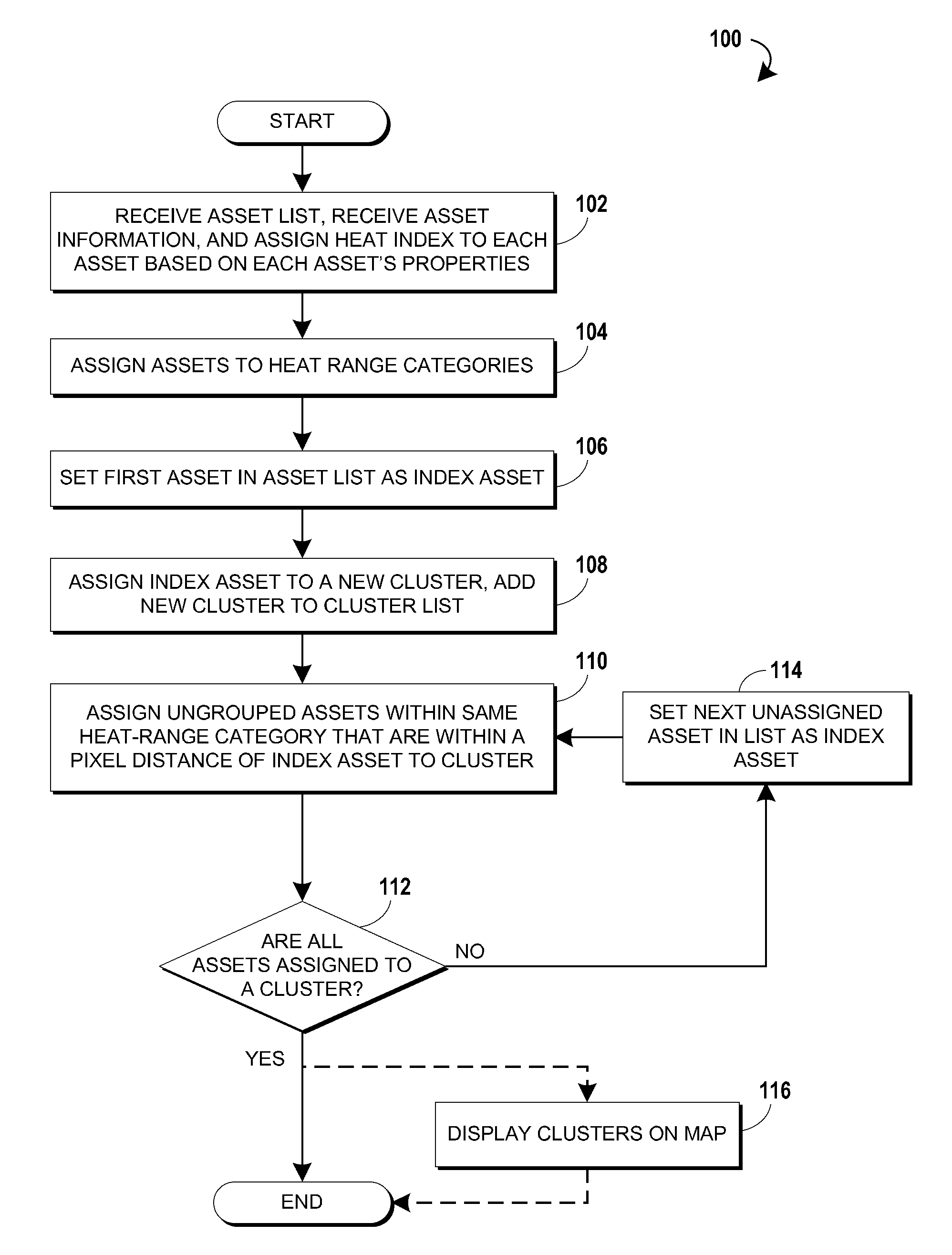

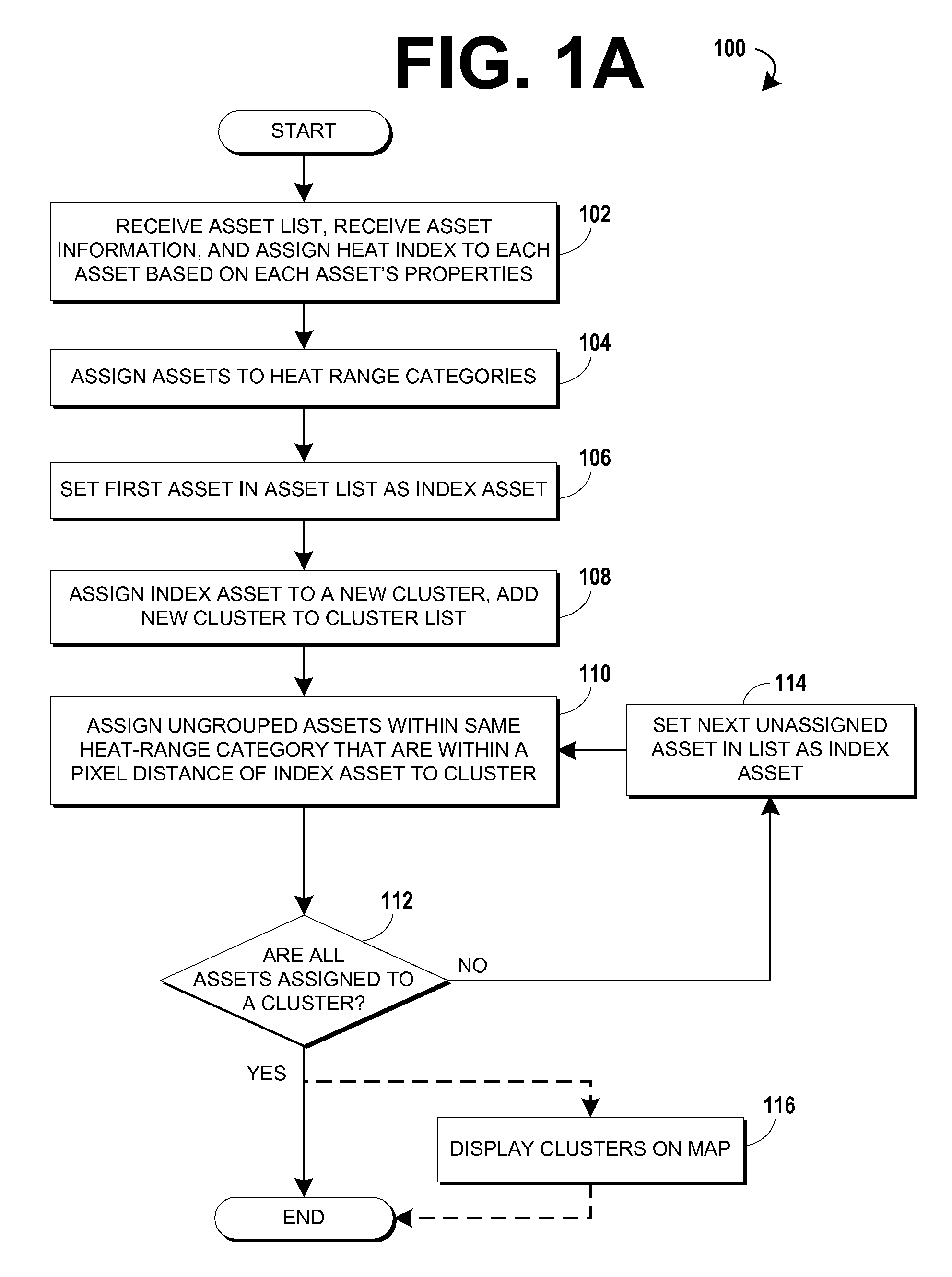

Cluster mapping to highlight areas of electrical congestion

InactiveUS20130016106A1Minimize timeLow costDrawing from basic elementsMaps/plans/chartsHeat mapPower grid

Methods of generating heat maps of assets using clustering of assets are disclosed. Some methods include receiving a list of assets, assigning the assets to one or more heat range categories based on the status of the assets, assigning assets operating within a zone to a zone cluster, assigning the assets of the zone cluster to category clusters based on the heat range categories assigned to the assets. The positions of the clusters may be calculated for mapping, and may be displayed on a map. Some embodiments of these methods allow a user to quickly detect and locate non-standard assets on a map while standard assets are consolidated to clusters that are less prominent to the user. This leads to minimizing the time required to form responses to de-load hotspots in an electrical grid, minimizing the cost of assets by reducing the need for hardware redundancy, and minimized equipment outages.

Owner:ENGIE STORAGE SERVICES NA LLC

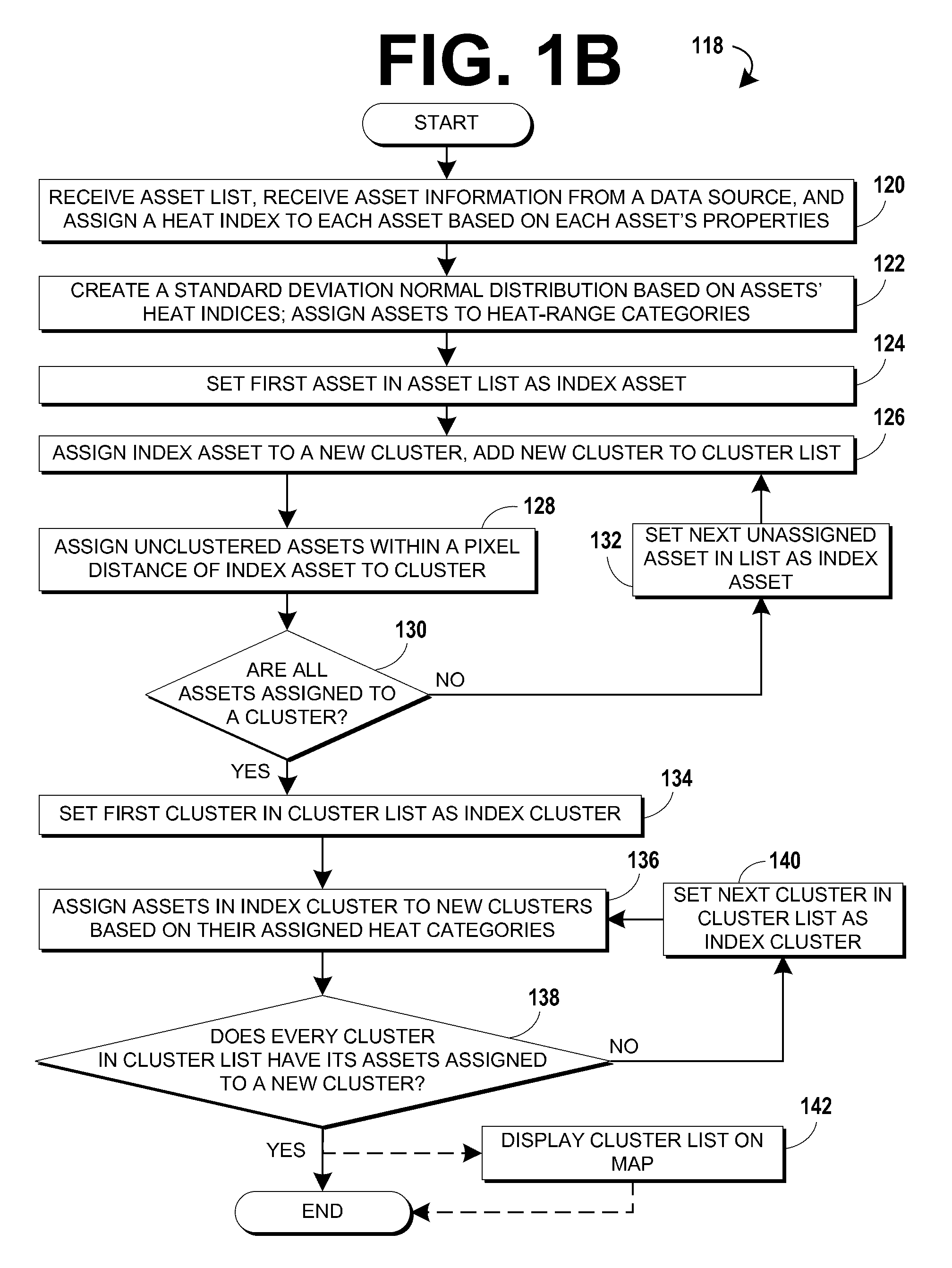

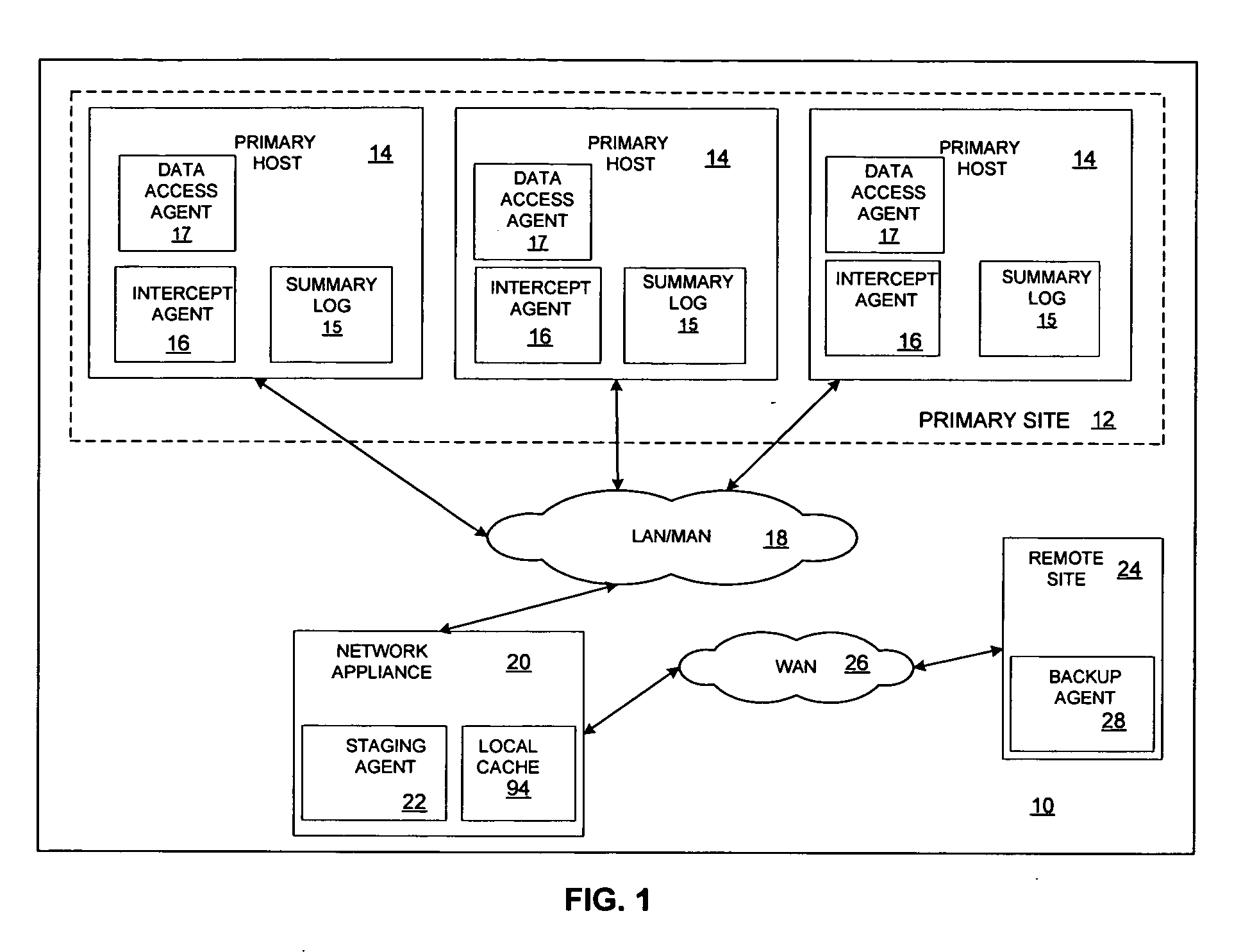

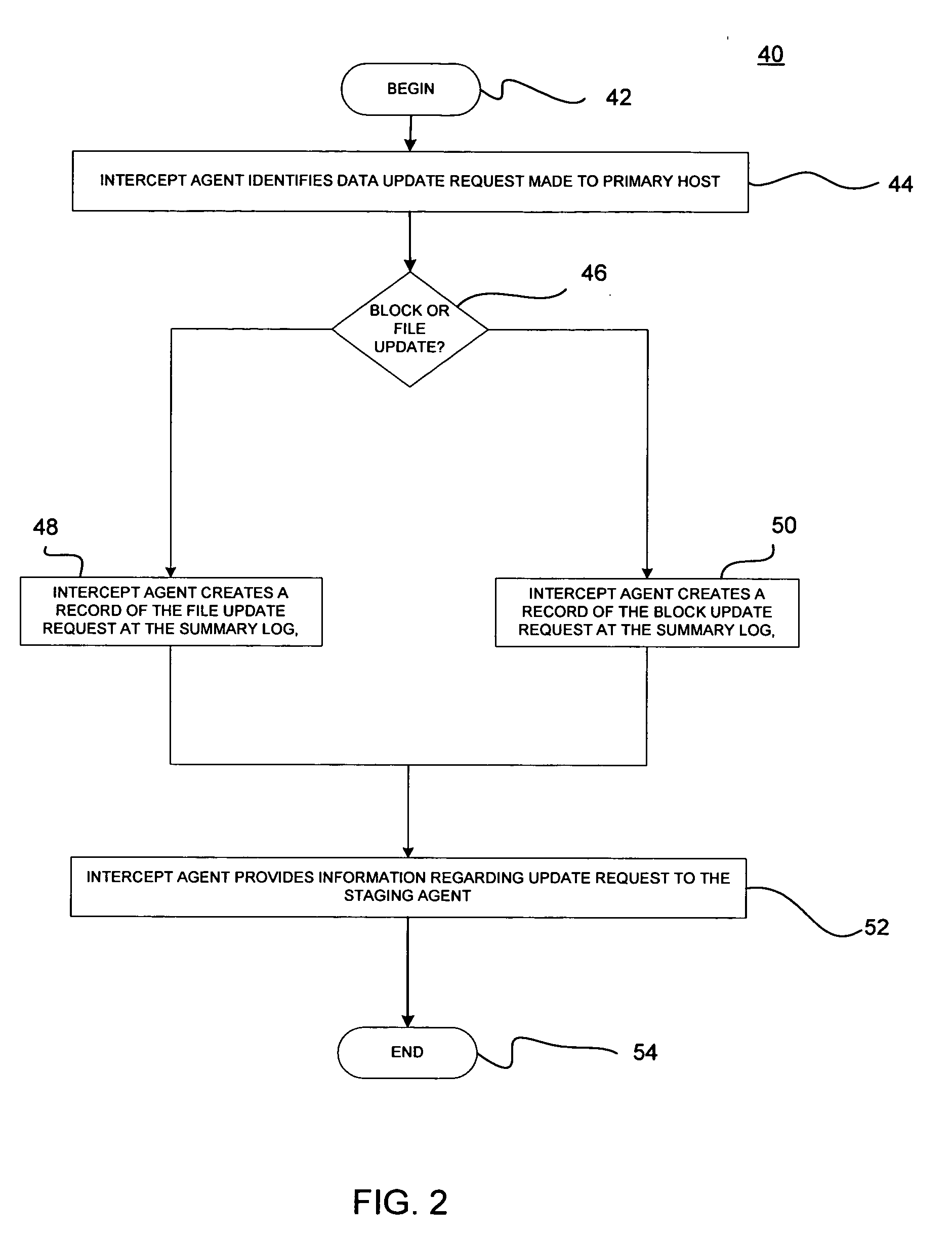

Minimizing resynchronization time after backup system failures in an appliance-based business continuance architecture

ActiveUS20050273654A1Minimize resynchronization timeMinimize timeMemory systemsRedundant hardware error correctionDowntimeSystem failure

A system for minimizing downtime in an appliance-based business continuance architecture is provided. The system includes at least one primary data storage and least one primary host machine. The system includes an intercept agent to intercept primary host machine data requests, and to collect information associated with the intercepted data requests. Moreover, at least one business continuance appliance in communication with the primary host machine and in communication with a remote backup site is provided. The appliance receives information associated with the intercepted data requests from the intercept agent. In addition, a local cache is included within the business continuance appliance. The local cache maintains copies of primary data storage according to the information received. Furthermore, the remote site is provided with the intercepted data requests via the business continuance appliance, wherein the remote site maintains a backup of the primary data storage.

Owner:LENOVO PC INT

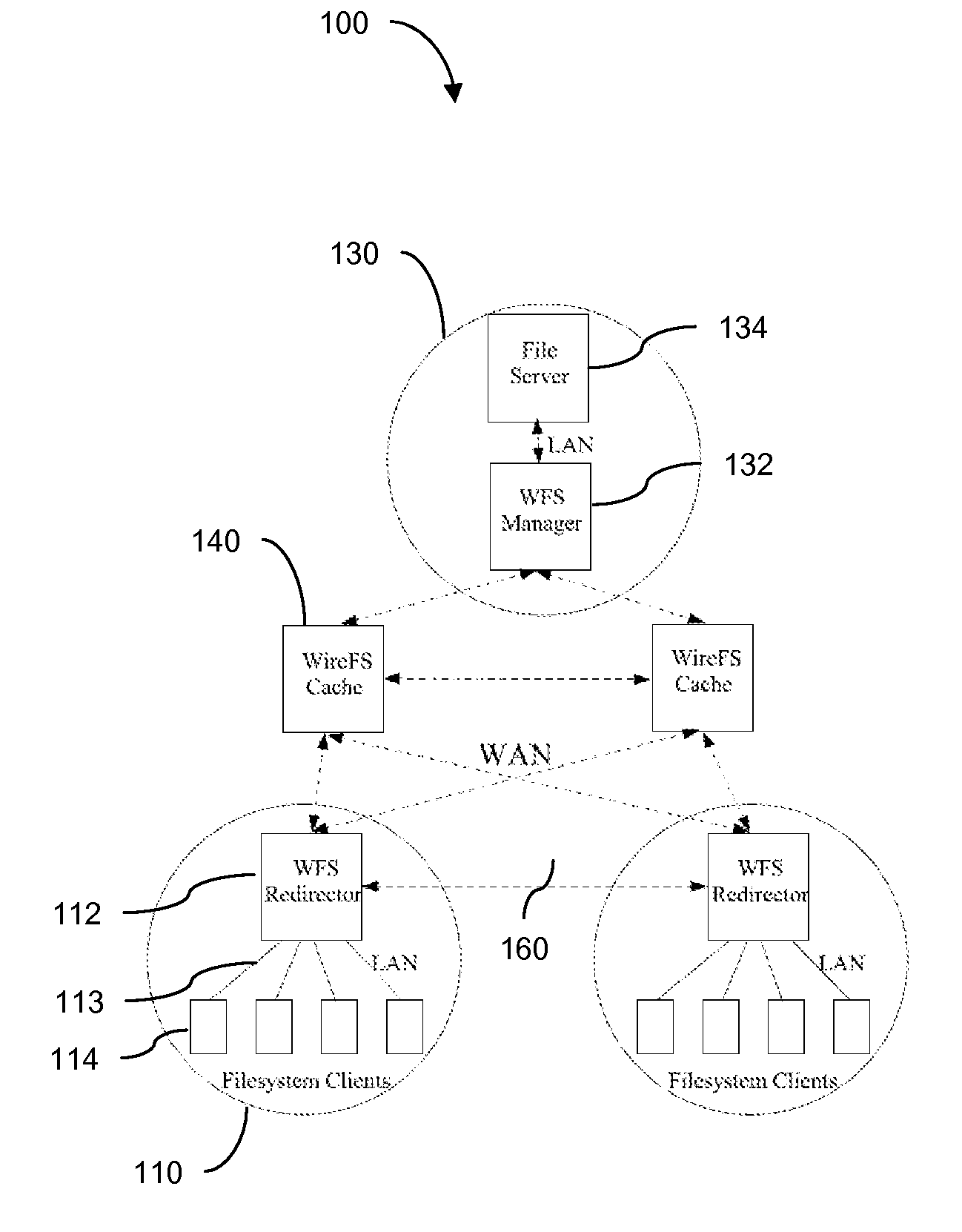

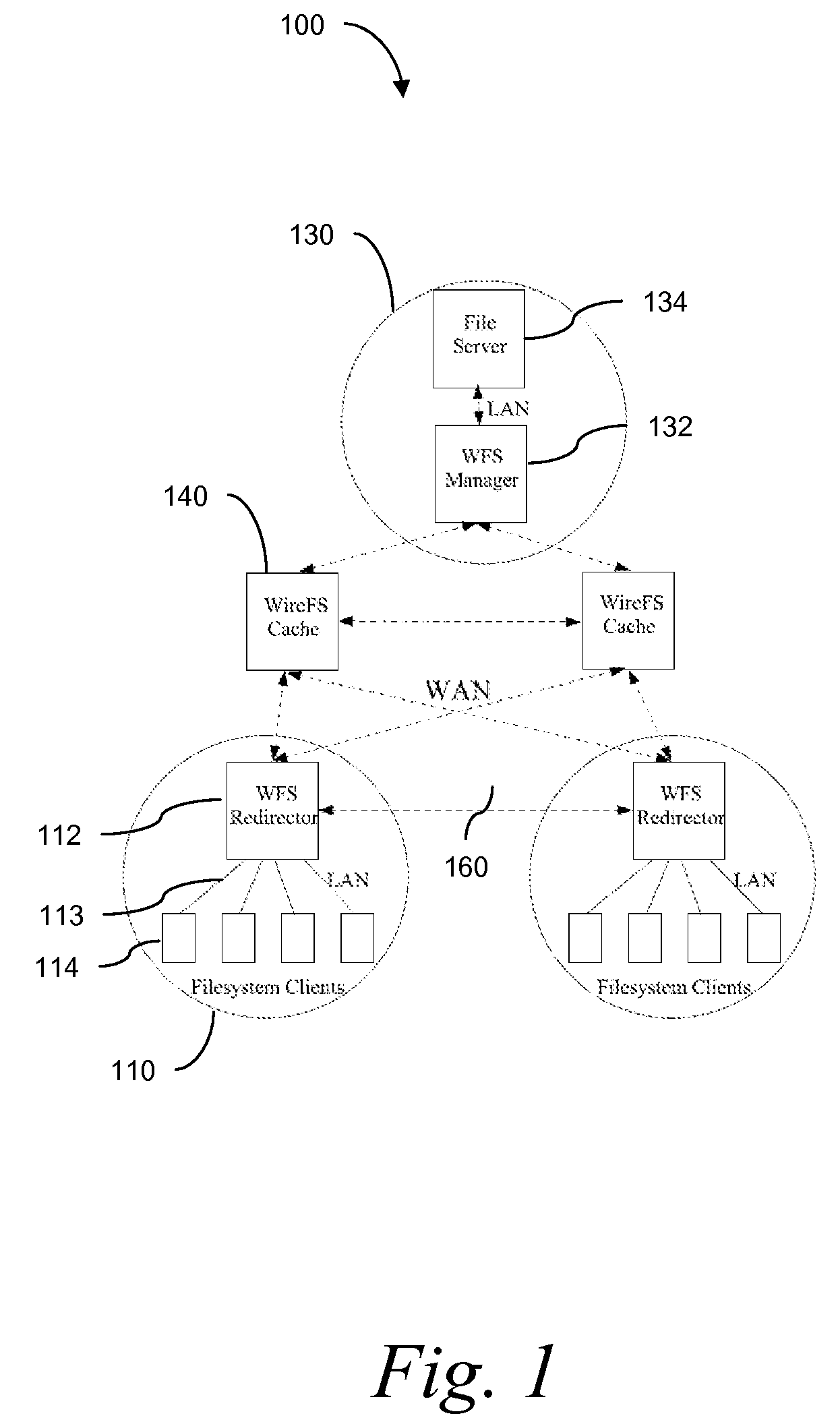

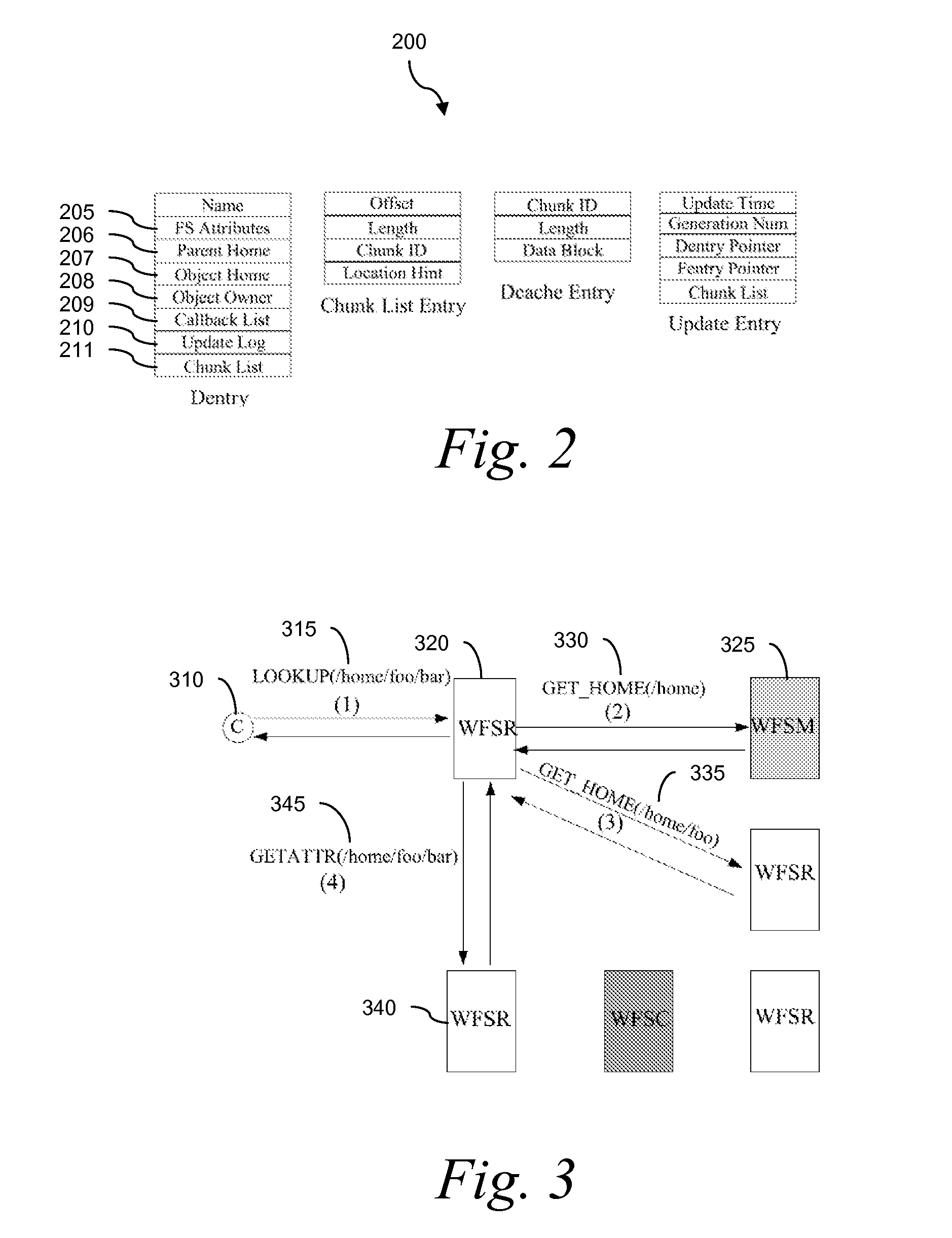

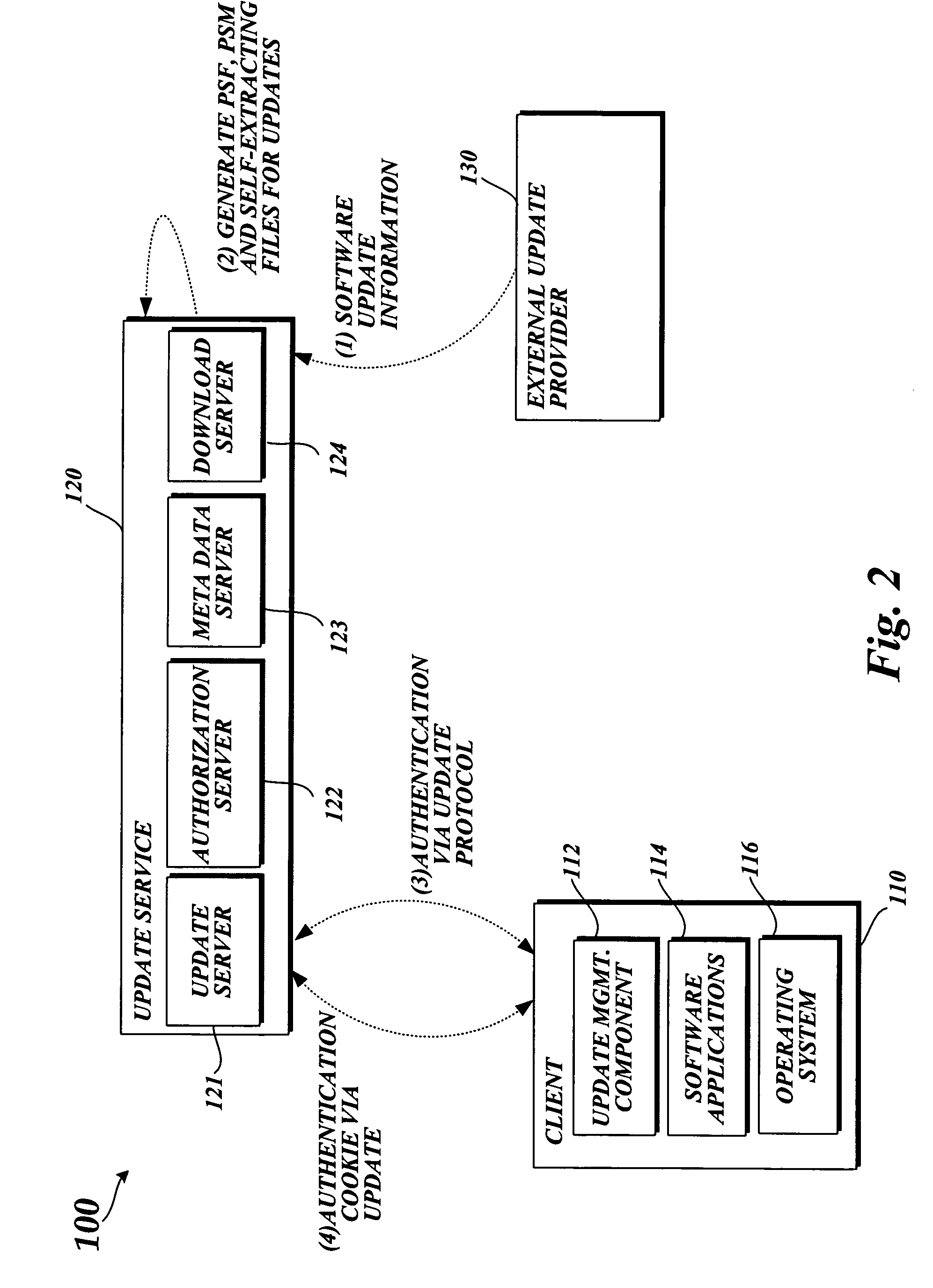

Wide Area Networked File System

InactiveUS20070162462A1Eliminate overheadAlleviates the bottleneck at the central serverSpecial data processing applicationsMemory systemsWide areaRandomized algorithm

Traditional networked file systems like NFS do not extend to wide-area due to network latency and dynamics introduced in the WAN environment. To address that problem, a wide-area networked file system is based on a traditional networked file system (NFS / CIFS) and extends to the WAN environment by introducing a file redirector infrastructure residing between the central file server and clients. The file redirector infrastructure is invisible to both the central server and clients so that the change to NFS is minimal. That minimizes the interruption to the existing file service when deploying WireFS on top of NFS. The system includes an architecture for an enterprise-wide read / write wide area network file system, protocols and data structures for metadata and data management in this system, algorithms for history based prefetching for access latency minimization in metadata operations, and a distributed randomized algorithm for the implementation of global LRU cache replacement scheme.

Owner:NEC CORP

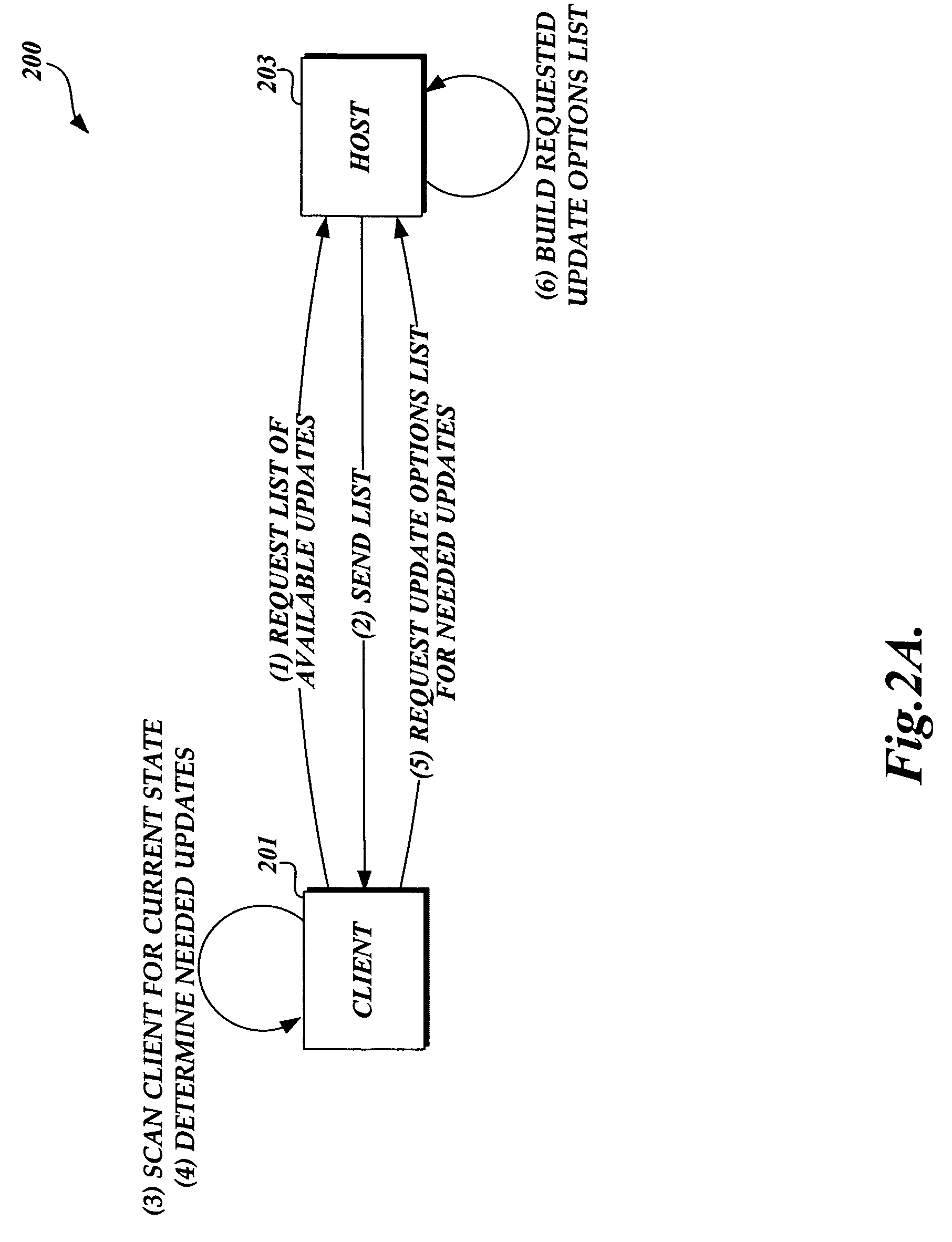

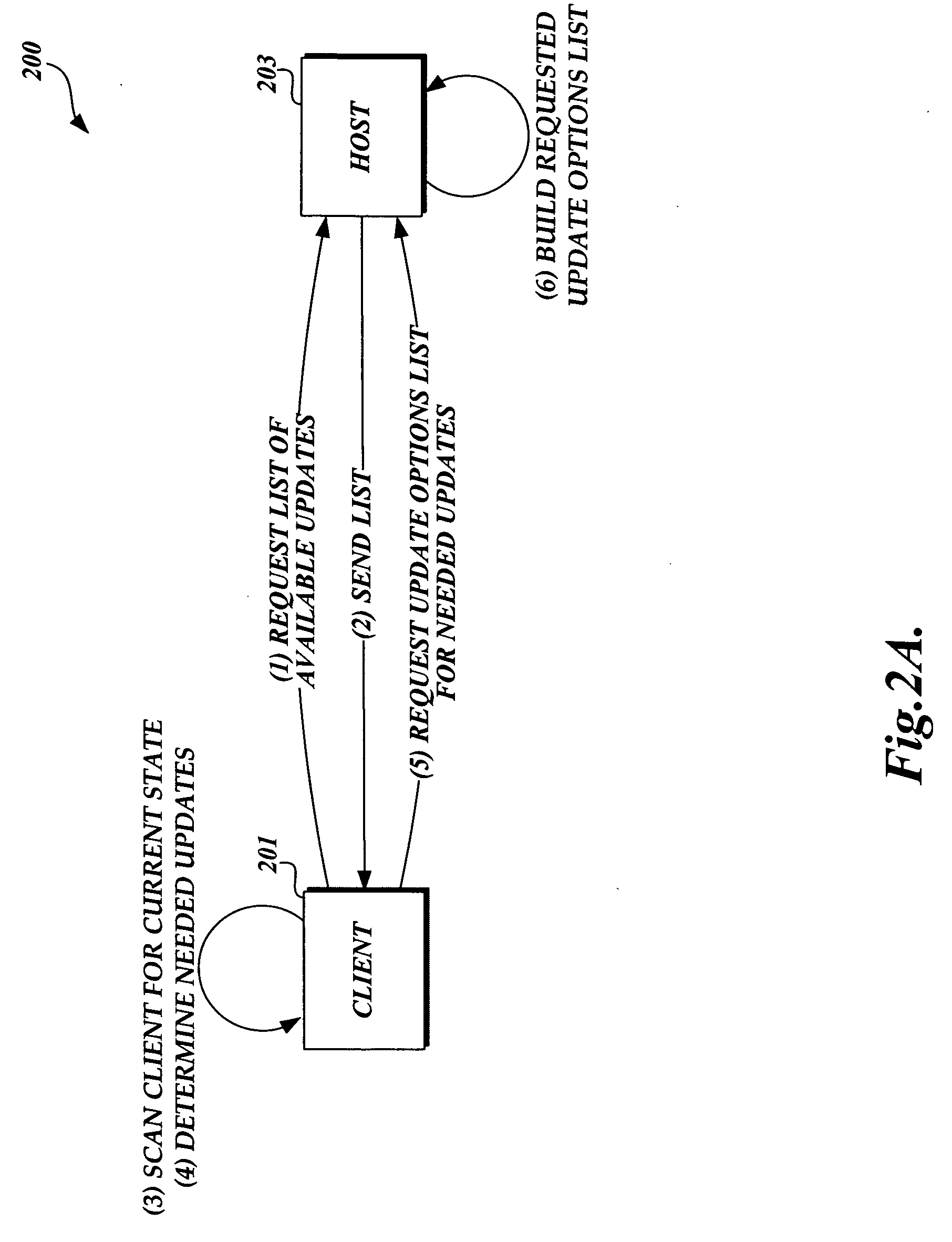

Managing software updates and a software distribution service

ActiveUS7478381B2Facilitating selection and implementationMinimizing bandwidthLink editingMultiple digital computer combinationsSoftware distributionService control

The present invention is directed to a system and method for managing software updates. More specifically, the present invention is directed to a system and method for facilitating the selection and implementation of software updates while minimizing the bandwidth and processing resources required to select and implement the software updates. In accordance with an aspect of the present invention, a software update service controls access to software updates stored on servers. In accordance with another aspect, the software update service synchronizes with client machines to identify applicable updates.

Owner:MICROSOFT TECH LICENSING LLC

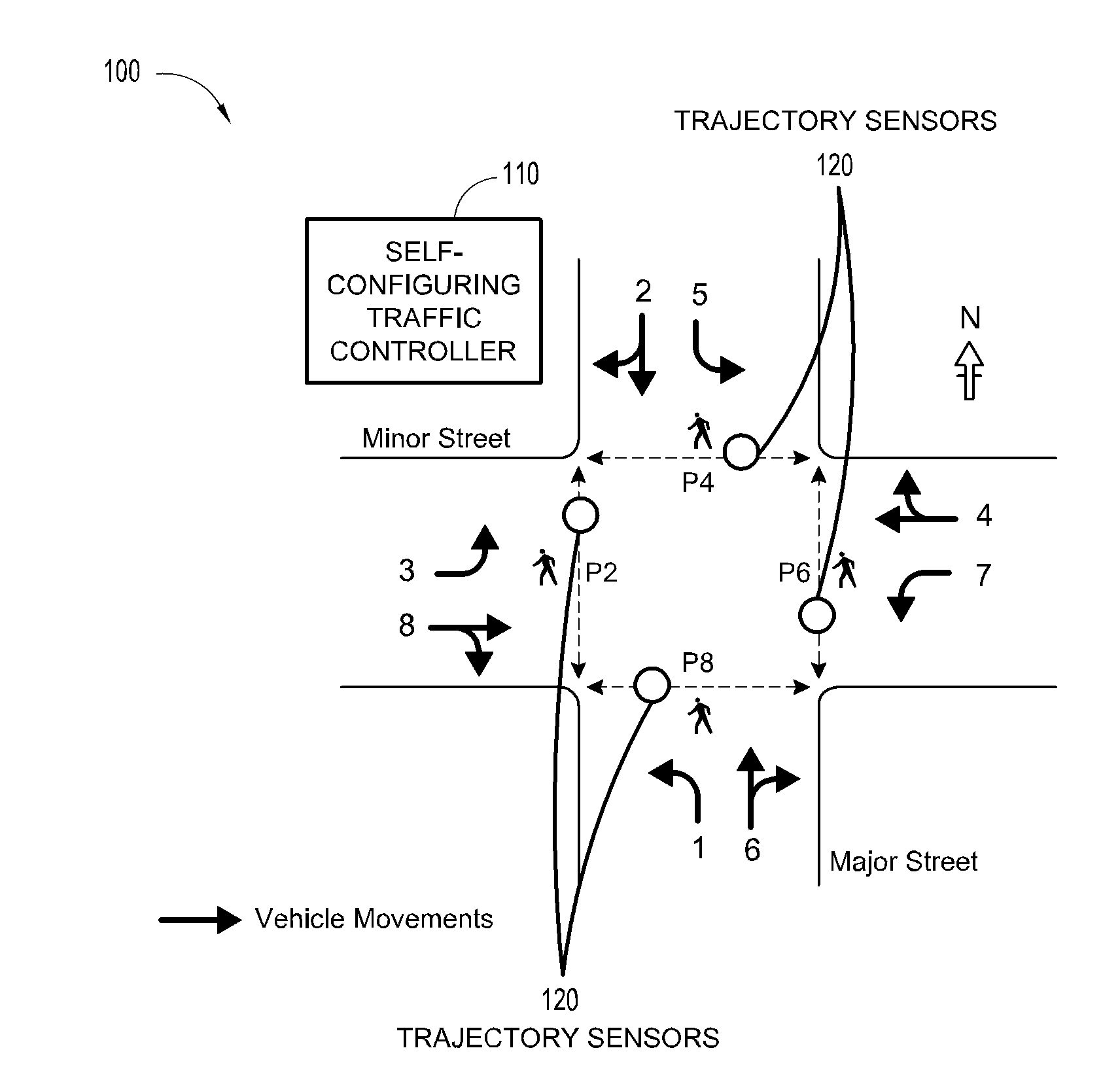

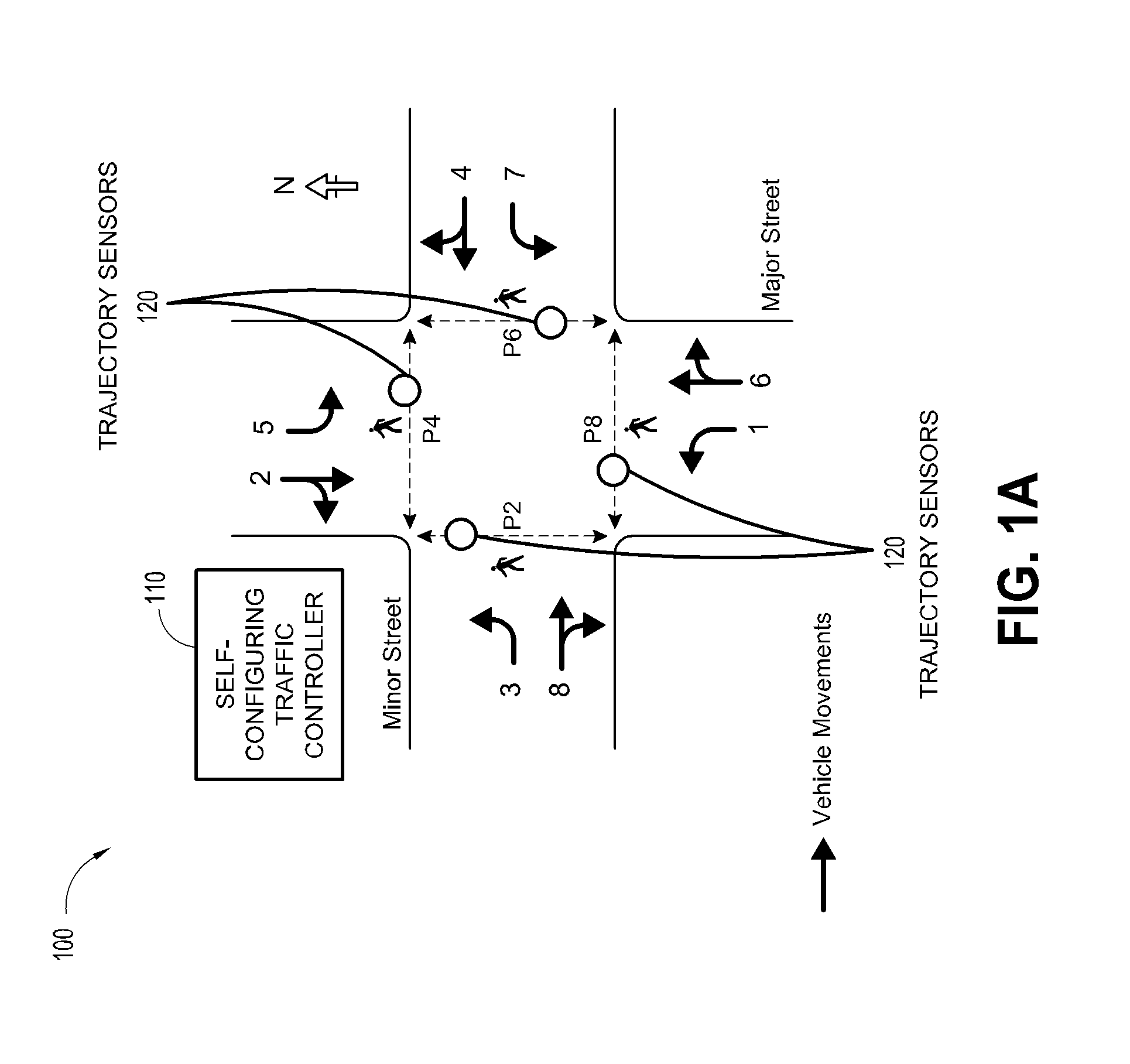

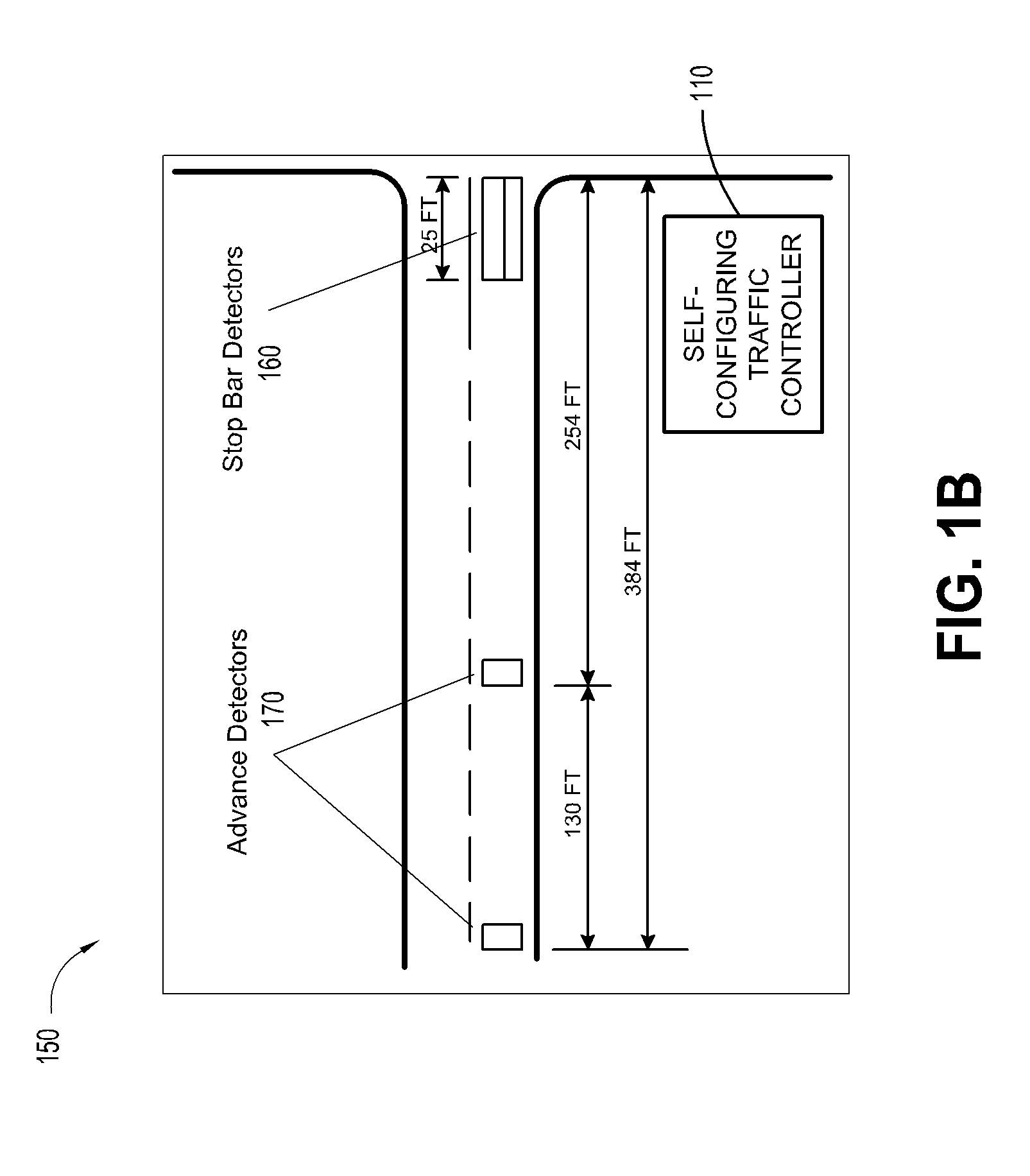

Self-configuring traffic signal controller

Embodiments describe new mechanisms for signalized intersection control. Embodiments expand inputs beyond traditional traffic control methods to include awareness of agency policies for signalized control, industry standardized calculations for traffic control parameters, geometric awareness of the roadway and / or intersection, and / or input of vehicle trajectory data relative to this intersection geometry. In certain embodiments, these new inputs facilitate a real-time, future-state trajectory modeling of the phase timing and sequencing options for signalized intersection control. Phase selection and timing can be improved or otherwise optimized based upon modeling the signal's future state impact on arriving vehicle trajectories. This improvement or optimization can be performed to reduce or minimize the cost basis of a user definable objective function.

Owner:ECONOLITE GRP

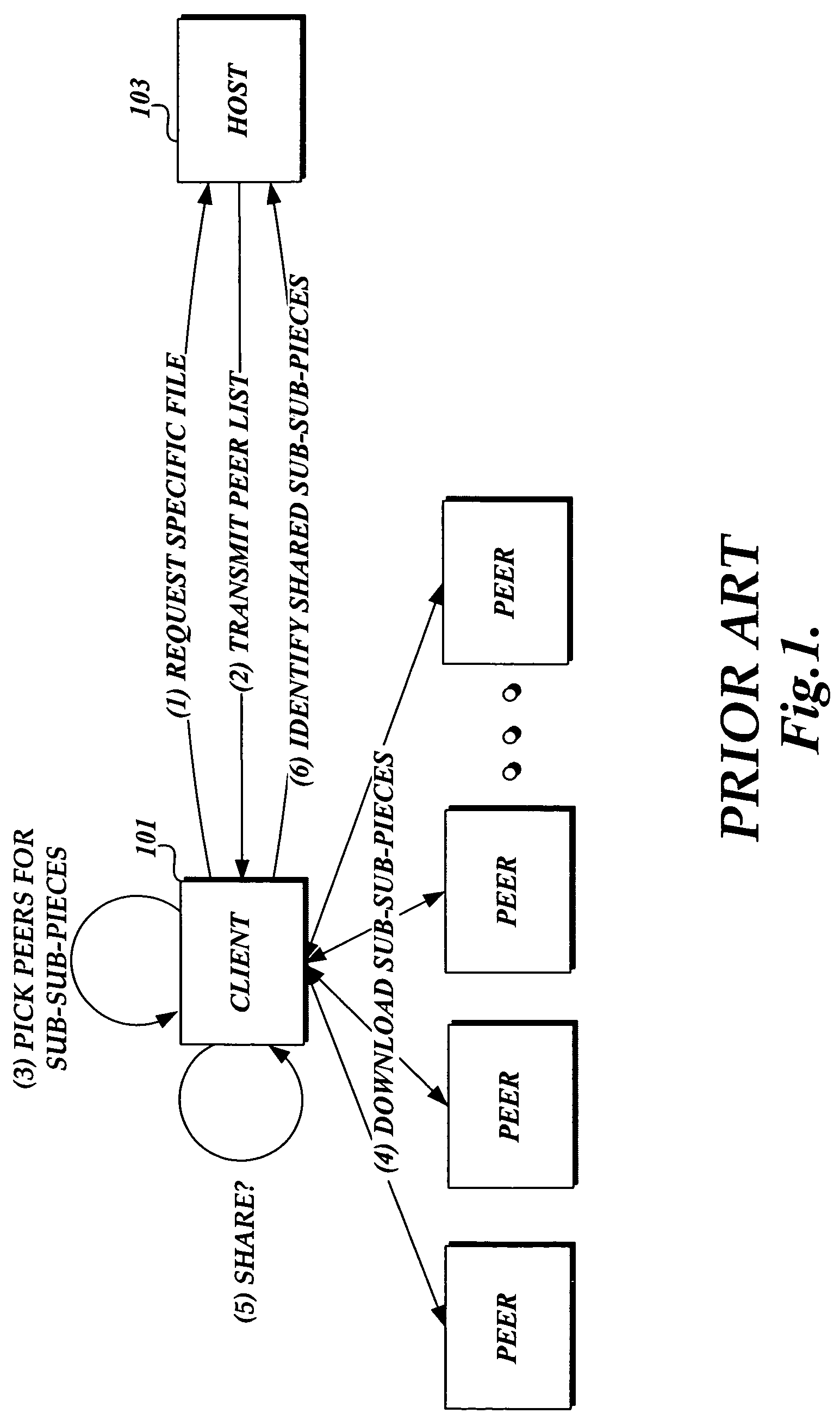

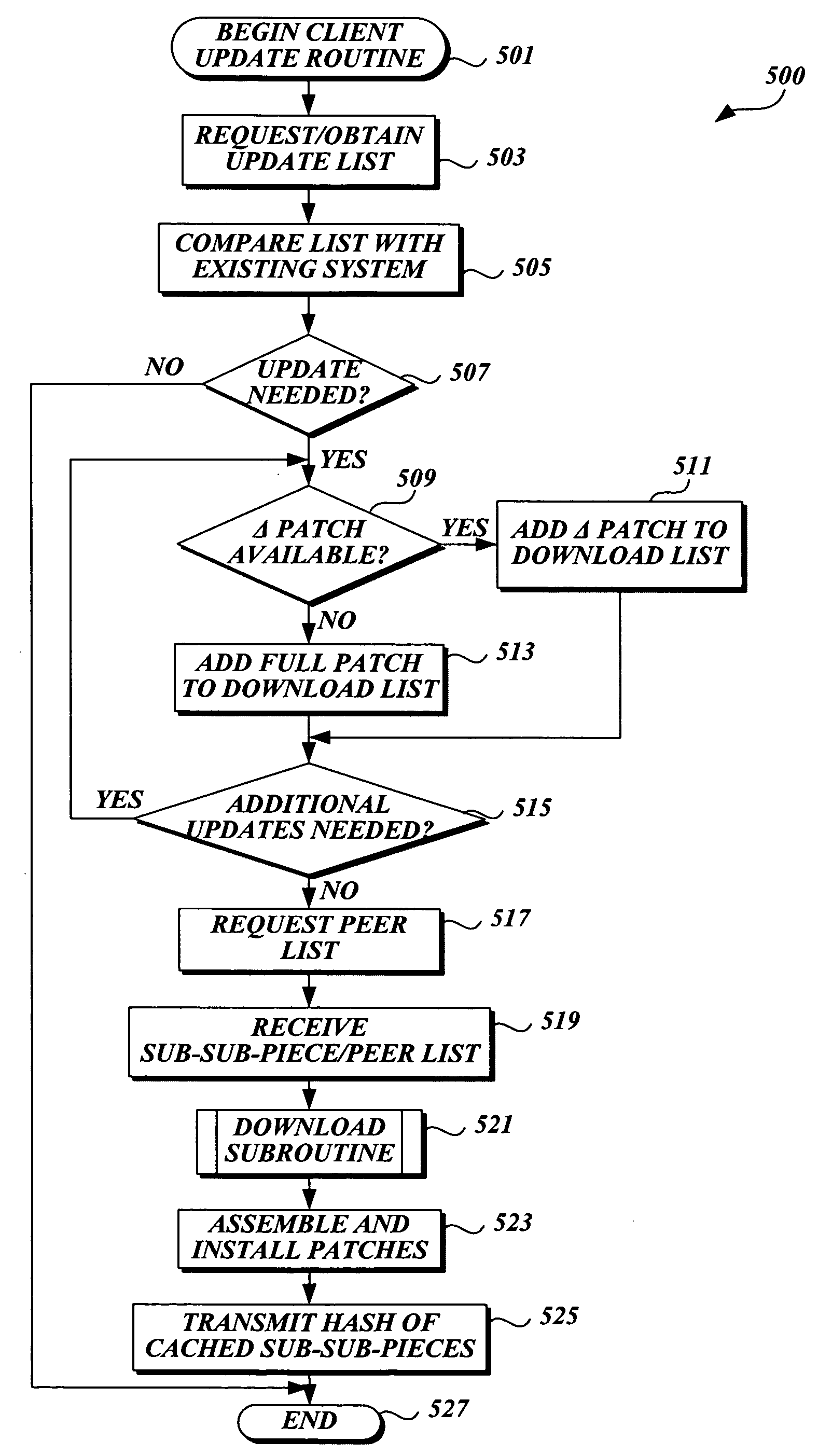

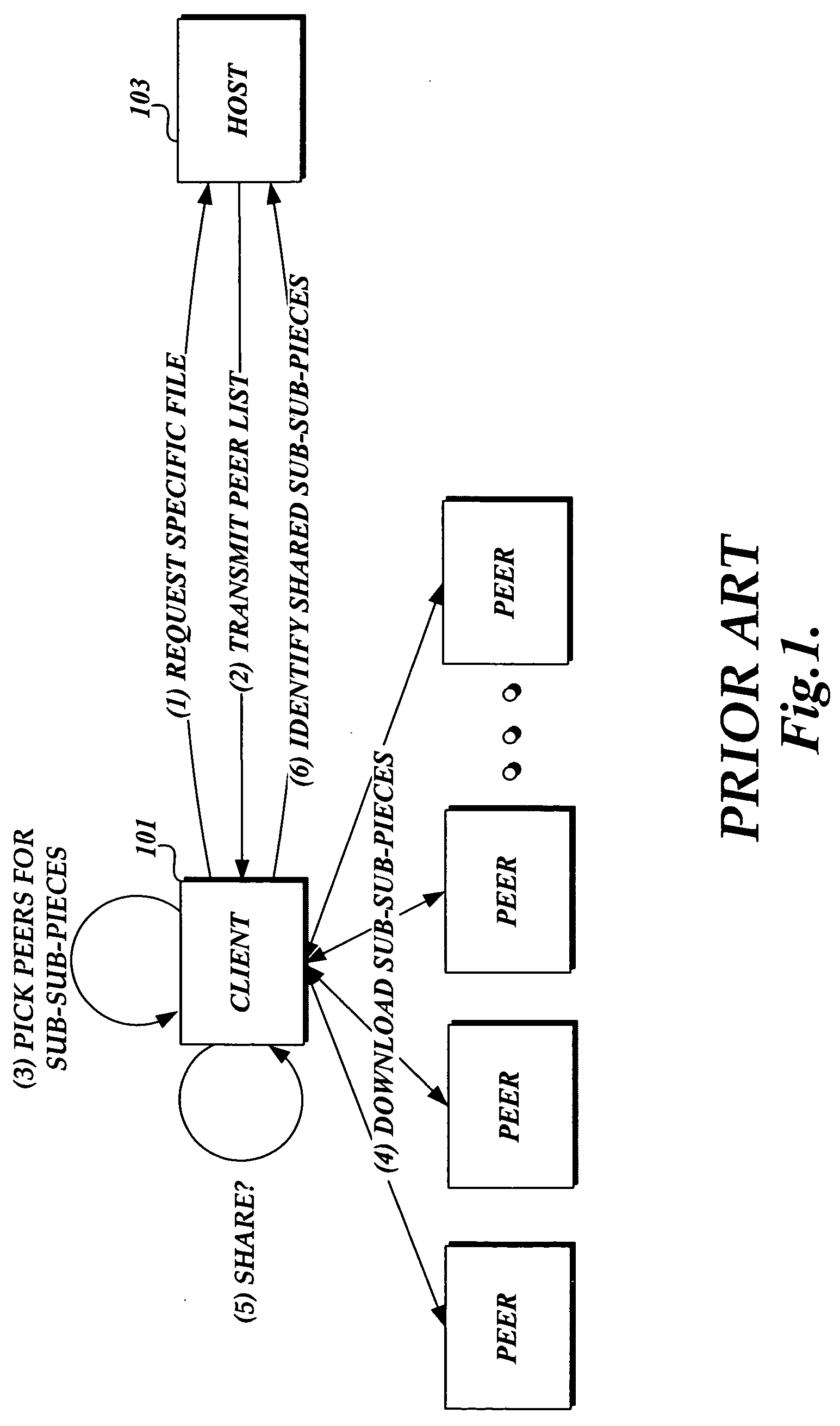

Method and system for downloading updates

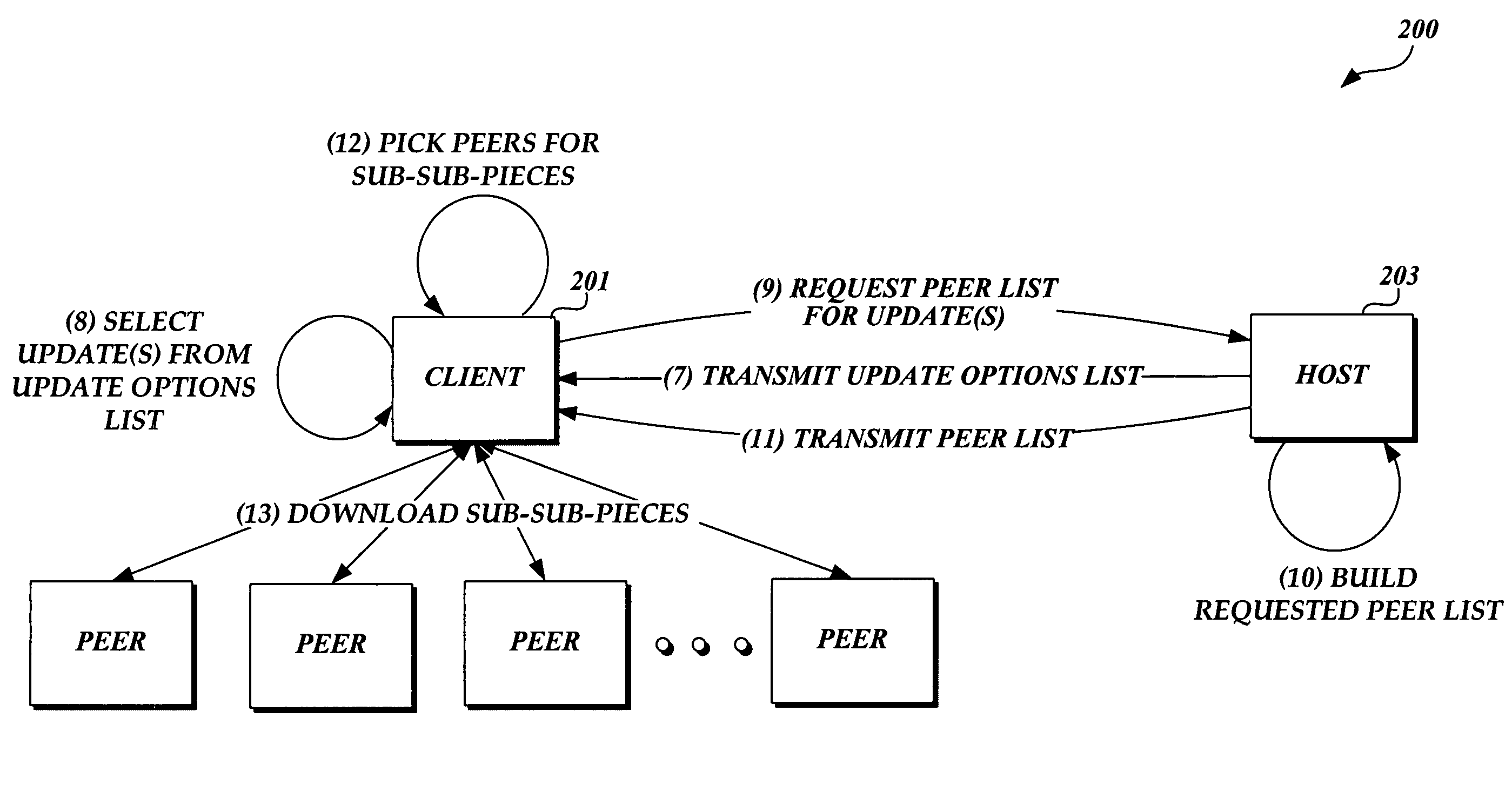

ActiveUS20060130037A1Minimizing download timeReduce the amount requiredSoftware maintainance/managementMultiple digital computer combinationsAnti virusSoftware update

Embodiments of the present invention provide the ability for a software provider to distribute software updates to several different recipients utilizing a peer-to-peer environment. The invention described herein may be used to update any type of software, including, but not limited to, operating software, programming software, anti-virus software, database software, etc. The use of a peer-to-peer environment with added security provides the ability to minimize download time for each peer and also reduce the amount of egress bandwidth that must be provided by the software provider to enable recipients (peers) to obtain the update.

Owner:MICROSOFT TECH LICENSING LLC

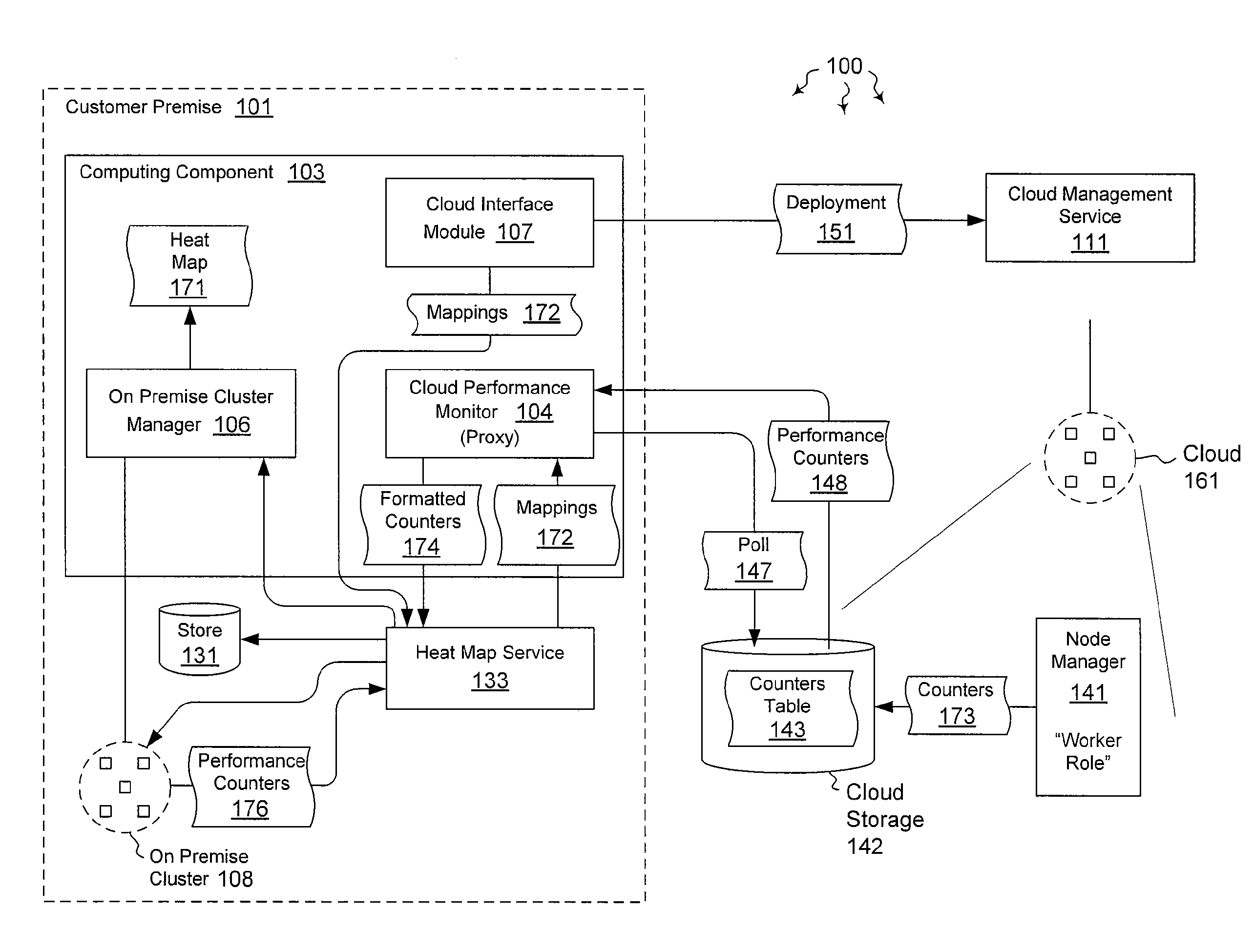

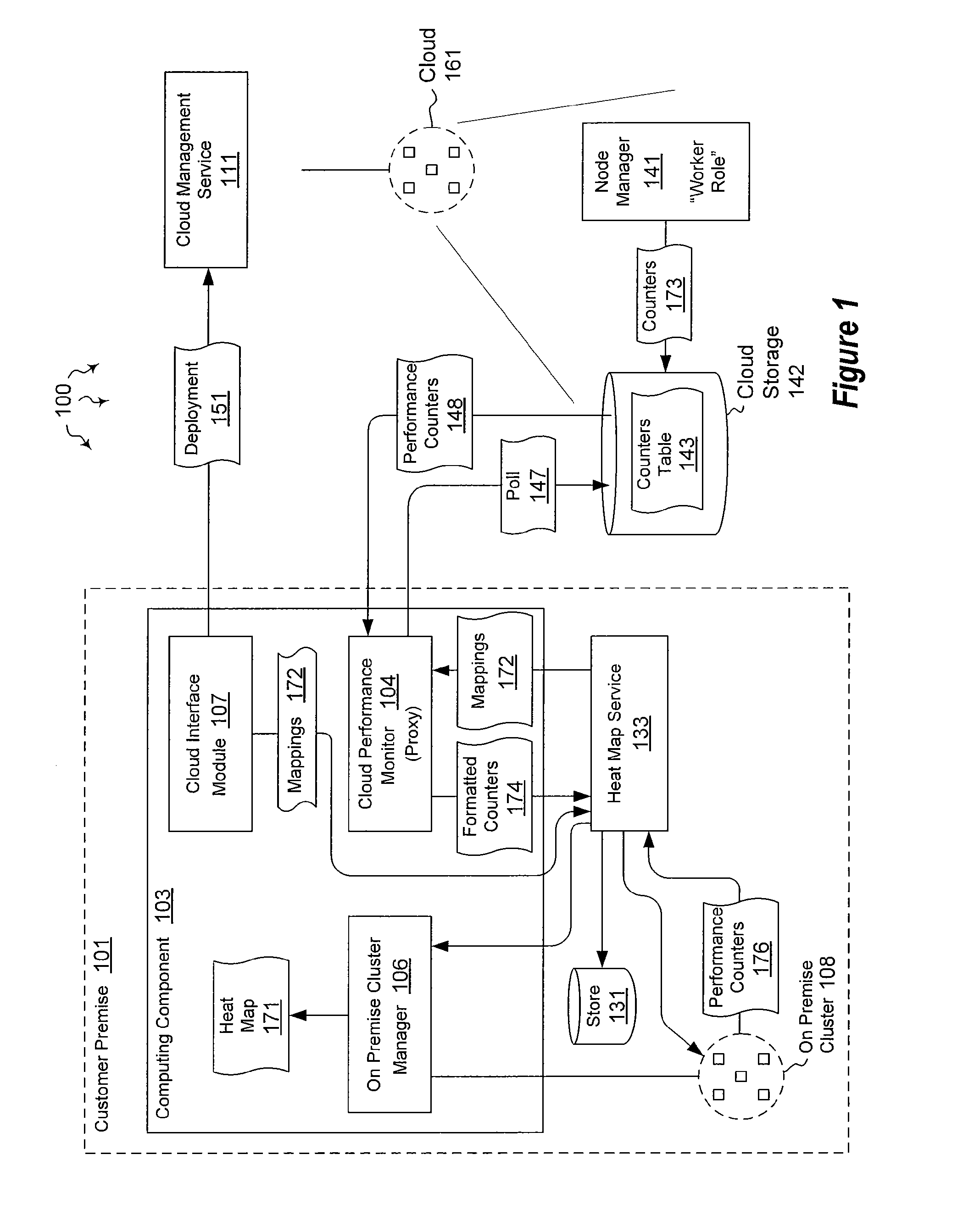

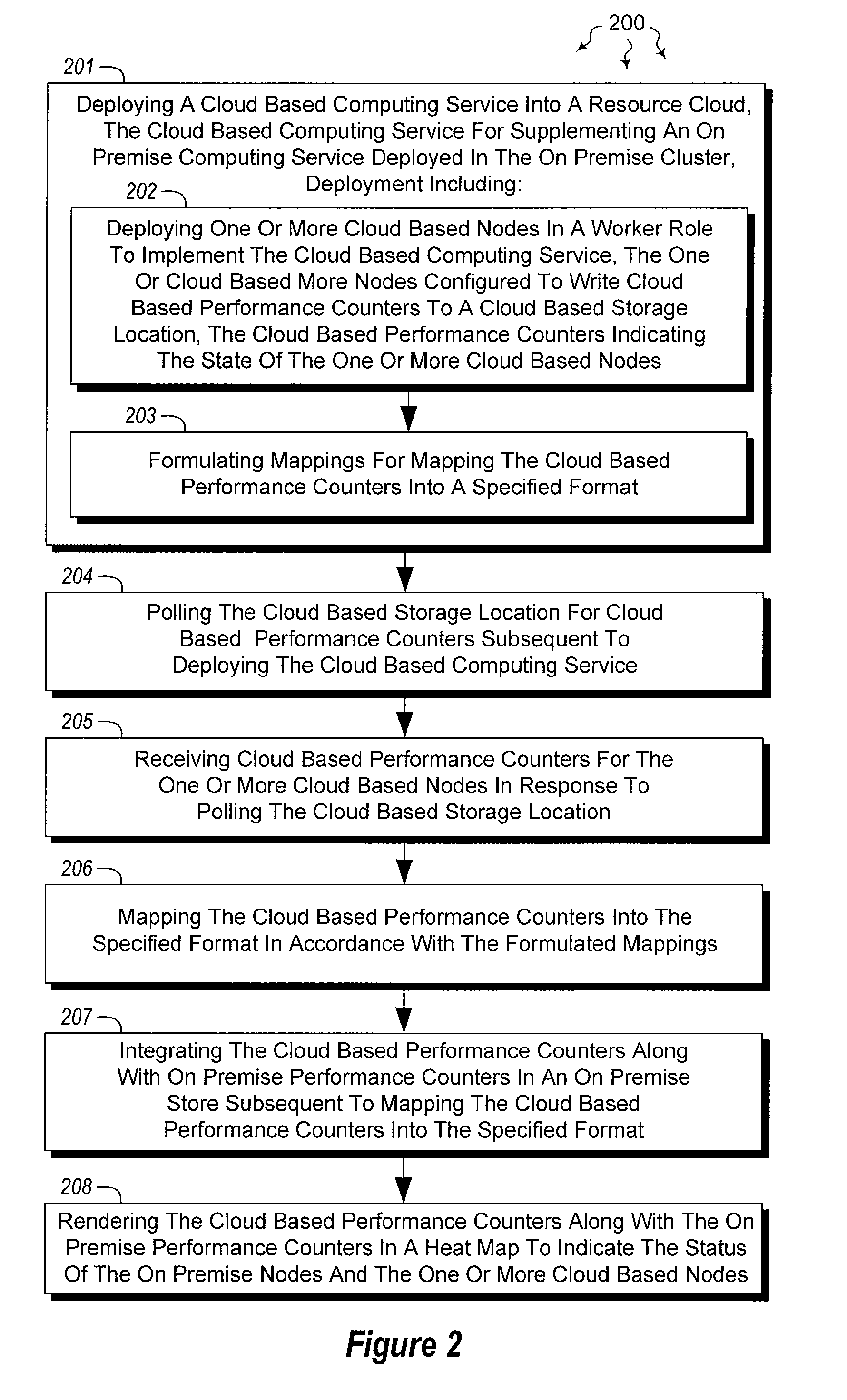

Integrating external and cluster heat map data

ActiveUS20120072578A1Well formedError detection/correctionMultiple digital computer combinationsTimestampHeat map

The present invention extends to methods, systems, and computer program products for integrating external and cluster heat map data. Embodiments of the invention include a proxy service that manages (e.g., asynchronous) communication with cloud nodes. The proxy service simulates packets to on-premise services to simplify the integration with an existing heat map infrastructure. The proxy maintains a cache of performance counter mappings and timestamps on the on-premise head node to minimize the impact of latency into heat map infrastructure. In addition, data transfer is minimized by mapping a fixed set of resource based performance counters into a variable set of performance counters compatible with the on premise heat map infrastructure.

Owner:MICROSOFT TECH LICENSING LLC

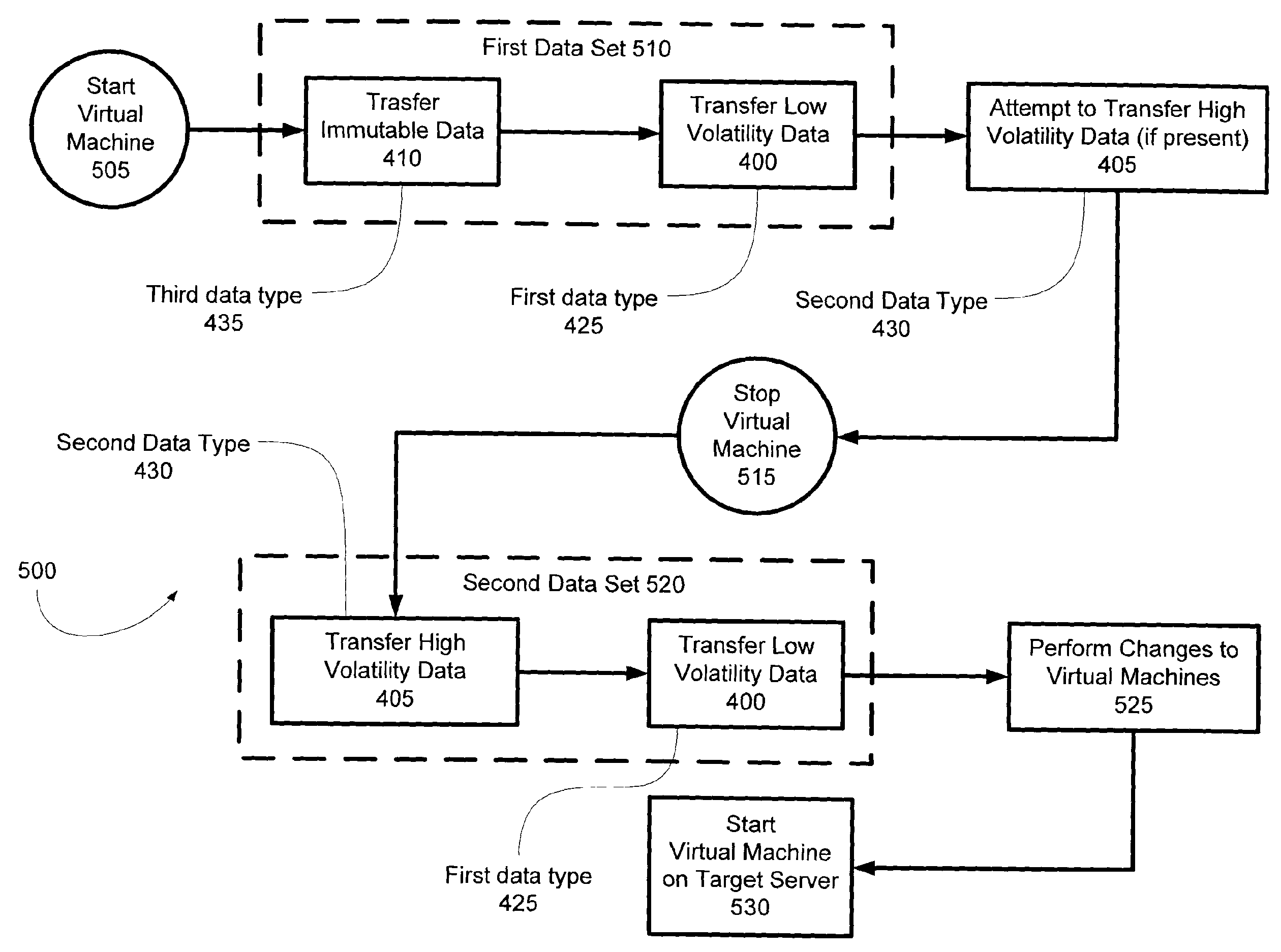

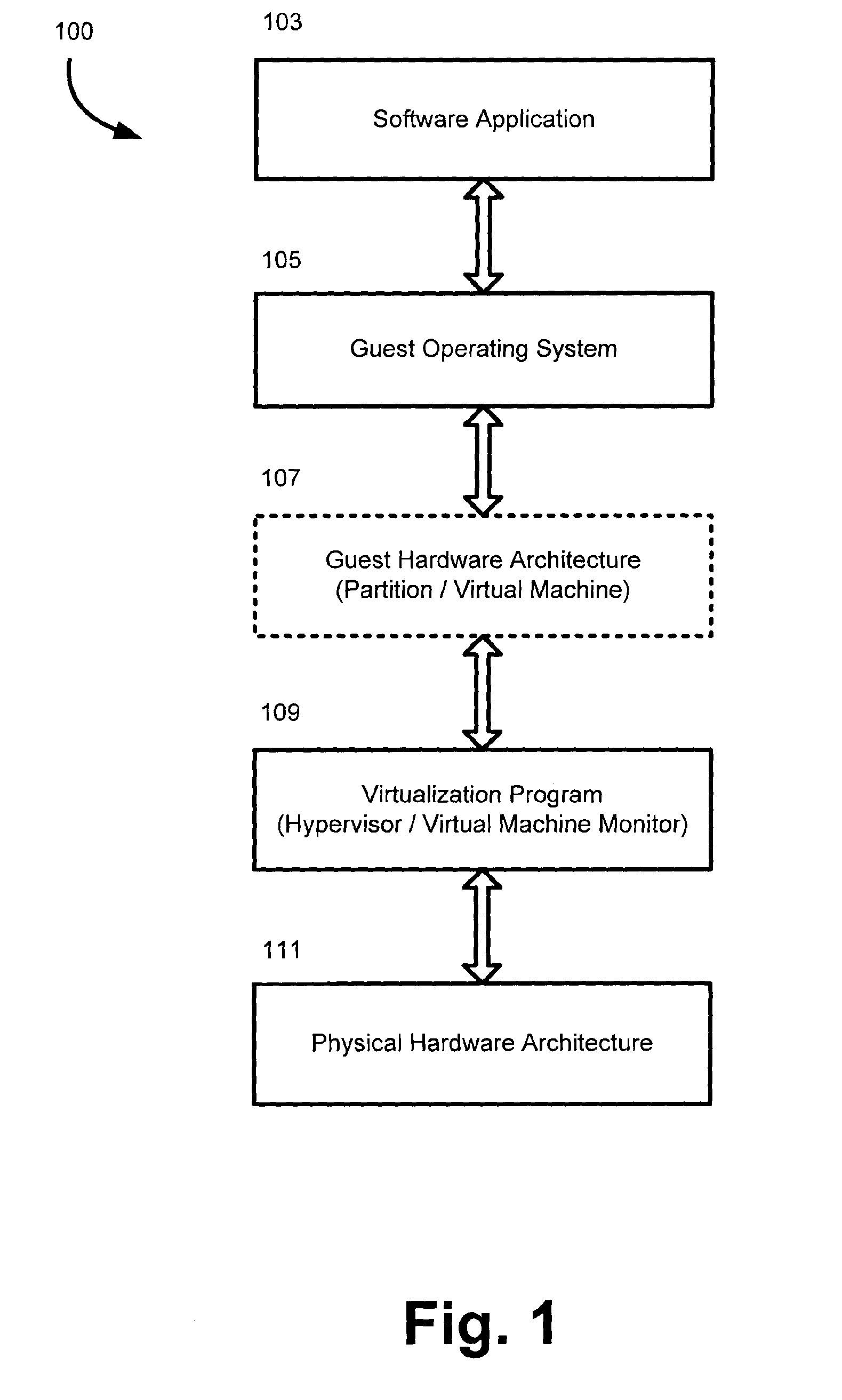

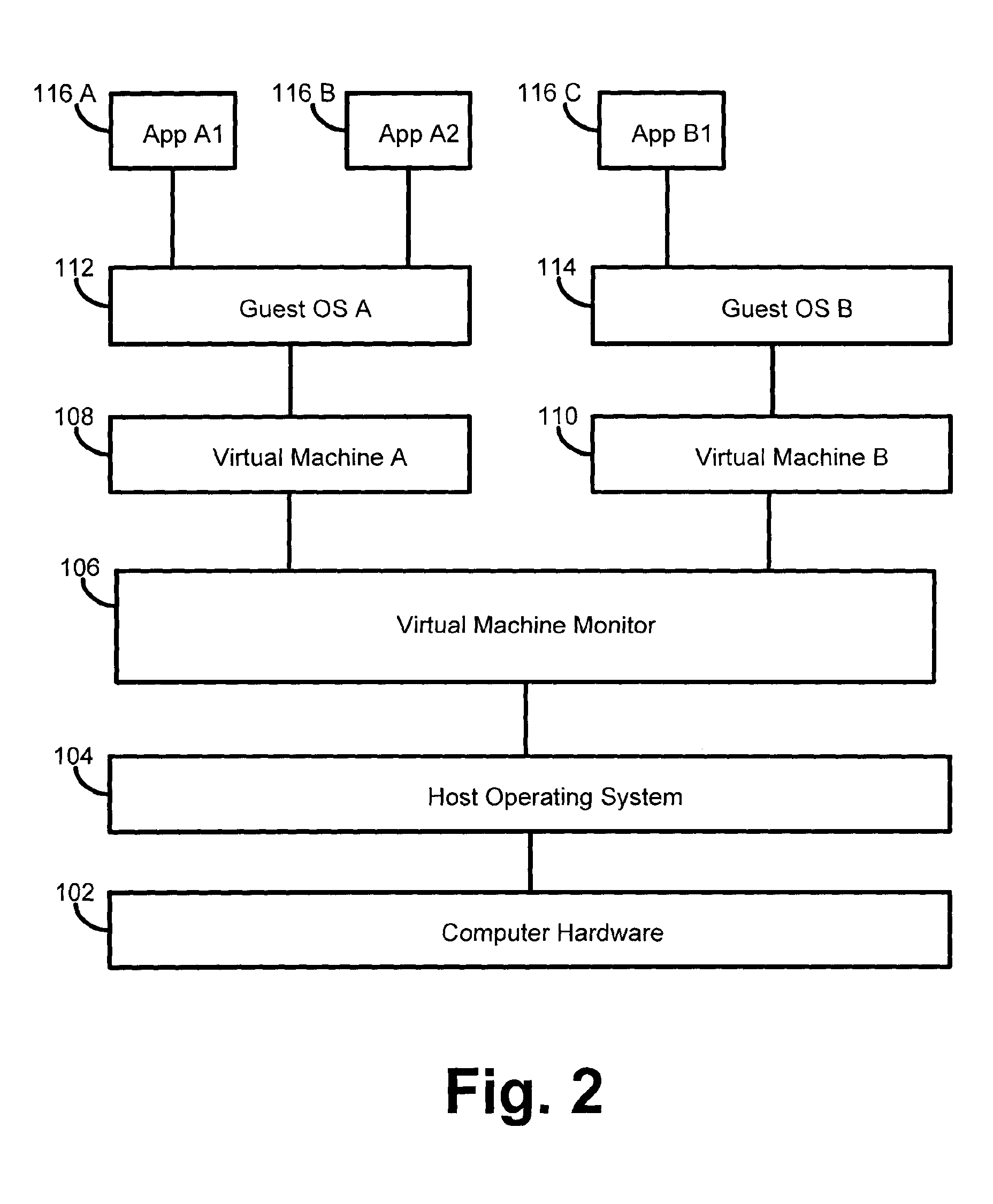

Virtual Machine Smart Migration

ActiveUS20090007106A1Reduce downtimeMaximizing consistent stateInterprogram communicationSoftware simulation/interpretation/emulationRandom access memoryData storing

Migration mechanisms are disclosed herein that smartly transfer data among virtual machines, minimizing the down time of migration of such machines but maximizing the consistent state of data stored thereon. Specifically, data can be classified into three types: low volatility data (such as hard disk data), high volatility data (such a random access memory data), and immutable data (such as read only data). This data can be migrated from a source virtual machine to a target virtual machine by sending the immutable data along with the low volatility data first—before the source virtual machine has stopped itself for the migration process. Then, after the source virtual machine has stopped, high volatility data and (again) low volatility data can be sent from the source to the target. In this latter case, only differences between the low volatility data may be sent (or alternatively, new low volatility data may be sent).

Owner:MICROSOFT TECH LICENSING LLC

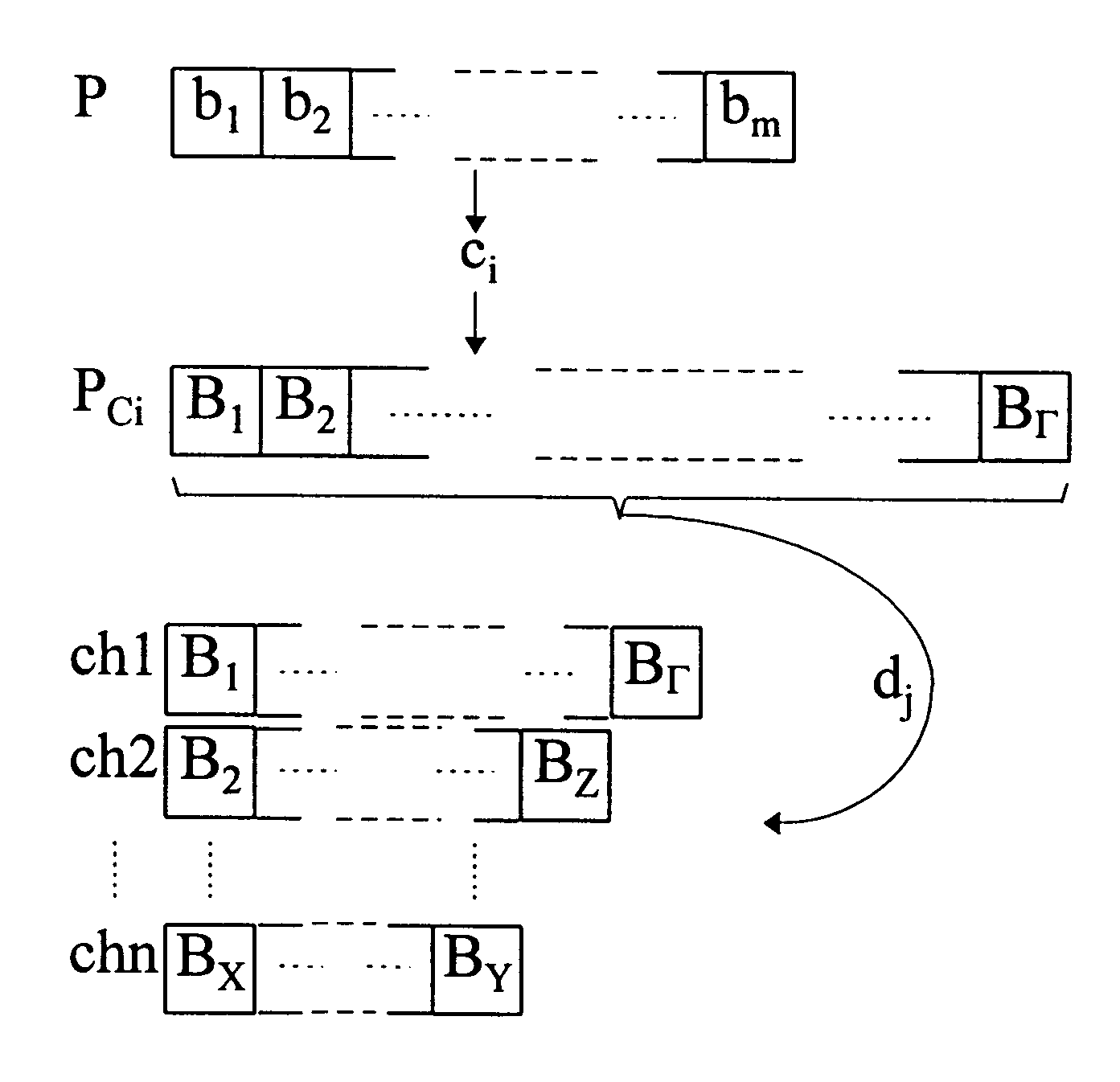

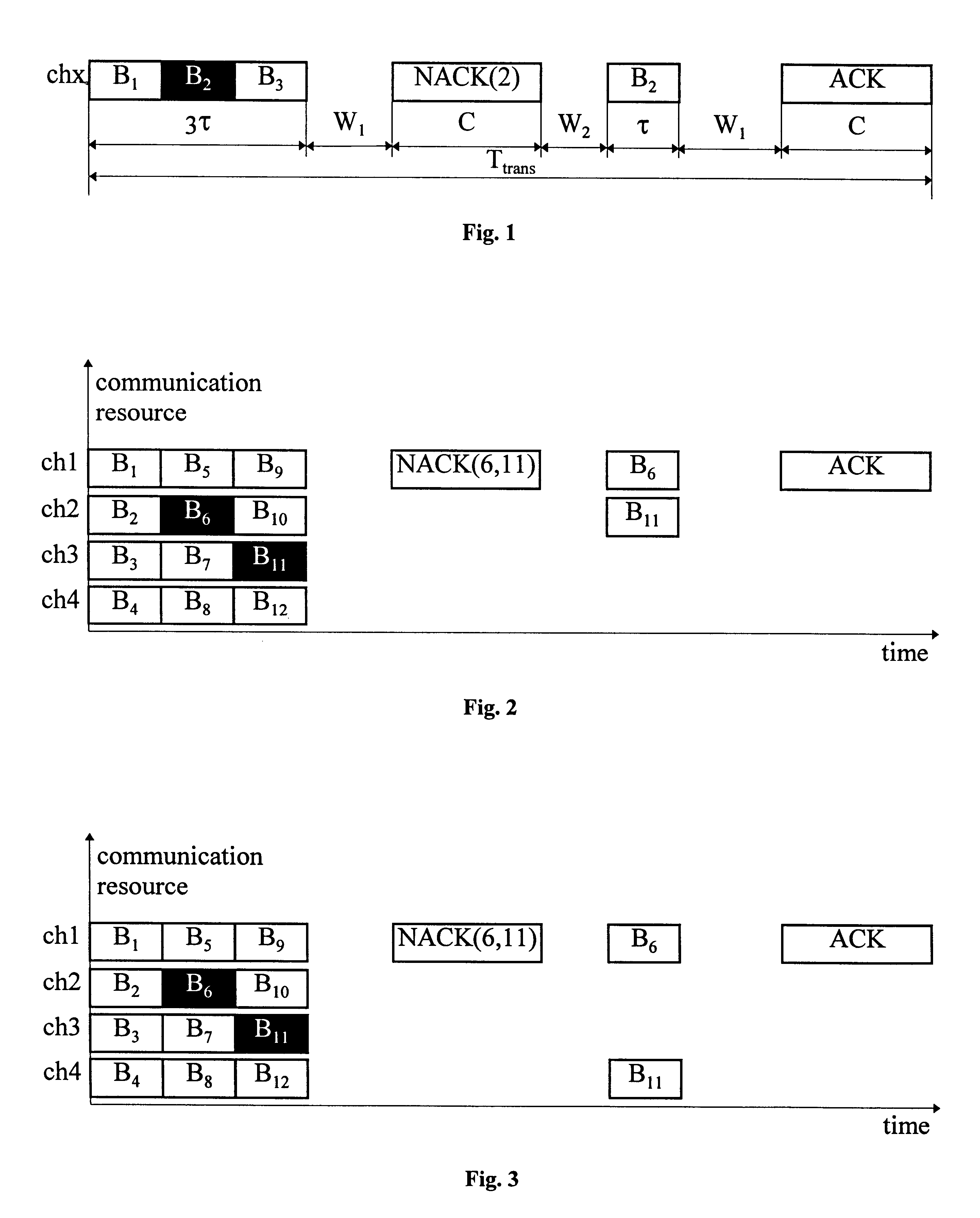

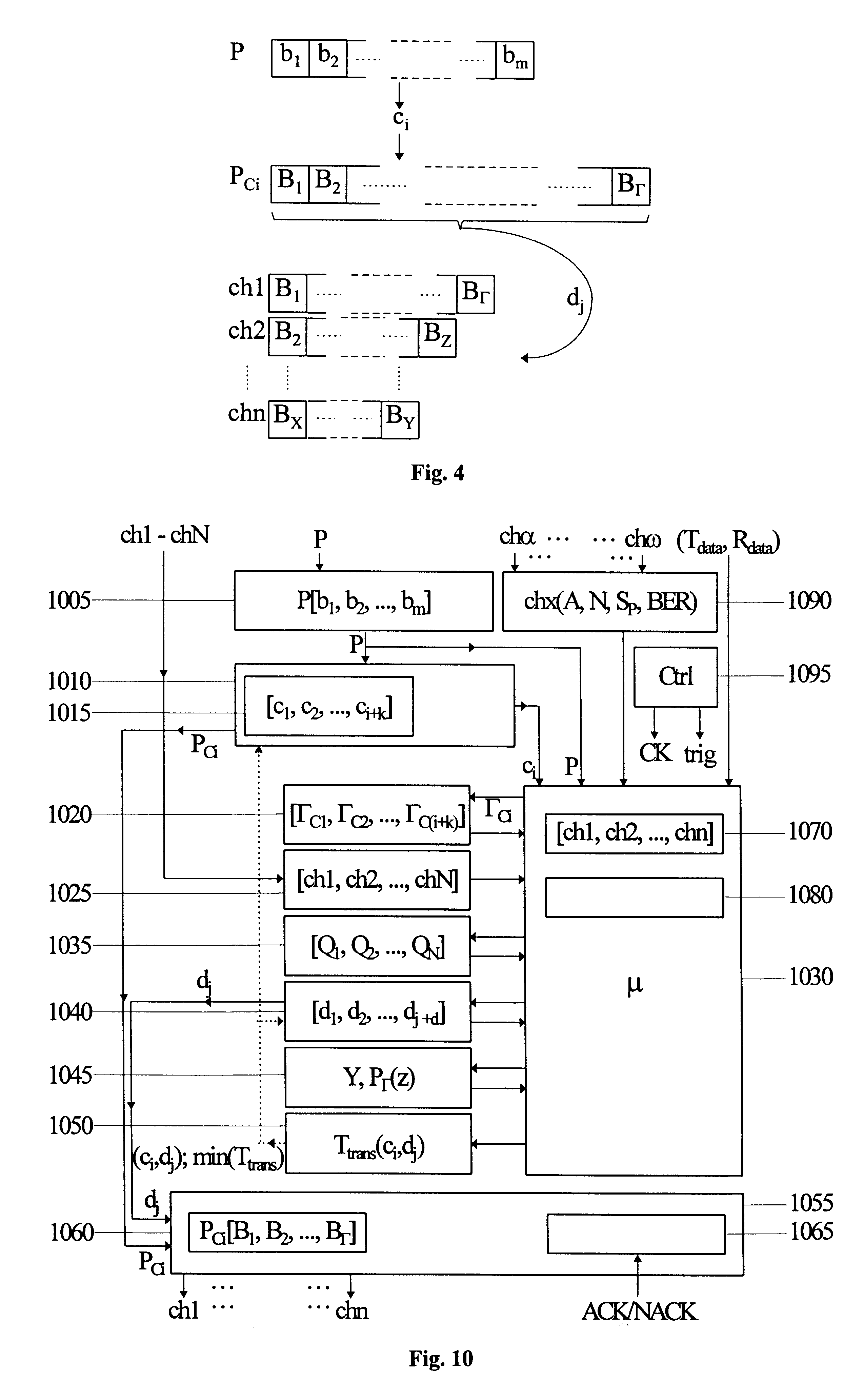

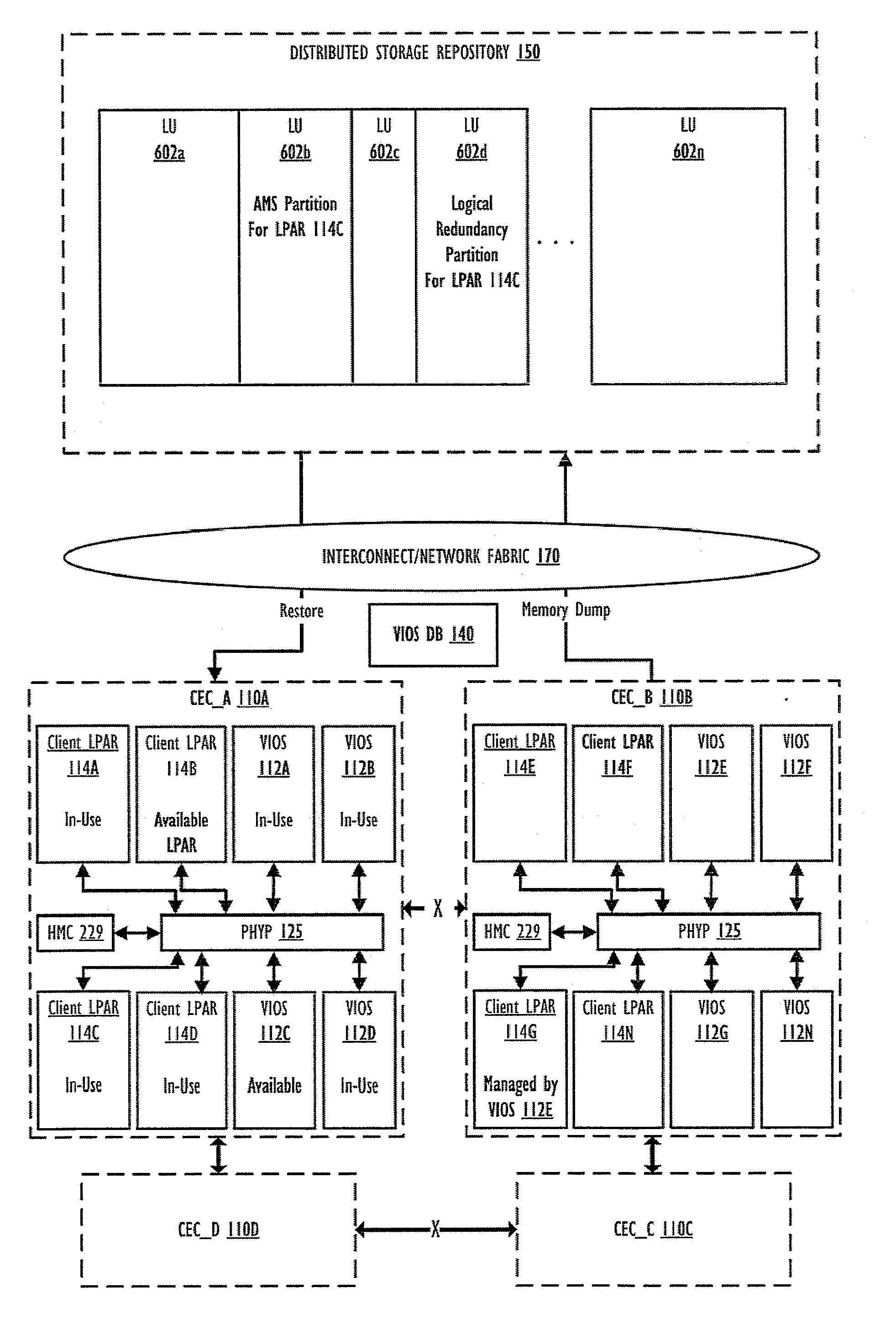

Digital telecommunication system with selected combination of coding schemes and designated resources for packet transmission based on estimated transmission time

InactiveUS6363425B1Quantity minimizationAir interface efficientlyError prevention/detection by using return channelCode conversionForward error correctionMajorization minimization

The present invention relates to a method and an arrangement for communicating packet information in a digital telecommunications system. Through the invention is selected a set of designated communication resources (ch1-chn) from an available amount of resources. Every packet (P) is forward error correction encoded into an encoded packet (Pci), via one of at least two different coding schemes (ci), prior to being transmitted to a receiving party, over the designated communication resources (ch1-chn). An estimated transmission time is calculated for all combinations of coding scheme (ci) and relevant distribution (dj) of the encoded data blocks (B1-BΓ), in the encoded packet (Pci) over the set of designated communication resources (ch1-chn), and the combination (ci,dj) is selected, which minimises the estimated transmission time.

Owner:TELEFON AB LM ERICSSON (PUBL)

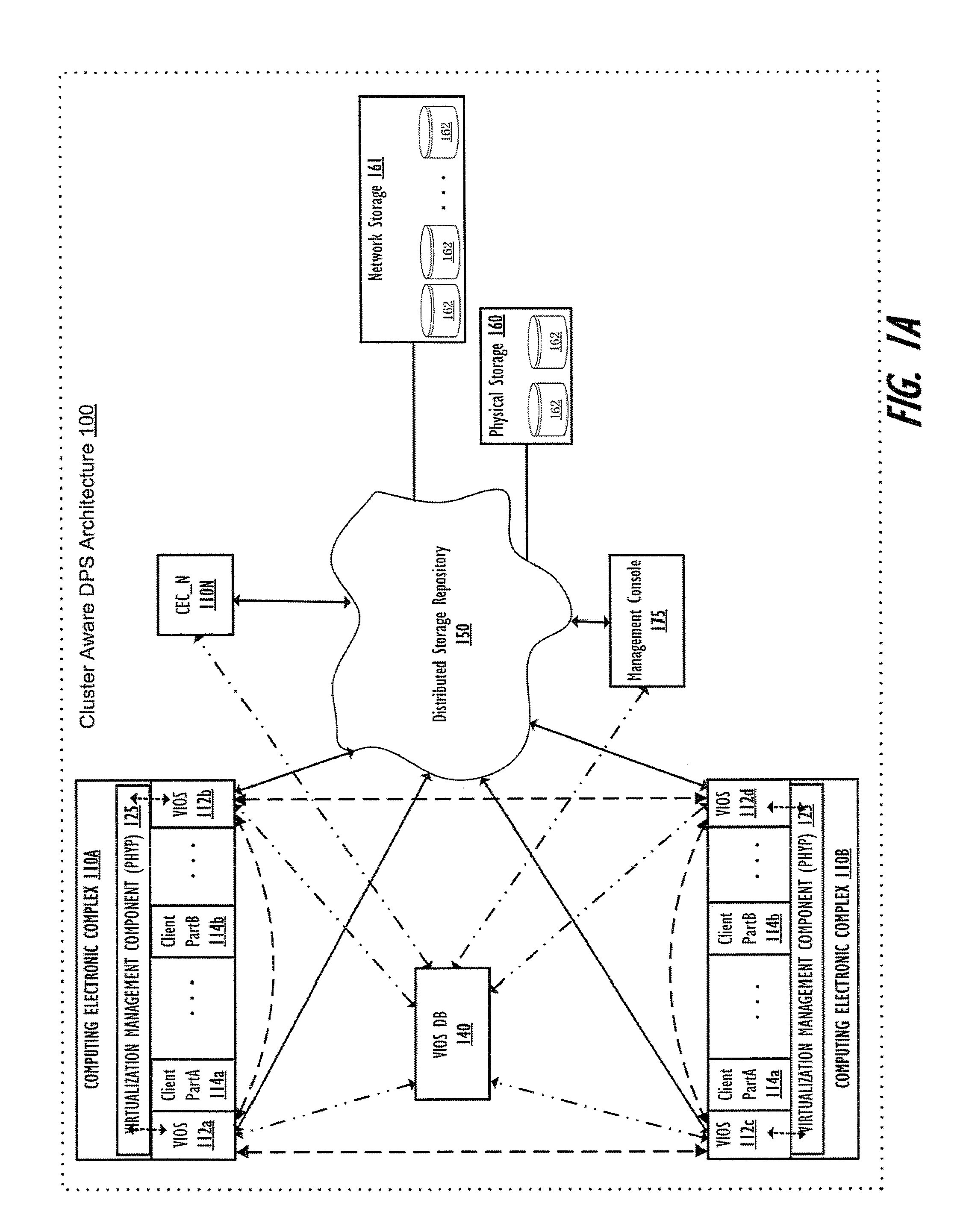

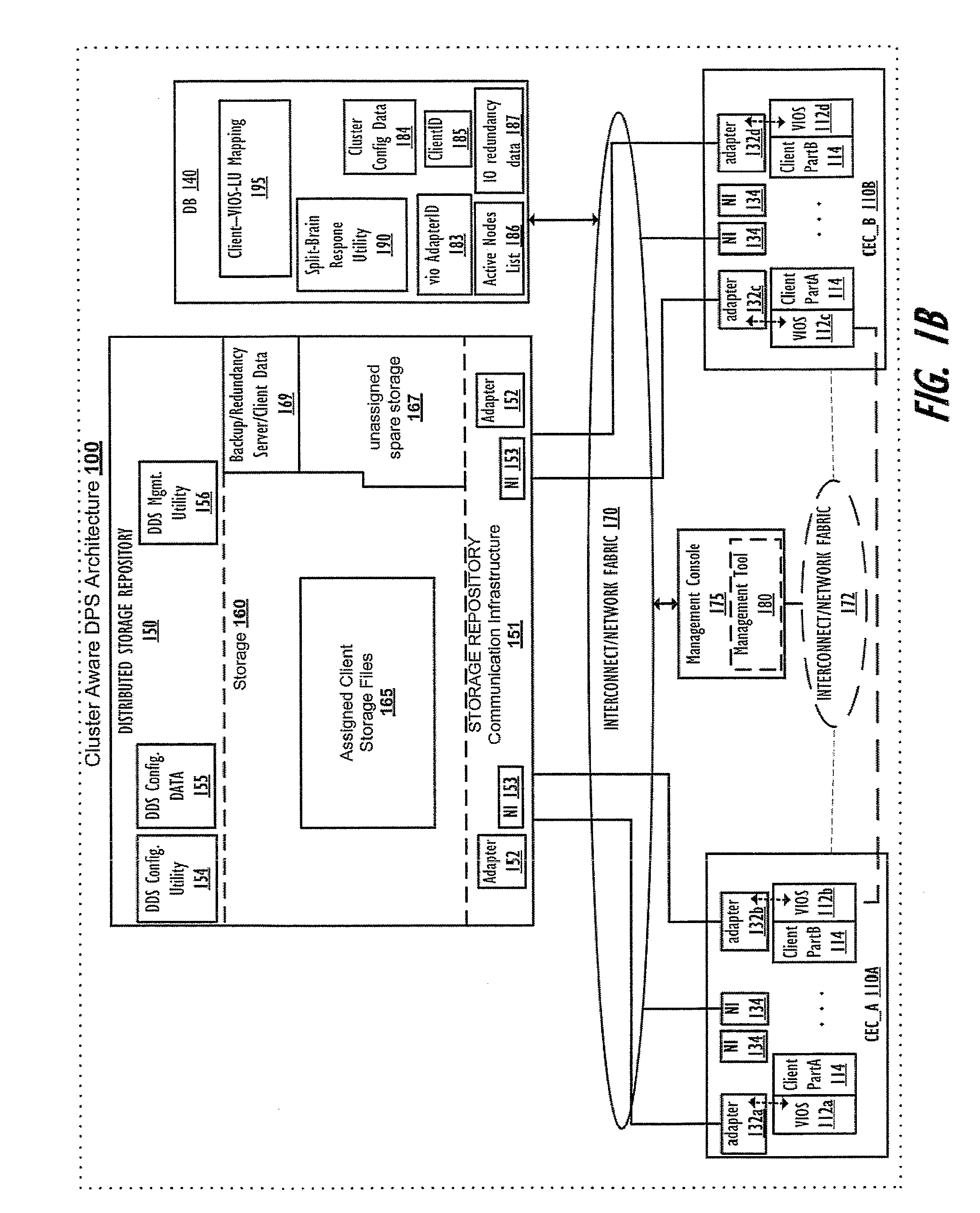

Supporting autonomous live partition mobility during a cluster split-brained condition

InactiveUS20120179771A1Avoiding and minimizing any downtimeError preventionTransmission systemsData processing systemDowntime

A method, data processing system, and computer program product autonomously migrate clients serviced by a first VIOS to other VIOSes in the event of a VIOS cluster “split-brain” scenario generating a primary sub-cluster and a secondary sub-cluster, where the first VIOS is in the secondary sub-cluster. The VIOSes in the cluster continually exchange keep-alive information to provide each VIOS with an up-to-date status of other VIOSes within the cluster and to notify the VIOSes when one or more nodes loose connection to or are no longer communicating with other nodes within the cluster, as occurs with a cluster split-brain event / condition. When this event is detected, a first sub-cluster assumes a primary sub-cluster role and one or more clients served by one or more VIOSes within the secondary sub-cluster are autonomously migrated to other VIOSes in the primary sub-cluster, thus minimizing downtime for clients previously served by the unavailable / uncommunicative VIOSes.

Owner:IBM CORP

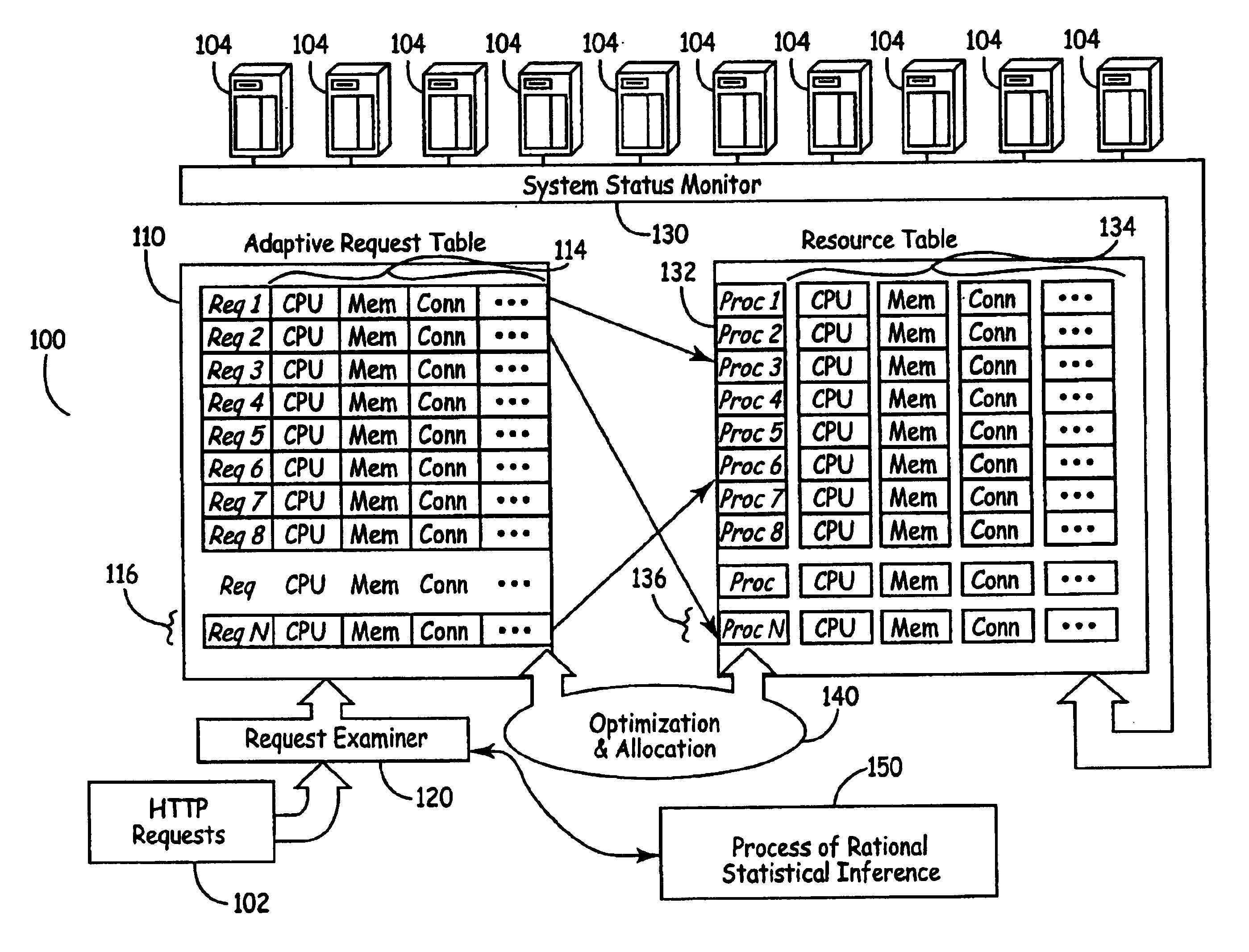

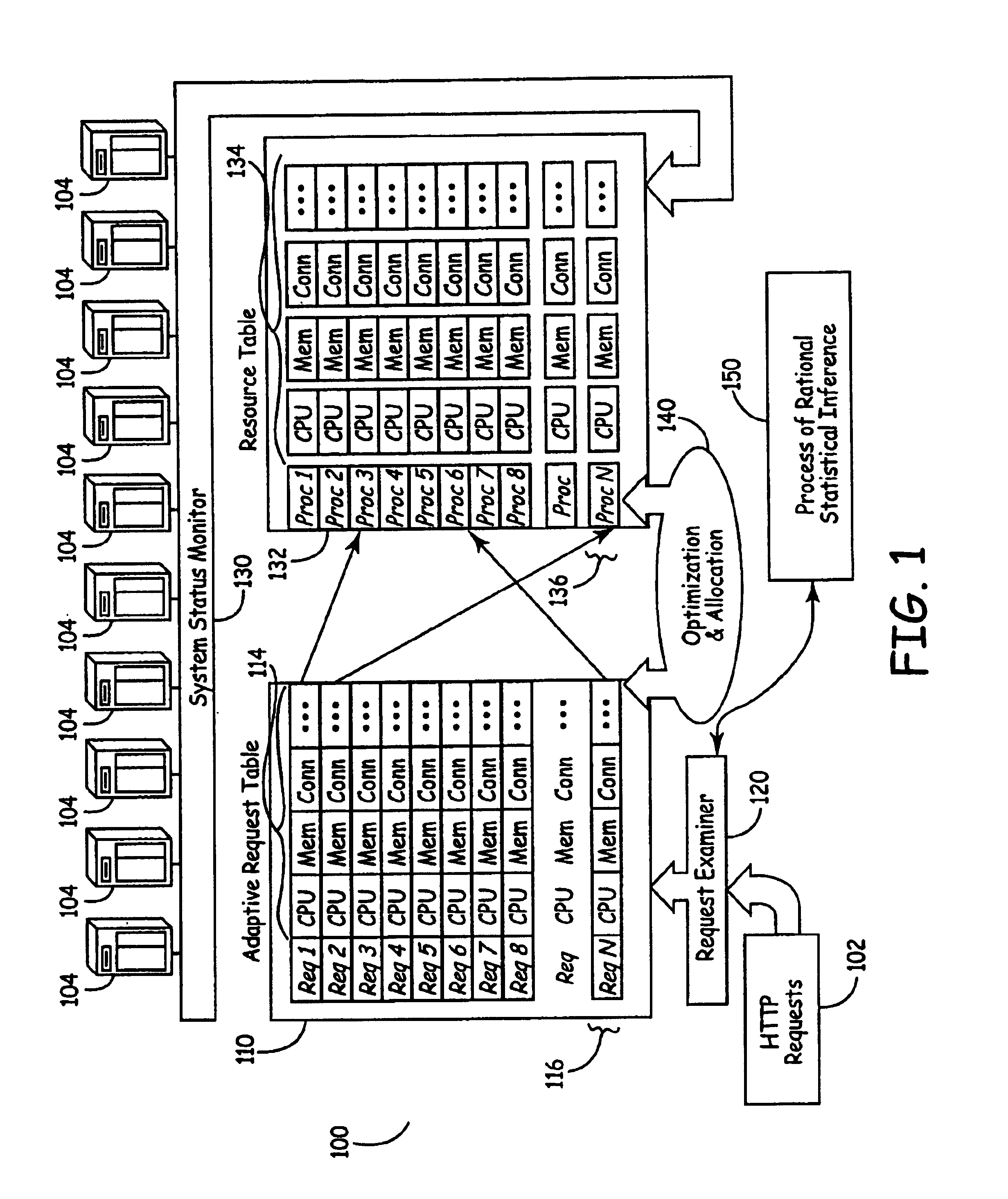

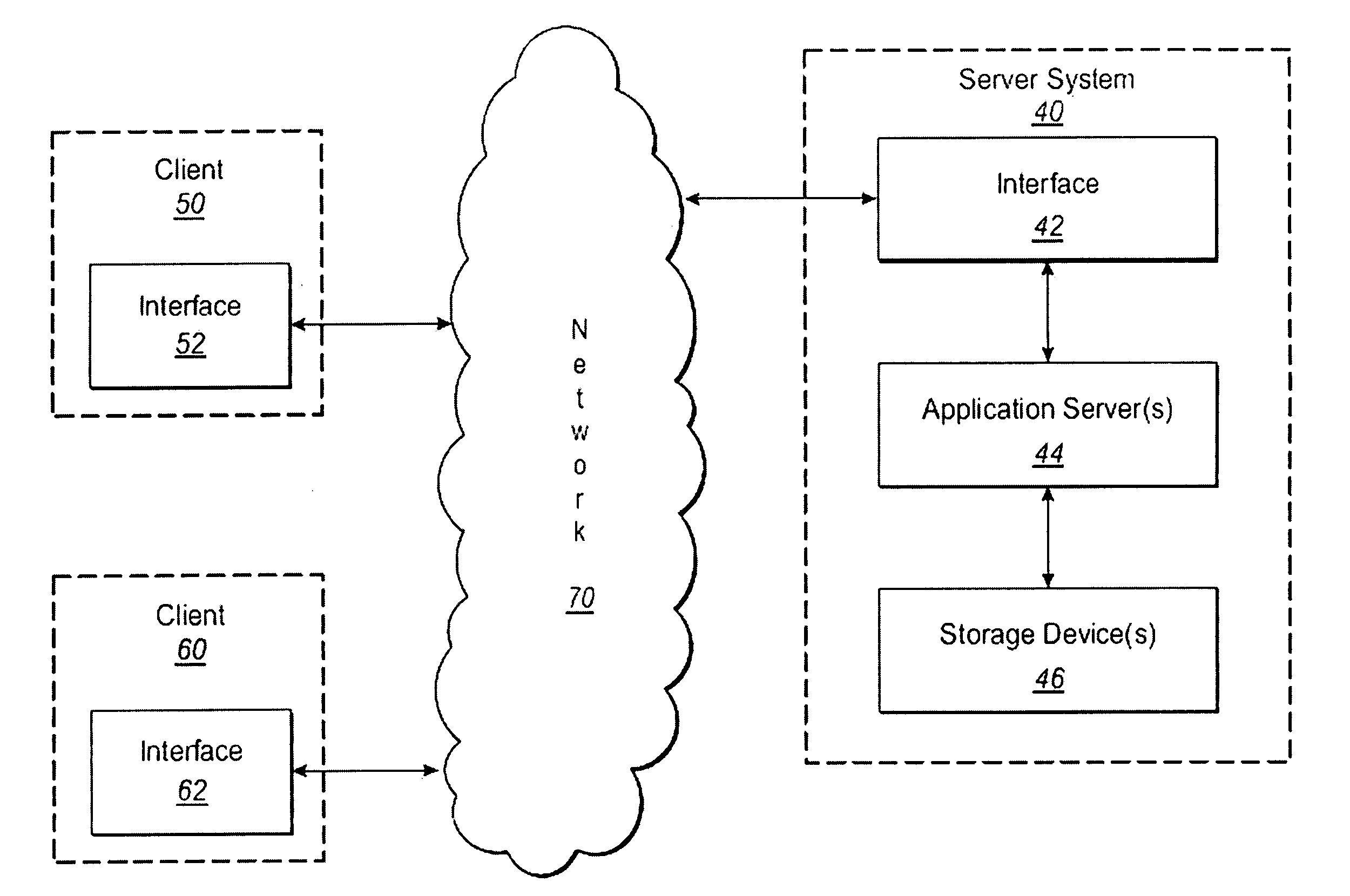

System for balance distribution of requests across multiple servers using dynamic metrics

InactiveUS6938256B2Improve performanceMaximizing numberResource allocationHardware monitoringDynamic metricsRelational database

A system for distributing incoming client requests across multiple servers in a networked client-server computer environment processes all requests as a set that occur within a given time interval and collects information on both the attributes of the requests and the resource capability of the servers to dynamically allocate the requests in a set to the appropriate servers upon the completion of the time interval. Preferably, the system includes a request table to collect at least two requests incoming within a predetermined time interval. A request examiner routine analyzes each collected request with respect to at least one attribute. A system status monitor collects resource capability information of each server in a resource table. An optimization and allocation process distributes collected requests in the request table across the multiple servers upon completion of said time interval based on an optimization of potential pairings of the requests in the request table with the servers in the resource table. The optimization and allocation process preferably analyzes metrics maintained in the request table and resource table as part of a relational database to allocate requests to servers based on a minimization of the metric distance between pairings of requests and servers. Preferably, the request table is part of a dynamic, relational database and a process of statistical inference for ascertaining expected demand patterns involving said the attributes adds predictive information about client requests as part of the request examiner routine.

Owner:RPX CORP +1

Method of creating core-tile-switch mapping architecture in on-chip bus and computer-readable medium for recording the method

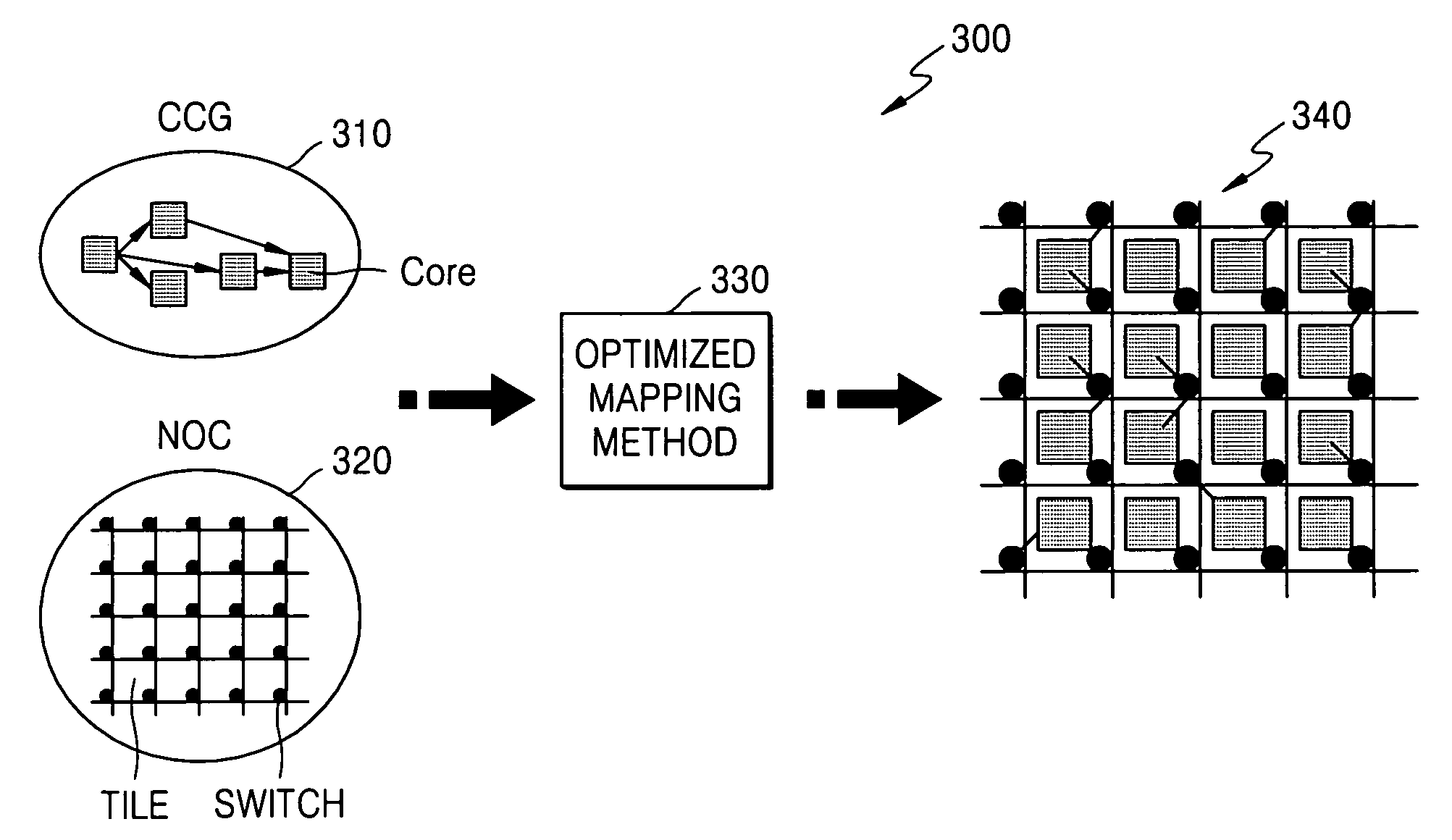

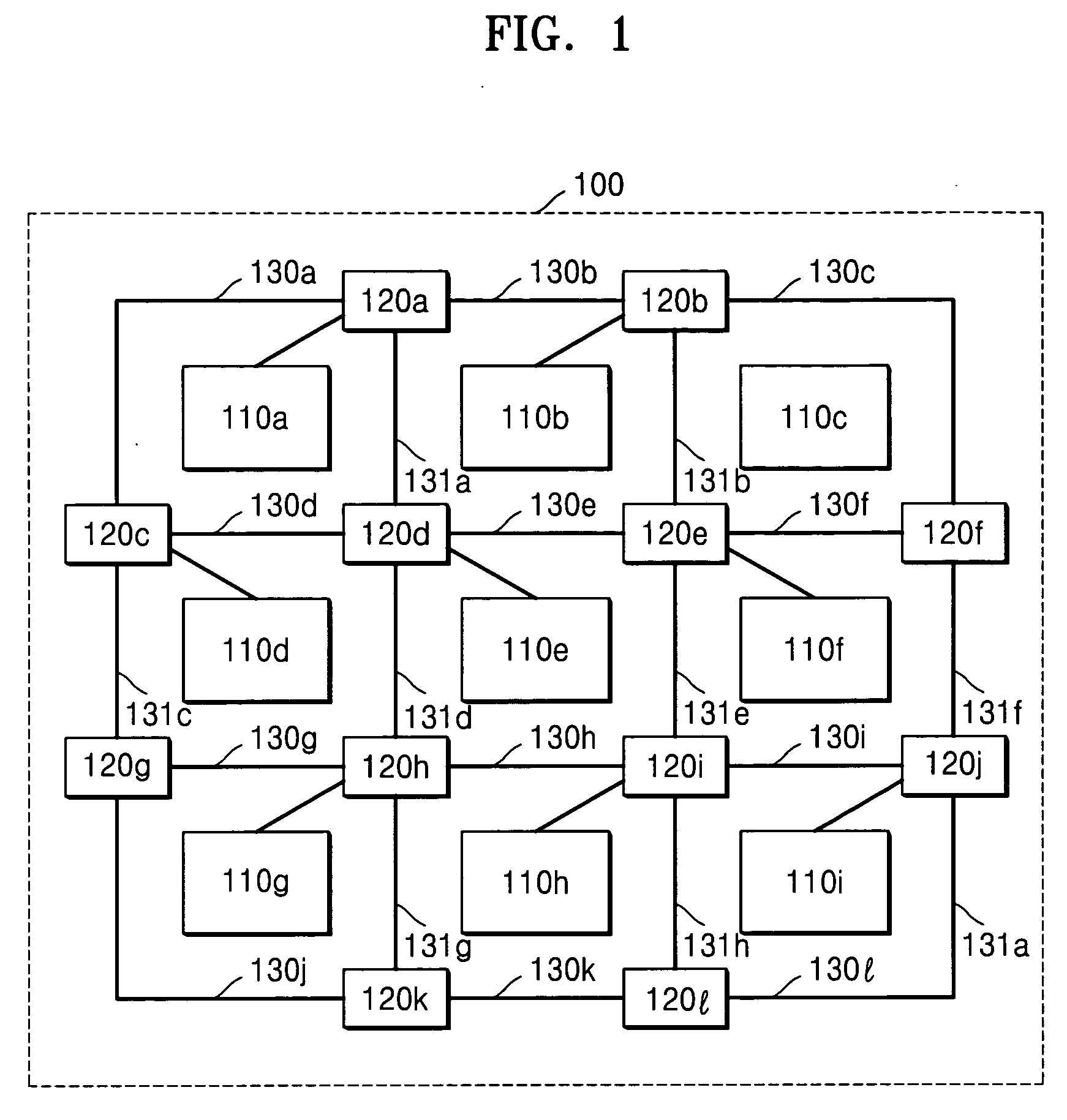

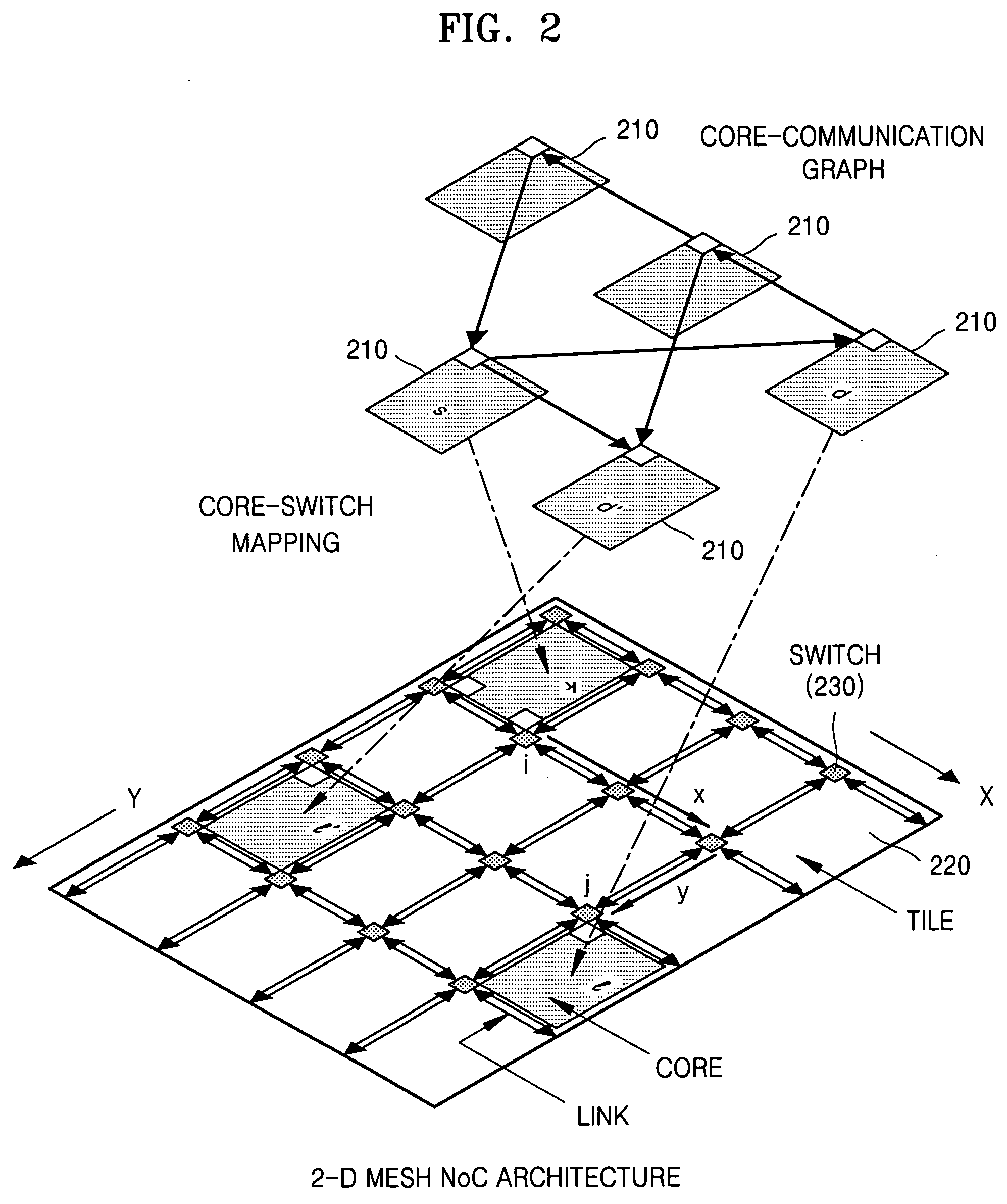

There are provided a method of creating an optimized core-tile-switch mapping architecture in an on-chip bus and a computer-readable recording medium for recording the method. The core-tile-switch mapping architecture creating method includes: creating a core communication graph representing the connection relationship between arbitrary cores; creating a Network-on-chip (NOC) architecture including a plurality of switches, a plurality of tiles, and a plurality of links interconnecting the plurality of switches; and mapping the cores to the tiles using a predetermined optimized mapping method to thereby create the optimized core-tile-switch mapping architecture. The optimized mapping method includes first, second, and third calculating steps. According to the optimized core-tile-switch mapping architecture creating method and the computer-readable recording medium for recording the method, since the hop distance between cores is minimized, it is possible to minimize energy consumption and communication delay time in an on-chip bus. Furthermore, the optimized mapping architecture presents a standard for comparing the optimization of other mapping architectures.

Owner:SAMSUNG ELECTRONICS CO LTD

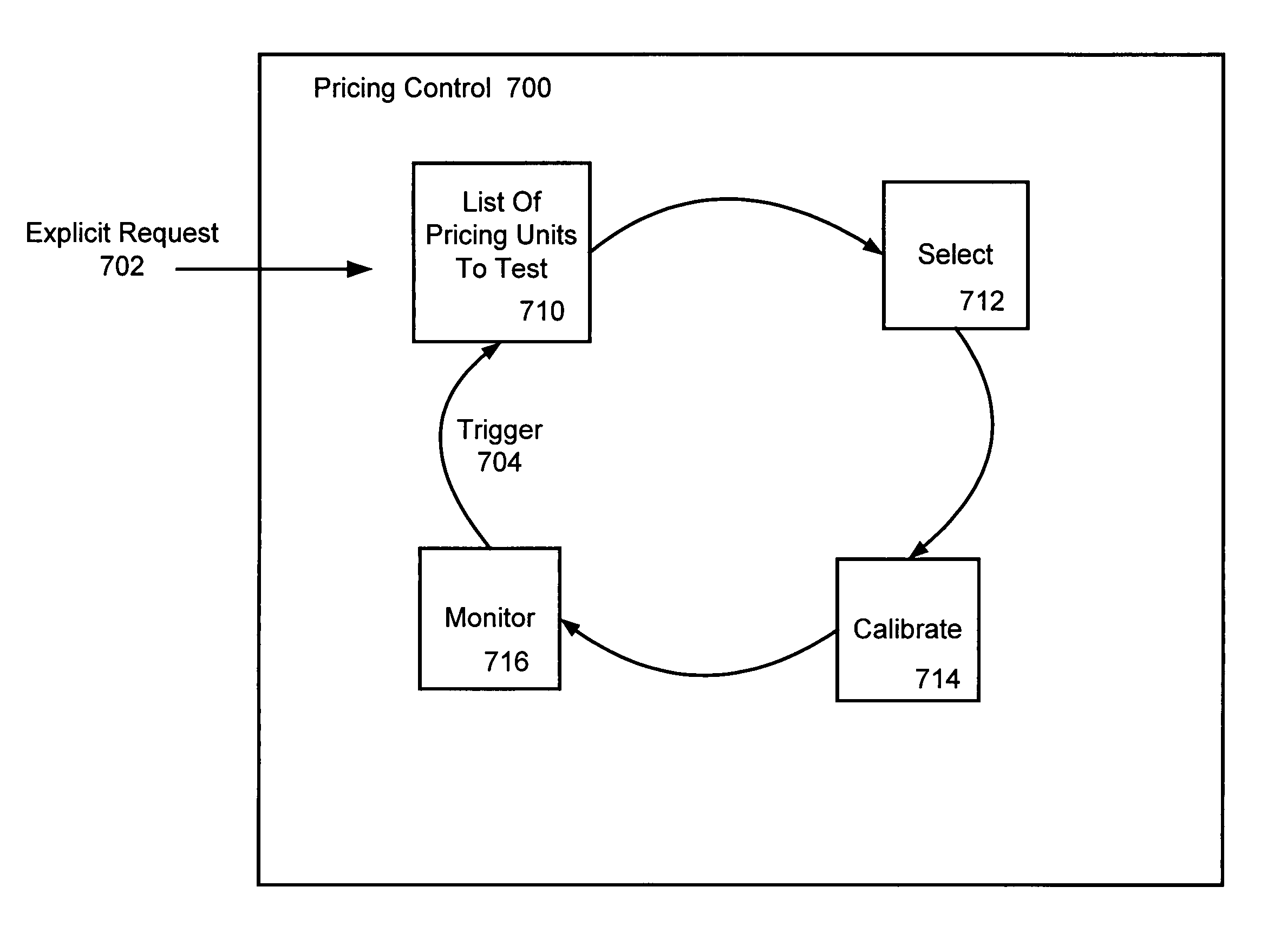

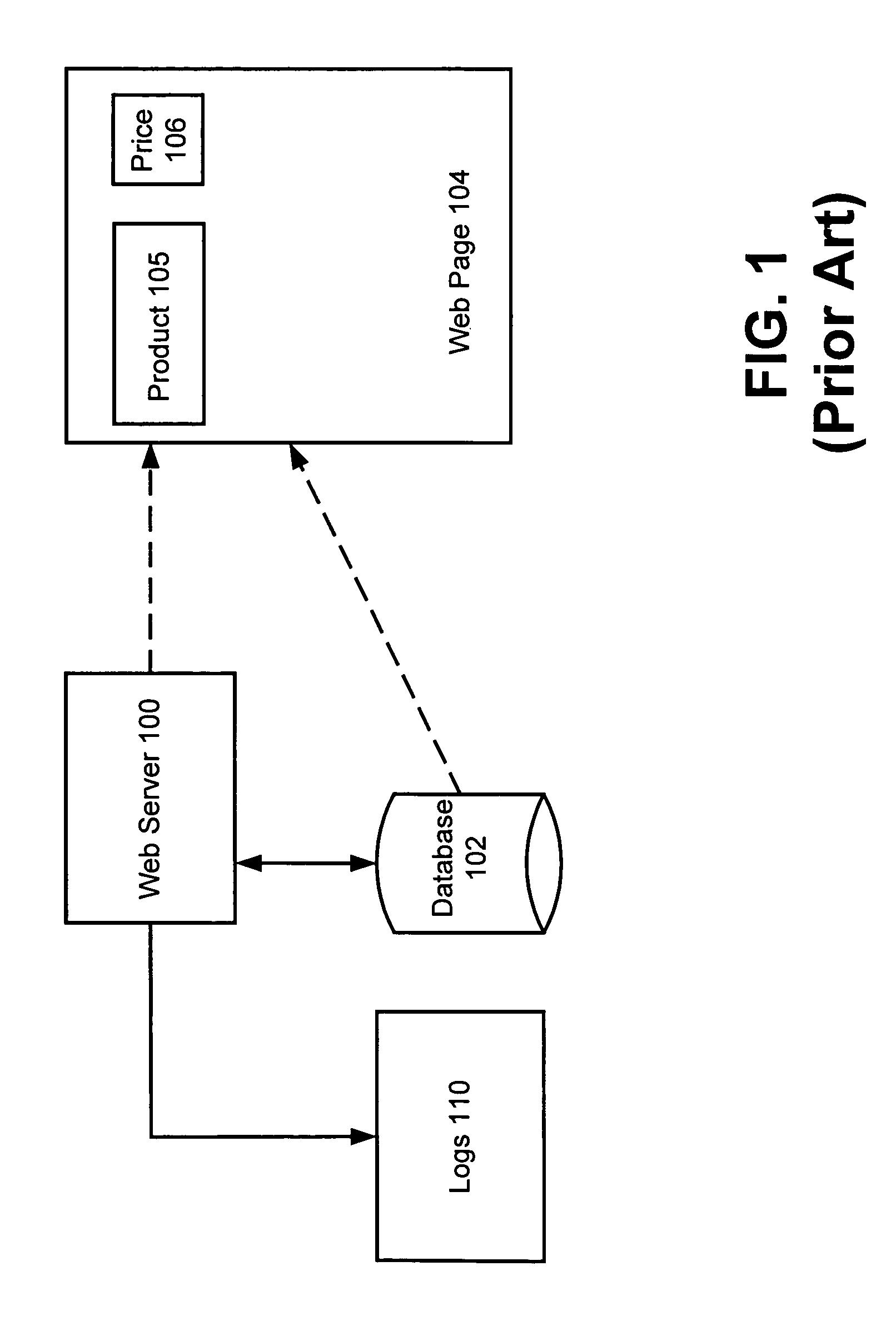

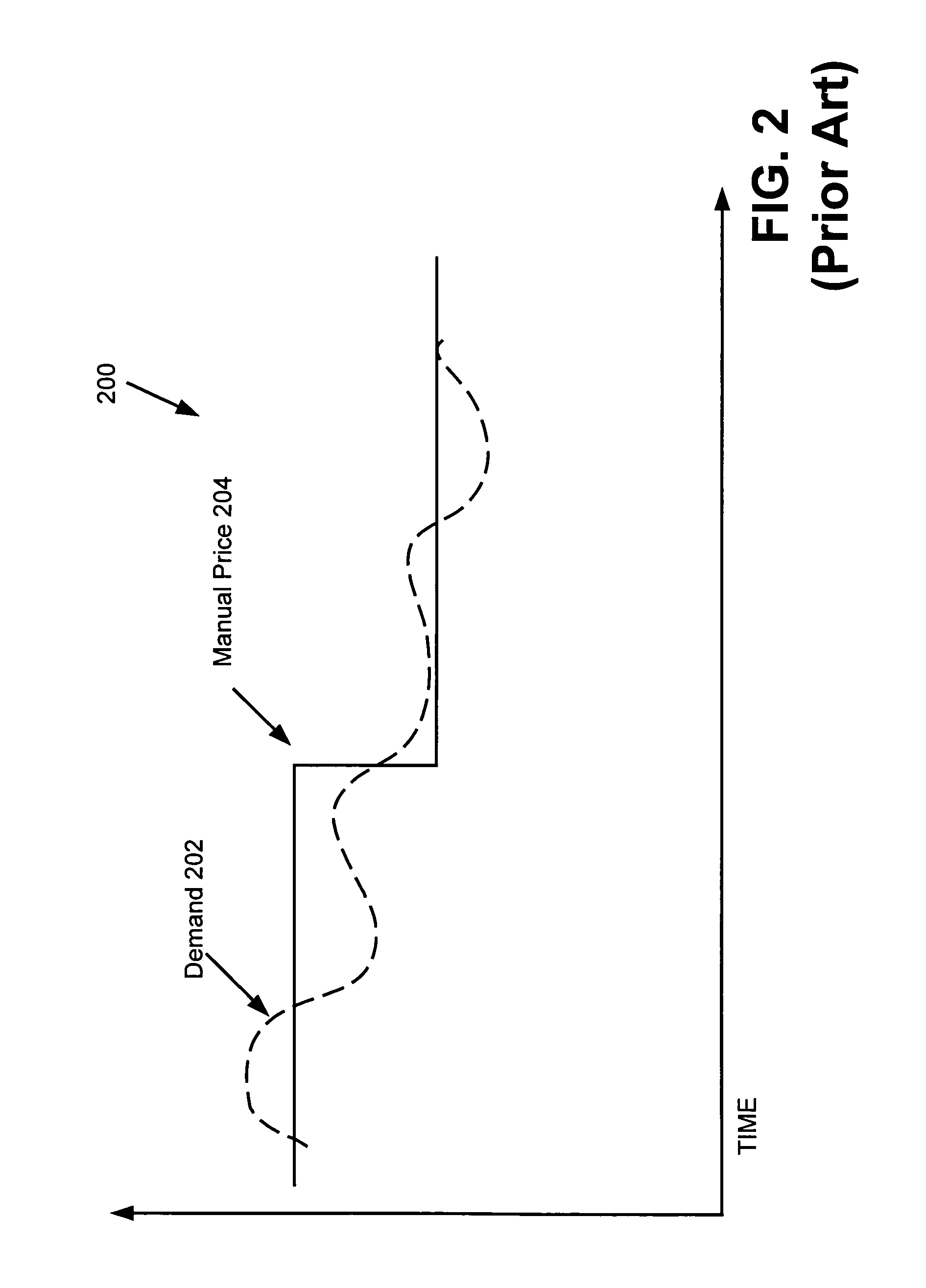

Method and apparatus for automatic pricing in electronic commerce

InactiveUS7970713B1Increased cost of testingReduce testing costsMarket predictionsBuying/selling/leasing transactionsE-commerceMajorization minimization

Owner:OIP TECH

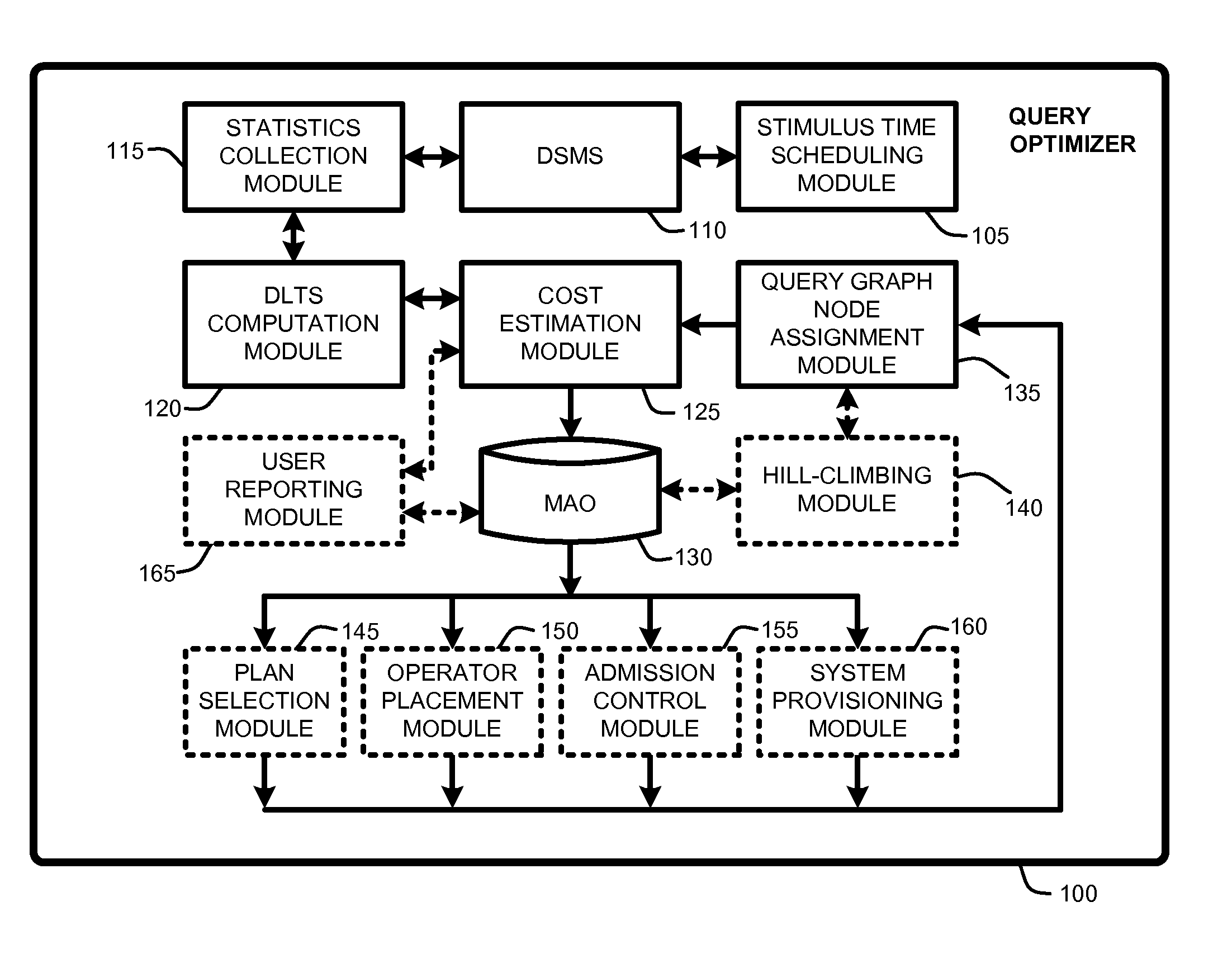

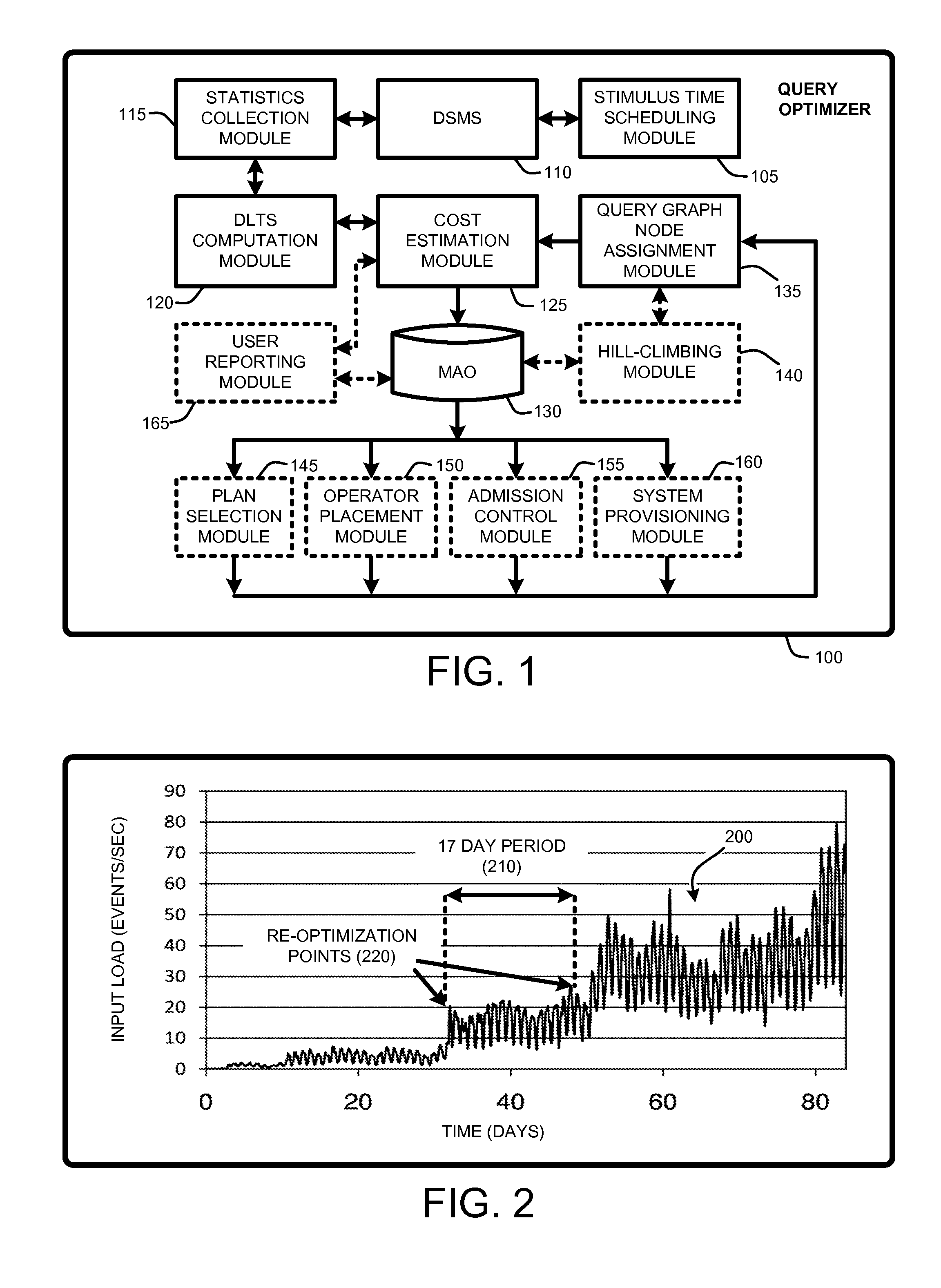

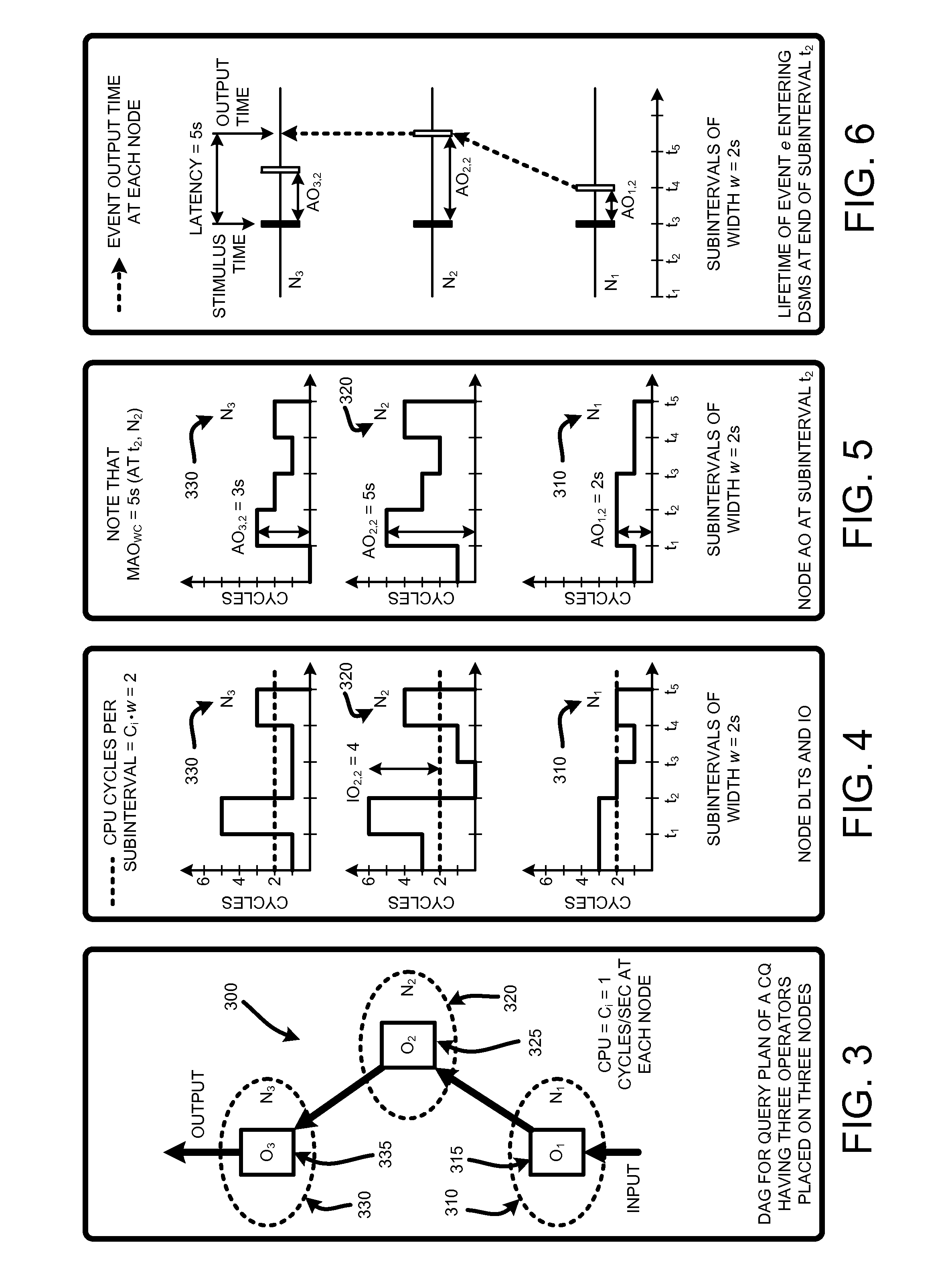

Estimating latencies for query optimization in distributed stream processing

InactiveUS20100030896A1Easy to mergeReduce the numberDigital data information retrievalDigital computer detailsQuery optimizationWorkload

A “Query Optimizer” provides a cost estimation metric referred to as “Maximum Accumulated Overload” (MAO). MAO is approximately equivalent to maximum system latency in a data stream management system (DSMS). Consequently, MAO is directly relevant for use in optimizing latencies in real-time streaming applications running multiple continuous queries (CQs) over high data-rate event sources. In various embodiments, the Query Optimizer computes MAO given knowledge of original operator statistics, including “operator selectivity” and “cycles / event” in combination with an expected event arrival workload. Beyond use in query optimization to minimize worst-case latency, MAO is useful for addressing problems including admission control, system provisioning, user latency reporting, operator placements (in a multi-node environment), etc. In addition, MAO, as a surrogate for worst-case latency, is generally applicable beyond streaming systems, to any queue-based workflow system with control over the scheduling strategy.

Owner:MICROSOFT TECH LICENSING LLC

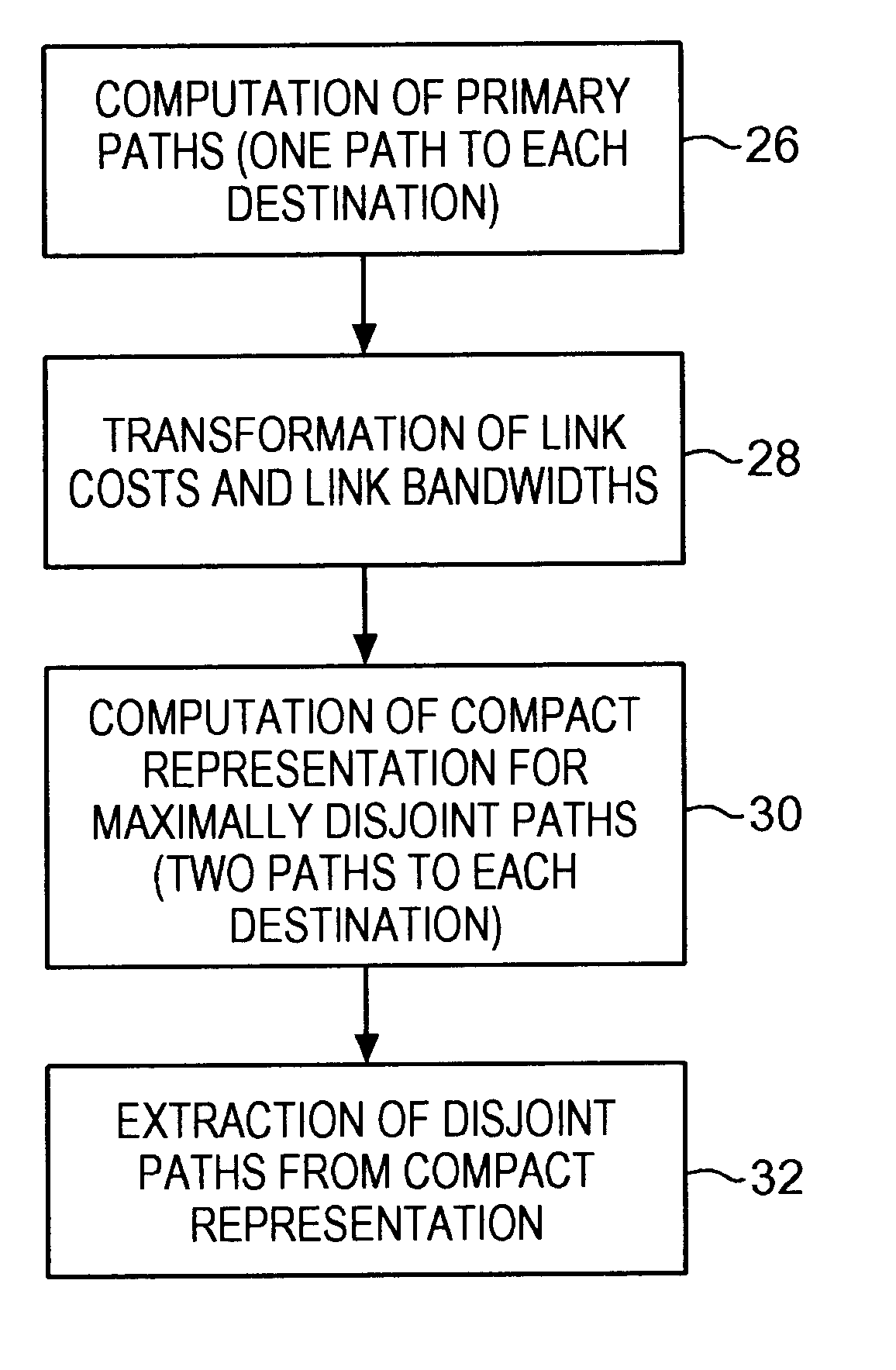

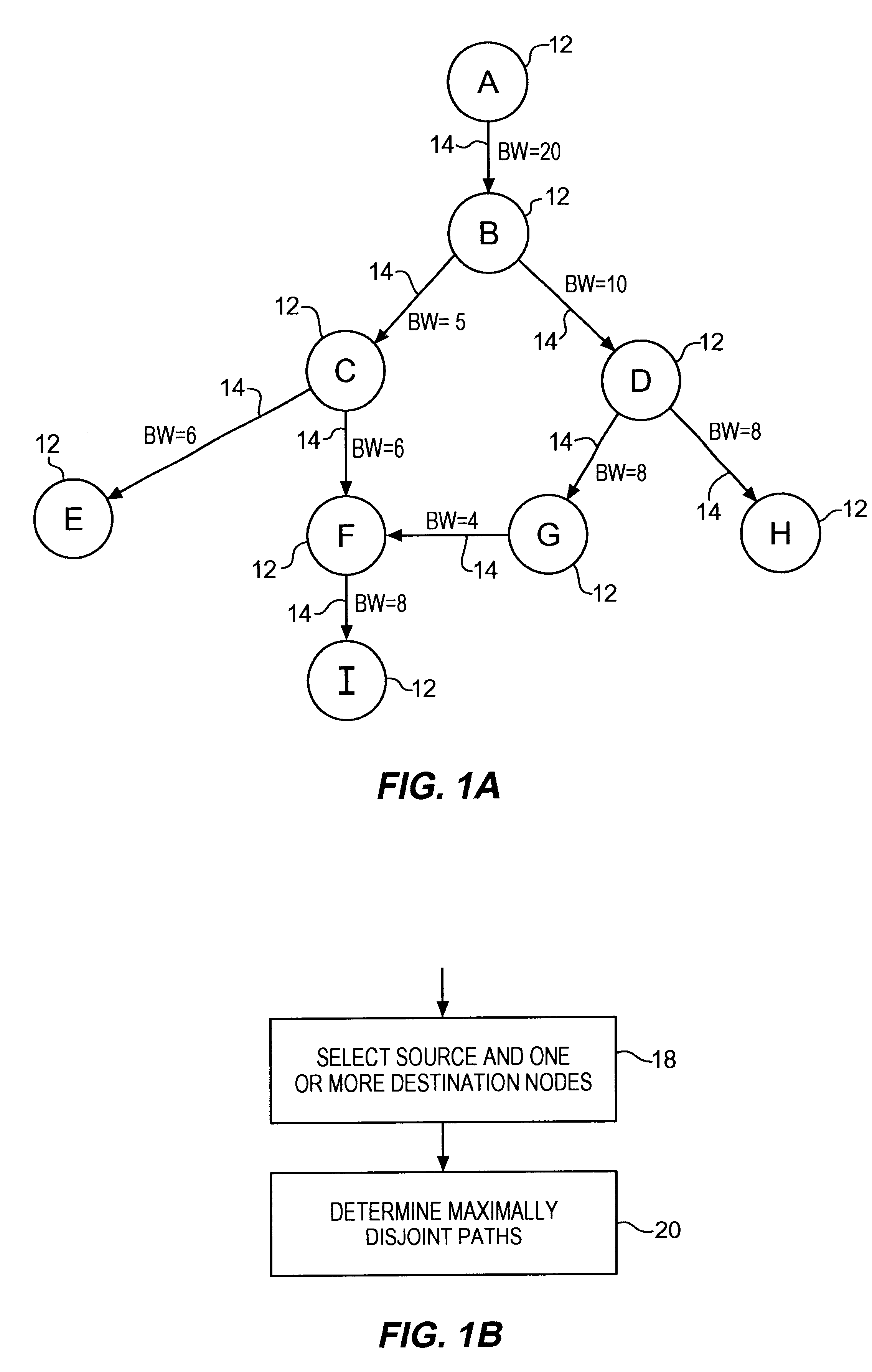

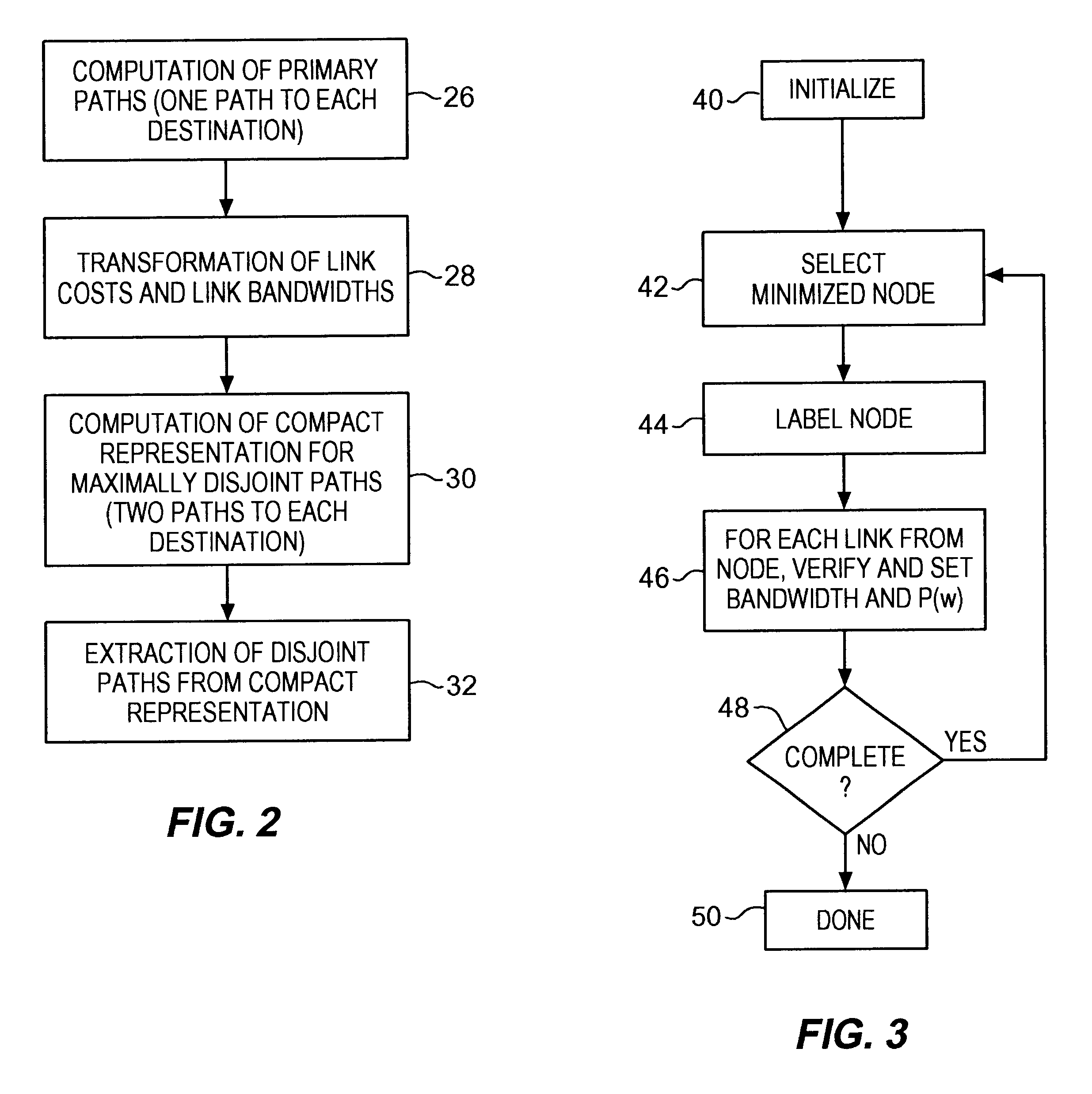

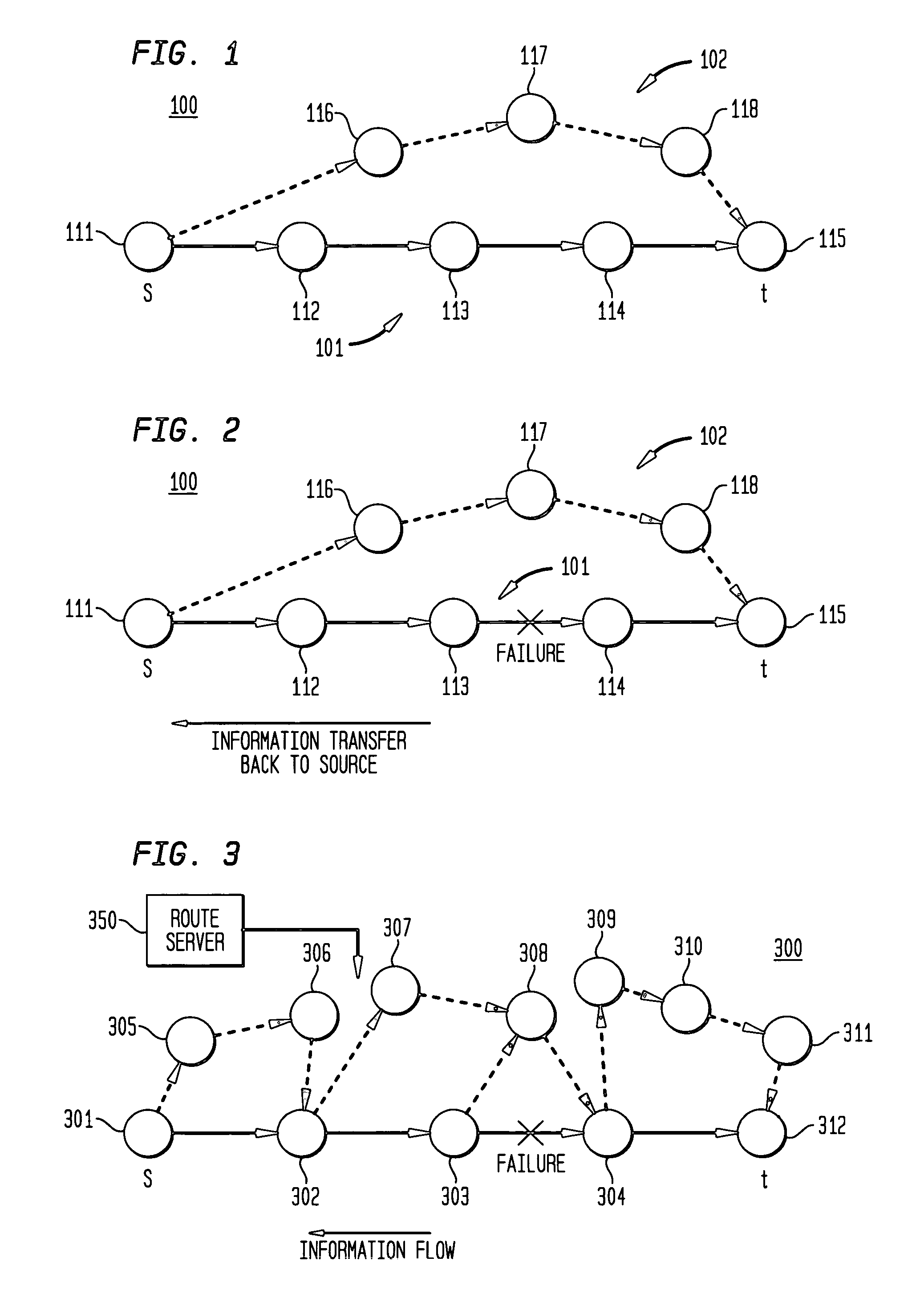

Communications network system and method for routing based on disjoint pairs of path

InactiveUS6542469B1Raise the possibilityMinimizes probabilityError preventionFrequency-division multiplex detailsPathPingHigh bandwidth

Methods for determining at least two pre-computed paths to a destination in a communications network are provided. The two paths are maximally disjoint. Maximally disjoint paths are paths where the number of links or nodes common to the two paths is minimized. This minimization is given a priority over other path considerations, such as bandwidth or cost metrics. By pre-computing a maximally disjoint pair of paths, the probability that an inoperable link or node is in both paths is minimized. The probability that the inoperable link or node blocks a transfer of data is minimized. Additionally, a pair of maximally disjoint paths is determined even if absolutely disjoint paths are not possible. The communications network may include at least four nodes, and maximally disjoint pairs of paths are pre-computed from each node to each other node. A third path from each node to each other node may also be computed as a function of bandwidth or a cost metric. Therefore, the advantages of the maximally disjoint pair of paths are provided as discussed above and a path associated with a higher bandwidth or lower cost is provided to more likely satisfy the user requirements of a data transfer.

Owner:SPRINT CORPORATION

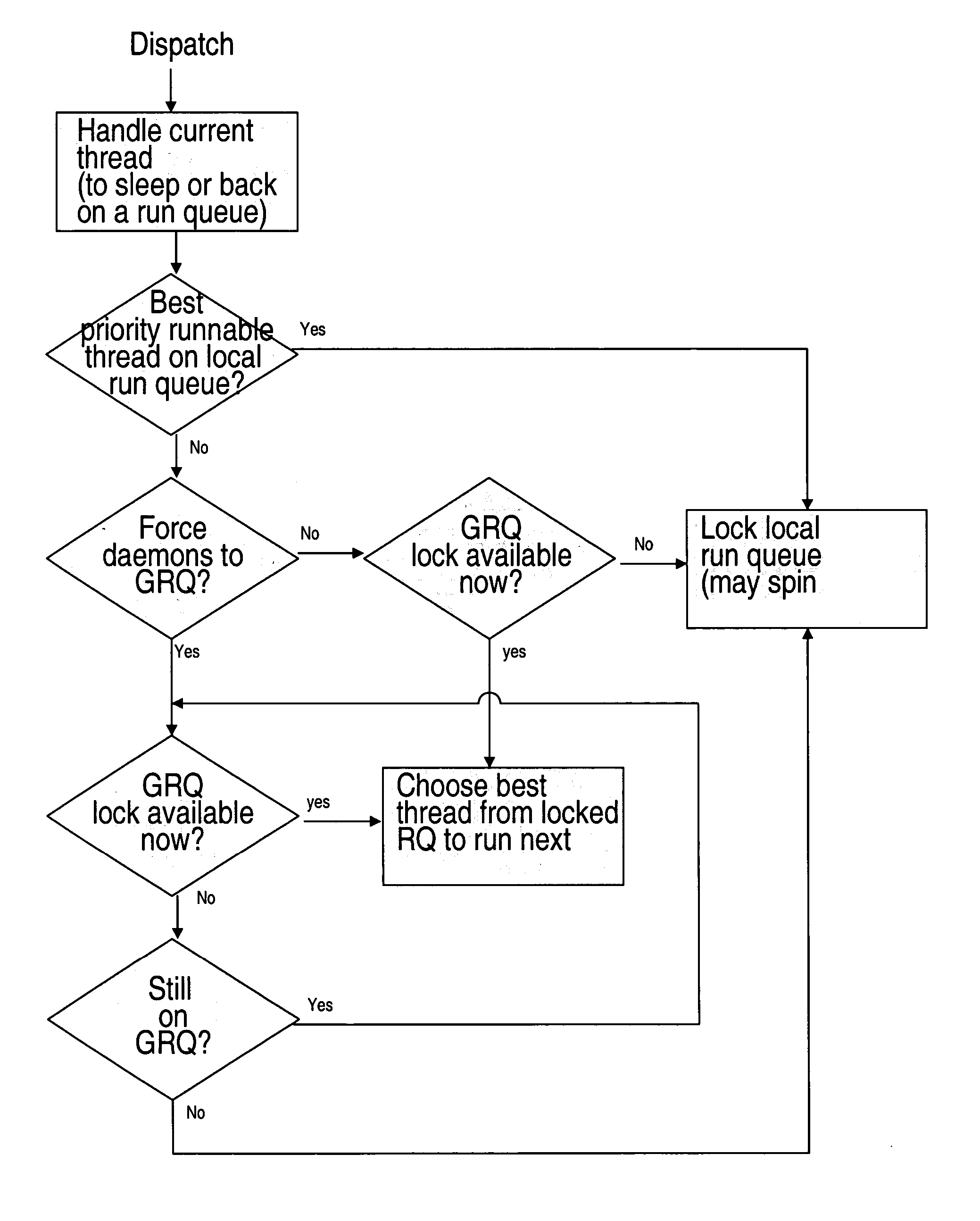

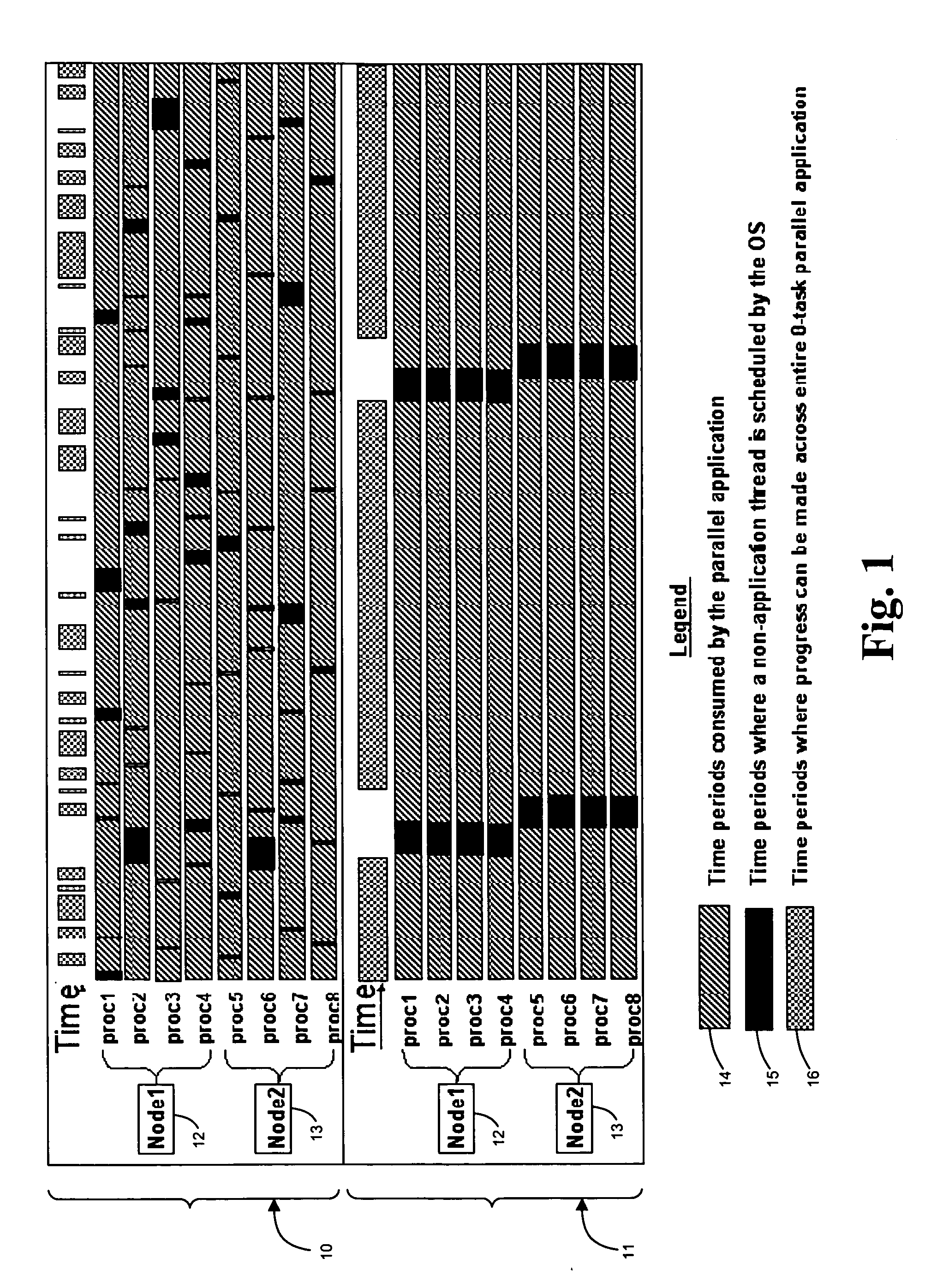

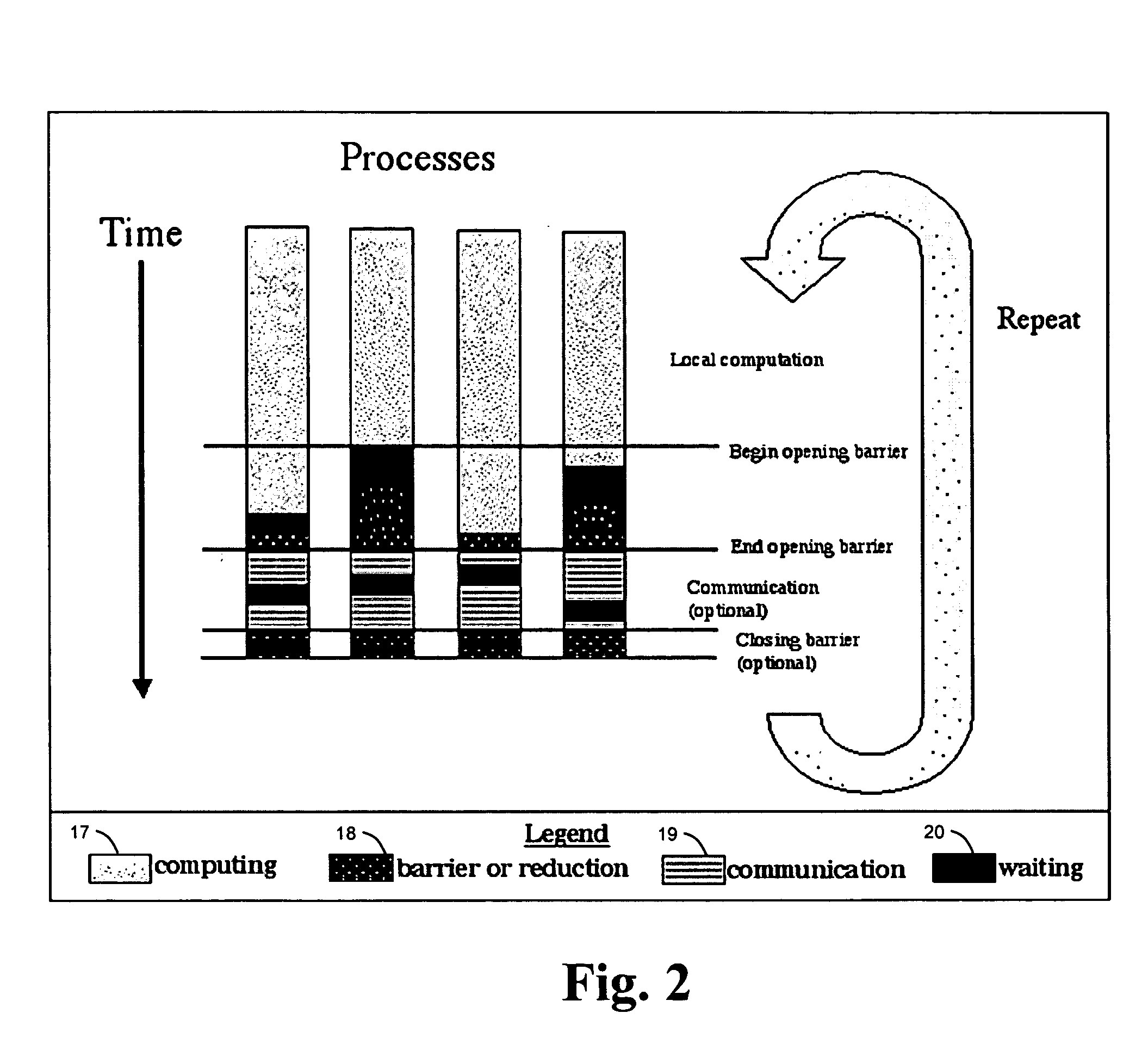

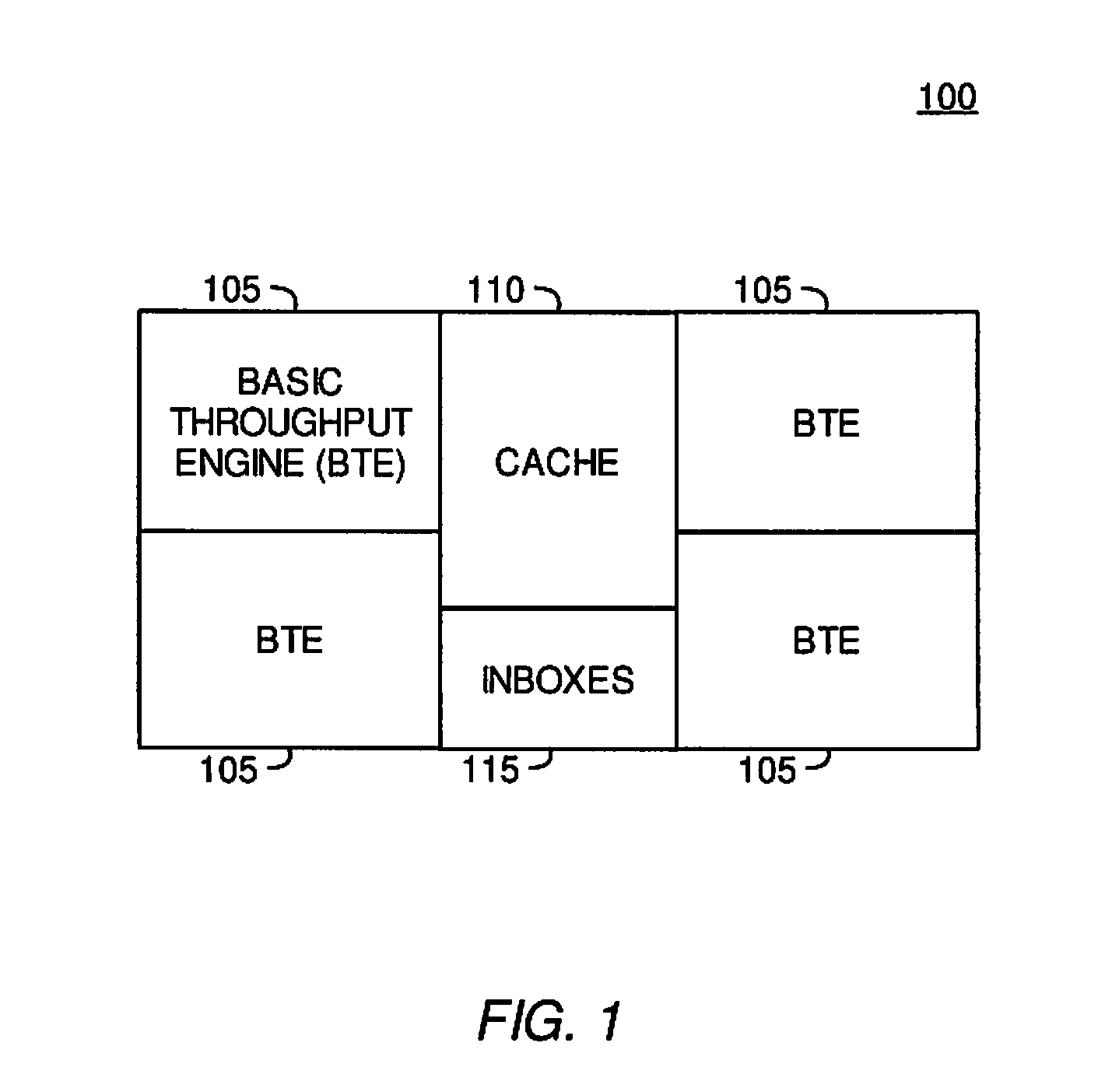

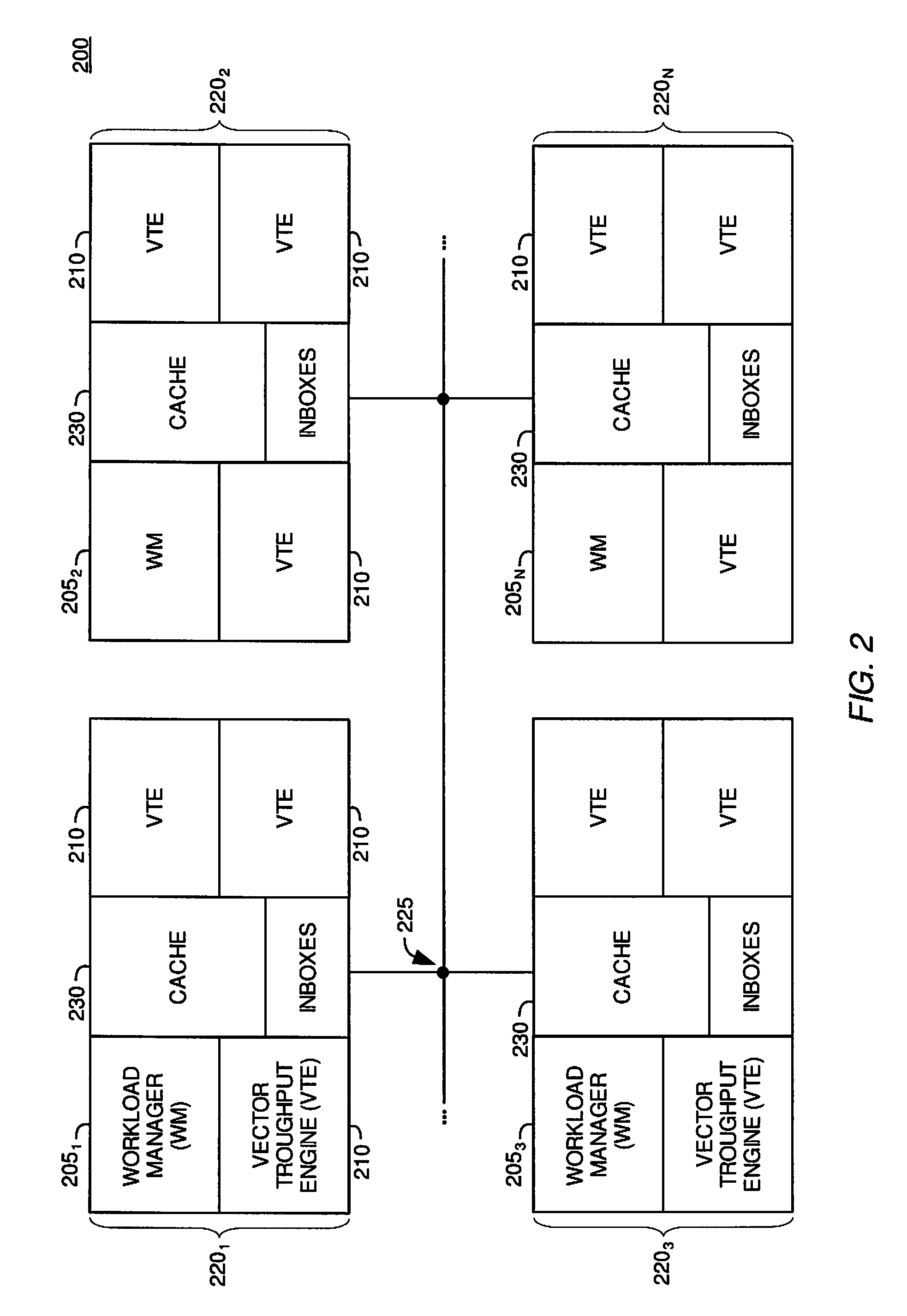

Parallel-aware, dedicated job co-scheduling method and system

InactiveUS20050131865A1Improve performanceImprove scalabilityProgram initiation/switchingSpecial data processing applicationsExtensibilityOperational system

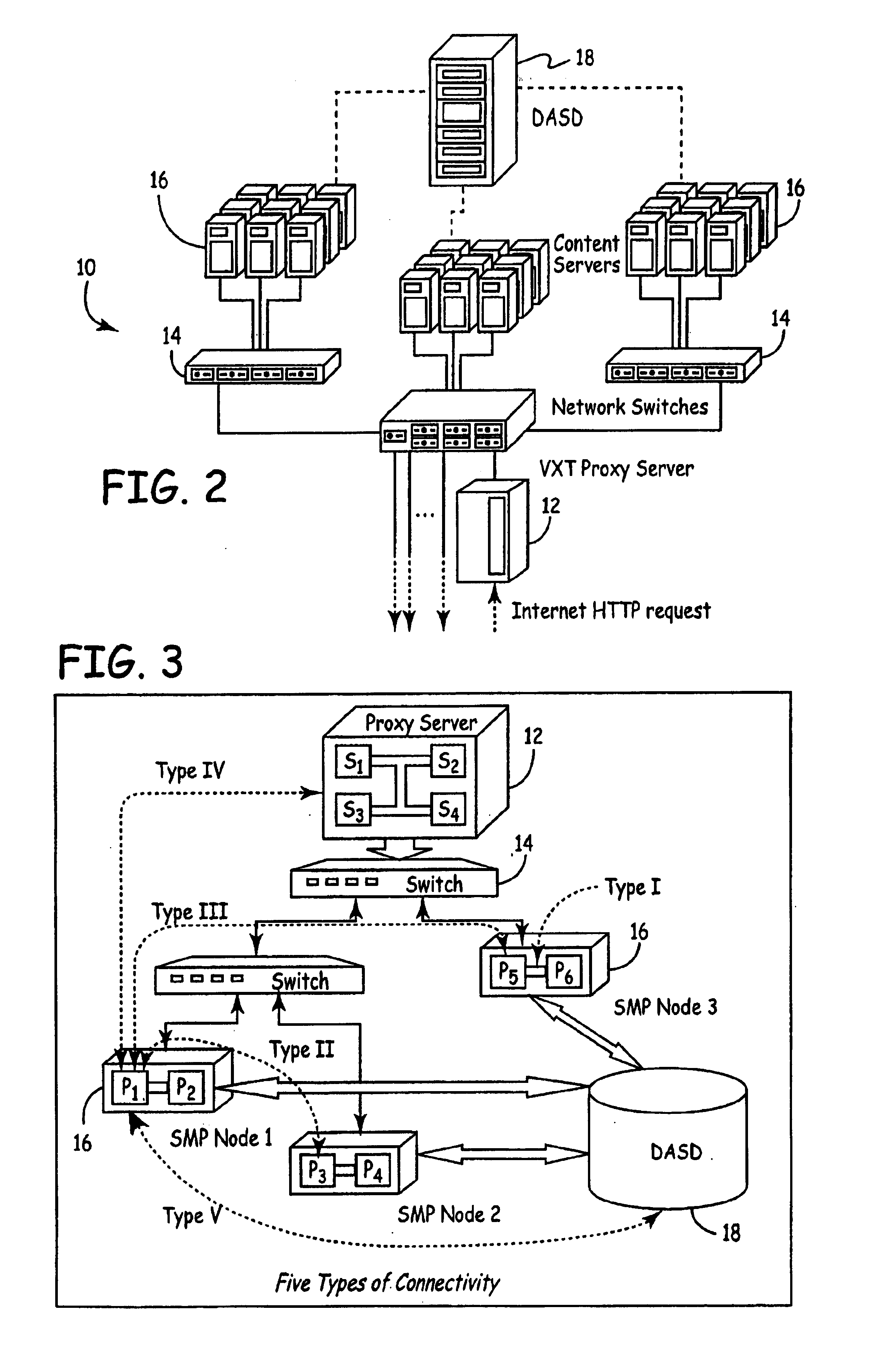

In a parallel computing environment comprising a network of SMP nodes each having at least one processor, a parallel-aware co-scheduling method and system for improving the performance and scalability of a dedicated parallel job having synchronizing collective operations. The method and system uses a global co-scheduler and an operating system kernel dispatcher adapted to coordinate interfering system and daemon activities on a node and across nodes to promote intra-node and inter-node overlap of said interfering system and daemon activities as well as intra-node and inter-node overlap of said synchronizing collective operations. In this manner, the impact of random short-lived interruptions, such as timer-decrement processing and periodic daemon activity, on synchronizing collective operations is minimized on large processor-count SPMD bulk-synchronous programming styles.

Owner:LAWRENCE LIVERMORE NAT SECURITY LLC

Systems and methods for dynamically updating computer systems

InactiveUS20060130045A1Minimize impactSpecific program execution arrangementsMemory systemsSoftware updateClient-side

Systems and methods for intelligently trickle-feeding a computer system with needed software without over-consuming available bandwidth to allow the software update to occur in the background and to minimize the impact of the update on a user of the computer system. A determination is made as to whether or not the software update is needed. The type of network is also determined, including the amount of bandwidth that is available for transmission of the software update to the client computer device. An intelligent determination is made relating to how much of the update to send at a time and the rate of sending information chunks in order to trickle-feed the needed update to the client computer device. Confirmation information is exchanged relating to a completed update. If an unsuccessful or partial update occurs, a retransmission of only the needed chunks is performed to minimize the amount of data transfer needed to rectify the problem or completely install the update.

Owner:FATPOT TECH

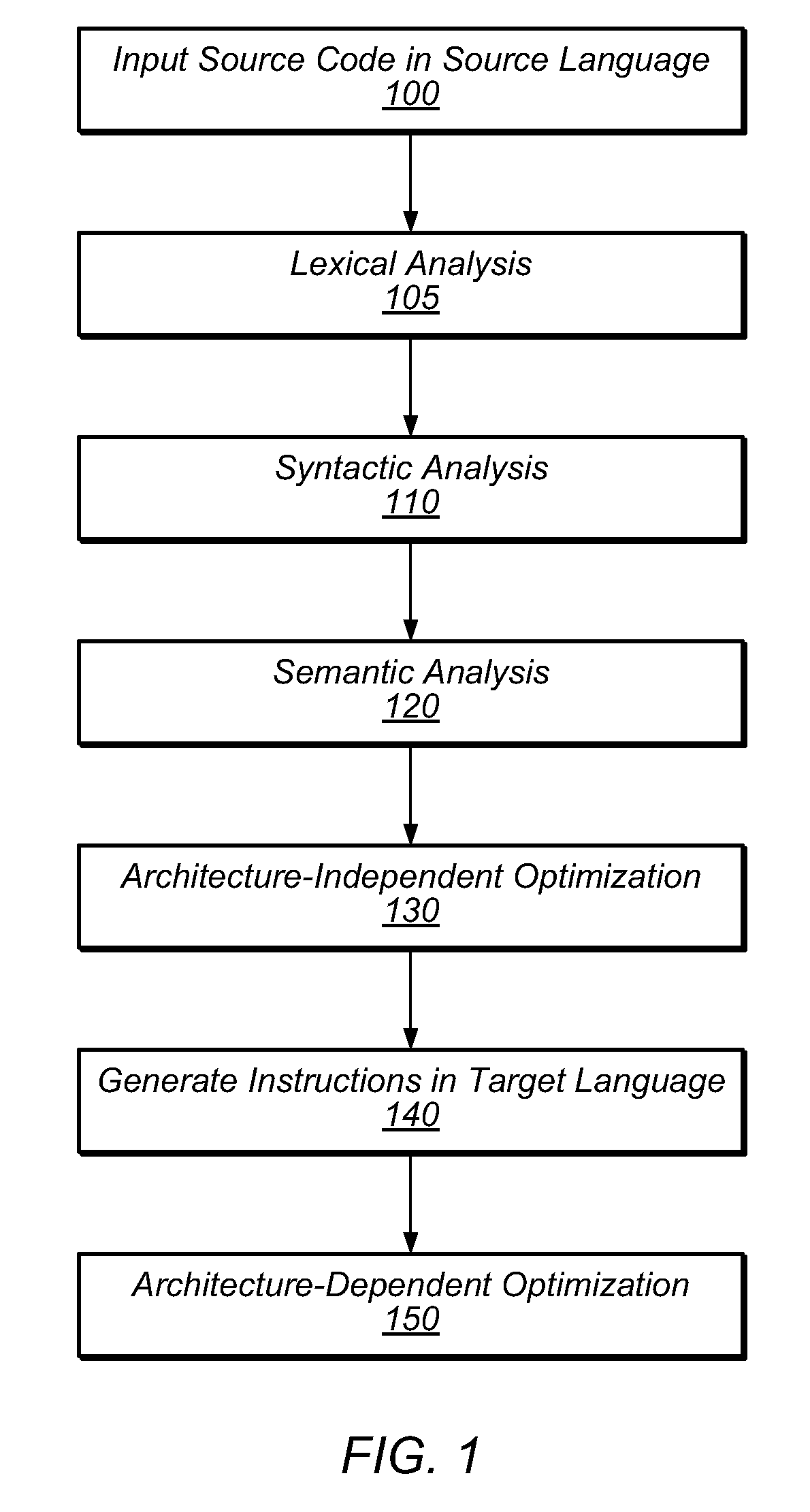

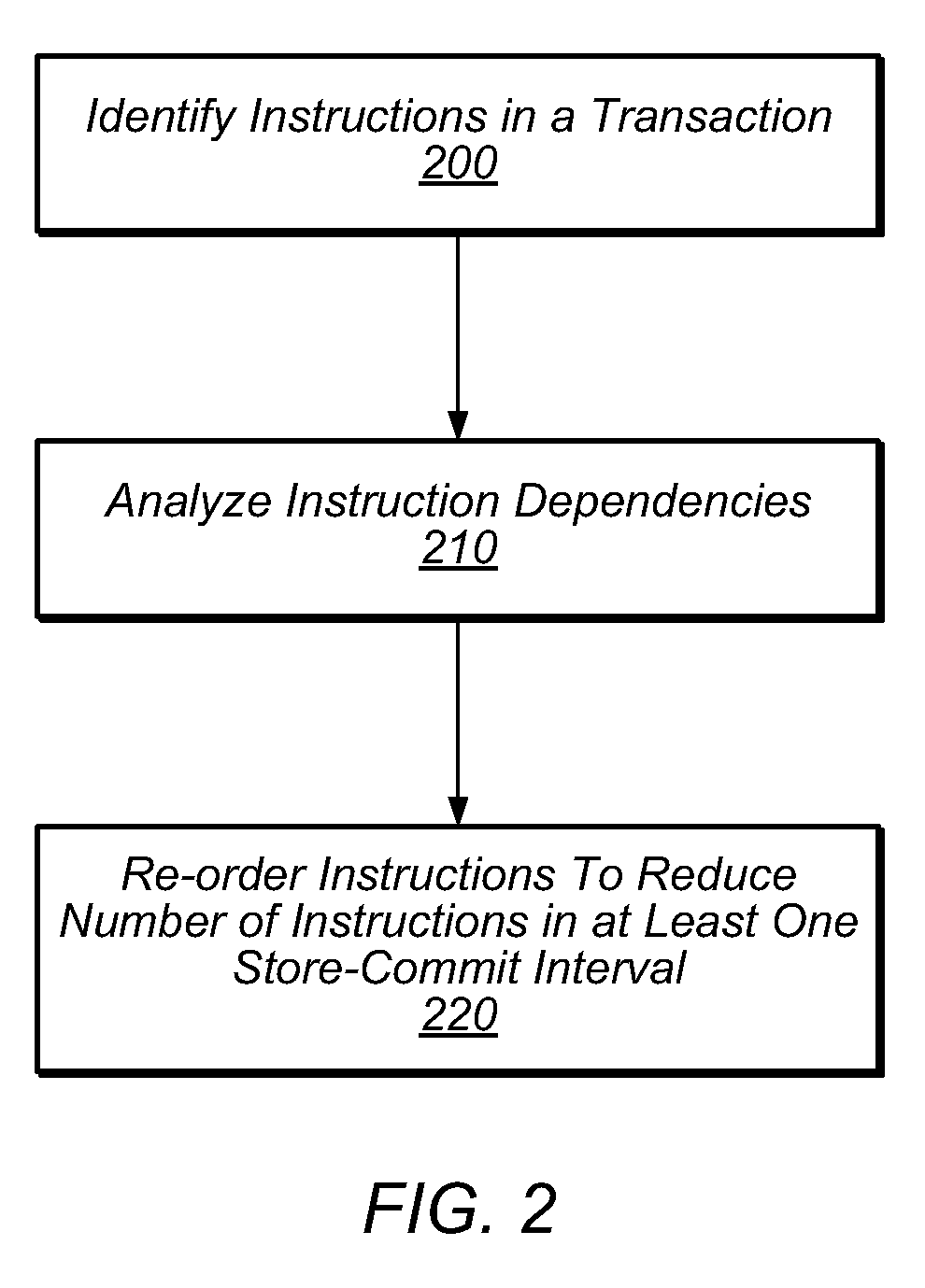

System and Method for Reducing Transactional Abort Rates Using Compiler Optimization Techniques

ActiveUS20100169870A1Reduce probabilityReduce transactional abortsSoftware engineeringSpecific program execution arrangementsLong latencyTransactional memory

In transactional memory systems, transactional aborts due to conflicts between concurrent threads may cause system performance degradation. A compiler may attempt to minimize runtime abort rates by performing one or more code transformations and / or other optimizations on a transactional memory program in an attempt to minimize one or more store-commit intervals. The compiler may employ store deferral, hoisting of long-latency operations from within a transaction body and / or store-commit interval, speculative hoisting of long-latency operations, and / or redundant store squashing optimizations. The compiler may perform optimizing transformations on source code and / or on any intermediate representation of the source code (e.g., parse trees, un-optimized assembly code, etc.). In some embodiments, the compiler may preemptively avoid naïve target code constructions. The compiler may perform static and / or dynamic analysis of a program in order to determine which, if any, transformations should be applied and / or may dynamically recompile code sections at runtime, based on execution analysis.

Owner:SUN MICROSYSTEMS INC

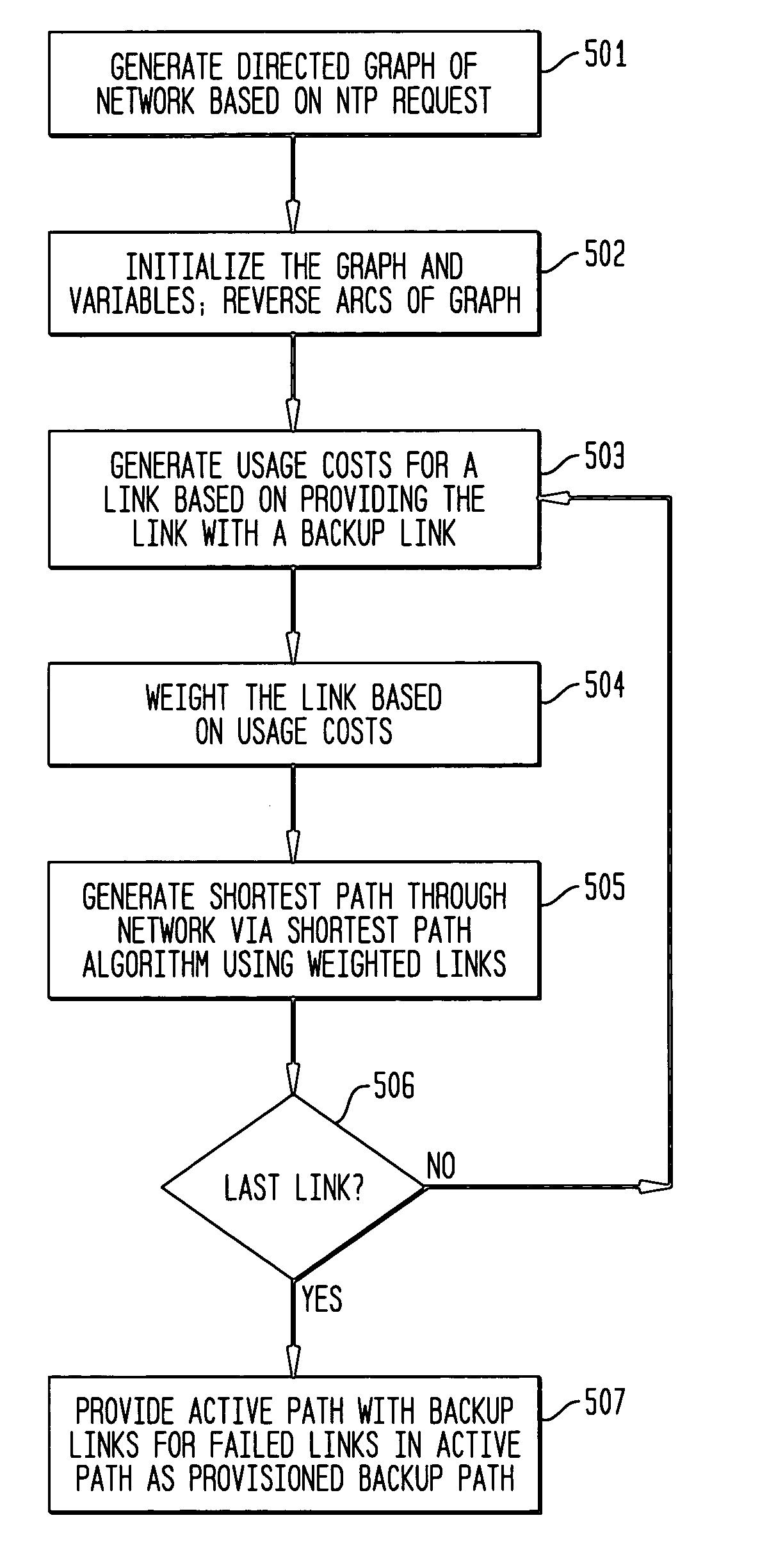

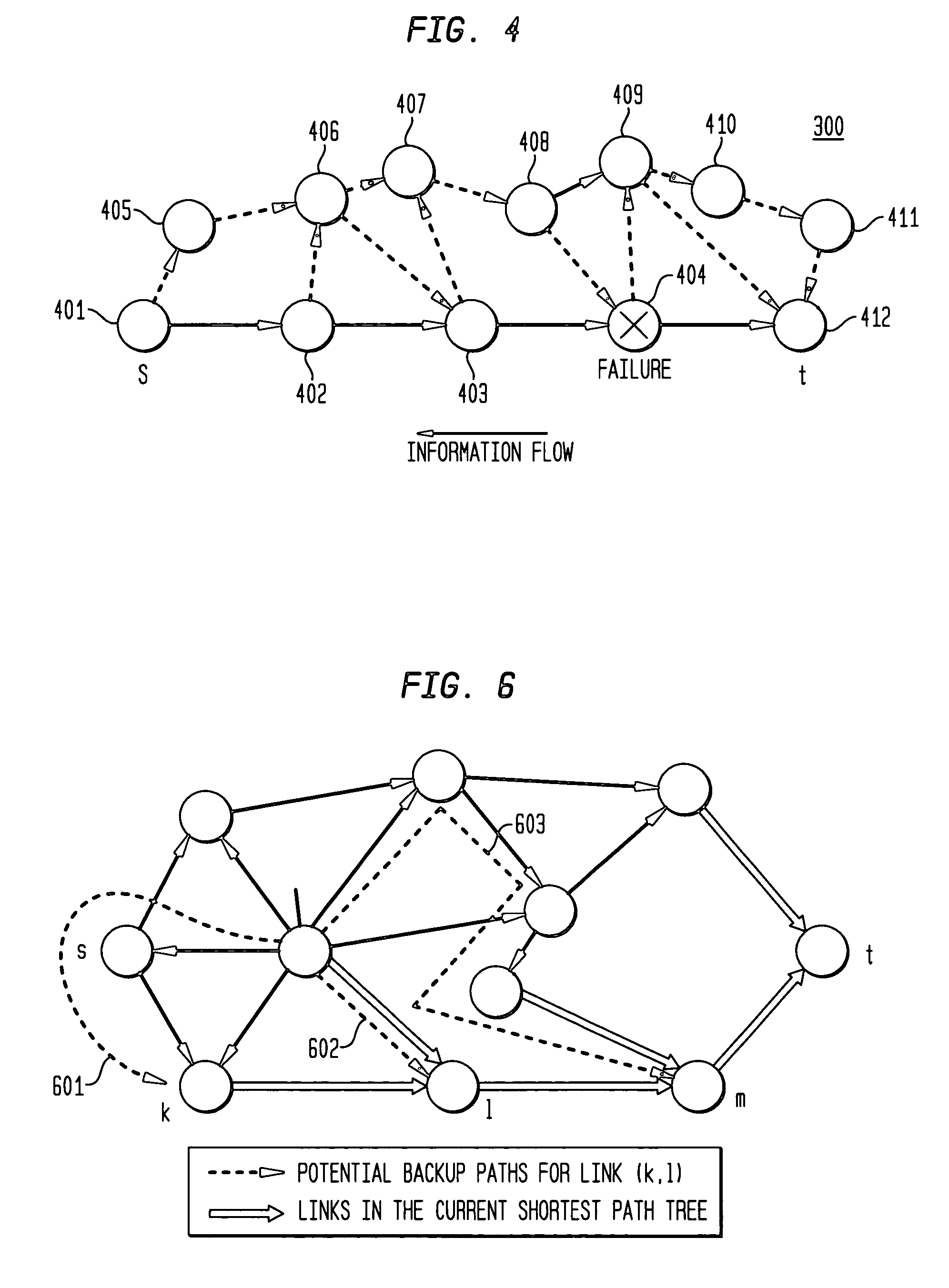

Dynamic backup routing of network tunnel paths for local restoration in a packet network

A packet network of interconnected nodes employing dynamic backup routing of a Network Tunnel Path (NTP) allocates an active and backup path to the NTP based upon detection of a network failure. Dynamic backup routing employs local restoration to determine the allocation of, and, in operation, to switch between, a primary / active path and a secondary / backup path. Switching from the active path is based on a backup path determined with iterative shortest-path computations with link weights assigned based on the cost of using a link to backup a given link. Costs may be assigned based on single-link failure or single element (node or link) failure. Link weights are derived by assigning usage costs to links for inclusion in a backup path, and minimizing the costs with respect to a predefined criterion.

Owner:LUCENT TECH INC

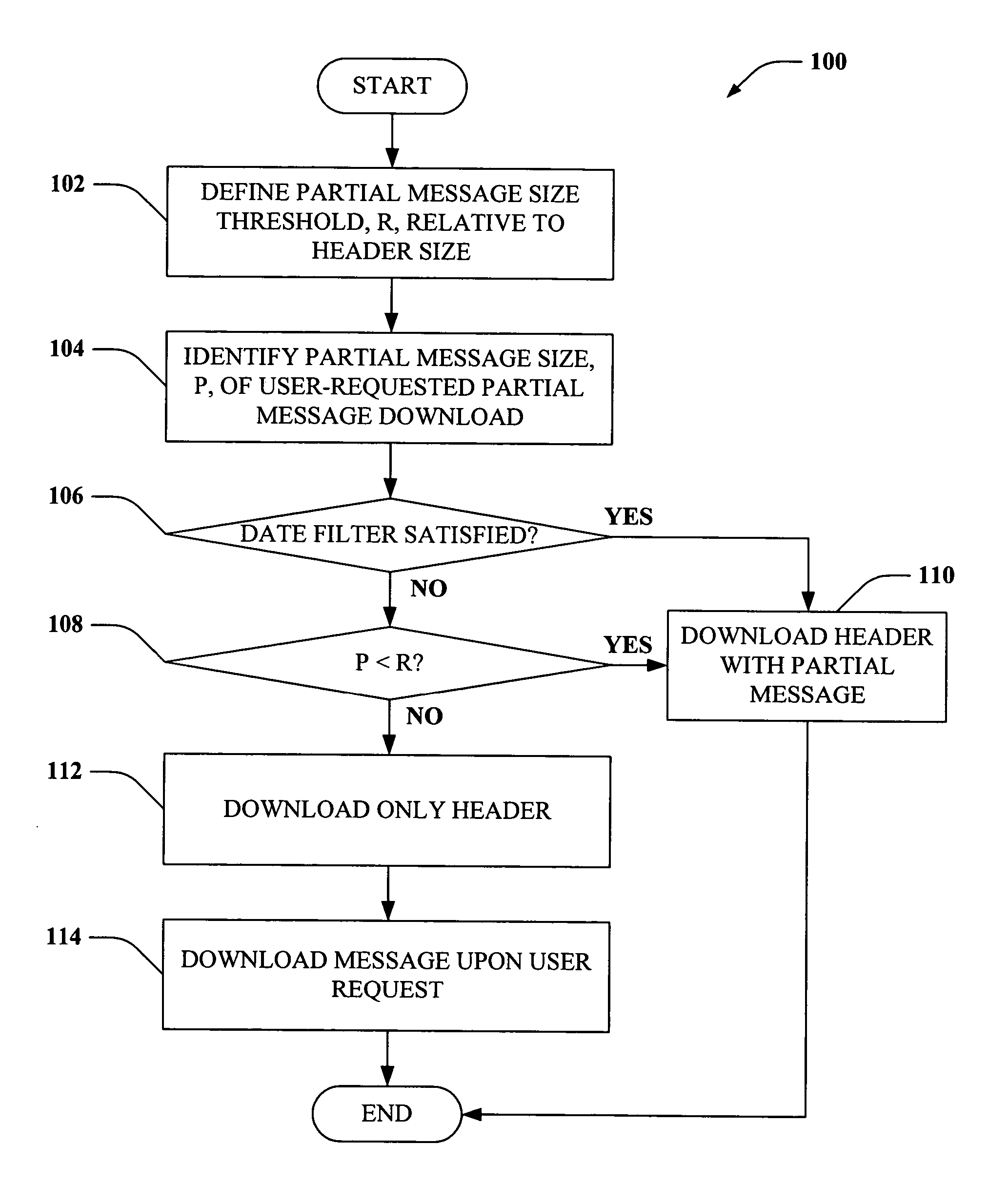

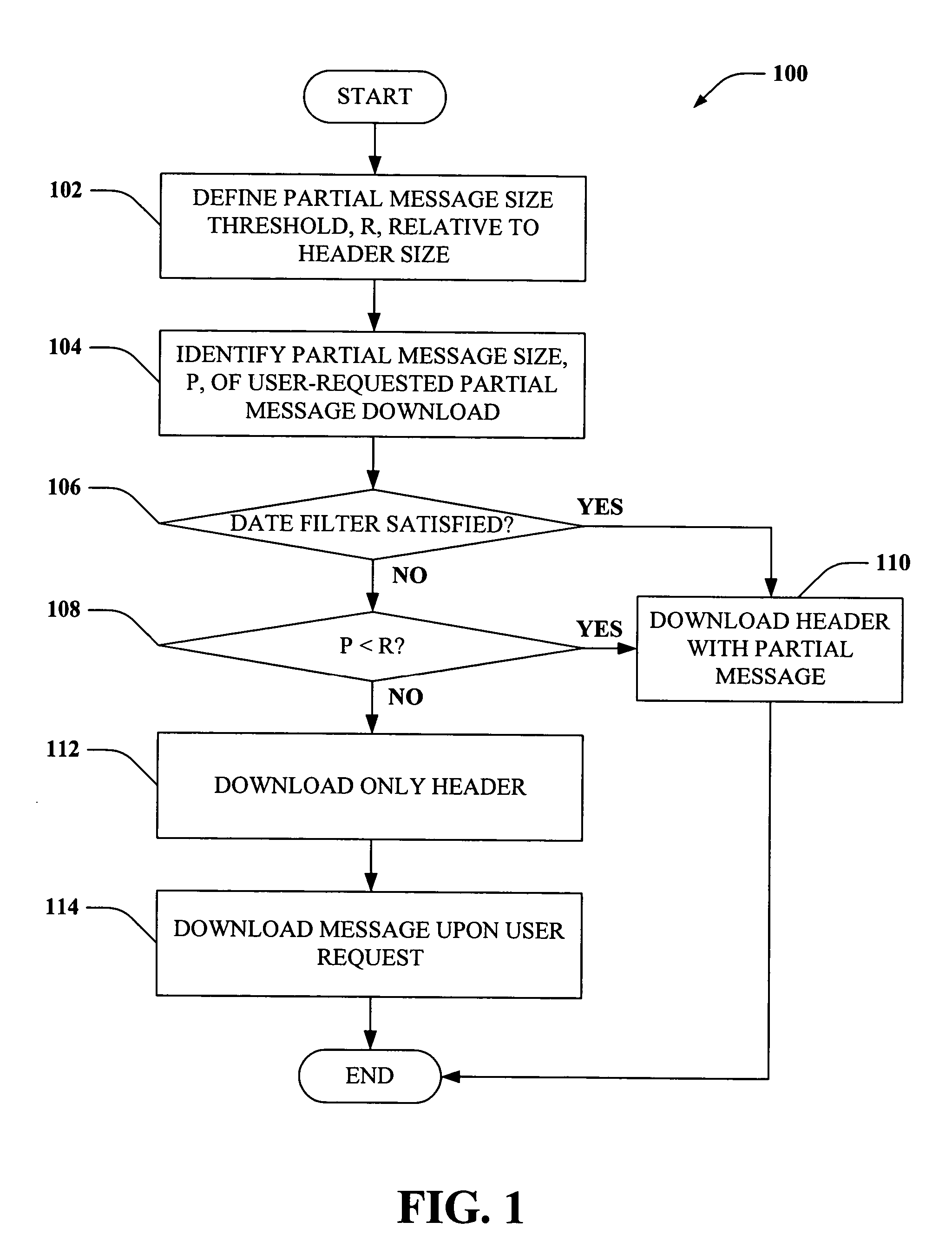

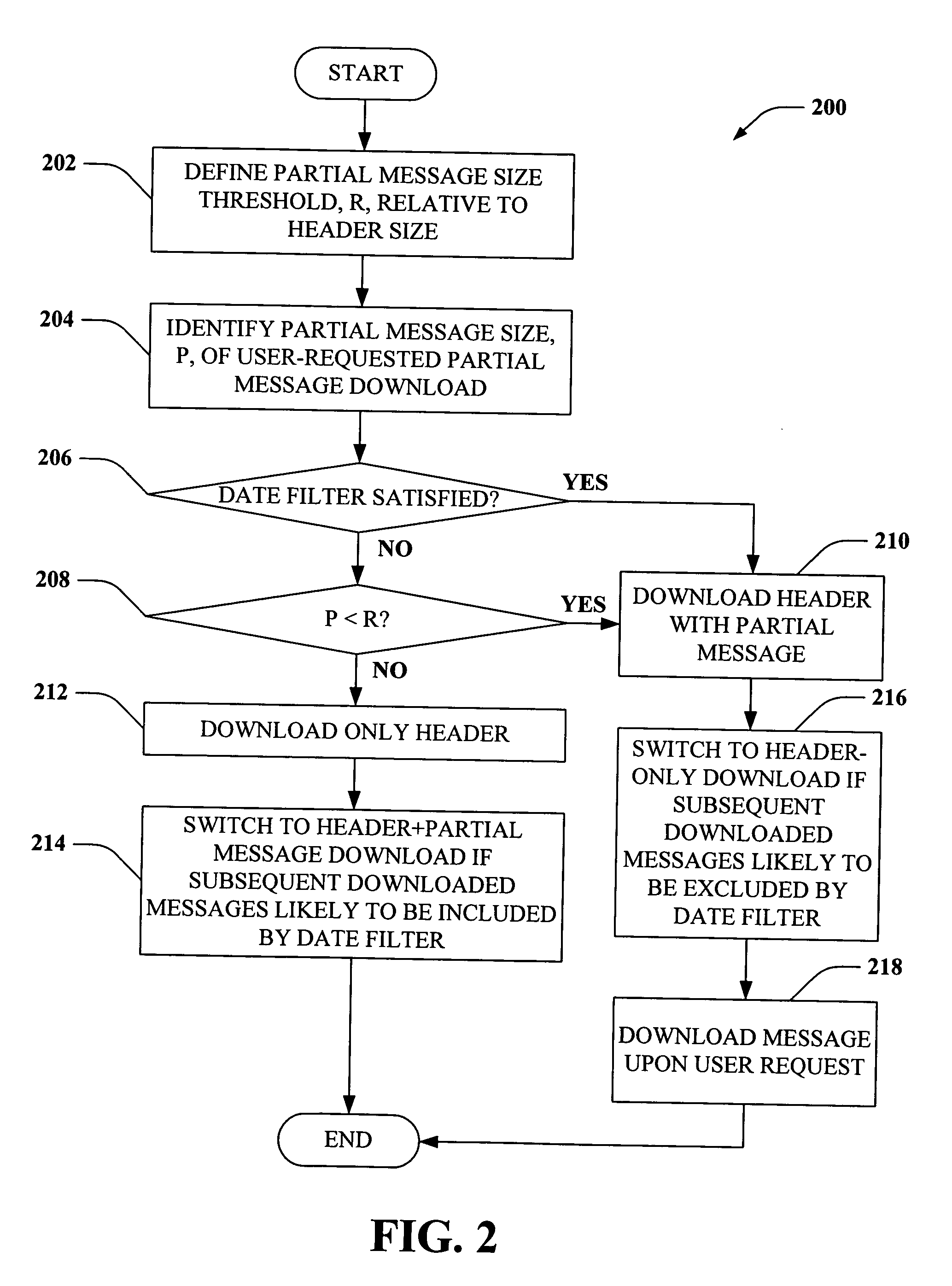

Minimizing data transfer from POP3 servers

InactiveUS20060277257A1Minimize bandwidthMinimize storage requirementMultiple digital computer combinationsData switching networksDate RangeBody size

Systems and methods are disclosed that facilitate minimizing data transfer from a post office protocol (POP) server to a client device by employing a date filter with a predefined date range and applying a message body size threshold above which only a message header will be downloaded to conserve bandwidth and / or memory space on the client device. A user can request download of a message for which only a message header was originally downloaded if the header comprises information of interest to the user.

Owner:MICROSOFT TECH LICENSING LLC

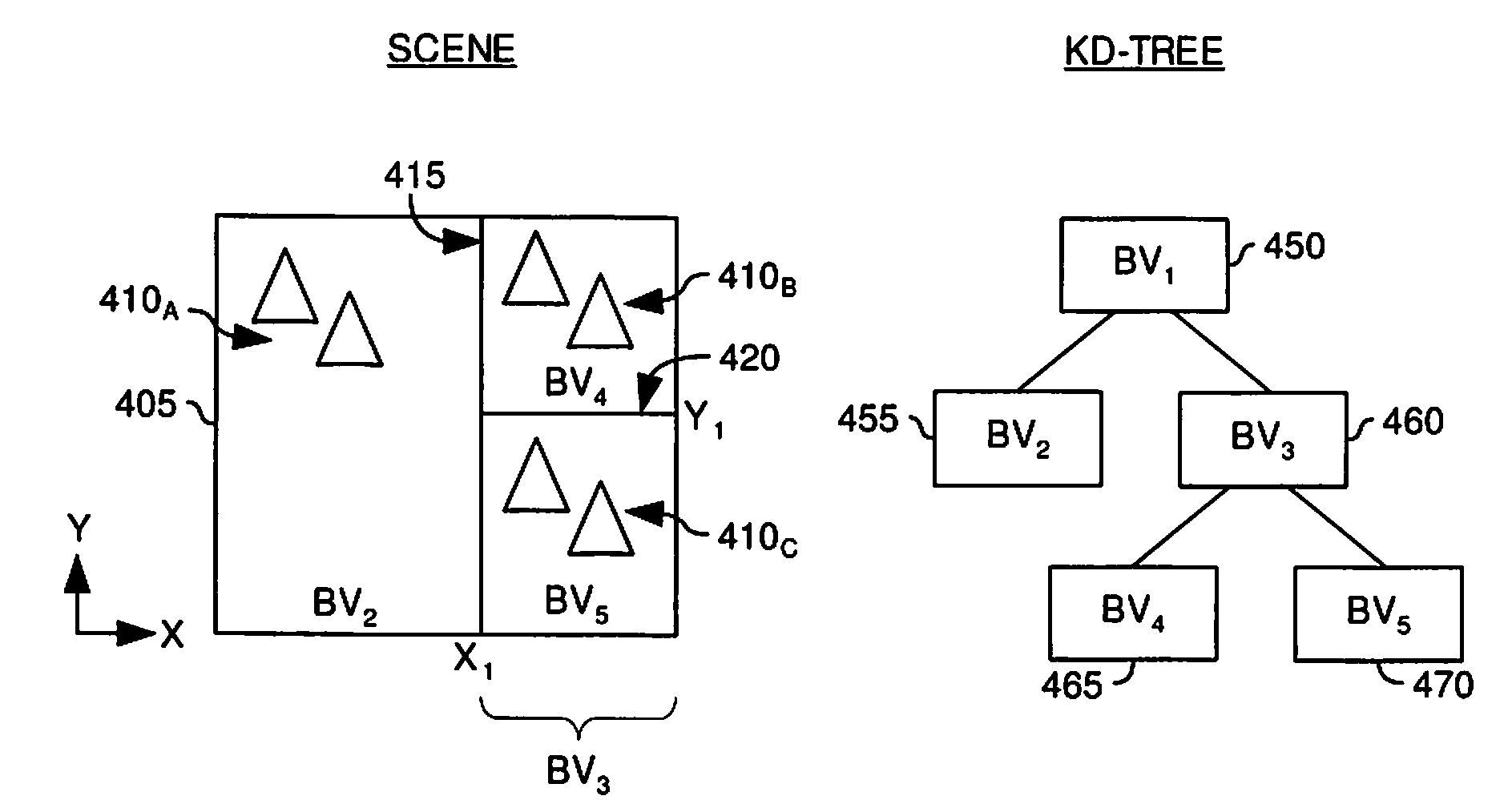

Cache Utilization Optimized Ray Traversal Algorithm with Minimized Memory Bandwidth Requirements

Embodiments of the invention provide methods and apparatus for recording the traversal history of a ray through a spatial index structure and utilizing the recorded traversal history. An image processing system may initially determine which nodes a ray intersects as it traverses through a spatial index. Results of the node intersection determinations may be recorded as the ray traverses the spatial index, and the recorded determinations may be associated with the ray. Furthermore, the image processing system may decide upon a traversal path based upon some probability of striking primitives corresponding to the nodes which make up the spatial index. This traversal path may also be recorded and associated with the ray. If the image processing system needs to re-traverse the spatial index at a later time, the recorded traversal history may be used to eliminate the need to recalculate ray-node intersections, and eliminate incorrect traversal path determinations.

Owner:IBM CORP

[01] cost-efficient repair for storage systems using progressive engagement

ActiveUS20150303949A1Minimal costMinimize the numberError detection/correctionCode conversionNode countDistributed database

An apparatus or method for minimizing the total accessing cost, such as minimizing repair bandwidth, delay or the number of hops including the steps of minimizing the number of nodes to be engaged for the recovery process using a polynomial-time solution that determines the optimal number of participating nodes and the optimal set of nodes to be engaged for recovering lost data, where in a distributed database storage system, for example a dynamic system, where the accessing cost or even the number of available nodes are subject to change results in different values for the optimal number of participating nodes. An MDS code is included which can be reused when the number of participating nodes varies without having to change the entire code structure and the content of the nodes.

Owner:RGT UNIV OF CALIFORNIA

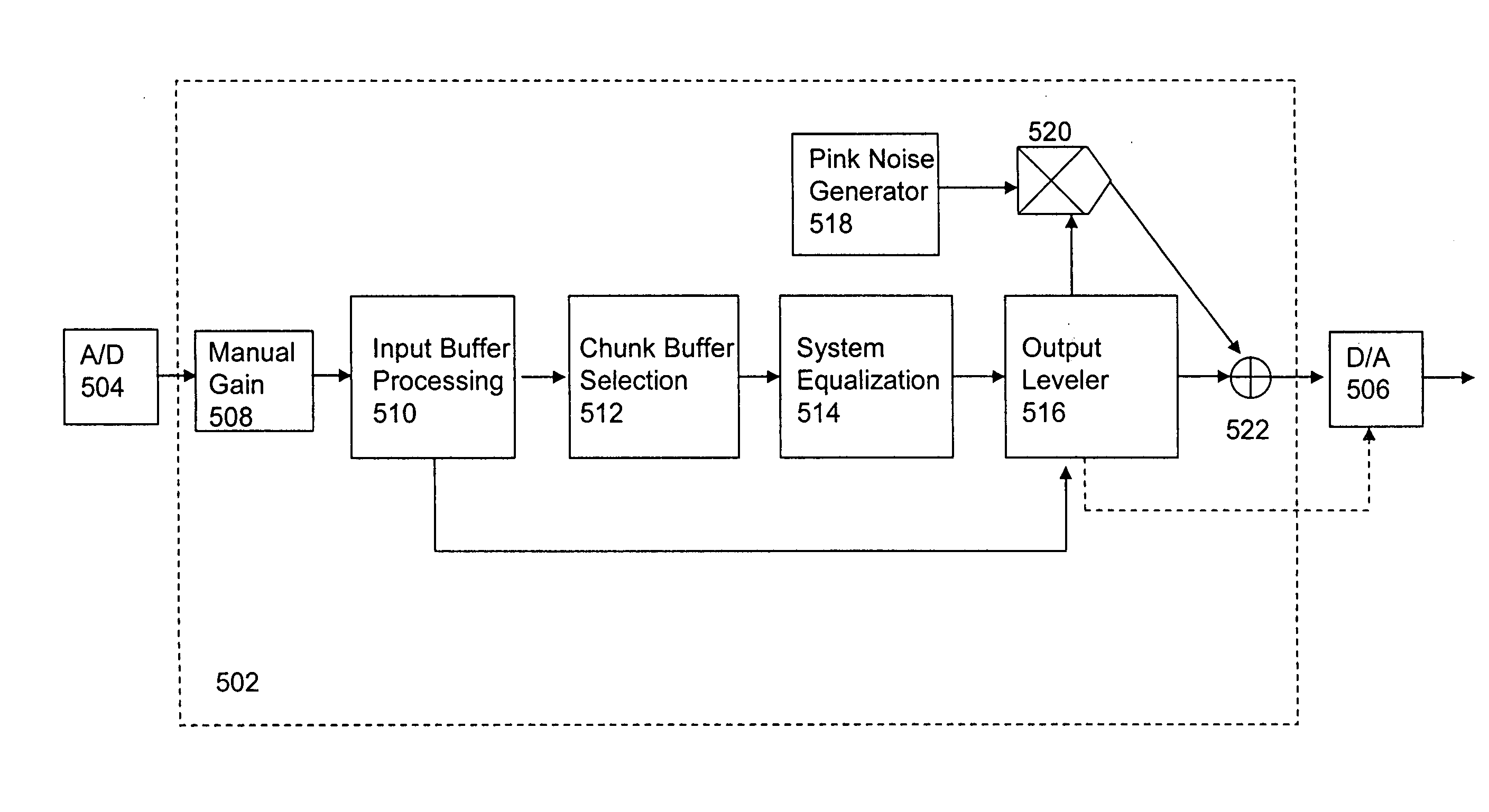

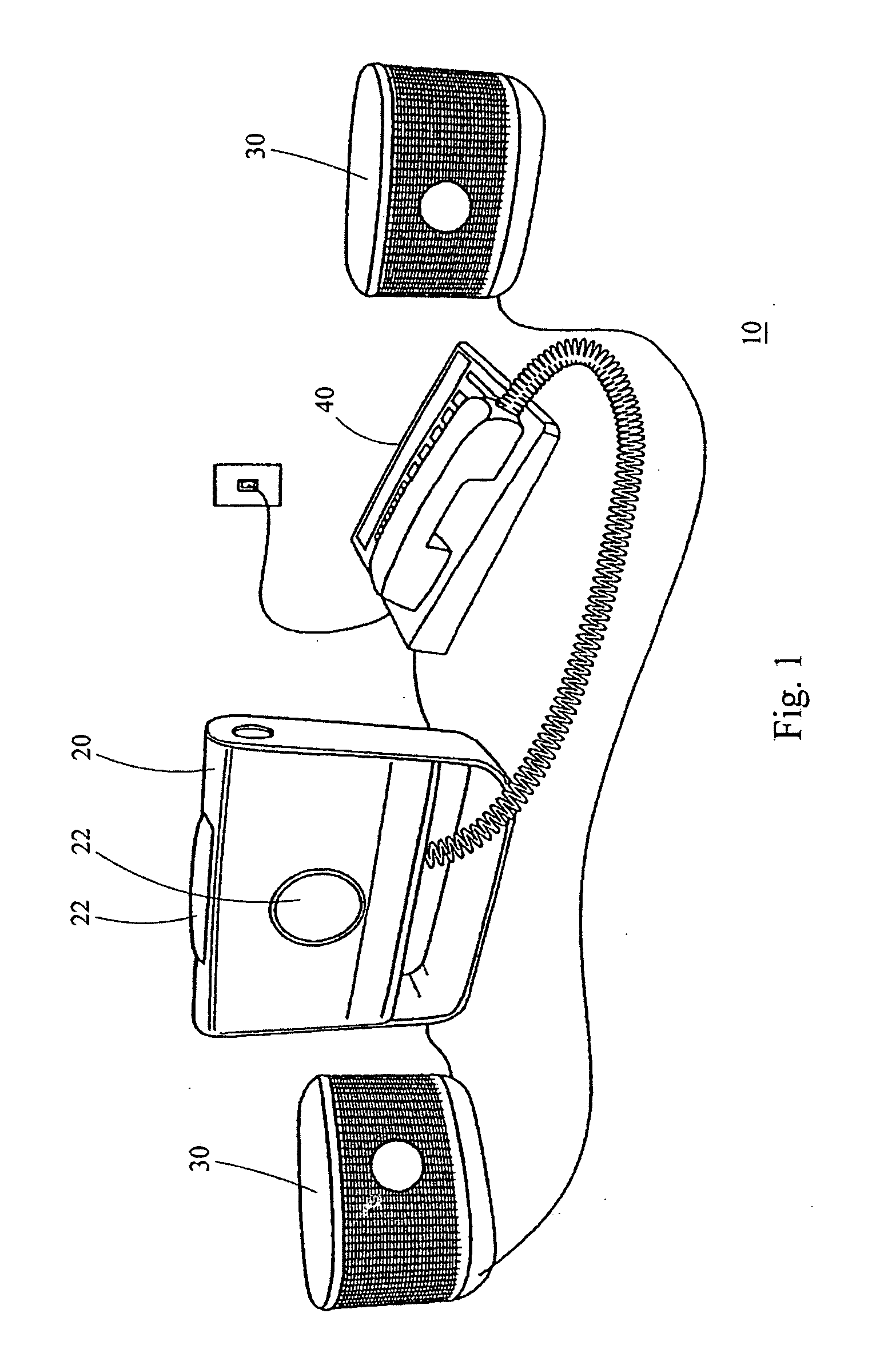

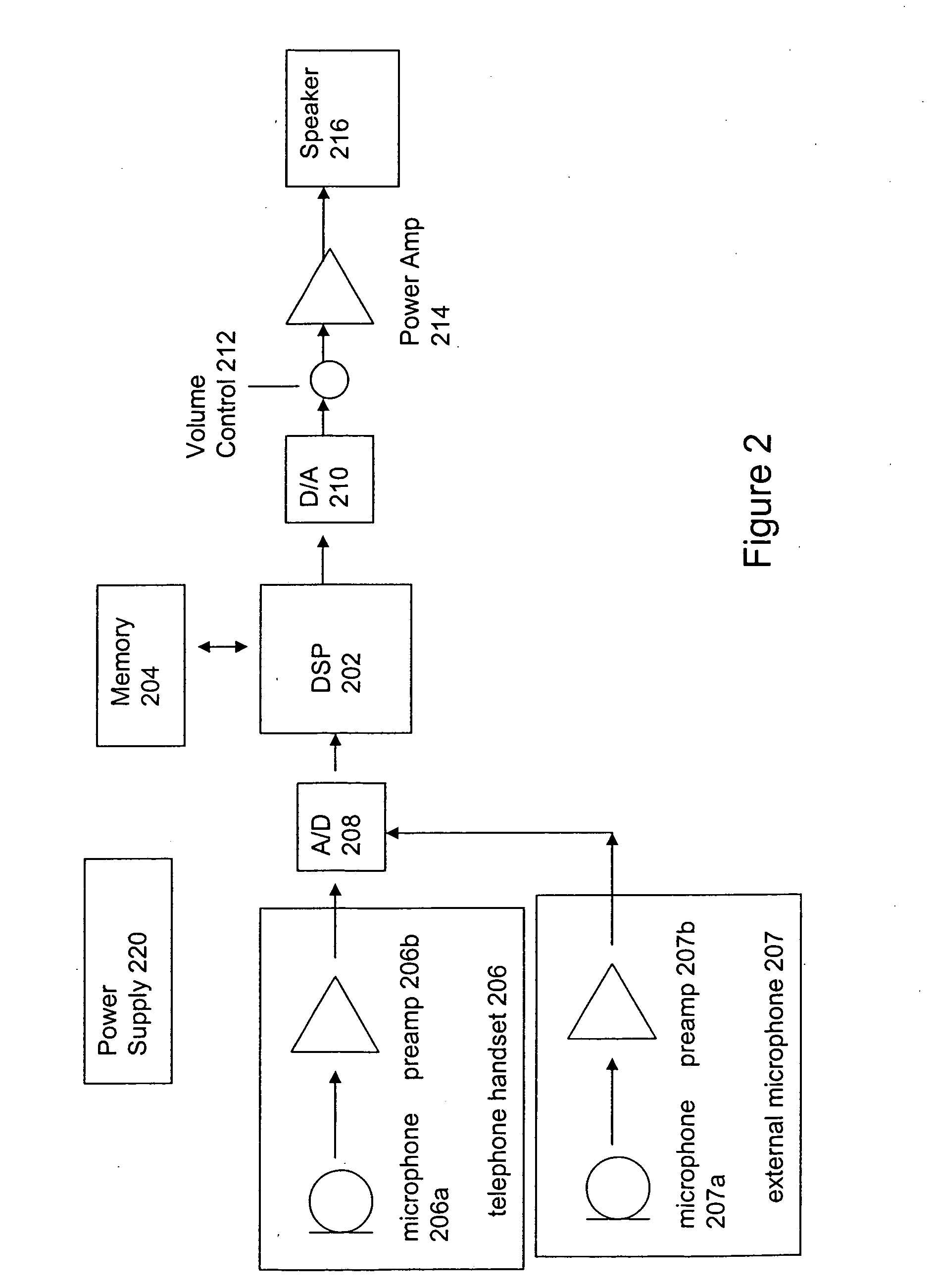

Method and apparatus for speech privacy

InactiveUS20060247919A1Reduce capacityLose abilityEar treatmentGain controlInternet privacyAuditory system

A privacy apparatus adds a privacy sound based on a speaker's own voice into the environment, thereby confusing listeners as to which of the sounds is the real source. This permits disruption of the ability to understand the source speech of the user by eliminating segregation cues that the auditory system uses to interpret speech. The privacy apparatus minimizes segregation cues. The privacy apparatus is relatively quiet and thus easily acceptable in a typical open floor design office space. The privacy apparatus contains an A / D converter that converts the speech into a digital signal, a DSP that converts the digital signal into a privacy signal with pre-recorded speech fragments of the person speaking, a D / A converter that converts the privacy signal into an output signal and one or more loudspeakers from which the output signal is emitted.

Owner:HERMAN MILLER INC

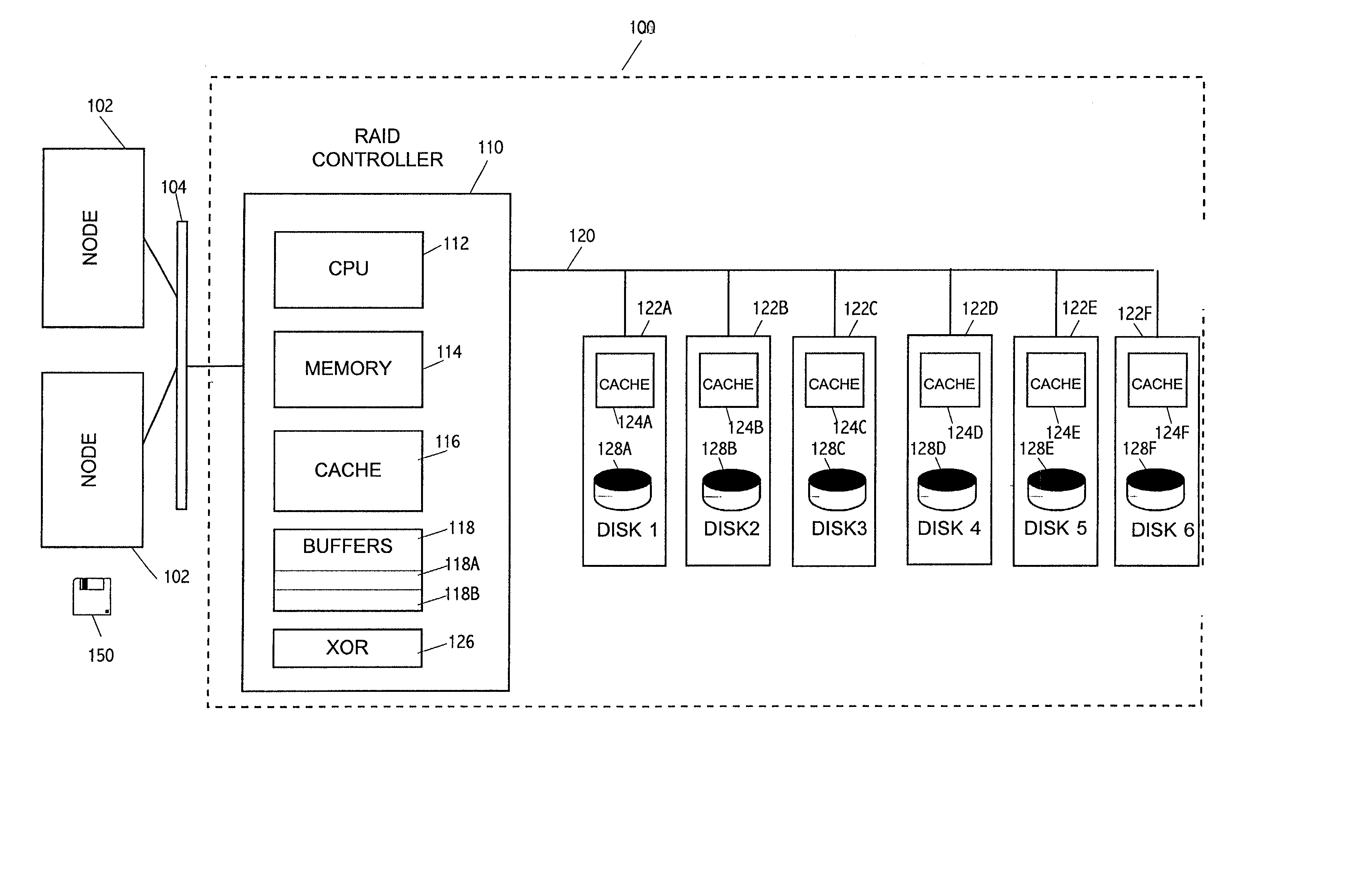

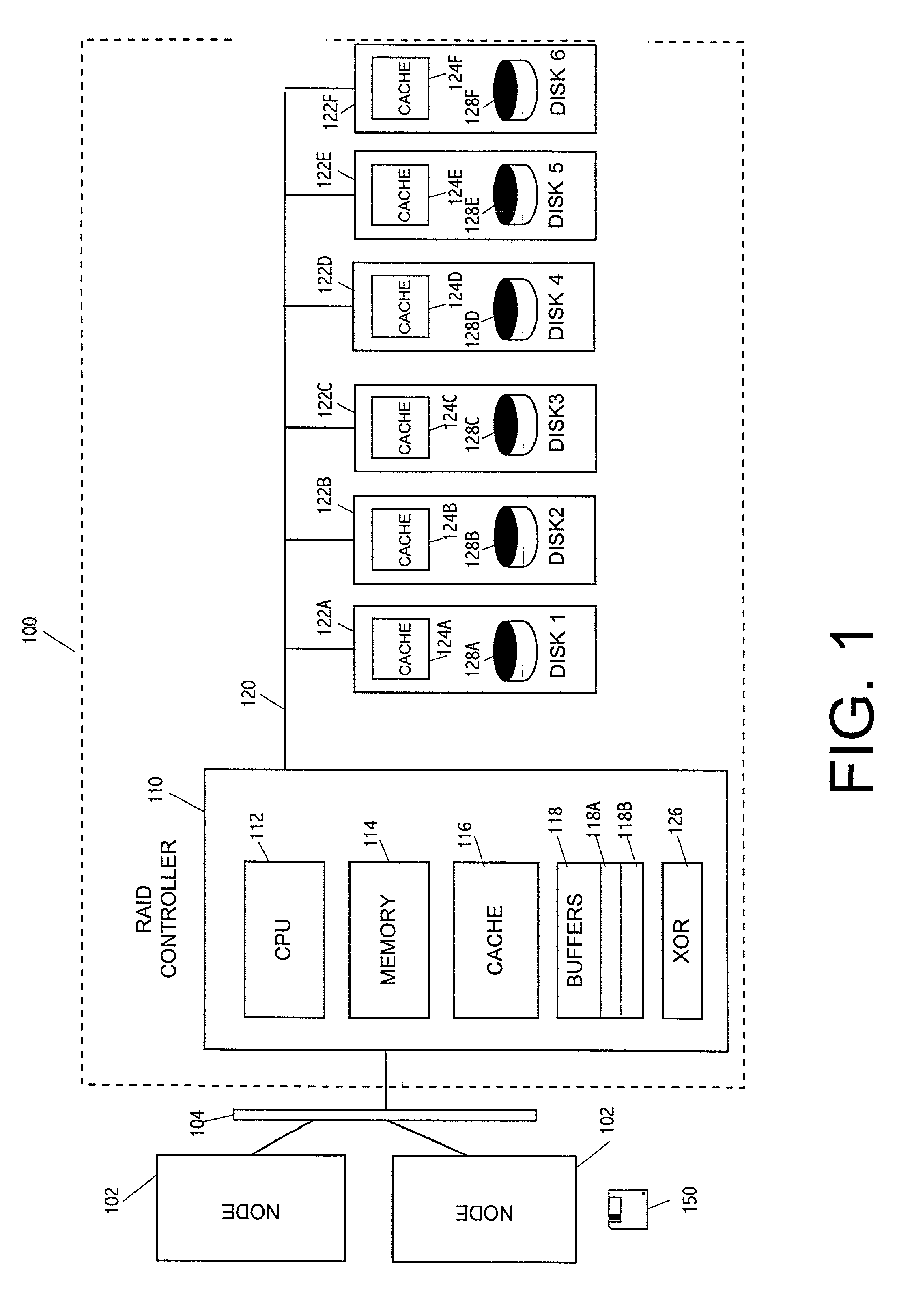

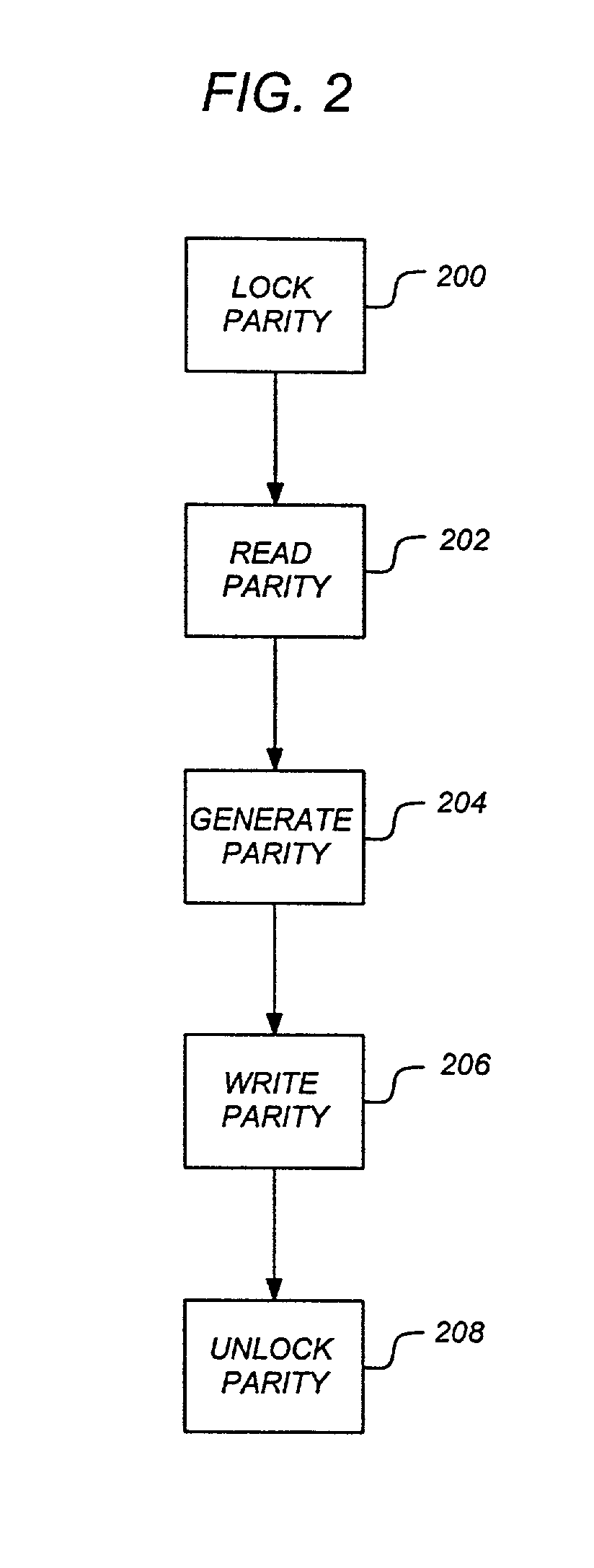

Method and apparatus for supporting parity protected raid in a clustered environment

To address the requirements described above, the present invention discloses a method, apparatus, article of manufacture, and a locking structure for supporting parity protected RAID in a clustered environment. When updating parity, the parity is locked so that other nodes cannot access or modify the parity. Accordingly, the parity is locked, read, generated, written, and unlocked by a node. An enhanced protocol may combine the lock and read functions and the write and unlock functions. Further, the SCSI RESERVE and RELEASE commands may be utilized to lock / unlock the parity data. By locking the parity in this manner, overhead is minimized and does not increase as the number of nodes increases.

Owner:IBM CORP

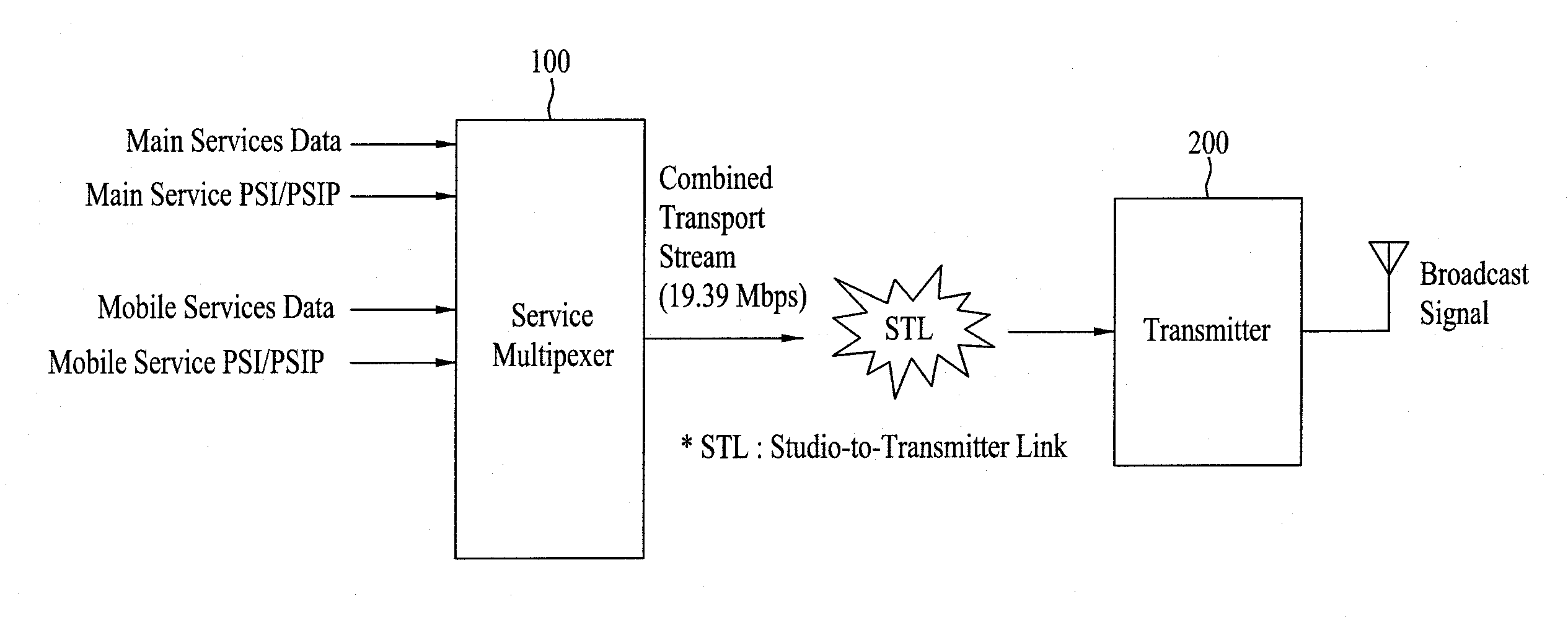

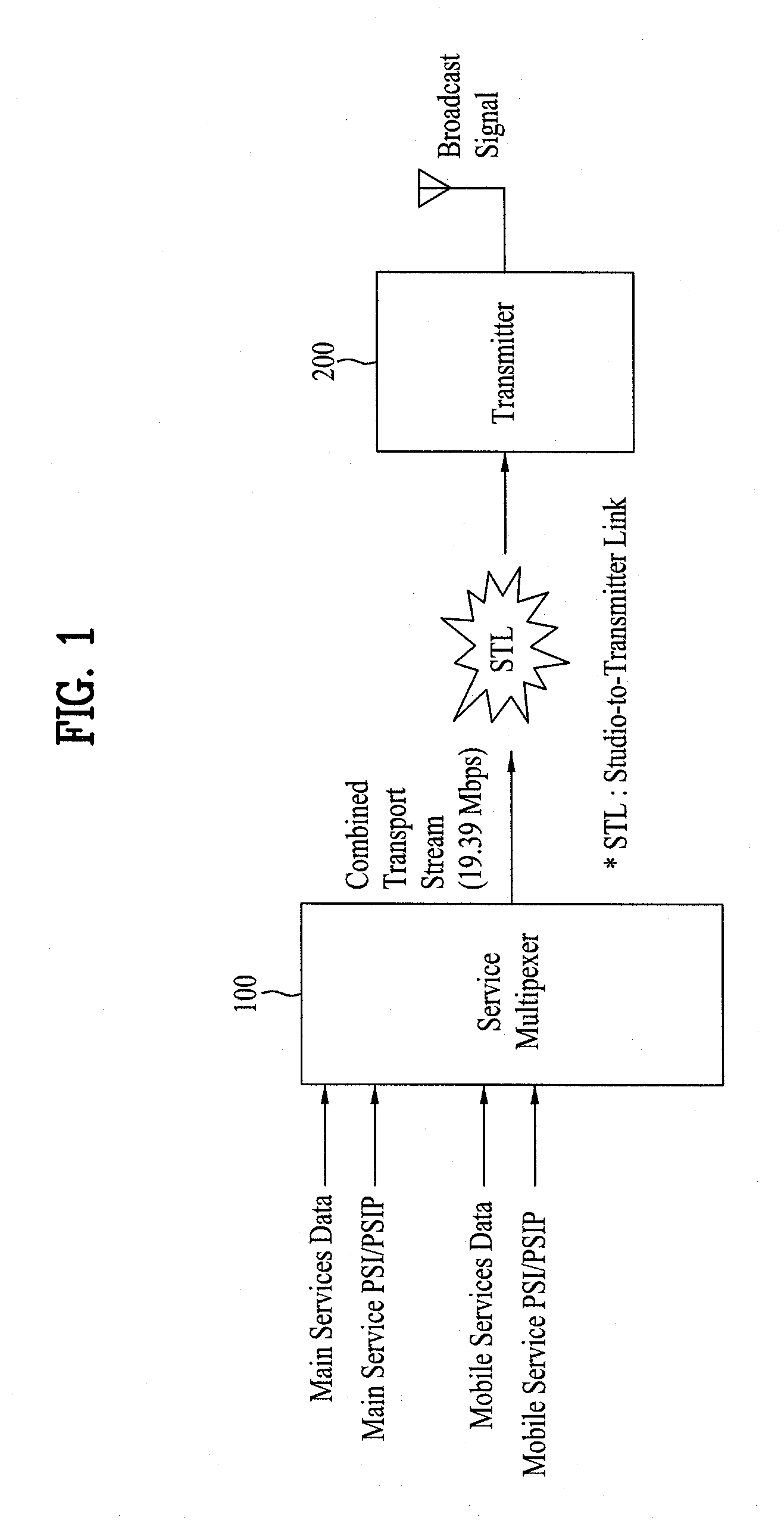

Method of controlling and apparatus of receiving mobile service data

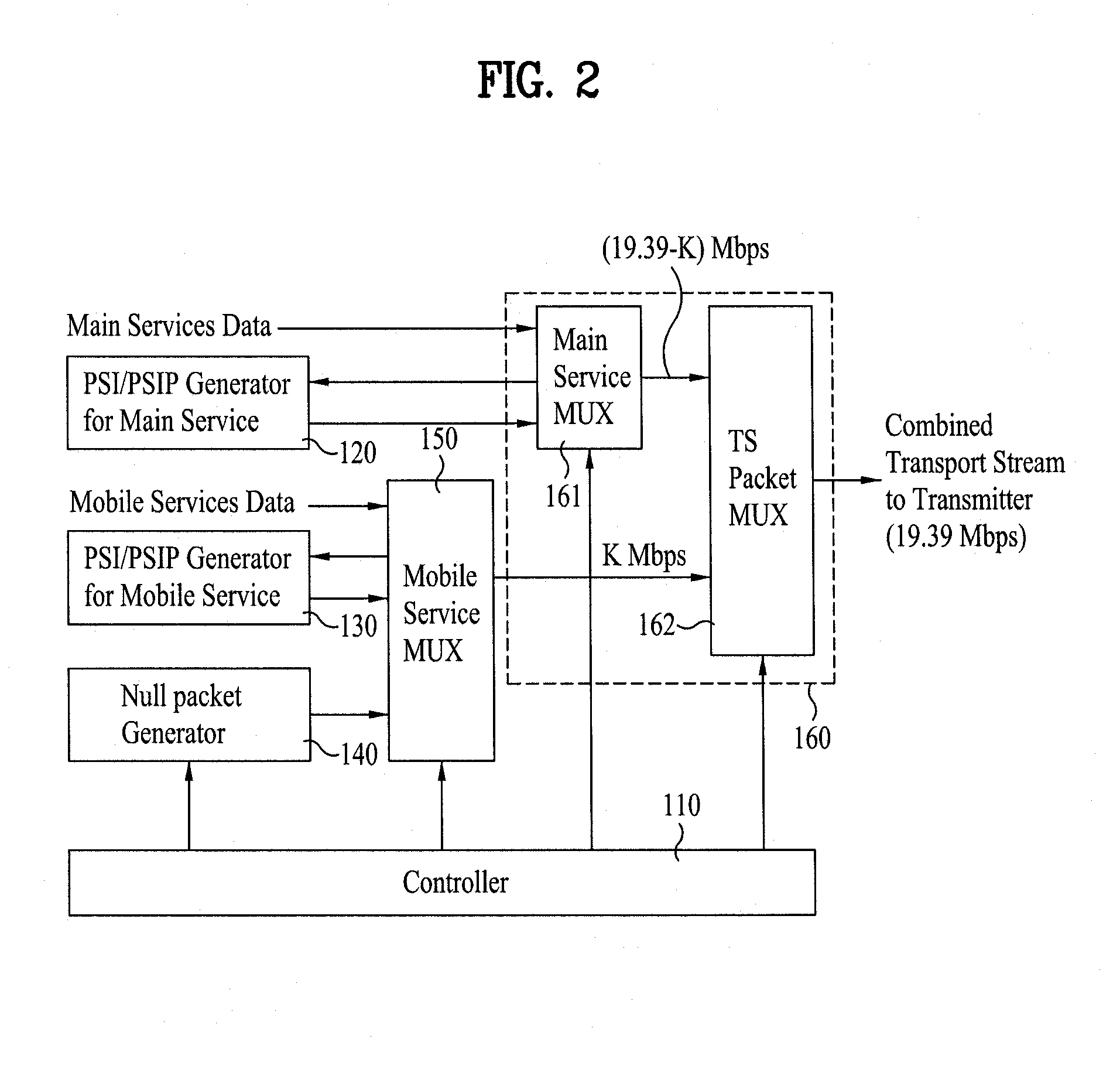

ActiveUS20080271077A1Strong resistanceImprove performanceTelevision system detailsBroadcast specific applicationsMobile businessMobile context

A data transmission system for minimizing the number of errors during Tx / Rx times of mobile service data under mobile environments, and a data processing method for the same are disclosed. The system additionally codes the mobile service data, and transmits the resultant coded mobile service data. As a result, the mobile service data has a strong resistance to noise and channel variation, and can quickly cope with the rapid channel variation.

Owner:LG ELECTRONICS INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com

![[01] cost-efficient repair for storage systems using progressive engagement [01] cost-efficient repair for storage systems using progressive engagement](https://images-eureka-patsnap-com.libproxy1.nus.edu.sg/patent_img/a9653ab0-f18e-444f-8212-6db105d9530a/US20150303949A1-20151022-D00000.PNG)

![[01] cost-efficient repair for storage systems using progressive engagement [01] cost-efficient repair for storage systems using progressive engagement](https://images-eureka-patsnap-com.libproxy1.nus.edu.sg/patent_img/a9653ab0-f18e-444f-8212-6db105d9530a/US20150303949A1-20151022-D00001.PNG)

![[01] cost-efficient repair for storage systems using progressive engagement [01] cost-efficient repair for storage systems using progressive engagement](https://images-eureka-patsnap-com.libproxy1.nus.edu.sg/patent_img/a9653ab0-f18e-444f-8212-6db105d9530a/US20150303949A1-20151022-D00002.PNG)