Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

114 results about "Long latency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Latency period is the time between exposure to something that can cause disease, like asbestos, and presentation of symptoms in patients. For mesothelioma specifically, there is a long latency period, which is directly related to the poor prognosis that is typical of the disease.

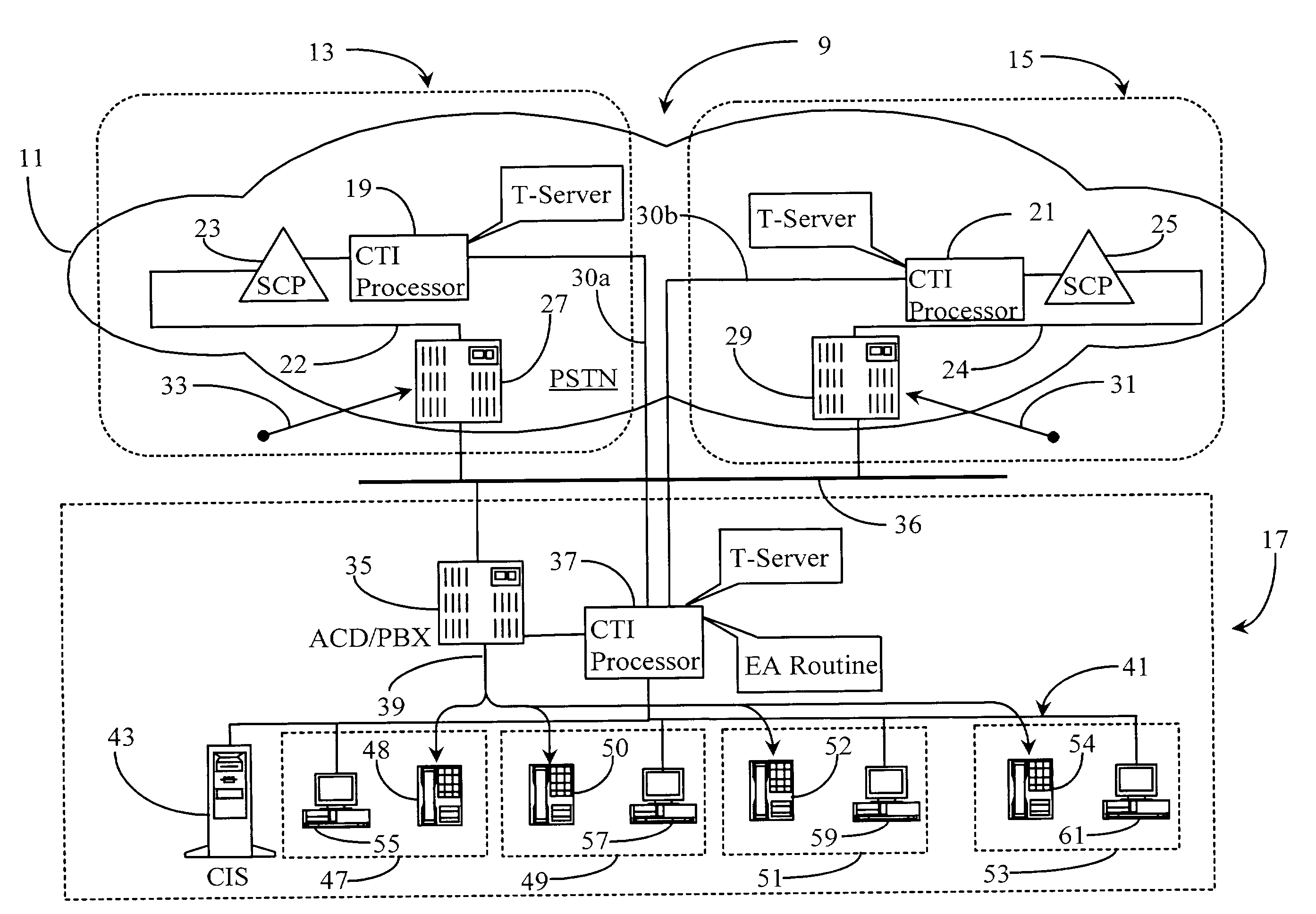

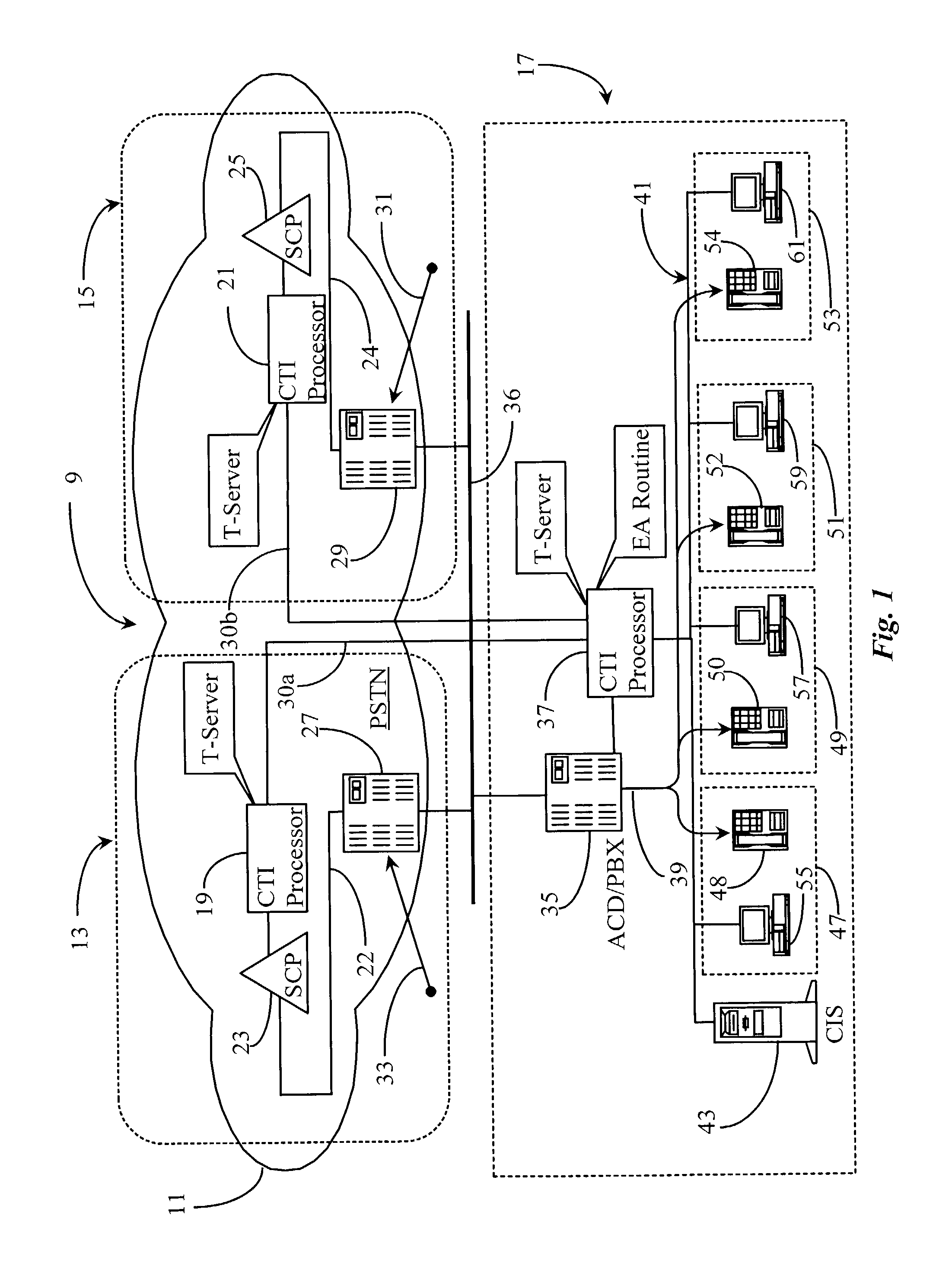

Method and apparatus for providing fair access to agents in a communication center

InactiveUS7236584B2Long latencyShort incubation periodIntelligent networksSpecial service for subscribersLong latencyTimer

A system for granting access to agents at a communication center in response to requests for connection from network-level entities starts a fairness timer having a fairness time period when a first request is received for and agent, monitors any other requests for the same agent during the fairness time, and executes an algorithm at the end of the fairness time to select the network-level entity to which the request should be granted. In a preferred embodiment the fairness time is set to be equal to or greater than twice the difference between network round-trip latency for the longest latency and shortest latency routers requesting service from the communication center. In some embodiments an agent reservation timer is set at the same point as the fairness timer to prevent calls to the same agent, and has a period longer than the fairness timer by a time sufficient for a connection to be made to the agent station once access is granted, and for notification of the connection to be made to network-level entities.

Owner:GENESYS TELECOMM LAB INC AS GRANTOR +3

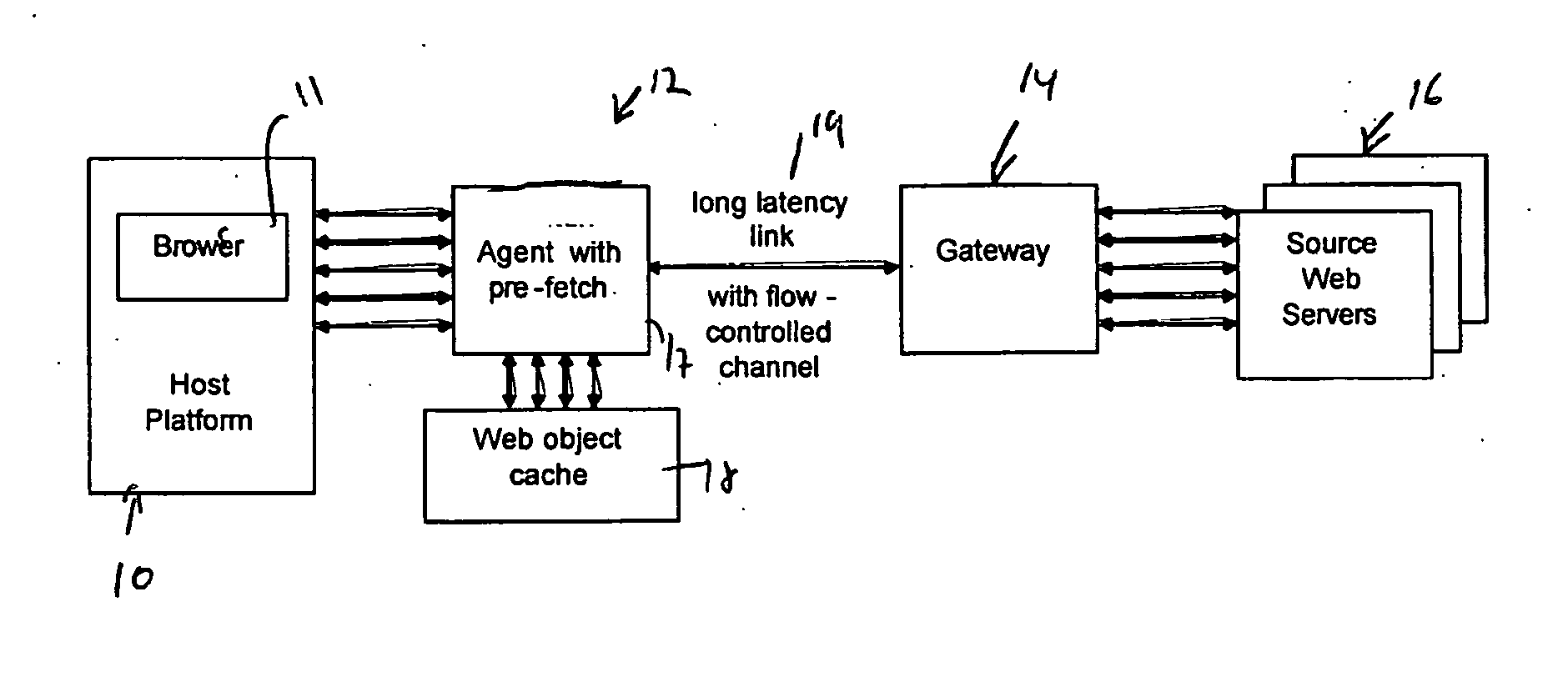

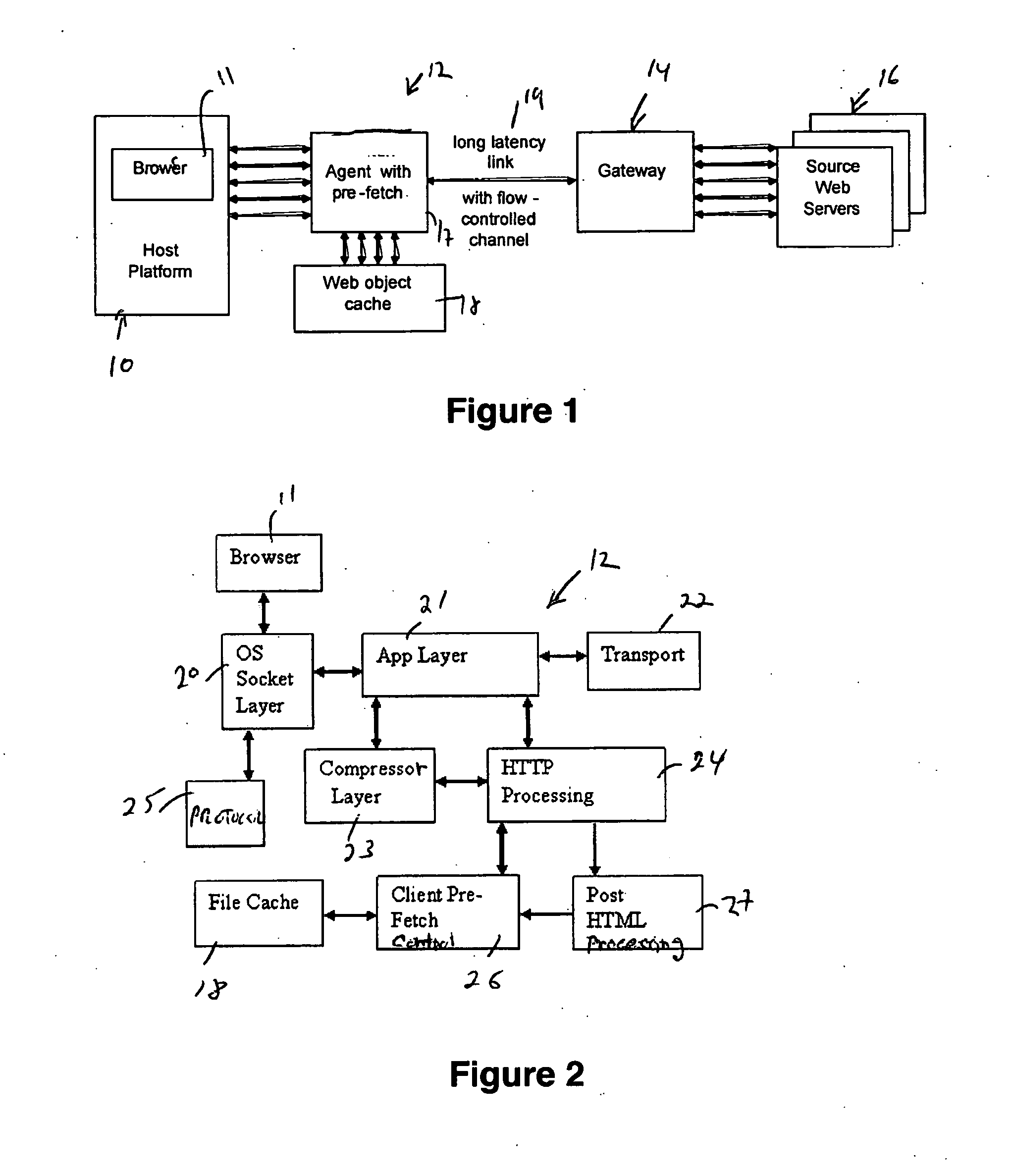

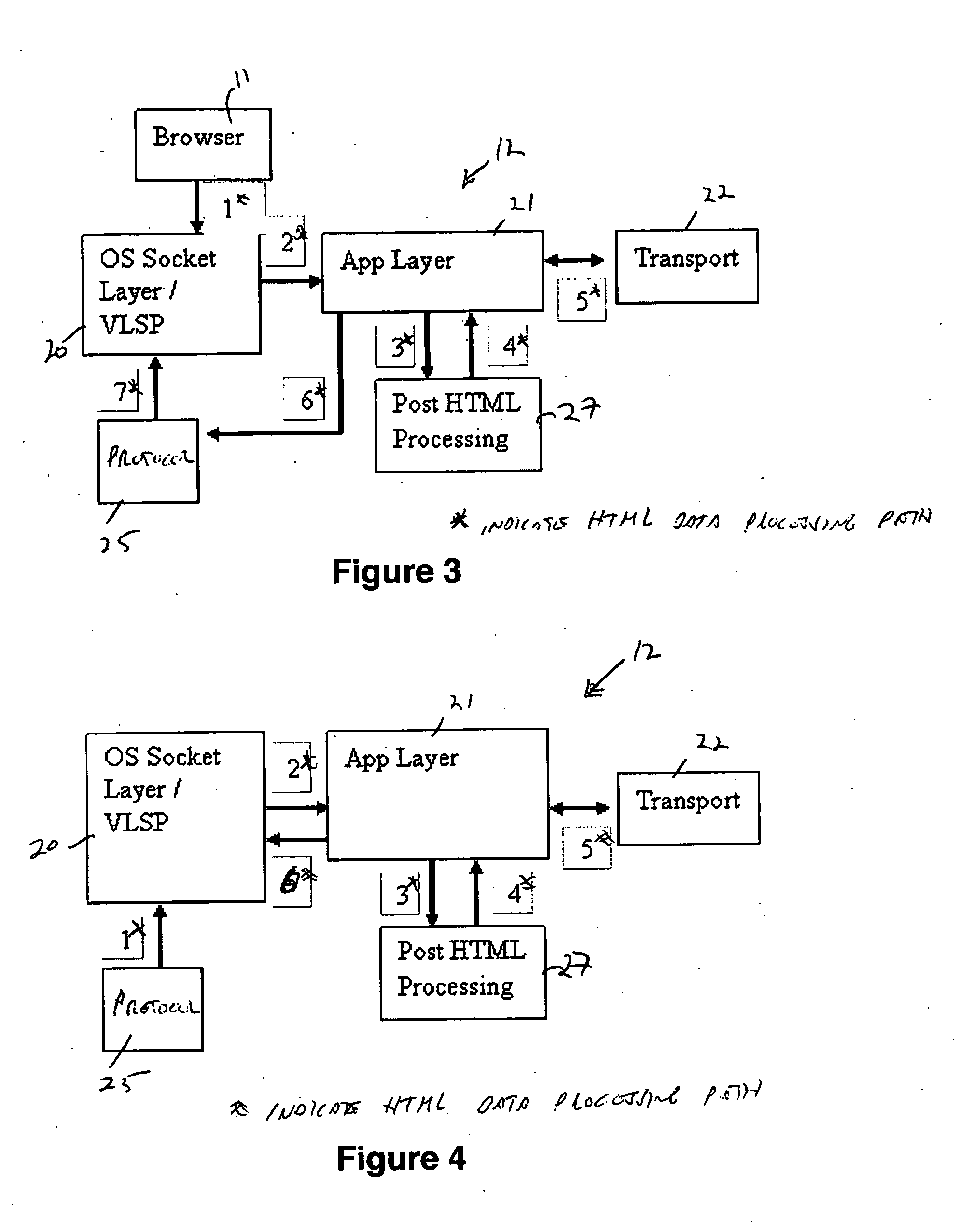

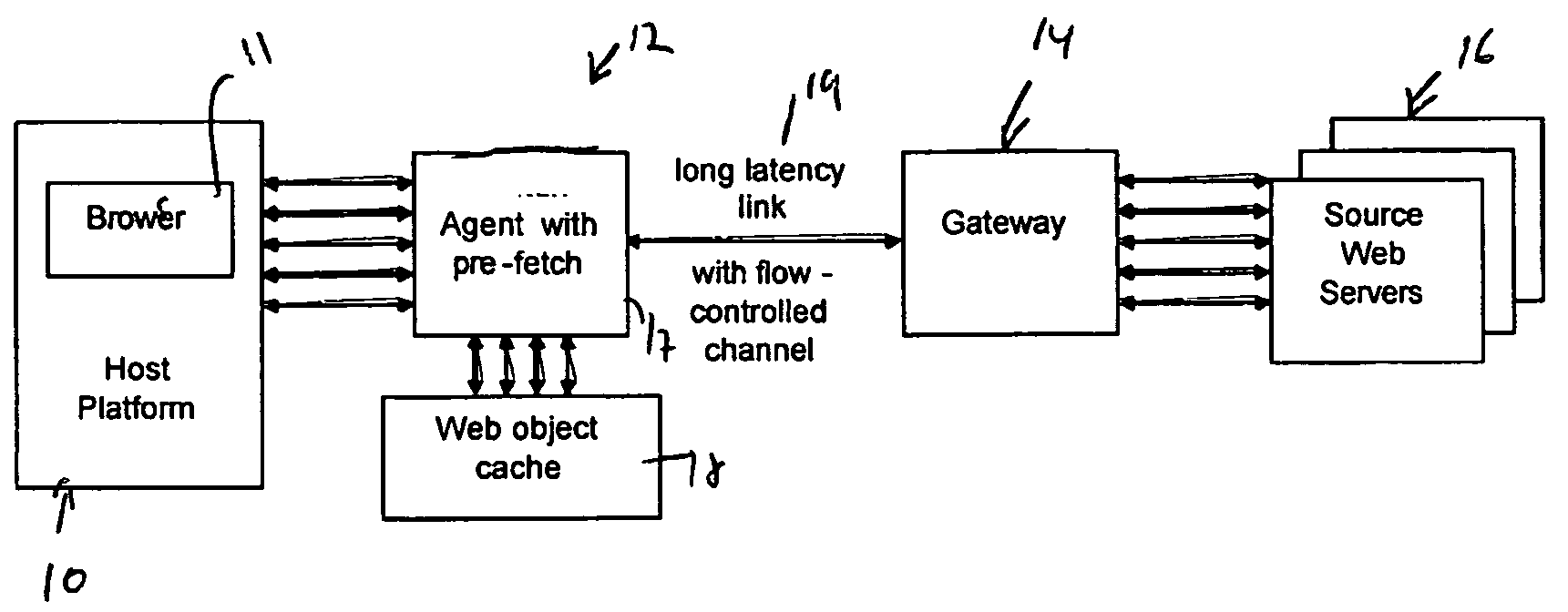

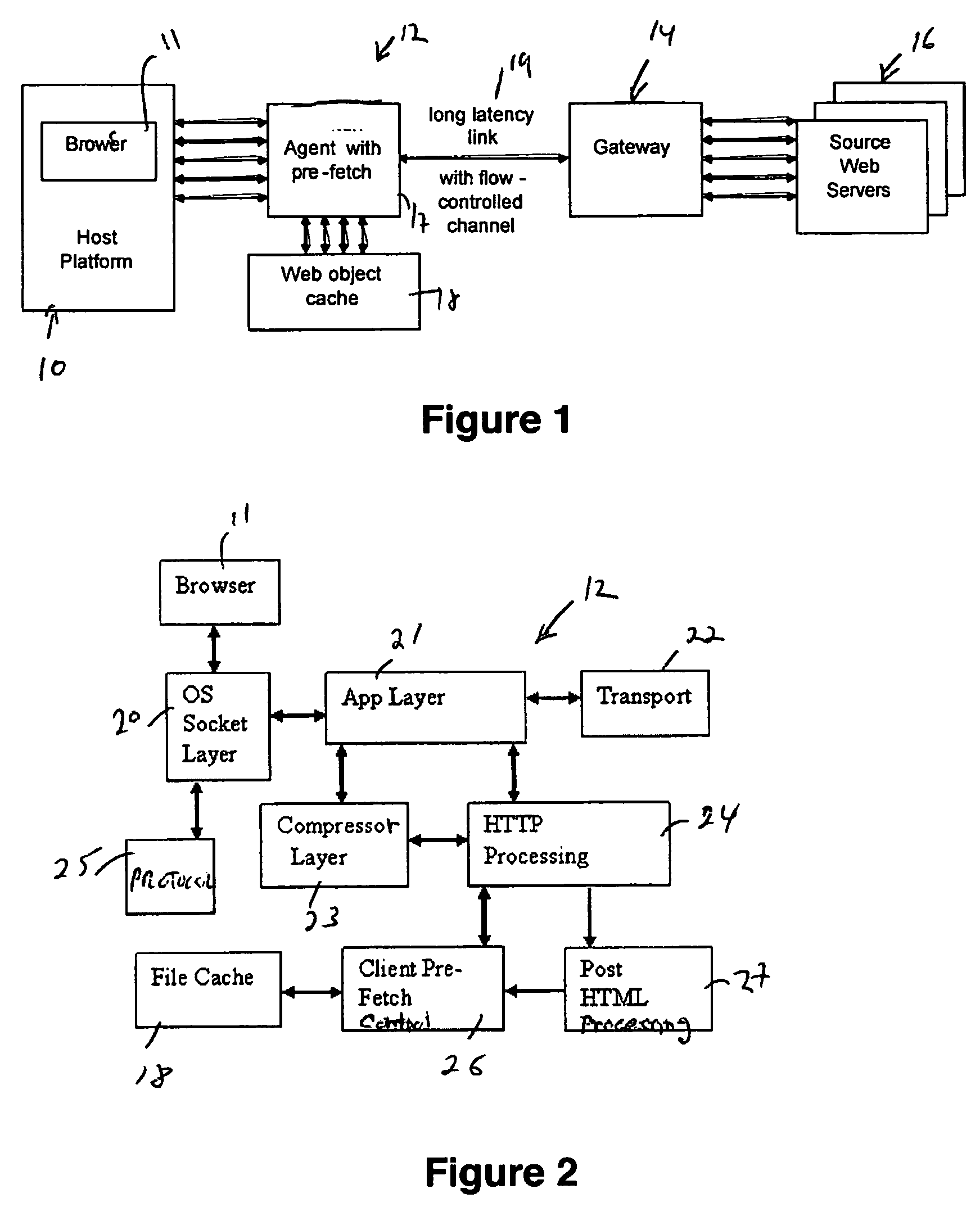

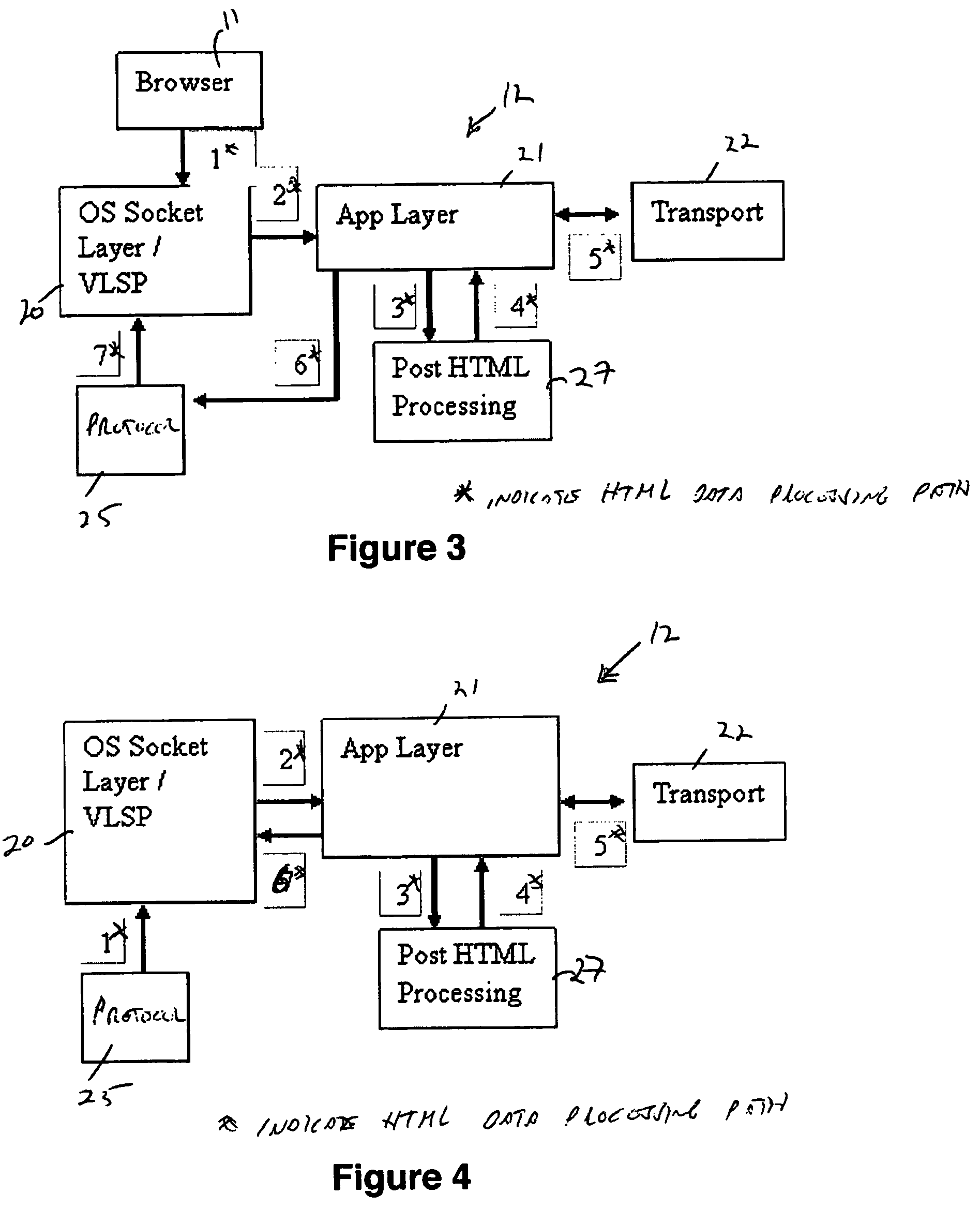

Method and apparatus for increasing performance of HTTP over long-latency links

ActiveUS20060253546A1Reduce negative impactImprove parallelismDigital data information retrievalMultiprogramming arrangementsWeb siteWeb browser

The invention increases performance of HTTP over long-latency links by pre-fetching objects concurrently via aggregated and flow-controlled channels. An agent and gateway together assist a Web browser in fetching HTTP contents faster from Internet Web sites over long-latency data links. The gateway and the agent coordinate the fetching of selective embedded objects in such a way that an object is ready and available on a host platform before the resident browser requires it. The seemingly instantaneous availability of objects to a browser enables it to complete processing the object to request the next object without much wait. Without this instantaneous availability of an embedded object, a browser waits for its request and the corresponding response to traverse a long delay link.

Owner:VENTURI WIRELESS

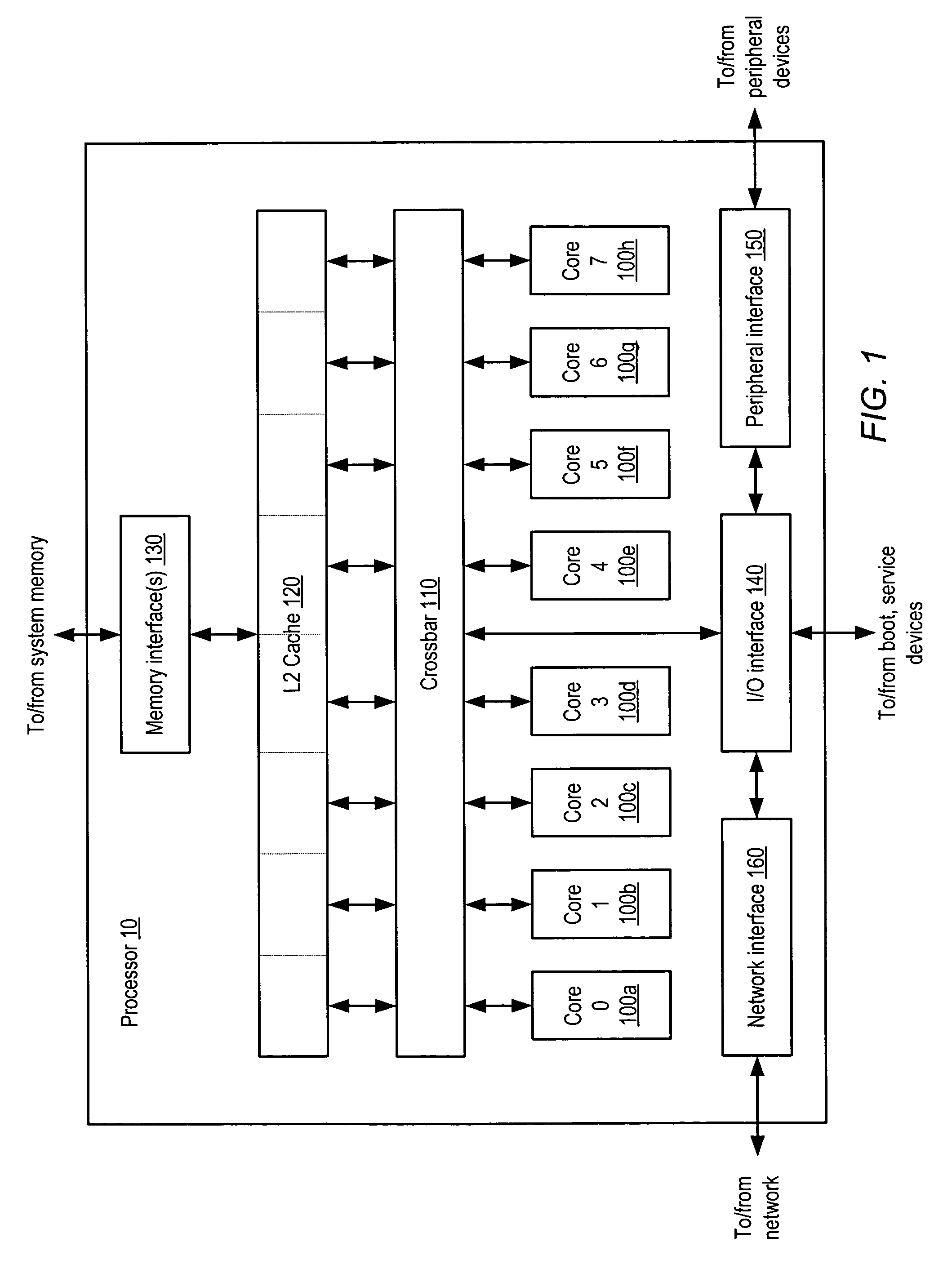

Detecting long latency pipeline stalls for thread switching

InactiveUS6016542AGeneral purpose stored program computerConcurrent instruction executionLong latencyLevel structure

An apparatus is provided that operates in conjunction with a processor having registers and associated caches and a memory. A load management module monitors loads that return data to the registers, including bus requests generated in response to loads that miss in one or more of the caches. A cache miss register includes entries, each of which is associated with one of the registers. A mapping module maps a bus request to a register and sets a bit in a cache miss register entry associated with the register when the bus request is directed to a higher level structure in the memory system.

Owner:INTEL CORP

Method and apparatus for increasing performance of HTTP over long-latency links

ActiveUS7694008B2Reduce negative impactNo latencyDigital data information retrievalMultiprogramming arrangementsWeb siteWeb browser

The invention increases performance of HTTP over long-latency links by pre-fetching objects concurrently via aggregated and flow-controlled channels. An agent and gateway together assist a Web browser in fetching HTTP contents faster from Internet Web sites over long-latency data links. The gateway and the agent coordinate the fetching of selective embedded objects in such a way that an object is ready and available on a host platform before the resident browser requires it. The seemingly instantaneous availability of objects to a browser enables it to complete processing the object to request the next object without much wait. Without this instantaneous availability of an embedded object, a browser waits for its request and the corresponding response to traverse a long delay link.

Owner:VENTURI WIRELESS

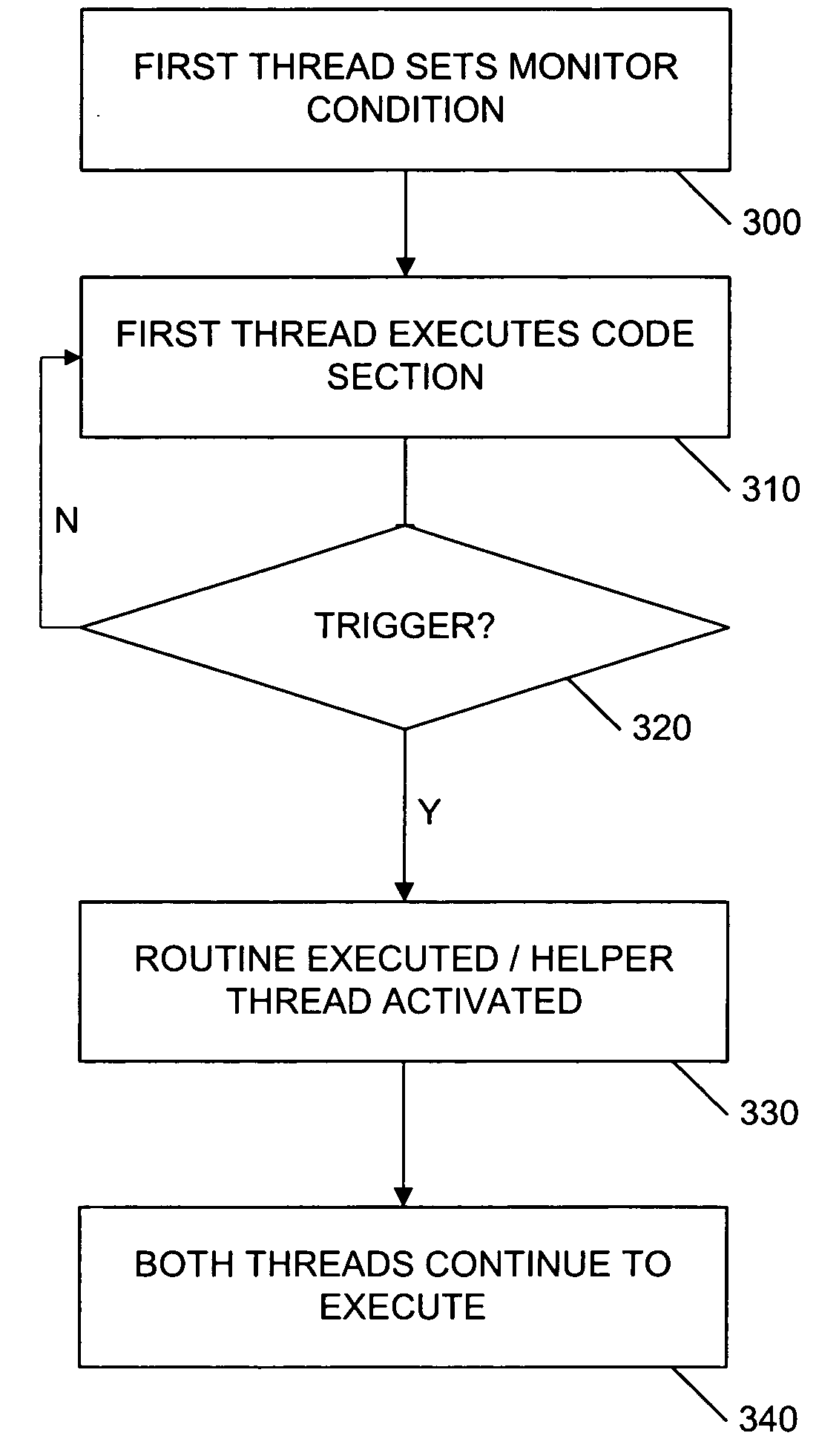

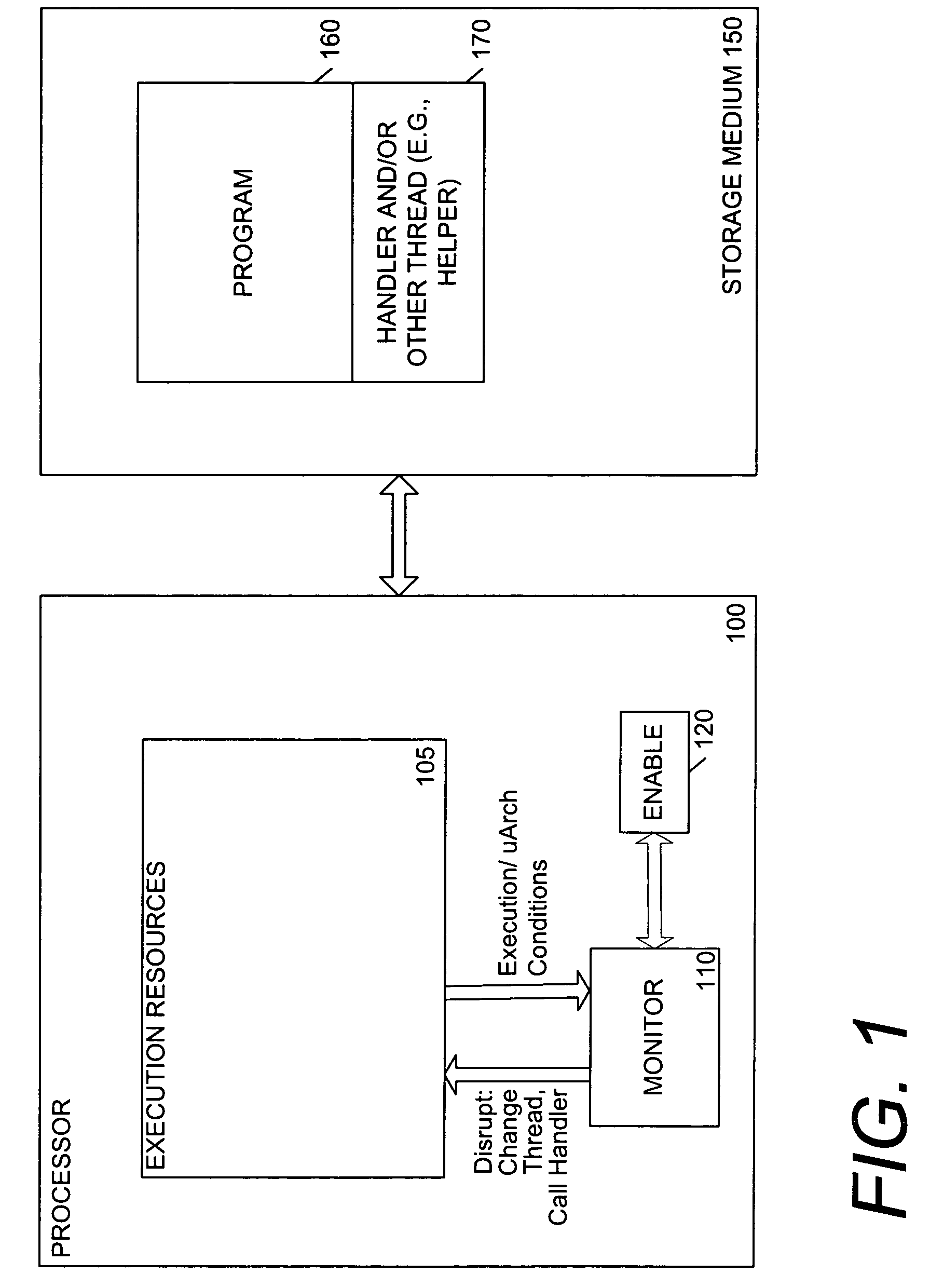

Mechanism to exploit synchronization overhead to improve multithreaded performance

Method, apparatus, and program means for a programmable event driven yield mechanism that may activate other threads. In one embodiment, an apparatus includes execution resources to execute a plurality of instructions and an event detector to detect a long latency event associated with a synchronization object. The event detector can cause a first thread switch in response to the long latency event associated with the synchronization object. The apparatus may also include a spin detector to detect that the synchronization object is a contended synchronization object. The spin detector can cause a second thread switch in response to the detection of the contended synchronization object to enable a spin detect response.

Owner:INTEL CORP

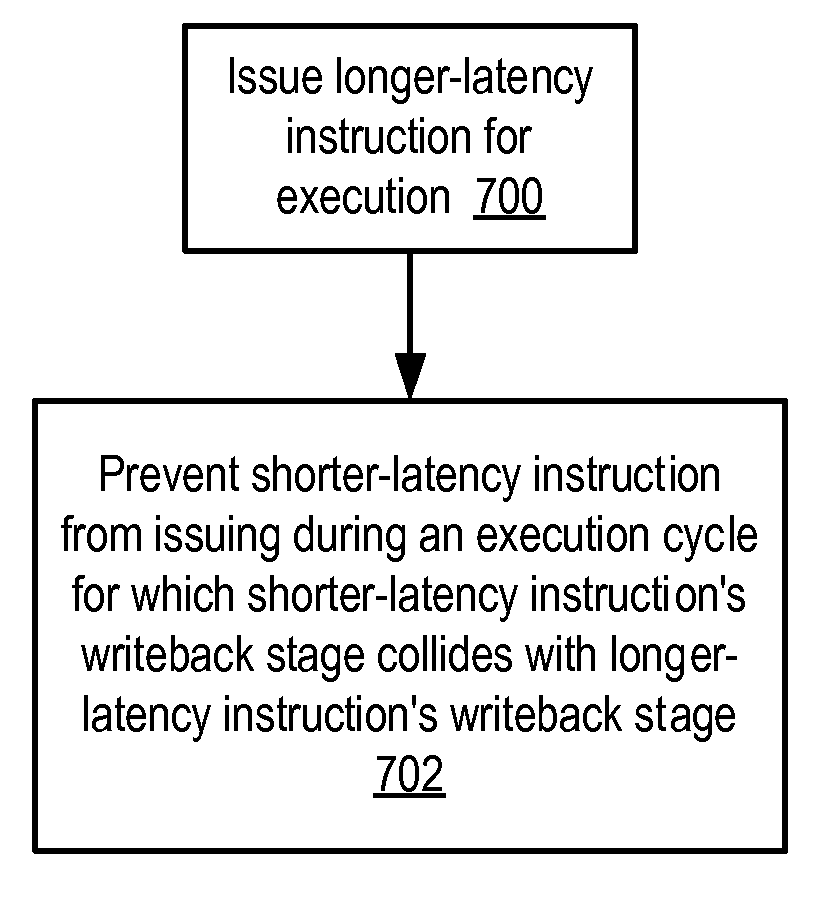

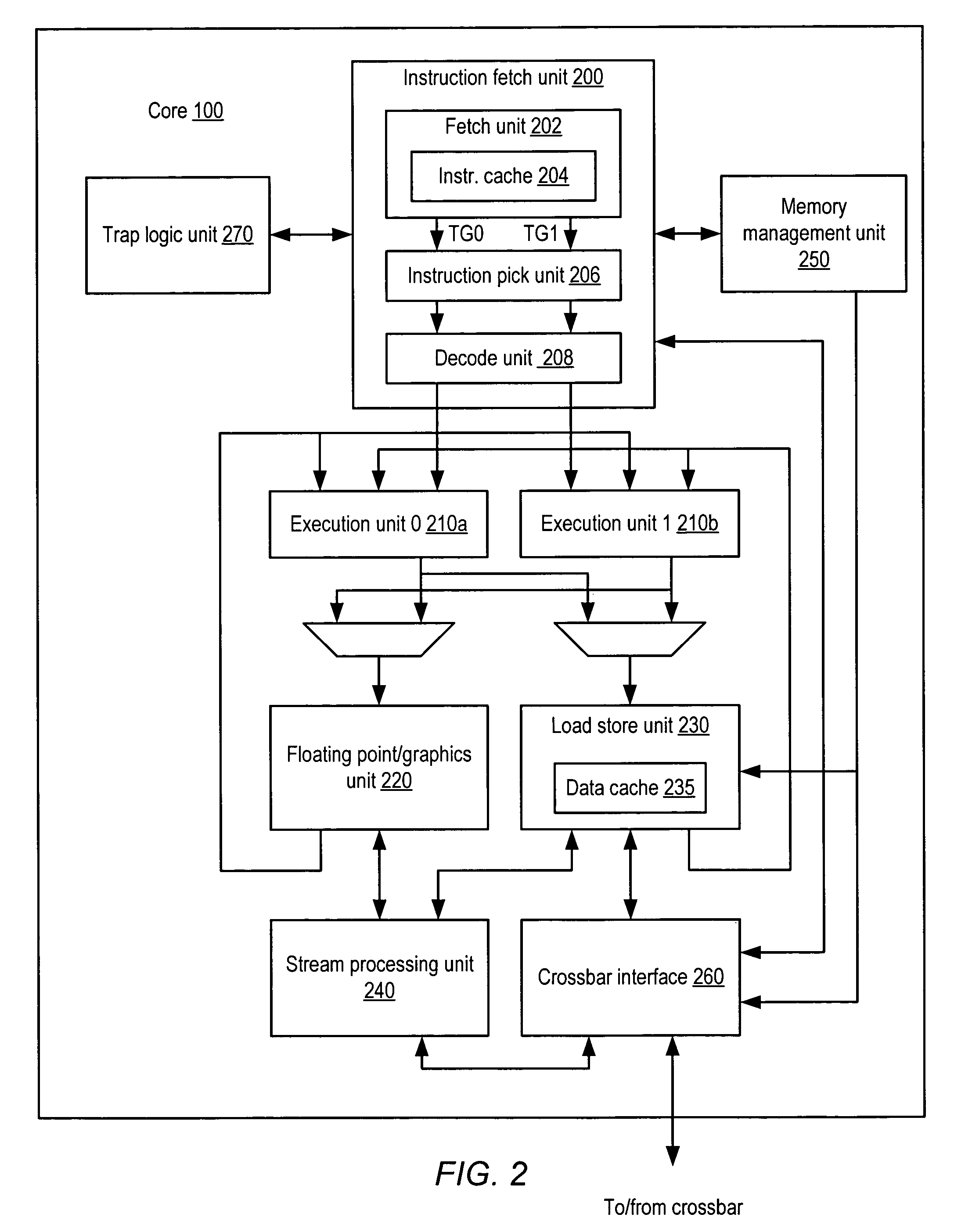

Apparatus and method to support pipelining of differing-latency instructions in a multithreaded processor

ActiveUS7478225B1Easy accessAvoid issuingDigital computer detailsMemory systemsLong latencyParallel computing

An apparatus and method to support pipelining of variable-latency instructions in a multithreaded processor. In one embodiment, a processor may include instruction fetch logic configured to issue a first and a second instruction from different ones of a plurality of threads during successive cycles. The processor may also include first and second execution units respectively configured to execute shorter-latency and longer-latency instructions and to respectively write shorter-latency or longer-latency instruction results to a result write port during a first or second writeback stage. The first writeback stage may occur a fewer number of cycles after instruction issue than the second writeback stage. The instruction fetch logic may be further configured to guarantee result write port access by the second execution unit during the second writeback stage by preventing the shorter-latency instruction from issuing during a cycle for which the first writeback stage collides with the second writeback stage.

Owner:ORACLE INT CORP

Controller for multiple instruction thread processors

InactiveUS6931641B1Efficient comprehensive utilizationProgram initiation/switchingProgram synchronisationFistLong latency

A mechanism controls a multi-thread processor so that when a fist thread encounters a latency event to a first predefined time interval temporary control is transferred to an alternate execution thread for duration of the first predefined time interval and then back to the original thread. The mechanism grants full control to the alternate execution thread when a latency event for a second predefined time interval is encountered. The first predefined time interval is termed short latency event whereas the second time interval is termed long latency event.

Owner:INTEL CORP

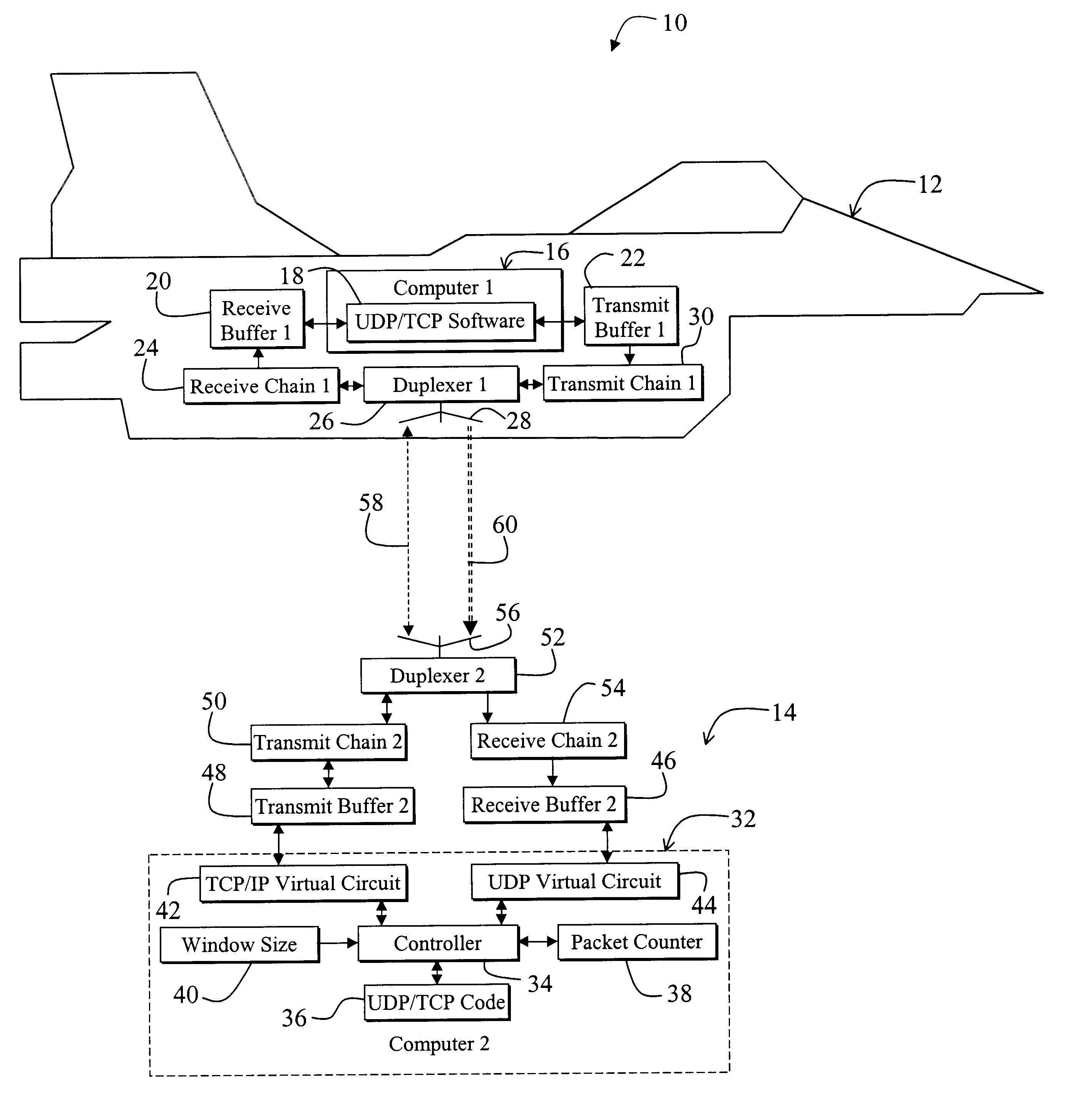

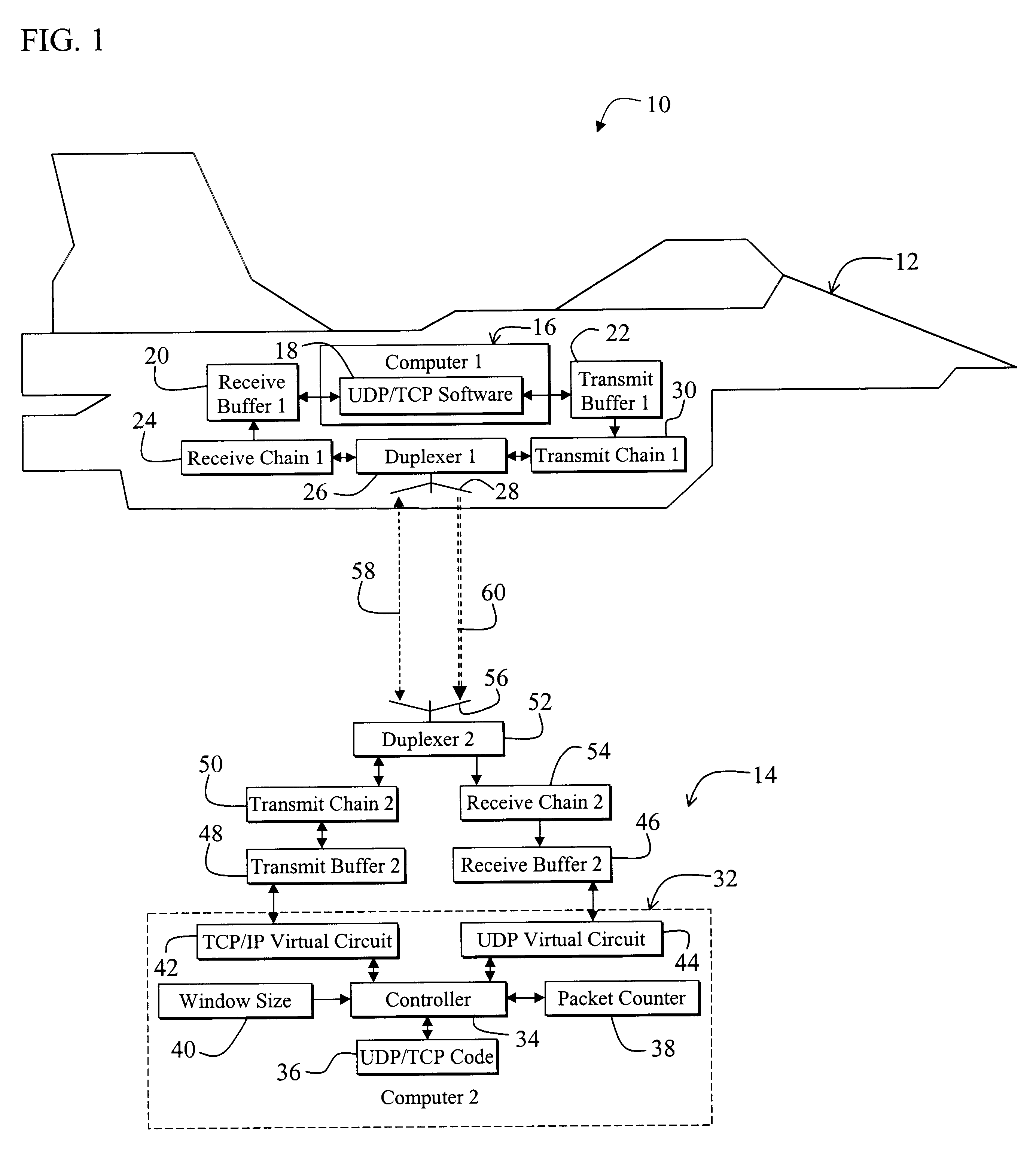

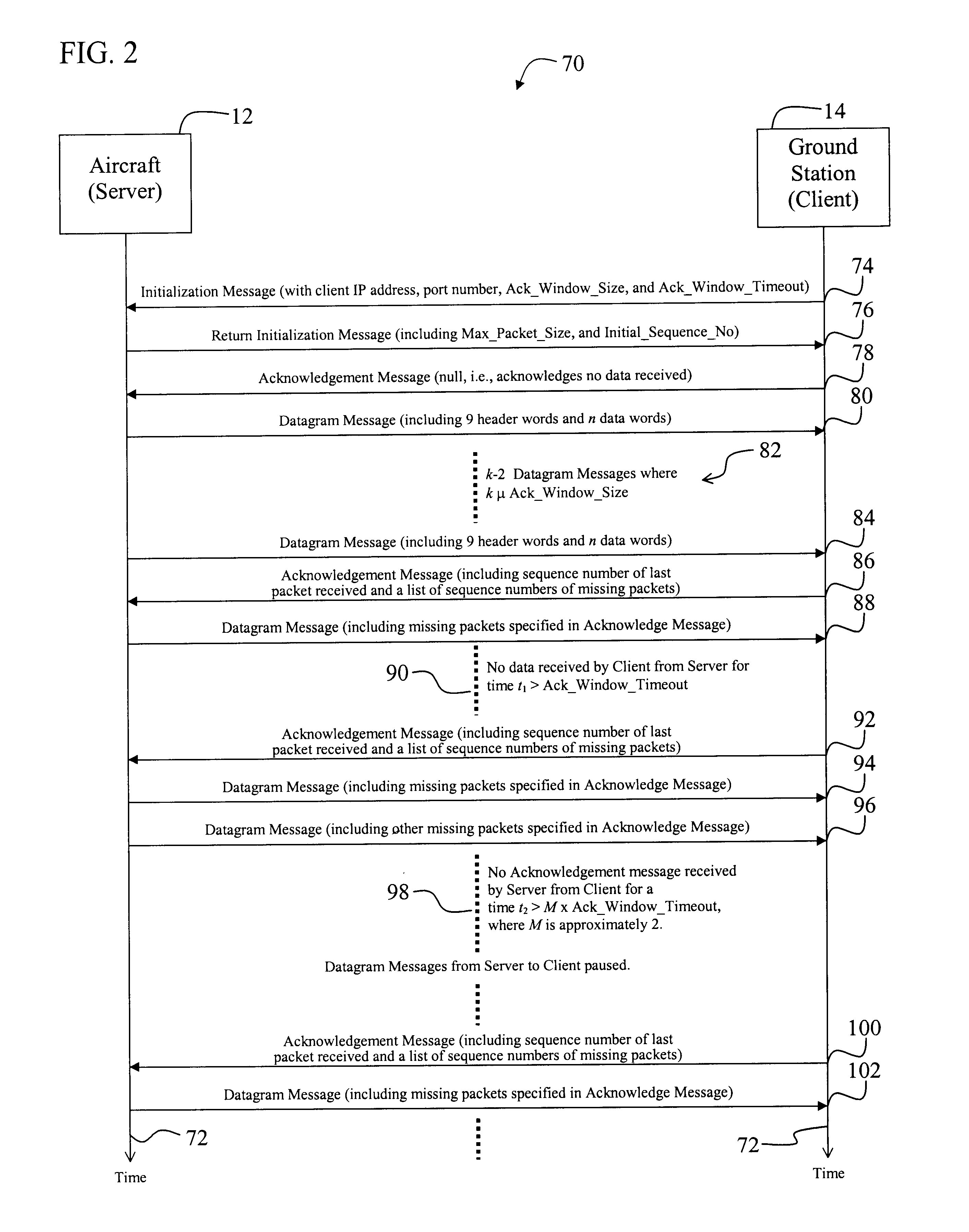

Effective protocol for high-rate, long-latency, asymmetric, and bit-error prone data links

InactiveUS6831912B1Error prevention/detection by using return channelTransmission systemsLong latencyTelecommunications link

A system for efficiently and reliably communicating over a high-speed asymmetric communications link. The system includes a first mechanism for connecting a first device to a second device via a channel. A second mechanism delivers data packets over the channel from the first device to the second device. Each packet is associated with a window of packets. A third mechanism selectively employs the second mechanism to re-send data packets not received by the second device after each window of packets. The window of packets is sized in accordance with the bandwidth of the communications link between the first device and the second device, and the round trip delay time. In a specific embodiment, the first mechanism (includes Transmission Control Protocol / Internet Protocol (TCP / IP) functionality on the first device and the second device for establishing a first TCP / IP link from the second device to the first device. The first mechanism also includes Universal Datagram Protocol (UDP) functionality on the first device and the second device for transferring UDP packets from the first device to the second device. The third mechanism sends acknowledgement messages from the second device to the first device specifying the packets not received by the second device. The system further includes a fourth mechanism for selectively disabling the second mechanism when first device does not receive an acknowledgement message after a predetermined time interval. The predetermined time interval is a function of a window timeout variable. The predetermined function is (M)x(window timeout), where M is approximately 2. The window timeout is greater than N multiplied by a number of packets included in the window of packets divided by the data rate of the communications link between the first device and the second device, here N is an integer greater than 1. N is between 3 and 10.

Owner:RAYTHEON CO

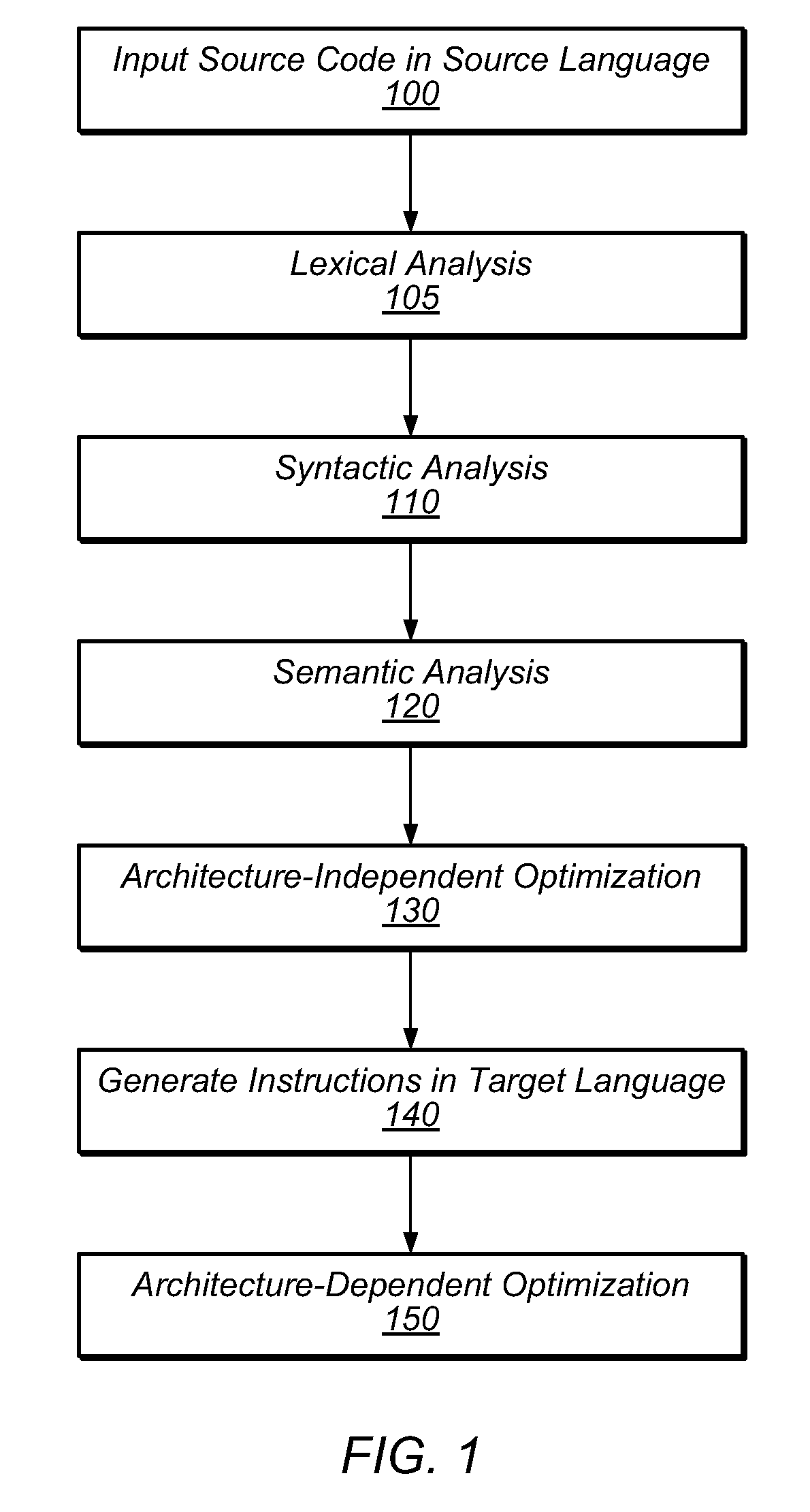

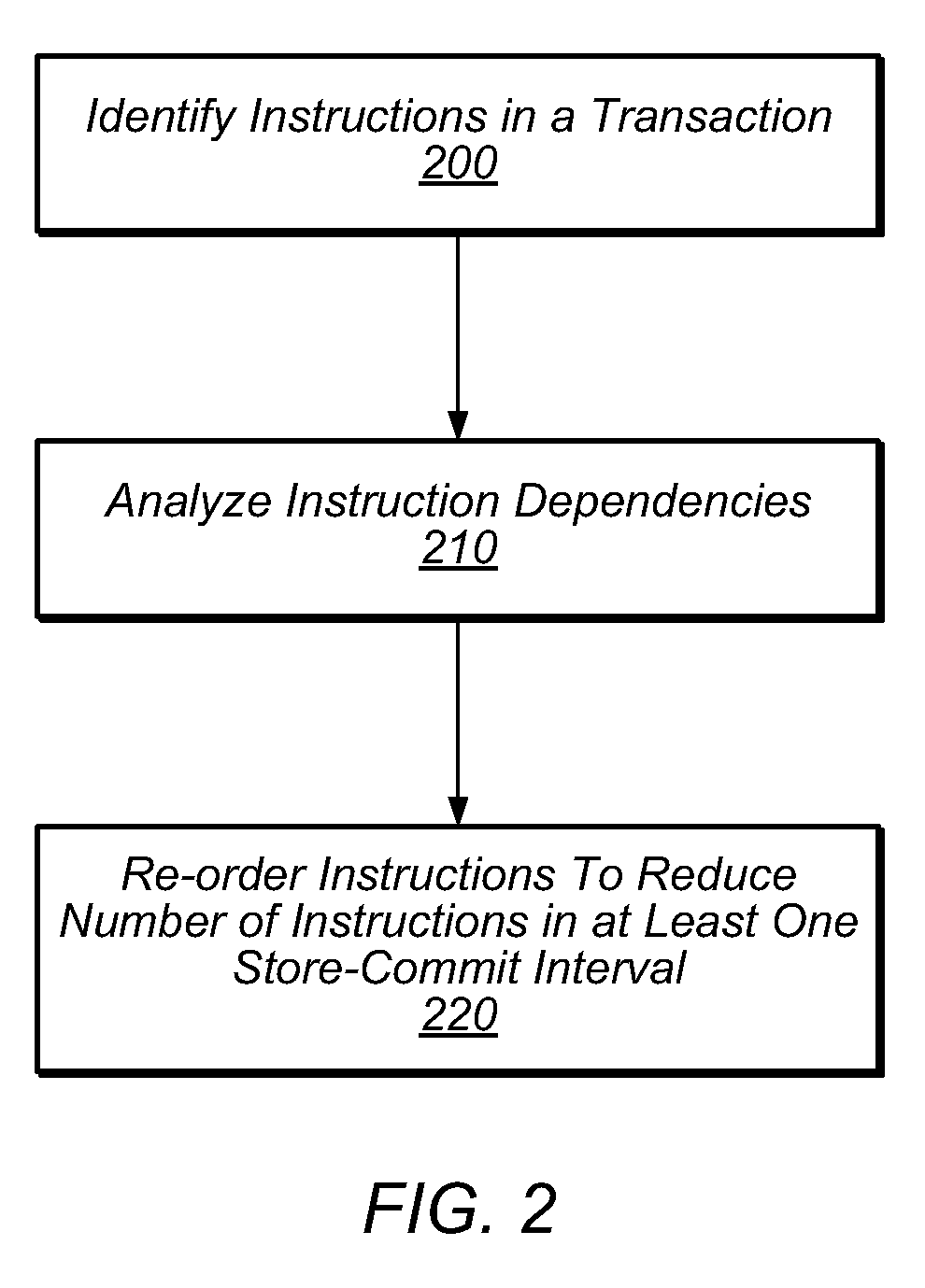

System and Method for Reducing Transactional Abort Rates Using Compiler Optimization Techniques

ActiveUS20100169870A1Reduce probabilityReduce transactional abortsSoftware engineeringSpecific program execution arrangementsLong latencyTransactional memory

In transactional memory systems, transactional aborts due to conflicts between concurrent threads may cause system performance degradation. A compiler may attempt to minimize runtime abort rates by performing one or more code transformations and / or other optimizations on a transactional memory program in an attempt to minimize one or more store-commit intervals. The compiler may employ store deferral, hoisting of long-latency operations from within a transaction body and / or store-commit interval, speculative hoisting of long-latency operations, and / or redundant store squashing optimizations. The compiler may perform optimizing transformations on source code and / or on any intermediate representation of the source code (e.g., parse trees, un-optimized assembly code, etc.). In some embodiments, the compiler may preemptively avoid naïve target code constructions. The compiler may perform static and / or dynamic analysis of a program in order to determine which, if any, transformations should be applied and / or may dynamically recompile code sections at runtime, based on execution analysis.

Owner:SUN MICROSYSTEMS INC

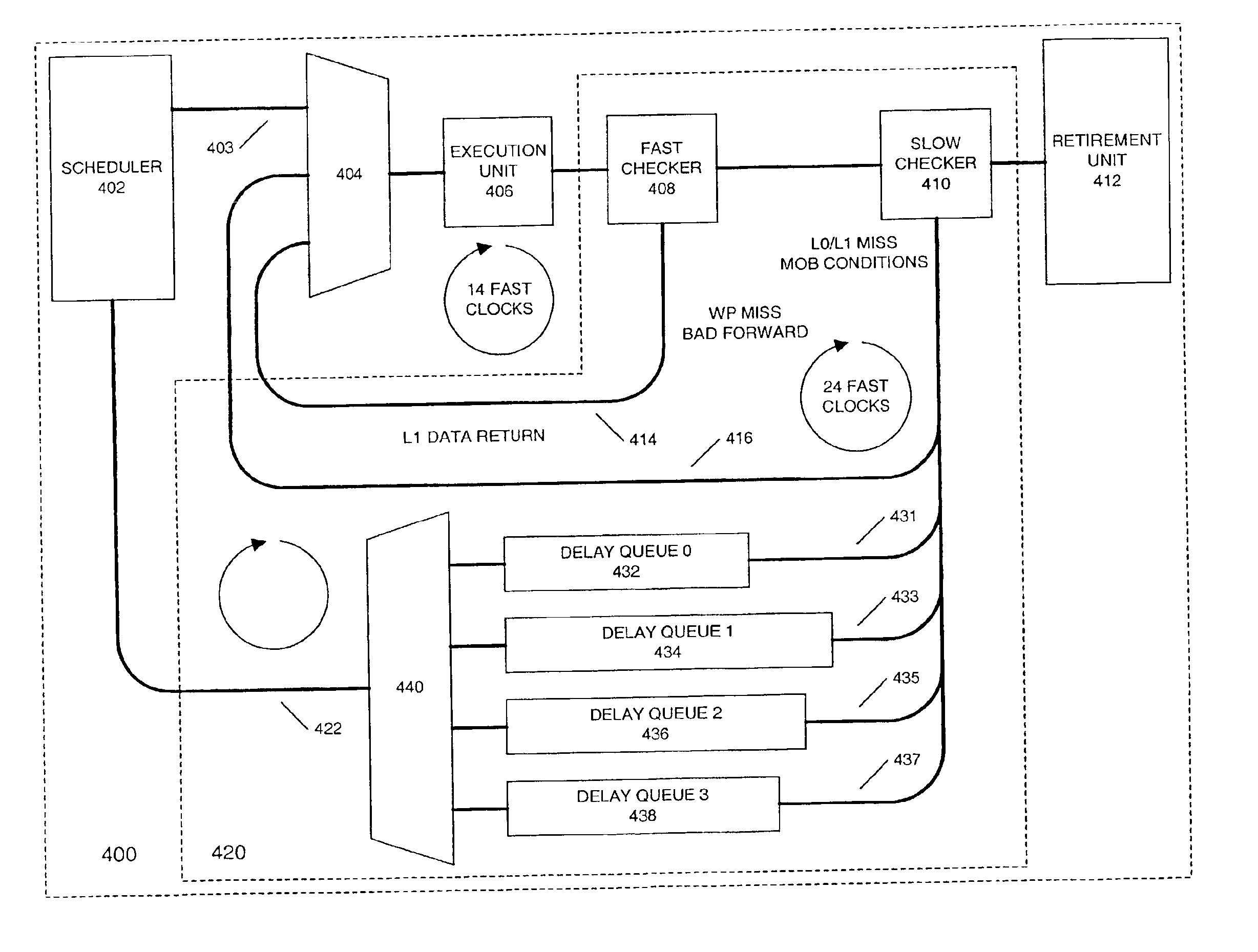

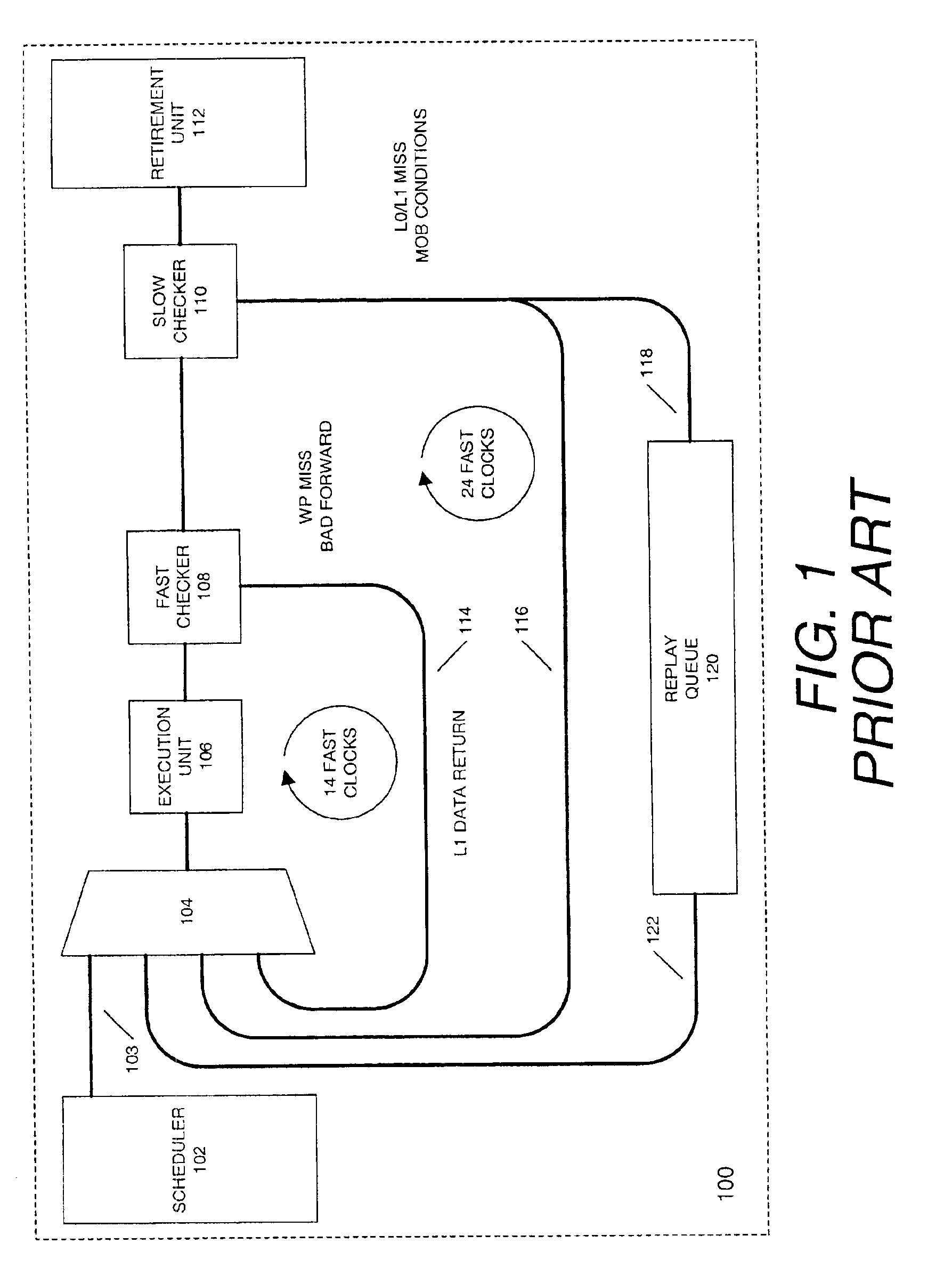

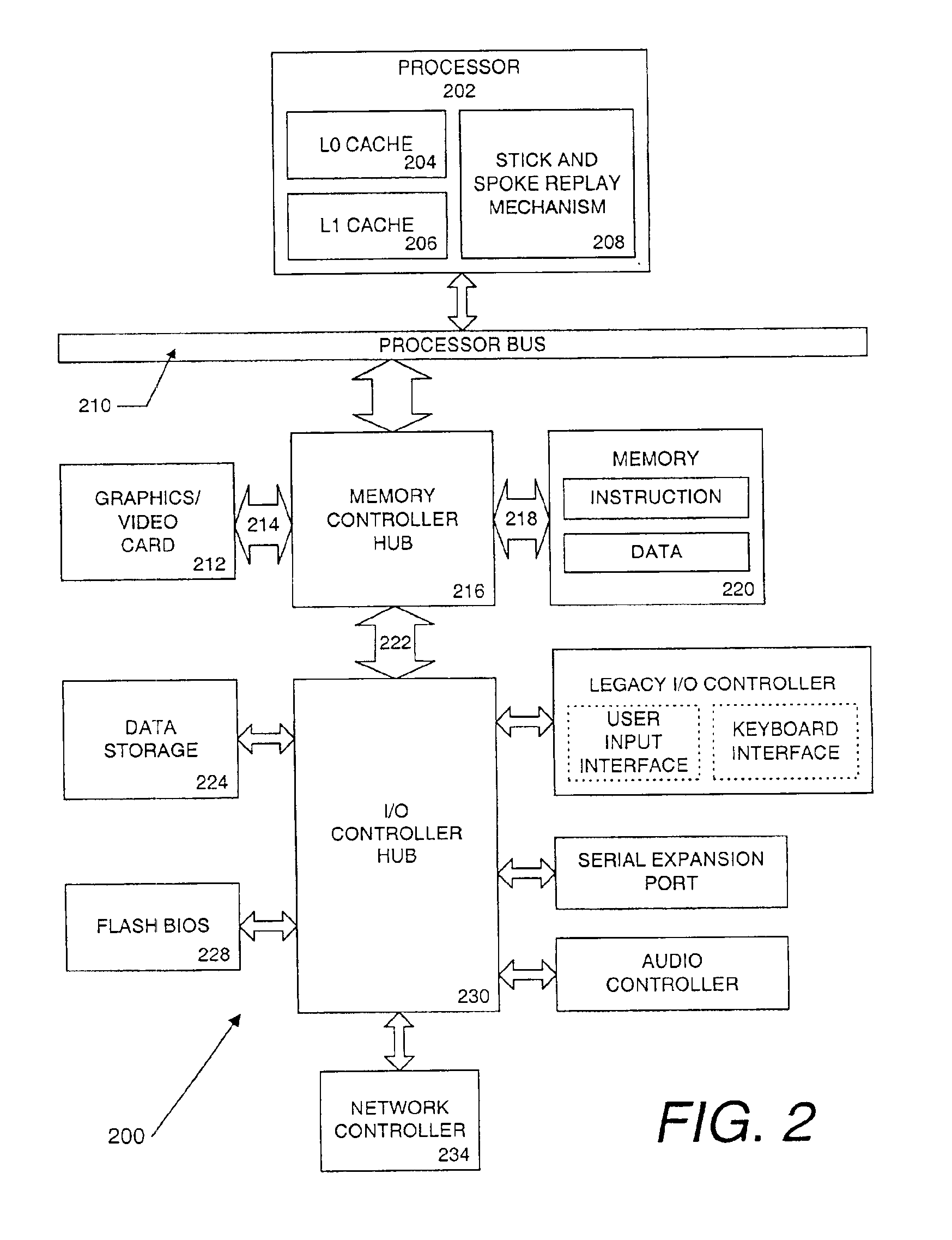

Stick and spoke replay with selectable delays

InactiveUS6912648B2Digital computer detailsConcurrent instruction executionLong latencyParallel computing

A method for stick and spoke replay in a processor. The method of one embodiment comprises dispatching an instruction for execution. The instruction is speculatively executed. It is determined whether the instruction executed correctly. The instruction is routed to a replay mechanism if the instruction did not execute correctly. It is determined incorrect execution of the instruction is due to a long latency operation. The instruction is routed for immediate re-execution if the incorrect execution is not due to the long latency operation. The routing of the instruction for re-execution is delayed if the incorrect execution is due to the long latency operation. The instruction is re-executed if the instruction did not execute correctly. The instruction is retired if the instruction executed correctly.

Owner:INTEL CORP

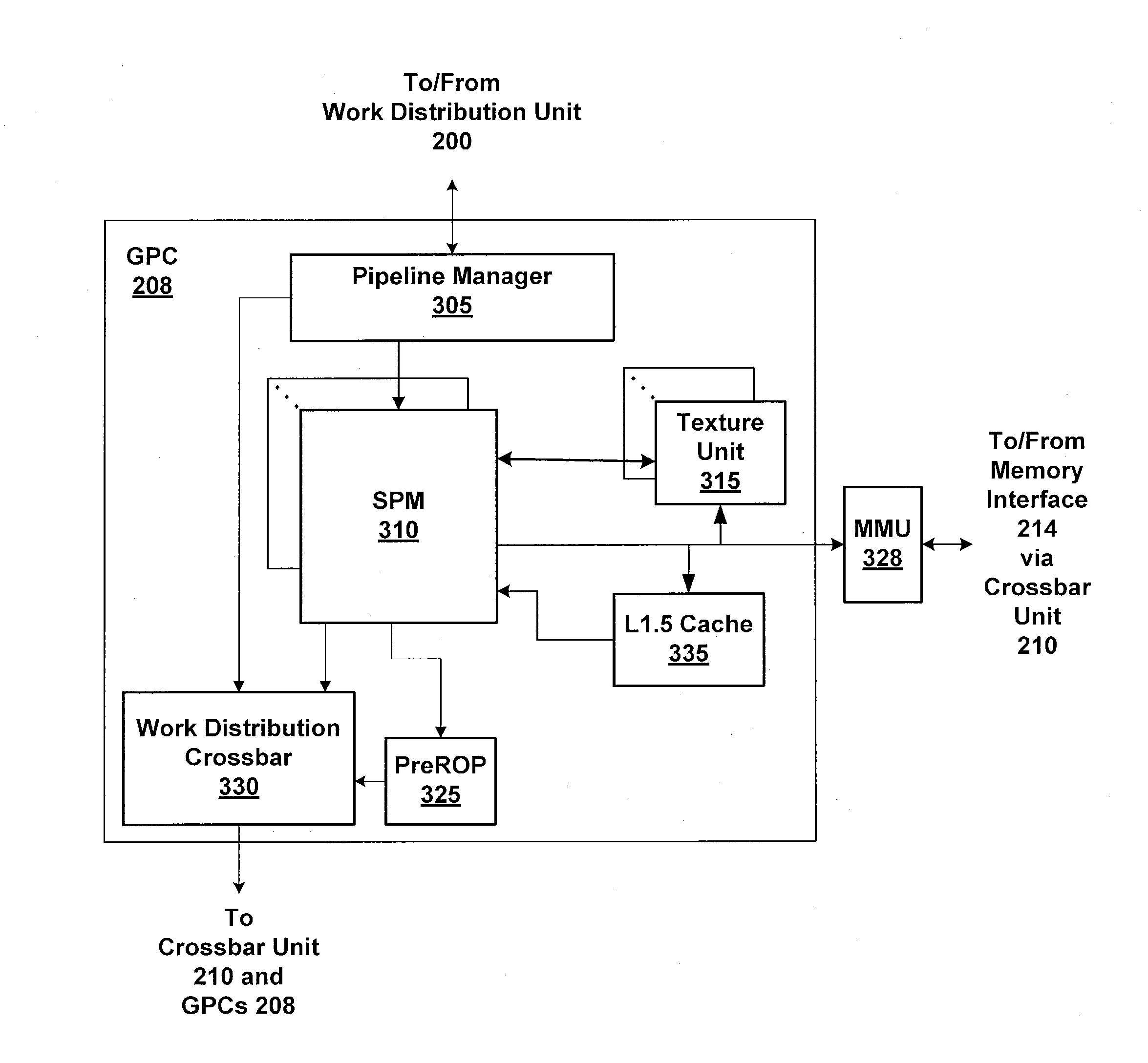

Two-Level Scheduler for Multi-Threaded Processing

ActiveUS20120079503A1Reduce power consumptionReduce areaProgram initiation/switchingMemory systemsLong latencyLocal memories

One embodiment of the present invention sets forth a technique for scheduling thread execution in a multi-threaded processing environment. A two-level scheduler maintains a small set of active threads called strands to hide function unit pipeline latency and local memory access latency. The strands are a sub-set of a larger set of pending threads that is also maintained by the two-leveler scheduler. Pending threads are promoted to strands and strands are demoted to pending threads based on latency characteristics. The two-level scheduler selects strands for execution based on strand state. The longer latency of the pending threads is hidden by selecting strands for execution. When the latency for a pending thread is expired, the pending thread may be promoted to a strand and begin (or resume) execution. When a strand encounters a latency event, the strand may be demoted to a pending thread while the latency is incurred.

Owner:NVIDIA CORP

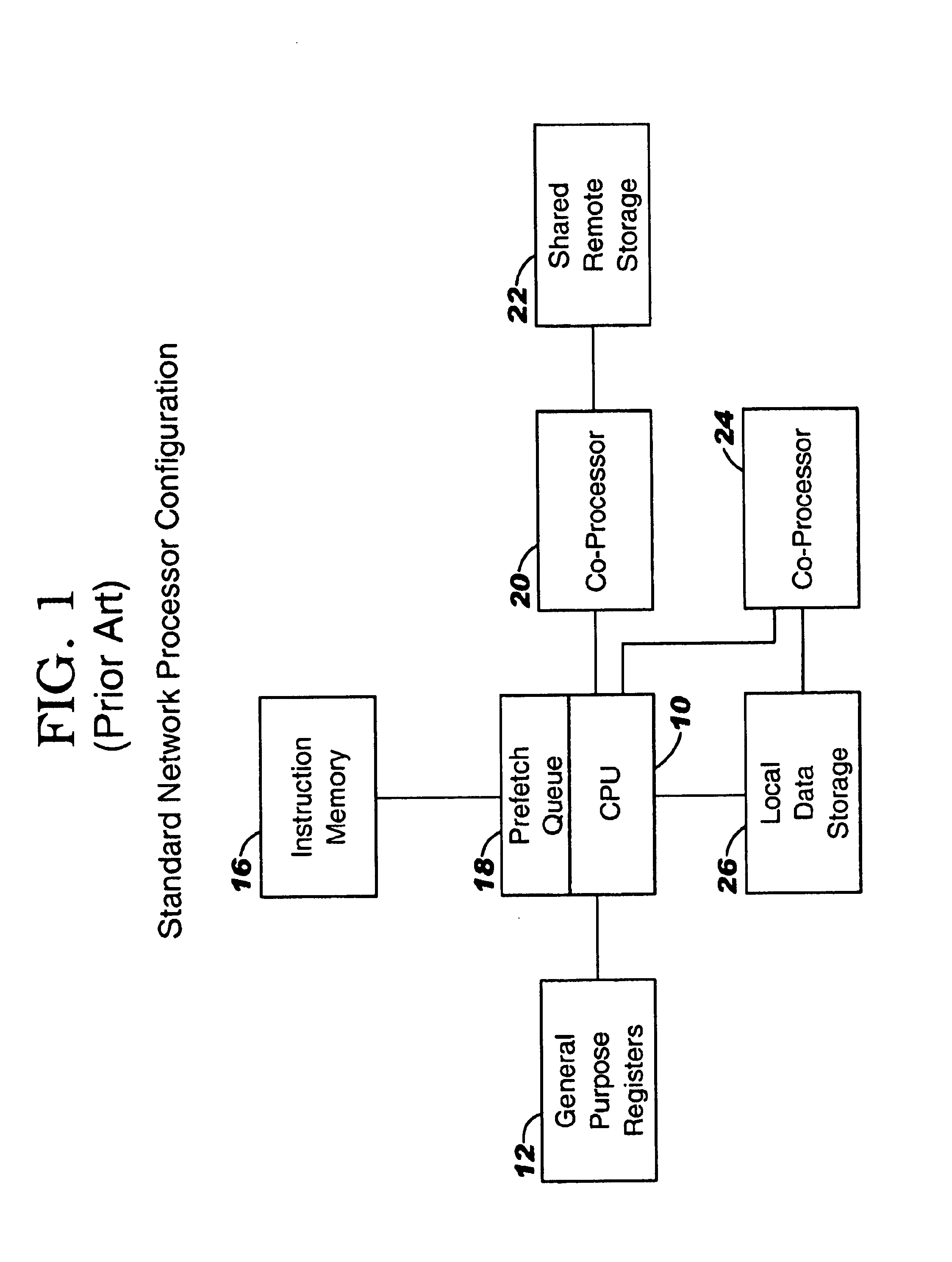

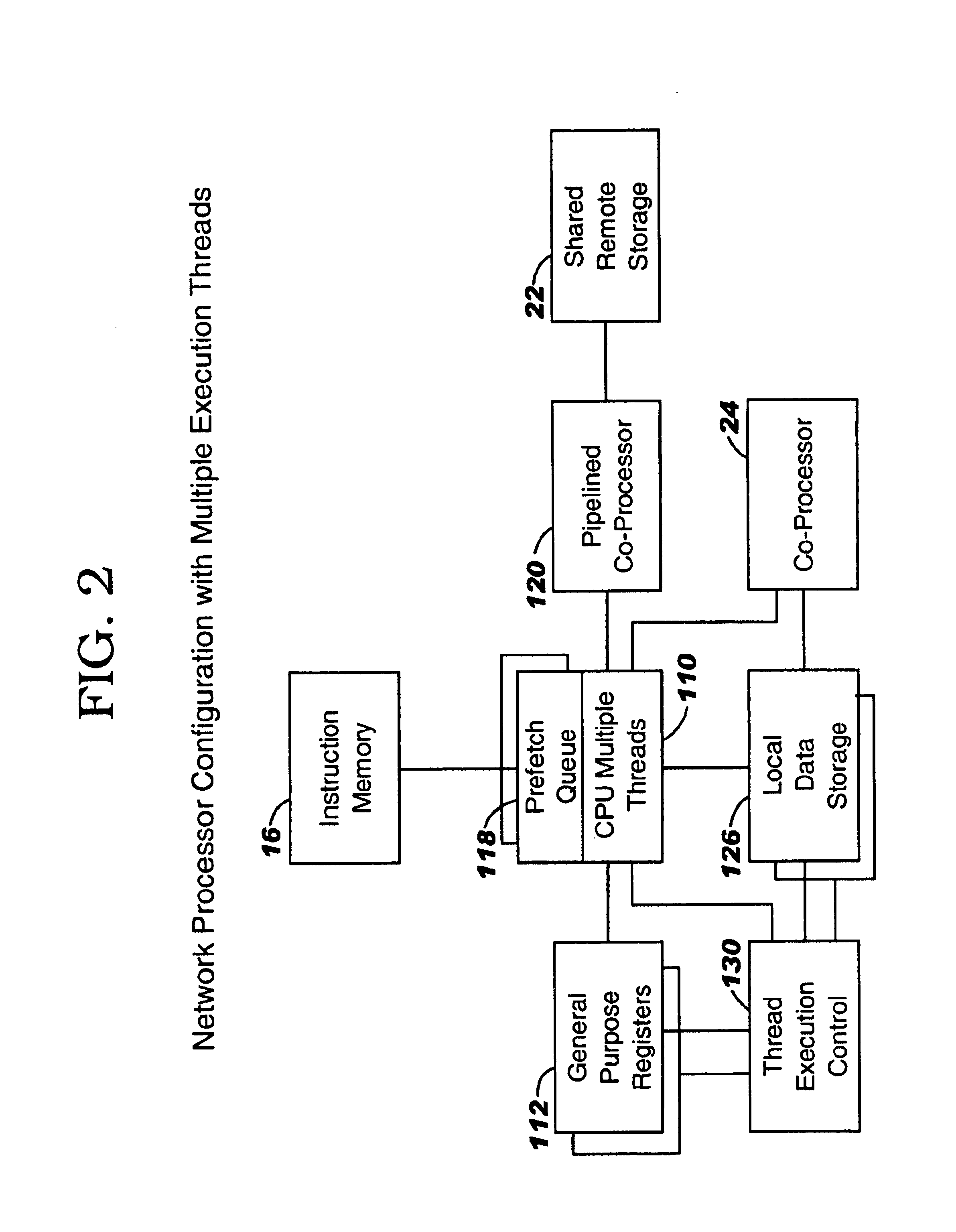

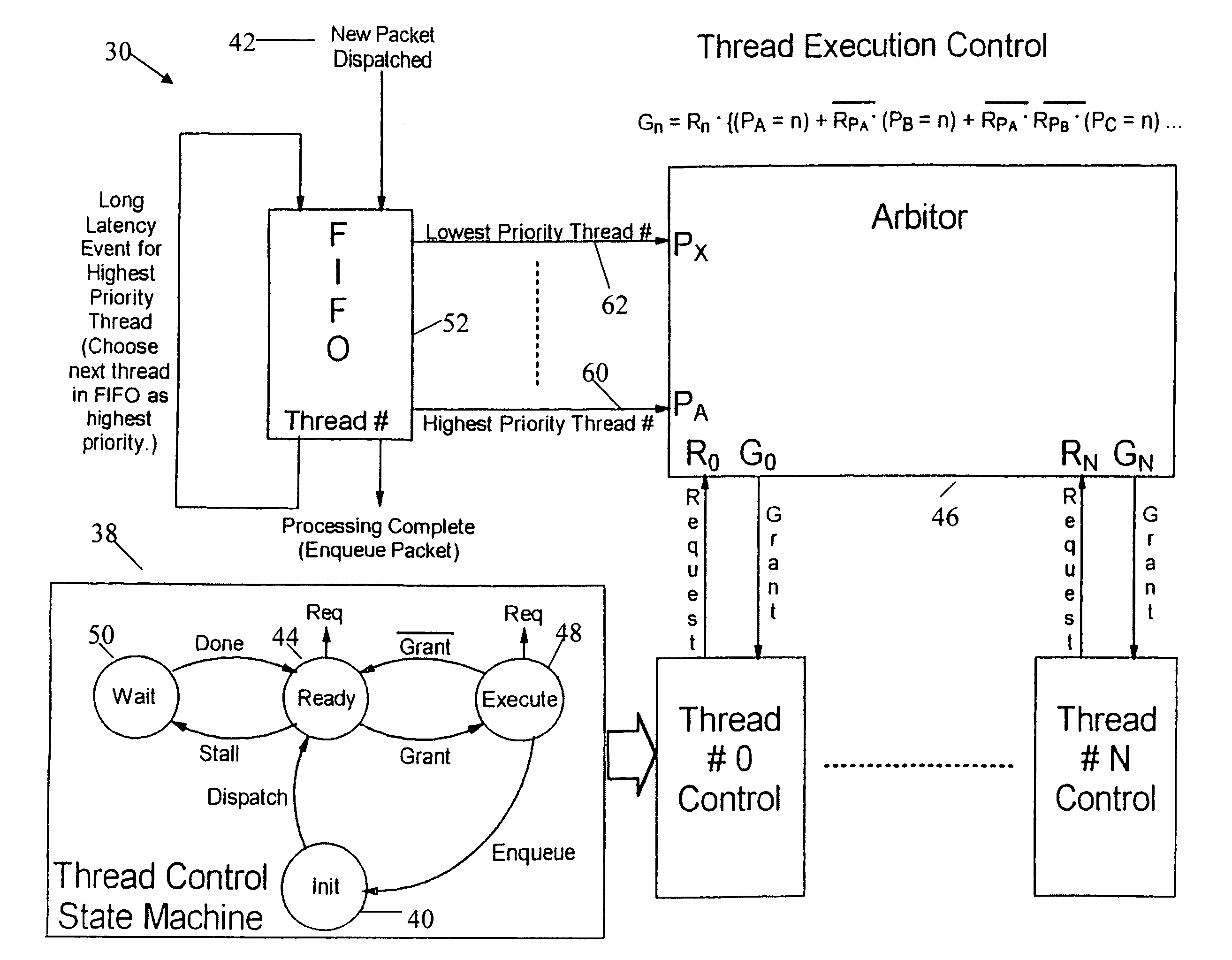

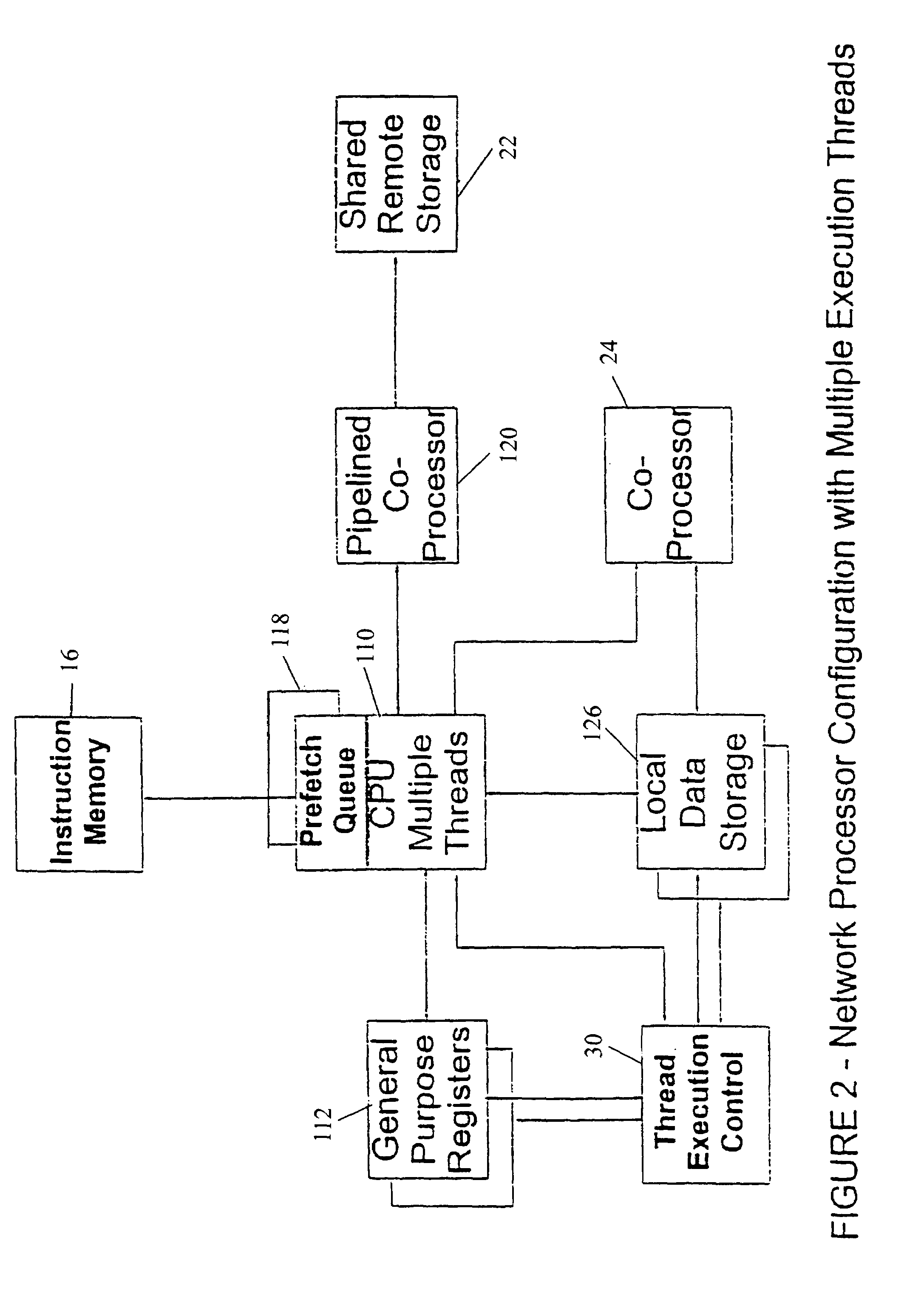

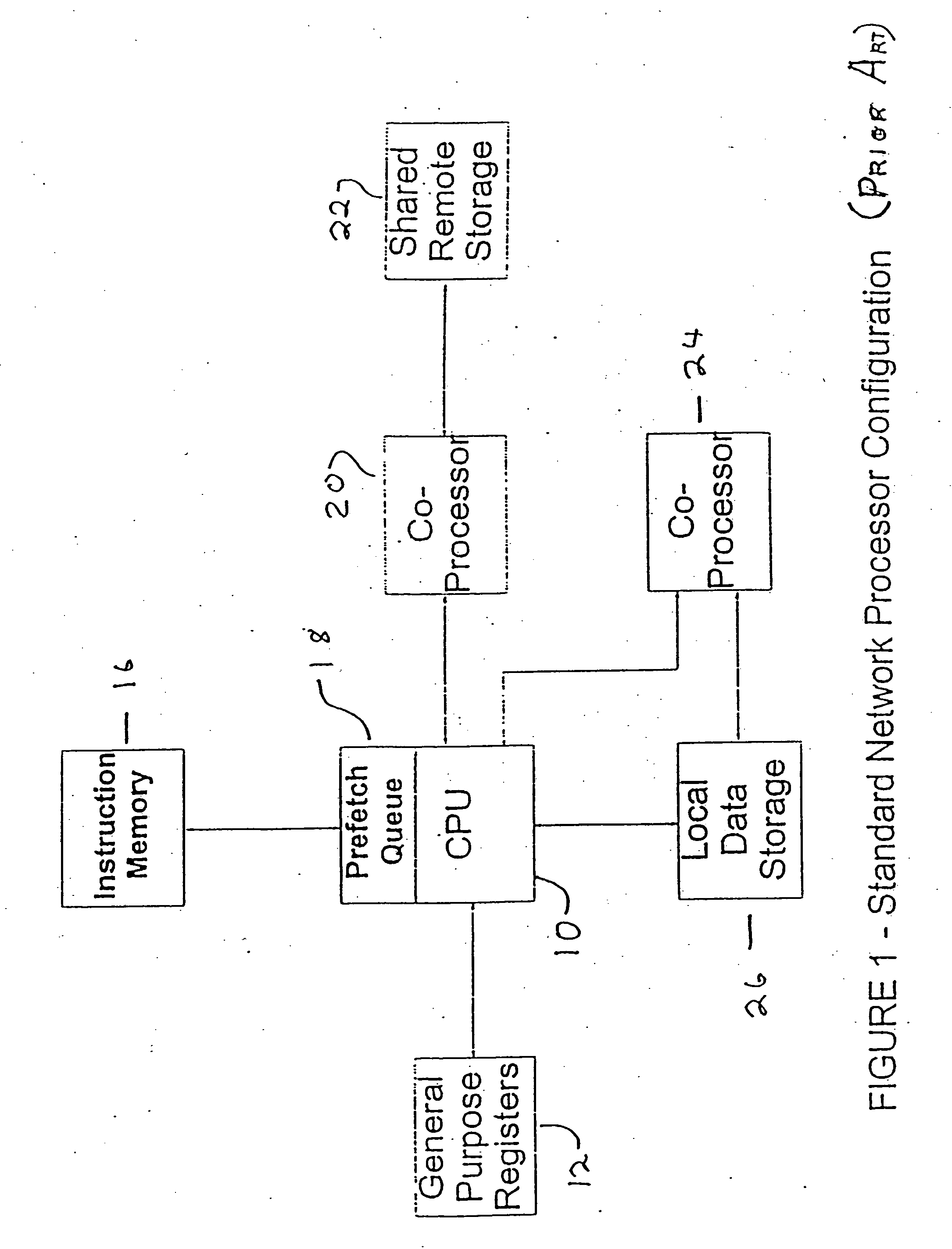

Network processor which makes thread execution control decisions based on latency event lengths

InactiveUS7093109B1Easy to useZero overheadDigital computer detailsMultiprogramming arrangementsLong latencyCoprocessor

A control mechanism is established between a network processor and a tree search coprocessor to deal with latencies in accessing the data such as information formatted in a tree structure. A plurality of independent instruction execution threads are queued to enable them to have rapid access to the shared memory. If execution of a thread becomes stalled due to a latency event, full control is granted to the next thread in the queue. The grant of control is temporary when a short latency event occurs or full when a long latency event occurs. Control is returned to the original thread when a short latency event is completed. Each execution thread utilizes an instruction prefetch buffer that collects instructions for idle execution threads when the instruction bandwidth is not fully utilized by an active execution thread. The thread execution control is governed by the collective functioning of a FIFO, an arbiter and a thread control state machine.

Owner:INTEL CORP

Pre-fetch communication systems and methods

ActiveUS7359395B2Increase speedImprove efficiencyData switching by path configurationMultiple digital computer combinationsLong latencyCommunications system

The present invention relates to telecommunications devices, systems and methods for providing improved performance over long latency communications links. Some embodiments selectively pre-fetch and transmit information over the link using improved protocol and pre-fetch mechanisms. One system includes a first gateway (430) adapted to communicate with a client (410), the first gateway including a processor coupled to a storage medium. The storage medium includes code for receiving a request for retrieving a web object, code for forwarding the request to a second gateway (440) over the long latency link, and code for receiving a pre-fetch announcement and response data for the web object from the second gateway. The pre-fetch announcement is received prior to receiving the response data.

Owner:CA TECH INC

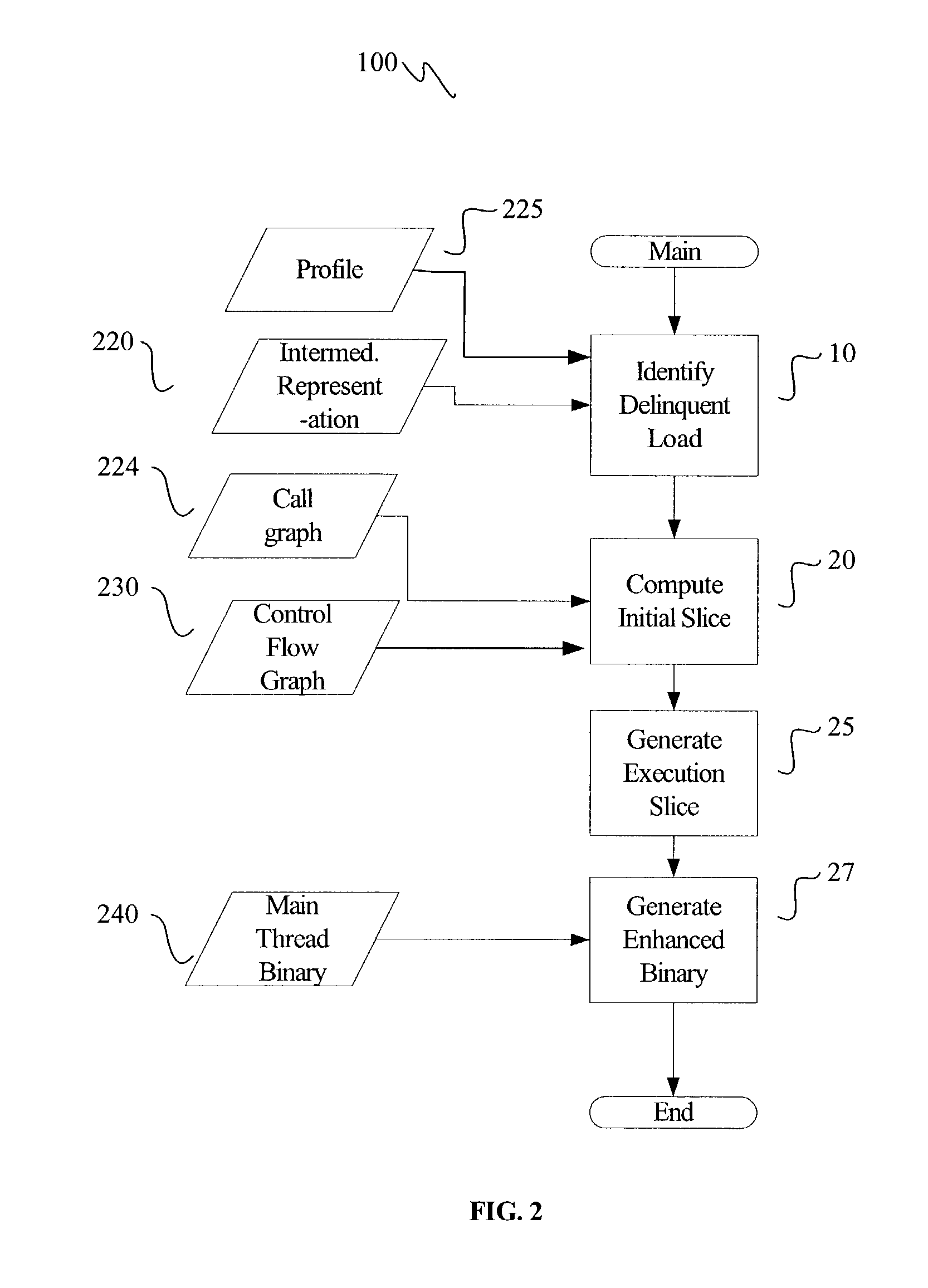

Post-pass binary adaptation for software-based speculative precomputation

ActiveUS20040054990A1Software engineeringSpecific program execution arrangementsLong latencyPrecomputation

The latencies associated with cache misses or other long-latency instructions in a main thread are decreased through the use of a simultaneous helper thread. The helper thread is a speculative prefetch thread to perform a memory prefetch for the main thread. The instructions for the helper thread are dynamically incorporated into the main thread binary during post-pass operation of a compiler.

Owner:INTEL CORP

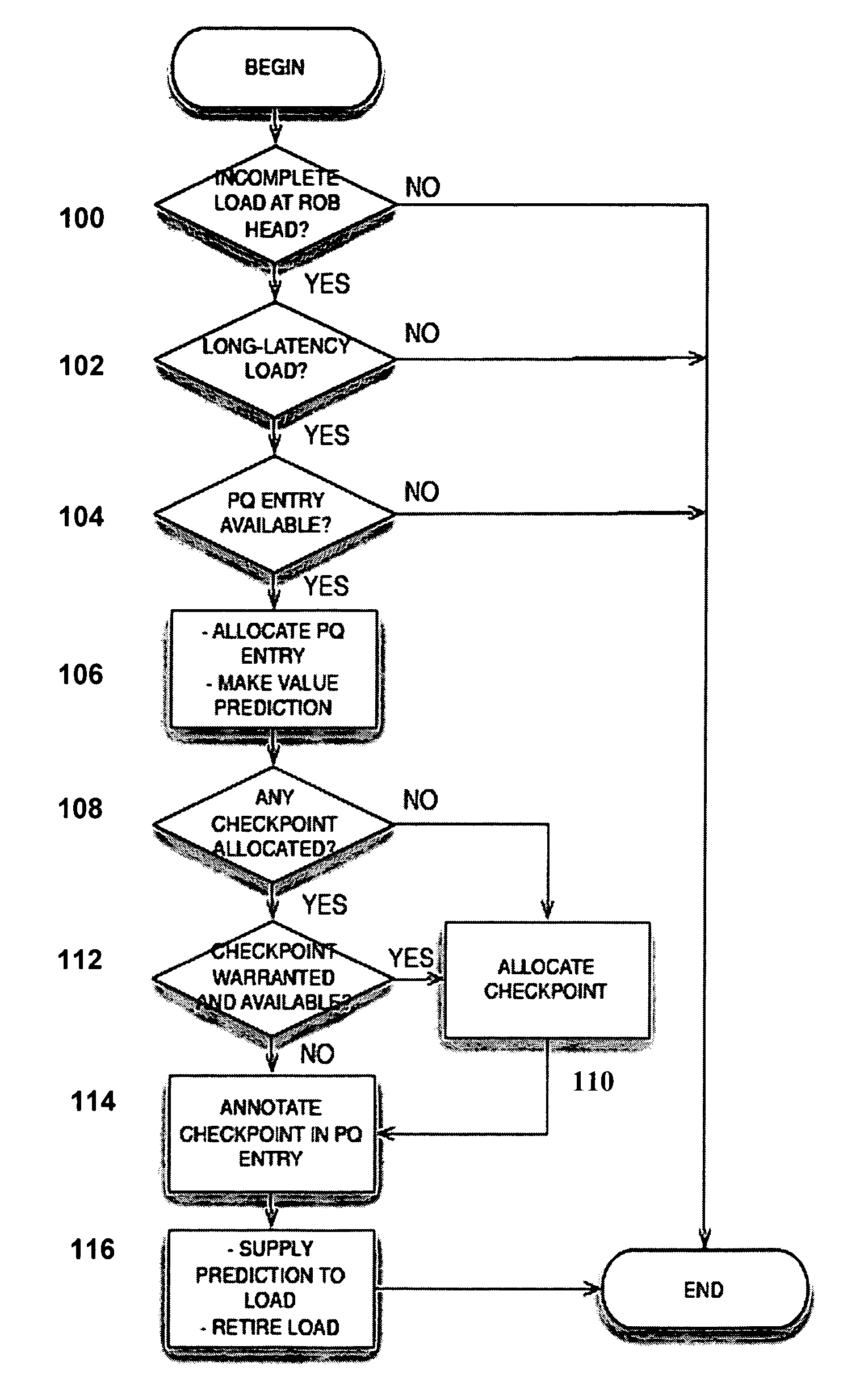

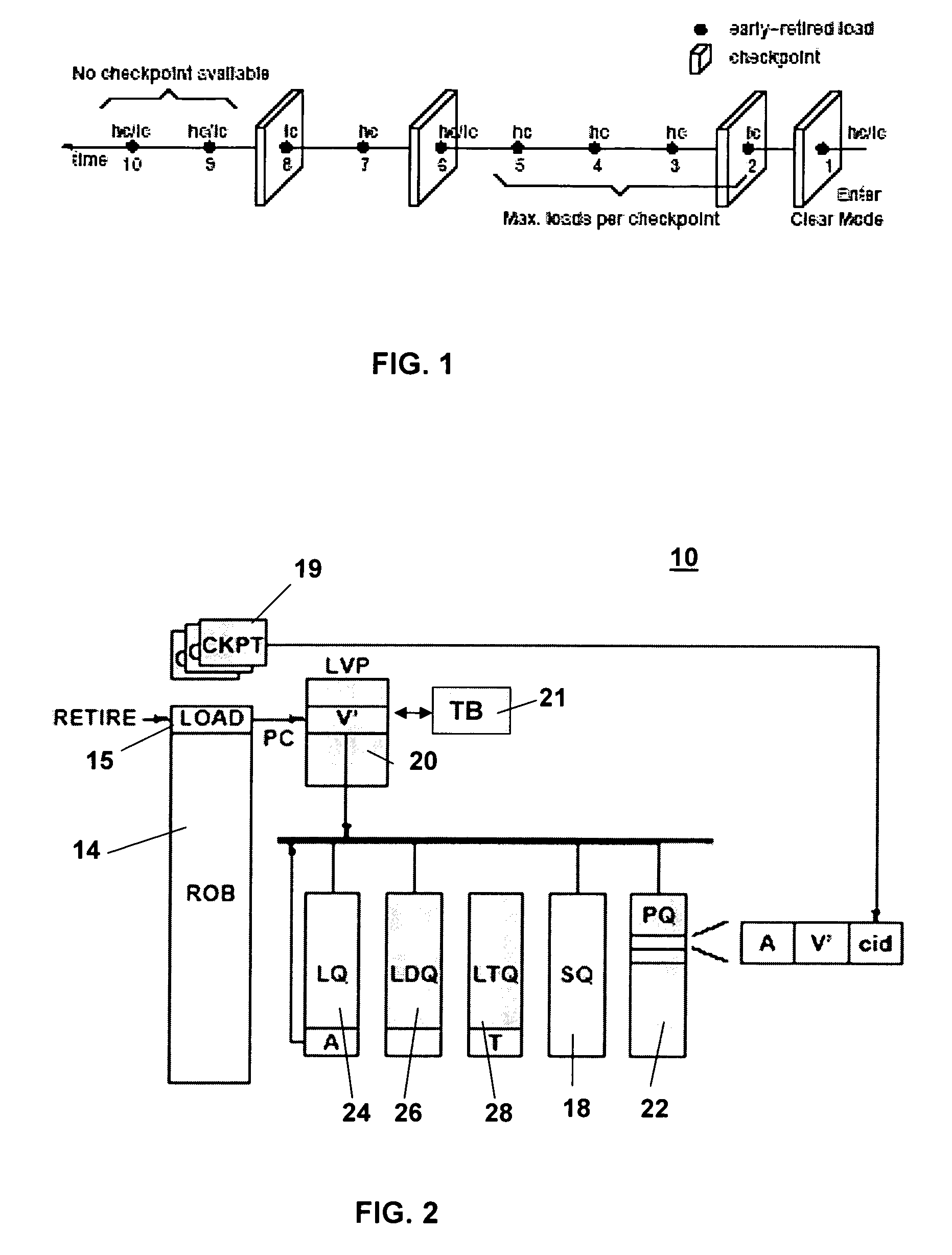

Method and apparatus for early load retirement in a processor system

ActiveUS20070074006A1Fast progressDigital computer detailsSpecific program execution arrangementsLong latencyParallel computing

Owner:CORNELL RES FOUNDATION INC

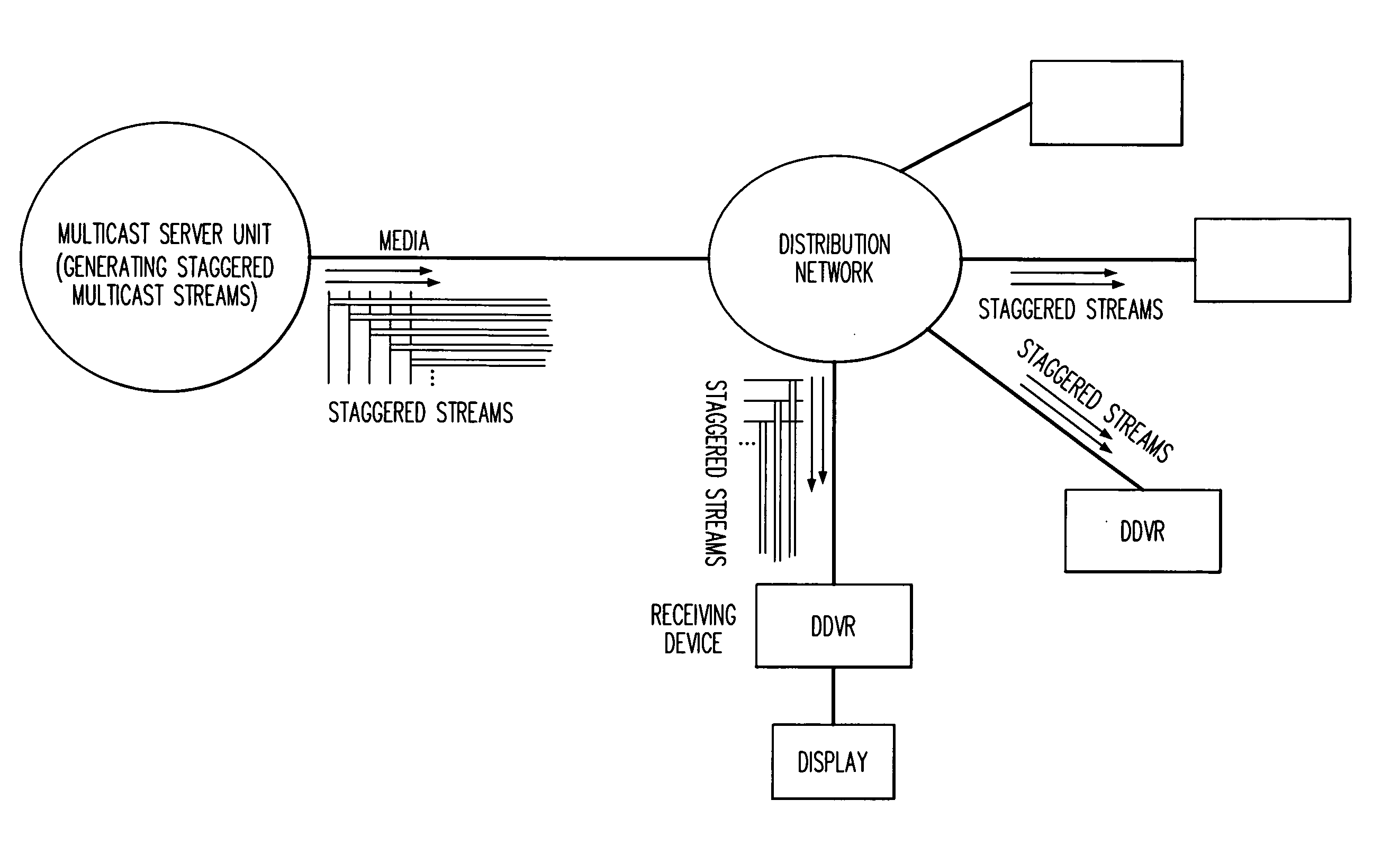

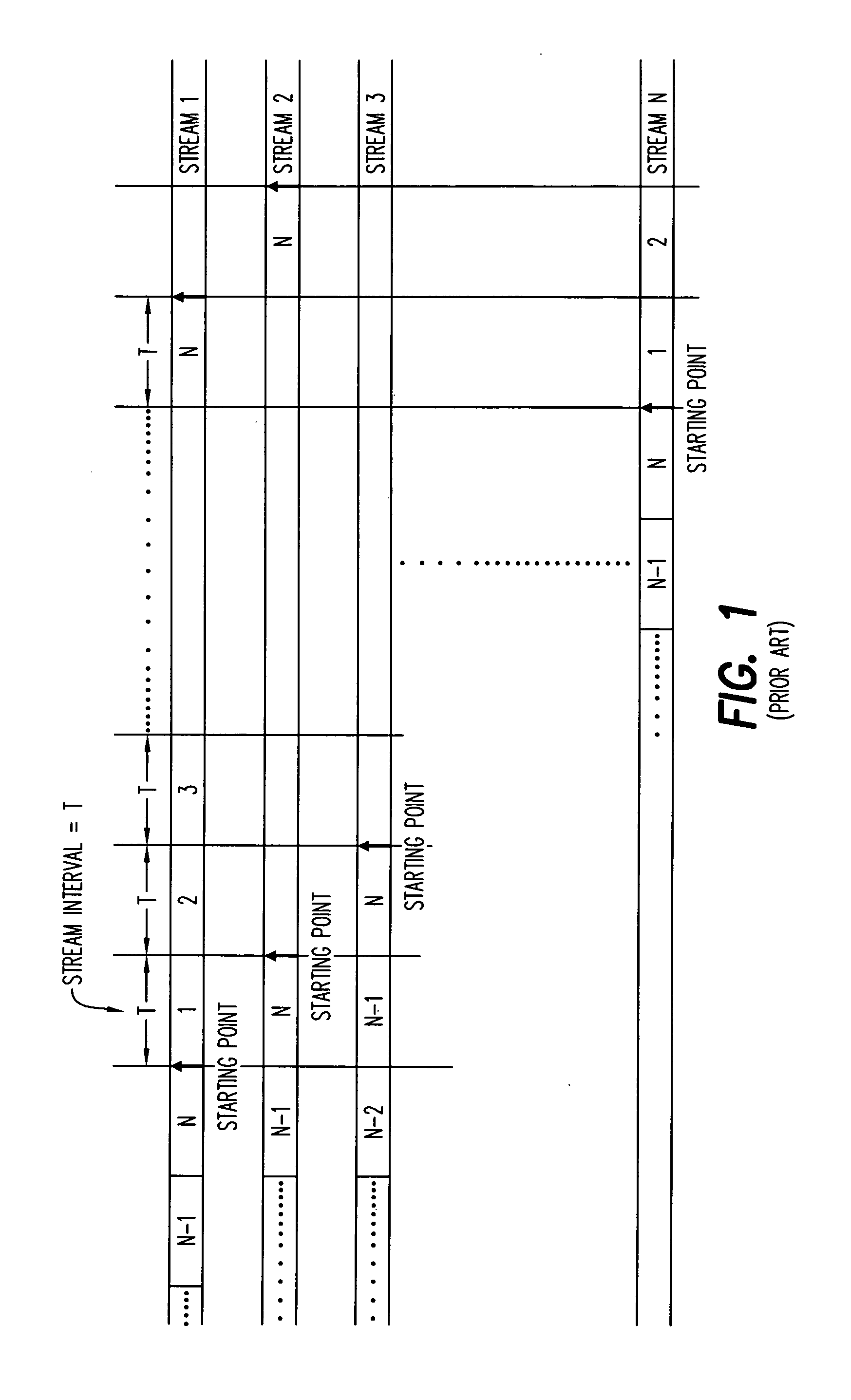

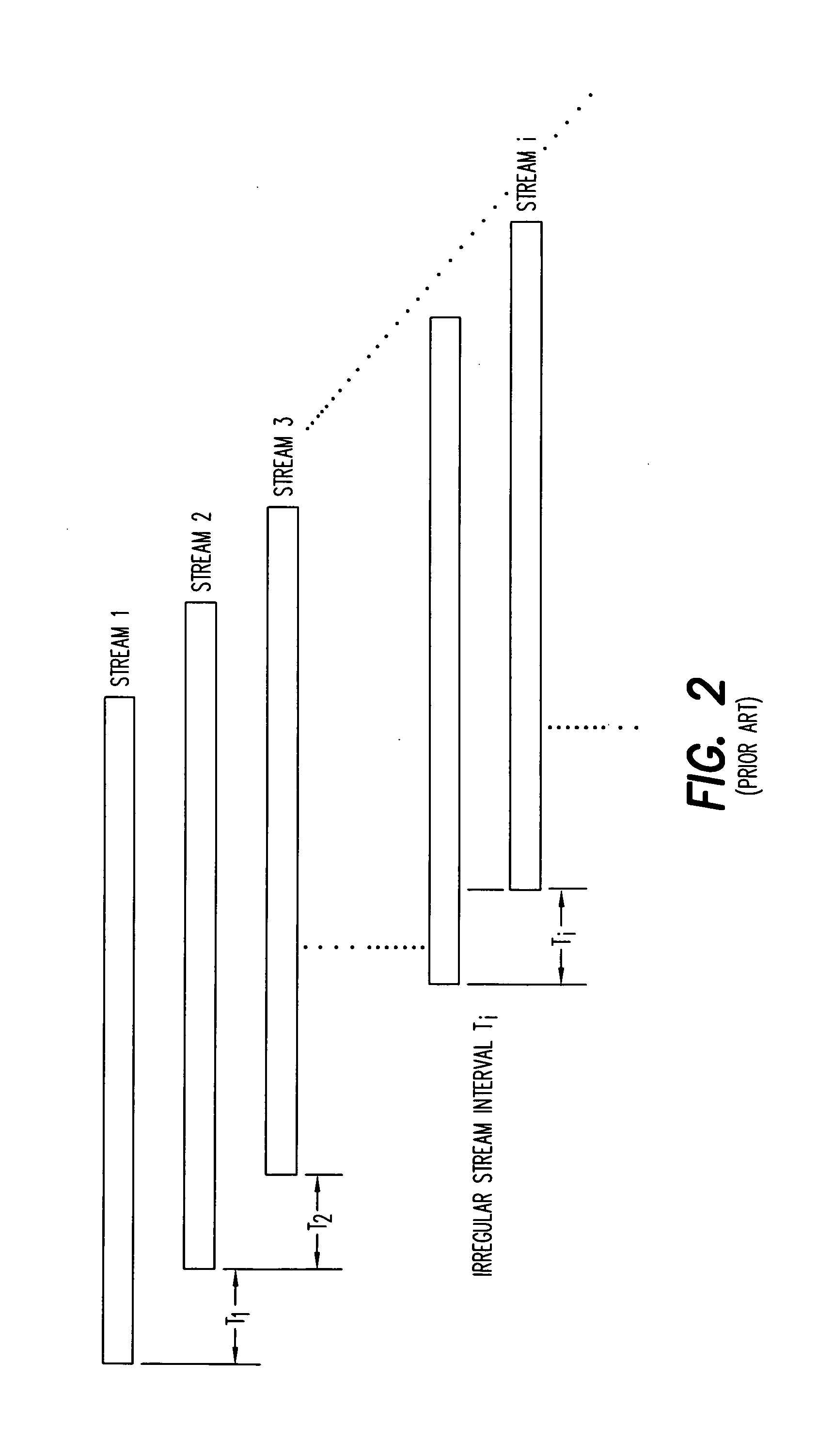

Method for delivering large amounts of data with interactivity in an on-demand system

InactiveUS7174384B2Convenience to mergeTelevision system detailsError preventionData streamLong latency

A method and system for delivering data over a network to a large number of clients, which may be suitable for building large-scale Video-on-Demand (VOD) systems. In current VOD systems, the client may suffer from a long latency before starting to receive requested data that is capable of providing sufficient interactive functions, or the reverse, without significantly increasing the network load. The method utilizes two groups of data streams, one responsible for minimizing latency while the other provides the required interactive functions. In the anti-latency data group, uniform, or non-uniform or hierarchical staggered stream intervals may be used. The system may have a relatively small startup latency while users may enjoy most of the interactive functions that are typical of video recorders including fast-forward, forward-jump, and so on. Furthermore, the system can maintain the number of data streams, and therefore the bandwidth, required.

Owner:DINASTECH IPR

Controller for multiple instruction thread processors

InactiveUS20050022196A1Efficient comprehensive utilizationProgram initiation/switchingProgram synchronisationLong latencyWaiting time

A mechanism controls a multi-thread processor so that when a first thread encounters a latency event for a first predefined time interval temporary control is transferred to an alternate execution thread for duration of the first predefined time interval and then back to the original thread. The mechanism grants full control to the alternate execution thread when a latency event for a second predefined time interval is encountered. The first predefined time interval is termed short latency event whereas the second time interval is termed long latency event.

Owner:INTEL CORP

Inkjet ink

The present invention pertains to inkjet ink with long latency and, more particularly, to an aqueous inkjet ink comprising a self-dispersing pigment and certain water soluble vehicle components which, in combination, provide long latency.

Owner:EI DU PONT DE NEMOURS & CO

Using value speculation to break constraining dependencies in iterative control flow structures

One embodiment of the present invention provides a system that uses value speculation to break constraining dependencies in loops. The system operates by first identifying a loop within a computer program, and then identifying a dependency on a long-latency operation within the loop that is likely to constrain execution of the loop. Next, the system breaks the dependency by modifying the loop to predict a value that will break the dependency, and then using the predicted value to speculatively execute subsequent loop instructions.

Owner:ORACLE INT CORP

Mechanism to exploit synchronization overhead to improve multithreaded performance

Method, apparatus, and program means for a programmable event driven yield mechanism that may activate other threads. In one embodiment, an apparatus includes execution resources to execute a plurality of instructions and an event detector to detect a long latency event associated with a synchronization object. The event detector can cause a first thread switch in response to the long latency event associated with the synchronization object. The apparatus may also include a spin detector to detect that the synchronization object is a contended synchronization object. The spin detector can cause a second thread switch in response to the detection of the contended synchronization object to enable a spin detect response.

Owner:INTEL CORP

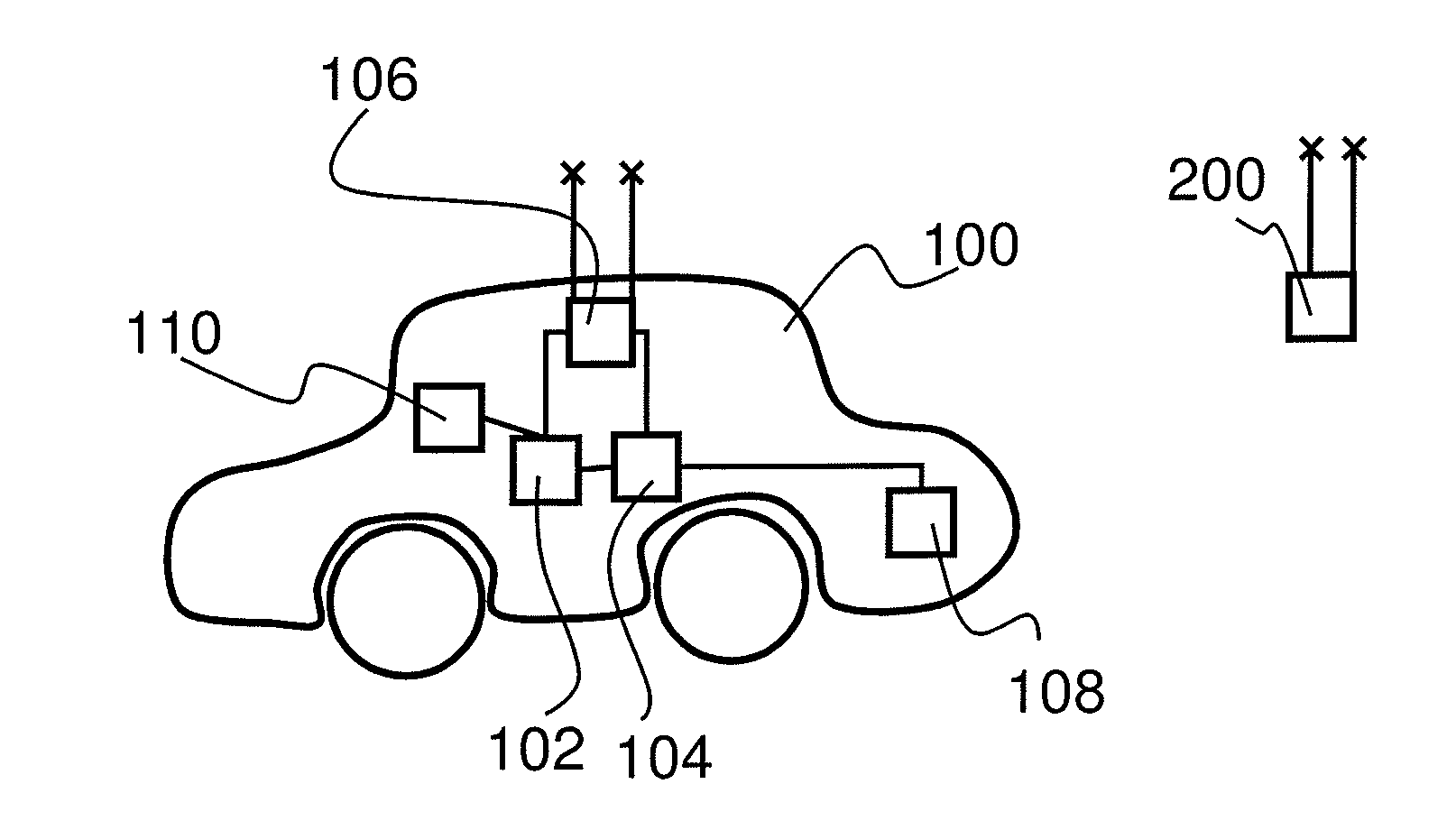

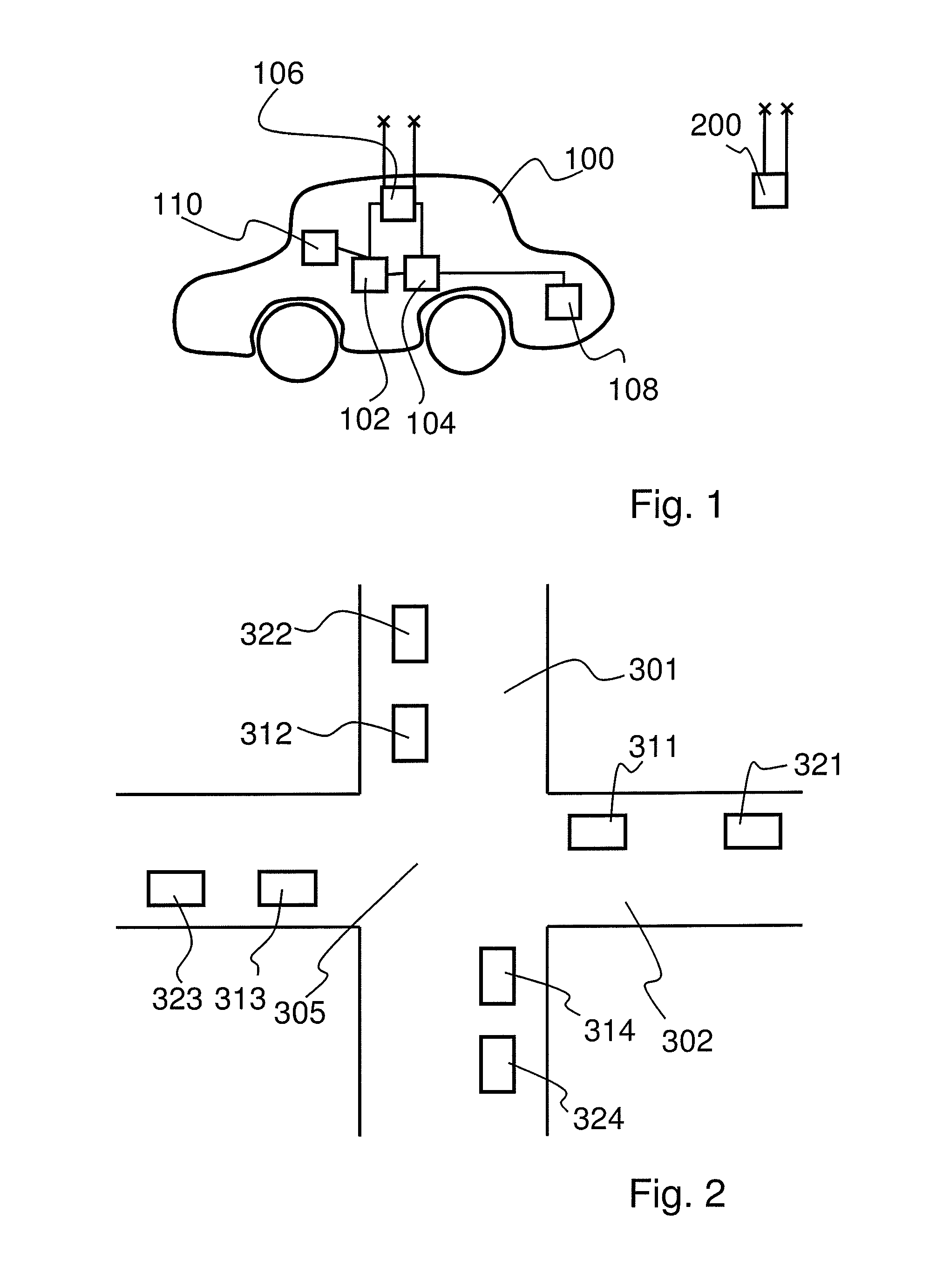

Device and method for c2x communication

ActiveUS20130120159A1Less periodRelatively large bandwidthAnti-collision systemsTransmissionMarket penetrationLong latency

A method for the communication of a vehicle with at least one further vehicle and / or for the communication of a vehicle with an infrastructure device (C2X communication), wherein the communication serves to transmit at least one information item for application in a driver assistance system and / or a safety system of the vehicle. In order to ensure good safety and reliability of the driver assistance system or safety system in the case of low market penetration of C2X communication, the at least one information item is transmitted via a first communication channel and / or via a second communication channel in dependence on its nature, wherein the first communication channel has a longer latency period than the second communication channel. The invention also describes a corresponding communication device, a corresponding driver assistance system or a corresponding safety system, a corresponding vehicle, a program element and a computer-readable medium.

Owner:CONTINENTAL TEVES AG & CO OHG

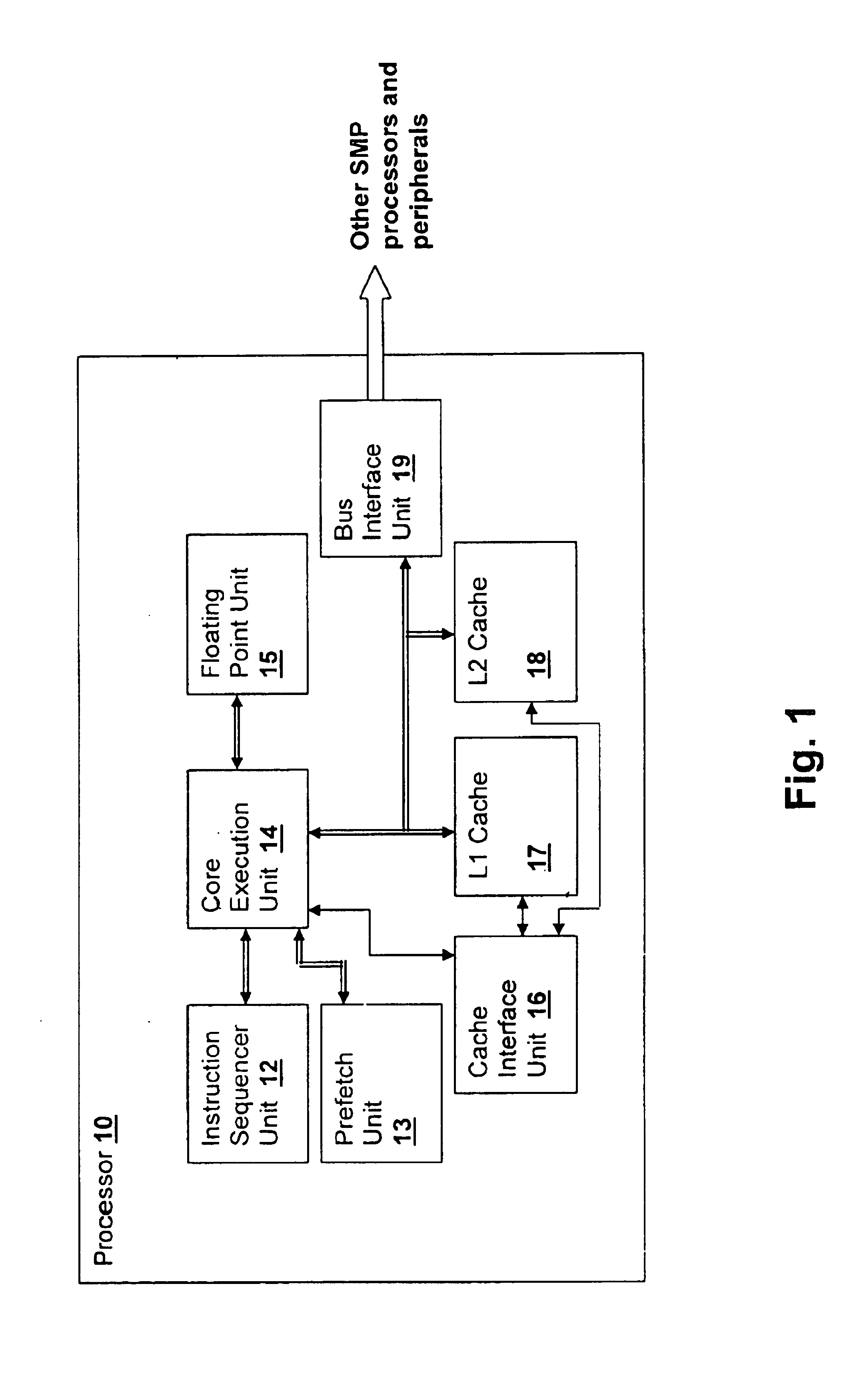

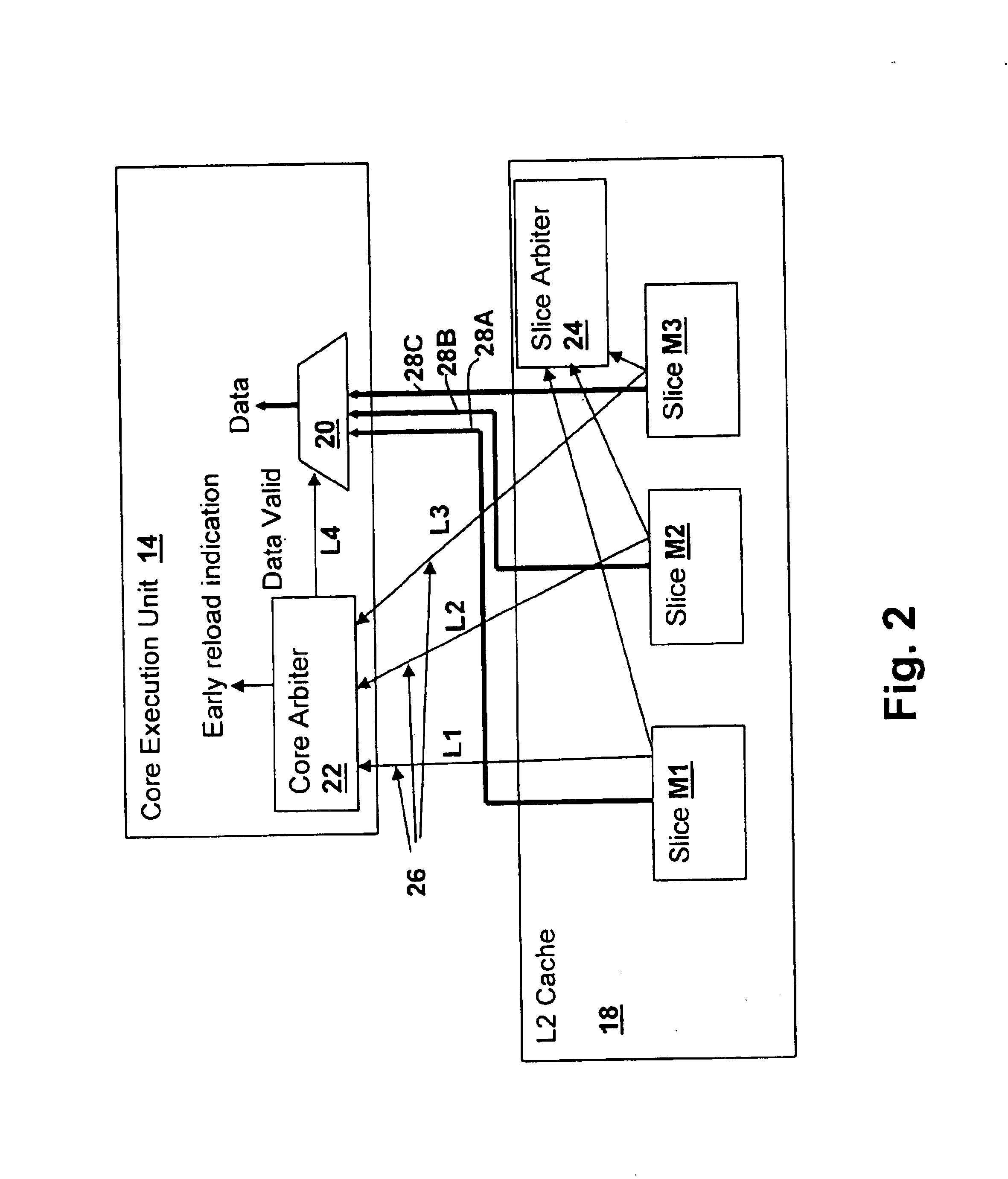

Method and system for managing distributed arbitration for multicycle data transfer requests

InactiveUS6950892B2Improved multicycle data transfer operationIncrease delayMemory adressing/allocation/relocationLong latencyLatency (engineering)

A method and system for managing distributed arbitration for multi-cycle data transfer requests provides improved performance in a processing system. A multi-cycle request indicator is provided to a slice arbiter and if a multi-cycle request is present, only one slice is granted its associated bus. The method further blocks any requests from other requesting slices having a lower latency than the first slice until the latency difference between the other requesting slices and the longest latency slice added to a predetermined cycle counter value has expired. The method also blocks further requests from the first slice until the predetermined cycle counter value has elapsed and blocks requests from slices having a higher latency than the first slice until the predetermined cycle counter value less the difference in latencies for the first slice and for the higher latency slice has elapsed.

Owner:INT BUSINESS MASCH CORP

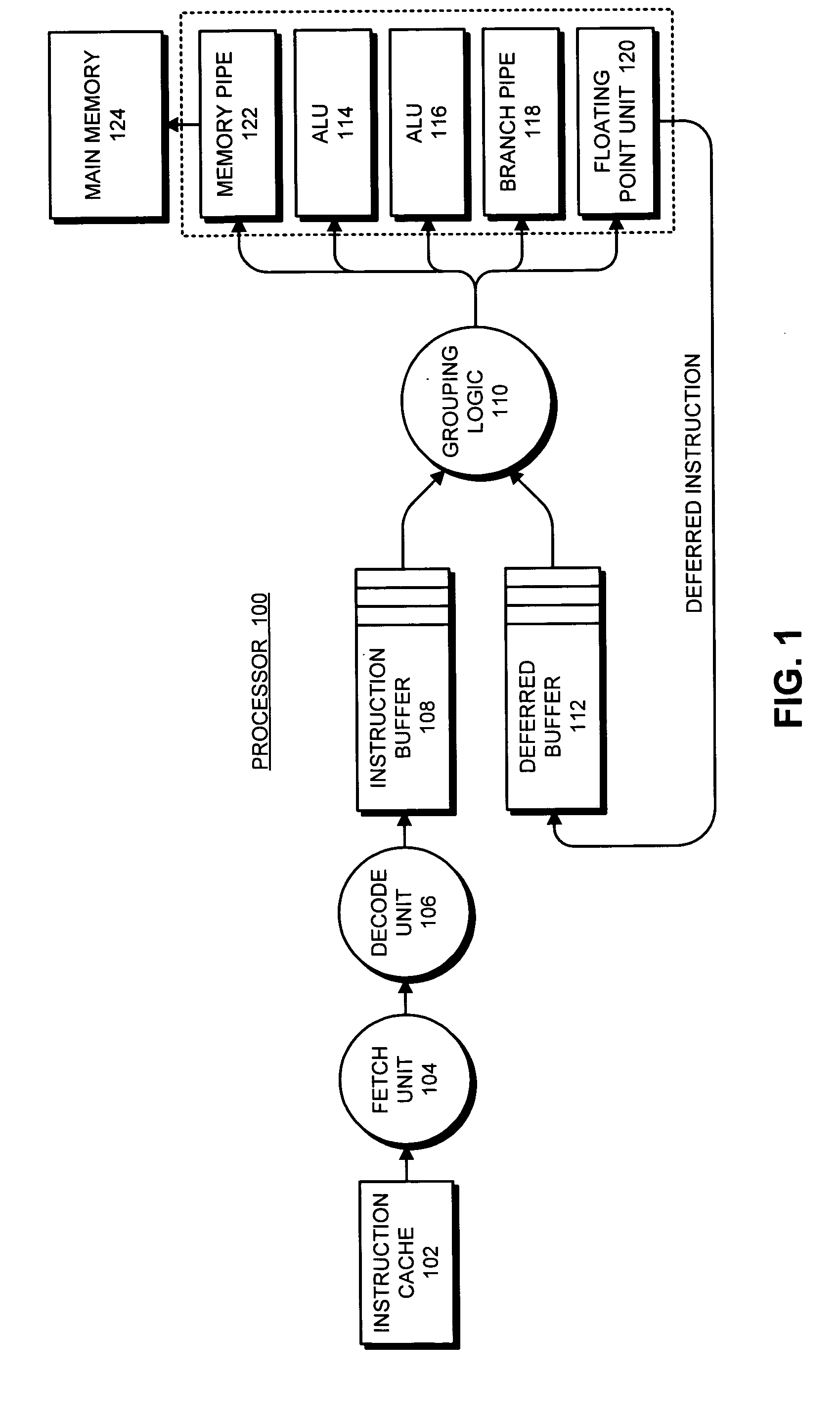

Selective execution of deferred instructions in a processor that supports speculative execution

ActiveUS20060010309A1Digital computer detailsSpecific program execution arrangementsSpeculative executionLong latency

One embodiment of the present invention provides a system which selectively executes deferred instructions following a return of a long-latency operation in a processor that supports speculative-execution. During normal-execution mode, the processor issues instructions for execution in program order. When the processor encounters a long-latency operation, such as a load miss, the processor records the long-latency operation in a long-latency scoreboard, wherein each entry in the long-latency scoreboard includes a deferred buffer start index. Upon encountering an unresolved data dependency during execution of an instruction, the processor performs a checkpointing operation and executes subsequent instructions in an execute-ahead mode, wherein instructions that cannot be executed because of the unresolved data dependency are deferred into a deferred buffer, and wherein other non-deferred instructions are executed in program order. Upon encountering a deferred instruction that depends on a long-latency operation within the long-latency scoreboard, the processor updates a deferred buffer start index associated with the long-latency operation to point to position in the deferred buffer occupied by the deferred instruction. When a long-latency operation returns, the processor executes instructions in the deferred buffer starting at the deferred buffer start index for the returning long-latency operation.

Owner:ORACLE INT CORP

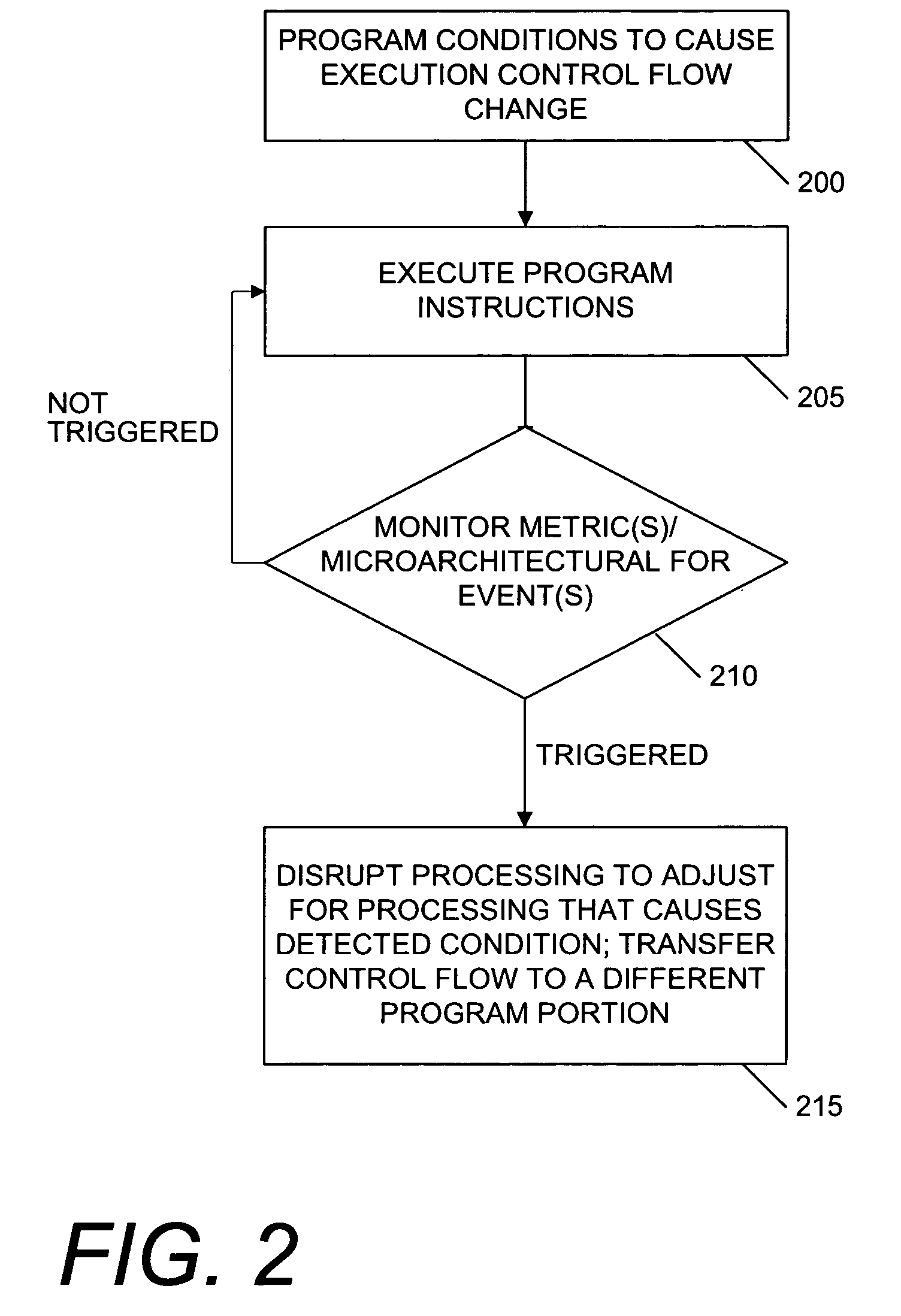

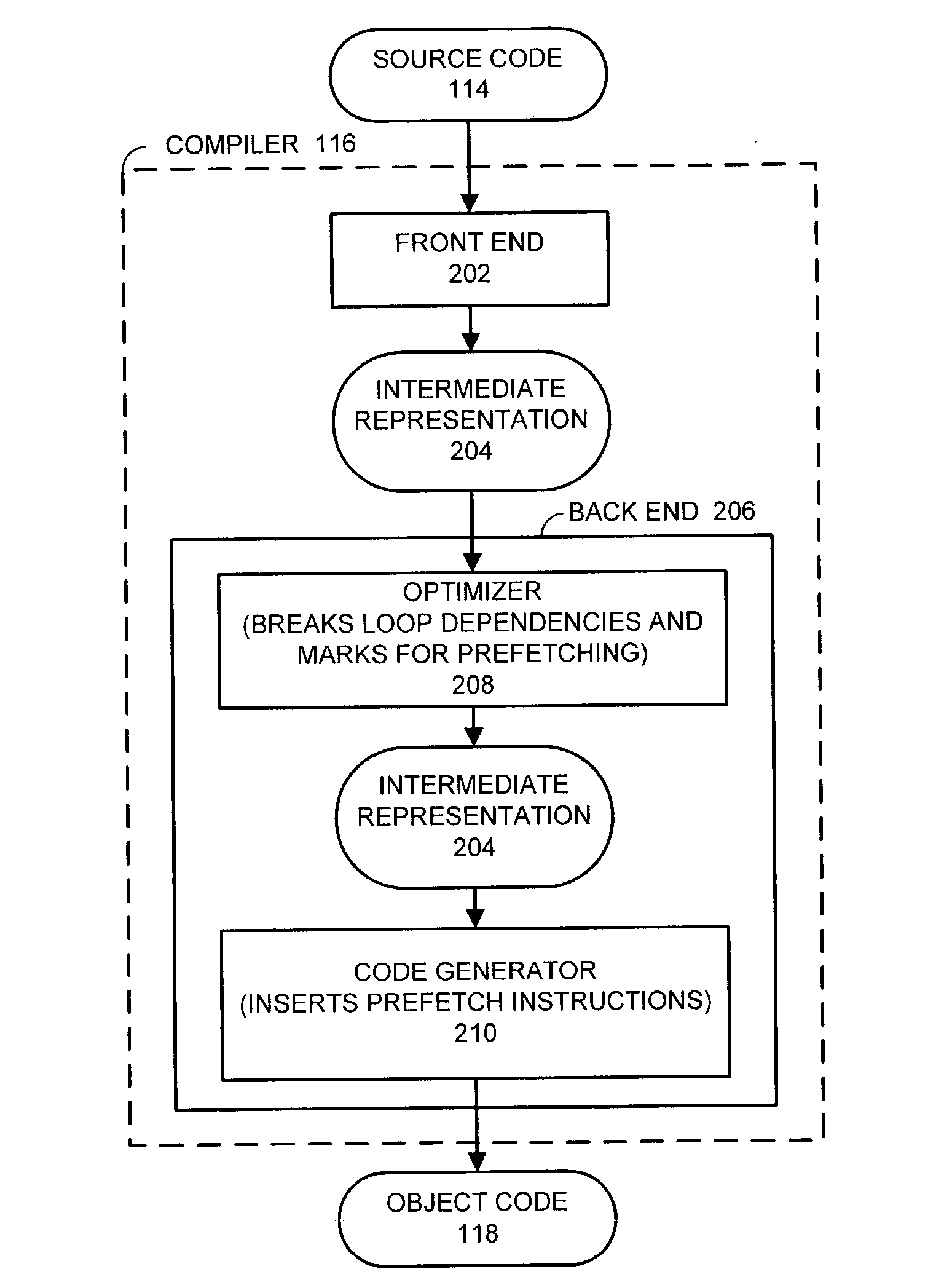

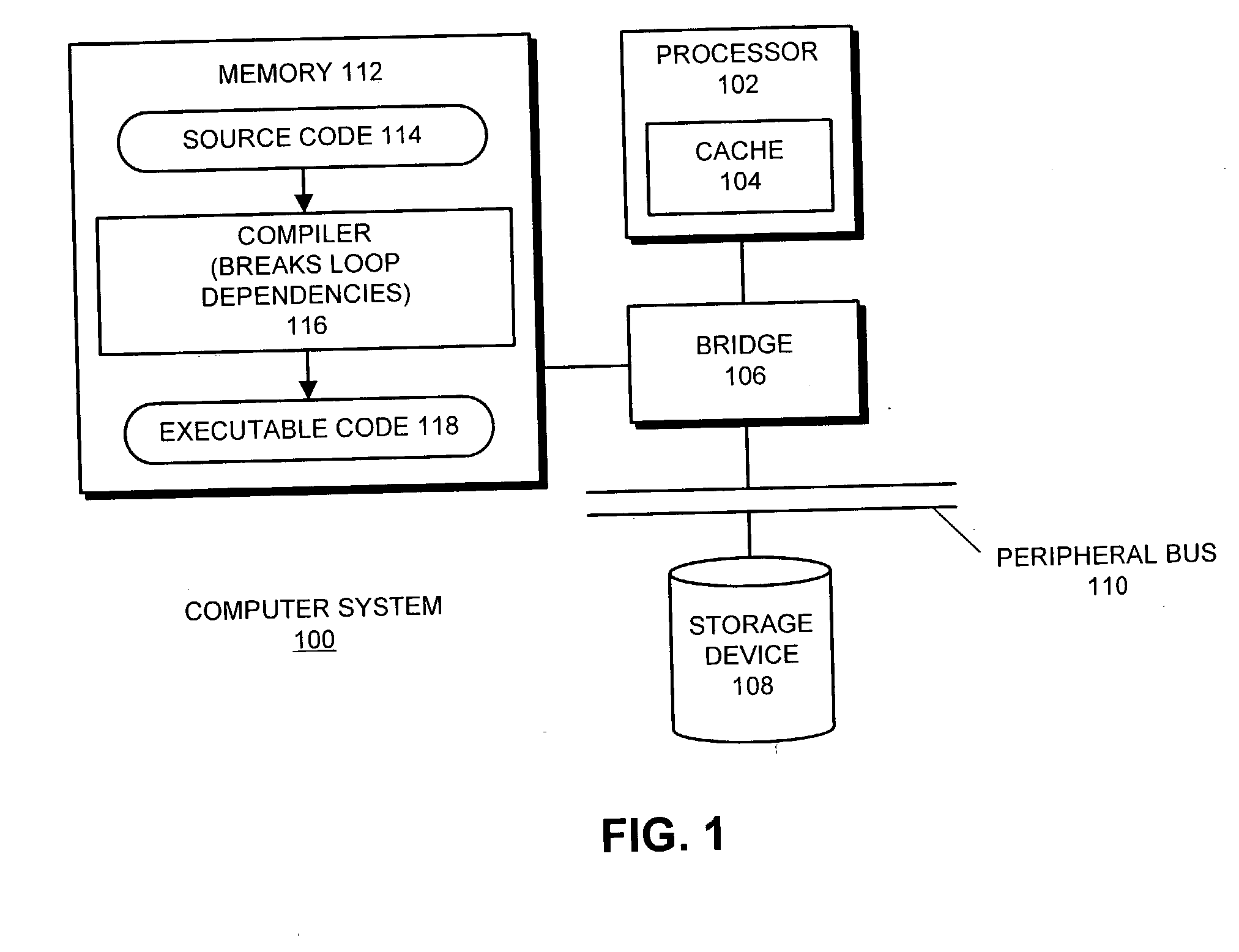

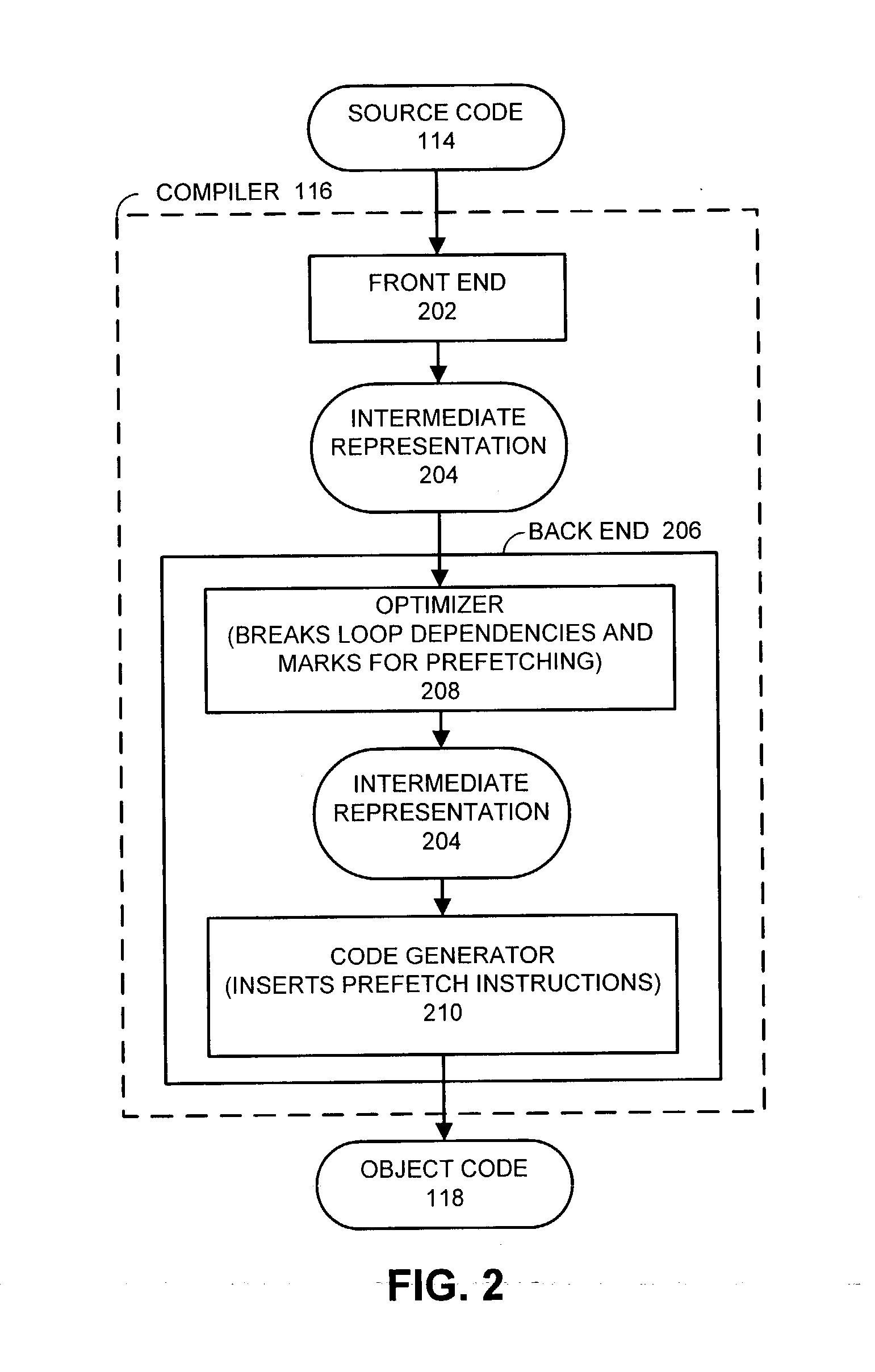

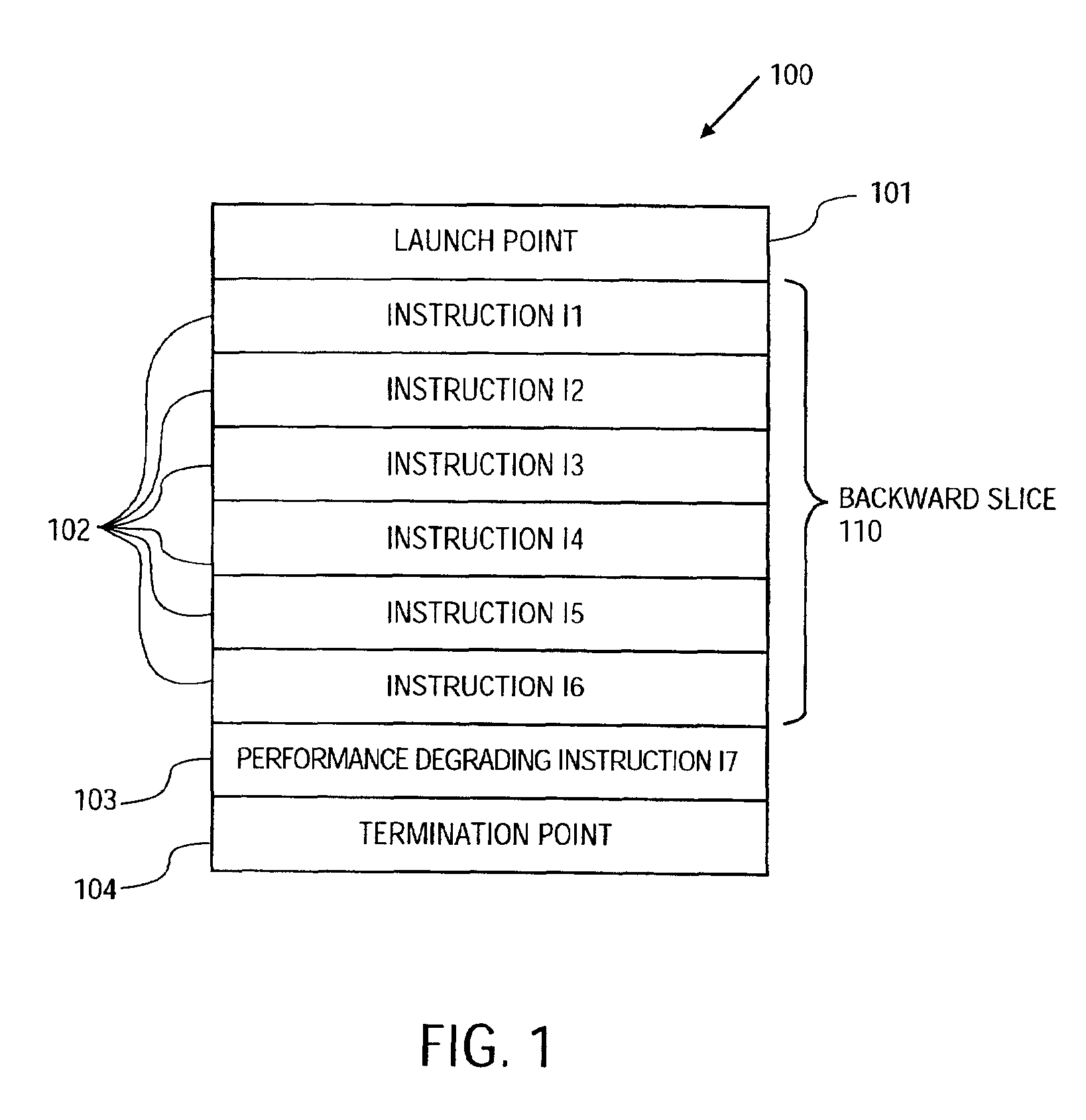

Compiler-directed speculative approach to resolve performance-degrading long latency events in an application

A compiler-directed speculative approach to resolve performance-degrading long latency events in an application is described. One or more performance-degrading instructions are identified from multiple instructions to be executed in a program. A set of instructions prefetching the performance-degrading instruction is defined within the program. Finally, at least one speculative bit of each instruction of the identified set of instructions is marked to indicate a predetermined execution of the instruction.

Owner:INTEL CORP

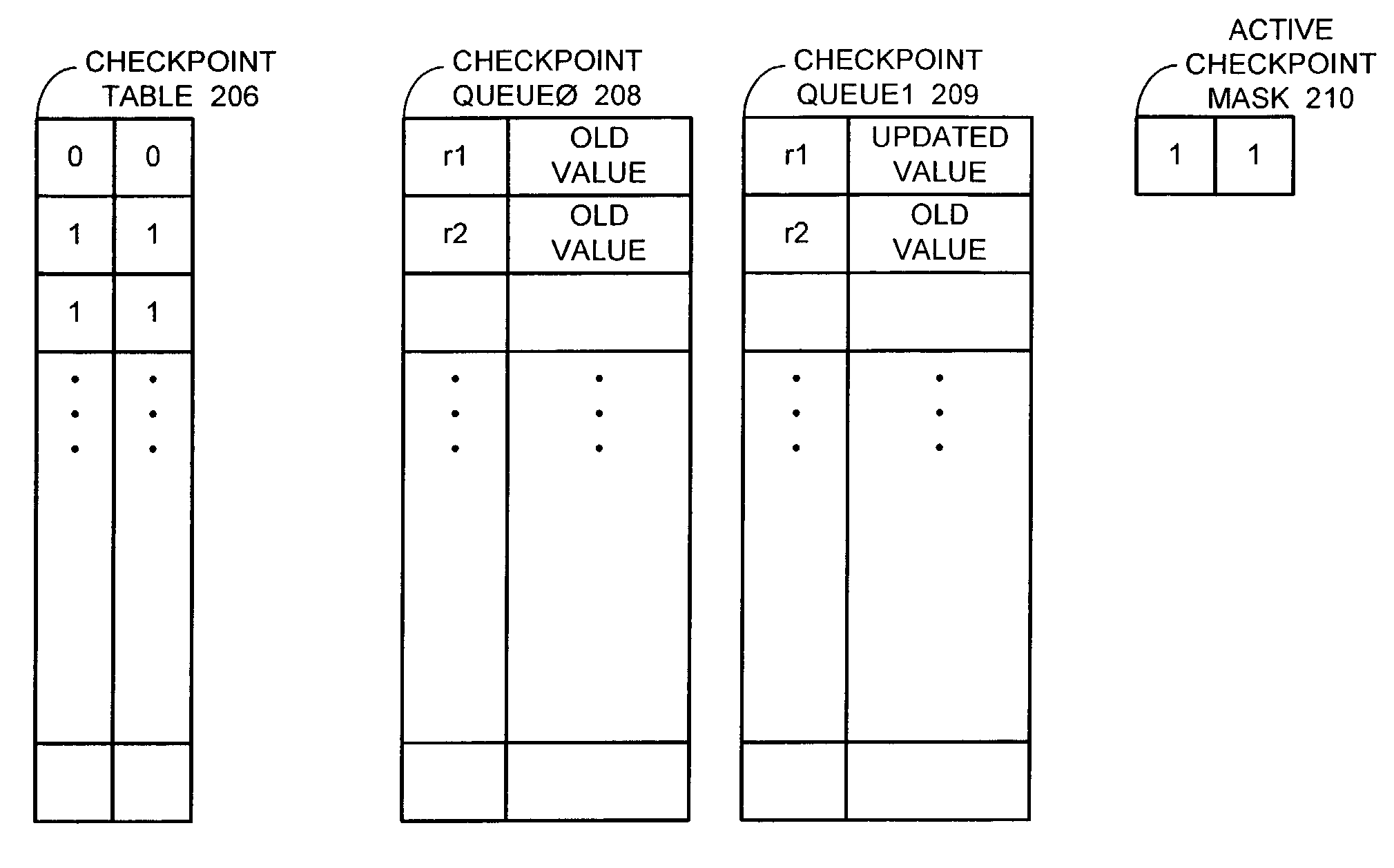

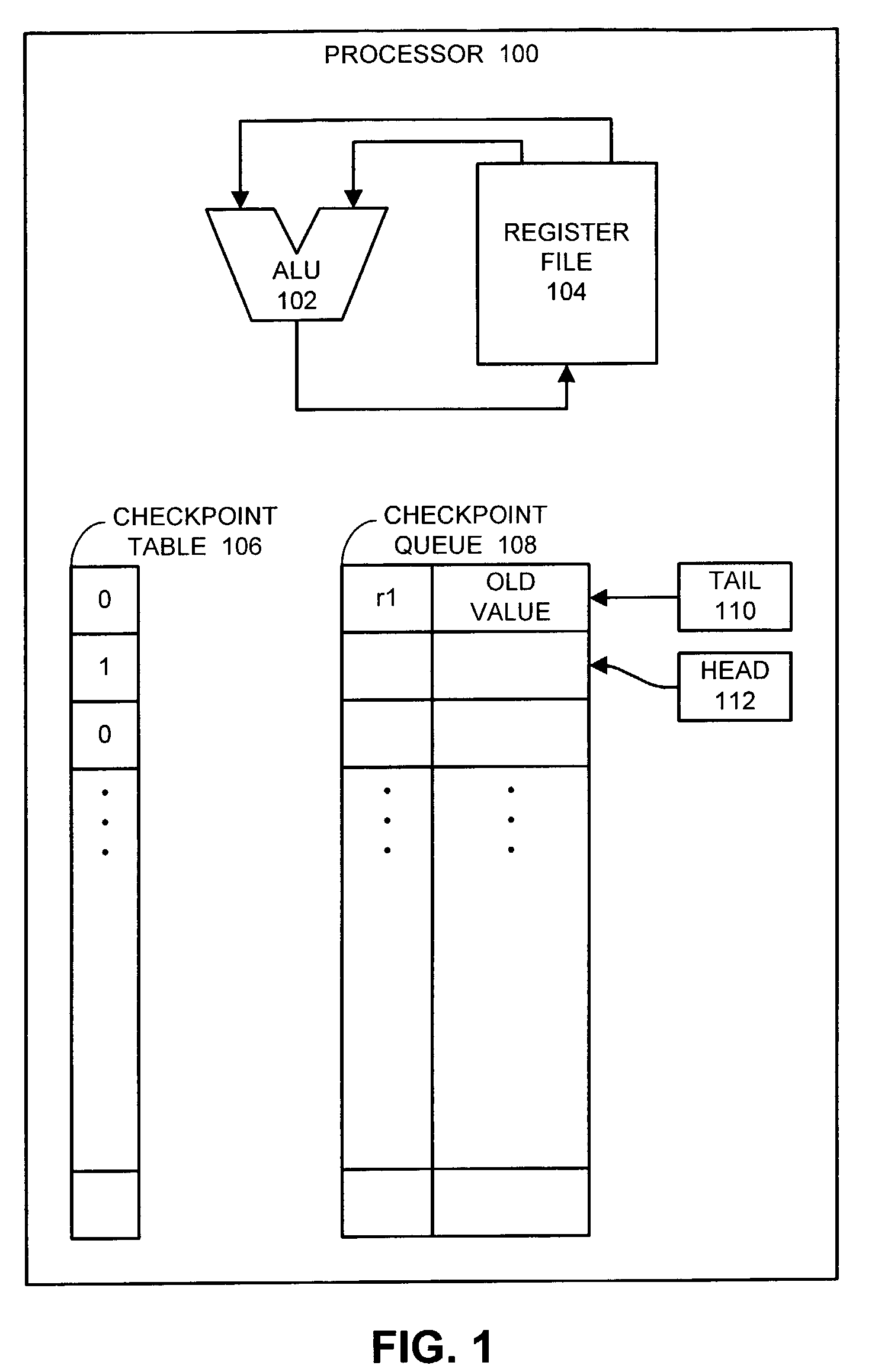

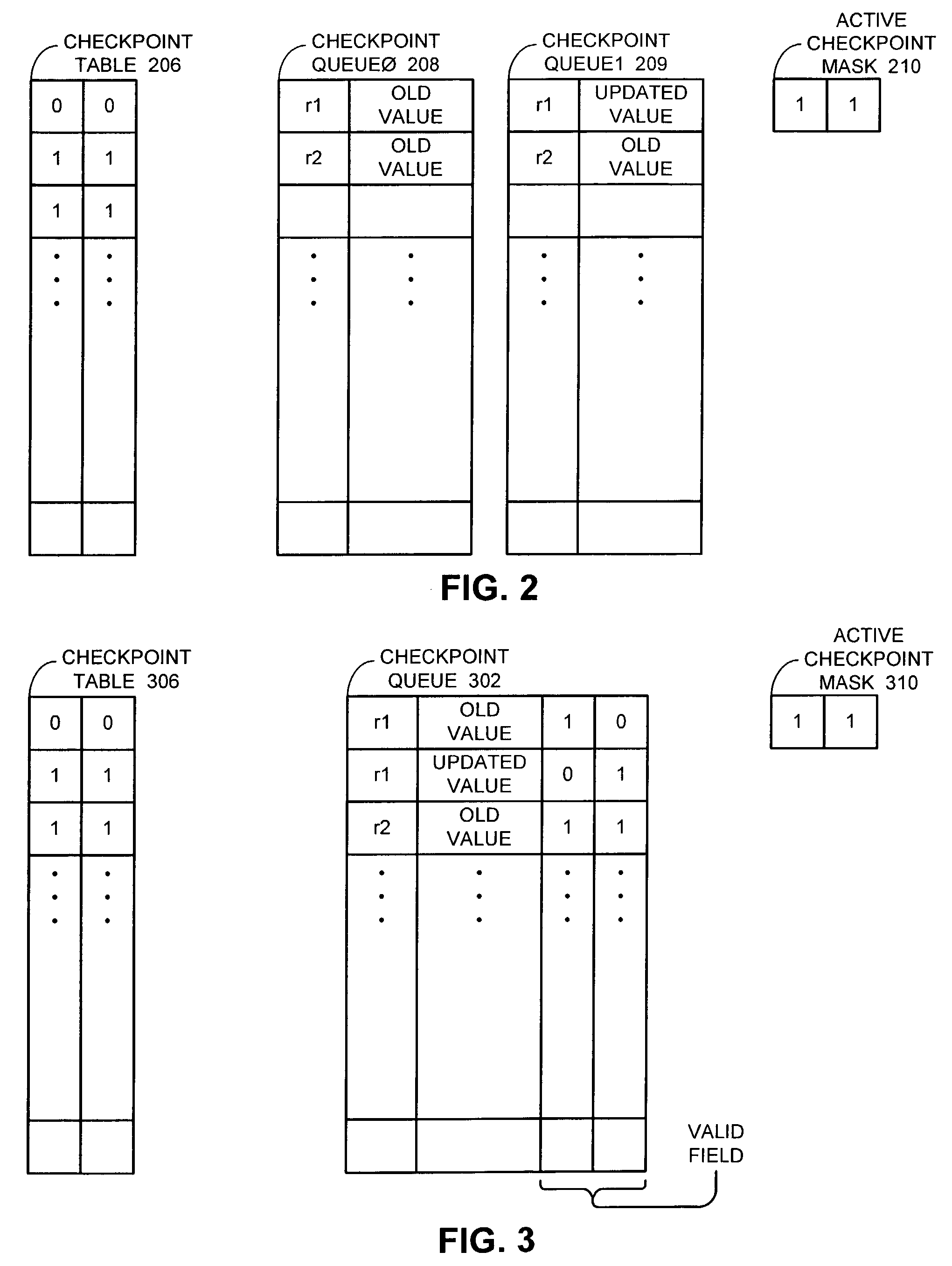

Method and apparatus for performing register file checkpointing to support speculative execution within a processor

ActiveUS7475230B2Improve system performanceMinimize the numberDigital computer detailsConcurrent instruction executionSpeculative executionLong latency

One embodiment of the present invention provides a system that performs register file checkpointing to support speculative execution within a processor. During operation, the system commences speculative execution of a program from a point of speculation, at which the outcome of a long latency instruction is speculatively predicted. During this speculative execution, registers are updated by checkpointing an old value of the register, if the register has not already been checkpointed, and then updating the architectural state of the register with the new value. In this way, only registers that are updated during the speculative execution are checkpointed, instead of checkpointing all of the architectural registers prior to commencing speculative execution.

Owner:ORACLE INT CORP

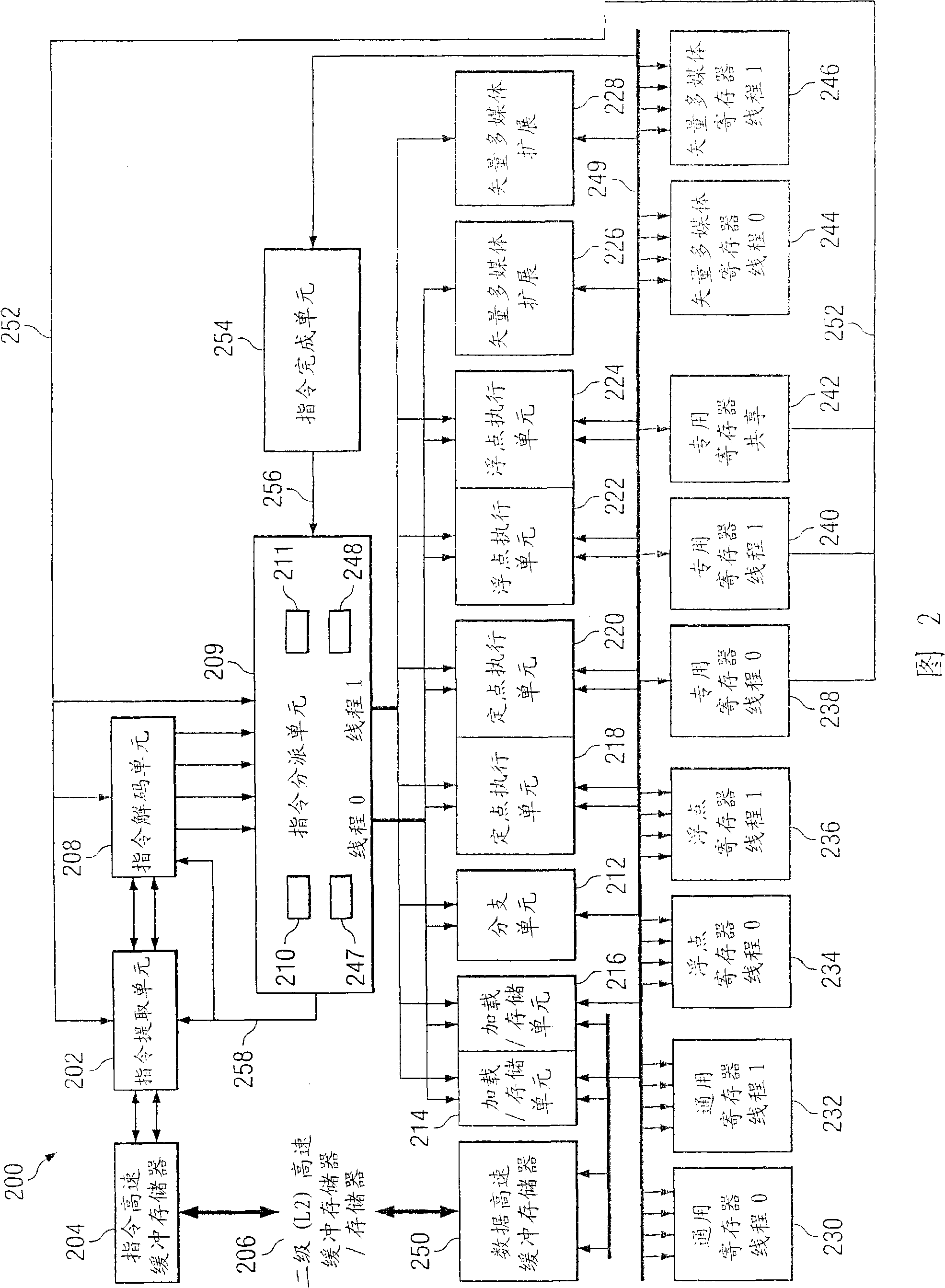

Method and system for performing independent loading for reinforcement processing unit

InactiveCN101324840AImprove abilitiesImprove performanceRegister arrangementsConcurrent instruction executionLong latencyLoad instruction

A method, system, and computer program product are provided for enhancing the execution of independent loads in a processing unit. A processing unit detects if a long-latency miss associated with a load instruction has been encountered. Responsive to a long-latency miss, the processing unit enters a load lookahead mode. Responsive to entering the load lookahead mode, the processing unit dispatches each instruction from a first set of instructions from a first buffer with an associated vector. The processing unit determines if the first set of instructions from the first buffer have completed execution. Responsive to completed execution of the first set of instructions from the first buffer, the processing unit copies the set of vectors from a first vector array to a second vector array. Then the processing unit dispatches a second set of instructions from a second buffer with an associated vector from the second vector array.

Owner:INT BUSINESS MASCH CORP

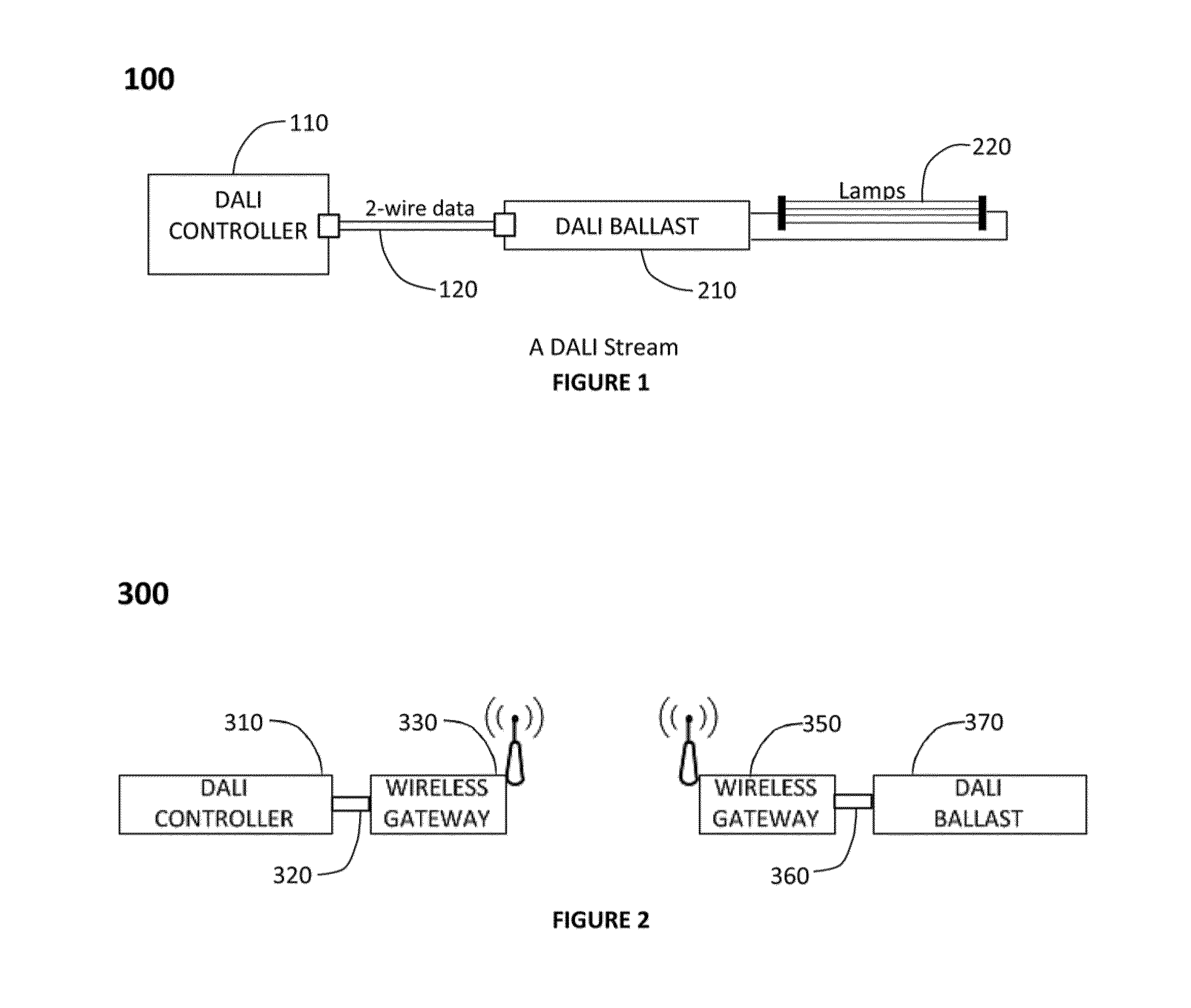

Method of supporting DALI protocol between DALI controllers and devices over an intermediate communication protocol

InactiveUS20130264971A1Save bandwidthImproving data ageElectrical apparatusElectric light circuit arrangementLong latencyWifi network

Methods of supporting DALI protocol between DALI controllers and devices over an intermediate communication protocol are disclosed. We will describe an implementation of the invention using the mesh wireless network prescribed by IEEE 802.15.4 as an intermediate protocol where it's long latency and highly variable delay characteristics poses a significant challenge to maintain adherence with the DALI specification. For practitioners skilled in the art it is obvious that the invention is also applicable to many other communication mediums and protocols, including wired networking such as Ethernet and Wifi networking such as IEEE 802.11 a / b / g / n. Additionally, the invention is applicable to transport other control protocols where specification limits the duration between forward messages and associated reverse replies.

Owner:VERIFIED ENERGY

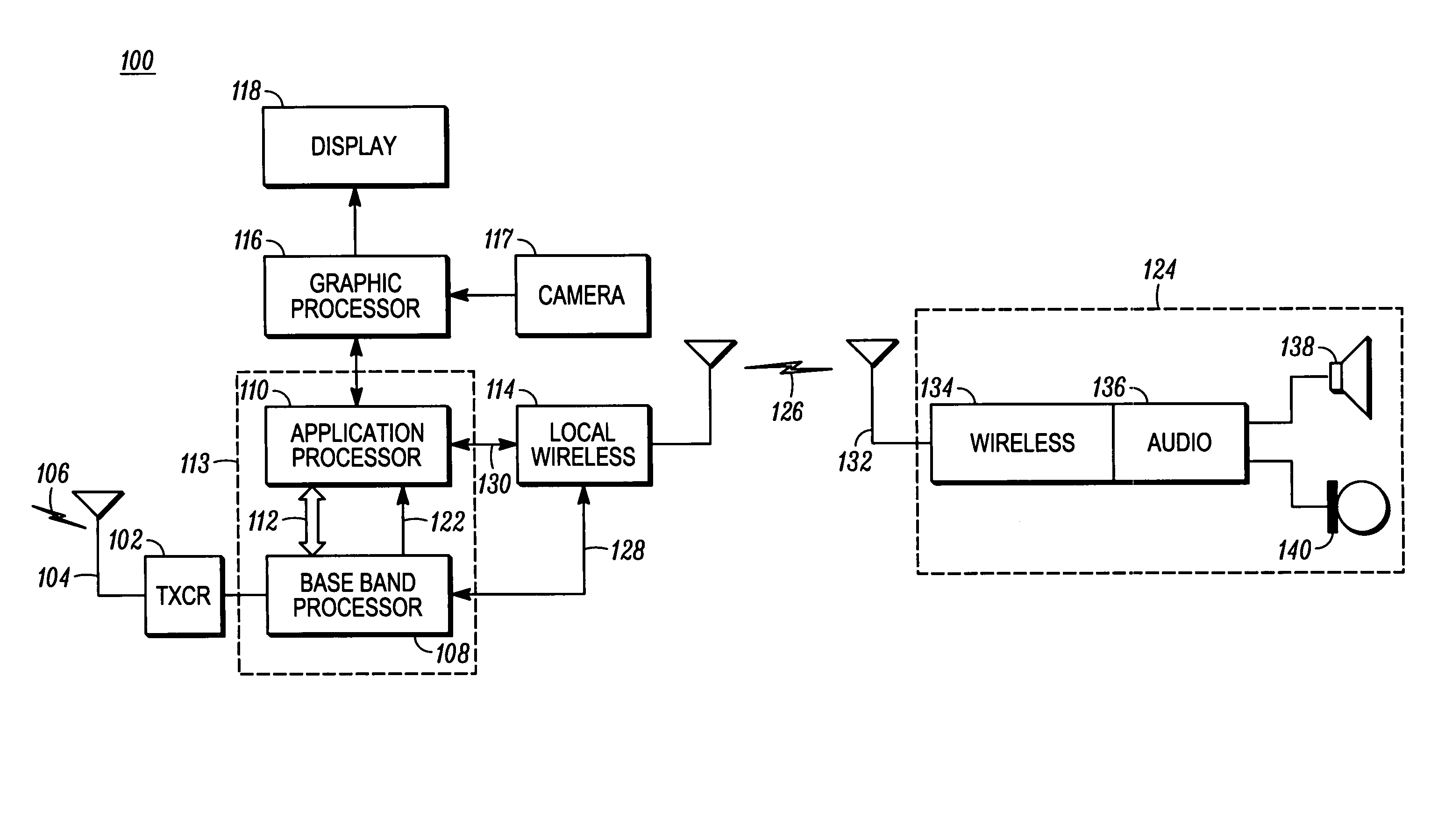

Method and apparatus for establishing an audio link to a wireless earpiece in reduced time

A mobile communication device (100) contains a local wireless controller (114) for operating with a remote audio processor (124). The remote audio processor is used for listening to and speaking with remote parties via the mobile communication device, and operates in a mode having a relatively long latency in communicating with the mobile communication device. Upon receiving a dispatch call (306), a baseband processor (108) of the mobile communication device notifies an application processor (110) with a high priority dispatch notification (122). The high priority dispatch notification causes an active link to be established between the mobile communication device and remote audio processor sooner than if were to occur with ordinary call processing.

Owner:GOOGLE TECH HLDG LLC

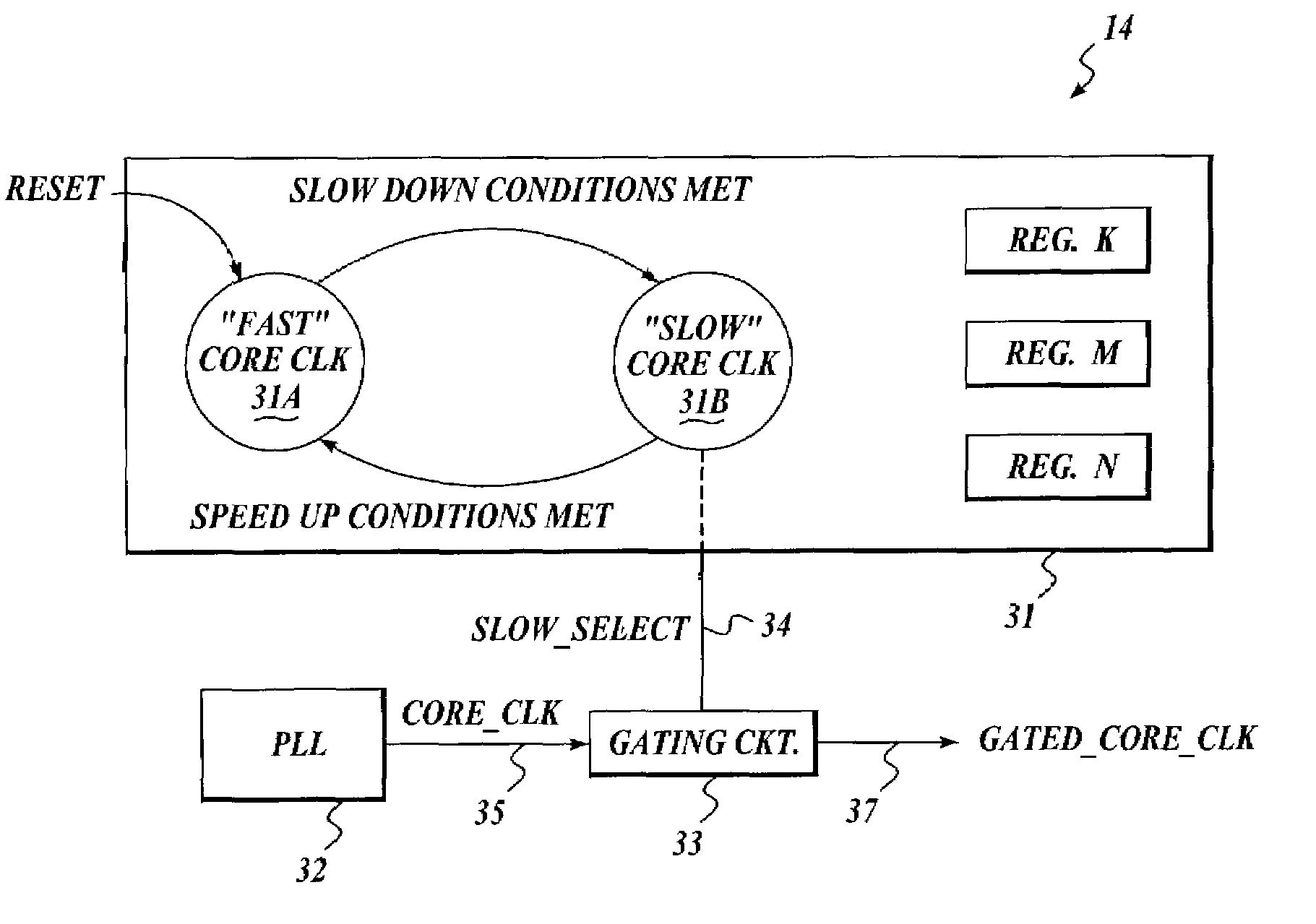

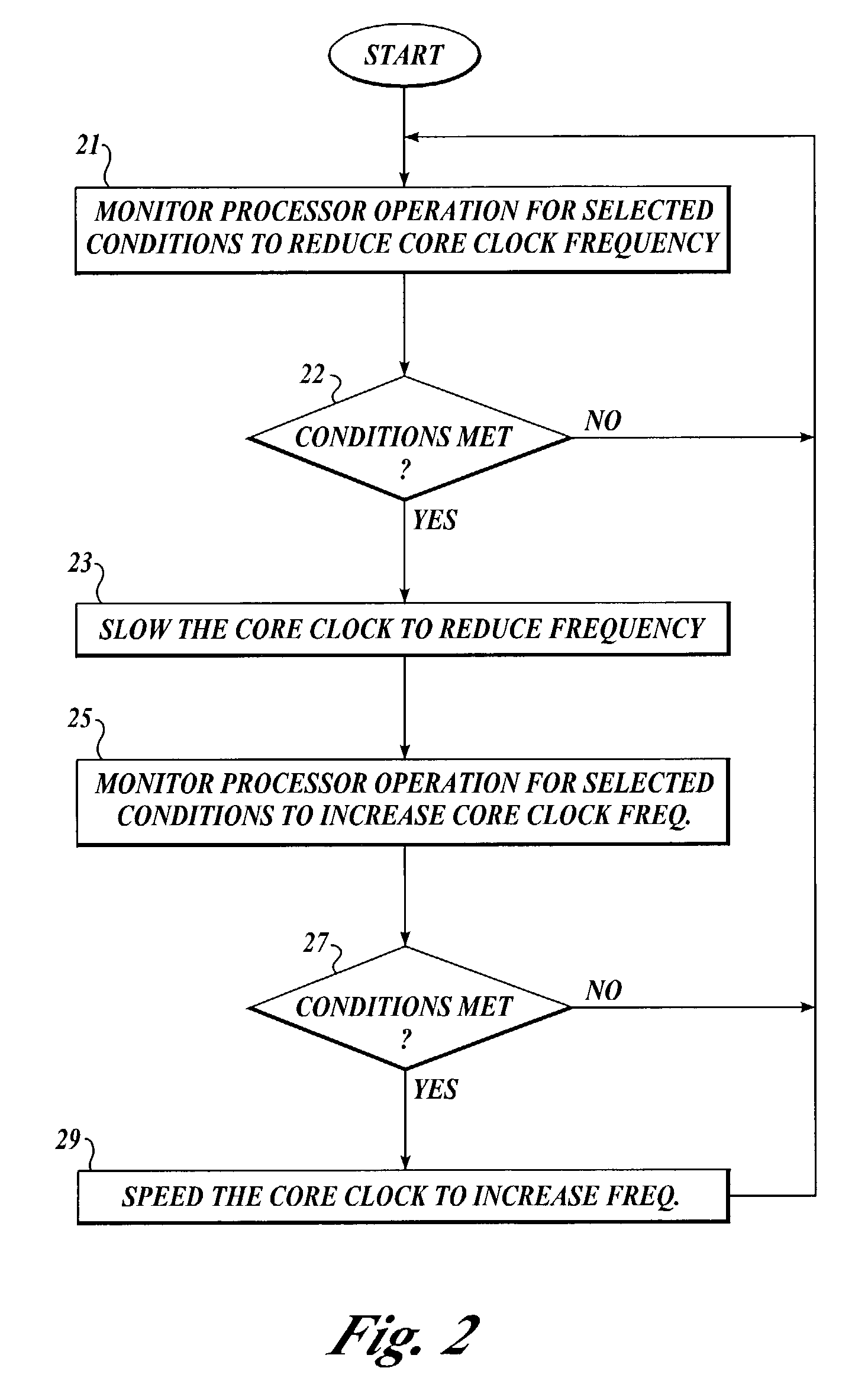

Method and apparatus for reducing clock frequency during low workload periods

A clock frequency control unit for an integrated circuit (IC) includes a clock generator, a finite state machine (FSM), and a gating circuit (GC). The FSM has at least first and second states corresponding to non-low workload low workload states, respectively. In the first state, the GC provides a clock signal to functional units of the IC with the same frequency as the clock generator output. In the second state, the GC reduces the frequency of the clock signal. In one embodiment, the GC masks out selected cycles of the clock generator output to reduce the clock signal frequency. The FSM monitors the operation of the IC to transition from the first state to the second state when selected “low workload” conditions are detected (e.g., long latency cache miss). Similarly, the FSM transitions from the second state to the first state when selected “non-low workload” conditions are detected.

Owner:INTEL CORP

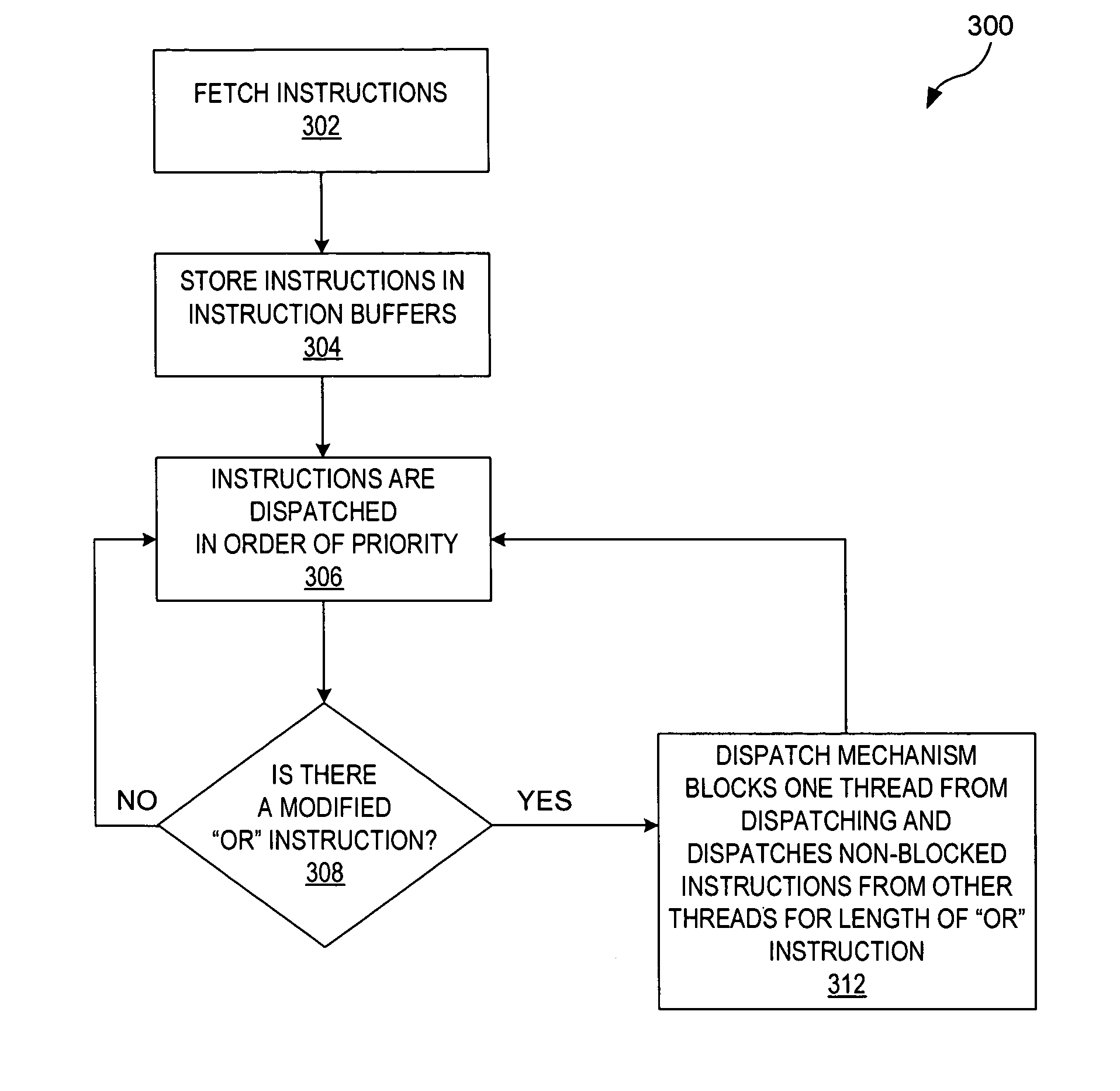

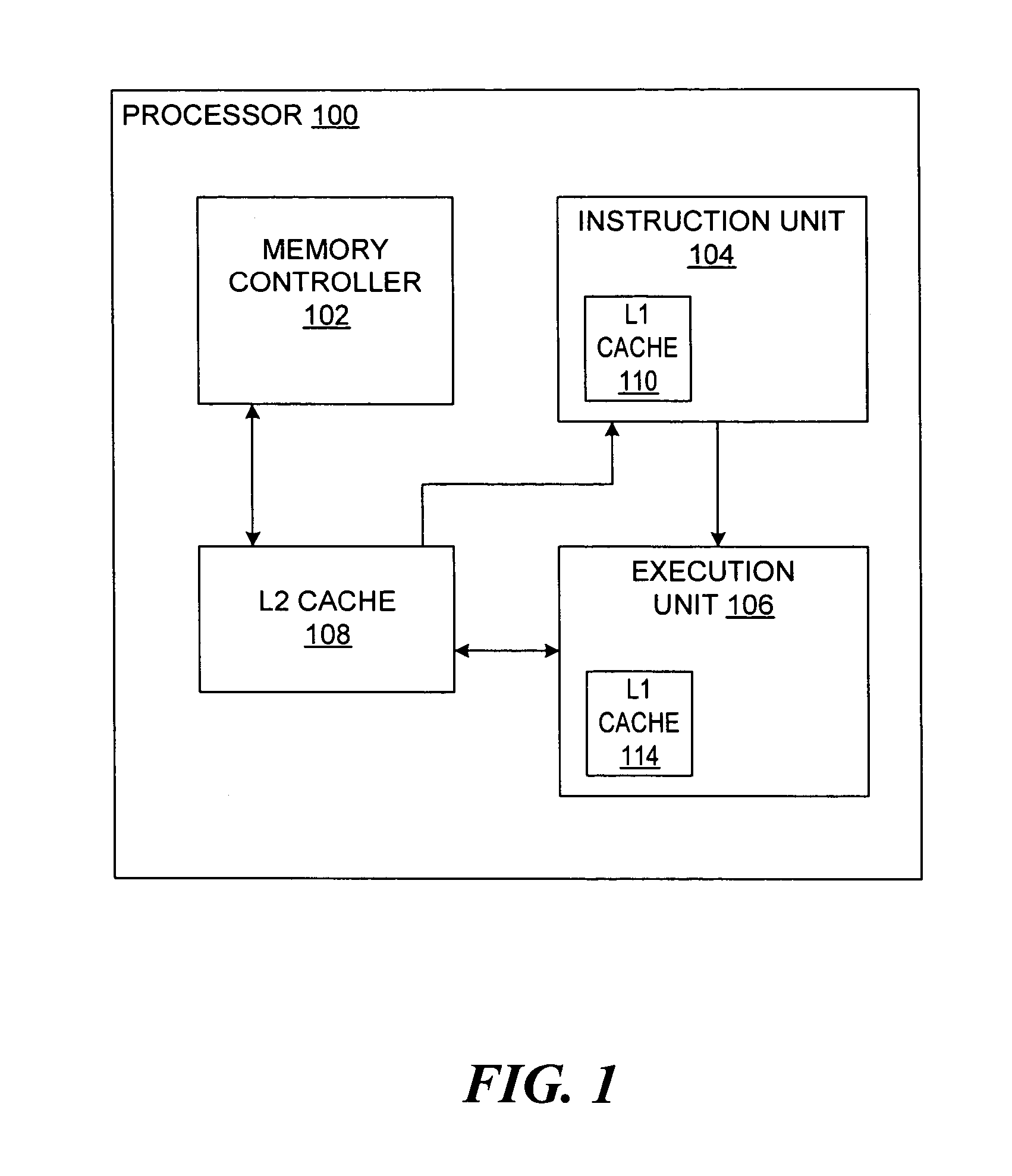

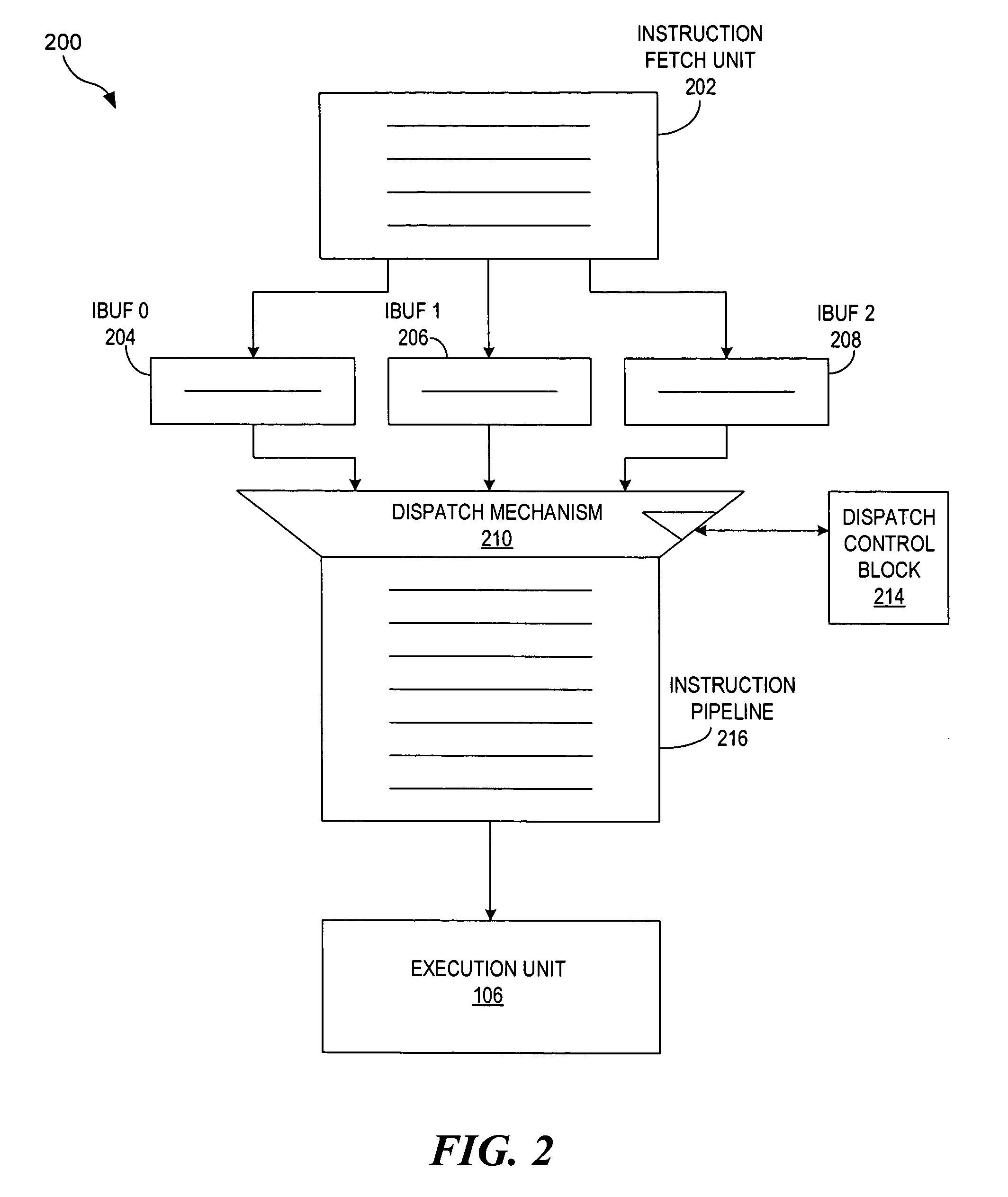

Fine grained multi-thread dispatch block mechanism

InactiveUS7313673B2Fine-grained control of thread performanceDigital computer detailsConcurrent instruction executionLong latencyThread scheduling

The present invention provides a method, a computer program product, and an apparatus for blocking a thread at dispatch in a multi-thread processor for fine-grained control of thread performance. Multiple threads share a pipeline within a processor. Therefore, a long latency condition for an instruction on one thread can stall all of the threads that share the pipeline. A dispatch-block signaling instruction blocks the thread containing the long latency condition at dispatch. The length of the block matches the length of the latency, so the pipeline can dispatch instructions from the blocked thread after the long latency condition is resolved. In one embodiment the dispatch-block signaling instruction is a modified OR instruction and in another embodiment it is a Nop instruction. By blocking one thread at dispatch, the processor can dispatch instructions from the other threads during the block.

Owner:GOOGLE LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com