Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

12655 results about "Waiting time" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Waiting times are a series of events that follow back to back to get a patient from referral to treatment in the shortest time. At each stage of this process, the waiting time clock will start and stop in order to accurately calculate how long a patient has waited so far.

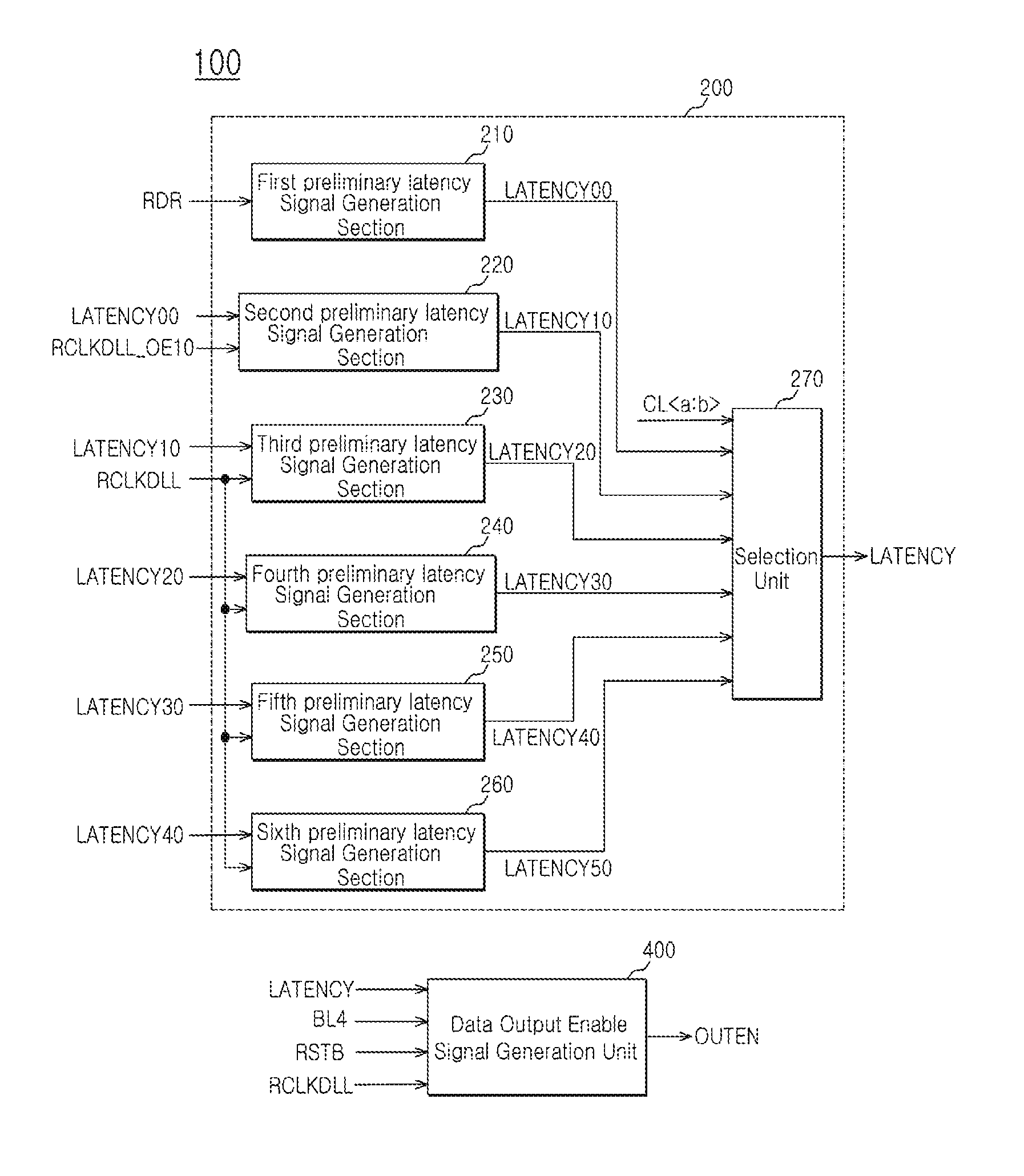

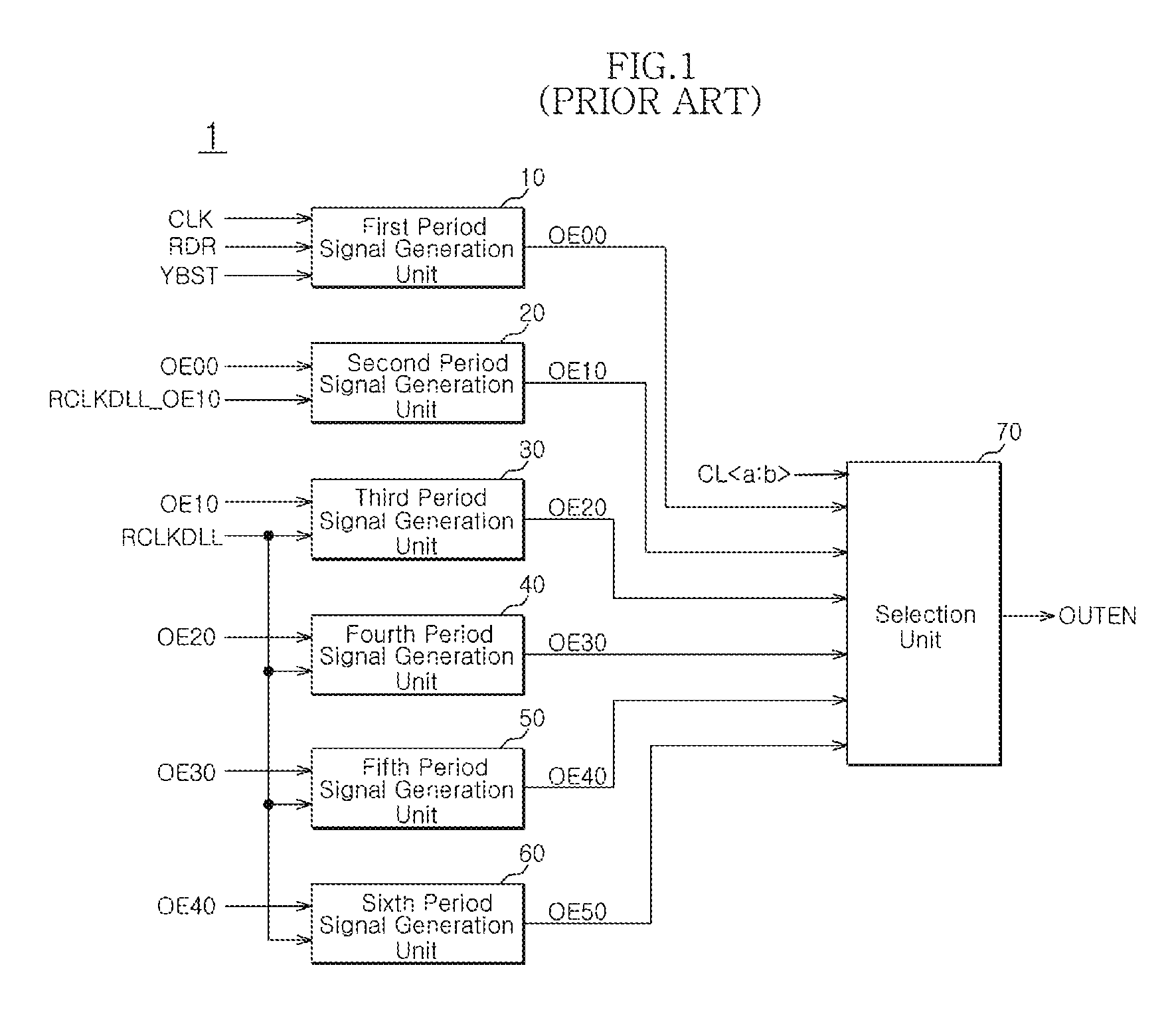

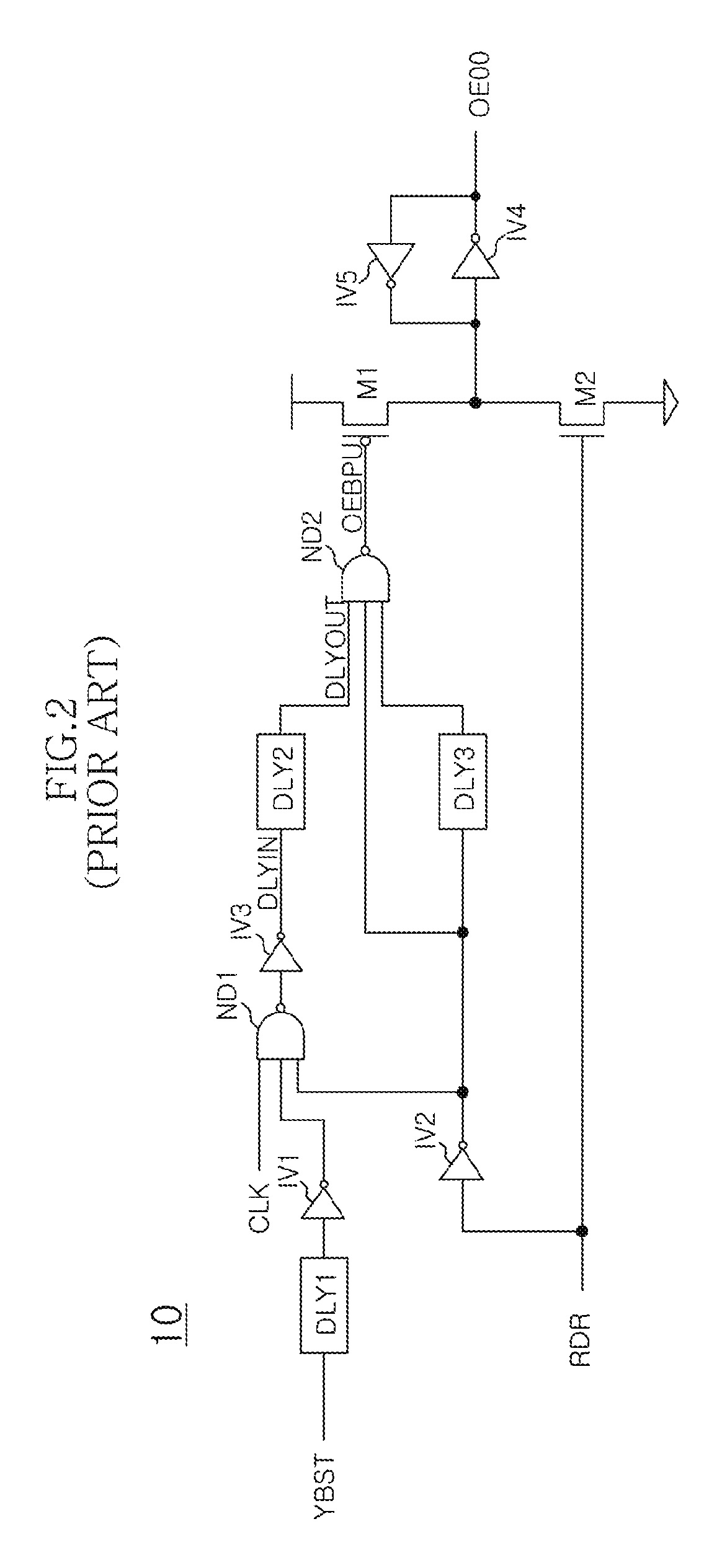

Output enable signal generation circuit of semiconductor memory

An output enable signal generation circuit of a semiconductor memory includes: a latency signal generation unit configured to generate a latency signal for designating activation timing of a data output enable signal in response to a read signal and a CAS latency signal; and a data output enable signal generation unit configured to control the activation timing and deactivation timing of the data output enable signal in response to the latency signal and a signal generated by shifting the latency signal based on a burst length (BL).

Owner:SK HYNIX INC

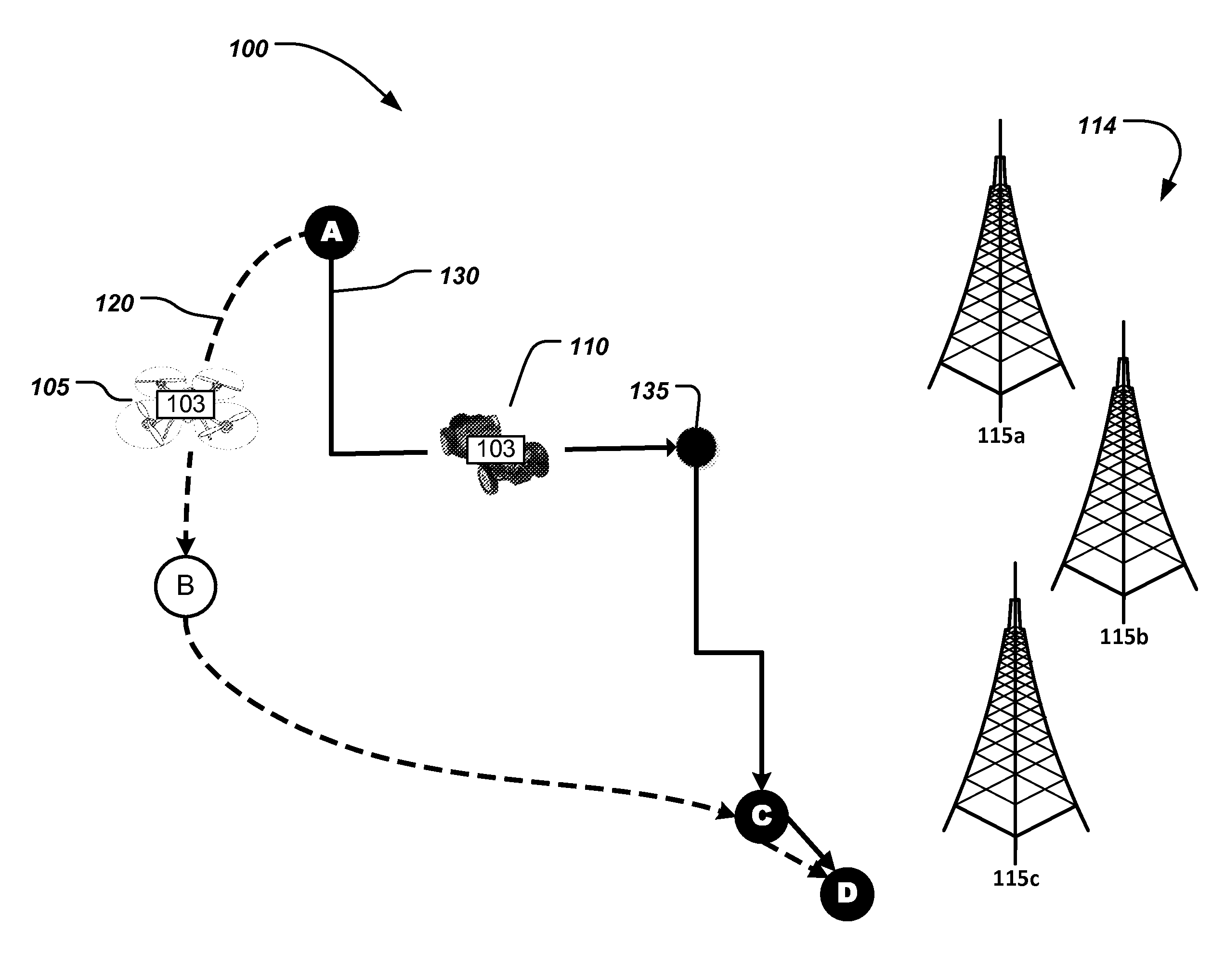

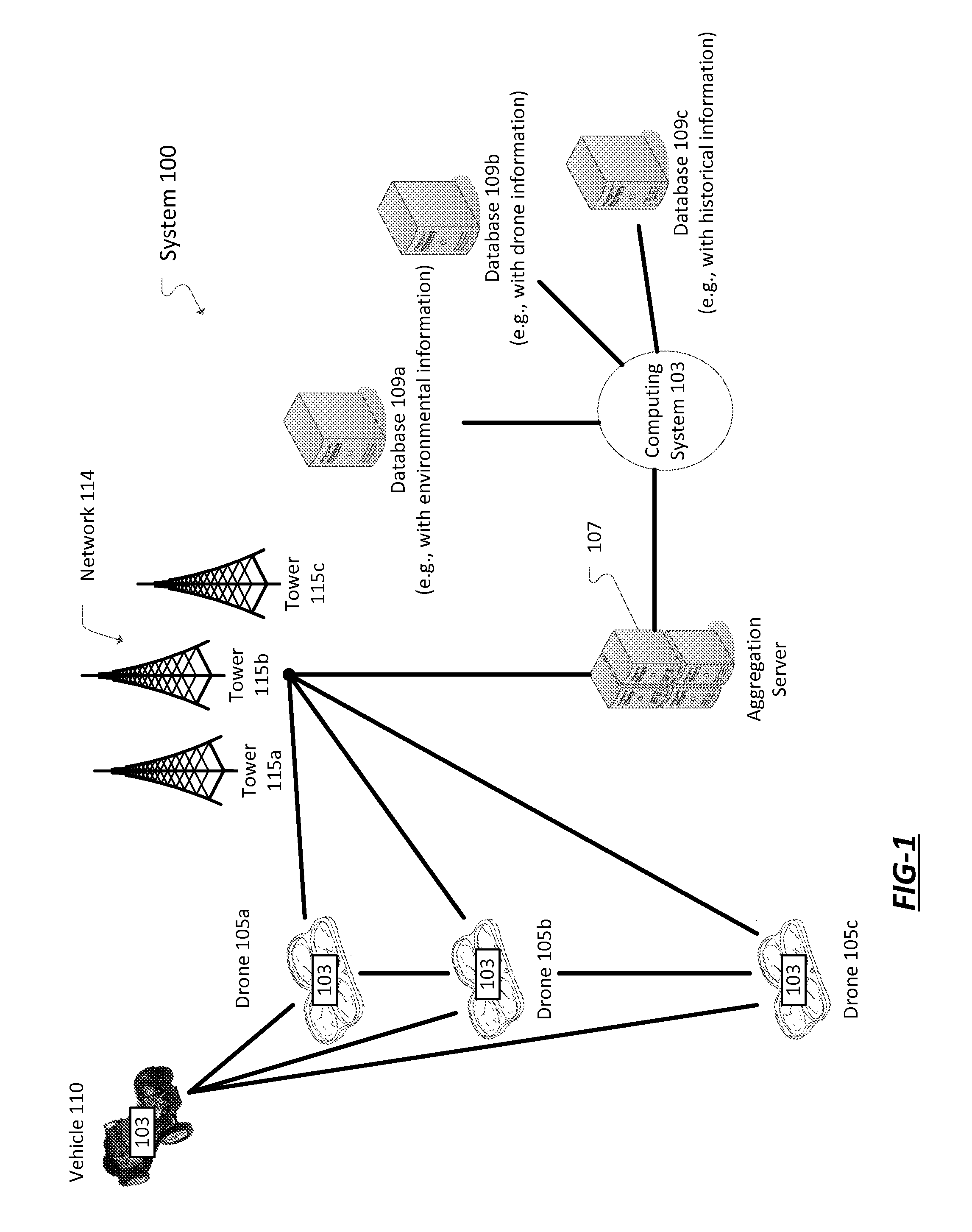

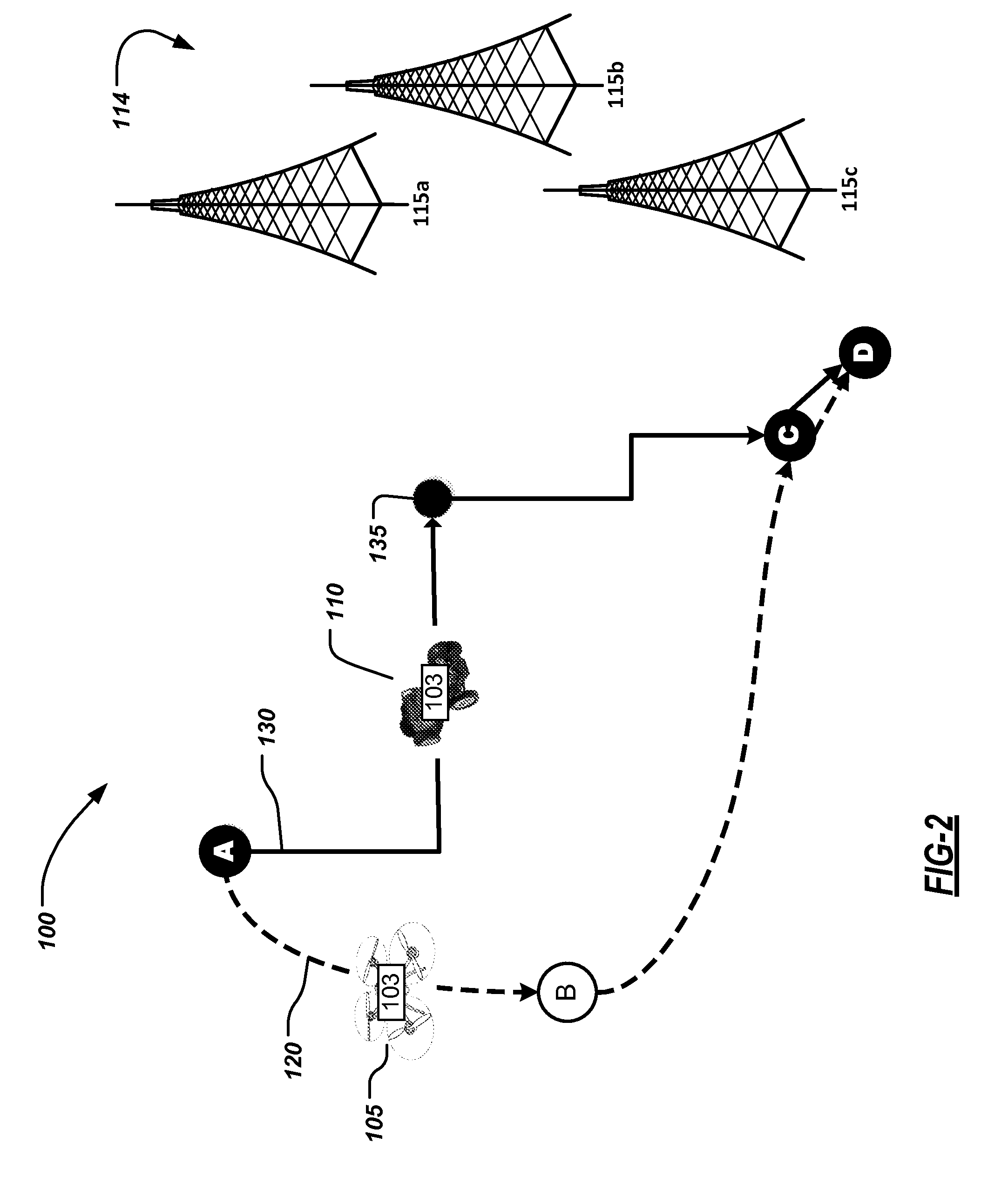

Method and system for drone deliveries to vehicles in route

ActiveUS20150370251A1Aircraft componentsNavigational calculation instrumentsTransceiverUncrewed vehicle

A system comprise a server configured to communicate vehicle information with a vehicle transceiver of a vehicle moving along a vehicle route and communicate drone information with a drone transceiver of a drone moving along a drone route. A computing device with a memory and a processor may be configured to communicatively connect with the server, process the vehicle information and the drone information, identify a plurality of pickup locations based in part on the vehicle information and drone information, select at least one of the plurality of pickup locations based in part on a priority score associated with a travel time to or wait time for each of the plurality of pickup locations, and update the drone route based in part on the selected pickup location.

Owner:VERIZON PATENT & LICENSING INC

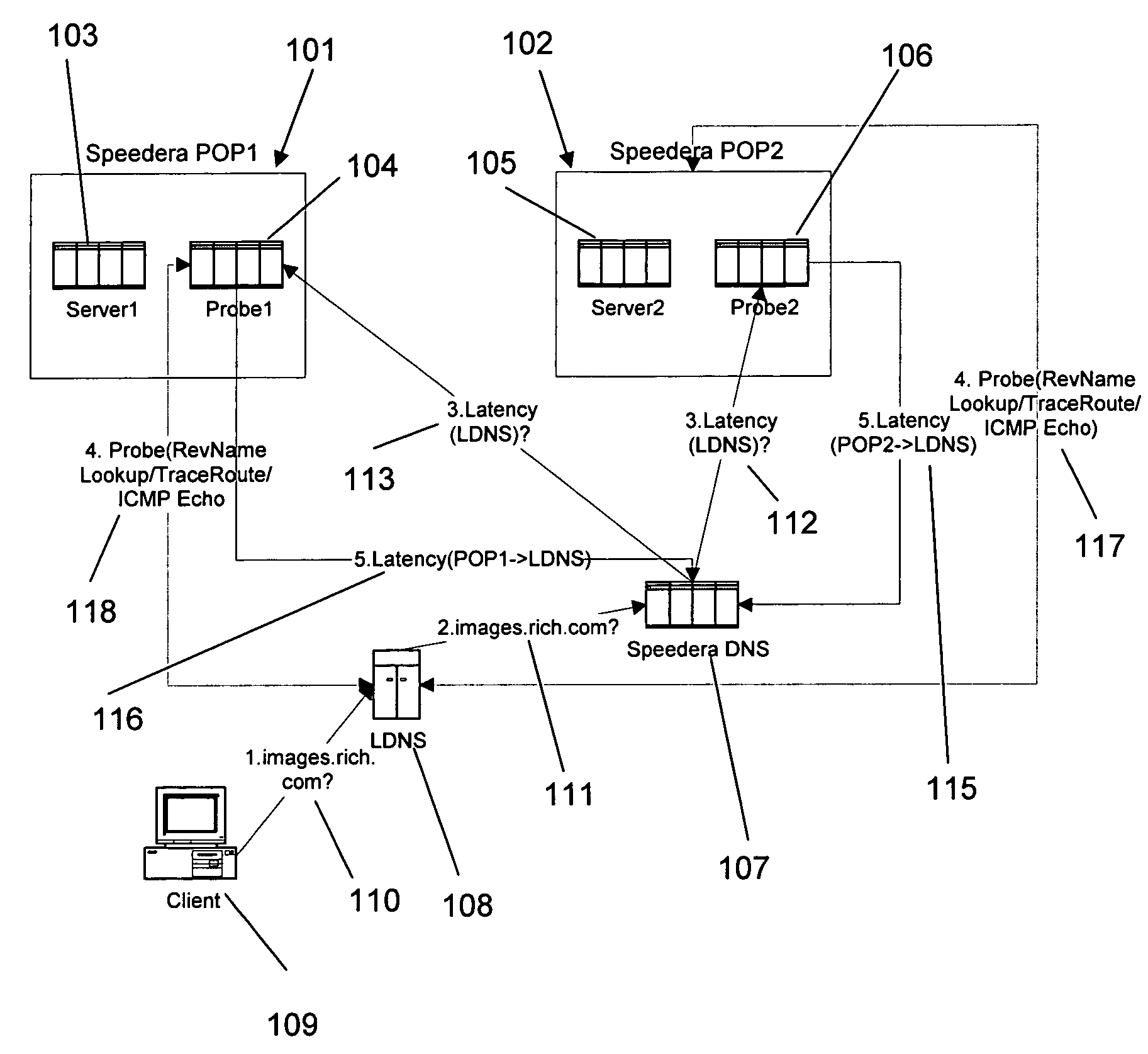

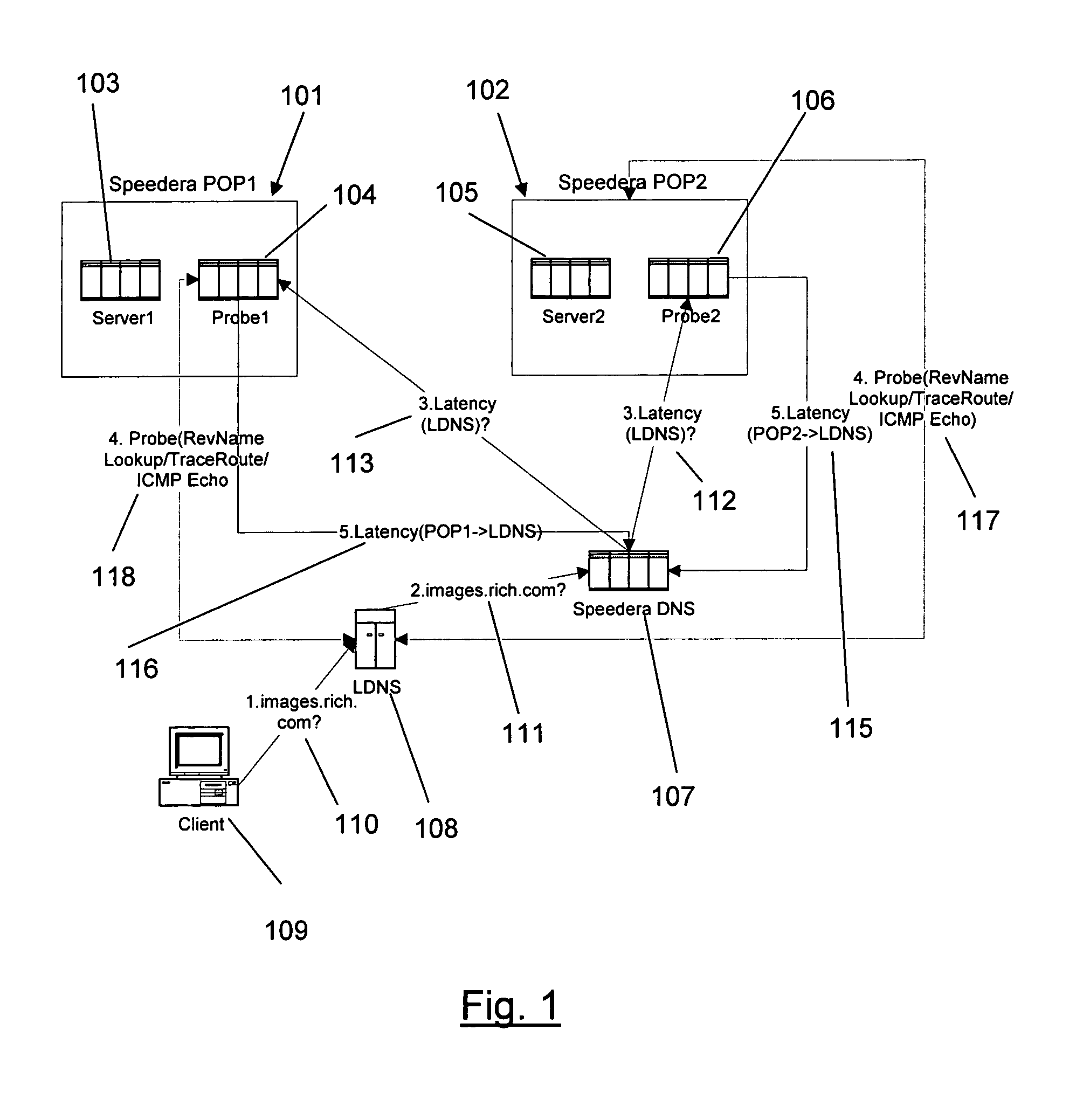

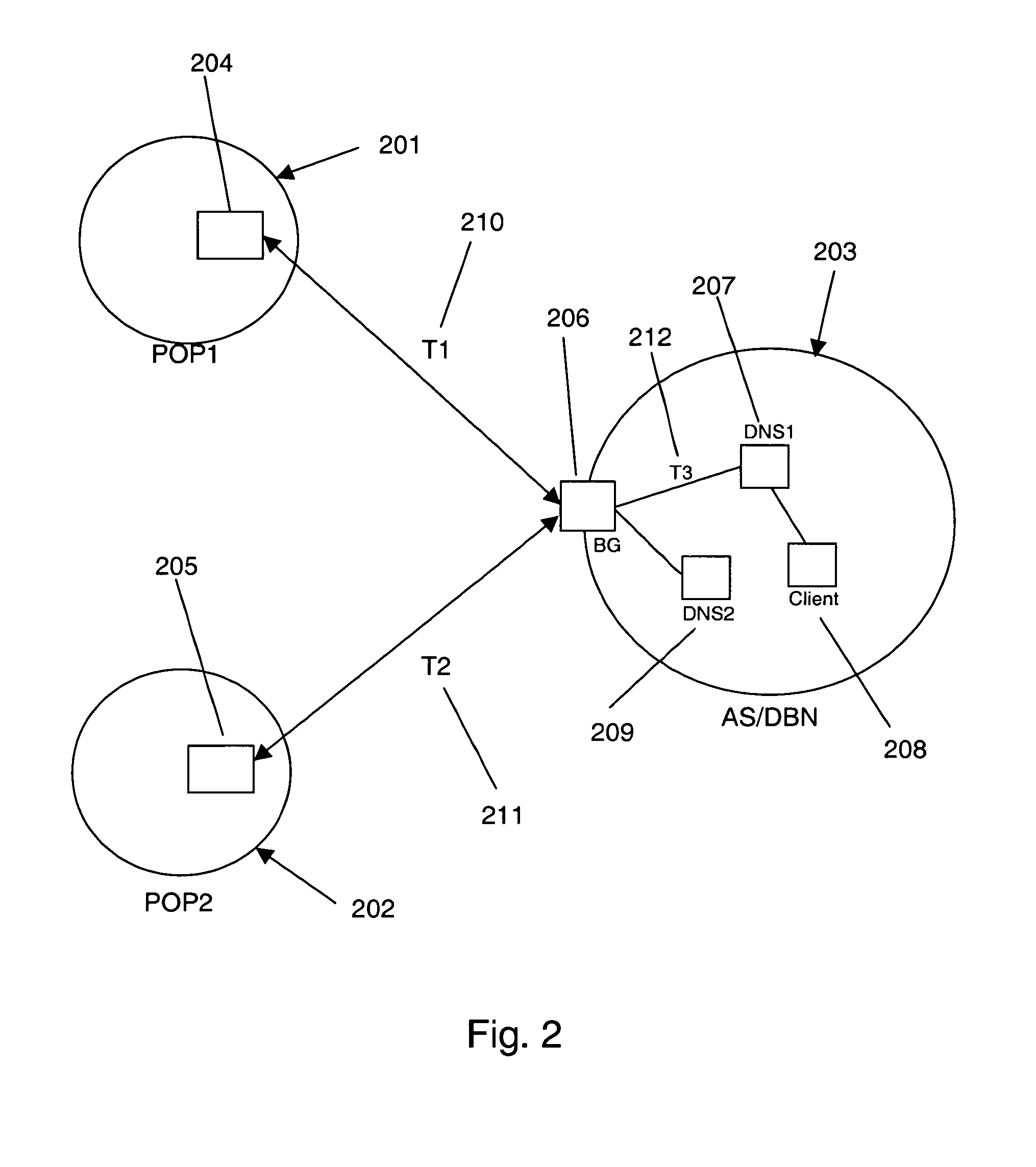

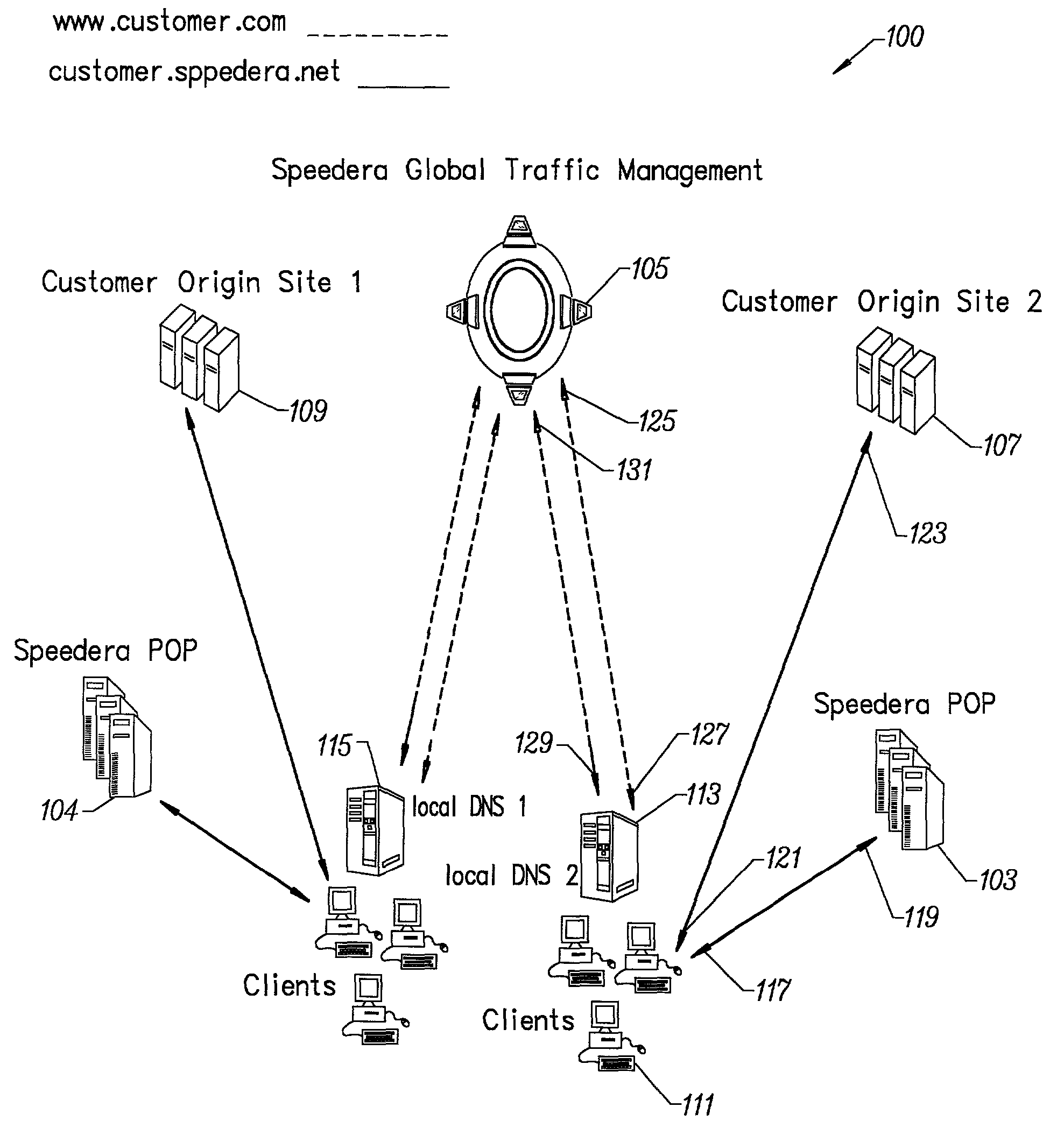

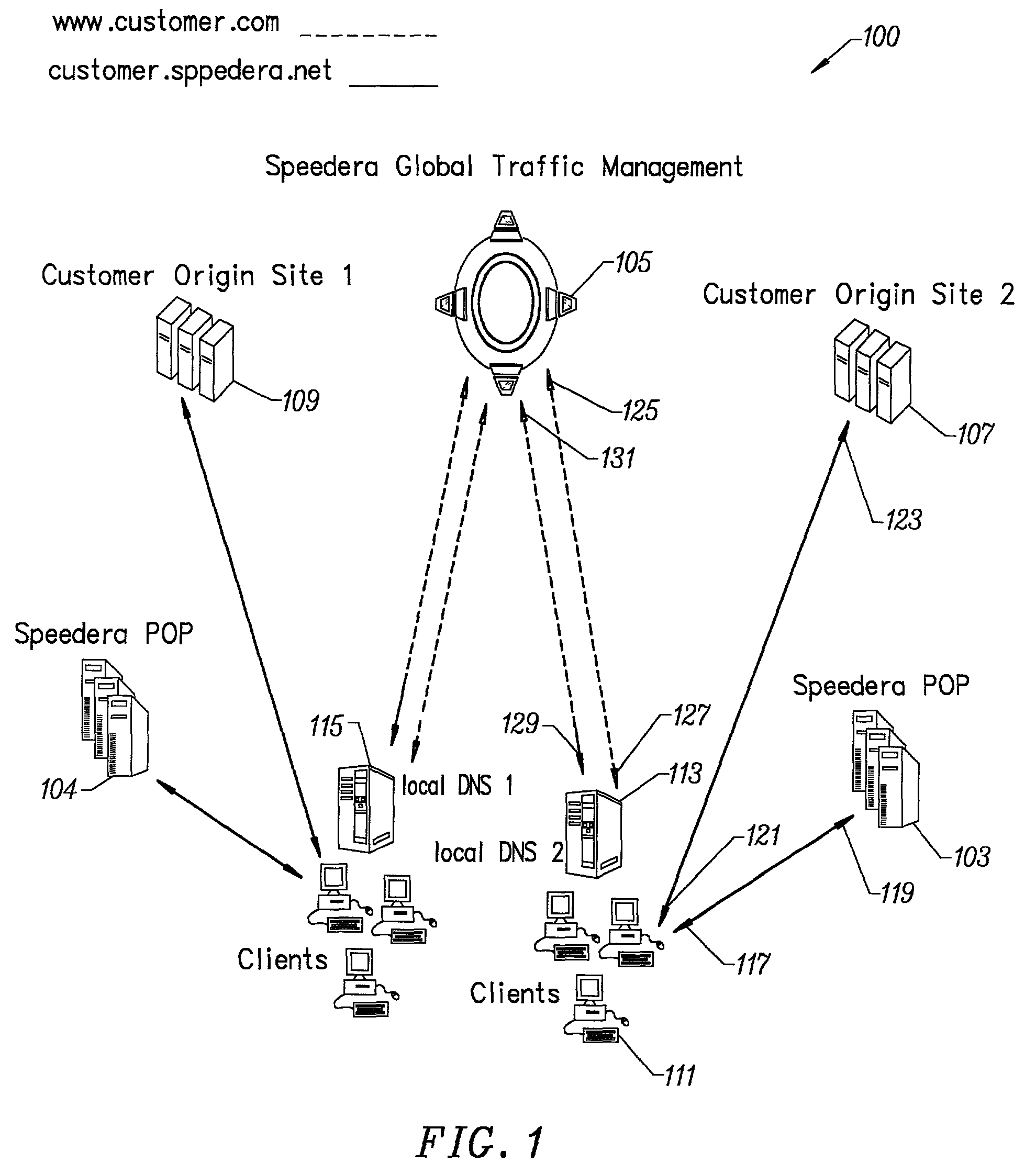

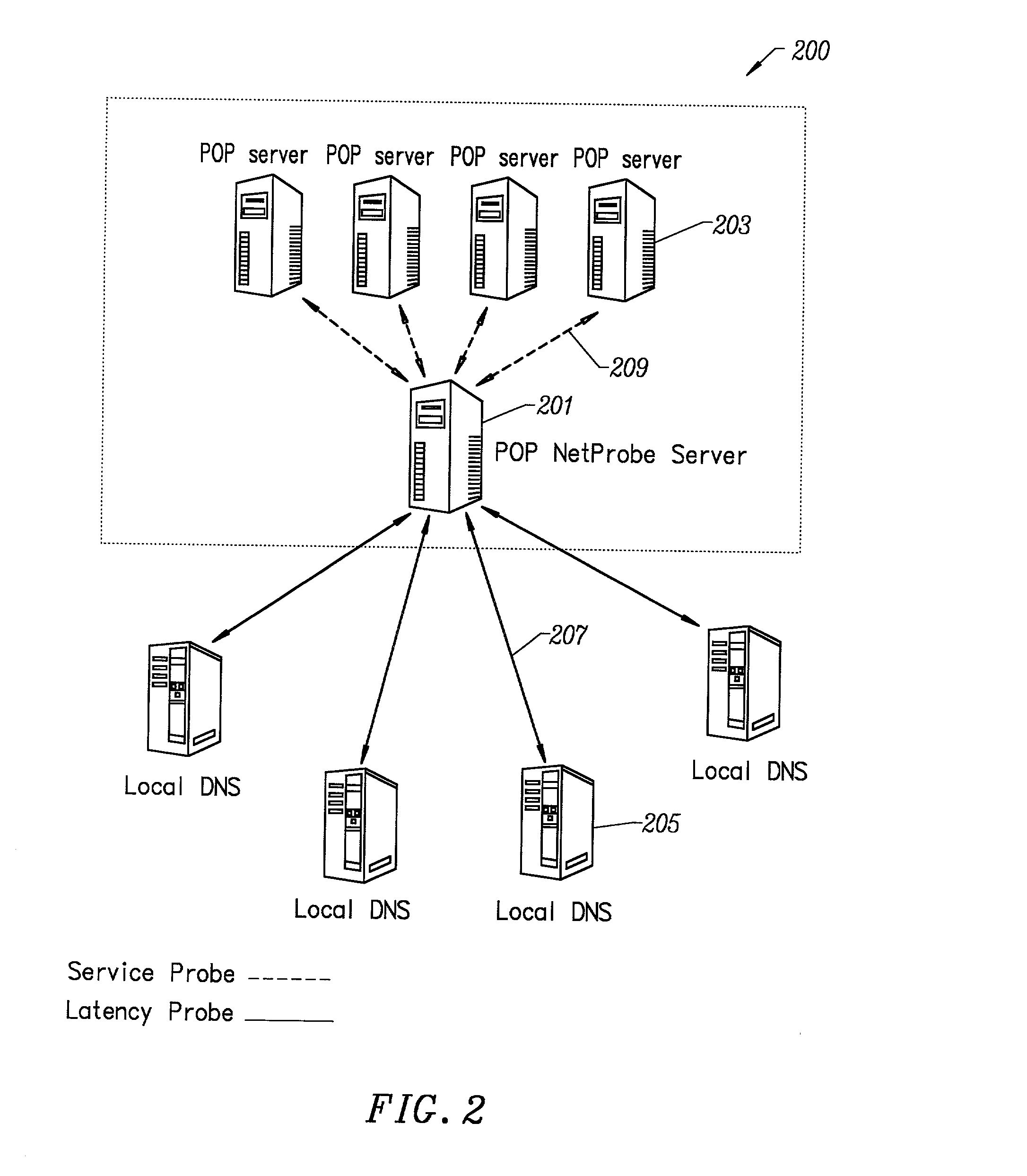

Method and apparatus for determining latency between multiple servers and a client

InactiveUS7058706B1Reduce network trafficAccurately determineDigital computer detailsData switching networksTraffic capacityName server

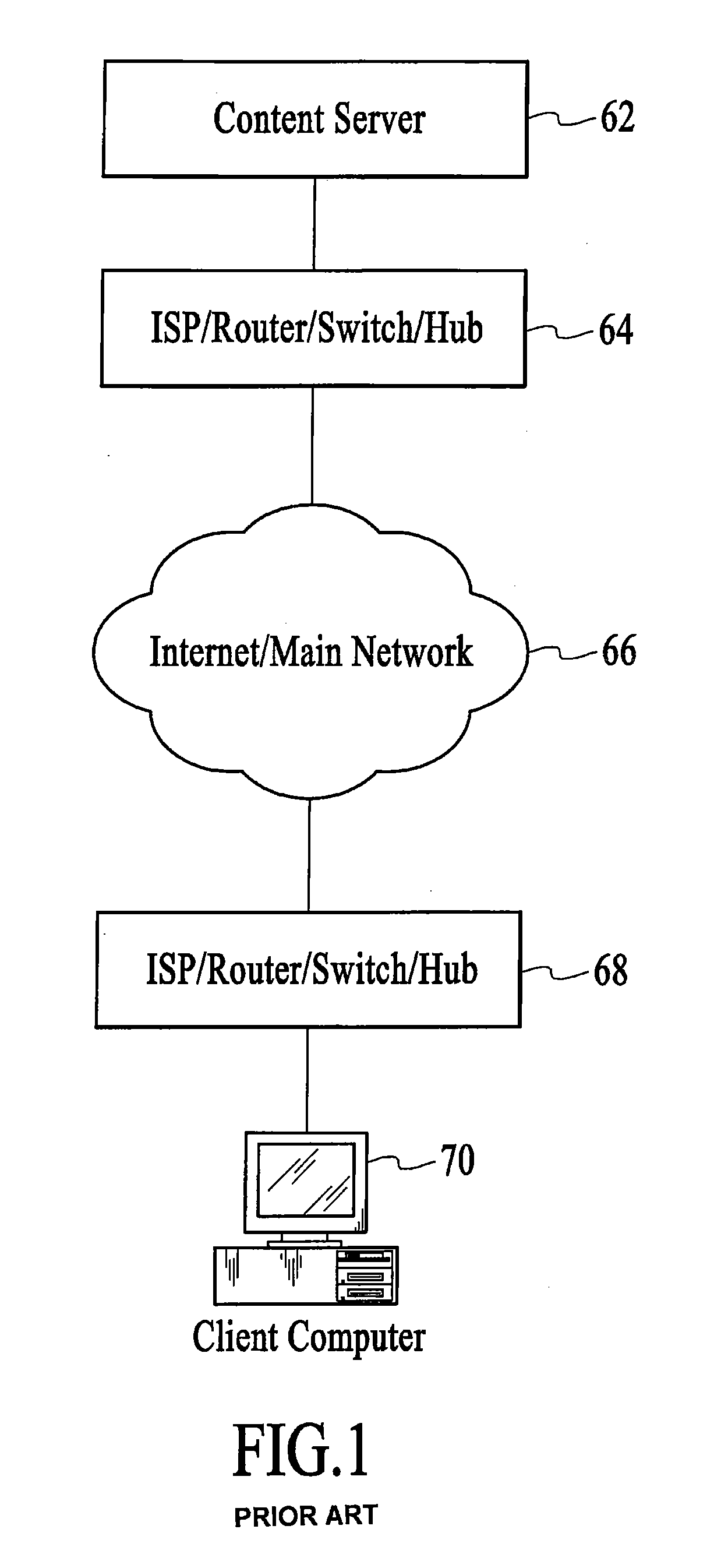

A method and apparatus for determining latency between multiple servers and a client receives requests for content server addresses from local domain names servers (LDNS). POPs that can serve the content are determined and sent latency metric requests. The content server receives the request for latency metrics and looks up the latency metric for the requesting client. Periodic latency probes are sent to the IP addresses in a Latency Management Table. The IP addresses of clients are masked so the latency probes are sent to higher level servers to reduce traffic across the network. The hop count and latency data in the packets sent in response to the latency probes are stored in the Latency Management Table and is used to determine the latency metric from the resident POP to the requesting client before sending the latency metric to the requesting server. The BGP hop count in the Latency Management Table is used for the latency metric upon the first request for an IP address. The latency metric is calculated for subsequent requests of IP addresses using the hop count and RTT data in the Latency Management Table. Latency metrics from POPs are collected and the inverse relationship of the hop counts in a weighted combination with the RTT are used to determine which latency metric indicates the optimal POP. The address of the optimal POP is then sent to the requesting LDNS.

Owner:AKAMAI TECH INC

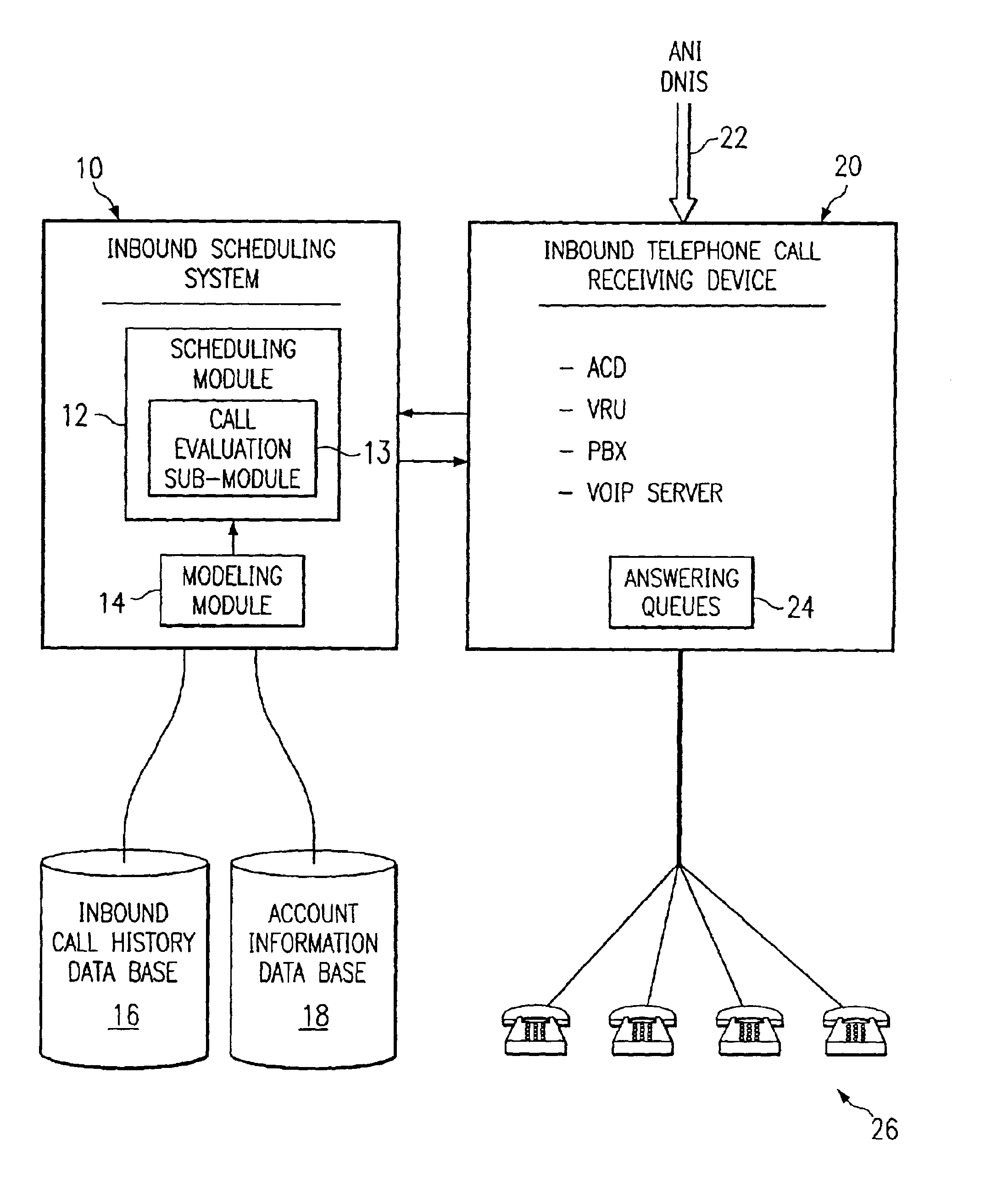

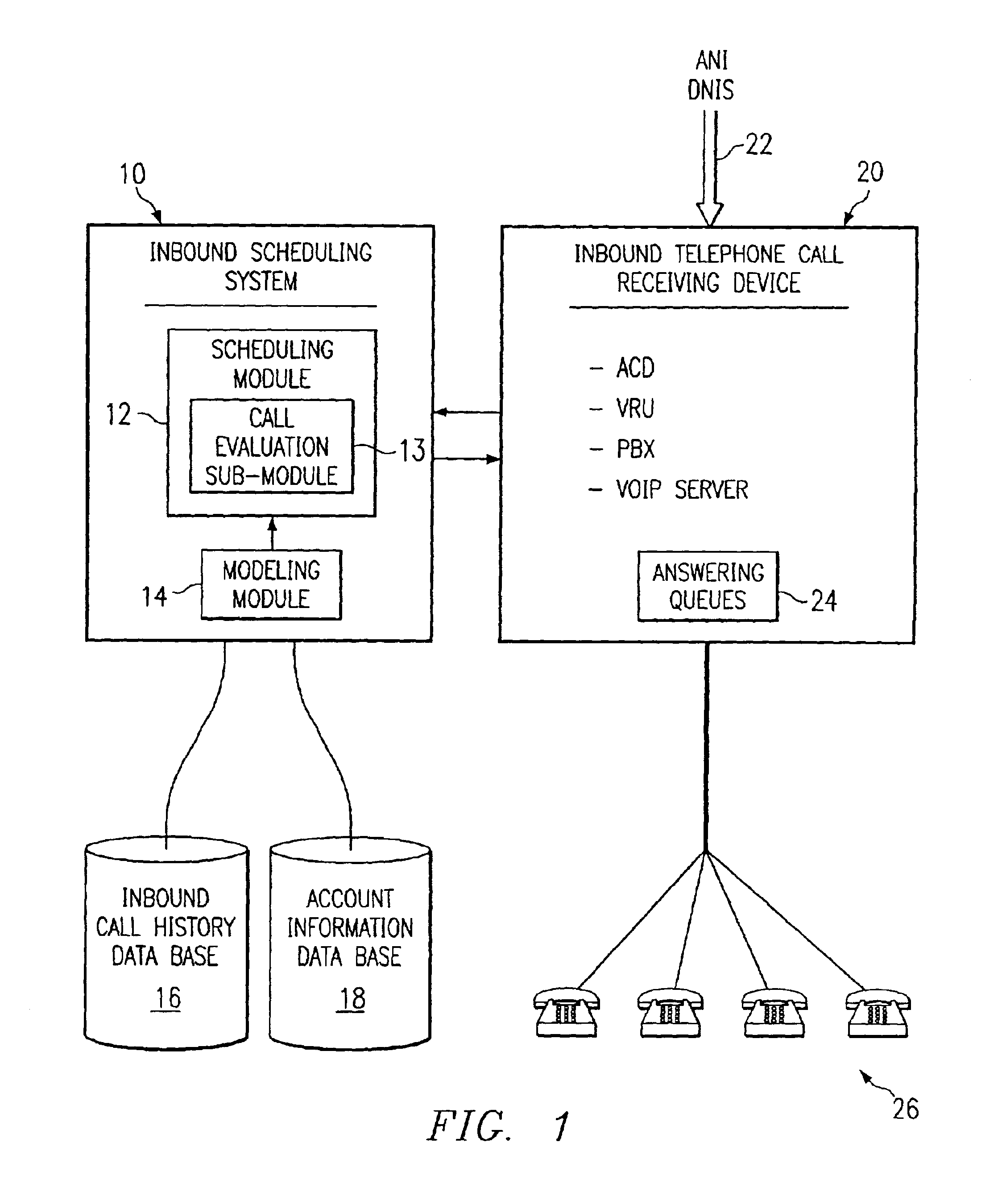

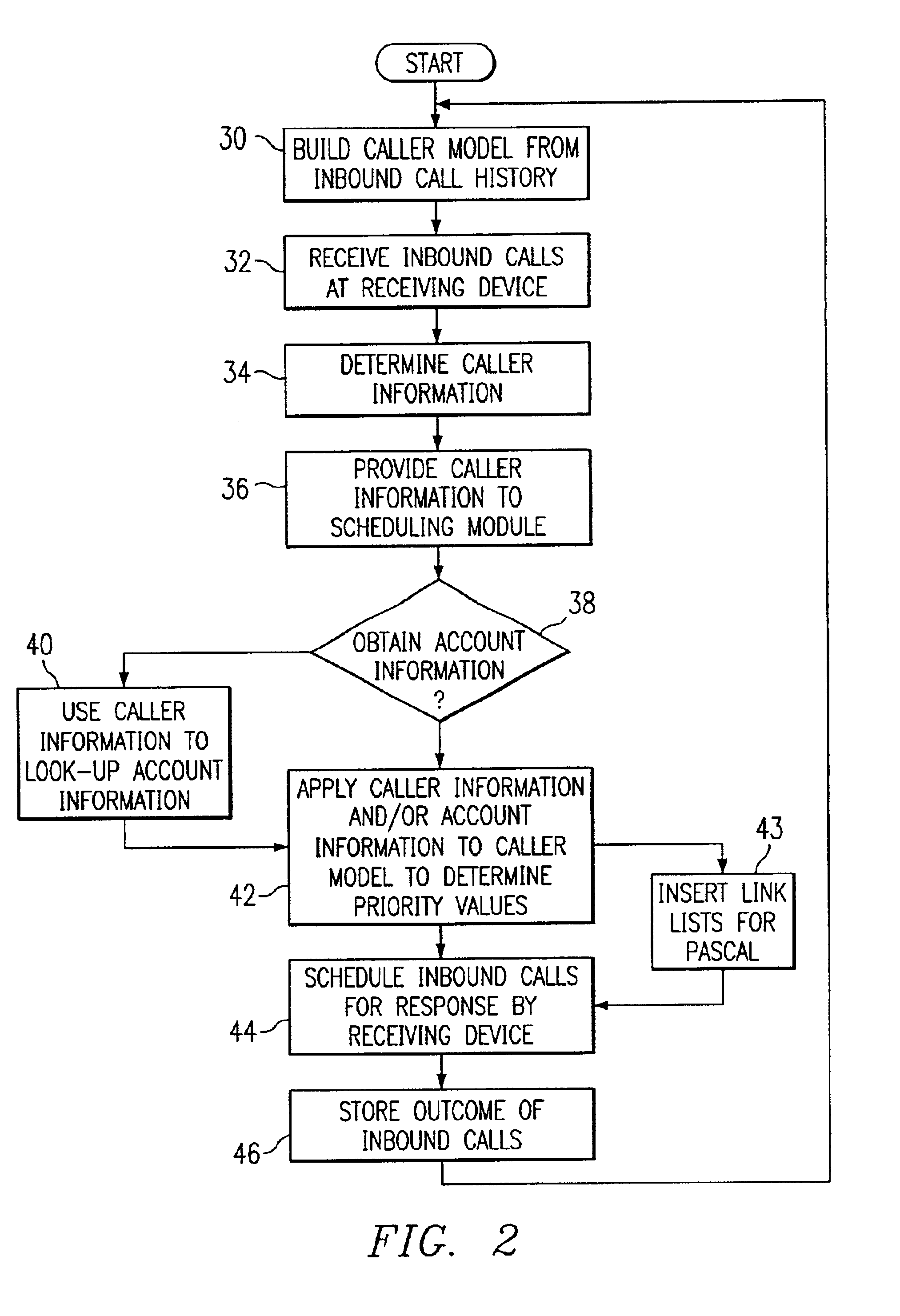

Method and system for self-service scheduling of inbound inquiries

InactiveUS6859529B2Accurate modelingMaximize useSpecial service for subscribersManual exchangesProduction rateRegression analysis

A method and system schedules inbound inquiries, such as inbound telephone calls, for response by agents in an order that is based in part on the forecasted outcome of the inbound inquiries. A scheduling module applies inquiry information to a model to forecast the outcome of an inbound inquiry. The forecasted outcome is used to set a priority value for ordering the inquiry. The priority value may be determined by solving a constrained optimization problem that seeks to maximize an objective function, such as maximizing an agent's productivity to produce sales or to minimize inbound call attrition. A modeling module generates models that forecast inquiry outcomes based on a history and inquiry information. Statistical analysis such as regression analysis determines the model with the outcome related to the nature of the inquiry. Operator wait time is regulated by forcing low priority and / or highly tolerant inbound inquiries to self service.

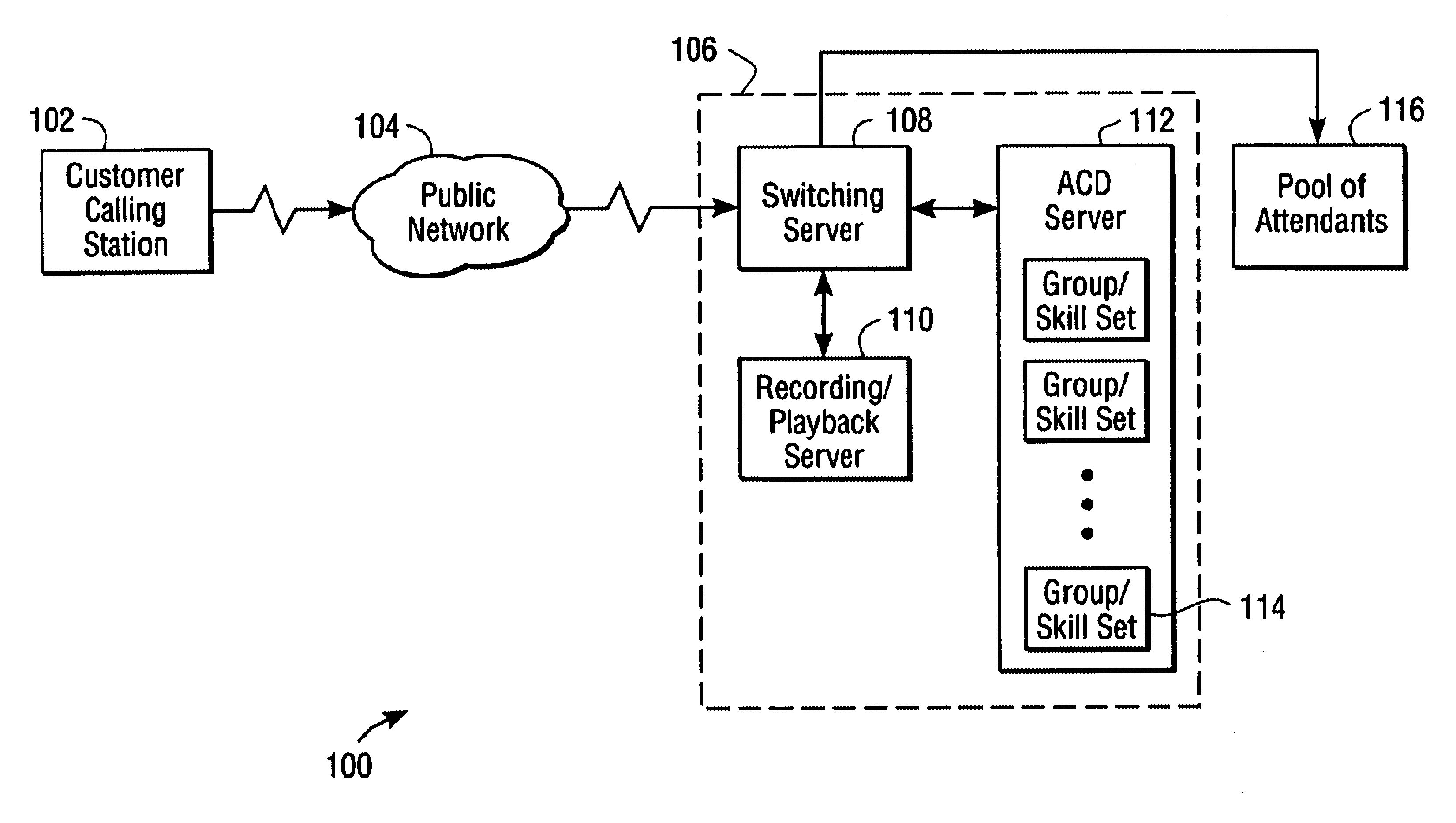

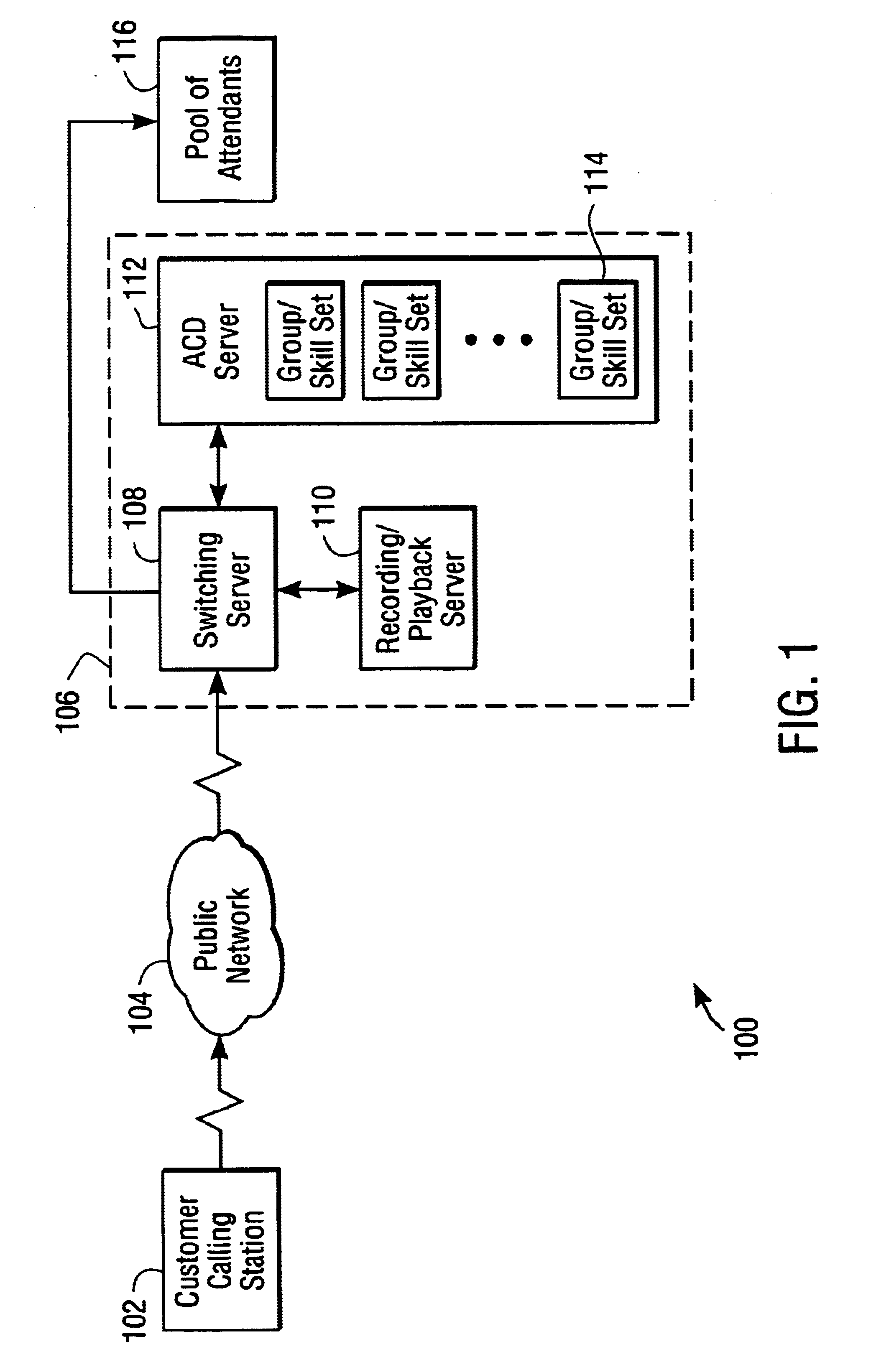

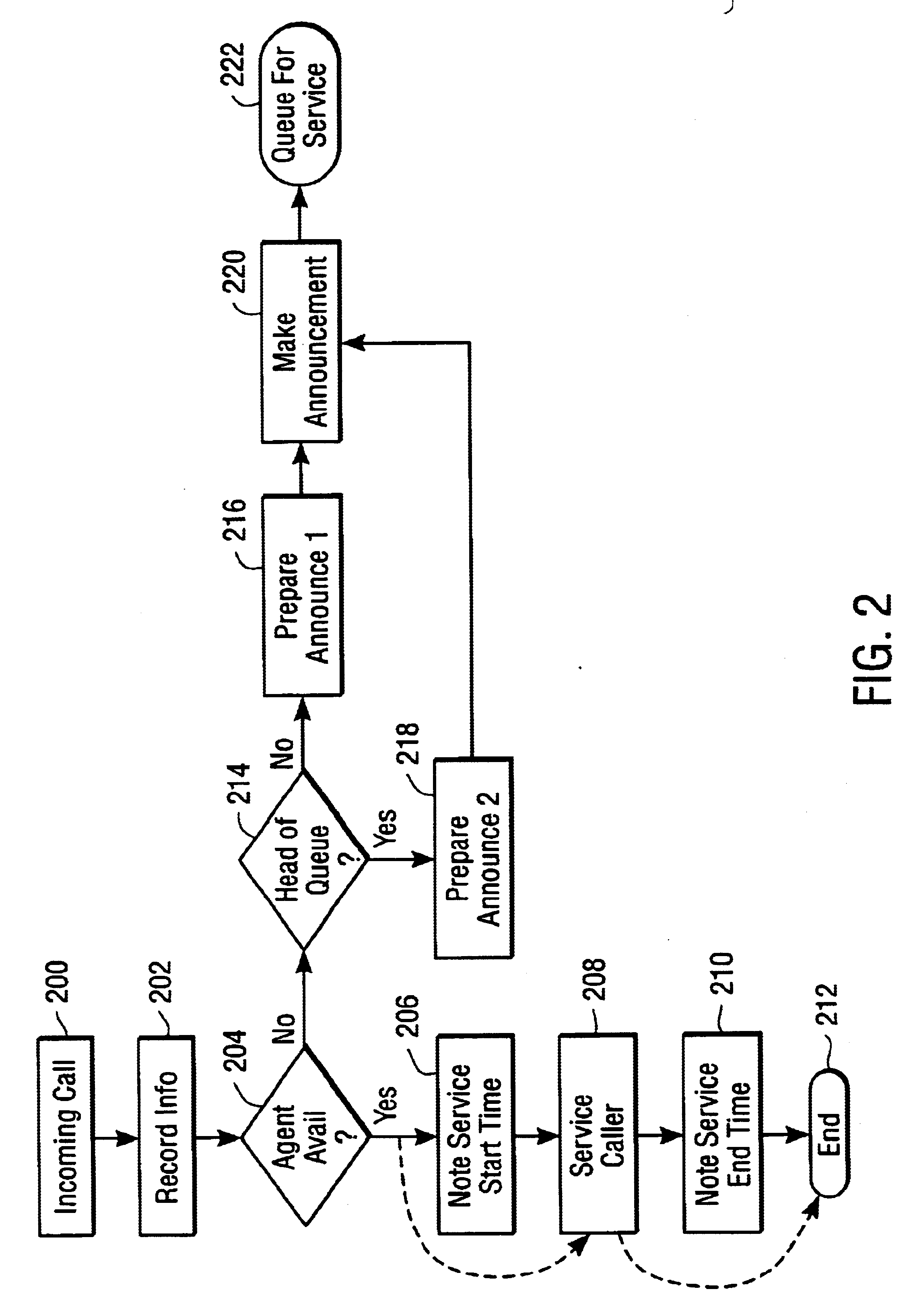

System and method for implementing wait time estimation in automatic call distribution queues

InactiveUS6714643B1Flexibility advantageAutomatic call-answering/message-recording/conversation-recordingSpecial service for subscribersSkill setsArrival time

A system and method for predicting the wait time of a caller to a call center is disclosed. The call center associates a set of agents to which the caller may be queued. This set of agents selected may depend on the skills that each agent possesses, the type of service request made by the caller, caller priority, time of day, day of week and other conditions. An initial wait time estimate may then be given to the caller who is just queued. As a caller's conditions may dynamically change, a caller's position in the queue may also change as well as the pool of available agents. Periodic wait time estimate updates may also be given to the queued caller. A caller's wait time may be estimated based upon mean inter-arrival times for recently past calls into the call center. An average inter-arrival time may be calculated for the last several calls. Alternatively, a caller's wait time may be estimated based upon calls that are recently queued and dequeued. A table of values, Wnj, are maintained wherein each such value denoting the jth recent wait time of calls arriving with n calls already in the queue. An average value, Wn, for each n among all such Wnj, is thus calculated and a caller's estimated wait time is thus given, depending on how many calls are in the queue at the time of calling.

Owner:ENTERPRISE SYST TECH S A R L

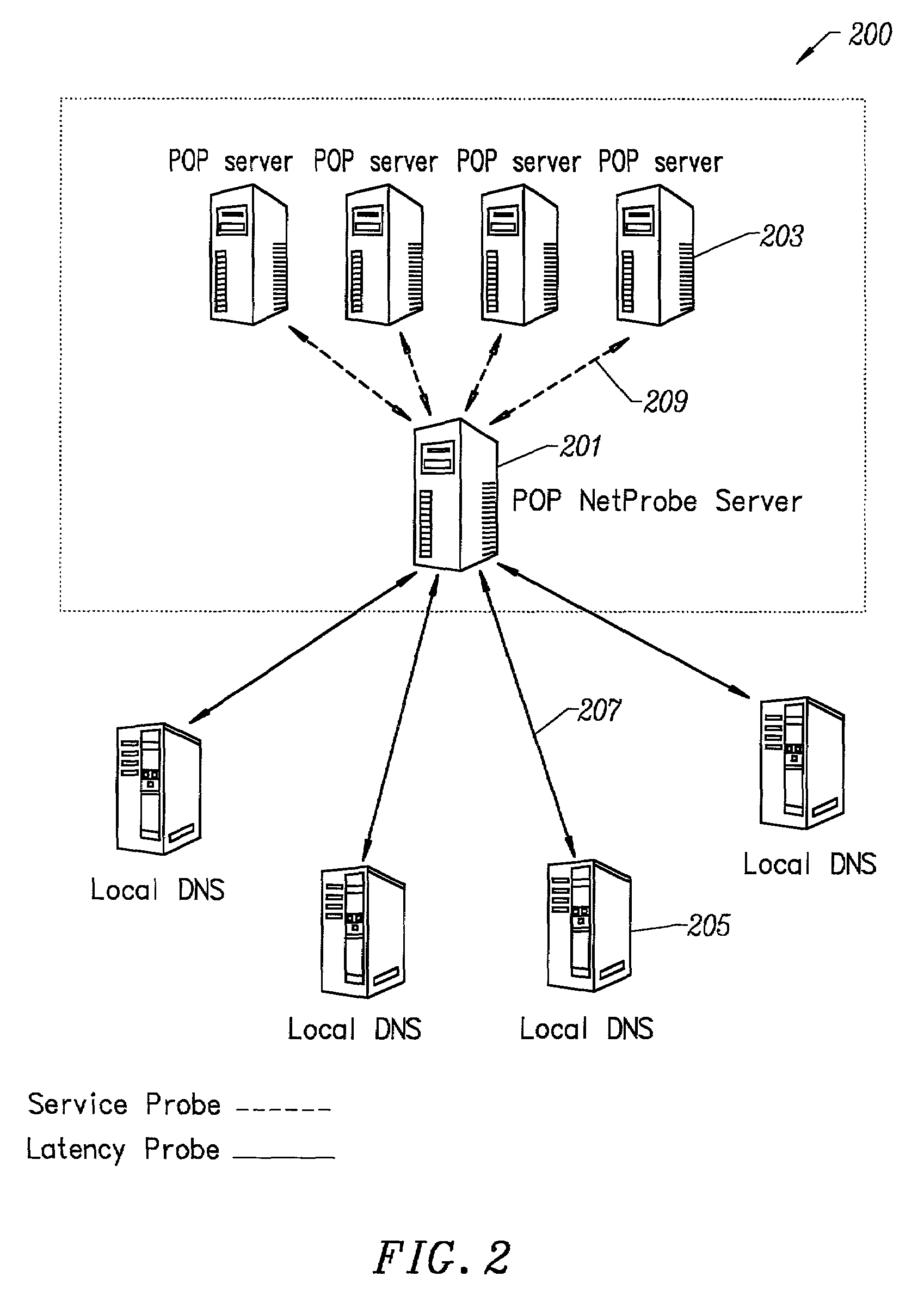

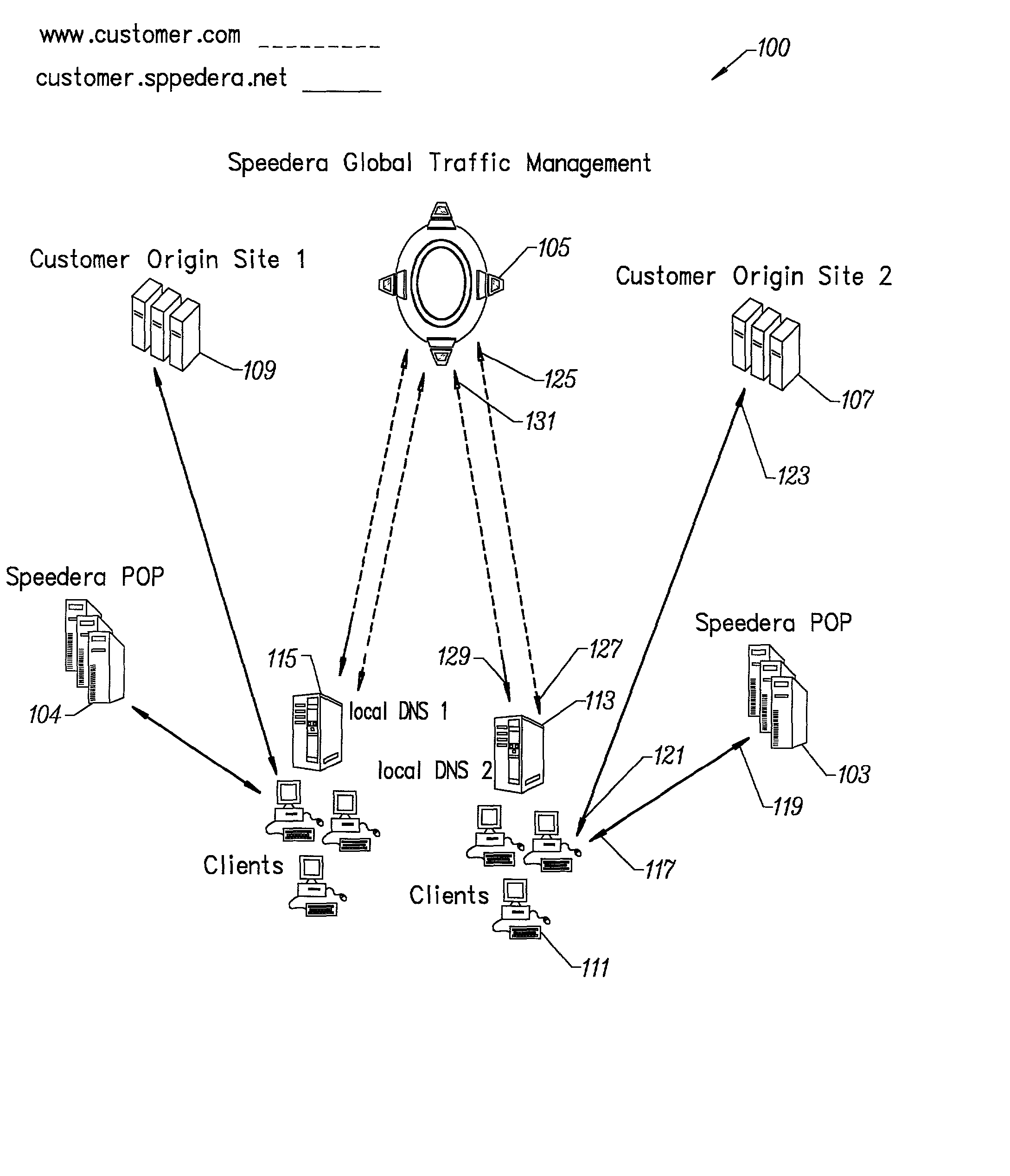

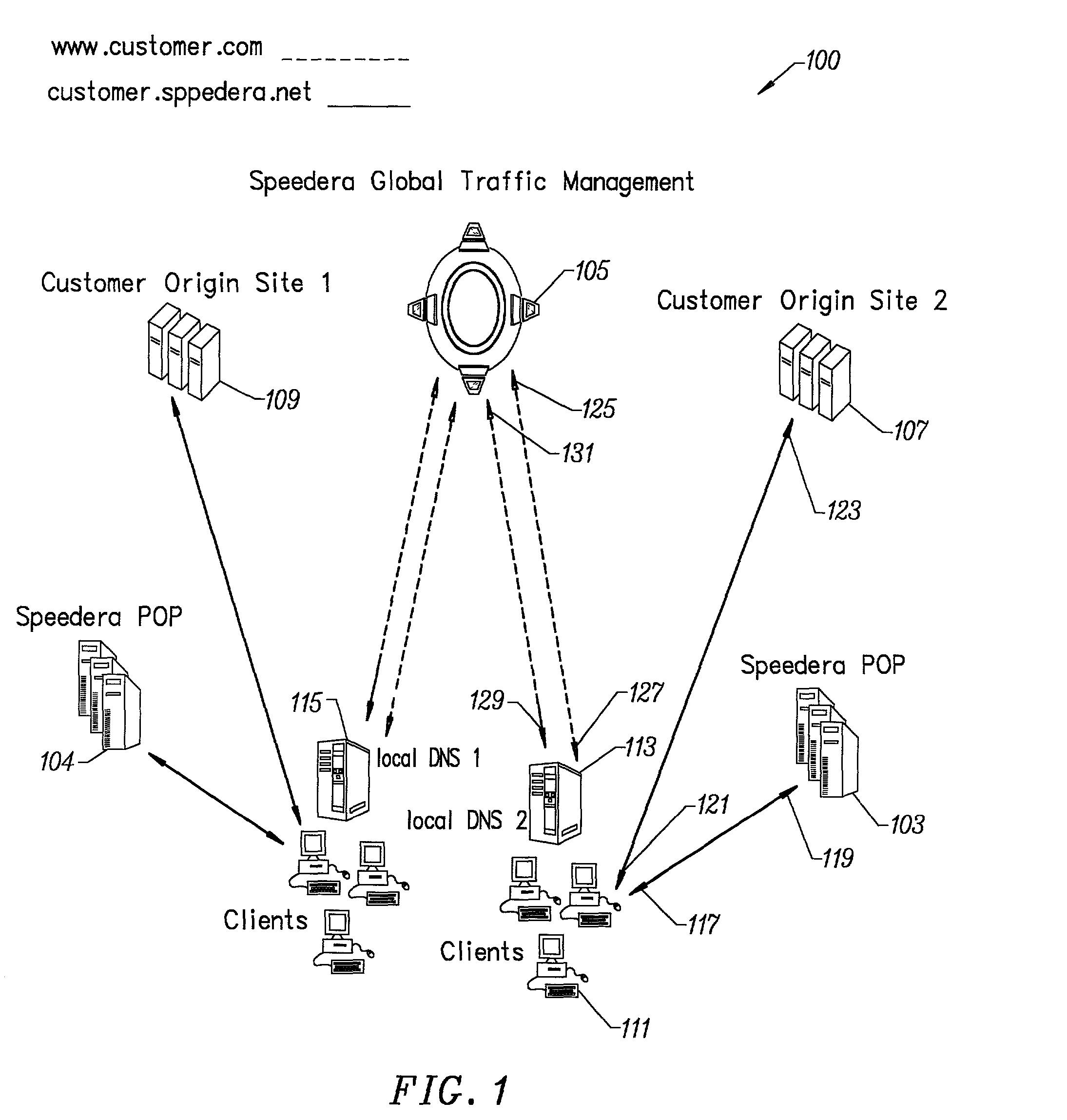

Method for determining metrics of a content delivery and global traffic management network

ActiveUS7523181B2Efficient executionMetering/charging/biilling arrangementsError preventionData packConfigfs

A method for determining metrics of a content delivery and global traffic management network provides service metric probes that determine the service availability and metric measurements of types of services provided by a content delivery machine. Latency probes are also provided for determining the latency of various servers within a network. Service metric probes consult a configuration file containing each DNS name in its area and the set of services. Each server in the network has a metric test associated with each service supported by the server which the service metric probes periodically performs metric tests on and records the metric test results which are periodically sent to all of the DNS servers in the network. DNS servers use the test result updates to determine the best server to return for a given DNS name. The latency probe calculates the latency from its location to a client's location using the round trip time for sending a packet to the client to obtain the latency value for that client. The latency probe updates the DNS servers with the clients' latency data. The DNS server uses the latency test data updates to determine the closest server to a client.

Owner:AKAMAI TECH INC

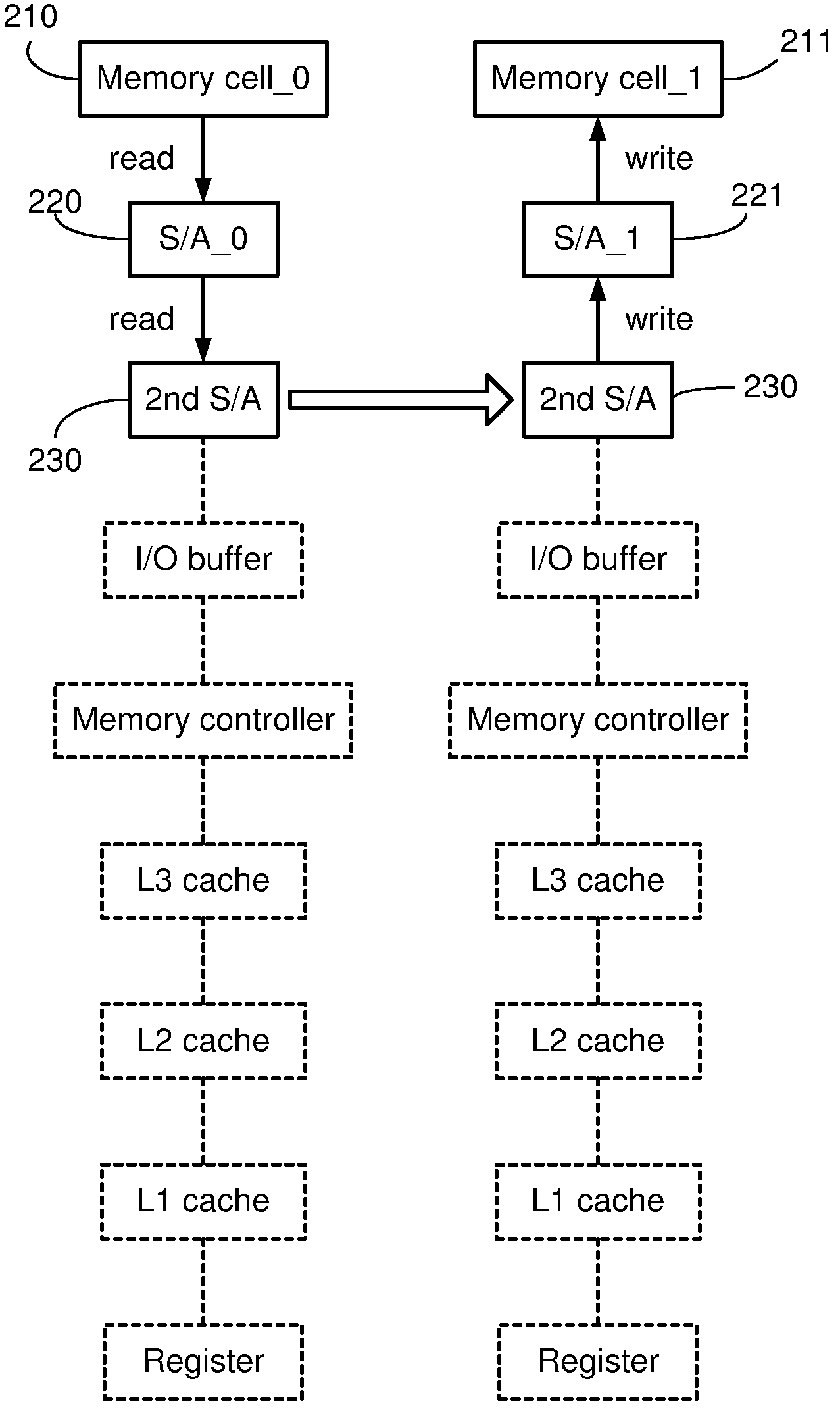

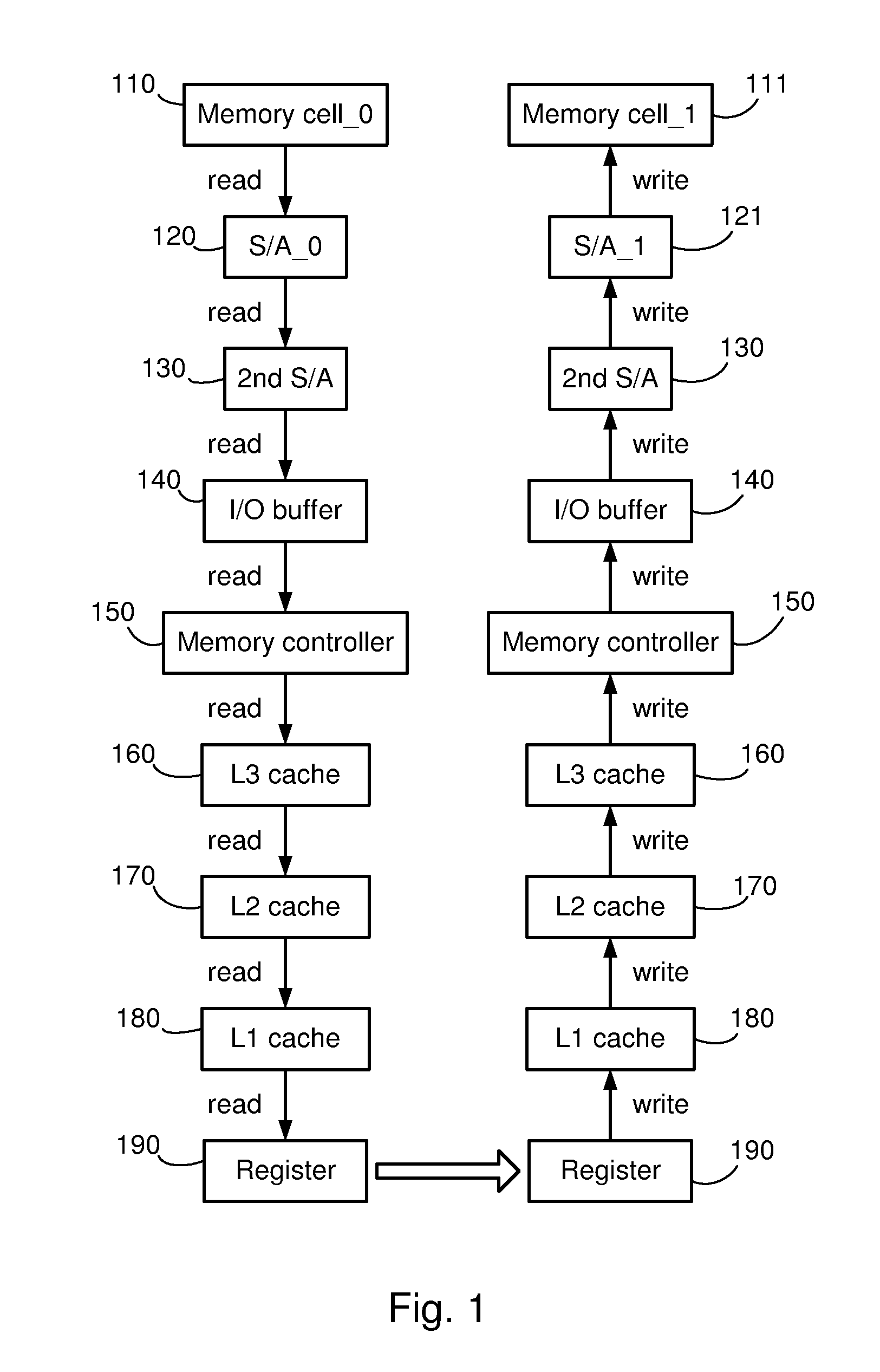

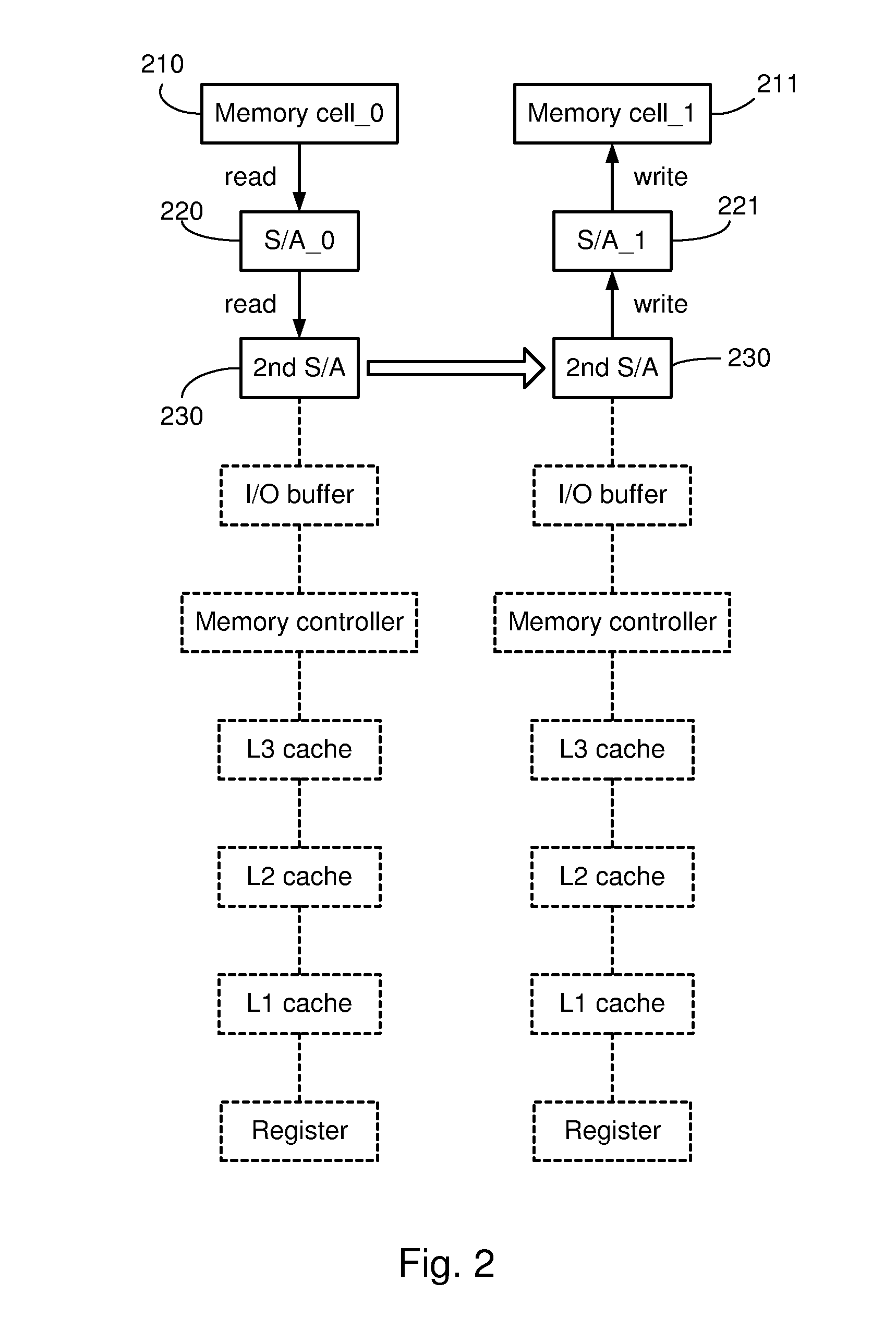

Systems and methods for data transfers between memory cells

Systems and methods for reducing the latency of data transfers between memory cells by enabling data to be transferred directly between sense amplifiers in the memory system. In one embodiment, a memory system uses a conventional DRAM memory structure having a pair of first-level sense amplifiers, a second-level sense amplifier and control logic for the sense amplifiers. Each of the sense amplifiers is configured to be selectively coupled to a data line. In a direct data transfer mode, the control logic generates control signals that cause the sense amplifiers to transfer data from a first one of the first-level sense amplifiers (a source sense amplifier) to the second-level sense amplifier, and from there to a second one of the first-level sense amplifiers (a destination sense amplifier.) The structure of these sense amplifiers is conventional, and the operation of the system is enabled by modified control logic.

Owner:TOSHIBA AMERICA ELECTRONICS COMPONENTS

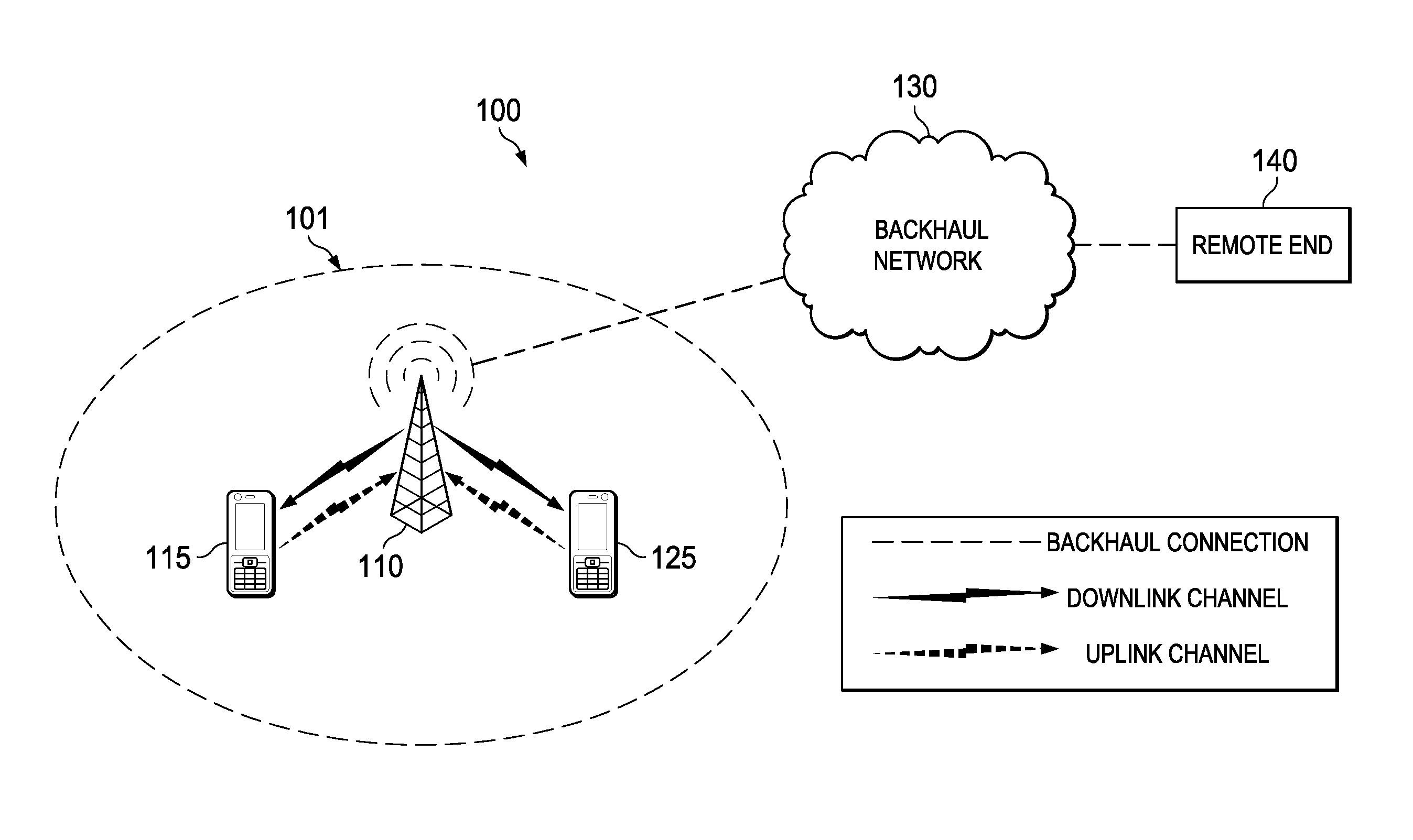

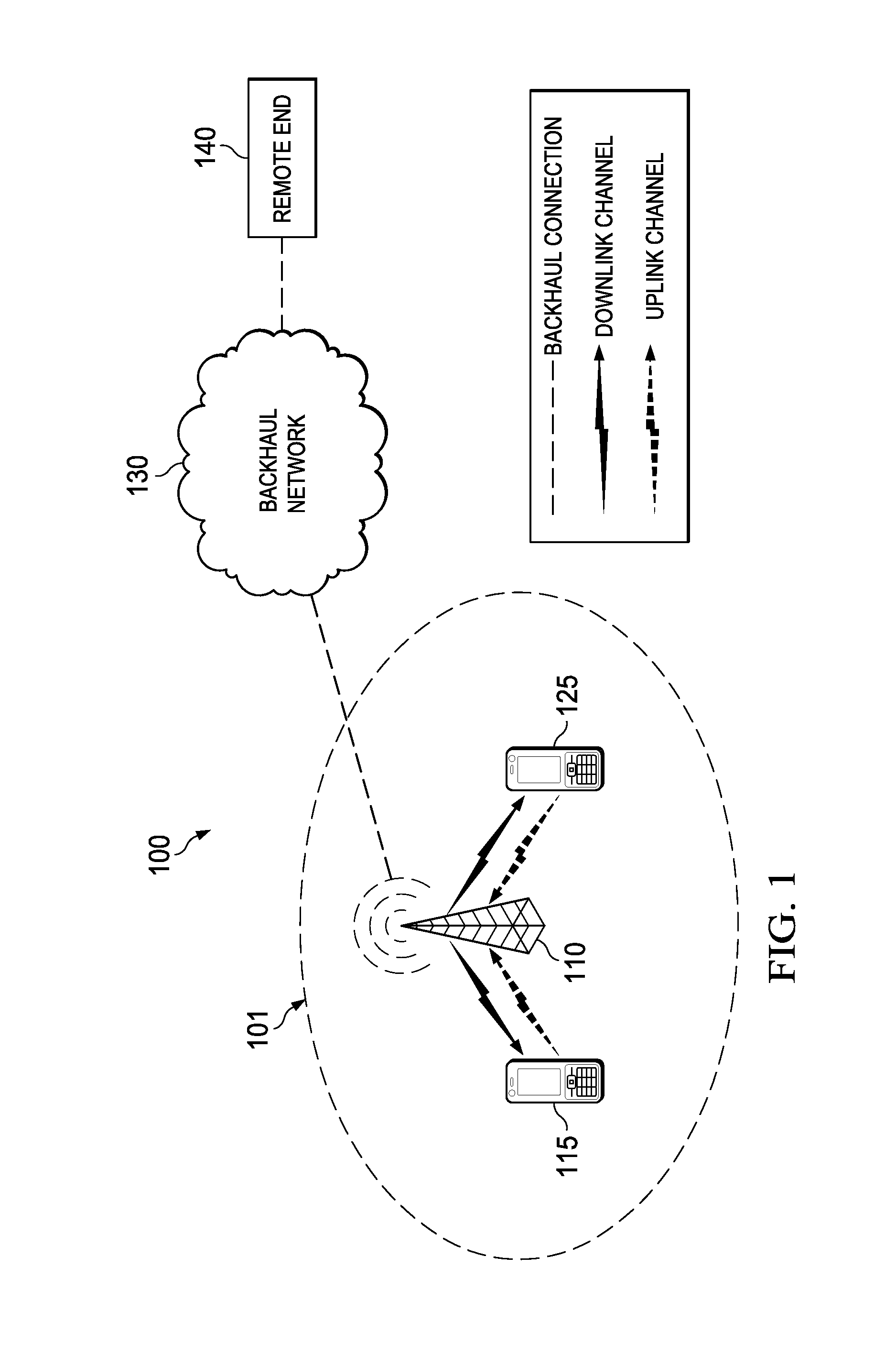

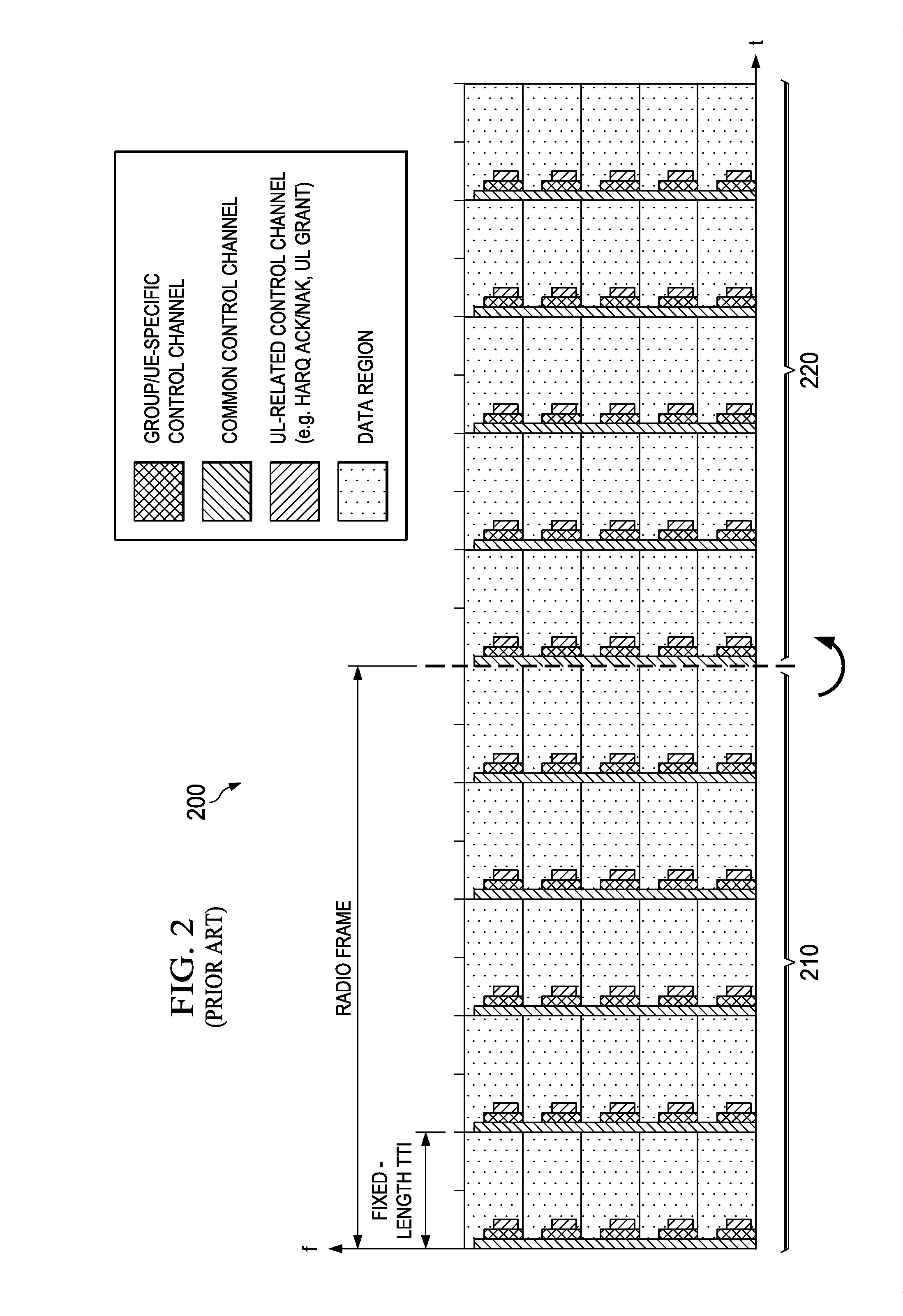

System and Method for Adaptive Transmission Time Interval (TTI) Structure

ActiveUS20140071954A1Length be adaptNetwork traffic/resource managementTime-division multiplexQuality of serviceStructure of Management Information

Methods and devices are provided for communicating data in a wireless channel. In one example, a method includes adapting the transmission time interval (TTI) length of transport container for transmitting data in accordance with a criteria. The criteria may include (but is not limited to) a latency requirement of the data, a buffer size associated with the data, a mobility characteristic of a device that will receive the data. The TTI lengths may be manipulated for a variety of reasons, such as for reducing overhead, satisfy quality of service (QoS) requirements, maximize network throughput, etc. In some embodiments, TTIs having different TTI lengths may be carried in a common radio frame. In other embodiments, the wireless channel may partitioned into multiple bands each of which carrying (exclusively or otherwise) TTIs having a certain TTI length.

Owner:HUAWEI TECH CO LTD

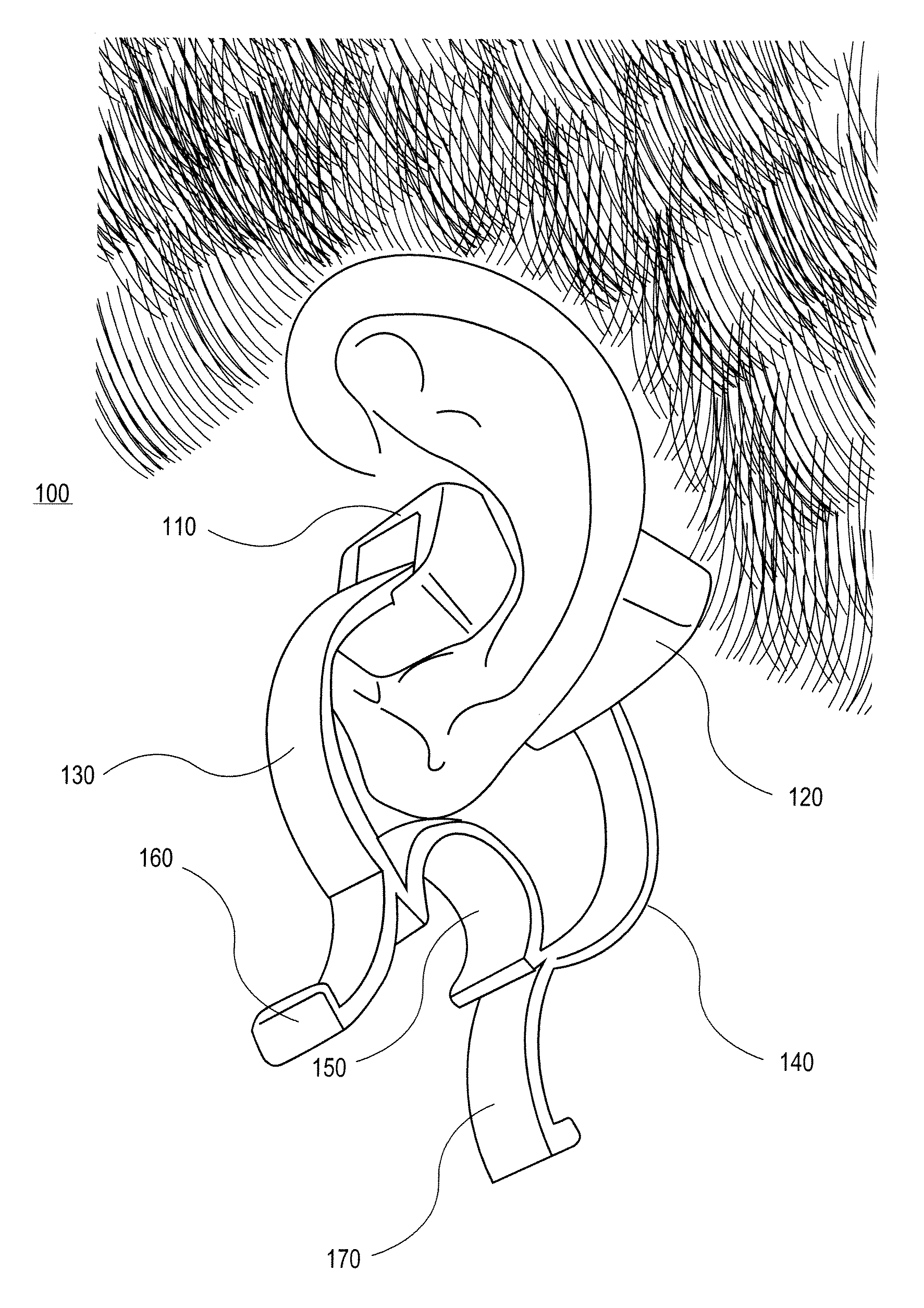

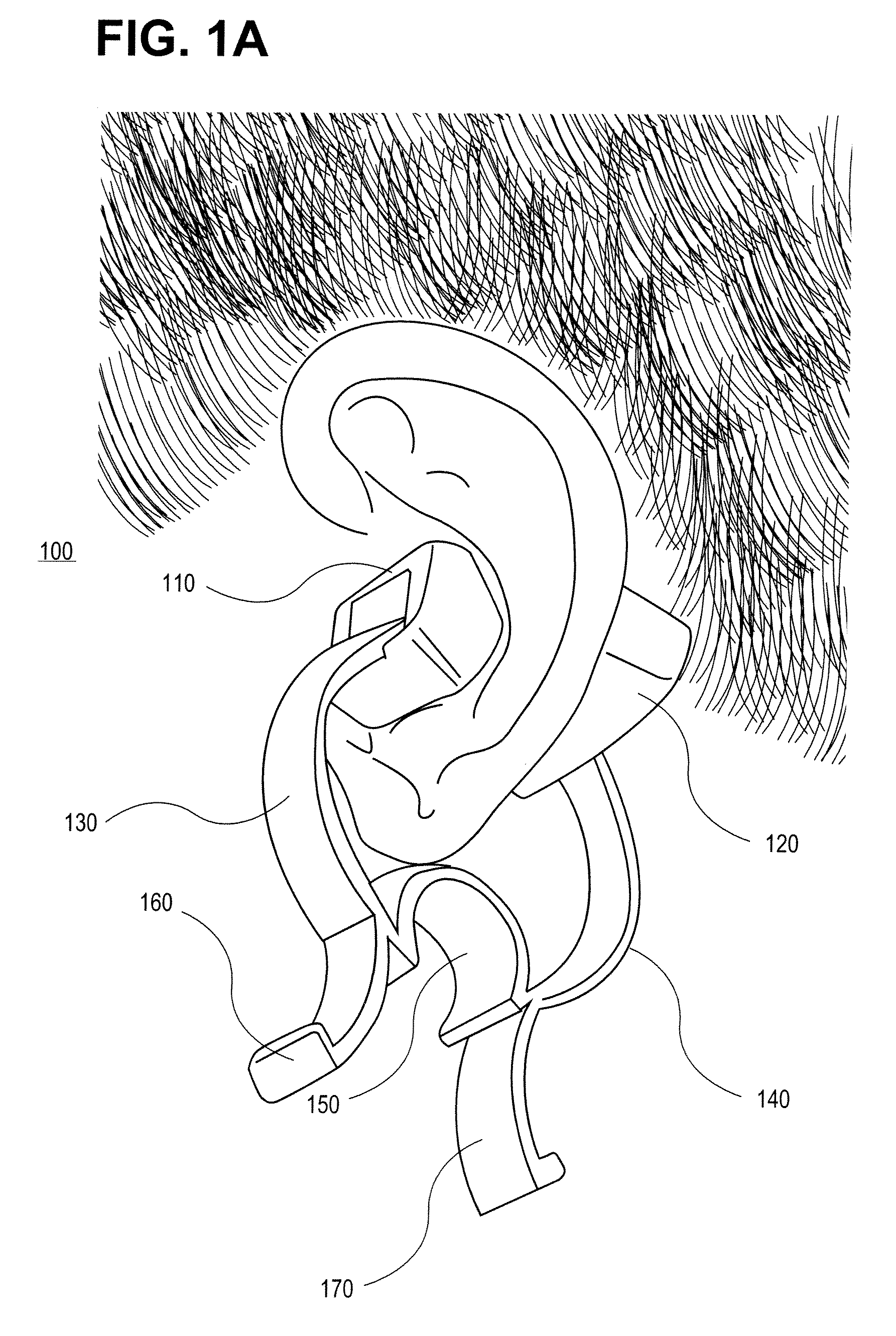

External ear-placed non-invasive physiological sensor

ActiveUS20090275813A1Lower latencyFast trackDiagnostic recording/measuringSensorsExternal earsMedicine

Owner:RGT UNIV OF CALIFORNIA +1

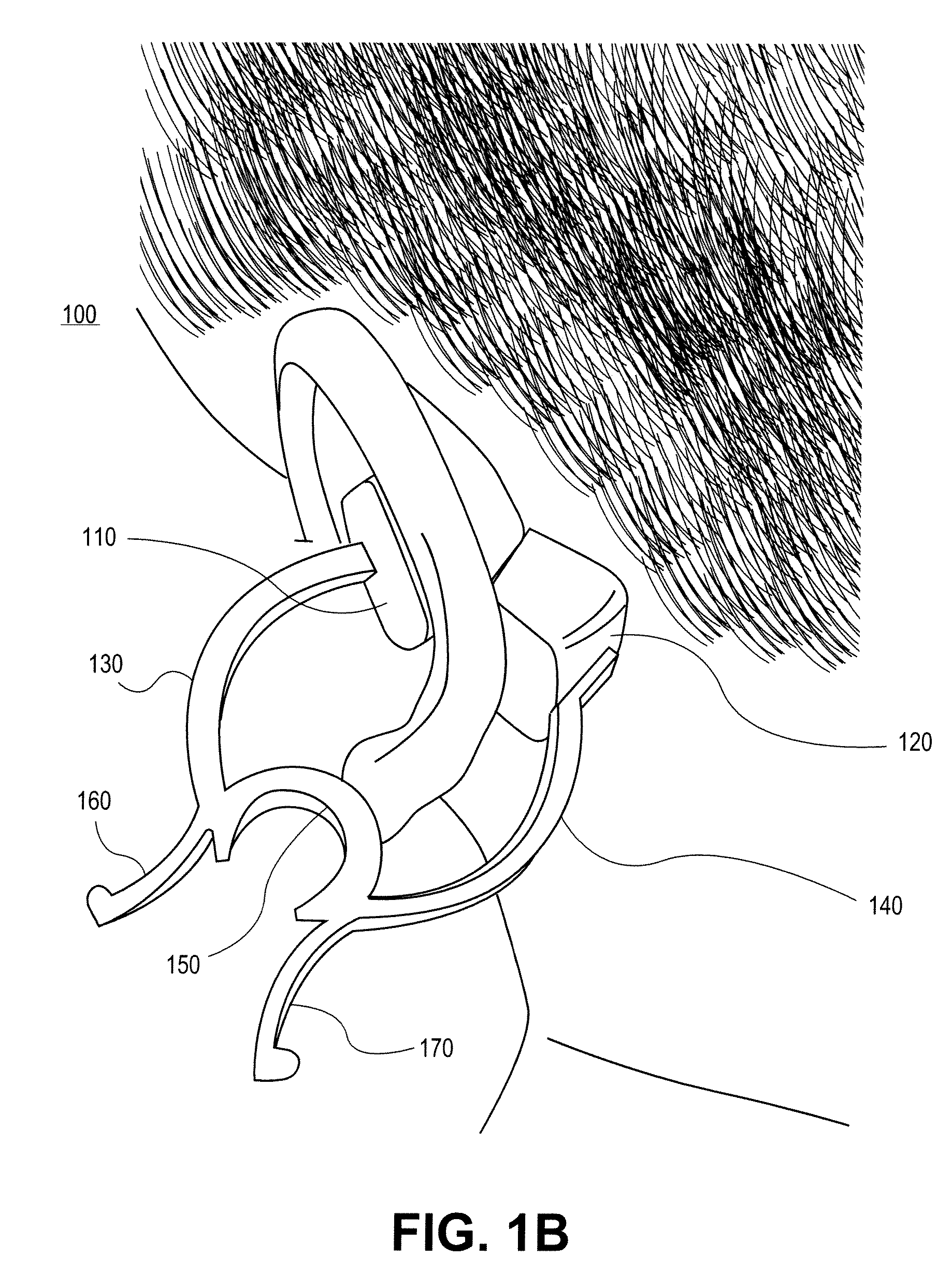

Determining client latencies over a network

InactiveUS7676570B2Multiple digital computer combinationsTransmissionTime informationNetwork communication

A network latency estimation apparatus for estimating latency in network communications between a server and a client. The apparatus comprises an event observer for observing occurrences of pre-selected events. The events associated with the communication occurring at the server. A logging device associated with the event observer for logging into a data store the occurrence of the events together with respective time information. A latency estimator associated with the logging device for using the logged occurrences with the respective time information to arrive at an estimation of a client's latency for the communication.

Owner:ZARBANA DIGITAL FUND

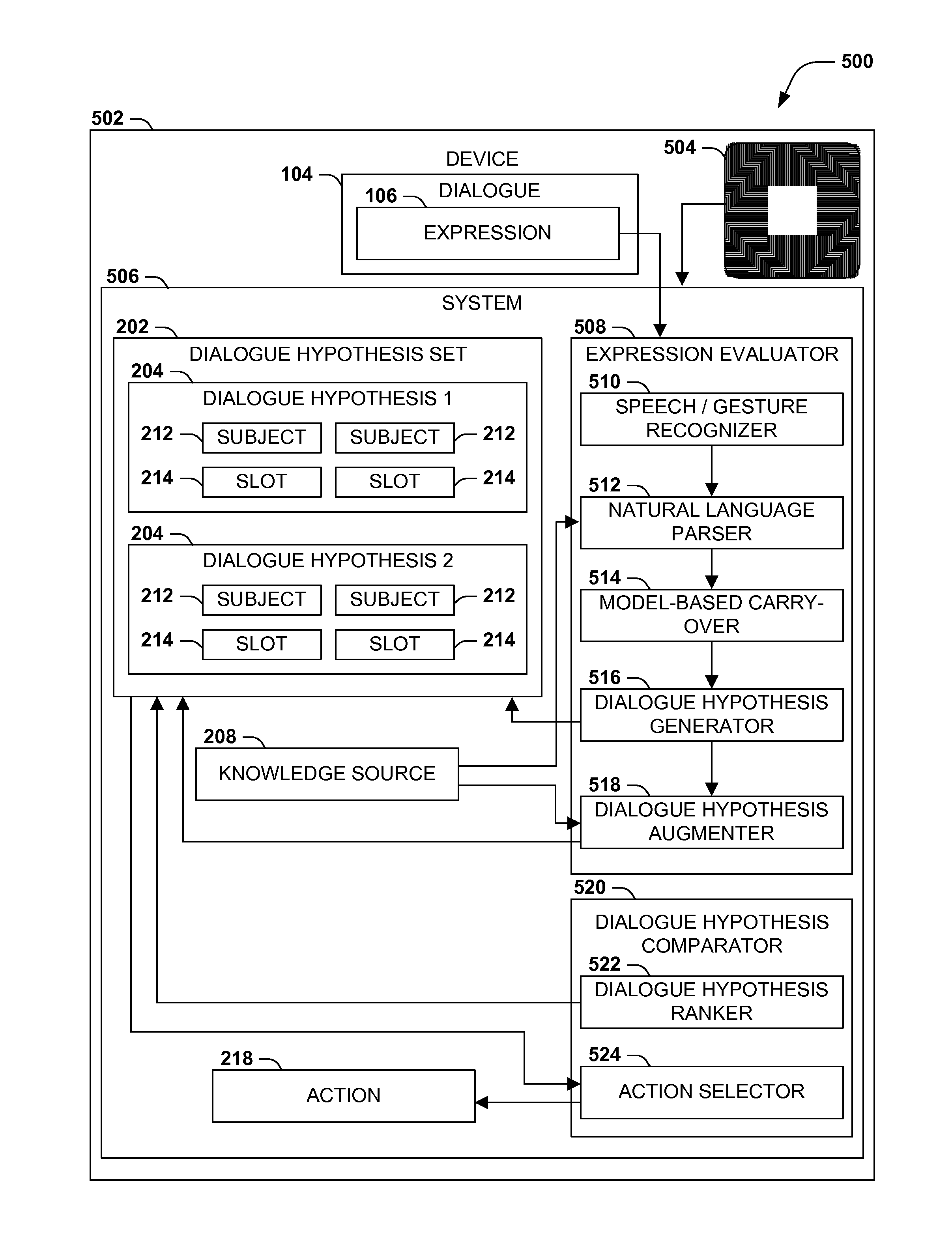

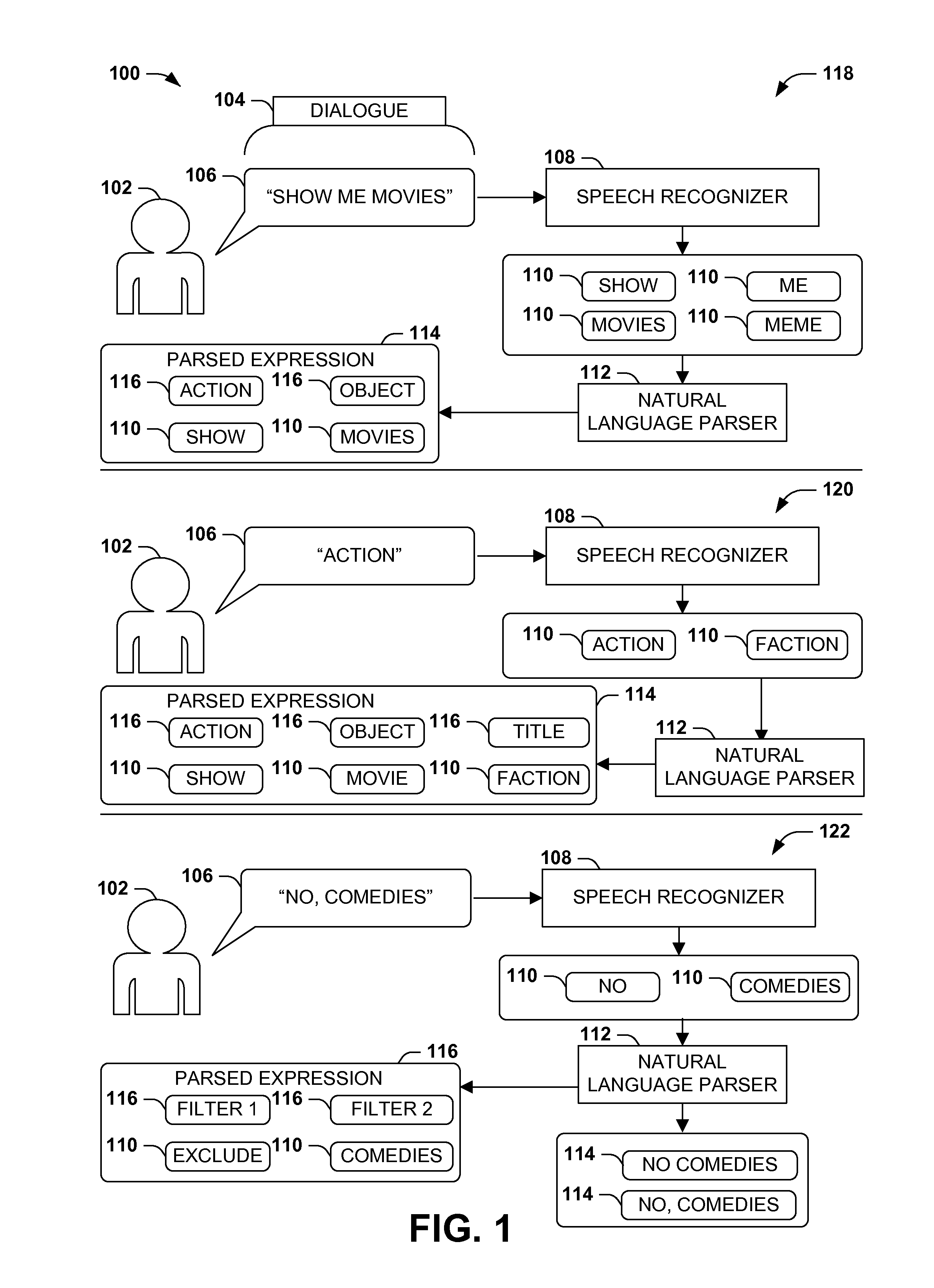

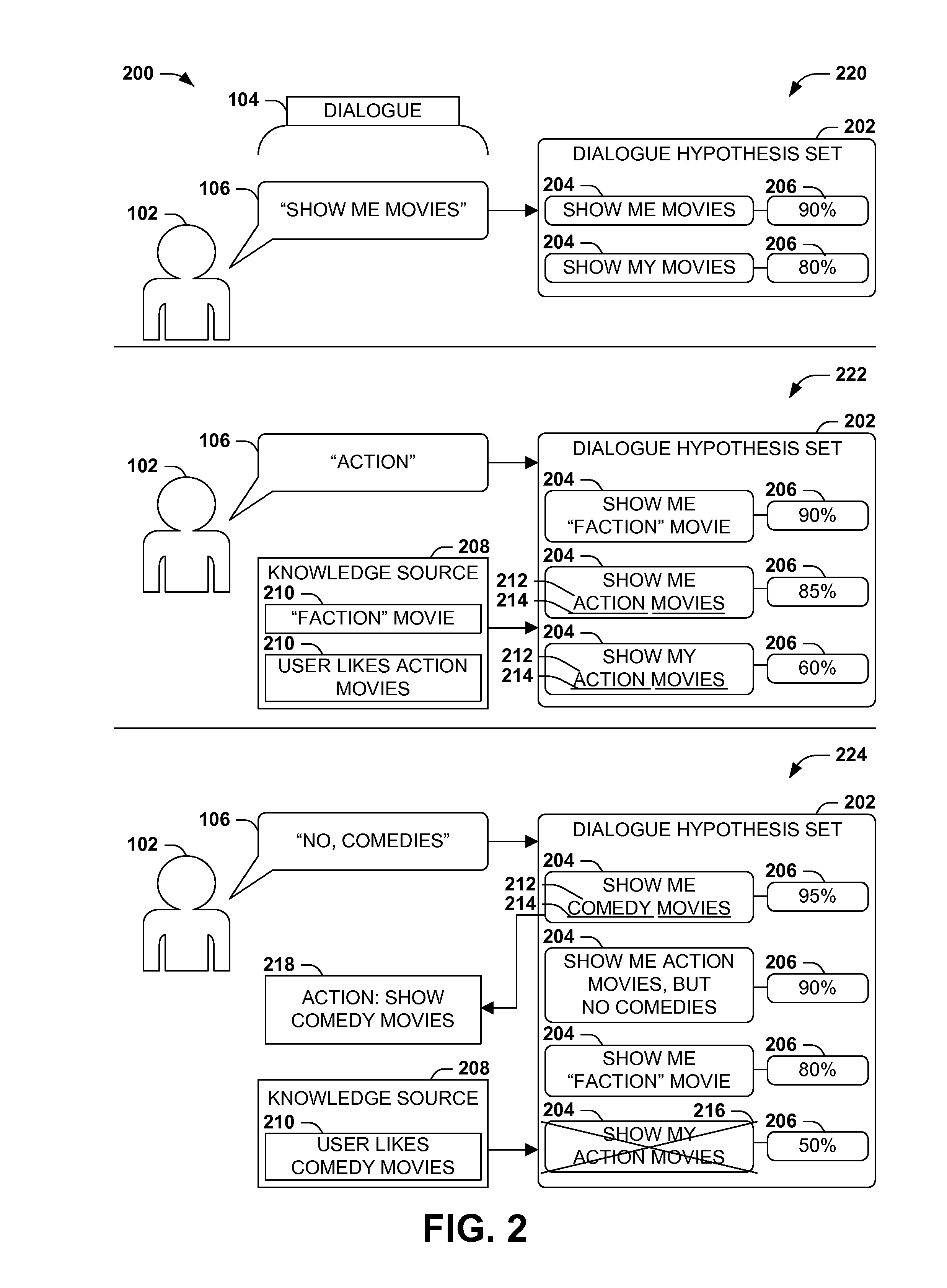

Dialogue evaluation via multiple hypothesis ranking

ActiveUS20150142420A1Reduce settingsNatural language data processingSpeech recognitionKnowledge sourcesMultiple hypothesis

In language evaluation systems, user expressions are often evaluated by speech recognizers and language parsers, and among several possible translations, a highest-probability translation is selected and added to a dialogue sequence. However, such systems may exhibit inadequacies by discarding alternative translations that may initially exhibit a lower probability, but that may have a higher probability when evaluated in the full context of the dialogue, including subsequent expressions. Presented herein are techniques for communicating with a user by formulating a dialogue hypothesis set identifying hypothesis probabilities for a set of dialogue hypotheses, using generative and / or discriminative models, and repeatedly re-ranks the dialogue hypotheses based on subsequent expressions. Additionally, knowledge sources may inform a model-based with a pre-knowledge fetch that facilitates pruning of the hypothesis search space at an early stage, thereby enhancing the accuracy of language parsing while also reducing the latency of the expression evaluation and economizing computing resources.

Owner:MICROSOFT TECH LICENSING LLC

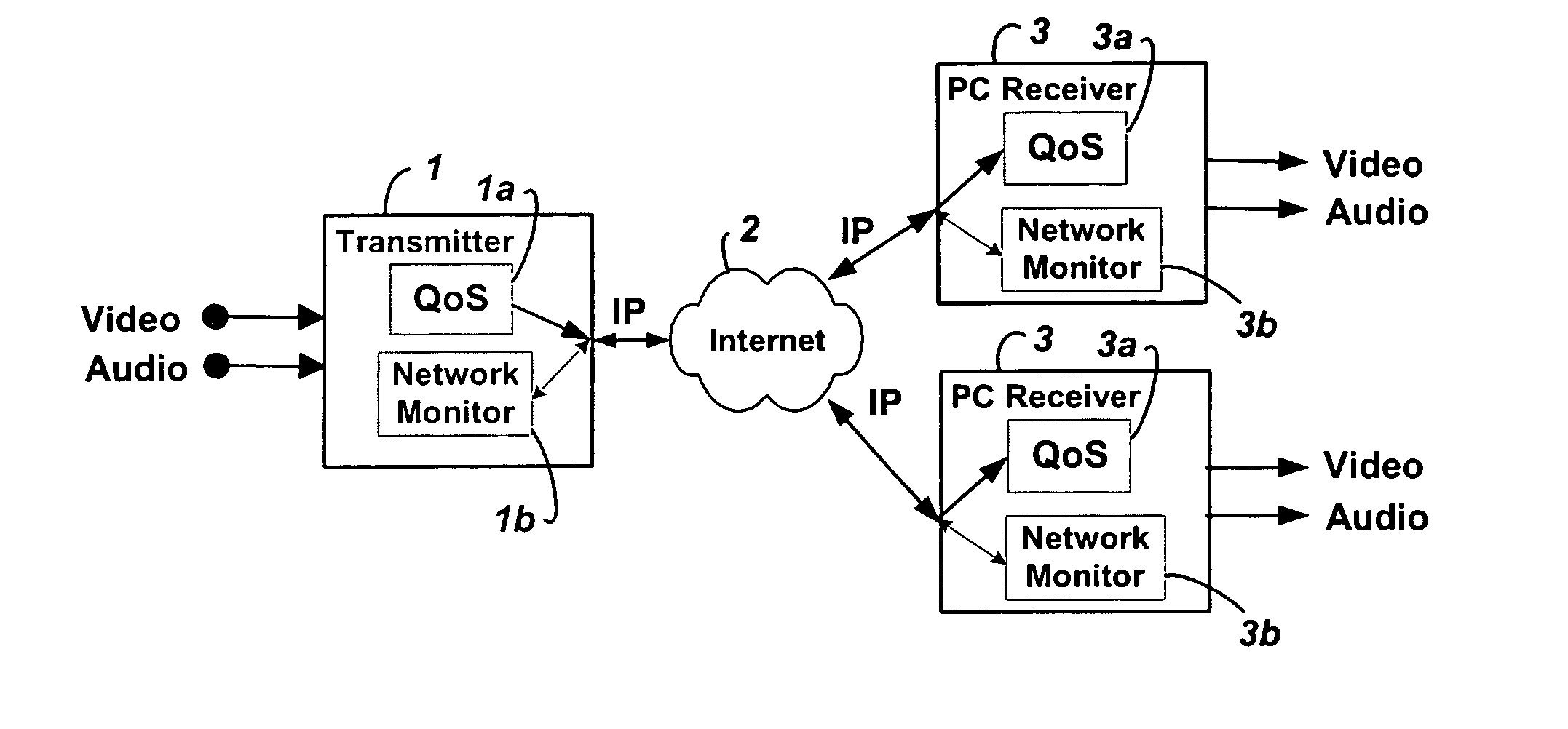

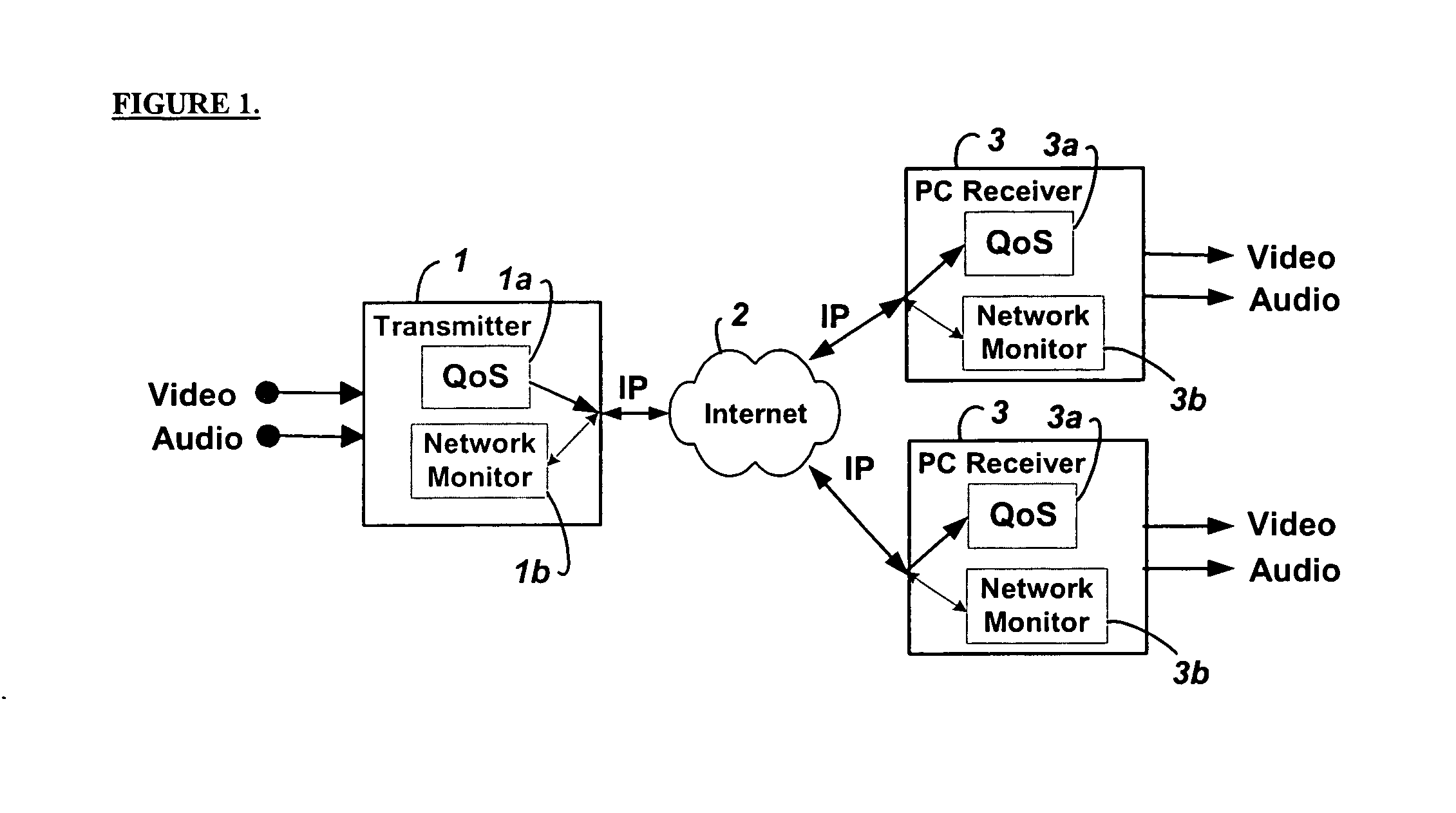

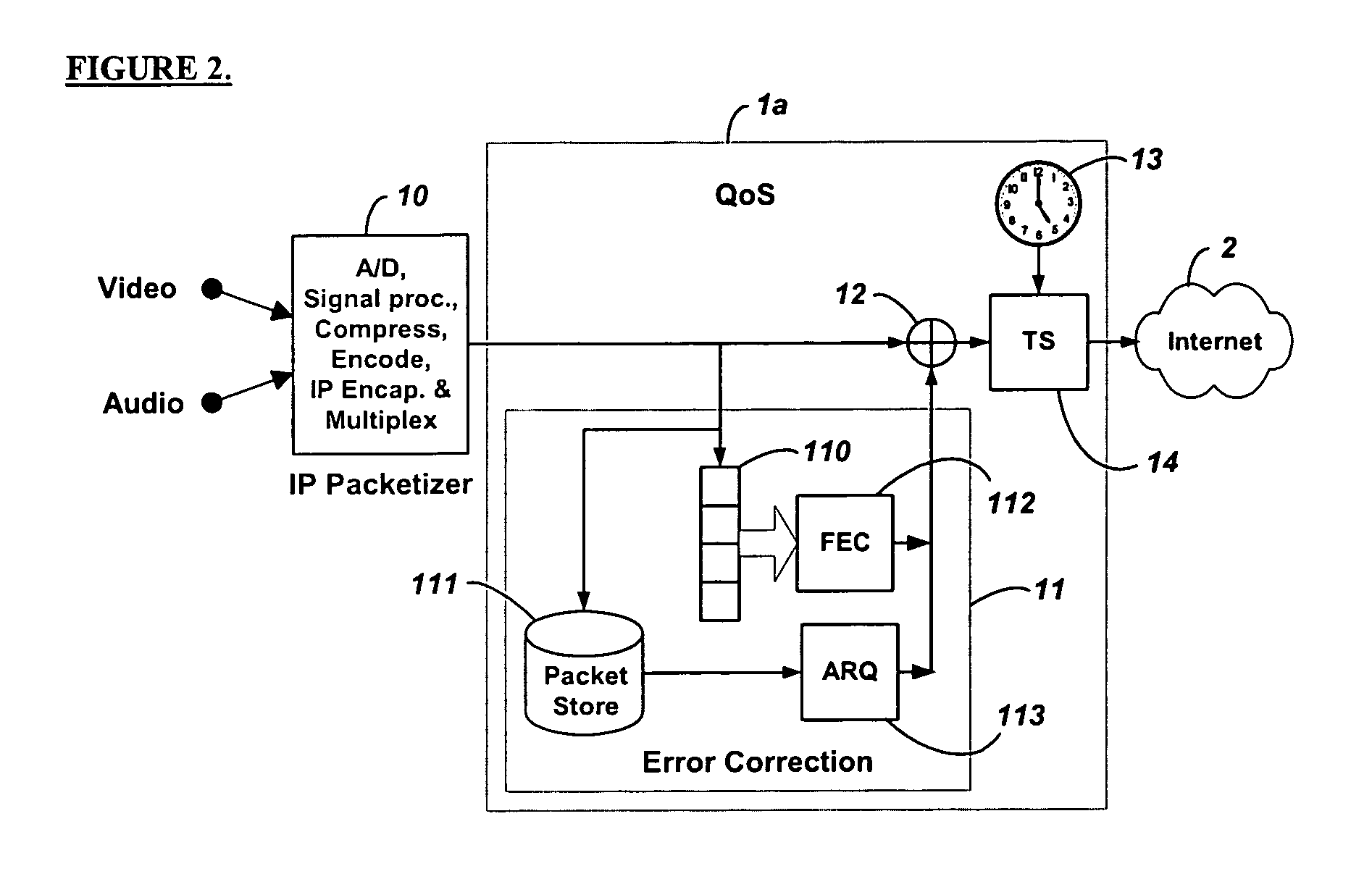

Method and system for providing site independent real-time multimedia transport over packet-switched networks

InactiveUS20060007943A1Minimal latency site-independenceAchieve independenceTime-division multiplexData switching by path configurationData packTimestamp

Embodiments of the invention enable minimum latency site independent real-time video transport over packet switched networks. Some examples of real-time video transport are video conferencing and real-time or live video streaming. In one embodiment of the invention, a network node transmits live or real-tine audio and video signals, encapsulated as Internet Protocol (IP) data packets, to one or more nodes on the Internet or other IP network. One embodiment of the invention enables a user to move to different nodes or move nodes to different locations thereby providing site independence. Site independence is achieved by measuring and accounting for the jitter and delay between a transmitter and receiver based on the particular path between the transmitter and receiver independent of site location. The transmitter inserts timestamps and sequence numbers into packets and then transmits them. A receiver uses these timestamps to recover the transmitter's clock. The receiver stores the packets in a buffer that orders them by sequence number. The packets stay in the buffer for a fixed latency to compensate for possible network jitter and / or packet reordering. The combination of timestamp packet-processing, remote clock recovery and synchronization, fixed-latency receiver buffering, and error correction mechanisms help to preserve the quality of the received video, despite the significant network impairments generally encountered throughout the Internet and wireless networks.

Owner:QVIDIUM TECH

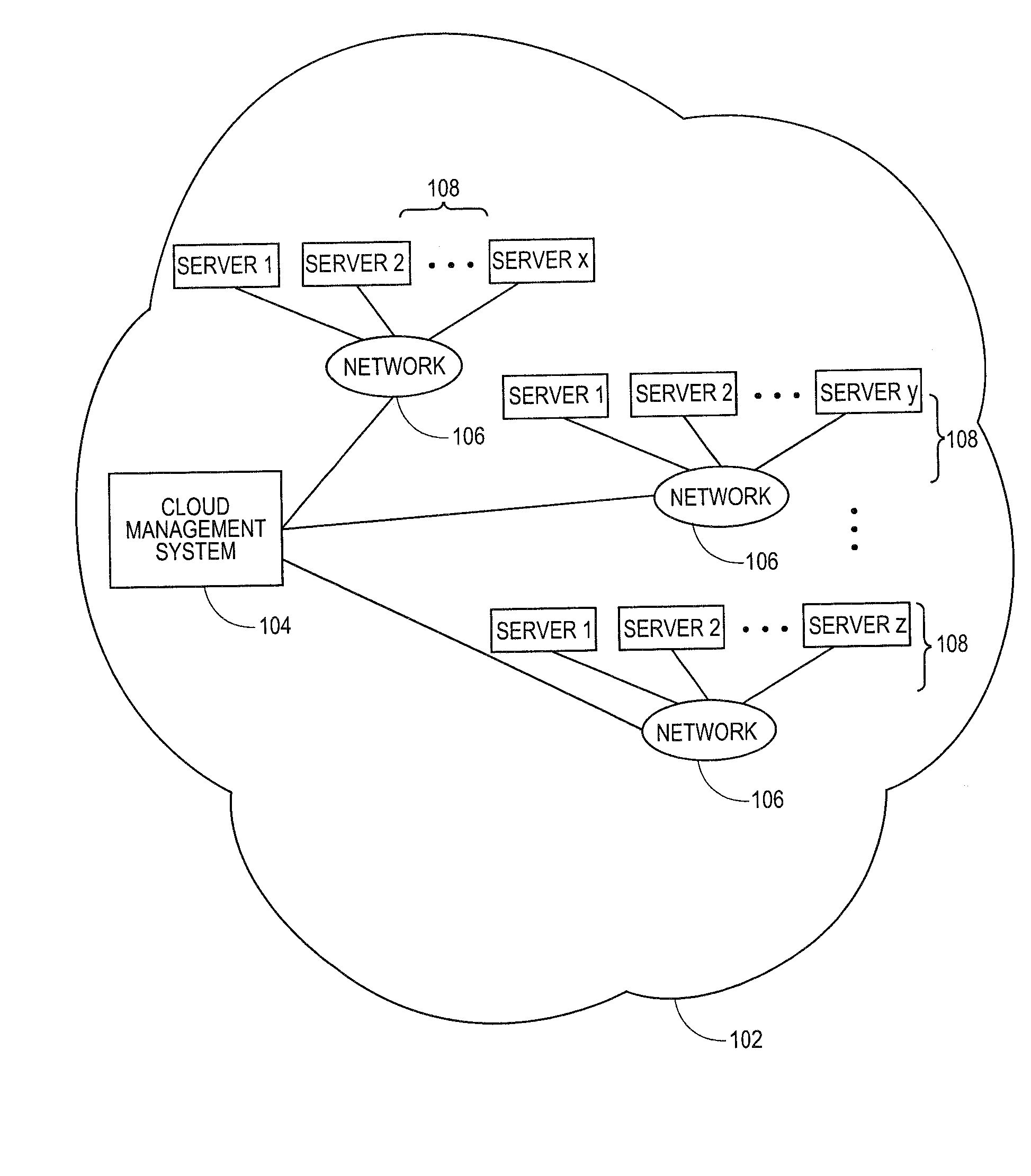

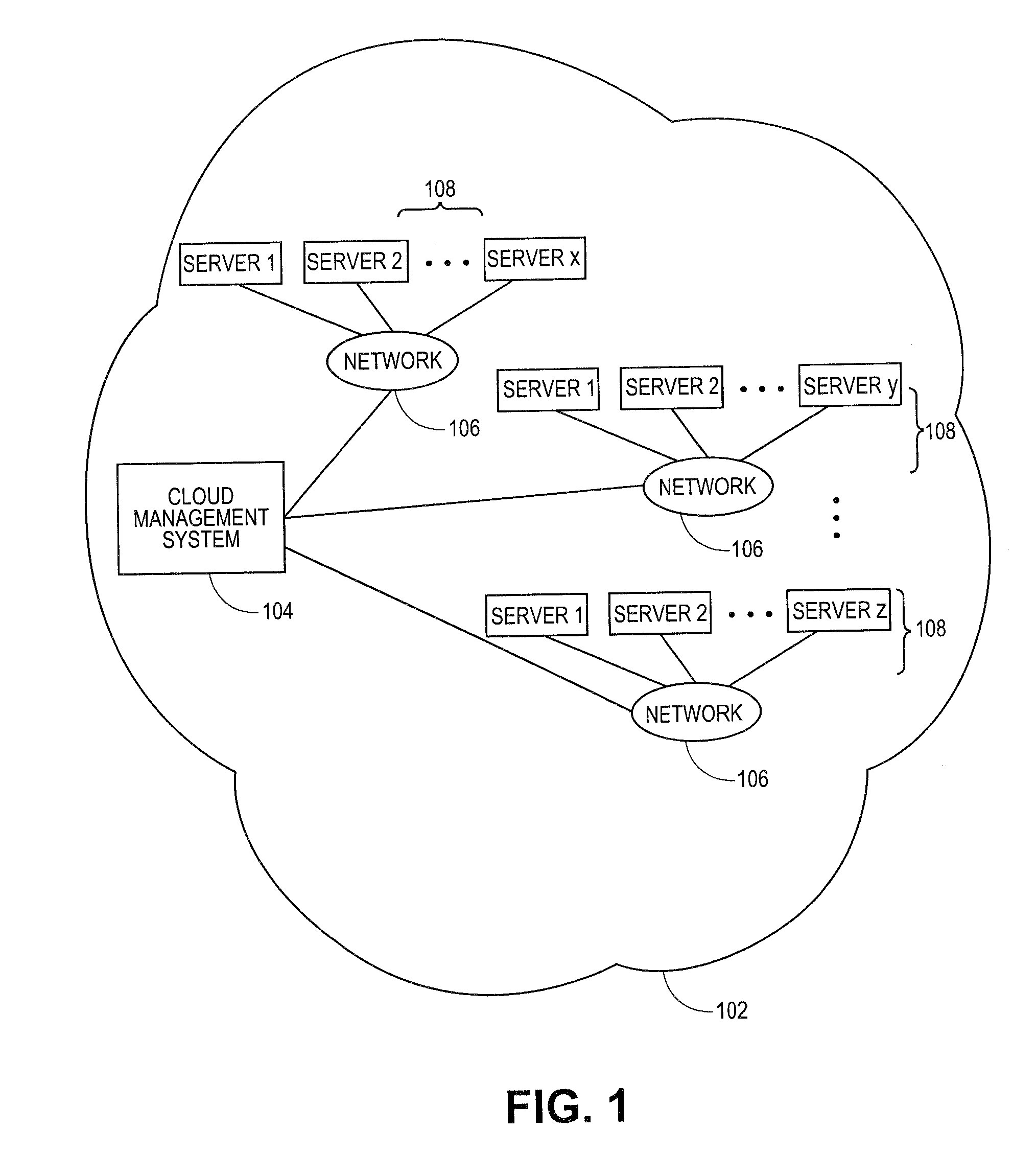

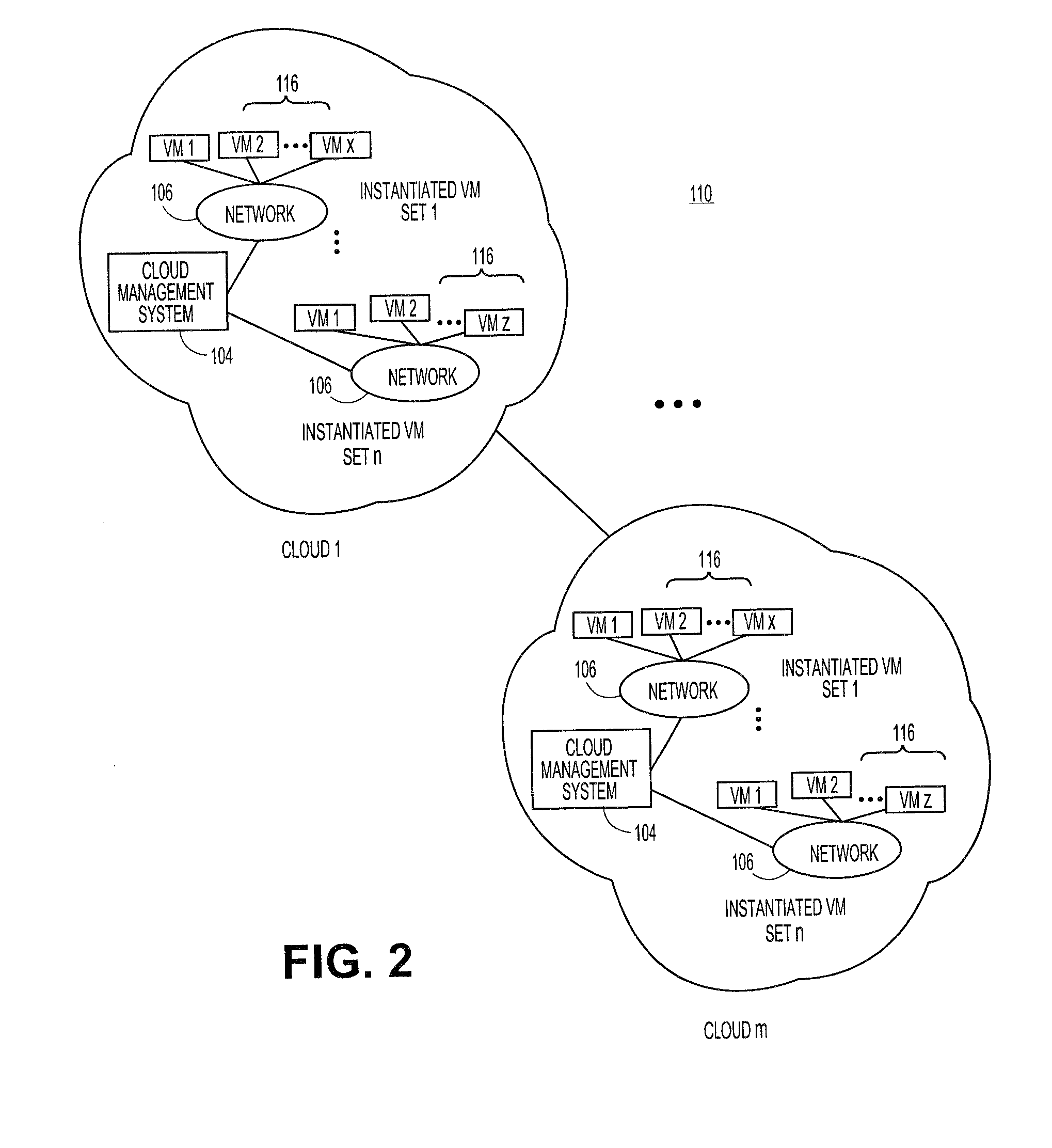

Systems and methods for embedding a cloud-based resource request in a specification language wrapper

Embodiments relate to systems and methods for embedding a cloud-based resource request in a specification language wrapper. In embodiments, a set of applications and / or a set of appliances can be registered to be instantiated in a cloud-based network. Each application or appliance can have an associated set of specified resources with which the user wishes to instantiate those objects. For example, a user may specify a maximum latency for input / output of the application or appliance, a geographic location of the supporting cloud resources, a processor throughput, or other resource specification to instantiate the desired object. According to embodiments, the set of requested resources can be embedded in a specification language wrapper, such as an XML object. The specification language wrapper can be transmitted to a marketplace to seek the response of available clouds which can support the application or appliance according to the specifications contained in the specification language wrapper.

Owner:RED HAT

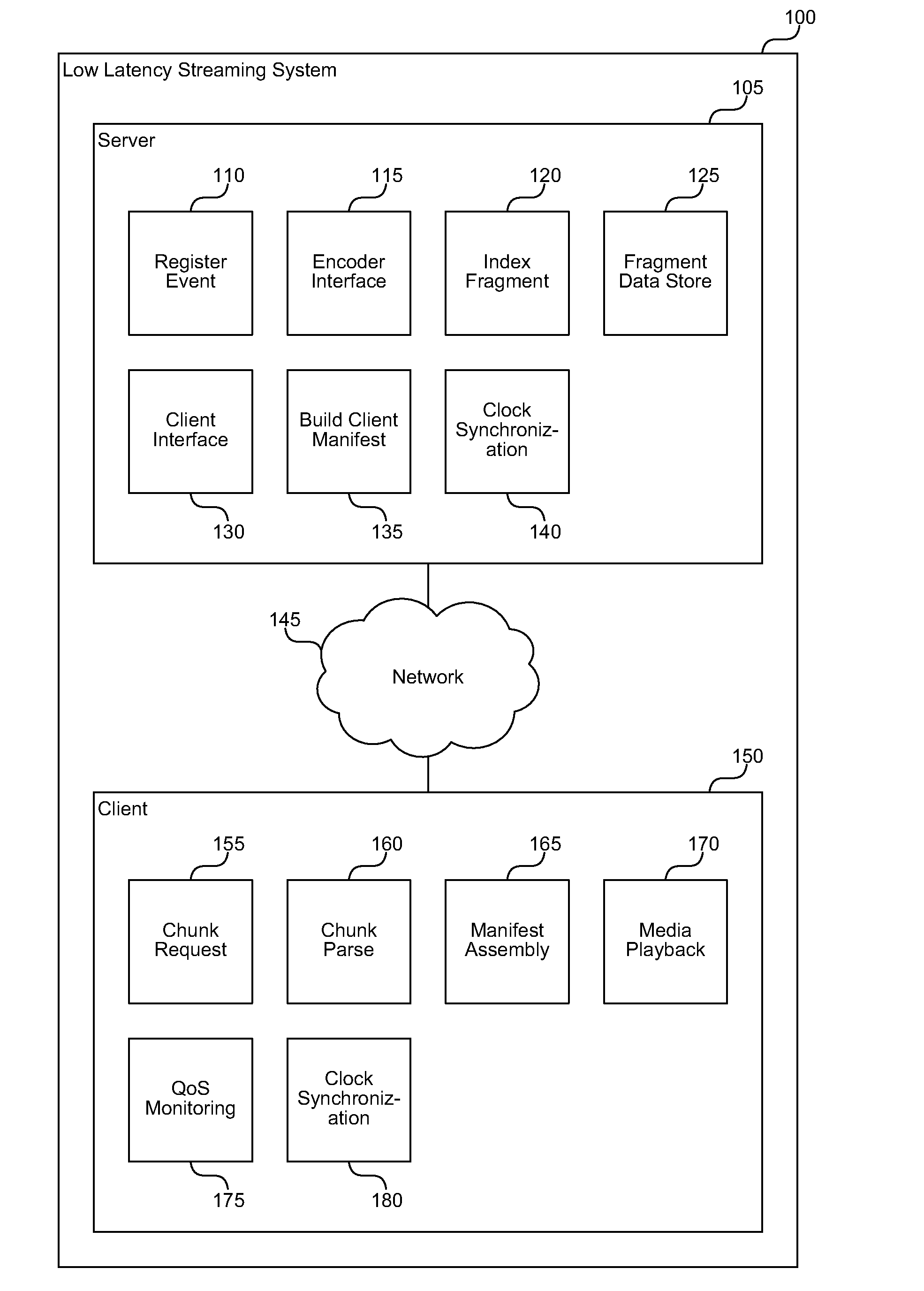

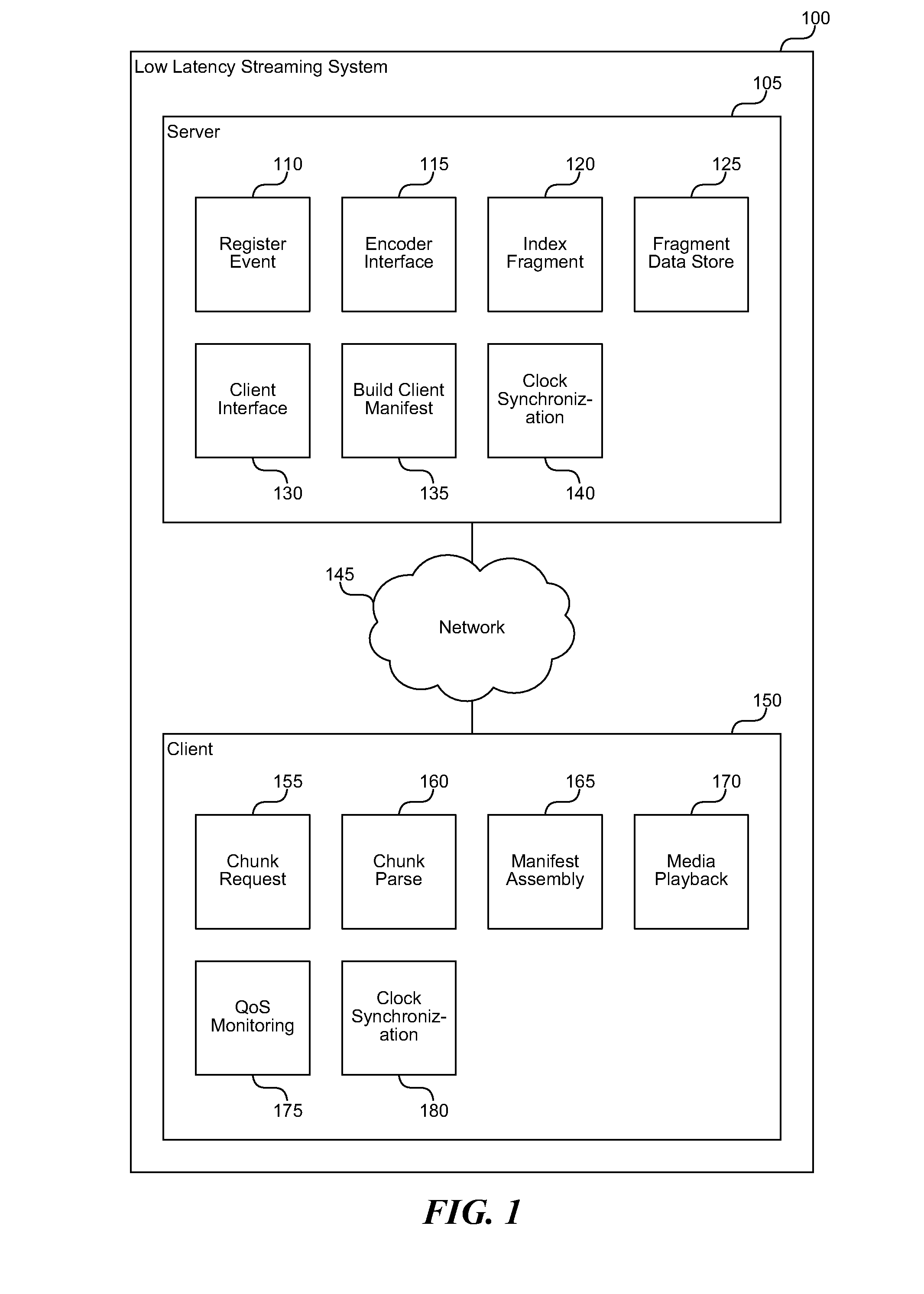

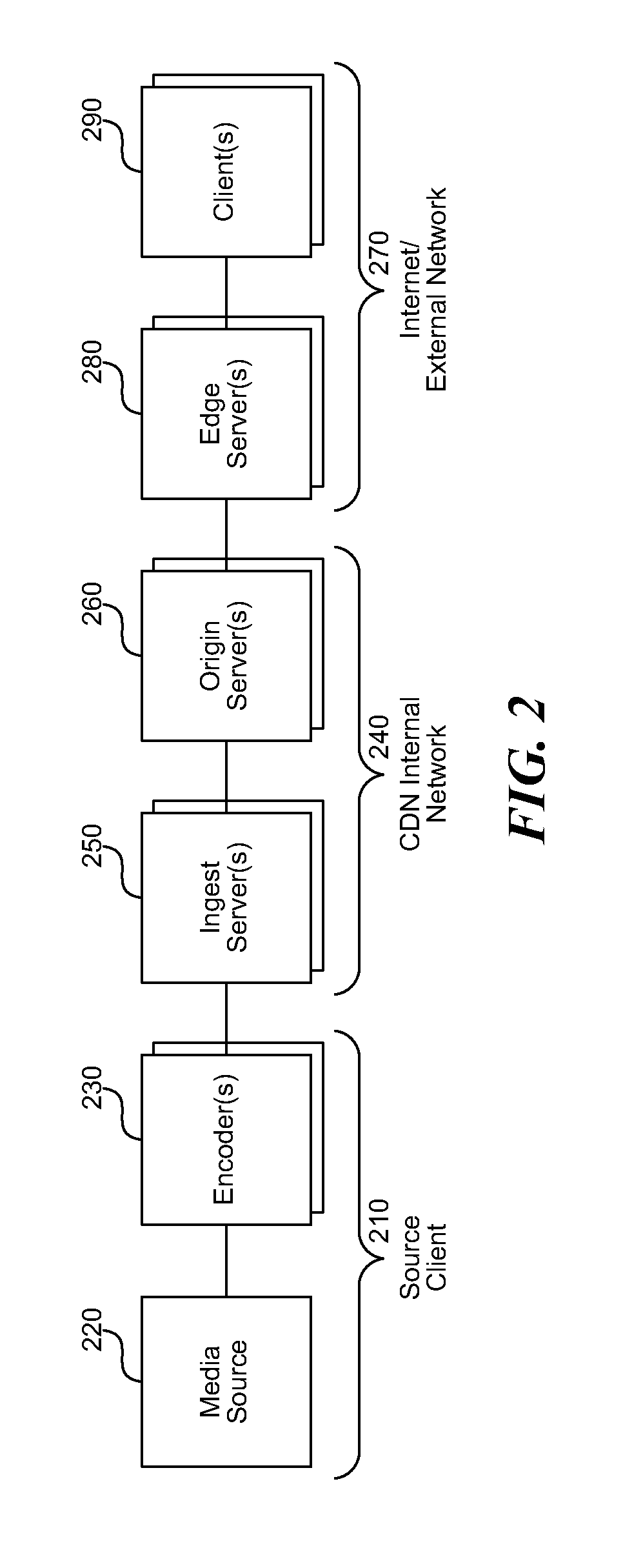

Low latency cacheable media streaming

ActiveUS20110080940A1Lower latencyRaise the possibilityPicture reproducers using cathode ray tubesPicture reproducers with optical-mechanical scanningGroup of picturesCache server

A low latency streaming system provides a stateless protocol between a client and server with reduced latency. The server embeds incremental information in media fragments that eliminates the usage of a typical control channel. In addition, the server provides uniform media fragment responses to media fragment requests, thereby allowing existing Internet cache infrastructure to cache streaming media data. Each fragment has a distinguished Uniform Resource Locator (URL) that allows the fragment to be identified and cached by both Internet cache servers and the client's browser cache. The system reduces latency using various techniques, such as sending fragments that contain less than a full group of pictures (GOP), encoding media without dependencies on subsequent frames, and by allowing clients to request subsequent frames with only information about previous frames.

Owner:MICROSOFT TECH LICENSING LLC

Method for determining metrics of a content delivery and global traffic management network

ActiveUS20030065763A1Metering/charging/biilling arrangementsError preventionTraffic capacityNetwork packet

Owner:AKAMAI TECH INC

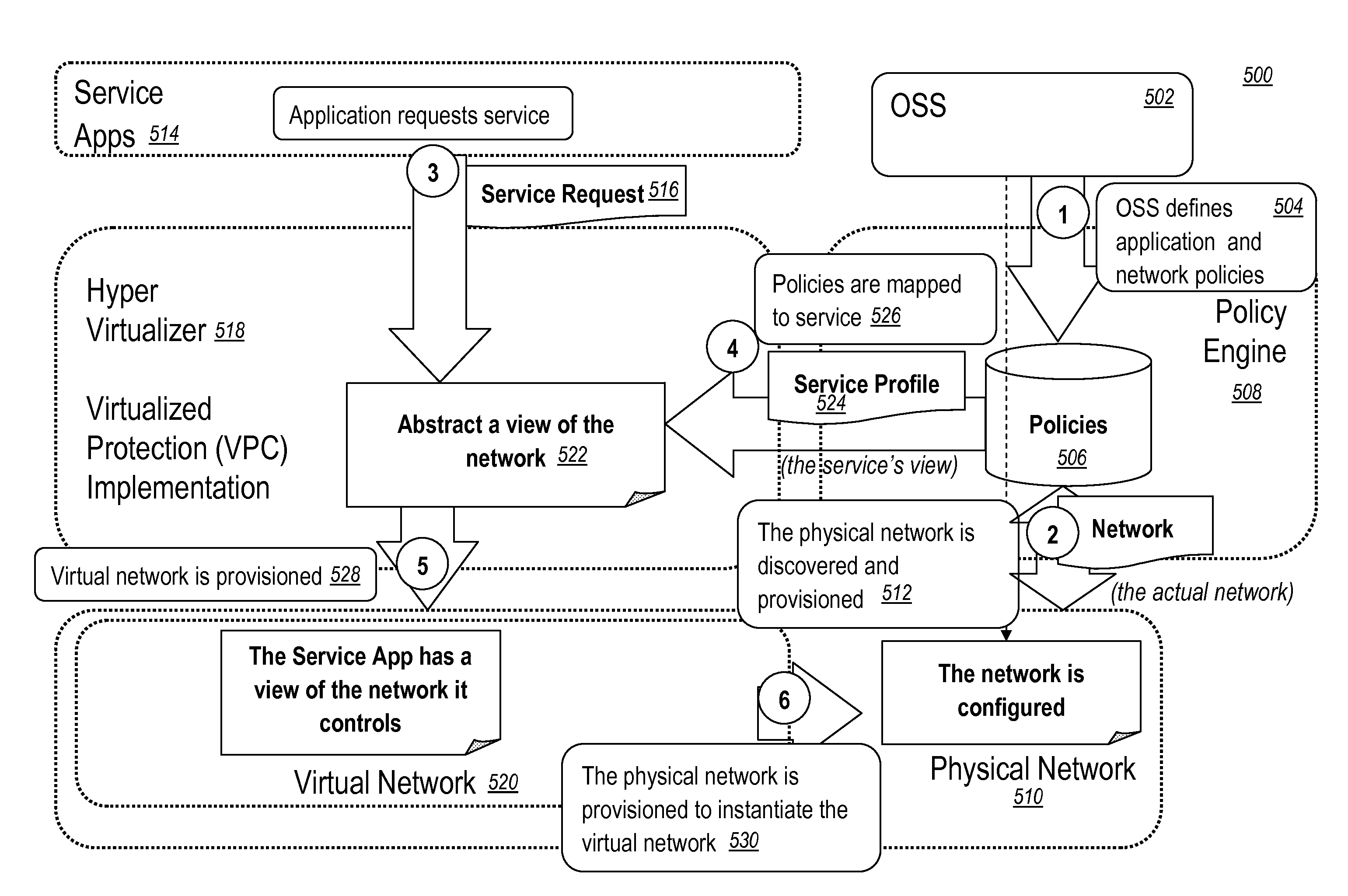

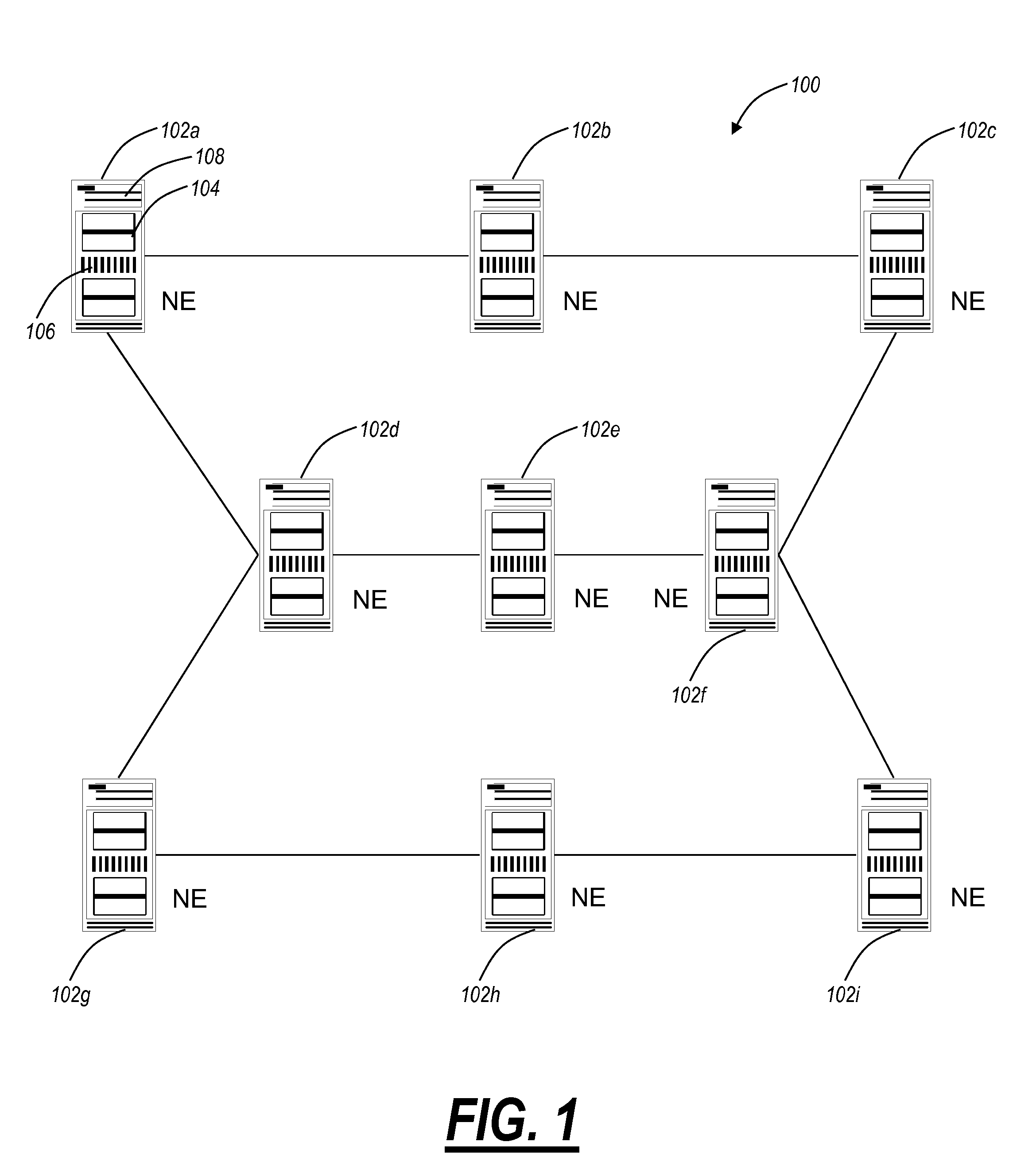

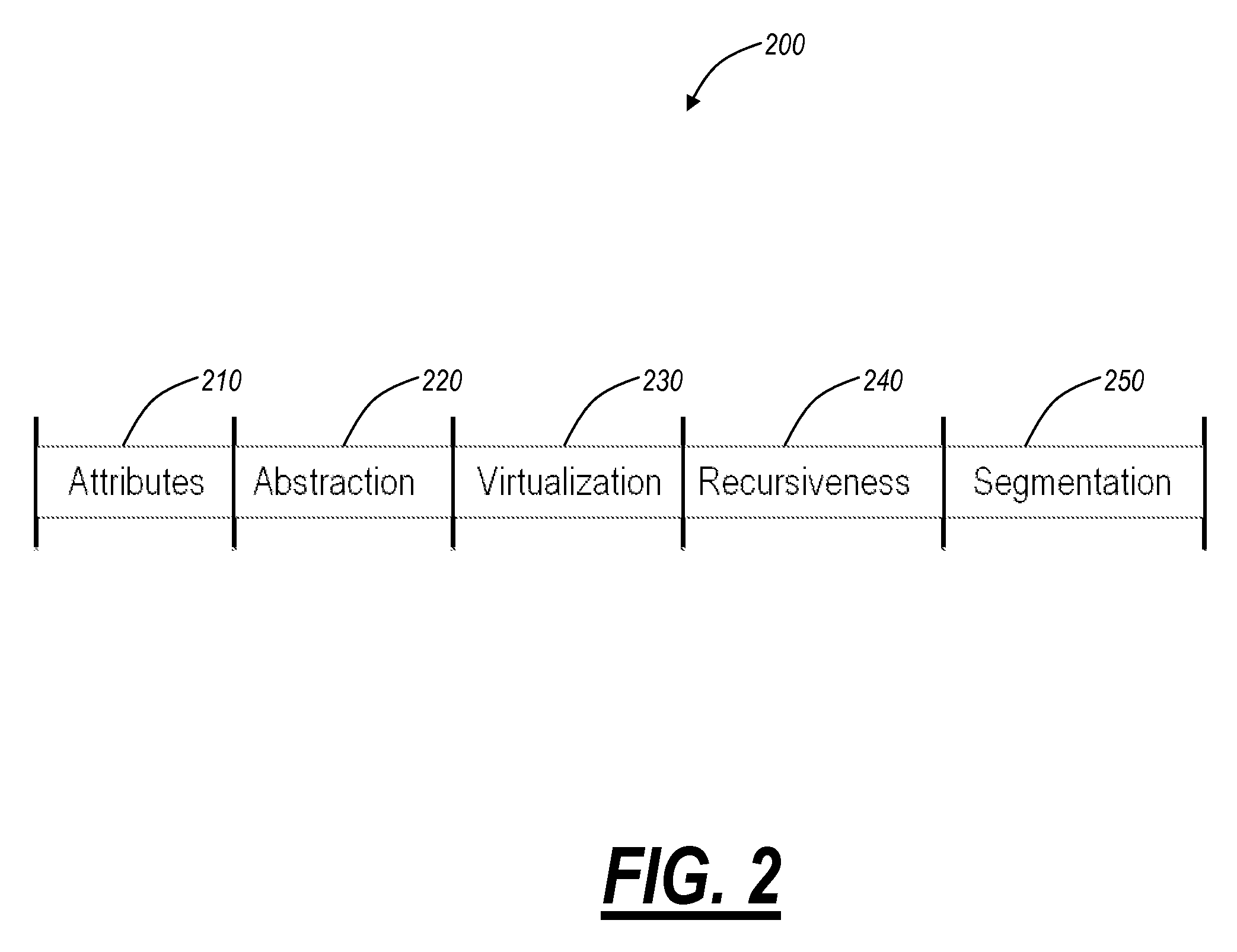

Virtualized shared protection capacity

The present disclosure relates a network, a network element, a system, and a method providing an efficient allocation of protection capacity for network connections and / or services. These may be for services within a given Virtual Private Network (VPN) or Virtual Machine (VM) instance flow. Network ingress / egress ports are designed to be VM instance aware while transit ports may or may not be depending on network element capability or configuration. A centralized policy management and a distributed control plane are used to discover and allocate resources to and among the VPNs or VM instances. Algorithms for efficient allocation and release of protection capacity may be coordinated between the centralized policy management and the distributed control plane. Additional coupling of attributes such as latency may provide more sophisticated path selection algorithms including efficient sharing of protection capacity.

Owner:CIENA

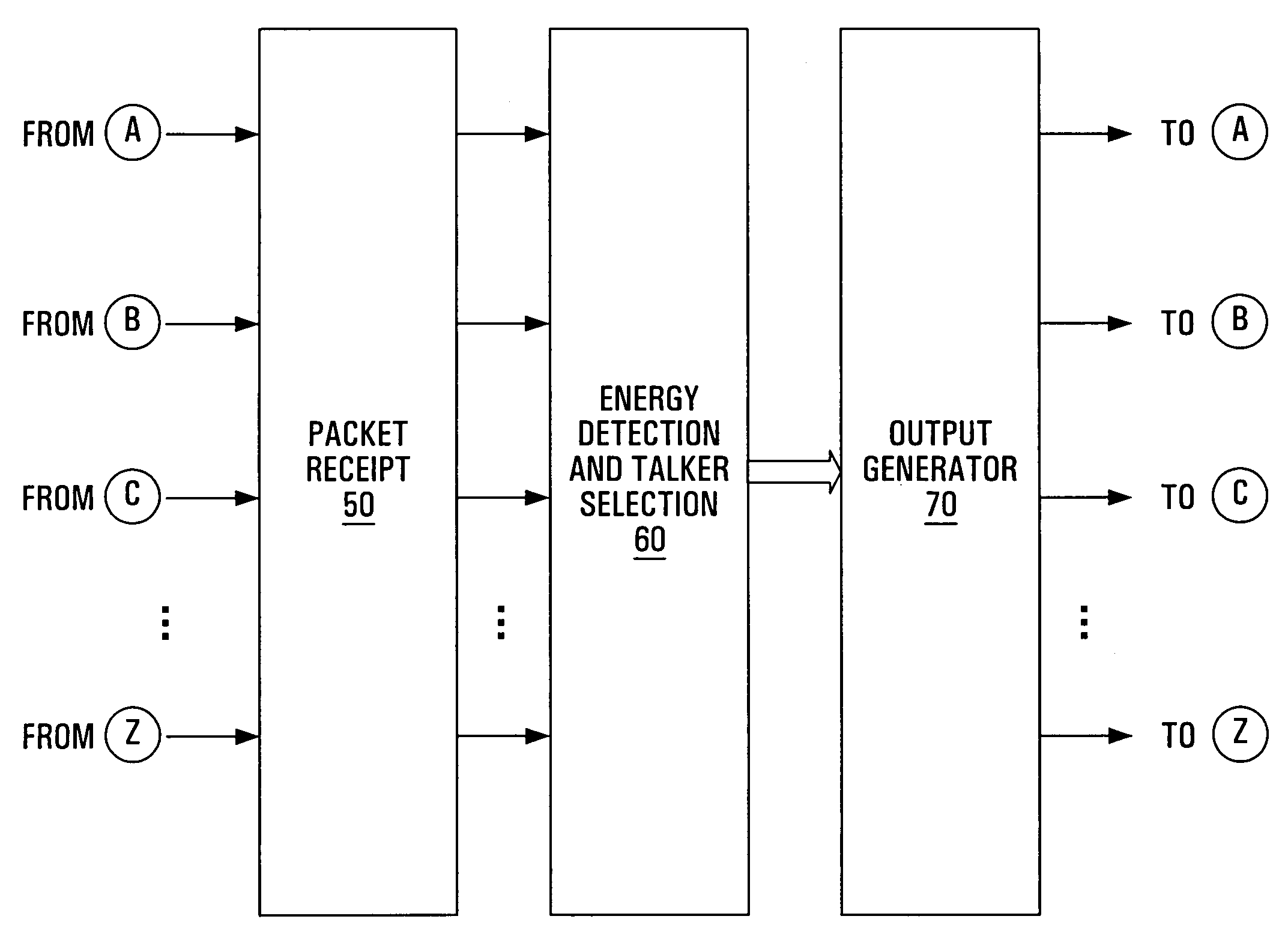

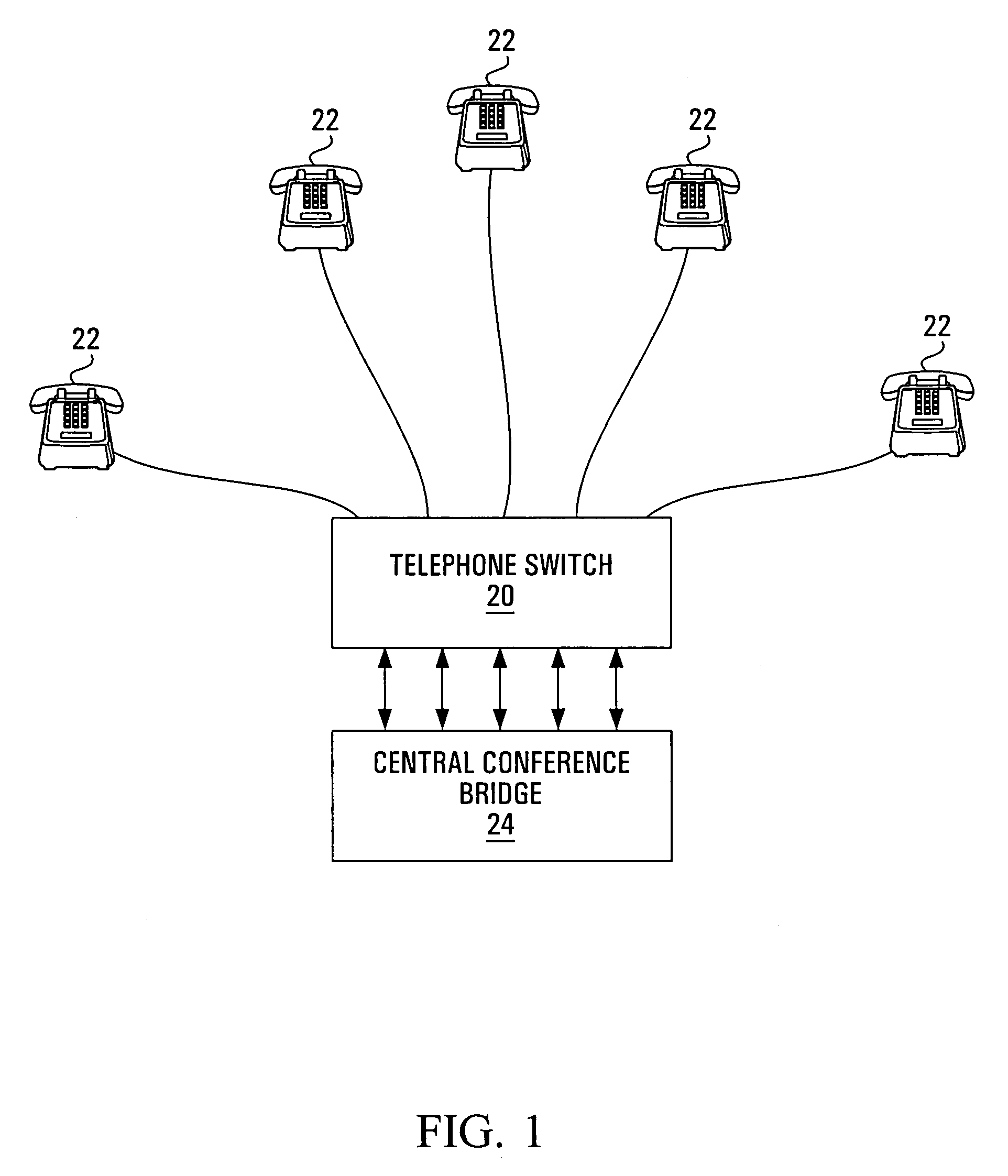

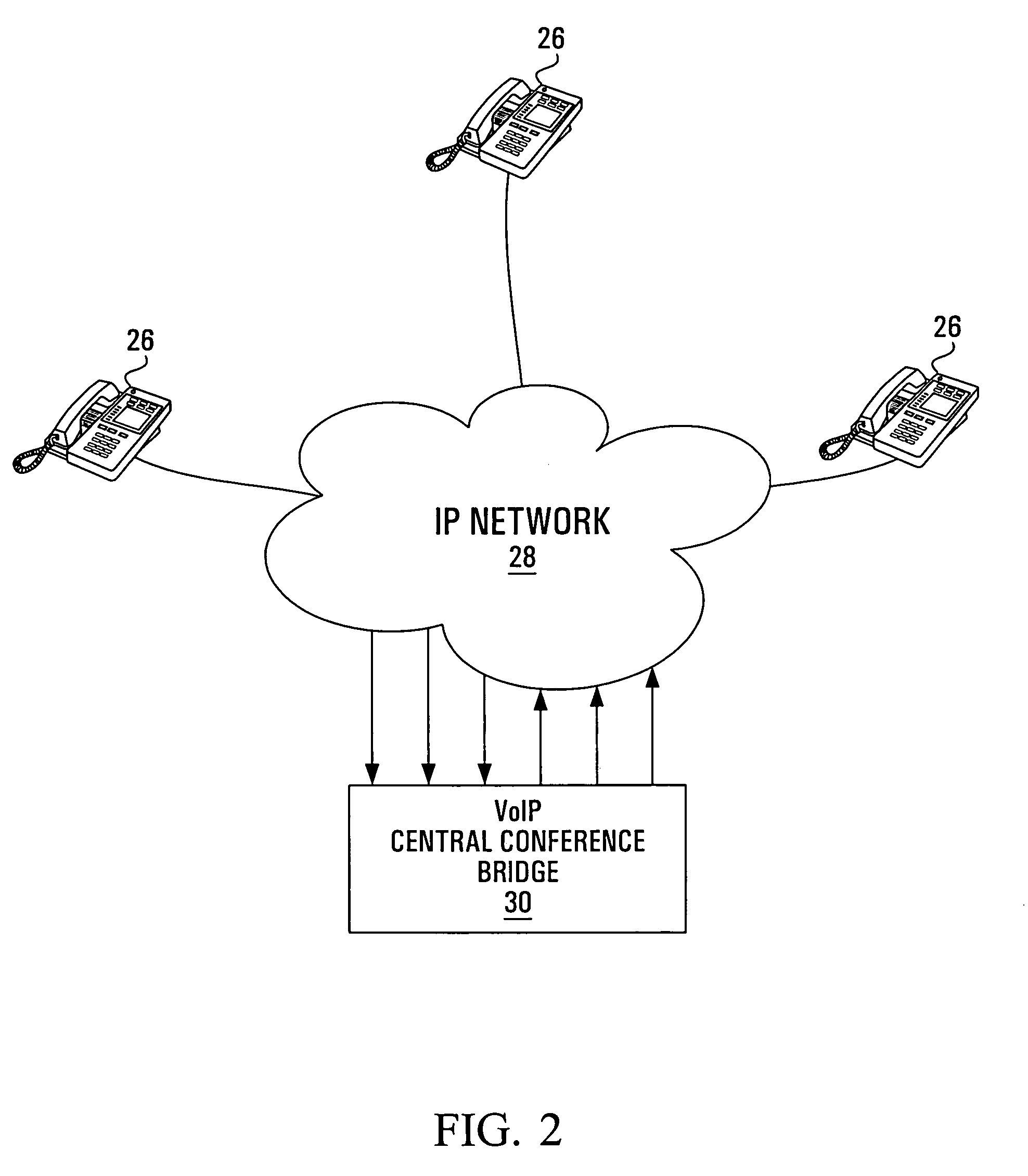

Apparatus and method for packet-based media communications

InactiveUS6940826B1Reduction in transcodingReduce latencySpecial service provision for substationMultiplex system selection arrangementsSignal qualityVoice communication

Packet-based central conference bridges, packet-based network interfaces and packet-based terminals are used for voice communications over a packet-based network. Modifications to these apparatuses can reduce the latency and the signal processing requirements while increasing the signal quality within a voice conference as well as point-to-point communications. For instance, by selecting the talkers prior to the decompression of the voice signals, decreases in the latency and increases in signal quality within the voice conference can result due to a possible removal of the decompression and subsequent compression operations in a conference bridge unnecessary in some circumstances. Further, the removal of the jitter buffers within the conference bridges and the moving of the mixing operation to the individual terminals and / or network interfaces are modifications that can cause lower latency and transcoding within the voice conference.

Owner:RPX CLEARINGHOUSE

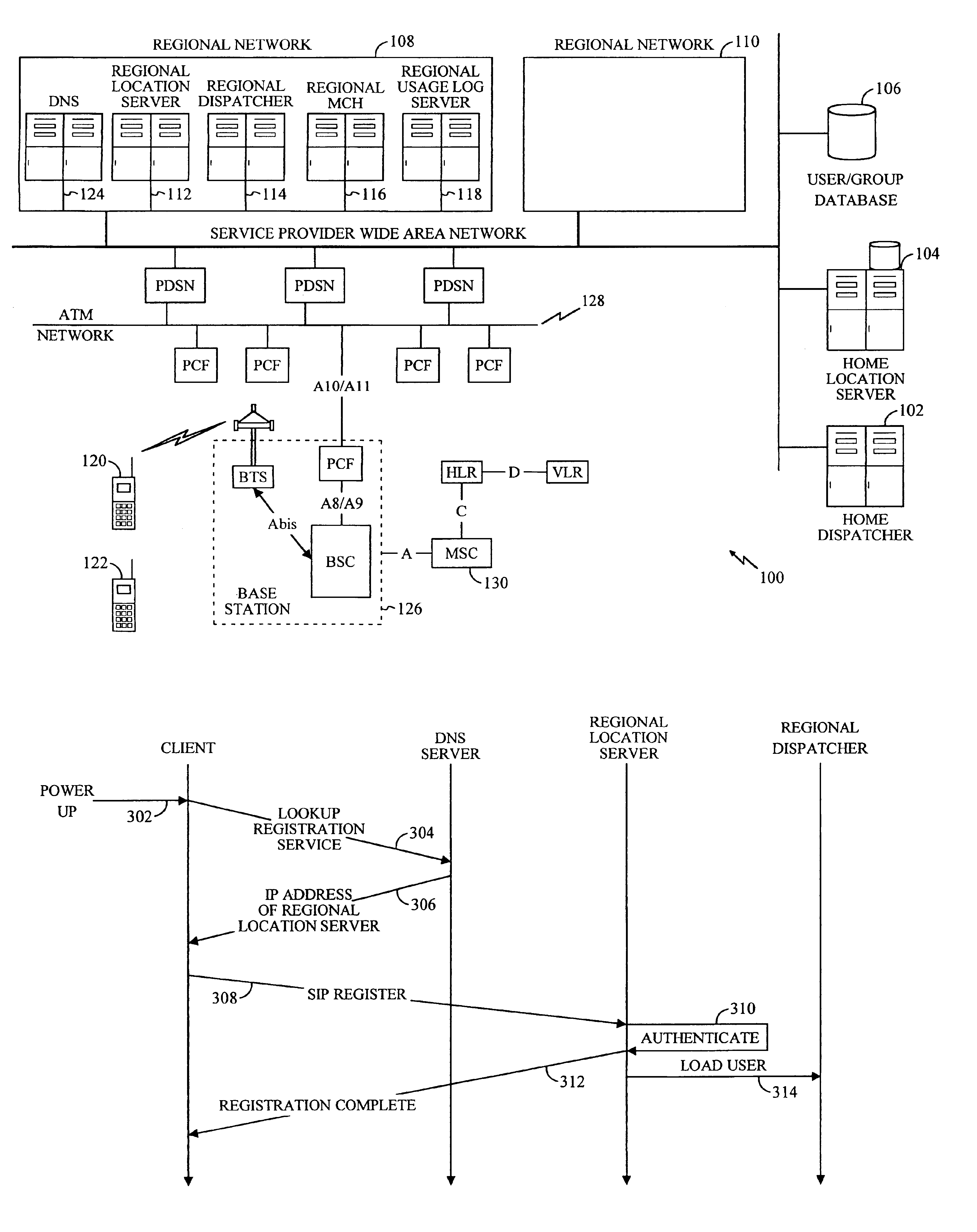

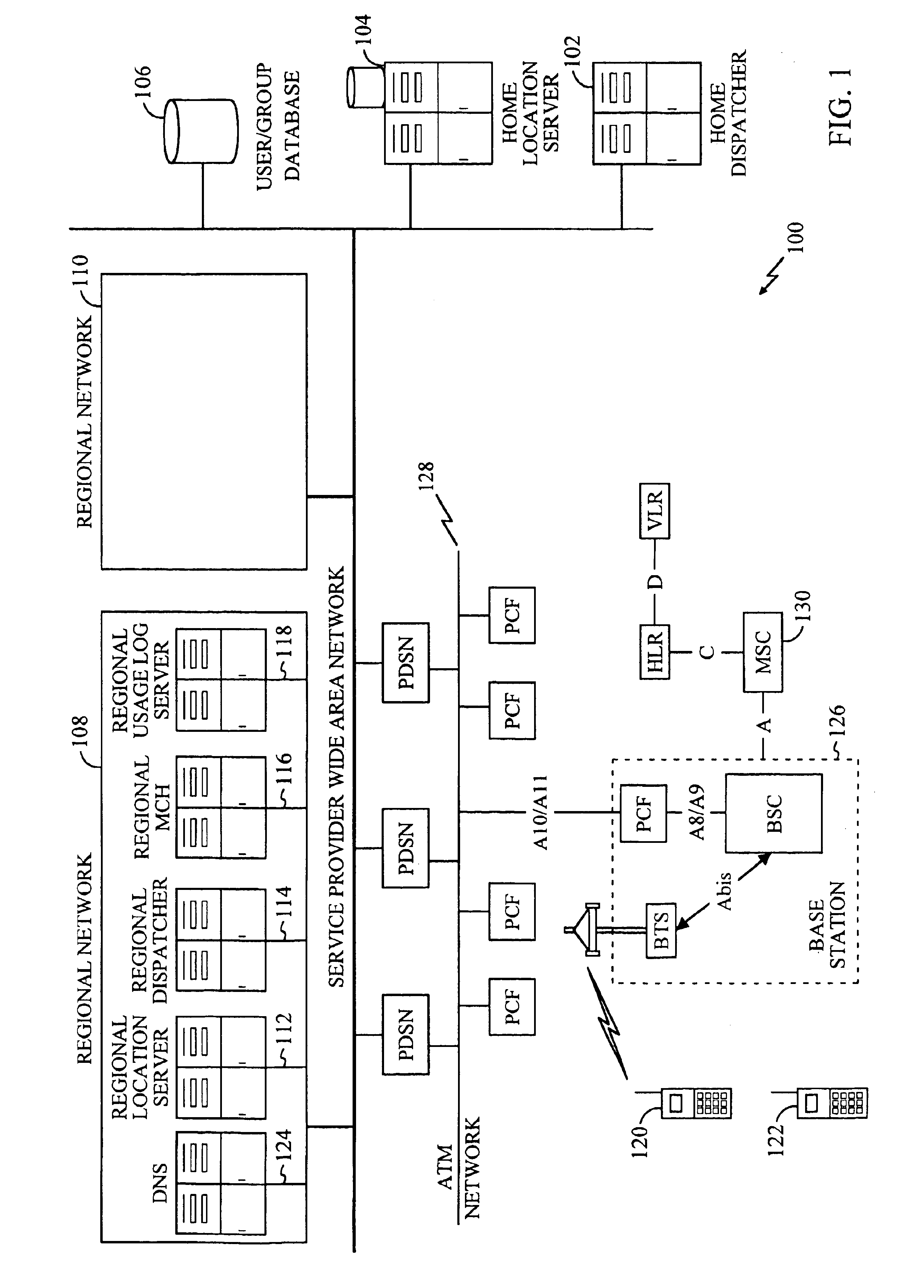

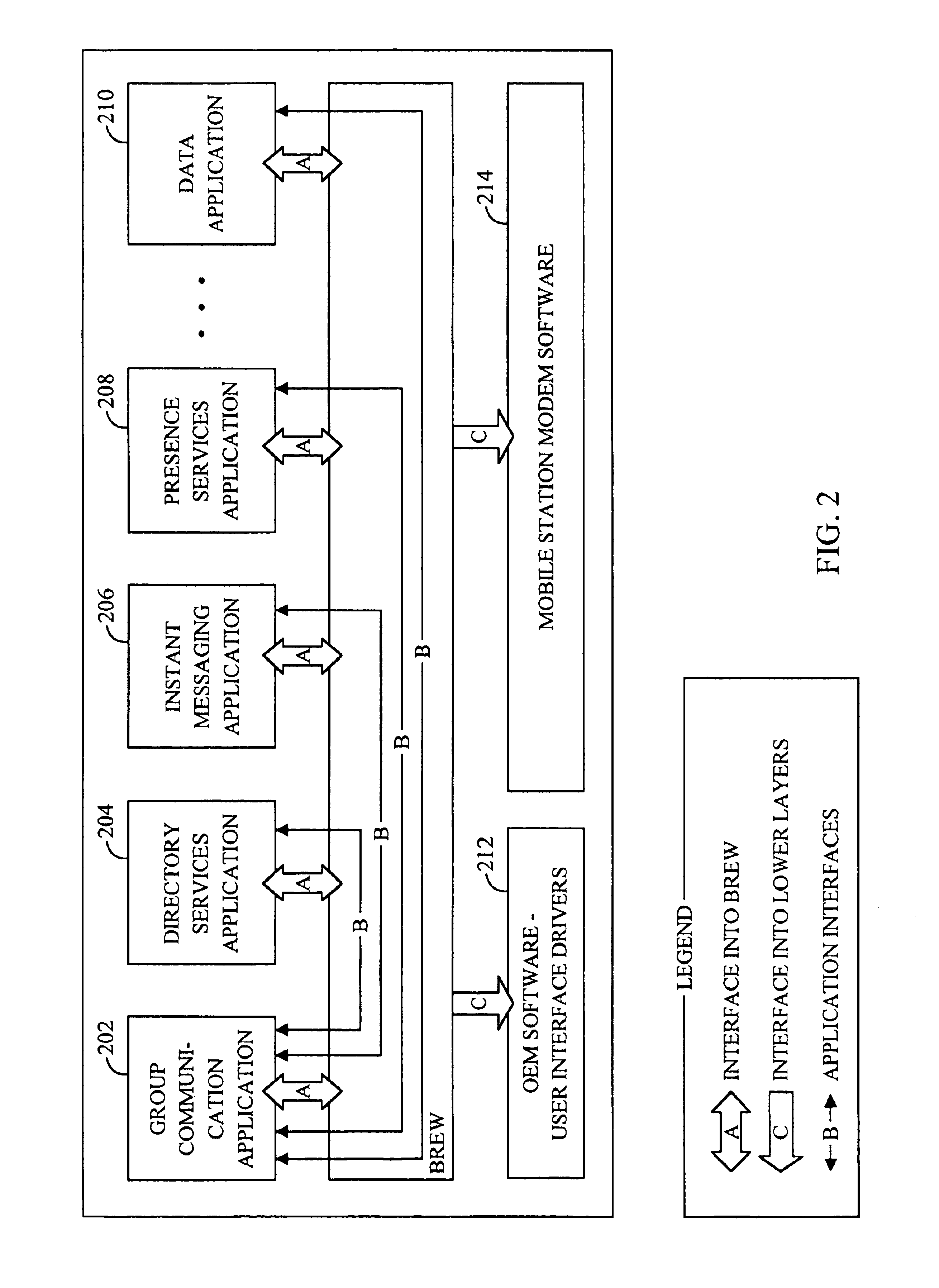

Method and an apparatus for adding a new member to an active group call in a group communication network

InactiveUS6873854B2Multiplex system selection arrangementsSpecial service provision for substationTelecommunicationsMobile station

A method and apparatus for adding a member to an active call in a group communication network provides for receiving a member list from a user and sending a request to a server to add the member list to the active group call. The method and apparatus further provides for announcing each member in the member list that they are being added to the group call, receiving acknowledgement from a member who wishes to participate in the group call, and forwarding media to the member. The method and apparatus also provides for a significant reduction in the actual total dormancy wakeup time and latency by exchanging group call signaling even when mobiles are dormant and no traffic channel is active.

Owner:QUALCOMM INC

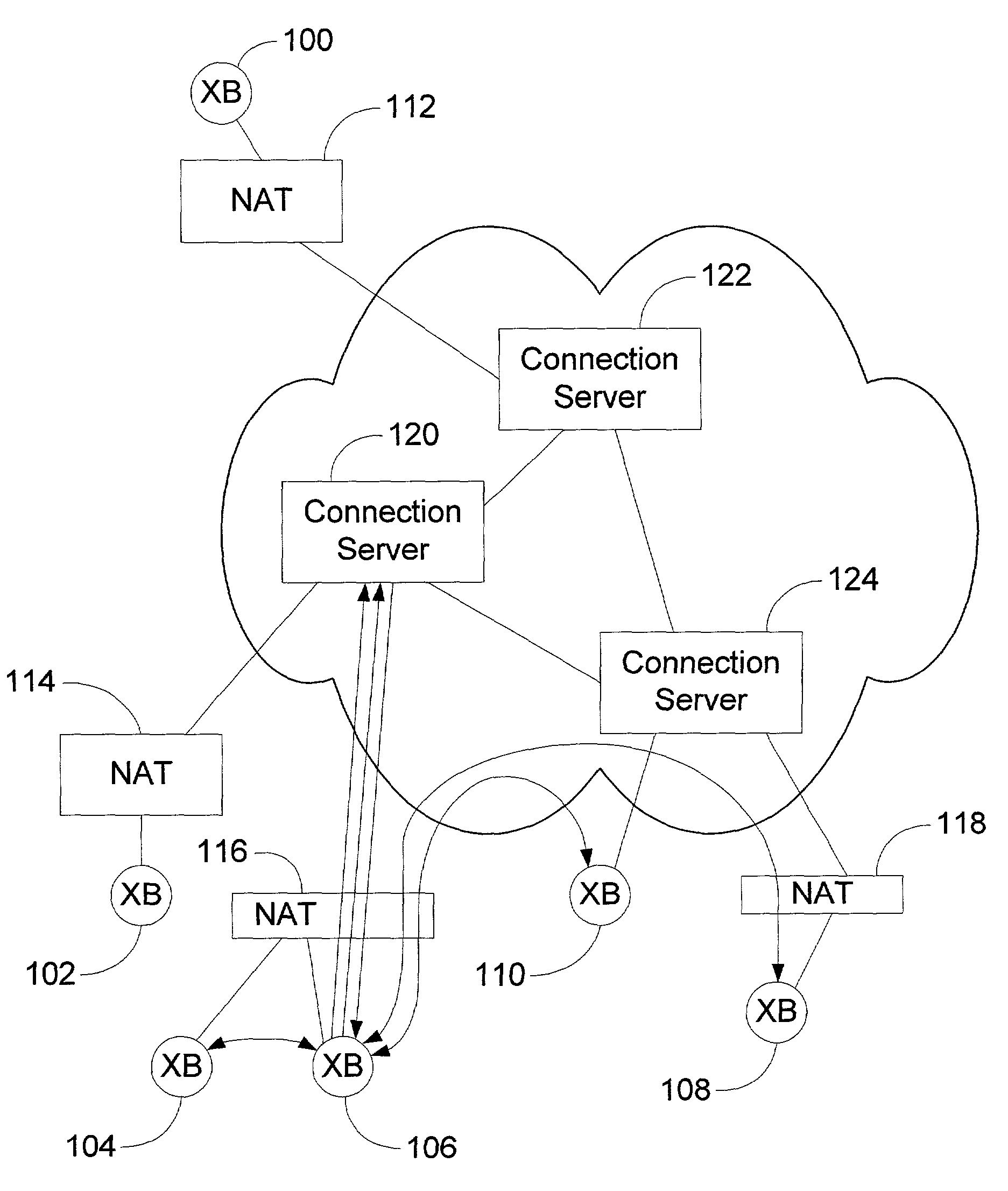

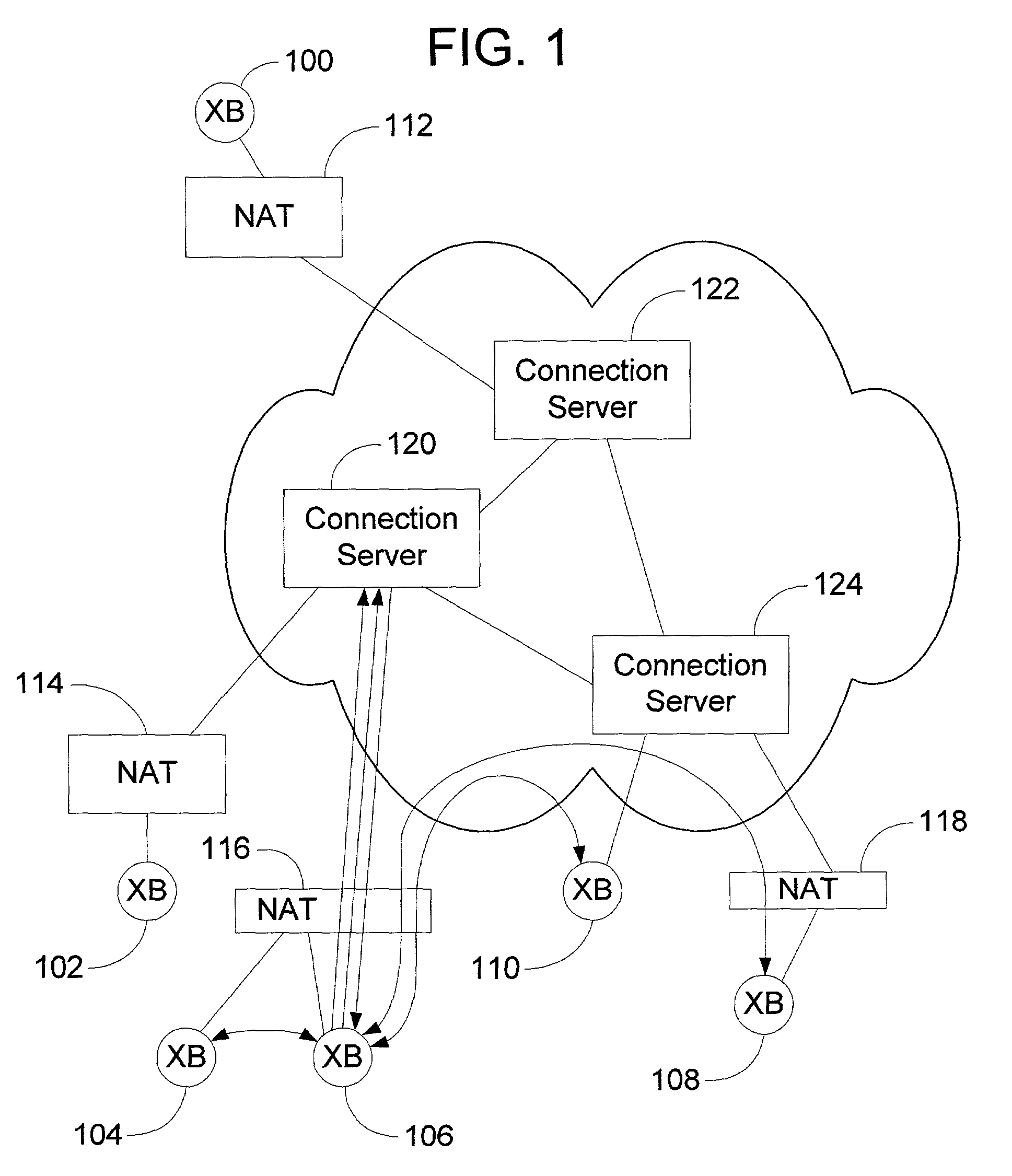

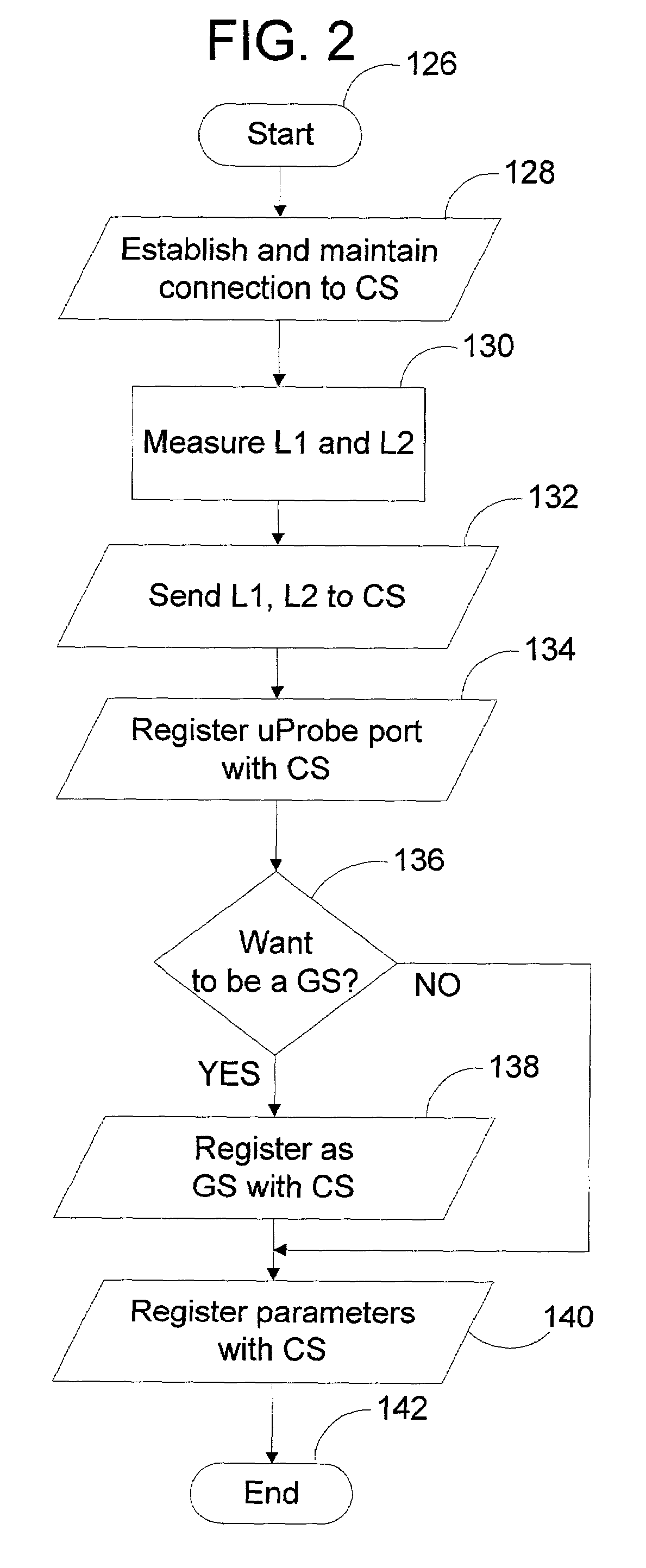

Peer-to-peer method of quality of service (QoS) probing and analysis and infrastructure employing same

InactiveUS7133368B2Improve gaming experienceImprove performanceError preventionTransmission systemsQuality of servicePacket loss

A peer-to-peer (P2P) probing / network quality of service (QoS) analysis system utilizes a UDP-based probing tool for determining latency, bandwidth, and packet loss ratio between peers in a network. The probing tool enables network QoS probing between peers that connect through a network address translator. The list of peers to probe is provided by a connection server based on prior probe results and an estimate of the network condition. The list includes those peers which are predicted to have the best QoS with the requesting peer. Once the list is obtained, the requesting peer probes the actual QoS to each peer on the list, and returns these results to the connection server. P2P probing in parallel using a modified packet-pair scheme is utilized. If anomalous results are obtained, a hop-by-hop probing scheme is utilized to determine the QoS of each link. In such a scheme, differential destination measurement is utilized.

Owner:MICROSOFT TECH LICENSING LLC

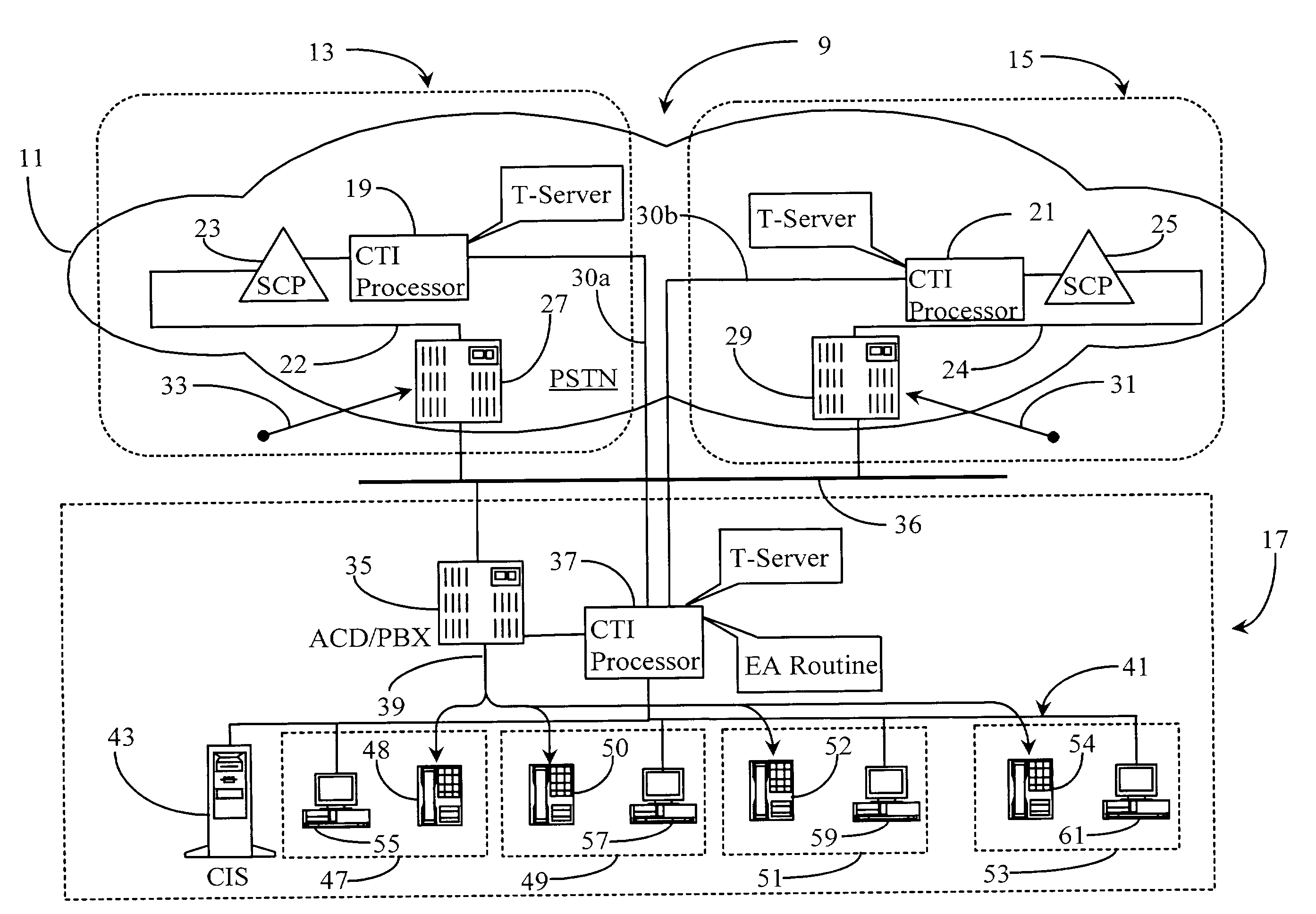

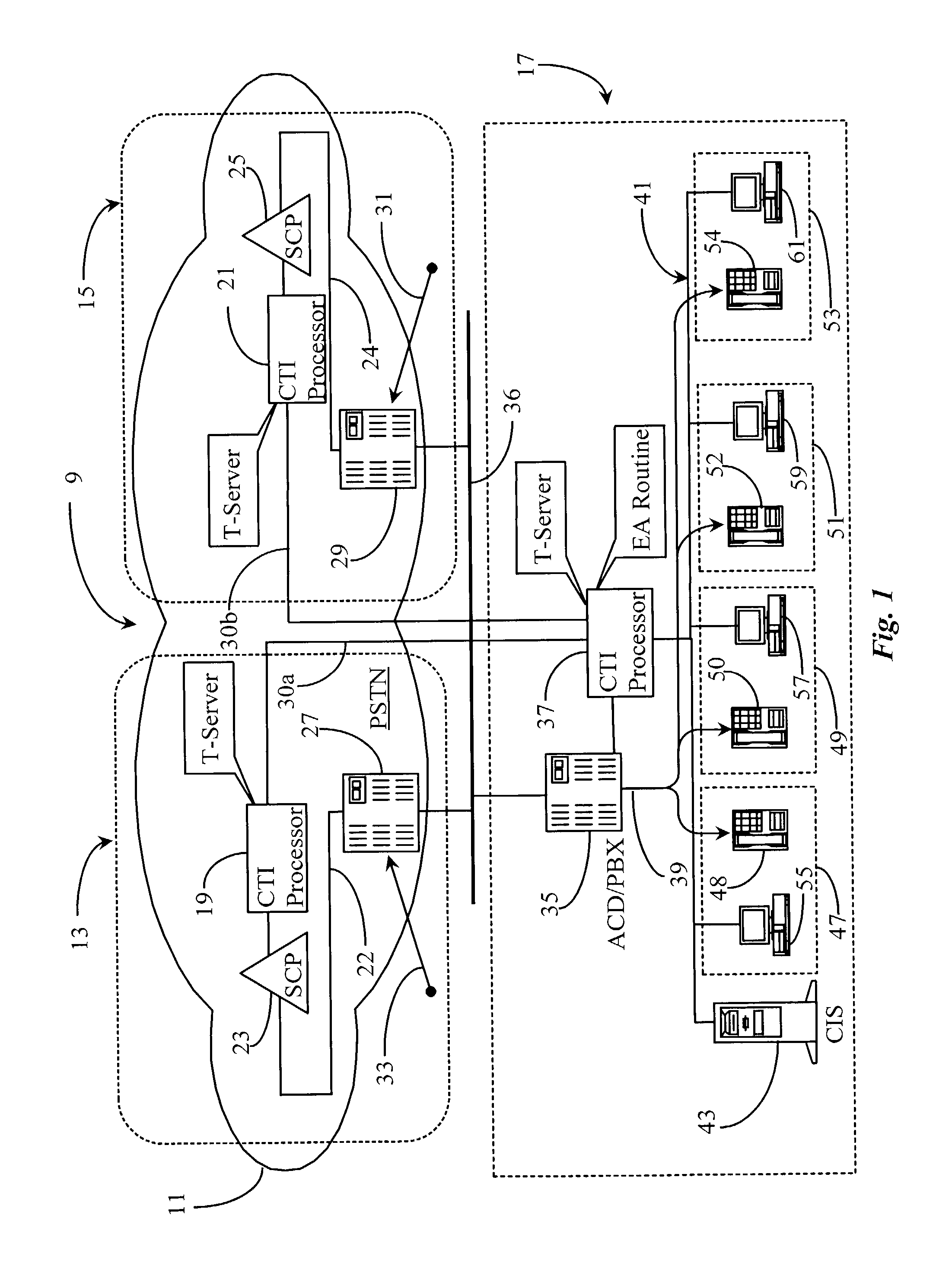

Method and apparatus for providing fair access to agents in a communication center

InactiveUS7236584B2Long latencyShort incubation periodIntelligent networksSpecial service for subscribersLong latencyTimer

A system for granting access to agents at a communication center in response to requests for connection from network-level entities starts a fairness timer having a fairness time period when a first request is received for and agent, monitors any other requests for the same agent during the fairness time, and executes an algorithm at the end of the fairness time to select the network-level entity to which the request should be granted. In a preferred embodiment the fairness time is set to be equal to or greater than twice the difference between network round-trip latency for the longest latency and shortest latency routers requesting service from the communication center. In some embodiments an agent reservation timer is set at the same point as the fairness timer to prevent calls to the same agent, and has a period longer than the fairness timer by a time sufficient for a connection to be made to the agent station once access is granted, and for notification of the connection to be made to network-level entities.

Owner:GENESYS TELECOMM LAB INC AS GRANTOR +3

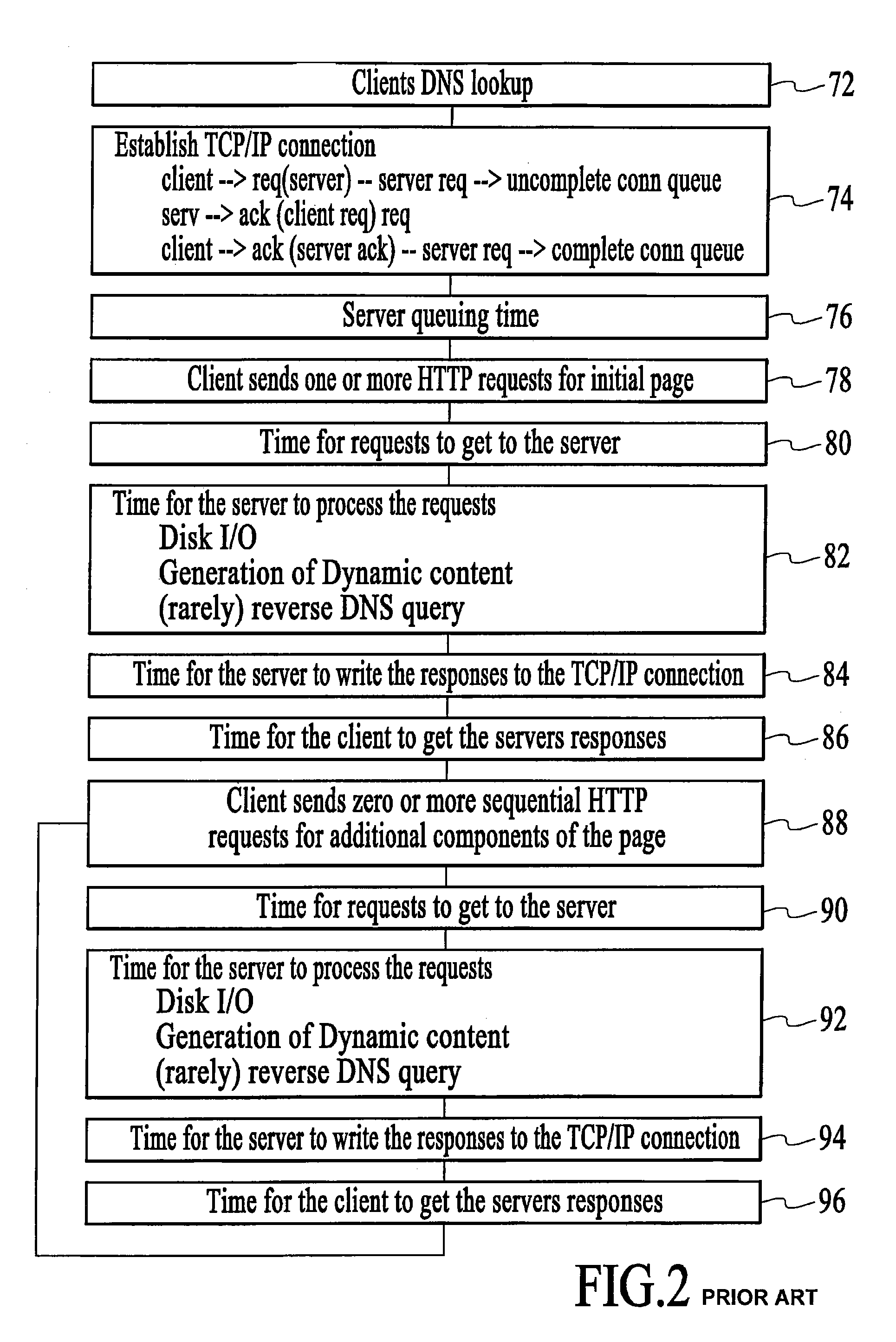

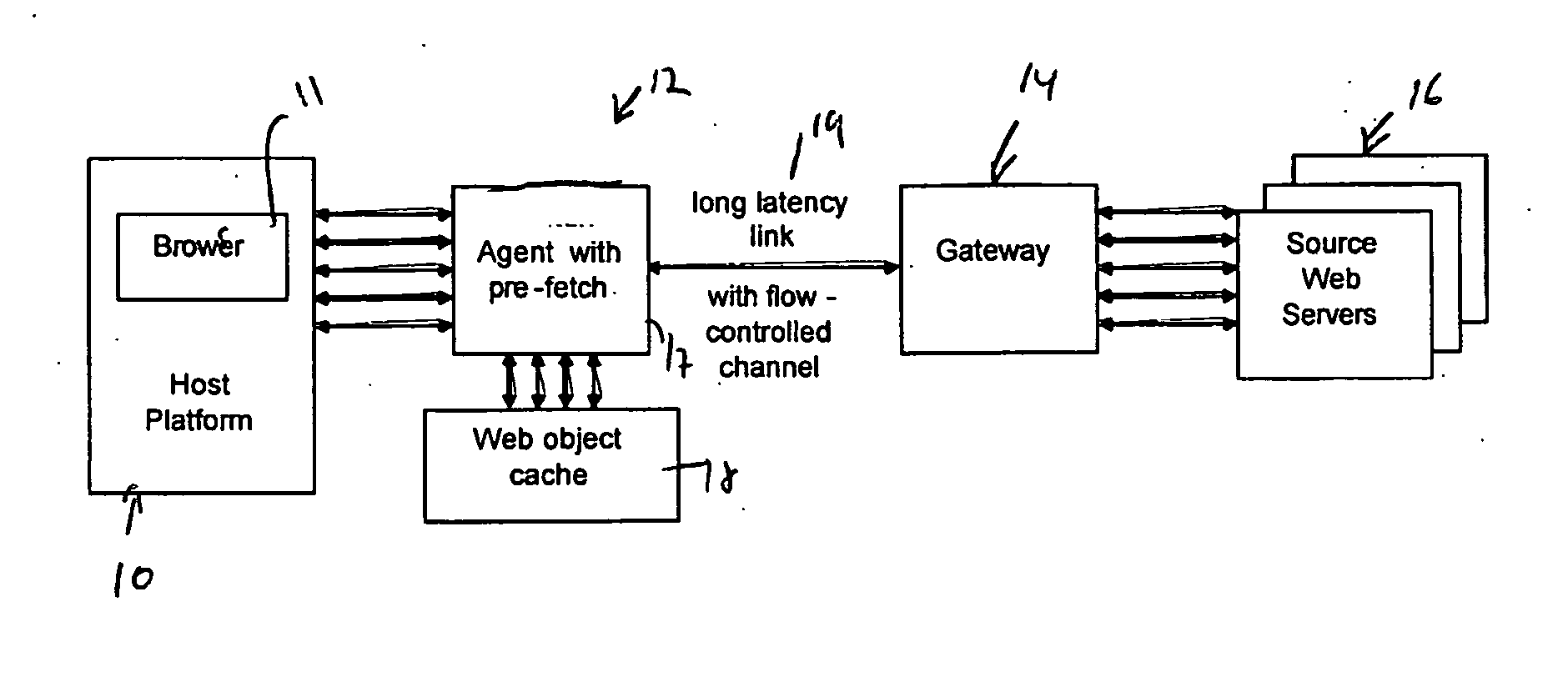

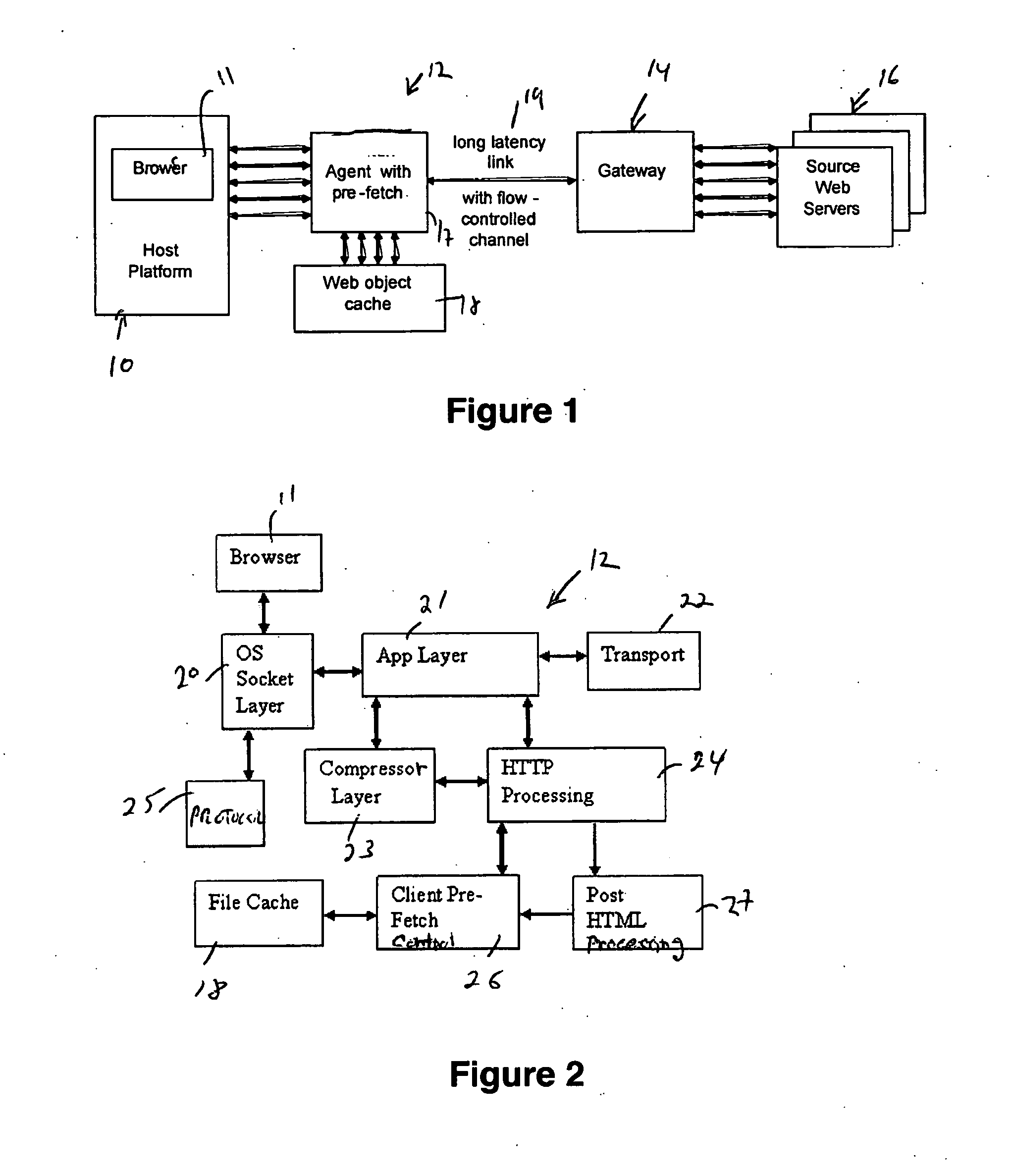

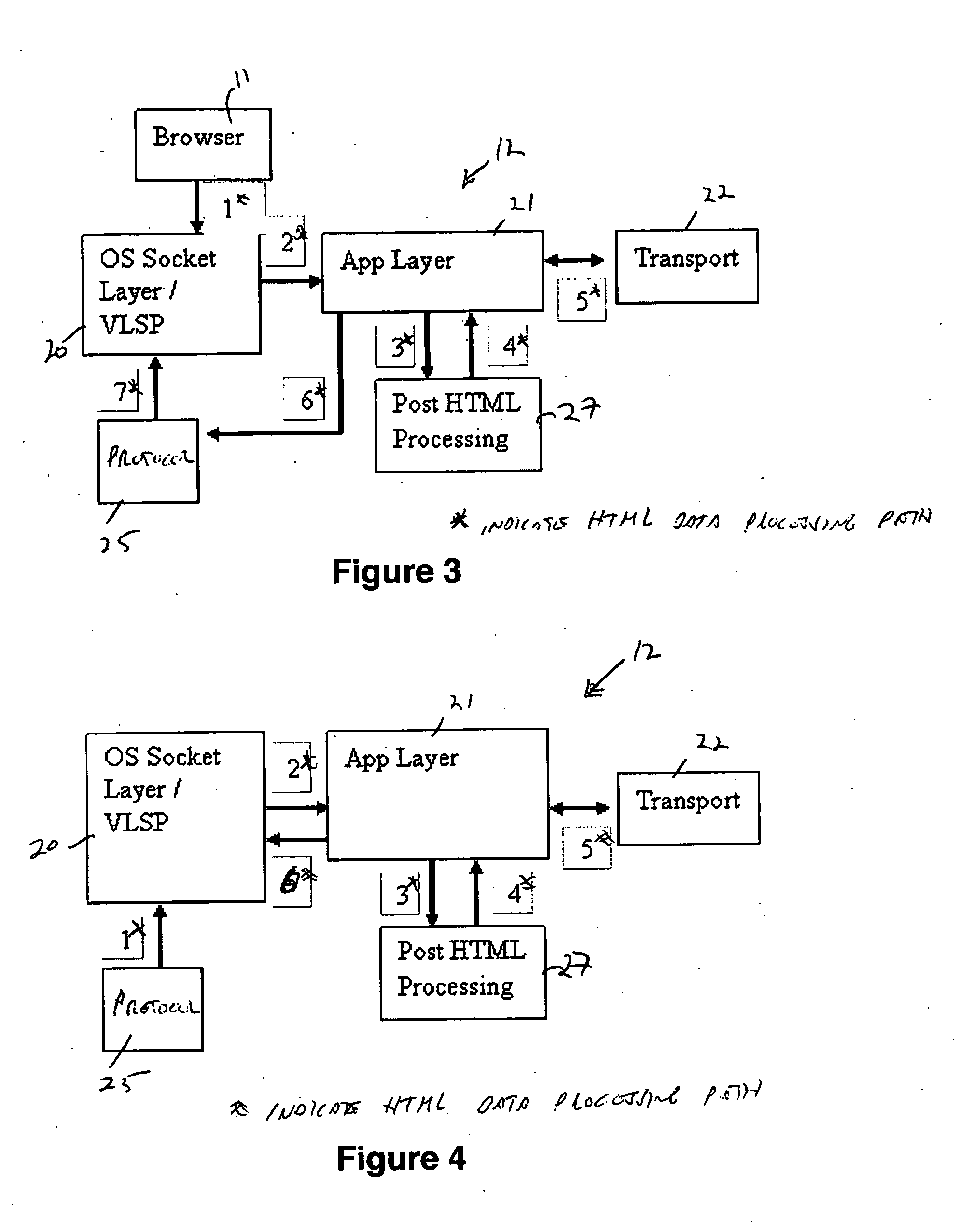

Method and apparatus for increasing performance of HTTP over long-latency links

ActiveUS20060253546A1Reduce negative impactImprove parallelismDigital data information retrievalMultiprogramming arrangementsWeb siteWeb browser

The invention increases performance of HTTP over long-latency links by pre-fetching objects concurrently via aggregated and flow-controlled channels. An agent and gateway together assist a Web browser in fetching HTTP contents faster from Internet Web sites over long-latency data links. The gateway and the agent coordinate the fetching of selective embedded objects in such a way that an object is ready and available on a host platform before the resident browser requires it. The seemingly instantaneous availability of objects to a browser enables it to complete processing the object to request the next object without much wait. Without this instantaneous availability of an embedded object, a browser waits for its request and the corresponding response to traverse a long delay link.

Owner:VENTURI WIRELESS

System and method for reducing latency in call setup and teardown

ActiveUS8144591B2Reduce signalingLower latencyError preventionFrequency-division multiplex detailsDistributed computingCall setup

Systems and methods for reducing latency in call setup and teardown are provided. A network device with integrated functionalities and a cache is provided that stores policy information to reduce the amount of signaling that is necessary to setup and teardown sessions. By handling various aspects of the setup and teardown within a network device, latency is reduced and the amount of bandwidth needed for setup signaling is also reduced.

Owner:CISCO TECH INC

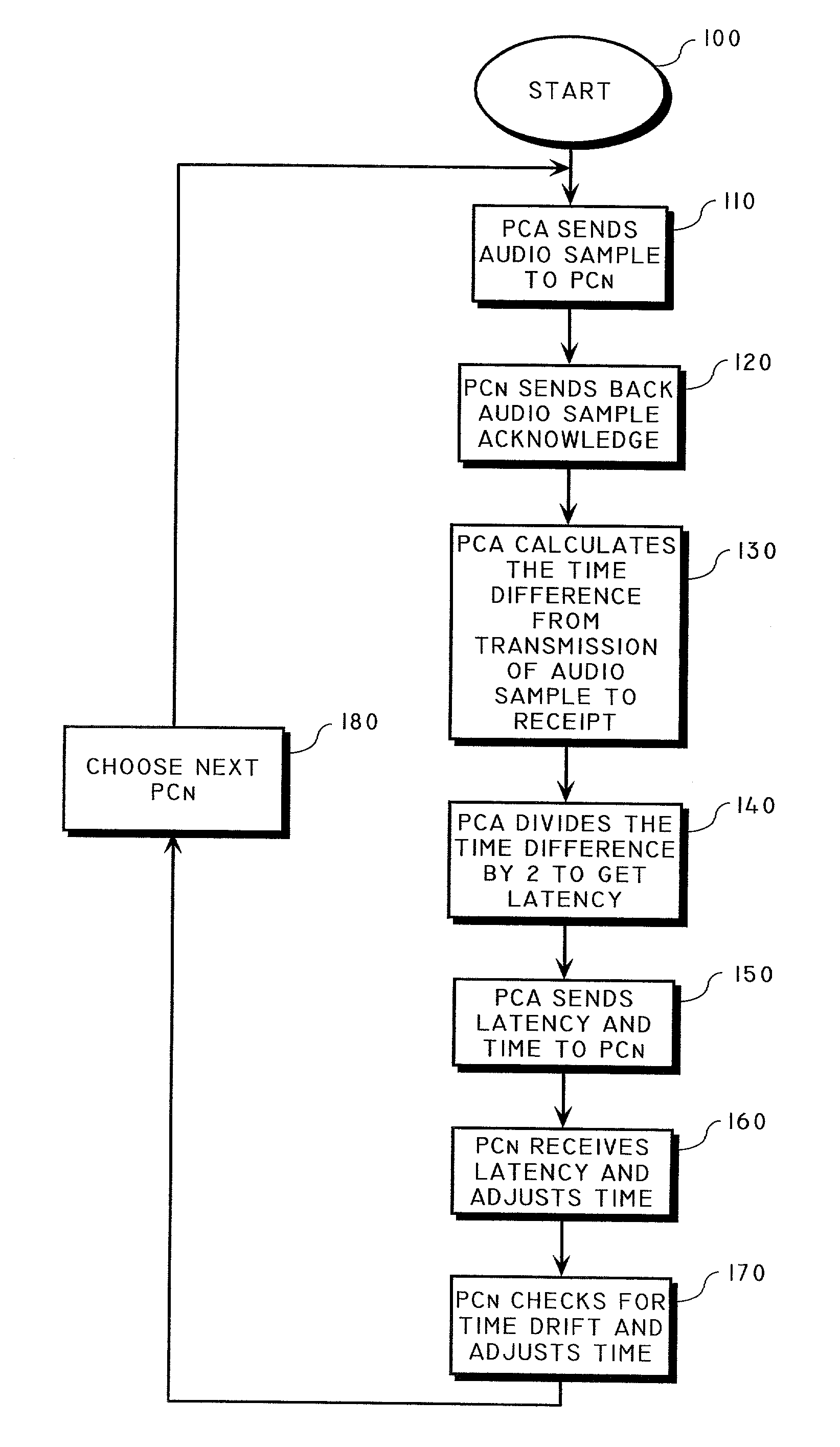

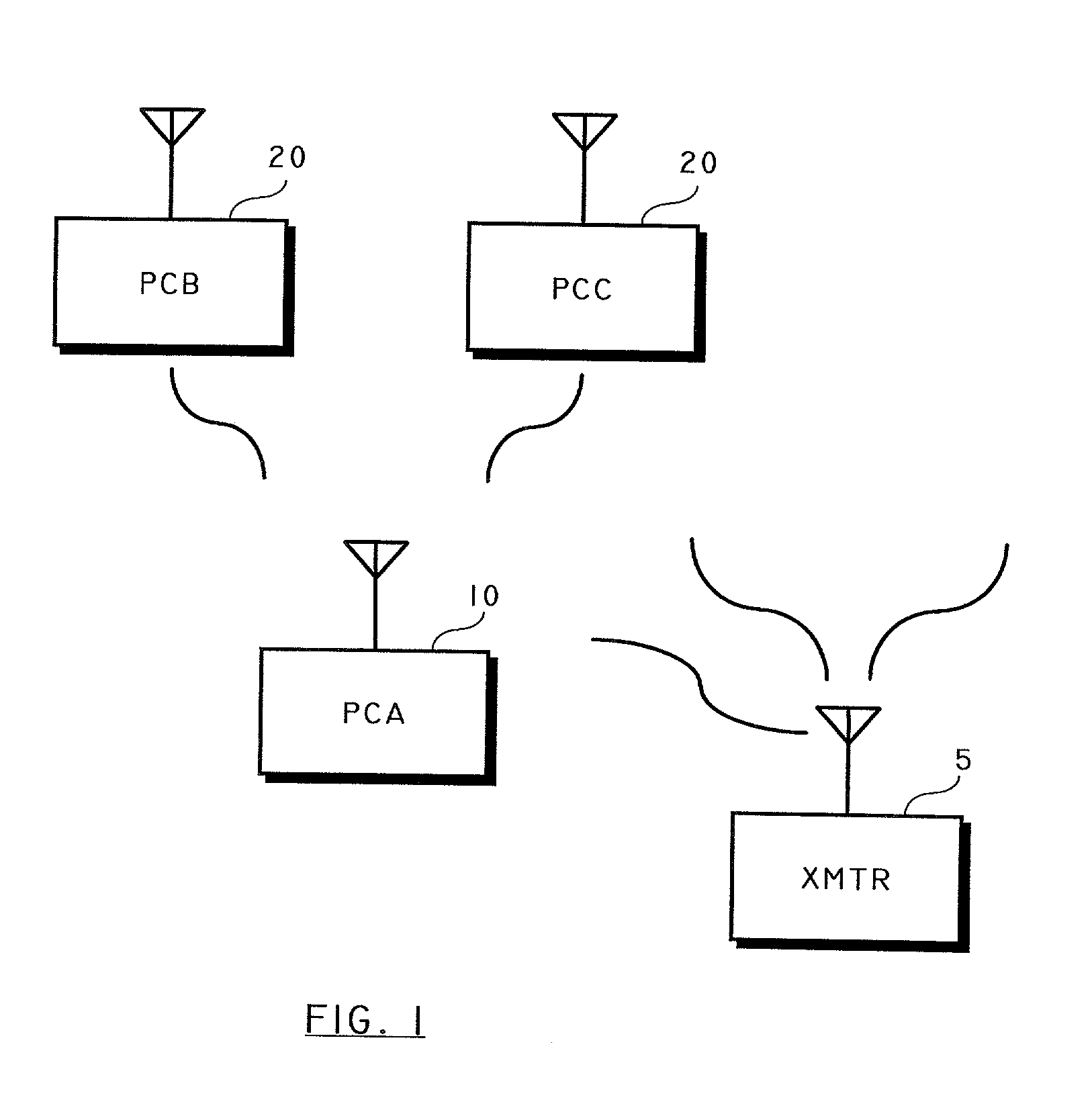

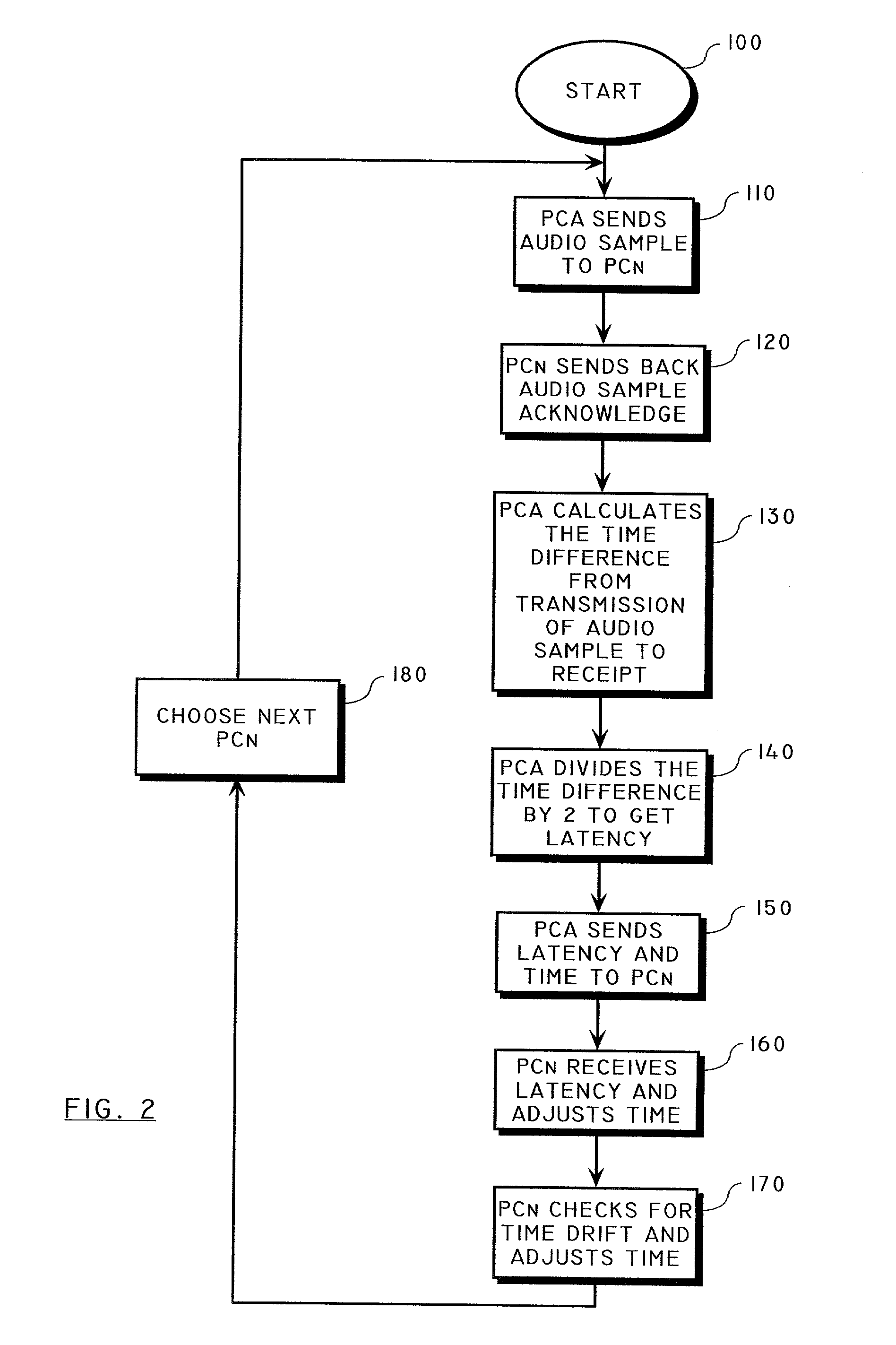

Method of synchronizing the playback of a digital audio broadcast using an audio waveform sample

ActiveUS7392102B2Time-division multiplexSpecial data processing applicationsNetwork outputDigital audio broadcasting

A method is provided for synchronizing the playback of a digital audio broadcast on a plurality of network output devices by inserting an audio waveform sample in an audio stream of the digital audio broadcast. The method includes the steps of outputting a first unique signal as part of an audio signal which has unique identifying characteristics and is regularly occurring, outputting a second unique signal so that the time between the first and second unique signals must be significantly greater than the latency between sending and receiving devices, and coordinating play of audio by an audio waveform sample assuring the simultaneous output of the audio signal from multiple devices. An algorithm in hardware, software, or a combination of the two identifies the audio waveform sample in the audio stream. The digital audio broadcast from multiple receivers does not present to a listener any audible delay or echo effect.

Owner:GATEWAY

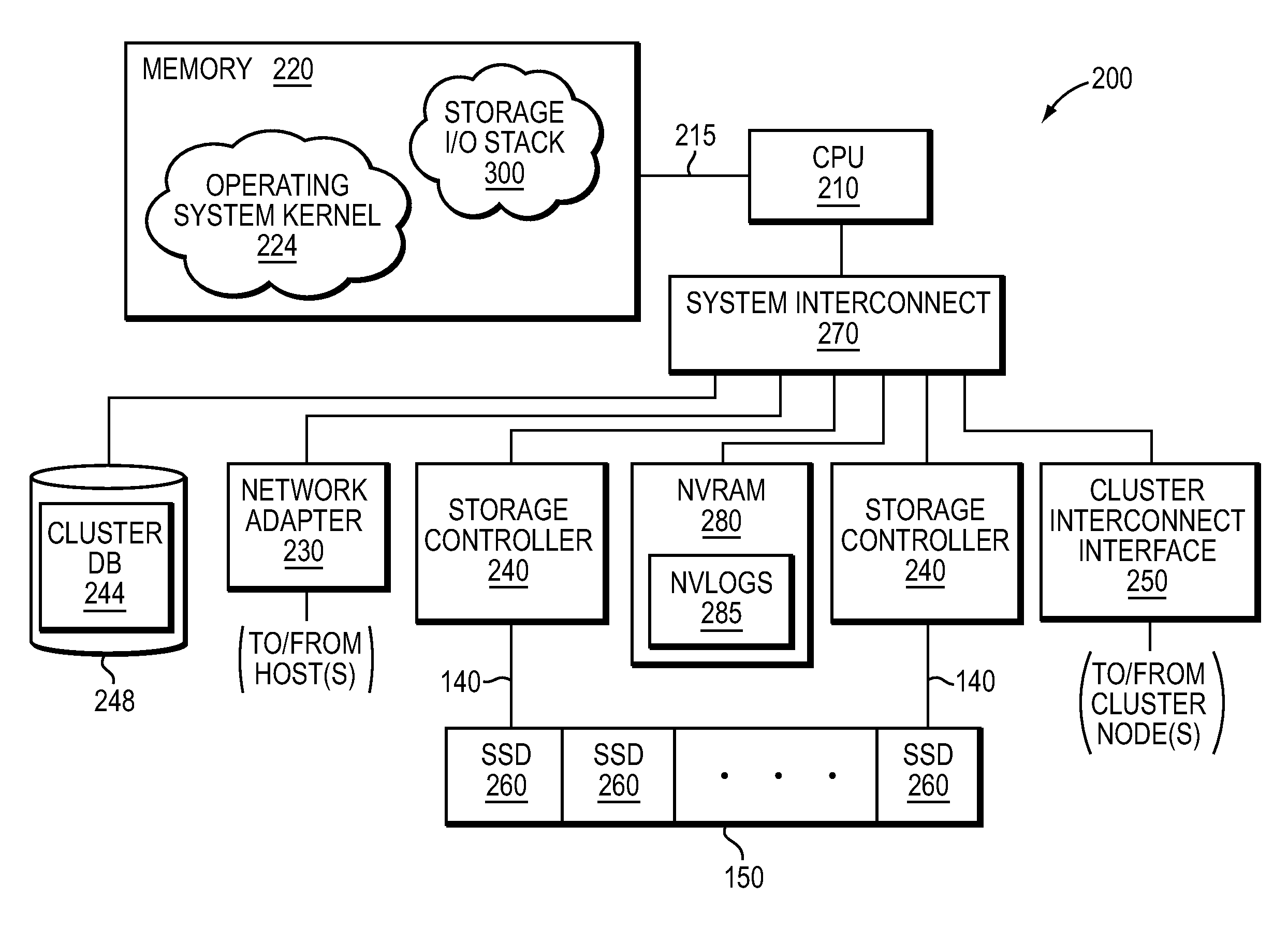

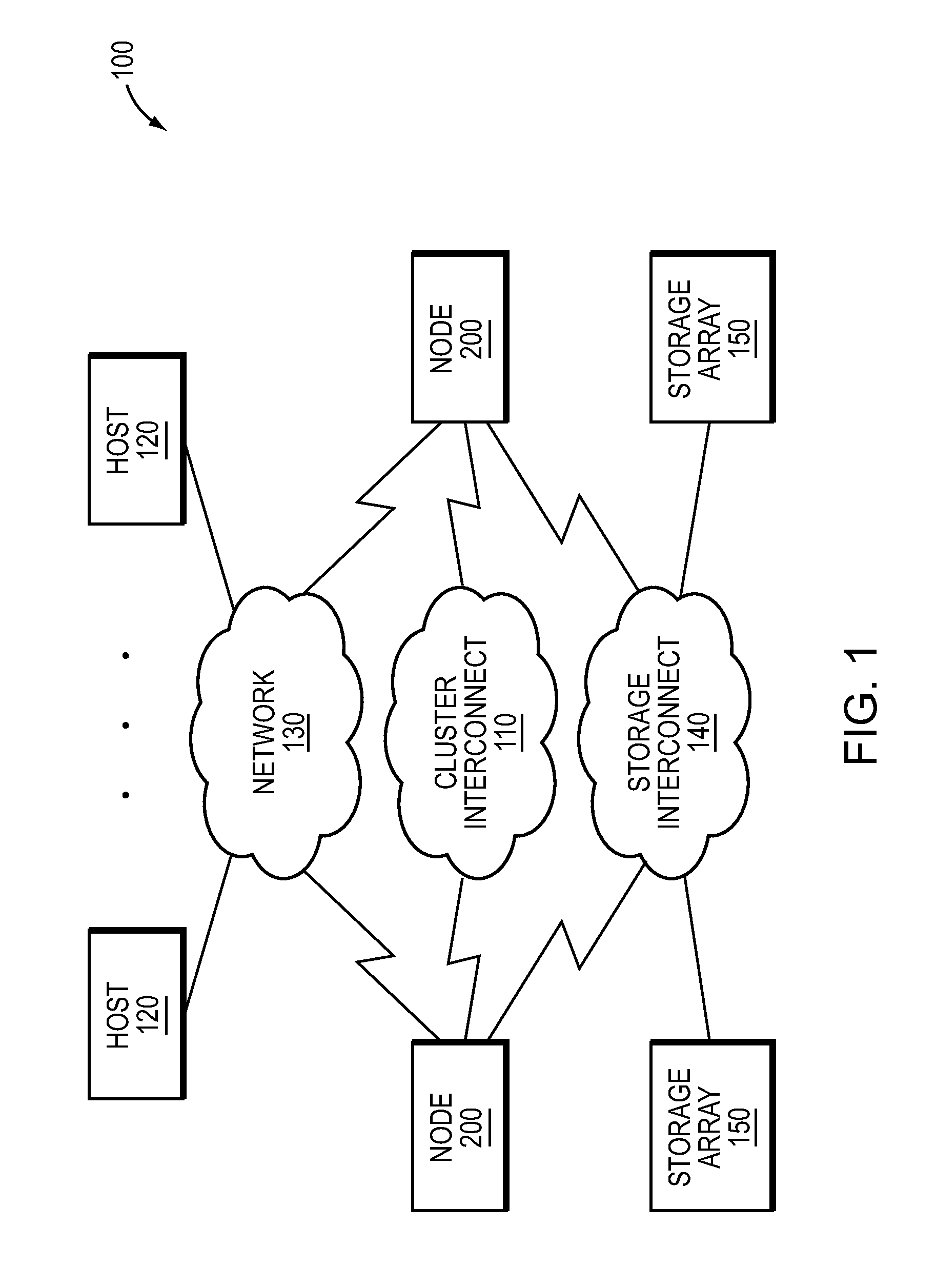

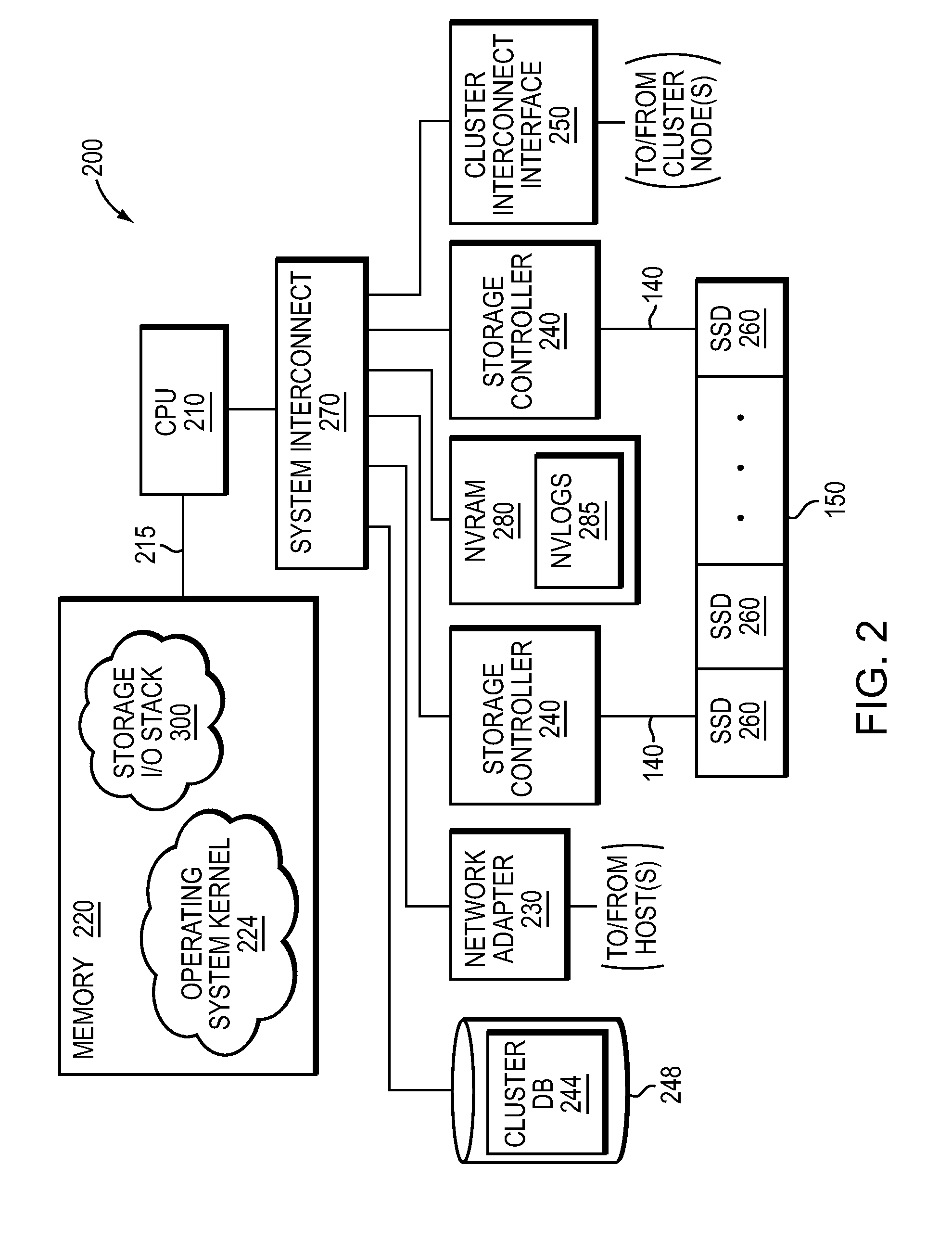

NVRAM caching and logging in a storage system

ActiveUS8898388B1Memory architecture accessing/allocationInput/output to record carriersLatency (engineering)Solid-state drive

In one embodiment, non-volatile random access memory (NVRAM) caching and logging delivers low latency acknowledgements of input / output (I / O) requests, such as write requests, while avoiding loss of data. Write data may be stored in a portion of an NVRAM configured as, e.g., a persistent write-back cache, while parameters of the request may be stored in another portion of the NVRAM configured as one or more logs, e.g., NVLogs. The write data may be organized into separate variable length blocks or extents and “written back” out-of-order from the write back cache to storage devices, such as solid state drives (SSDs). The write data may be preserved in the write-back cache until each extent is safely and successfully stored on SSD (i.e., in the event of power loss), or operations associated with the write request are sufficiently logged on NVLog.

Owner:NETWORK APPLIANCE INC

Quality of service support for A/V streams

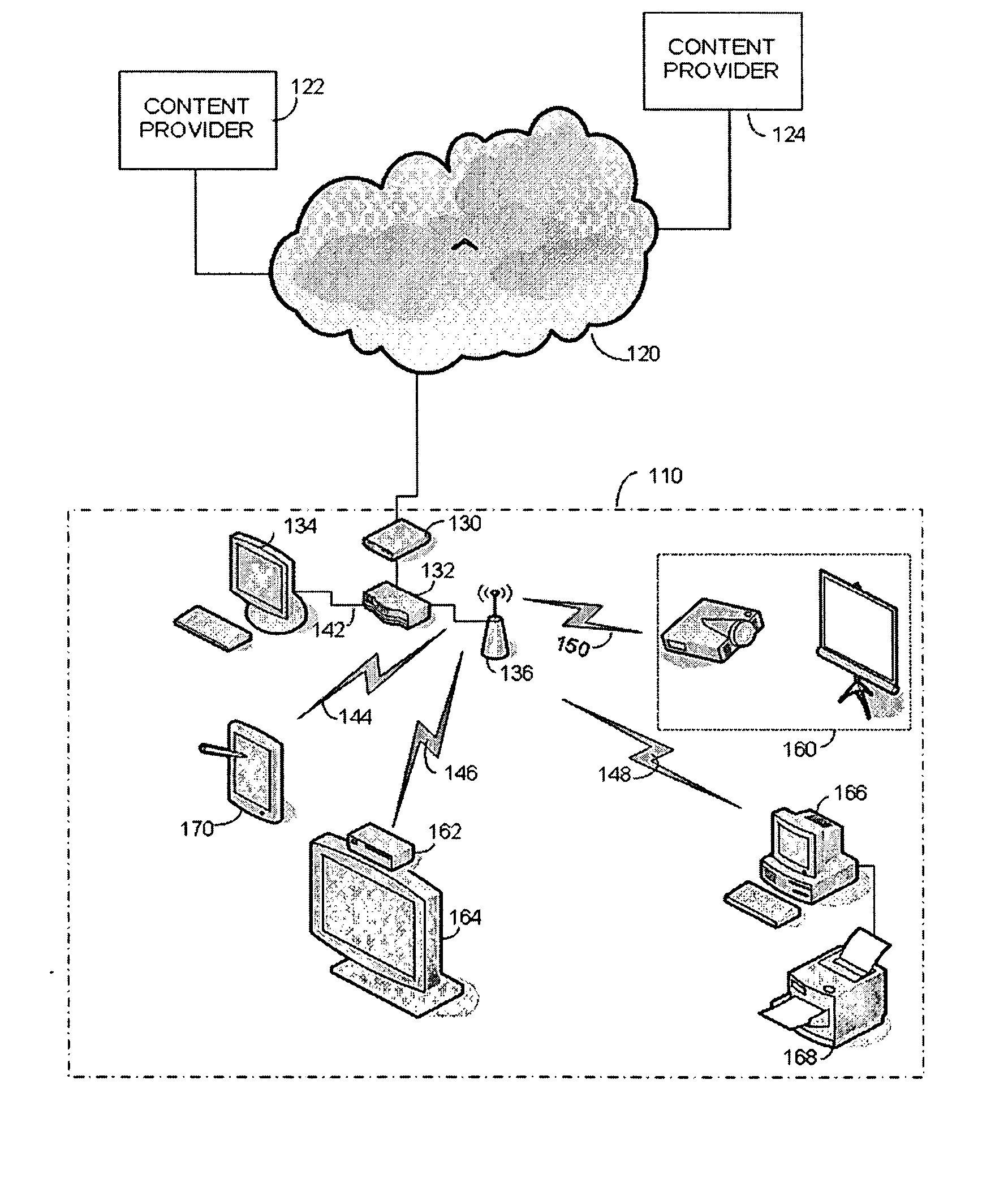

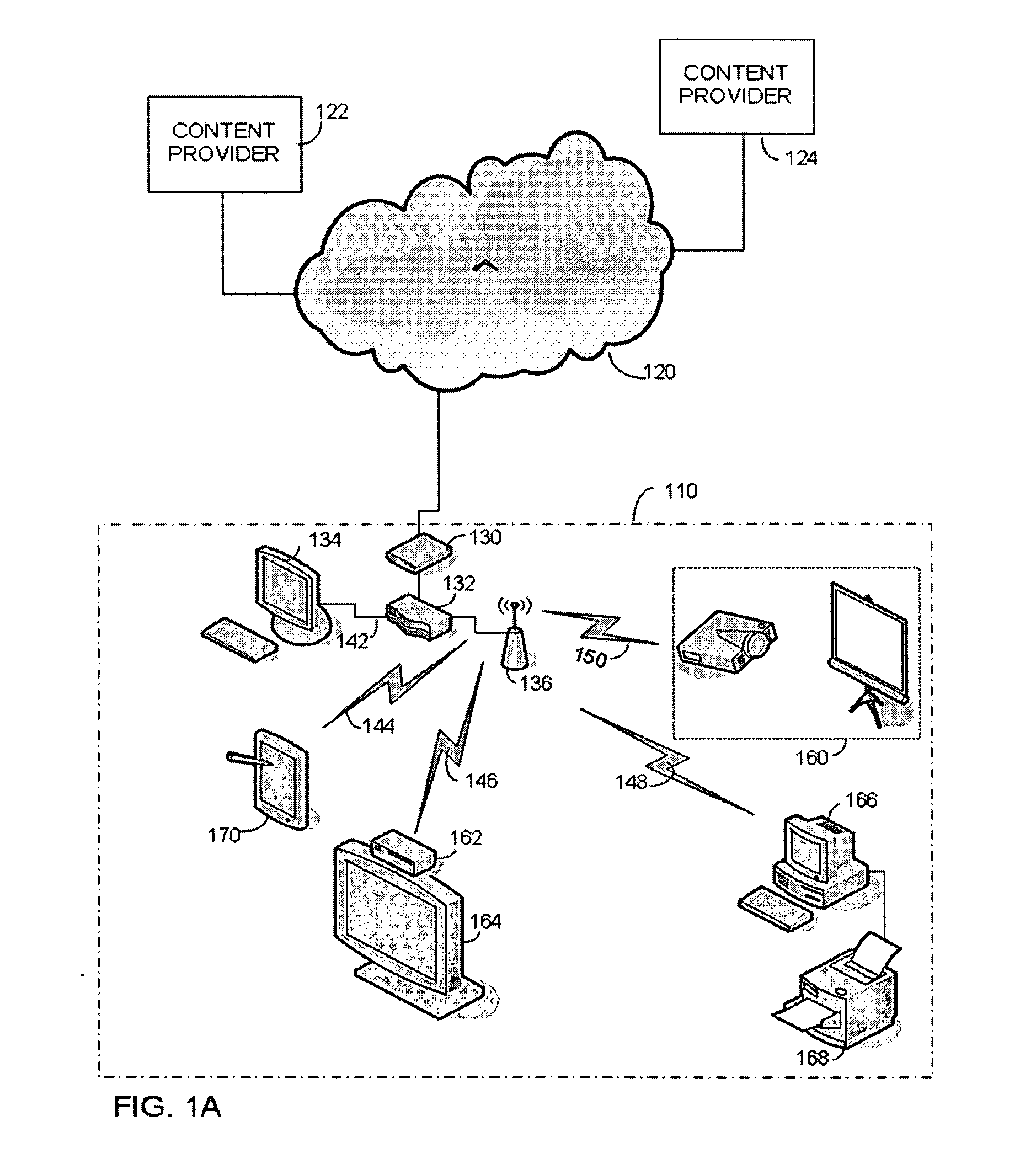

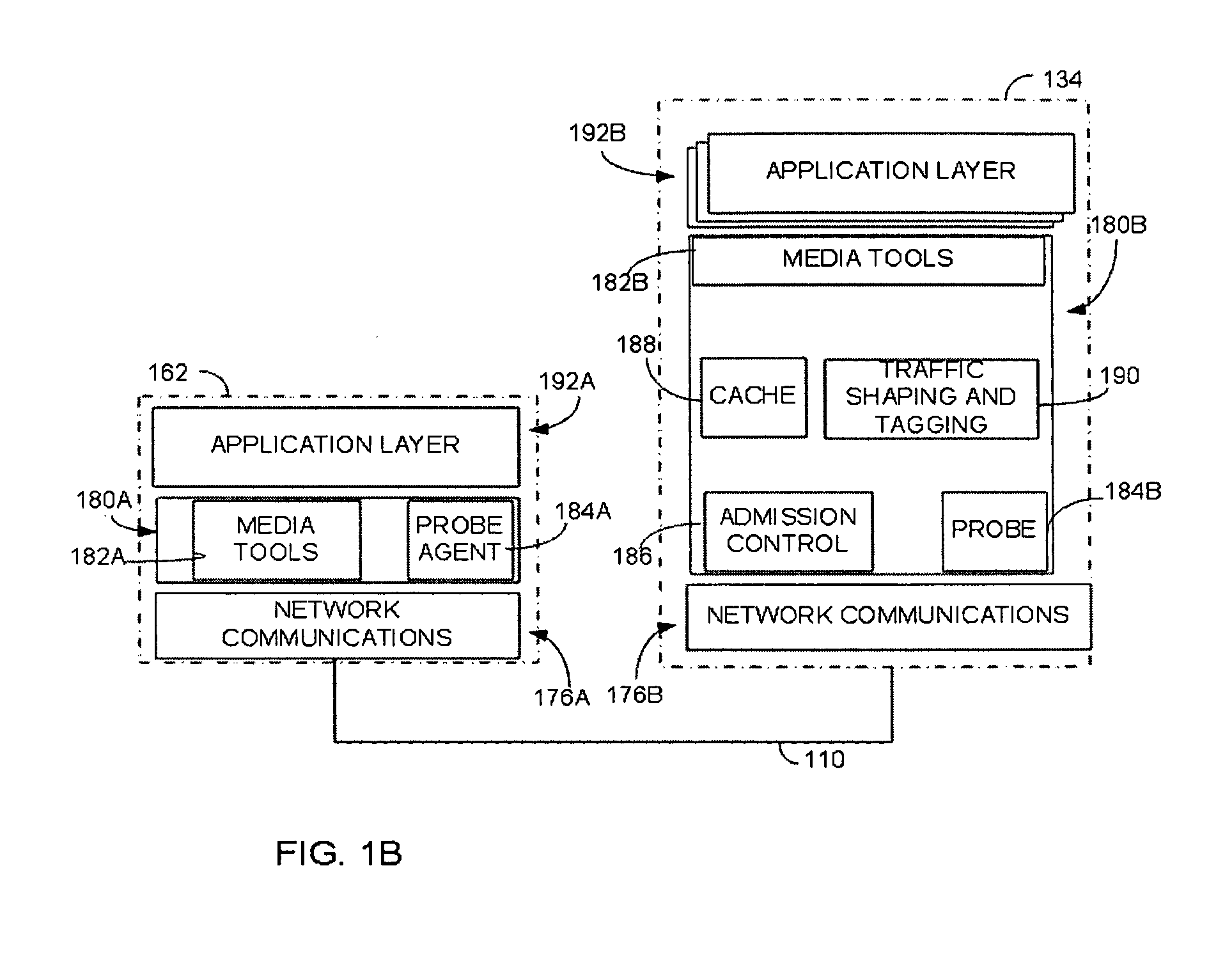

ActiveUS20070248100A1Speed up the processLower latencyData switching by path configurationWireless commuication servicesQuality of serviceData stream

An access control mechanism in a network connecting one or more sink devices to a server providing audio / visual data (A / V) in streams. As a sink device requests access, the server measures available bandwidth to the sink device. If the measurement of available bandwidth is completed before the sink device requests a stream of audio / visual data, the measured available bandwidth is used to set transmission parameters of the data stream in accordance with a Quality of Service (QoS) policy. If the measurement is not completed when the data stream is requested, the data stream is nonetheless transmitted. In this scenario, the data stream may be transmitted using parameters computed using a cached measurement of the available bandwidth to the sink device. If no cached measurement is available, the data stream is transmitted with a low priority until a measurement can be made. Once the measurement is available, the transmission parameters of the data stream are re-set. With this access control mechanism, A / V streams may be provided with low latency but with transmission parameters accurately set in accordance with the QoS policy.

Owner:MICROSOFT TECH LICENSING LLC

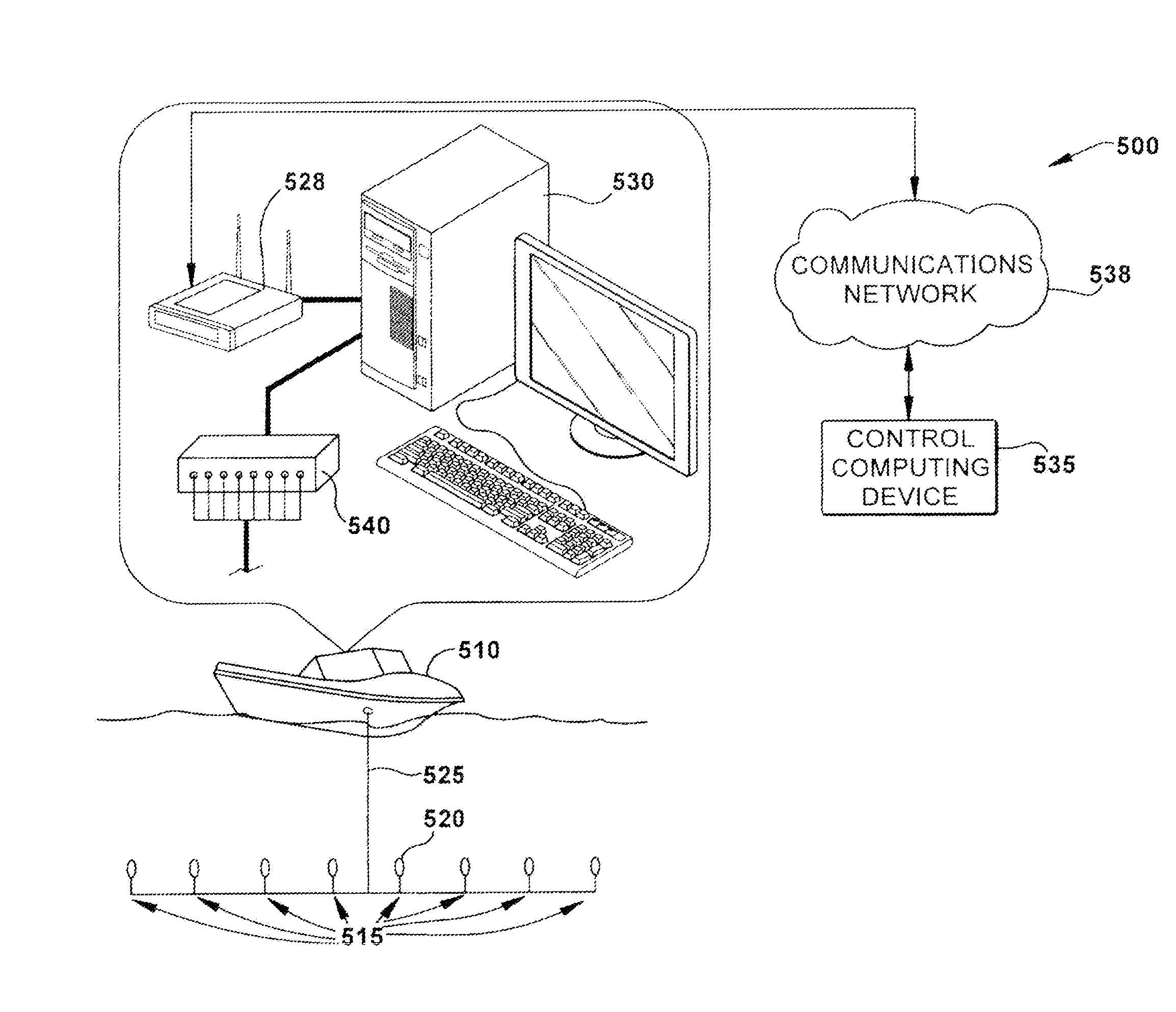

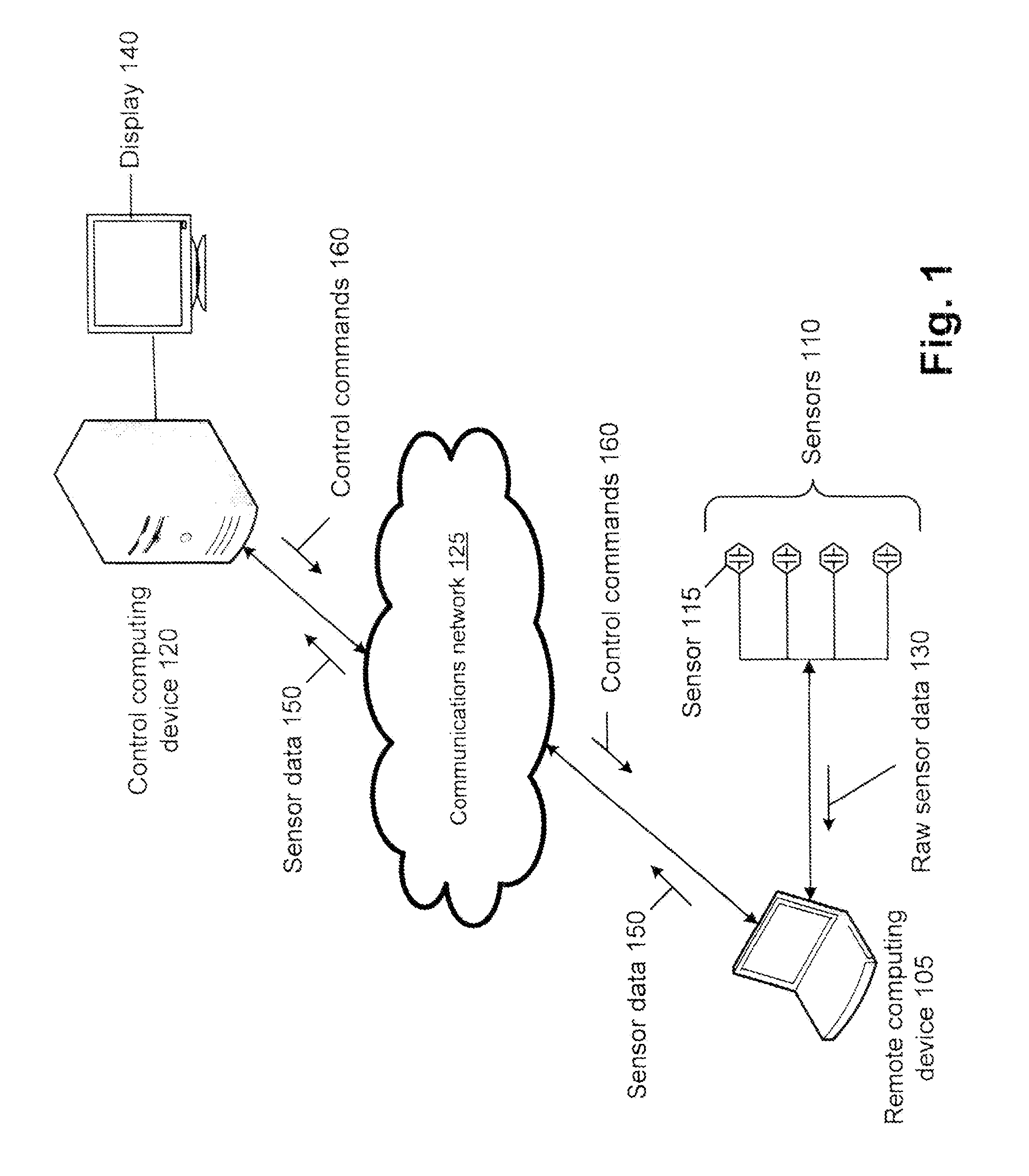

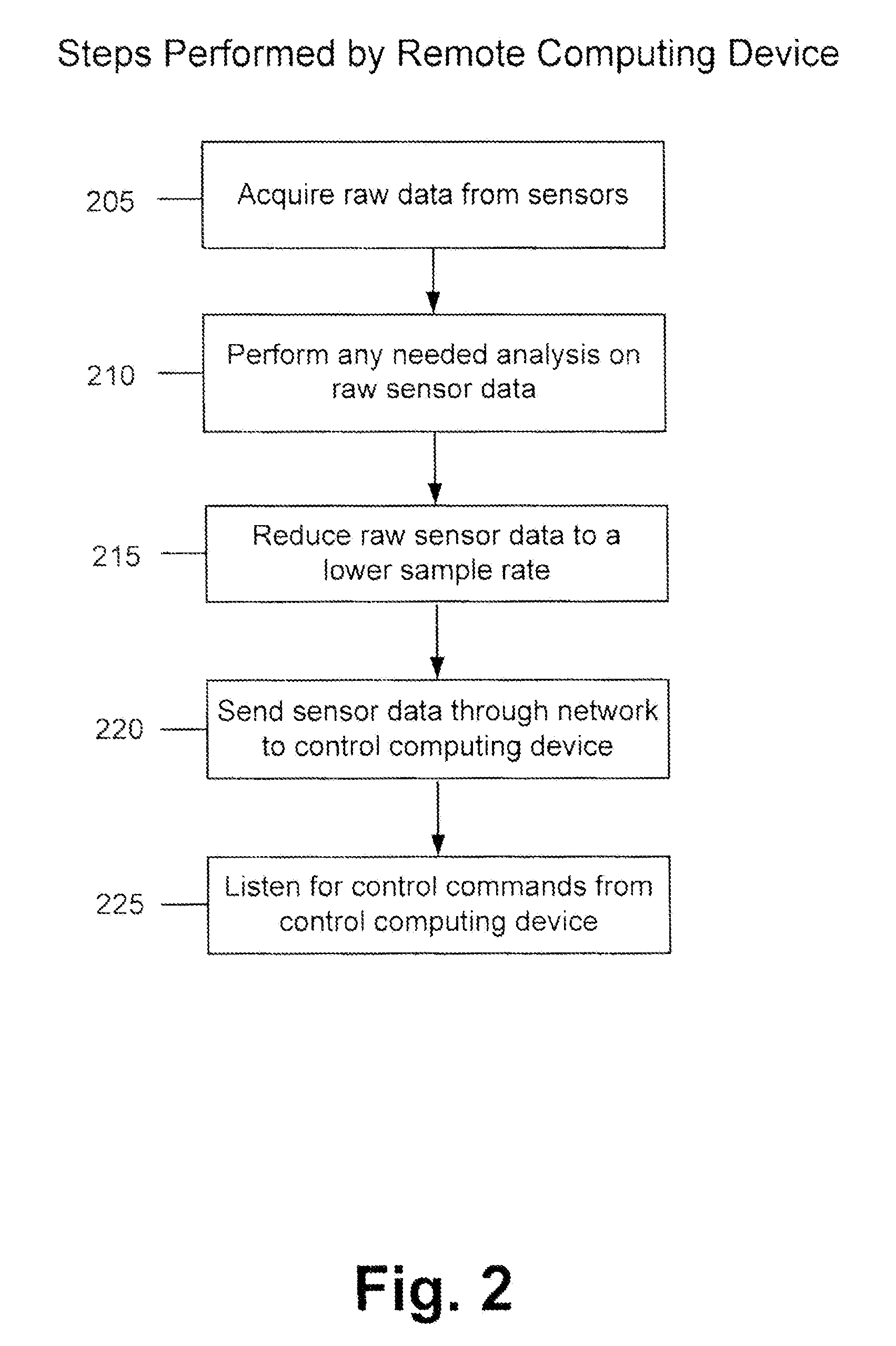

Method and apparatus for adaptive transmission of sensor data with latency controls

ActiveUS20100278086A1High bandwidthData latency is minimizedNetwork traffic/resource managementNetwork topologiesAutomatic controlThroughput

Disclosed is a method and apparatus to continuously transmit high bandwidth, real-time data, on a communications network (e.g., wired, wireless, and a combination of wired and wireless segments). A control computing device uses user or application requirements to dynamically adjust the throughput of the system to match the bandwidth of the communications network being used, so that data latency is minimized. An operator can visualize the instantaneous characteristic of the link and, if necessary, make a tradeoff between the latency and resolution of the data to help maintain the real-time nature of the system and better utilize the available network resources. Automated control strategies have also been implemented into the system to enable dynamic adjustments of the system throughput to minimize latency while maximizing data resolution. Several applications have been cited in which latency minimization techniques can be employed for enhanced dynamic performance.

Owner:STEVENS INSTITUTE OF TECHNOLOGY

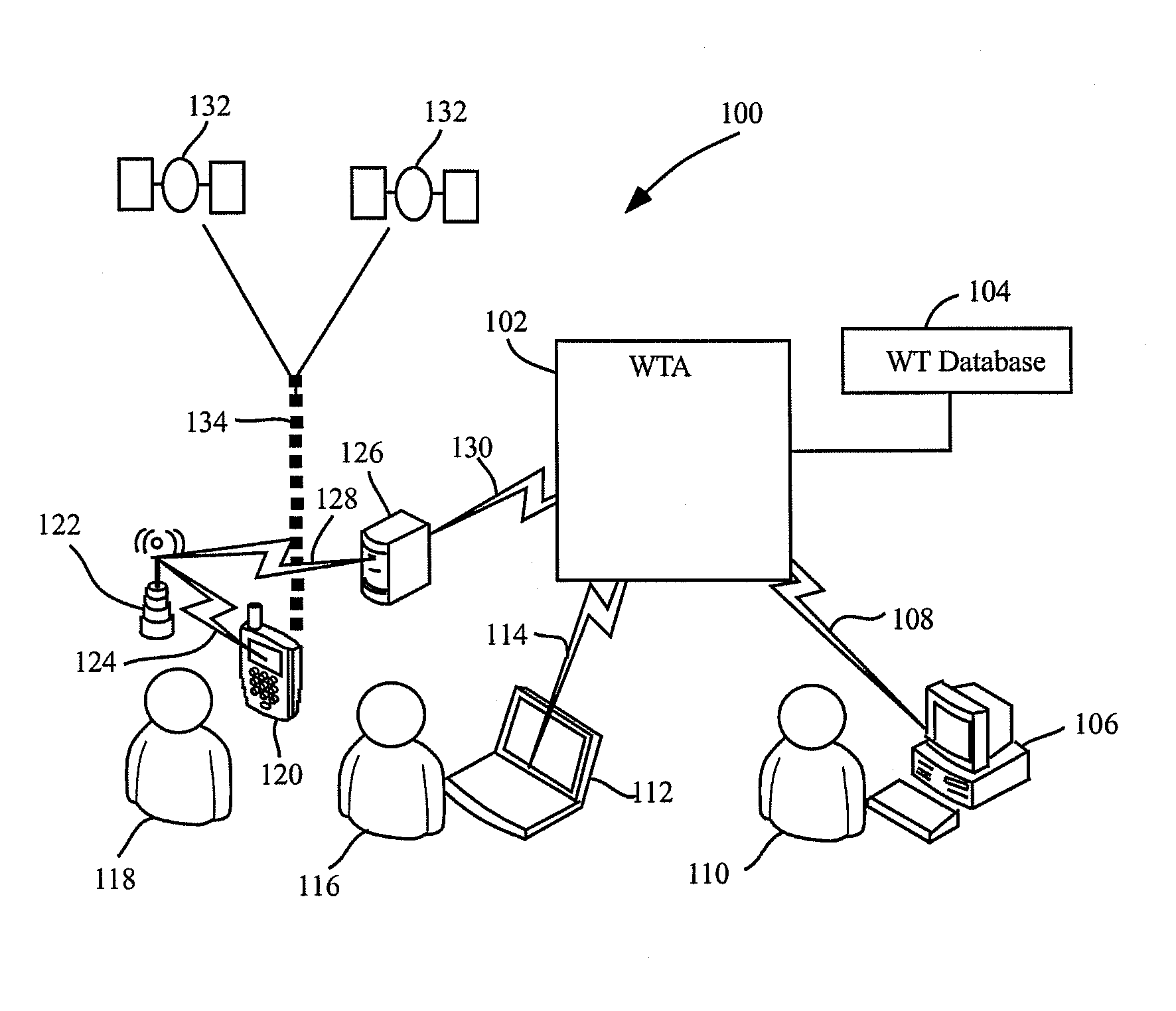

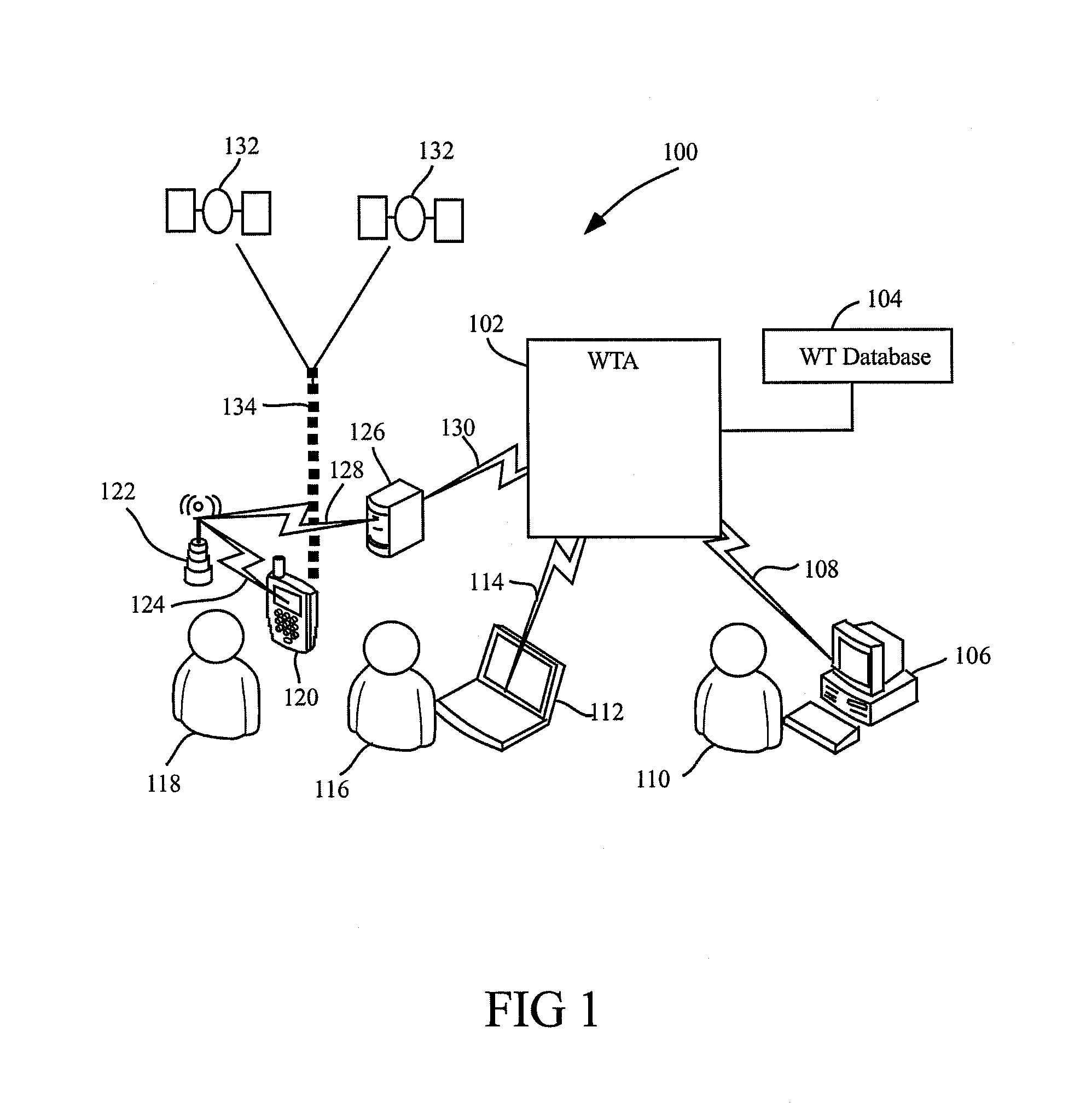

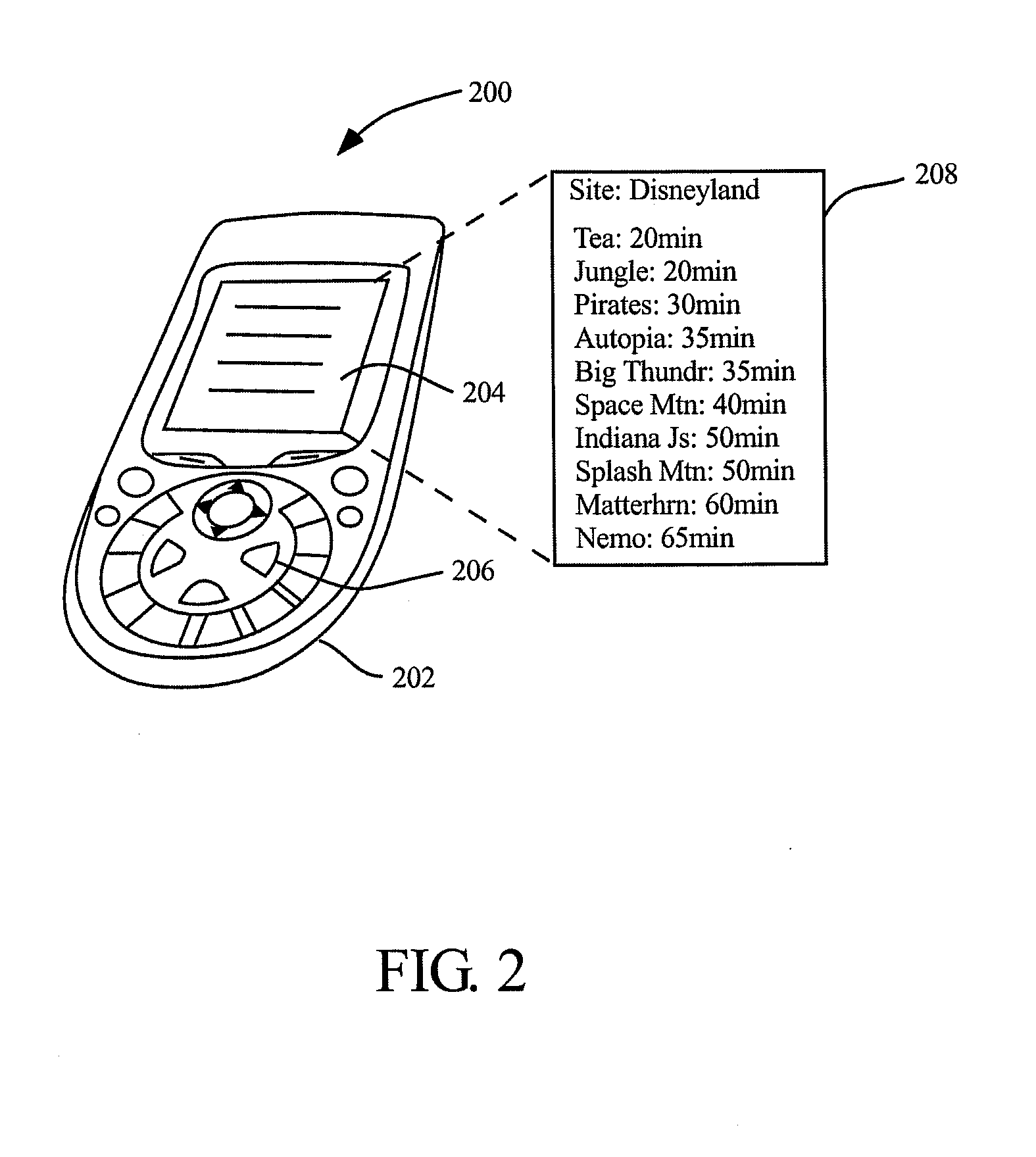

Attraction wait-time inquiry apparatus, system and method

InactiveUS20080267107A1Broadcast specific applicationsBroadcast transmission systemsComputer scienceAmusement park

Methods and apparatus are disclosed that enables a plurality of users of portable computing devices to each individually request and receive wait-time indications for each of a plurality of attractions within a physical establishment. In certain embodiments the attractions are rides and the physical establishments are amusement parks. In this way, users of portable computing device, such as mobile phones, may individually request and receive wait-time indications for each of a plurality of rides within at least one of a plurality of amusement parks. The methods and apparatus may be configured to maintain and access a database of wait-time values for a plurality of attractions within one or more physical establishments. In certain embodiments, request messages are received as SMS text messages from mobile phone and response messages are sent as SMS text messages to mobile phones.

Owner:OUTLAND RES

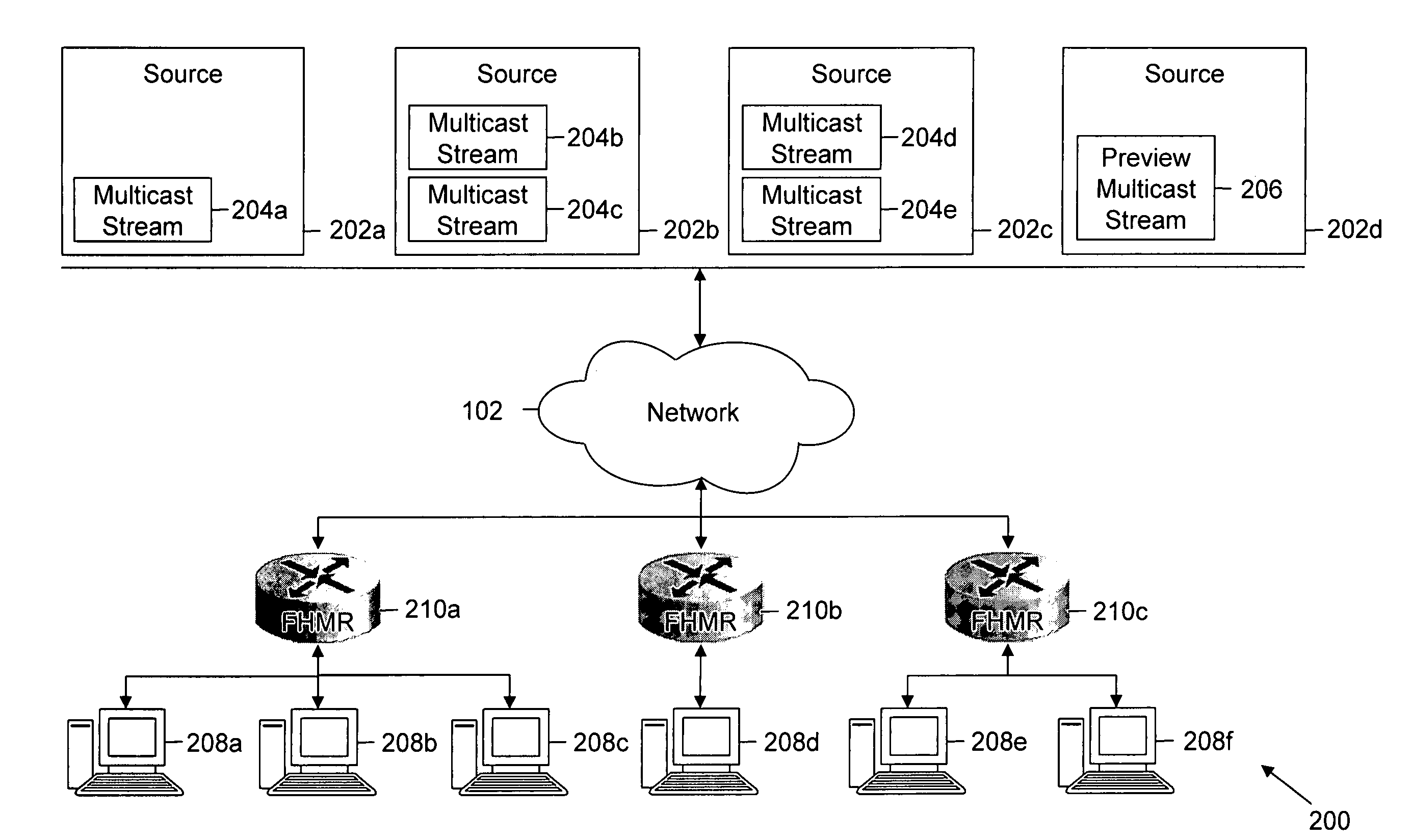

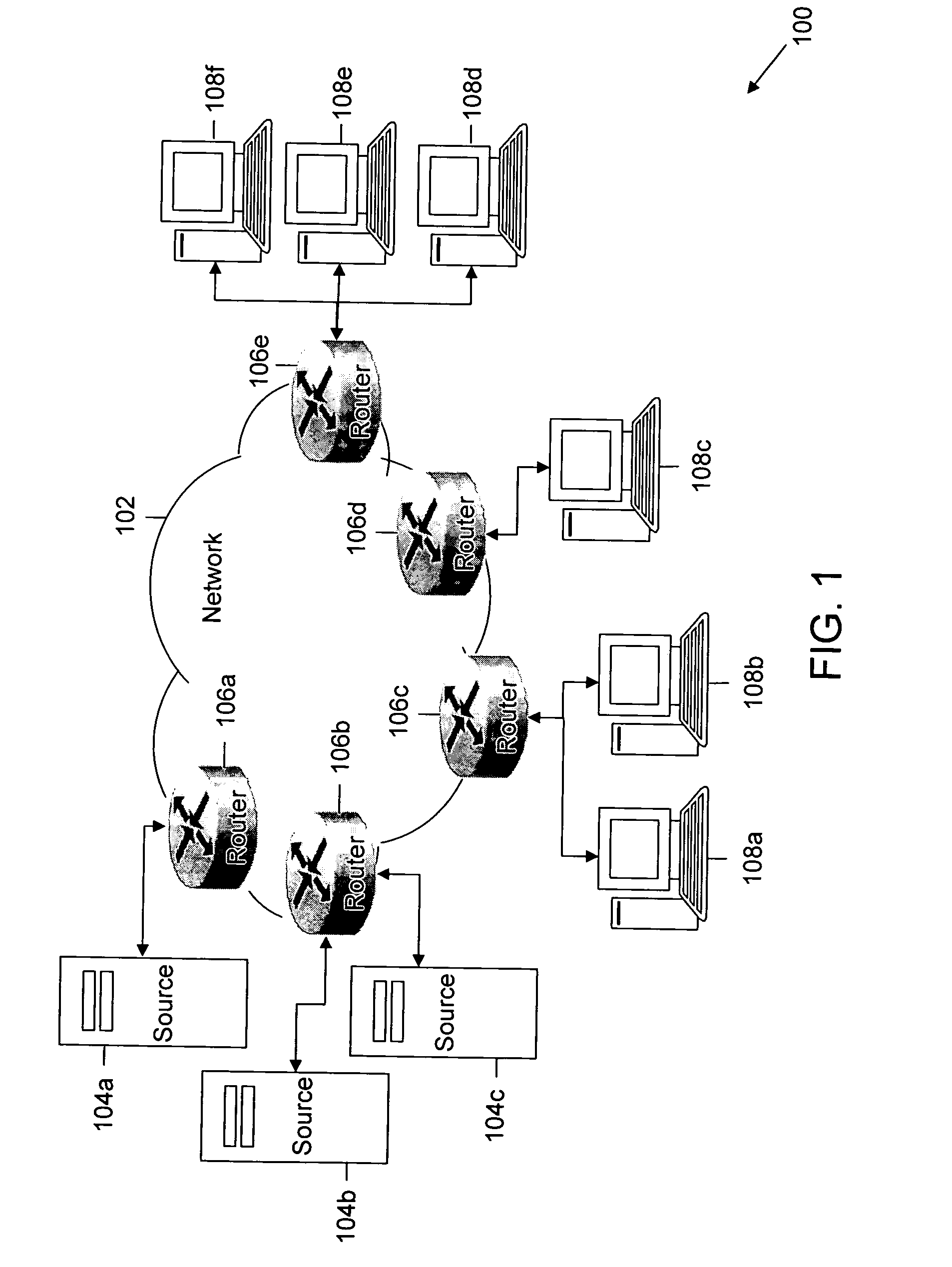

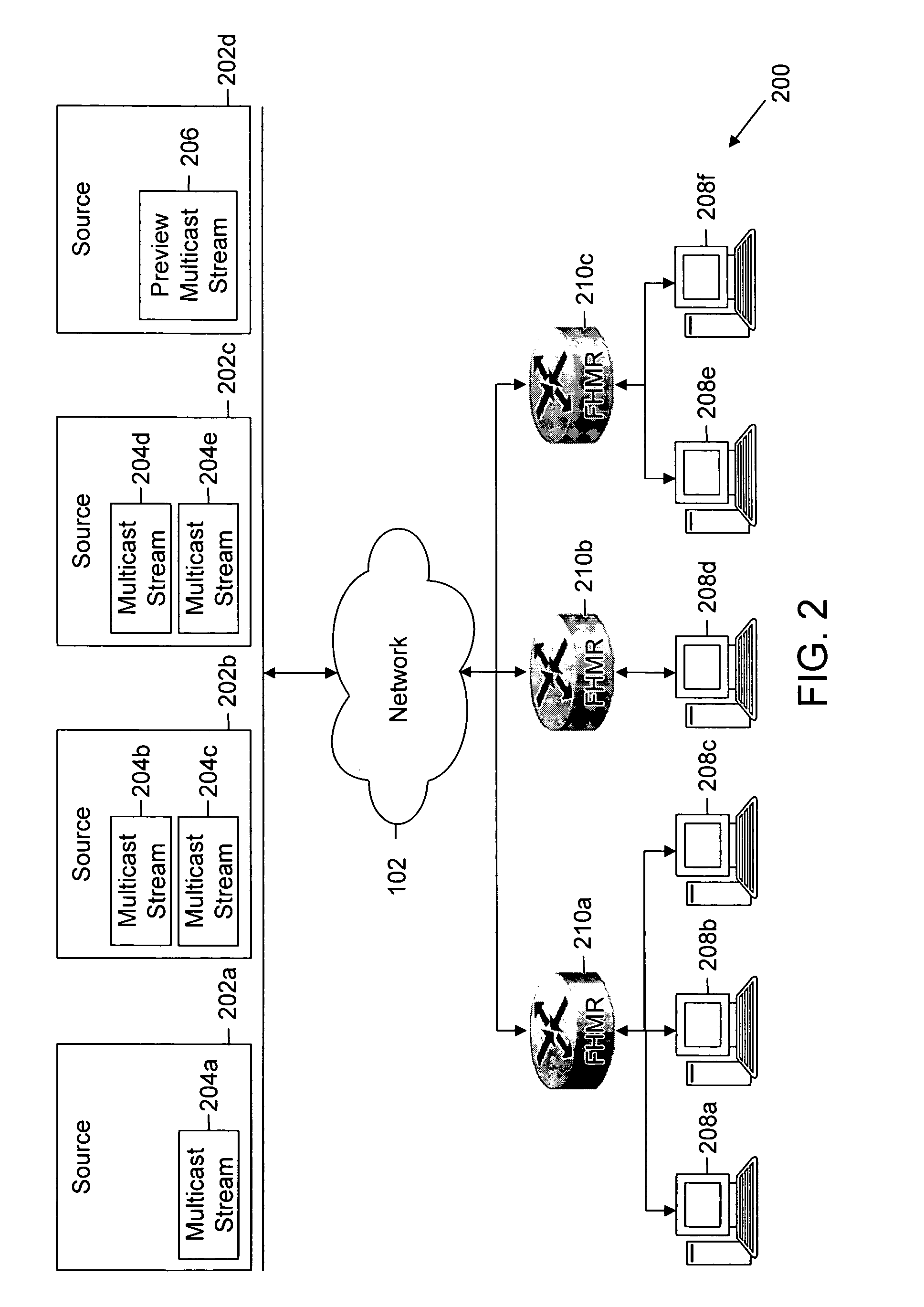

Method and system for reducing latency in a multi-channel multicast streaming environment in content-delivery networks

ActiveUS20080025304A1Lower latencyDelay minimizationTelevision system detailsColor television detailsDistribution treeWaiting time

Methods, systems and apparatus for reducing apparent latency in content-delivery networks are provided. Sources multicast certain ‘preview multicast streams’ to multiple subscribers. These preview multicast streams provide pre-recorded content of multicast streams. When a subscriber switches to a desired multicast stream, pre-recorded content of the desired multicast stream is reconstructed from a preview multicast stream. Thereafter, the pre-recorded content is played during the setup of the new multicast distribution tree to minimize latency. Once the distribution tree is setup, live content of the desired multicast stream is made available to the subscriber.

Owner:CISCO TECH INC

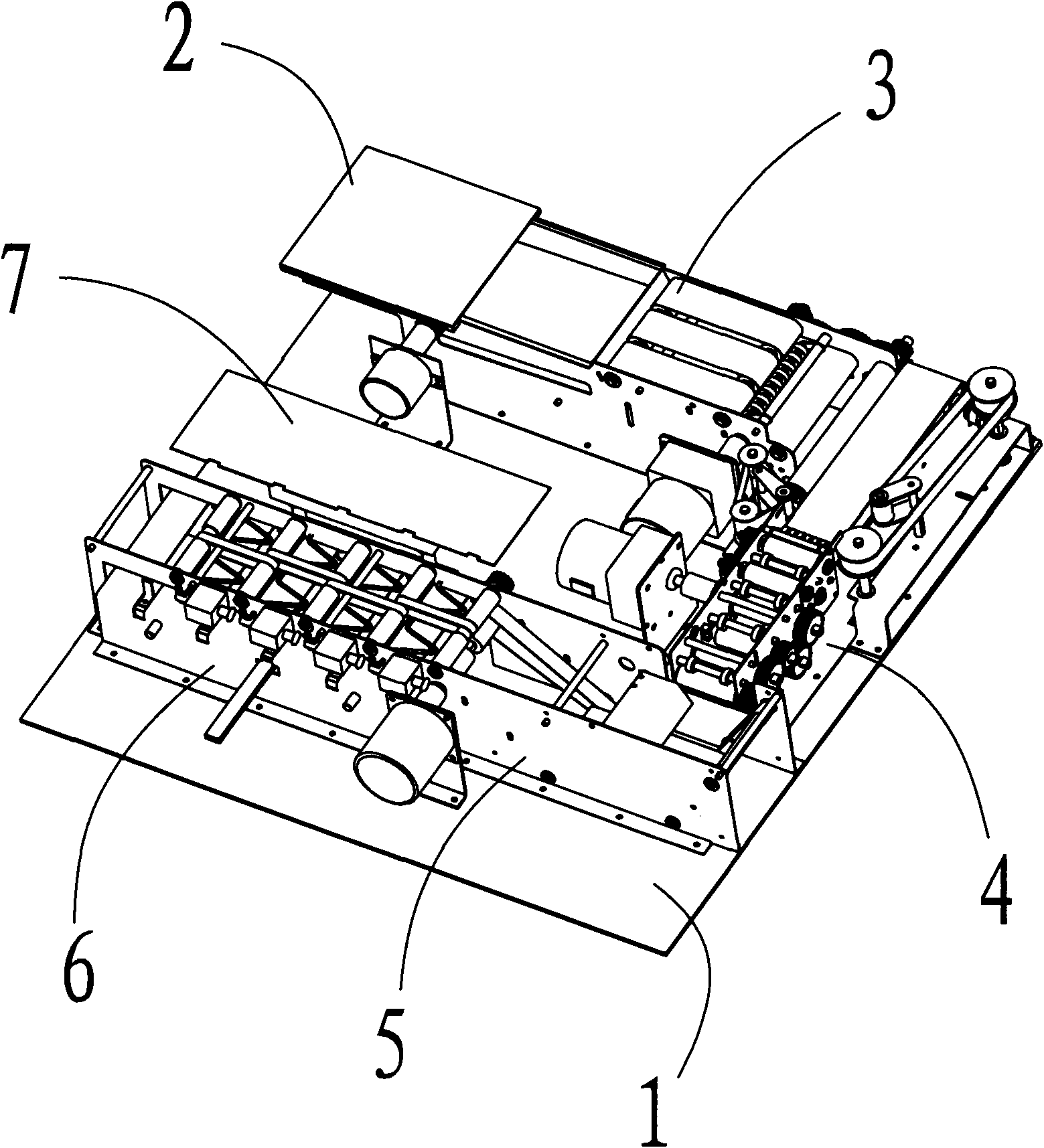

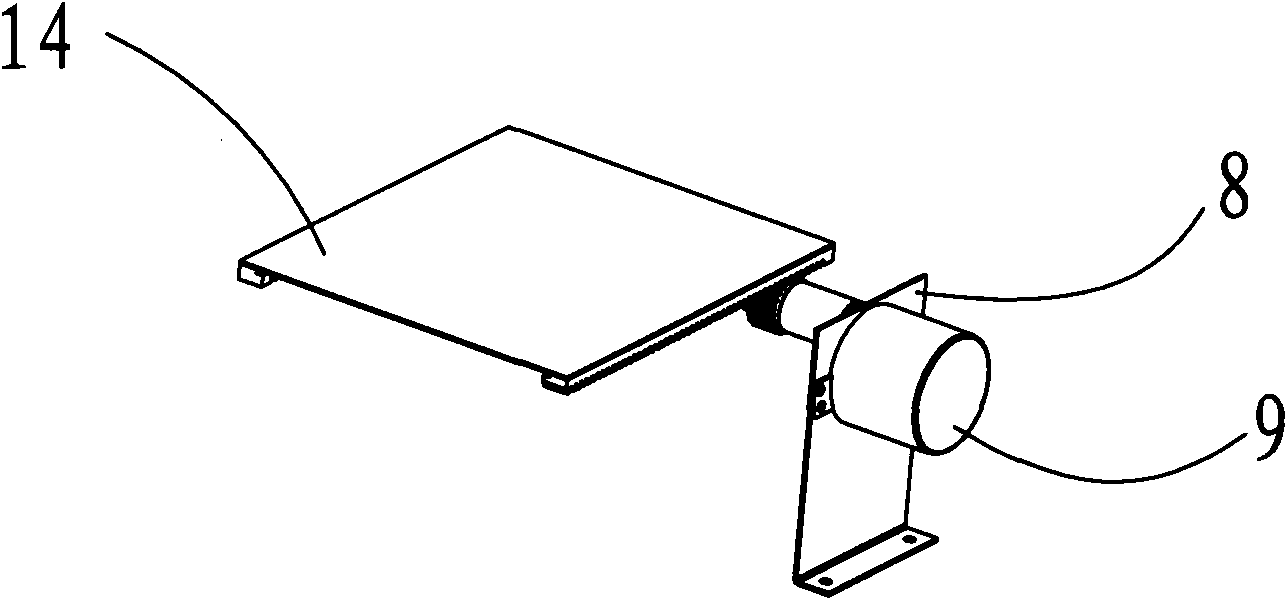

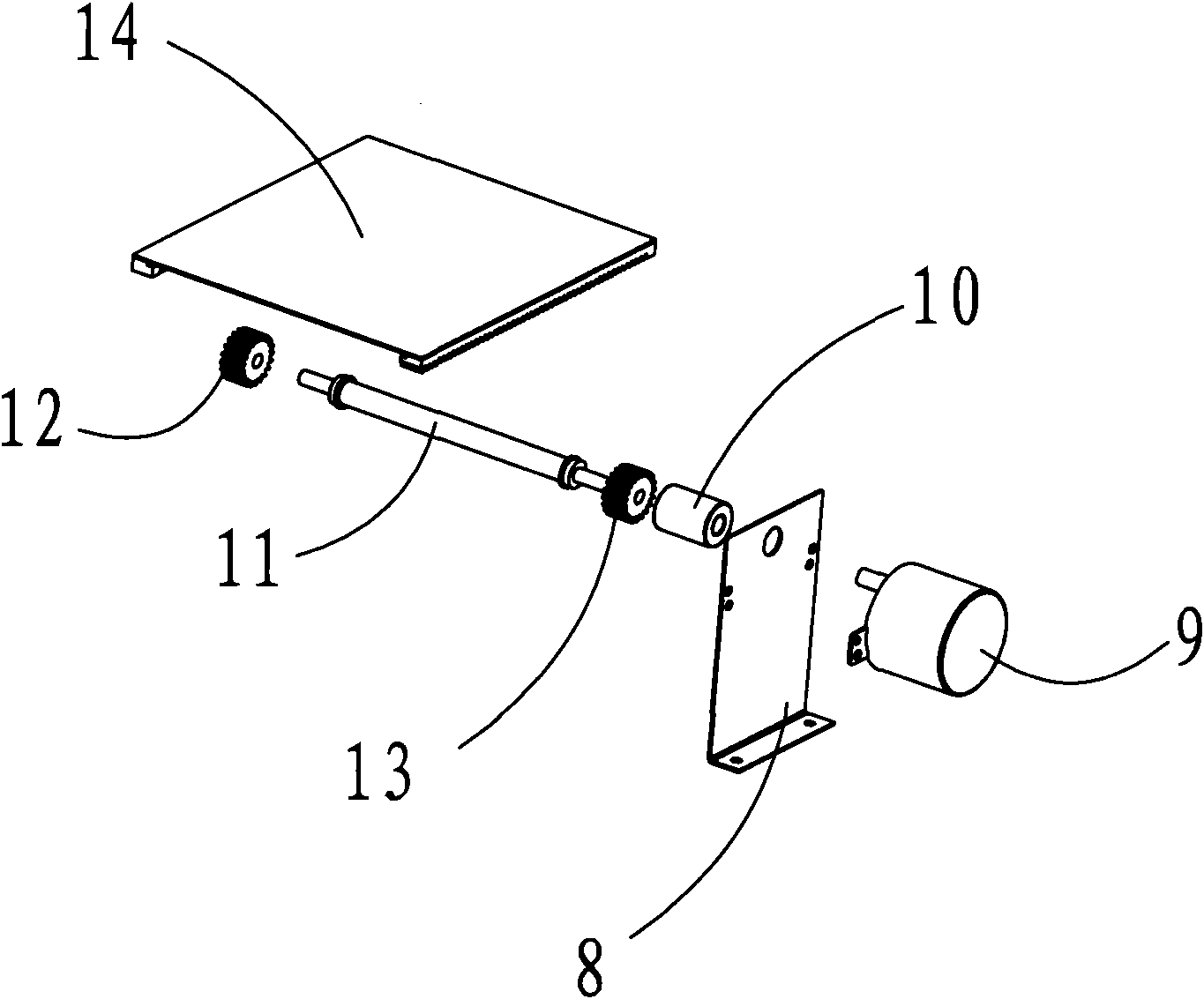

Full-automatic poker machine

InactiveCN102580307AAvoid misidentificationHigh degree of full automationCard gamesEngineeringWaiting time

The invention relates to a full-automatic poker machine, which comprises a mounting bottom plate and a plurality of support legs. A card inlet is disposed on the mounting bottom plate, card cutting and primary card distributing equipment is arranged at one end of the card inlet, card sorting and secondary card distributing equipment is arranged at the other end of the card cutting and primary card distributing equipment, card flopping and dealing equipment is disposed at the other end of the card sorting and secondary card distributing equipment, card pushing equipment is arranged at the other end of the card flopping and dealing equipment, and card lifting equipment is disposed on one side of the card pushing equipment. When the full-automatic poker machine works, disorder poker cards are primarily separated by the aid of the card cutting and primary card distributing equipment, then are adjusted to face the same direction and separated from each other by the card sorting and secondary card distributing equipment, then by the aid of the card flopping and dealing equipment, the single poker cards with front surfaces facing upwards and downwards are adjusted so that the front surfaces of the single poker cards face upwards or downwards in a unified manner and the single poker cards are dealt, the poker cards are pushed to specified positions by the card pushing equipment, and finally are lifted to a tabletop by the card lifting equipment, and card cutting and dealing actions are completed. The full-automatic poker machine brings convenience for reducing labor intensity of game, waiting time is shortened, and simultaneously, automation degree of the machine is improved.

Owner:陈雄兵

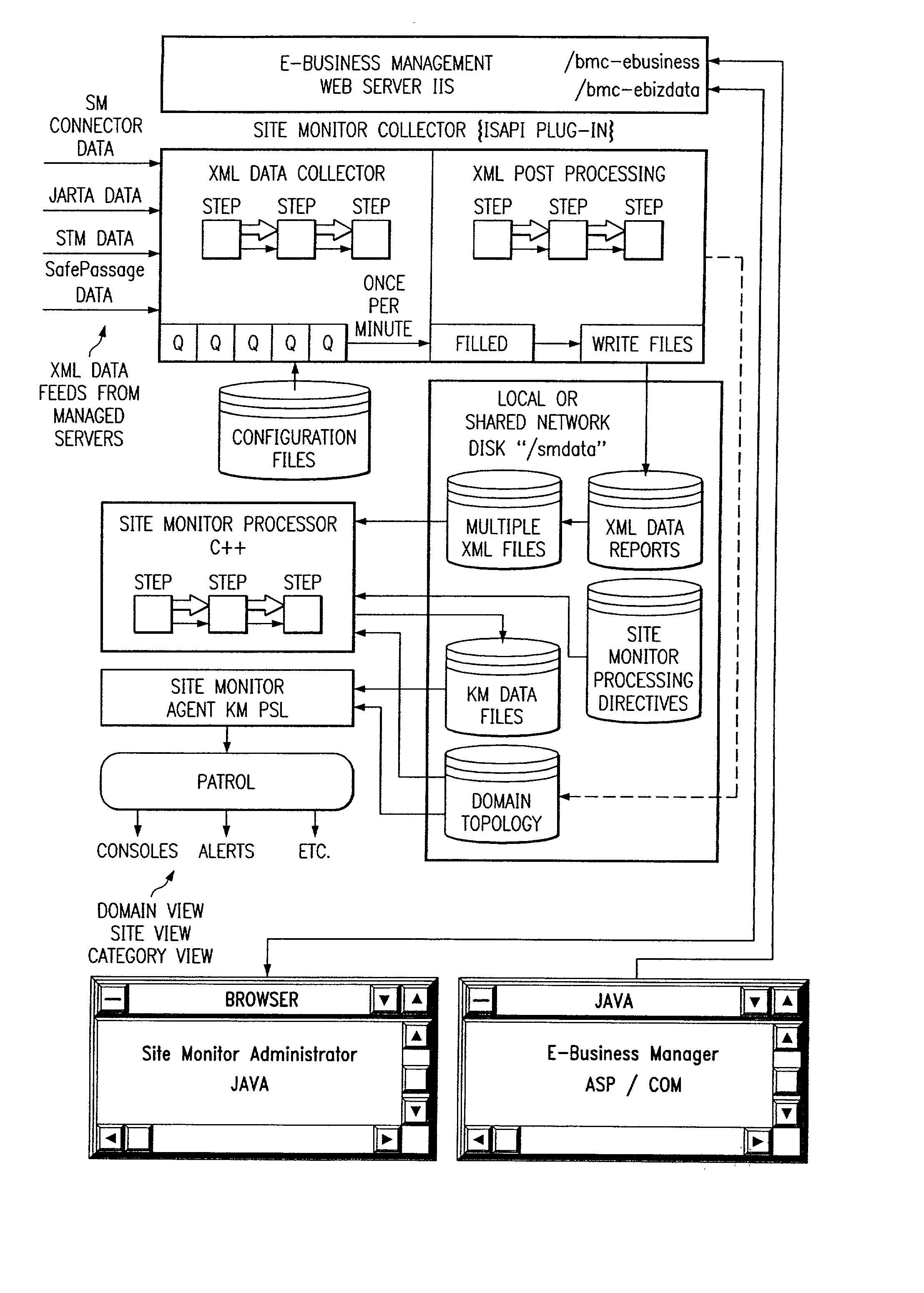

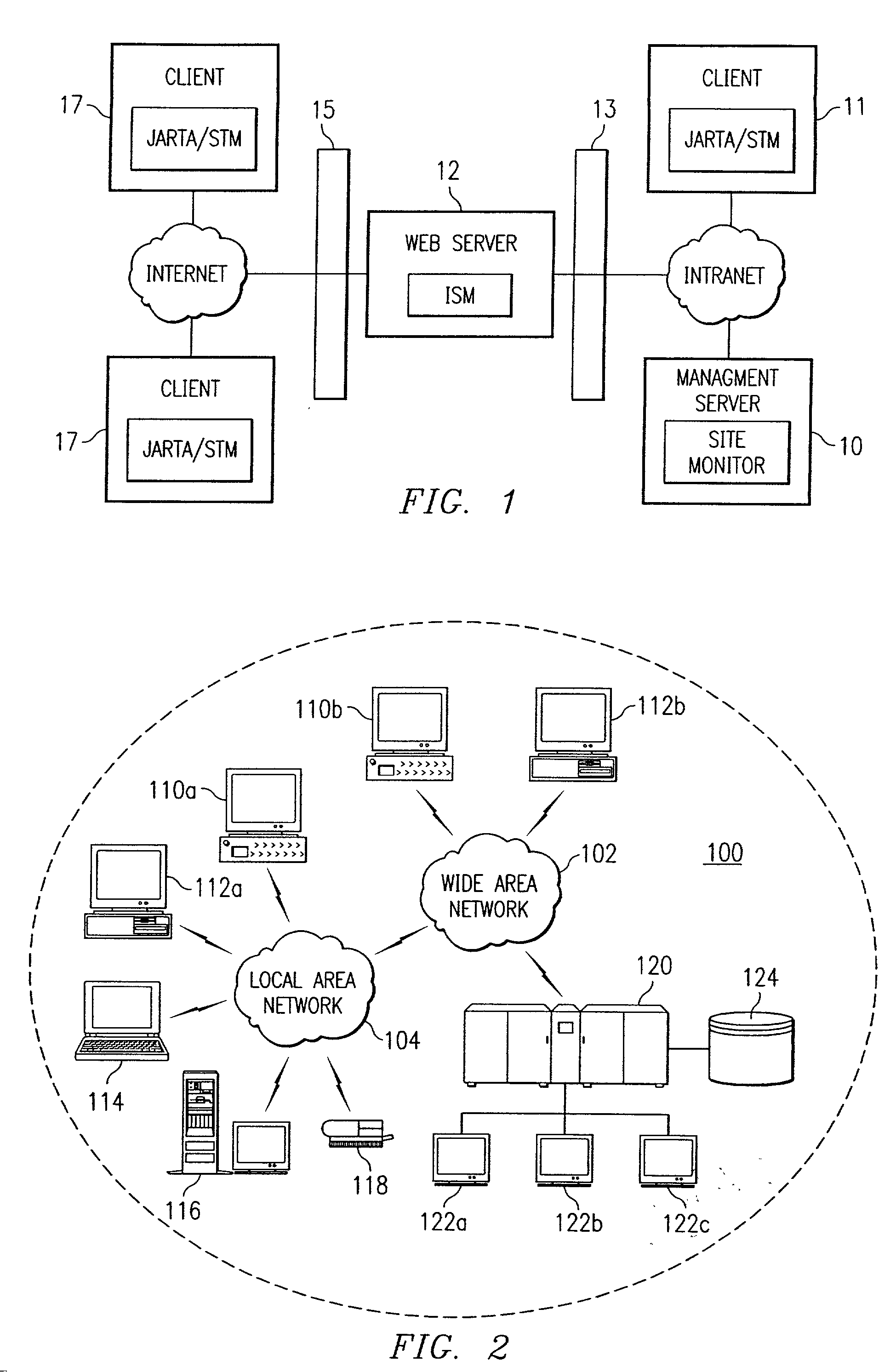

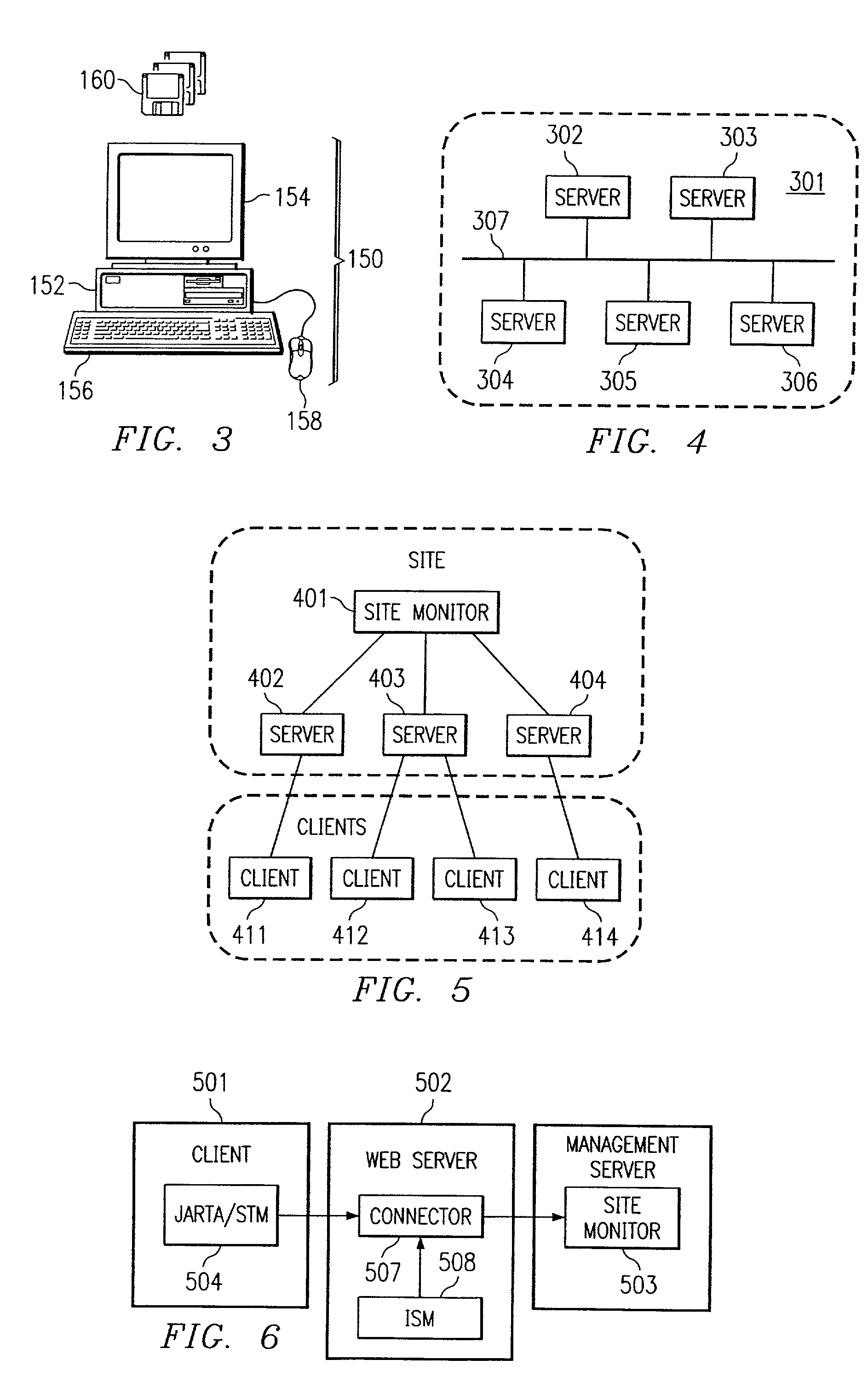

Site monitor

Systems and methods for network service management, wherein the internet service management system includes one or more components which collectively enable an administrator to obtain a site-wide view of network activities on servers such as web servers, FTP servers, e-mail servers, domain name servers, etc. In addition to collecting information relating to web server latency and processing time, the internet service management system may collect actual user transaction information and system information from end users on client computers. The internet service management system may provide domain summary information for a domain, or it may provide management information organized by "categories" according to how a site manager wants to view and manage his business; e.g., byline of business (books, auctions, music, etc.), by site function (searches, shopping cart, support, quotes, sales automation), or most any other categorization customers may choose.

Owner:BMC SOFTWARE

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com