Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

168 results about "Preemption" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

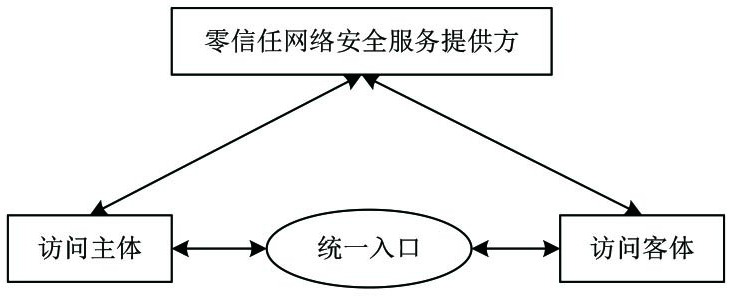

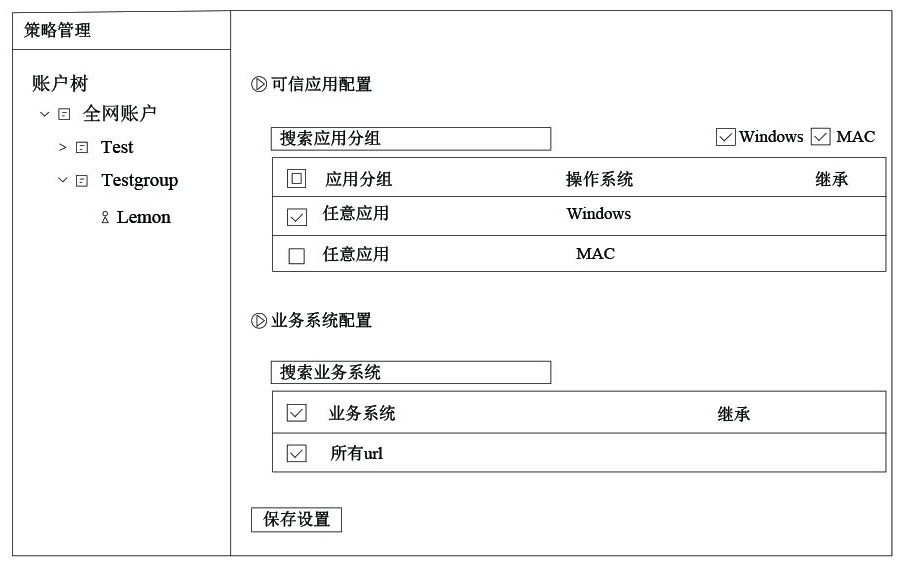

In computing, preemption is the act of temporarily interrupting a task being carried out by a computer system, without requiring its cooperation, and with the intention of resuming the task at a later time. Such changes of the executed task are known as context switches. It is normally carried out by a privileged task or part of the system known as a preemptive scheduler, which has the power to preempt, or interrupt, and later resume, other tasks in the system.

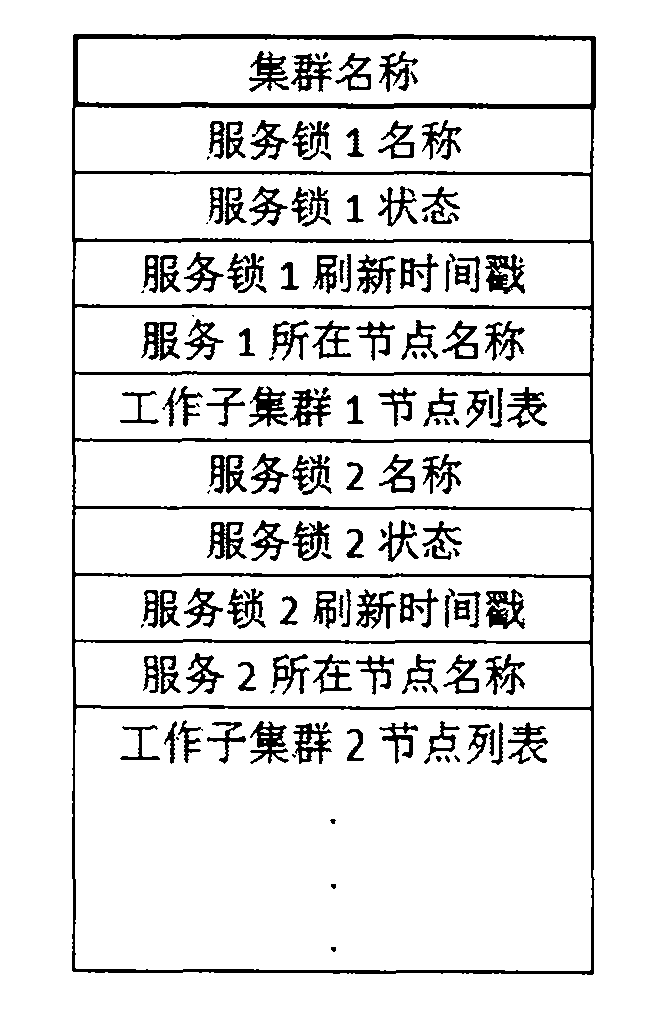

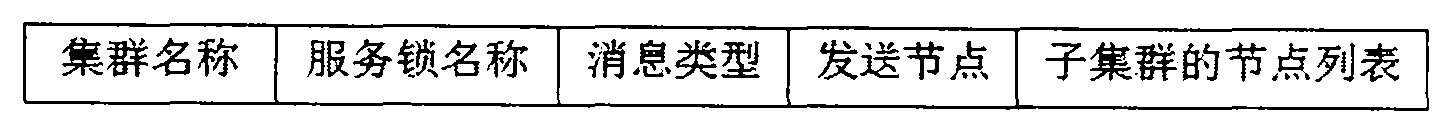

Arbitration server based cluster split-brain prevent method and device

ActiveCN103684941AAvoid the risk of simultaneous launchesImprove resource usage efficiencyNetworks interconnectionSplit-brainCluster Node

The invention discloses an arbitration server based cluster split-brain prevent method and device, and belongs to high-availability cluster split-brain prevention technology in the field of computer cluster technology, in order to solve the problem that services cannot be taken over or the services run on two nodes at the same time due to the fact that states of other nodes and running services thereof cannot be accurately distinguished when a cluster heartbeat network is interrupted. The scheme includes that when the heartbeat network is interrupted, cluster nodes not running services can take over the services only by acquiring corresponding service locks through an arbitration server so as to avoid the problem of split-brain; after the services cease, the arbitration server recovers the service locks and allows other cluster nodes to preempt the same again; in the process that multiple nodes preempt one service lock, only one node succeeds in preemption and the services can be started to prevent occurrence of the split-brain.

Owner:GUANGDONG ZHONGXING NEWSTART TECH CO LTD

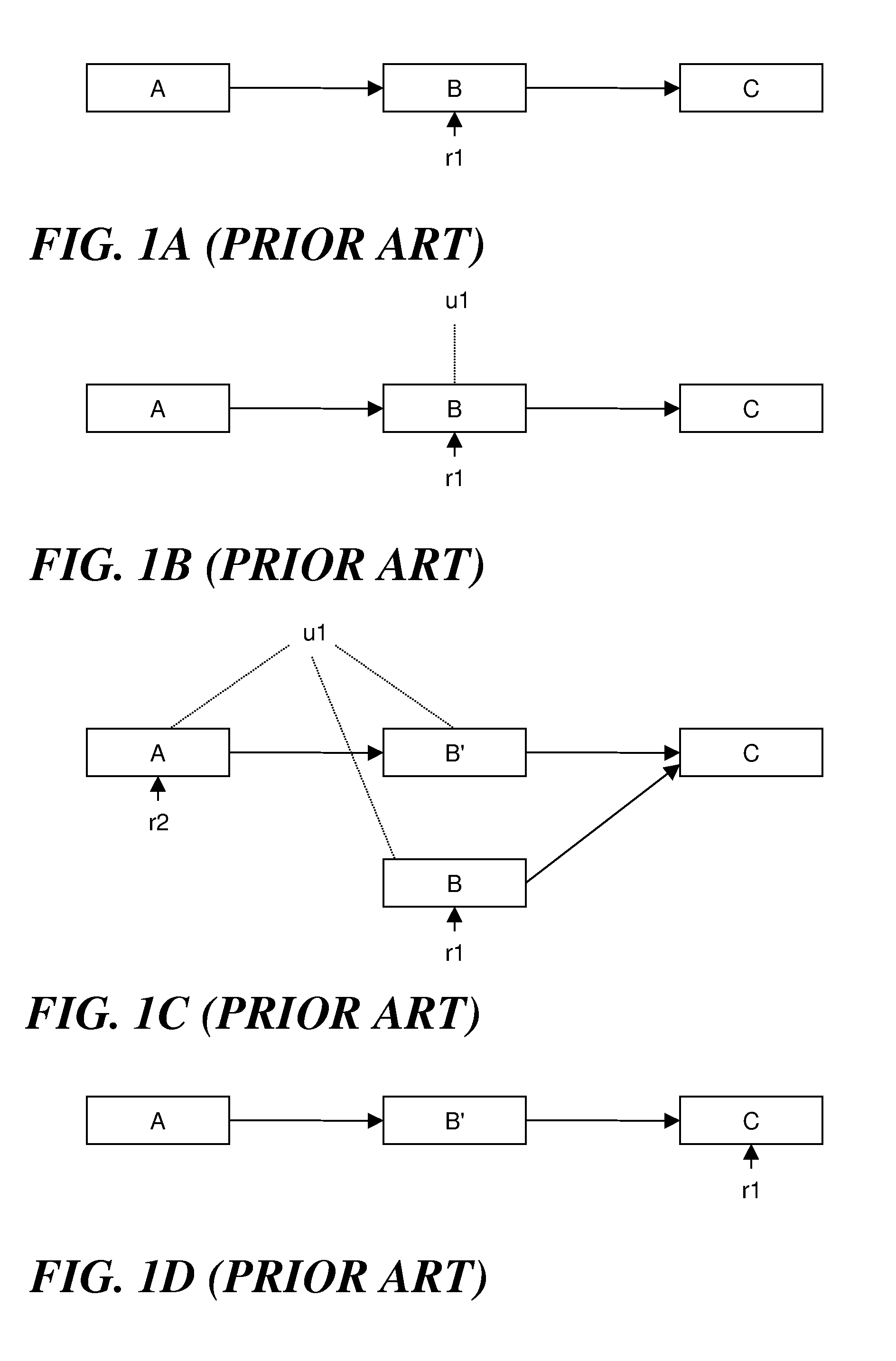

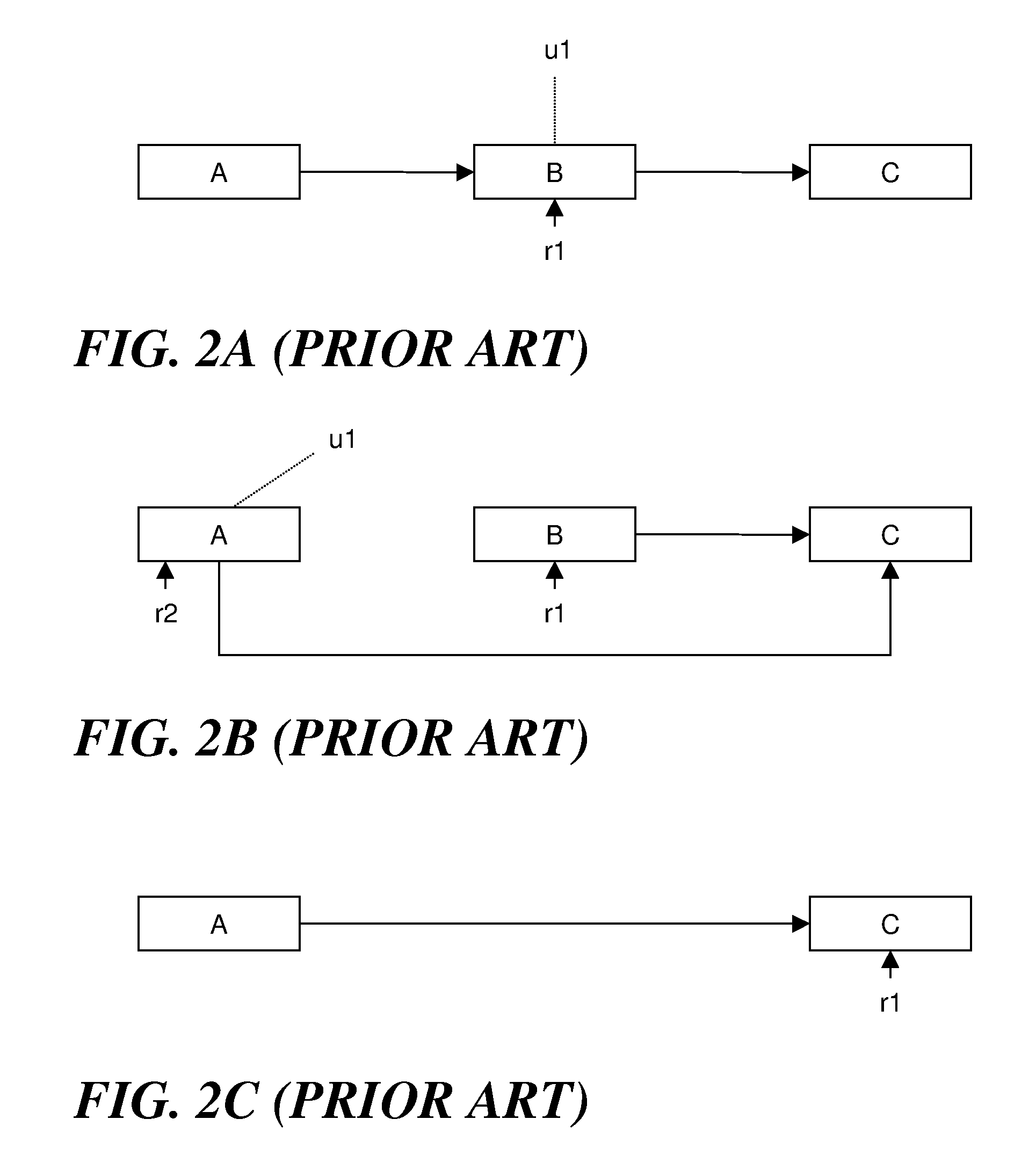

User-level read-copy update that does not require disabling preemption or signal handling

InactiveUS8020160B2Digital data processing detailsMultiprogramming arrangementsComputer architectureRead-copy-update

Owner:INT BUSINESS MASCH CORP

Use of rollback RCU with read-side modifications to RCU-protected data structures

InactiveUS20060130061A1Multiprogramming arrangementsMemory systemsArray data structureCritical section

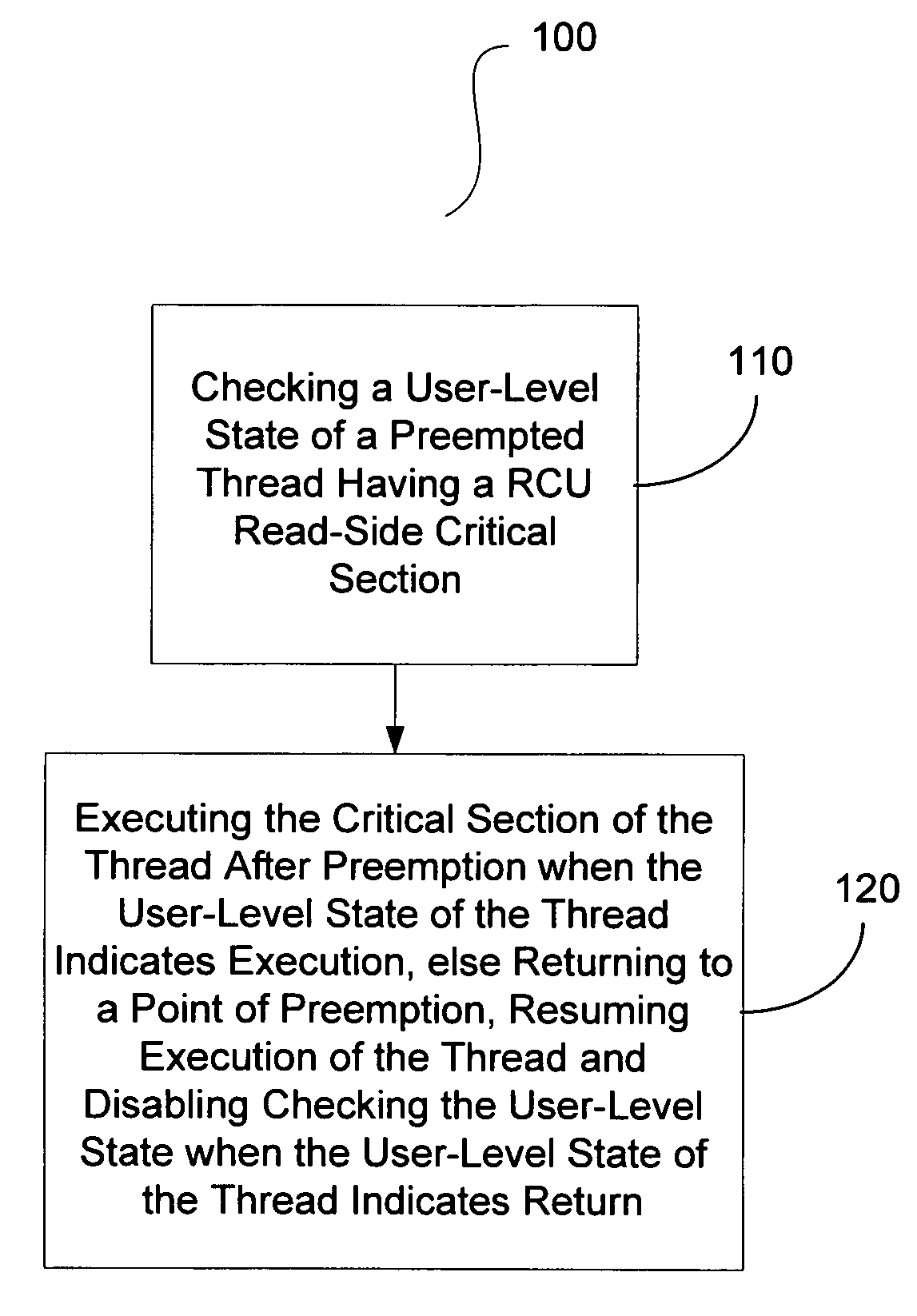

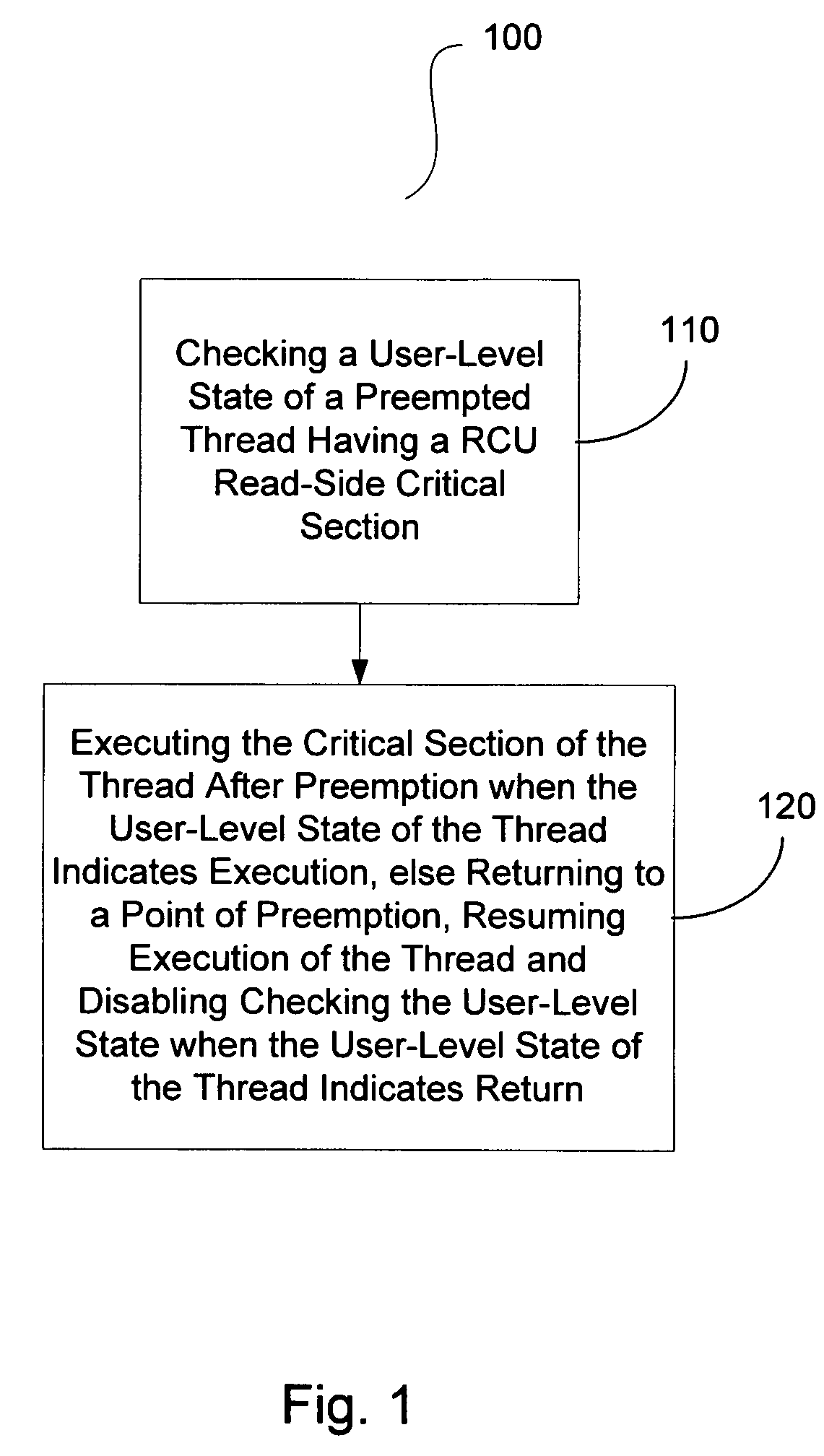

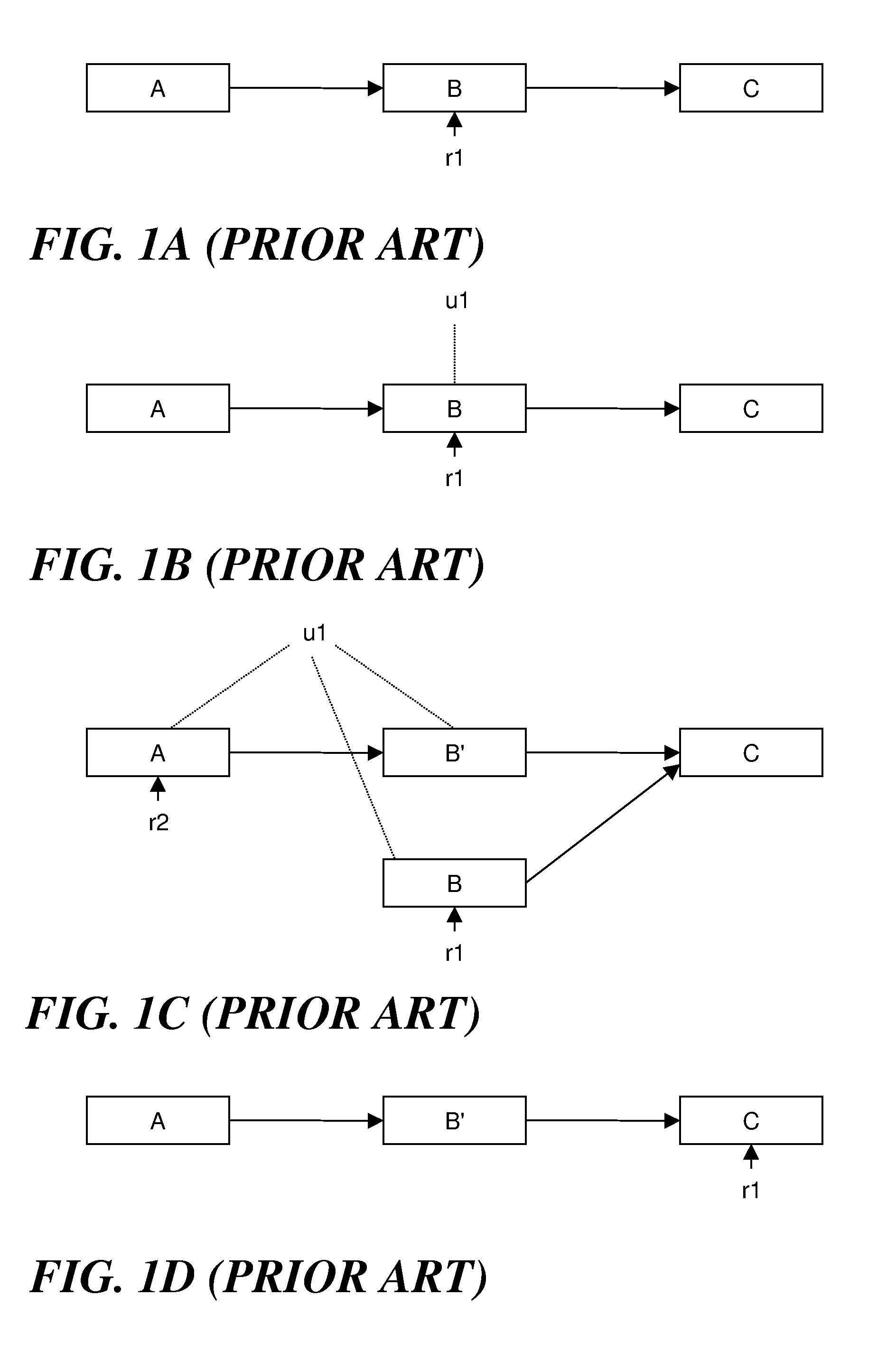

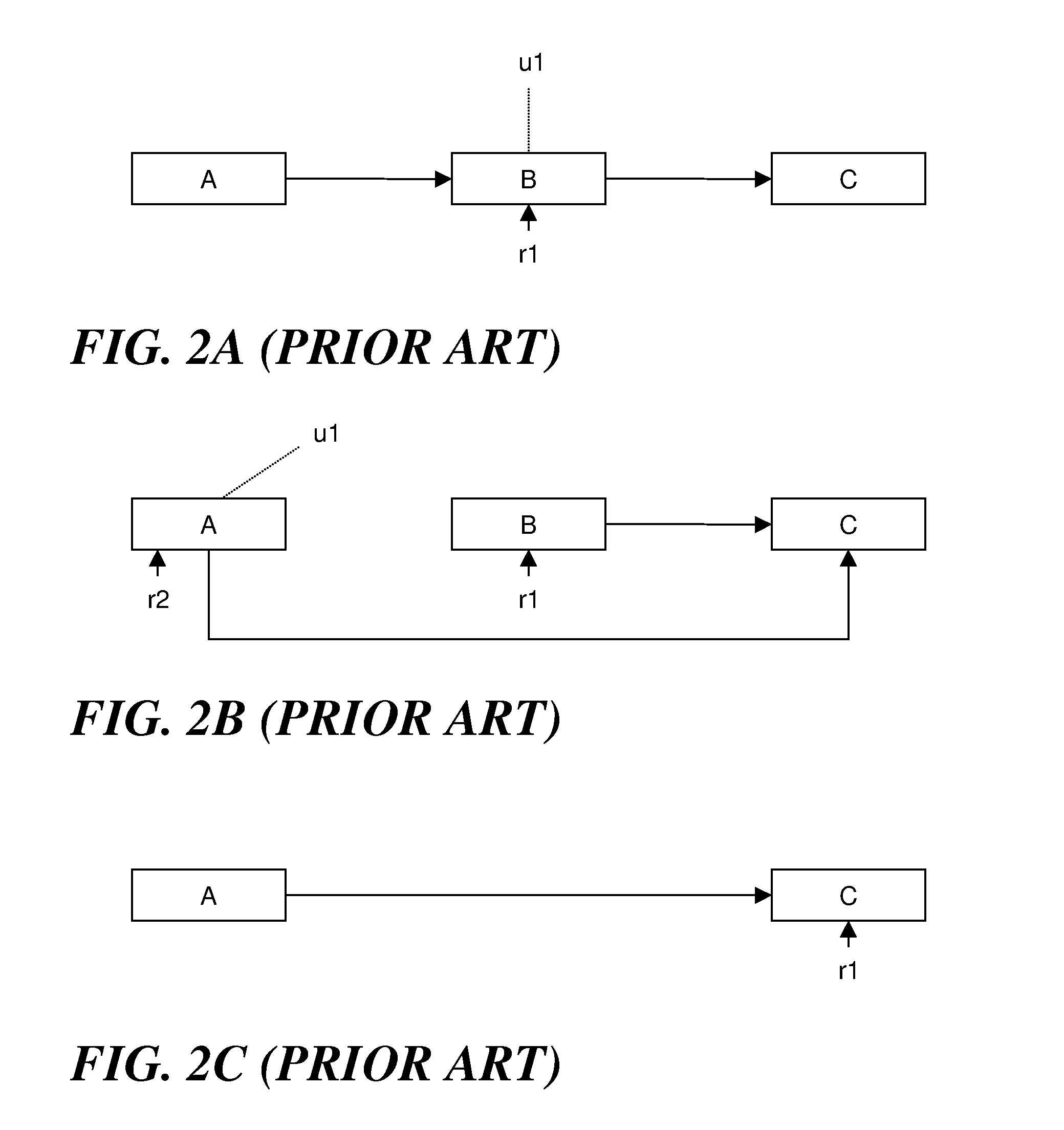

A method, apparatus and program storage device for performing a return / rollback process for RCU-protected data structures is provided that includes checking a user-level state of a preempted thread having a RCU read-side critical section, and executing the critical section of the thread after preemption when the user-level state of the thread indicates execution, otherwise returning to a point of preemption, resuming execution of the thread and disabling checking the user-level state when the user-level state of the thread indicates return.

Owner:IBM CORP

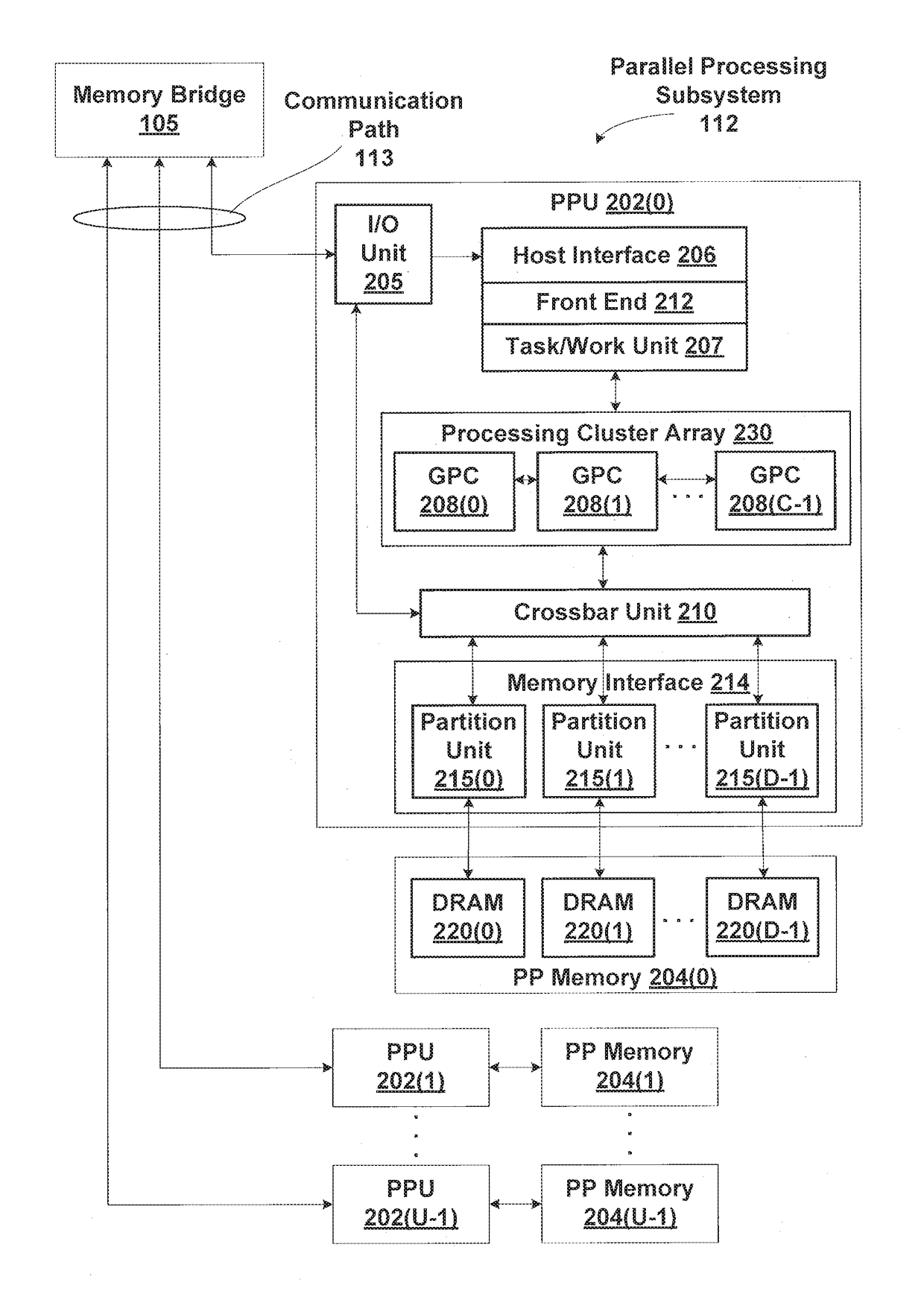

Instruction level execution preemption

InactiveUS20130124838A1Quantity minimizationShort amount of timeDigital computer detailsConcurrent instruction executionGranularityParallel computing

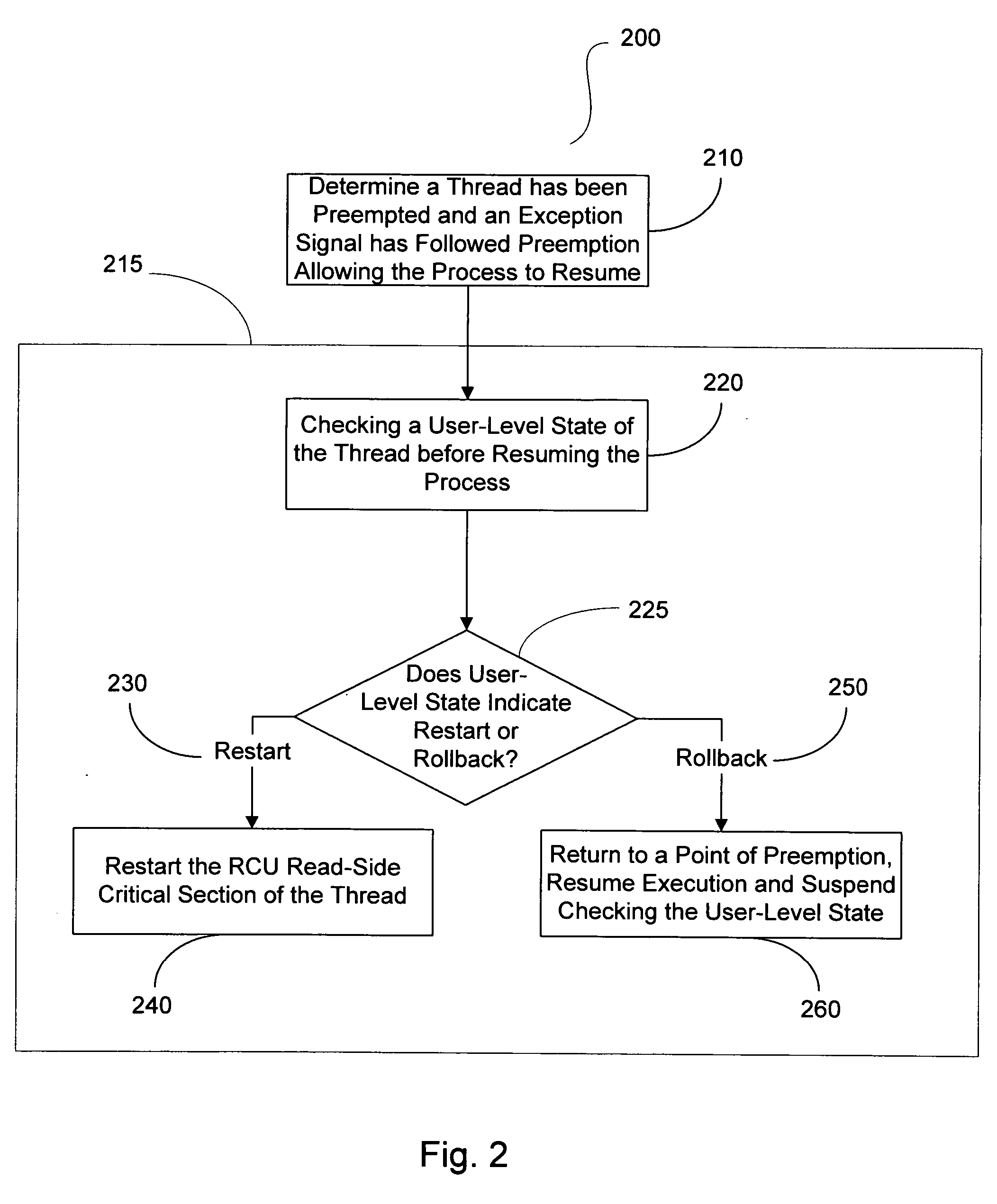

One embodiment of the present invention sets forth a technique instruction level and compute thread array granularity execution preemption. Preempting at the instruction level does not require any draining of the processing pipeline. No new instructions are issued and the context state is unloaded from the processing pipeline. When preemption is performed at a compute thread array boundary, the amount of context state to be stored is reduced because execution units within the processing pipeline complete execution of in-flight instructions and become idle. If, the amount of time needed to complete execution of the in-flight instructions exceeds a threshold, then the preemption may dynamically change to be performed at the instruction level instead of at compute thread array granularity.

Owner:NVIDIA CORP

Avoiding unnecessary RSVP-based preemptions

InactiveUS20060274650A1Solve insufficient resourcesAvoids unnecessary preemption of resource reservationError preventionFrequency-division multiplex detailsComputer networkResource Reservation Protocol

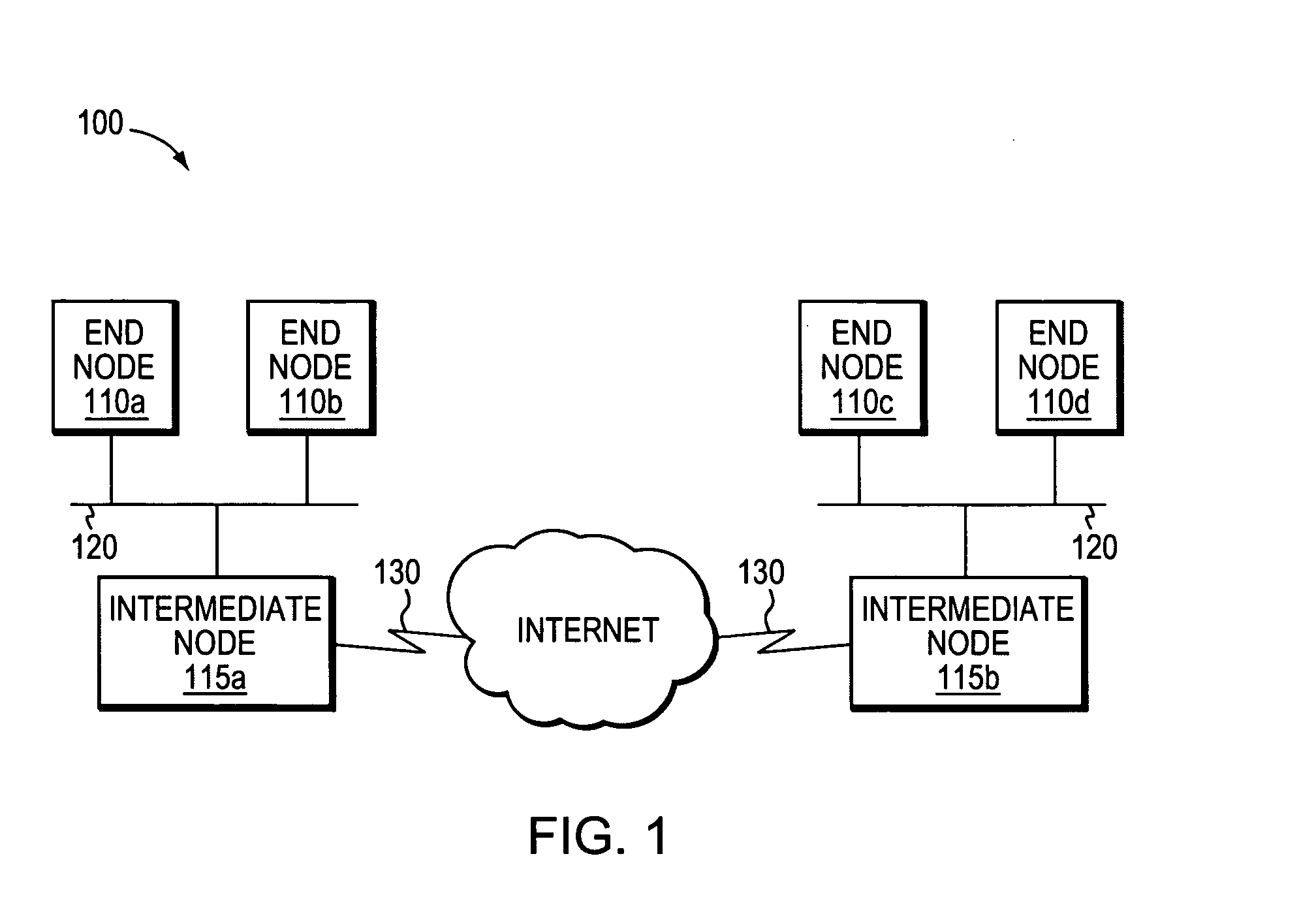

A technique avoids unnecessary preemption of resource reservations along a requested flow between nodes in a computer network. A node receives priority-based resource reservation requests and determines conditions of reservation eligibility by comparing the requested resources to the amount of available resources at the node. Specifically, the node maintains a “held resources” state where available requested resources are held prior to their being assigned or reserved (confirmed) for the requested flow, such as, e.g., during an initial Resource reSerVation Protocol (RSVP) Path message. The node includes the held resources in calculations of available resources in such a way as to prevent resources from being assigned or reserved if the resources would be subsequently preempted by a request of higher priority, or if an earlier request would first utilize the resources. The node (e.g., an end node) also prevents resources from being reserved or preempted in a duplex (bi-directional) reservation on a first flow in a first direction when resources available for a second flow in a second direction indicate a failure of the second flow.

Owner:CISCO TECH INC

User-level read-copy update that does not require disabling preemption or signal handling

InactiveUS20100023946A1Easy to optimizeDigital data processing detailsMultiprogramming arrangementsRegistration statusRead-copy-update

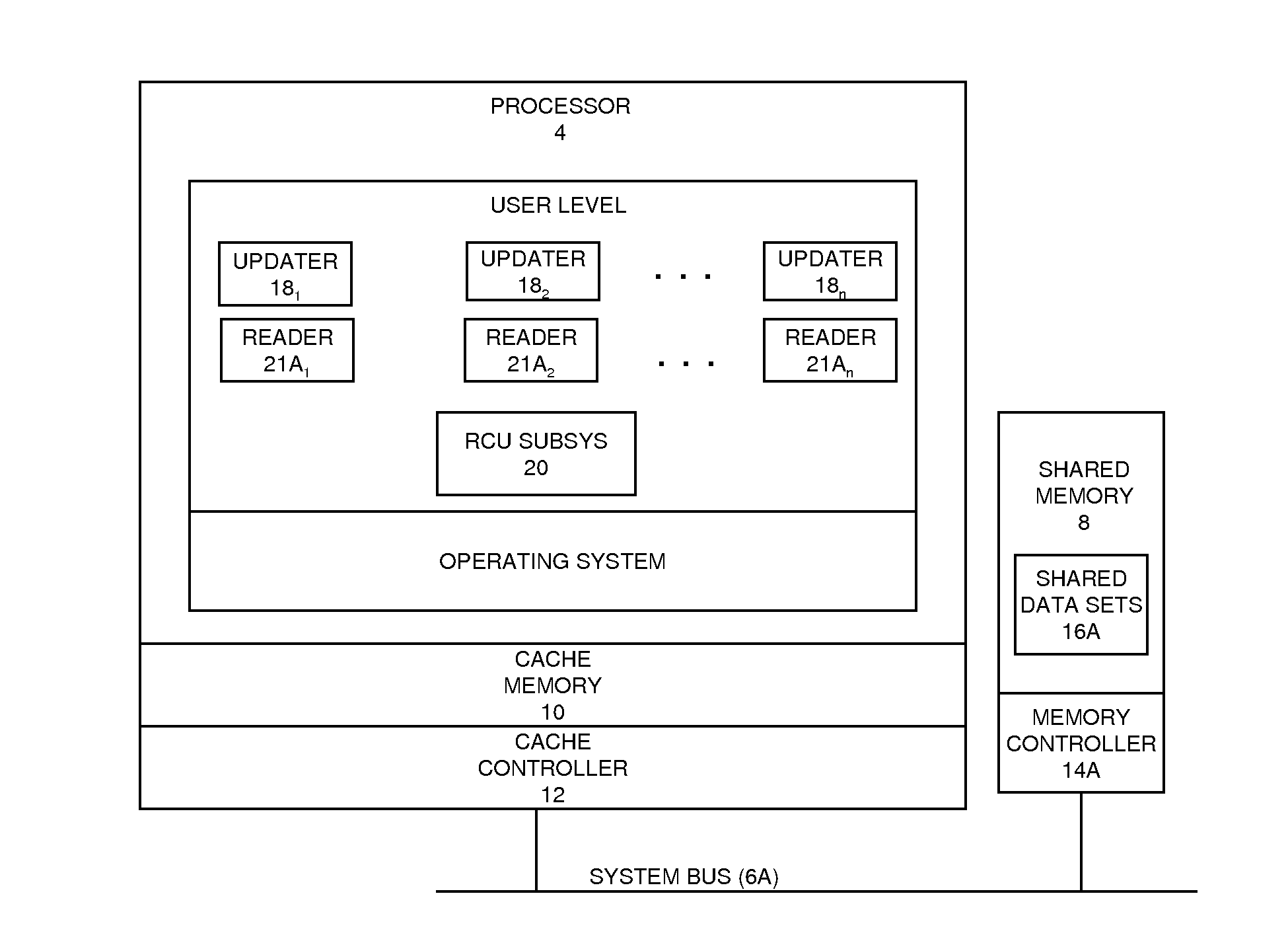

A user-level read-copy update (RCU) technique. A user-level RCU subsystem executes within threads of a user-level multithreaded application. The multithreaded application may include reader threads that read RCU-protected data elements in a shared memory and updater threads that update such data elements. The reader and updater threads may be preemptible and comprise signal handlers that process signals. Reader registration and unregistration components in the RCU subsystem respectively register and unregister the reader threads for RCU critical section processing. These operations are performed while the reader threads remain preemptible and with their signal handlers being operational. A grace period detection component in the RCU subsystem considers a registration status of the reader threads and determines when it is safe to perform RCU second-phase update processing to remove stale versions of updated data elements that are being referenced by the reader threads, or take other RCU second-phase update processing actions.

Owner:IBM CORP

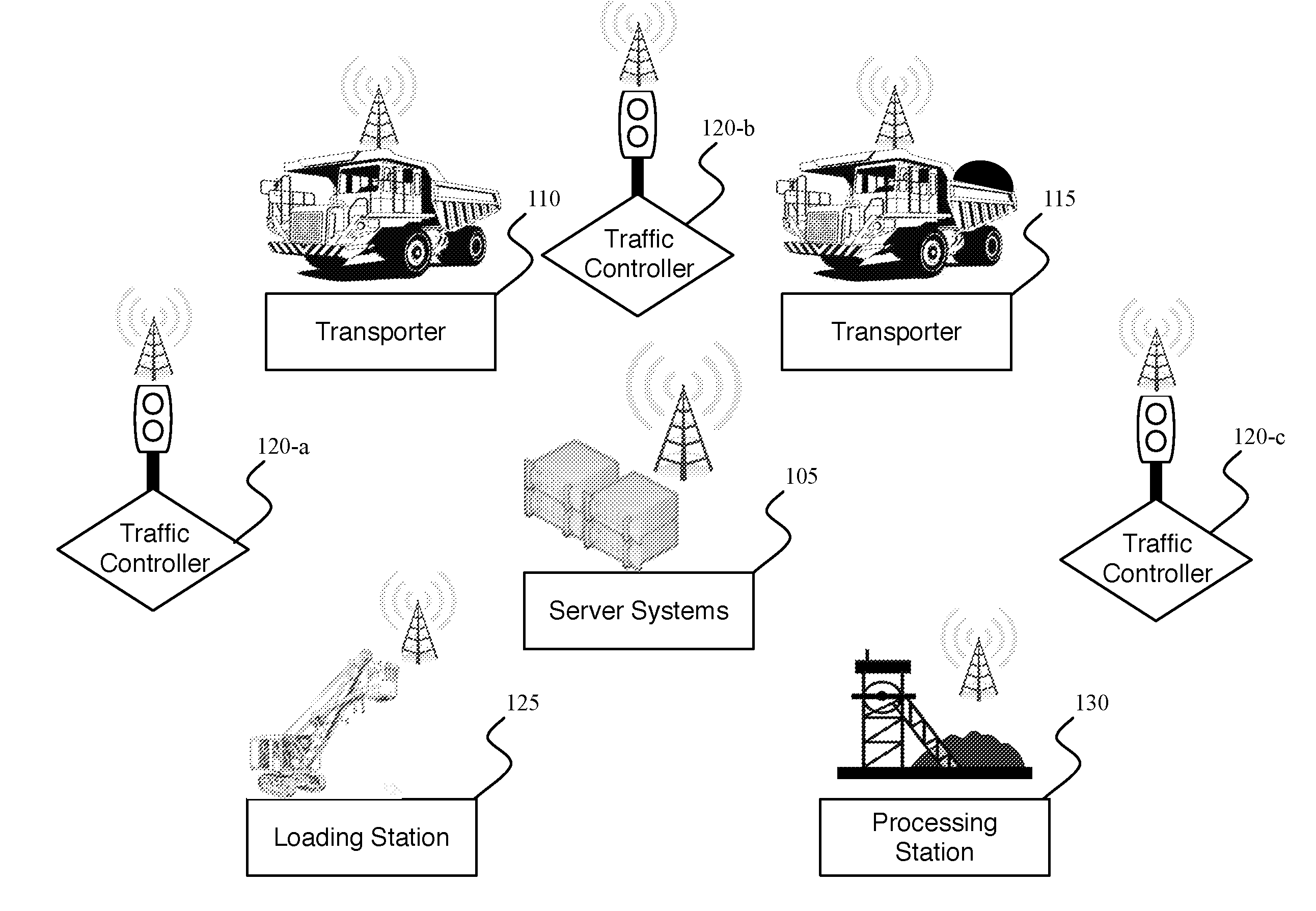

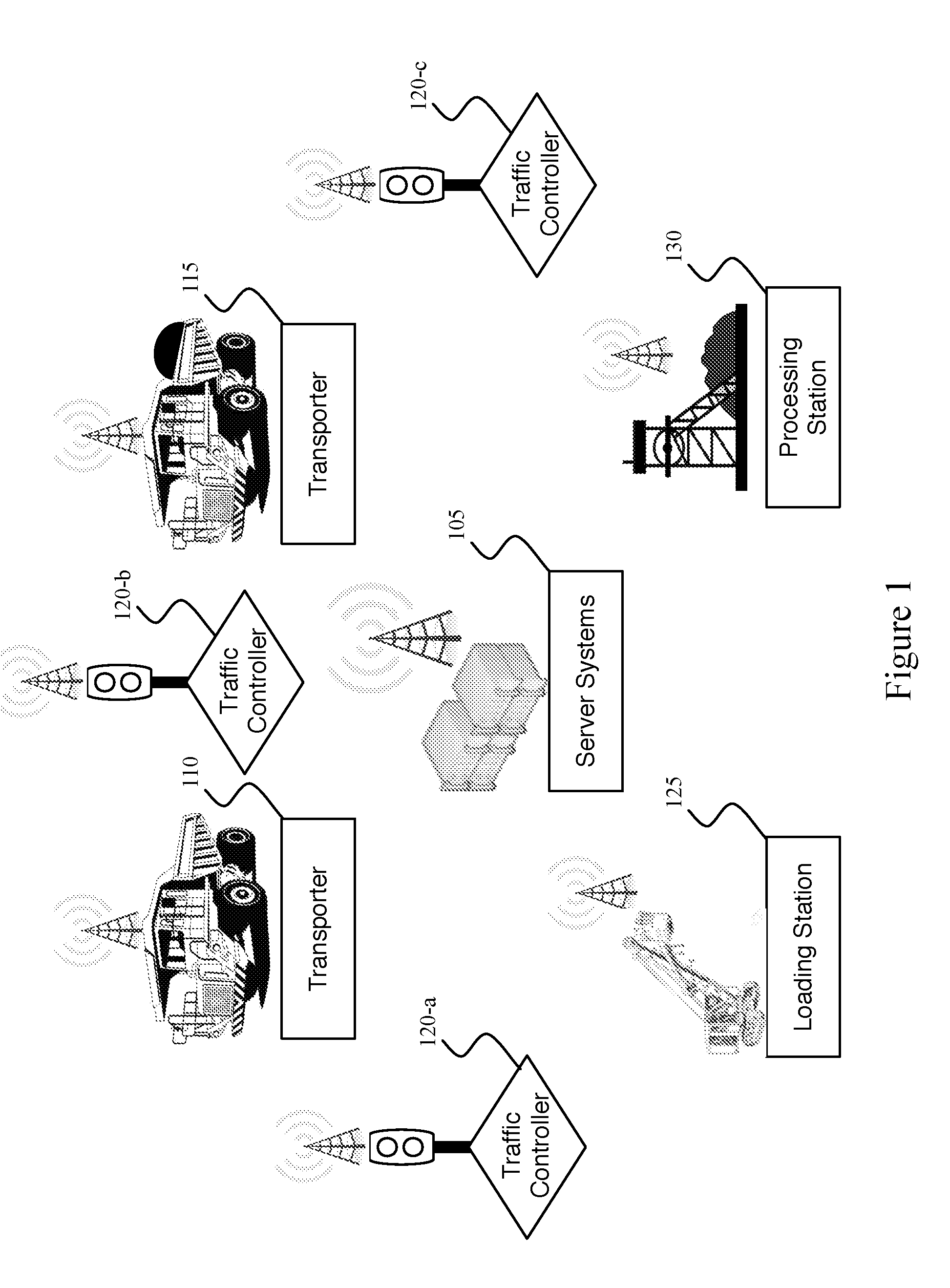

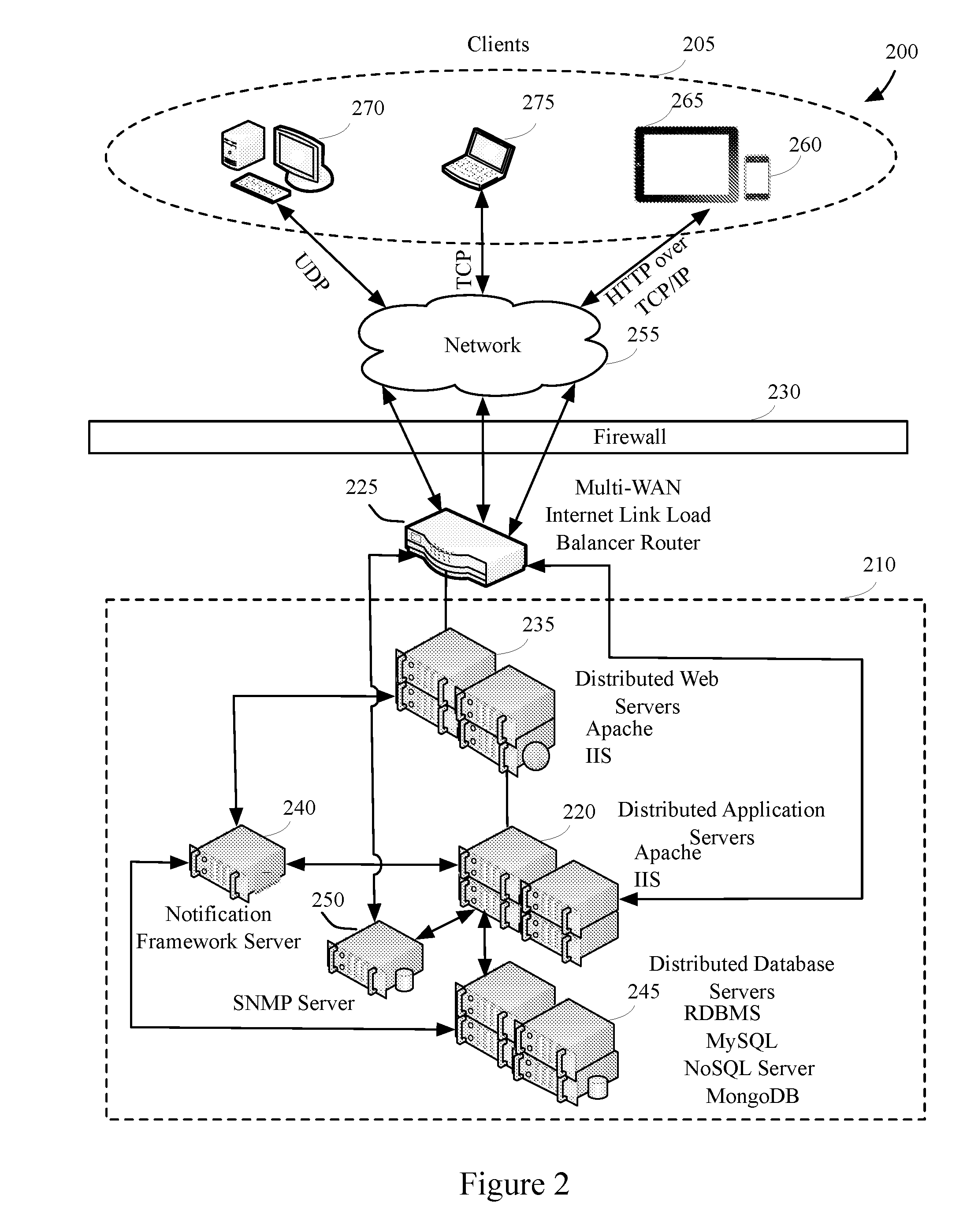

Traffic control system and method of use

InactiveUS20160379490A1Reduce and eliminate errorReduce and eliminate and and limitationControlling traffic signalsRoad traffic controlCentralized computing

Owner:HAWS CORP

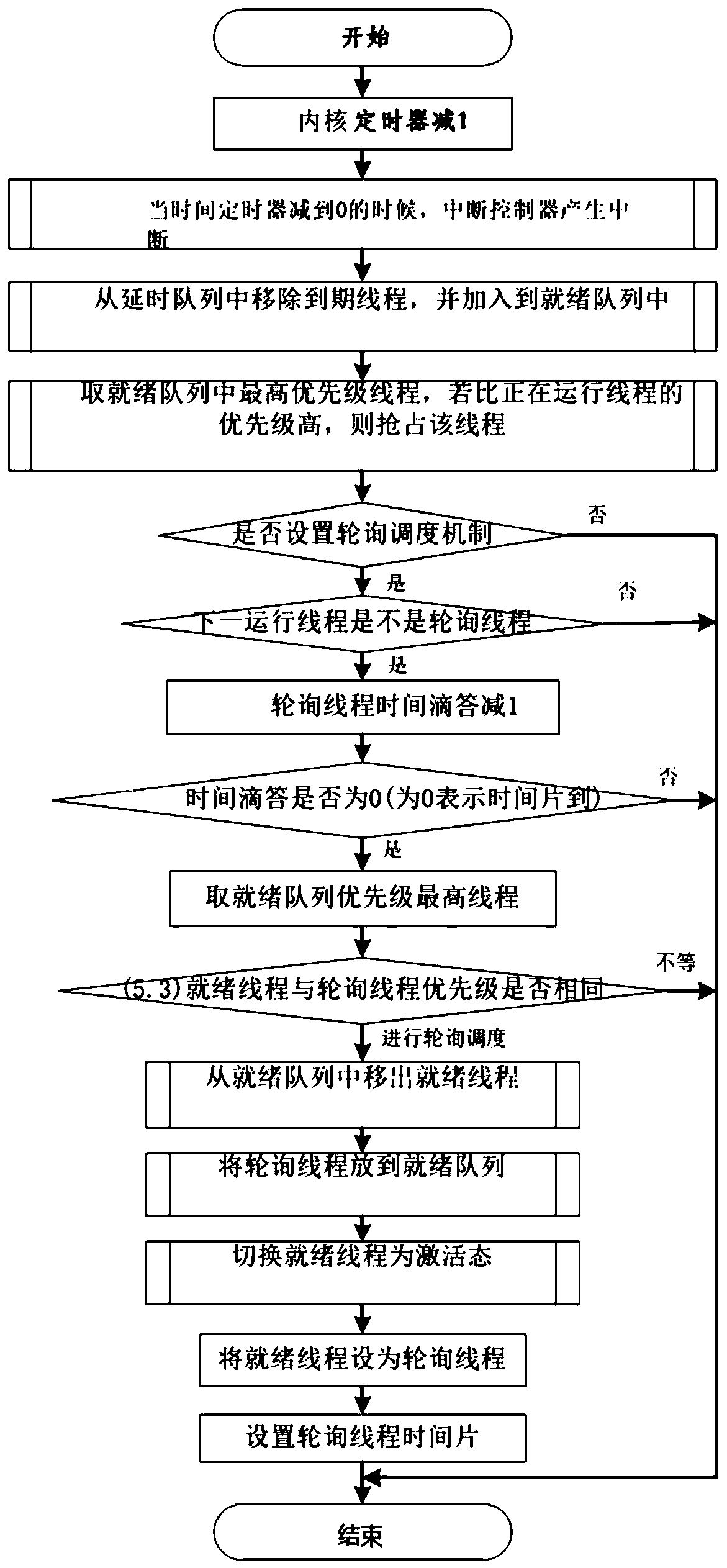

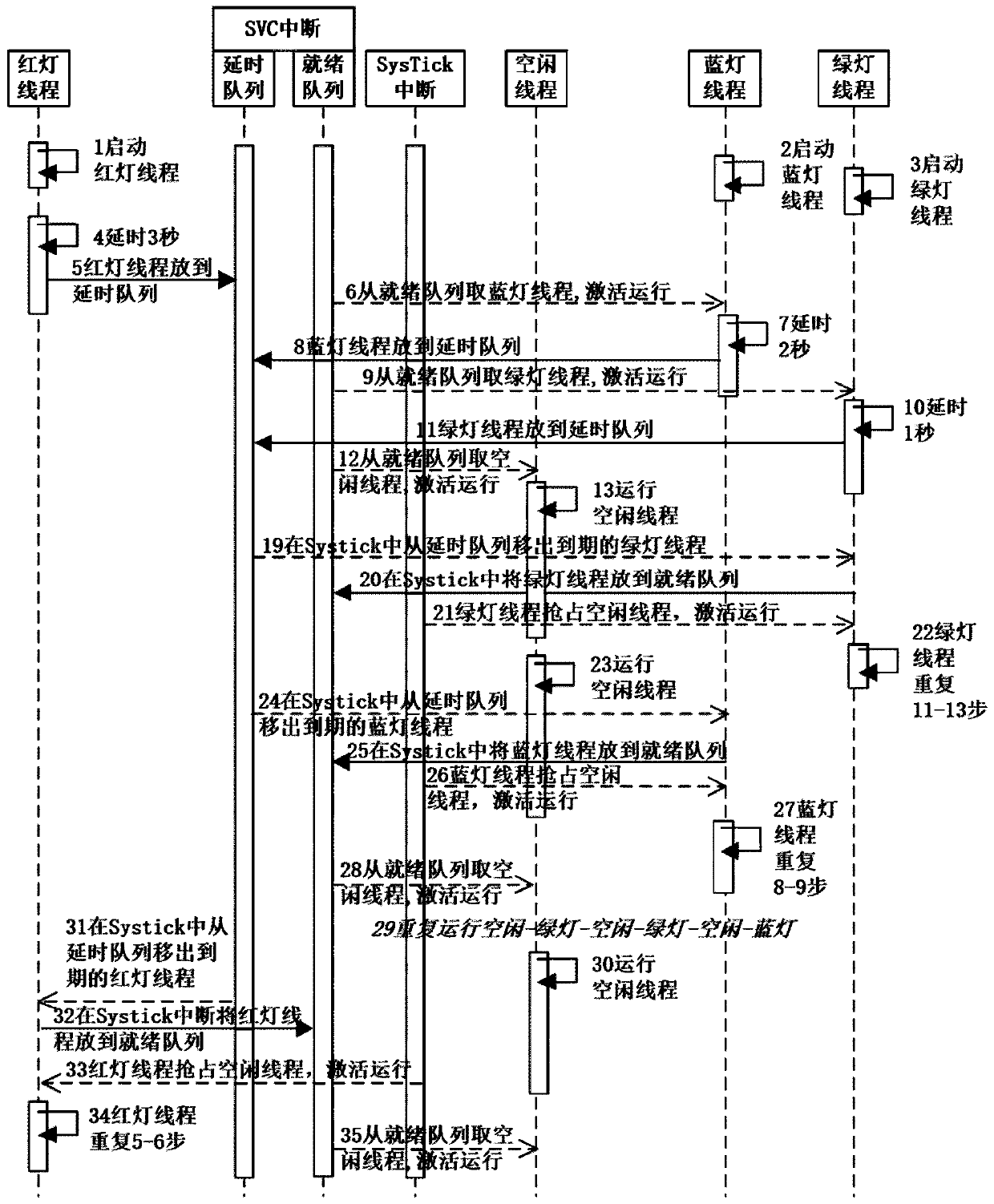

Task scheduling processing system and method of embedded real-time operating system

The invention provides a task scheduling processing system and method for an embedded real-time operating system. The system comprises a processor, a timer, an interrupt controller, a scheduler and anSVC switching module, wherein the timer, the interrupt controller, the scheduler and the SVC switching module are arranged in the processor. The scheduler comprises a priority scheduler and a time slice polling scheduler; according to the invention, a scheduling mode based on priority preemption is adopted; the right to use of the CPU is always allocated to the currently ready task with the highest priority; the tasks with the same priority are scheduled according to a first-in first-out sequence; and meanwhile, a time slice polling scheduling mode is adopted as a supplement of a priority preemption scheduling mode, so that the problem that the real-time performance of a processor system is reduced due to the fact that a plurality of ready thread tasks with the same priority share a processor can be coordinated, and the problem of task processing of a plurality of ready threads with high priorities is improved.

Owner:SUZHOU UNIV

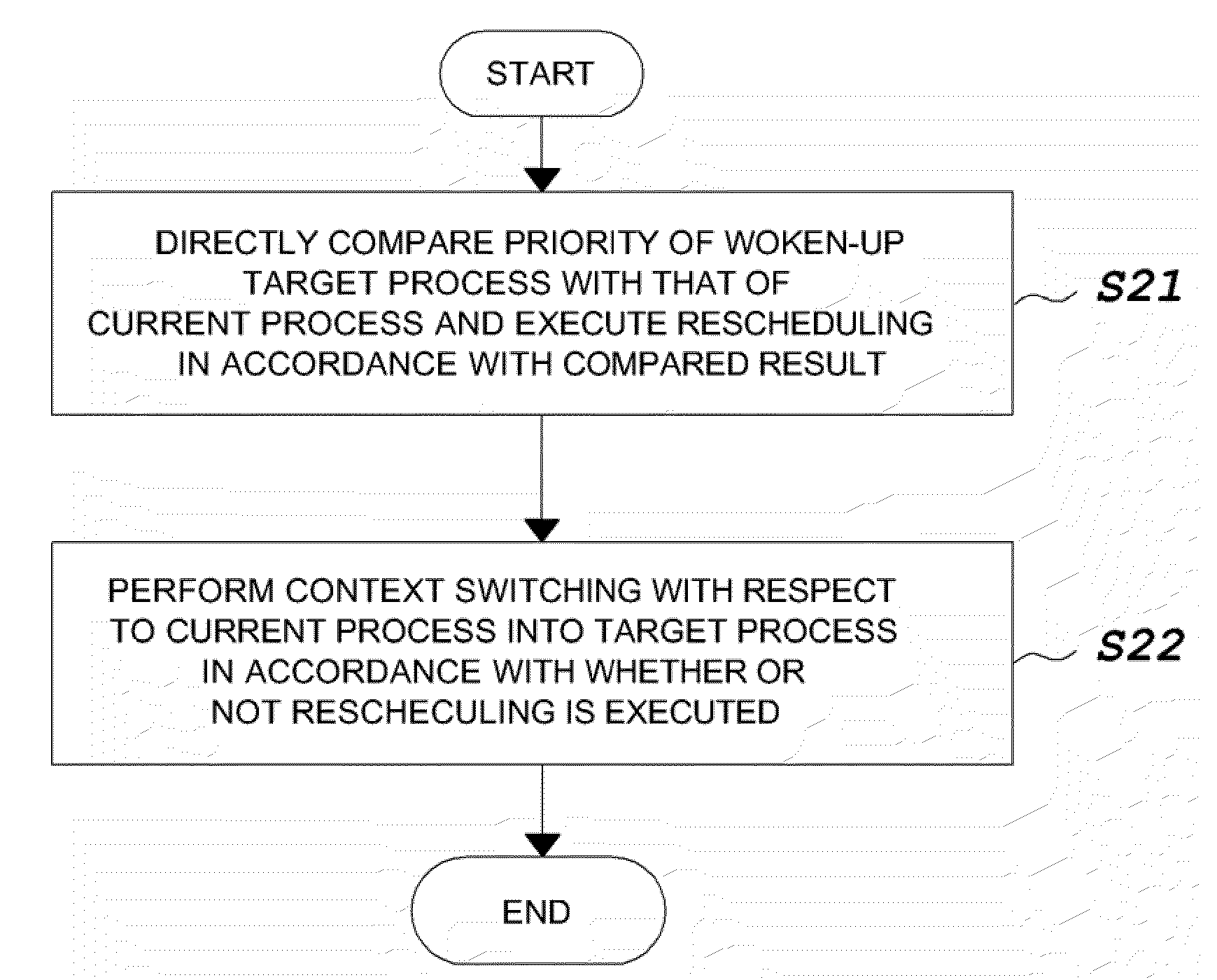

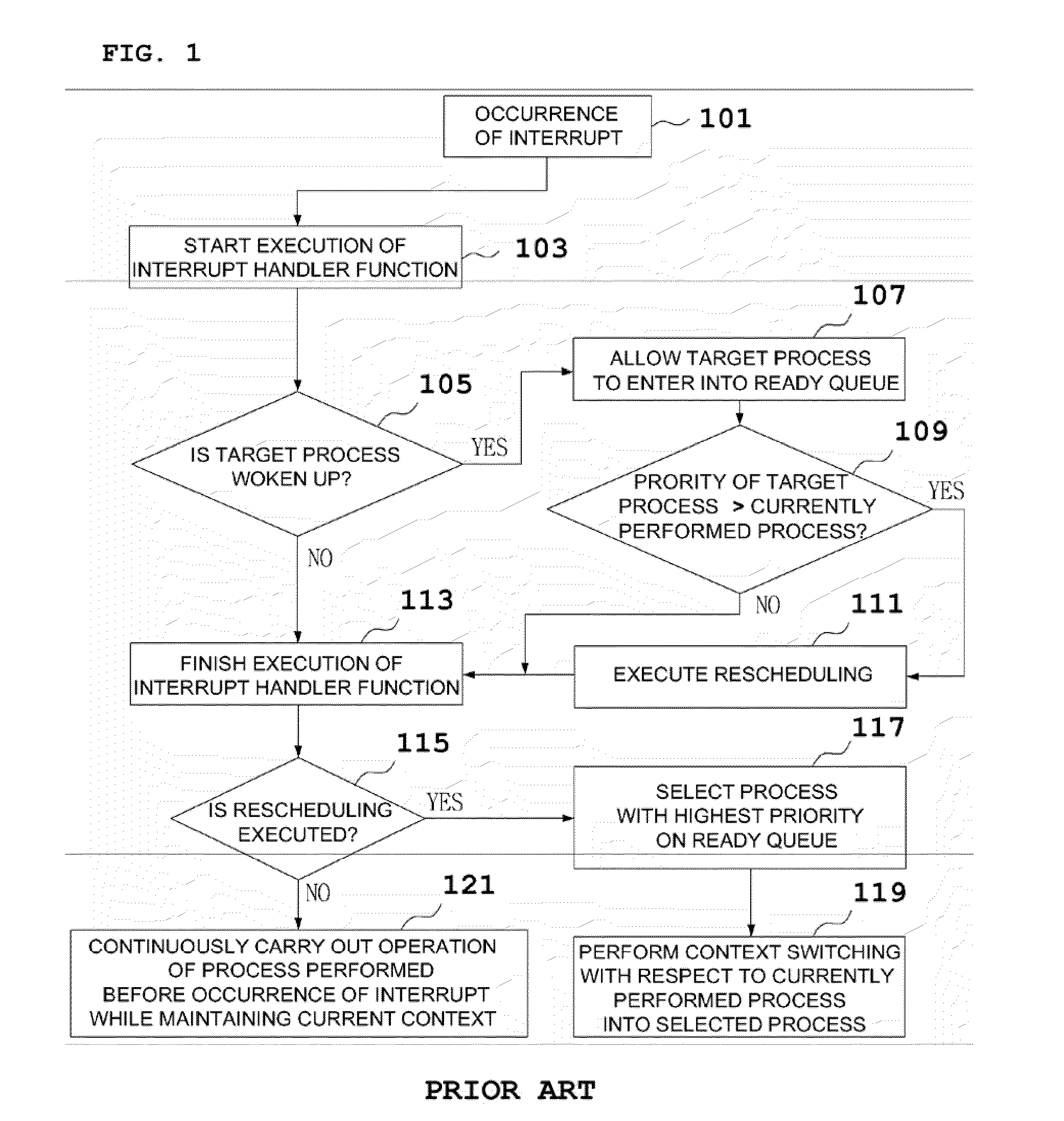

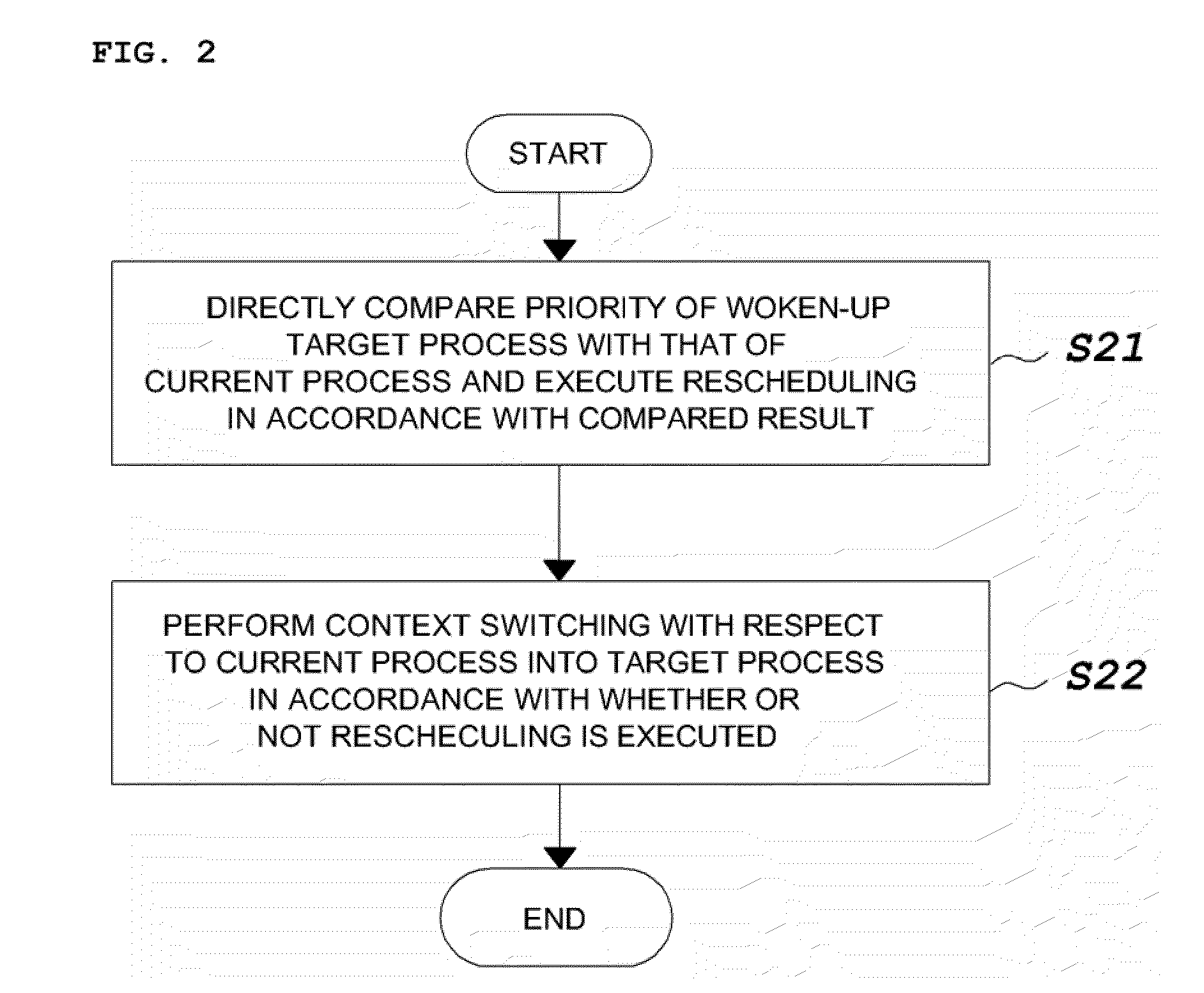

Method of interrupt scheduling

InactiveUS20090292846A1MiniaturizationDelay minimizationProgram control using stored programsMultiprogramming arrangementsOperational systemComputerized system

There is provided a method of interrupt scheduling. The method comprises: without allowing a target process woken up when an interrupt occurs to enter into a ready queue, directly comparing the priority of the woken-up target process with that of a current process performed before the occurrence of the interrupt, and executing a rescheduling in accordance with the compared result; and performing direct context switching with respect to the current process into the target process in accordance with whether or not the rescheduling is executed. Accordingly, in the method of interrupt scheduling, the preemption latency caused by the interrupt in the operating system of the computer system can be minimized by omitting the process of allowing the woken-up target process to enter into the ready queue and the process of selecting a process with the highest priority on the ready queue.

Owner:KOREA ADVANCED INST OF SCI & TECH

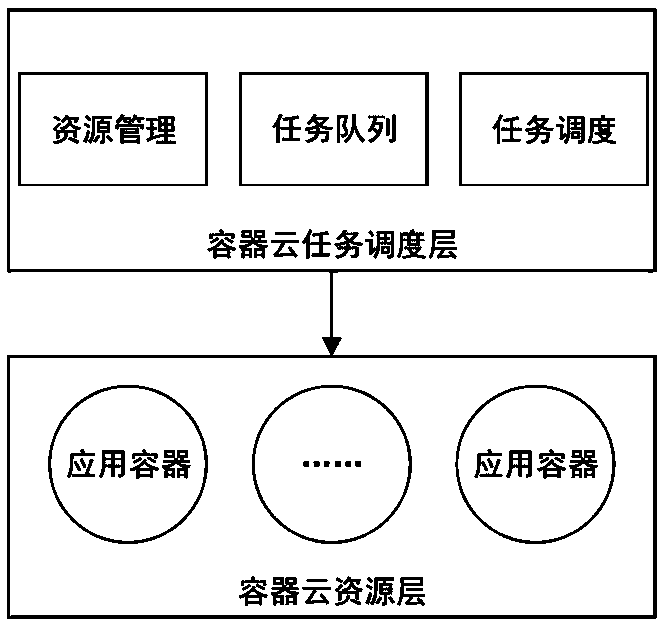

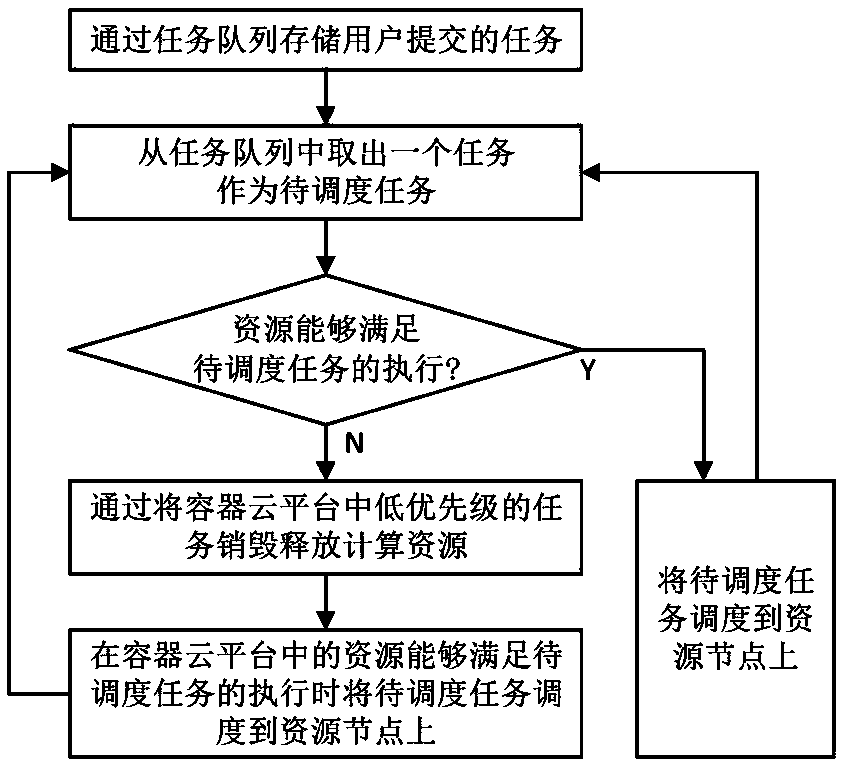

Container cloud-oriented task preemption scheduling method and system

ActiveCN111399989AReduce the number of tasksImprove task scheduling efficiencyProgram initiation/switchingResource allocationParallel computingEngineering

The invention discloses a container cloud-oriented task preemption scheduling method and system. The task preemption scheduling method comprises the steps: storing tasks submitted by a user through atask queue; taking out to-be-scheduled tasks from the task queue; judging whether the resources in the container cloud platform can meet the execution of the to-be-scheduled task or not; if the execution of the to-be-scheduled task can be satisfied, scheduling the to-be-scheduled task to the resource node, otherwise, destroying the low-priority task in the container cloud platform to release the computing resource, and scheduling the to-be-scheduled task to the resource node when the resource in the container cloud platform can satisfy the execution of the to-be-scheduled task. According to the task scheduling method and device, the tasks with low priorities can be destroyed and computing resources can be released in a resource full-load operation scene, so the tasks with high priorities are executed in time, meanwhile, due to smooth execution of the tasks, the number of the tasks waiting for being executed in the task queue is greatly reduced, and the task scheduling efficiency is effectively improved.

Owner:NAT UNIV OF DEFENSE TECH

Placement of explicit preemption points into compiled code

InactiveUS20200272444A1Close in performanceLow costProgram controlCode compilationControl flowPathPing

Improvements in the placement of explicit preemption points into compiled code are disclosed. A control flow graph is created, from executable code, that includes every control path in a function. From the control flow graph, an estimated execution time for each control path is determined. For each control path, it is determined whether an estimated execution time of a control path exceeds a preemption latency parameter, wherein the preemption latency parameter is a maximum allowable time between preemption points. When it is determined that the estimated execution time of a particular control path violates the preemption latency parameter, an explicit preemption point is placed into the executable code that satisfies the preemption latency parameter.

Owner:IBM CORP

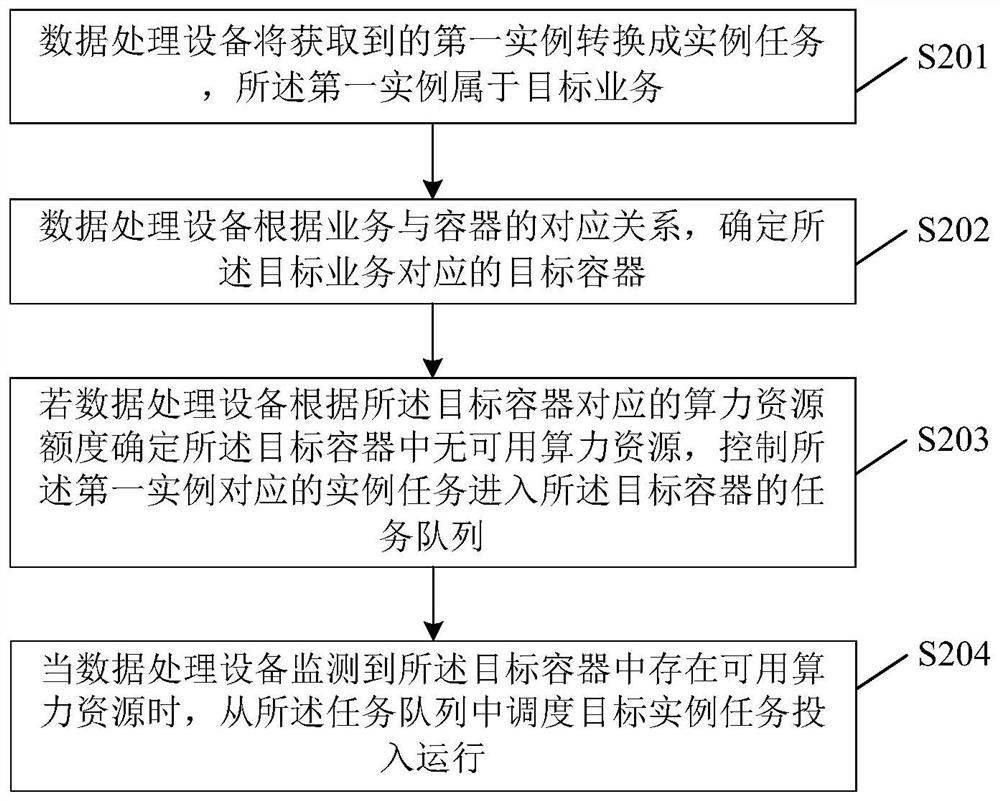

Computing power resource allocation method and device, equipment and storage medium

PendingCN112380020AGuarantee the quality of operationAffect the experienceResource allocationProcessor architectures/configurationEngineeringScheduling (computing)

The embodiment of the invention discloses a computing power resource allocation method and device, equipment and a storage medium, containers corresponding to different business configurations, a computing power resource quota corresponding to each container configuration converts a first instance into an instance task when the submitted first instance is obtained for a target business, and according to a corresponding relationship between the business and the container, the target container corresponding to the target computing is determined. Iif it is determined that no available computing power resource exists in the target container according to the computing power resource quota corresponding to the target container, the instance task corresponding to the first instance is controlledto enter a task queue of the target container. When it is monitored that the available computing power resources appear in the target container, the target instance task is scheduled from the task queue to be put into operation. Since different services are isolated through the container, examples of the same service share the computing power resources of the same container, even if priority preemption occurs during scheduling, the priority preemption is only constrained in the container, the problem of computing power resource preemption among different services is avoided, and the user experience is improved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

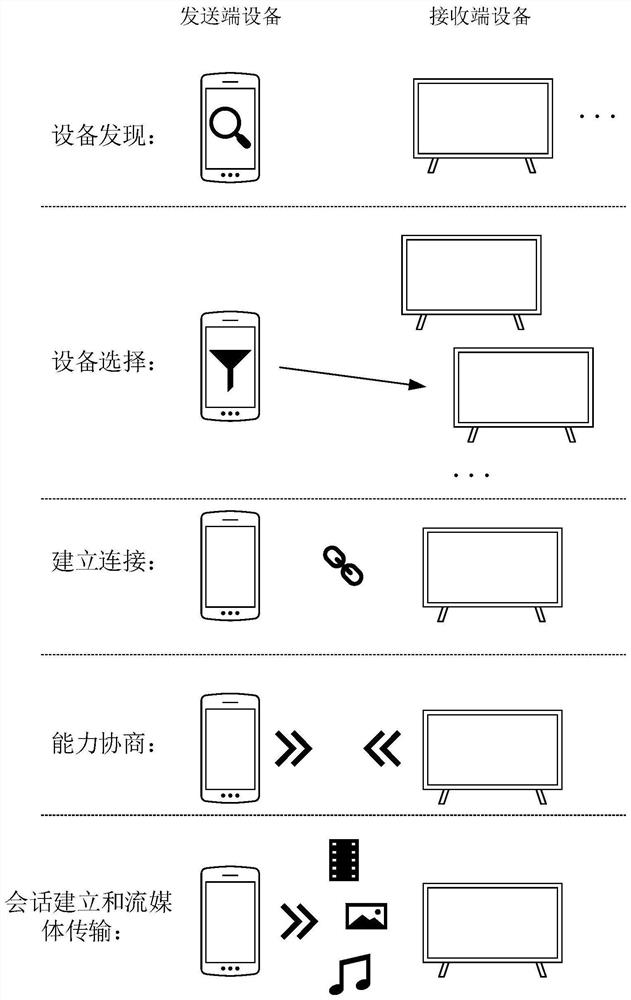

Projection screen connection control method and electronic equipment

ActiveCN113360108AImprove user experienceAvoid interferenceStatic indicating devicesConnection managementComputer hardwareUser needs

The invention provides a projection screen connection control method and electronic equipment. The method comprises the following steps: sending end equipment obtains preemption capability information of a receiving end device, wherein the preemption capability information indicates whether the receiving end device supports preemption projection screen connection or not; and when the receiving end equipment supports the preemptive screen projection connection, the sending end equipment sends first configuration information to the receiving end equipment in response to a user instruction, so that the receiving end equipment is configured to not support the preemptive screen projection connection. According to the technical scheme of the invention, the sending end equipment can obtain the preemption capability of the receiving end equipment, so that the sending end equipment can dynamically configure the preemption capability of the receiving end equipment according to the preemption capability of the receiving end equipment and user requirements. For example, if the user does not want to preempt the projection screen connection, the sending end equipment can configure the receiving end device to be not support preemption, so that the content delivered by the user on the receiving end equipment is prevented from being interfered or interrupted, the preemption logic of the streaming media projection screen scene is optimized, and the user experience is improved.

Owner:HONOR DEVICE CO LTD

Data processing method, related device, equipment and storage medium

PendingCN112506683AImprove processing efficiencyImplement parallel processingInterprogram communicationData packEngineering

The invention discloses a data processing method based on storage and reading. The data processing method comprises the steps: acquiring the storage state of an annular queue; if the annular queue isin a full state or a non-empty state, acquiring a first data packet from the annular queue according to the current count value of a consumer counter; based on the CAS cycle operation, performing atomincremental operation on the current count value of the consumer counter to obtain a next count value; and obtaining a second data packet from the annular queue according to the next count value. Besides, if the annular queue is in an idle state or a non-empty state, adding a data packet into the annular queue according to the current count value of a producer counter, and performing atomic incremental operation on the current count value of the producer counter to obtain the next count value. The invention further provides a related device. The annular queue of a non-blocking mechanism is introduced to serve as a data packet buffer structure, parallel processing of data packets is achieved, the task preemption frequency is reduced, and jolt of a cache memory is effectively relieved.

Owner:TENCENT TECH (SHENZHEN) CO LTD

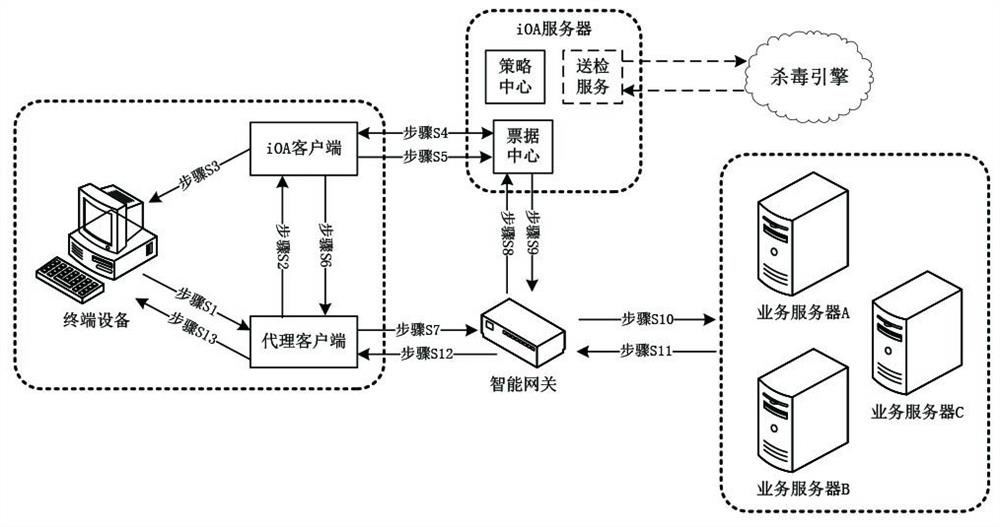

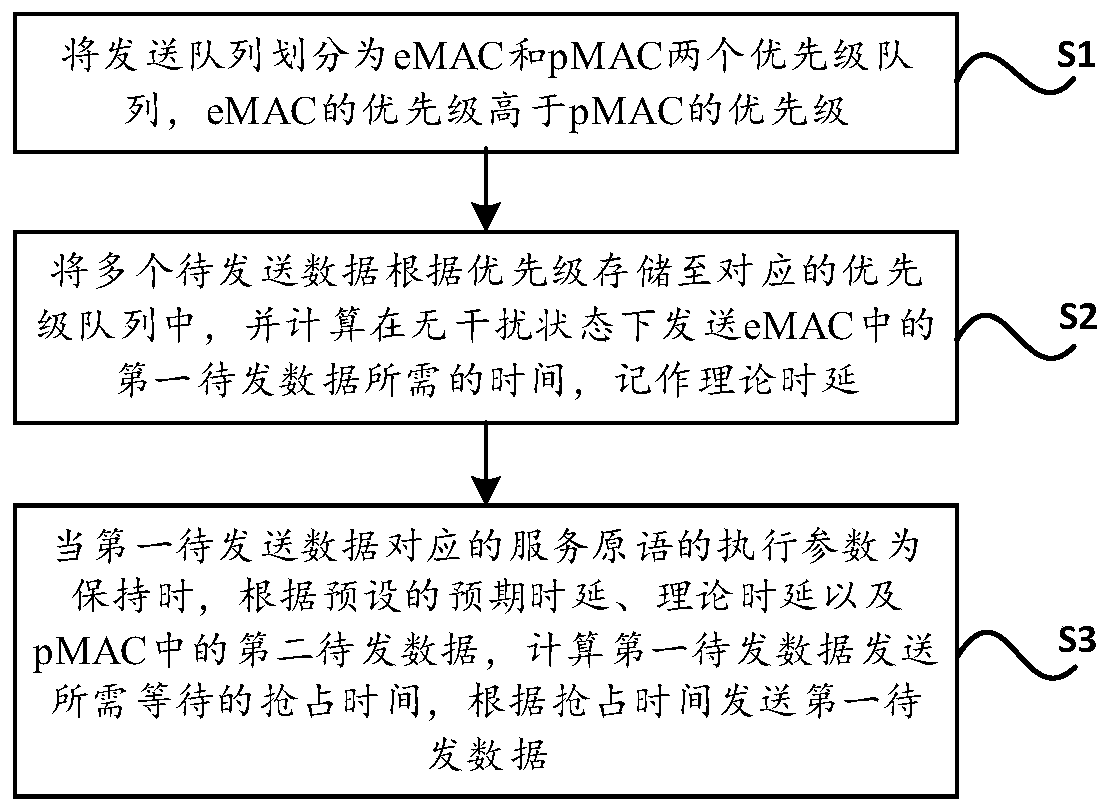

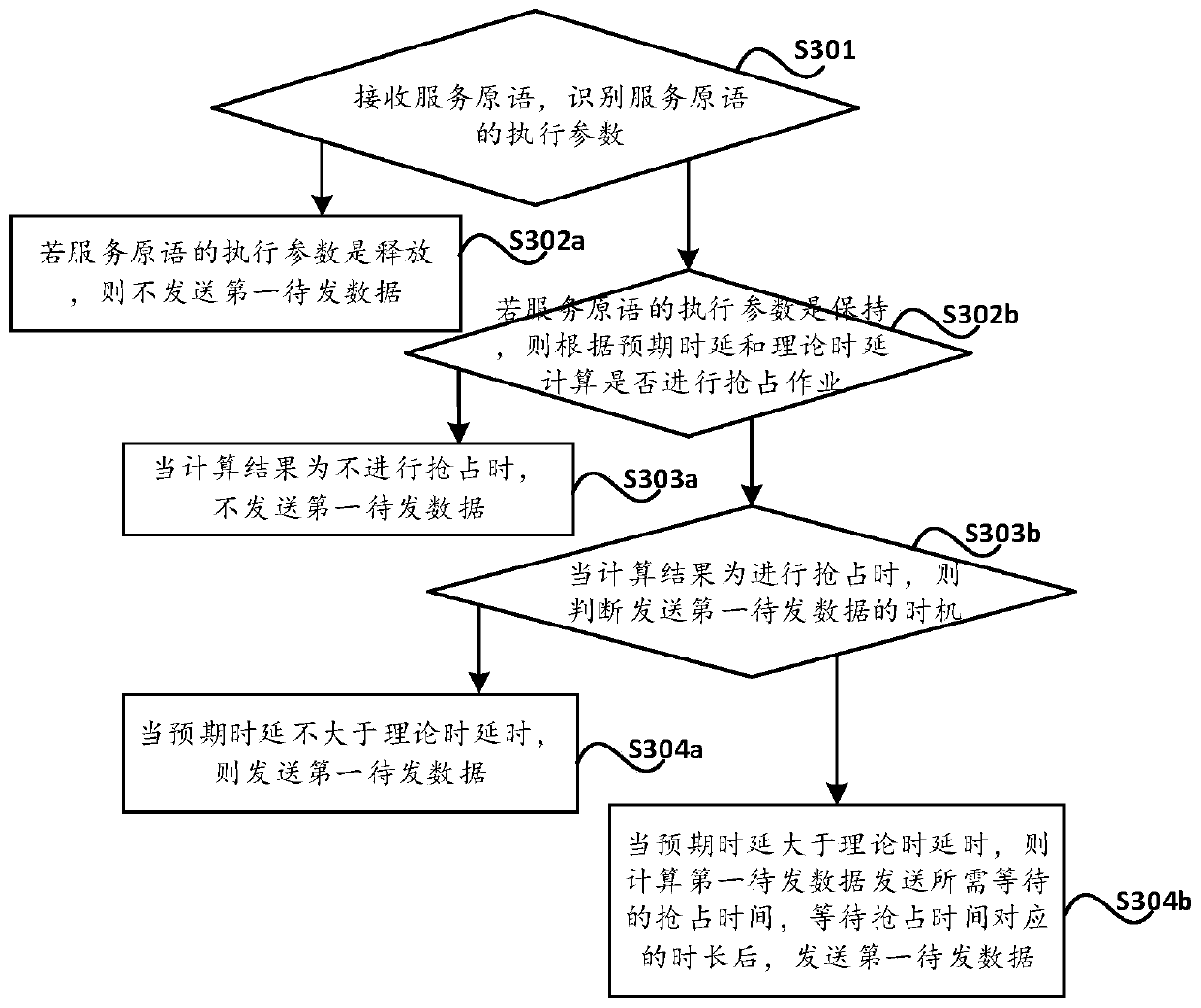

Data sending method and system based on time delay constraint

ActiveCN110784418AReasonably arrange to sendOvercoming performance-degrading flawsData switching networksService flowTime delays

The invention provides a data sending method and system based on time delay constraint, and the method comprises the following steps: dividing a sending queue into an eMAC priority queue and a pMAC priority queue, wherein the priority of the eMAC is higher than the priority of the pMAC; storing the plurality of pieces of to-be-sent data into corresponding priority queues according to priorities, calculating time required for sending the first to-be-sent data in the eMAC in an interference-free state, and recording the time as theoretical time delay; and when the execution parameter of the service primitive corresponding to the first to-be-sent data is kept, according to preset expected time delay, theoretical time delay and second to-be-sent data in the pMAC, calculating the preemption time for waiting for sending the first to-be-sent data, and sending the first to-be-sent data according to the preemption time. Under the condition that the time delay constraint condition of the high-priority frame is satisfied, the low-priority data has more transmission opportunities as much as possible, and the time delay is reduced, so that the overall performance of the system is improved, andthe relative fairness of service flow transmission is ensured.

Owner:FENGHUO COMM SCI & TECH CO LTD

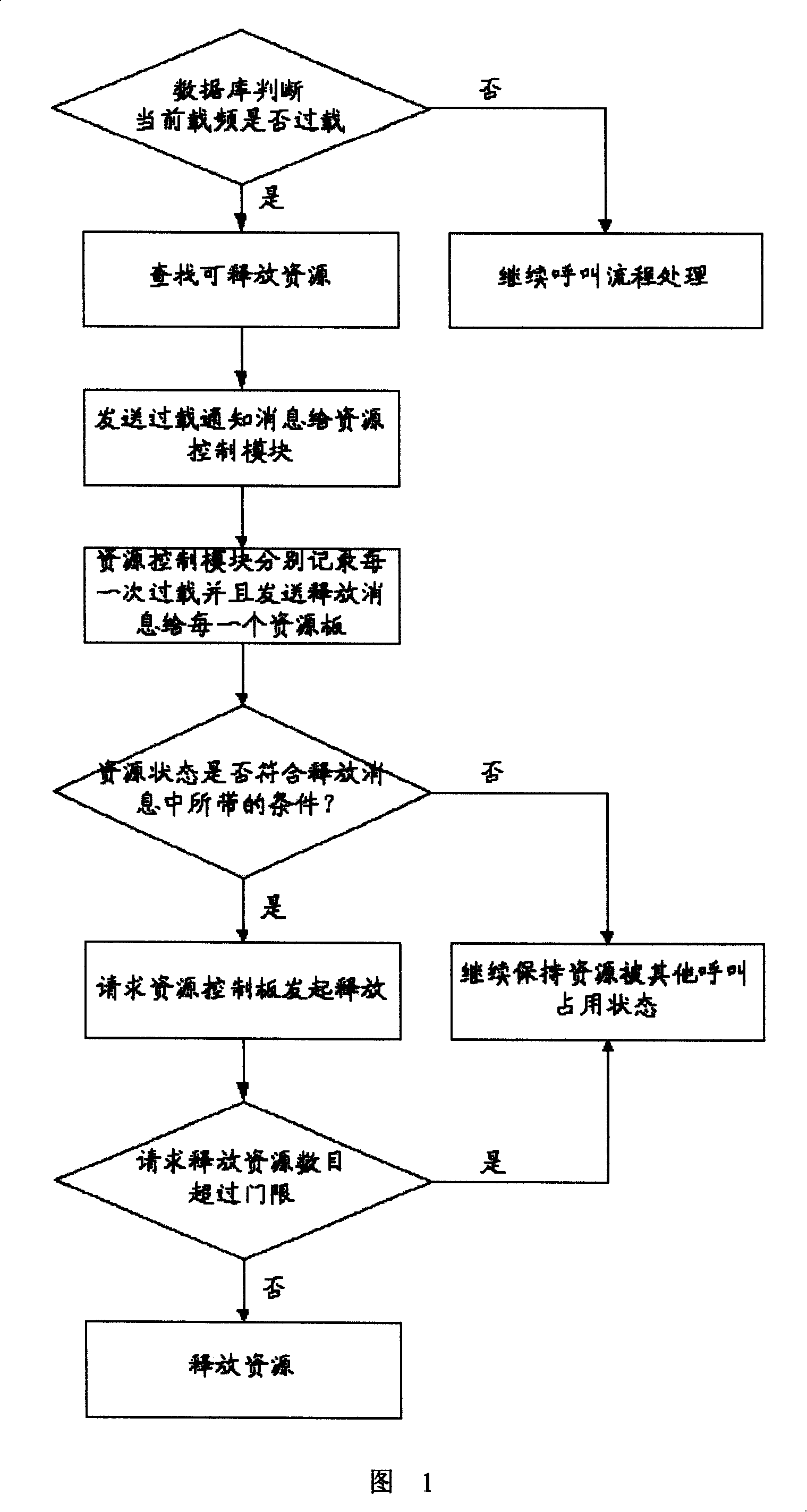

Overload control method of urgent call and preempted preference call

InactiveCN101141708APrevent excessive forward powerAvoid wasting system resourcesTransmission control/equalisingRadio/inductive link selection arrangementsPush-to-talkPriority call

The present invention relates to an overload control method of an emergency call and a preemption and priority call. When the emergency call and the preemption and priority call of a push-to-talk group calling are accessed, a resource control module distributes the required resource according to the non overload flow, after the resource is distributed successfully, and when the power overload in the frequency is found, the resource control module calculates the threshold value of the resource number which is required to be released; other calling resources which conform to the releasing condition are searched and released; when the released resource number reaches the threshold value, the releasing is stopped. The overload control method of the present invention for both the system performance and other normal calling when the current carrier power is overloaded not only prevent too high forward power, but also avoid the waste of the system resources caused by excessive normal calling releasing.

Owner:ZTE CORP

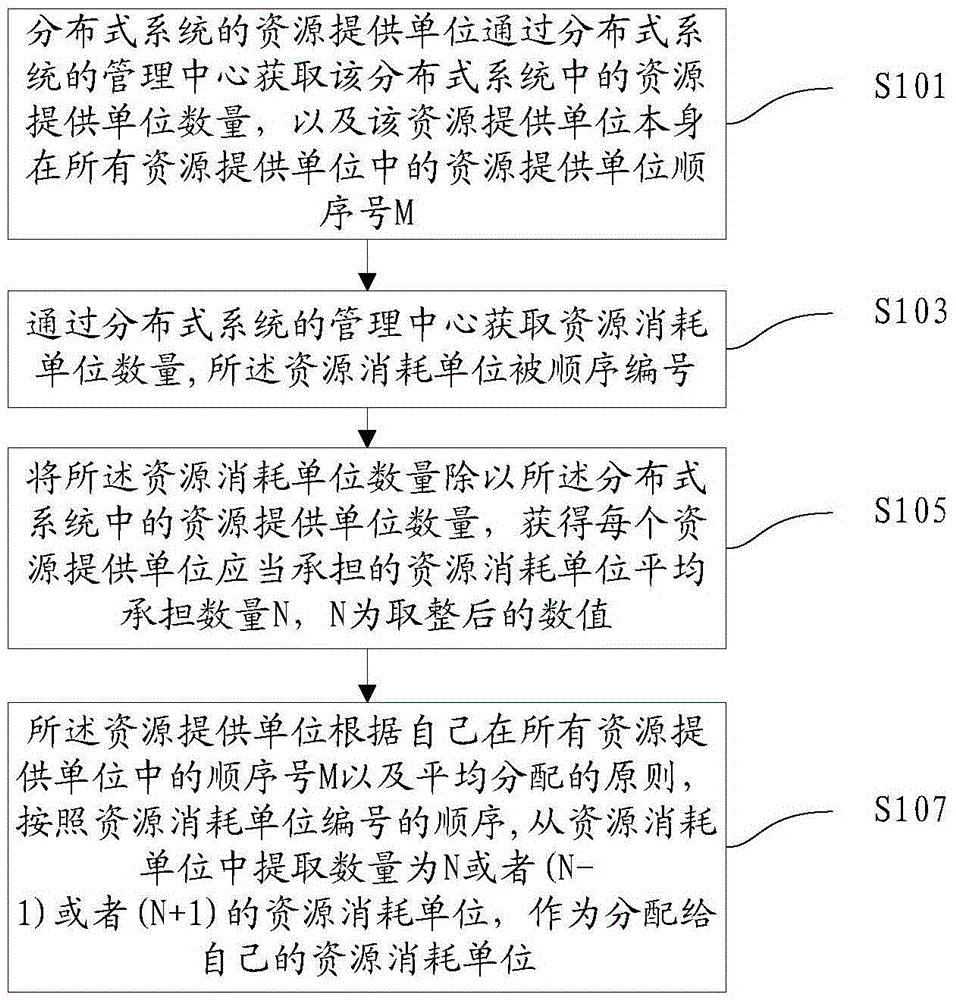

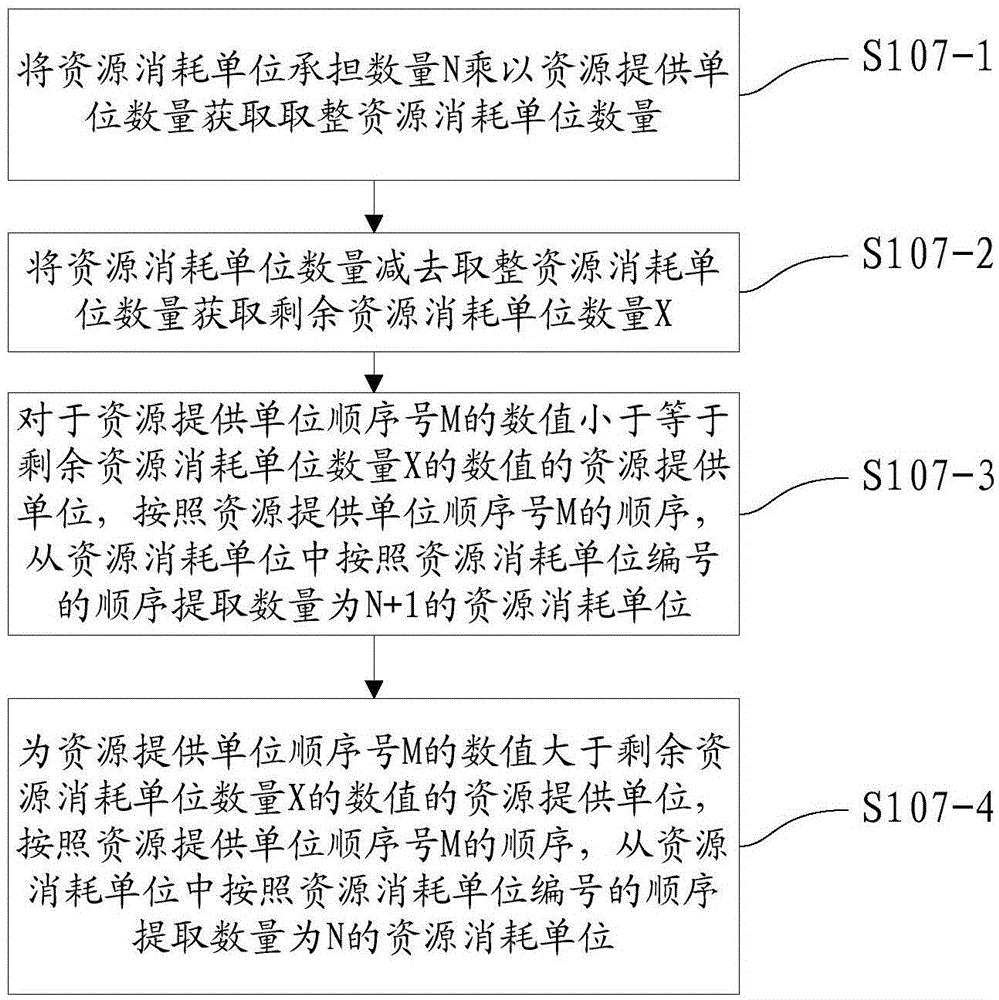

Distributed system scheduling method and device and electronic equipment

InactiveCN106681824ASolve the problem of slow allocation convergence and long allocation timeResource allocationResource consumptionParallel computing

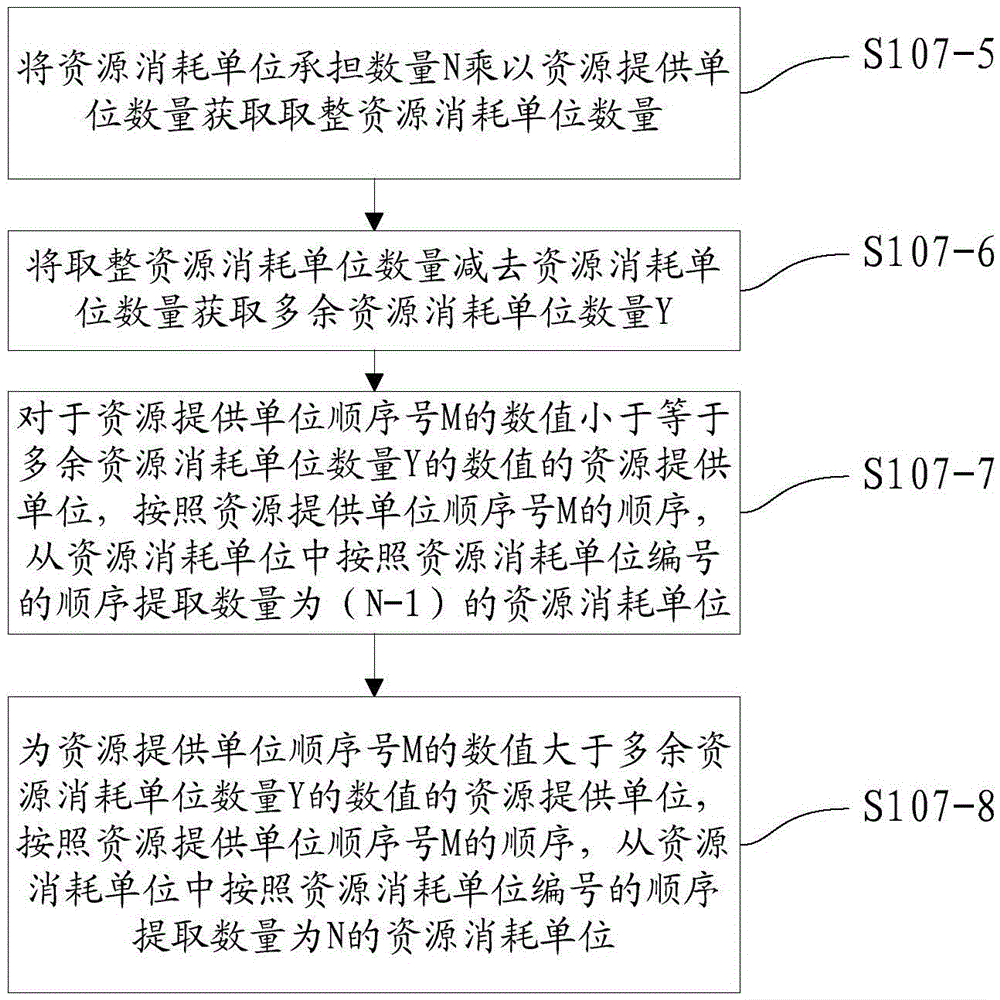

The invention discloses distributed system scheduling method and device and electronic equipment. The distributed system scheduling method includes that a management center of a distributed system acquires quantity and sequence number M of resource providing units of the distributed system; the management center of the distributed system acquires quantity and number of resource consumption units; the quantity of the resource consumption units is divided by the quantity of resource providing units to obtain the resource consumption unit average bearing quantity N born by the resource providing units; N or (N-1) or (N+1) resource consumption units are extracted from the resource consumption units according to the sequence of the number of the resource consumption units and are taken as the resource consumption units distributed to the resource providing units on the basis of the resource providing unit sequence number M. According to the technical scheme, problems of low task handling efficiency and deadlock possibility caused during task distribution carried out by means of distributed locks of the distributed system, and low speed of convergence in task distribution and long distribution time caused by circular preemption are solved.

Owner:CAINIAO SMART LOGISTICS HLDG LTD

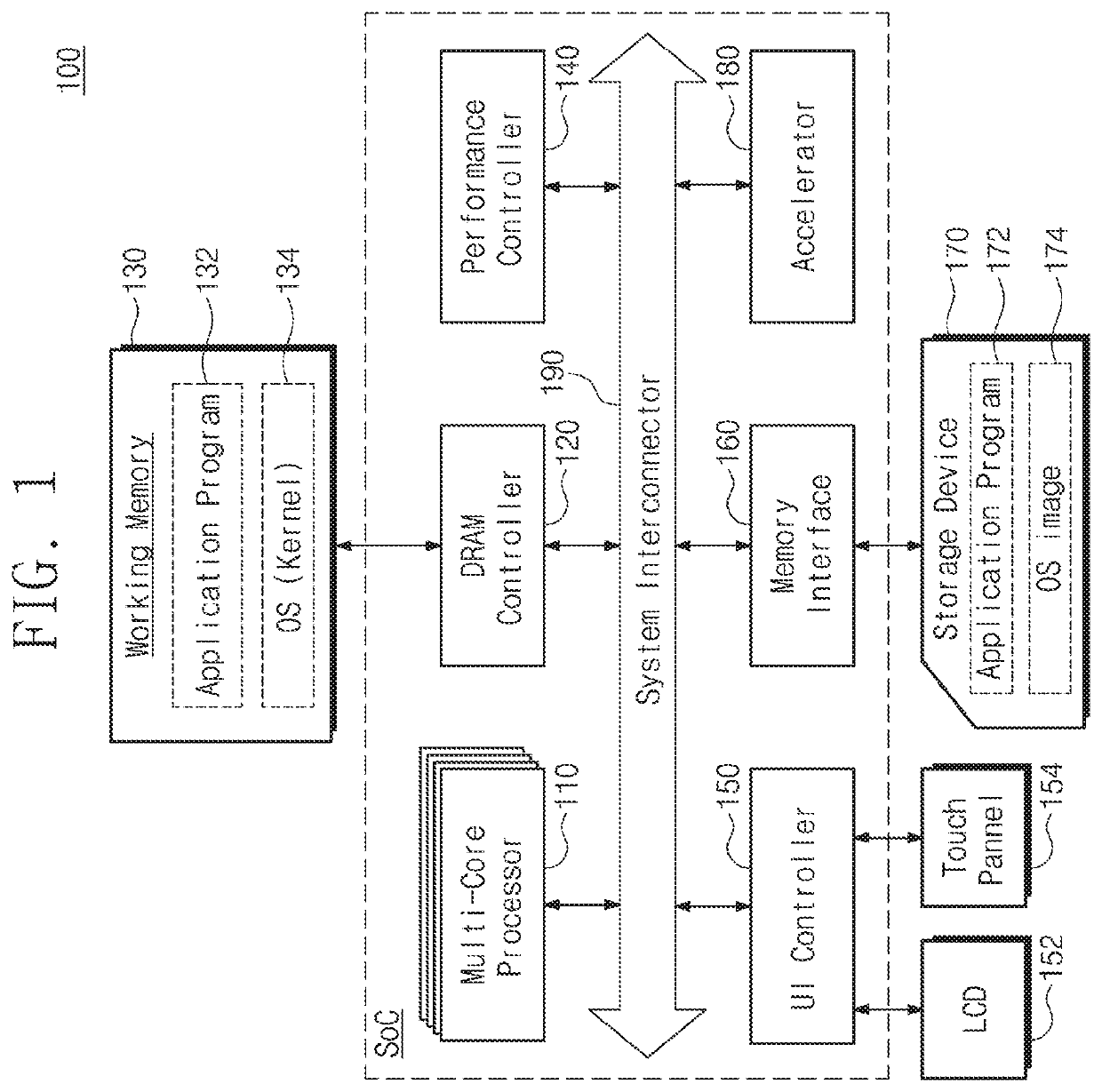

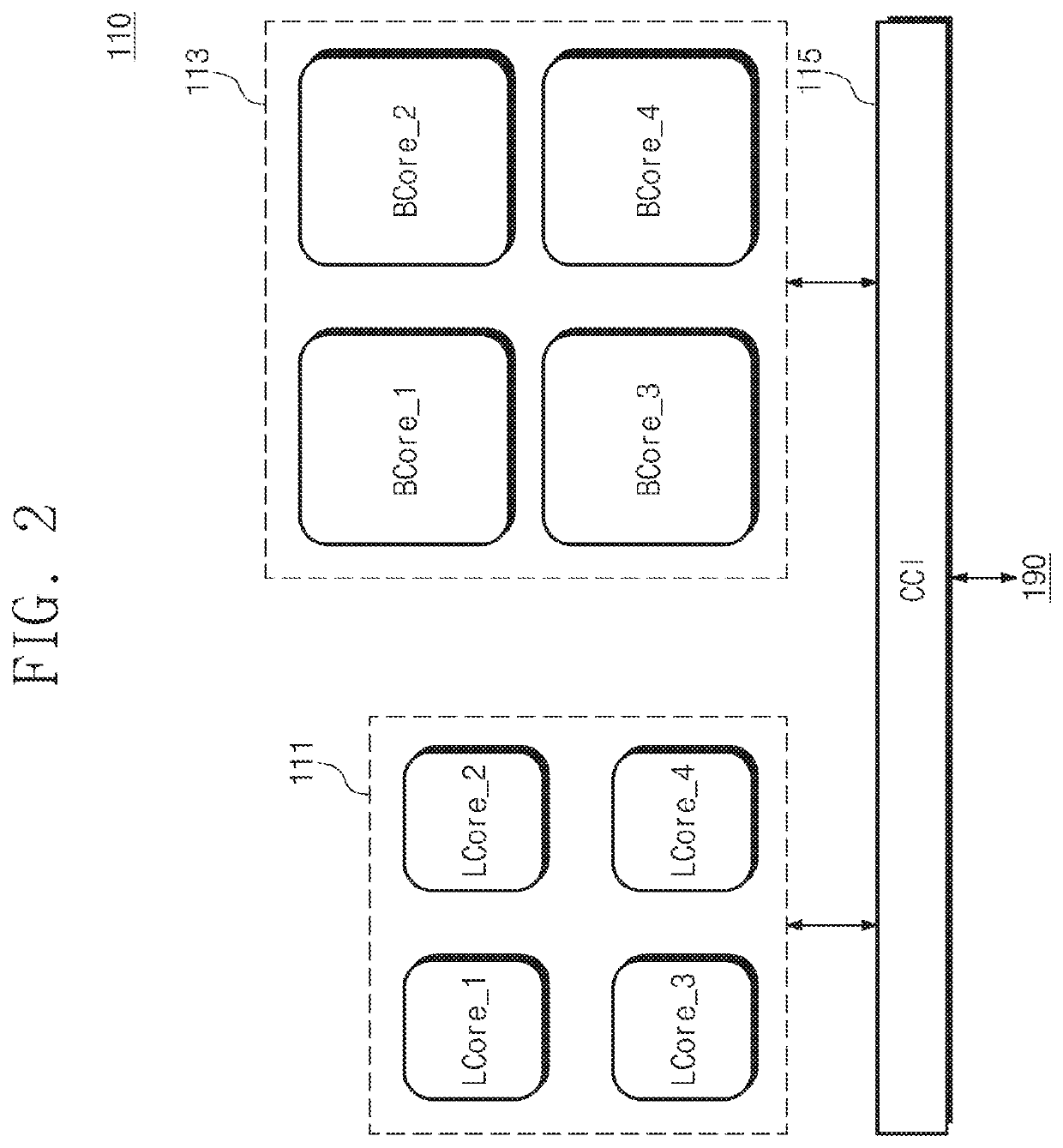

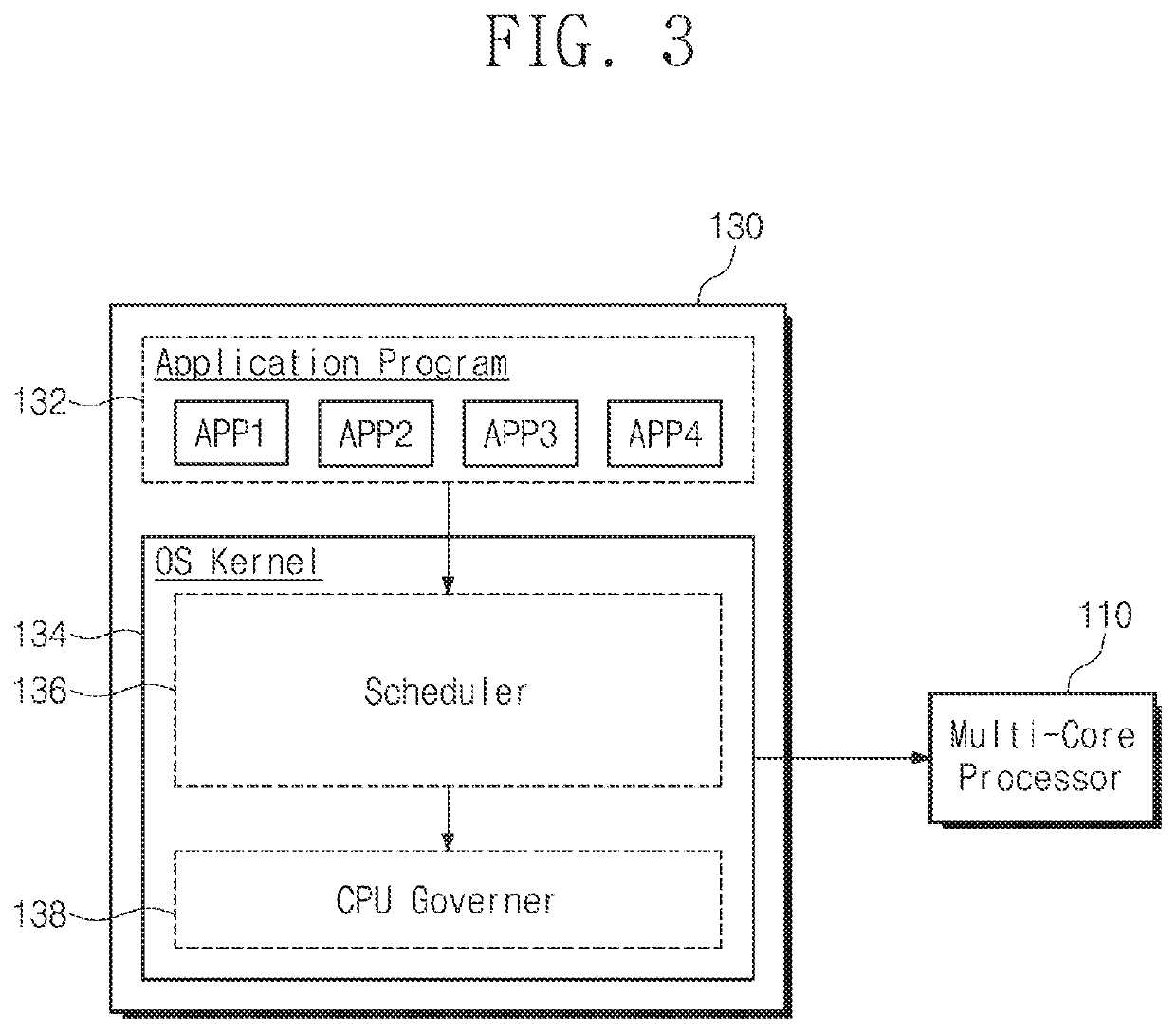

System on chip including a multi-core processor and task scheduling method thereof

A scheduling method of a system on chip including a multi-core processor includes receiving a schedule-requested task, converting a priority assigned to the schedule-requested task into a linear priority weight, selecting a plurality of candidate cores, to which the schedule-requested task will be assigned, from among cores of the multi-core processor, calculating a preemption compare index indicating a current load state of each of the plurality of candidate cores, comparing the linear priority weight with the preemption compare index of the each of the plurality of candidate cores to generate a comparison result, and assigning the schedule-requested task to one candidate core of the plurality of candidate cores depending on the comparison result.

Owner:SAMSUNG ELECTRONICS CO LTD

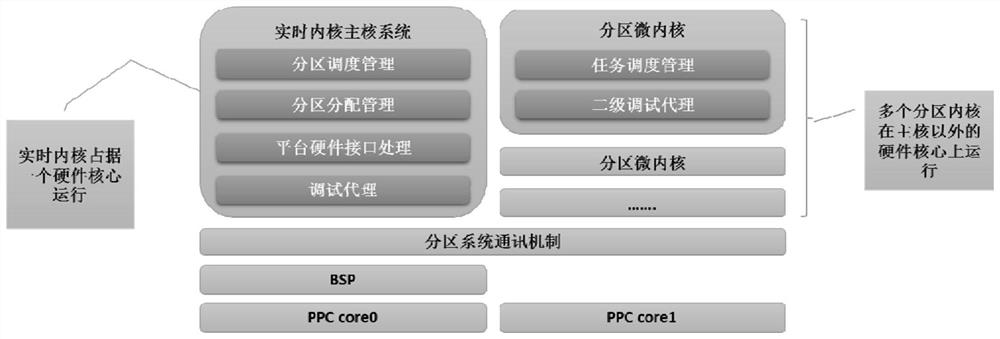

Embedded multi-core operating system scheduling method and scheduling device, electronic equipment and storage medium

ActiveCN111796921AAchieve organic unityImprove efficiencyProgram initiation/switchingResource allocationOperational systemTimer

The invention discloses an embedded multi-core operating system scheduling method and a scheduling device, electronic equipment and a storage medium, and the scheduling method comprises the steps: triggering periodic task scheduling through timer clock interruption, enabling a basic scheduling strategy of time-sharing parallel and multi-priority management of multiple tasks to trigger real-time preemption of a key task through external interruption; providing a mechanism for time-sharing scheduling of a plurality of partition microcores, and supporting a priority-based round-robin task scheduling mechanism in the microcores. According to the method, system task scheduling with high timeliness requirements is completed, multi-task security isolation parallel data processing is also completed, multi-task space isolation is ensured, and the security and reliability of the system are improved.

Owner:XIAN MICROELECTRONICS TECH INST

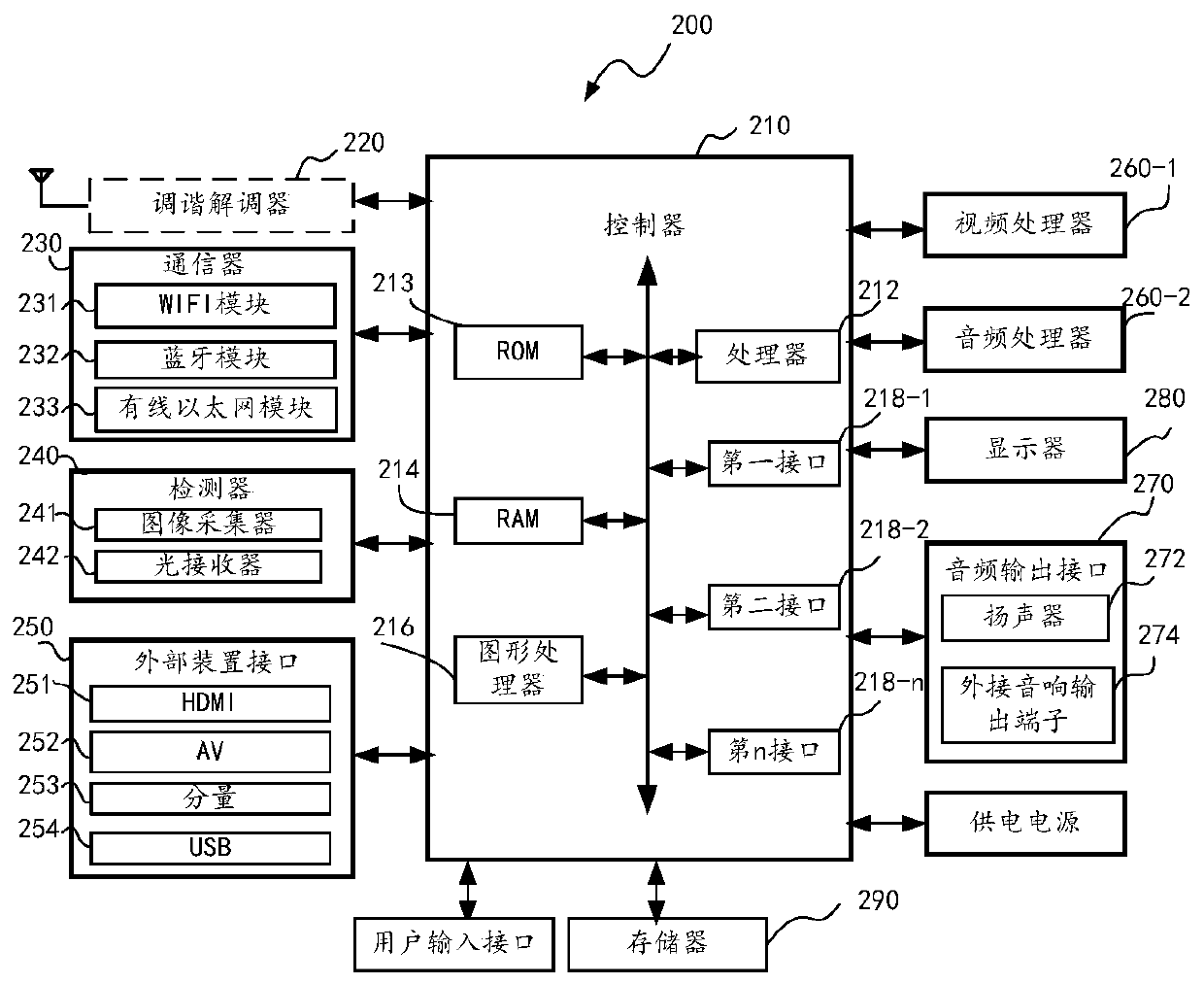

Display equipment and scheduling method for Bluetooth communication resources of display equipment

ActiveCN111050199AAvoid preemptionReduce CatonSelective content distributionComputer hardwareDisplay device

The invention discloses a display device and a scheduling method for Bluetooth communication resources of the display device. The display device comprises a display, a controller and a Bluetooth module, the Bluetooth module is used for executing a corresponding processing task according to control of the controller, and the controller is configured to obtain the state of the Bluetooth module whenan operation request of an application for the Bluetooth module is received; if the Bluetooth module is in the working state, determining the priority of a target processing task corresponding to theoperation request and the priority of a current processing task of the Bluetooth module; judging whether the priority of the target processing task is higher than that of the current processing task or not; If the priority of the target processing task is higher than that of the current processing task, controlling the Bluetooth module to suspend execution of the current processing task, and executing the target processing task. Through application of the method and the device, resource preemption can be avoided, the success rate of equipment pairing and connection is improved, Bluetooth audiojamming is reduced, and reasonable scheduling and effective use of Bluetooth communication resources are realized.

Owner:HISENSE VISUAL TECH CO LTD

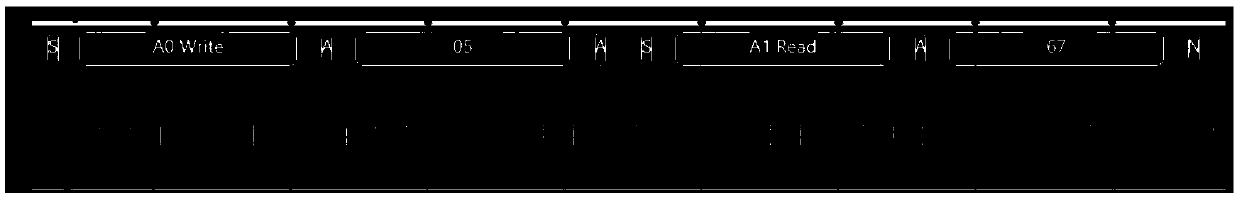

hybrid control method and device based on software simulation I2C and hardware I2C

ActiveCN109656841ANo abnormal conditions such as instabilityElectric digital data processingInformation controlMaster controller

The invention discloses a hybrid control method for simulating I2C and hardware I2C based on software. The method comprises the following steps: 1) an I2C master controller arranged on an ARM CPU chipobtains attribute information and register address information of a display module through an upper computer; 2) software I2C signal driving software is loaded by the I2C master controller, outputting a simulation I2C signal to I2C slave equipment, and read a time sequence signal by the simulation I2C signal; And 3) the I2C master controller unloads the software I2C signal driving software, loadsthe hardware I2C signal driving software, and outputs a standard I2C signal to the I2C slave device, and the standard I2C signal is a standard I2C write time sequence signal. According to the invention, when the hardware I2C signal is used for reading operation; Compared with software simulation I2C signals, the method has the advantages that only standard repstart waveforms can be output, the output requirements of non-standard time sequence waveforms cannot be met, and meanwhile, compared with software simulation I2C signals, the abnormal conditions that clock signals are unstable and the like due to the fact that i2c signals are interfered by CPU preemption are avoided.

Owner:WUHAN JINGLI ELECTRONICS TECH

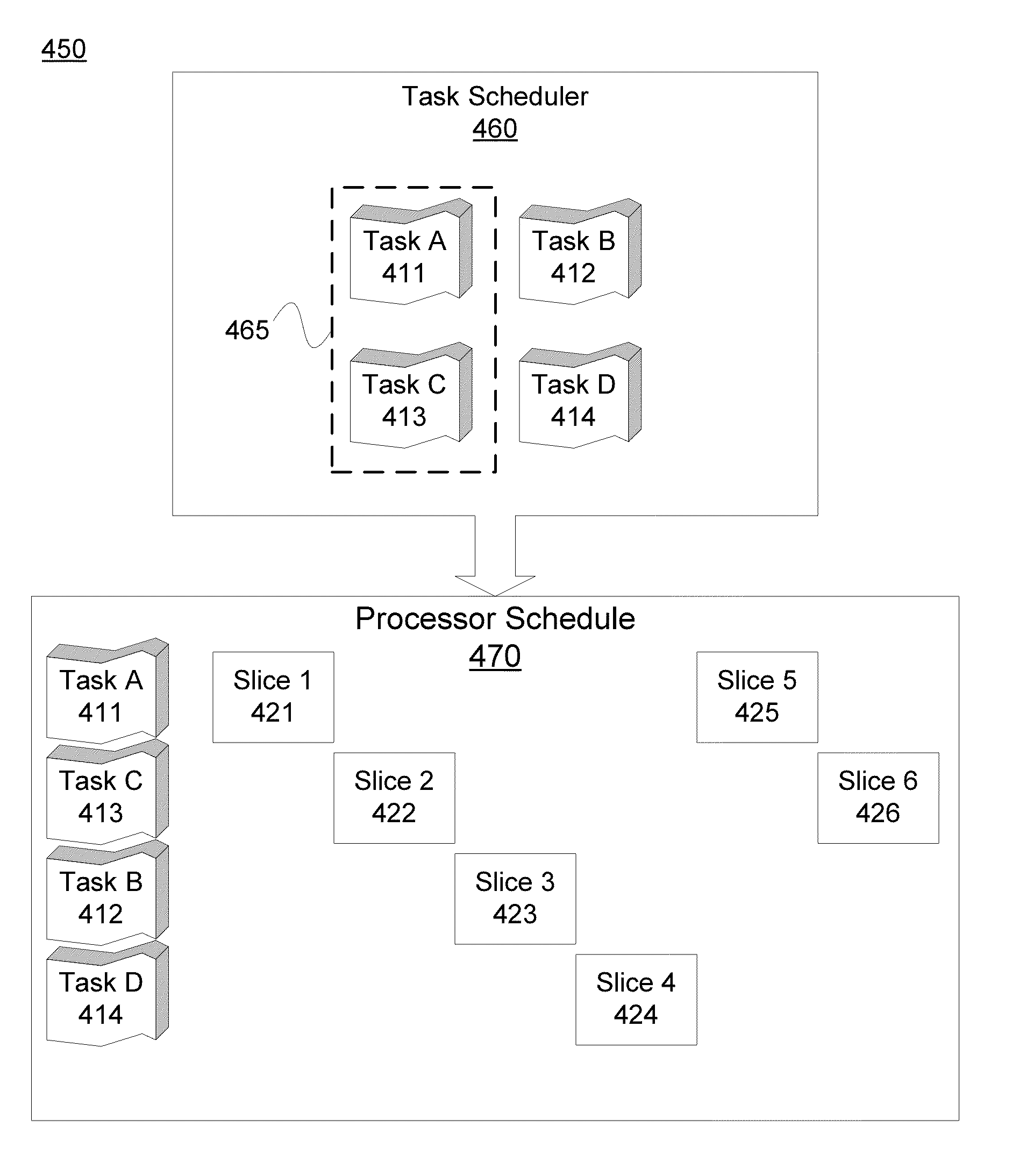

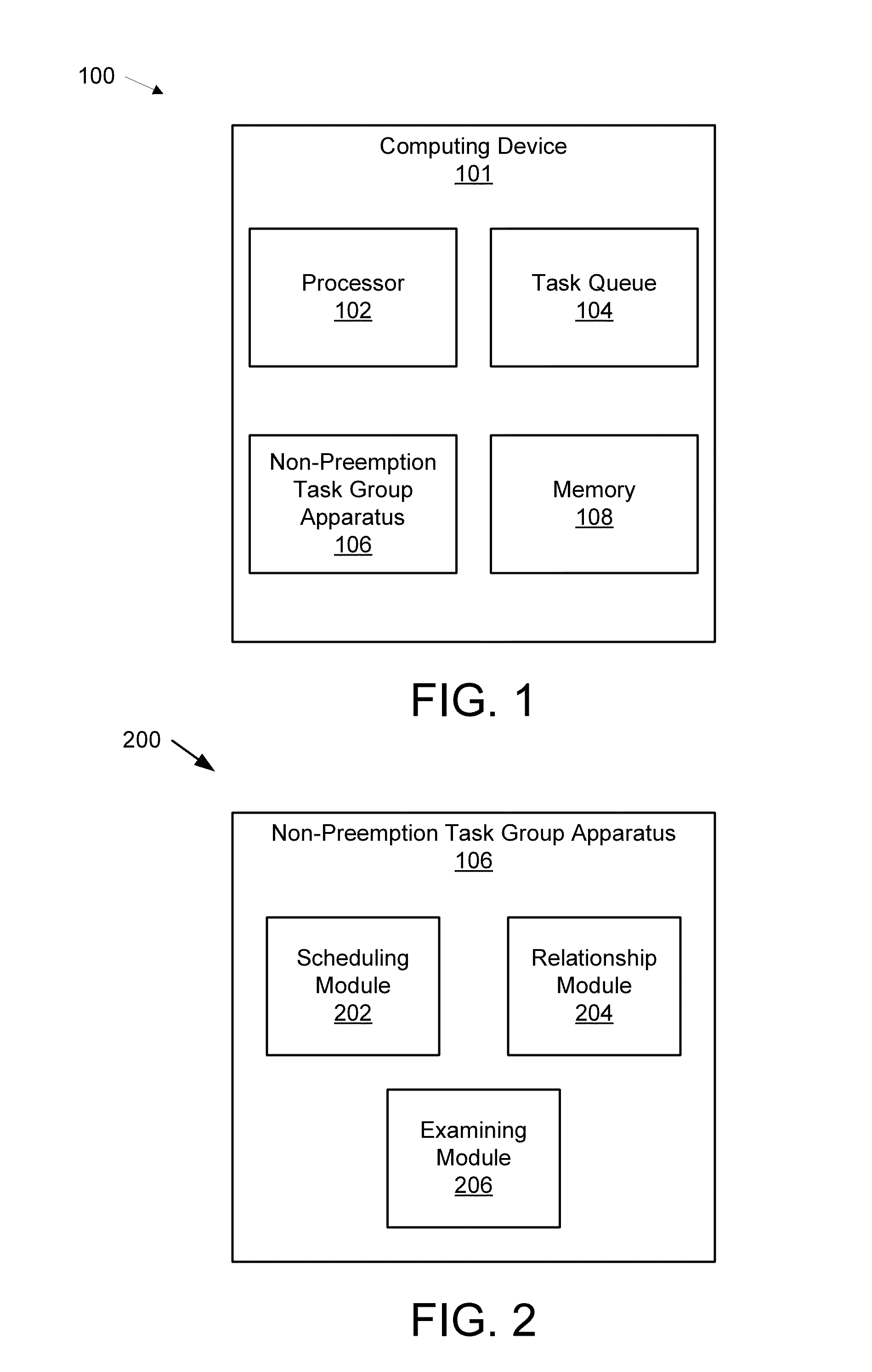

Non-preemption of a group of interchangeable tasks in a computing device

InactiveUS20150169368A1Avoid transmissionProgram initiation/switchingInterprogram communicationParallel computingComputer engineering

A method for non-preemption of interchangeable tasks is disclosed. The method for non-preemption of interchangeable tasks includes identifying a first task assigned to a first time slice, identifying a second task assigned to a subsequent time slice, comparing the first task to the second task, identifying whether the first task and the second task are interchangeable tasks, and executing the first task during the subsequent time slice in response to the first task and the second task being interchangeable. The first task may be currently executing on a processor or may be scheduled to execute on the processor.

Owner:IBM CORP

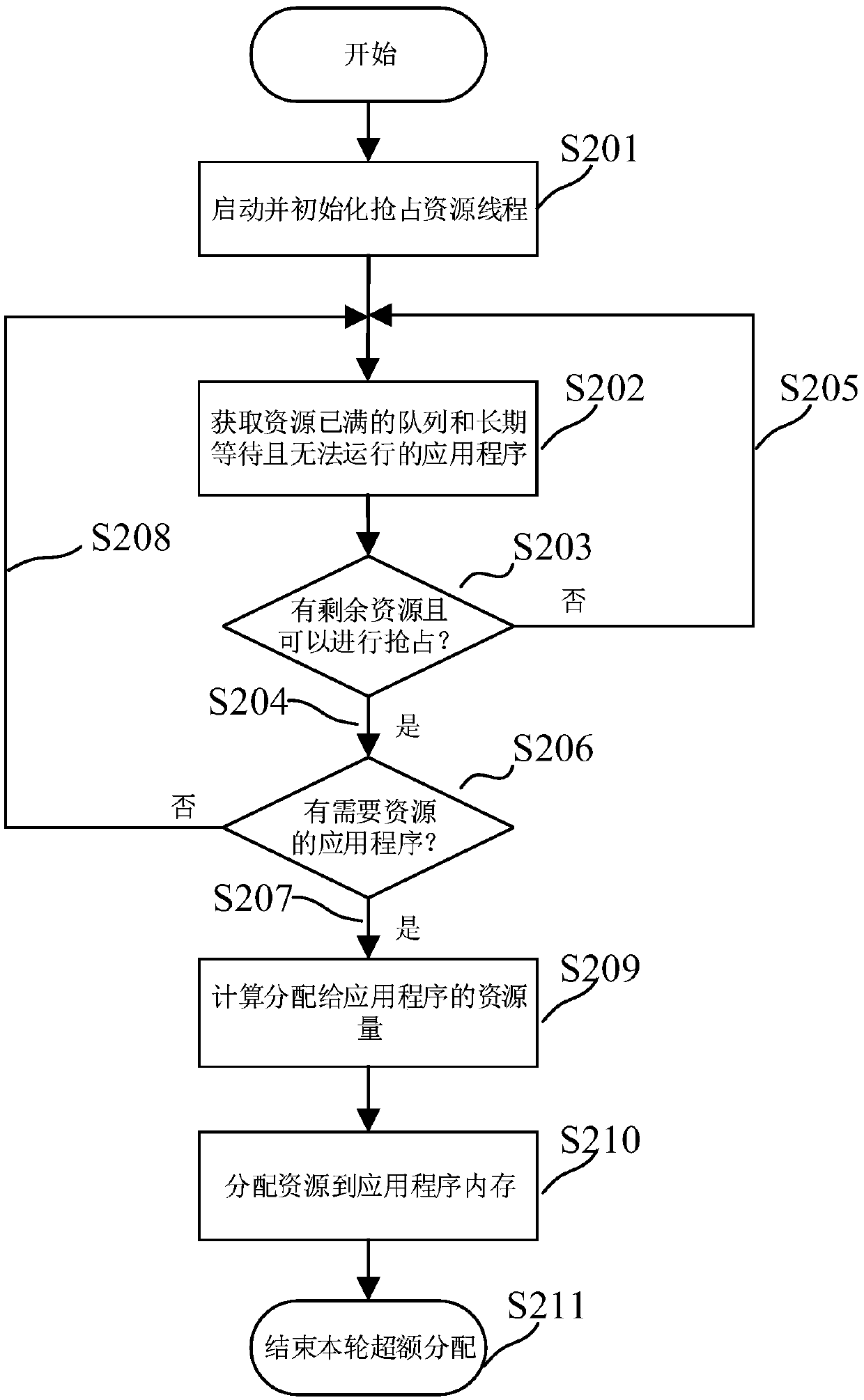

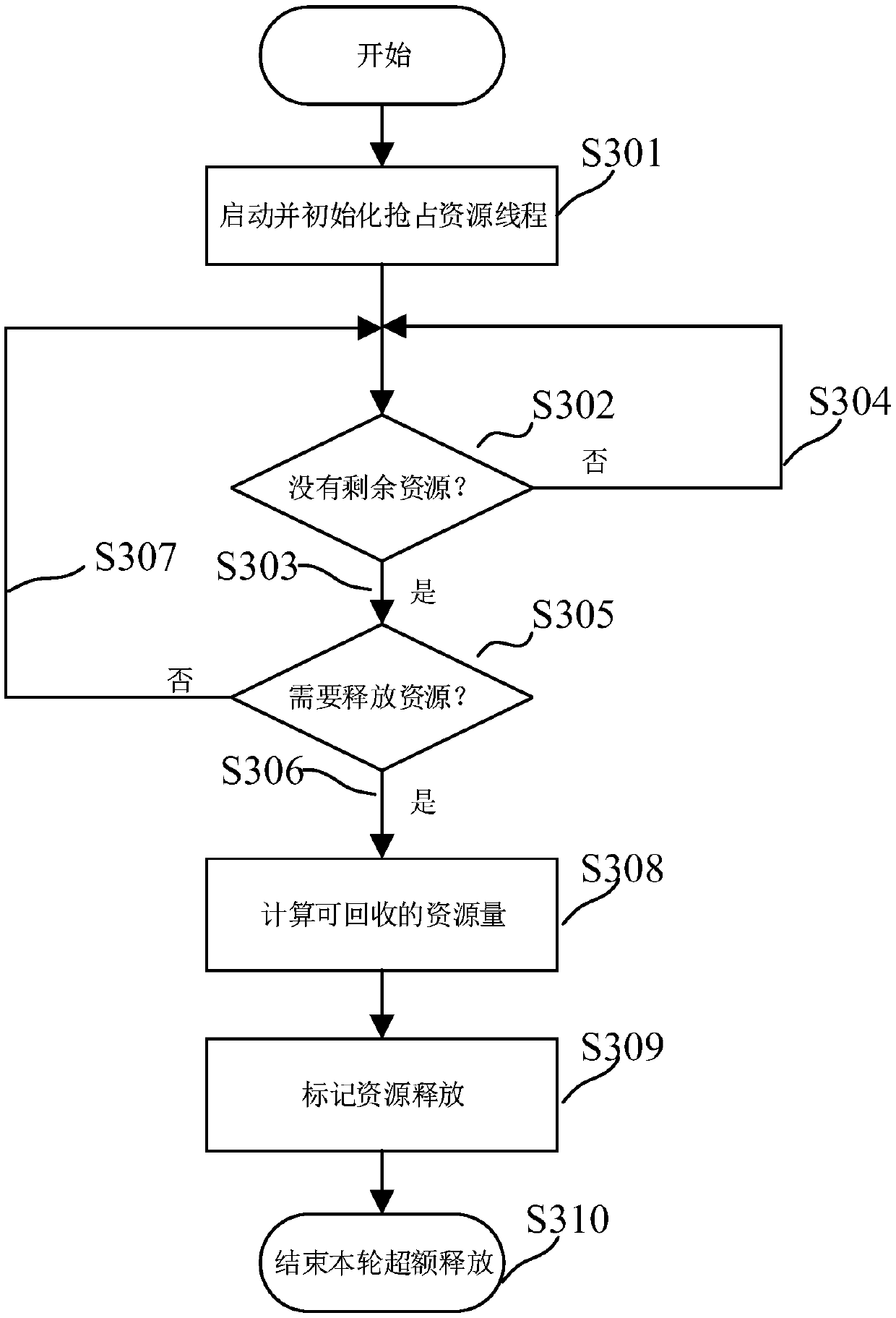

Resource scheduling method and device, electronic equipment and storage medium

PendingCN111338785AImprove work efficiencyImprove utilization efficiencyResource allocationEnergy efficient computingResource allocationDistributed computing

The embodiment of the invention provides a resource scheduling method and device based on a Hadoop cluster, electronic equipment and a storage medium, and relates to the technical field of big data. The resource scheduling method comprises the steps: receiving a resource preemption request of a resource preemption thread; in response to the resource preemption request, obtaining a target job needing to calculate resources; judging whether the whole cluster has enough residual computing resources or not and whether the computing resources can be preempted or not according to the target operation; when it is determined that the overall cluster is not sufficient for the remaining computing resources and computing resources may be preempted, excessively allocating the computing resources to the target job. According to the technical scheme of the embodiment of the invention, preemption in allocation is independent through the adjustment logic, the resource allocation efficiency of the cluster is improved, and the working efficiency of the system is improved.

Owner:BEIJING JINGDONG SHANGKE INFORMATION TECH CO LTD +1

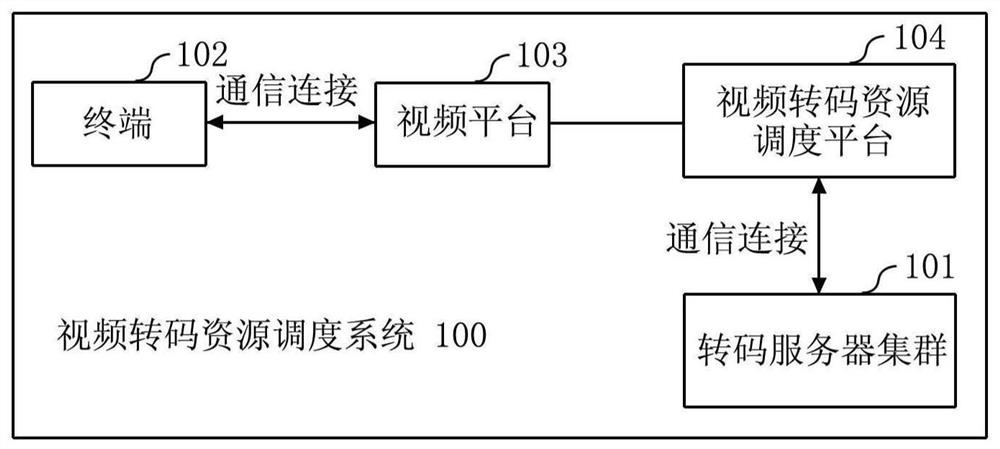

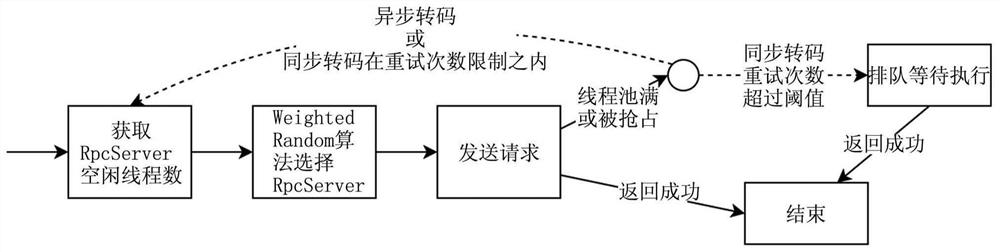

Video transcoding resource scheduling method and device

ActiveCN112565774AImprove resource utilizationLow costDigital video signal modificationTranscodingServer

The invention provides a video transcoding resource scheduling method and device. The video transcoding resource scheduling method comprises the following steps: receiving a first transcoding task; comparing the priority of the first transcoding task with the priority of the transcoding task being executed under the condition that no idle thread exists in a transcoding cluster at present and it isdetermined that a preemption function is started; and under the condition that a second transcoding task of which the priority is lower than that of the first transcoding task exists in the currentlyexecuted transcoding tasks, controlling the server executing the second transcoding task to stop executing the second transcoding task, and sending a request for executing the first transcoding taskto the server stopping executing the second transcoding task. According to the video transcoding resource scheduling method and device disclosed by the invention, the bearing capacity of the peak traffic of the uploading link can be improved, the stability and resource utilization rate of the transcoding cluster are improved, and the server cost is saved.

Owner:BEIJING DAJIA INTERNET INFORMATION TECH CO LTD

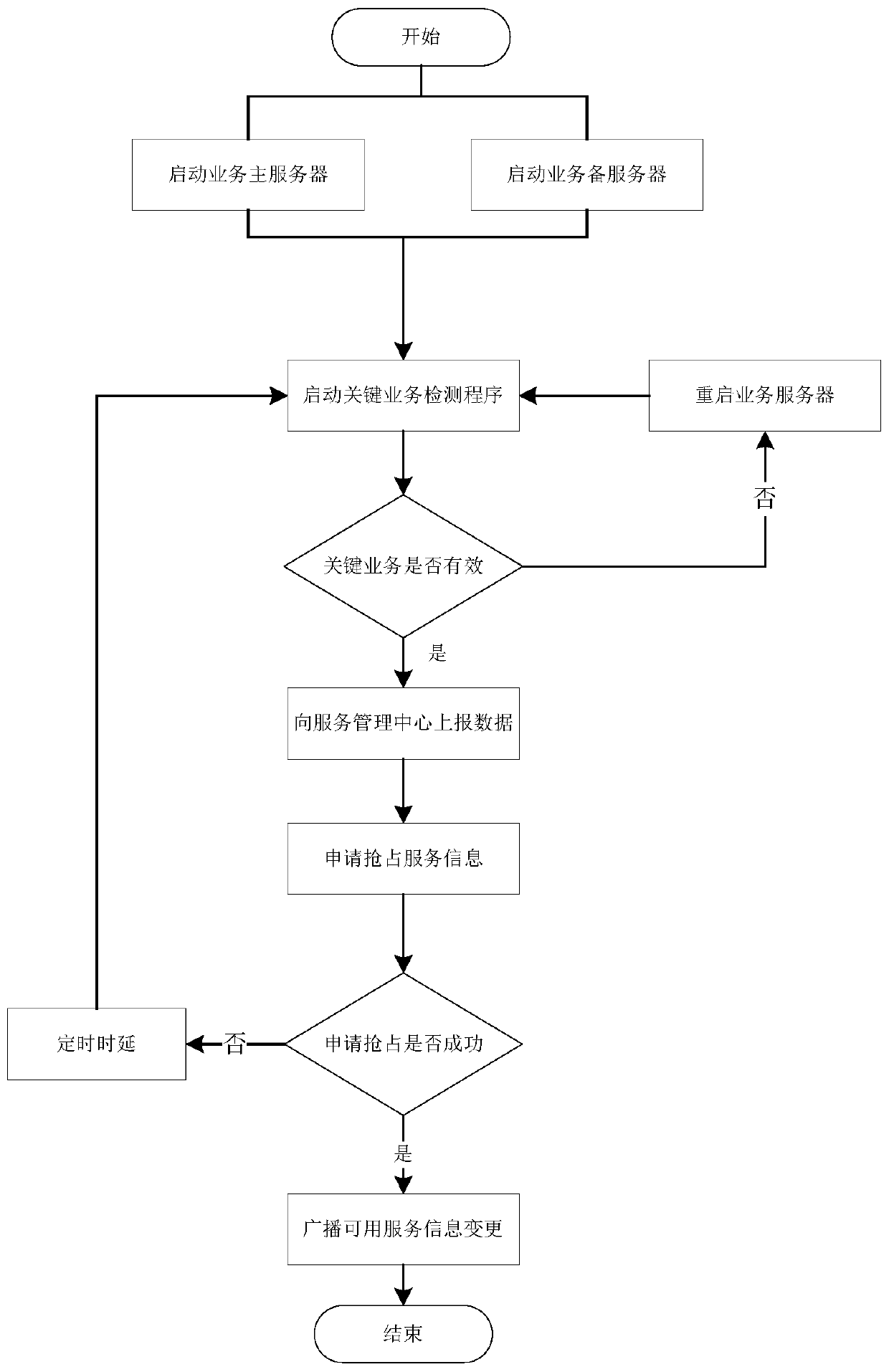

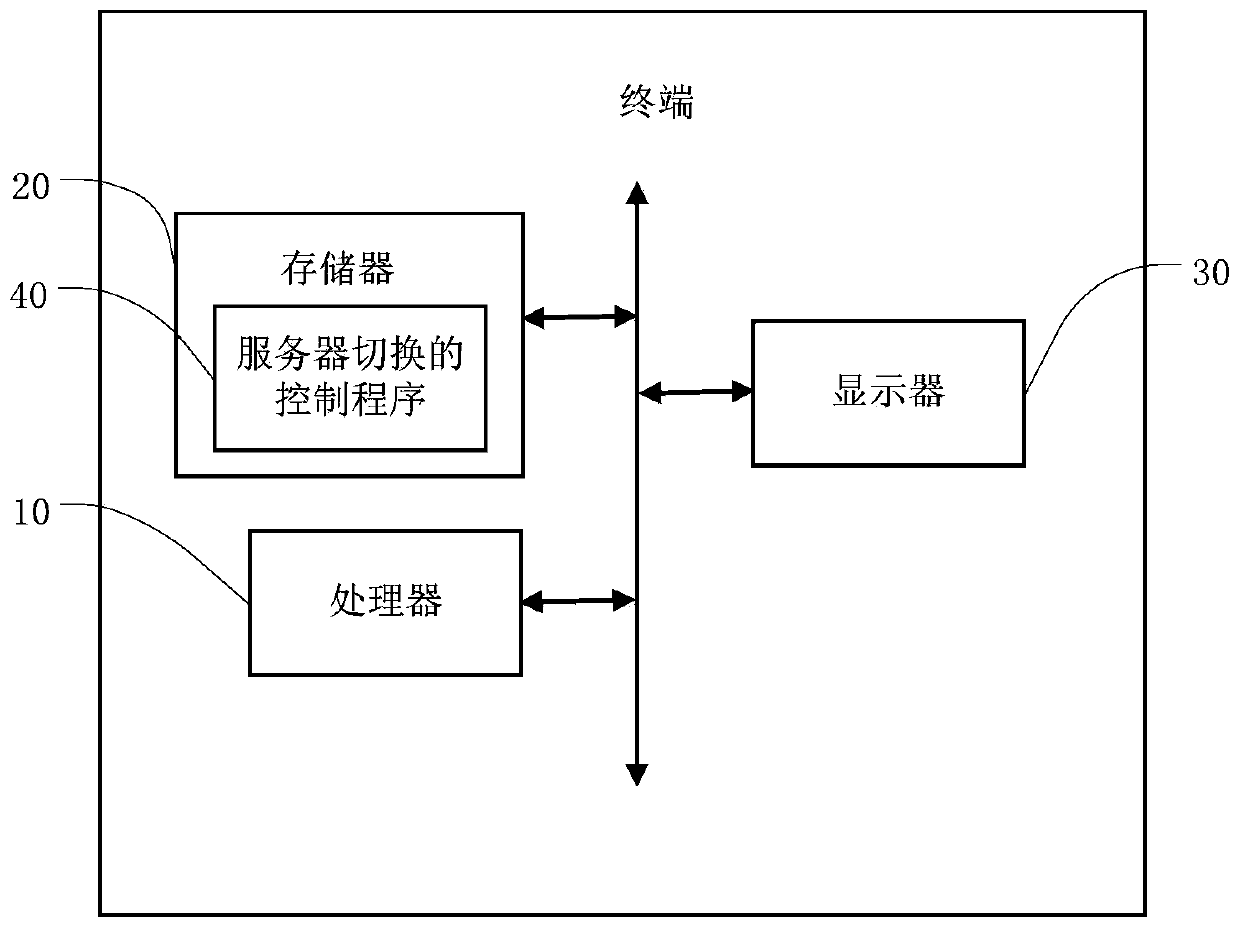

Server switching control method, terminal and storage medium

ActiveCN111277373AIncrease the number ofIncrease flexibilityError preventionData switching networksService informationBroadcast service

The invention discloses a server switching control method, a terminal and a storage medium, and the method comprises the steps: starting a main server and a standby server, and controlling the main server and the standby server to start a key business detection program; after the key business is detected, judging whether the key business is valid or not, and when the key business is valid, reporting service data to a service management center by the key business detection program; wherein the key business detection program applies for a preemption service, and the preemption service applies for preemption of the main server; and judging whether the preemption service is successful or not, and if the preemption service is successful, broadcasting a service information change message for notifying a service change dependency relationship associated with the main server. According to the invention, the key service of the server is detected so as to determine whether to switch the server,provide the preemption operation and determine the switching mode of the target server, the switched target server does not need to be configured in advance, the number of the servers can be dynamically increased, and the flexibility is higher.

Owner:GENEW TECH

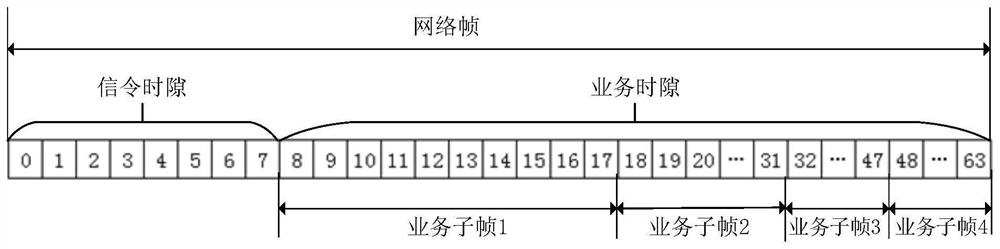

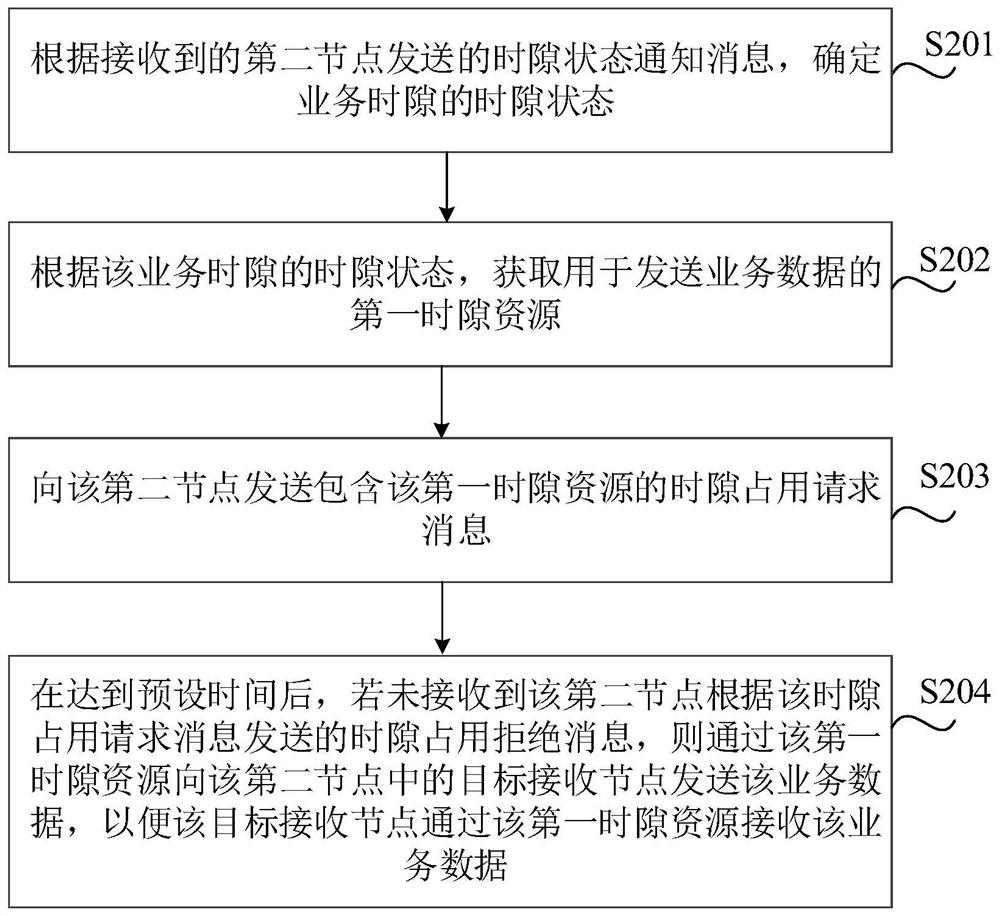

Data transmission method and device and electronic equipment

ActiveCN113099538ARealize distributed dynamic allocationAvoid Data Transmission ConflictsNetwork topologiesSignal allocationEngineeringData transmission

The invention relates to a data transmission method and device and electronic equipment. The method comprises the following steps: determining a time slot state of a service time slot according to a received time slot state notification message of a neighbor node, and obtaining a first time slot resource used for sending service data according to the time slot state of the service time slot; after a time slot occupation request message including the first time slot resource is sent to a neighbor node, determining whether the first time slot resource is available or not according to whether a time slot occupation rejection message is received or not, that is, after a preset time is reached, if the time slot occupation rejection message sent by the neighbor node according to the time slot occupation request message is not received, determining that the first time slot resource is available, and sending service data to a target receiving node in the neighbor nodes through the first time slot resource. Therefore, distributed dynamic allocation of the service time slot resources can be realized, and data transmission conflicts caused by resource preemption are avoided, so that the success rate and efficiency of data transmission are improved.

Owner:北京和峰科技有限公司

Software-assisted instruction level execution preemption

ActiveUS20170249152A1Quantity minimizationQuickly preemptedConcurrent instruction executionProgram saving/restoringComputer architectureEngineering

One embodiment of the present invention sets forth a technique for instruction level execution preemption. Preempting at the instruction level does not require any draining of the processing pipeline. No new instructions are issued and the context state is unloaded from the processing pipeline. Any in-flight instructions that follow the preemption command in the processing pipeline are captured and stored in a processing task buffer to be reissued when the preempted program is resumed. The processing task buffer is designated as a high priority task to ensure the preempted instructions are reissued before any new instructions for the preempted context when execution of the preempted context is restored.

Owner:NVIDIA CORP

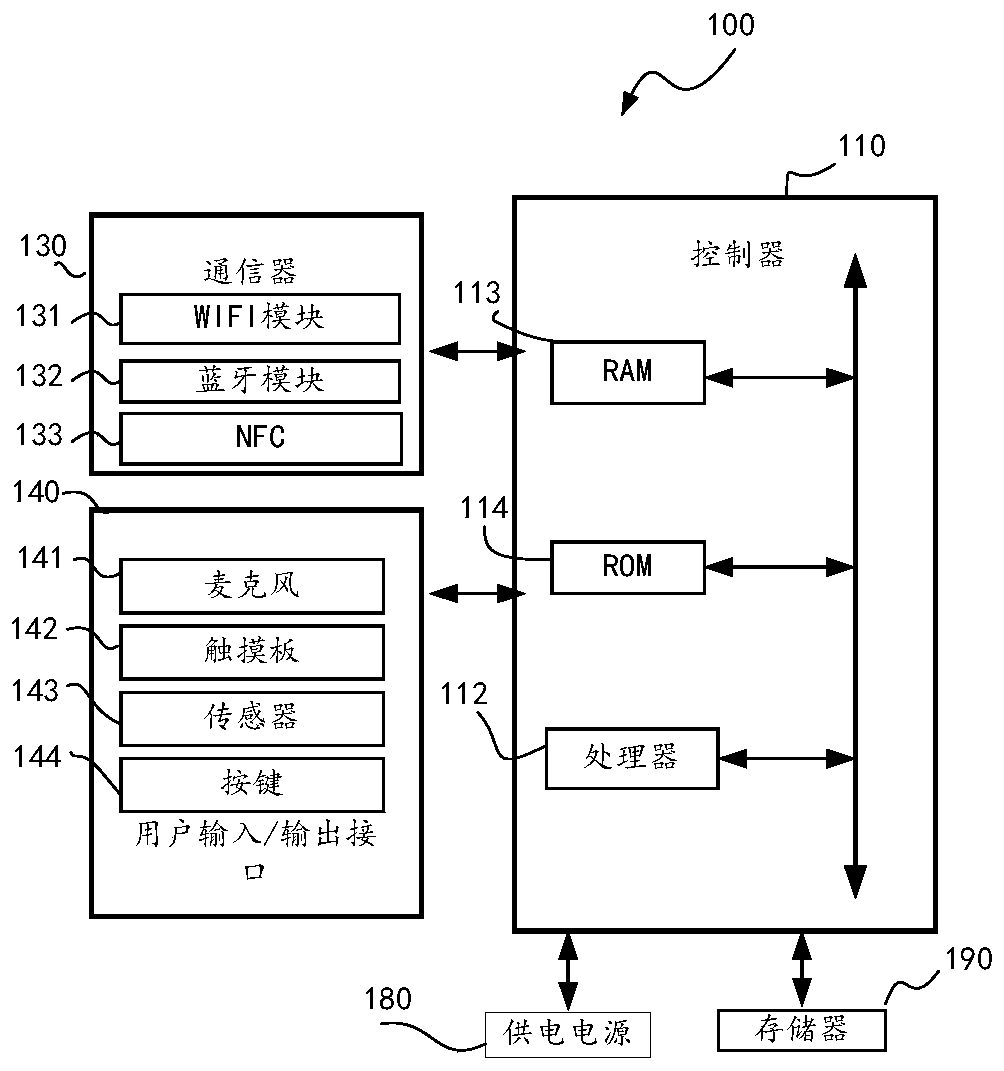

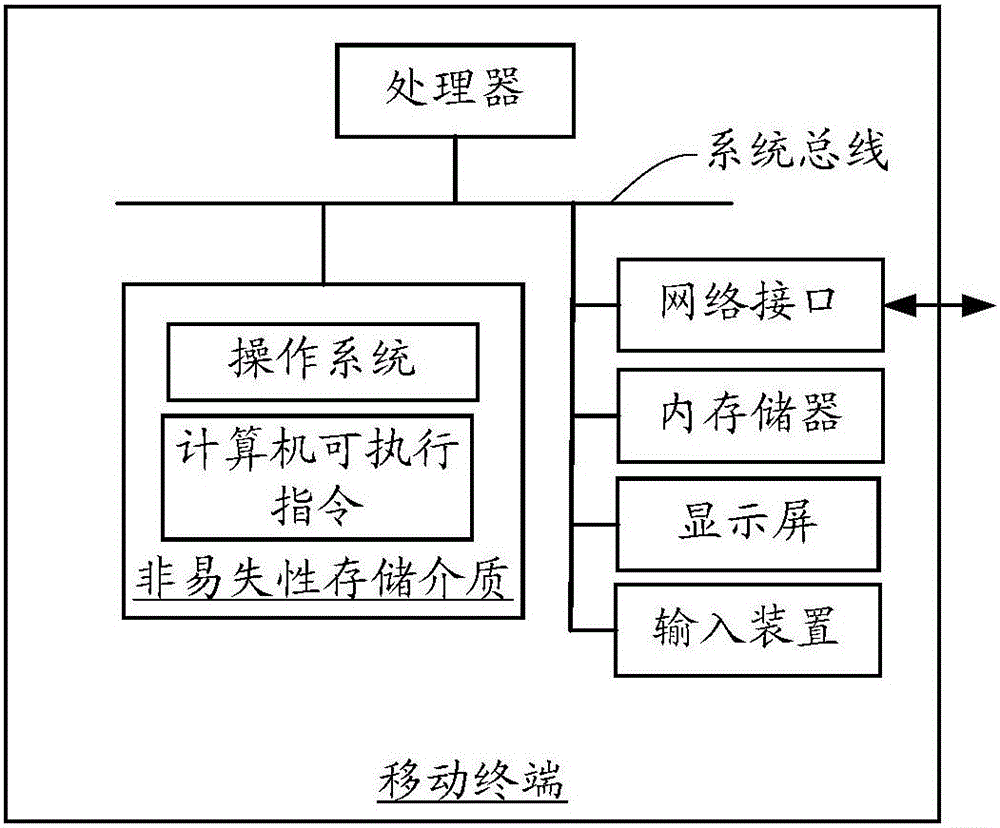

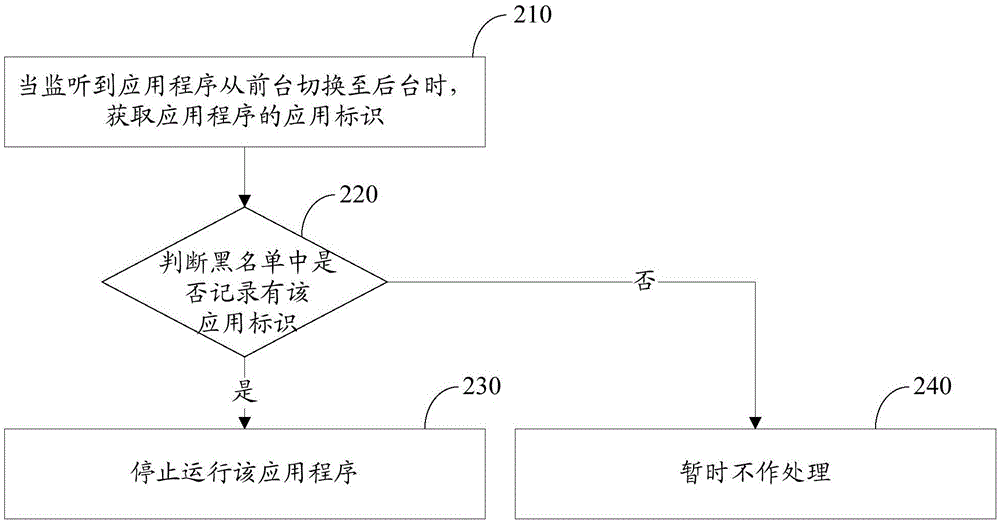

Application processing method and apparatus, mobile terminal and storage medium

ActiveCN107526638AReduce lagResource allocationEnergy efficient computingApplication softwareBlack list

The invention relates to an application processing method and apparatus, a mobile terminal and a storage medium. The method comprises the steps of obtaining an application identifier of an application when it is monitored that the application is switched to a background from a foreground; judging whether the application identifier is recorded in a blacklist or not; and if the application identifier is recorded, stopping running the application, wherein the identifier of the application which is monitored to occupy a CPU abnormally for continuous first preset times is recorded in the blacklist. According to the application processing method and apparatus, the mobile terminal and the storage medium, the situation of jamming of the application running in the foreground due to resource preemption can be effectively reduced.

Owner:GUANGDONG OPPO MOBILE TELECOMM CORP LTD

Parallel test task scheduling optimization method, device and computing equipment

PendingCN112363913AConvenient timeGuaranteed traceabilitySoftware testing/debuggingRelational databaseParallel computing

One embodiment of the invention discloses a parallel test task scheduling optimization method, a device and computing equipment. The method comprises the steps: S10, obtaining test parameters which comprise a test task, a test project, a test resource, a predicted execution period, a father-son relation between the test projects, and a preemption priority; s20, the test parameters are automatically input into a relational database, the relational database is configured to be a test requirement form associated with the test parameters, and the test requirement form stores corresponding relations between the test items and the test resources, predicted execution periods, parent-child relations and preemption priorities; s30, establishing a simulation training task according to the test demand form to optimize the scheduling method, and forming a test result form according to the entry time, the exit time and the actual execution period of the task; and S40, forming an optimal task scheduling strategy according to the test result form.

Owner:BEIJING INST OF ELECTRONICS SYST ENG

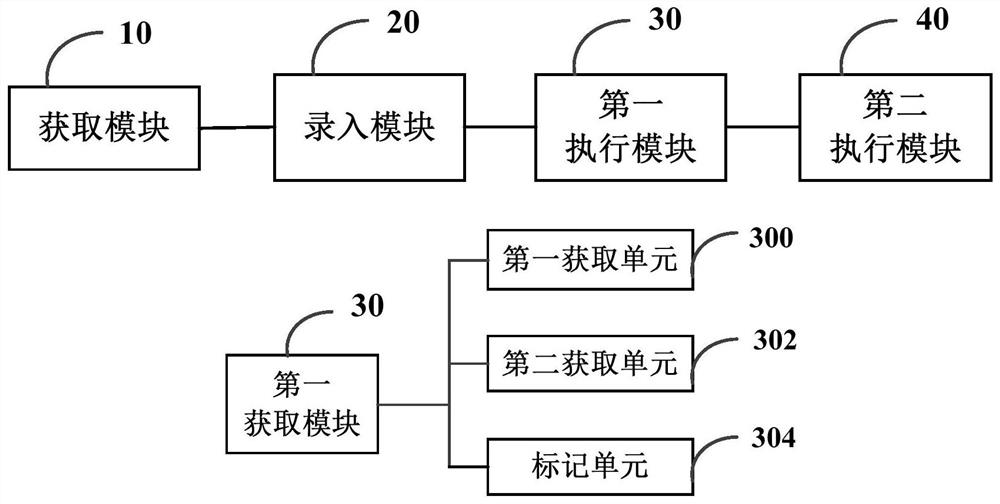

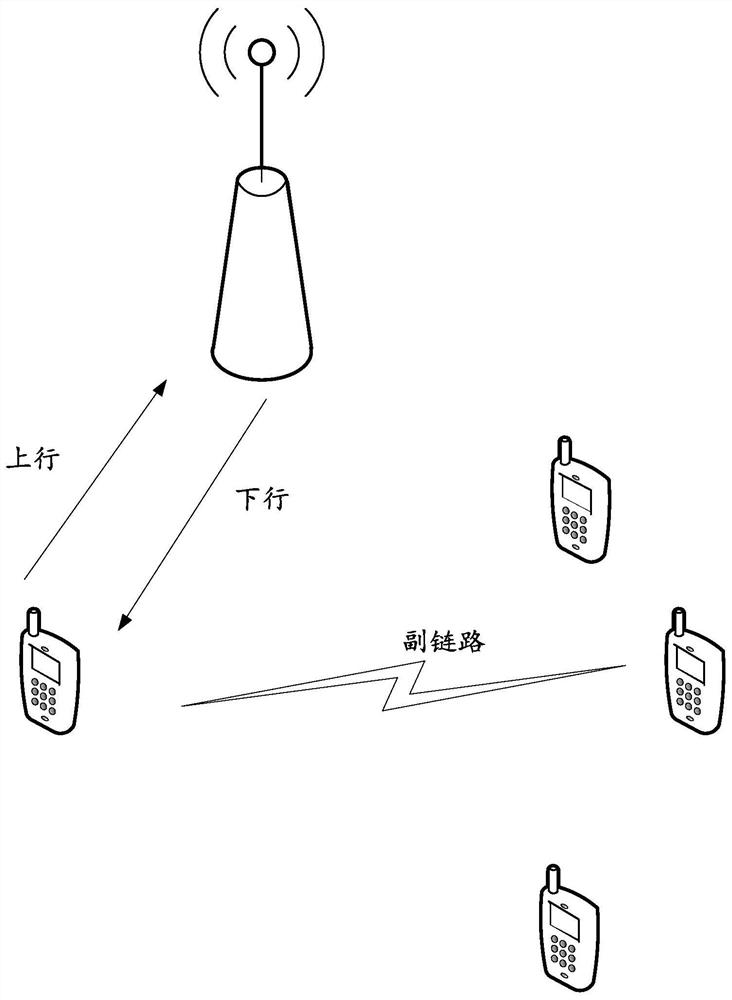

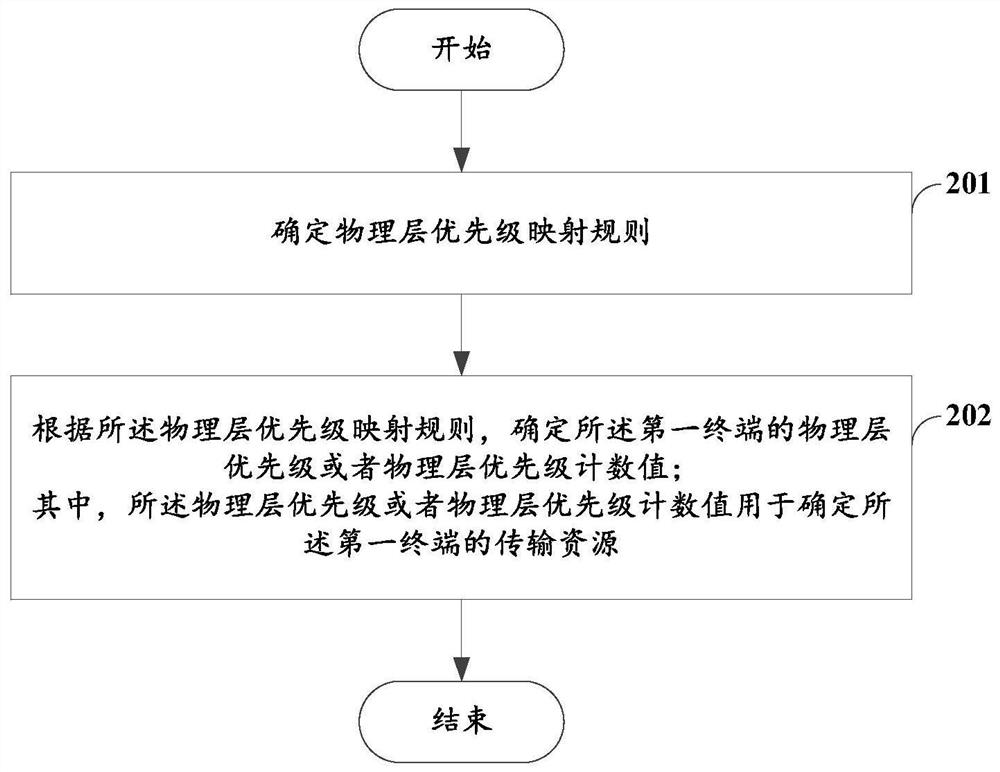

Congestion control method and equipment on side link

ActiveCN112637895AReduce negative impactImprove performanceNetwork traffic/resource managementPhysical layerEngineering

The embodiment of the invention provides a congestion control method and equipment on a side link. The method comprises the following steps: determining a physical layer priority mapping rule; determining a physical layer priority or a physical layer priority count value of the first terminal according to the physical layer priority mapping rule, wherein the physical layer priority or the physical layer priority count value is used for determining transmission resources of the first terminal. In the embodiment of the invention, through the definition and use of the priority of the physical layer, the transmission of the low-delay high-reliability service is ensured, the negative influence of a resource preemption mechanism on the low-priority terminal is reduced, and the overall performance of the system can be better improved.

Owner:VIVO MOBILE COMM CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com