Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

872 results about "Request queue" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

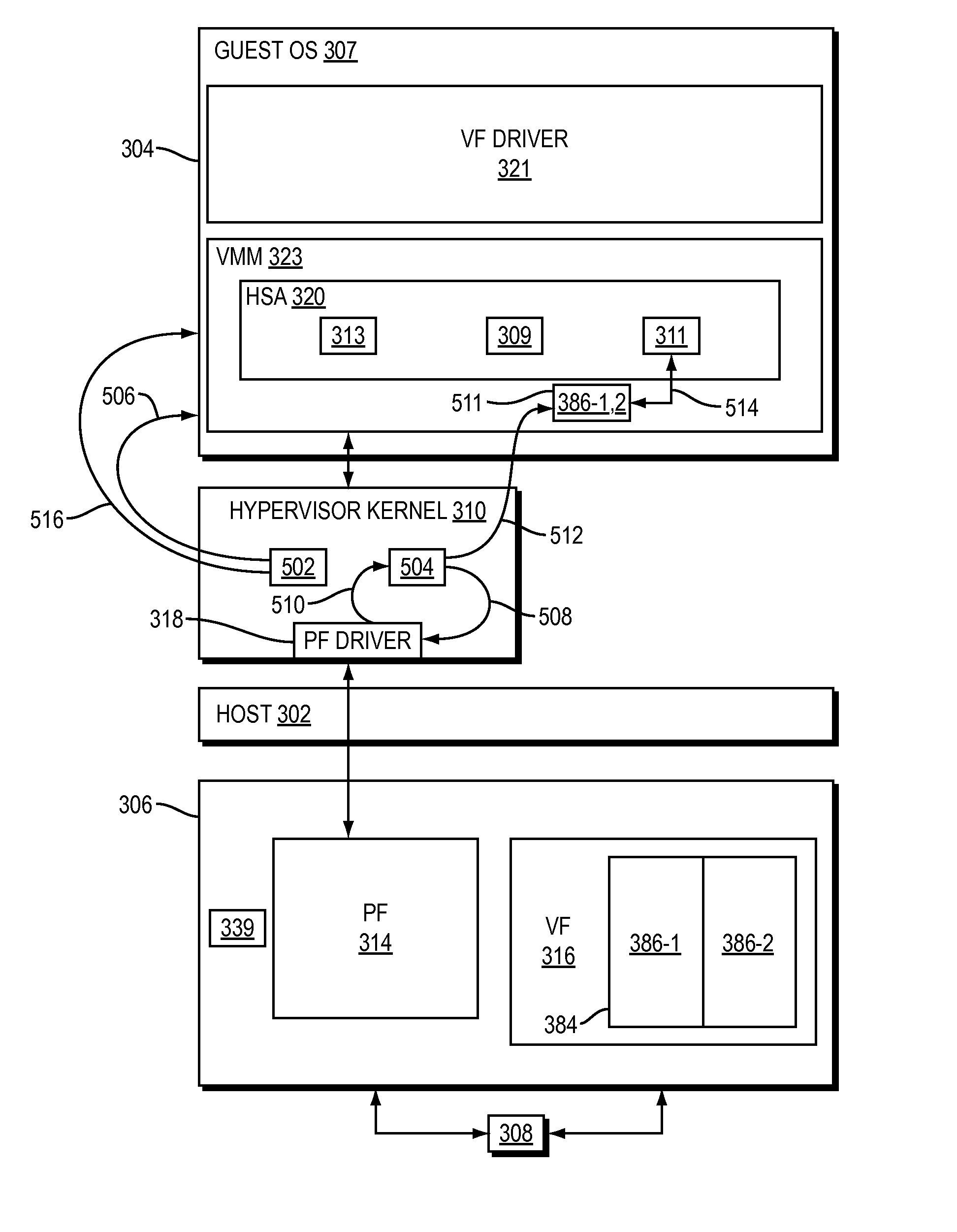

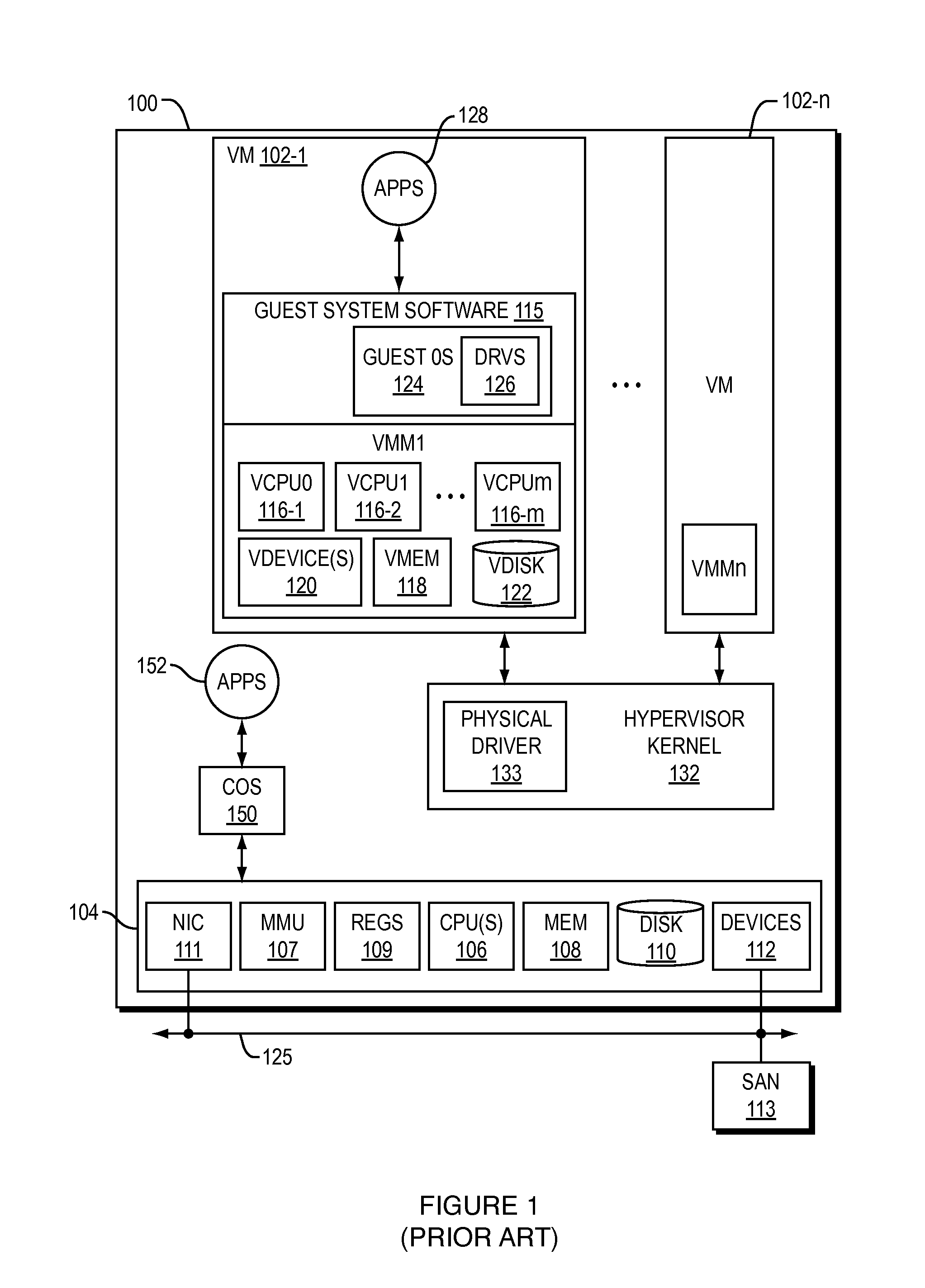

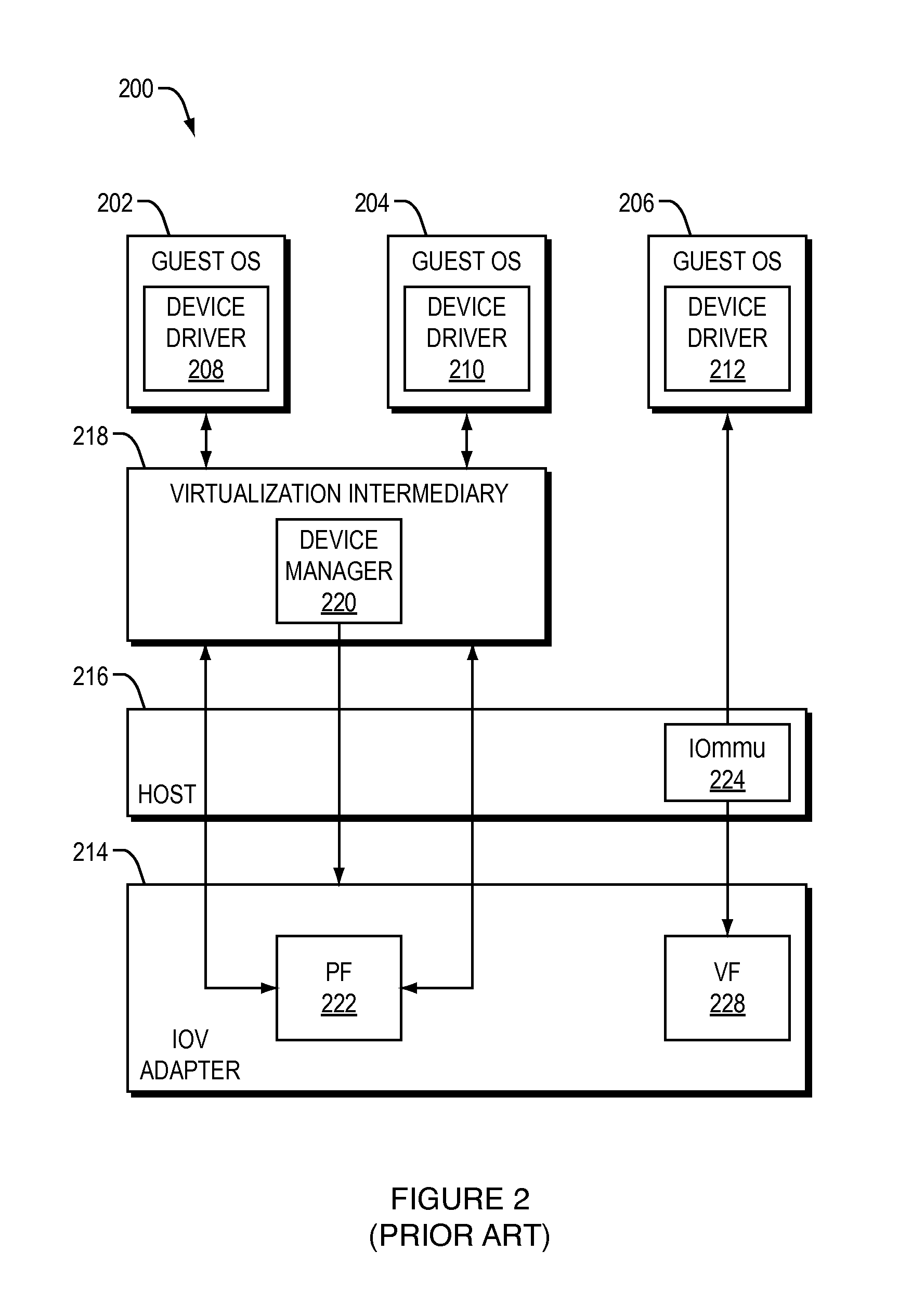

Live migration of virtual machine during direct access to storage over sr iov adapter

ActiveUS20120042034A1Digital computer detailsComputer security arrangementsComputer memoryVirtual machine

A method is provided to migrate a virtual machine from a source computing machine to a destination computing machine comprising: suspending transmission of requests from a request queue disposed in source computing machine memory associated with the VM from the request queue to a VF; while suspending the transmission of requests, determining when no more outstanding responses to prior requests remain to be received; in response to a determination that no more outstanding responses to prior requests remain to be received, transferring state information that is indicative of locations of requests inserted to the request queue from the VF to a PF and from the PF to a memory region associated with a virtualization intermediary of the source computing machine. After transferring the state information to source computing machine memory associated with a virtualization intermediary, resuming transmission of requests from locations of the request queue indicated by the state information to the PF; and transmitting the requests from the PF to the physical storage.

Owner:VMWARE INC

System and method for providing an actively invalidated client-side network resource cache

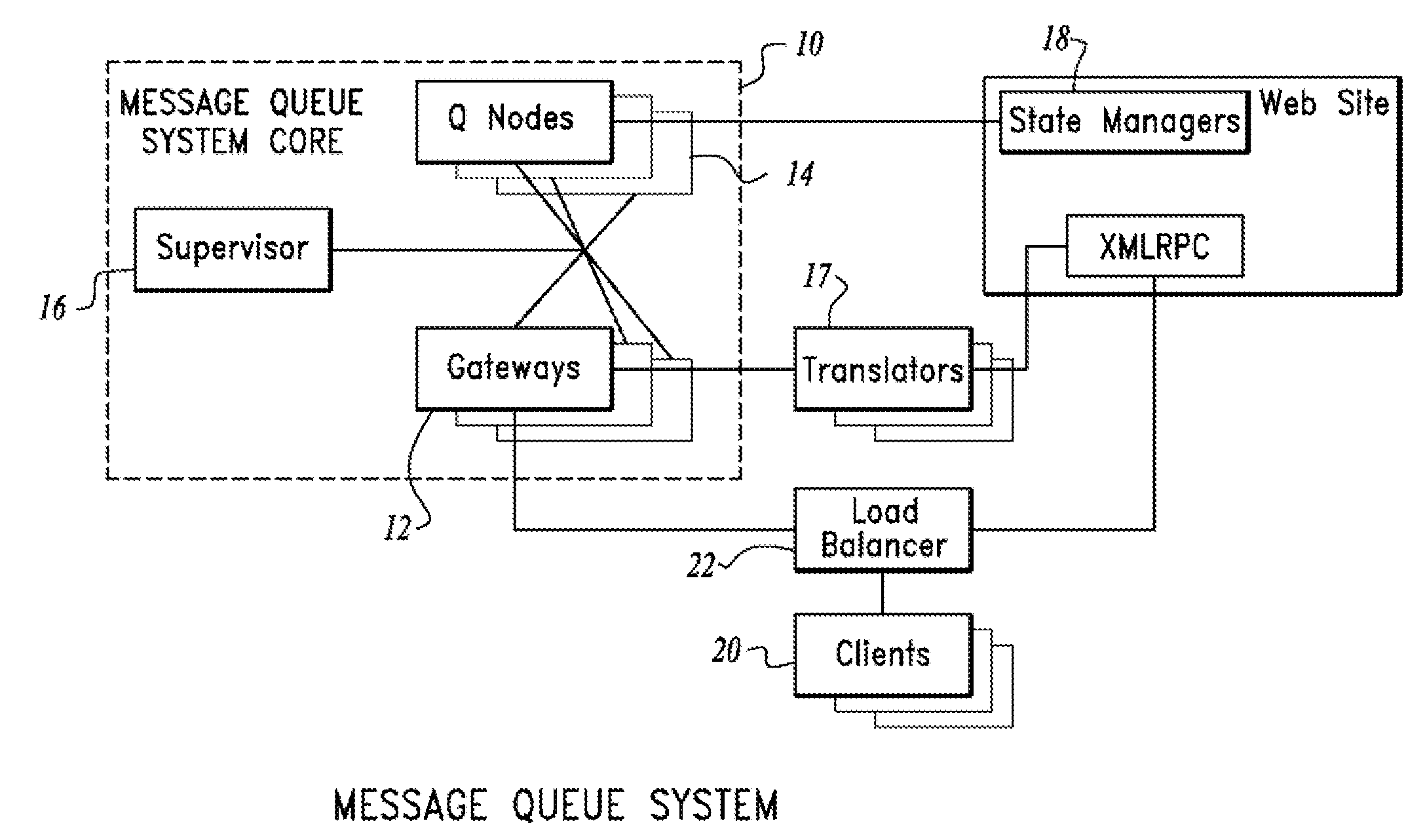

A system and method for providing an actively invalidated client-side network resource cache are disclosed. A particular embodiment includes: a client configured to request, for a client application, data associated with an identifier from a server; the server configured to provide the data associated with the identifier, the data being subject to subsequent change, the server being further configured to establish a queue associated with the identifier at a scalable message queuing system, the scalable message queuing system including a plurality of gateway nodes configured to receive connections from client systems over a network, a plurality of queue nodes containing subscription information about queue subscribers, and a consistent hash table mapping a queue identifier requested on a gateway node to a corresponding queue node for the requested queue identifier; the client being further configured to subscribe to the queue at the scalable message queuing system to receive invalidation information associated with the data; the server being further configured to signal the queue of an invalidation event associated with the data; the scalable message queuing system being configured to convey information indicative of the invalidation event to the client; and the client being further configured to re-request the data associated with the identifier from the server upon receipt of the information indicative of the invalidation event from the scalable message queuing system.

Owner:IMVU

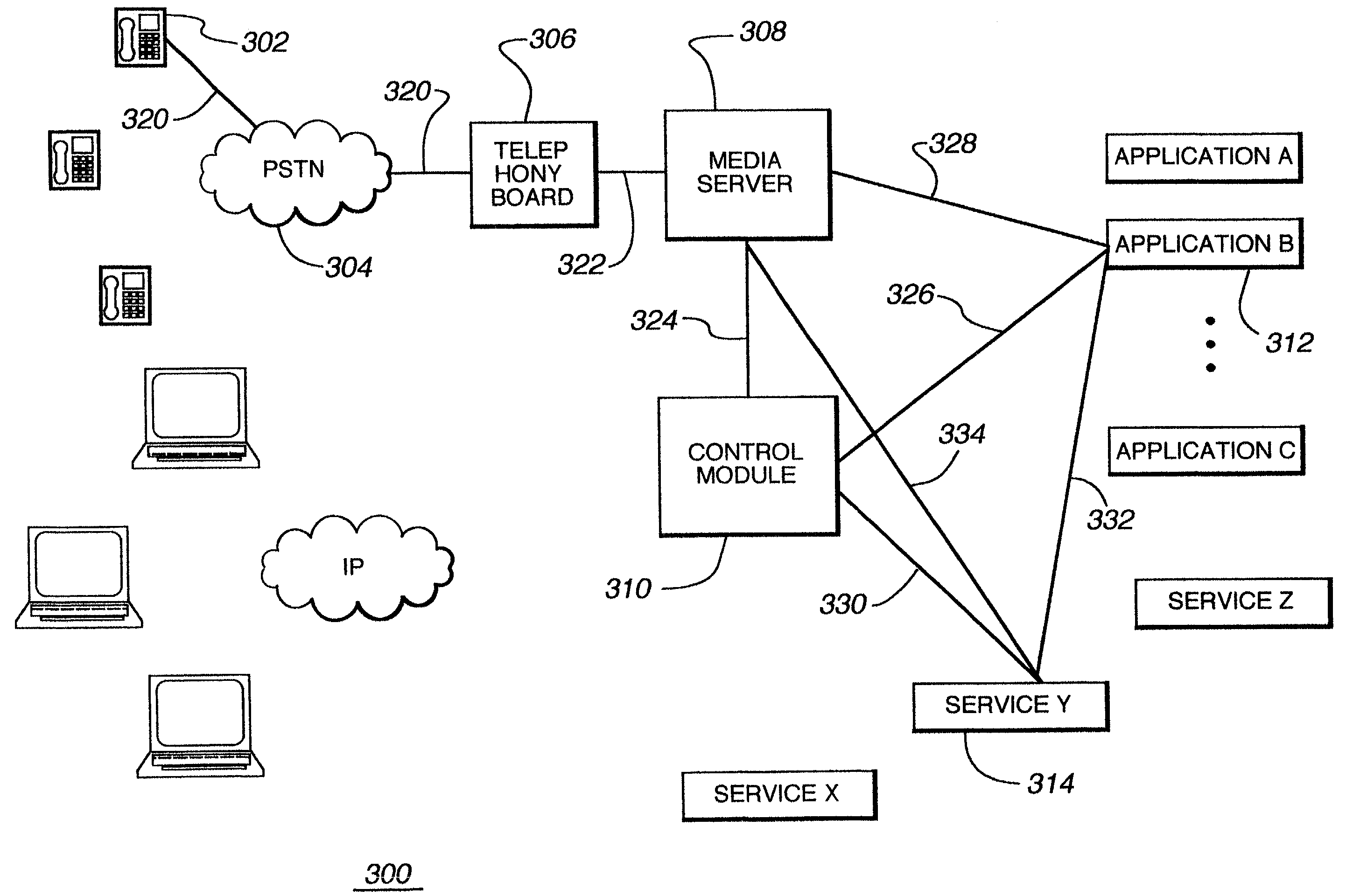

Media session framework using a control module to direct and manage application and service servers

ActiveUS7185094B2Multiple digital computer combinationsSecuring communicationMultiplexingTelecommunications link

The present invention provides for multiplexing applications. In particular, an access receives a request from a user to access an application. Based on the received request, the access server establishes a communication link between the access server and the user. The access request is stored in an input request queue when an available communication path to the requested application is available. The communication path between the input request queue and the application is established, the stored request is removed and sent to the application.

Owner:SANDCHERRY NETWORKS INC

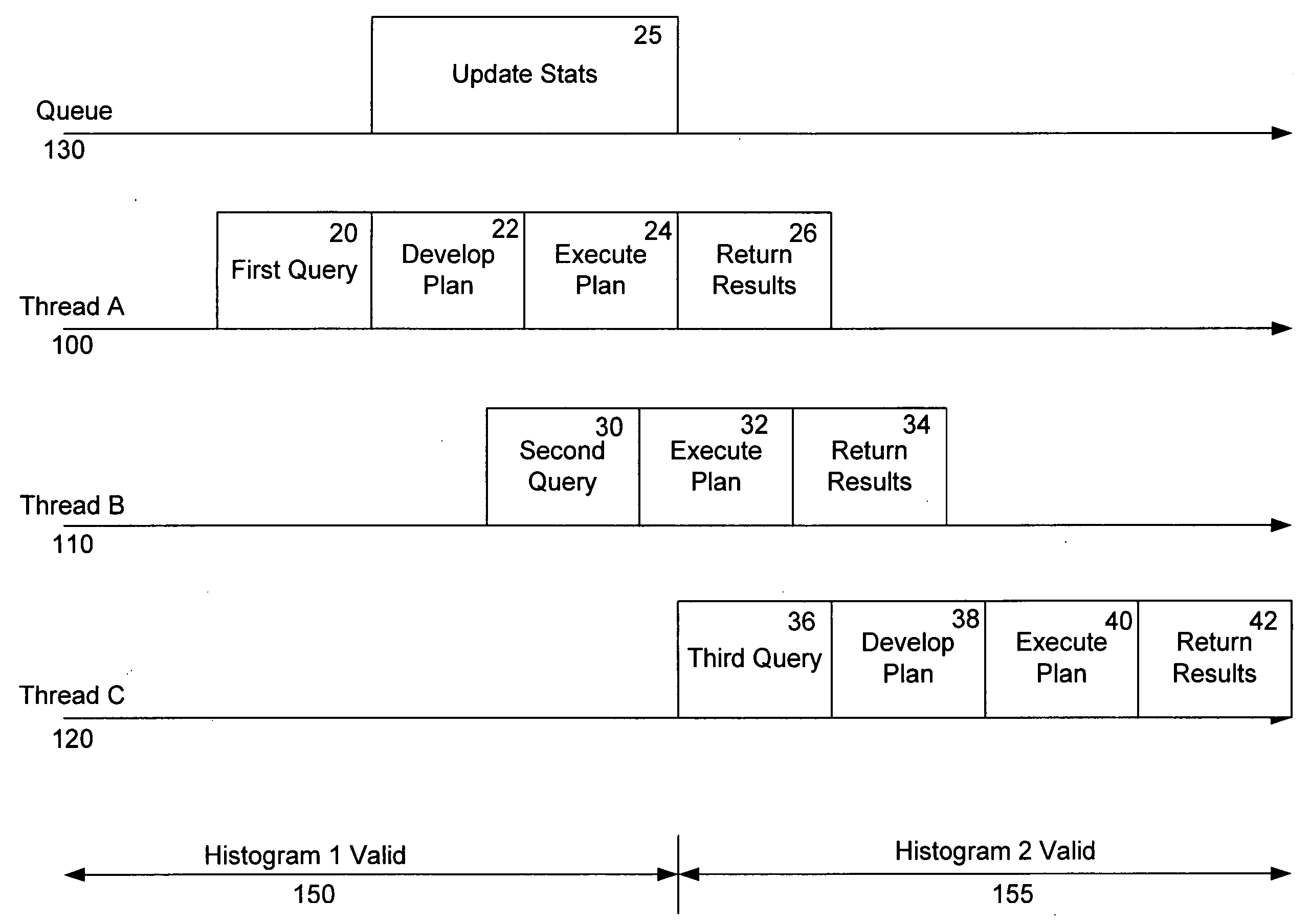

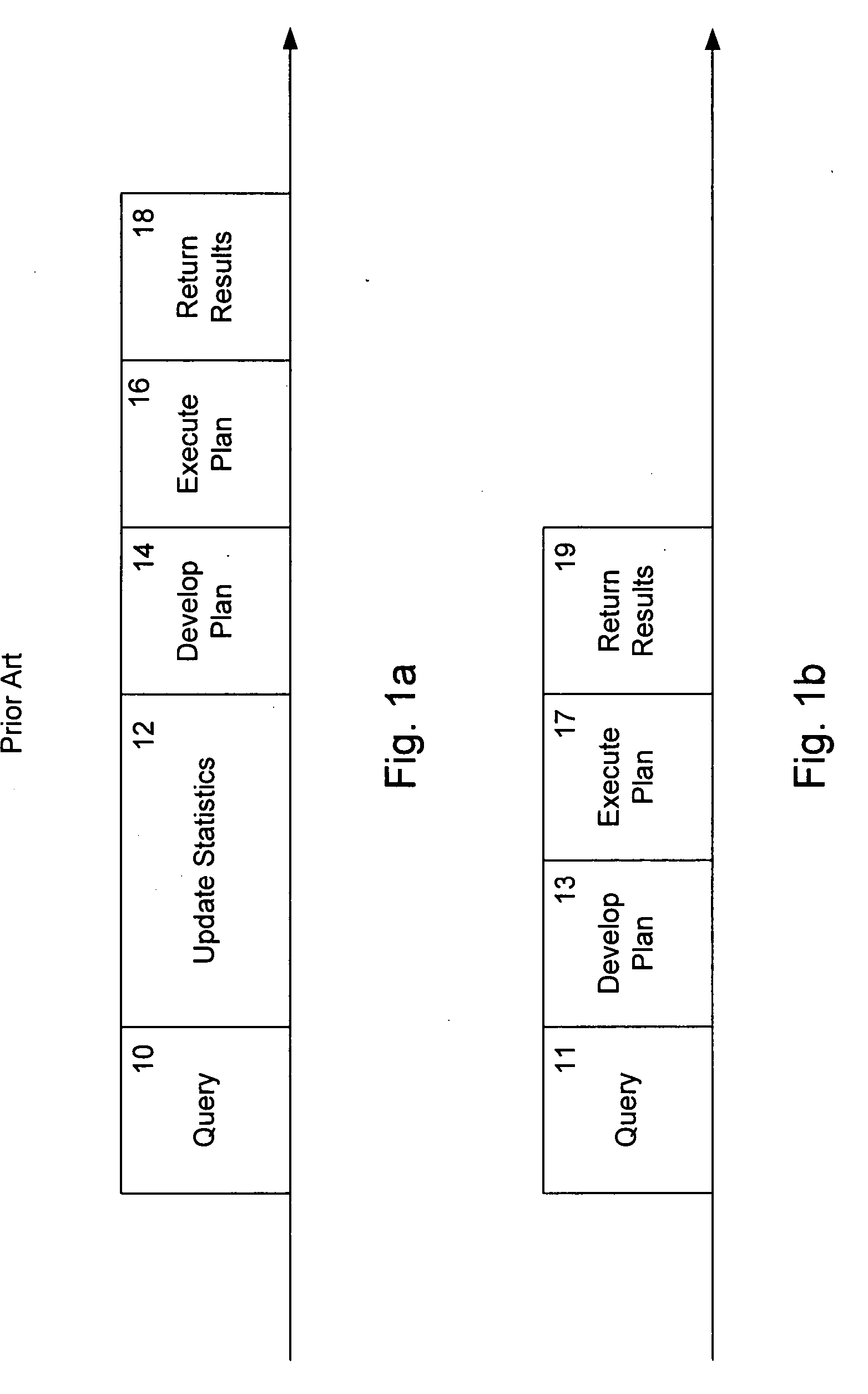

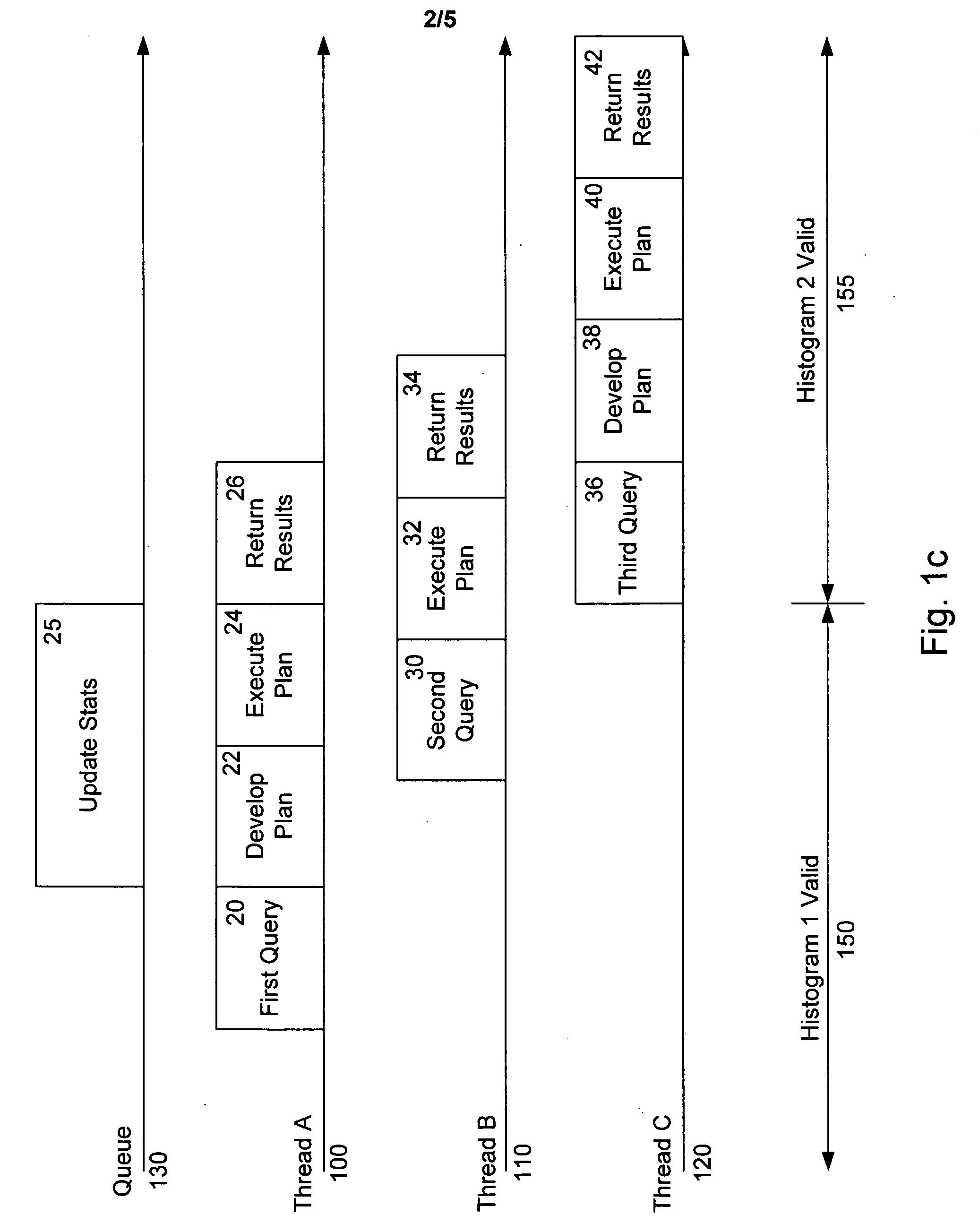

System and method for an asynchronous queue in a database management system

InactiveUS20060294058A1Resource optimizationDigital data information retrievalSpecial data processing applicationsQuery planData mining

A method for performing asynchronous statistics updates in a database management system includes receiving a first query against the database, determining if present statistics related to the first query are stale and entering on a queue a request to acquire updated statistics if the present statistics are stale. The queue jobs are executed asynchronously with respect to the query request. As a result, a first query plan may be developed using the present statistics related to the first query. Thus, no delay in processing the query due to statistics updates is incurred. The first query plan may be executed and results given to the requester. At some later time, the request to acquire updated statistics related to the first query is processed asynchronously from the query request. If subsequent queries are received, the queue can delete duplicate requests to update the same statistics. Those subsequent queries can benefit from the updated statistics.

Owner:MICROSOFT TECH LICENSING LLC

Dynamic request priority arbitration

Owner:ORACLE INT CORP

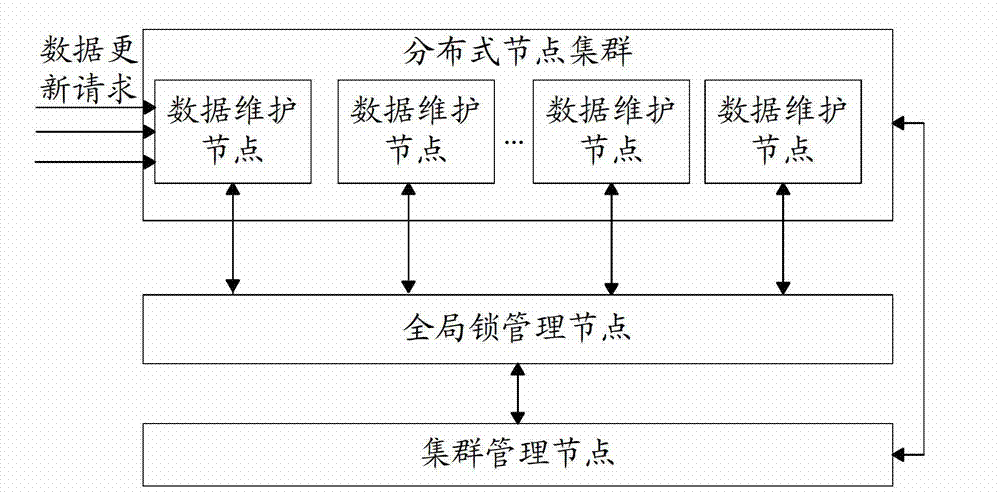

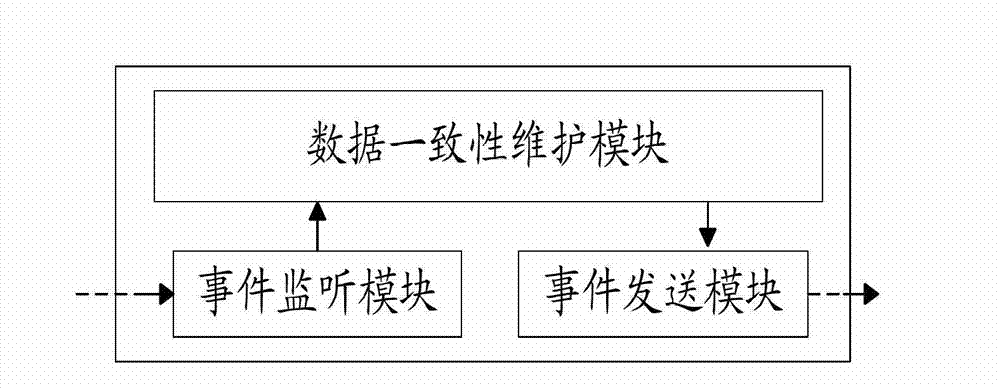

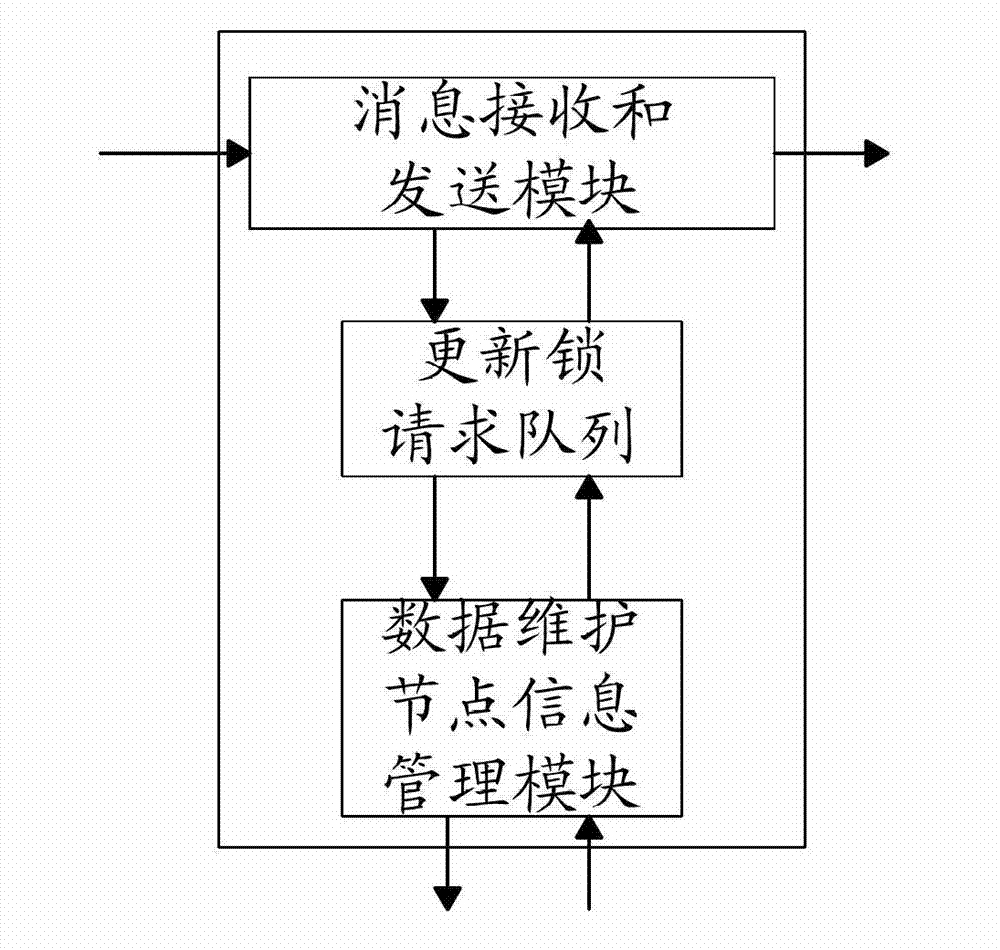

Consistency maintenance system and methods for distributed-type data

InactiveCN103036717AHigh working reliabilityImprove work flexibilityData switching networksCluster systemsClient-side

Provided is a consistency maintenance system and methods for distributed-type data in a distributed-type cluster system. The consistency maintenance system and methods for distributed-type data is composed of a cluster management node, a global lock management node and a plurality of data maintenance nodes which are scattered in the system, wherein the data maintenance nodes are the isomorphic nodes suitable for storing one or more data replicas. The data maintenance nodes are provided with three modules of monitoring events, maintaining data consistency and sending events. The global lock management node is responsible for storing and managing update locks of all the data in a system, and storing all information of the data maintenance nodes. The global lock management node is provided with three modules of receiving and sending massage, the update locks requesting queue, and the information management for data maintenance nodes. The cluster management node is responsible for managing the latest information of all the nodes in the system, and testing the state of each node in the system periodically. The consistency maintenance system and methods for distributed-type data have the advantages of being reliable and flexible in work methods. Besides, a client can develop a data update towards a plurality of targets at the same time. The consistency maintenance system and methods for distributed-type data have a simple operation, a less communication overhead, a short updating time delay, and a promising application prospects.

Owner:BEIJING UNIV OF POSTS & TELECOMM

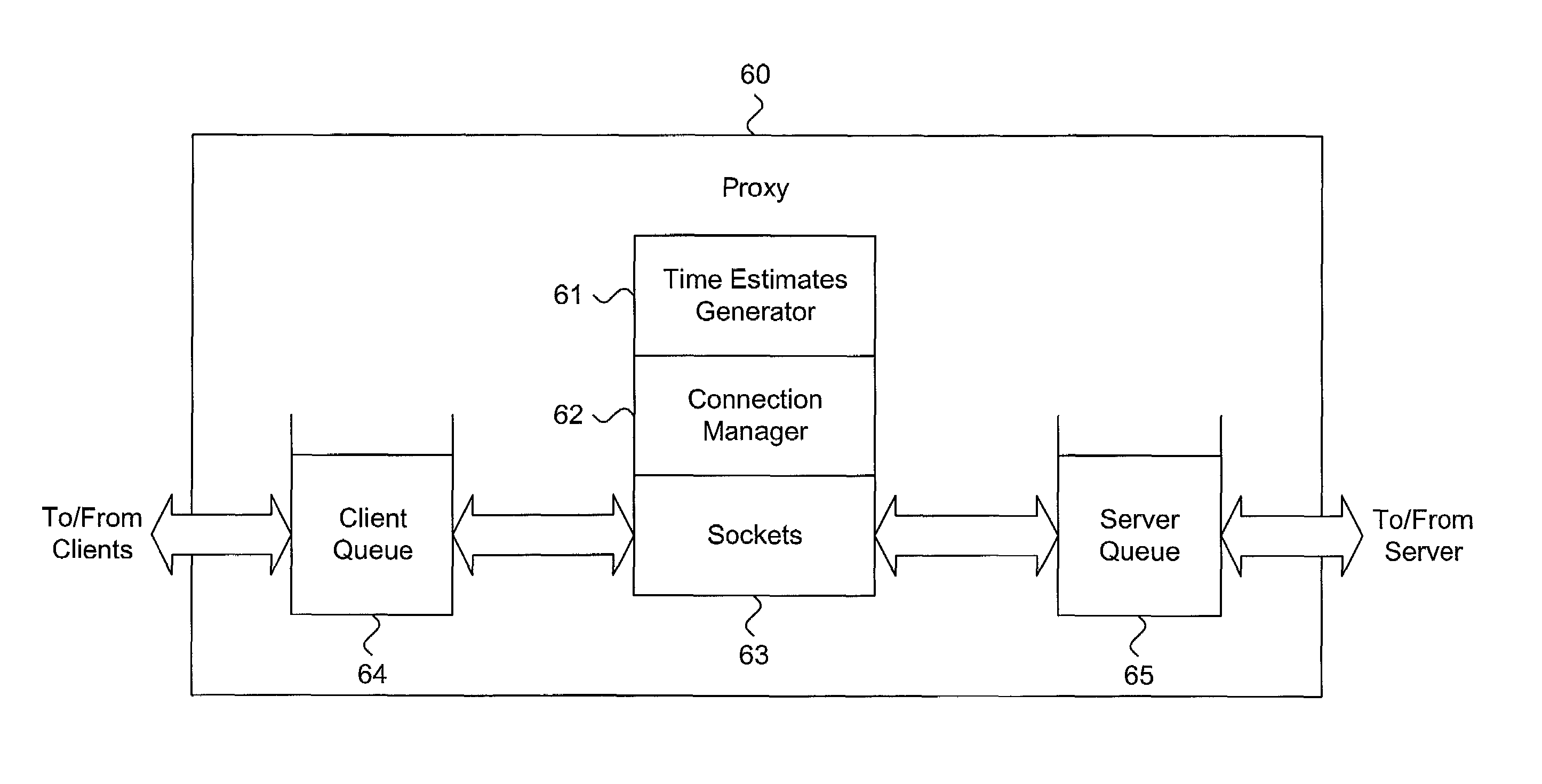

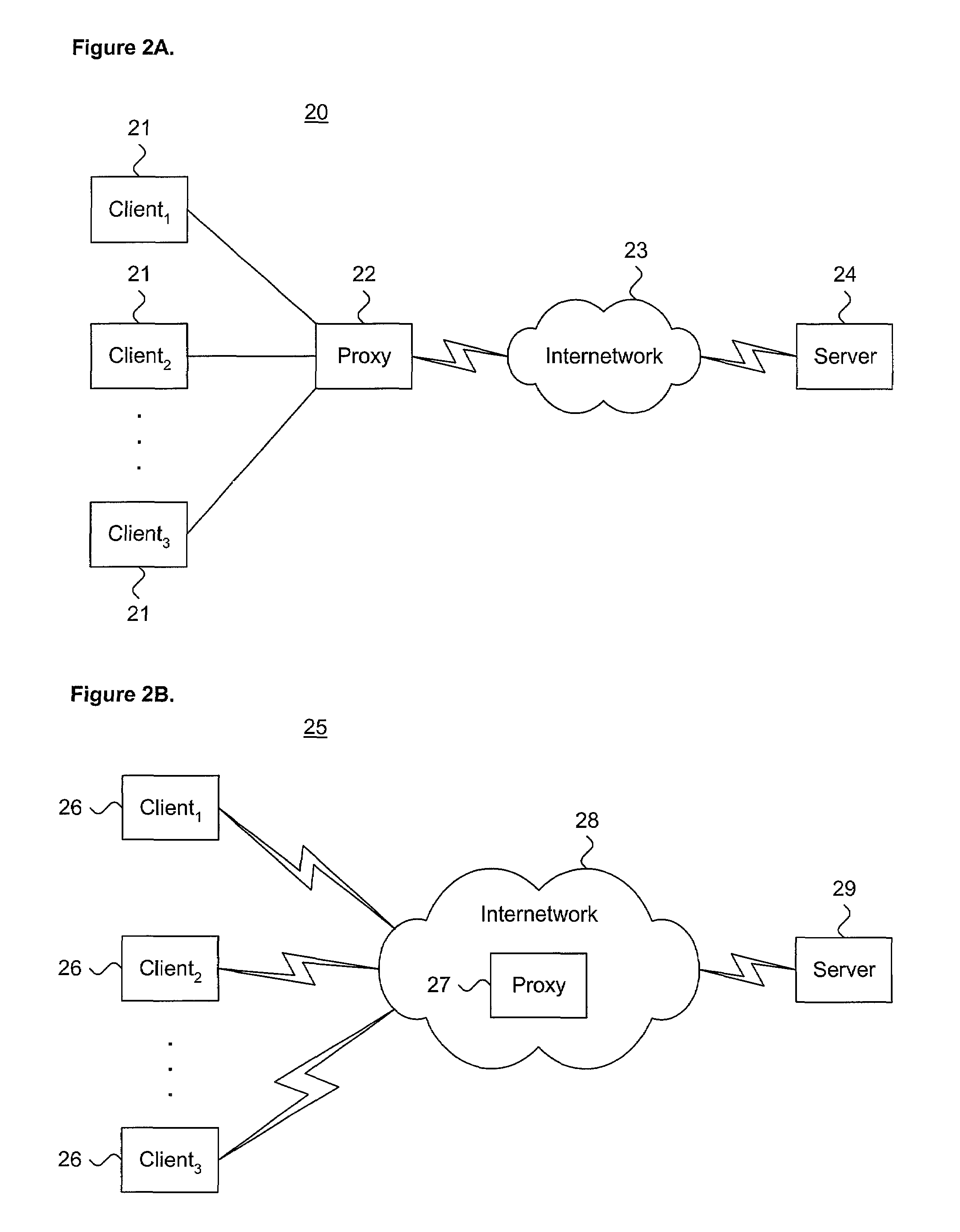

System and method for efficiently forwarding client requests from a proxy server in a TCP/IP computing environment

InactiveUS7003572B1Rapid responseEfficient forwardingMultiprogramming arrangementsMultiple digital computer combinationsSlow-startClient-side

A system and method for efficiently forwarding client requests from a proxy server in a TCP / IP computing environment is described. A plurality of transient requests are received from individual sending clients into a request queue. Each request is commonly addressed to an origin server. Time estimates of TCP overhead, slow start overhead, time-to-idle, and request transfer time for sending the requests over each of a plurality of managed connections to the origin server are dynamically calculated, concurrent to receiving and during processing of each request. The managed connection is chosen from, in order of preferred selection, a warm idle connection, an active connection with a time-to-idle less than a slow start overhead, a cold idle connection, an active connection with a time-to-idle less than a TCP overhead, a new managed connection, and an existing managed connection with a smallest time-to-idle. Each request is forwarded to the origin server over the selected managed connection.

Owner:CA TECH INC

System and method for providing highly available processing of asynchronous service requests

ActiveUS7222148B2Overcome deficienciesData processing applicationsMultiple digital computer combinationsClient-sideApplication software

Highly-available processing of an asynchronous request can be accomplished in a single transaction. A distributed request queue receives a service request from a client application or application view client. A service processor is deployed on each node of a cluster containing the distributed request queue. A service processor pulls the service request from the request queue and invokes the service for the request, such as to an enterprise information system. If that service processor fails, another service processor in the cluster can service the request. The service processor receives a service response from the invoked service and forwards the service response to a distributed response queue. The distributed response queue holds the service response until the response is retrieved for the client application.

Owner:ORACLE INT CORP

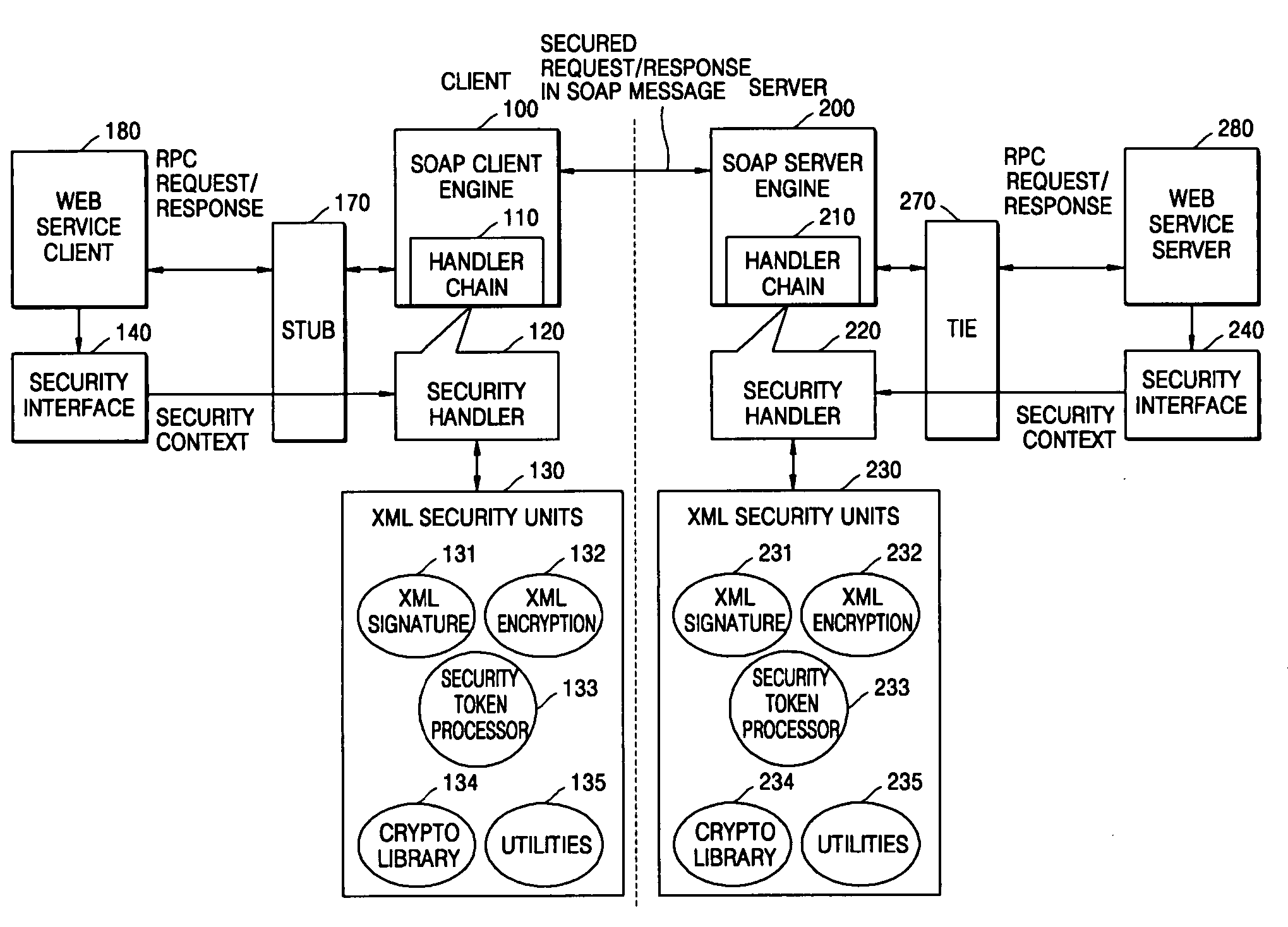

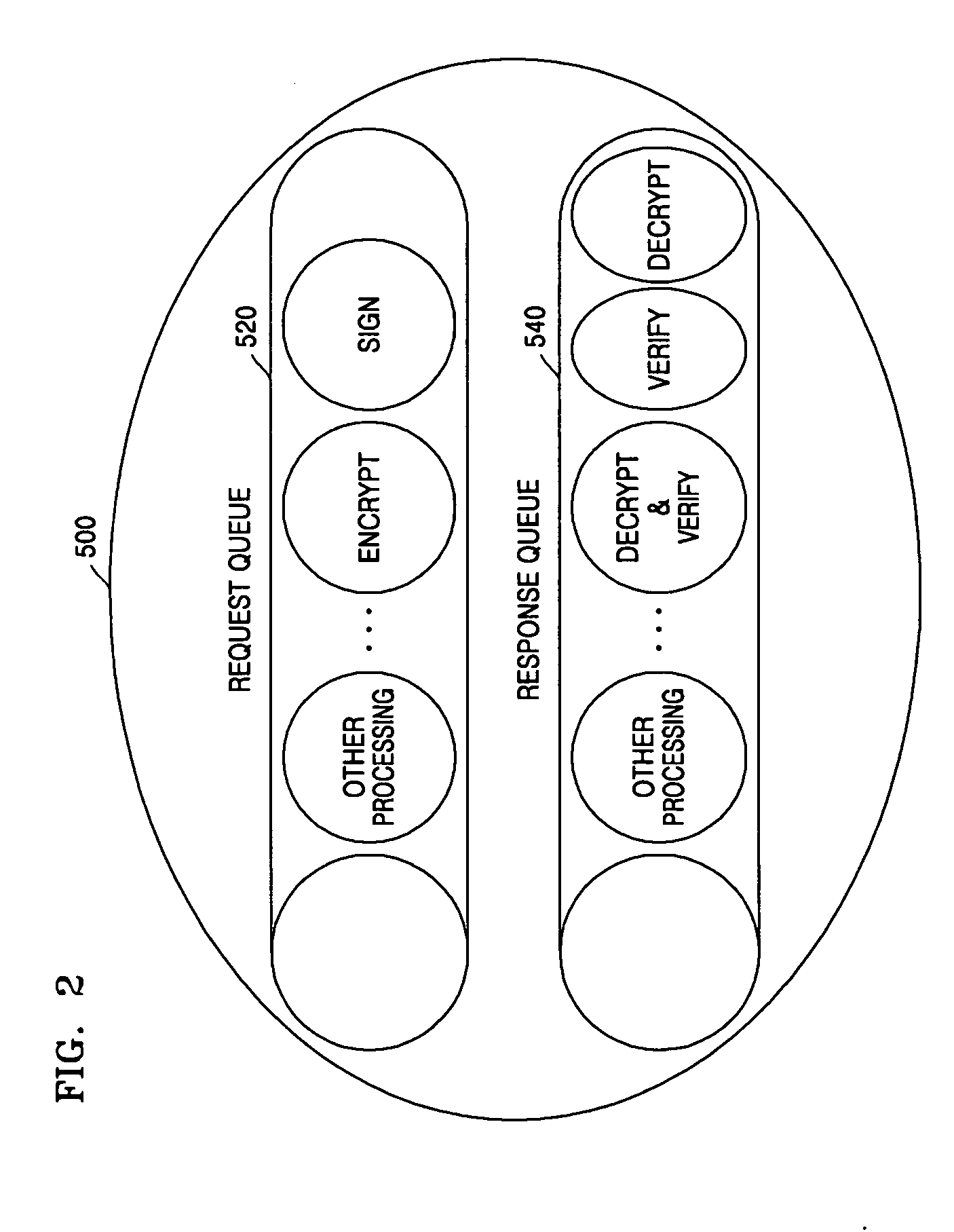

Message security processing system and method for web services

InactiveUS20050144457A1User identity/authority verificationDigital computer detailsTimestampWeb service

A message security processing system and method for Web services are provided. In the message security processing system in which messages are exchanged between a client and a server with a SOAP-RPC format, each of the client and the server includes: a security interface allowing information related to digital signature, encryption, and timestamp insertion to be set in a security context object for an application program to meet security requirements of the client or the server; a security handler receiving the security context object from the security interface, and performing security processing of a request message by calling security objects stored in a request queue of the security context object one by one in order or performing security processing of a response message by calling security objects stored in a response queue of the security context object one by one in order; and an XML security unit supporting an XML security functions by called by the security handler.

Owner:ELECTRONICS & TELECOMM RES INST

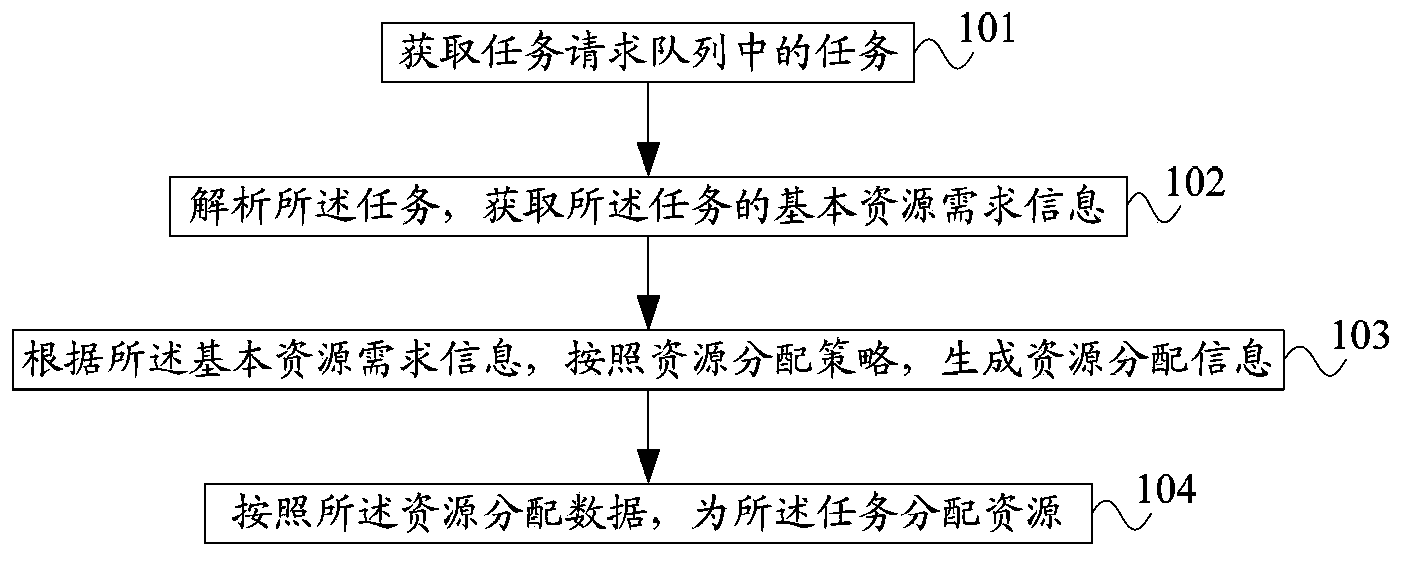

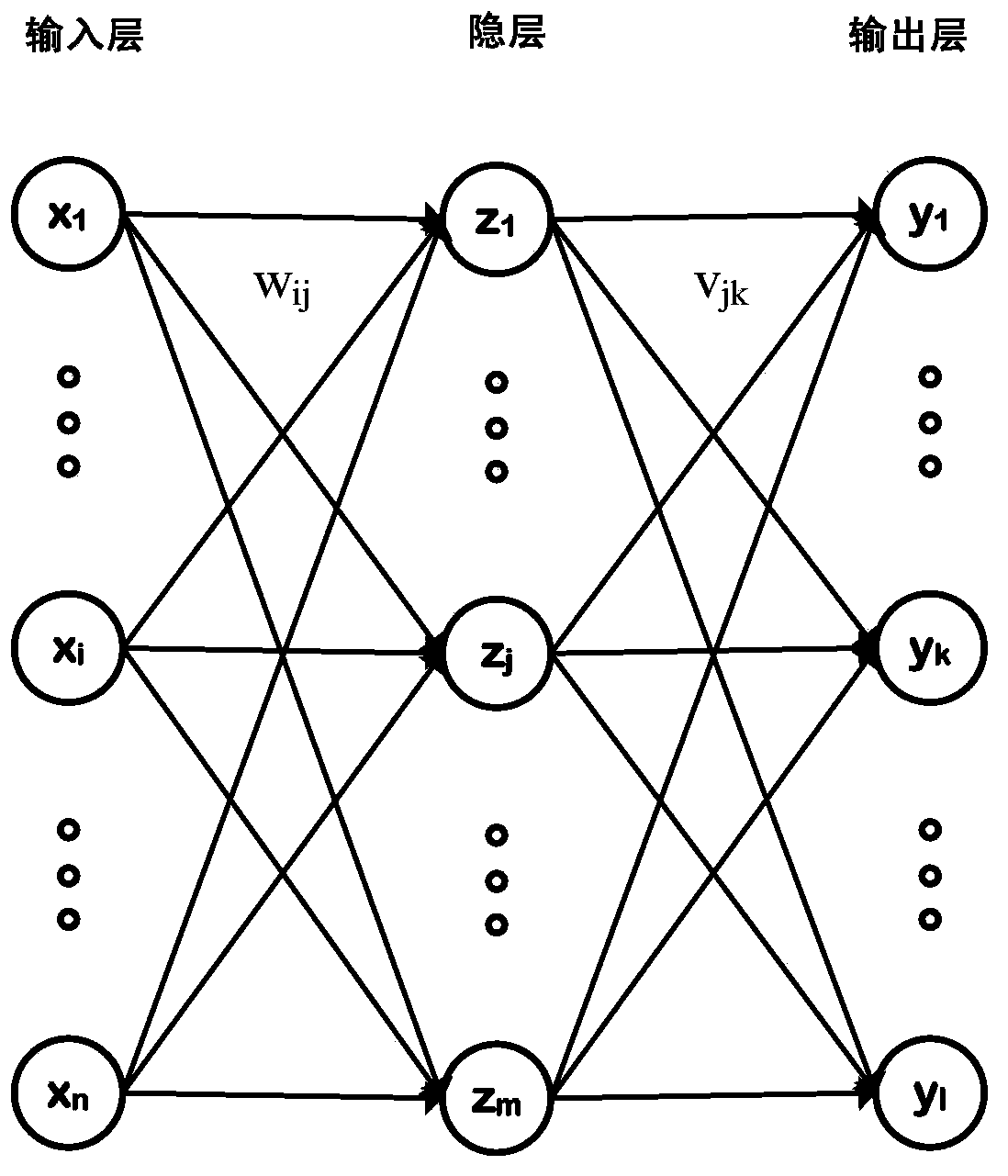

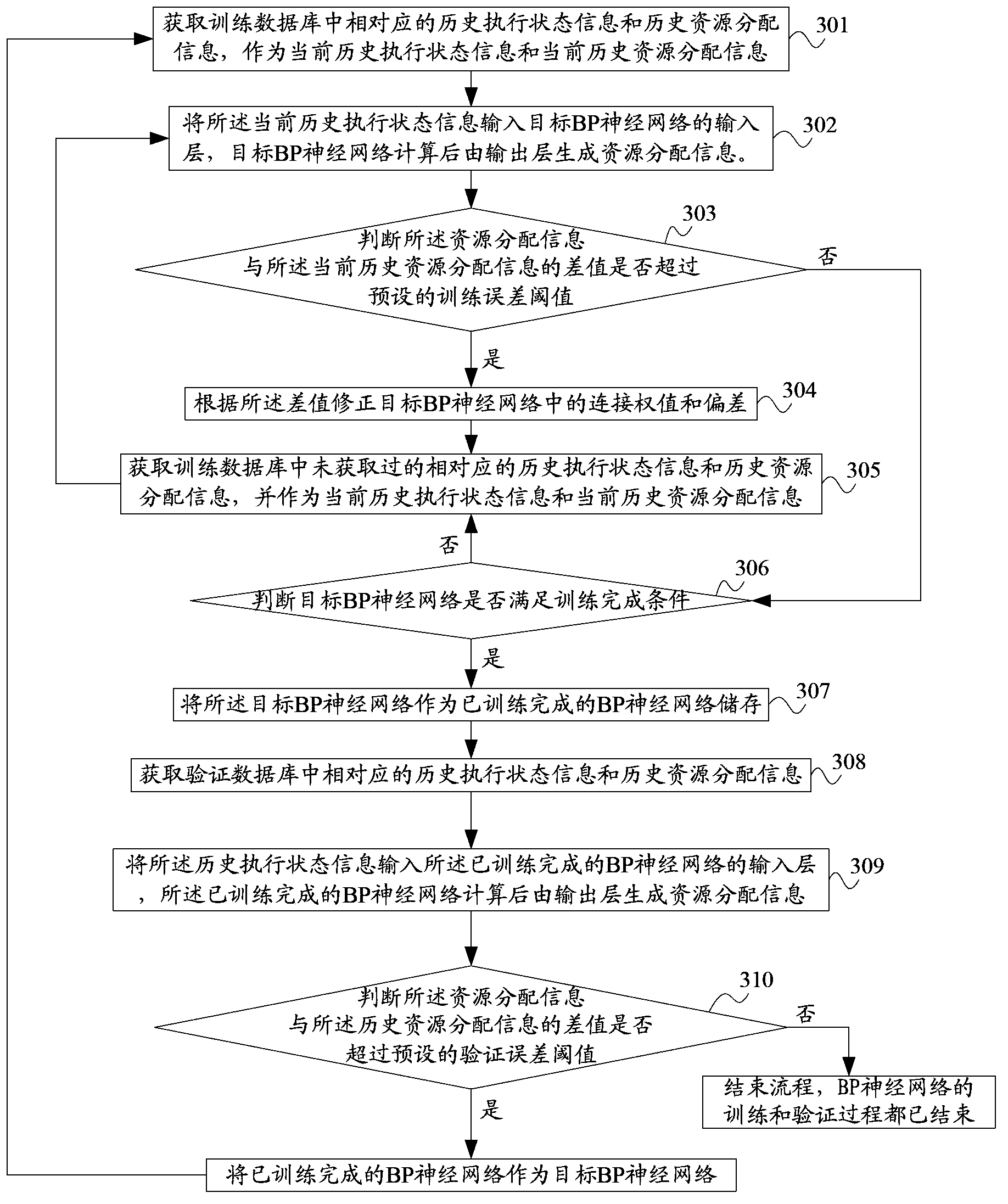

Method and device for cloud computing platform system to distribute resources to task

ActiveCN103699440AImprove resource utilizationIncrease the amount of concurrent tasksResource allocationNeural learning methodsNetwork generationResource utilization

The invention discloses a method and a device for a cloud computing platform system to distribute resources to a task. The method comprises the steps that a task from a task request queue is obtained; the task is analyzed, and the basic resource requiring information of the task is obtained; resource distribution information is generated by a resource distribution strategy according to the basic resource requiring information; the resource distribution strategy is a trained back propagation (BP) neural network; the training is as follows: a historically executed state information is input into a target BP neural network; if the difference between the generated resource distribution information and historical resource distribution information exceeds a preset training error threshold, the target BP neural network is revised, so that the difference between resource distribution information which is output after the training and the historical resource distribution information does not exceed the training error threshold; the resources are distributed to the task according to the resource distribution information. According to the method and the device for the cloud computing platform system to distribute the resources to the task, the system distributes the resources according to the resource distribution information generated by the BP neural network, so that the resource utilization rate of the system is improved, and the number of concurrent tasks and the throughput of the tasks are increased.

Owner:BEIJING SOHU NEW MEDIA INFORMATION TECH

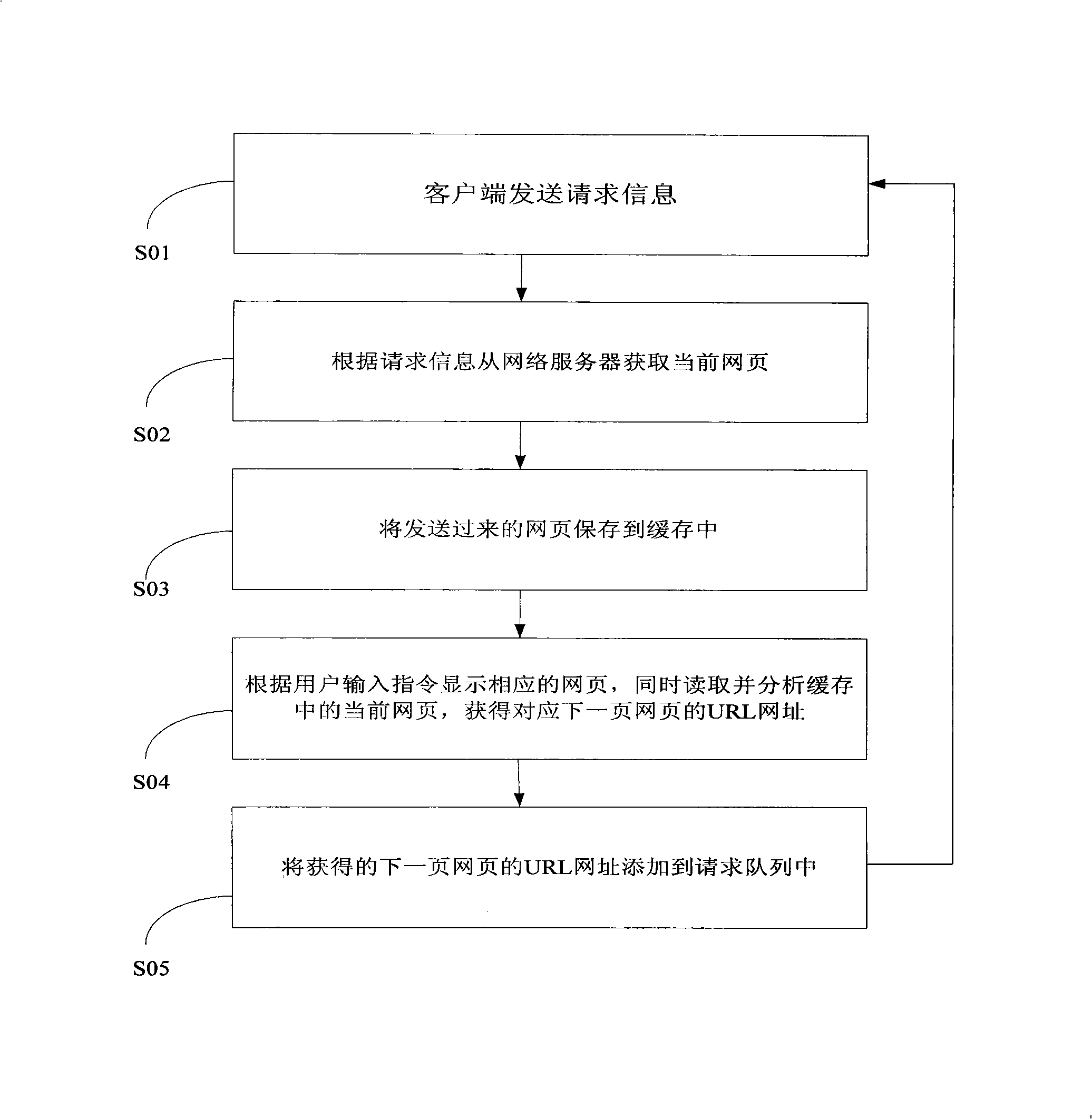

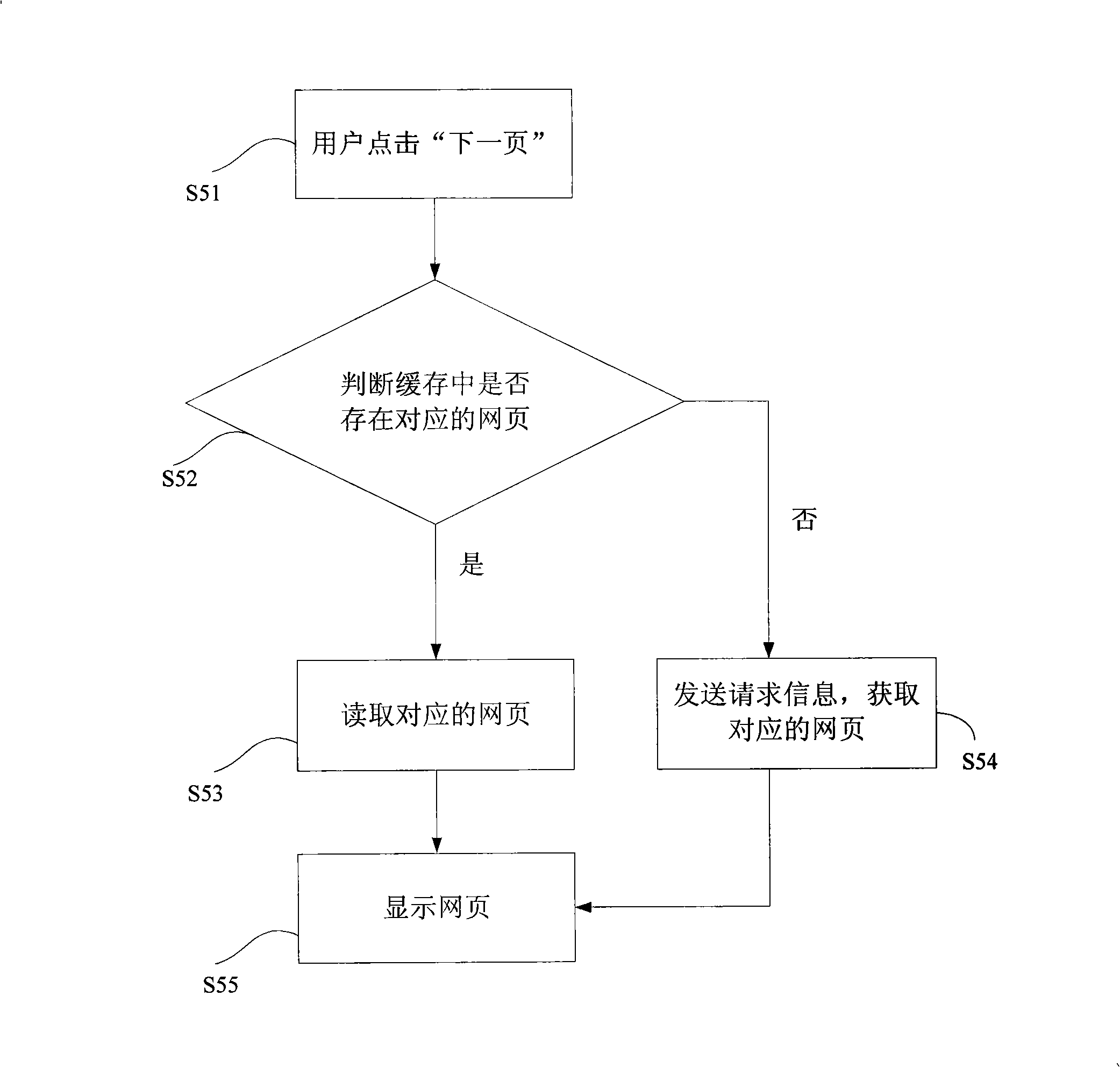

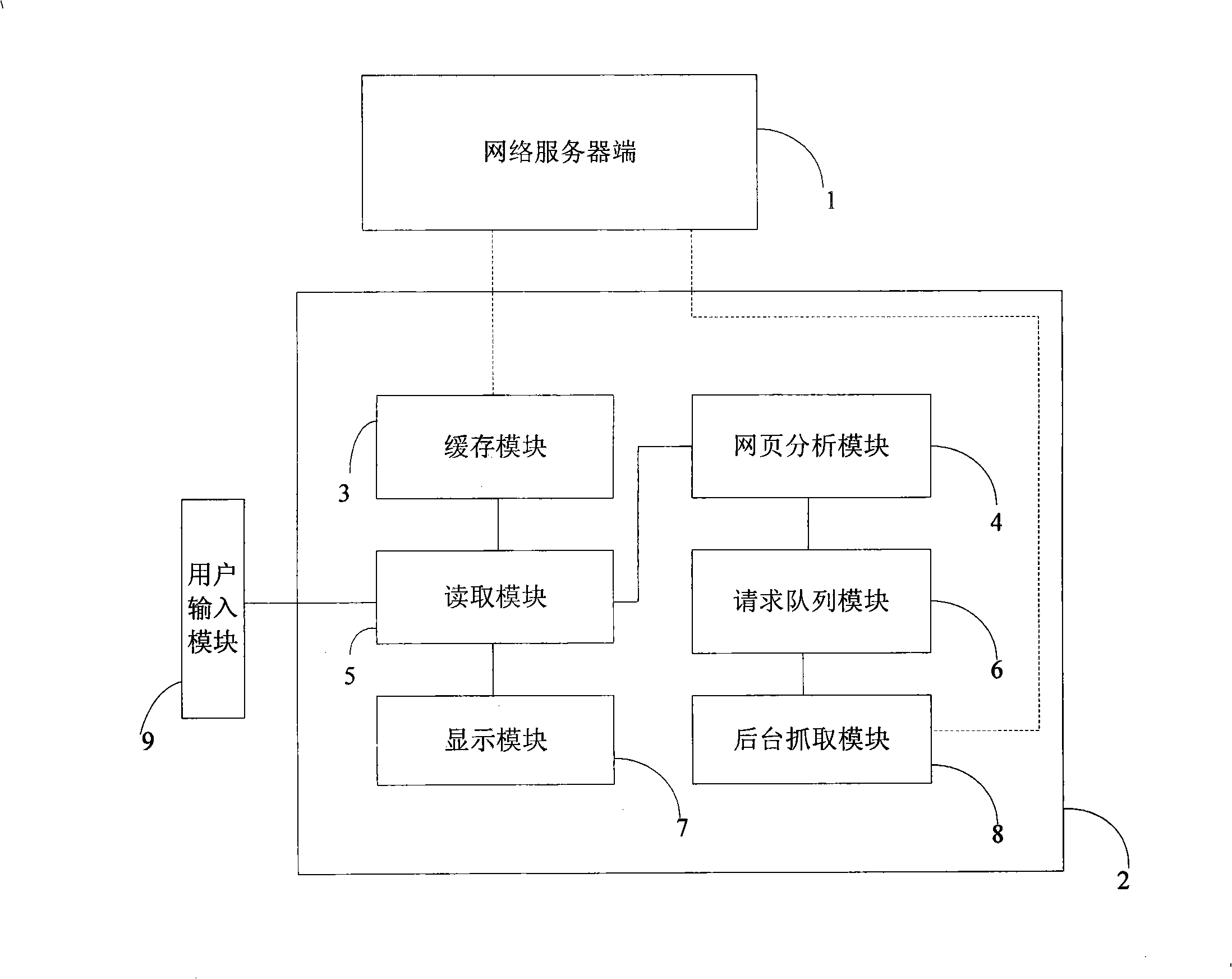

Method and system for pre-reading web page by micro-browser intelligently

InactiveCN101325602AQuick ViewImprove the efficiency of reading and browsing the webRadio/inductive link selection arrangementsData switching networksUser inputUniform resource locator

The invention provides a method to pre-read web page intelligently by a micro browser and the system thereof. The method to pre-read web page intelligently by a micro browser comprises the following steps: A. receiving the request message from a client-side, B. acquiring a current web page from network server according the request message; C. storing the acquired current web page into buffer memory; D. according to the client demand, displaying, reading and analyzing the current in the buffer memory to acquiring the URL address of the next corresponding web page; E. adding the acquired next corresponding URL address into request queue and going to STEP A. The system for micro browser to pre-read web page intelligently comprises a network server and a client-side. The client-side consists of a web page analyzing module, a request queue module, a buffer memory module, a reading module and a display module. By analyzing the web page intelligently to acquiring and the next corresponding web page and storing it into the buffer memory, the invention helps the mobile phone user improve web page browsing speed.

Owner:GUANGZHOU UCWEB COMP TECH CO LTD

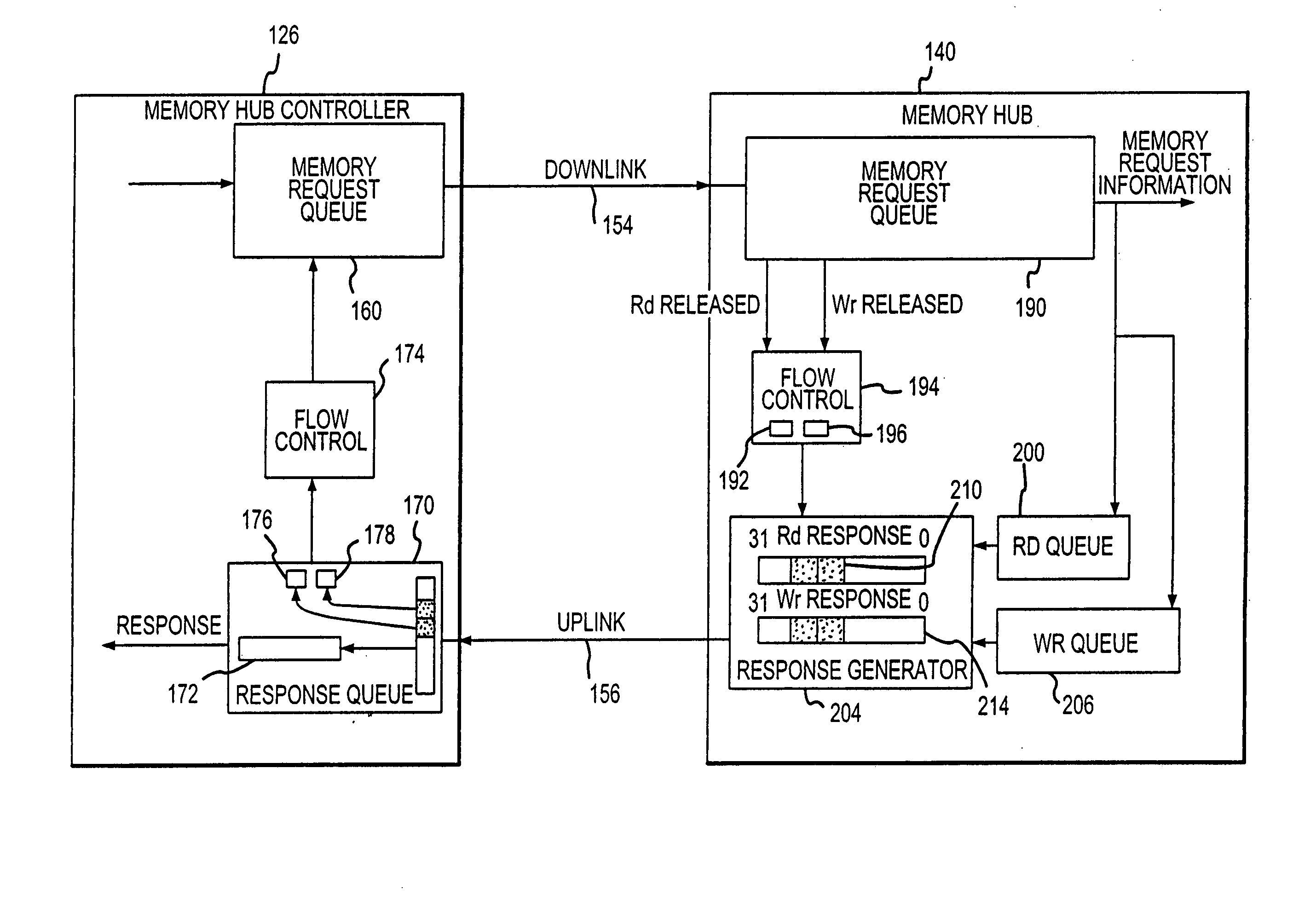

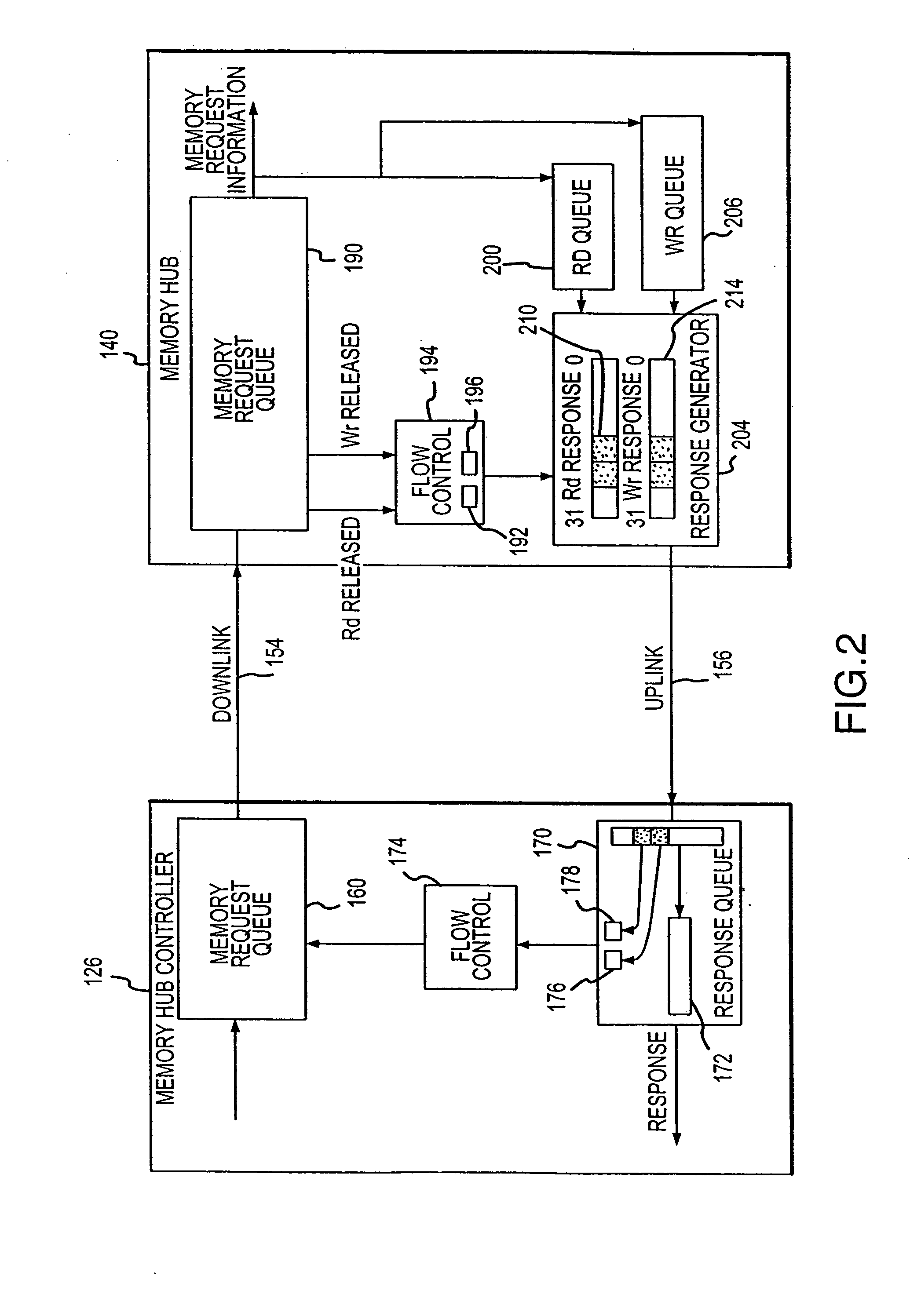

Method and system for controlling memory accesses to memory modules having a memory hub architecture

A computer system includes a memory hub controller coupled to a plurality of memory modules. The memory hub controller includes a memory request queue that couples memory requests and corresponding request identifier to the memory modules. Each of the memory modules accesses memory devices based on the memory requests and generates response status signals from the request identifier when the corresponding memory request is serviced. These response status signals are coupled from the memory modules to the memory hub controller along with or separate from any read data. The memory hub controller uses the response status signal to control the coupling of memory requests to the memory modules and thereby control the number of outstanding memory requests in each of the memory modules.

Owner:ROUND ROCK RES LLC

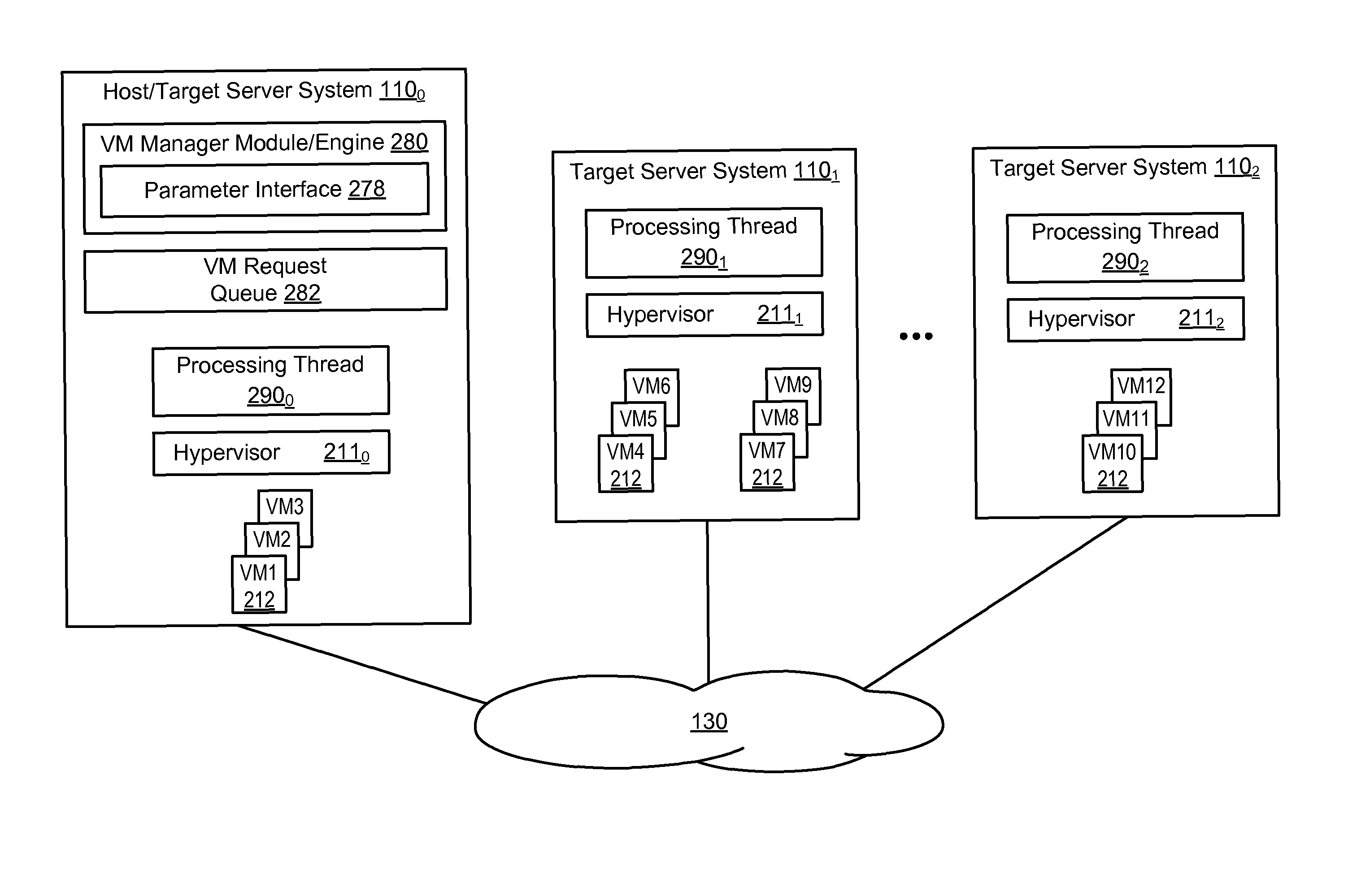

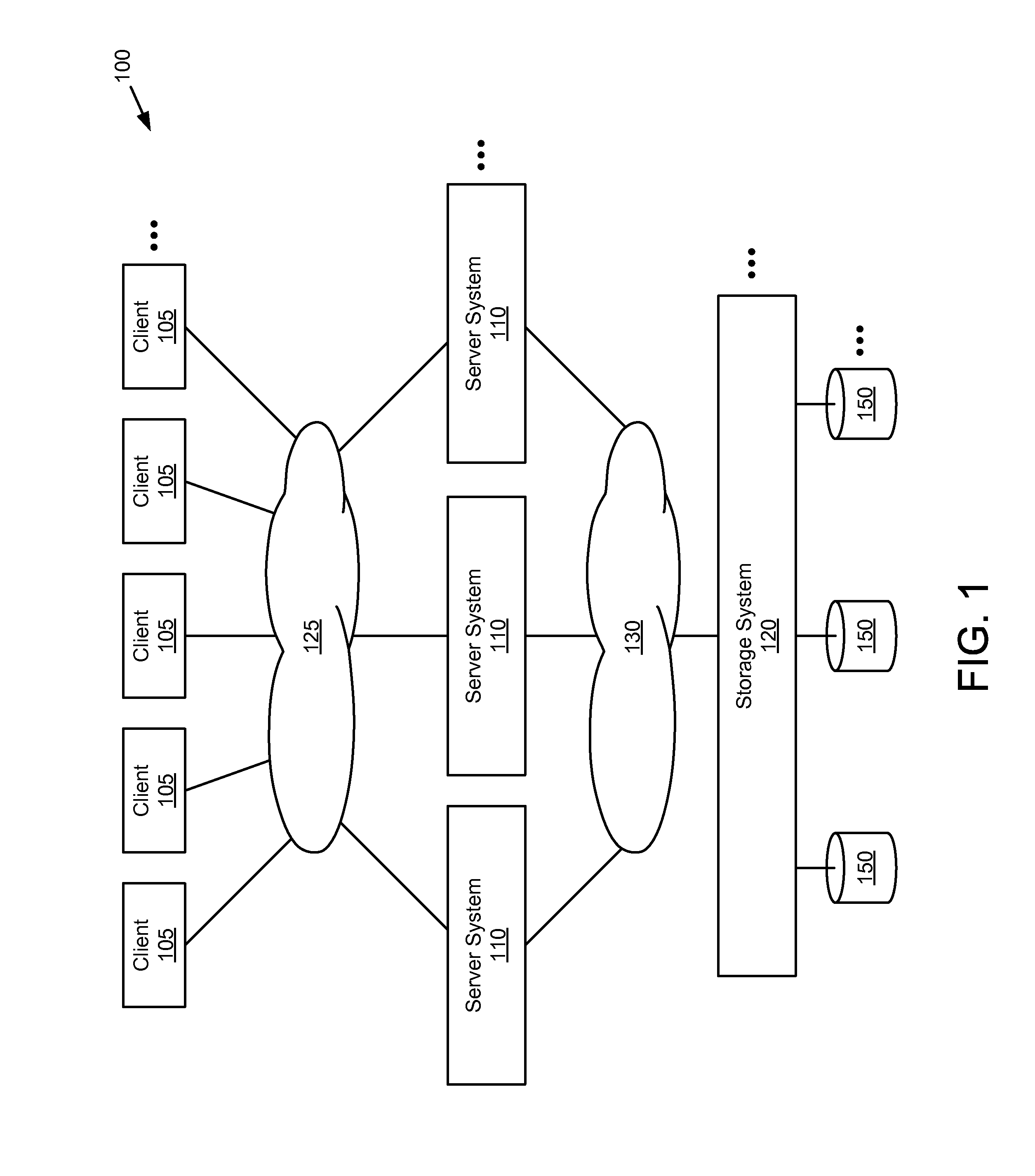

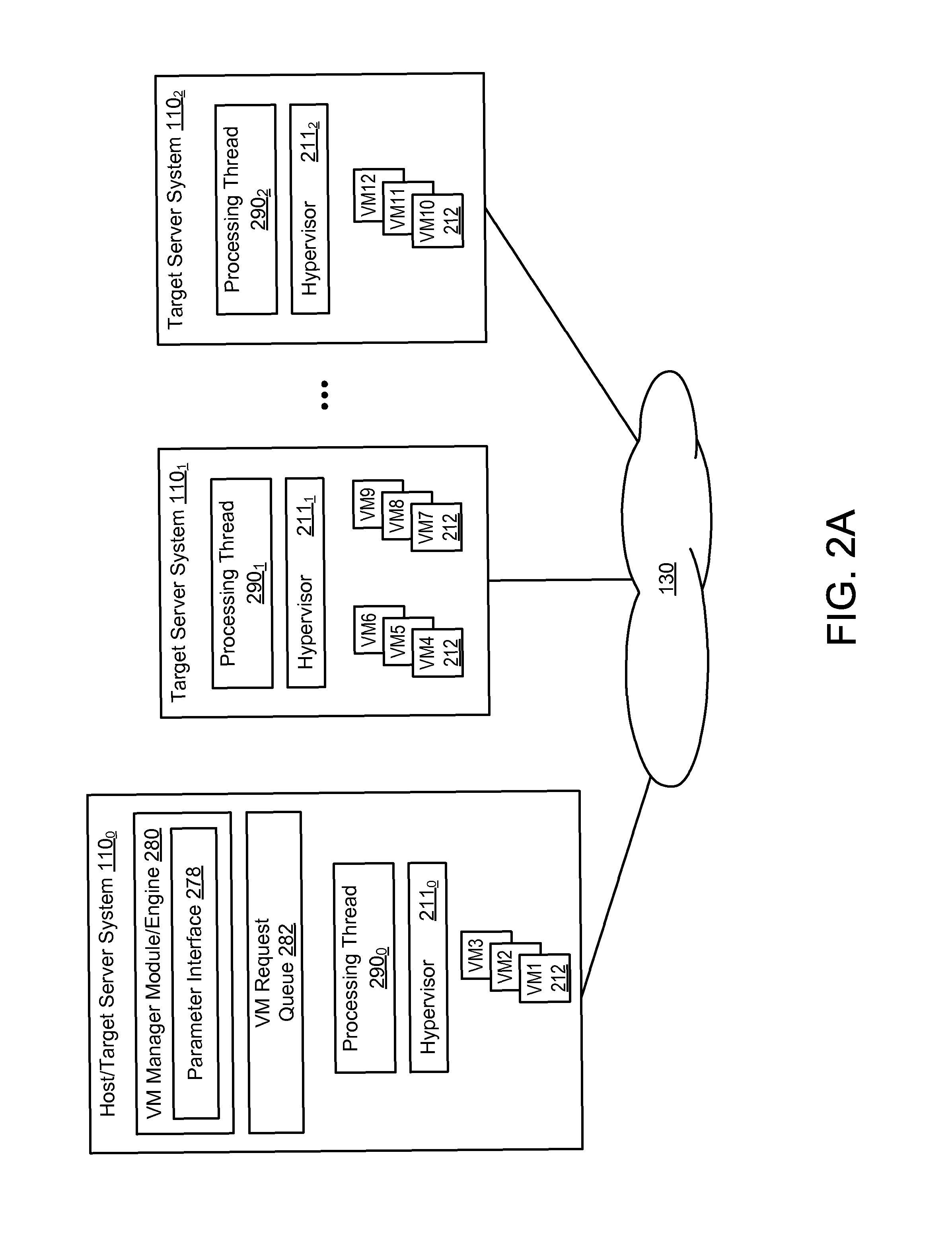

System and method for dynamic allocation of virtual machines in a virtual server environment

ActiveUS8261268B1Higher/faster performance capabilitiesSoftware simulation/interpretation/emulationMemory systemsVirtual serversNatural selection

A system and method for dynamically producing virtual machines (VMs) across a plurality of servers in the virtual server environment is provided. A single VM request queue is produced comprising VM requests for producing the plurality of VMs. A processing thread is produced and assigned for each server and retrieves VM requests from the VM request queue and produces VMs only on the assigned server according to the retrieved VM requests. Each processing thread may be configured for retrieving VM requests and producing VMs without any programmed delays, whereby the rate at which a processing thread produces VMs on its assigned server is a function of the performance capabilities of the assigned server. This dynamic allocation of VMs based on such a “natural selection” technique may provide an appropriately balanced allocation of VMs based on the performance capabilities of each server in the virtual server environment.

Owner:NETWORK APPLIANCE INC

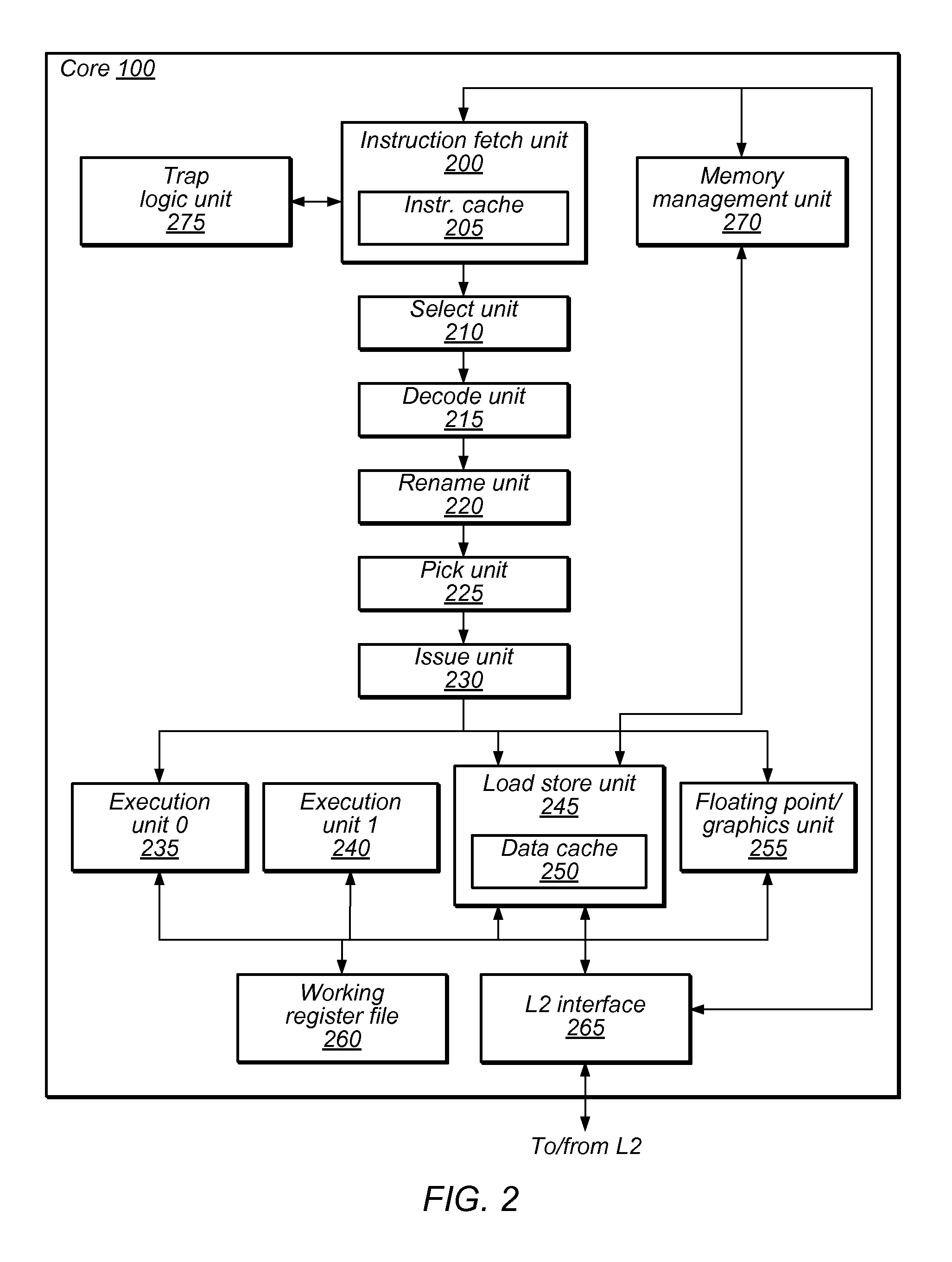

System and Method to Manage Address Translation Requests

ActiveUS20100332787A1Efficient managementEfficient recyclingMemory architecture accessing/allocationMemory adressing/allocation/relocationManagement unitTranslation table

A system and method for servicing translation lookaside buffer (TLB) misses may manage separate input and output pipelines within a memory management unit. A pending request queue (PRQ) in the input pipeline may include an instruction-related portion storing entries for instruction TLB (ITLB) misses and a data-related portion storing entries for potential or actual data TLB (DTLB) misses. A DTLB PRQ entry may be allocated to each load / store instruction selected from the pick queue. The system may select an ITLB- or DTLB-related entry for servicing dependent on prior PRQ entry selection(s). A corresponding entry may be held in a translation table entry return queue (TTERQ) in the output pipeline until a matching address translation is received from system memory. PRQ and / or TTERQ entries may be deallocated when a corresponding TLB miss is serviced. PRQ and / or TTERQ entries associated with a thread may be deallocated in response to a thread flush.

Owner:ORACLE INT CORP

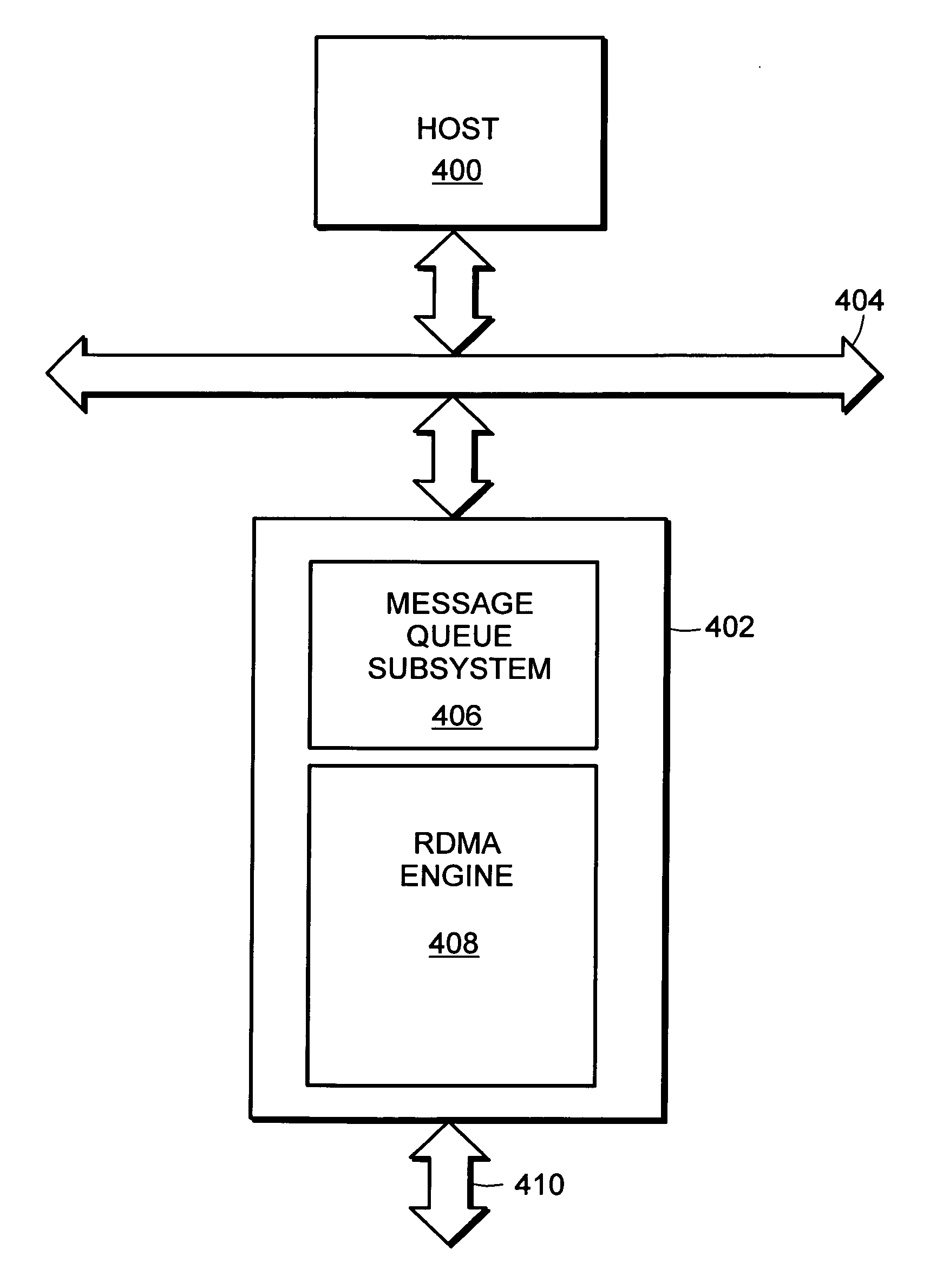

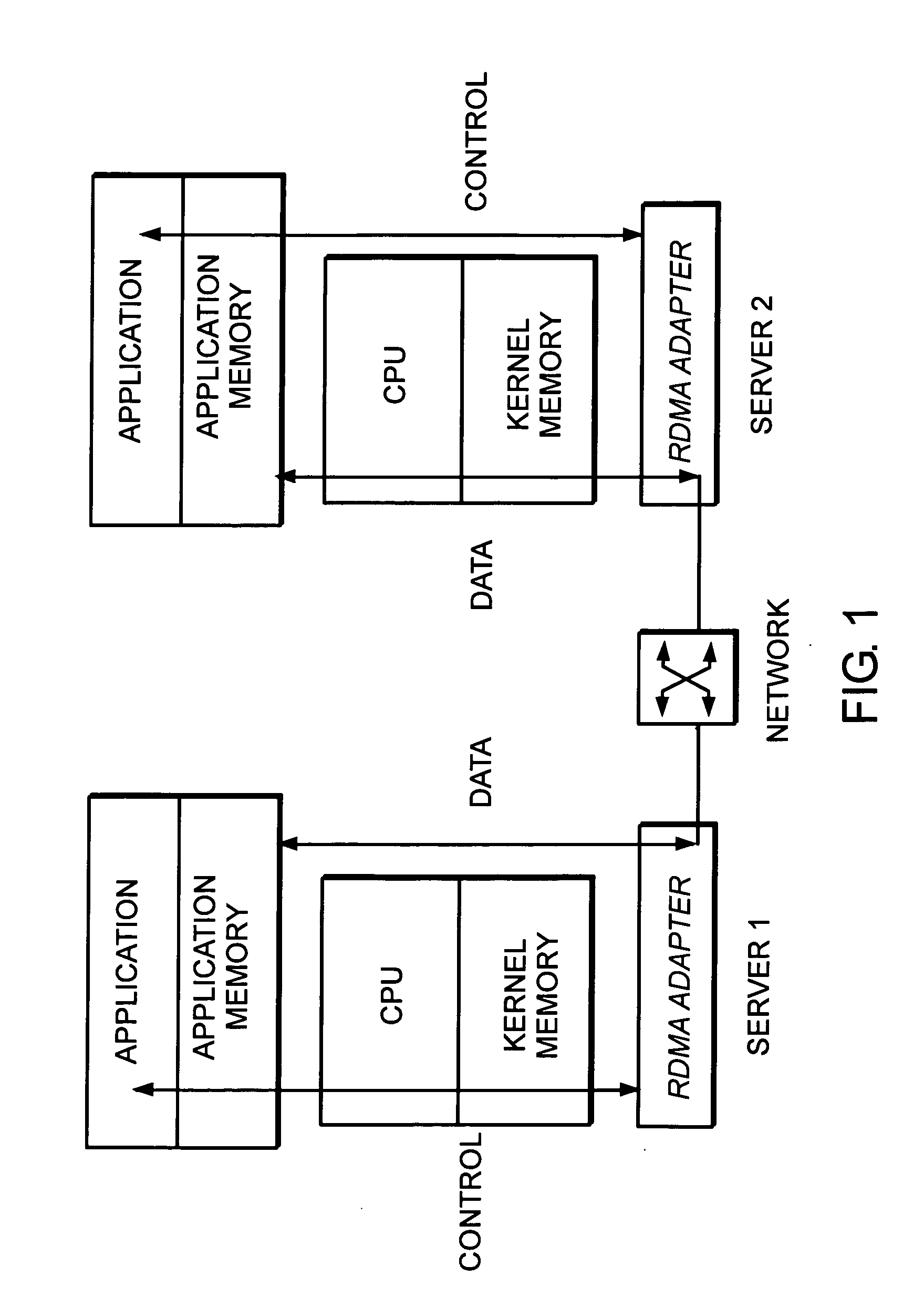

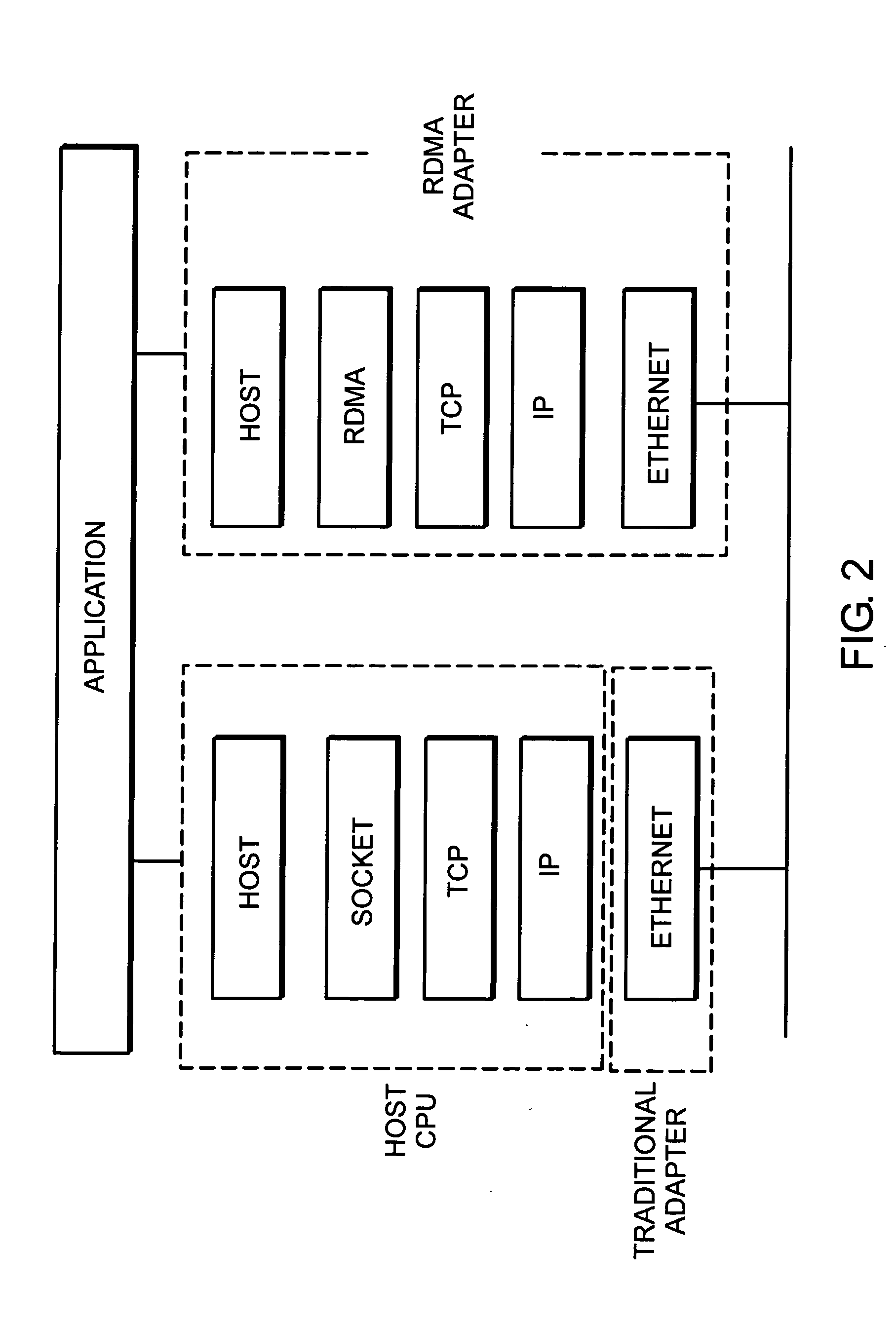

System and method for work request queuing for intelligent adapter

InactiveUS20050220128A1Effective supportData switching by path configurationNetwork connectionsMessage queueMemory address

A system and method for work request queuing for an intelligent network interface card or adapter. More specifically, the invention provides a method and system that efficiently supports an extremely large number of work request queues. A virtual queue interface is presented to the host, and supported on the “back end” by a real queue shared among many multiple virtual queues. A message queue subsystem for an RDMA capable network interface includes a memory mapped virtual queue interface. The queue interface has a large plurality of virtual message queues with each virtual queue mapped to a specified range of memory address space. The subsystem includes logic to detect work requests on a host interface bus to at least one of specified address ranges corresponding to one of the virtual queues and logic to place the work requests into a real queue that is memory based and shared among at least some of the plurality of virtual queues, and wherein real queue entries include indications of the virtual queue to which the work request was addressed.

Owner:AMMASSO

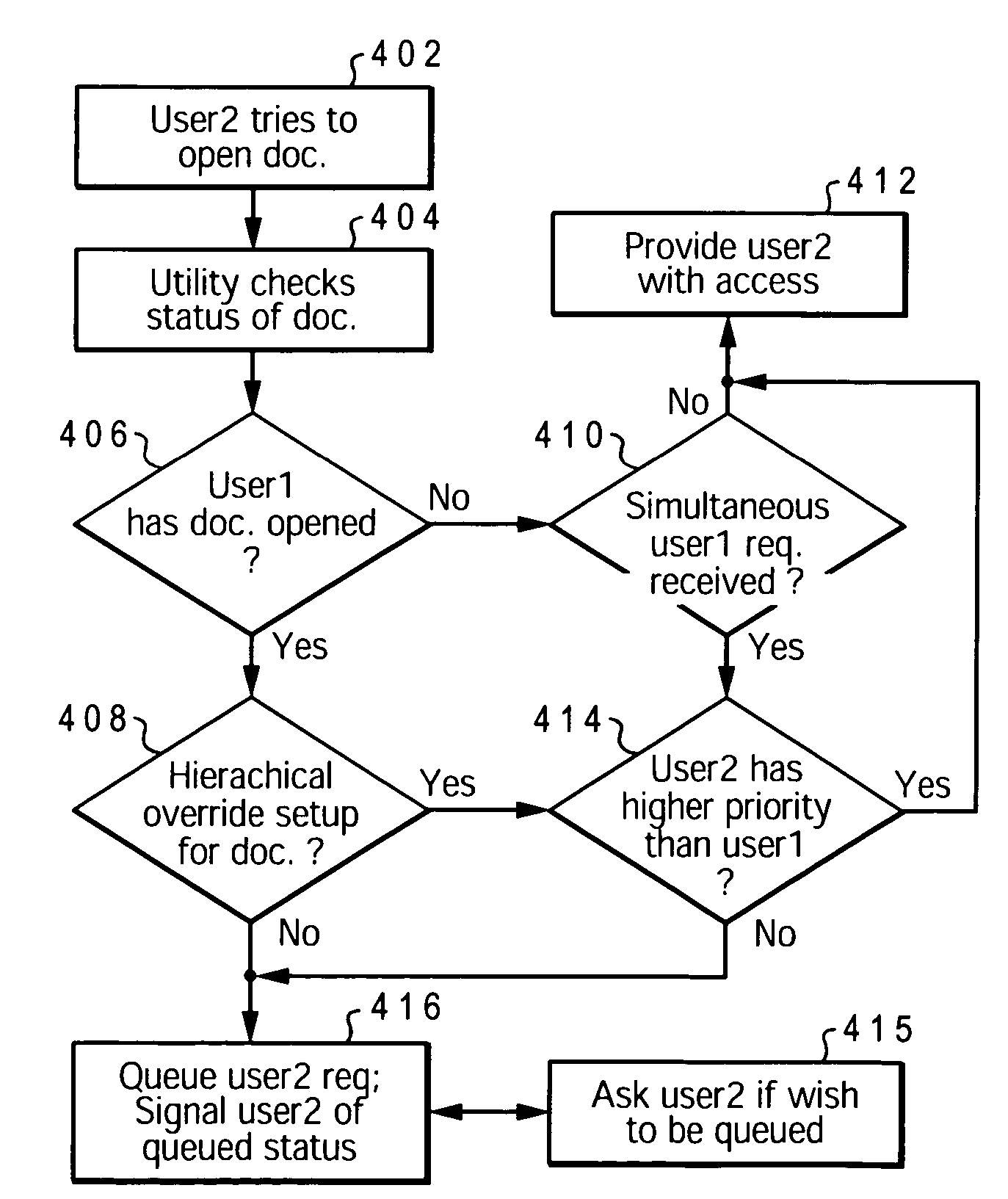

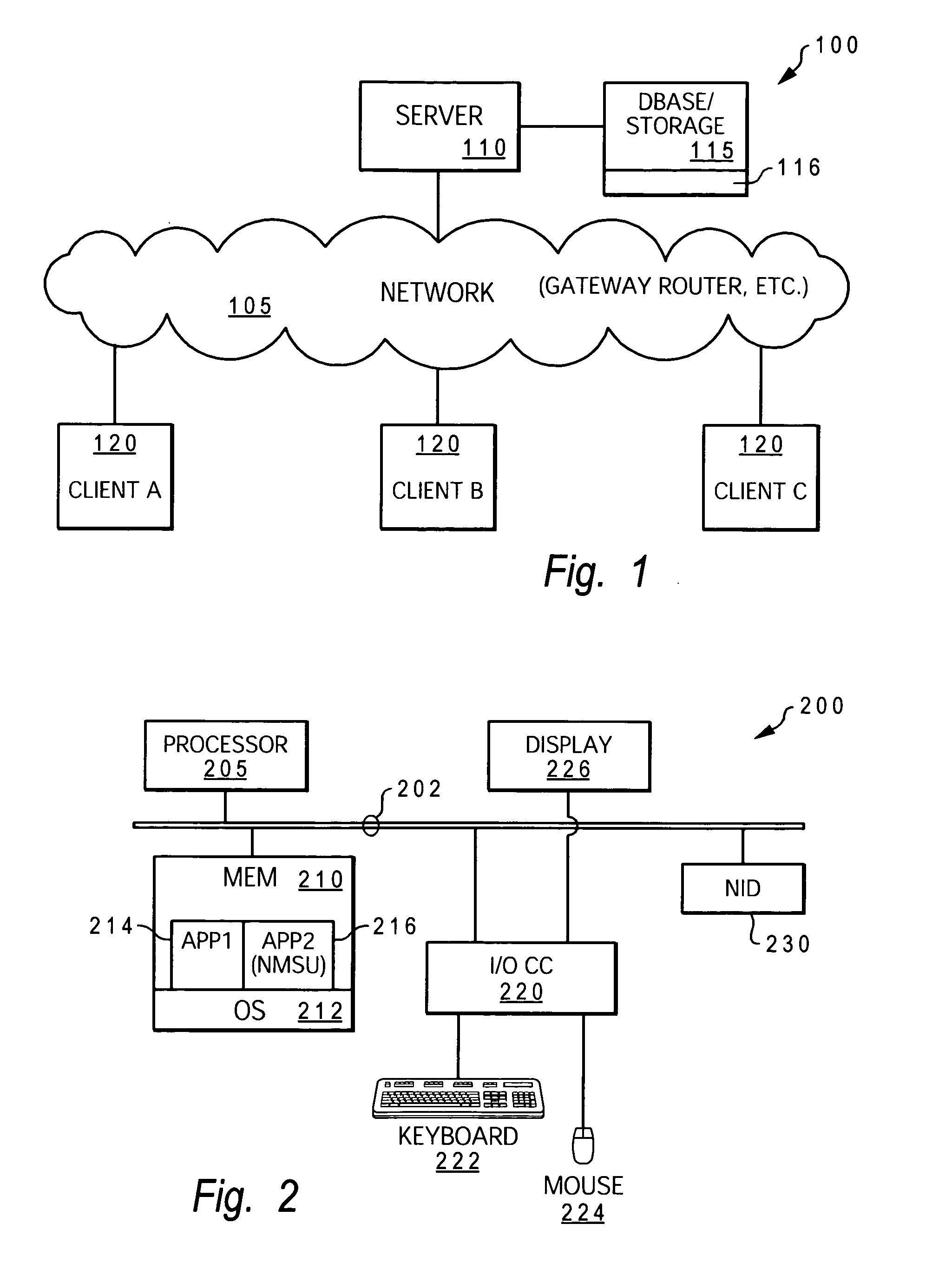

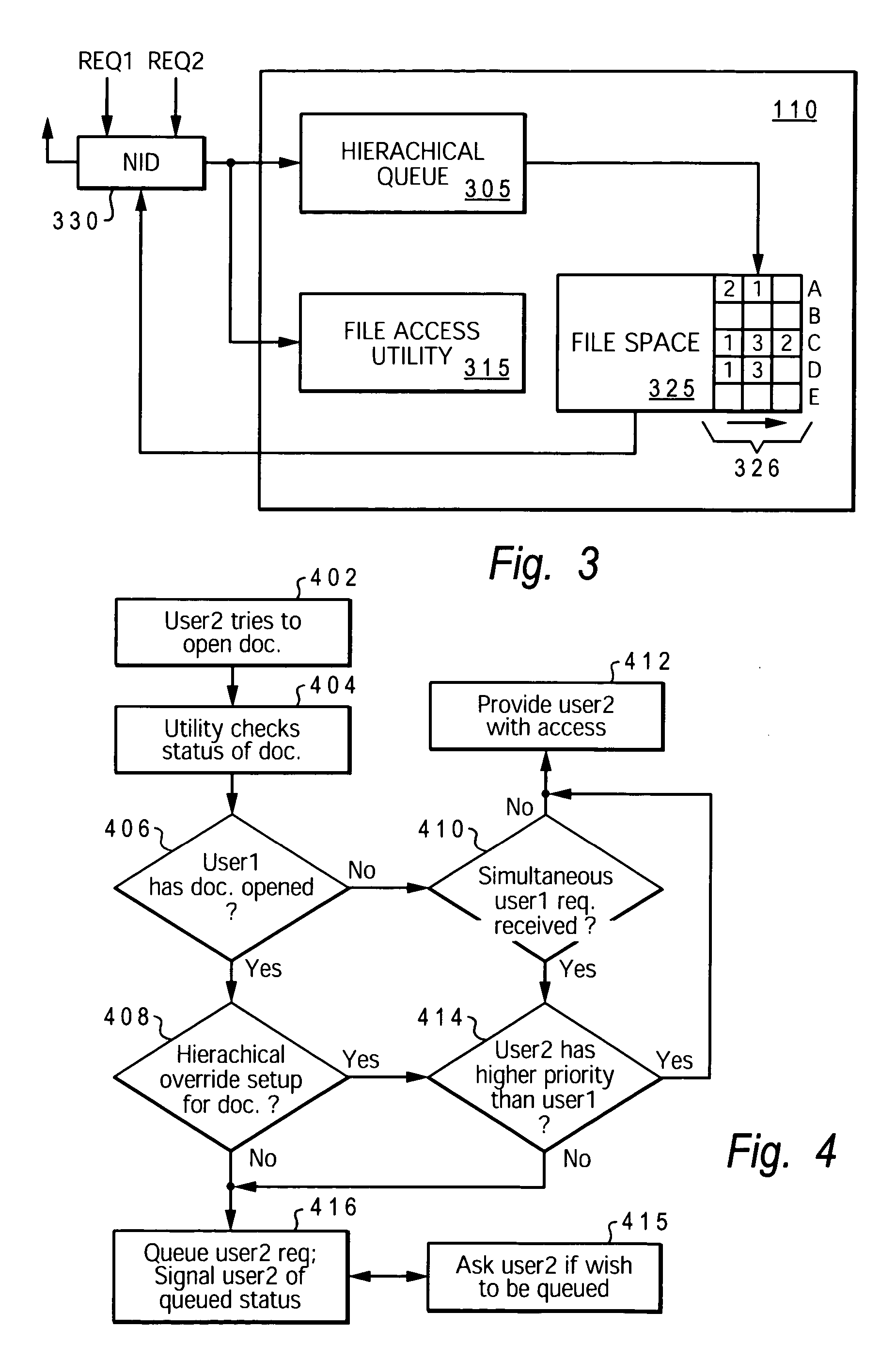

Managing hierarchical authority to access files in a shared database

InactiveUS20060155705A1Timely controlSpecial data processing applicationsFile systemsNetwork dataUser identifier

A method, system, and program product that enable dynamic scheduling / arranging of access by multiple users to a single electronic file. A network-database access management utility (NAMU) is provided, which manages / schedules network-level access to the electronic file. NAMU includes an access-request queue that schedules / arranges the user identifier (IDs) for each of multiple users that have requested access to the file while the file was checked out to a previous user. When the first user completes his access to the file and closes the file, an alert is generated for the next user in queue. This alert informs the next user that the first user has closed the file and that he / she may now access the electronic file.

Owner:IBM CORP

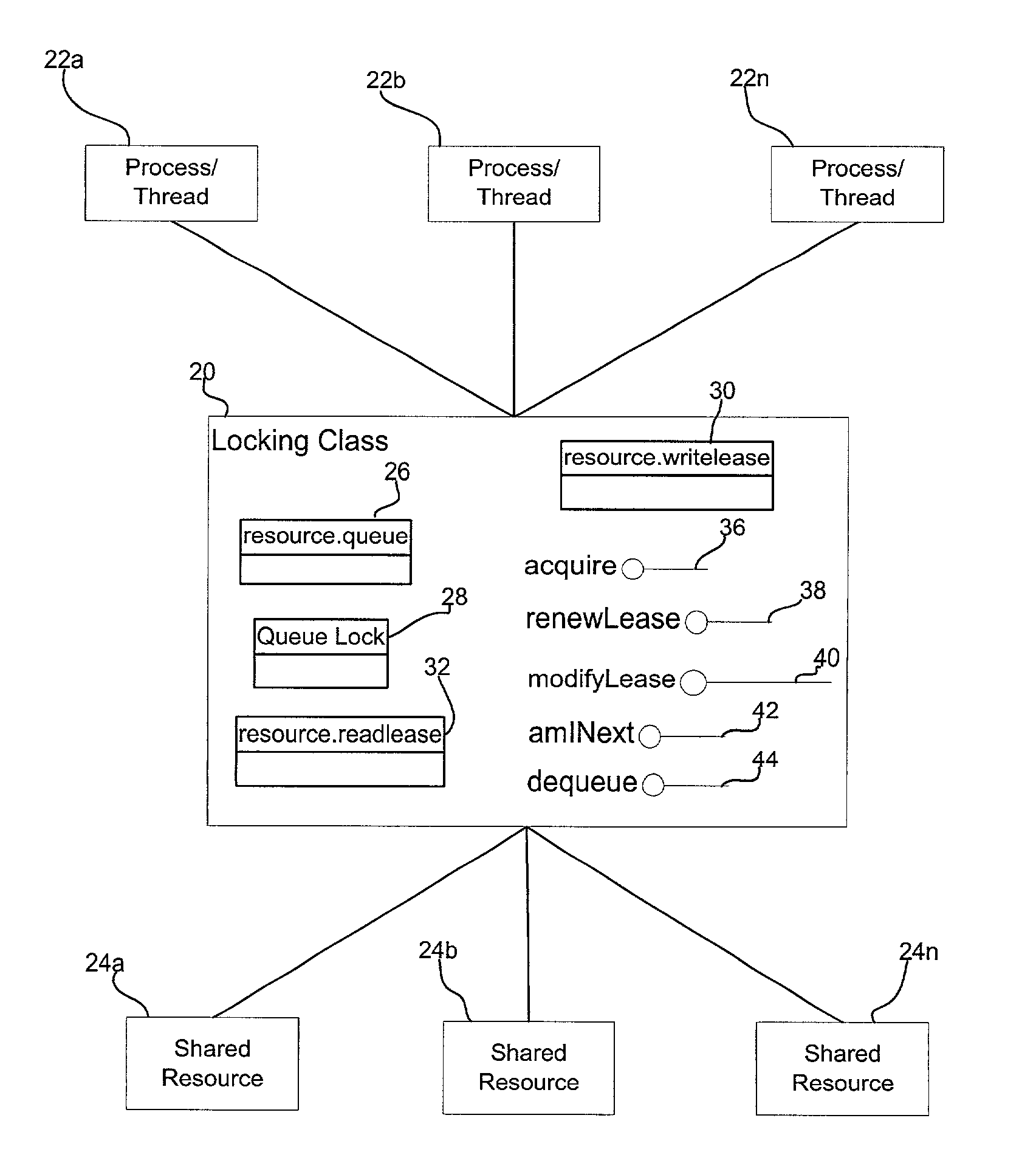

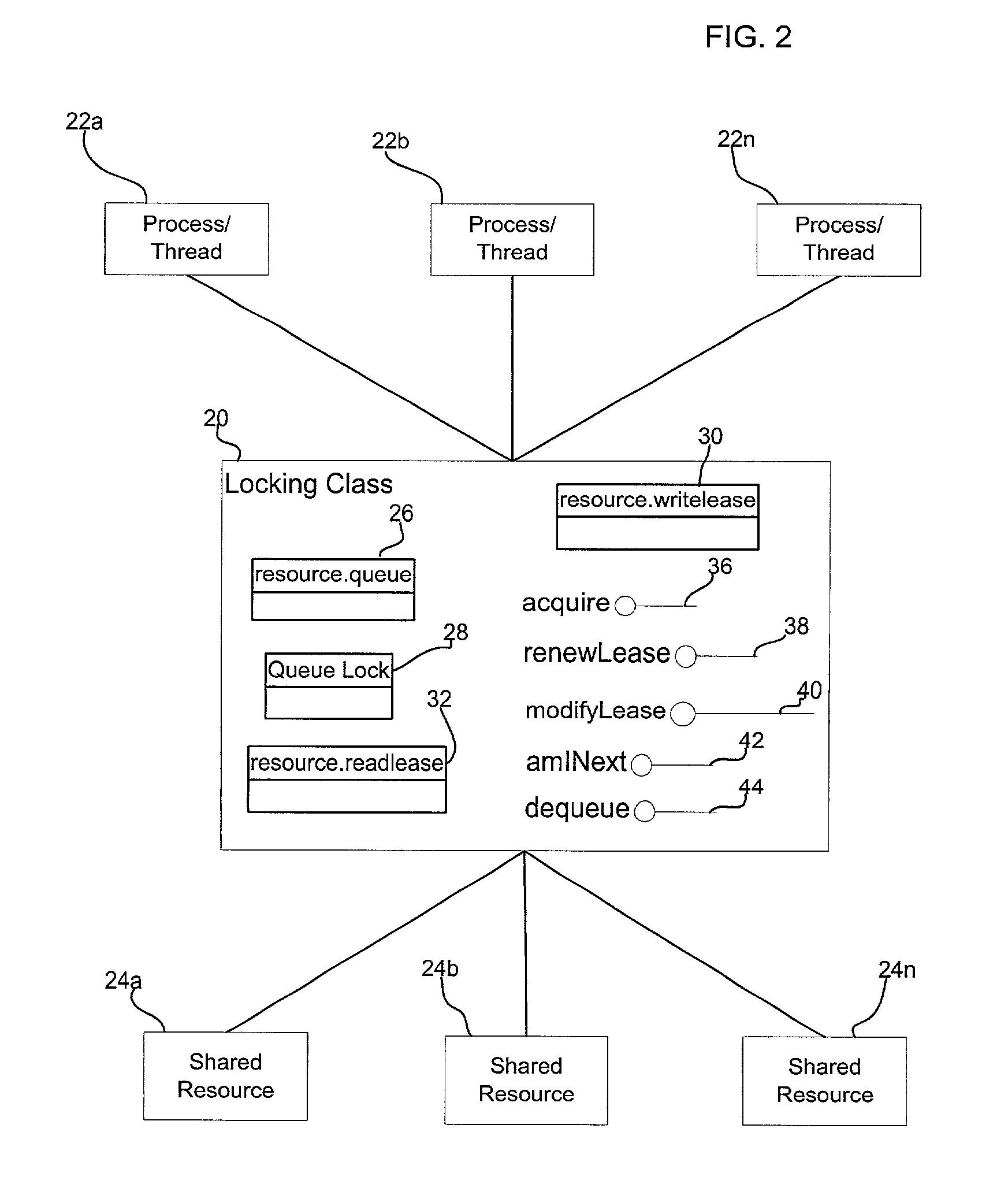

Method, system, program, and data structure for implementing a locking mechanism for a shared resource

ActiveUS7107267B2Data processing applicationsProgram synchronisationLocking mechanismShared resource

Provided are a method, system, program, and data structure for implementing a locking mechanism to control access to a shared resource. A request is received to access the shared resource. A determination is made of whether a first file has a first name. The first file is renamed to a second name if the first file has the first name. A second file is updated to indicate the received request in a queue of requests to the shared resource if the first file is renamed to the second name. An ordering of the requests in the queue is used to determine whether access to the shared resource is granted to the request. The first file is renamed to the first name after the second file is updated.

Owner:ORACLE INT CORP

Request queue management

InactiveUS7146233B2Digital data information retrievalResource allocationQueue management systemVirtual servers

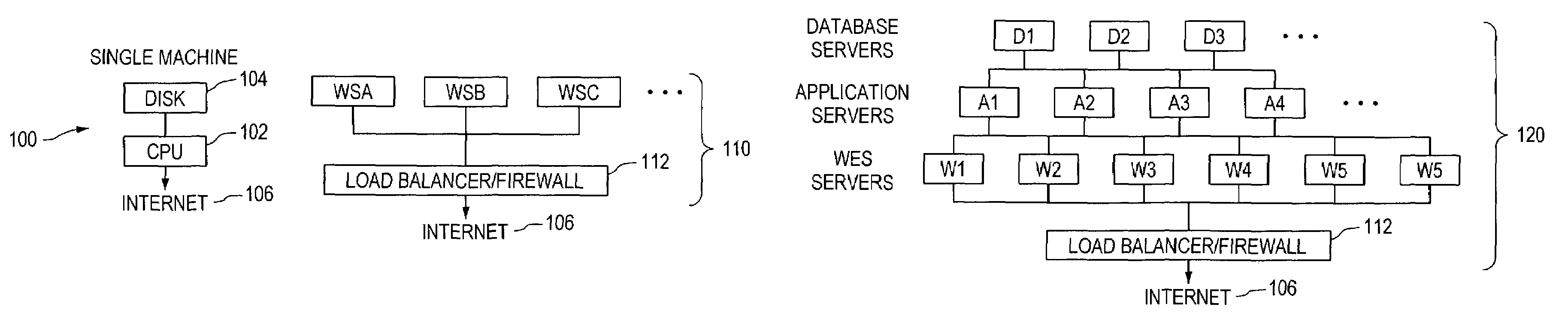

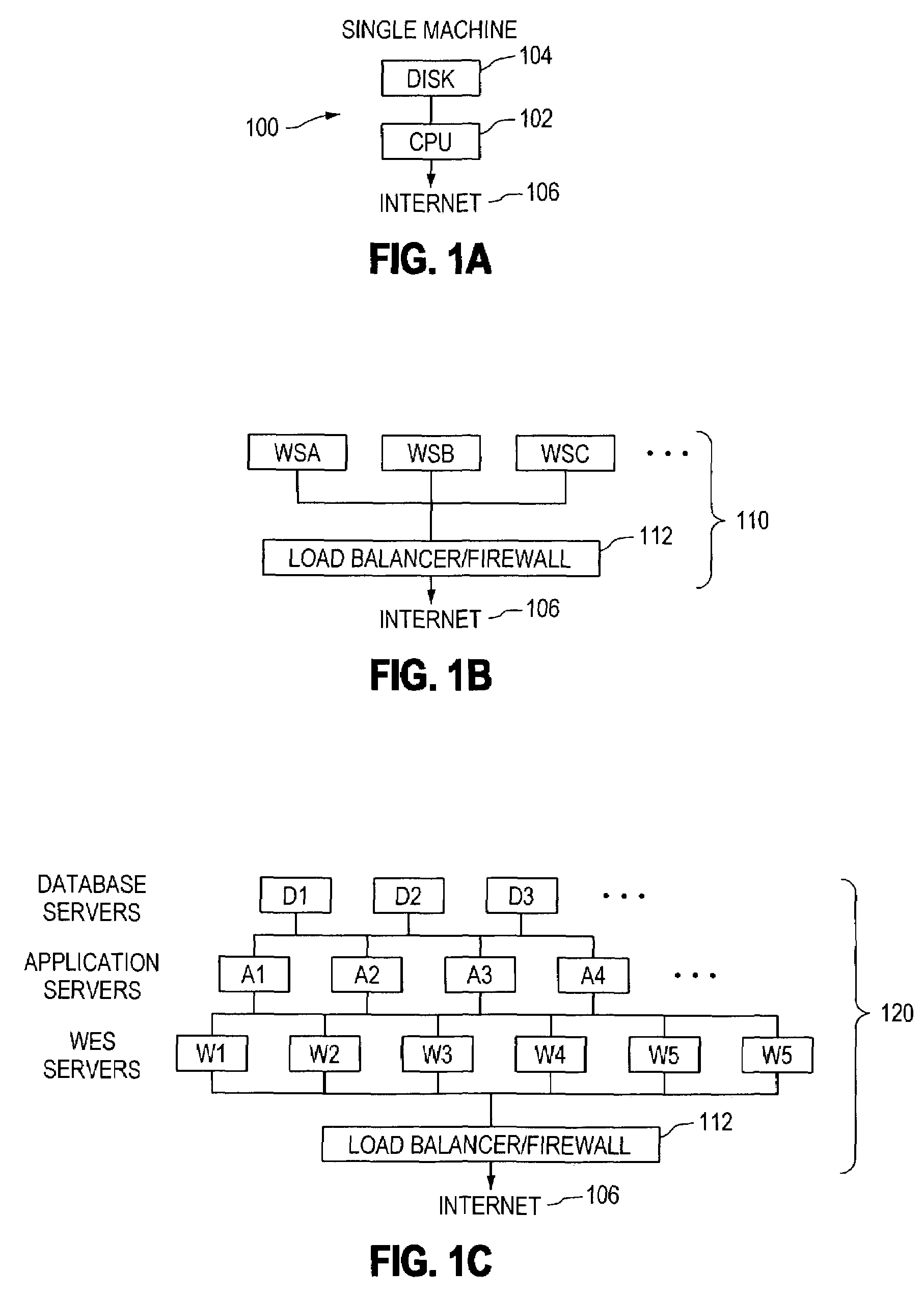

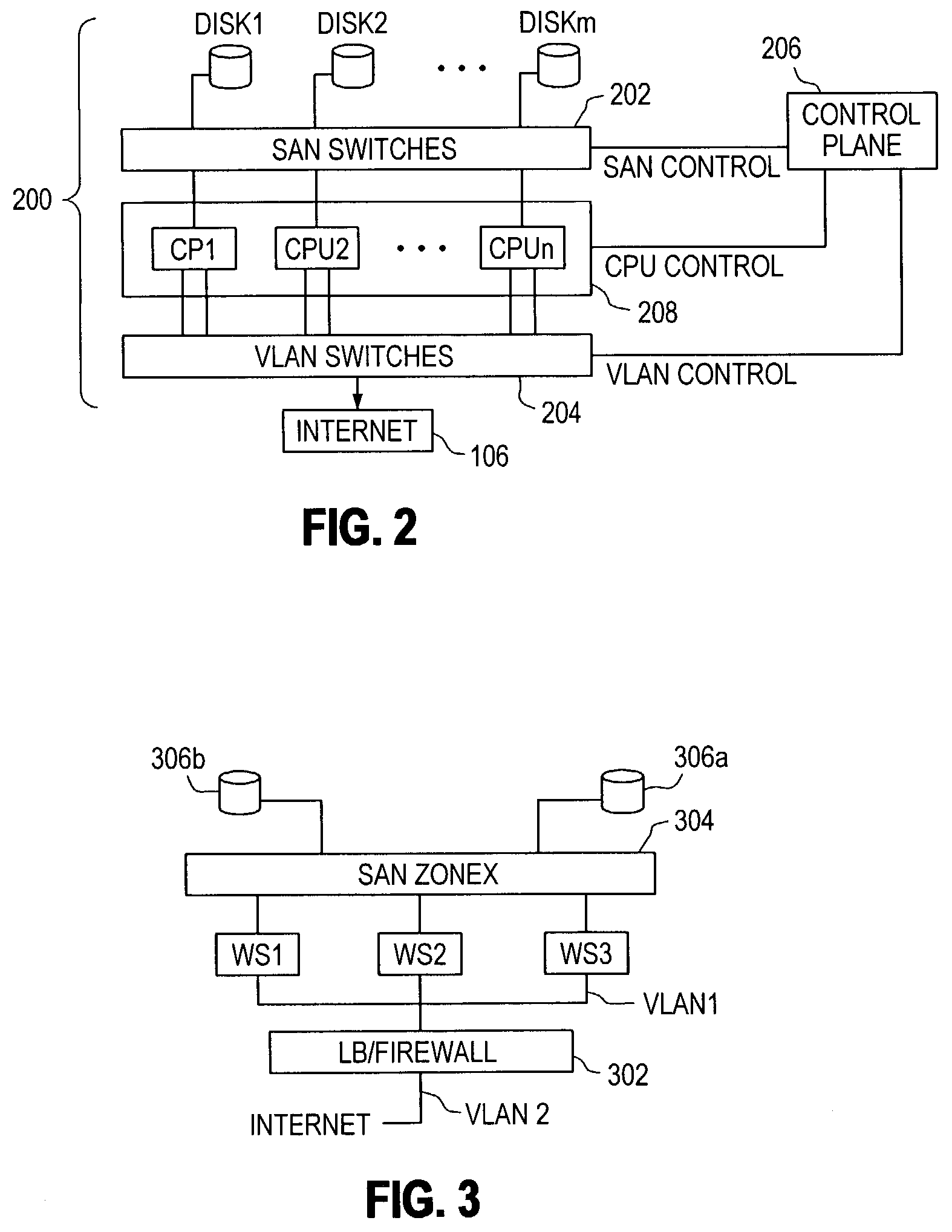

Methods and apparatus providing, controlling and managing a dynamically sized, highly scalable and available server farm are disclosed. A Virtual Server Farm (VSF) is created out of a wide scale computing fabric (“Computing Grid”) which is physically constructed once and then logically divided up into VSFs for various organizations on demand. Each organization retains independent administrative control of a VSF. A VSF is dynamically firewalled within the Computing Grid. Allocation and control of the elements in the VSF is performed by a control plane connected to all computing, networking, and storage elements in the computing grid through special control ports. The internal topology of each VSF is under control of the control plane. A request queue architecture is also provided for processing work requests that allows selected requests to be blocked until required human intervention is satisfied.

Owner:ORACLE INT CORP

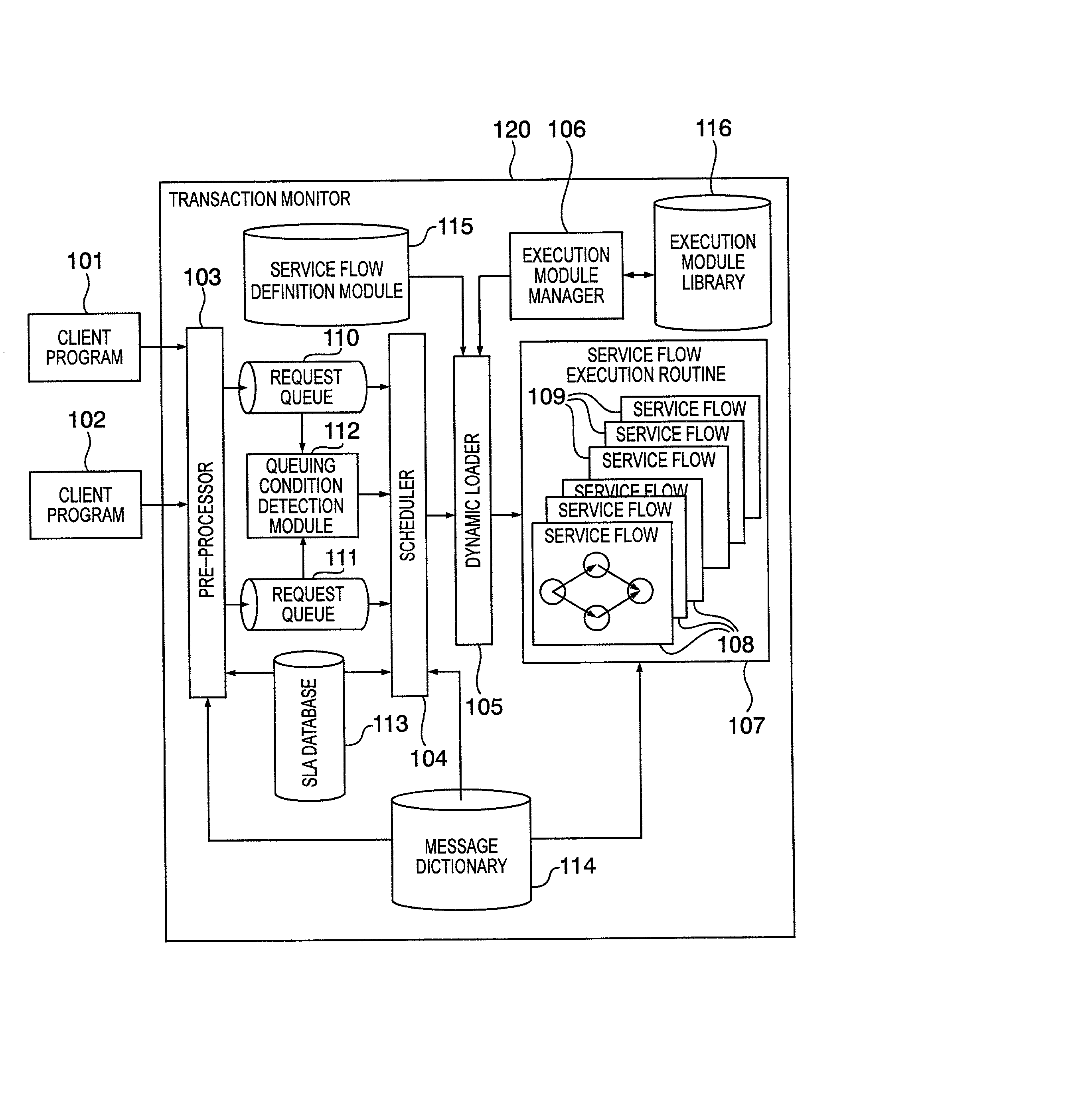

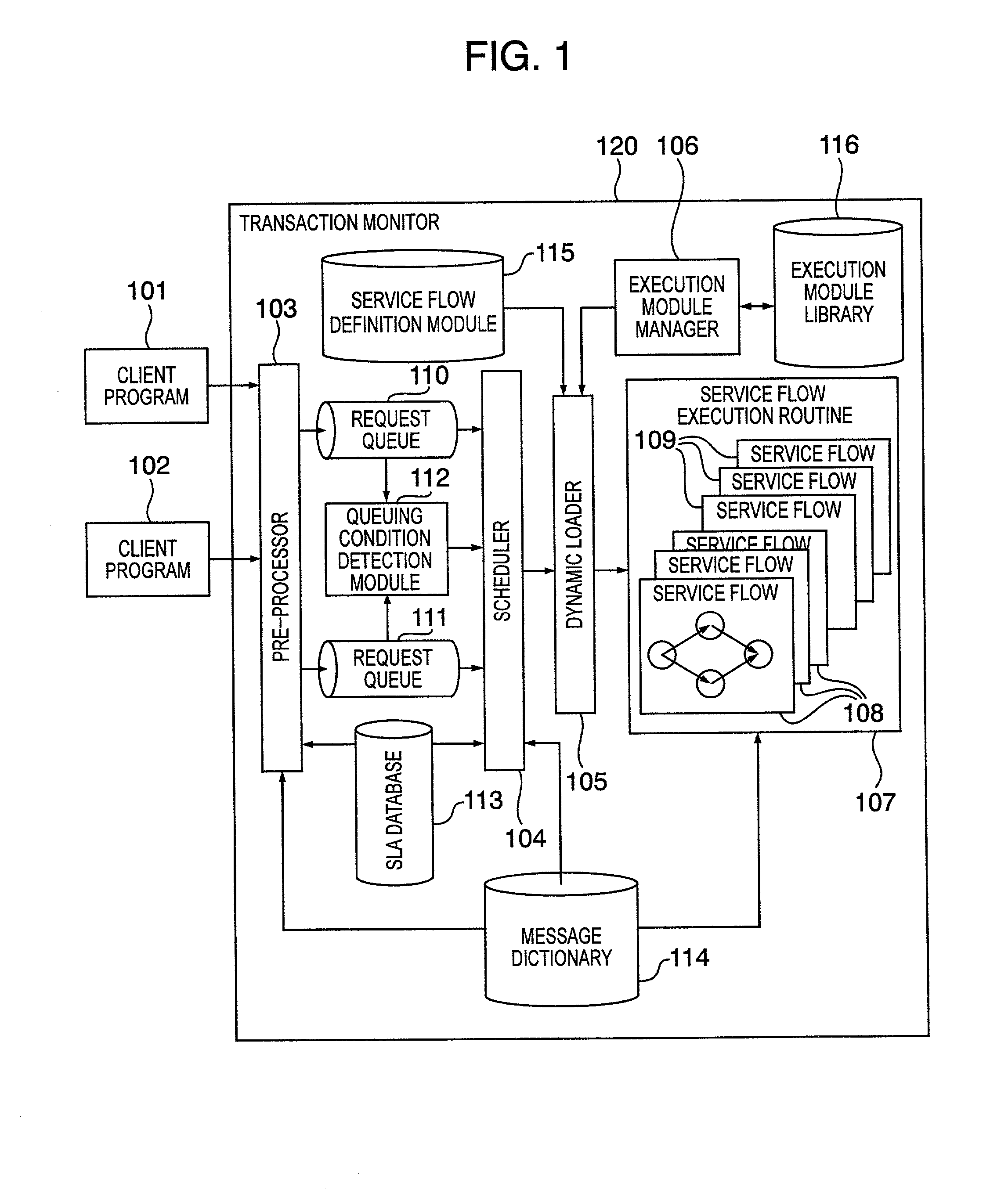

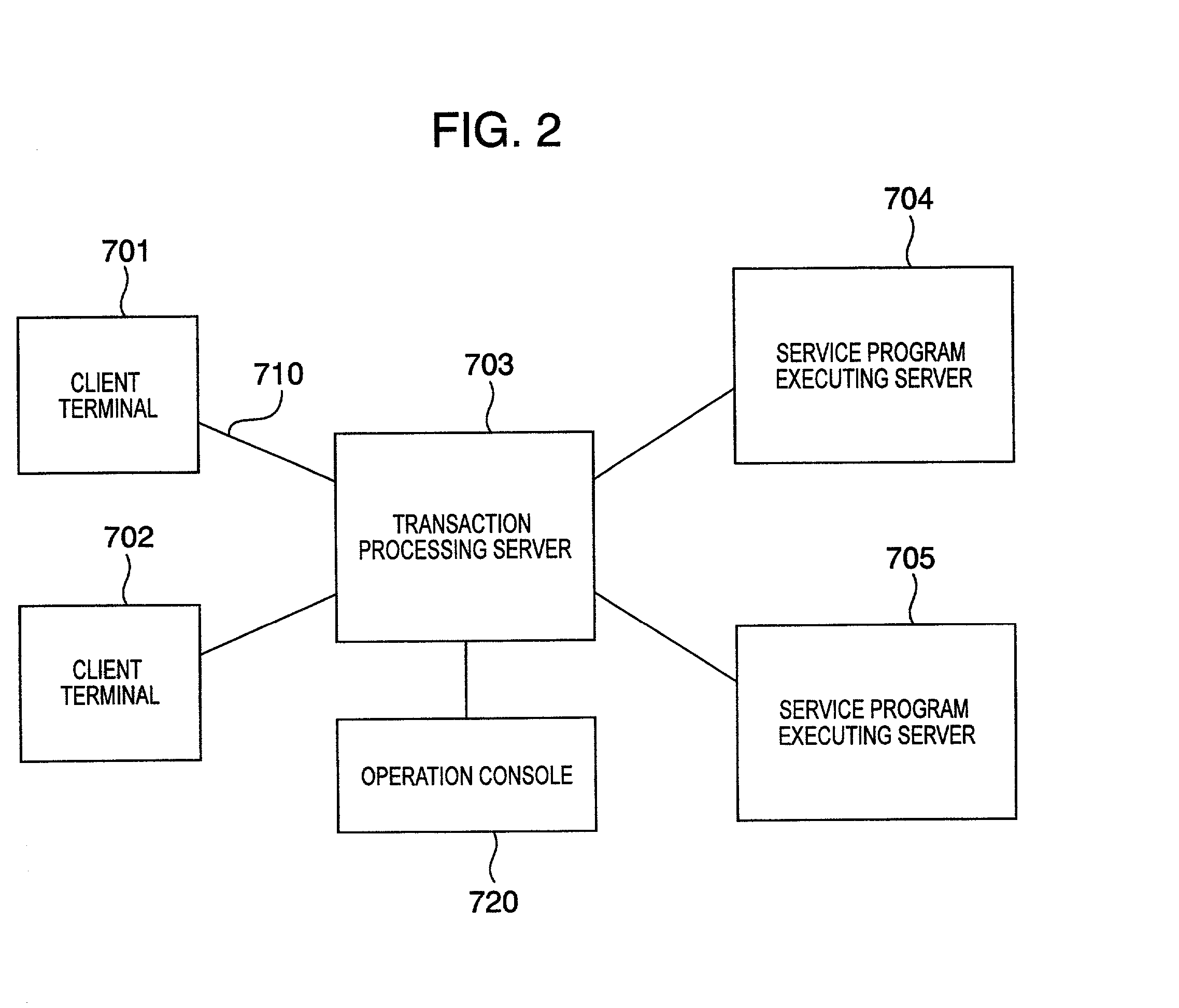

Transaction processing system having service level control capabilities

InactiveUS20020107743A1Improve processing efficiencyMore transactionResource allocationDigital computer detailsClient-sideTransaction processing system

There is provided a transaction processing system for providing plural services according to service level contracts, the system comprising: an SLA database for storing contract conditions defined for each of the services provided; request queues for storing processing requests sent from clients for the services provided while putting the respective services into a particular order; queuing condition detection module for obtaining waiting conditions of the processing requests stored in the request queues; and a scheduler for deciding priorities to the processing requests input from the client to the transaction processing system by referring to the contract conditions and the waiting conditions of the processing requests.

Owner:HITACHI LTD

Live migration of virtual machine during direct access to storage over SR IOV adapter

ActiveUS8489699B2Digital computer detailsComputer security arrangementsComputer memoryVirtual machine

A method is provided to migrate a virtual machine from a source computing machine to a destination computing machine comprising: suspending transmission of requests from a request queue disposed in source computing machine memory associated with the VM from the request queue to a VF; while suspending the transmission of requests, determining when no more outstanding responses to prior requests remain to be received; in response to a determination that no more outstanding responses to prior requests remain to be received, transferring state information that is indicative of locations of requests inserted to the request queue from the VF to a PF and from the PF to a memory region associated with a virtualization intermediary of the source computing machine. After transferring the state information to source computing machine memory associated with a virtualization intermediary, resuming transmission of requests from locations of the request queue indicated by the state information to the PF; and transmitting the requests from the PF to the physical storage.

Owner:VMWARE INC

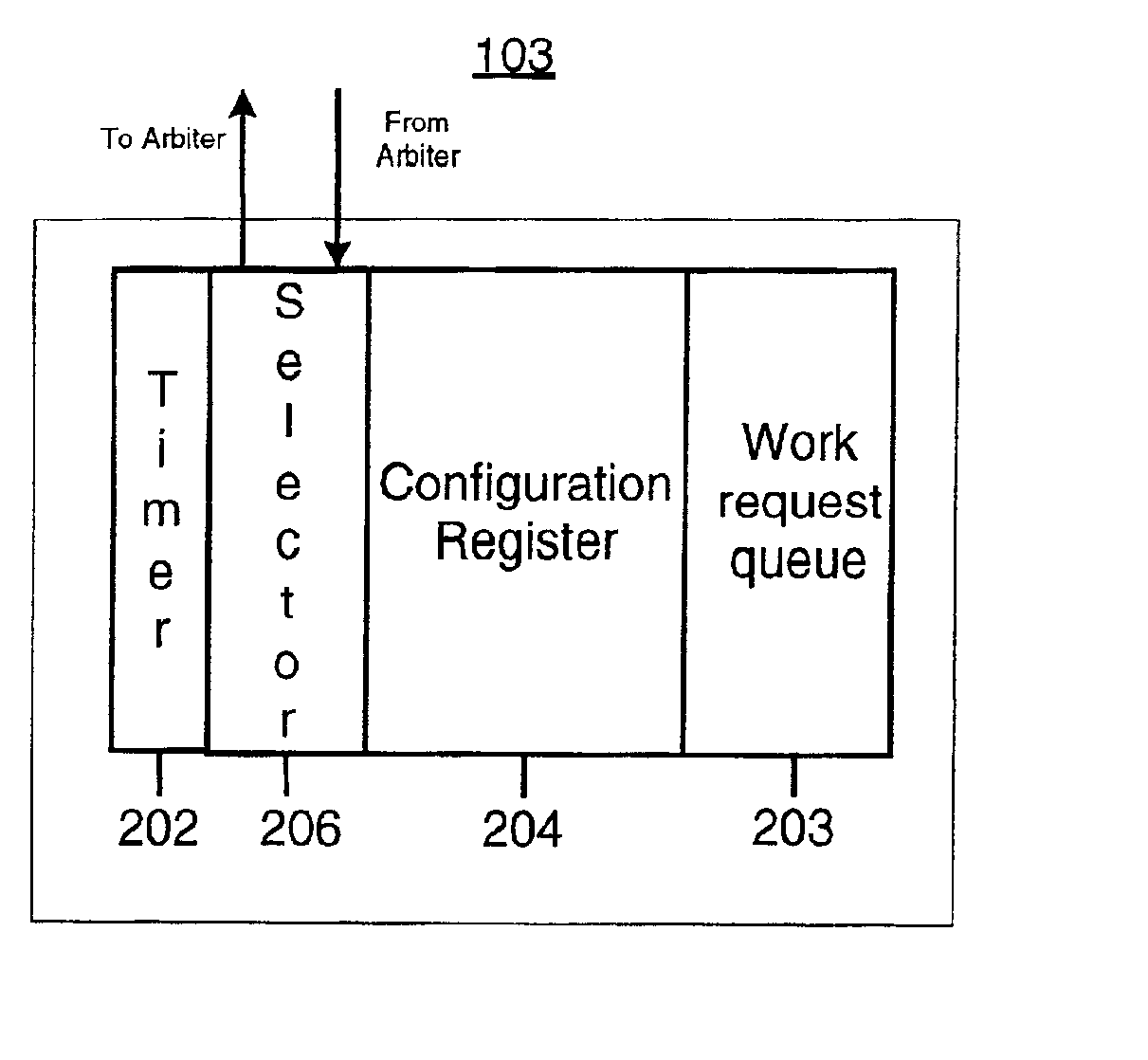

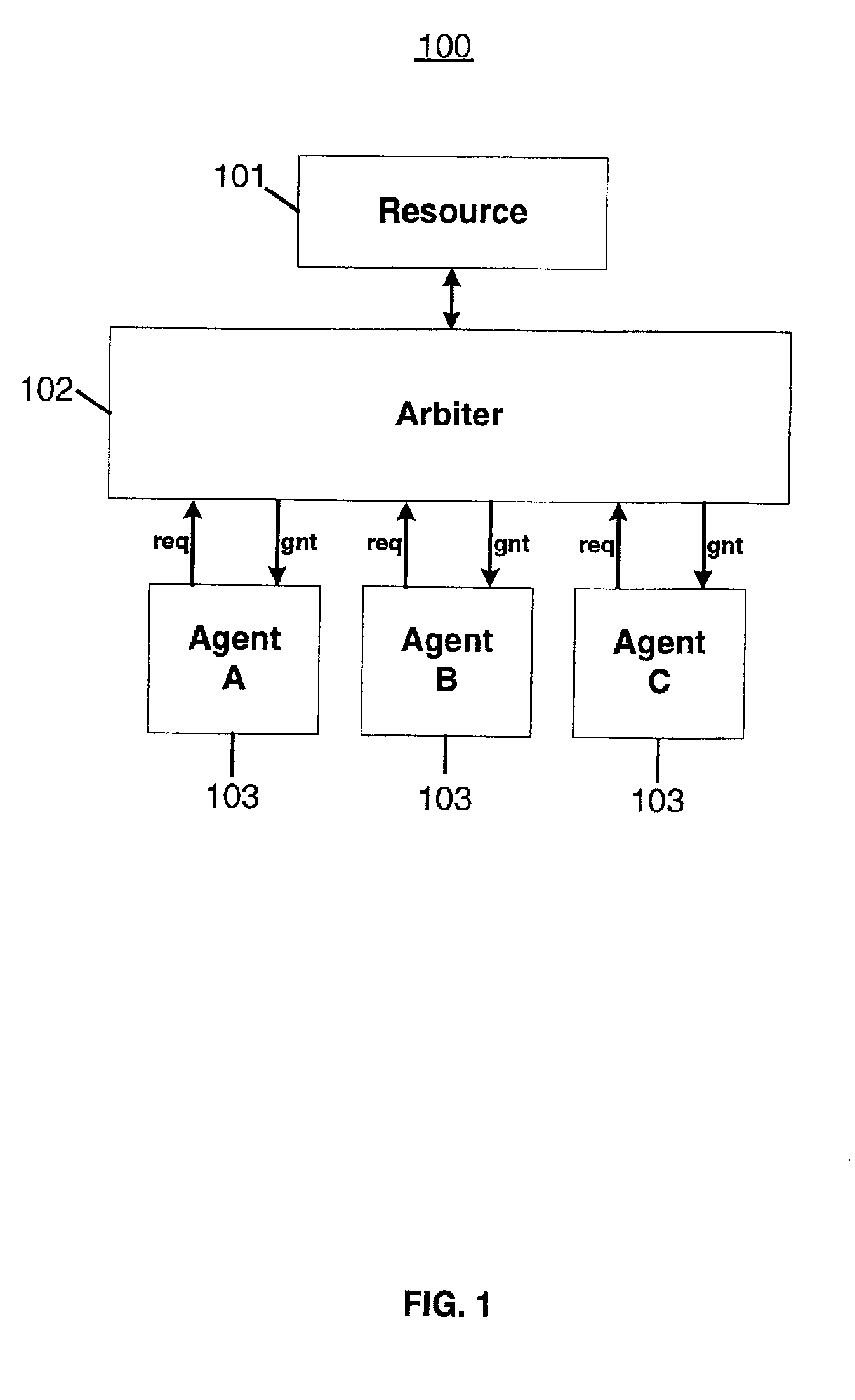

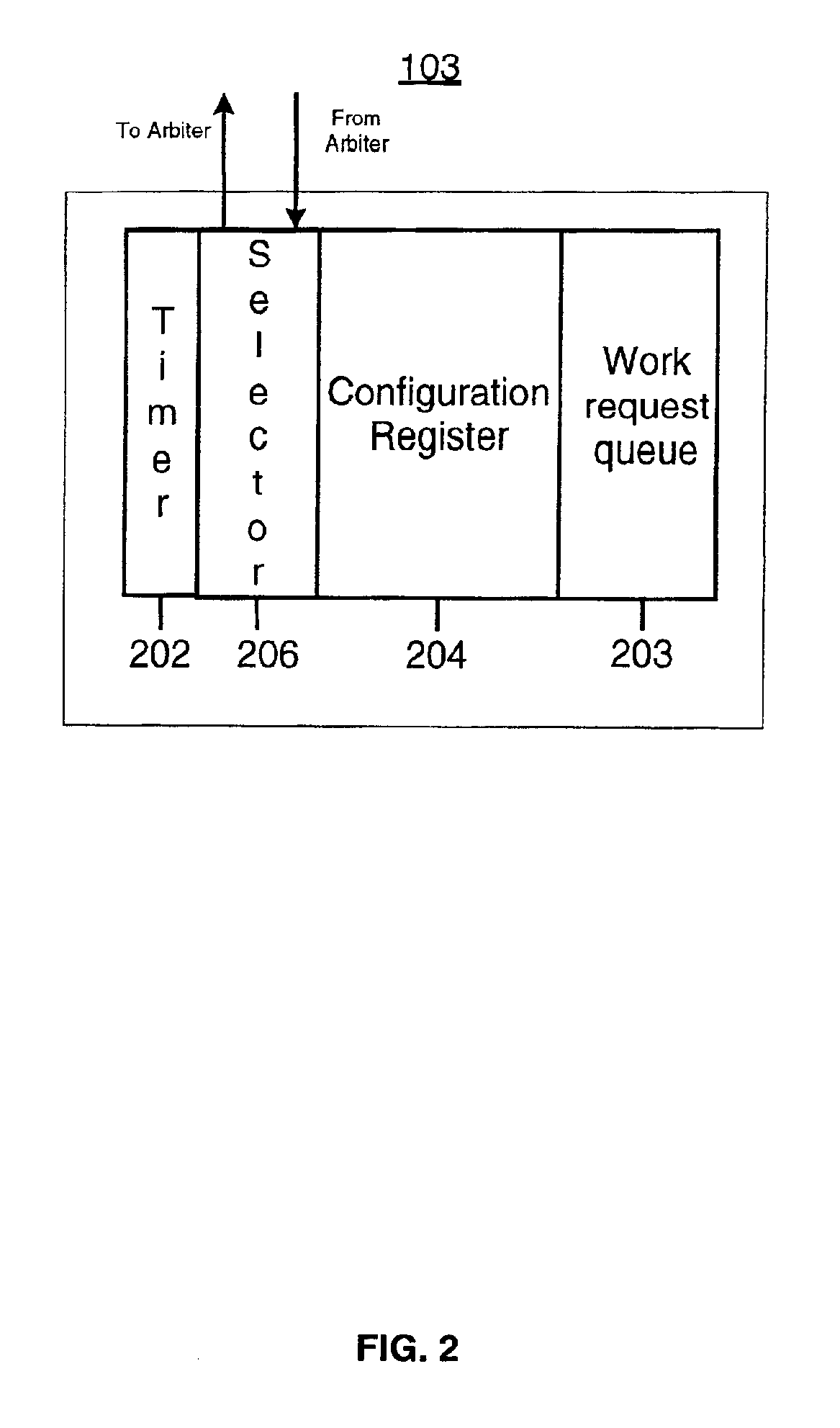

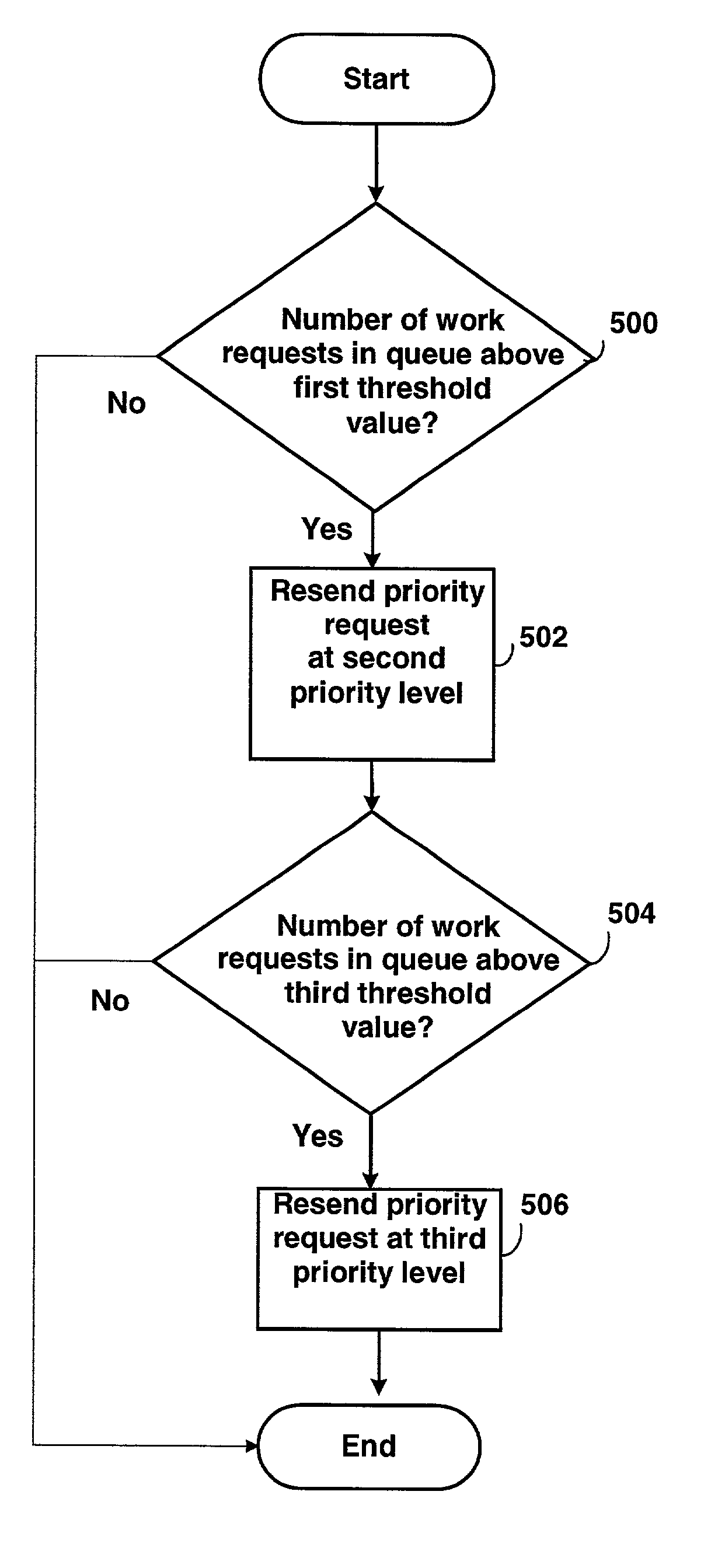

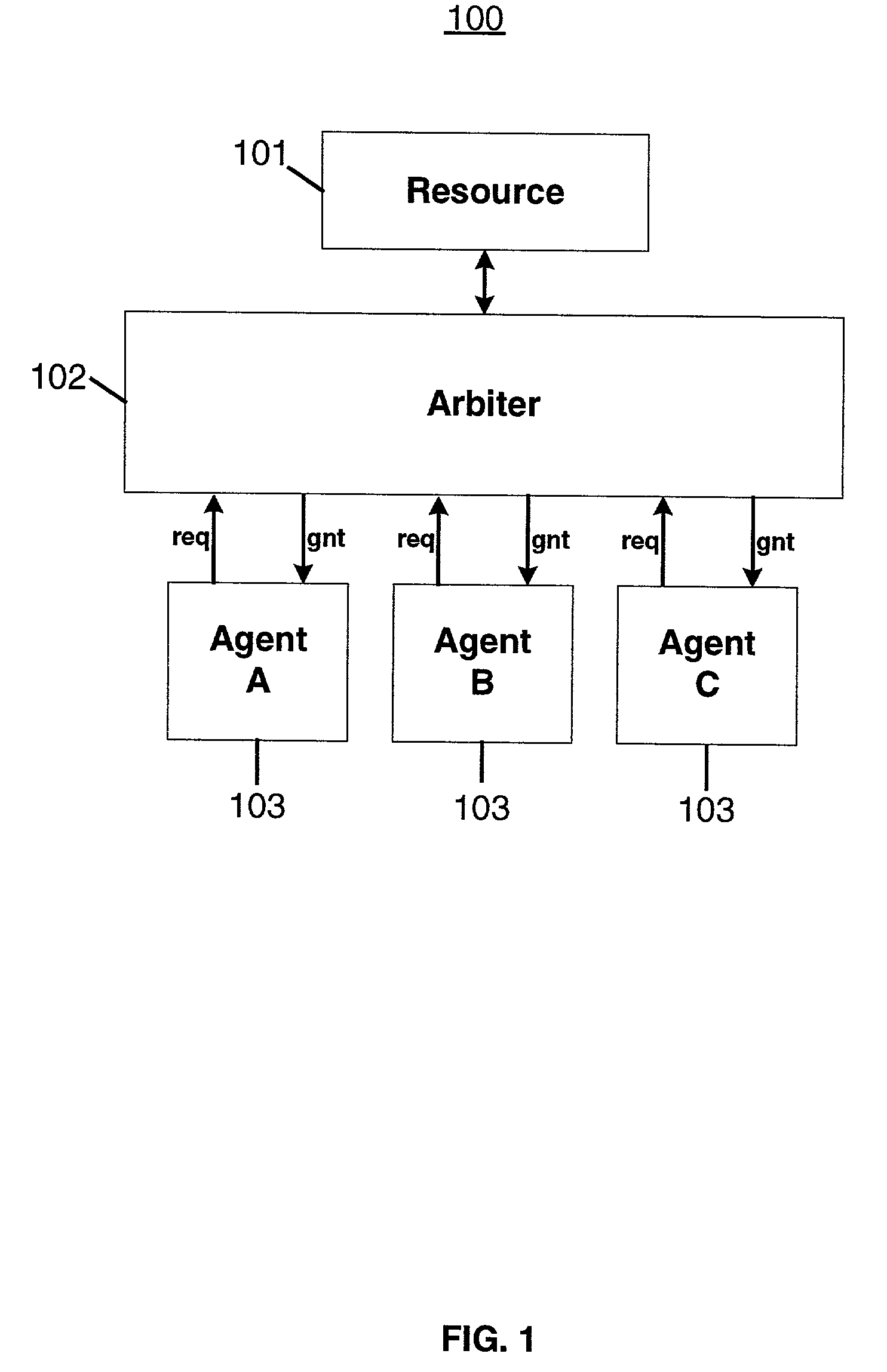

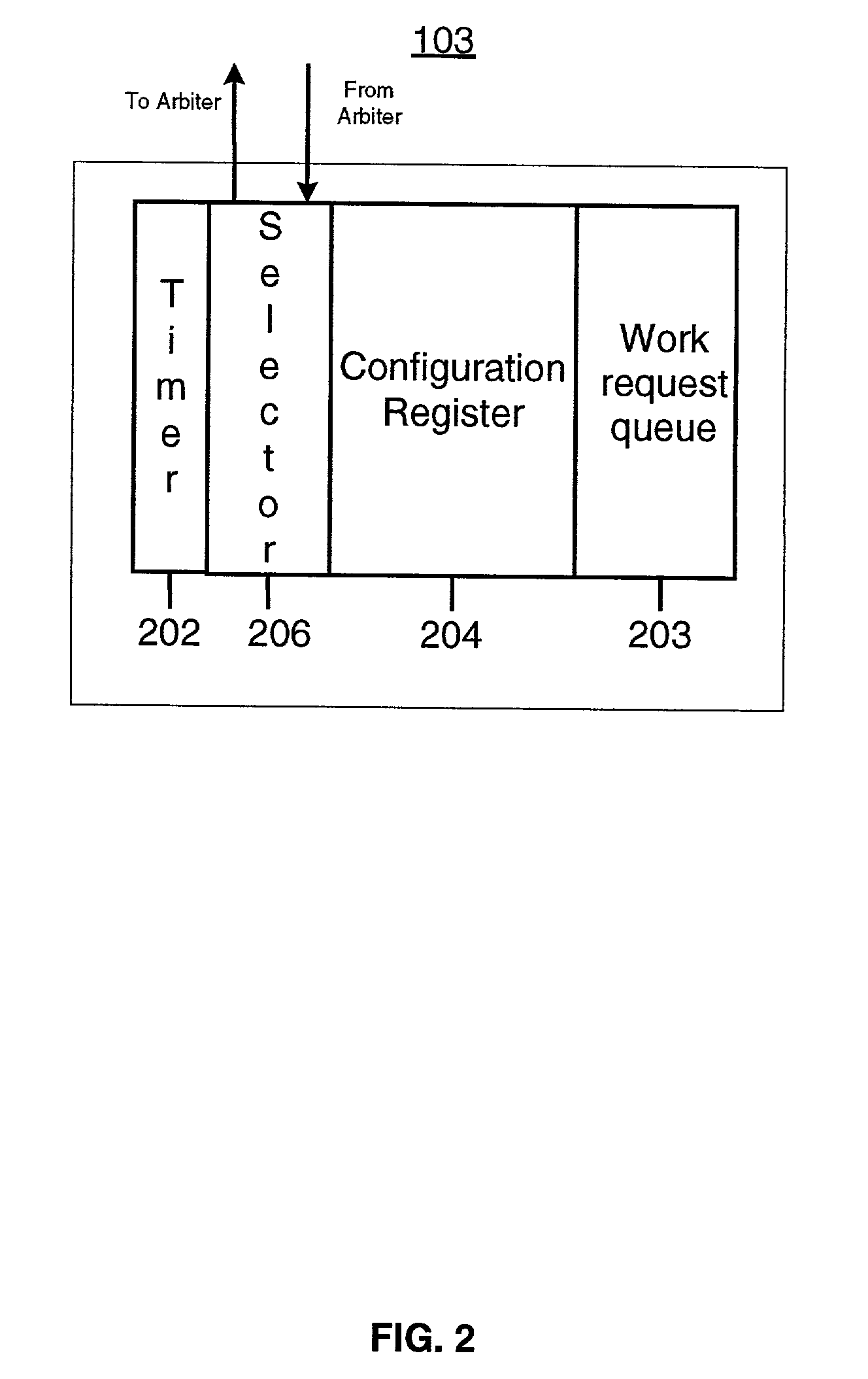

Dynamic request priority arbitration

A system and method are provided for dynamically determining the priority of requests for access to a resource taking into account changes in the access needs of a requesting agent over time. A requesting agent selects a priority level from a plurality of priority selections to include with a priority request to an arbiter. Work requests requiring the access to a resource may be stored in a work request queue. The priority level may be dynamic. The dynamic priority level enables the agent to sequentially increase or decrease the priority level of a priority request when threshold values representing the number of work requests in the work request queue are reached. The threshold values which cause the priority level to be increased may be higher than the threshold values which cause the priority level to be decreased to provide hysteresis. The dynamic priority level may, alternatively, enable the agent to start a timer for timing a pending priority request for a predetermined time period. The priority level of the priority request is increased if the priority request has not been granted before the timer expires.

Owner:ORACLE INT CORP

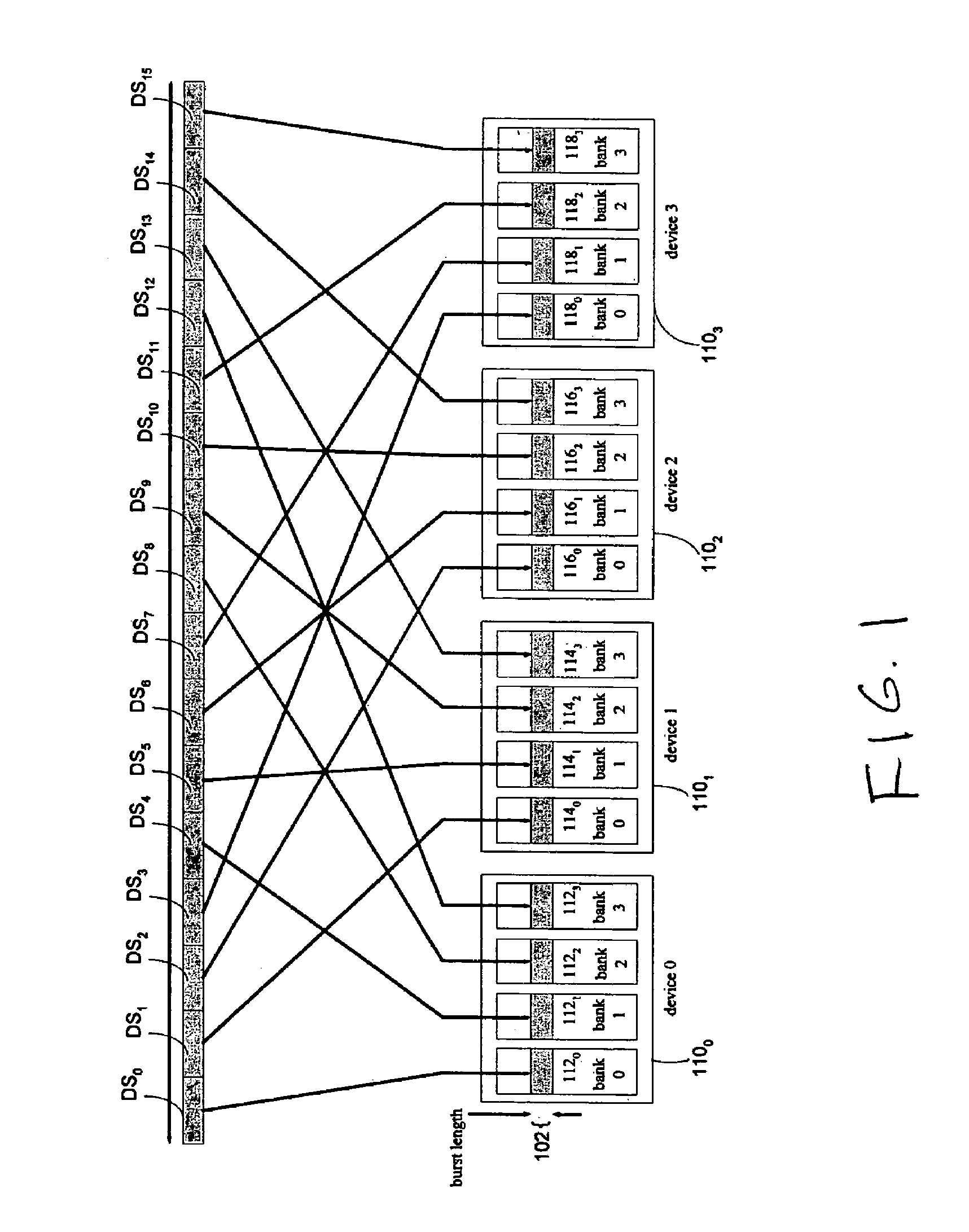

High bandwidth memory management using multi-bank DRAM devices

InactiveUS7296112B1Performance maximizationHigh bandwidthMemory adressing/allocation/relocationHidden dataData segment

The disclosure describes implementations for accessing in parallel a plurality of banks across a plurality of DRAM devices. These implementations are suited for operation within a parallel packet processor. A data word in partitioned into data segments which are stored in the plurality of banks in accordance with an access scheme that hides pre-charging of rows behind data transfers. A storage distribution control module is communicatively coupled to a memory comprising a plurality of storage request queues, and a retrieval control module is communicatively coupled to a memory comprising a plurality of retrieval request queues. In one example, each request queue may be implemented as a first-in-first-out (FIFO) memory buffer. The plurality of storage request queues are subdivided into sets as are the plurality of retrieval queues. Each is set is associated with a respective DRAM device. A scheduler for each respective DRAM device schedules data transfer between its respective storage queue set and the DRAM device and between its retrieval queue set and the DRAM device independently of the scheduling of the other devices, but based on a shared criteria for queue service.

Owner:CISCO TECH INC

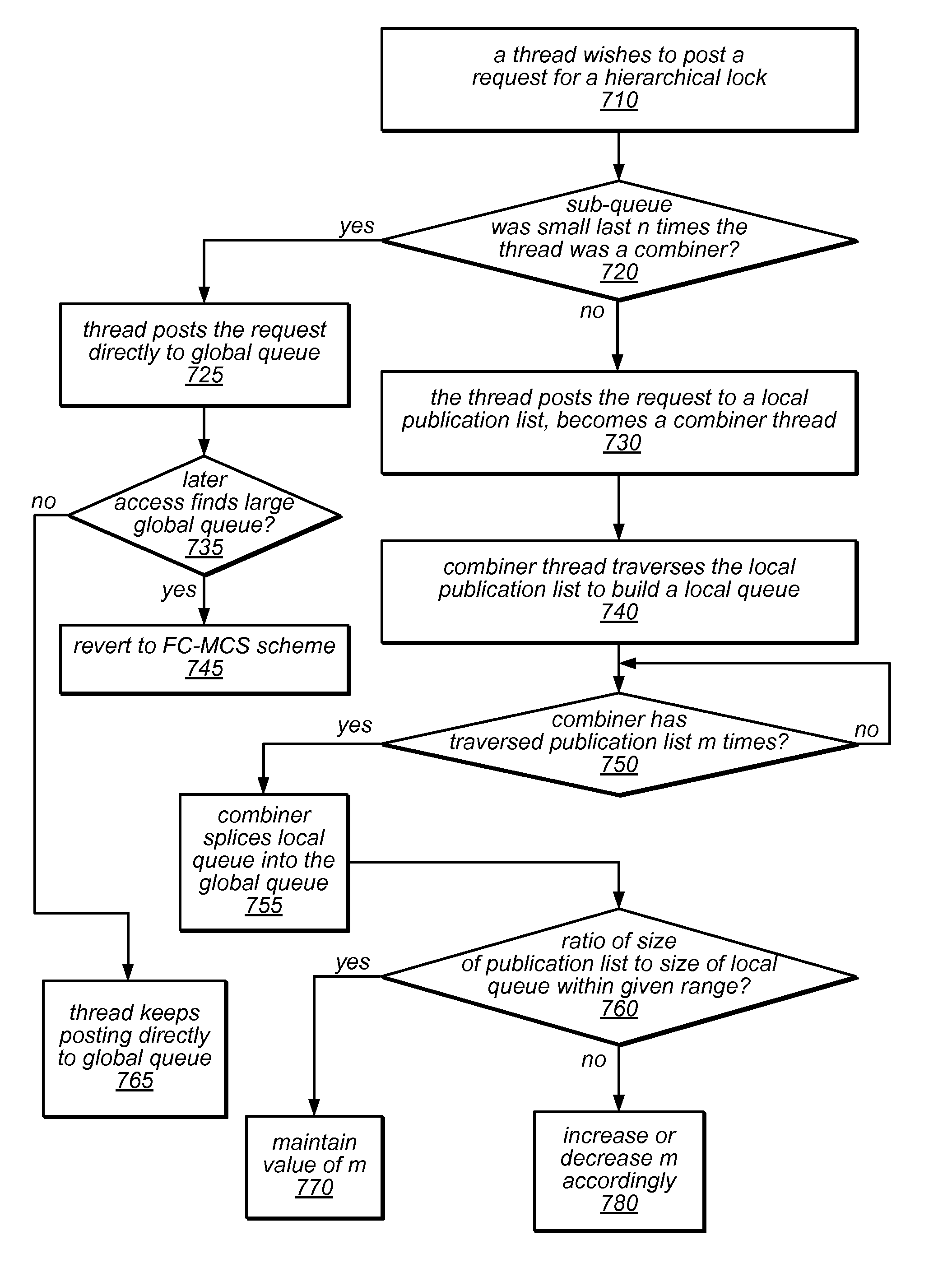

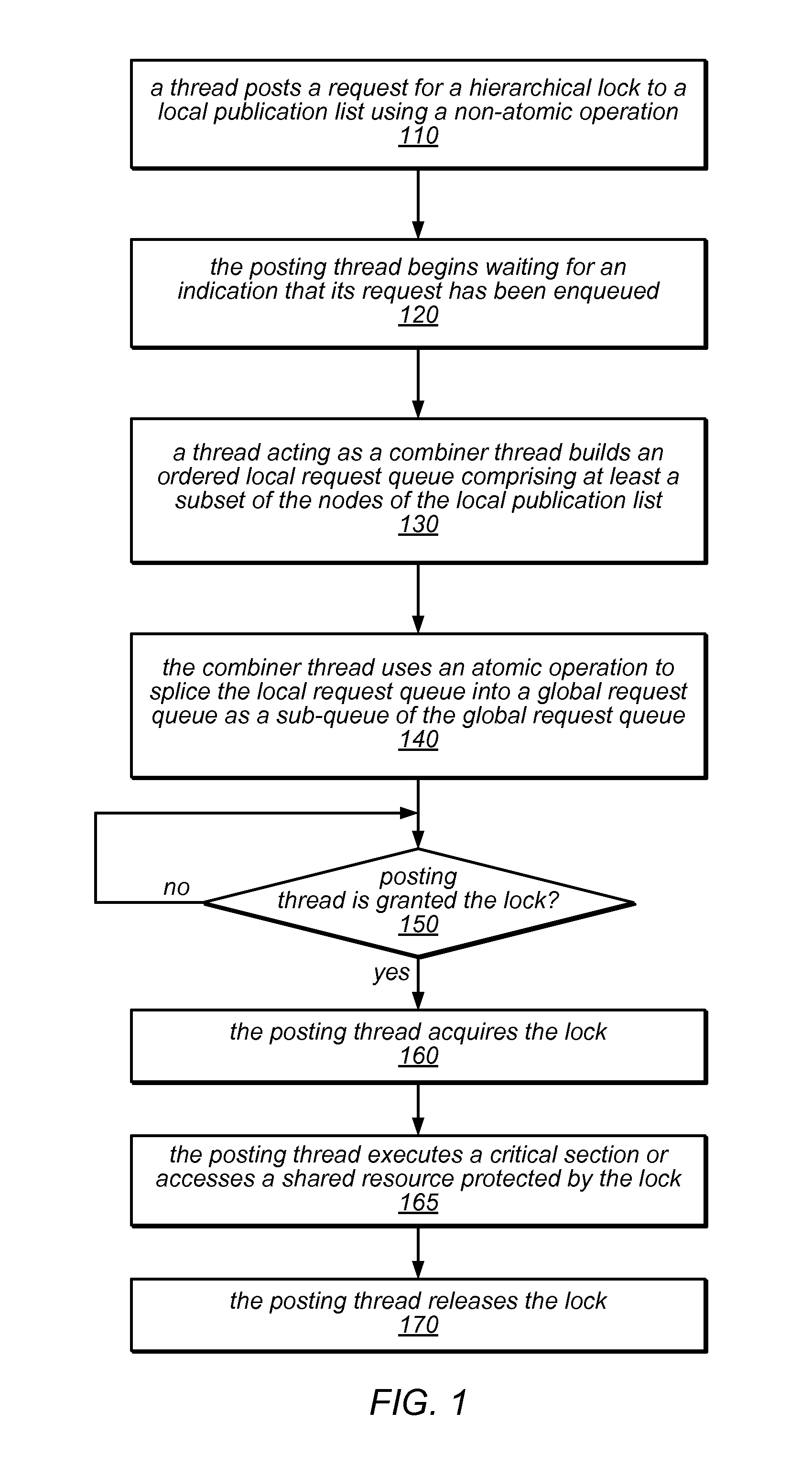

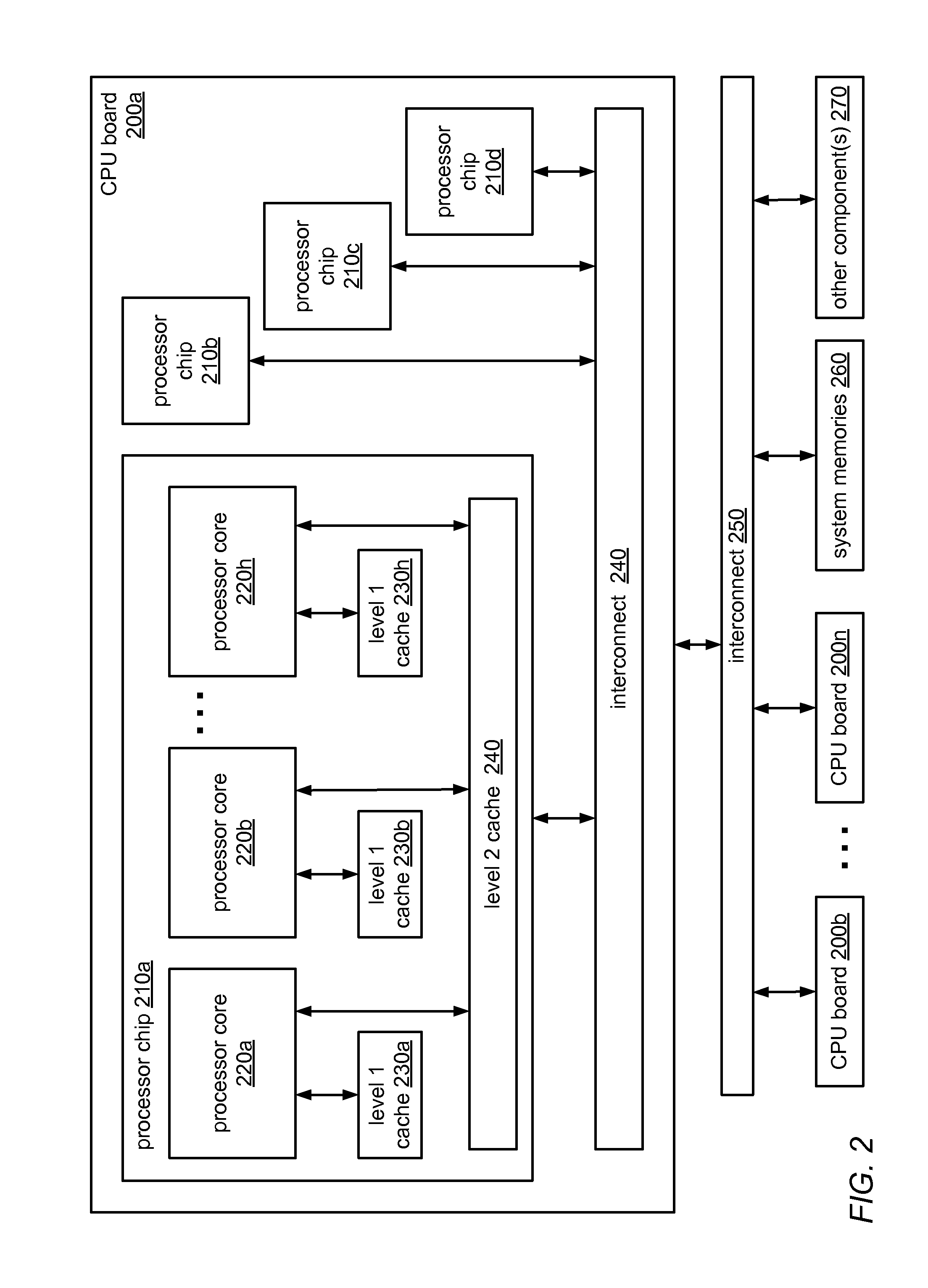

System and Method for Implementing Hierarchical Queue-Based Locks Using Flat Combining

ActiveUS20120311606A1Little interconnection trafficFew costly synchronization operationMultiprogramming arrangementsMemory systemsDistributed computingAtomic operations

The system and methods described herein may be used to implement a scalable, hierarchal, queue-based lock using flat combining. A thread executing on a processor core in a cluster of cores that share a memory may post a request to acquire a shared lock in a node of a publication list for the cluster using a non-atomic operation. A combiner thread may build an ordered (logical) local request queue that includes its own node and nodes of other threads (in the cluster) that include lock requests. The combiner thread may splice the local request queue into a (logical) global request queue for the shared lock as a sub-queue. A thread whose request has been posted in a node that has been combined into a local sub-queue and spliced into the global request queue may spin on a lock ownership indicator in its node until it is granted the shared lock.

Owner:ORACLE INT CORP

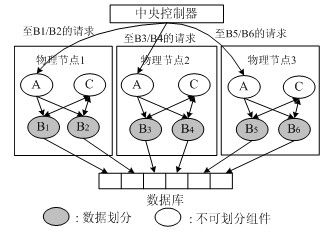

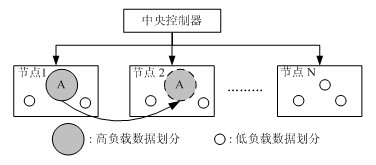

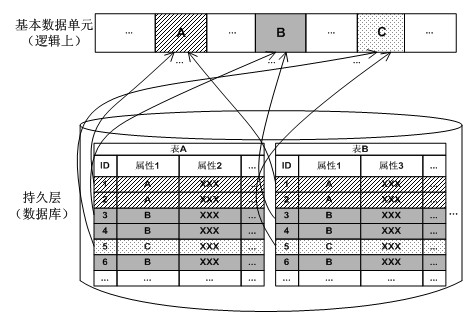

Method for achieving dynamic partitioning and load balancing of data-partitioning distributed environment

ActiveCN102207891ASolve the problem of load balancing timelinessWide applicabilityResource allocationGranularityResource utilization

The invention discloses a method for achieving dynamic partitioning and load balancing of a data-partitioning distributed environment. According to the invention, the method can achieve the local load balancing by basic data unit dynamic combination, namely dynamic partitioning, so as to make the partition granularity meet the system operation requirement. Therefore, the method solves the problemof load balancing time limitation resulting from over-large granularity of a fixed partitioning method. The method provided by the invention has wide applicability by using a partition request queue and a scarce resource queue model to analyze node loads. A local monitor provided in the invention can dynamically adjust the number of partitions after the system achieves load balance so as to achieve self-adaptive adjustment of the partitions inside nodes, thereby improving the resource utilization rate inside the nodes and further improving the work efficiency of the entire system.

Owner:ZHEJIANG UNIV

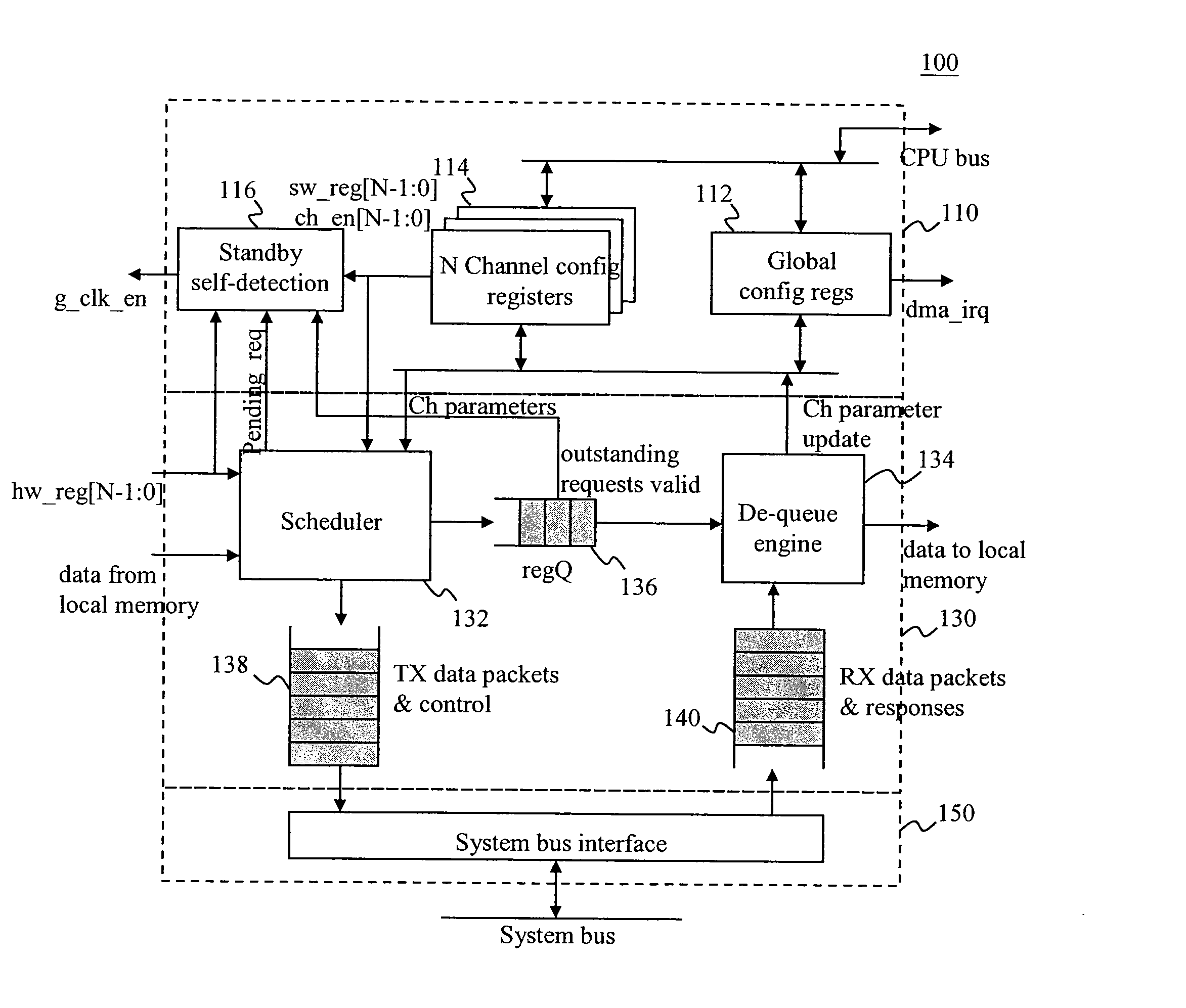

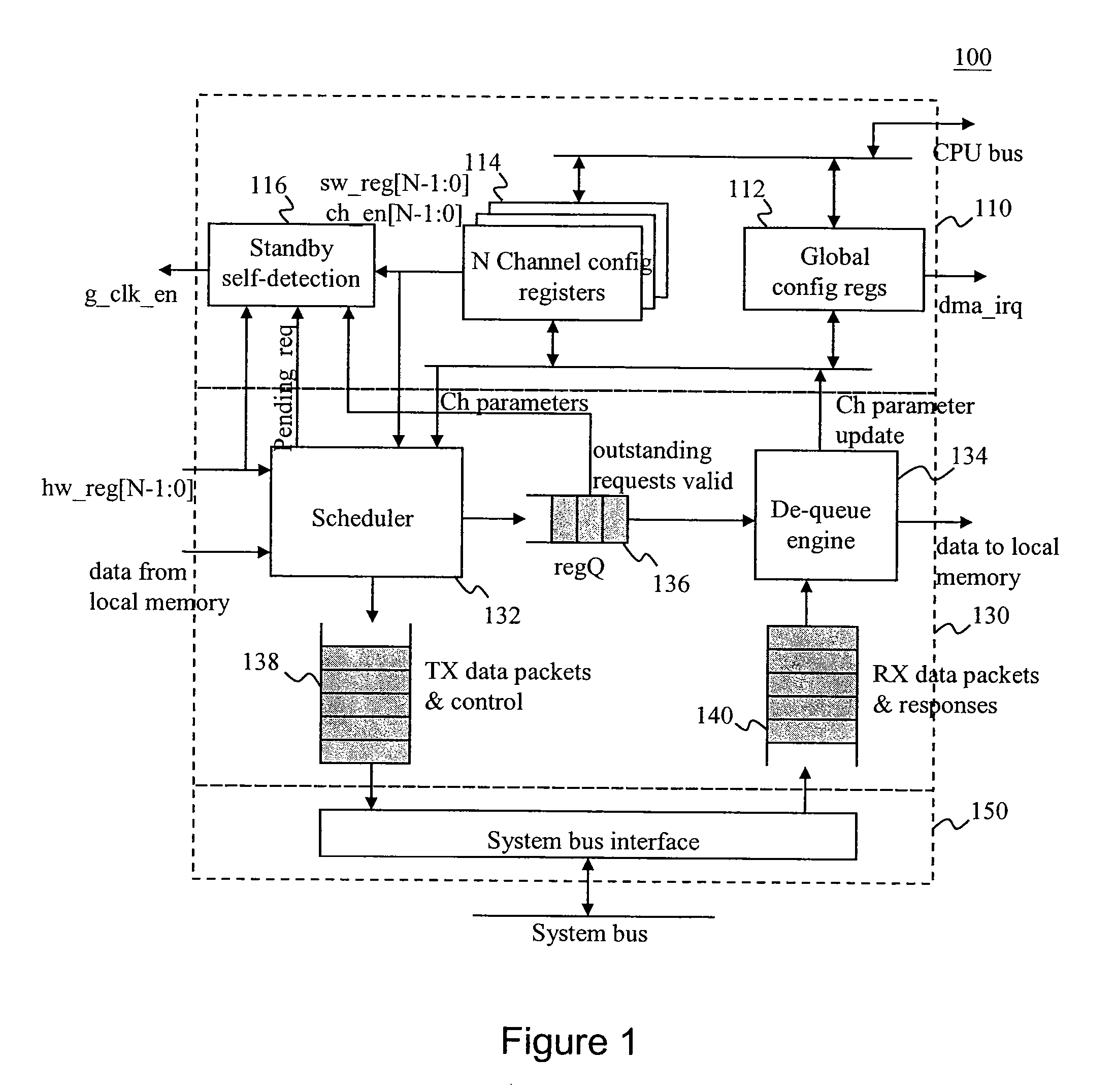

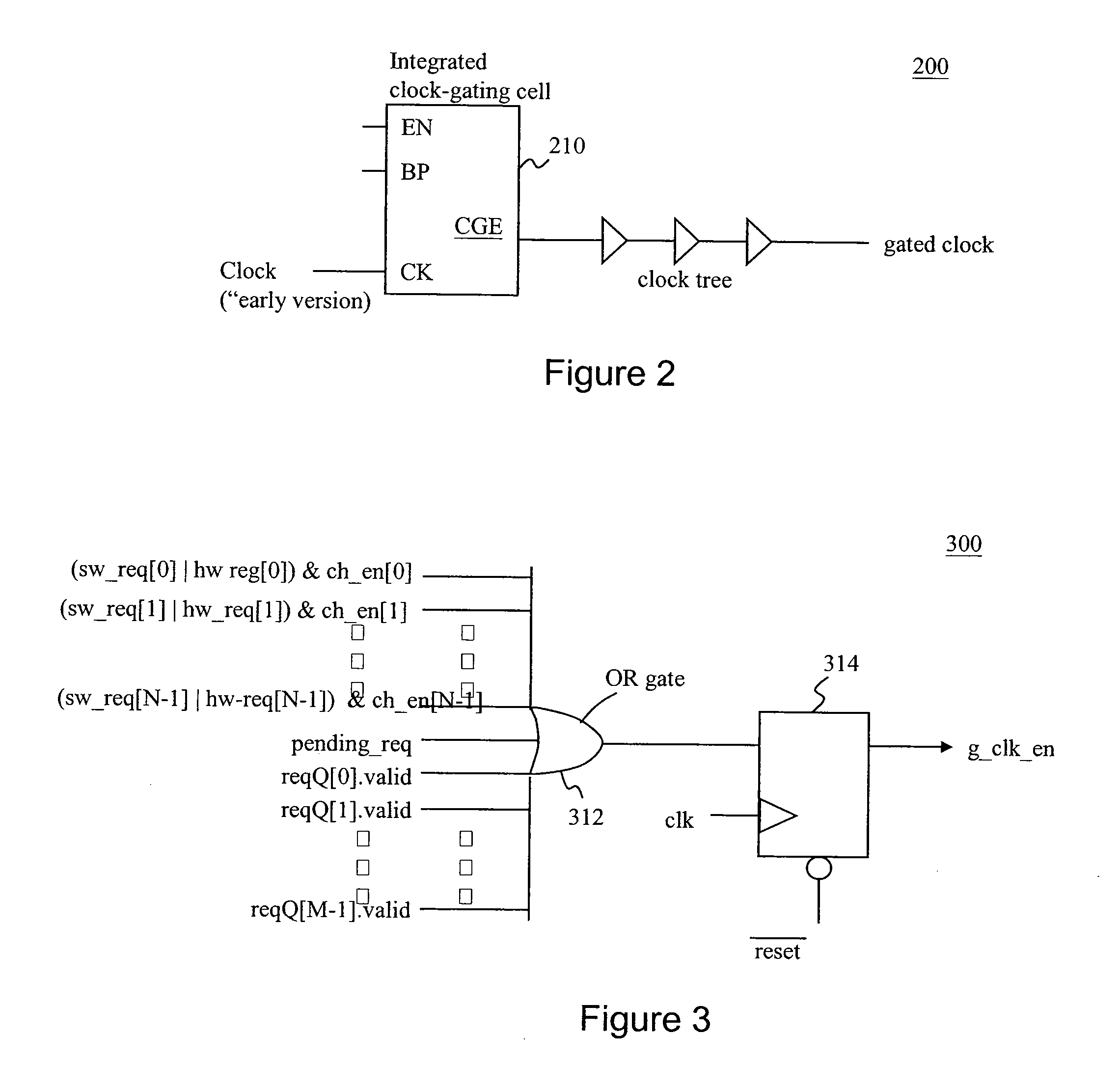

DMA Controller With Self-Detection For Global Clock-Gating Control

InactiveUS20070162648A1Reduce power consumptionEnergy efficient ICTEnergy efficient computingCell schedulingClock tree

A standby self-detection mechanism in a DMA controller which reduces the power consumption by dynamically controlling the on / off states of at least one clock tree driven by global clock-gating circuitry is disclosed. The DMA controller comprises a standby self-detection unit, a scheduler, at least one set of channel configuration registers associated with at least one DMA channel, and an internal request queue which holds already scheduled DMA requests that are presently outstanding in the DMA controller. The standby self-detection unit drives a signal to a global clock-gating circuitry to selectively turn on or off at least one of the clock trees to the DMA controller, depending on whether the DMA controller is presently performing a DMA transfer.

Owner:VIA TECH INC

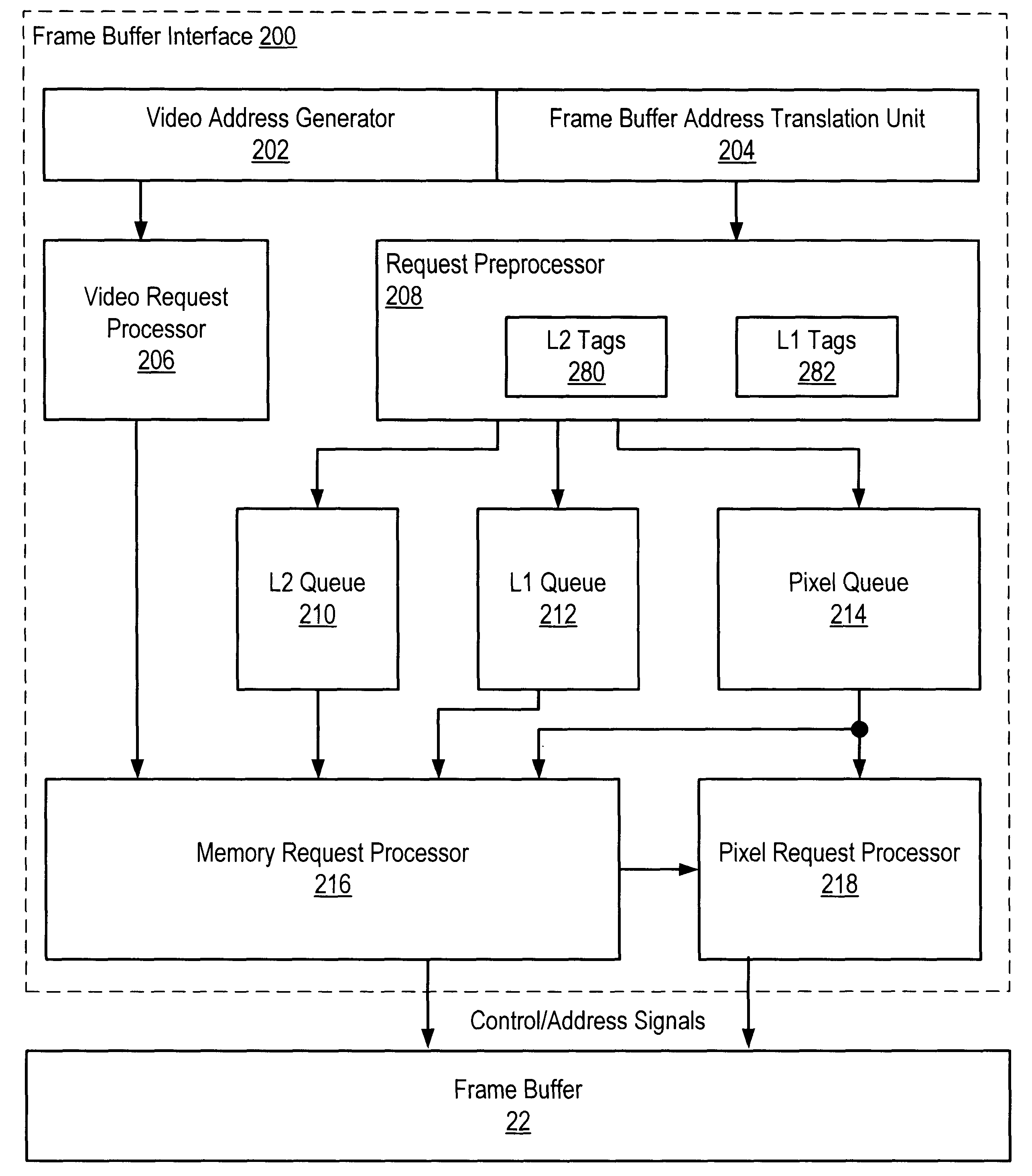

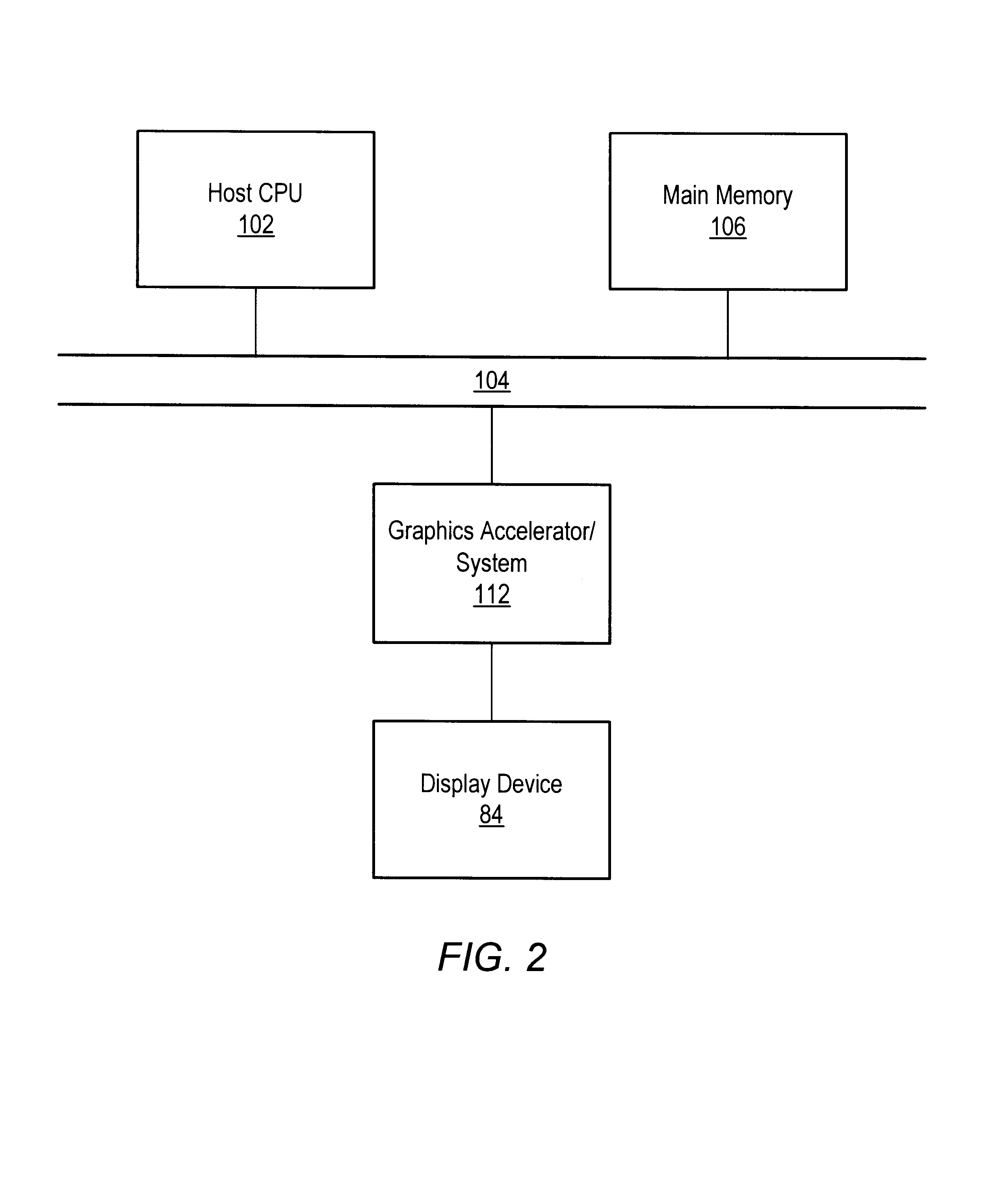

System and method for prefetching data from a frame buffer

A graphics system may include a frame buffer that includes several sets of one or more memory banks and a cache. The frame buffer may load data from one of the memory banks into the cache in response to receiving a cache fill request. Each set of memory banks is accessible independently of each other set of memory banks. A frame buffer interface coupled to the frame buffer includes a plurality of cache fill request queues. Each cache fill request queue is configured to store one or more cache fill requests targeting a corresponding one of the sets of memory banks. The frame buffer interface is configured to select a cache fill request from one of the cache fill request queues that stores cache fill requests targeting a set of memory banks that is not currently being accessed and to provide the selected cache fill request to the frame buffer.

Owner:ORACLE INT CORP

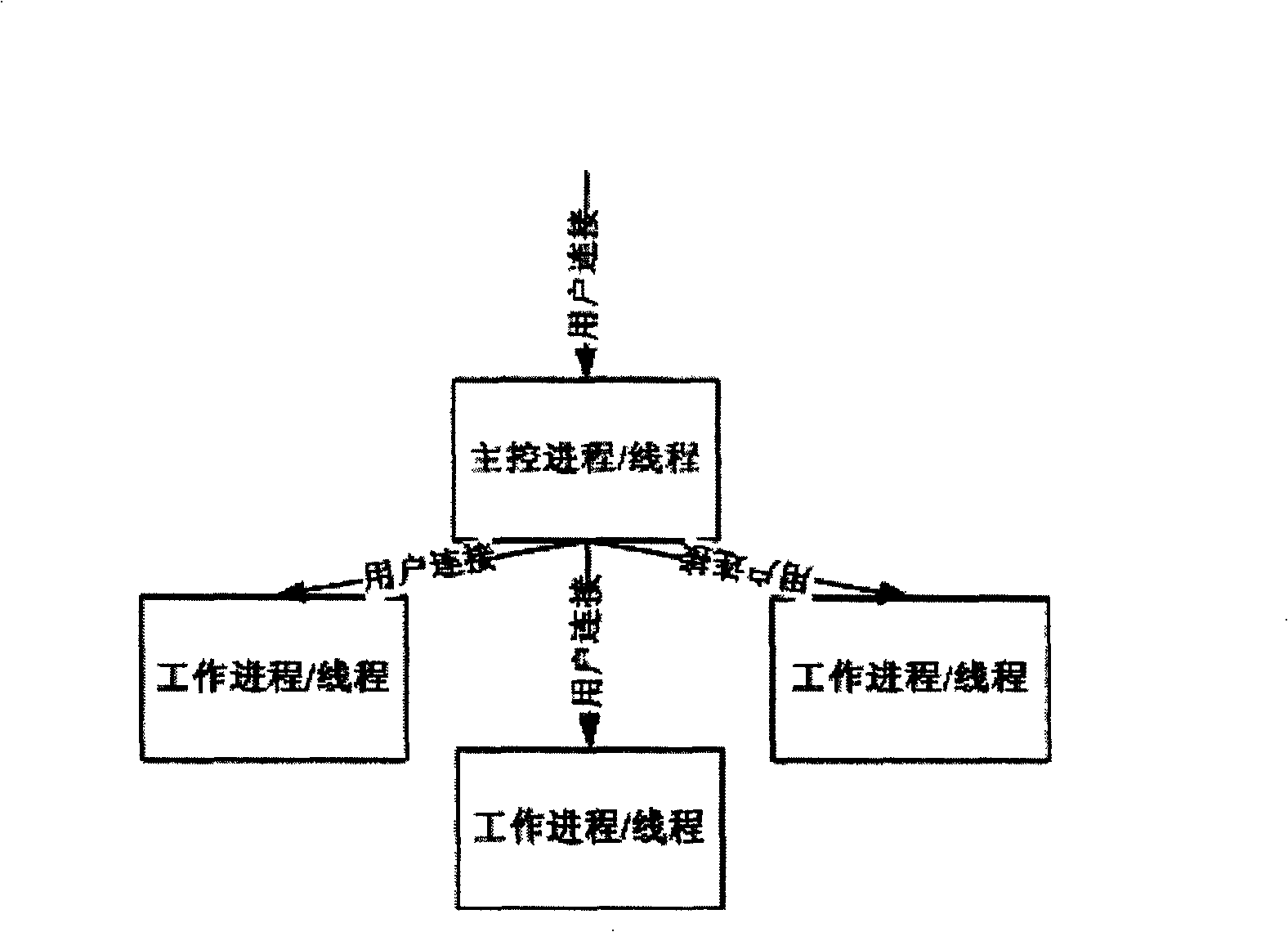

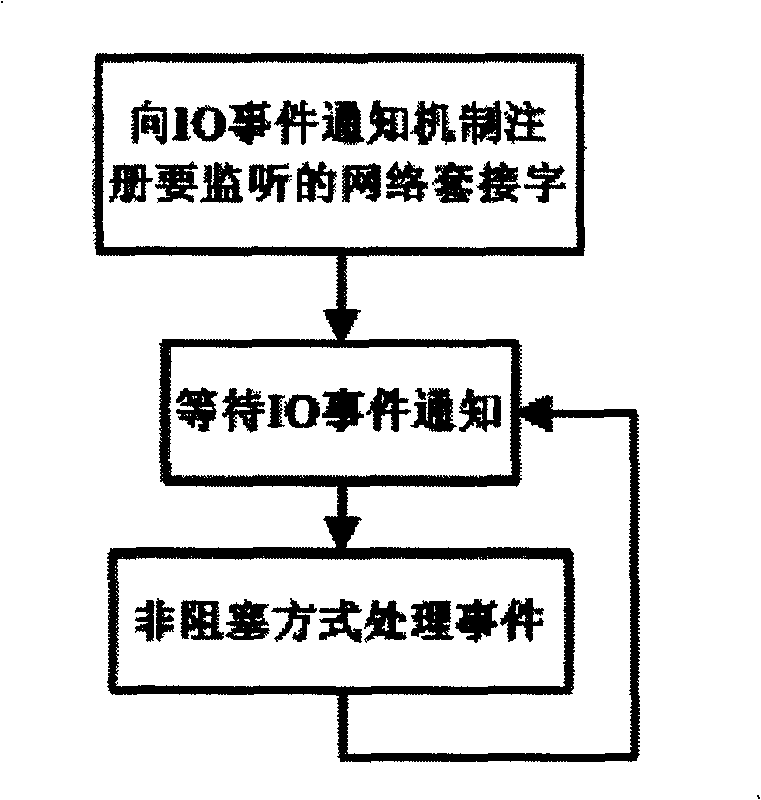

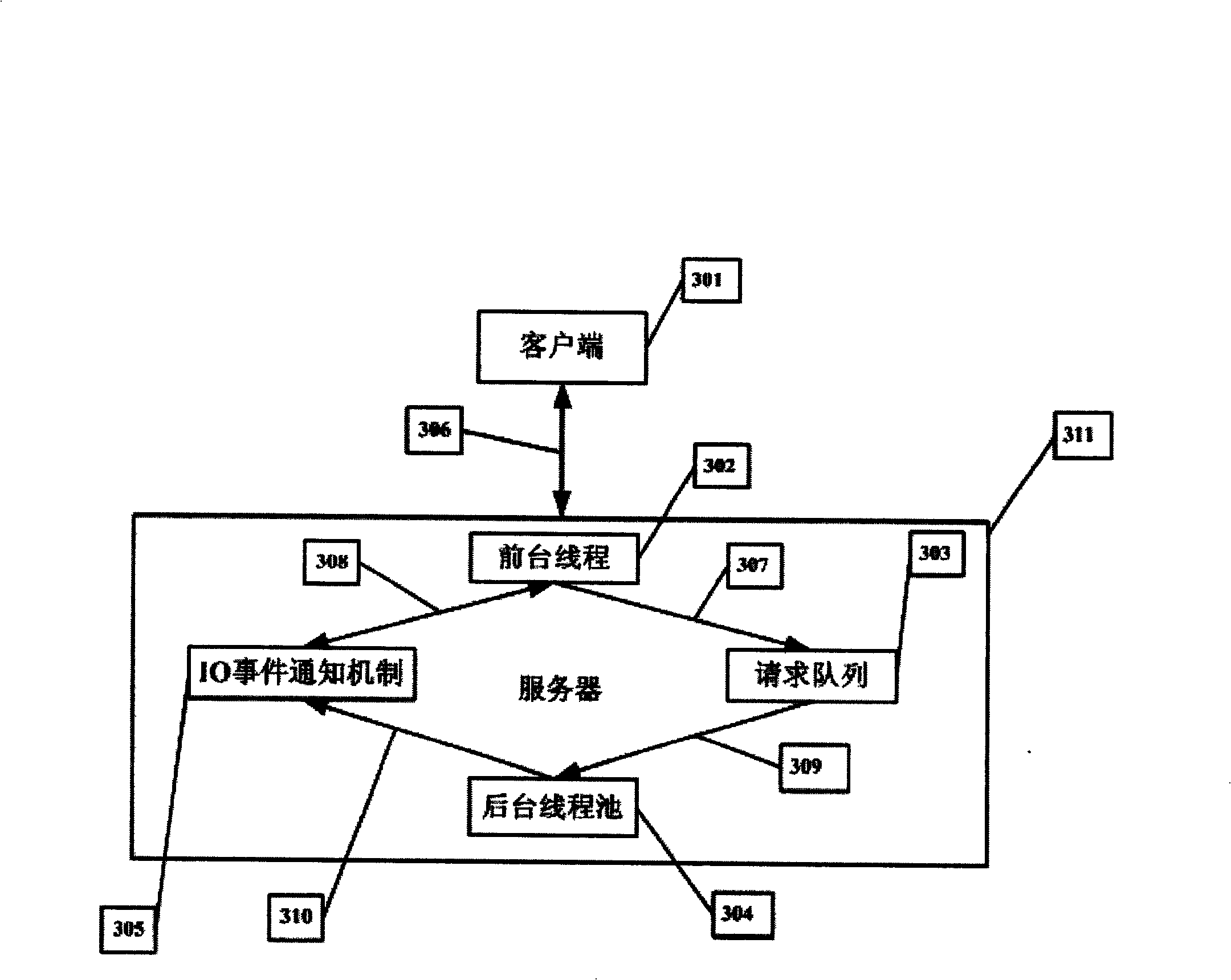

Single process contents server device and method based on IO event notification mechanism

InactiveCN101256505AReduce overheadEliminate overheadMultiprogramming arrangementsTransmissionContent distributionSingle process

The invention discloses a single process high performance content server IO device based on IO event notification mechanism, comprising a request queue, a foreground process, a background process pool and an IO event notification mechanism; the foreground process is connected with the request queue and the IO event notification mechanism, the request queue and the IO event notification mechanism are also connected with the background process pool, the foreground process is connected with an external client. The invention also discloses a method for implementing IO request process. The invention not only reduces cost of context switch, interprocess communication and share resource, but also removes the cost of creating process, and makes the process number unrelated to the connection number, thus to realize high concurrence and high expansion, as well as meet the content distribution service of large scale users.

Owner:INST OF ACOUSTICS CHINESE ACAD OF SCI

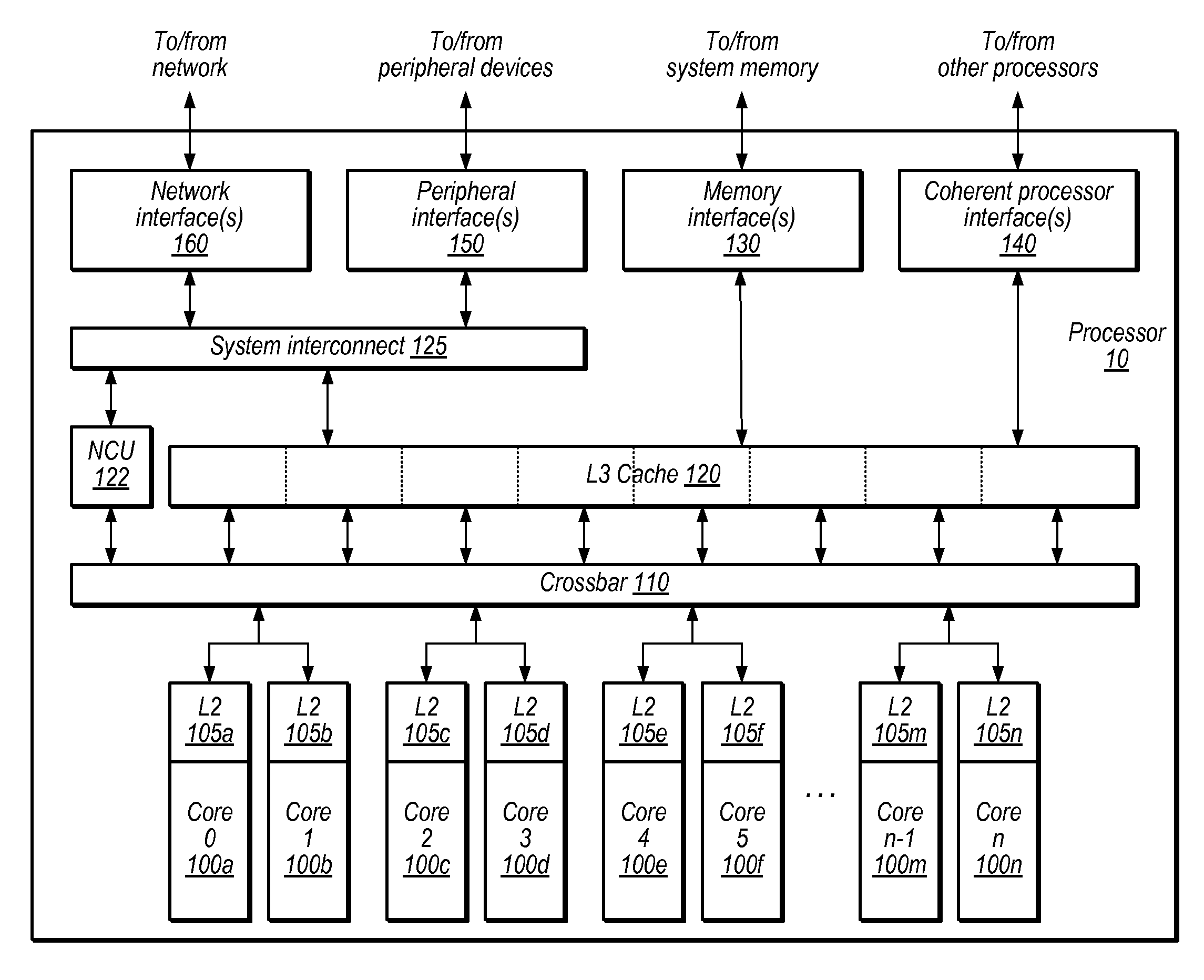

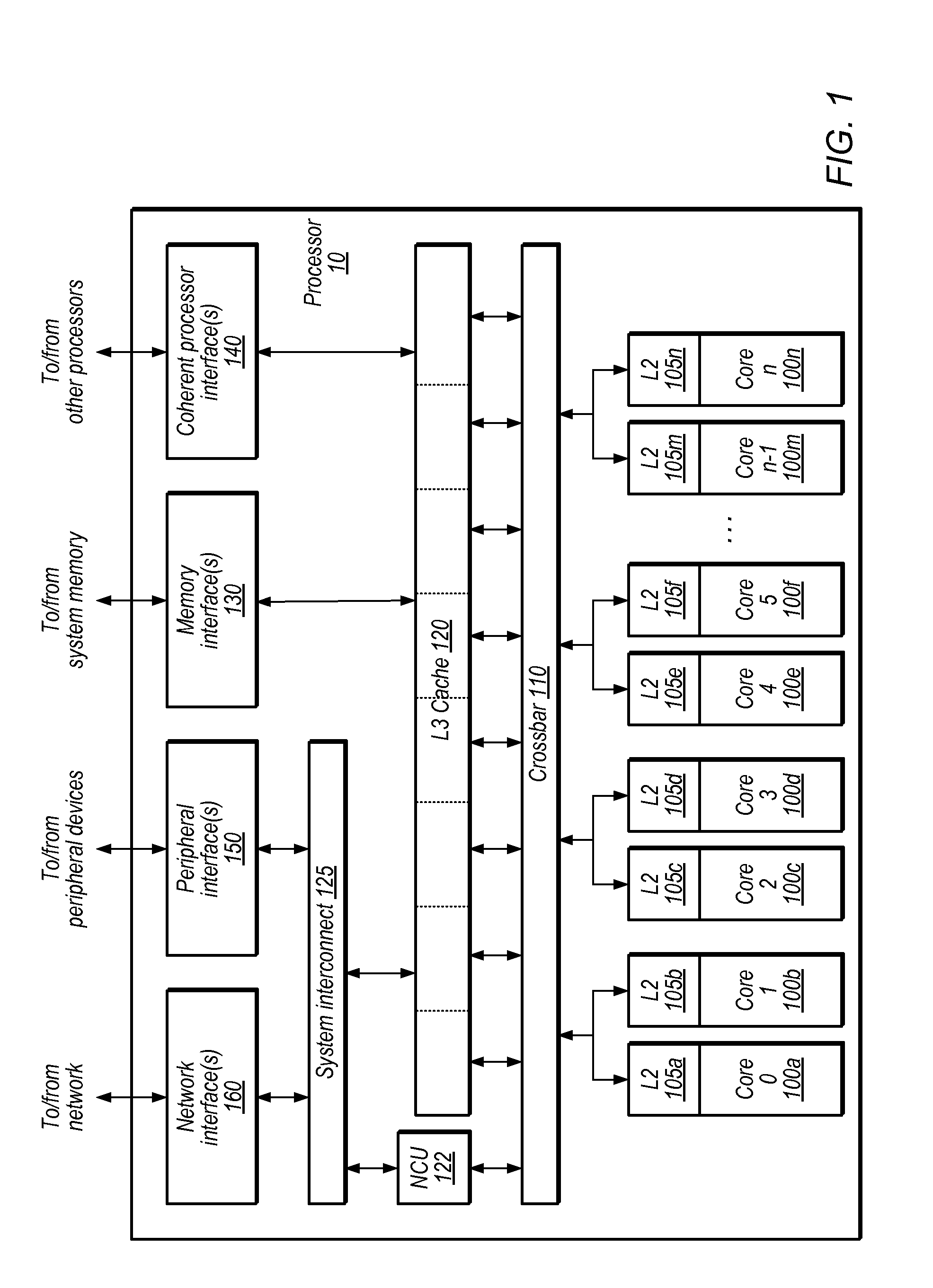

Load request scheduling in a cache hierarchy

InactiveUS20100268882A1Memory architecture accessing/allocationMemory adressing/allocation/relocationCache hierarchyLeast recently frequently used

A system and method for tracking core load requests and providing arbitration and ordering of requests. When a core interface unit (CIU) receives a load operation from the processor core, a new entry in allocated in a queue of the CIU. In response to allocating the new entry in the queue, the CIU detects contention between the load request and another memory access request. In response to detecting contention, the load request may be suspended until the contention is resolved. Received load requests may be stored in the queue and tracked using a least recently used (LRU) mechanism. The load request may then be processed when the load request resides in a least recently used entry in the load request queue. CIU may also suspend issuing an instruction unless a read claim (RC) machine is available. In another embodiment, CIU may issue stored load requests in a specific priority order.

Owner:IBM CORP

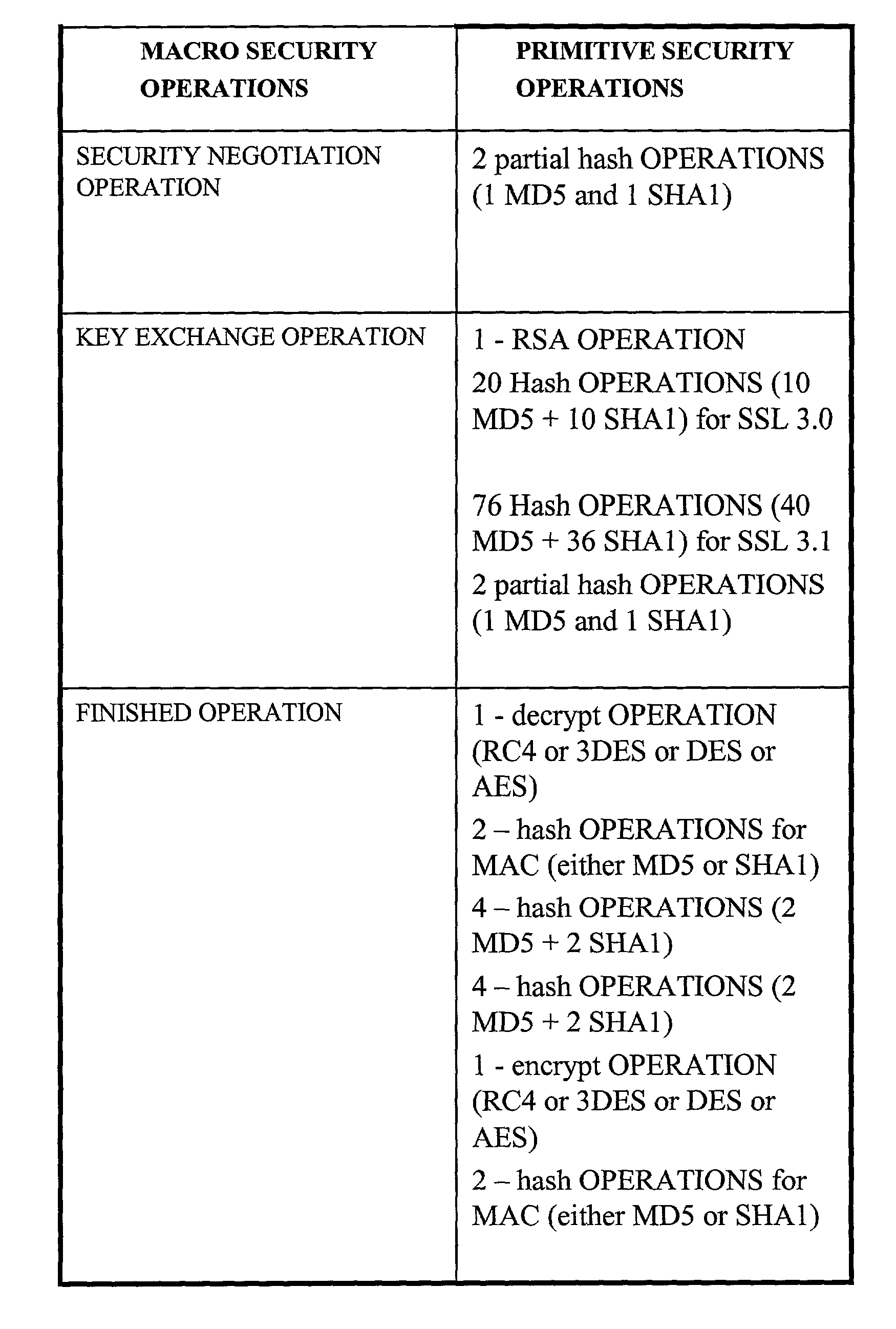

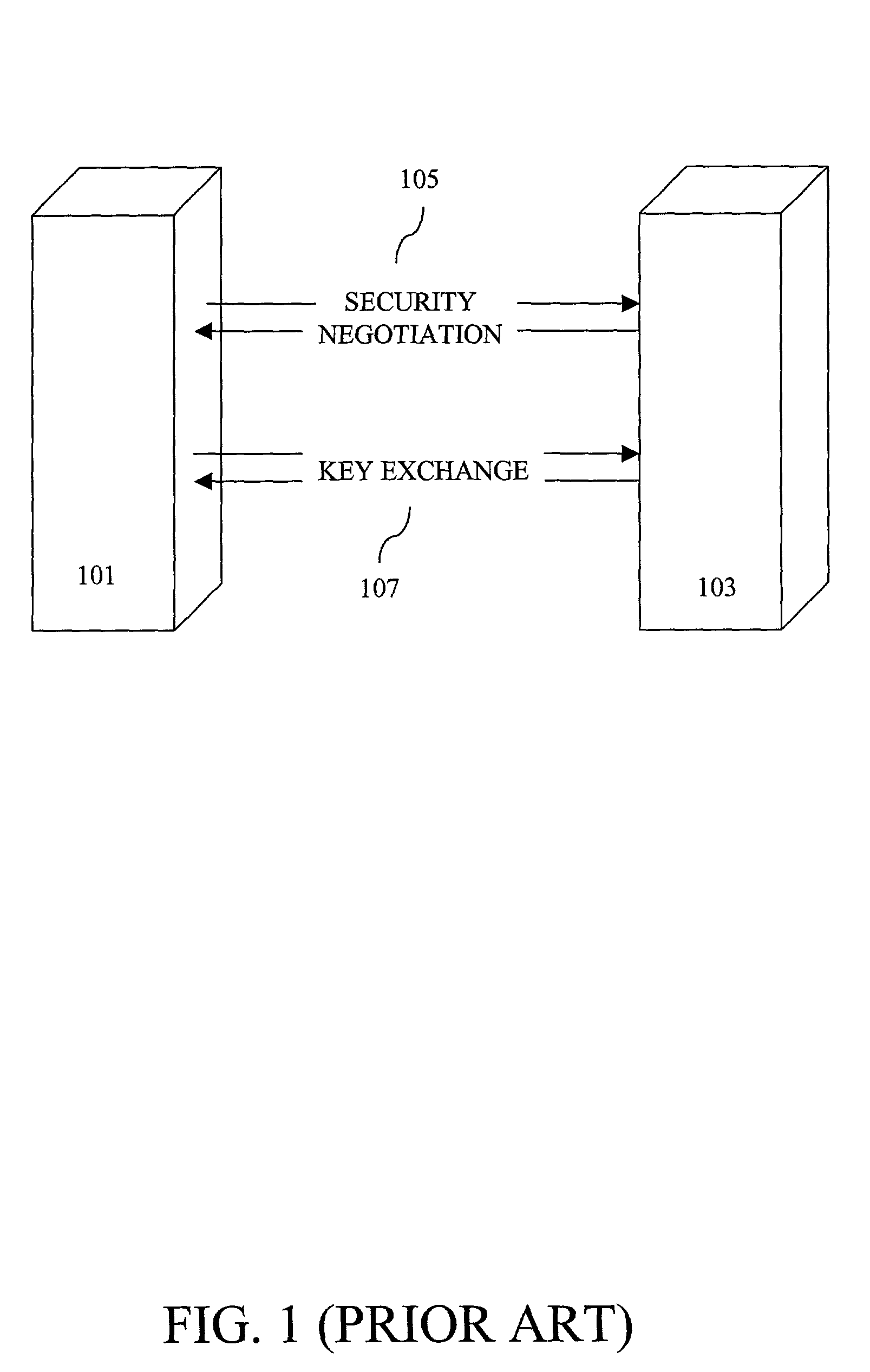

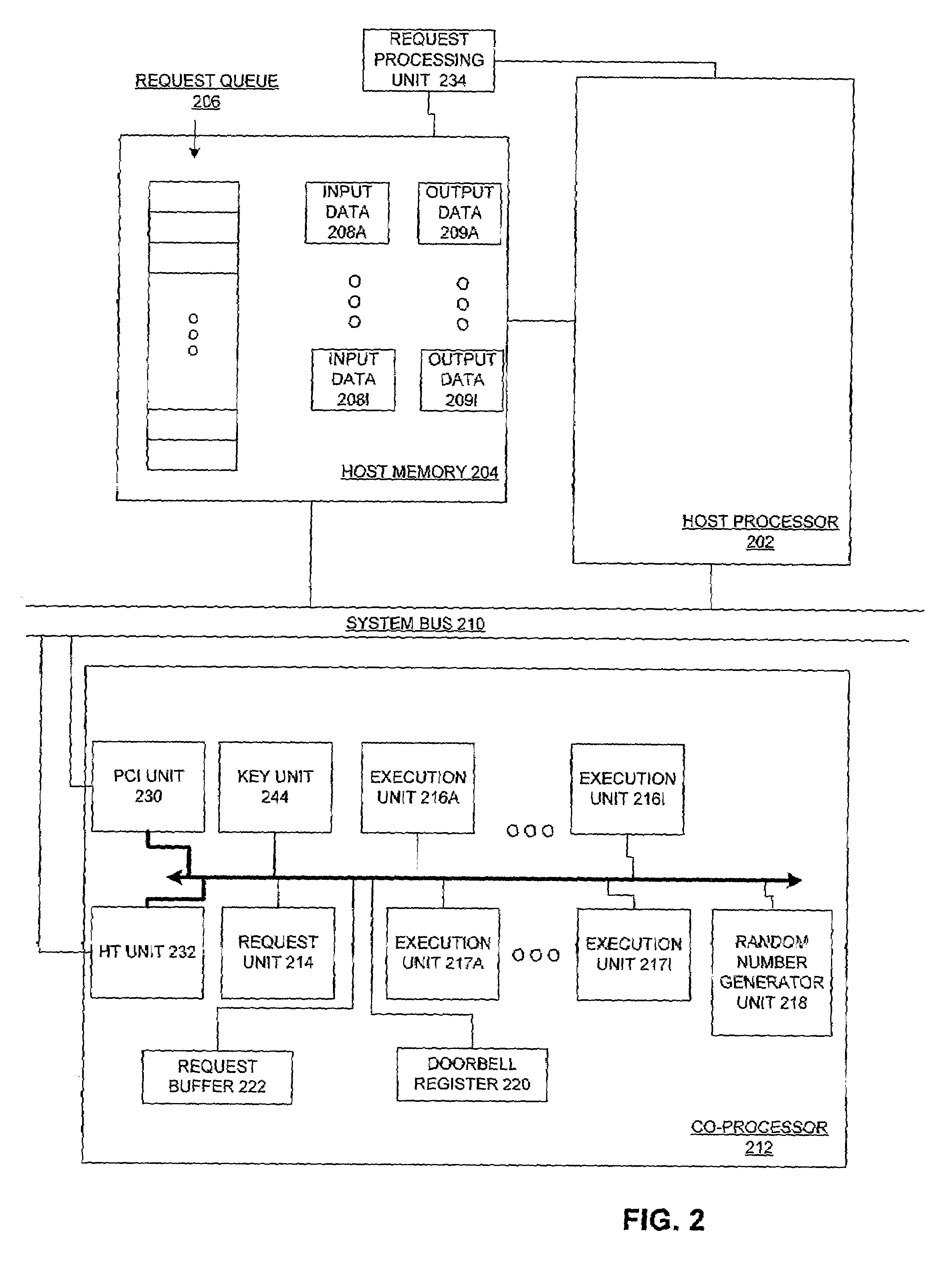

Method and apparatus for establishing secure sessions

ActiveUS7240203B2Key distribution for secure communicationUser identity/authority verificationParallel computingExecution unit

A method and apparatus for processing security operations are described. In one embodiment, a processor includes a number of execution units to process a number of requests for security operations. The number of execution units are to output the results of the number of requests to a number of output data structures associated with the number of requests within a remote memory based on pointers stored in the number of requests. The number of execution units can output the results in an order that is different from the order of the requests in a request queue. The processor also includes a request unit coupled to the number of execution units. The request unit is to retrieve a portion of the number of requests from the request queue within the remote memory and associated input data structures for the portion of the number of requests from the remote memory. Additionally, the request unit is to distribute the retrieved requests to the number of execution units based on availability for processing by the number of execution units.

Owner:MARVELL ASIA PTE LTD

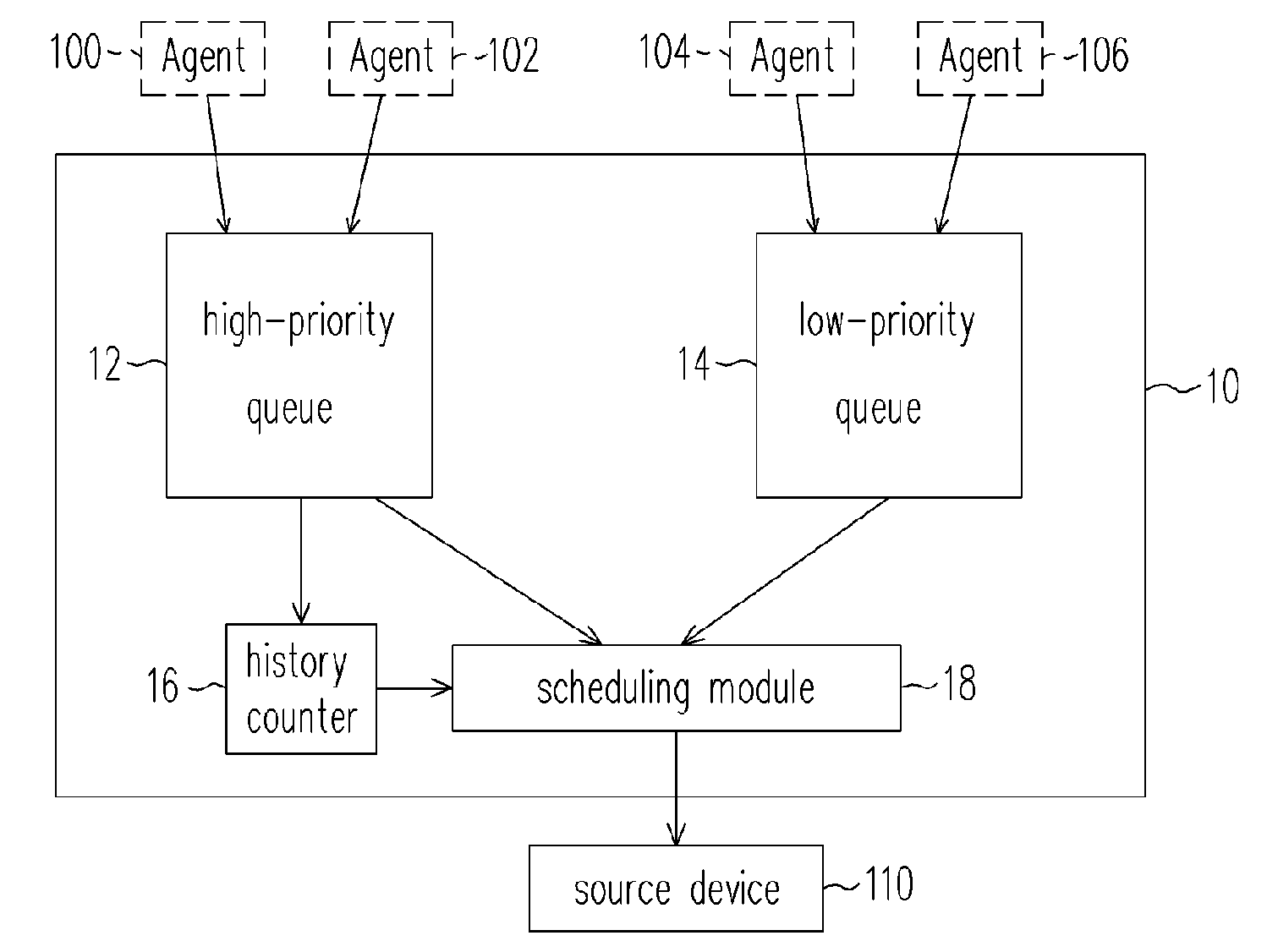

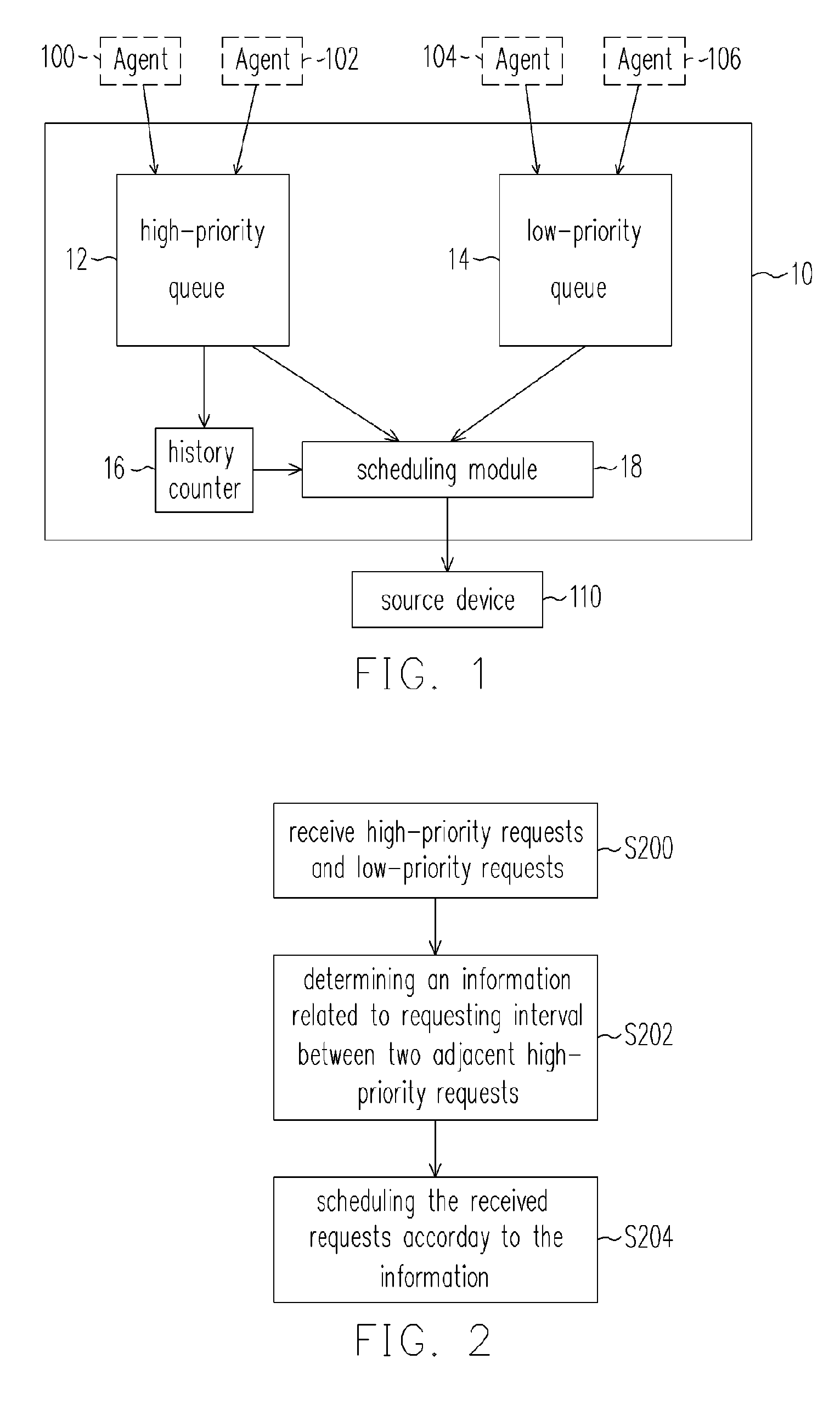

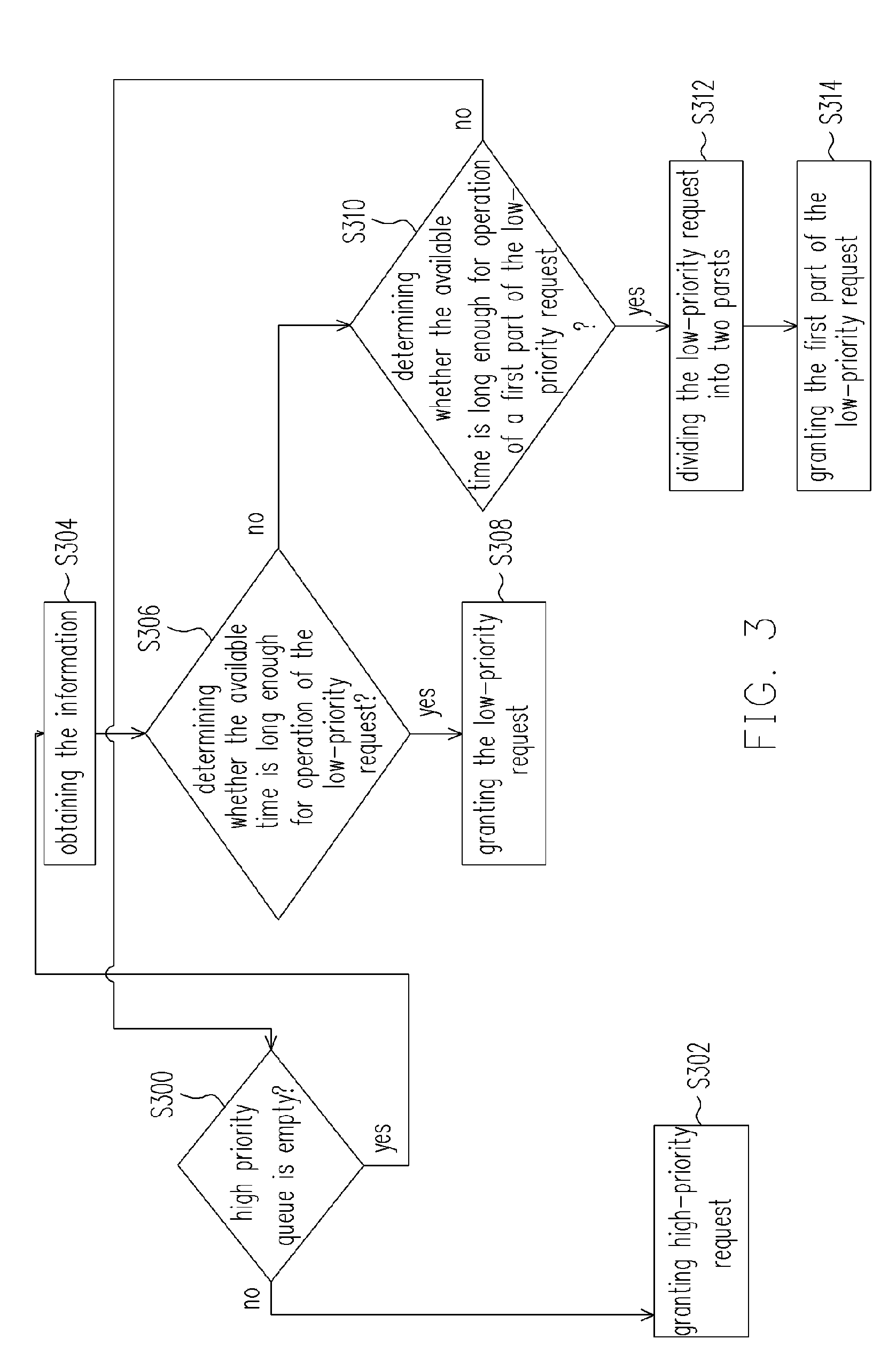

Apparatus and method for scheduling requests to source device

InactiveUS20060168383A1Opportunities decreaseLow-priority requests could beMemory systemsInput/output processes for data processingDistributed computingLower priority

An apparatus and method for scheduling requests to a source device is provided. The apparatus comprises a high-priority request queue for storing a plurality of high-priority requests to the source device; a low-priority request queue for storing a low-priority request to the source device, wherein a priority of one of the high-priority requests is higher than the priority of the low-priority request; a history counter for storing an information related to at least one requesting interval between two adjacent high-priority requests; and a scheduling module for scheduling the high-priority requests and the low-priority request according to the information.

Owner:HIMAX TECH LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com