Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

1119 results about "Mixed reality" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Mixed reality (MR) is the merging of real and virtual worlds to produce new environments and visualizations, where physical and digital objects co-exist and interact in real time. Mixed reality does not exclusively take place in either the physical or virtual world, but is a hybrid of reality and virtual reality, encompassing both augmented reality and augmented virtuality via immersive technology.

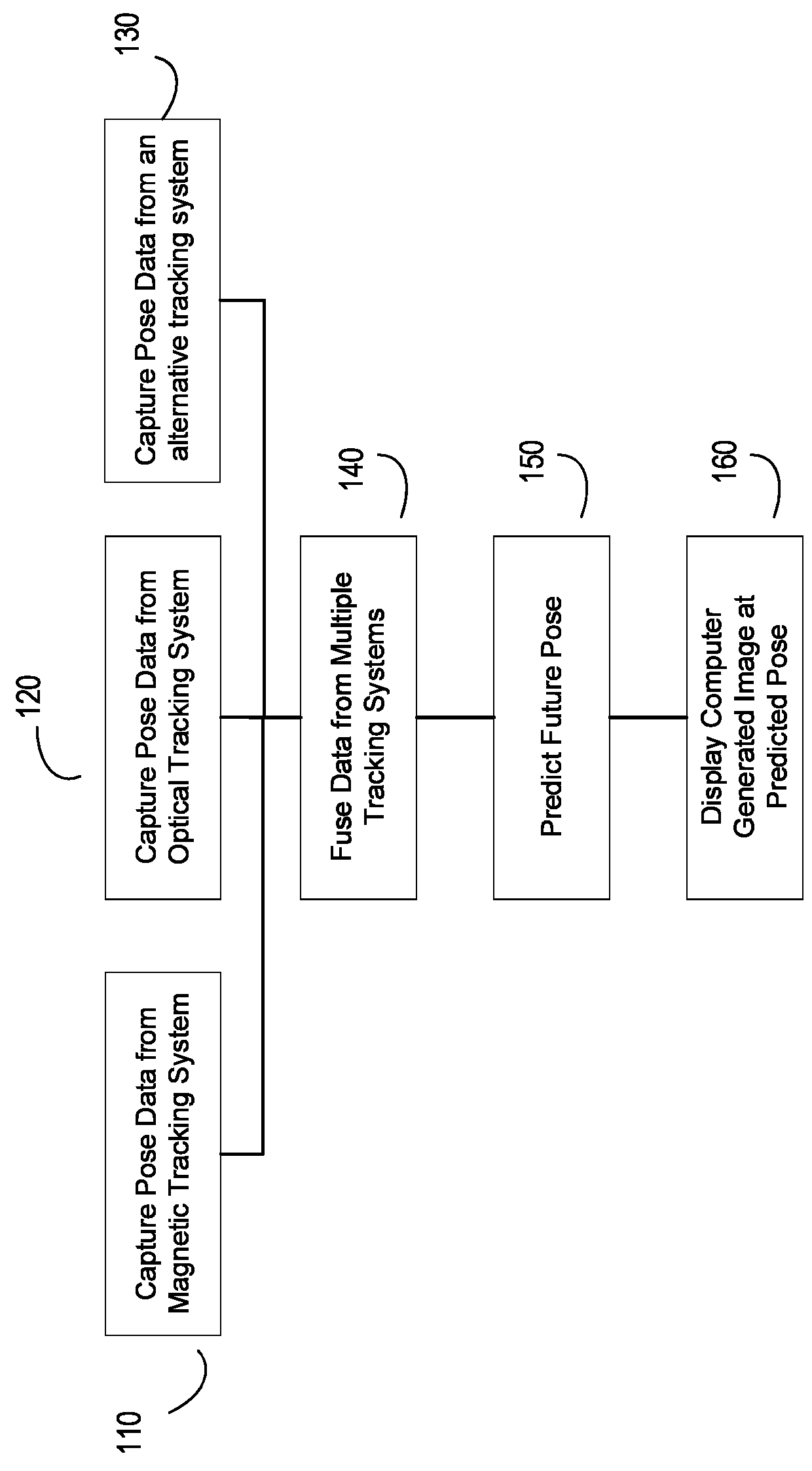

Low-latency fusing of virtual and real content

ActiveUS20120105473A1Introduce inherent latencyImage analysisCathode-ray tube indicatorsMixed realityLatency (engineering)

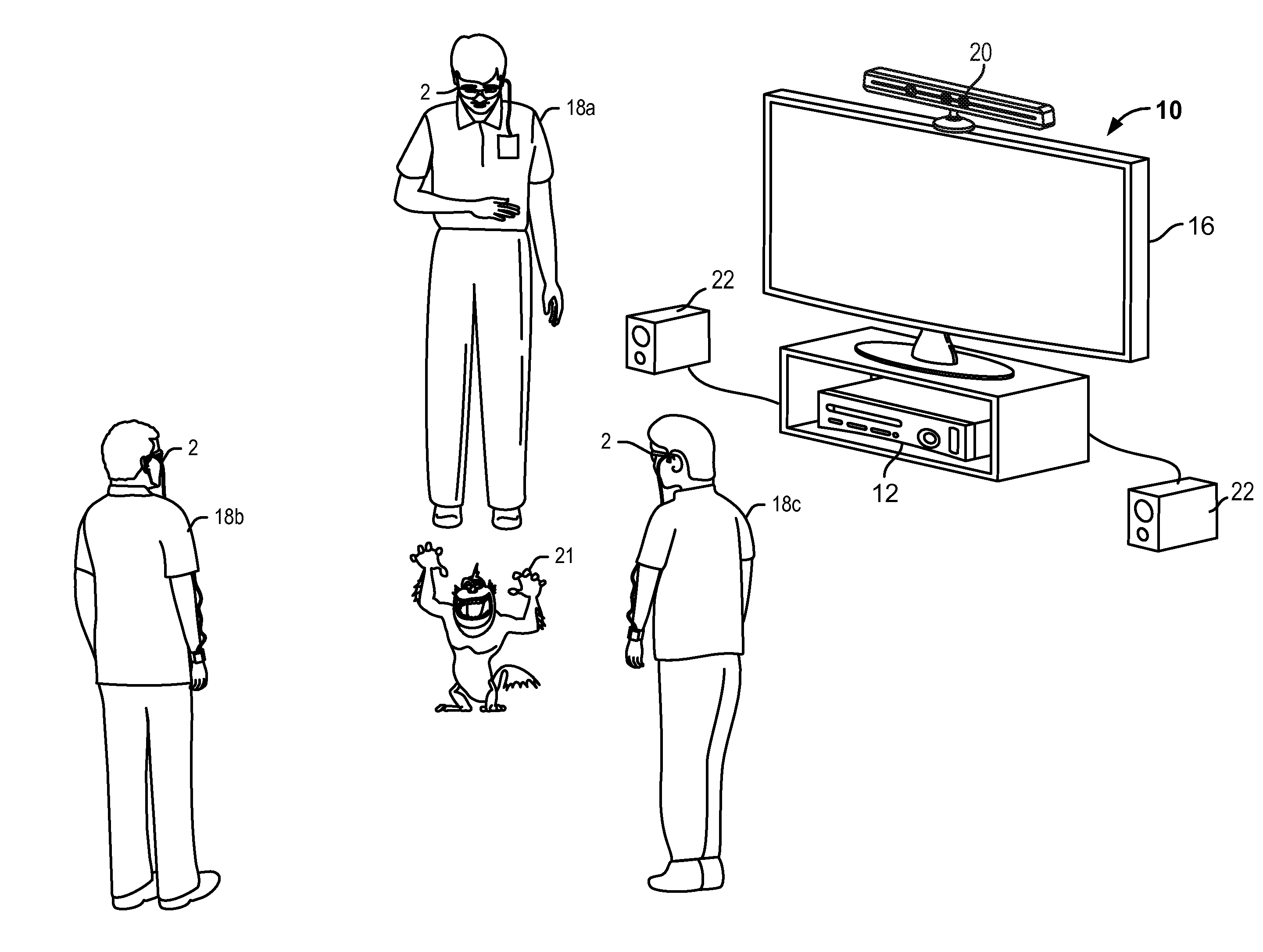

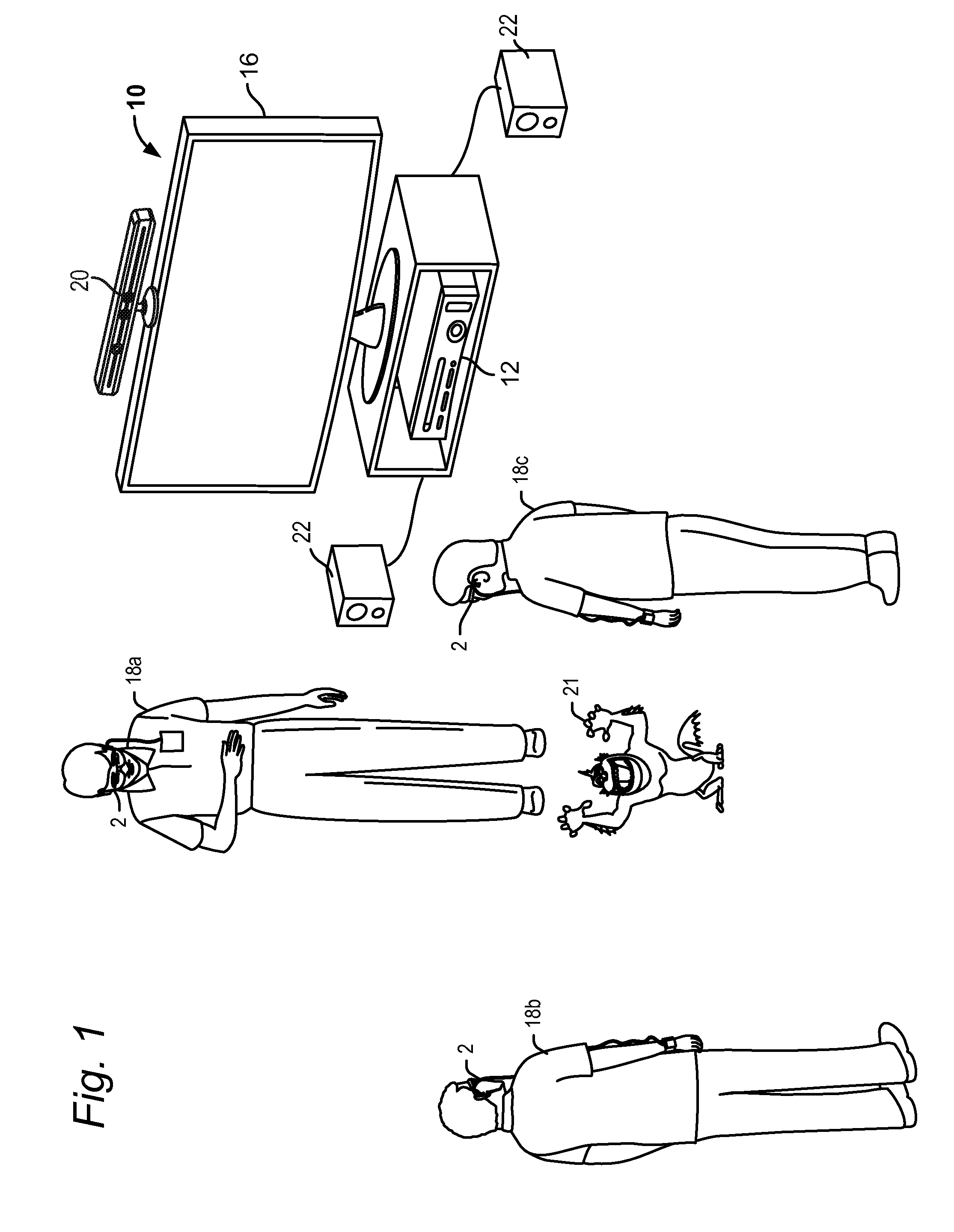

A system that includes a head mounted display device and a processing unit connected to the head mounted display device is used to fuse virtual content into real content. In one embodiment, the processing unit is in communication with a hub computing device. The processing unit and hub may collaboratively determine a map of the mixed reality environment. Further, state data may be extrapolated to predict a field of view for a user in the future at a time when the mixed reality is to be displayed to the user. This extrapolation can remove latency from the system.

Owner:MICROSOFT TECH LICENSING LLC

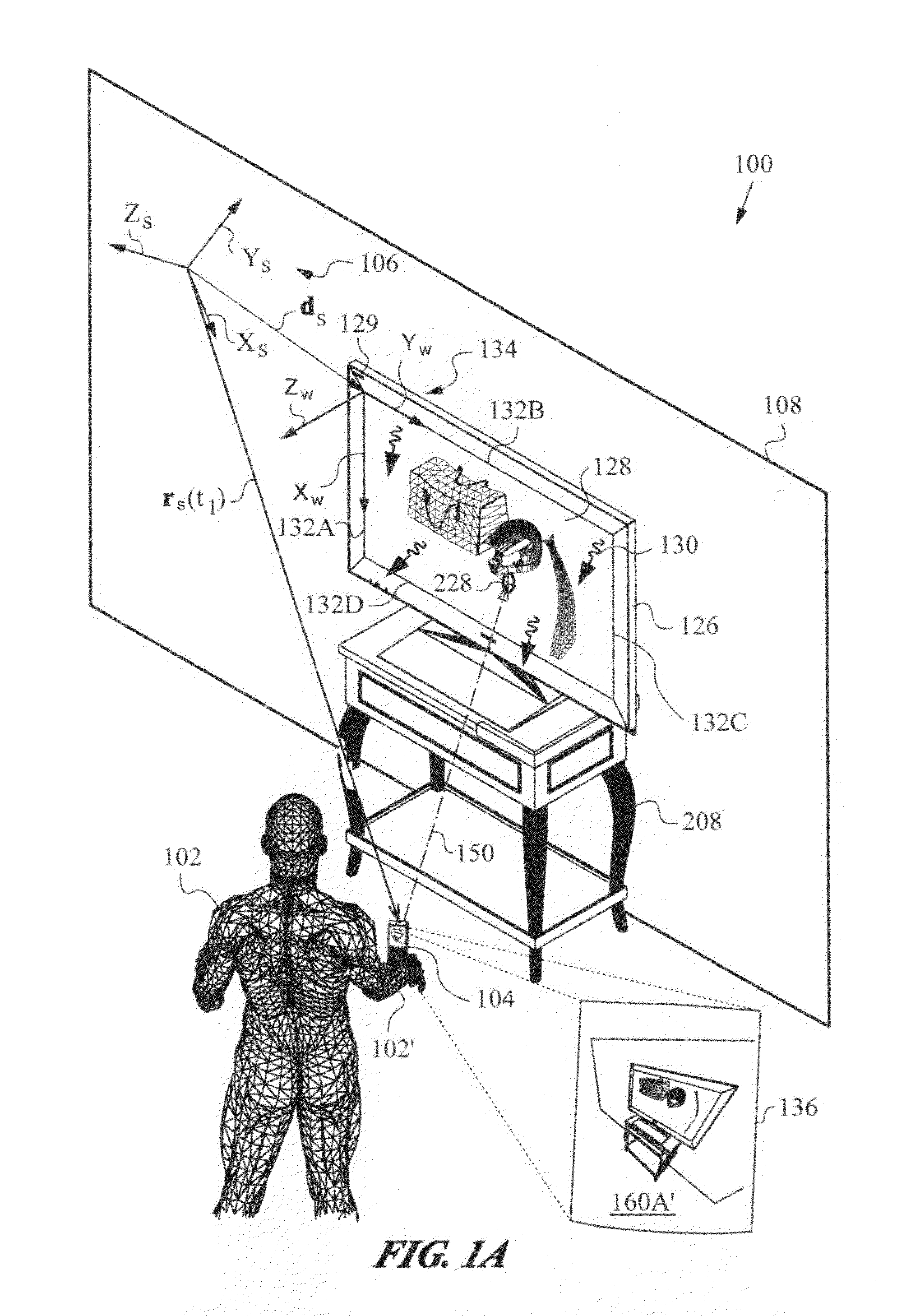

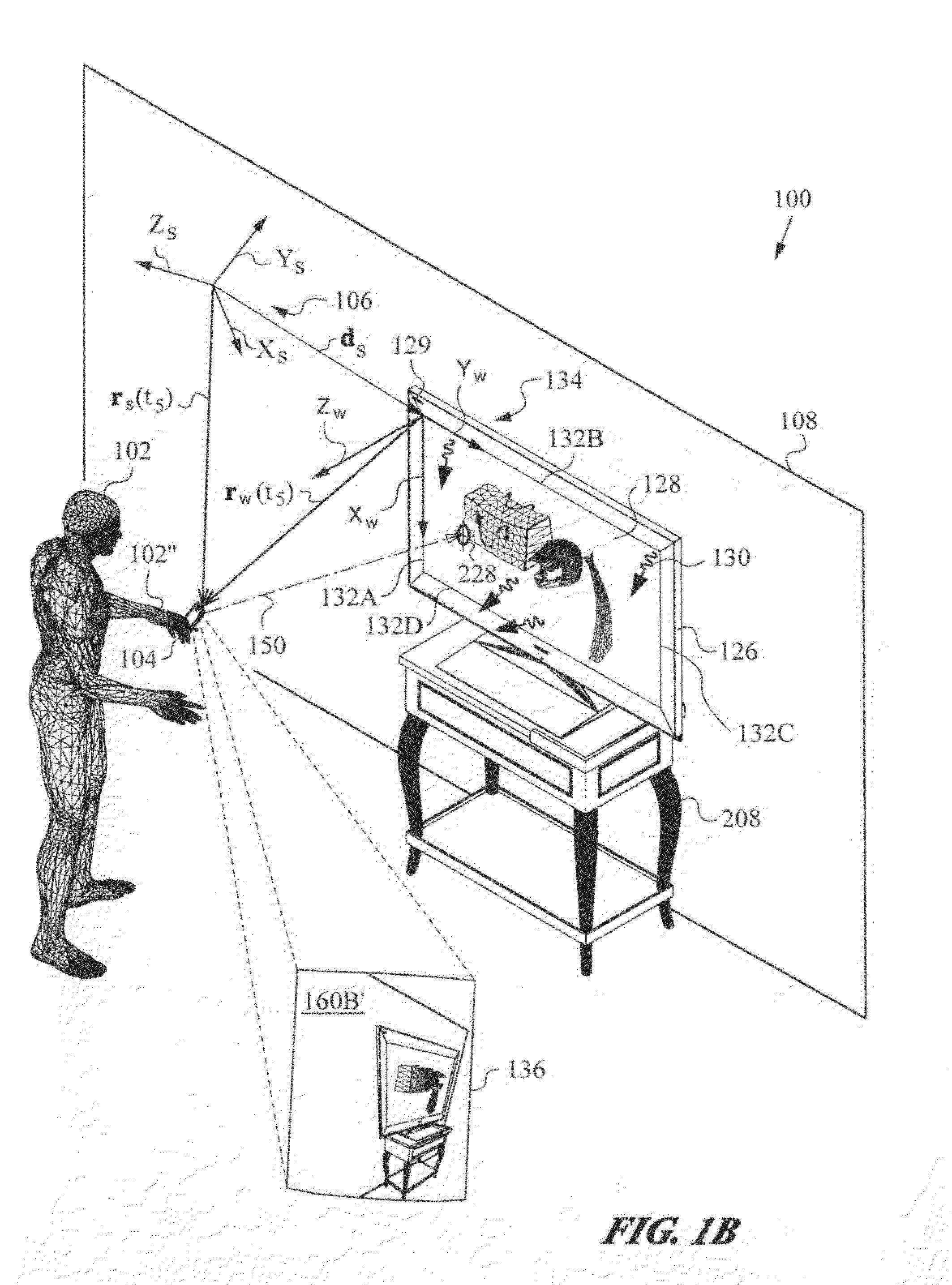

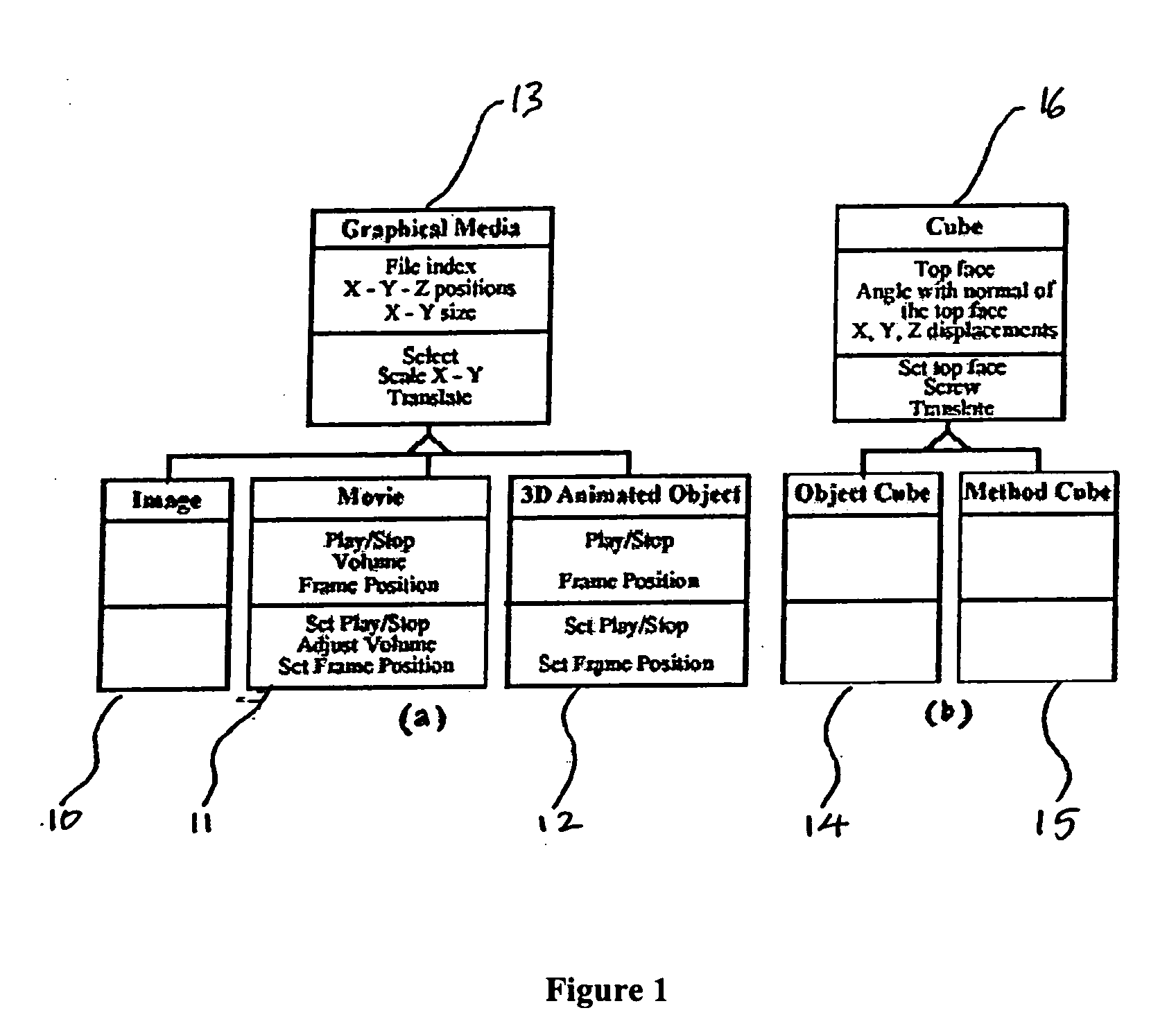

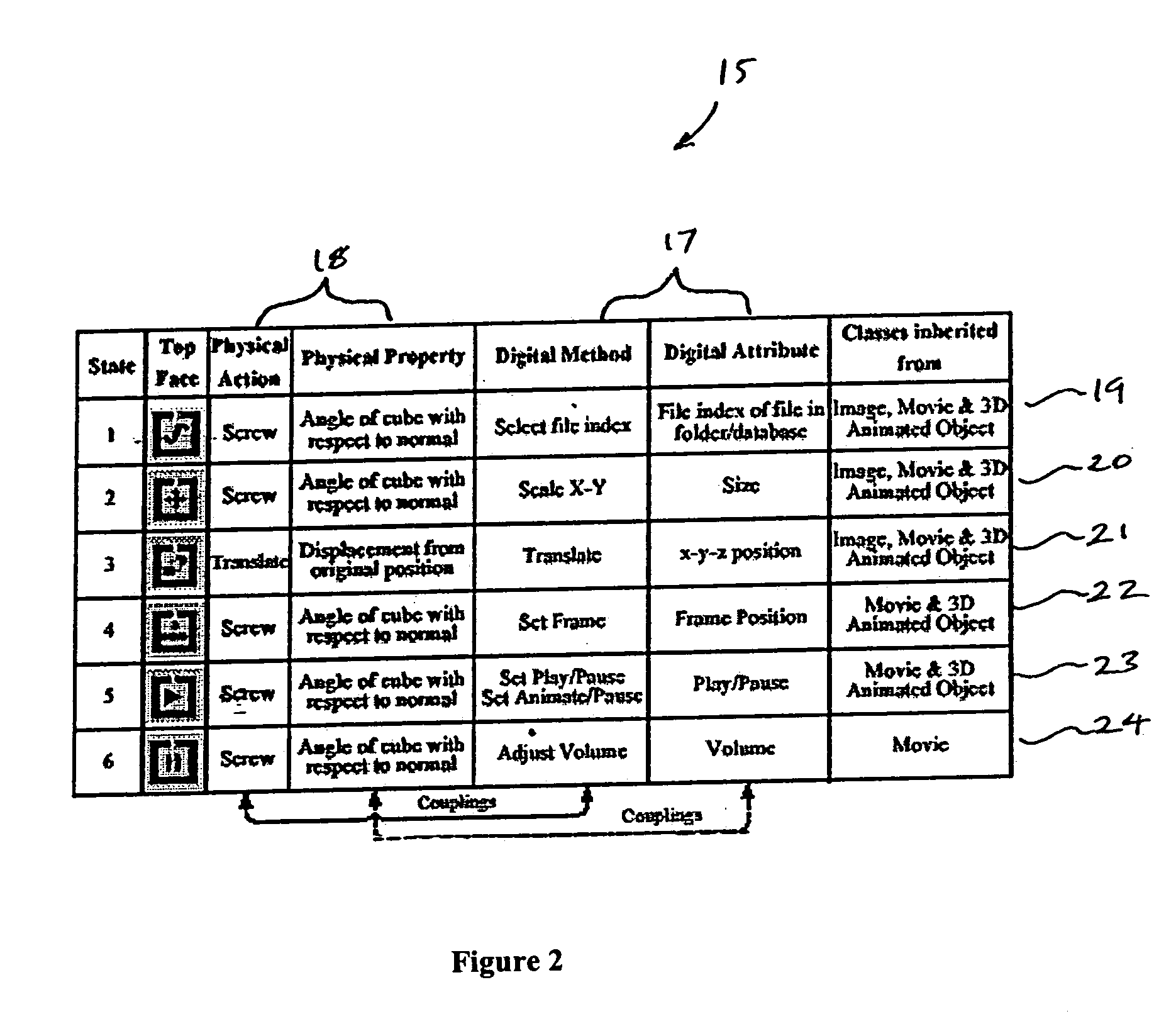

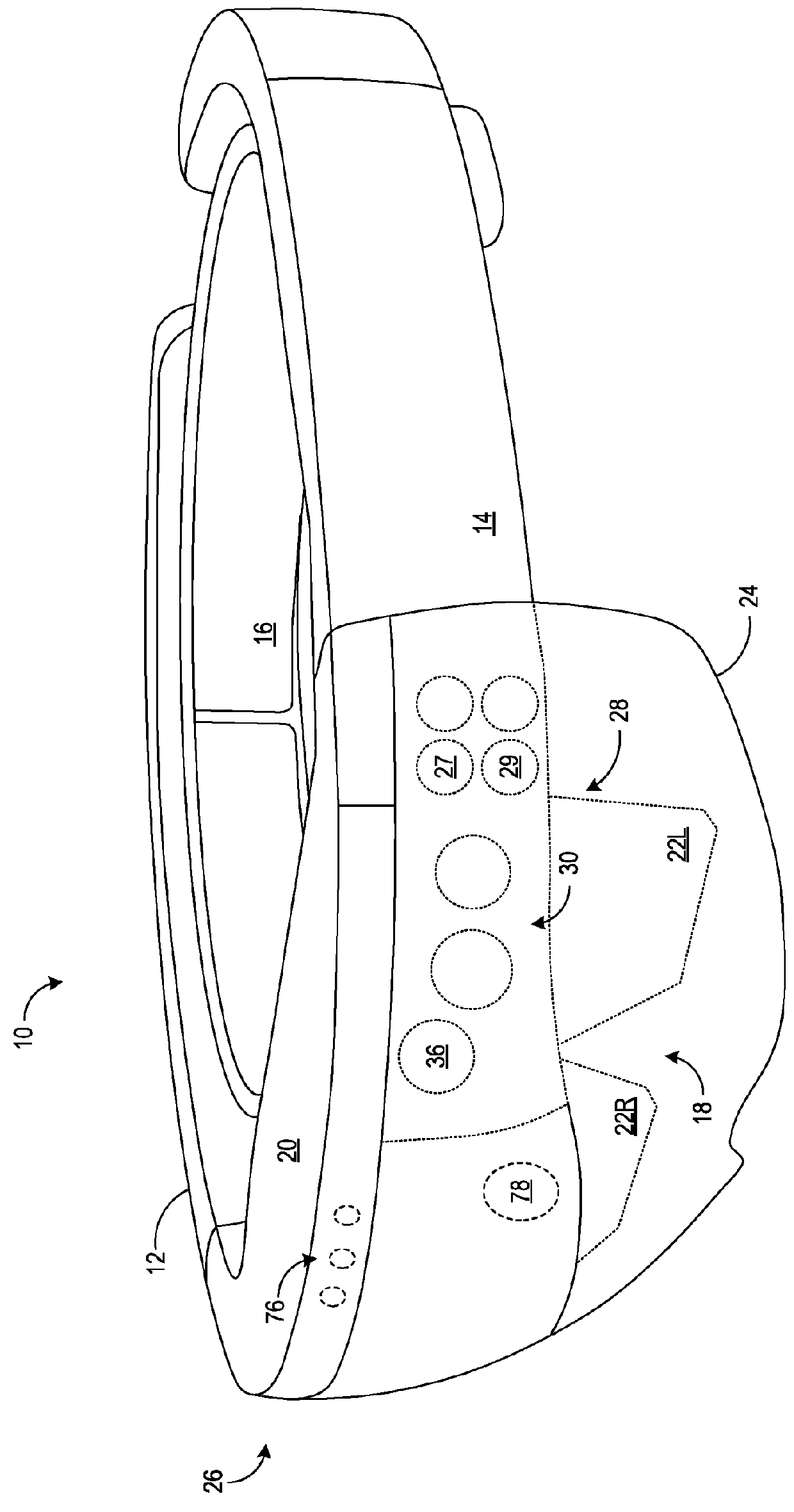

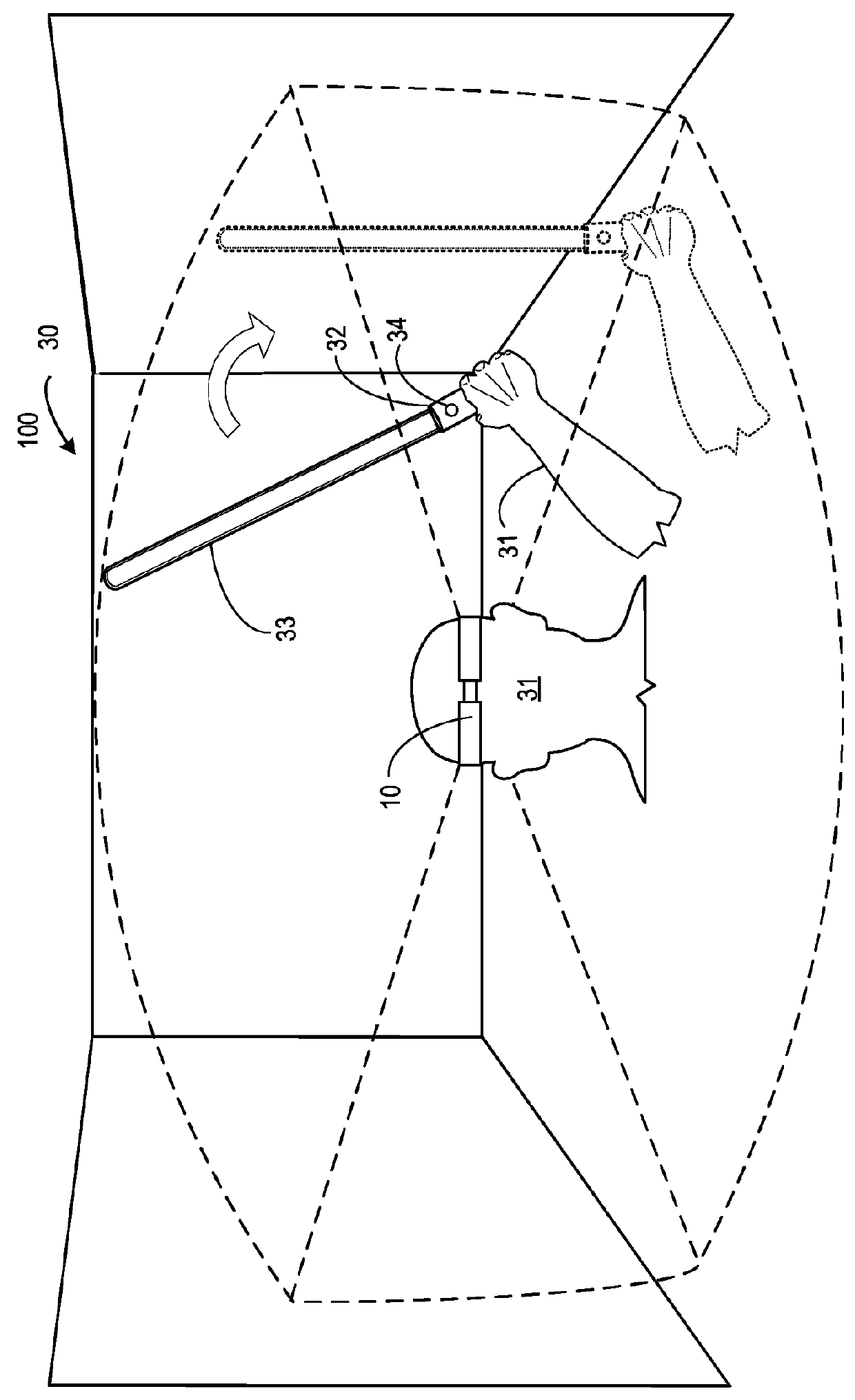

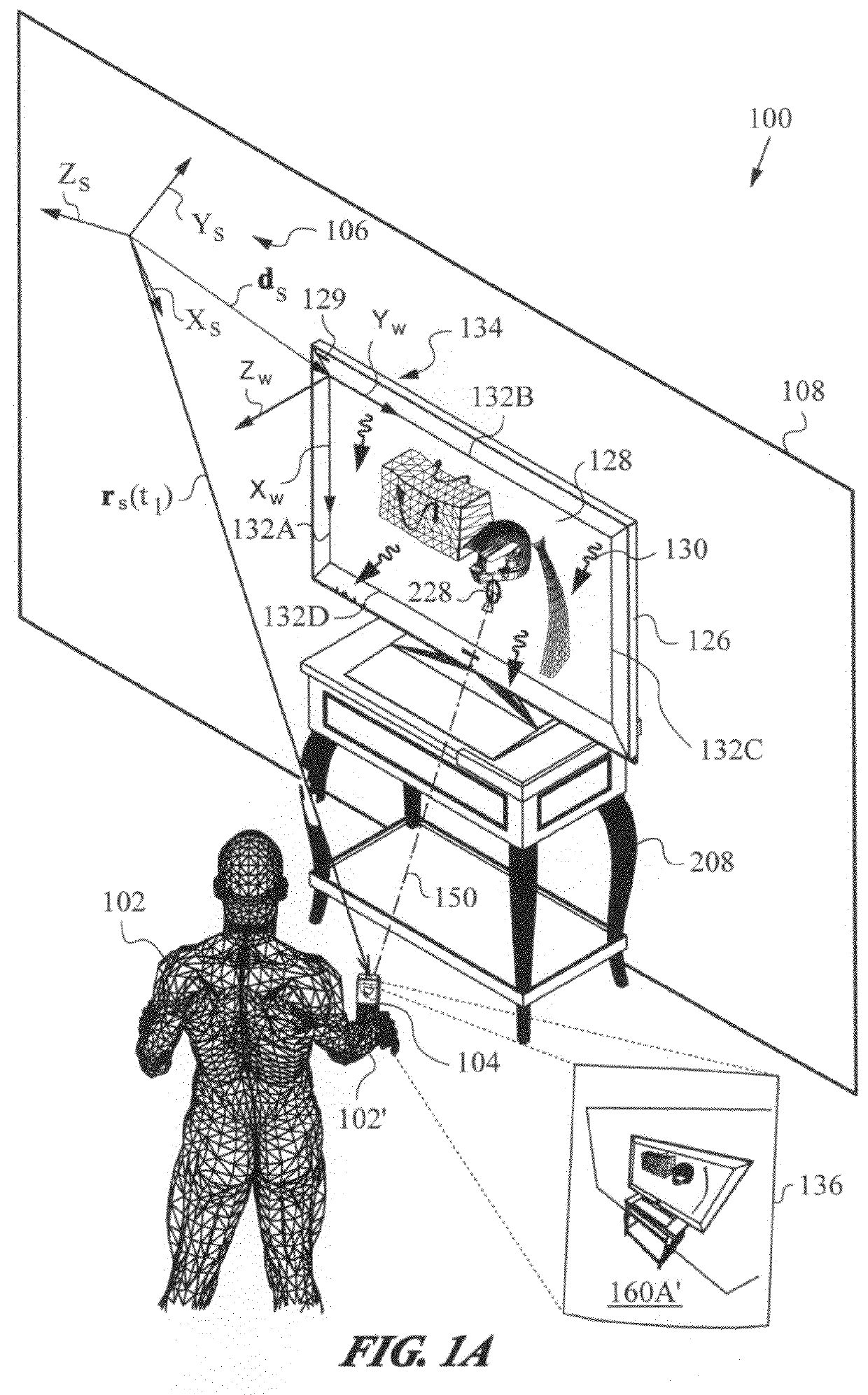

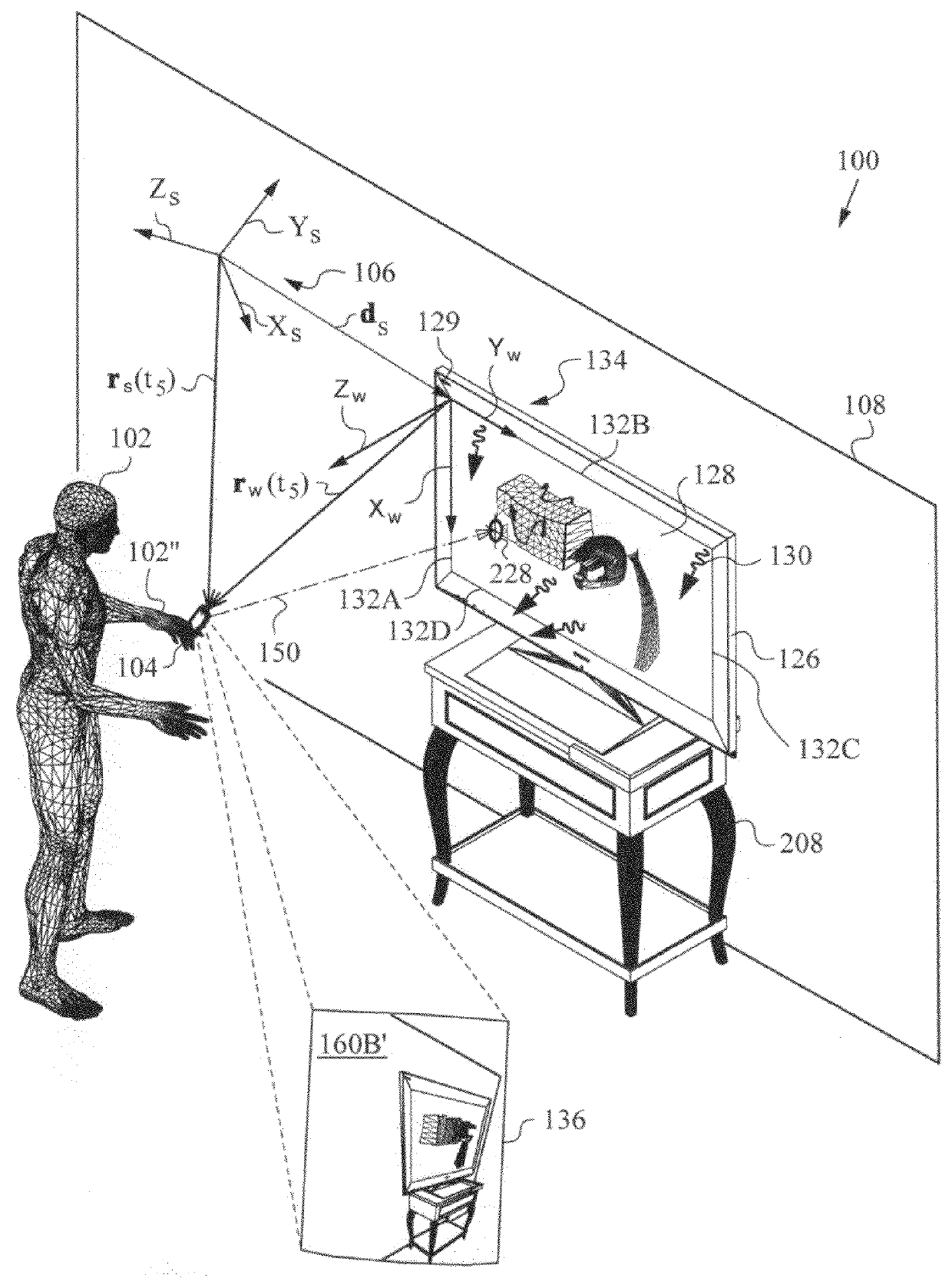

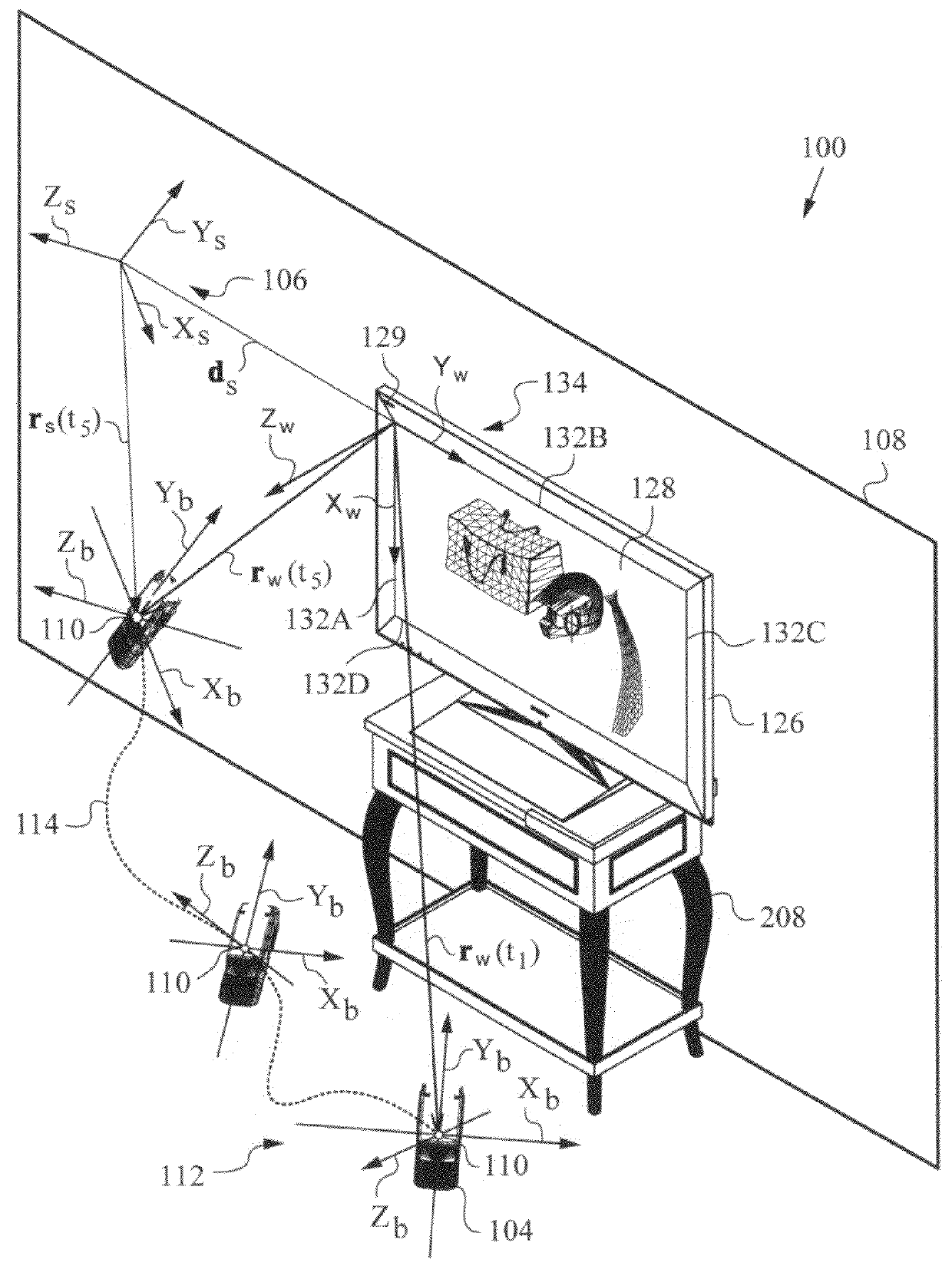

Deriving input from six degrees of freedom interfaces

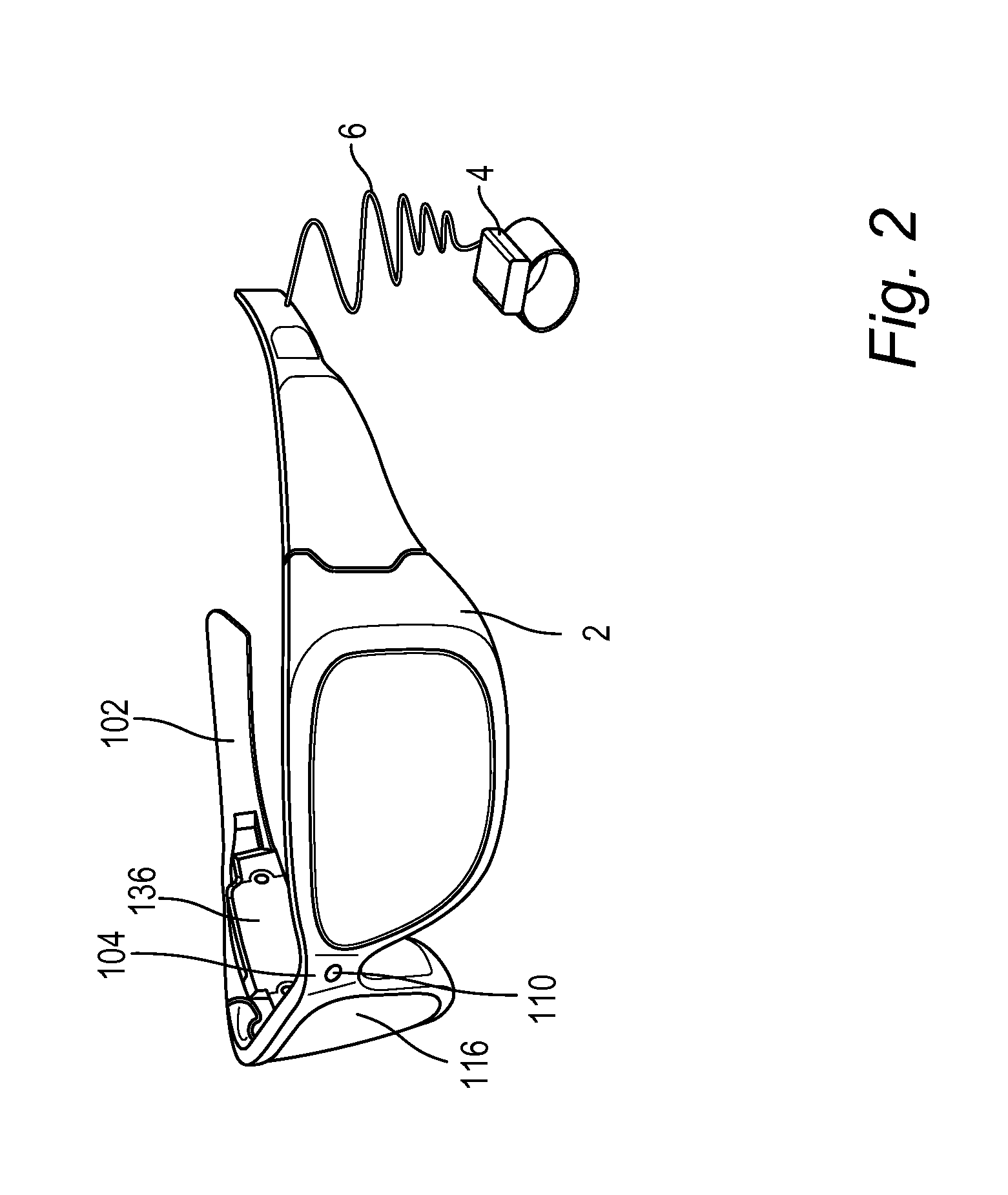

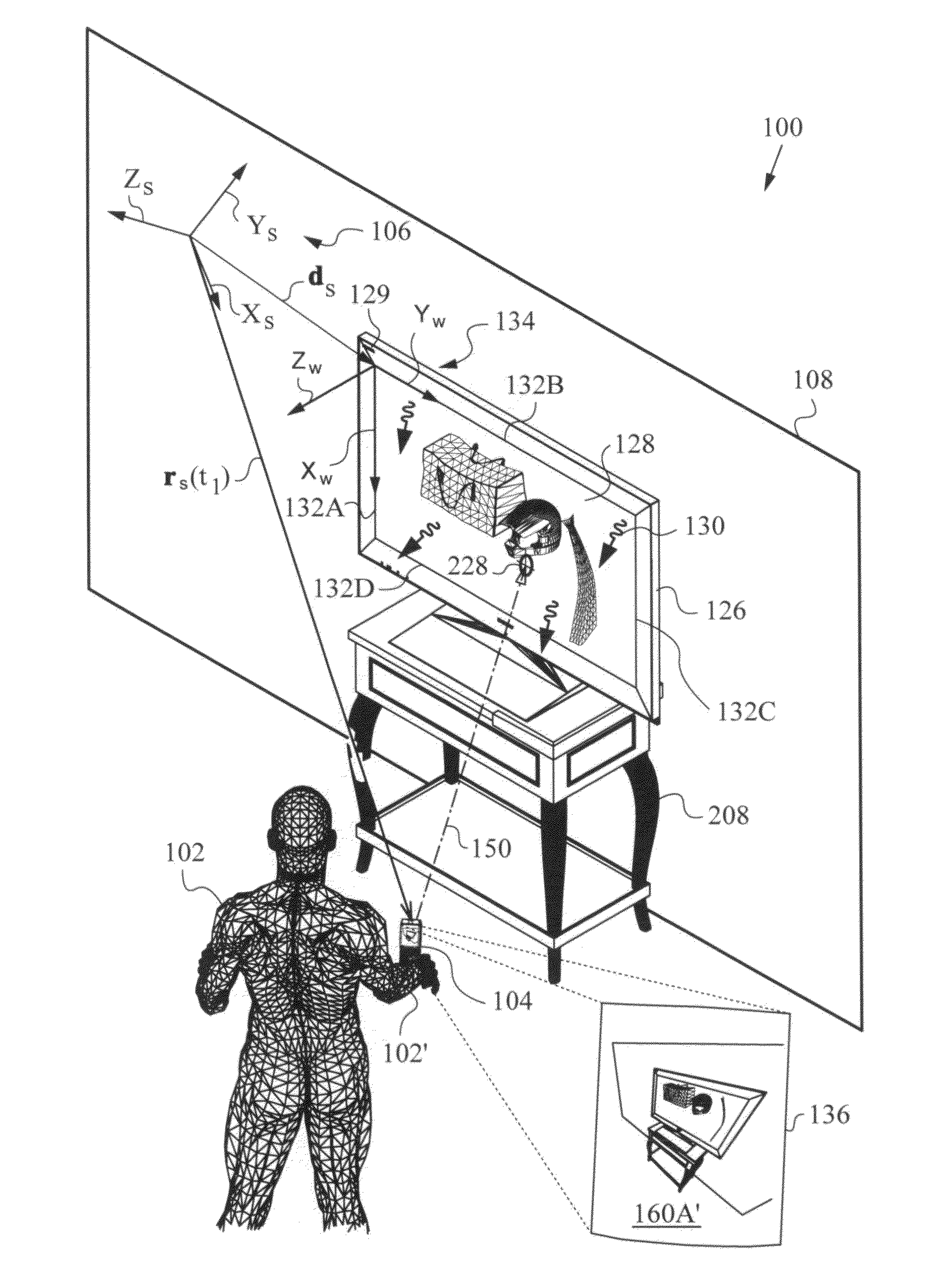

ActiveUS20120038549A1Cathode-ray tube indicatorsInput/output processes for data processingMixed realityVirtual space

The present invention relates to interfaces and methods for producing input for software applications based on the absolute pose of an item manipulated or worn by a user in a three-dimensional environment. Absolute pose in the sense of the present invention means both the position and the orientation of the item as described in a stable frame defined in that three-dimensional environment. The invention describes how to recover the absolute pose with optical hardware and methods, and how to map at least one of the recovered absolute pose parameters to the three translational and three rotational degrees of freedom available to the item to generate useful input. The applications that can most benefit from the interfaces and methods of the invention involve 3D virtual spaces including augmented reality and mixed reality environments.

Owner:ELECTRONICS SCRIPTING PRODS

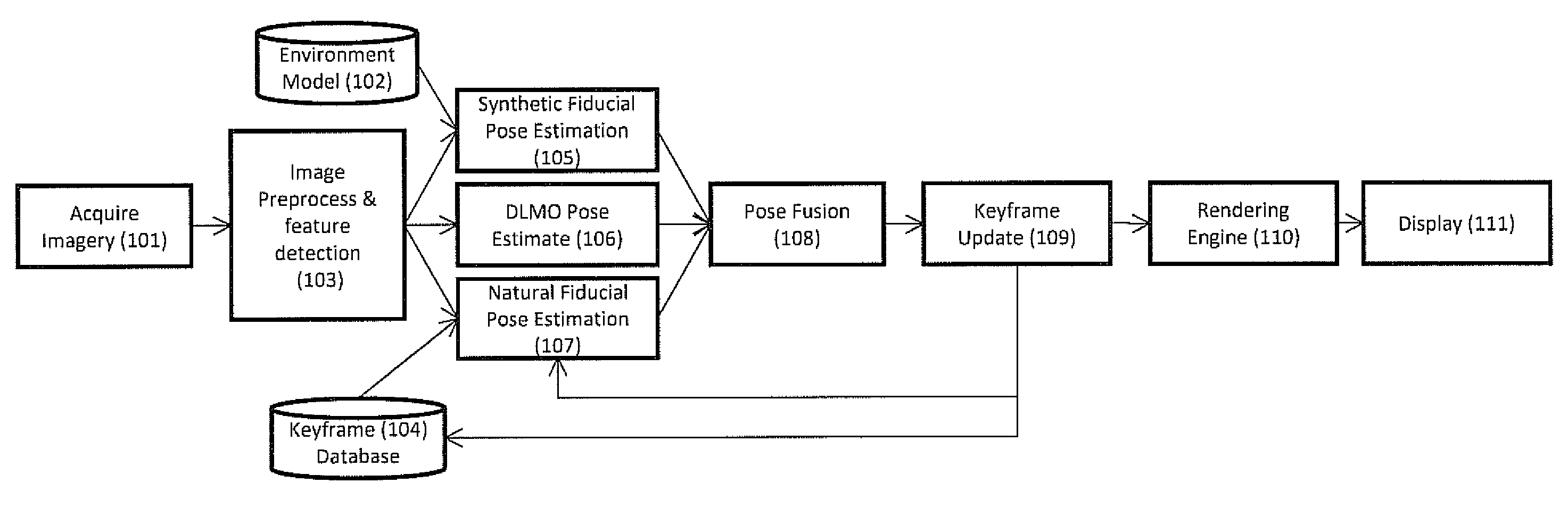

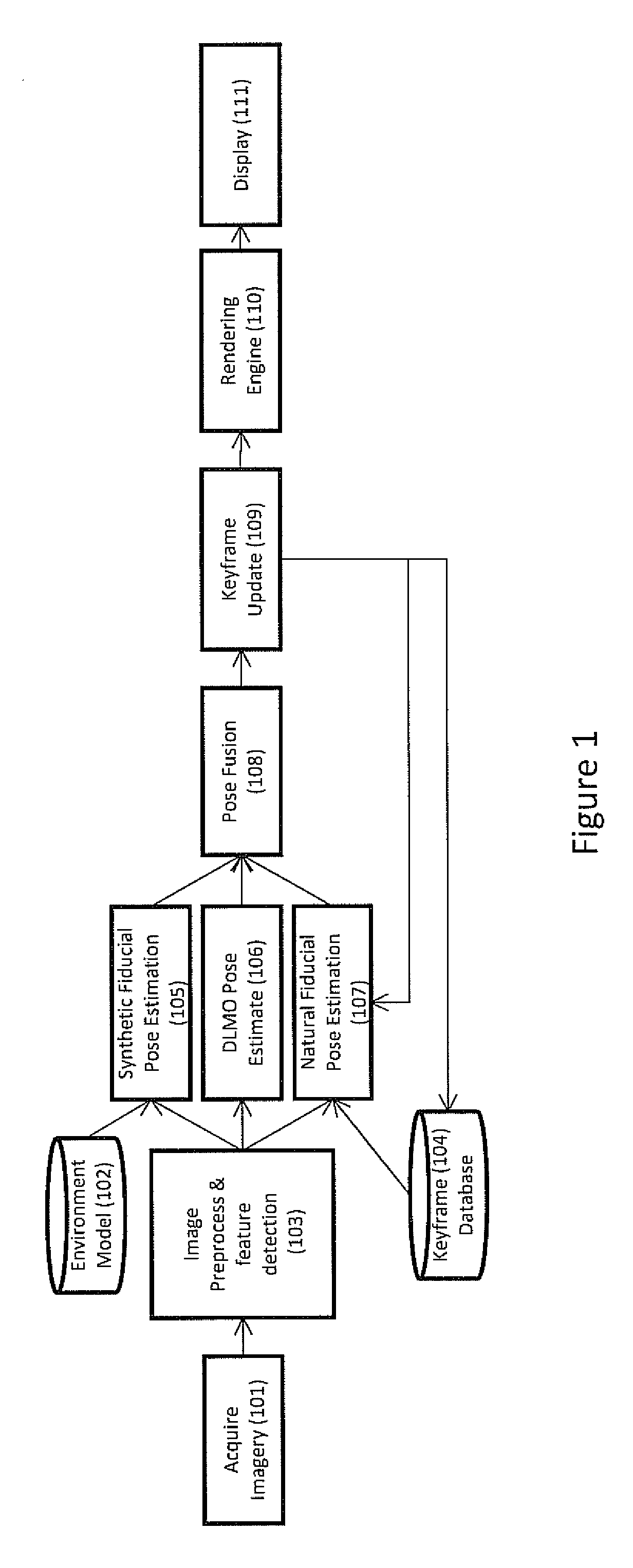

Automatic mapping of augmented reality fiducials

InactiveUS20100045701A1Configuration highImage enhancementImage analysisMixed realityComputer science

Systems and methods expedite and improve the process of configuring an augmented reality environment. A method of pose determination according to the invention includes the step of placing at least one synthetic fiducial in a real environment to be augmented. A camera, which may include apparatus for obtaining directly measured camera location and orientation (DLMO) information, is used to acquire an image of the environment. The natural and synthetic fiducials are detected, and the pose of the camera is determined using a combination of the natural fiducials, the synthetic fiducial if visible in the image, and the DLMO information if determined to be reliable or necessary. The invention is not limited to architectural environments, and may be used with instrumented persons, animals, vehicles, and any other augmented or mixed reality applications.

Owner:CYBERNET SYST

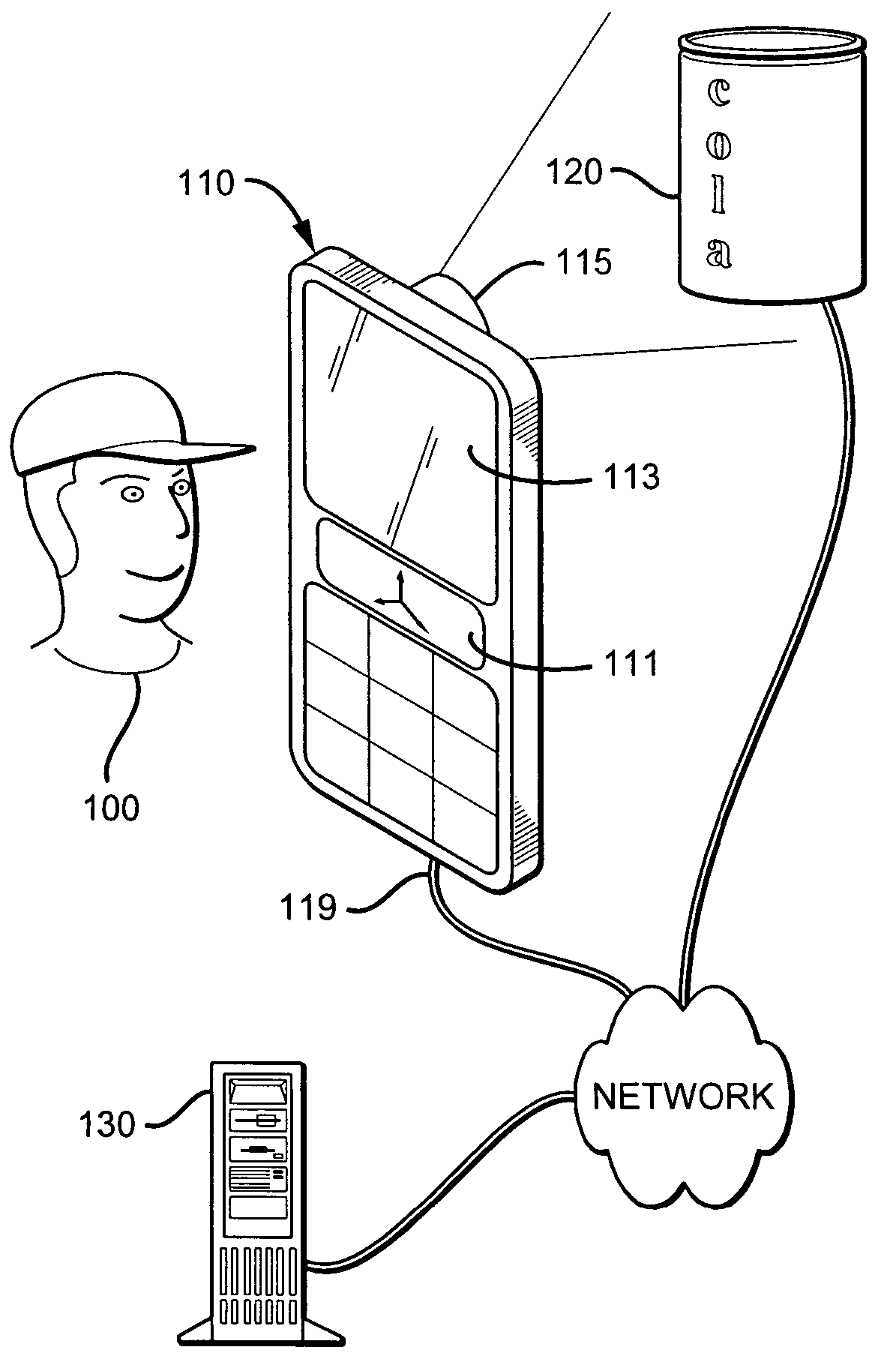

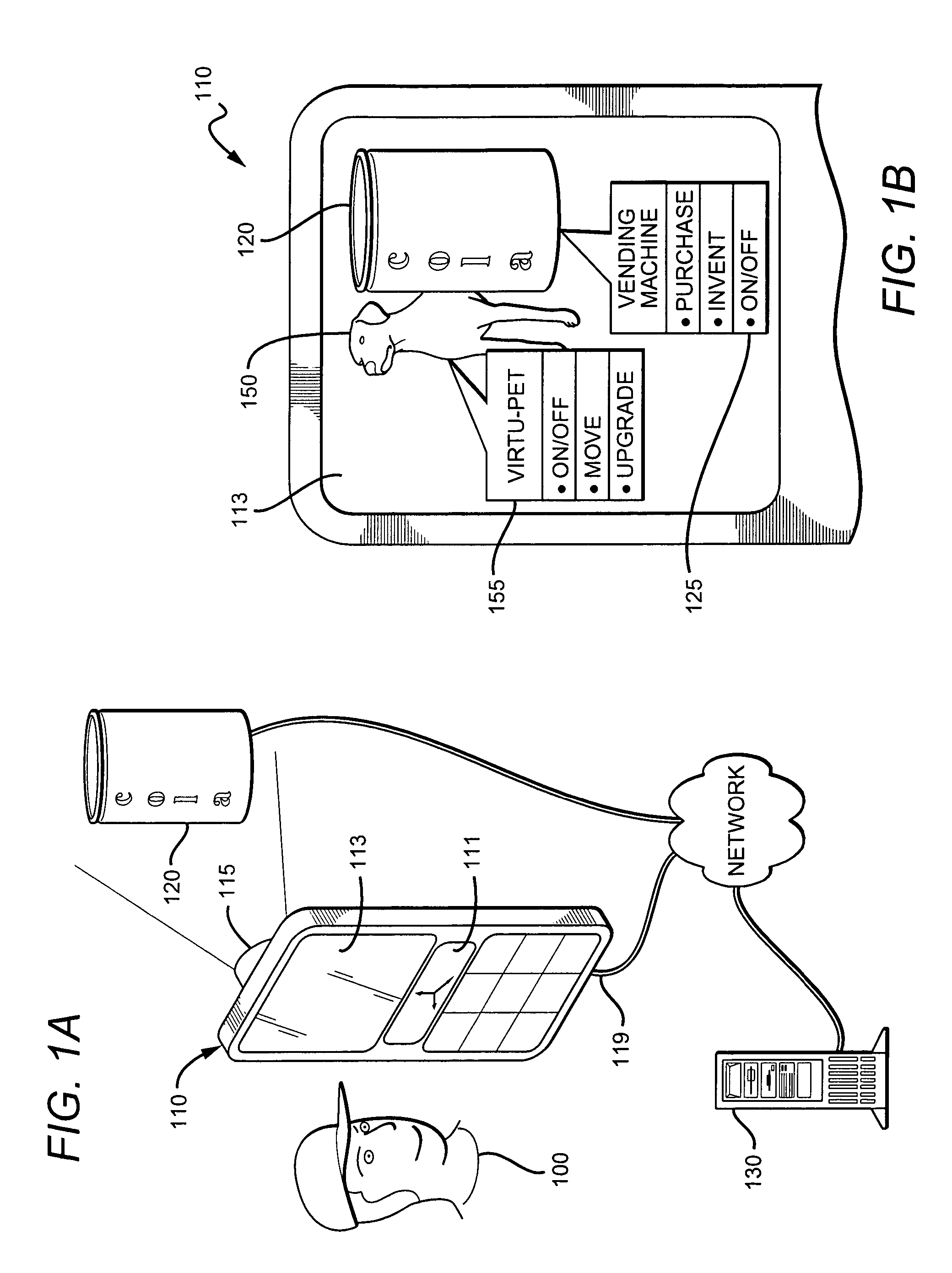

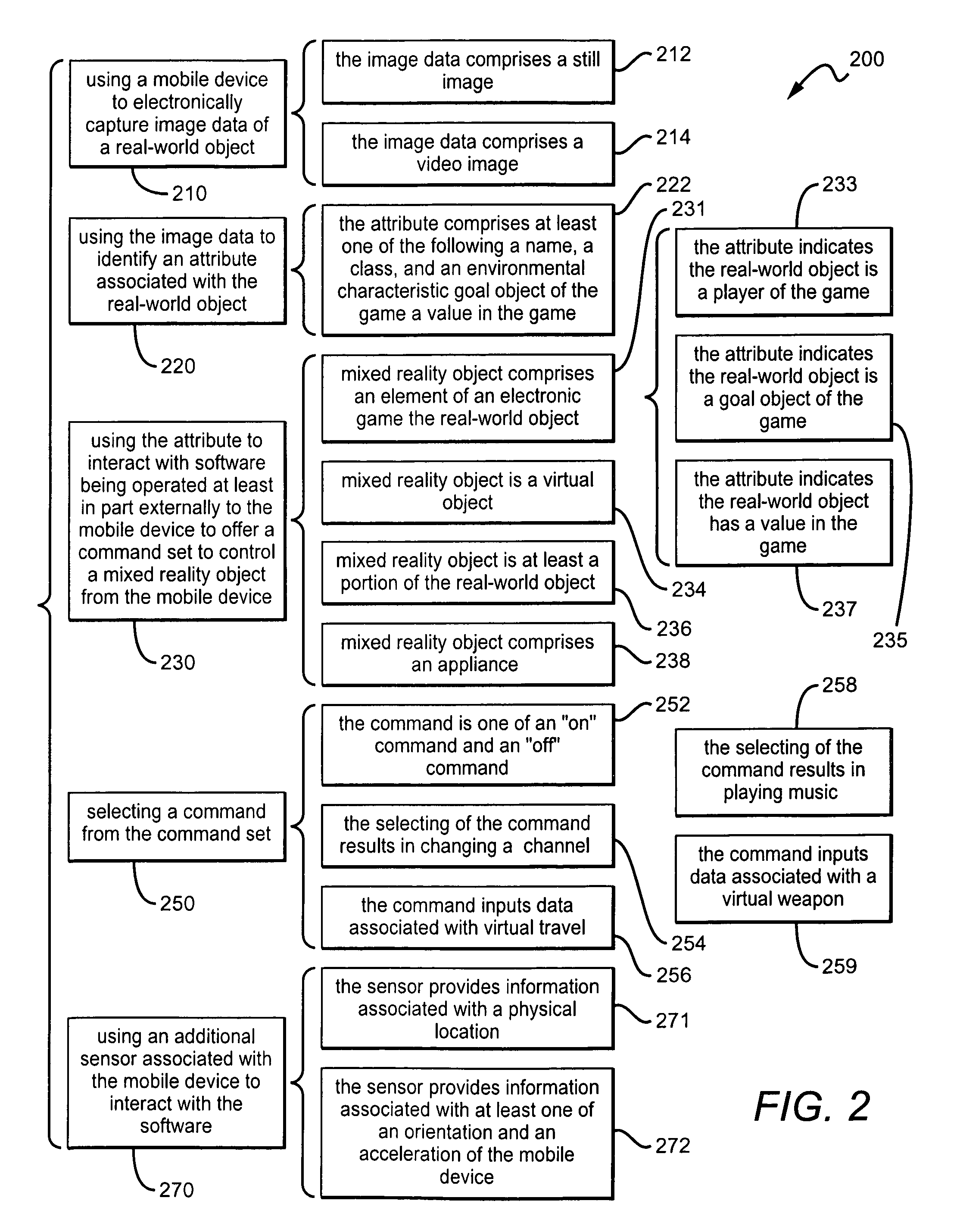

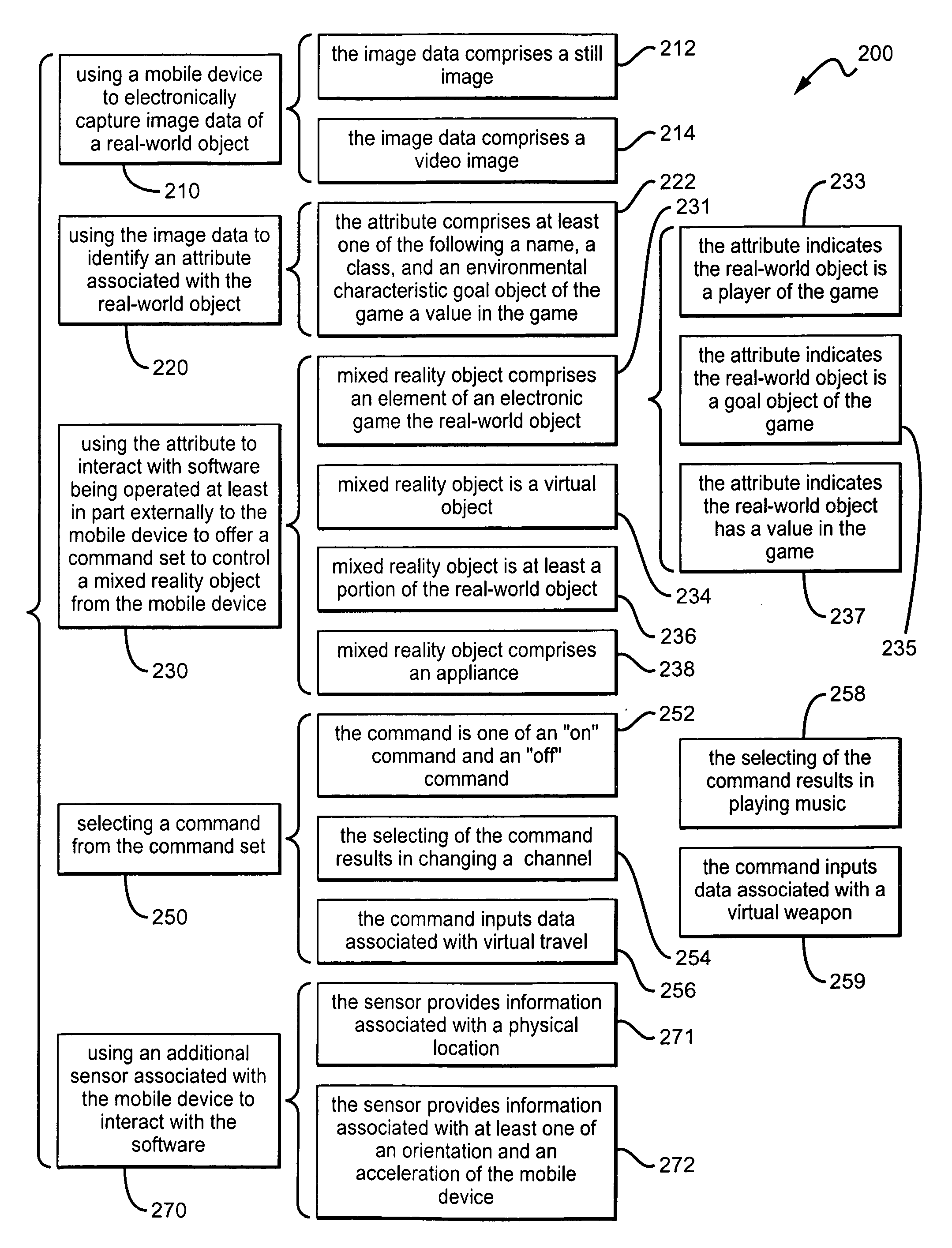

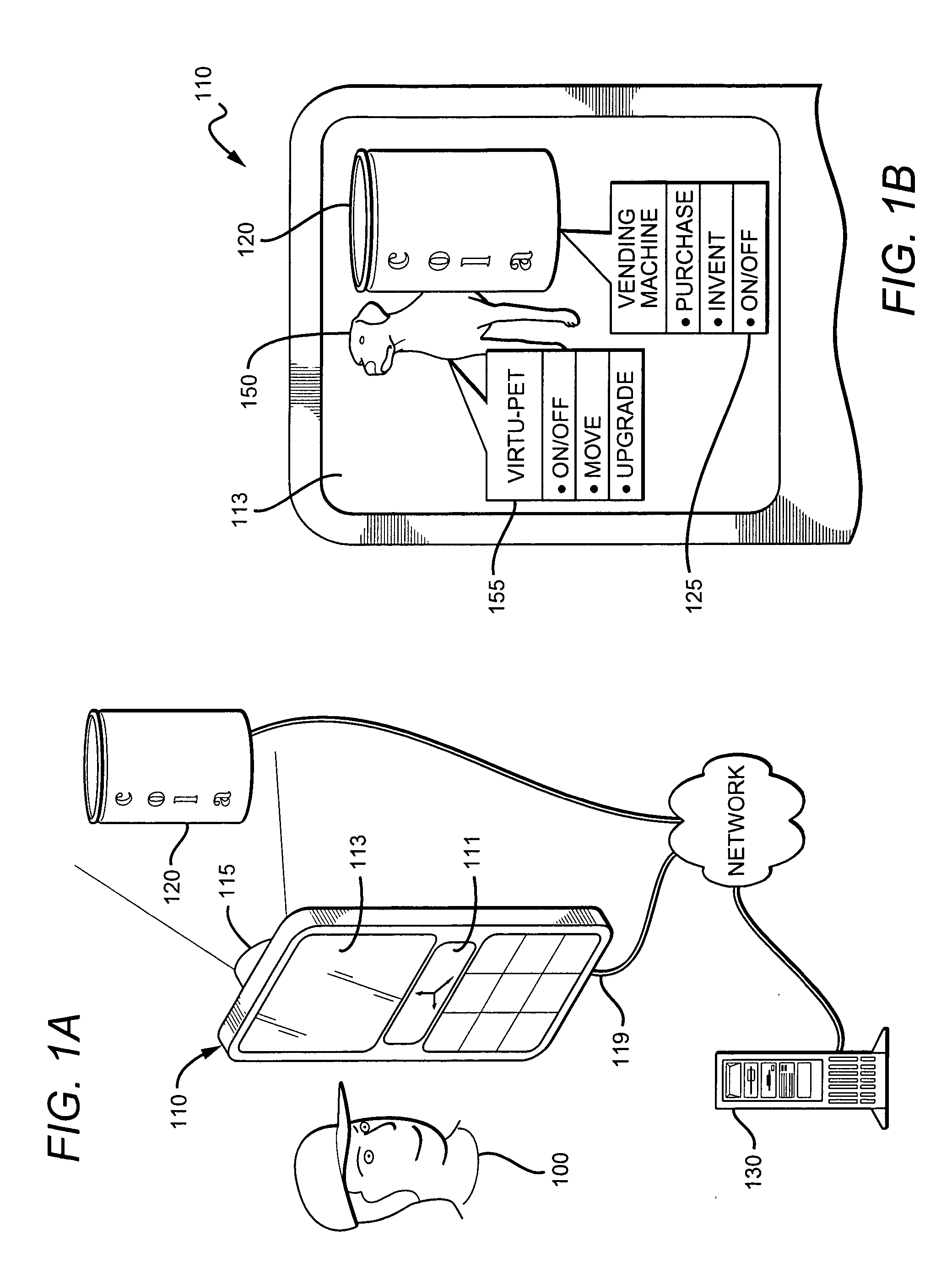

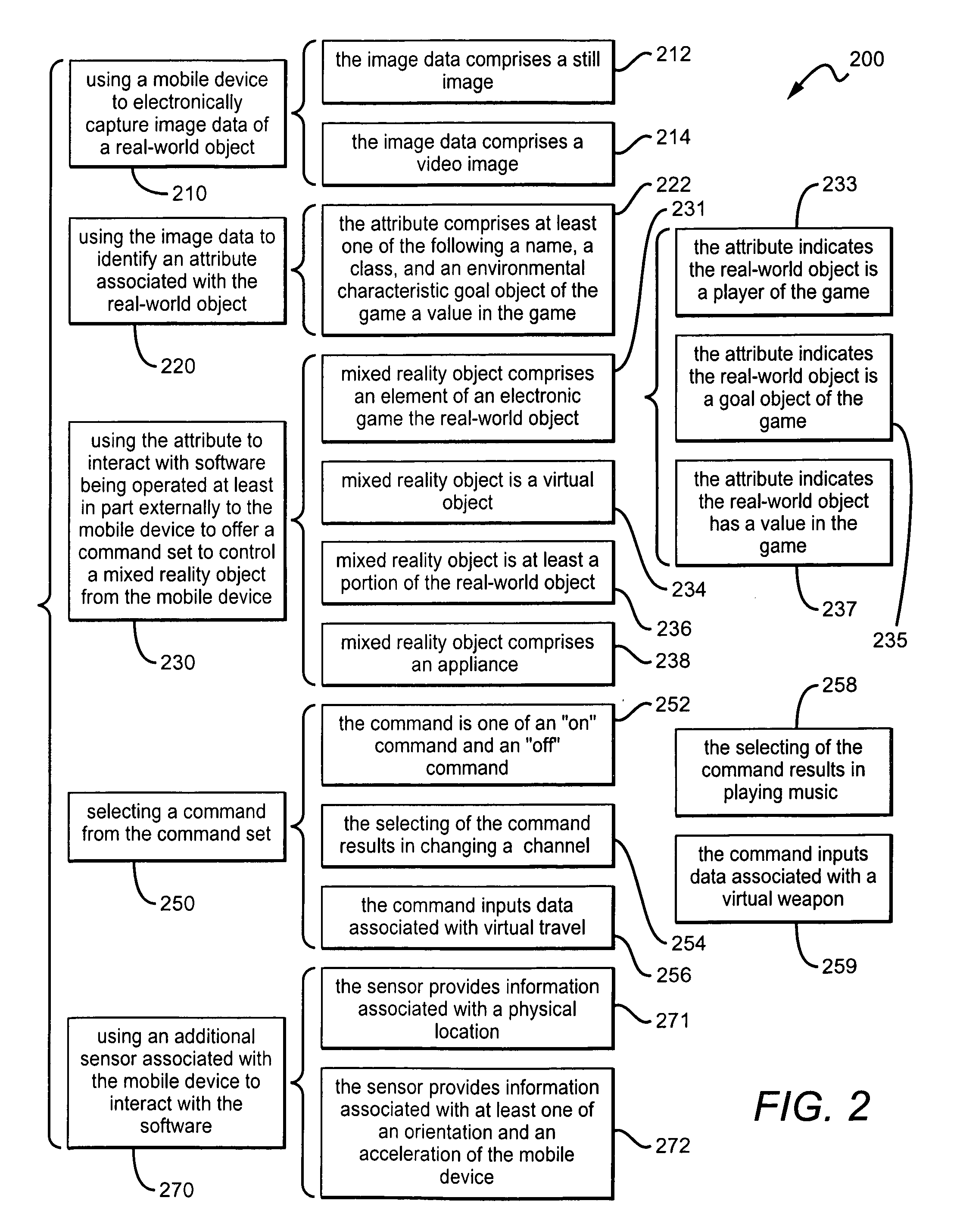

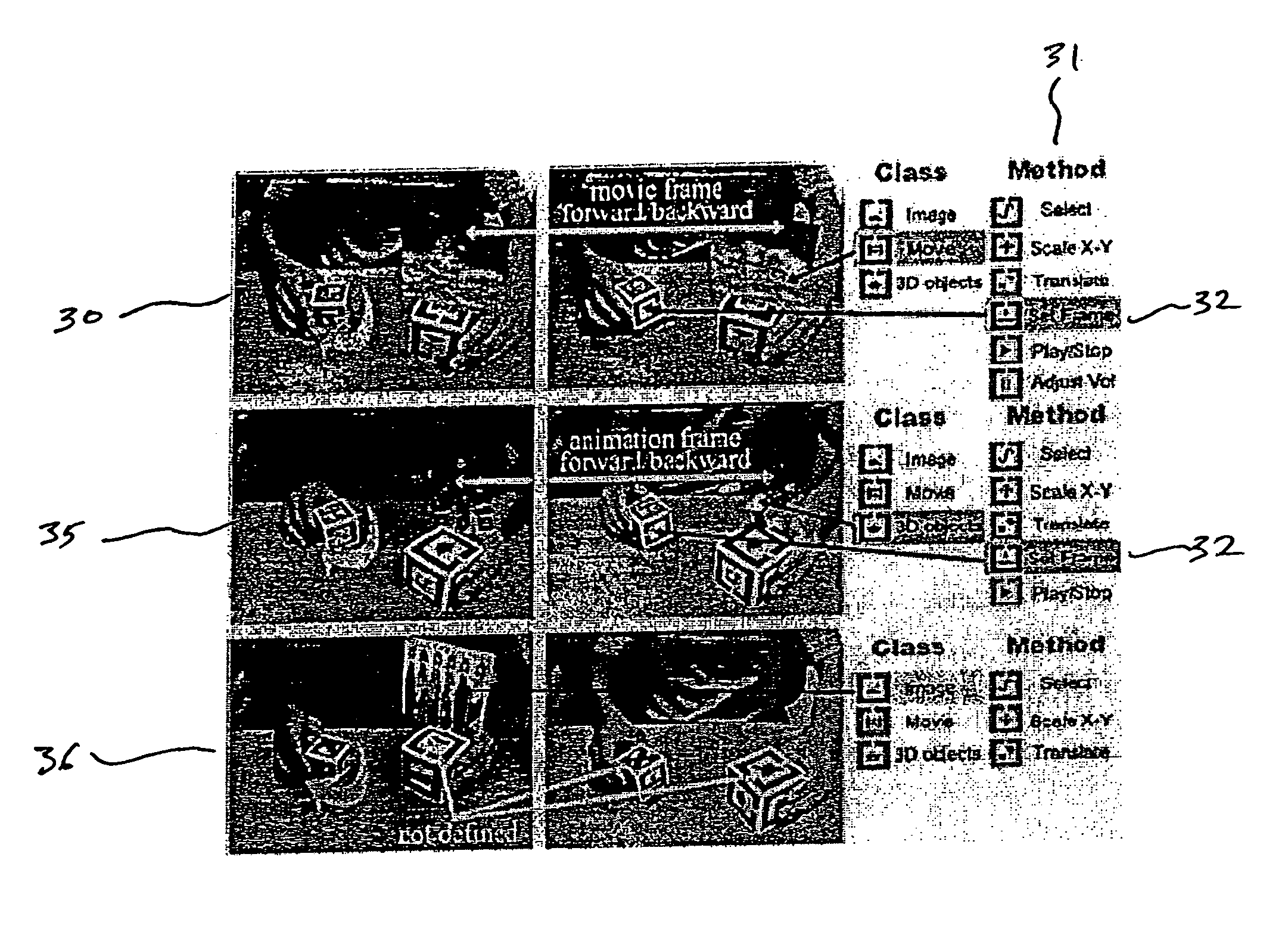

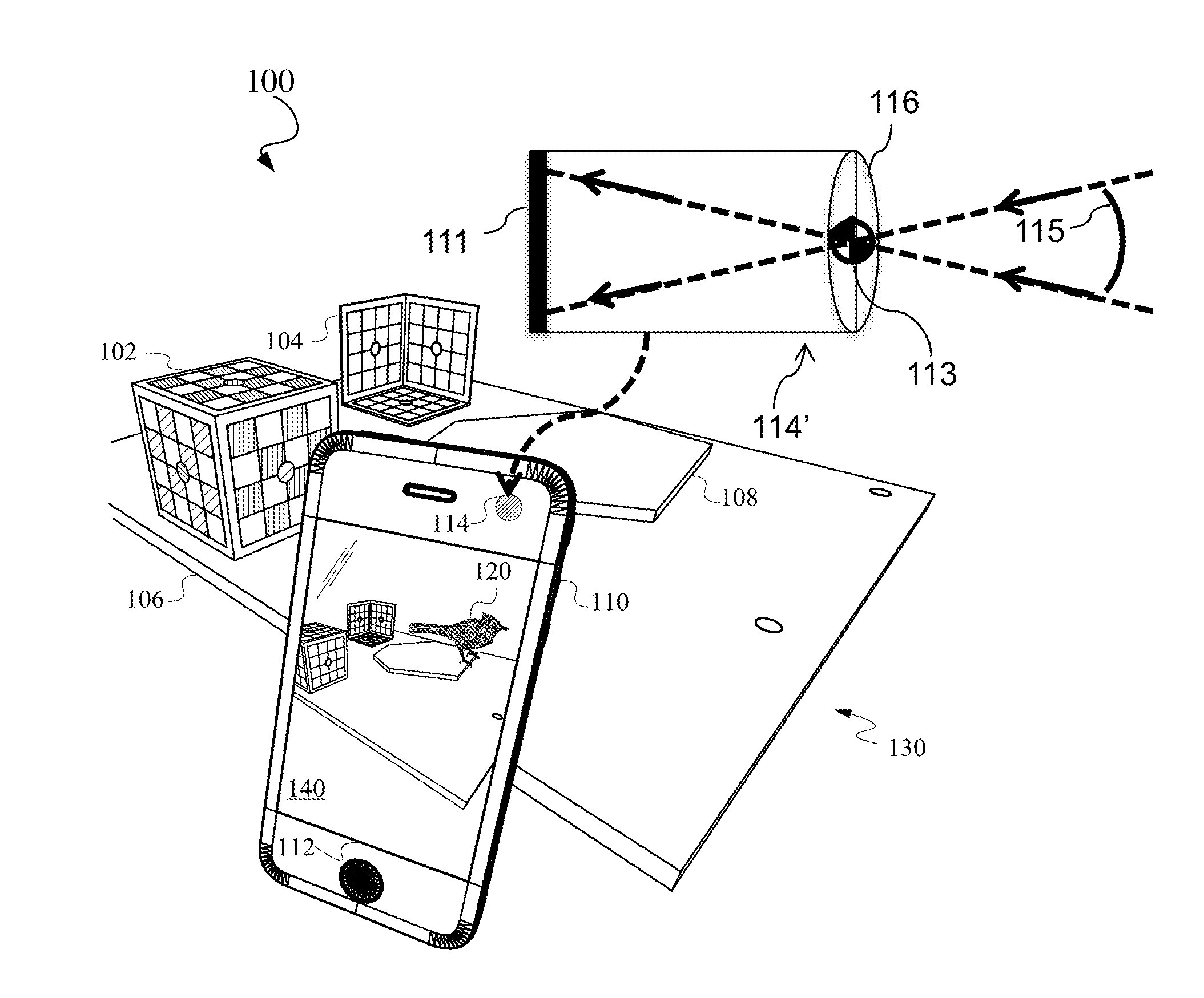

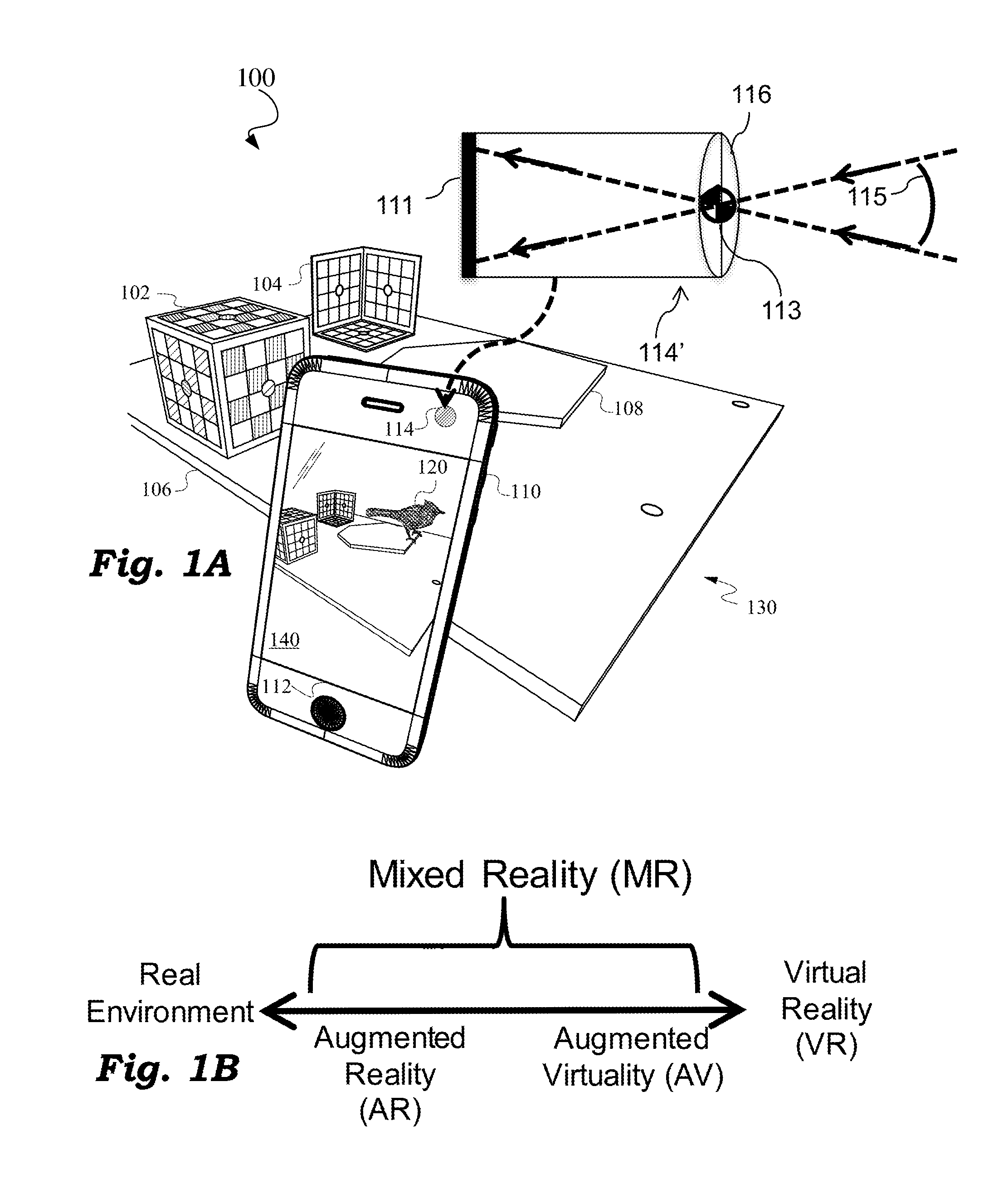

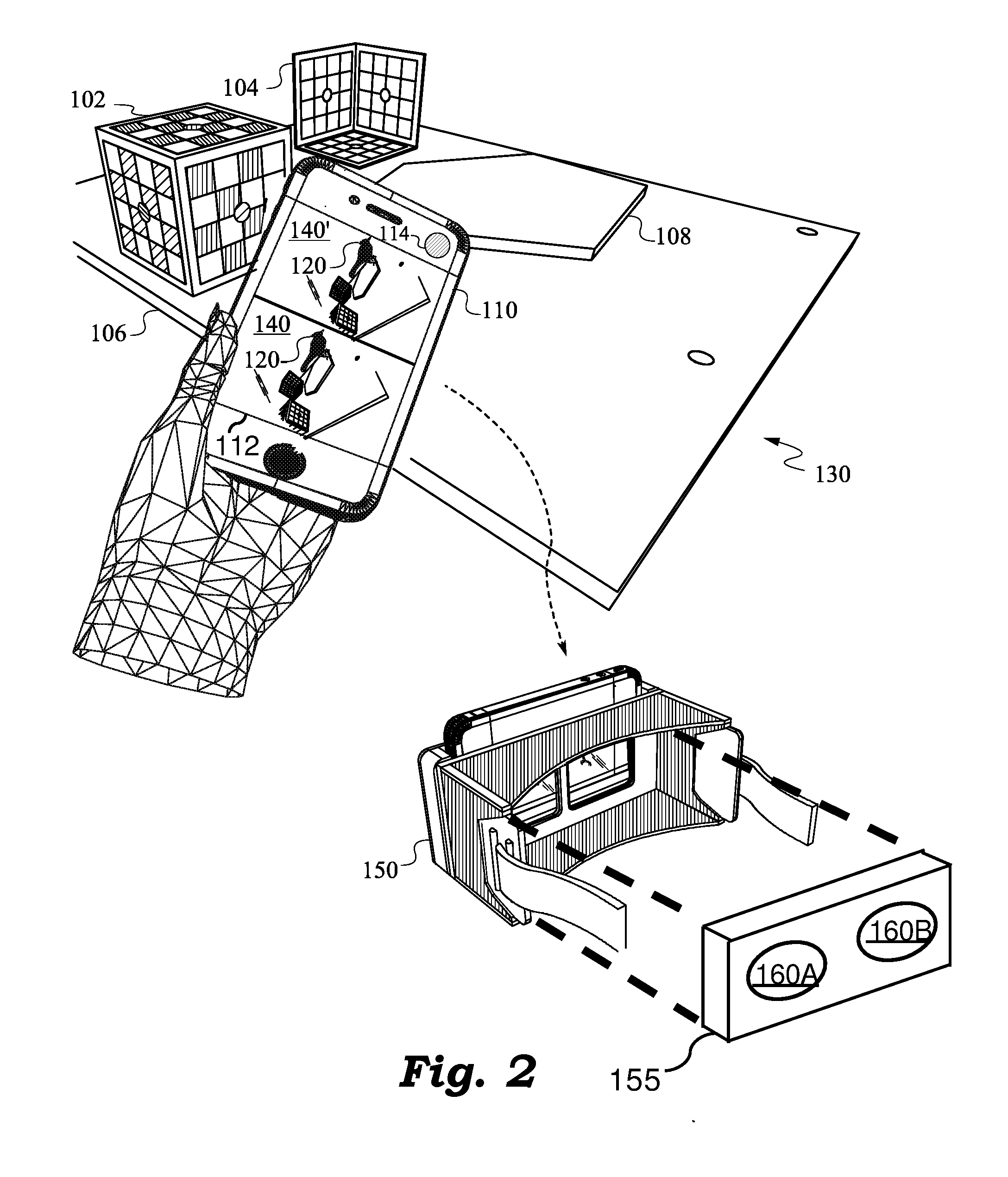

Interactivity with a mixed reality

ActiveUS7564469B2Input/output for user-computer interactionCathode-ray tube indicatorsMixed realityMobile device

Methods of interacting with a mixed reality are presented. A mobile device captures an image of a real-world object where the image has content information that can be used to control a mixed reality object through an offered command set. The mixed reality object can be real, virtual, or a mixture of both real and virtual.

Owner:NANT HLDG IP LLC

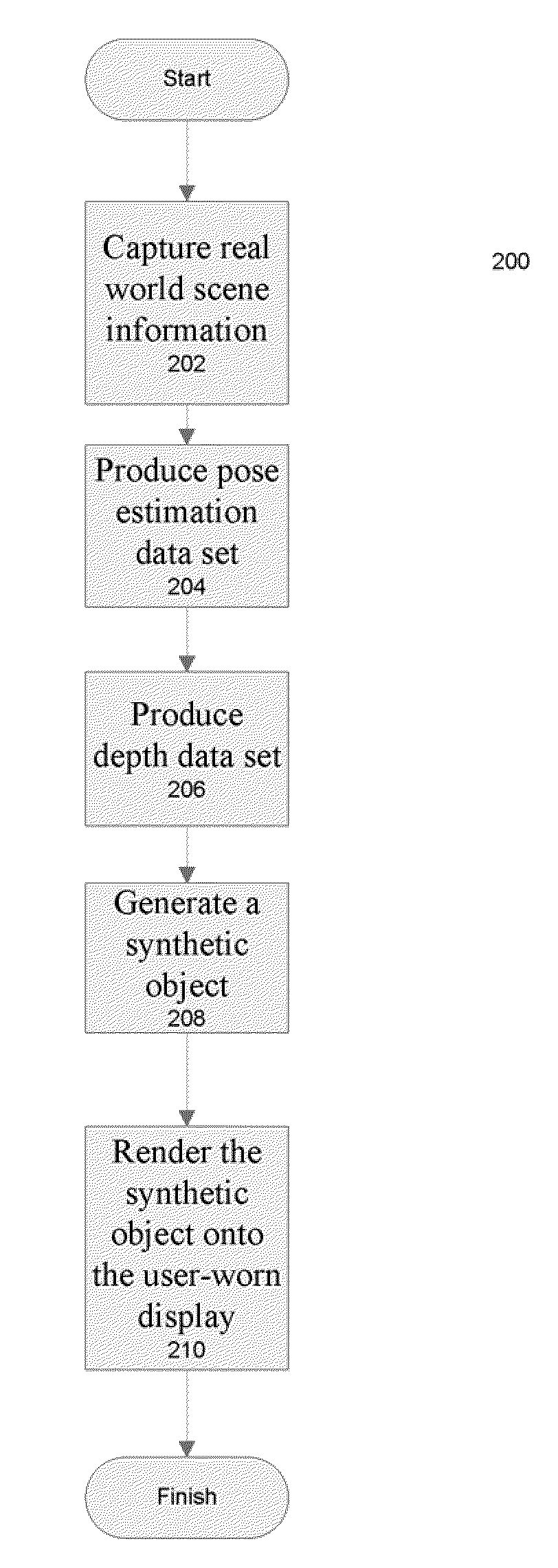

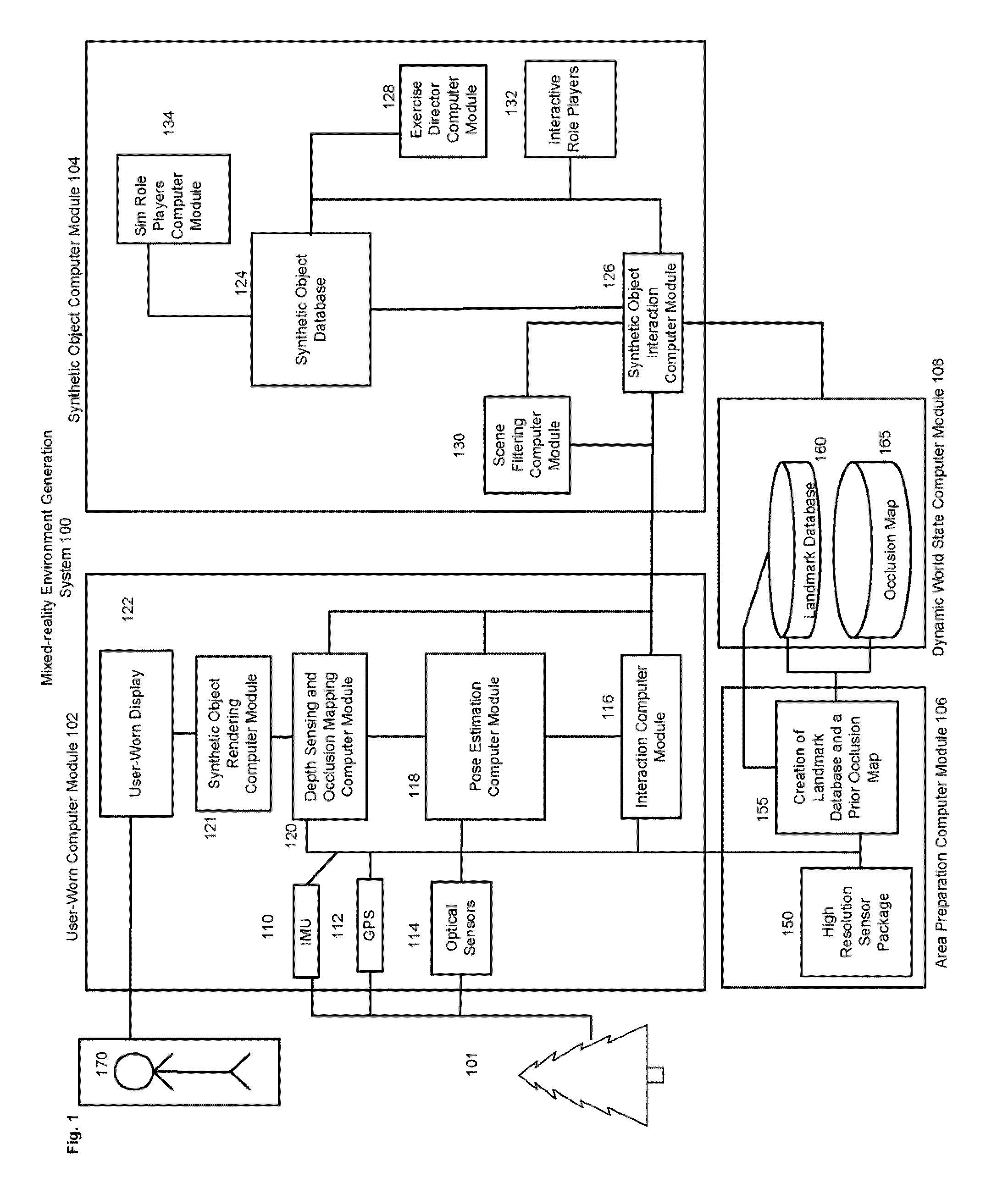

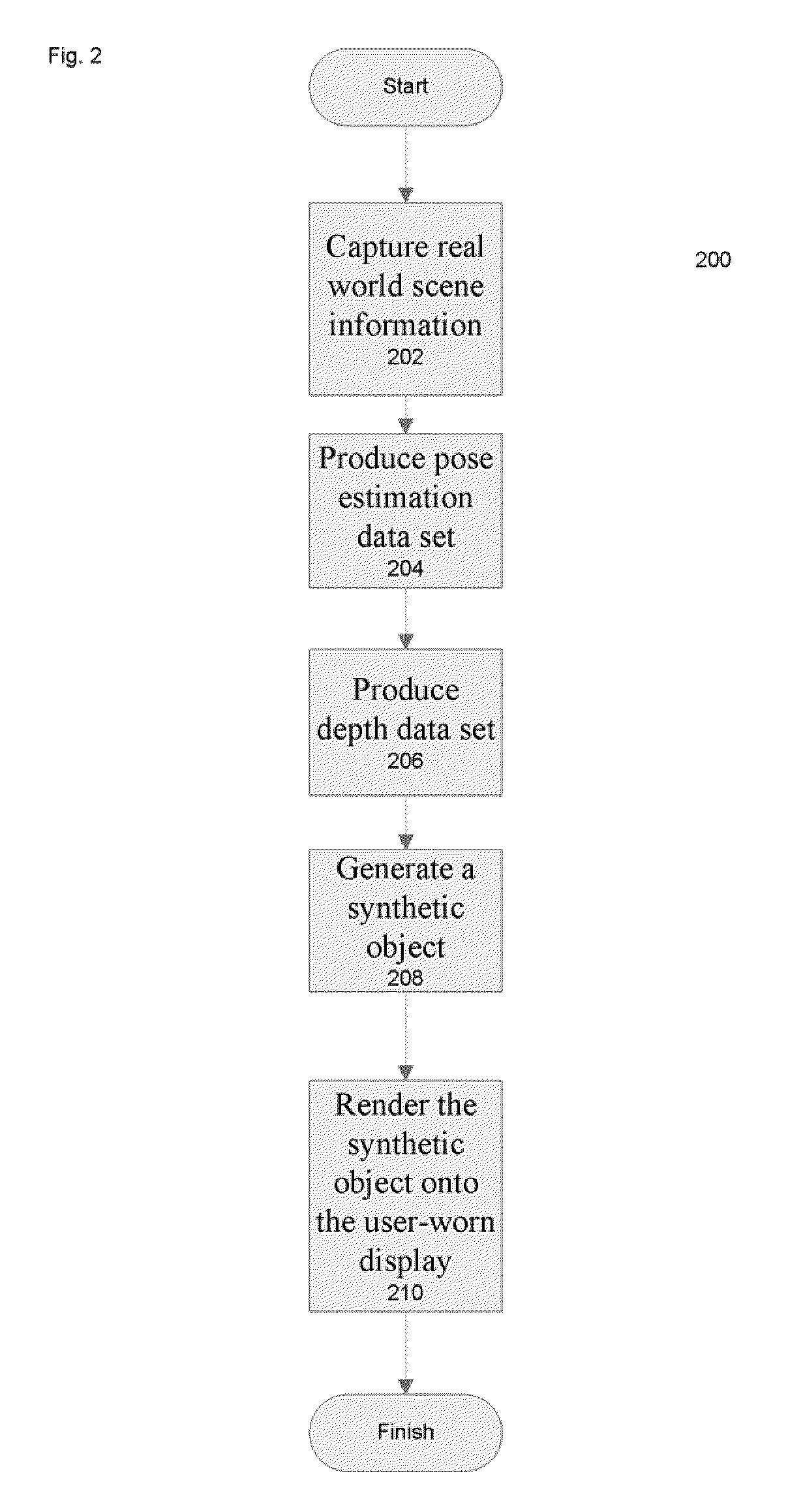

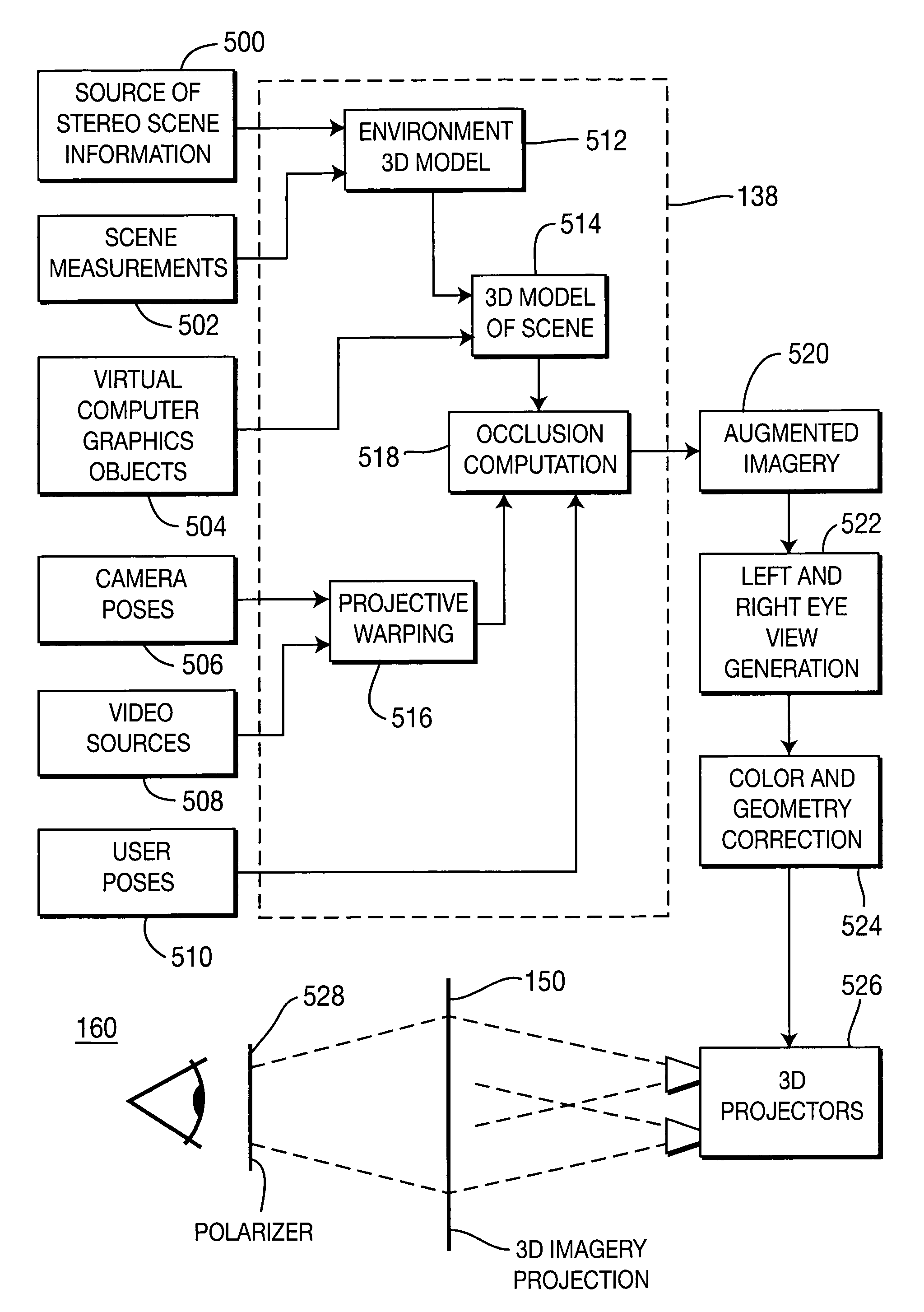

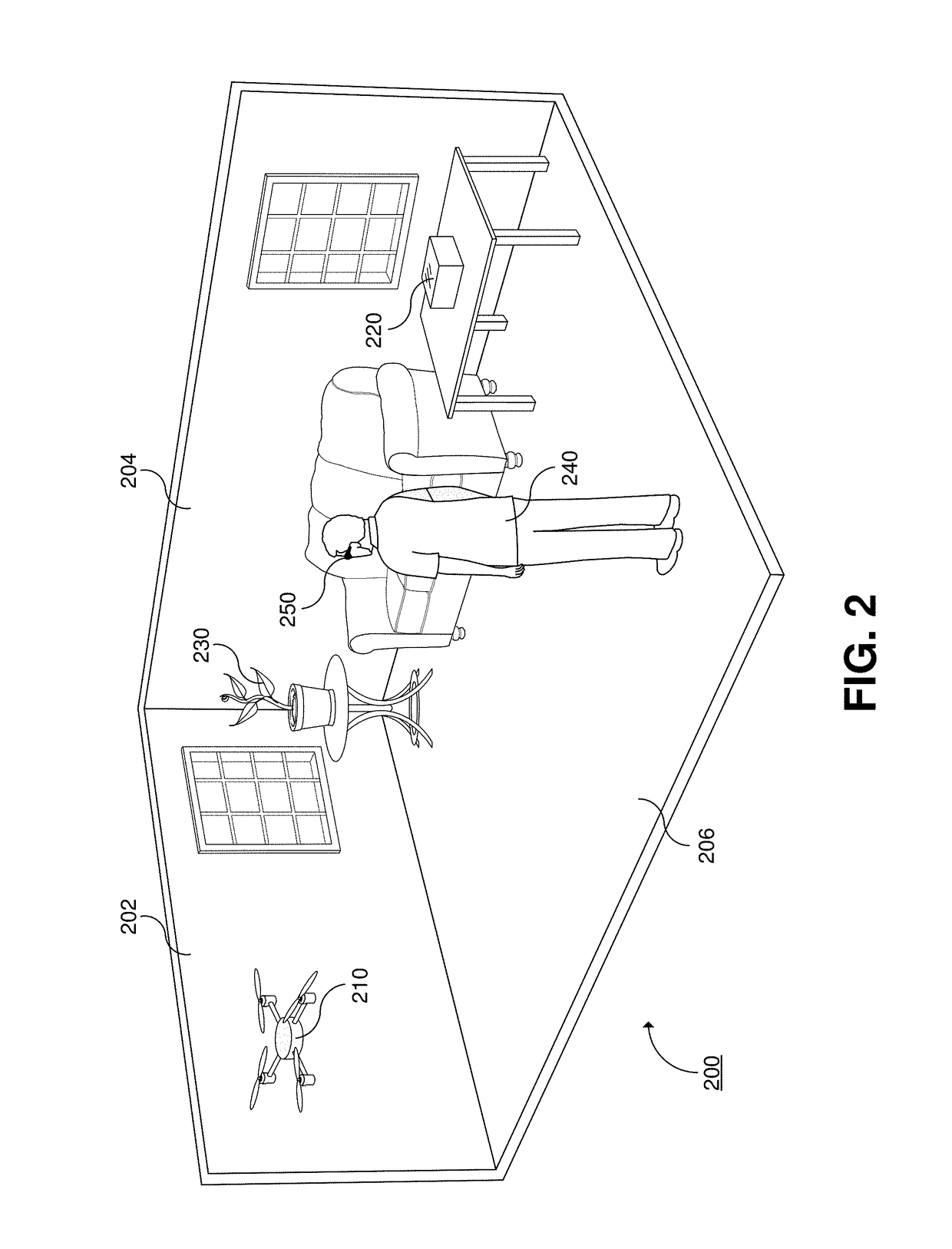

System and method for generating a mixed reality environment

ActiveUS20100103196A1Integrated precisionImprove latencyCathode-ray tube indicatorsImage data processingMixed realityObject based

A system and method for generating a mixed-reality environment is provided. The system and method provides a user-worn sub-system communicatively connected to a synthetic object computer module. The user-worn sub-system may utilize a plurality of user-worn sensors to capture and process data regarding a user's pose and location. The synthetic object computer module may generate and provide to the user-worn sub-system synthetic objects based information defining a user's real world life scene or environment indicating a user's pose and location. The synthetic objects may then be rendered on a user-worn display, thereby inserting the synthetic objects into a user's field of view. Rendering the synthetic objects on the user-worn display creates the virtual effect for the user that the synthetic objects are present in the real world.

Owner:SRI INTERNATIONAL

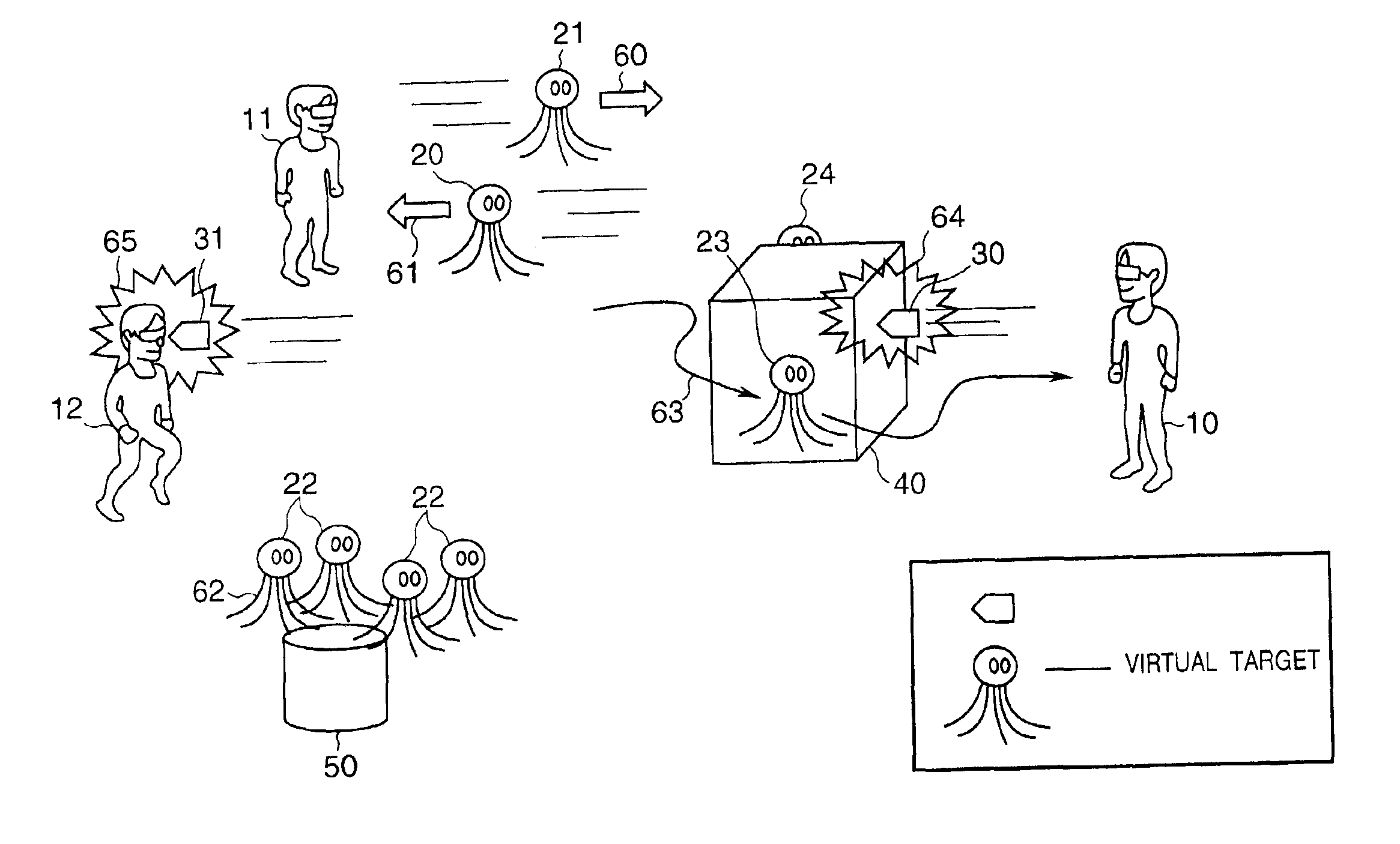

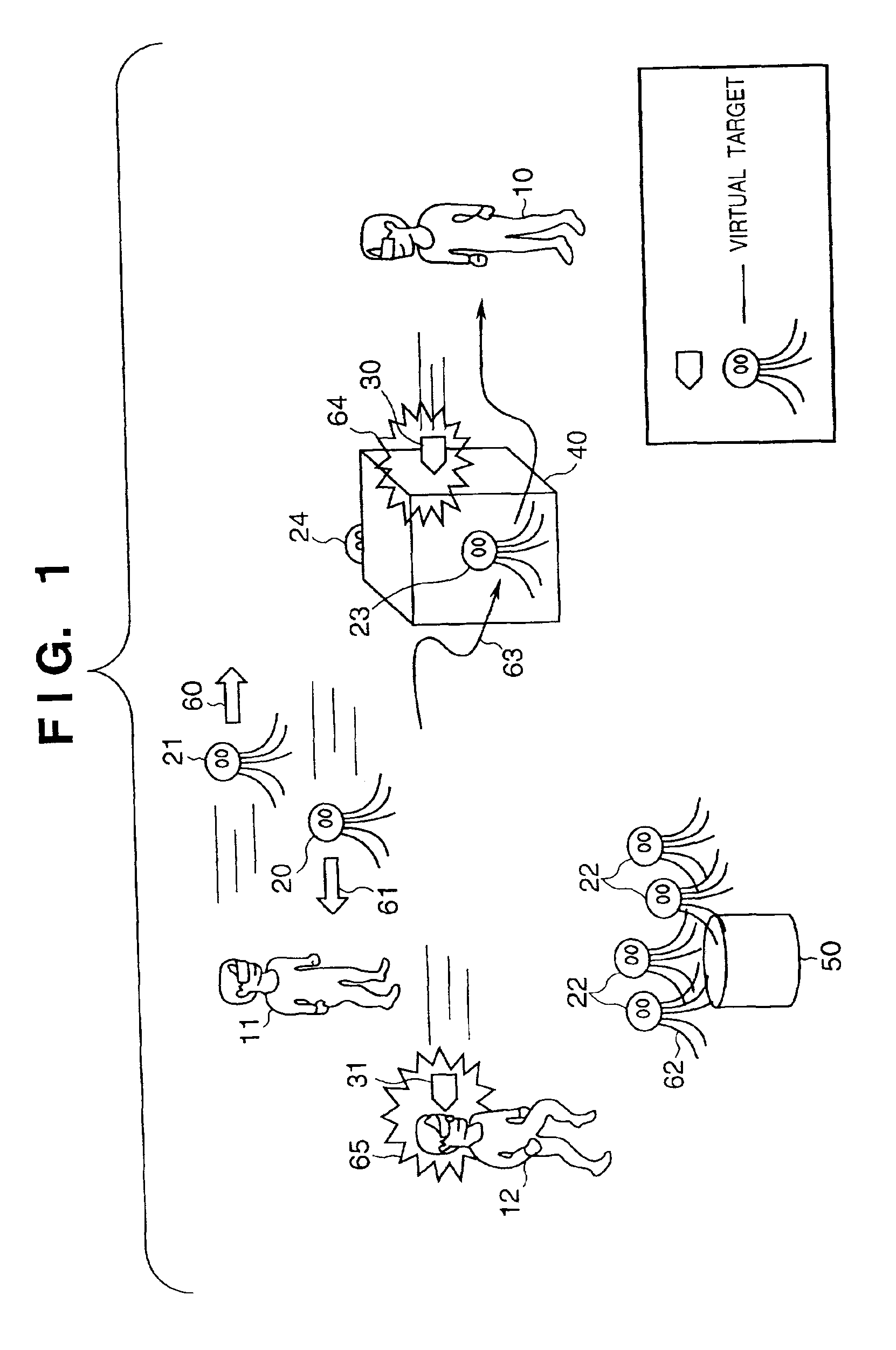

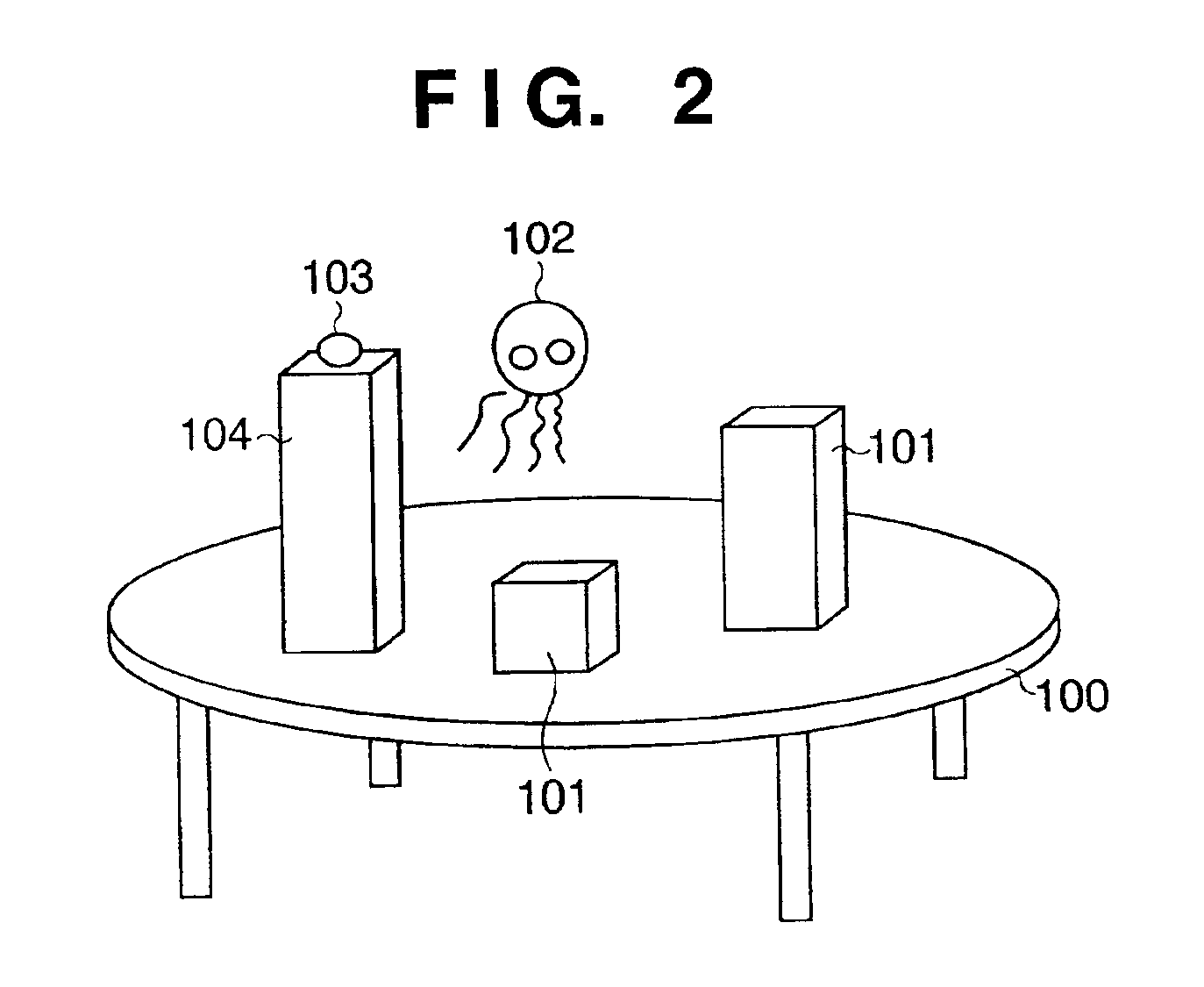

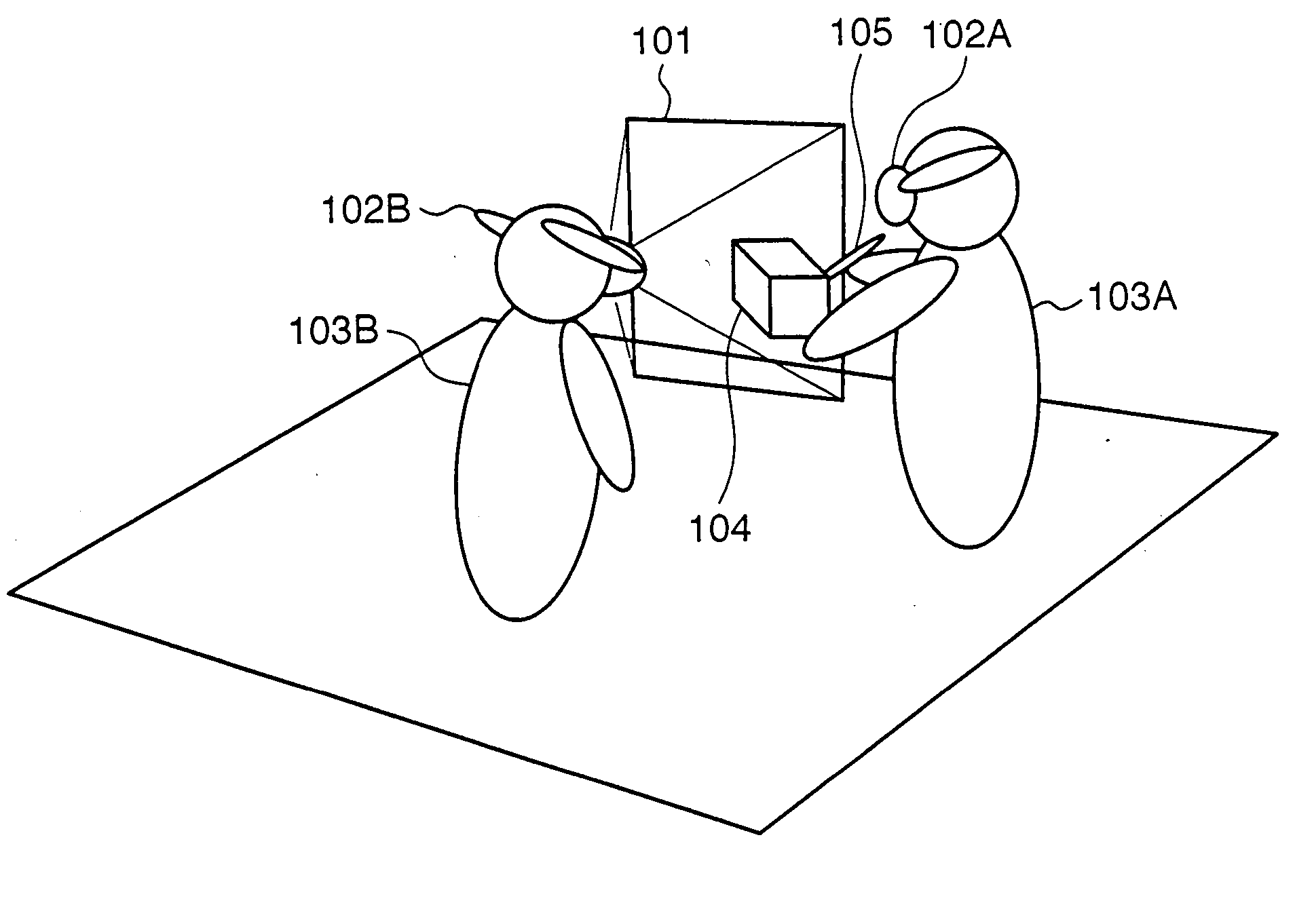

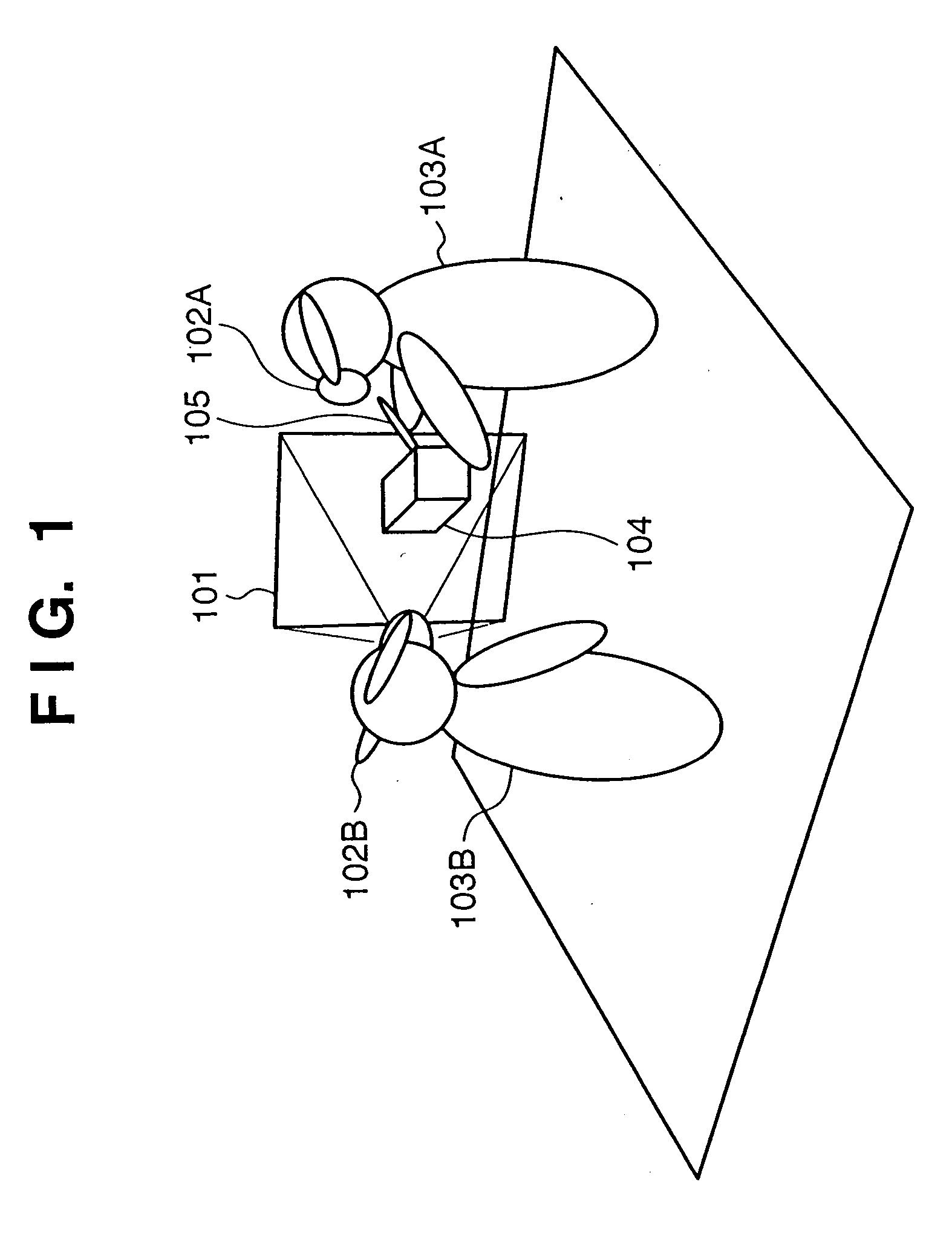

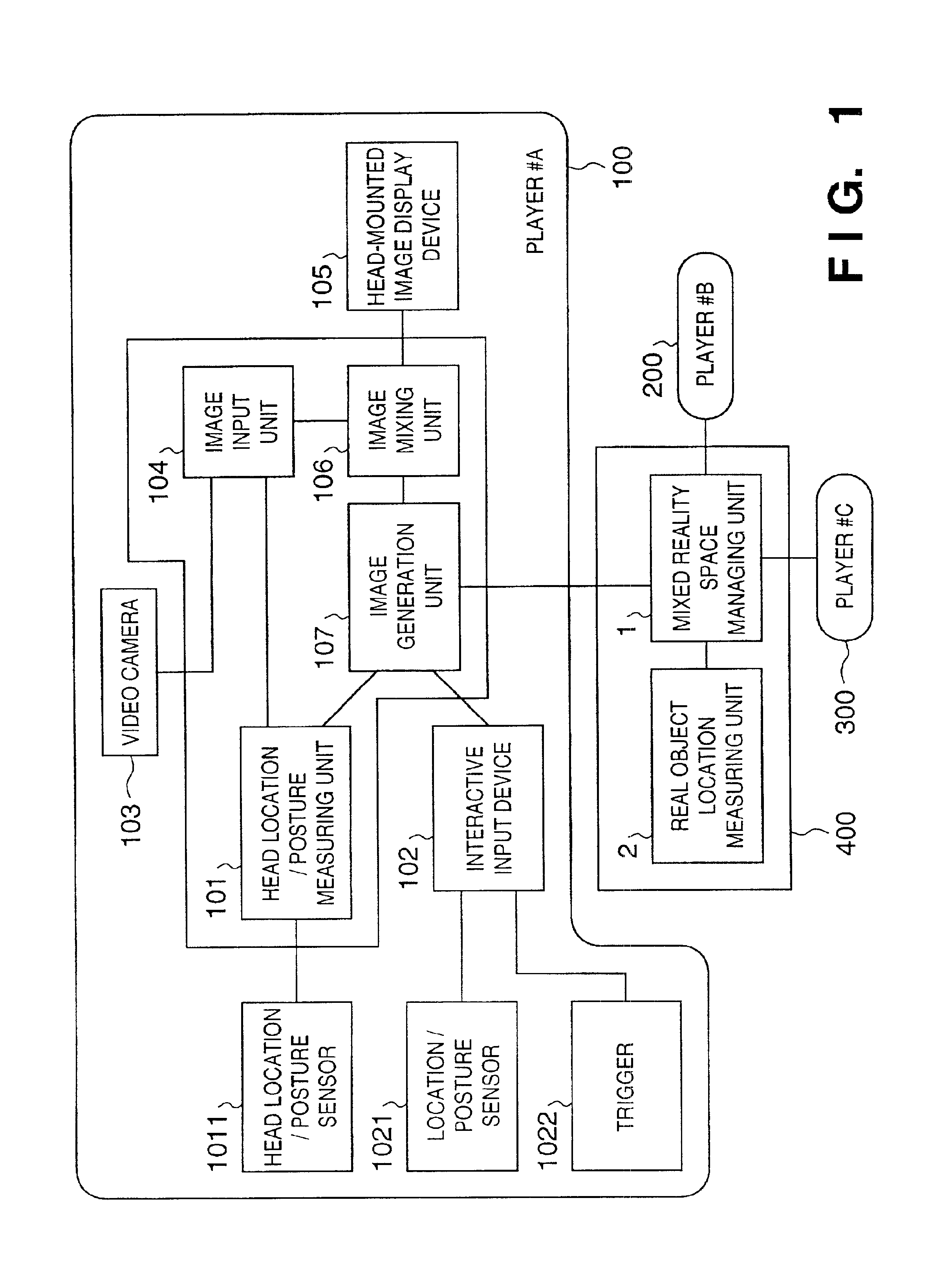

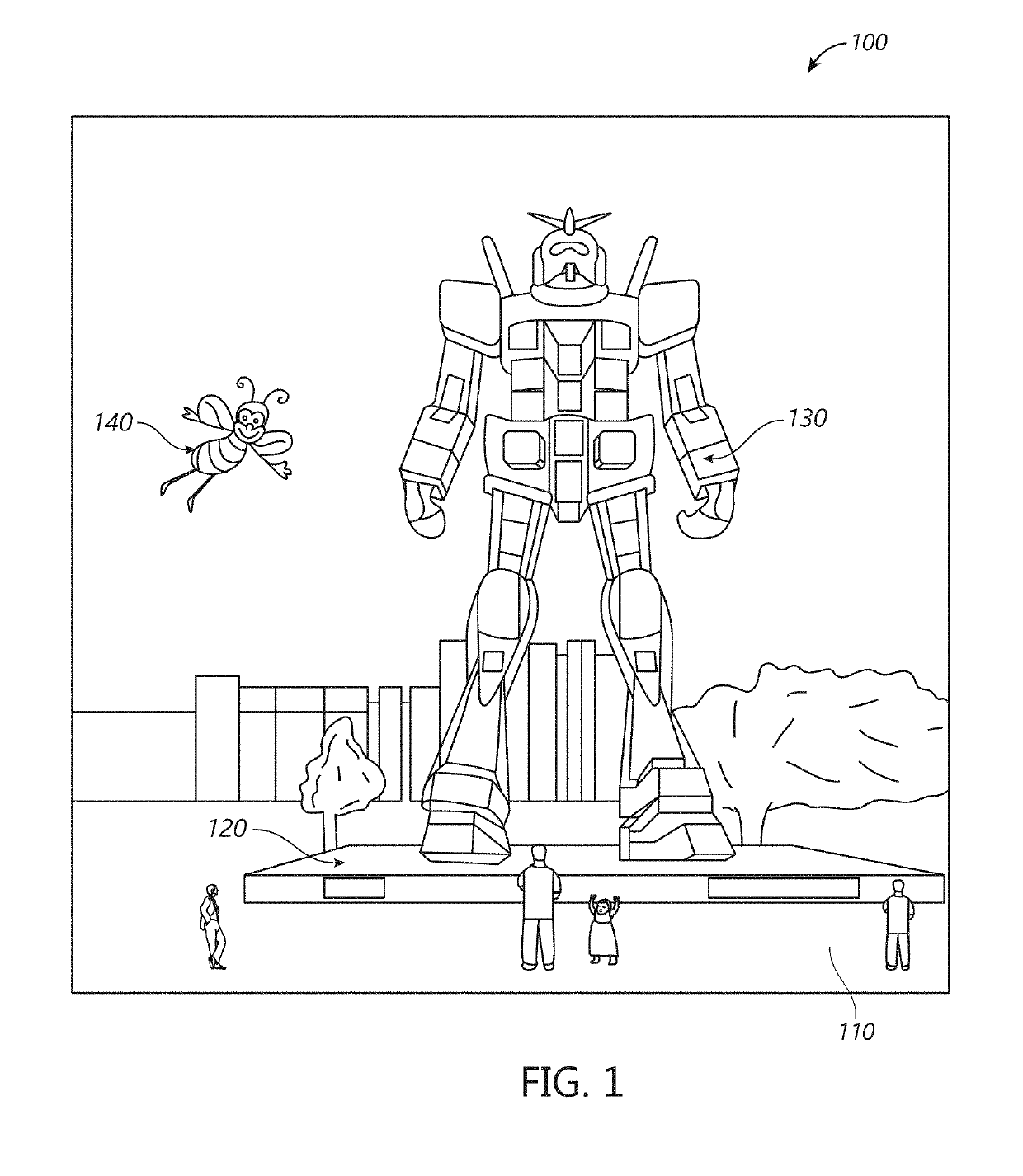

Game apparatus for mixed reality space, image processing method thereof, and program storage medium

InactiveUS6951515B2High shape accuracyHigh precisionVideo gamesSpecial data processing applicationsImaging processingMixed reality

A game apparatus allows a virtual object to act in a mixed reality space as if it had its own will. A player can play a game with the virtual object. Rules for controlling the action patterns of the virtual object on the basis of the objective of the game, and the relative positional relationship between the virtual object and the real object is pre-stored. The next action pattern of the virtual object is determined based on an operator command, the stored rule(s), a simulation progress status, and geometric information of a real object(s).

Owner:CANON KK

Interactivity with a Mixed Reality

Methods of interacting with a mixed reality are presented. A mobile device captures an image of a real-world object where the image has content information that can be used to control a mixed reality object through an offered command set. The mixed reality object can be real, virtual, or a mixture of both real and virtual.

Owner:NANT HLDG IP LLC

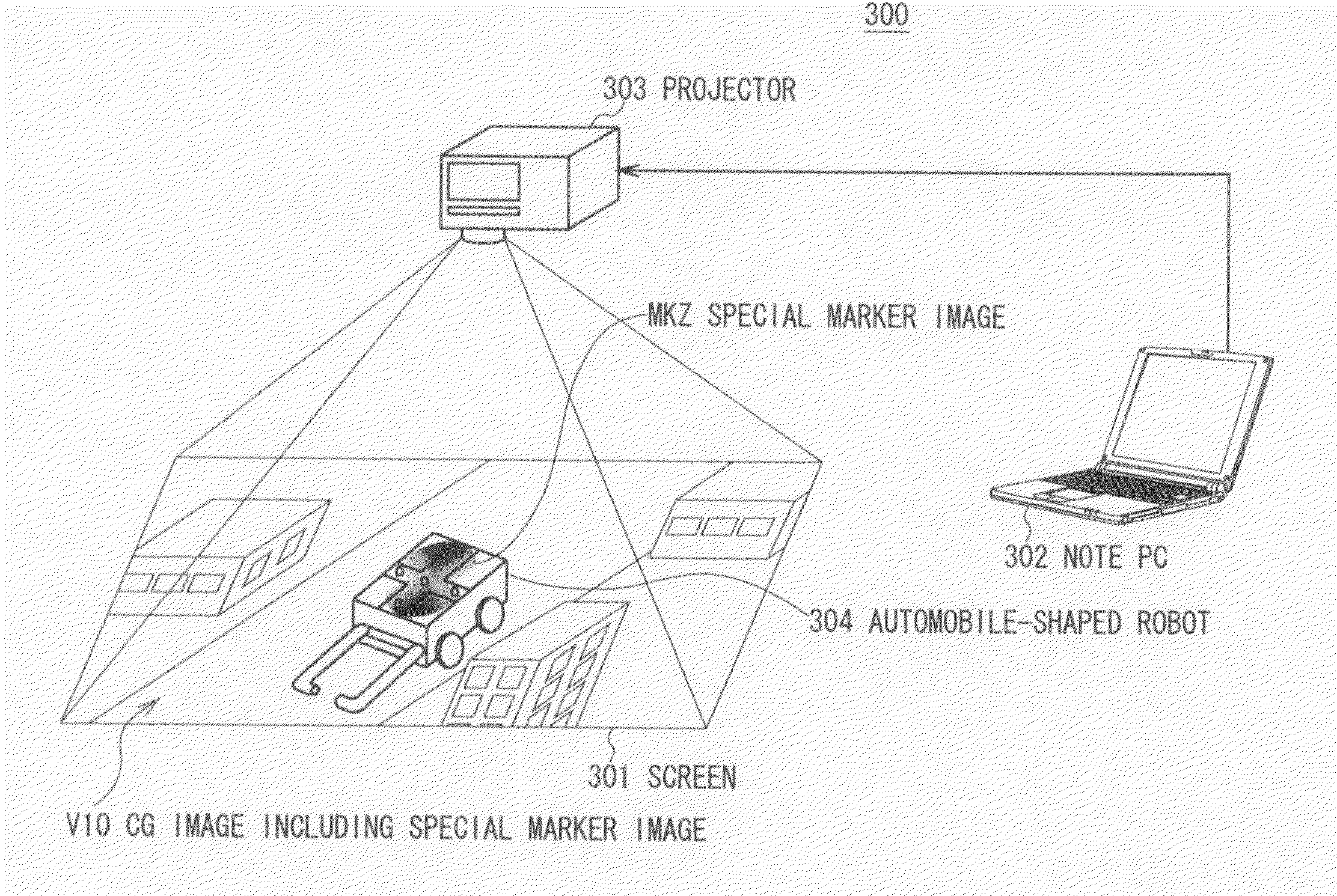

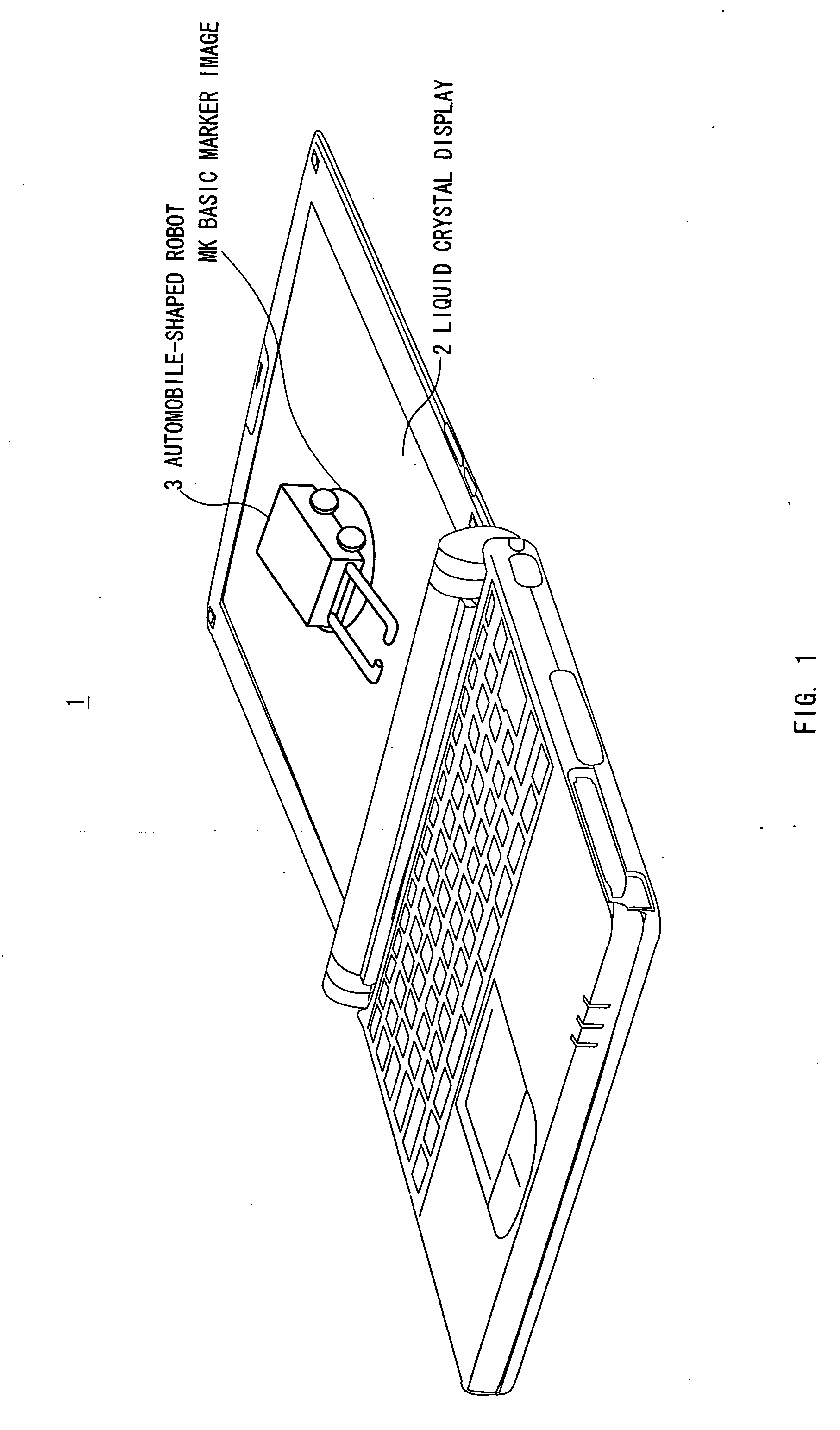

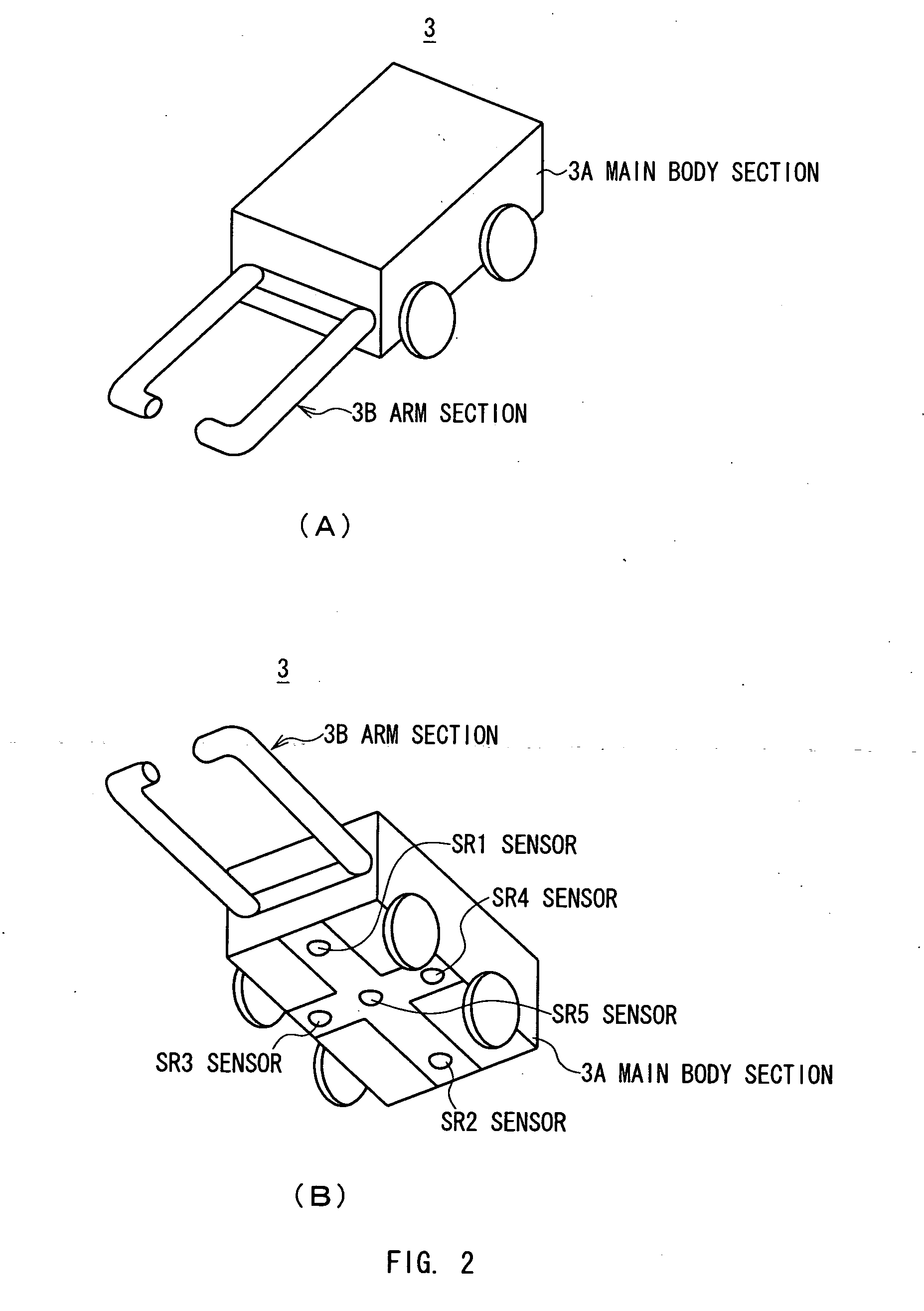

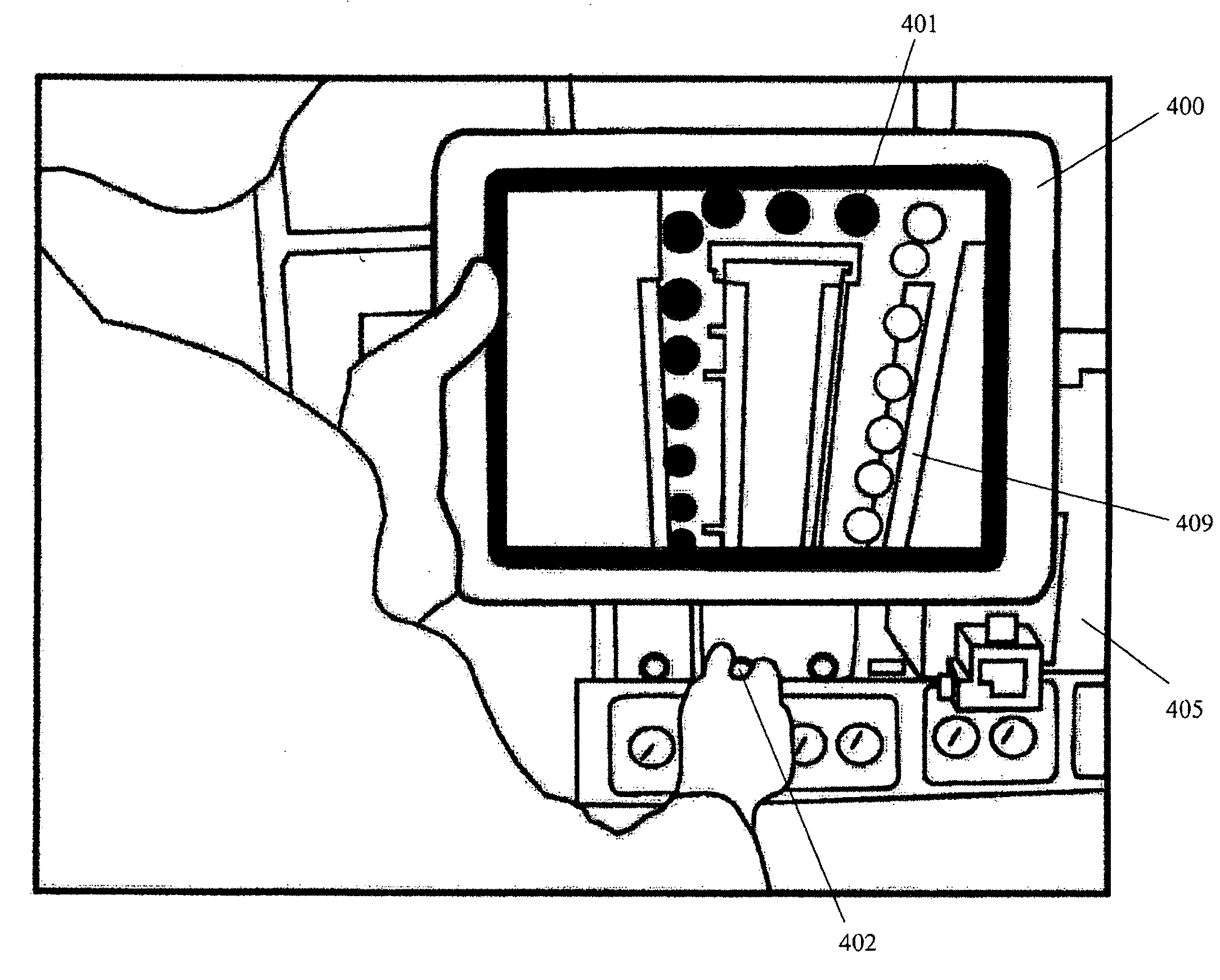

Position Tracking Device, Position Tracking Method, Position Tracking Program and Mixed Reality Providing System

InactiveUS20080267450A1Accurate detectionImage enhancementImage analysisMixed realityLiquid-crystal display

The present invention has a simpler structure than before and is designed to precisely detect the position of a real environment's target object on a screen. The present invention generates a special marker image MKZ including a plurality of areas whose brightness levels gradually change in X and Y directions, displays the special marker image MKZ on the screen of a liquid crystal display 2 such that the special marker image MKZ faces an automobile-shaped robot 3, detects, by using sensors SR1 to SR4 provided on the automobile-shaped robot 3 for detecting the change of brightness level of position tracking areas PD1A, PD2A, PD3 and PD4 of the special marker image MKZ in the X and Y directions, the change of brightness level, and then detects the position of the automobile-shaped robot 3 on the screen of the liquid crystal display 2 by calculating, based on the change of brightness level, the change of relative coordinate value between the special marker image MKZ and the automobile shaped robot 3.

Owner:UNIVERSITY OF ELECTRO-COMMUNICATIONS

Mobile platform

InactiveUS20050285878A1Provide experienceIncrease the differenceCathode-ray tube indicatorsSubstation equipmentMixed realityImage capture

A mobile platform for providing a mixed reality experience to a user via a mobile communications device of the user, the platform including an image capturing module to capture images of an item in a first scene, the item having at least one marker; a communications module to transmit the captured images to a server, and to receive images in a second scene from the server providing a mixed reality experience to the user. In addition, the second scene is generated by retrieving multimedia content associated with an identified marker, and superimposing the associated multimedia content over the first scene in a relative position to the identified marker.

Owner:NAT UNIV OF SINGAPORE

Generating and Displaying a Computer Generated Image on a Future Pose of a Real World Object

Methods and systems for displaying a computer generated image corresponding to the pose of a real-world object in a mixed reality system. The system may include of a head-mounted display (HMD) device, a magnetic track system and an optical system. Pose data detected by the two tracking systems can be synchronized by a timestamp that is embedded in an electromagnetic field transmitted by the magnetic tracking system. A processor may also be configured to calculate a future pose of the real world object based on a time offset based on the time needed by the HMD to calculate, buffer and generate display output and on data from the two tracking systems, such that the relative location of the computer generated image (CGI) corresponds with the actual location of the real-world object relative to the real world environment at the time the CGI actually appears in the display.

Owner:MICROSOFT TECH LICENSING LLC

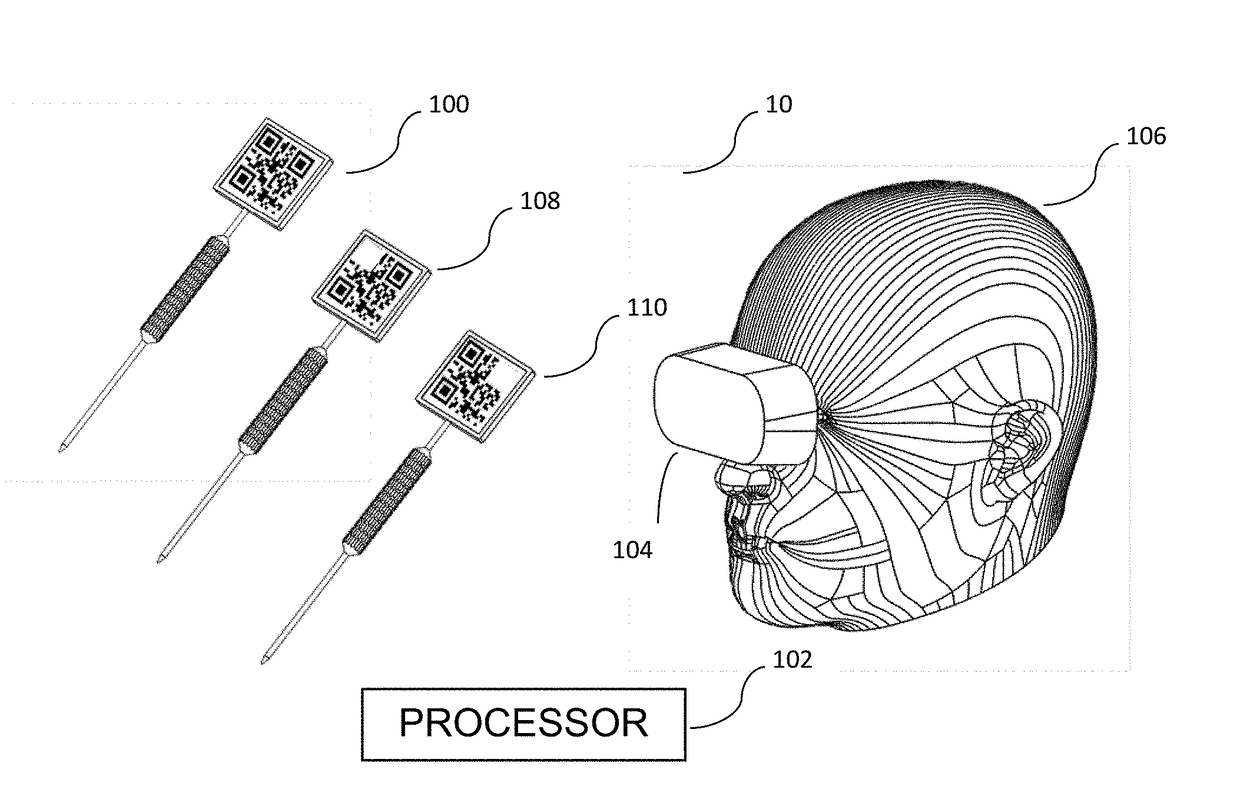

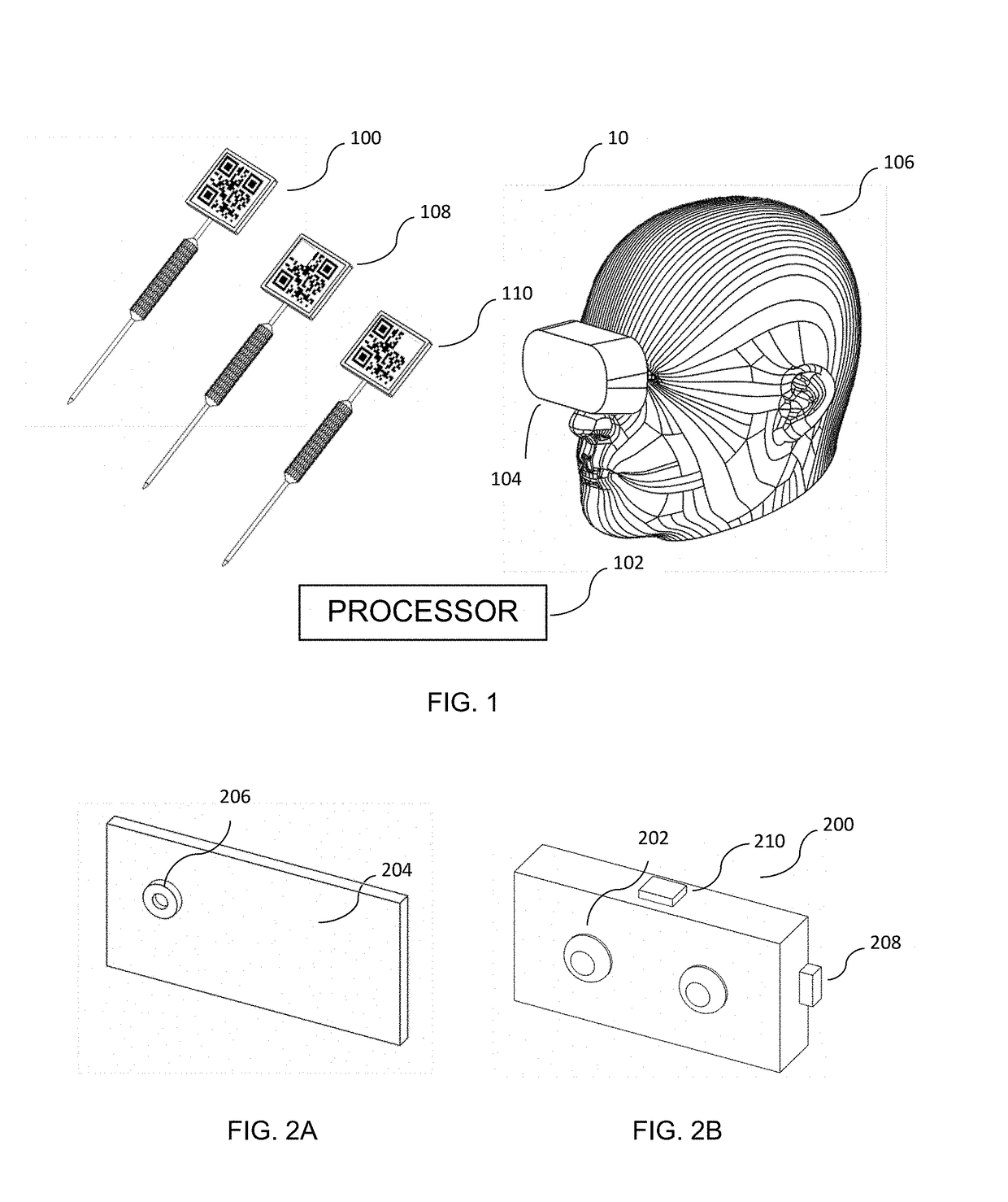

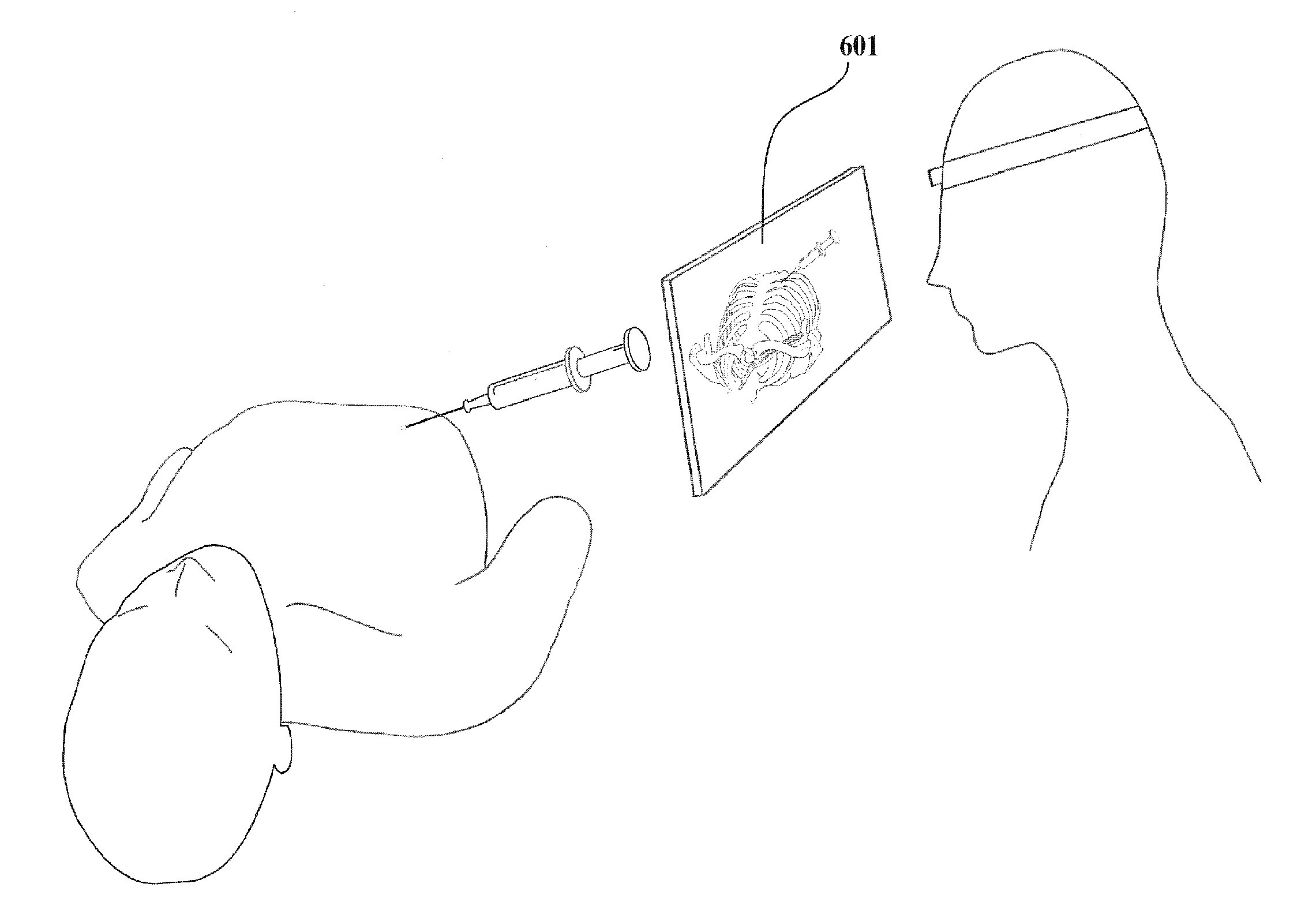

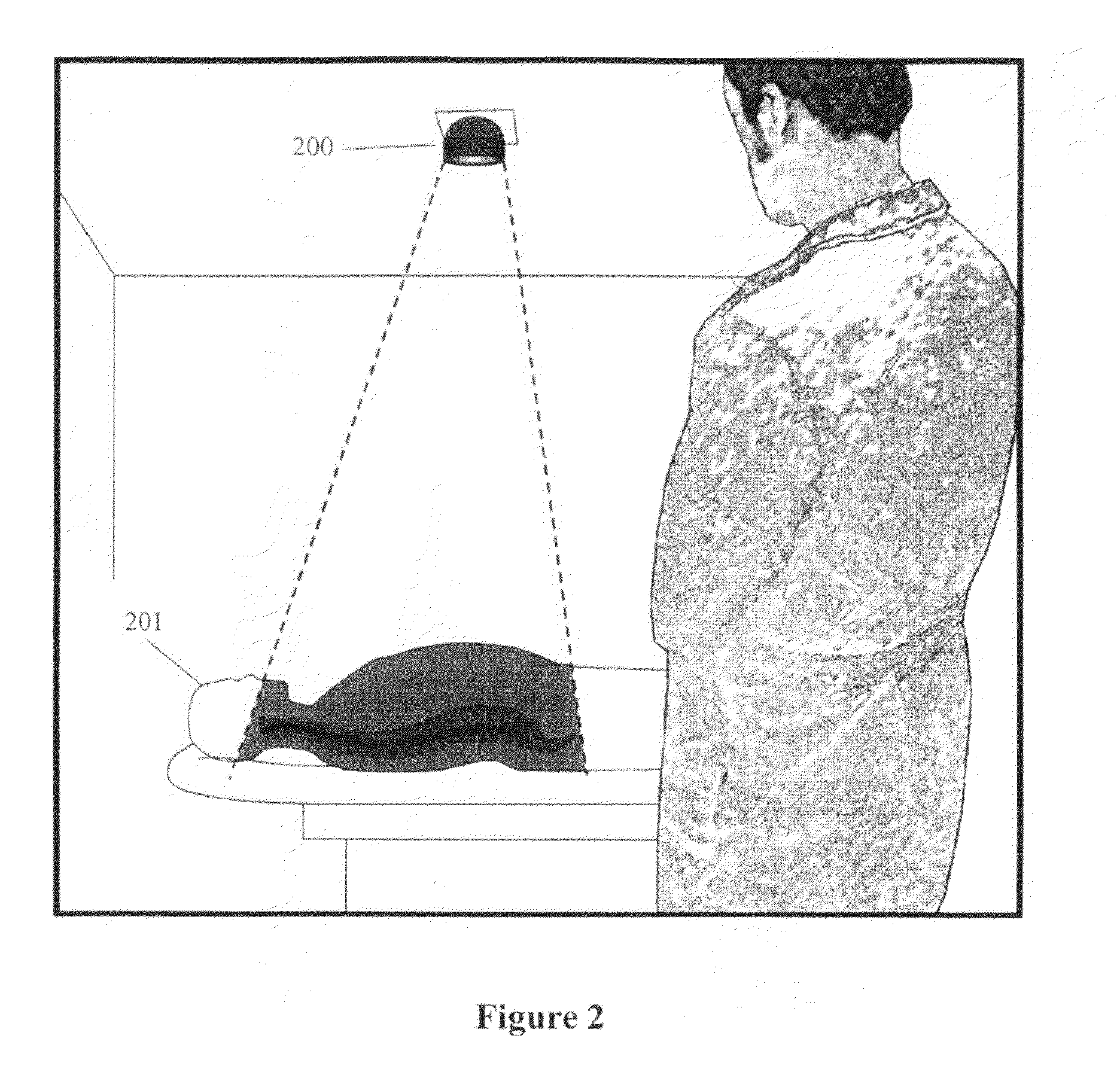

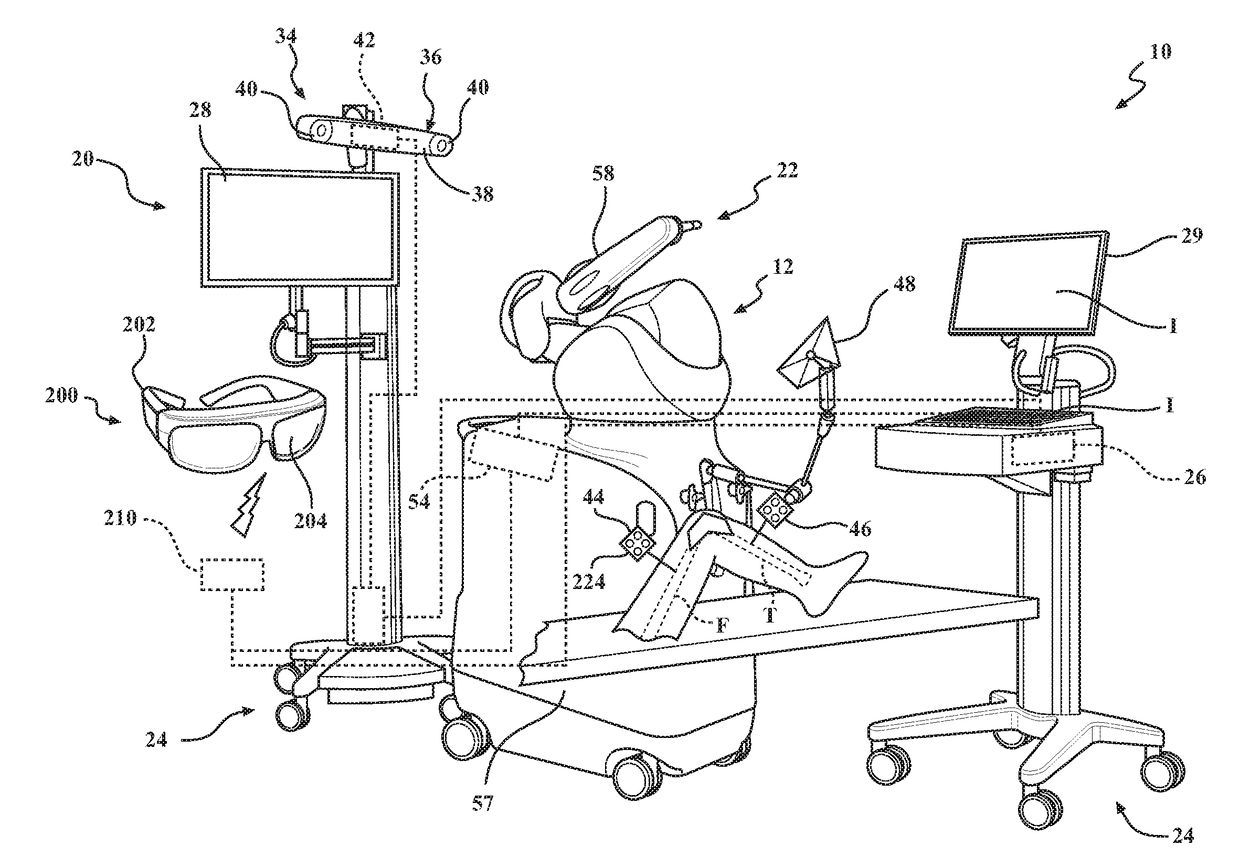

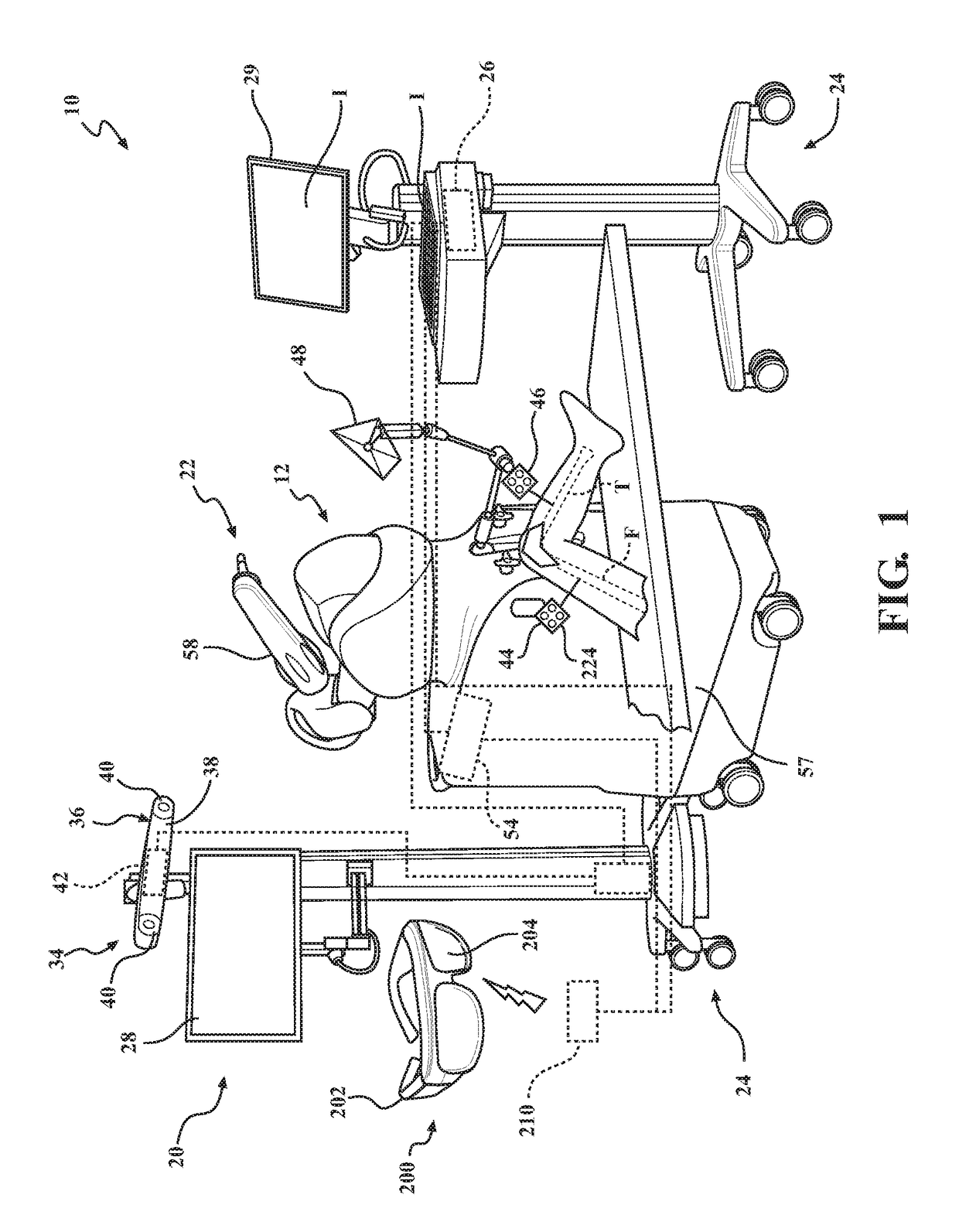

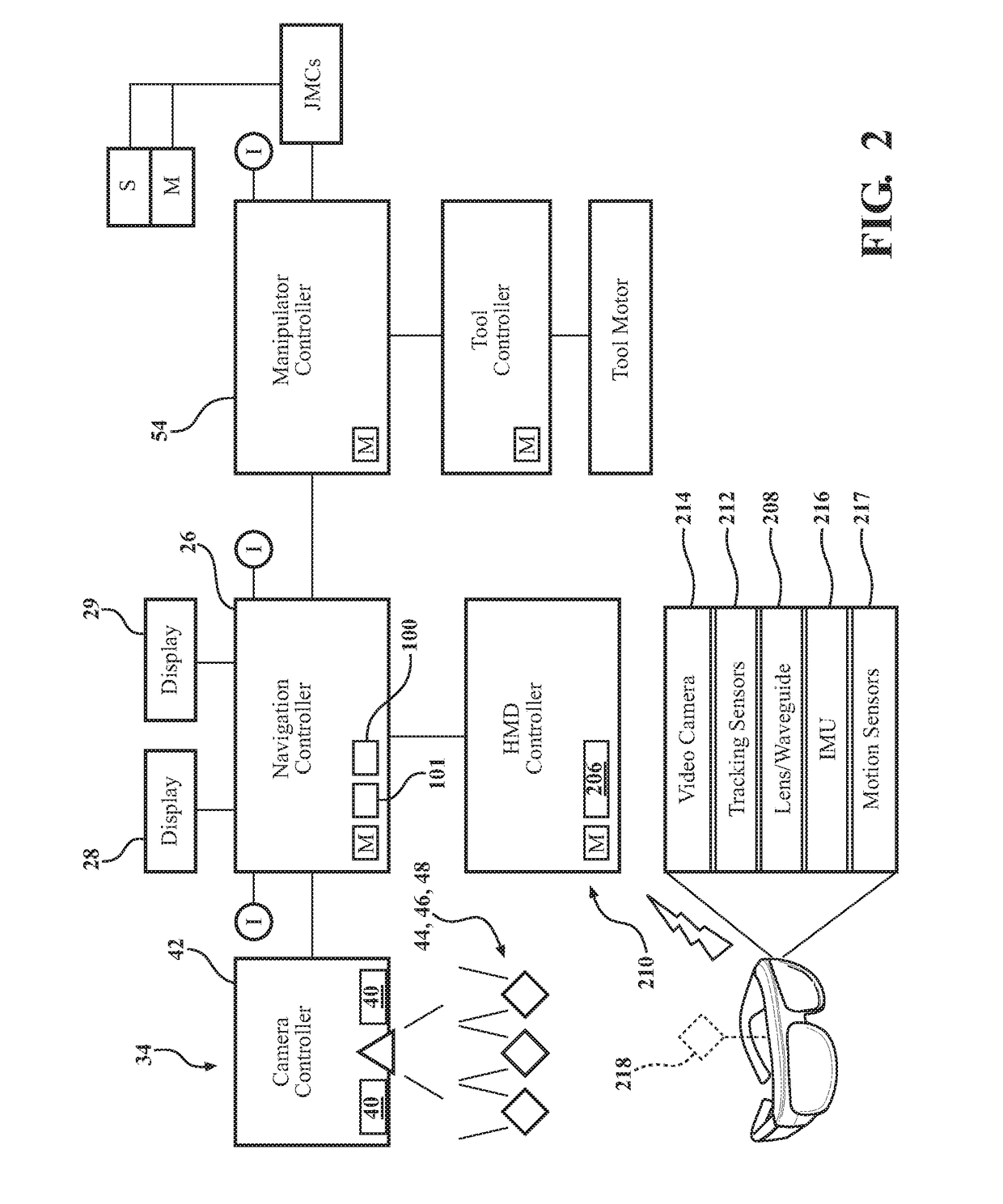

Systems and methods for sensory augmentation in medical procedures

InactiveUS20180049622A1Minimizes line of site obscuration issuePrecise positioningEndoscopesJoint implantsMixed realityGNSS augmentation

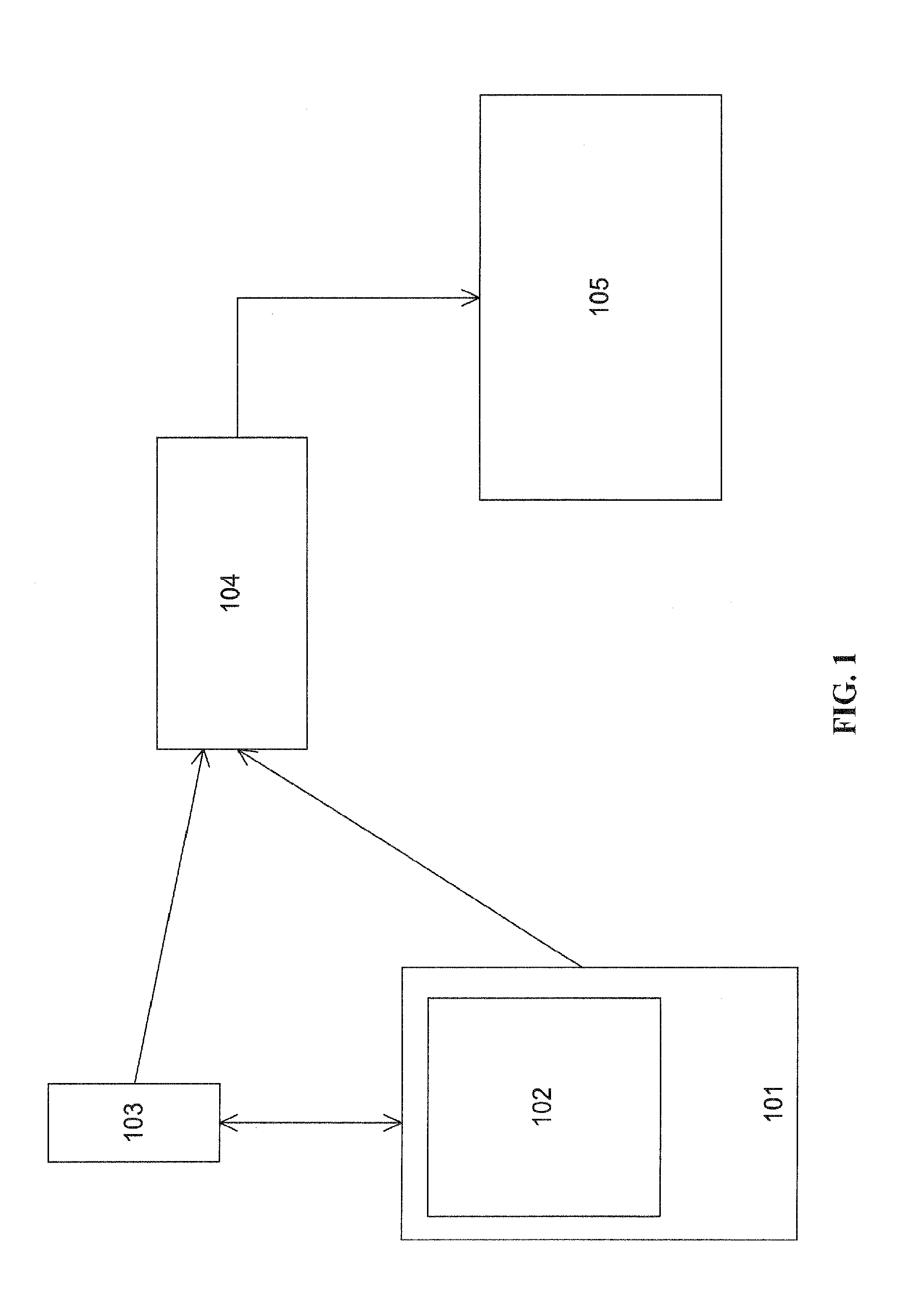

The present invention provides a mixed reality surgical navigation system (10) comprising: a display device (104) comprising a processor unit (102), a display generator (204), a sensor suite (210) having at least one camera (206); and at least one marker (600) fixedly attached to a surgical tool (608); wherein the system (10) maps three-dimensional surfaces of partially exposed surfaces of an anatomical object of interest (604); tracks a six-degree of freedom post of the surgical tool (608), and provides a mixed reality user interface comprising stereoscopic virtual images of desired features of the surgical tool (608) and desired features of the anatomical object (604) in the user's (106) field of view. The present invention also provides methods of using the system in various medical procedures.

Owner:INSIGHT MEDICAL SYST INC

Pleasant and Realistic Virtual/Augmented/Mixed Reality Experience

InactiveUS20160267720A1Modify their appearanceReduced homographyTelevision system detailsColor television detailsMixed realityViewpoints

The present invention discloses apparatus and methods for the viewing of a reality, and in particular a comfortable and pleasant viewing of the reality by a user. The reality is viewed by the user with a viewing mechanism that may involve optics. The reality viewed may be a virtual reality, an augmented reality or a mixed reality. A projection mechanism renders the scene for the user and modifies one or more virtual objects present in the scene. The modification performed is based on one or more properties of an inside-out camera. The modification and the associated property / properties of the inside-out camera are suitably chosen to fit an application need such as to provide a pleasant and comfortable viewing experience for the user. The inside-out camera may be attached to the viewing mechanism, which may be worn by the user. The reality viewed may be from the viewpoint of the user, or from the viewpoint of another device detached from the user.

Owner:ELECTRONICS SCRIPTING PRODS

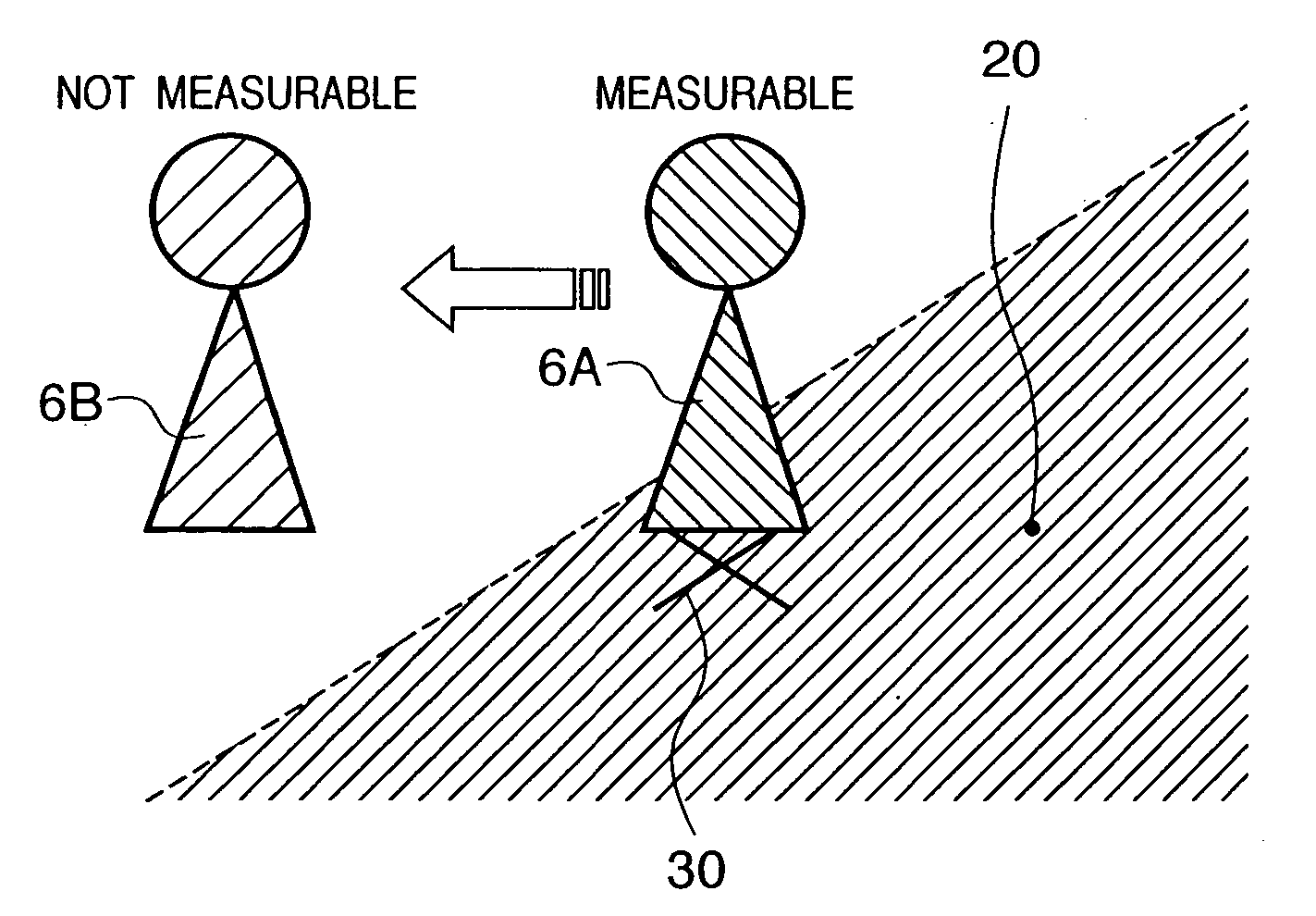

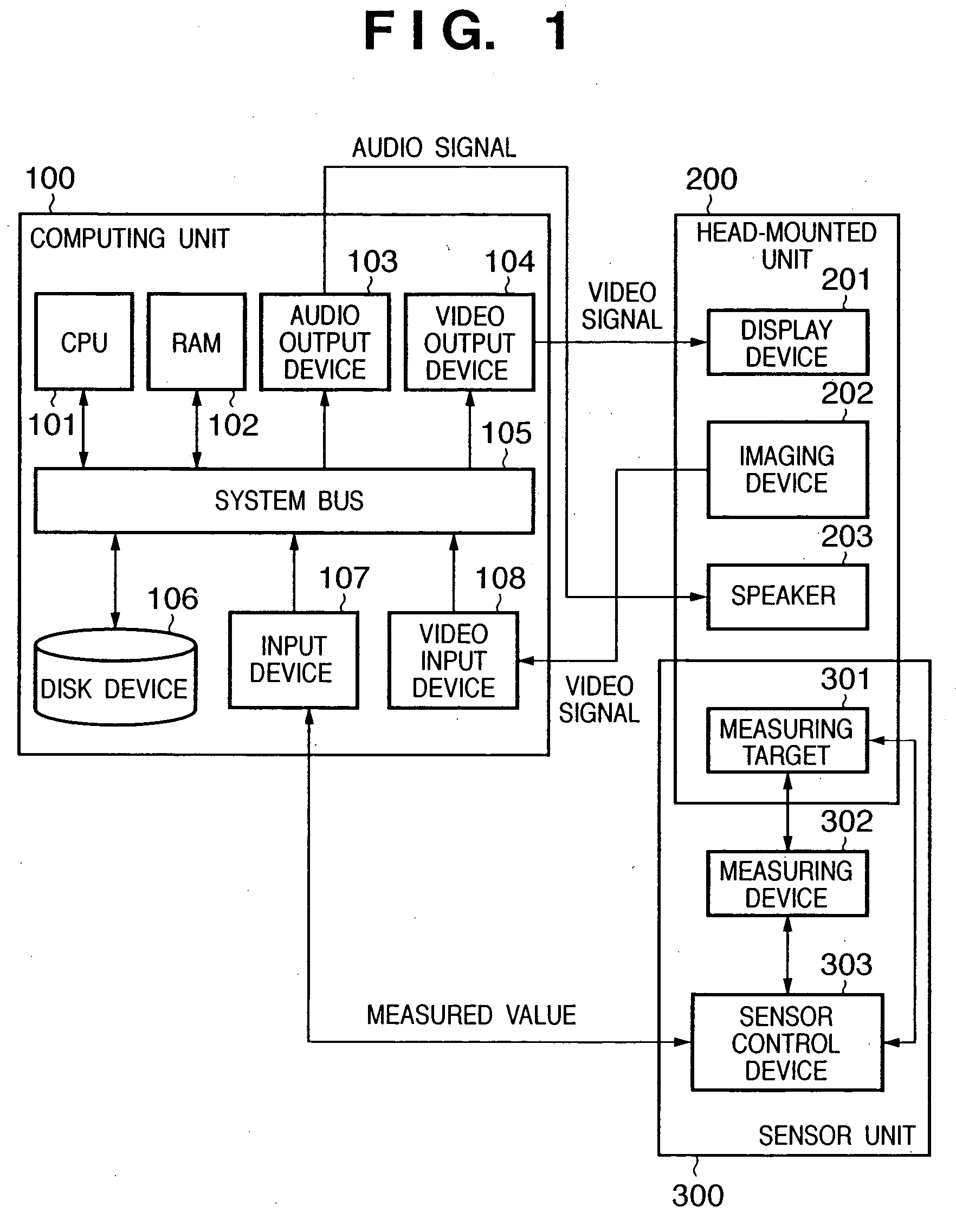

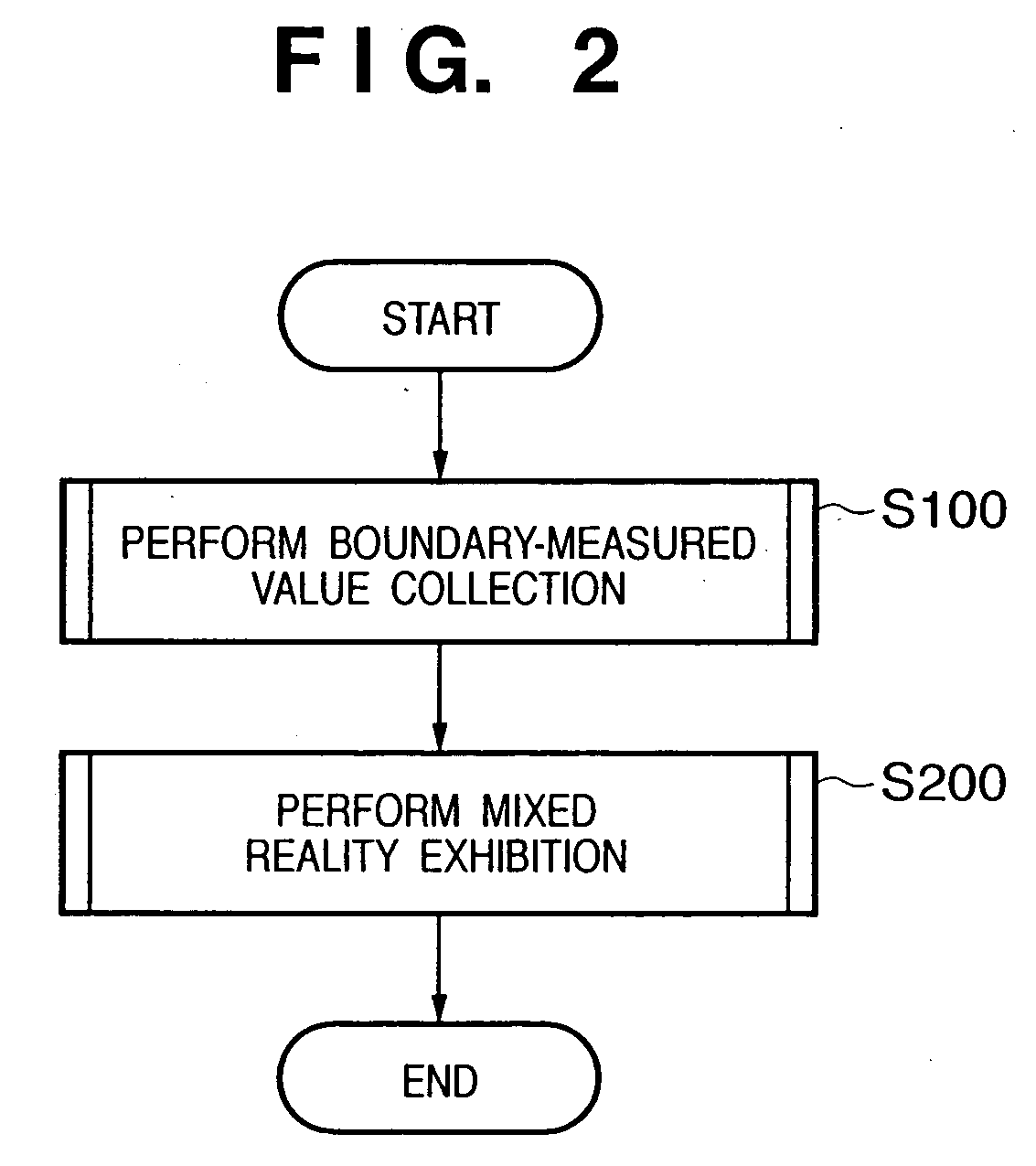

Mixed reality exhibiting method and apparatus

ActiveUS20050123171A1Input/output for user-computer interactionImage analysisMixed realityViewpoints

In exhibition of a synthesized image which is obtained by synthesizing a virtual world image with a real world image observed from a viewpoint position and direction of a user, data representing a position and orientation of a user is acquired, a virtual image is generated based on the data representing the position and orientation of the user, and the virtual image is synthesized with a real image corresponding to the position and orientation of the user. Based on a measurable area of the position and orientation of the user, area data is set. Based on the data representing the position of the user and the area data, notification related to the measurable area is controlled.

Owner:CANON KK

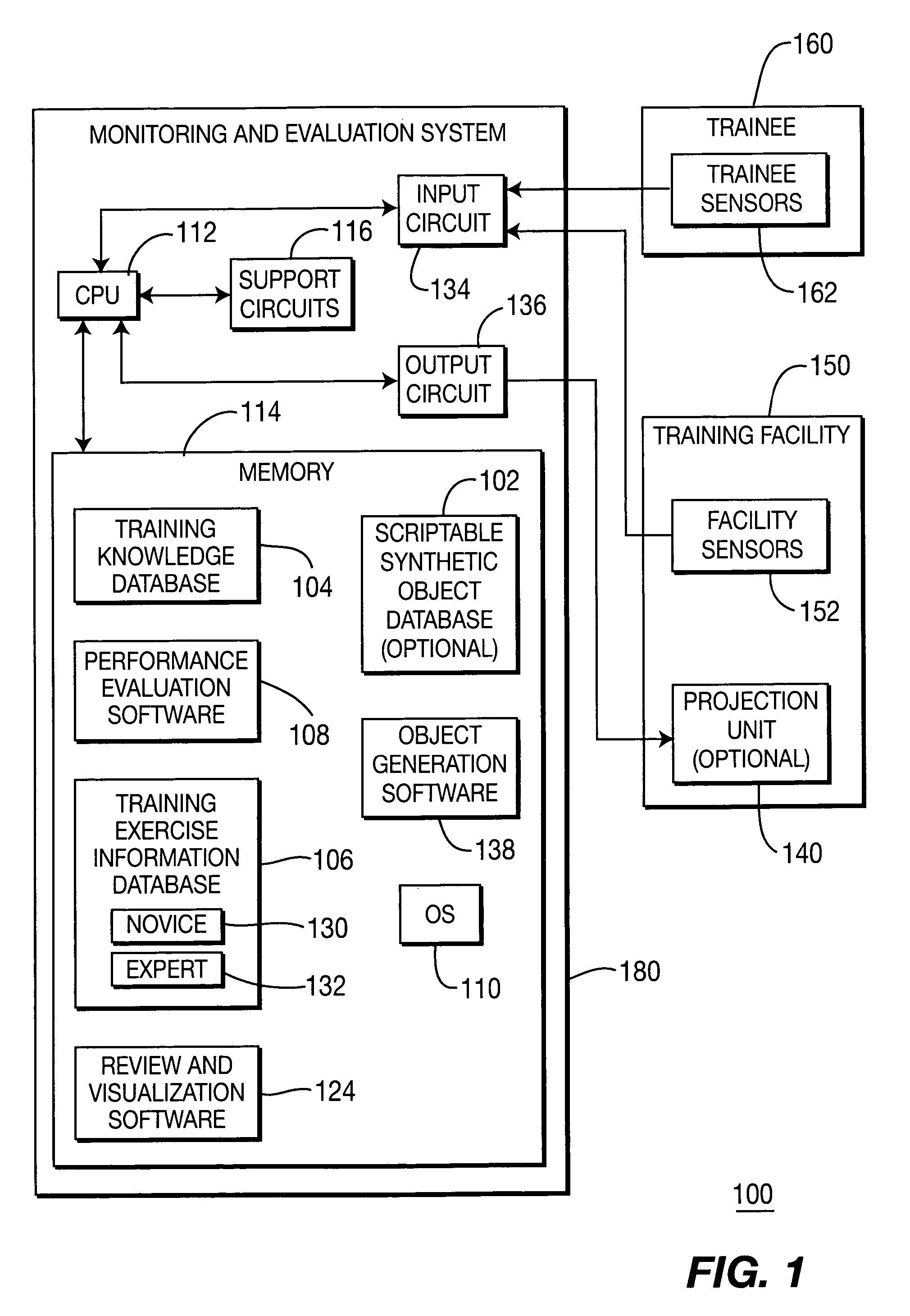

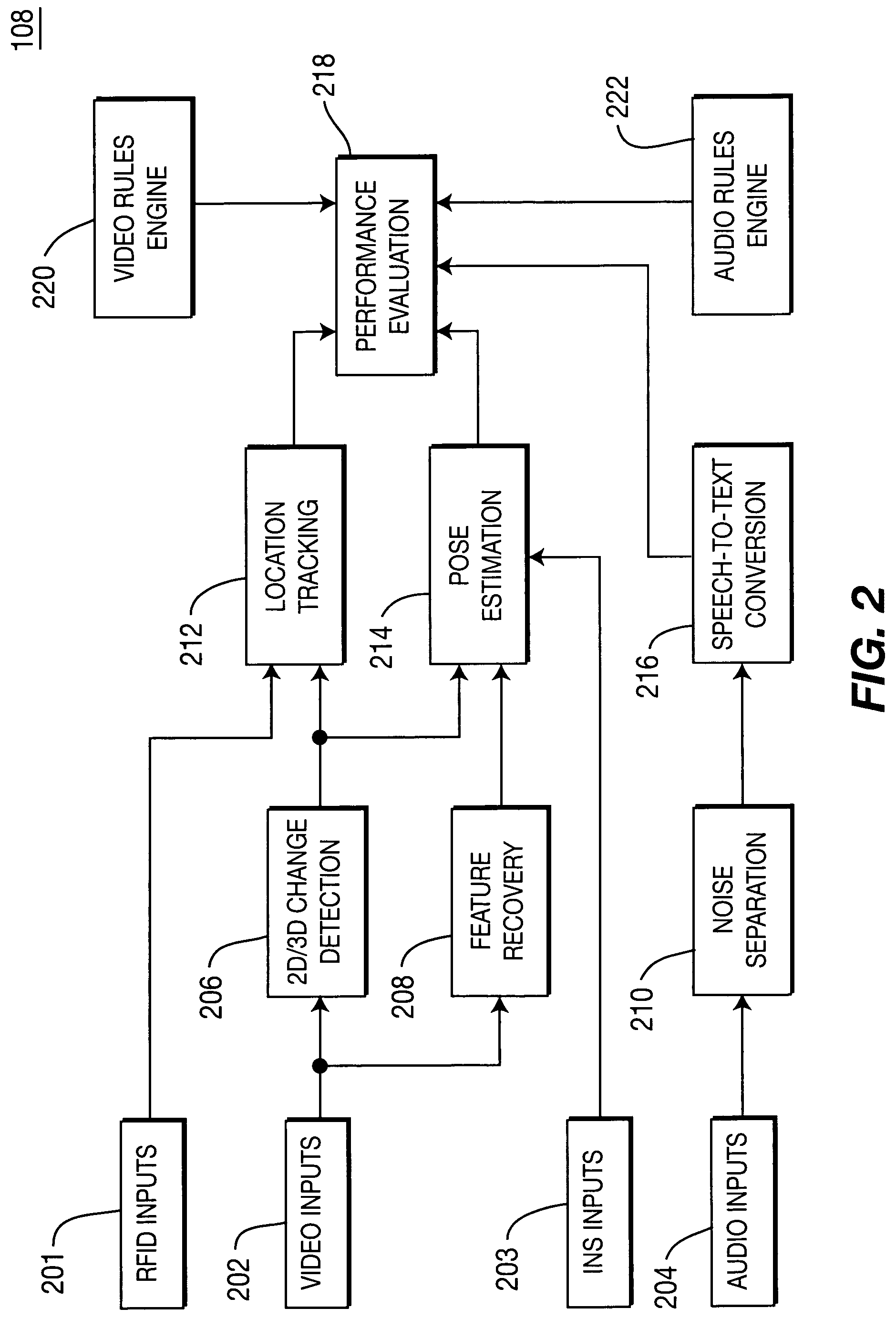

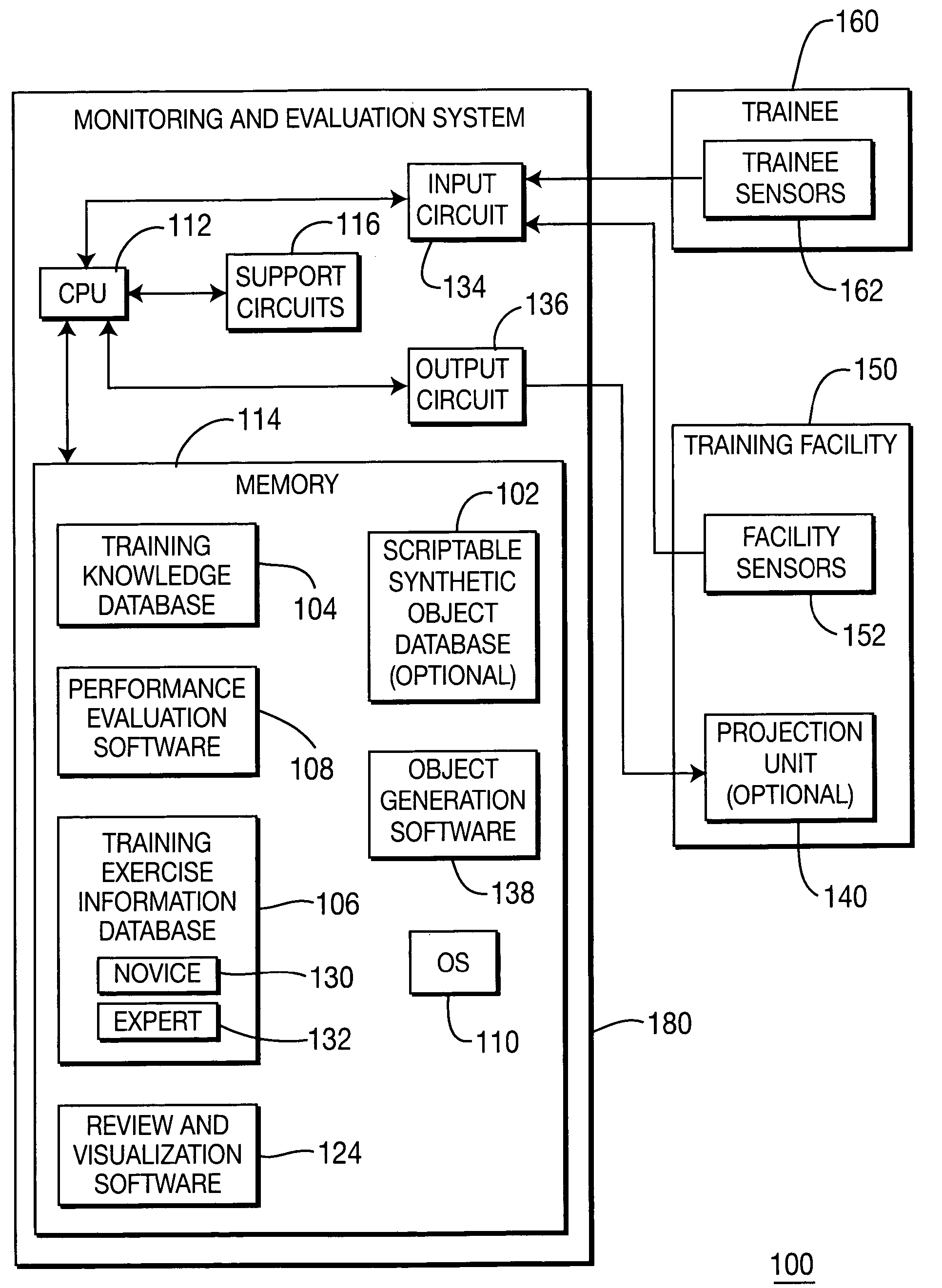

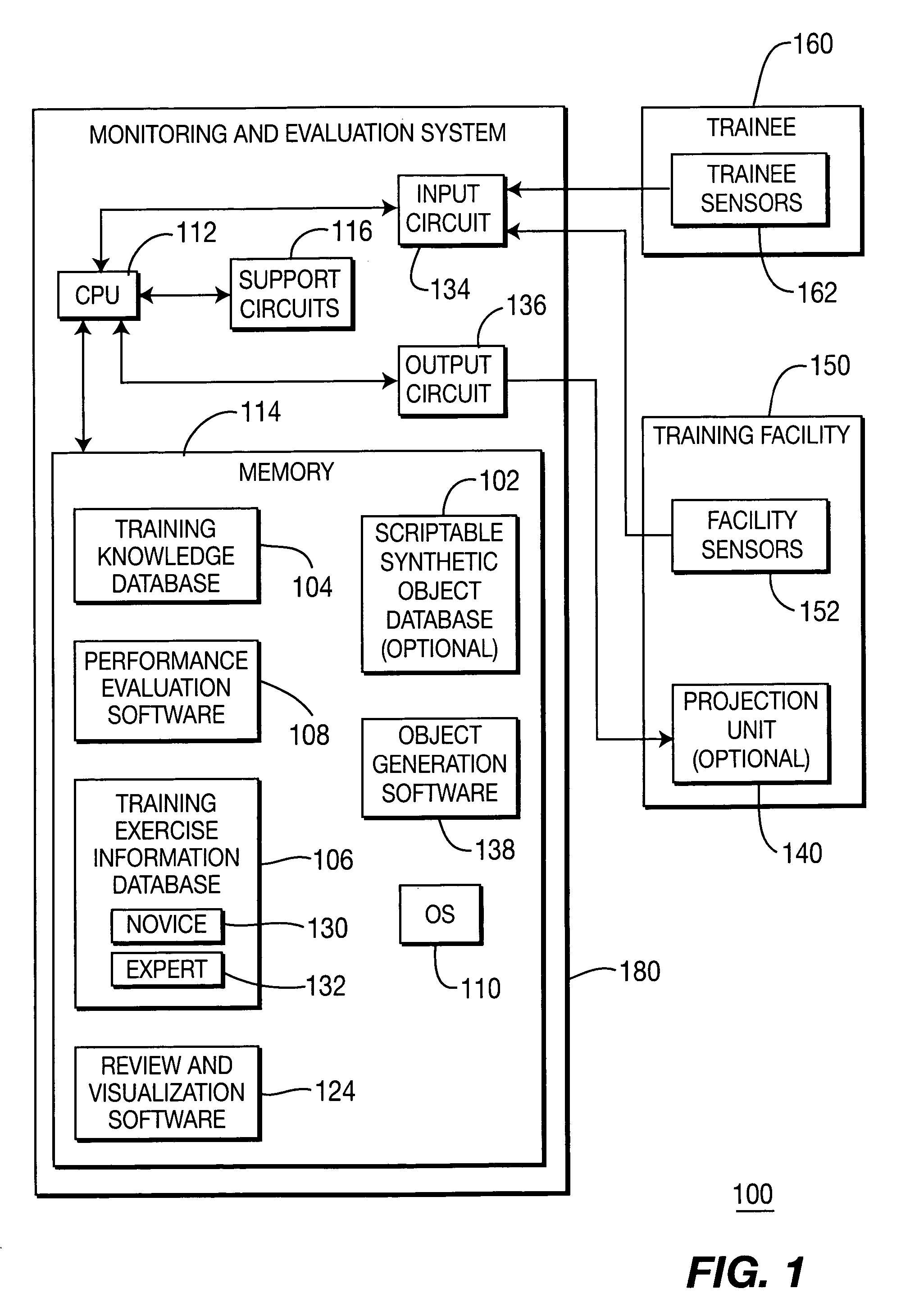

Automated trainee monitoring and performance evaluation system

ActiveUS7949295B2Cosmonautic condition simulationsDrawing from basic elementsMixed realitySimulation

Owner:SRI INTERNATIONAL

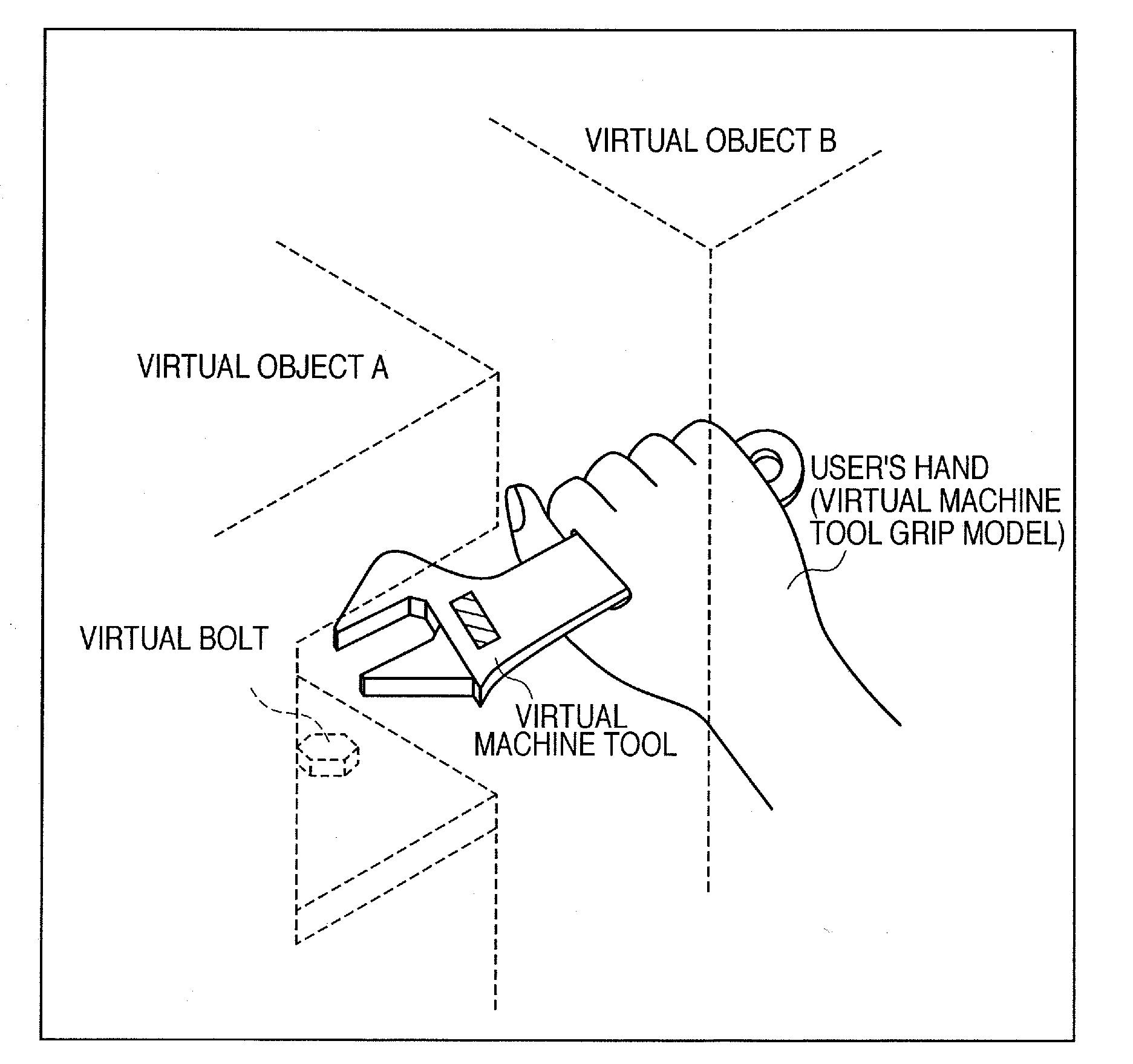

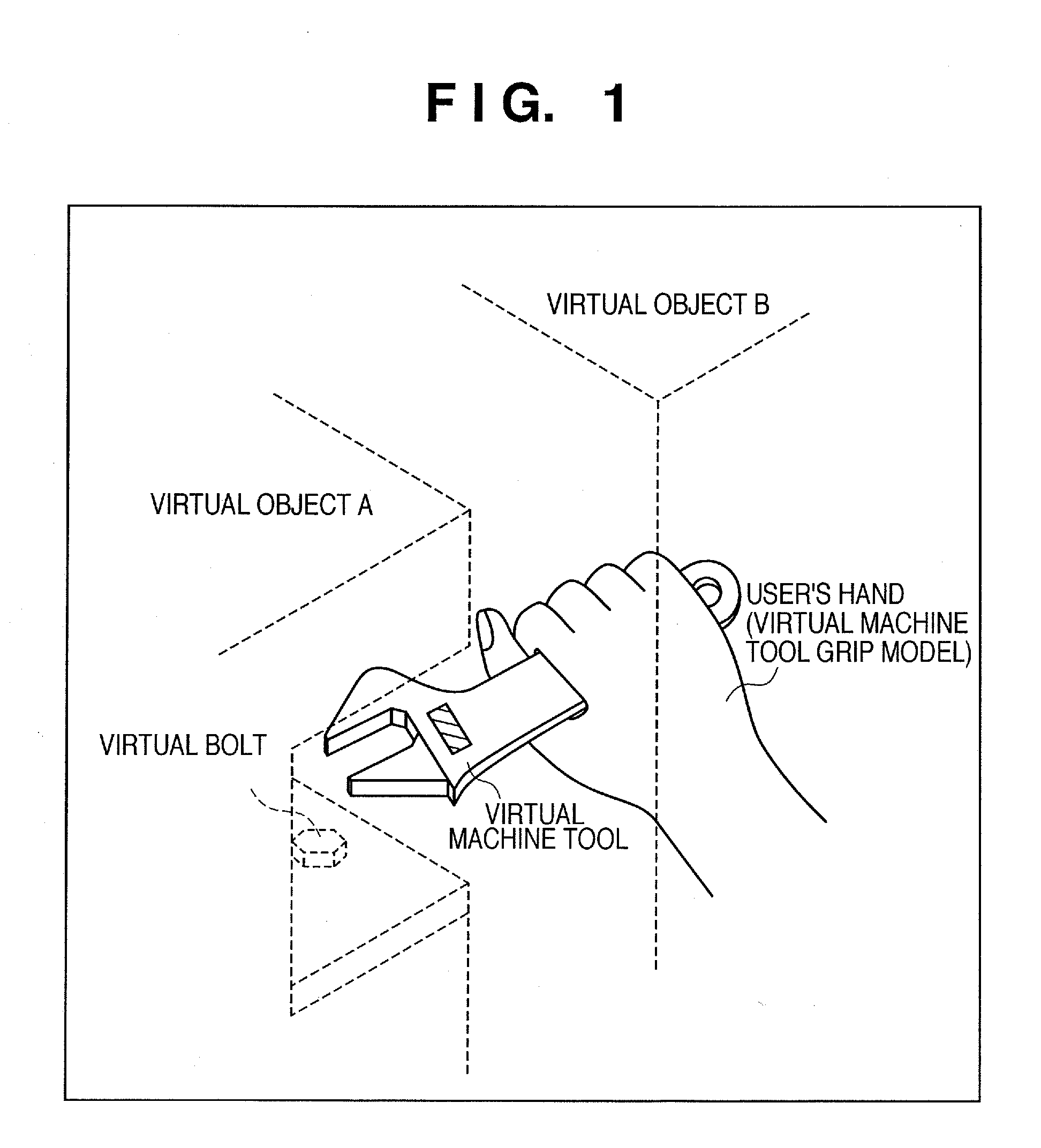

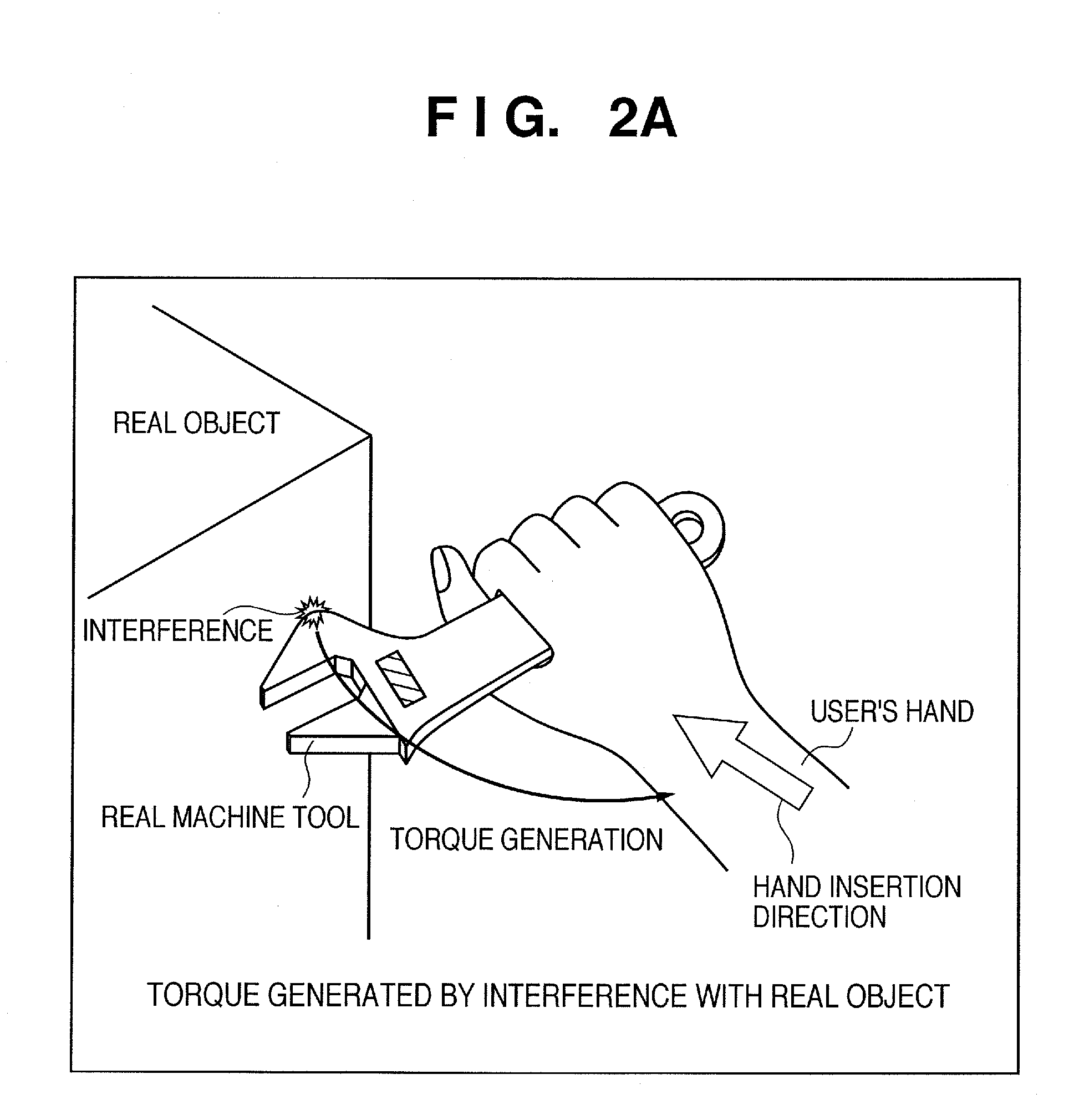

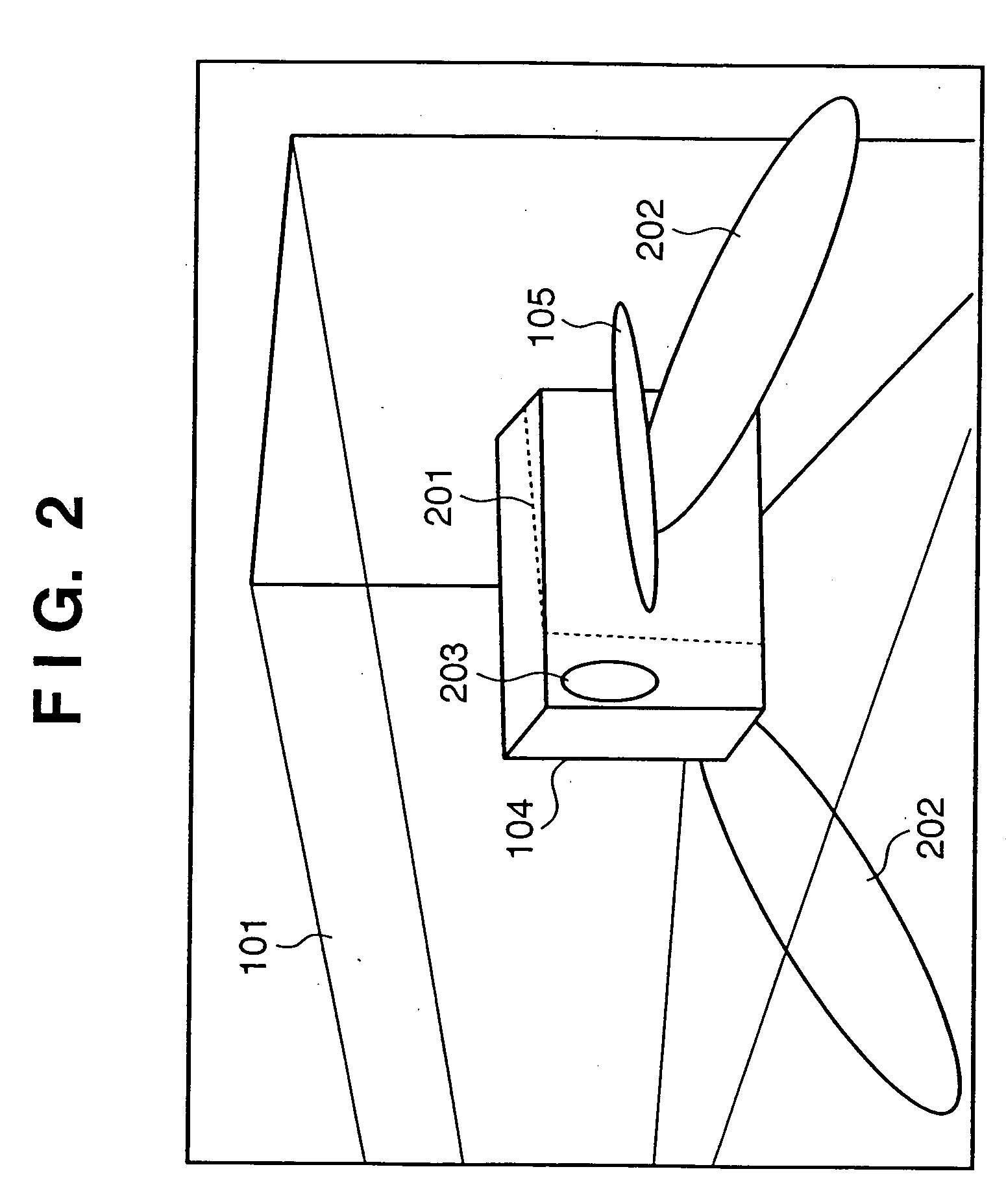

Force sense presentation device, mixed reality system, information processing method, and information processing apparatus

InactiveUS20080059131A1Easy to operateEasy to presentCathode-ray tube indicatorsAnalogue computers for heat flowInformation processingMixed reality

A force sense presentation device for presenting a sense of force in virtual space to a user, comprises: a fixed unit which is gripped by the user; a force sense presentation unit which presents a sense of force; an actuator which supplies a driving force and operates the force sense presentation unit relative to the fixed unit; a joint unit which is provided between the fixed unit and the force sense presentation unit, and guides the relative operation of the force sense presentation unit; and a force sense rendering unit which controls the relative operation of the force sense presentation unit by the actuator, wherein the force sense presentation device simulates a device which is gripped and used by the user, and the force sense rendering unit controls the relative operation of the force sense presentation unit based on a position and orientation of the device in the virtual space.

Owner:CANON KK

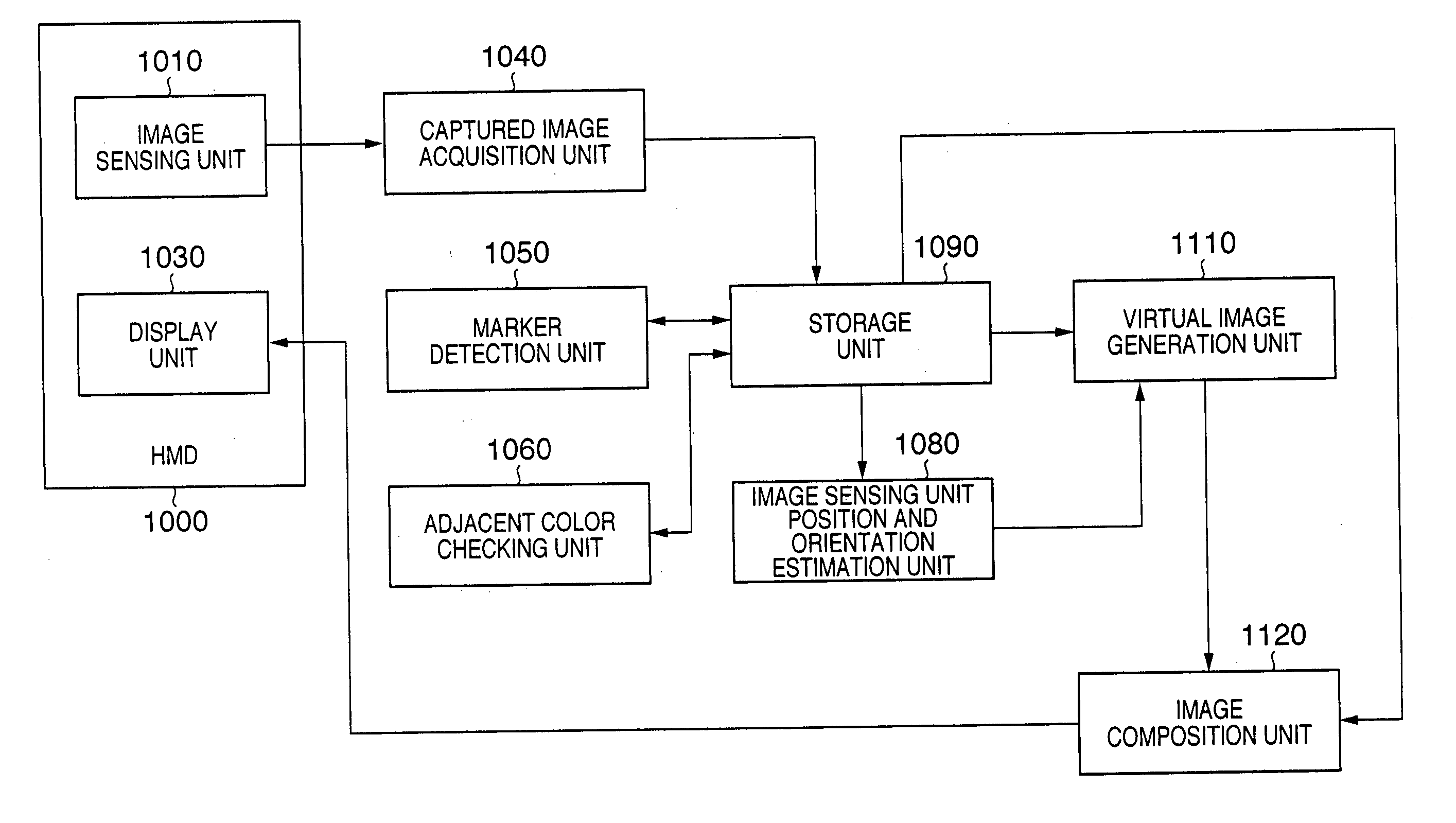

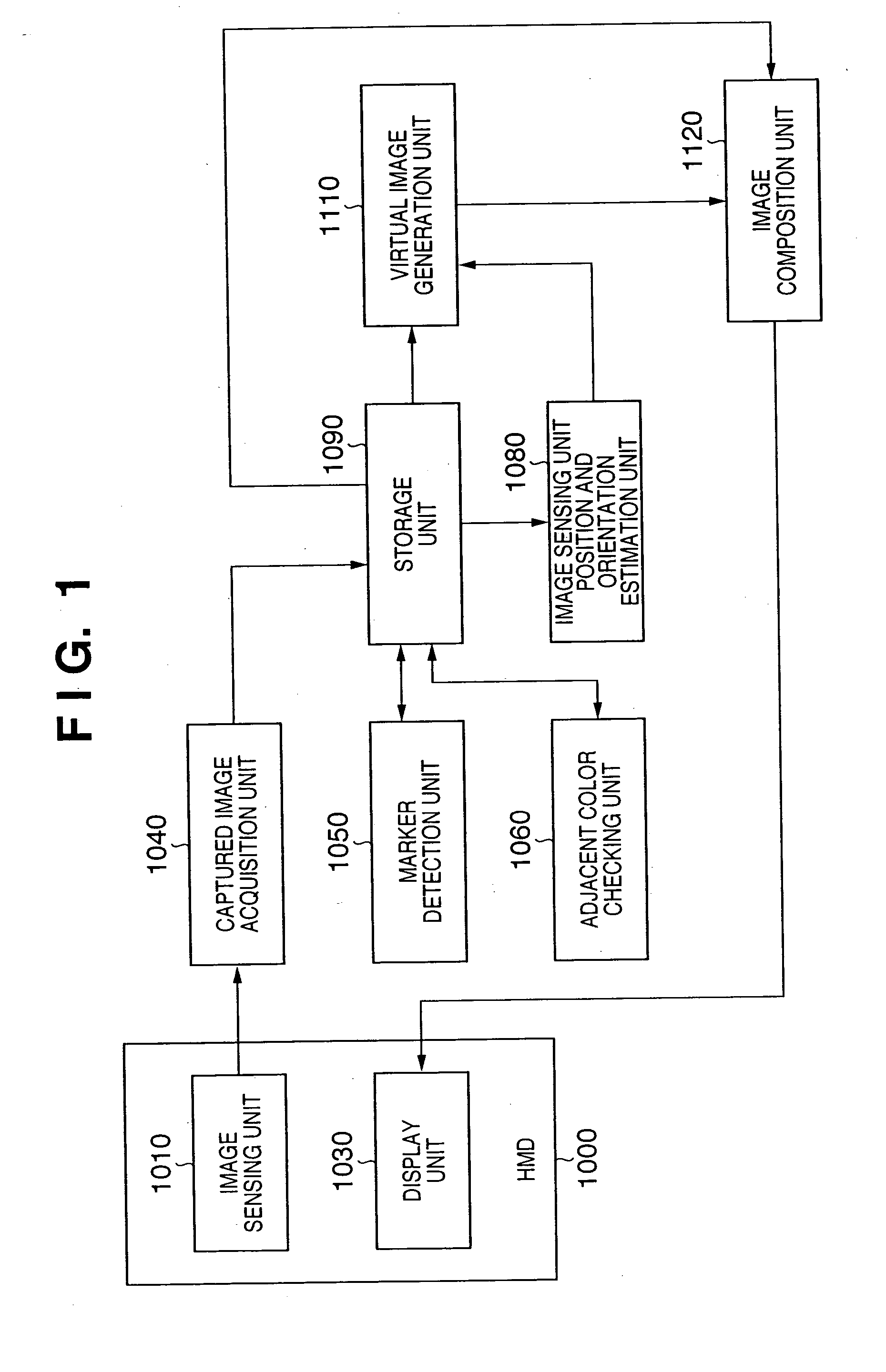

Marker detection method and apparatus, and position and orientation estimation method

InactiveUS20050234333A1Enhance the imageHigh precisionImage enhancementTelevision system detailsMixed realityEstimation methods

This invention relates to a mixed reality presentation apparatus for obtaining the position and orientation of an image sensing unit using markers. Whether or not a marker detected in a captured image has suspicion of partial occlusion is determined by checking if a region that neighbors a marker region includes a predetermined color, thus inhibiting information obtained from the marker with suspicion of partial occlusion from being used in position and orientation estimation of the image sensing unit. The precision of the obtained position and orientation can be improved.

Owner:CANON KK

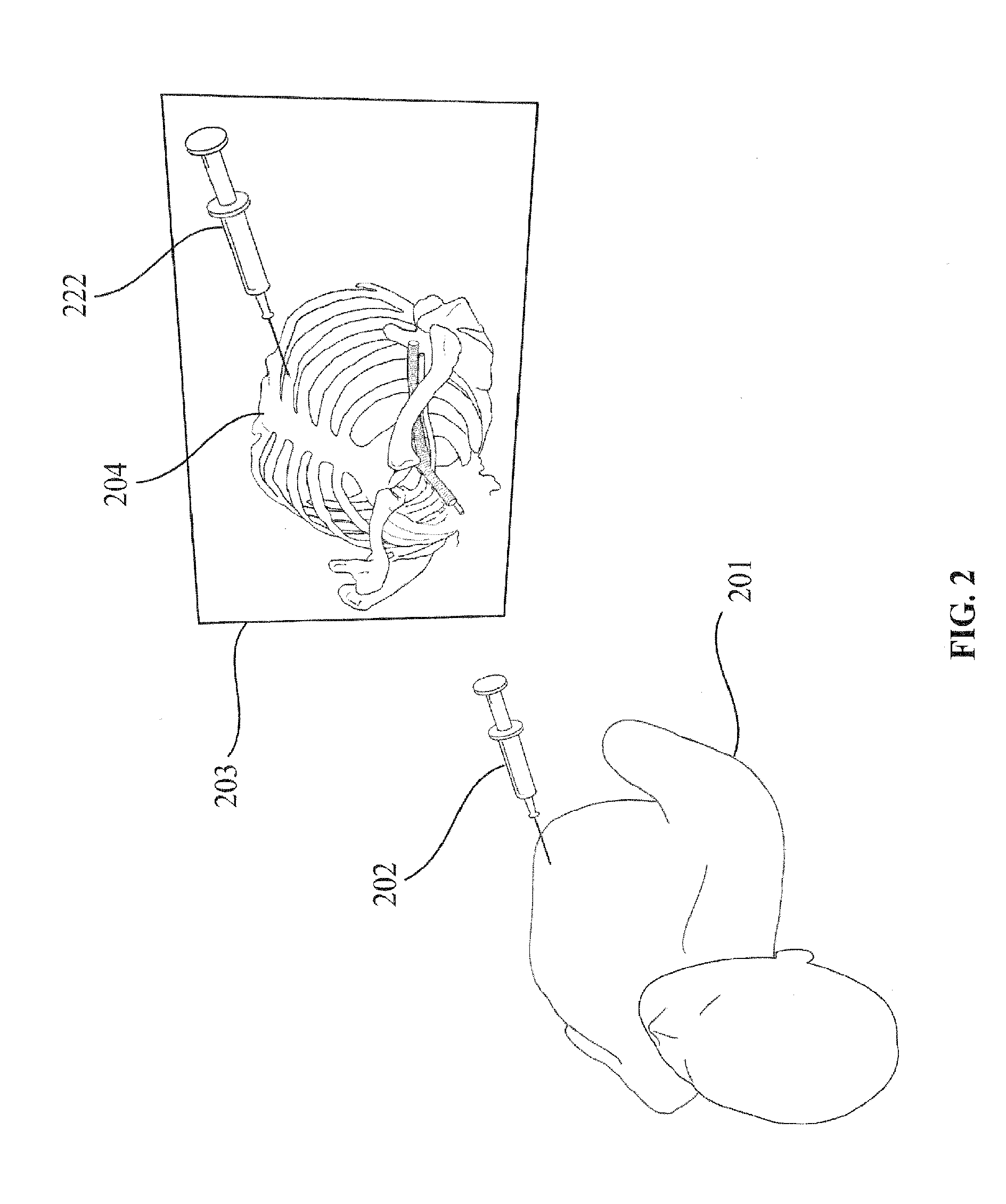

Interactive mixed reality system and uses thereof

ActiveUS20120280988A1Eliminate timeAdditive manufacturing apparatusColor television detailsMixed realityPhysical body

An interactive mixed reality simulator is provided that includes a virtual 3D model of internal or hidden features of an object; a physical model or object being interacted with; and a tracked instrument used to interact with the physical object. The tracked instrument can be used to simulate or visualize interactions with internal features of the physical object represented by the physical model. In certain embodiments, one or more of the internal features can be present in the physical model. In another embodiment, some internal features do not have a physical presence within the physical model.

Owner:UNIV OF FLORIDA RES FOUNDATION INC

Mixed Simulator and Uses Thereof

InactiveUS20100159434A1Correction of unevennessImprove portabilityEducational modelsSimulatorsMixed realityDisplay device

The subject invention provides mixed simulator systems that combine the advantages of both physical objects / simulations and virtual representations. In-context integration of virtual representations with physical simulations or objects can facilitate education and training. Two modes of mixed simulation are provided. In the first mode, a virtual representation is combined with a physical simulation or object by using a tracked display capable of displaying an appropriate dynamic virtual representation as a user moves around the physical simulation or object. In the second mode, a virtual representation is combined with a physical simulation or object by projecting the virtual representation directly onto the physical object or simulation. In further embodiments, user action and interaction can be tracked and incorporated within the mixed simulator system to provide a mixed reality after-action review. The subject mixed simulators can be used in many applications including, but not limited to healthcare, education, military, vocational schools, and industry.

Owner:UNIV OF FLORIDA RES FOUNDATION INC

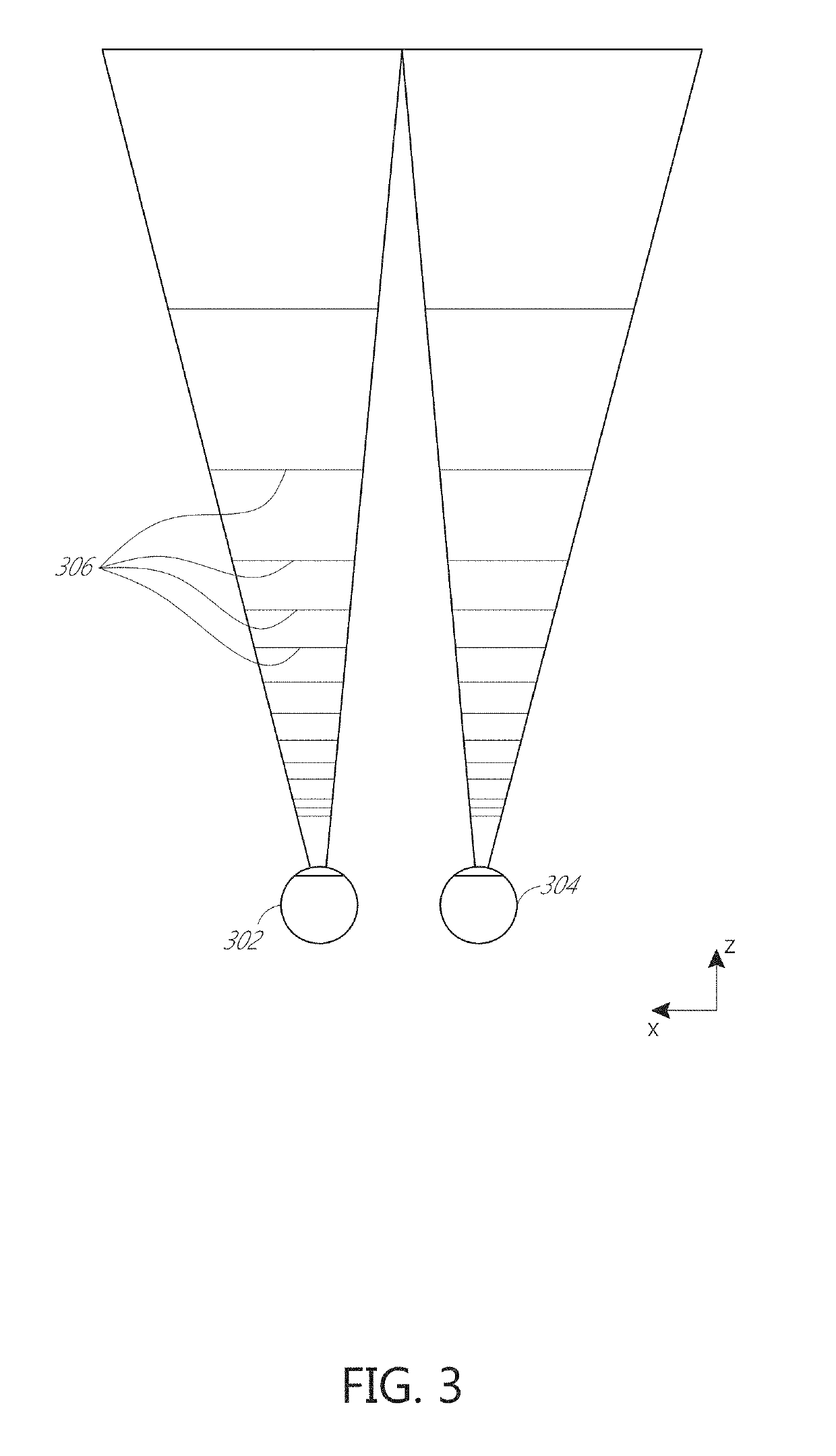

Image displaying method and apparatus

Image exhibiting method of exhibiting an image of mixed reality space is provided. A three-dimensional visual-field area of an observer in the mixed reality space is calculated, a virtual visual-field object representing the visual-field area is generated, and the virtual visual-field object is superimposed on the image of the mixed reality space to be exhibited.

Owner:CANON KK

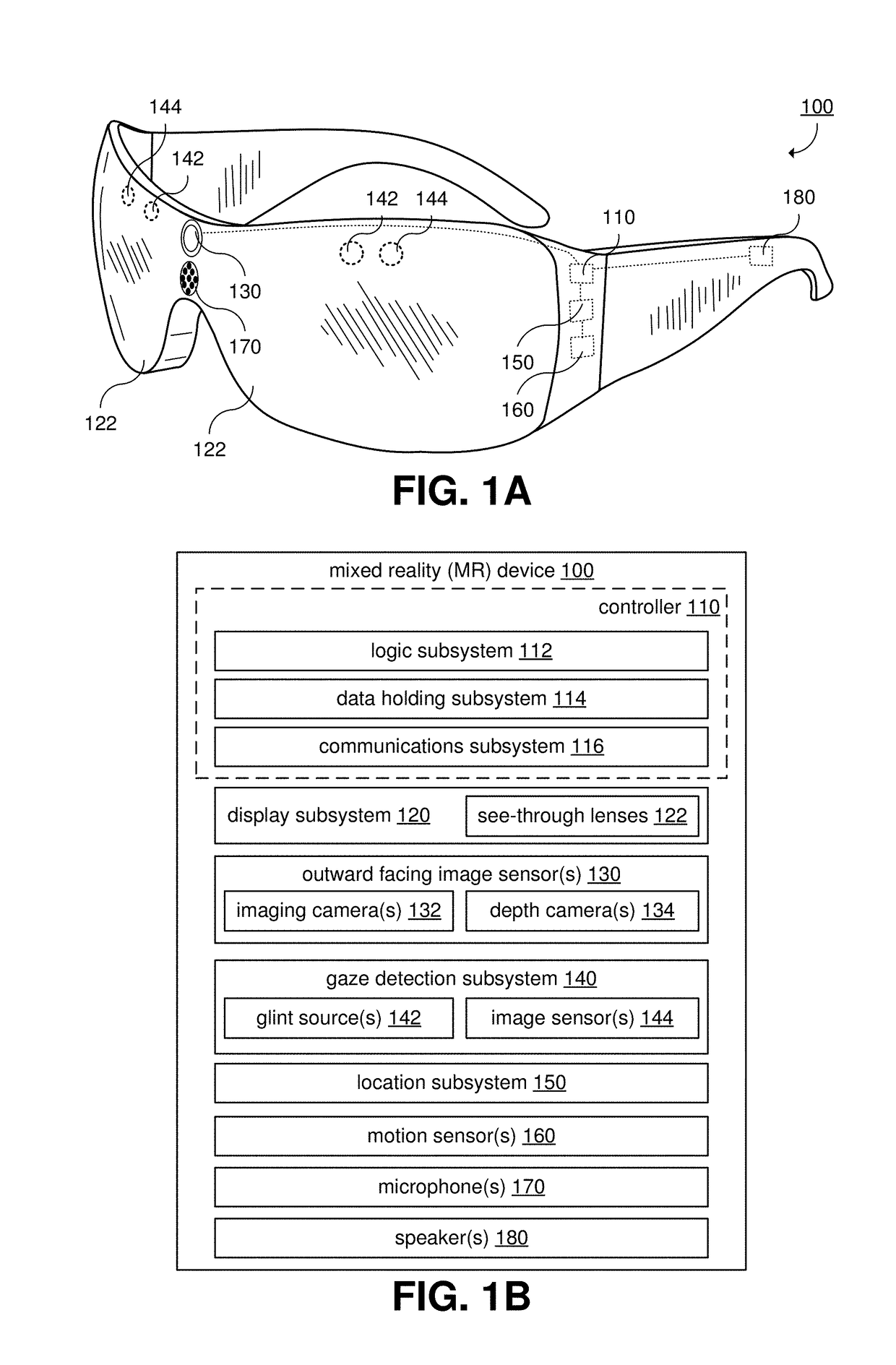

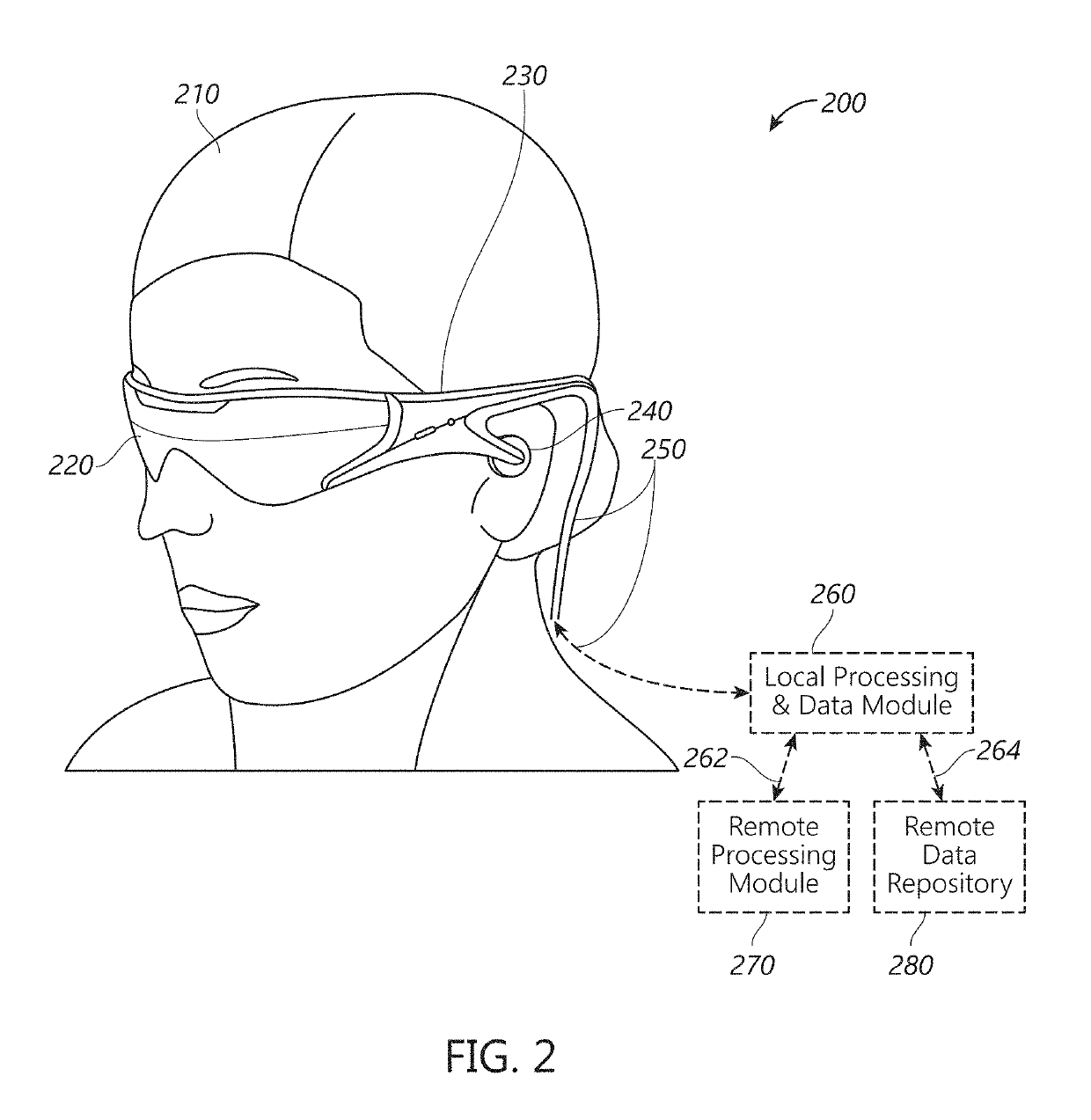

Sensory eyewear

ActiveUS20180075659A1Enhanced interactionInput/output for user-computer interactionNatural language translationMixed realityEyewear

A sensory eyewear system for a mixed reality device can facilitate user's interactions with the other people or with the environment. As one example, the sensory eyewear system can recognize and interpret a sign language, and present the translated information to a user of the mixed reality device. The wearable system can also recognize text in the user's environment, modify the text (e.g., by changing the content or display characteristics of the text), and render the modified text to occlude the original text.

Owner:MAGIC LEAP

Systems And Methods For Surgical Navigation

ActiveUS20180185100A1Reduce errorsEasy alignmentDiagnosticsSurgical navigation systemsMixed realityNavigation system

System and methods for surgical navigation providing mixed reality visualization are provided. The mixed reality visualization depicts virtual images in conjunction with real objects to provide improved visualization to users.

Owner:MAKO SURGICAL CORP

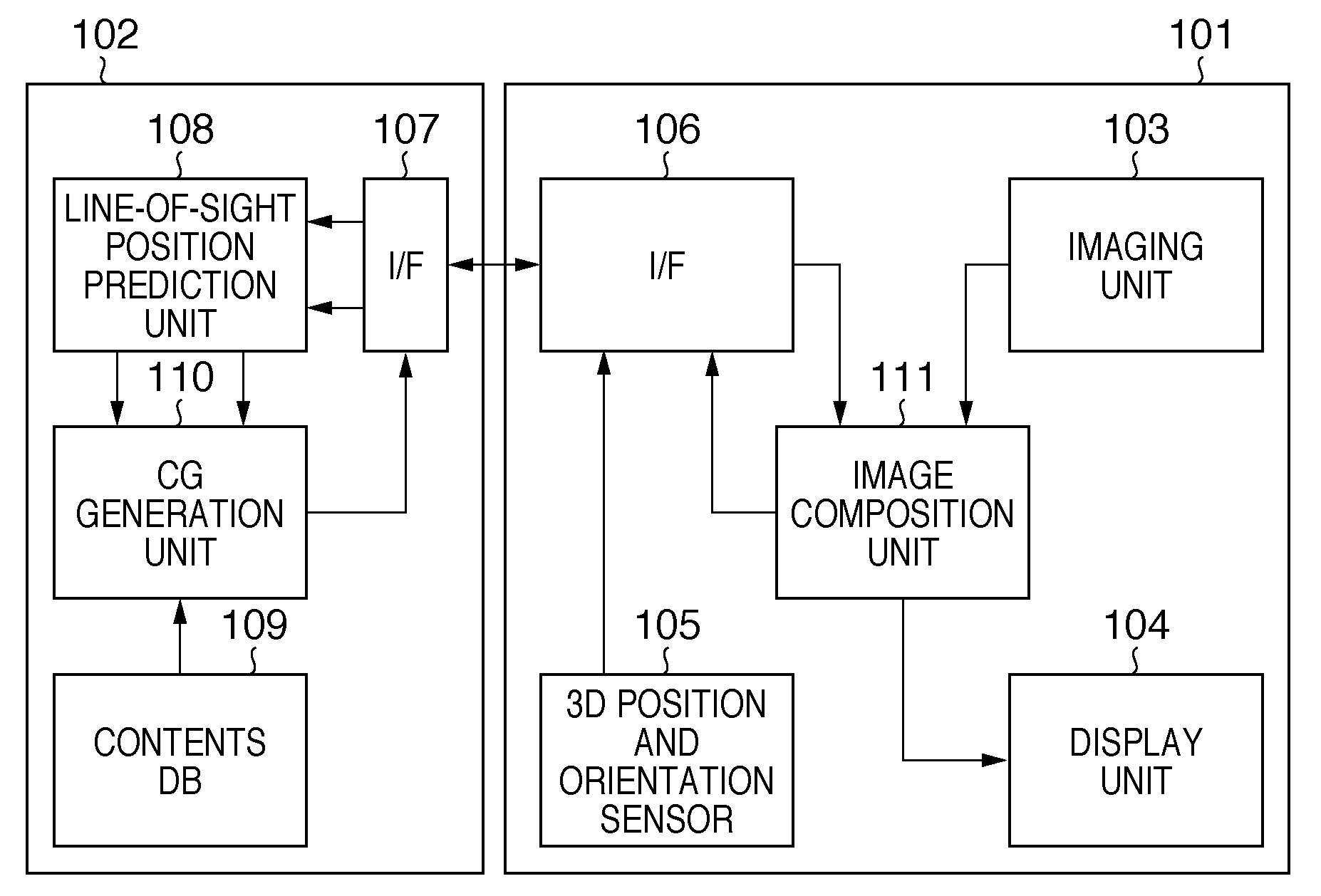

Mixed reality presentation system

ActiveUS20100026714A1Cathode-ray tube indicatorsImage data processingPattern recognitionMixed reality

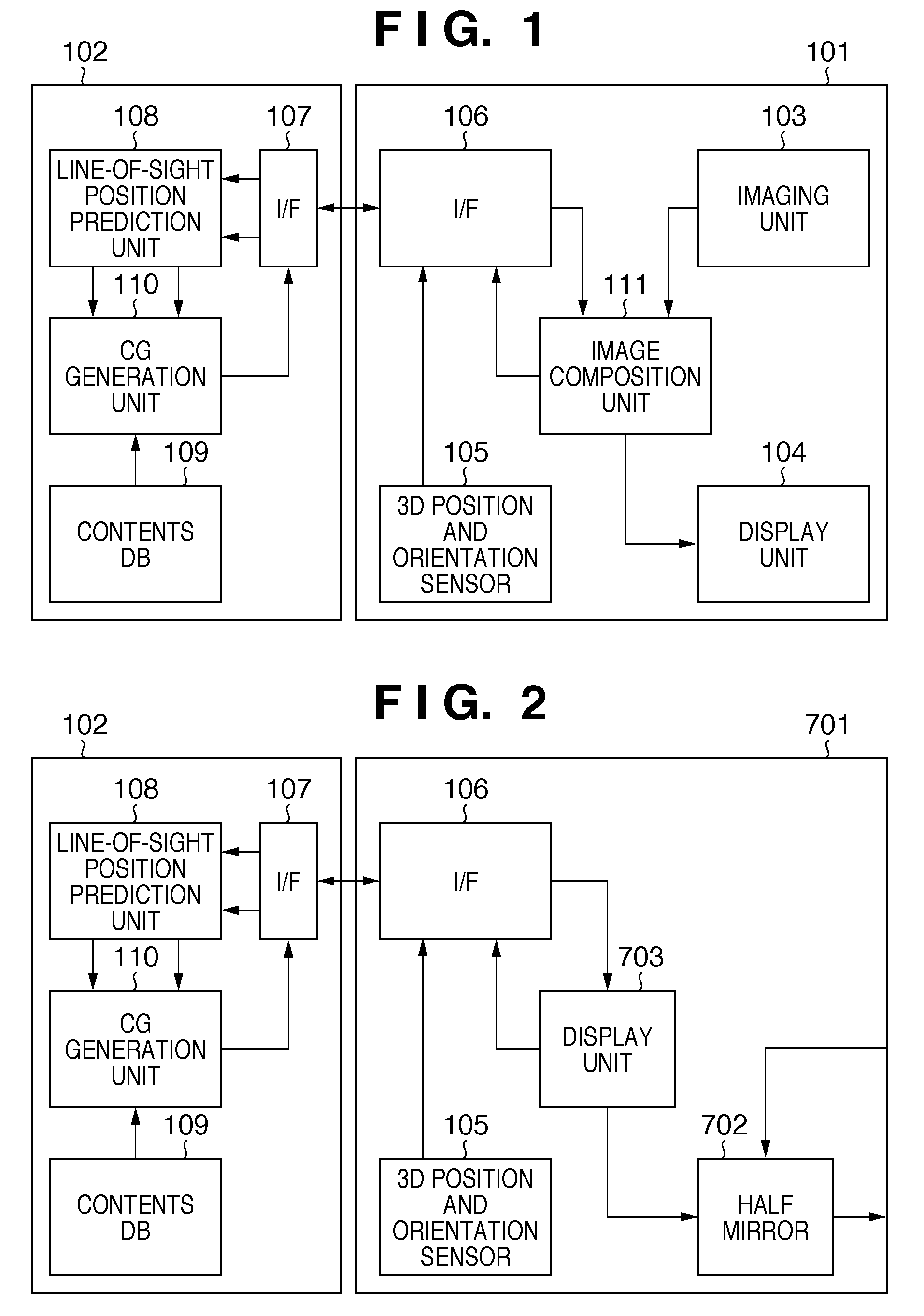

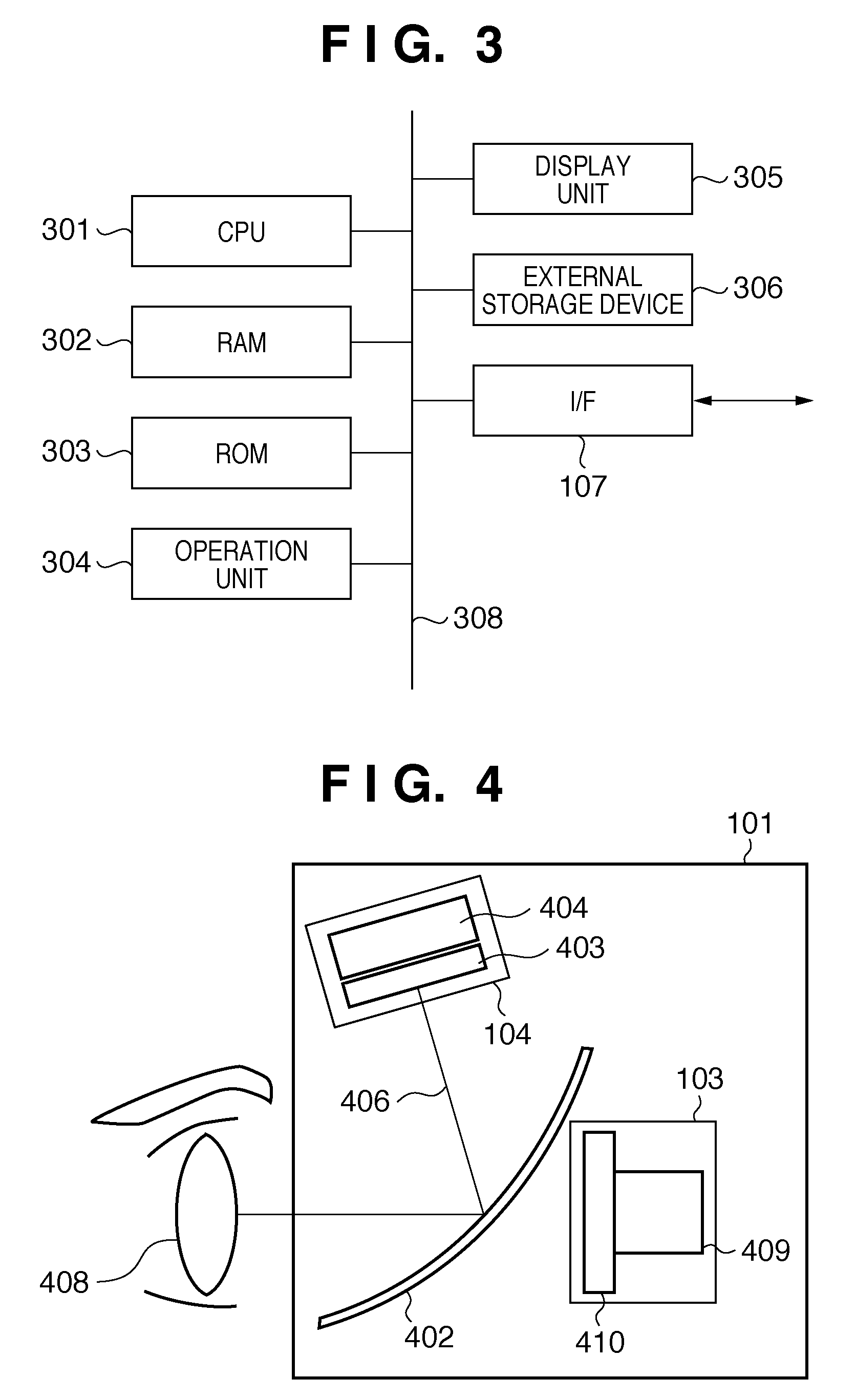

An image composition unit outputs a composition image of a physical space and virtual space to a display unit. The image composition unit calculates, as difference information, a half of the difference between an imaging time of the physical space and a generation completion predicted time of the virtual space. The difference information and acquired position and orientation information are transmitted to an image processing apparatus. A line-of-sight position prediction unit updates previous difference information using the received difference information, calculates, as the generation completion predicted time, a time ahead of a receiving time by the updated difference information, and predicts the position and orientation of a viewpoint at the calculated generation completion predicted time using the received position and orientation information. The virtual space based on the predicted position and orientation, and the generation completion predicted time are transmitted to a VHMD.

Owner:CANON KK

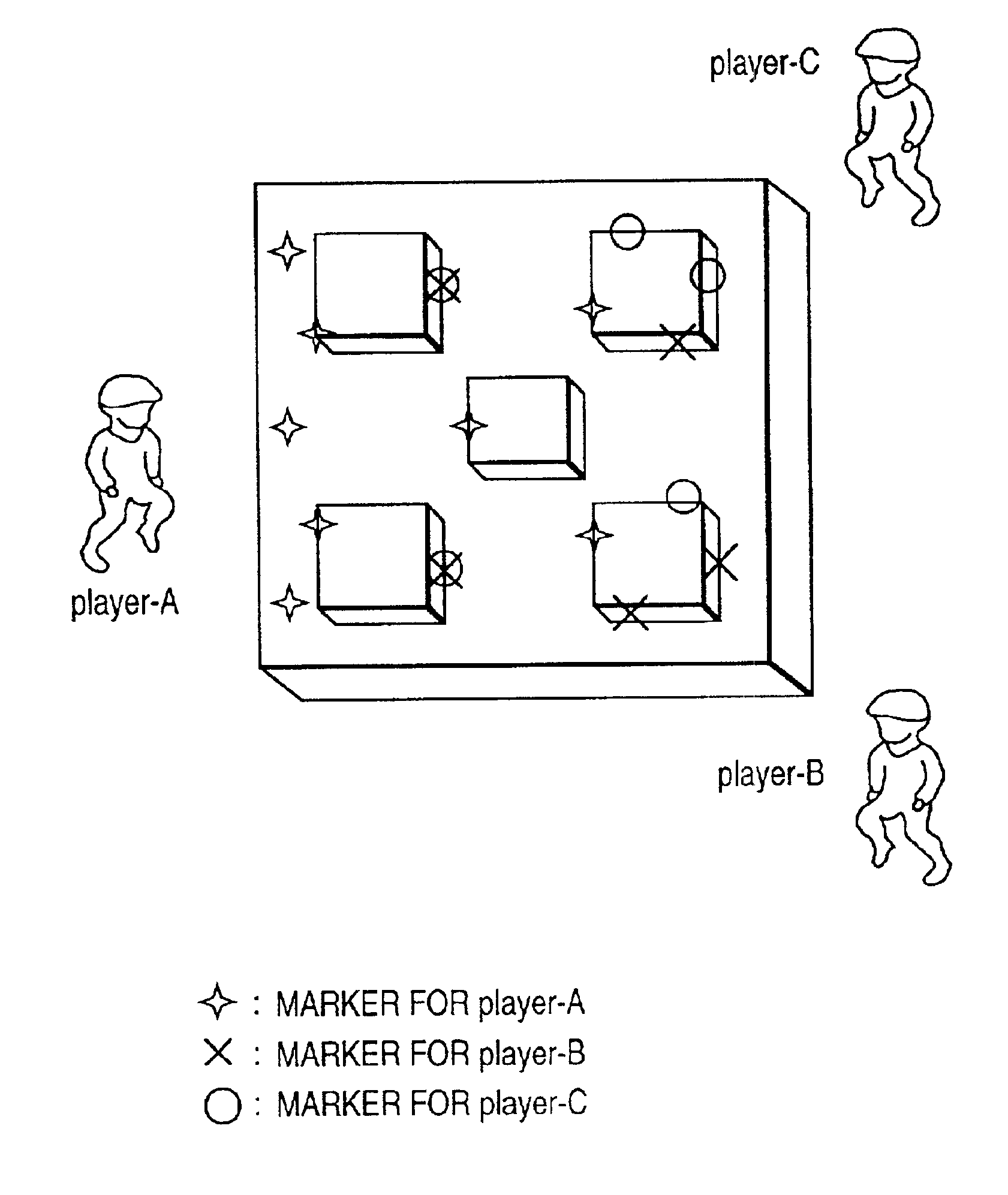

Marker layout method, mixed reality apparatus, and mixed reality space image generation method

InactiveUS7084887B1Reliably detect markers in units of playersInput/output for user-computer interactionImage analysisMixed realityImage generation

There is disclosed a marker layout method in a mixed reality space, which can reliably detect markers in units of players, even when a plurality of players share a common mixed reality space. According to this invention, markers to be used by only a given player are laid out at positions that cannot be seen from other players. Real objects used in an application that uses a mixed reality space may be used.

Owner:CANON KK

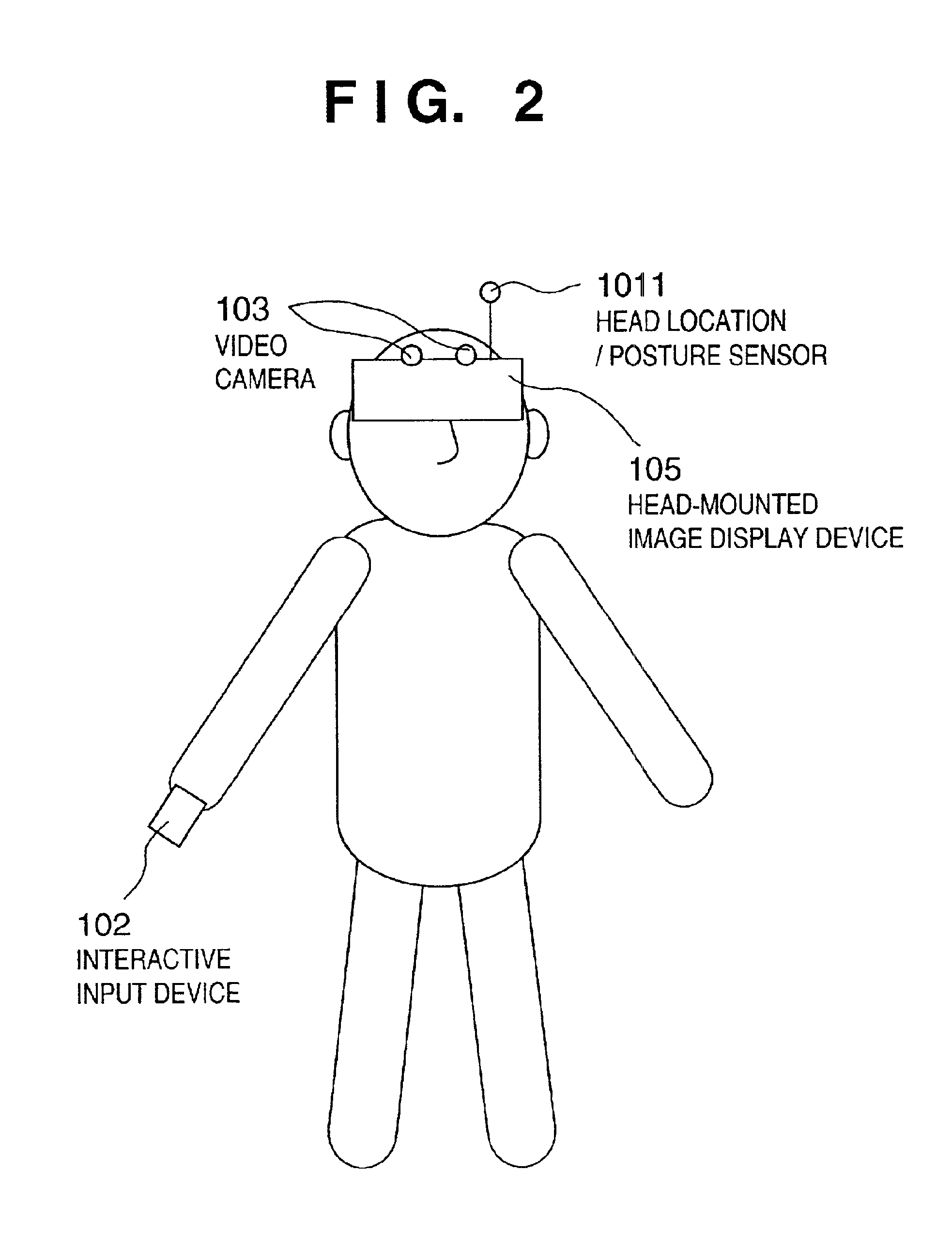

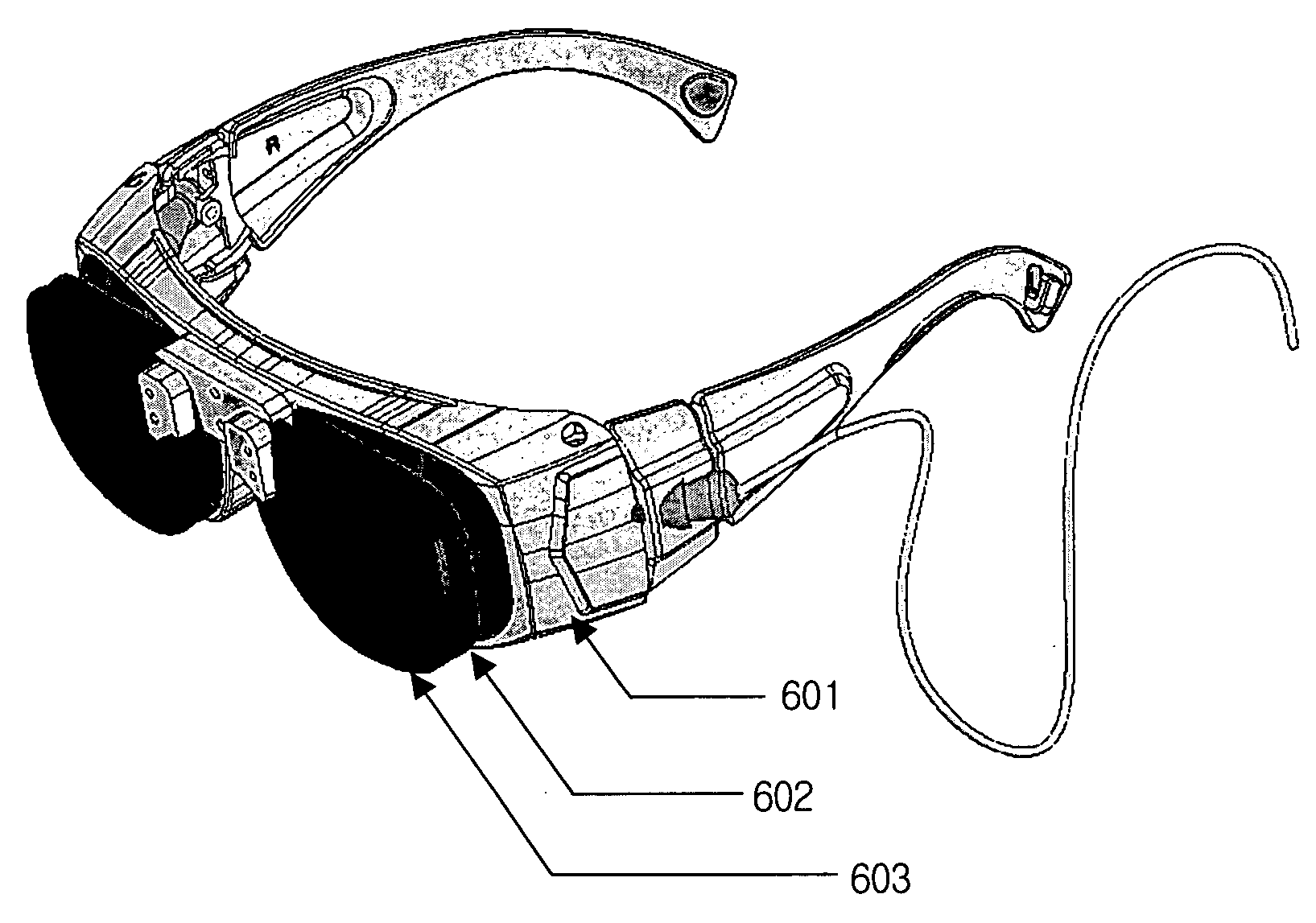

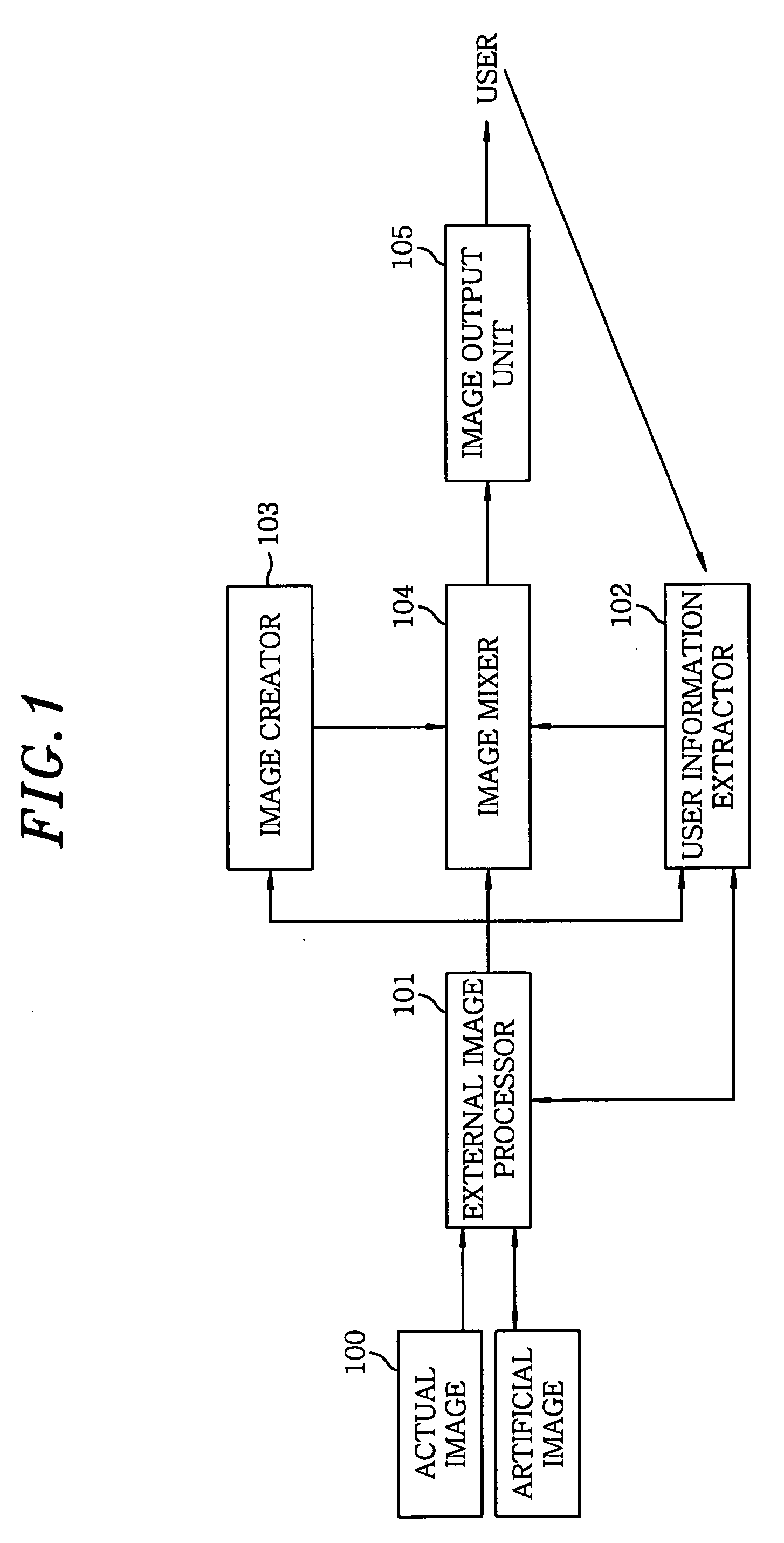

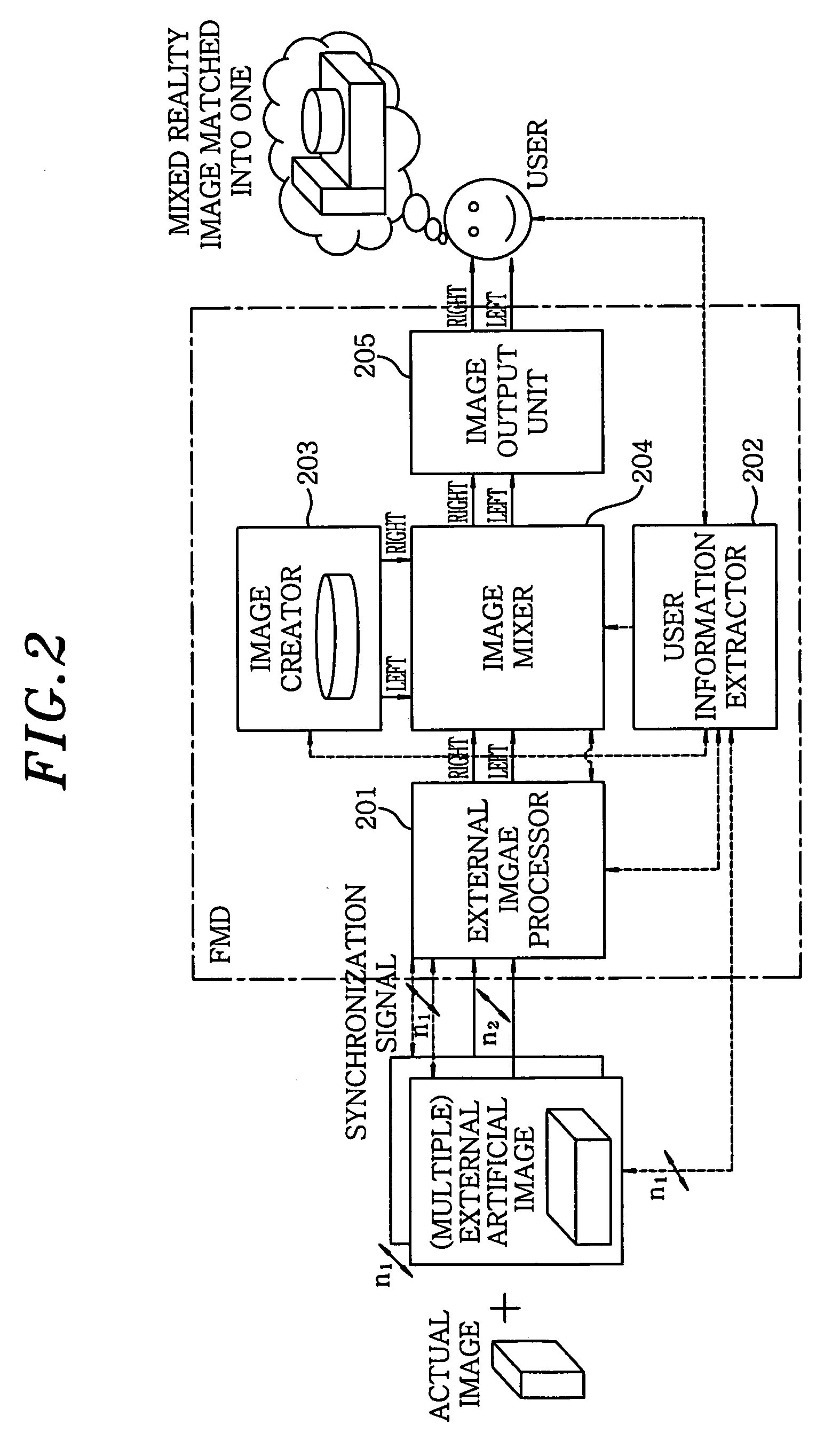

Face-mounted display apparatus for mixed reality environment

A display apparatus for a mixed reality environment includes an image processor for mixing an actual image of an object around a user and a artificial stereo images to produce multiple external image signals, a user information extractor for extracting the user's sight line information including the user's position his / her eye position, direction of a sight line and focal distance; an image creator for creating a stereo image signal based on the extracted user's sight line information; an image mixer for synchronously mixing the multiple external image signals and the stereo image signal; and an image output unit for outputting the mixed image signal to the user.

Owner:ELECTRONICS & TELECOMM RES INST

Mixed reality space image generation method and mixed reality system

InactiveUS20050179617A1Image analysisCathode-ray tube indicatorsMixed realityComputer graphics (images)

A mixed reality space image generation apparatus for generating a mixed reality space image formed by superimposing virtual space images onto a real space image obtained by capturing a real space, includes an image composition unit (109) which superimposes a virtual space image, which is to be displayed in consideration of occlusion by an object on the real space of the virtual space images, onto the real space image, and an annotation generation unit (108) which further imposes an image to be displayed without considering any occlusion of the virtual space images. In this way, a mixed reality space image which can achieve both natural display and convenient display can be generated.

Owner:CANON KK

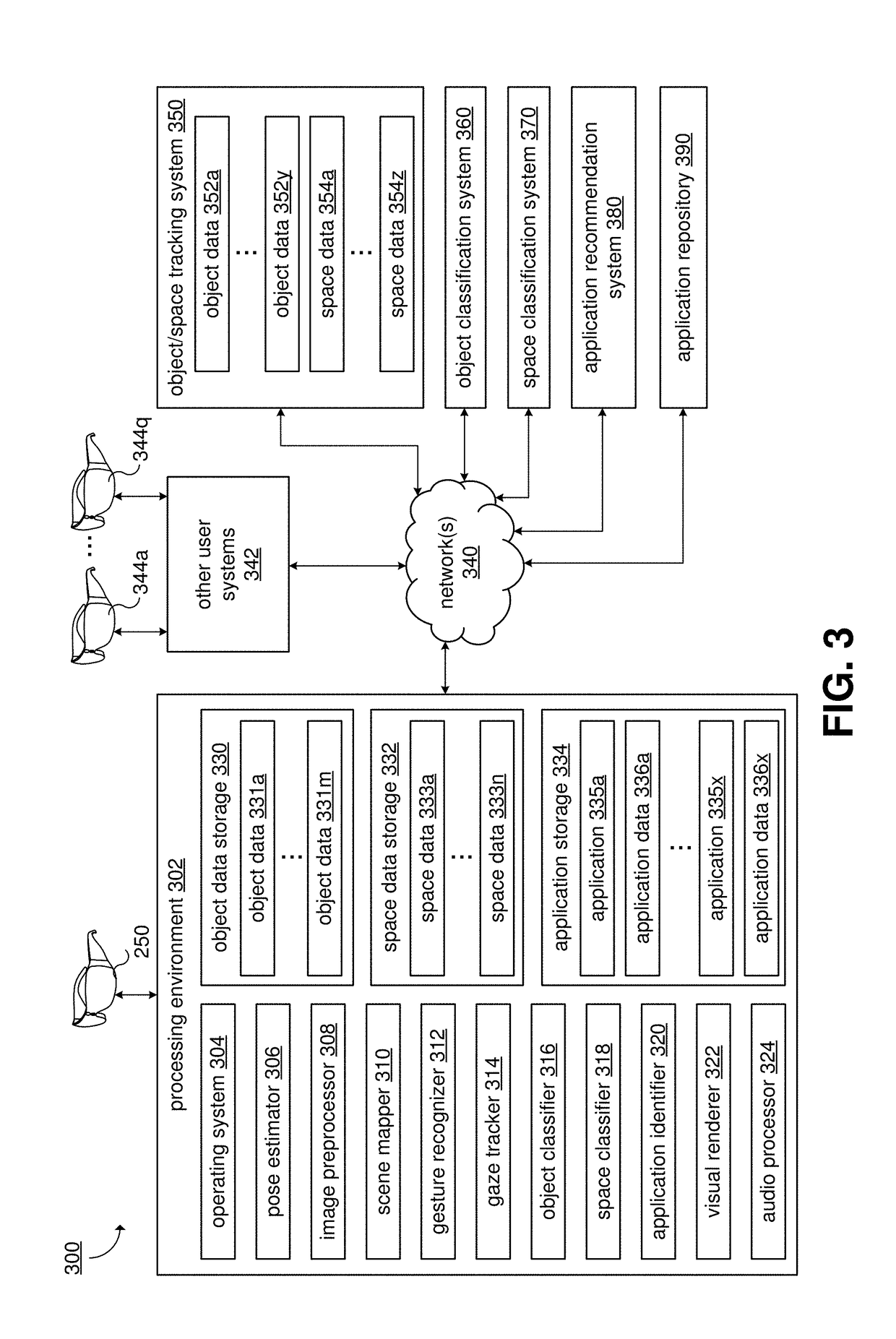

Object holographic augmentation

ActiveUS20180365898A1Well formedCharacter and pattern recognitionDetails for portable computersMixed realityPhysical space

Devices, systems, and methods for augmenting a real-world object using a mixed reality device, involving capturing image data for a real-world object included in a physical space observed by the mixed reality device; automatically classifying the real-world object as being associated with an object classification based on the image data; automatically identifying a software application based on the real-world object being associated with the object classification; associating the software application with the real-world object; and executing the software application on the mixed reality device in association with the real-world object.

Owner:MICROSOFT TECH LICENSING LLC

Eclipse cursor for mixed reality displays

ActiveUS20190237044A1More experienceMore emphasis in the visual hierarchyInput/output for user-computer interactionCathode-ray tube indicatorsMixed realityComputer graphics (images)

Systems and methods for displaying a cursor and a focus indicator associated with real or virtual objects in a virtual, augmented, or mixed reality environment by a wearable display device are disclosed. The system can determine a spatial relationship between a user-movable cursor and a target object within the environment. The system may render a focus indicator (e.g., a halo, shading, or highlighting) around or adjacent objects that are near the cursor. The focus indicator may be emphasized in directions closer to the cursor and deemphasized in directions farther from the cursor. When the cursor overlaps with a target object, the system can render the object in front of the cursor (or not render the cursor at all), so the object is not occluded by the cursor. The cursor and focus indicator can provide the user with positional feedback and help the user navigate among objects in the environment.

Owner:MAGIC LEAP INC

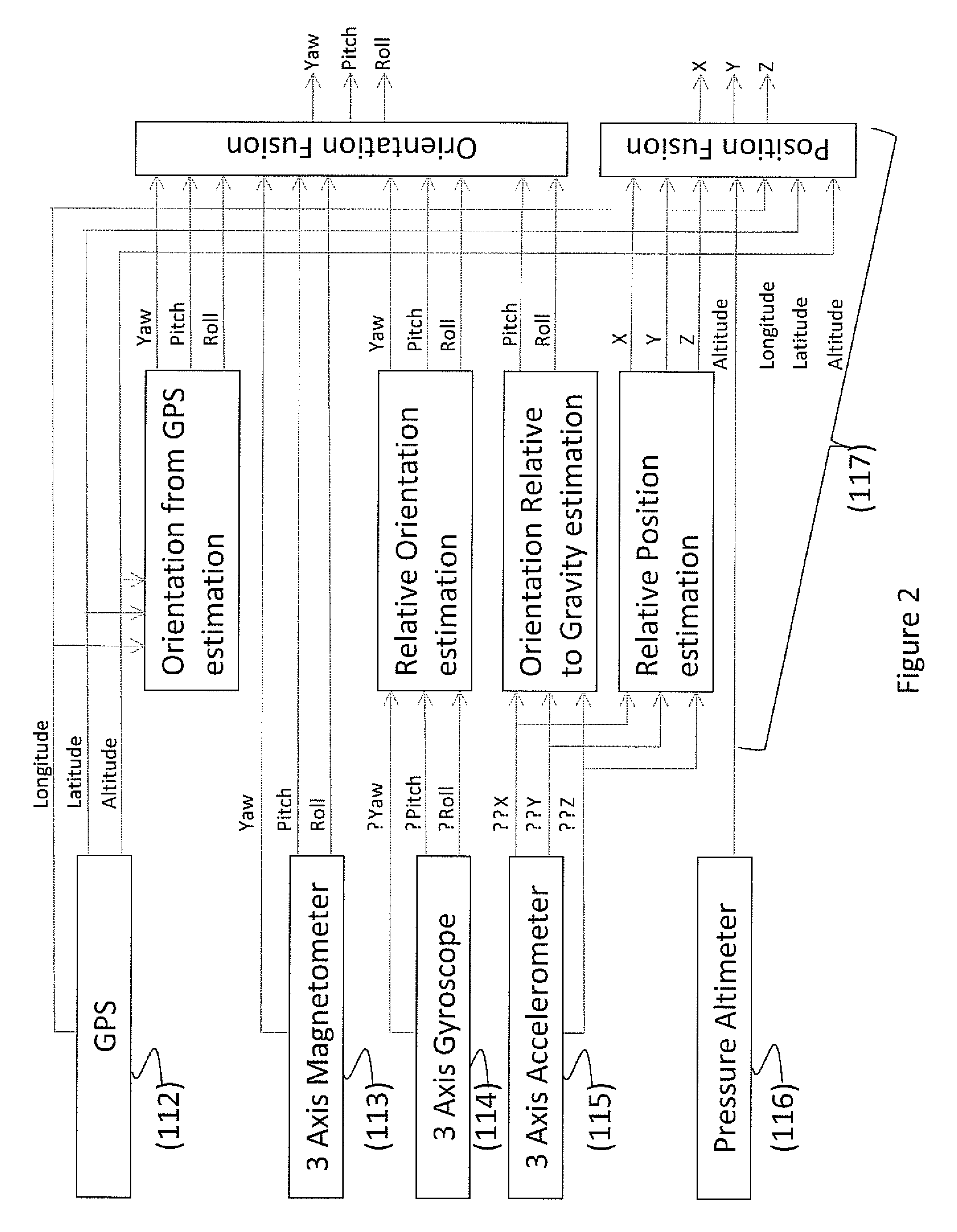

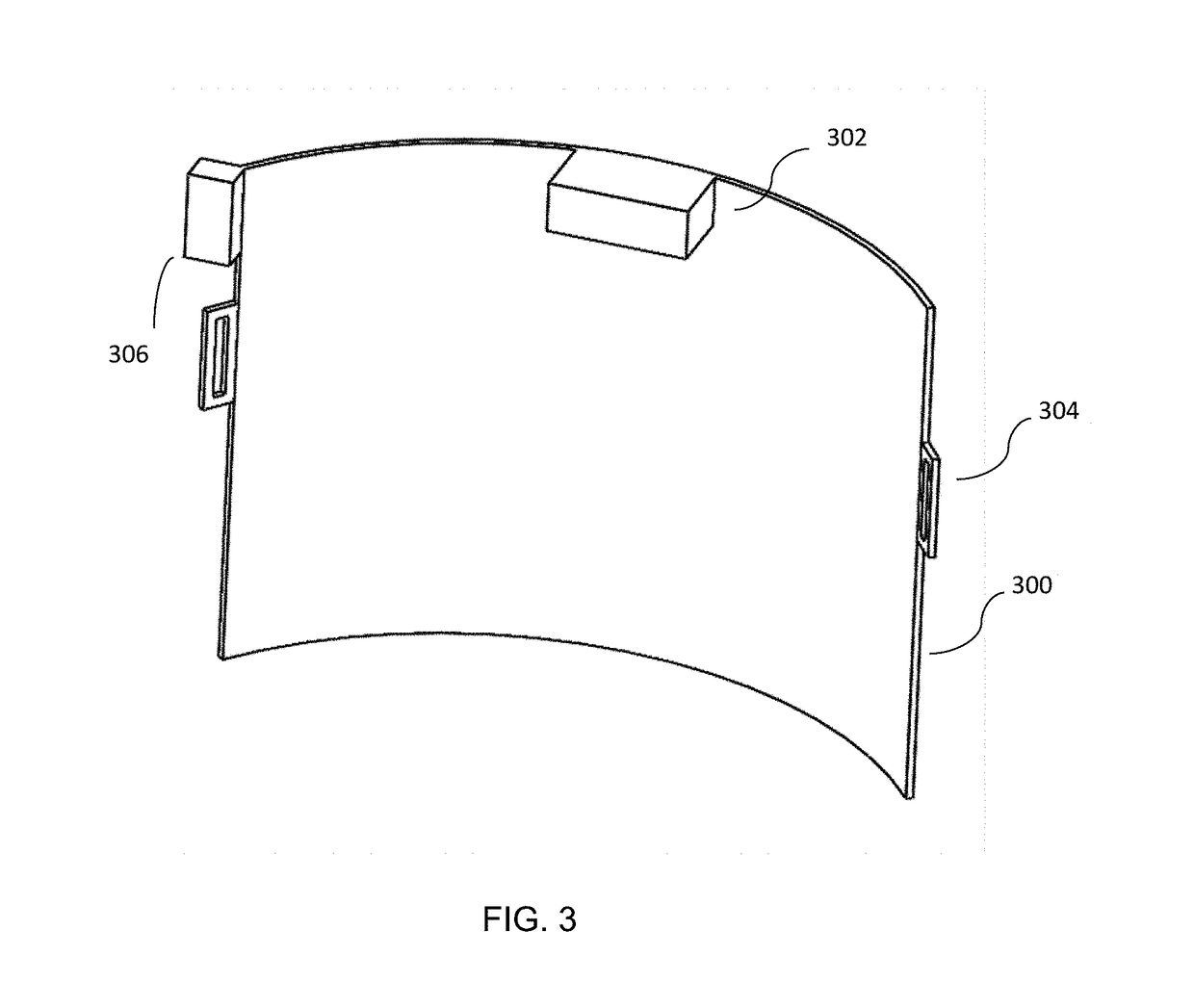

Deriving input from six degrees of freedom interfaces

ActiveUS9229540B2Input/output for user-computer interactionCathode-ray tube indicatorsMixed realityVirtual space

The present invention relates to interfaces and methods for producing input for software applications based on the absolute pose of an item manipulated or worn by a user in a three-dimensional environment. Absolute pose in the sense of the present invention means both the position and the orientation of the item as described in a stable frame defined in that three-dimensional environment. The invention describes how to recover the absolute pose with optical hardware and methods, and how to map at least one of the recovered absolute pose parameters to the three translational and three rotational degrees of freedom available to the item to generate useful input. The applications that can most benefit from the interfaces and methods of the invention involve 3D virtual spaces including augmented reality and mixed reality environments.

Owner:ELECTRONICS SCRIPTING PRODS

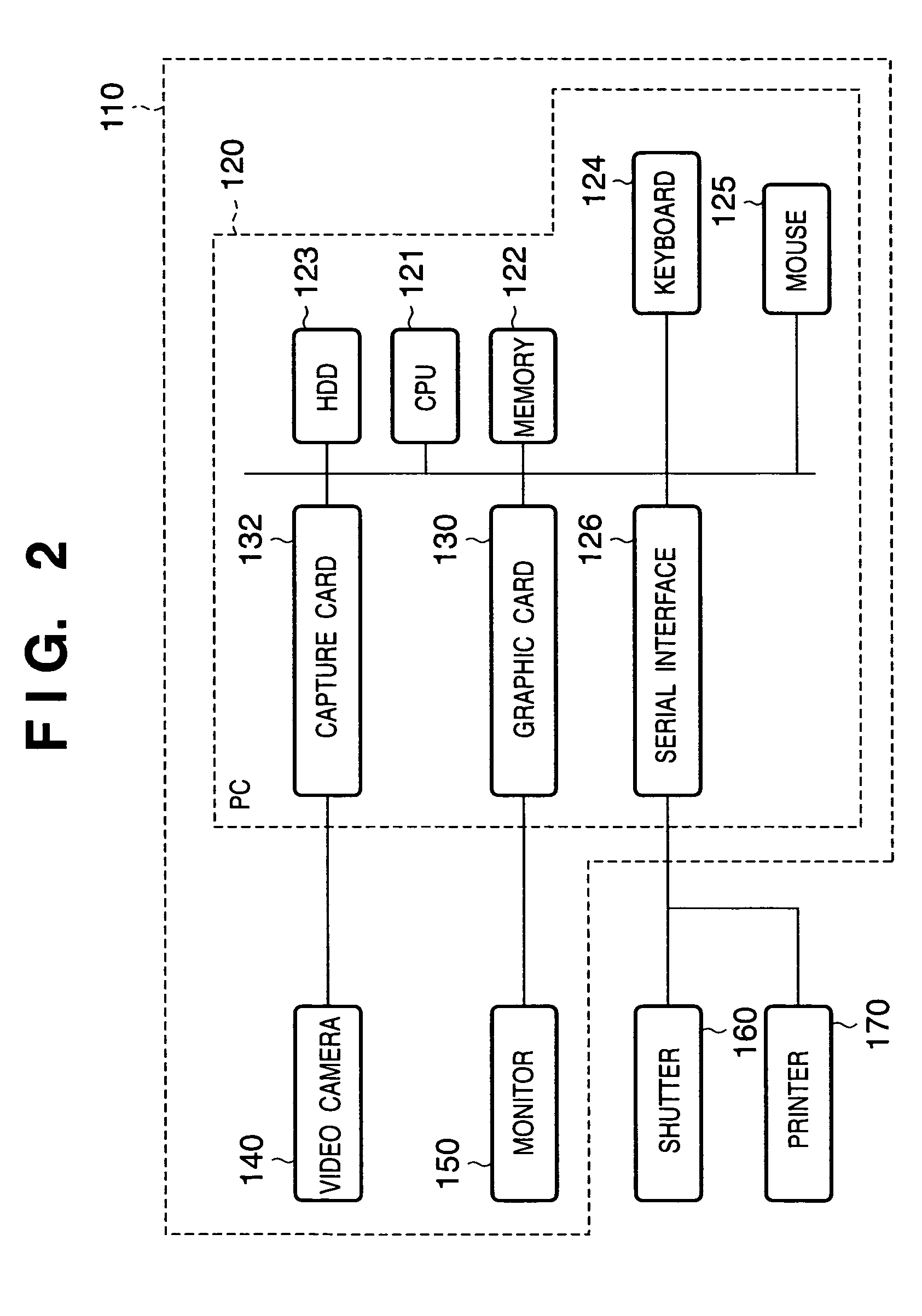

Image processing apparatus and image processing method

An image processing apparatus is connected to a print unit adapted to print an image, and can output an image to be printed to the print unit. The apparatus includes an image sensing unit adapted to capture a real space image, a generation unit adapted to generate a virtual space image according to a viewpoint position and orientation of the image sensing unit, and a composition unit adapted to obtain a mixed reality space image by combining the real space image captured by the image sensing unit and the virtual space image generated by the generation unit. In addition, a display unit is adapted to display a mixed reality space image obtained by the composition unit, and an output unit is adapted to output a mixed reality space image, which is obtained by the composition unit, to the print unit in response to an input of a print instruction. The generation unit generates a virtual space image to be displayed by the display unit according to a first procedure, and generates a virtual space image to be outputted by the output unit according to a second procedure which is different from the first procedure.

Owner:CANON KK

Automated trainee monitoring and performance evaluation system

ActiveUS20060073449A1Improve performanceCosmonautic condition simulationsDrawing from basic elementsMixed realitySimulation

A method and system for performing automated training environment monitoring and evaluation. The training environment may include a mixed reality elements to enhance a training experience.

Owner:SRI INTERNATIONAL

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com