Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

697 results about "Response delay" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Delayed Reinforcement is a time delay between the desired response of an organism and the delivery of reward. In operant conditioning a conditioned response is the desired response that has been conditioned and elicits reinforcement.

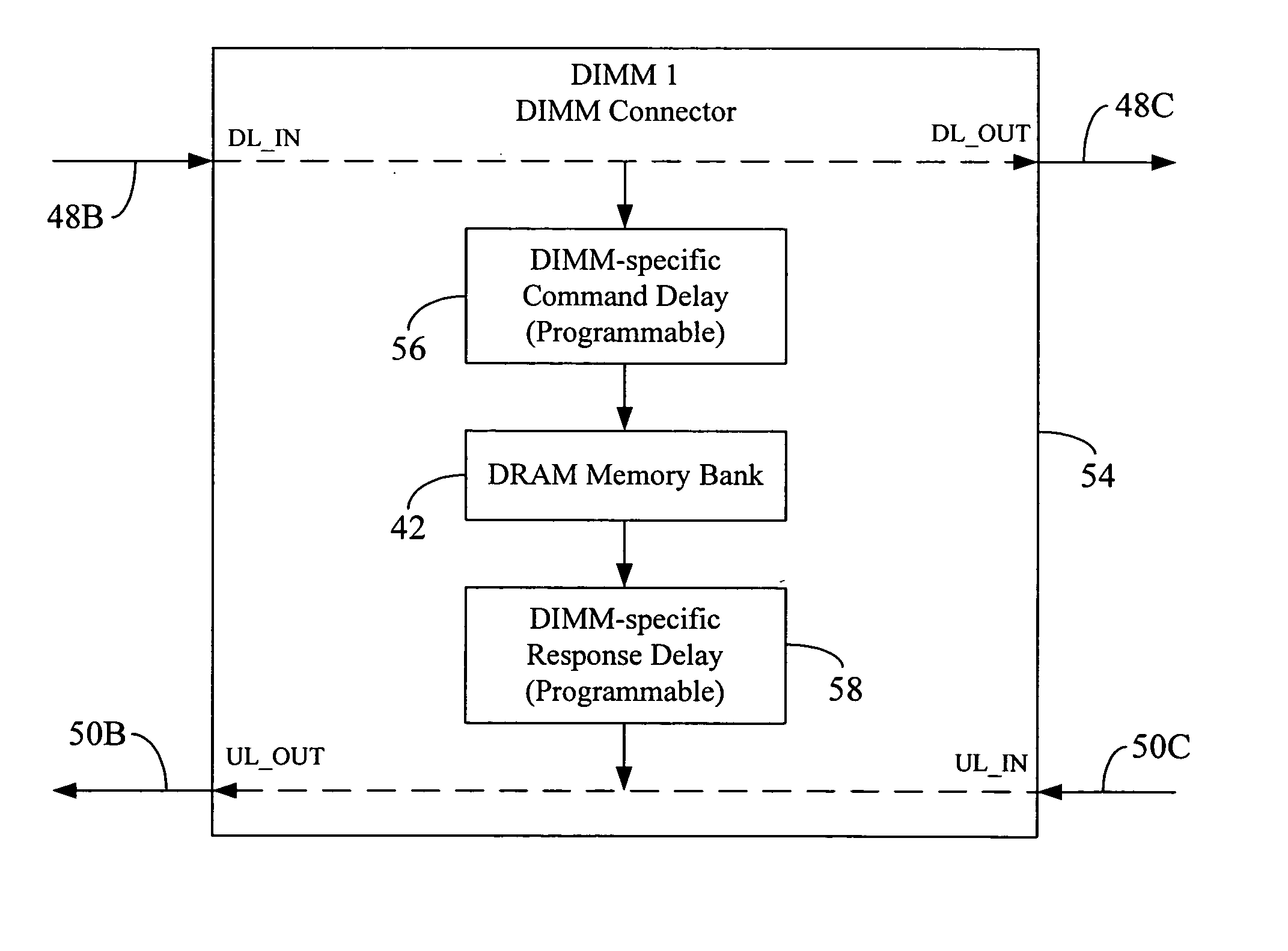

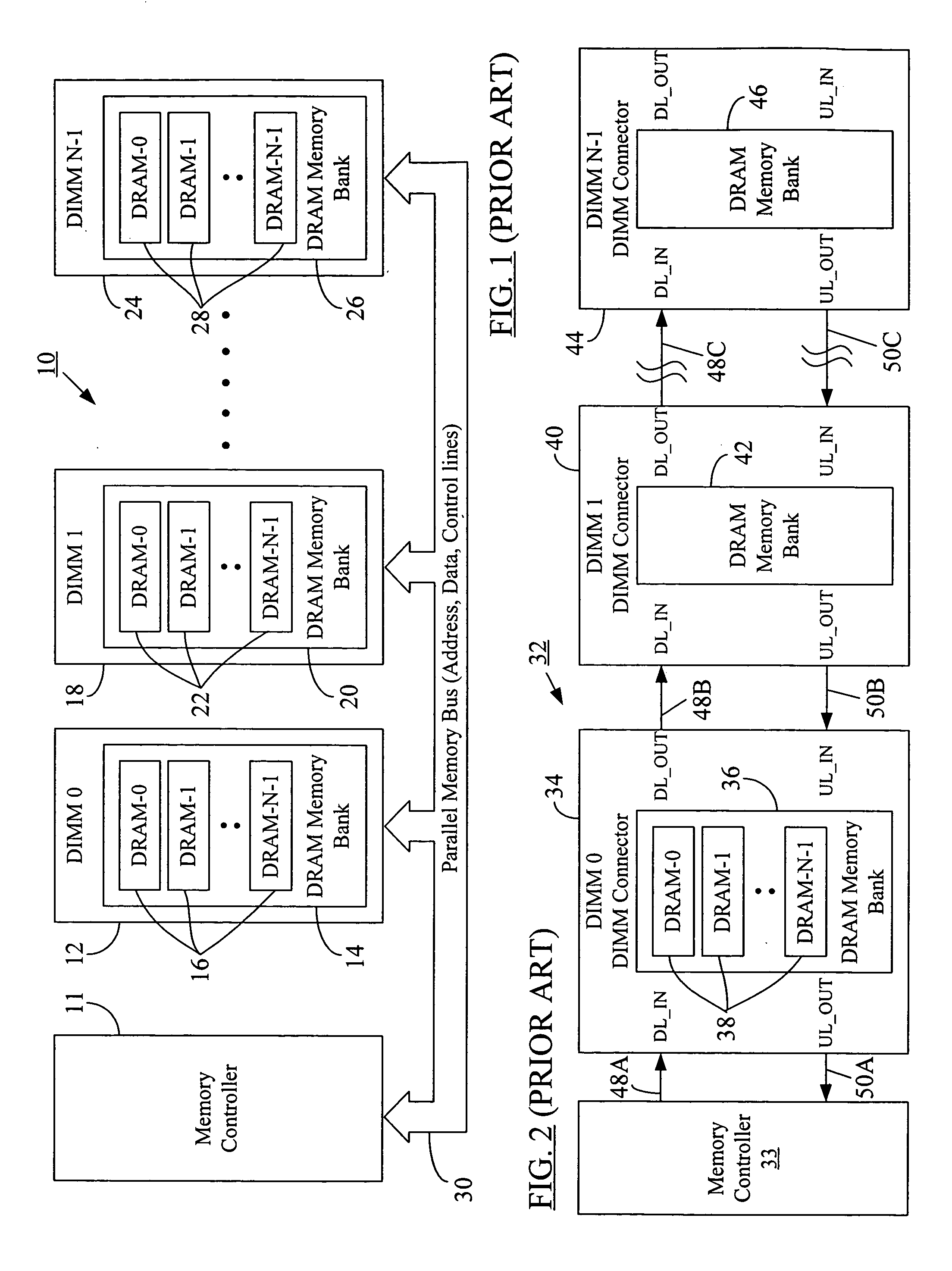

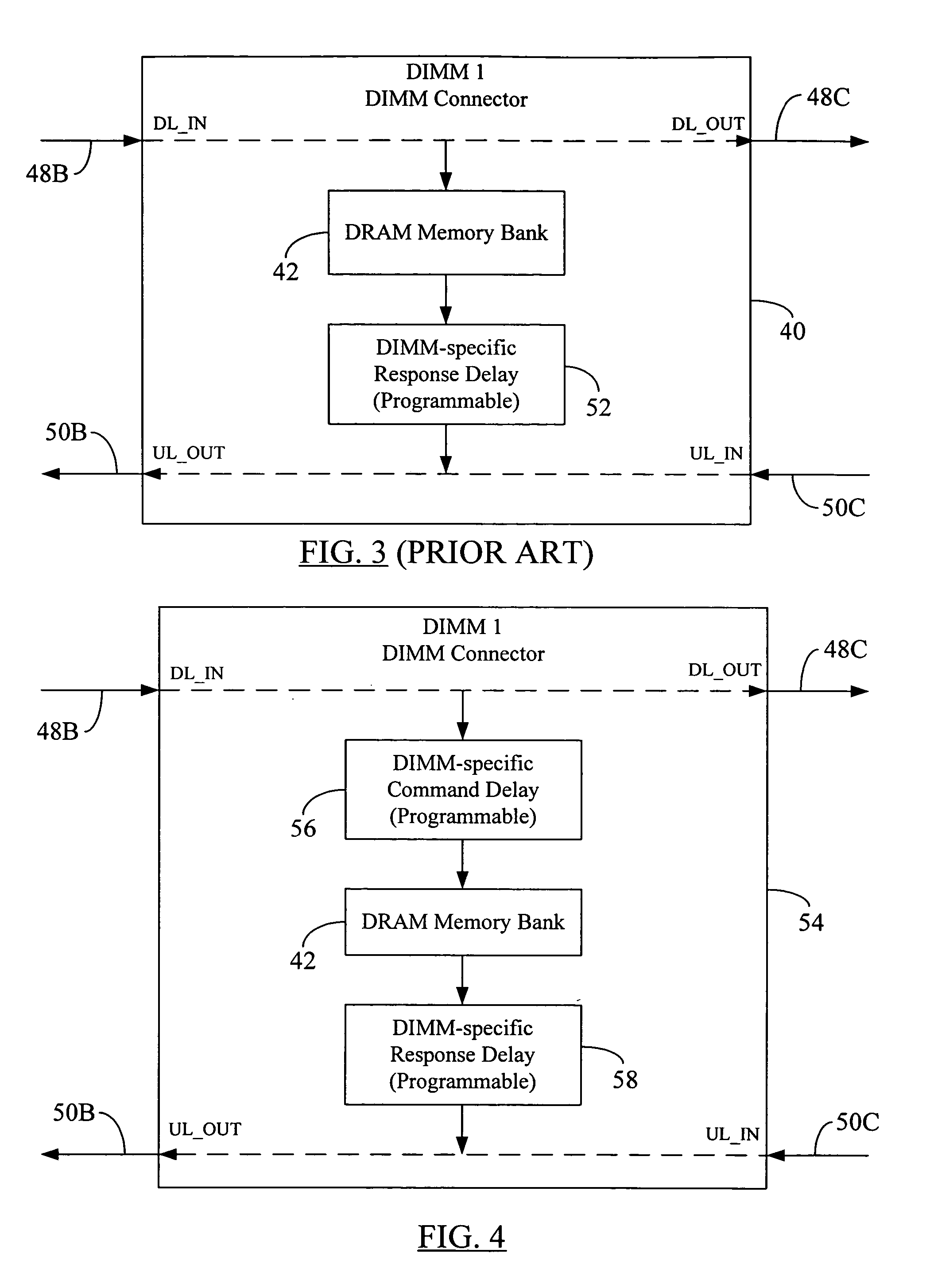

Memory command delay balancing in a daisy-chained memory topology

InactiveUS20060041730A1Effective predictionAccurately ascertainedEnergy efficient ICTDigital storageDIMMControl power

A methodology for a daisy-chained memory topology wherein, in addition to the prediction of the timing of receipt of a response from a memory module (DIMM), the memory controller can effectively predict when a command sent by it will be executed by the addressee DIMM. By programming DIMM-specific command delay in the DIMM's command delay unit, the command delay balancing methodology according to the present disclosure “normalizes” or “synchronizes” the execution of the command signal across all DIMMs in the memory channel. With such ability to predict command execution timing, the memory controller can efficiently control power profile of all the DRAM devices (or memory modules) on a daisy-chained memory channel. A separate DIMM-specific response delay unit in the DIMM may also be programmed to provide DIMM-specific delay compensation in the response path, further allowing the memory controller to accurately ascertain the timing of receipt of a response thereat, and, hence, to better manage further processing of the response.

Owner:ROUND ROCK RES LLC

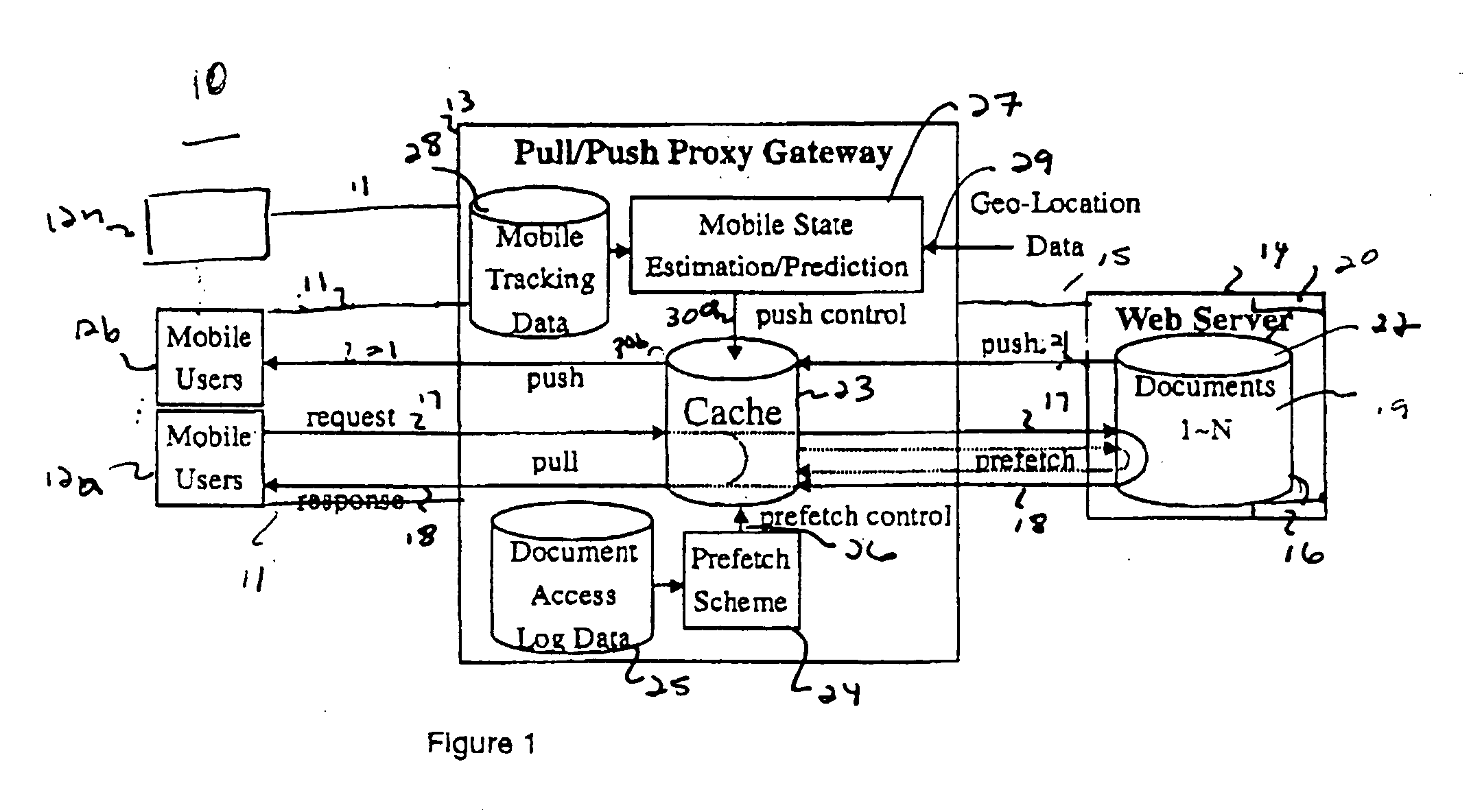

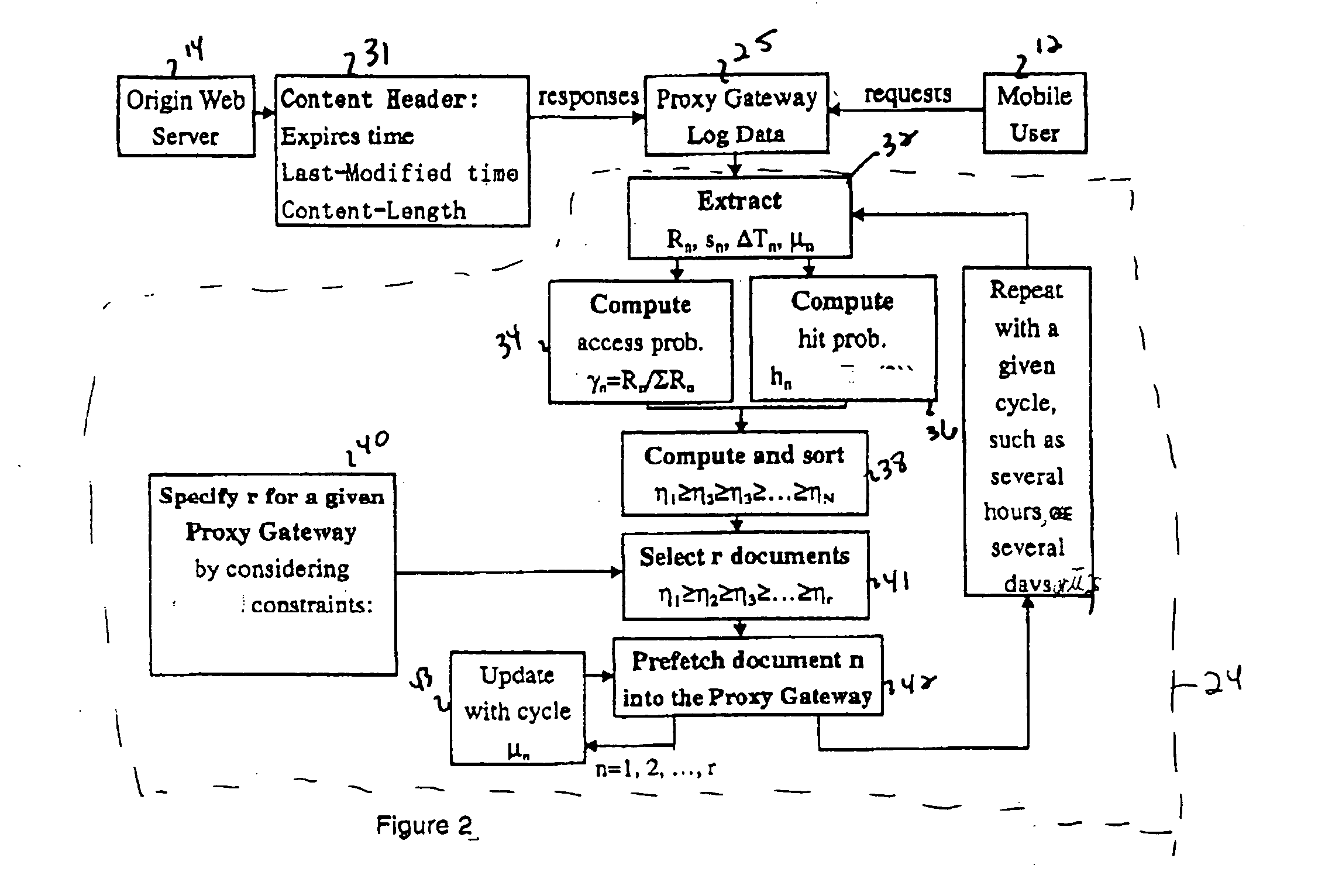

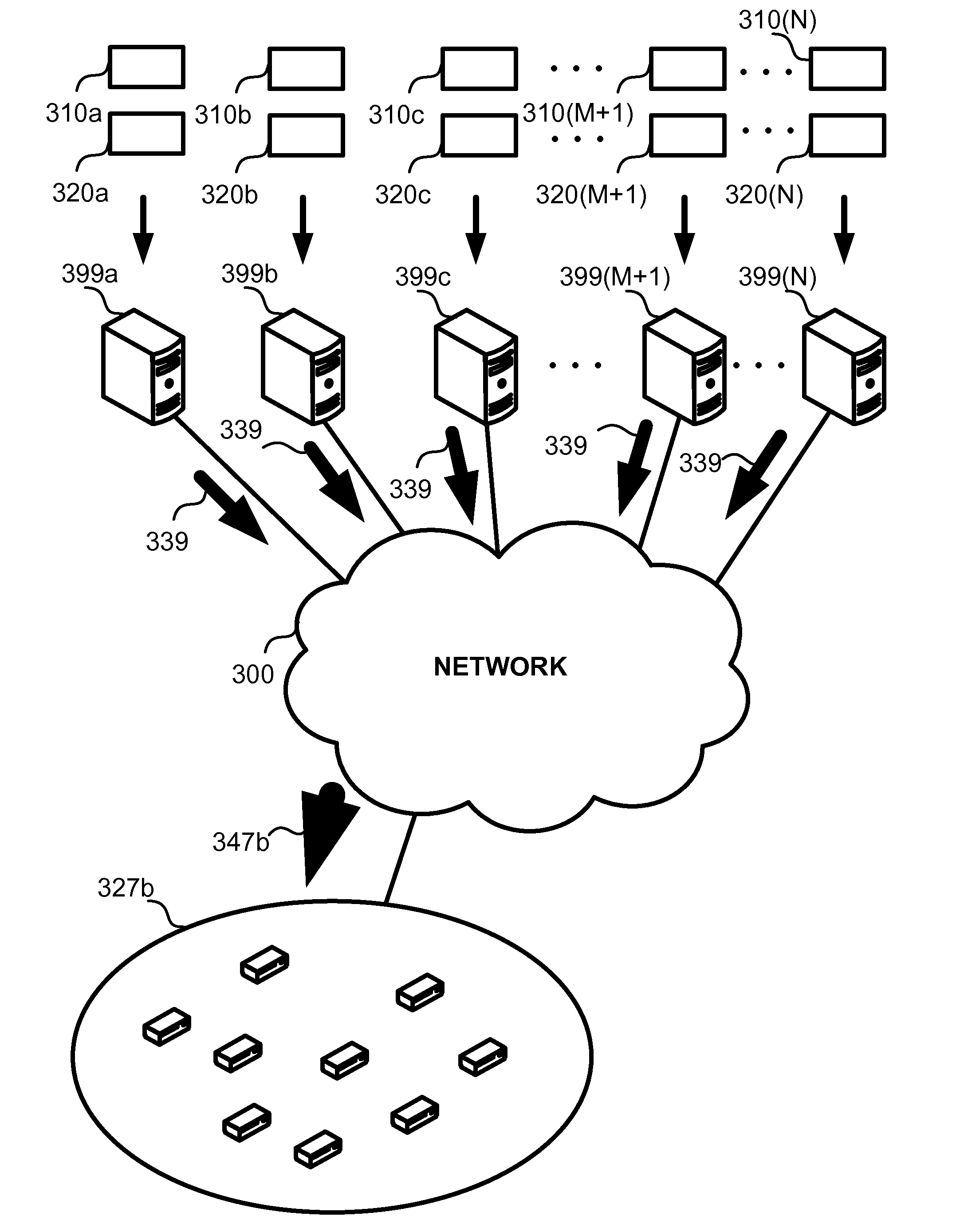

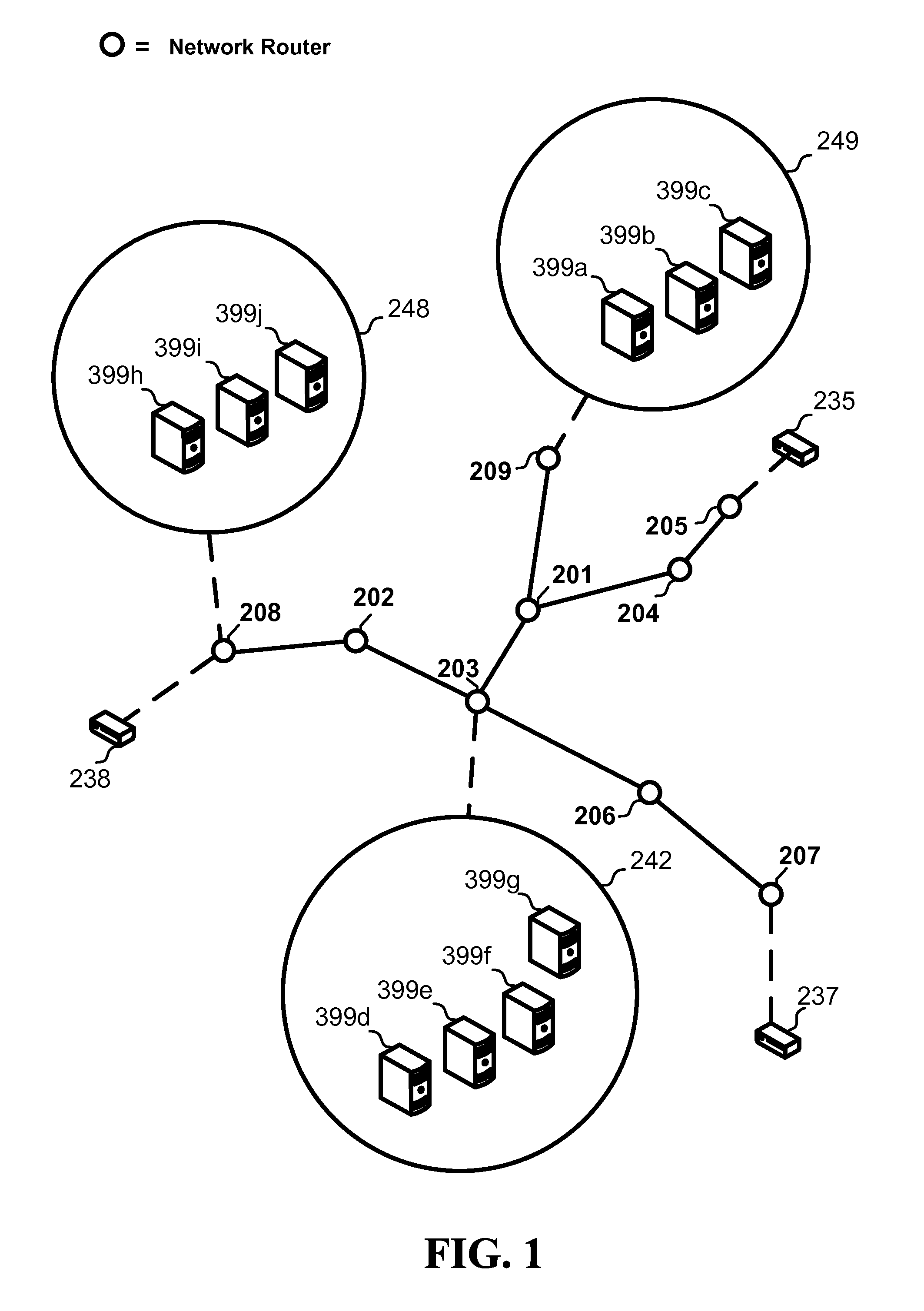

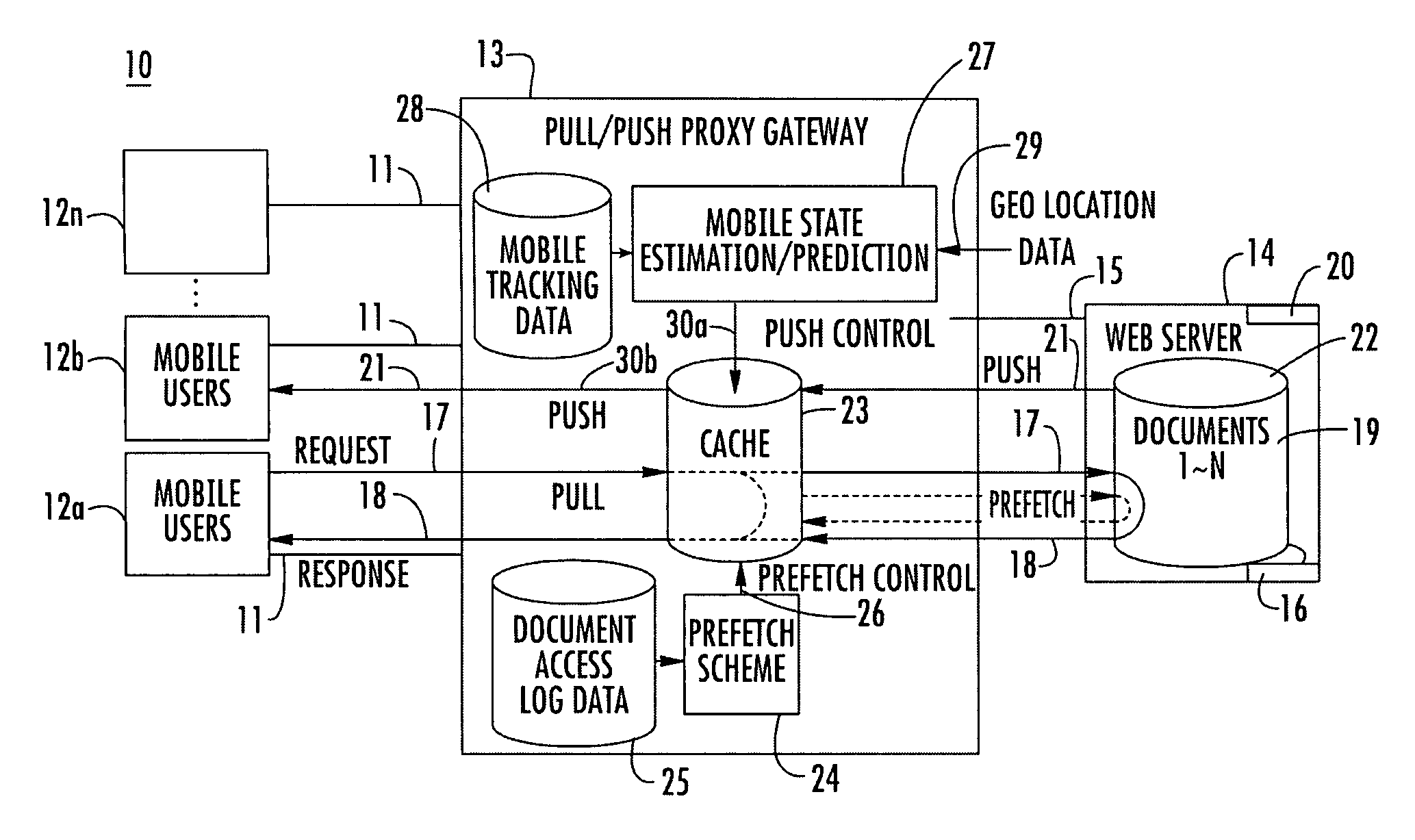

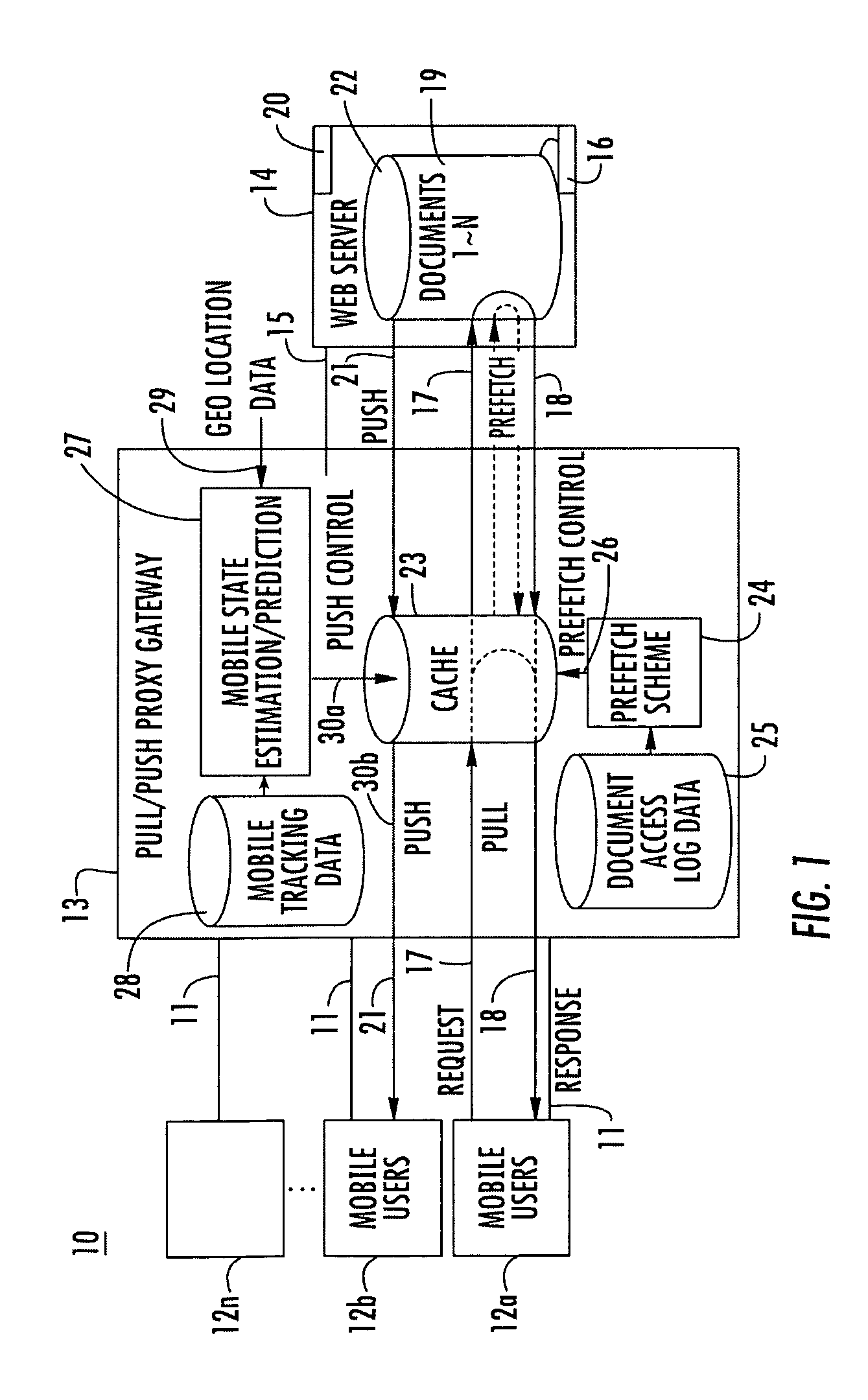

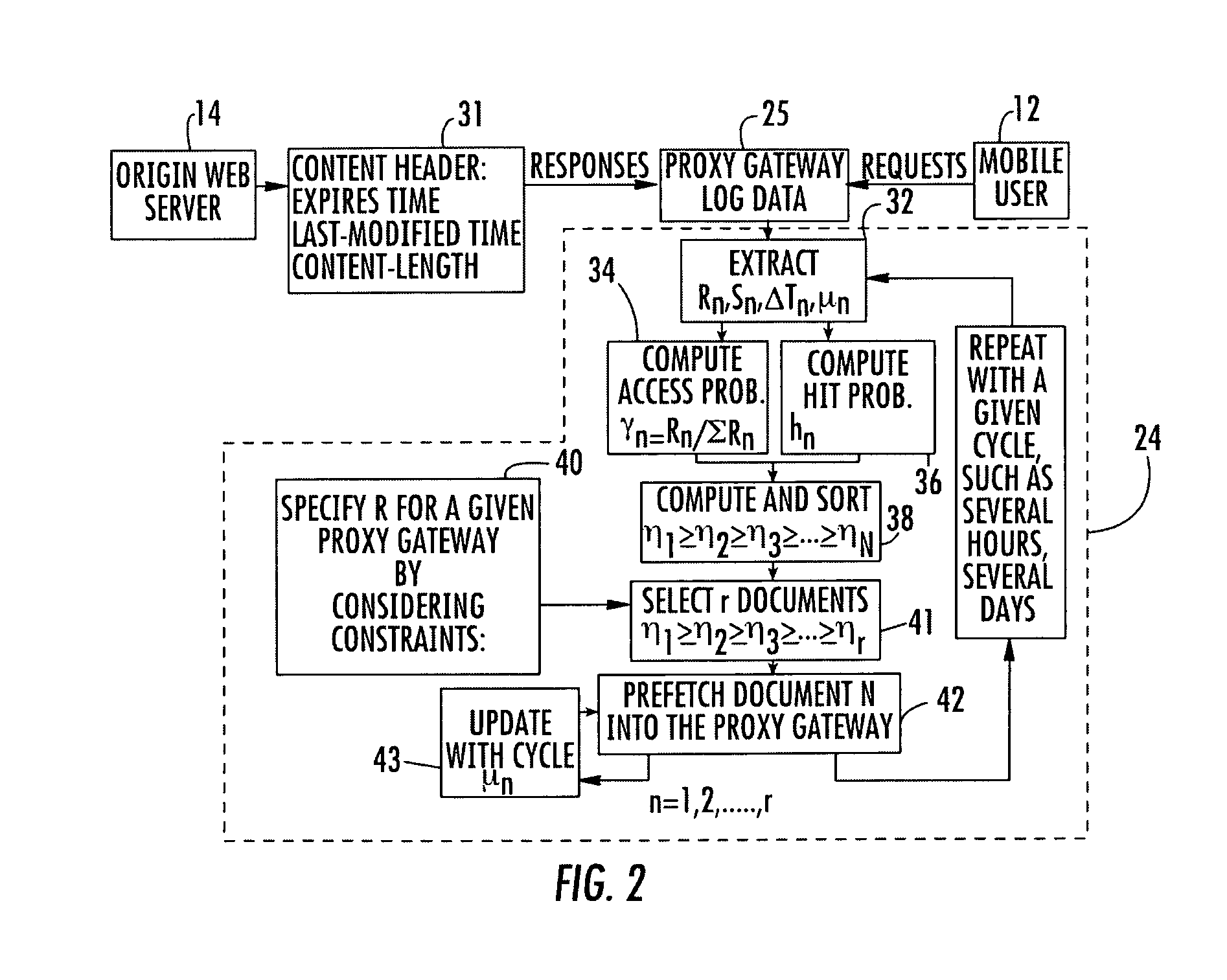

System for wireless push and pull based services

InactiveUS20050193096A1Improve performanceReduce access latencyTelephonic communicationMultiple digital computer combinationsPush and pullResponse delay

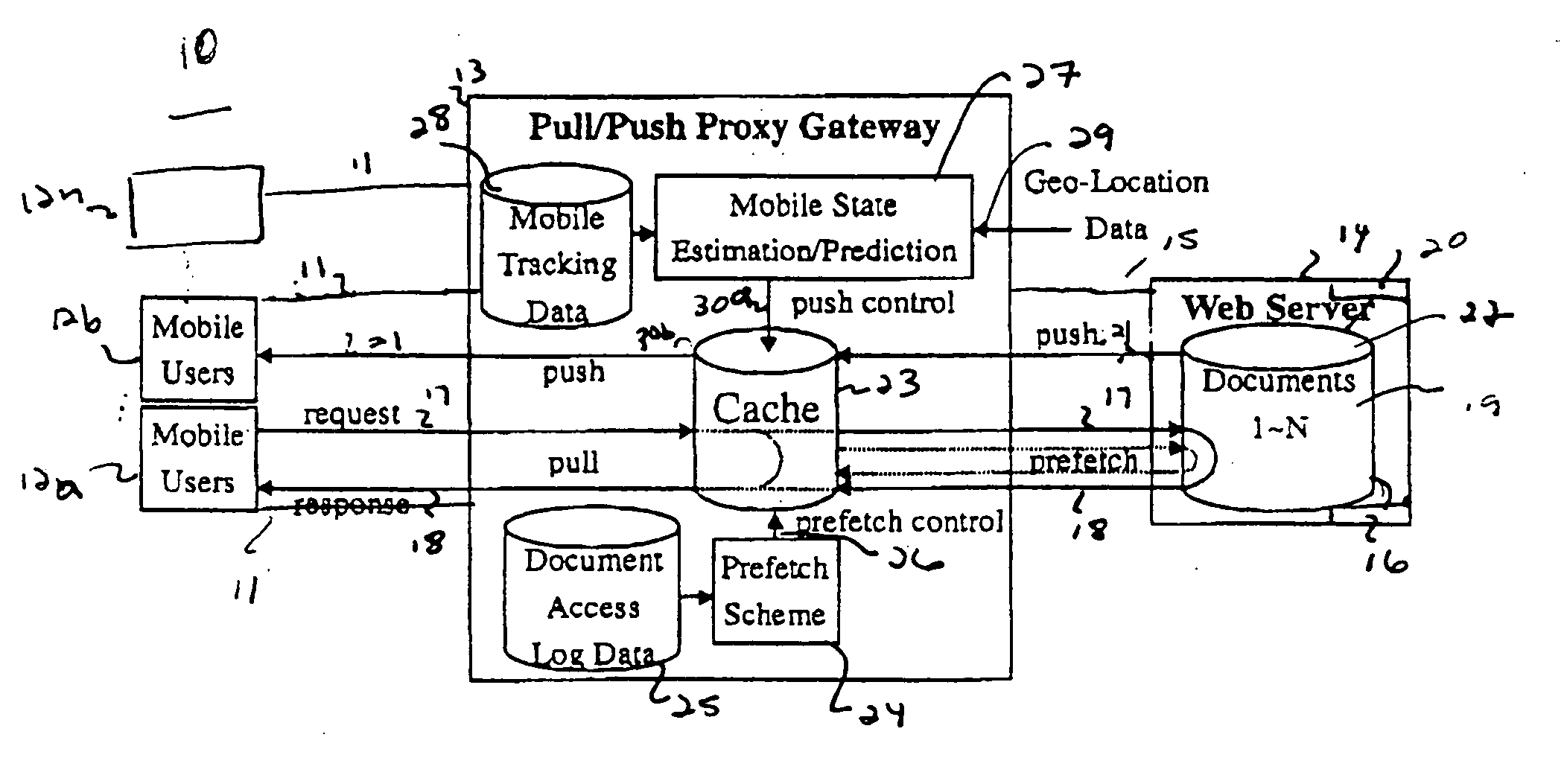

The present invention relates to a method and system for providing Web content from pull and push based services running on Web content providers to mobile users. A proxy gateway connects the mobile users to the Web content providers. A prefetching module is used at the proxy gateway to optimize performance of the pull services by reducing average access latency. The average access latency can be reduced by using at least three factors: one related to the frequency of access to the pull content; second, the update cycle of the pull content determined by the Web content providers; and third, the response delay for fetching pull content from the content provider to the proxy gateway. Pull content, such as documents, having the greatest average access latency are sorted and a predetermined number of the documents are prefetched into the cache. Push services are optimized by iteratively estimating a state of each of the mobile users to determine relevant push content to be forward to the mobile user.

Owner:PRINCETON UNIV

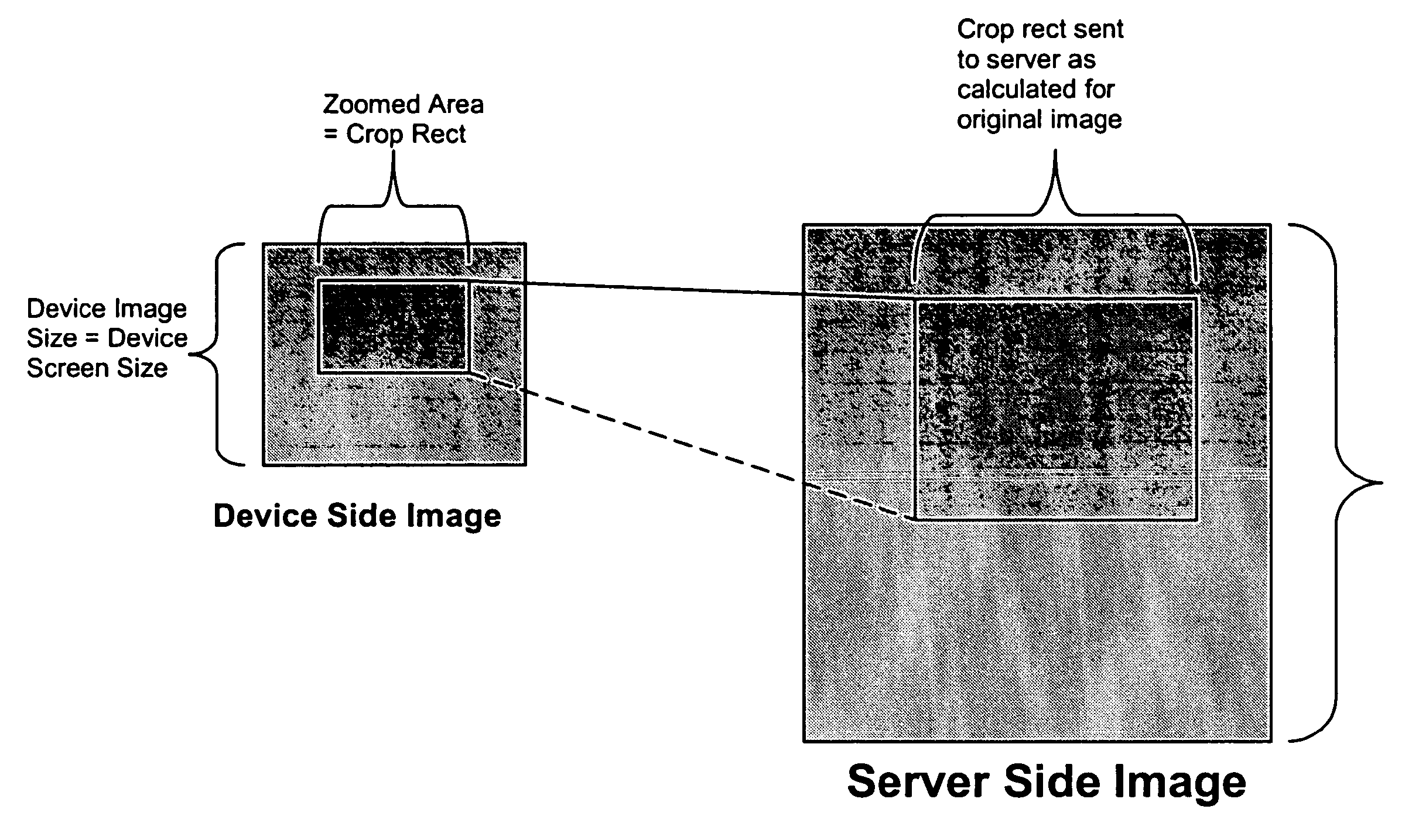

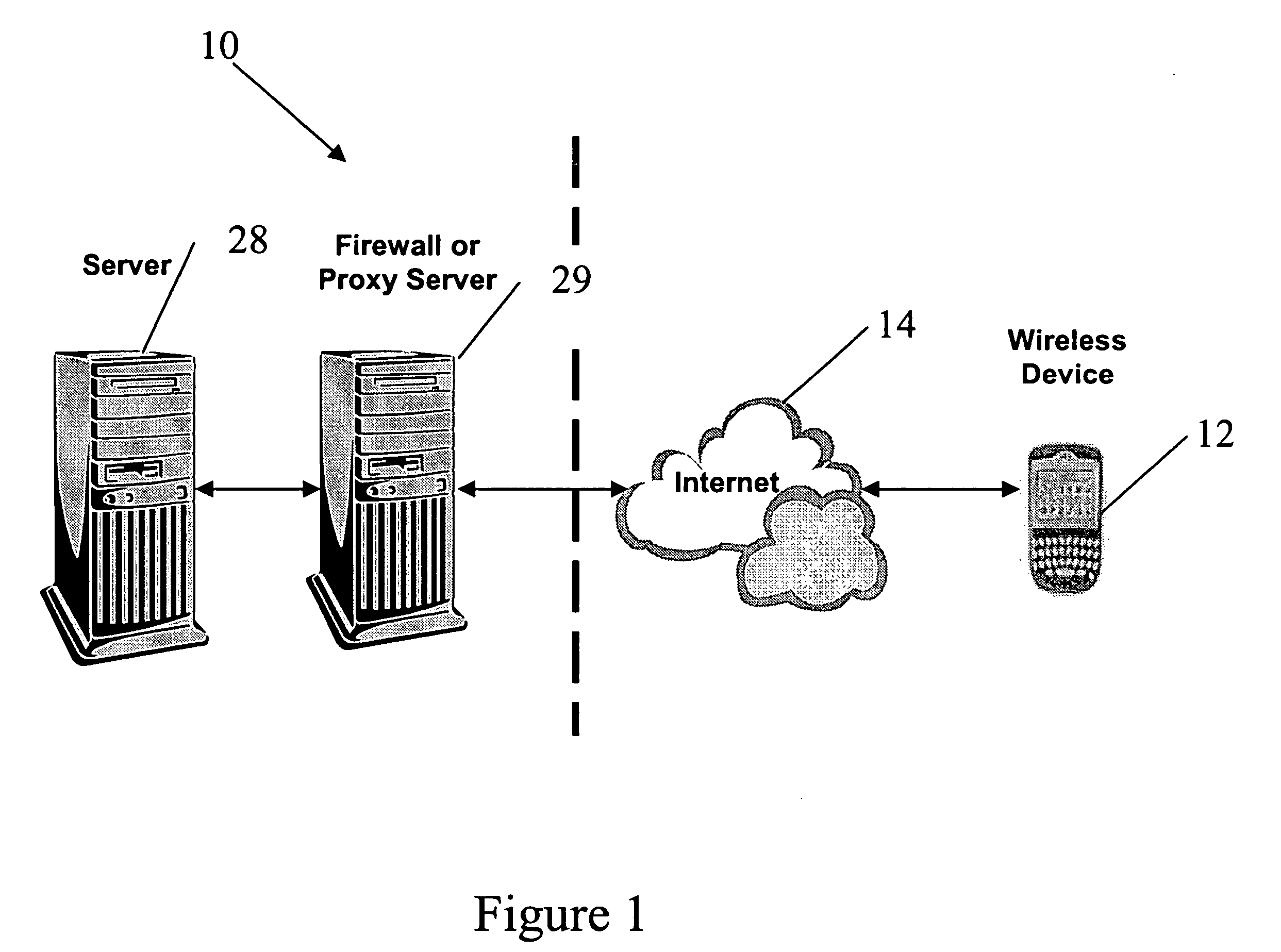

Method for requesting and viewing a zoomed area of detail from an image attachment on a mobile communication device

ActiveUS20060055693A1Reduce data volumeReduce resolutionGeometric image transformationCathode-ray tube indicatorsLevel of detailResponse delay

A process is set forth for viewing an enlarged area of an image. The image is stored on a server and re-sized for viewing on a mobile communication device based on screen size and colour display capabilities of the device. The image is enlarged within the server by modifying binary raw data of the original image based on crop rectangle coordinates entered at the mobile communication device. The process allows users to quickly retrieve any relevant part of a large image attachment that has been resized by the server. This minimizes bandwidth usage, device memory / CPU consumption, and request / response latency while still allowing the user to view an image area in its original level of detail.

Owner:MALIKIE INNOVATIONS LTD

Latency based selection of fractional-storage servers

InactiveUS20100094970A1Reduce response delayOther decoding techniquesCode conversionResponse delayErasure code

Latency based selection of fractional-storage servers, including the steps of identifying a first group of fractional-storage servers estimated to have low response latencies in relation to an assembling device. Retrieving, by the assembling device from a second group of fractional-storage servers, enough erasure-coded fragments for reconstructing approximately sequential segments of streaming content. While retrieving the fragments, identifying at least one server from the second group having latency higher than a certain threshold in response to a fragment pull protocol request. And using the fragment pull protocol to replace the identified server with at least one server selected from the first group.

Owner:PATENTVC

System for wireless push and pull based services

InactiveUS7058691B1Improve performanceReduce access latencyMultiple digital computer combinationsProgram controlPush and pullResponse delay

The present invention relates to a method and system for providing Web content from pull and push based services running on Web content providers to mobile users. A proxy gateway connects the mobile users to the Web content providers. A prefetching module is used at the proxy gateway to optimize performance of the pull services by reducing average access latency. The average access latency can be reduced by using at least three factors: one related to the frequency of access to the pull content; second, the update cycle of the pull content determined by the Web content providers; and third, the response delay for fetching pull content from the content provider to the proxy gateway. Pull content, such as documents, having the greatest average access latency are sorted and a predetermined number of the documents are prefetched into the cache. Push services are optimized by iteratively estimating a state of each of the mobile users to determine relevant push content to be forward to the mobile user.

Owner:THE TRUSTEES FOR PRINCETON UNIV

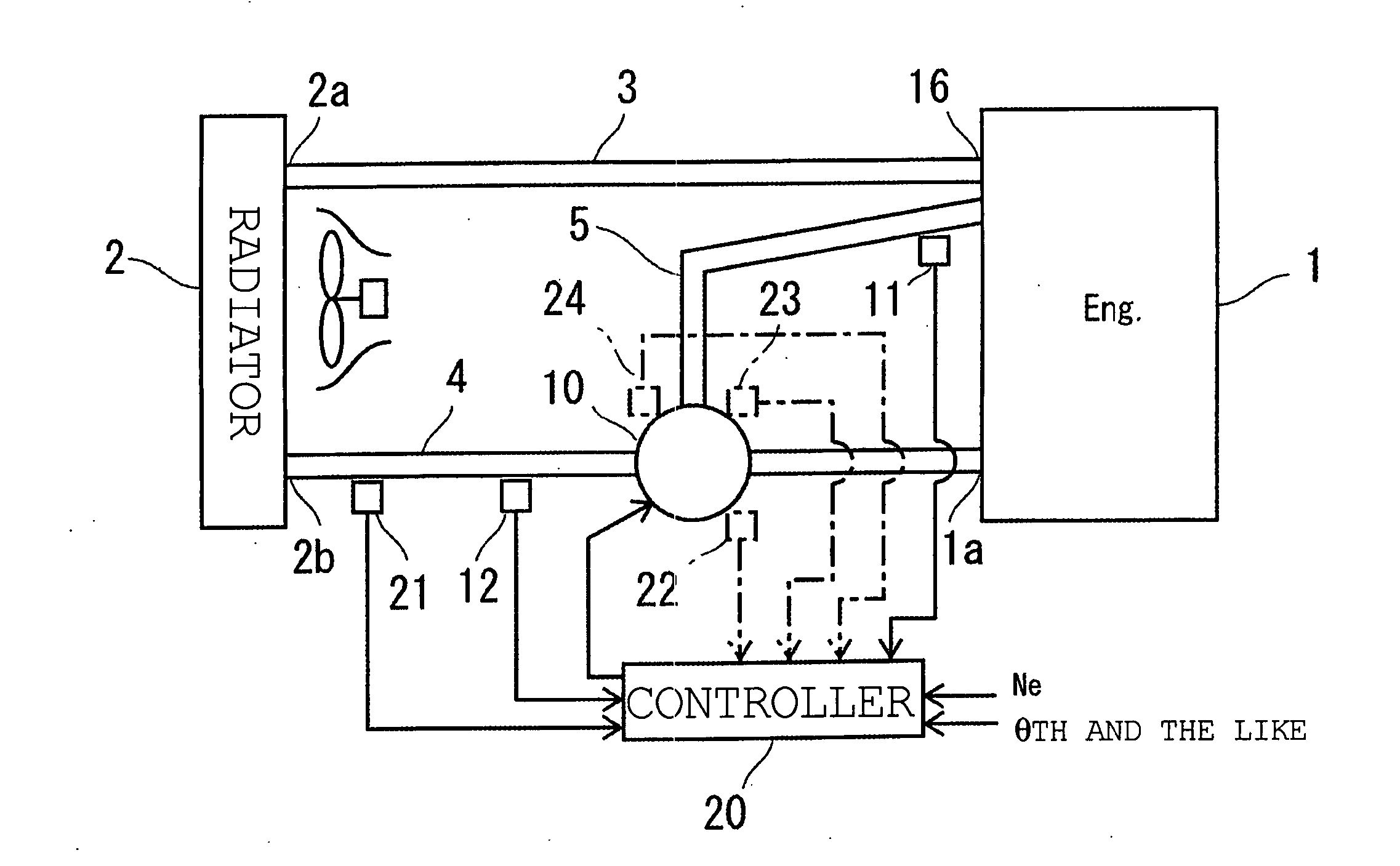

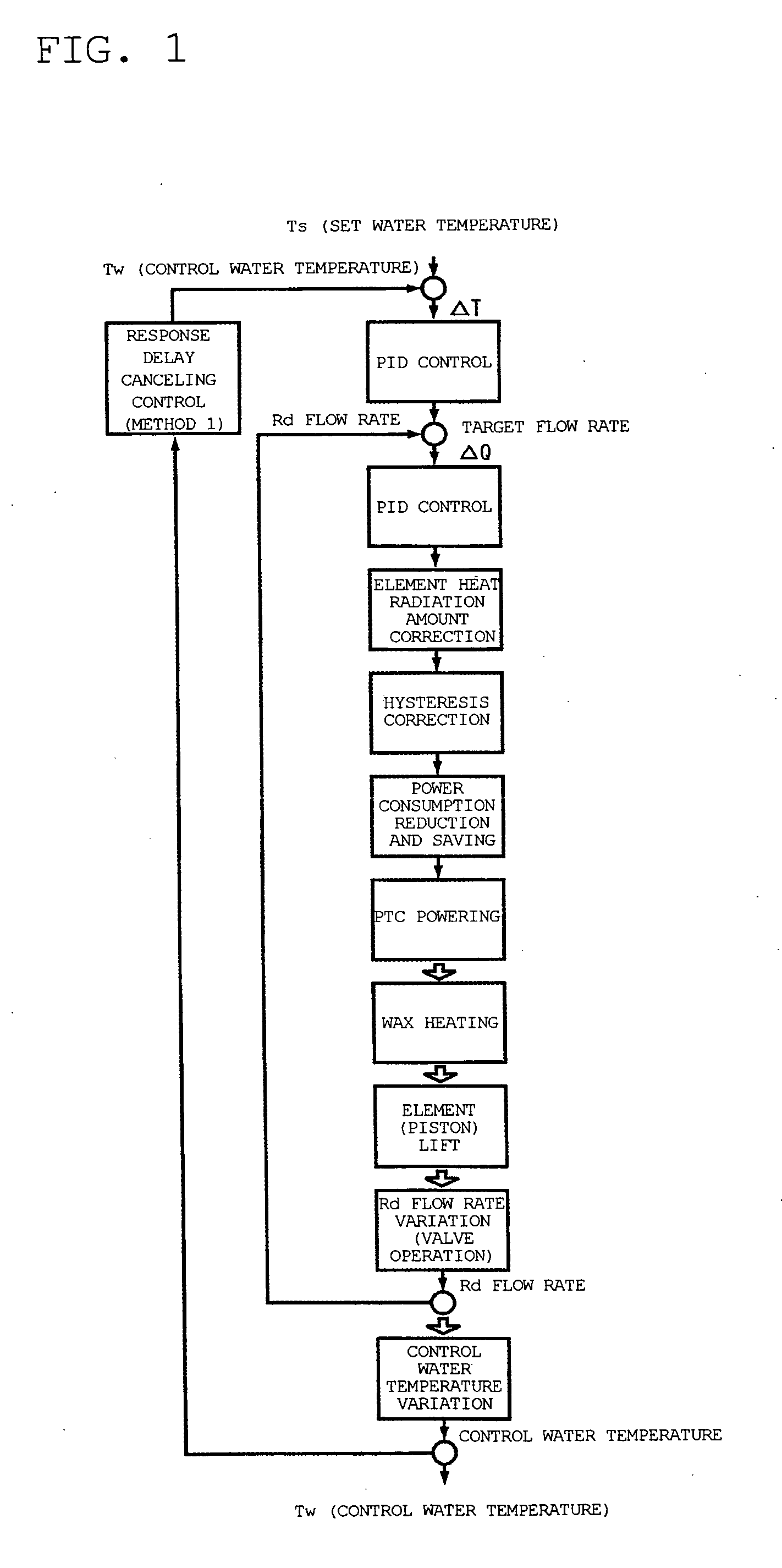

Method of controlling electronic controlled thermostat

ActiveUS20050006487A1Reliable improvement in fuel consumptionPoor temperature controlTemperature control without auxillary powerElectrical controlResponse delayEngineering

An electronically controlled thermostat control method is obtained which makes it possible to eliminate the response delay from the time that the required cooling water temperature is set to the time that the actual cooling water temperature reaches the set water temperature by controlling the flow rate, and to realize high cooling water temperature tracking characteristics with a high degree of precision at a low cost. The method of the present invention is characterized in that in an electronically controlled thermostat which is used to control the cooling water temperature of an engine, and which comprises an actuator that can arbitrarily vary the degree of valve opening without depending only on the actual cooling water temperature, the actuator is controlled by the control controller, which has means for calculating the elapsed time from the powering of the actuator to the variation of the water temperature for predicting the water temperature after the elapsed time when the cooling water temperature is controlled to an arbitrarily set water temperature, and the actuator is controlled in advance in accordance with the above-described predicted water temperature.

Owner:NIPPON THERMOSTAT CO LTD

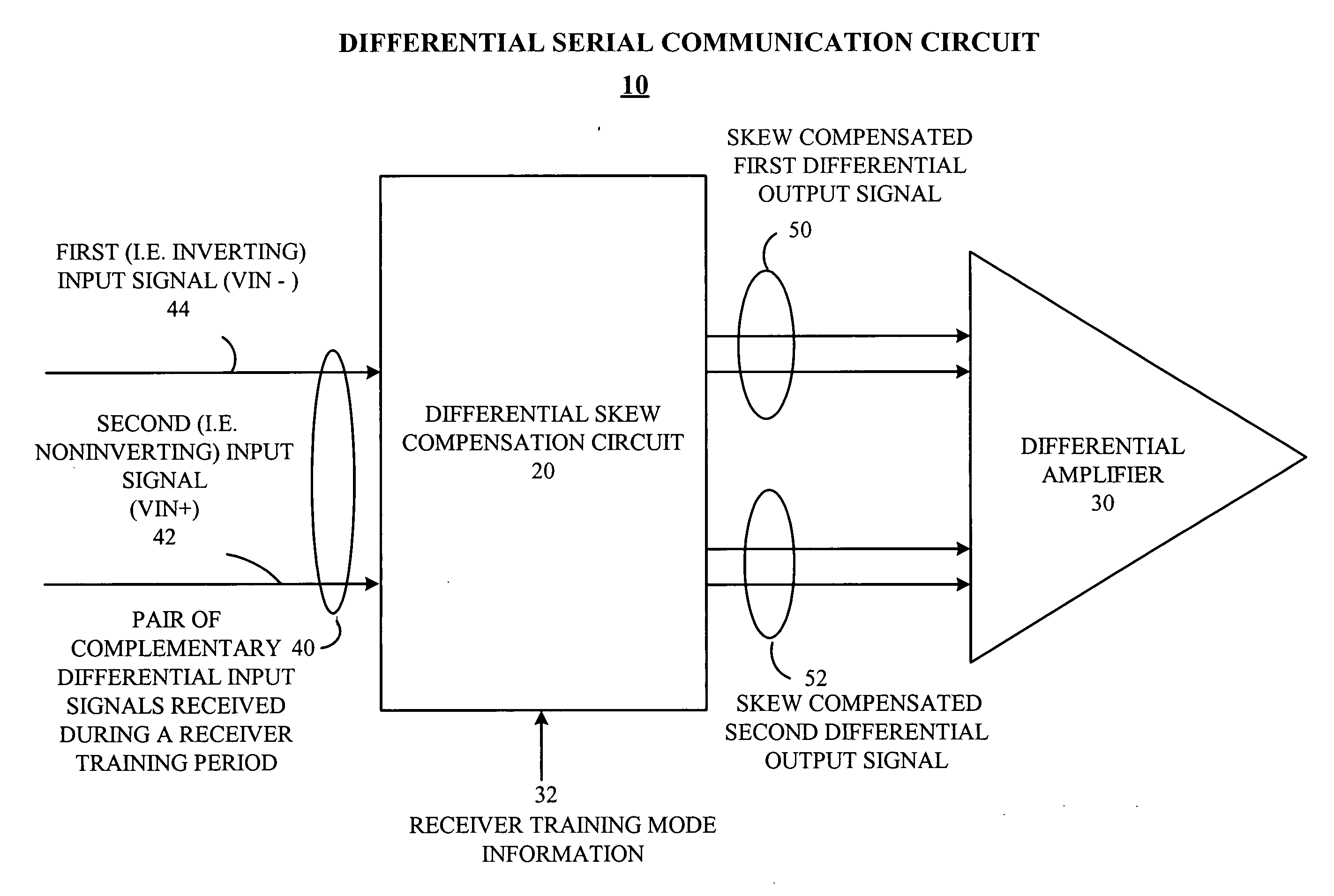

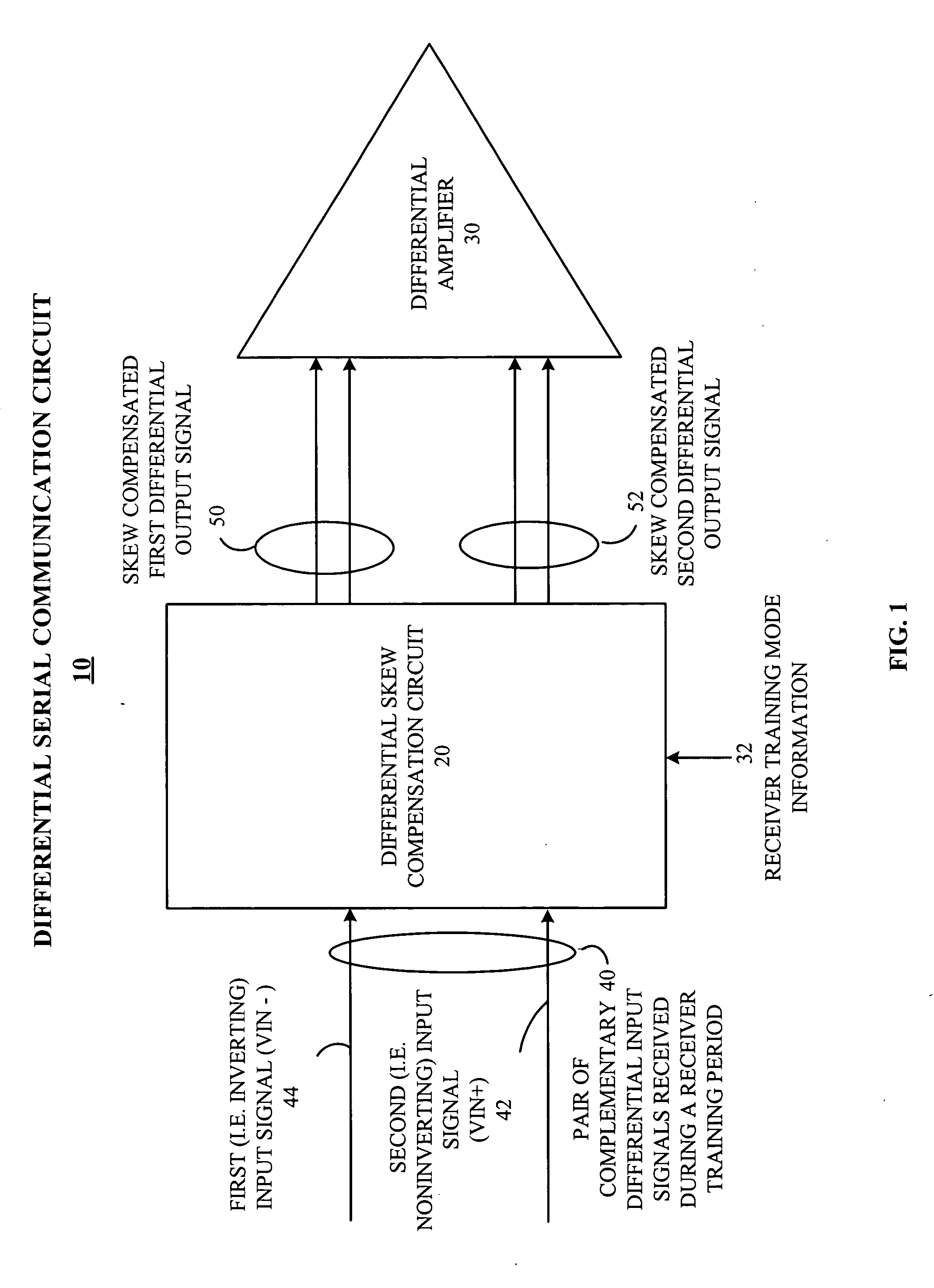

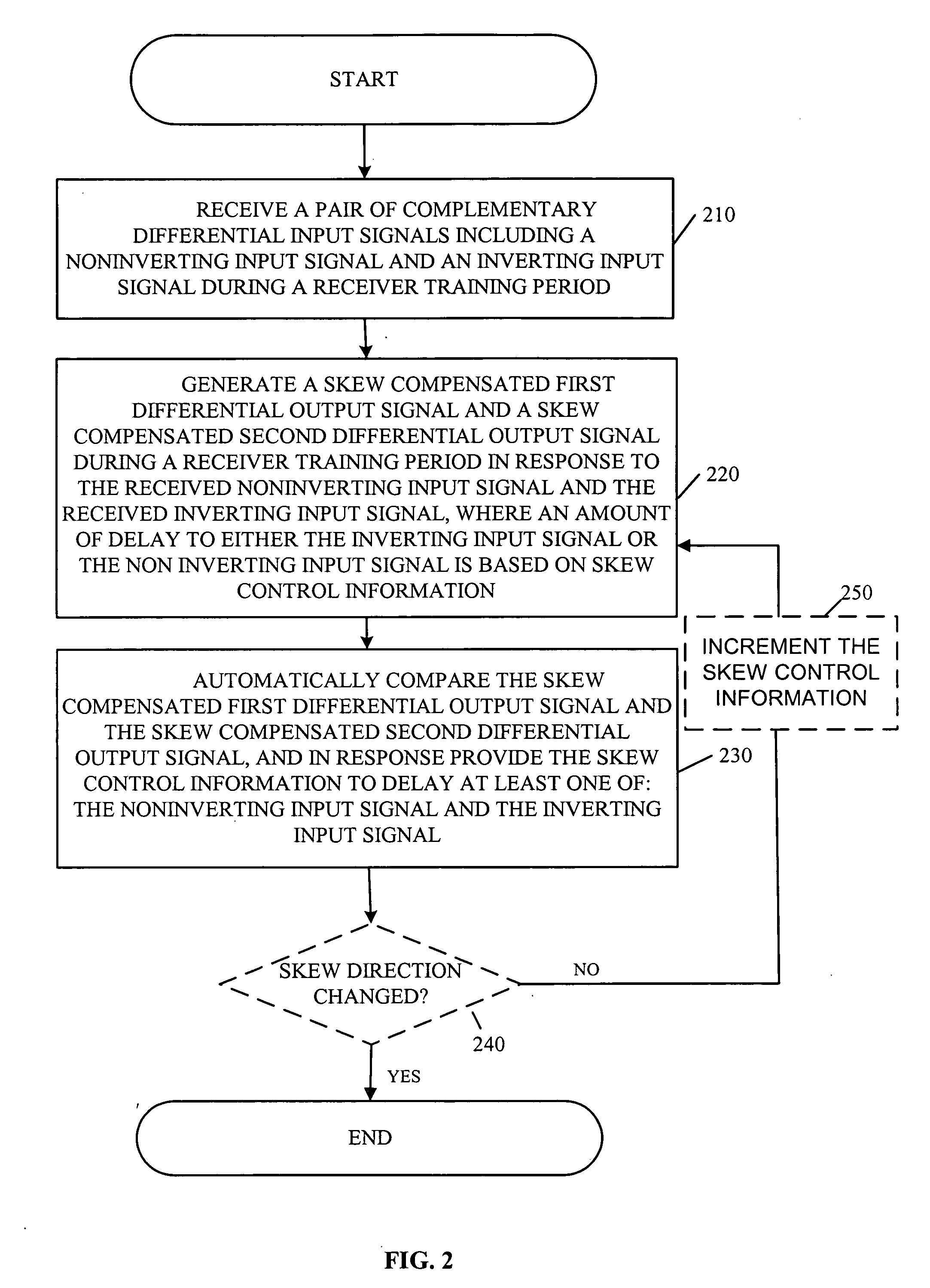

Intra-pair differential skew compensation method and apparatus for high-speed cable data transmission systems

ActiveUS20060244505A1Pulse automatic controlManipulation where pulse delivered at different timesTraining periodTelecommunications link

A differential serial communication receiver circuit automatically compensates for intrapair skew between received differential signals on a serial differential communication link, with deterministic skew adjustment set during a receiver training period. Intrapair skew refers to the skew within a pair of differential signals, and is hence interchangeable with the term differential skew in the context of this document. During the receiver training period, a training data pattern is received, such as alternating ones and zeros (e.g., a D10.2 pattern as is known in the art), rather than an actual data payload. The differential serial communication receiver circuit includes a differential skew compensation circuit to compensate for intrapair skew. The differential skew compensation circuit receives a pair of complementary differential input signals including a noninverting input signal and an inverting input signal, and in response generates a skew compensated first differential output signal and a skew compensated second differential output signal. The differential skew compensation circuit compares the relative delay of the skew compensated first differential output signal and the skew compensated second differential output signal, and in response delays at least one of the noninverting input signal or the inverting input signal to reduce intrapair skew.

Owner:ATI TECH INC

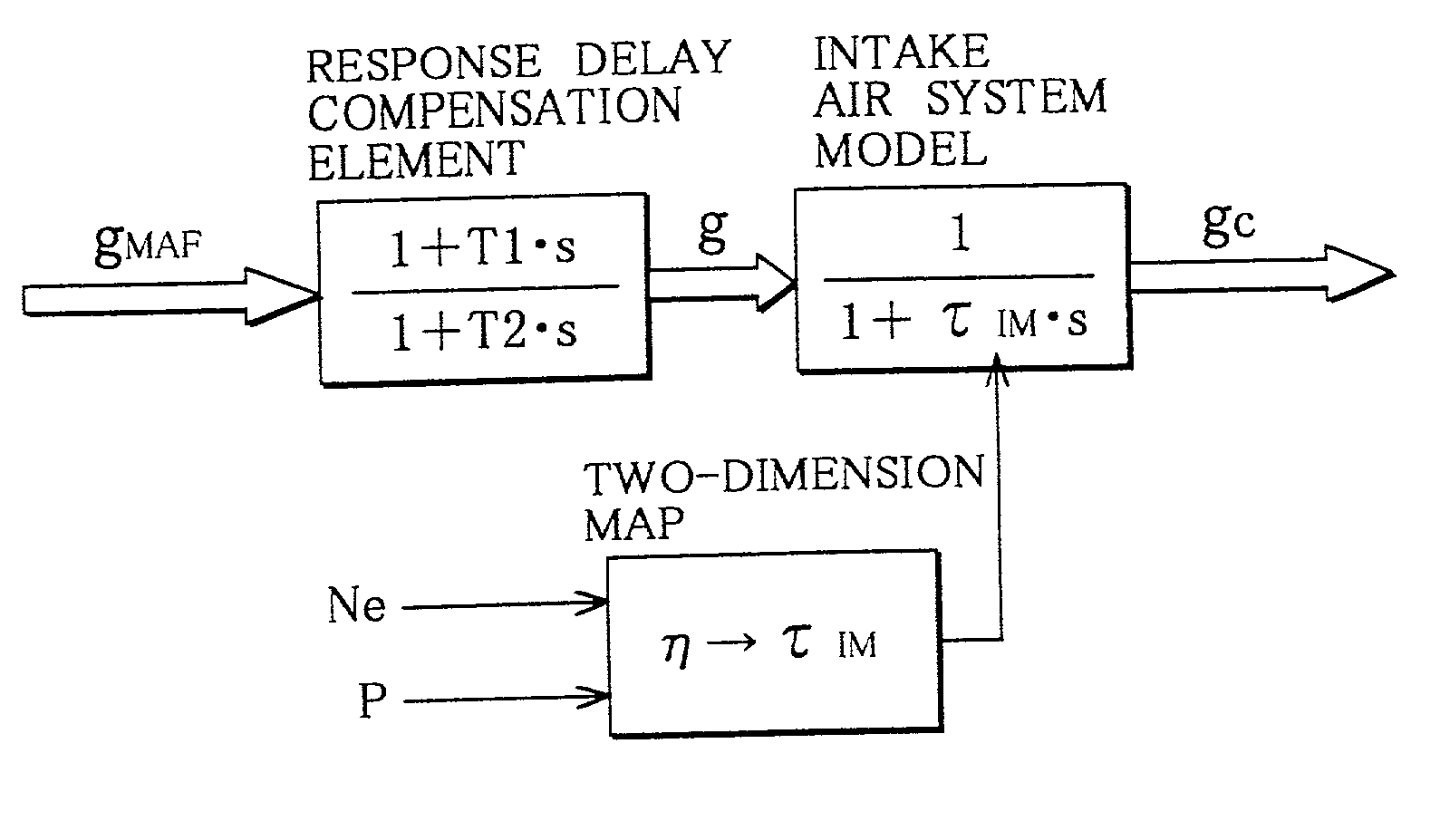

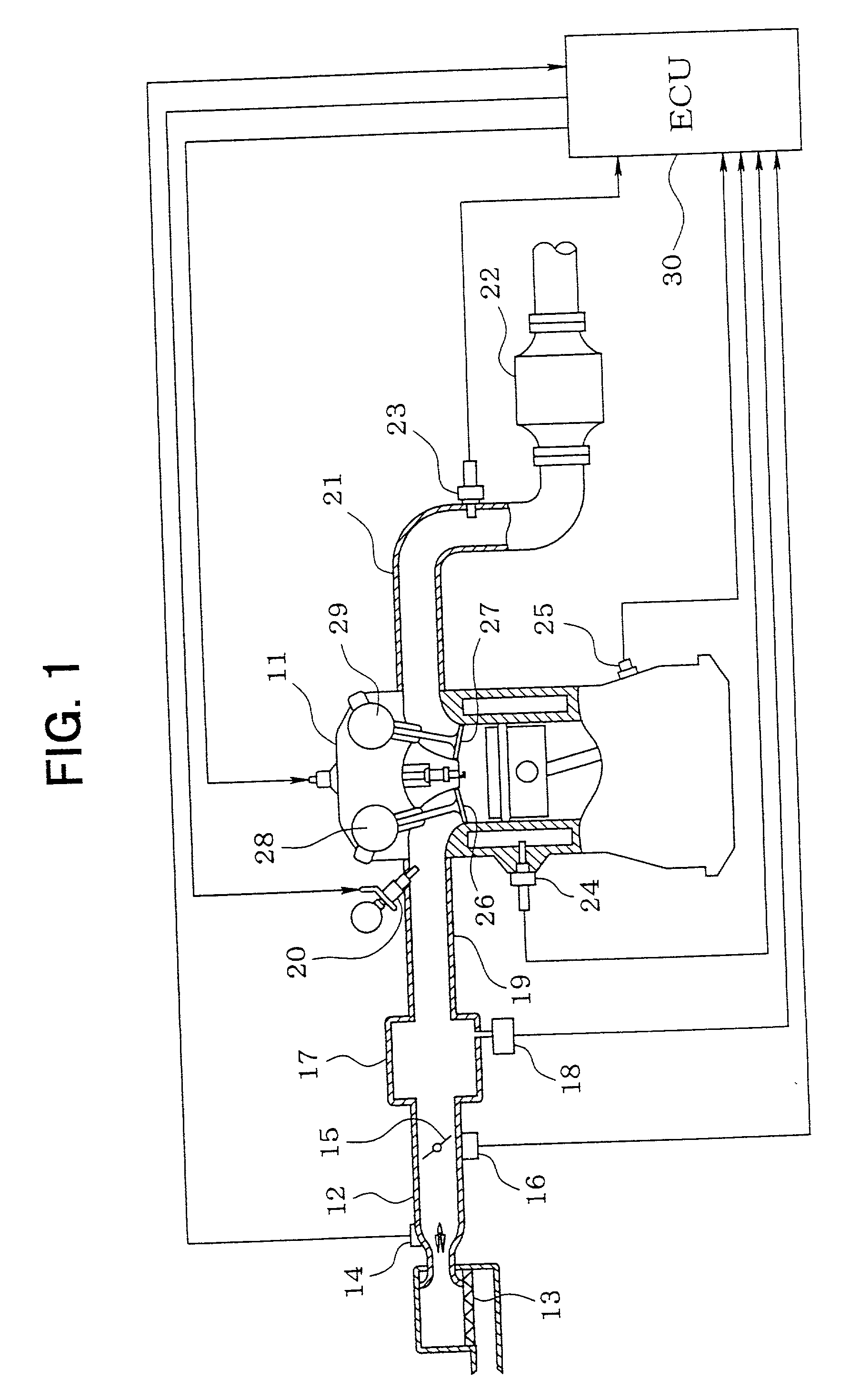

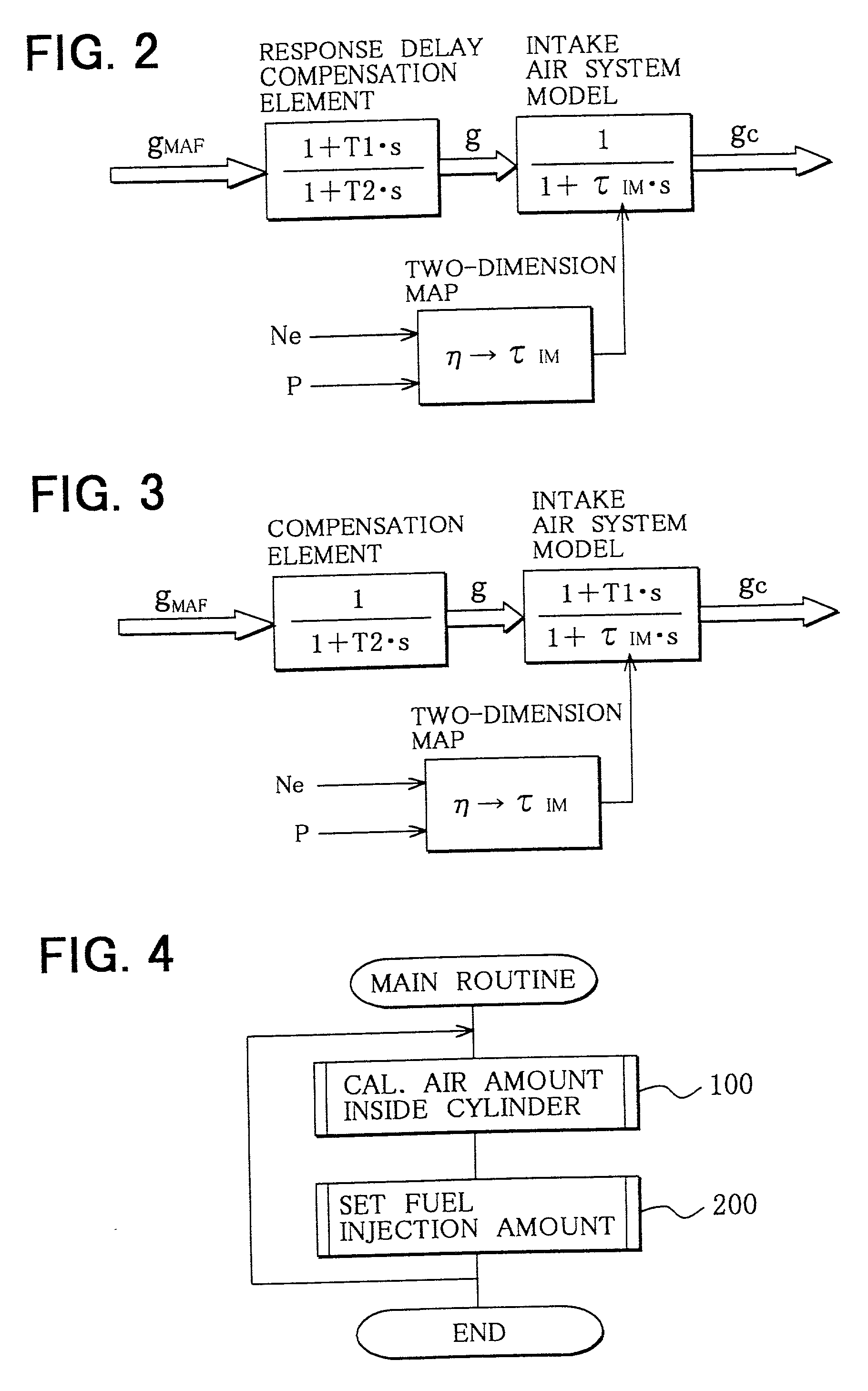

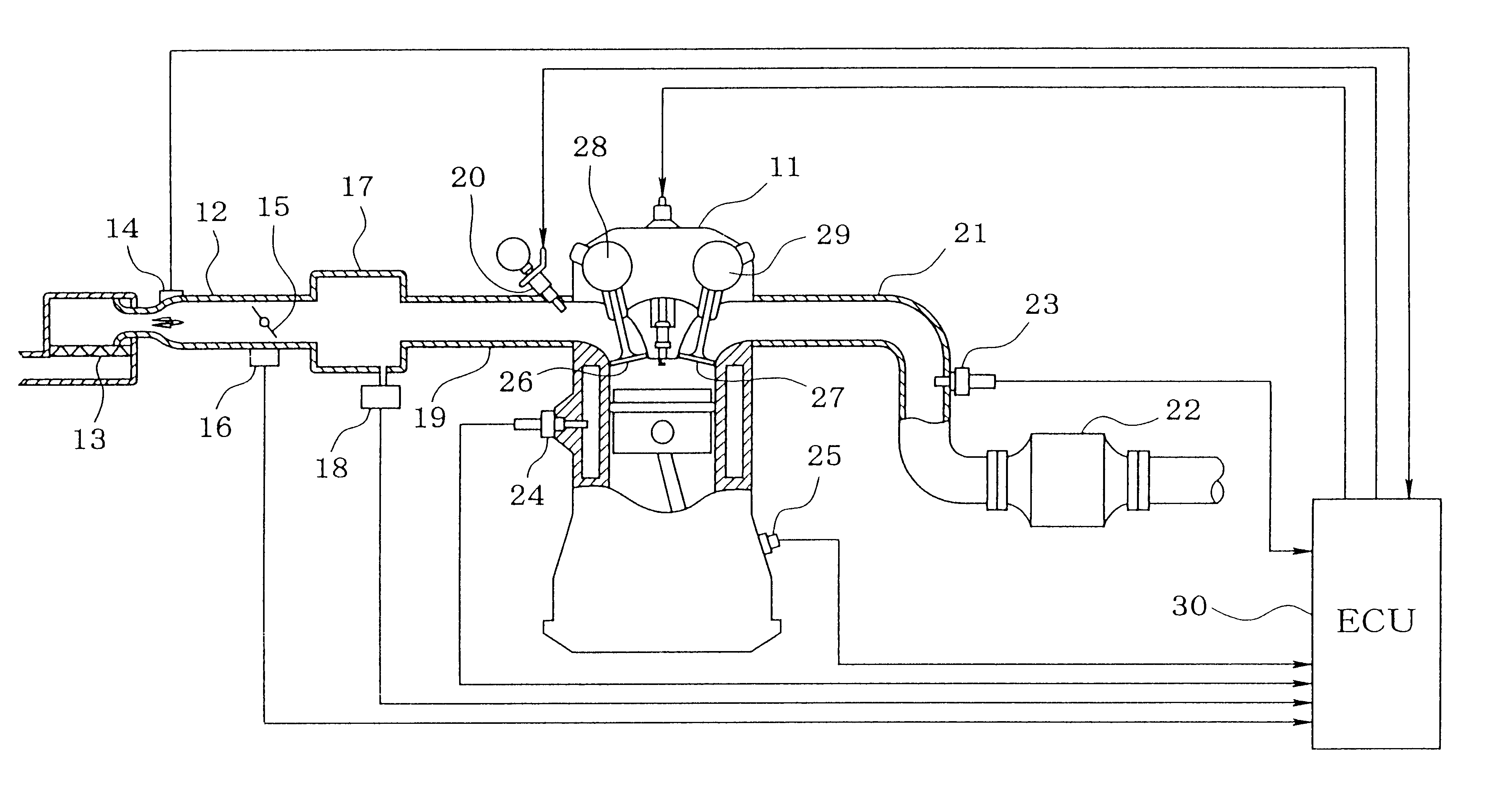

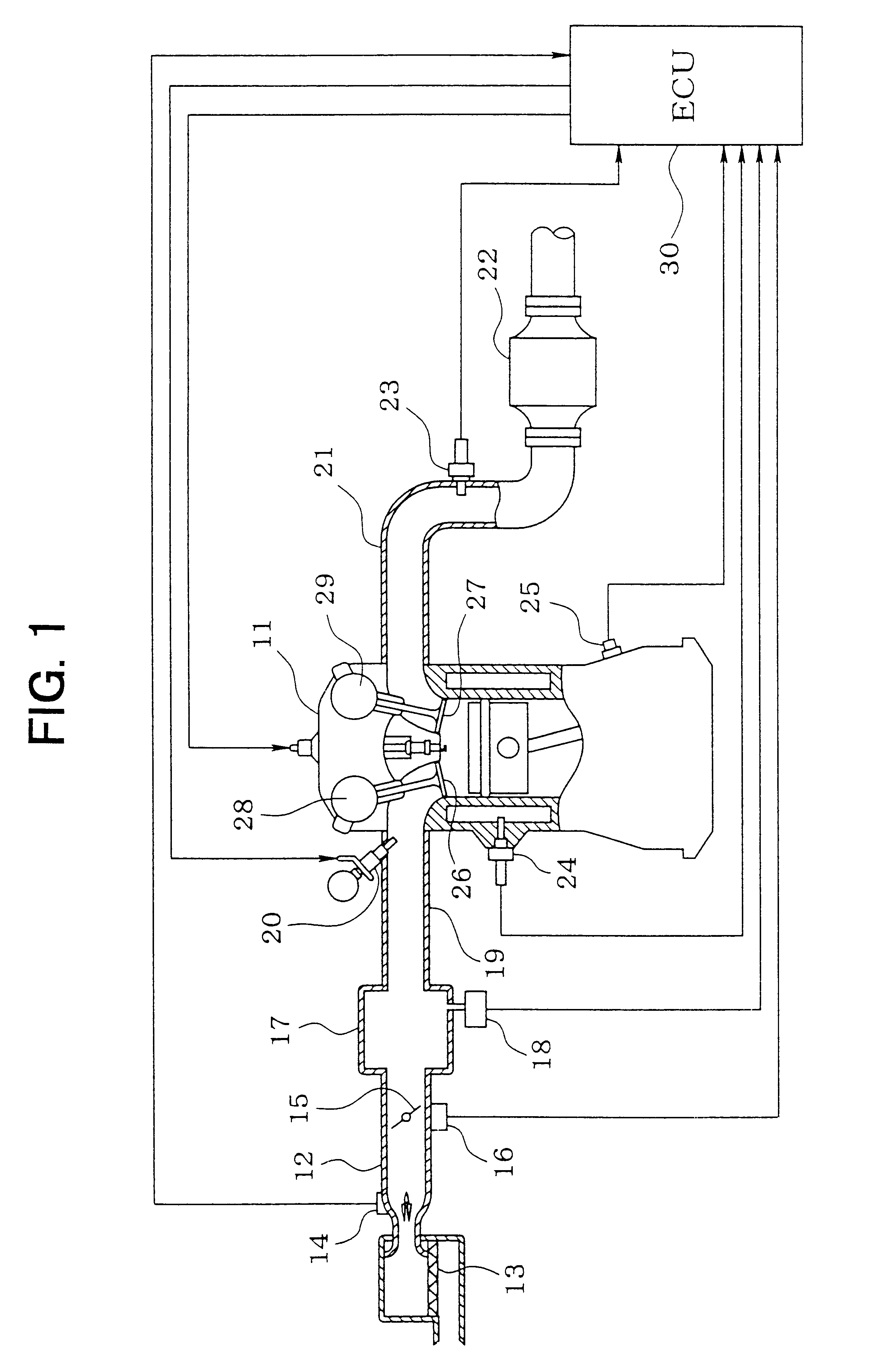

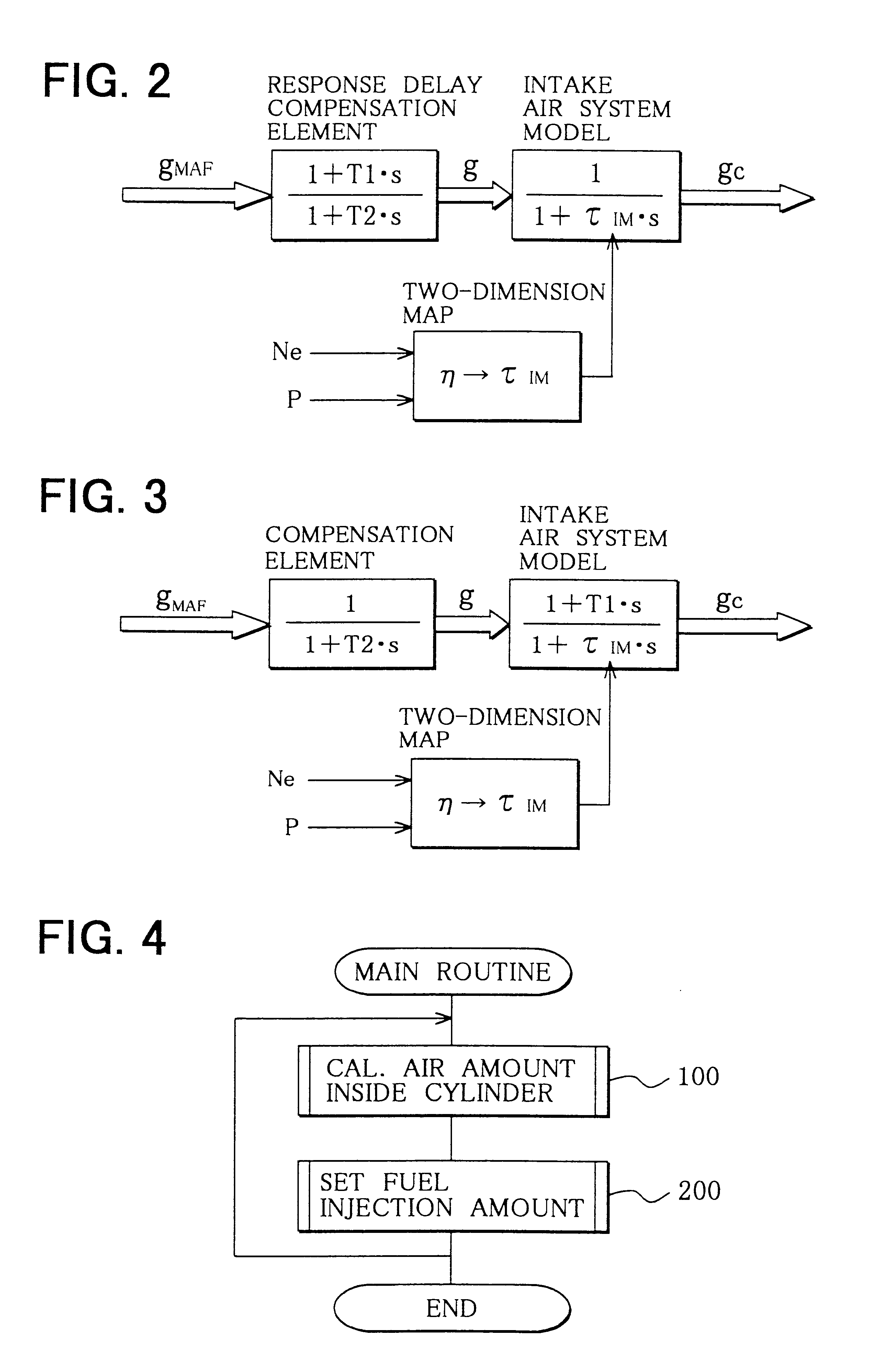

Air amount detector for internal combustion engine

InactiveUS20020107630A1Improve calculation accuracyAnalogue computers for vehiclesElectrical controlResponse delayPhase advance

A response delay compensation element for compensating a response delay of an output gMAF of an airflow meter by a phase advance compensation is provided so that an output g of the response delay compensation element is input to the intake air system model. A transfer function of the phase advance compensation is <paragraph lvl="0"><in-line-formula>g=(1+T1.s) / (1+T2s).gMAF< / in-line-formula>where T1 and T2 are time constant of the phase advance compensation, which is set based on at least one of the output gMAF of the airflow meter, engine speed, an intake air pressure, and a throttle angle. The model time constant τIM of the intake air system model is calculated by variables including volumetric efficiency and the engine speed. The volumetric efficiency is calculated by two-dimensional map having the engine speed and the intake air pressure as parameters thereof.

Owner:DENSO CORP

Failure detecting device for supercharging-pressure control means in supercharging device of engine

InactiveUS20080022679A1Accurate detectionHigh frequencyElectrical controlInternal combustion piston enginesWastegateResponse delay

When an engine load QC is equal to or larger than a predetermined value, an acceleration-state determiner determines that a turbine is in an acceleration state, and a supercharging-pressure-control-state determiner determines that supercharging-pressure controller (a bypass valve, a wastegate, and a variable flap) of a turbocharger are in a maximum supercharging pressure control state (a closed valve state), i.e., when a delay coefficient α of the turbocharger calculated by a delay-coefficient calculator on the basis of an actual supercharging pressure πc and a convergent value πc* of a supercharging pressure calculated by a convergent-value calculator indicates a value peculiar to the turbocharger, a failure of the supercharging-voltage controller is determined on the basis of the delay coefficient α. Thus, it is possible to secure a high failure detection accuracy, and increase a frequency of performing failure detection even when the engine load QC suddenly changes to cause a delay in a response of the supercharging pressure πc.

Owner:HONDA MOTOR CO LTD

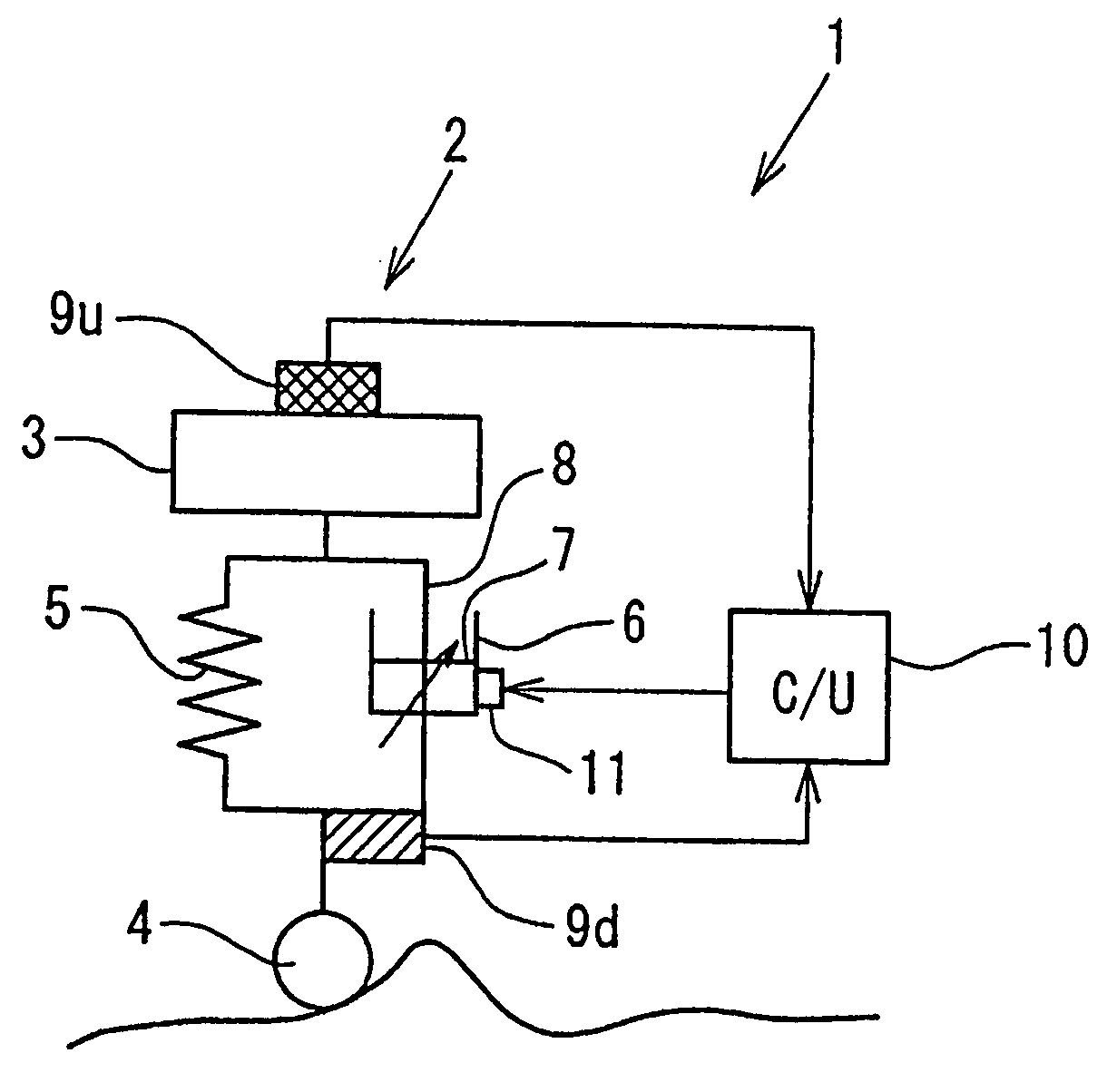

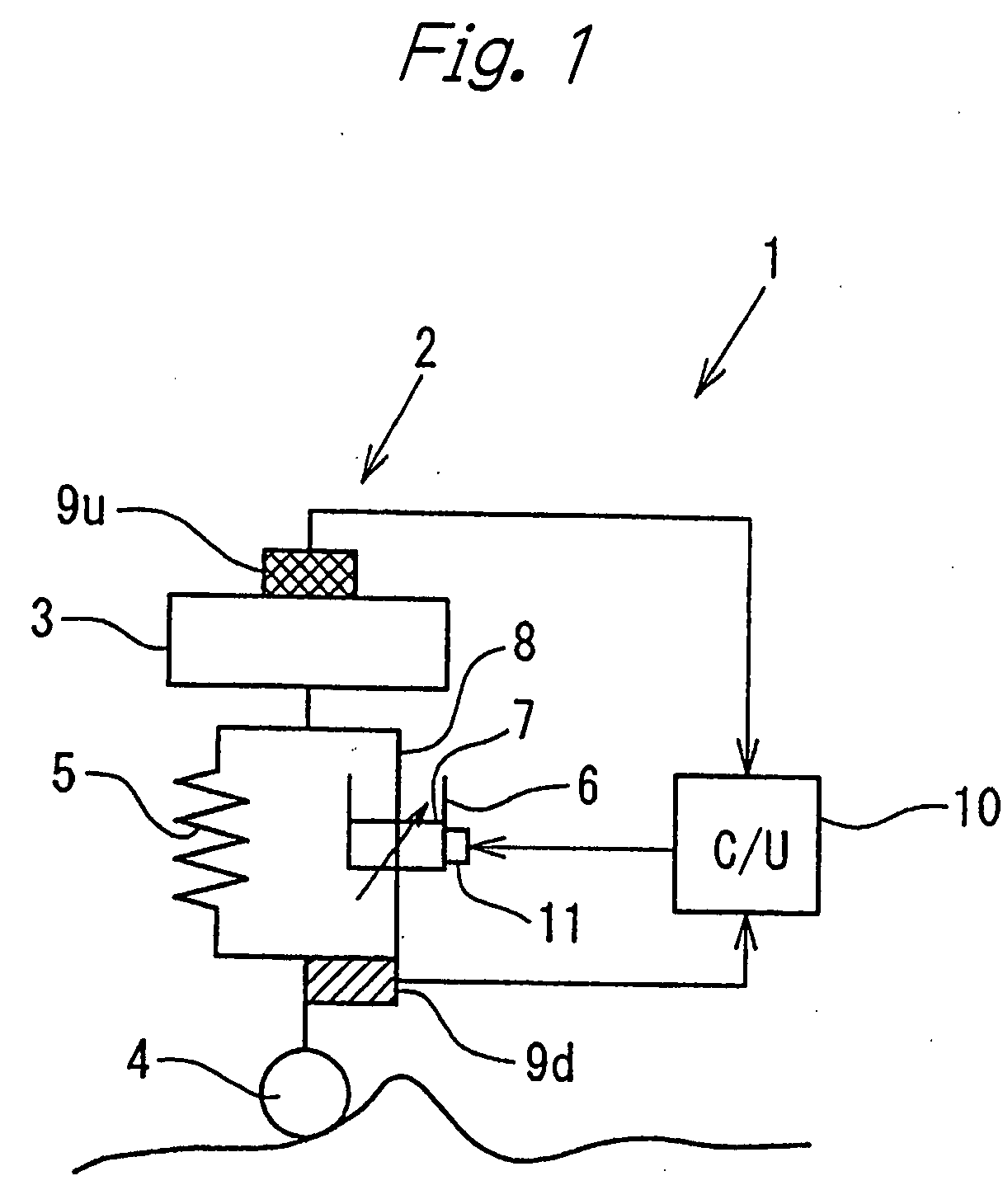

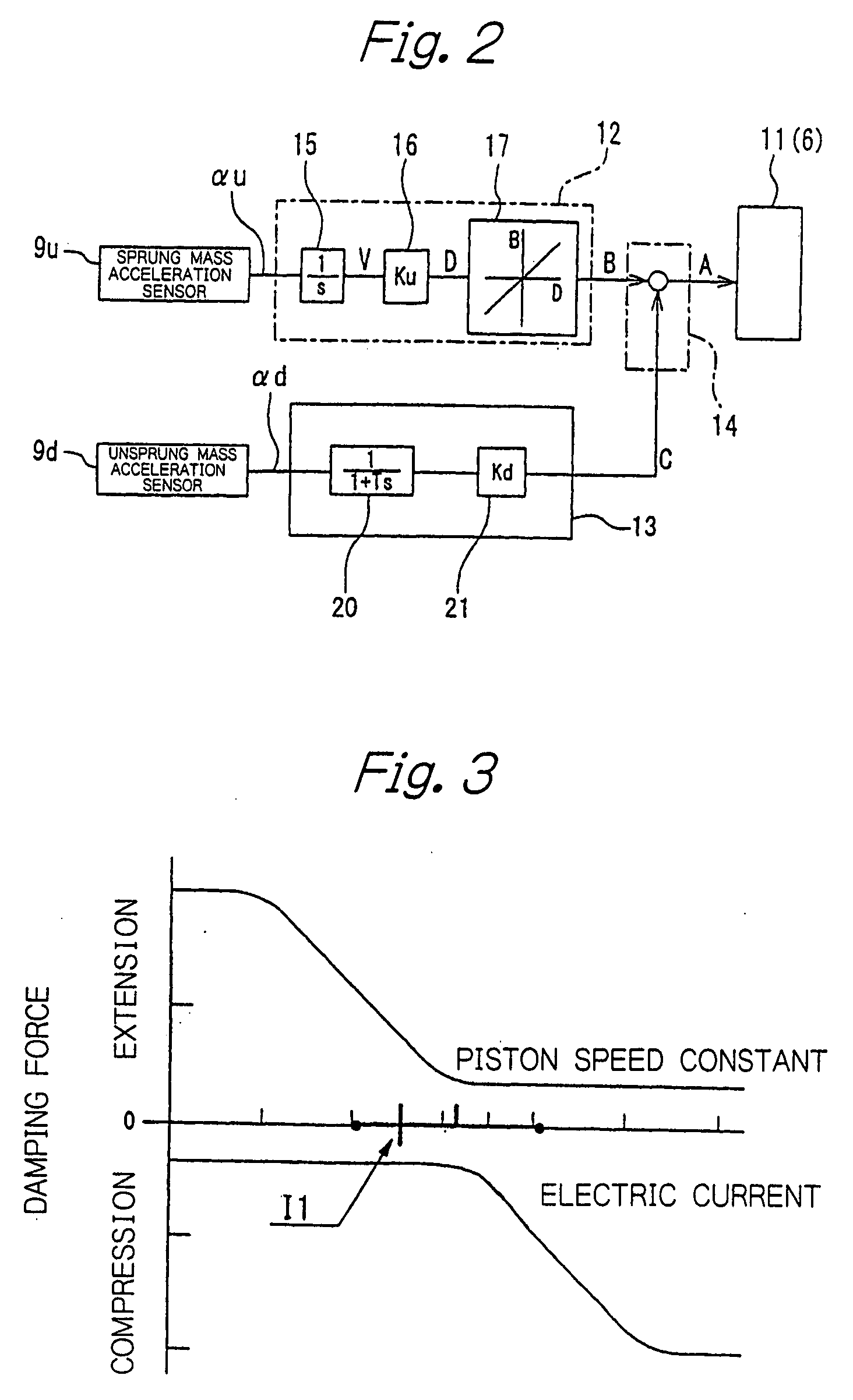

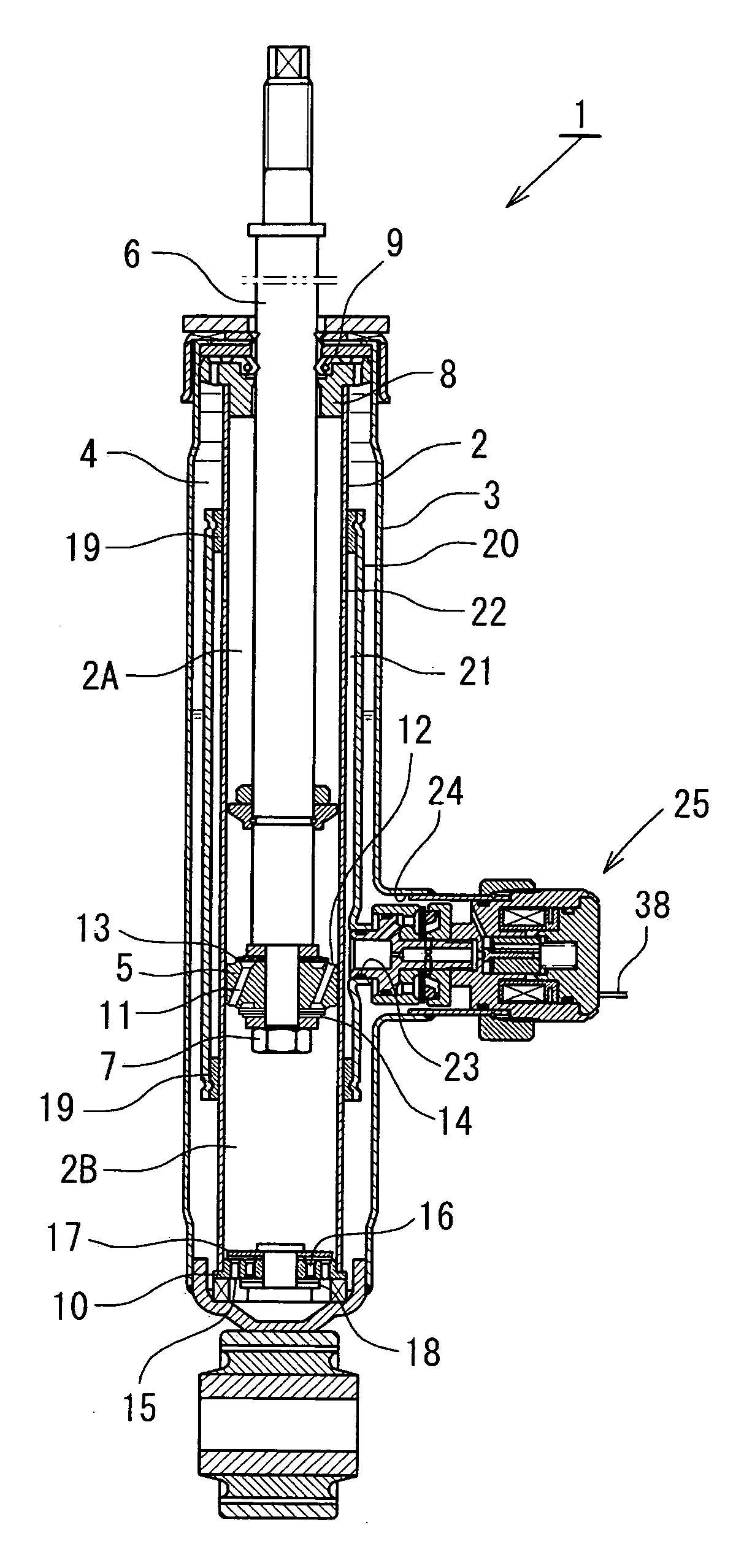

Suspension control apparatus

InactiveUS20050178628A1Improve efficiencyCompensation delayPortable framesDigital data processing detailsControl signalControl system

In a suspension control system according to the present invention, a sky-hook command signal B obtained from velocity data obtained by integrating a sprung mass acceleration αu from a sprung mass acceleration sensor 9u and an unsprung mass vibration damping command signal C obtained on the basis of an unsprung mass acceleration αd detected by an unsprung mass acceleration sensor 9d are added together to obtain a control signal A for a damping characteristic inverting type shock absorber 6. The control signal A reflects the unsprung mass acceleration αd that leads in phase by 90° the piston speed. Accordingly, it is possible to compensate for a response delay due to an actuator 11, etc.

Owner:HITACHI LTD

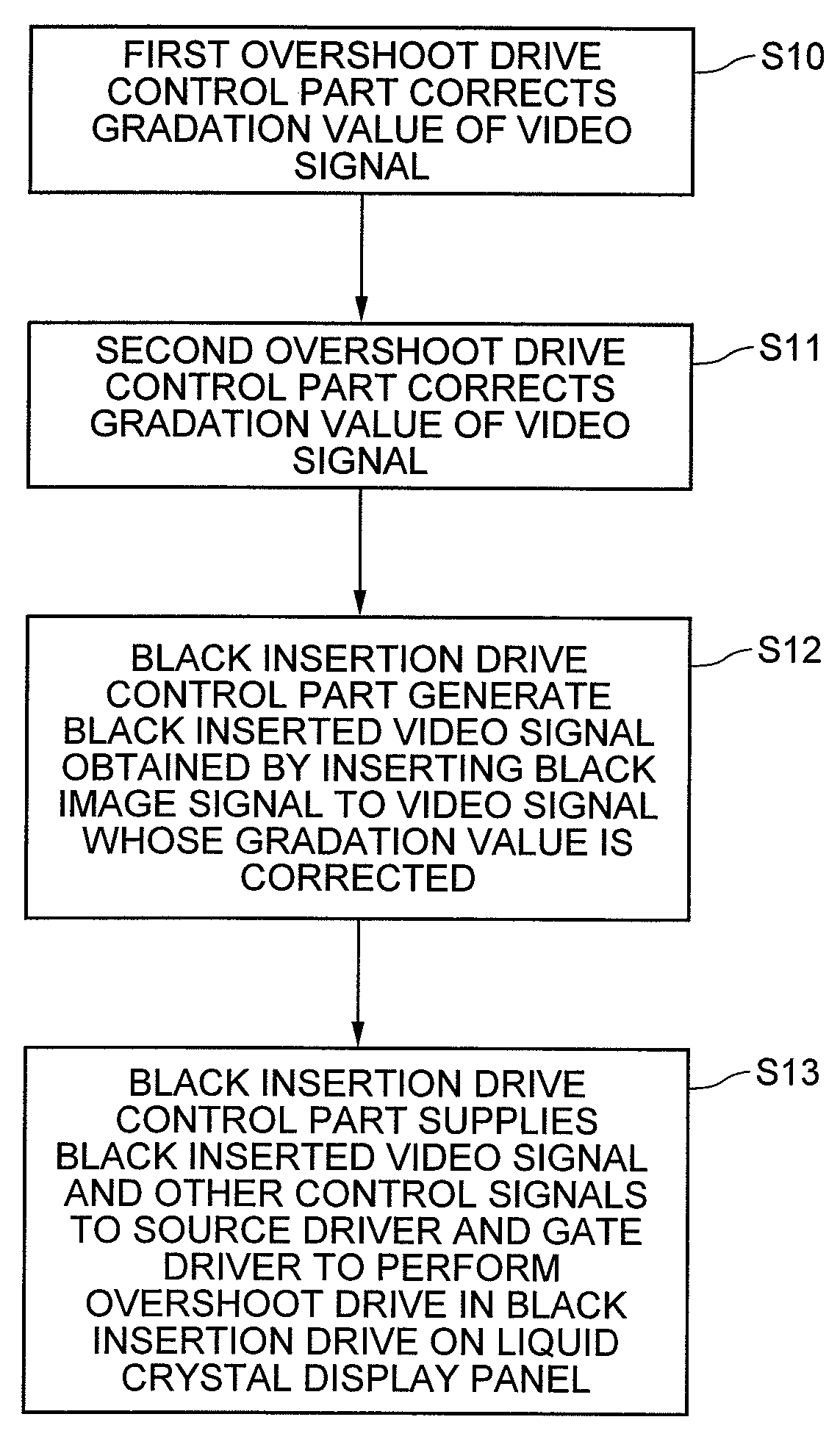

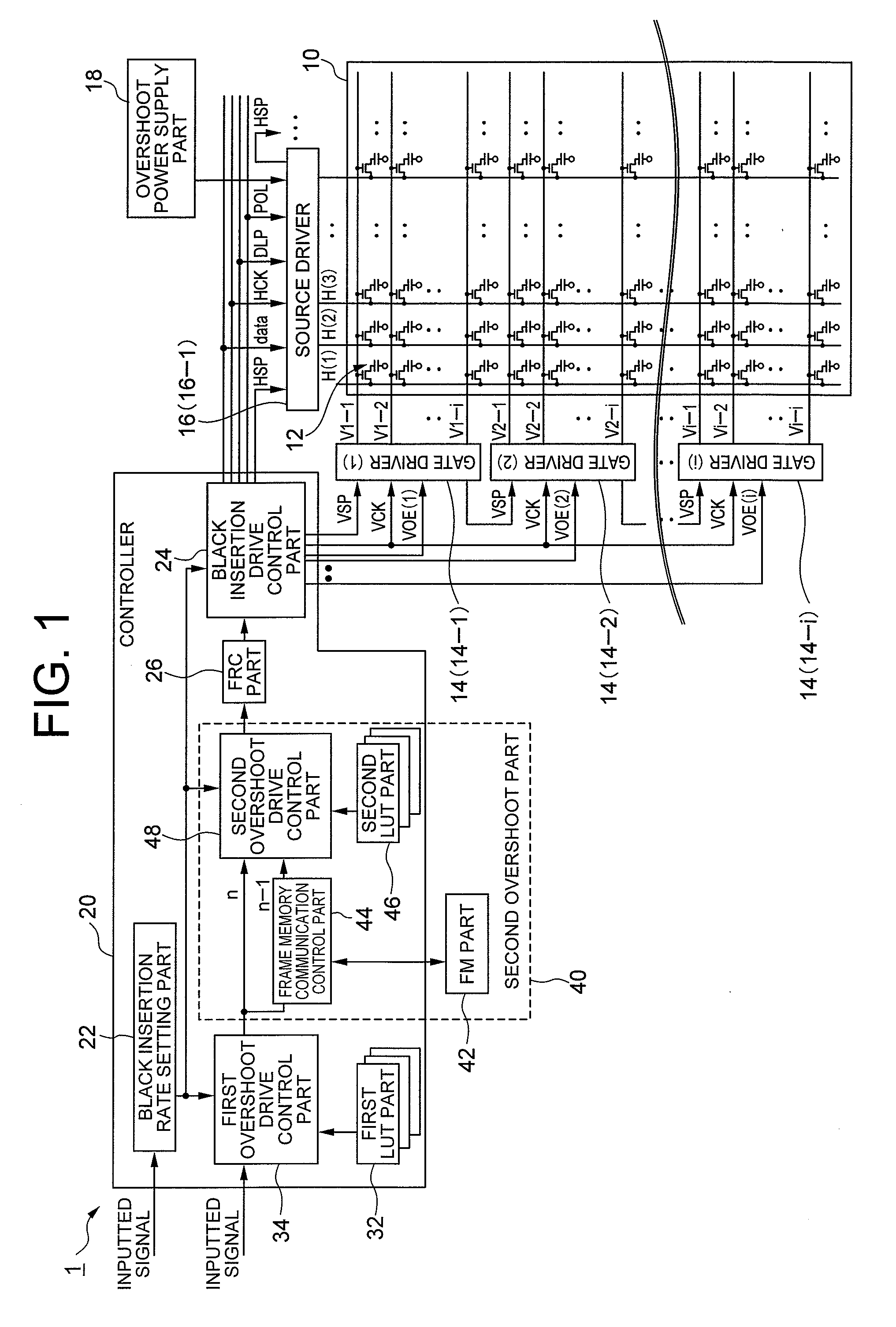

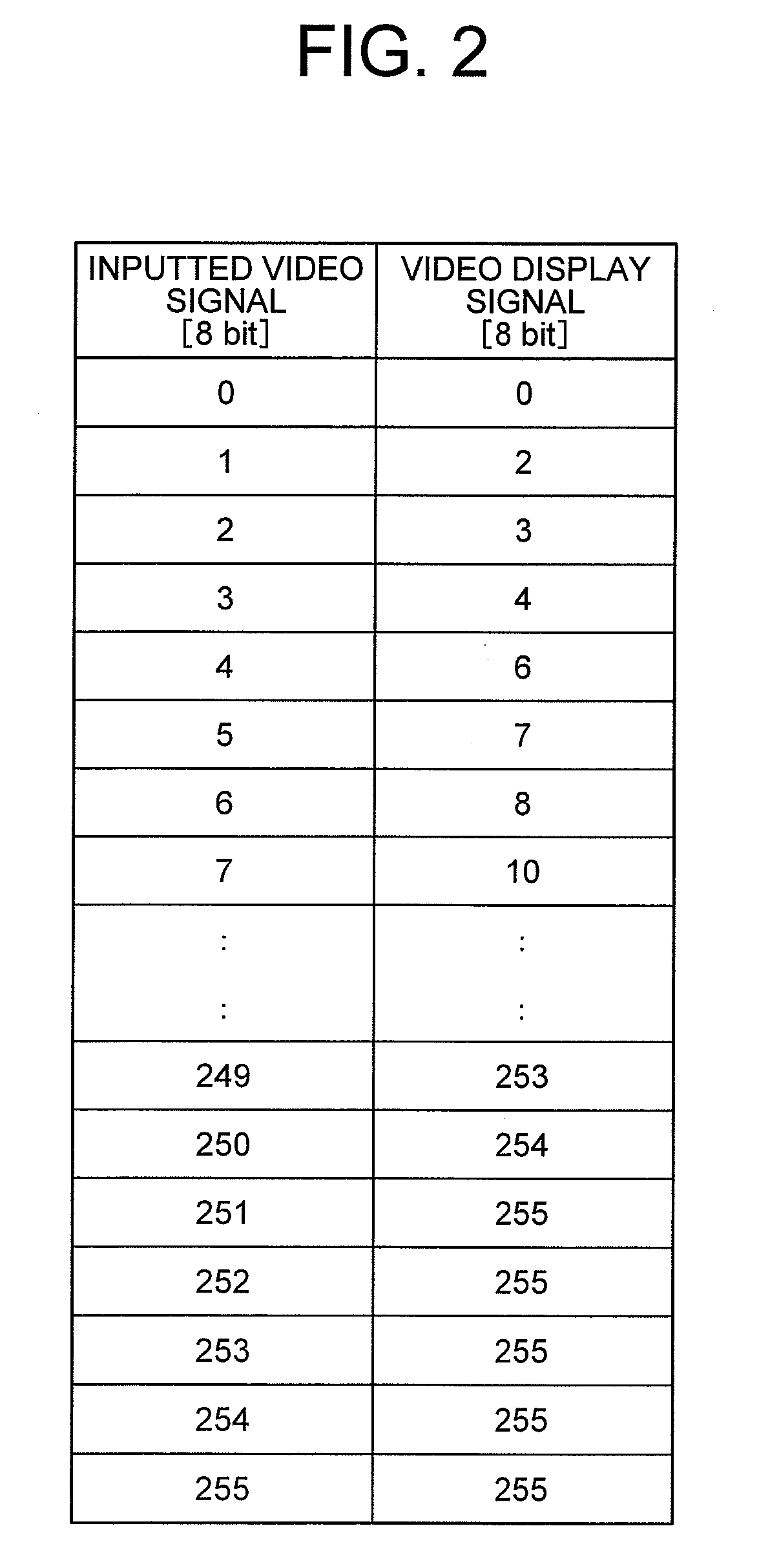

Display panel control device, liquid crystal display device, electronic appliance, display device driving method, and control program

ActiveUS20090109247A1Avoid problemsAvoid it happening againCathode-ray tube indicatorsInput/output processes for data processingLiquid-crystal displayDisplay device

To provide a display panel control device capable of preventing generation of step-like tailing and ghost when executing black insertion drive. A first correction device performs first correction on a gradation value of a video signal by considering response delay of the display panel when changing from a second gradation voltage to a first gradation voltage. A second correction device performs second correction on one of or both of the gradation value of the video signal and the gradation voltage of a monochrome image signal by considering accumulative luminance reaching delay of the video part caused due to a difference between each monochrome display luminance of each monochrome image part in different unit frame cycle periods, when the gradation value of the video signal changes from a unit frame cycle period to another unit frame cycle period. A monochrome image insertion drive control device generates the monochrome image inserted video signal including the video part and the monochrome image part to which the first correction or the second correction is performed, and controls the monochrome image insertion drive of the display panel.

Owner:NEC LCD TECH CORP

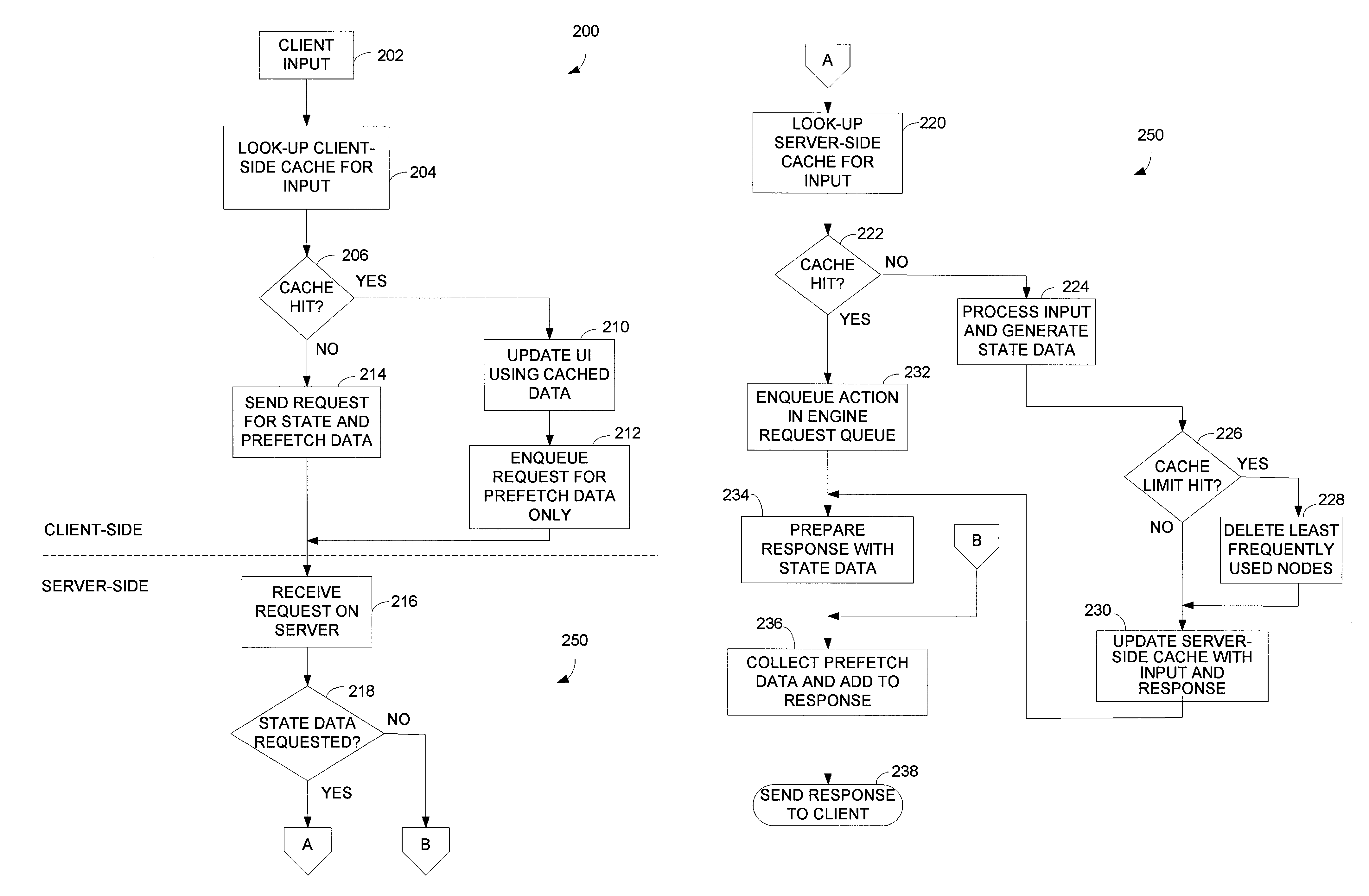

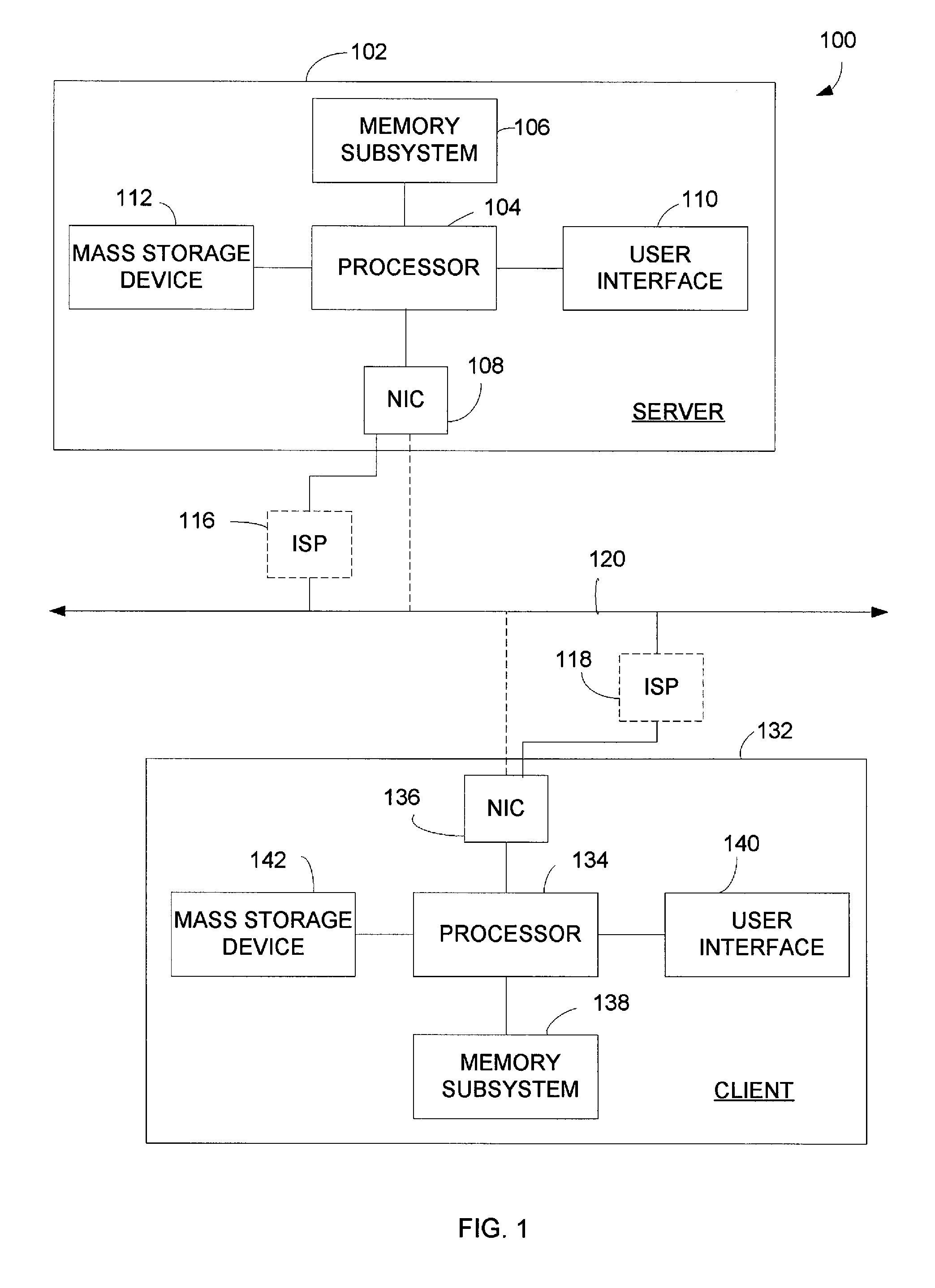

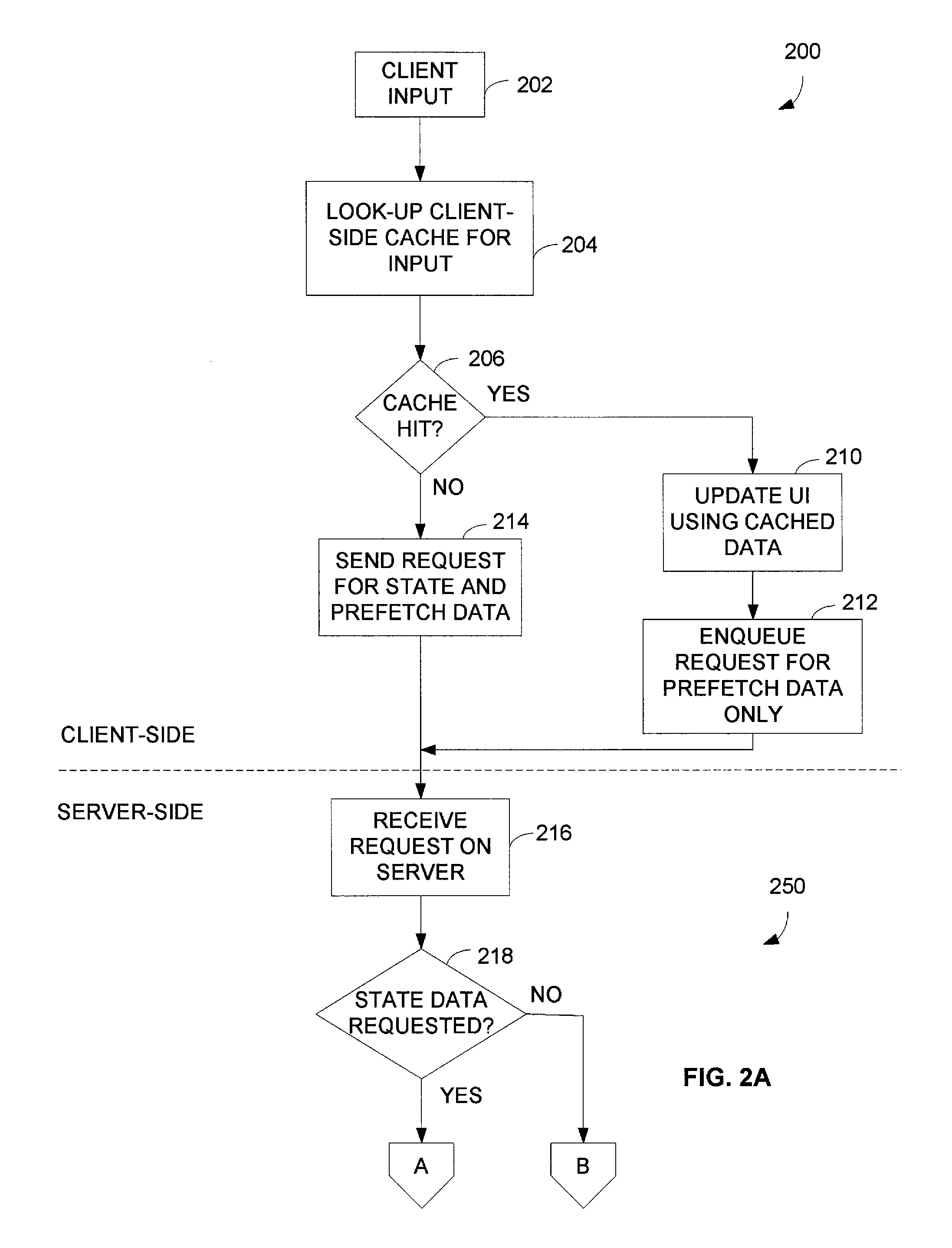

Application state server-side cache for a state-based client-server application

ActiveUS8019811B1Reduce delaysAvoid delayMultiple digital computer combinationsProgram controlResponse delayClient-side

Response delay associated with a state-based client-server application can be reduced with utilization of an application state server-side cache. A server caches data for a set of one or more possible states of a client-server application that may follow a current state of the application. The server rapidly responds to a client request with data that corresponds to an appropriate new state of the application in accordance with the application state server-side cache. The server determines that the application will transition to the appropriate new state from the current state of the application with the application state server-side cache based, at least in part, on an operation indicated by the client request.

Owner:VERSATA DEV GROUP

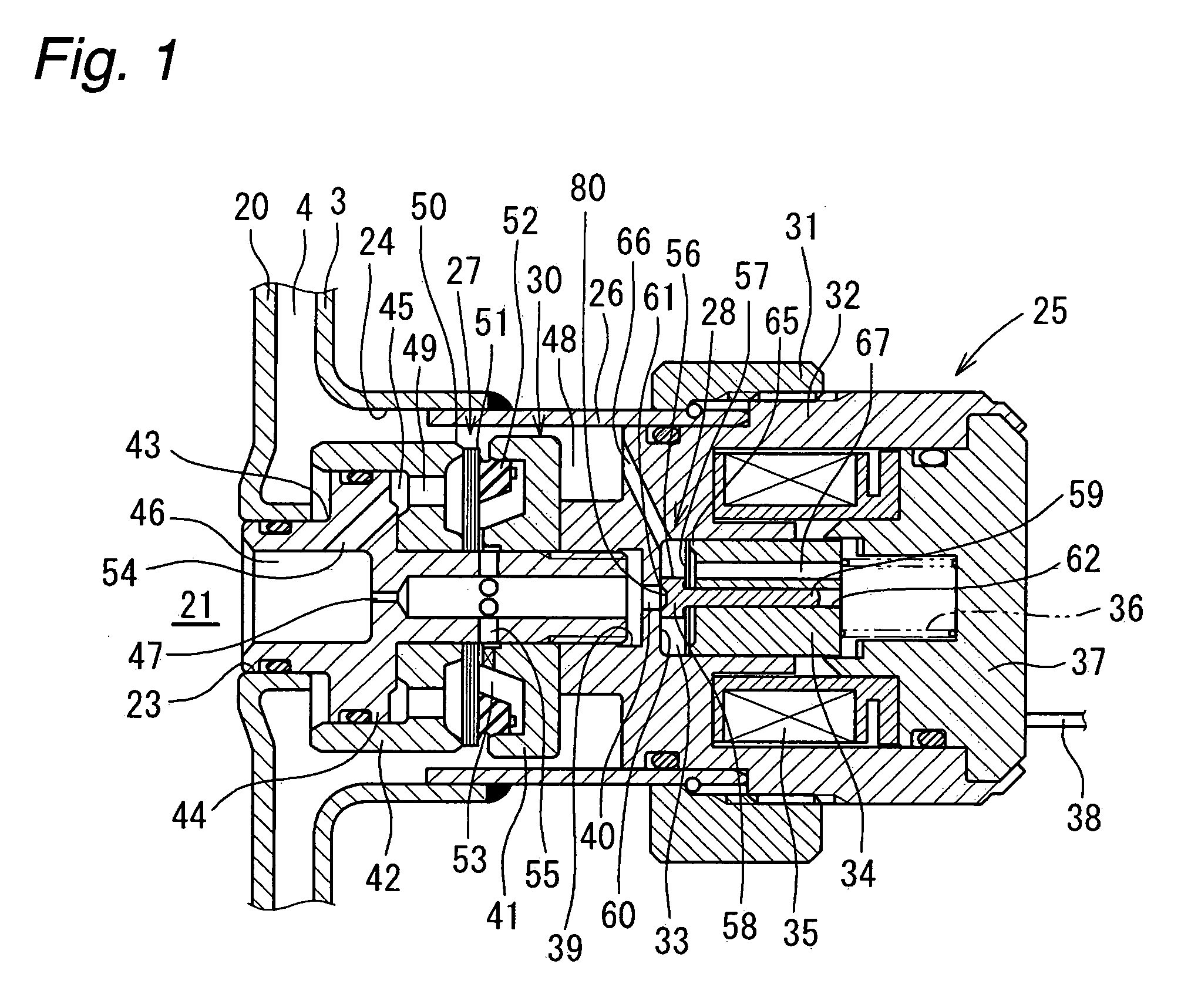

Damping force adjustable shock absorber

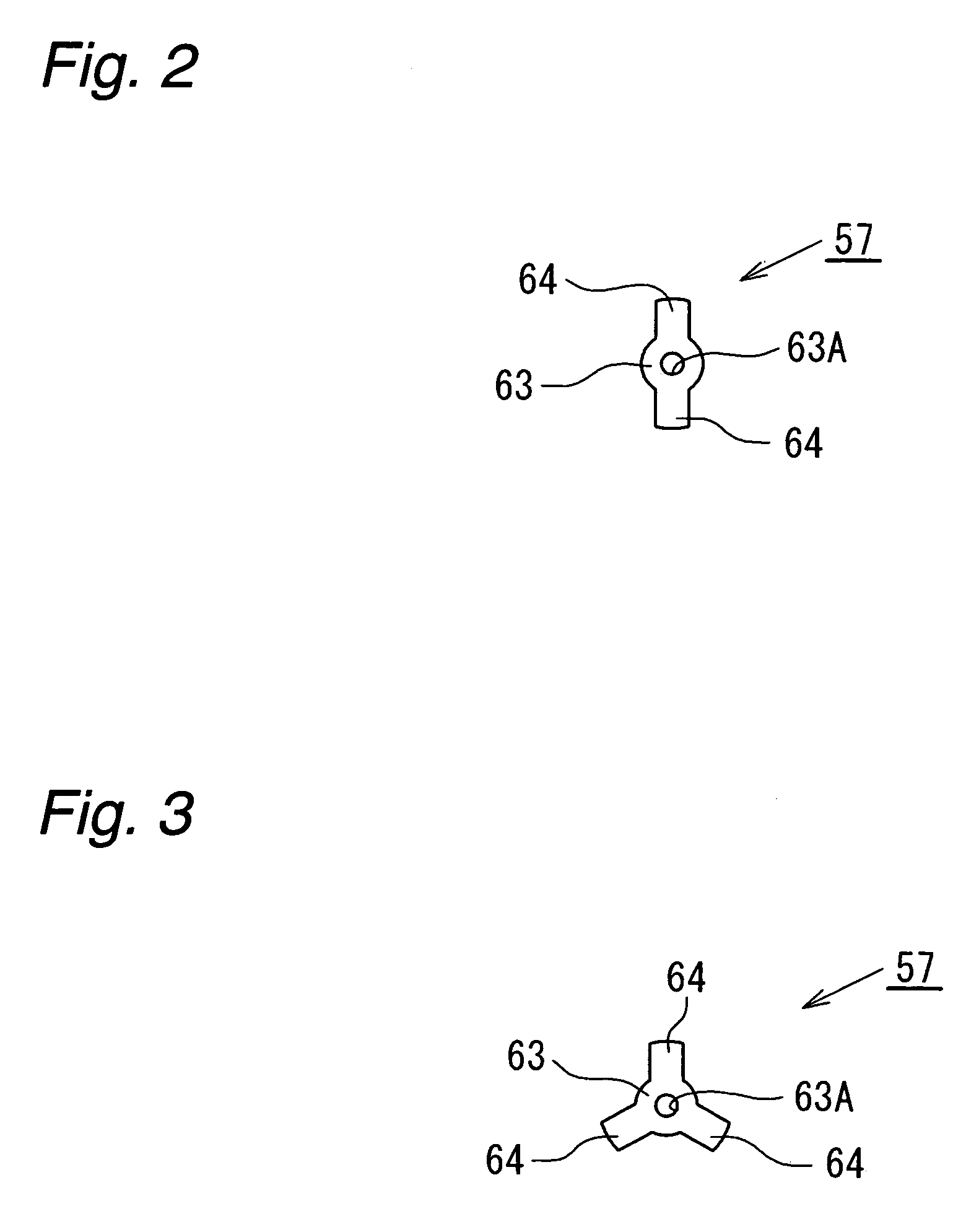

ActiveUS20090242339A1Avoid response delaysImprove responsivenessSpringsShock absorbersInternal pressureSelf excited

An object of the present invention is to provide a damping force adjustable hydraulic shock absorber, in which response delay of a pressure control valve and self-excited vibration of a valve body can be prevented. A damping force is generated by controlling an oil flow between an annular oil passage 21 and a reservoir 4 generated by a sliding movement of a piston in a cylinder with use of a back-pressure type main valve 27 and a pressure control valve 28. The damping force is directly generated by the pressure control valve 28, and a valve-opening pressure of the main valve 27 is adjusted by adjusting an inner pressure of a back-pressure chamber 53. In the pressure control valve 28, a valve spring 57 is disposed between a valve body 56 and a plunger 34. A mass of the valve body 56 is sufficiently less than that of a plunger 34, and a spring stiffness of the valve spring 57 is higher than that of a plunger spring 36. As a result, it is possible to improve the responsiveness of the valve body 56 to prevent response delay in a damping force control. In addition, since the natural frequency of the valve body 56 is high, it is possible to prevent self-excited vibration of the valve body 56.

Owner:HITACHI ASTEMO LTD

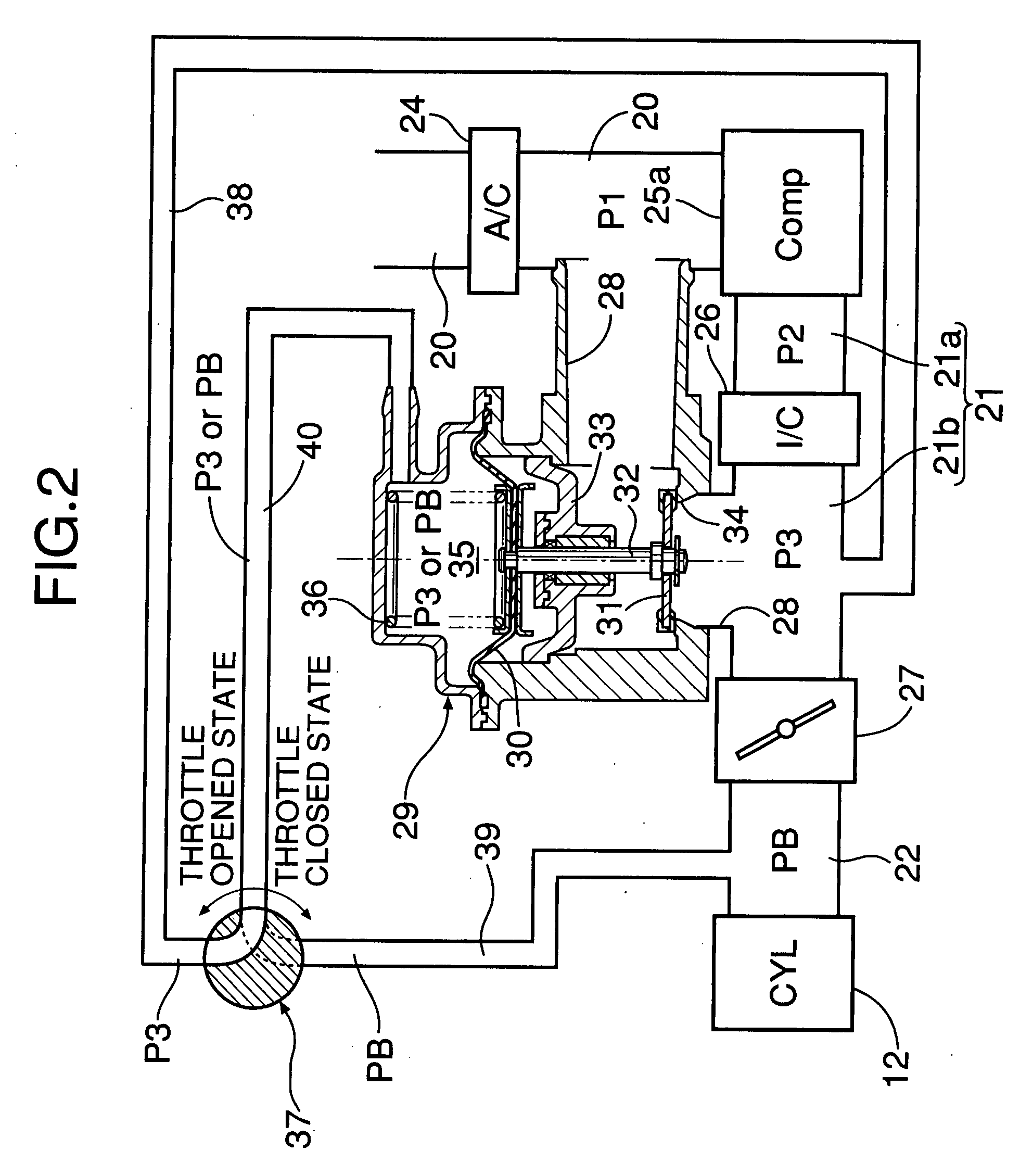

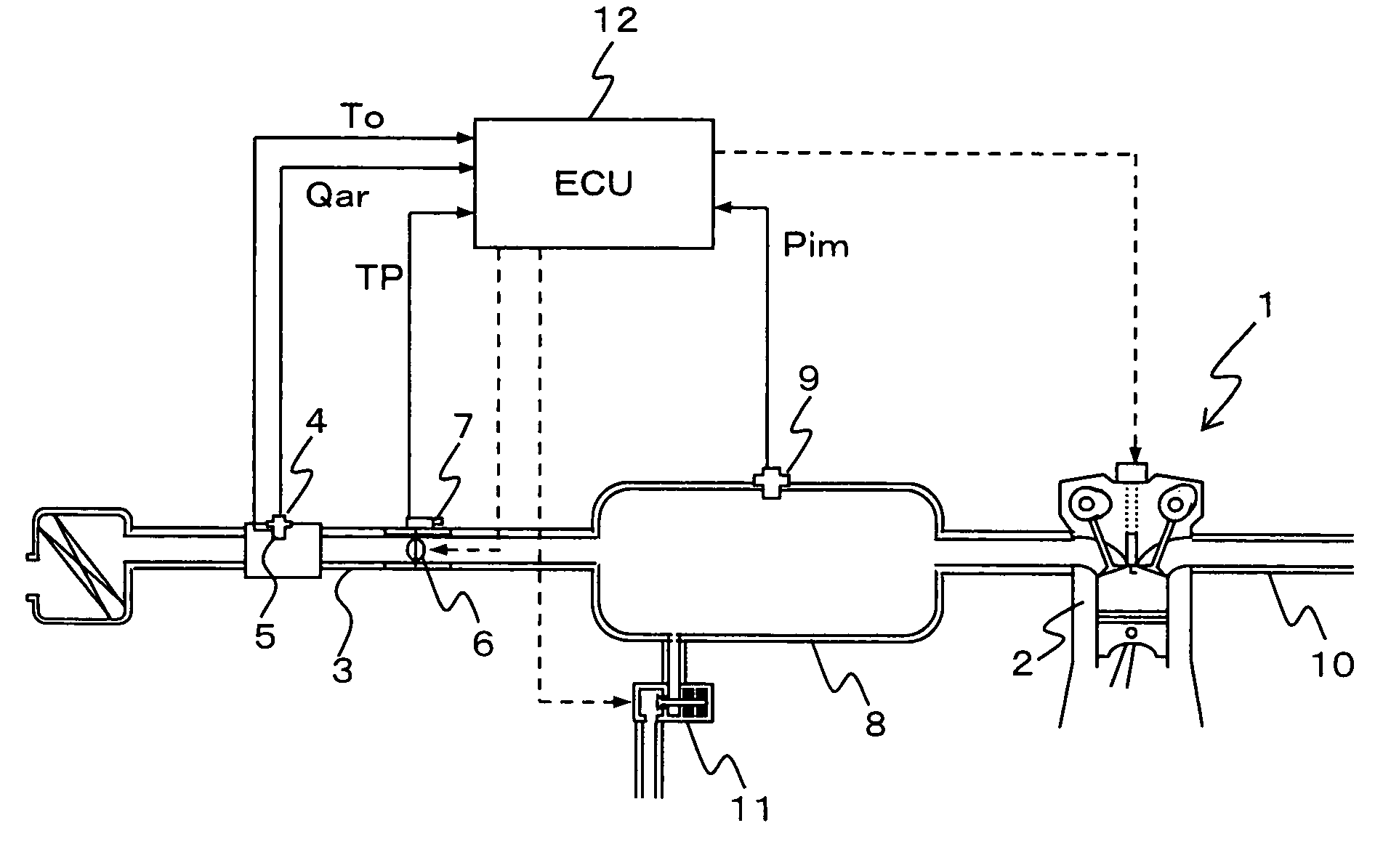

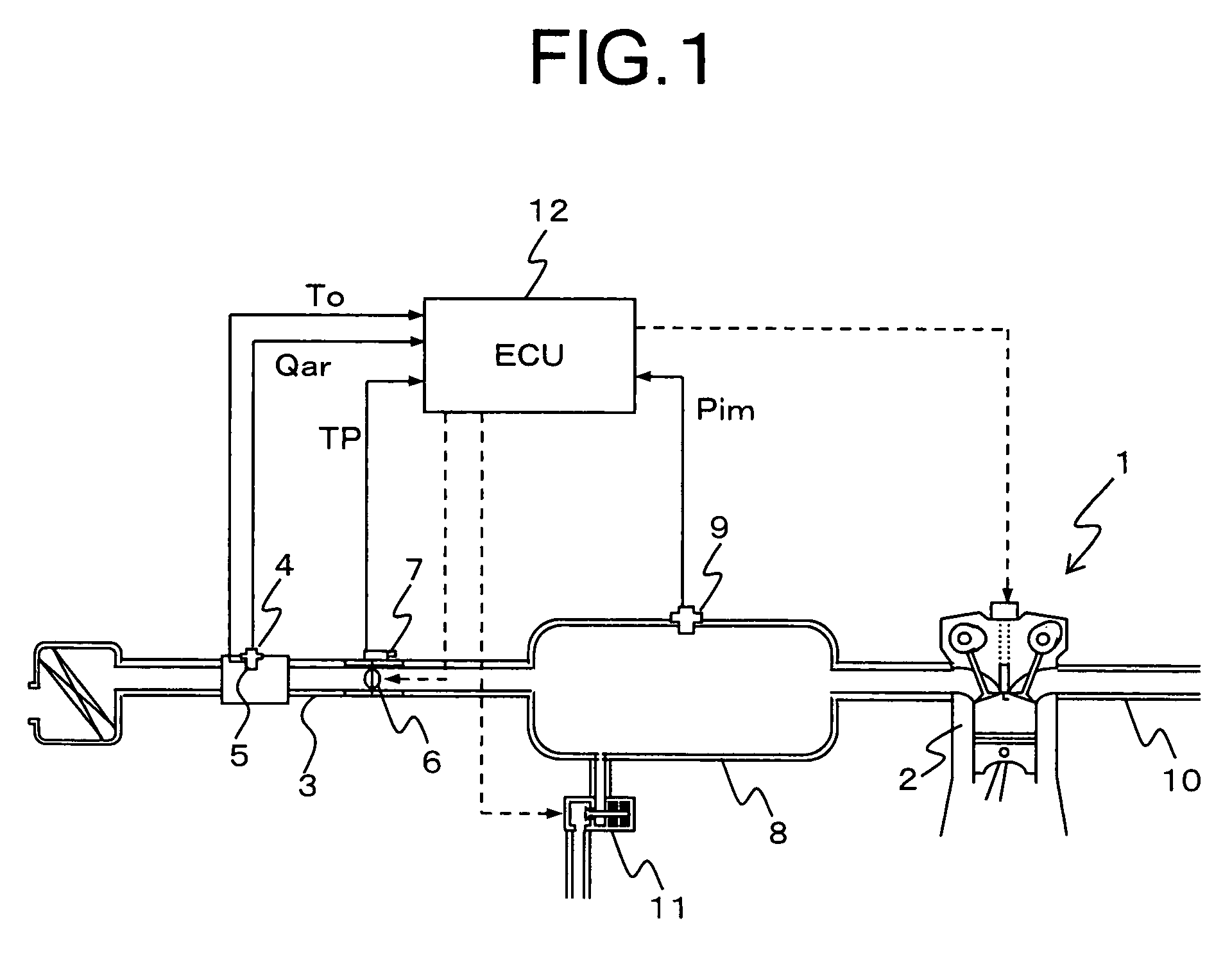

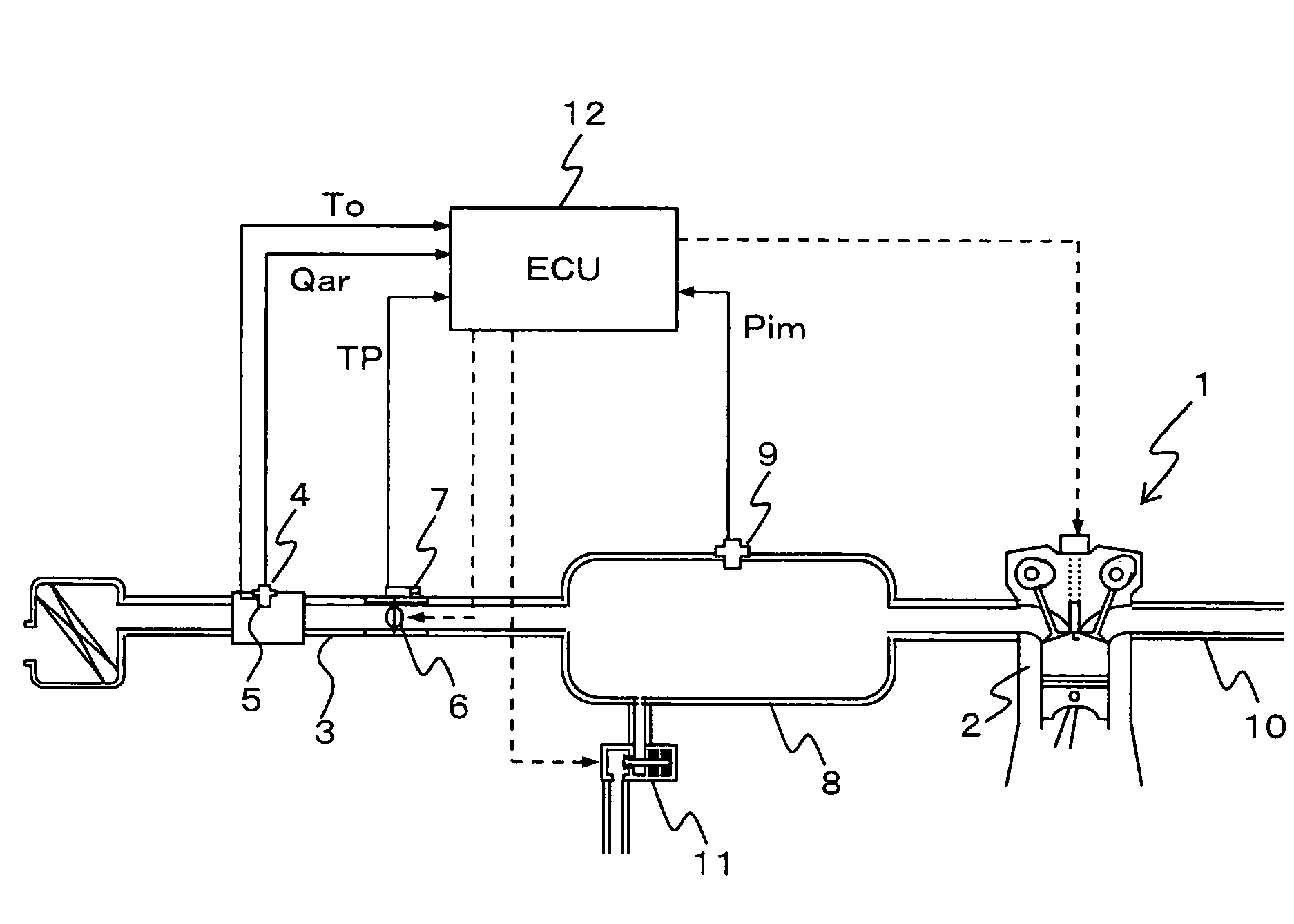

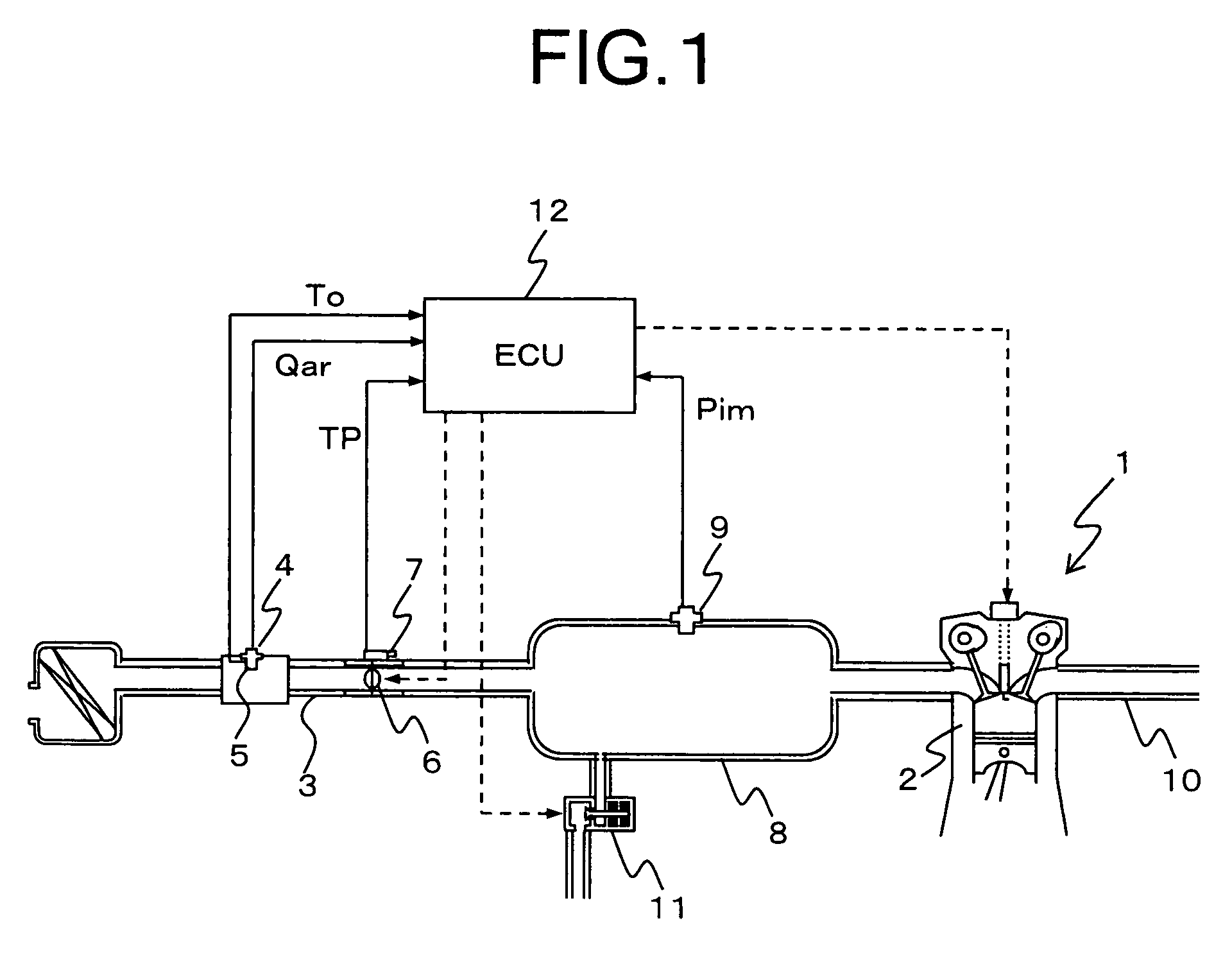

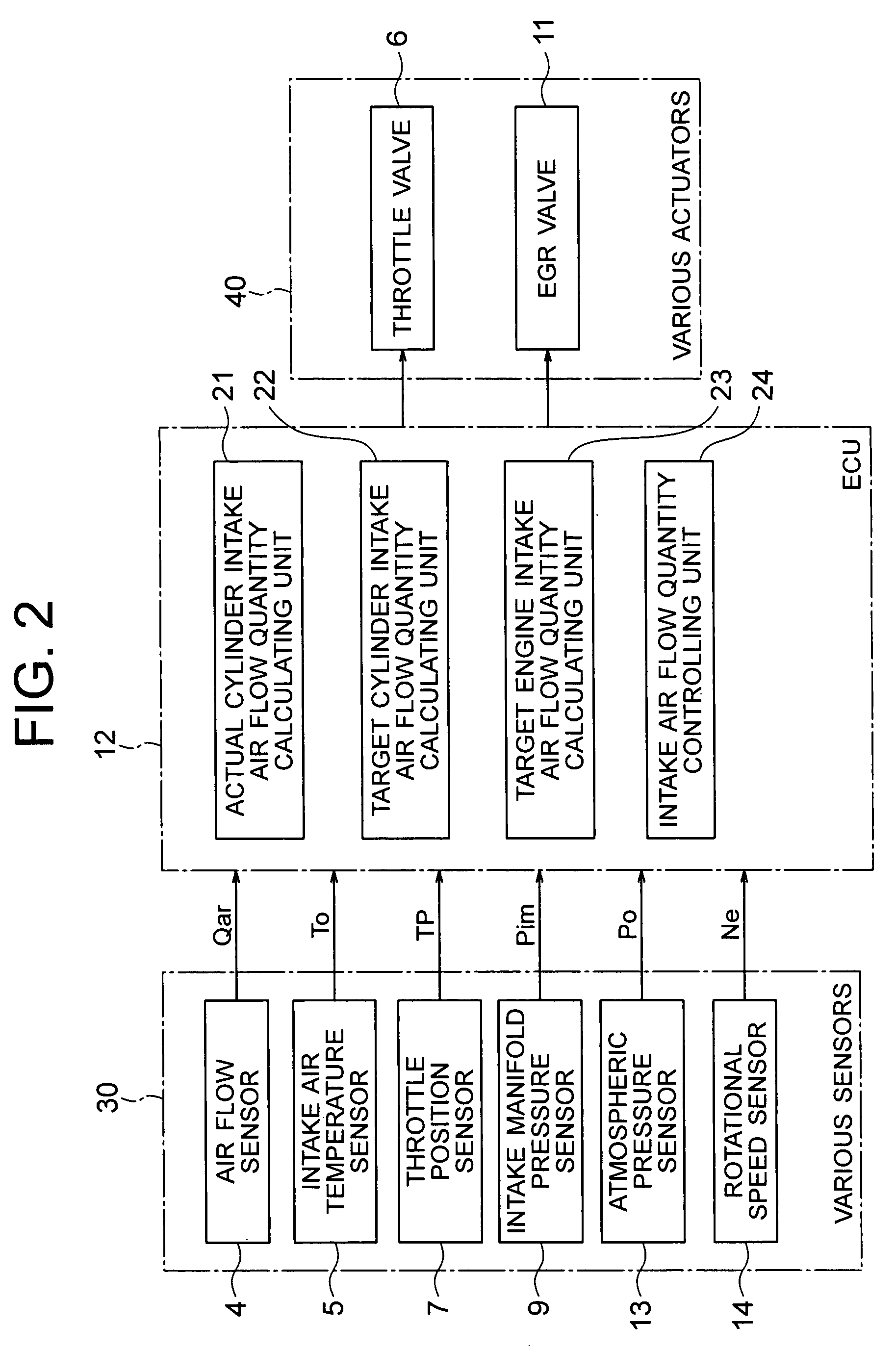

Control device for internal combustion engine

ActiveUS20080127938A1Reduce in quantityShort calculation timeElectrical controlInternal combustion piston enginesResponse delayThrottle opening

Provided is a control device for an internal combustion engine, which is provided to allow a throttle opening degree to be controlled in accordance with a target engine intake air flow quantity even during transitional operation. The actual cylinder intake air flow quantity calculating unit (21) calculates a response delay model for an intake system from a volumetric efficiency equivalent value (Kv) calculated from a rotational speed (Ne) of an engine (1) and an intake manifold pressure (Pim), an intake pipe volume (Vs), and a displacement (Vc) of each of cylinders (2), and calculates an actual cylinder intake air amount (Qcr) from an actual engine intake air amount (Qar) obtained from an air flow sensor (4) and the response delay model. The intake air flow quantity controlling unit (24) controls the throttle opening degree (TP) in accordance with the target engine intake air amount (Qat).

Owner:MITSUBISHI ELECTRIC CORP

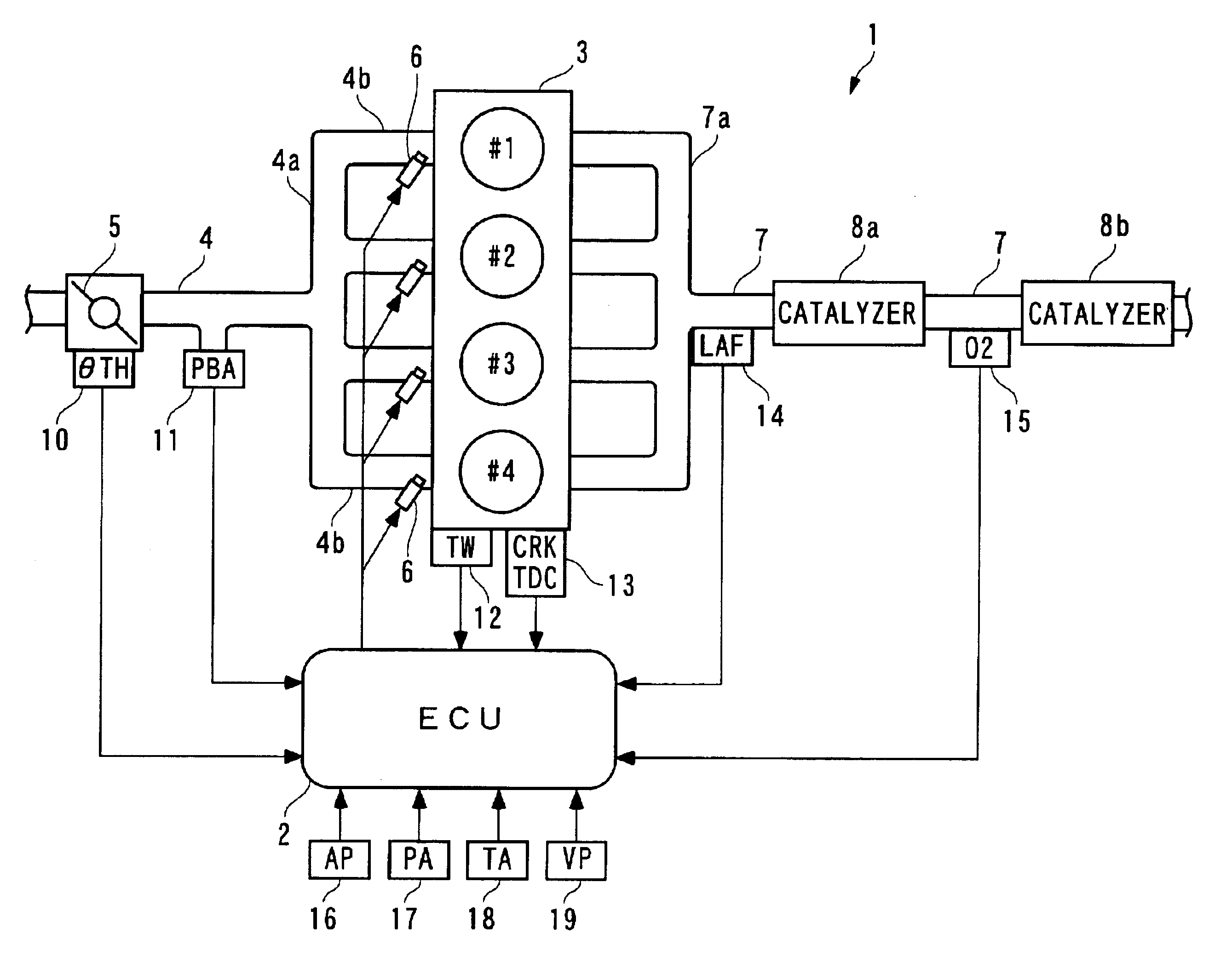

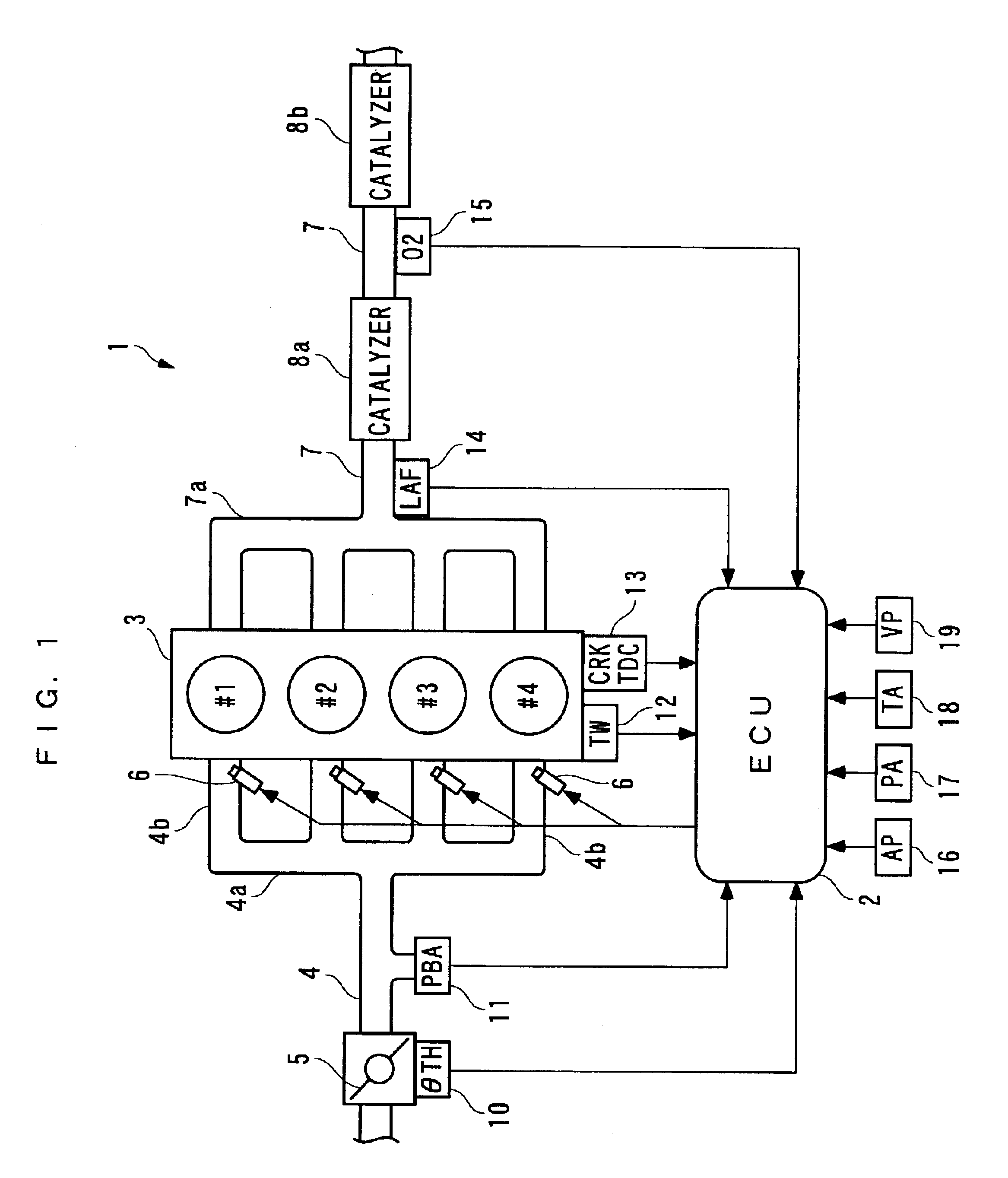

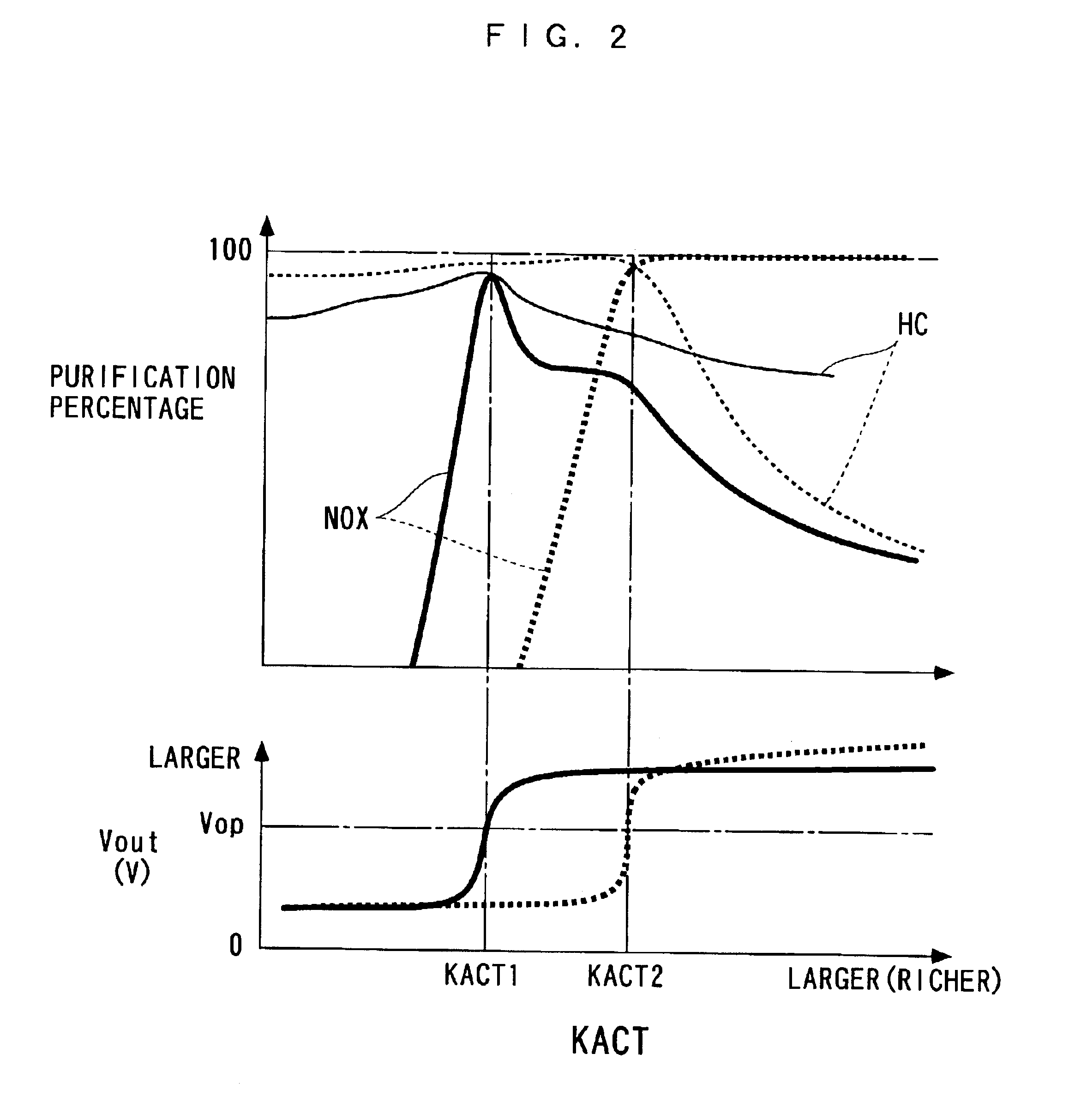

Control apparatus, control method, and engine control unit

InactiveUS6925372B2Analogue computers for vehiclesElectrical controlDead timeExternal combustion engine

A control apparatus, a control method, and an engine control unit are provided for controlling an output of a controlled object which has a relatively large response delay and / or dead time to rapidly and accurately converge to a target value. When the output of the controlled object is chosen to be that of an air / fuel ratio sensor in an internal combustion engine, the output of the air / fuel ratio sensor can be controlled to rapidly and accurately converge to a target value even in an extremely light load operation mode.

Owner:HONDA MOTOR CO LTD

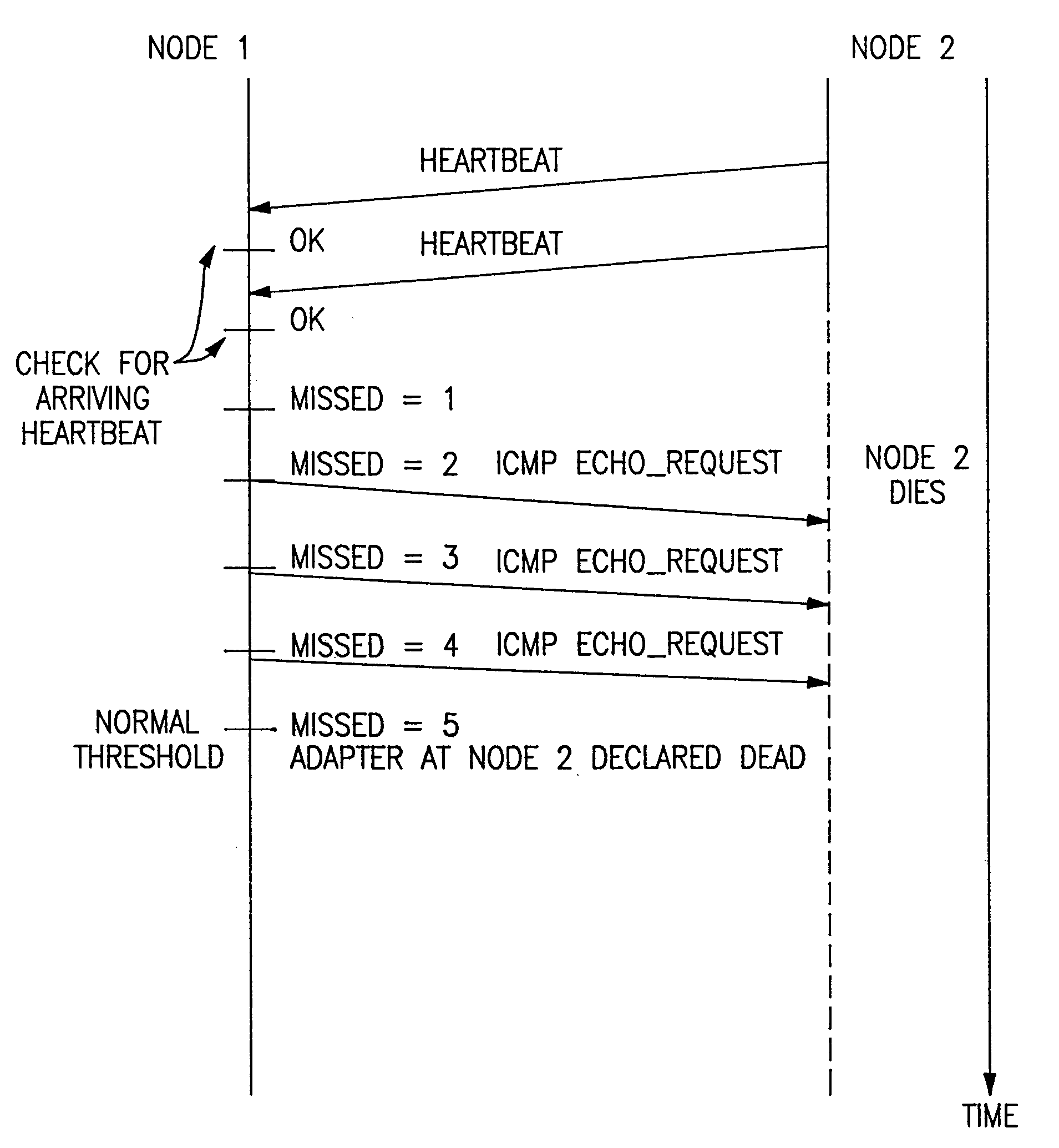

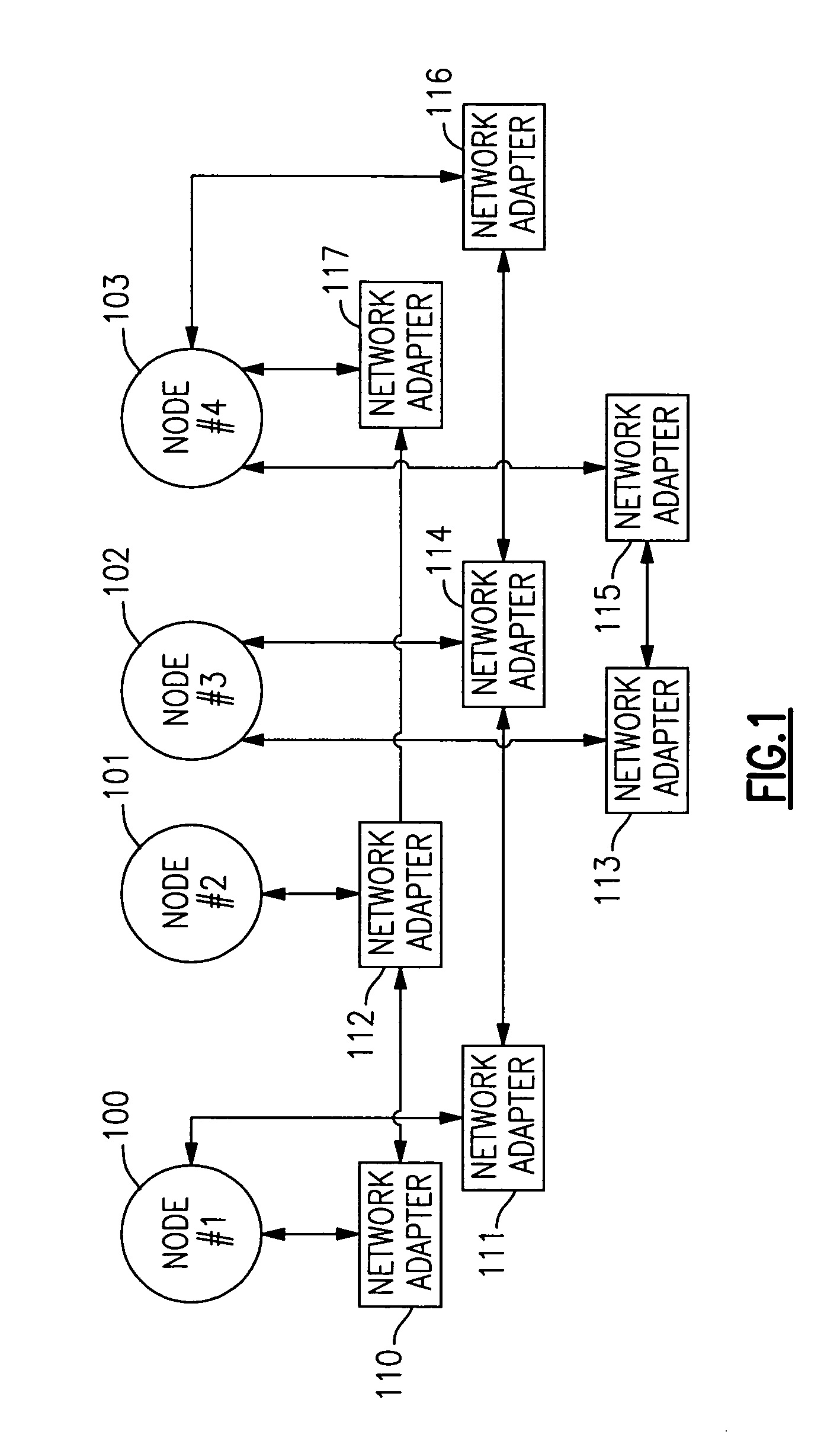

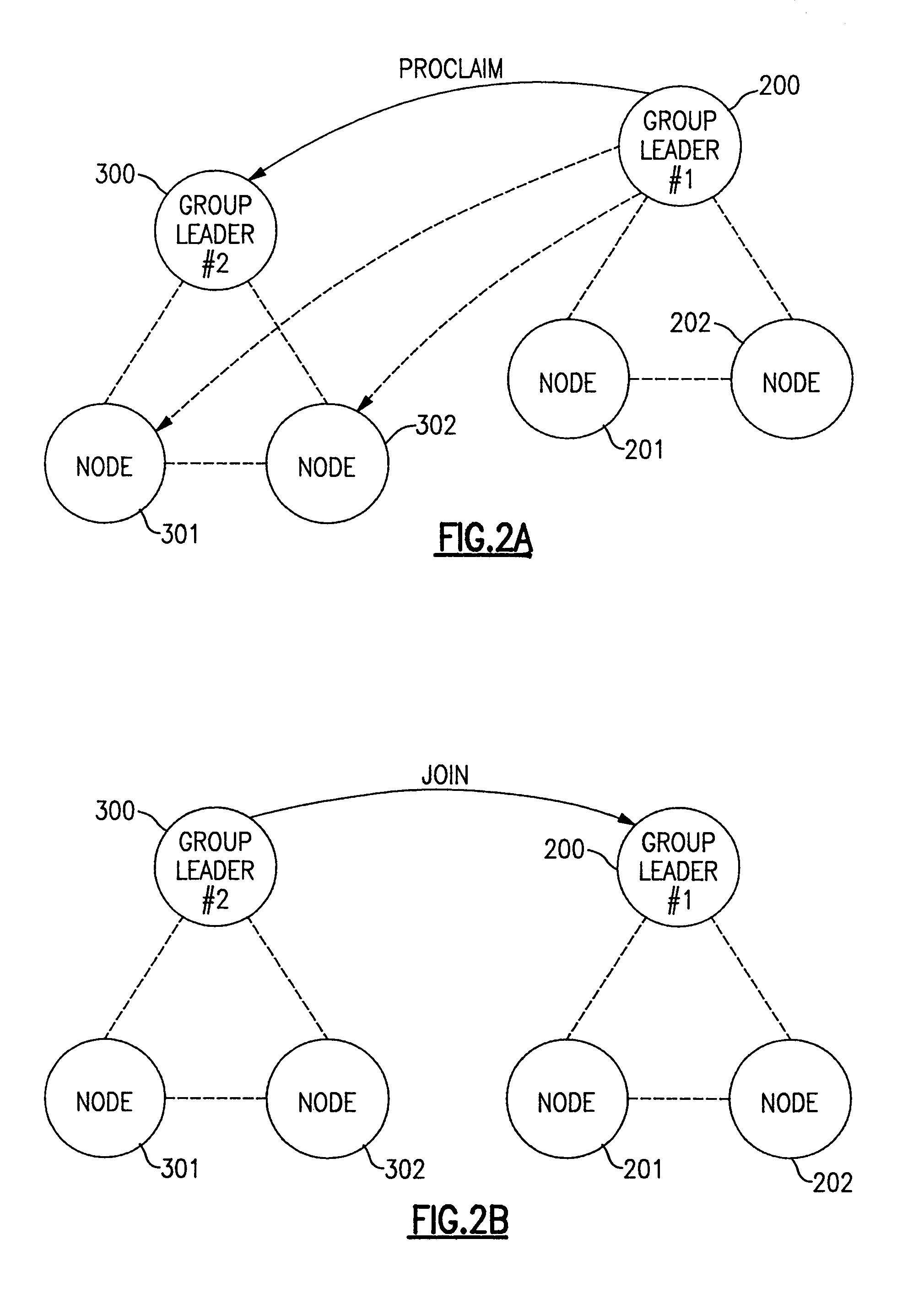

Method using two different programs to determine state of a network node to eliminate message response delays in system processing

InactiveUS7120693B2Increase elasticityImprove usabilityProgram initiation/switchingBroadband local area networksData processing systemLiveness

The determination of node and / or adapter liveness in a distributed network data processing system is carried out via one messaging protocol that can be assisted by a second messaging protocol which is significantly less susceptible to delay, especially memory blocking delays encountered by daemons running on other nodes. The switching of protocols is accompanied by controlled grace periods for needed responses. This messaging protocol flexibility is also adapted for use as a mechanism for controlling the deliberate activities of node addition (birth) and node deletion (death).

Owner:IBM CORP

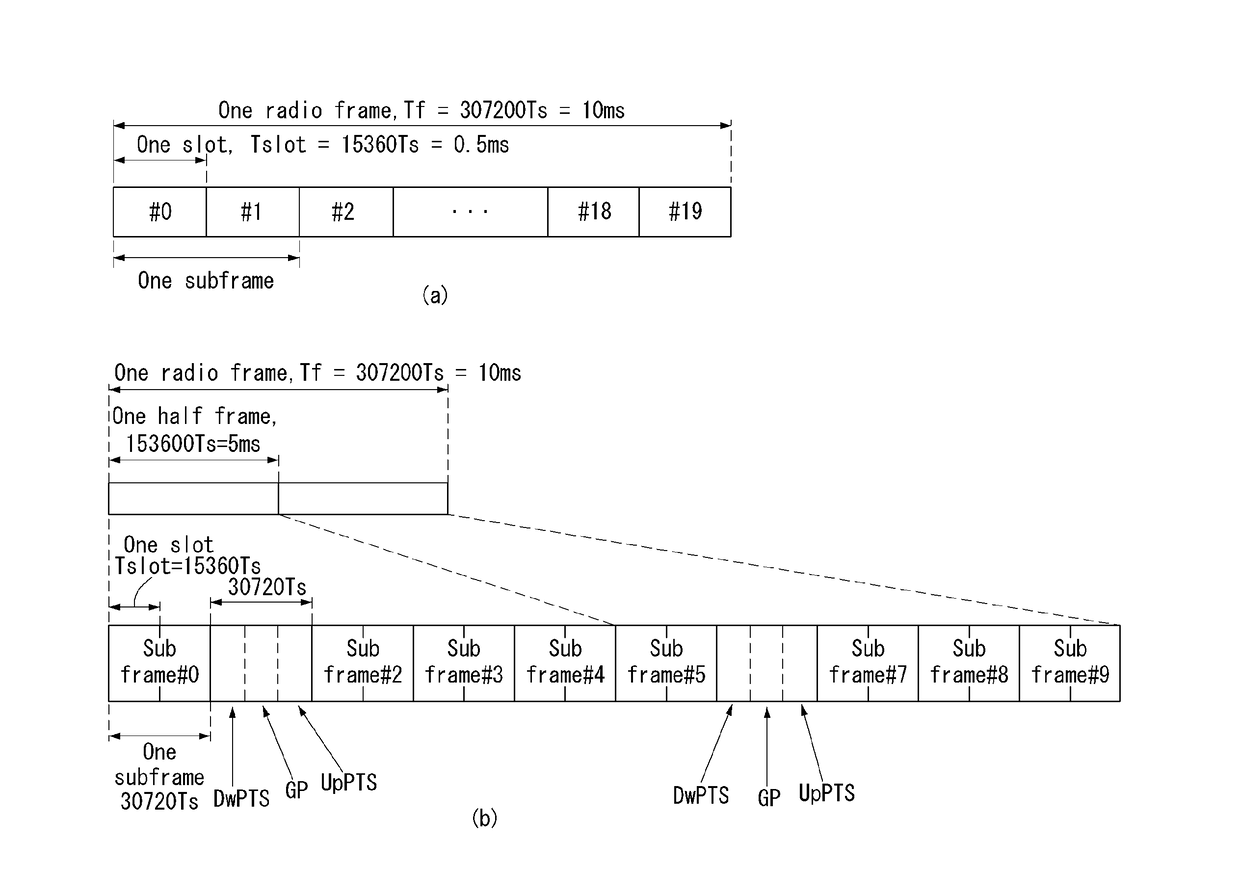

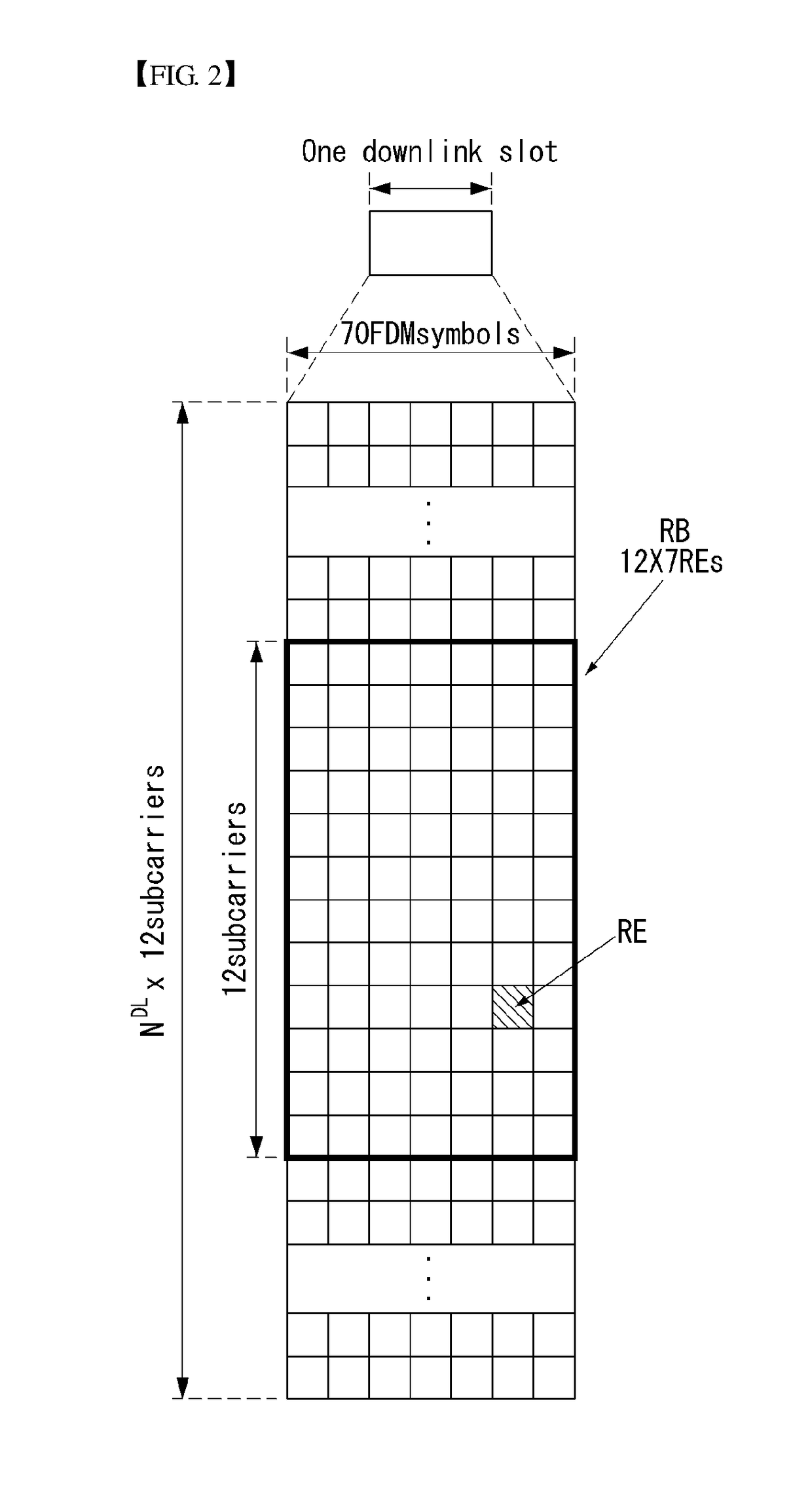

Method for discovering device in wireless communication system supporting device-to-device communication and apparatus for same

ActiveUS20170078863A1Minimizing resource conflictEfficient searchAssess restrictionPilot signal allocationCommunications systemResponse delay

Disclosed are a terminal discovery method in a wireless communication system supporting device-to-device (D2D) communication, and a device therefor. The method for discovering a terminal in a wireless communication system supporting device-to-device (D2D) communication, includes transmitting, by a terminal, a discovery signal, and receiving, by the terminal, a response signal as a response with respect to the discovery signal from a different terminal, wherein the response signal is transmitted by the different terminal when a response delay time determined on the basis of a reception signal-to-interference noise ratio (SINR) terminates.

Owner:LG ELECTRONICS INC +1

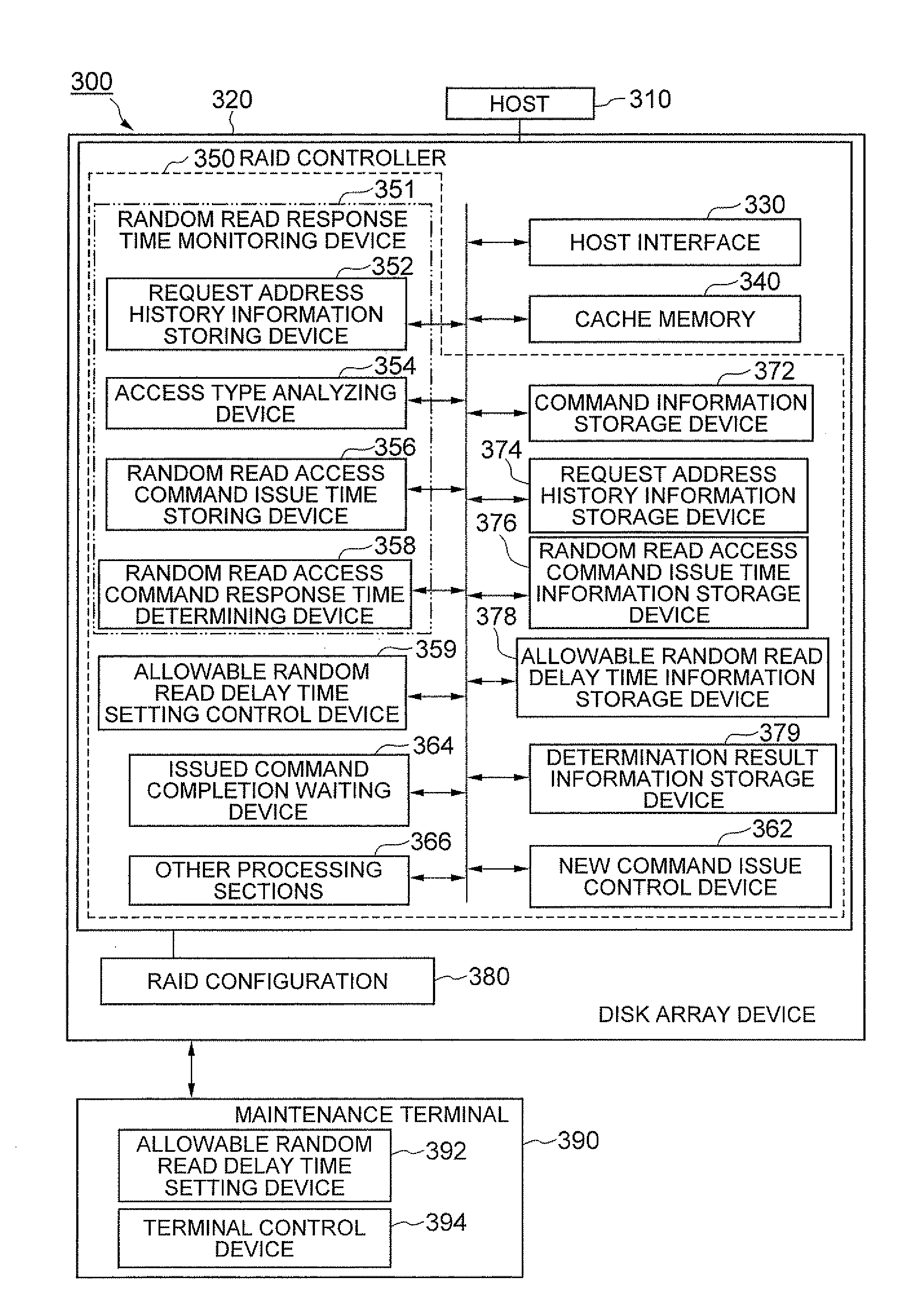

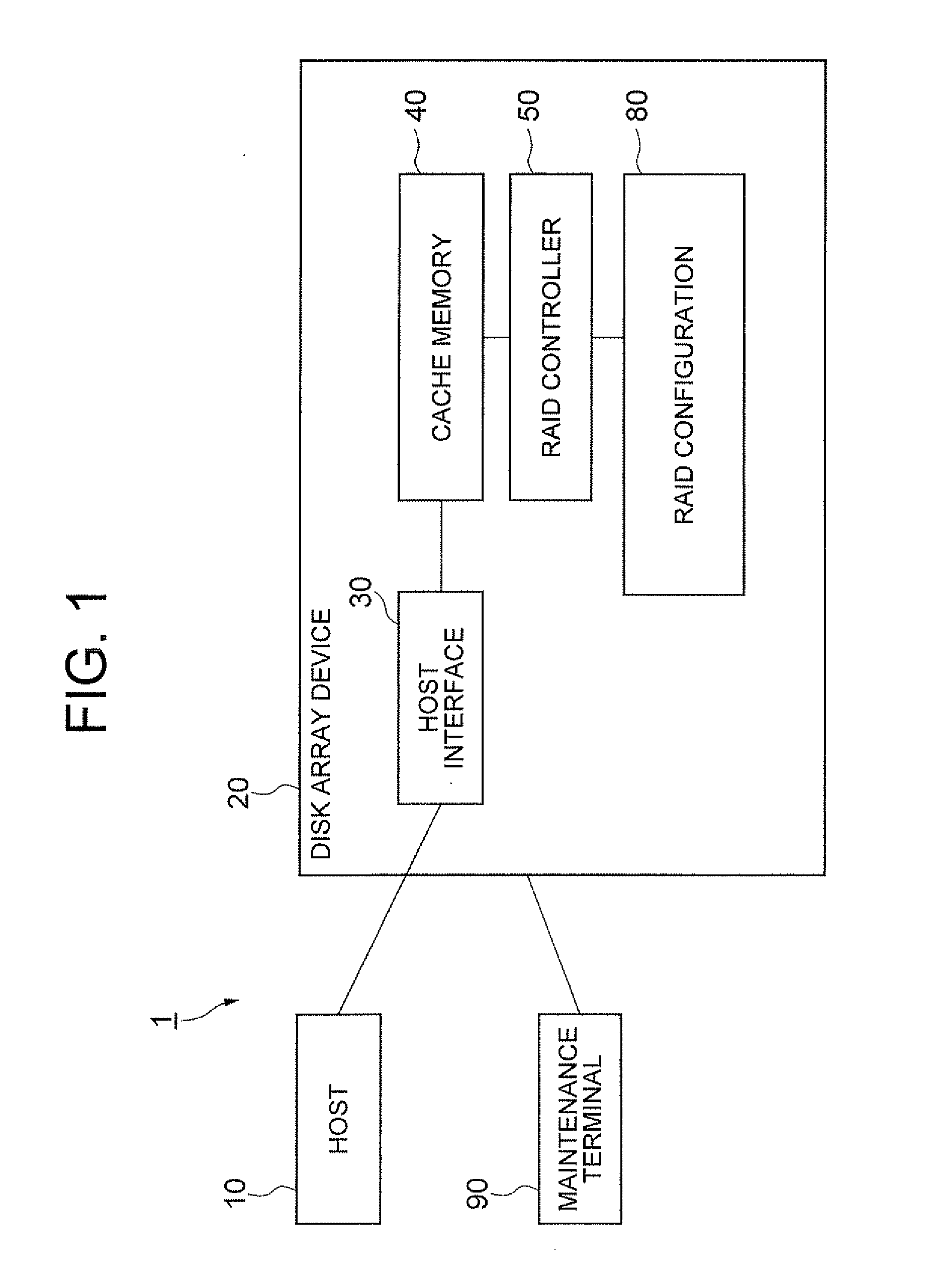

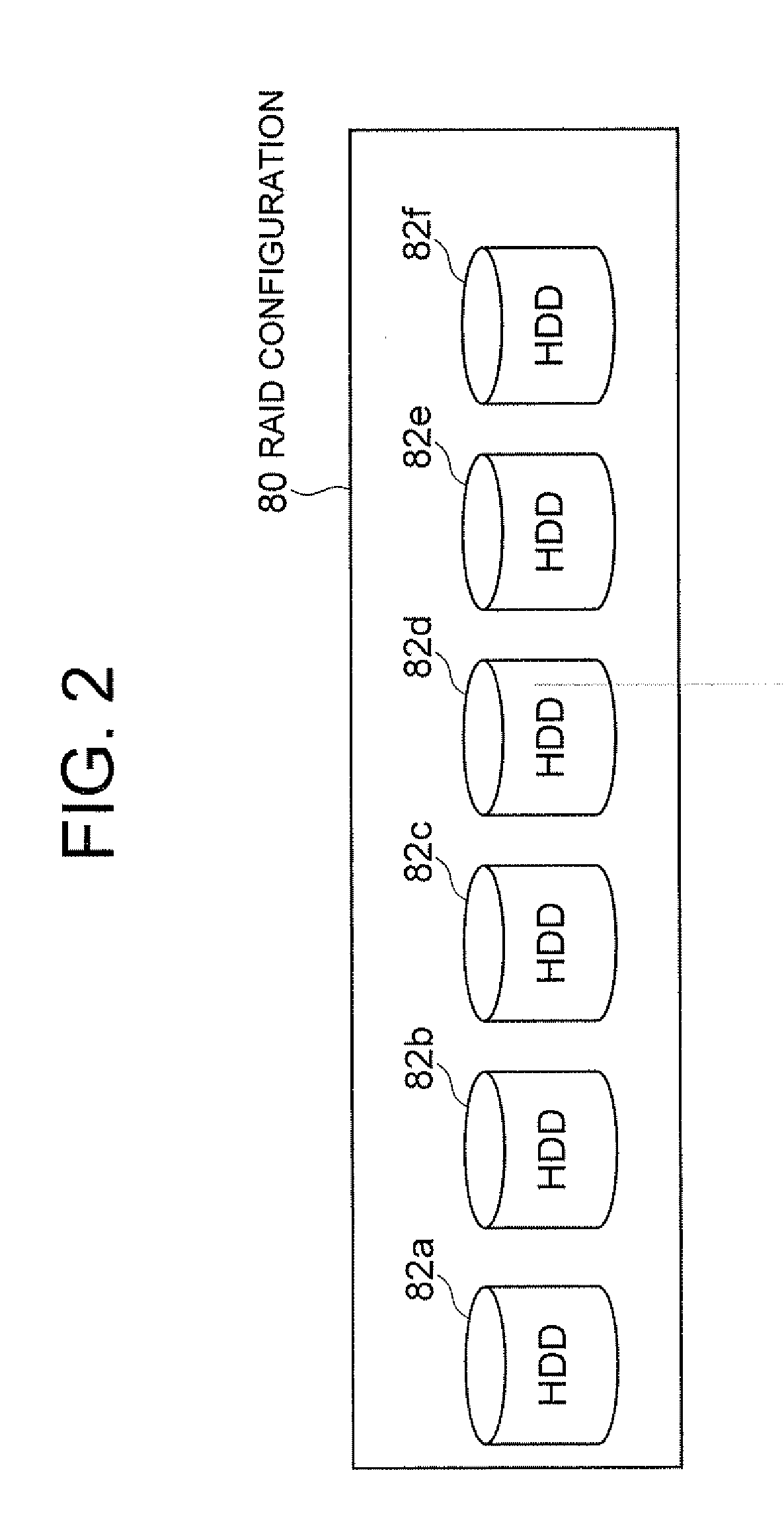

Storage medium control unit, data storage device, data storage system, method, and control program

InactiveUS20080282031A1Improve processing speedAvoid residueMemory adressing/allocation/relocationUnauthorized memory use protectionMedia controlsDelayed time

To prevent random access commands from remaining even in the case of mixed sequential and random accesses. A storage medium control unit is used in a data storage device adapted to perform processing on a data storage medium based on multiple requests including sequential access requests and random access requests. The storage medium control unit includes: request response delay monitoring device for monitoring the presence of delay in response to the requests based on whether or not the response time for each request exceeds a certain allowable delay time; and request control device for preventing the rearrangement processing of the sequential access requests and controlling the processing of the requests to be performed in a certain request order at the allowable delay time if exceeded.

Owner:NEC CORP

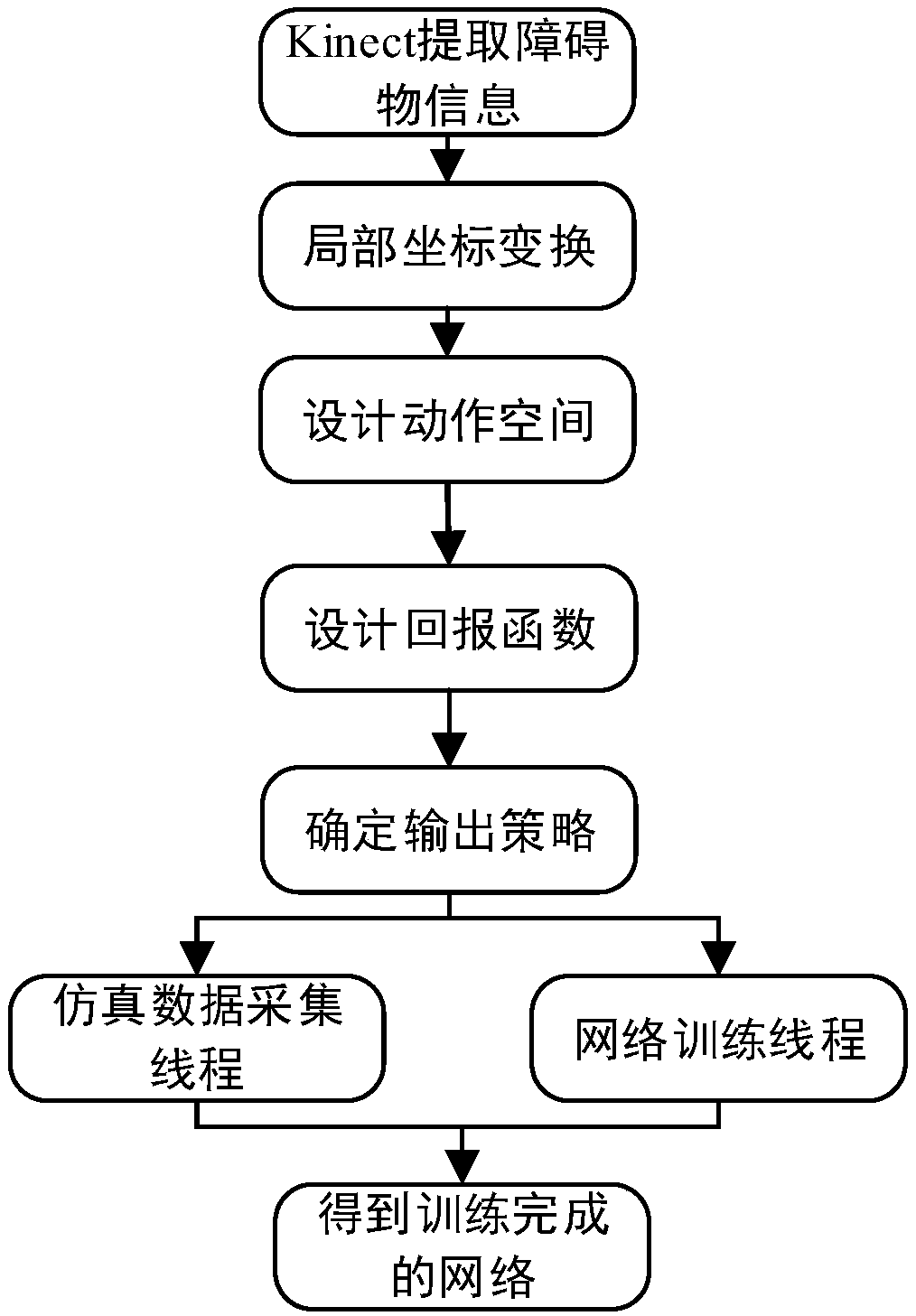

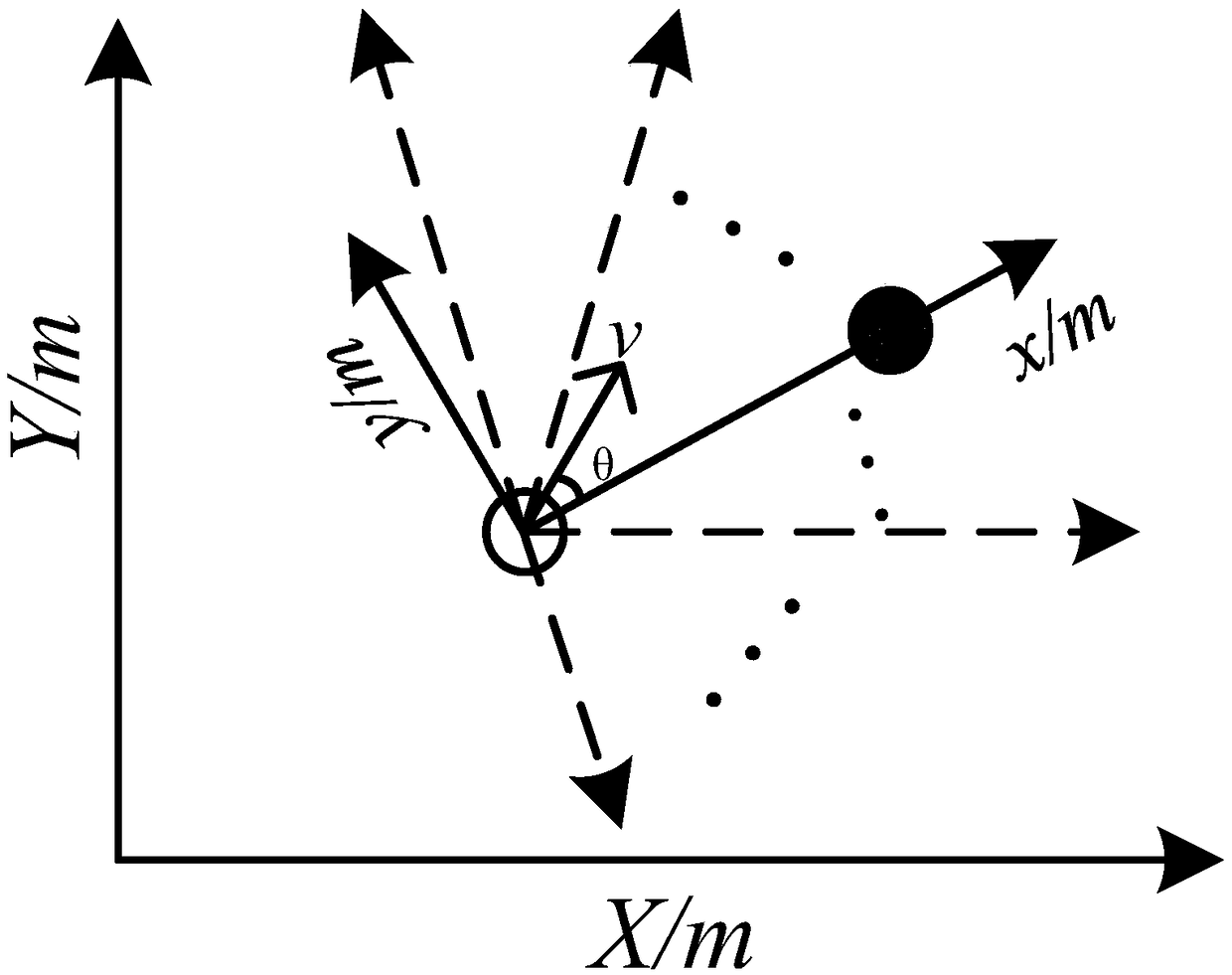

Mobile robot obstacle avoidance method based on DoubleDQN network and deep reinforcement learning

ActiveCN109407676AOvercoming success rateOvercoming the problem of high response latencyNeural architecturesPosition/course control in two dimensionsData acquisitionSimulation

The invention, which belongs to the technical field of mobile robot navigation, provides a mobile robot obstacle avoidance method based on a DoubleDQN network and deep reinforcement learning so that problems of long response delay, long needed training time, and low success rate of obstacle avoidance based on the existing deep reinforcement learning obstacle avoidance method can be solved. Specialdecision action space and a reward function are designed; mobile robot trajectory data collection and Double DQN network training are performed in parallel at two threads, so that the training efficiency is improved effectively and a problem of long training time needed by the existing deep reinforcement learning obstacle avoidance method is solved. According to the invention, unbiased estimationof an action value is carried out by using the Double DQN network, so that a problem of falling into local optimum is solved and problems of low success rate and high response delay of the existing deep reinforcement learning obstacle avoidance method are solved. Compared with the prior art, the mobile robot obstacle avoidance method has the following advantages: the network training time is shortened to be below 20% of the time in the prior art; and the 100% of obstacle avoidance success rate is kept. The mobile robot obstacle avoidance method can be applied to the technical field of mobilerobot navigation.

Owner:HARBIN INST OF TECH +1

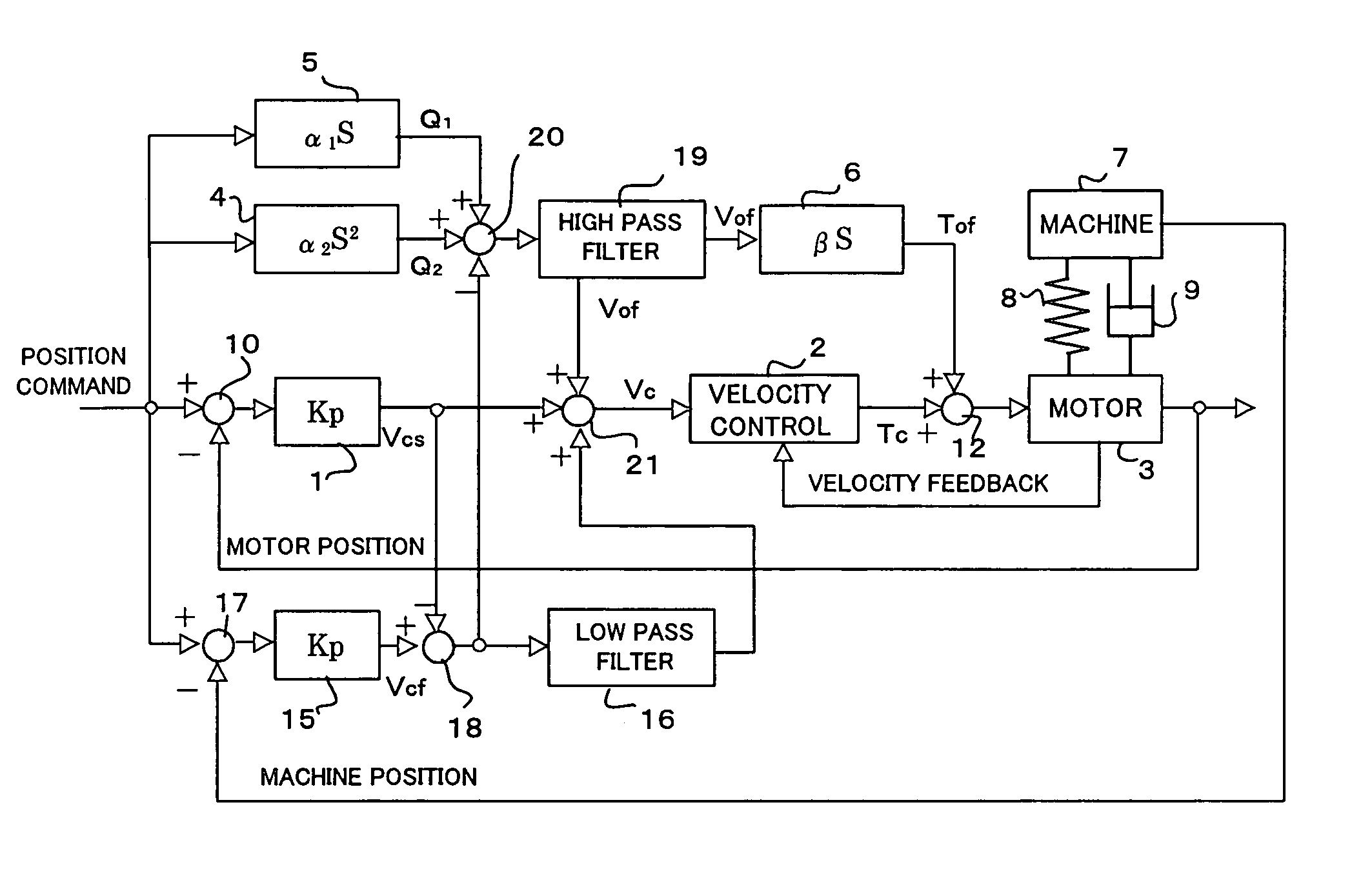

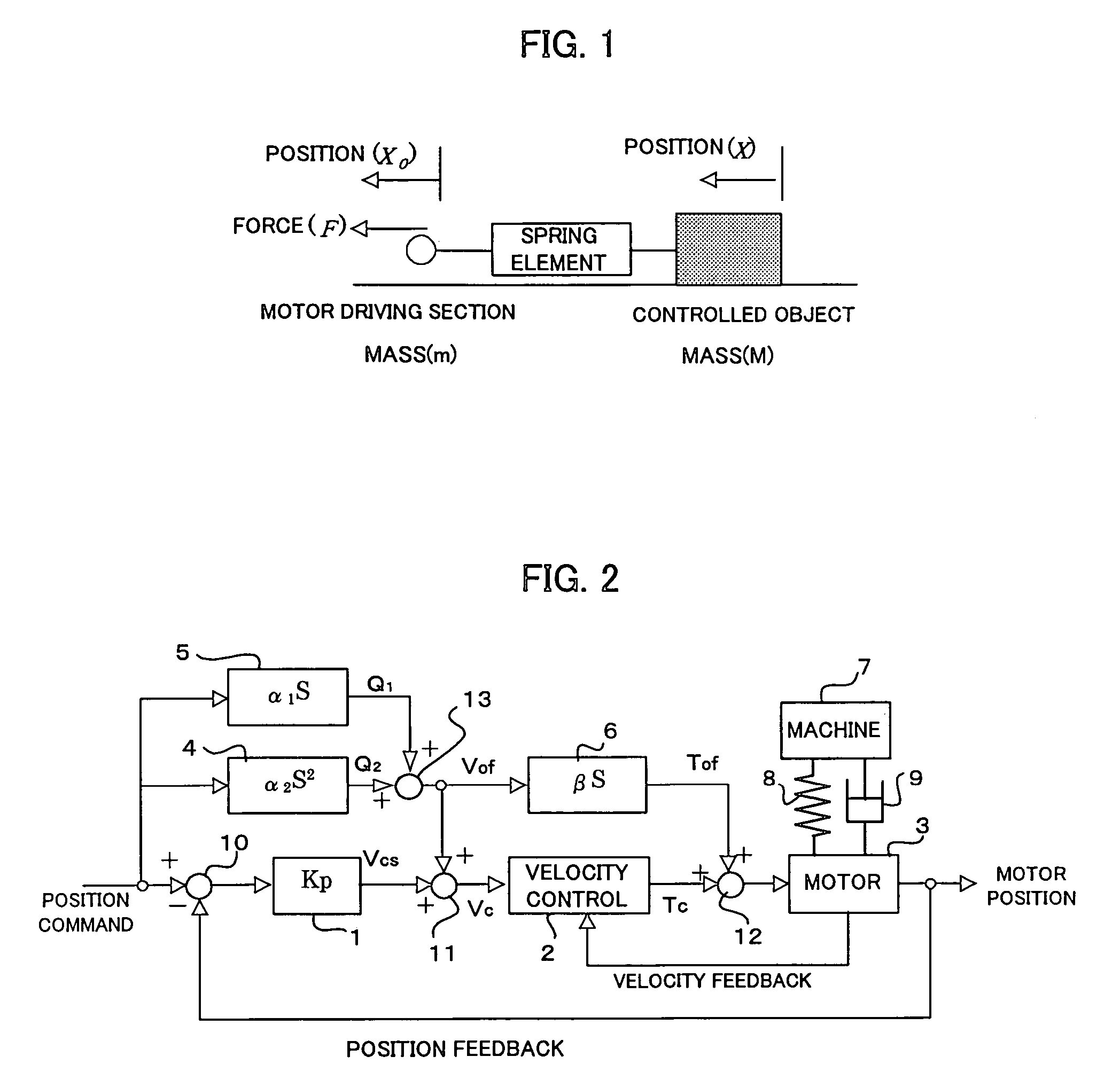

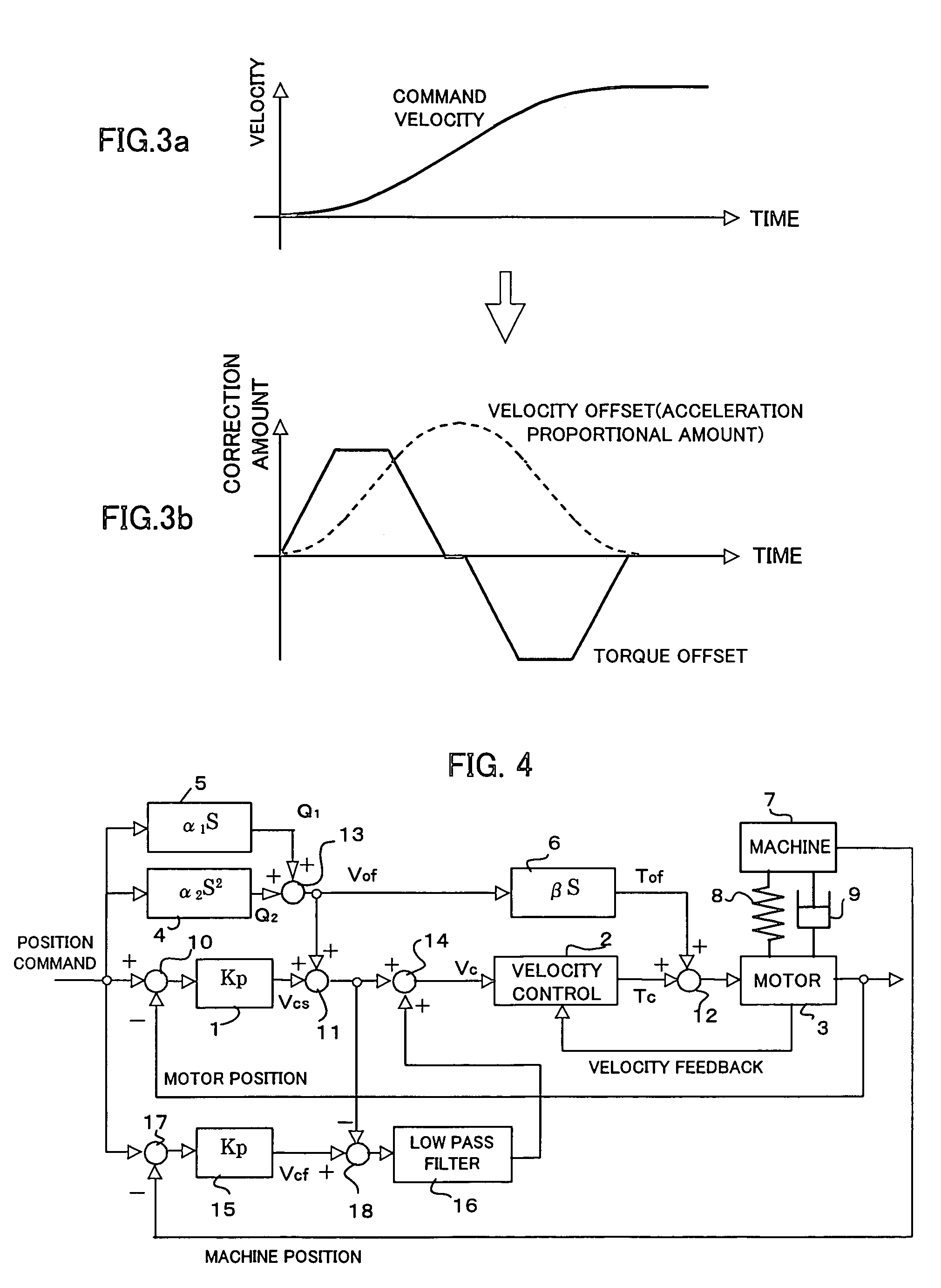

Controller

ActiveUS7030585B2Suppression delayReduce delaysElement comparisonFeeding apparatusLoop controlResponse delay

A controller capable of preventing response delay and generation of vibrations attributable thereto during position control of a movable part of a machine having low rigidity. A motor for driving a machine having low rigidity is subjected to position and velocity loop control. Compensation amount Q1 proportional to command velocity obtained by differentiating a position command and compensation amount Q2 proportional to second-order differentiated command acceleration are obtained. Compensation amounts Q1 and Q2 are added together, thus obtaining a velocity offset amount Vof corresponding to a estimated torsion amount. A differentiated value of the velocity offset amount is multiplied by coefficient β to obtain a torque offset amount Tof. The velocity offset amount Vof is added to a velocity command Vcs obtained by position loop control 1. The torque offset amount Tof is added to a torque command Tc outputted in velocity loop control 2, and the result is used as a drive command to the motor. Based on the velocity and the torque offset amount Vof and Tof, a torsion amount between motor 3 and the machine is controlled. The machine position and velocity are controlled with accuracy by regular position and velocity loop control.

Owner:FANUC LTD

Control device for internal combustion engine

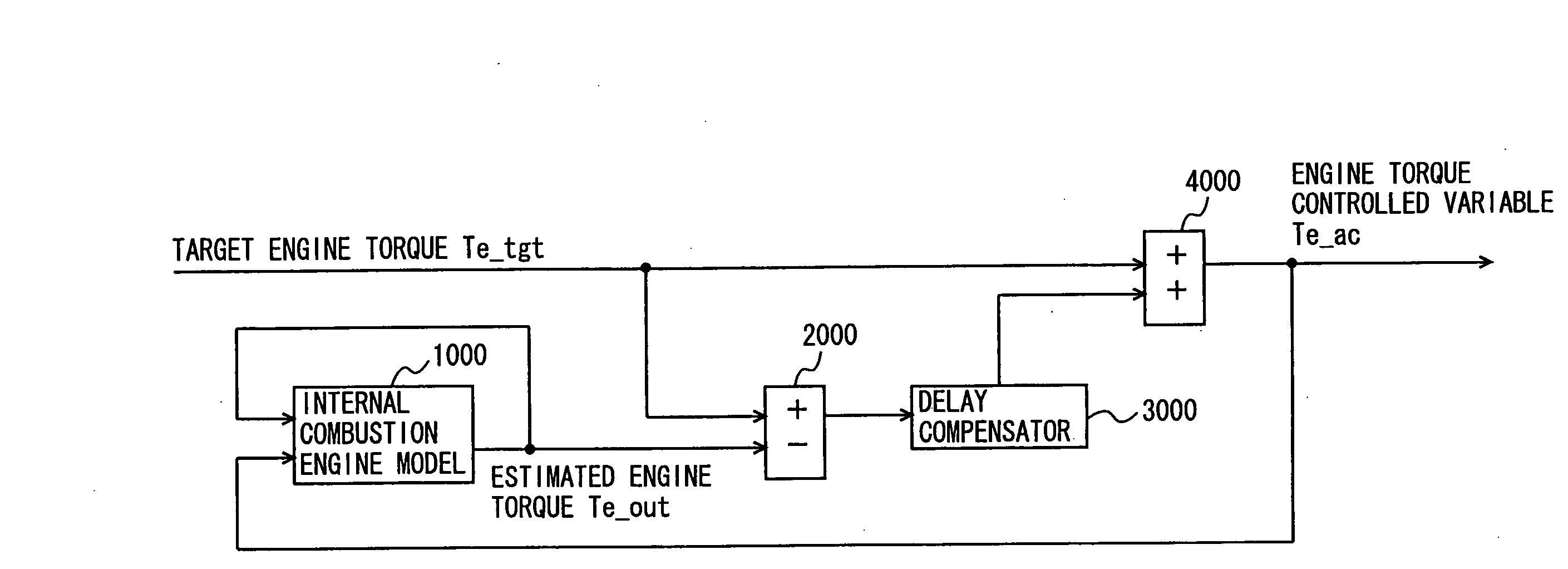

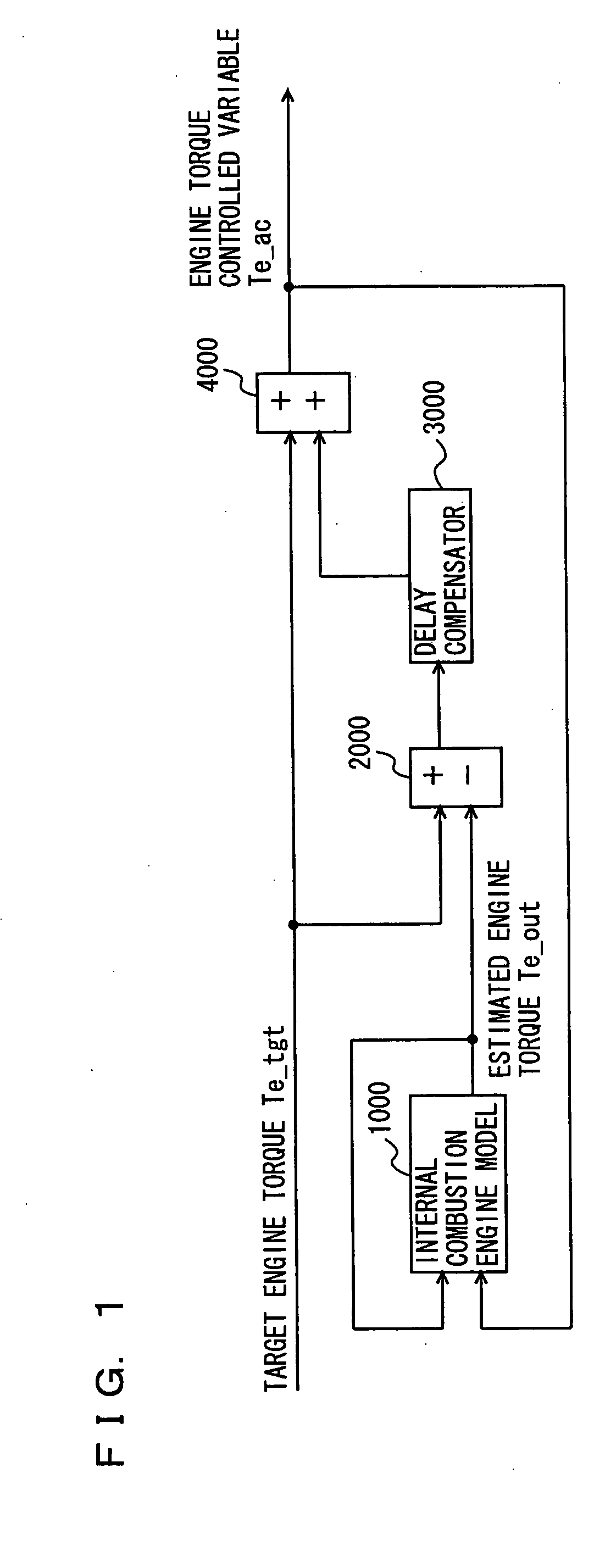

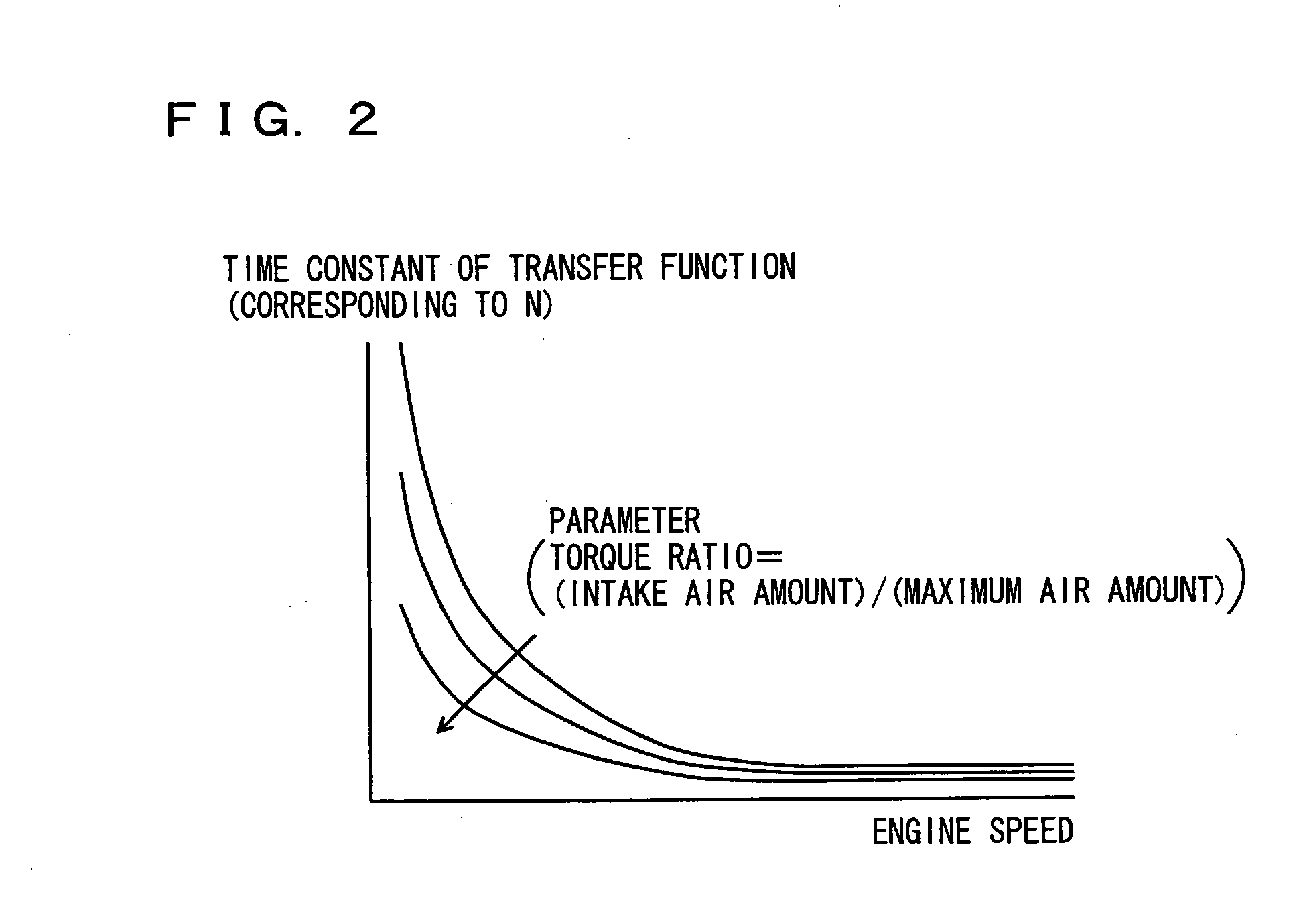

InactiveUS20090037066A1Improvement in control response characteristic control in controlImprove control stabilityAnalogue computers for vehiclesElectrical controlControl systemResponse delay

Owner:TOYOTA JIDOSHA KK

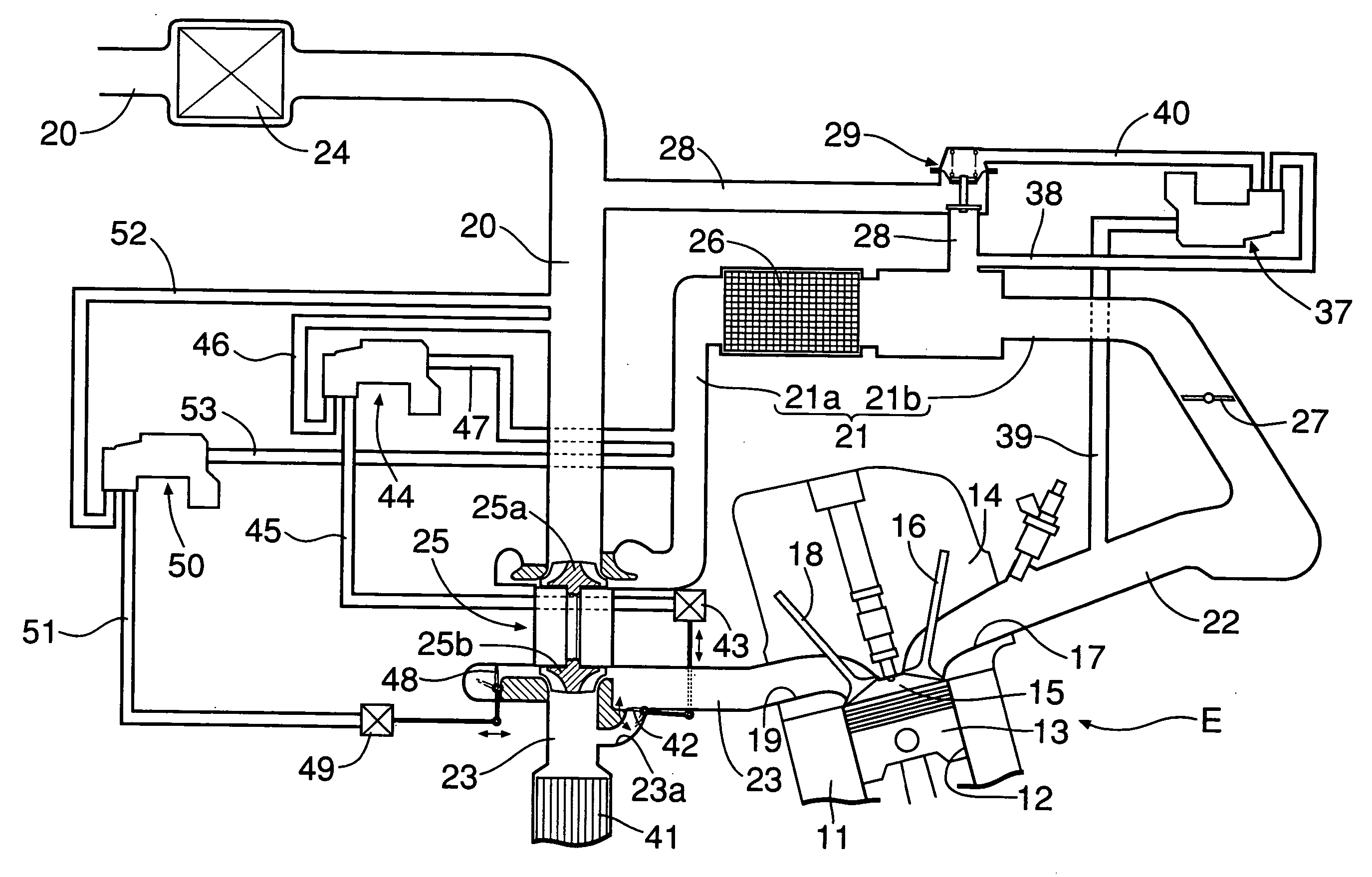

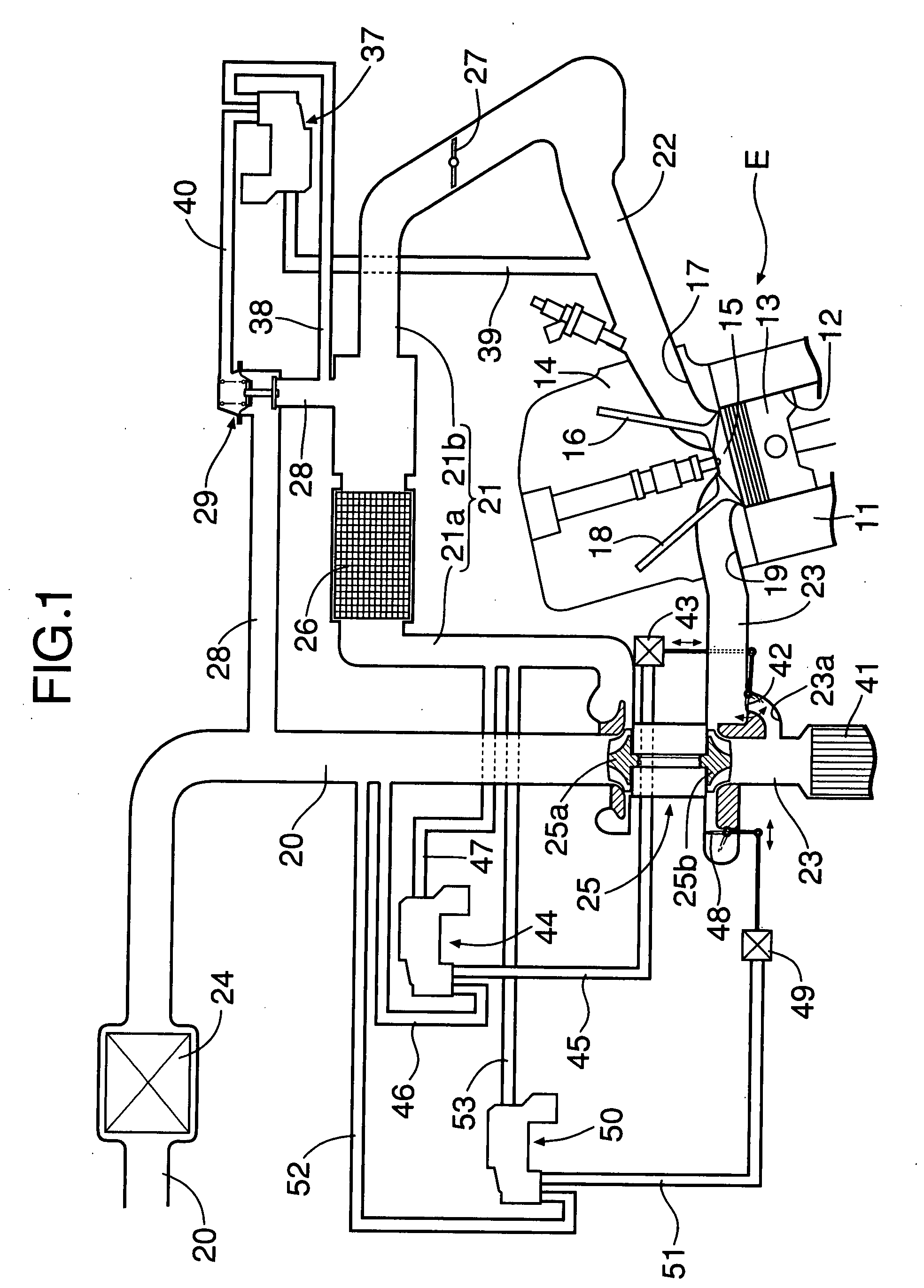

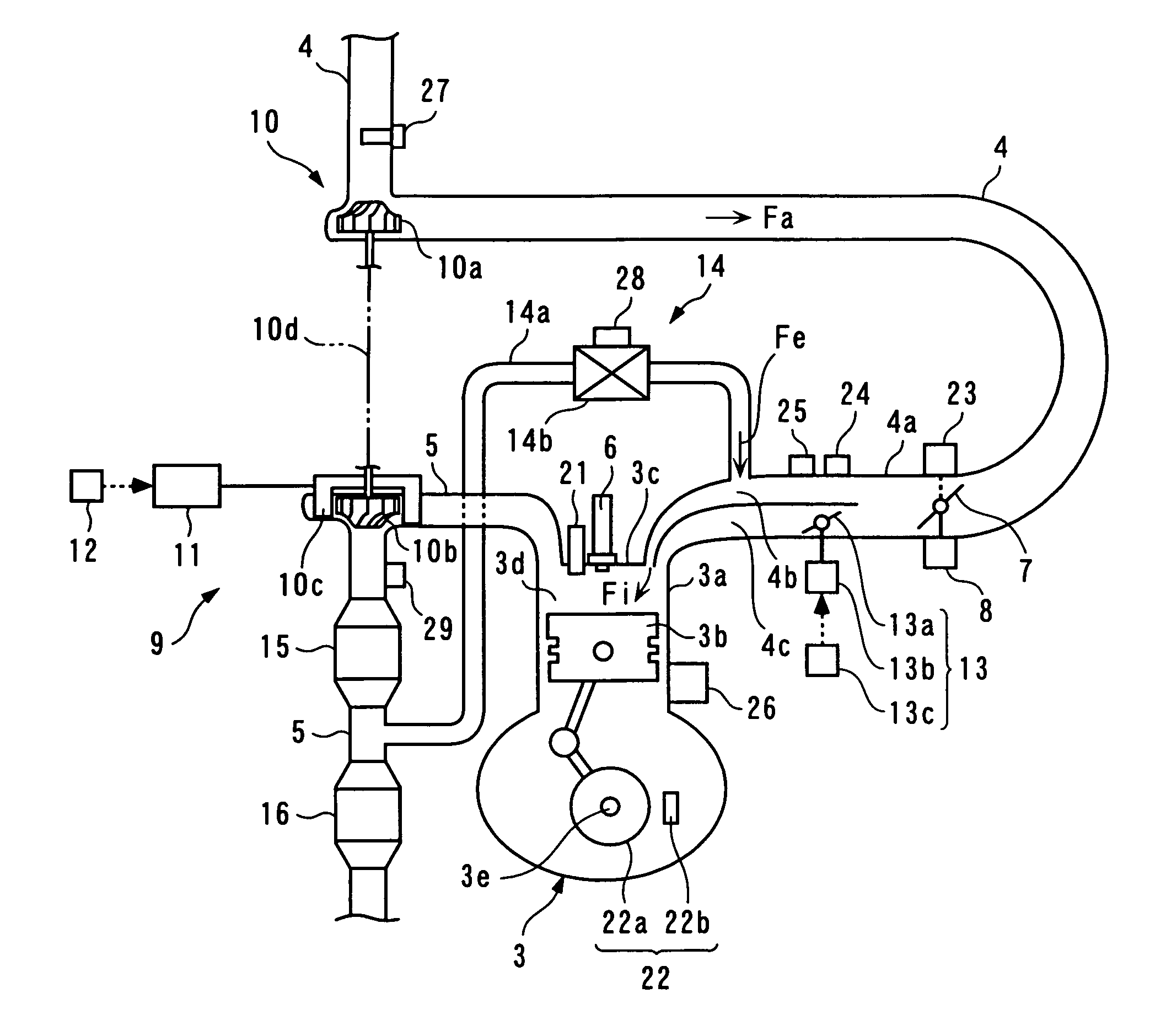

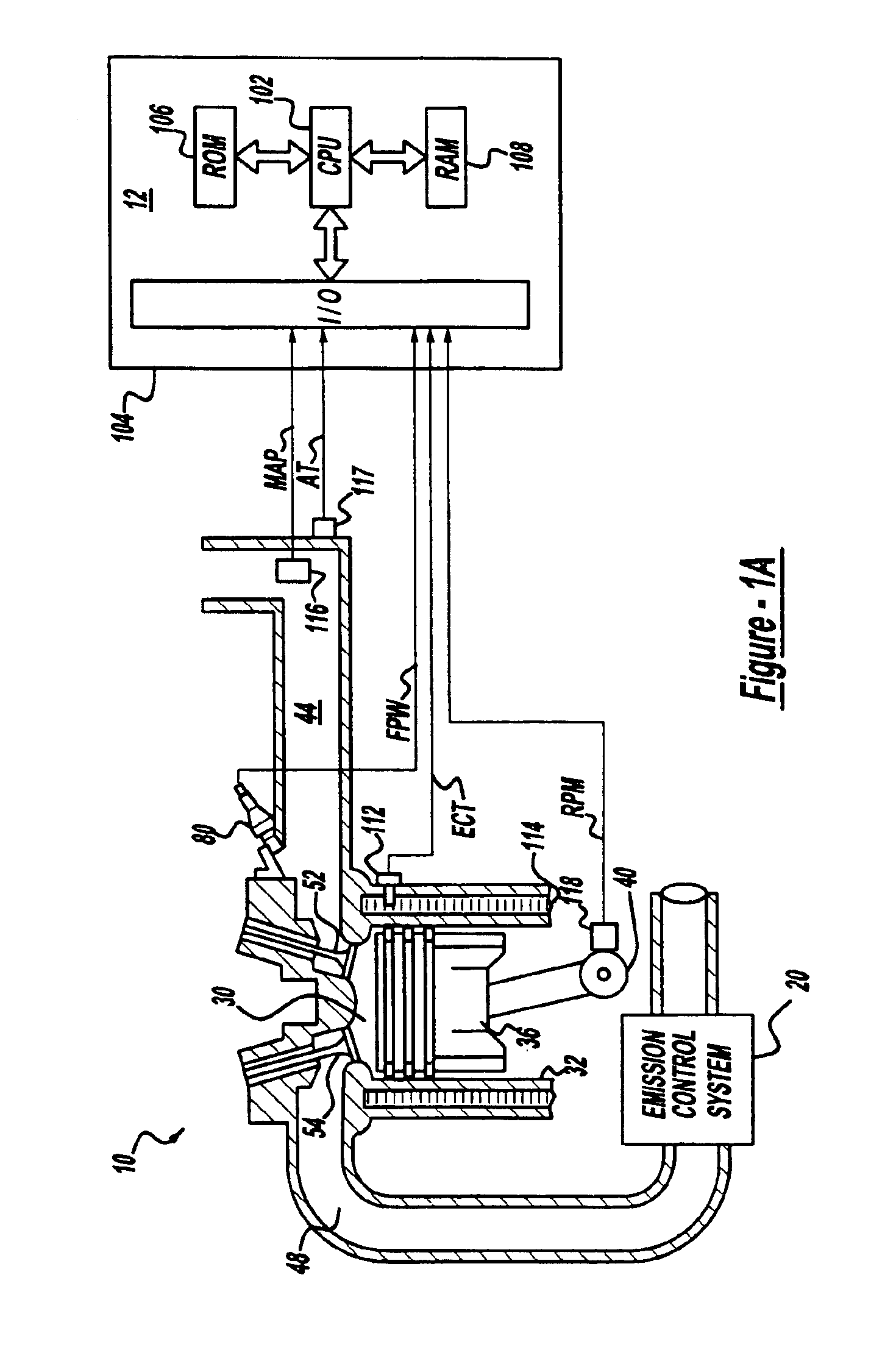

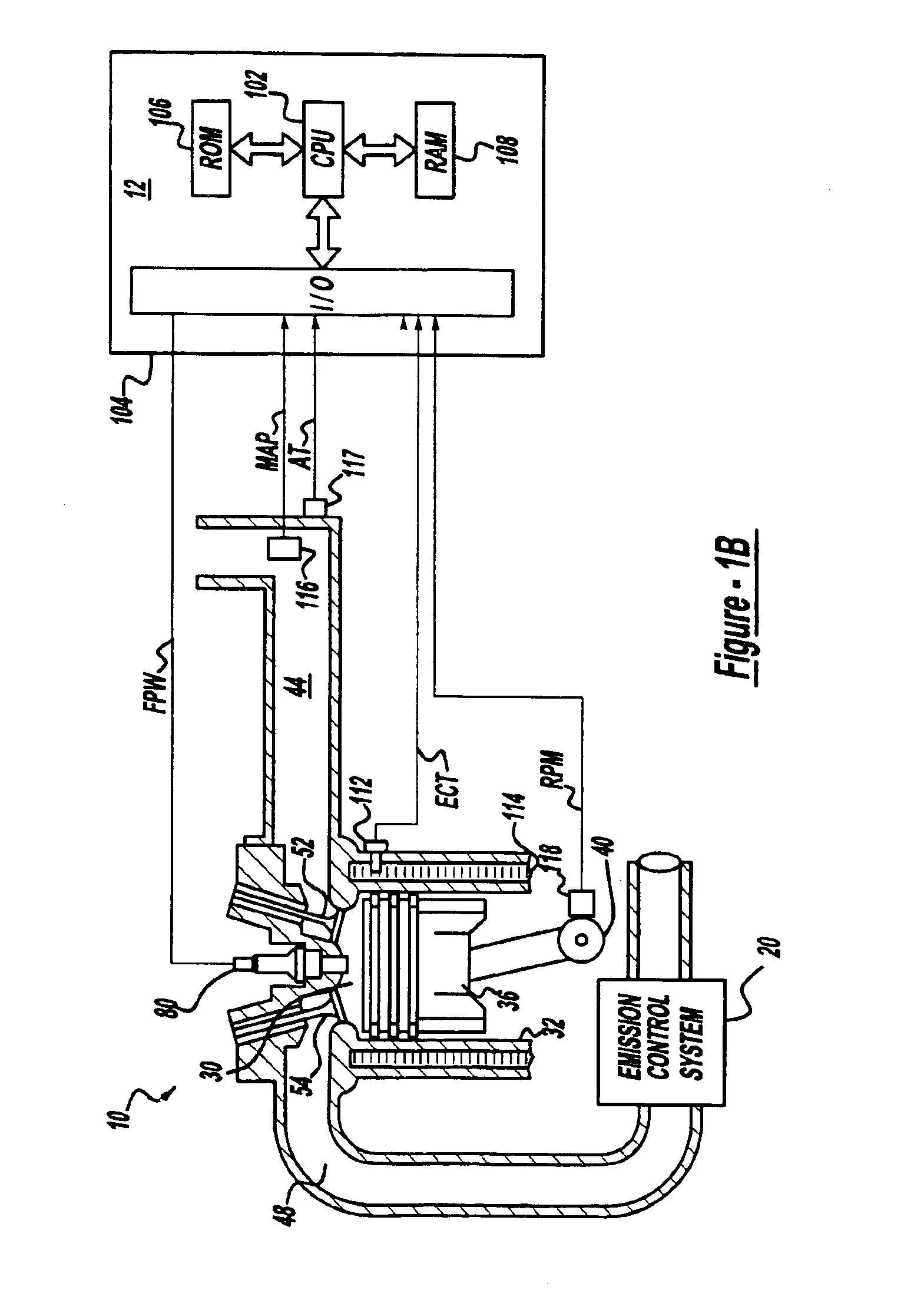

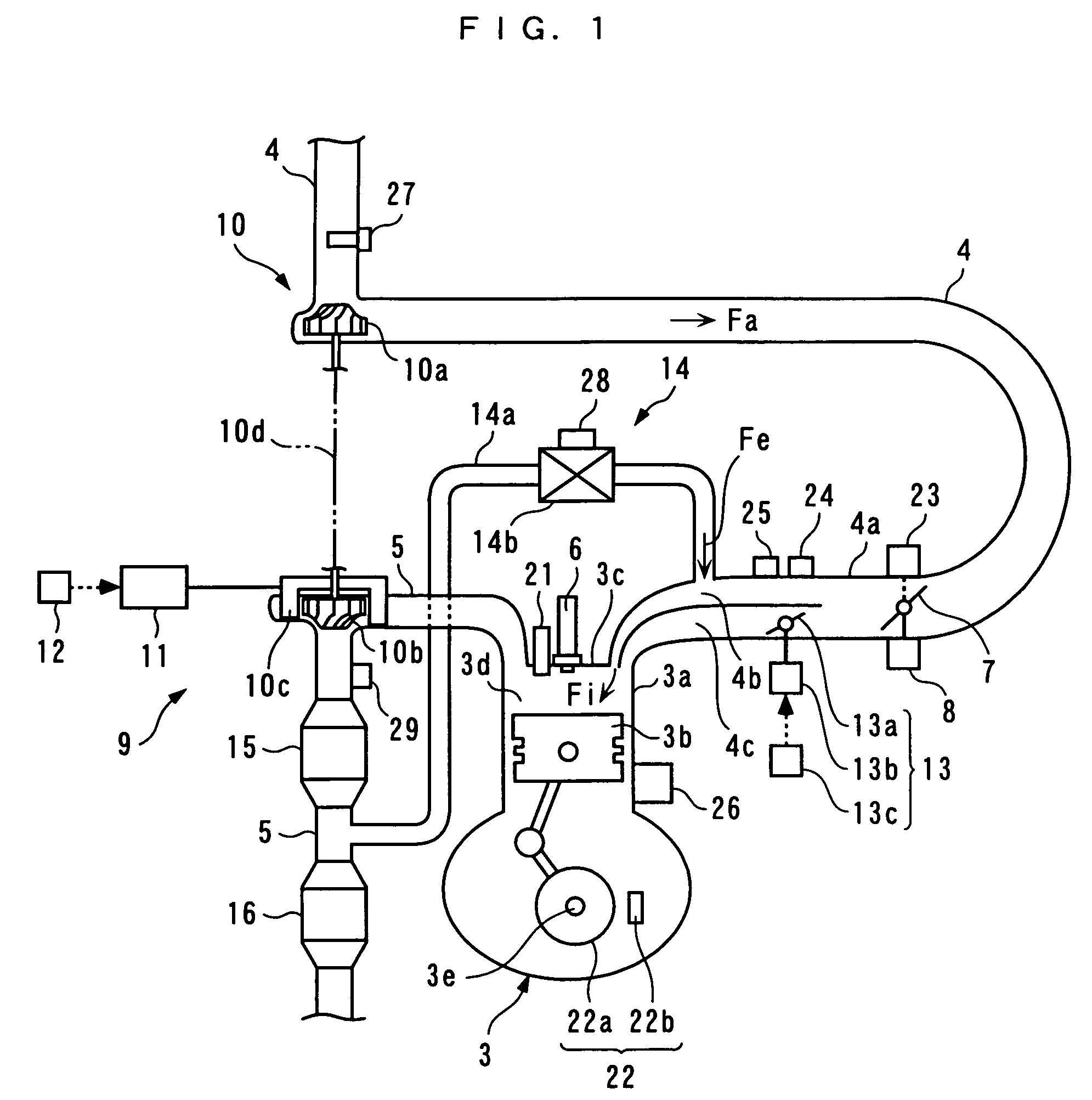

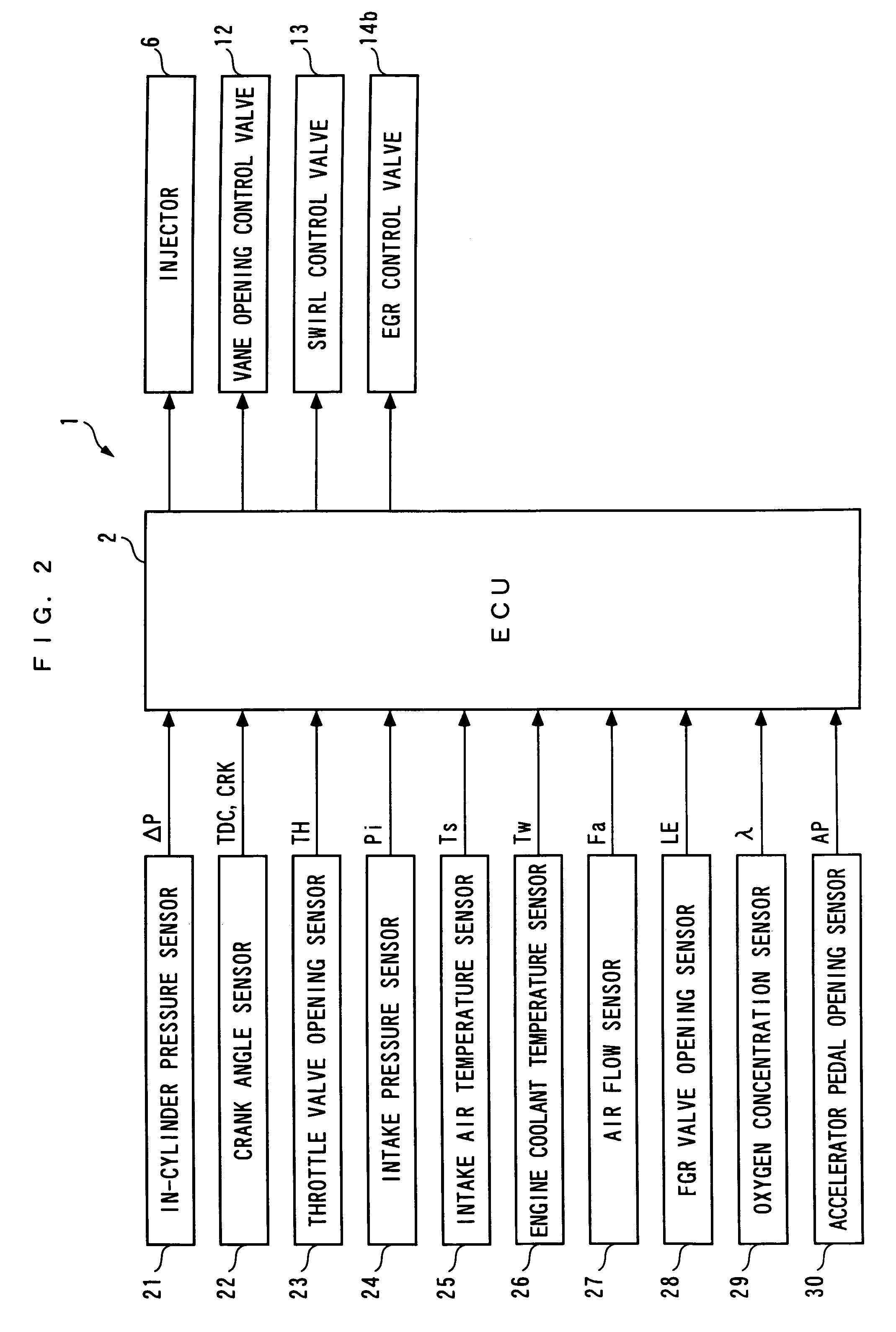

Control system for internal combustion engine

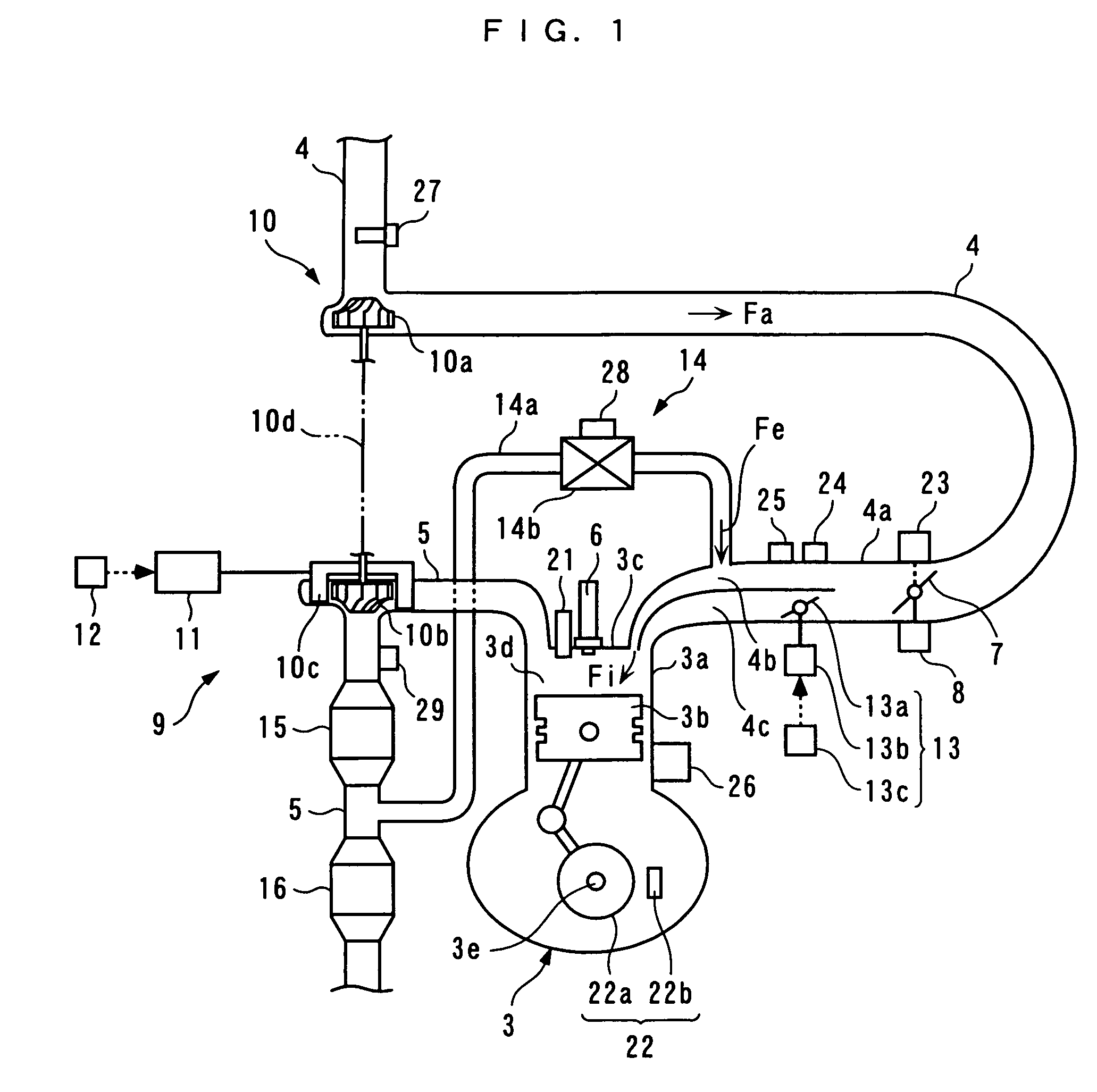

ActiveUS20060011180A1Easy to controlSuppress torque variationElectrical controlNon-fuel substance addition to fuelTransient stateControl system

A control system for an internal combustion engine, which is capable of optimally controlling fuel injection by an injector even in a transient state of the engine. An ECU 2 of the engine provided with an EGR system (14) controls the amount (Fa) of air drawn into cylinders (3a) via an intake system (4). An air flow sensor (27) detects the intake air amount (Fa). The ECU 2 estimates a flow rate (Fe_hat) of EGR gases according to response delay of recirculation of the EGR gases by the EGR system (14), estimates the amount (mo2) of oxygen existing in the cylinder (3a), based on the detected intake air amount (Fa) and the estimated flow rate (Fe_hat) of the EGR gases, determines a fuel injection parameter (Q*) based on the engine speed (Ne) and the estimated in-cylinder oxygen amount (mo2), and controls an injector (6) based on the determined fuel injection parameter (Q*).

Owner:HONDA MOTOR CO LTD

Air amount detector for internal combustion engine

InactiveUS6662640B2Improve calculation accuracyAnalogue computers for vehiclesElectrical controlResponse delayPhase advance

A response delay compensation element for compensating a response delay of an output gMAF of an airflow meter by a phase advance compensation is provided so that an output g of the response delay compensation element is input to the intake air system model. A transfer function of the phase advance compensation iswhere T1 and T2 are time constant of the phase advance compensation, which is set based on at least one of the output gMAF of the airflow meter, engine speed, an intake air pressure, and a throttle angle. The model time constant tauIM of the intake air system model is calculated by variables including volumetric efficiency and the engine speed. The volumetric efficiency is calculated by two-dimensional map having the engine speed and the intake air pressure as parameters thereof.

Owner:DENSO CORP

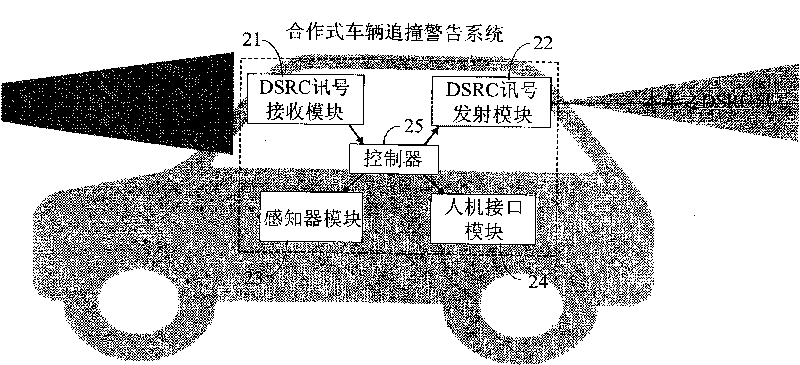

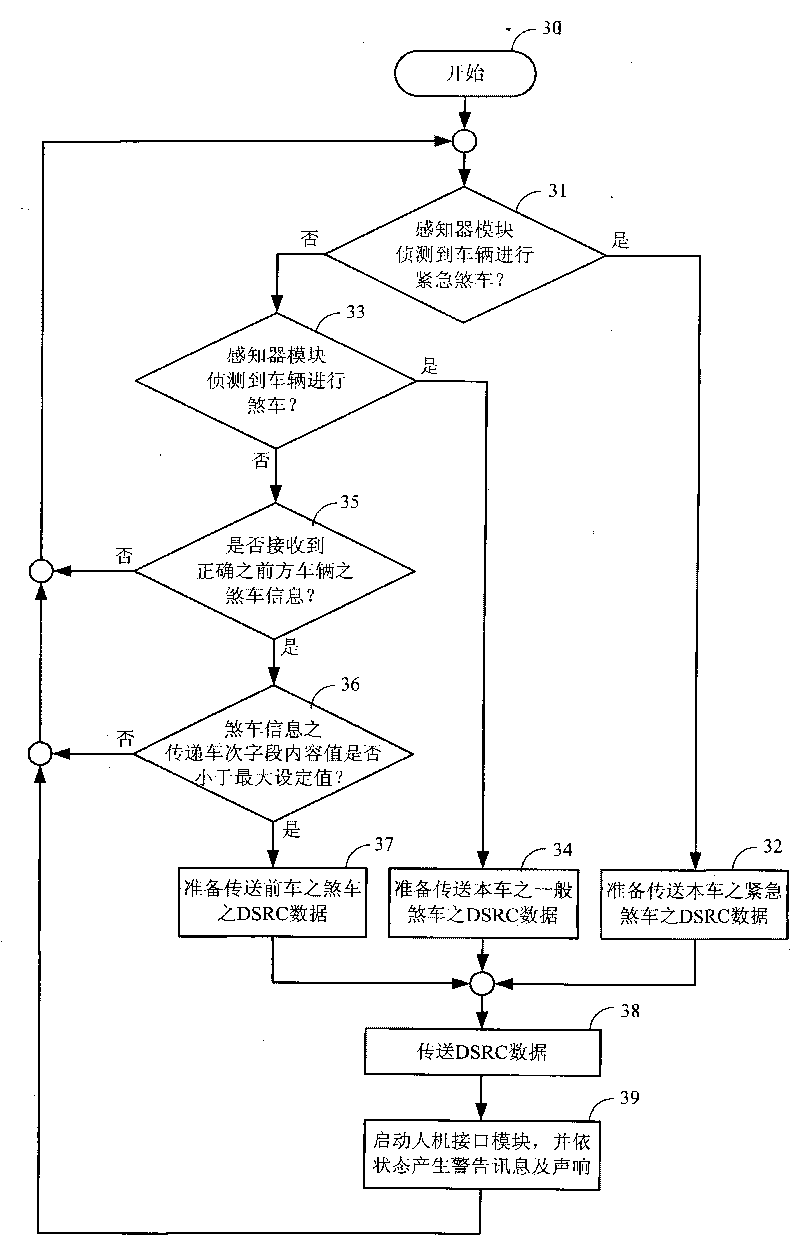

Cooperative vehicle overtaking collision warning system

InactiveCN101707006AFast driving dynamicsAvoid occupyingAnti-collision systemsControl systemShortest distance

The invention discloses a cooperative vehicle overtaking collision warning system. The warning system comprises a DSRC signal receiving module, a DSRC signal transmitting module, a sensor module, a human-computer interface module and a controller, wherein the DSRC signal receiving module is used for receiving a DSRC signal transmitted by a vehicle ahead and converting the DSRC signal into traveling data for the vehicle ahead; the DSRC signal transmitting module is used for transmitting the traveling data of the vehicle of the driver to a back vehicle through the DSRC signal; the sensor module is used for sensing the travelling movement of vehicles; the human-computer interface module accepts a user to operate and set the system and provides the functions of displaying information and giving sound alarm; and the controller is used for processing the sensing result of the sensor and controlling system operation flow, informing the human-computer interface module to warn the driver of the vehicle through lamplight and sound according to the brake event of the vehicle ahead or the vehicle at the back, and informing the DSRC signal transmitting module to warn the driving of the back vehicle. The warning system adopts a specific short distance wireless communication technology with propagation directional property to transmit travelling information to rearward rapidly, eliminates the response delay time of the driver in the vehicle stream, and further avoids the overtaking collision accident.

Owner:CHUNGHWA TELECOM CO LTD

Control device for internal combustion engine

ActiveUS7441544B2Short calculation timeImprove accuracyElectrical controlInternal combustion piston enginesResponse delayThrottle opening

Provided is a control device for an internal combustion engine, which is provided to allow a throttle opening degree to be controlled in accordance with a target engine intake air flow quantity even during transitional operation. The actual cylinder intake air flow quantity calculating unit (21) calculates a response delay model for an intake system from a volumetric efficiency equivalent value (Kv) calculated from a rotational speed (Ne) of an engine (1) and an intake manifold pressure (Pim), an intake pipe volume (Vs), and a displacement (Vc) of each of cylinders (2), and calculates an actual cylinder intake air amount (Qcr) from an actual engine intake air amount (Qar) obtained from an air flow sensor (4) and the response delay model. The intake air flow quantity controlling unit (24) controls the throttle opening degree (TP) in accordance with the target engine intake air amount (Qat).

Owner:MITSUBISHI ELECTRIC CORP

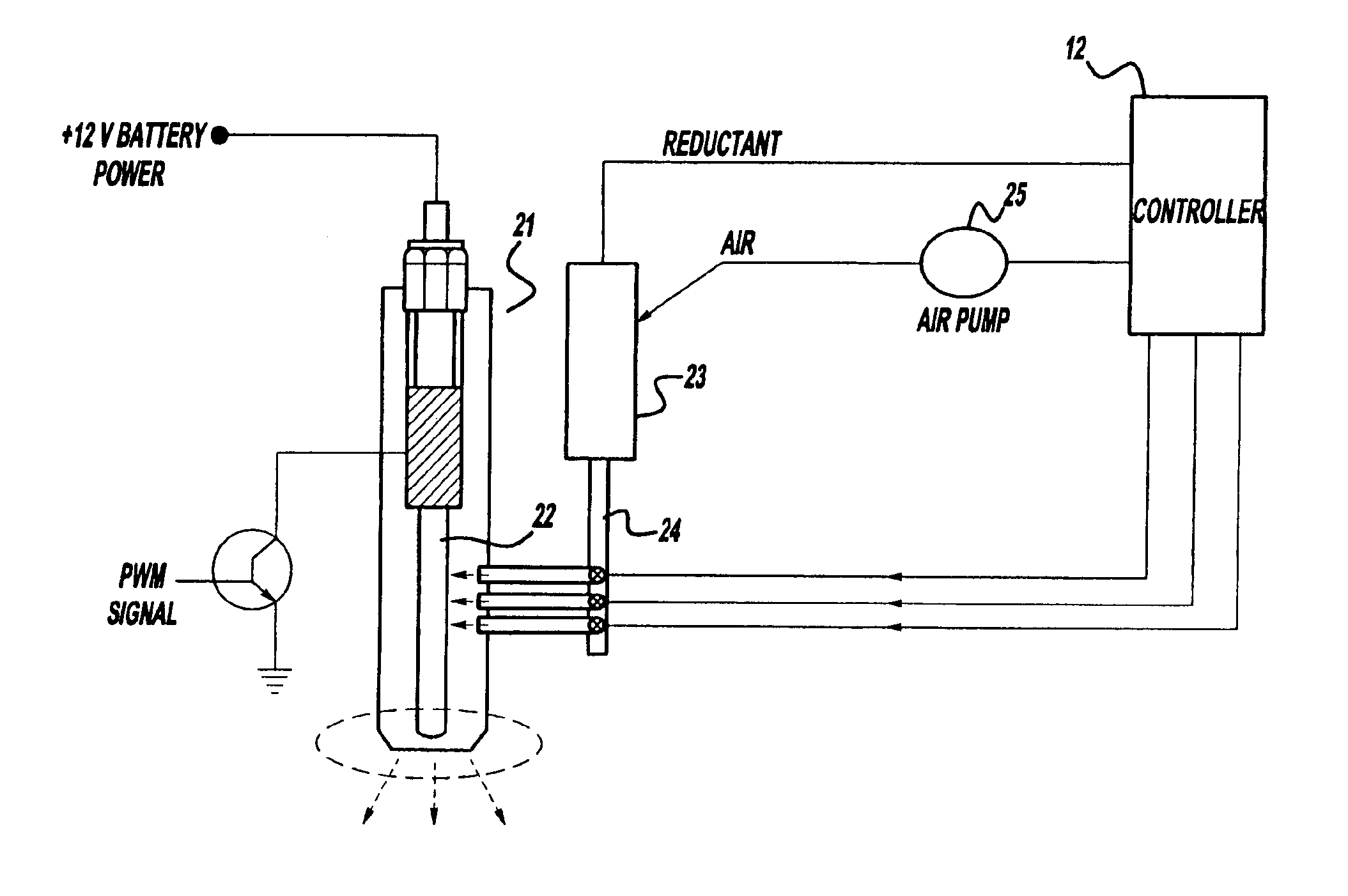

Diesel aftertreatment systems

InactiveUS6895747B2Readily apparentImprove NOx conversion efficiencyInternal combustion piston enginesExhaust apparatusResponse delayProcess engineering

A method and a system for improving conversion efficiency of a urea-based SCR catalyst coupled downstream of a diesel or other lean burn engine is presented. The system includes an electrically heated vaporizer unit into which a mixture of reductant and air in injected. The mixture is vaporized in the unit and introduced into the exhaust gas prior to its entering the SCR catalyst. Introducing the reductant mixed with air into the reductant delivery system prevents lacquering and soot deposits on the heated element housed inside the unit, and also speeds up the vaporization process thus reducing system response delays and improving the device conversion efficiency. The reductant delivery system is further improved by adding a hydrolyzing catalyst to it, and by isolating the reductant and air mixture from the heating element.

Owner:FORD GLOBAL TECH LLC

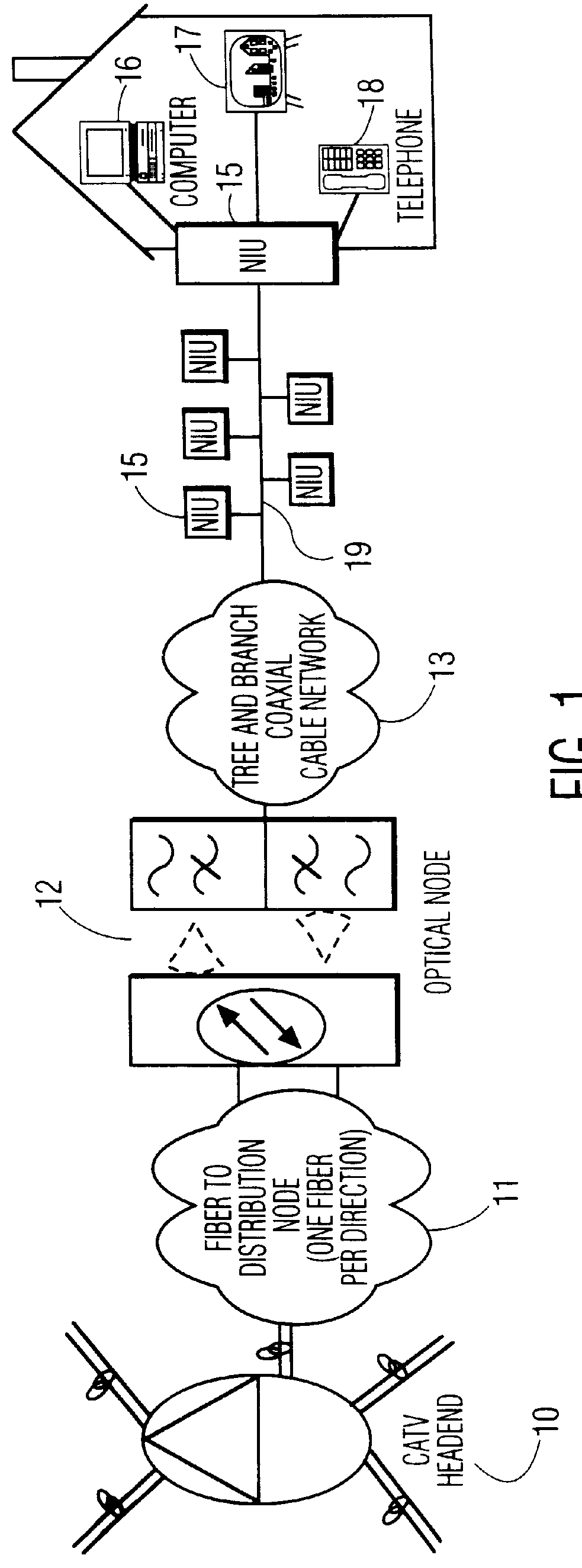

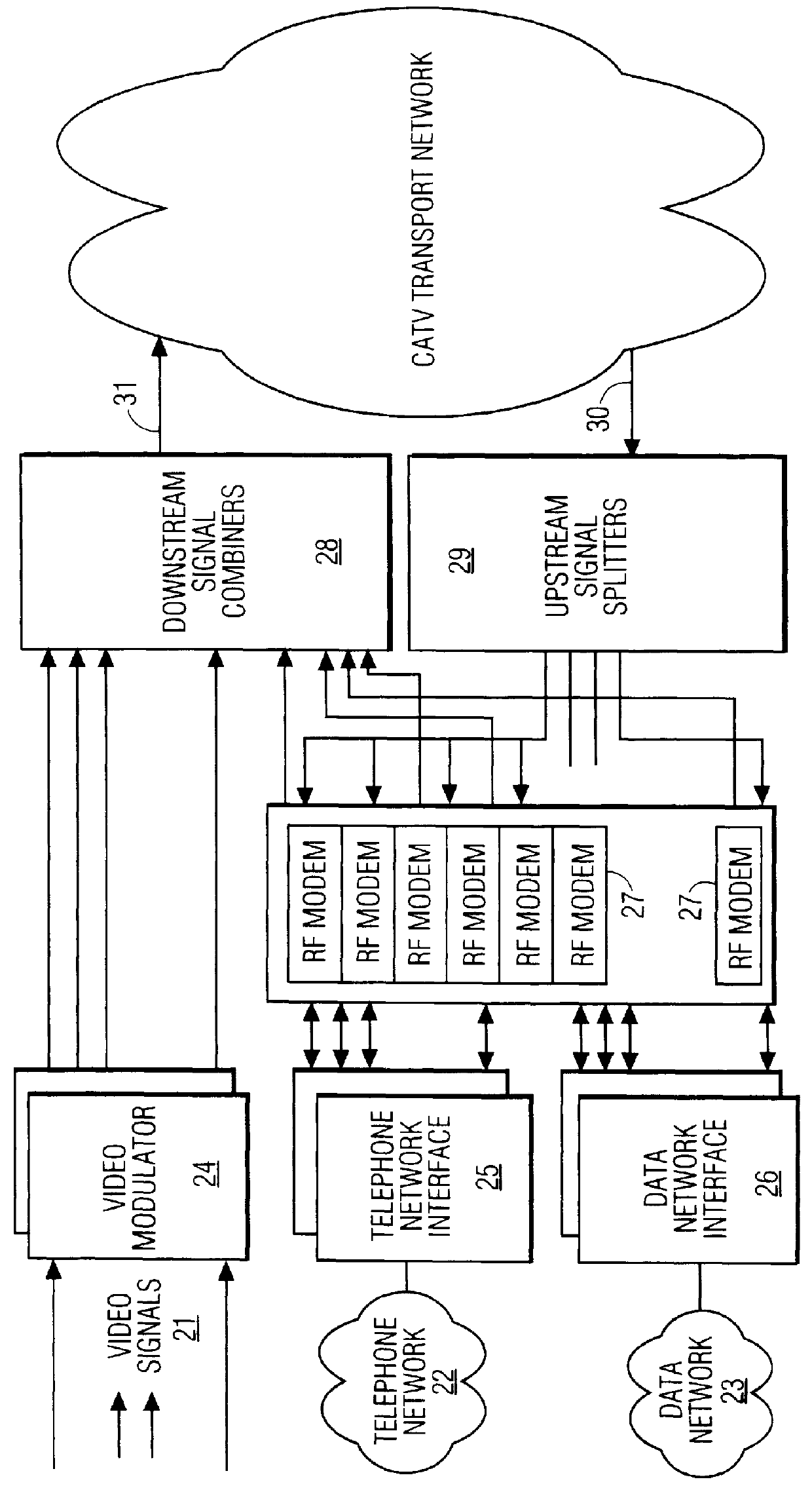

Method and apparatus for improved time division multiple access (TDMA) communication

InactiveUS6031846ATime-division multiplexSynchronisation signal speed/phase controlTime division multiple accessTime efficient

An improved method for Time Division Multiple Access (TDMA) communications particularly applicable to a network comprising transmitters having significantly different transmission paths to a common receiver. The common receiver measures the response time from each transmitter, relative to a common time reference, then instructs each transmitter to delay all subsequent responses by an amount specific to each transmitter, as determined from these measured response times. This imposed delay results in a more time efficient TDMA protocol, improved error detection capability, and improved security capabilities. This invention is particularly applicable to bi-directional communications to and from a provider of Cable TV, Telephony, and Data Services.

Owner:PHILIPS ELECTRONICS NORTH AMERICA

Control system for internal combustion engine

ActiveUS7163007B2Easy to controlSuppress torque variationElectrical controlInternal combustion piston enginesTransient stateControl system

A control system for an internal combustion engine, which is capable of optimally controlling fuel injection by an injector even in a transient state of the engine. An ECU 2 of the engine provided with an EGR system (14) controls the amount (Fa) of air drawn into cylinders (3a) via an intake system (4). An air flow sensor (27) detects the intake air amount (Fa). The ECU 2 estimates a flow rate (Fe_hat) of EGR gases according to response delay of recirculation of the EGR gases by the EGR system (14), estimates the amount (mo2) of oxygen existing in the cylinder (3a), based on the detected intake air amount (Fa) and the estimated flow rate (Fe_hat) of the EGR gases, determines a fuel injection parameter (Q*) based on the engine speed (Ne) and the estimated in-cylinder oxygen amount (mo2), and controls an injector (6) based on the determined fuel injection parameter (Q*).

Owner:HONDA MOTOR CO LTD

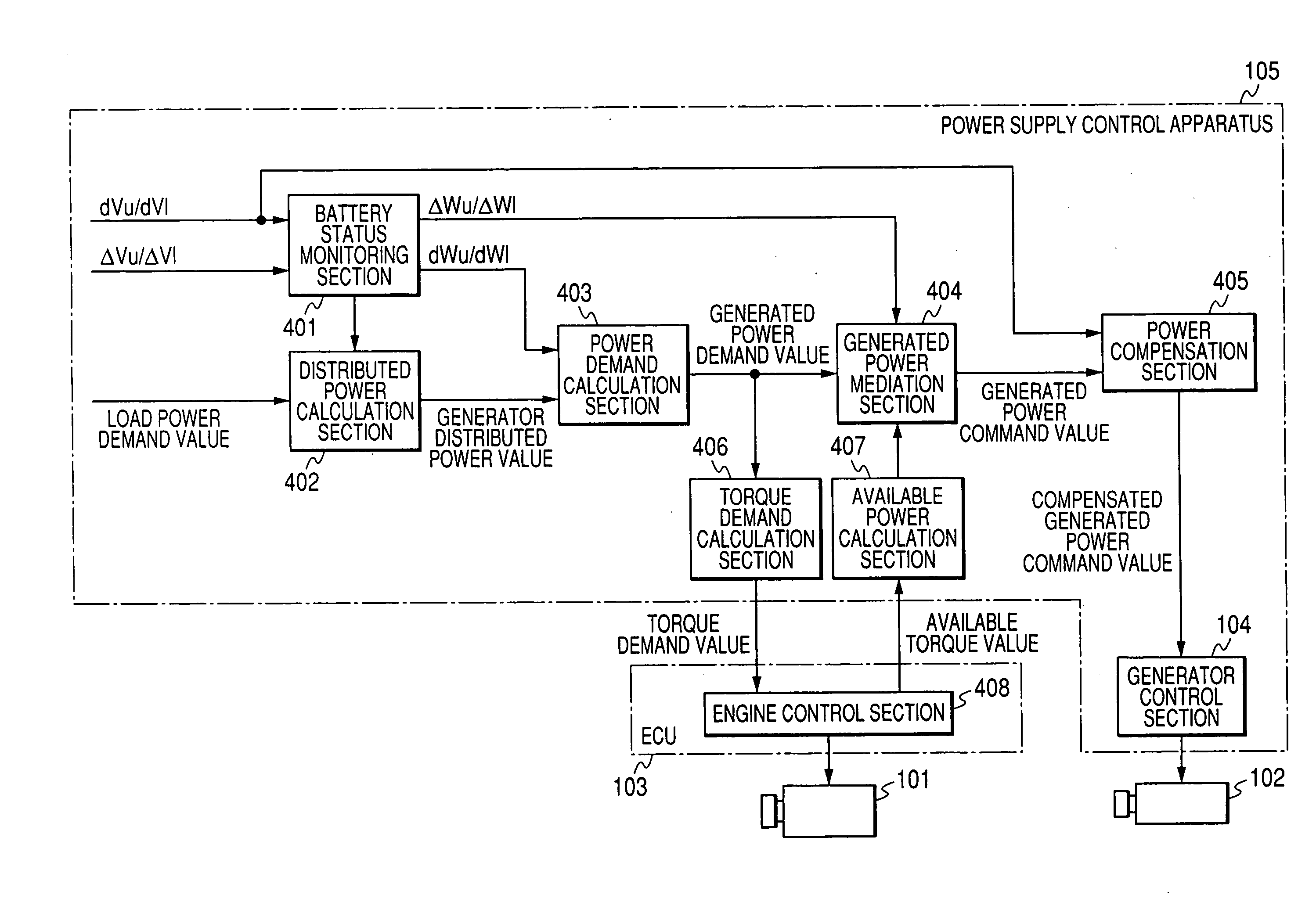

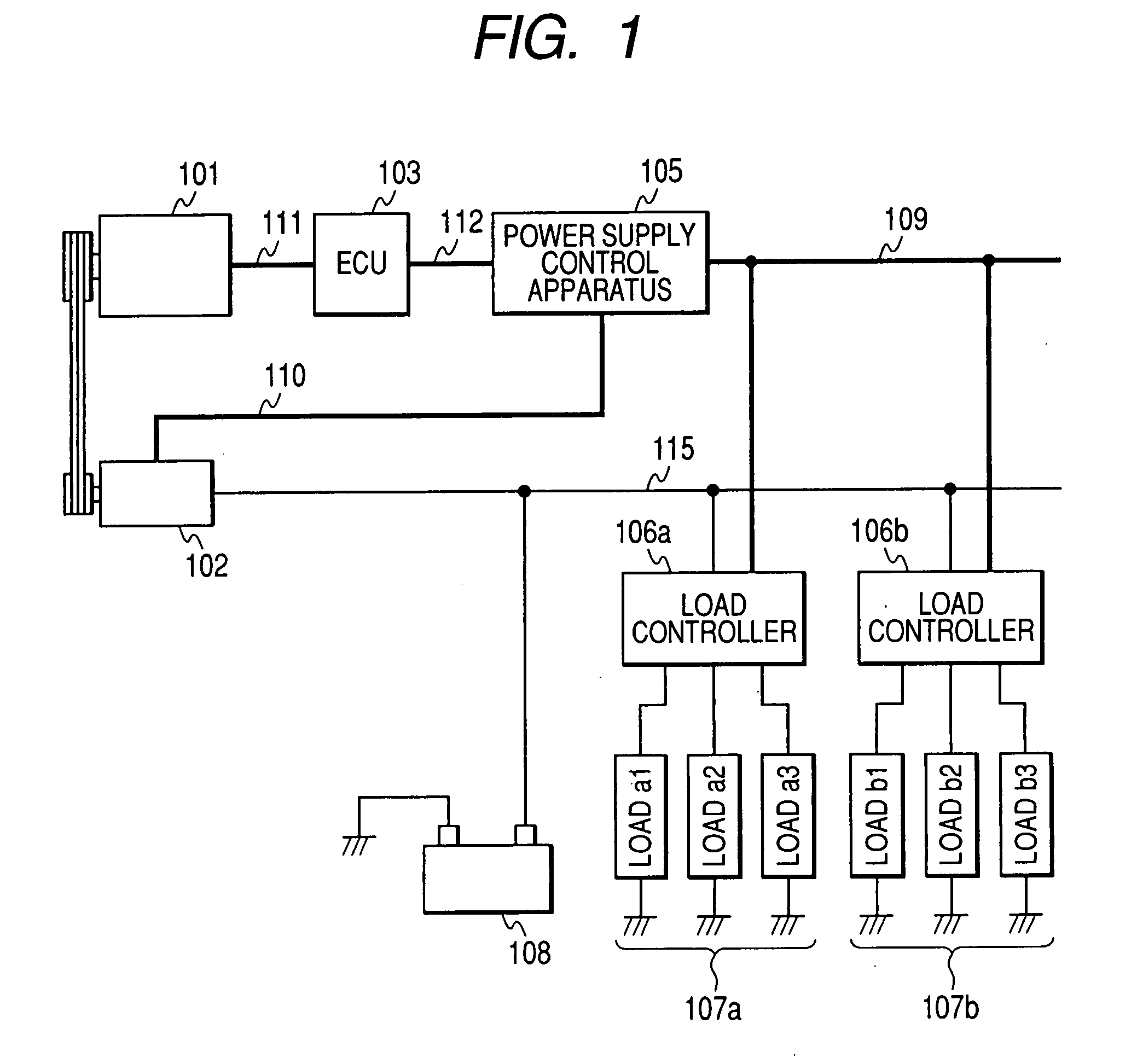

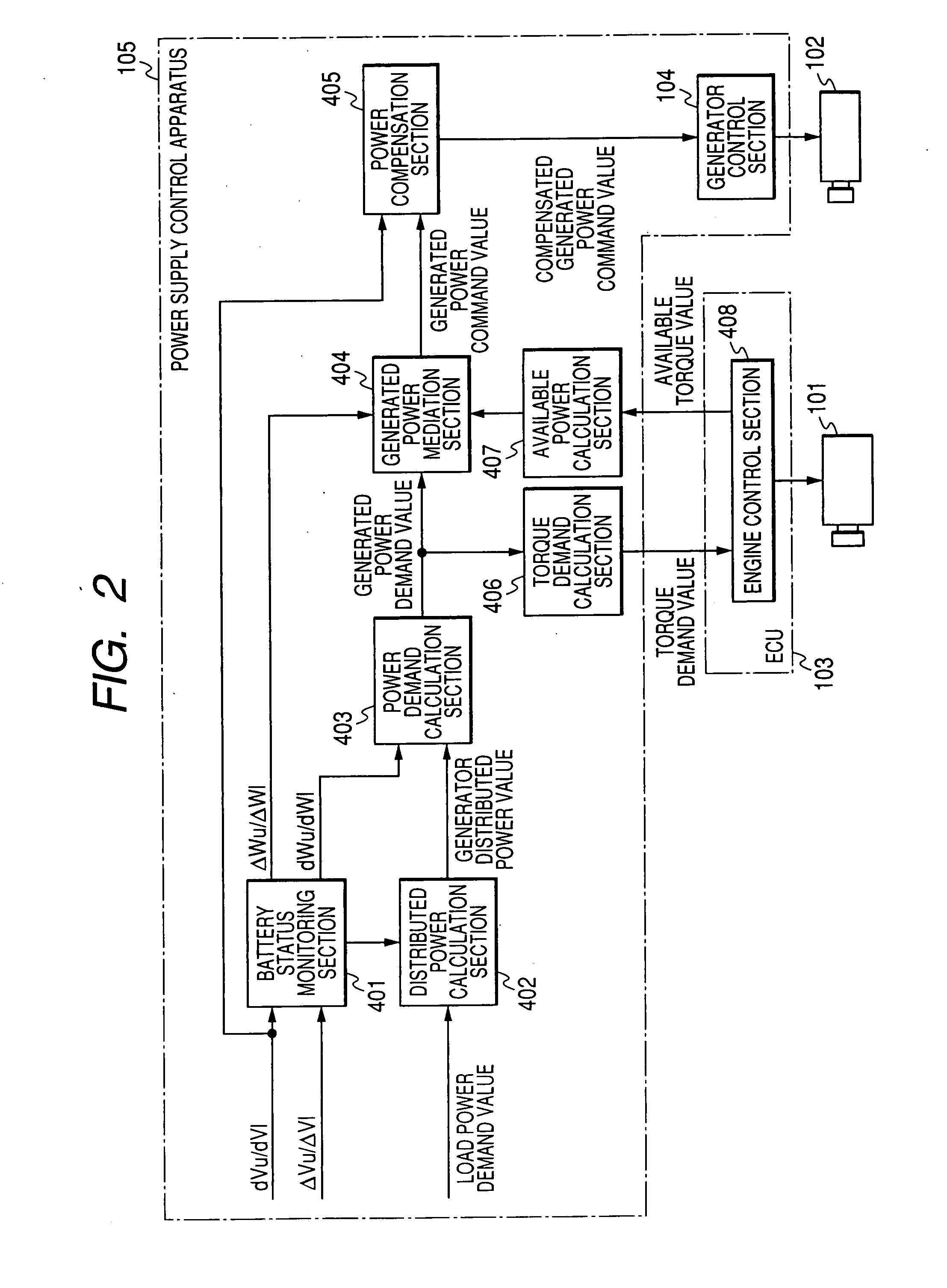

Electrical power supply system for motor vehicle

ActiveUS20080157539A1Suppressing amount of decreaseReduce level of electricalBatteries circuit arrangementsElectrical controlElectricityElectrical battery

A power supply control apparatus for controlling an electric generator of a vehicle limits the rate of change of a power supply voltage to a predetermined variation rate range, when the change is caused by operations to control the charge condition of the vehicle battery, and controls the generated power to match the drive torque applied by the engine to the generator. When the electrical load demand changes, the generated power is controlled to limit a resultant momentary change in the power supply voltage caused by an engine response delay, while minimizing a resultant momentary amount of engine speed variation.

Owner:DENSO CORP

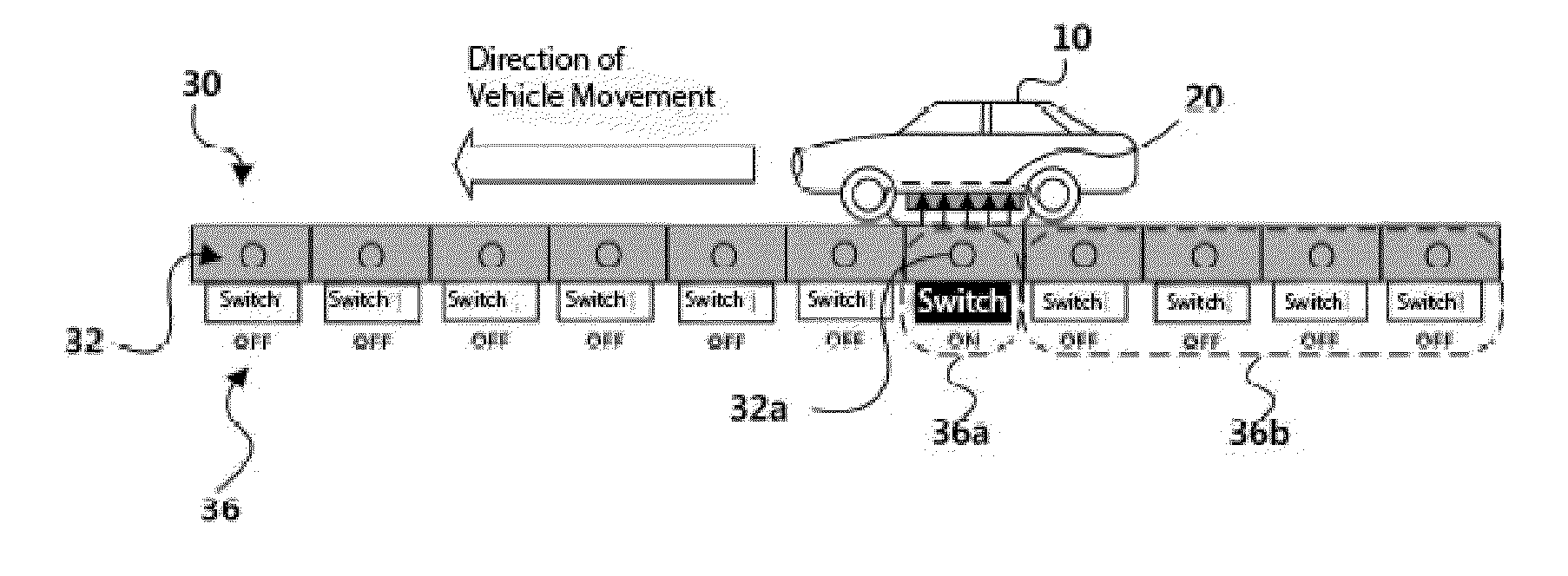

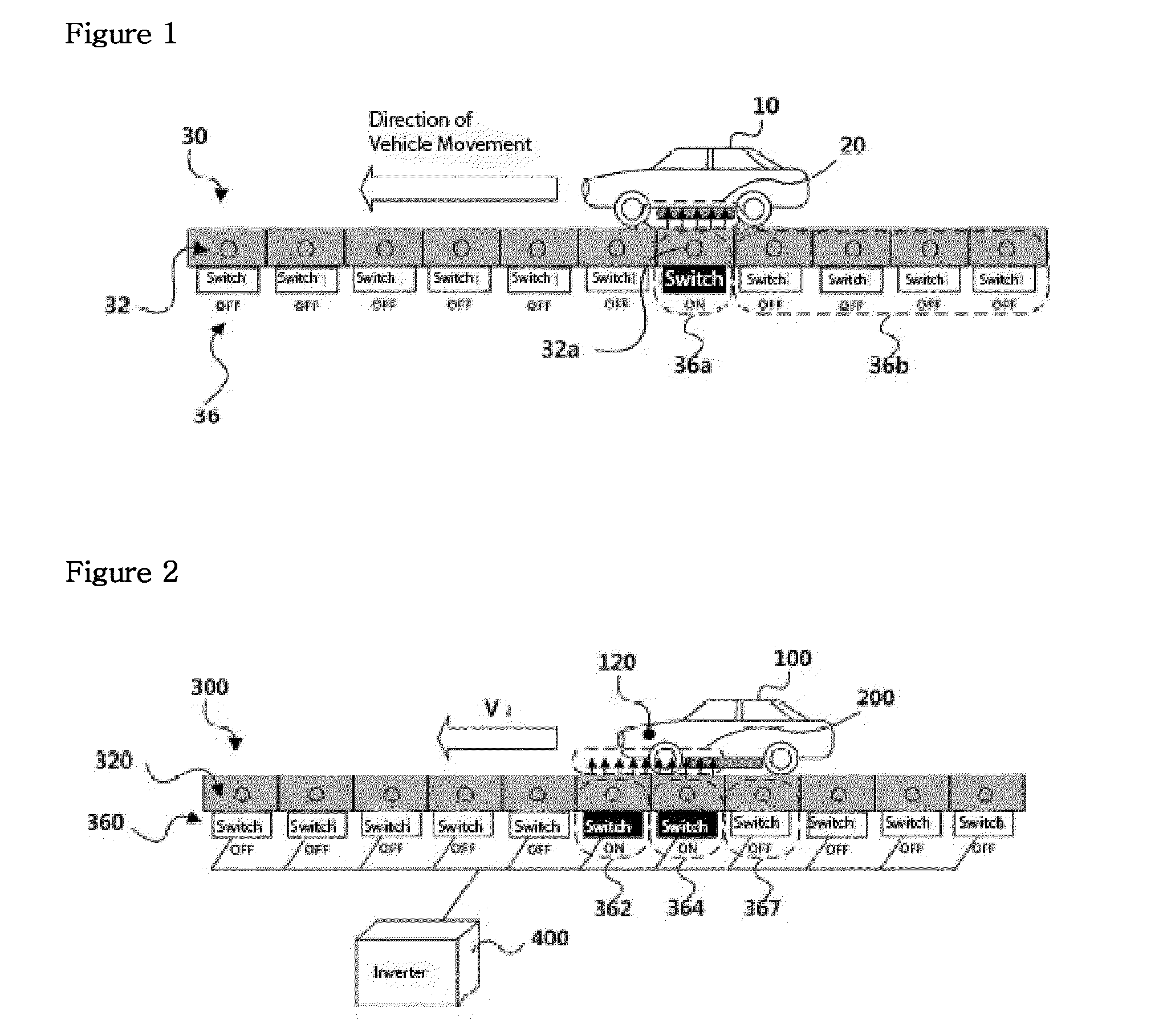

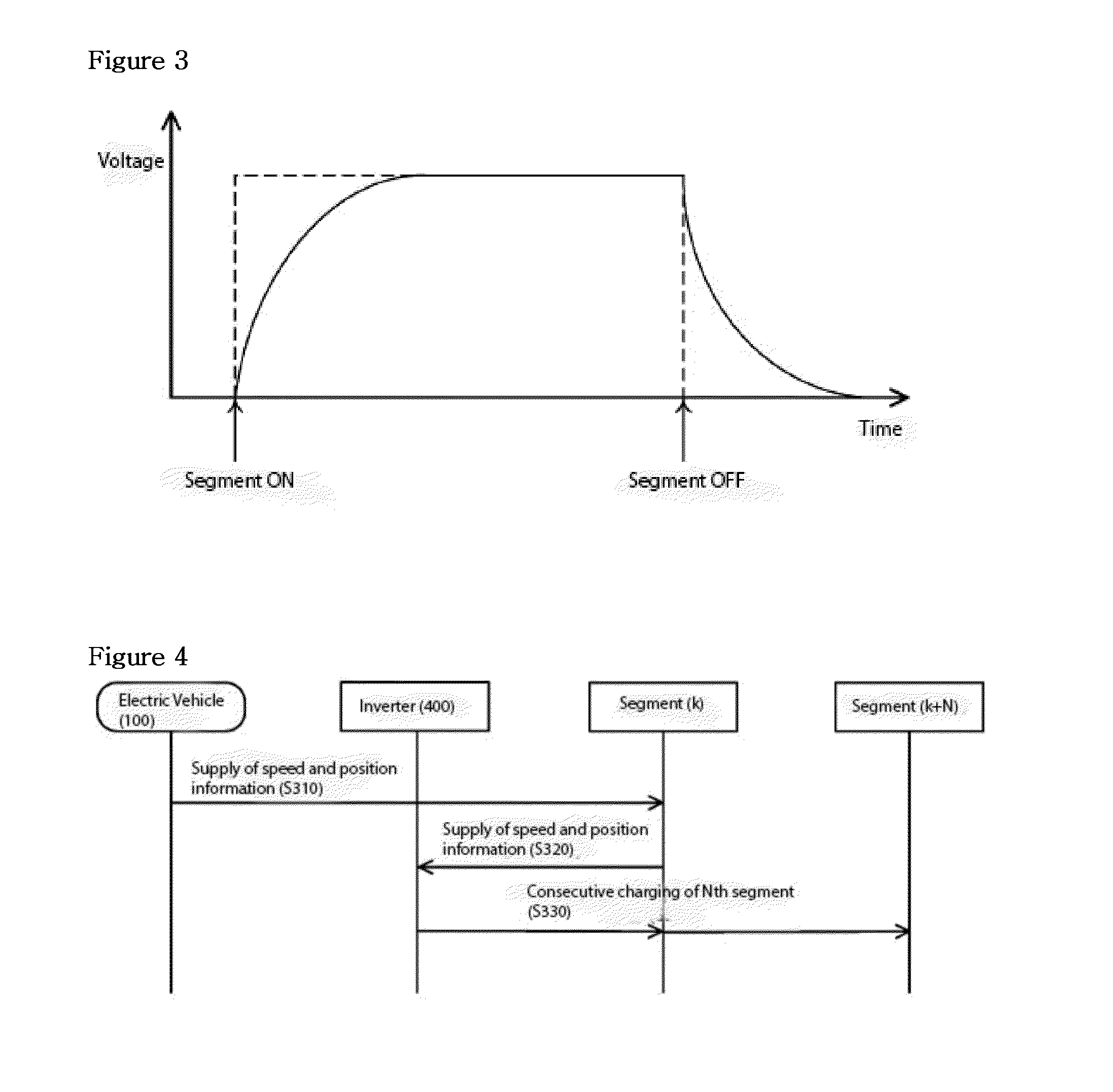

Method for controlling the charging of segments for an online electric vehicle

InactiveUS20140125286A1Reduce power wasteShorten the life cycleBatteries circuit arrangementsCharging stationsResponse delayEngineering

A method for controlling the charging of segments for an online electric vehicle is described. In some situations, the method comprises: (a) receiving, from segments, information on the speed and position of the vehicle entering the range of the power-supplying device; and (b) controlling the charging / discharging timing of the current segment from which the vehicle is leaving and the next segment into the range of which the vehicle is to enter, in accordance with the information on the speed and position of the vehicle. The charging / discharging response delay characteristics of the segments may be considered.

Owner:KOREA ADVANCED INST OF SCI & TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com