Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

54 results about "Memory barrier" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A memory barrier, also known as a membar, memory fence or fence instruction, is a type of barrier instruction that causes a central processing unit (CPU) or compiler to enforce an ordering constraint on memory operations issued before and after the barrier instruction. This typically means that operations issued prior to the barrier are guaranteed to be performed before operations issued after the barrier.

Software implementation of synchronous memory barriers

InactiveUS6996812B2Program synchronisationDigital computer detailsProcessor registerInter-processor interrupt

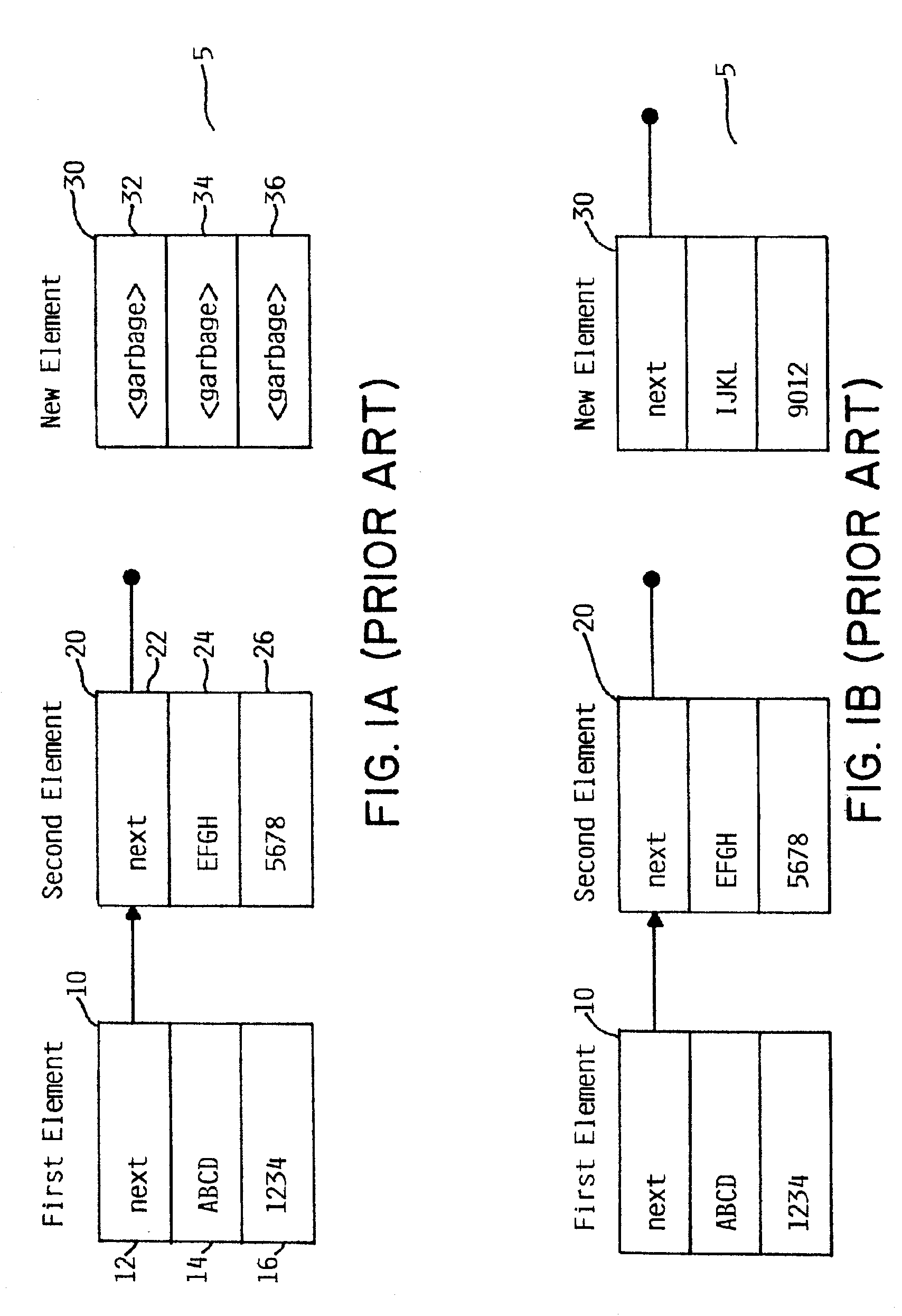

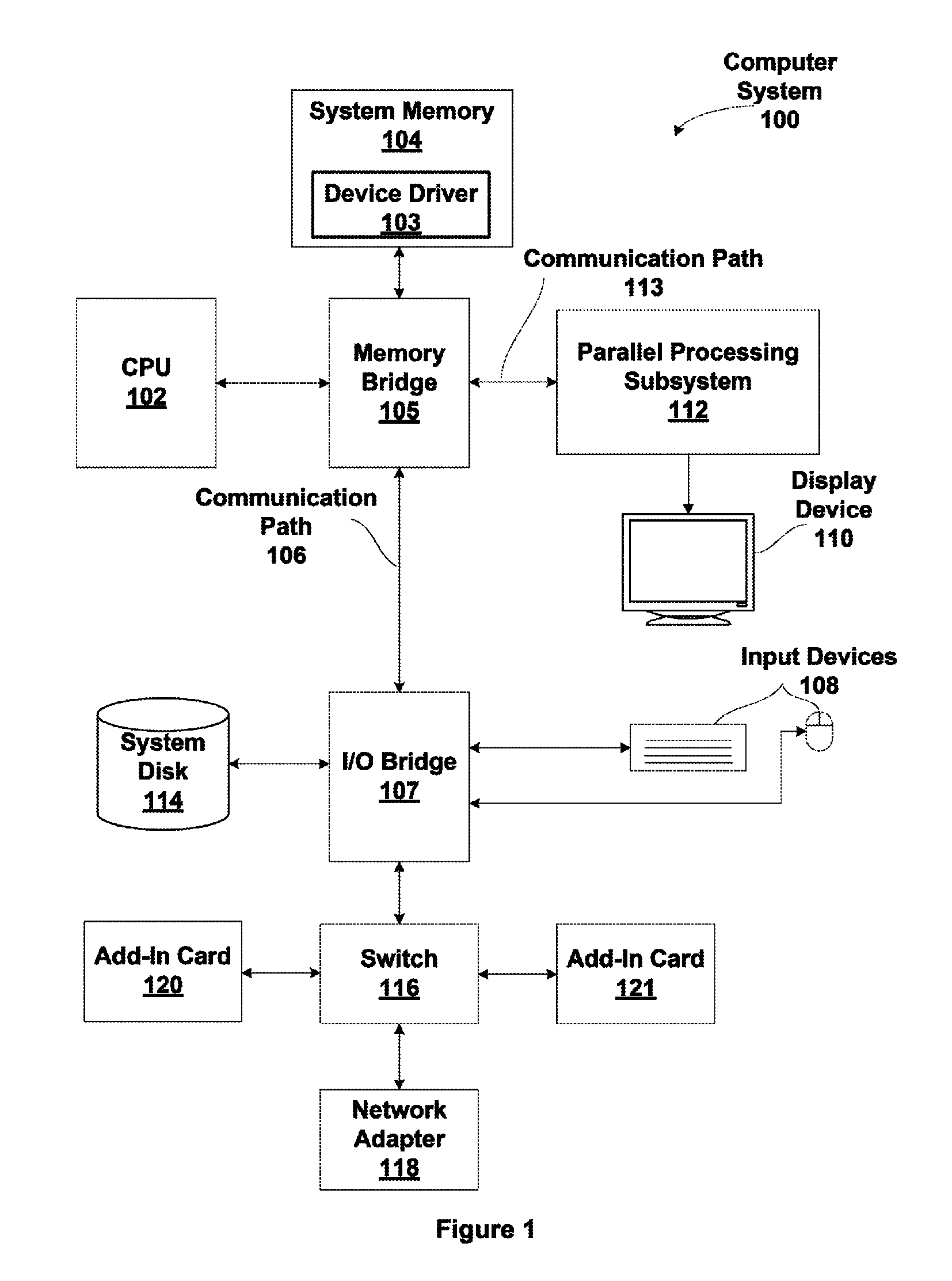

Selectively emulating sequential consistency in software improves efficiency in a multiprocessing computing environment. A writing CPU uses a high priority inter-processor interrupt to force each CPU in the system to execute a memory barrier. This step invalidates old data in the system. Each CPU that has executed a memory barrier instruction registers completion and sends an indicator to a memory location to indicate completion of the memory barrier instruction. Prior to updating the data, the writing CPU must check the register to ensure completion of the memory barrier execution by each CPU. The register may be in the form of an array, a bitmask, or a combining tree, or a comparable structure. This step ensures that all invalidates are removed from the system and that deadlock between two competing CPUs is avoided. Following validation that each CPU has executed the memory barrier instruction, the writing CPU may update the pointer to the data structure.

Owner:IBM CORP

Memory barriers primitives in an asymmetric heterogeneous multiprocessor environment

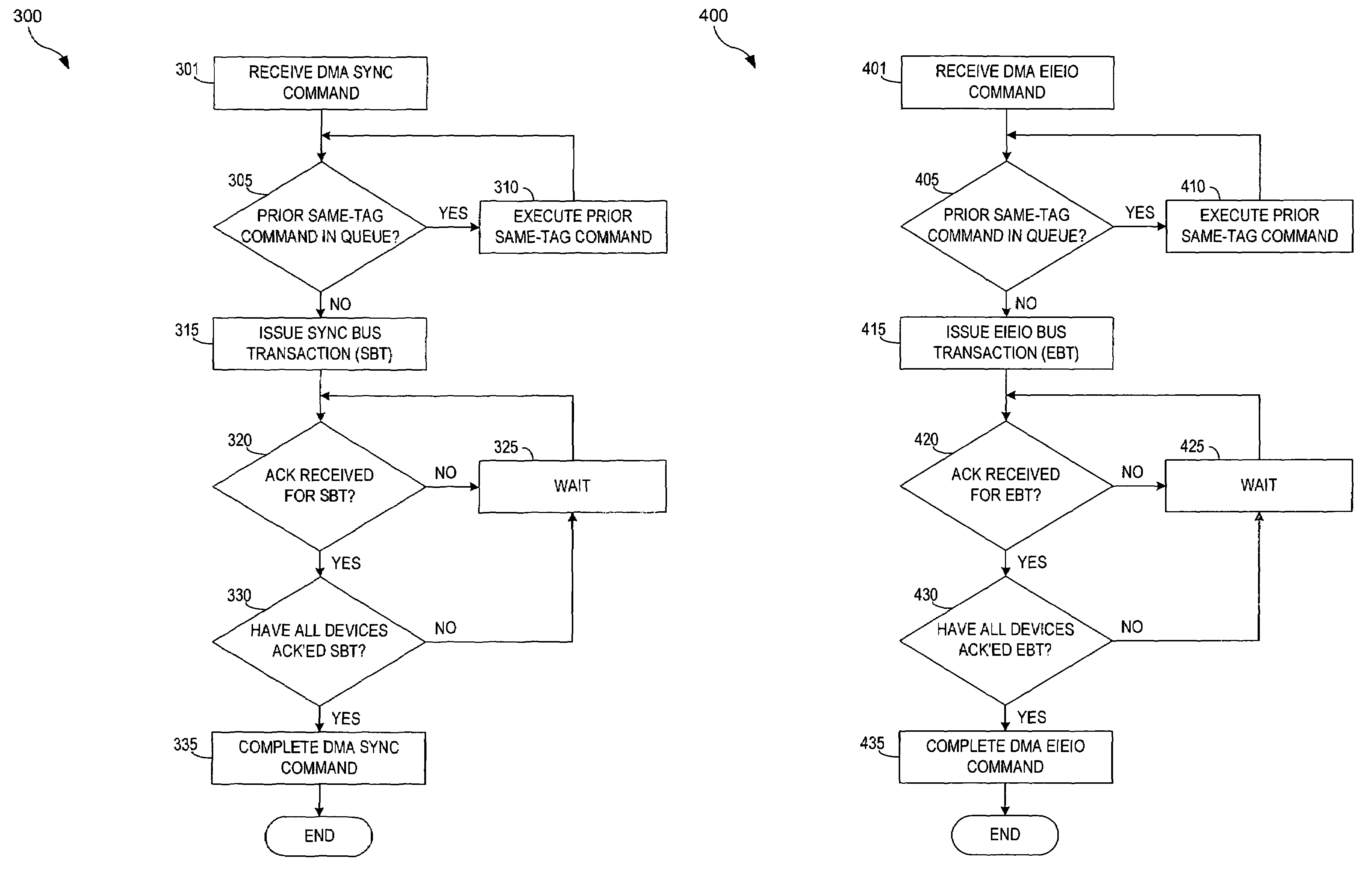

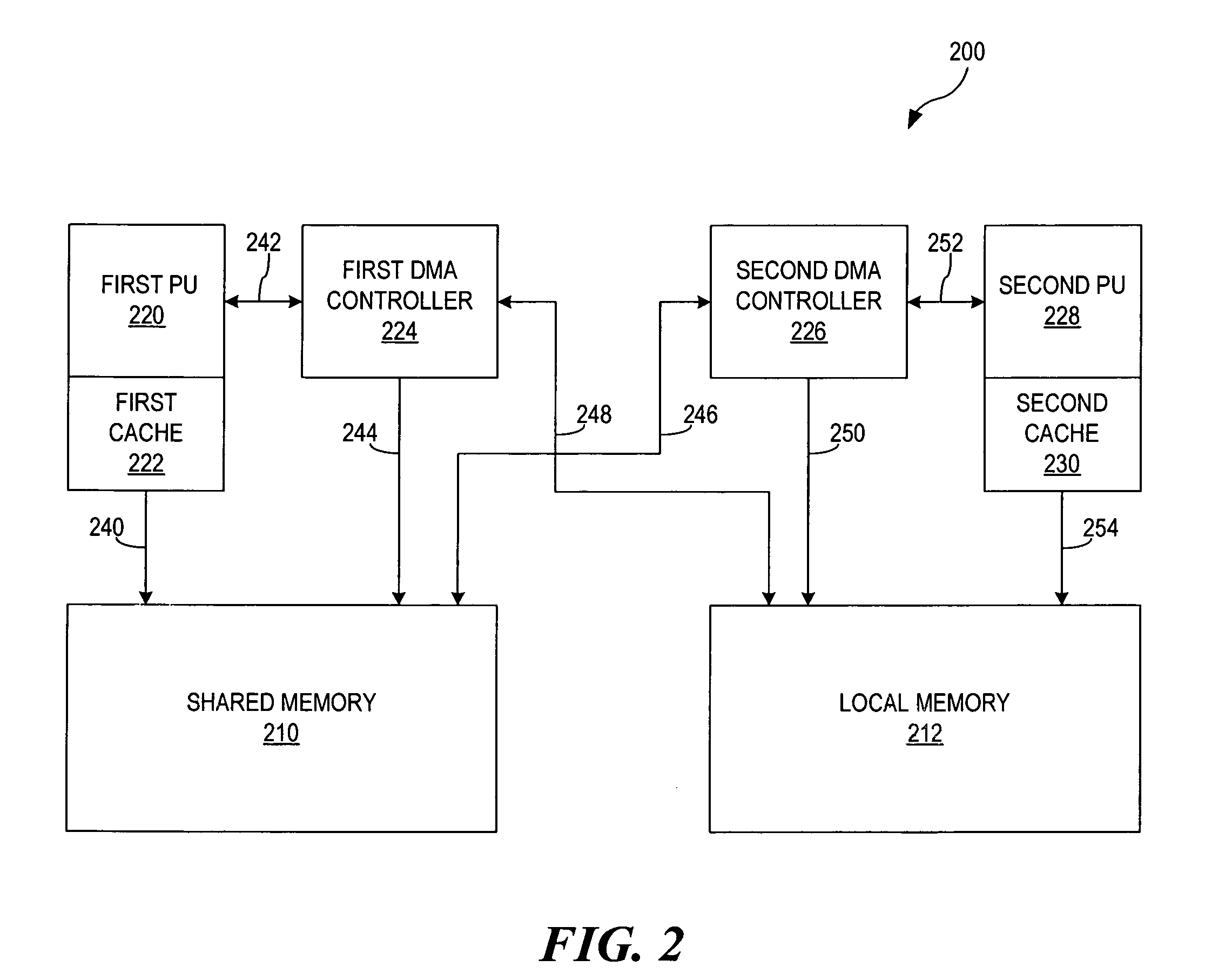

The present invention provides a method and apparatus for creating memory barriers in a Direct Memory Access (DMA) device. A memory barrier command is received and a memory command is received. The memory command is executed based on the memory barrier command. A bus operation is initiated based on the memory barrier command. A bus operation acknowledgment is received based on the bus operation. The memory barrier command is executed based on the bus operation acknowledgment. In a particular aspect, memory barrier commands are direct memory access sync (dmasync) and direct memory access enforce in-order execution of input / output (dmaeieio) commands.

Owner:IBM CORP +1

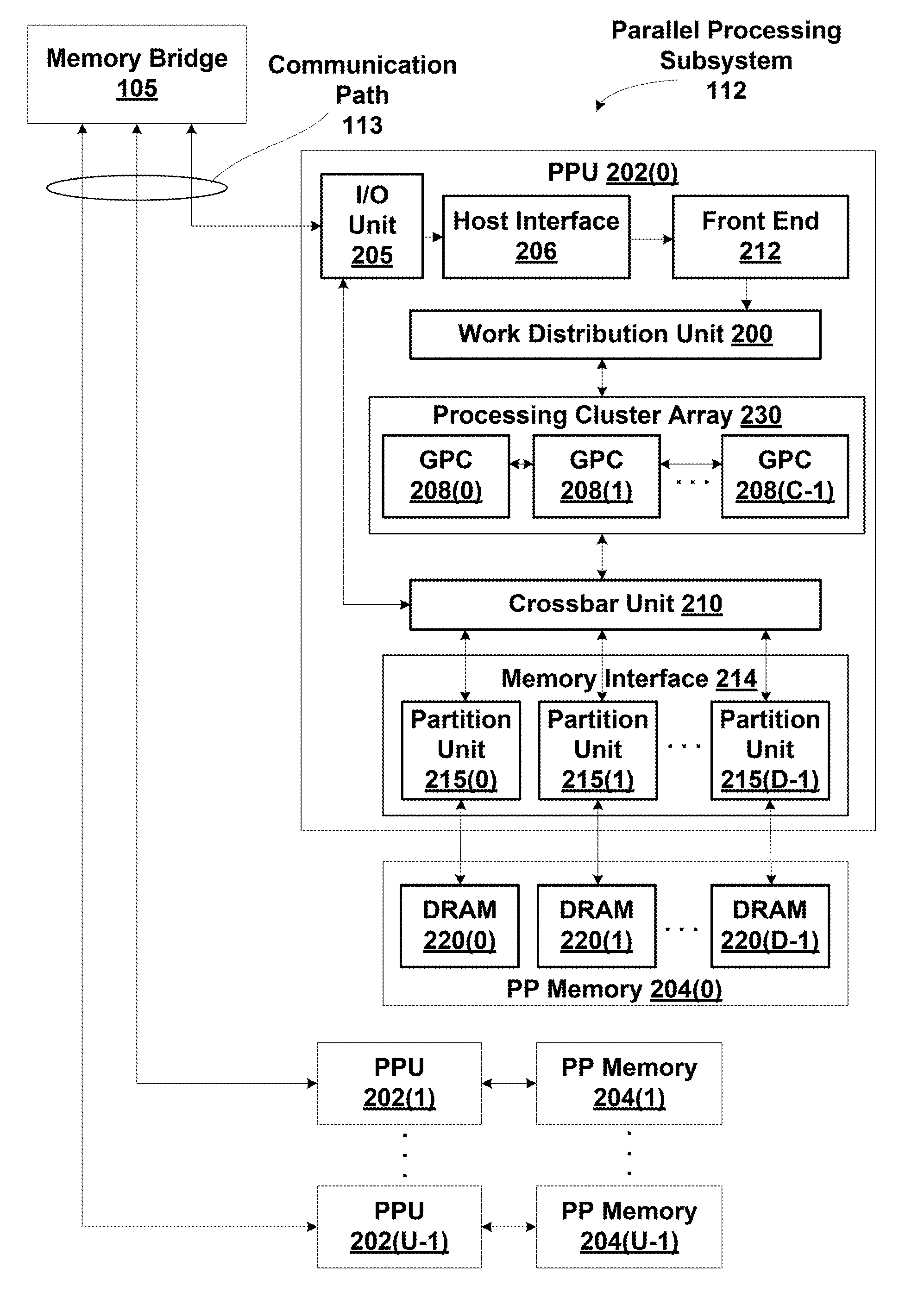

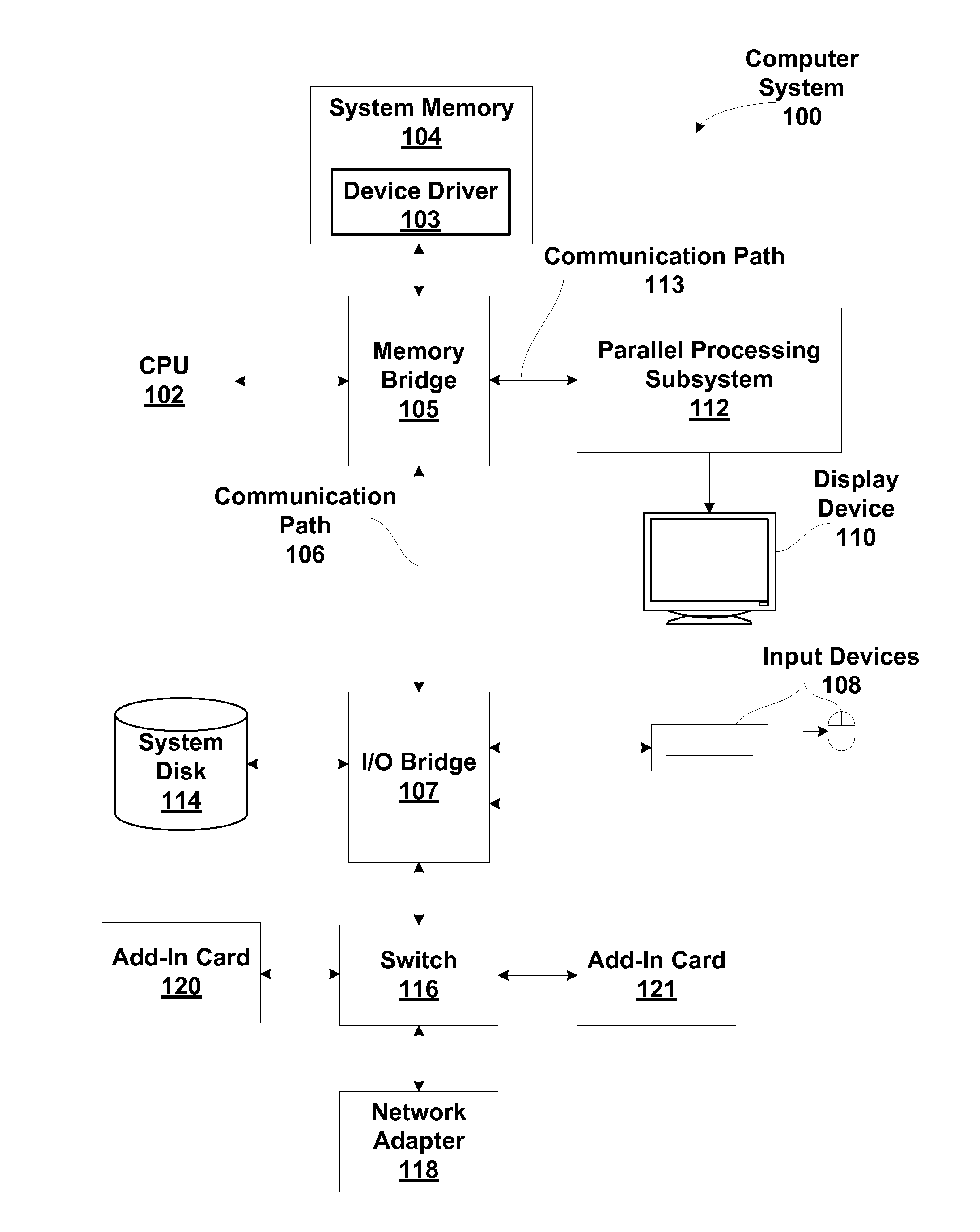

Coalescing memory barrier operations across multiple parallel threads

ActiveUS20110078692A1Expensive to operateReduce the impactMultiprogramming arrangementsMemory systemsParallel computingSystem level

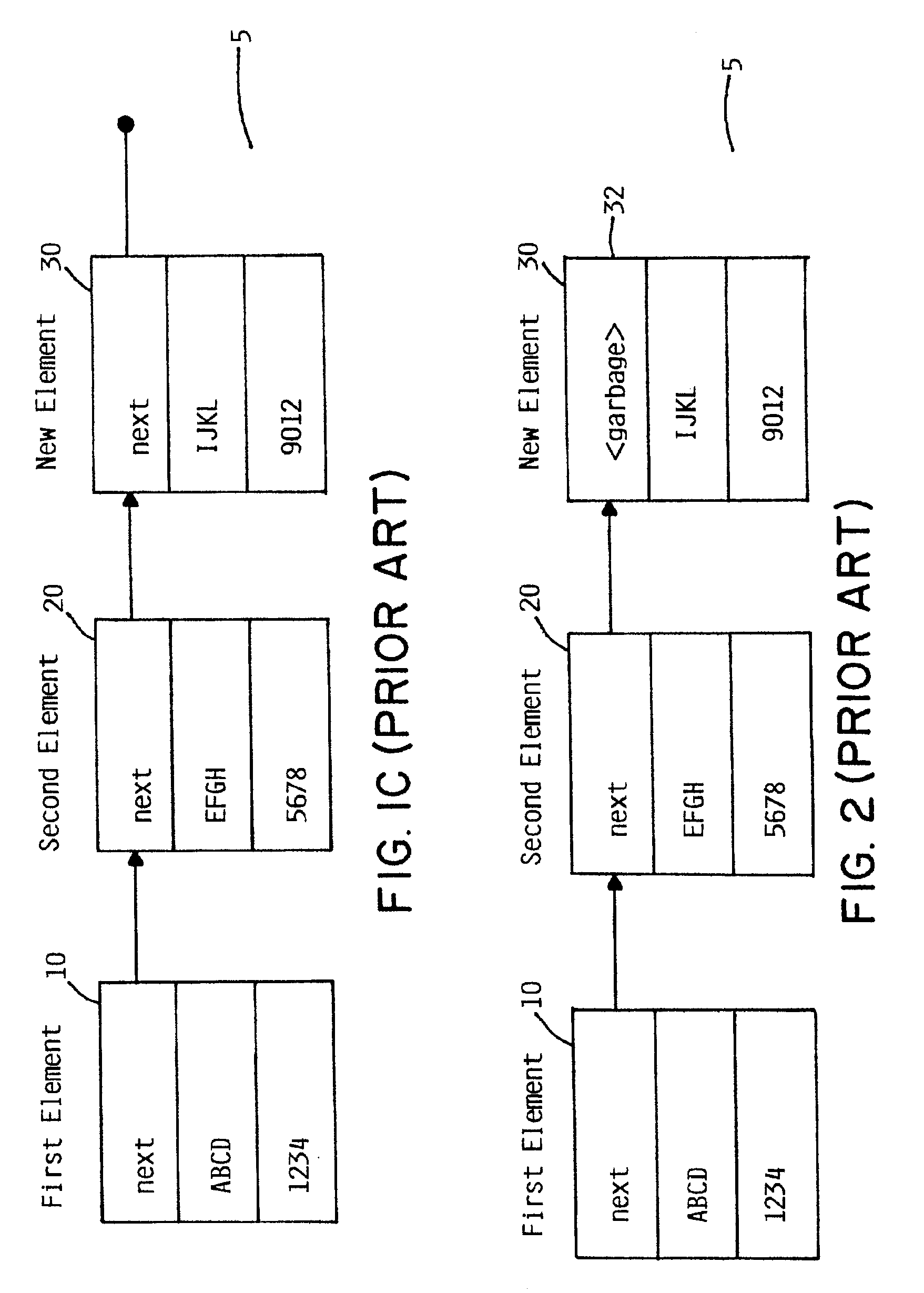

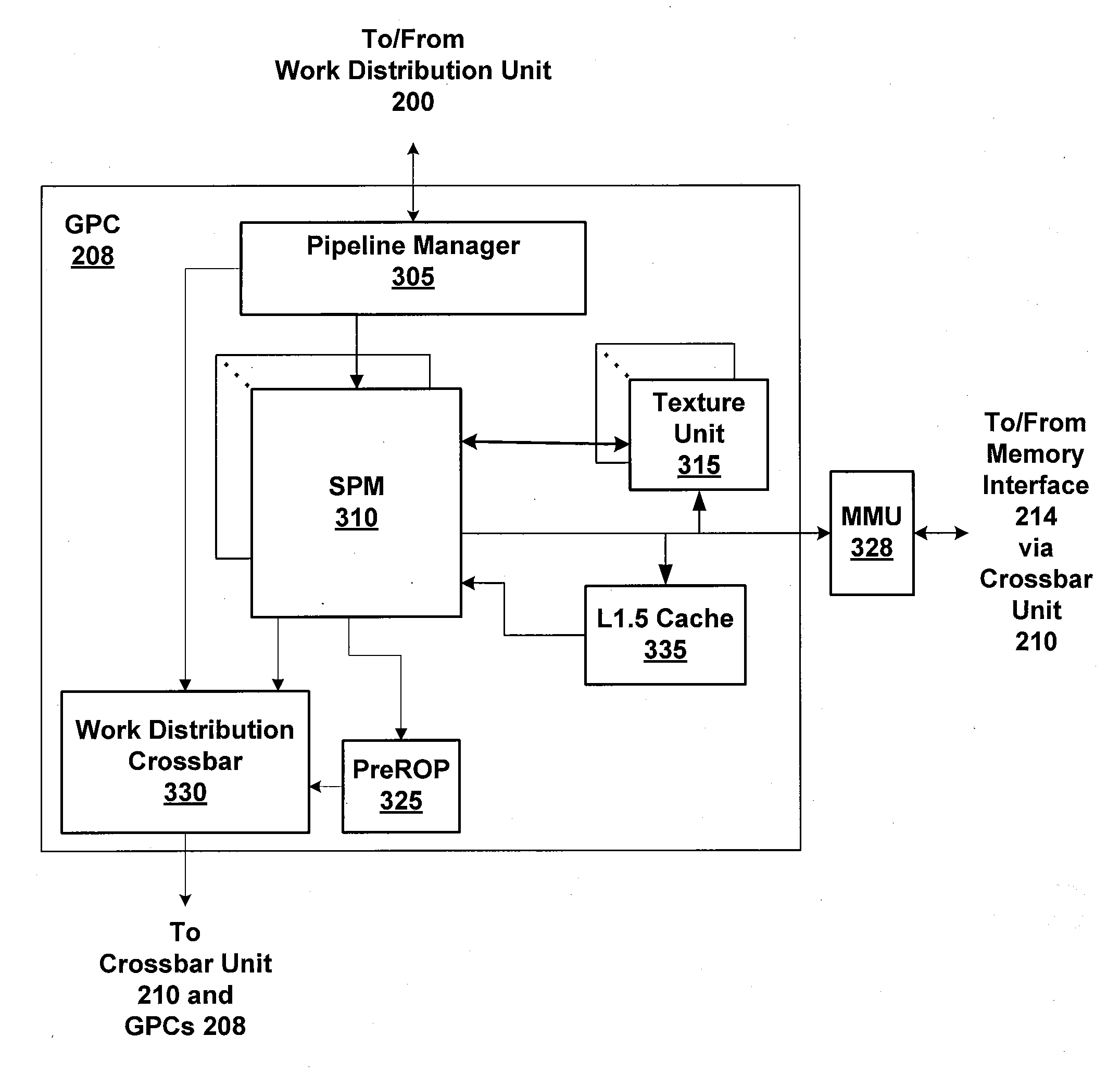

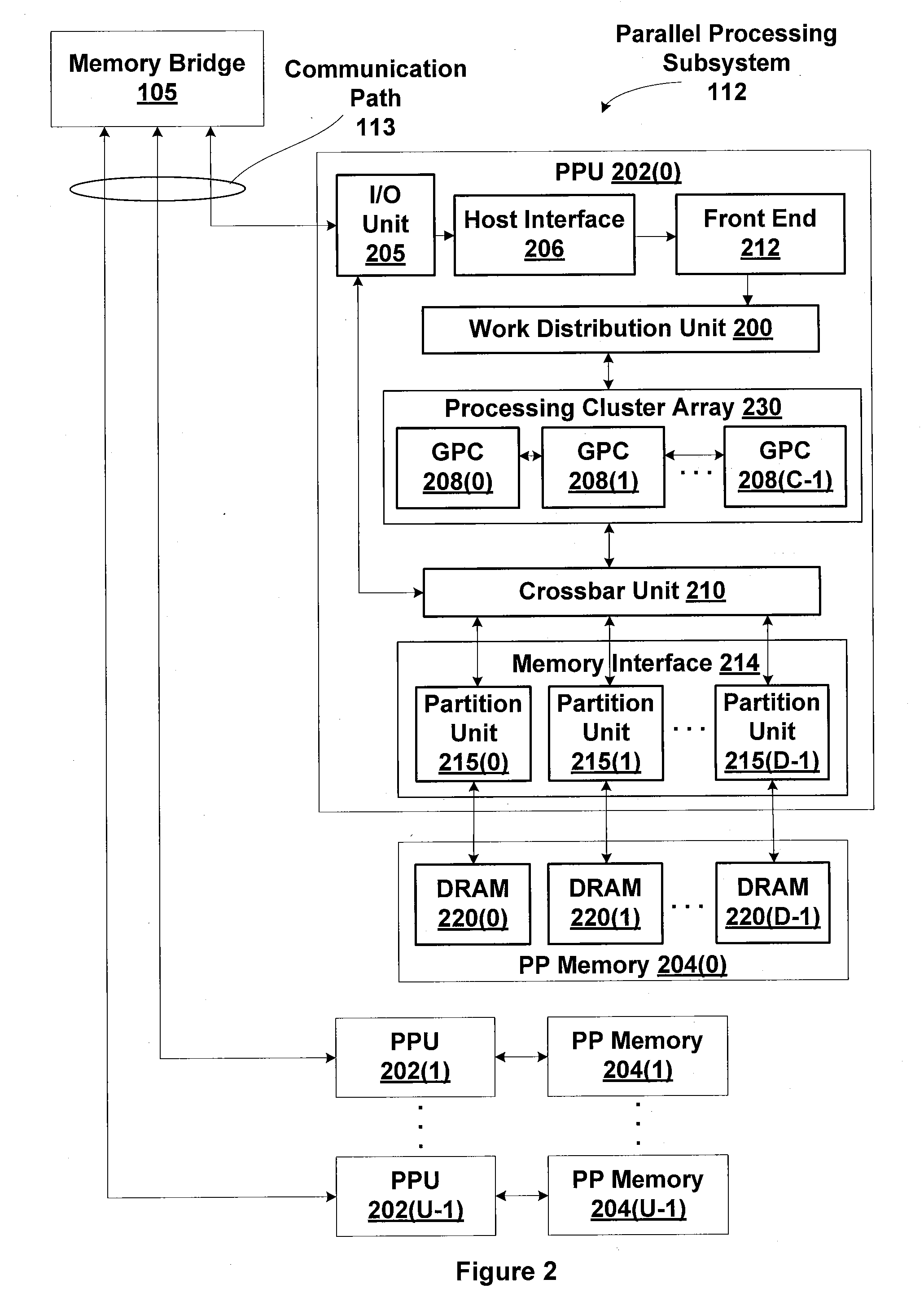

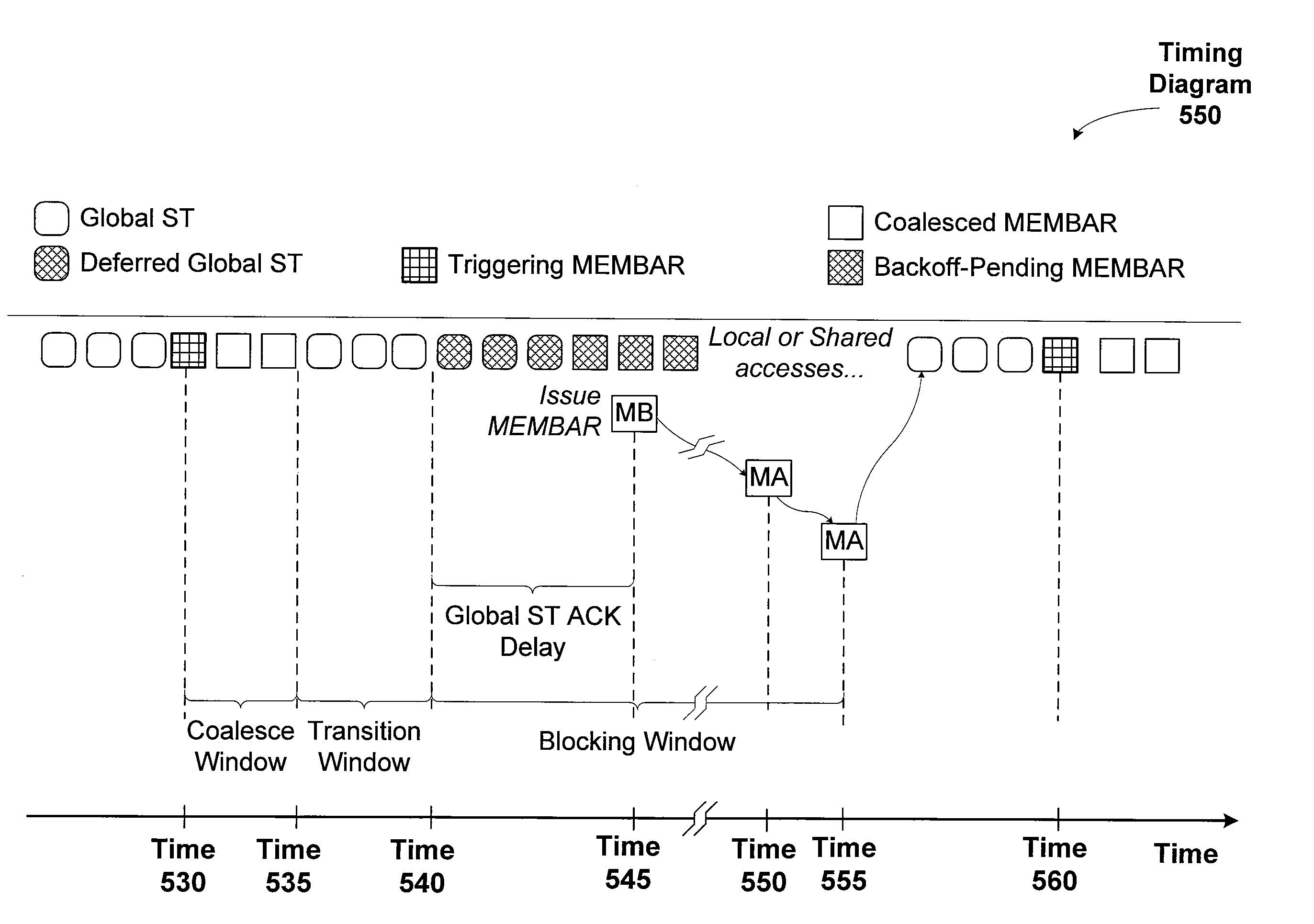

One embodiment of the present invention sets forth a technique for coalescing memory barrier operations across multiple parallel threads. Memory barrier requests from a given parallel thread processing unit are coalesced to reduce the impact to the rest of the system. Additionally, memory barrier requests may specify a level of a set of threads with respect to which the memory transactions are committed. For example, a first type of memory barrier instruction may commit the memory transactions to a level of a set of cooperating threads that share an L1 (level one) cache. A second type of memory barrier instruction may commit the memory transactions to a level of a set of threads sharing a global memory. Finally, a third type of memory barrier instruction may commit the memory transactions to a system level of all threads sharing all system memories. The latency required to execute the memory barrier instruction varies based on the type of memory barrier instruction.

Owner:NVIDIA CORP

Memory coherency in graphics command streams and shaders

ActiveUS20110063313A1Simple technologyCathode-ray tube indicatorsProcessor architectures/configurationDelayed MemoryParallel computing

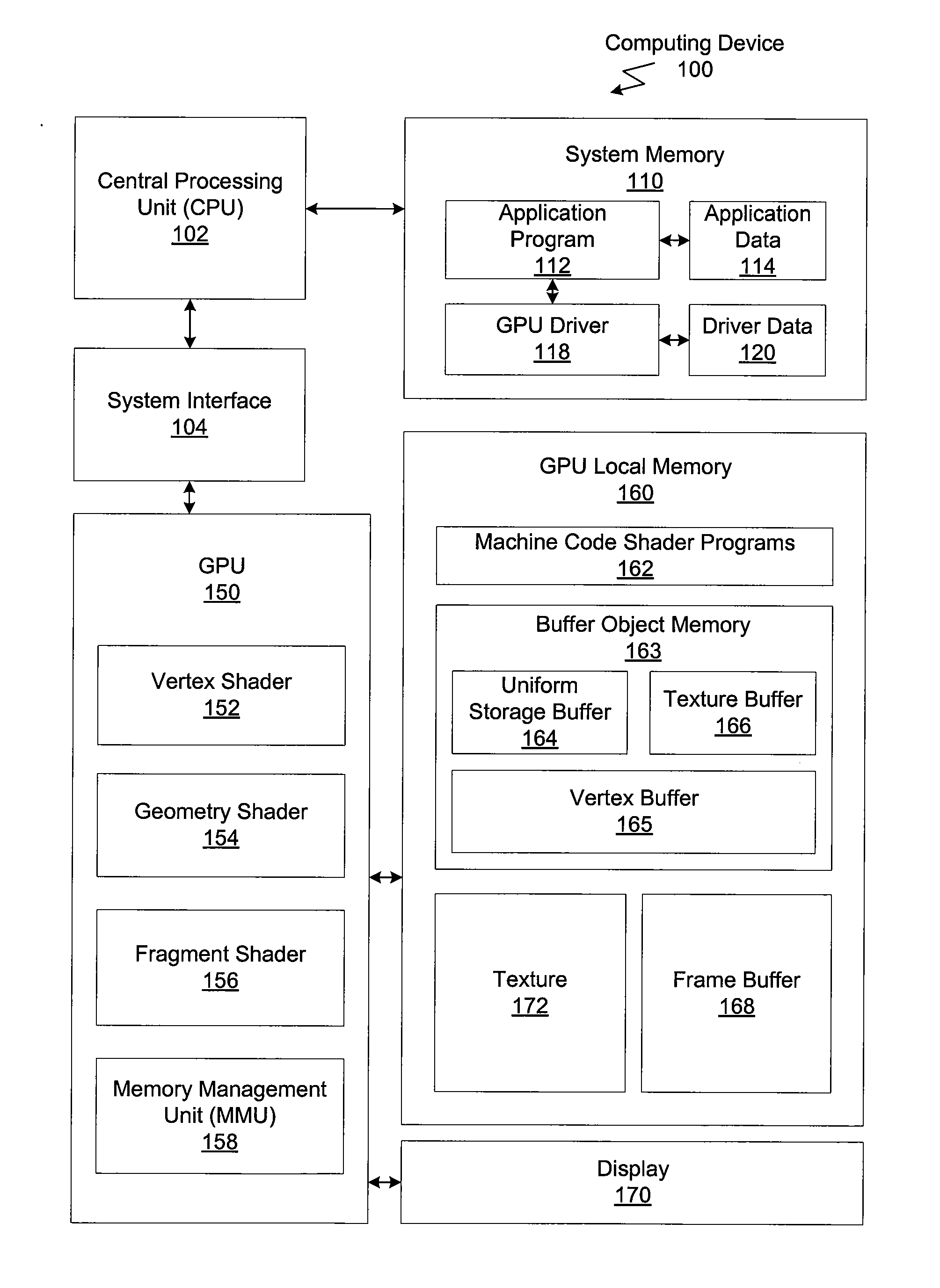

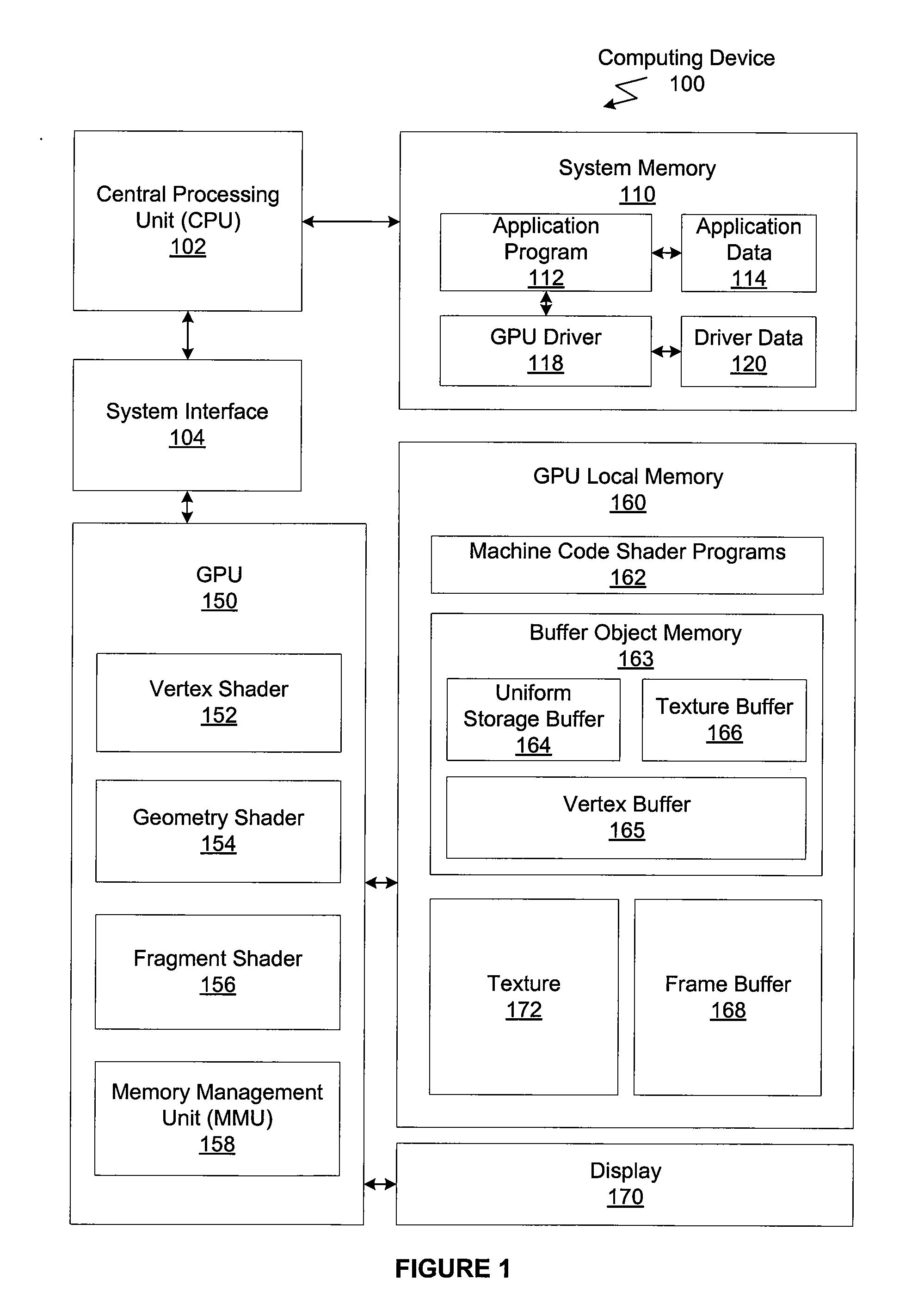

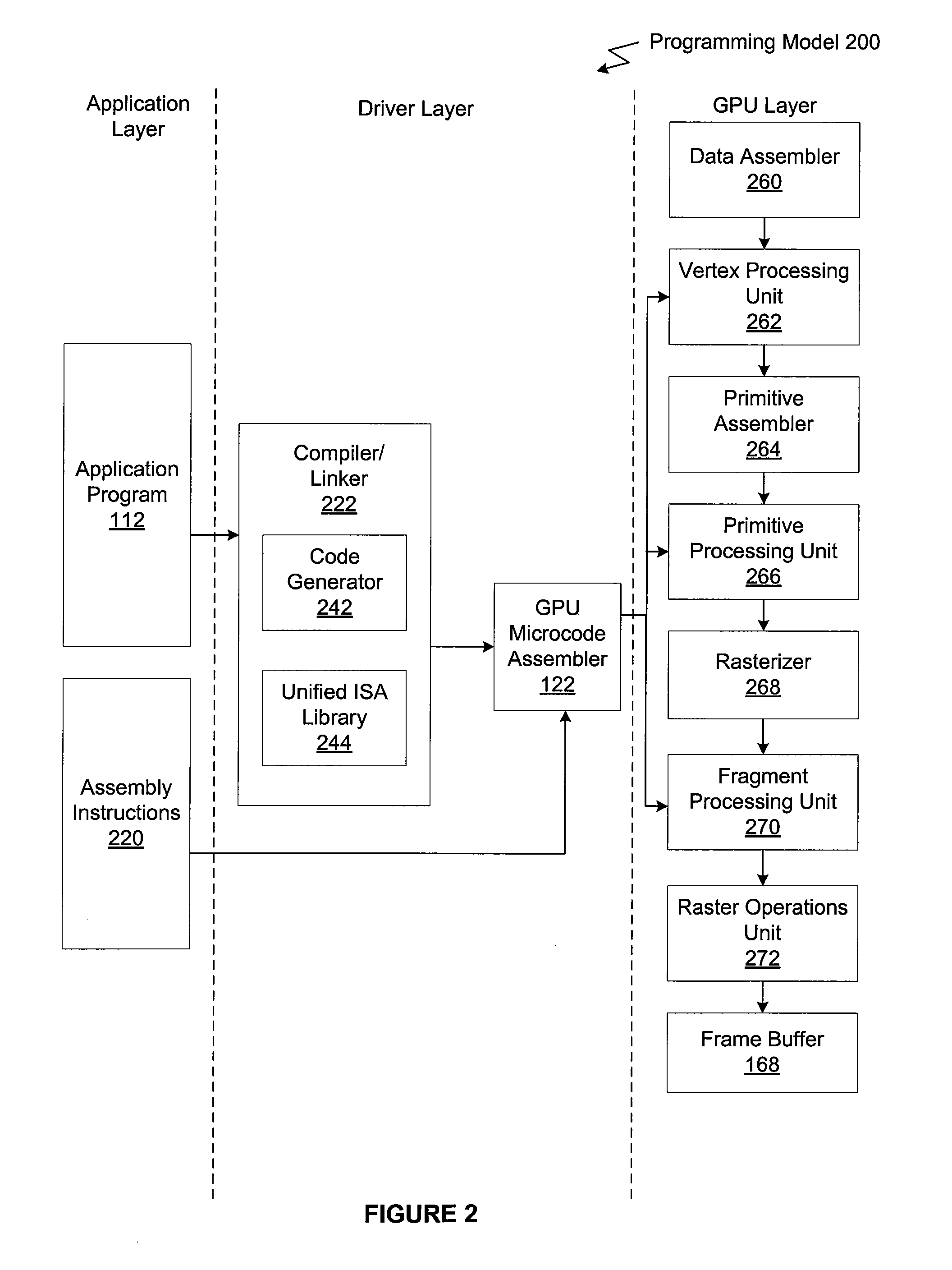

One embodiment of the present invention sets forth a technique for performing a computer-implemented method that controls memory access operations. A stream of graphics commands includes at least one memory barrier command. Each memory barrier command in the stream of graphics command delays memory access operations scheduled for any command specified after the memory barrier command until all memory access operations scheduled for commands specified prior to the memory barrier command have completely executed.

Owner:NVIDIA CORP

Fused Store Exclusive/Memory Barrier Operation

ActiveUS20110208915A1Reduce stepsLower latencyRuntime instruction translationMemory adressing/allocation/relocationCache hierarchyData memory

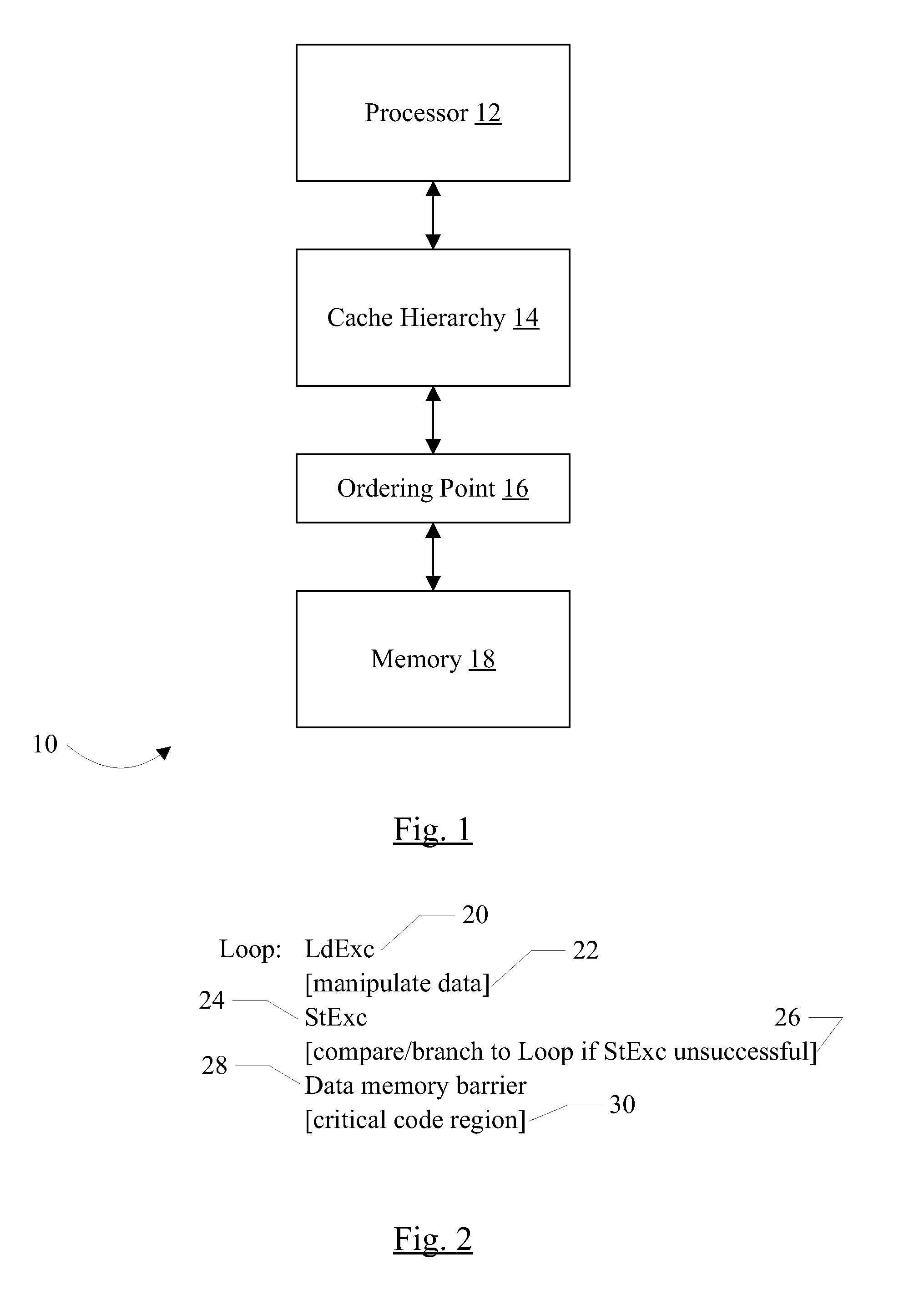

In an embodiment, a processor may be configured to detect a store exclusive operation followed by a memory barrier operation in a speculative instruction stream being executed by the processor. The processor may fuse the store exclusive operation and the memory barrier operation, creating a fused operation. The fused operation may be transmitted and globally ordered, and the processor may complete both the store exclusive operation and the memory barrier operation in response to the fused operation. As the fused operation progresses through the processor and one or more other components (e.g. caches in the cache hierarchy) to the ordering point in the system, the fused operation may push previous memory operations to effect the memory barrier operation. In some embodiments, the latency for completing the store exclusive operation and the subsequent data memory barrier operation may be reduced if the store exclusive operation is successful at the ordering point.

Owner:APPLE INC

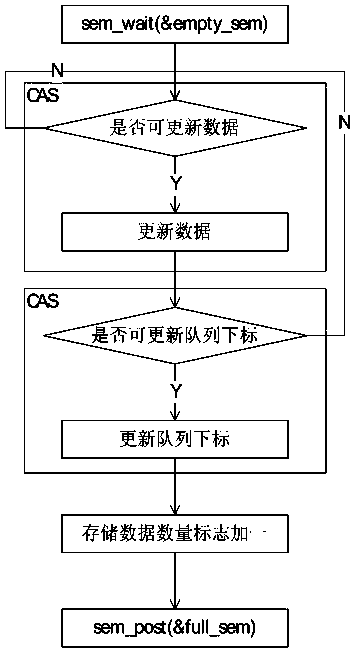

Method for achieving unlocked concurrence message processing mechanism

InactiveCN104077113AGuaranteed atomicityImprove efficiencyConcurrent instruction executionComputer hardwareMessage processing

The invention discloses a method for achieving an unlocked concurrence message processing mechanism. An annular array is used as a data buffering area, caching and pre-reading can be facilitated, meanwhile, the defect that memory needs to be applied or released at each node operation caused by a chain table structure is overcome, and the efficiency is improved. In order to solve the concurrence control problem of a multiple-producer and single-consumer mode, a CAS and a memory barrier are used for guaranteeing mutual exclusion, a locking mode is not used, and performance deterioration caused by low efficiency of locking is avoided. In order to solve the common ABA problem in the unlocked technology, a double-insurance CAS technology is used for avoiding the occurrence of the ABA problem. In order to solve the false sharing problem, the method that cache lines are filled between a head pointer, a tail pointer and capacity is used for avoiding the false sharing problem due to the fact that the head pointer, the tail pointer and the capacity are in the same cache line. Meanwhile, the length of the array is set to be the index times of two, the bit operation of 'and operation' is used for acquiring the subscript of the array, and the overall efficiency is improved.

Owner:CSIC WUHAN LINCOM ELECTRONICS

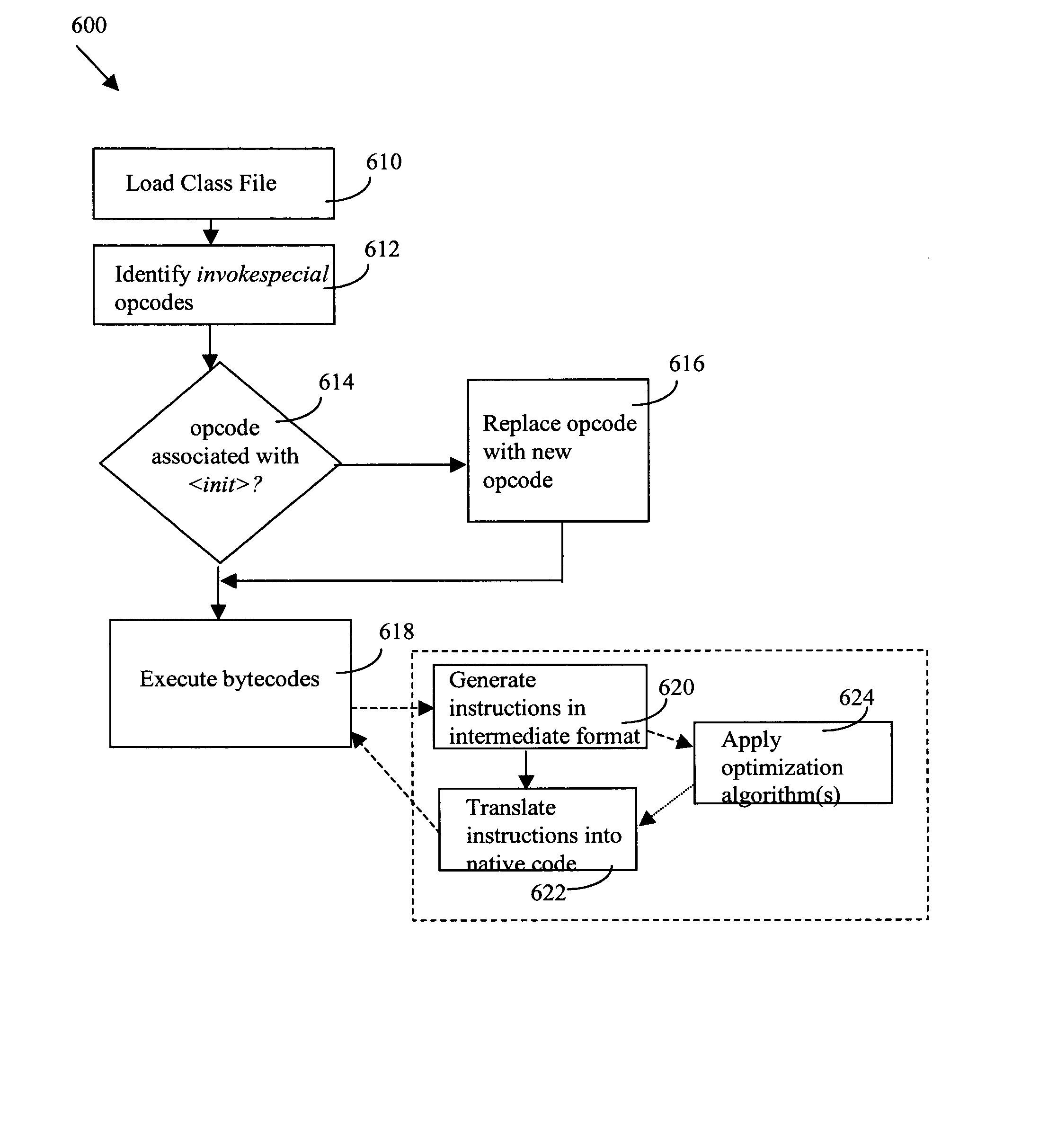

Method and apparatus to guarantee type and initialization safety in multithreaded programs

InactiveUS20050050528A1Initialization safety is also guaranteedImprove performanceMemory loss protectionSpecific program execution arrangementsMultithreadingType safety

A method and apparatus to guarantee type safety in multithreaded programs, and to guarantee initialization safety in well-behaved multithreaded programs. A plurality of bytecodes representing a program are received and examined to identify bytecodes defining object creation operations and object initialization operations. Upon execution of the plurality of bytecodes, memory barrier operations are performed subsequent to the performance of both the object creation operations and the object initialization operations. This guarantees type safety, and further guarantees initialization safety if the program is well-behaved. Optimization algorithms may also be applied in the compilation of bytecodes to improve performance.

Owner:IBM CORP

N-way memory barrier operation coalescing

ActiveUS20120198214A1Digital computer detailsSpecific program execution arrangementsParallel computingMemory operation

One embodiment sets forth a technique for N-way memory barrier operation coalescing. When a first memory barrier is received for a first thread group execution of subsequent memory operations for the first thread group are suspended until the first memory barrier is executed. Subsequent memory barriers for different thread groups may be coalesced with the first memory barrier to produce a coalesced memory barrier that represents memory barrier operations for multiple thread groups. When the coalesced memory barrier is being processed, execution of subsequent memory operations for the different thread groups is also suspended. However, memory operations for other thread groups that are not affected by the coalesced memory barrier may be executed.

Owner:NVIDIA CORP

Multithreaded lock management

Apparatus, systems, and methods may operate to construct a memory barrier to protect a thread-specific use counter by serializing parallel instruction execution. If a reader thread is new and a writer thread is not waiting to access data to be read by the reader thread, the thread-specific use counter is created and associated with a read data structure and a write data structure. The thread-specific use counter may be incremented if a writer thread is not waiting. If the writer thread is waiting to access the data after the thread-specific use counter is created, then the thread-specific use counter is decremented without accessing the data by the reader thread. Otherwise, the data is accessed by the reader thread and then the thread-specific use counter is decremented. Additional apparatus, systems, and methods are disclosed.

Owner:MICRO FOCUS SOFTWARE INC

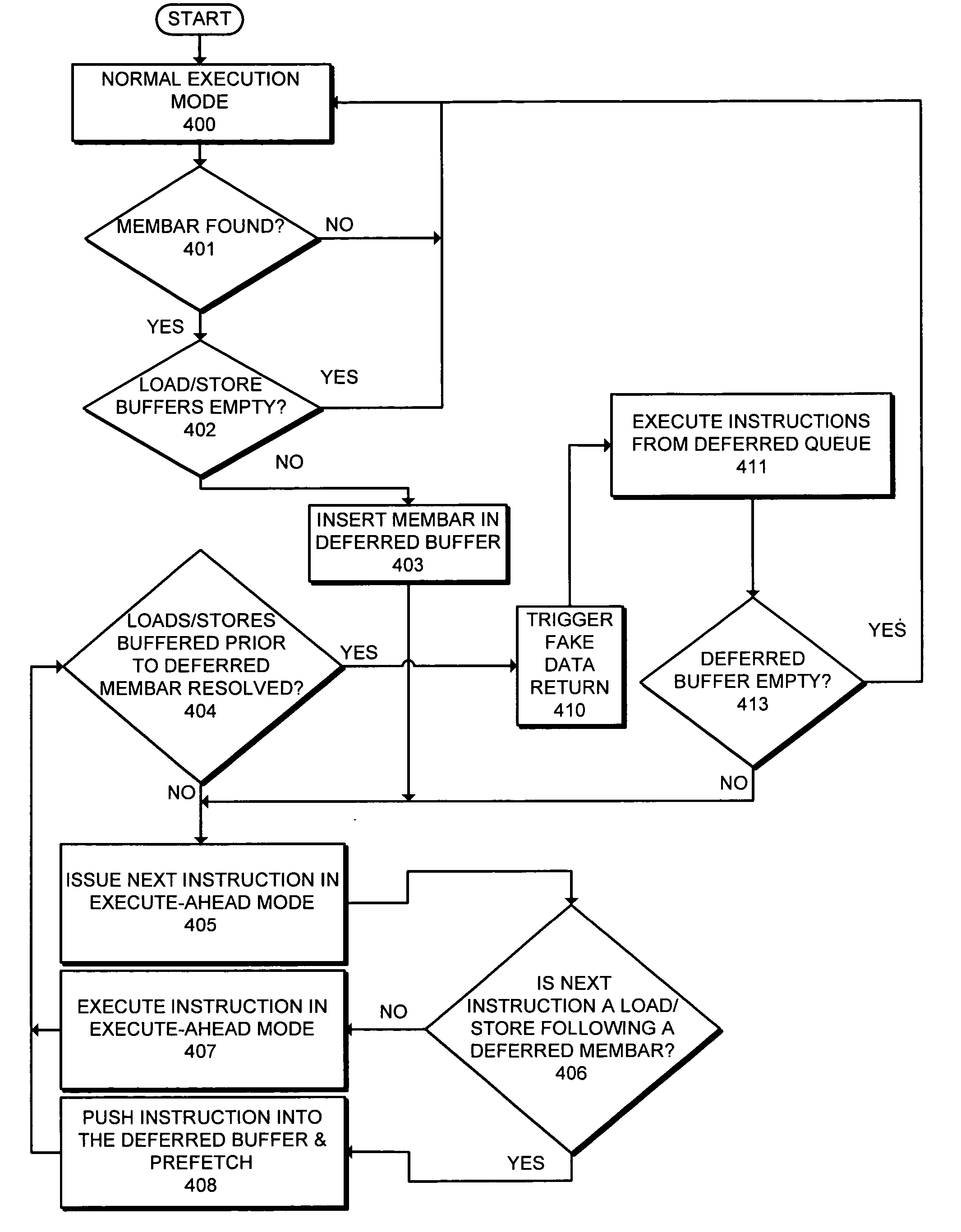

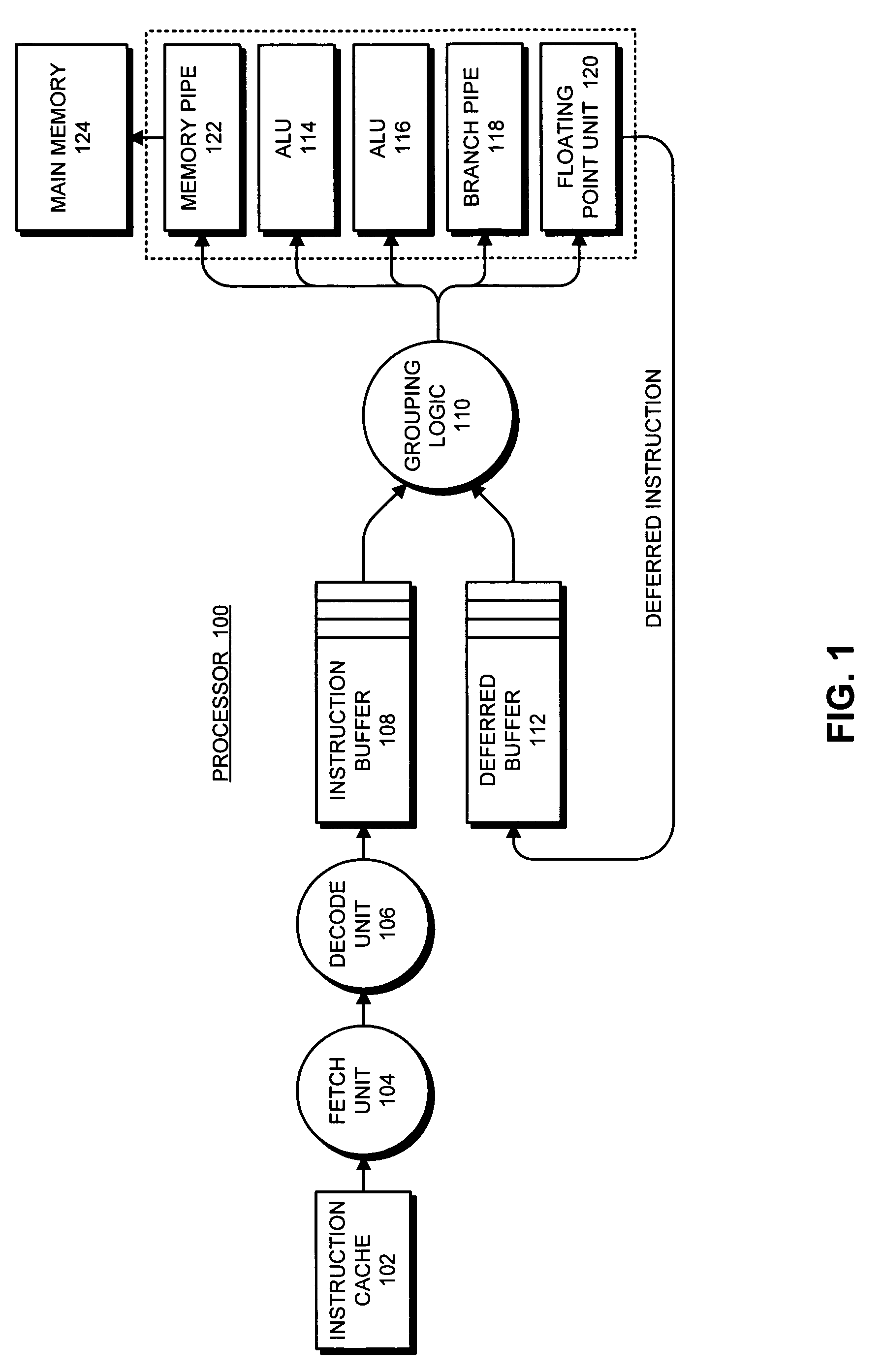

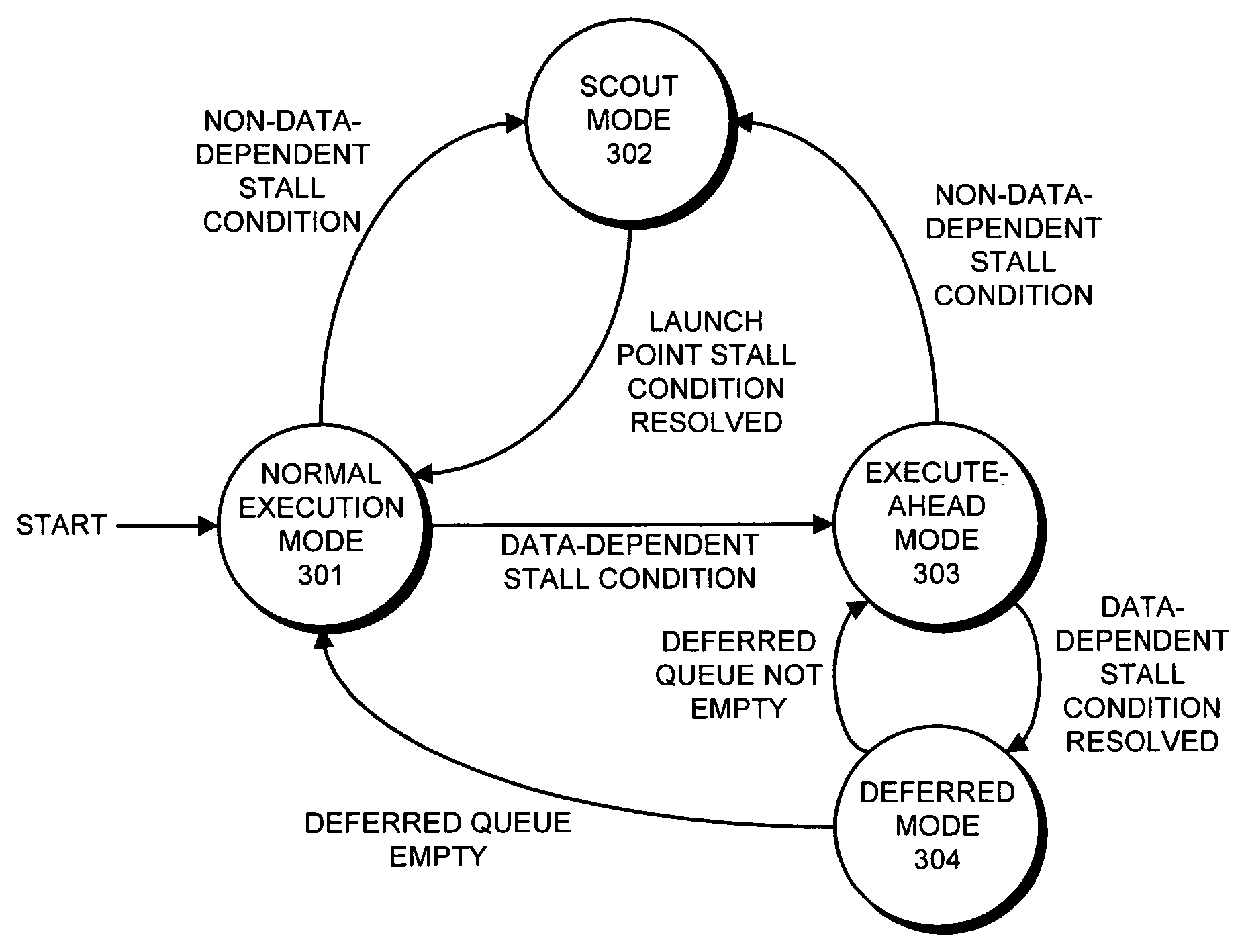

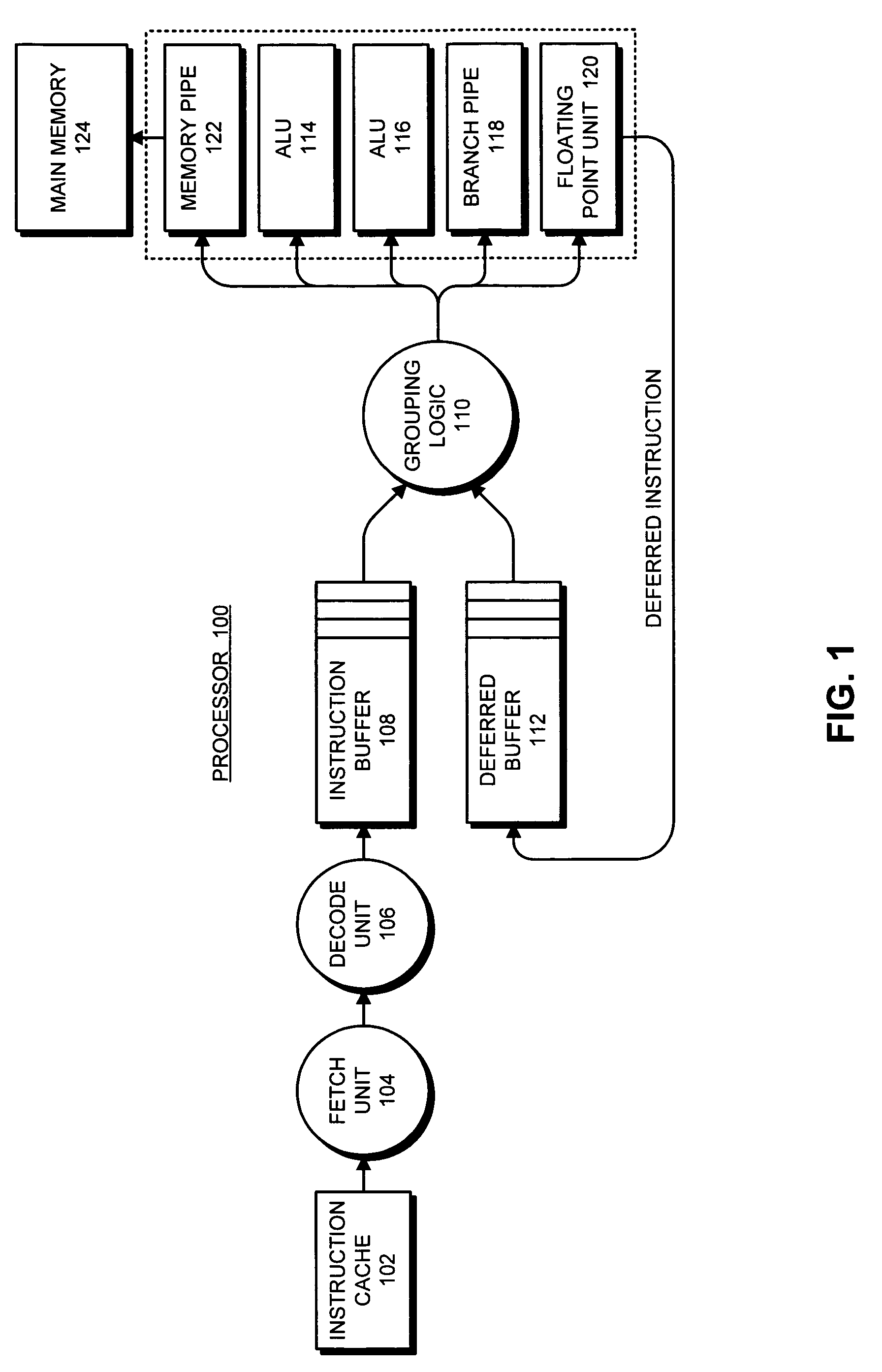

Method and apparatus for enforcing membar instruction semantics in an execute-ahead processor

ActiveUS20050273583A1Memory adressing/allocation/relocationDigital computer detailsProgram instructionSemantics

One embodiment of the present invention provides a system that facilitates executing a memory barrier (membar) instruction in an execute-ahead processor, wherein the membar instruction forces buffered loads and stores to complete before allowing a following instruction to be issued. During operation in a normal-execution mode, the processor issues instructions for execution in program order. Upon encountering a membar instruction, the processor determines if the load buffer and store buffer contain unresolved loads and stores. If so, the processor defers the membar instruction and executes subsequent program instructions in execute-ahead mode. In execute-ahead mode, instructions that cannot be executed because of an unresolved data dependency are deferred, and other non-deferred instructions are executed in program order. When all stores and loads that precede the membar instruction have been committed to memory from the store buffer and the load buffer, the processor enters a deferred mode and executes the deferred instructions, including the membar instruction, in program order. If all deferred instructions have been executed, the processor returns to the normal-execution mode and resumes execution from the point where the execute-ahead mode left off.

Owner:ORACLE INT CORP

Instructions and logic to provide memory fence and store functionality

Instructions and logic provide memory fence and store functionality. Some embodiments include a processor having a cache to store cache coherent data in cache lines for one or more memory addresses of a primary storage. A decode stage of the processor decodes an instruction specifying a source data operand, one or more memory addresses as destination operands, and a memory fence type. Responsive to the decoded instruction, one or more execution units may enforce the memory fence type, then store data from the source data operand to the one or more memory addresses, and ensure that the stored data has been committed to primary storage. For some embodiments, the primary storage may comprise persistent memory. For some embodiments, cache lines corresponding to the memory addresses may be flushed, or marked for persistent write back to primary storage. Alternatively the cache may be bypassed, e.g. by performing a streaming vector store.

Owner:INTEL CORP

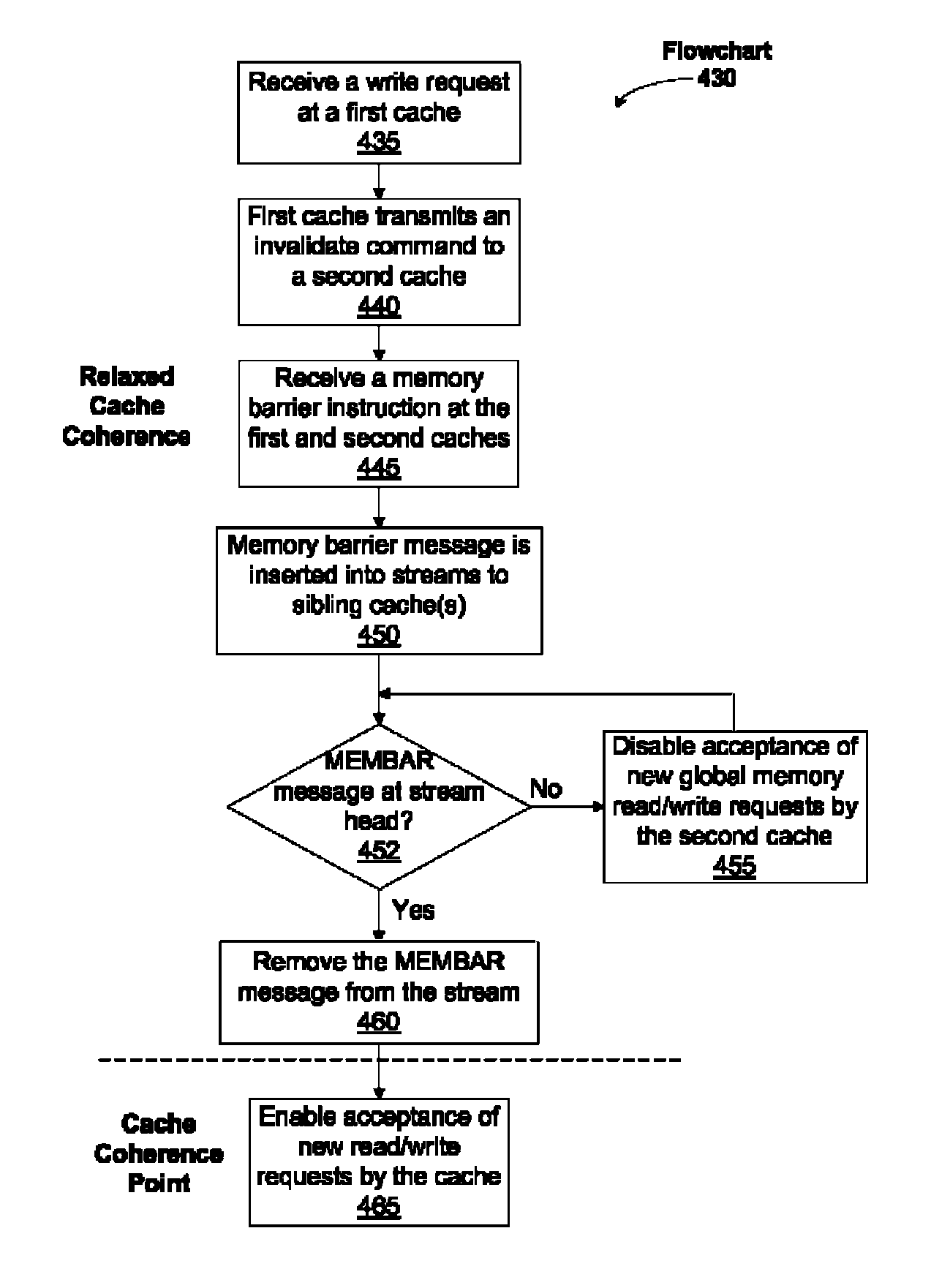

Relaxed coherency between different caches

One embodiment sets forth a technique for ensuring relaxed coherency between different caches. Two different execution units may be configured to access different caches that may store one or more cache lines corresponding to the same memory address. During time periods between memory barrier instructions relaxed coherency is maintained between the different caches. More specifically, writes to a cache line in a first cache that corresponds to a particular memory address are not necessarily propagated to a cache line in a second cache before the second cache receives a read or write request that also corresponds to the particular memory address. Therefore, the first cache and the second are not necessarily coherent during time periods of relaxed coherency. Execution of a memory barrier instruction ensures that the different caches will be coherent before a new period of relaxed coherency begins.

Owner:NVIDIA CORP

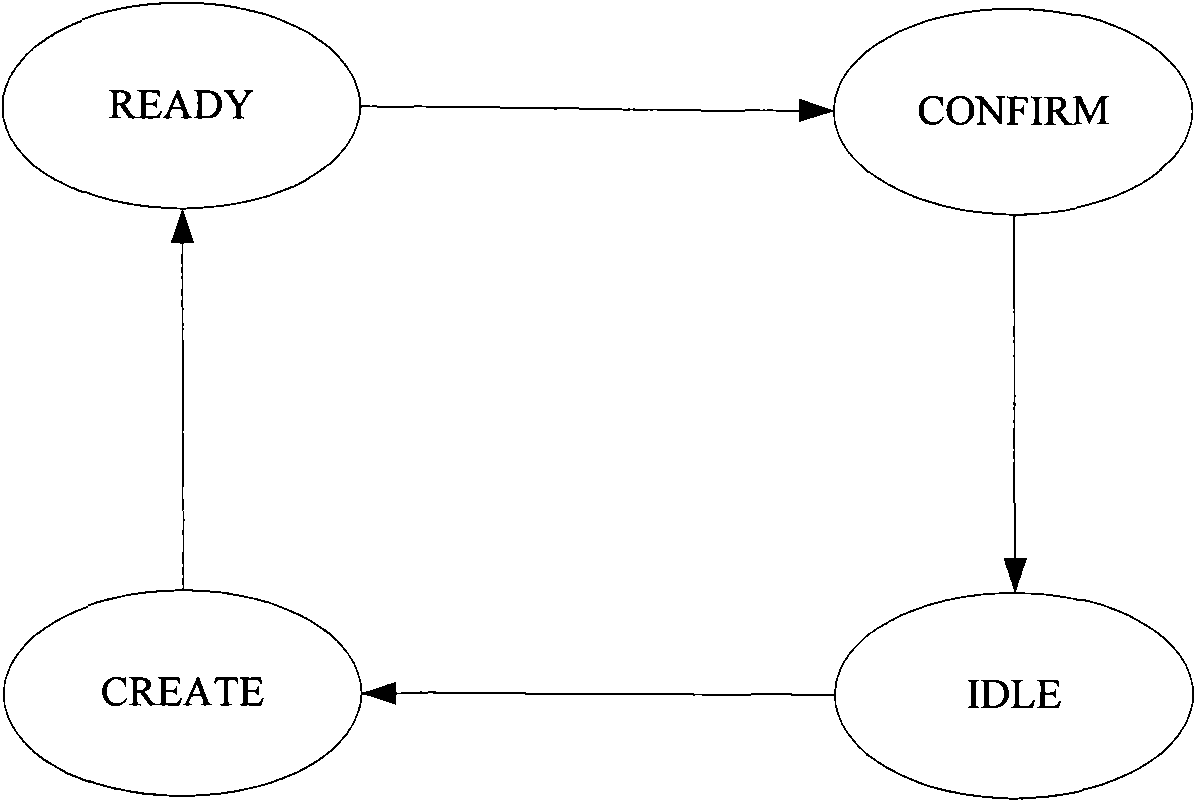

Method for concurrently processing join in multi-core systems

The invention discloses a method for concurrently processing a join in multi-core systems. The method is used for effectively controlling a join life cycle and join state conversion through the comprehensive use of a fine-grained read-write lock, a spin lock, a join reference counter and a memory barrier so that the multi-core systems provide the integrity and consistency of data when sharing thesame join, and the correctness and the performance of the communication and control process of multi-core data are ensured.

Owner:BEIJING TOPSEC NETWORK SECURITY TECH

Consistency evaluation of program execution across at least one memory barrier

InactiveUS8301844B2Memory adressing/allocation/relocationProgram controlLoad instructionProgram instruction

Multi-processor systems and methods are disclosed. One embodiment may comprise a multi-processor system including a processor that executes program instructions across at least one memory barrier. A request engine may provide an updated data fill corresponding to an invalid cache line. The invalid cache line may be associated with at least one executed load instruction. A load compare component may compare the invalid cache line to the updated data fill to evaluate the consistency of the at least one executed load instruction.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP

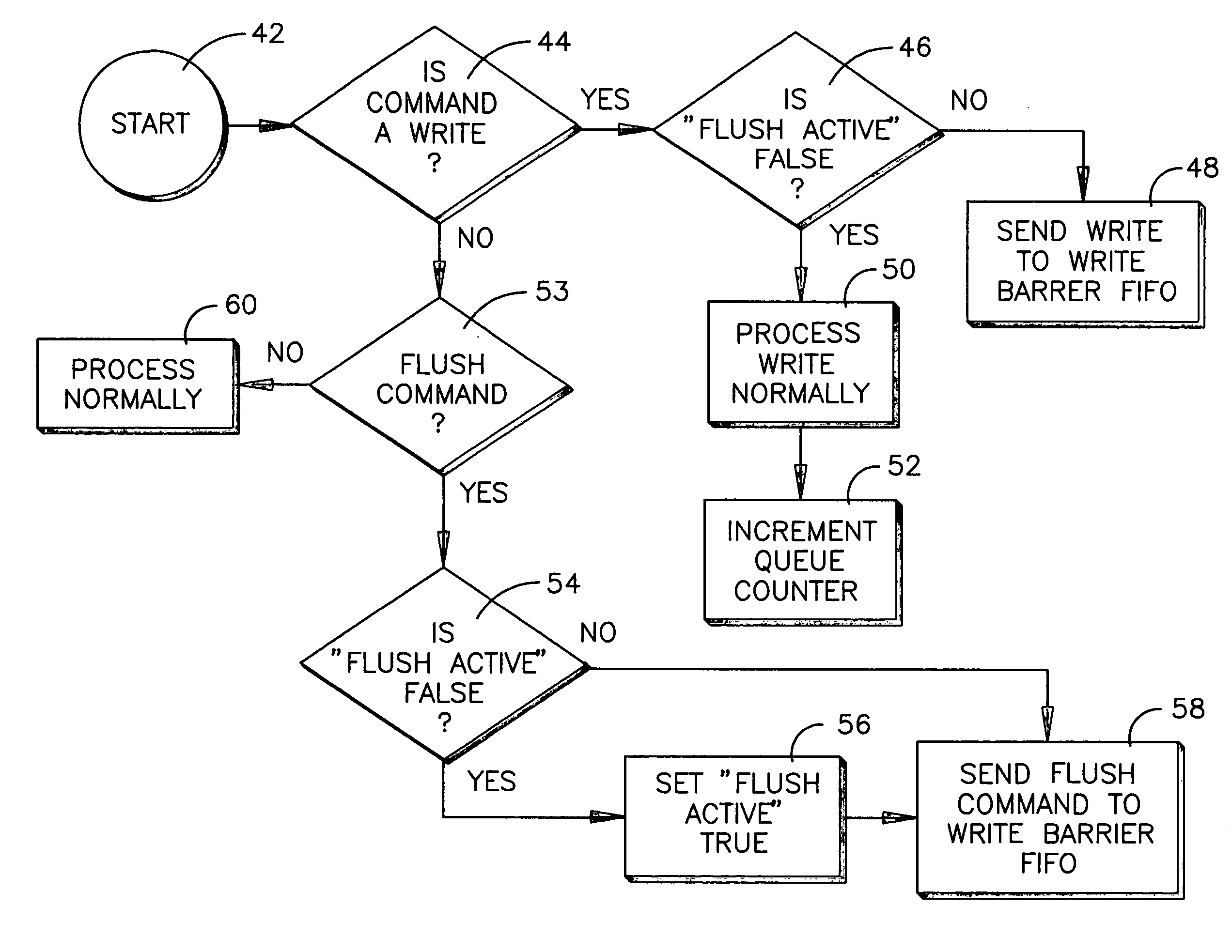

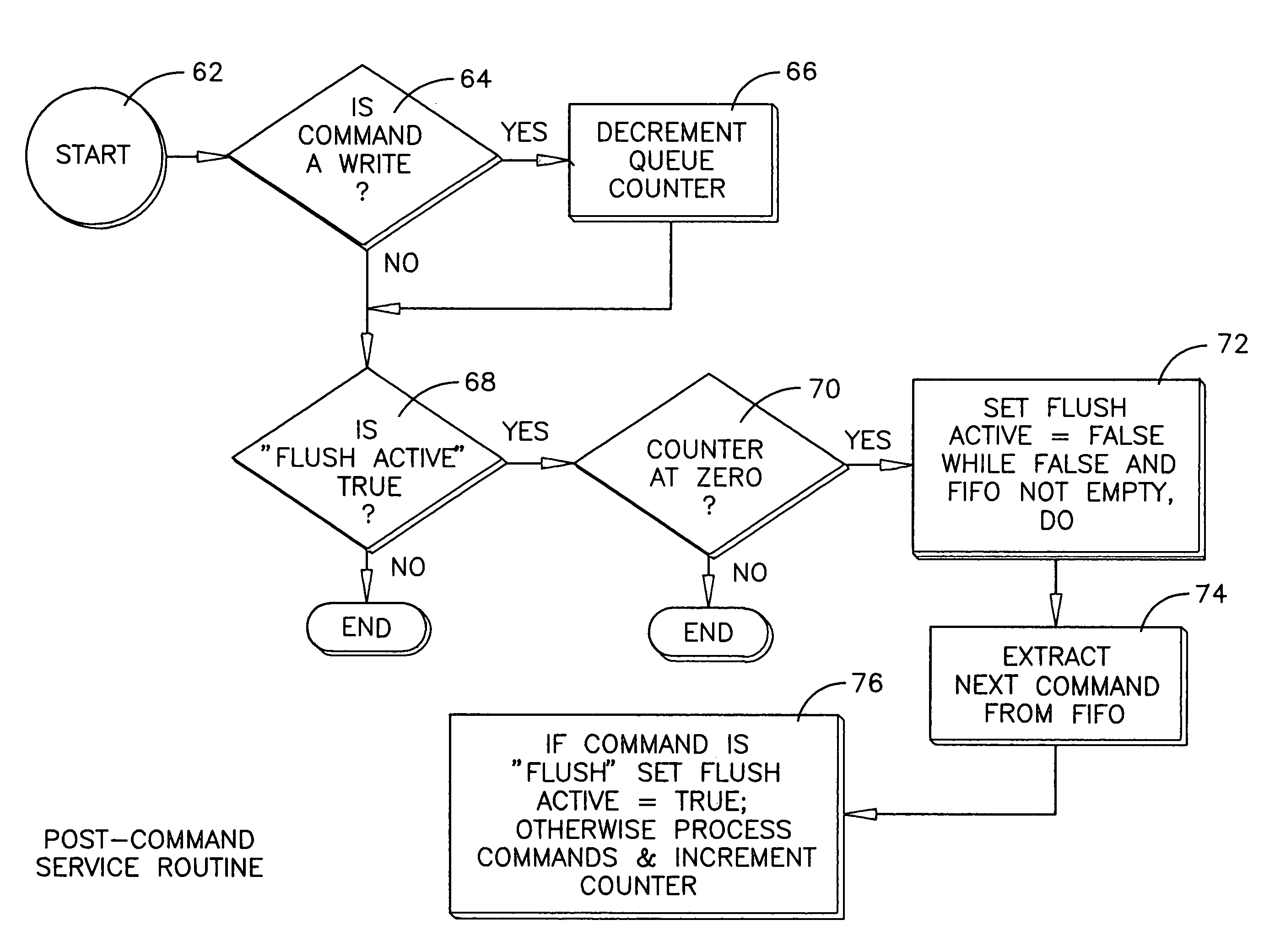

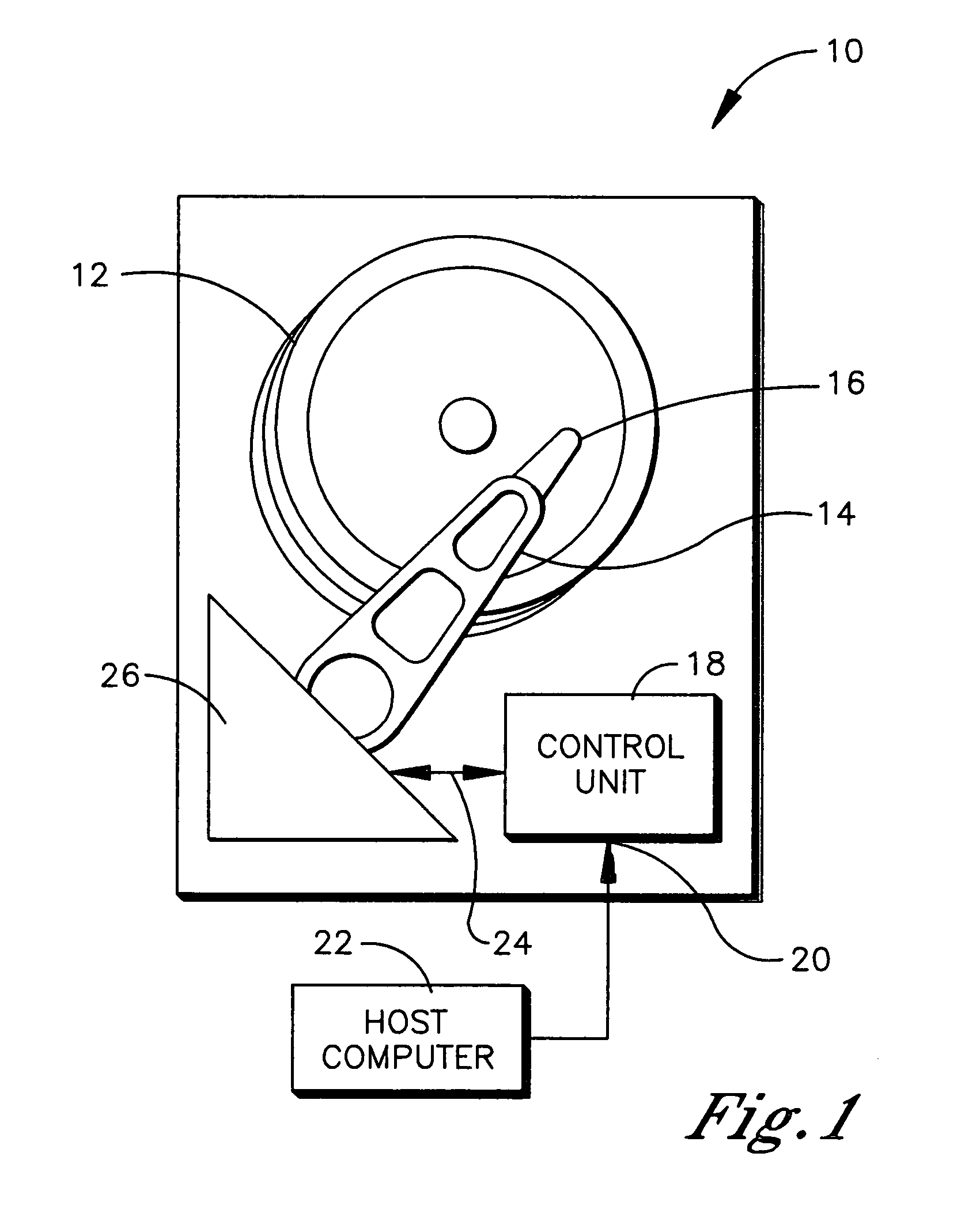

Transforming flush queue command to memory barrier command in disk drive

InactiveUS20070168626A1Lower performance requirementsImprove performanceMemory systemsComputer hardwareOperation mode

In a HDD, the flush queue (cache) command is transformed into a memory barrier command. The HDD thus has an operation mode in which flush commands do not cause the pending commands to be executed immediately, but instead simply introduces a constraint on the command reordering algorithms that prevents commands sent after the flush command from being executed before commands sent prior to the flush command. The constraint may be applied only on write commands.

Owner:WESTERN DIGITAL TECH INC

Transforming flush queue command to memory barrier command in disk drive

InactiveUS7574565B2Lower performance requirementsImprove performanceMemory systemsComputer hardwareOperation mode

In a HDD, the flush queue (cache) command is transformed into a memory barrier command. The HDD thus has an operation mode in which flush commands do not cause the pending commands to be executed immediately, but instead simply introduces a constraint on the command reordering algorithms that prevents commands sent after the flush command from being executed before commands sent prior to the flush command. The constraint may be applied only on write commands.

Owner:WESTERN DIGITAL TECH INC

Method and apparatus for enforcing membar instruction semantics in an execute-ahead processor

ActiveUS7689813B2Memory adressing/allocation/relocationDigital computer detailsParallel computingSemantics

Embodiments of the present invention provide a system that facilitates executing a memory barrier (membar) instruction in an execute-ahead processor, wherein the membar instruction forces buffered loads and stores to complete before allowing a following instruction to be issued.

Owner:ORACLE INT CORP

N-way memory barrier operation coalescing

ActiveUS8997103B2Program synchronisationMultiple digital computer combinationsParallel computingMemory operation

Owner:NVIDIA CORP

Coalescing memory barrier operations across multiple parallel threads

ActiveUS9223578B2Reduce impactExpensive to operateMultiprogramming arrangementsConcurrent instruction executionParallel computingSystem level

One embodiment of the present invention sets forth a technique for coalescing memory barrier operations across multiple parallel threads. Memory barrier requests from a given parallel thread processing unit are coalesced to reduce the impact to the rest of the system. Additionally, memory barrier requests may specify a level of a set of threads with respect to which the memory transactions are committed. For example, a first type of memory barrier instruction may commit the memory transactions to a level of a set of cooperating threads that share an L1 (level one) cache. A second type of memory barrier instruction may commit the memory transactions to a level of a set of threads sharing a global memory. Finally, a third type of memory barrier instruction may commit the memory transactions to a system level of all threads sharing all system memories. The latency required to execute the memory barrier instruction varies based on the type of memory barrier instruction.

Owner:NVIDIA CORP

Generic shared memory barrier

InactiveUS8065681B2Efficient executionGeneral purpose stored program computerMultiprogramming arrangementsInformation processingTheoretical computer science

A method, information processing node, and a computer program storage product are provided for performing synchronization operations between participants of a program. Each participant includes at least one of a set of processes and a set of threads. Each participant in a first subset of participants of a program updates a portion of a first local vector that is local to the respective participant. Each participant in a second subset of participants of the program updates a portion of a second local vector that is local to the respective participant. The participants in the second subset exit the synchronization barrier in response to determining that all of the participants in the first subset have reached the synchronization barrier.

Owner:DARPA

Efficient execution of memory barrier bus commands

ActiveCN101395574AProgram synchronisationConcurrent instruction executionOrder processingHandling system

The disclosure is directed to a weakly-ordered processing system and method of executing memory barriers in weakly-ordered processing system. The processing system includes memory and a master device configured to issue memory access requests, including memory barriers, to the memory. The processing system also includes a slave device configured to provide the master device access to the memory, the slave device being further configured to produce a signal indicating that an ordering constraint imposed by a memory barrier issued by the master device will be enforced, the signal being producedbefore the execution of all memory access requests issued by the master device to the memory before the memory barrier.

Owner:QUALCOMM INC

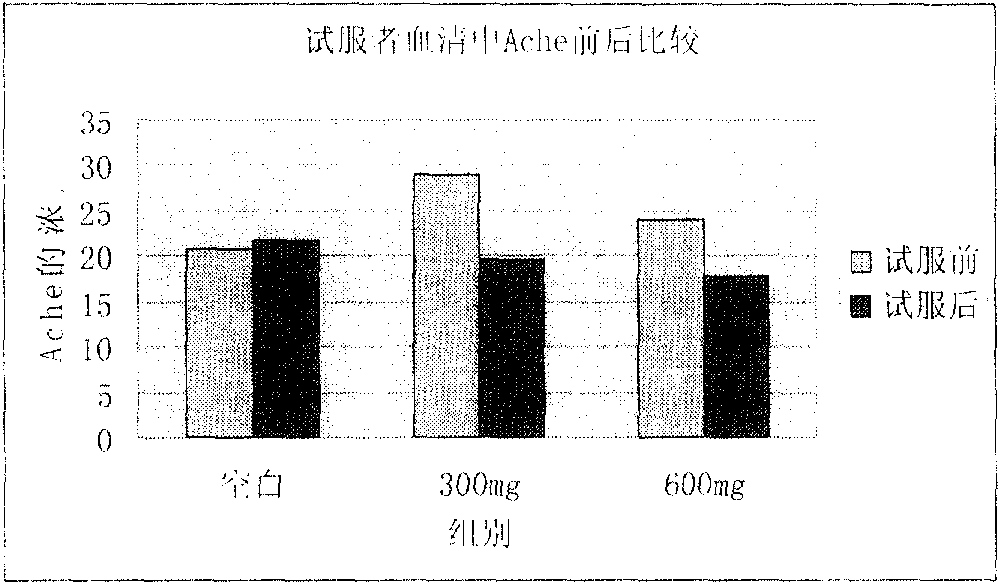

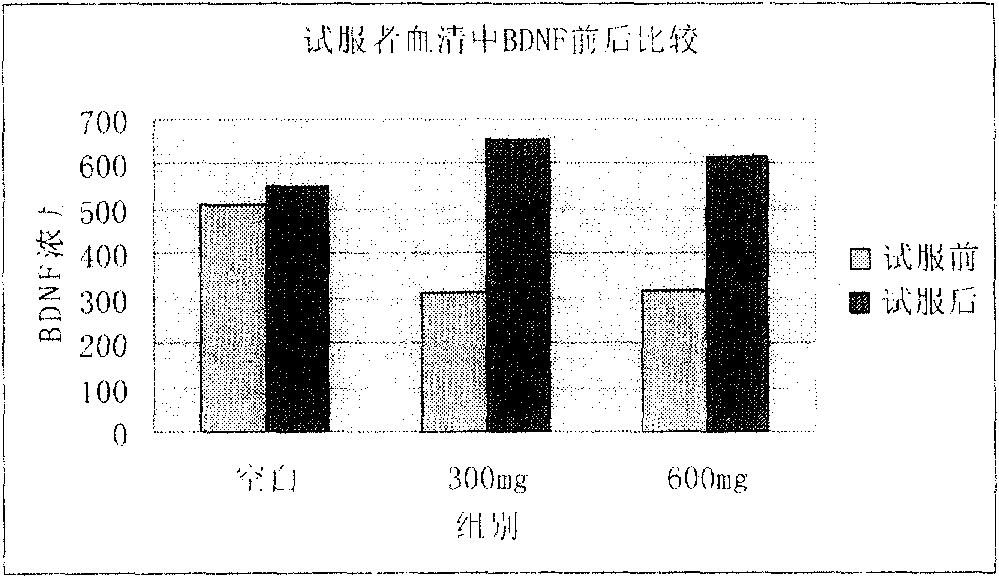

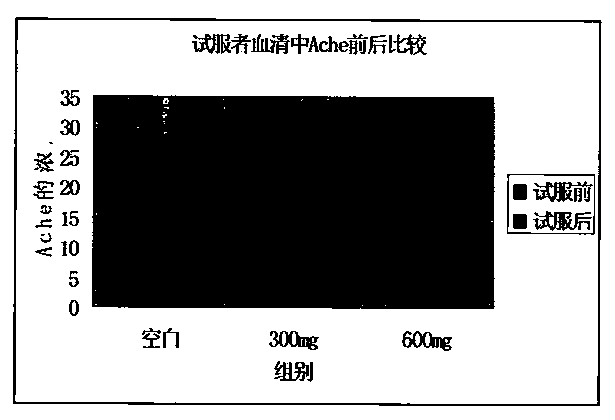

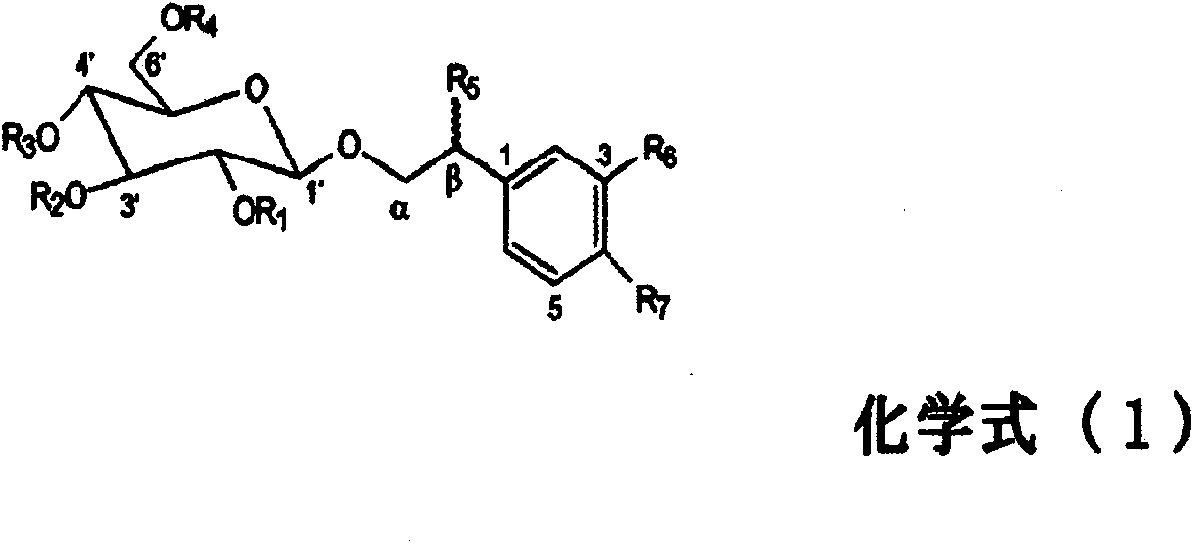

Novel health-care food or drug with function of improving memory

ActiveCN102499322AInhibitory activityEnhance memoryOrganic active ingredientsNervous disorderDecompositionAcetylcholinesterase

The invention provides a novel health-care food or drug with the function of improving memory. The health-care food or drug can reduce the content of acetylcholinesterase, further reduce the decomposition of acetylcholine, improve the content of BDNF (brain derived neurophic factor), and improve memory. Besides, even through the food or drug is ate by healthy people that have no memory barriers, the inhabitation of the acetylcholinesterase as well as BDNF can improve memory; mild cognitive impairment resulting from declining memory of health people can be further effectively prevented, and senile dementia can be further prevented.

Owner:SINPHAR TIAN LI PHARMA

Method and apparatus to guarantee type and initialization safety in multithreaded programs

InactiveUS7434212B2Initialization safety is also guaranteedImprove performanceMemory loss protectionSpecific program execution arrangementsType safetyByte

A method and apparatus to guarantee type safety in multithreaded programs, and to guarantee initialization safety in well-behaved multithreaded programs. A plurality of bytecodes representing a program are received and examined to identify bytecodes defining object creation operations and object initialization operations. Upon execution of the plurality of bytecodes, memory barrier operations are performed subsequent to the performance of both the object creation operations and the object initialization operations. This guarantees type safety, and further guarantees initialization safety if the program is well-behaved. Optimization algorithms may also be applied in the compilation of bytecodes to improve performance.

Owner:INT BUSINESS MASCH CORP

Relaxed coherency between different caches

One embodiment sets forth a technique for ensuring relaxed coherency between different caches. Two different execution units may be configured to access different caches that may store one or more cache lines corresponding to the same memory address. During time periods between memory barrier instructions relaxed coherency is maintained between the different caches. More specifically, writes to a cache line in a first cache that corresponds to a particular memory address are not necessarily propagated to a cache line in a second cache before the second cache receives a read or write request that also corresponds to the particular memory address. Therefore, the first cache and the second are not necessarily coherent during time periods of relaxed coherency. Execution of a memory barrier instruction ensures that the different caches will be coherent before a new period of relaxed coherency begins.

Owner:NVIDIA CORP

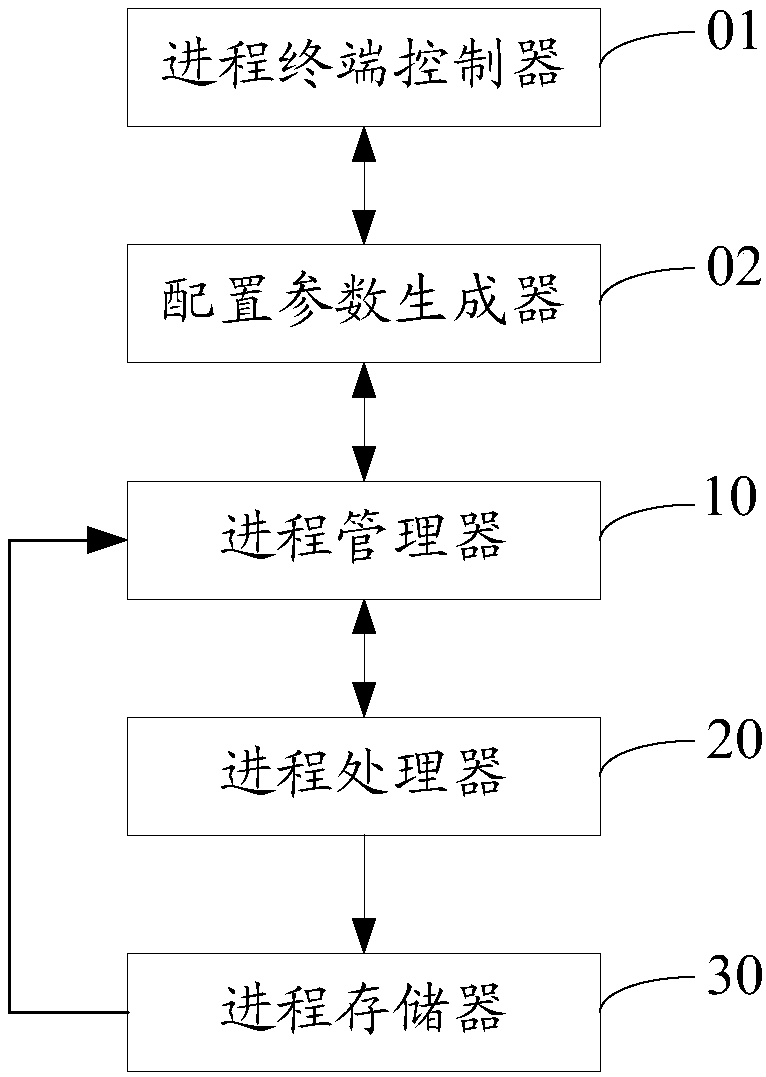

Process pool system and method

ActiveCN107656804AImprove processing speedImprove processing efficiencyProgram initiation/switchingProgram loading/initiatingProcess memoryMulti processor

The invention discloses a process pool system and method. The process pool system comprises a process manager, a process processor and a process memory, wherein the process manager responds to a process starting instruction, obtains a work task to be processed, and sends the work task to be processed to the process processor; the process processor carries out lock-free processing on a to-be-processed work process corresponding to the work task to be processed, generates a work process processing result and sends the work process processing result to the process memory; and the process memory adopts a memory barrier to store the work process processing result. The process pool system supports a symmetrical multi-processor framework, the work process is subjected to the lock-free processingto improve the processing speed and efficiency of the work process, the caching of the work process is consistent through the memory barrier, and a resource use ratio and the processing ability of thework process are improved.

Owner:深圳金融电子结算中心有限公司

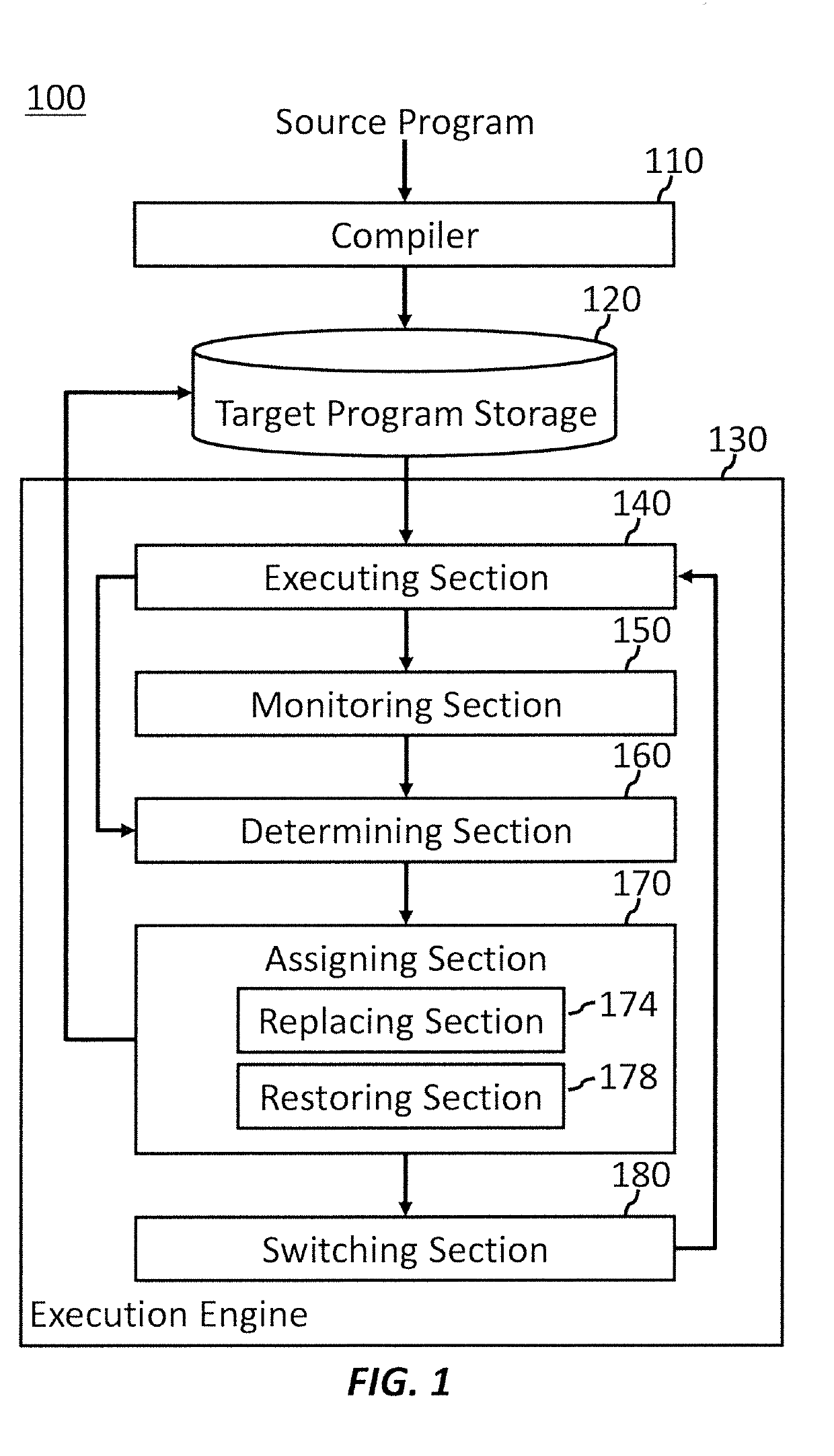

Optimizing memory fences based on workload

A method, computer program product, and apparatus for optimizing memory fences based on workload are provided. The method includes determining whether to execute a target program on a single hardware thread or a plurality of hardware threads. The method also includes assigning one of a light-weight memory fence and a heavy-weight memory fence as a memory fence in the target program based on whether to execute the target program on the single hardware thread or the plurality of hardware threads. The method further includes assigning the light-weight memory fence in response to determining to execute the target program on the single hardware thread, and the heavy-weight memory fence is assigned in response to determining to execute the target program on the plurality of hardware threads.

Owner:IBM CORP

Fused store exclusive/memory barrier operation

ActiveUS8285937B2Reduce stepsLower latencyRuntime instruction translationMemory adressing/allocation/relocationCache hierarchyTerm memory

In an embodiment, a processor may be configured to detect a store exclusive operation followed by a memory barrier operation in a speculative instruction stream being executed by the processor. The processor may fuse the store exclusive operation and the memory barrier operation, creating a fused operation. The fused operation may be transmitted and globally ordered, and the processor may complete both the store exclusive operation and the memory barrier operation in response to the fused operation. As the fused operation progresses through the processor and one or more other components (e.g. caches in the cache hierarchy) to the ordering point in the system, the fused operation may push previous memory operations to effect the memory barrier operation. In some embodiments, the latency for completing the store exclusive operation and the subsequent data memory barrier operation may be reduced if the store exclusive operation is successful at the ordering point.

Owner:APPLE INC

Memory barriers primitives in an asymmetric heterogeneous multiprocessor environment

The present invention provides a method and apparatus for creating memory barriers in a Direct Memory Access (DMA) device. A memory barrier command is received and a memory command is received. The memory command is executed based on the memory barrier command. A bus operation is initiated based on the memory barrier command. A bus operation acknowledgment is received based on the bus operation. The memory barrier command is executed based on the bus operation acknowledgment. In a particular aspect, memory barrier commands are direct memory access sync (dmasync) and direct memory access enforce in-order execution of input / output (dmaeieio) commands.

Owner:INT BUSINESS MASCH CORP +1

Novel health-care food or drug with function of improving memory

ActiveCN102499322BInhibitory activityEnhance memoryOrganic active ingredientsNervous disorderDecompositionAcetylcholinesterase

Owner:SINPHAR TIAN LI PHARMA

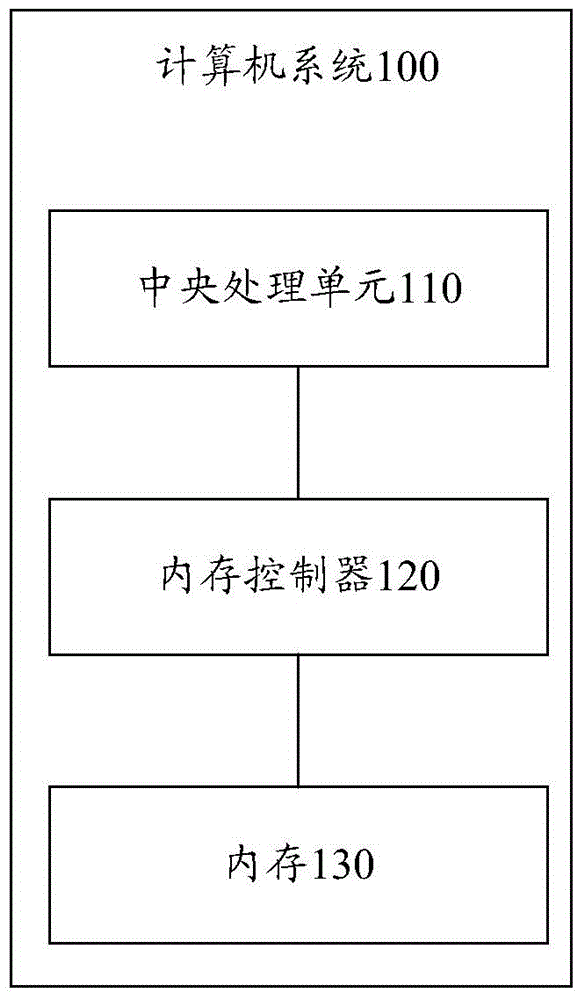

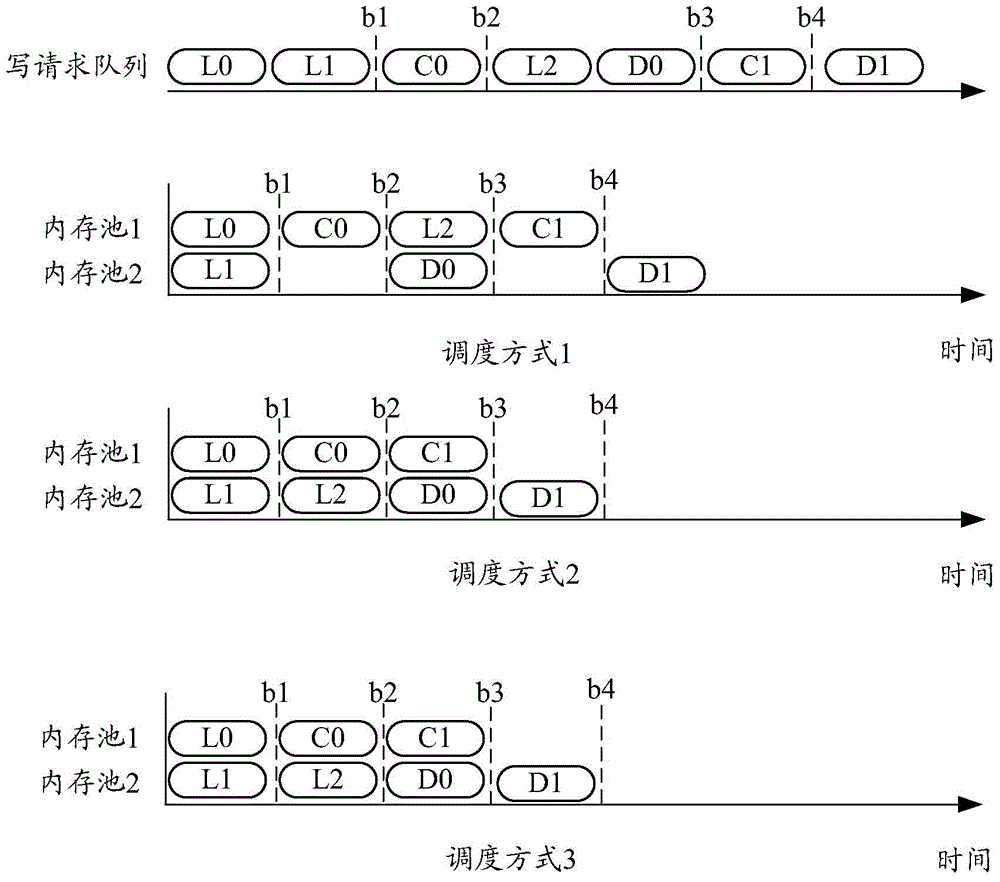

Write request processing method and memory controller

ActiveCN106293491AImprove processing efficiencyIncrease profitInput/output to record carriersProgram synchronisationMemory controllerComputer engineering

The invention provides a write request processing method and a memory controller. The method comprises: determining that the number of write requests in a first write request set to be scheduled is less than the number of unoccupied storage units in a memory, wherein a first memory barrier exists between the write requests in the first write request set and other write requests in a write request queue; determining a second write request set, wherein the write requests in the second write request set are log write requests, the write requests in the second write request set are located behind the first memory barrier in the write request queue, and the sum of the number of the write requests in the second write request set and the number of the write requests in the first write request set is not greater than the number of the unoccupied storage units in the memory; and sending the write requests in the first write request set and the write requests in the second write request set in parallel into the unoccupied different storage units of the memory. The invention increases the efficiency of processing write requests.

Owner:HUAWEI TECH CO LTD +1

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com