Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

142 results about "Program Thread" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Technically, a thread is defined as an independent stream of instructions that can be scheduled to run as such by the operating system. To the software developer, the concept of a "procedure" that runs independently from its main program may best describe a thread.

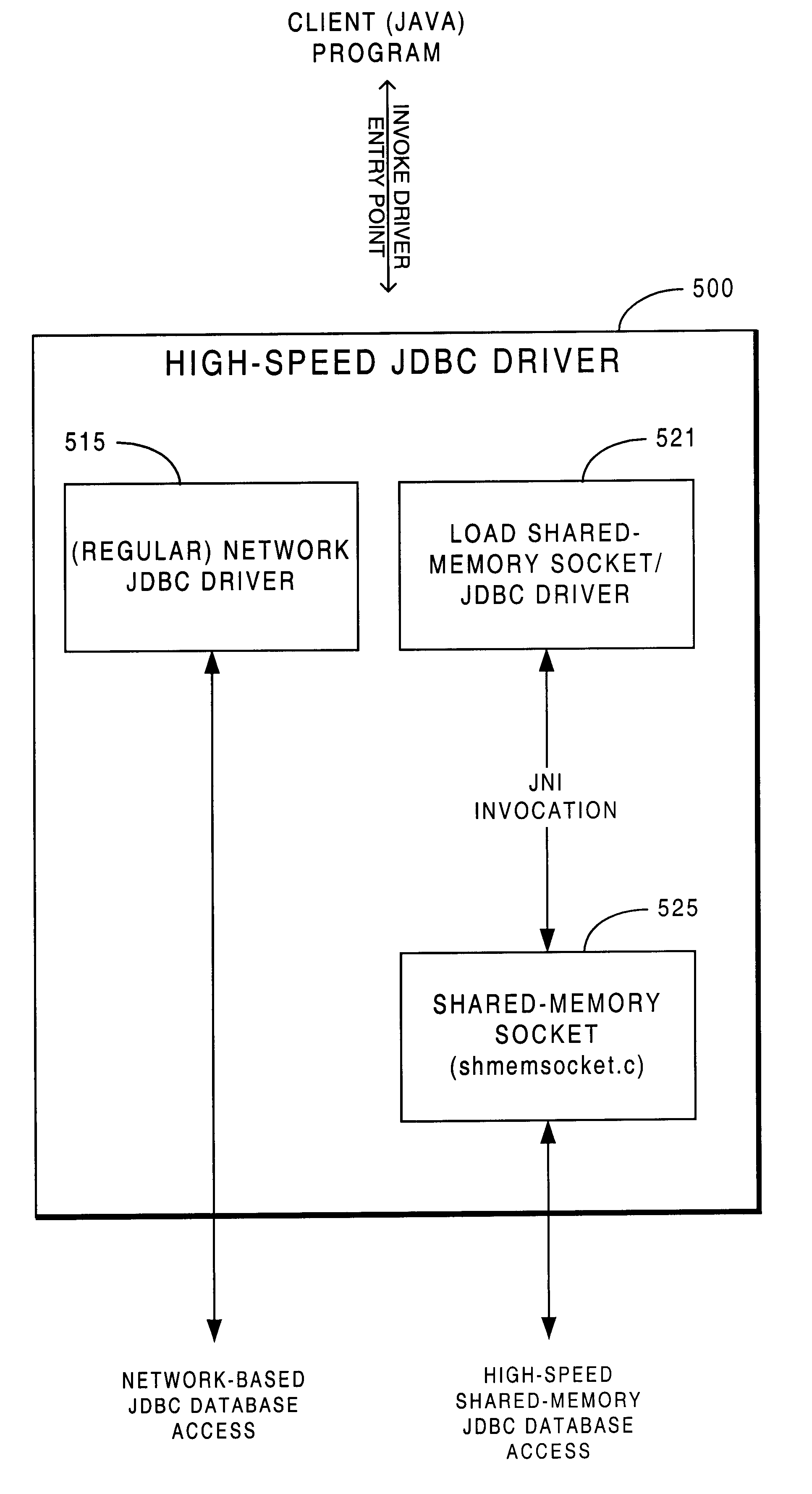

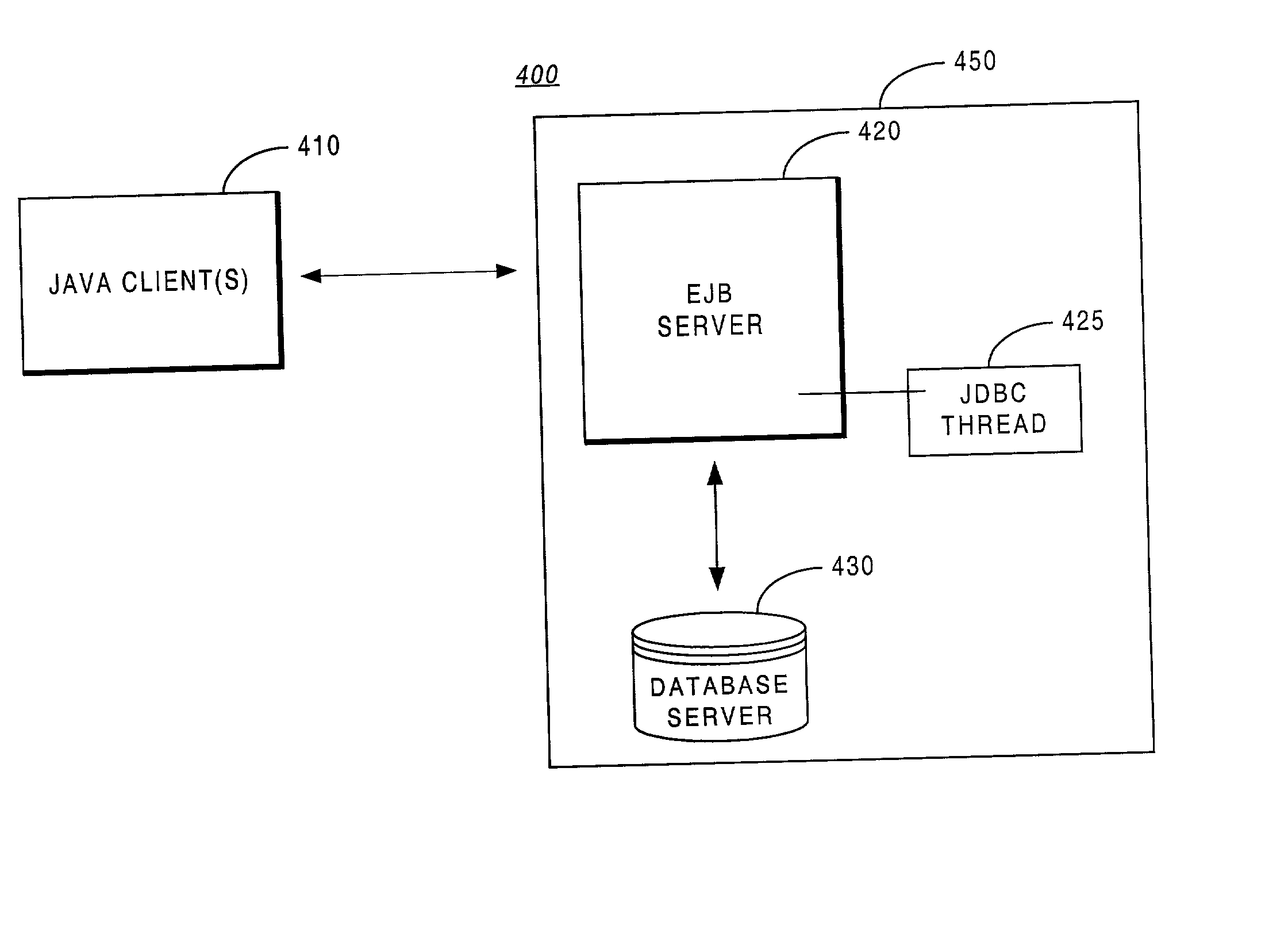

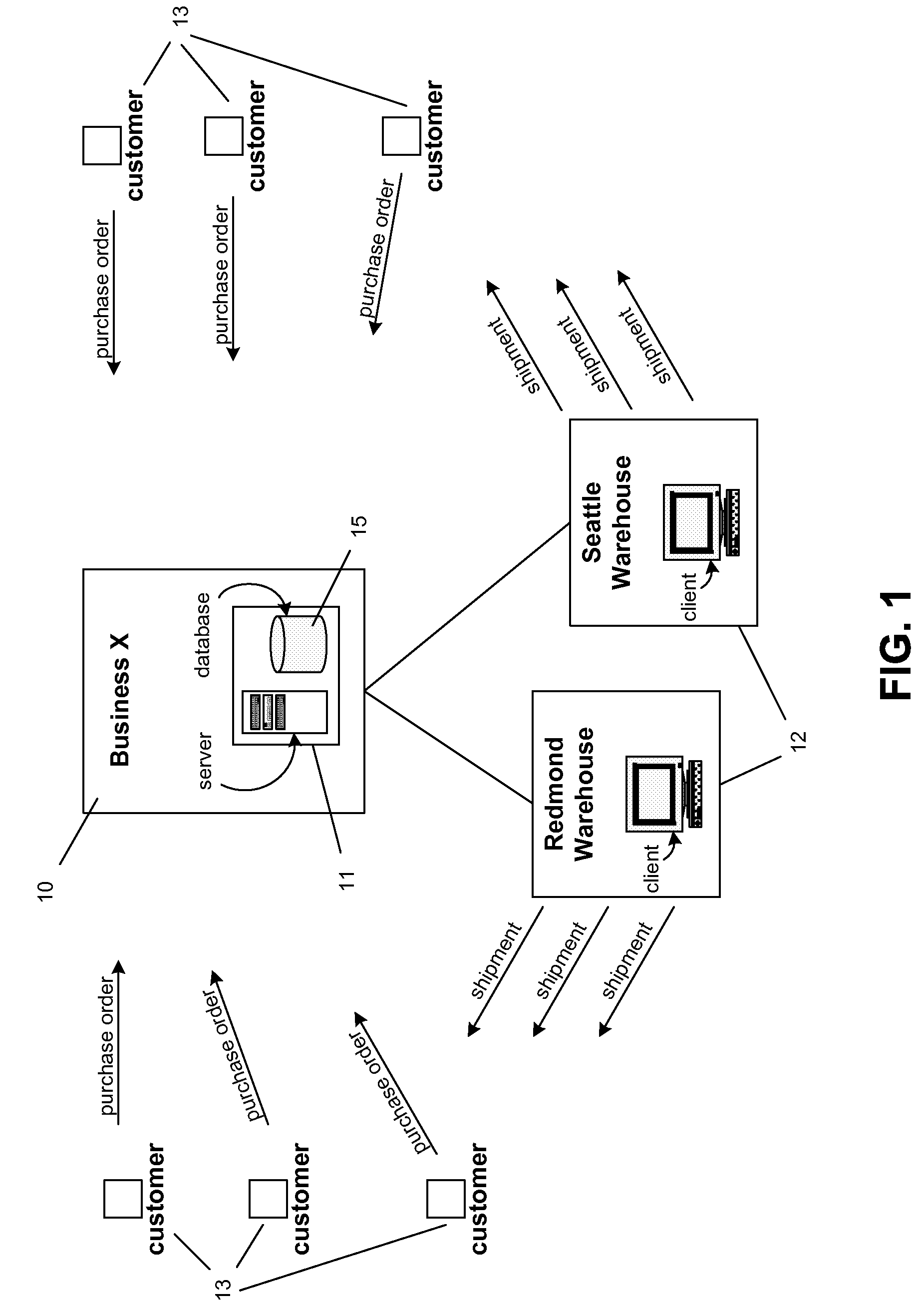

Methodology providing high-speed shared memory access between database middle tier and database server

InactiveUS6687702B2Data processing applicationsInterprogram communicationApplication serverDatabase server

A multi-tier database system is modified such that a middle-tier application server (EJB server) and a database server run on the same host computer and communicate via shared-memory interprocess communication. The system includes a database (e.g., JDBC) driver thread that attaches to the database server, specifically by attaching to the database server's shared memory segment. Operation of the JDBC driver is modified to provide direct access between the middle tier (i.e., EJB server) and the database server, when the two are operating on the same host computer.

Owner:SYBASE INC

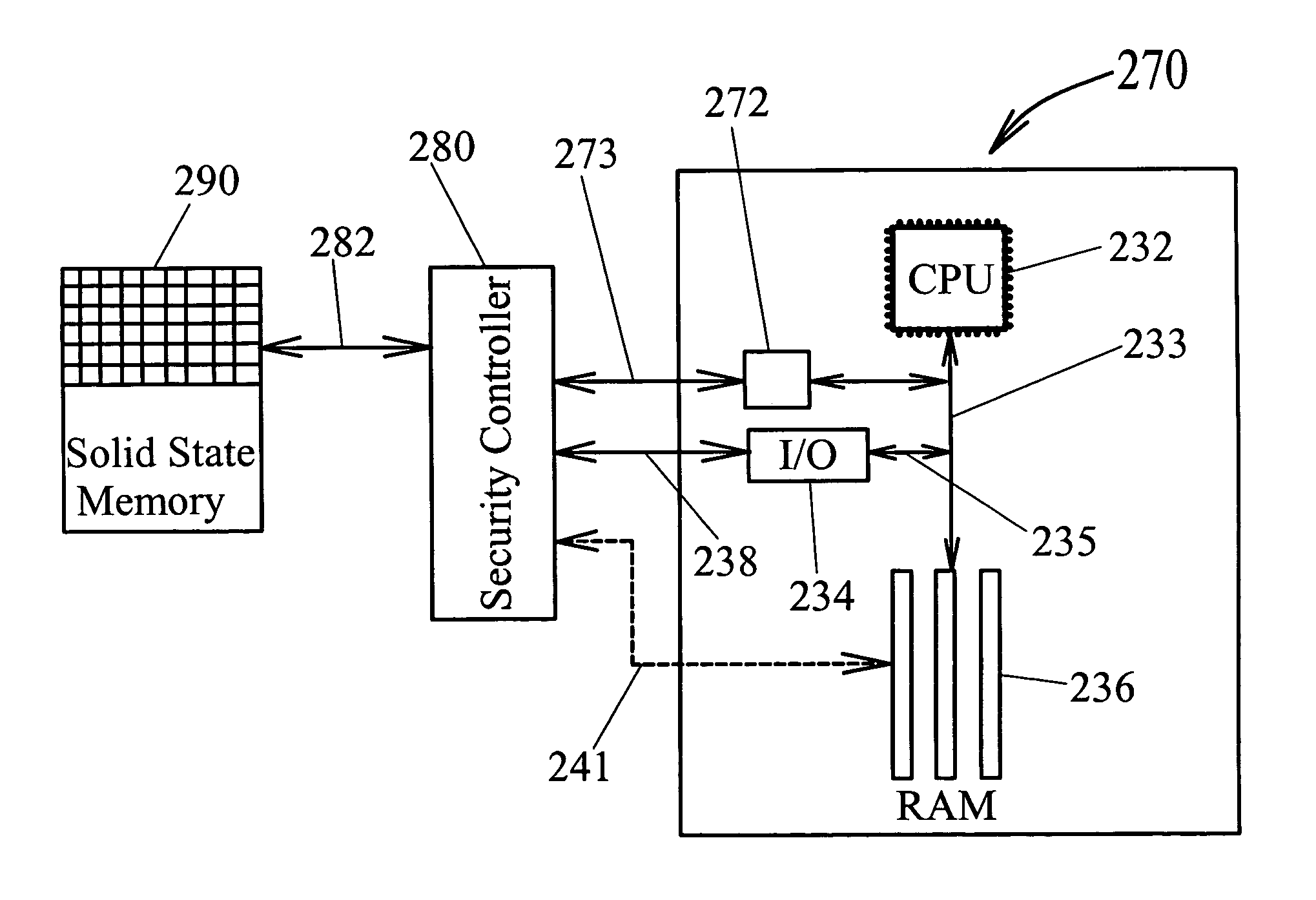

Dynamic associative storage security for long-term memory storage devices

InactiveUS7814554B1Improve protectionSignificant to useDigital data processing detailsUnauthorized memory use protectionStorage securityProgram Thread

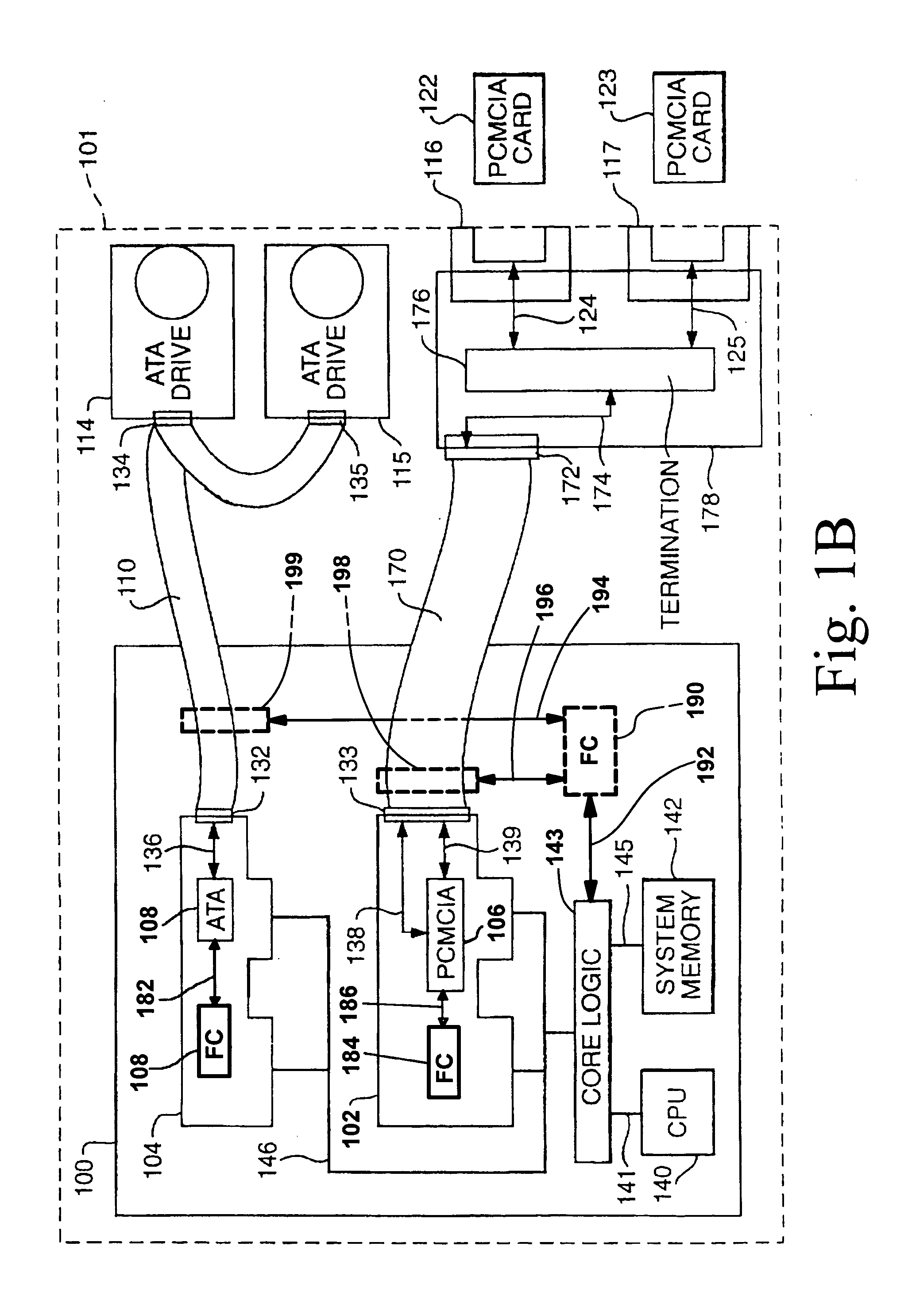

A hierarchical folder security system for mapping files into and out of alias directories and / or real directories depending on: 1) the directory of the specific file being accessed, 2) the program thread requesting access to a memory storage device (114), and 3) the type of access request being made (i.e. load, save, run, etc.). For write access requests (i.e. save, modify, paste, delete, cut, move, rename, etc.), security controller (180) determines if the requested folder access address (150a) is associated with the requesting program's folder address (168). If it is, the file is written at absolute address (156b) on disk platter (160) through real folder map (152). If requested address (150a) is not associated with program folder address (168), then an alias directory address is created and the file is written at alias address (156a) through alias folder address map (154).

Owner:RAGNER GARY DEAN

Virus scanning prioritization using pre-processor checking

ActiveUS7188367B1Promote quick completionMemory loss protectionUnauthorized memory use protectionParallel computingProgram Thread

A virus scanner is provided in which a pool of pre-processor threads and a queue are interposed between the event filter and the pool of scanner threads. The pre-processor threads perform operations that can be completed quickly to determine whether an object of a scan request needs to be scanned. The pre-processor threads gather characteristics about the scan requests and place them in the queue in a priority order based on those characteristics. The scanner threads select a scan request from the queue based on the priority order. Alternatively, the scan request is selected based on the scan request's characteristics as compared to the characteristics of the scan requests whose objects are currently being scanned by other scanner threads in the pool.

Owner:MCAFEE LLC

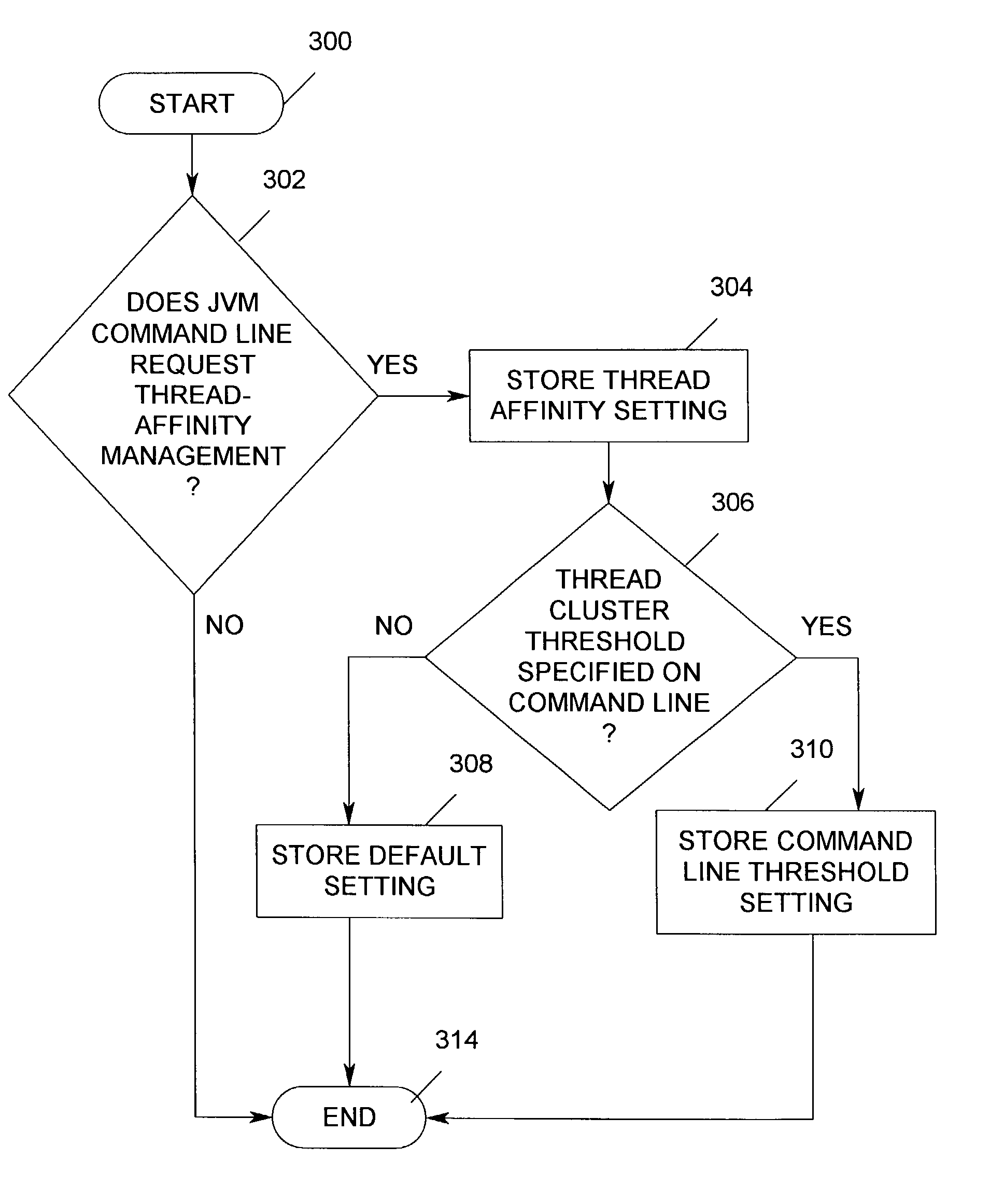

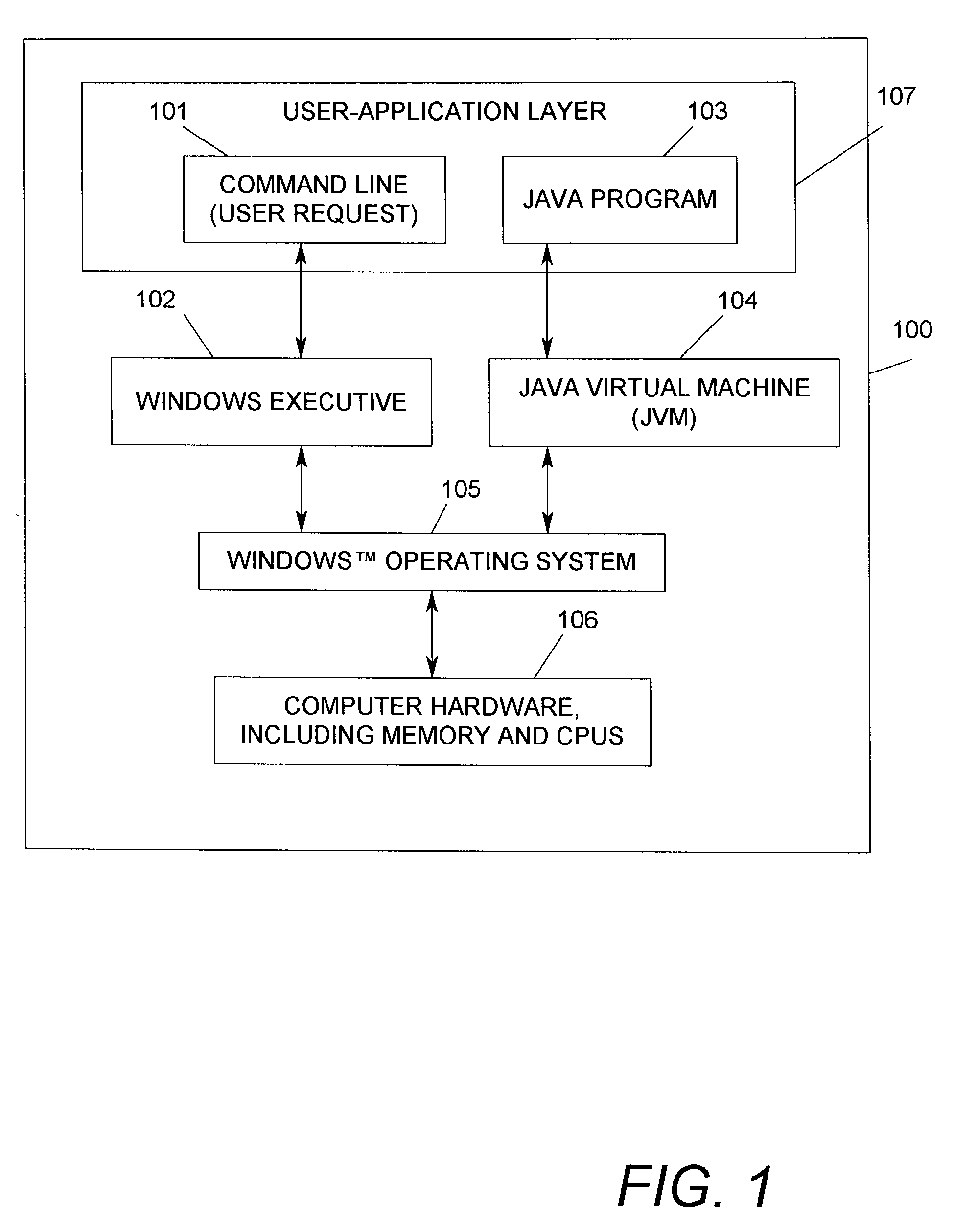

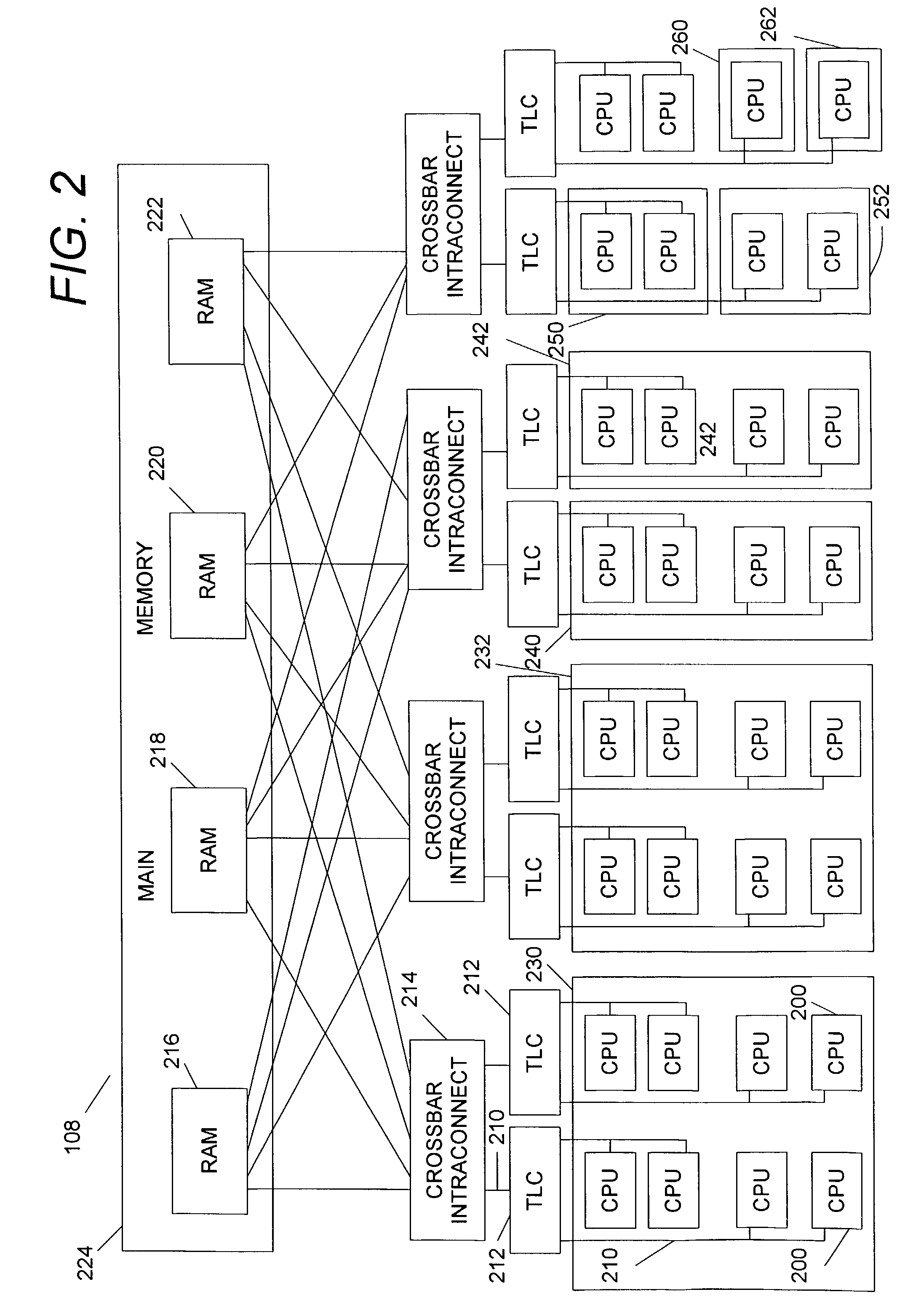

Method and system for managing distribution of computer-executable program threads between central processing units in a multi-central processing unit computer system

ActiveUS7093258B1Minimize sharing of dataIncrease the number ofProgram initiation/switchingResource allocationOperational systemAccess time

A method and a system is disclosed for managing distribution of computer-executable program threads between a plurality of central processing units (CPUs) administered by an operating system in a multi-CPU computer system having a plurality of memory caches shared amongst the CPUs. The method includes assigning the CPUs to a plurality of CPU-groups of a predetermined group-size, selecting a CPU-group form the CPU-groups, setting a predetermined threshold for the selected CPU-group, and affinitizing a program thread to the selected CPU-group based on the predetermined threshold wherein the operating system distributes the program threads among the CPU-groups based on the affinitizing. In this way, the memory access time delays associated with the transfer of data amongst the CPU-groups can be advantageously reduced while the ability to more effectively utilize the total number of available CPUs in the processing of the program threads is advantageously increased.

Owner:UNISYS CORP

Integrated mechanism for suspension and deallocation of computational threads of execution in a processor

InactiveUS20050050305A1Minimize overheadMinimal overheadProgram initiation/switchingSoftware engineeringProgram ThreadComputer science

A mechanism for processing in a processor enabled to support and execute multiple program threads includes a parameter for scheduling a program thread and an instruction disposed within the program thread and enabled to access the parameter. When the parameter equals a first value the instruction, when issued by a program thread, reschedules the program thread in accordance with one or more conditions encoded within the parameter.

Owner:MIPS TECH INC

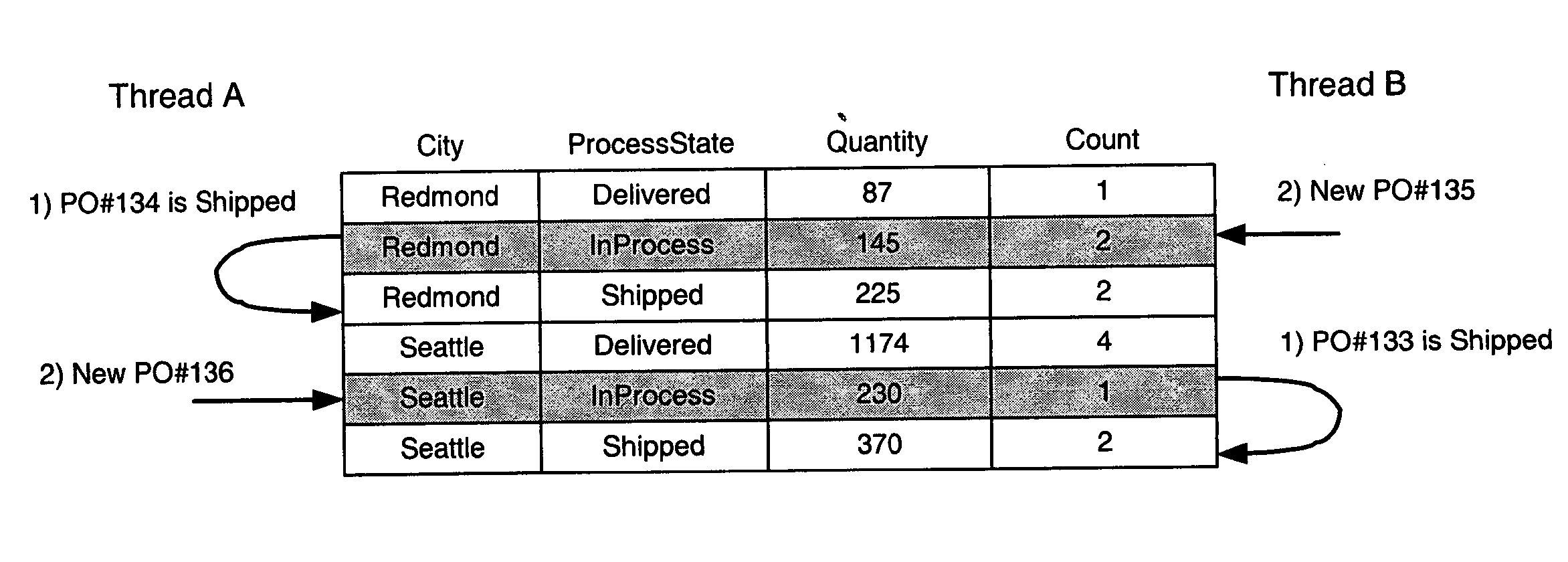

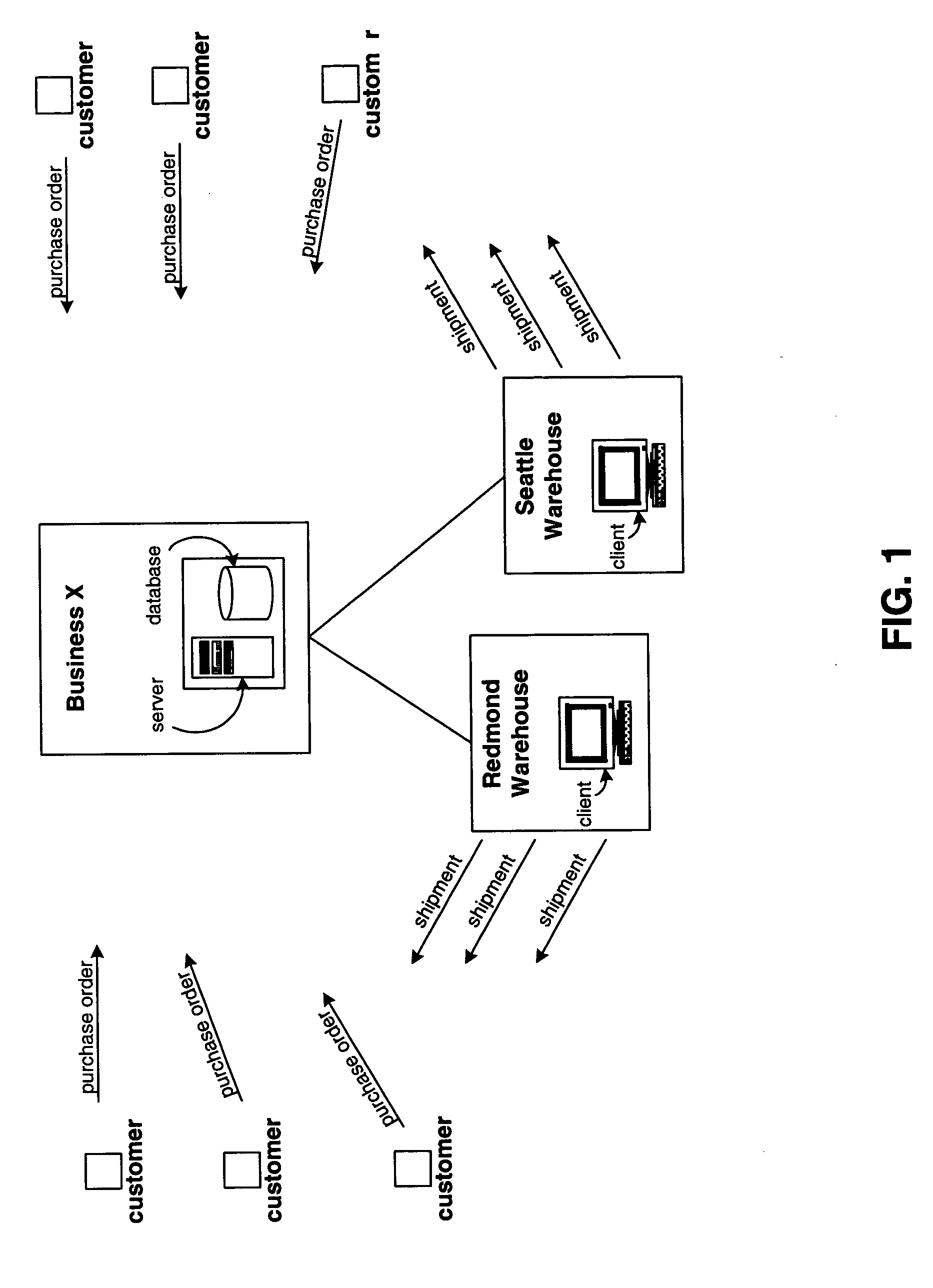

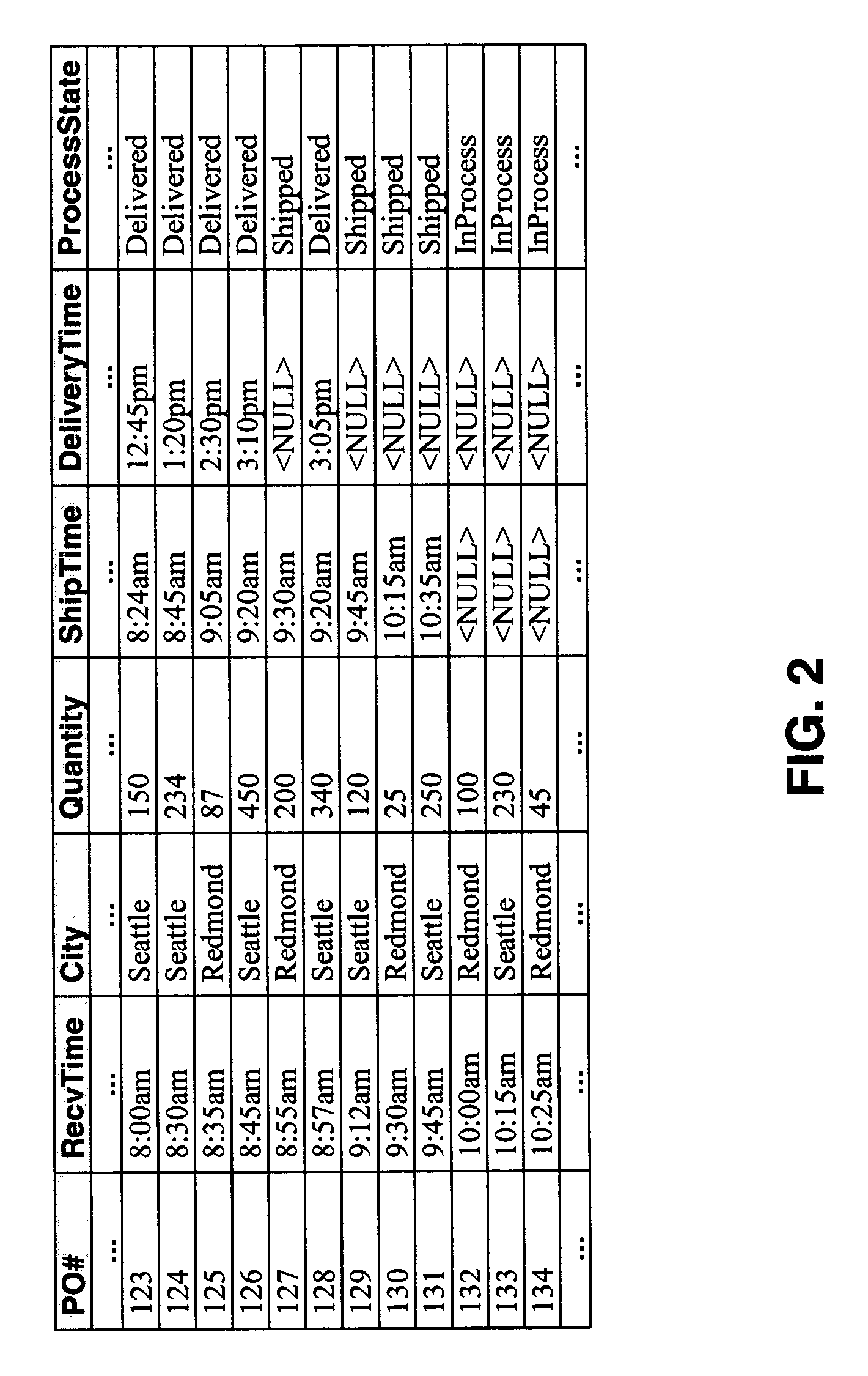

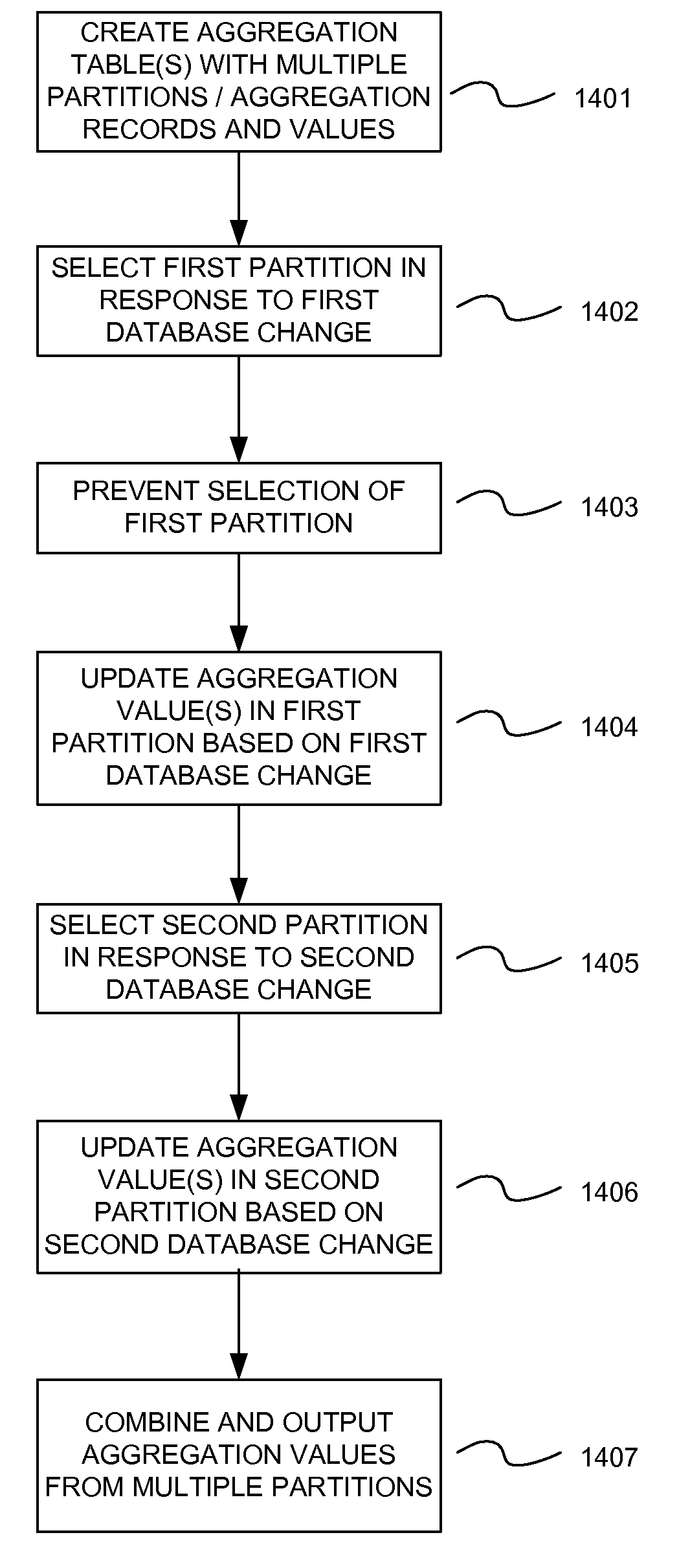

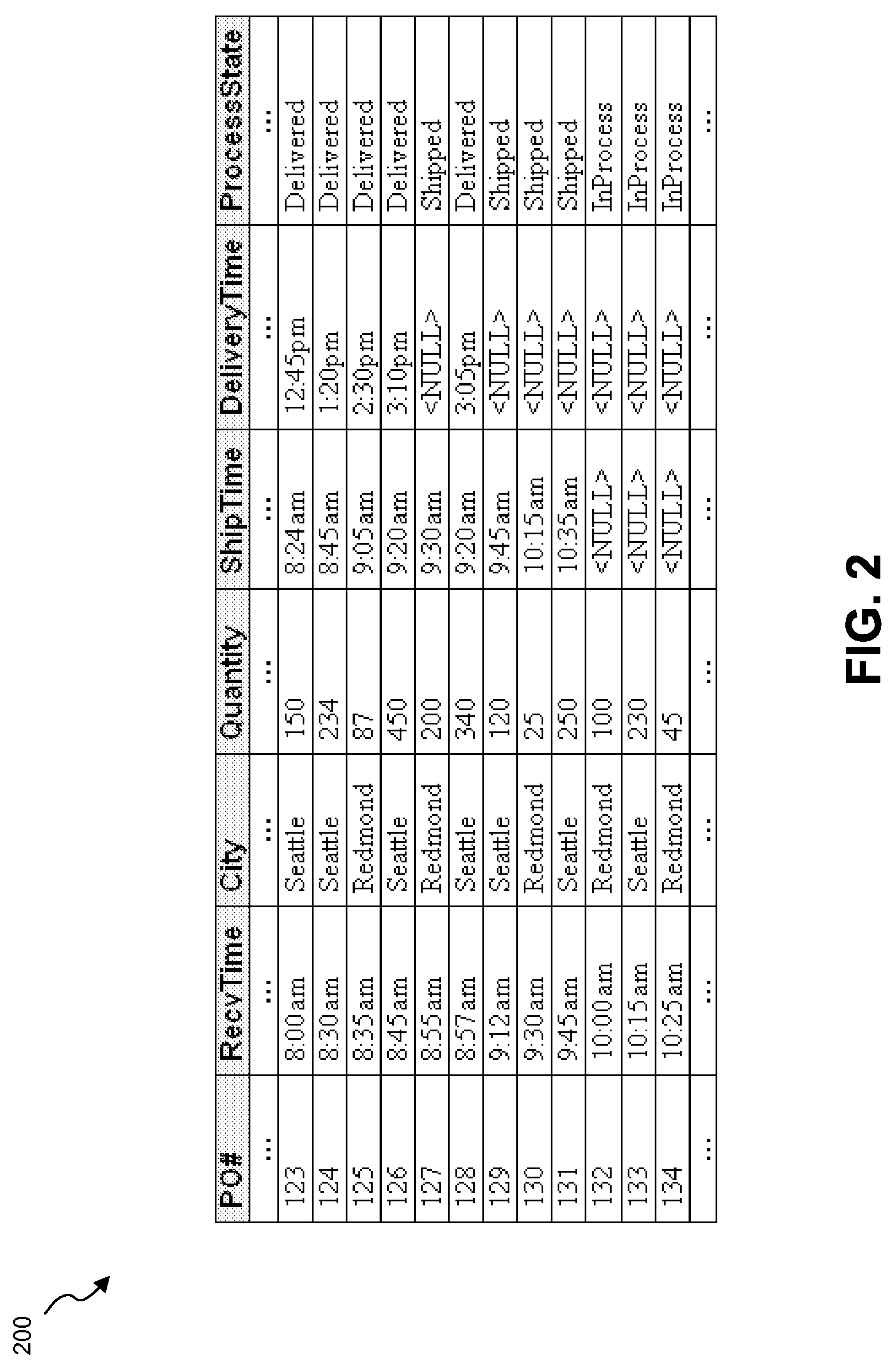

Self-maintaining real-time data aggregations

InactiveUS20050071320A1Readily apparentData processing applicationsDigital data information retrievalReal-time dataData mining

Multiple aggregation groups, which can be multiple partitions in an aggregated data table, are formed. Each group includes multiple aggregation records; each aggregation record includes an aggregation of values contained by a different subset of multiple database records. While an aggregation group is accessed by a single program thread during an aggregation group update transaction, no other threads are allowed to access that group. The aggregation groups are combined into a single table of aggregation records. Each of the multiple database records may correspond to an instance of an organizational activity and include a field having a value indicating the corresponding instance to be in one of several process states. Each aggregation group may further include time-sorted aggregation records, each time-sorted aggregation record containing an aggregation value for instances in one of the several process states during a time period associated with the time-sorted aggregation record. Aggregation records corresponding to instances completed outside of a preselected time window are deleted.

Owner:MICROSOFT TECH LICENSING LLC

Integrated mechanism for suspension and deallocation of computational threads of execution in a processor

ActiveUS20050125795A1Valuable opcode spaceLittle overheadProgram initiation/switchingSoftware engineeringOperandProgram Thread

A yield instruction for execution in a multithreaded microprocessor is disclosed. The yield instruction includes an operand. If the operand is zero the microprocessor terminates the program thread including the yield instruction. If the operand is −1 the microprocessor unconditionally reschedules the program thread. If the operand is a positive integer the microprocessor views the operand as a bit vector specifying one or more yield qualifier inputs, such as interrupt signals, and conditionally reschedules the thread based on the qualifier inputs and bit vector values. The microprocessor also includes a mask register that specifies a bit vector of the qualifier inputs. If the operand specifies a qualifier input not also specified in the mask register, an exception to the instruction is raised. The instruction returns a value specifying the values of the qualifier inputs qualified by the mask register value.

Owner:MIPS TECH INC

Methodology providing high-speed shared memory access between database middle tier and database server

InactiveUS20030014552A1Data processing applicationsInterprogram communicationApplication serverDatabase server

A multi-tier database system is modified such that a middle-tier application server (EJB server) and a database server run on the same host computer and communicate via shared-memory interprocess communication. The system includes a database (e.g., JDBC) driver thread that attaches to the database server, specifically by attaching to the database server's shared memory segment. Operation of the JDBC driver is modified to provide direct access between the middle tier (i.e., EJB server) and the database server, when the two are operating on the same host computer.

Owner:SYBASE INC

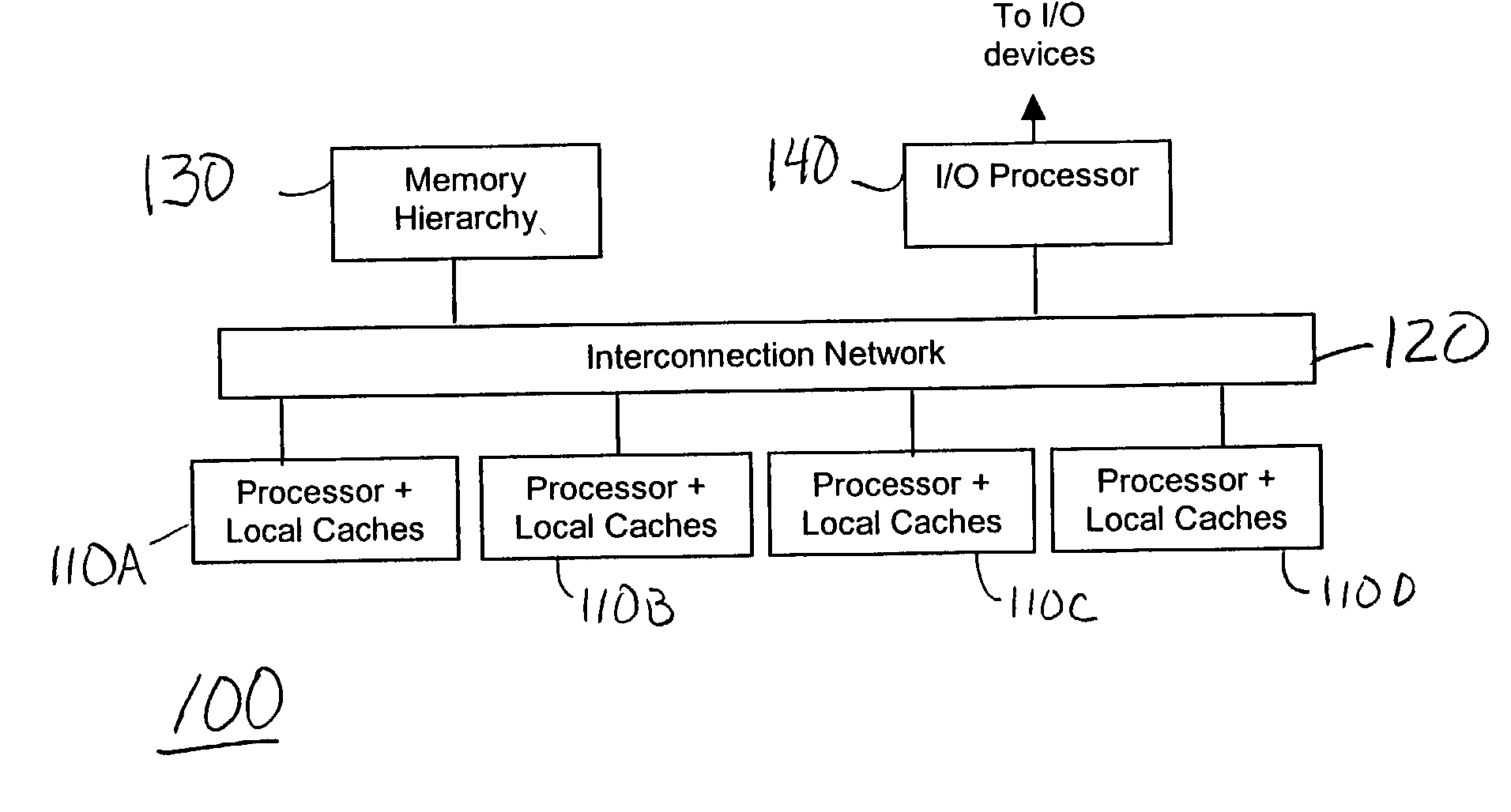

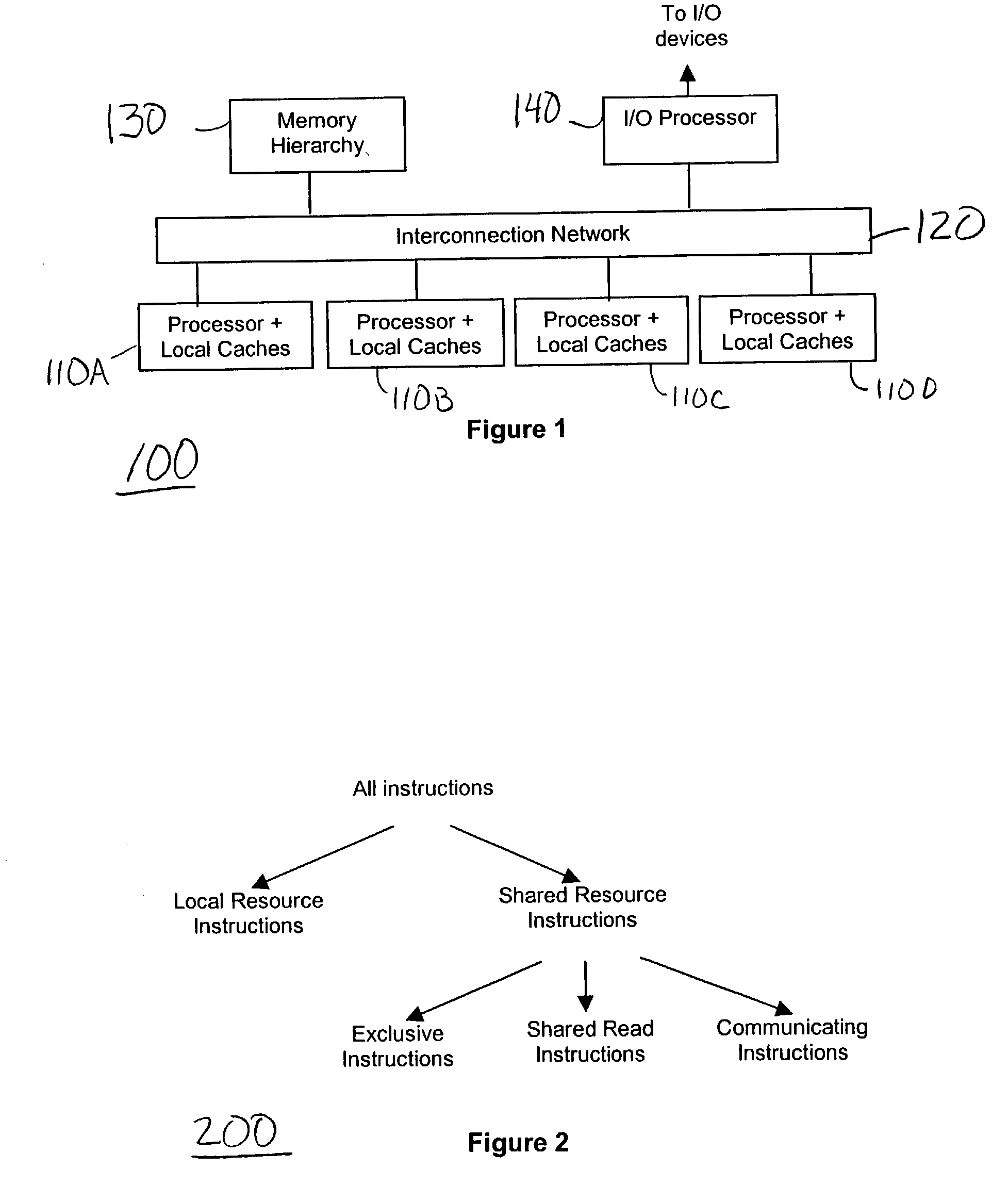

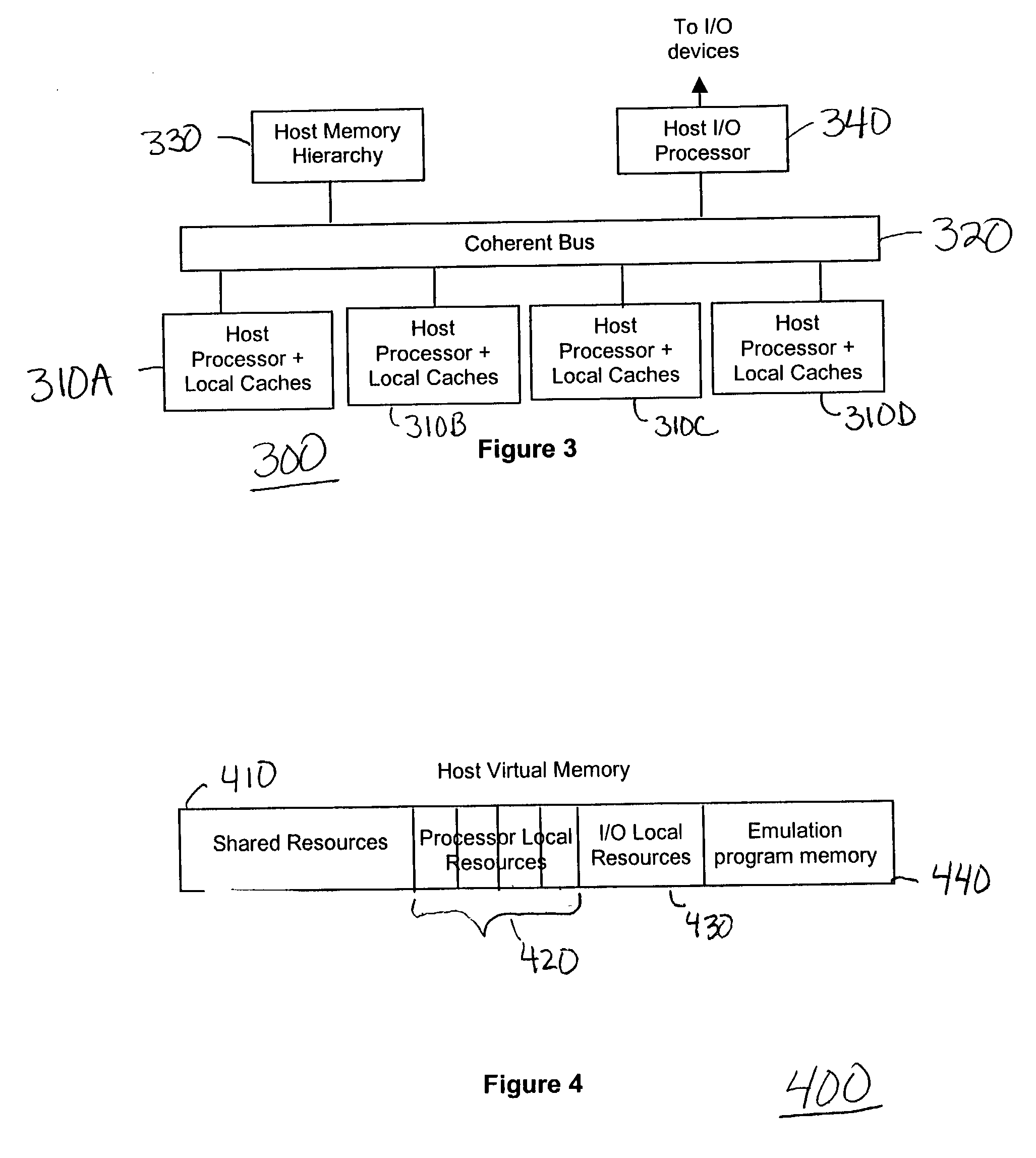

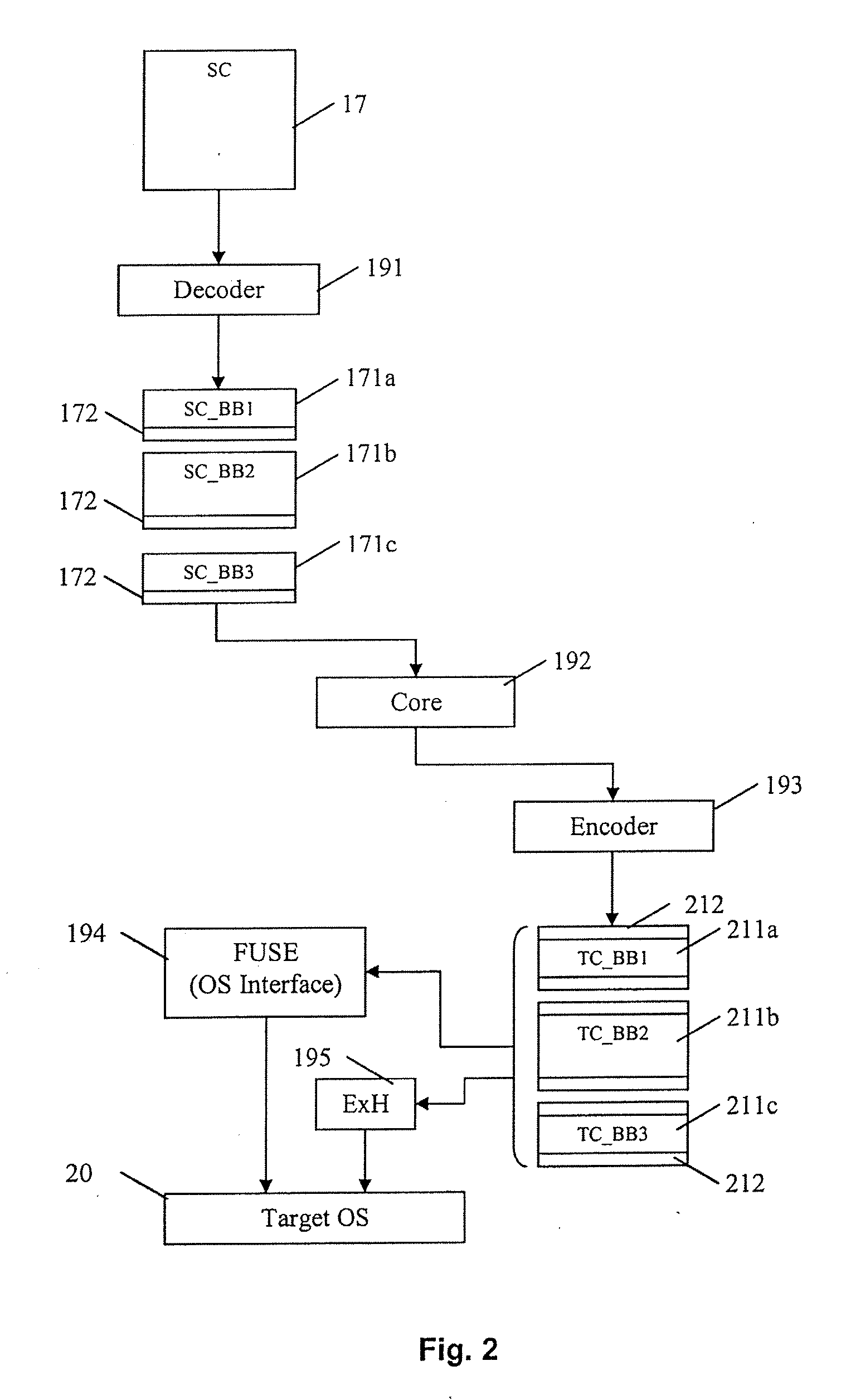

Method and system for multiprocessor emulation on a multiprocessor host system

InactiveUS20040054517A1Improve performanceEfficient executionResource allocationConcurrent instruction executionMulti processorProgram Thread

A method (and system) for executing a multiprocessor program written for a target instruction set architecture on a host computing system having a plurality of processors designed to process instructions of a second instruction set architecture, includes representing each portion of the program designed to run on a processor of the target computing system as one or more program threads to be executed on the host computing system.

Owner:IBM CORP

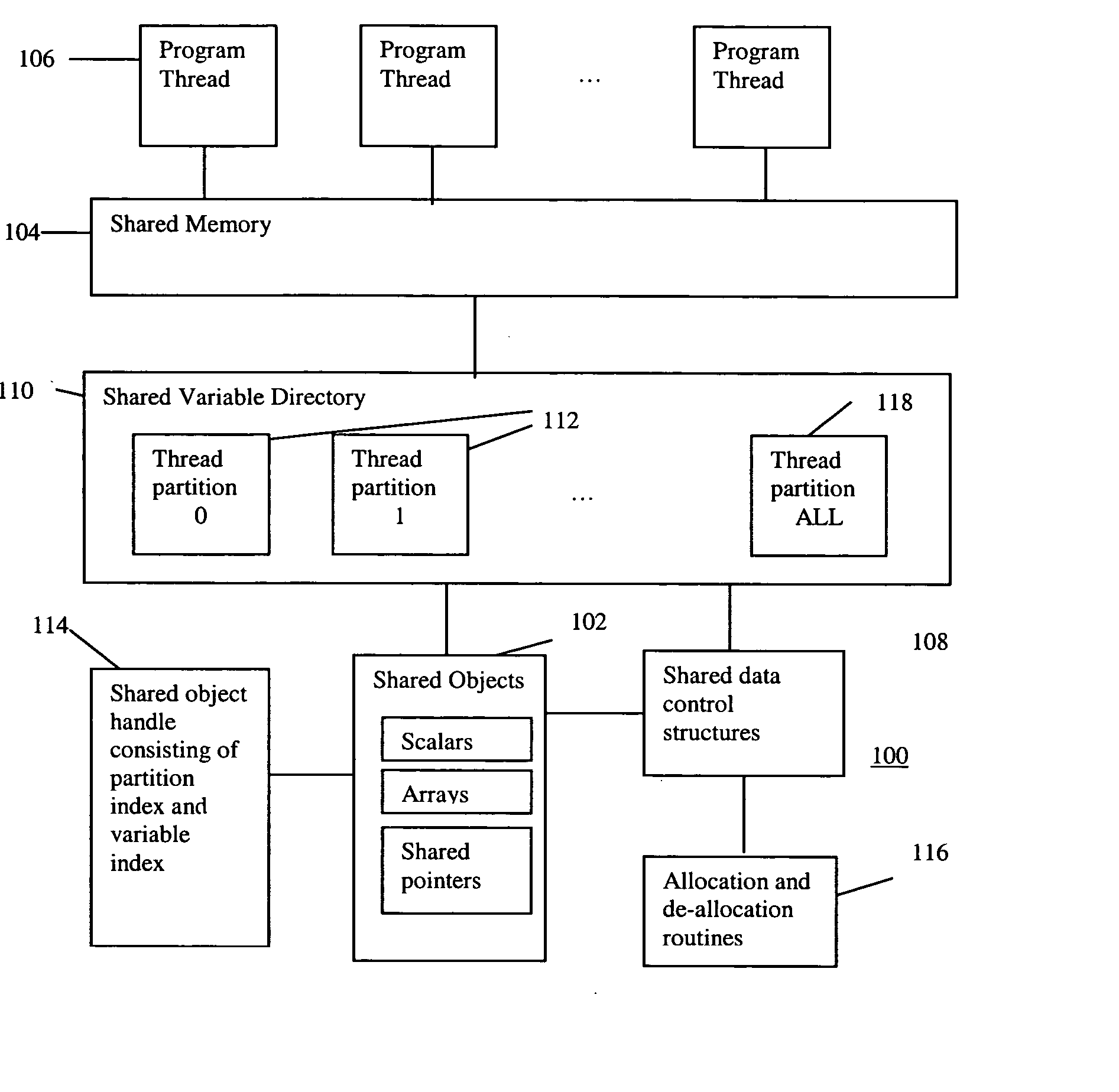

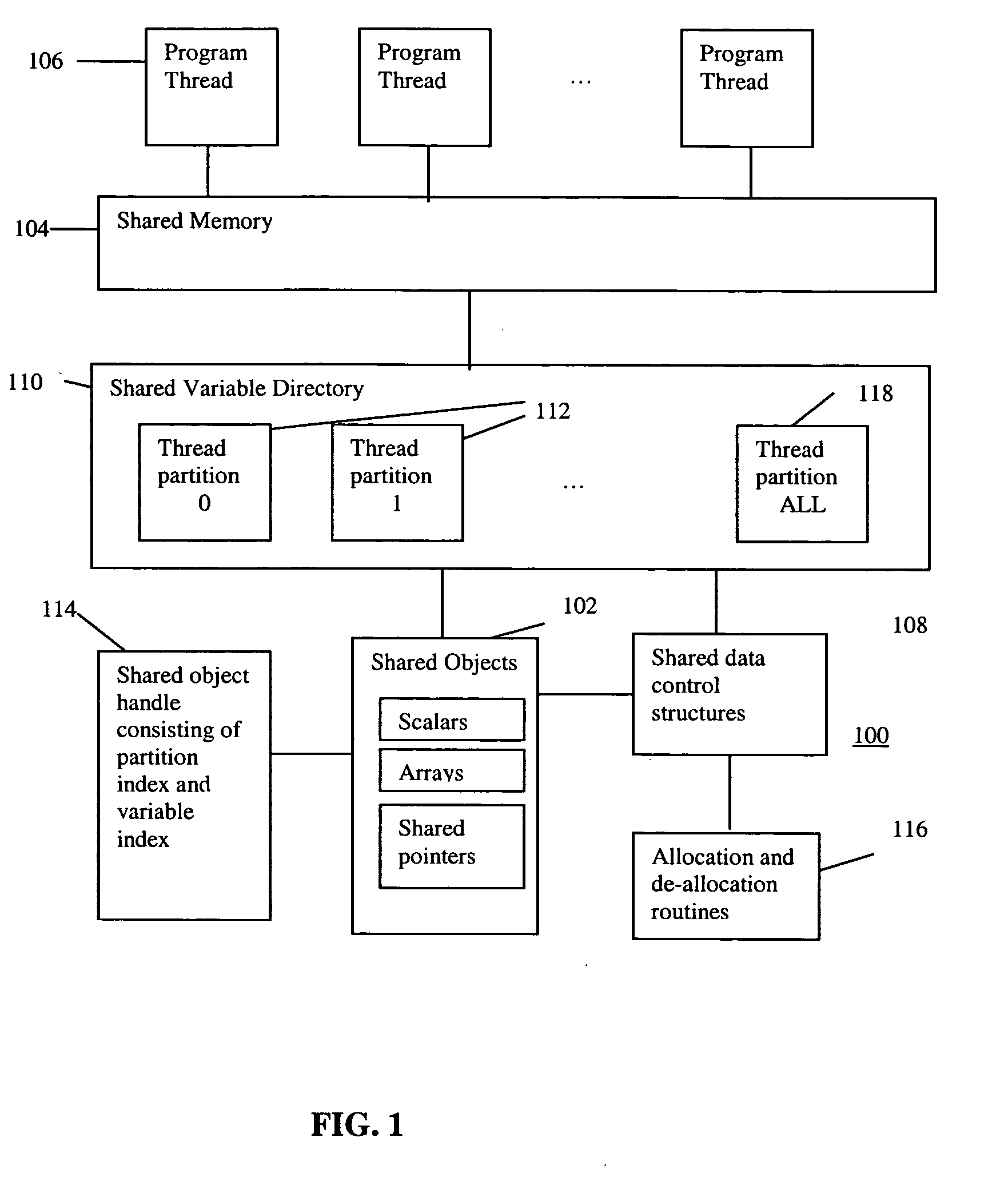

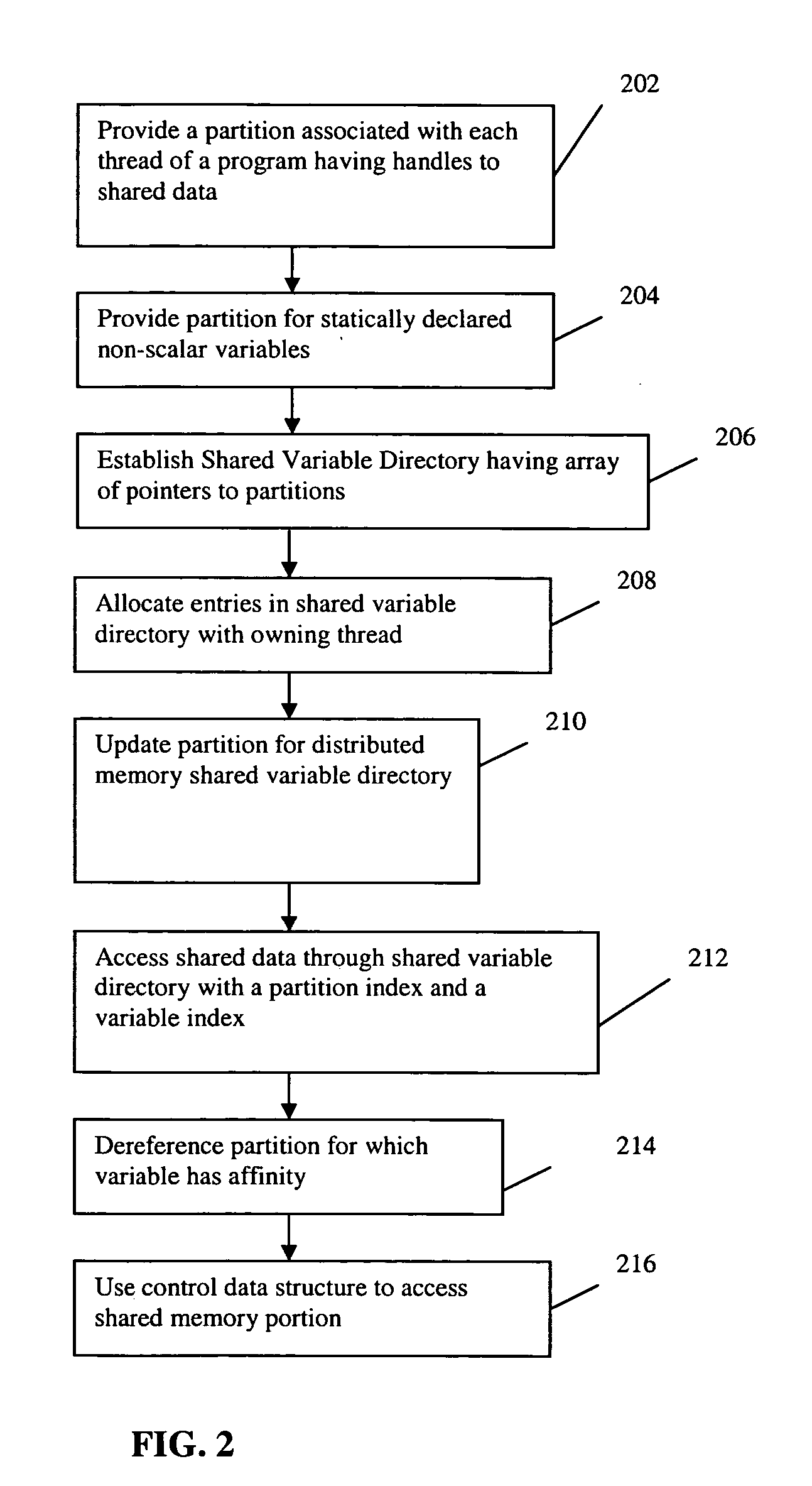

Scalable runtime system for global address space languages on shared and distributed memory machines

ActiveUS20050149903A1Improve scalabilityResource allocationSpecific program execution arrangementsExtensibilityGlobal address space

An improved scalability runtime system for a global address space language running on a distributed or shared memory machine uses a directory of shared variables having a data structure for tracking shared variable information that is shared by a plurality of program threads. Allocation and de-allocation routines are used to allocate and de-allocate shared variable entries in the directory of shared variables. Different routines can be used to access different types of shared data. A control structure is used to control access to the shared data such that all threads can access the data at any time. Since all threads see the same objects, synchronization issues are eliminated. In addition, the improved efficiency of the data sharing allows the number of program threads to be vastly increased.

Owner:DAEDALUS BLUE LLC

Maintaining time-sorted aggregation records representing aggregations of values from multiple database records using multiple partitions

InactiveUS7149736B2Digital data information retrievalData processing applicationsProgram ThreadData mining

Multiple aggregation groups, which can be multiple partitions in an aggregated data table, are formed. Each group includes multiple aggregation records; each aggregation record includes an aggregation of values contained by a different subset of multiple database records. While an aggregation group is accessed by a single program thread during an aggregation group update transaction, no other threads are allowed to access that group. The aggregation groups are combined into a single table of aggregation records. Each of the multiple database records may correspond to an instance of an organizational activity and include a field having a value indicating the corresponding instance to be in one of several process states. Each aggregation group may further include time-sorted aggregation records, each time-sorted aggregation record containing an aggregation value for instances in one of the several process states during a time period associated with the time-sorted aggregation record. Aggregation records corresponding to instances completed outside of a preselected time window are deleted.

Owner:MICROSOFT TECH LICENSING LLC

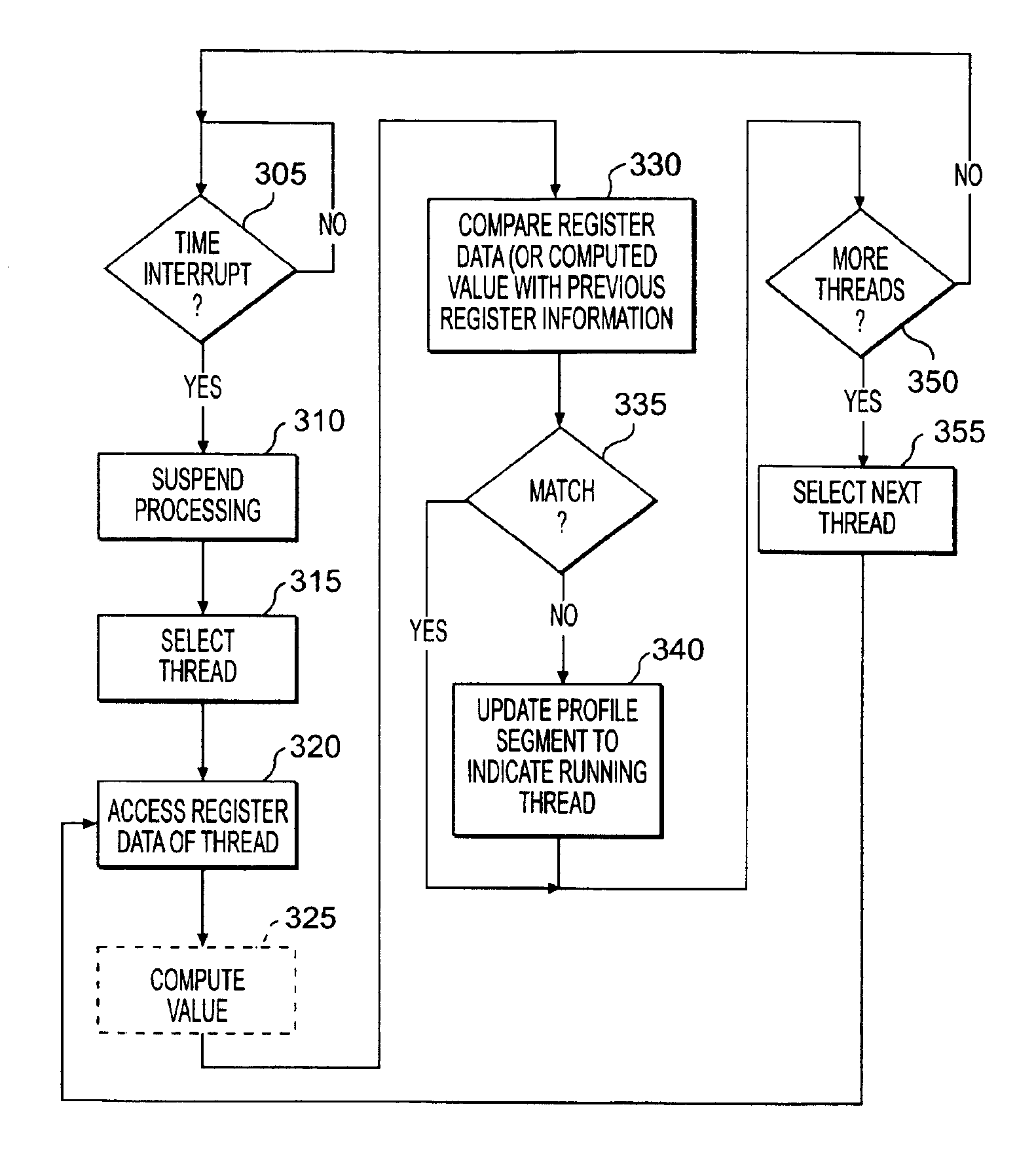

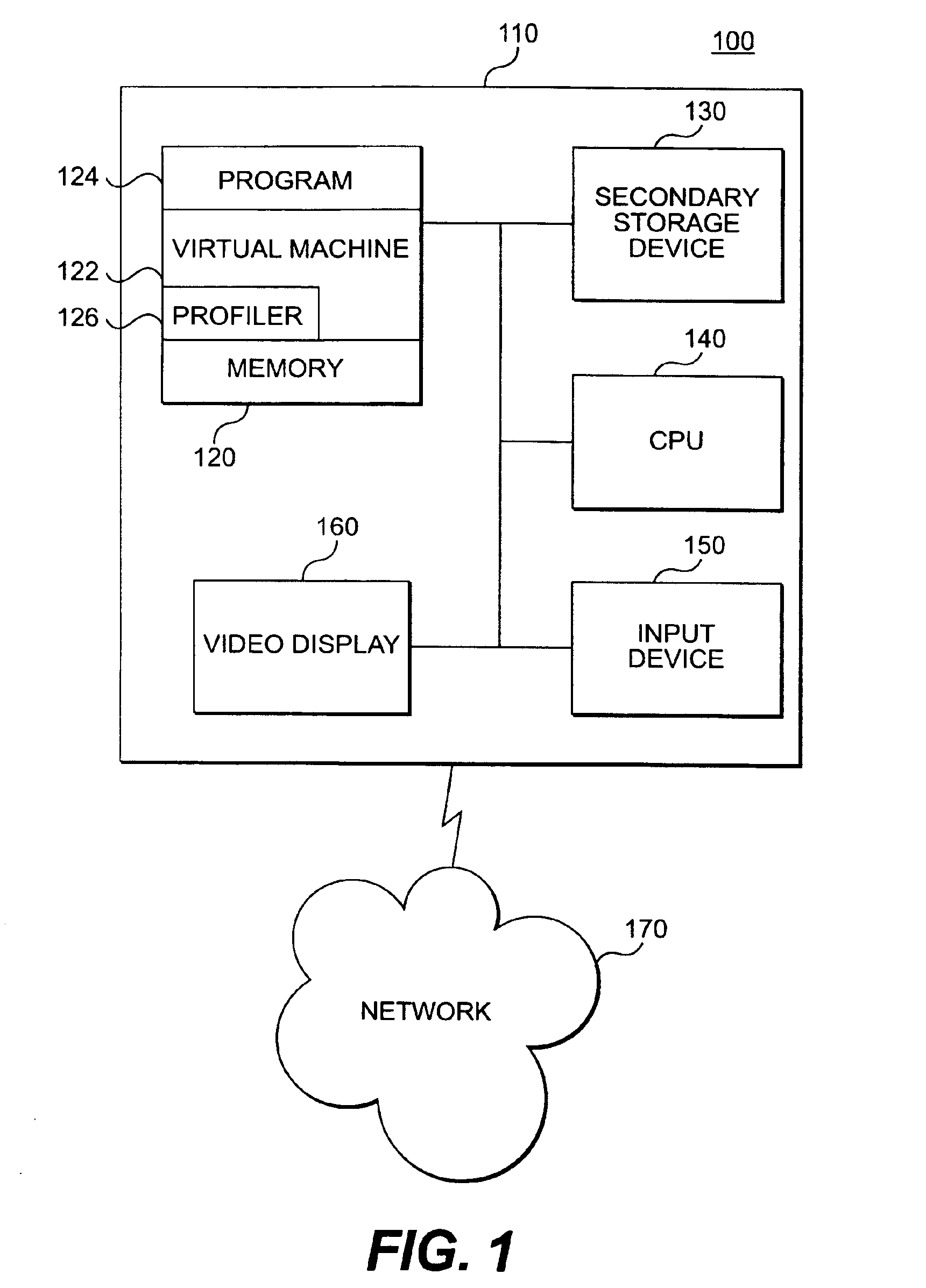

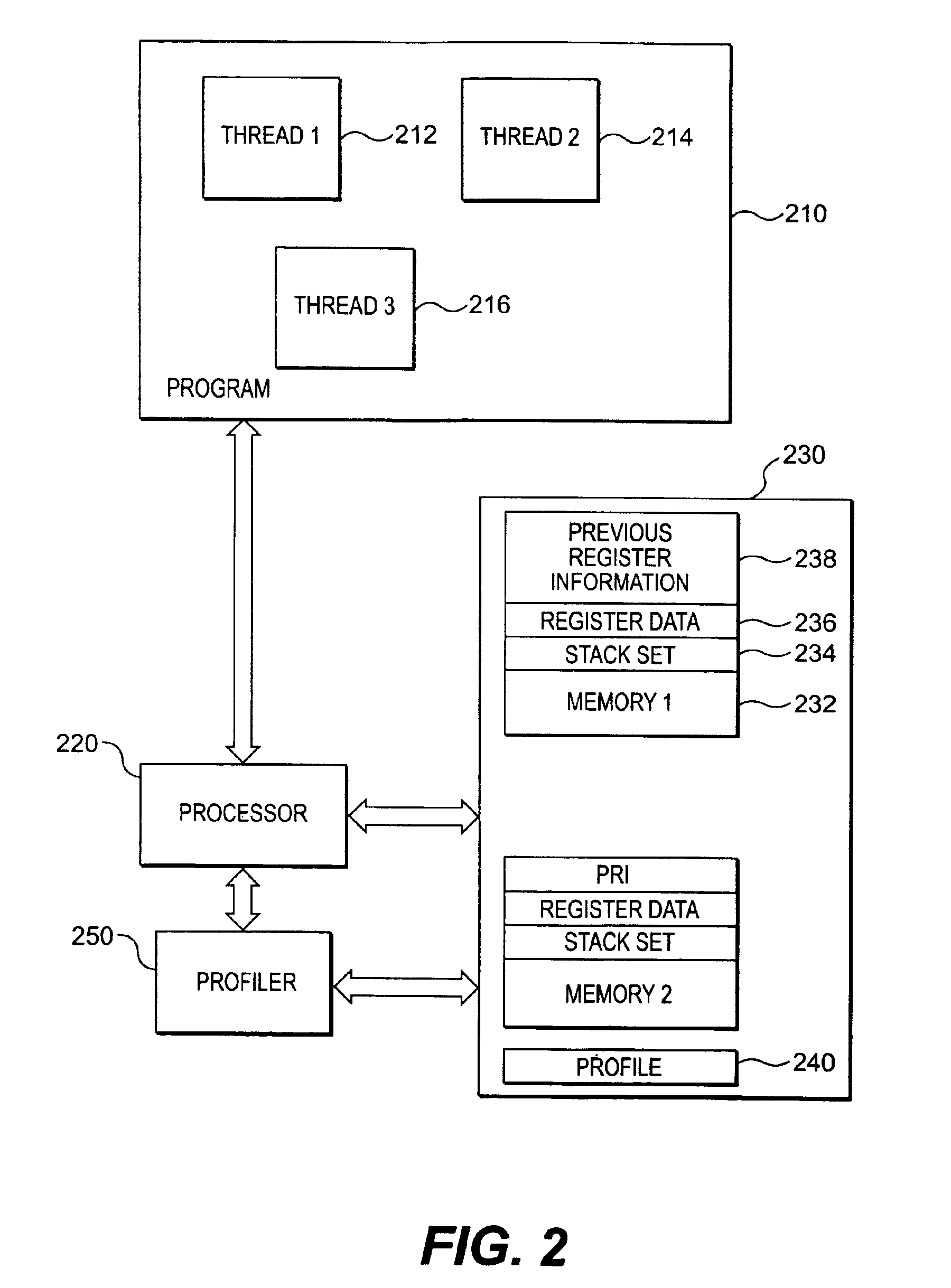

Method apparatus and article of manufacture for time profiling multi-threaded programs

Methods, systems, and articles of manufacture consistent with the present invention time profile program threads using data corresponding to states of the registers of a processor(s) executing the threads. Methods, systems, and articles of manufacture consistent with the present invention determine whether a selected thread of execution of a multi-threaded program is running by suspending execution of the multi-threaded program, retrieving register data corresponding to the selected thread, computing register information based on the register data, comparing the computed register information with stored register information from a previous suspension of the multi-threaded program, and regarding the selected thread as running if the computed register information is different from stored register information. The last operation of regarding the selected thread as running may involve updating the previous register information based on the computed register information, and / or providing an indication corresponding to a portion of the program containing the selected thread.

Owner:ORACLE INT CORP

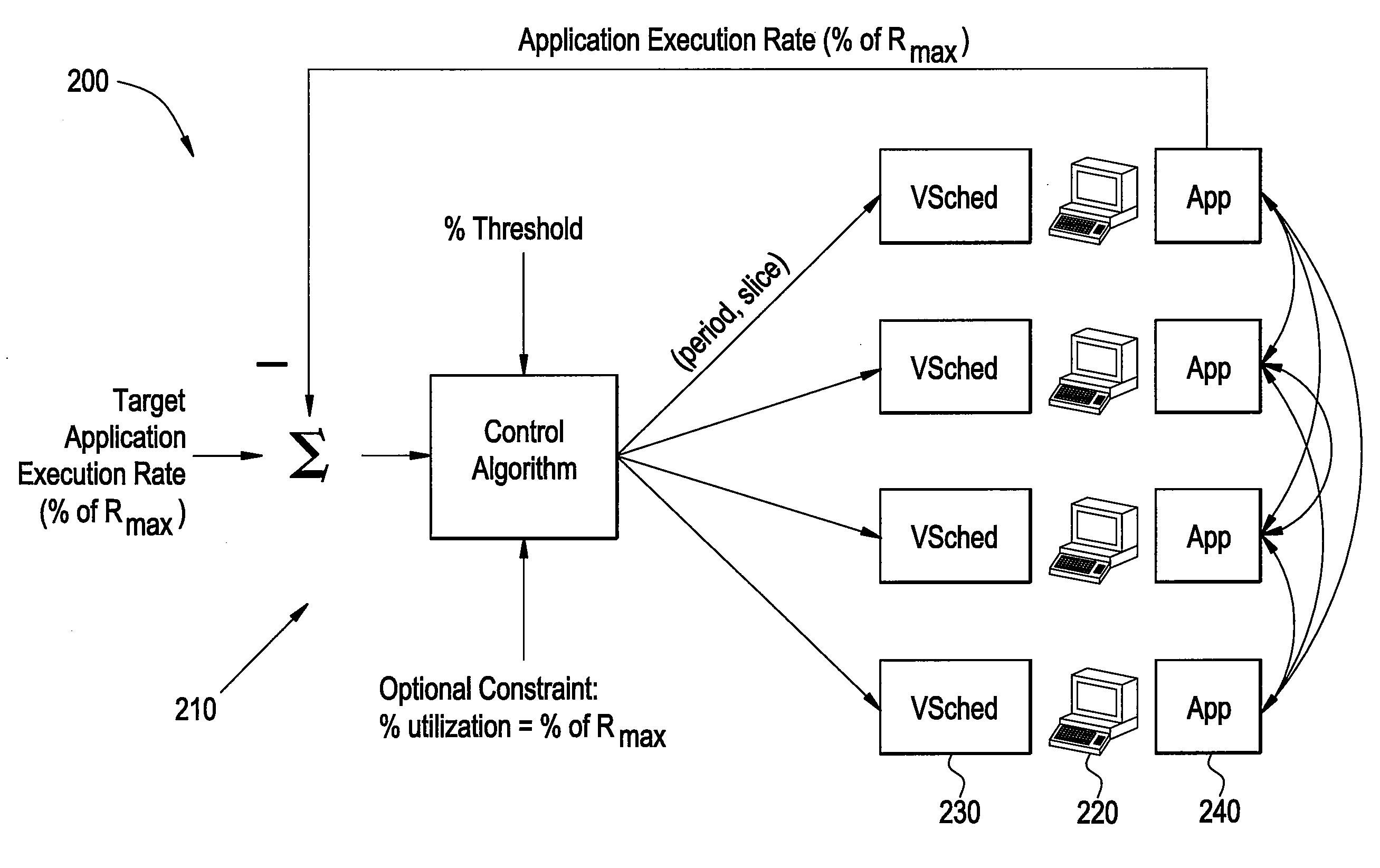

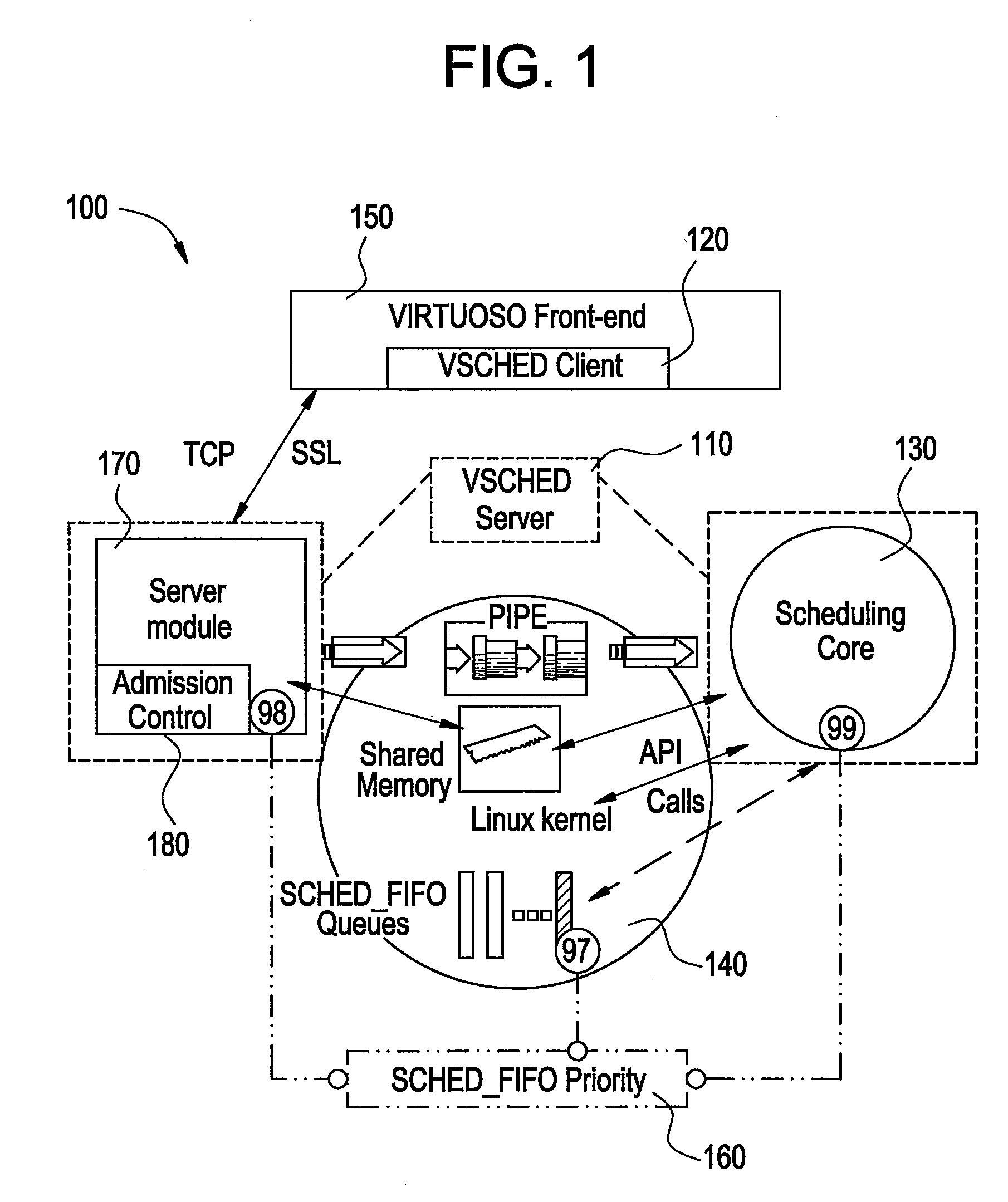

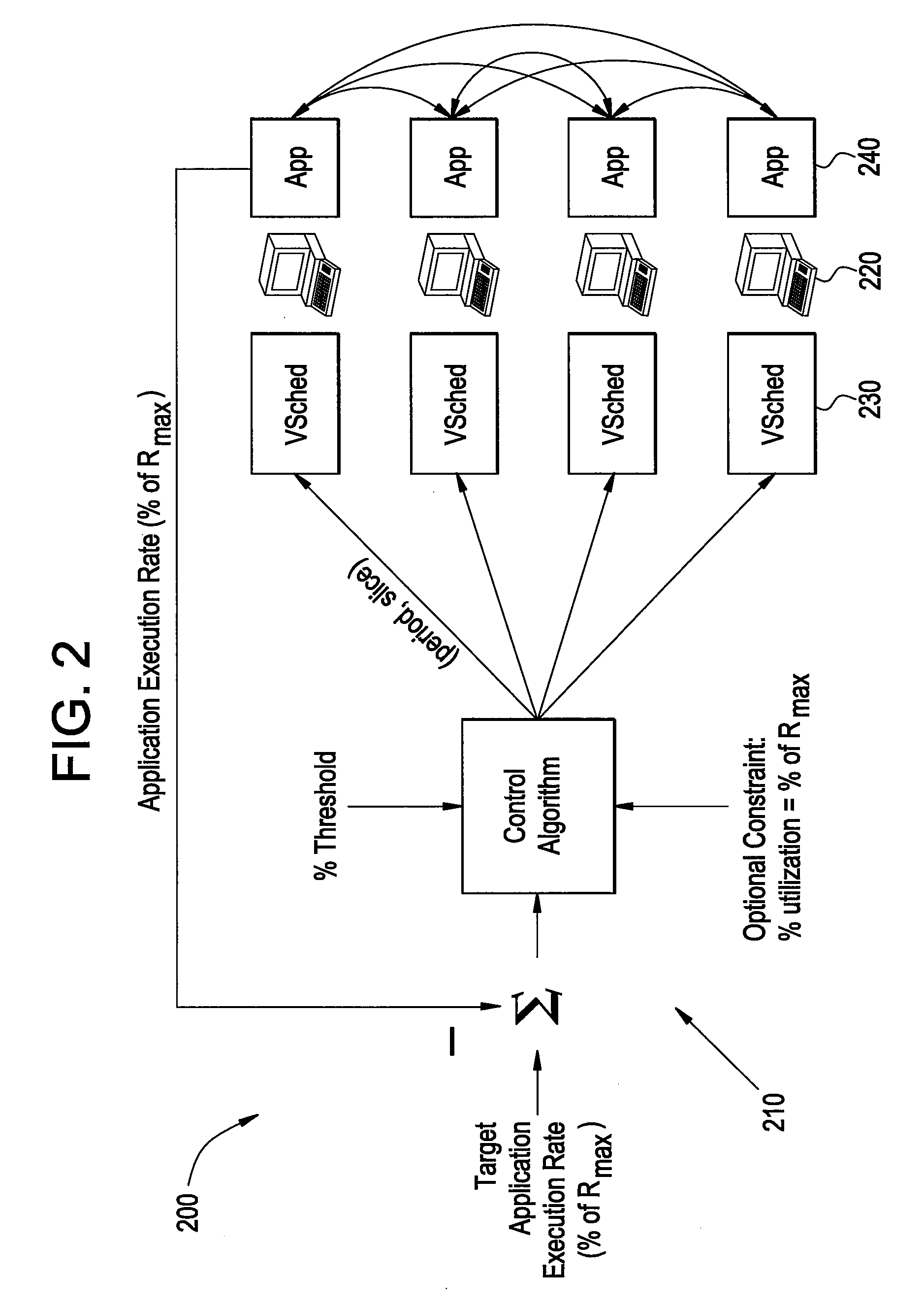

Methods and systems for time-sharing parallel applications with performance isolation and control through performance-targeted feedback-controlled real-time scheduling

Certain embodiments of the present invention provide systems and method for time-sharing parallel applications with performance isolation and control through feedback-controlled real-time scheduling. Certain embodiments provide a computing system for time-sharing parallel applications. The system includes a controller adapted to determine a scheduling constraint for each thread of execution for an application based at least in part on a target execution rate for the application. The system also includes a local scheduler executing on a node in the computing system. The local scheduler schedules execution of a thread of execution for the application based on the scheduling constraint received from the controller. The local scheduler provides feedback regarding a current execution rate for the application thread to the controller, and the controller modifies the scheduling constraint for the local scheduler based on the feedback.

Owner:NORTHWESTERN UNIV

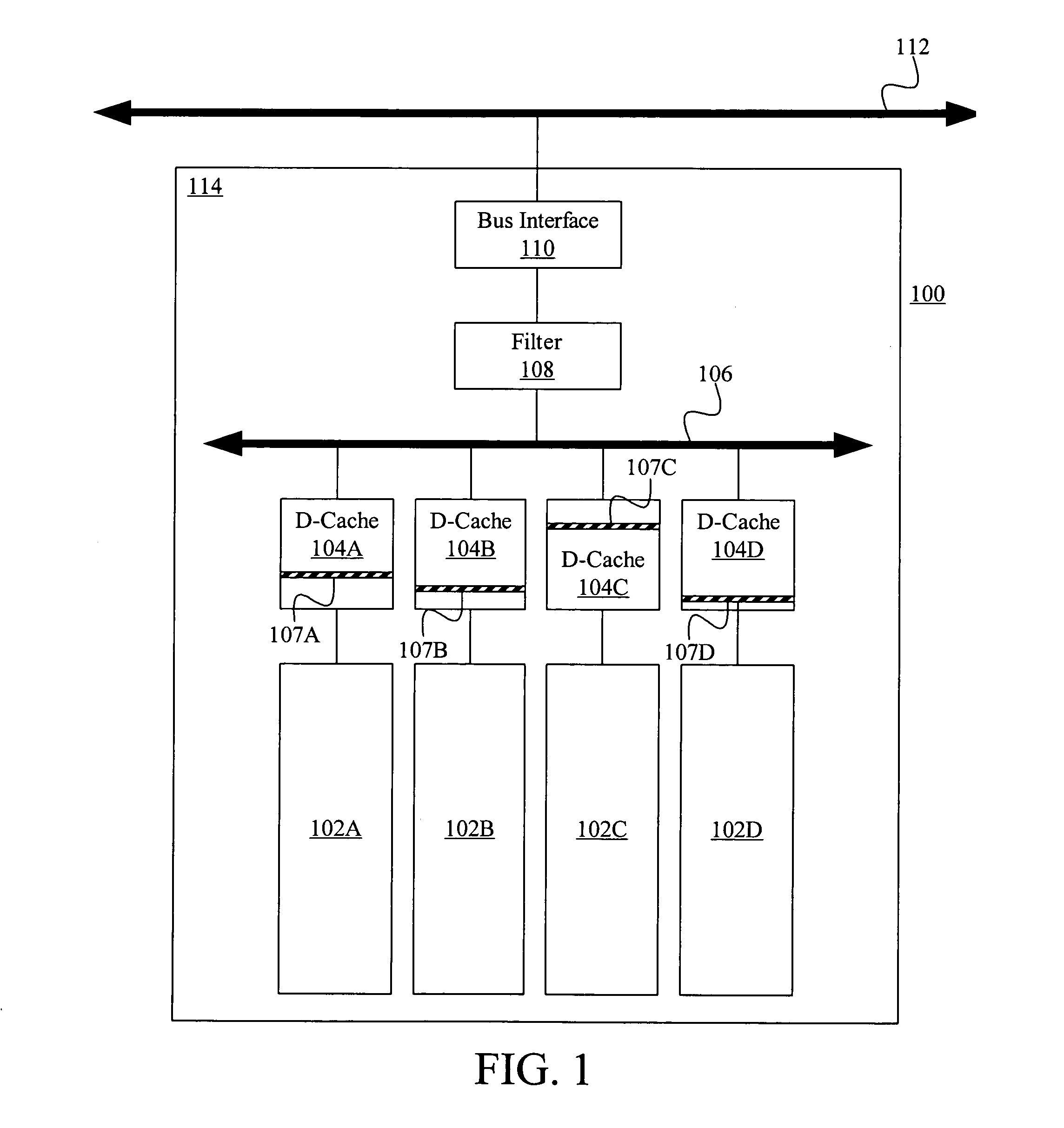

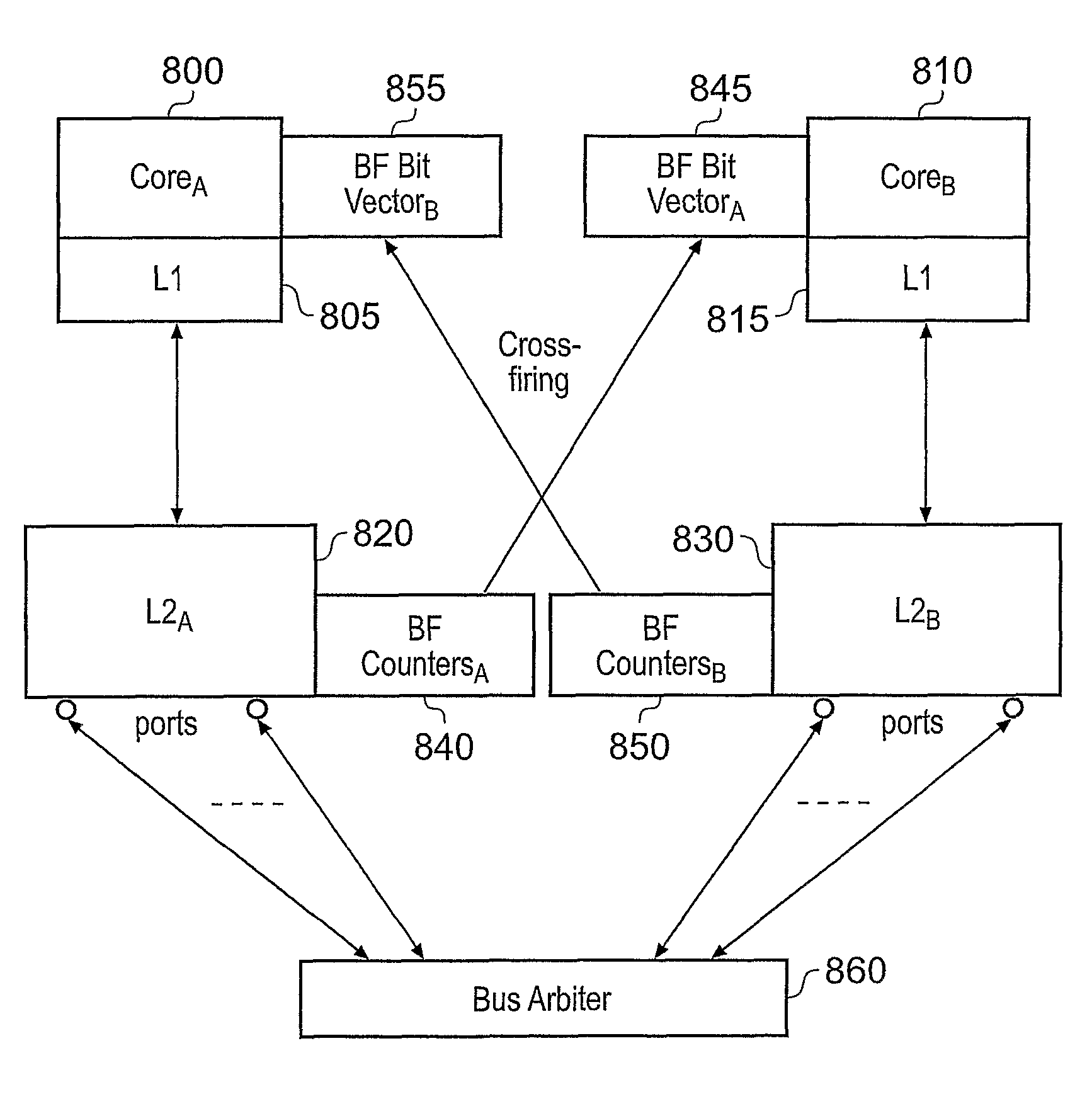

Multiprocessor computing system with multi-mode memory consistency protection

ActiveUS20090210649A1Memory architecture accessing/allocationMemory adressing/allocation/relocationMulti processorActive memory

Disclosed are a method and apparatus for protecting memory consistency in a multiprocessor computing system, relating to program code conversion such as dynamic binary translation. The exemplary multiprocessor computing system provides memory and multiple processors, and a set of controller / translator units TX1, TX2, TX3 arranged to convert respective application programs into program threads T1, T2, etc., which are executed by the processors. Each controller / translator unit sets a first mode where a single thread T1 executes on a single processor P1, orders a second mode for two or more threads T1, T2 that are forced to execute one at a time on a single processor P2 such as by setting affinity with that processor, and orders a third mode to selectively apply active memory consistency protection in relation to accesses to explicit or implicit shared memory while allowing the multiple threads T1, T2, T3, T4 to execute on the multiple processors.

Owner:IBM CORP

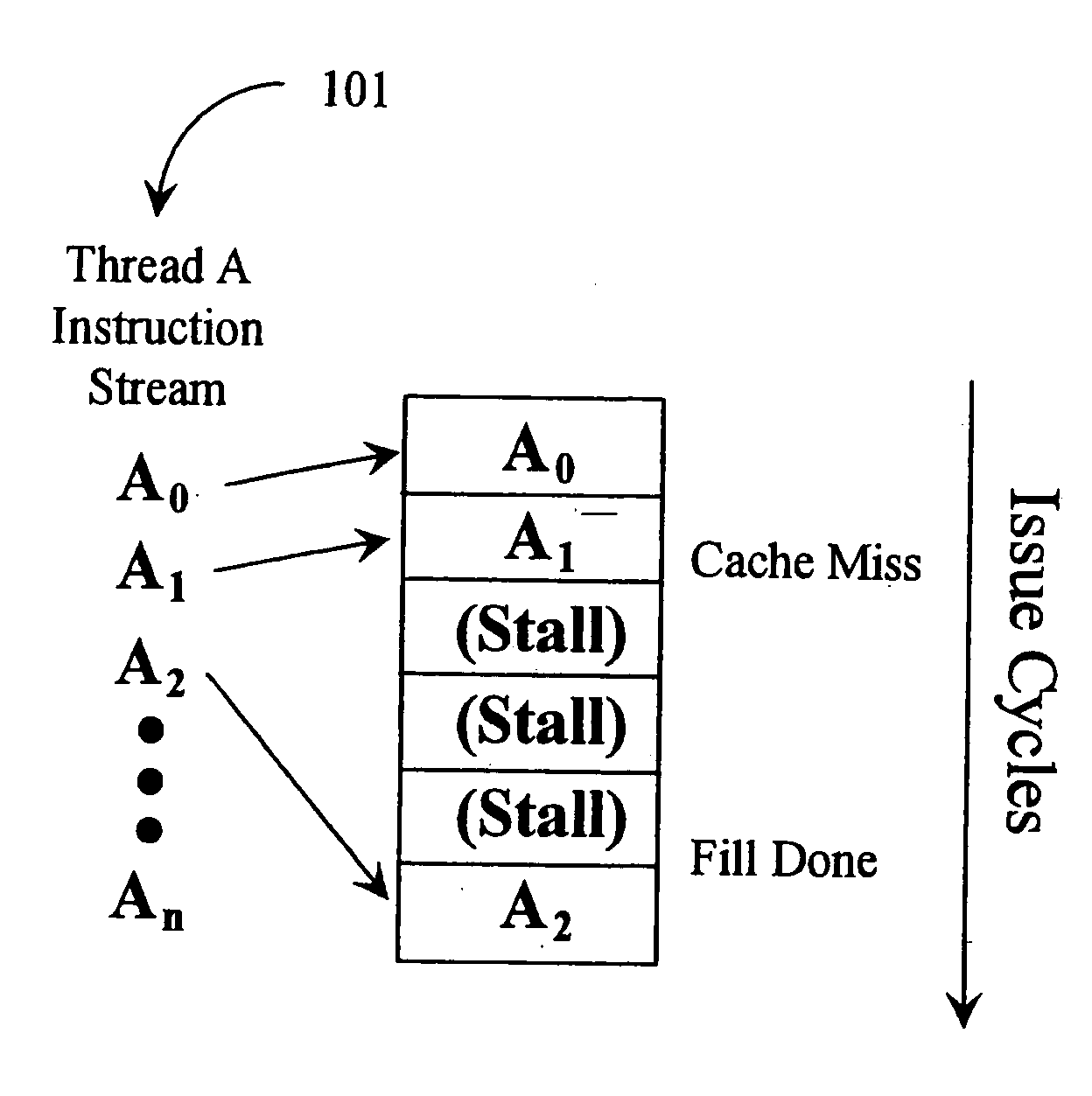

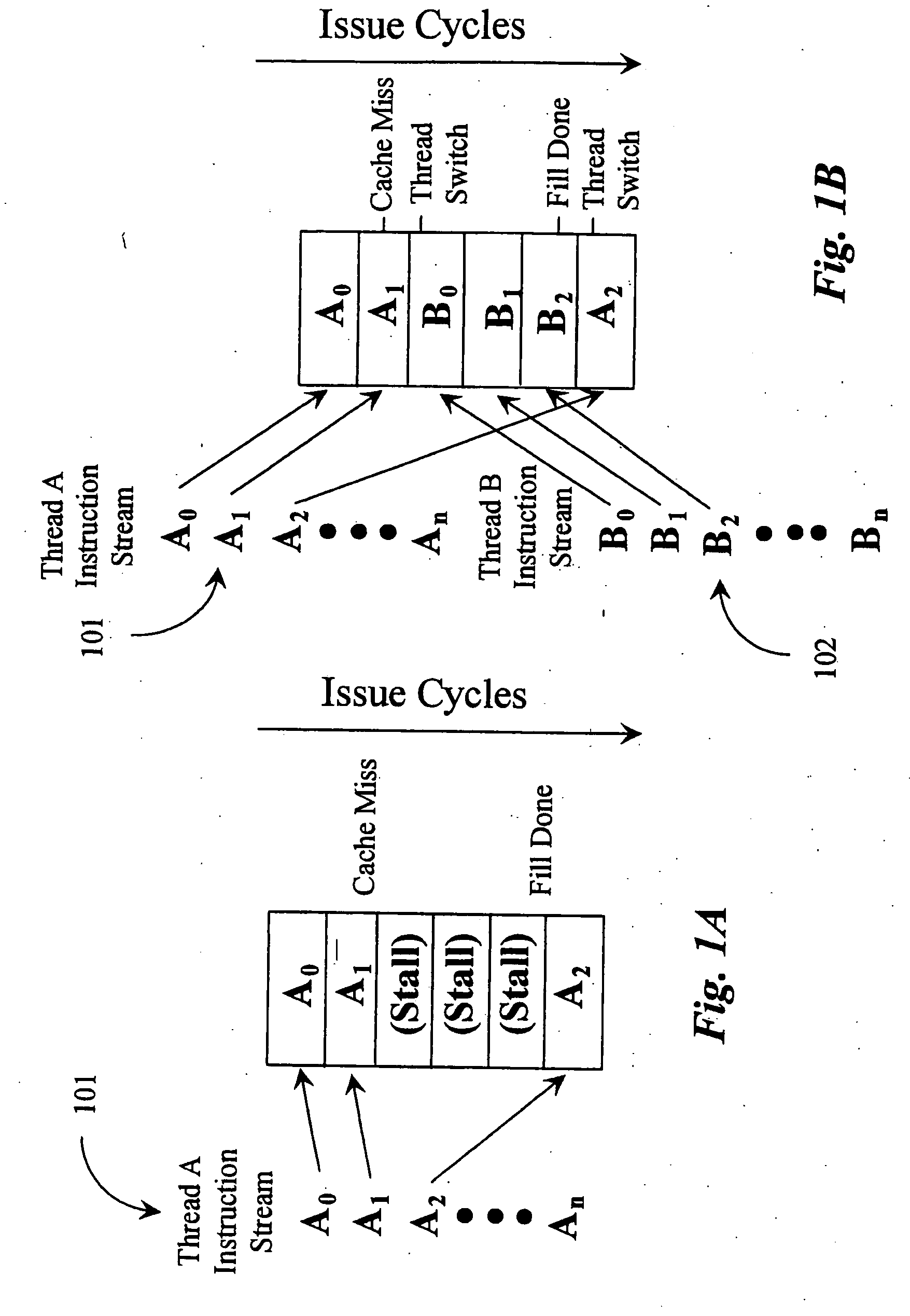

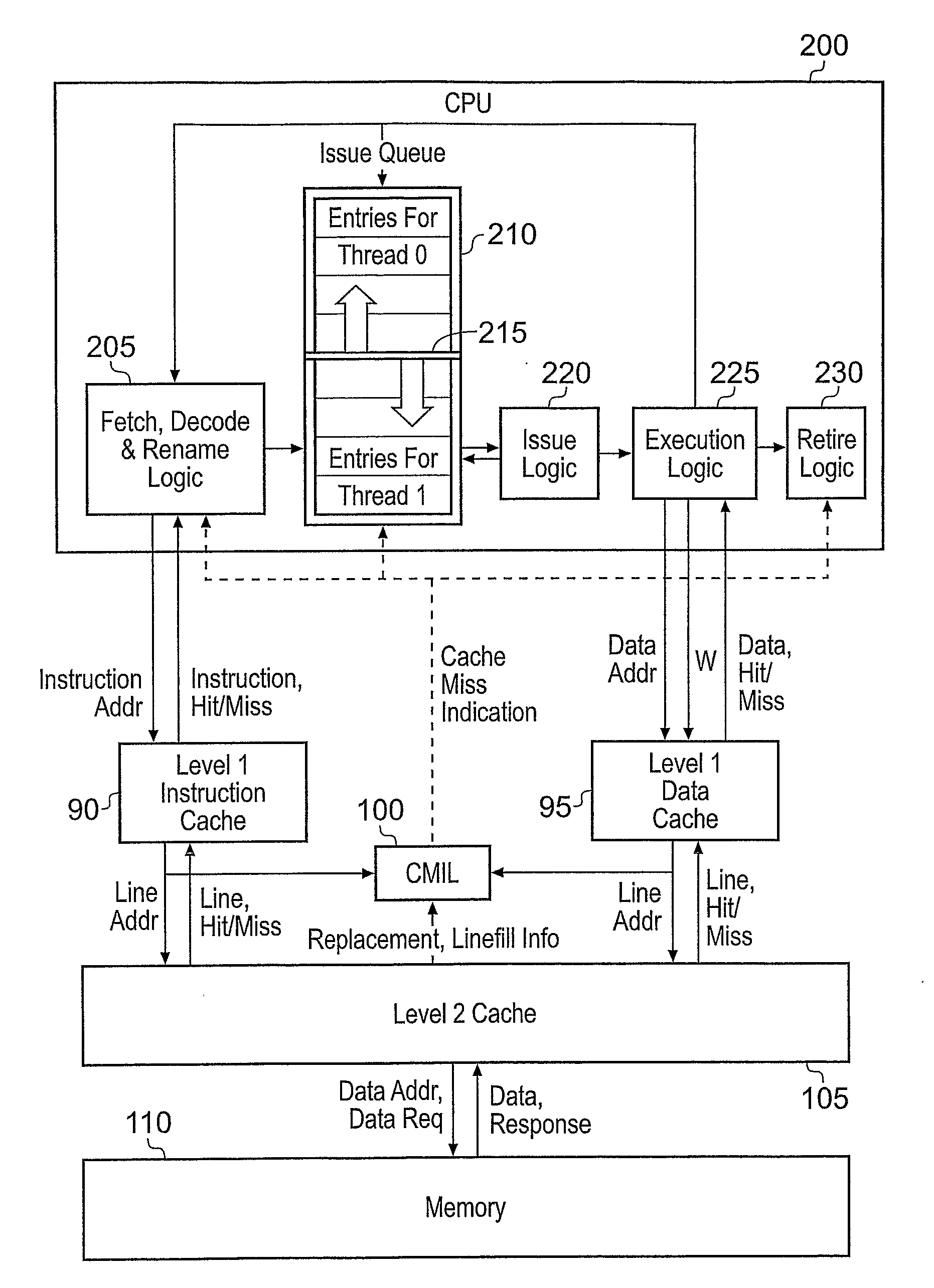

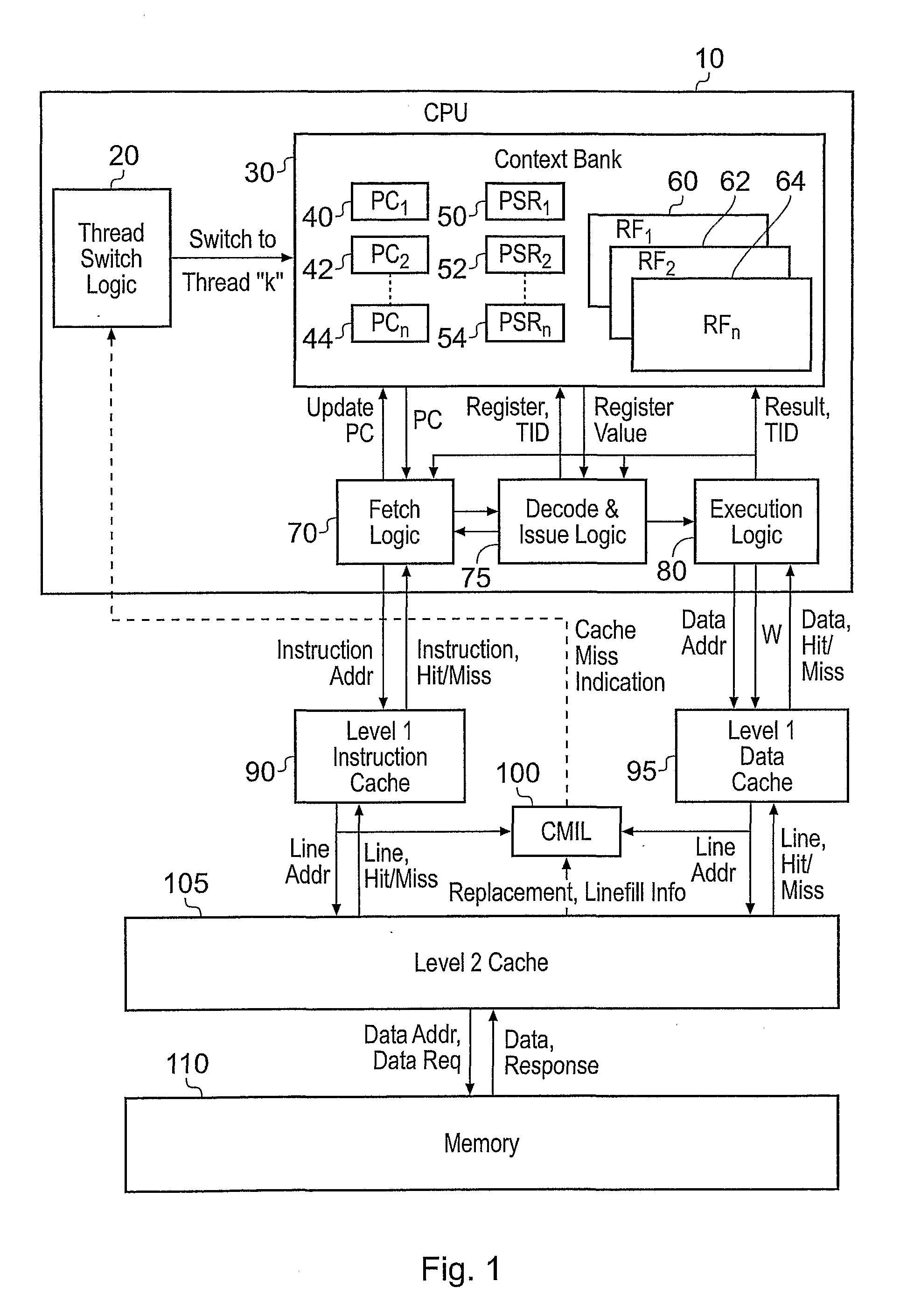

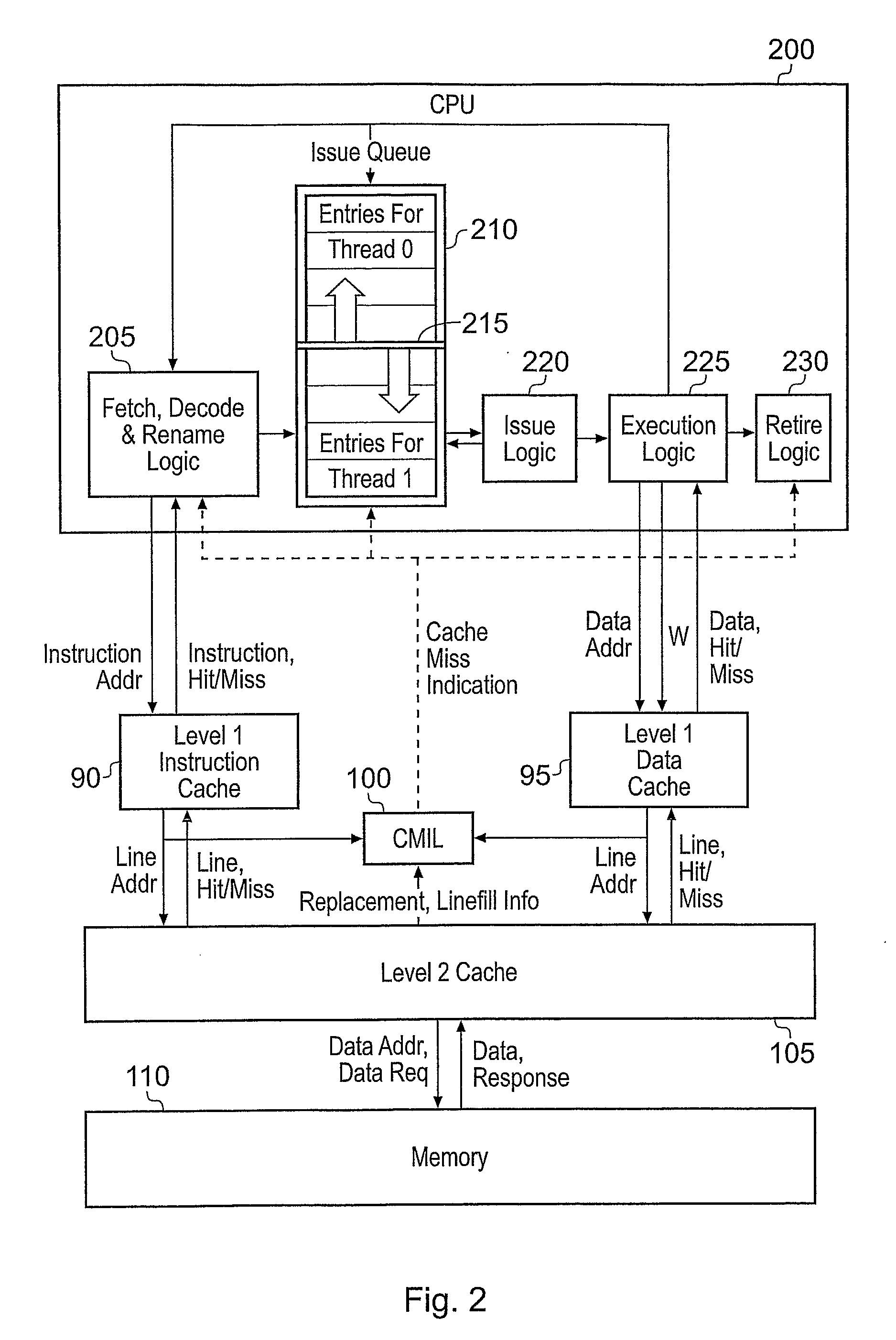

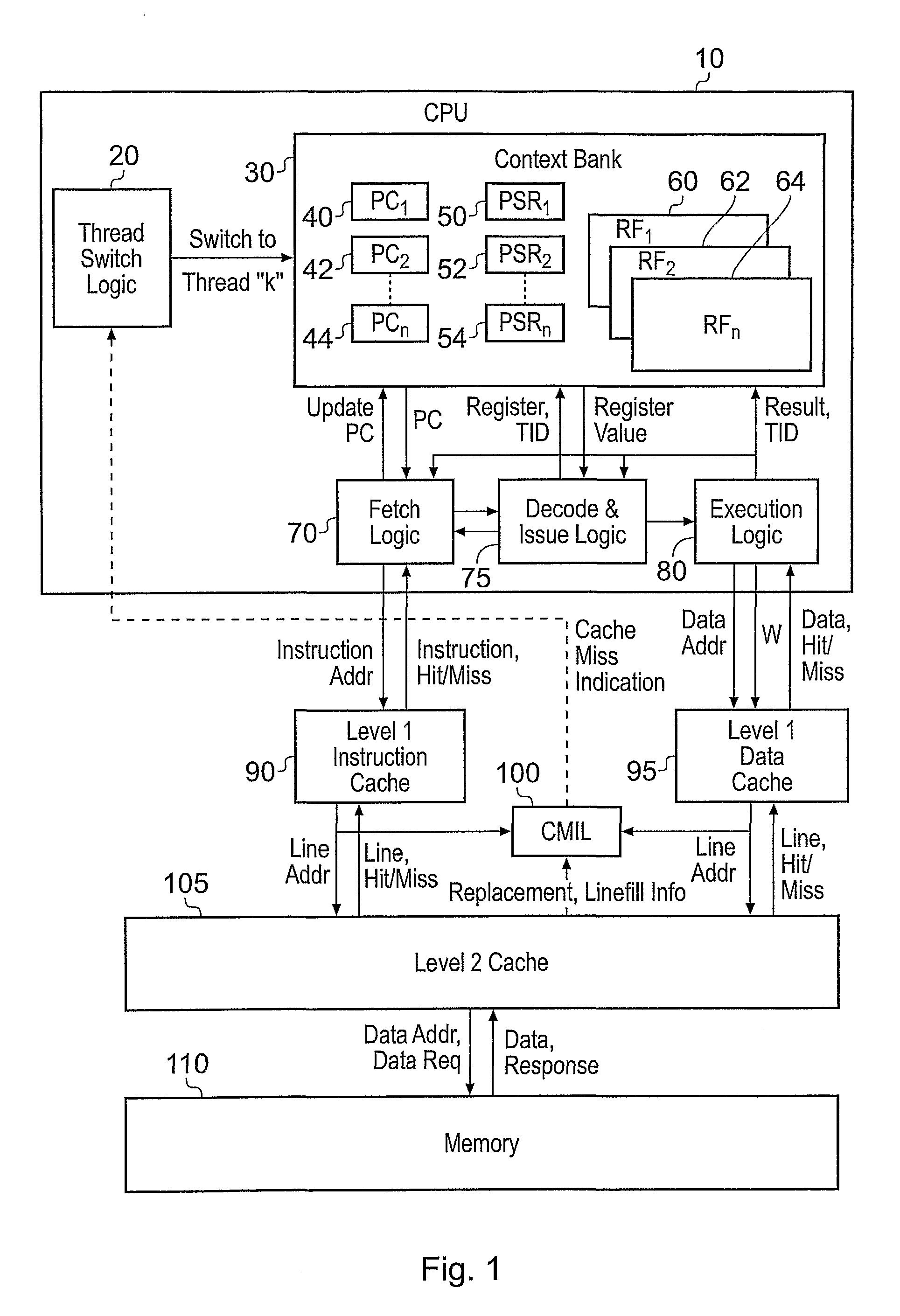

Cache miss detection in a data processing apparatus

ActiveUS20090222625A1Significant performance improvementReduce in quantityMemory adressing/allocation/relocationMultiprogramming arrangementsParallel computingProgram Thread

A data processing apparatus and method are provided for detecting cache misses. The data processing apparatus has processing logic for executing a plurality of program threads, and a cache for storing data values for access by the processing logic. When access to a data value is required while executing a first program thread, the processing logic issues an access request specifying an address in memory associated with that data value, and the cache is responsive to the address to perform a lookup procedure to determine whether the data value is stored in the cache. Indication logic is provided which in response to an address portion of the address provides an indication as to whether the data value is stored in the cache, this indication being produced before a result of the lookup procedure is available, and the indication logic only issuing an indication that the data value is not stored in the cache if that indication is guaranteed to be correct. Control logic is then provided which, if the indication indicates that the data value is not stored in the cache, uses that indication to control a process having an effect on a program thread other than the first program thread.

Owner:ARM LTD

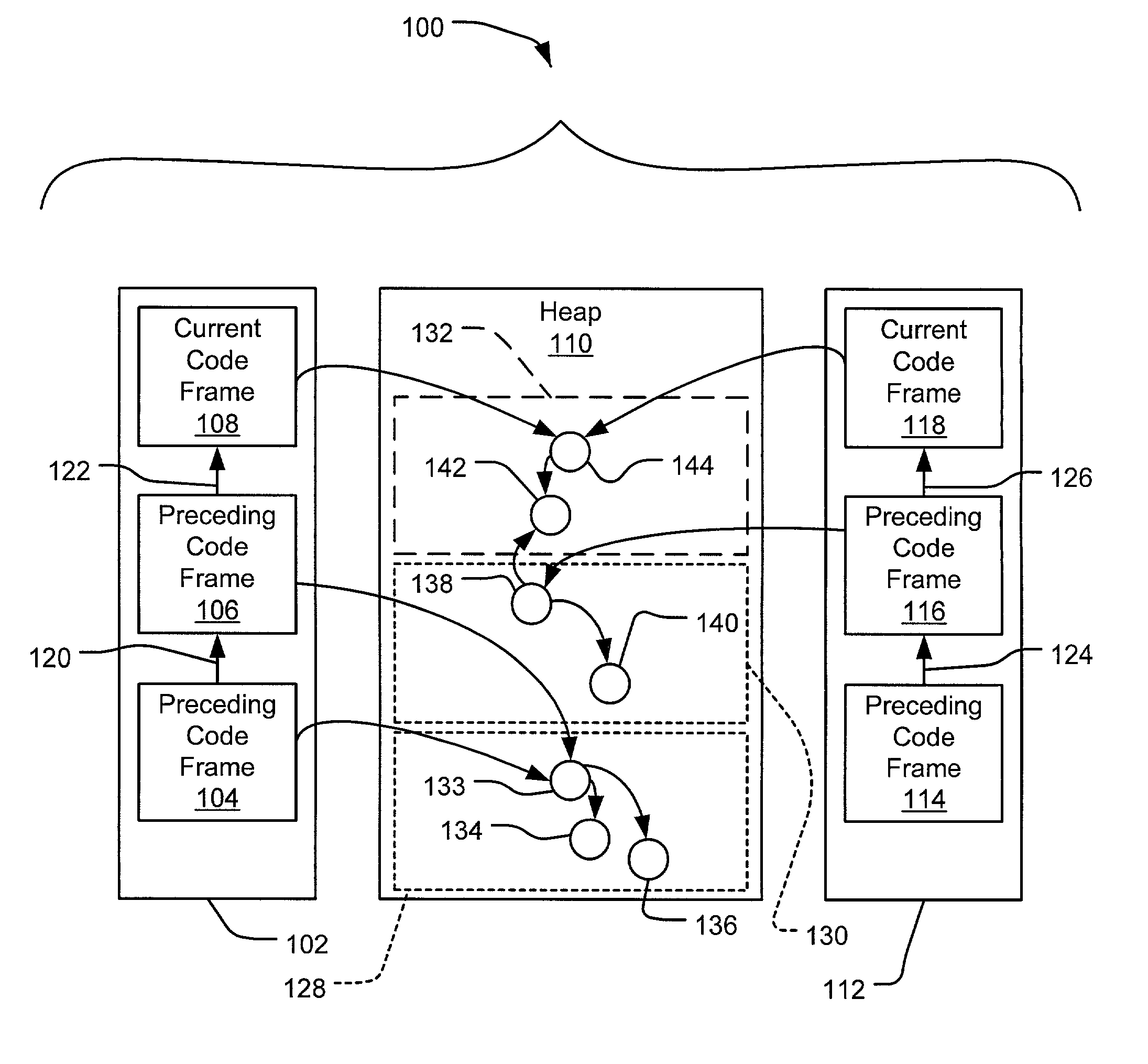

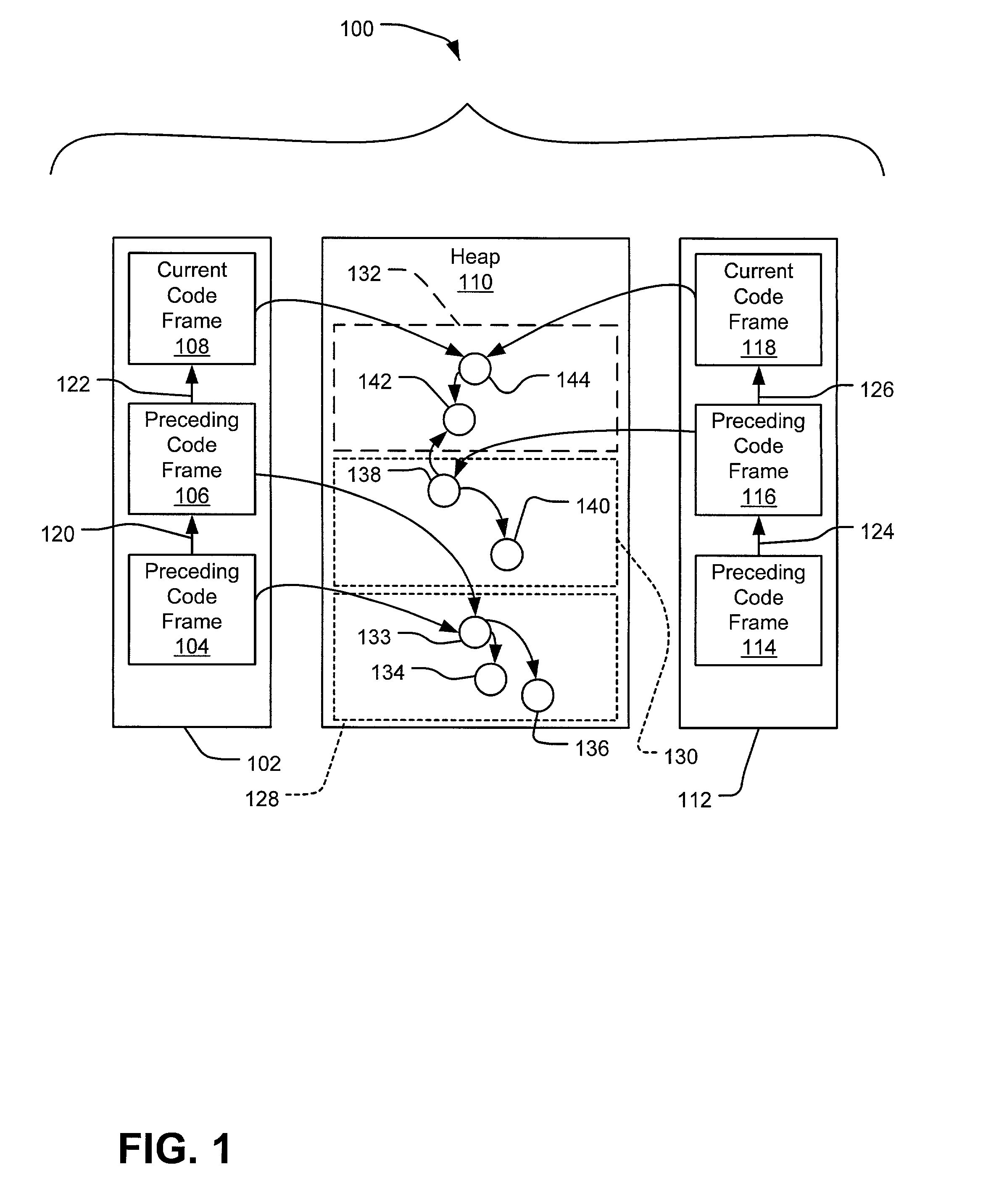

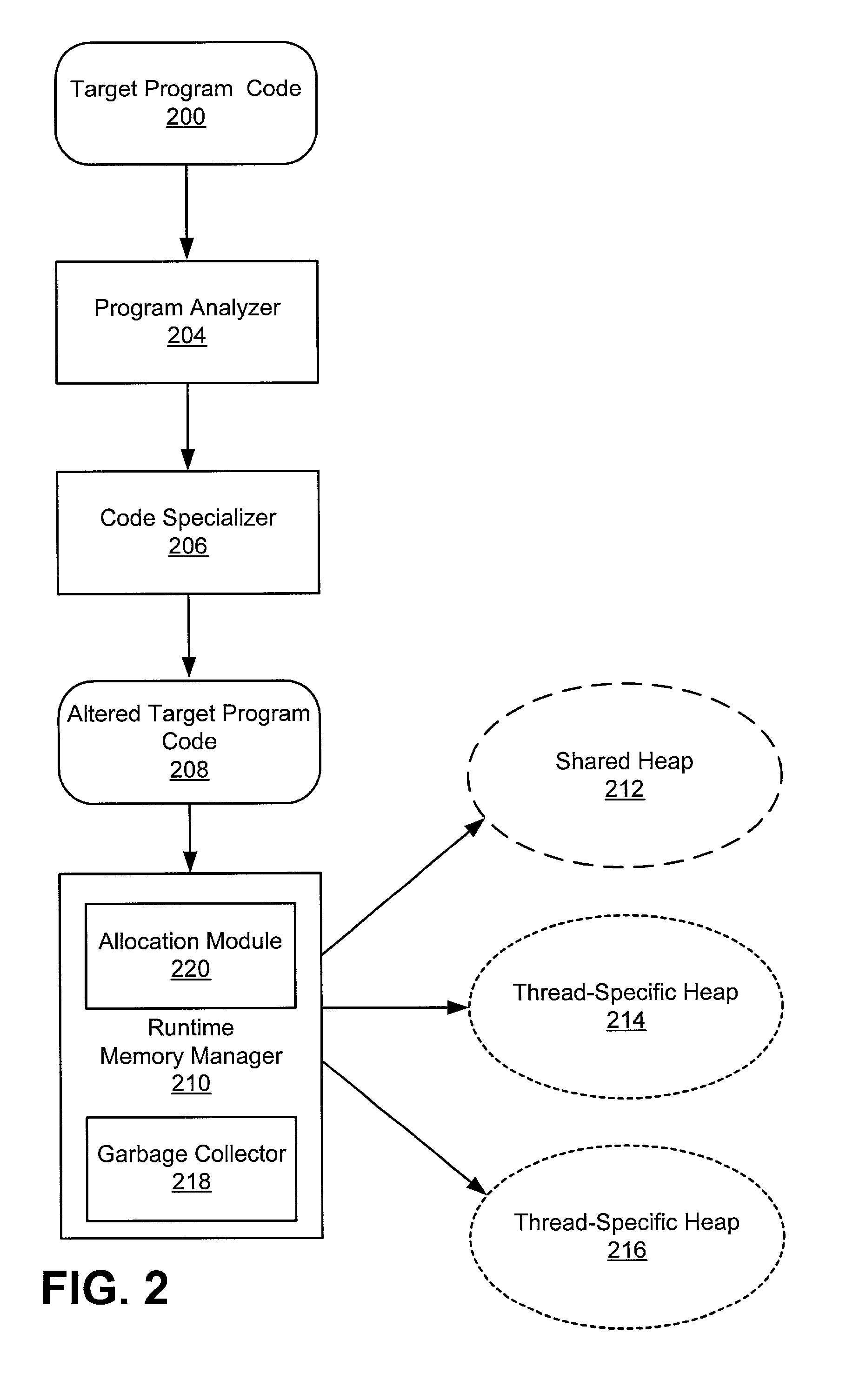

Thread-specific heaps

InactiveUS7111294B2Lower latencyResource allocationMemory adressing/allocation/relocationWaste collectionEscape analysis

Thread-specific heaps are employed in multithreaded programs to decrease garbage collection latency in such programs. Program data in a target program is analyzed to identify thread-specific data and shared data. Thread-specific data is identified on the basis that the thread-specific data is determined to be reachable only from a single program thread of the target program. Each program thread can be associated with an individual thread-specific heap. Shared data is identified on the basis that the shared data is potentially reachable from a plurality of program threads of the target program. An exemplary method of identifying such data is referred to as a thread escape analysis. Garbage collection of such heaps may be performed independently or with minimal synchronization. Remembered sets may also be used to increase the independence of collection of individual heaps and to decrease garbage collection latency.

Owner:MICROSOFT TECH LICENSING LLC

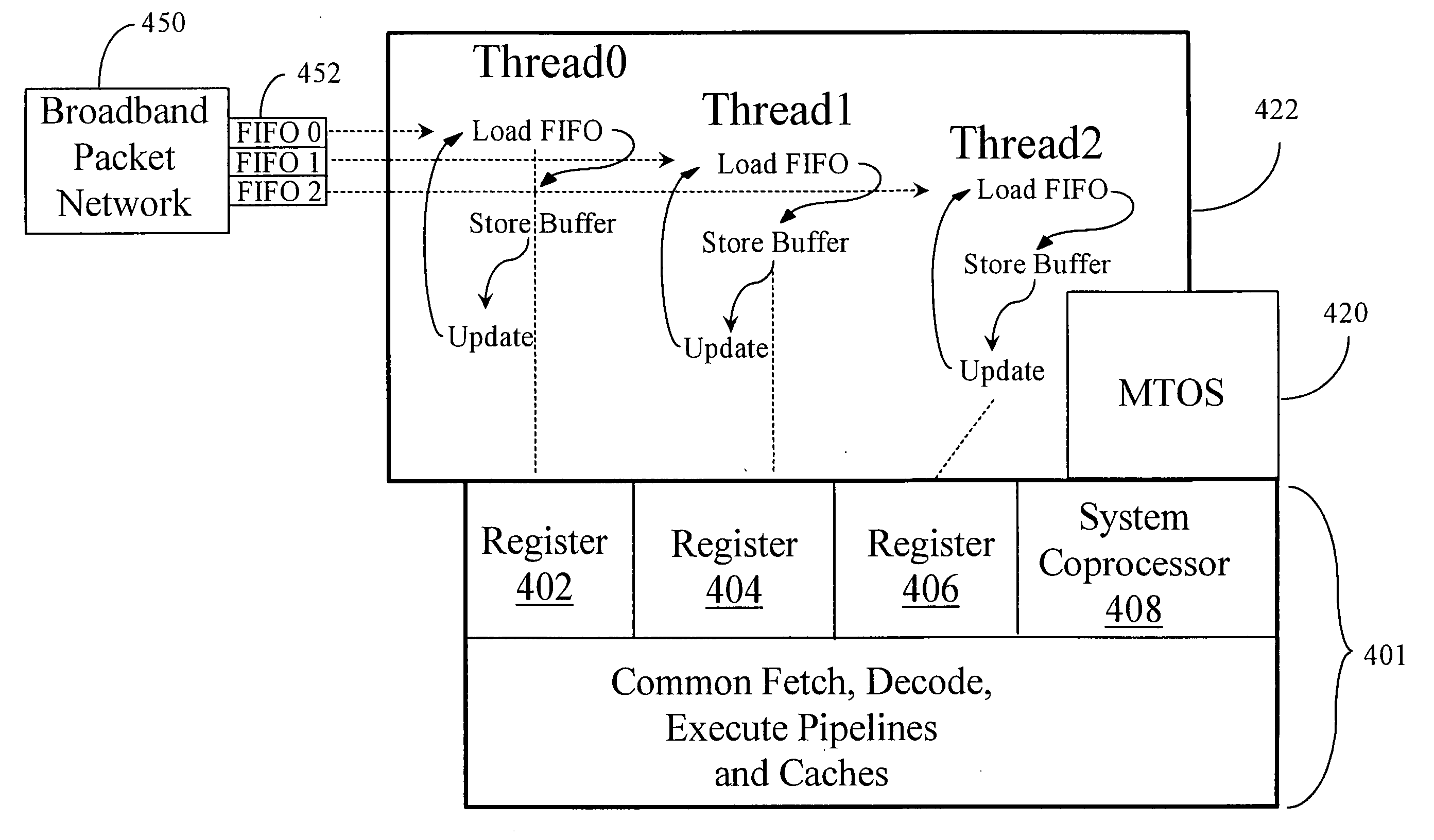

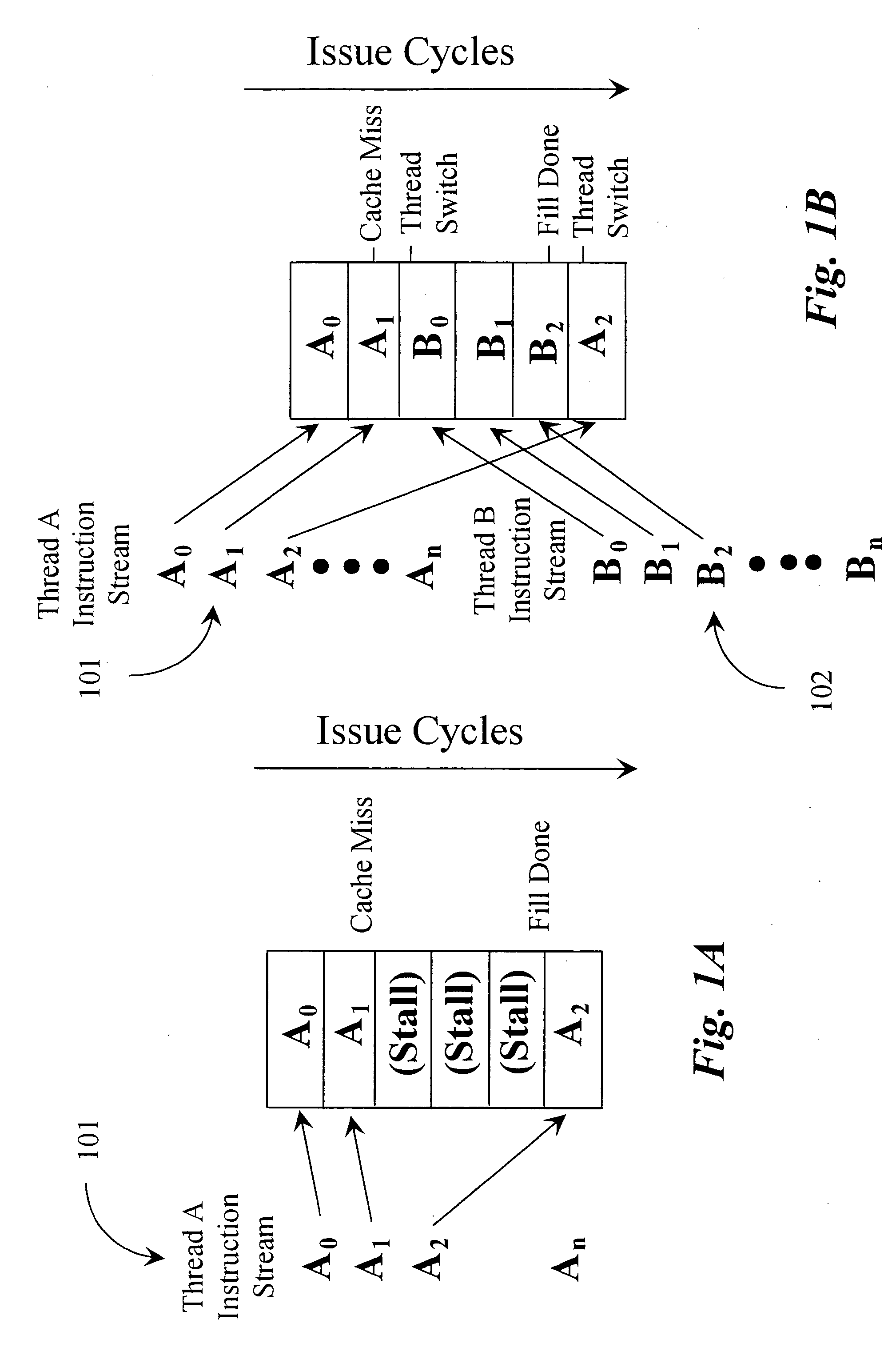

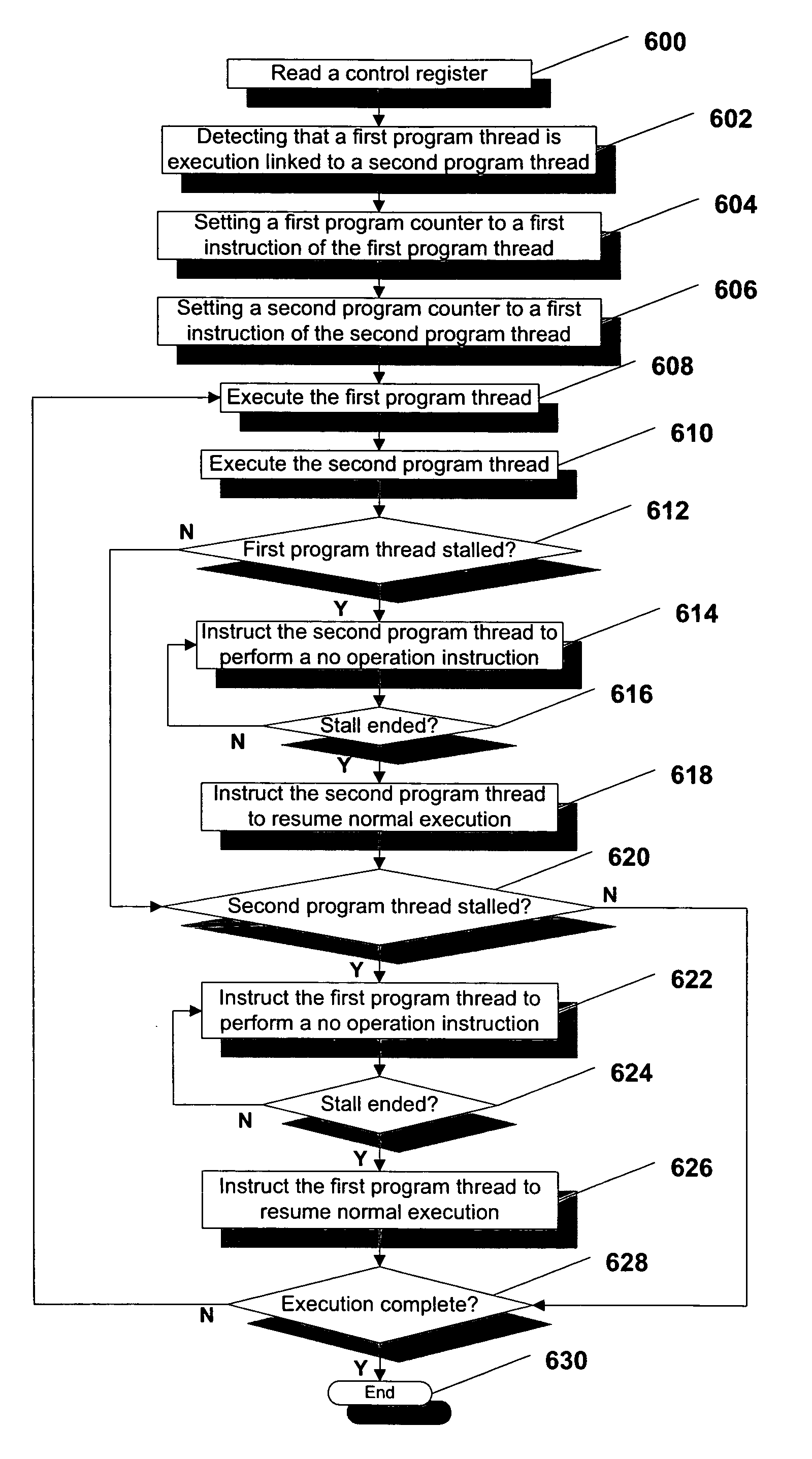

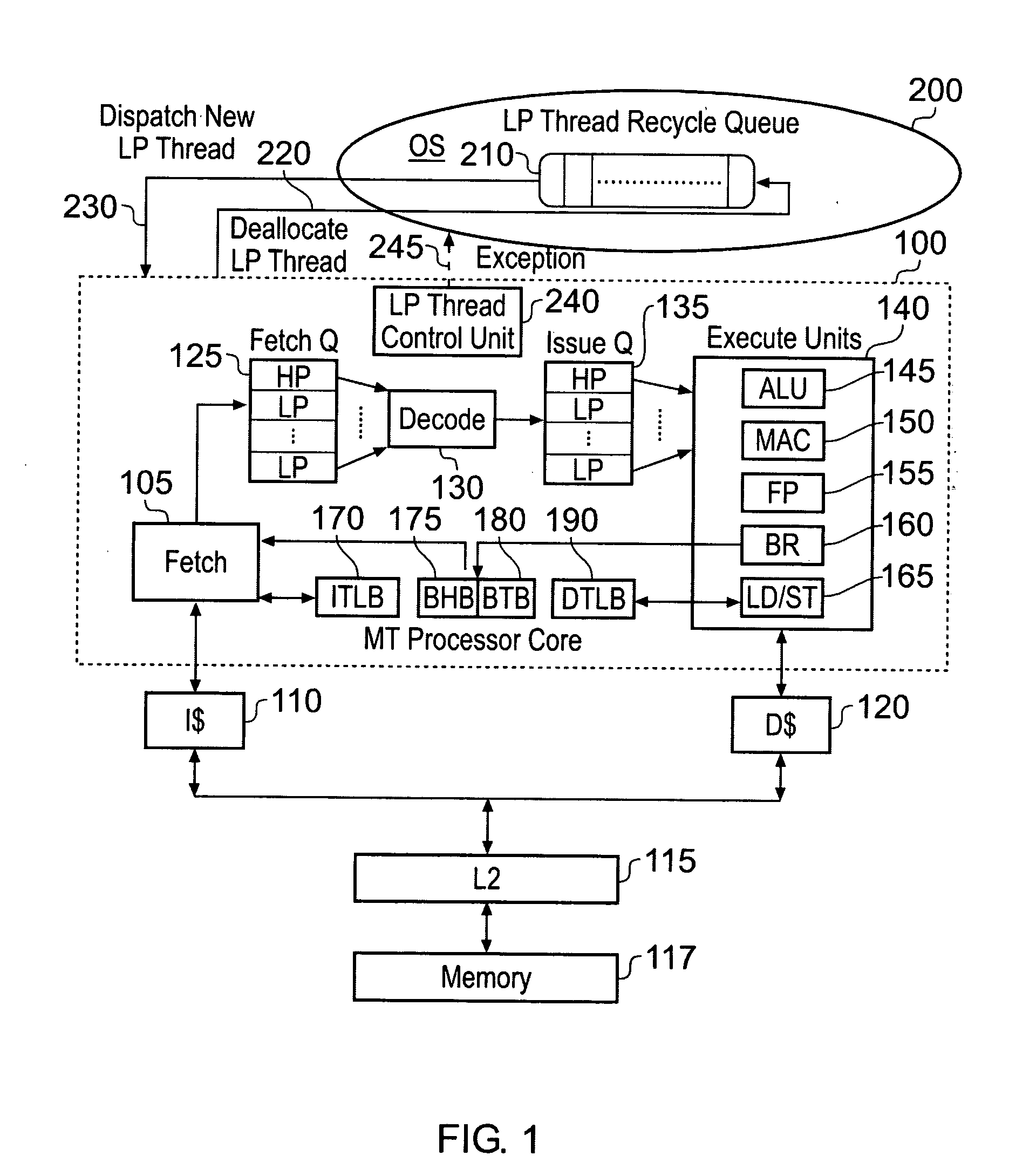

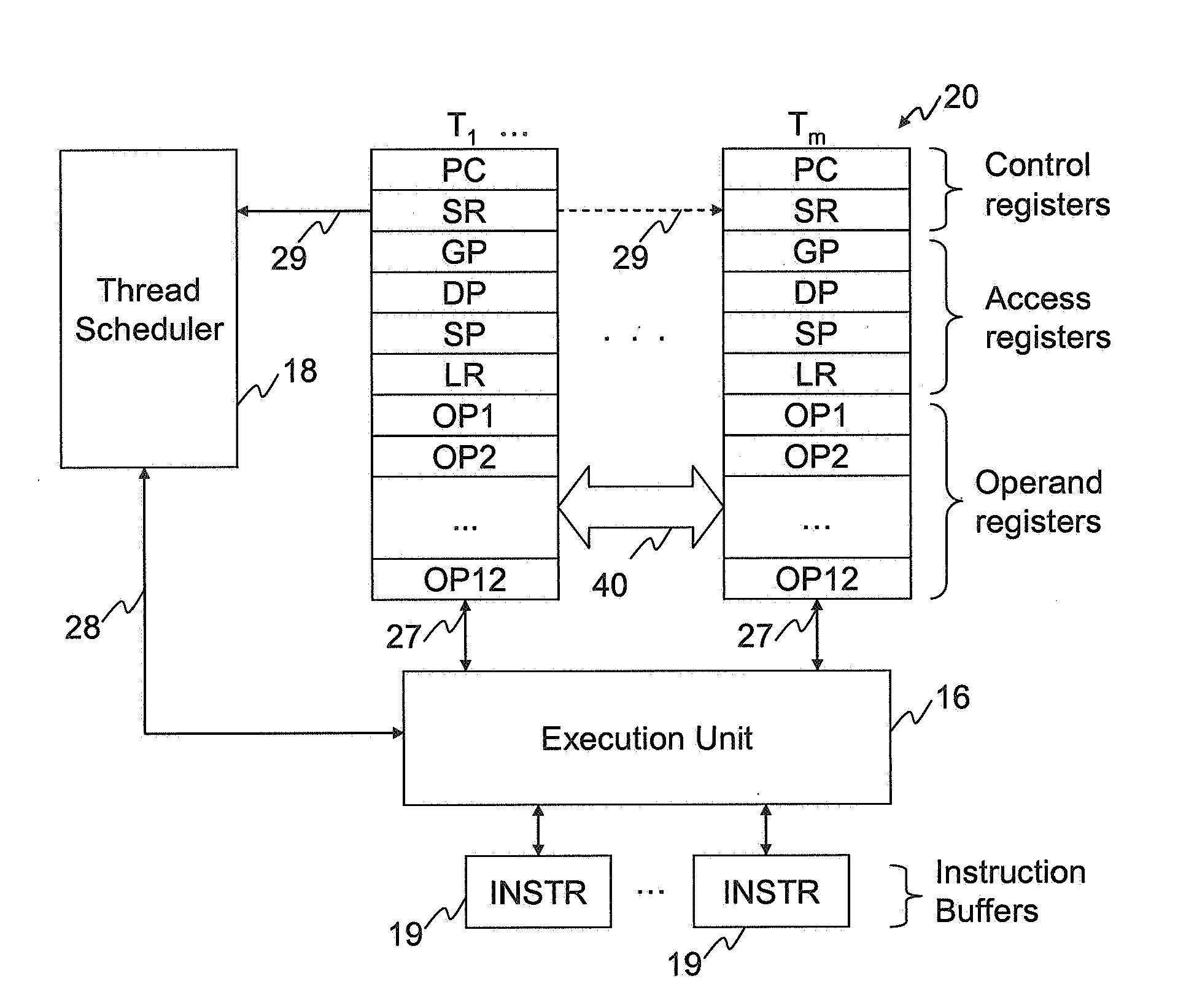

System and method of executing program threads in a multi-threaded processor

ActiveUS20060242645A1Reduces parallel programming complexityImprove processor performanceDigital computer detailsMultiprogramming arrangementsProgram ThreadOperating system

A multithreaded processor device is disclosed and includes a first program thread and second program thread. The second program thread is execution linked to the first program thread in a lock step manner. As such, when the first program thread experiences a stall event, the second program thread is instructed to perform a no operation instruction in order to keep the second program thread execution linked to the first program thread. Also, the second program thread performs a no operation instruction during each clock cycle that the first program thread is stalled due to the stall event. When the first program thread performs a first successful operation after the stall event, the second program thread restarts normal execution.

Owner:QUALCOMM INC

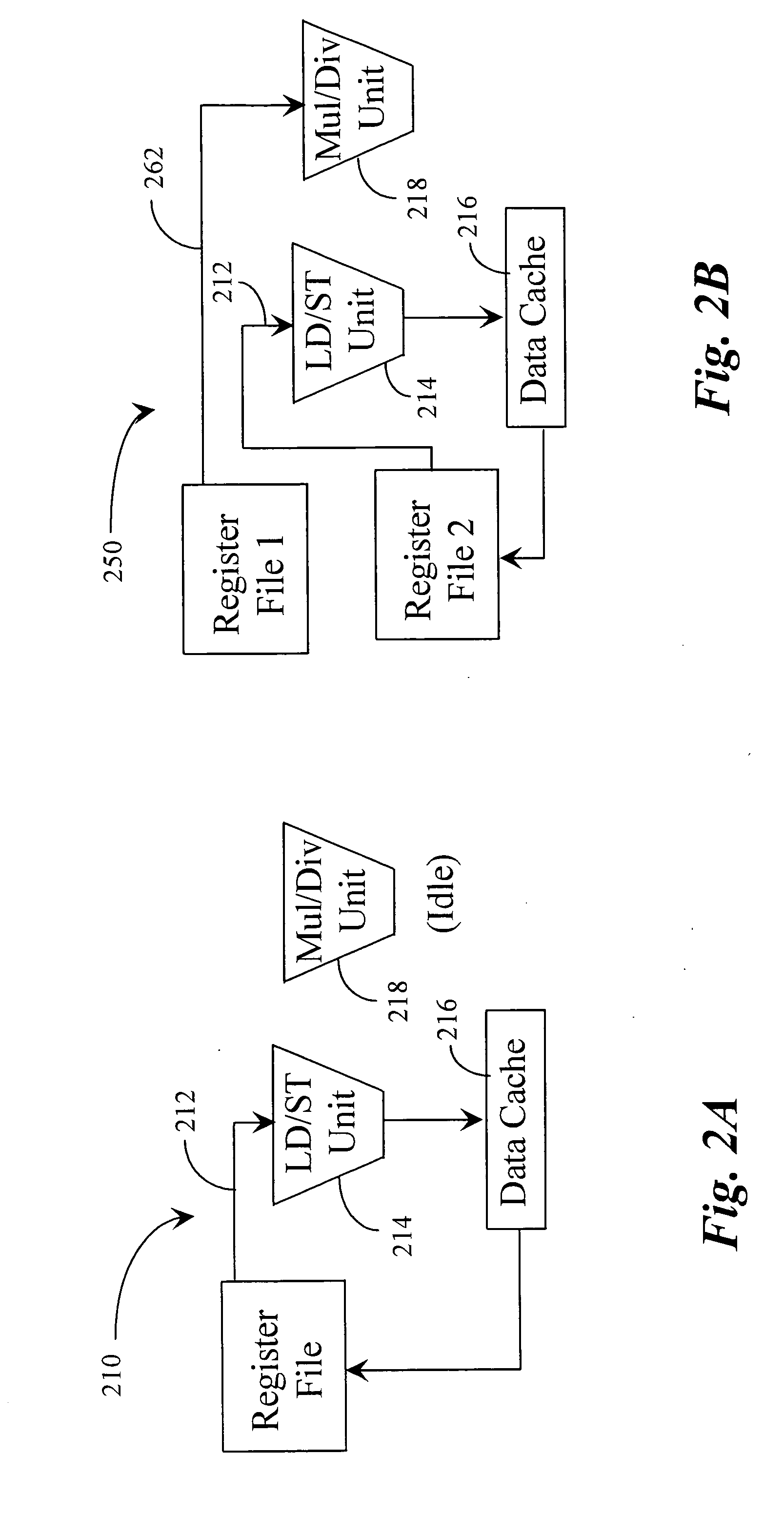

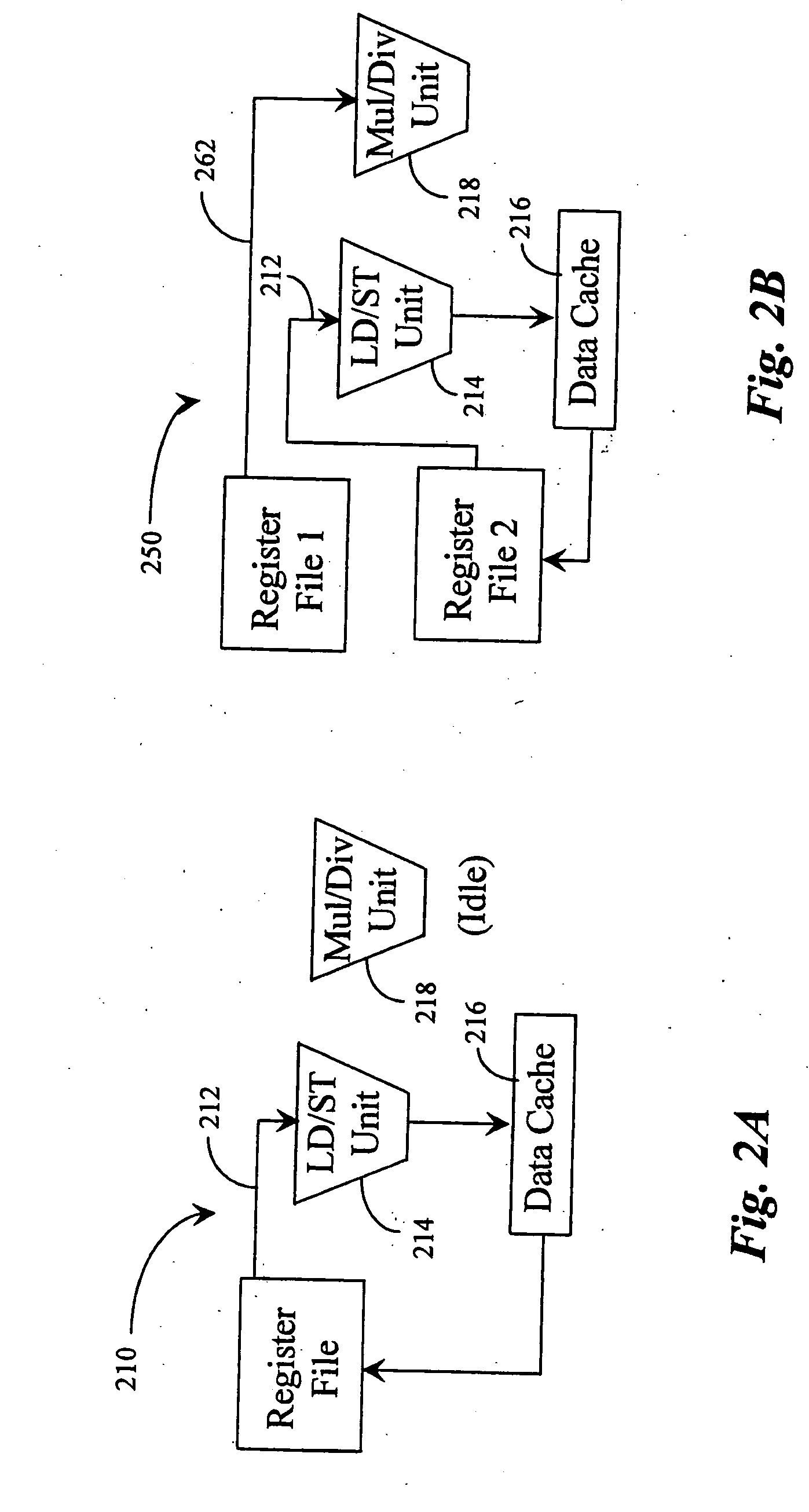

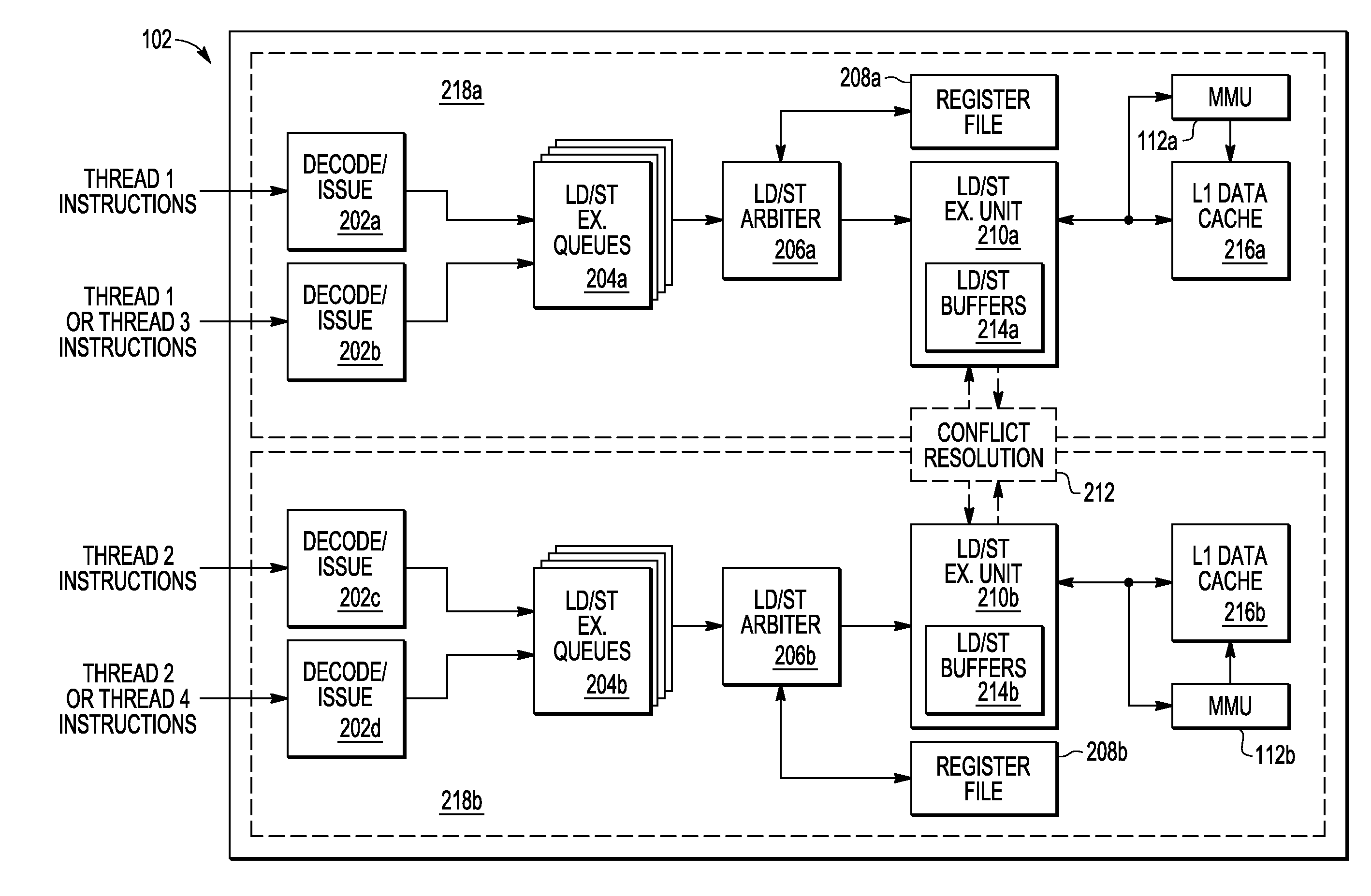

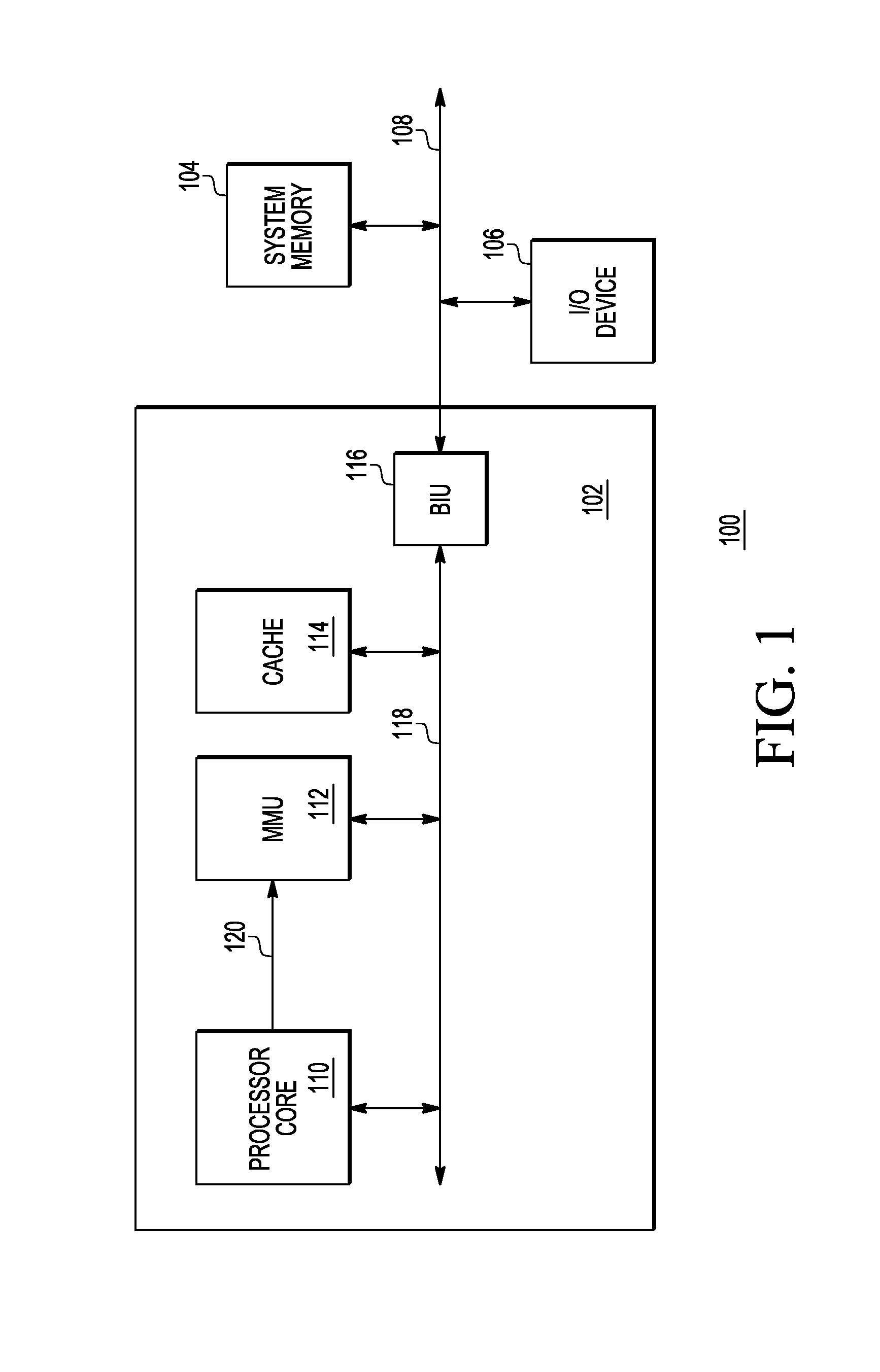

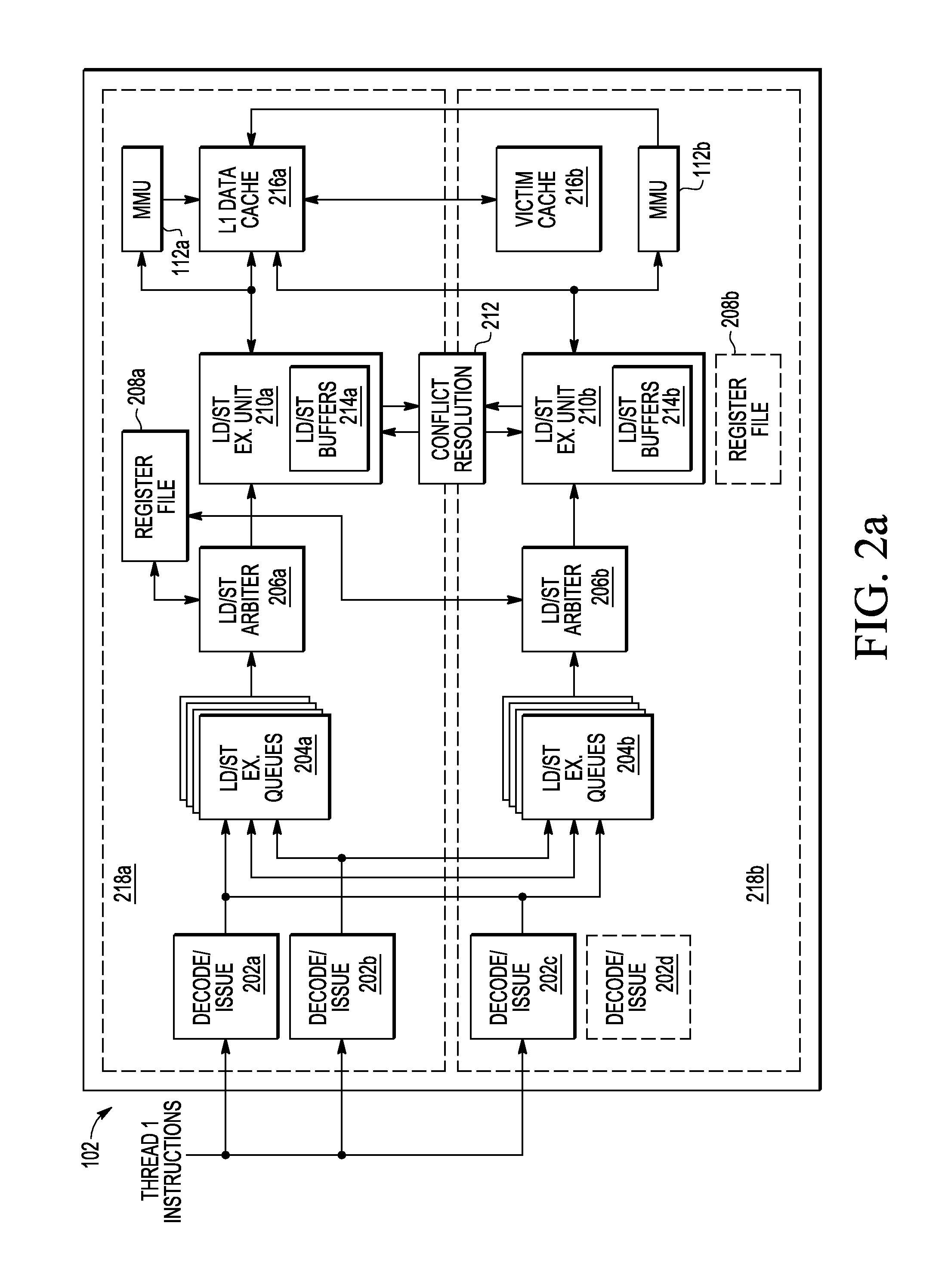

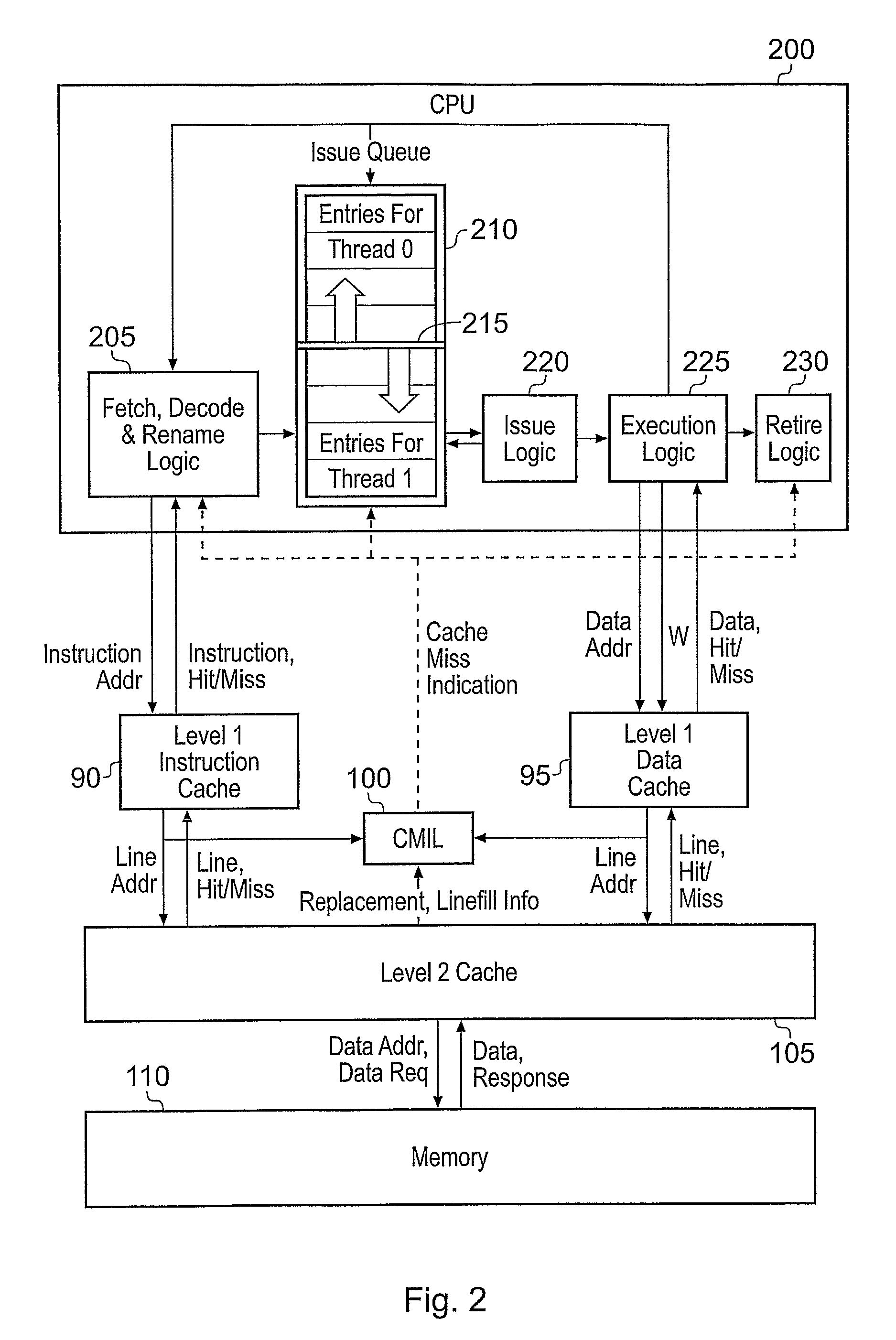

Systems and methods for configuring load/store execution units

ActiveUS20120221796A1Memory adressing/allocation/relocationProgram controlParallel computingComputerized system

Systems and methods are disclosed for multi-threading computer systems. In a computer system executing multiple program threads in a processing unit, a first load / store execution unit is configured to handle instructions from a first program thread and a second load / store execution unit is configured to handle instructions from a second program thread. When the computer system executing a single program thread, the first and second load / store execution units are reconfigured to handle instructions from the single program thread, and a Level 1 (L1) data cache is reconfigured with a first port to communicate with the first load / store execution unit and a second port to communicate with the second load / store execution unit.

Owner:NXP USA INC

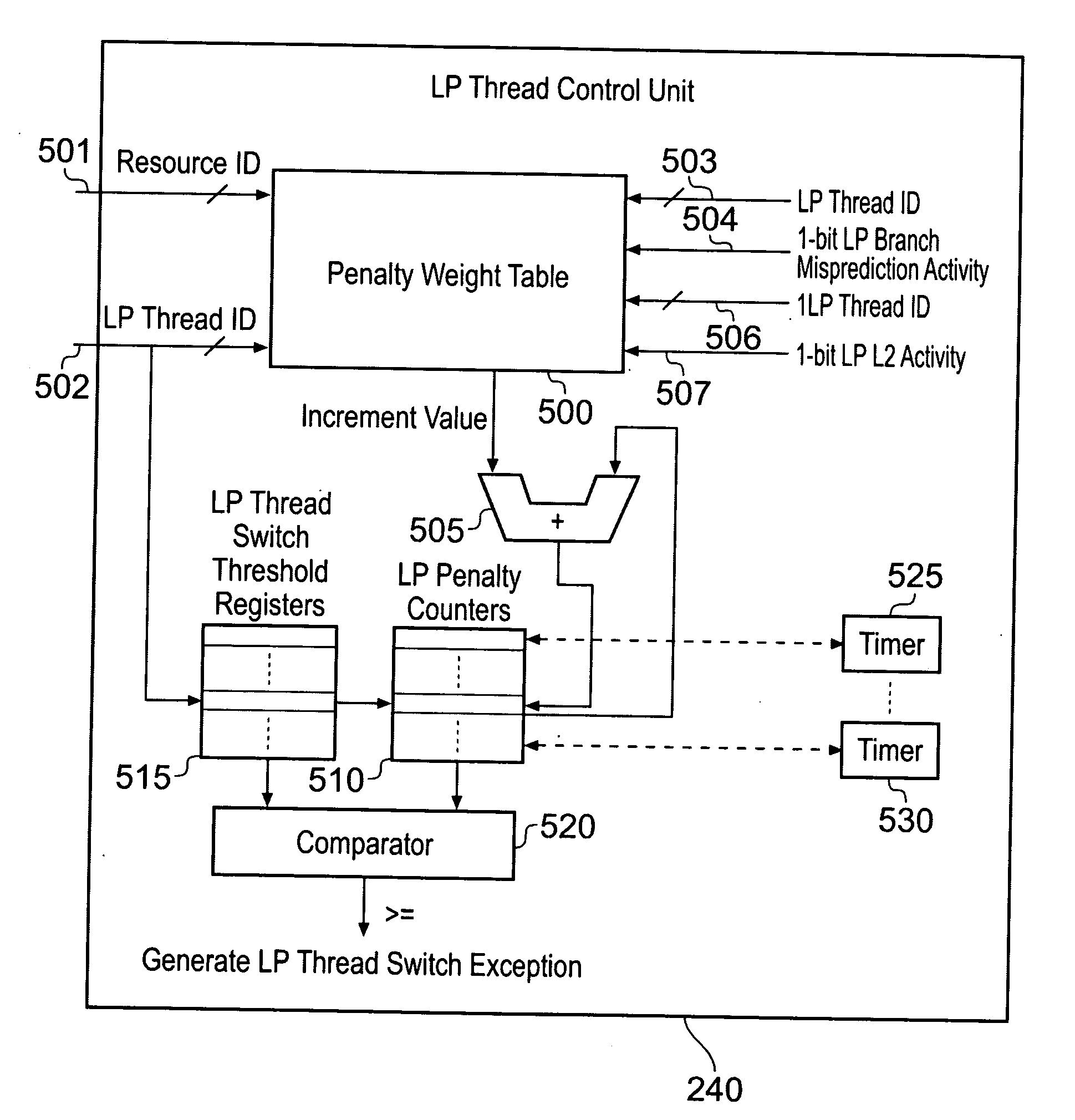

Data processing apparatus and method for managing multiple program threads executed by processing circuitry

InactiveUS20080295105A1High interference levelOptimization mechanismMultiprogramming arrangementsMemory systemsProgram ThreadComputer science

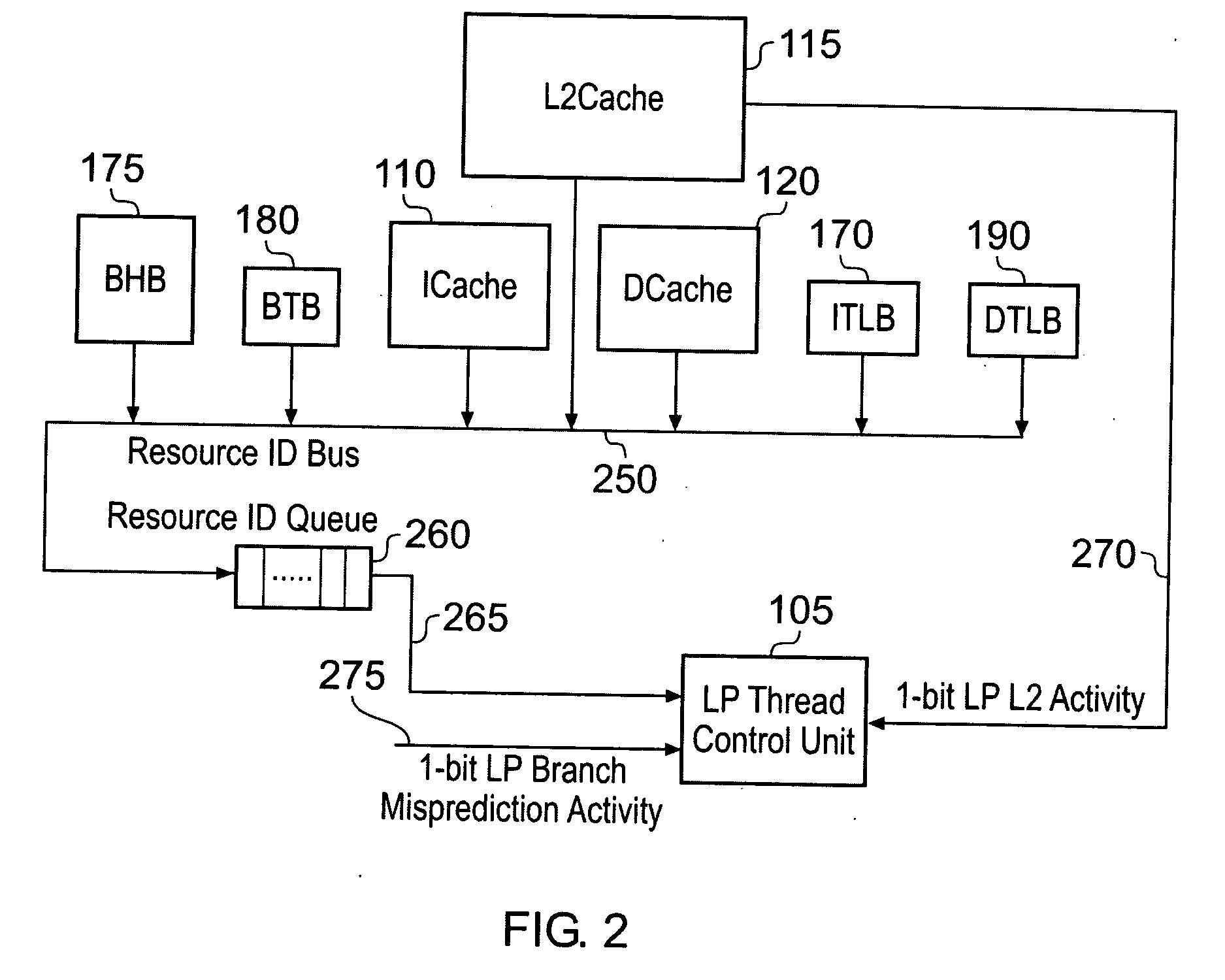

A data processing apparatus and method are provided for managing multiple program threads executed by processing circuitry. The multiple program threads include at least one high priority program thread and at least one lower priority program thread. At least one storage unit is shared between the multiple program threads and has multiple entries for storing information for reference by the processing circuitry when executing the program threads. Thread control circuitry is used to detect a condition indicating an adverse effect caused by a lower priority program thread being executed by the processing circuitry and resulting from sharing of the at least one storage unit between the multiple program threads. On detection of such a condition, the thread control circuitry issues an alert signal, and a scheduler is then responsive to the alert signal to cause execution of the lower priority program thread causing the adverse effect to be temporarily halted, for example by causing that lower priority program thread to be de-allocated and an alternative lower priority program thread allocated in its place. This has been found to provide a particularly efficient mechanism for allowing any high priority program thread to progress as much as possible, whilst at the same time improving the overall processor throughput by seeking to find co-operative lower priority program threads.

Owner:ARM LTD

Apparatus and method for an automatic thread-partition compiler

InactiveUS20050108695A1Program initiation/switchingSoftware engineeringParallel computingTerm memory

In some embodiments, a method and apparatus for an automatic thread-partition compiler are described. In one embodiment, the method includes the transformation of a sequential application program into a plurality of application program threads. Once partitioned, the plurality of application program threads are concurrently executed as respective threads of a multi-threaded architecture. Hence, a performance improvement of the parallel multi-threaded architecture is achieved by hiding memory access latency through or by overlapping memory access with computations or with other memory accesses. Other embodiments are described and claimed.

Owner:INTEL CORP

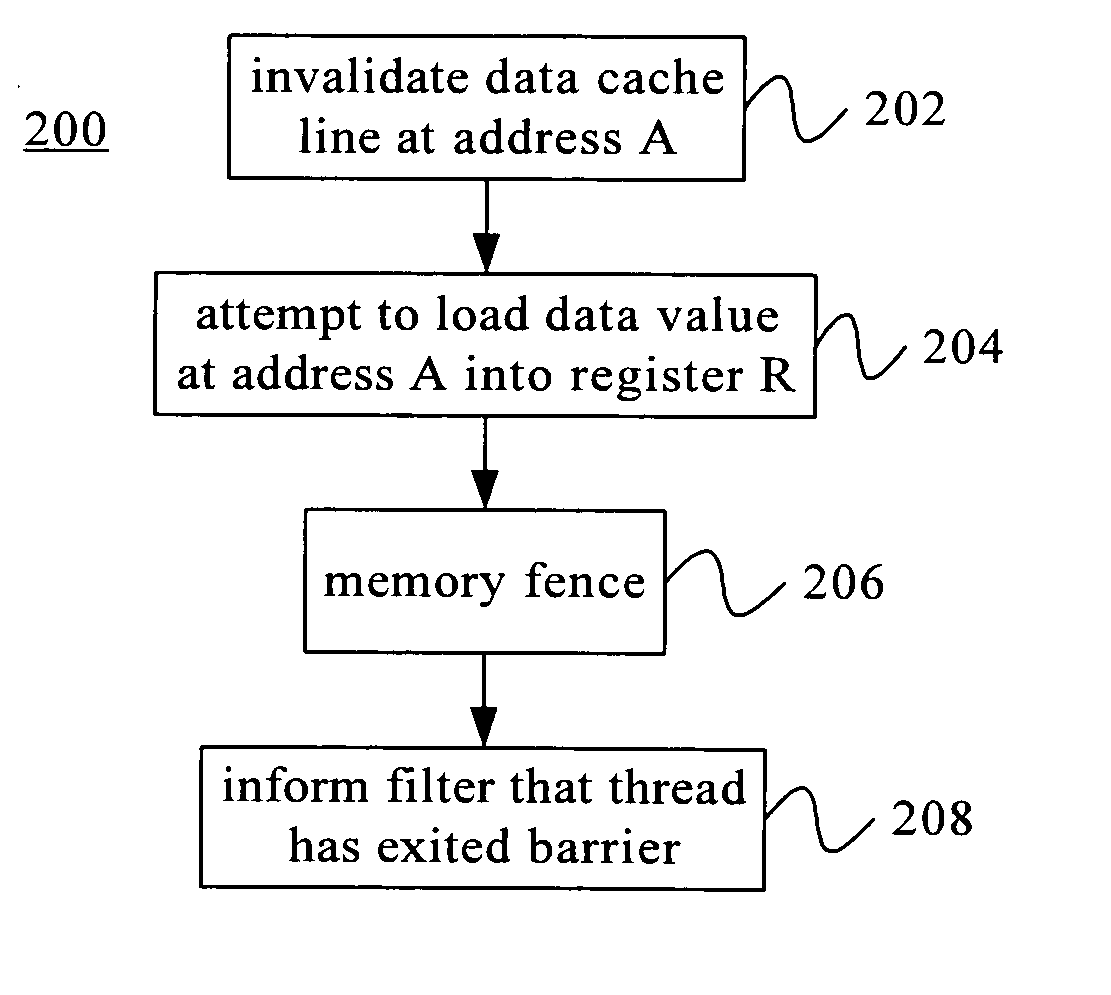

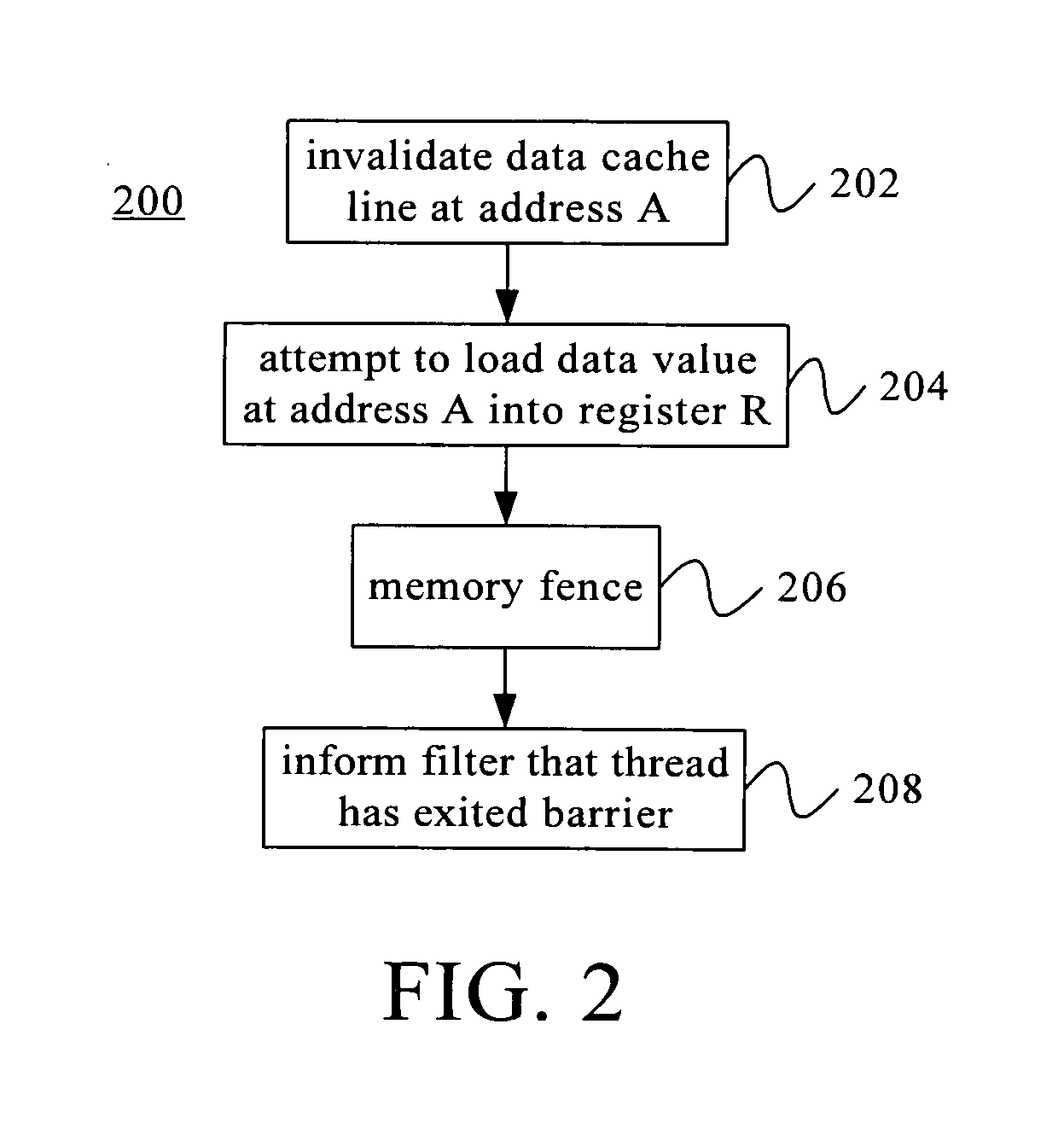

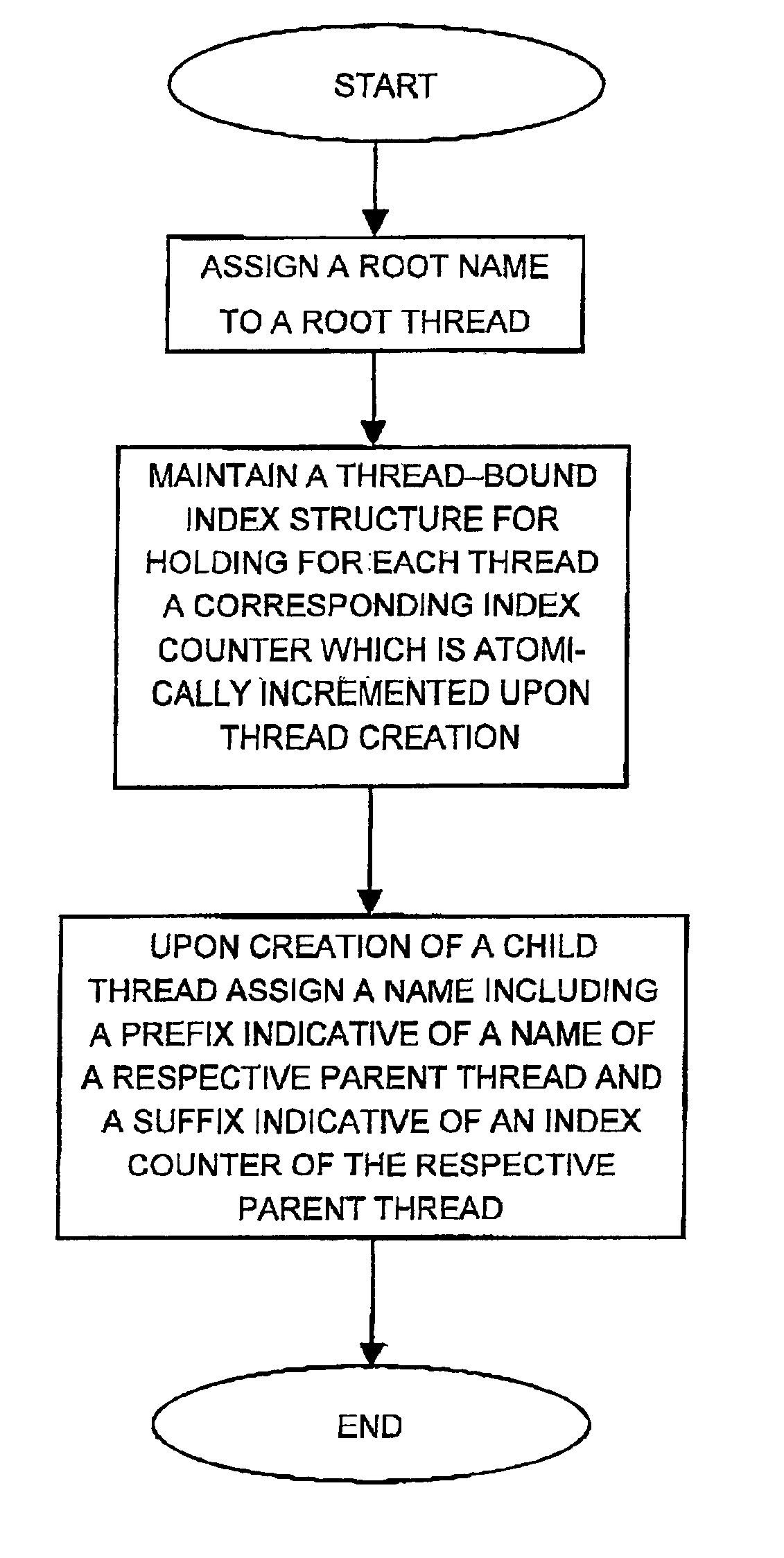

Program thread synchronization

The present invention is a method of and system for program thread synchronization. In accordance with an embodiment of the invention, a method of synchronizing program threads for one or more processors is provided. An address for data for each of a plurality of program threads to be synchronized is determined. For each processor executing one or more of the threads to be synchronized, execution of the thread is halted at a barrier by attempting a data operation to the determined address and the address being unavailable. Execution of the threads is resumed.

Owner:HEWLETT-PACKARD ENTERPRISE DEV LP +1

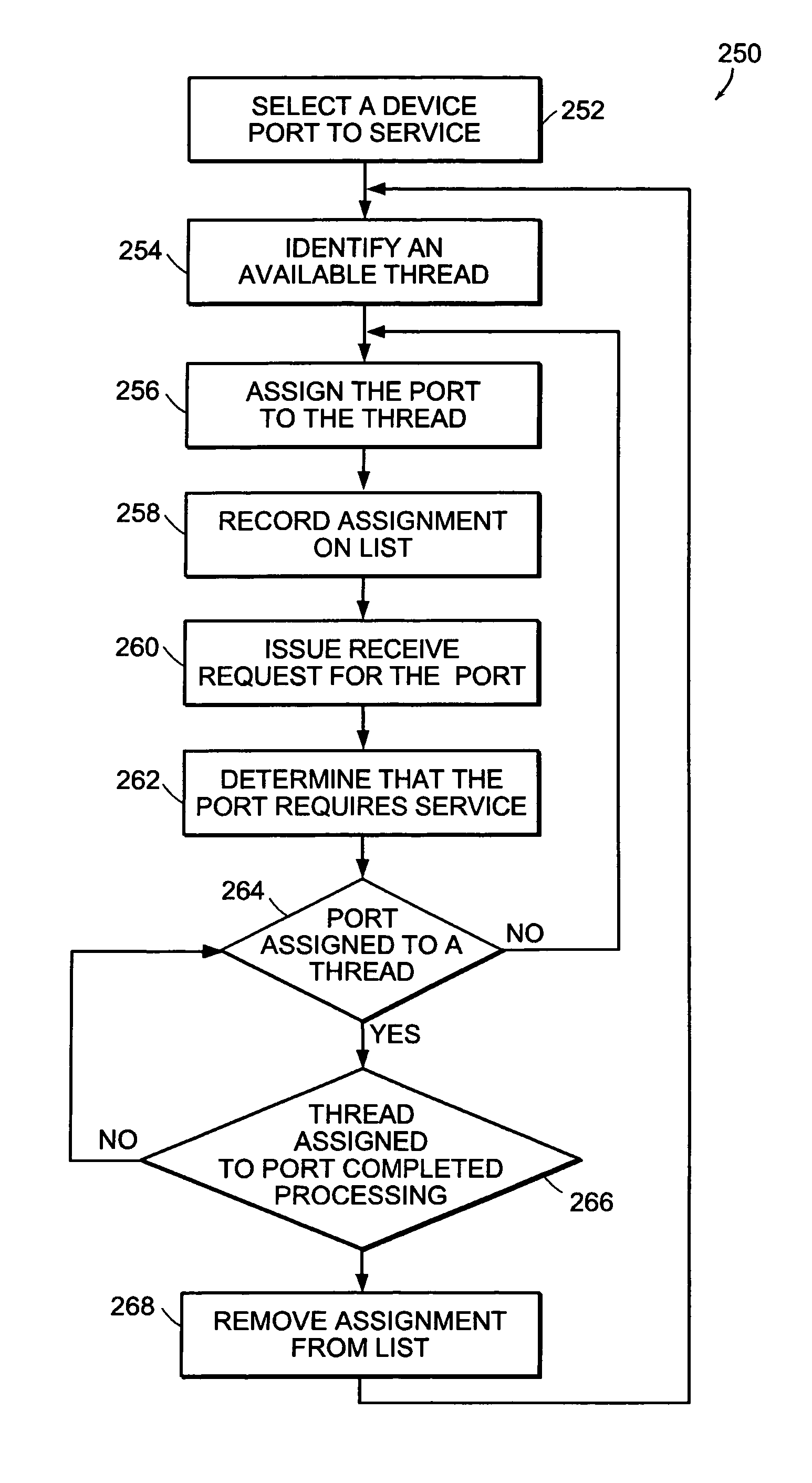

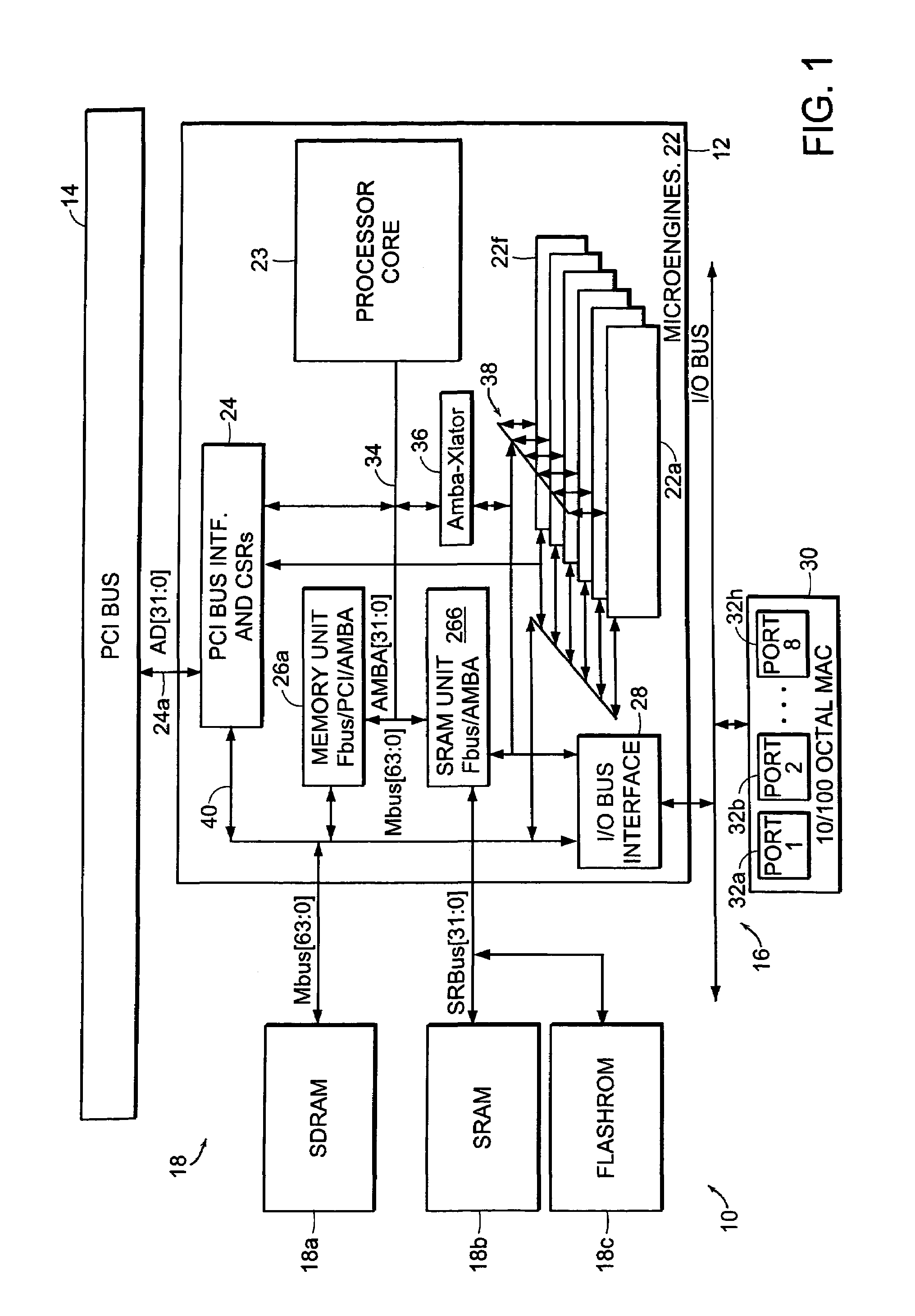

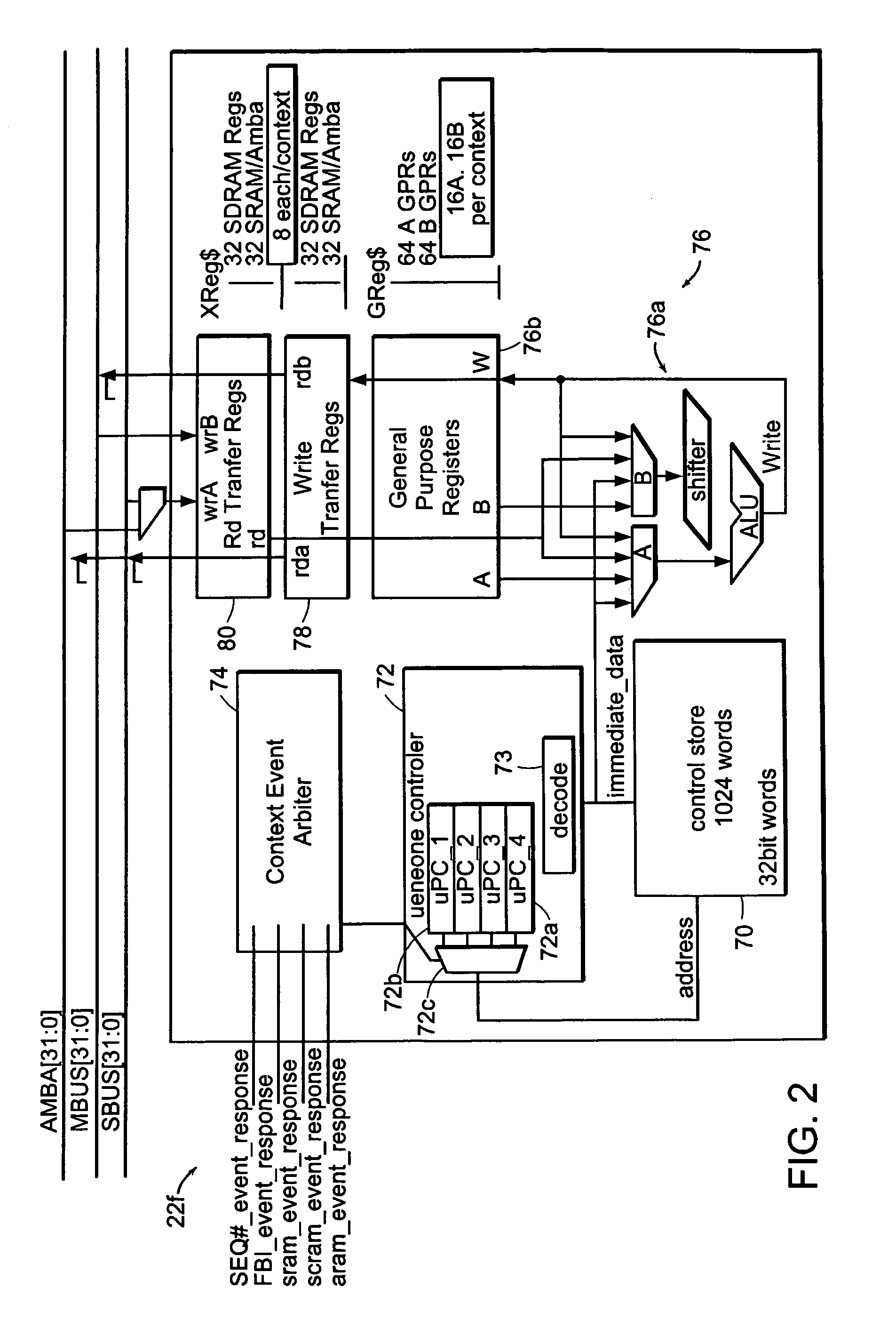

Port blocking technique for maintaining receive packet ordering for a multiple ethernet port switch

InactiveUS6976095B1Program initiation/switchingMultiple digital computer combinationsProcessing elementNetwork processor

A network processor that has multiple processing elements, each supporting multiple simultaneous program threads with access to shared resources in an interface. Packet data is received from ports in segments and each segment is assigned to one of the program threads. Ordering of segments within packets, and between packets from the same port, is maintained by a scheduler program thread. The scheduler program thread blocks a new assignment of the previously assigned port to a program thread until the program thread to which the port was previously assigned has indicated that it has completed the processing of the segment from that port.

Owner:INTEL CORP

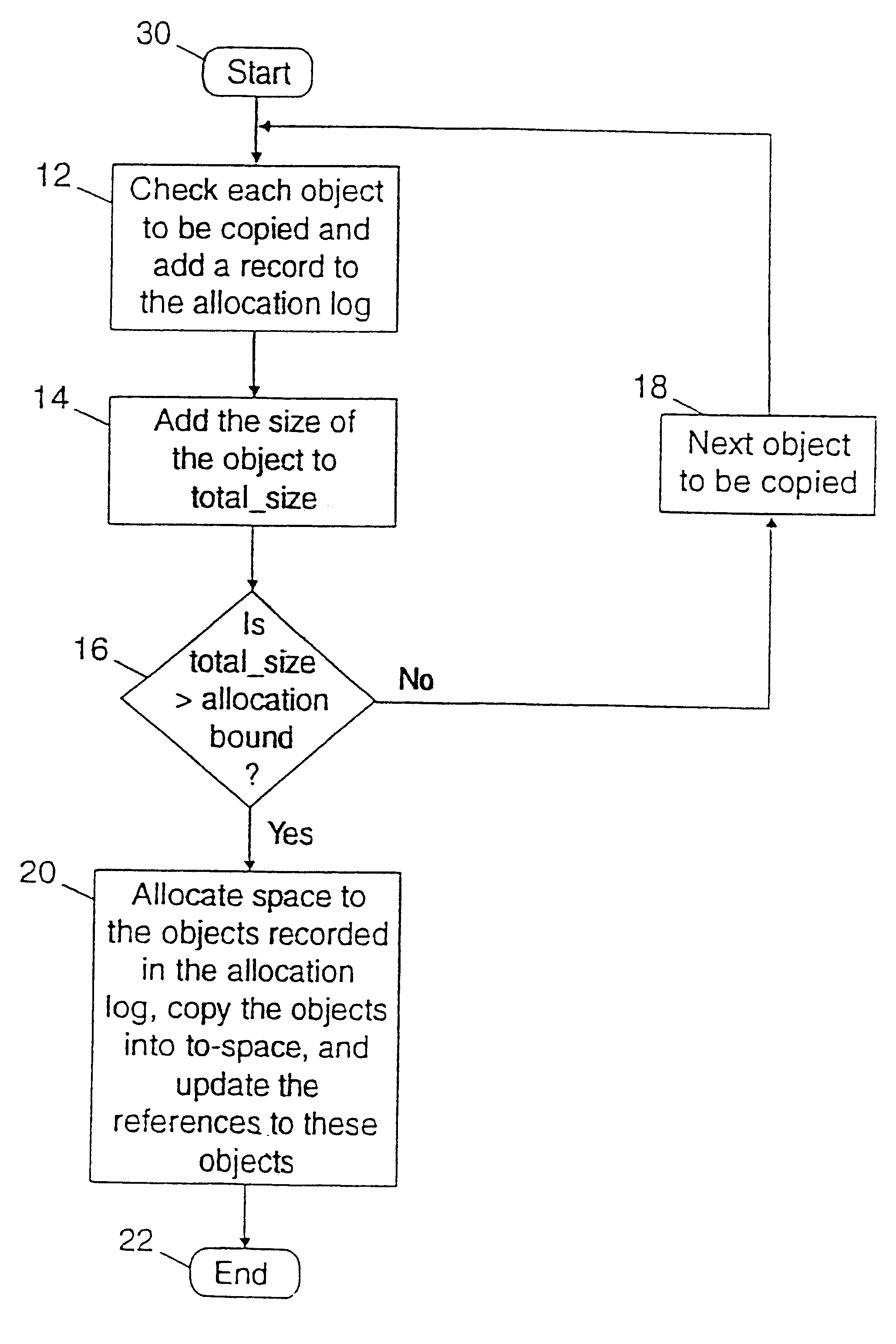

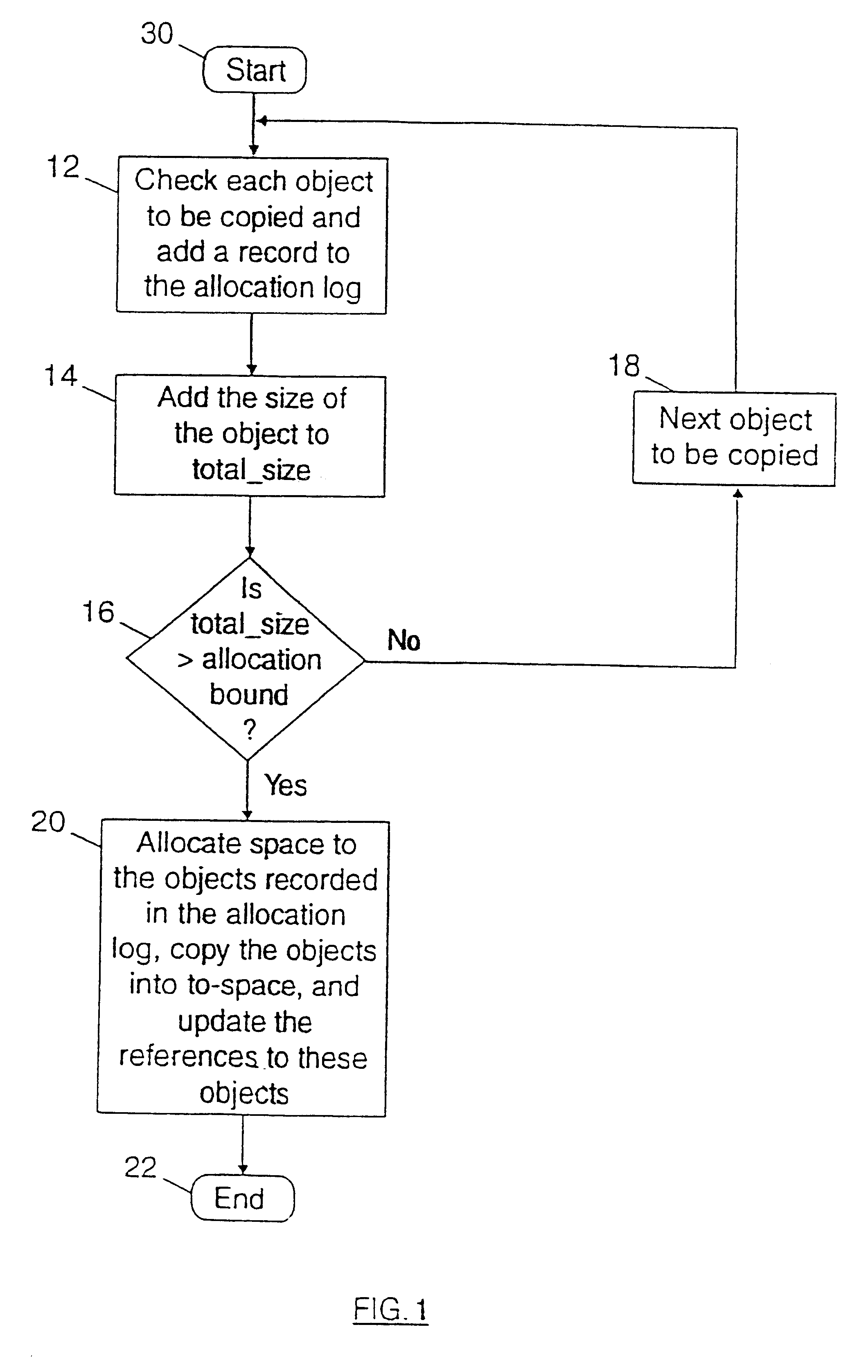

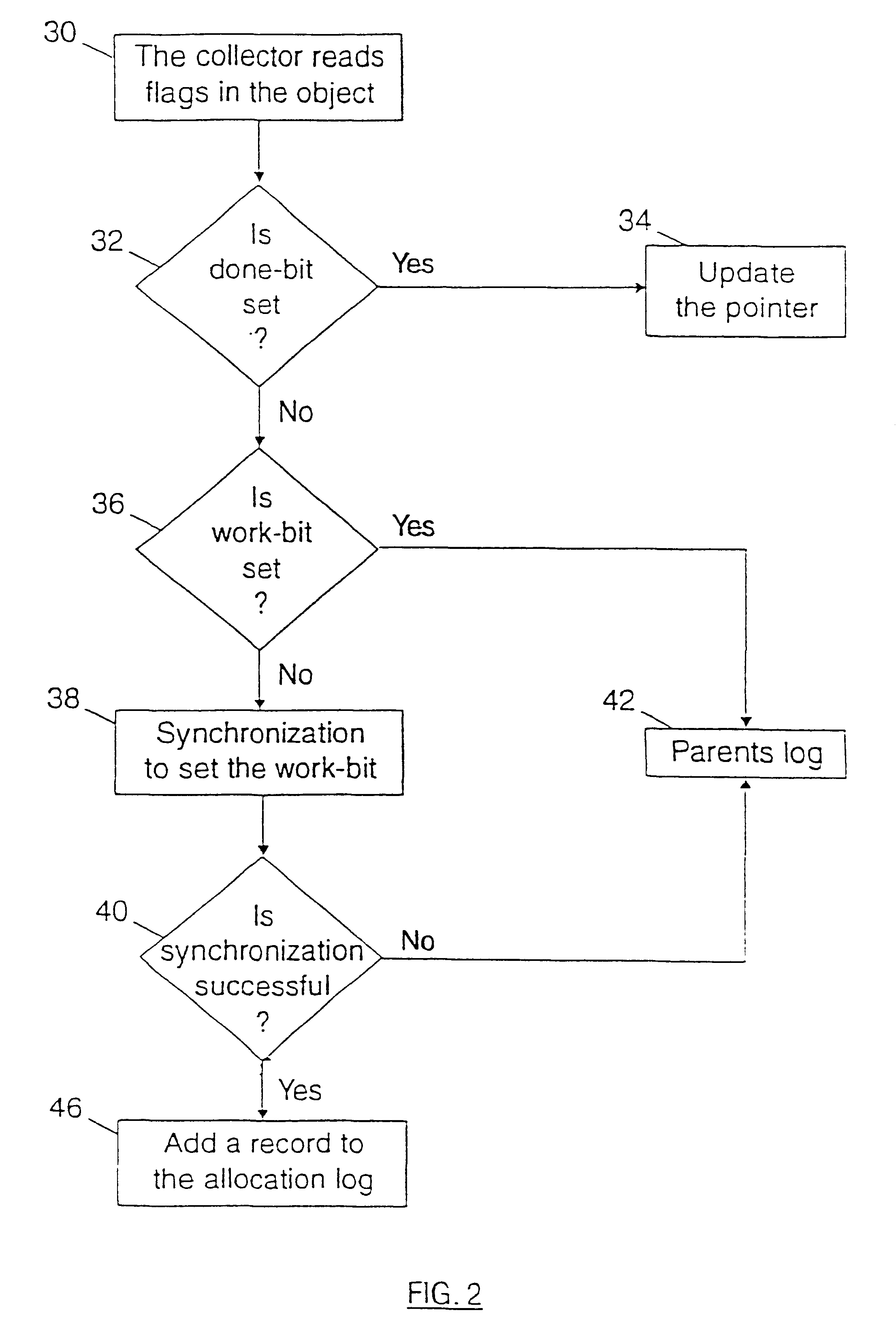

Method of delaying space allocation for parallel copying garbage collection

InactiveUS6427154B1Reduce competitionRemove debrisData processing applicationsDigital data processing detailsData processing systemObject copying

The present invention relates to a method of delaying space allocation for parallel copying garbage collection in a data processing system comprising a memory divided in a current area (from-space) used by at least a program thread during current program execution and reserve area (to-space), and wherein a copying garbage collection is run in parallel by several collector threads, the garbage collection consisting in stopping the program threads and flipping the roles of the current area and reserved area before copying into the reserved area the live objects stored in the current area. Such a method comprises the steps of checking (12) by one collector thread the live objects of the current area to be copied in said reserved area, the live objects being referenced by a list of pointers; storing for each live object, a record into an allocation log, this record including at least the address of the object and its size; adding (14) the object size to a total_size which is the accumulated size of all the checked objects for which a record has been stored in the allocation log; and copying (20) all the checked objects into the reserved area when the value of total_size reaches a predetermined allocation bound.

Owner:LINKEDIN

Cache miss detection in a data processing apparatus

ActiveUS8099556B2Significant performance improvementReduce in quantityMemory adressing/allocation/relocationMultiprogramming arrangementsProgram ThreadData value

A data processing apparatus and method are provided for detecting cache misses. The data processing apparatus has processing logic for executing a plurality of program threads, and a cache for storing data values for access by the processing logic. When access to a data value is required while executing a first program thread, the processing logic issues an access request specifying an address in memory associated with that data value, and the cache is responsive to the address to perform a lookup procedure to determine whether the data value is stored in the cache. Indication logic is provided which in response to an address portion of the address provides an indication as to whether the data value is stored in the cache, this indication being produced before a result of the lookup procedure is available, and the indication logic only issuing an indication that the data value is not stored in the cache if that indication is guaranteed to be correct. Control logic is then provided which, if the indication indicates that the data value is not stored in the cache, uses that indication to control a process having an effect on a program thread other than the first program thread.

Owner:ARM LTD

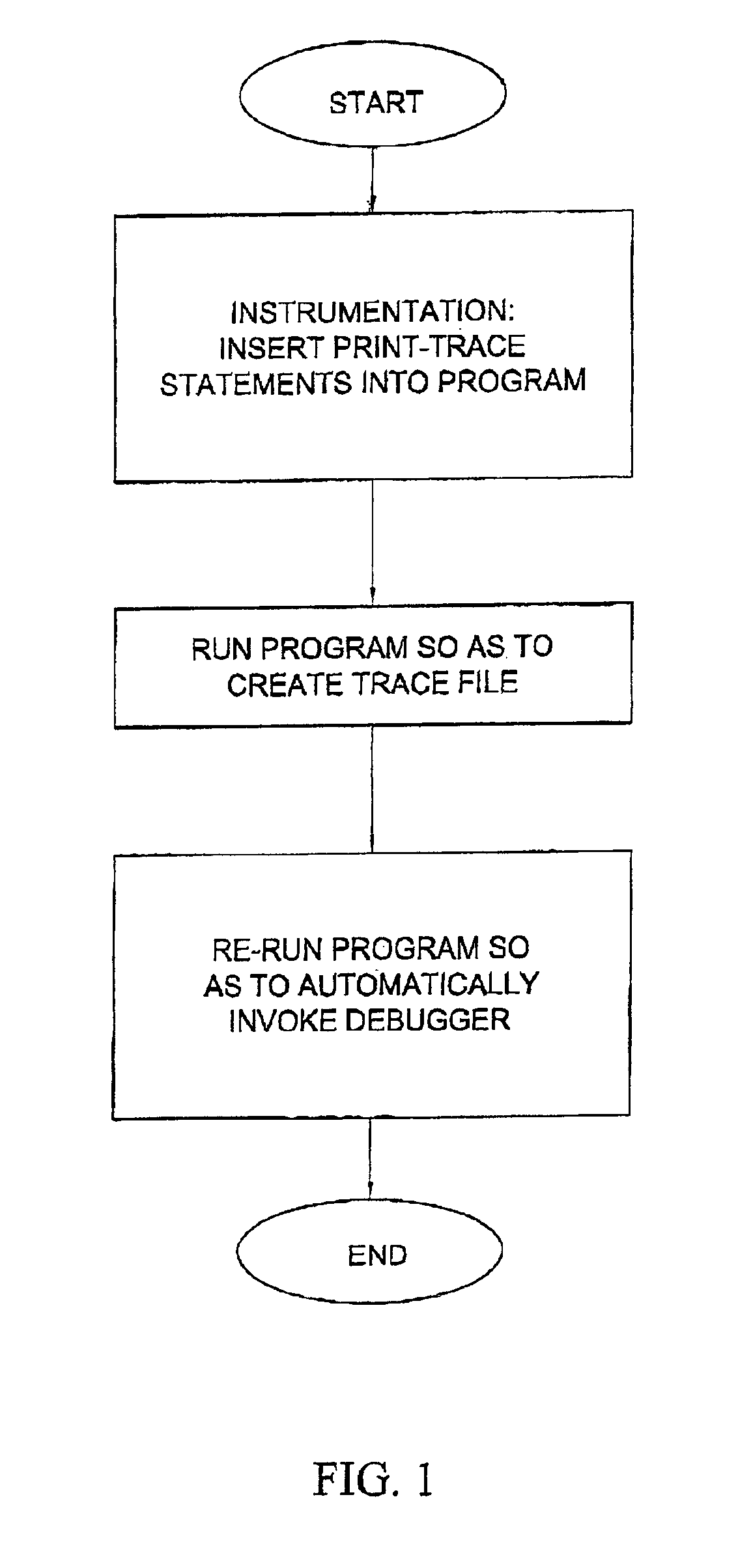

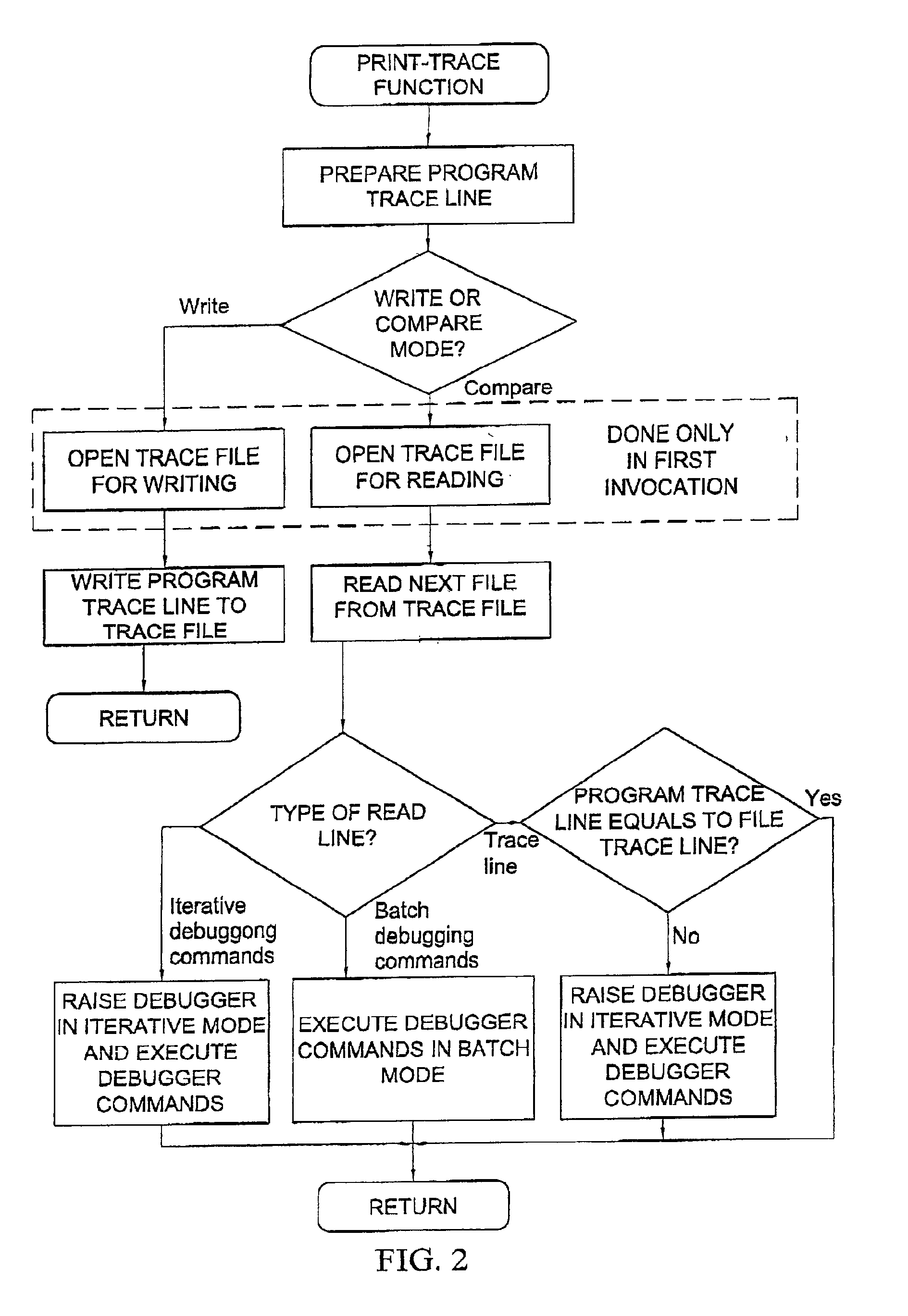

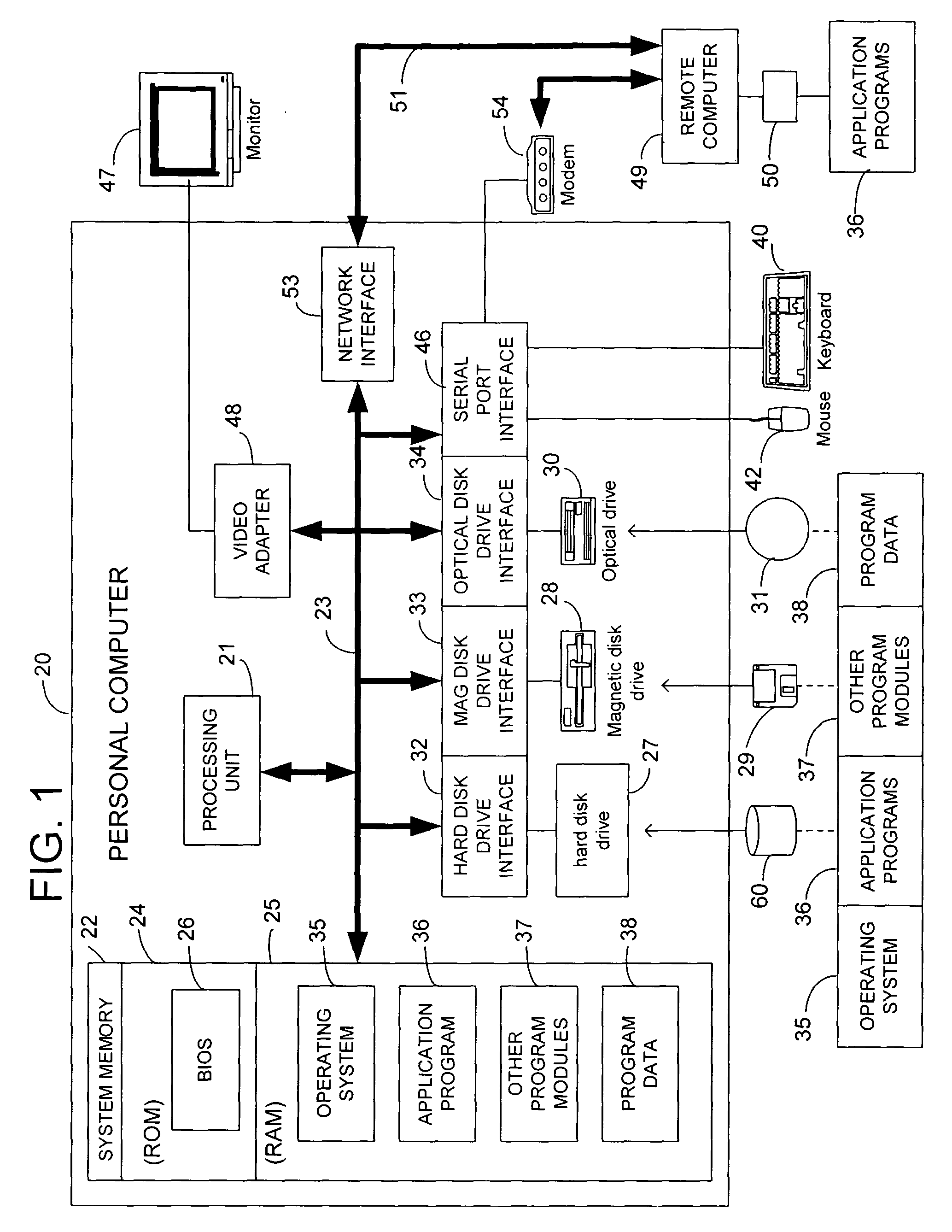

Computer-implemented method and system for automatically invoking a predetermined debugger command at a desired location of a single thread of a program

InactiveUS6978444B1Difficult to implementCompromise integrityError detection/correctionSpecific program execution arrangementsTrace fileProgram Thread

A computer-implemented method and system for automatically invoking a predetermined debugger command at a desired location of a single thread of a program containing at least one thread. At the desired location of the program thread, there is embedded a utility which reads a trace file in which the predetermined debugger command has been previously embedded. Upon re-running the program, the trace file is read and upon reaching the predetermined debugger command, the debugger attaches itself to the running process and executes the process from its current program counter. The debugger is invoked only if there is a discrepancy between successive runs of the program.

Owner:IBM CORP

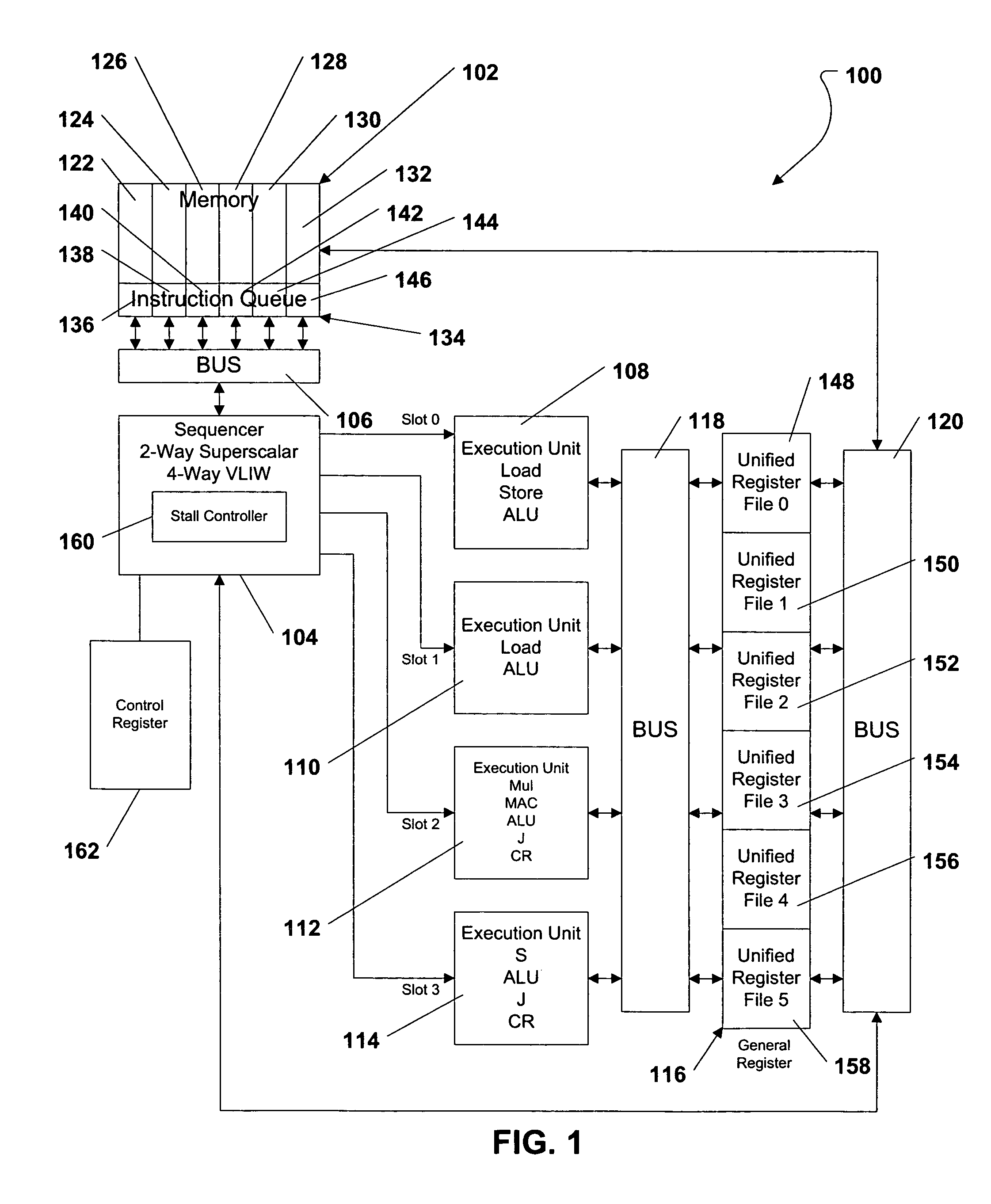

Instruction issue control within a multi-threaded in-order superscalar processor

ActiveUS20080270749A1Performance constrainedReduce hardware overheadGeneral purpose stored program computerMemory systemsScalar processorProgram Thread

A multi-threaded in-order superscalar processor 2 is described having a fetch stage 8 within which thread interleaving circuitry 36 interleaves instructions taken from different program threads to form an interleaved stream of instructions which is then decoded and subject to issue. Hint generation circuitry 62 within the fetch stage 8 adds hint data to the threads indicating that parallel issue of an associated instruction is permitted with one of more other instructions.

Owner:ARM LTD

Cycle count replication in a simultaneous and redundantly threaded processor

InactiveUS6854051B2Improve performanceProviding fault toleranceInterprogram communicationDigital computer detailsProgram ThreadStorage cell

A pipelined, simultaneous and redundantly threaded (“SRT”) processor comprising, among other components, load / store units configured to perform load and store operations to or from data locations such as a data cache and data registers and a cycle counter configured to keep a running count of processor clock cycles. The processor is configured to detect transient faults during program execution by executing instructions in at least two redundant copies of a program thread and wherein false errors caused by incorrectly replicating cycle count values in the redundant program threads are avoided by implementing a cycle count queue for storing the actual values fetched by read cycle count instructions in the first program thread. The load / store units then access the cycle count queue and not the cycle counter to fetch cycle count values in response to read cycle count instructions in the second program thread.

Owner:SONRAI MEMORY LTD

Multithreaded lock management

Apparatus, systems, and methods may operate to construct a memory barrier to protect a thread-specific use counter by serializing parallel instruction execution. If a reader thread is new and a writer thread is not waiting to access data to be read by the reader thread, the thread-specific use counter is created and associated with a read data structure and a write data structure. The thread-specific use counter may be incremented if a writer thread is not waiting. If the writer thread is waiting to access the data after the thread-specific use counter is created, then the thread-specific use counter is decremented without accessing the data by the reader thread. Otherwise, the data is accessed by the reader thread and then the thread-specific use counter is decremented. Additional apparatus, systems, and methods are disclosed.

Owner:MICRO FOCUS SOFTWARE INC

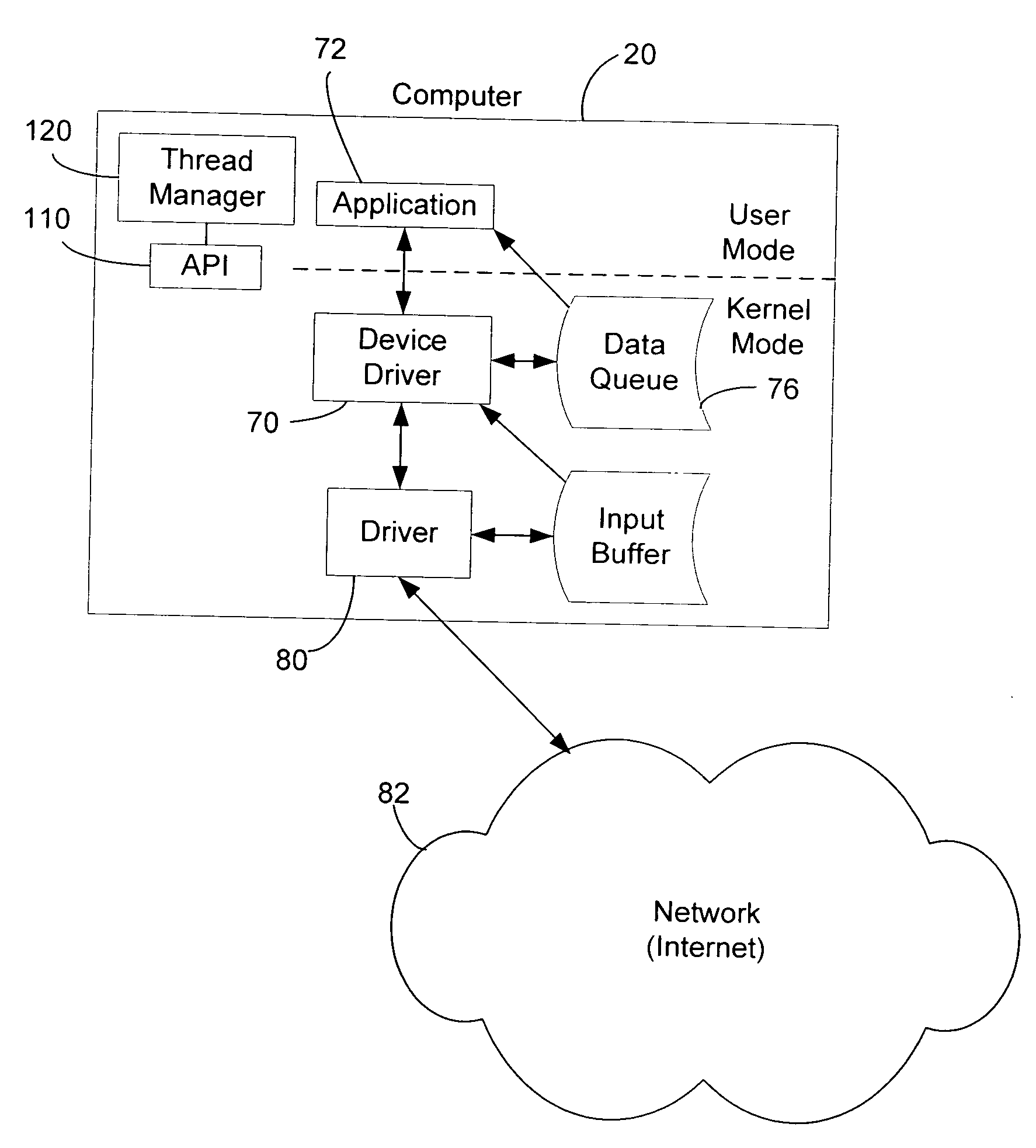

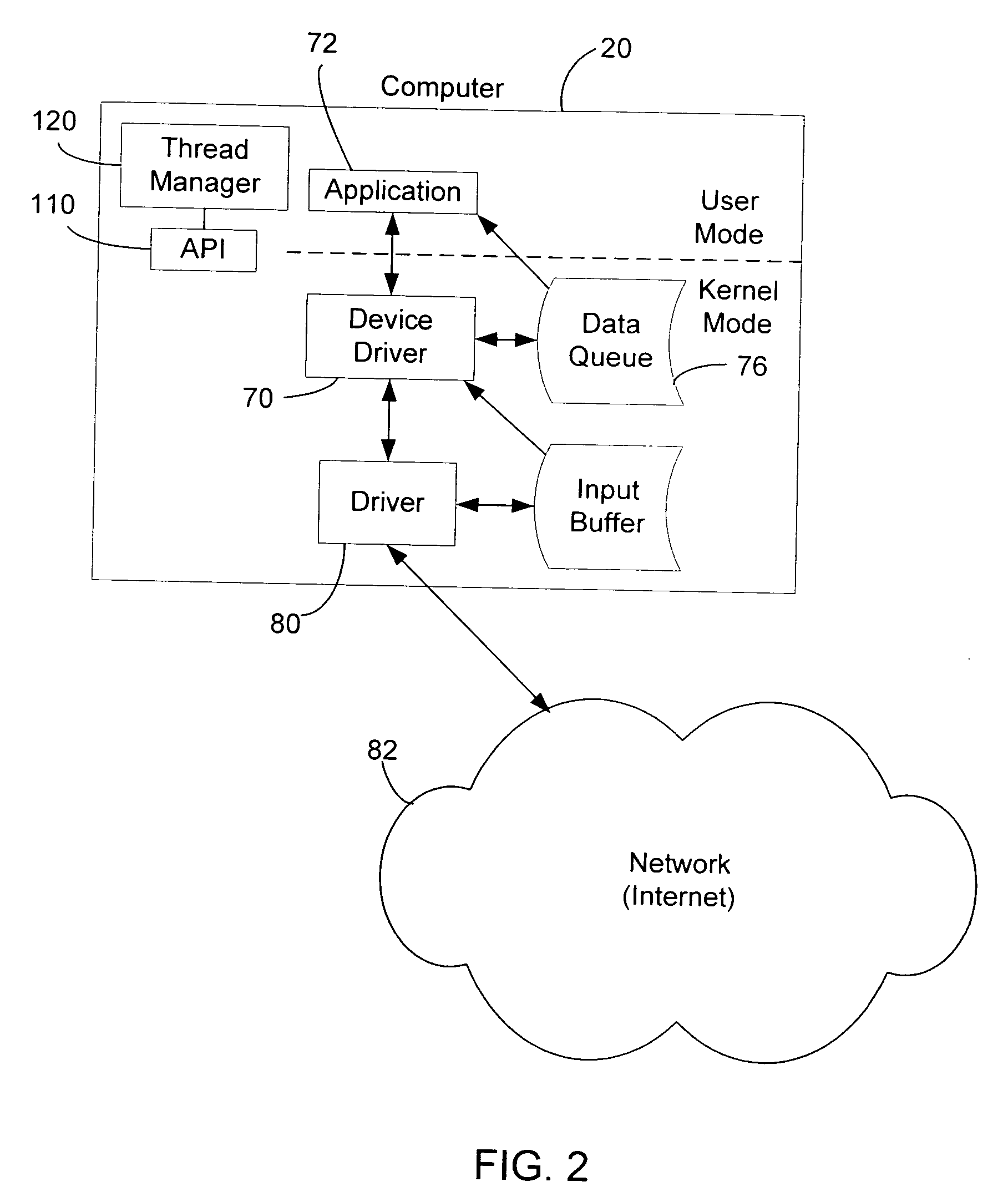

System and method for increasing data throughput using thread scheduling

InactiveUS20050050552A1Easy to useRaise the possibilityProgram initiation/switchingNext instruction address formationThread schedulingApplication software

A system and method for delivering data from a device driver to an application increases the usage of synchronous processing (fast I / O mode) of data requests from the application by utilizing thread scheduling to enhance the likelihood that the requested data are available for immediate delivery to the application. If the amount of data in a data queue for storing data ready for delivery is low, the thread scheduling of the system is modified to promote the thread of the device driver to give it the opportunity to place more data in the data queue for consumption by the application. The promotion of the thread of the device driver may be done by switching from the application thread to another thread (not necessarily the device driver thread), boosting the device driver's priority, and / or lowering the priority of the application, etc.

Owner:MICROSOFT TECH LICENSING LLC

Synchronisation

ActiveUS20090013323A1Reduce sync delayIncrease synchronisation code densityMultiprogramming arrangementsMemory systemsParallel computingExecution unit

The invention provides a processor comprising an execution unit arranged to execute multiple program threads, each thread comprising a sequence of instructions, and a plurality of synchronisers for synchronising threads. Each synchroniser is operable, in response to execution by the execution unit of one or more synchroniser association instructions, to associate with a group of at least two threads. Each synchroniser is also operable, when thus associated, to synchronise the threads of the group by pausing execution of a thread in the group pending a synchronisation point in another thread of that group.

Owner:XMOS

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com