Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

74 results about "Superscalar" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A superscalar processor is a CPU that implements a form of parallelism called instruction-level parallelism within a single processor. In contrast to a scalar processor that can execute at most one single instruction per clock cycle, a superscalar processor can execute more than one instruction during a clock cycle by simultaneously dispatching multiple instructions to different execution units on the processor. It therefore allows for more throughput (the number of instructions that can be executed in a unit of time) than would otherwise be possible at a given clock rate. Each execution unit is not a separate processor (or a core if the processor is a multi-core processor), but an execution resource within a single CPU such as an arithmetic logic unit.

Cycle segmented prefix circuits

InactiveUS6609189B1Improve performanceAvoid performanceComputation using non-contact making devicesGeneral purpose stored program computerExtensibilityScalar processor

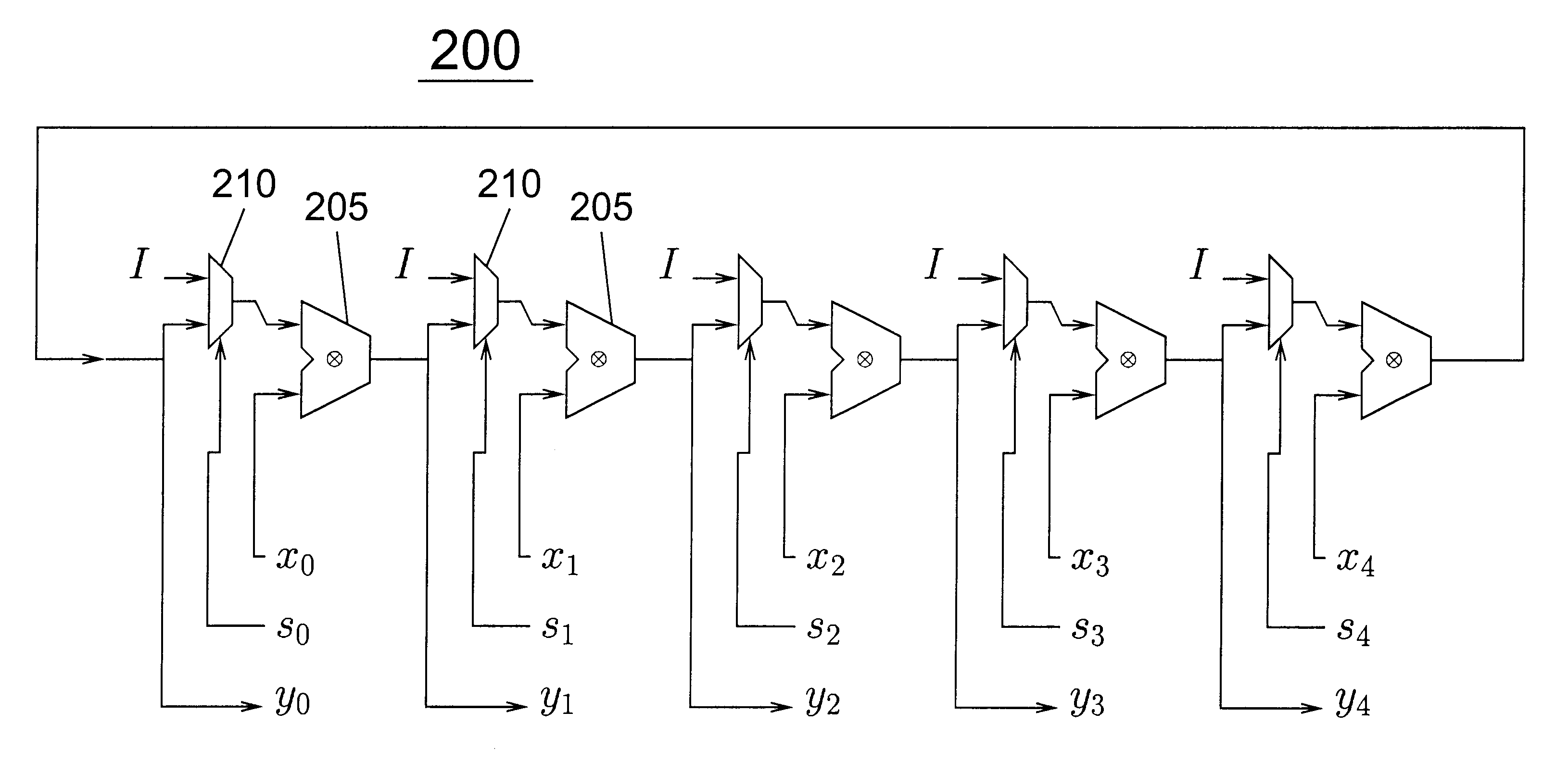

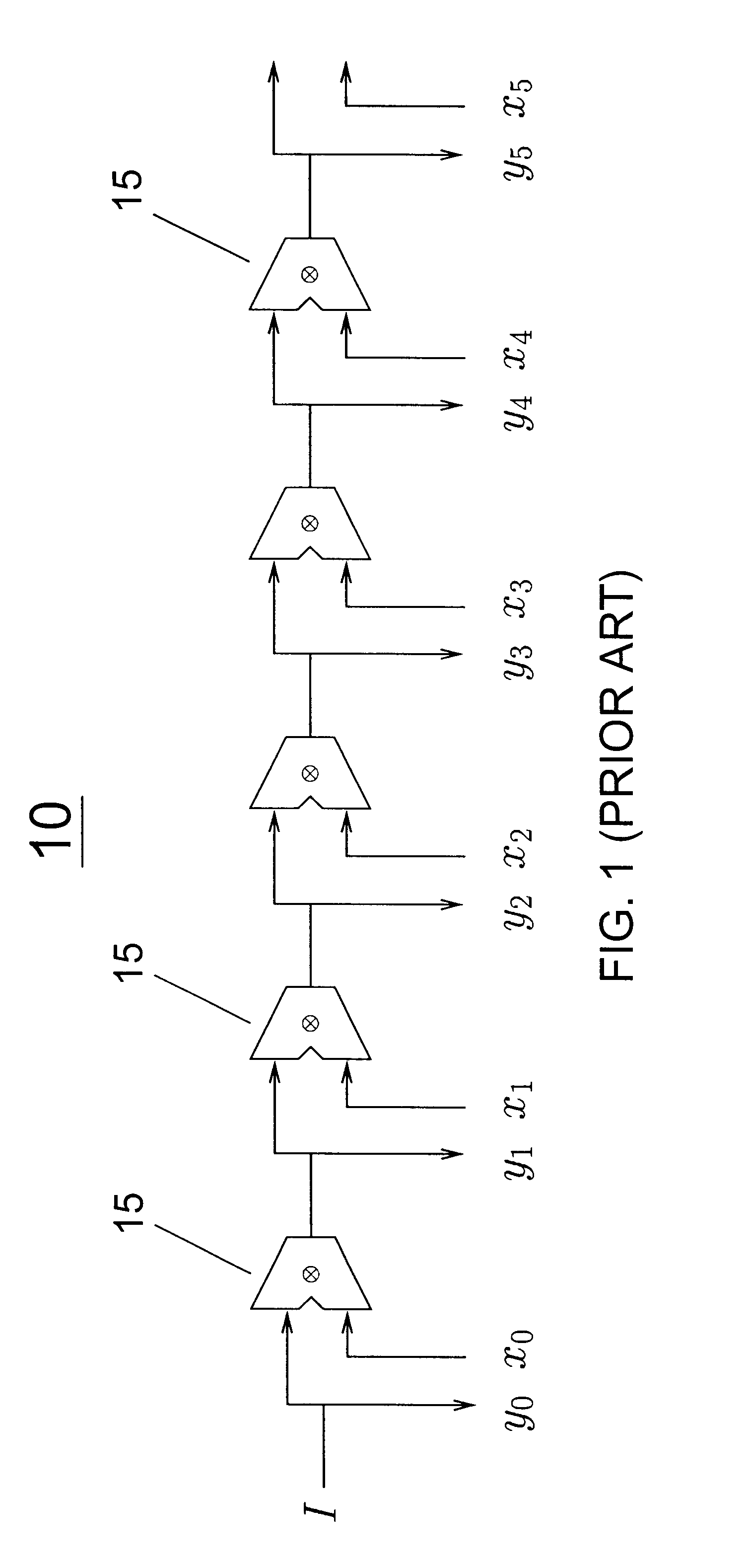

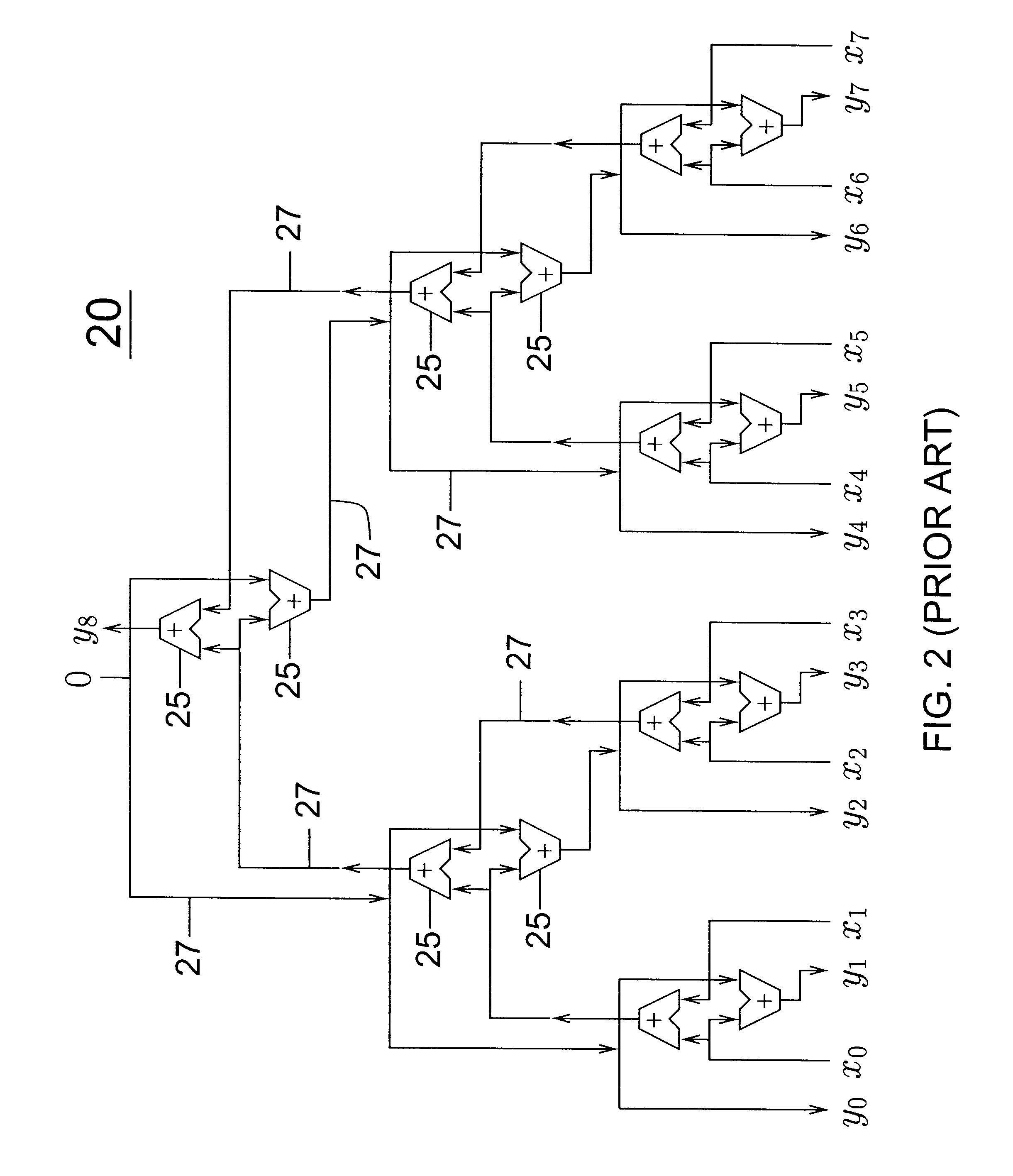

The poor scalability of existing superscalar processors has been of great concern to the computer engineering community. In particular, the critical-path delays of many components in existing implementations grow quadratically with the issue width and the window size. This patent presents a novel way to reimplement these components and reduce their critical-path delay growth. It then describes an entire processor microarchitecture, called the Ultrascalar processor, that has better critical-path delay growth than existing superscalars. Most of our scalable designs are based on a single circuit, a cyclic segmented parallel prefix (cspp). We observe that processor components typically operate on a wrap-around sequence of instructions, computing some associative property of that sequence. For example, to assign an ALU to the oldest requesting instruction, each instruction in the instruction sequence must be told whether any preceding instructions are requesting an ALU. Similarly, to read an argument register, an instruction must somehow communicate with the most recent preceding instruction that wrote that register. A cspp circuit can implement such functions by computing for each instruction within a wrap-around instruction sequence the accumulative result of applying some associative operator to all the preceding instructions. A cspp circuit has a critical path gate delay logarithmic in the length of the instruction sequence. Depending on its associative operation and its layout, a cspp circuit can have a critical path wire delay sublinear in the length of the instruction sequence.

Owner:YALE UNIV

Fast just-in-time (JIT) scheduler

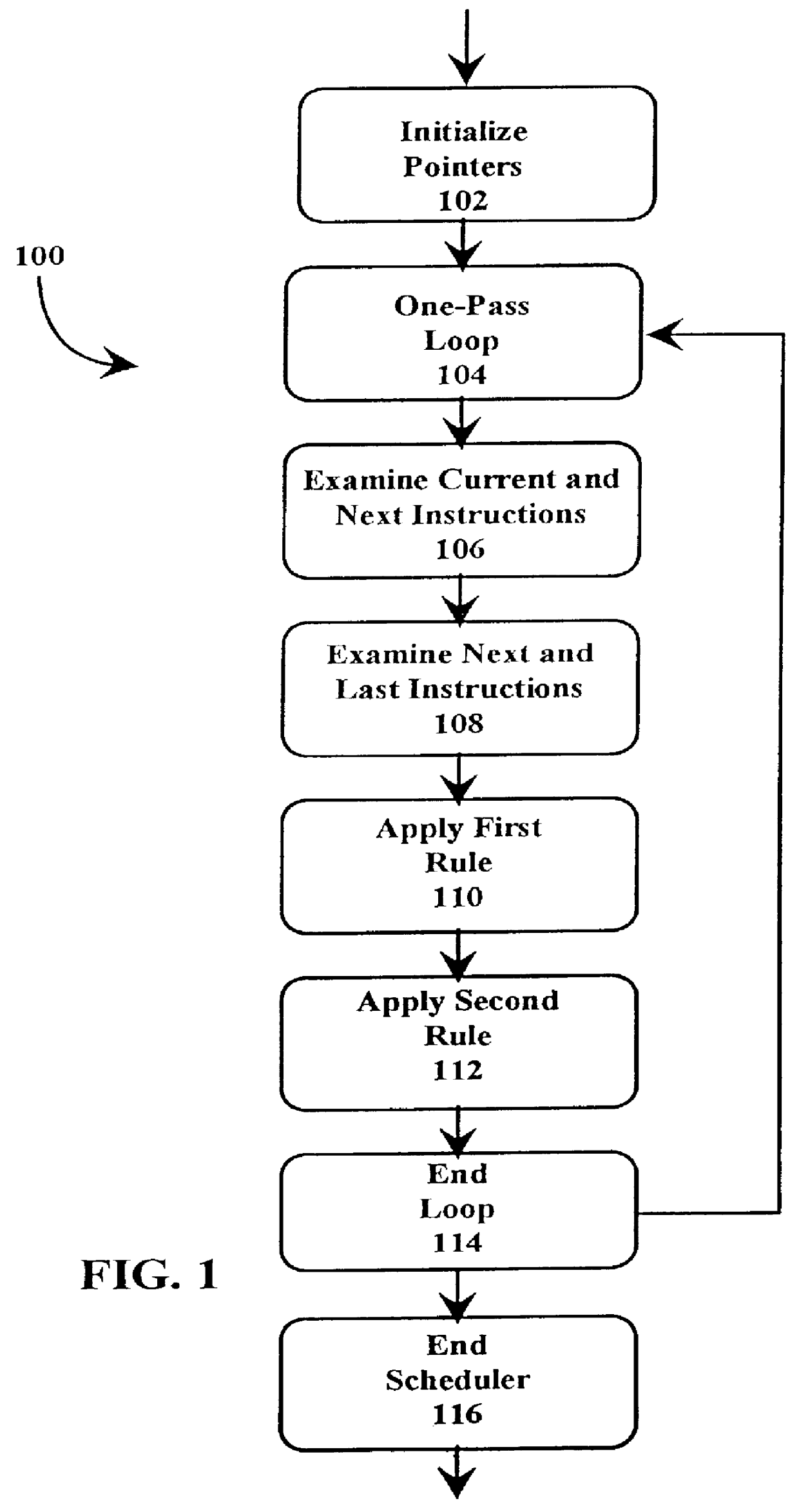

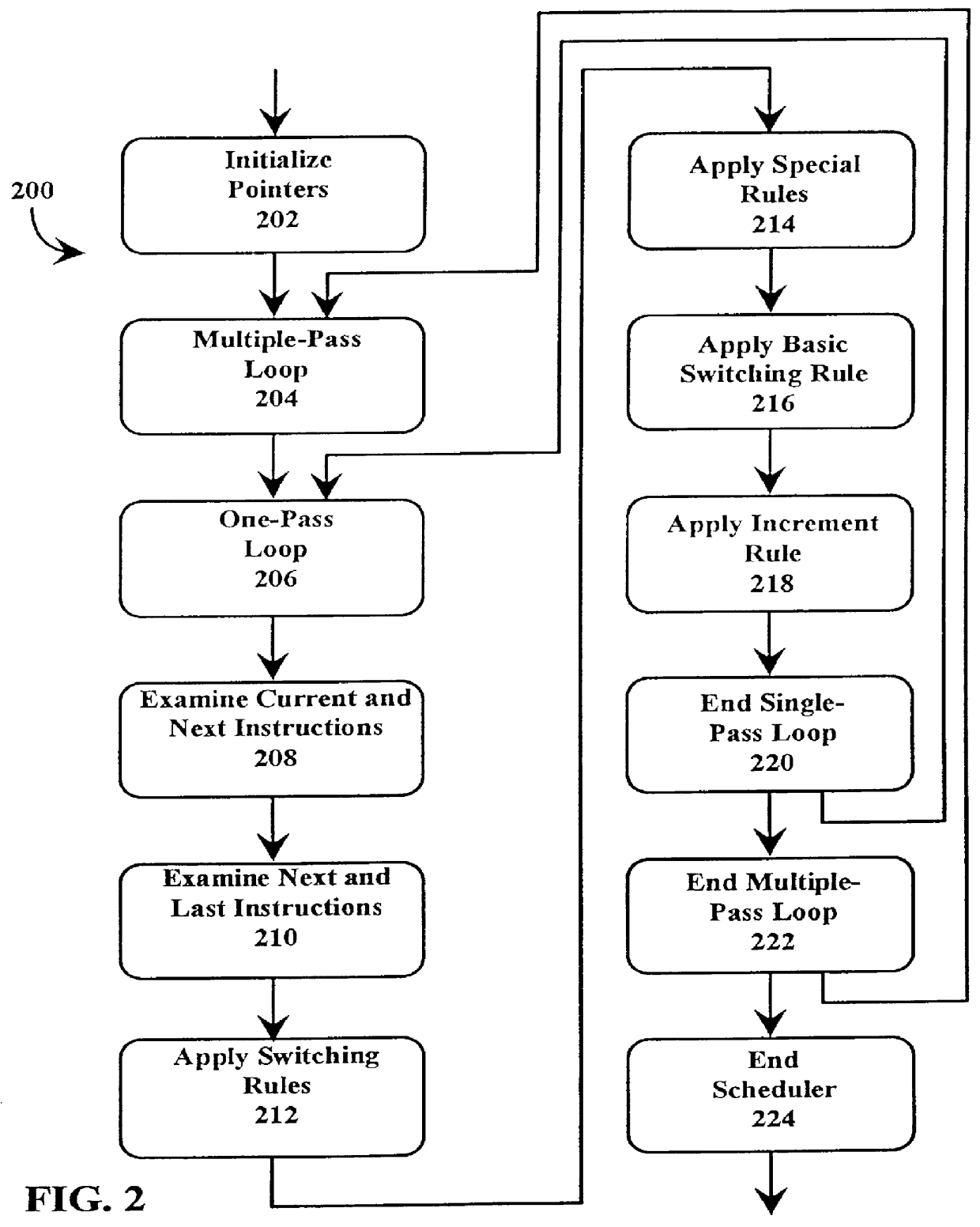

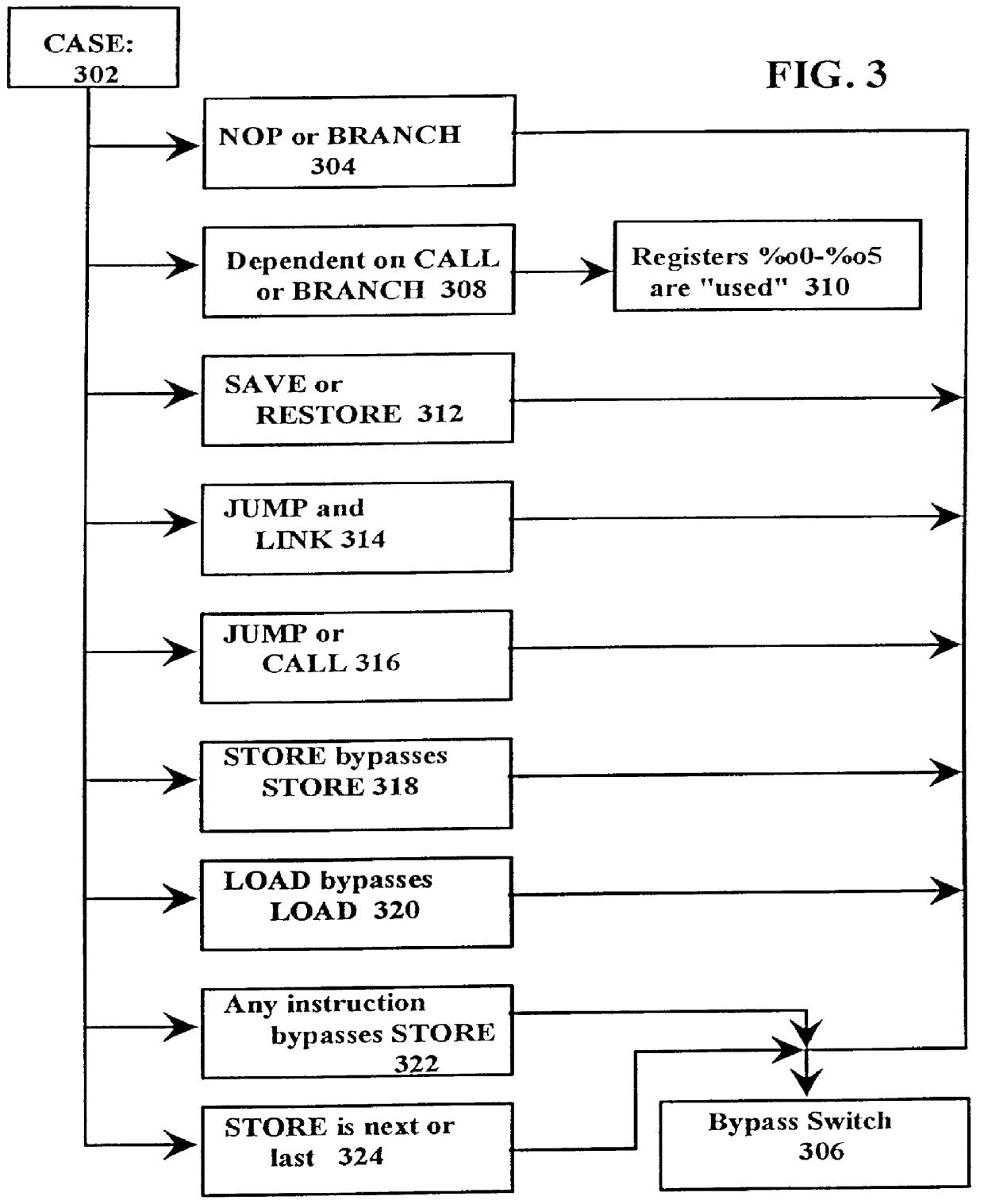

A just-in-time (JIT) compiler typically generates code from bytecodes that have a sequence of assembly instructions forming a "template". It has been discovered that a just-in-time (JIT) compiler generates a small number, approximately 2.3, assembly instructions per bytecode. It has also been discovered that, within a template, the assembly instructions are almost always dependent on the next assembly instruction. The absence of a dependence between instructions of different templates is exploited to increase the size of issue groups using scheduling. A fast method for scheduling program instructions is useful in just-in-time (JIT) compilers. Scheduling of instructions is generally useful for just-in-time (JIT) compilers that are targeted to in-order superscalar processors because the code generated by the JIT compilers is often sequential in nature. The disclosed fast scheduling method has a complexity, and therefore an execution time, that is proportional to the number of instructions in an instruction block (N complexity), a substantial improvement in comparison to the N2 complexity of conventional compiler schedulers. The described fast scheduler advantageously reorders instructions with a single pass, or few passes, through a basic instruction block while a conventional compiler scheduler such as the DAG scheduler must iterate over an instruction basic block many times. A fast scheduler operates using an analysis of a sliding window of three instructions, applying two rules within the three instruction window to determine when to reorder instructions. The analysis includes acquiring the opcodes and operands of each instruction in the three instruction window, and determining register usage and definition of the operands of each instruction with respect to the other instructions within the window. The rules are applied to determine ordering of the instructions within the window.

Owner:ORACLE INT CORP

Processor and method including a cache having confirmation bits for improving address predictable branch instruction target predictions

InactiveUS6457120B1Memory adressing/allocation/relocationDigital computer detailsScalar processorSuperscalar

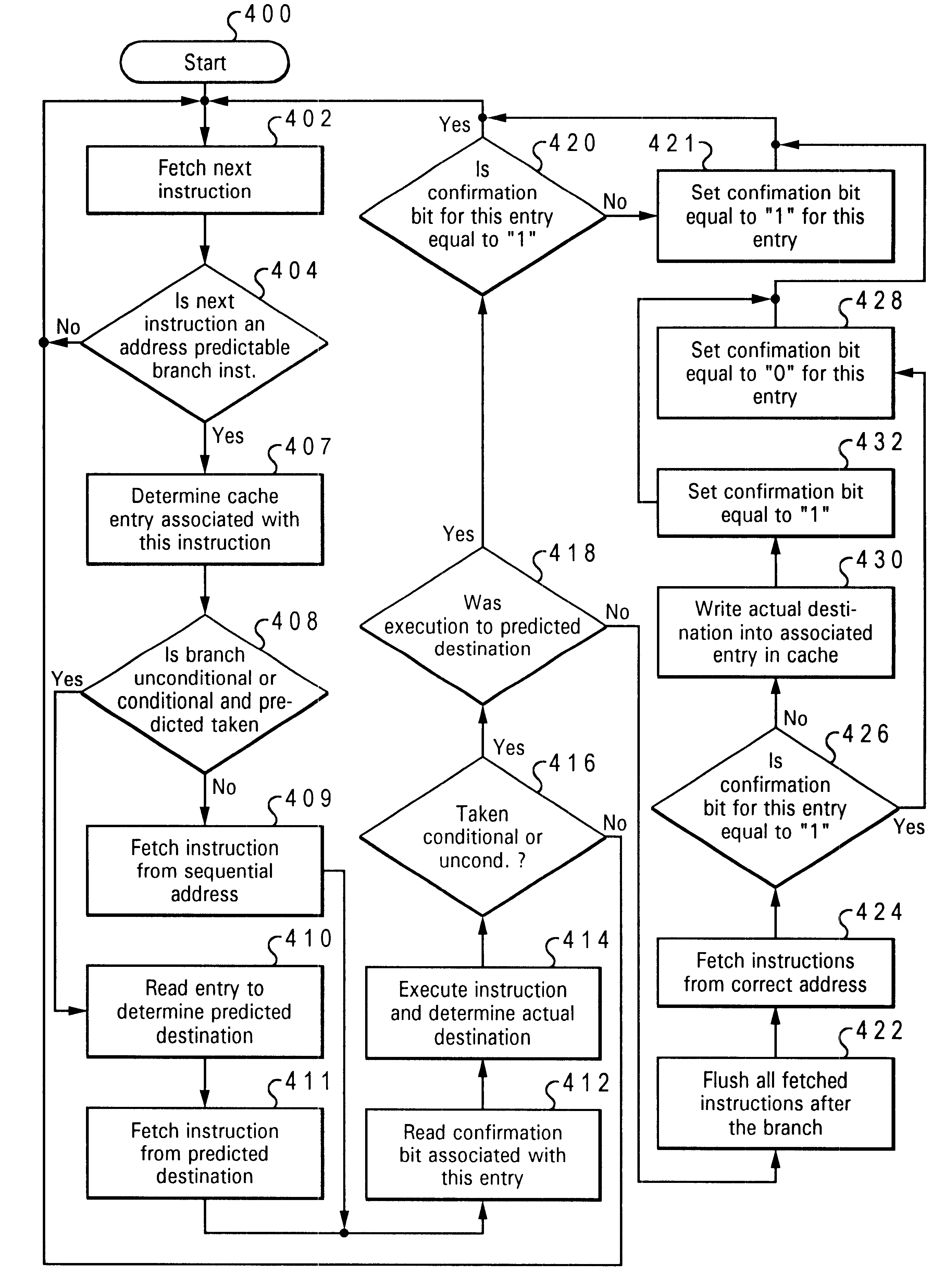

A superscalar processor and method are disclosed for improving the accuracy of predictions of a destination of a branch instruction utilizing a cache. The cache is established including multiple entries. Each of multiple branch instructions are associated with one of the entries of the cache. One of the entries of the cache includes a stored predicted destination for the branch instruction associated with this entry of the cache. The predicted destination is a destination the branch instruction is of predicted to branch to upon execution of the branch instruction. The stored predicted destination is updated in the one of the entries of the cache only in response to two consecutive mispredictions of the destination of the branch instruction, wherein the two consecutive mispredictions were made utilizing the one of the entries of the cache.

Owner:IBM CORP

Processor with demand-driven clock throttling power reduction

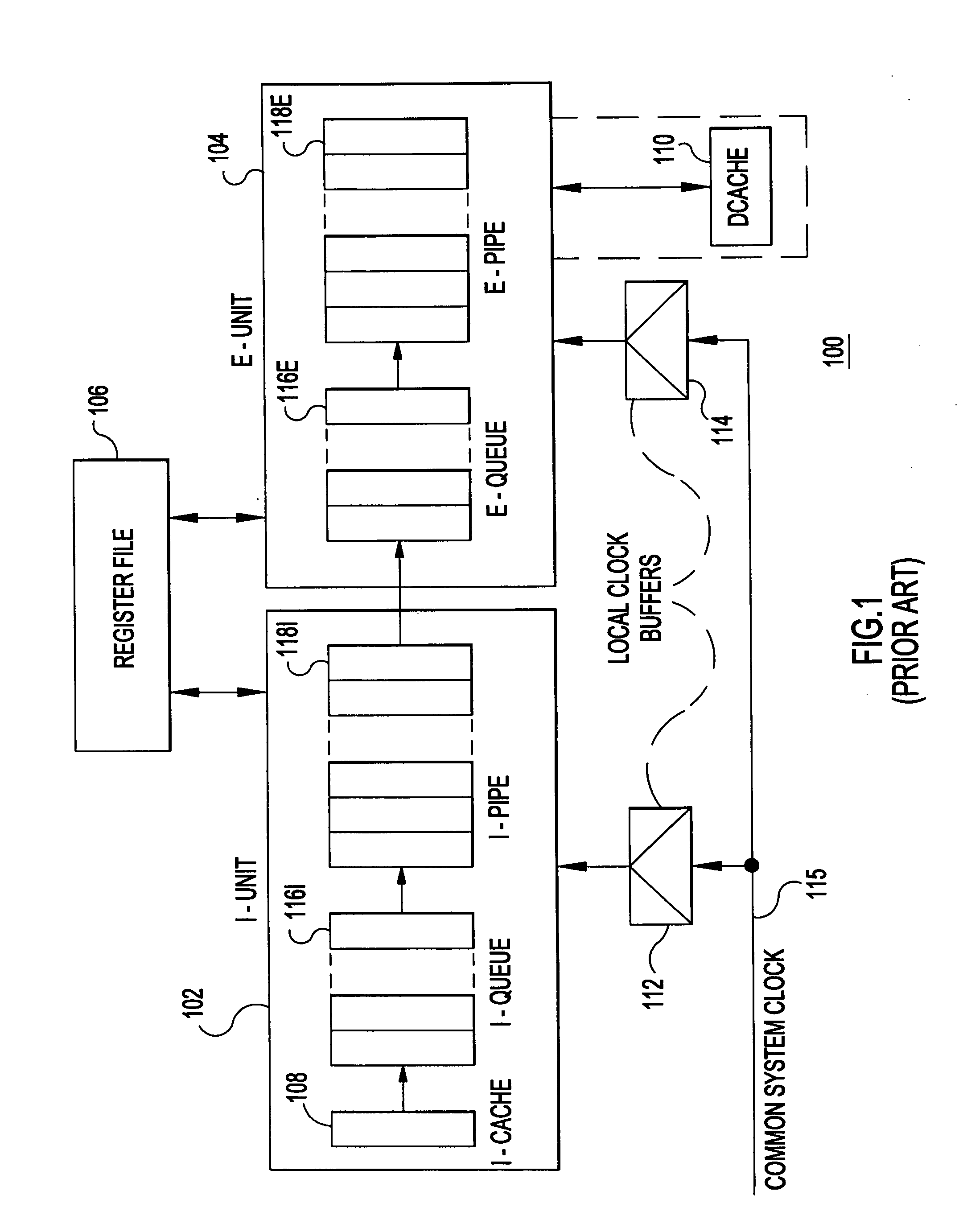

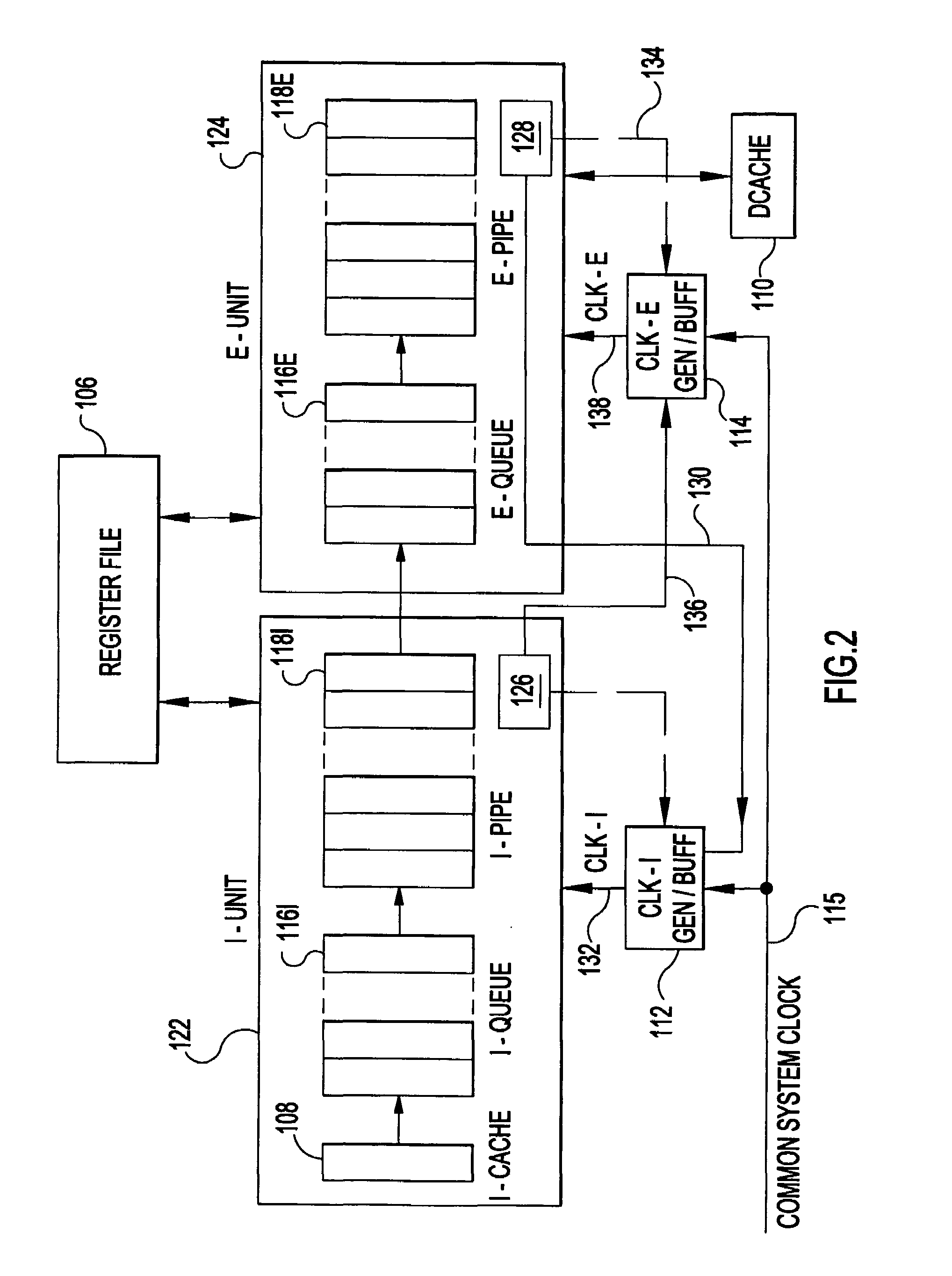

InactiveUS20040044915A1Energy efficient ICTVolume/mass flow measurementScalar processorProcessor register

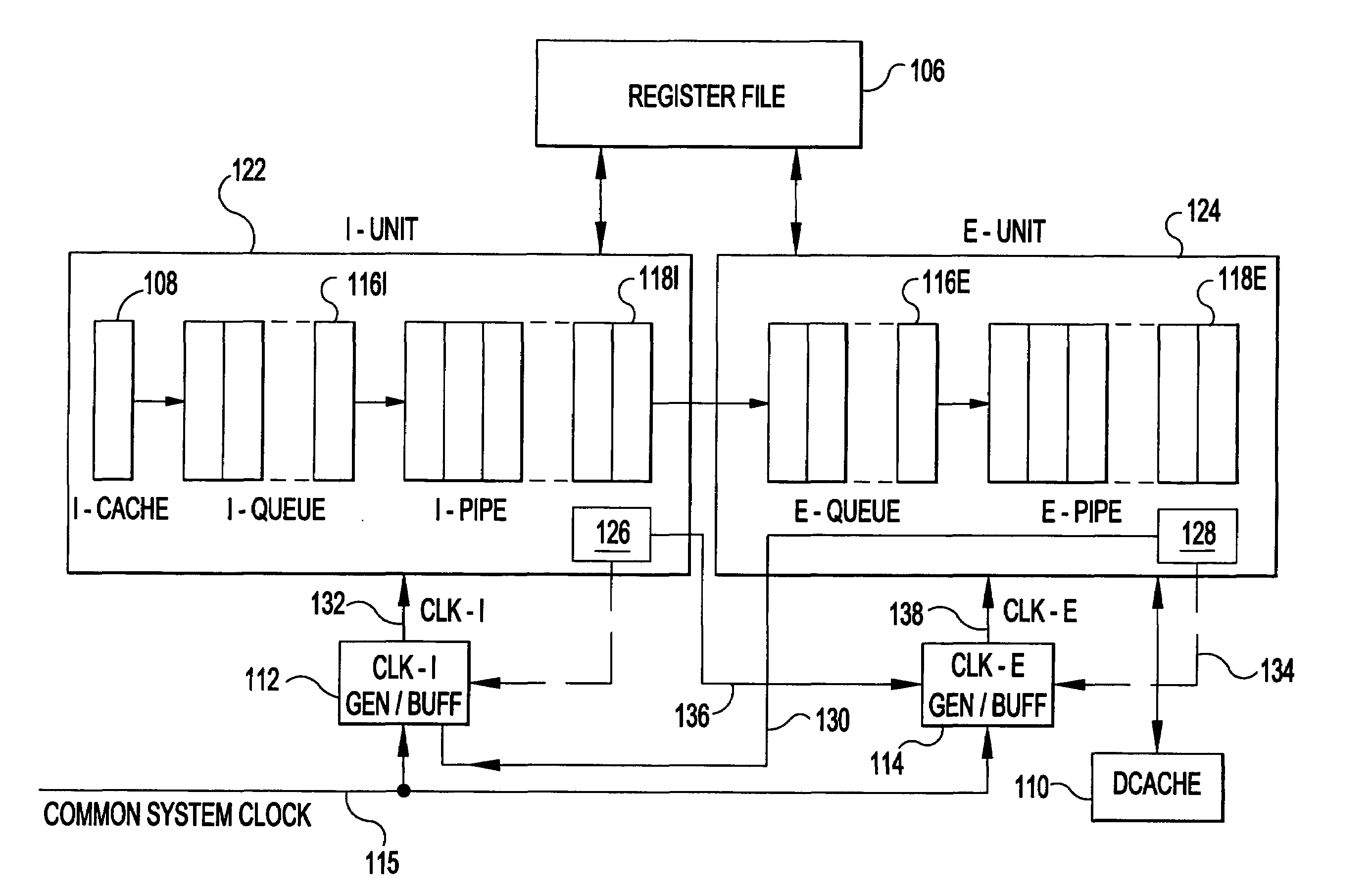

A synchronous integrated circuit such as a scalar processor or superscalar processor. Circuit components or units are clocked by and synchronized to a common system clock. At least two of the clocked units include multiple register stages, e.g., pipeline stages. A local clock generator in each clocked unit combines the common system clock and stall status from one or more other units to adjust register clock frequency up or down.

Owner:IBM CORP

Processor pipeline including partial replay

InactiveUS6076153AGeneral purpose stored program computerConcurrent instruction executionSpeculative executionScalar processor

The invention, in one embodiment, is a method for committing the results of at least two speculatively executed instructions to an architectural state in a superscalar processor. The method includes determining which of the speculatively executed instructions encountered a problem in execution, and replaying the instruction that encountered the problem in execution while retaining the results of executing the instruction that did not encounter the problem.

Owner:INTEL CORP

Instruction vector-mode processing in multi-lane processor by multiplex switch replicating instruction in one lane to select others along with updated operand address

ActiveUS7493475B2Register arrangementsGeneral purpose stored program computerMultiplexingScalar processor

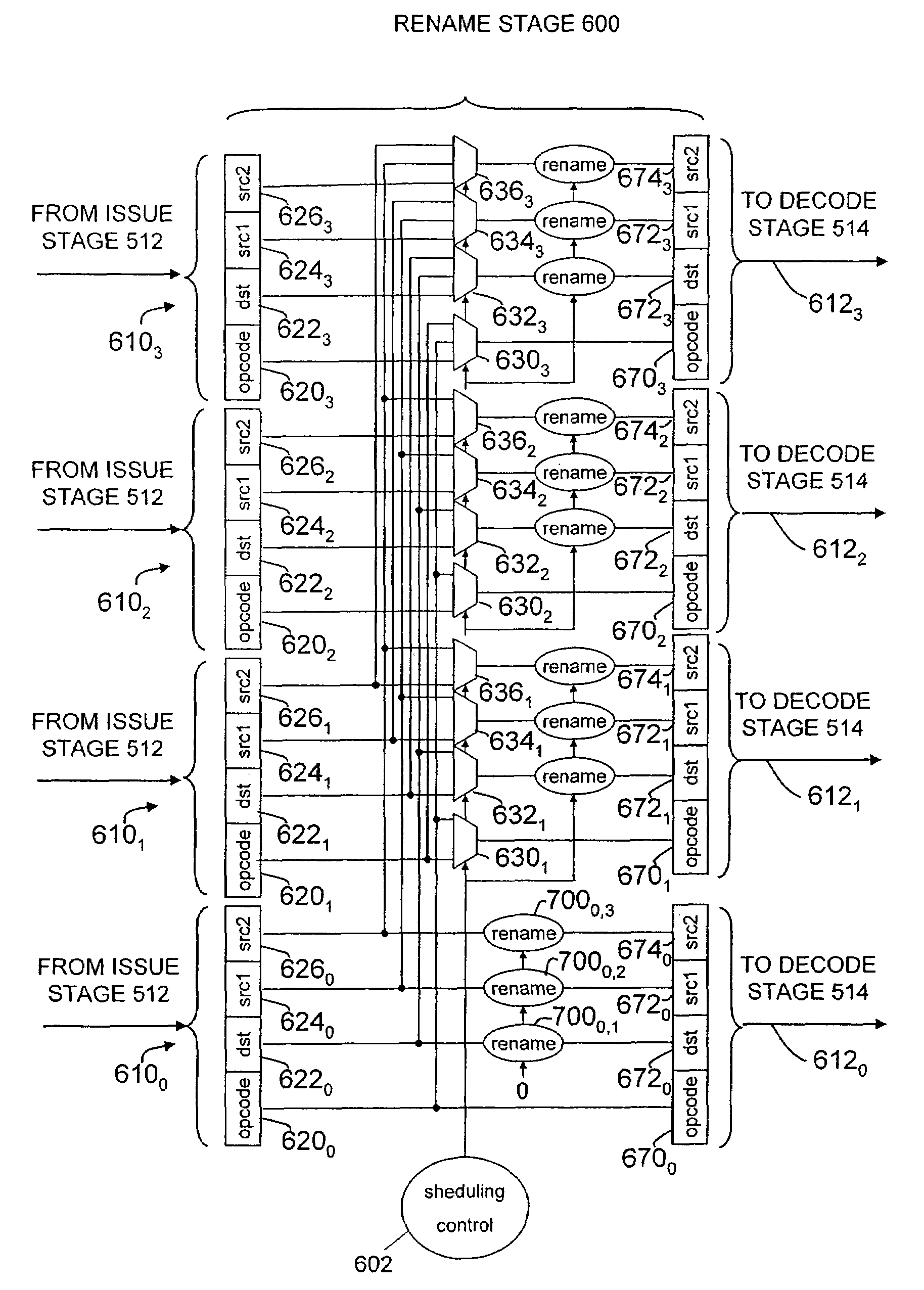

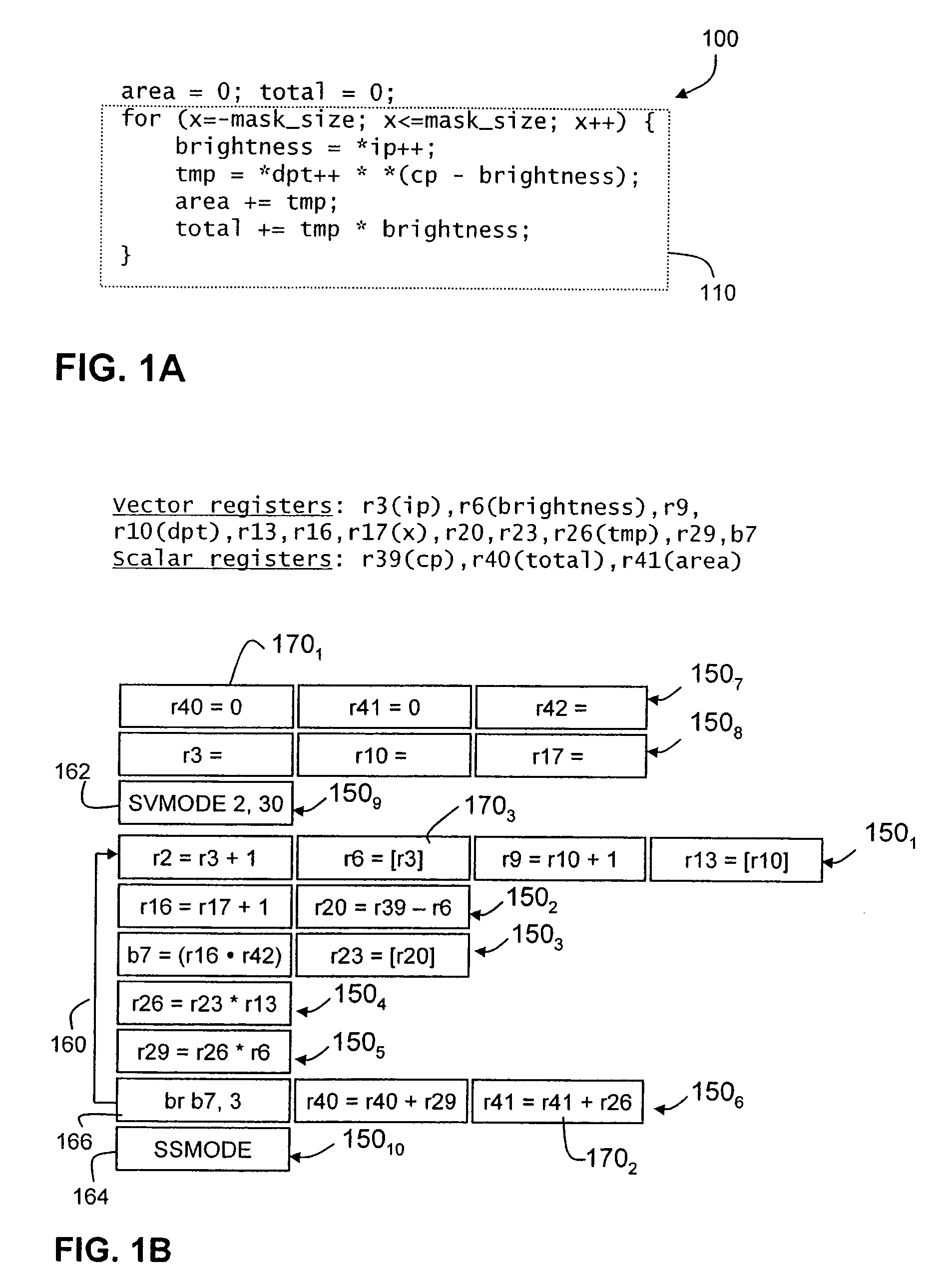

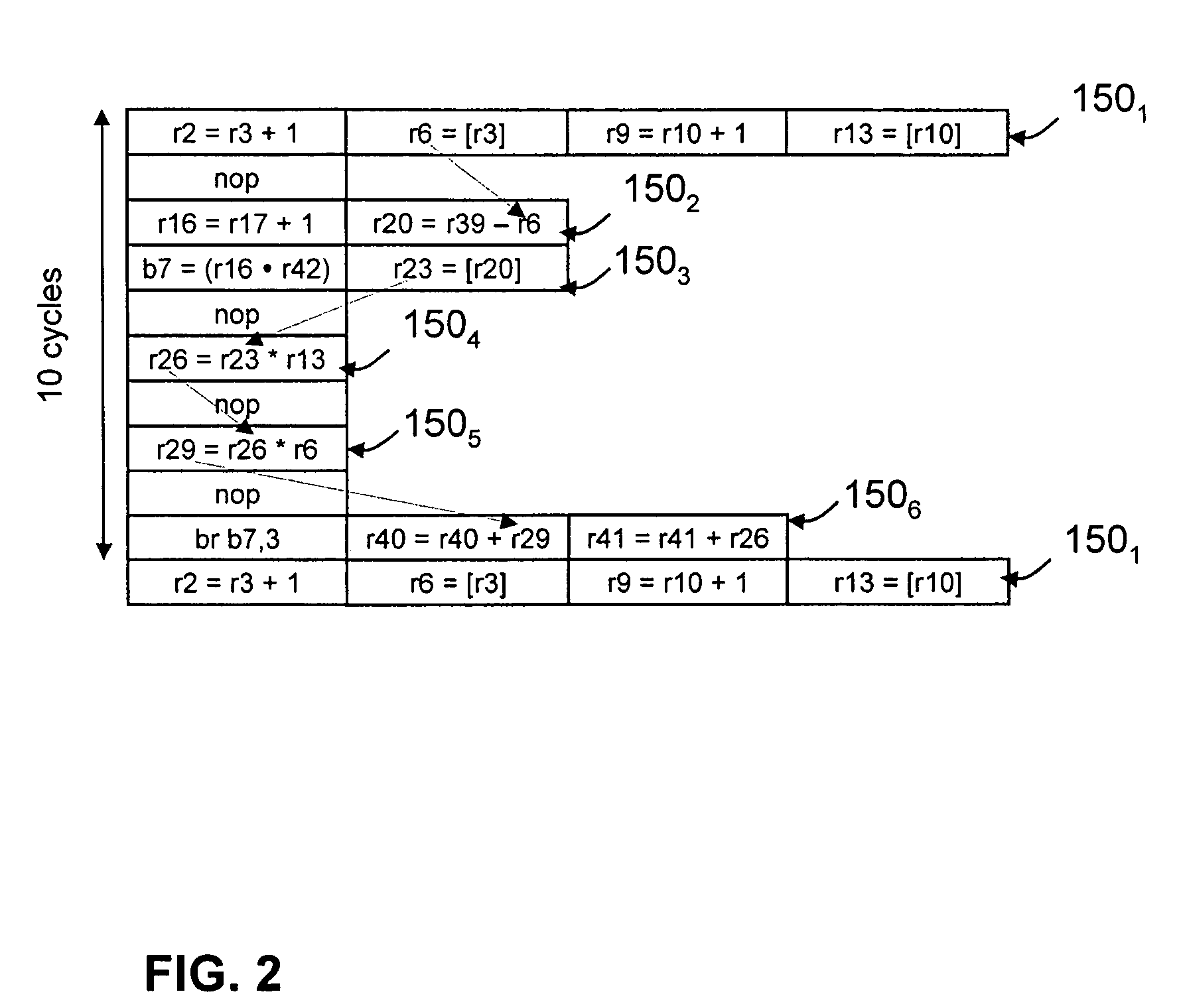

An improved superscalar processor. The processor includes multiple lanes, allowing multiple instructions in a bundle to be executed in parallel. In vector mode, the parallel lanes may be used to execute multiple instances of a bundle, representing multiple iterations of the bundle in a vector run. Scheduling logic determines whether, for each bundle, multiple instances can be executed in parallel. If multiple instances can be executed in parallel, coupling circuitry couples an instance of the bundle from one lane into one or more other lanes. In each lane, register addresses are renamed to ensure proper execution of the bundles in the vector run. Additionally, the processor may include a register bank separate from the architectural register file. Renaming logic can generate addresses to this separate register bank that are longer than used to address architectural registers, allowing longer vectors and more efficient processor operation.

Owner:STMICROELECTRONICS SRL

Processor with demand-driven clock throttling power reduction

InactiveUS7076681B2Reduce consumptionNo performance lossEnergy efficient ICTVolume/mass flow measurementScalar processorProcessor register

A synchronous integrated circuit such as a scalar processor or superscalar processor. Circuit components or units are clocked by and synchronized to a common system clock. At least two of the clocked units include multiple register stages, e.g., pipeline stages. A local clock generator in each clocked unit combines the common system clock and stall status from one or more other units to adjust register clock frequency up or down.

Owner:INT BUSINESS MASCH CORP

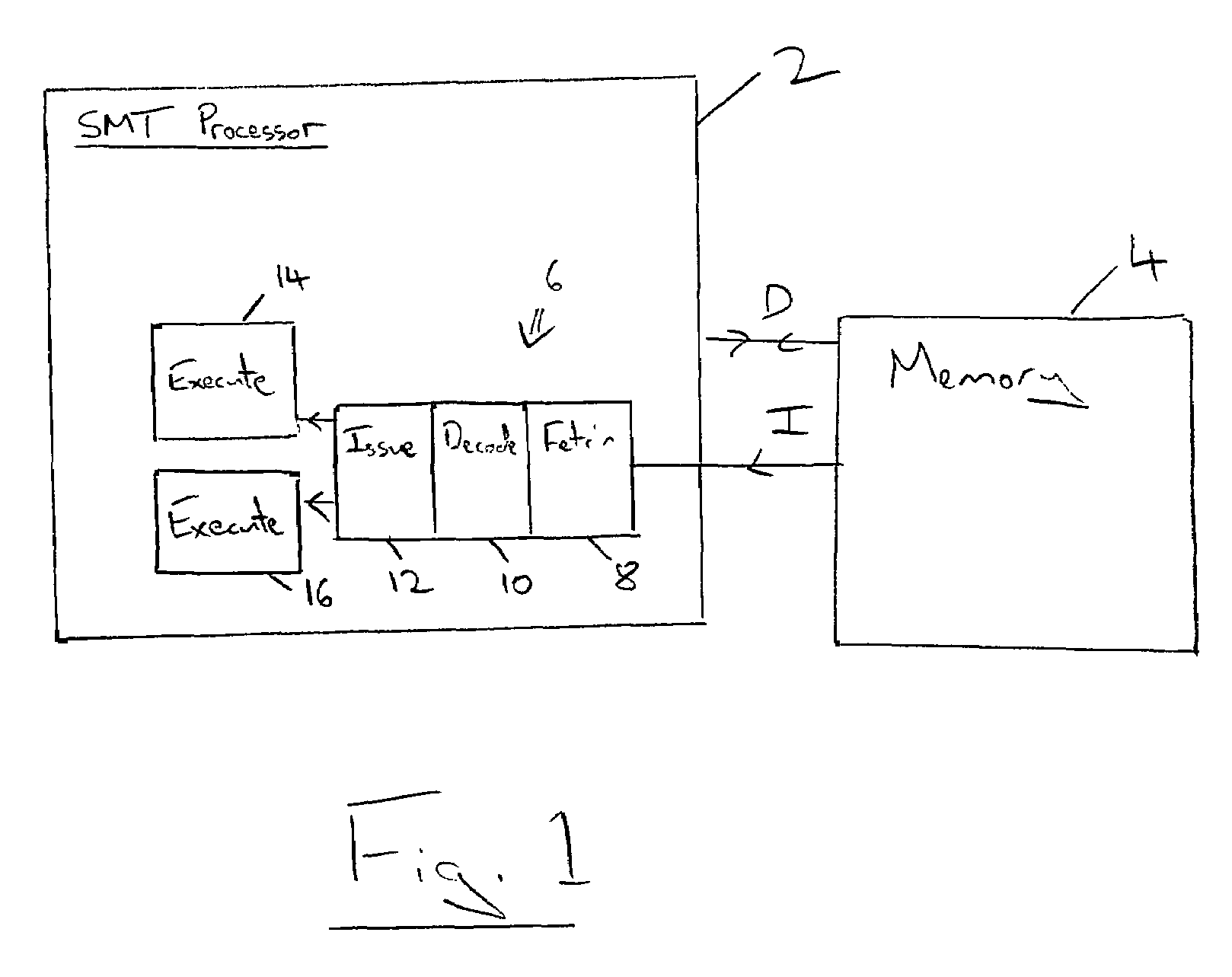

Instruction issue control within a multi-threaded in-order superscalar processor

ActiveUS20080270749A1Performance constrainedReduce hardware overheadGeneral purpose stored program computerMemory systemsScalar processorProgram Thread

A multi-threaded in-order superscalar processor 2 is described having a fetch stage 8 within which thread interleaving circuitry 36 interleaves instructions taken from different program threads to form an interleaved stream of instructions which is then decoded and subject to issue. Hint generation circuitry 62 within the fetch stage 8 adds hint data to the threads indicating that parallel issue of an associated instruction is permitted with one of more other instructions.

Owner:ARM LTD

Instruction issue control within a multi-threaded in-order superscalar processor

ActiveUS7707390B2Performance constrainedIncrease flexibilityGeneral purpose stored program computerMemory systemsScalar processorProgram Thread

A multi-threaded in-order superscalar processor 2 is described having a fetch stage 8 within which thread interleaving circuitry 36 interleaves instructions taken from different program threads to form an interleaved stream of instructions which is then decoded and subject to issue. Hint generation circuitry 62 within the fetch stage 8 adds hint data to the threads indicating that parallel issue of an associated instruction is permitted with one of more other instructions.

Owner:ARM LTD

Method for renaming state register and processor using the method

ActiveCN101169710AReduce pauseReduce congestionConcurrent instruction executionComputer scienceFLAGS register

The invention provides a method for renaming a status register in a superscalar processor with a pipeline structure, wherein the status register is a register composed of a plurality of flag bits selected from all flag bits of a flag register. The method comprises determining whether a microcode will read the status register when the microcode coded by a command reaches a register renaming module of the processor; if determining that the microcode will read the status register, allocating a nearest mapping physical register for the status register; otherwise, not allocating the physical register for the status register; determining whether the microcode will be written to the status register; if determining that the microcode will be written into the status register, allocating a new physical register with empty status to the status register; and otherwise, not allocating the physical register for the status register.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

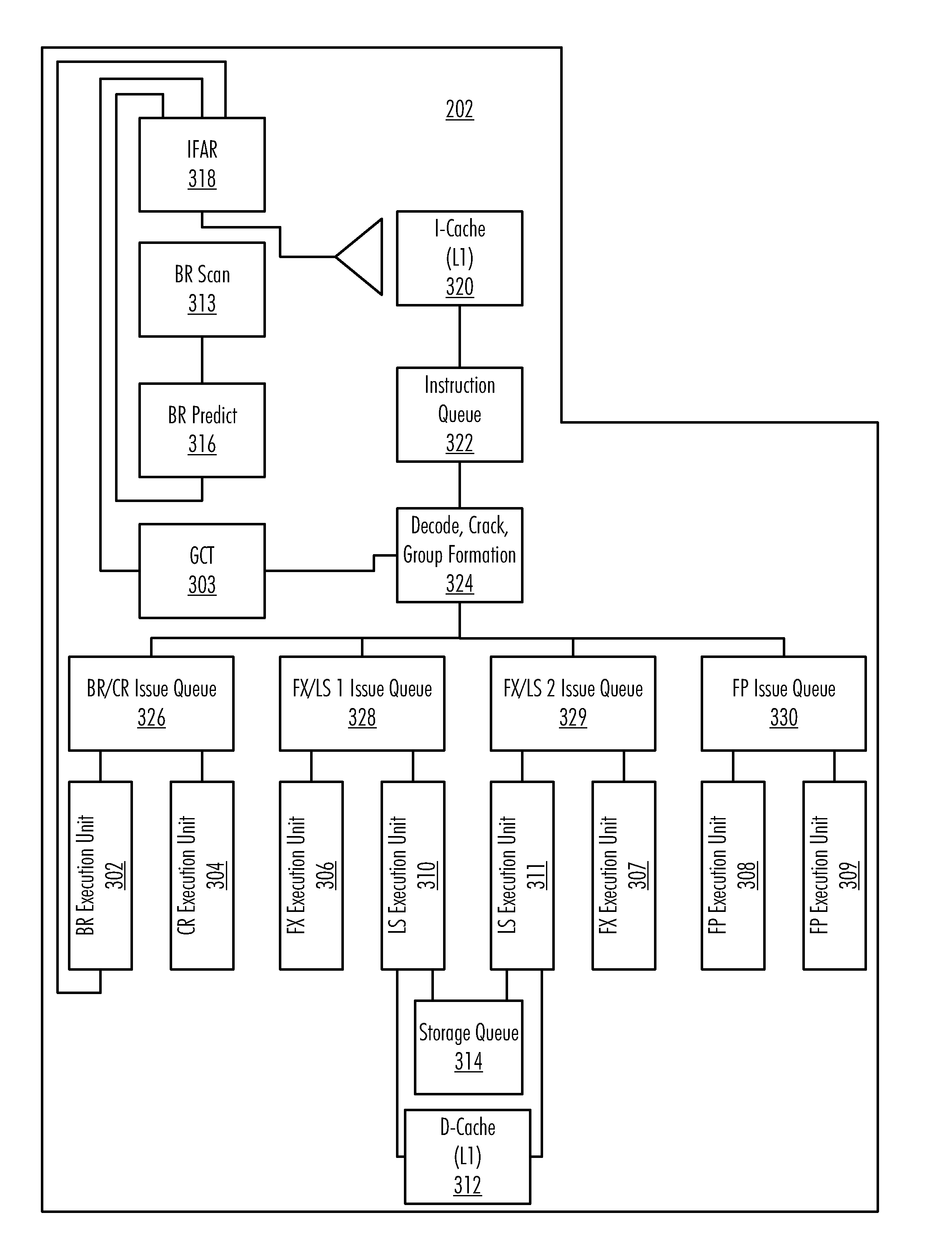

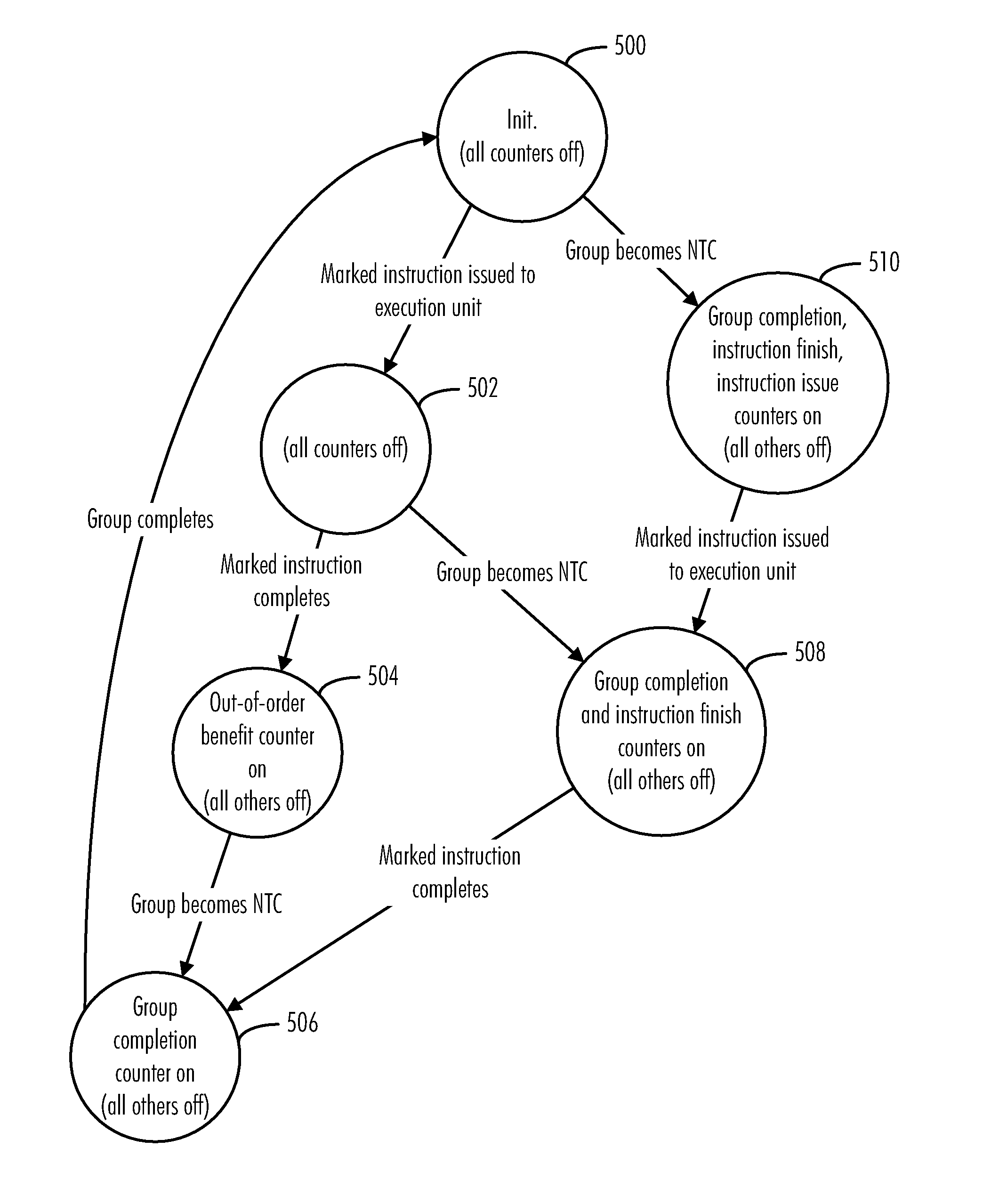

Quantifying Completion Stalls Using Instruction Sampling

InactiveUS20090259830A1Error detection/correctionDigital computer detailsData processing systemScalar processor

A method, computer program product, and data processing system for collecting metrics regarding completion stalls in an out-of-order superscalar processor with branch prediction is disclosed. A preferred embodiment of the present invention selectively samples particular instructions (or classes of instructions). Each selected instruction, as it passes through the processor datapath, is marked (tagged) for monitoring by a performance monitoring unit. The progress of marked instructions is monitored by the performance monitoring unit, and various stall counters are triggered by the progress of the marked instructions and the instruction groups they form a part of. The stall counters count cycles to give an indication of when certain delays associated with particular instructions occur and how serious the delays are.

Owner:IBM CORP

Processing prefix code in instruction queue storing fetched sets of plural instructions in superscalar processor

InactiveUS8402256B2Improve performanceIncrease powerInstruction analysisRuntime instruction translationInstruction unitScalar processor

The present invention is directed to realize efficient issue of a superscalar instruction in an instruction set including an instruction with a prefix. A circuit is employed which retrieves an instruction of each instruction code type other than a prefix on the basis of a determination result of decoders for determining an instruction code type, adds the immediately preceding instruction to the retrieved instruction, and outputs the resultant to instruction executing means. When an instruction of a target instruction code type is detected in a plurality of instruction units to be searched, the circuit outputs the detected instruction code and the immediately preceding instruction other than the target instruction code type as prefix code candidates. When an instruction of a target instruction code type cannot be detected at the rear end of the instruction units to be searched, the circuit outputs the instruction at the rear end as a prefix code candidate. When an instruction of a target instruction code type is detected at the head in the instruction code search, the circuit outputs the instruction code at the head.

Owner:RENESAS ELECTRONICS CORP

Superscaler processor and method for efficiently recovering from misaligned data addresses

InactiveUS6289428B1Memory adressing/allocation/relocationDigital computer detailsScalar processorData segment

A superscalar processor and method are disclosed for efficiently recovering from misaligned data addresses. The processor includes a memory device partitioned into a plurality of addressable memory units. Each of the plurality of addressable memory units has a width of a first plurality of bytes. A determination is made regarding whether a data address included within a memory access instruction is misaligned. The data address is misaligned if it includes a first data segment located in a first addressable memory unit and a second data segment located in a second addressable memory unit where the first and second data segments are separated by an addressable memory unit boundary. In response to a determination that the data address is misaligned, a first internal instruction is executed which accesses the first memory unit and obtains the first data segment. A second internal instruction is executed which accesses the second memory unit and obtains the second data segment. The first and second data segments are merged together. All of the instructions executed by the processor are constrained by the memory boundary and do not access memory across the memory boundary.

Owner:IBM CORP

Issue policy control within a multi-threaded in-order superscalar processor

ActiveUS20080282067A1Improve performanceDigital computer detailsMemory systemsScalar processorExecution unit

A multi-threaded in-order superscalar processor 2 includes an issue stage 12 including issue circuitry 22, 24 for selecting instructions to be issued to execution units 14, 16 in dependence upon a currently selected issue policy. A plurality of different issue policies are provided by associated different policy circuitry 28, 30, 32 and a selection between which of these instances of the policy circuitry 28, 30, 32 is active is made by policy selecting circuitry 34 in dependence upon detected dynamic behaviour of the processor 2.

Owner:ARM LTD

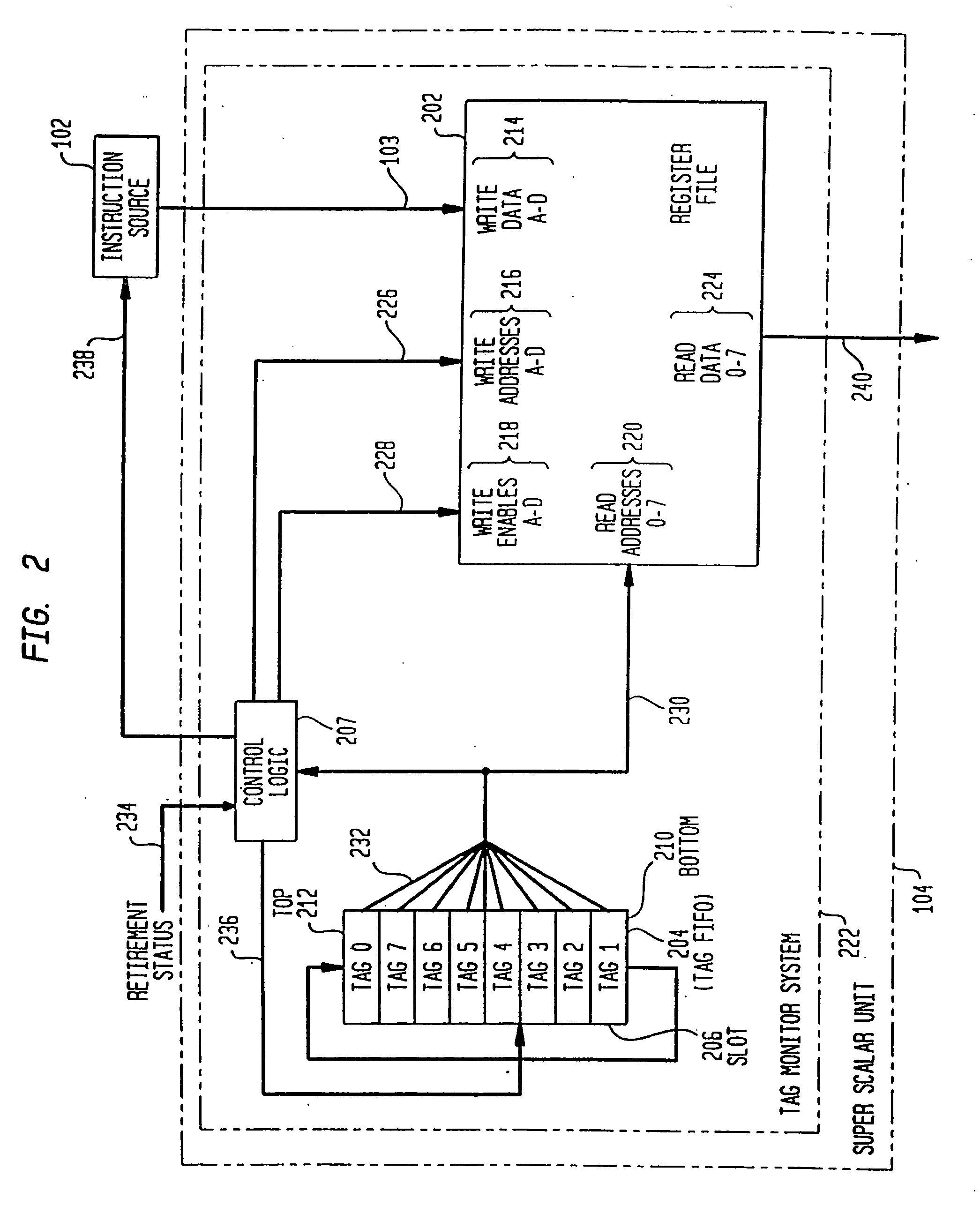

System and method for assigning tags to control instruction processing in a superscalar processor

InactiveUS20060123218A1Simple designImprove operational flexibilityGeneral purpose stored program computerConcurrent instruction executionMonitoring systemProcedure sequence

A tag monitoring system for assigning tags to instructions. A source supplies instructions to be executed by a functional unit. A register file stores information required for the execution of each instruction. A queue having a plurality of slots containing tags which are used for tagging the instructions. The tags are arranged in the queue in an order specified by the program order of their corresponding instructions. A control unit monitors the completion of executed instructions and advances the tags in the queue upon completion of an executed instruction. The register file stores an instruction's information at a location in the register file defined by the tag assigned to that instruction. The register file also contains a plurality of read address enable ports and corresponding read output ports. Each of the slots from the queue is coupled to a corresponding one of the read address enable ports. Thus, the information for each instruction can be read out of the register file in program order.

Owner:SAMSUNG ELECTRONICS CO LTD

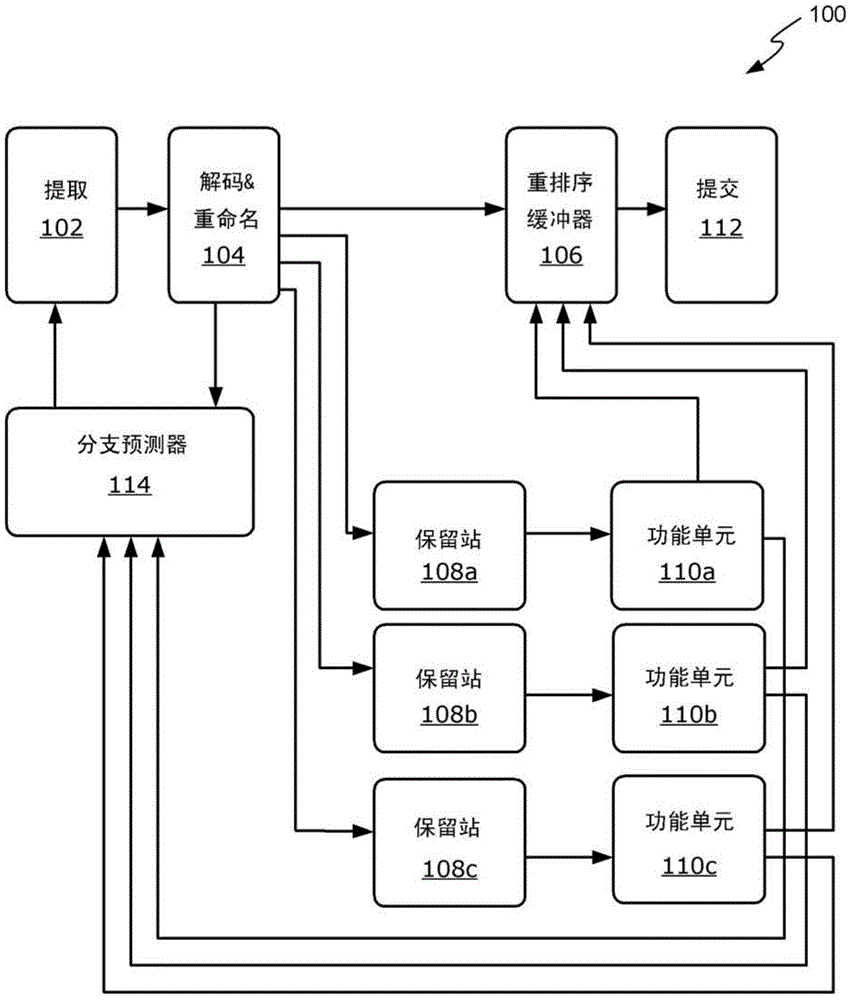

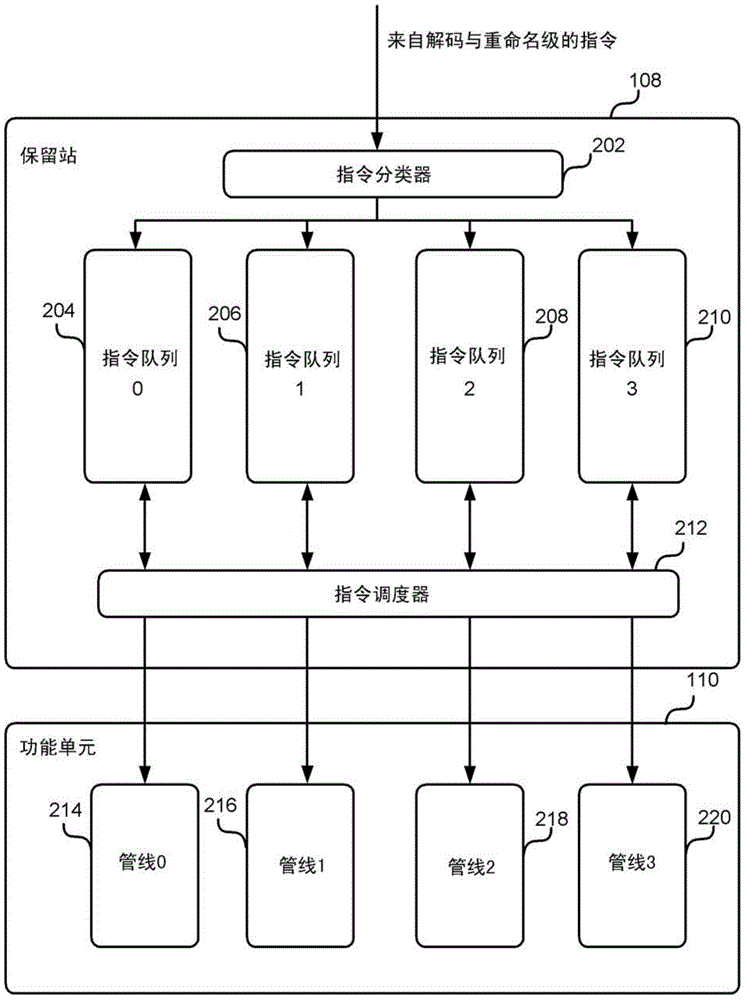

Prioritising instructions according to category of instruction

ActiveCN104346223AProgram initiation/switchingInstruction analysisReservation stationMultiple category

A method of selecting instructions to issue to a functional unit of an out-of-order superscalar processor single-threaded or multi-threaded. A reservation station classifies each instruction into one of a number of categories based on the type of instruction. Once classified, an instruction is stored in one of several instruction queues corresponding to the category in which it was classified. Instructions are then selected from one or more of the instruction queues (up to a maximum number of instructions for each particular queue) to issue to the functional unit based on a relative priority of the plurality of types of instructions. This allows certain types of instructions (eg. control transfer instructions, flag setting instructions and / or address generation instructions) to be prioritised over other types of instructions even if they are younger. A functional unit may contain a plurality of pipelines, and there may be several such functional units in a processor.

Owner:MIPS TECH INC

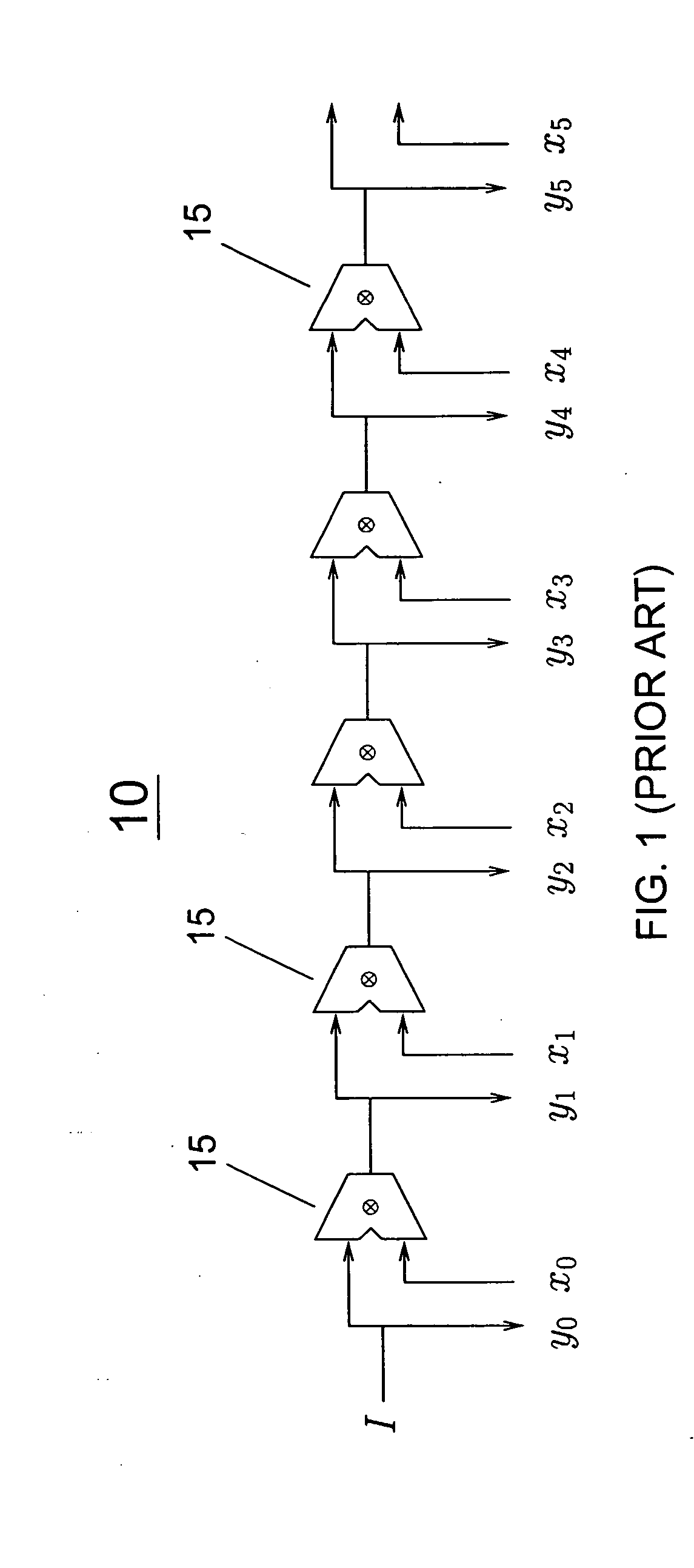

Efficient circuits for out-of-order microprocessors

InactiveUS20060015547A1Improve performanceAvoid performanceDigital data processing detailsDigital computer detailsExtensibilityScalar processor

The poor scalability of existing superscalar processors has been of great concern to the computer engineering community. In particular, the critical-path delays of many components in existing implementations grow quadratically with the issue width and the window size. This patent presents a novel way to reimplement these components and reduce their critical-path delay growth. It then describes an entire processor microarchitecture, called the Ultrascalar processor, that has better critical-path delay growth than existing superscalars. Most of our scalable designs are based on a single circuit, a cyclic segmented parallel prefix (cspp). We observe that processor components typically operate on a wrap-around sequence of instructions, computing some associative property of that sequence. For example, to assign an ALU to the oldest requesting instruction, each instruction in the instruction sequence must be told whether any preceding instructions are requesting an ALU. Similarly, to read an argument register, an instruction must somehow communicate with the most recent preceding instruction that wrote that register. A cspp circuit can implement such functions by computing for each instruction within a wrap-around instruction sequence the accumulative result of applying some associative operator to all the preceding instructions. A cspp circuit has a critical path gate delay logarithmic in the length of the instruction sequence. Depending on its associative operation and its layout, a cspp circuit can have a critical path wire delay sublinear in the length of the instruction sequence.

Owner:YALE UNIV

Multithreaded multicore uniprocessor and a heterogeneous multiprocessor incorporating the same

Owner:INT BUSINESS MASCH CORP

Multi-Level Dispatch for a Superscalar Processor

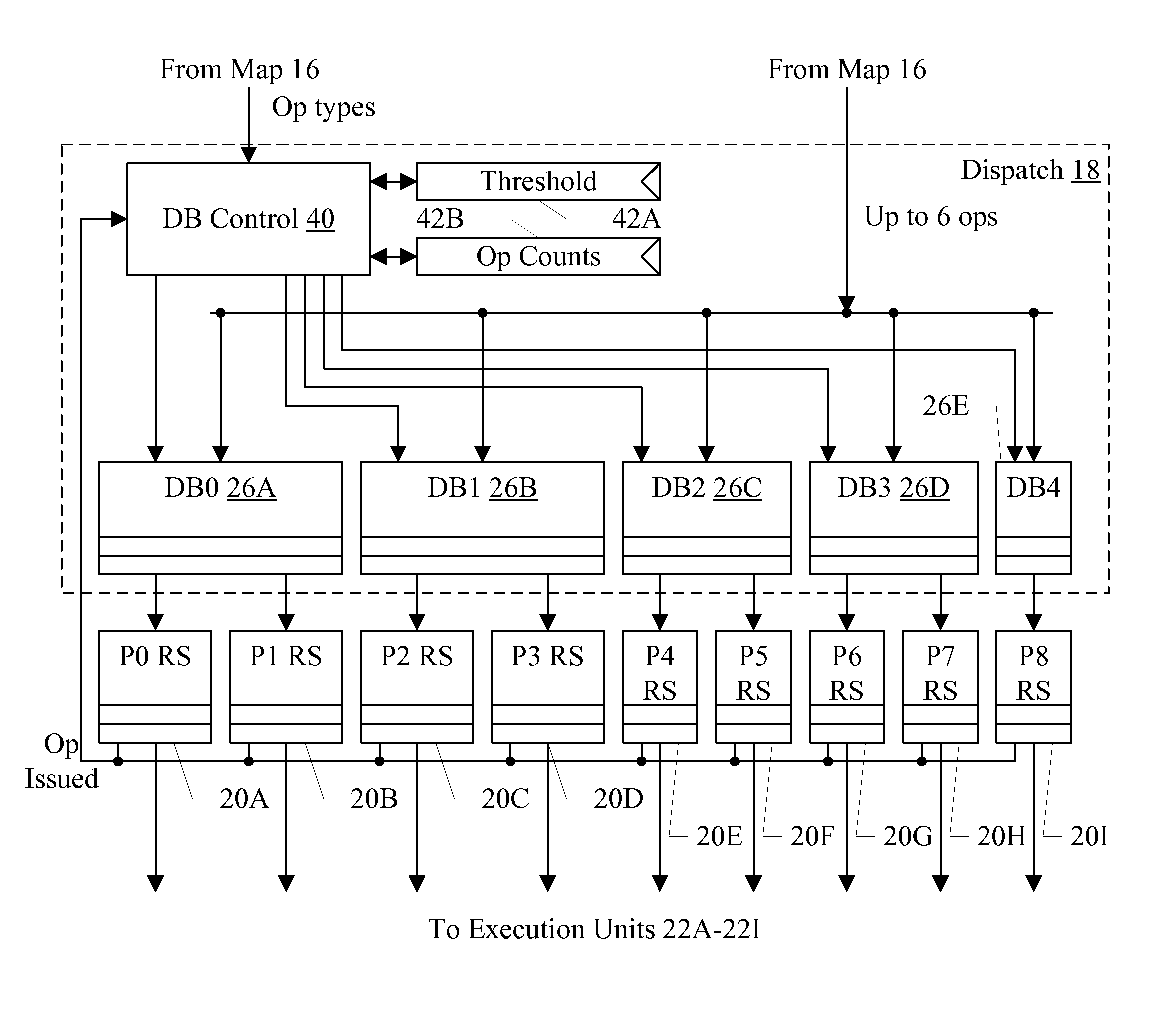

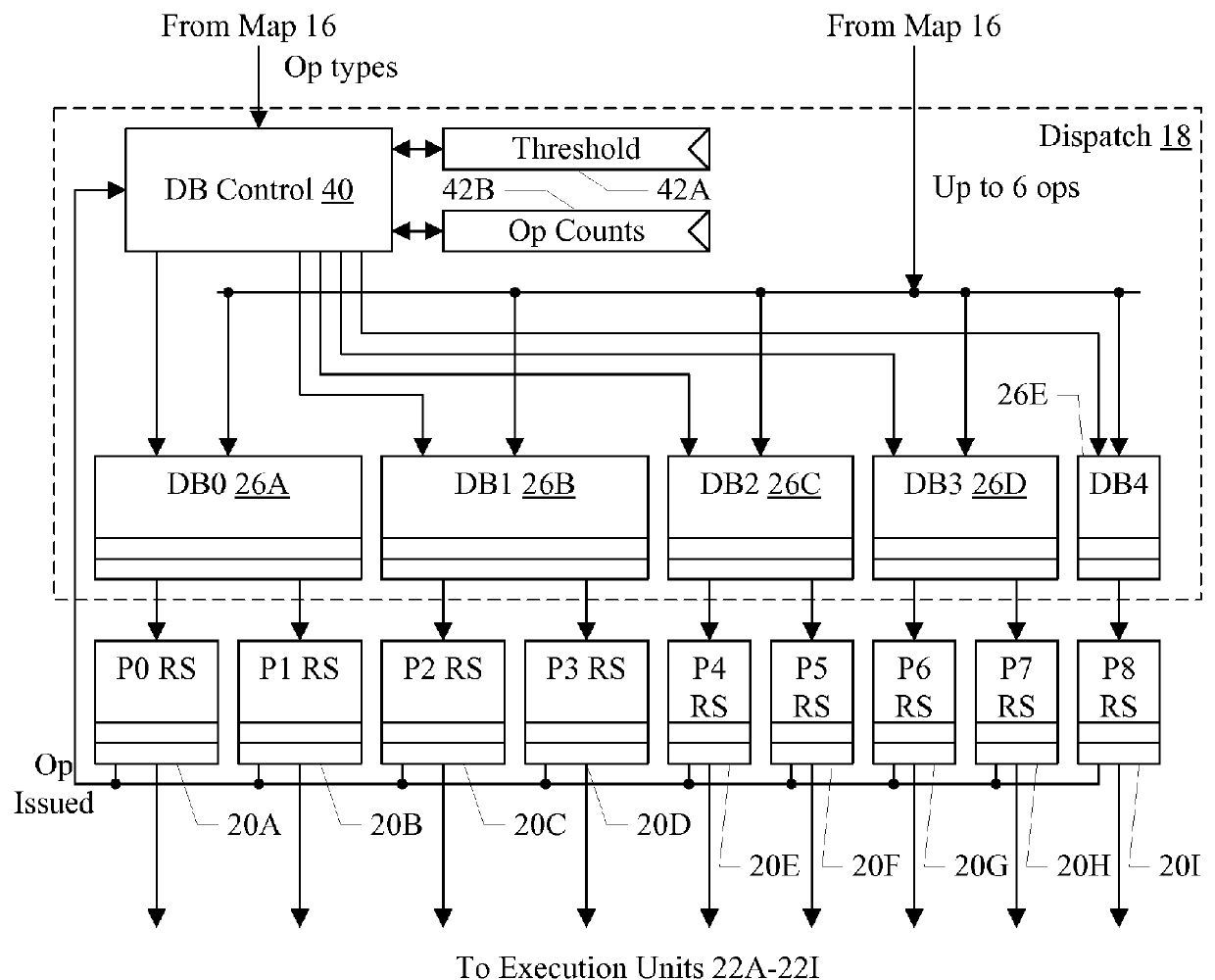

ActiveUS20140215188A1Avoid low frequency operationImprove performanceInstruction analysisDigital computer detailsReservation stationScalar processor

In an embodiment, a processor includes a multi-level dispatch circuit configured to supply operations for execution by multiple parallel execution pipelines. The multi-level dispatch circuit may include multiple dispatch buffers, each of which is coupled to multiple reservation stations. Each reservation station may be coupled to a respective execution pipeline and may be configured to schedule instruction operations (ops) for execution in the respective execution pipeline. The sets of reservation stations coupled to each dispatch buffer may be non-overlapping. Thus, if a given op is to be executed in a given execution pipeline, the op may be sent to the dispatch buffer which is coupled to the reservation station that provides ops to the given execution pipeline.

Owner:APPLE INC

Multi-level dispatch for a superscalar processor

ActiveUS9336003B2Improve performanceHigh operating requirementsProgram initiation/switchingInstruction analysisReservation stationScalar processor

In an embodiment, a processor includes a multi-level dispatch circuit configured to supply operations for execution by multiple parallel execution pipelines. The multi-level dispatch circuit may include multiple dispatch buffers, each of which is coupled to multiple reservation stations. Each reservation station may be coupled to a respective execution pipeline and may be configured to schedule instruction operations (ops) for execution in the respective execution pipeline. The sets of reservation stations coupled to each dispatch buffer may be non-overlapping. Thus, if a given op is to be executed in a given execution pipeline, the op may be sent to the dispatch buffer which is coupled to the reservation station that provides ops to the given execution pipeline.

Owner:APPLE INC

Quantifying completion stalls using instruction sampling

InactiveUS8234484B2Error detection/correctionDigital computer detailsData processing systemScalar processor

Owner:INT BUSINESS MASCH CORP

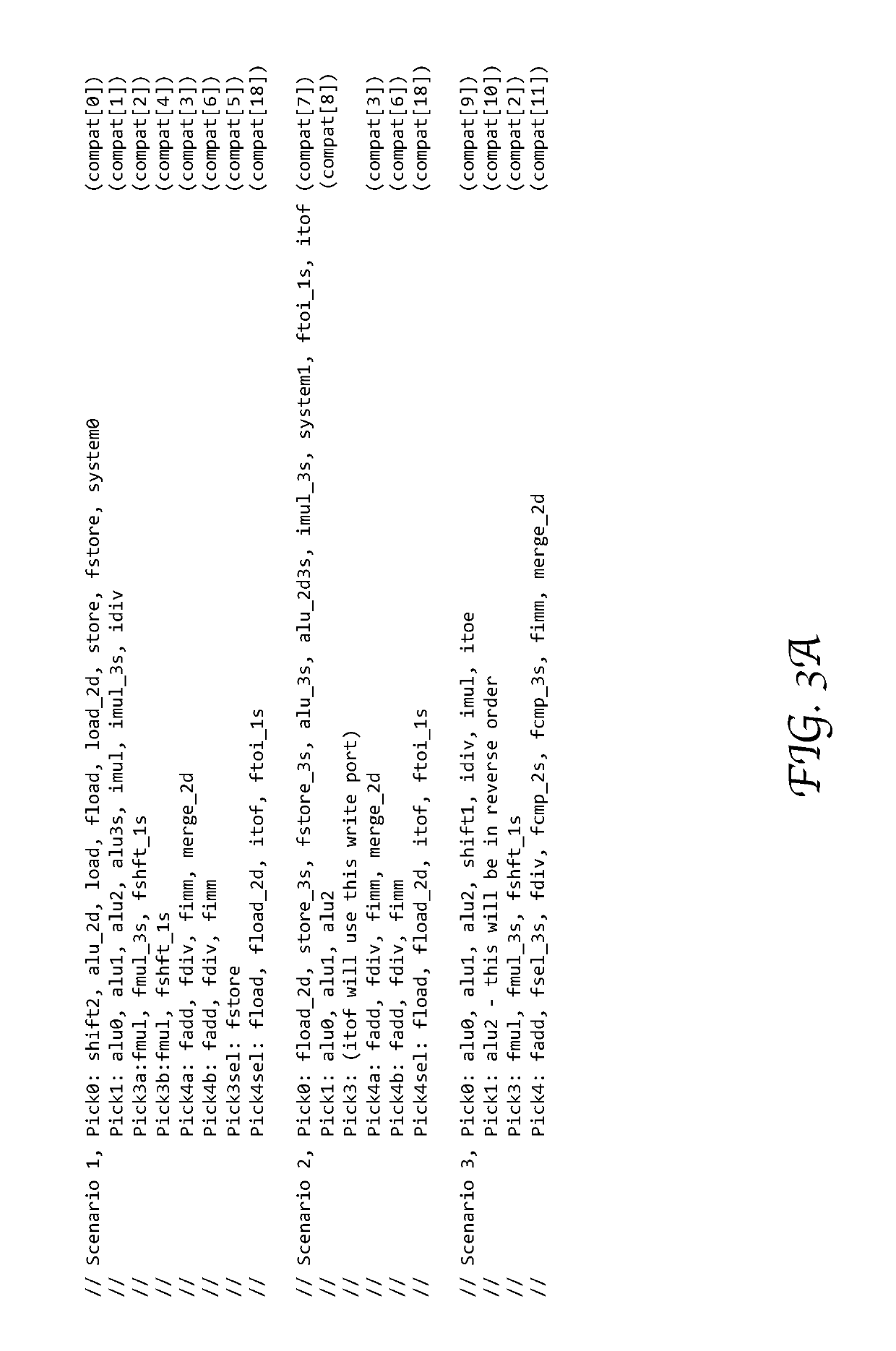

Issuing instructions based on resource conflict constraints in microporcessor

ActiveUS20190227805A1Advantageously ensure compatibilitySubstantial processing speedOperational speed enhancementRegister arrangementsScalar processorScenario based

Systems and methods of selecting a collection of compatible issue-ready instructions for parallel execution by functional units in a superscalar processor in a single clock cycle. All possible instructions (opcodes) to be executed by the functional units are pre-arranged into several scenarios based on potential resource conflicts among the instructions. Each scenario includes multiple groups of predefined instructions. During operation, concurrently for all the groups, an issue-ready instruction is identified with reference to each group based on group-specific selection policies. Further, based on the identified instructions, predefined policies are applied to select one or more scenarios and select among the picks of the selected scenarios. As a result, the output instructions of the selected scenarios are issued for parallel execution by the functional units.

Owner:MARVELL ASIA PTE LTD

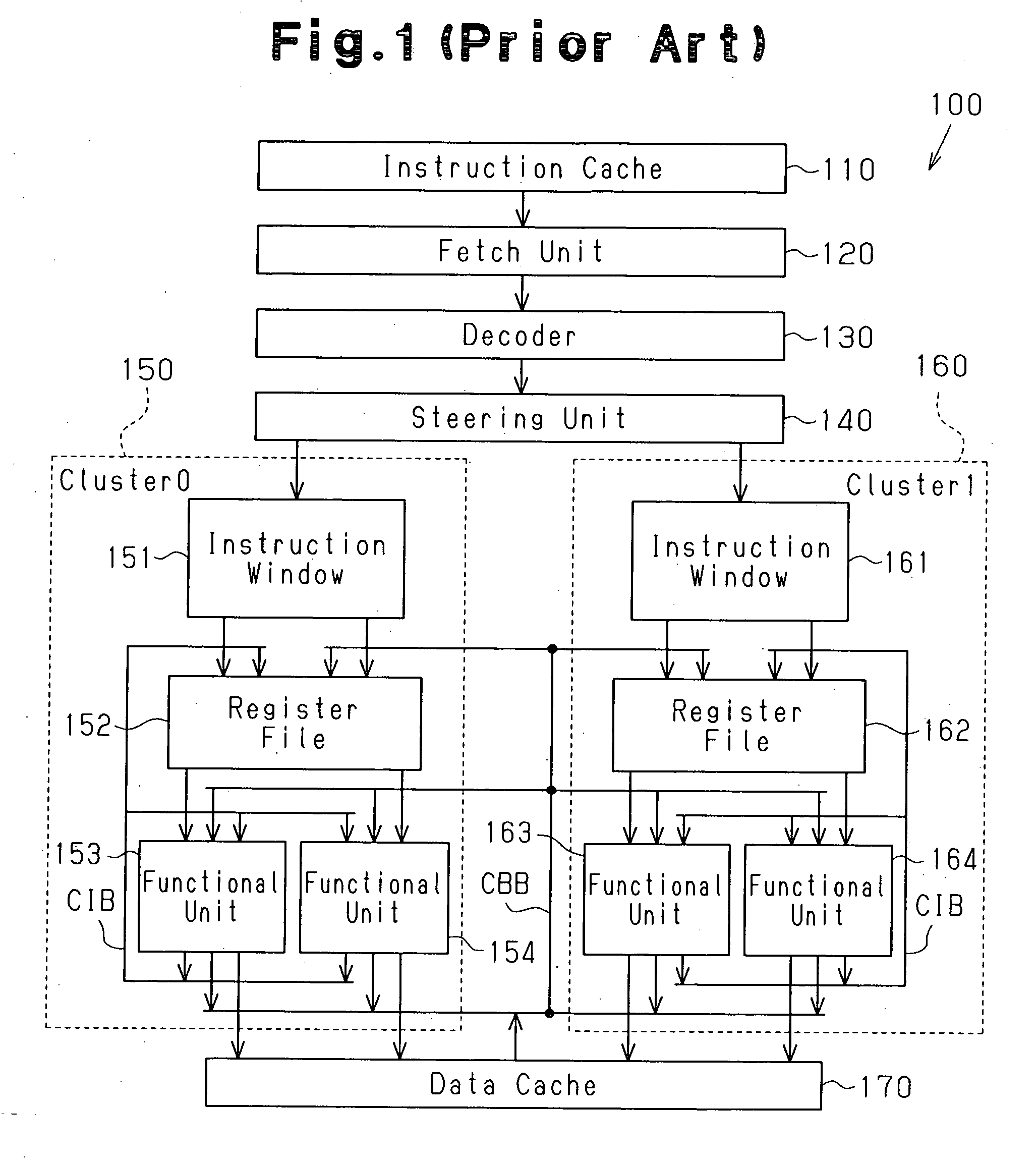

Clustered superscalar processor with communication control between clusters

InactiveUS7373485B2Improve performanceReduce error rateRegister arrangementsGeneral purpose stored program computerScalar processorProcessor register

Owner:NAGOYA UNIVERSITY

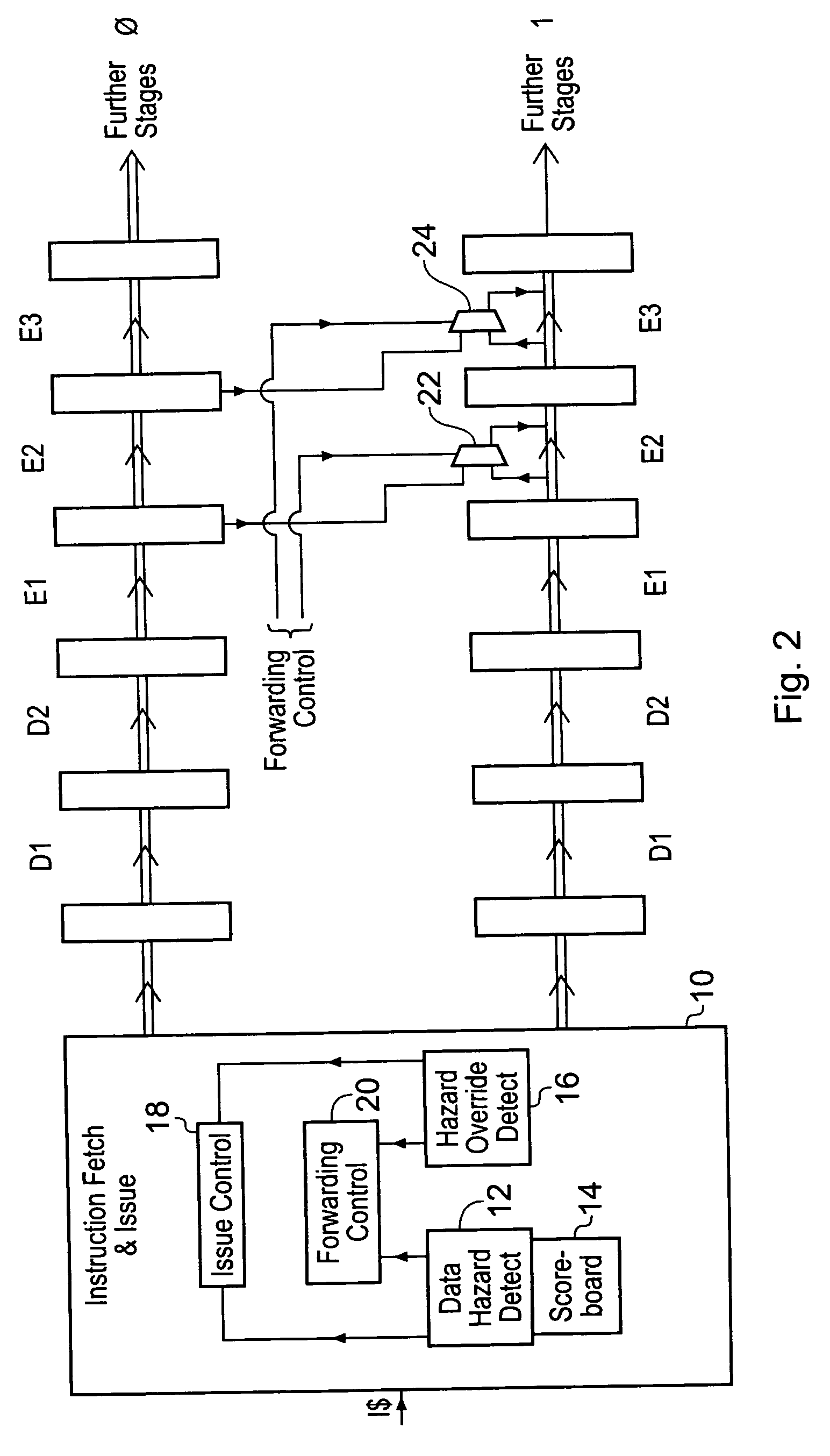

Instruction issue control within a superscalar processor

ActiveUS20060271768A1Increased complexityReduce decreaseDigital computer detailsMemory systemsData processing systemOperand

A data processing system including multiple execution pipelines each having multiple execution stages E1, E2, E3 may have instructions issued together in parallel despite a data dependency therebetween if it is detected that the result operand value for the older instruction will be generated in an execution stage prior to an execution stage which requires that result operand value as an input operand value to the younger instruction and accordingly cross-forwarding of the operand value is possible between the execution pipelines to resolve the data dependency.

Owner:ARM LTD

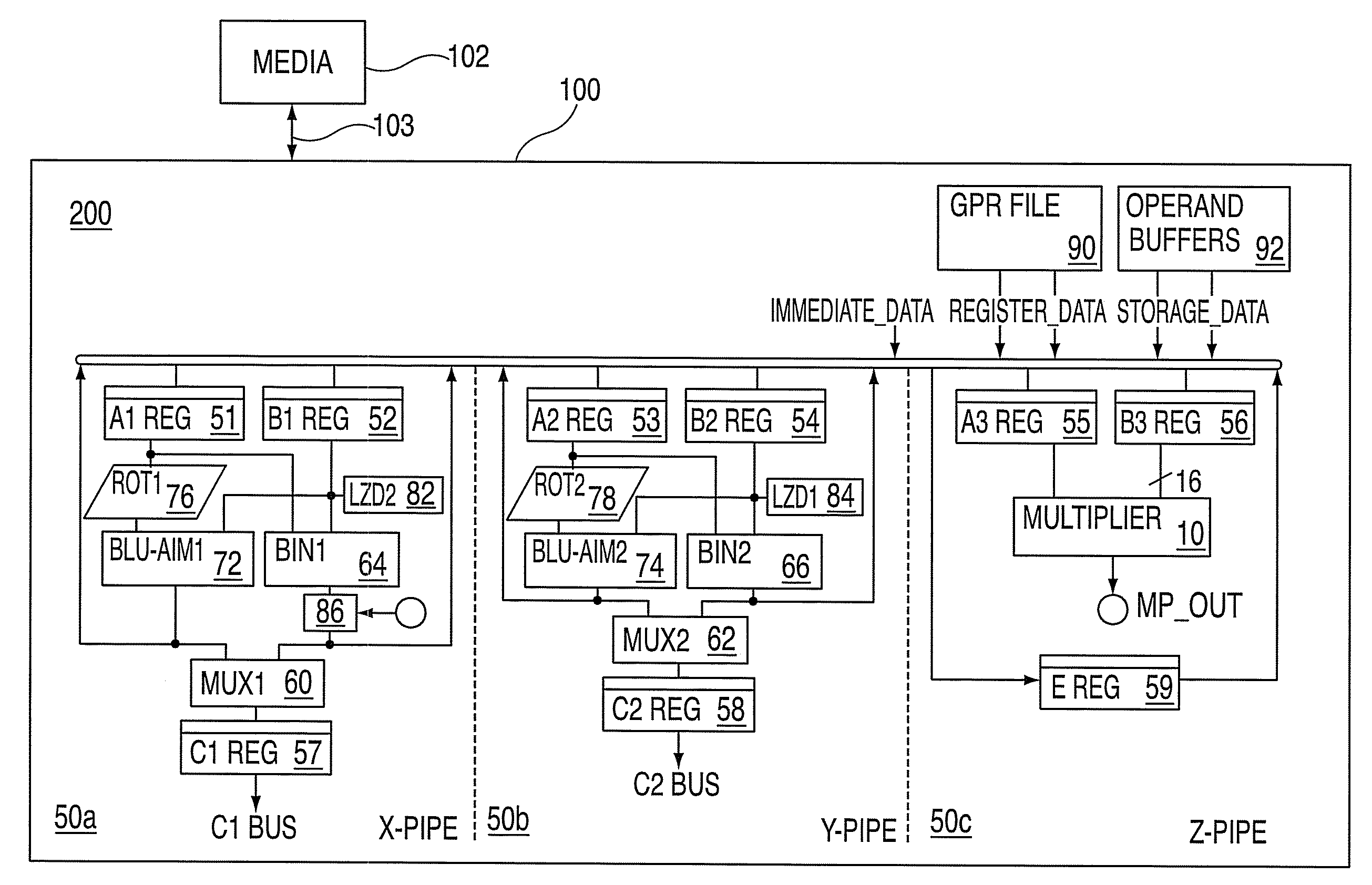

Modular binary multiplier for signed and unsigned operands of variable widths

InactiveUS7853635B2Computation using non-contact making devicesSpecific program execution arrangementsBinary multiplierScalar processor

A system for binary multiplication in a superscalar processor includes a first pipeline, an execution unit, and a first multiplexer; a first rotator in communication with one register of the first pipeline and the execution unit; and a leading zero detection register in communication with the execution unit and another register of the first pipeline; a second pipeline, a second execution unit, and a second multiplexer; a rotator in communication with one register of the second pipeline and the second execution unit; and a leading zero detection register in communication with the second execution unit and another register of the first pipeline; and a third pipeline, a binary multiplier in communication with a pair registers of the third pipeline; a general register; an operand buffer for obtaining first and second operands; and a bus for communication between the pipelines, the general register and the operand buffer.

Owner:IBM CORP

Configuration steering for a reconfigurable superscalar processor

InactiveUS20060259749A1Digital computer detailsSpecific program execution arrangementsConfiguration selectionExecution unit

A reconfigurable processor including a plurality of reconfigurable execution units, a memory, an instruction queue, a configuration selection unit, and a configuration loader. The memory stores a plurality of steering vector processing hardware configurations for configuring the reconfigurable execution units. The instruction queue stores a plurality of instructions to be executed by at least one of the reconfigurable execution units. The configuration selection unit analyzes the instructions stored in the instruction queue and chooses one of the steering vector processing hardware configurations. The configuration loader determines whether one of the reconfigurable slots is available and reconfigures at least one of the reconfigurable slots with at least a part of the chosen steering vector processing hardware configuration responsive to at least one of the reconfigurable slots being available.

Owner:THE BOARD OF RGT UNIV OF OKLAHOMA

Multithreaded multicore uniprocessor and a heterogeneous multiprocessor incorporating the same

InactiveUS20080046684A1Reduce the required powerGeneral purpose stored program computerProgram controlScalar processorCombined use

A uniprocessor that can run multiple threads (programs) simultaneously is achieved by use of a plurality of low-frequency minicore processors, each minicore for receiving a respective thread from a high-frequency cache and processing the thread. A superscalar processor may be used in conjunction with the uniprocessor to process threads requiring high throughput.

Owner:IBM CORP

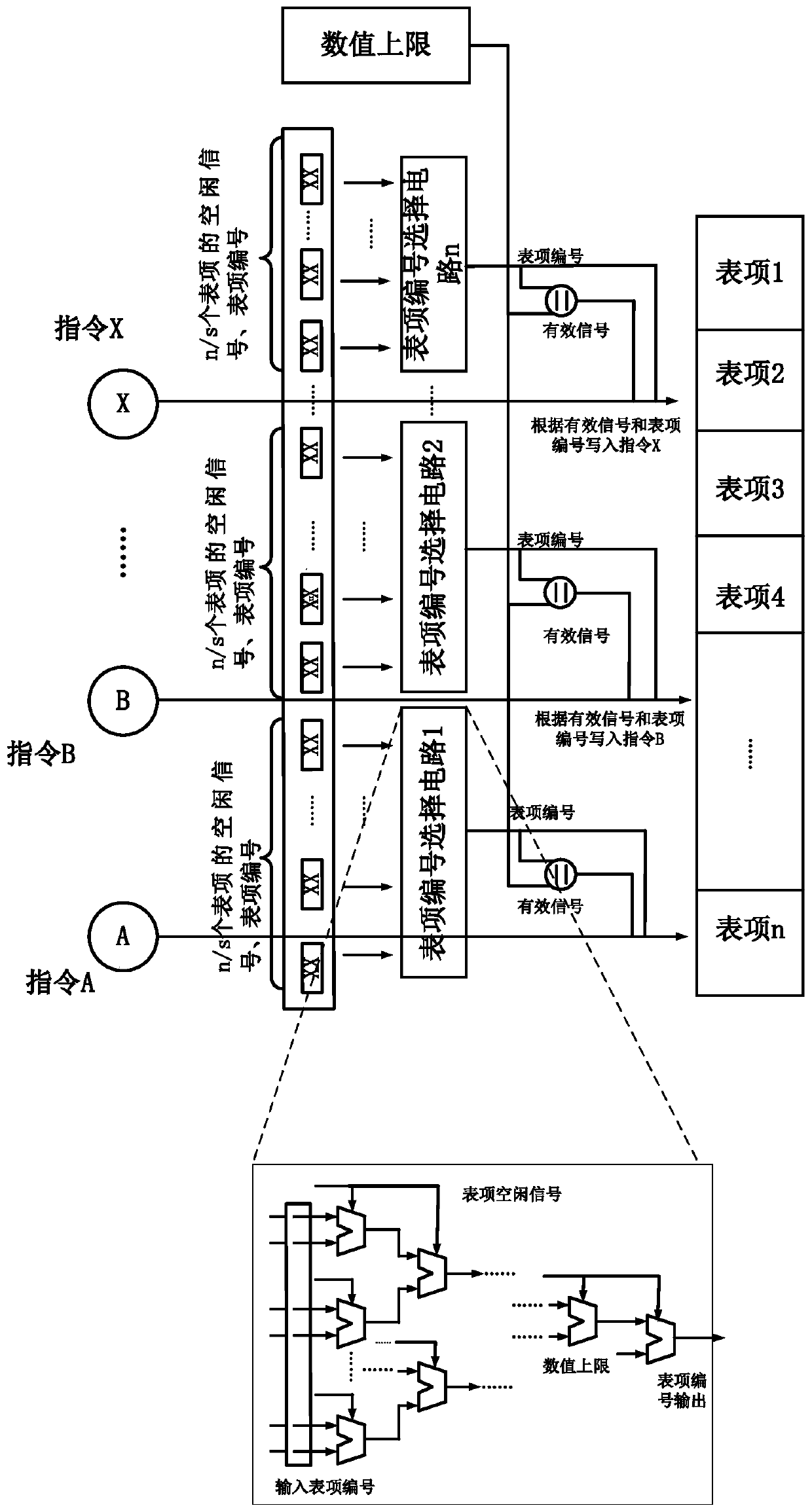

Multi-instruction out-of-order transmitting method based on instruction withering and processor

ActiveCN111538534ASolve the problem of not being able to increase the number of entries in the launch queueSolve the problem of increasing latencyConcurrent instruction executionEnergy efficient computingEngineeringLow delay

The invention discloses a multi-instruction out-of-order transmitting method based on instruction withering and a processor, and belongs to the field of processor design. According to the invention, aredundant arbitration structure in a traditional transmitting architecture is abandoned, an instruction withering circuit is added, and an instruction age array is adopted to represent the storage time of instructions in a CPU. In addition, an awakening state bit is added, the instructions exceeding the withering threshold value are stored in a settling pond so that a CPU can directly transmit the instructions, circuit structures such as an instruction request circuit, an instruction distribution circuit and an awakening circuit are improved, and the time sequence of a key path in the processor for multi-instruction transmission is effectively improved; and when an instruction is awakened, delayed awakening is performed on an instruction with a short execution period, the instruction witha long execution period is awakened in advance so as to ensure that the instruction can be executed back to back, the requirements of high power consumption ratio, low delay and high IPC in a modernsuperscalar out-of-order processor are met, and the problems that in the prior art, the number of items of a launch queue table of a processor cannot be increased day by day, and delay is also increased day by day are solved.

Owner:JIANGNAN UNIV

Clustered superscalar processor and communication control method between clusters in clustered superscalar processor

InactiveUS20060095736A1Reduce error rateImprove performanceRegister arrangementsGeneral purpose stored program computerScalar processorProcessor register

A clustered superscalar processor for reducing the miss rate of a register cache and reducing the possibility of miss penalties. The processor checks before storing an instruction in an instruction window whether there is a data dependency relationship between the instruction that will be stored in the instruction window and a previous instruction stored in the instruction window. When there is a data dependency relationship, the execution result of the previous instruction of one cluster is communicated to a register cache of another cluster that executes the instruction having a data dependency relationship with the previous instruction.

Owner:NAGOYA UNIVERSITY

Neural network accelerator compiling method and device

PendingCN113554161ARapid deploymentFix loading issuesPhysical realisationNetwork structureCircular buffer

The invention provides a neural network accelerator compiling method and device, and the method comprises the steps: generating a dependency relationship between preset instruction types and a plurality of neural network compiler instruction queues based on neural network structure information and preset instruction types, wherein the neural network compiler instruction queue is a queue composed of neural network compiler instructions of the same preset instruction type; according to the dependency relationship, determining a parallel operation strategy between the neural network compiler instruction queues; and generating an acceleration instruction of the neural network accelerator according to the parallel operation strategy. According to the method and device, flexible dynamic adjustment technologies such as the circular buffer and the superscale are fused in the accelerator special for the neural network, so that the problems of neural network parameter loading and module utilization rate can be effectively solved, and the neural network can be deployed at the edge end more quickly.

Owner:TSINGHUA UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com