Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

280 results about "Instruction distribution" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Method and apparatus for rasterizer interpolation

ActiveUS7061495B1Time spentFine level of precisionProcessor architectures/configurationFilling planer surface with attributesComputational scienceGraphics

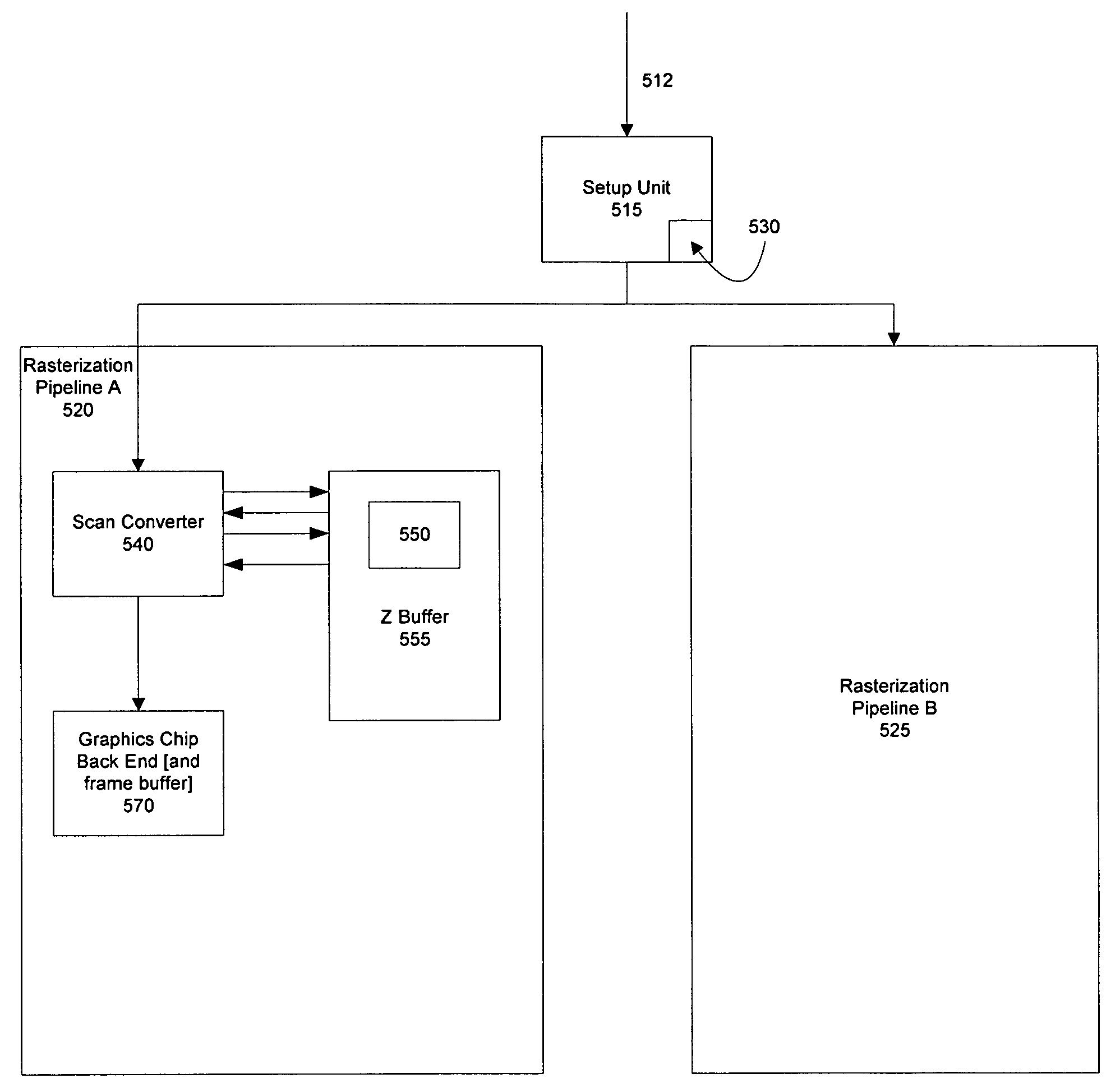

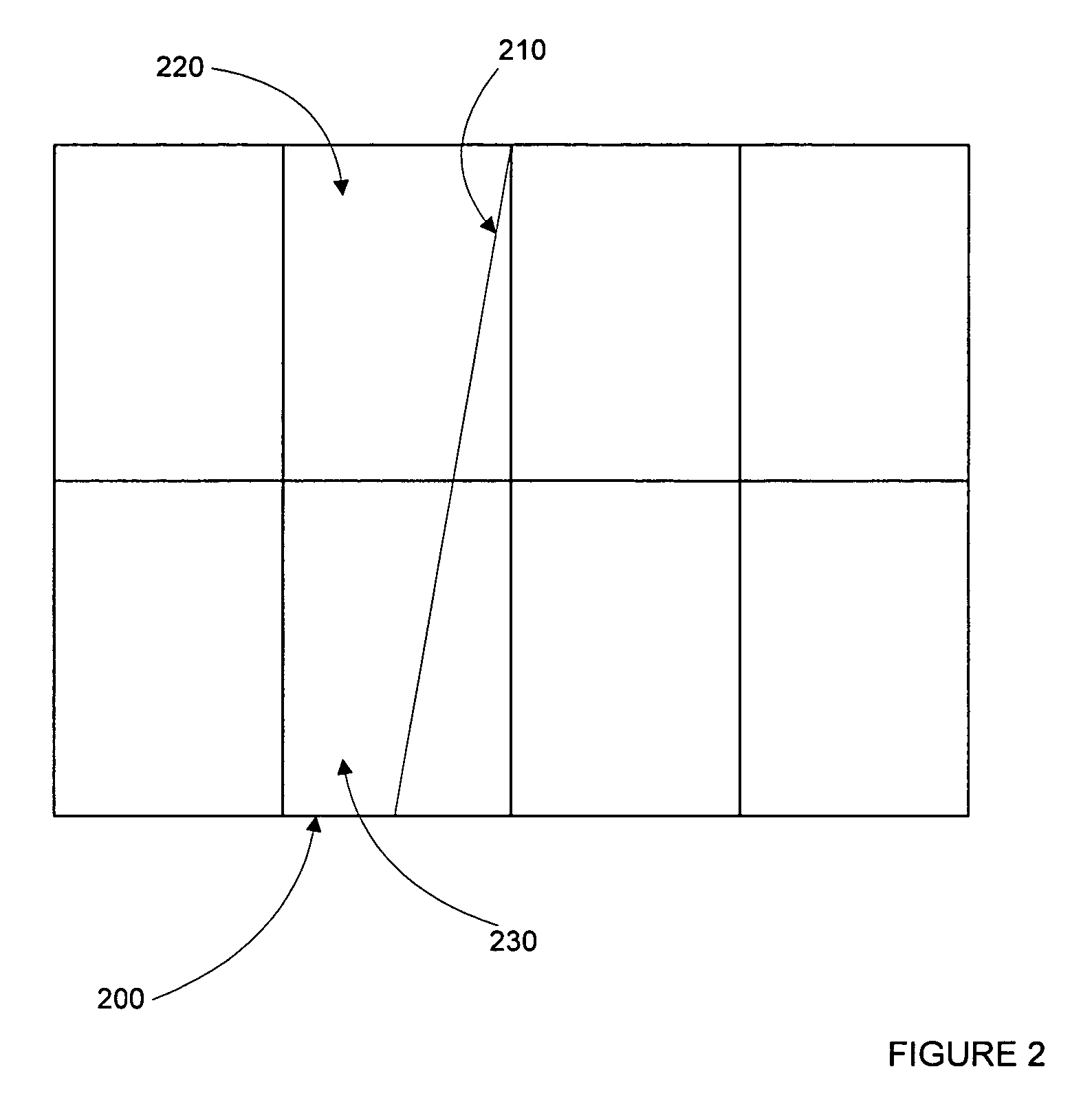

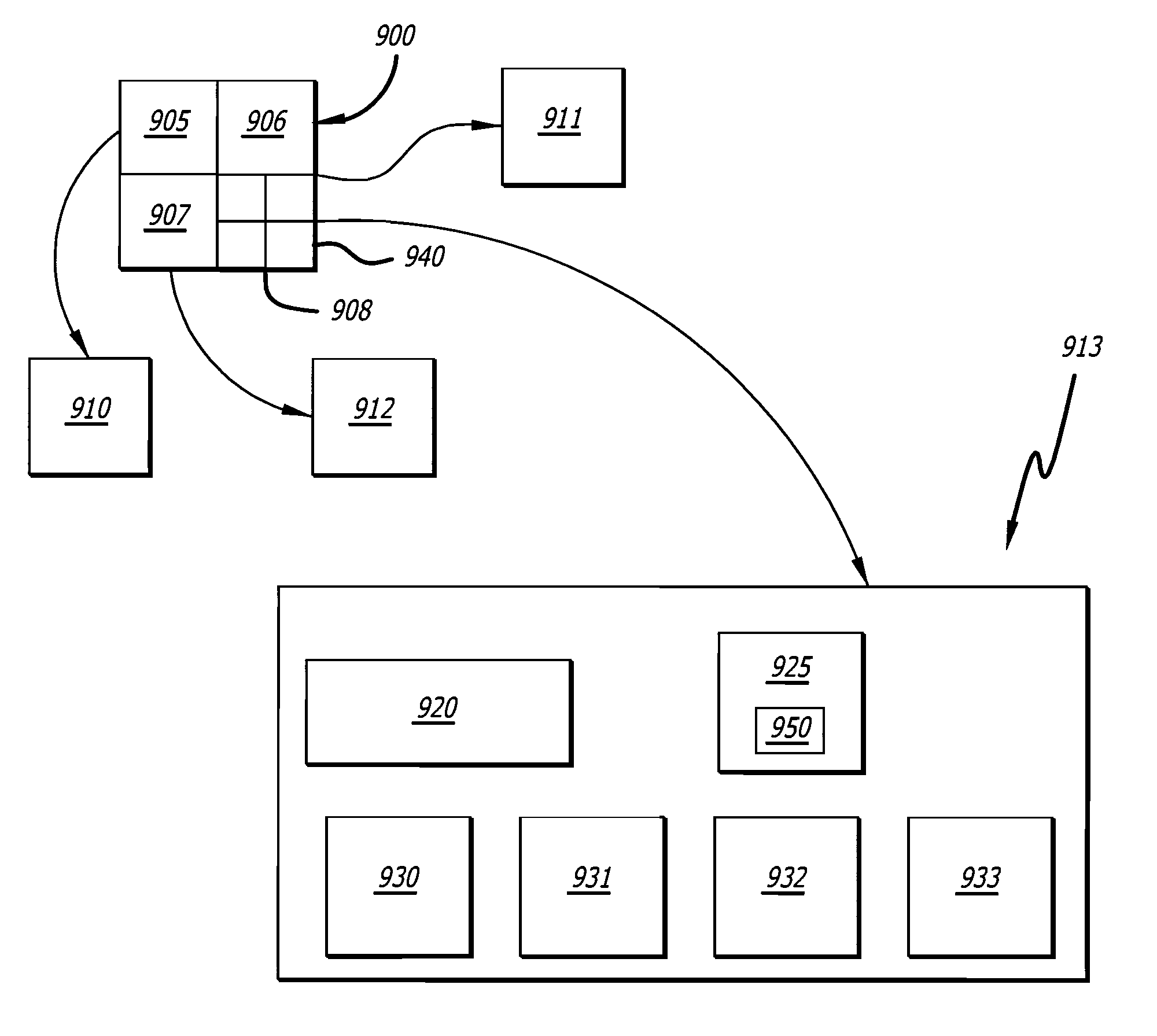

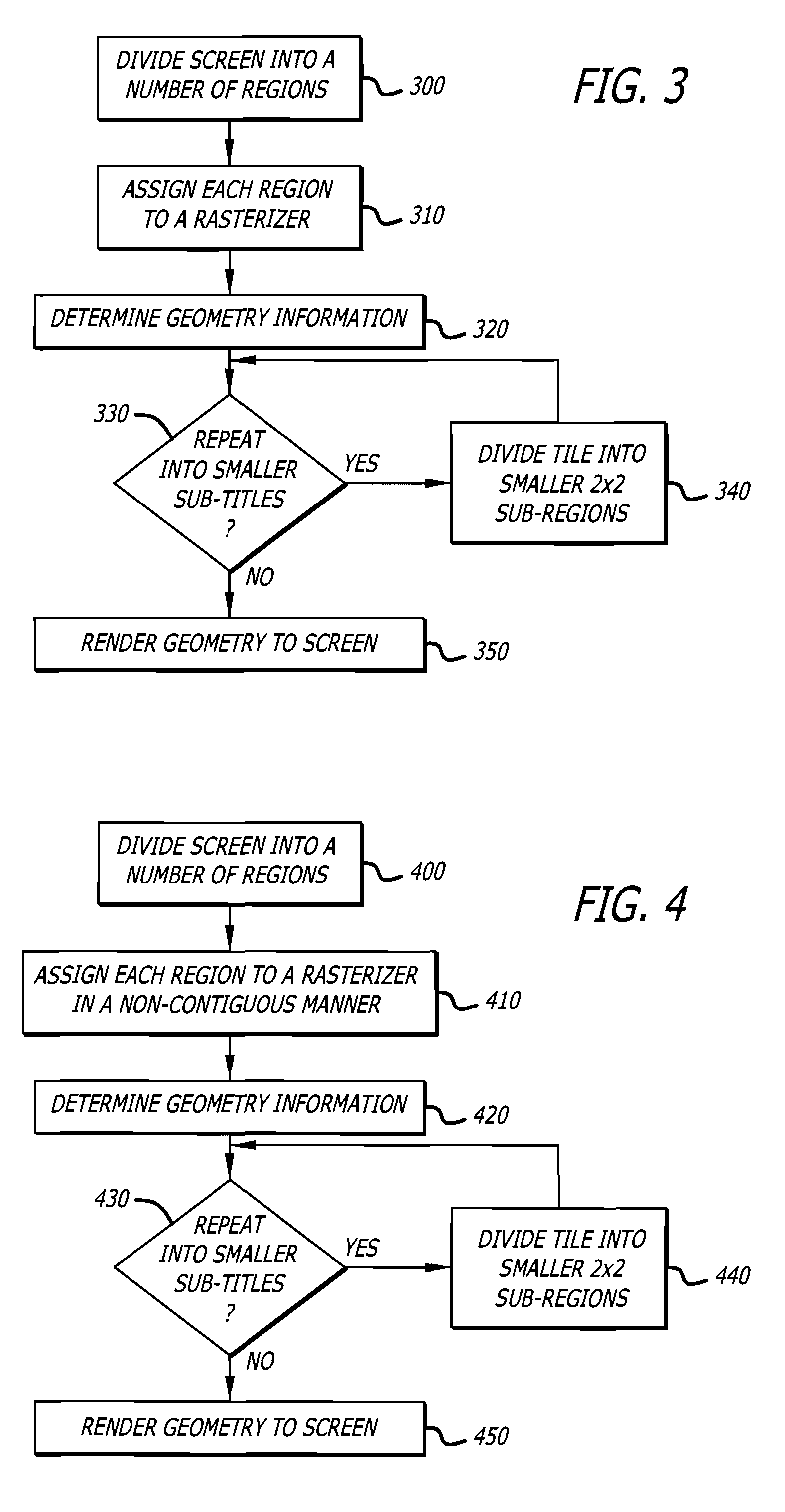

The present invention relates to a rasterizer interpolator. In one embodiment, a setup unit is used to distribute graphics primitive instructions to multiple parallel rasterizers. To increase efficiency, the setup unit calculates the polygon data and checks it against one or more tiles prior to distribution. An output screen is divided into a number of regions, with a number of assignment configurations possible for various number of rasterizer pipelines. For instance, the screen is sub-divided into four regions and one of four rasterizers is granted ownership of one quarter of the screen. To reduce time spent on processing empty times, a problem in prior art implementations, the present invention reduces empty tiles by the process of coarse grain tiling. This process occurs by a series of iterations performed in parallel. Each region undergoes an iterative calculation / tiling process where coverage of the primitive is deduced at a successively more detailed level.

Owner:ATI TECH INC

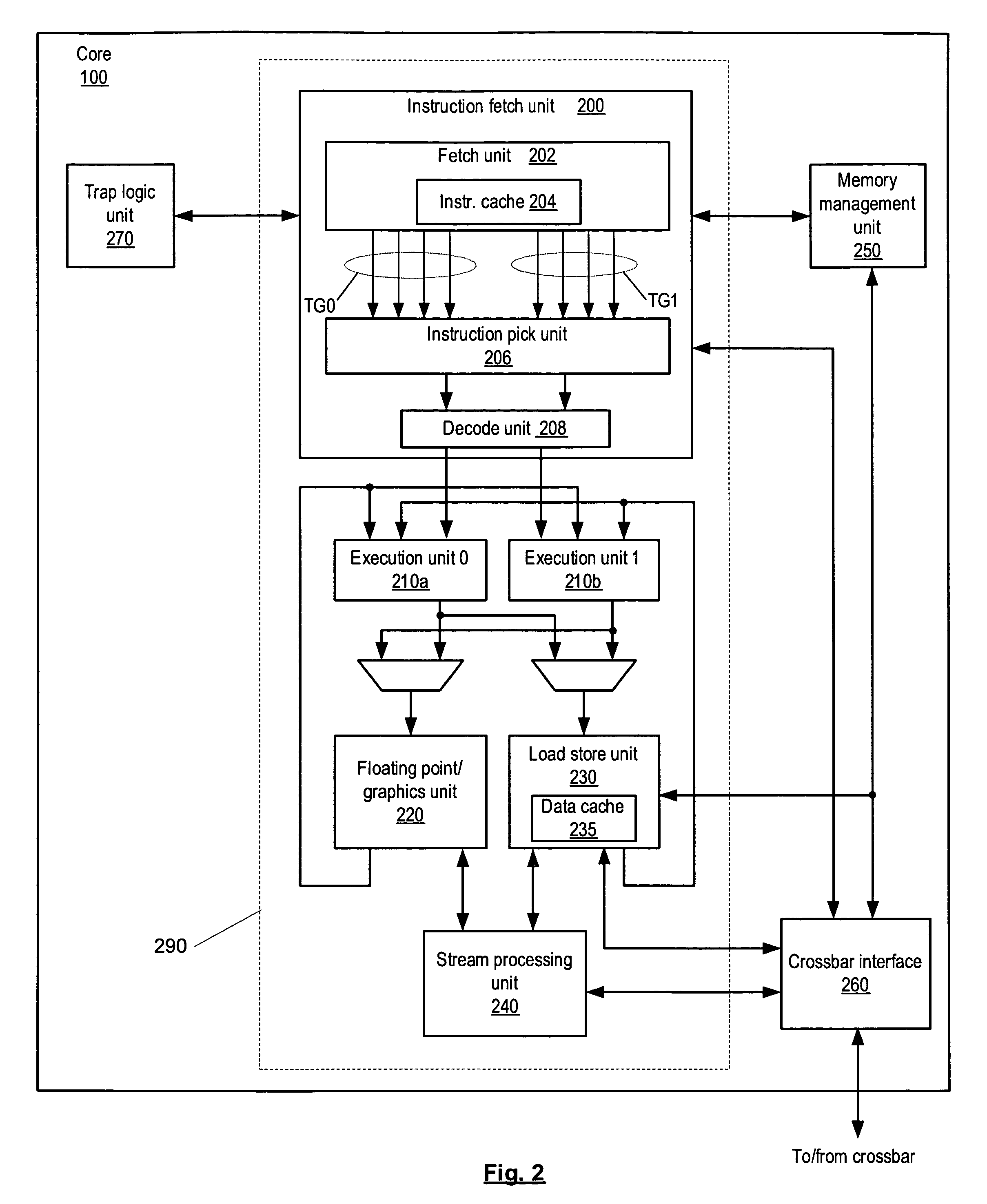

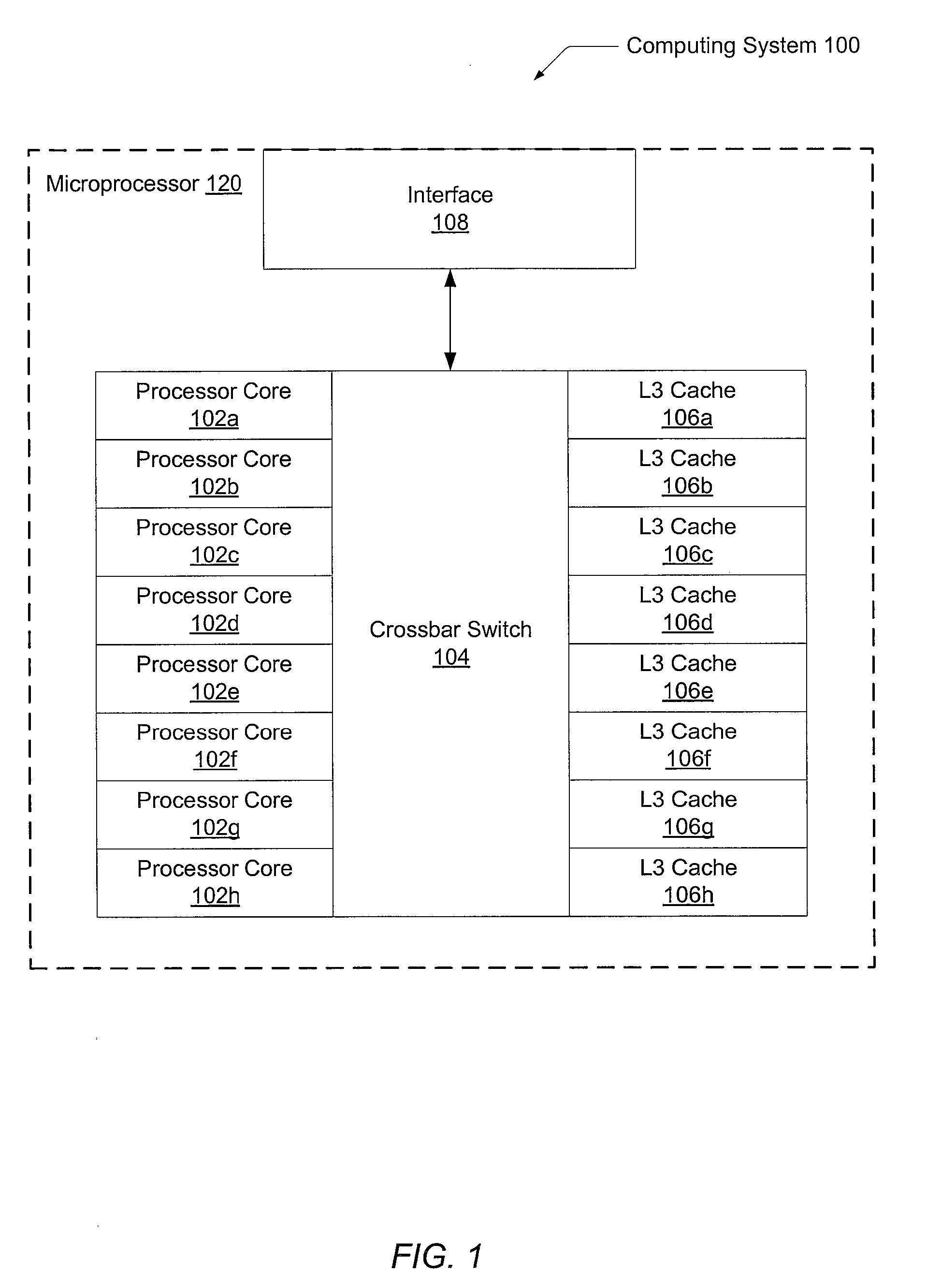

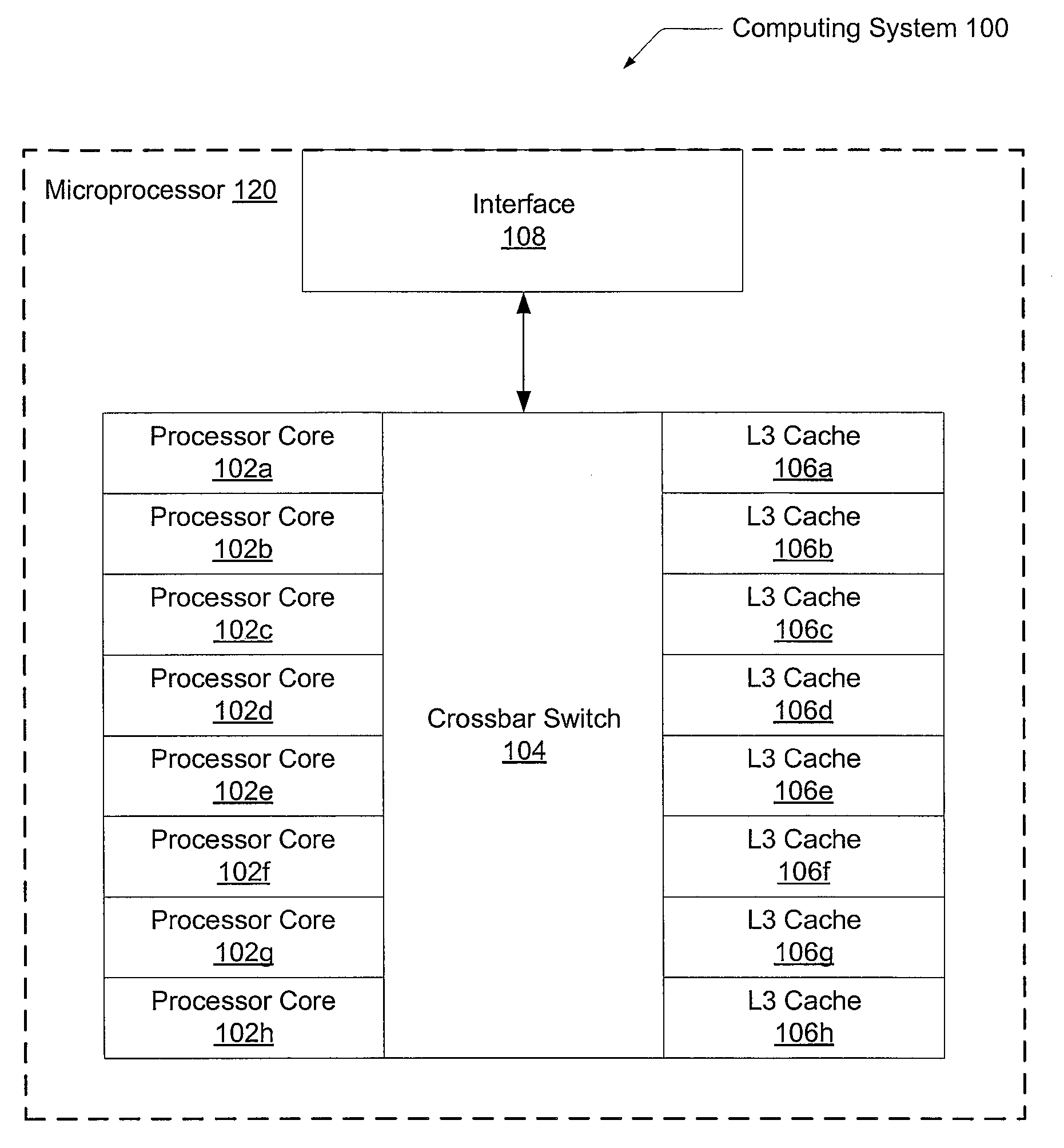

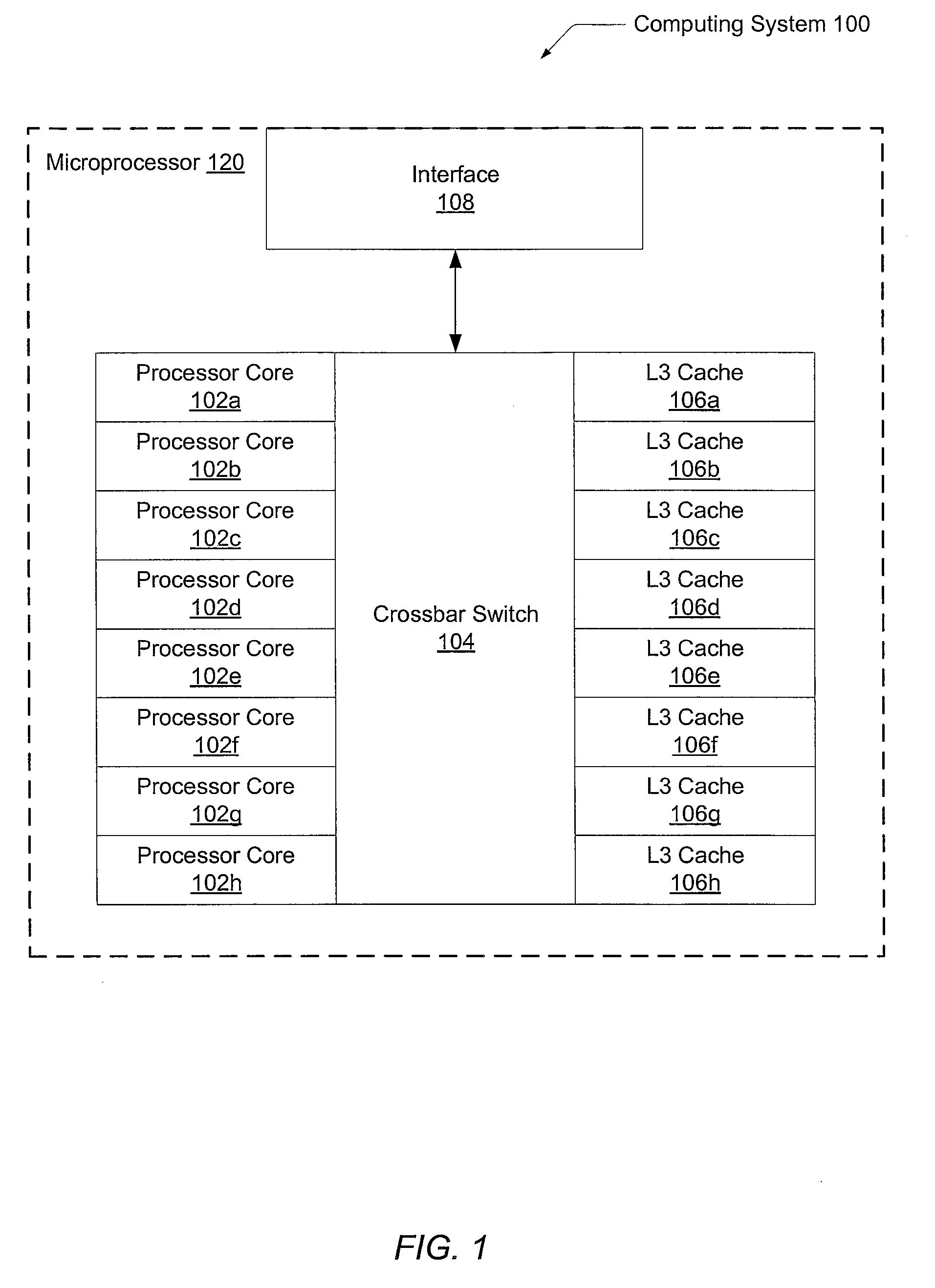

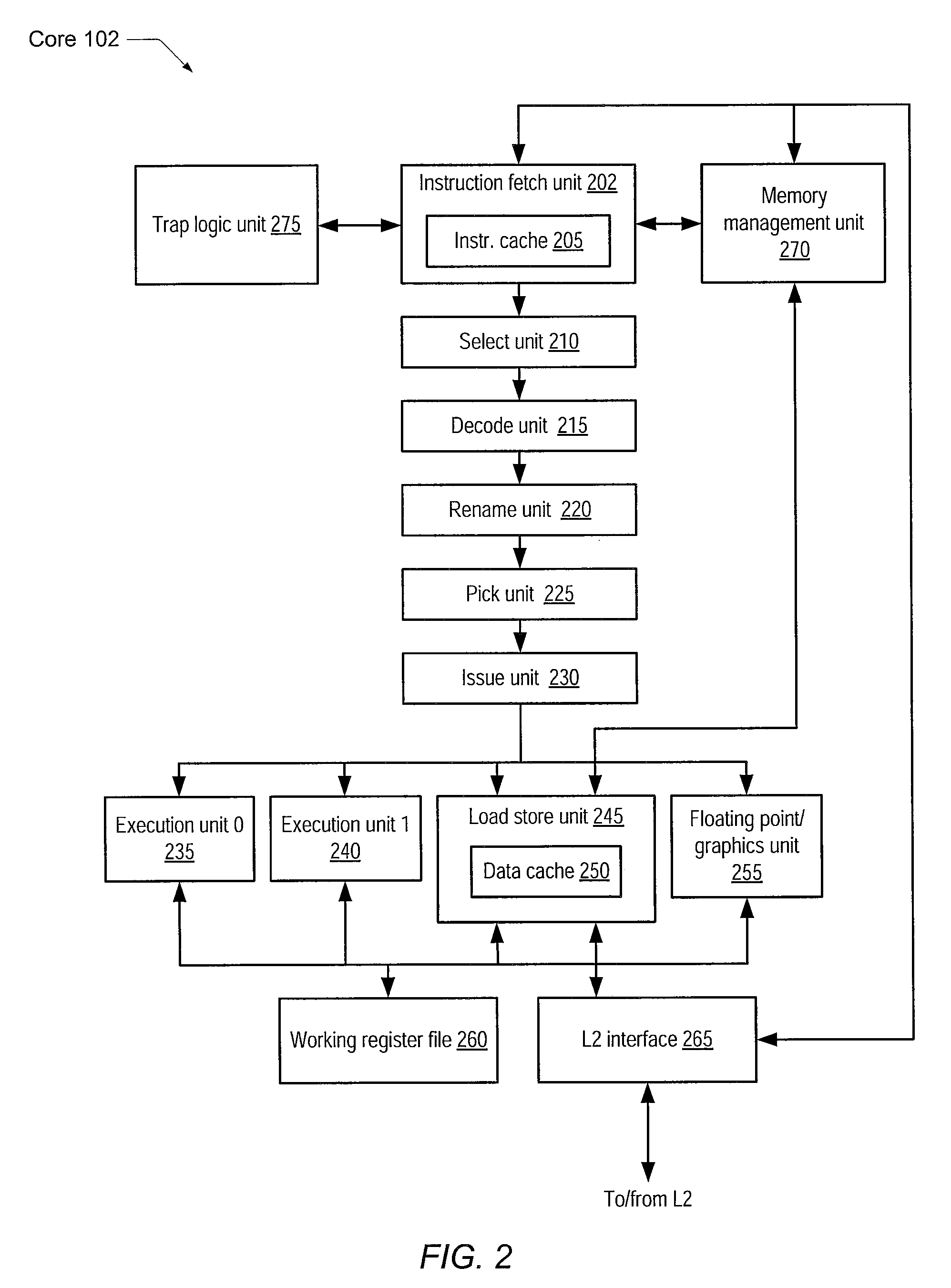

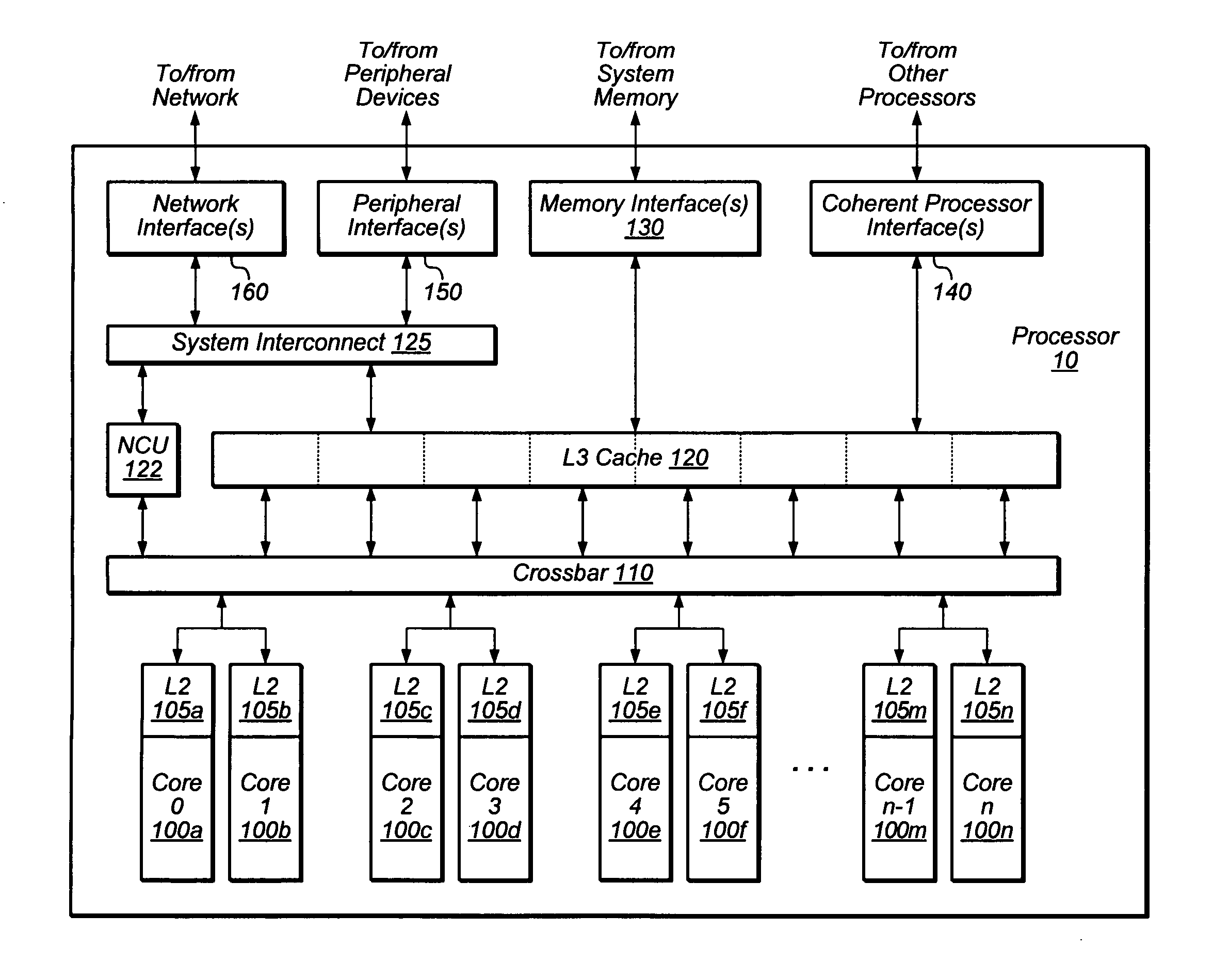

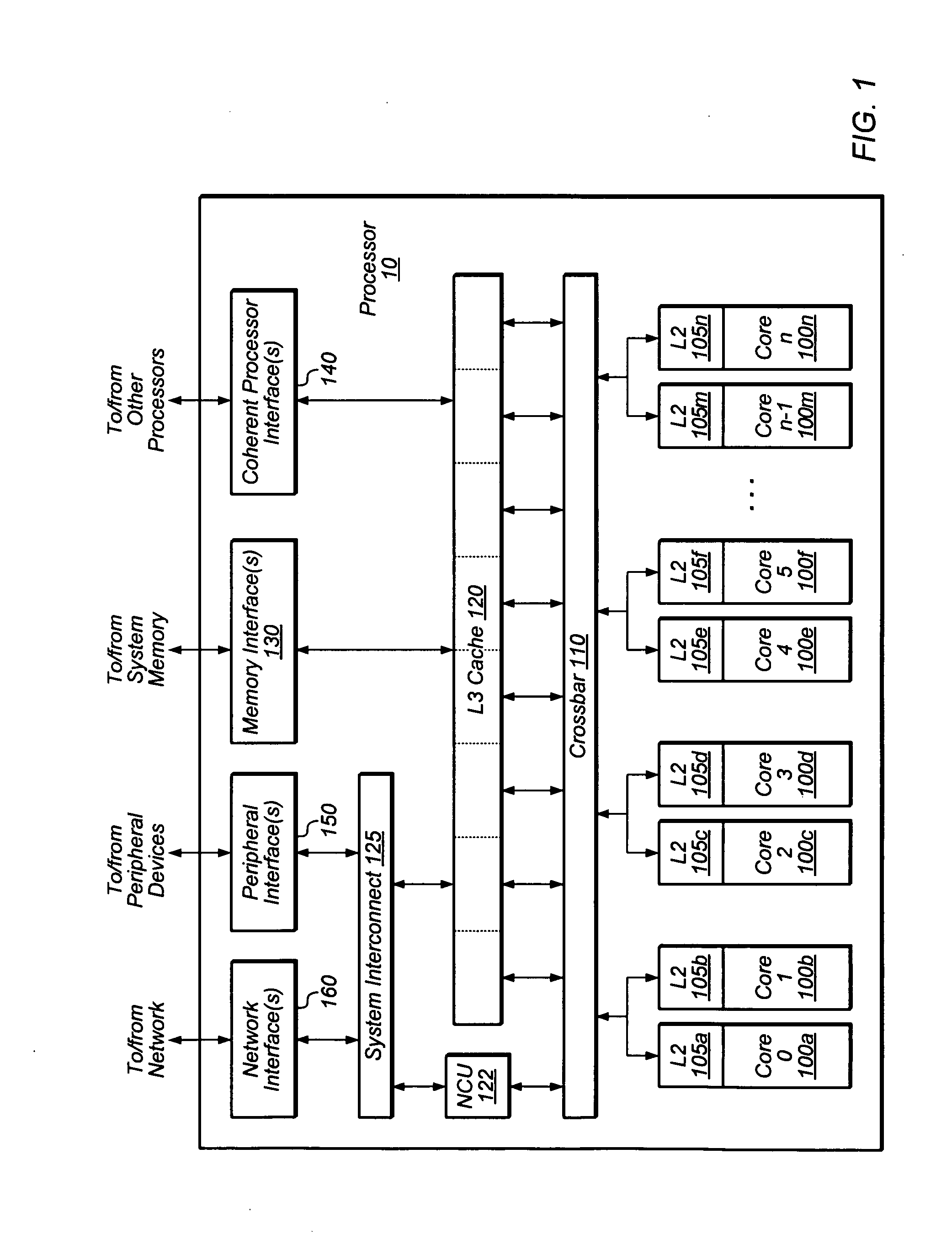

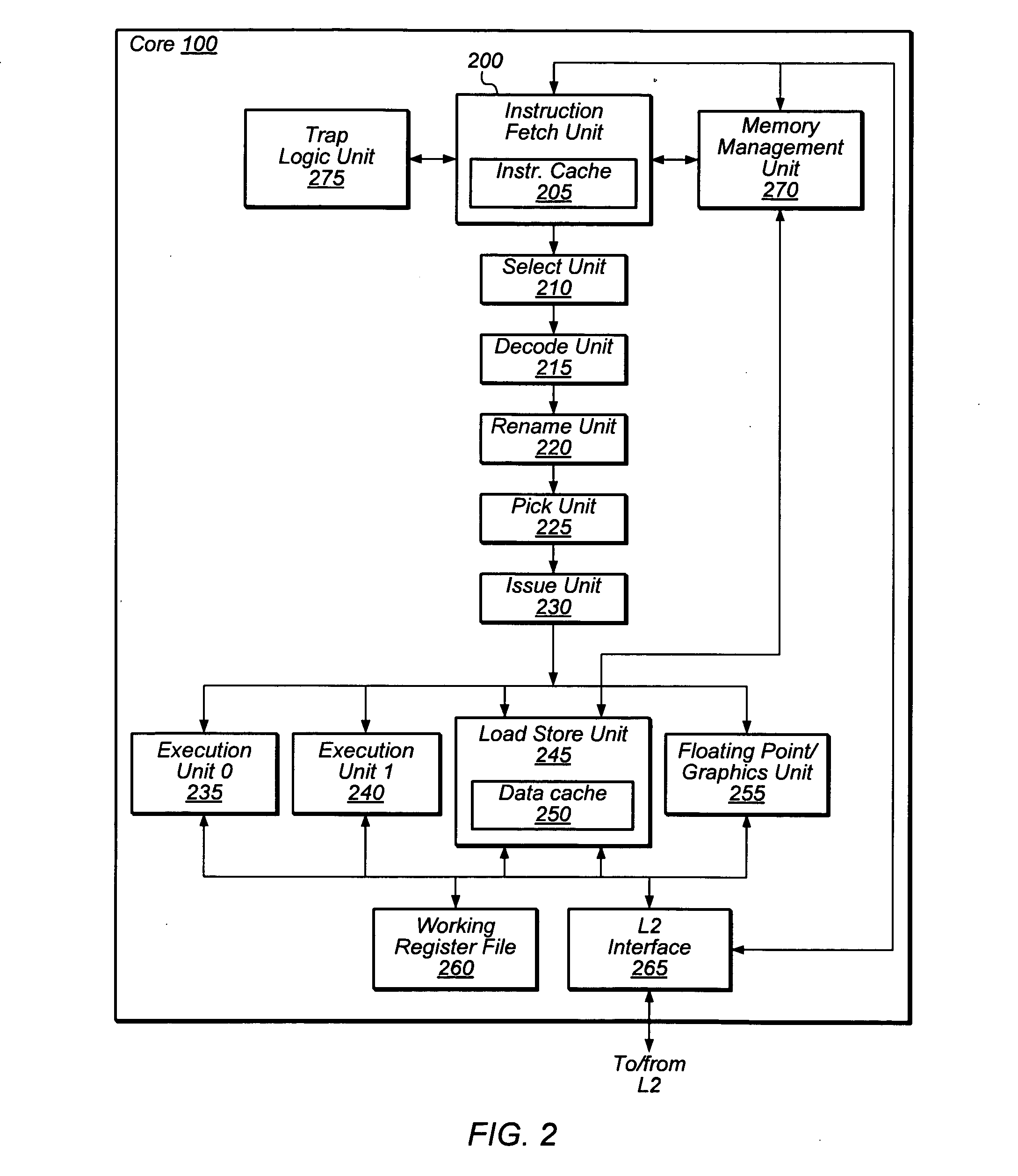

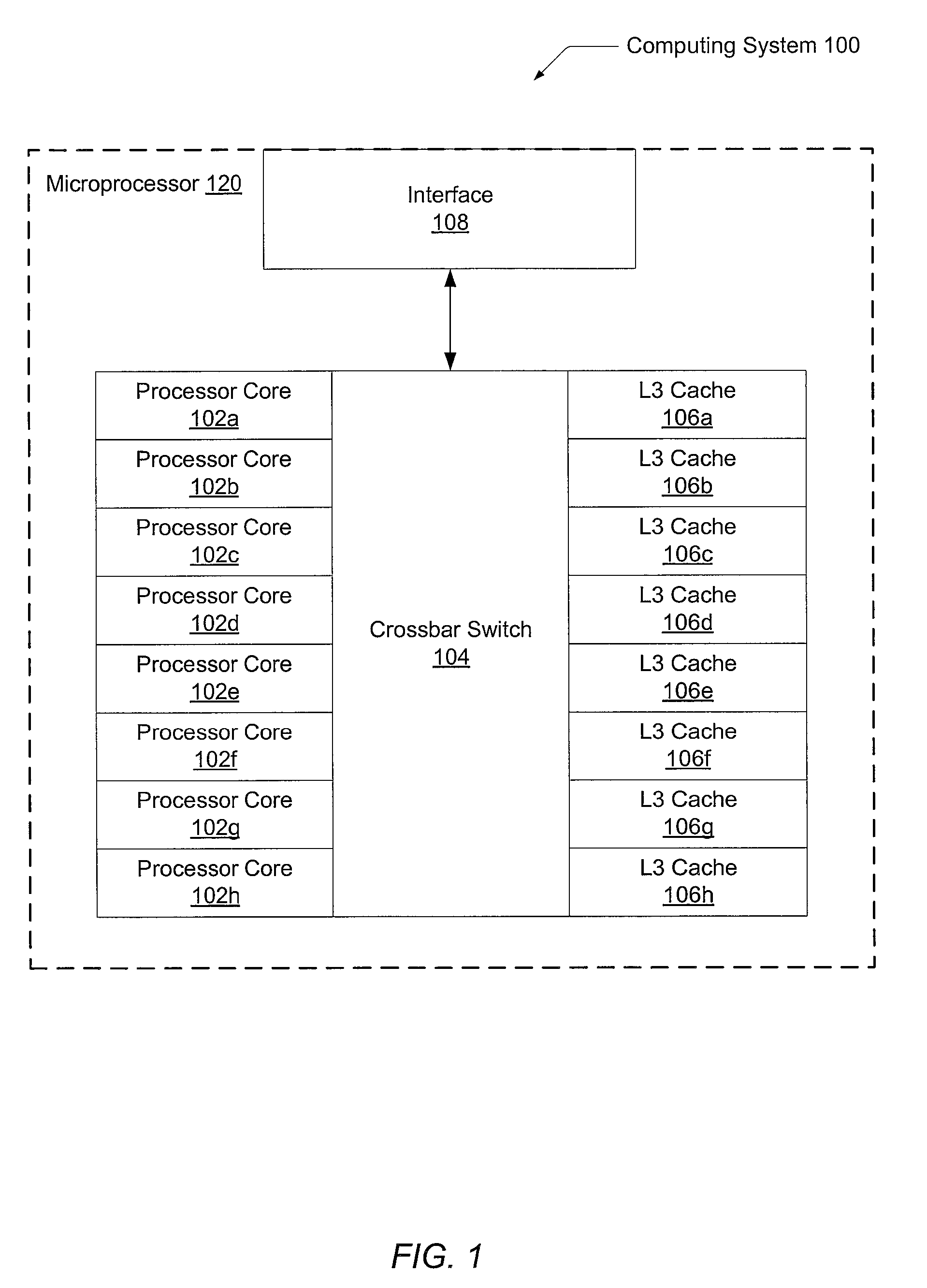

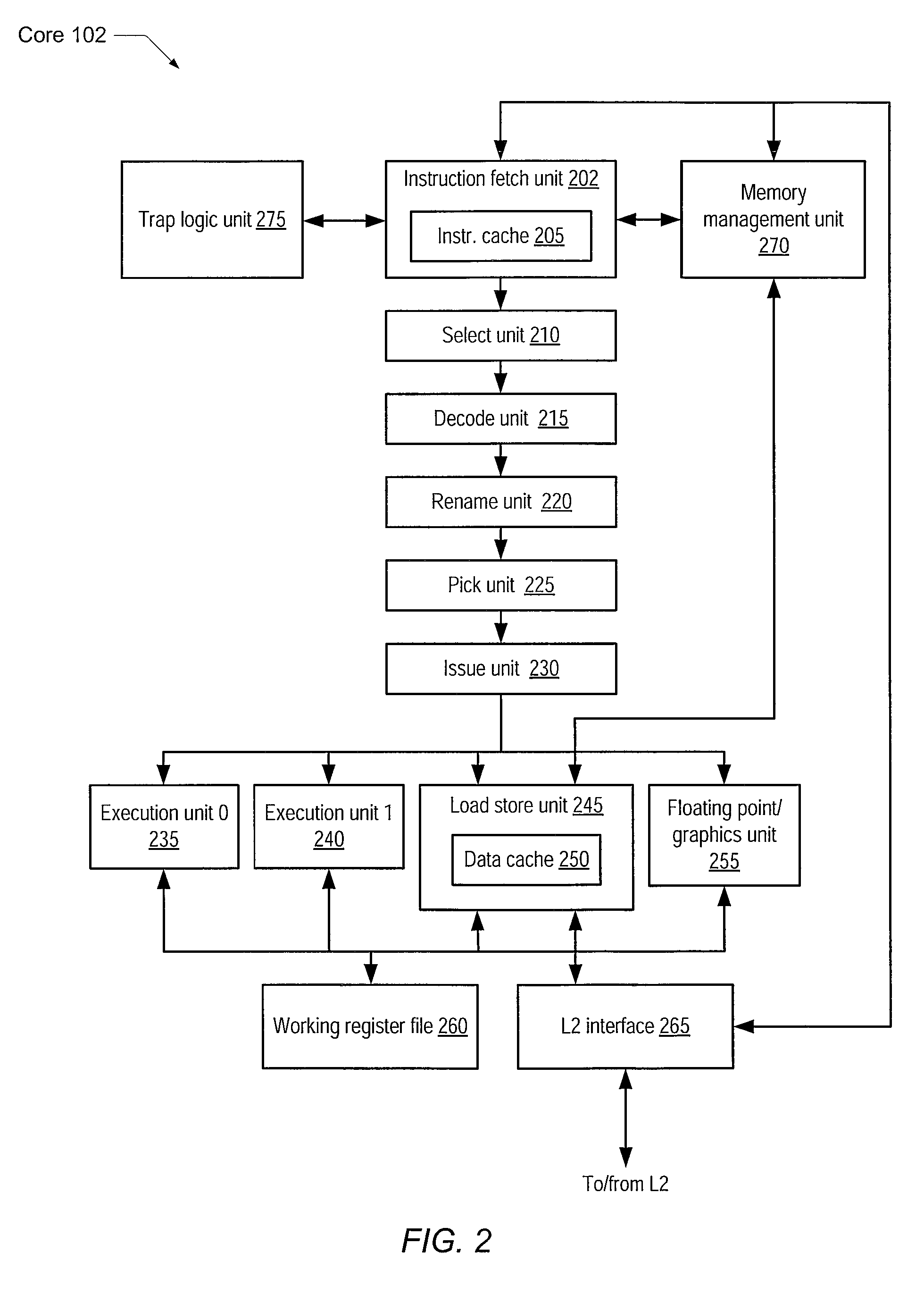

Precise error handling in a fine grain multithreaded multicore processor

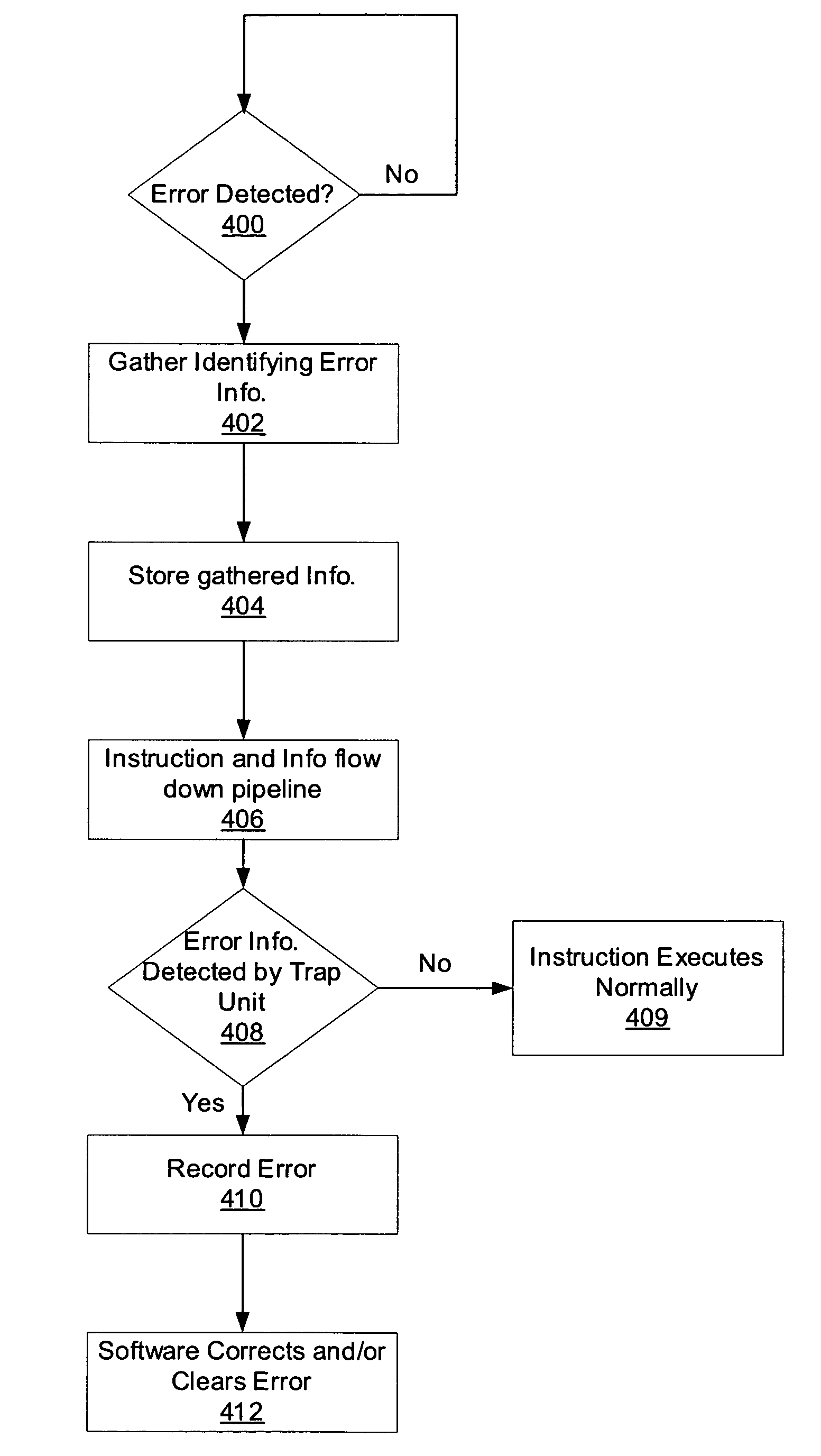

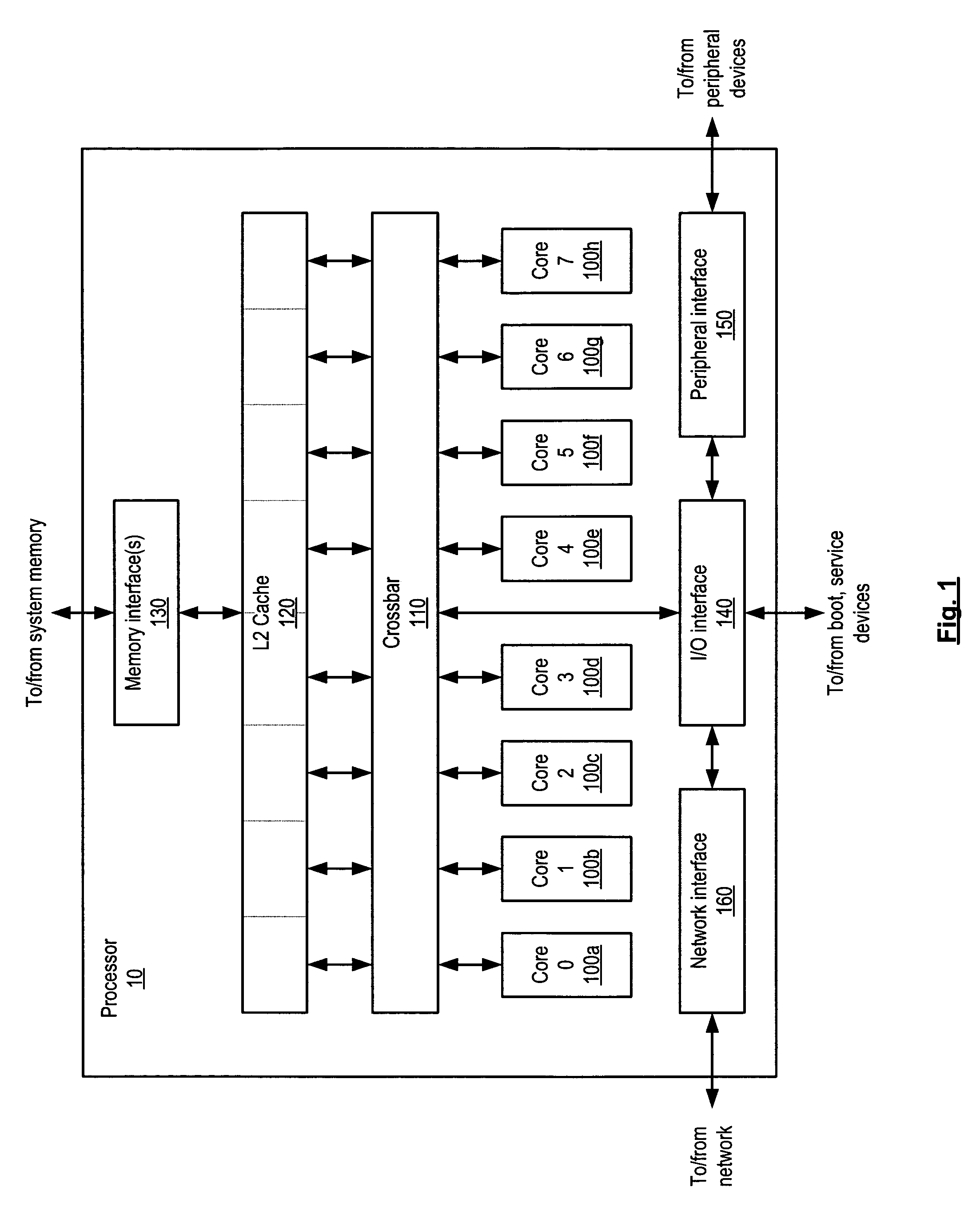

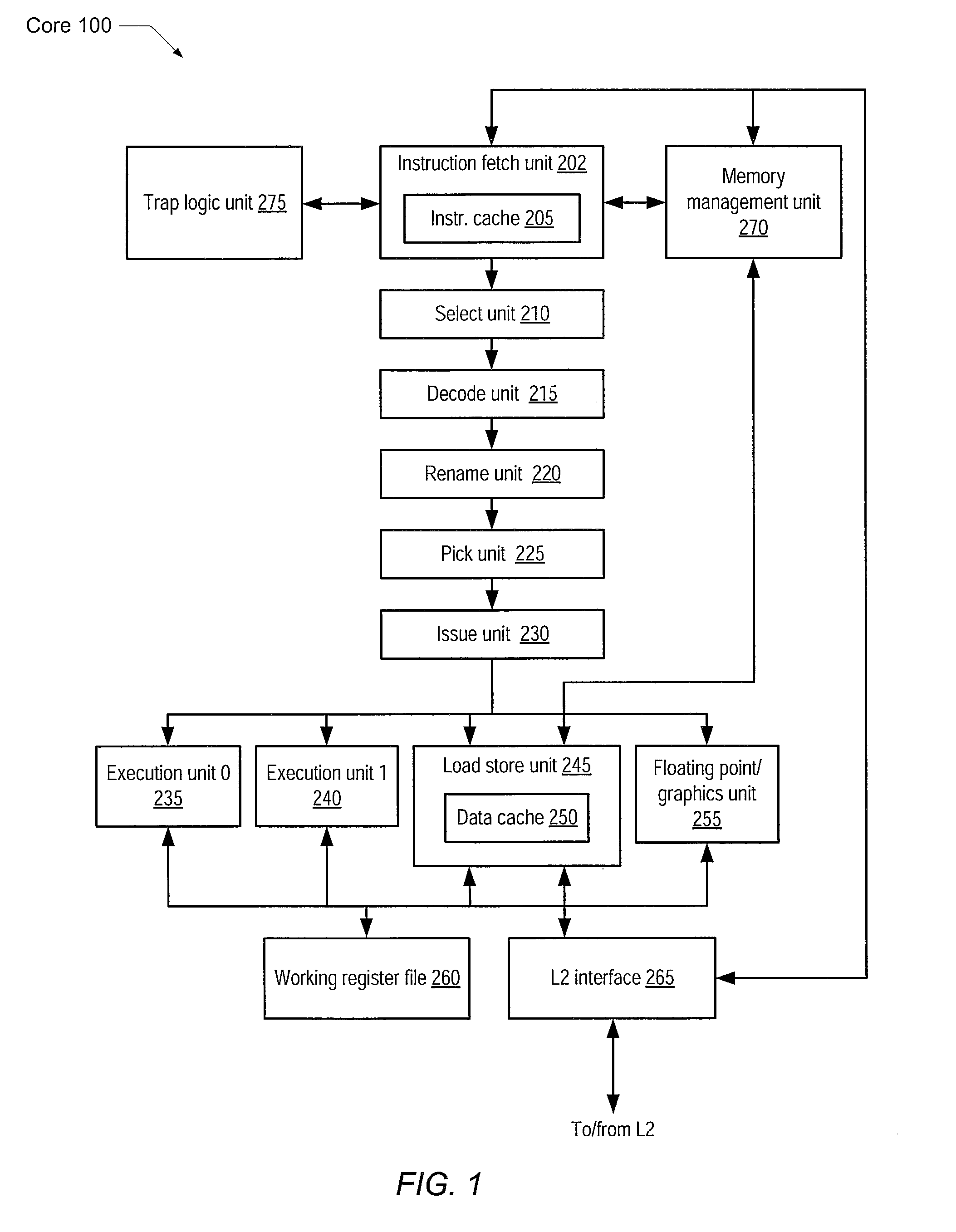

A method and mechanism for error recovery in a processor. A multithreaded processor is configured to utilize software for hardware detected machine errors. Rather than correcting and clearing the detected errors, hardware is configured to report the errors precisely. Both program-related exceptions and hardware errors are detected and, without being corrected by the hardware, flow down the pipeline to a trap unit where they are prioritized and handled via software. The processor assigns each instruction a thread ID and error information as it follows the pipeline. The trap unit records the error by using the thread ID of the instruction and the pipelined error information in order to determine which ESR receives the information and what to store in the ESR. A trap handling routine is then initiated to facilitate error recovery.

Owner:ORACLE INT CORP

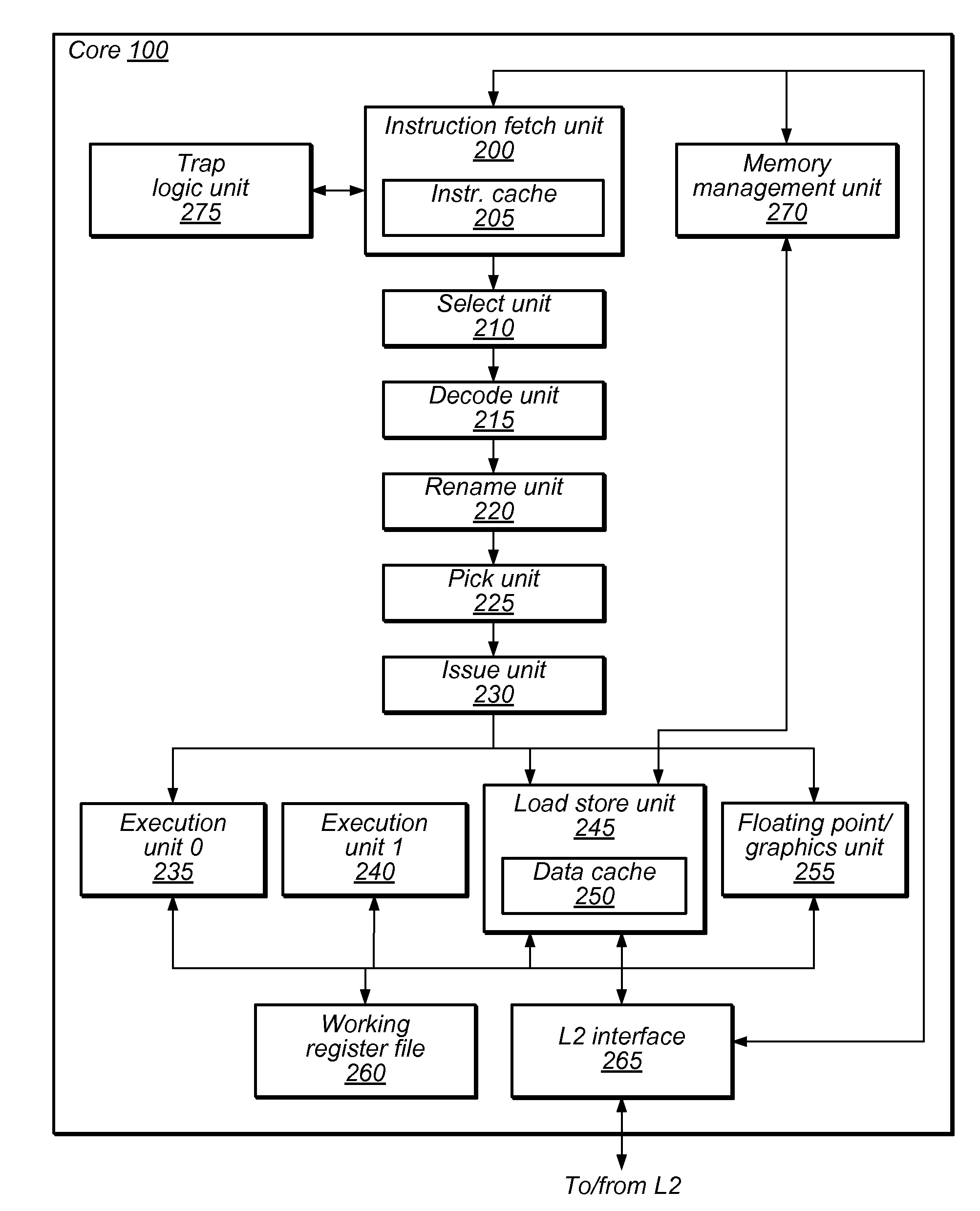

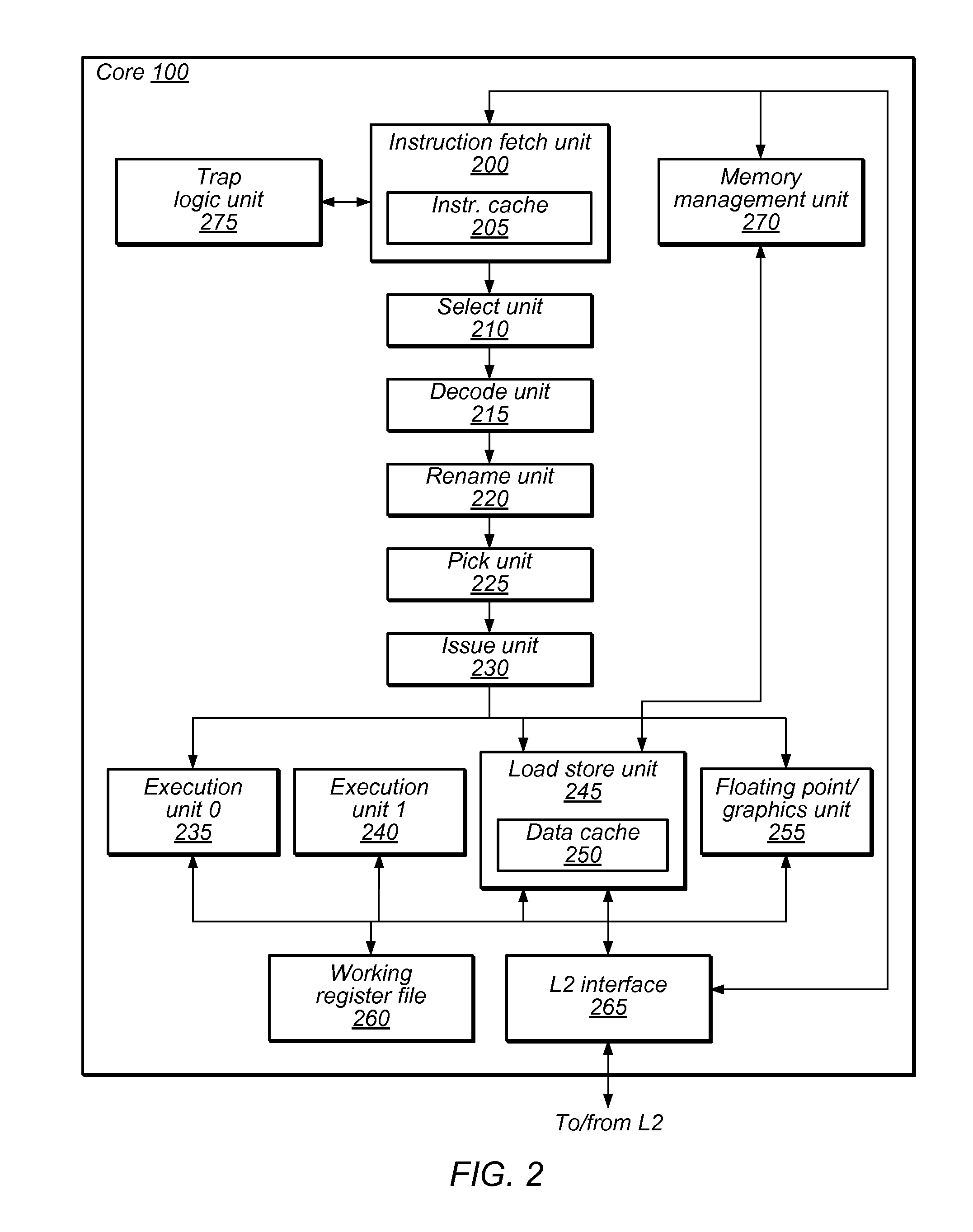

System and Method for Balancing Instruction Loads Between Multiple Execution Units Using Assignment History

ActiveUS20100325394A1Eliminate the effects ofDigital computer detailsMultiprogramming arrangementsInstruction distributionParallel computing

A system and method for balancing instruction loads between multiple execution units are disclosed. One or more execution units may be represented by a slot configured to accept instructions on behalf of the execution unit(s). A decode unit may assign instructions to a particular slot for subsequent scheduling for execution. Slot assignments may be made based on an instruction's type and / or on a history of previous slot assignments. A cumulative slot assignment history may be maintained in a bias counter, the value of which reflects the bias of previous slot assignments. Slot assignments may be determined based on the value of the bias counter, in order to balance the instruction load across all slots, and all execution units. The bias counter may reflect slot assignments made only within a desired historical window. A separate data structure may store data reflecting the actual slot assignments made during the desired historical window.

Owner:SUN MICROSYSTEMS INC

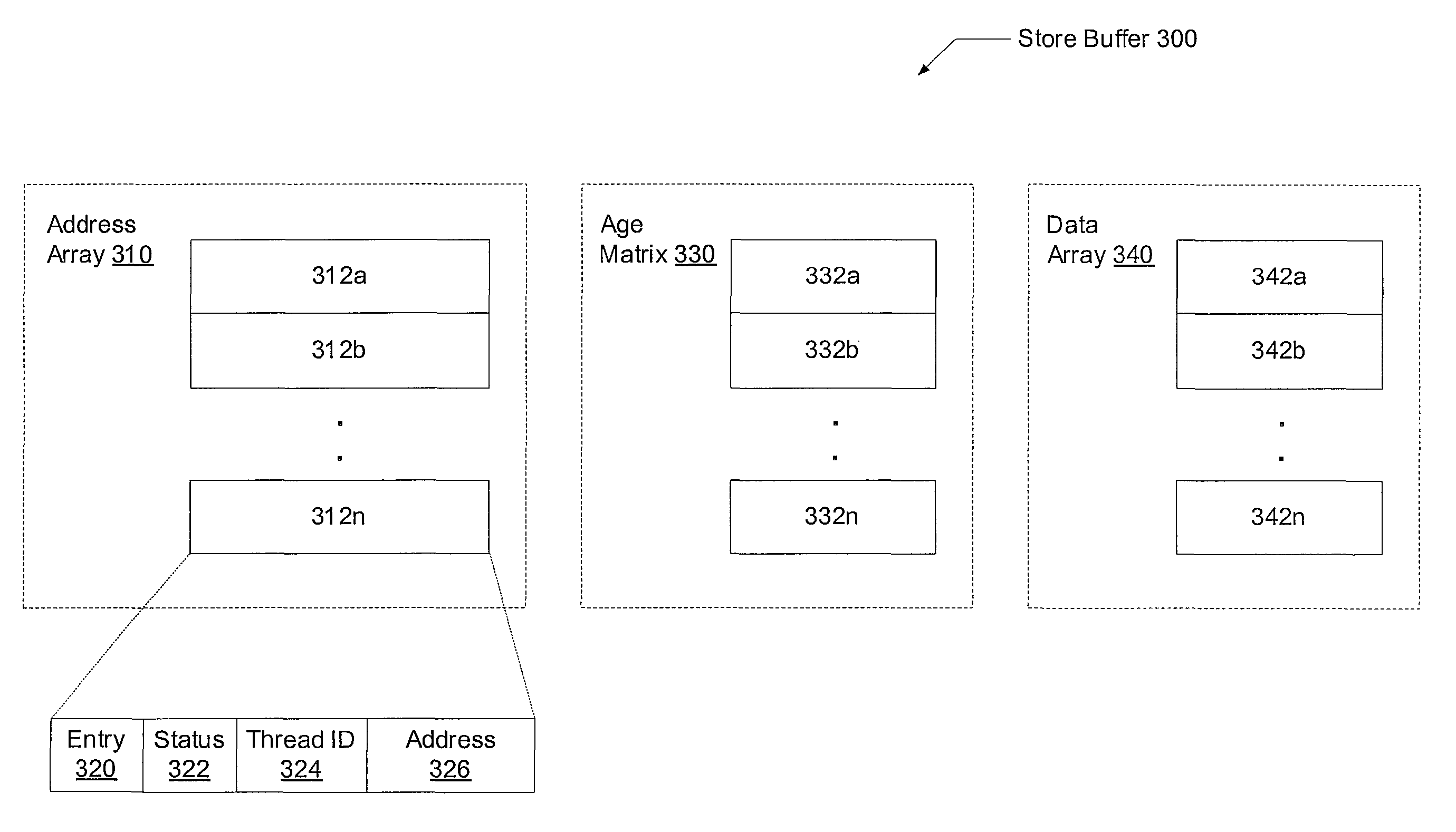

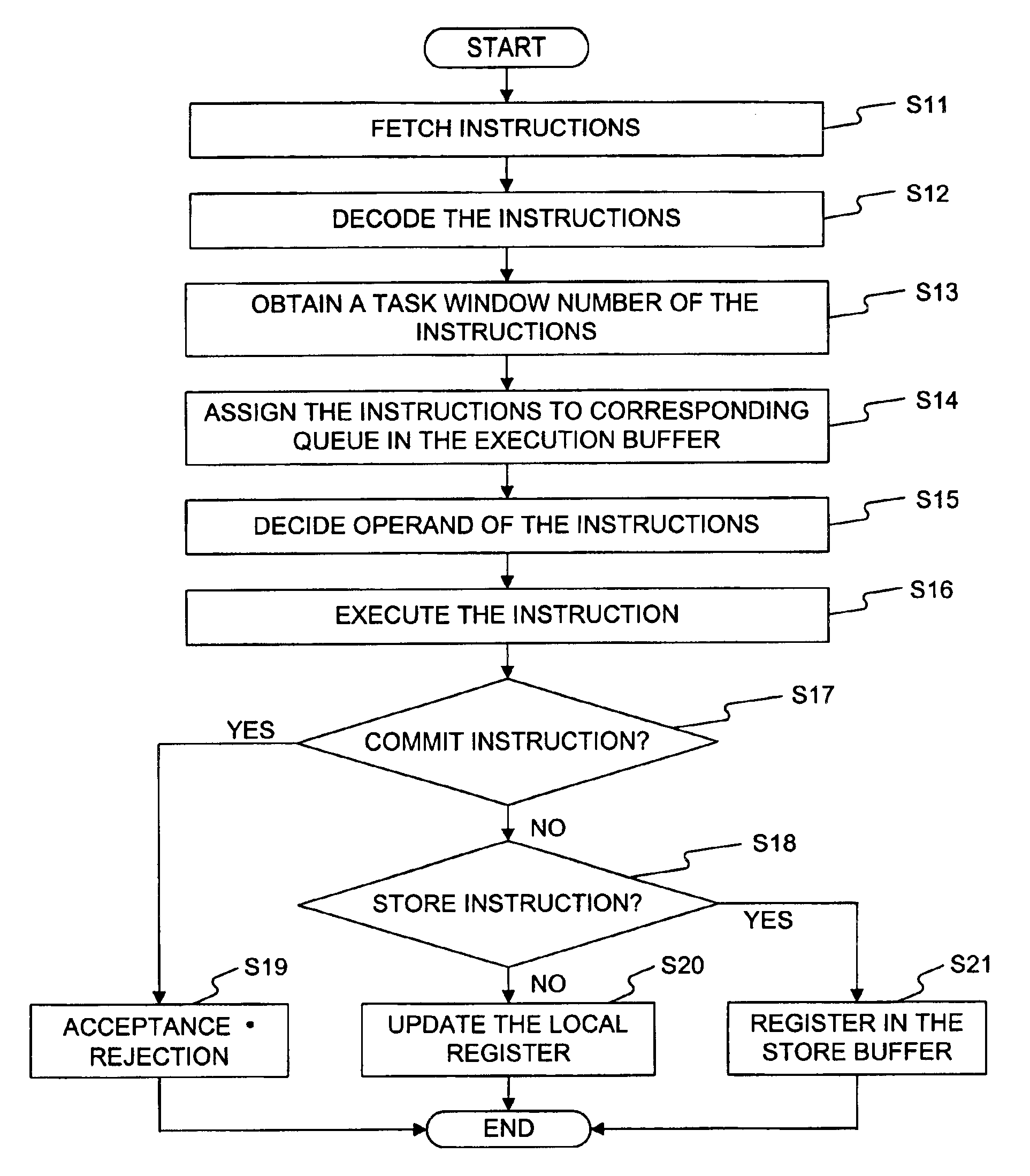

Load/store ordering in a threaded out-of-order processor

ActiveUS20100293347A1Memory adressing/allocation/relocationProgram controlArray data structureLoad instruction

Systems and methods for efficient load-store ordering. A processor comprises a store buffer that includes an array. The store buffer dynamically allocates any entry of the array for an out-of-order (o-o-o) issued store instruction independent of a corresponding thread. Circuitry within the store buffer determines a first set of entries of the array entries that have store instructions older in program order than a particular load instruction, wherein the store instructions have a same thread identifier and address as the load instruction. From the first set, the logic locates a single final match entry of the first set corresponding to the youngest store instruction of the first set, which may be used for read-after-write (RAW) hazard detection.

Owner:ORACLE INT CORP

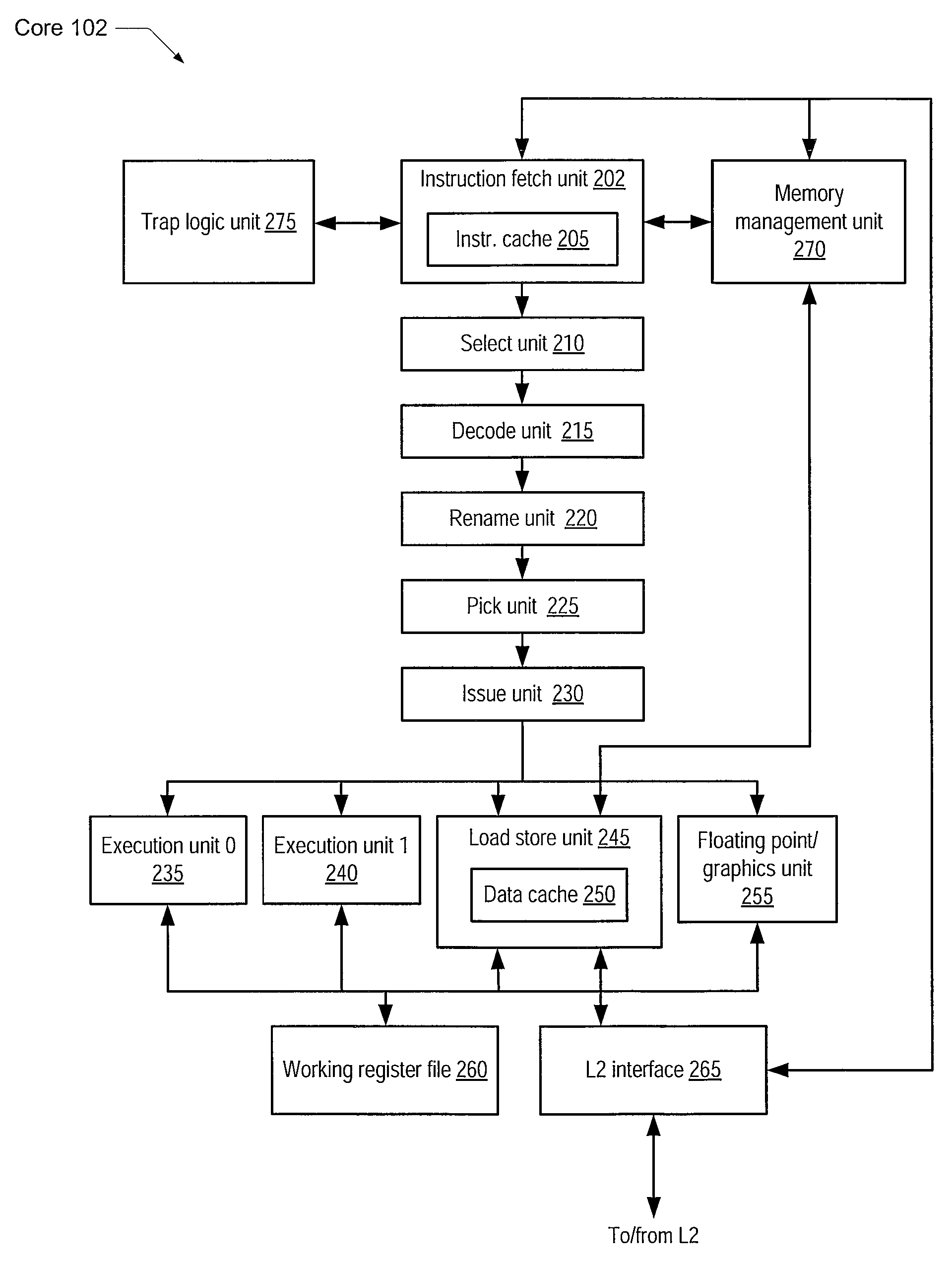

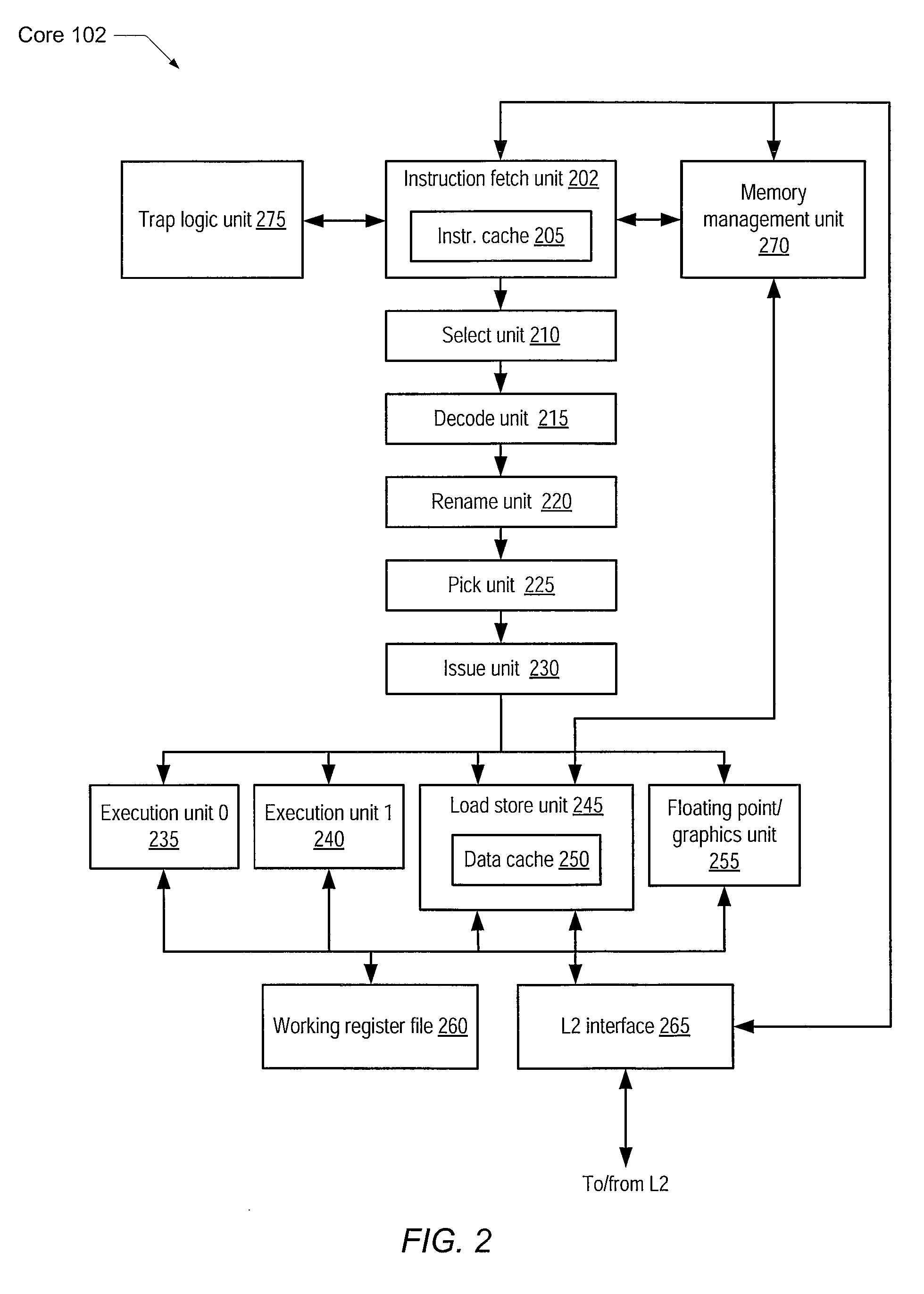

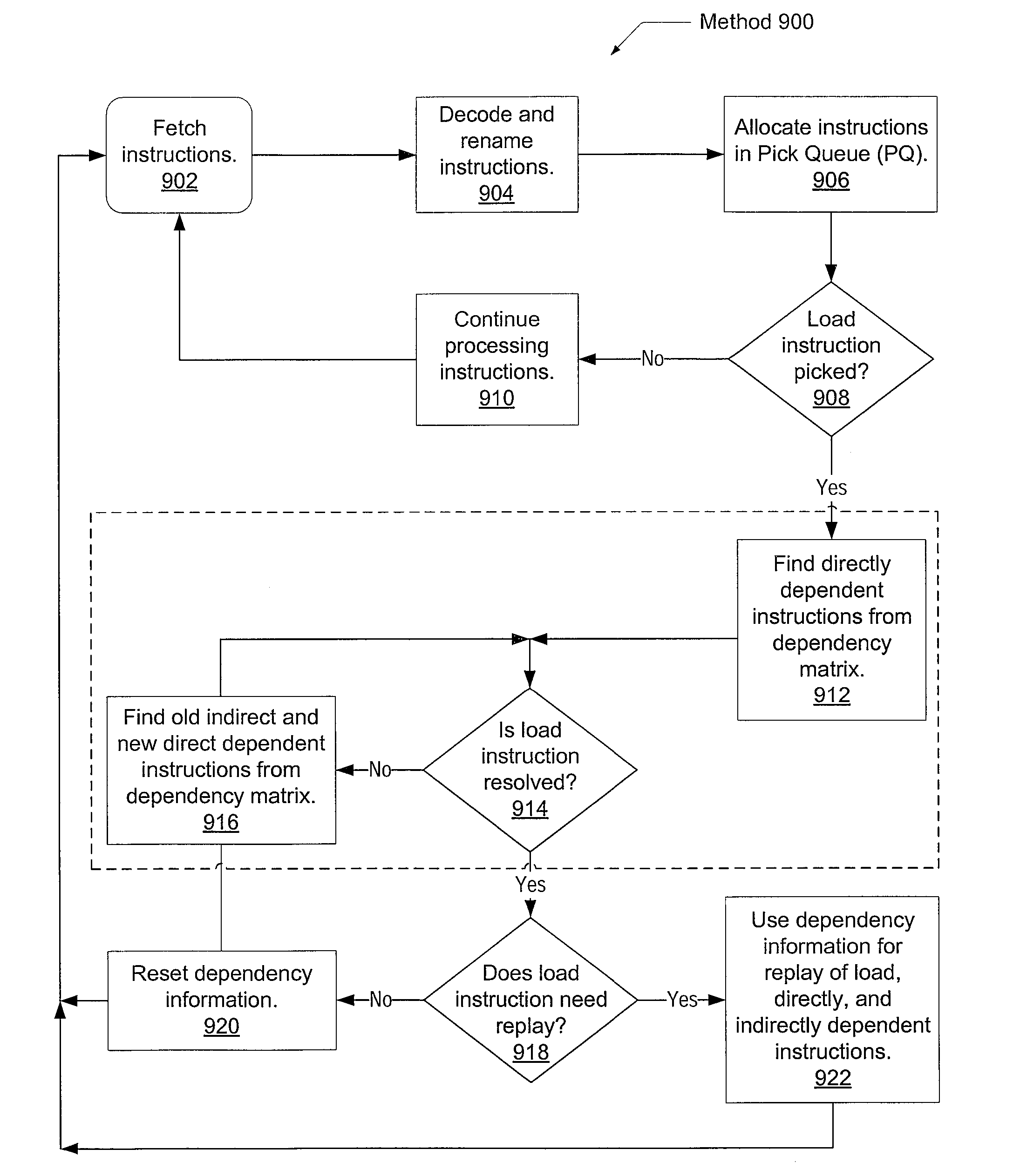

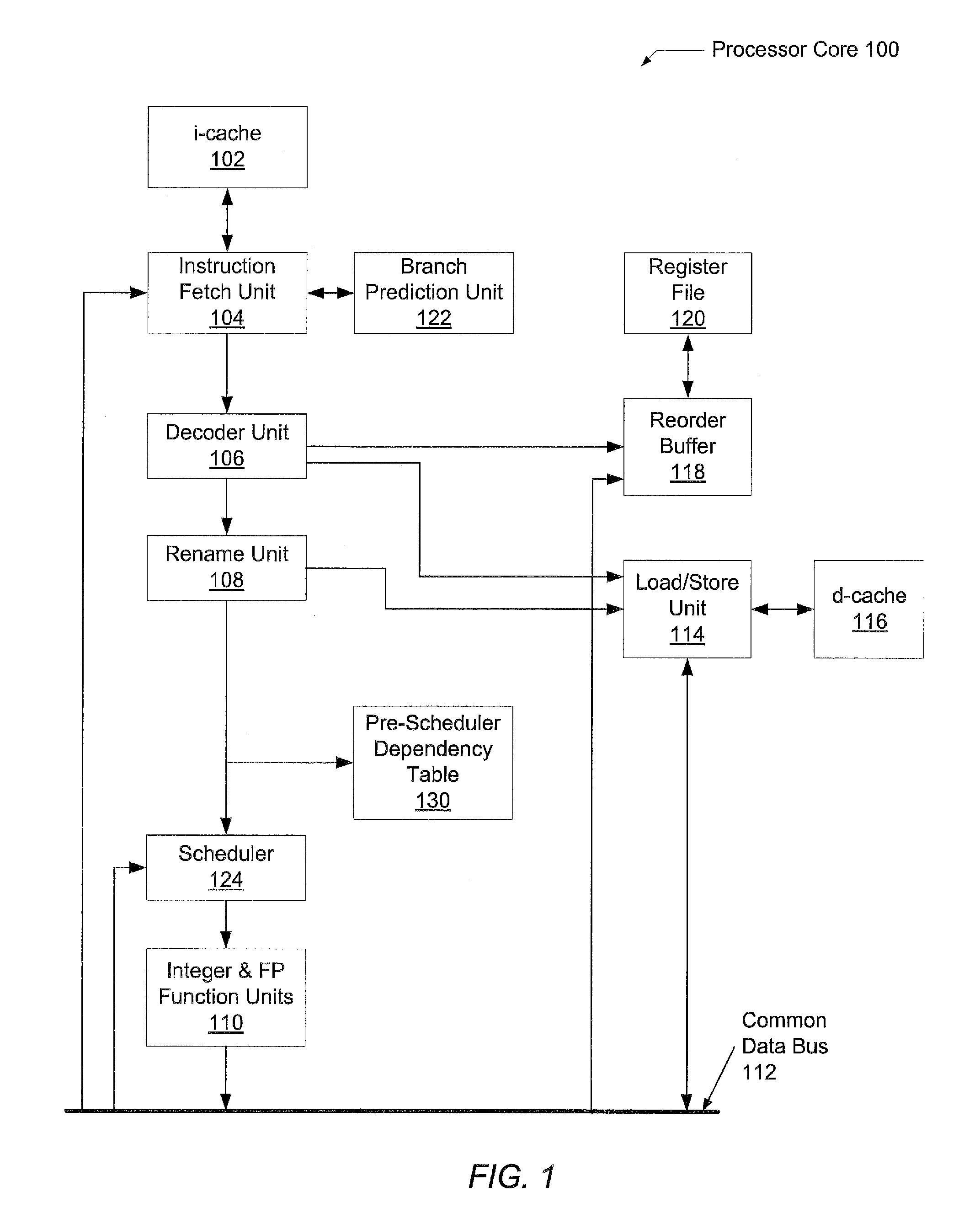

Dependency matrix for the determination of load dependencies

ActiveUS20100332806A1Efficient identificationDigital computer detailsMemory systemsParallel computingInstruction distribution

Systems and methods for identification of dependent instructions on speculative load operations in a processor. A processor allocates entries of a unified pick queue for decoded and renamed instructions. Each entry of a corresponding dependency matrix is configured to store a dependency bit for each other instruction in the pick queue. The processor speculates that loads will hit in the data cache, hit in the TLB and not have a read after write (RAW) hazard. For each unresolved load, the pick queue tracks dependent instructions via dependency vectors based upon the dependency matrix. If a load speculation is found to be incorrect, dependent instructions in the pick queue are reset to allow for subsequent picking, and dependent instructions in flight are canceled. On completion of a load miss, dependent operations are re-issued. On resolution of a TLB miss or RAW hazard, the original load is replayed and dependent operations are issued again from the pick queue.

Owner:SUN MICROSYSTEMS INC

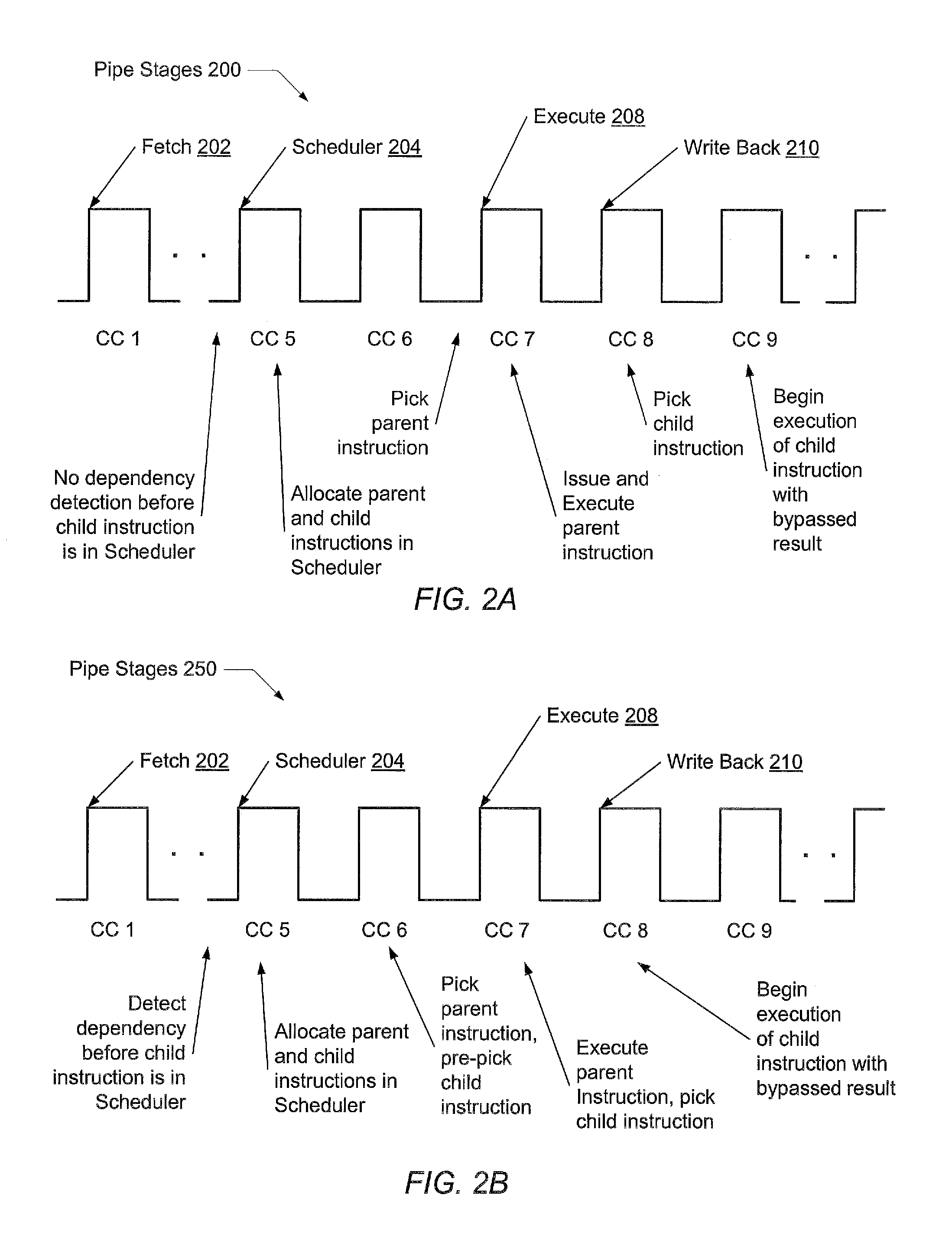

Paired execution scheduling of dependent micro-operations

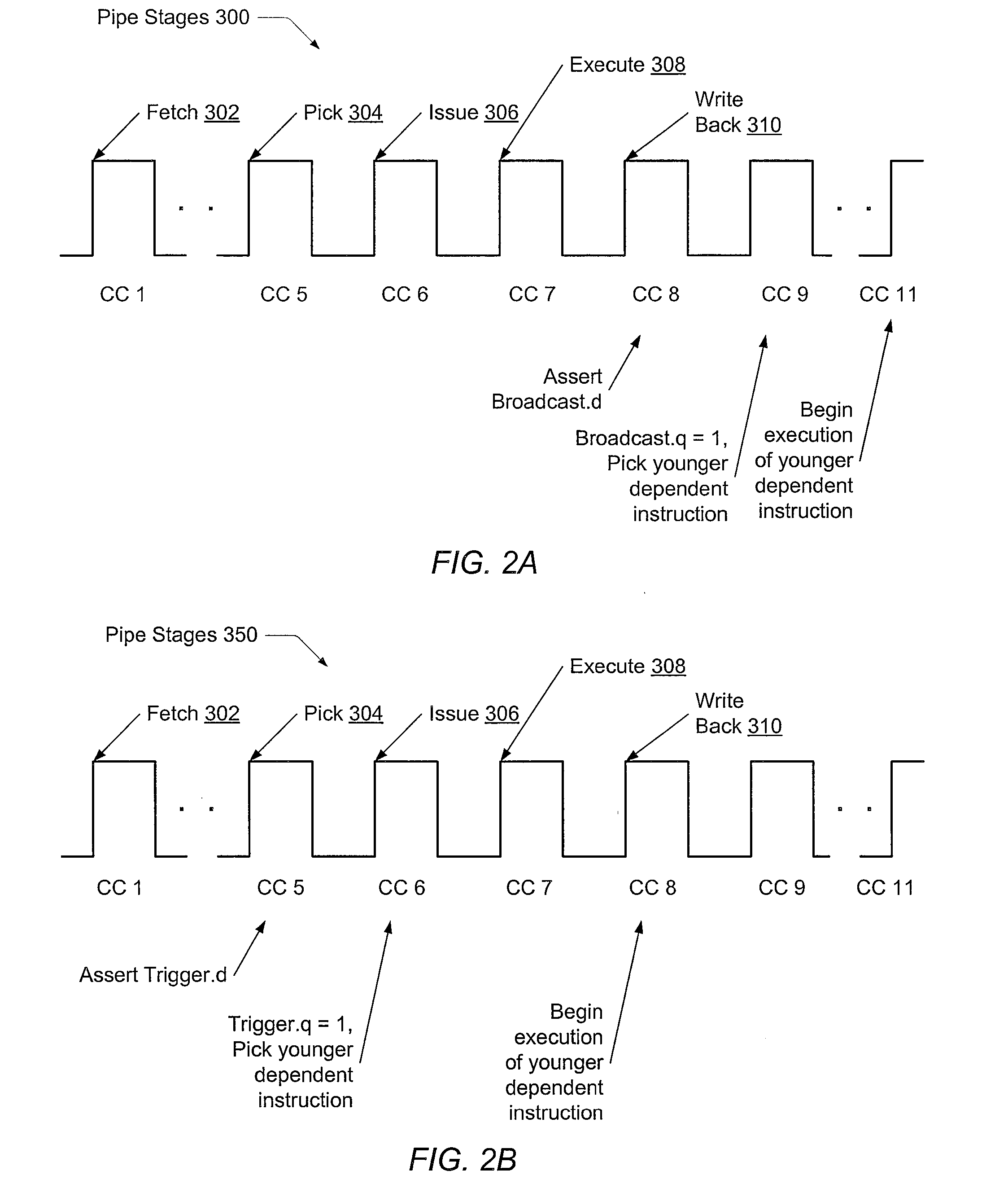

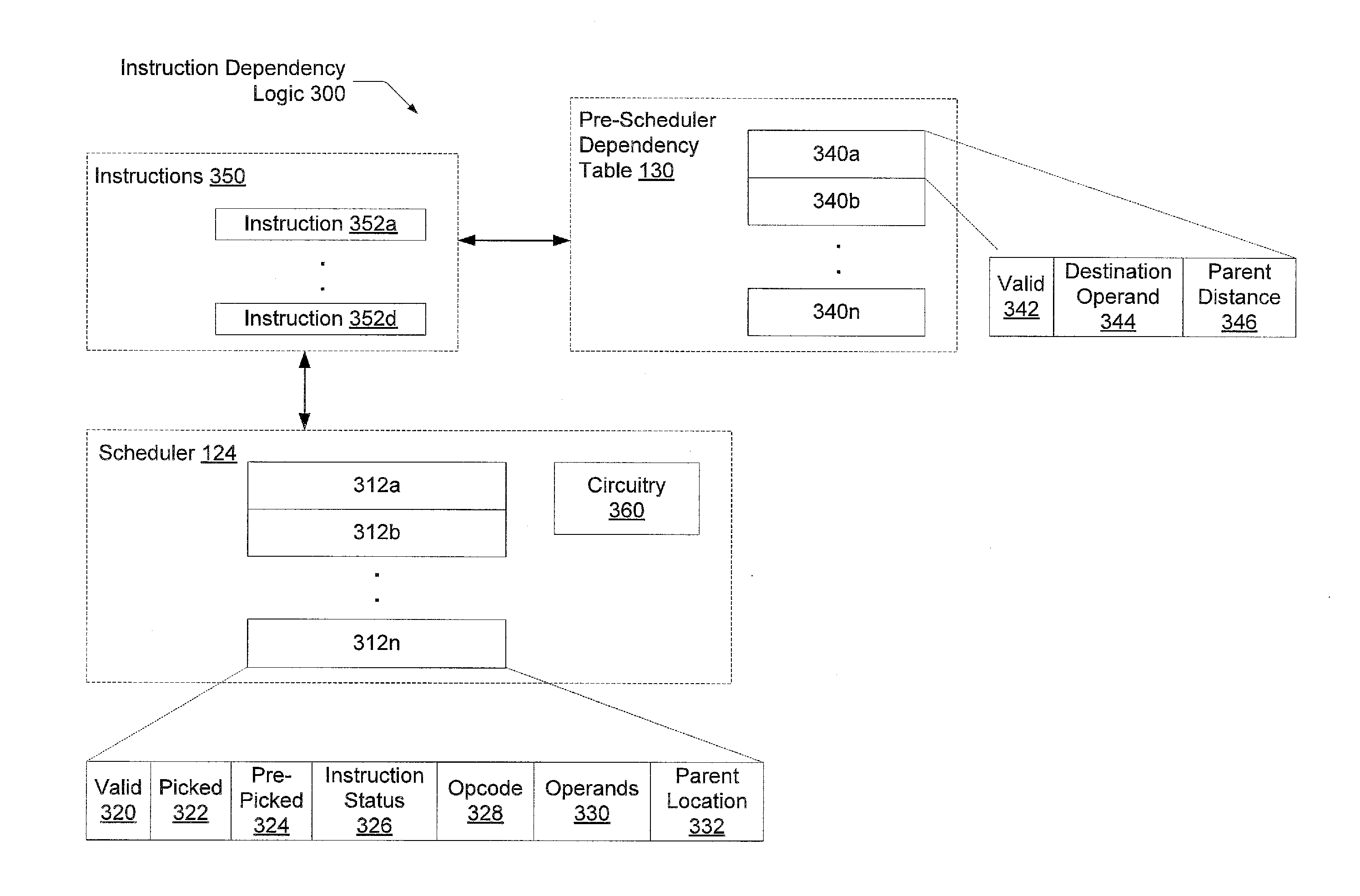

InactiveUS20120023314A1Lower latencySimple logicDigital computer detailsConcurrent instruction executionMicro-operationData dependence

A method and mechanism for reducing latency of a multi-cycle scheduler within a processor. A processor comprises a front end pipeline that determines data dependencies between instructions prior to a scheduling pipe stage. For each data dependency, a distance value is determined based on a number of instructions a younger dependent instruction is located from a corresponding older (in program order) instruction. When the younger dependent instruction is allocated an entry in a multi-cycle scheduler, this distance value may be used to locate an entry storing the older instruction in the scheduler. When the older instruction is picked for issue, the younger dependent instruction is marked as pre-picked. In an immediately subsequent clock cycle, the younger dependent instruction may be picked for issue, thereby reducing the latency of the multi-cycle scheduler.

Owner:ADVANCED MICRO DEVICES INC

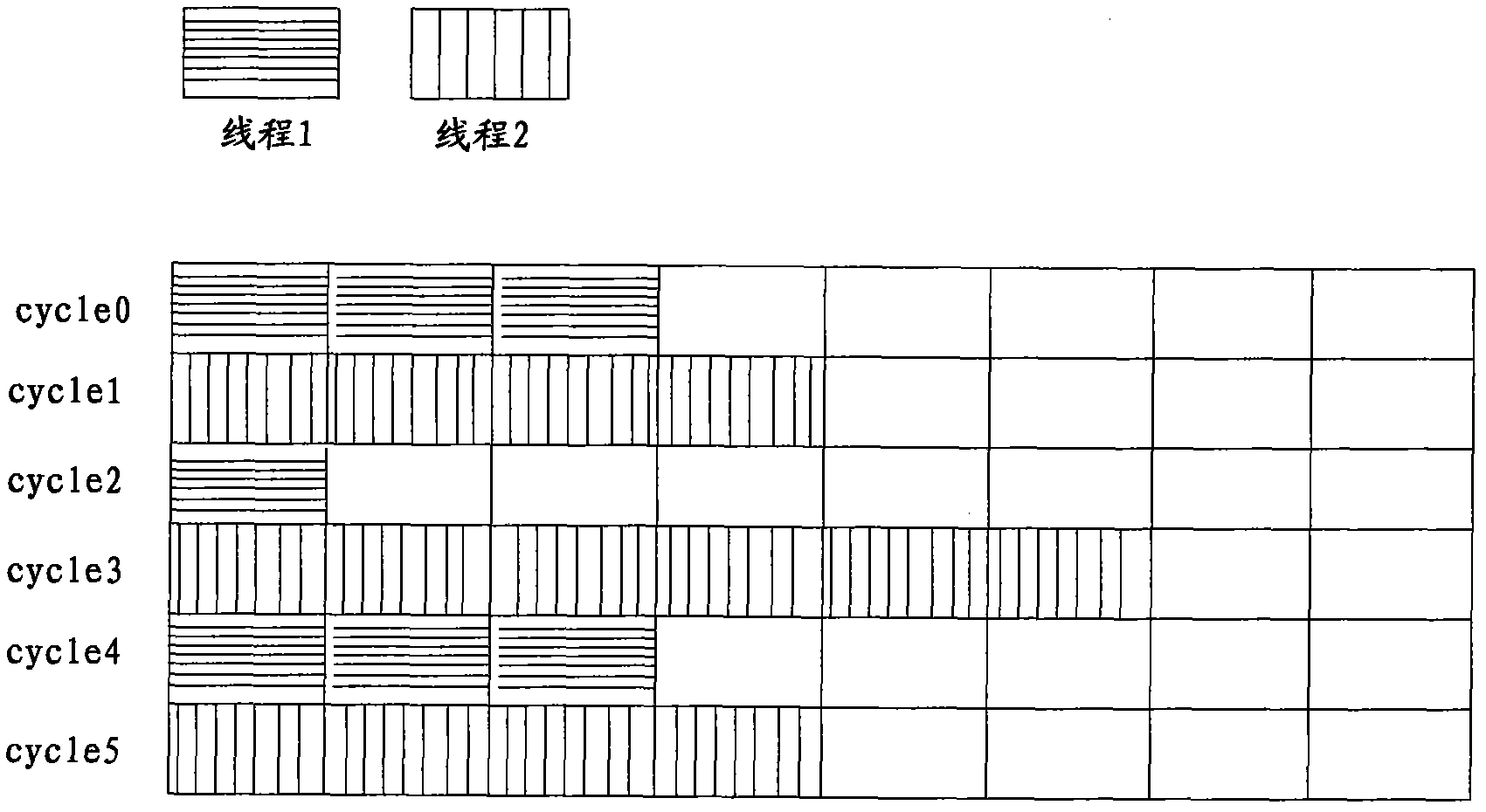

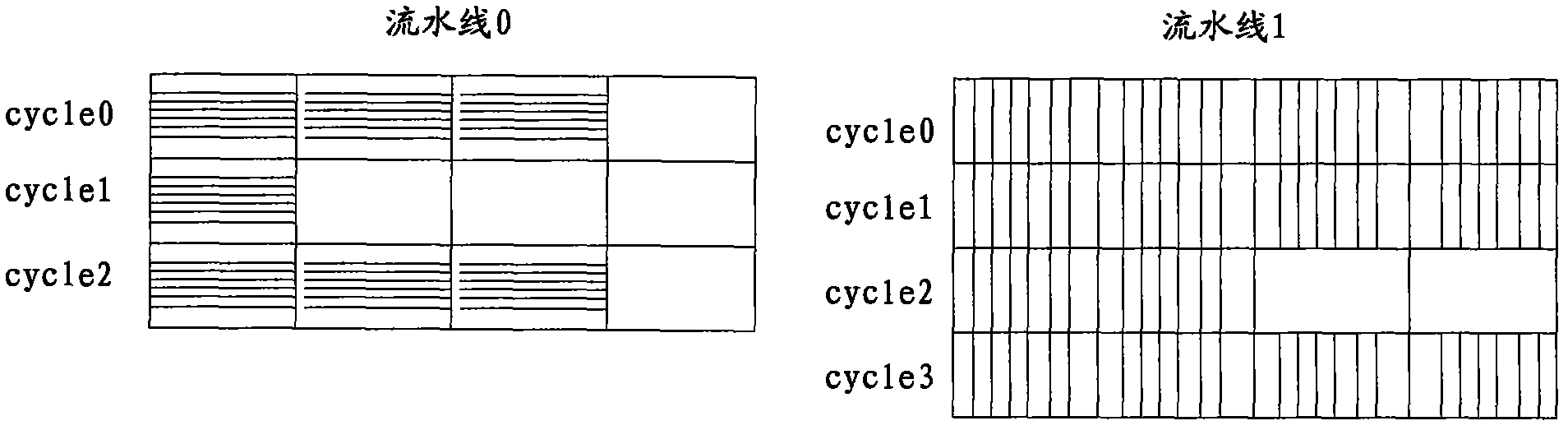

Very long instruction word processor structure supporting simultaneous multithreading

ActiveCN102004719AImprove access efficiencyFast executionConcurrent instruction executionArchitecture with multiple processing unitsProcessor registerControl register

The invention provides a very long instruction word processor structure supporting simultaneous multithreading, which comprises at least two parallel instruction processing pipeline structures, wherein each instruction processing pipeline structure comprises an instruction obtaining module, an instruction distribution module and an instruction executing module, a general register file, a floating point register file and a control register file, the instruction obtaining module is used for obtaining instruction information, the instruction distribution module is used for receiving and distributing the instruction information obtained by the instruction obtaining module, and the instruction executing module comprises instruction executing units A, D, M and F which are used for executing the instruction information, the general register file is used for storing executing results of the corresponding executing units A, M and D, and the floating point register file is used for storing executing results of the corresponding executing units D and F. Through the structure, the resources of a processor can be more sufficiently utilized, the threading access efficiency is enhanced, and the processing speed of the processor is improved.

Owner:TSINGHUA UNIV

Method and apparatus for rasterizer interpolation

InactiveUS20060170690A1Timely processingImprove the level ofDrawing from basic elementsCathode-ray tube indicatorsComputational scienceGraphics

The present invention relates to a rasterizer interpolator. In one embodiment, a setup unit is used to distribute graphics primitive instructions to multiple parallel rasterizers. To increase efficiency, the setup unit calculates the polygon data and checks it against one or more tiles prior to distribution. An output screen is divided into a number of regions, with a number of assignment configurations possible for various number of rasterizer pipelines. For instance, the screen is sub-divided into four regions and one of four rasterizers is granted ownership of one quarter of the screen. To reduce time spent on processing empty times, a problem in prior art implementations, the present invention reduces empty tiles by the process of coarse grain tiling. This process occurs by a series of iterations performed in parallel. Each region undergoes an iterative calculation / tiling process where coverage of the primitive is deduced at a successively more detailed level.

Owner:ATI TECH INC

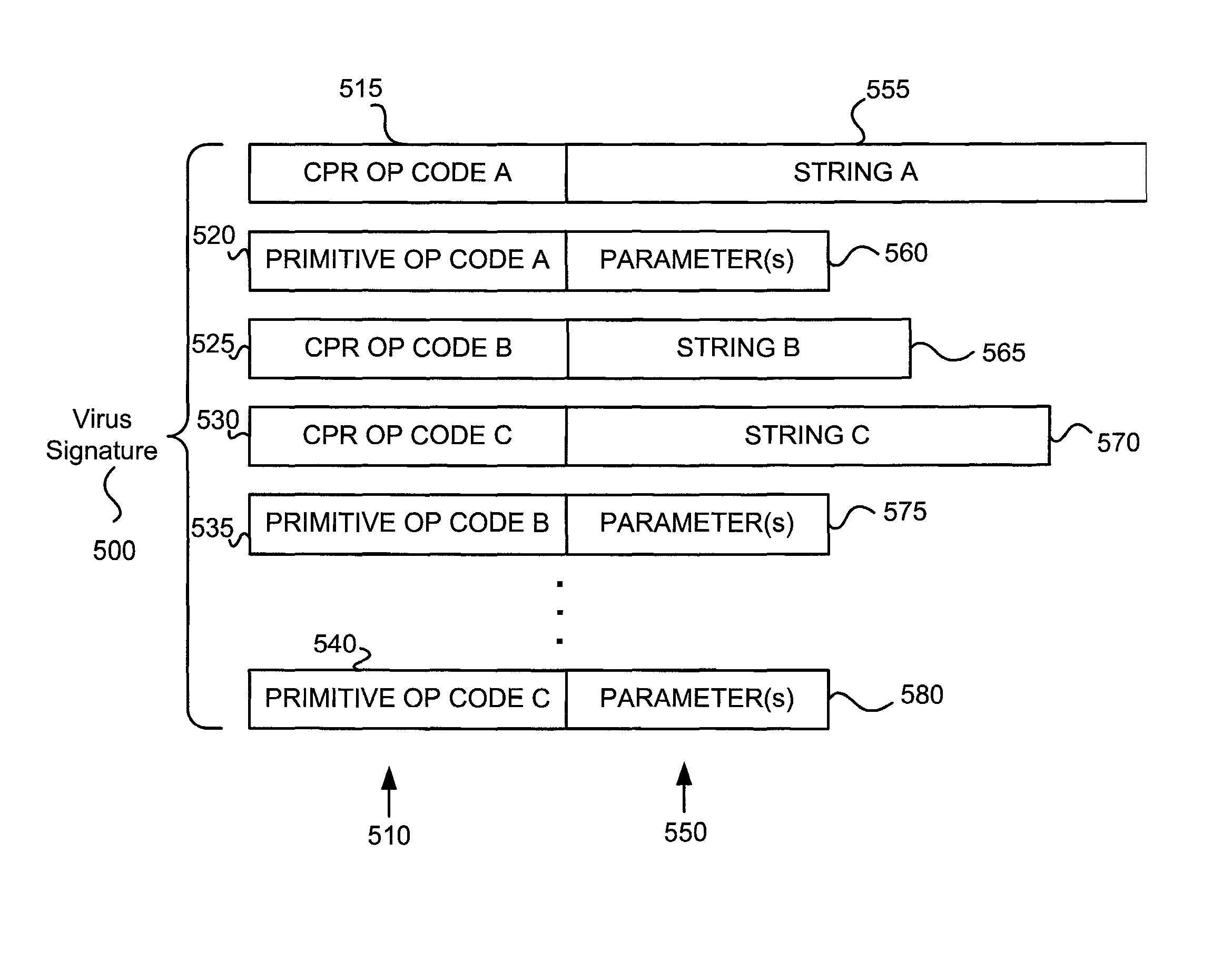

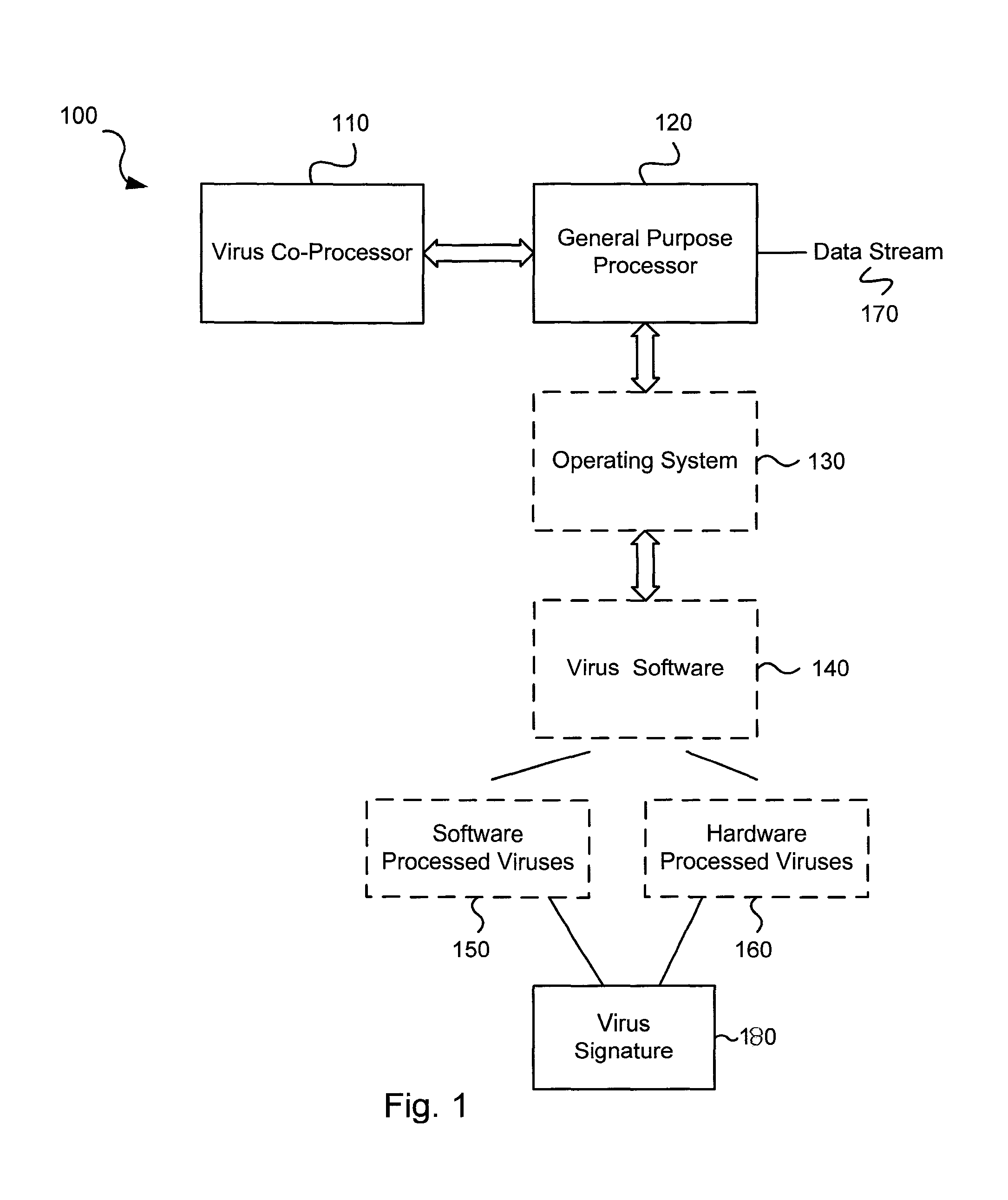

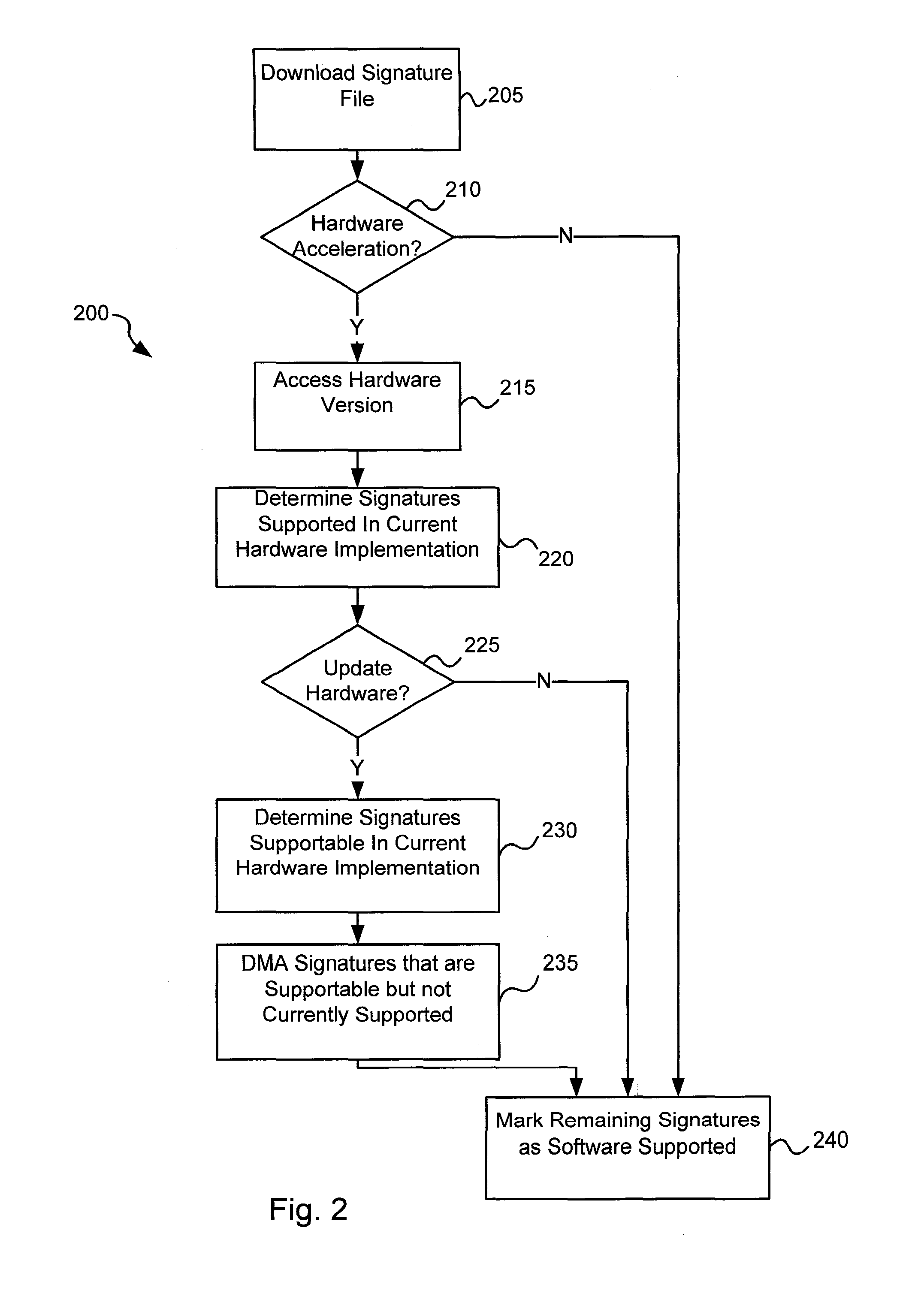

Operation of a dual instruction pipe virus co-processor

Owner:FORTINET

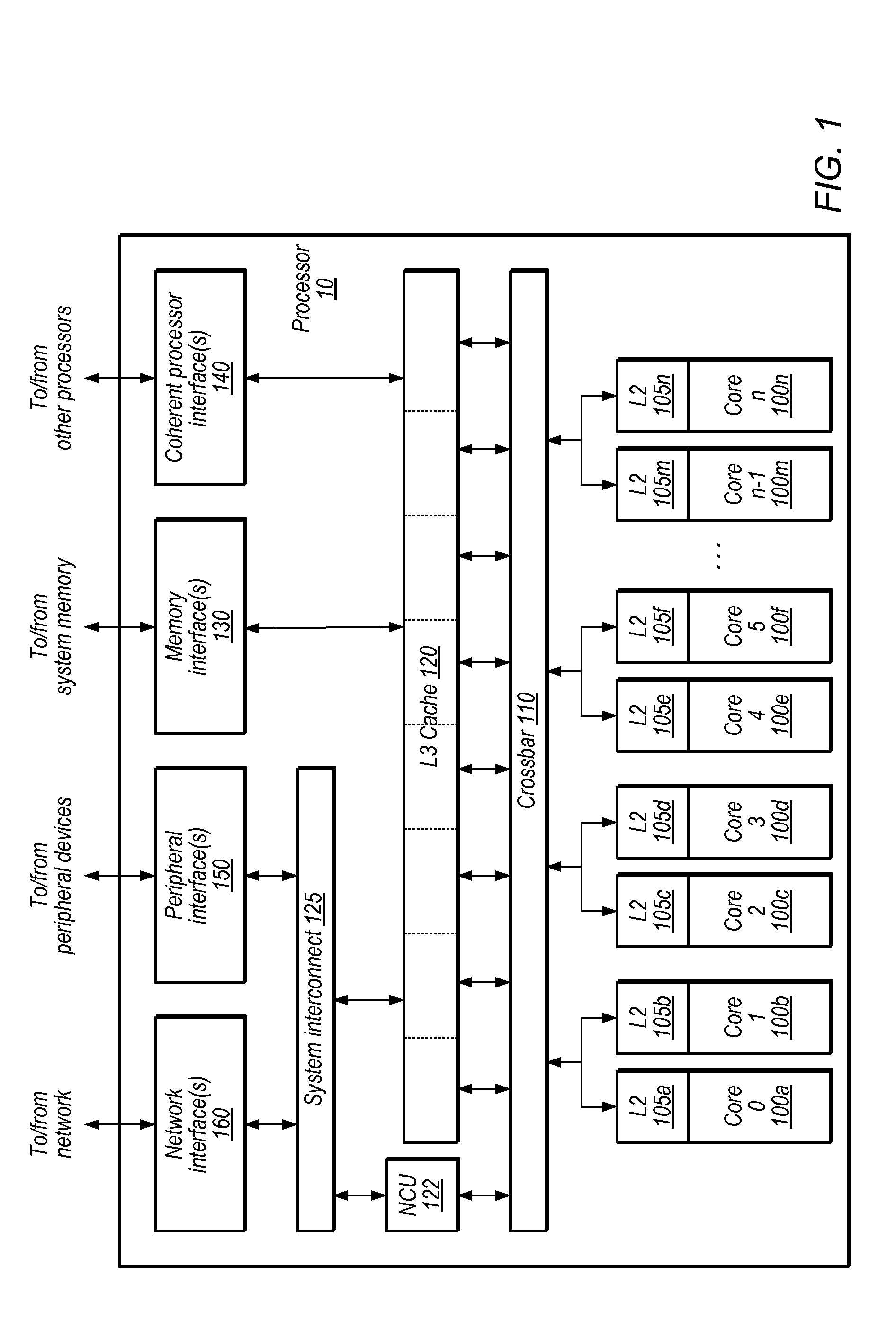

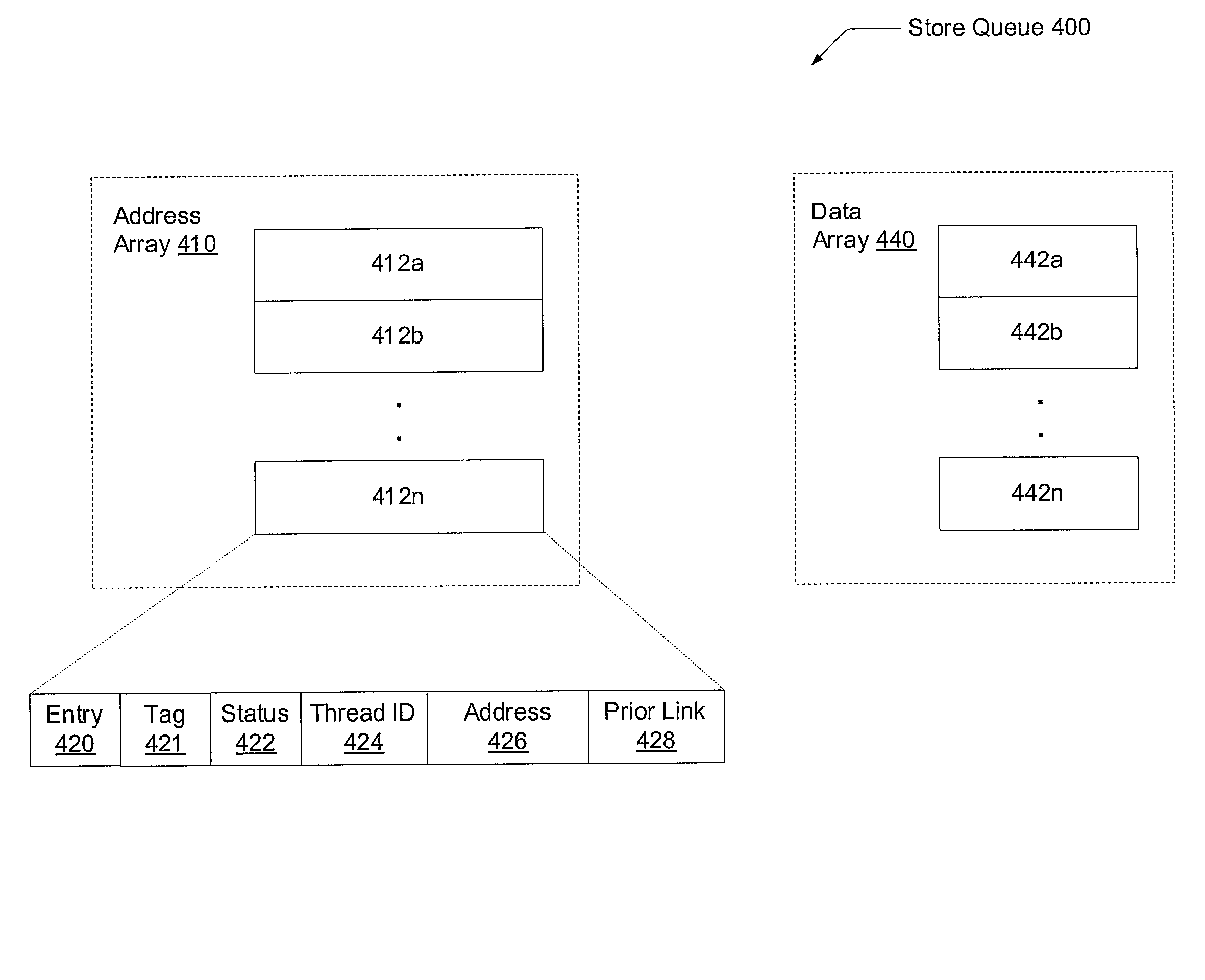

Dynamically allocated store queue for a multithreaded processor

ActiveUS20100299508A1Digital computer detailsConcurrent instruction executionData shippingInstruction distribution

Systems and methods for storage of writes to memory corresponding to multiple threads. A processor comprises a store queue, wherein the queue dynamically allocates a current entry for a committed store instruction in which entries of the array may be allocated out of program order. For a given thread, the store queue conveys store data to a memory in program order. The queue is further configured to identify an entry of the plurality of entries that corresponds to an oldest committed store instruction for a given thread and determine a next entry of the array that corresponds to a next committed store instruction in program order following the oldest committed store instruction of the given thread, wherein said next entry includes data identifying the entry. The queue marks an entry as unfilled upon successful conveying of store data to the memory.

Owner:ORACLE INT CORP

Dynamic tag allocation in a multithreaded out-of-order processor

ActiveUS20100333098A1Memory adressing/allocation/relocationMultiprogramming arrangementsInstruction distributionProcedure sequence

Various techniques for dynamically allocating instruction tags and using those tags are disclosed. These techniques may apply to processors supporting out-of-order execution and to architectures that supports multiple threads. A group of instructions may be assigned a tag value from a pool of available tag values. A tag value may be usable to determine the program order of a group of instructions relative to other instructions in a thread. After the group of instructions has been (or is about to be) committed, the tag value may be freed so that it can be re-used on a second group of instructions. Tag values are dynamically allocated between threads; accordingly, a particular tag value or range of tag values is not dedicated to a particular thread.

Owner:ORACLE INT CORP

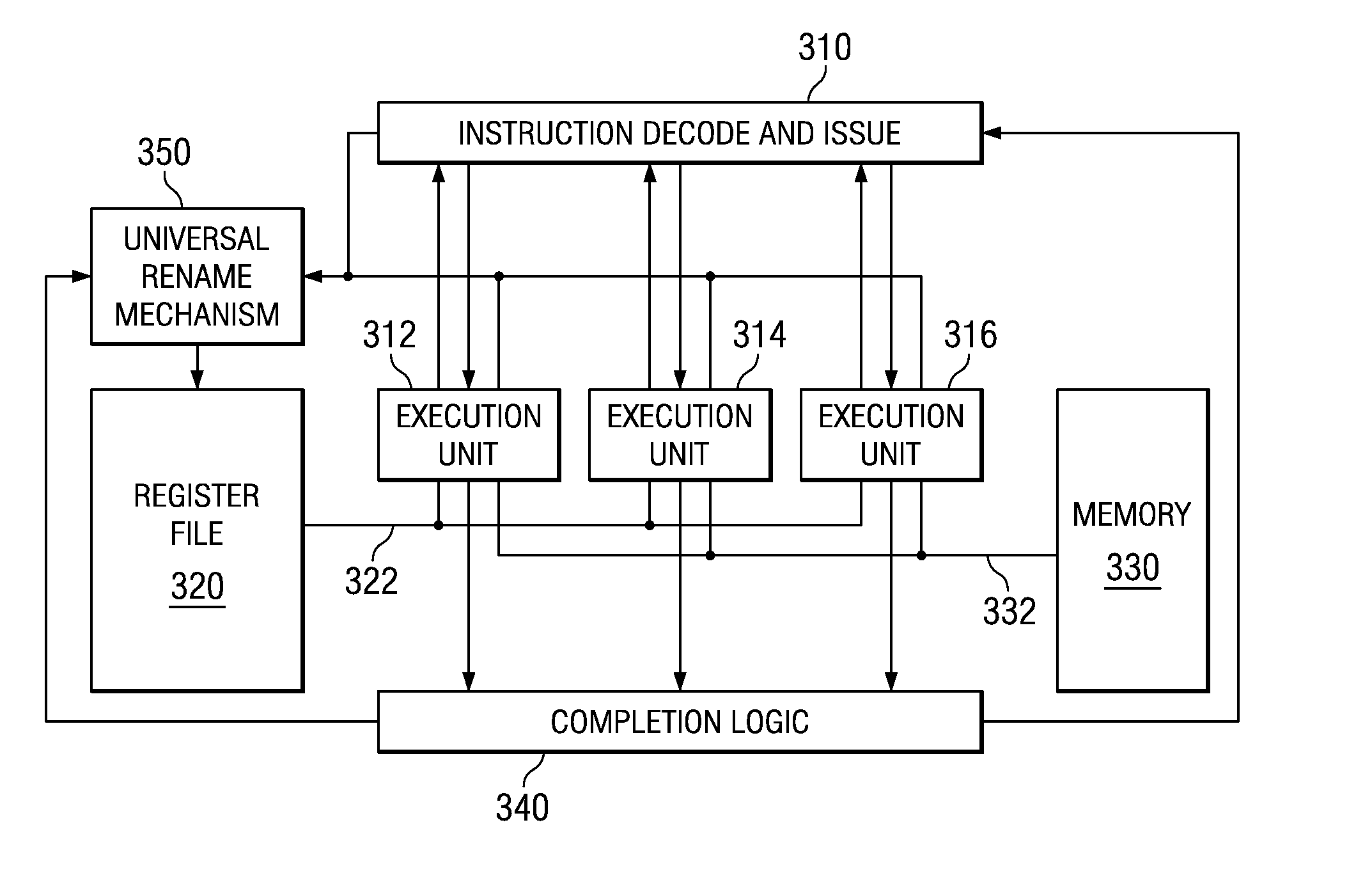

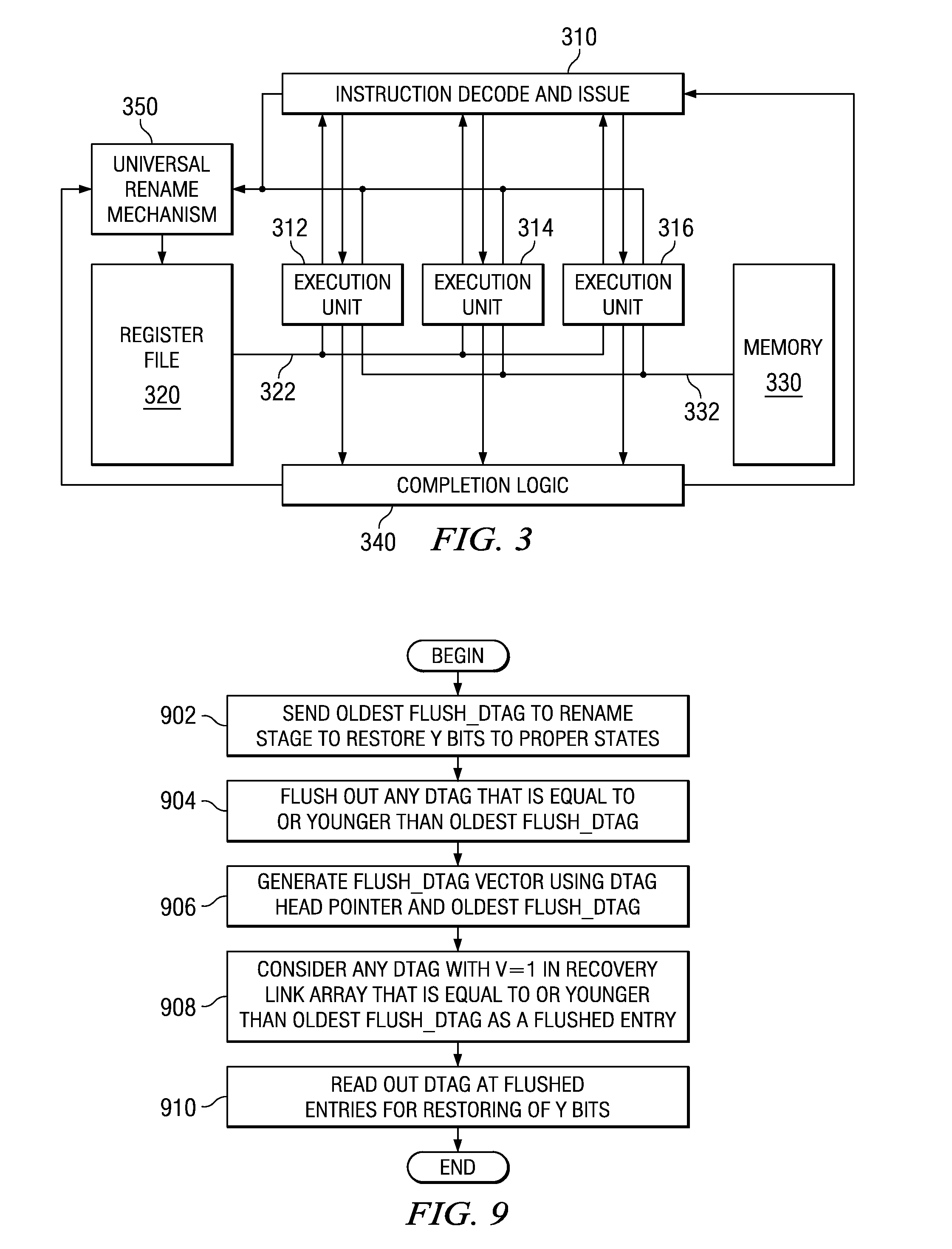

Universal Register Rename Mechanism for Targets of Different Instruction Types in a Microprocessor

InactiveUS20080263321A1Increase powerGeneral purpose stored program computerProgram controlGeneral purposeParallel computing

A unified register rename mechanism for targets of different instruction types is provided in a microprocessor. The universal rename mechanism renames destinations of different instruction types using a single rename structure. Thus, an instruction that is updating a floating point register (FPR) can be renamed along with an instruction that is updating a general purpose register (GPR) or vector multimedia extensions (VMX) instructions register (VR) using the same rename structure because the number of architected states for GPR is the same as the number of architected states for FPR and VR. Each destination tag (DTAG) is assigned to one destination. A floating point instruction may be assigned to a DTAG, and then a fixed point instruction may be assigned to the next DTAG and so forth. With a universal rename mechanism, significant silicon and power can be saved by having only one rename structure for all instruction types.

Owner:IBM CORP

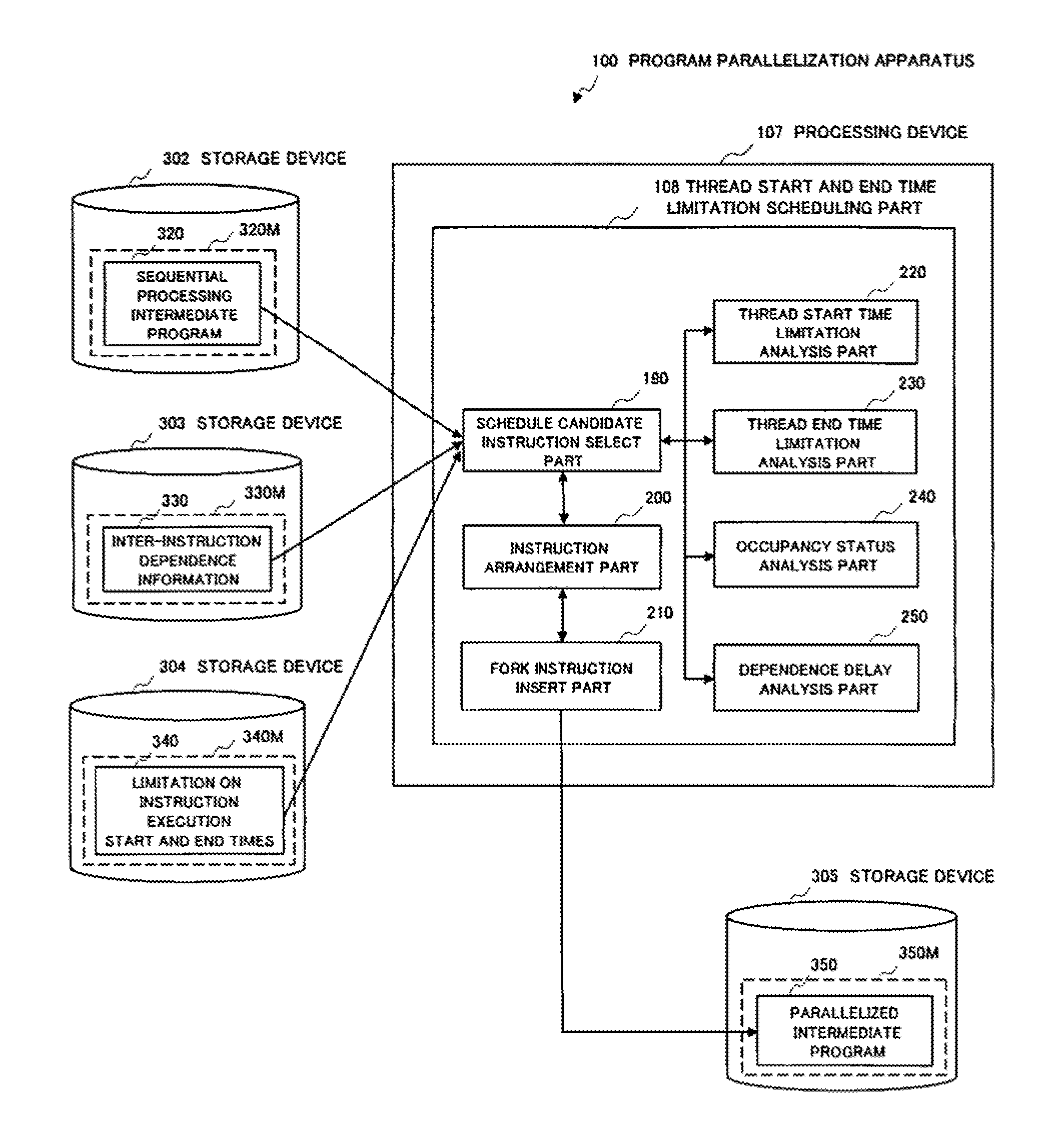

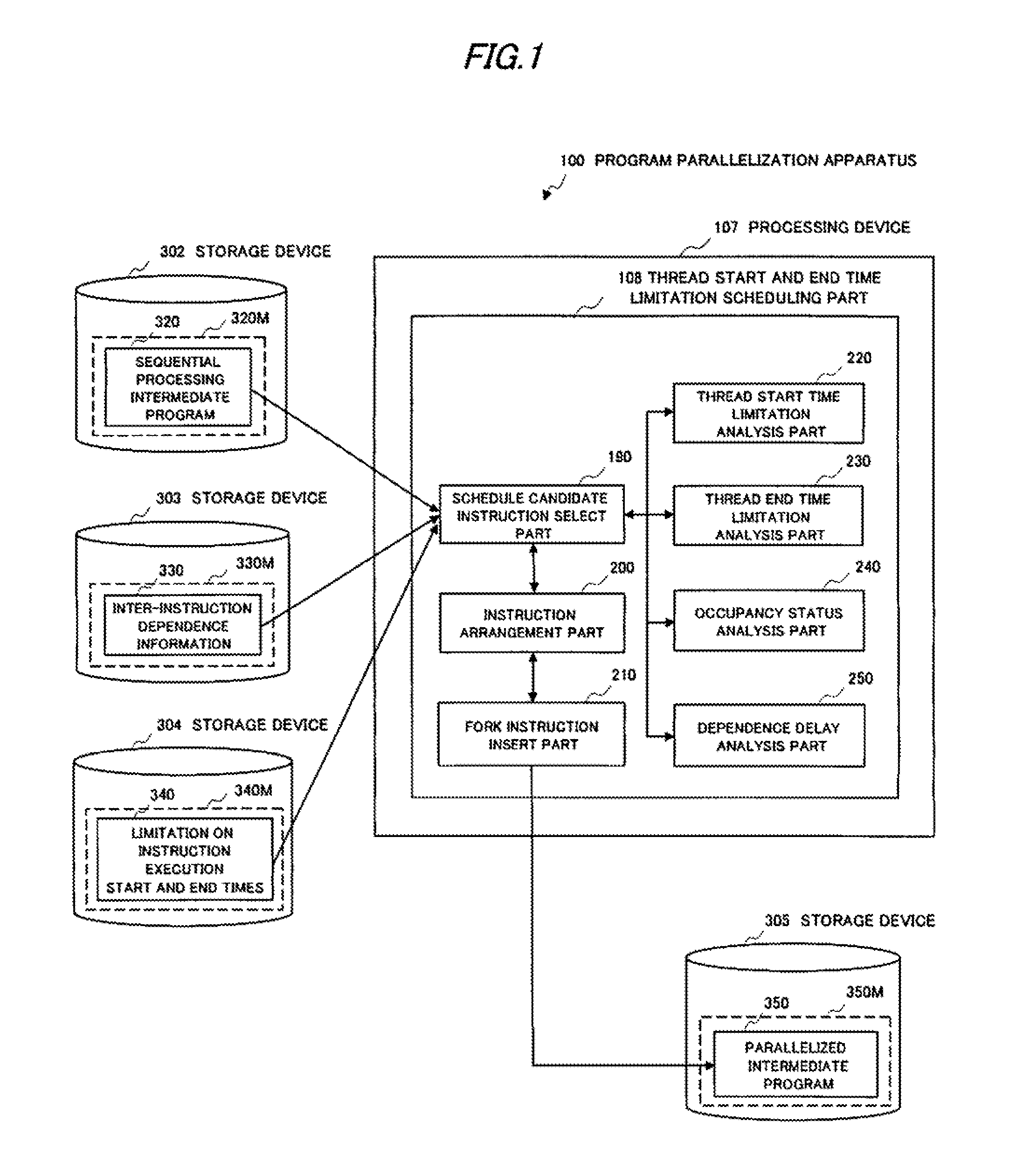

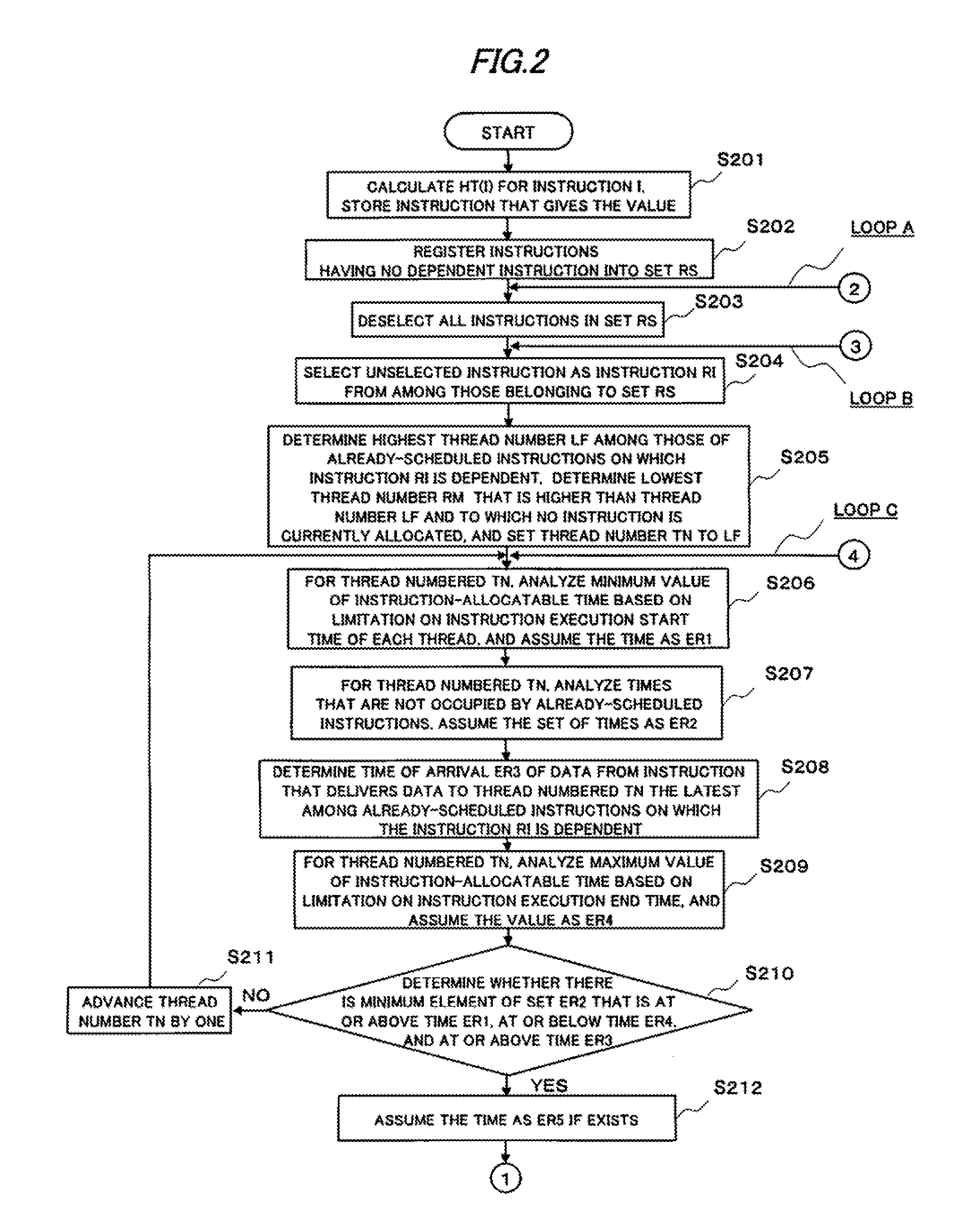

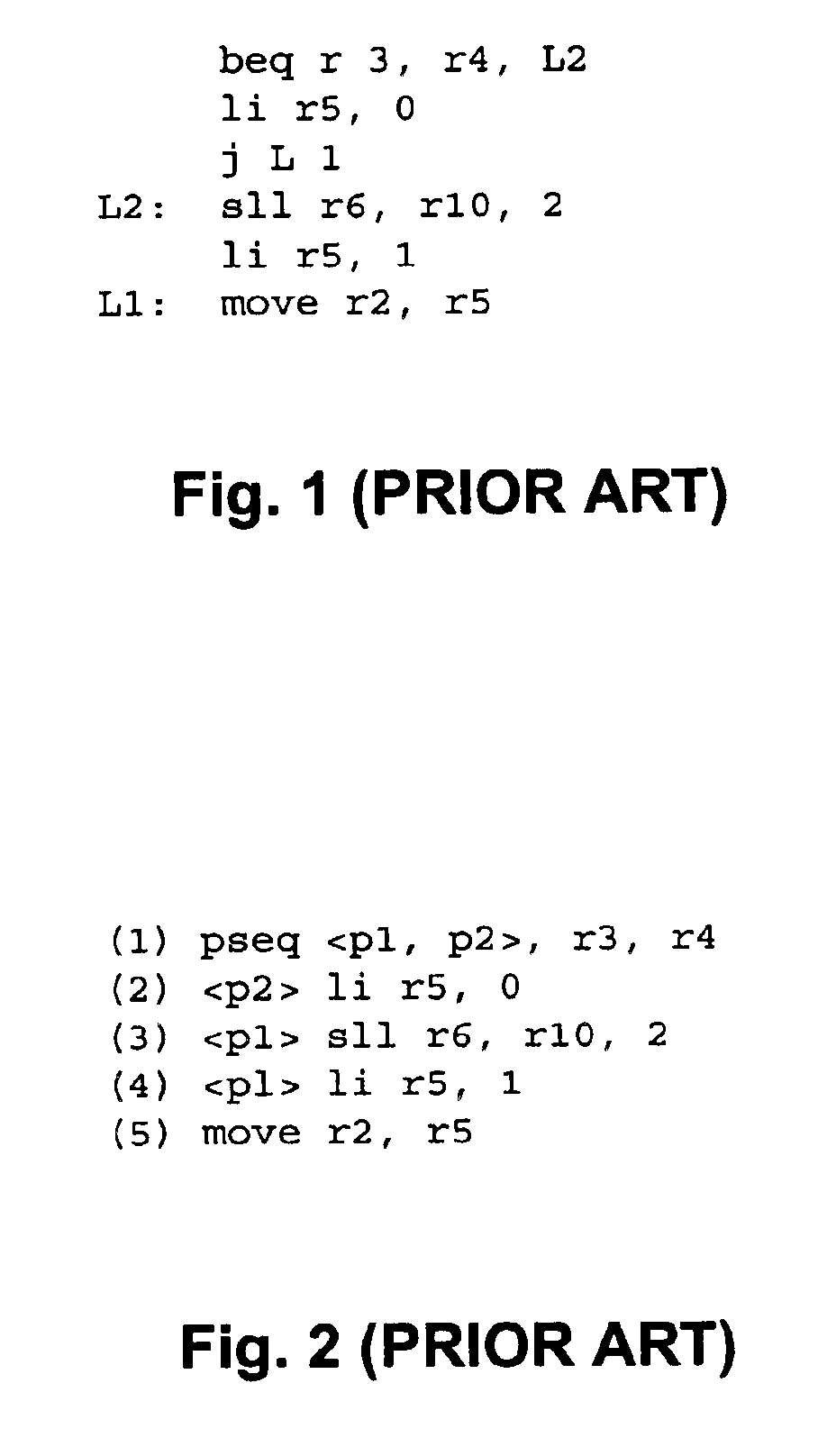

Program parallelization apparatus, program parallelization method, and program parallelization program

InactiveUS20110067015A1Reduce idle timeShort execution timeSoftware engineeringProgram controlStart timeParallel computing

A program parallelization apparatus which generates a parallelized program of shorter parallel execution time is provided. The program parallelization apparatus inputs a sequential processing intermediate program and outputs a parallelized intermediate program. In the apparatus, a thread start time limitation analysis part analyzes an instruction-allocatable time based on a limitation on an instruction execution start time of each thread. A thread end time limitation analysis part analyzes an instruction-allocatable time based on a limitation on an instruction execution end time of each thread. An occupancy status analysis part analyzes a time not occupied by already-scheduled instructions. A dependence delay analysis part analyzes an instruction-allocatable time based on a delay resulting from dependence between instructions. A schedule candidate instruction select part selects a next instruction to schedule. An instruction arrangement part allocates a processor and time to execute to an instruction.

Owner:NEC CORP

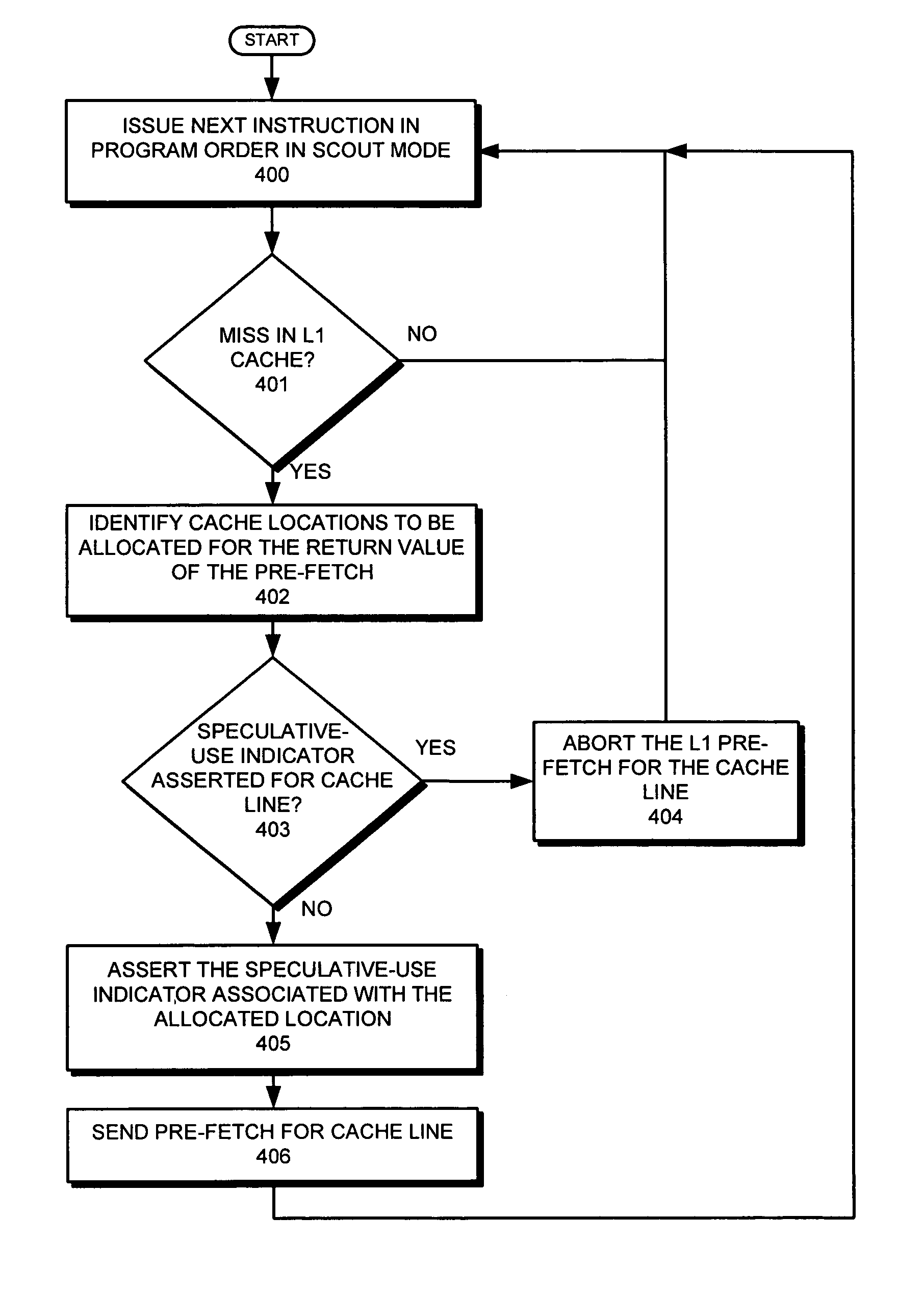

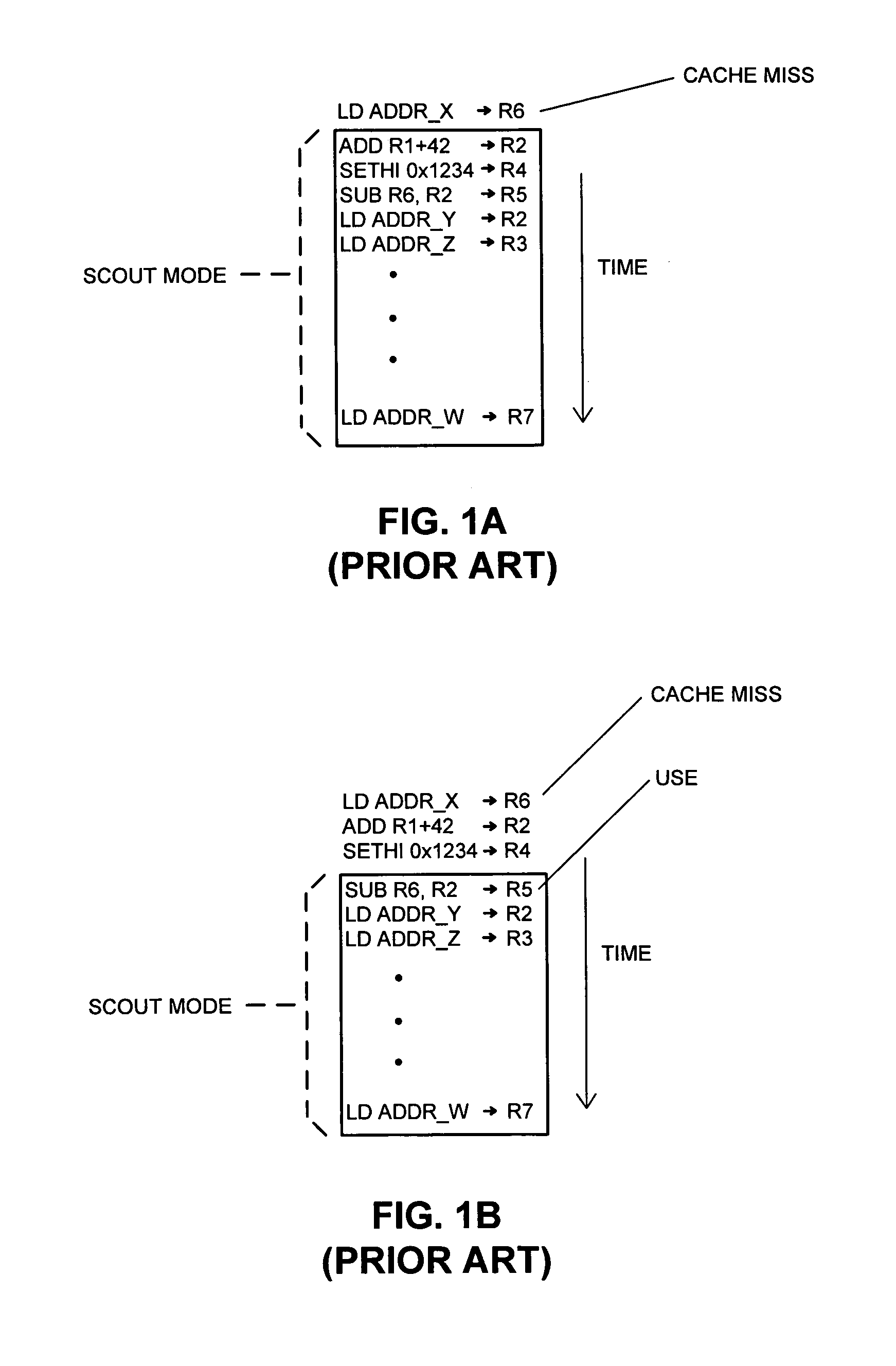

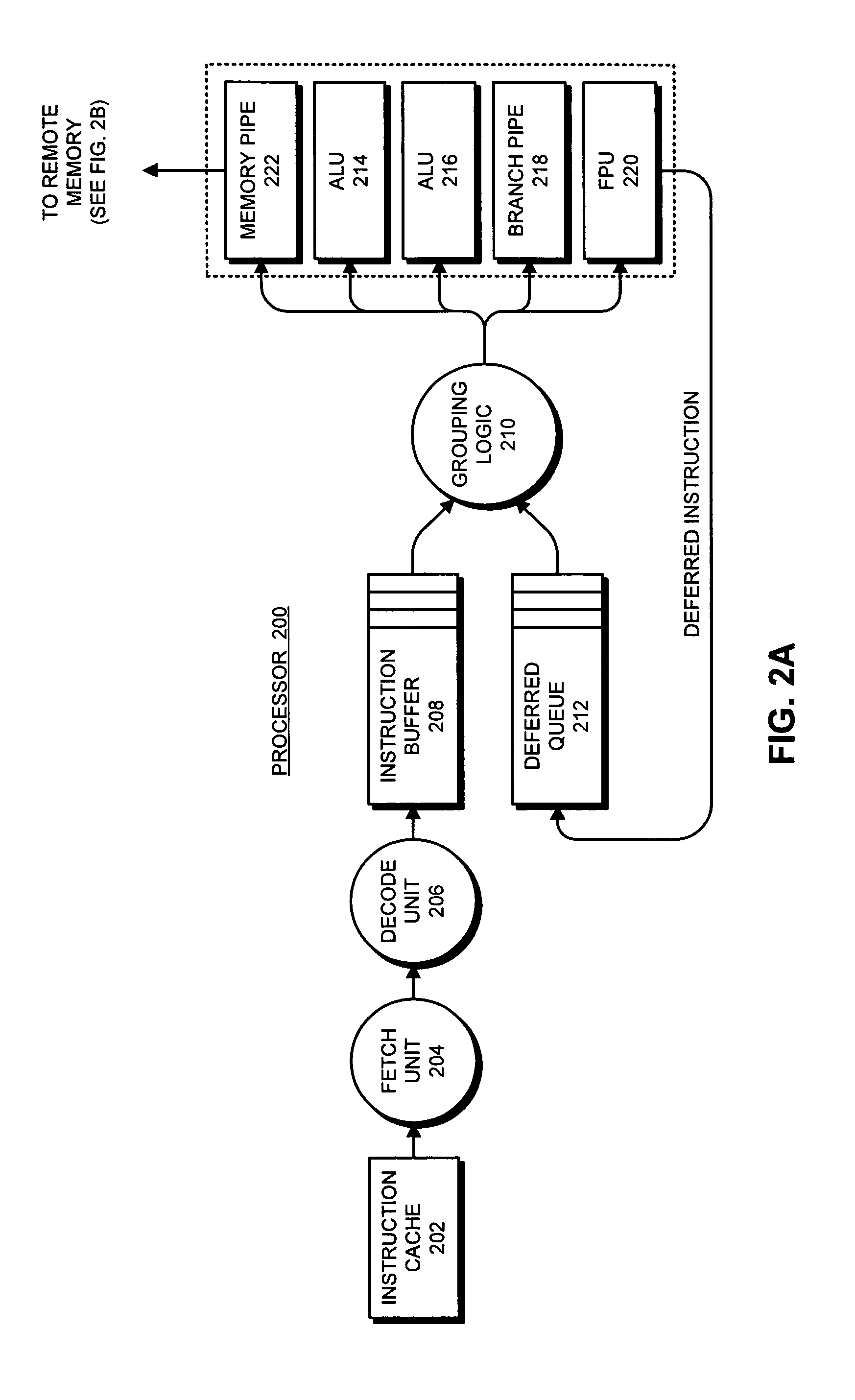

Allocating processor resources during speculative execution using a temporal ordering policy

ActiveUS7774531B1Memory architecture accessing/allocationProgram controlSpeculative executionInstruction distribution

One embodiment provides a system which uses a temporal ordering policy for allocation of limited processor resources. The system starts by executing instructions for a program during a normal-execution mode. Upon encountering a condition which causes the processor to enter a speculative-execution mode, the processor performs a checkpoint and commences execution of instructions in the speculative-execution mode. Upon encountering an instruction which requires the allocation of an instance of a limited processor resource during the execution of instructions in the speculative-execution mode, the processor checks a speculative-use indicator associated with each instance of the limited processor resource. Upon finding the speculative-use indicators asserted for all instances of the limited processor resource which are available to be allocated for the instruction, the processor aborts the instruction. On the other hand, upon finding the speculative-use indicator is deasserted for an instance of the limited processor resource which is available to be allocated for the instruction, the processor asserts the speculative-use indicator associated with the instance and executes the instruction.

Owner:ORACLE INT CORP

Load/store ordering in a threaded out-of-order processor

Systems and methods for efficient load-store ordering. A processor comprises a store buffer that includes an array. The store buffer dynamically allocates any entry of the array for an out-of-order (o-o-o) issued store instruction independent of a corresponding thread. Circuitry within the store buffer determines a first set of entries of the array entries that have store instructions older in program order than a particular load instruction, wherein the store instructions have a same thread identifier and address as the load instruction. From the first set, the logic locates a single final match entry of the first set corresponding to the youngest store instruction of the first set, which may be used for read-after-write (RAW) hazard detection.

Owner:ORACLE INT CORP

Optimized allocation of multi-pipeline executable and specific pipeline executable instructions to execution pipelines based on criteria

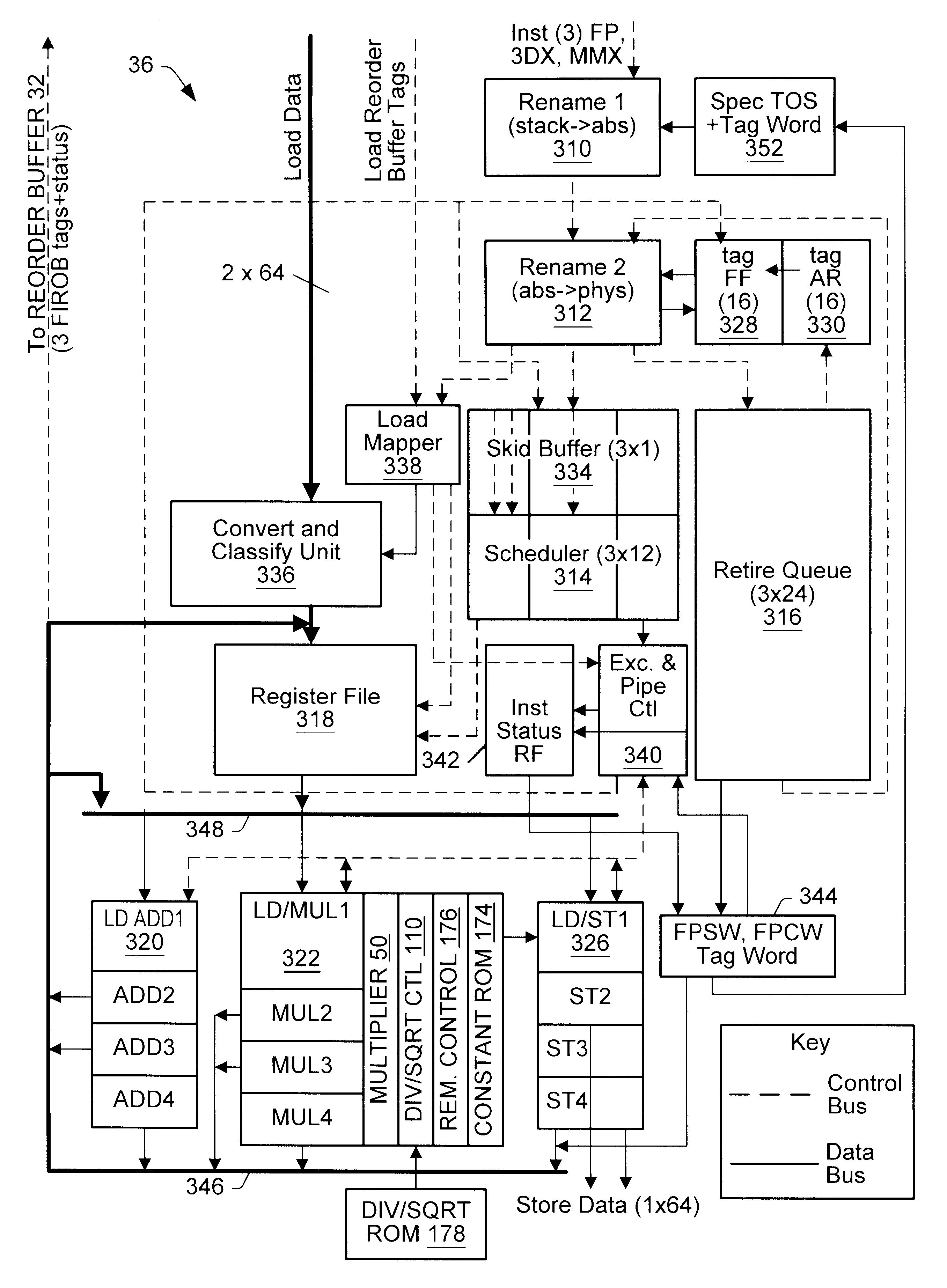

InactiveUS6370637B1Digital computer detailsMultiprogramming arrangementsFloating-point unitParallel computing

A microprocessor with a floating point unit configured to efficiently allocate multi-pipeline executable instructions is disclosed. Multi-pipeline executable instructions are instructions that are not forced to execute in a particular type of execution pipe. For example, junk ops are multi-pipeline executable. A junk op is an instruction that is executed at an early stage of the floating point unit's pipeline (e.g., during register rename), but still passes through an execution pipeline for exception checking. Junk ops are not limited to a particular execution pipeline, but instead may pass through any of the microprocessor's execution pipelines in the floating point unit. Multi-pipeline executable instructions are allocated on a per-clock cycle basis using a number of different criteria. For example, the allocation may vary depending upon the number of multi-pipeline executable instructions received by the floating point unit in a single clock cycle.

Owner:GLOBALFOUNDRIES INC

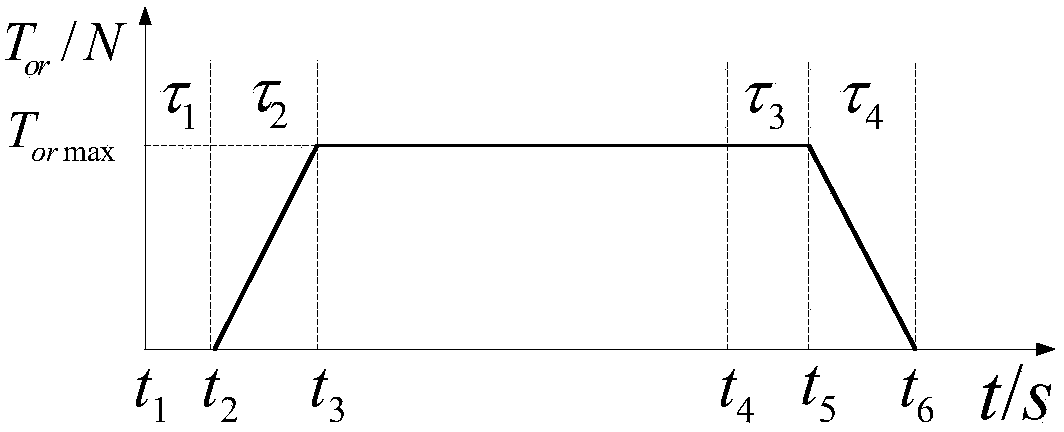

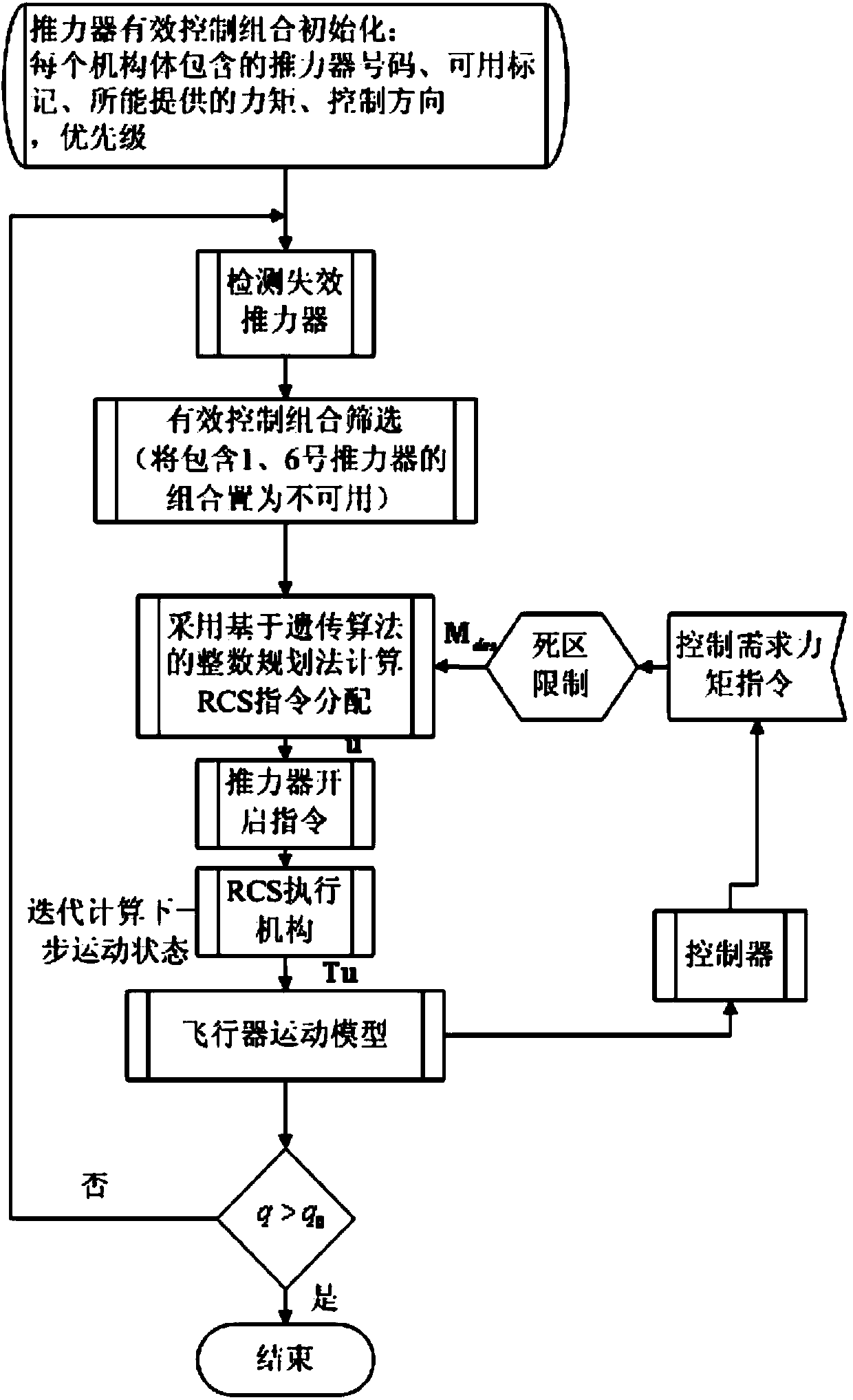

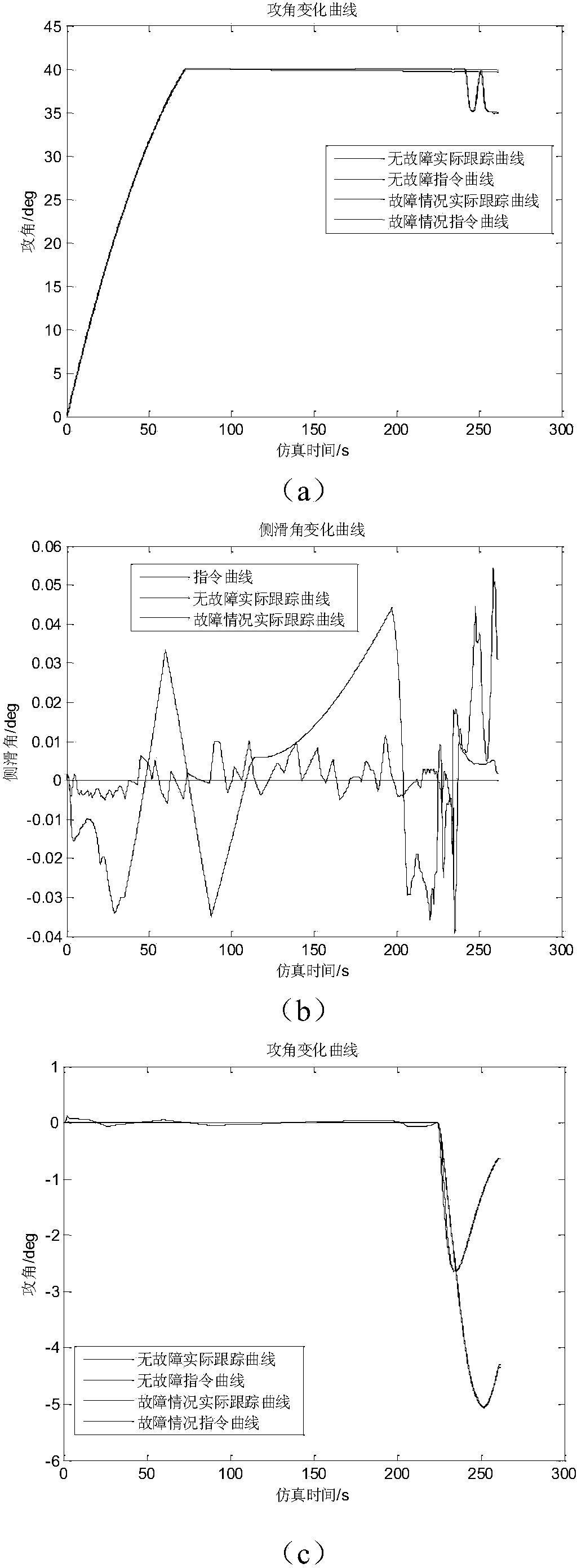

Re-entry attitude control method of reusable launch vehicle

ActiveCN104635741AImplement failure reconstructionReduce complexityAttitude controlRe entryAttitude control

The invention discloses a re-entry attitude control method of a reusable launch vehicle (RLV), and aims at solving the technical problem of an existing method that the distribution efficiency of an RCS (Reaction Control System) instruction is low. According to the technical scheme, the re-entry attitude control method comprises the following steps: firstly, designing all combinations in all control directions of all thrusters in all control directions of an RCS; adopting an integer linear planning method to realize real-time instruction distribution in a determined required moment direction combination; solving the integer planning method by using a genetic algorithm. Control instruction distribution of the RCS is converted into a dimension-lowering integer linear planning method; compared with a background technology, the requirements of the RLV re-entry attitude control are combined; the amplitudes of the thrusters and the fuel combustion of the RCS are considered, and an offline design optimal table is not needed under the condition that the thrusters have the fault, so that the complexity of the thrusters is reduced; the real-time efficiency of the RCS instruction distribution is improved through the dimension-lowering treatment; the RCS fault reconstruction of minimum RCS energy consumption and instruction moment tracking errors is realized.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

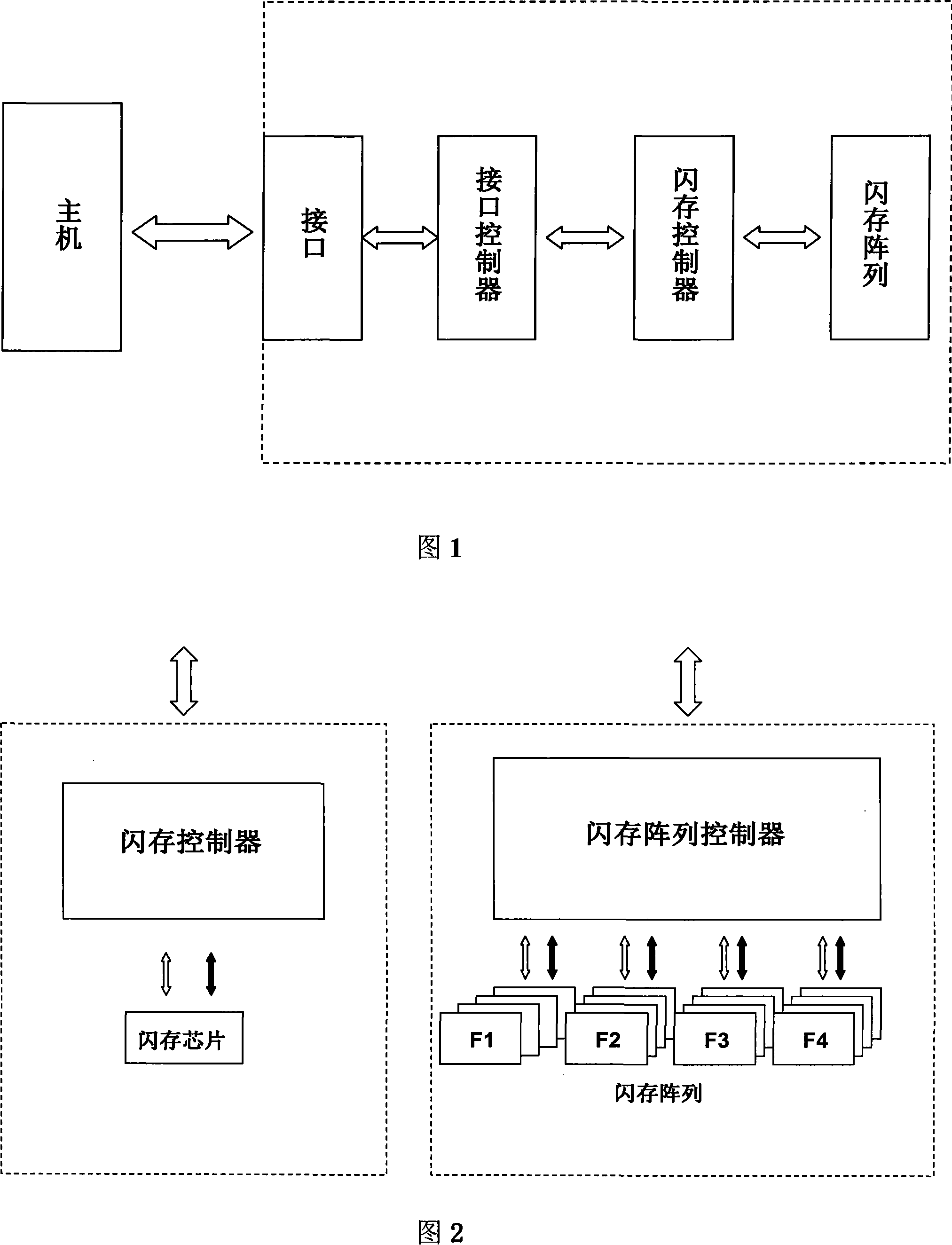

Flash controller

ActiveCN101046725ASolve bottlenecksImprove read and write speedInput/output to record carriersControl signalFlash memory controller

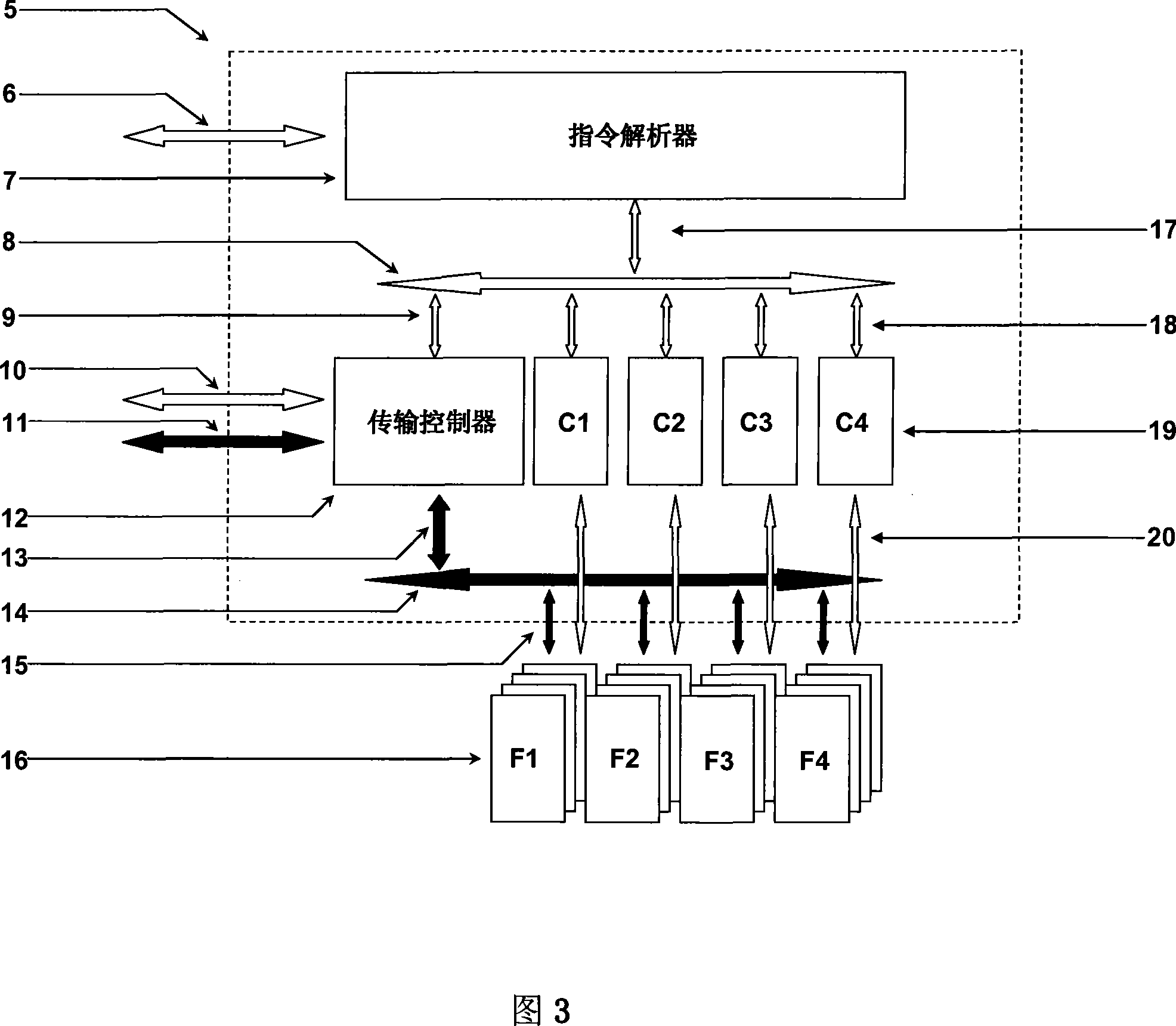

The present invention discloses a flash storage controller. Said flash storage controller includes the following several portions: instruction resolver, transmission controller and several on-chip flash storage control units. Said invention also provides the concrete function and action of above-mentioned every portion, and also provides the working principle of said flash storage controller and its concrete operation method. The flash memory made up by adopting the invented flash storage controller can greatly raise read / write speed.

Owner:MEMORIGHT (WUHAN) CO LTD

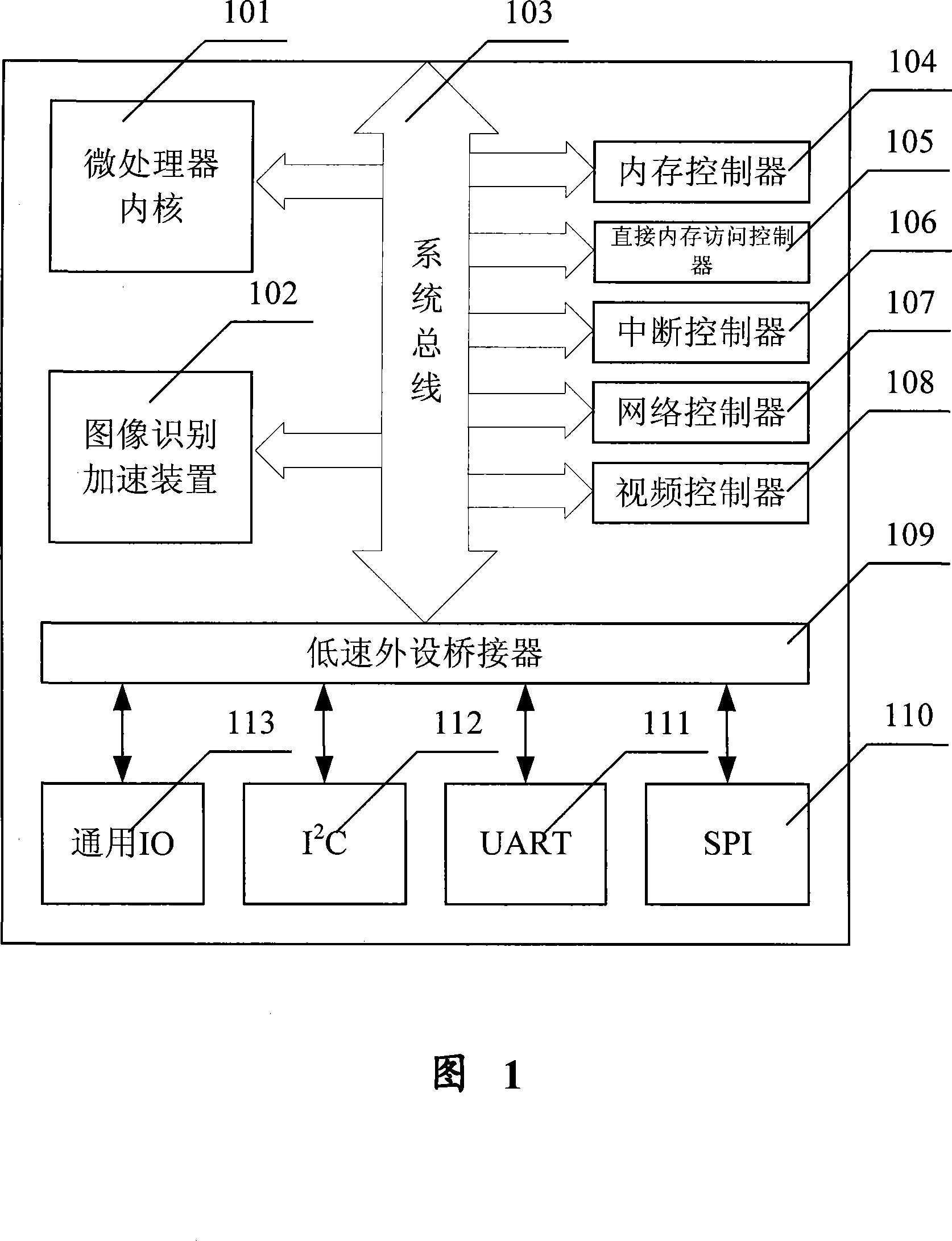

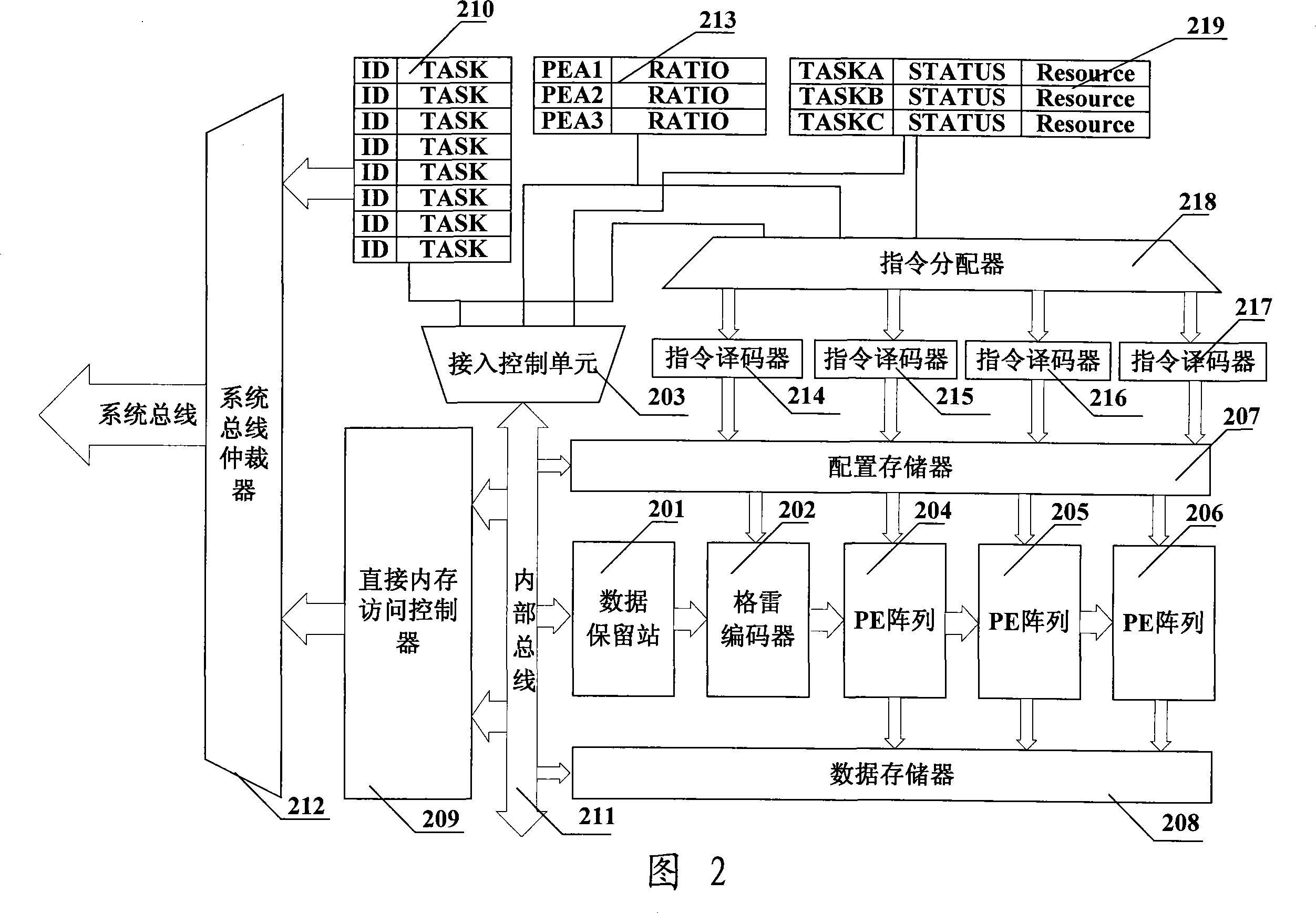

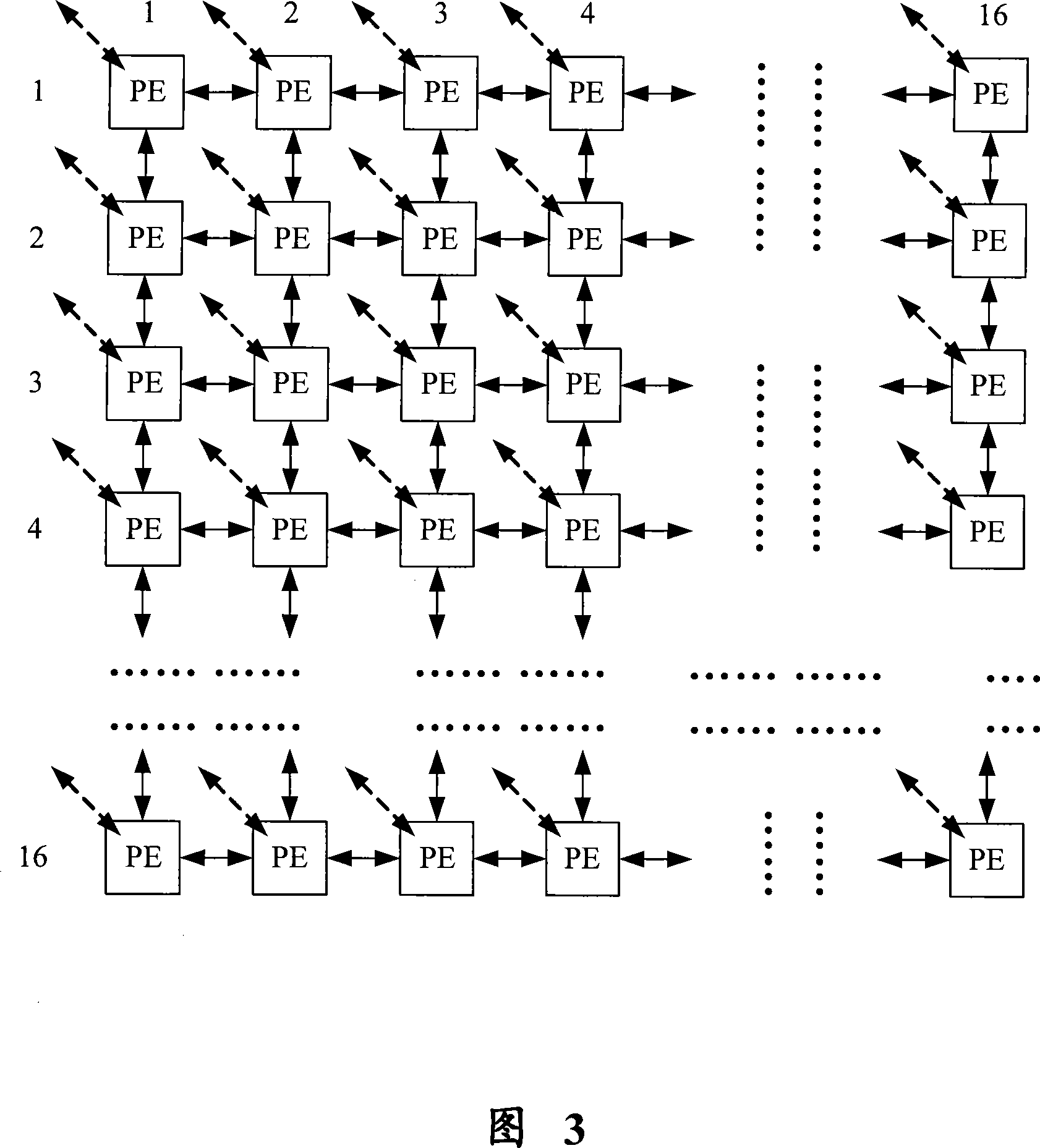

Image recognition accelerator and MPU chip possessing image recognition accelerator

InactiveCN101236601AHigh peak computing powerFlexible configurationCharacter and pattern recognitionConcurrent instruction executionPattern recognitionDirect memory access

The invention provides an image recognition accelerator which mainly comprises a system bus arbitrator, an inside bus, an access control unit, a command distribution device, a direct memory access controller, a system task array, a resource statistical meter, a running task reserving station, a configuration memory, a plurality of command encoder units, a data memory, a plurality of processing unit arrays, a data reserving station and a Gray encoder. Compared with the prior special image recognition accelerating chip, the image recognition accelerator has the advantages of high performance, low cost, flexible application, etc.

Owner:马磊

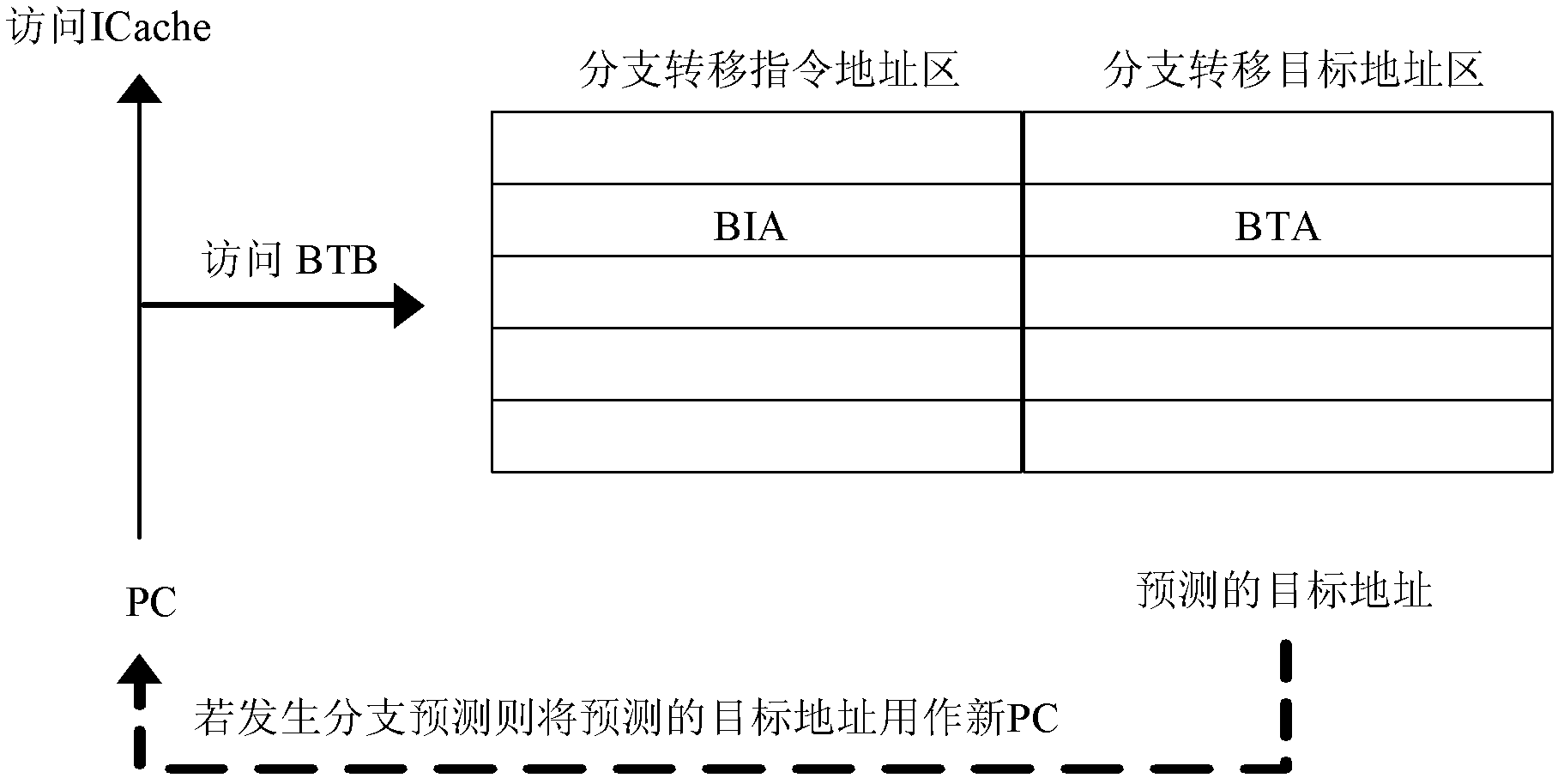

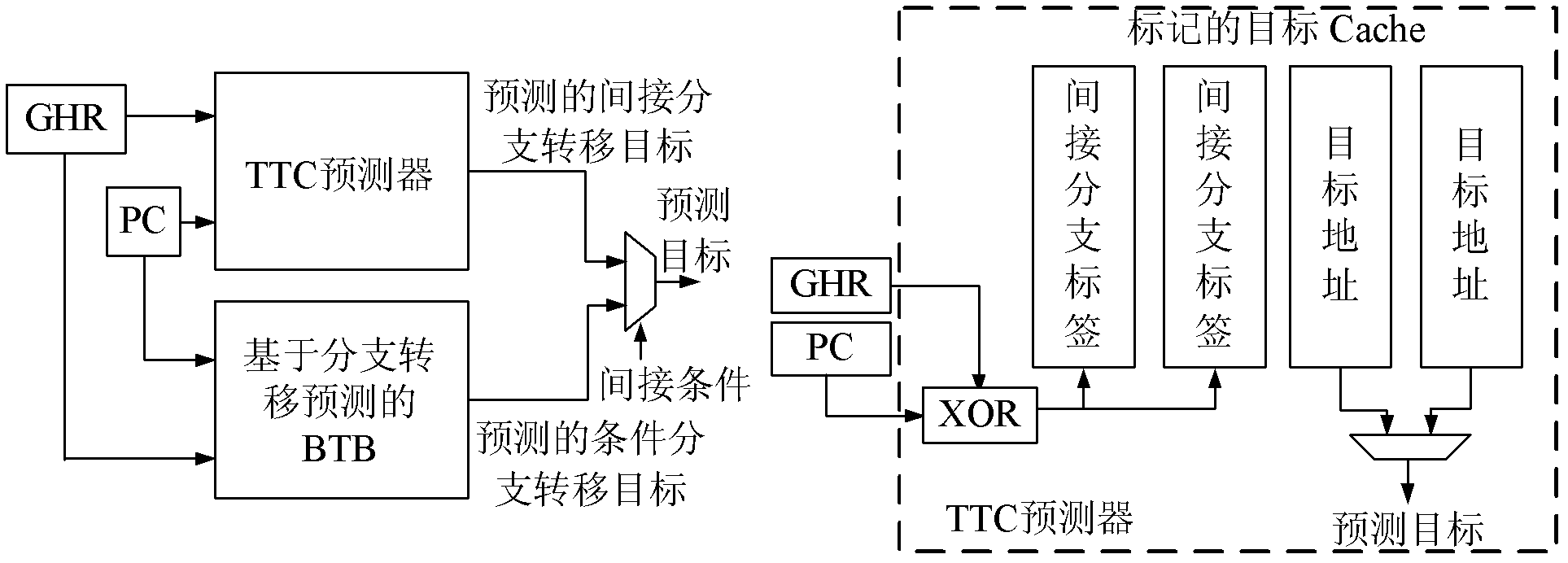

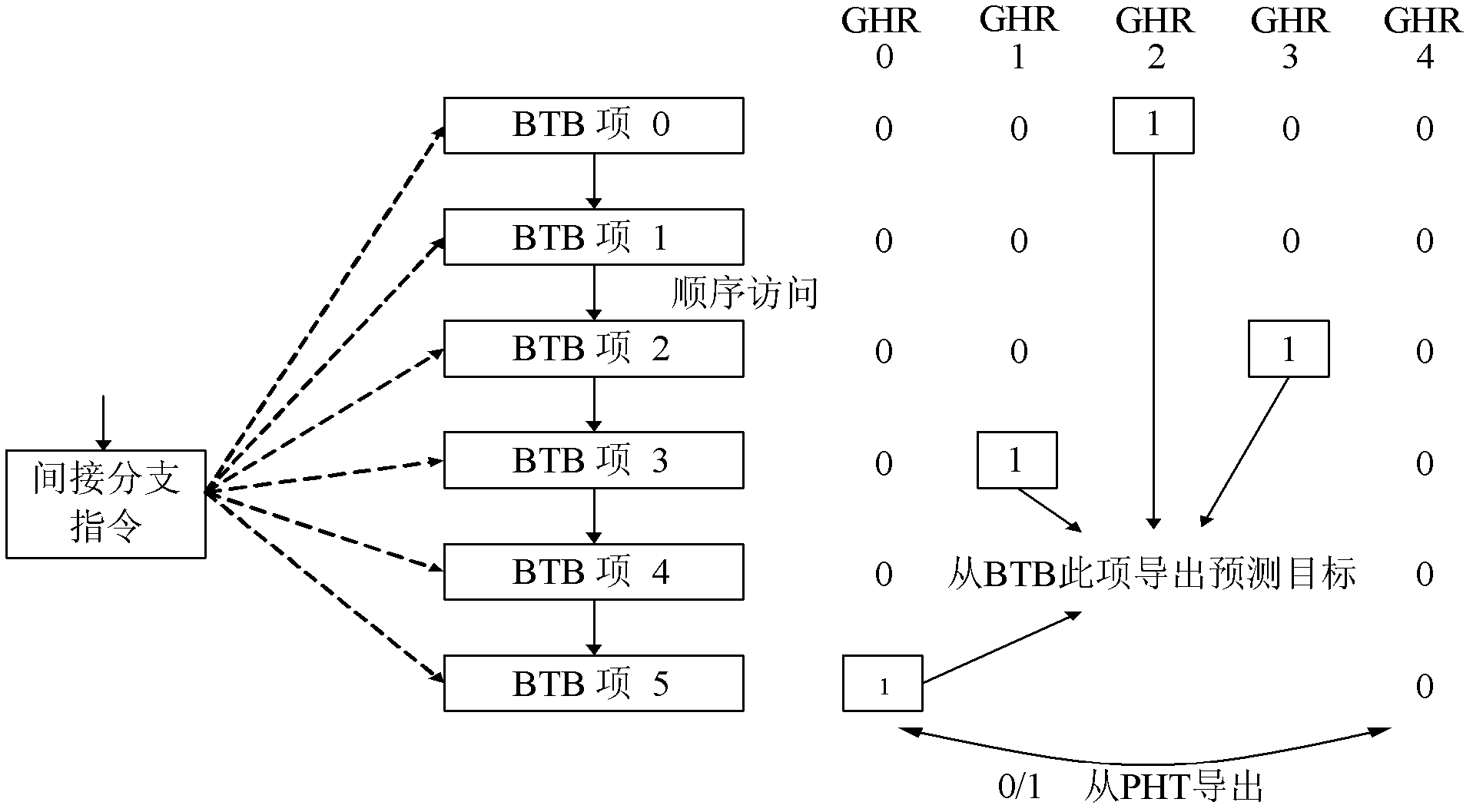

Device and method for realizing indirect branch and prediction among modern processors

ActiveCN102306094AAvoid mistakesImprove forecast accuracyConcurrent instruction executionMass storageParallel computing

The invention discloses a device and a method for realizing indirect branch and prediction among modern processors, wherein the device comprises a branch direction predictor which generates a target pointer according to an indirect branch direction predicted by a plurality of sub-predictors, a target address mapping device which maps the target pointer generated by the branch direction predictor to a virtual address of an indexing branch target buffer, and a branch target buffer which distributes a target address item and a distribution item to an indirect branch instruction; the target address item is indexed through the virtual address; and the distribution item records the use condition of each indirect branch instruction target address item and is indexed through a value of a program counter. On the basis of not needing bulk-storage memory support, both the prediction accuracy and the processor energy efficiency are increased at the cost of time similar to that of the existing indirect branch prediction technique.

Owner:BEIJING PKUNITY MICROSYST TECH

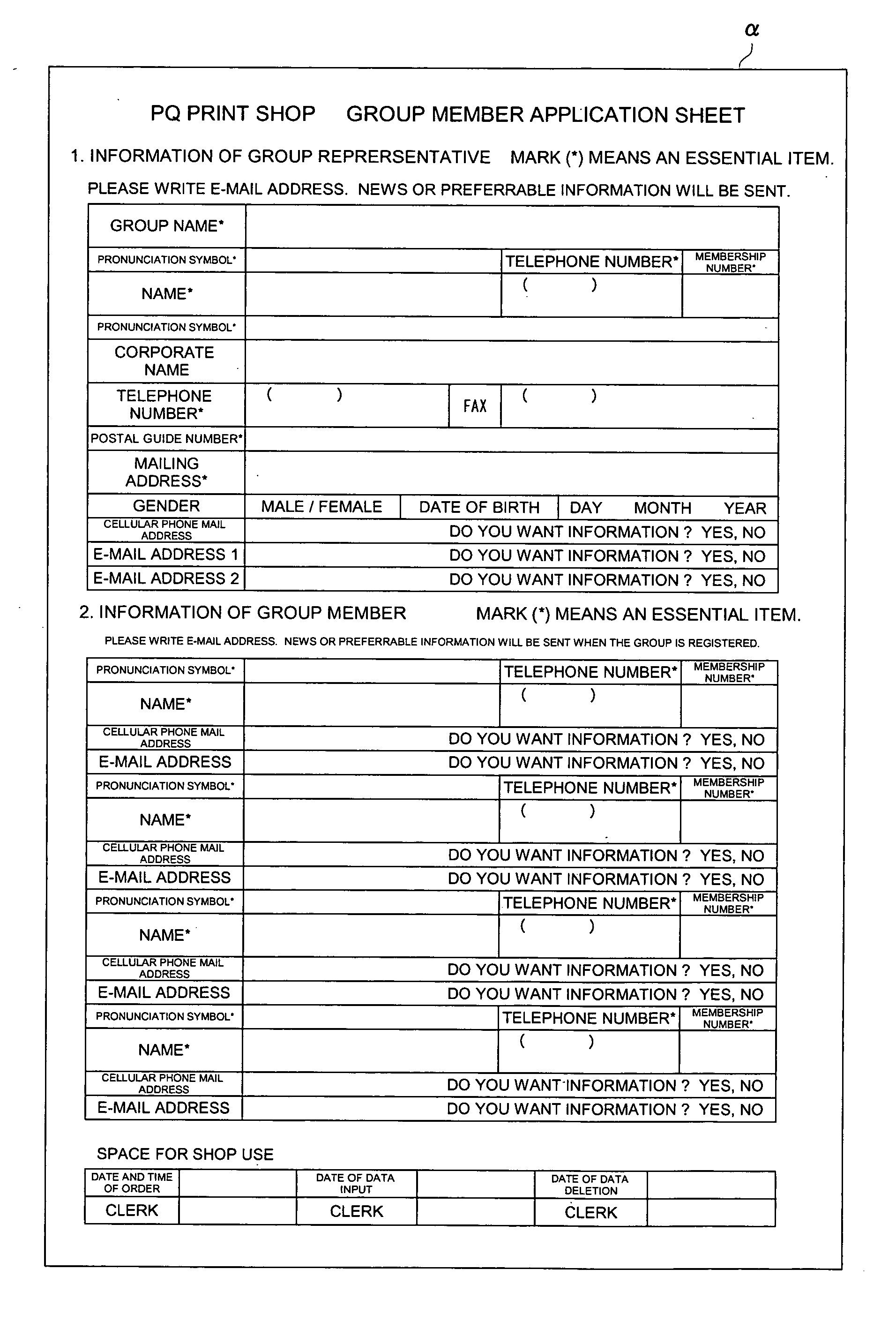

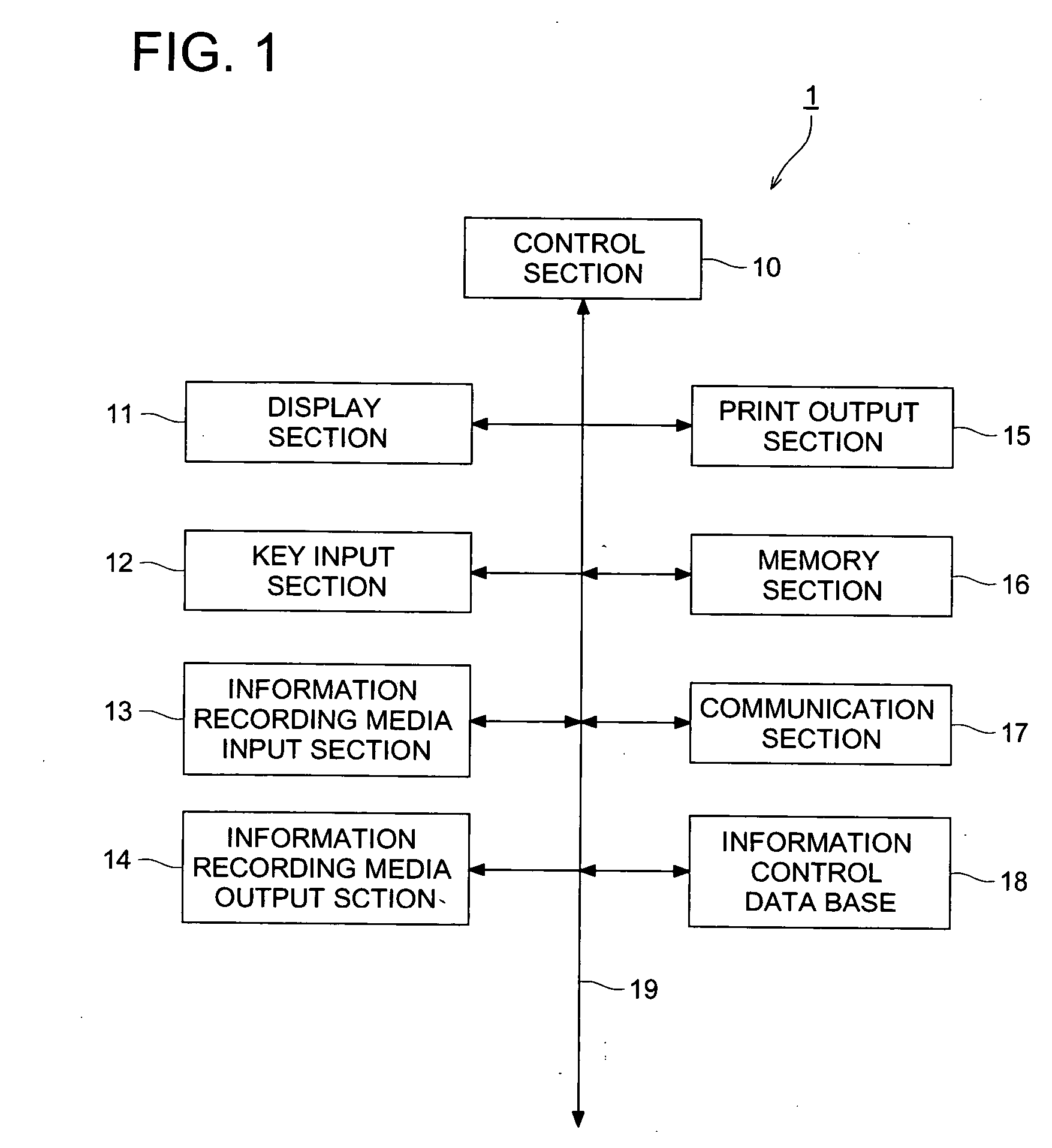

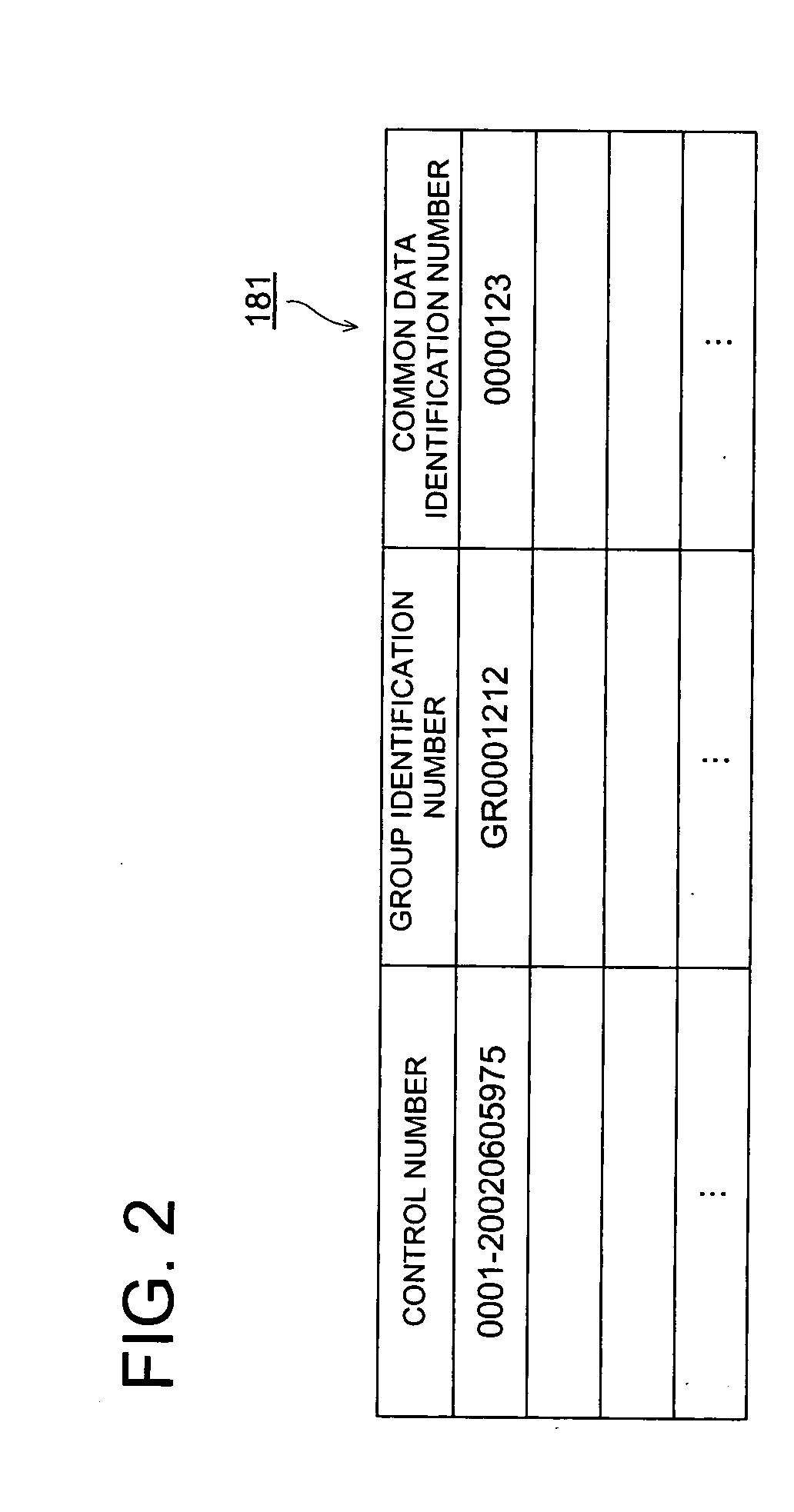

Print order slip issuing device and print generation system

InactiveUS20050237578A1Promote efficient orderingDigital data processing detailsPictoral communicationOrder formInstruction distribution

A print ordering sheet for group members, which is used via a shop when the group member places an order of print from a photographed image stored in an information recording medium shared by the group members, including: a displaying section for displaying a visual list including total printed images produced from the photographed images; a print order assigning section for each printed image on the list; a control information displaying section for displaying control information relevant to a group; an identification writing section for writing identification information of the group member; and an address displaying section for displaying a contact address of the shop.

Owner:KONICA MINOLTA INC

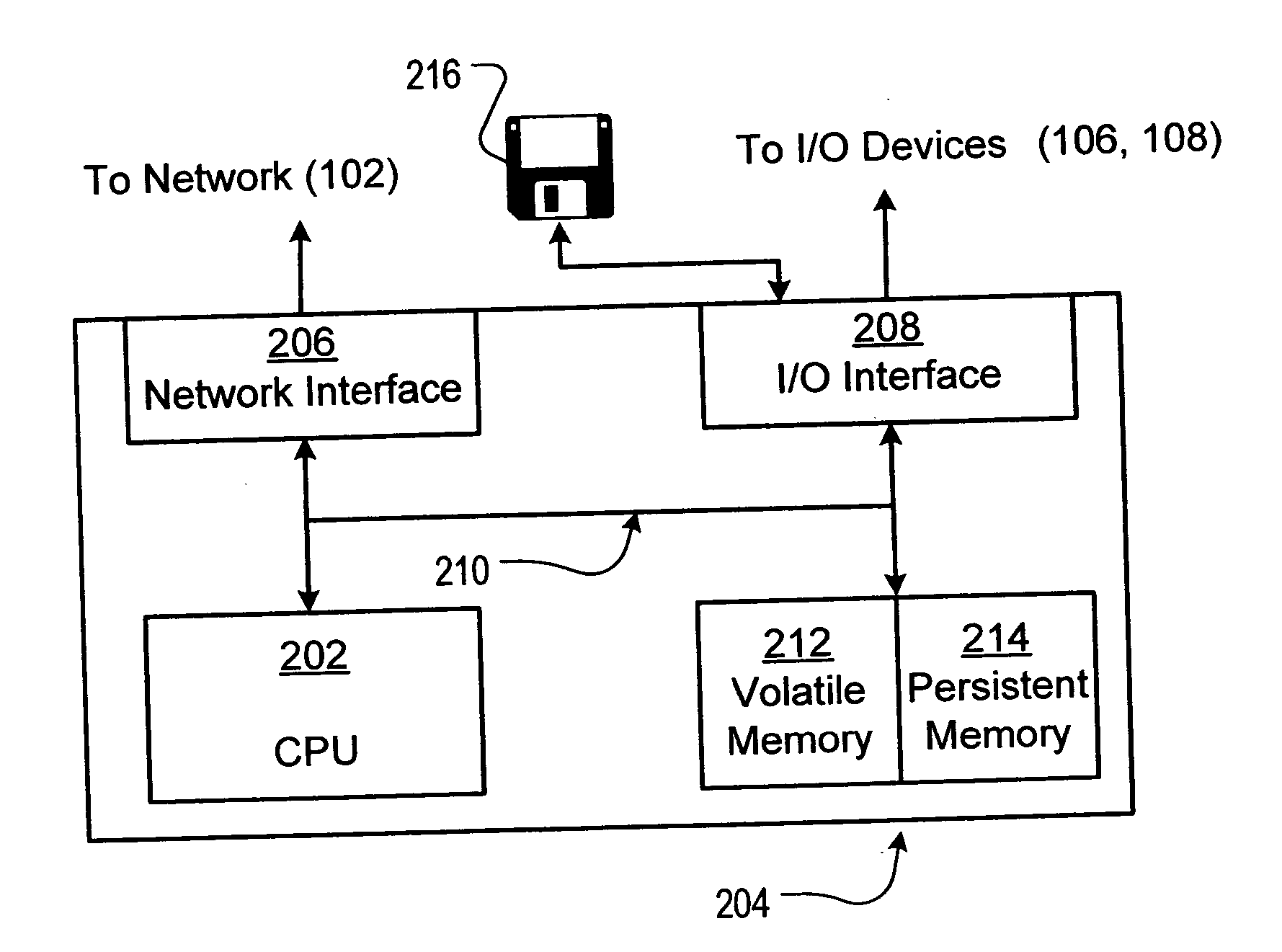

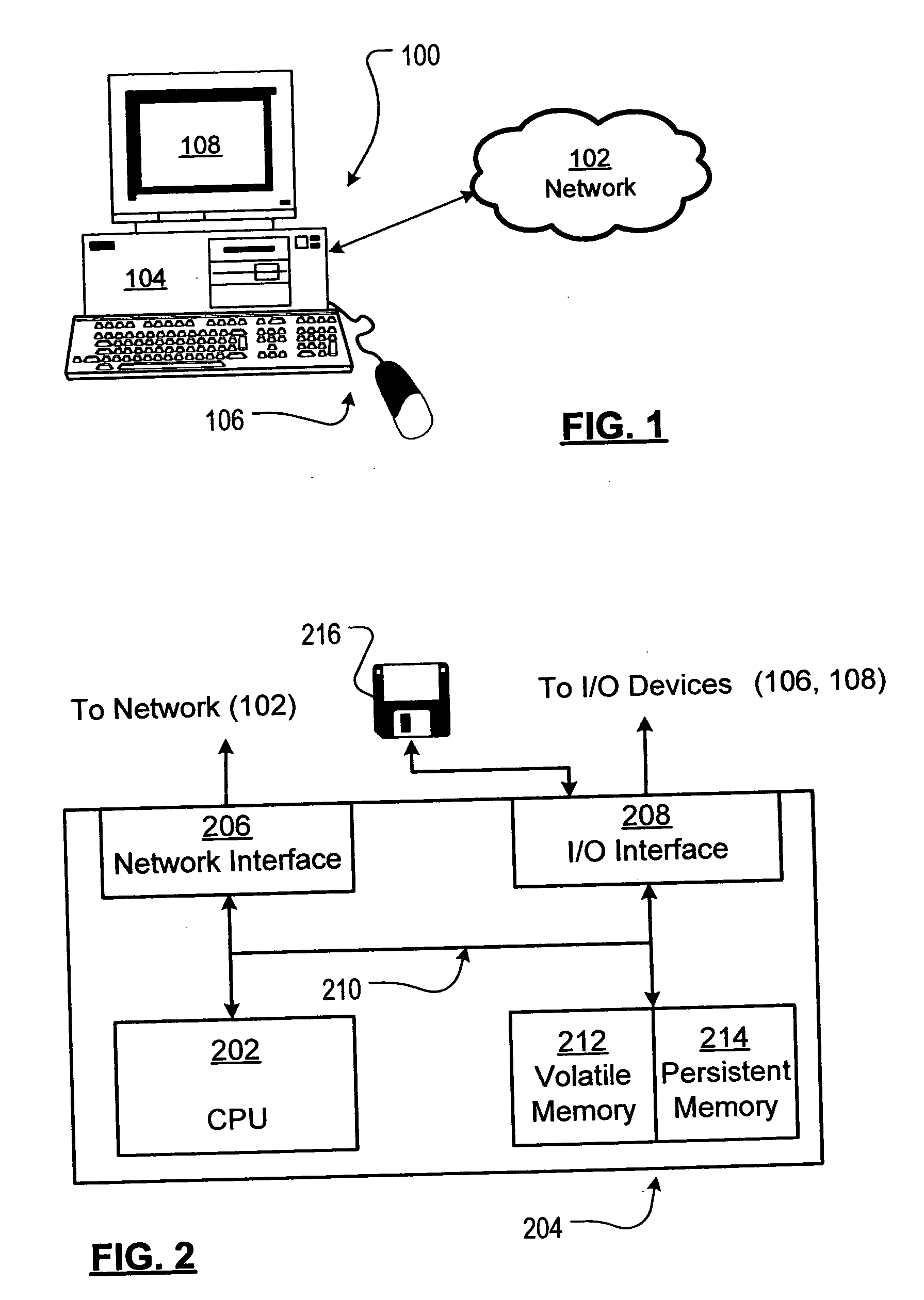

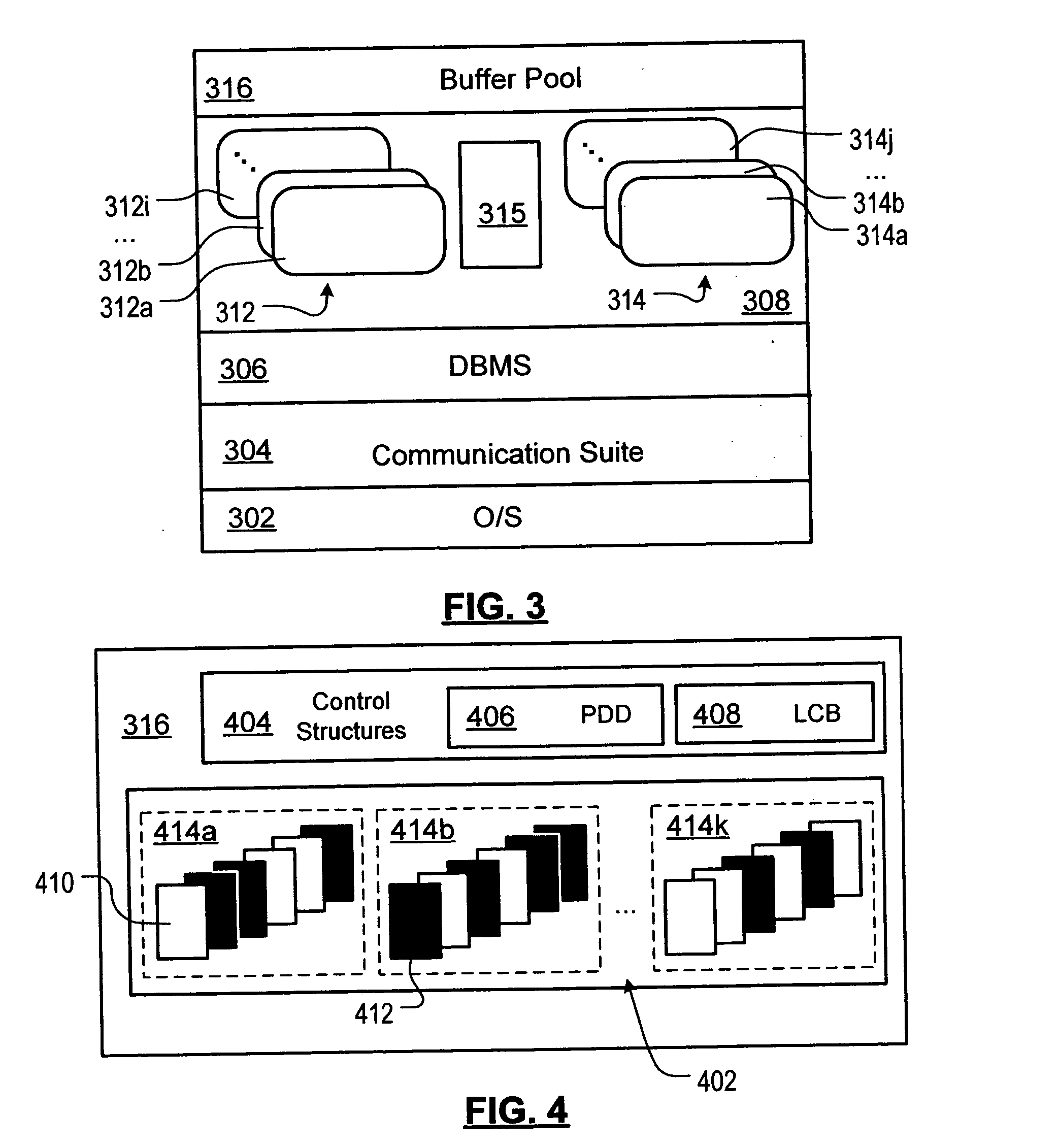

System and method for starting a buffer pool

InactiveUS20050050443A1Special service provision for substationSpecial data processing applicationsOperating systemInstruction distribution

For an information retrieval system coupled to a buffer pool maintaining a plurality of pages of recently accessed information for subsequent re-access, a technique for starting the buffer pool is provided. The technique facilitates a quicker start to the buffer pool by deferring allocation of page storing portions, for example, until they are needed. The technique makes the buffer pool available for storing pages while deferring allocation of a page storing portion of the buffer pool and allocates the page storing portion of the buffer pool in response to a demand to store pages to the buffer pool. The technique may be used to re-start a buffer pool with pages stored to a memory coupled to the information retrieval system where the pages where stored upon a buffer pool shutdown. Further, buffer pool readers or prefetchers may be configured to read pages for storing to the buffer pool and allocate the page storing portions of the buffer pool in response to instructions to read particular pages.

Owner:IBM CORP

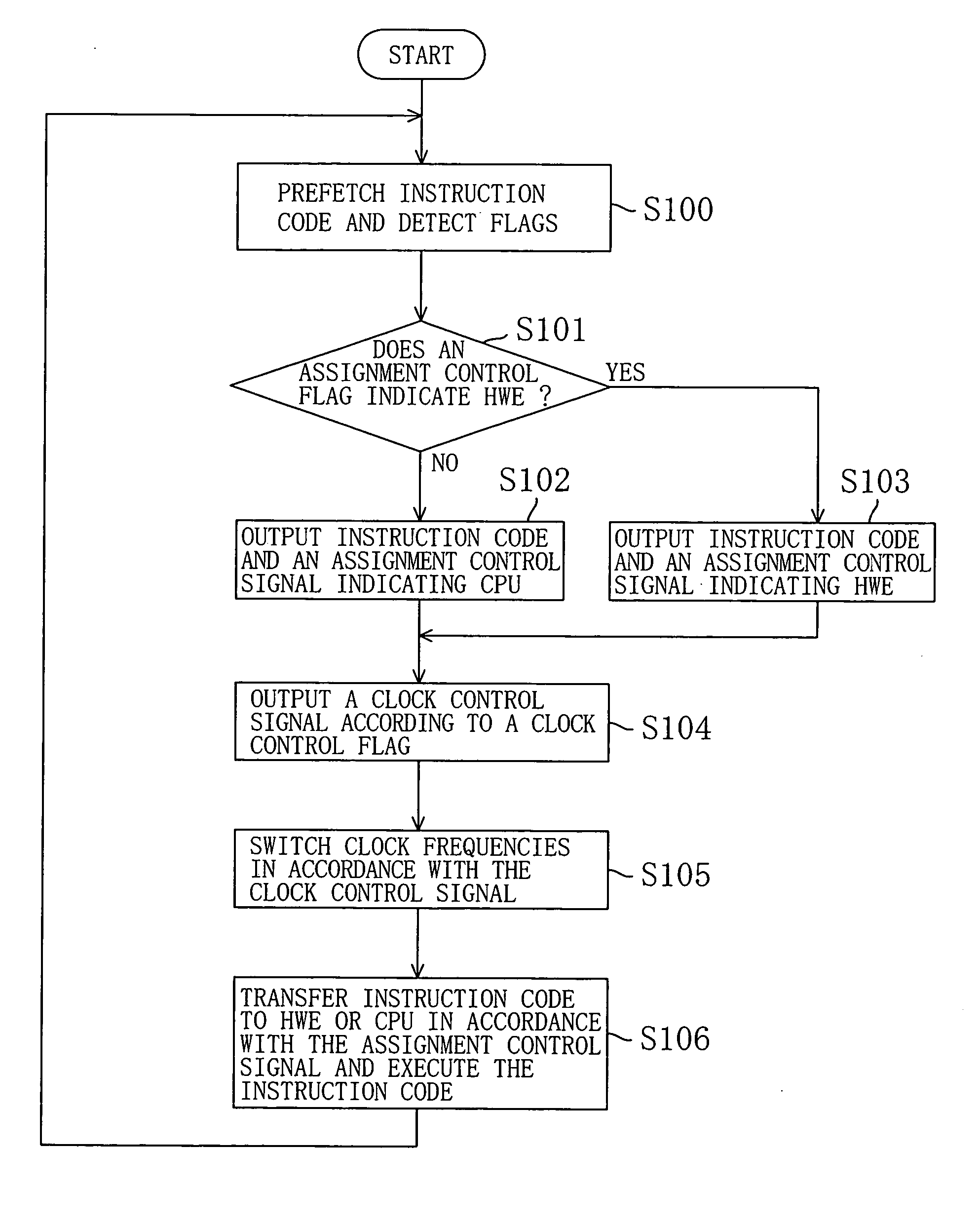

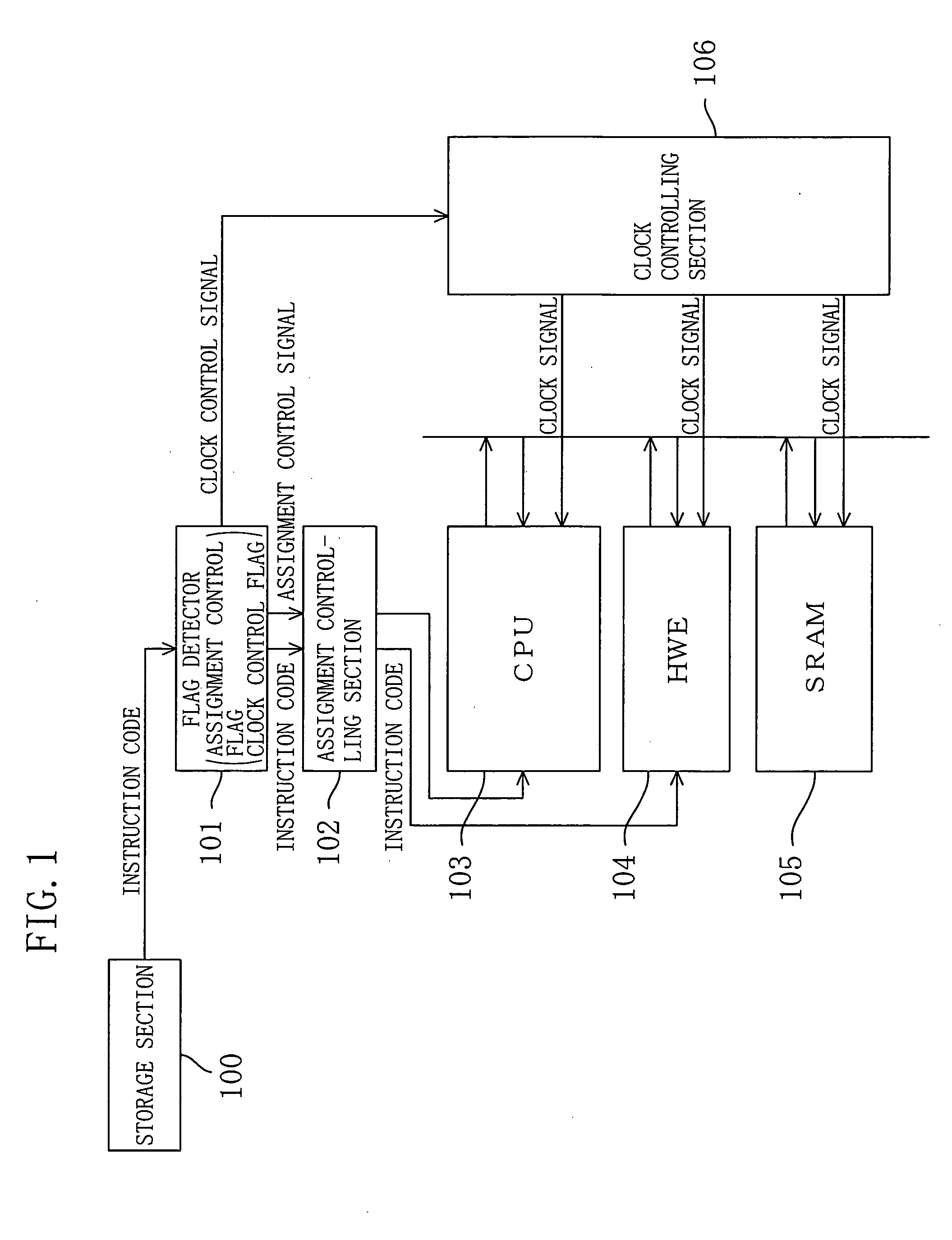

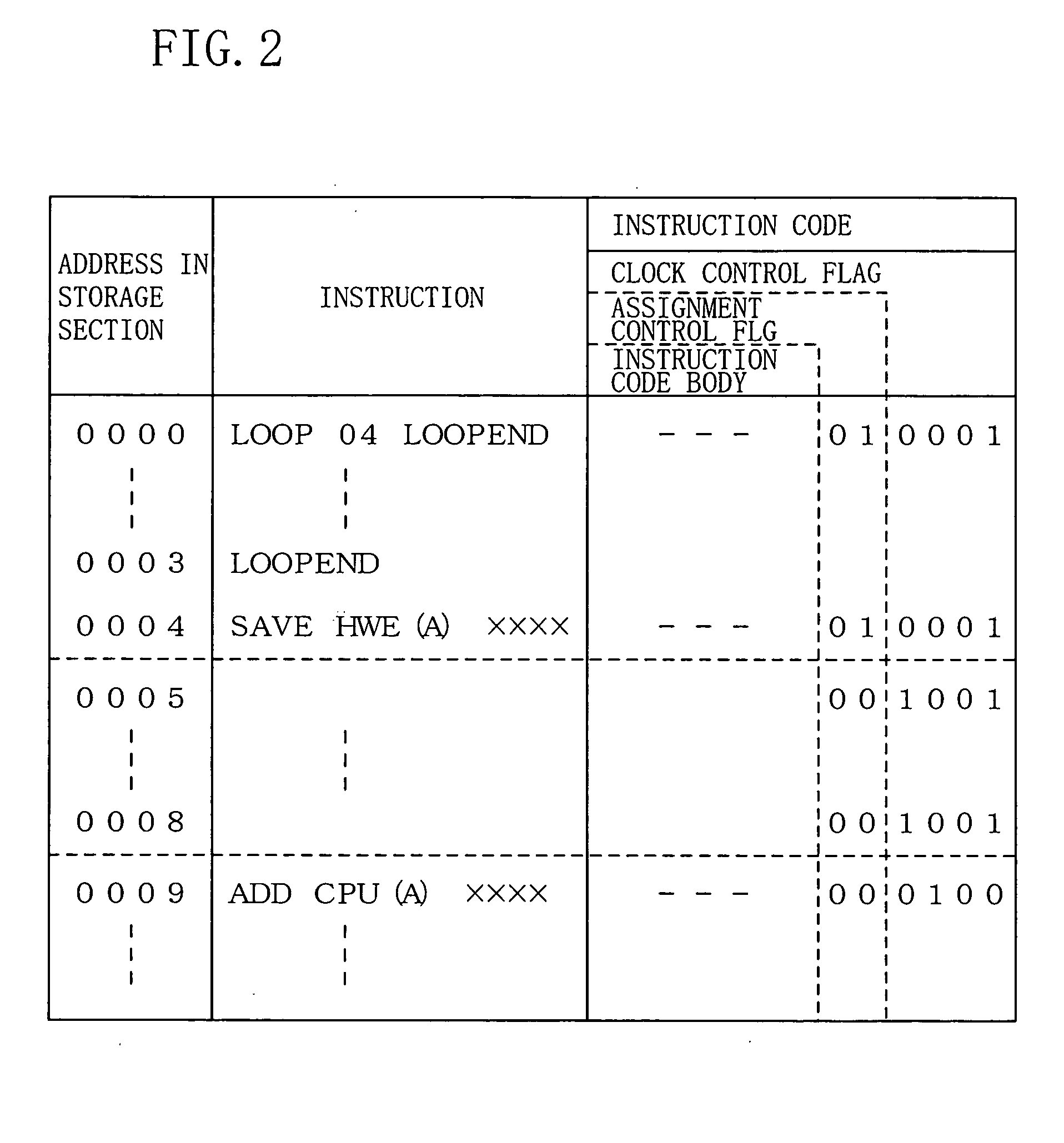

Processor system, instruction sequence optimization device, and instruction sequence optimization program

InactiveUS20050283629A1Prevents increase of execution periodLarge degradation of parallelismEnergy efficient ICTVolume/mass flow measurementDistribution controlClock rate

To reduce power consumption of a processor system including a plurality of processors without degradation of the processing ability, a flag detecting section detects an assignment control flag and a clock control flag added to instruction code. An instruction assignment controlling section outputs the instruction code to a CPU or an HWE based on the detection to have the instruction code executed. A clock controlling section supplies a clock signal having a frequency lower than the maximum clock frequency to one of the CPU and the HWE in which a waiting time arises when the CPU and the HWE operate at the maximum clock frequencies, thus reducing power consumption.

Owner:SOCIONEXT INC

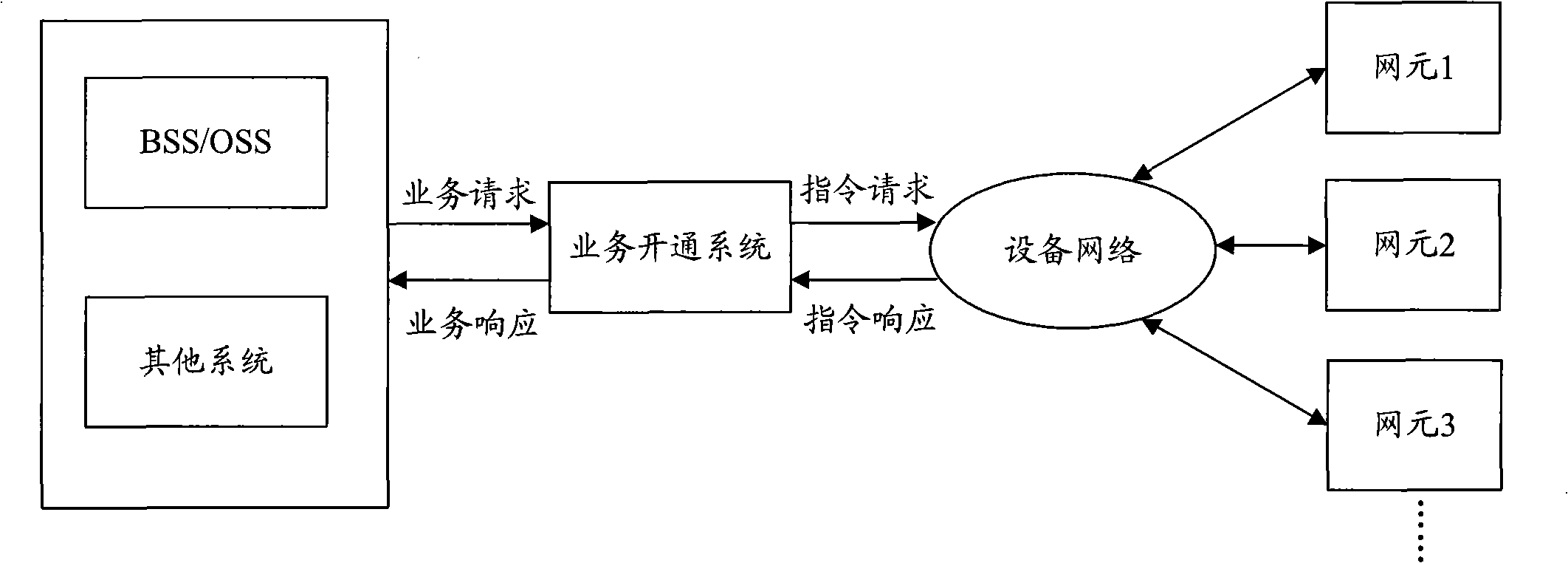

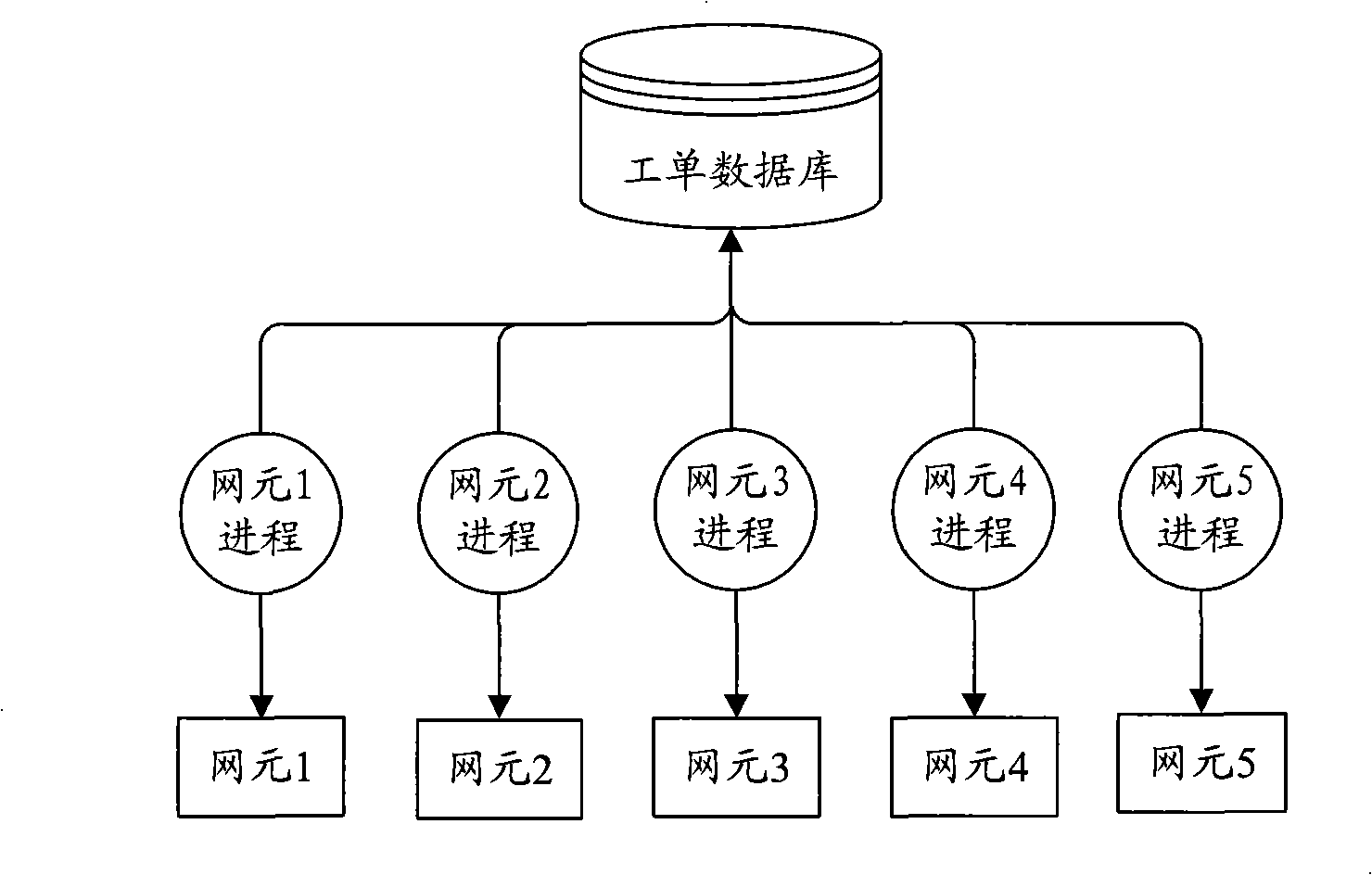

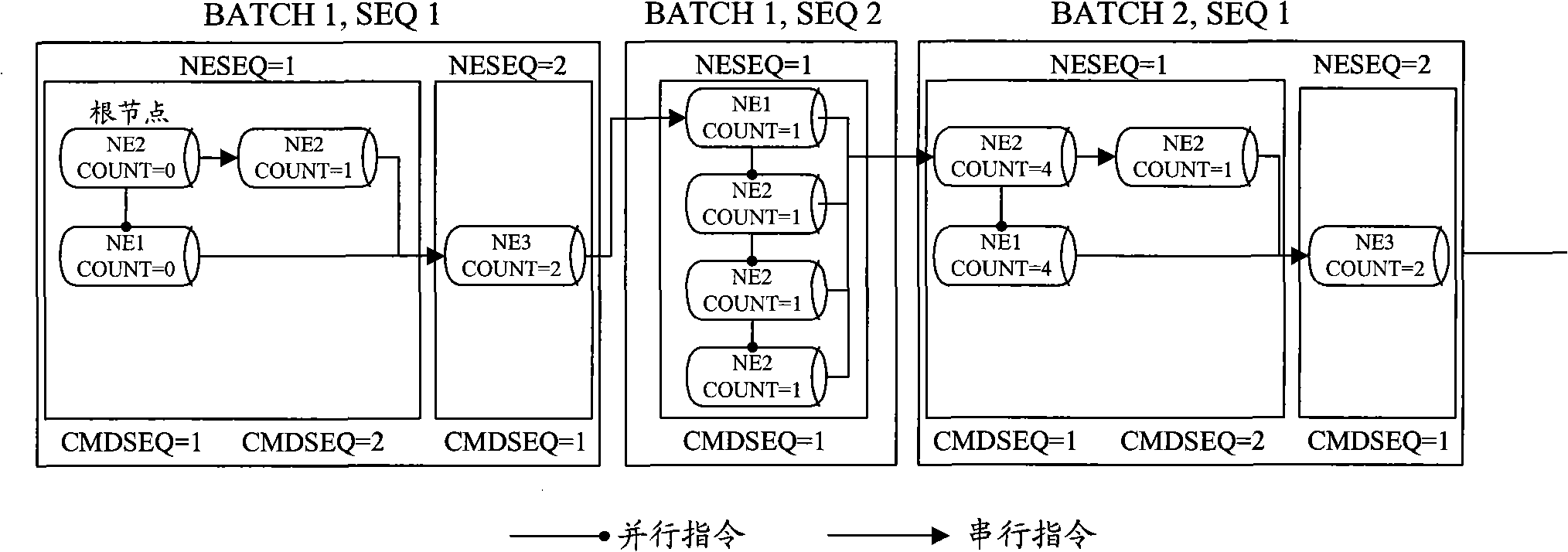

Execution method, apparatus and system for service open command

ActiveCN101360309AReduce resource usageEasy to handleSupervisory/monitoring/testing arrangementsRadio/inductive link selection arrangementsInstruction memoryInstruction distribution

The invention is applied to the communication field, in particular relates to a execution method of a service activation instruction and the system thereof, which includes steps as following: obtaining outgoing instructions consistently; inserting the instructions with the same subjection traits into the corresponding instruction memory queue according to the service logic relation between the outgoing instructions; allocating the instructions to be executed by current network element in instruction memory queue to corresponding network element connection; re-writing consistently the instruction execution result of the network element connection. In the embodiment of the invention, the outgoing instructions in the worksheet database can be obtained consistently, the instructions can be sent quickly to the corresponding network element connection for execution through the instruction memory queue, and the execution result of the instruction is re-wrote to the worksheet database; thereby reducing the resource occupation of the worksheet and improving the processing efficiency of the instruction.

Owner:HUAWEI TECH CO LTD

Central processing apparatus and a compile method

InactiveUS6871343B1Efficient executionRegister arrangementsDigital computer detailsInstruction sequenceInstruction distribution

Owner:KK TOSHIBA

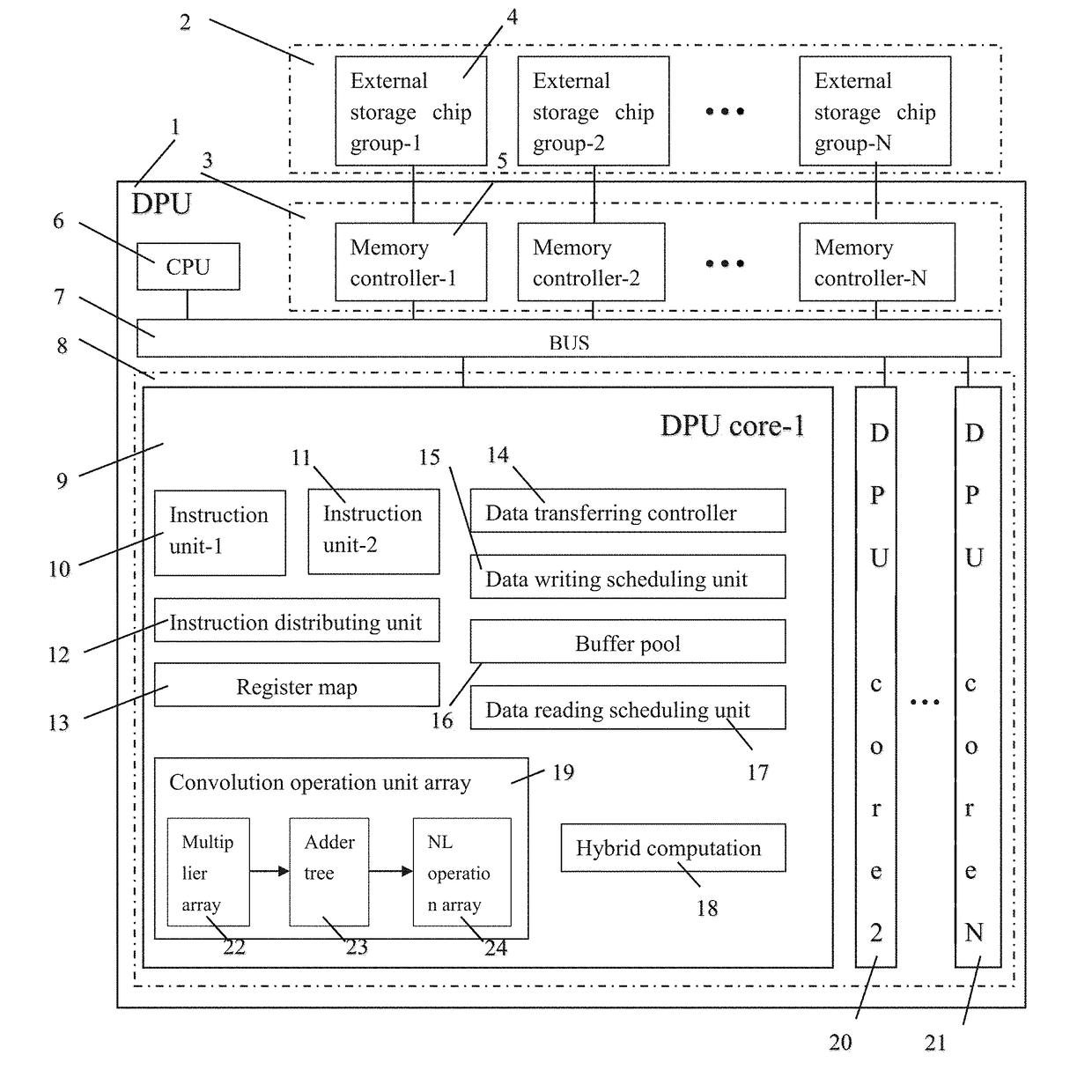

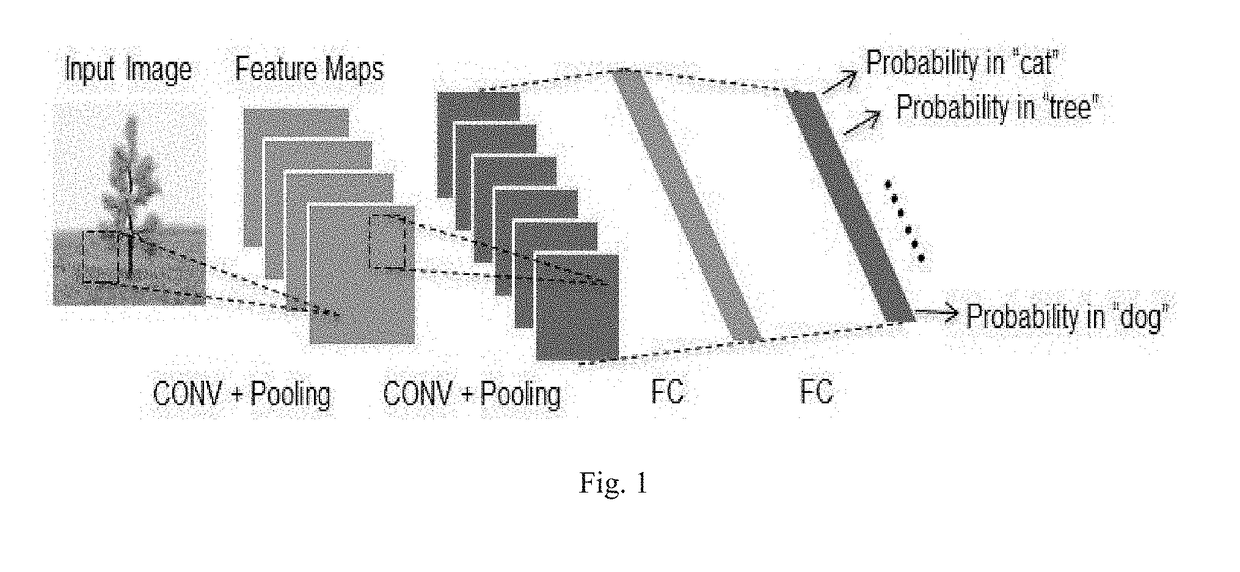

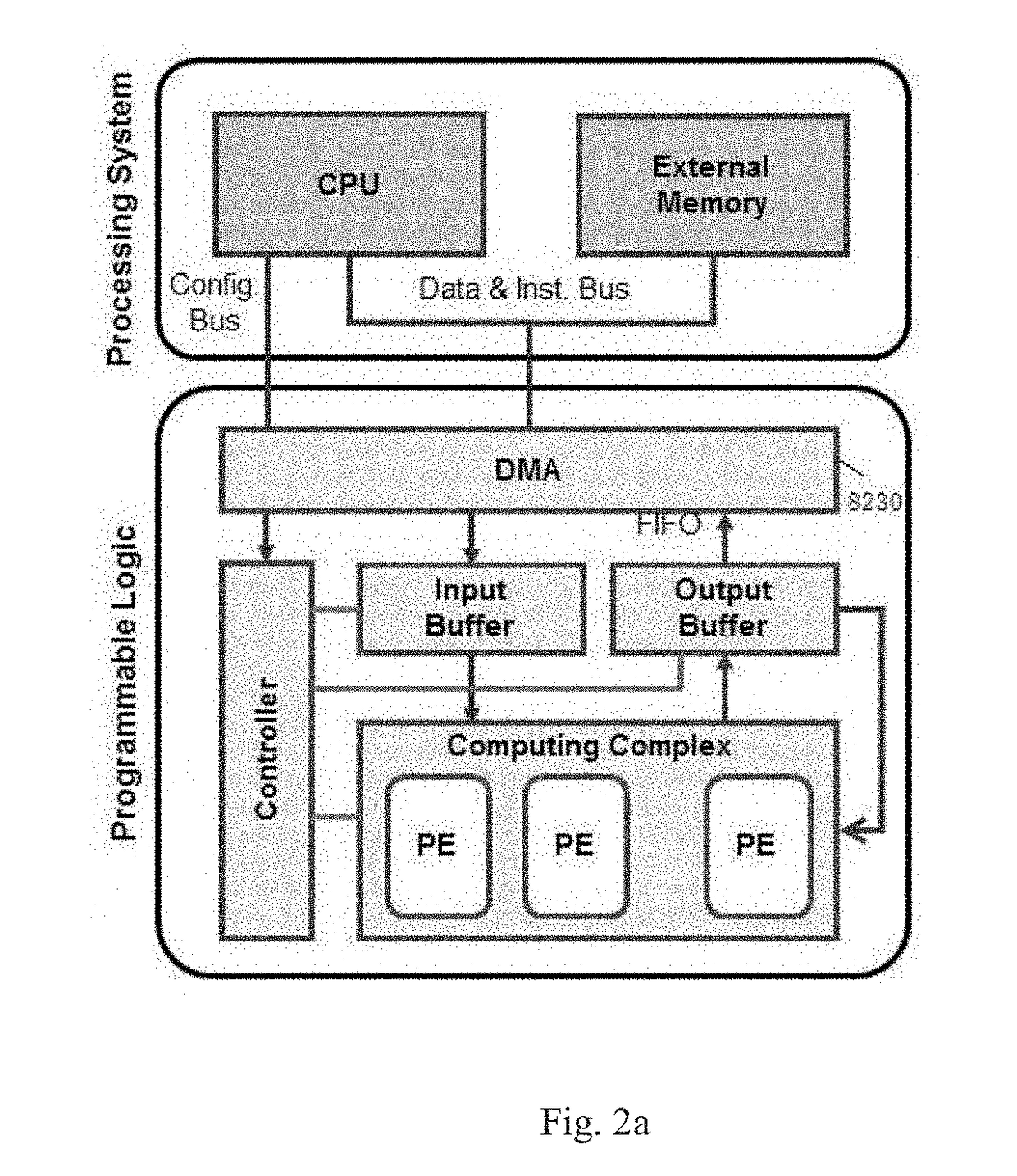

Device for implementing artificial neural network with mutiple instruction units

ActiveUS20180307974A1Digital data processing detailsNeural architecturesInstruction unitNerve network

The present disclosure relates to a processor for implementing artificial neural networks, for example, convolutional neural networks. The processor includes a memory controller group, an on-chip bus and a processor core, wherein the processor core further includes a register map, a first instruction unit, a second instruction unit, an instruction distributing unit, a data transferring controller, a buffer module and a computation module. The processor of the present disclosure may be used for implementing various neural networks with increased computation efficiency.

Owner:XILINX INC

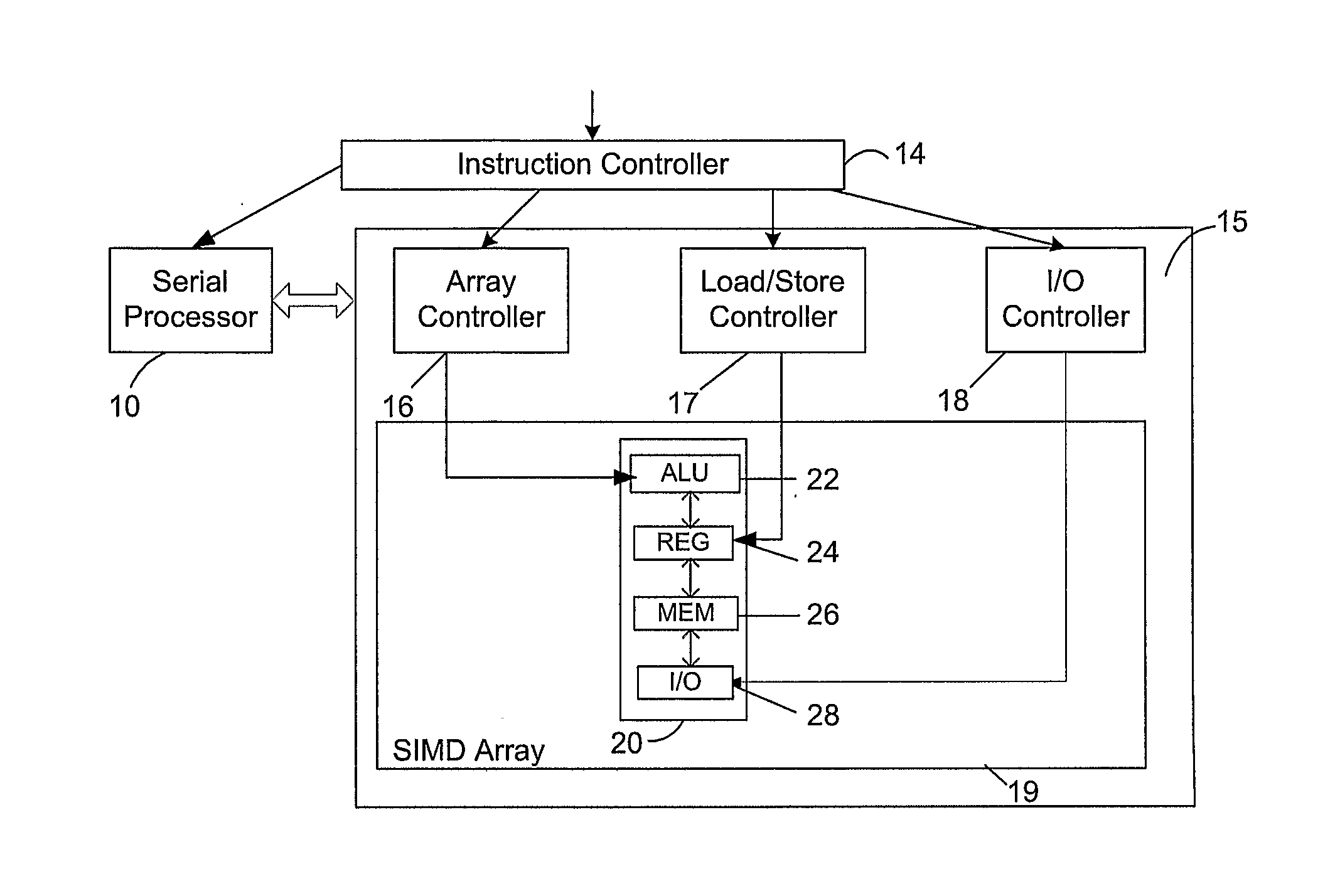

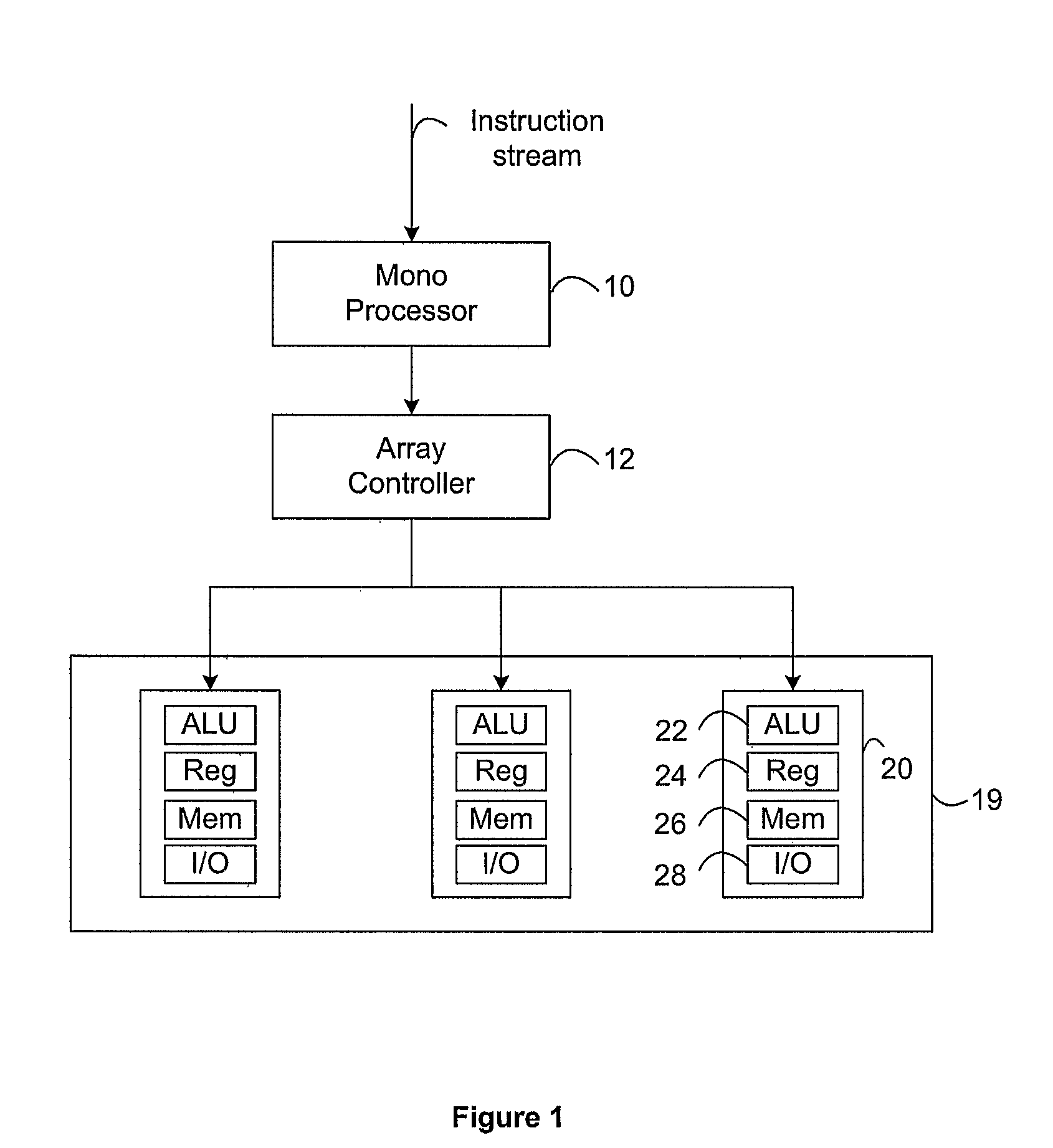

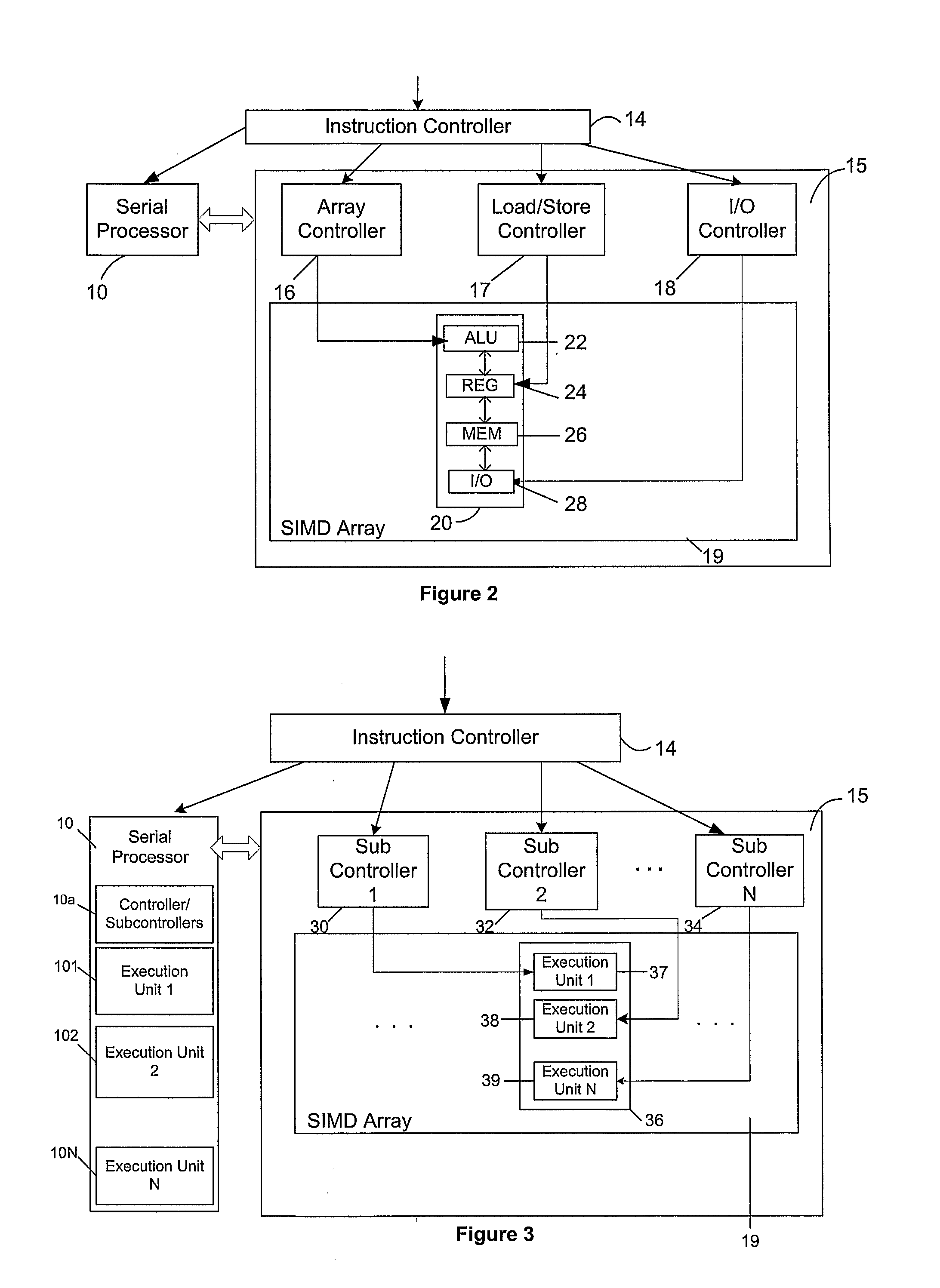

Microprocessor Architectures

InactiveUS20080209164A1Single instruction multiple data multiprocessorsProgram control using wired connectionsExecution unitProcessing element

A microprocessor architecture comprises a plurality of processing elements arranged in a single instruction multiple data SIMD array, wherein each processing element includes a plurality of execution units, each of which is operable to process an instruction of a particular instruction type, a serial processor which includes a plurality of execution units, each of which is operable to process an instruction of a particular instruction type, and an instruction controller operable to receive a plurality of instructions, and to distribute received instructions to the execution units in dependence upon the instruction types of the received instruction. The execution units of the serial processor are operable to process respective instructions in parallel.

Owner:RAMBUS INC

Dynamically allocated store queue for a multithreaded processor

ActiveUS8006075B2Digital computer detailsSpecific program execution arrangementsData shippingInstruction distribution

Owner:ORACLE INT CORP

Unmanned aerial vehicle-based abnormal traffic identification method

InactiveCN105788269AEfficient identificationAccurate identificationDetection of traffic movementTime conditionControl system

The invention relates to an unmanned aerial vehicle-based abnormal traffic identification method. The method includes the following steps that: an unmanned aerial vehicle acquires traffic image information in a cruise range and outputs the traffic image information to a server; the traffic image information is processed; current processed traffic image information is compared with preset normal state traffic image information; and whether a anomaly exists in a current road section can be judged. According to the unmanned aerial vehicle-based abnormal traffic identification method of the invention adopted; real-time traffic is photographed by the unmanned aerial vehicle, and pictures are automatically processed; picture information worthy of being distributed is selected and is uploaded to a traffic control system; based on a floating car method principle and an intensity projection method, the unmanned aerial vehicle is utilized to detect the volume of traffic of the road; and the traffic control system can perform operation such as traffic state detection, traffic safety early warning and traffic control induction according to traffic real-time condition and traffic flow in the pictures and perform picture receiving, processing and instruction distribution according to a cloud service platform.

Owner:CHINA MERCHANTS CHONGQING COMM RES & DESIGN INST

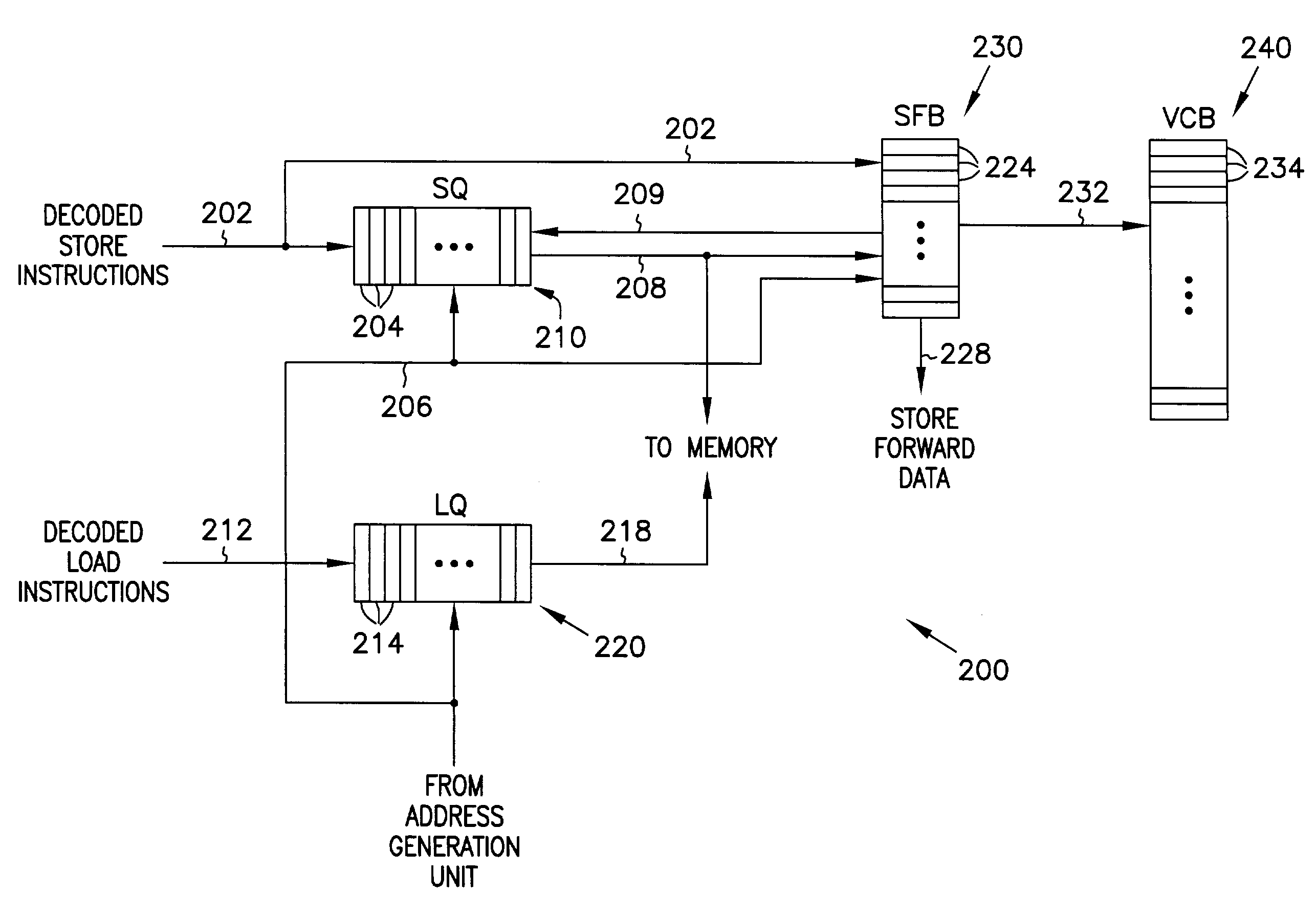

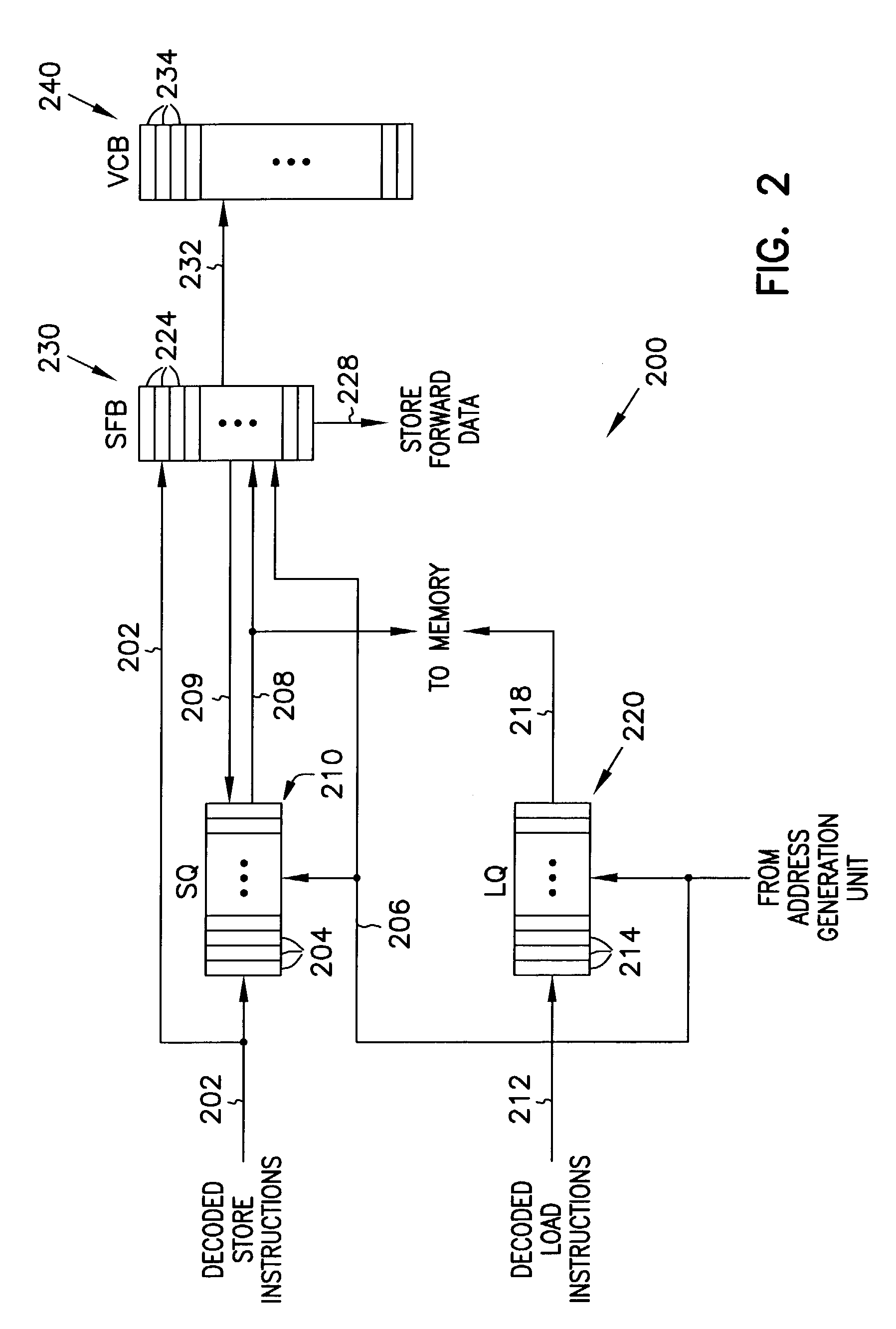

Memory disambiguation for large instruction windows

InactiveUS7418552B2Digital computer detailsConcurrent instruction executionLoad instructionInstruction window

A memory disambiguation apparatus includes a store queue, a store forwarding buffer, and a version count buffer. The store queue includes an entry for each store instruction in the instruction window of a processor. Some store queue entries include resolved store addresses, and some do not. The store forwarding buffer is a set-associative buffer that has entries allocated for store instructions as store addresses are resolved. Each entry in the store forwarding buffer is allocated into a set determined in part by a subset of the store address. When the set in the store forwarding buffer is full, an older entry in the set is discarded in favor of the newly allocated entry. A version count buffer including an array of overflow indicators is maintained to track overflow occurrences. As load addresses are resolved for load instructions in the instruction window, the set-associative store forwarding buffer can be searched to provide memory disambiguation.

Owner:INTEL CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com