Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

165 results about "Simultaneous multithreading" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Simultaneous multithreading (SMT) is a technique for improving the overall efficiency of superscalar CPUs with hardware multithreading. SMT permits multiple independent threads of execution to better utilize the resources provided by modern processor architectures.

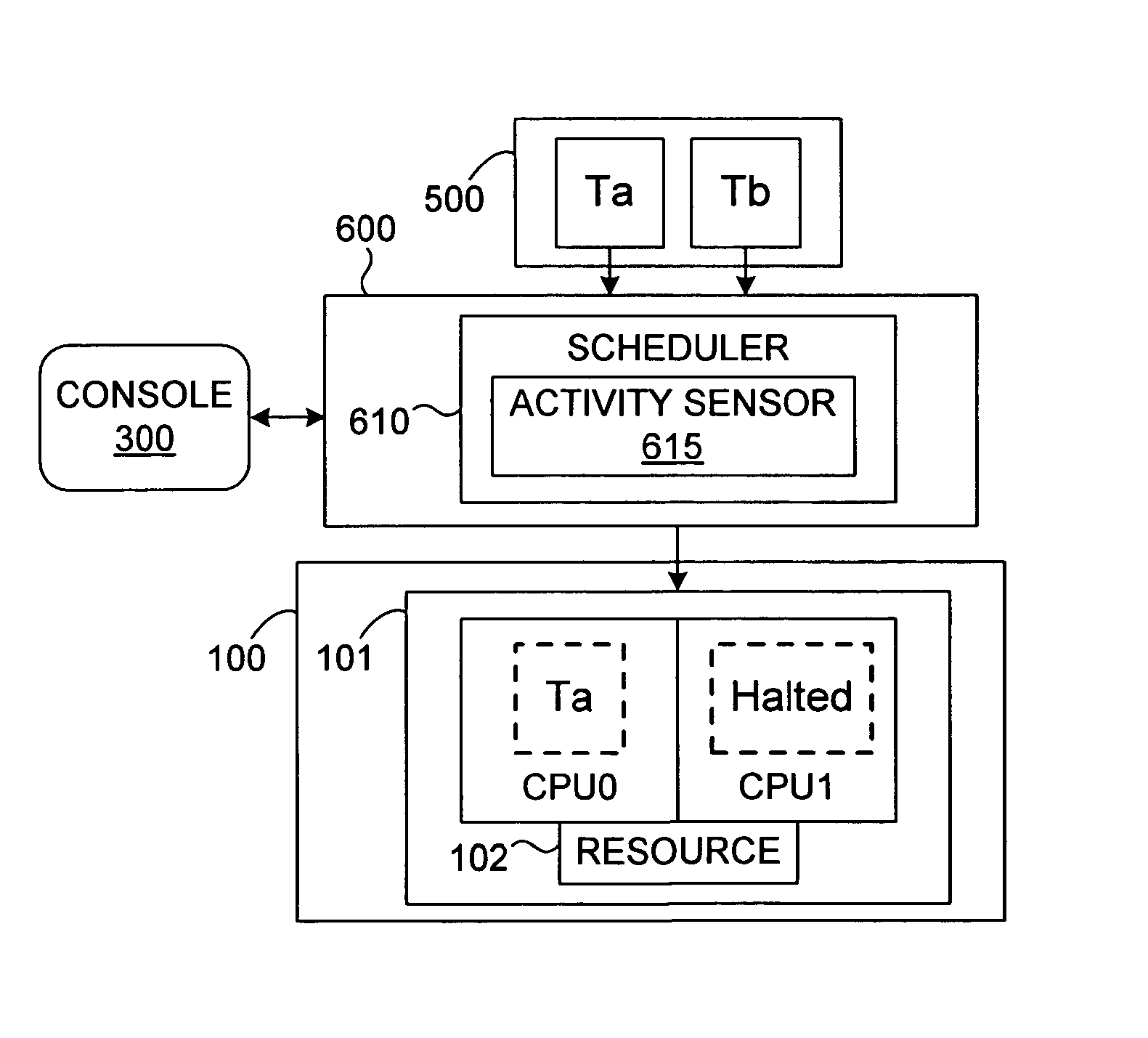

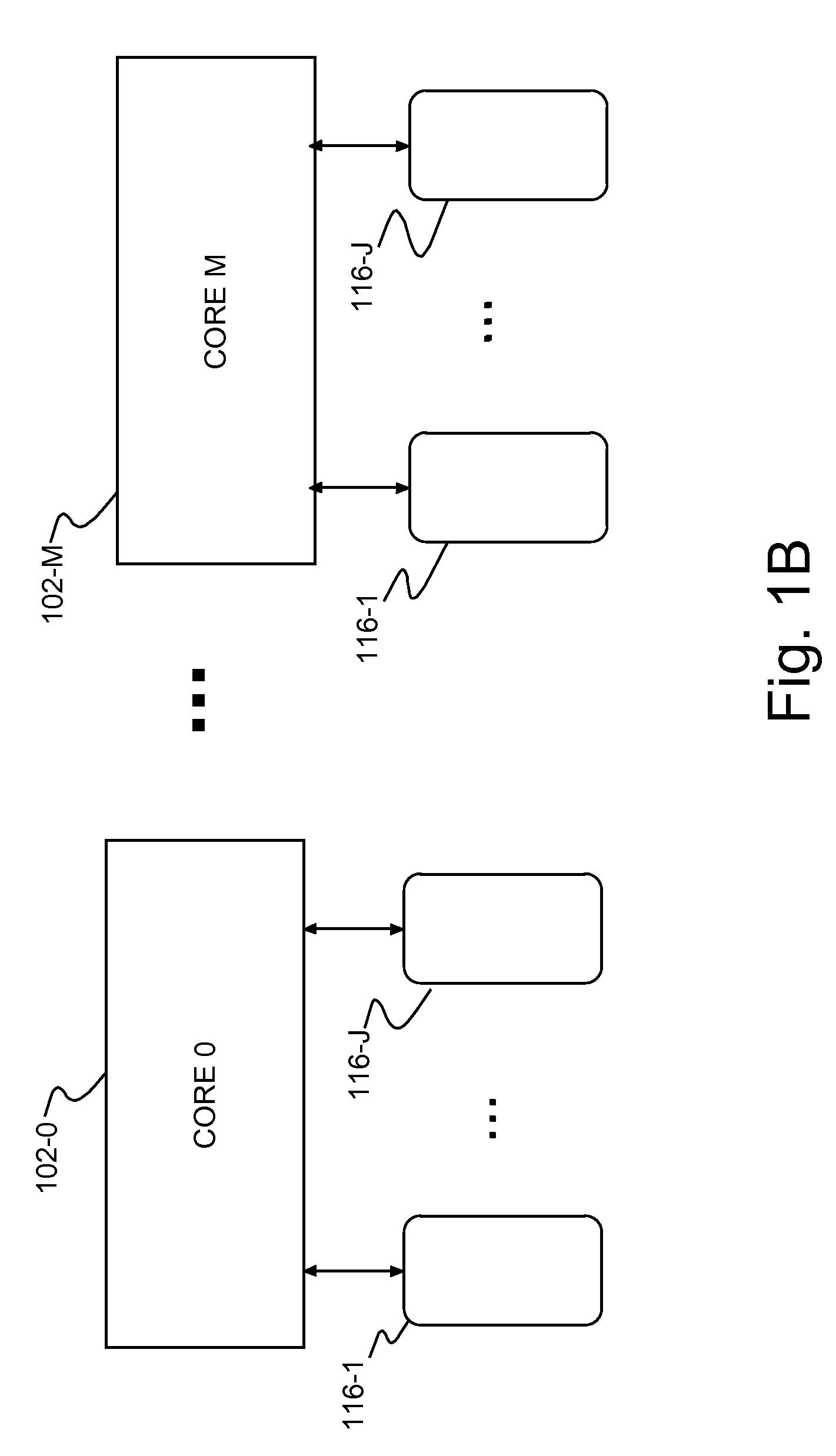

Mechanism for scheduling execution of threads for fair resource allocation in a multi-threaded and/or multi-core processing system

ActiveUS7707578B1Digital computer detailsMultiprogramming arrangementsThread schedulingShared resource

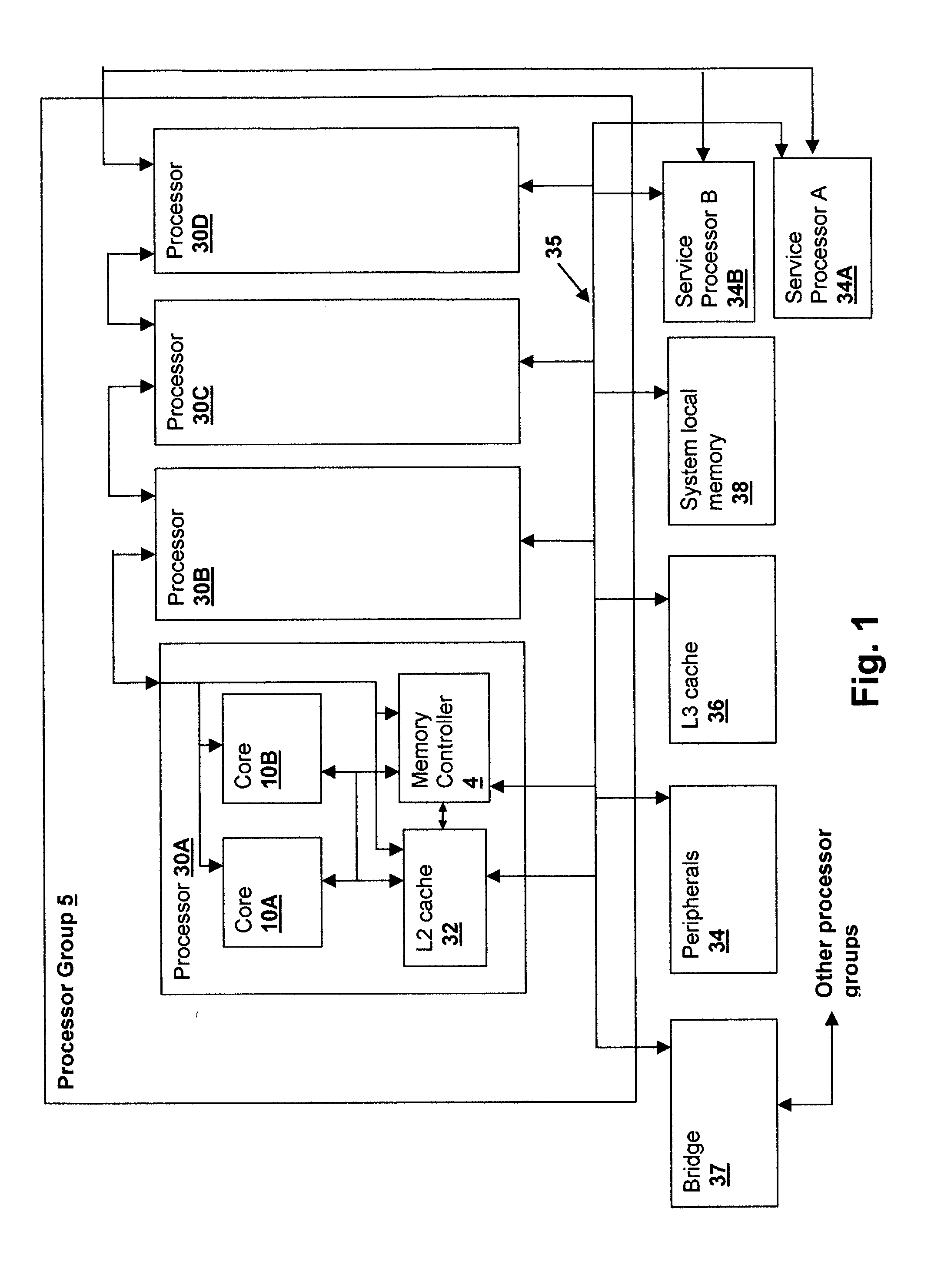

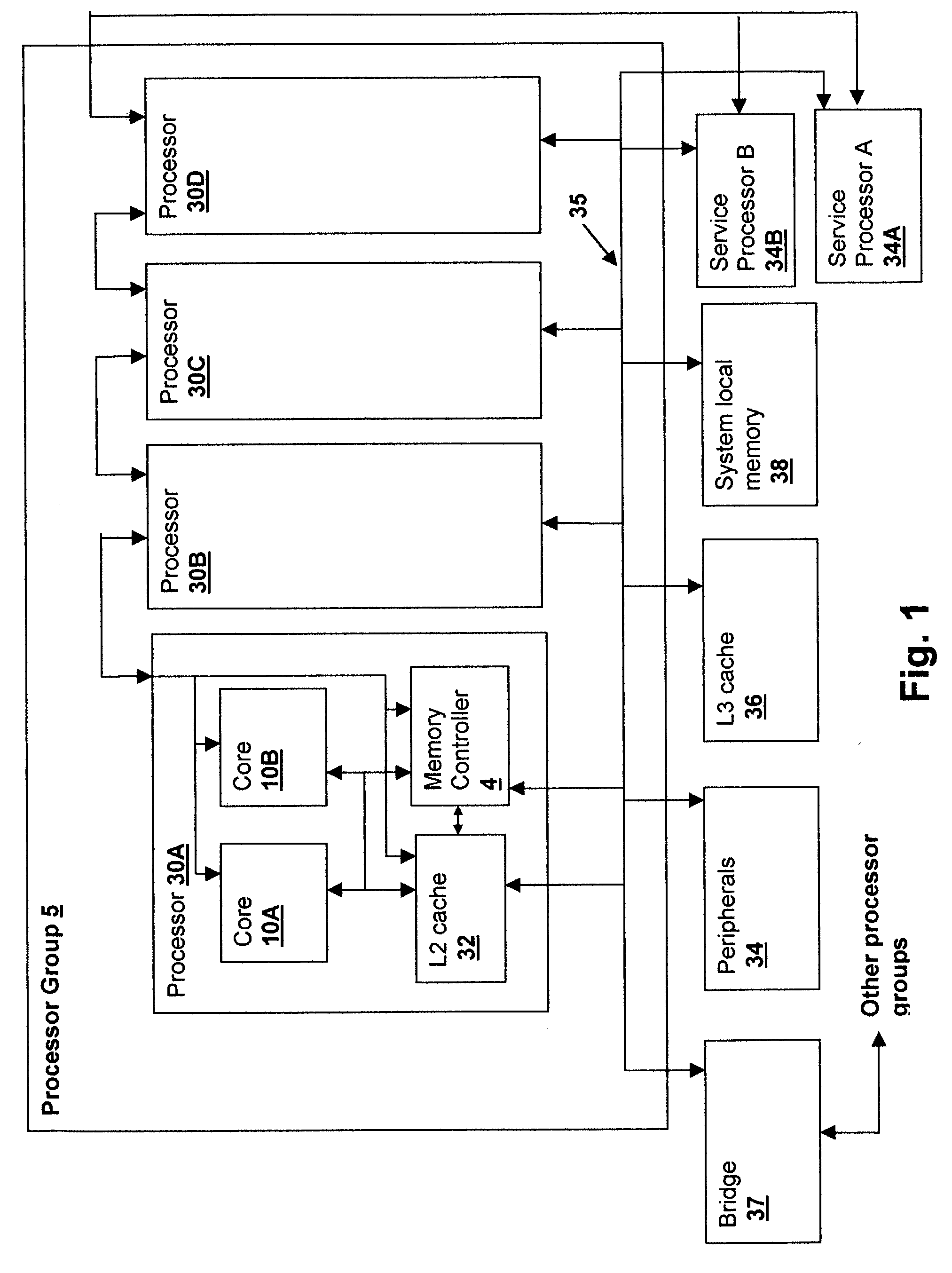

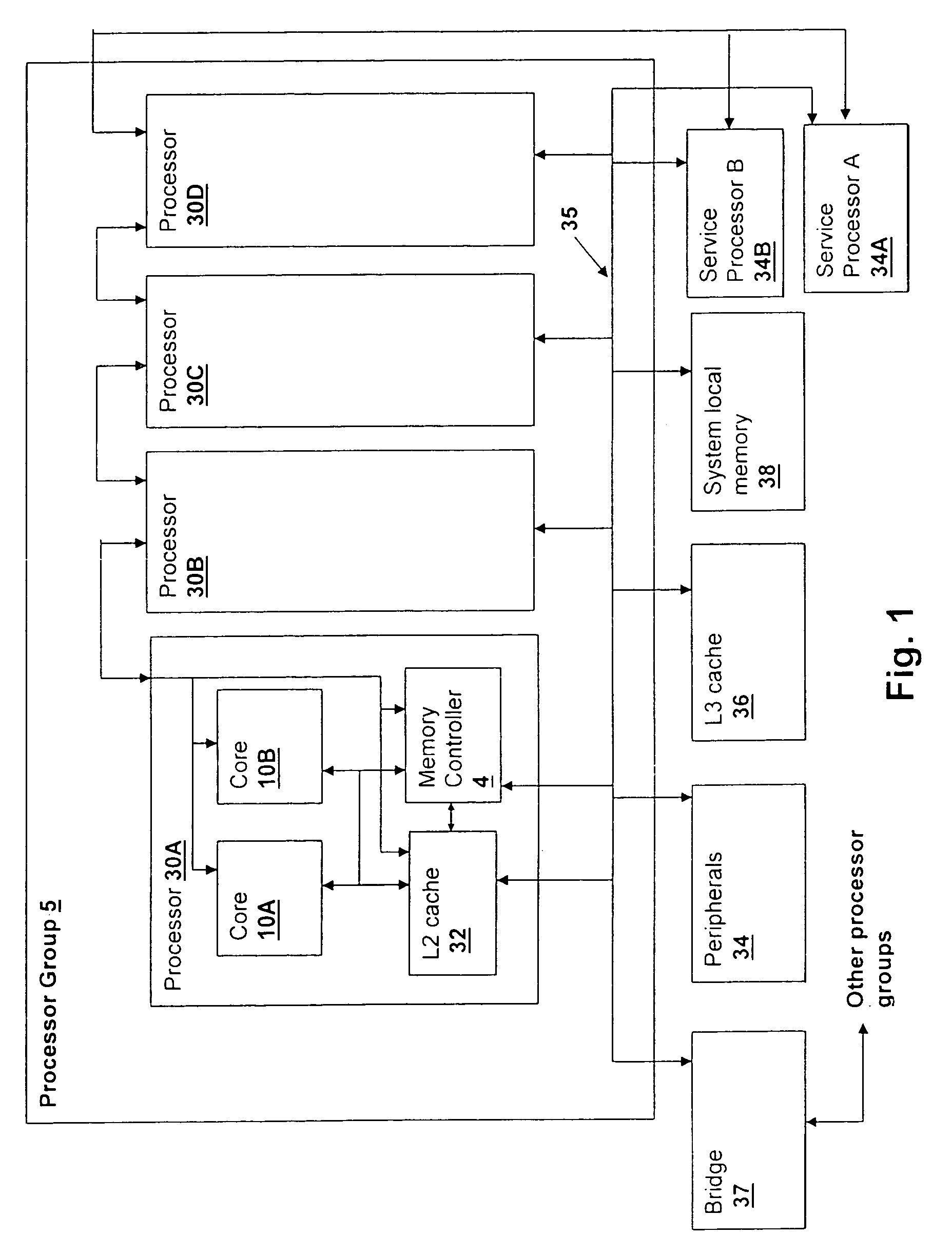

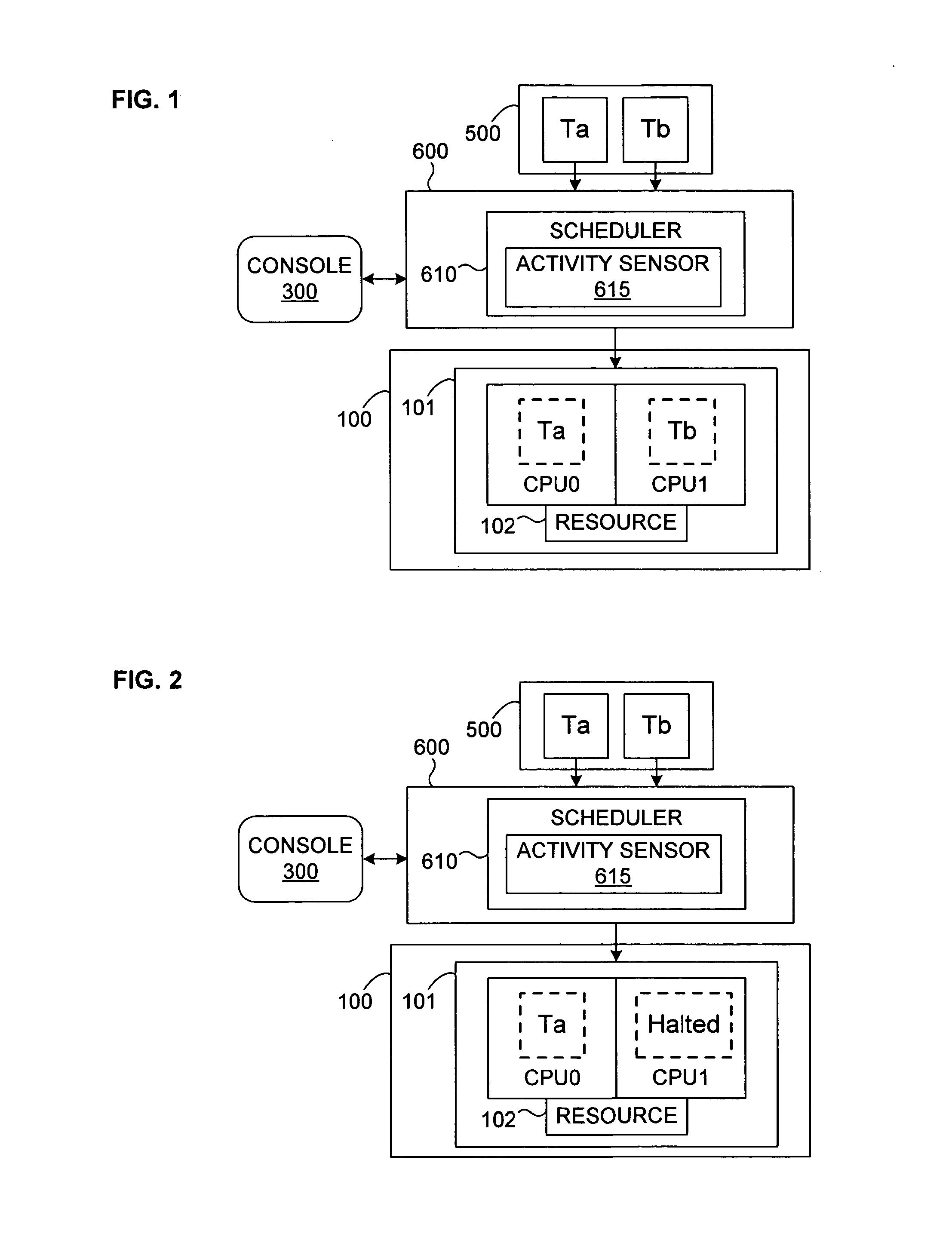

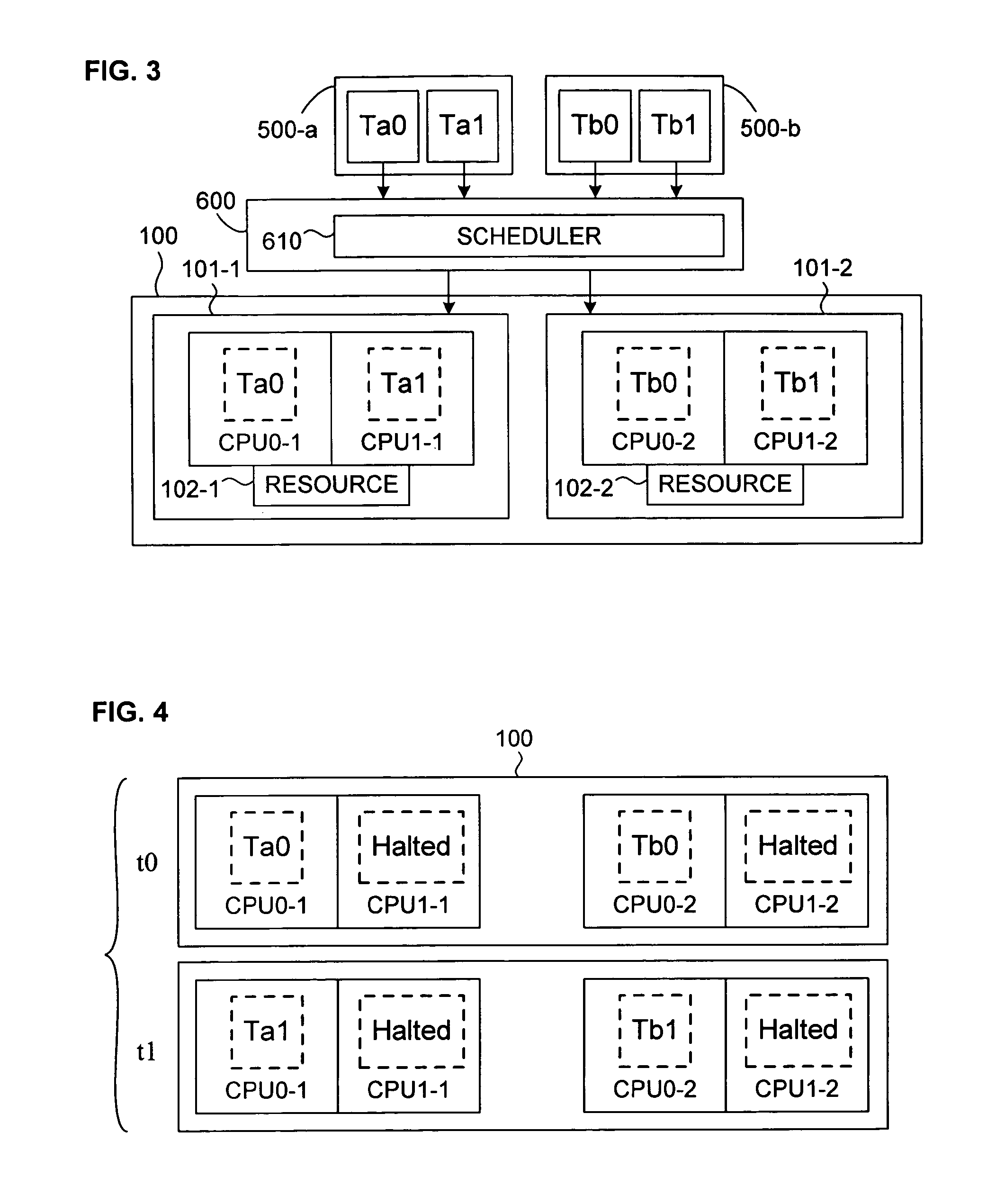

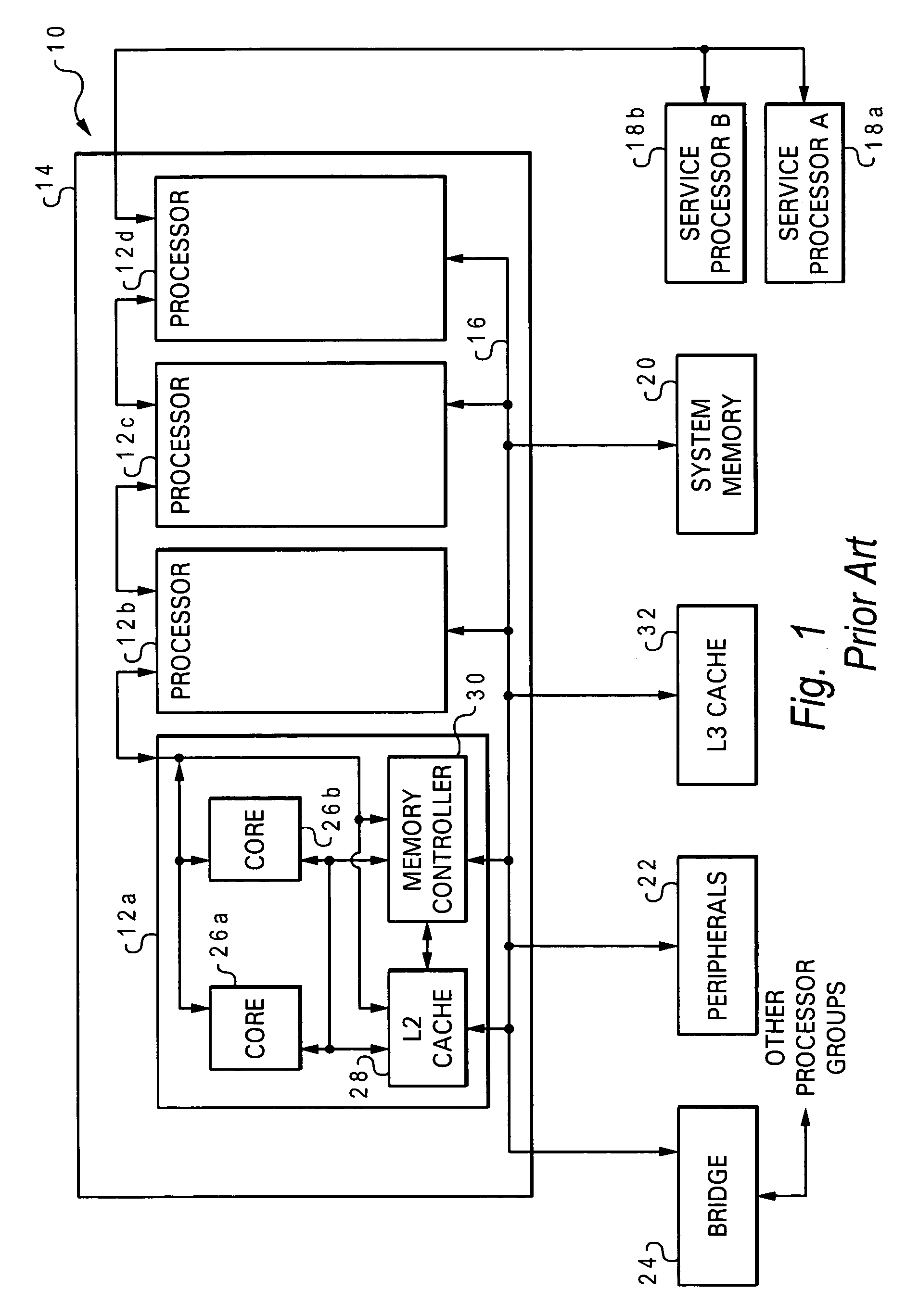

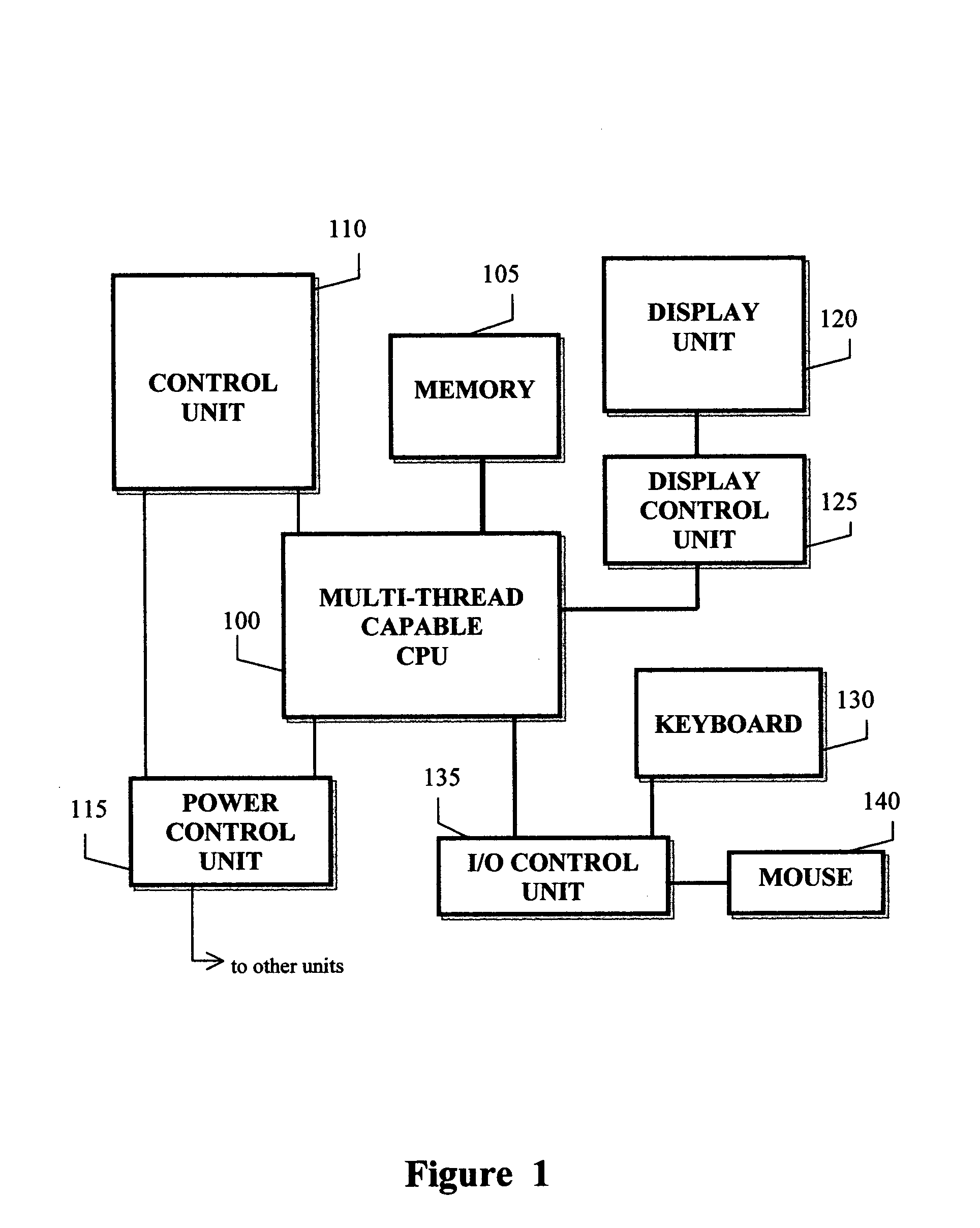

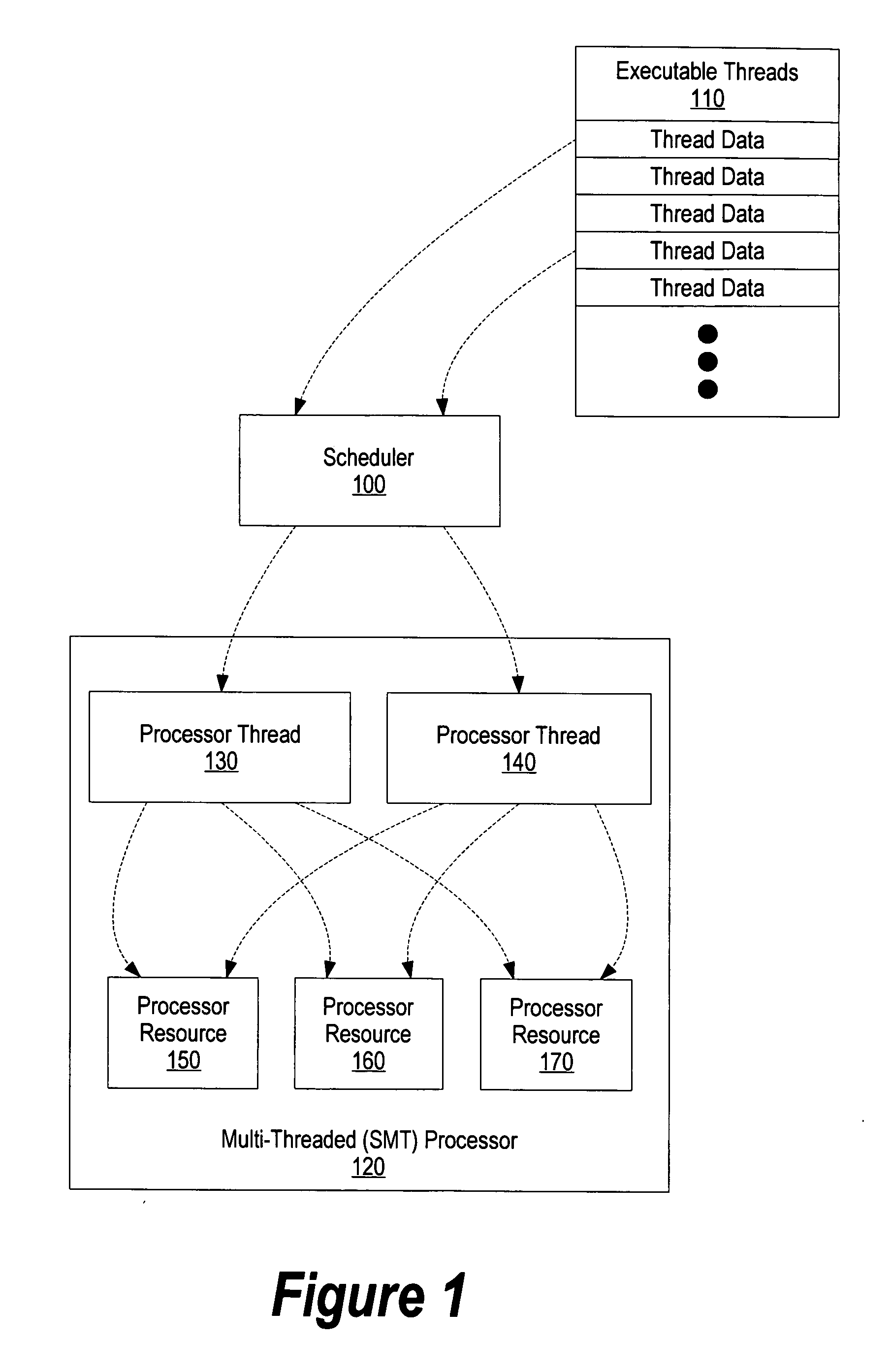

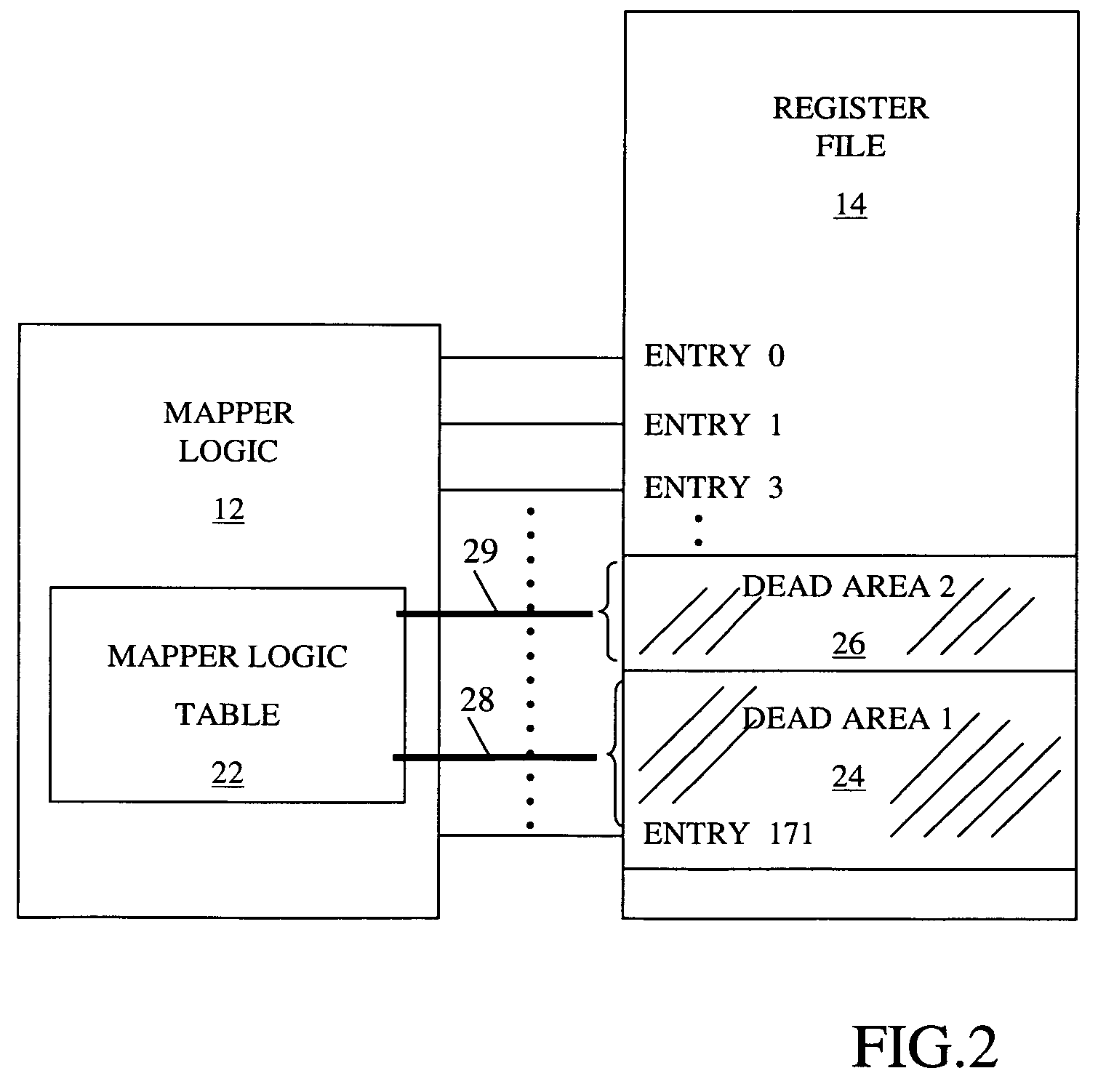

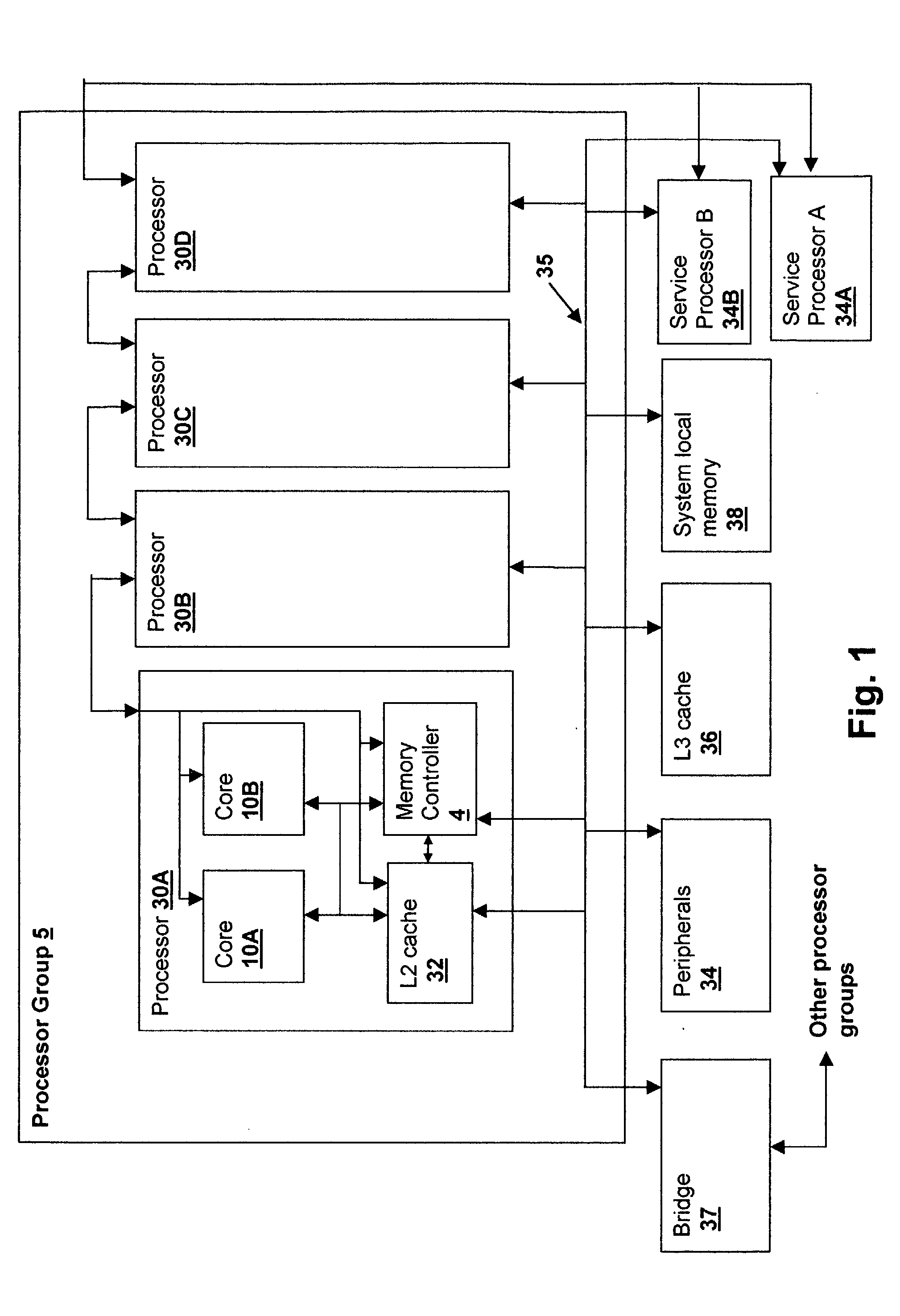

A thread scheduling mechanism is provided that flexibly enforces performance isolation of multiple threads to alleviate the effect of anti-cooperative execution behavior with respect to a shared resource, for example, hoarding a cache or pipeline, using the hardware capabilities of simultaneous multi-threaded (SMT) or multi-core processors. Given a plurality of threads running on at least two processors in at least one functional processor group, the occurrence of a rescheduling condition indicating anti-cooperative execution behavior is sensed, and, if present, at least one of the threads is rescheduled such that the first and second threads no longer execute in the same functional processor group at the same time.

Owner:VMWARE INC

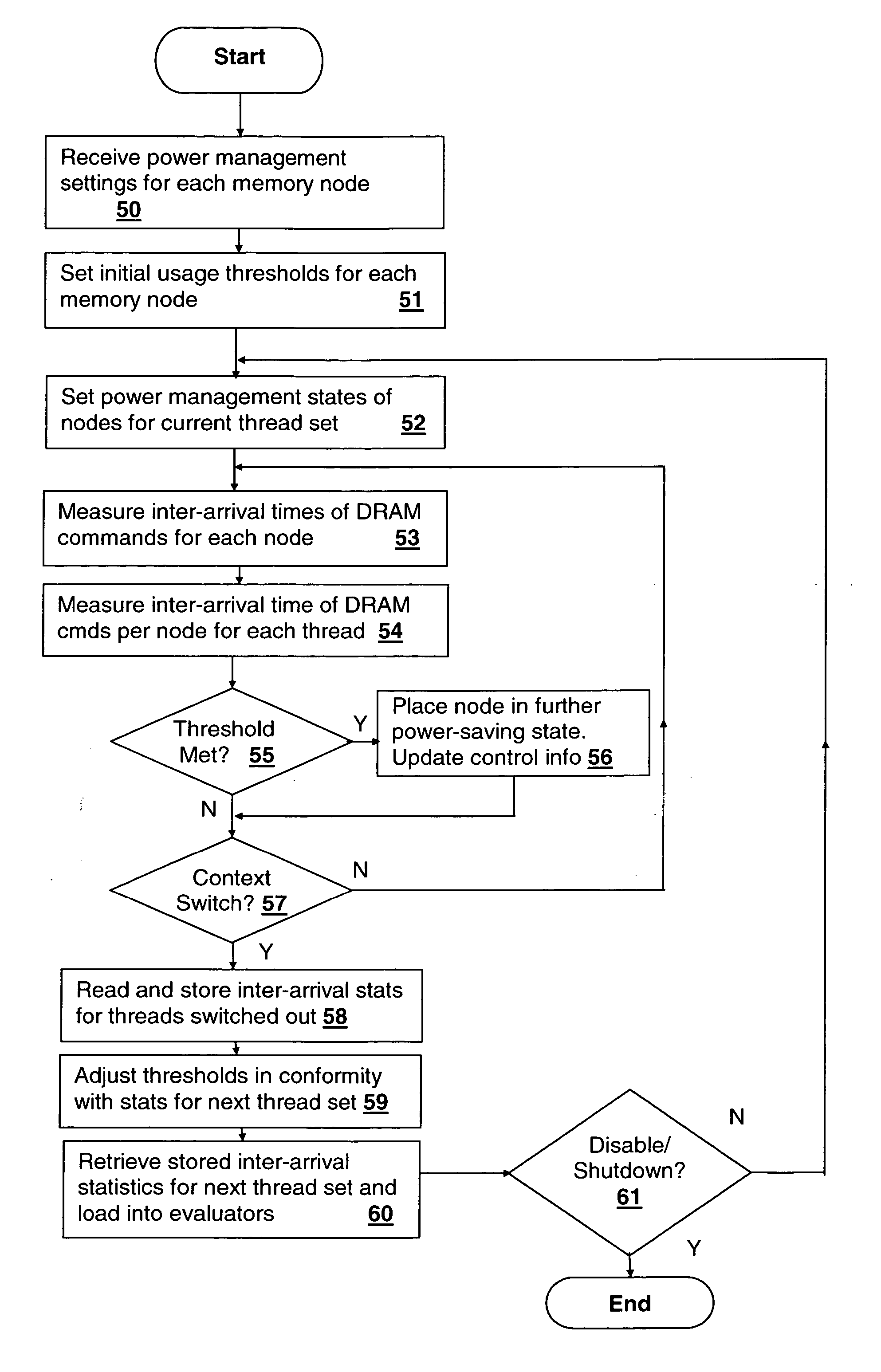

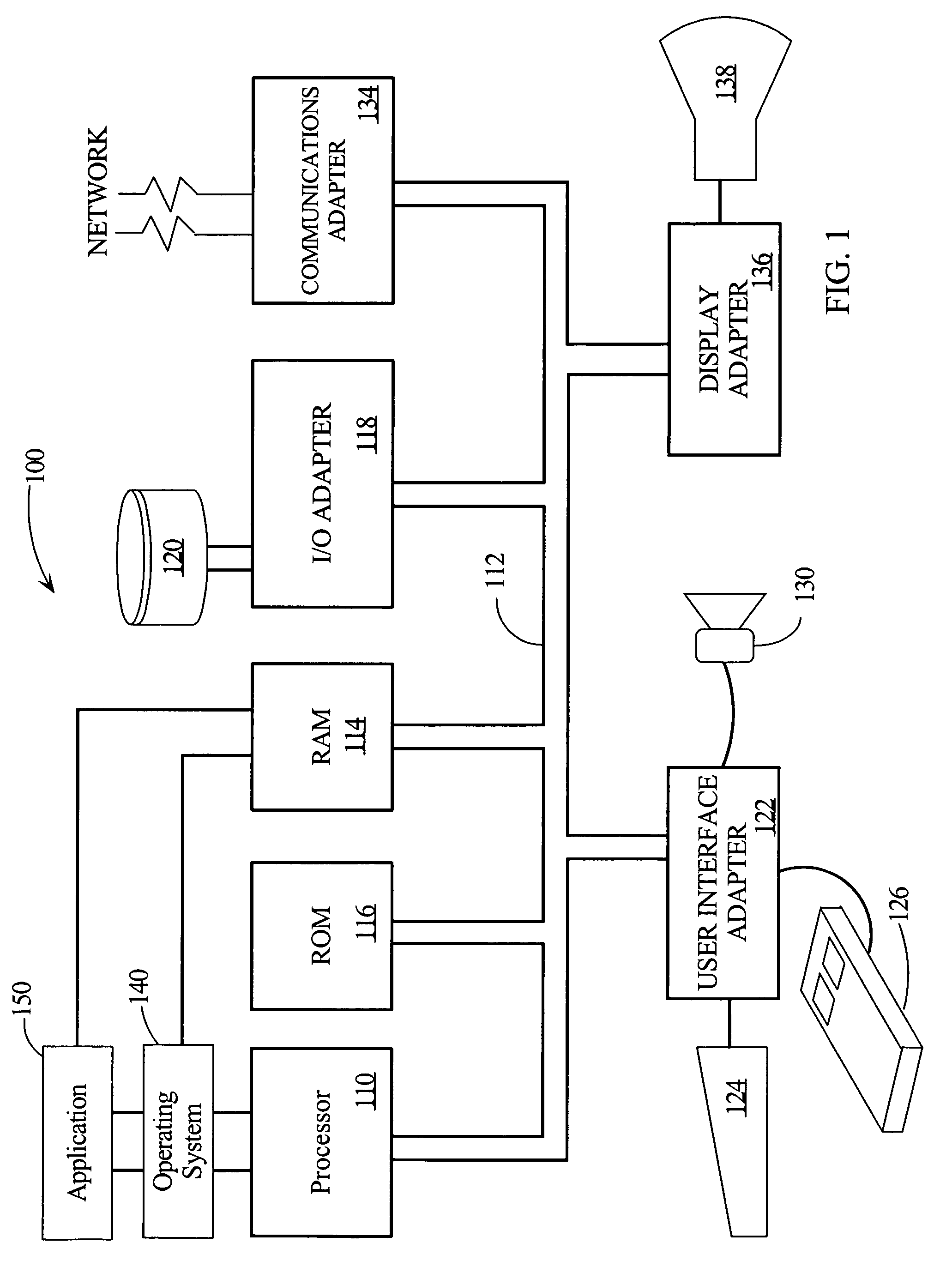

Method and system for energy management in a simultaneous multi-threaded (SMT) processing system including per-thread device usage monitoring

InactiveUS20050138442A1Lower latencyReduce power consumptionEnergy efficient ICTVolume/mass flow measurementDecision controlOperational system

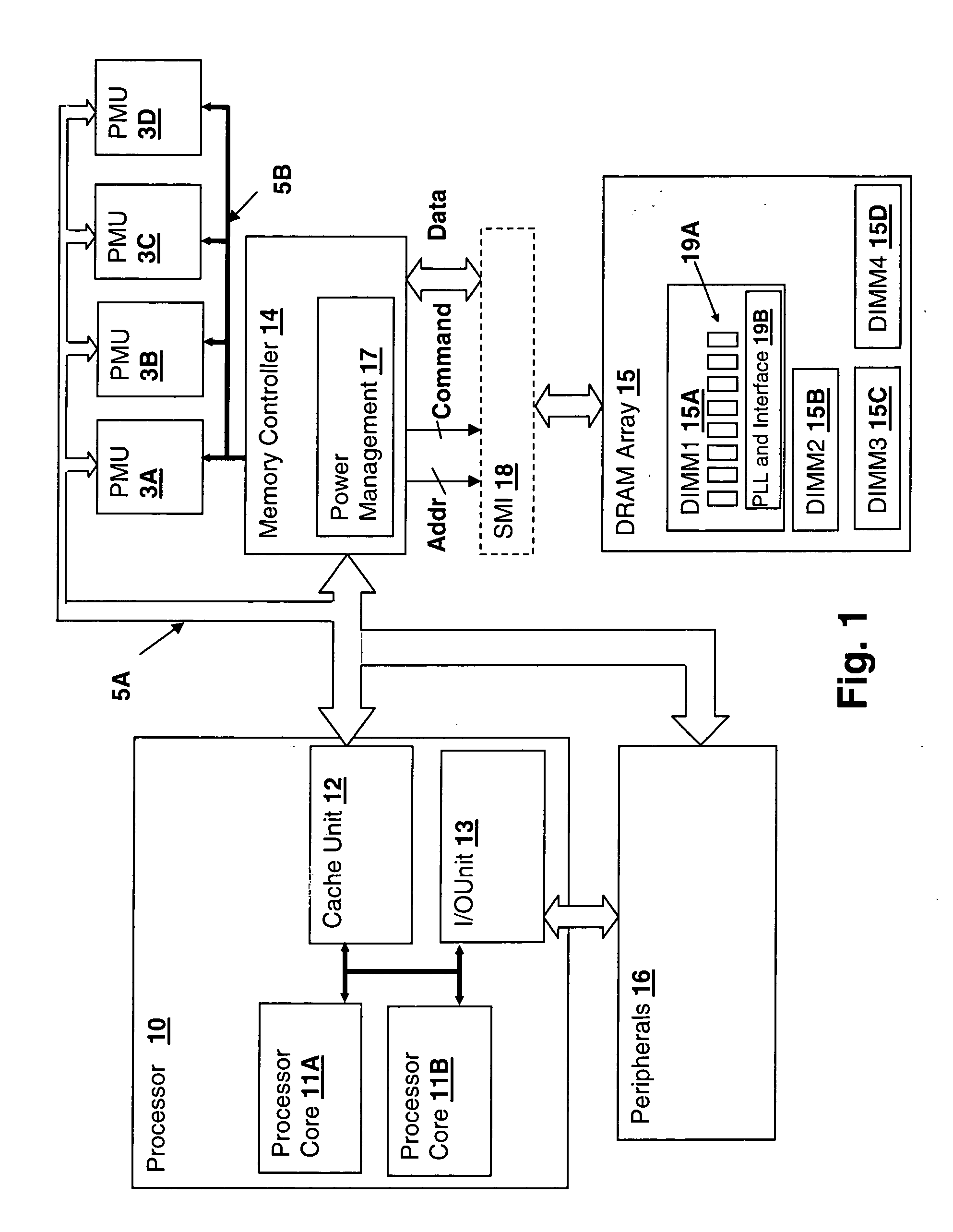

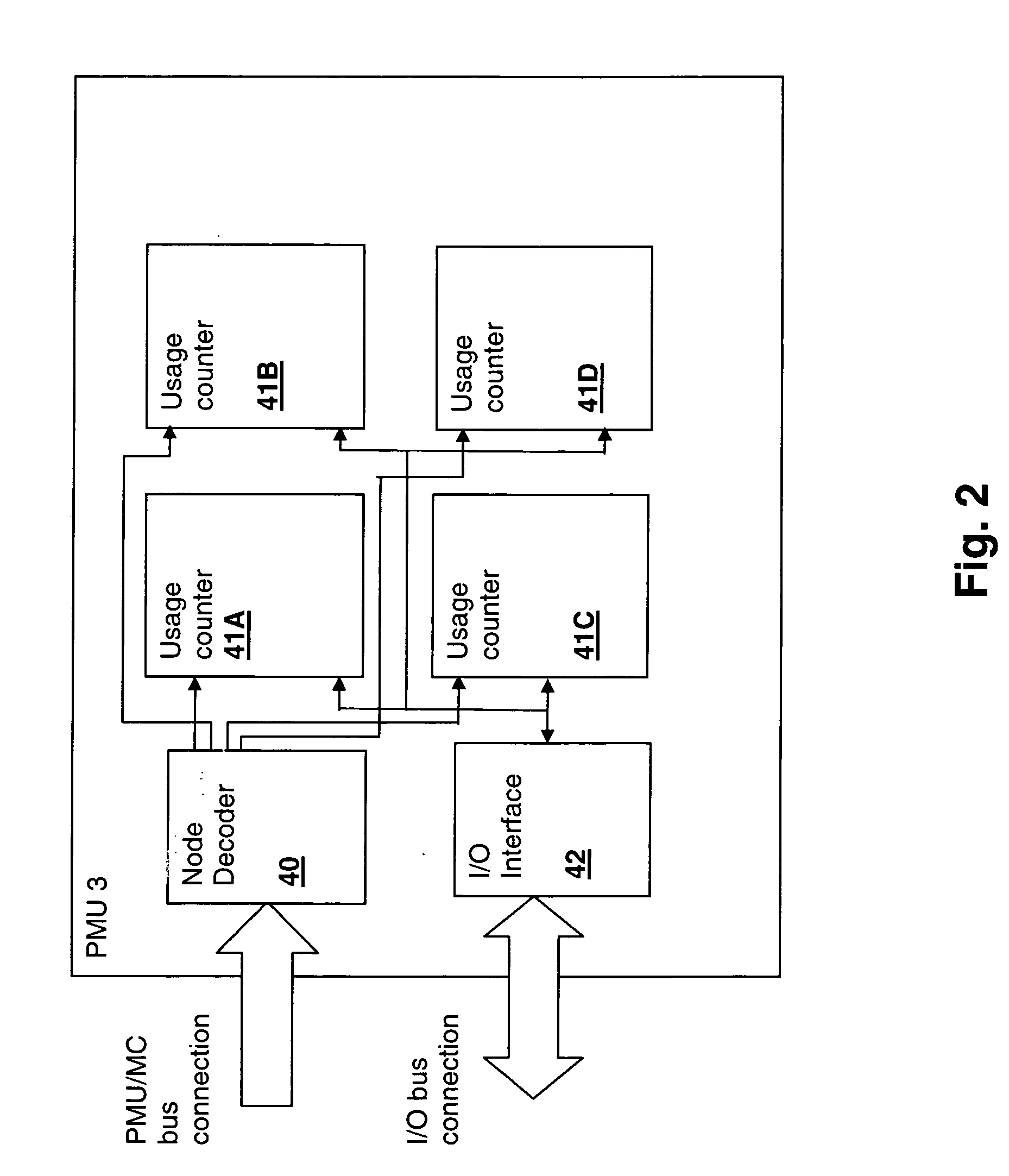

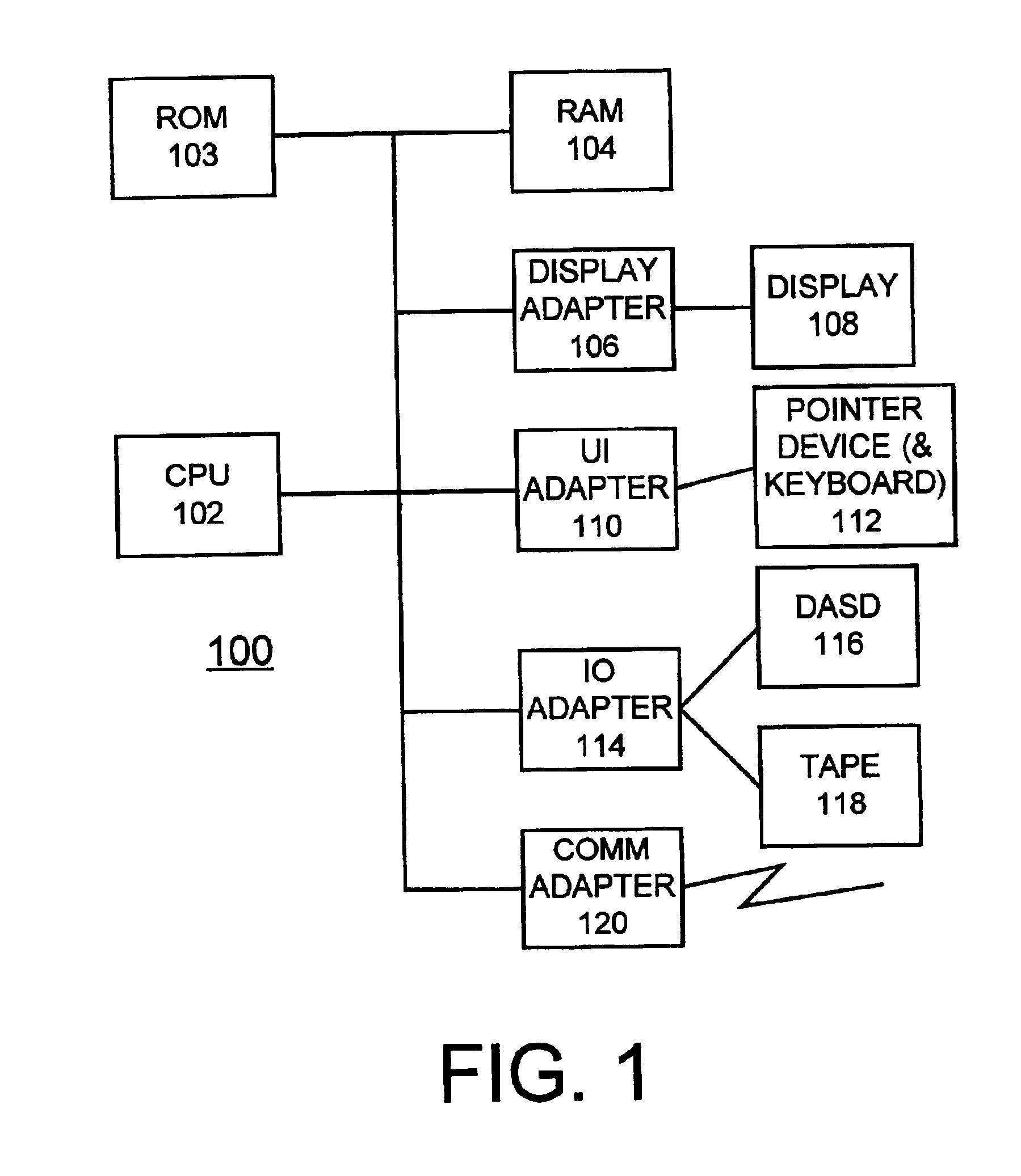

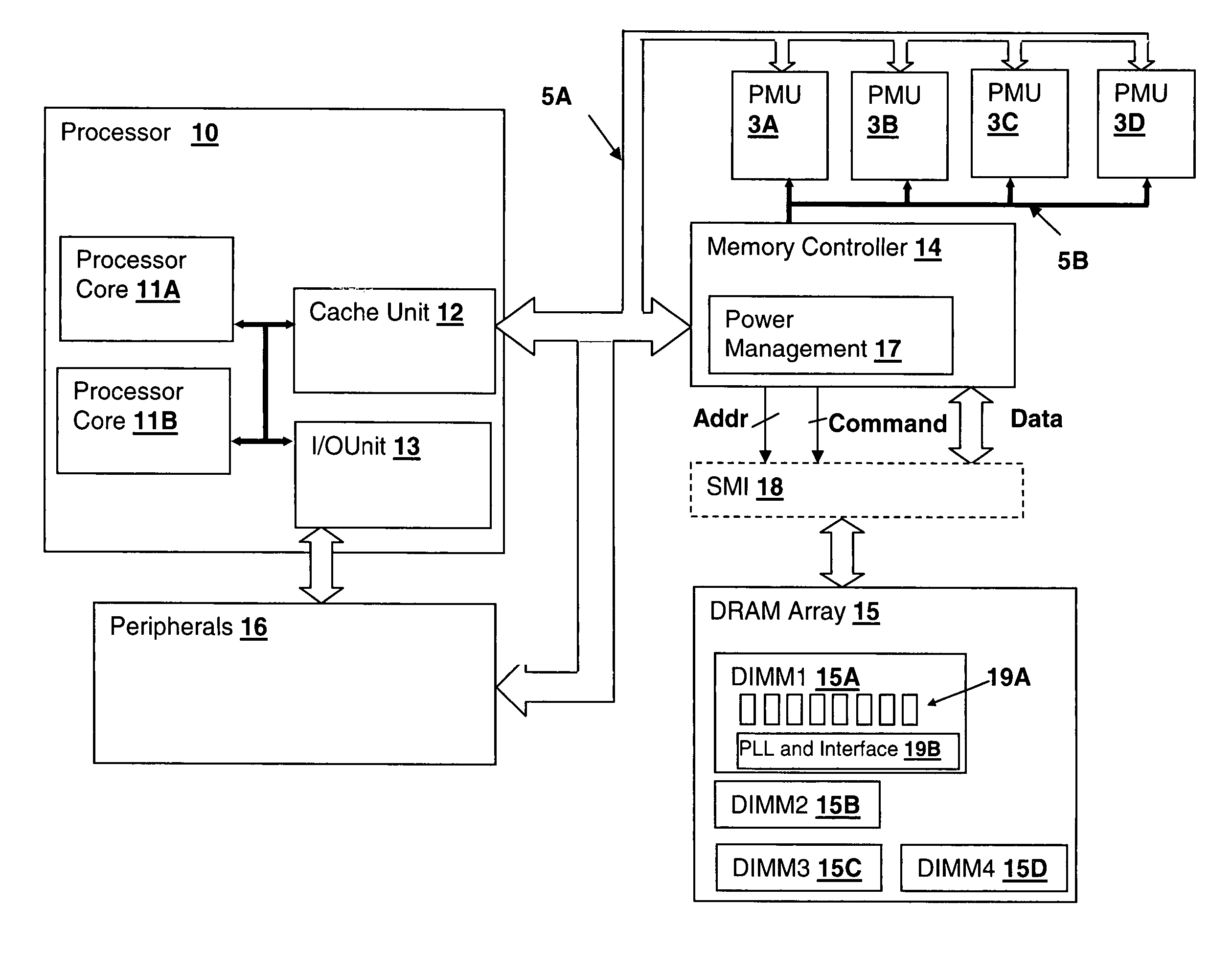

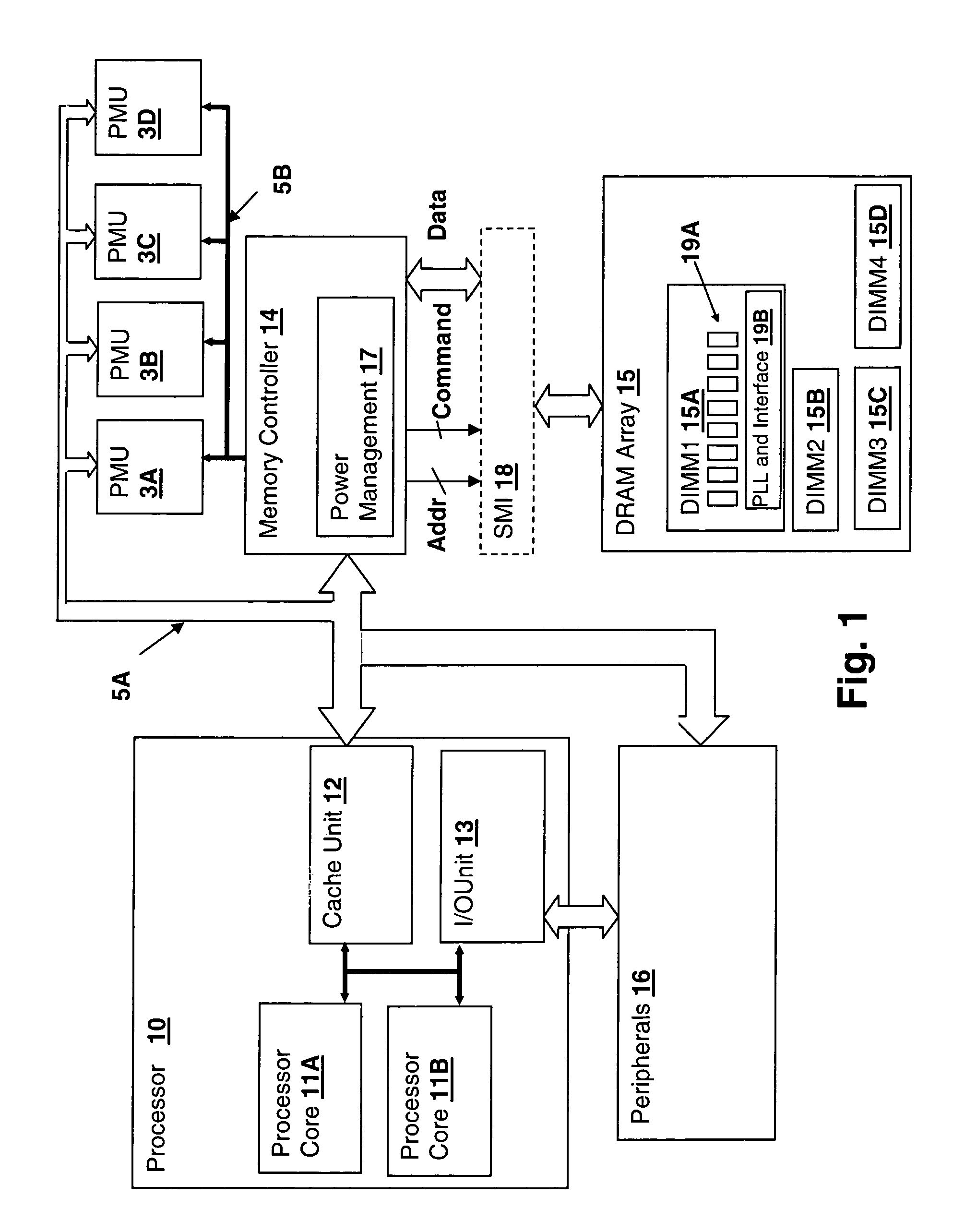

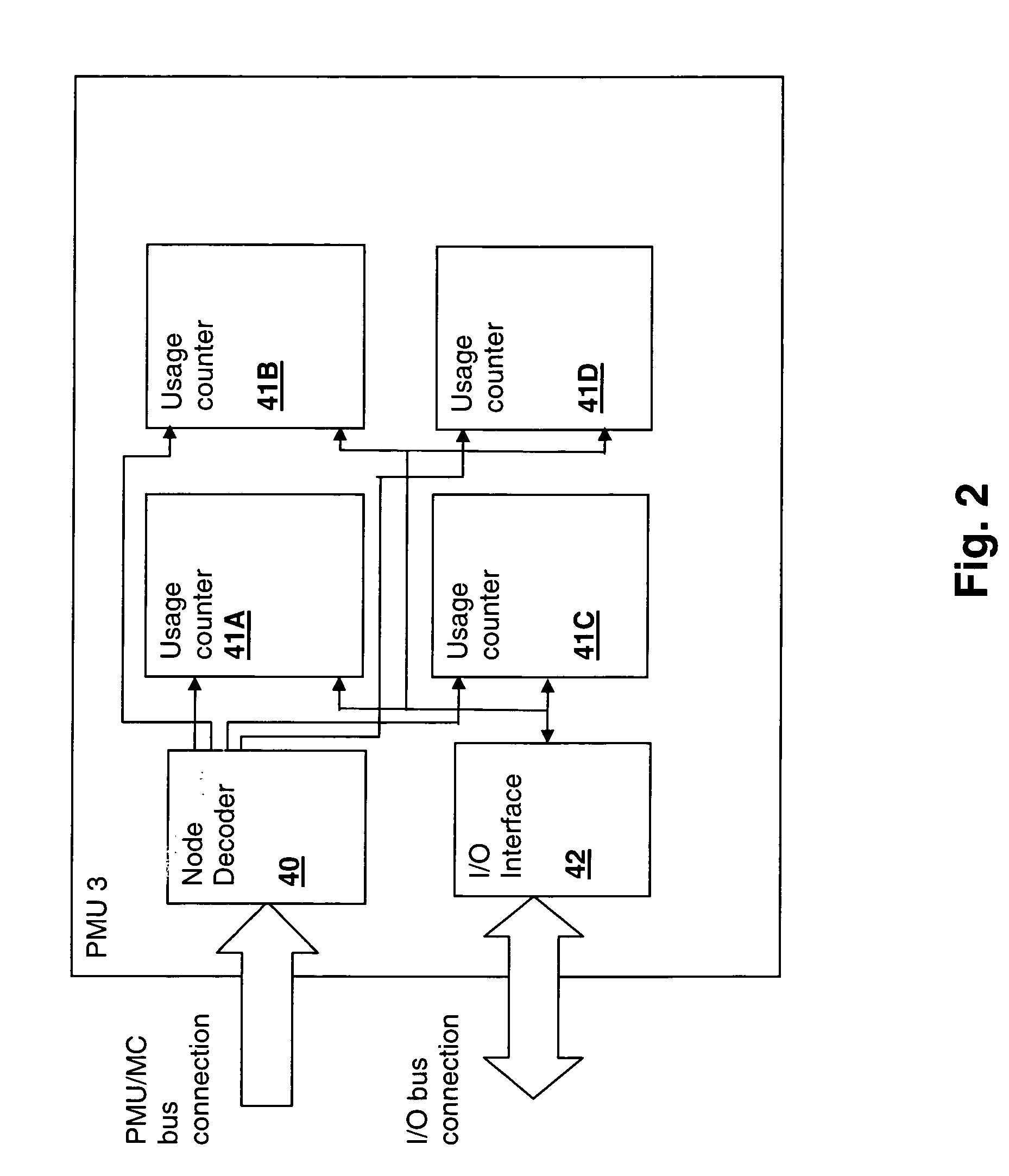

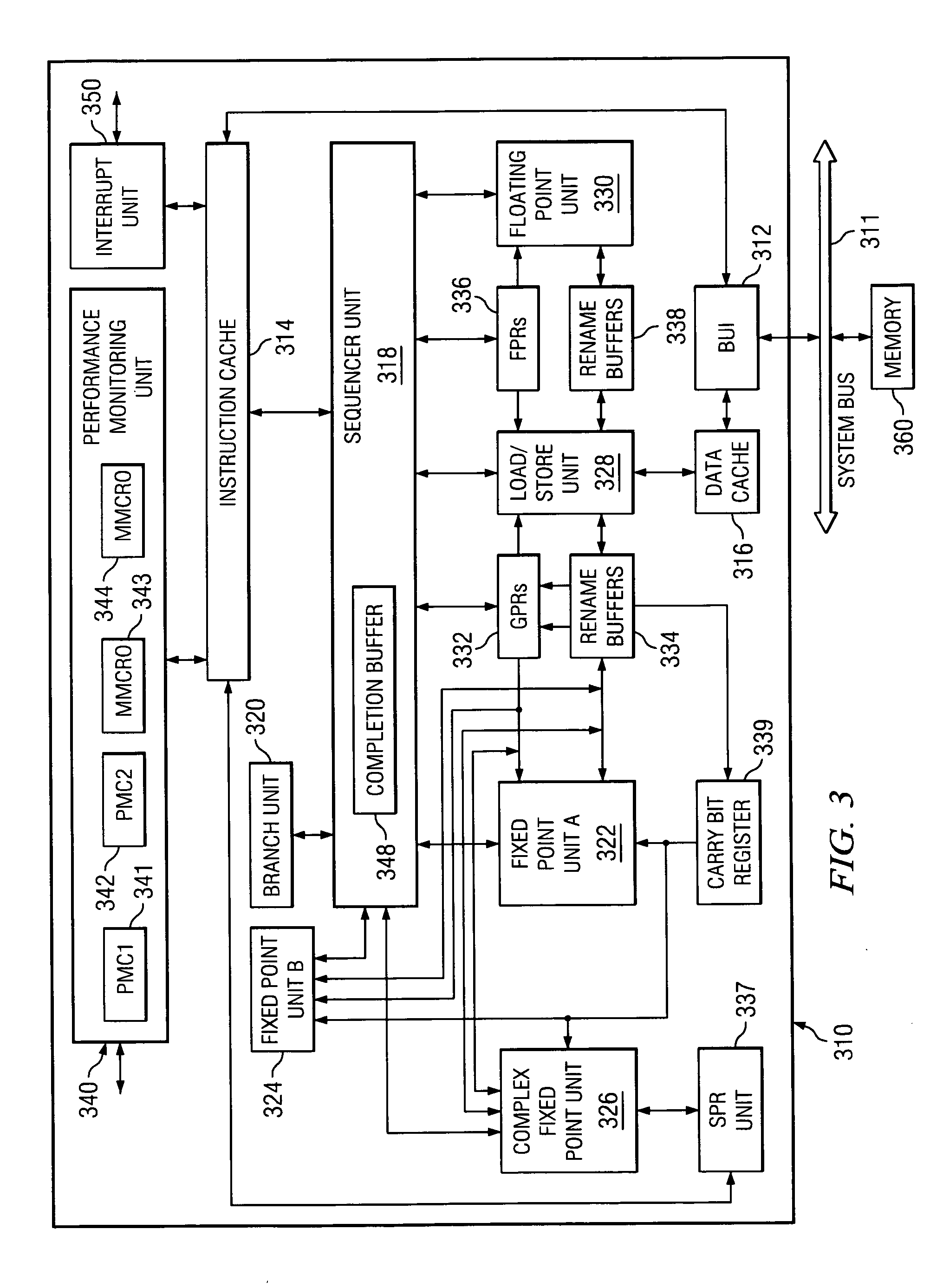

A method and system for energy management in a simultaneous multi-threaded (SMT) processing system including per-thread device usage monitoring provides control of energy usage that accommodates thread parallelism. Per-device usage information is measured and stored on a per-thread basis, so that upon a context switch, the previous usage evaluation state can be restored. The per-thread usage information is used to adjust the thresholds of device energy management decision control logic, so that energy use can be managed with consideration as to which threads will be running in a given execution slice. A device controller can then provide for per-thread control of attached device power management states without intervention by the processor and without losing the historical evaluation state when a process is switched out. The device controller may be a memory controller and the controlled devices memory modules or banks within modules if individual banks can be power-managed. Local thresholds provide the decision-making mechanism for each controlled device and are adjusted by the operating system in conformity with the measured usage level for threads executing within the processing system. The per-thread usage information may be obtained from a performance monitoring unit that is located within or external to the device controller and the usage monitoring state is then retrieved and replaced by the operating system at each context switch.

Owner:IBM CORP

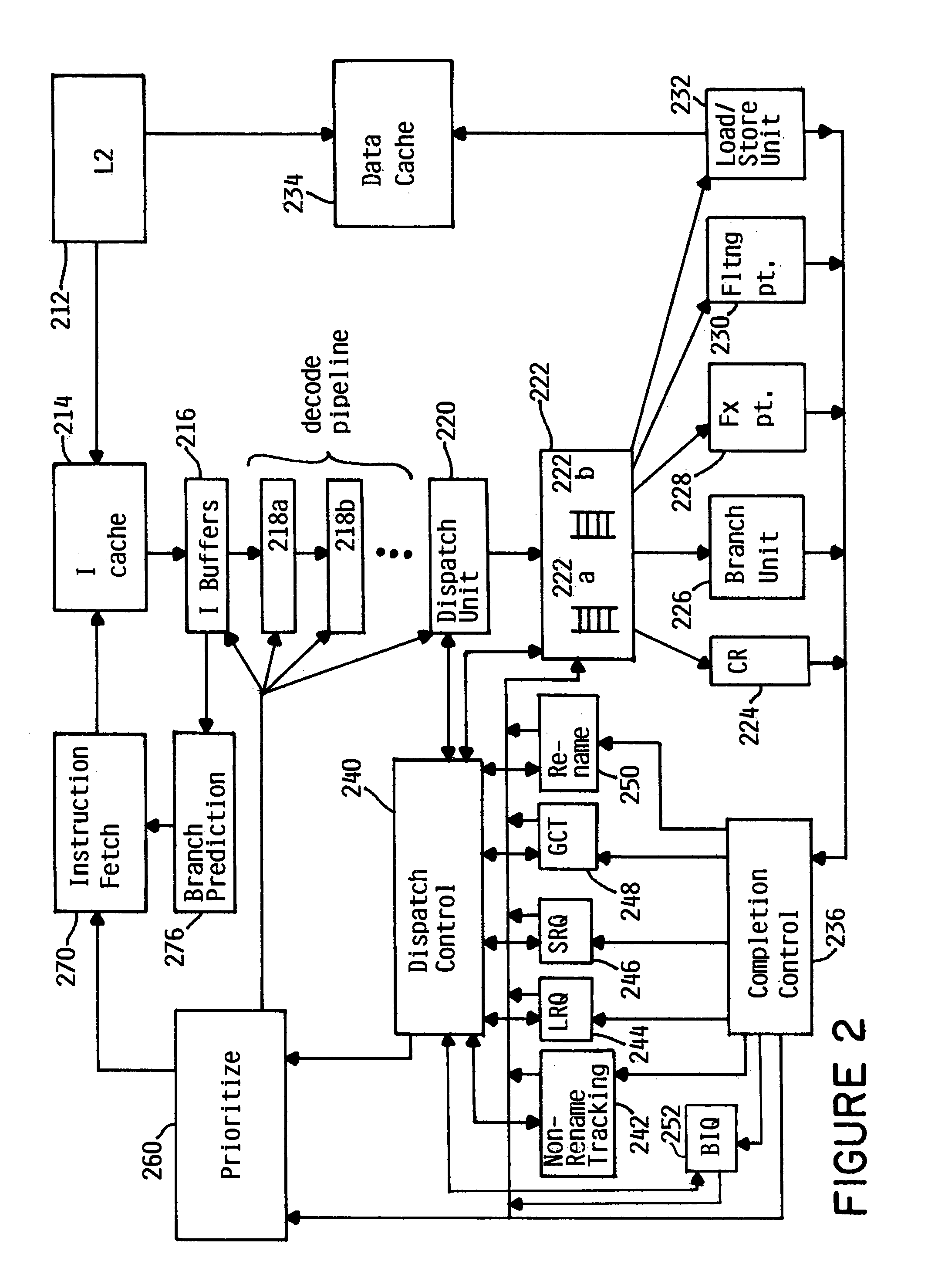

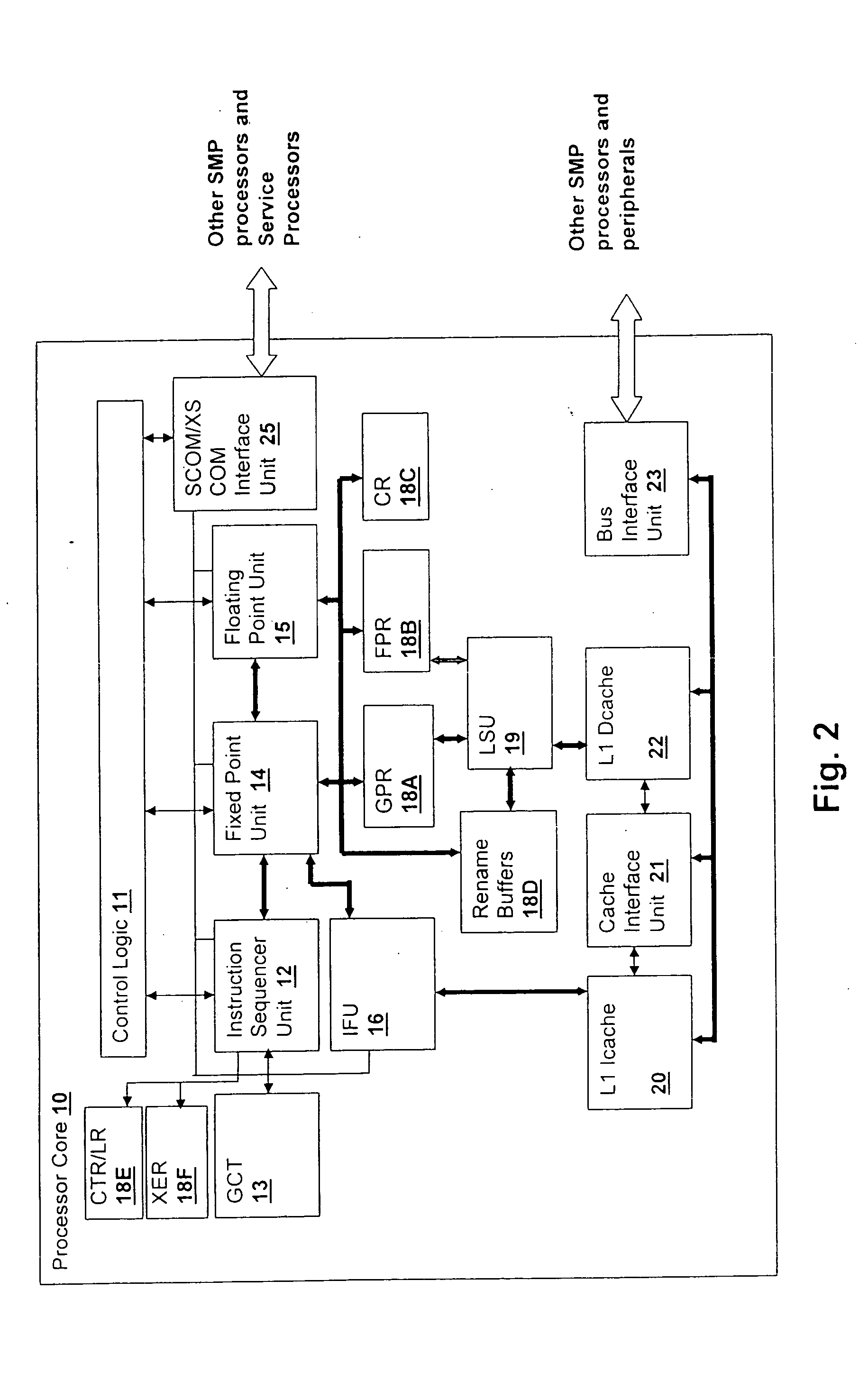

Method and logical apparatus for rename register reallocation in a simultaneous multi-threaded (SMT) processor

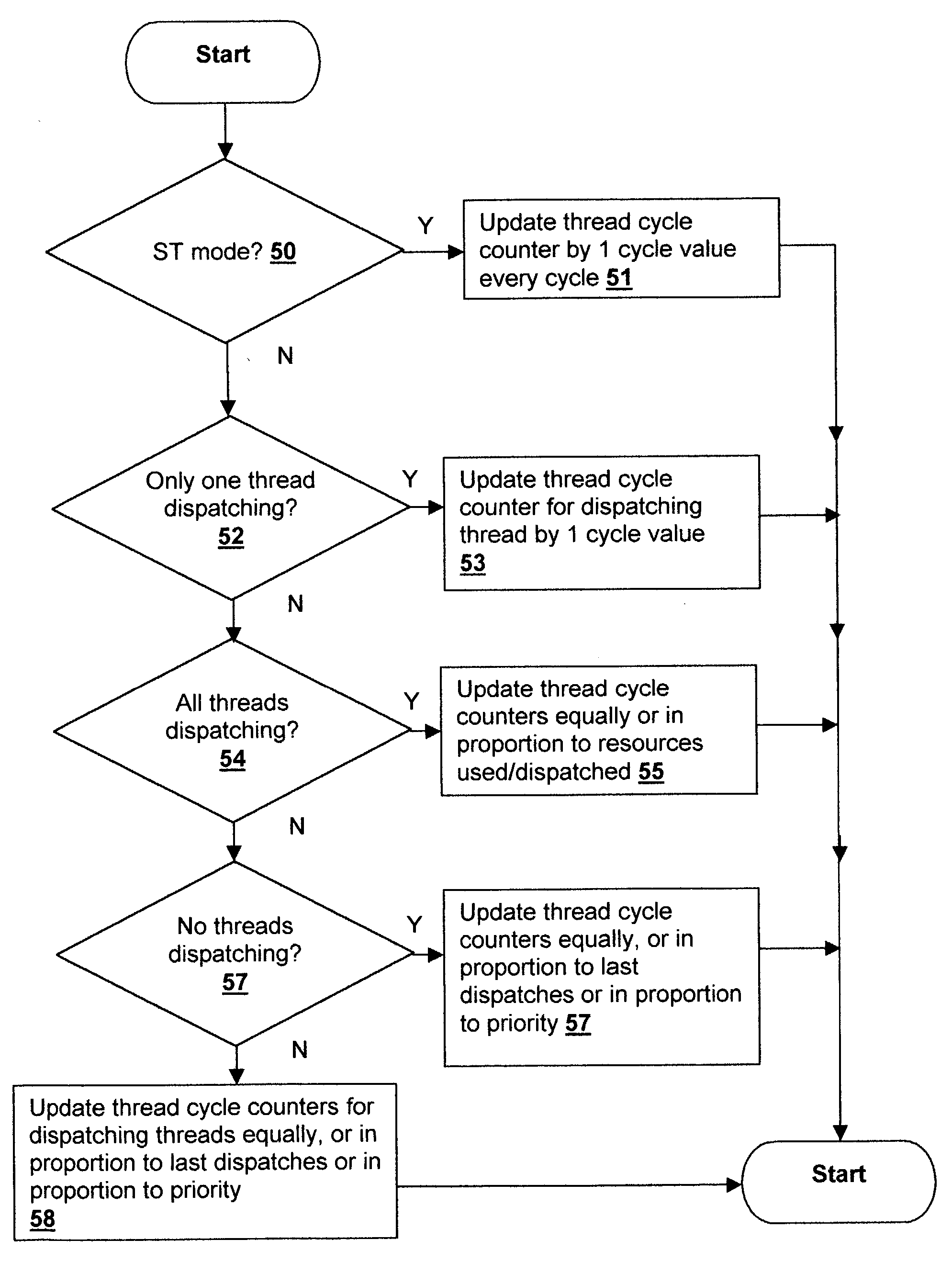

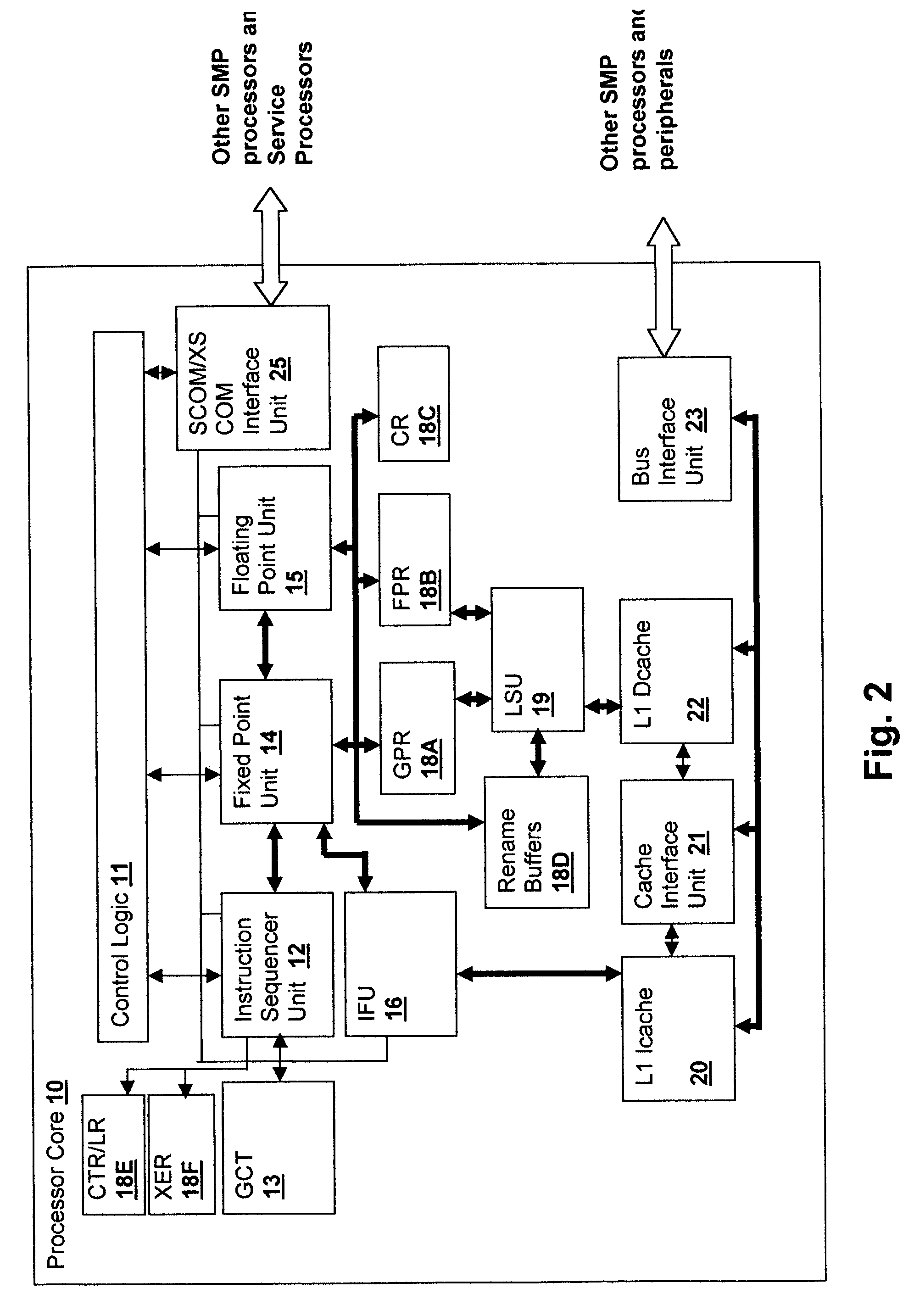

InactiveUS20040216120A1Digital computer detailsMultiprogramming arrangementsProcessor registerControl logic

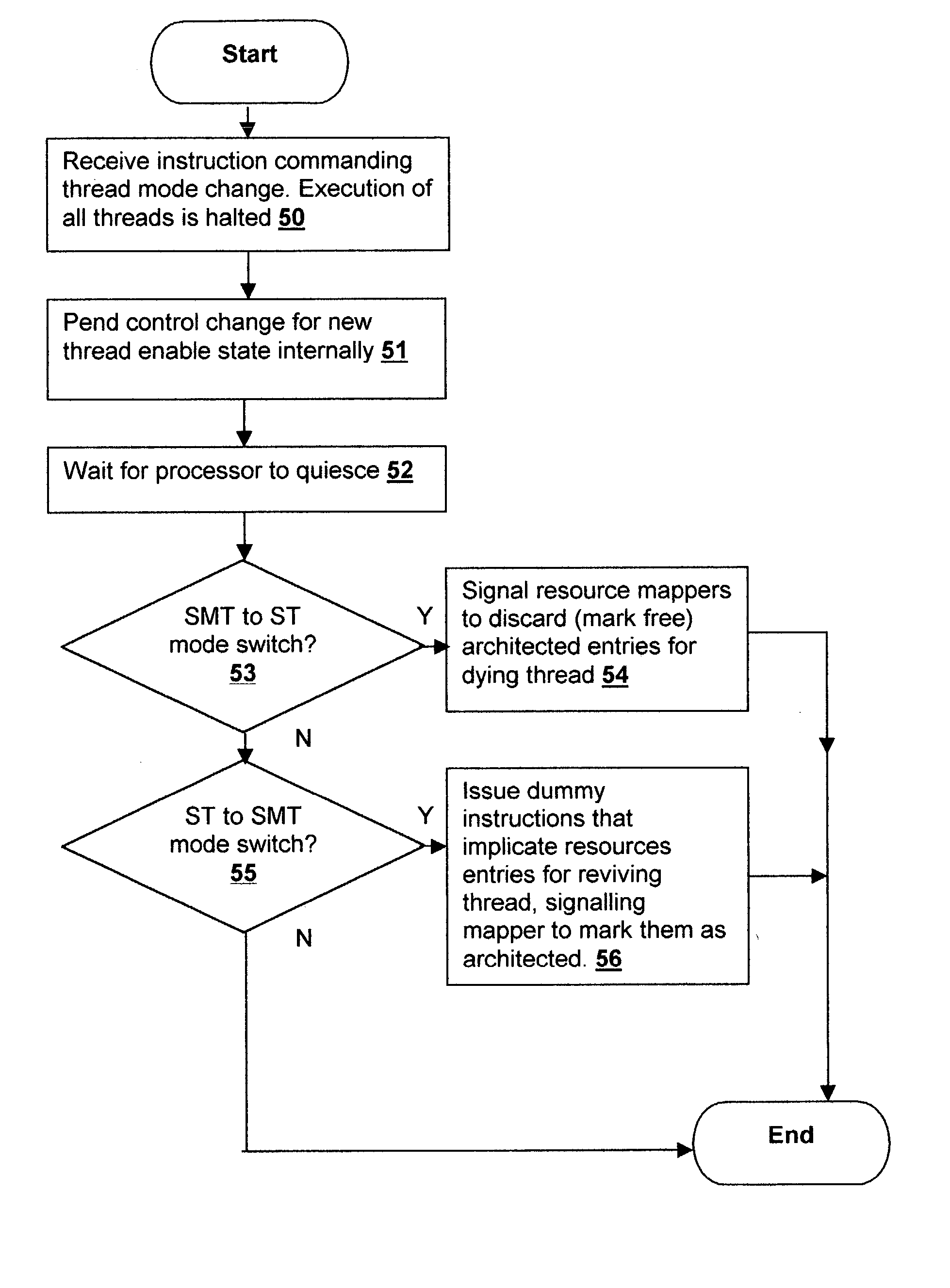

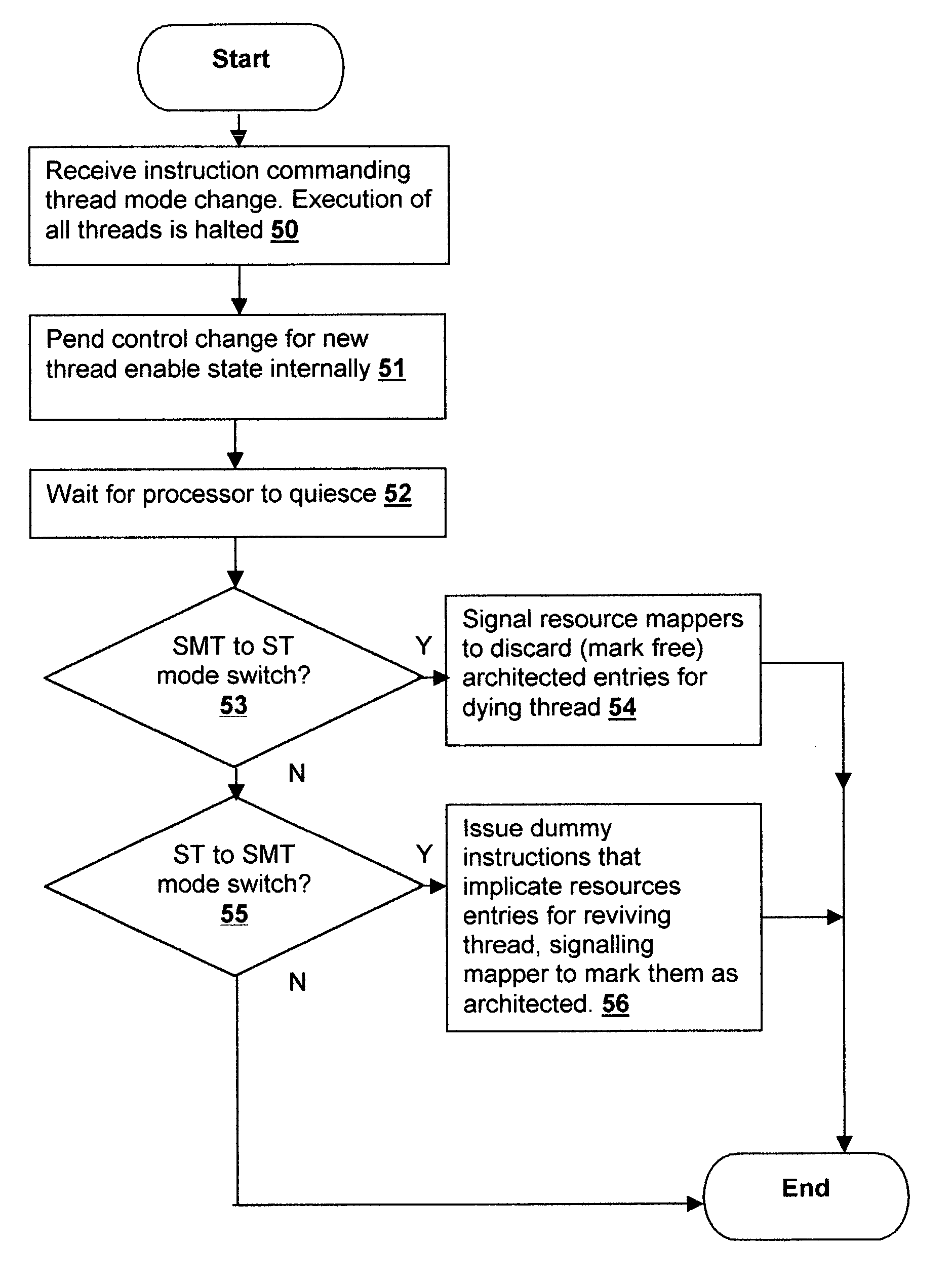

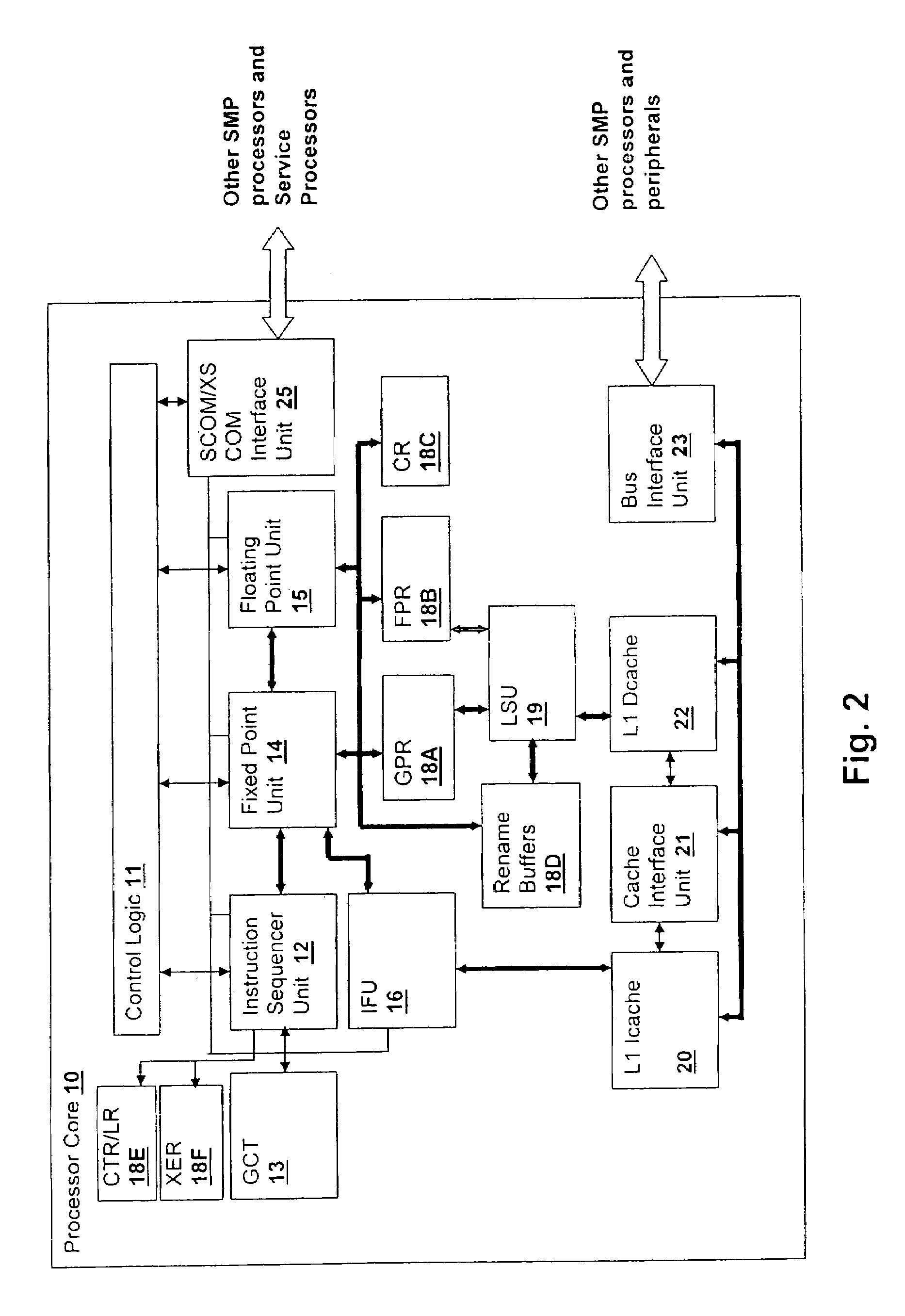

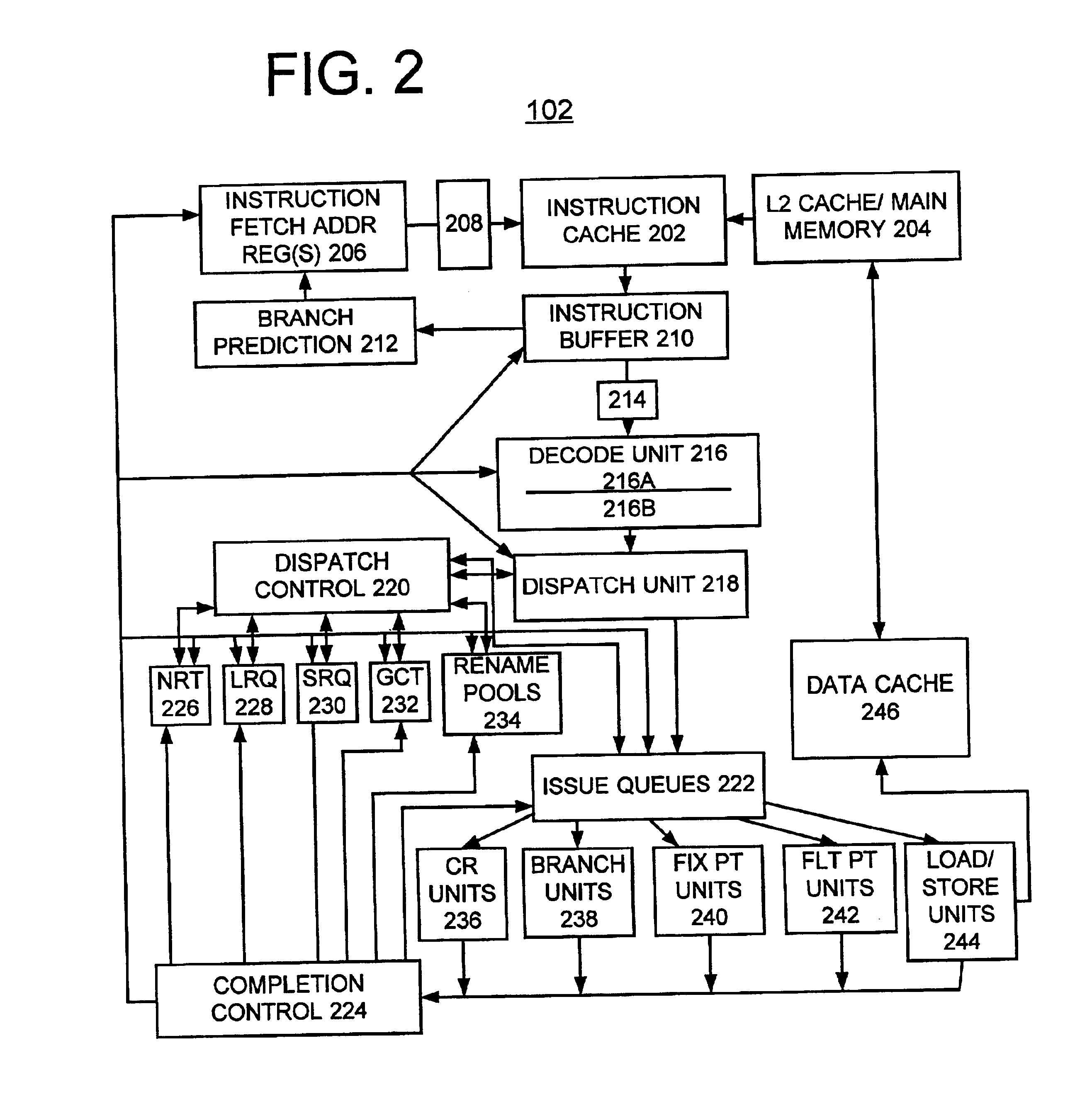

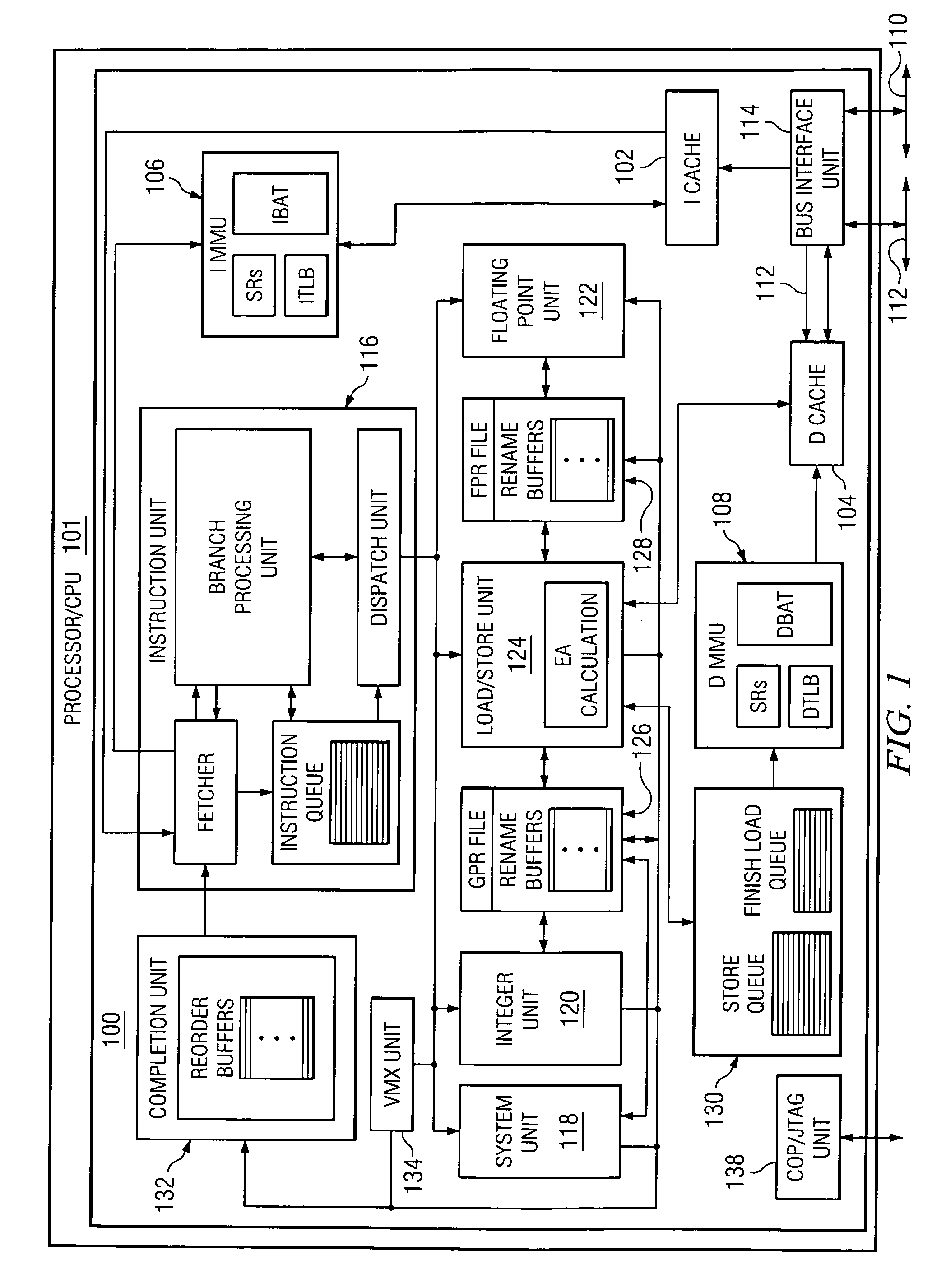

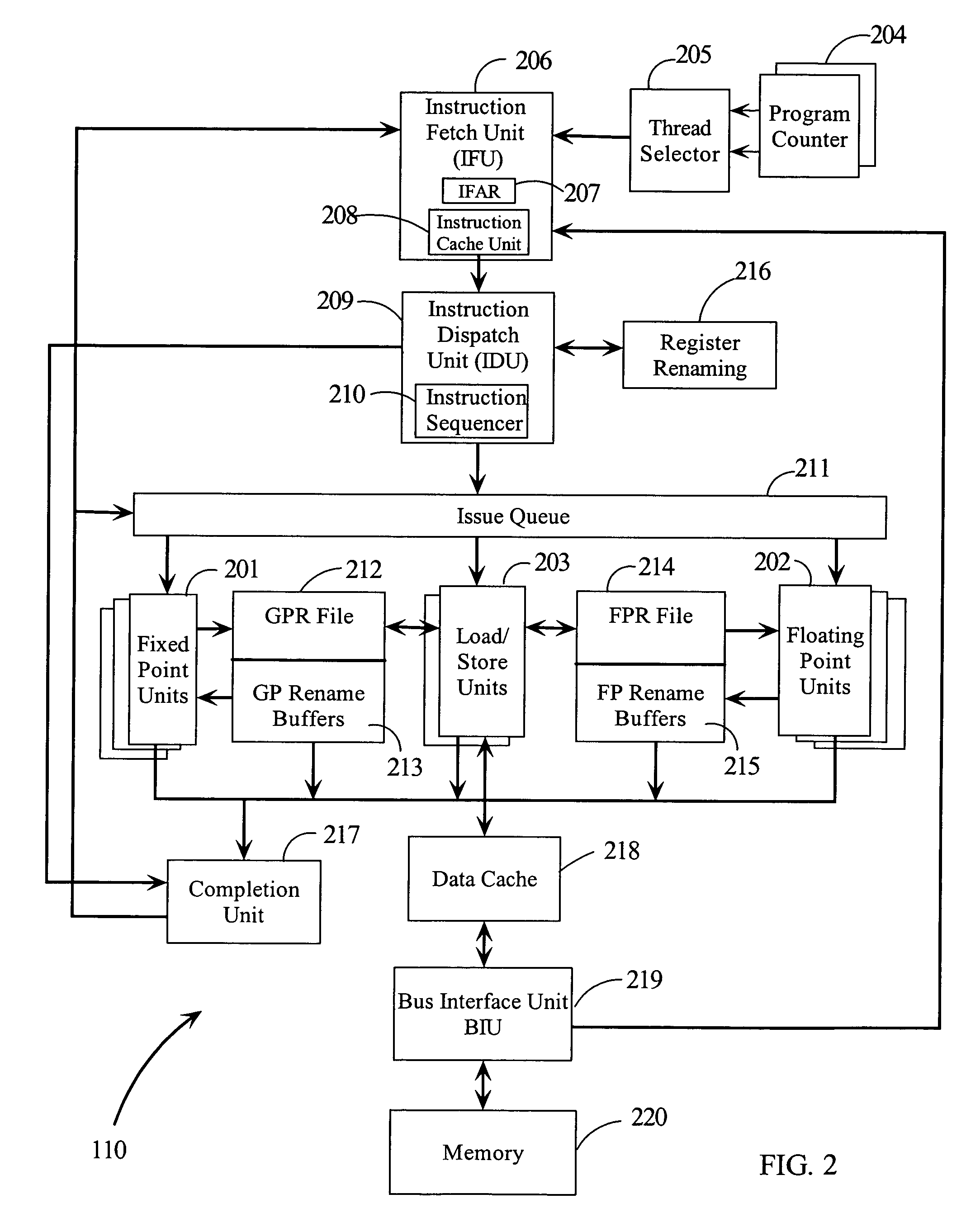

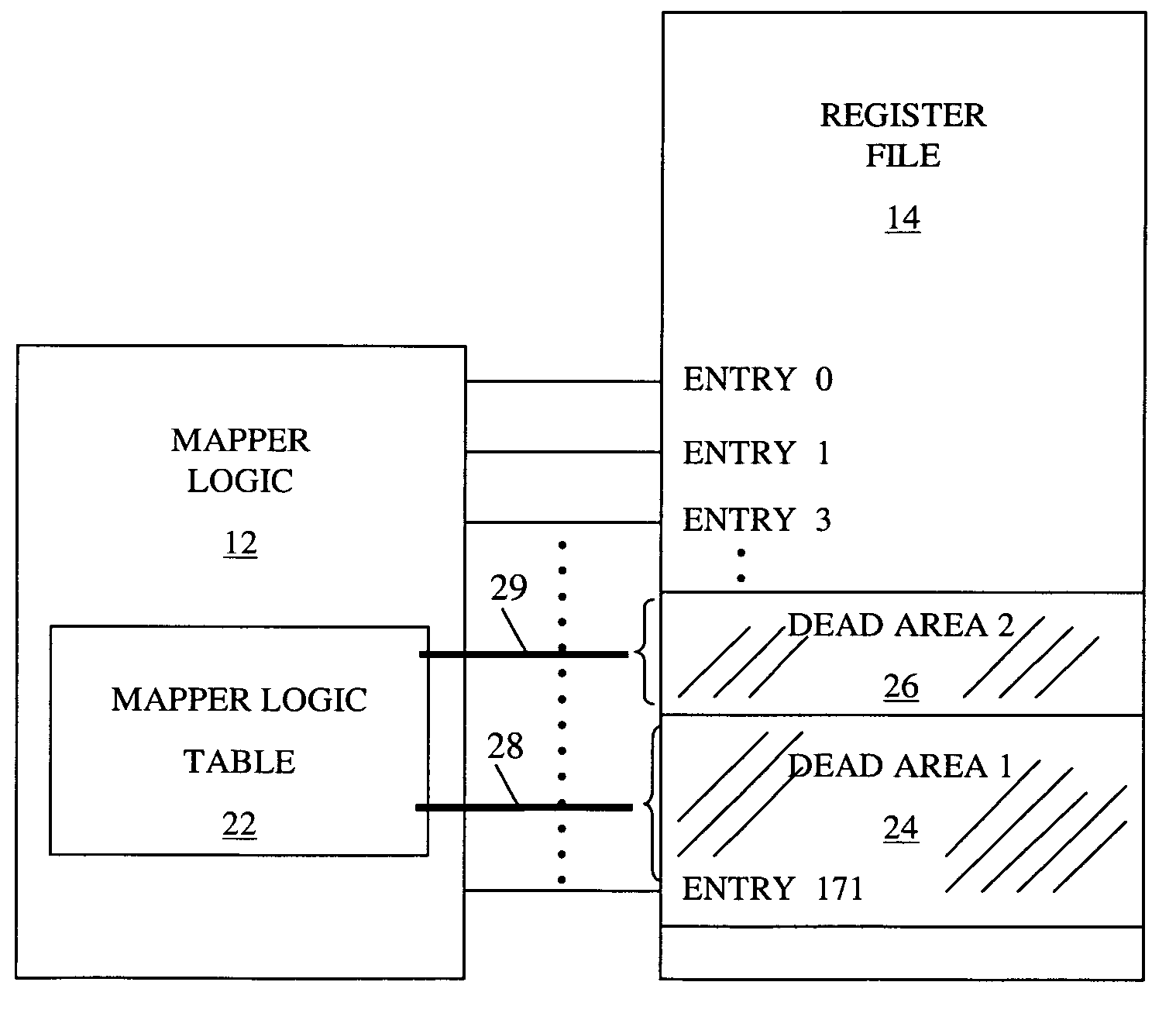

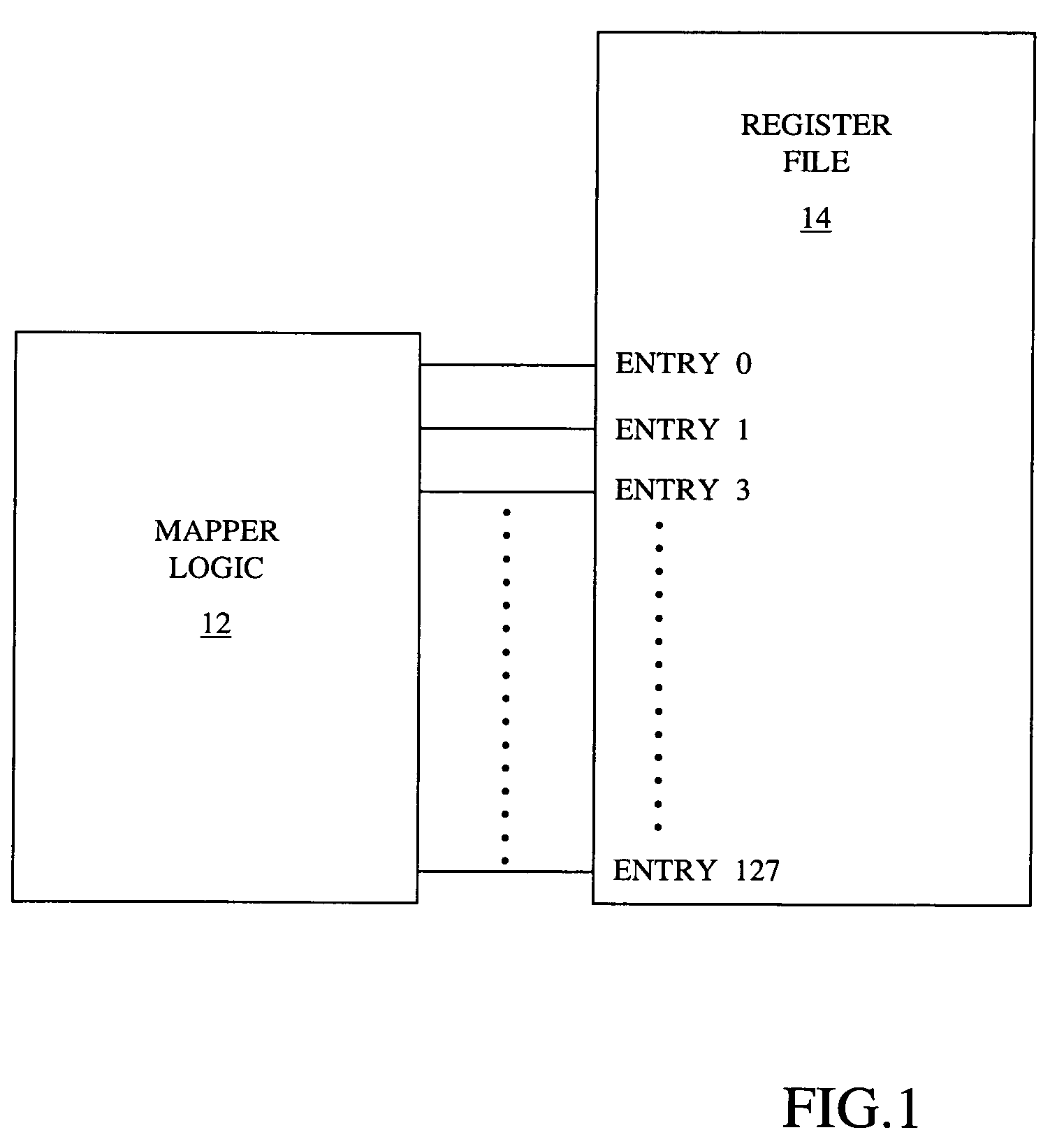

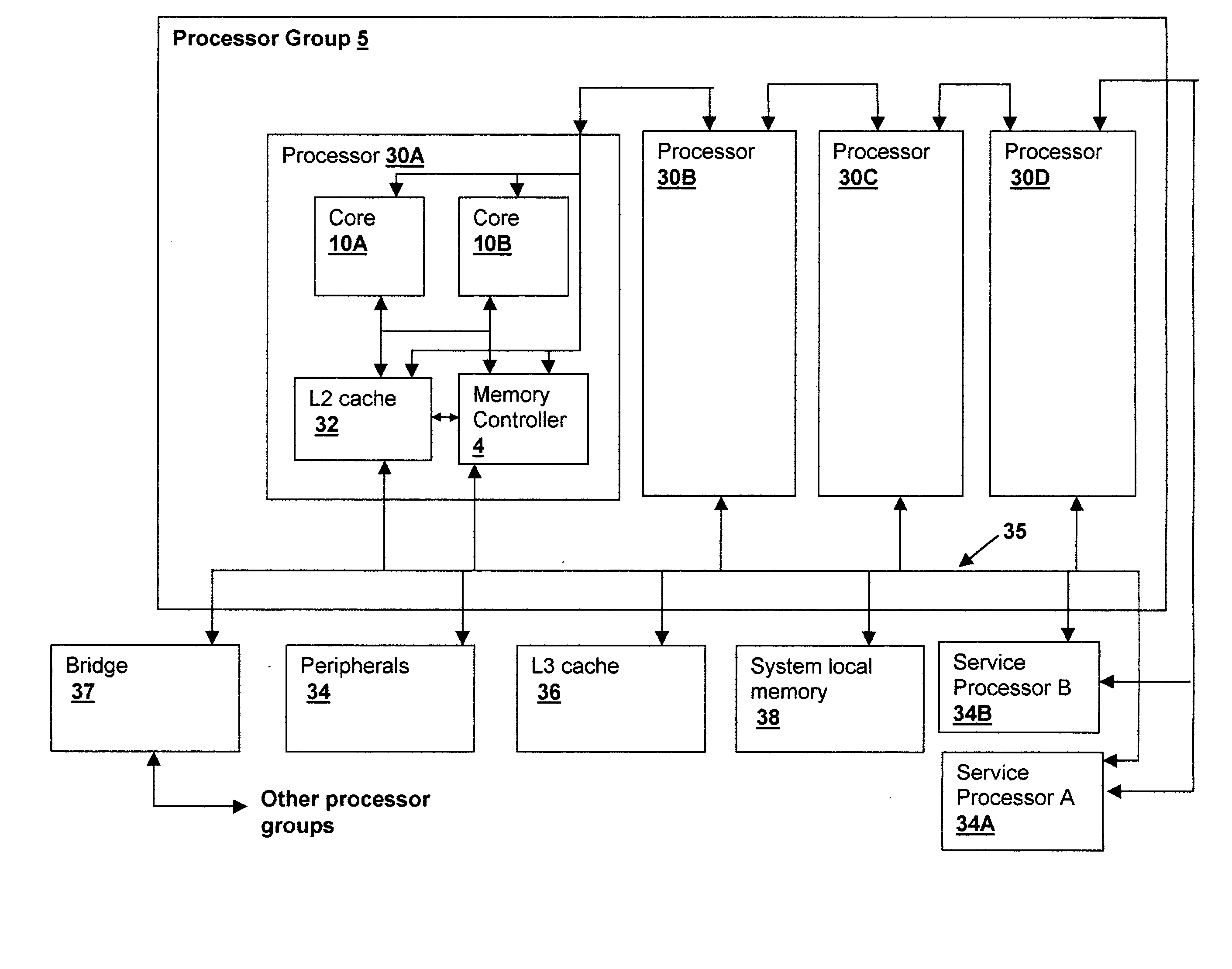

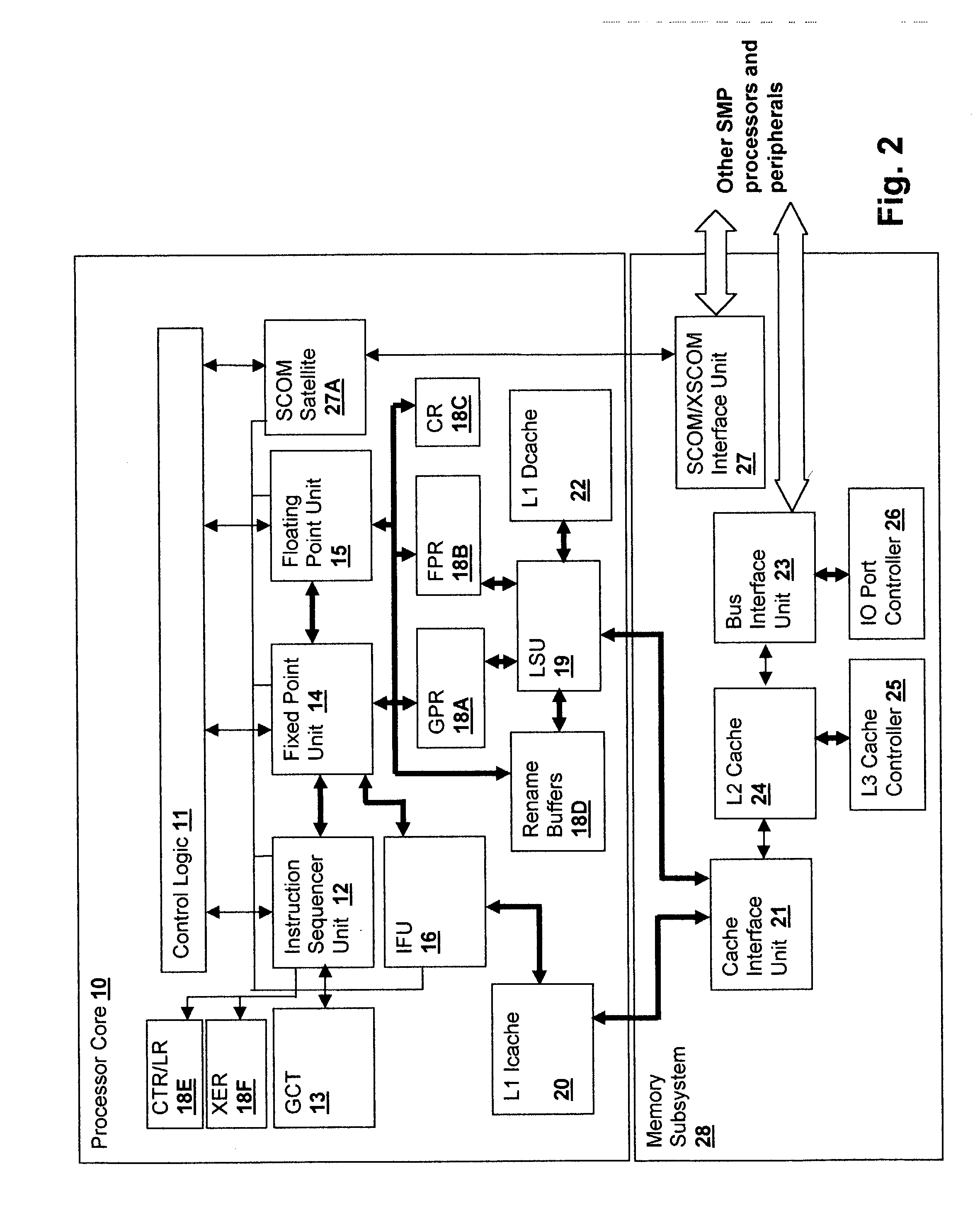

A method and logical apparatus for rename register reallocation in a simultaneous multi-threaded (SMT) processor provides a mechanism for redistributing rename (mapped) resources between one thread during single-threaded (ST) execution and multiple threads during multi-threaded execution. The processor receives an instruction specifying a transition from a single-threaded to a multi-threaded mode or vice-versa and halts execution of all threads executing on the processor. The internal control logic then signals the resources to reallocate the resources. Rename resources are reallocated by directing an action at the rename mapper. Specifically, on a switch from SMT to ST mode, the mapper is directed to drop entries for the dying thread, but on a switch from ST to SMT mode, "dummy" instruction group dispatch indications are sent to the mapper that indicate use of all registers, so that mapper entries are deleted from the pool of entries used by the previously-executing "surviving" thread to make room for rename entries needed by another "reviving" thread that is being started for further execution in SMT mode.

Owner:GOOGLE LLC

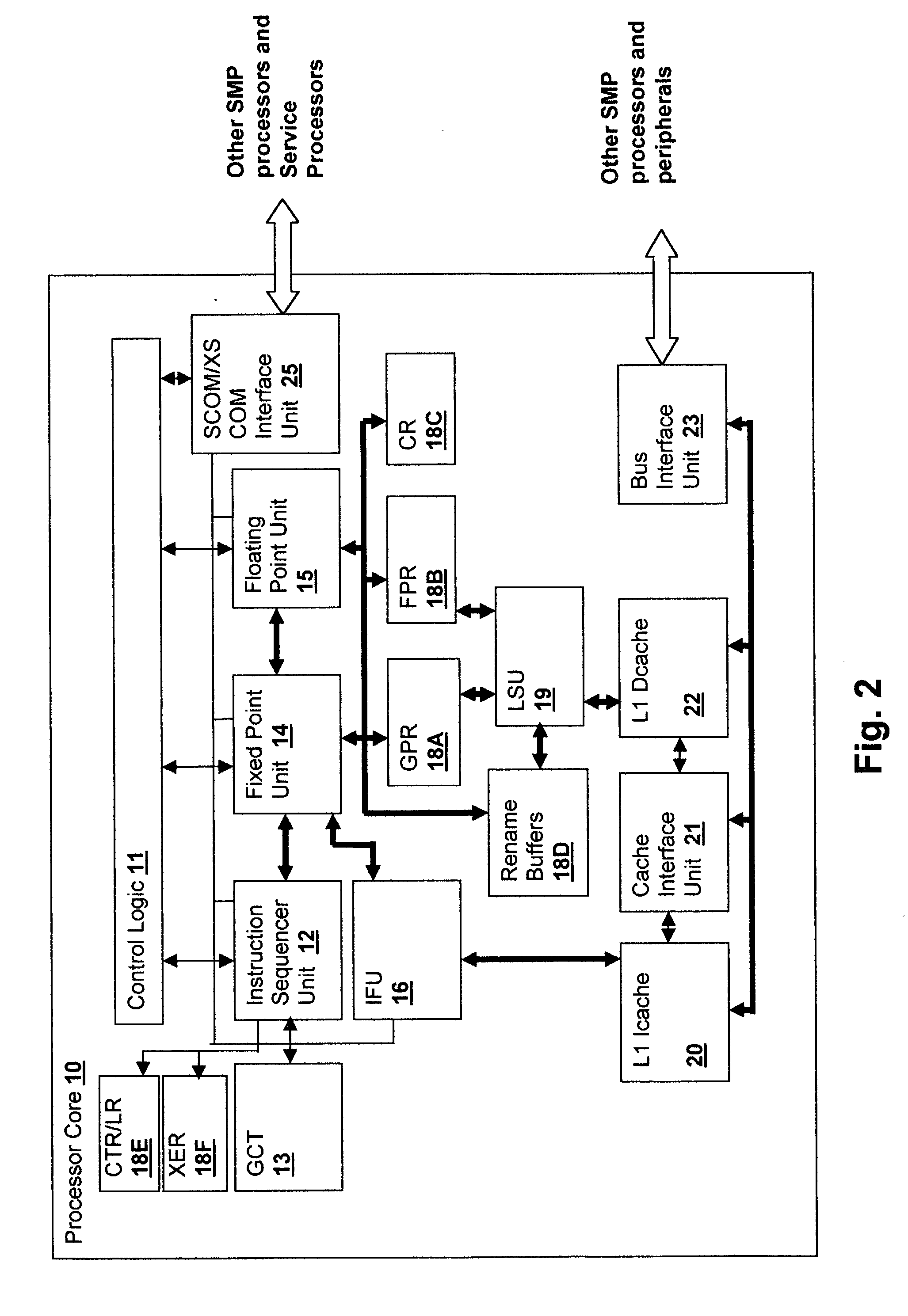

Accounting method and logic for determining per-thread processor resource utilization in a simultaneous multi-threaded (SMT) processor

InactiveUS20040216113A1Resource allocationProgram control using stored programsResource utilizationScheduling instructions

An accounting method and logic for determining per-thread processor resource utilization in a simultaneous multi-threaded (SMT) processor provides a mechanism for accounting for processor resource usage by programs and threads within programs. Relative resource use is determined by detecting instruction dispatches for multiple threads active within the processor, which may include idle threads that are still occupying processor resources. If instructions are dispatched for all threads or no threads, the processor cycle is accounted equally to all threads. Alternatively if no threads are in a dispatch state, the accounting may be made using a prior state, or in conformity with ratios of the threads' priority levels. If only one thread is dispatching, that thread is accounted the entire processor cycle. If multiple threads are dispatching, but less than all threads are dispatching (in processors supporting more than two threads), the processor cycle is billed evenly across the dispatching threads. Multiple dispatches may be detected for the threads and a fractional resource usage determined for each thread and the counters may be updated in accordance with their fractional usage.

Owner:IBM CORP

Method and logical apparatus for rename register reallocation in a simultaneous multi-threaded (SMT) processor

InactiveUS7290261B2Digital computer detailsMultiprogramming arrangementsProcessor registerControl logic

A circuit and method provide rename register reallocation for simultaneous multi-threaded (SMT) processors that redistributes rename (mapped) resources between one thread during single-threaded (ST) execution and multiple threads during multi-threaded execution. The processor receives an instruction specifying a transition from a single-threaded to a multi-threaded mode or vice-versa and halts execution of all threads executing on the processor. The internal control logic then signals the resources to reallocate the resources. Rename resources are reallocated by directing an action at the rename mapper. When switching from SMT to ST mode, the mapper is directed to drop entries for the dying thread, but on a switch from ST to SMT mode, “dummy” instruction group dispatch indications are sent to the mapper that indicate use of all architected registers for each thread.

Owner:GOOGLE LLC

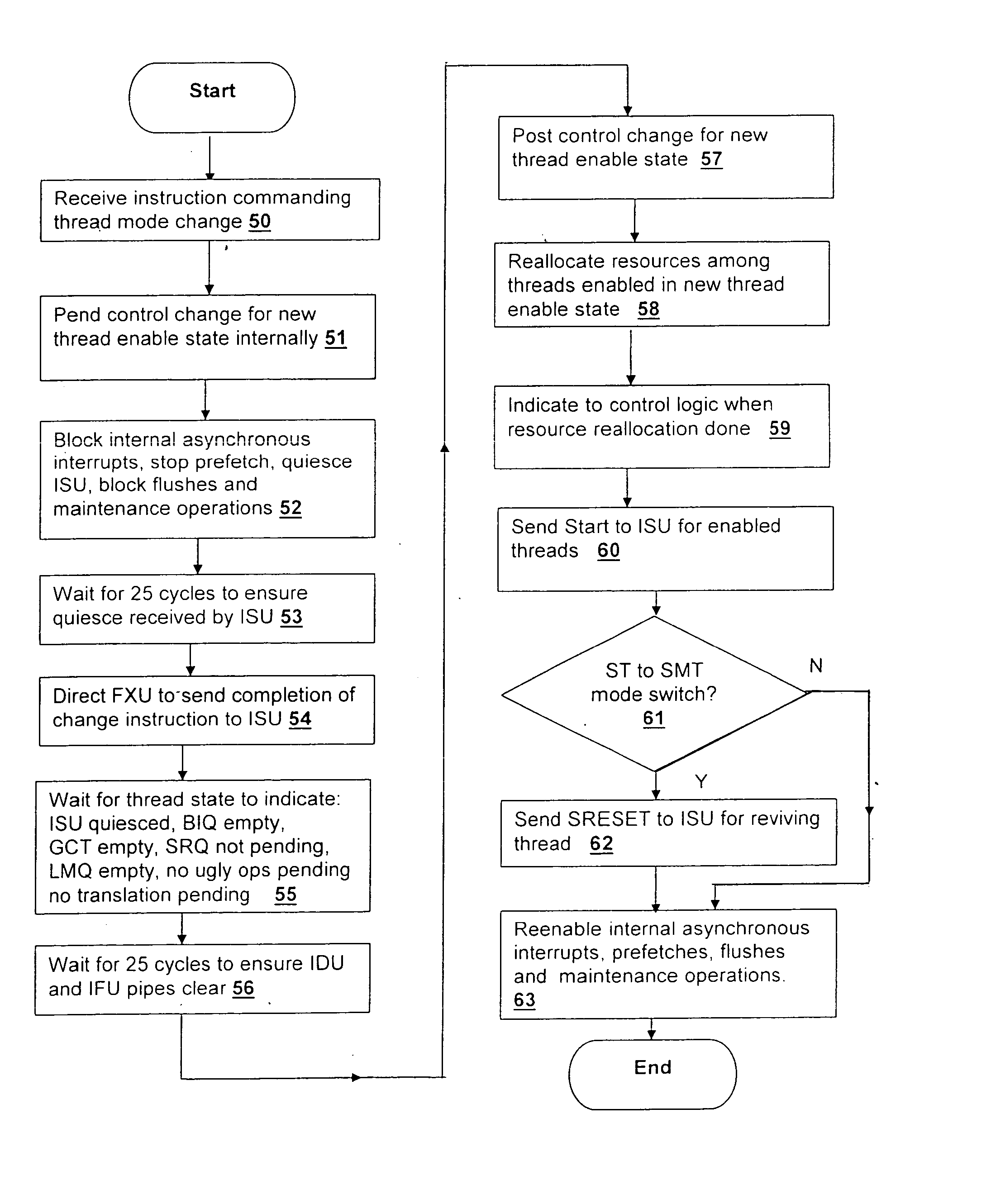

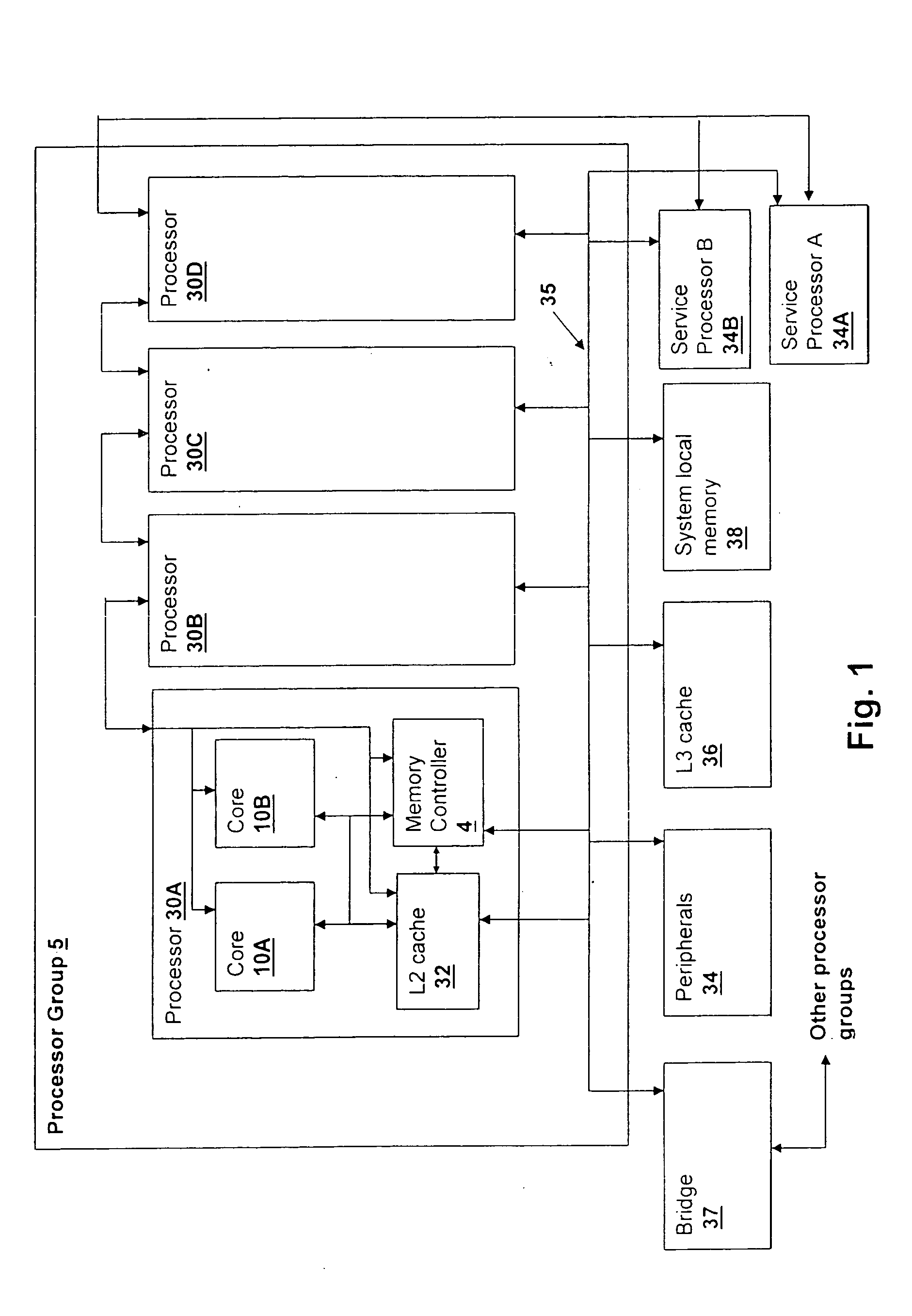

Method and logical apparatus for switching between single-threaded and multi-threaded execution states in a simultaneous multi-threaded (SMT) processor

InactiveUS7155600B2Program initiation/switchingGeneral purpose stored program computerPost processorControl logic

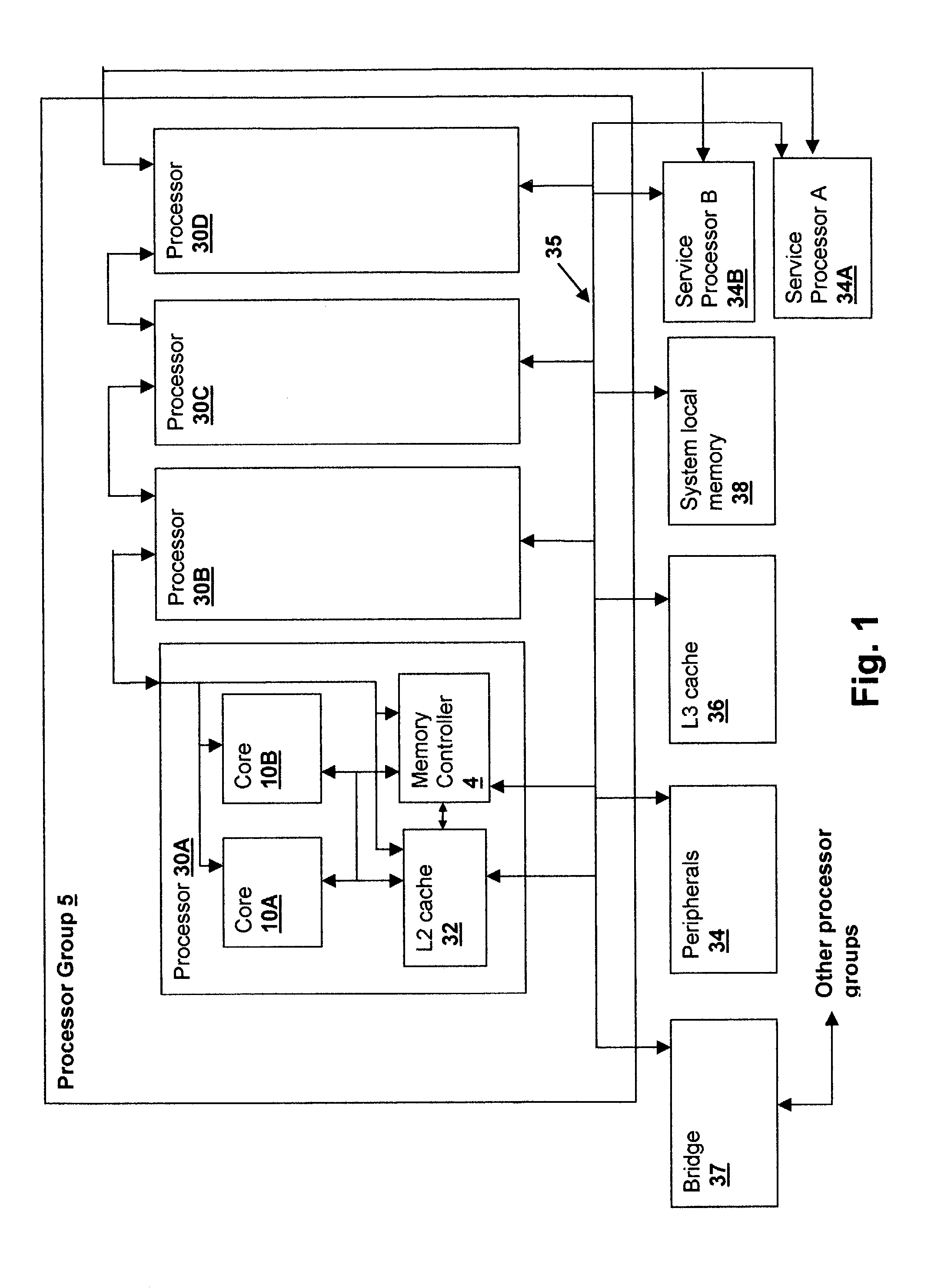

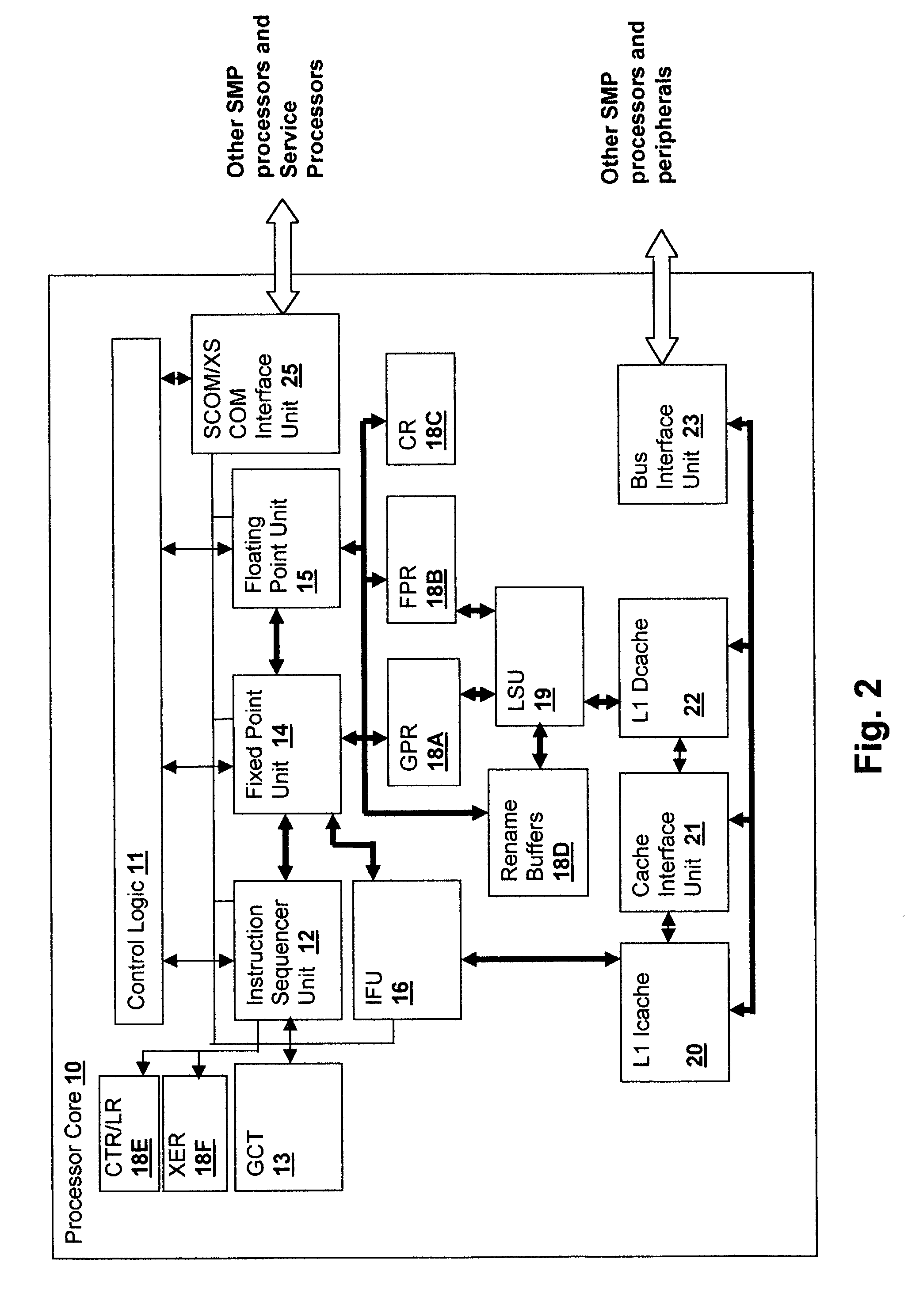

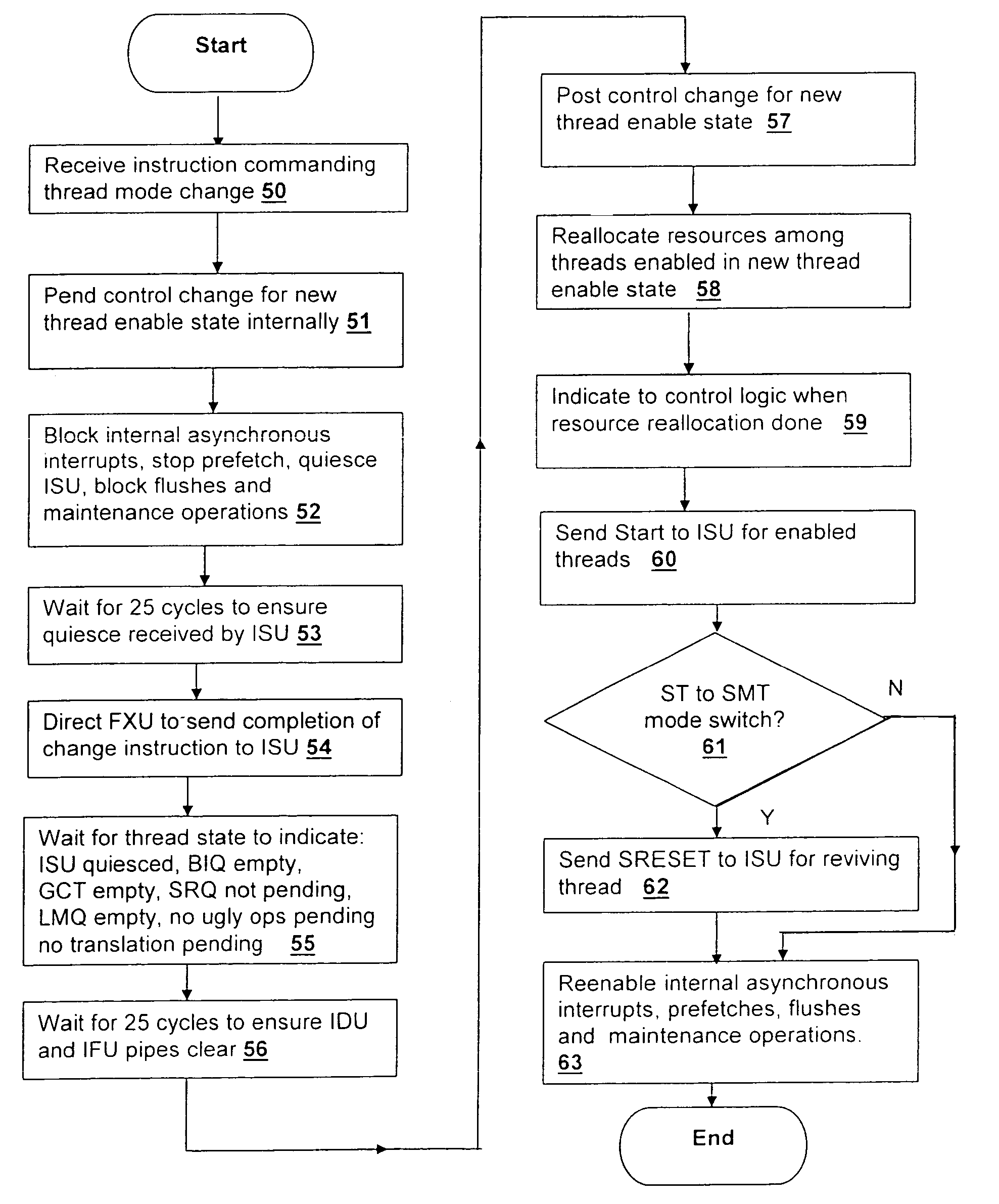

A method and logical apparatus for switching between single-threaded and multi-threaded execution states within a simultaneous multi-threaded (SMT) processor provides a mechanism for switching between single-threaded and multi-threaded execution. The processor receives an instruction specifying a transition from a single-threaded to a multi-threaded mode or vice-versa and halts execution of all threads executing on the processor. Internal control logic controls a sequence of events that ends instruction prefetching, dispatch of new instructions, interrupt processing and maintenance operations and waits for operation of the processor to complete for instructions that are in process. Then, the logic determines one or more threads to start in conformity with a thread enable state specifying the enable state of multiple threads and reallocates various resources, dividing them between threads if multiple threads are specified for further execution (multi-threaded mode) or allocating substantially all of the resources to a single thread if further execution is specified as single-threaded mode. The processor then starts execution of the remaining enabled threads.

Owner:INT BUSINESS MASCH CORP

Method for implementing a variable-partitioned queue for simultaneous multithreaded processors

InactiveUS6931639B1Overcome disadvantagesDigital computer detailsMultiprogramming arrangementsParallel computingSimultaneous multithreading

A method and apparatus are provided for implementing a variable-partitioned queue for simultaneous multithreaded processors. A value is stored in a partition register. A queue structure is divided between a plurality of threads responsive to the stored value in the partition register. When a changed value is received for storing in the partition register, the queue structure is divided between the plurality of threads responsive to the changed value.

Owner:INTEL CORP

Method for simultaneous multi-slice magnetic resonance imaging

ActiveUS20110254548A1Reliable separationLarge possible separationMeasurements using NMR imaging systemsElectric/magnetic detectionMagnetic gradientMulti slice

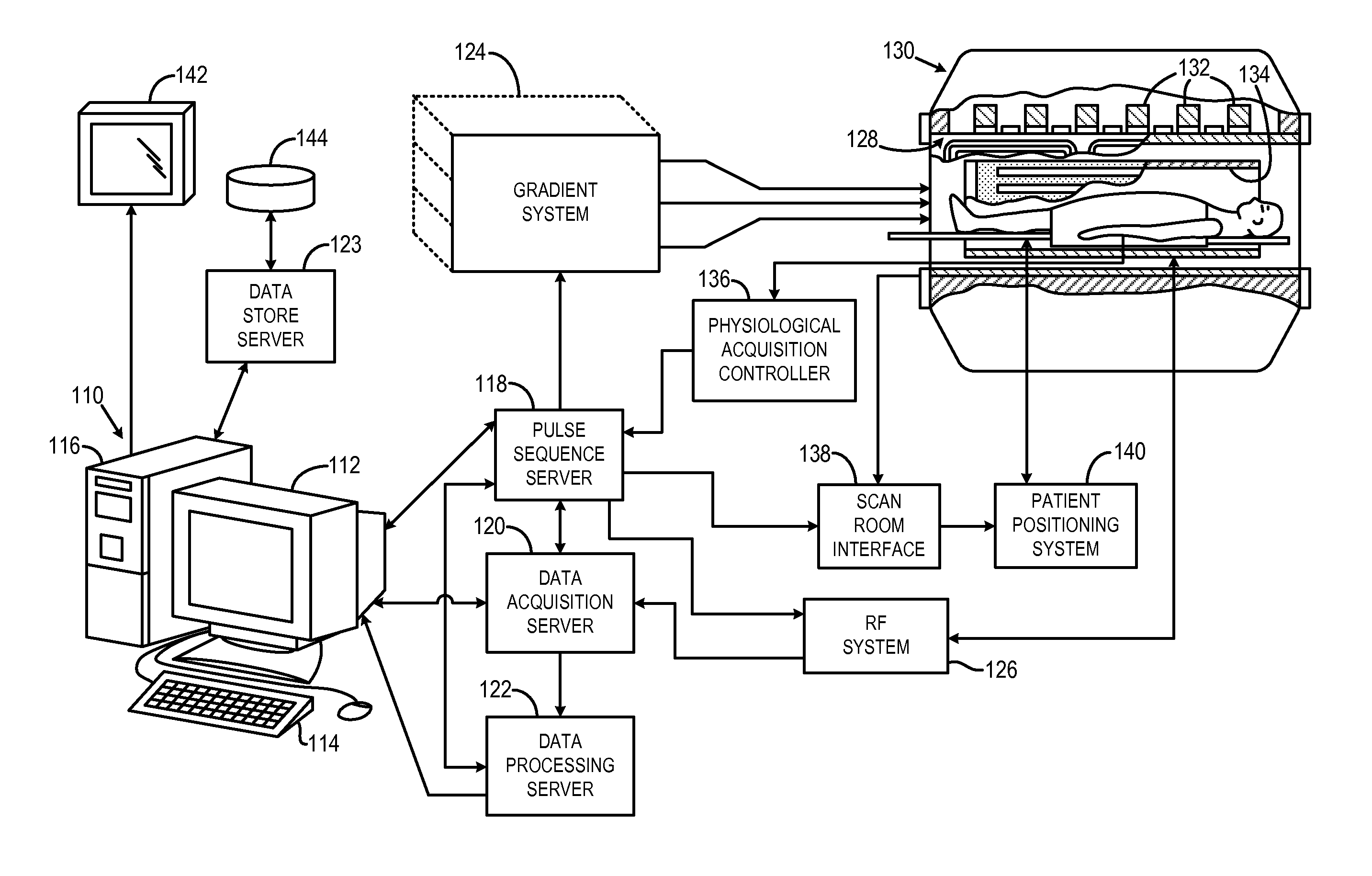

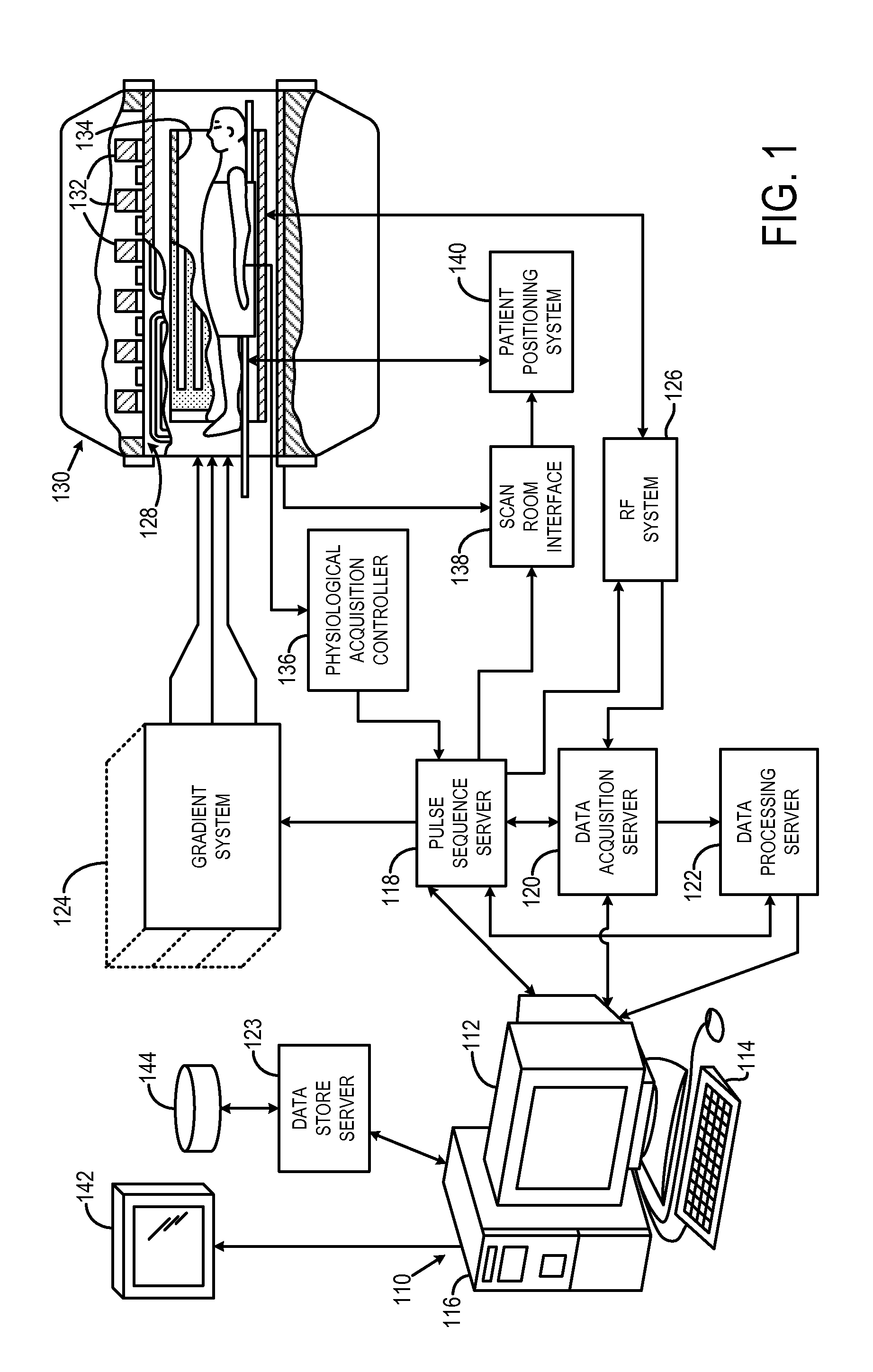

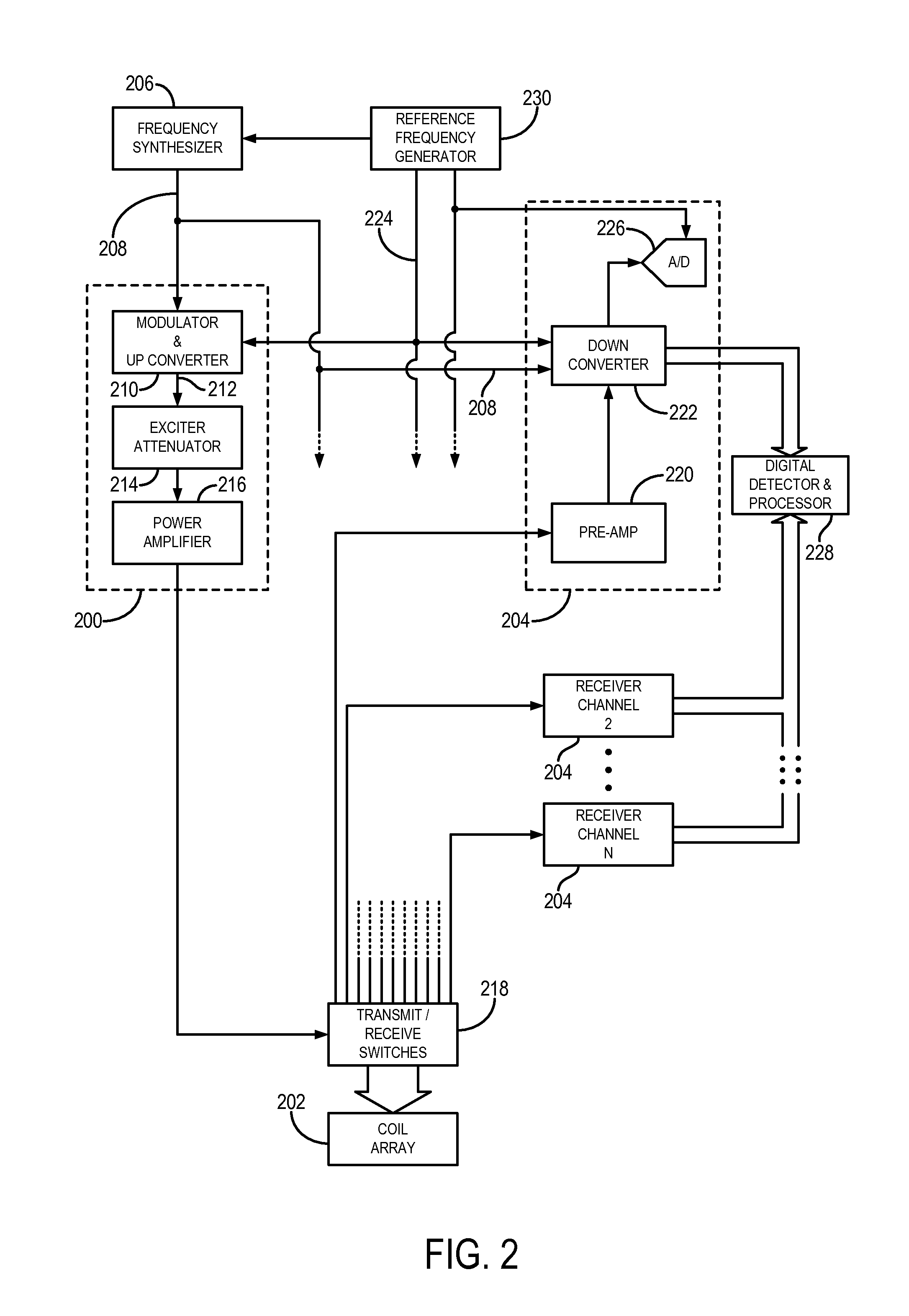

A method for multi-slice magnetic resonance imaging, in which image data is acquired simultaneously from multiple slice locations using a radio frequency coil array, is provided. By way of example, a modified EPI pulse sequence is provided, and includes a series of magnetic gradient field “blips” that are applied along a slice-encoding direction contemporaneously with phase-encoding blips common to EPI sequences. The slice-encoding blips are designed such that phase accruals along the phase-encoding direction are substantially mitigated, while providing that signal information for each sequentially adjacent slice location is cumulatively shifted by a percentage of the imaging FOV. This percentage FOV shift in the image domain provides for more reliable separation of the aliased signal information using parallel image reconstruction methods such as SENSE. In addition, the mitigation of phase accruals in the phase-encoding direction provides for the substantial suppression of pixel tilt and blurring in the reconstructed images.

Owner:THE GENERAL HOSPITAL CORP

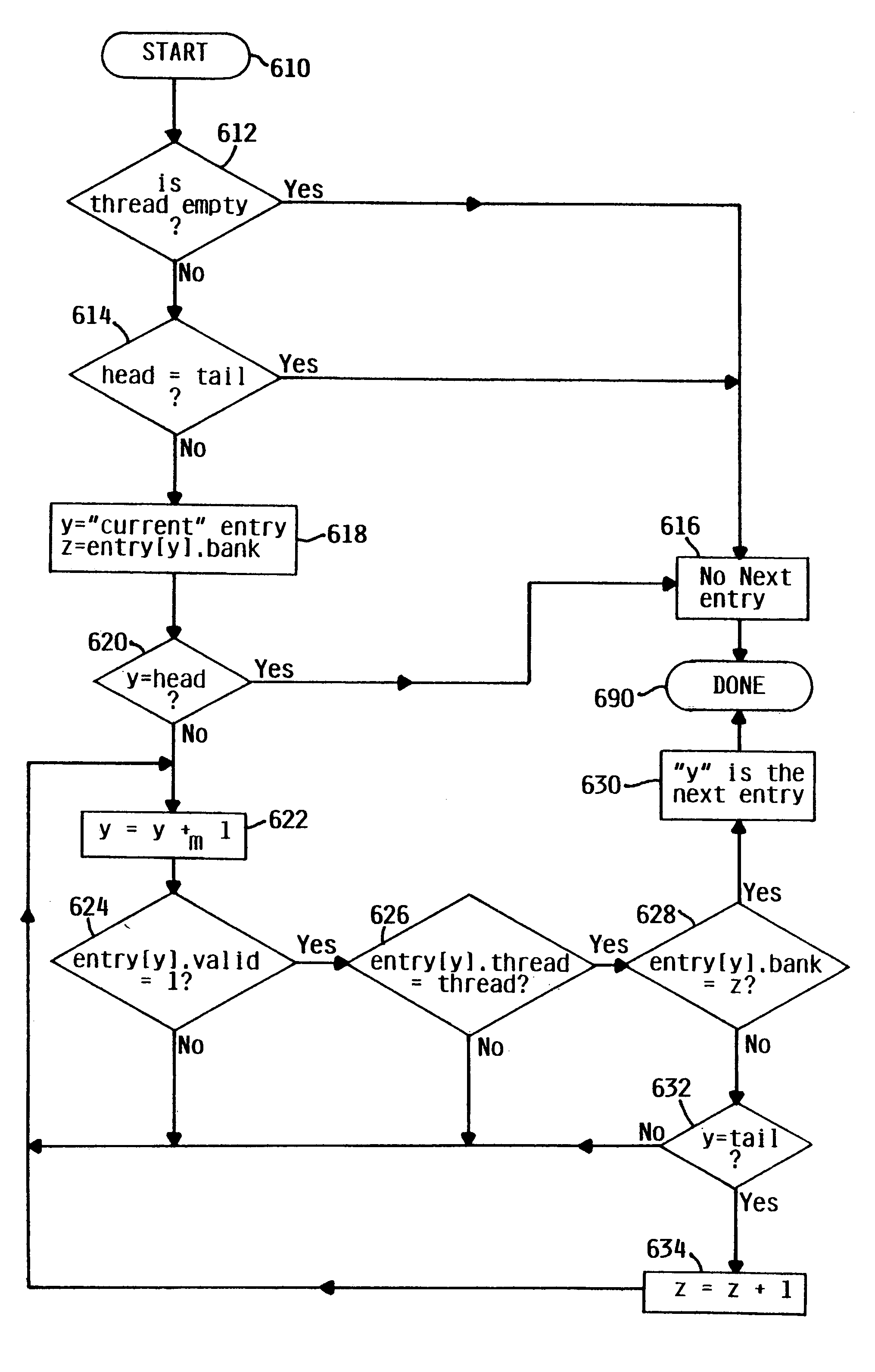

Shared resource queue for simultaneous multithreading processing wherein entries allocated to different threads are capable of being interspersed among each other and a head pointer for one thread is capable of wrapping around its own tail in order to access a free entry

InactiveUS6988186B2Improve efficiencyDigital computer detailsConcurrent instruction executionShared resourceDistributed computing

A queue, such as a first-in first-out queue, is incorporated into a processing device, such as a multithreaded pipeline processor. The queue may store the resources of more than one thread in the processing device such that the entries of one thread may be interspersed among the entries of another thread. The entries of each thread may be identified by a thread identification, a valid marker to indicate if the resources within the entry are valid, and a bank number. For a particular thread, the bank number tracks the number of times a head pointer pertaining to the first entry has passed a tail pointer. In this fashion, empty entries may be used and the resources may be efficiently allocated. In a preferred embodiment, the shared resource queue may be implemented into an in-order multithreaded pipelined processor as a queue storing resources to be dispatched for execution of instructions. The shared resource queue may also be implemented into a branch information queue or into any queue where more than one thread may require dynamic registers.

Owner:IBM CORP

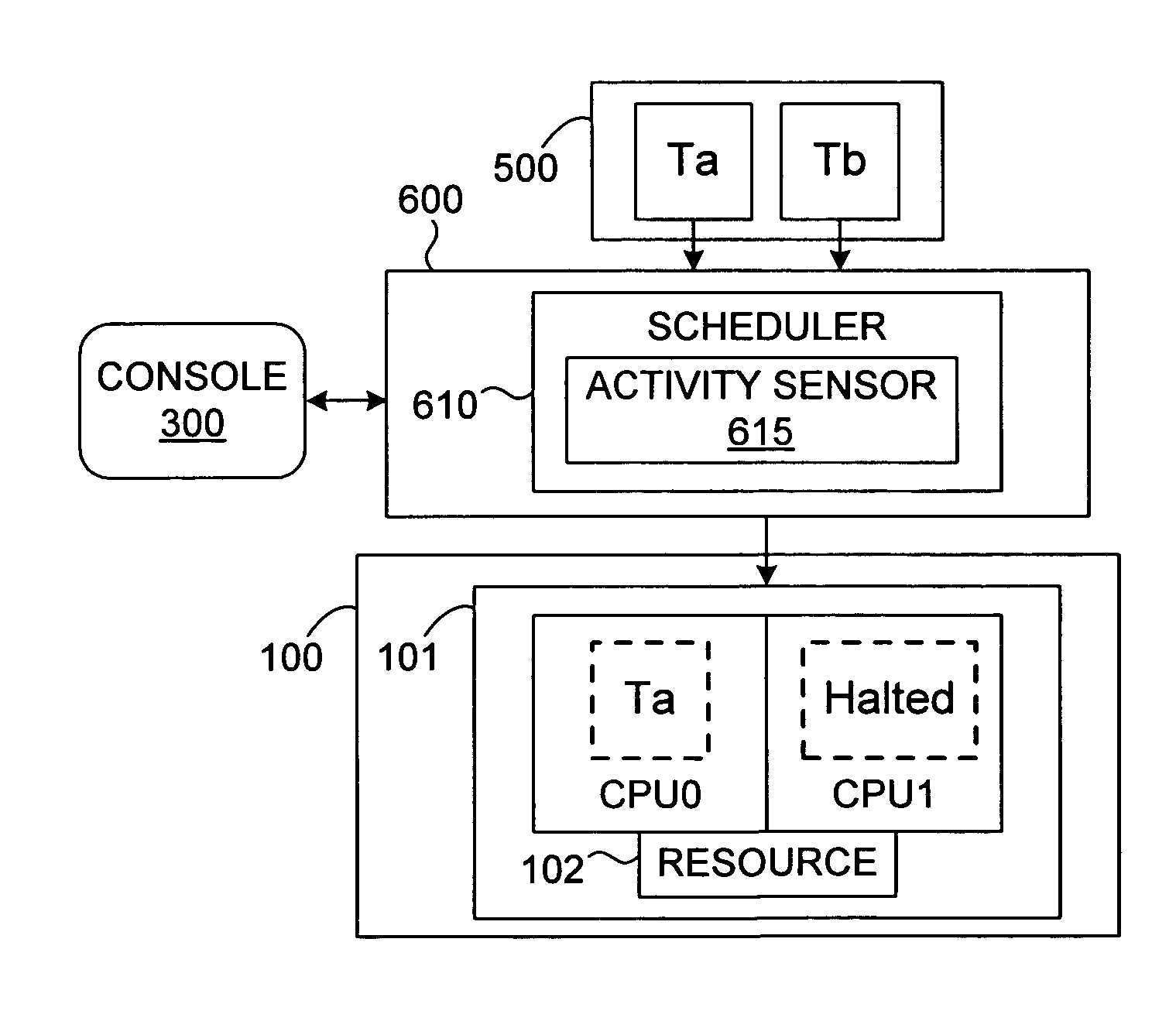

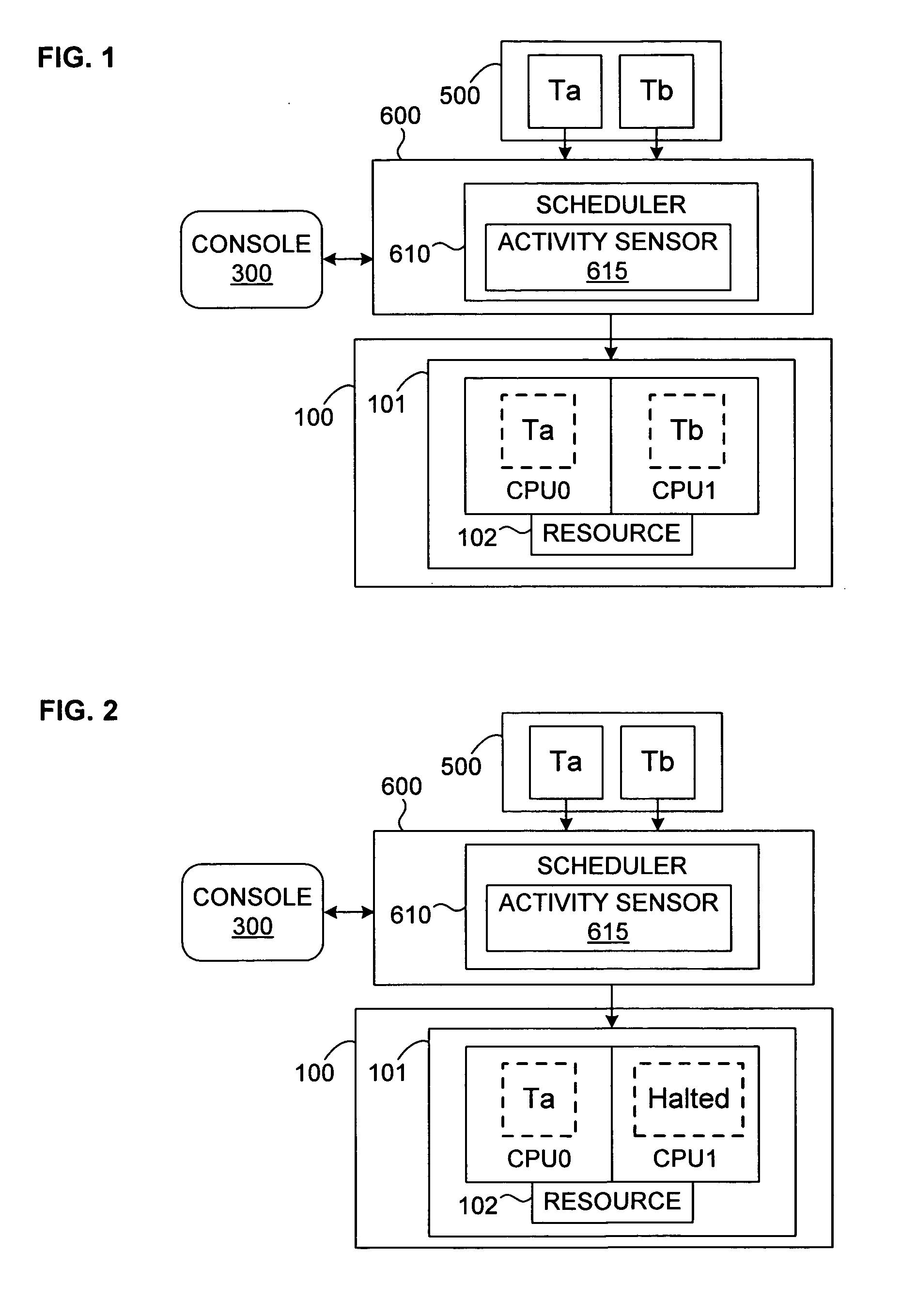

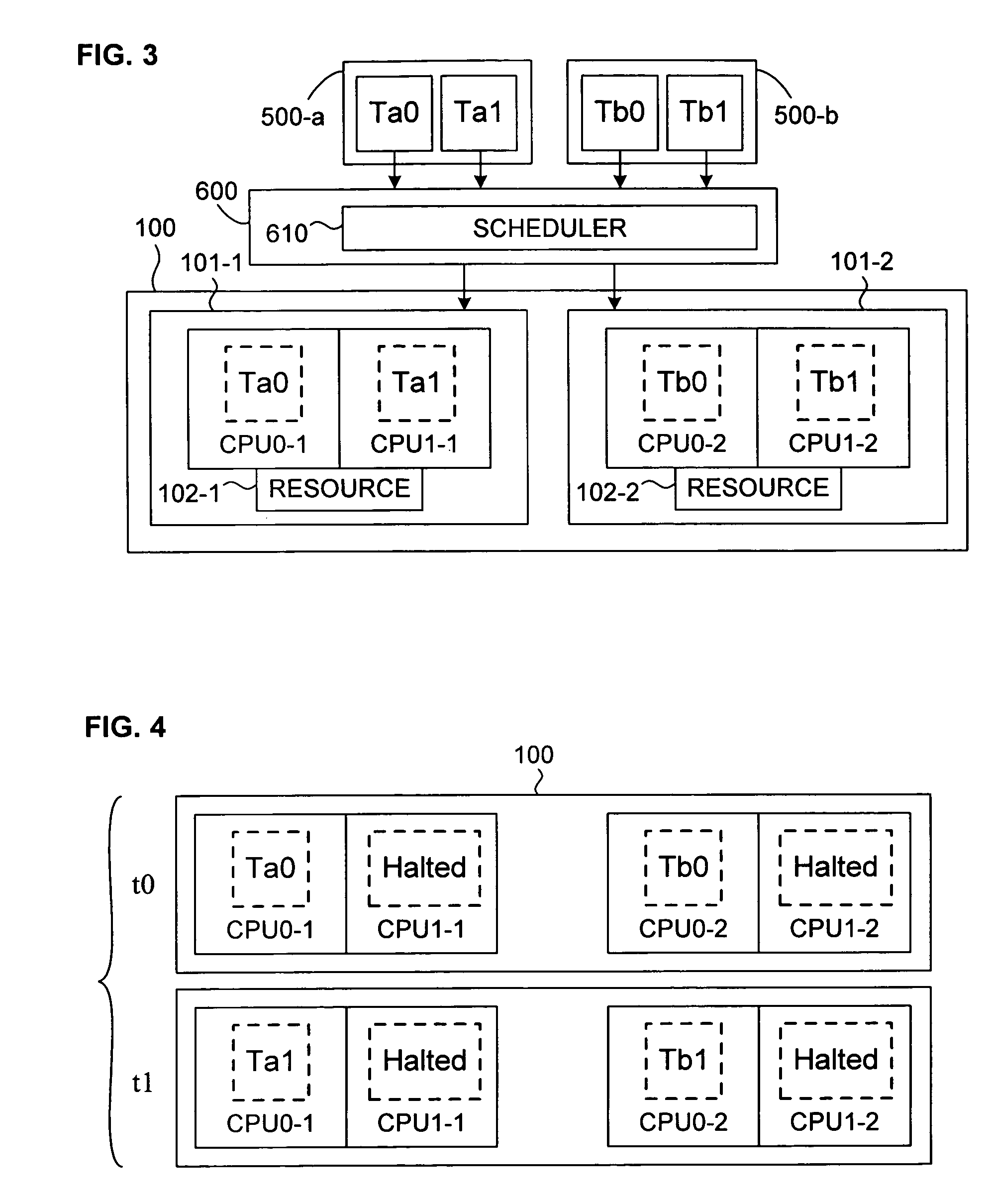

Mechanism for Scheduling Execution of Threads for Fair Resource Allocation in a Multi-Threaded and/or Multi-Core Processing System

InactiveUS20100205602A1Memory adressing/allocation/relocationDigital computer detailsThread schedulingShared resource

A thread scheduling mechanism is provided that flexibly enforces performance isolation of multiple threads to alleviate the effect of anti-cooperative execution behavior with respect to a shared resource, for example, hoarding a cache or pipeline, using the hardware capabilities of simultaneous multi-threaded (SMT) or multi-core processors. Given a plurality of threads running on at least two processors in at least one functional processor group, the occurrence of a rescheduling condition indicating anti-cooperative execution behavior is sensed, and, if present, at least one of the threads is rescheduled such that the first and second threads no longer execute in the same functional processor group at the same time.

Owner:VMWARE INC

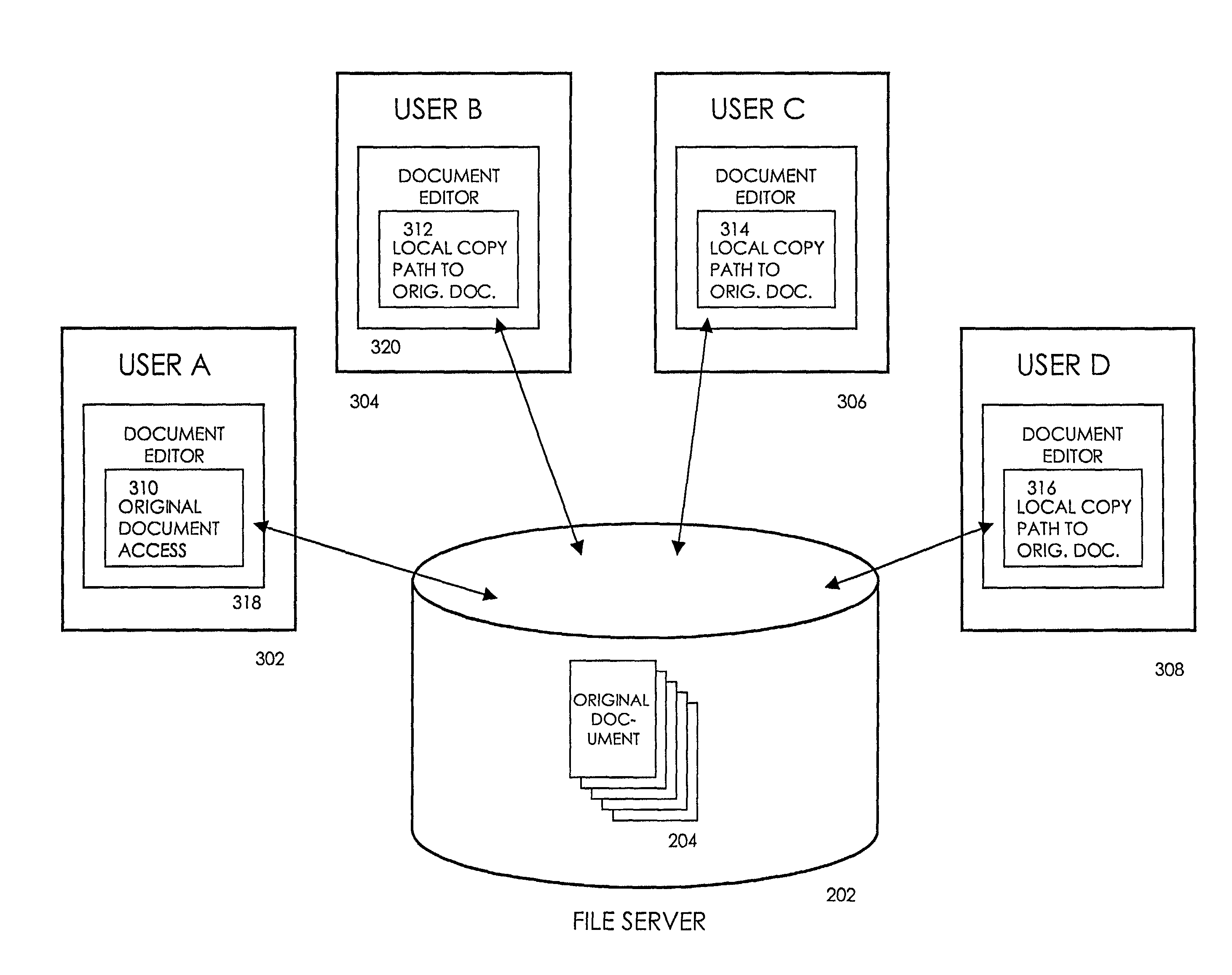

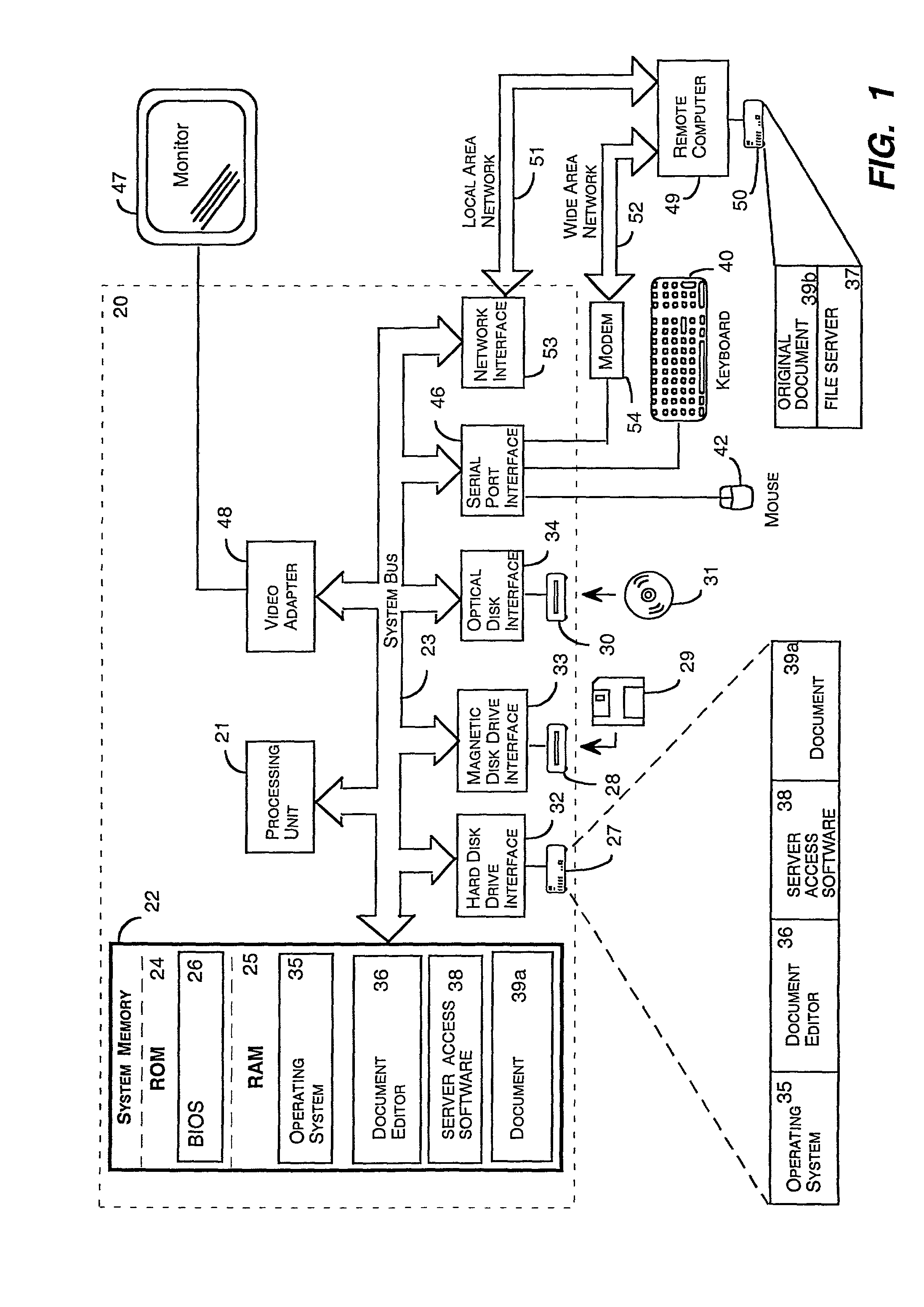

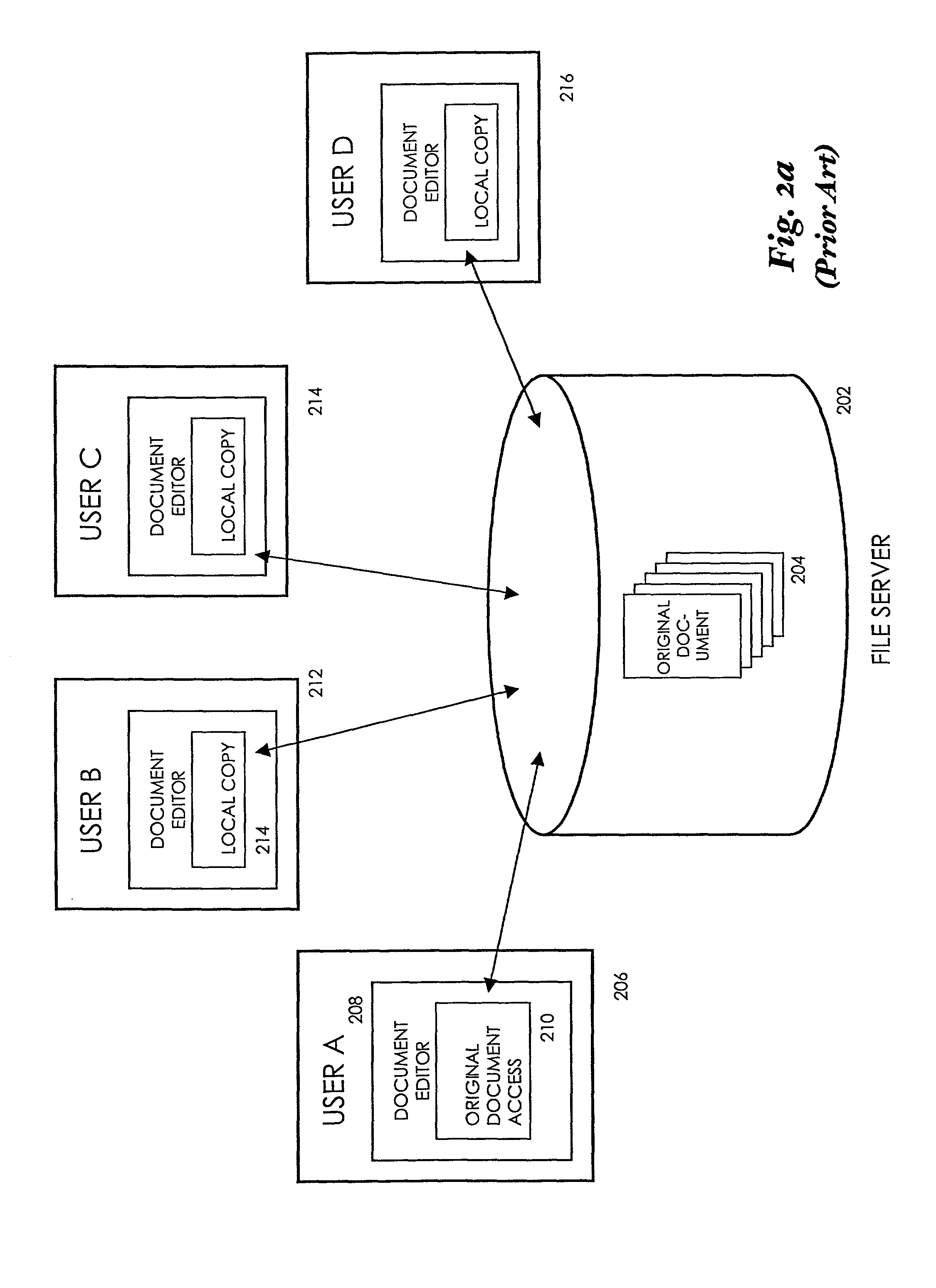

System and method for enabling simultaneous multi-user electronic document editing

ActiveUS8196029B1Simple and elegantNatural language data processingSpecial data processing applicationsElectronic documentDocumentation procedure

A document management system and method are provided to support simultaneous multi-user editing of a single document. The system and method do not require the use of a new file format or the use of a central document repository to limit user access to the document. When a user attempts to open the document and a determination is made that the document is in use, then an alert is presented to the user that informs the user that the document is locked for editing. The user may select to receive a notification when the original document is no longer in use. If the user selects to make a local copy and subsequently merge the changes, the local copy will be made and the path of the original document will be stored so that the original document location can be determined at the time that the changes are merged. When the original document becomes available, the user's changes can be merged into the original document. The original document is located using the original path that was stored when the local copy was created. Of course, any time that a merge is attempted, and a conflict exists (i.e., the changes in the local document are inconsistent with the changes made to the original document), an alert can be generated to inform the user of the conflict and the user can be prompted to reconcile the conflict to complete the merge.

Owner:MICROSOFT TECH LICENSING LLC

Method and logical apparatus for managing thread execution in a simultaneous multi-threaded (SMT) processor

InactiveUS20040215932A1Program initiation/switchingGeneral purpose stored program computerSimultaneous multithreadingInstruction prefetch

A method and logical apparatus for managing thread execution within a simultaneous multi-threaded (SMT) processor provides a mechanism for switching between single-threaded and multi-threaded execution. The processor receives an instruction specifying a transition from a single-threaded to a multi-threaded mode or vice-versa and halts execution of all threads executing on the processor. Internal control logic controls a sequence of events that ends instruction prefetching, dispatch of new instructions, interrupt processing and maintenance operations and waits for operation of the processor to complete for instructions that are in process. Then, the logic determines one or more threads to start in conformity with a thread enable state specifying the enable state of multiple threads and reallocates various resources, dividing them between threads if multiple threads are specified for further execution (multi-threaded mode) or allocating substantially all of the resources to a single thread if further execution is specified as single-threaded mode. The processor then starts execution of the remaining enabled threads.

Owner:IBM CORP

Method and system for energy management in a simultaneous multi-threaded (SMT) processing system including per-thread device usage monitoring

InactiveUS7197652B2Lower latencyReduce power consumptionEnergy efficient ICTVolume/mass flow measurementDecision controlOperational system

A method and system for energy management in a simultaneous multi-threaded (SMT) processing system including per-thread device usage monitoring provides control of energy usage that accommodates thread parallelism. Per-device usage information is measured and stored on a per-thread basis, so that upon a context switch, the previous usage evaluation state can be restored. The per-thread usage information is used to adjust the thresholds of device energy management decision control logic, so that energy use can be managed with consideration as to which threads will be running in a given execution slice. A device controller can then provide for per-thread control of attached device power management states without intervention by the processor and without losing the historical evaluation state when a process is switched out. The device controller may be a memory controller and the controlled devices memory modules or banks within modules if individual banks can be power-managed. Local thresholds provide the decision-making mechanism for each controlled device and are adjusted by the operating system in conformity with the measured usage level for threads executing within the processing system. The per-thread usage information may be obtained from a performance monitoring unit that is located within or external to the device controller and the usage monitoring state is then retrieved and replaced by the operating system at each context switch.

Owner:INT BUSINESS MASCH CORP

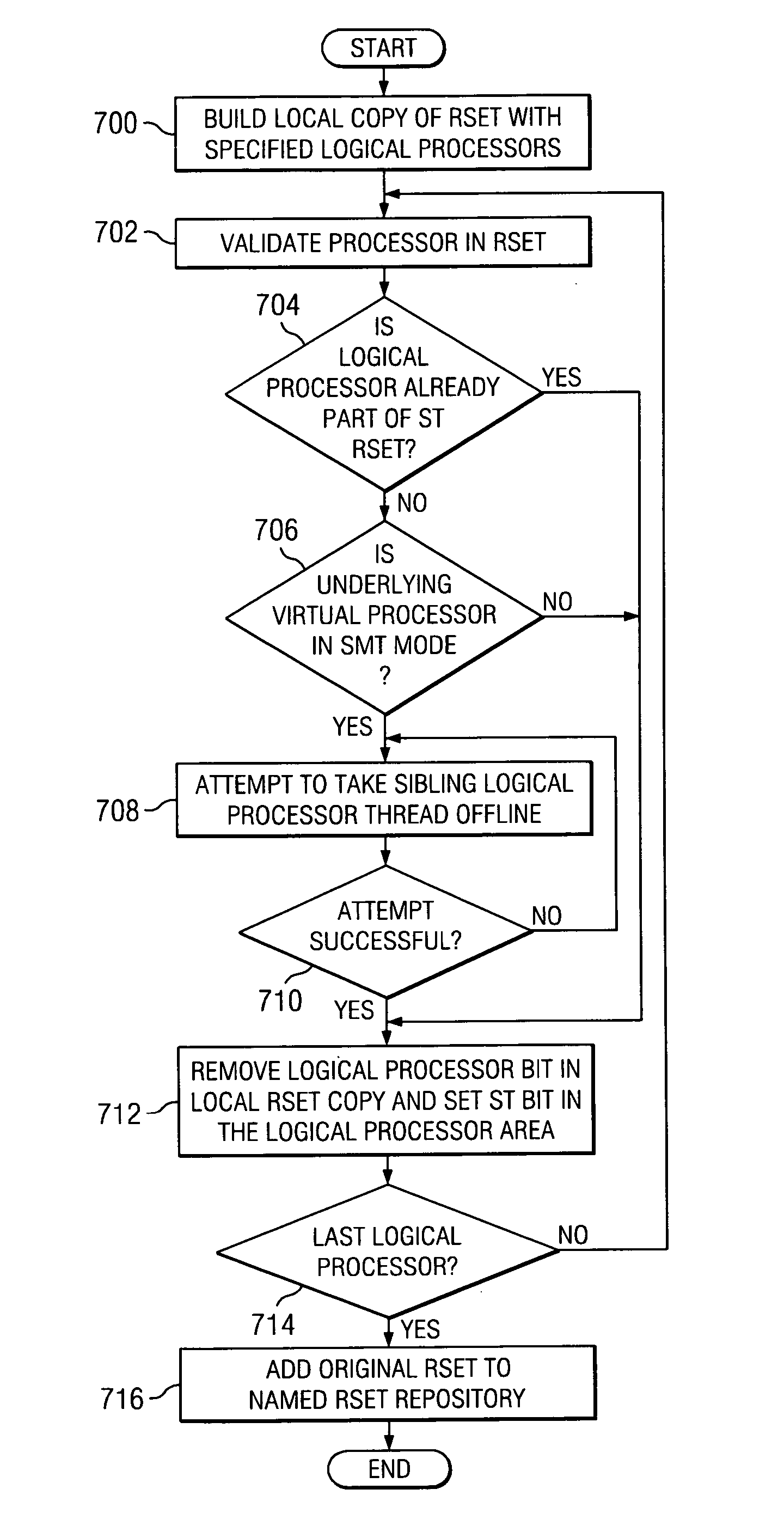

Job level control of simultaneous multi-threading functionality in a processor

InactiveUS20060242389A1Digital computer detailsSpecific program execution arrangementsData processing systemComputer architecture

Using resource sets for job-level control of the simultaneous multi-threading capability (SMT) of a processor in a data processing system. A resource set defined with respect to the processor is adapted to control whether the simultaneous multi-threading capability is enabled.

Owner:IBM CORP

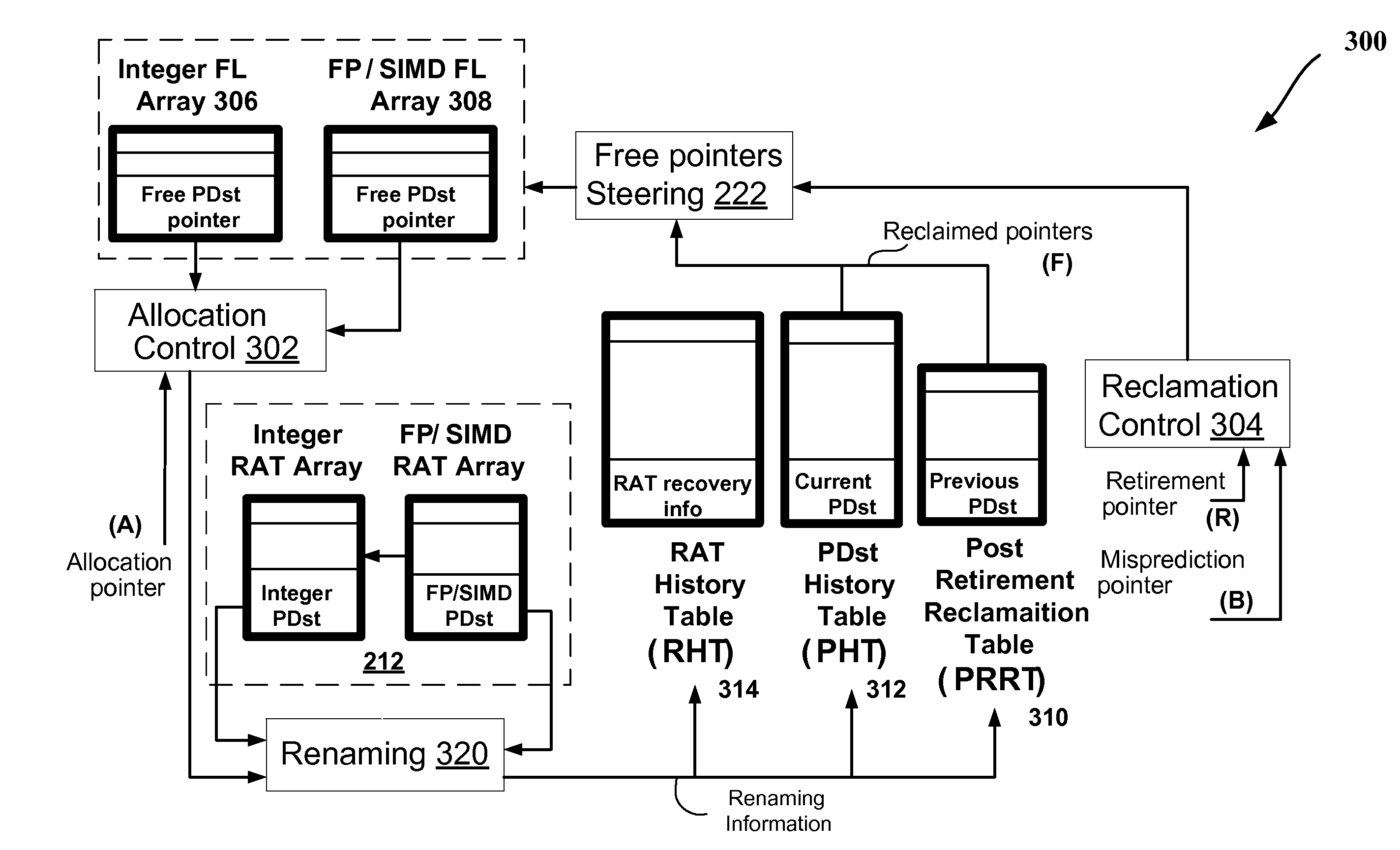

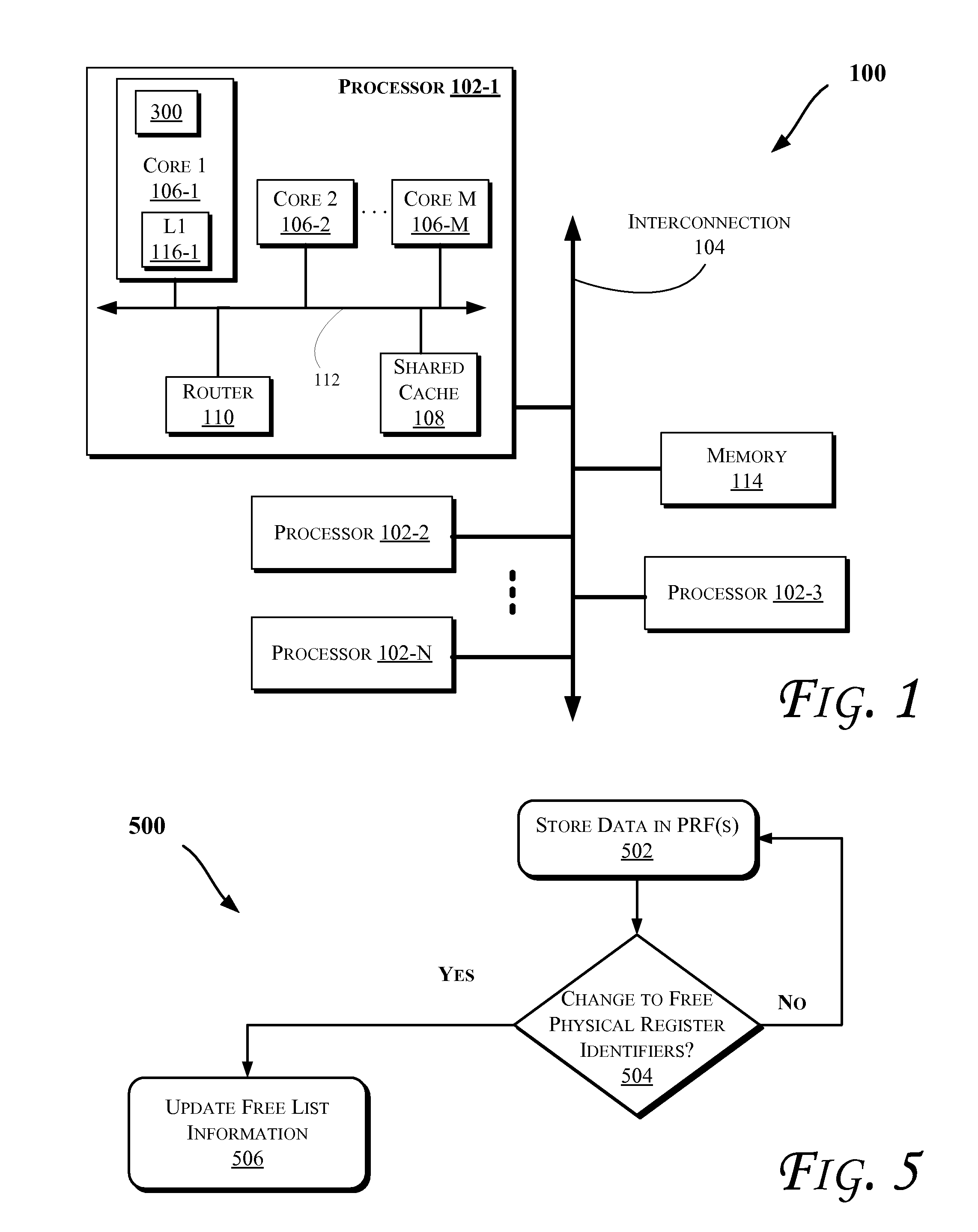

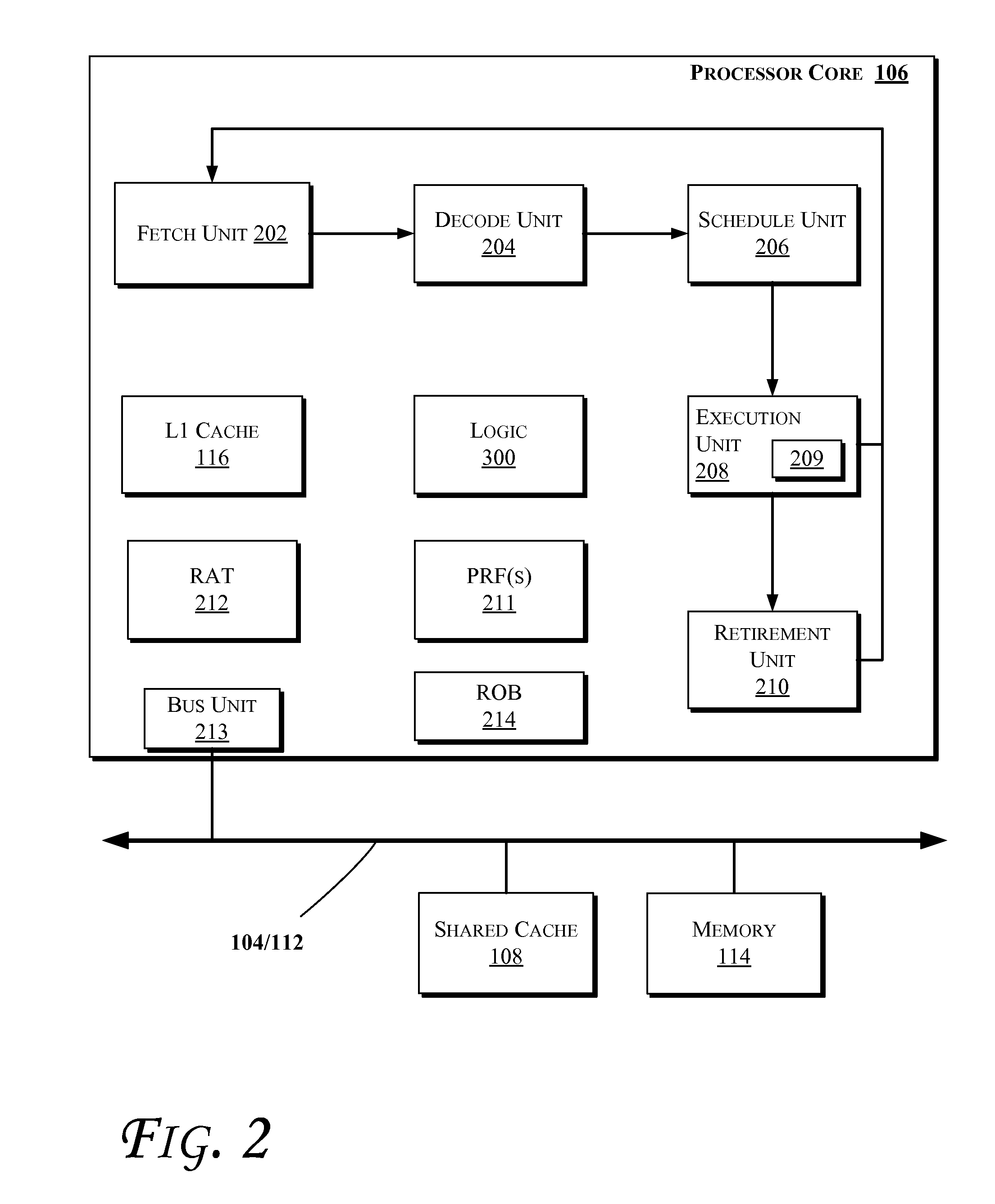

Mechanisms to handle free physical register identifiers for smt out-of-order processors

InactiveUS20090327661A1Register arrangementsDigital computer detailsProcessor registerSimultaneous multithreading

Methods and apparatus relating to mechanisms to handle free physical register identifiers for SMT (Simultaneous Multi-Threading) out-of-order processors are described. In some embodiments, a physical register file stores both speculative data and architectural data corresponding to a plurality of registers. A free list logic may maintain free physical register identifiers corresponding to the plurality of registers. An instruction may read the architectural data from the physical register file at dispatch. Other embodiments are also described and claimed.

Owner:INTEL CORP

Thread priority method, apparatus, and computer program product for ensuring processing fairness in simultaneous multi-threading microprocessors

InactiveUS20060184946A1Multiprogramming arrangementsMemory systemsData processing systemSimultaneous multithreading

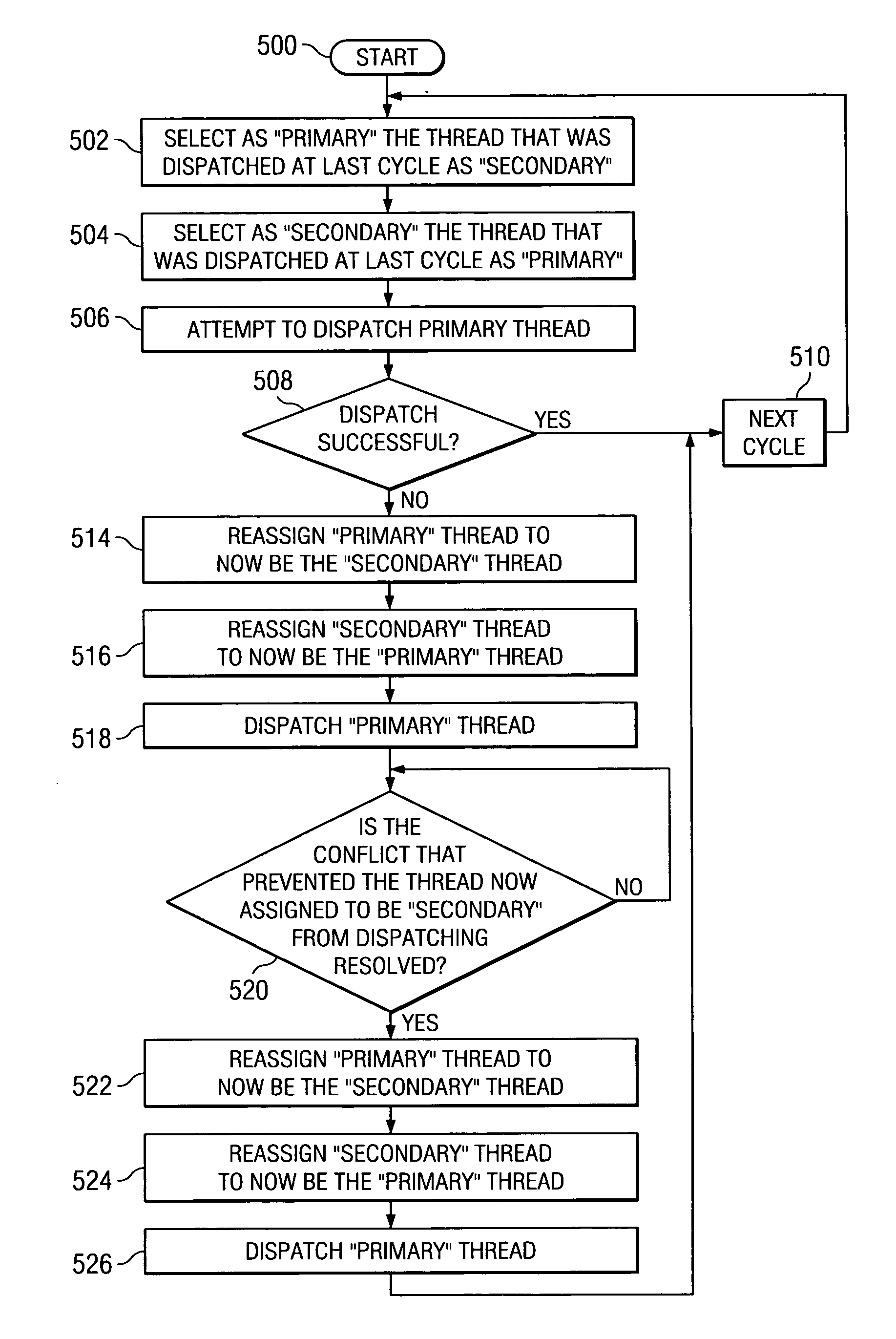

A method, apparatus, and computer program product are disclosed in a data processing system for ensuring processing fairness in simultaneous multi-threading (SMT) microprocessors that concurrently execute multiple threads during each clock cycle. A clock cycle priority is assigned to a first thread and to a second thread during a standard selection state that lasts for an expected number of clock cycles. The clock cycle priority is assigned according to a standard selection definition during the standard selection state by selecting the first thread to be a primary thread and the second thread to be a secondary thread during the standard selection state. If a condition exists that requires overriding the standard selection definition, an override state is executed during which the standard selection definition is overridden by selecting the second thread to be the primary thread and the first thread to be the secondary thread. The override state is forced to be executed for an override period of time which equals the expected number of clock cycles plus a forced number of clock cycles. The forced number of clock cycles is granted to the first thread in response to the first thread again becoming the primary thread.

Owner:IBM CORP

Instruction group formation and mechanism for SMT dispatch

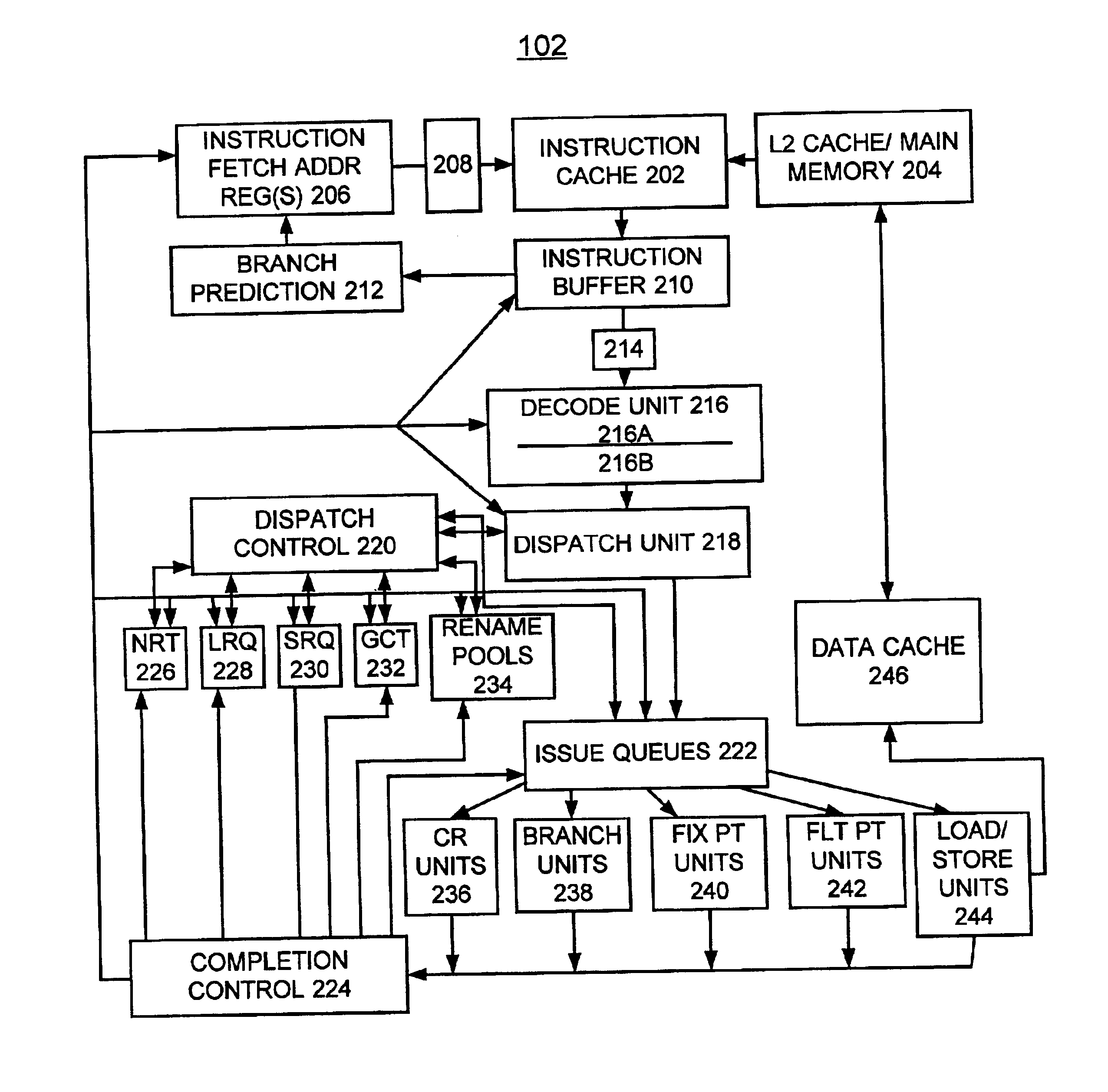

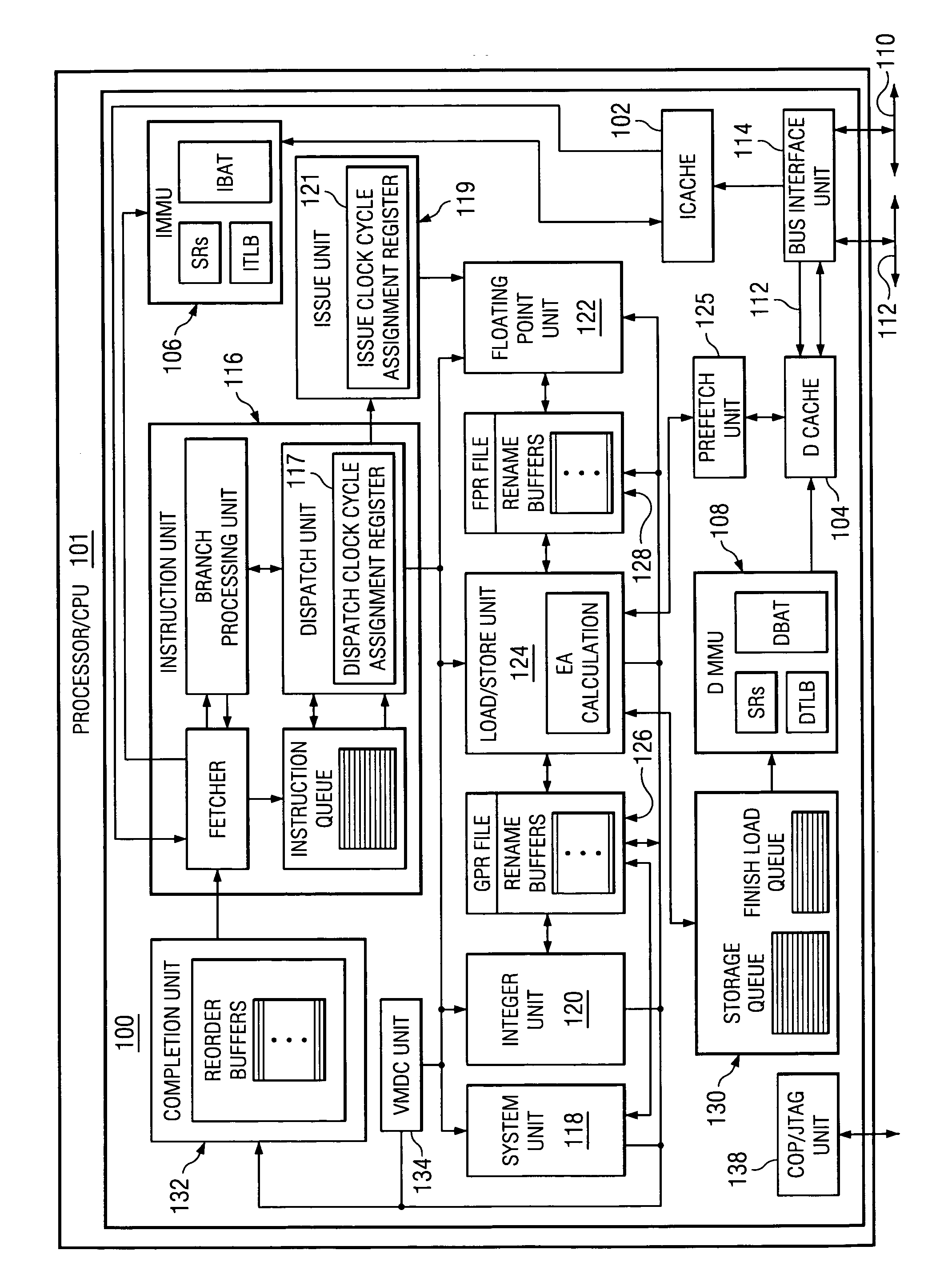

InactiveUS20060101241A1Increase dispatching bandwidthEasy constructionInstruction analysisDigital computer detailsHardware threadProgram instruction

A more efficient method of handling instructions in a computer processor, by associating resource fields with respective program instructions wherein the resource fields indicate which of the processor hardware resources are required to carry out the program instructions, calculating resource requirements for merging two or more program instructions based on their resource fields, and determining resource availability for simultaneously executing the merged program instructions based on the calculated resource requirements. Resource vectors indicative of the required resource may be encoded into the resource fields, and the resource fields decoded at a later stage to derive the resource vectors. The resource fields can be stored in the instruction cache associated with the respective program instructions. The processor may operate in a simultaneous multithreading mode with different program instructions being part of different hardware threads. When the resource availability equals or exceeds the resource requirements for a group of instructions, those instructions can be dispatched simultaneously to the hardware resources. A start bit may be inserted in one of the program instructions to define the instruction group. The hardware resources may in particular be execution units such as a fixed-point unit, a load / store unit, a floating-point unit, or a branch processing unit.

Owner:IBM CORP

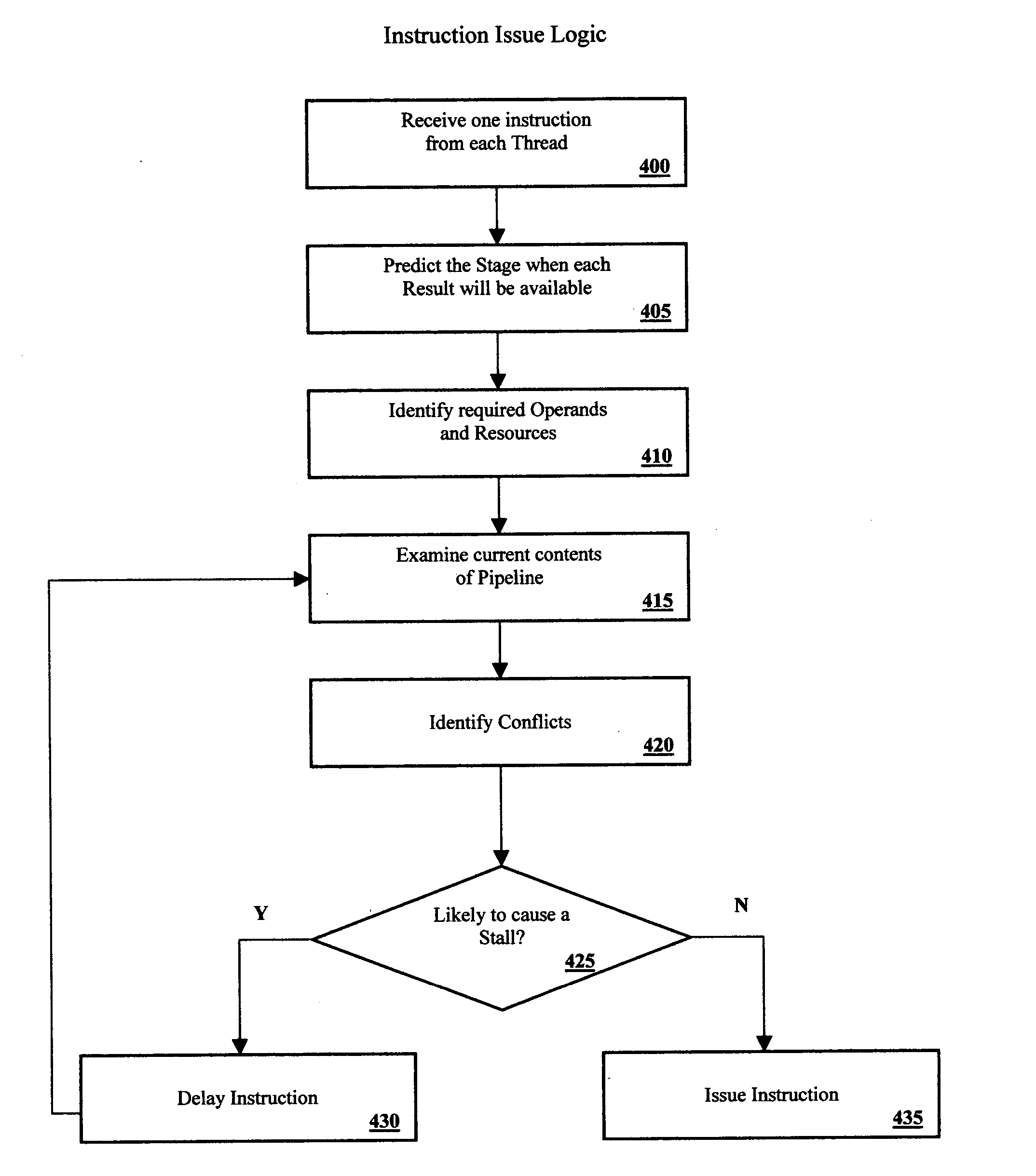

Speculative instruction issue in a simultaneously multithreaded processor

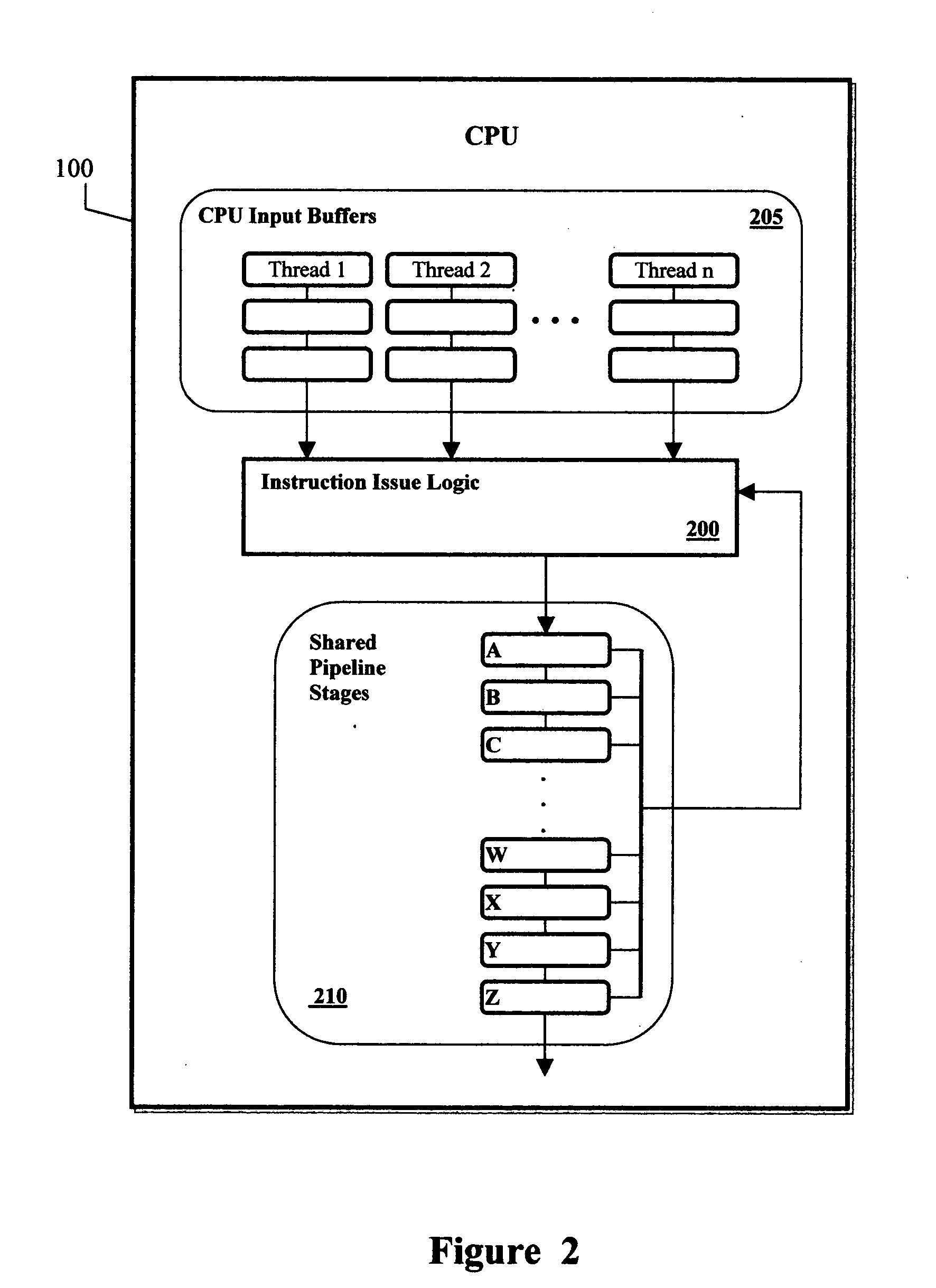

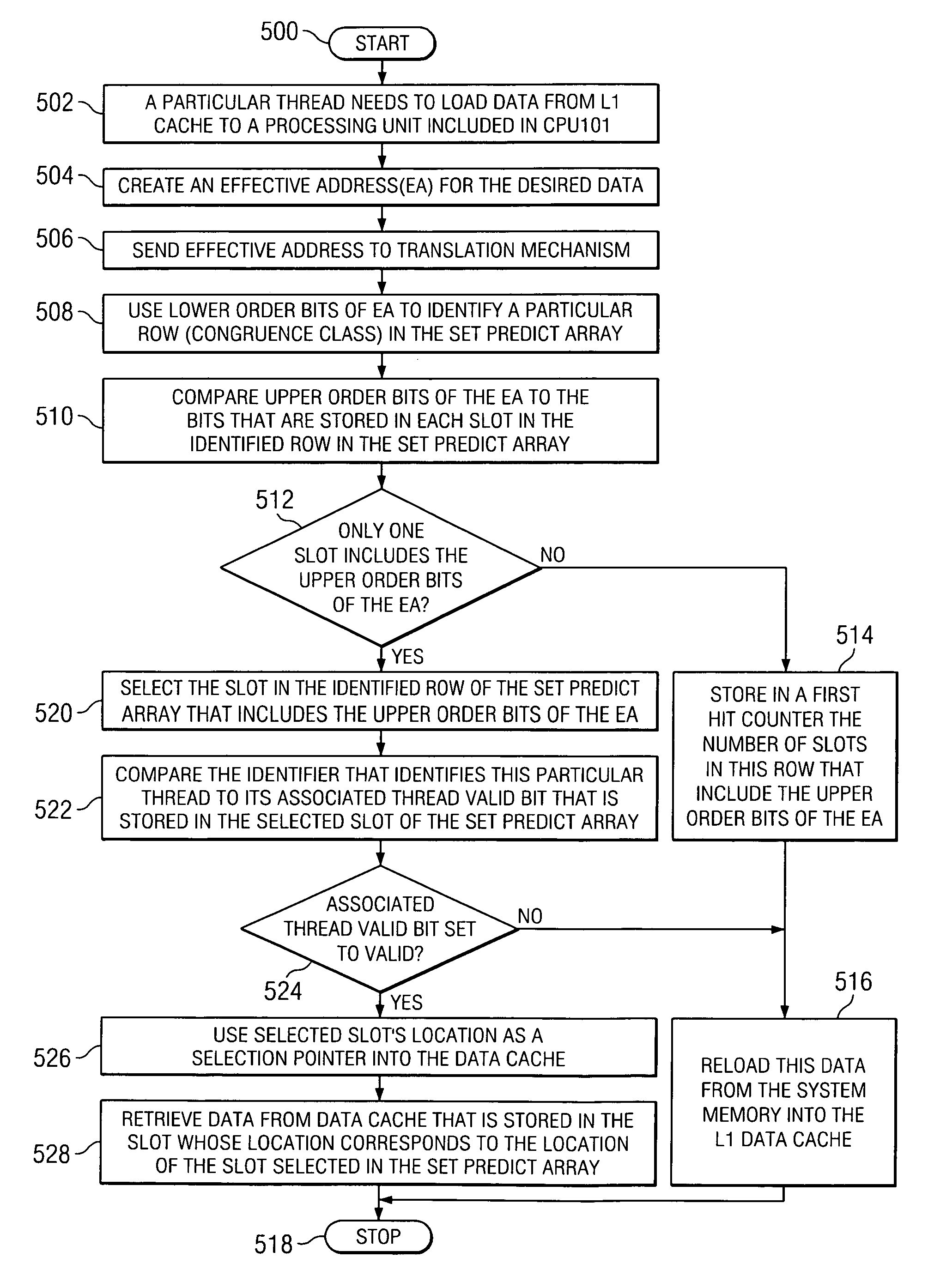

InactiveUS20050060518A1Improve performanceDigital computer detailsConcurrent instruction executionOperandIssue logic

A method for optimizing throughput in a microprocessor that is capable of processing multiple threads of instructions simultaneously. Instruction issue logic is provided between the input buffers and the pipeline of the microprocessor. The instruction issue logic speculatively issues instructions from a given thread based on the probability that the required operands will be available when the instruction reaches the stage in the pipeline where they are required. Issue of an instruction is blocked if the current pipeline conditions indicate that there is a significant probability that the instruction will need to stall in a shared resource to wait for operands. Once the probability that the instruction will stall is below a certain threshold, based on current pipeline conditions, the instruction is allowed to issue.

Owner:IBM CORP

Method, apparatus, and computer program product for sharing data in a cache among threads in an SMT processor

A method, apparatus, and computer program product are disclosed in a data processing system for sharing data in a cache among multiple threads in a simultaneous multi-threaded (SMT) processor. The SMT processor executes multiple threads concurrently during each clock cycle. The cache is dynamically allocated for use among the multiple threads. Portions of the cache are capable of being designated to store private data that is used exclusively by only a first one of the threads. The portions of the cache are capable of being designated to store shared data that can be used by any one of the multiple threads. The size of the portions can be changed dynamically during execution of the threads.

Owner:GOOGLE LLC

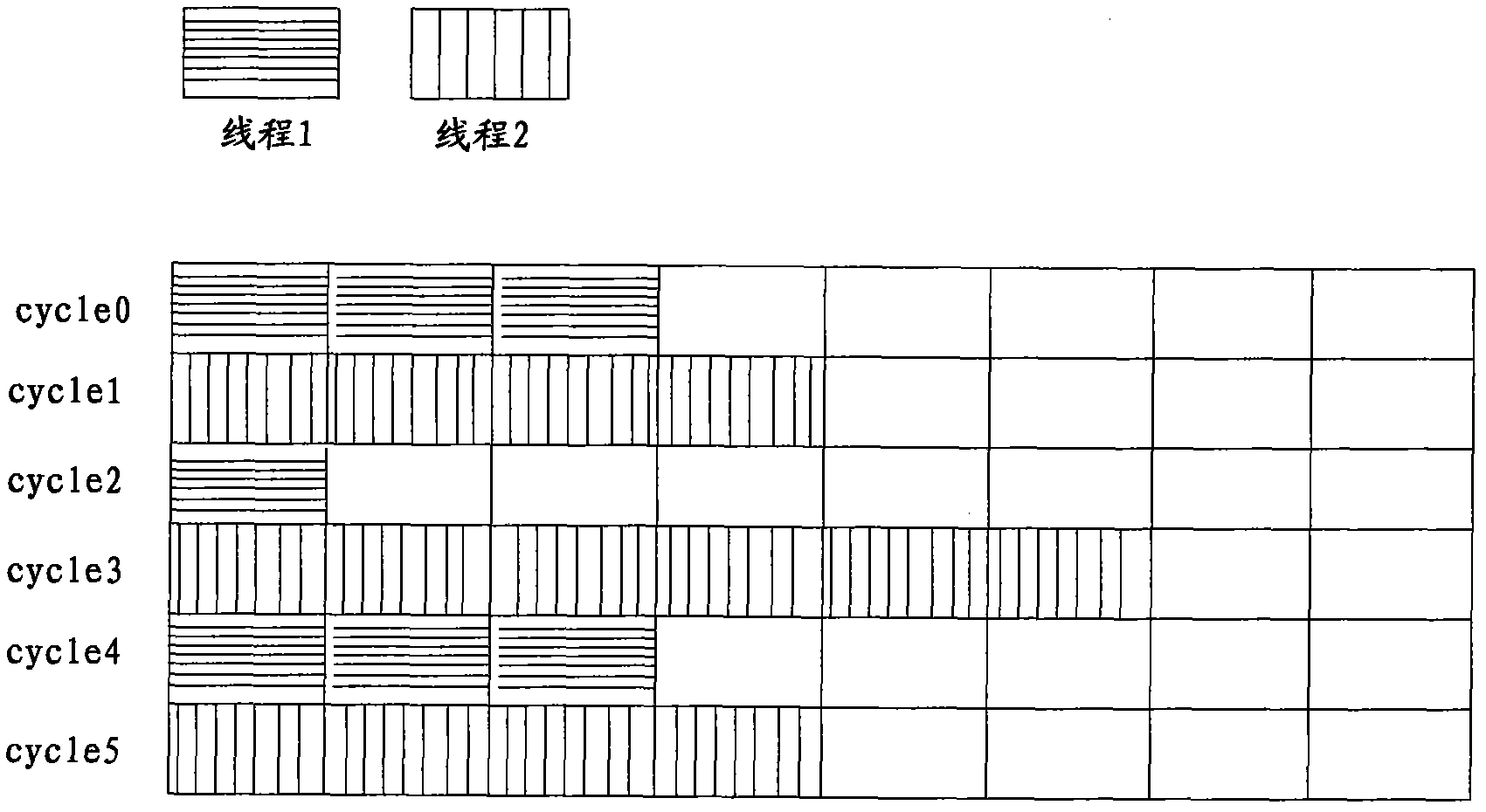

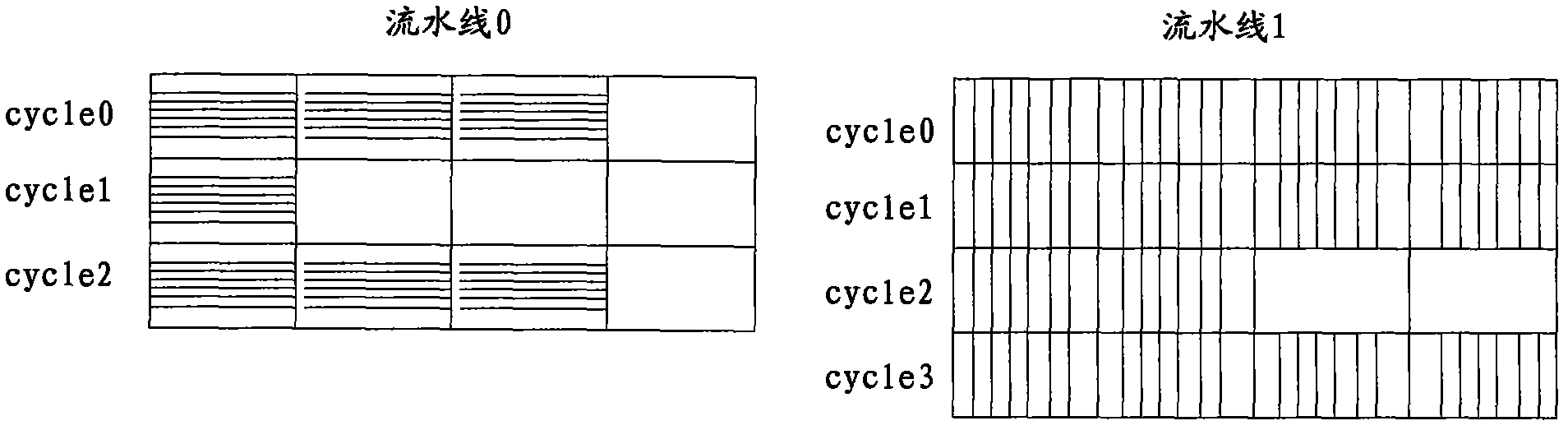

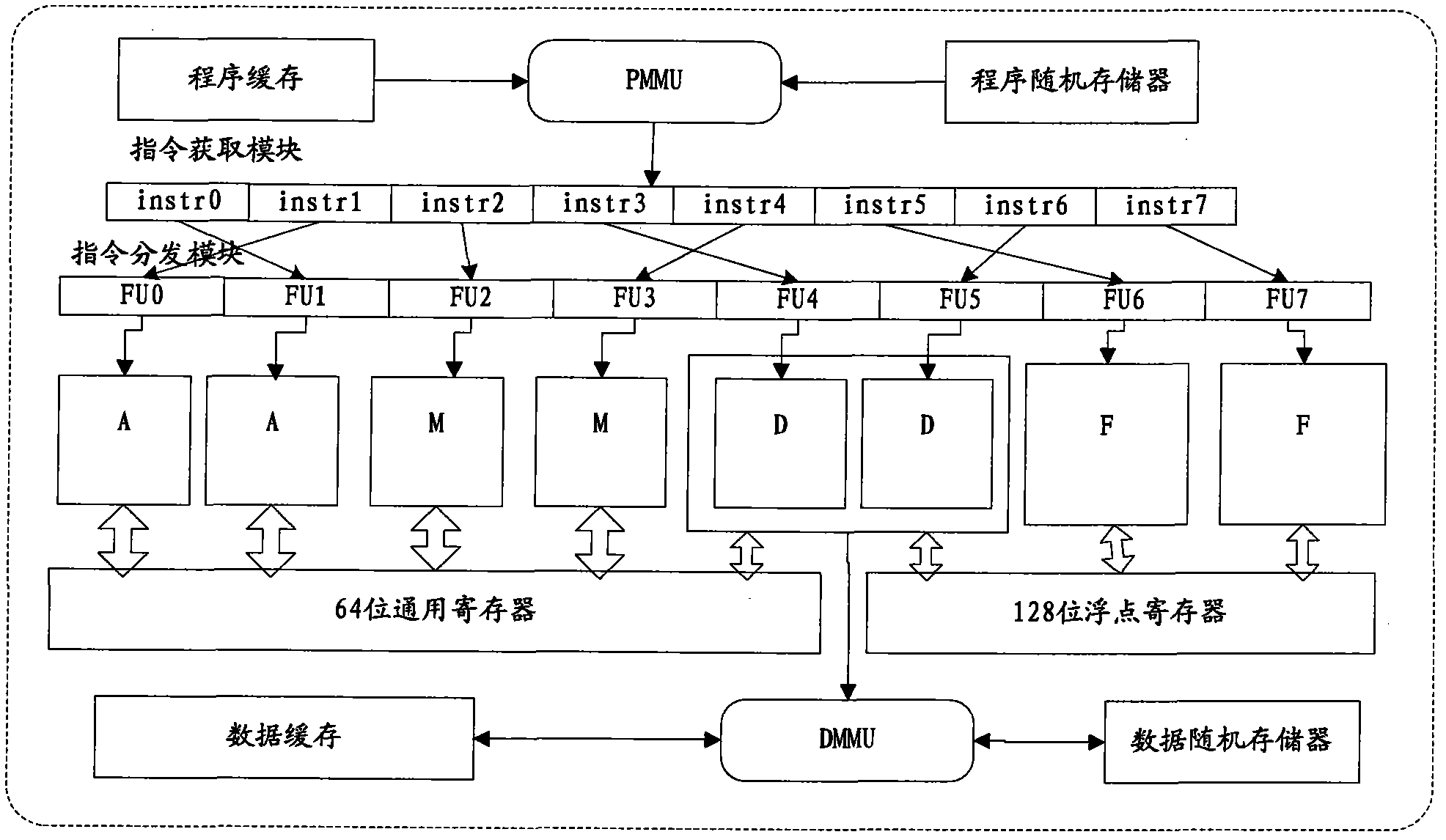

Very long instruction word processor structure supporting simultaneous multithreading

ActiveCN102004719AImprove access efficiencyFast executionConcurrent instruction executionArchitecture with multiple processing unitsProcessor registerControl register

The invention provides a very long instruction word processor structure supporting simultaneous multithreading, which comprises at least two parallel instruction processing pipeline structures, wherein each instruction processing pipeline structure comprises an instruction obtaining module, an instruction distribution module and an instruction executing module, a general register file, a floating point register file and a control register file, the instruction obtaining module is used for obtaining instruction information, the instruction distribution module is used for receiving and distributing the instruction information obtained by the instruction obtaining module, and the instruction executing module comprises instruction executing units A, D, M and F which are used for executing the instruction information, the general register file is used for storing executing results of the corresponding executing units A, M and D, and the floating point register file is used for storing executing results of the corresponding executing units D and F. Through the structure, the resources of a processor can be more sufficiently utilized, the threading access efficiency is enhanced, and the processing speed of the processor is improved.

Owner:TSINGHUA UNIV

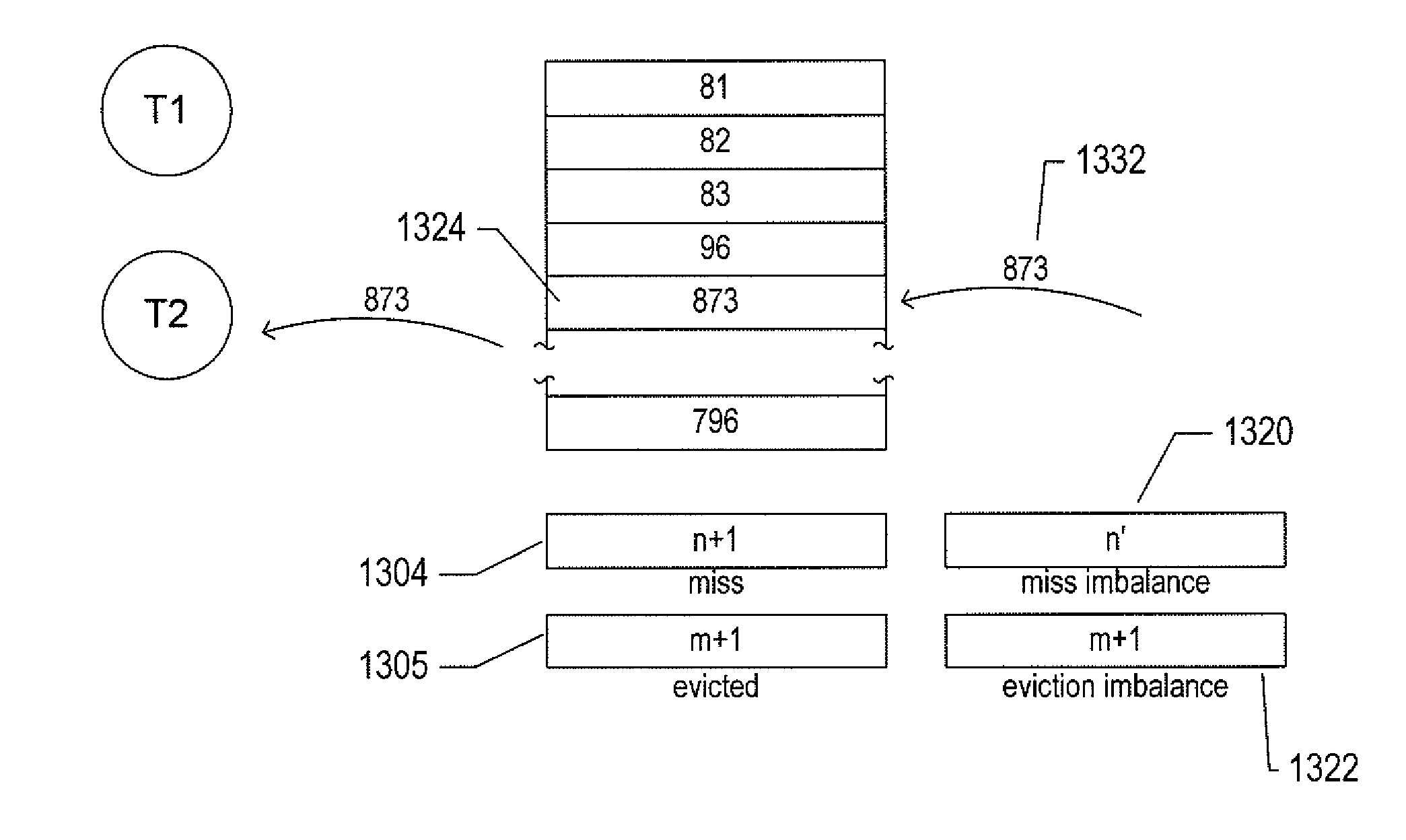

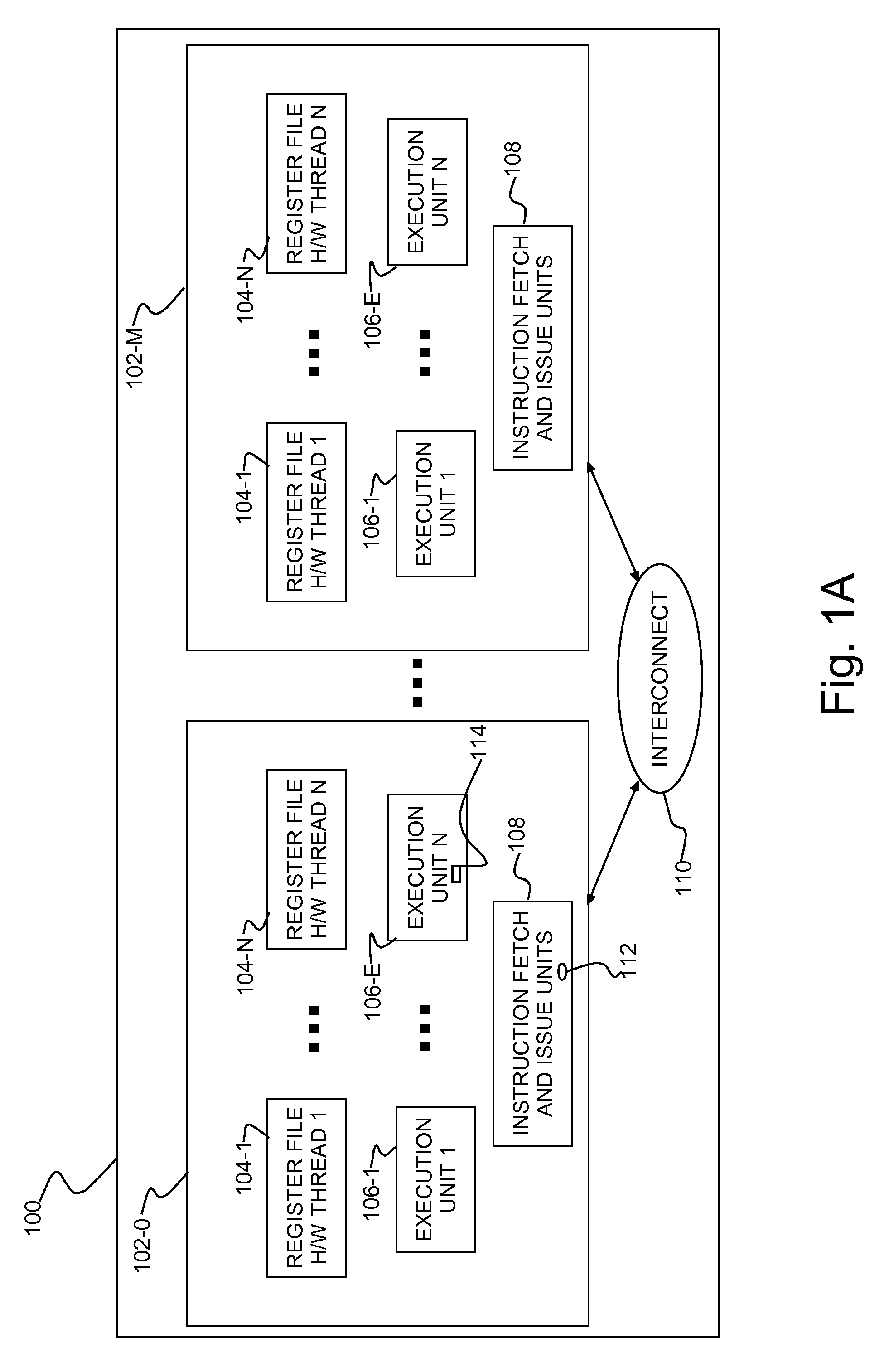

Performance-imbalance-monitoring processor features

The current application is directed to architected hardware support within computer processors for detecting and monitoring various types of potential performance imbalances with respect to simultaneously executing hardware threads in simultaneous multi-threading (“SMT”) processors and SMT-processor cores. The architected hardware support may include various types of performance-imbalance-monitoring registers that accumulate indications of performance imbalances and that can be used, by performance-monitoring software and by human analysts to detect performance-degrading conflicts between simultaneously executing hardware threads. Such conflicts can be ameliorated by changing the scheduling of virtual machines, tasks, and other computational entities, by redesigning and re-implementing all or portions of performance-limited and performance-degrading applications, by altering resource-allocation strategies, and by other means. In addition, performance imbalance detection and monitoring can be used to provide accurate, computational-throughput-based accounting in cloud-computing environments.

Owner:VMWARE INC

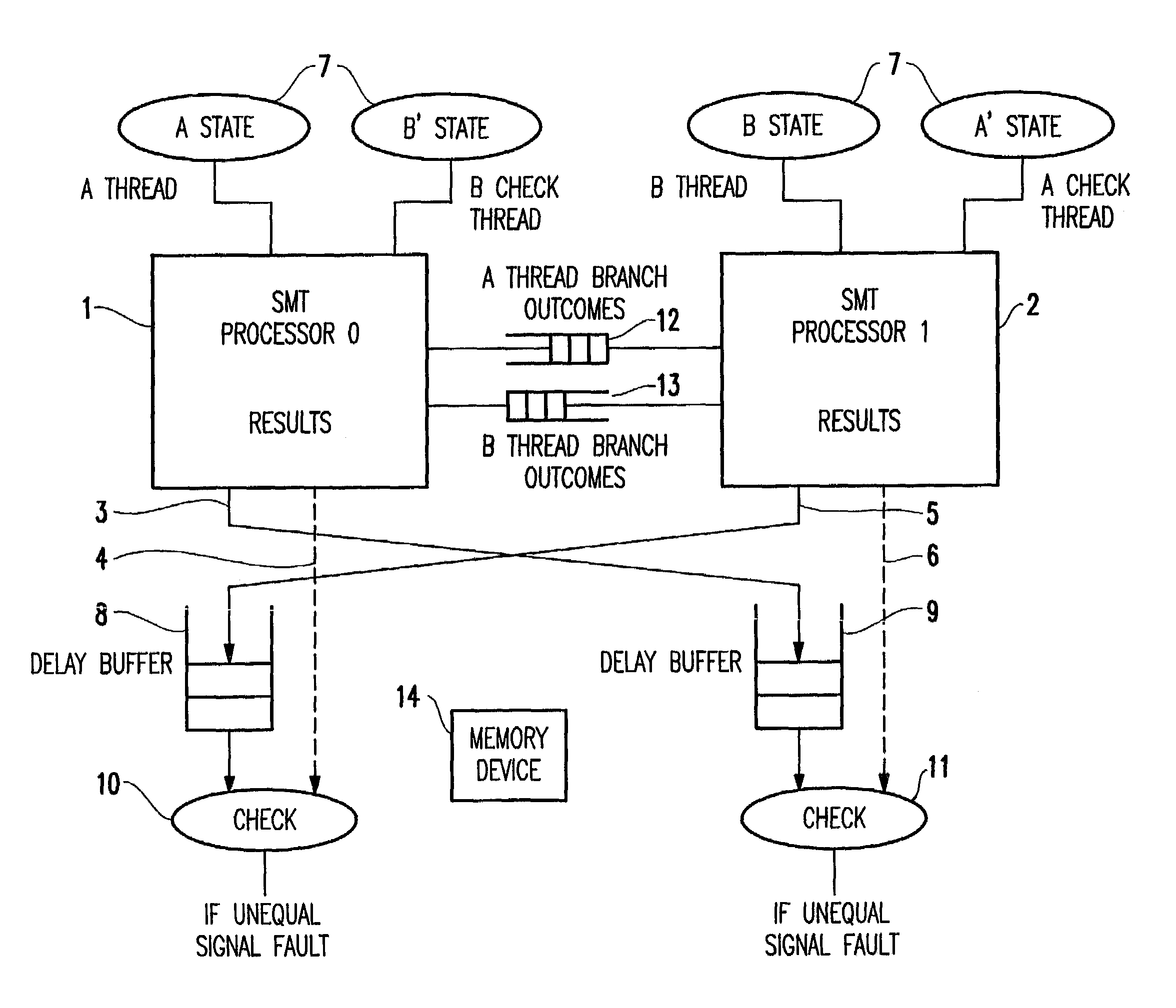

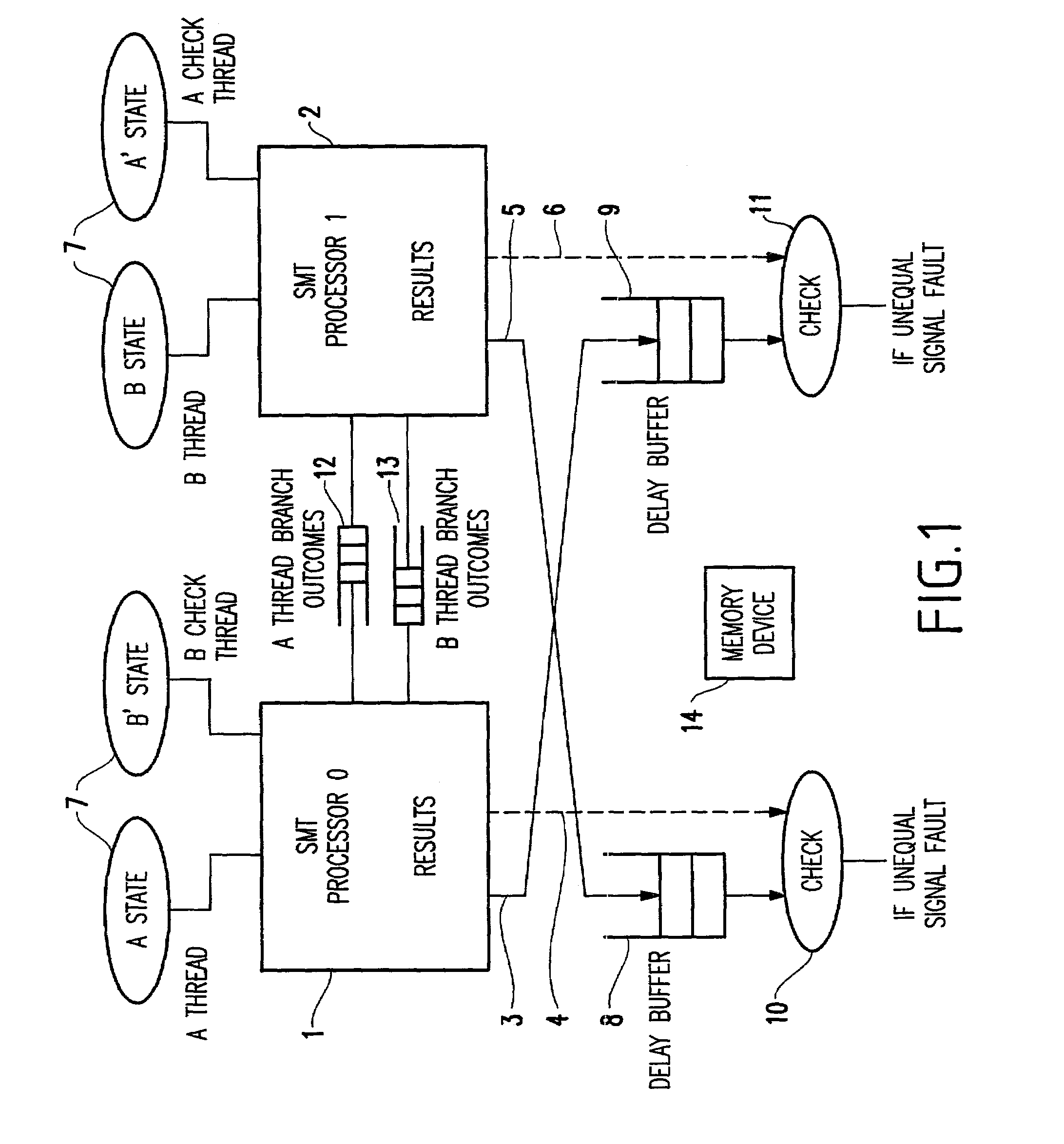

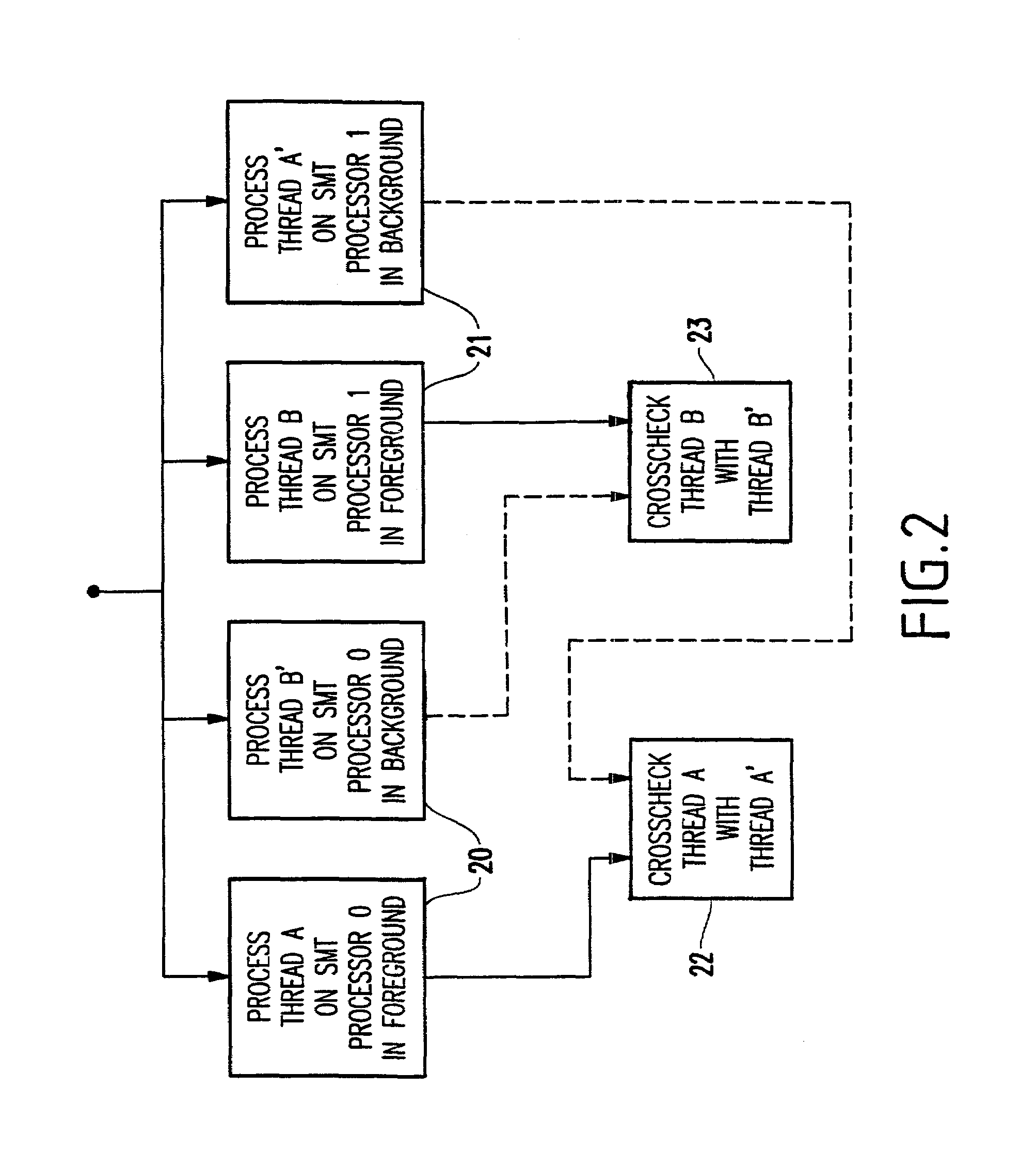

Method and apparatus for fault-tolerance via dual thread crosschecking

InactiveUS7017073B2Little performance lossImprove reliabilityHardware monitoringFault toleranceParallel computing

A method (and structure) of concurrent fault crosschecking in a computer having a plurality of simultaneous multithreading (SMT) processors, each SMT processor simultaneously processing a plurality of threads, includes processing a first foreground thread and a first background thread on a first SMT processor and processing a second foreground thread and a second background thread on a second SMT processor. The first background thread executes a check on the second foreground thread and the second background thread executes a check on the first foreground thread, thereby achieving a crosschecking of the execution of the threads on the processors.

Owner:INTEL CORP

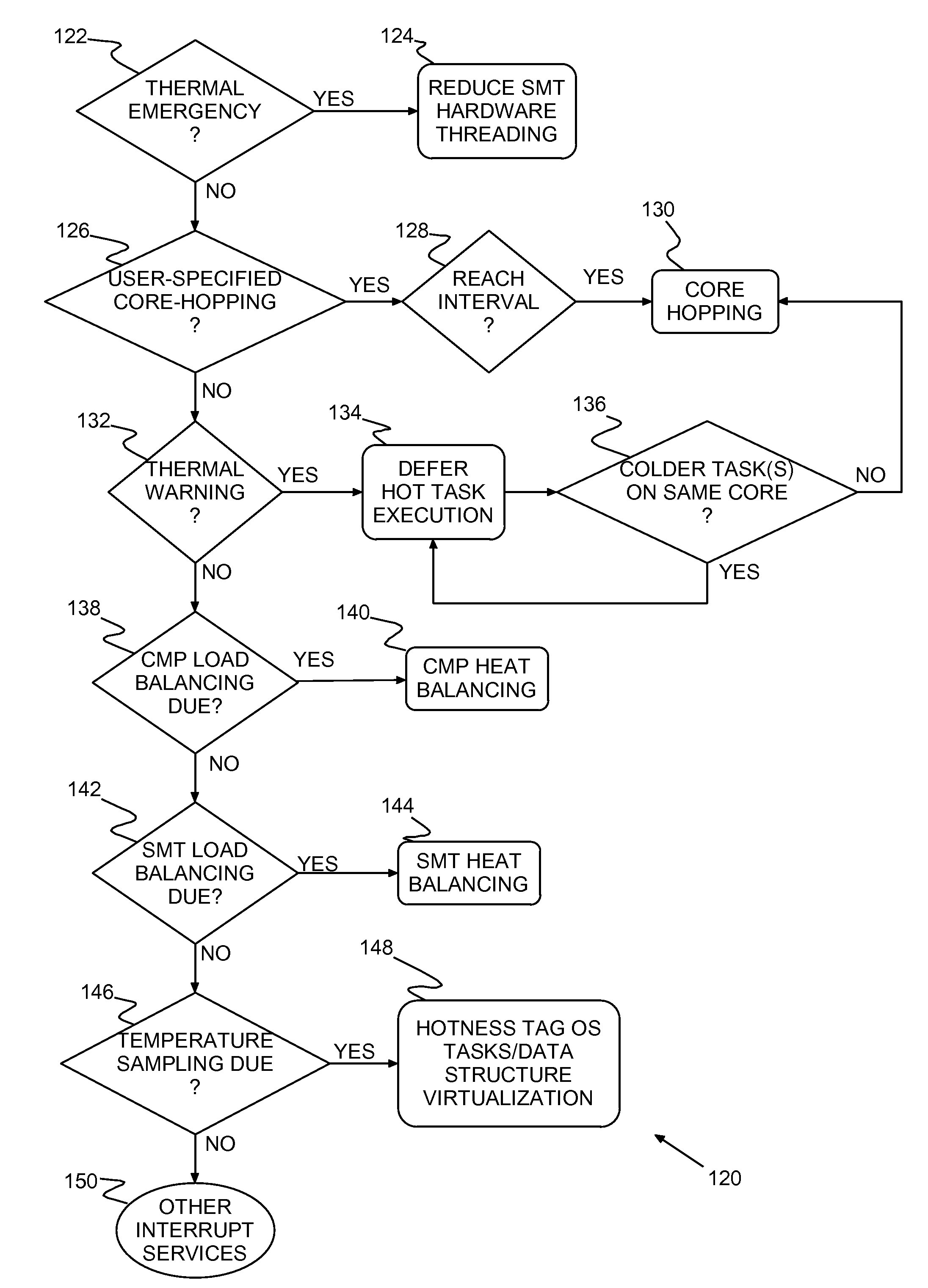

Method of virtualization and OS-level thermal management and multithreaded processor with virtualization and OS-level thermal management

InactiveUS7886172B2Improve processor performanceMinimize SMT processor performance lossEnergy efficient ICTVolume/mass flow measurementVirtualizationMonitoring temperature

A program product and method of managing task execution on an integrated circuit chip such as a chip-level multiprocessor (CMP) with Simultaneous MultiThreading (SMT). Multiple chip operating units or cores have chip sensors (temperature sensors or counters) for monitoring temperature in units. Task execution is monitored for hot tasks and especially for hotspots. Task execution is balanced, thermally, to minimize hot spots. Thermal balancing may include Simultaneous MultiThreading (SMT) heat balancing, chip-level multiprocessors (CMP) heat balancing, deferring execution of identified hot tasks, migrating identified hot tasks from a current core to a colder core, User-specified Core-hopping, and SMT hardware threading.

Owner:INT BUSINESS MASCH CORP

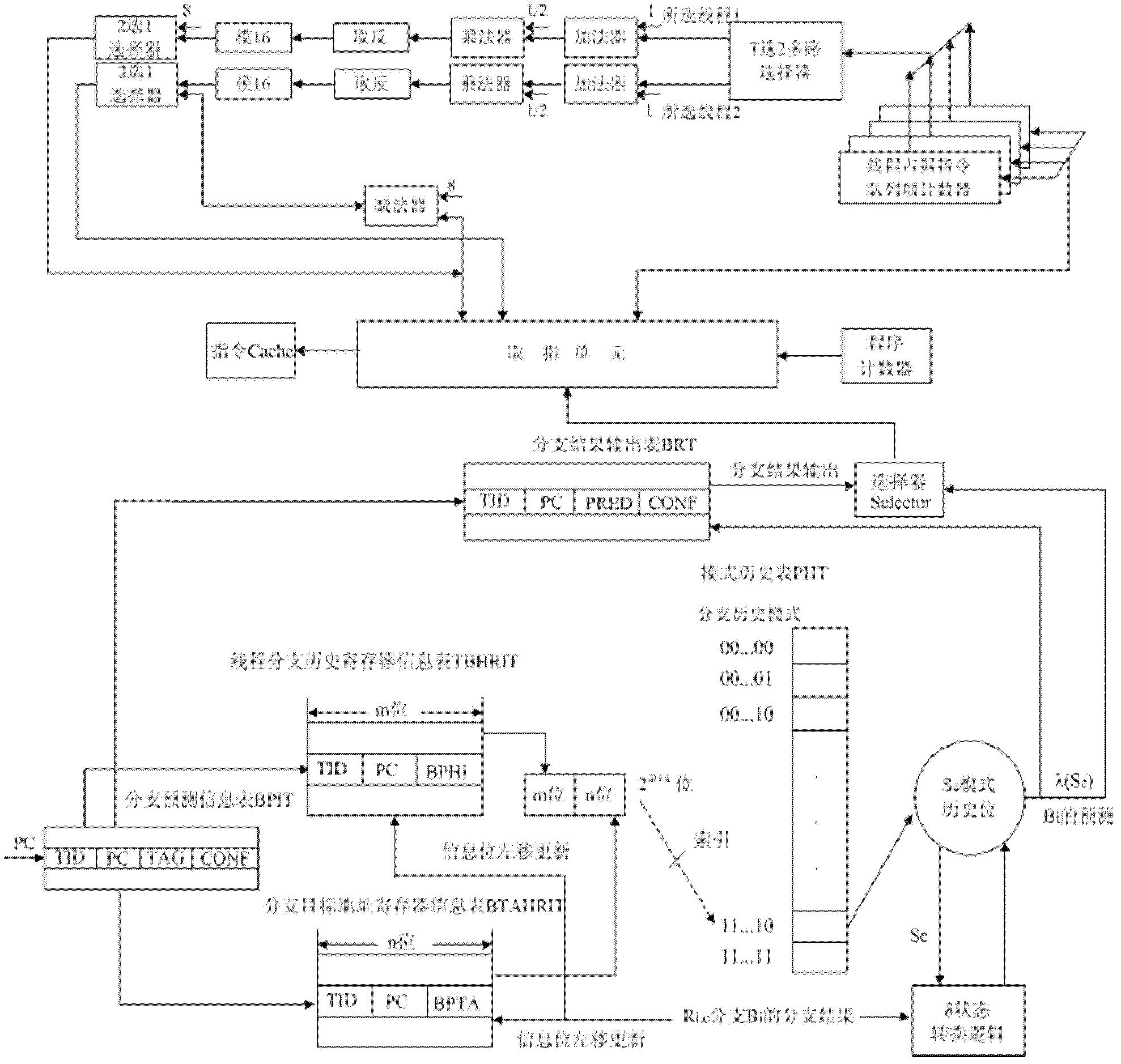

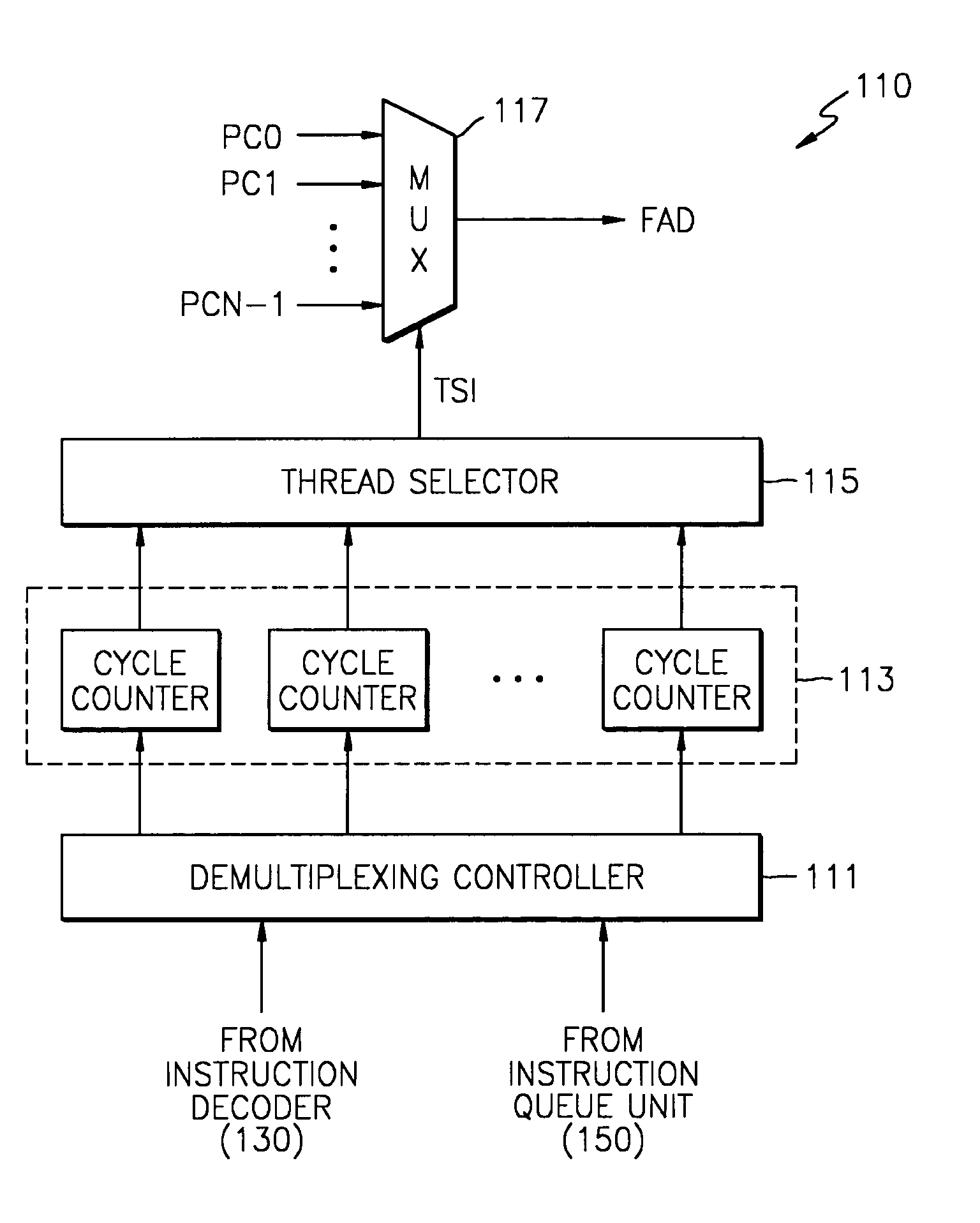

Instruction acquisition control method based on simultaneous multithreading

InactiveCN102566974AImprove superiorityAdvancing Predictive Execution SpeedConcurrent instruction executionMemory systemsFailure ratePattern matching

The invention provides an instruction acquisition control method based on simultaneous multithreading, which includes the steps: in each clock cycle of a processor, reading a PC (personable computer) value of instructions by an instruction acquisition component according to a program counter, selecting two threads with high priority as instruction acquisition threads firstly, and then computing the actual instruction number required by each instruction acquisition thread so as to read the instructions; according to an IPC (inter-process communication) value and the Cache failure rate, enabling a dual-priority resource allocation mechanism to compute system resources required by the threads in an instruction acquisition stage and complete dynamic allocation of the resources; matching a TBHBP (thread branch history branch predictor) with the instruction acquisition operations of the instruction acquisition component, acquiring a pattern type match position Sc by connecting global historical information with local historical information read by a branch instruction Bi to utilize as an index of a secondary PHT (pattern history table), and inputting computed results to a BRT (branch result table); and when the branch instruction Bi is executed again, judging whether CONF fields are larger than or equal to 2 or not by the aid of a selector, directly outputting the recorded branch results if the CONF fields are larger than or equal to 2, and finally placing the acquired instruction into an instruction Cache, so that all operations of instruction acquisition control are completed.

Owner:HARBIN ENG UNIV

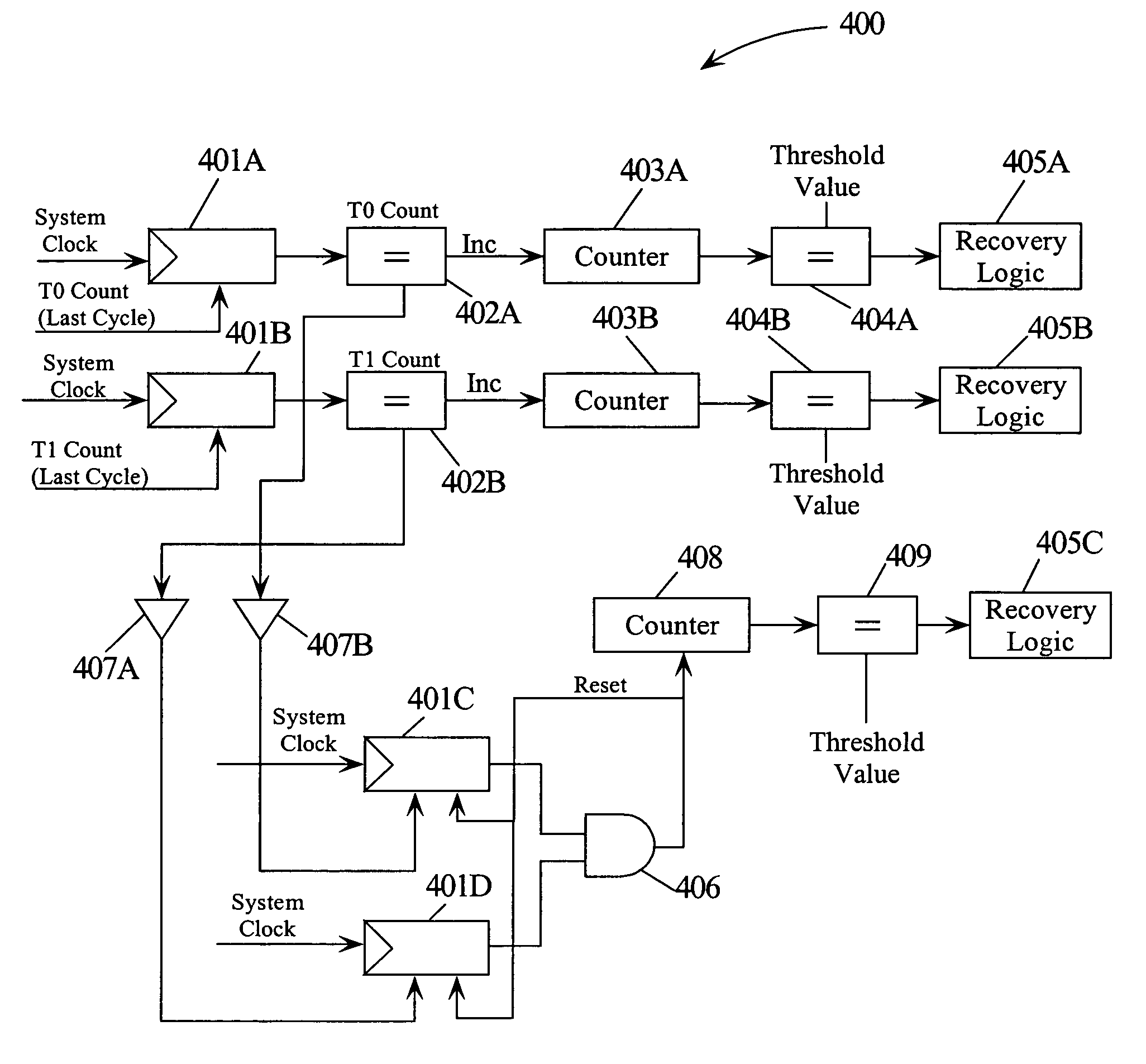

Mechanism for effectively handling livelocks in a simultaneous multithreading processor

InactiveUS7000047B2Preventing executionRuntime instruction translationUnauthorized memory use protectionOperating systemSimultaneous multithreading

A method and multithreaded processor for handling livelocks in a simultaneous multithreaded processor. A number of instructions for a thread in a queue may be counted. A counter in the queue may be incremented if the number of instructions for the thread in the queue in a previous clock cycle is equal to the number of instructions for the thread in the queue in a current clock cycle. If the value of the counter equals a threshold value, then a livelock condition may be detected. Further, if the value of the counter equals a threshold value, a recovery action may be activated to handle the livelock condition detected. The recovery action may include blocking the instructions associated with a thread causing the livelock condition from being executed thereby ensuring that the locked thread makes forward progress.

Owner:IBM CORP

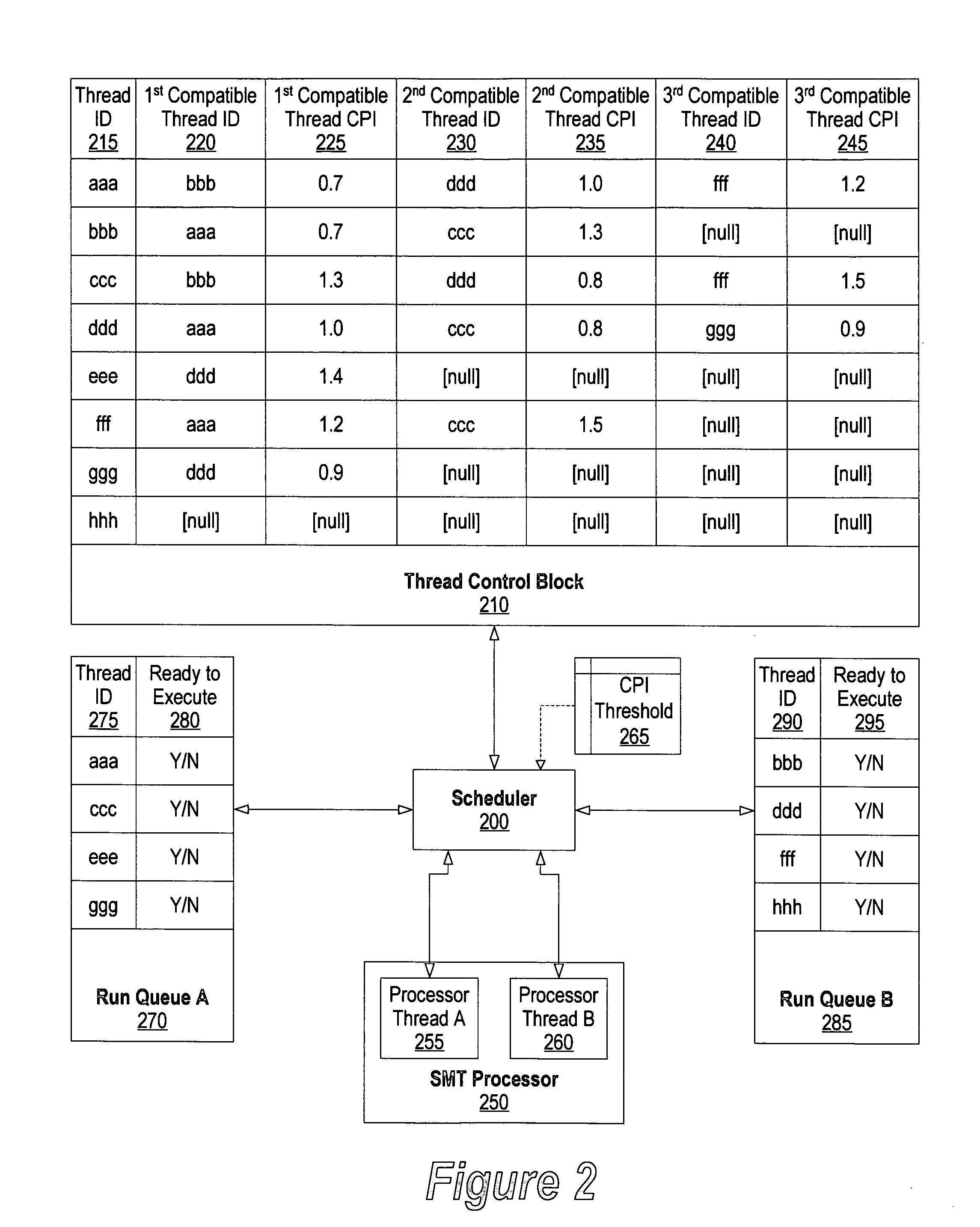

System and method for CPI scheduling on SMT processors

ActiveUS20050086660A1Improve performanceDigital computer detailsMultiprogramming arrangementsThread schedulingParallel computing

A system and method for identifying compatible threads in a Simultaneous Multithreading (SMT) processor environment is provided by calculating a performance metric, such as CPI, that occurs when two threads are running on the SMT processor. The CPI that is achieved when both threads were executing on the SMT processor is determined. If the CPI that was achieved is better than the compatibility threshold, then information indicating the compatibility is recorded. When a thread is about to complete, the scheduler looks at the run queue from which the completing thread belongs to dispatch another thread. The scheduler identifies a thread that is (1) compatible with the thread that is still running on the SMT processor (i.e., the thread that is not about to complete), and (2) ready to execute. The CPI data is continually updated so that threads that are compatible with one another are continually identified.

Owner:META PLATFORMS INC

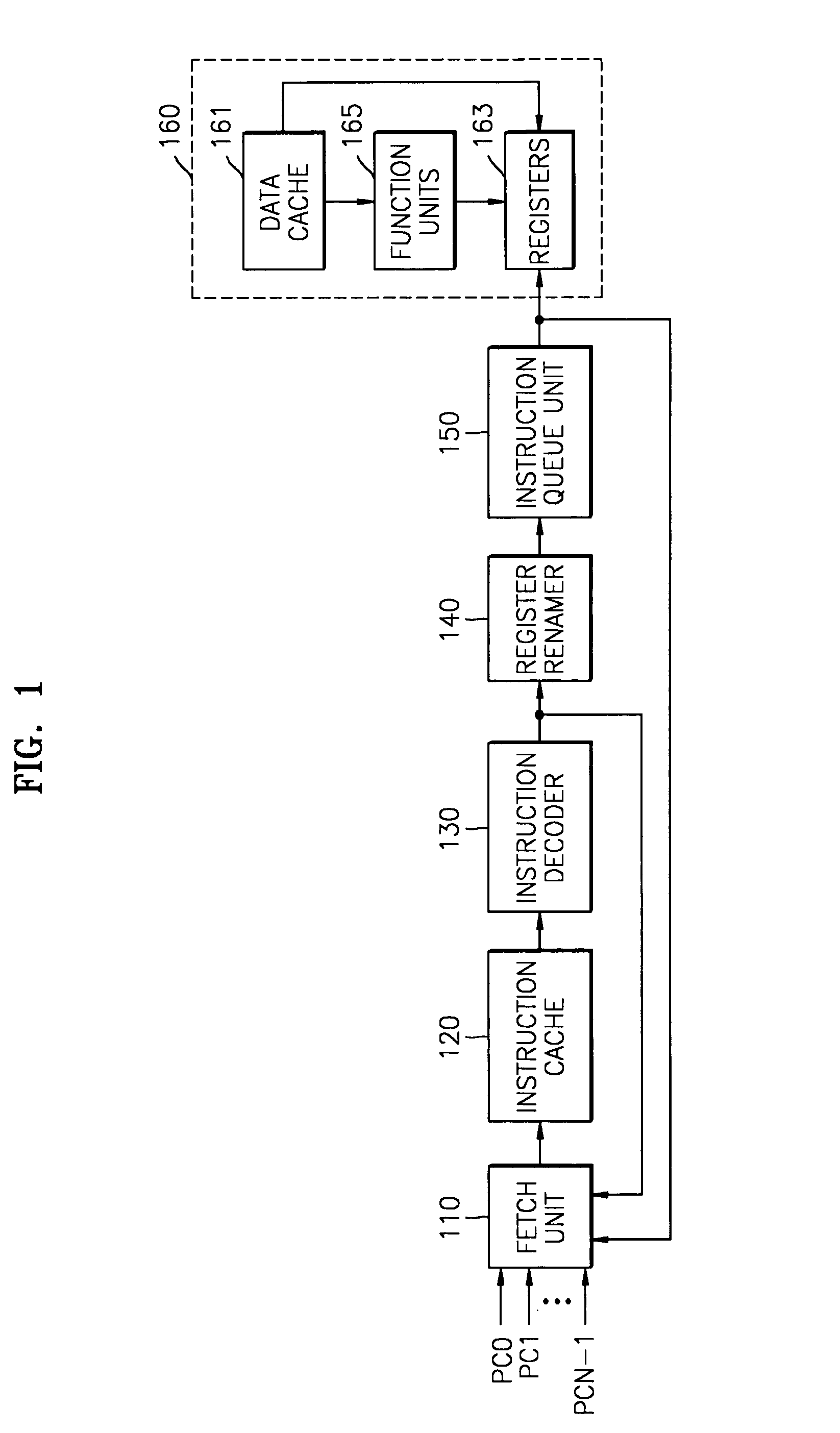

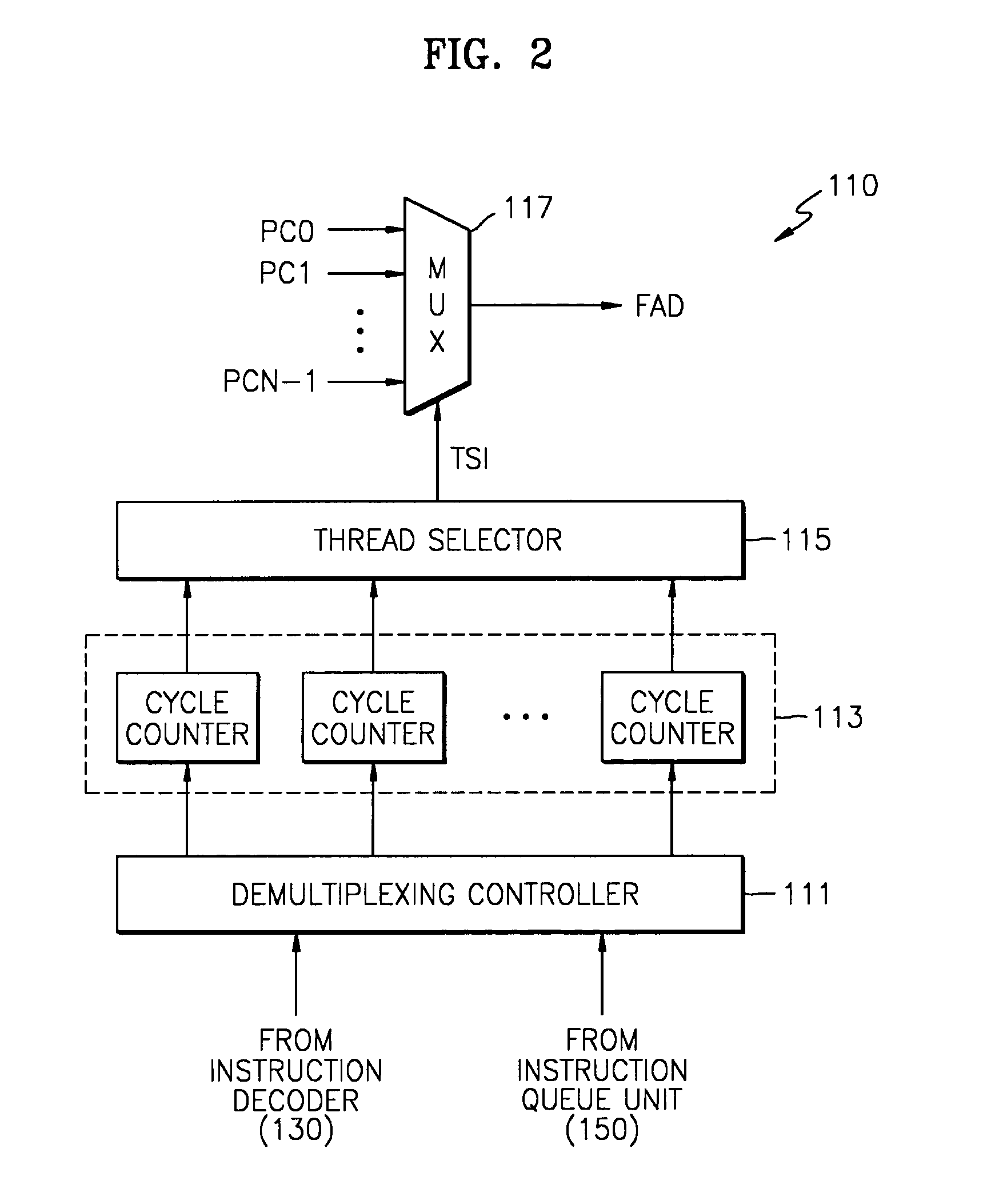

Thread selection for fetching instructions for pipeline multi-threaded processor

ActiveUS7269712B2Digital computer detailsMultiprogramming arrangementsParallel computingSimultaneous multithreading

A simultaneous multithreading processor determines, for each thread, the processing time occupied by each thread in the processing pipeline of the processor. Based on the determined processing times, a fetch unit in the processing pipeline determines the thread from which to fetch the next instruction.

Owner:SAMSUNG ELECTRONICS CO LTD

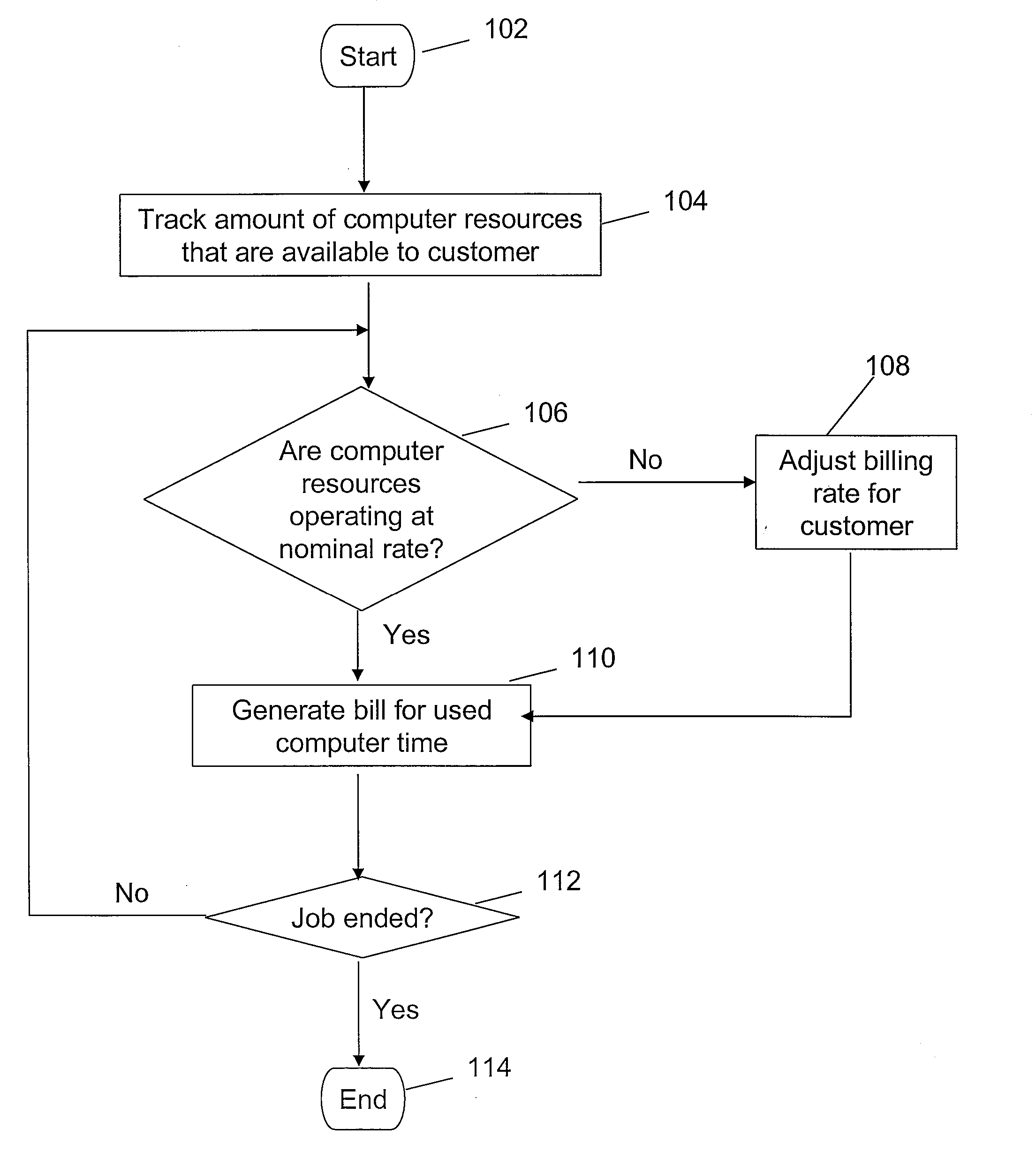

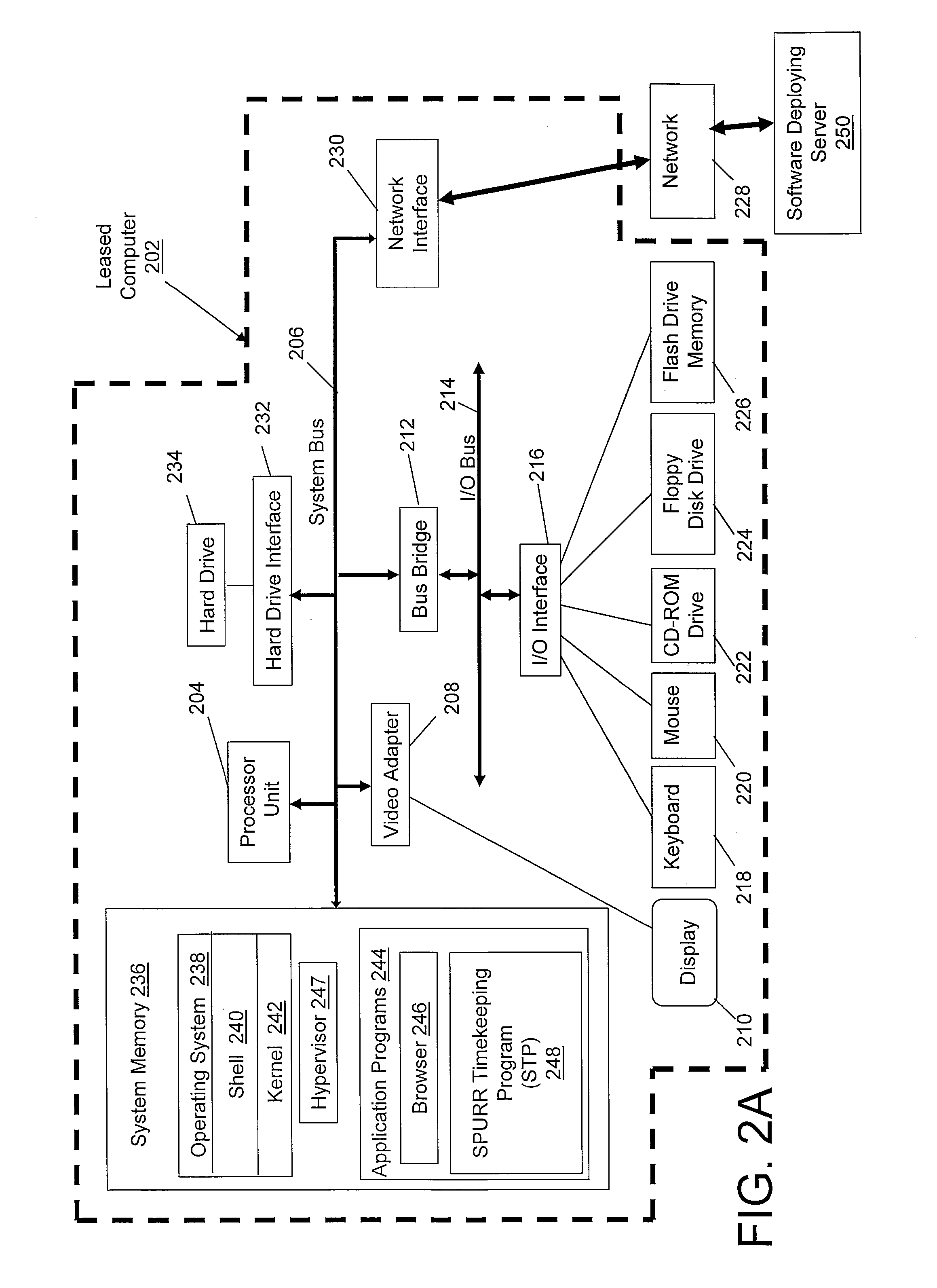

Method and apparatus for frequency independent processor utilization recording register in a simultaneously multi-threaded processor

InactiveUS20080086395A1Accurate chargesComplete banking machinesTelephonic communicationComputer resourcesComputer usage

The present invention thus provides for a method, system, and computer-usable medium that afford an equitably charging of a customer for computer usage time. In a preferred embodiment, the method includes the steps of: tracking an amount of computer resources in a Simultaneous Multithreading (SMT) computer that are available to a customer for a specified period of time; determining if the computer resources in the SMT computer are operating at a nominal rate; and in response to determining that the computer resources are operating at a non-nominal rate, adjusting a billing charge to the customer, wherein the billing charge reflects that the customer has available computer resources, in the SMT computer, that are not operating at the nominal rate during the specified period of time.

Owner:IBM CORP

Method to reduce power consumption of a register file with multi SMT support

InactiveUS8046566B2Reduce in quantityResource allocationDigital computer detailsProcessor registerControl register

A method for reducing the power consumption of a register file of a microprocessor supporting simultaneous multithreading (SMT) is disclosed. Mapping logic and associated table entries monitor a total number of processing threads currently executing in the processor and signal control logic to disable specific register file entries not required for currently executing or pending instruction threads or register file entries not meeting a minimum access threshold using a least recently used algorithm (LRU). The register file utilization is controlled such that a register file address range selected for deactivation is not assigned for pending or future instruction threads. One or more power saving techniques are then applied to disabled register files to reduce overall power dissipation in the system.

Owner:INT BUSINESS MASCH CORP

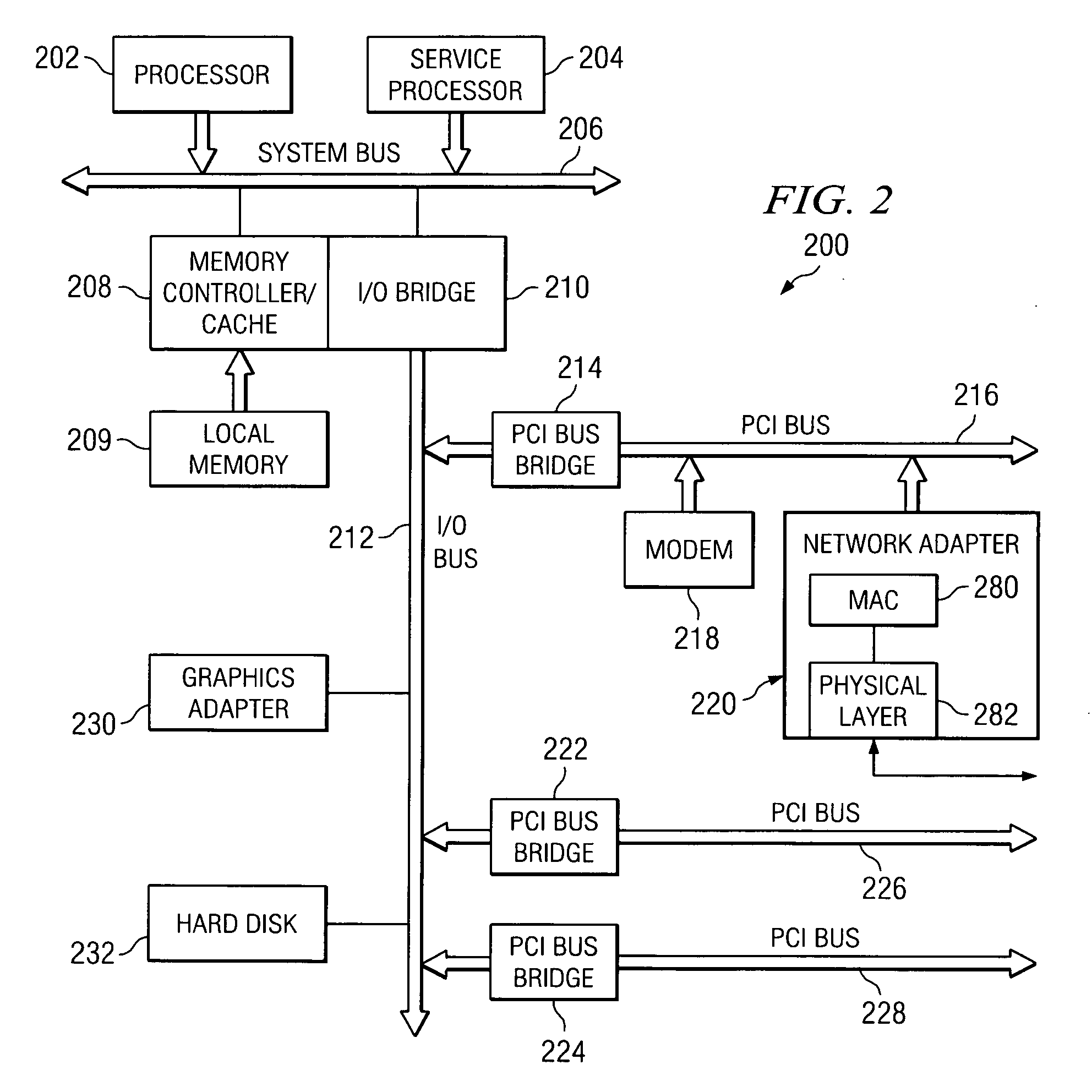

Method and apparatus for sending thread-execution-state-sensitive supervisory commands to a simultaneous multi-threaded (SMT) processor

A method and apparatus for sending thread-execution-state-sensitive supervisory commands to a simultaneous multi-threaded processor provides a mechanism for sending supervisory commands that must be executed for only live threads. The commands may be sent from a service processor or another primary processor in the system or may be supplied by the processor itself through supervisory software control. Since the state of execution of one or more threads may change dynamically within a processor core, an external processor will not know the thread execution state at the time the command operates. The method and apparatus provide a command set and logic that supports selective execution of particular commands directed at "alive" threads (or threads in some other determinable execution state), whereby the command is performed only on resources and / or execution units depending on the actual state of thread execution when the command operates within the processor. The command set and logic further support commands targeting one or all executing threads for commands that are not sensitive to thread execution state.

Owner:META PLATFORMS INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com