Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

150 results about "Reservation station" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Unified Reservation station, also known as unified scheduler, is a decentralized feature of the microarchitecture of a CPU that allows for register renaming, and is used by the Tomasulo algorithm for dynamic instruction scheduling.

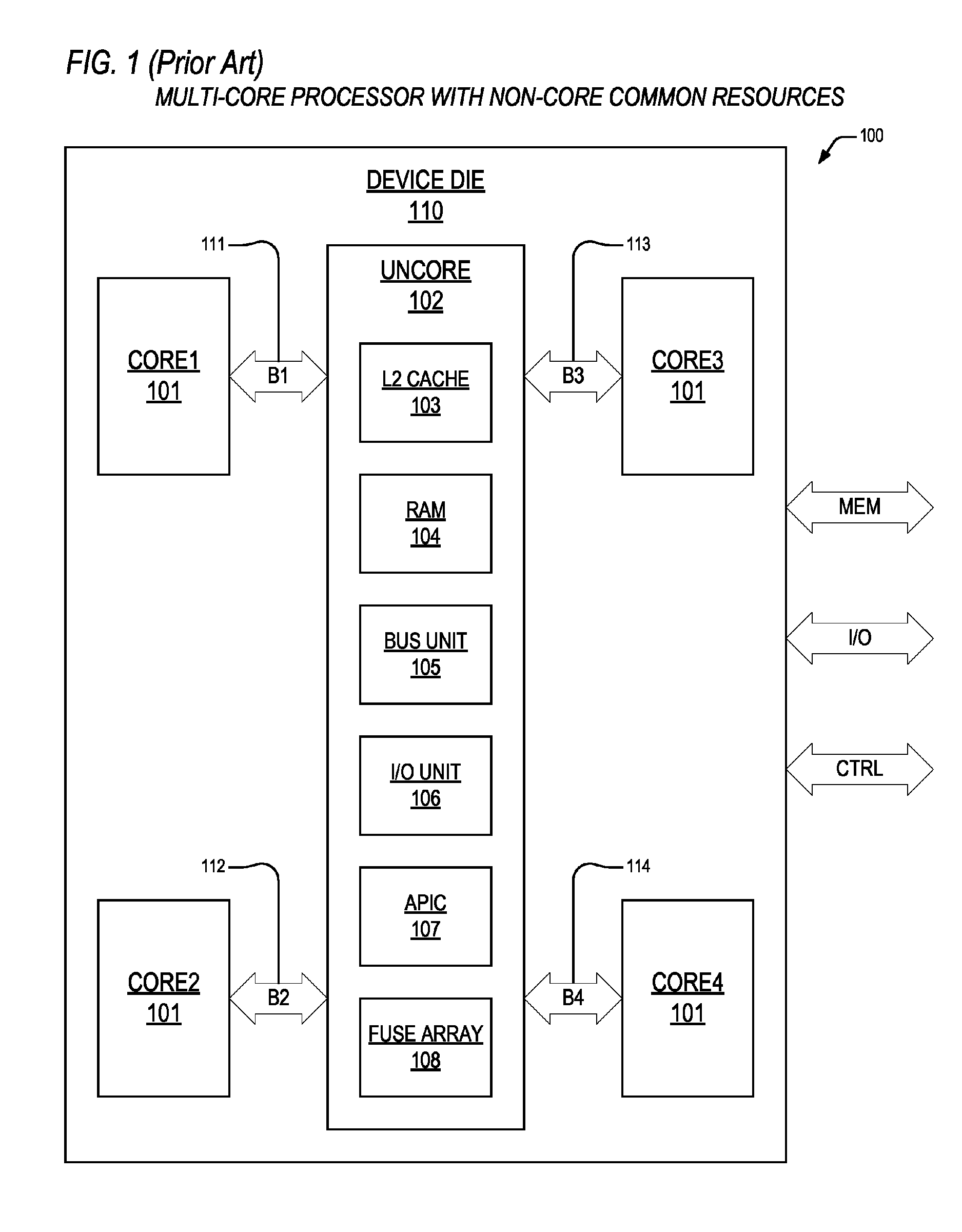

Voltage droop mitigation through instruction issue throttling

ActiveUS20090300329A1Reduce voltage dropVolume/mass flow measurementDigital computer detailsCapacitanceHash function

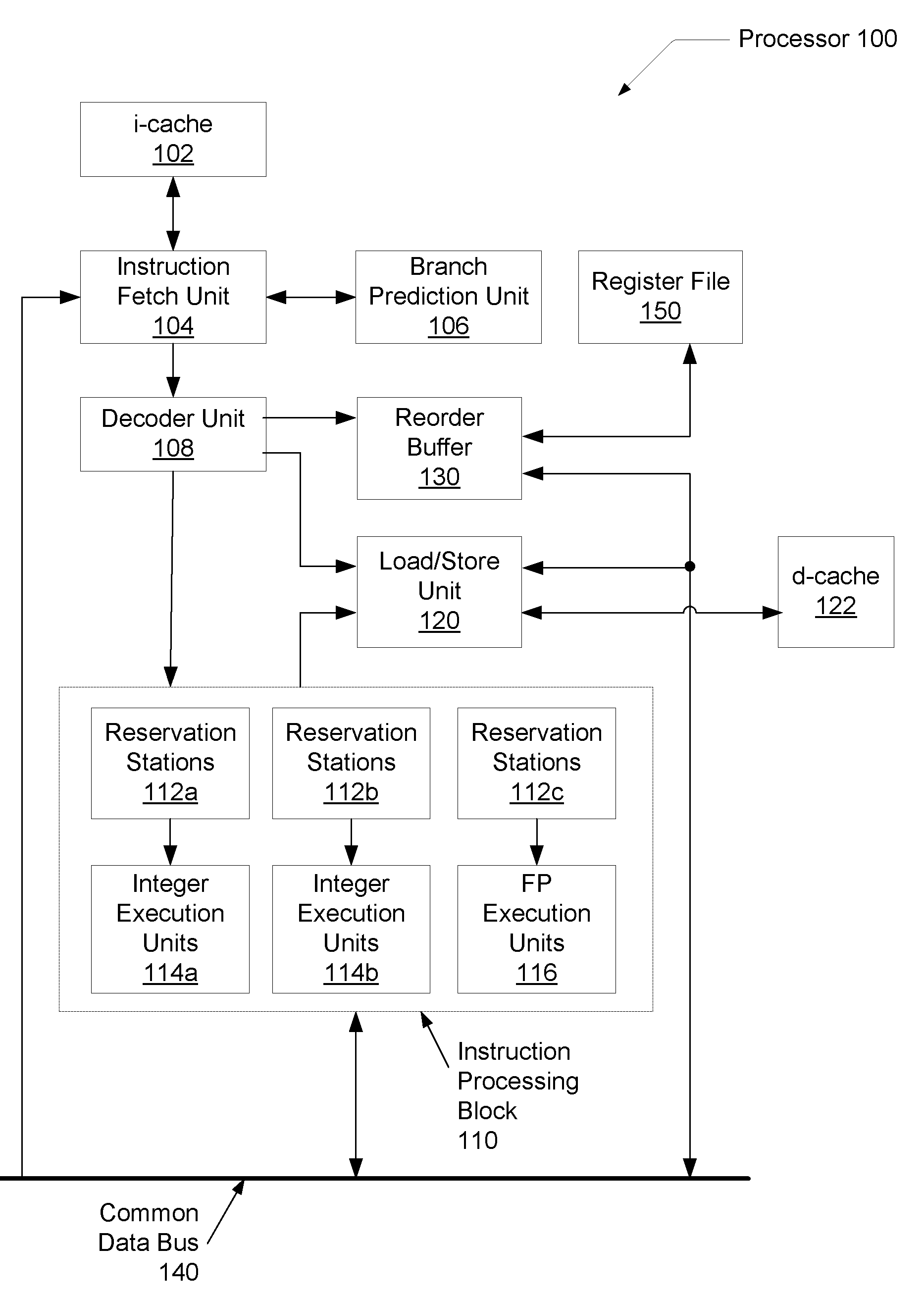

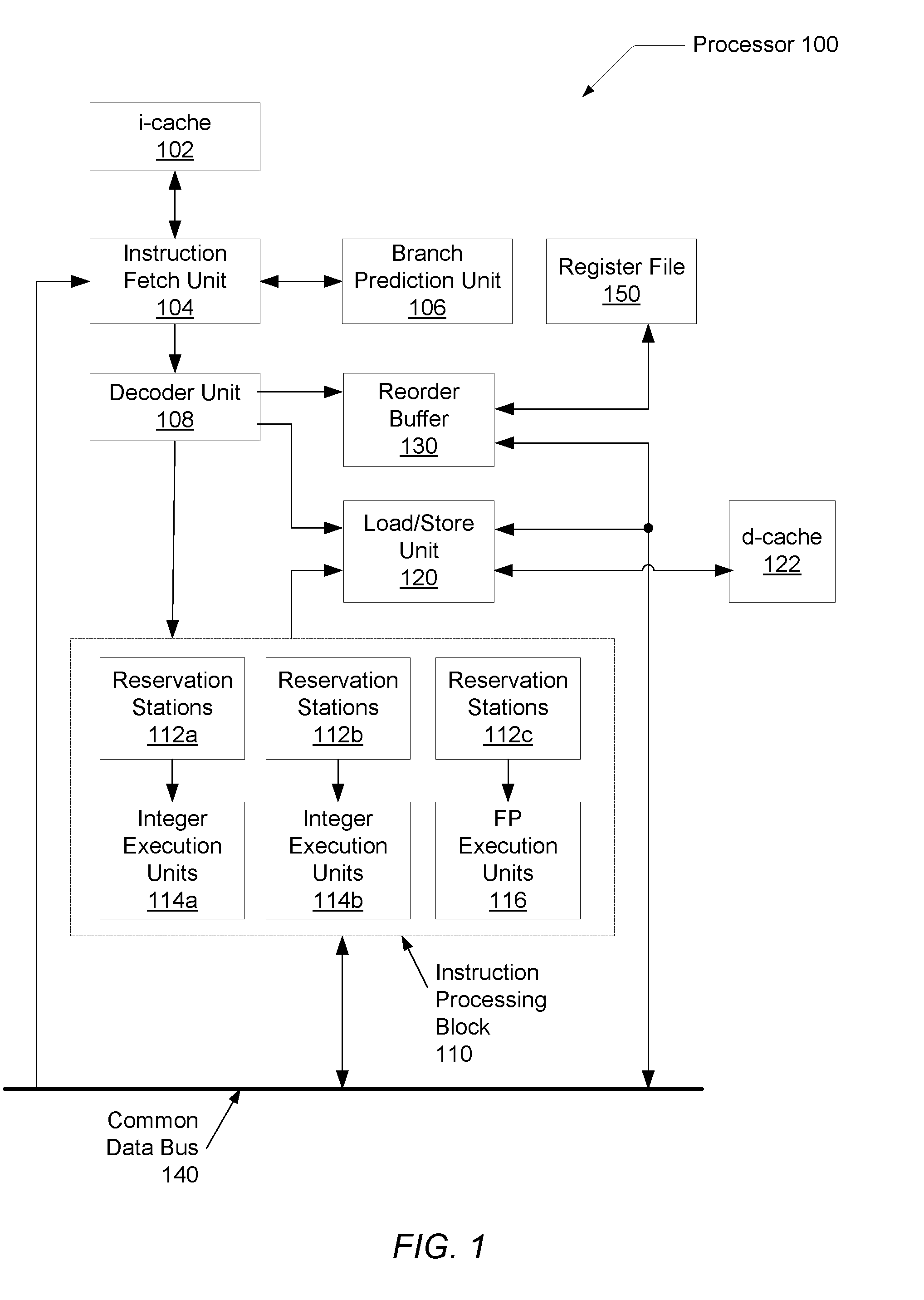

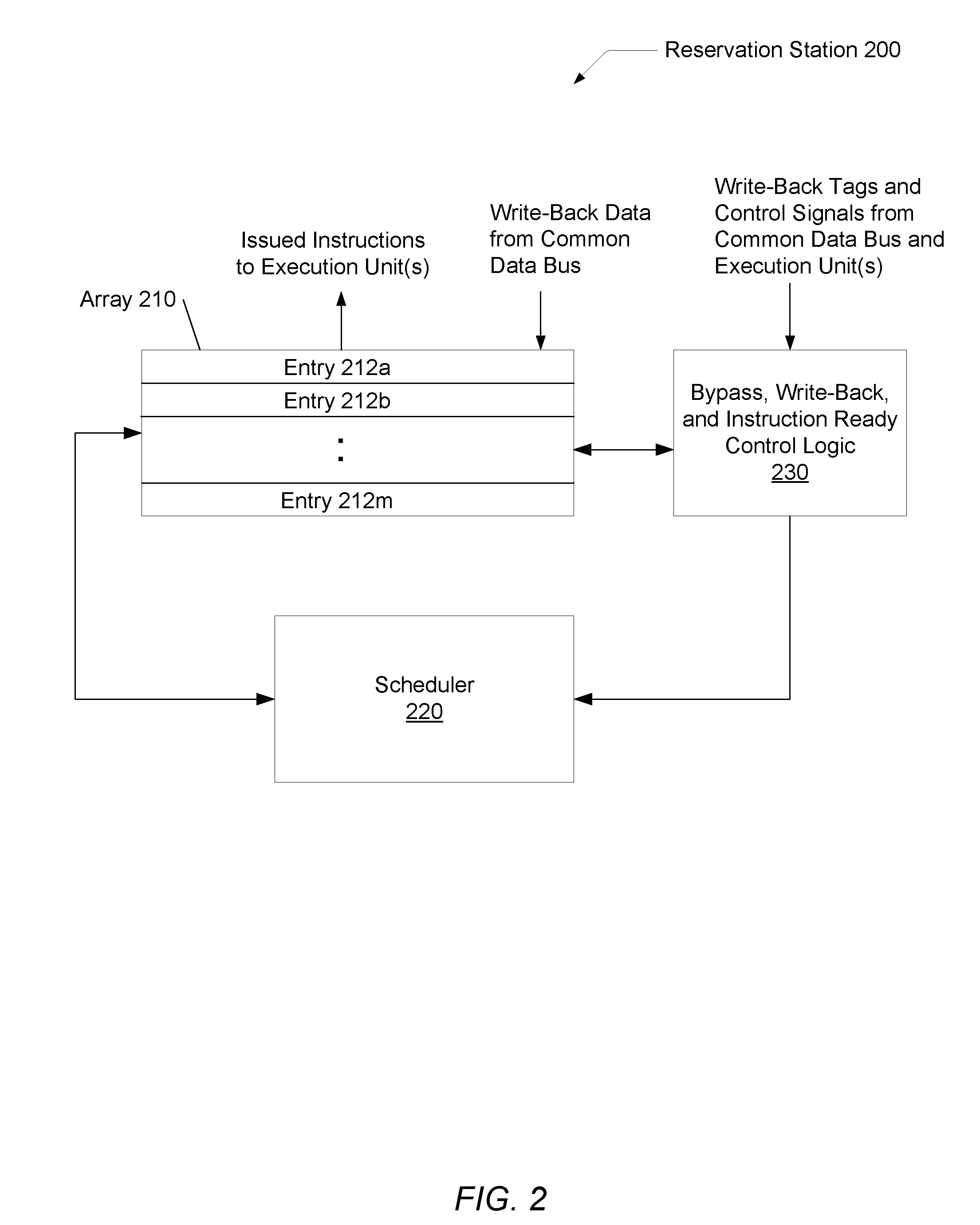

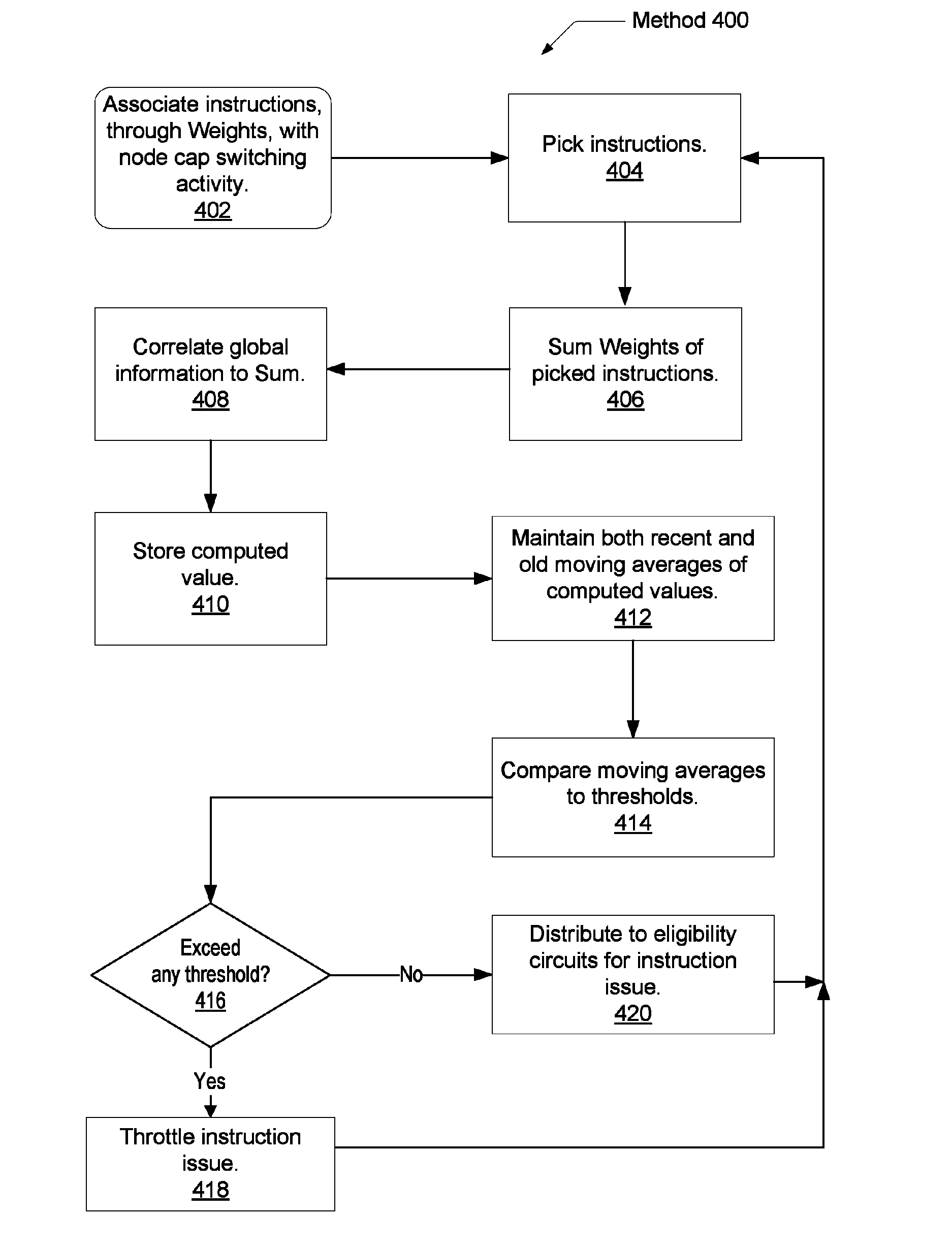

A system and method for providing a digital real-time voltage droop detection and subsequent voltage droop reduction. A scheduler within a reservation station may store a weight value for each instruction corresponding to node capacitance switching activity for the instruction derived from pre-silicon power modeling analysis. For instructions picked with available source data, the corresponding weight values are summed together to produce a local current consumption value and this value is summed with any existing global current consumption values from corresponding schedulers of other processor cores yielding an activity event. The activity event is stored. Hashing functions within the scheduler are used to determine both a recent and an old activity average using the calculated activity event and stored older activity events. Instruction issue throttling occurs if either a difference between the old activity average and the recent activity average exceed a first threshold or the recent activity average exceeds a second threshold.

Owner:ADVANCED MICRO DEVICES INC

Voltage droop mitigation through instruction issue throttling

A system and method for providing a digital real-time voltage droop detection and subsequent voltage droop reduction. A scheduler within a reservation station may store a weight value for each instruction corresponding to node capacitance switching activity for the instruction derived from pre-silicon power modeling analysis. For instructions picked with available source data, the corresponding weight values are summed together to produce a local current consumption value and this value is summed with any existing global current consumption values from corresponding schedulers of other processor cores yielding an activity event. The activity event is stored. Hashing functions within the scheduler are used to determine both a recent and an old activity average using the calculated activity event and stored older activity events. Instruction issue throttling occurs if either a difference between the old activity average and the recent activity average exceed a first threshold or the recent activity average exceeds a second threshold.

Owner:ADVANCED MICRO DEVICES INC

Data address prediction structure and a method for operating the same

InactiveUS6604190B1Digital computer detailsConcurrent instruction executionReservation stationImplicit memory

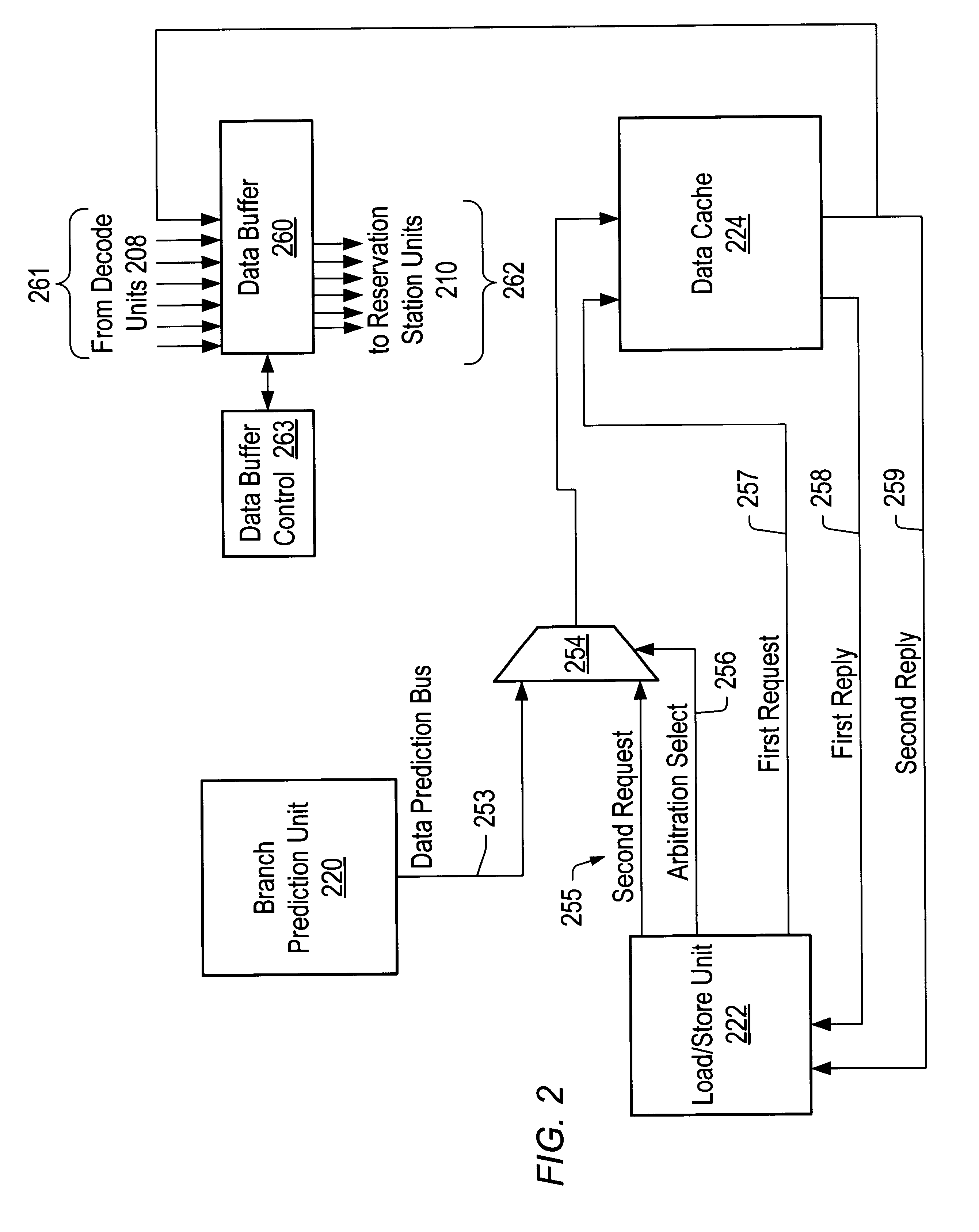

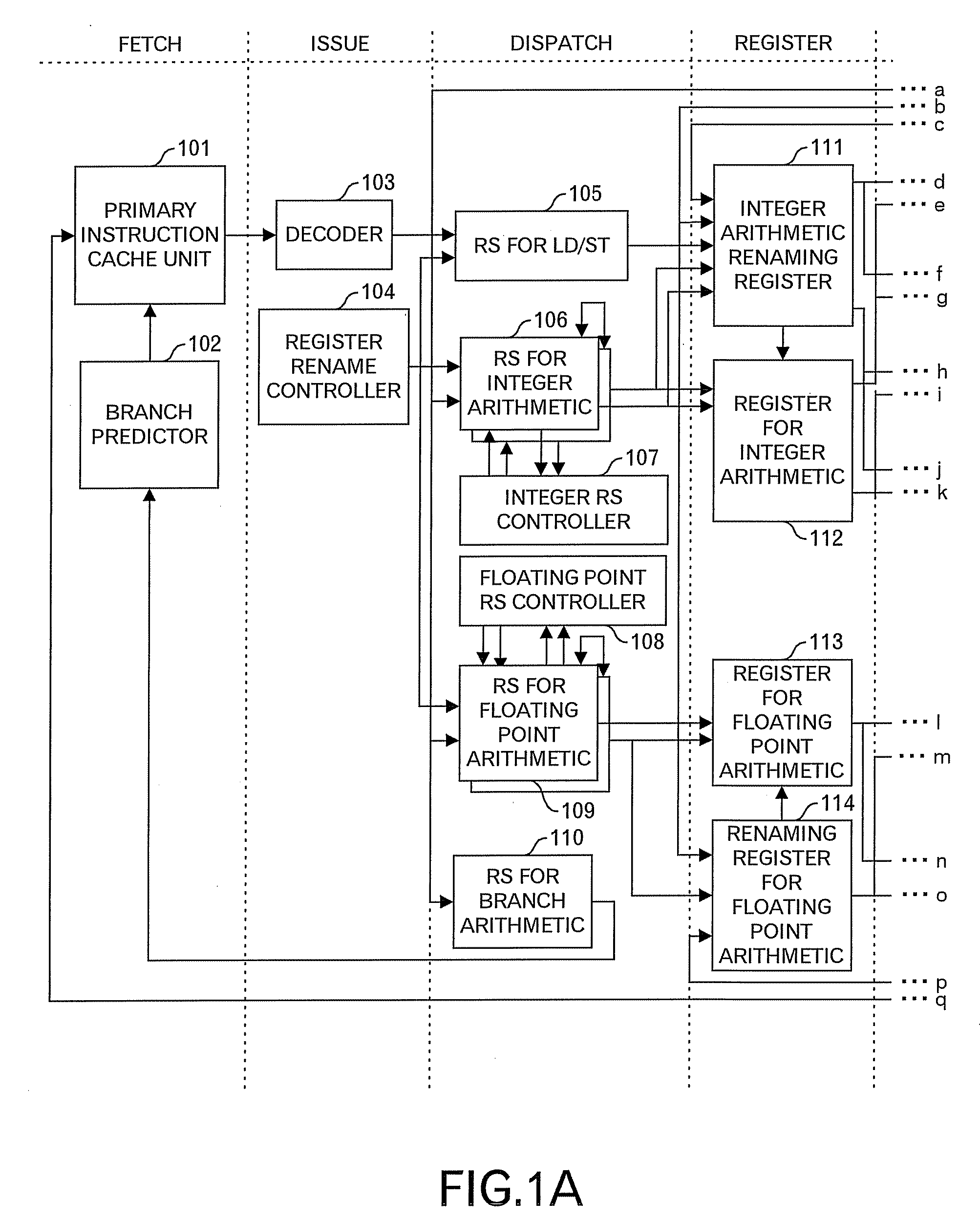

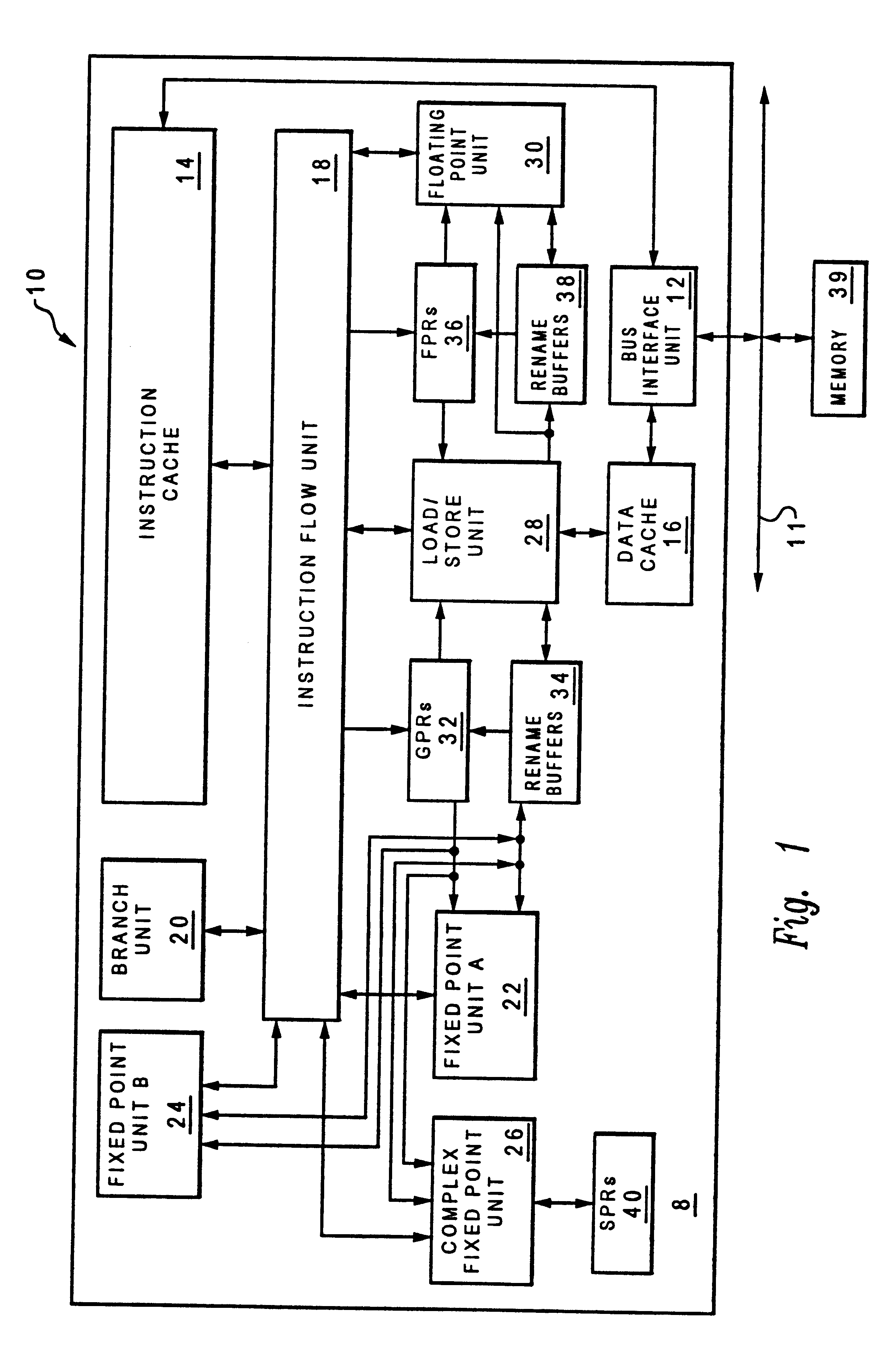

A data address prediction structure for a superscalar microprocessor is provided. The data address prediction structure predicts a data address that a group of instructions is going to access while that group of instructions is being fetched from the instruction cache. The data bytes associated with the predicted address are placed in a relatively small, fast buffer. The decode stages of instruction processing pipelines in the microprocessor access the buffer with addresses generated from the instructions, and if the associated data bytes are found in the buffer they are conveyed to the reservation station associated with the requesting decode stage. Therefore, the implicit memory read associated with an instruction is performed prior to the instruction arriving in a functional unit. The functional unit is occupied by the instruction for a fewer number of clock cycles, since it need not perform the implicit memory operation. Instead, the functional unit performs the explicit operation indicated by the instruction.

Owner:GLOBALFOUNDRIES US INC

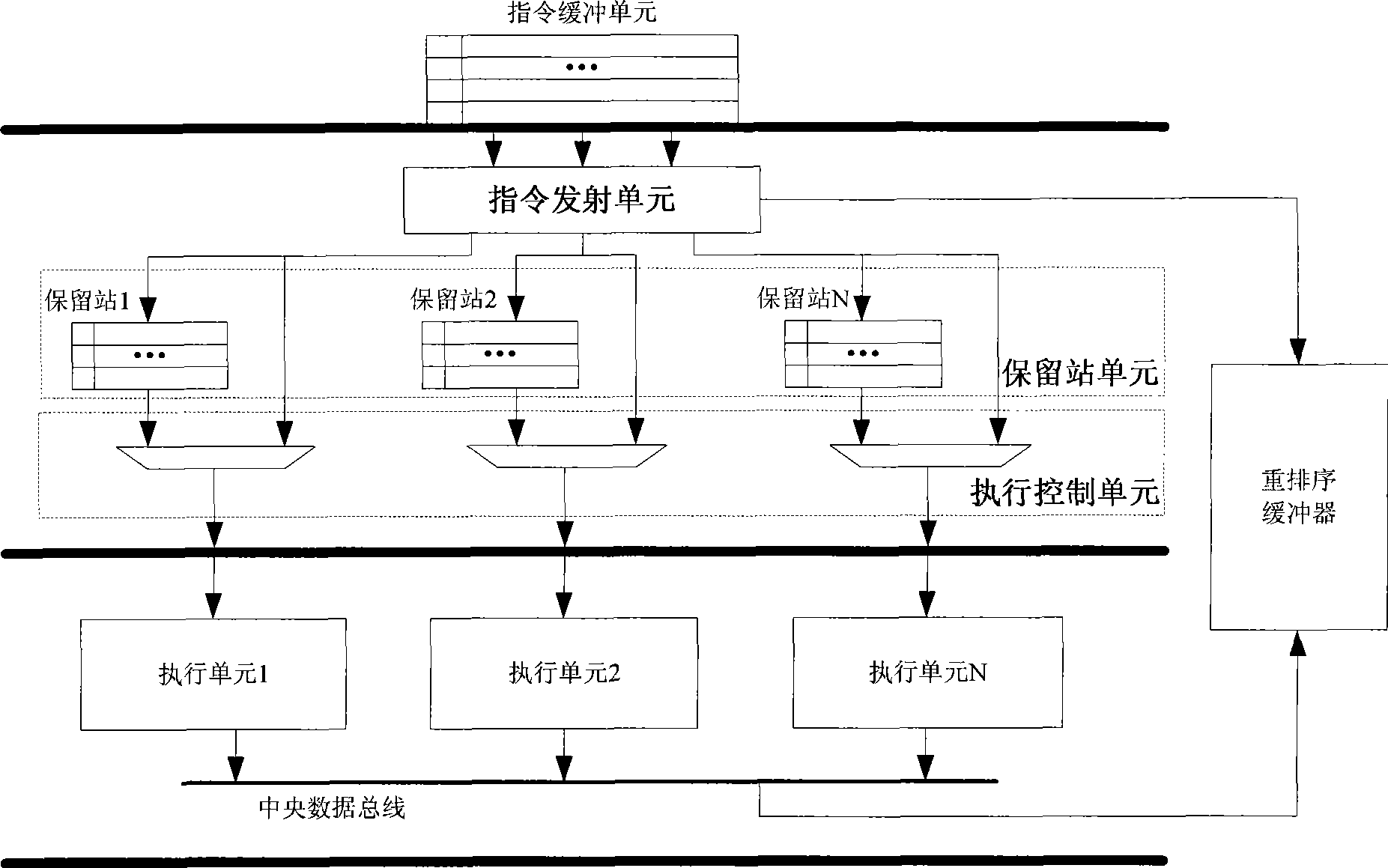

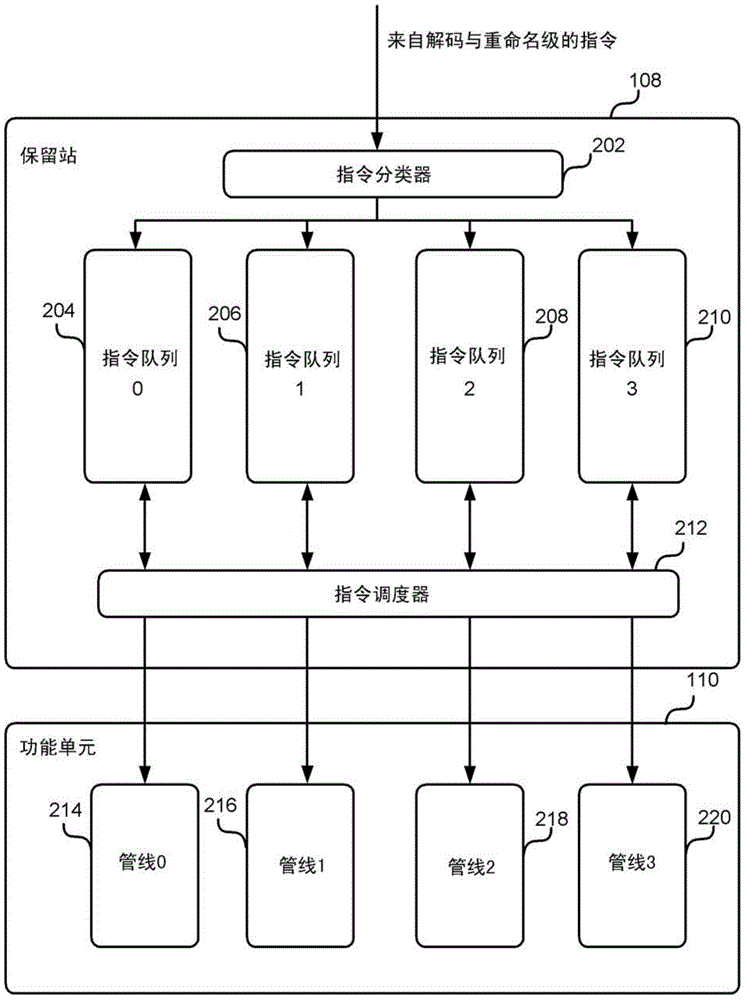

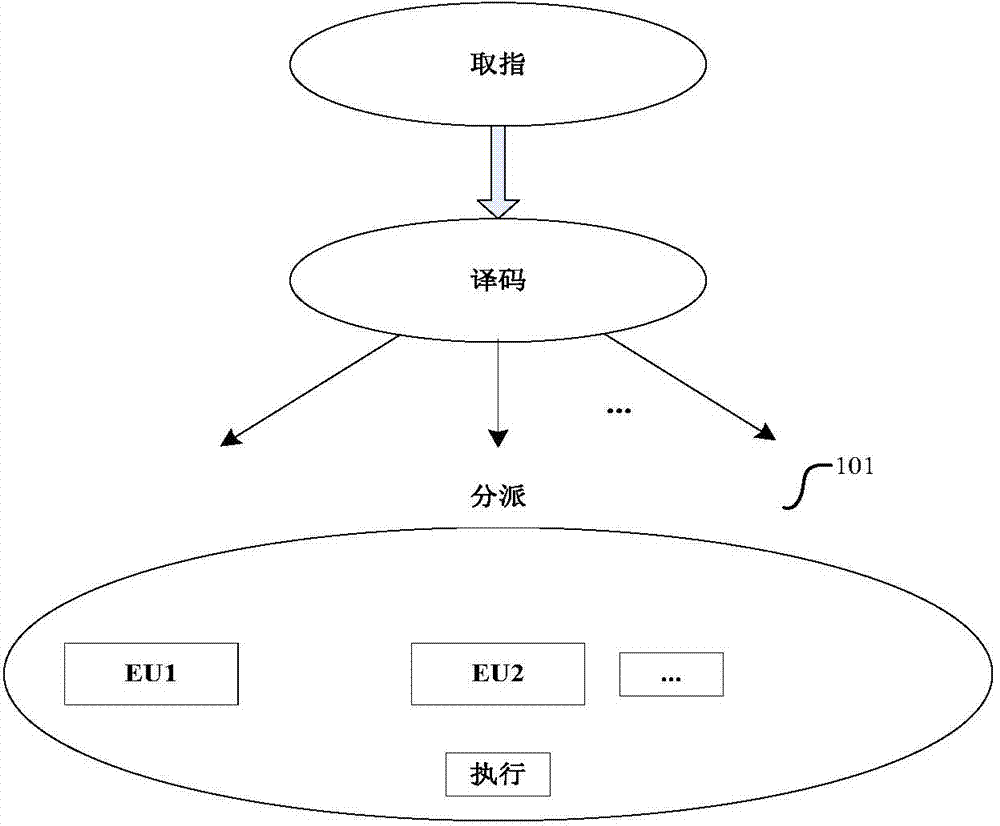

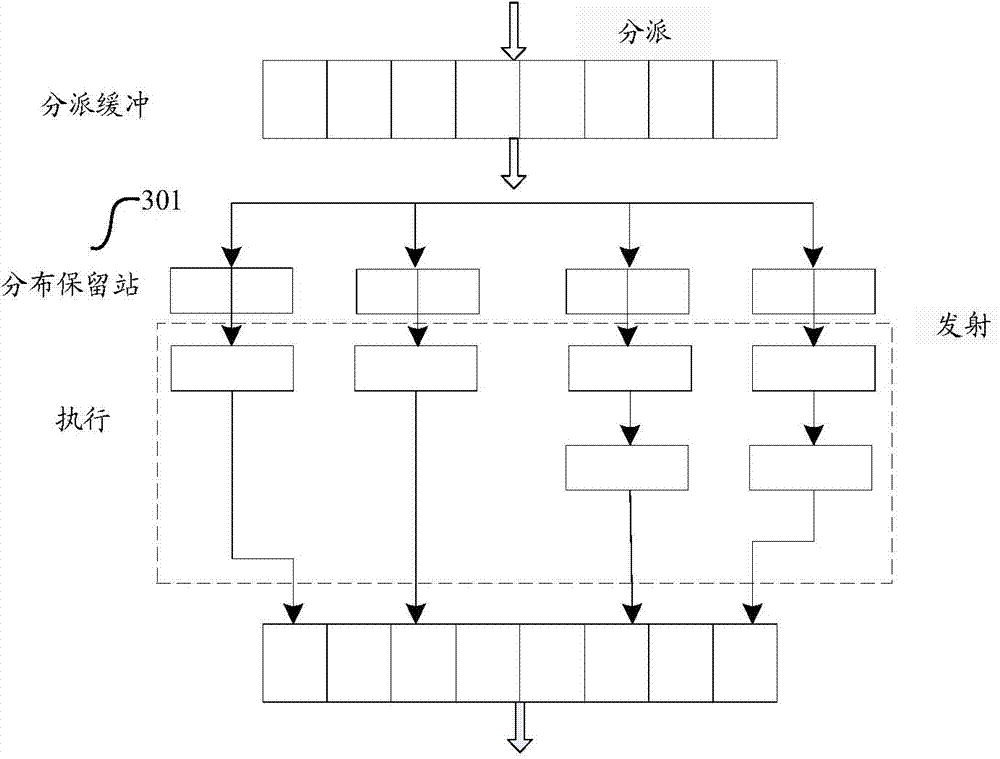

Device and method for instruction scheduling

ActiveCN101710272AImprove efficiencyImprove performanceConcurrent instruction executionReservation stationScheduling instructions

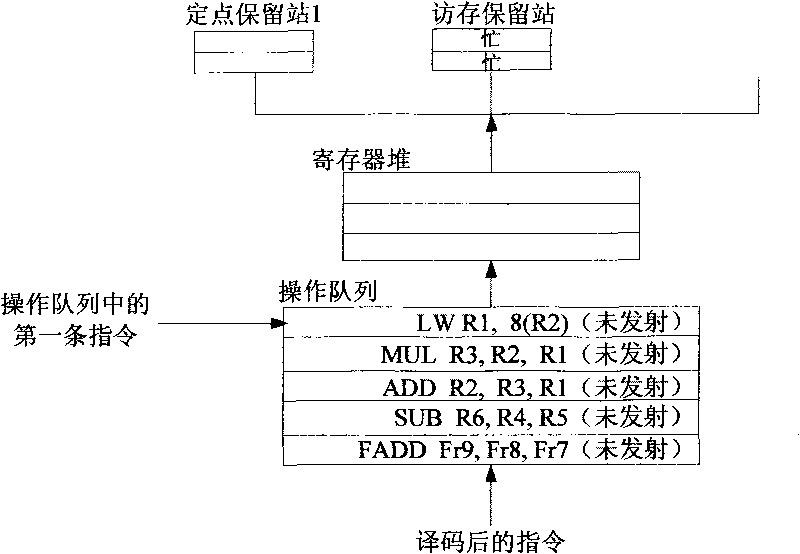

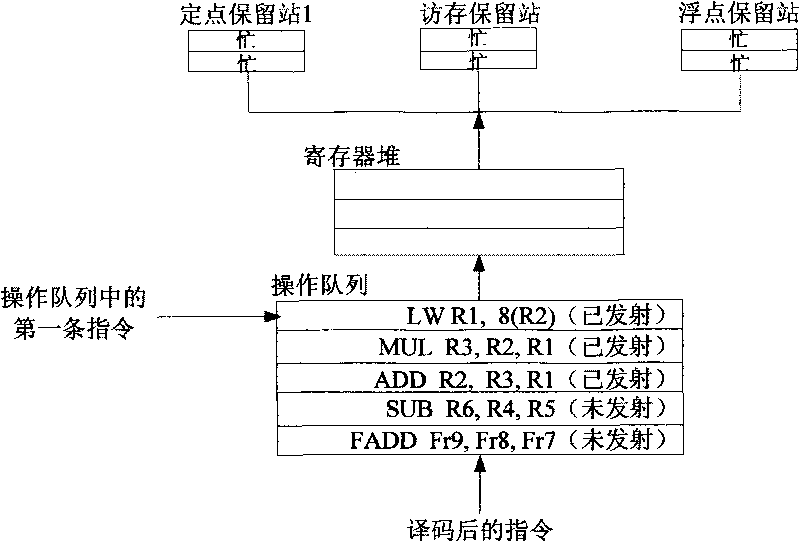

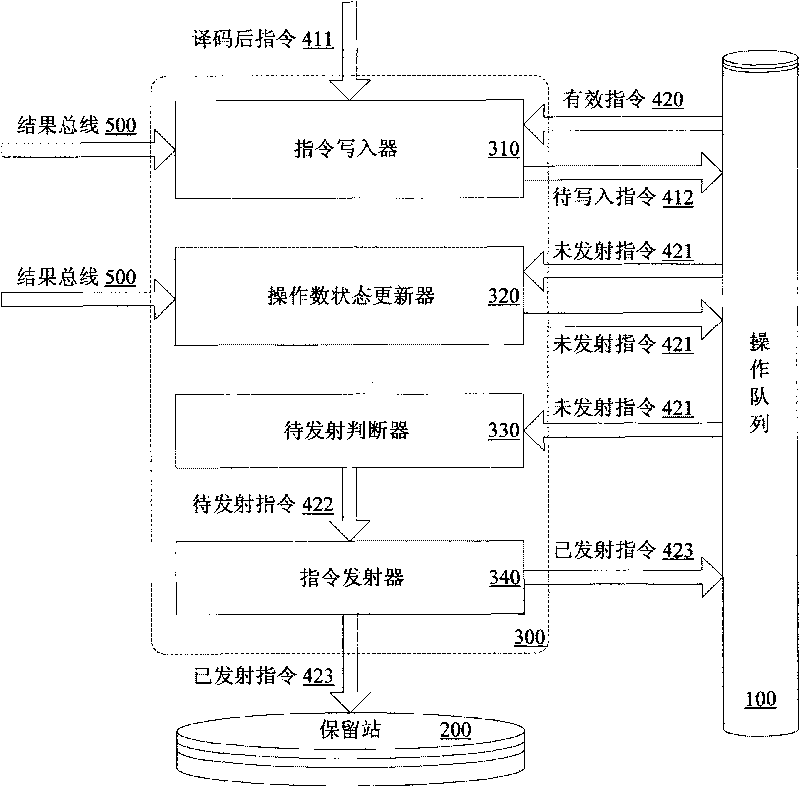

The invention provides a device and a method for dynamically scheduling instructions transmitted from an operation queue to a reservation station in a microprocessor. The method comprises the following: a step of writing instructions, which is to set and then write the operand states of the decoded instructions on the basis of data correlation between the decoded instructions to be written into the operation queue and effective instructions in the operation queue, as well as instruction execution results which have been written back and are being written; a step of updating the operand states, which is to update the operand state of each instruction not transmitted on the basis of the data correlation between each instruction not transmitted and the instructions being written back of instruction execution results; a step of judging to-be-transmitted instructions, which is to judge whether the to-be-transmitted instructions with all operands ready exist on the basis of the operand state of each instruction not transmitted; and a step of transmitting instructions, which is to transmit the judged to-be-transmitted instructions to the reservation station when the reservation station has vacancies. Pipeline efficiency can be effectively improved by transmitting the instructions with the operands ready to the reservation station on the basis of the data correlation between the instructions.

Owner:LOONGSON TECH CORP

Out-of-order execution control device of built-in processor

InactiveCN101477454AImprove performanceSimplify Bypass LogicConcurrent instruction executionReservation stationProcessor register

The invention relates to a device of an embedded processor, which can control the out-of-order execution. The device comprises a transmit unit, a reservation station register unit and an execution control unit. The transmit unit is used for storing a decoded instruction on a pipeline register, and sending an instruction in a single-clock-cycle manner; the reservation station register unit is used for temporarily storing an instruction for generating for generating a pause when the sent instruction generates a pause because of the related conflict of write / read data, and conducting the bypass monitoring on operands; the execution control unit is used for monitoring the working condition of each execution unit in a real time manner, and dynamically distributing the instruction in the reservation station register unit or the current transmitted instruction according to the information returned by each execution unit. The invention has the advantages of simple design, easy realization and remarkable promotion of the performance of the embedded processor.

Owner:ZHEJIANG UNIV

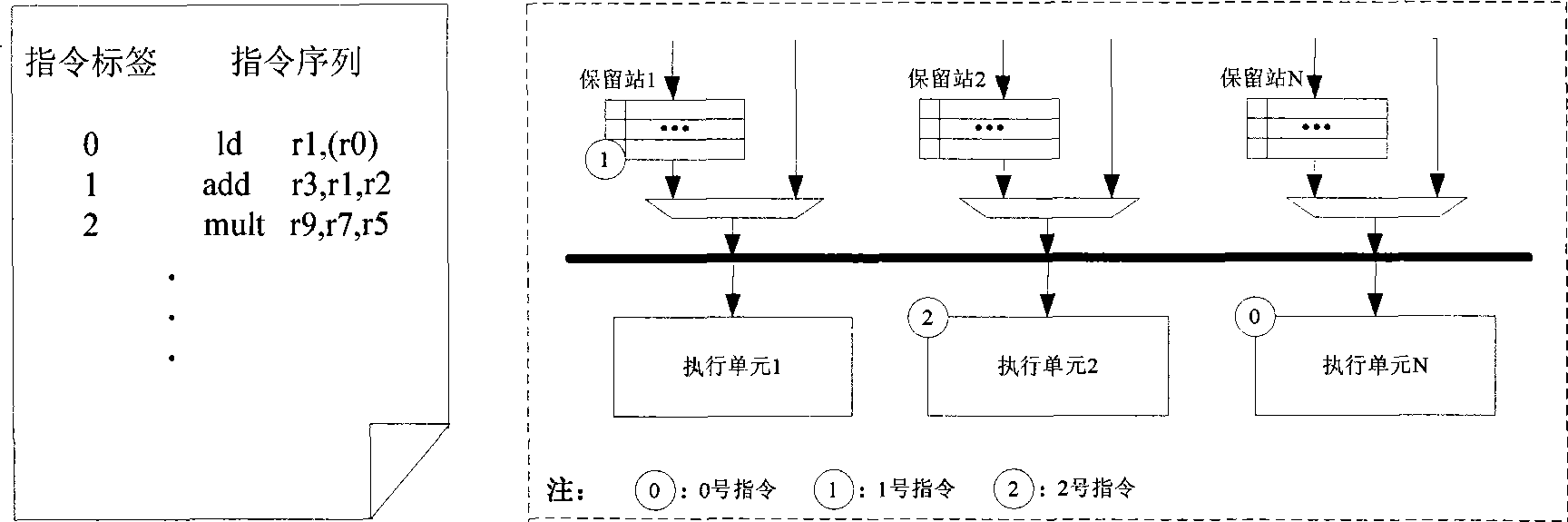

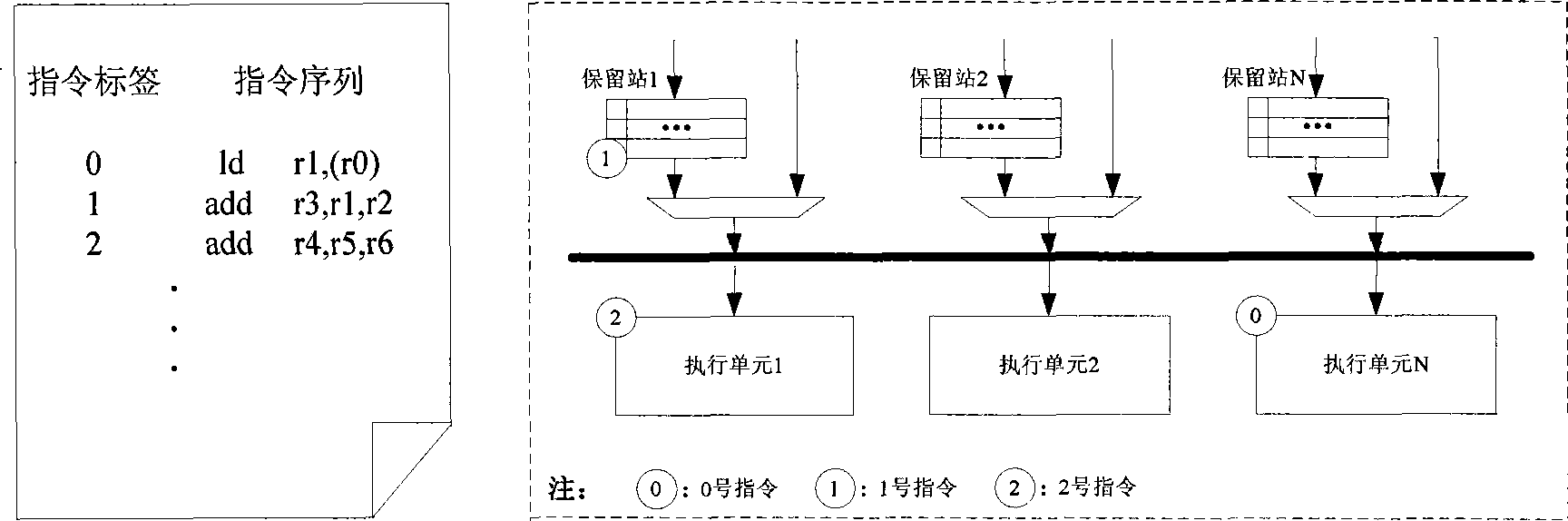

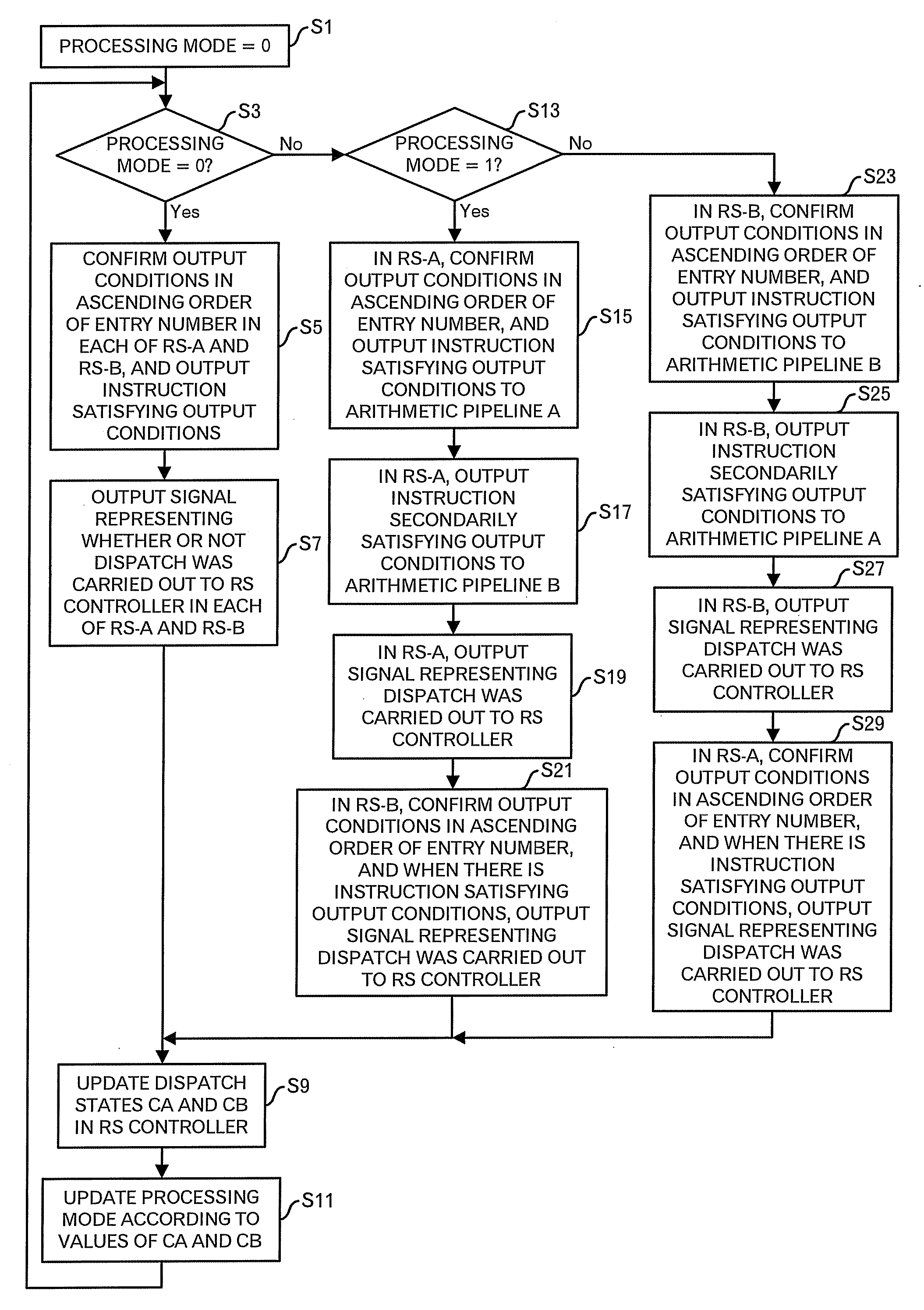

Processor device

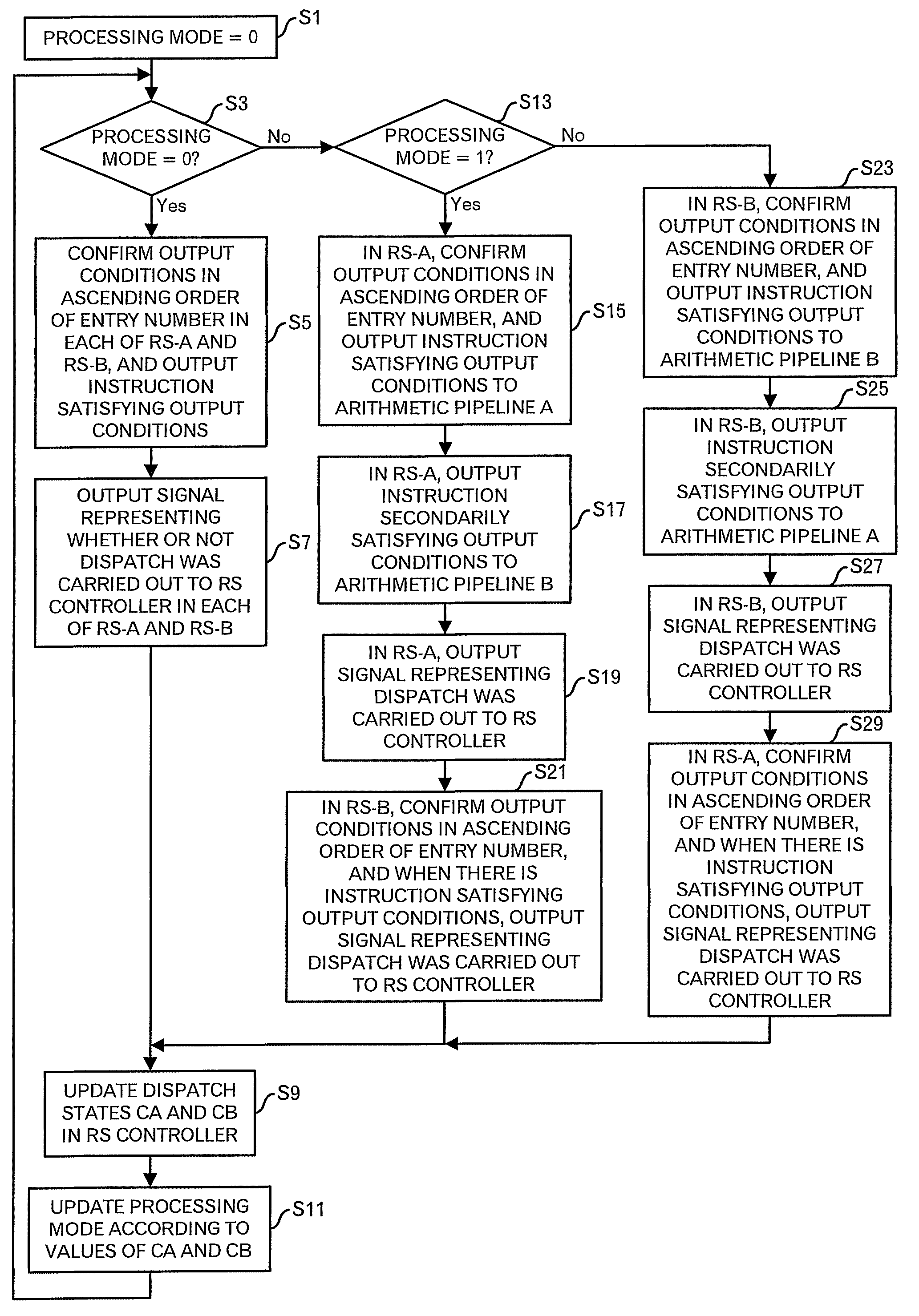

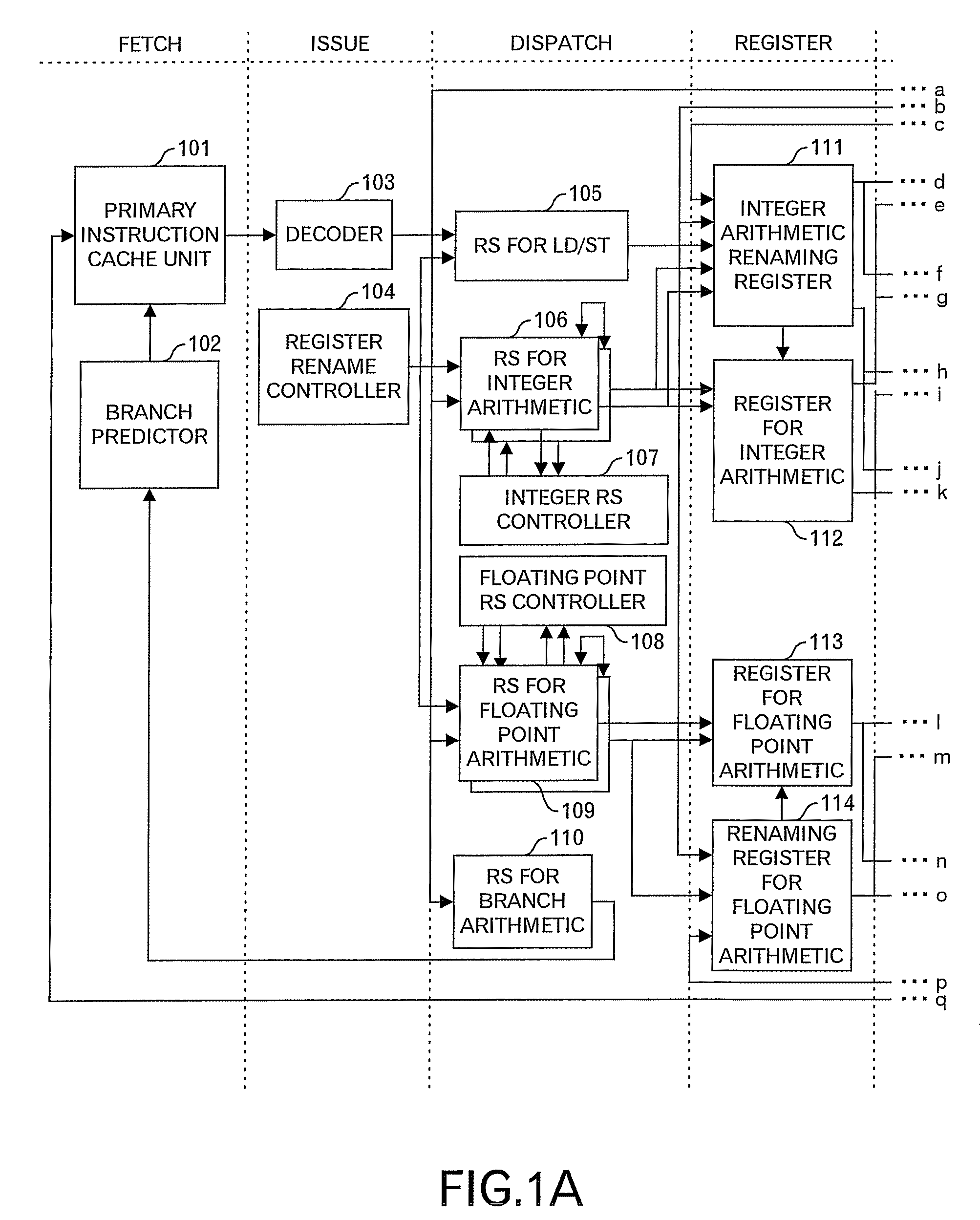

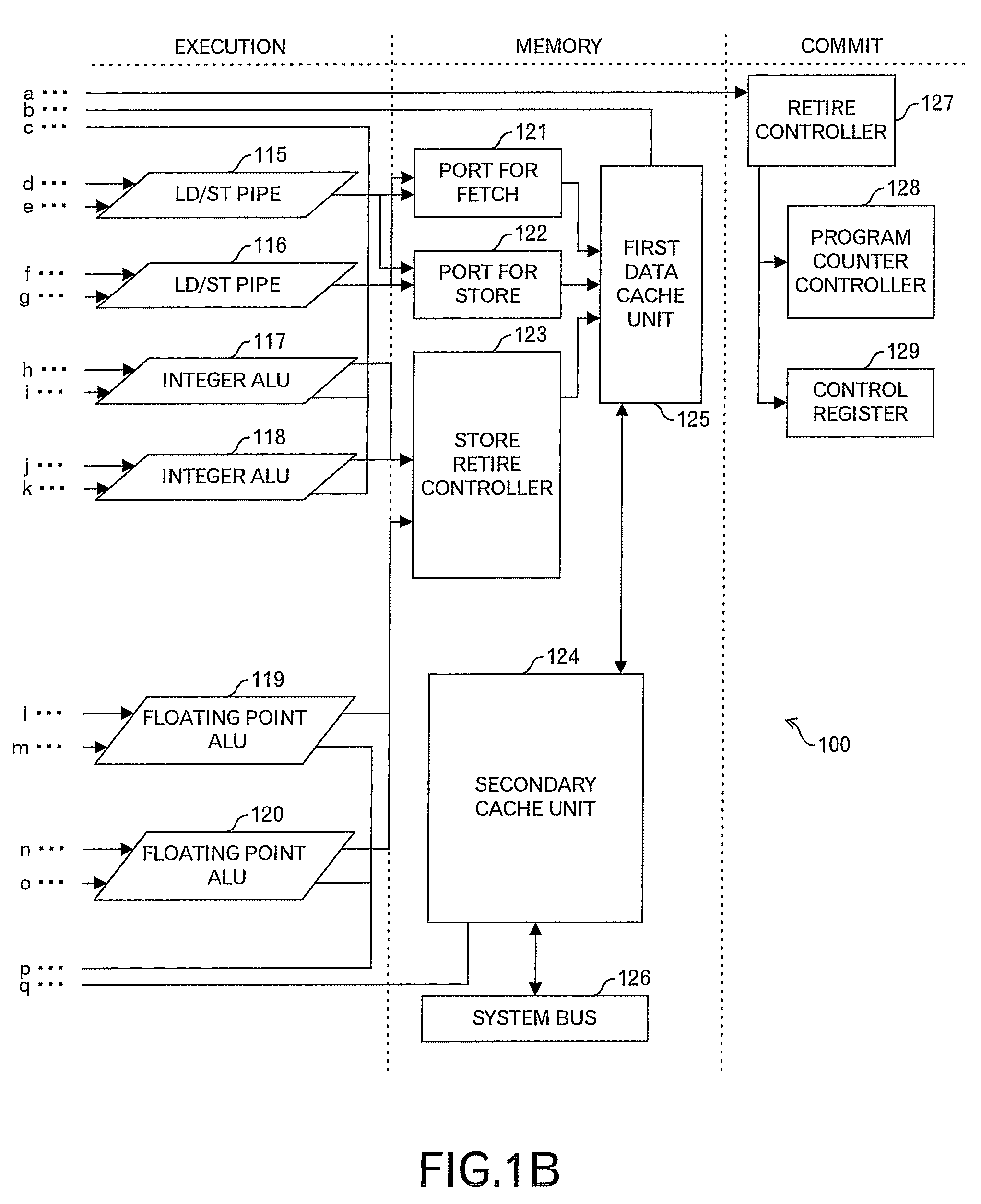

InactiveUS20080040589A1Efficient use ofIncreased complexityDigital computer detailsSpecific program execution arrangementsReservation station

A processor device having a reservation station (RS) is concerned. In case the processor device has plural RS, the RS is associated with an arithmetic pipeline, and two RS make a pair. When one RS of the pair cannot dispatch an instruction to an associated arithmetic pipeline, the other RS dispatches the instruction to that arithmetic pipeline, or delivers its held instruction to the one RS. In case one RS is equipped, plural entries in the RS are divided into groups, and by dynamically changing this grouping according to the dispatch frequency of the instruction to the arithmetic pipelines or the held state of the instructions, the arithmetic pipelines are efficiently utilized. Incidentally, depending on the grouping of the plural entries in the RS, a configuration as if the plural RS were allocated to each arithmetic pipeline may be realized.

Owner:FUJITSU LTD

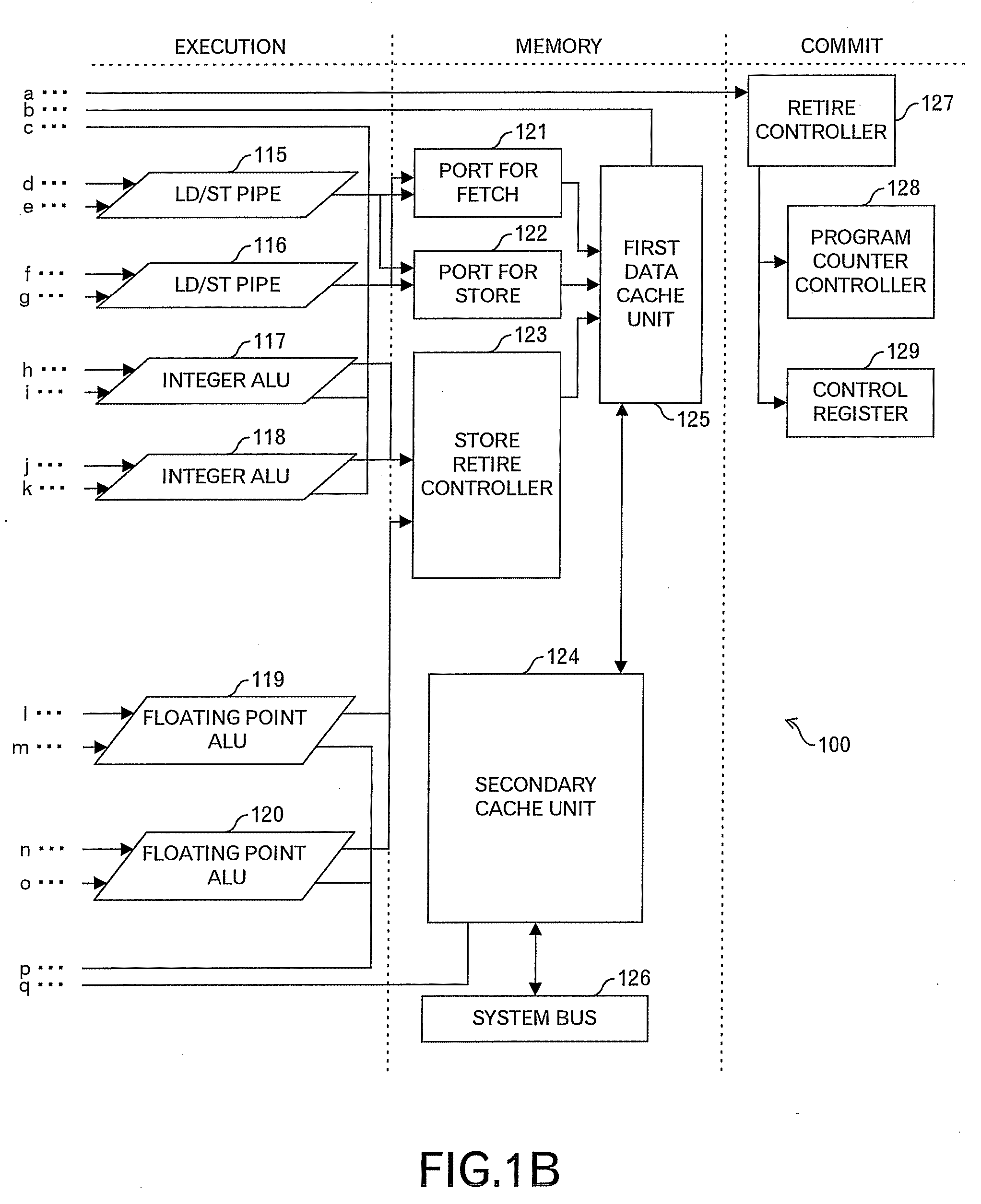

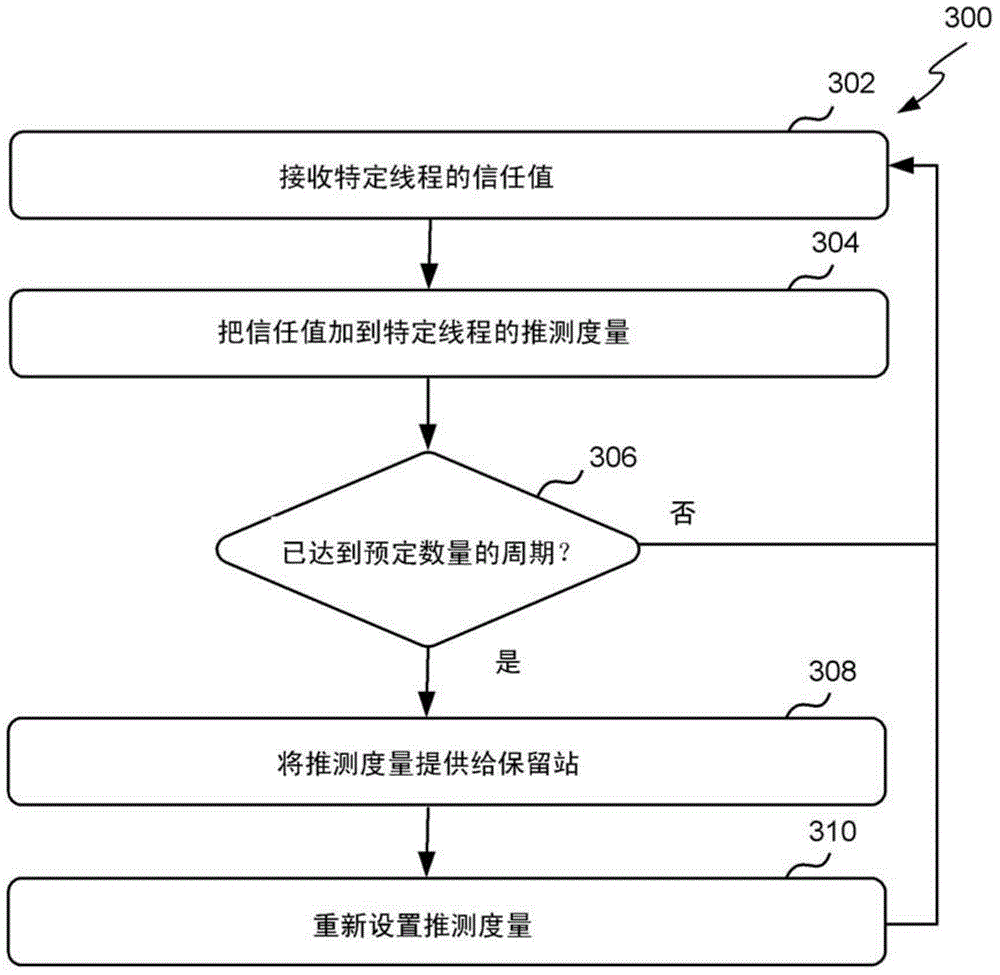

Allocating threads to resources using speculation metrics

ActiveCN103942033AAnalogue/digital conversionResource allocationReservation stationResource allocation

The invention describes allocating threads to resources using speculation metrics. A method, a reservation station and a processor for allocating resources to a plurality of threads based on the extent to which the instructions associated with each of the threads are speculative. The method comprises receiving a speculation metric for each thread at the reservation station. Each speculation metric represents the extent to which the instructions associated with a particular thread are speculative. The more speculative an instruction, the more likely the instruction has been incorrectly predicted by a branch predictor. The reservation station allocates functional unit resources (e.g. pipelines) to the threads based on the speculation metrics, and selects a number of instructions from one or more of the threads based on the allocation. The selected instructions are then issued to the functional unit resources.

Owner:MIPS TECH INC

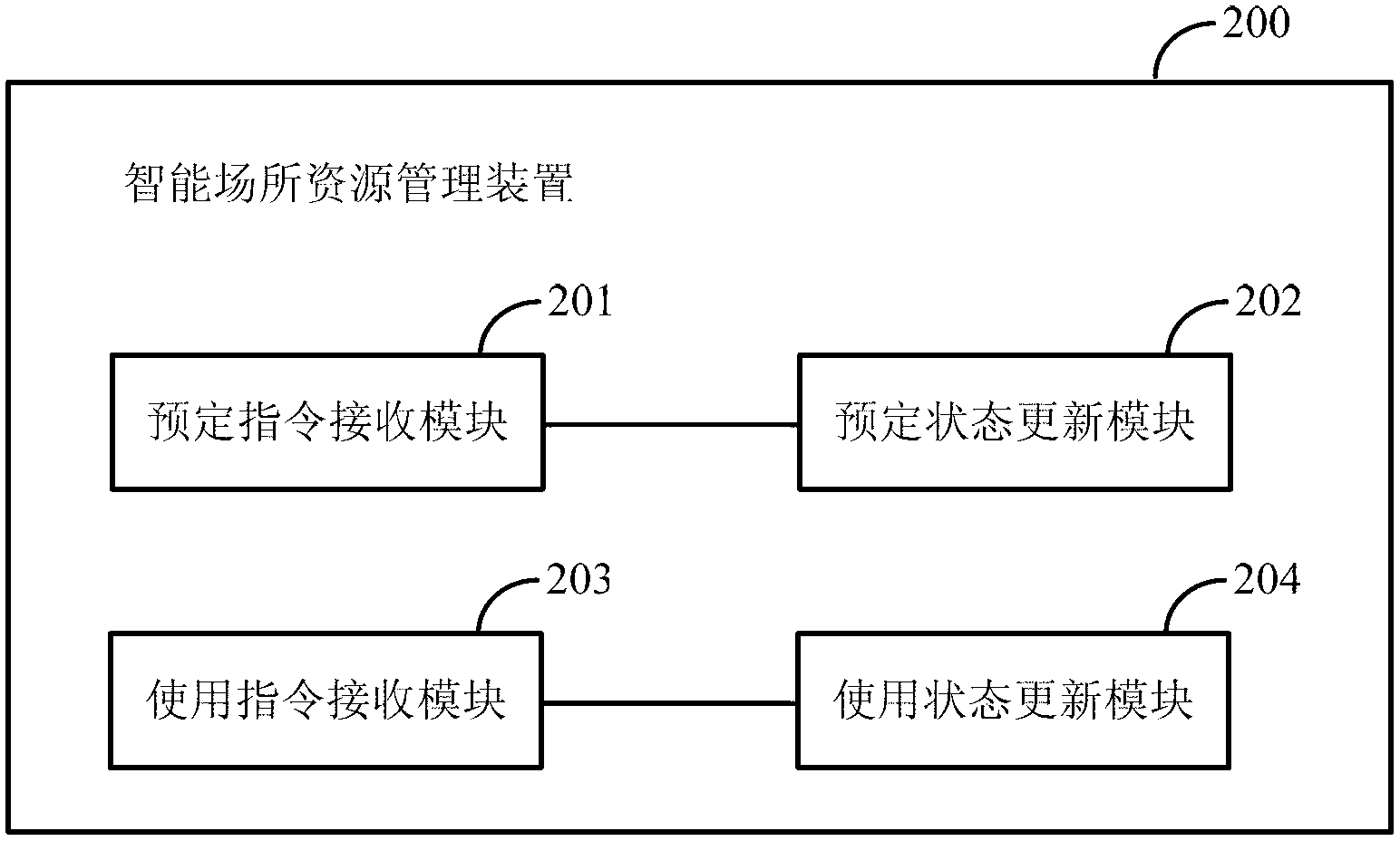

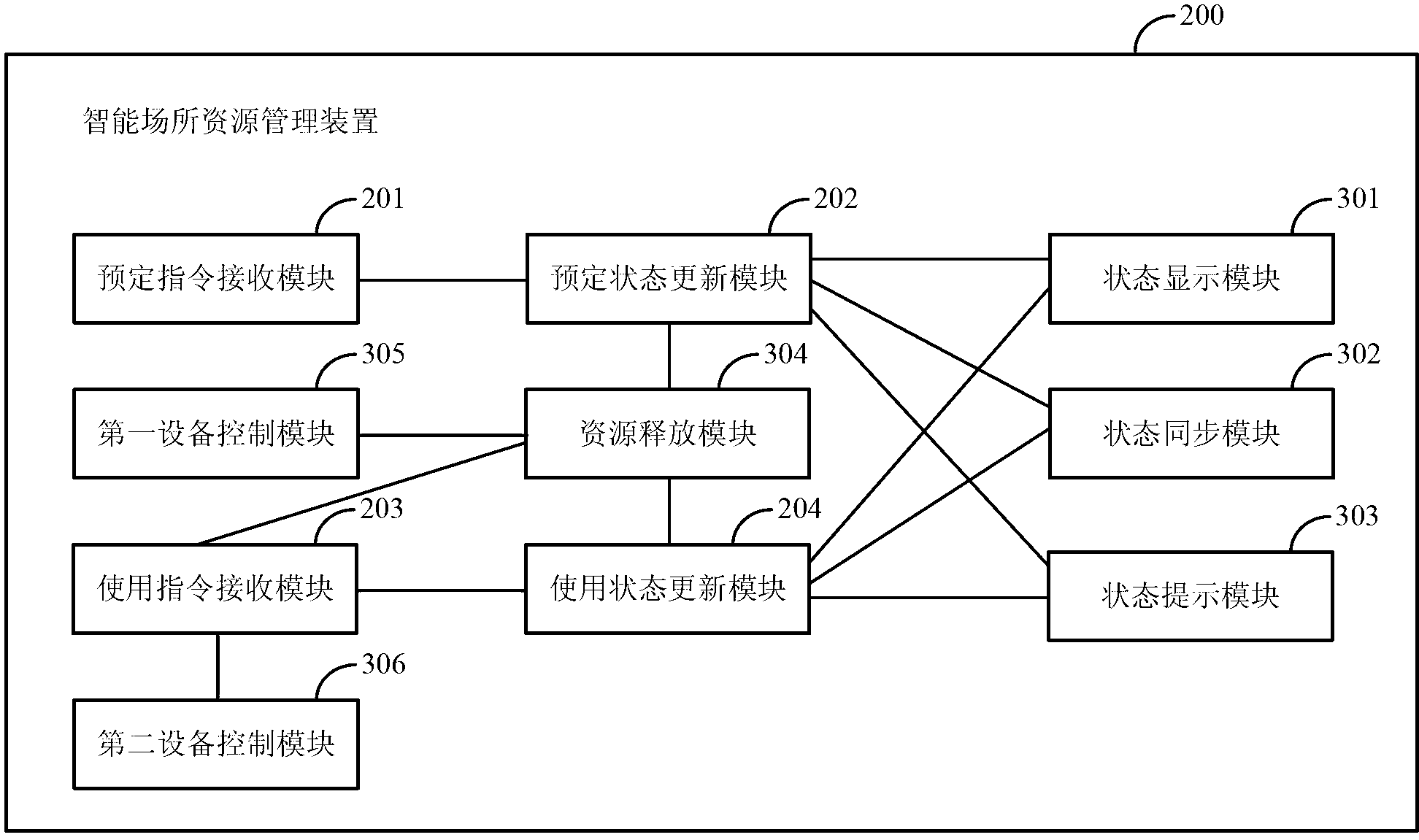

Intelligent place resource managing method and device

The invention discloses an intelligent place resource managing method and device. The method comprises receiving place resource reserving or reservation canceling operation orders submitted by a user, updating place resource reservation states according to the place resource reserving or reservation canceling operation orders, receiving sign-in or sign-off operation orders submitted by the user in a place resource site, and updating place resource using states according to the sign-in or sign-off operation orders. The device comprises a reservation order receiving module, a reservation station updating module, a using order receiving module and a using state updating module, wherein the reservation order receiving module is used for receiving the place resource reserving or reservation canceling operation orders, the reservation station updating module is used for updating the place resource reservation states according to the place resource reserving or reservation canceling operation orders, the using order receiving module is used for receiving the sign-in or sign-off operation orders submitted by the user in the place resource site, and the using state updating module is used for updating the place resource using states according to the sign-in or sign-off operation orders. The intelligent place resource managing method and device can accurately obtain reservation and using situations of place resources in real time.

Owner:北京诺亚星云科技有限责任公司

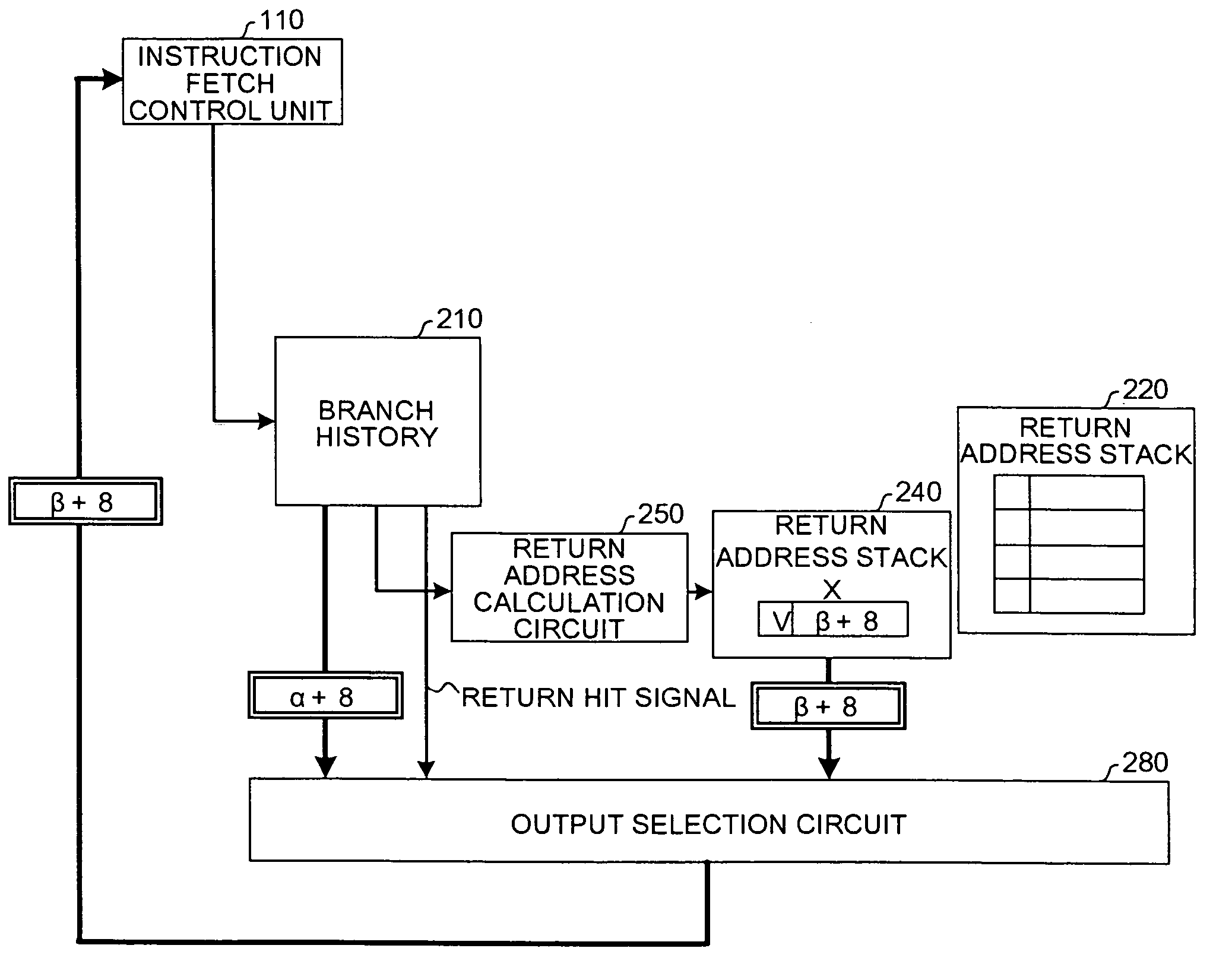

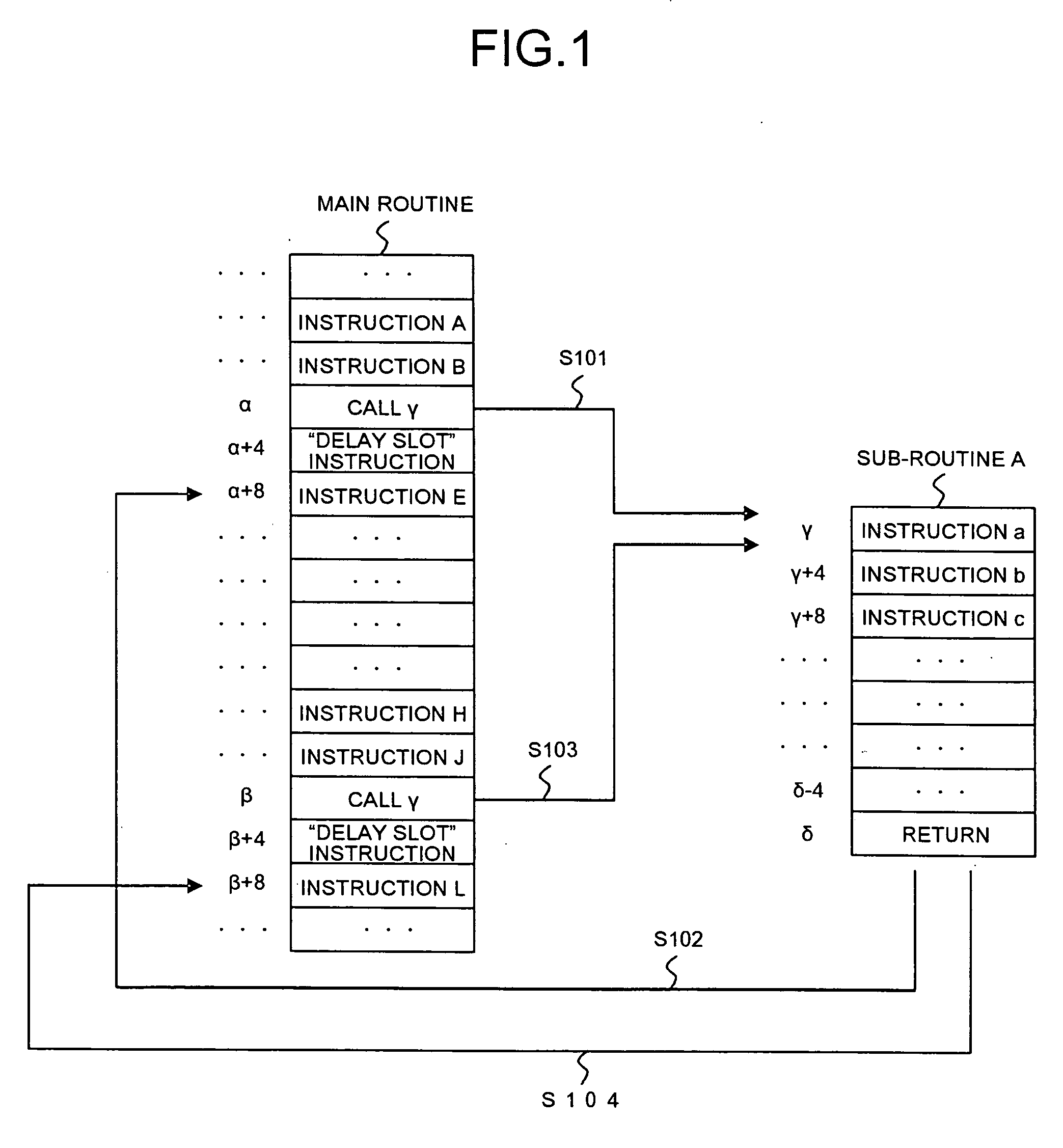

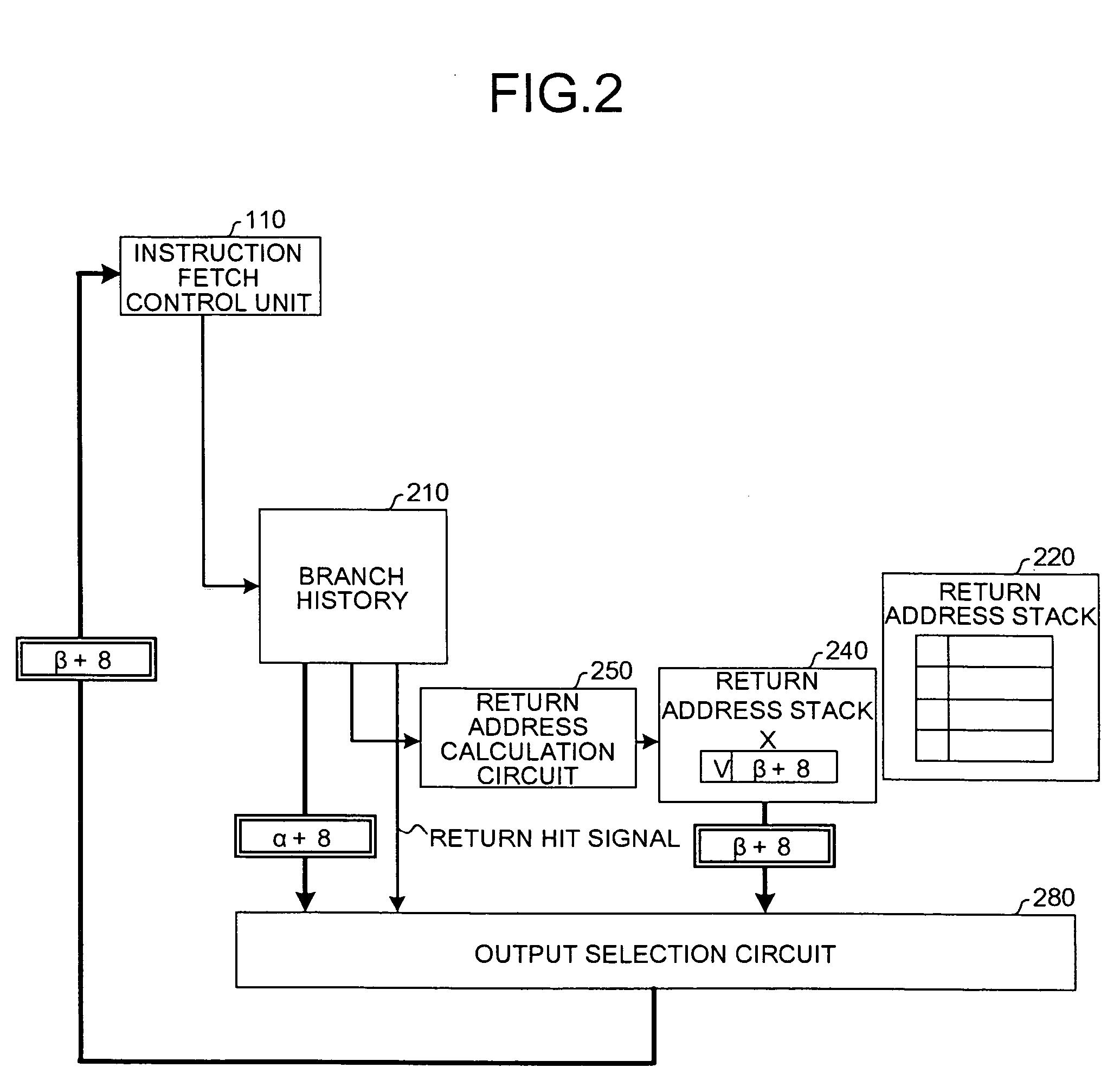

Branch predicting apparatus and branch predicting method

InactiveUS20060026410A1Digital computer detailsSpecific program execution arrangementsReservation stationReturn address stack

A return address in response to a return instruction corresponding to a call instruction is stored in a return address stack when a branch history detects presence of the call instruction. When the branch history detects the presence of the return instruction before a branch reservation station completes executing the call instruction, the return address in response to the return instruction is not stored in the return address stack. If so, an output selection circuit predicts a correct return target using information stored in the return address stack.

Owner:FUJITSU LTD

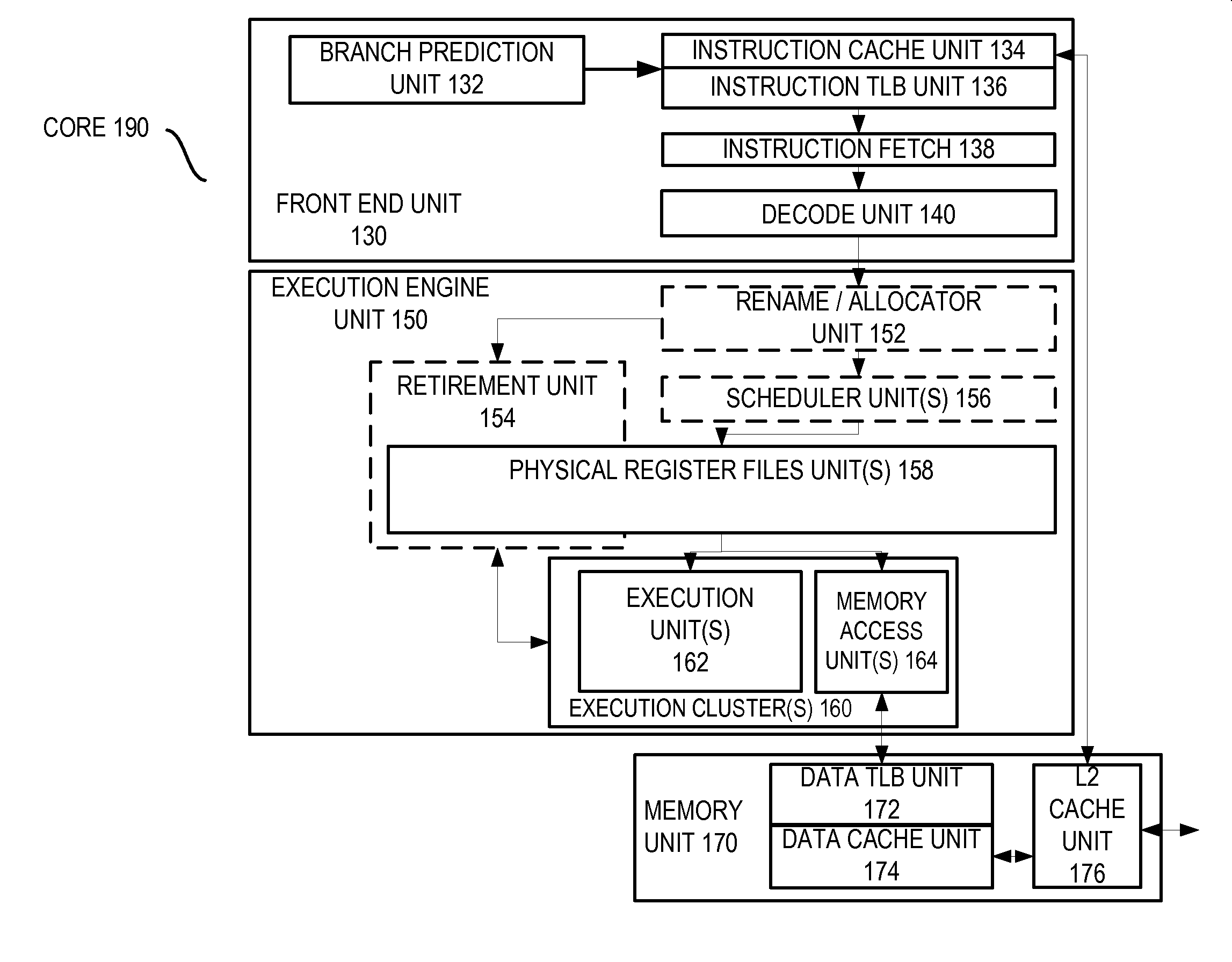

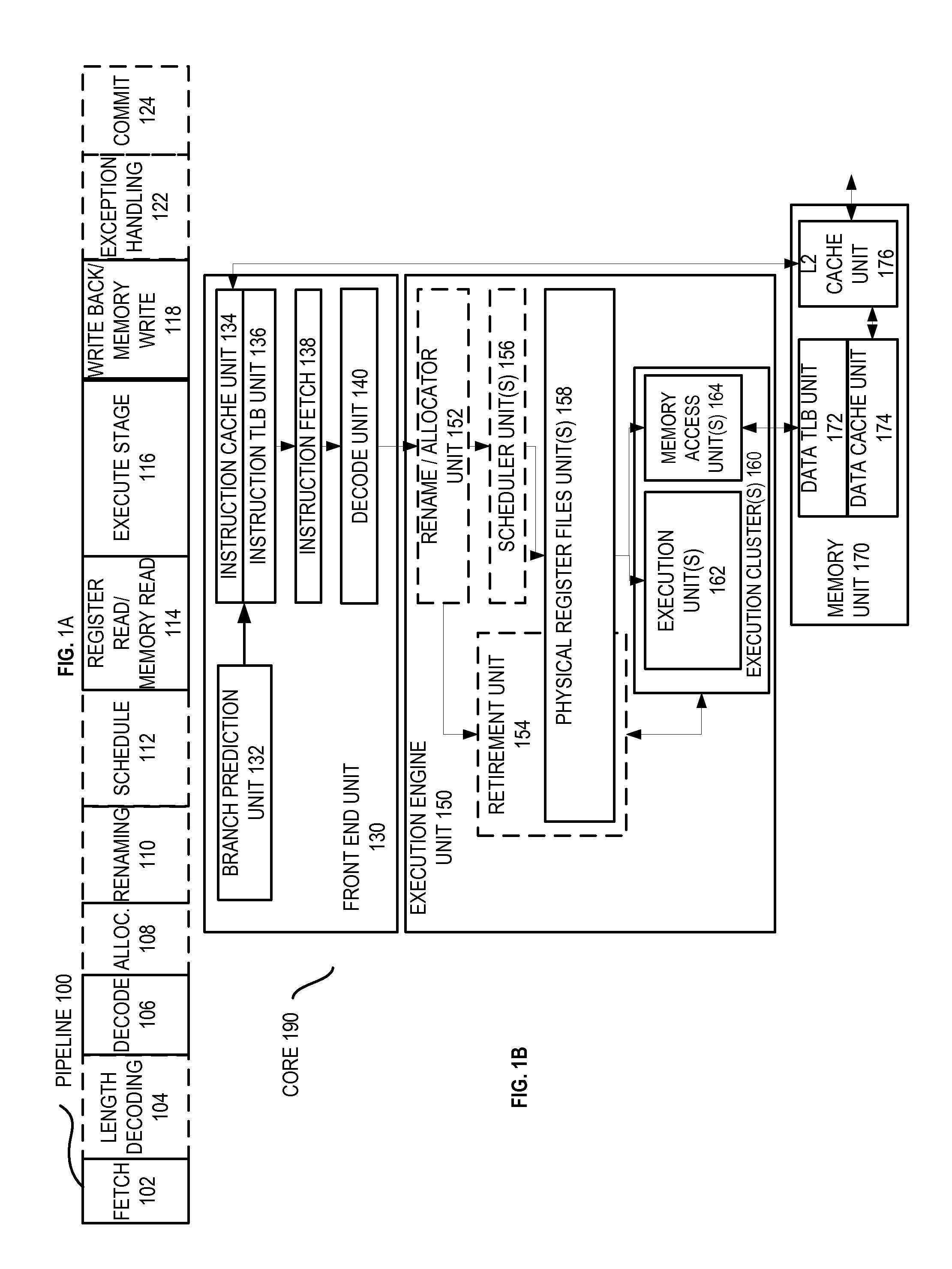

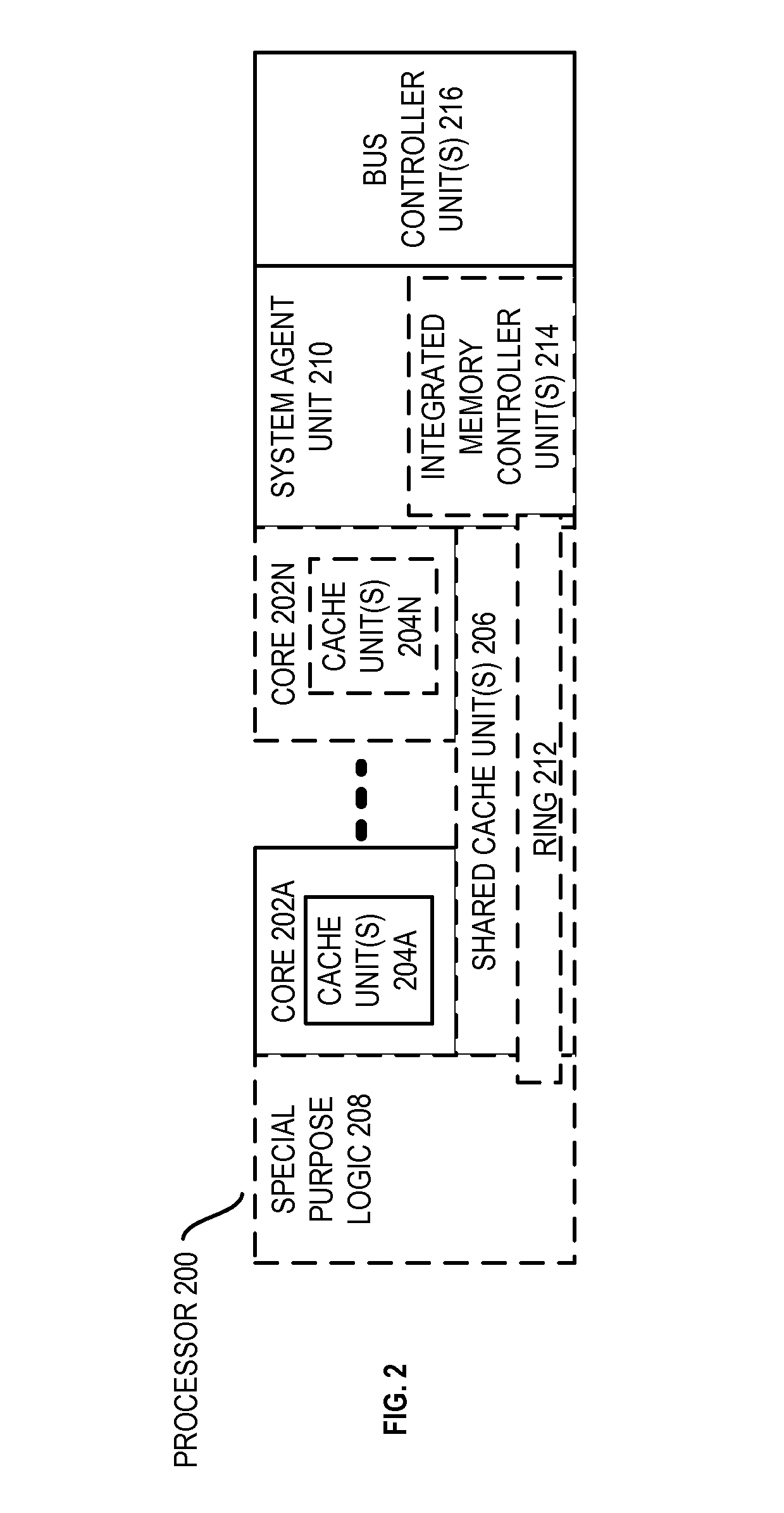

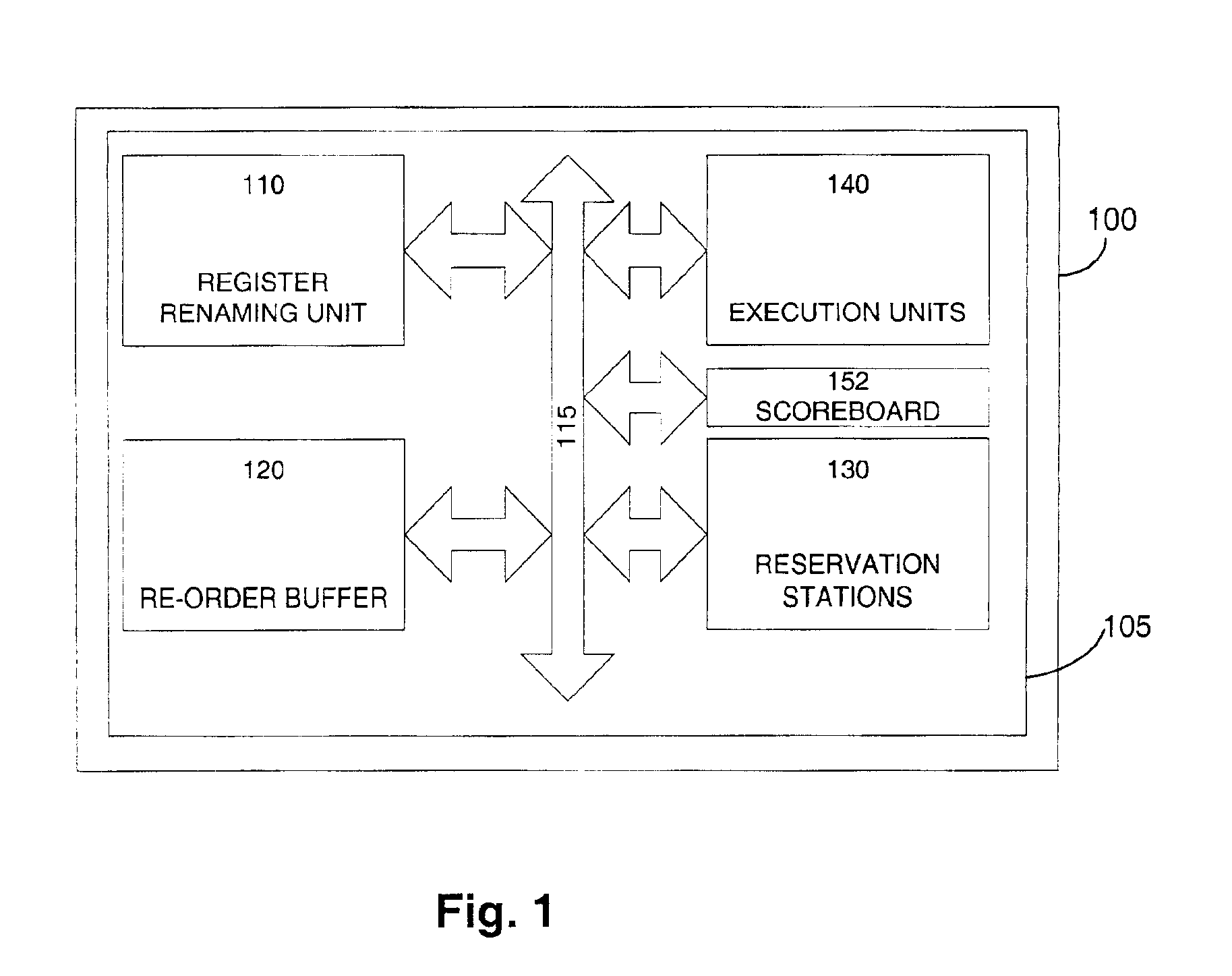

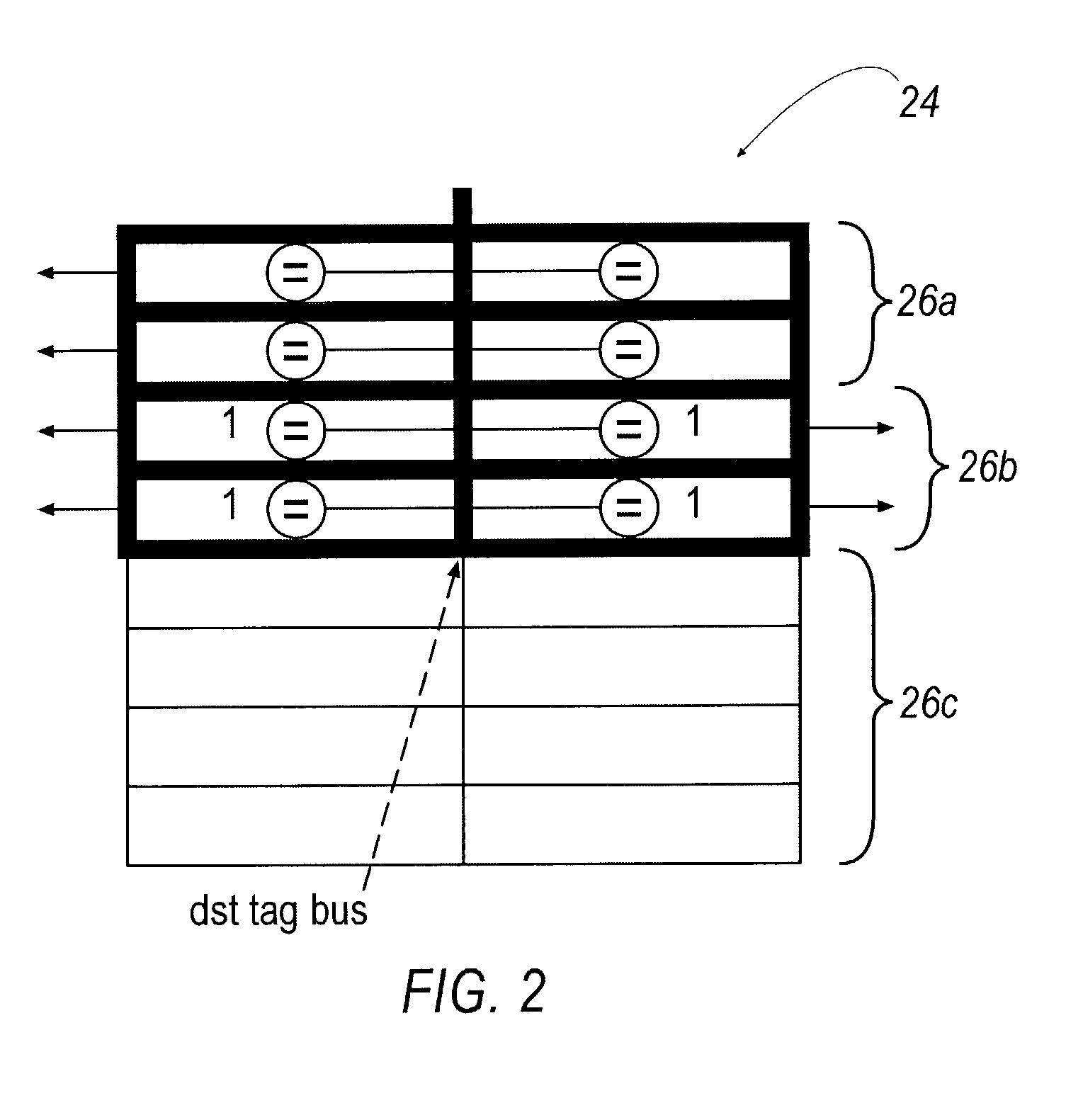

Method and apparatus for implementing dynamic portbinding within a reservation station

A processor and method are described for scheduling operations for execution within a reservation station. For example, a method in accordance with one embodiment of the invention includes the operations of: classifying a plurality of operations based on the execution ports usable to execute those operations; allocating the plurality of operations into groups within a reservation station based on the classification, wherein each group is serviced by one or more execution ports corresponding to the classification, and wherein two or more entries within a group share a common read port and a common write port; dynamically scheduling two or more operations in a group for concurrent execution based on the ports capable of executing those operations and a relative age of the operations.

Owner:INTEL CORP

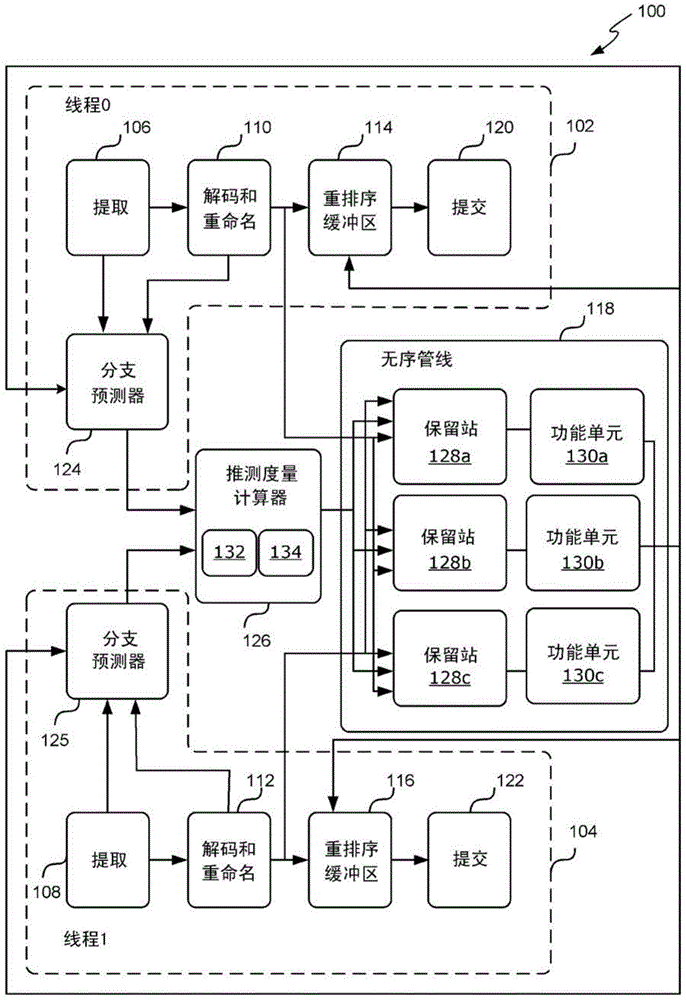

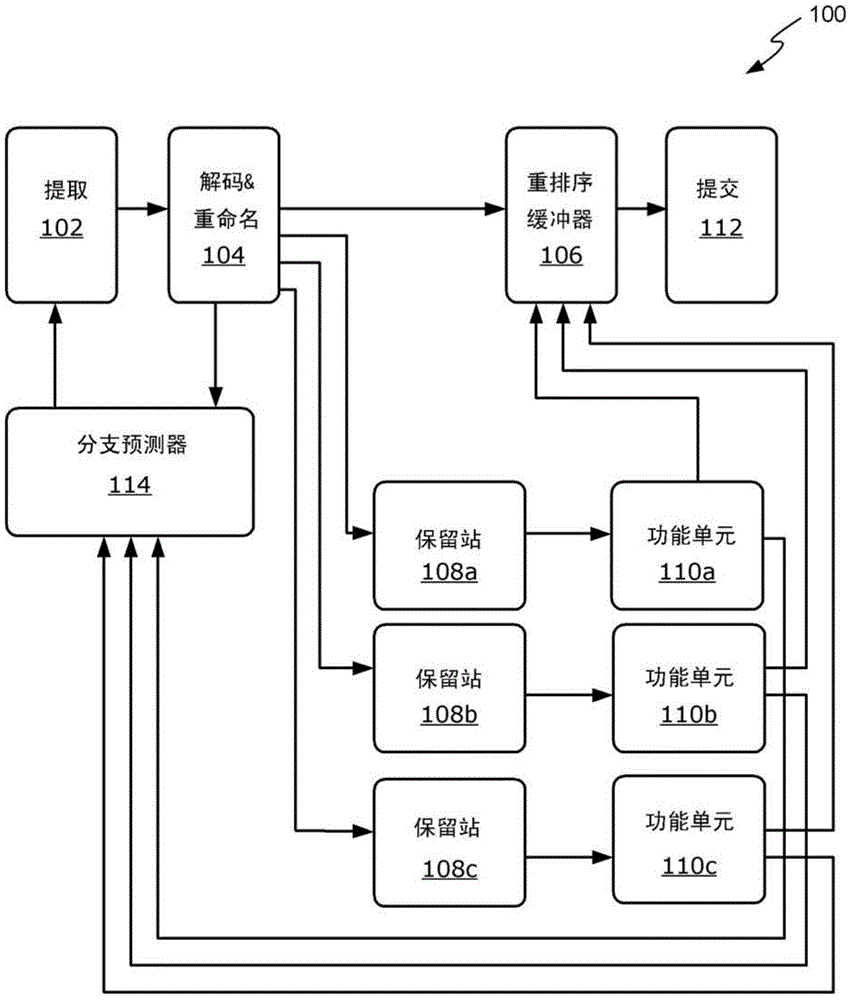

Prioritising instructions according to category of instruction

ActiveCN104346223AProgram initiation/switchingInstruction analysisReservation stationMultiple category

A method of selecting instructions to issue to a functional unit of an out-of-order superscalar processor single-threaded or multi-threaded. A reservation station classifies each instruction into one of a number of categories based on the type of instruction. Once classified, an instruction is stored in one of several instruction queues corresponding to the category in which it was classified. Instructions are then selected from one or more of the instruction queues (up to a maximum number of instructions for each particular queue) to issue to the functional unit based on a relative priority of the plurality of types of instructions. This allows certain types of instructions (eg. control transfer instructions, flag setting instructions and / or address generation instructions) to be prioritised over other types of instructions even if they are younger. A functional unit may contain a plurality of pipelines, and there may be several such functional units in a processor.

Owner:MIPS TECH INC

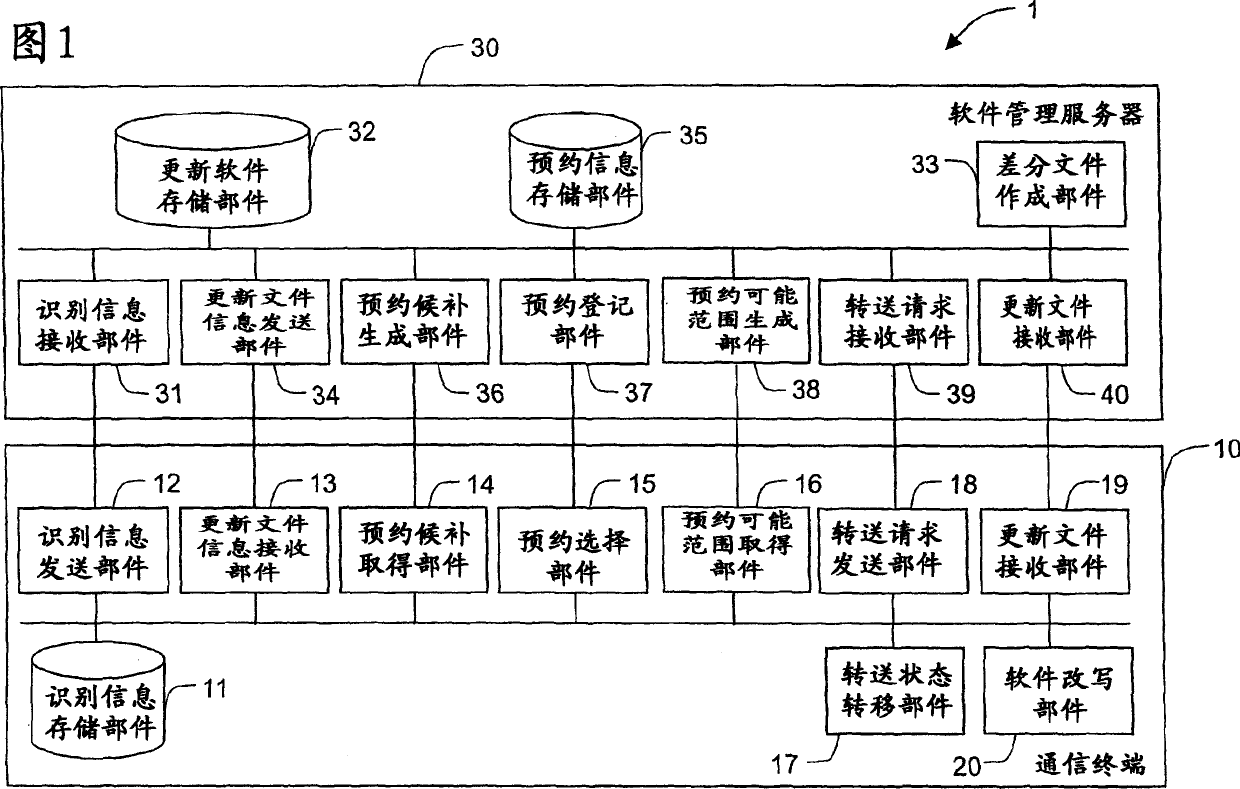

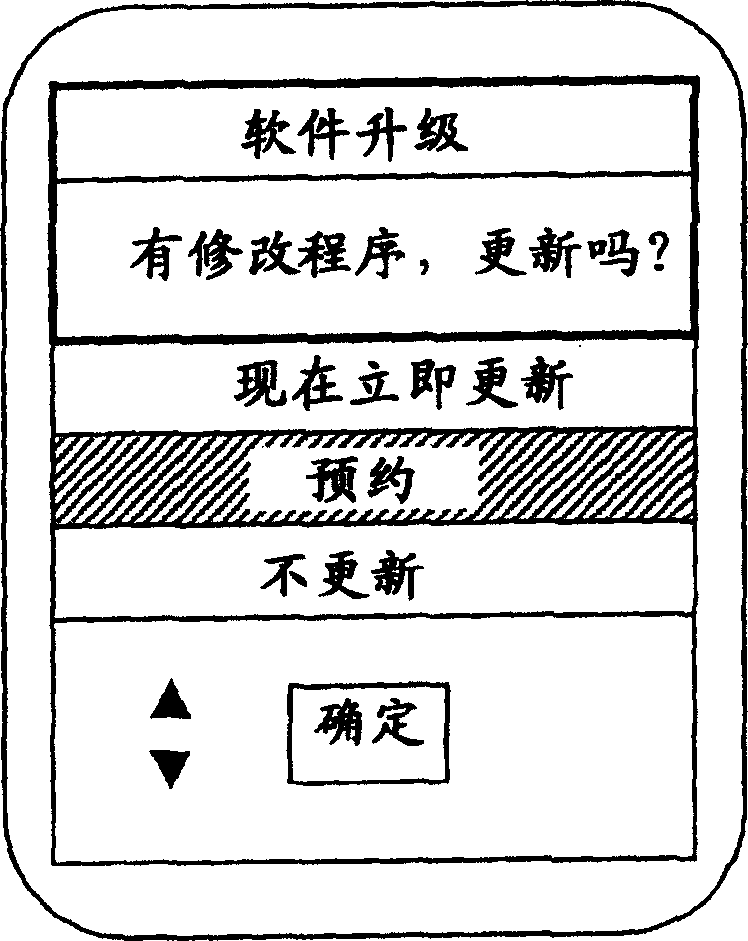

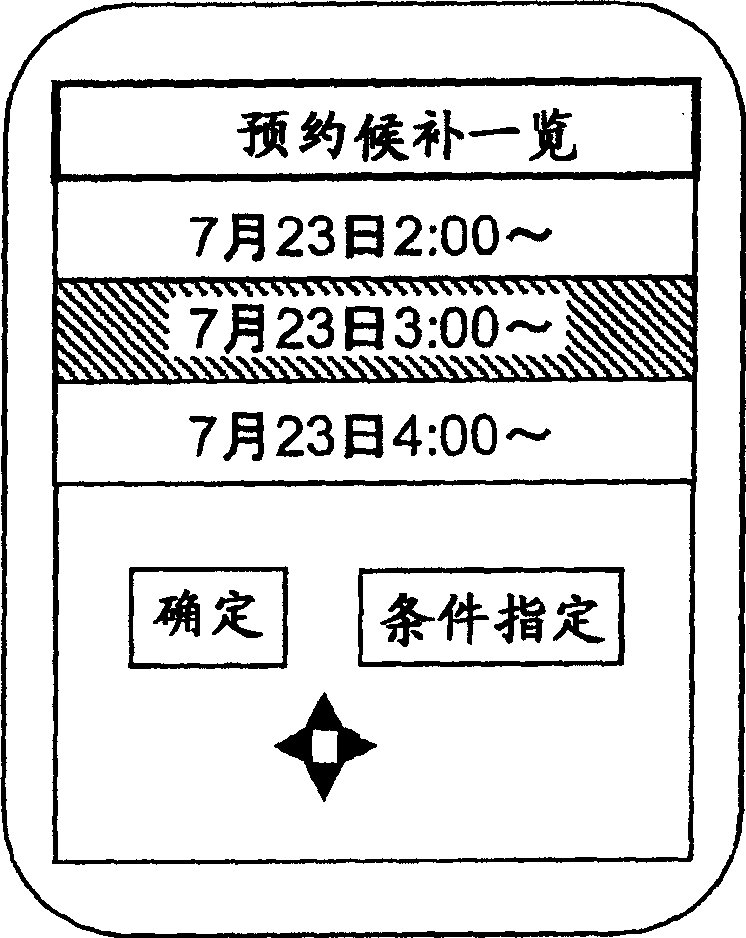

Download system, communication terminal, server, and download method

The present invention provides a downloading system that can appropriately distribute the load on servers and transmission paths, and further improve the convenience related to data downloading. A reservation candidate obtaining means (14) of a communication terminal (10) obtains reservation candidate information including time information on time slots in which candidates for download reservations for update files can be assigned from a software management server (30). A communication terminal (10) selects a reserved time slot from time slots corresponding to time information included in reservation candidate information, and a software management server (30) registers a reservation for the reserved time slot. In this way, since the reserved time zone can be selected from candidates for the time zone sent from the software management server (30), convenience is improved. In addition, because the candidates for the time zone are obtained based on the schedule set so that the load on the software management server (30) and the load on the transmission path are distributed, the load on the software management server (30) and the load on the transmission path can be appropriately distributed. .

Owner:NTT DOCOMO INC

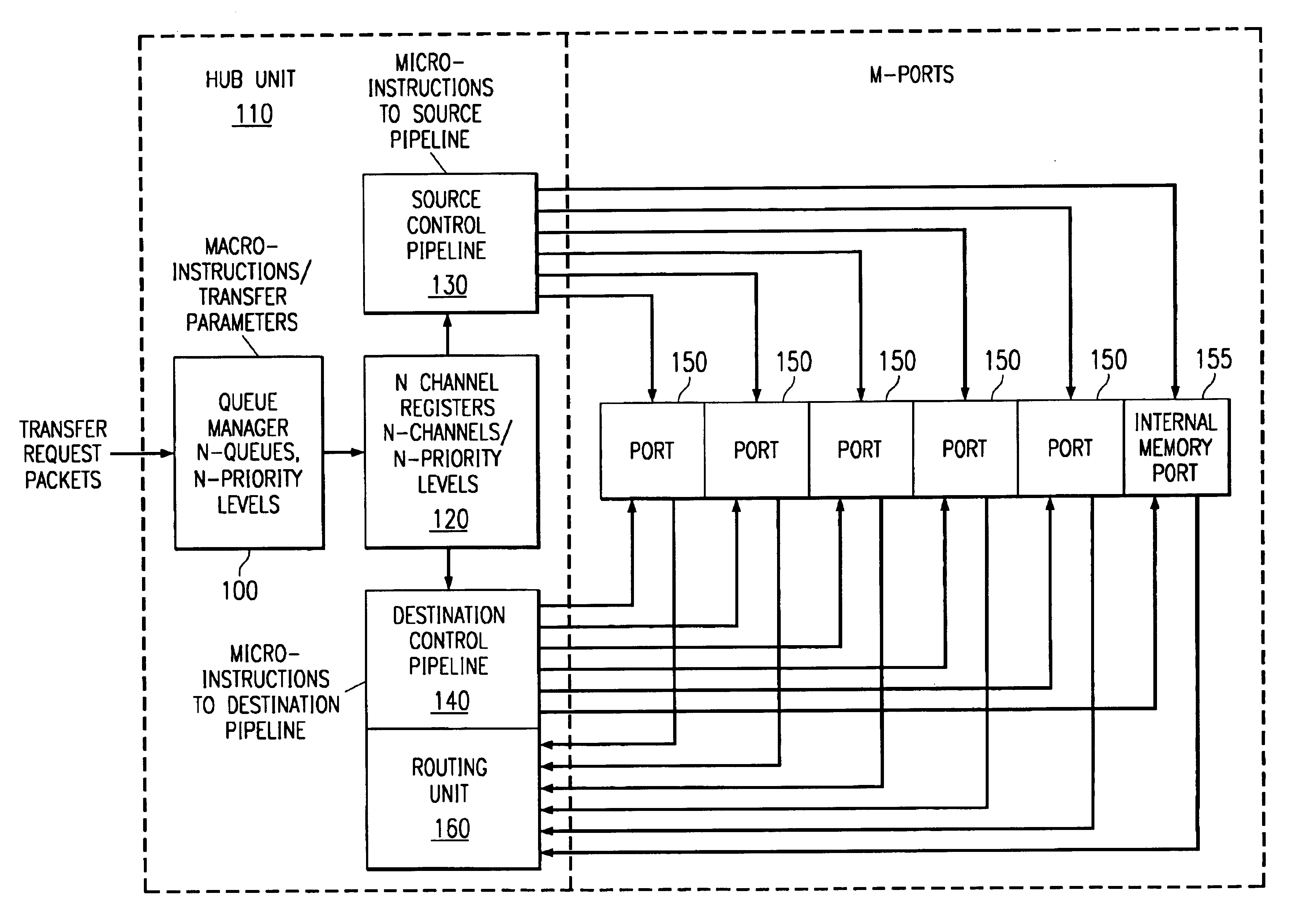

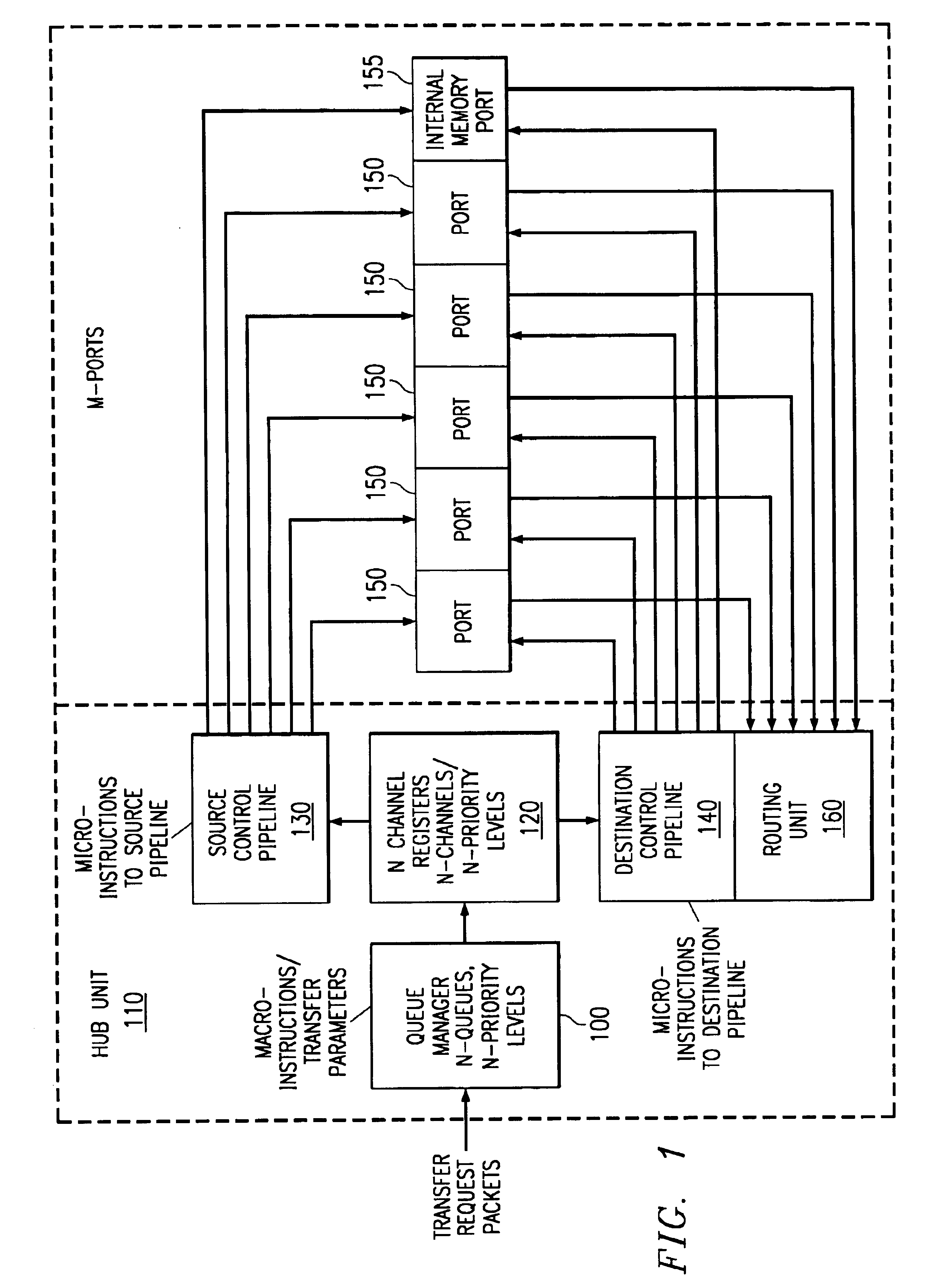

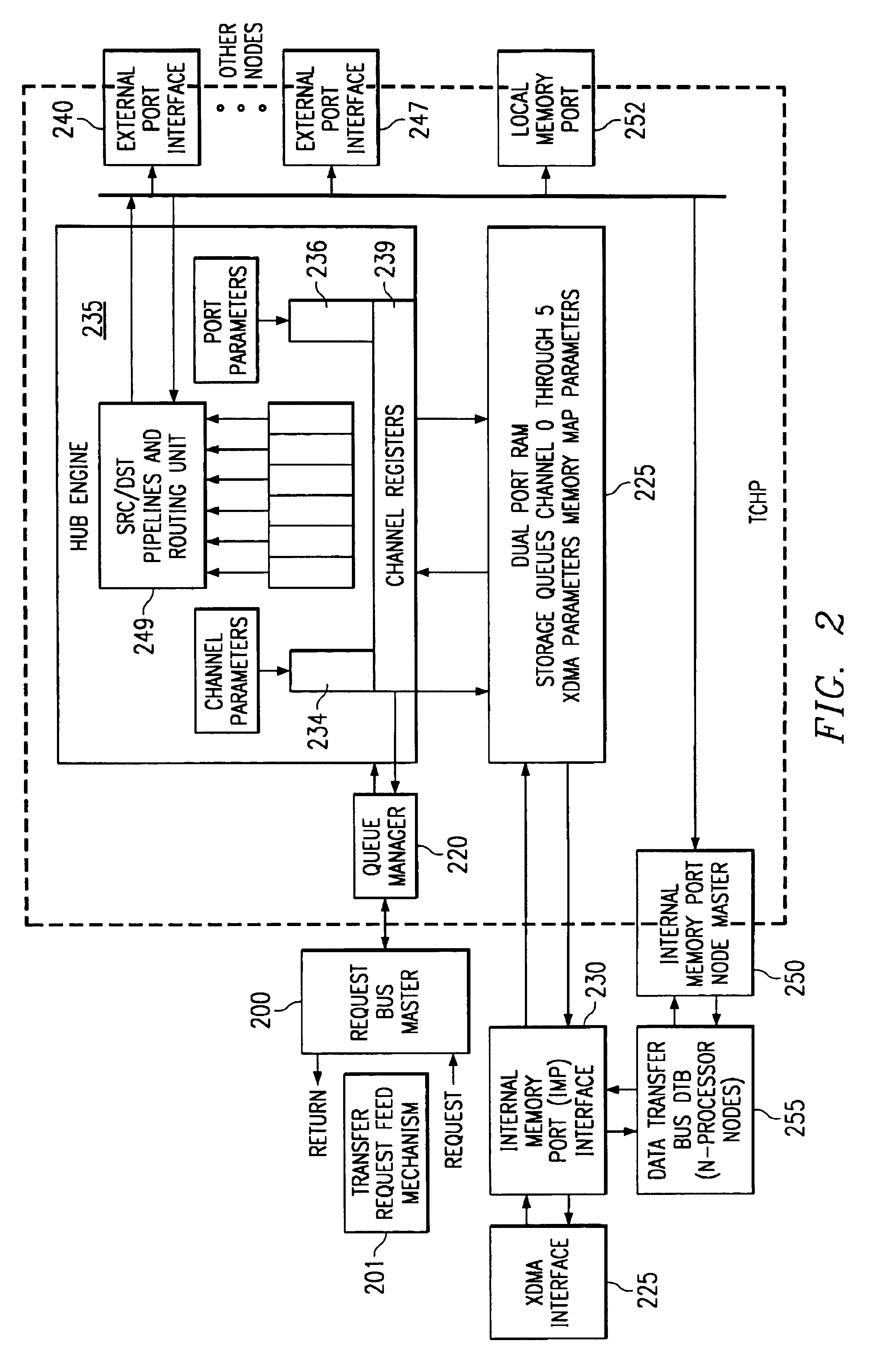

Using write request queue to prevent bottlenecking on slow ports in transfer controller with hub and ports architecture

InactiveUS6904474B1Avoid bottlenecksMultiple digital computer combinationsTransmissionReservation stationData shipping

A data transfer technique between a source port and a destination port of a transfer controller with plural ports. In response to a data transfer request (401), the transfer controller queries the destination port to determine if it can receive data of a predetermined size (402). If the destination port is not capable of receiving data, the transfer controller waits until said destination port is capable of receiving data (412). If the destination port is capable of receiving data, the destination port allocates a write reservation station to the data (403). Then the transfer controller reads data of the predetermined size from the source port (404) and transfers this read data to the destination port (405). The destination port forwards this data to an attached application unit, which may be memory or a peripheral, and then disallocates the write reservation station freeing space for further data transfer (406). This write driven process permits the transfer controller hub to service other data transfers from a fast source without being blocked by a slow destination.

Owner:TEXAS INSTR INC

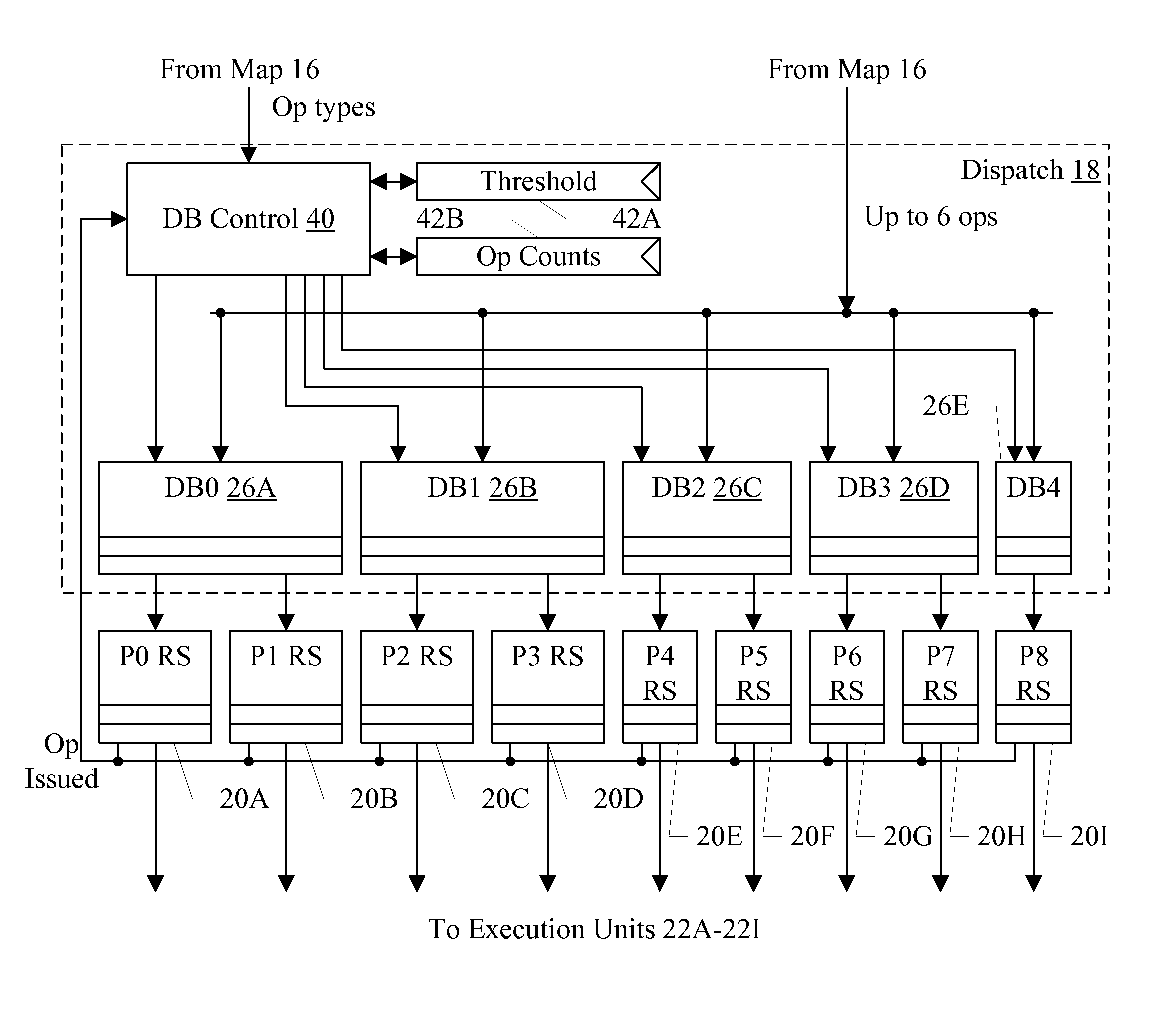

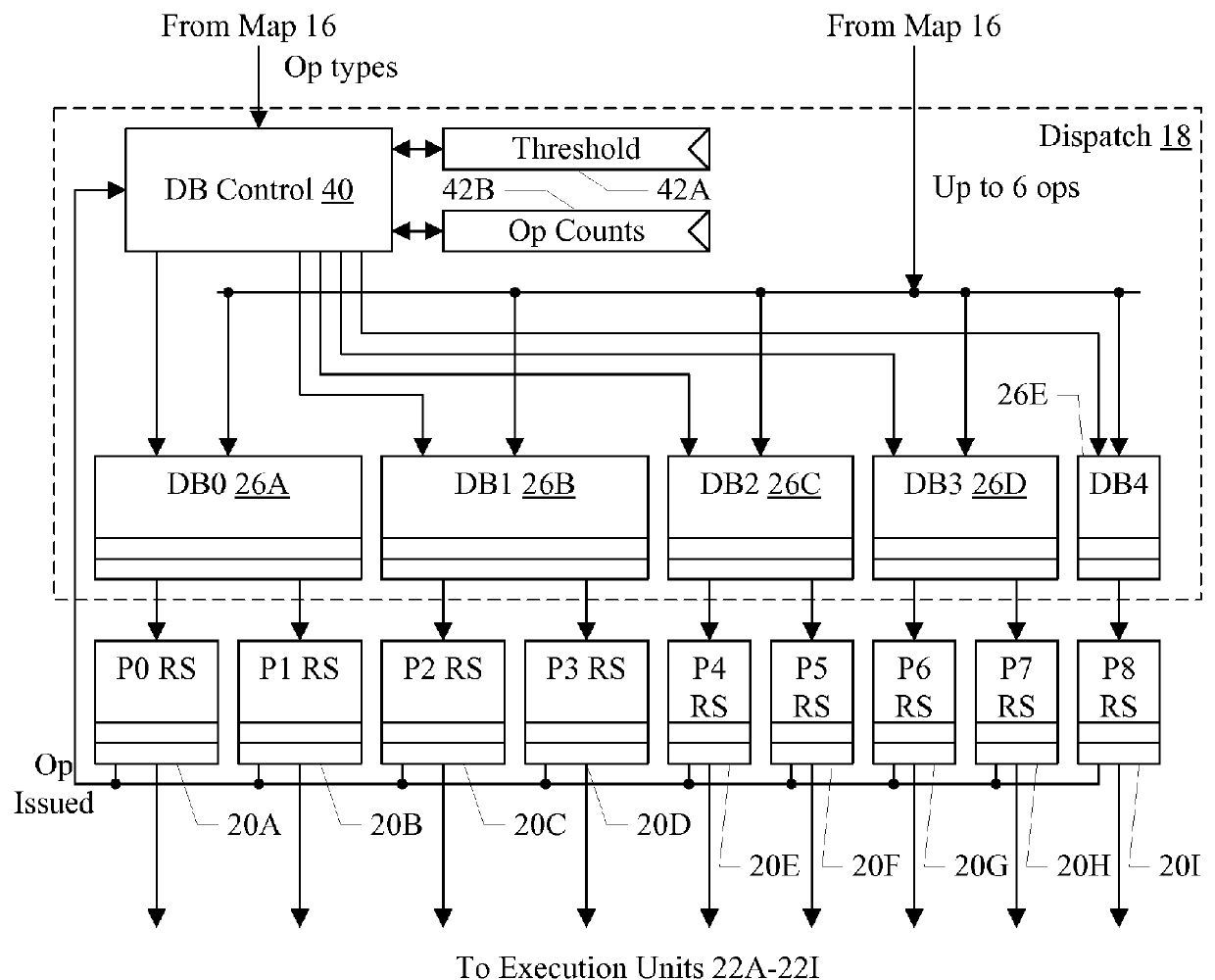

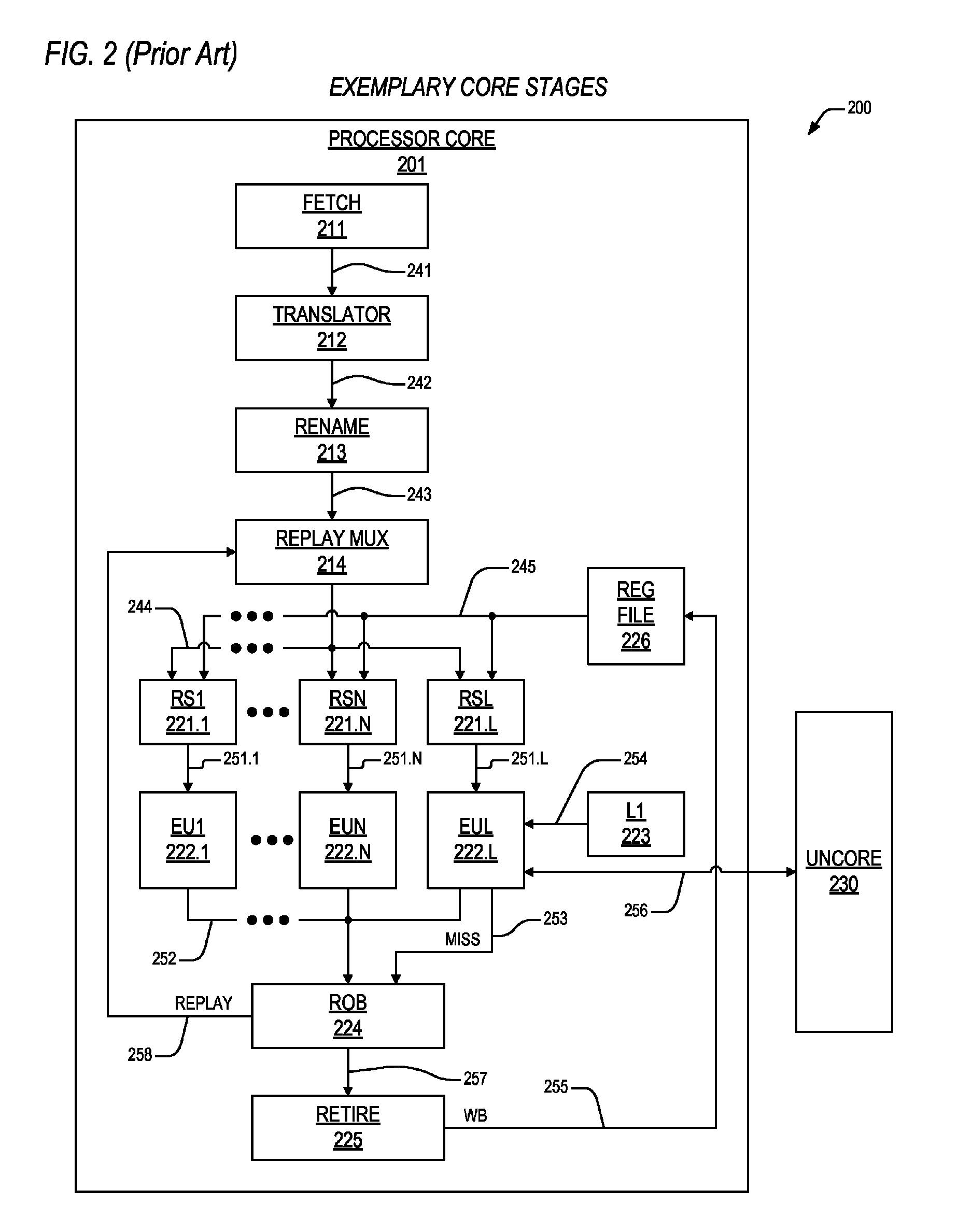

Multi-Level Dispatch for a Superscalar Processor

ActiveUS20140215188A1Avoid low frequency operationImprove performanceInstruction analysisDigital computer detailsReservation stationScalar processor

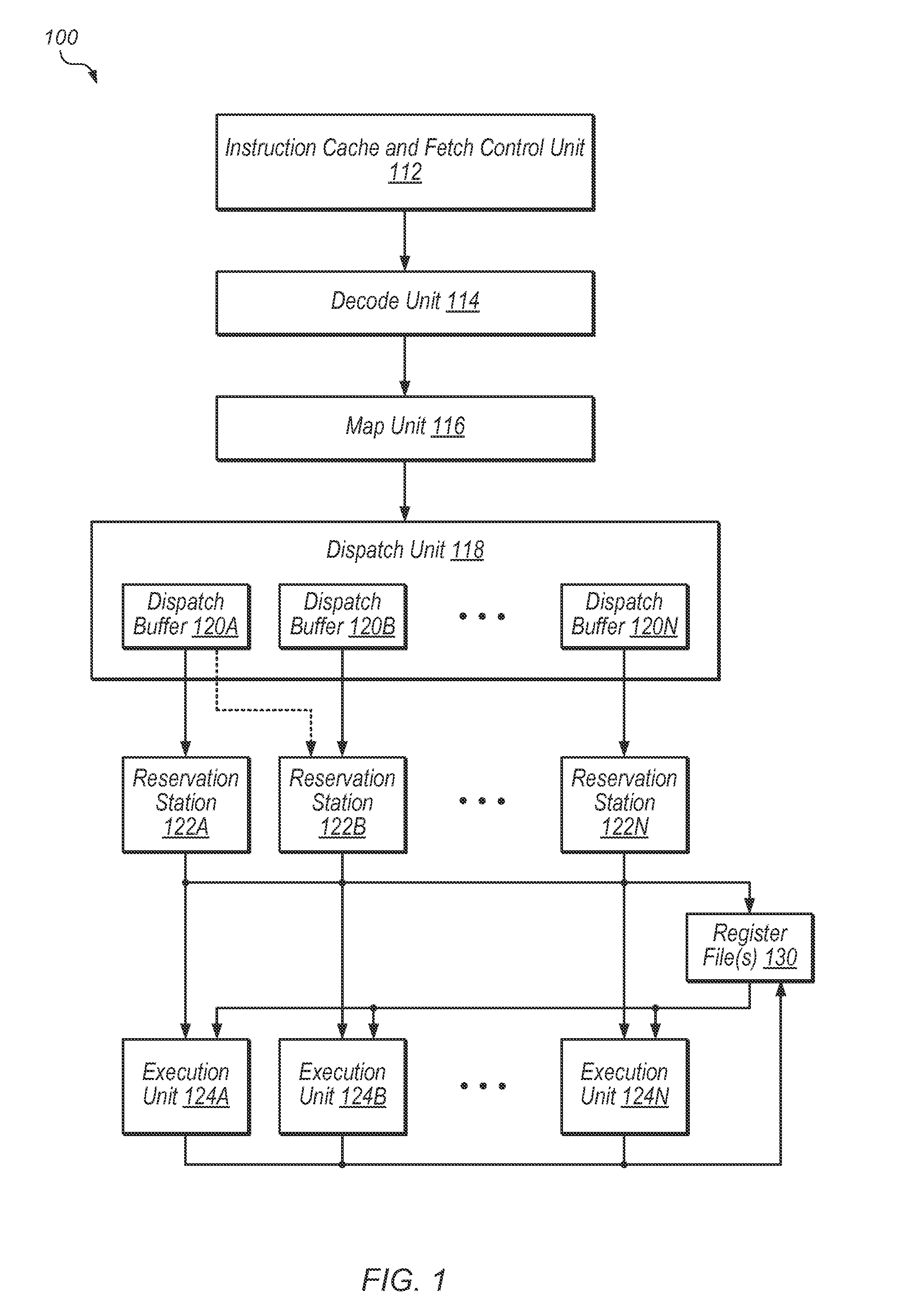

In an embodiment, a processor includes a multi-level dispatch circuit configured to supply operations for execution by multiple parallel execution pipelines. The multi-level dispatch circuit may include multiple dispatch buffers, each of which is coupled to multiple reservation stations. Each reservation station may be coupled to a respective execution pipeline and may be configured to schedule instruction operations (ops) for execution in the respective execution pipeline. The sets of reservation stations coupled to each dispatch buffer may be non-overlapping. Thus, if a given op is to be executed in a given execution pipeline, the op may be sent to the dispatch buffer which is coupled to the reservation station that provides ops to the given execution pipeline.

Owner:APPLE INC

Multi-level dispatch for a superscalar processor

ActiveUS9336003B2Improve performanceHigh operating requirementsProgram initiation/switchingInstruction analysisReservation stationScalar processor

In an embodiment, a processor includes a multi-level dispatch circuit configured to supply operations for execution by multiple parallel execution pipelines. The multi-level dispatch circuit may include multiple dispatch buffers, each of which is coupled to multiple reservation stations. Each reservation station may be coupled to a respective execution pipeline and may be configured to schedule instruction operations (ops) for execution in the respective execution pipeline. The sets of reservation stations coupled to each dispatch buffer may be non-overlapping. Thus, if a given op is to be executed in a given execution pipeline, the op may be sent to the dispatch buffer which is coupled to the reservation station that provides ops to the given execution pipeline.

Owner:APPLE INC

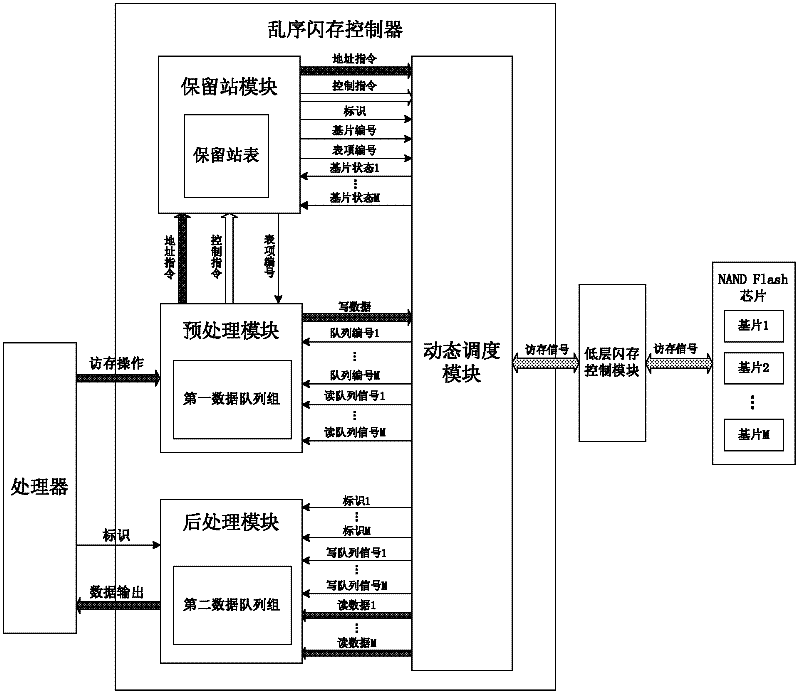

NAND flash memory controller supporting operation out-of-order execution

ActiveCN102567246AImprove throughputImplement "out-of-order" executionElectric digital data processingMemory chipReservation station

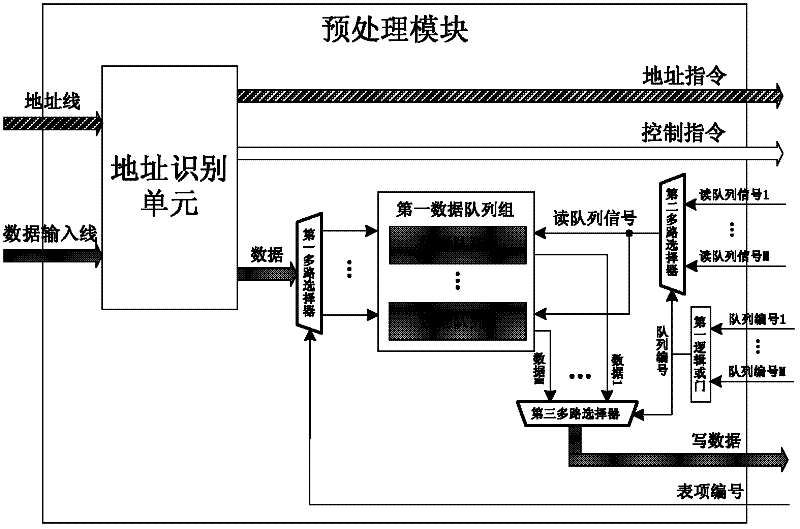

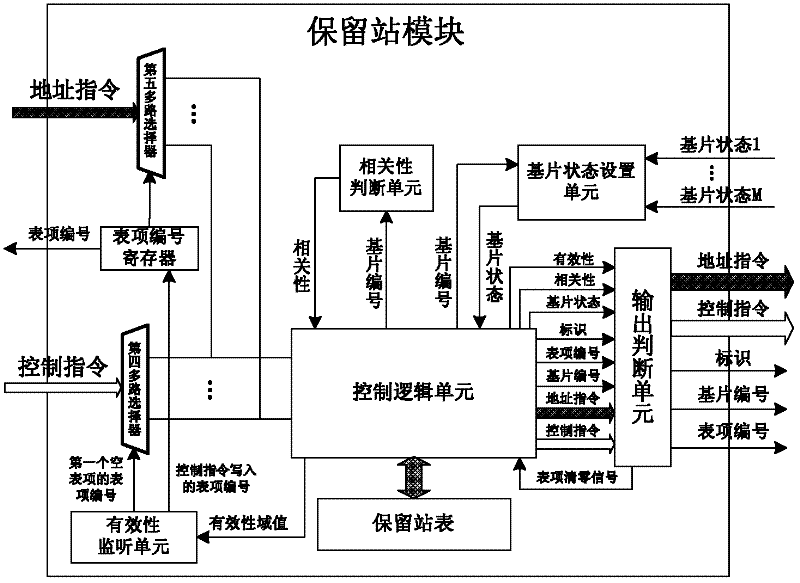

The invention discloses a NAND flash memory controller supporting operation out-of-order execution, and aims to improve memory access data throughput. The controller consists of a preprocessing module, a reservation station module, a dynamic scheduling module and a postprocessing module, wherein the preprocessing module consists of an address recognition unit, three multiplexers, a first data queue group and a first logic OR gate; the reservation station module consists of two multiplexers, a table entry number register, a validity monitoring unit, a relevance judgment unit, a substrate state setting unit, an output judgment unit, a control logic unit and a reservation station table; the dynamic scheduling module consists of five multiplexers, M state machines, an arbiter and a second logic OR gate; and the postprocessing module consists of a comparator, a second data queue group and a multiplexer. By the controller, the memory access operation of different substrates of a memory chip can be subjected to parallel execution, the working parallelism of the substrates is improved, and the memory access data throughput is effectively improved.

Owner:NAT UNIV OF DEFENSE TECH

Method and apparatus for processing a predicated instruction using limited predicate slip

InactiveUS6883089B2Digital computer detailsConcurrent instruction executionReservation stationExecution unit

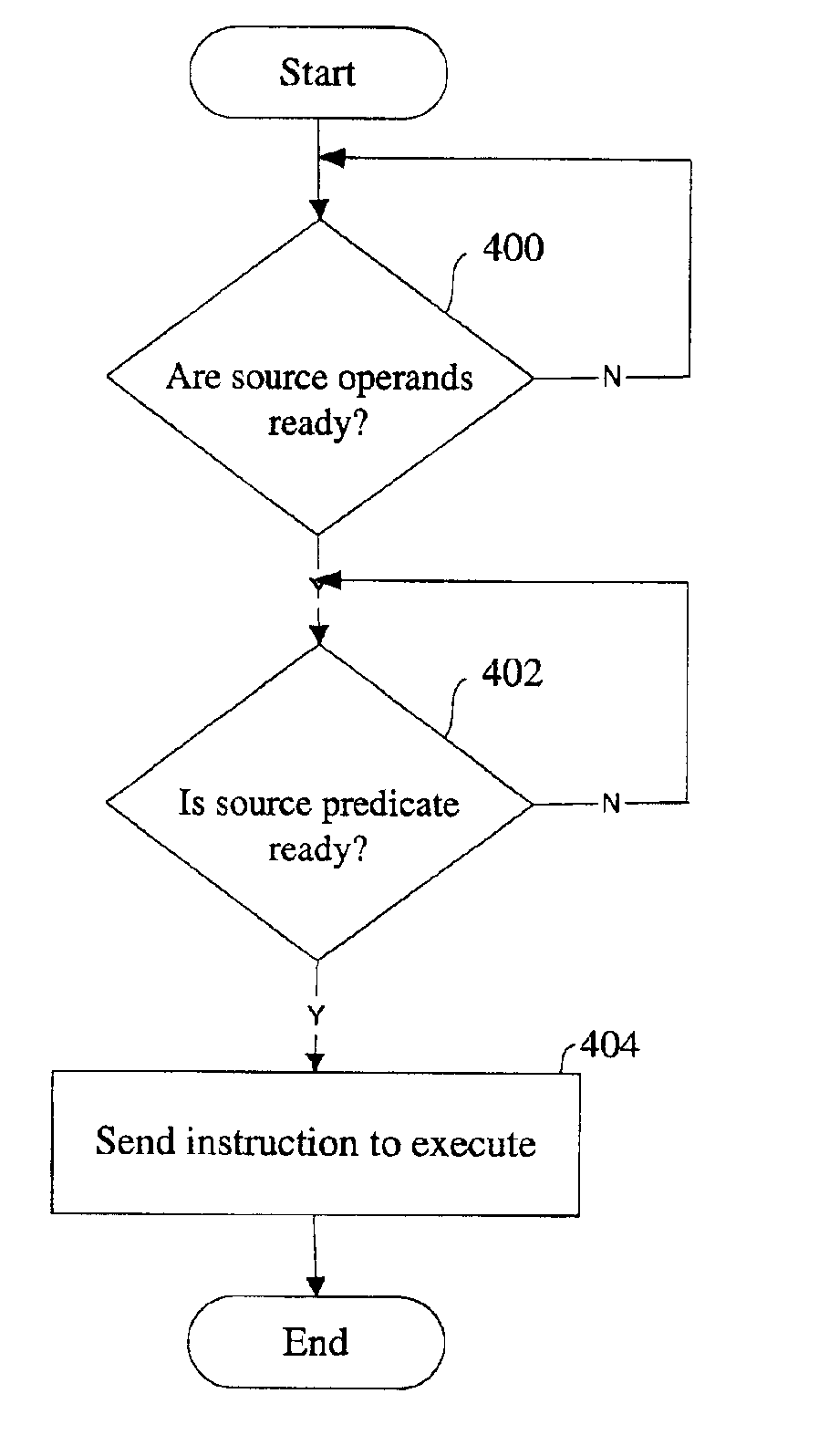

A system and method of processing a predicated instruction is disclosed. A consumer instruction and a predicated instruction are received in an reservation station of an out-order processor. The consumer instruction depends on a result of the predicated instruction. The predicated instruction is dispatched to an execution unit for execution. The executed predicate instruction is stored in a re-order buffer.

Owner:INTEL CORP

Adaptive allocation of reservation station entries to an instruction set with variable operands in a microprocessor

InactiveUS7979677B2Reduce areaReduce power consumptionInstruction analysisDigital computer detailsGeneral purposeReservation station

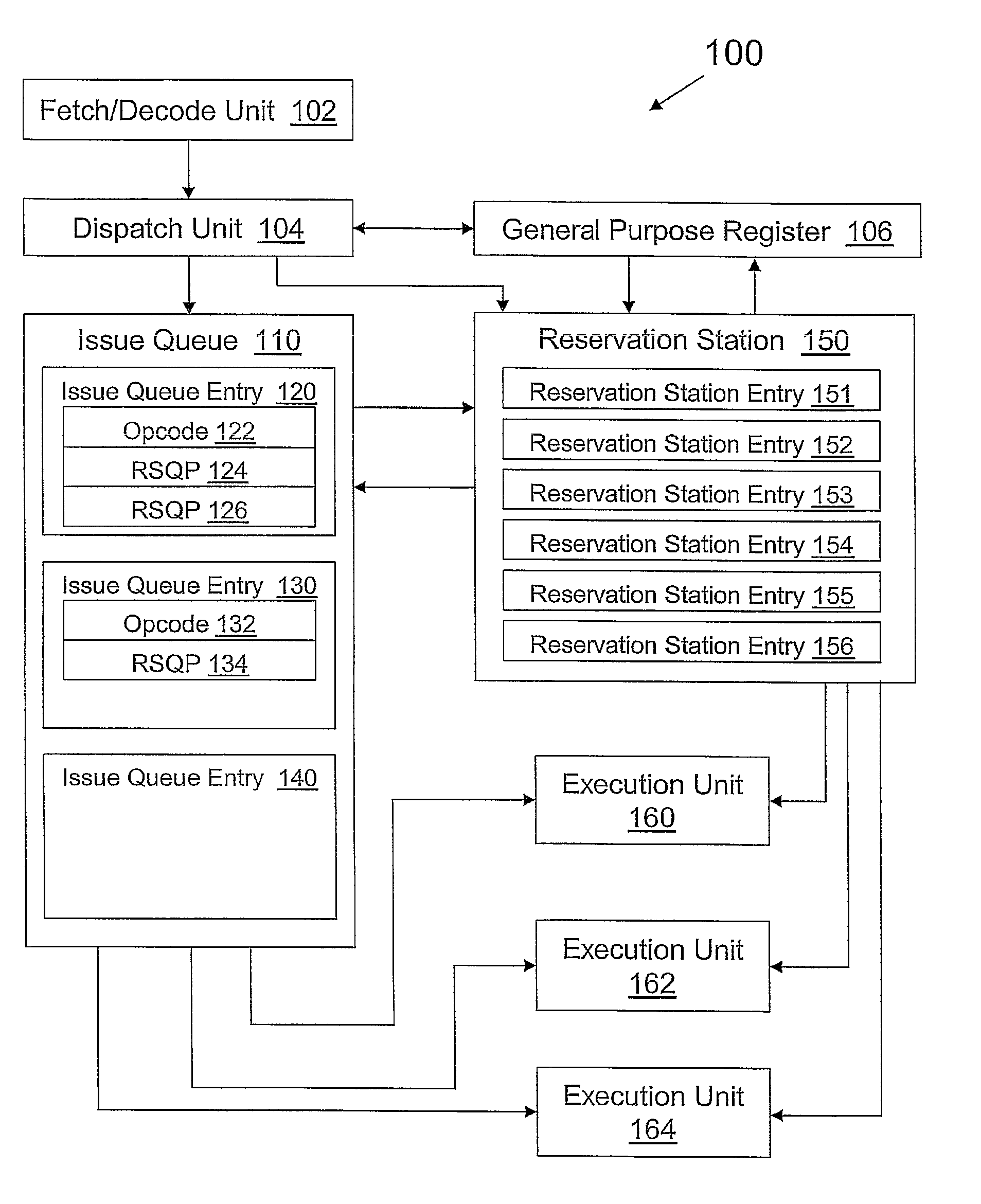

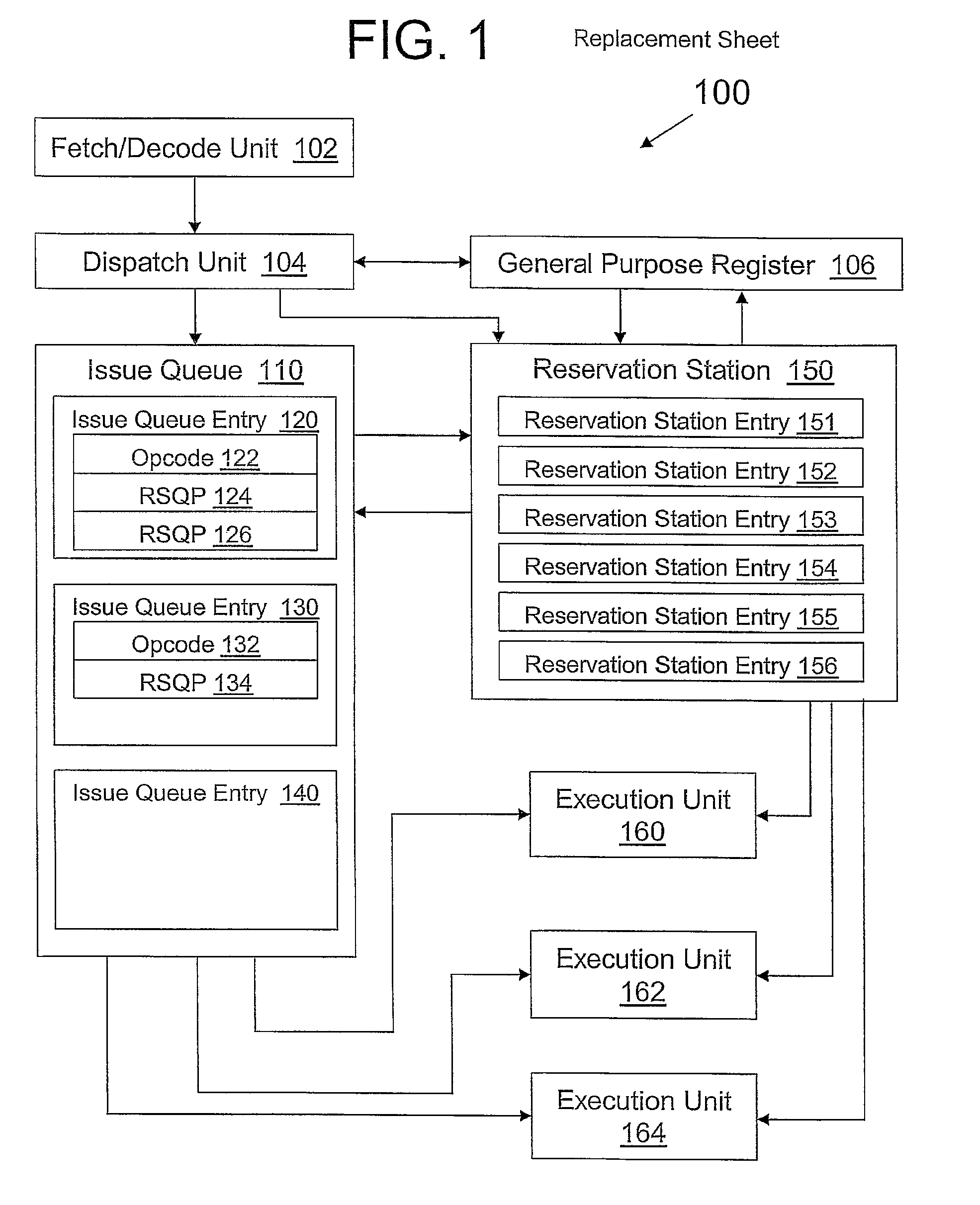

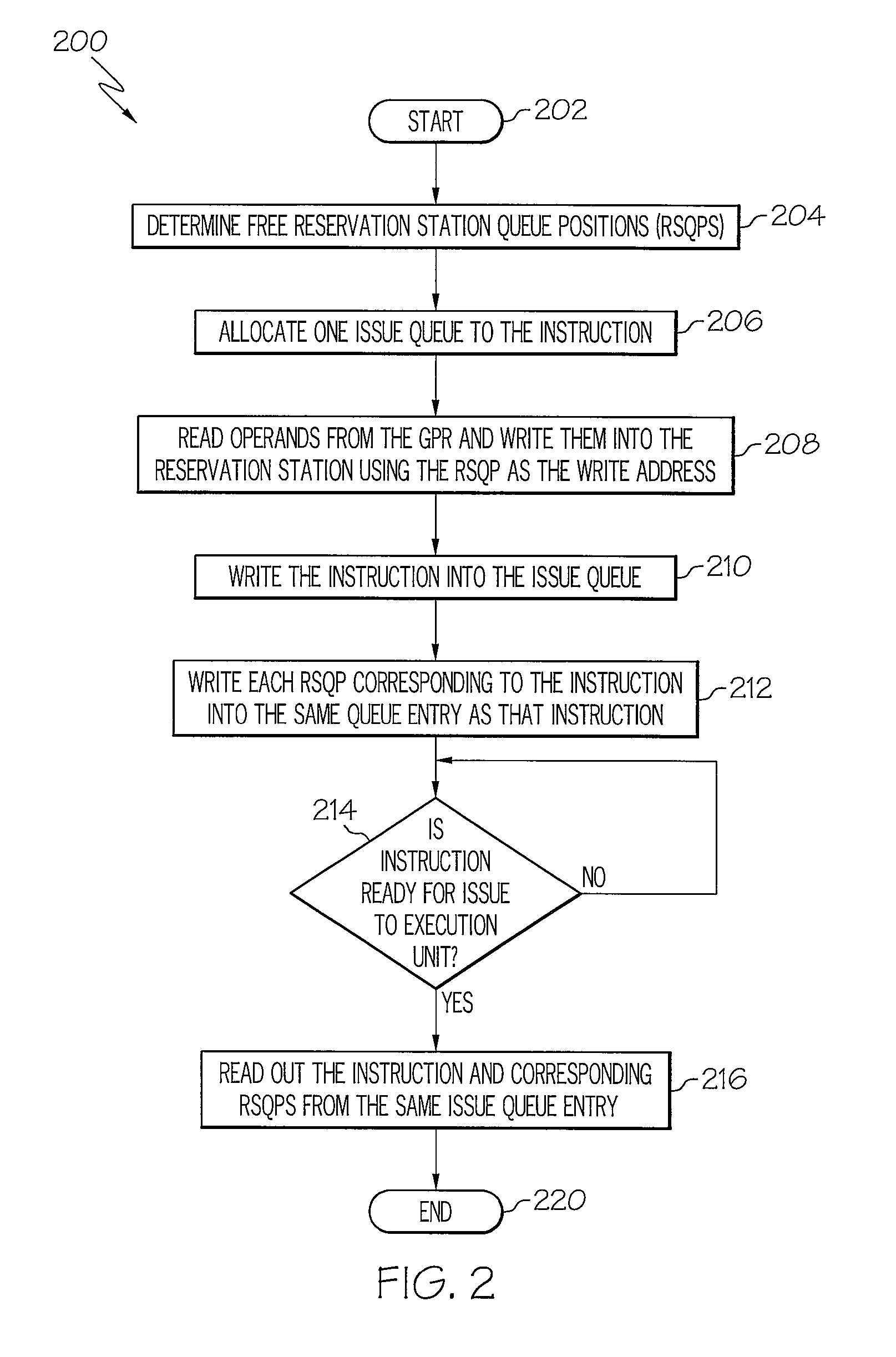

A method and device for adaptively allocating reservation station entries to an instruction set with variable operands in a microprocessor. The device includes logic for determining free reservation station queue positions in a reservation station. The device allocates an issue queue to an instruction and writes the instruction into the issue queue as an issue queue entry. The device reads an operand corresponding to the instruction from a general purpose register and writes the operand into a reservation station using one of the free reservations station positions as a write address. The device writes each reservation station queue position corresponding to said instruction into said issue queue entry. When the instruction is ready for issue to an execution unit, the device reads out the instruction from the issue queue entry the reservation station queue positions to the execution unit.

Owner:INT BUSINESS MASCH CORP

Processor device for out-of-order processing having reservation stations utilizing multiplexed arithmetic pipelines

InactiveUS7984271B2Efficient use ofIncreased complexityDigital computer detailsSpecific program execution arrangementsMultiplexingReservation station

A processor device having a reservation station (RS) is concerned. In case the processor device has plural RS, the RS is associated with an arithmetic pipeline, and two RS make a pair. When one RS of the pair cannot dispatch an instruction to an associated arithmetic pipeline, the other RS dispatches the instruction to that arithmetic pipeline, or delivers its held instruction to the one RS. In case one RS is equipped, plural entries in the RS are divided into groups, and by dynamically changing this grouping according to the dispatch frequency of the instruction to the arithmetic pipelines or the held state of the instructions, the arithmetic pipelines are efficiently utilized. Incidentally, depending on the grouping of the plural entries in the RS, a configuration as if the plural RS were allocated to each arithmetic pipeline may be realized.

Owner:FUJITSU LTD

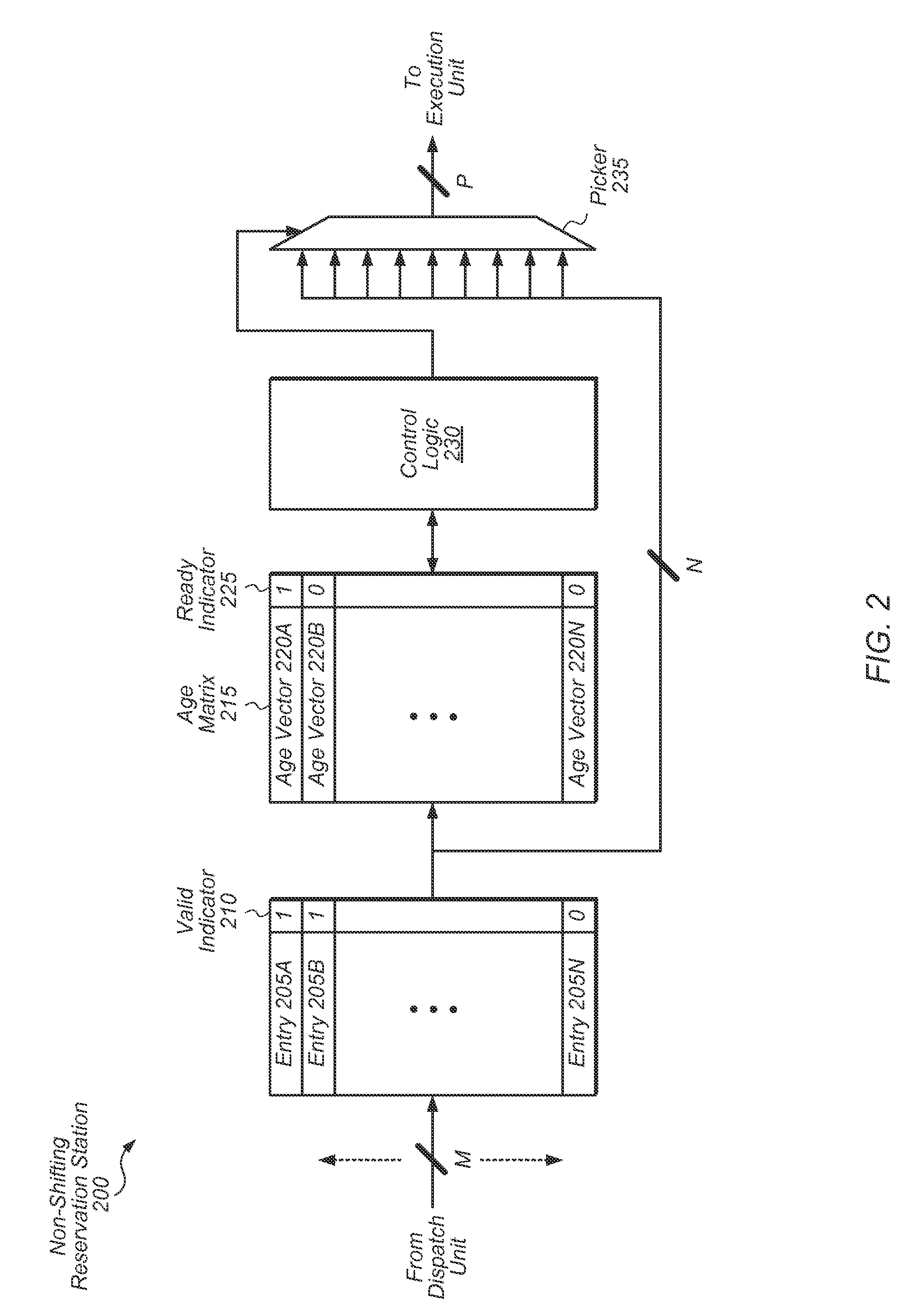

Non-shifting reservation station

Systems, apparatuses, and methods for implementing a non-shifting reservation station. A dispatch unit may write an operation into any entry of a reservation station. The reservation station may include an age matrix for determining the relative ages of the operations stored in the entries of the reservation station. The reservation station may include selection logic which is configured to pick the oldest ready operation from the reservation station based on the values stored in the age matrix. The selection logic may utilize control logic to mask off columns of an age matrix corresponding to non-ready operation so as to determine which operation is the oldest ready operation in the reservation station. Also, the reservation station may be configured to dequeue operations early when these operations do not have load dependency.

Owner:APPLE INC

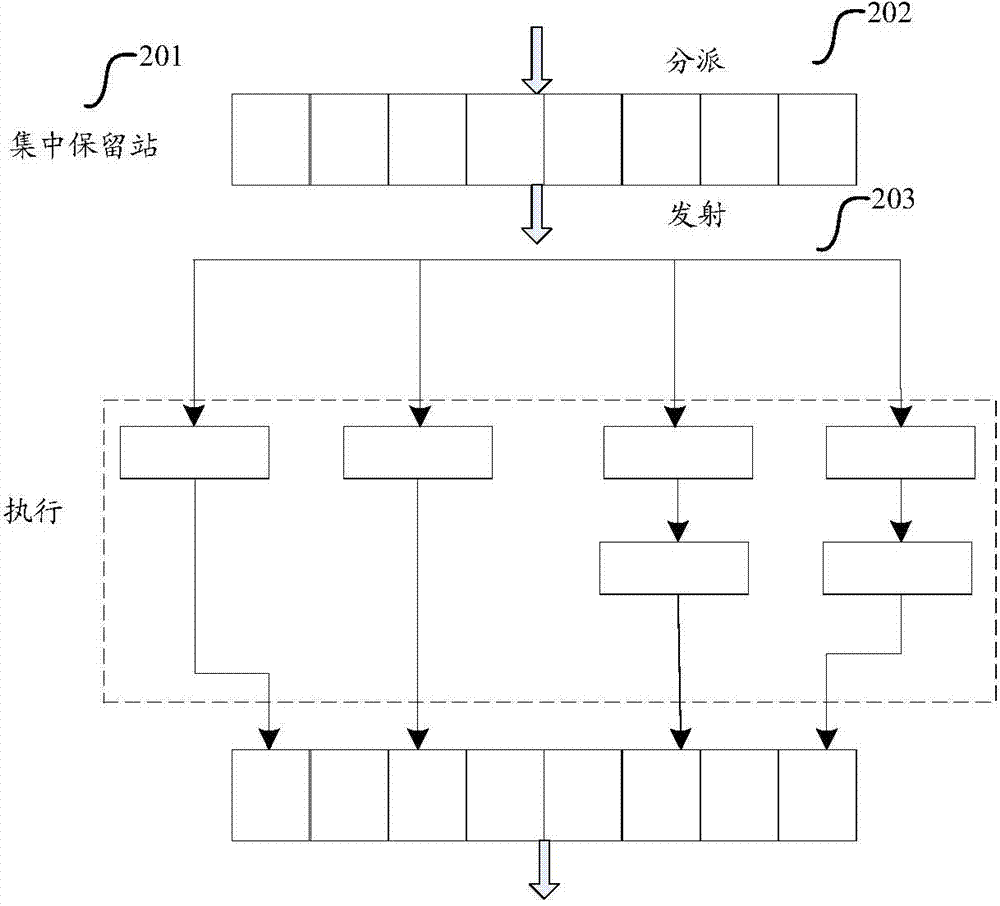

Superscale pipeline reservation station processing instruction method and device

InactiveCN104714780AImprove execution segment performanceImprove performanceConcurrent instruction executionProcessing InstructionReservation station

The invention is suitable for the technical field of micro-processors and provides a superscale pipeline reservation station processing instruction method and device. The superscale pipeline reservation station processing instruction method comprises the steps of determining a judgment rule of a correlation instruction and a non-correlation instruction; obtaining each instruction written in an operation queue in multiple pipelines; judging the correlation among the instructions according to the judgment rule, and recognizing the instructions to be the correlation instructions or the non-correlation instructions; caching the correlation instructions into a first type reservation station according to the instruction written-in sequence in the operation queen, and caching the non-correlation instructions into a second reservation station, wherein the correlation instructions refers to the instructions that a calling sequence exists in calculation results or operation parameters in the operation queue, the non-correlation instructions are the instructions except for the correlation instructions in the operation queue, and the first reservation station and the second reservation station are different reservation stations. The superscale pipeline reservation station processing instruction method and device improve the performance of pipeline performing section parallelism processing.

Owner:SHENZHEN STATE MICROELECTRONICS CO LTD

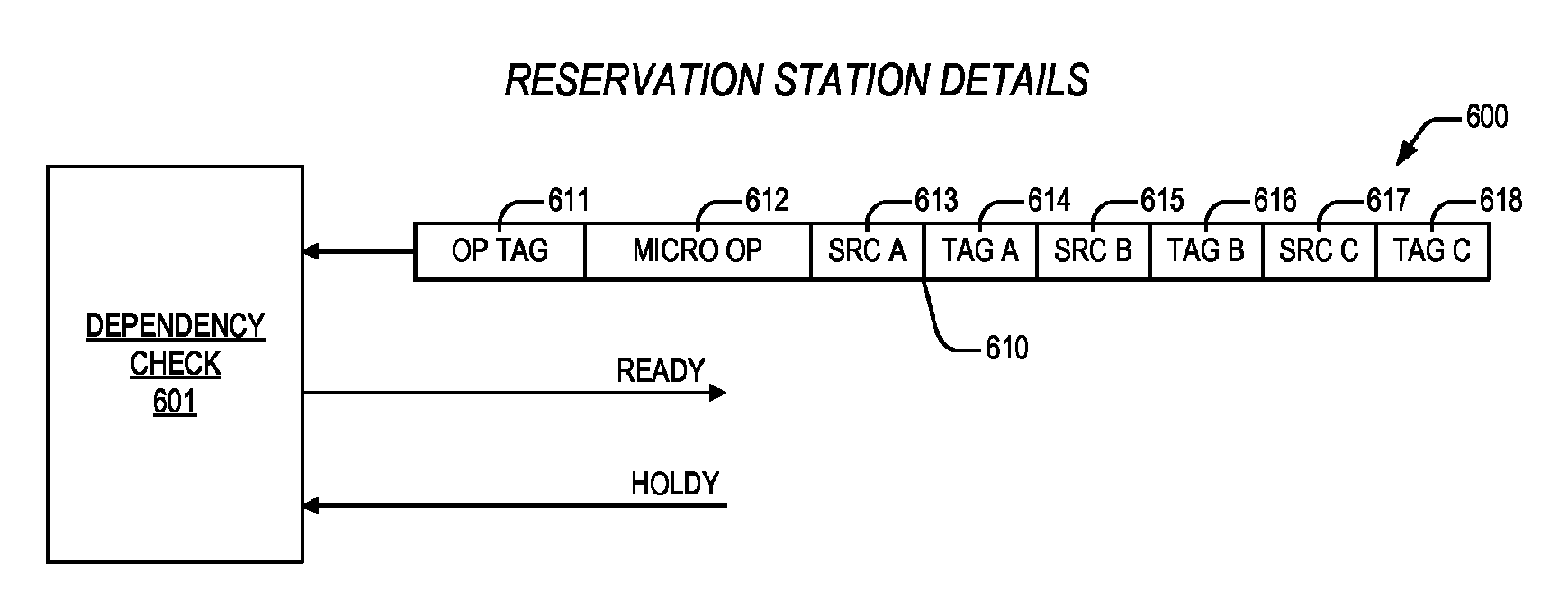

Apparatus and method for programmable load replay preclusion

ActiveUS20160350123A1Reducing replayConcurrent instruction executionLoad instructionReservation station

An apparatus including first and second reservation stations. The first reservation station dispatches a load micro instruction, and indicates on a hold bus if the load micro instruction is a specified load micro instruction directed to retrieve an operand from a prescribed resource other than on-core cache memory. The second reservation station is coupled to the hold bus, and dispatches one or more younger micro instructions therein that depend on the load micro instruction for execution after a number of clock cycles following dispatch of the first load micro instruction, and if it is indicated on the hold bus that the load micro instruction is the specified load micro instruction, the second reservation station is configured to stall dispatch of the one or more younger micro instructions until the load micro instruction has retrieved the operand. The plurality of non-core resources includes a random access memory, programmed via a Joint Test Action Group interface with the plurality of specified load instructions corresponding to the out-of-order processor which, upon initialization, accesses the random access memory to determine said plurality of specified load instructions.

Owner:VIA ALLIANCE SEMICON CO LTD

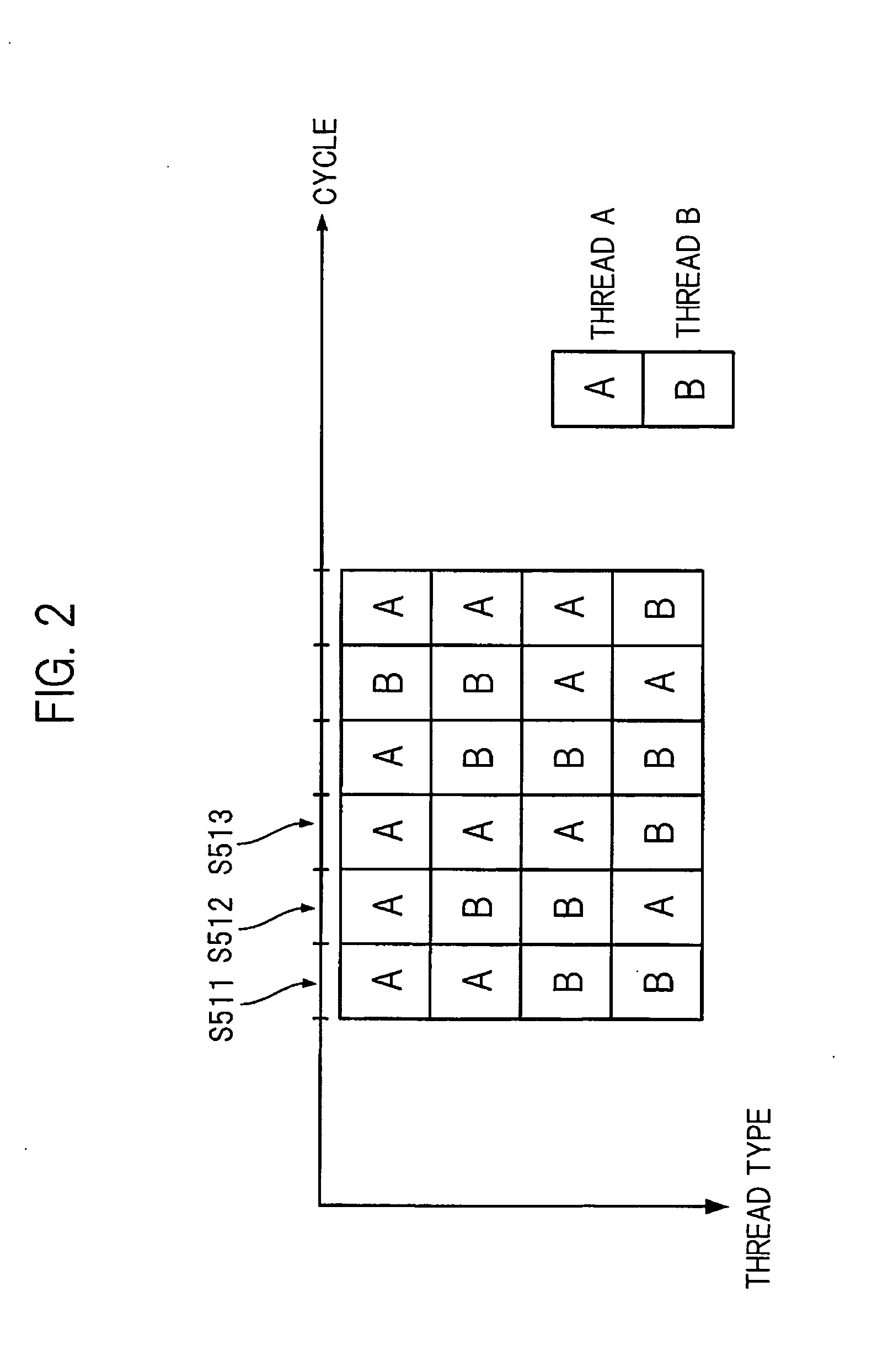

Providing quality of service via thread priority in a hyper-threaded microprocessor

InactiveCN101369224AProgram initiation/switchingConcurrent instruction executionQuality of serviceReservation station

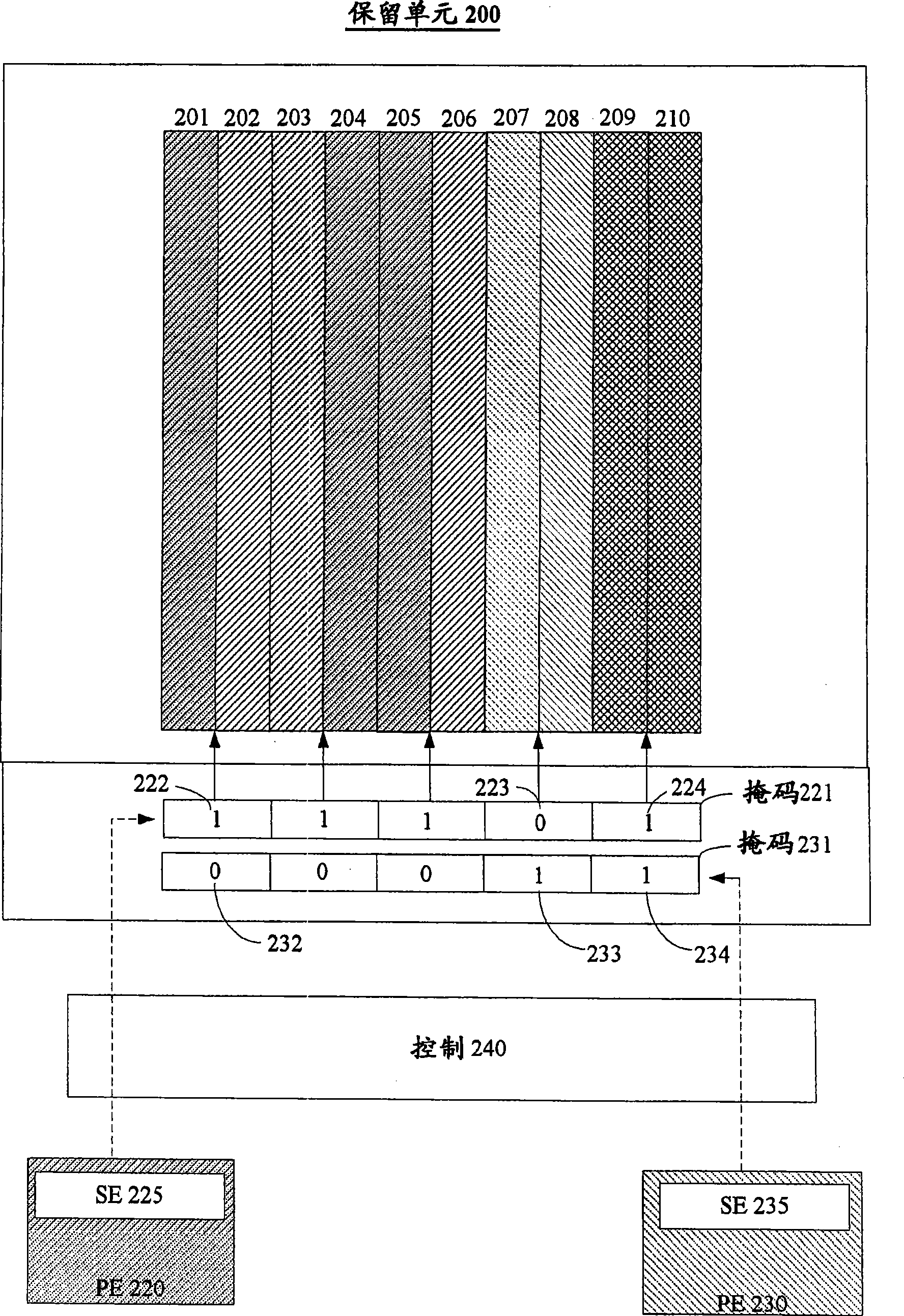

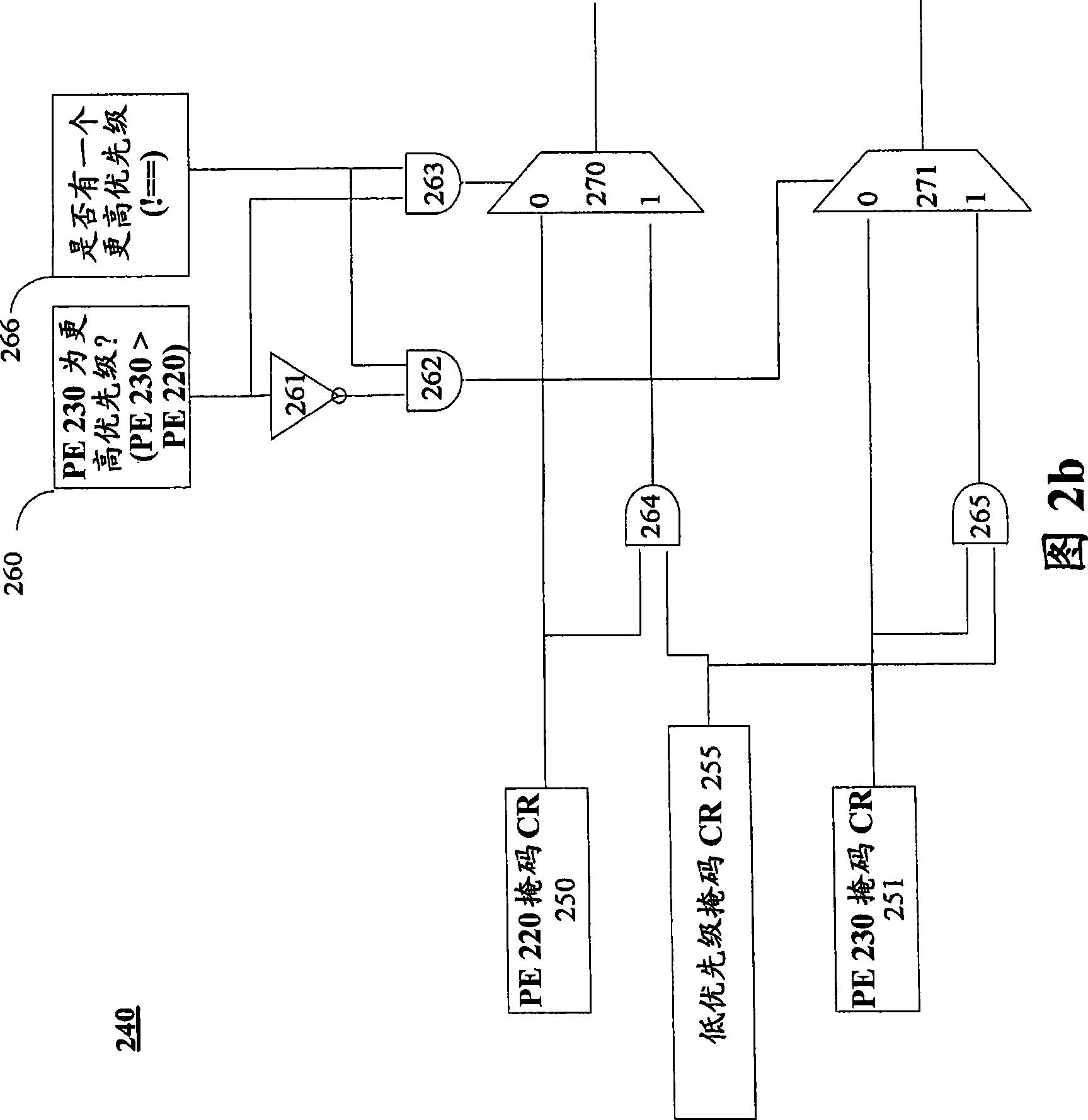

A method and an apparatus for providing quality of service in a multi-processing element environment based on priority is herein described. Consumption of resources, such as a reservation station and a pipeline, are biased towards a higher priority processing element. In a reservation station, mask elements are set to provide access for higher priority processing elements to more reservation entries. In a pipeline, bias logic provides a ratio of preference for selection of a high priority processing element.

Owner:INTEL CORP

Dynamic pipe staging adder

InactiveUS6560695B1Improve performanceDigital computer detailsConcurrent instruction executionProcessing InstructionReservation station

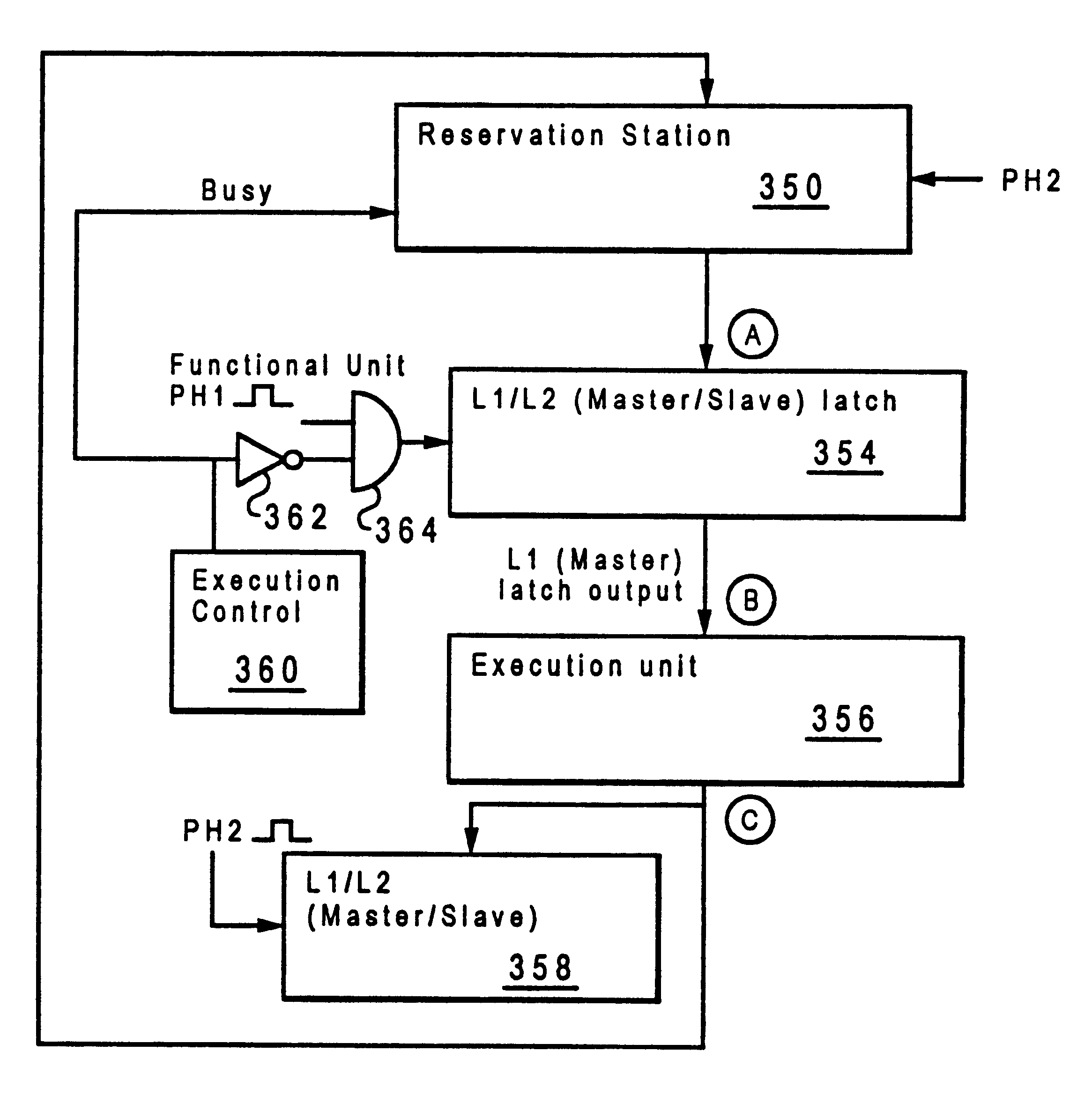

The present invention provides a method and apparatus for processing instructions in which the time allowed for the execution of an instruction is dynamically allocated. The allocation of time for execution of instruction occurs after the instruction is sent to the execution unit. The execution unit determines whether it can complete the instruction during the current processor cycle. In response to an ability to complete the instruction within the current processor cycle, the execution unit issues a busy signal to the reservation station. The reservation station continues to hold the next instruction until the execution until is capable of processing it.

Owner:IBM CORP

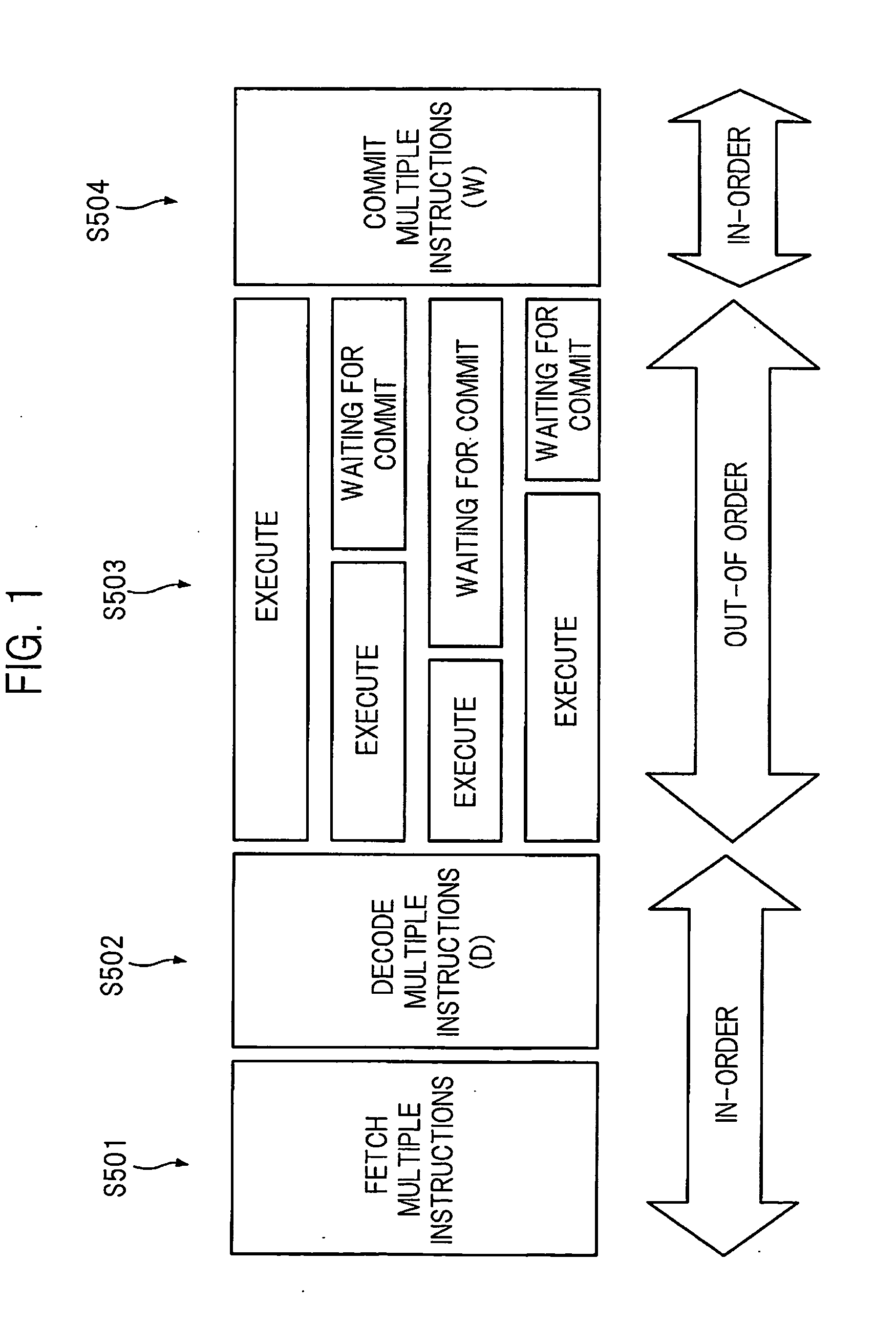

MANAGING DATAFLOW EXECUTION OF LOOP INSTRUCTIONS BY OUT-OF-ORDER PROCESSORS (OOPs), AND RELATED CIRCUITS, METHODS, AND COMPUTER-READABLE MEDIA

InactiveUS20160019061A1Instruction analysisDigital computer detailsReservation stationComputer program

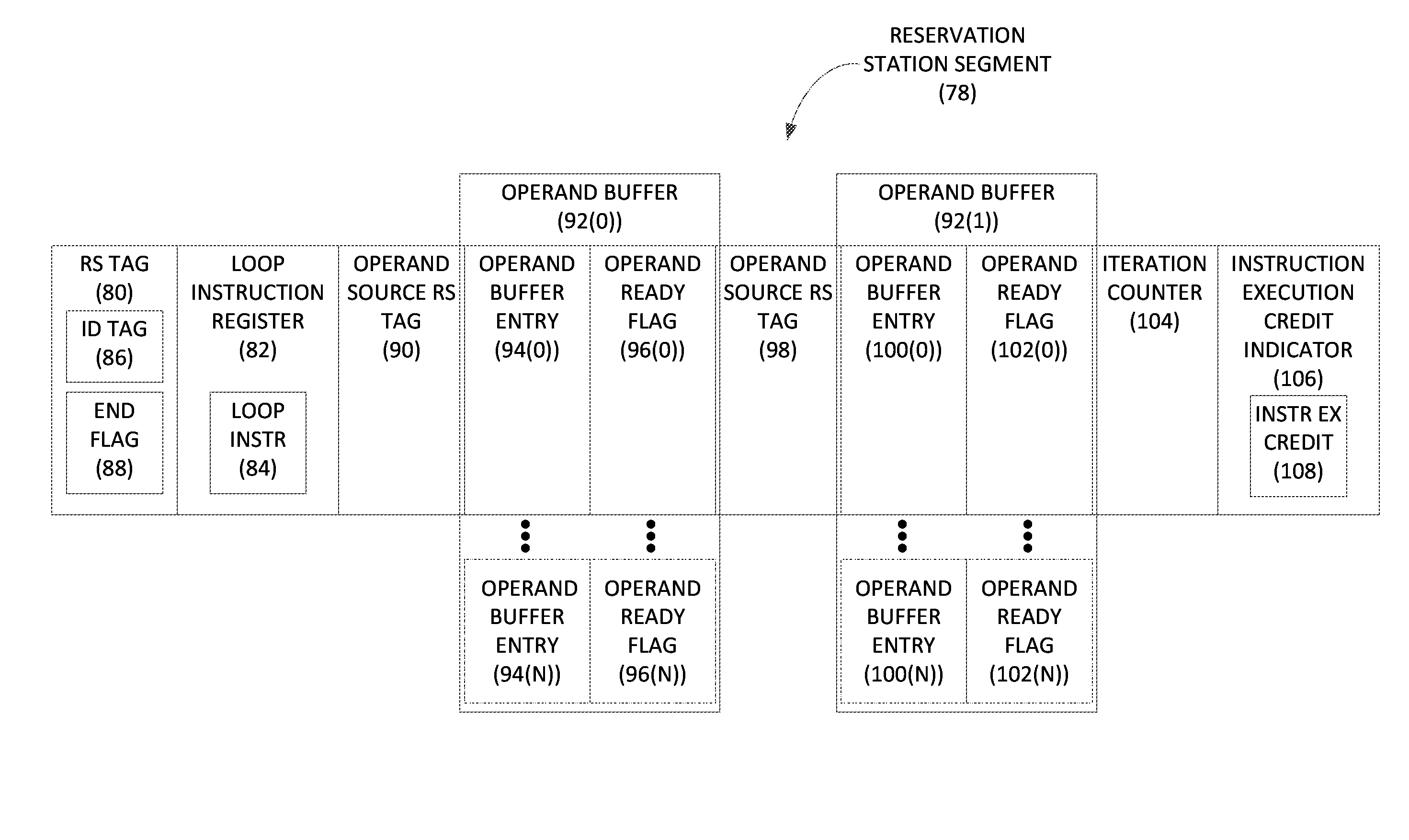

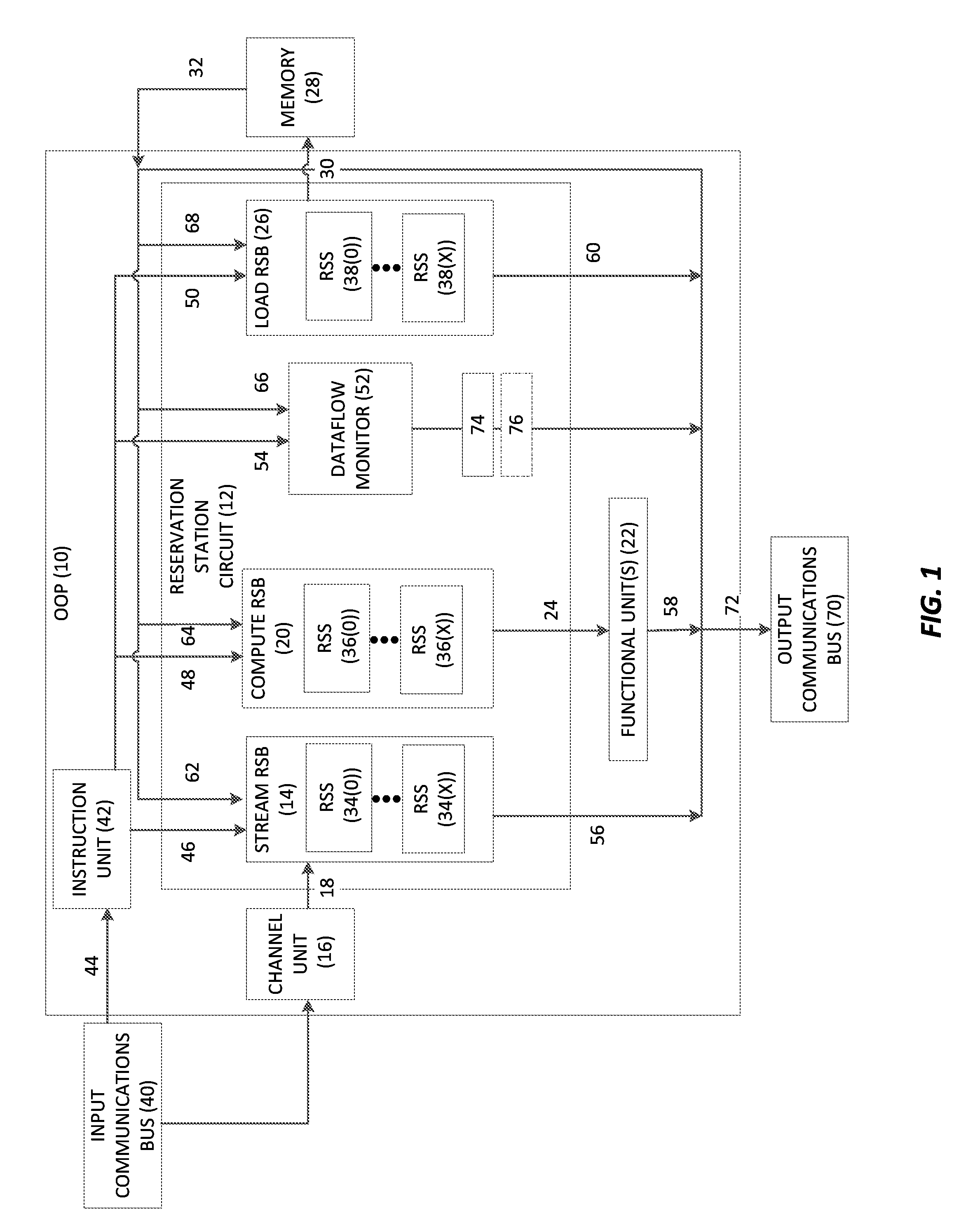

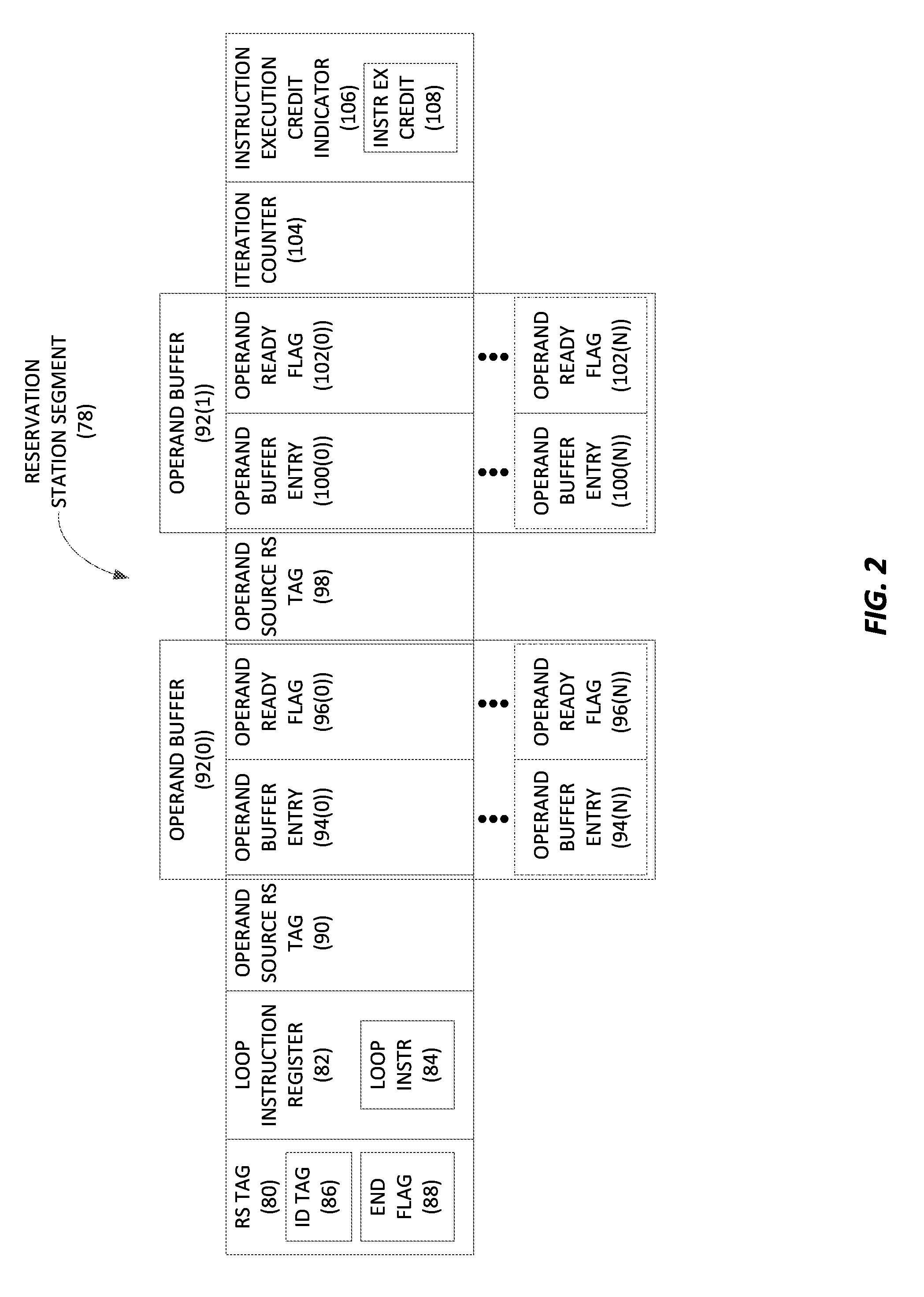

Managing dataflow execution of loop instructions by out-of-order processors (OOPs), and related circuits, methods, and computer-readable media are disclosed. In one aspect, a reservation station circuit is provided. The reservation station circuit includes multiple reservation station segments, each storing a loop instruction of a loop of a computer program. Each reservation station segment also stores an instruction execution credit indicating whether the corresponding loop instruction may be provided for dataflow execution. The reservation station circuit further includes a dataflow monitor that distributes an initial instruction execution credit to each reservation station segment. As each loop iteration is executed, each reservation station segment determines whether the instruction execution credit indicates that the loop instruction for the reservation station segment may be provided for dataflow execution. If so, the reservation station segment provides the loop instruction for dataflow execution, and adjusts the instruction execution credit for the reservation station segment.

Owner:QUALCOMM INC

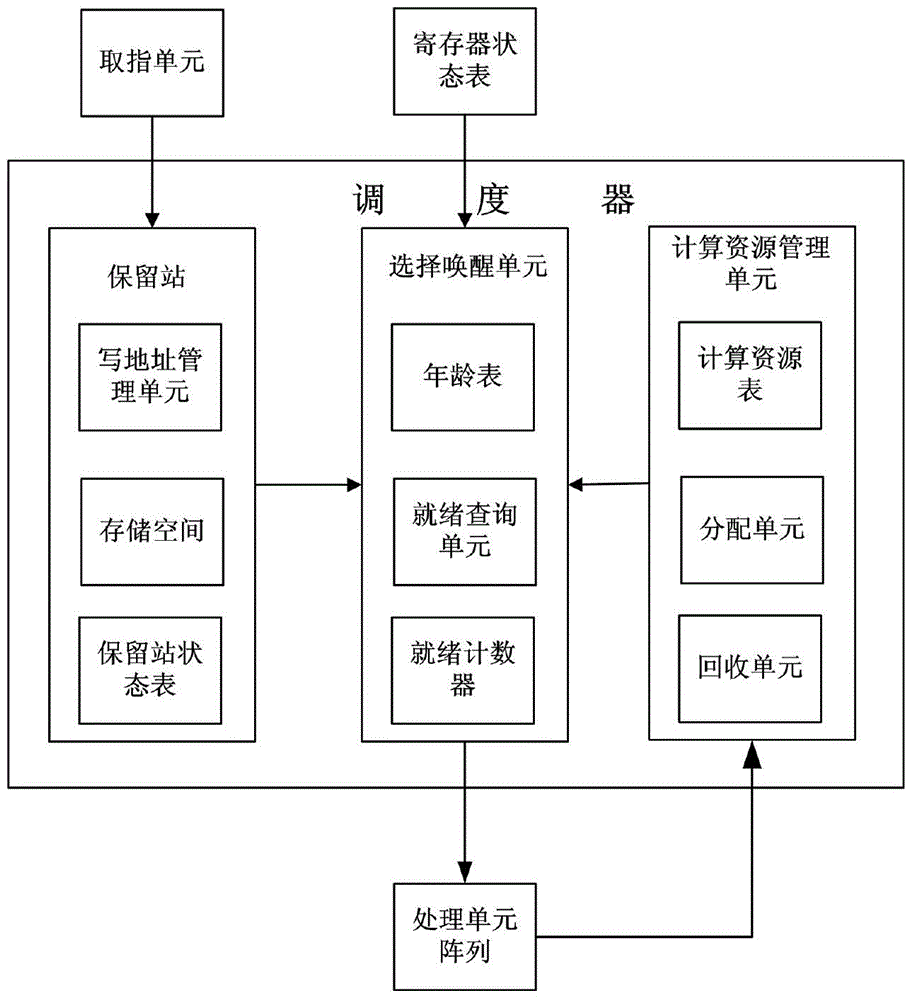

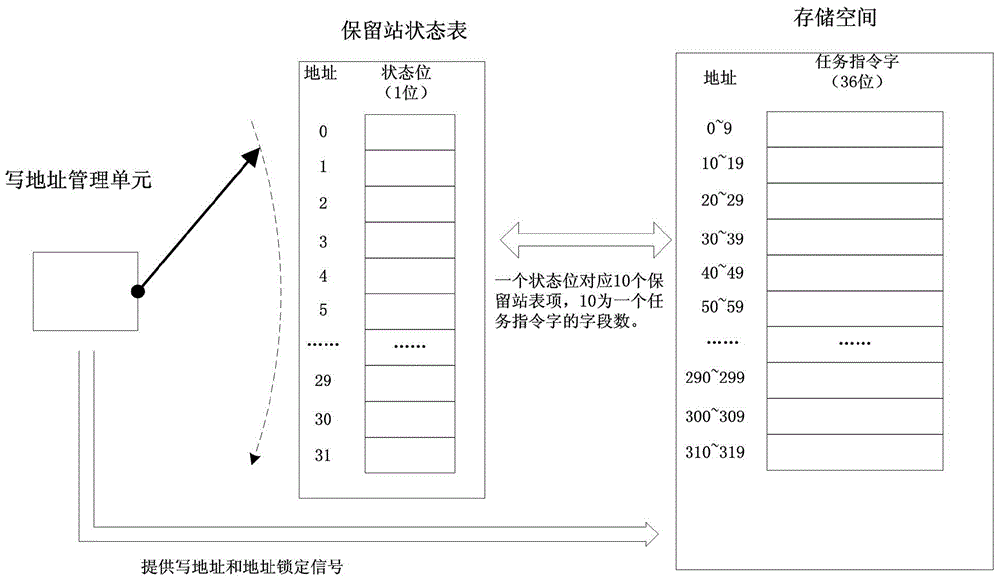

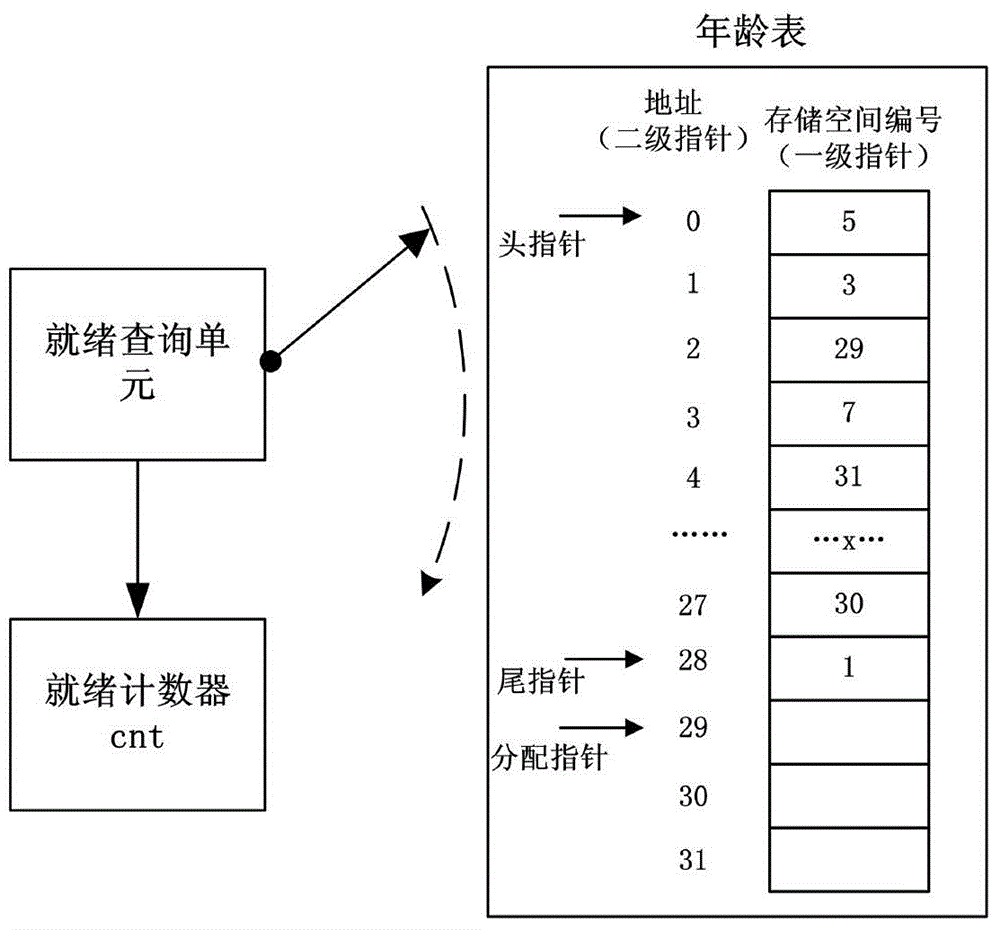

Task-level out-of-order multi-issue scheduler and scheduling method thereof

ActiveCN104932945AImprove utilization efficiencyGood computing powerResource allocationReservation stationManagement unit

The invention discloses a task-level out-of-order multi-issue scheduler and a scheduling method thereof. The scheduler is characterized by comprising a reservation station, an option awakening unit and a calculation resource management unit, wherein the reservation station comprises a write address management unit, a storage space and a reservation station state table; the option awakening unit comprises a chronological table, a ready inquiry unit and a ready counter; and the calculation resource management unit comprises a calculation resource table, an allocation unit and a recovery unit. The throughput and resource utilization efficiency of the scheduler can be increased, so that the task instruction issue efficiency is increased, and the system performance is improved.

Owner:HEFEI UNIV OF TECH

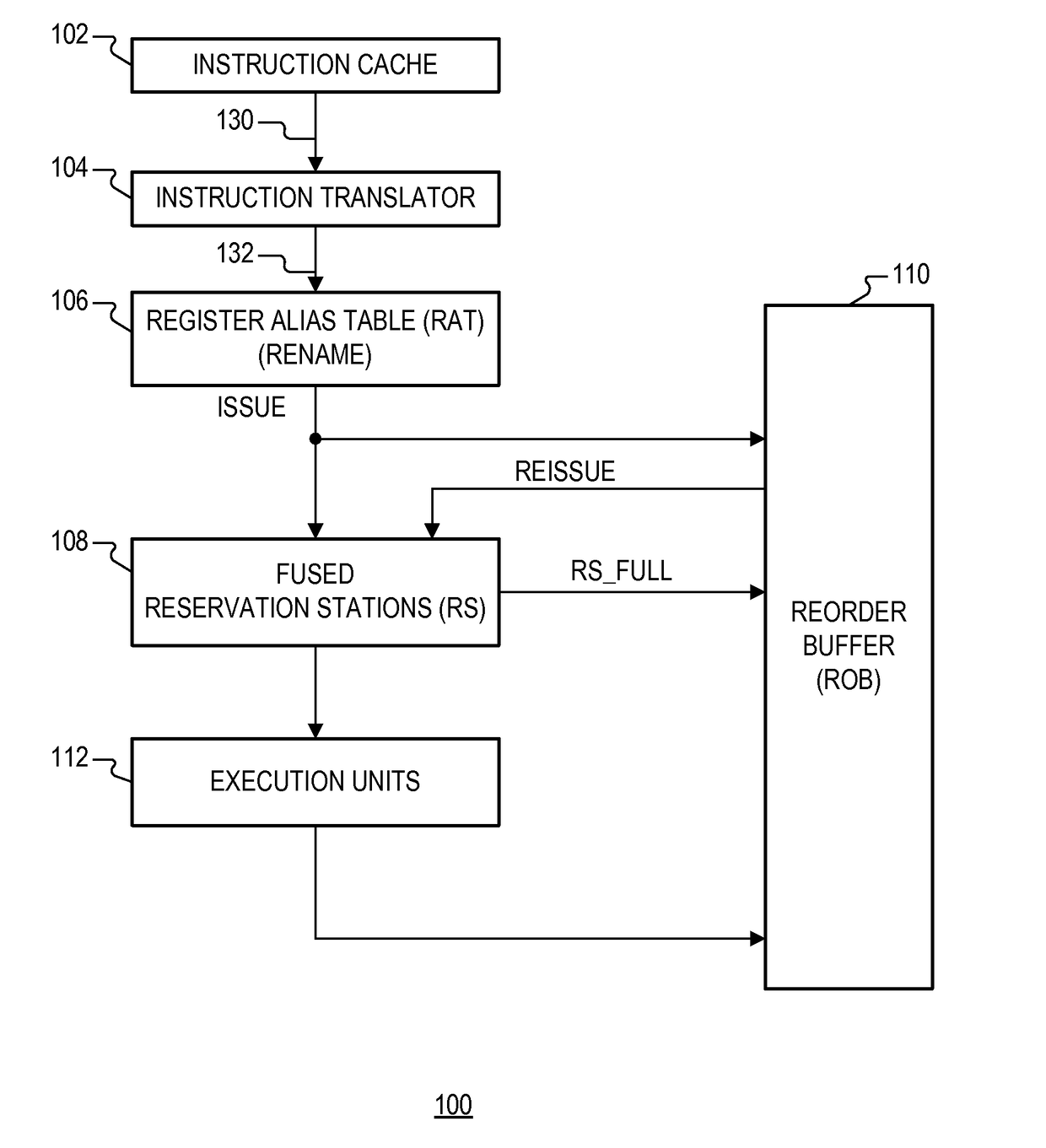

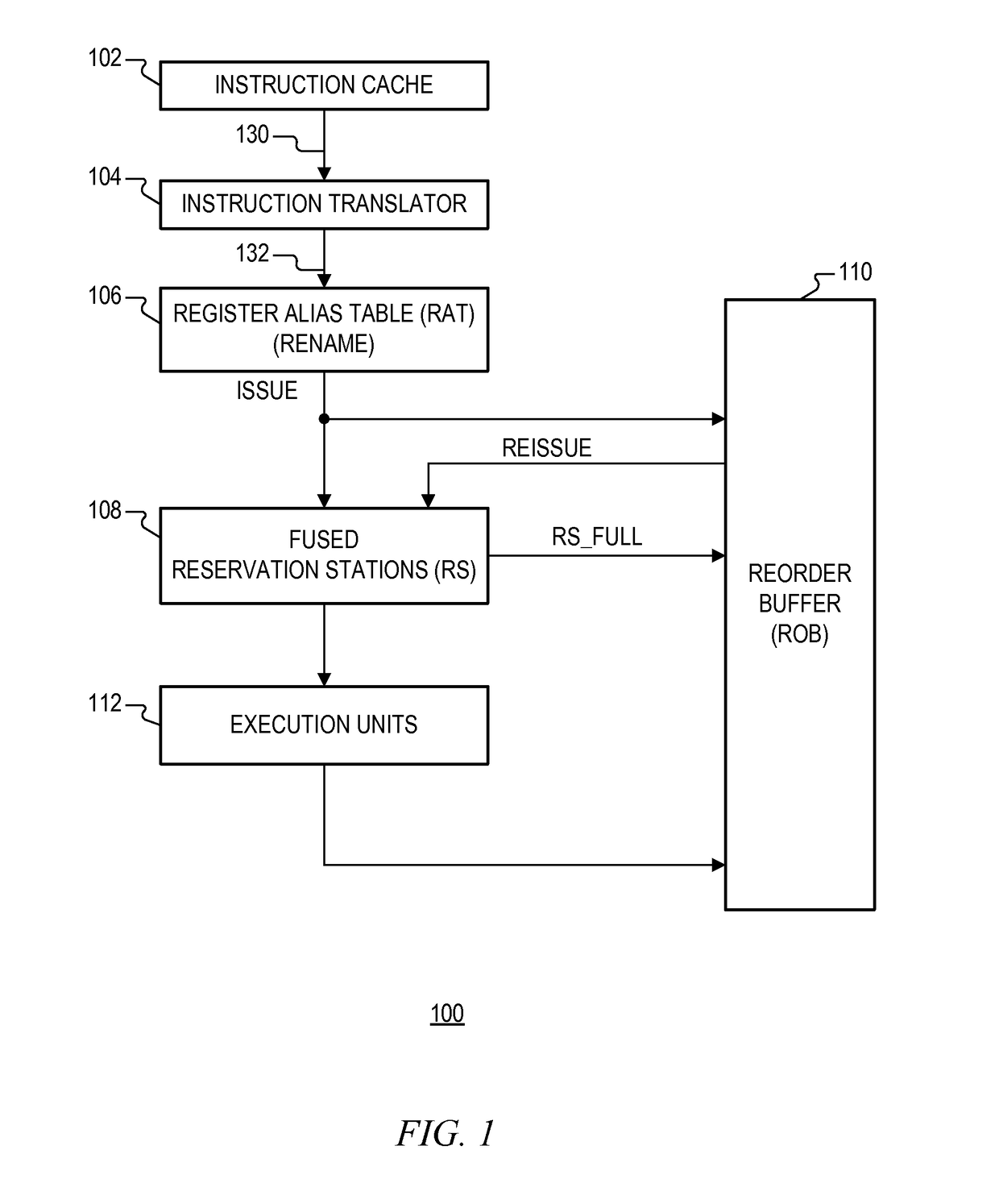

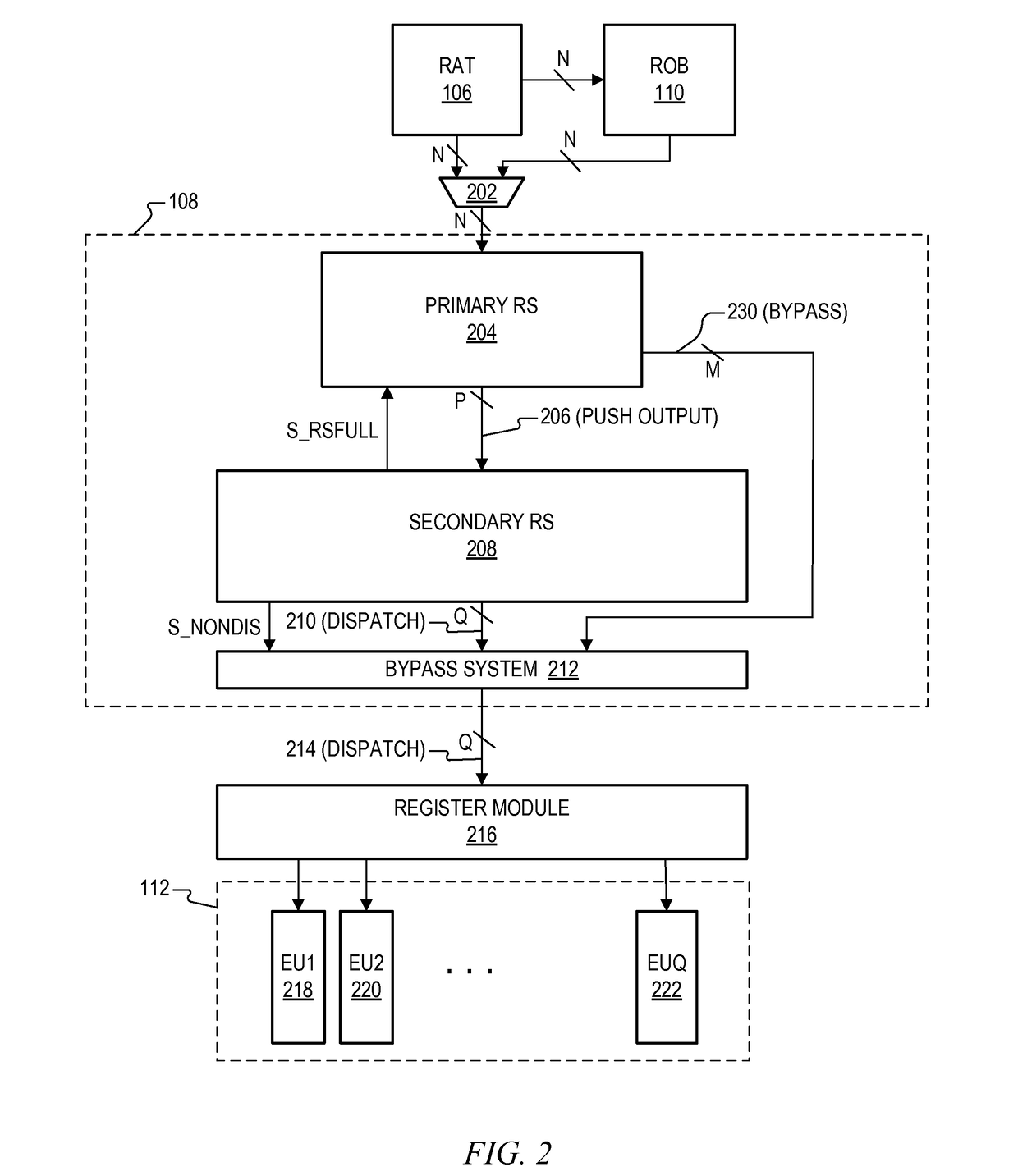

Microprocessor with fused reservation stations structure

ActiveUS20170090934A1Register arrangementsConcurrent instruction executionReservation stationComputer science

A microprocessor with a fused reservation stations (RS) structure including a primary RS, a secondary RS, and a bypass system. The primary RS has an input for receiving issued instructions, has a push output for pushing the issued instructions to the secondary RS, and has at least one bypass output for dispatching issued instructions that are ready for dispatch. The secondary RS has an input coupled to the push output of the primary RS and has at least one dispatch output. The bypass system selects between the bypass output of the primary RS and at least one dispatch output of the secondary RS for dispatching selected issued instructions. The primary and secondary RS may each be selected from different RS structure types. A unify RS provides a suitable primary RS, and the secondary RS may include multiple queues. The bypass output enables direct dispatch from the primary RS.

Owner:VIA ALLIANCE SEMICON CO LTD

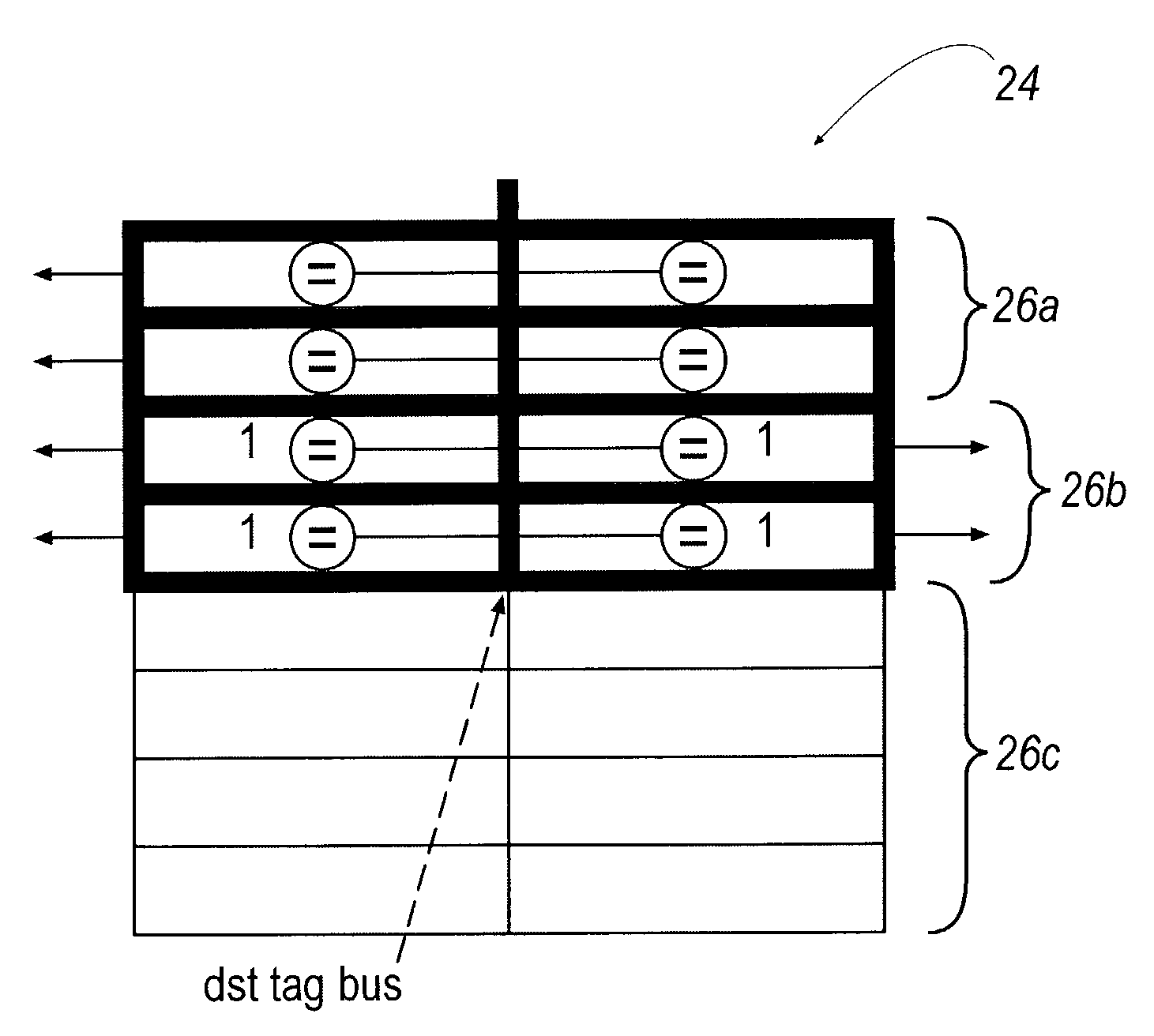

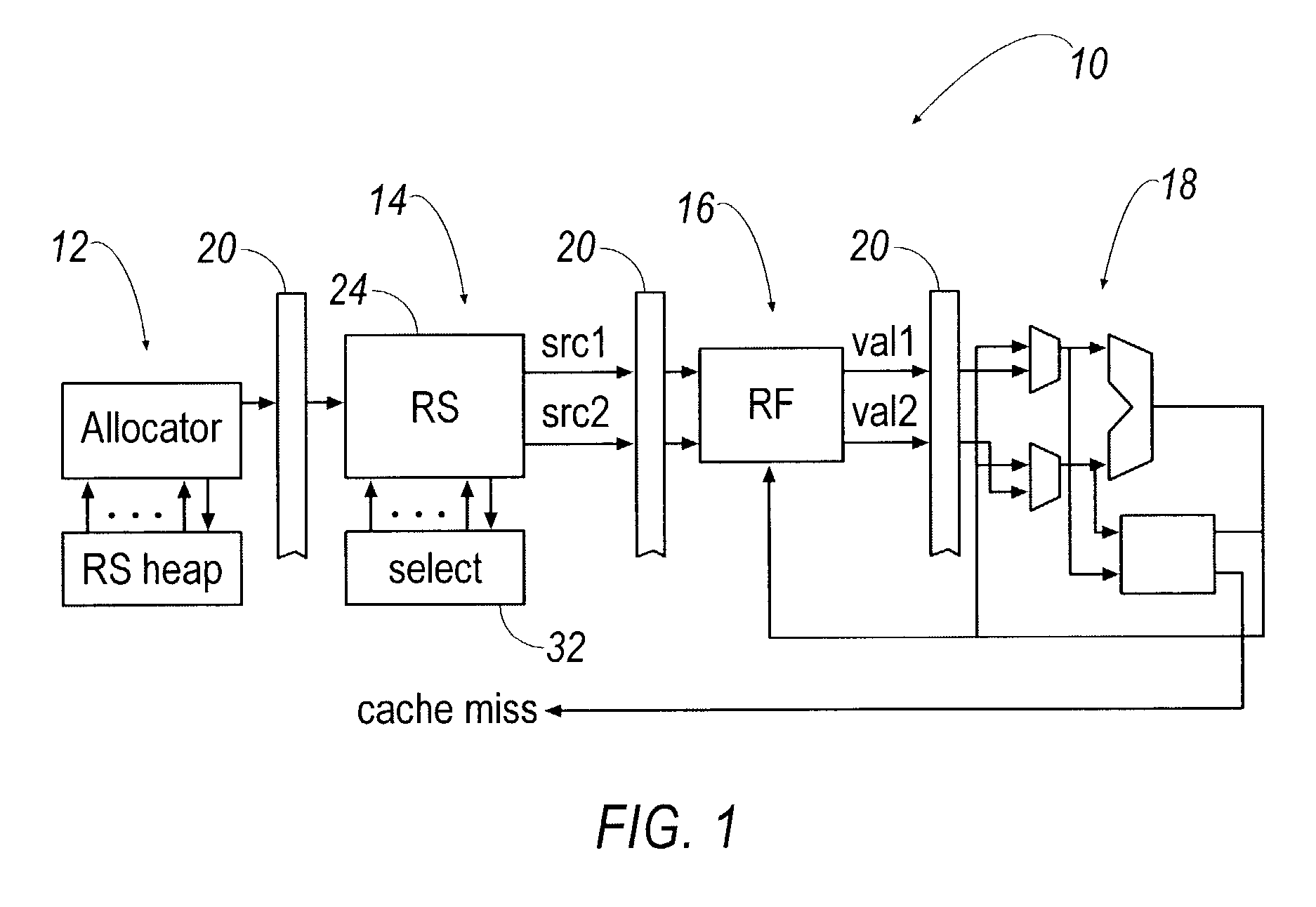

Technique for reduced-tag dynamic scheduling and reduced-tag prediction

ActiveUS7398375B2General purpose stored program computerConcurrent instruction executionReservation stationOperand

The present invention provides a dynamic scheduling scheme that uses reservation stations having at least one station that stores an at least two operand instruction. An allocator portion determines that the instruction, entering the pipeline, has one ready operand and one not-ready operand, and accordingly places it in a station having only one comparator. The one comparator then compares the not-ready operand with tags broadcasted on a result tag bus to determine when the not-ready operand becomes ready. Once ready, execution is requested to the corresponding functional unit.

Owner:RGT UNIV OF MICHIGAN

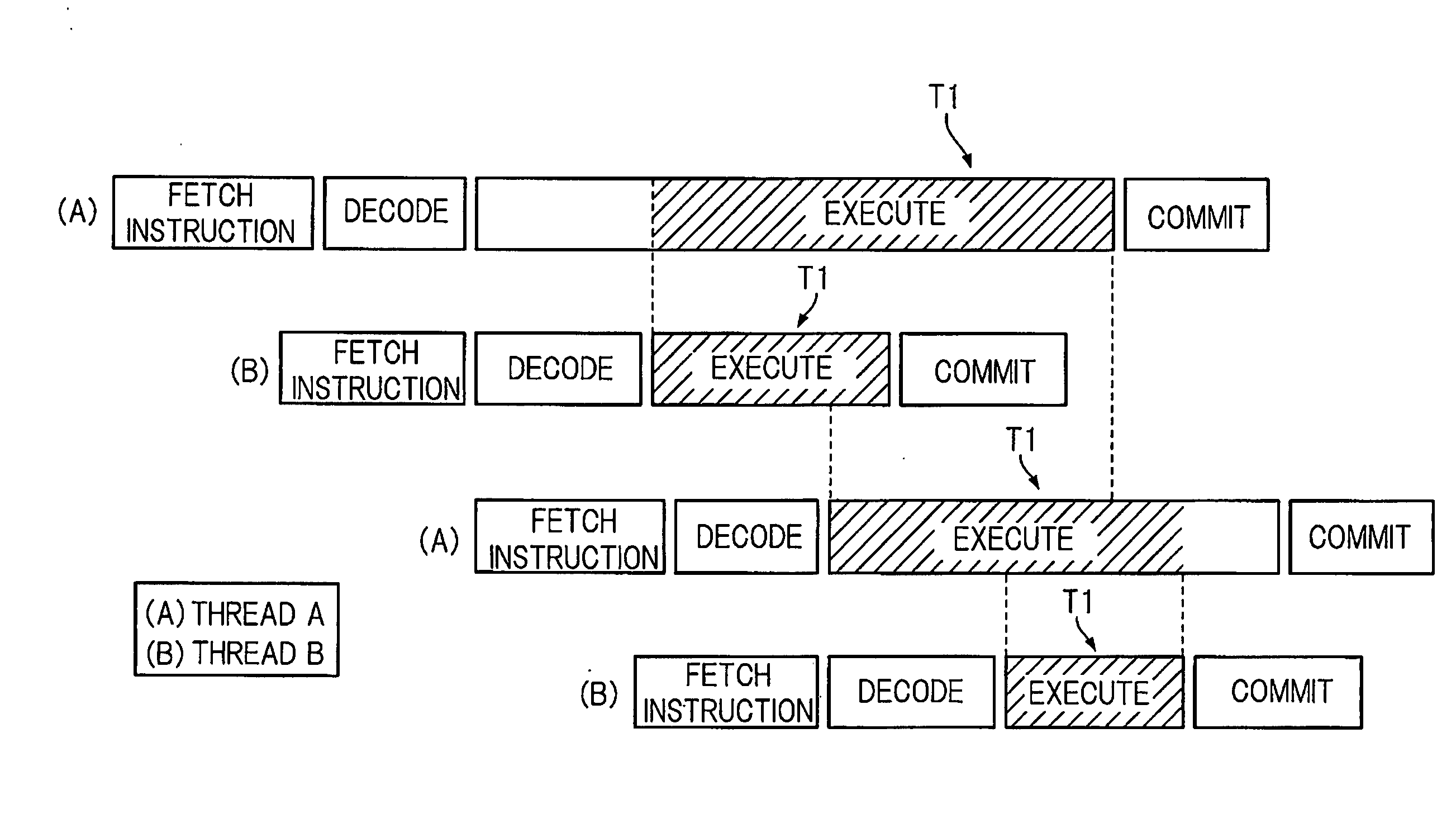

Instruction processing apparatus

InactiveUS20100106945A1Efficient processingSure avoidance of a troubleDigital computer detailsConcurrent instruction executionReservation stationInstruction buffer

The present invention includes a decode section for simultaneously holding a plurality of instructions in one thread at a time and for decoding the held instructions; an execution pipeline capable of simultaneously executing each processing represented by the respective instructions belonging to different threads and decoded by the decode section; a reservation station for receiving the instructions decoded by the decode section and holding the instructions, if the decoded instructions are of sync attribute, until executable conditions are ready and thereafter dispatching the decoded instructions to the execution pipeline; a pre-decode section for confirming by a simple decoding, prior to decoding by the decode section, whether or not the instructions are of sync attribute; and an instruction buffer for suspending issuance to the decode section and holding the instructions subsequent to an instruction of sync attribute.

Owner:FUJITSU LTD

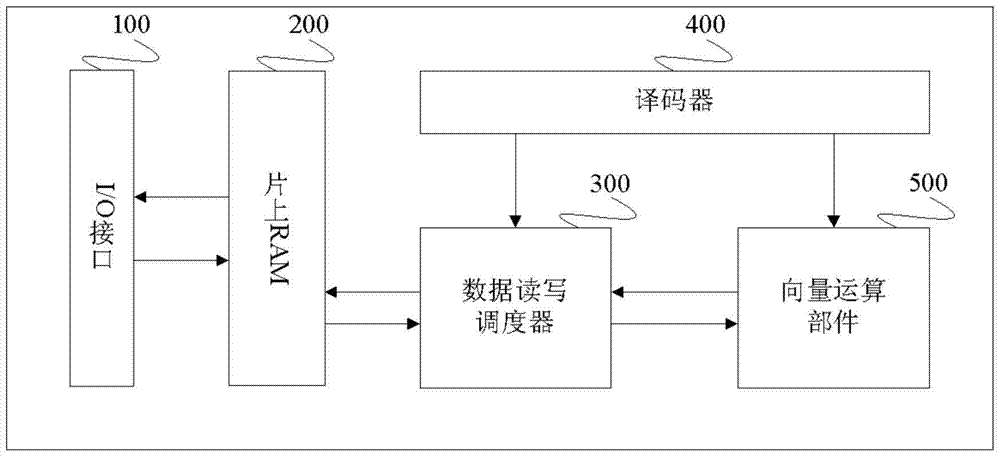

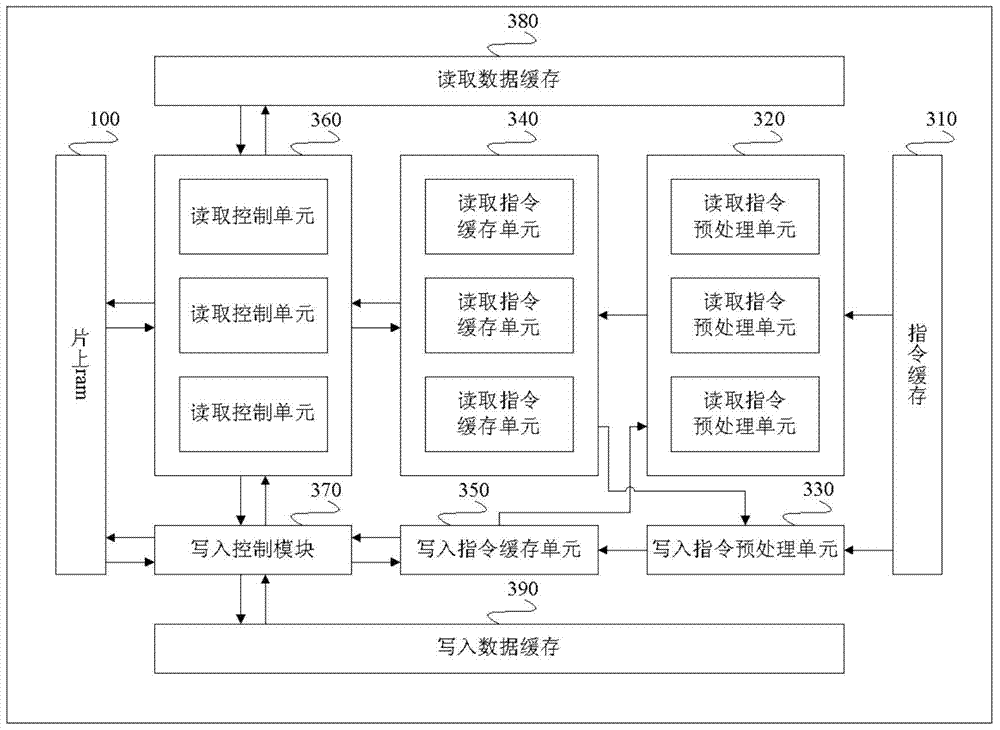

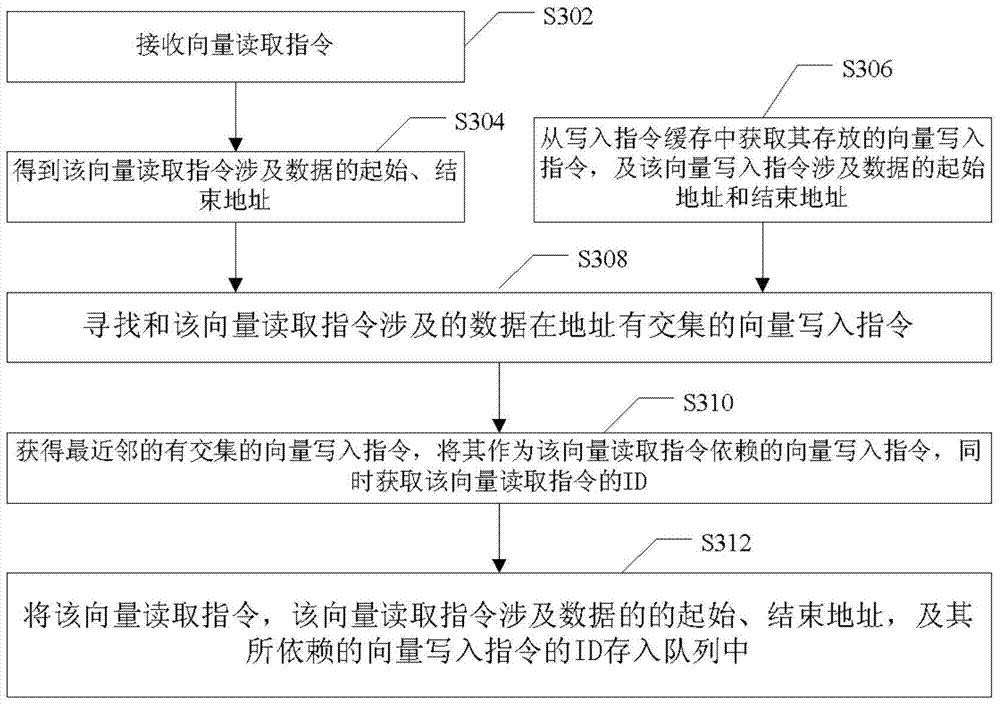

Data read-write scheduler and reservation station used for vector operation

ActiveCN106991073AResolving read-after-write conflictsImprove utilization efficiencyProgram controlArchitecture with single central processing unitReservation stationInstruction buffer

The invention provides a data read-write scheduler and a reservation station used for vector operation. In the data read-write scheduler, a read instruction buffer module and a write-in instruction buffer module are arranged, the read instruction buffer module and the write-in instruction buffer module mutually detect conflict instructions, when conflict exists, execution of the instruction is paused, the instruction is re-executed when the time is ripe, and therefore writing after reading conflict and reading after writing conflict between instructions are solved, the fact that correct data is provided for a vector operation part is guaranteed, and the data read-write scheduler has good promotion and application values.

Owner:CAMBRICON TECH CO LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com