Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

5758results about "Machine execution arrangements" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

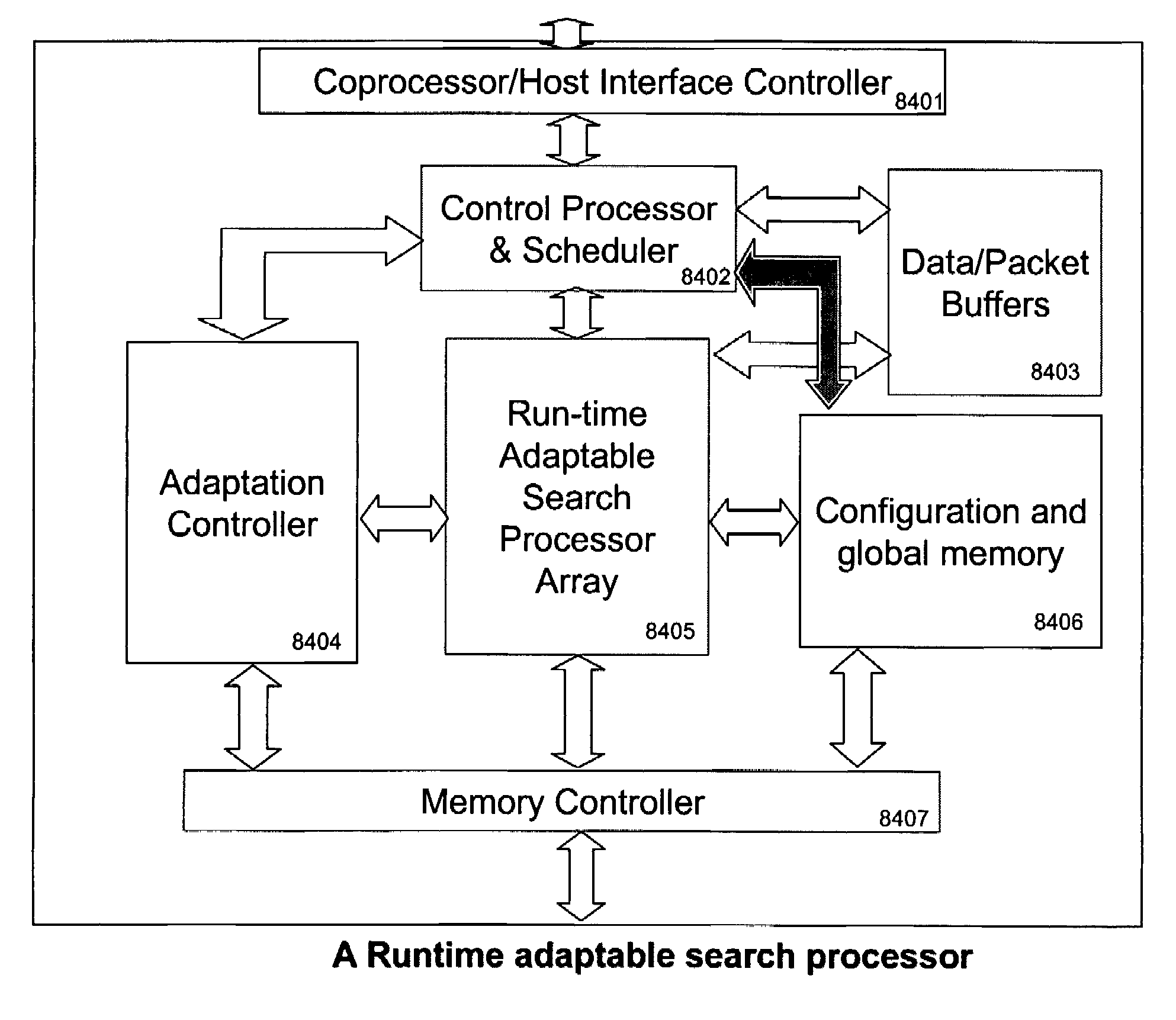

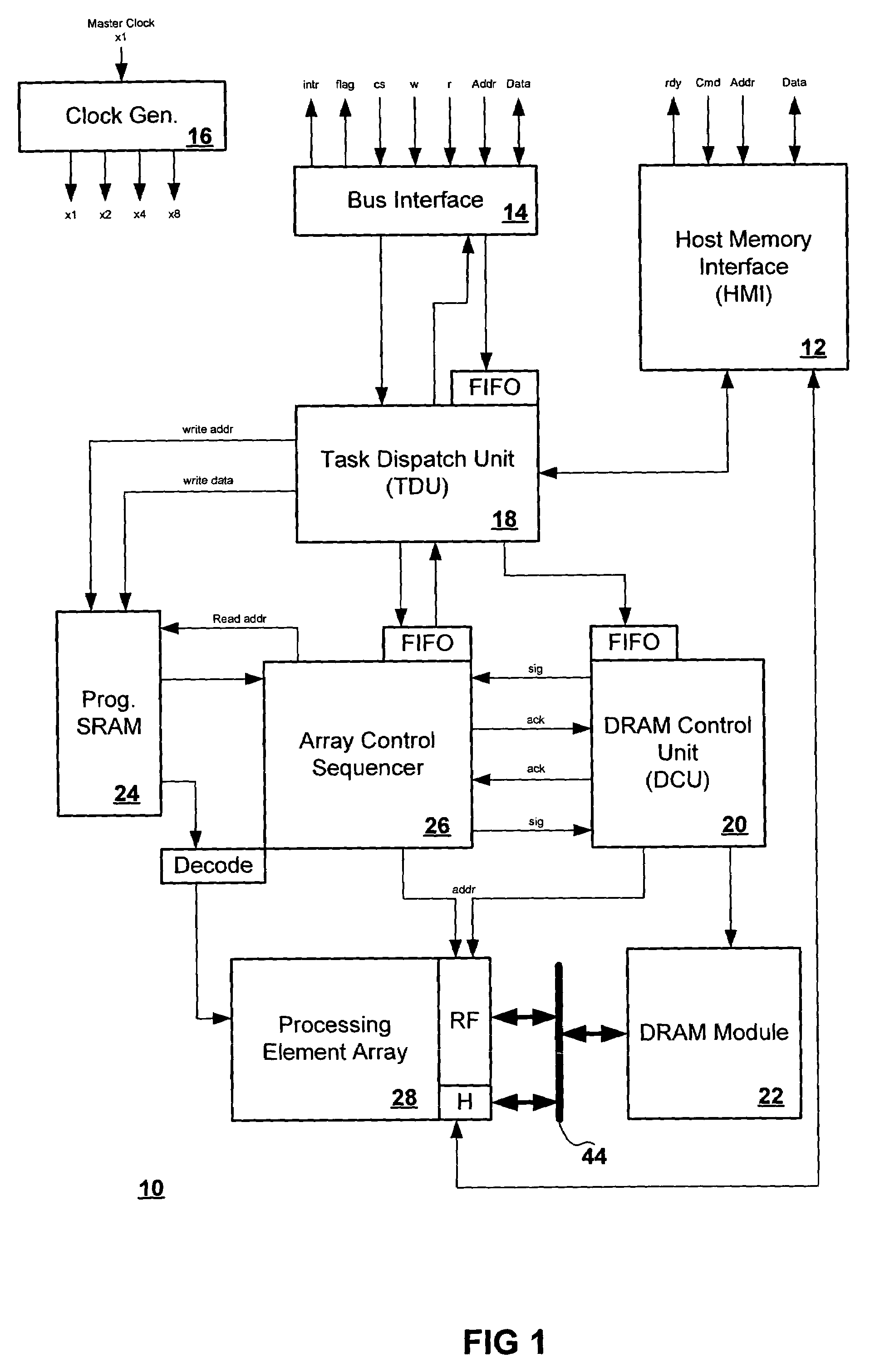

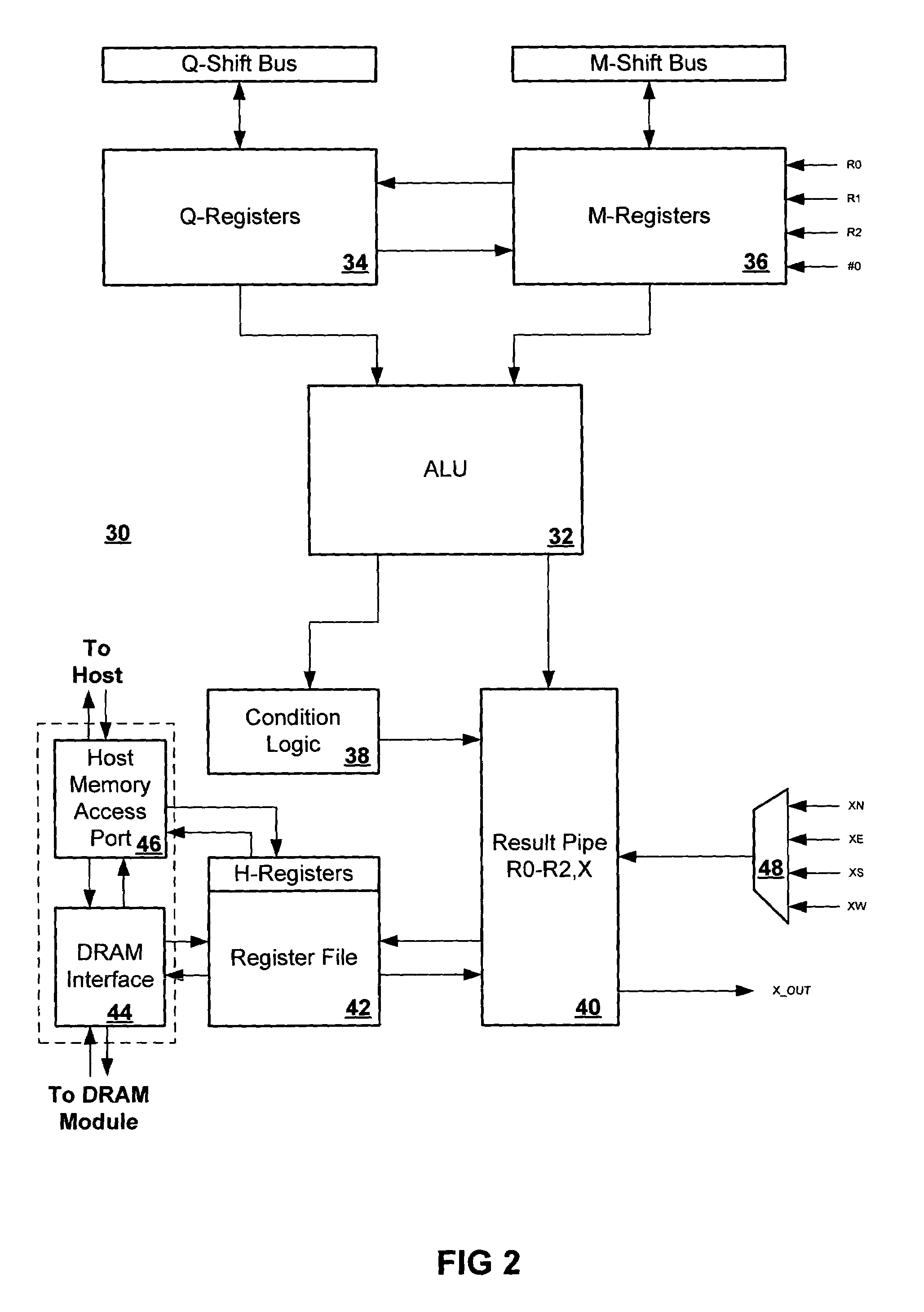

Runtime adaptable search processor

ActiveUS7685254B2Improve application performanceLarge capacityWeb data indexingMemory adressing/allocation/relocationPacket schedulingSchema for Object-Oriented XML

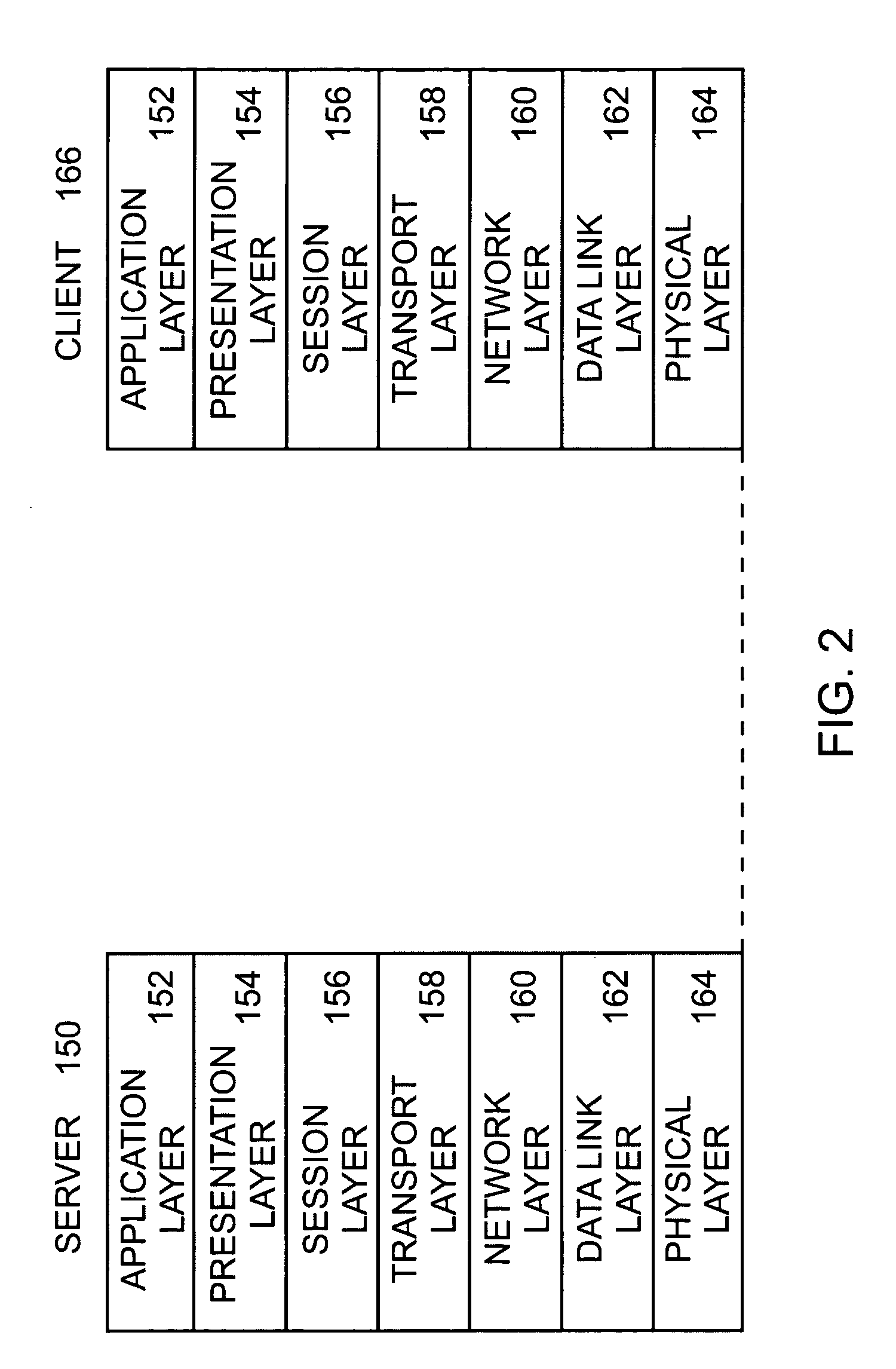

A runtime adaptable search processor is disclosed. The search processor provides high speed content search capability to meet the performance need of network line rates growing to 1 Gbps, 10 Gbps and higher. The search processor provides a unique combination of NFA and DFA based search engines that can process incoming data in parallel to perform the search against the specific rules programmed in the search engines. The processor architecture also provides capabilities to transport and process Internet Protocol (IP) packets from Layer 2 through transport protocol layer and may also provide packet inspection through Layer 7. Further, a runtime adaptable processor is coupled to the protocol processing hardware and may be dynamically adapted to perform hardware tasks as per the needs of the network traffic being sent or received and / or the policies programmed or services or applications being supported. A set of engines may perform pass-through packet classification, policy processing and / or security processing enabling packet streaming through the architecture at nearly the full line rate. A high performance content search and rules processing security processor is disclosed which may be used for application layer and network layer security. Scheduler schedules packets to packet processors for processing. An internal memory or local session database cache stores a session information database for a certain number of active sessions. The session information that is not in the internal memory is stored and retrieved to / from an additional memory. An application running on an initiator or target can in certain instantiations register a region of memory, which is made available to its peer(s) for access directly without substantial host intervention through RDMA data transfer. A security system is also disclosed that enables a new way of implementing security capabilities inside enterprise networks in a distributed manner using a protocol processing hardware with appropriate security features.

Owner:MEMORY ACCESS TECH LLC

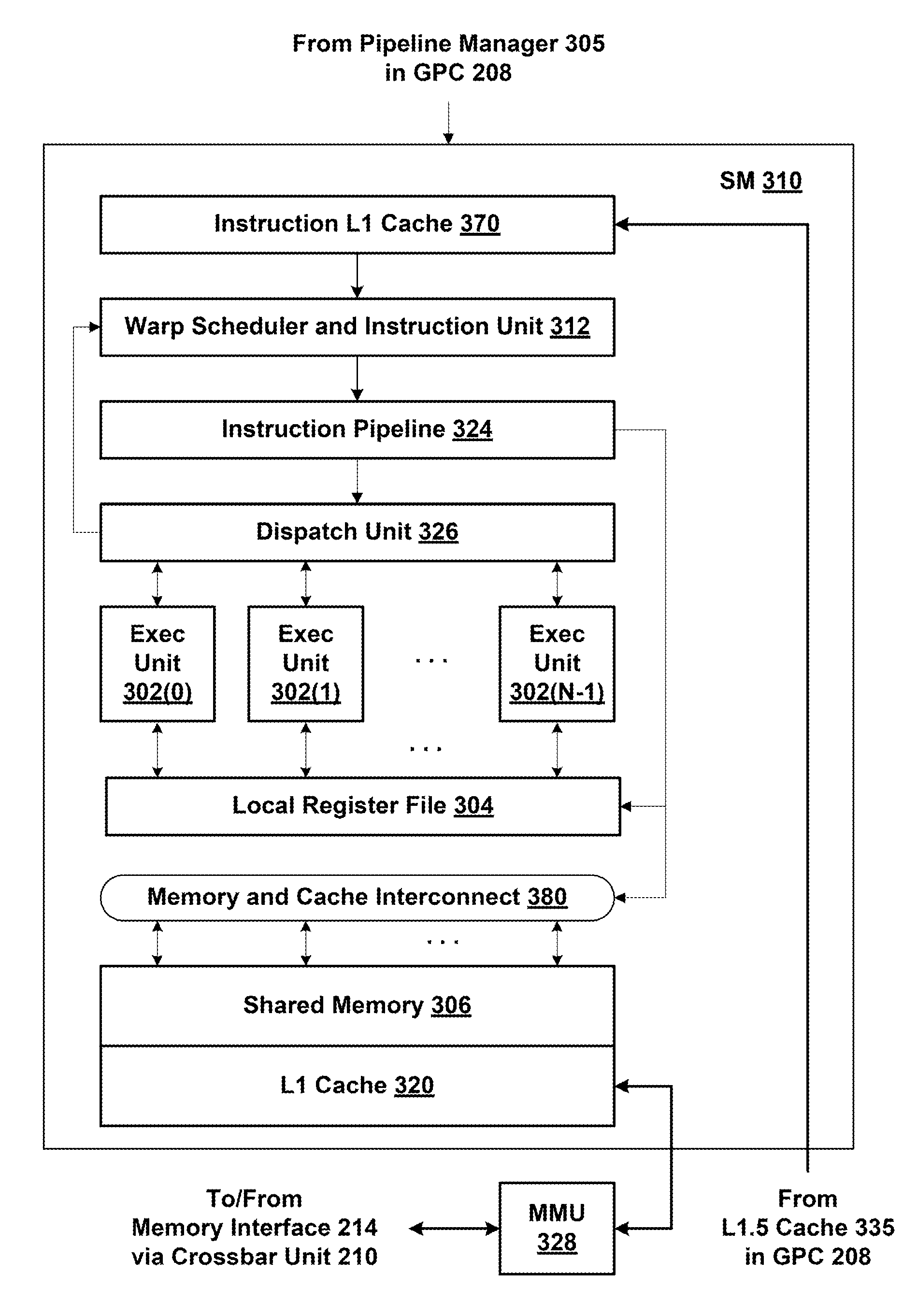

Speculative execution and rollback

ActiveUS20130117541A1Resolve dependenciesDigital computer detailsMemory systemsSpeculative executionExecution unit

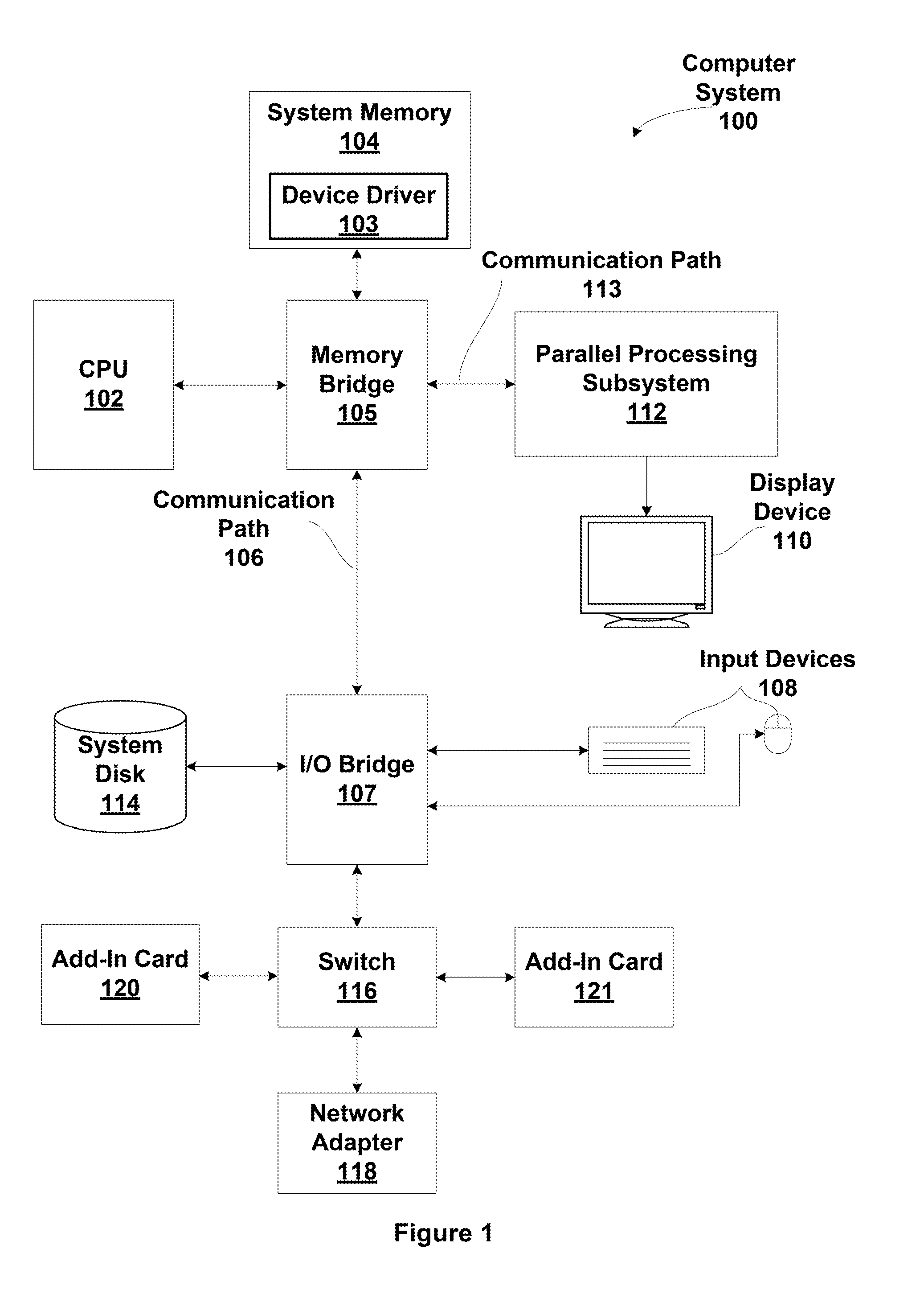

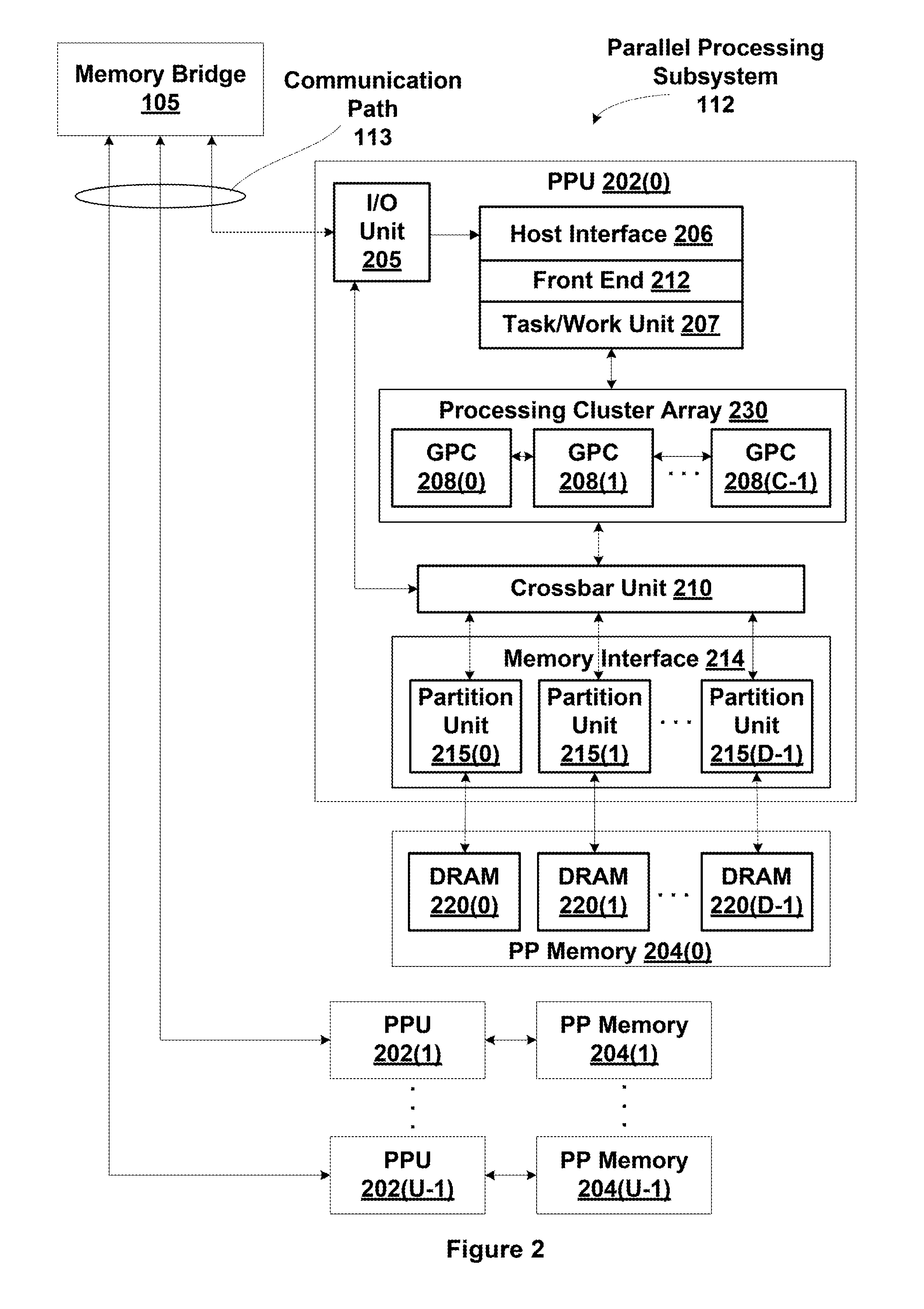

One embodiment of the present invention sets forth a technique for speculatively issuing instructions to allow a processing pipeline to continue to process some instructions during rollback of other instructions. A scheduler circuit issues instructions for execution assuming that, several cycles later, when the instructions reach multithreaded execution units, that dependencies between the instructions will be resolved, resources will be available, operand data will be available, and other conditions will not prevent execution of the instructions. When a rollback condition exists at the point of execution for an instruction for a particular thread group, the instruction is not dispatched to the multithreaded execution units. However, other instructions issued by the scheduler circuit for execution by different thread groups, and for which a rollback condition does not exist, are executed by the multithreaded execution units. The instruction incurring the rollback condition is reissued after the rollback condition no longer exists.

Owner:NVIDIA CORP

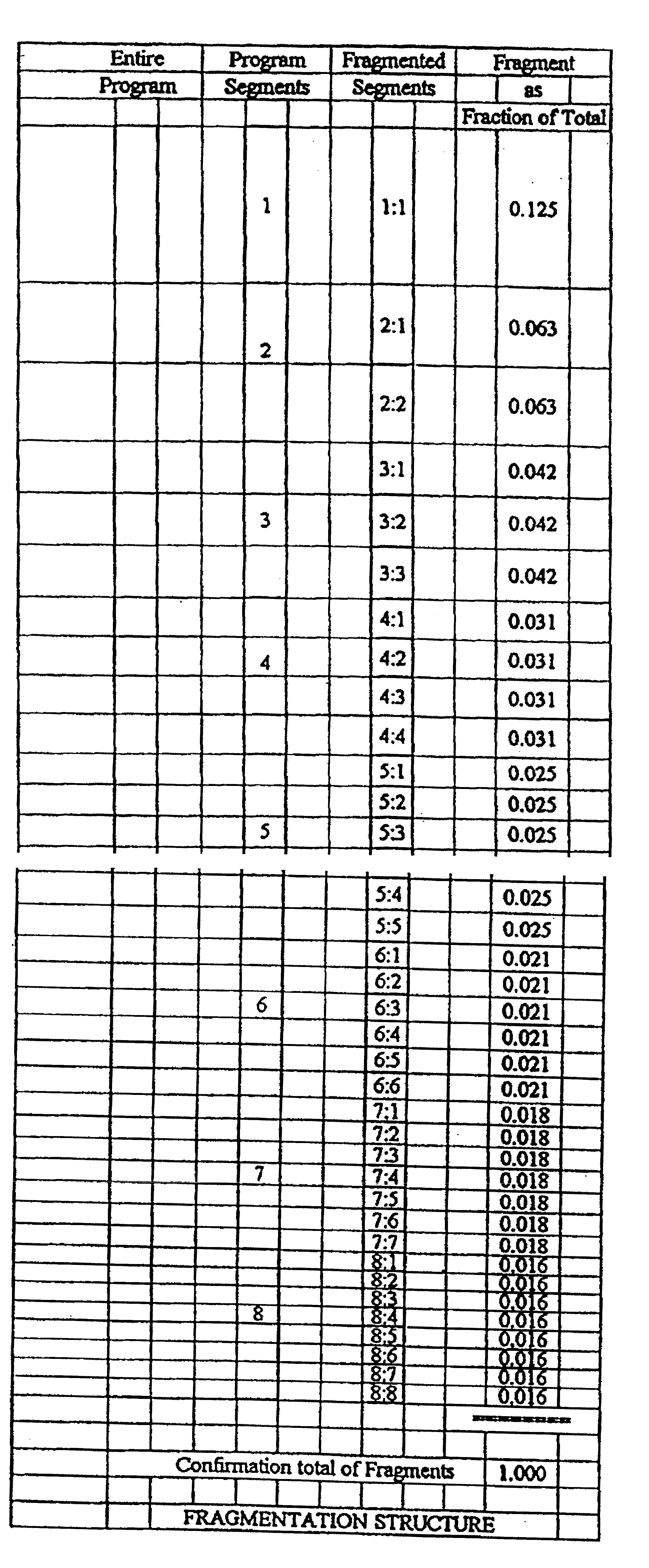

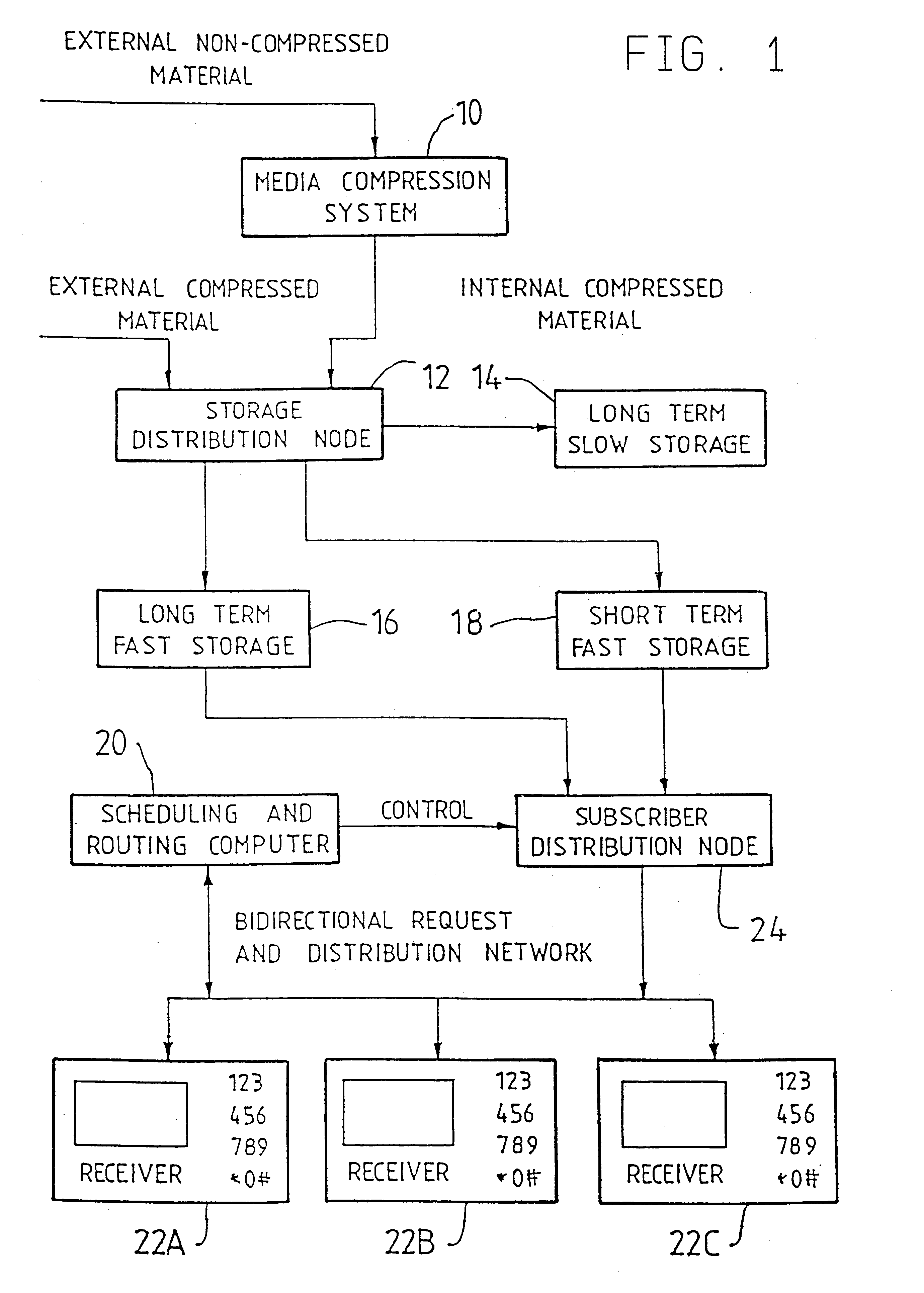

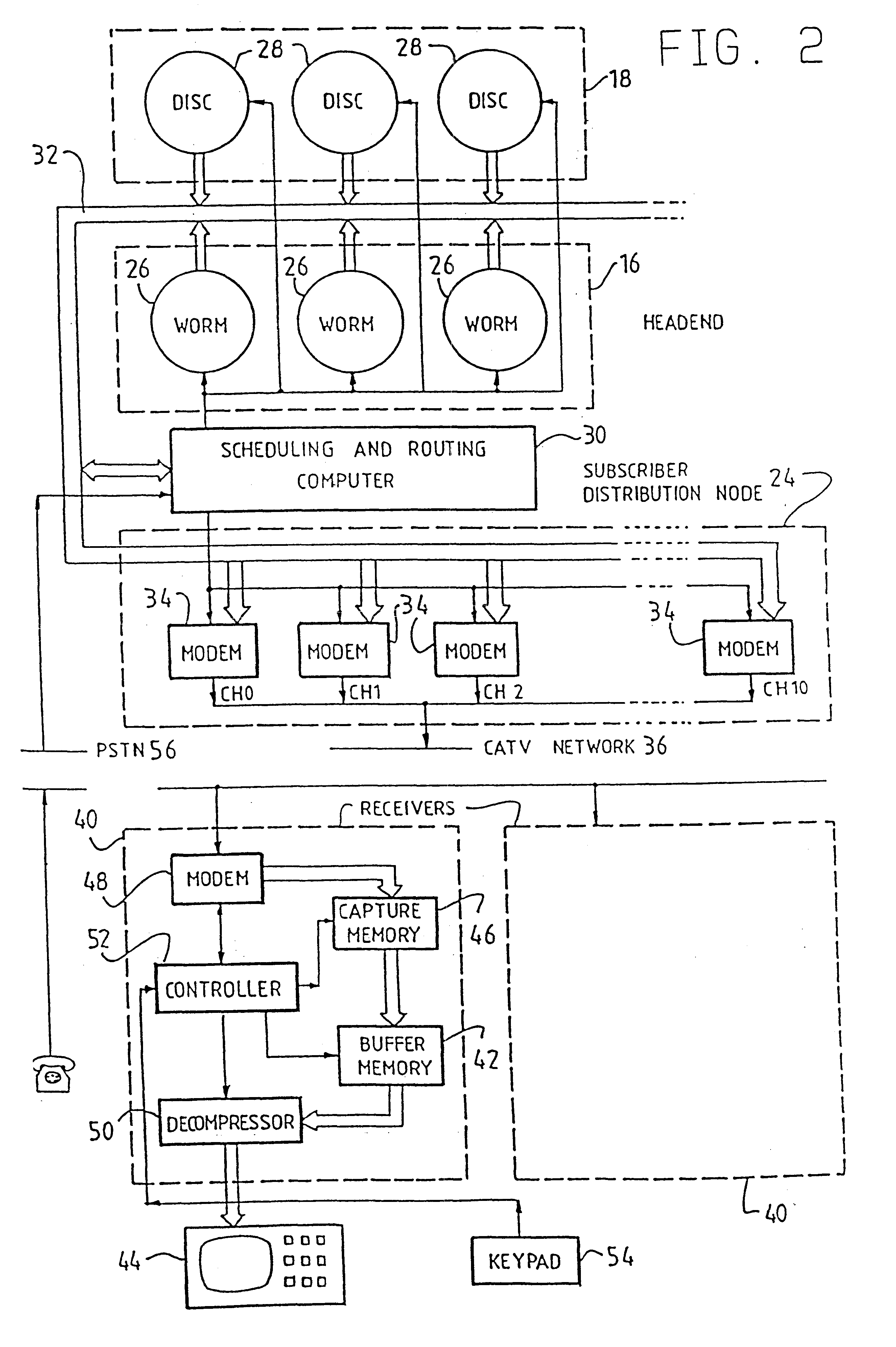

Method and system of program transmission optimization using a redundant transmission sequence

A system and method of optimizing transmission of a program to multiple users over a distribution system, with particular application to video-on-demand for a CATV network. The system includes, at a head end of the CATV network a scheduling and routing computer for dividing the video program stored in long term fast storage or short term fast storage into a plurality of program segments, and a subscriber distribution node for transmitting the program segments in a redundant sequence in accordance with a scheduling algorithm. At a receiver of the CATV network there is provided a buffer memory for storing the transmitted video program segments for subsequent playback whereby, in use, the scheduling algorithm can ensure that a user's receiver will receive all of the program segments in a manner that will enable continuous playback in real time of the program. Under the control of controller the receiver distinguishes received program segments by a segment identifier so that redundant segments captured in capture memory are then stored in buffer memory from which the segments can be retrieved and decompressed in data compressor for immediate or subsequent viewing. In one embodiment, the method of this invention includes dividing at least some segments into fragments, and transmitting one fragment of each segment during a playback interval of a duration, for example, equal to a playback time of a segment.

Owner:DETA TECH DEV

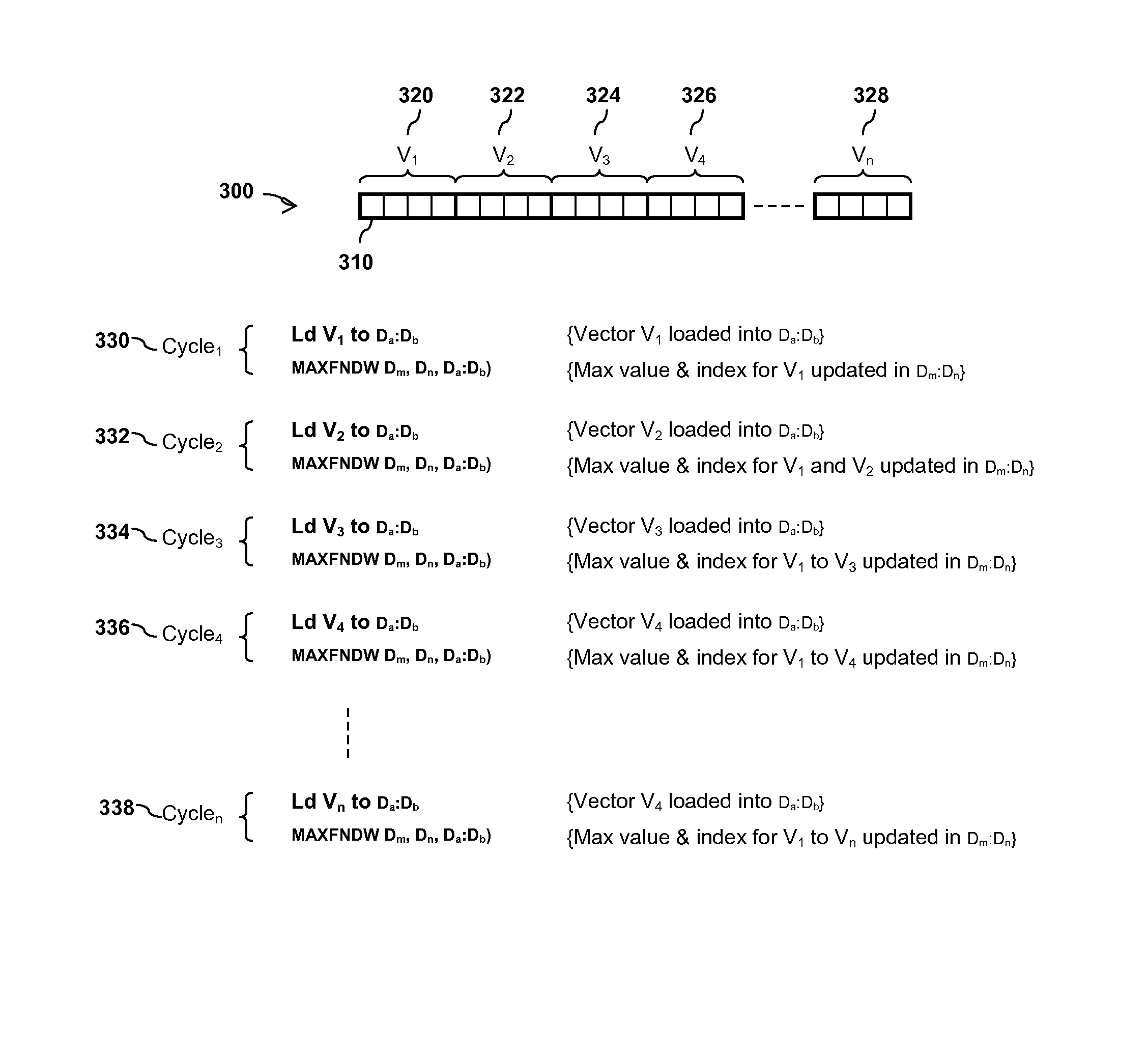

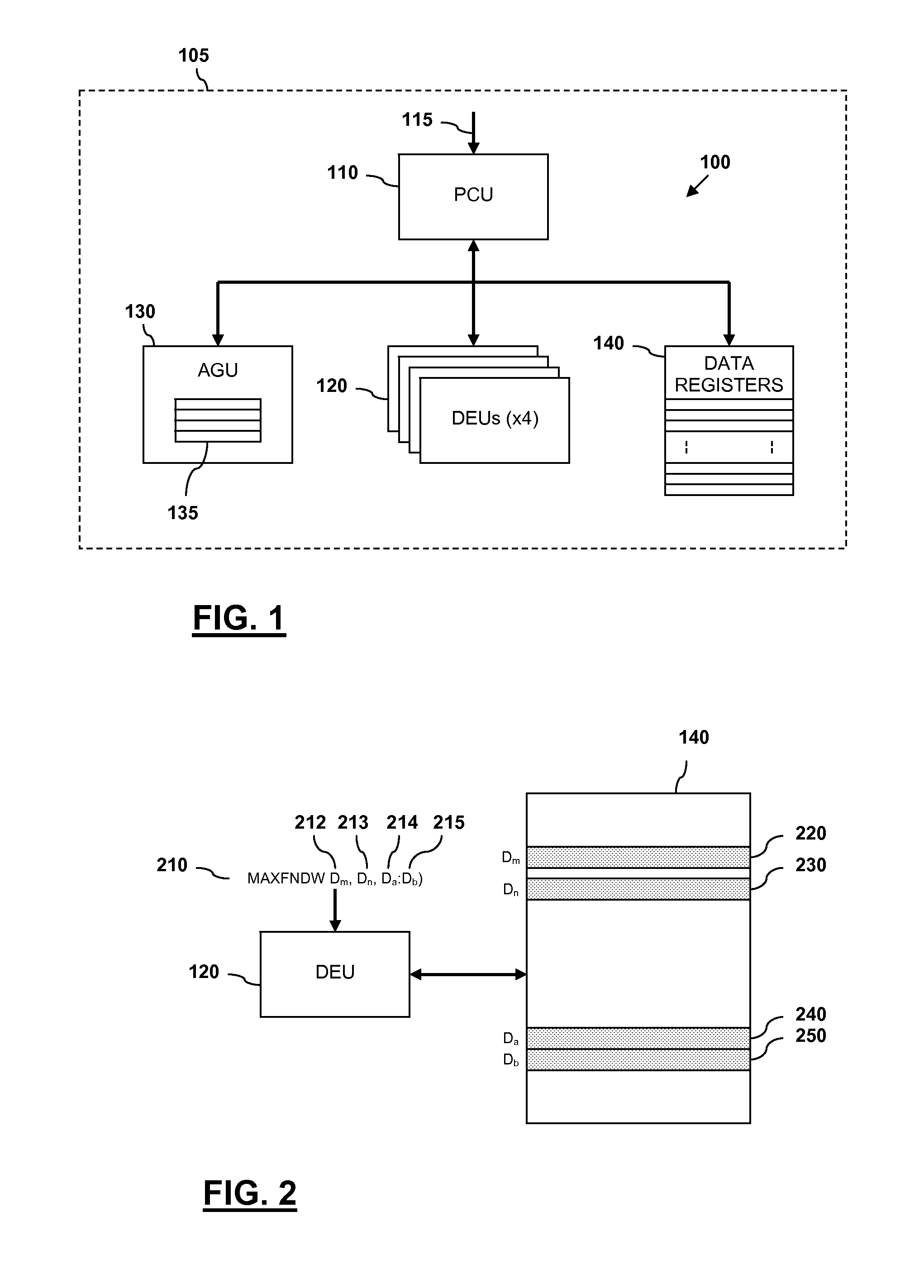

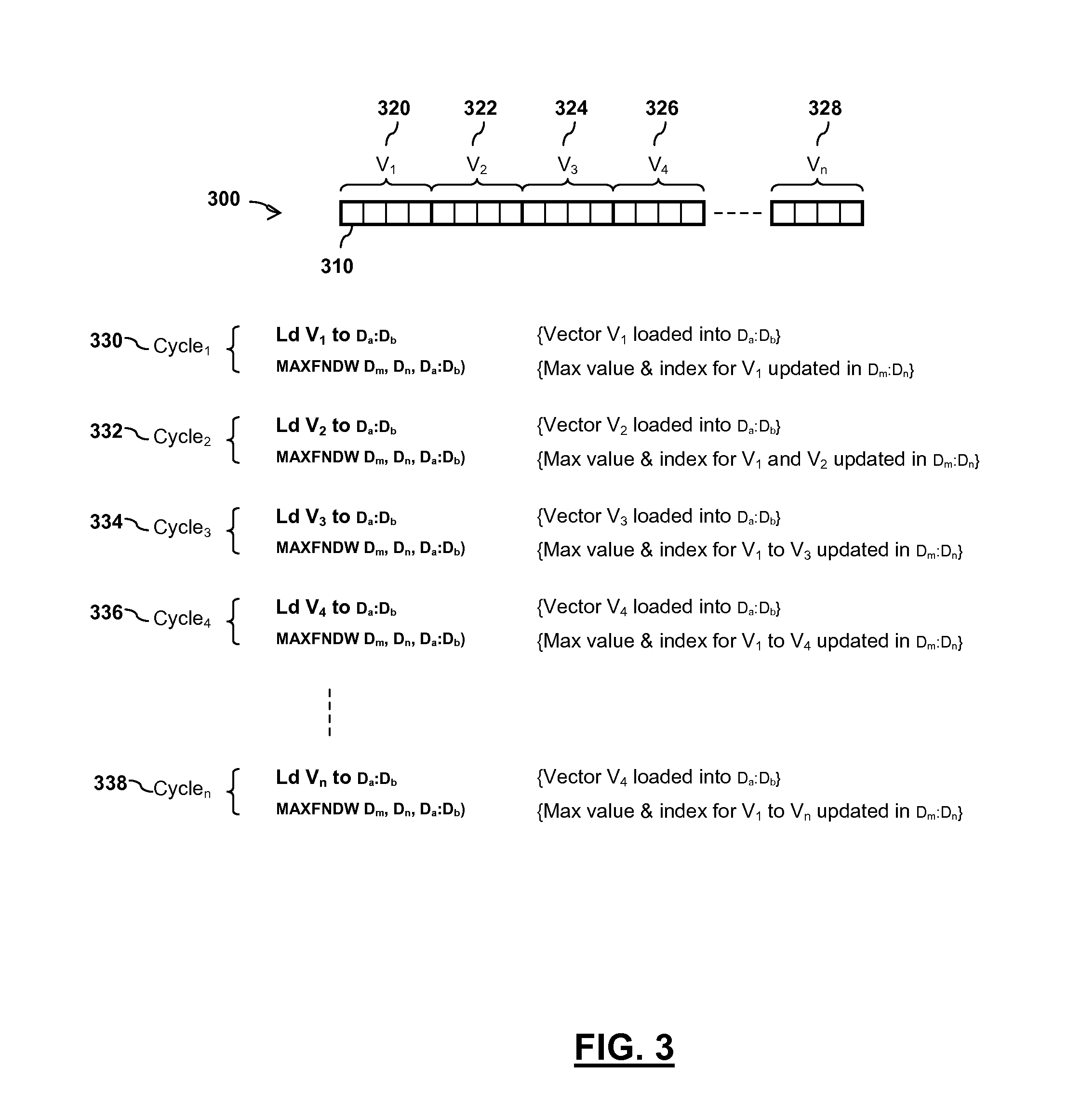

Integrated circuit device and method for determining an index of an extreme value within an array of values

InactiveUS9165023B2Digital data information retrievalDigital data processing detailsProcessor registerComputer module

An integrated circuit device comprises at least one digital signal processor, DSP, module, the at least one DSP module comprising a plurality of data registers and at least one data execution unit, DEU, module arranged to execute operations on data stored within the data registers. The at least one DEU module is arranged to, in response to receiving an extreme value index instruction, compare a previous extreme value located within a first data register set of the DSP module with at least one input vector data value located within a second data register set of the DSP module, and determine an extreme value thereof. The at least one DEU module is further arranged to, if the determined extreme value comprises an input vector data value located within the second data register set, store the determined extreme value in the first data register set, determine an index value for the determined extreme value, and store the determined index value in the first data register set.

Owner:NXP USA INC

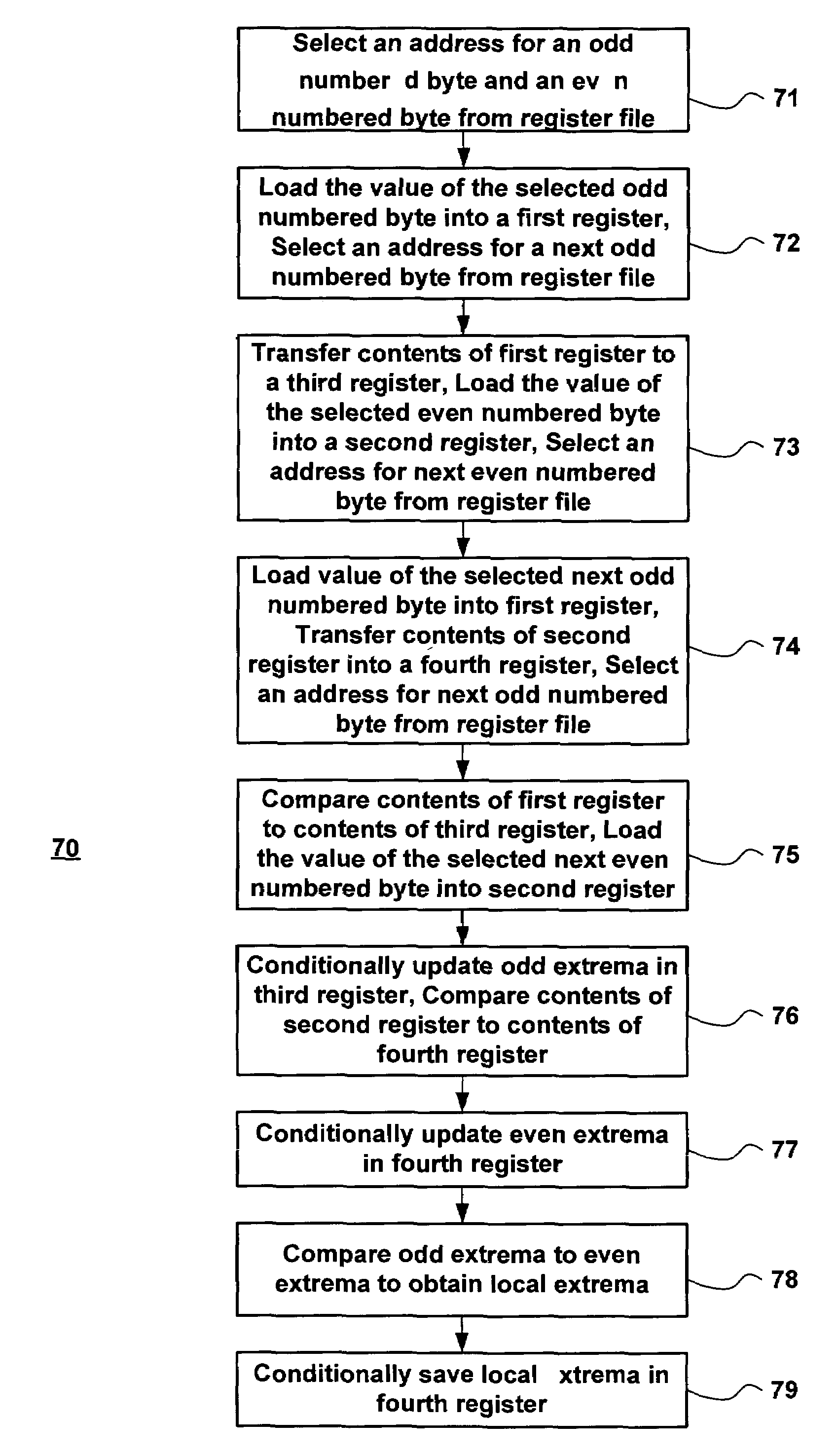

Method for finding local extrema of a set of values for a parallel processing element

ActiveUS7454451B2Digital data processing detailsDigital computer detailsTheoretical computer scienceProcessing element

A method for finding a local extrema for a single processing element having a set of values associated therewith includes separating the set of values into an odd set of values and an even set of values, determining a first extrema from the odd set of values, determining a second extrema from the even set of values, and determining the local extrema from the first extrema and the second extrema. The first extrema is found by comparing each odd-numbered value in the set to each other odd-numbered value in the set and the second extrema is found by comparing each even-numbered value in the set to each other even-numbered value in the set.

Owner:MICRON TECH INC

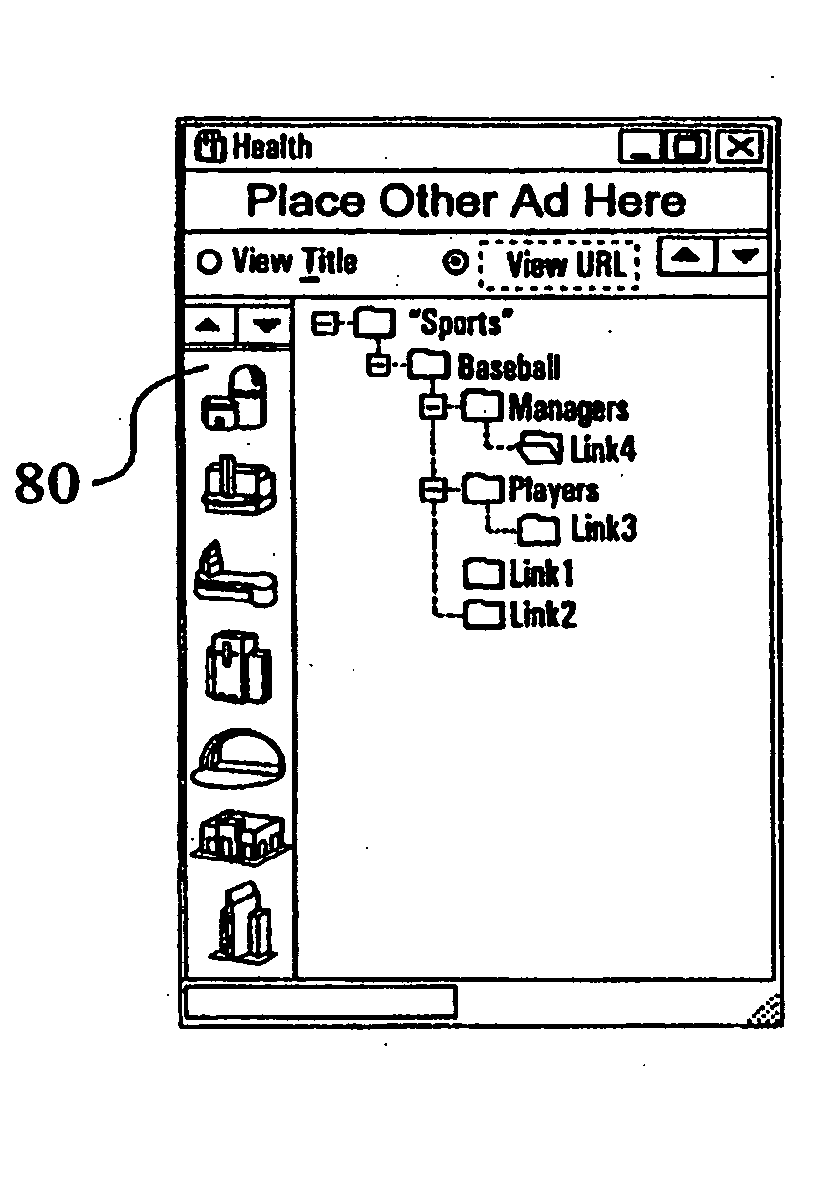

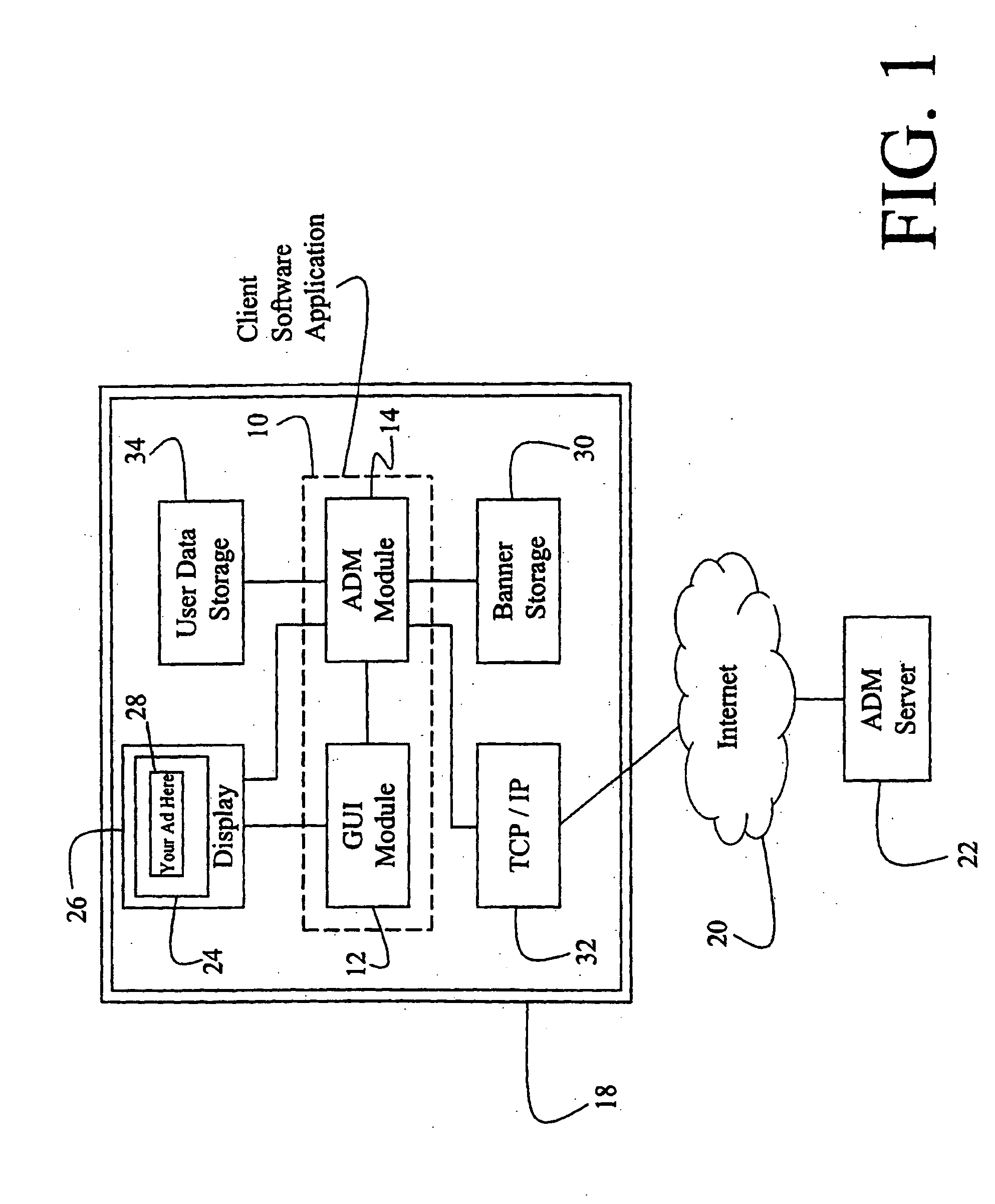

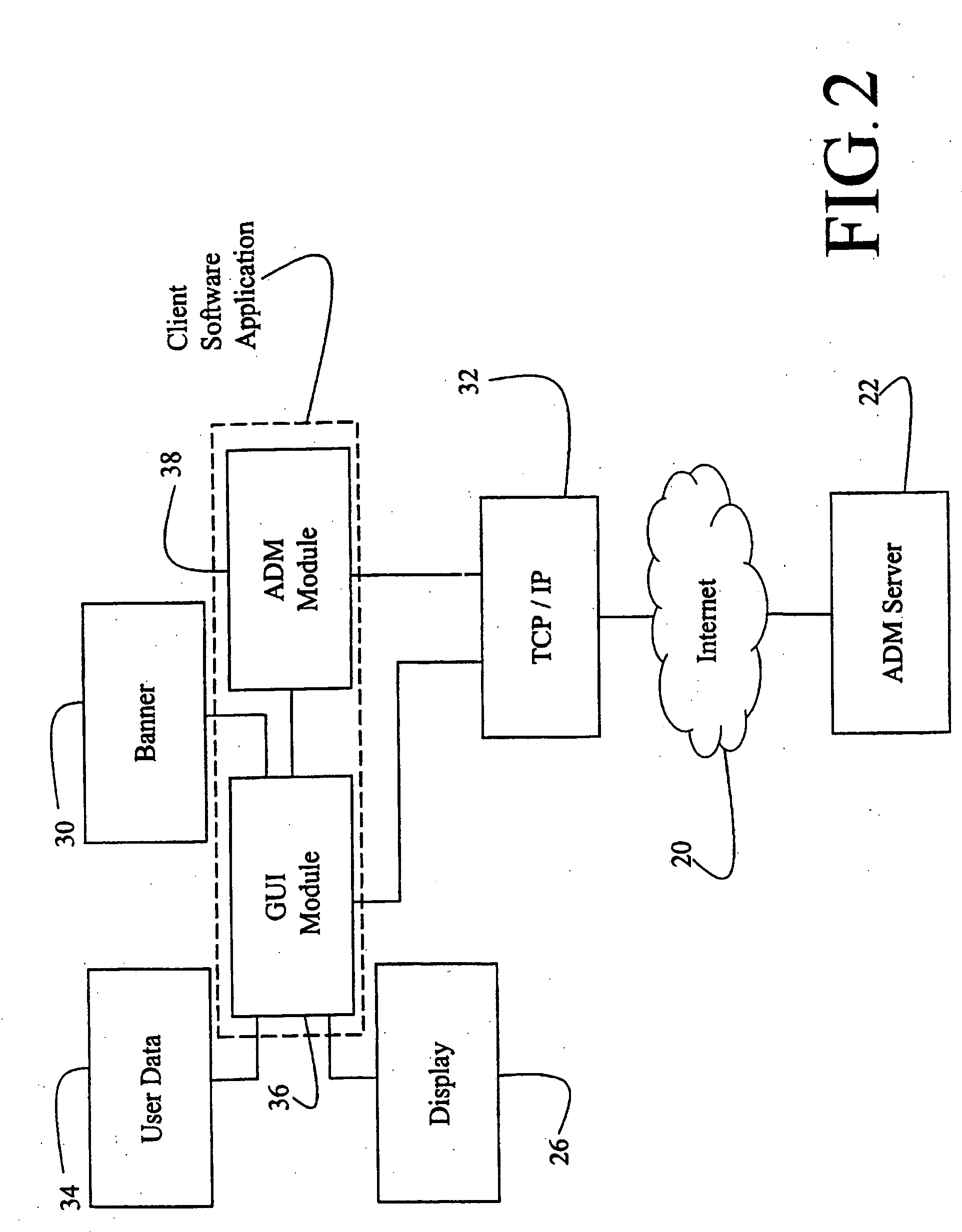

Computer interface method and apparatus with portable network organization system and targeted advertising

InactiveUS20050005242A1Permit targetingCathode-ray tube indicatorsProgram loading/initiatingPersonalizationSoftware distribution

A method and apparatus for providing an automatically upgradeable software application includes targeted advertising based upon demographics and user interaction with the computer. The software application includes a display region used for banner advertising that is downloaded over a network such as the Internet. The software application is accessible from a server via the network and demographic information on the user is acquired by the server and used for determining what advertising will be sent to the user. The software application further targets the advertisements in response to normal user interaction with the computer. Data associated with each advertisement is used by the software application in determining when a particular advertisement is to be displayed. This includes the specification of certain programs that the user may have so that, when the user runs the program (e.g., a spreadsheet program), a relevant advertisement will be displayed (e.g., an advertisement for a stock brokerage). This provides two-tiered, real-time targeting of advertising—both demographically and reactively. The software application includes programming that accesses the server to determine if one or more components of the application need upgrading. If so, the components can be downloaded and installed without further action by the user. A distribution tool is provided for software distribution and upgrading over the network. Also provided is a user profile that is accessible to any computer on the network. Furthermore, multiple users of the same computer can possess Internet web resources and files that are personalized, maintained and organized.

Owner:BETECH

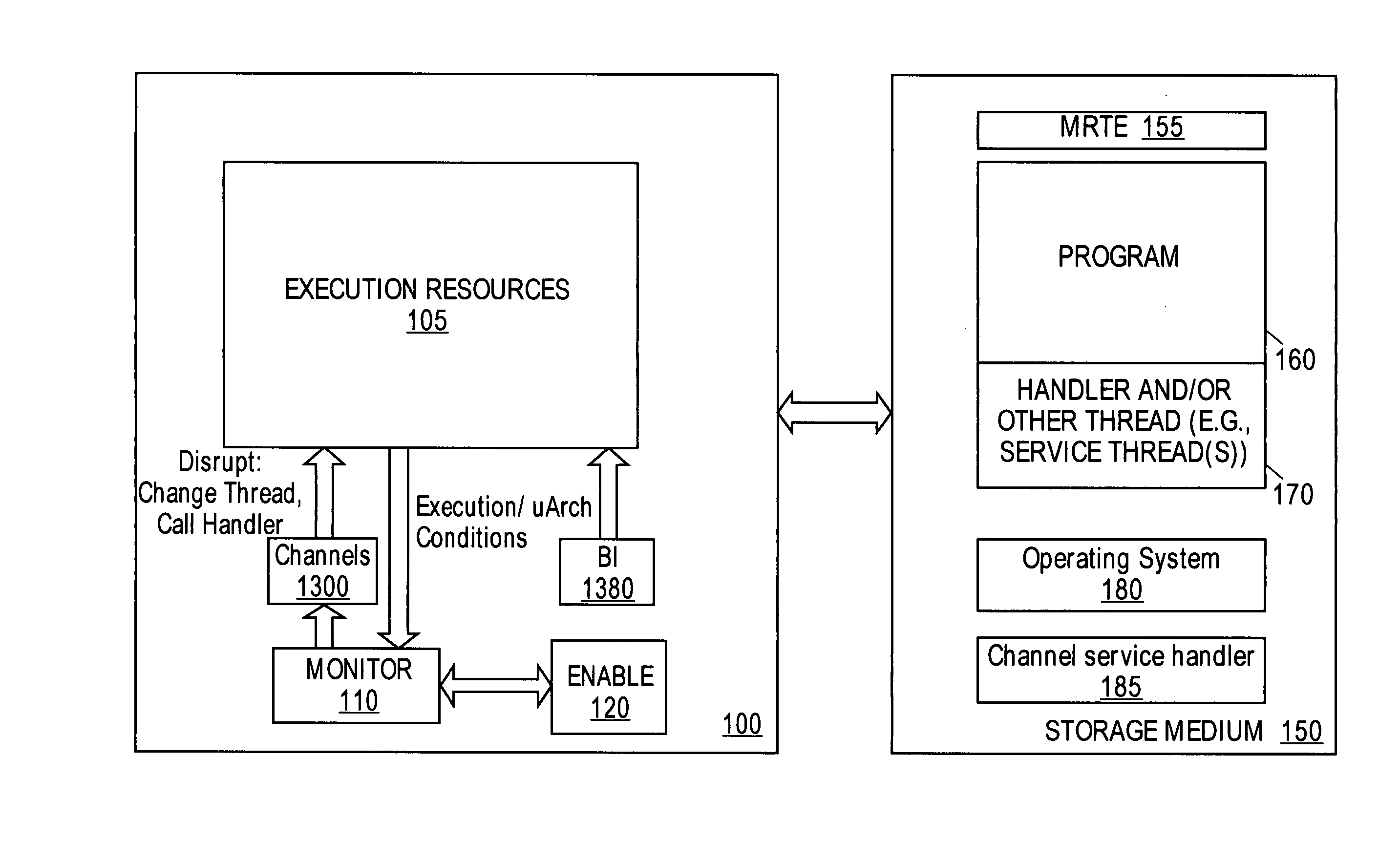

System to profile and optimize user software in a managed run-time environment

InactiveUS20070214342A1Error detection/correctionGeneral purpose stored program computerParallel computingRunning time

Method, apparatus, and system for monitoring performance within a processing resource, which may be used to modify user-level software. Some embodiments of the invention pertain to an architecture to allow a user to improve software running on a processing resources on a per-thread basis in real-time and without incurring significant processing overhead.

Owner:INTEL CORP

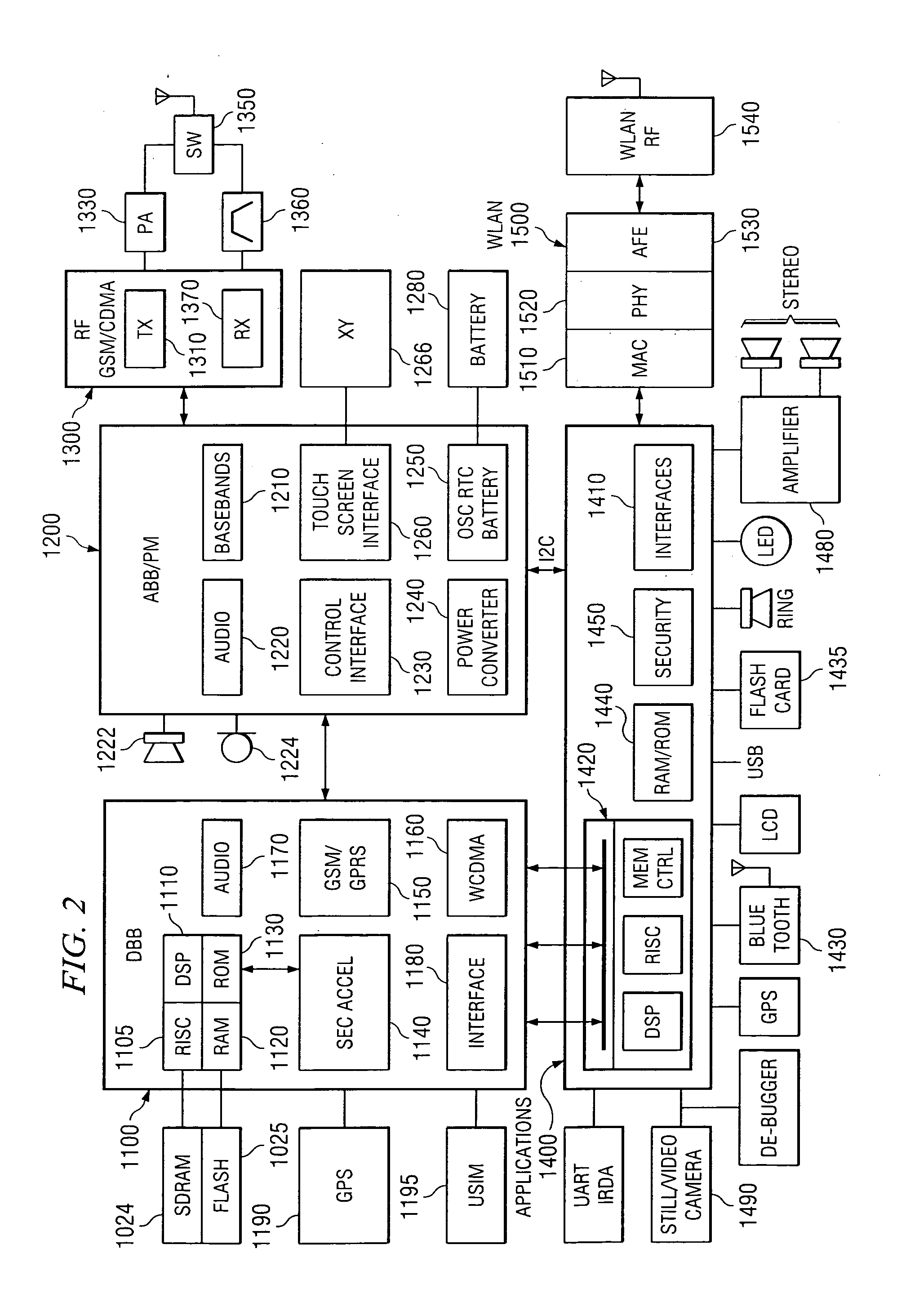

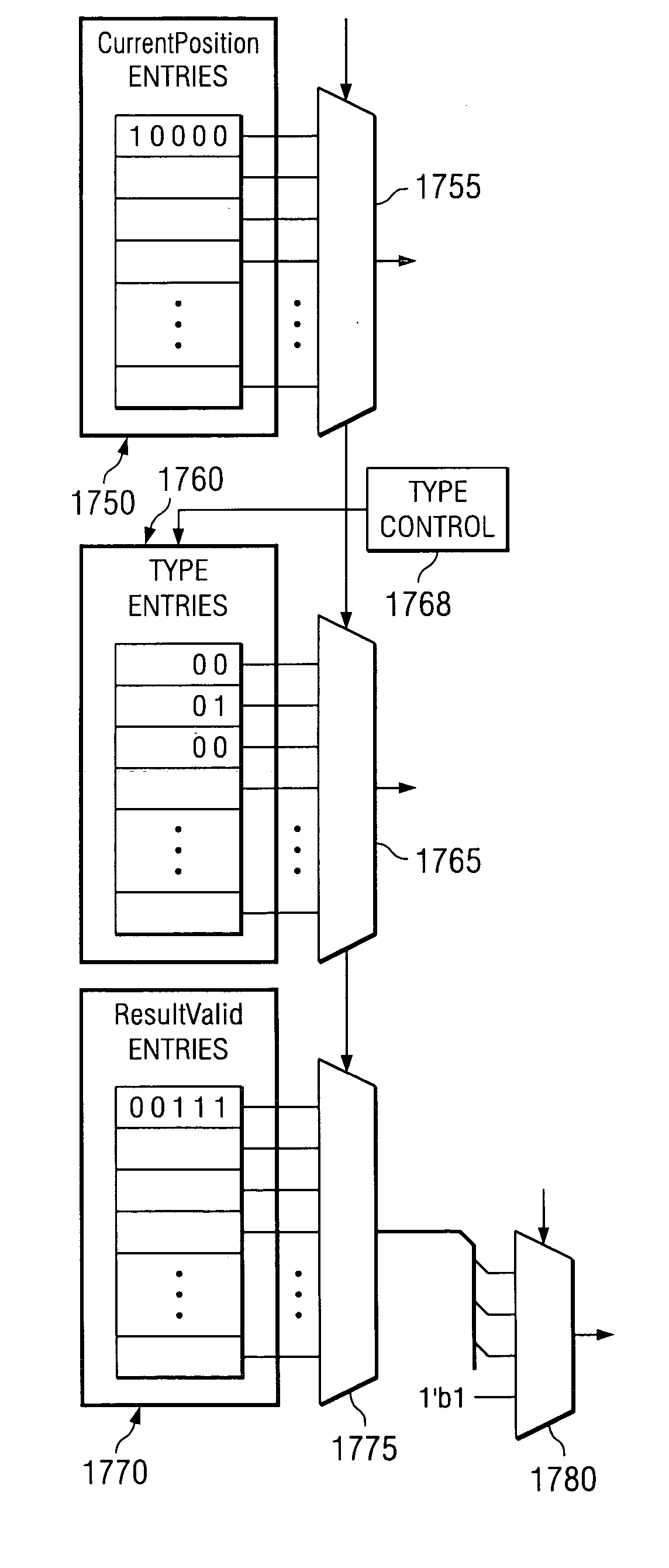

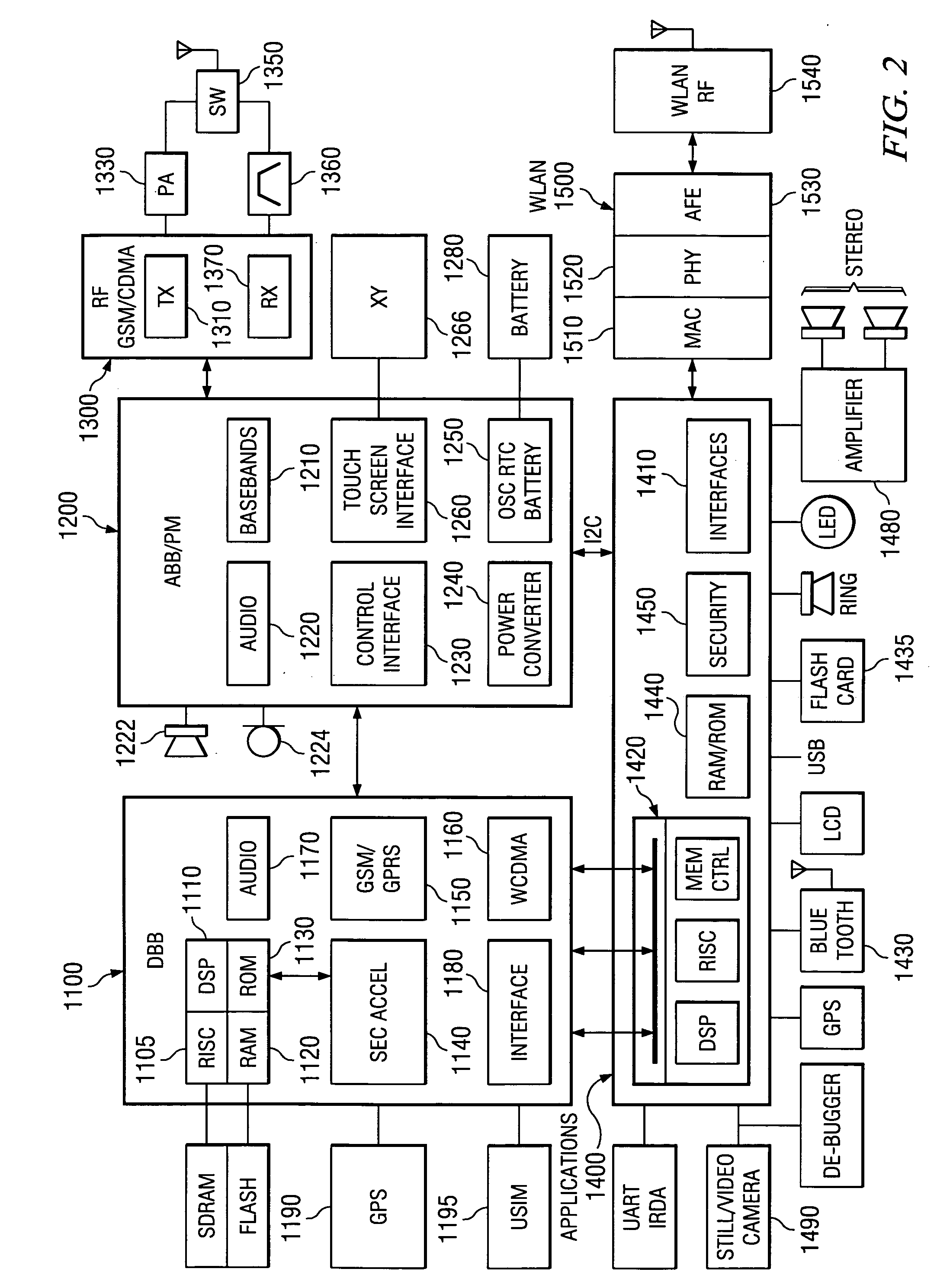

Multi-threading processors, integrated circuit devices, systems, and processes of operation and manufacture

ActiveUS20070204137A1Avoid issuingDigital data processing detailsGeneral purpose stored program computerLine tubingCoupling

A multi-threaded microprocessor (1105) for processing instructions in threads. The microprocessor (1105) includes first and second decode pipelines (1730.0, 1730.1), first and second execute pipelines (1740, 1750), and coupling circuitry (1916) operable in a first mode to couple first and second threads from the first and second decode pipelines (1730.0, 1730.1) to the first and second execute pipelines (1740, 1750) respectively, and the coupling circuitry (1916) operable in a second mode to couple the first thread to both the first and second execute pipelines (1740, 1750). Various processes of manufacture, articles of manufacture, processes and methods of operation, circuits, devices, and systems are disclosed.

Owner:TEXAS INSTR INC

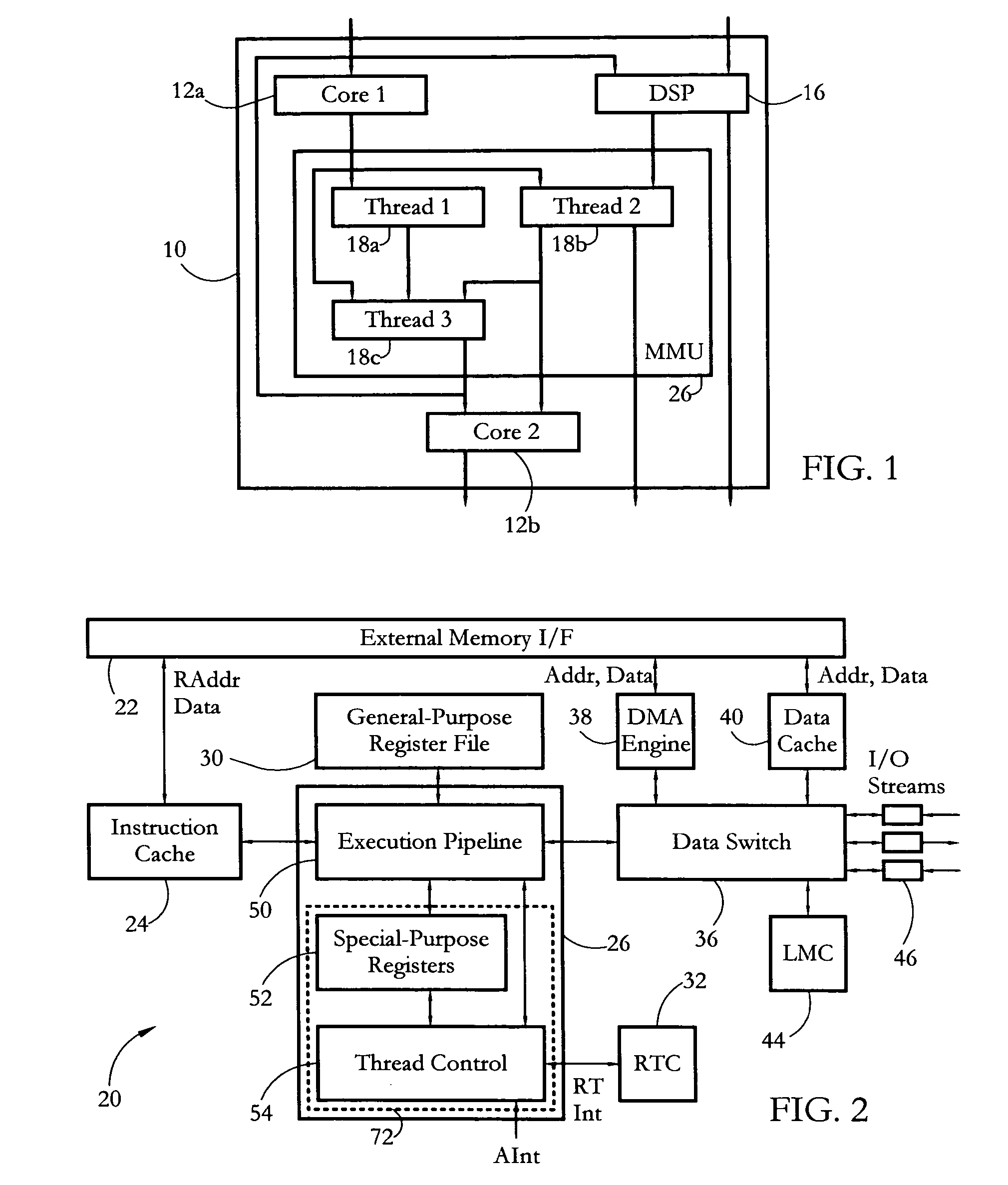

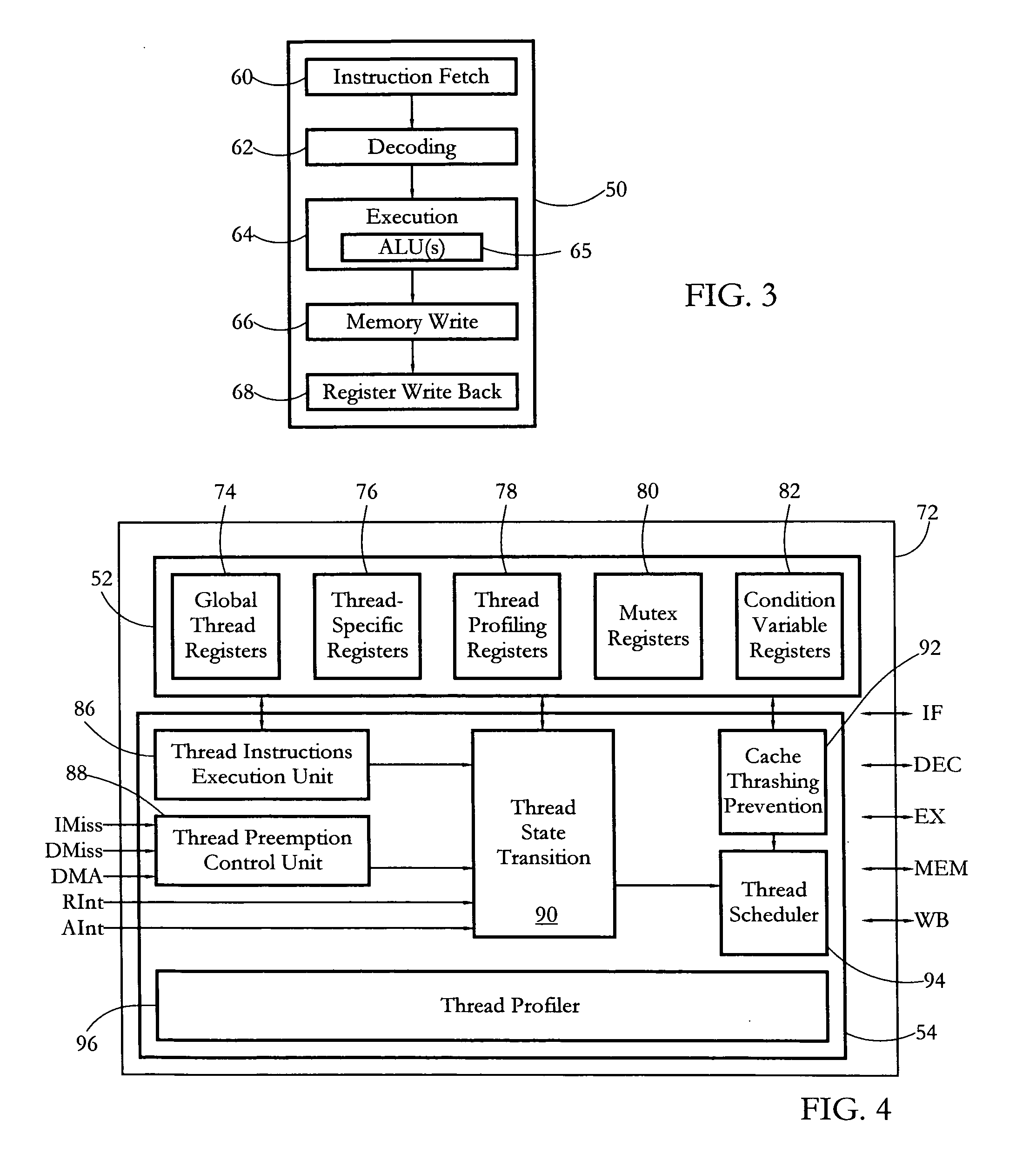

Hardware multithreading systems and methods

According to some embodiments, a multithreaded microcontroller includes a thread control unit comprising thread control hardware (logic) configured to perform a number of multithreading system calls essentially in real time, e.g. in one or a few clock cycles. System calls can include mutex lock, wait condition, and signal instructions. The thread controller includes a number of thread state, mutex, and condition variable registers used for executing the multithreading system calls. Threads can transition between several states including free, run, ready and wait. The wait state includes interrupt, condition, mutex, I-cache, and memory substates. A thread state transition controller controls thread states, while a thread instructions execution unit executes multithreading system calls and manages thread priorities to avoid priority inversion. A thread scheduler schedules threads according to their priorities. A hardware thread profiler including global, run and wait profiler registers is used to monitor thread performance to facilitate software development.

Owner:GEO SEMICONDUCTOR INC

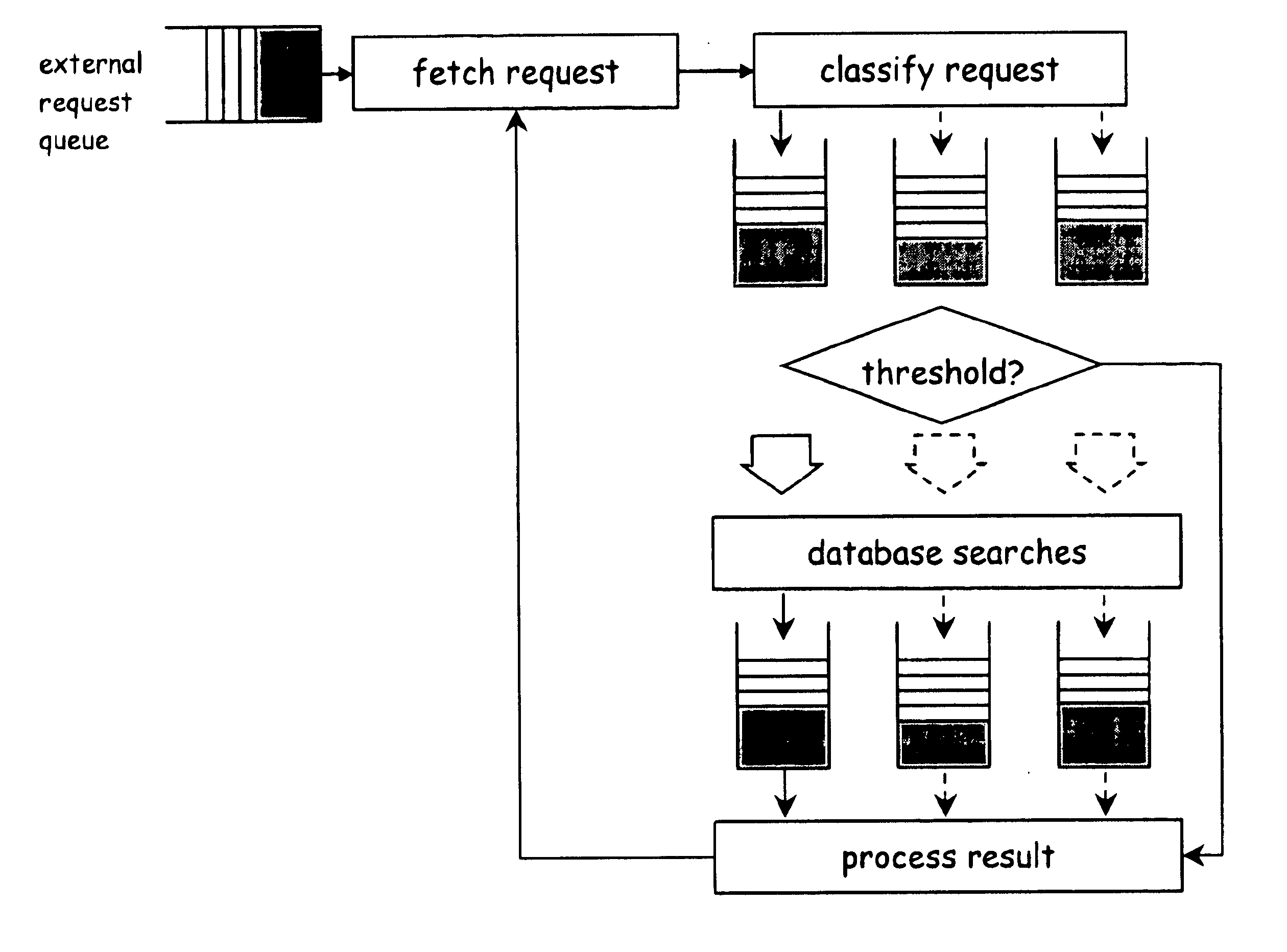

Method and apparatus for prefetching recursive data structures

InactiveUS6848029B2Improve cache hit ratioPotential throughput of the computer systemMemory architecture accessing/allocationMemory adressing/allocation/relocationApplication softwareCache hit rate

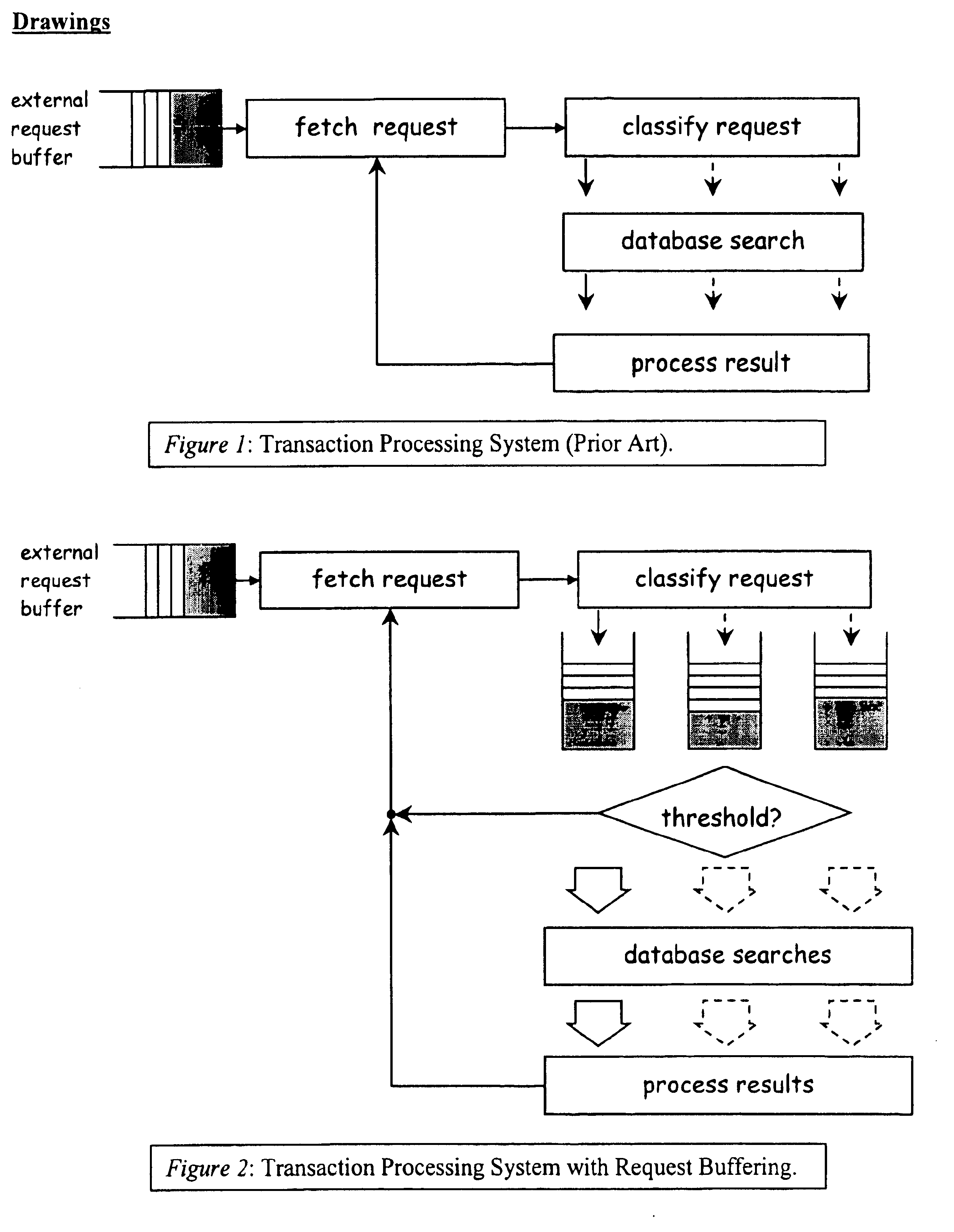

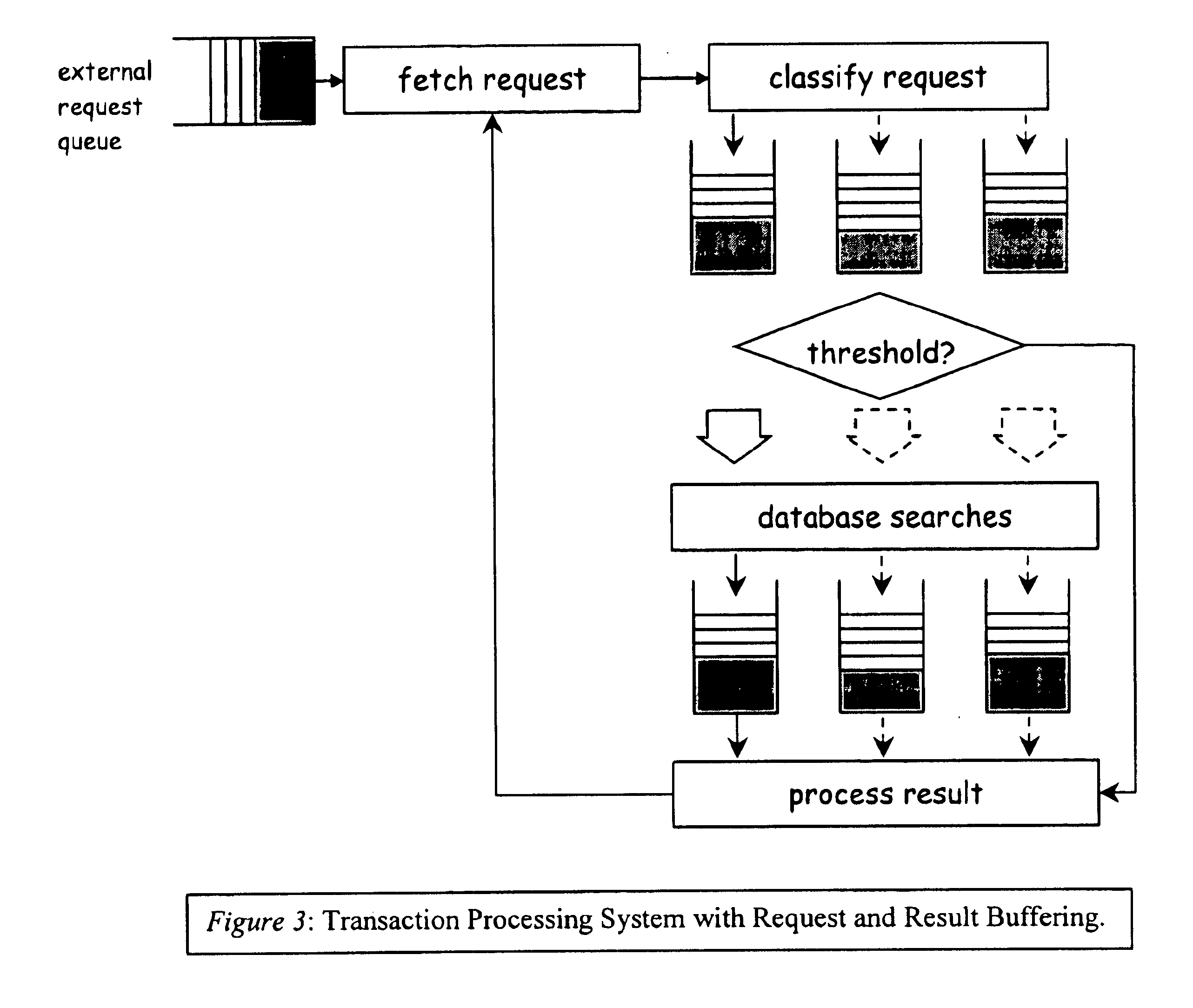

Computer systems are typically designed with multiple levels of memory hierarchy. Prefetching has been employed to overcome the latency of fetching data or instructions from or to memory. Prefetching works well for data structures with regular memory access patterns, but less so for data structures such as trees, hash tables, and other structures in which the datum that will be used is not known a priori. The present invention significantly increases the cache hit rates of many important data structure traversals, and thereby the potential throughput of the computer system and application in which it is employed. The invention is applicable to those data structure accesses in which the traversal path is dynamically determined. The invention does this by aggregating traversal requests and then pipelining the traversal of aggregated requests on the data structure. Once enough traversal requests have been accumulated so that most of the memory latency can be hidden by prefetching the accumulated requests, the data structure is traversed by performing software pipelining on some or all of the accumulated requests. As requests are completed and retired from the set of requests that are being traversed, additional accumulated requests are added to that set. This process is repeated until either an upper threshold of processed requests or a lower threshold of residual accumulated requests has been reached. At that point, the traversal results may be processed.

Owner:DIGITAL CACHE LLC +1

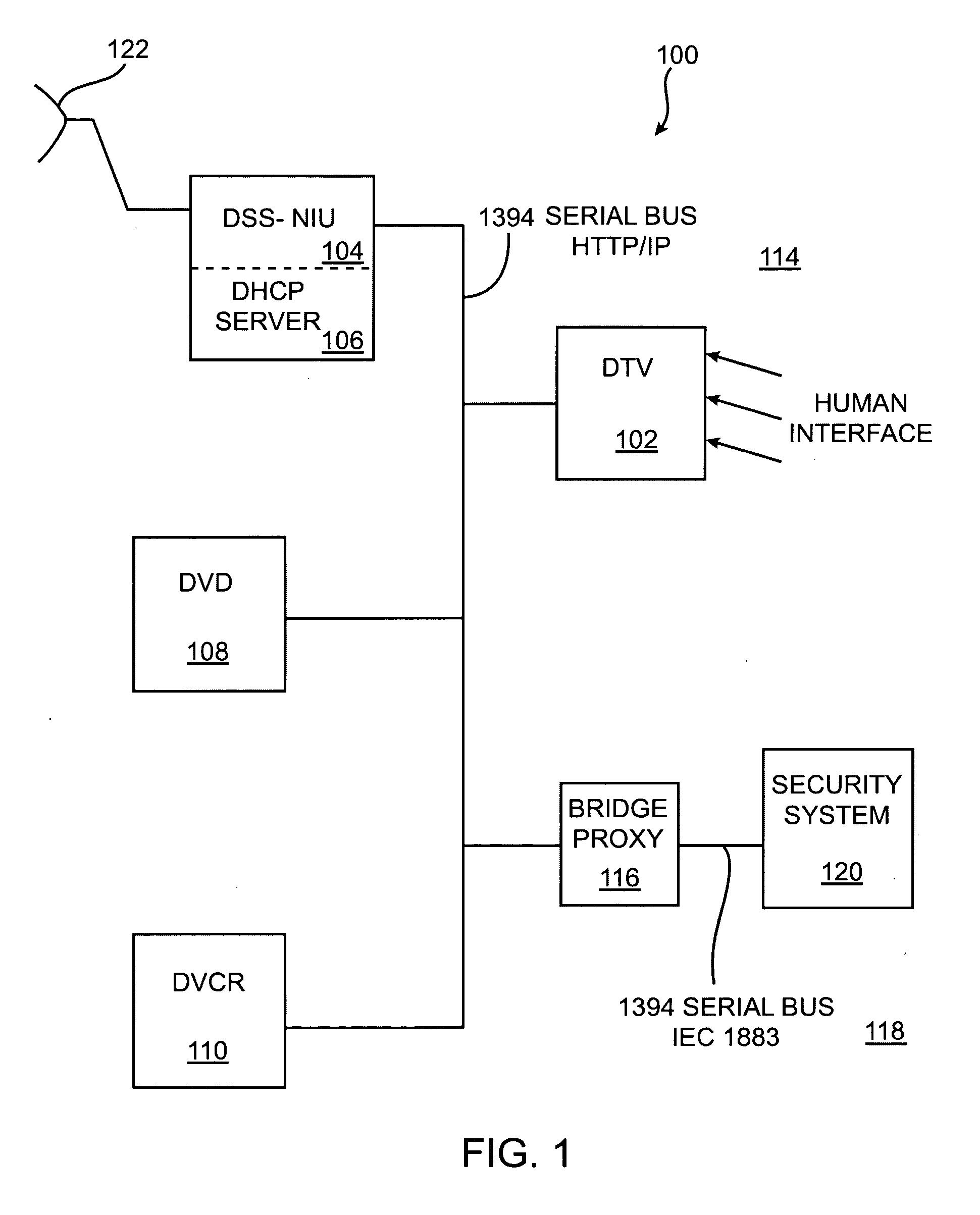

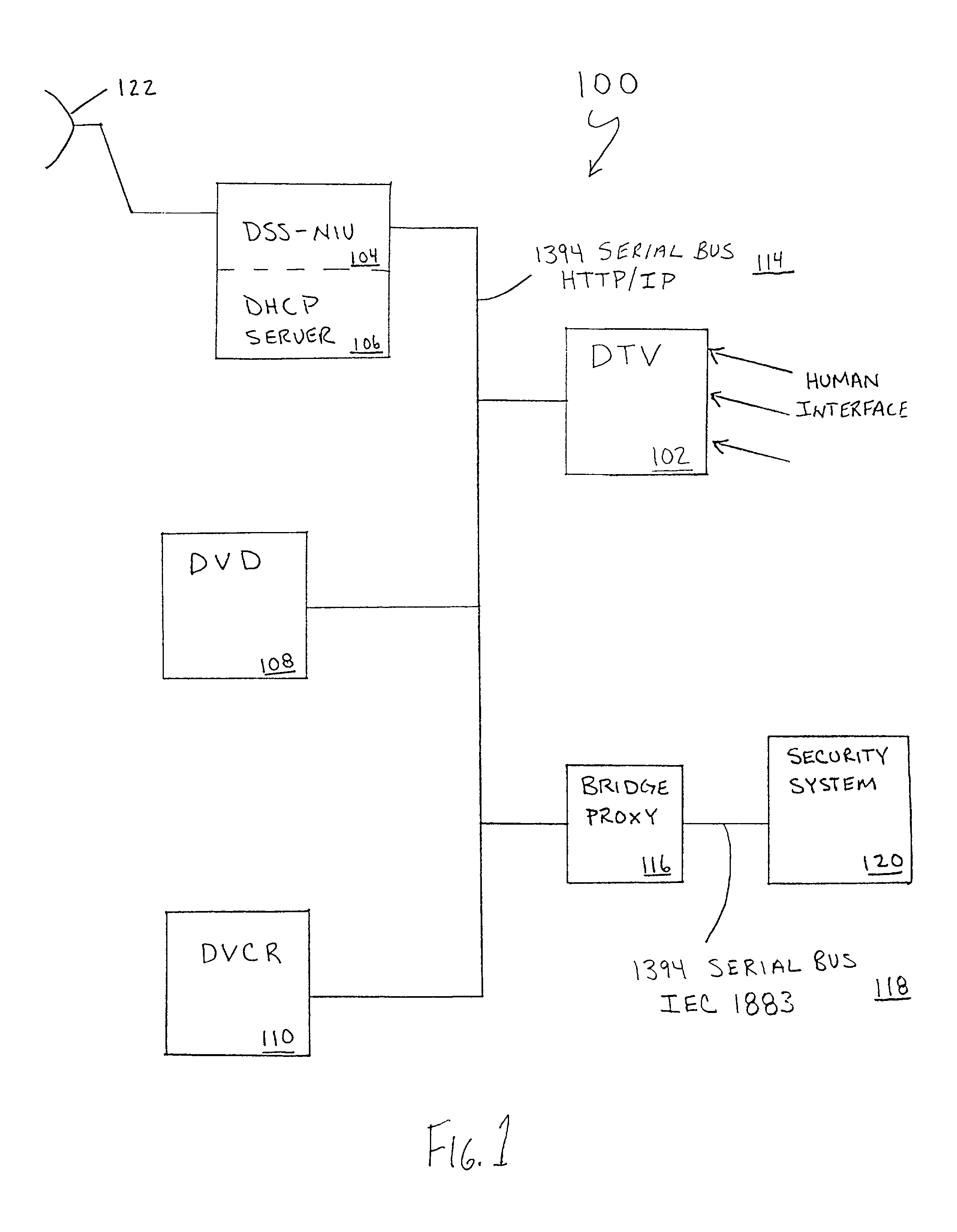

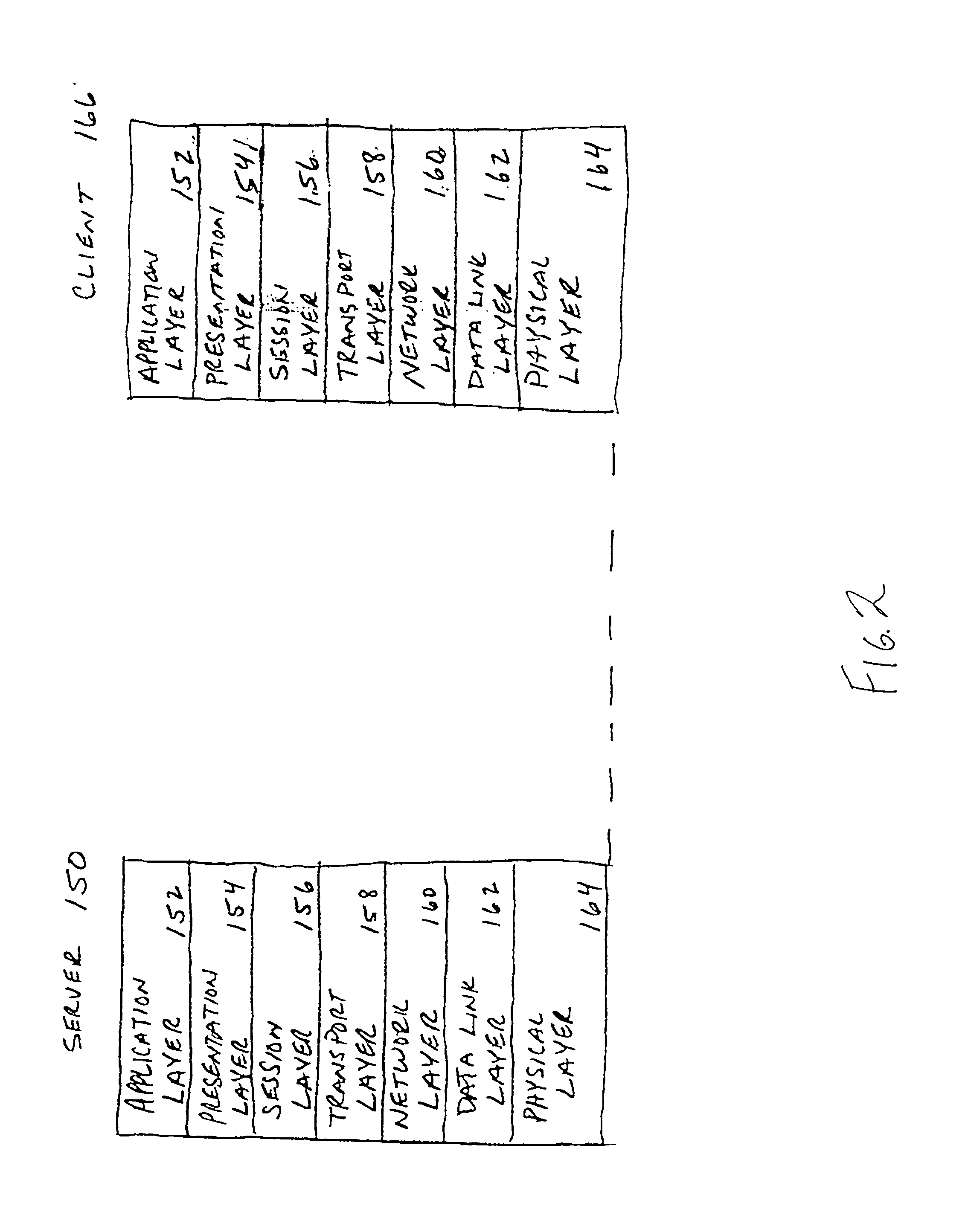

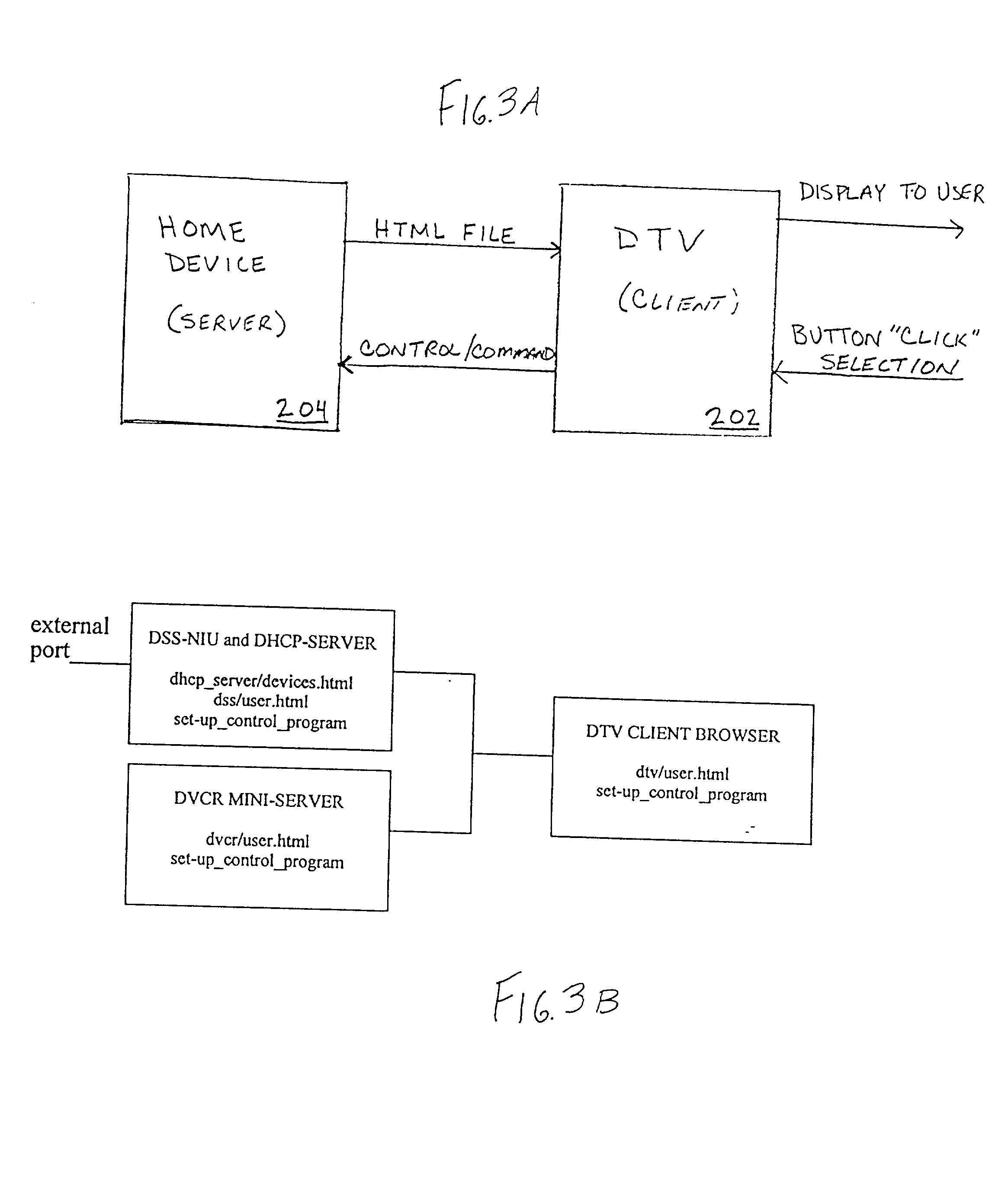

Method and apparatus for a home network auto-tree builder

InactiveUS20050010866A1Special service provision for substationTelevision system detailsComputer networkCommand and control

A method and system is provided for detecting, commanding and controlling diverse home devices currently connected to a home network. An interface is provided for accessing the home devices that are currently connected to a home network. According to the method, a device link file is generated, wherein the device link file identifies home devices that are currently connected to the home network. A device link page is created, wherein the device link page contains a device button that is associated with each home device that is identified in the device link file. A hyper-text link is associated with each device button, wherein the hyper-text link provides a link to an HTML page that is contained on the home device that is associated with the device button, and the device link page is displayed on a browser based home device.

Owner:SAMSUNG ELECTRONICS CO LTD

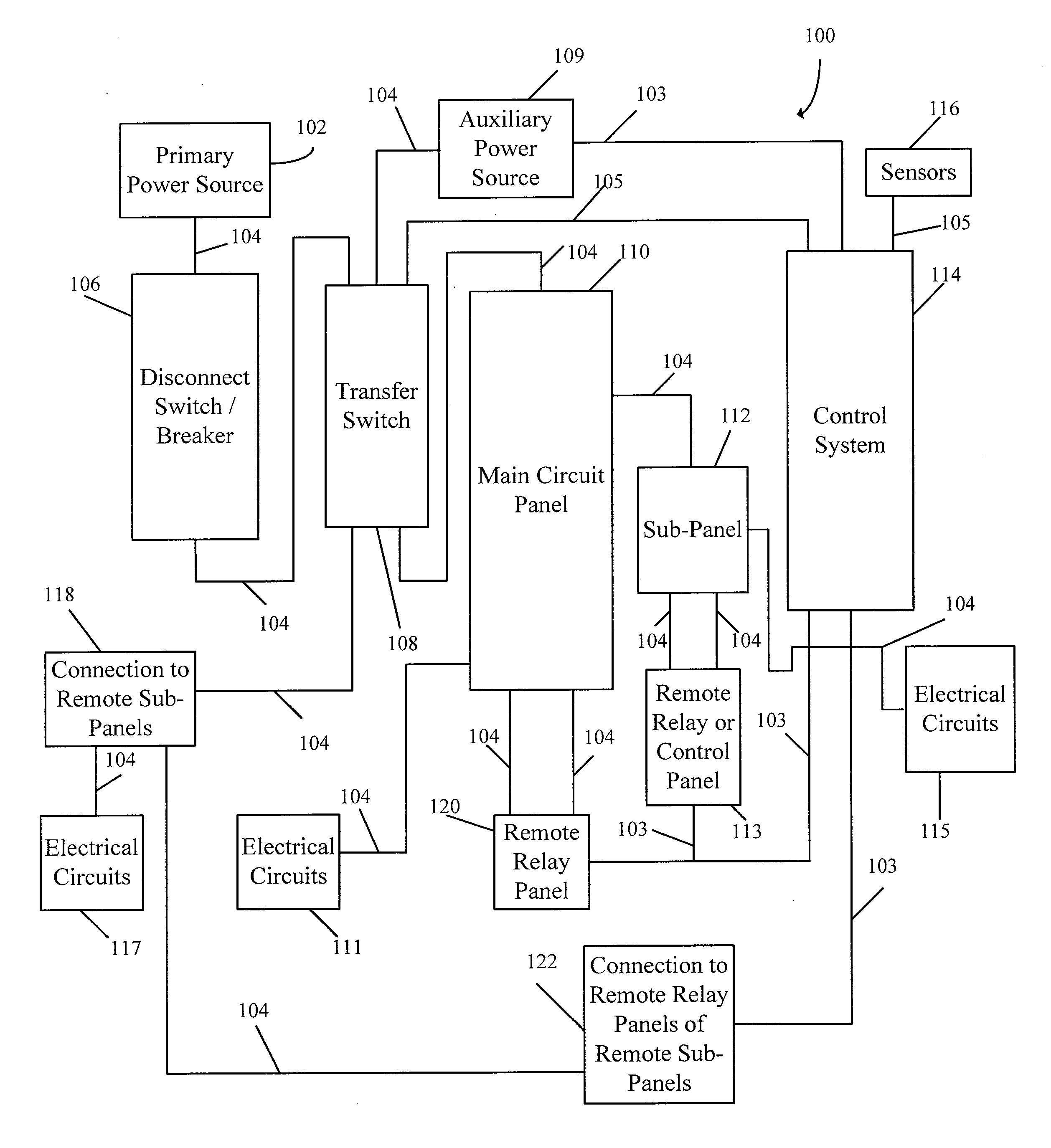

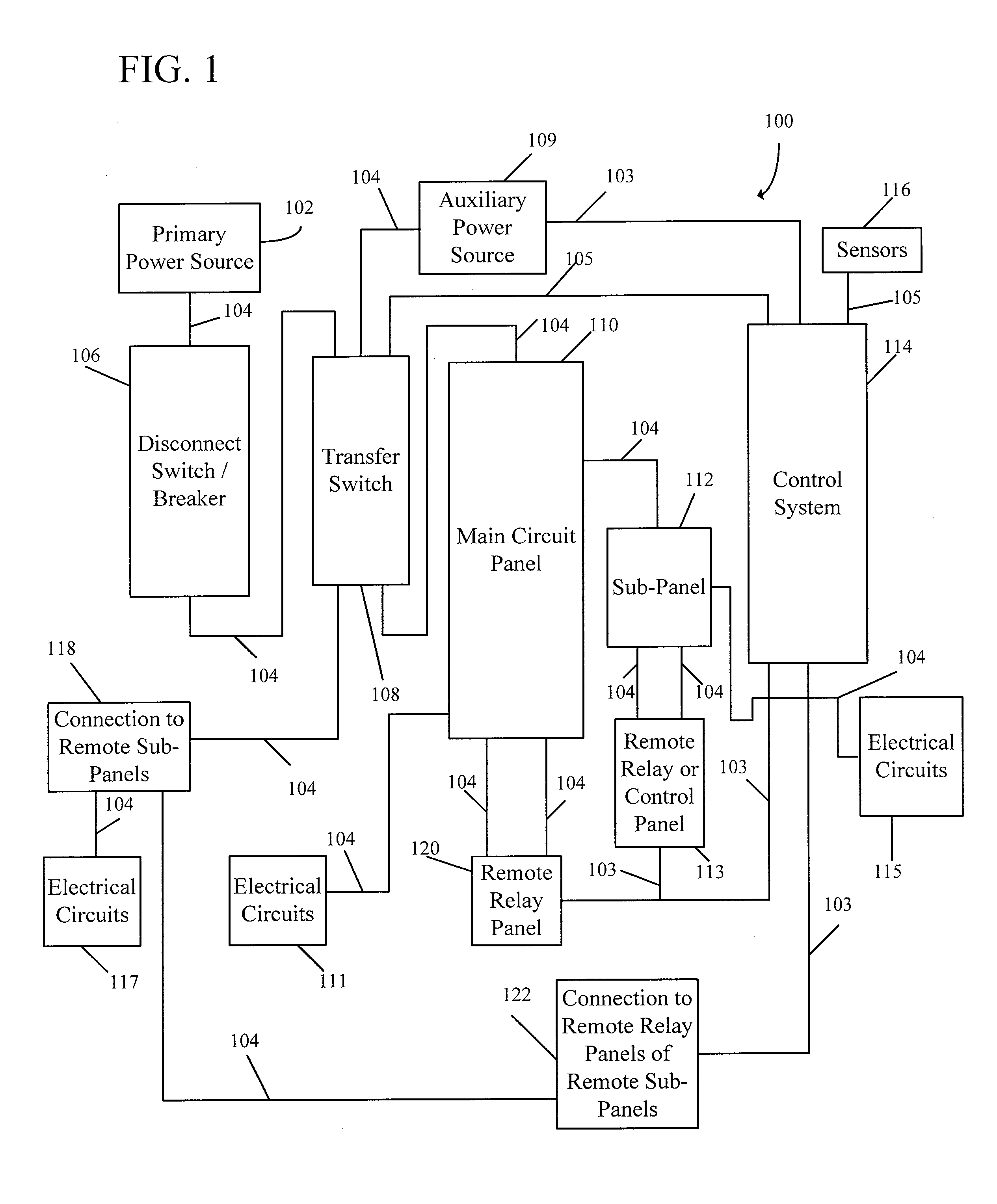

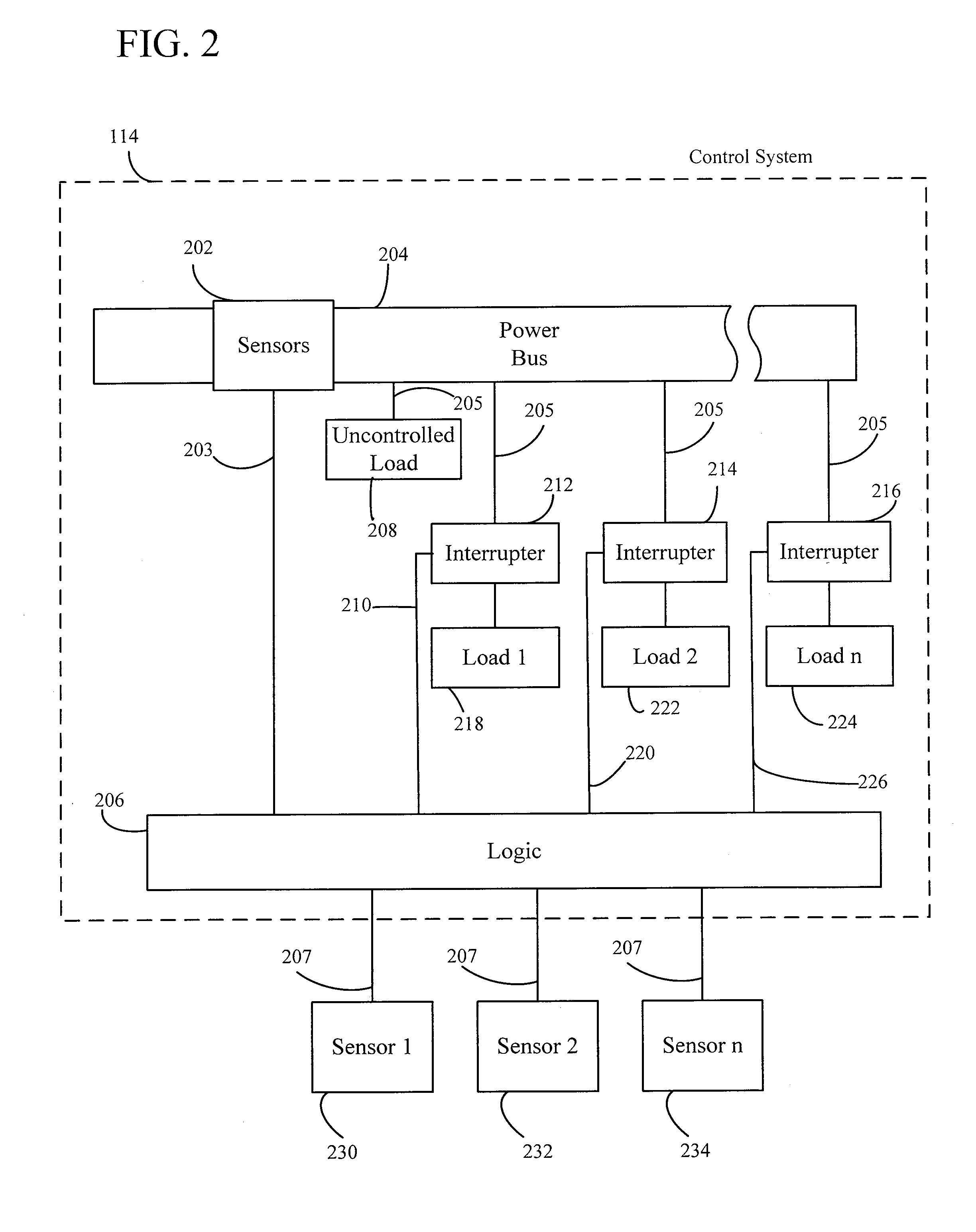

Energy management system for auxiliary power source

InactiveUS20100019574A1Effective monitoringEffective balanceBatteries circuit arrangementsLoad balancing in dc networkControl systemAuxiliary power unit

A control system is provided for controlling a load powered by an auxiliary power source during an interruption in the utility power source and / or during a power failure. The control system of the present invention provides power to essential loads in a dwelling as predetermined by a user and / or per the user's real-time instructions as the needs of the user may change. Additionally, the control system of the present invention automatically controls non-essential loads in order to maintain the auxiliary power load below the maximum threshold. Furthermore, the control system of the present invention allows the user to manually override all the controlled loads in an emergency or when the needs of the user change. Additionally, the control system of the present invention allows outside triggers to change the priority of the loads in real-time and can automatically change the priority due to predetermined tasks already running.

Owner:FJI HLDG

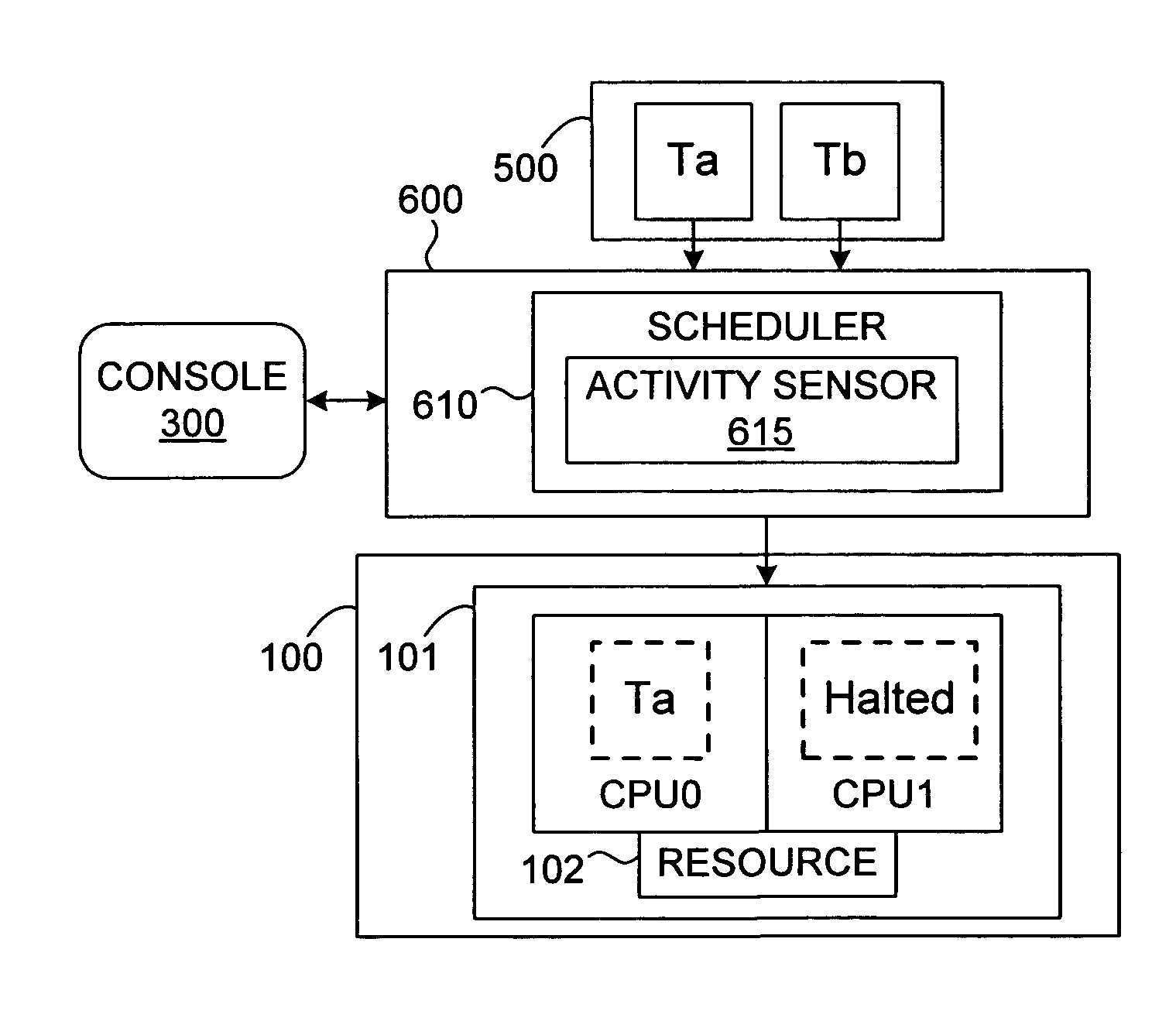

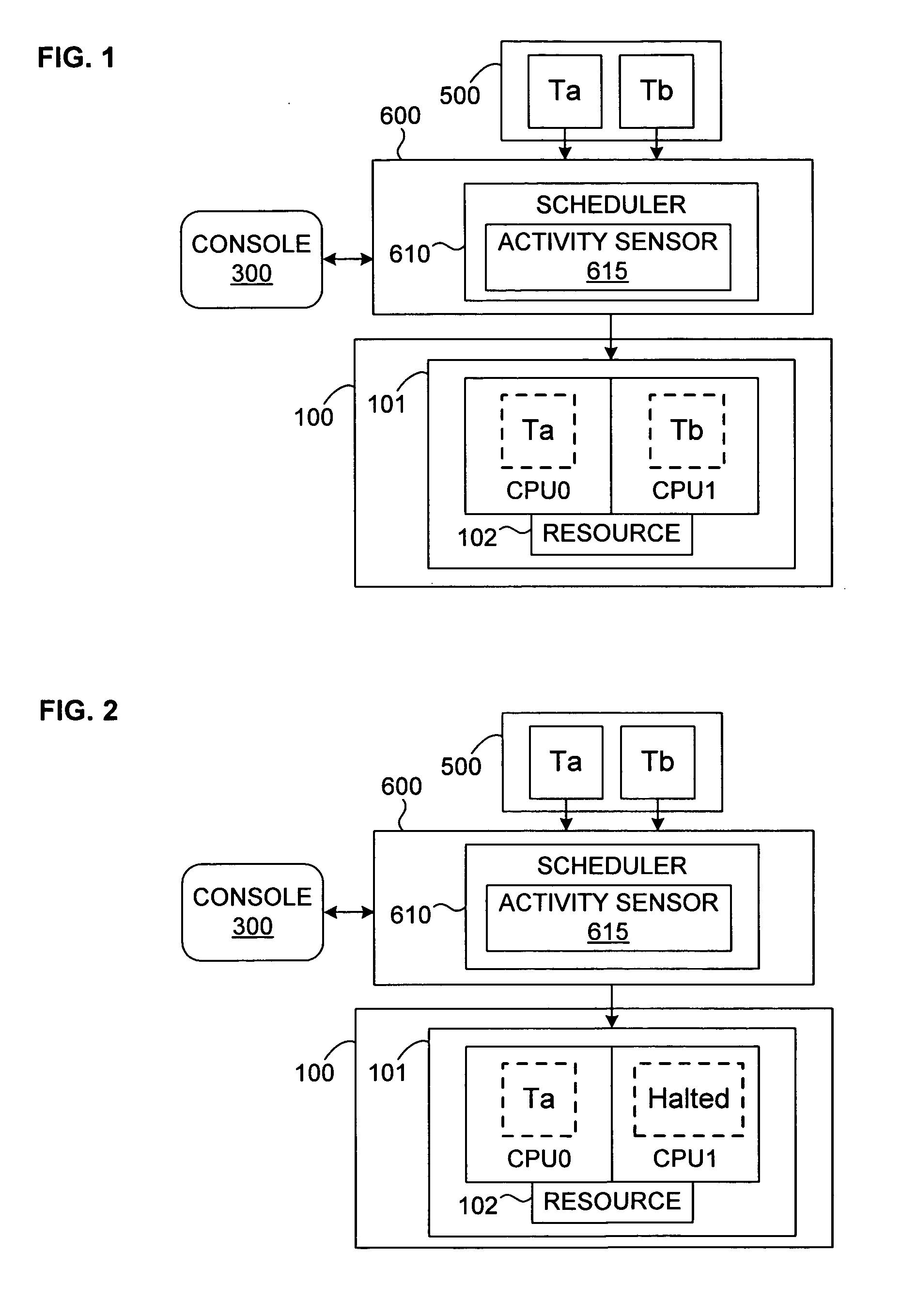

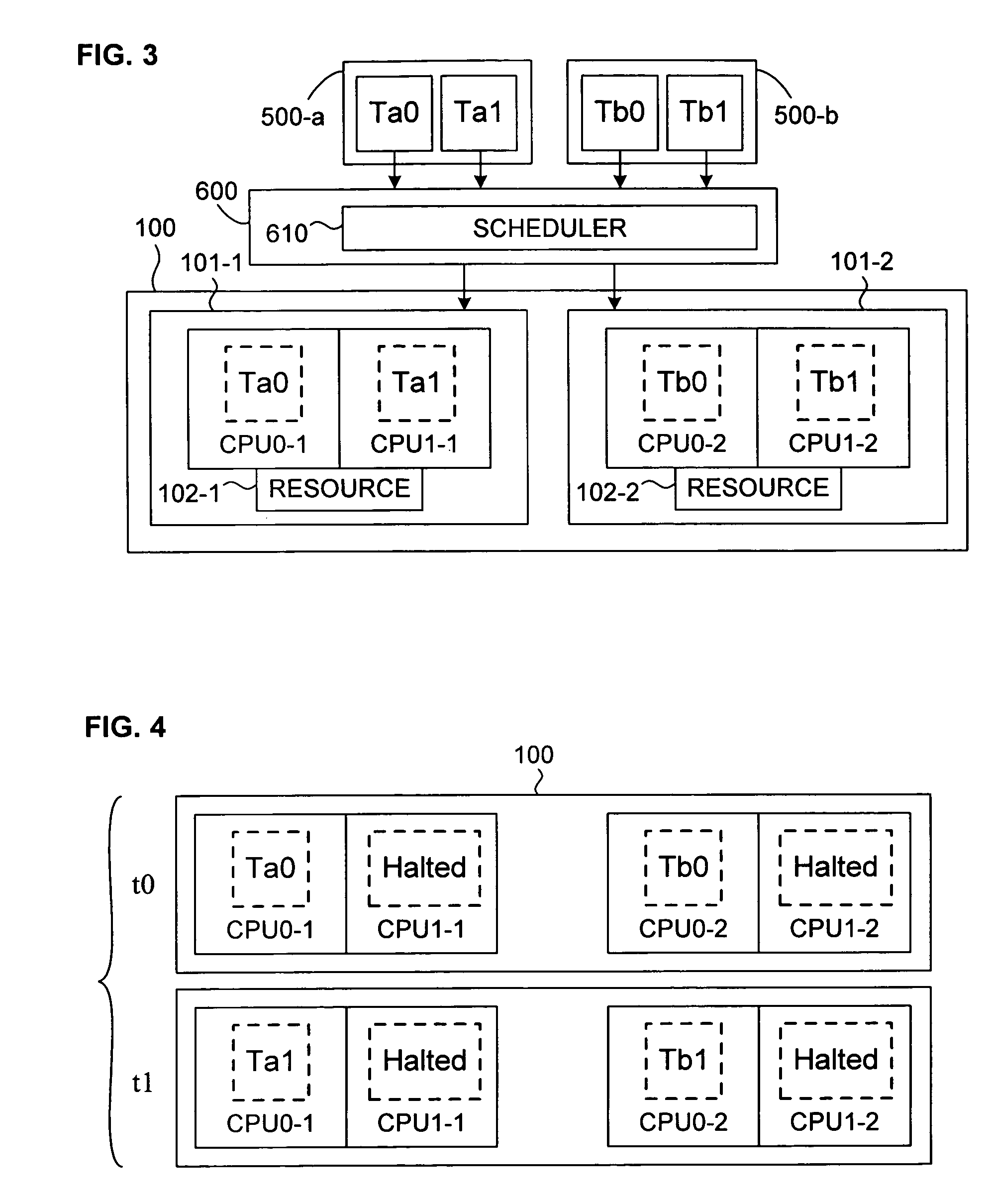

Mechanism for scheduling execution of threads for fair resource allocation in a multi-threaded and/or multi-core processing system

ActiveUS7707578B1Digital computer detailsMultiprogramming arrangementsThread schedulingShared resource

A thread scheduling mechanism is provided that flexibly enforces performance isolation of multiple threads to alleviate the effect of anti-cooperative execution behavior with respect to a shared resource, for example, hoarding a cache or pipeline, using the hardware capabilities of simultaneous multi-threaded (SMT) or multi-core processors. Given a plurality of threads running on at least two processors in at least one functional processor group, the occurrence of a rescheduling condition indicating anti-cooperative execution behavior is sensed, and, if present, at least one of the threads is rescheduled such that the first and second threads no longer execute in the same functional processor group at the same time.

Owner:VMWARE INC

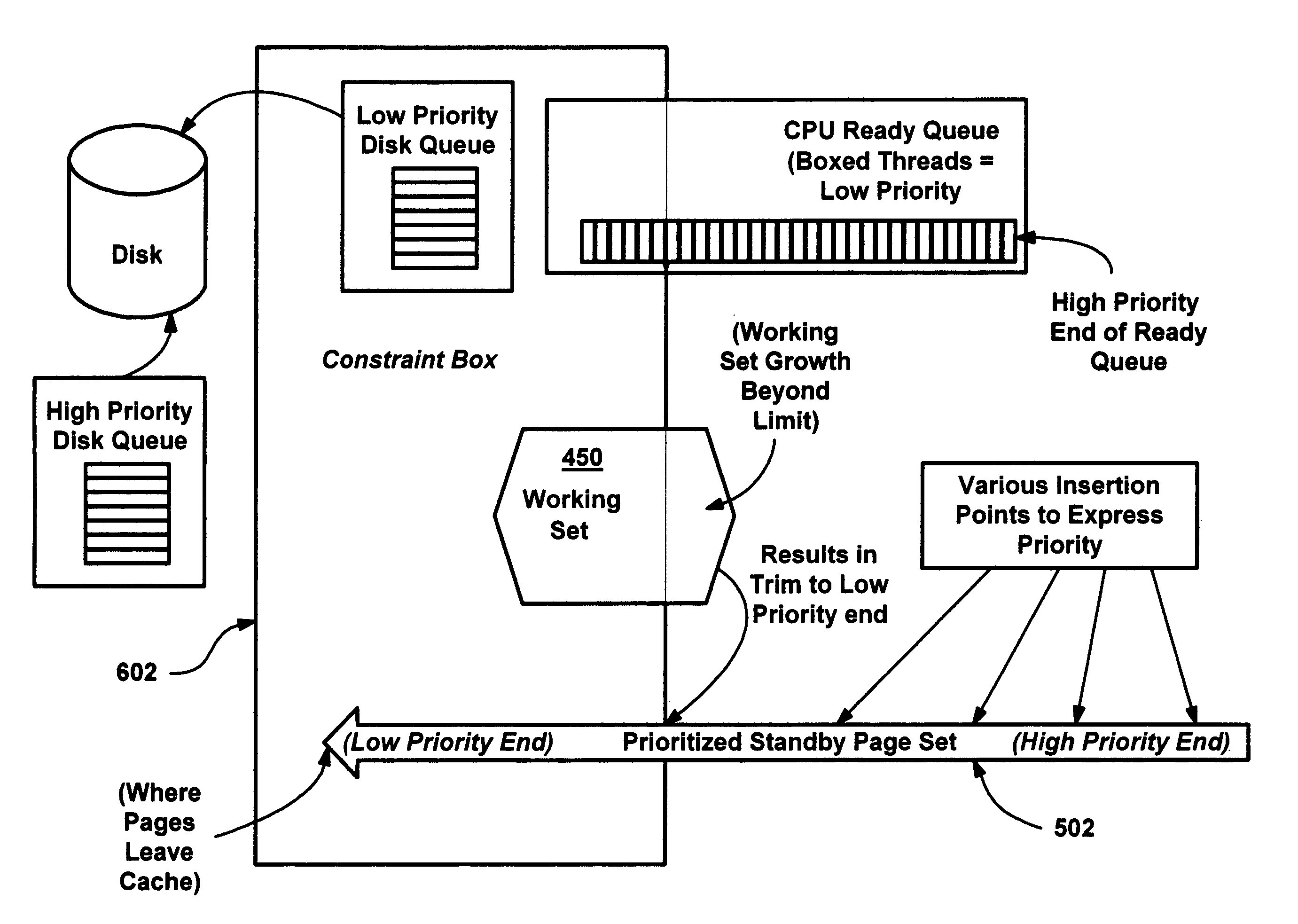

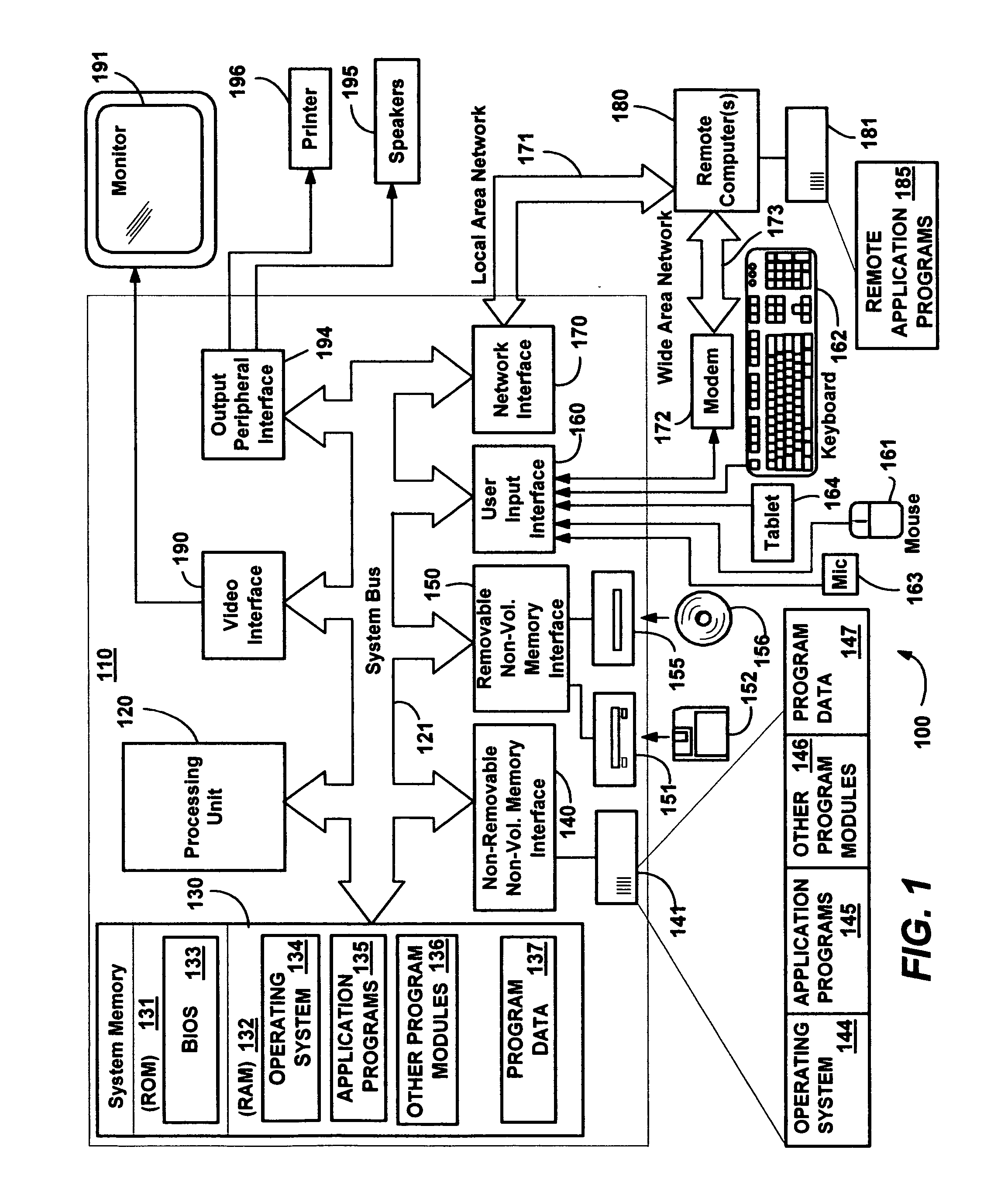

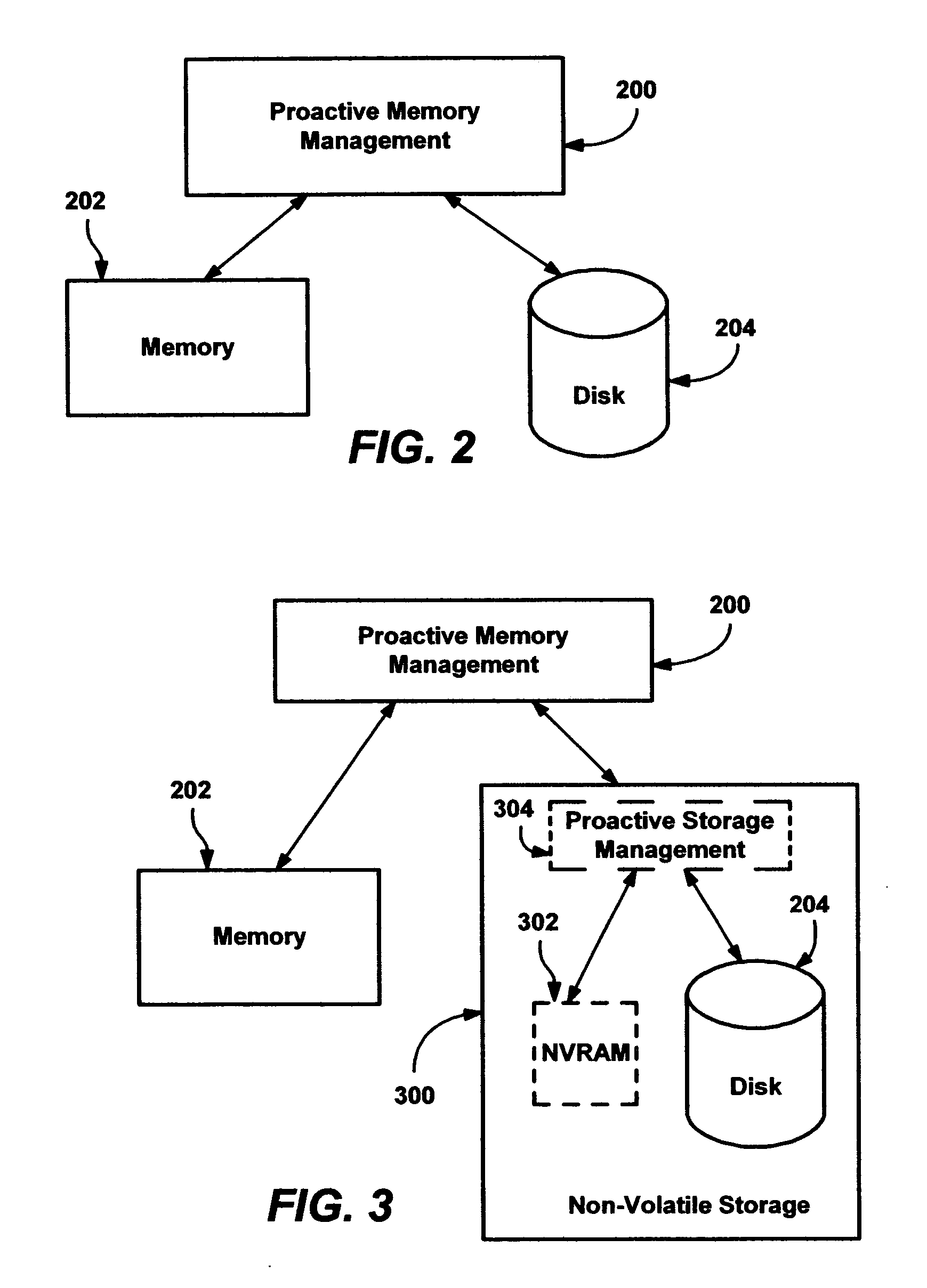

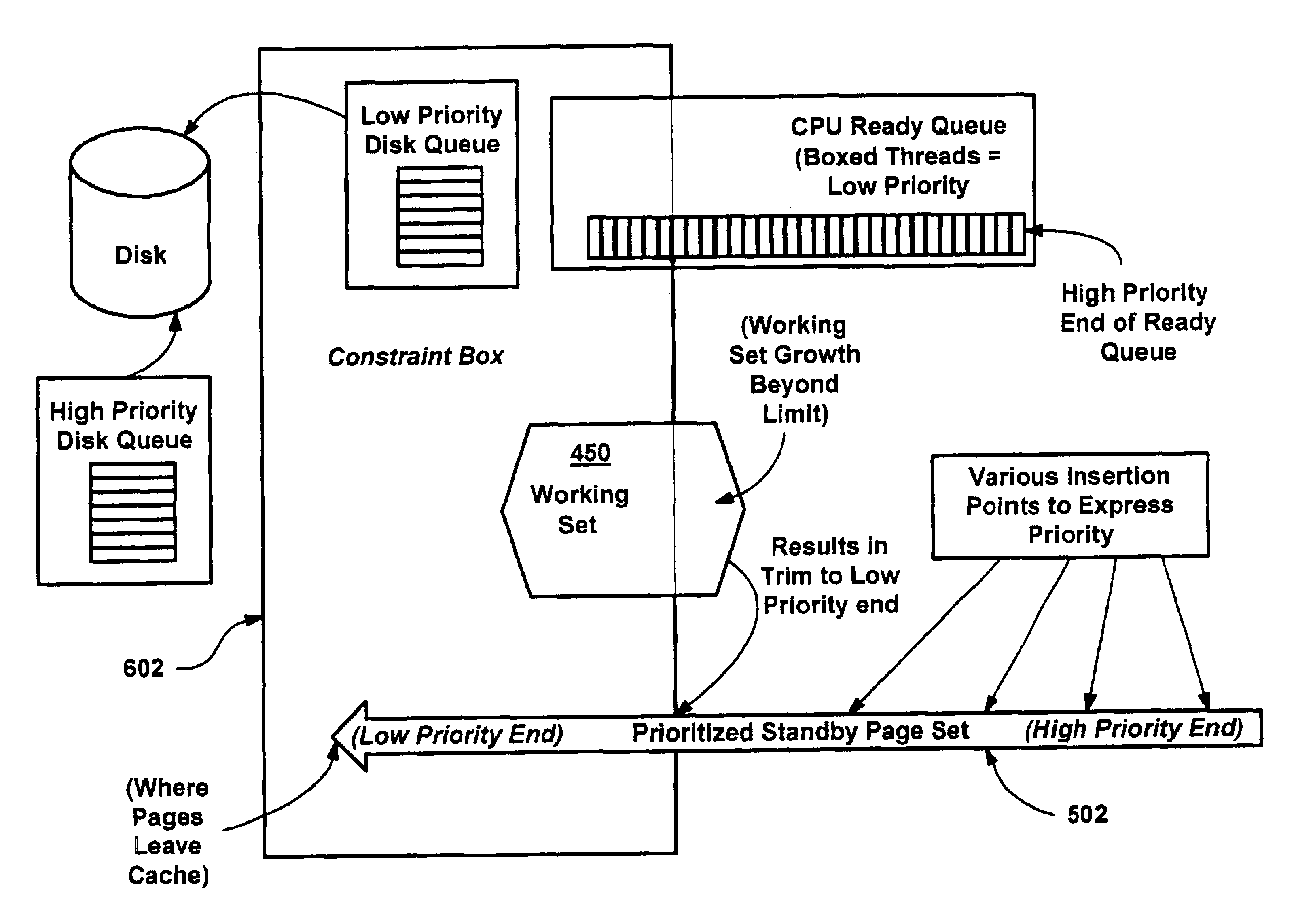

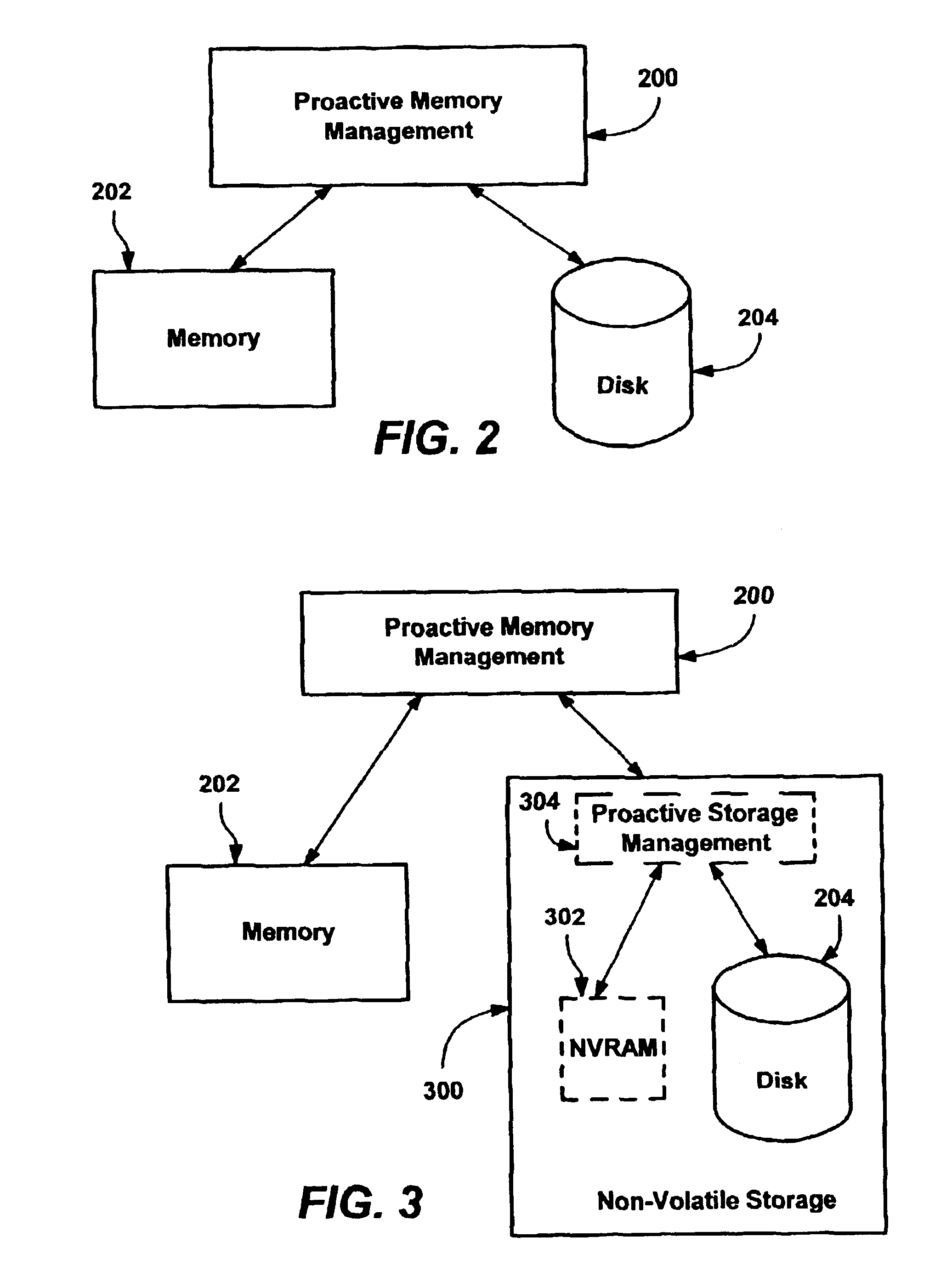

Methods and mechanisms for proactive memory management

InactiveUS20050228964A1Reduces or eliminates on-demand disk transfer operationsReduces or eliminates I/O bottlenecksInput/output to record carriersFault responseSelf-tuningTerm memory

A proactive, resilient and self-tuning memory management system and method that result in actual and perceived performance improvements in memory management, by loading and maintaining data that is likely to be needed into memory, before the data is actually needed. The system includes mechanisms directed towards historical memory usage monitoring, memory usage analysis, refreshing memory with highly-valued (e.g., highly utilized) pages, I / O pre-fetching efficiency, and aggressive disk management. Based on the memory usage information, pages are prioritized with relative values, and mechanisms work to pre-fetch and / or maintain the more valuable pages in memory. Pages are pre-fetched and maintained in a prioritized standby page set that includes a number of subsets, by which more valuable pages remain in memory over less valuable pages. Valuable data that is paged out may be automatically brought back, in a resilient manner. Benefits include significantly reducing or even eliminating disk I / O due to memory page faults.

Owner:MICROSOFT TECH LICENSING LLC

Methods and mechanisms for proactive memory management

InactiveUS6910106B2Raise priorityIncrease profitInput/output to record carriersFault responseSelf-tuningPaging

A proactive, resilient and self-tuning memory management system and method that result in actual and perceived performance improvements in memory management, by loading and maintaining data that is likely to be needed into memory, before the data is actually needed. The system includes mechanisms directed towards historical memory usage monitoring, memory usage analysis, refreshing memory with highly-valued (e.g., highly utilized) pages, I / O pre-fetching efficiency, and aggressive disk management. Based on the memory usage information, pages are prioritized with relative values, and mechanisms work to pre-fetch and / or maintain the more valuable pages in memory. Pages are pre-fetched and maintained in a prioritized standby page set that includes a number of subsets, by which more valuable pages remain in memory over less valuable pages. Valuable data that is paged out may be automatically brought back, in a resilient manner. Benefits include significantly reducing or even eliminating disk I / O due to memory page faults.

Owner:MICROSOFT TECH LICENSING LLC

Method and apparatus for a home network auto-tree builder

InactiveUS20010011284A1Special service provision for substationTelevision system detailsComputer networkCommand and control

A method and system is provided for detecting, commanding and controlling diverse home devices currently connected to a home network. An interface is provided for accessing the home devices that are currently connected to a home network. According to the method, a device link file is generated, wherein the device link file identifies home devices that are currently connected to the home network. A device link page is created, wherein the device link page contains a device button that is associated with each home device that is identified in the device link file. A hyper-text link is associated with each device button, wherein the hyper-text link provides a link to an HTML page that is contained on the home device that is associated with the device button, and the device link page is displayed on a browser based home device.

Owner:SAMSUNG ELECTRONICS CO LTD

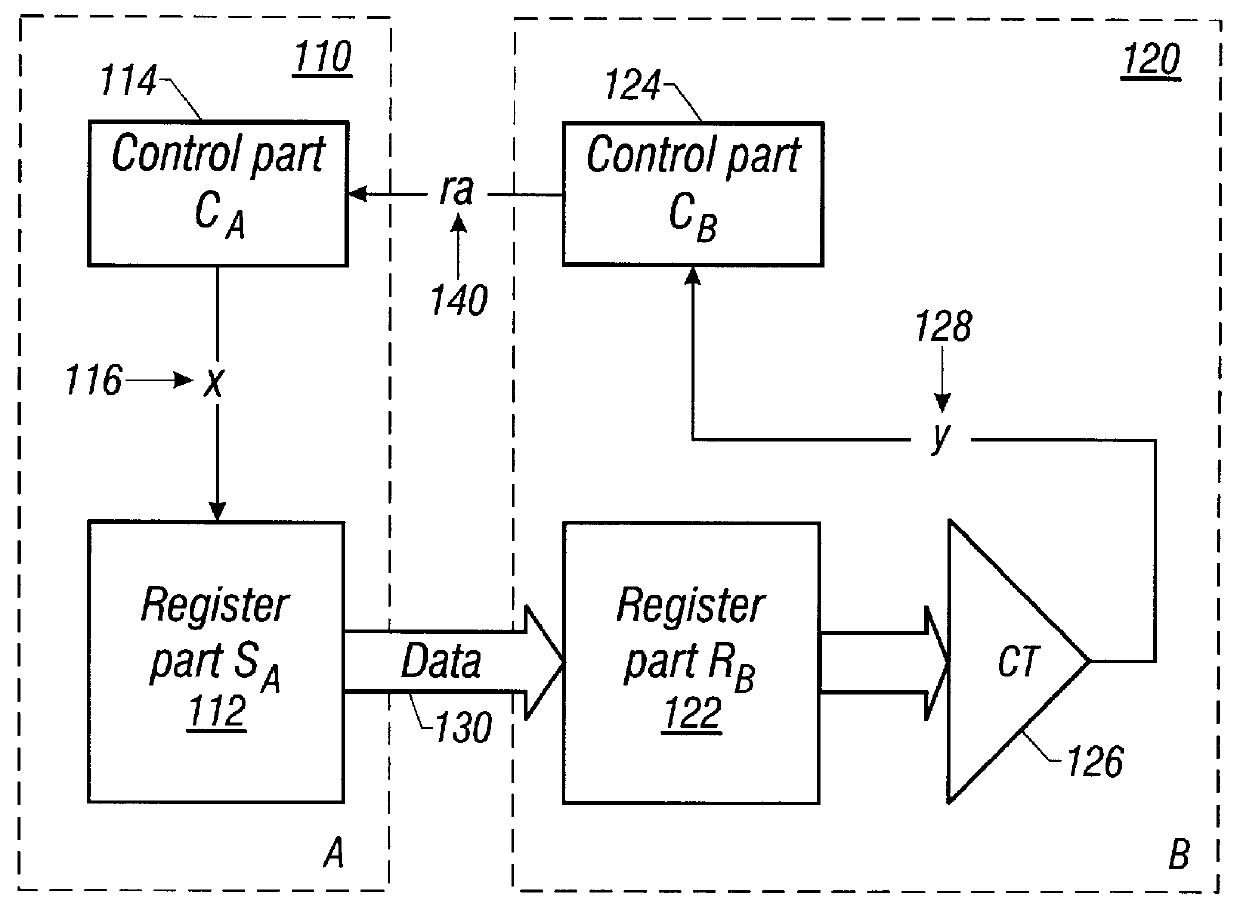

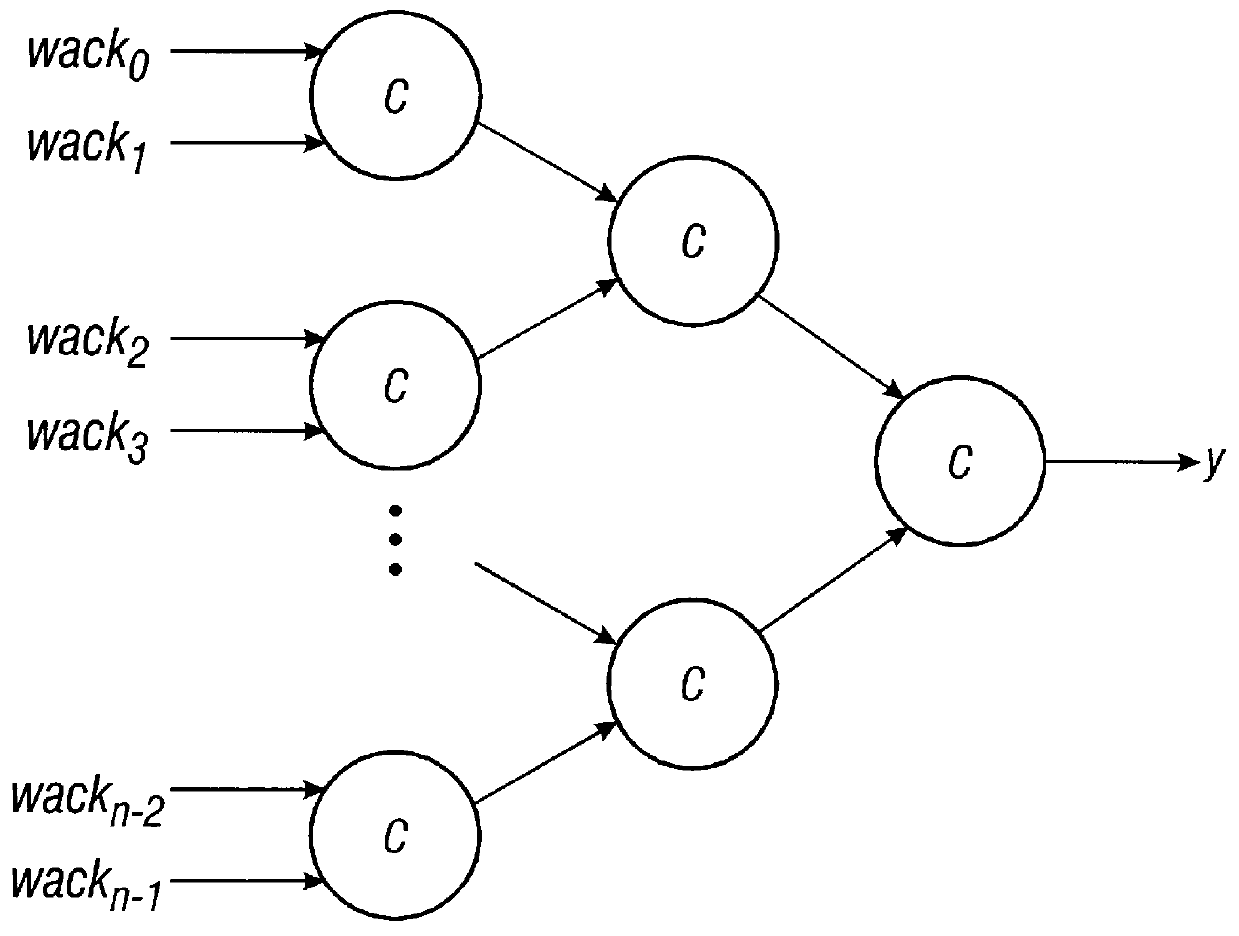

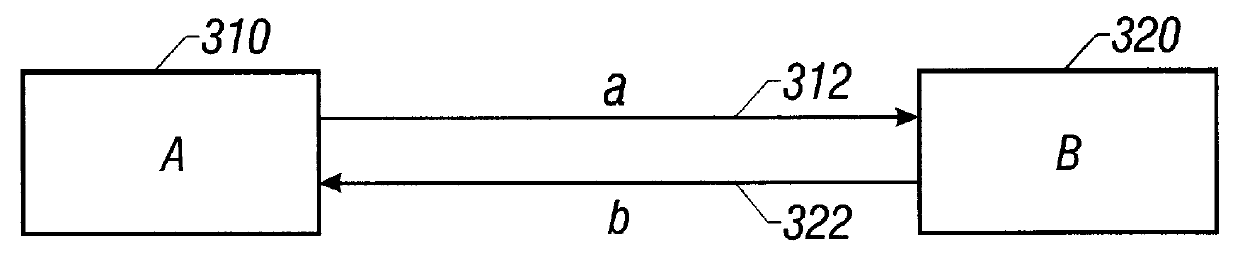

Pipelined completion for asynchronous communication

InactiveUS6038656AImprove throughputLower latencyArchitecture with single central processing unitMemory systemsAsynchronous circuitAsynchronous communication

Owner:CALIFORNIA INST OF TECH

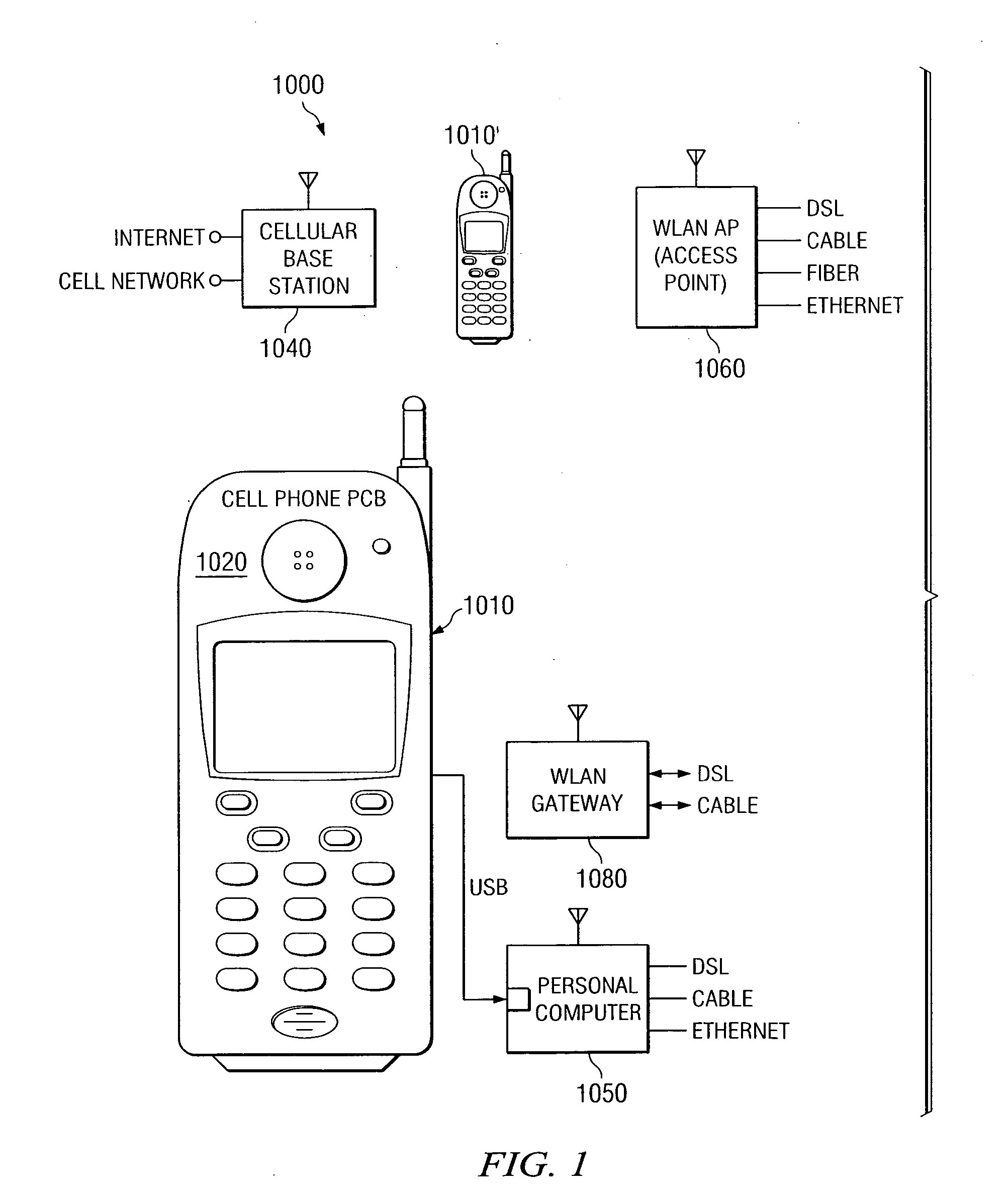

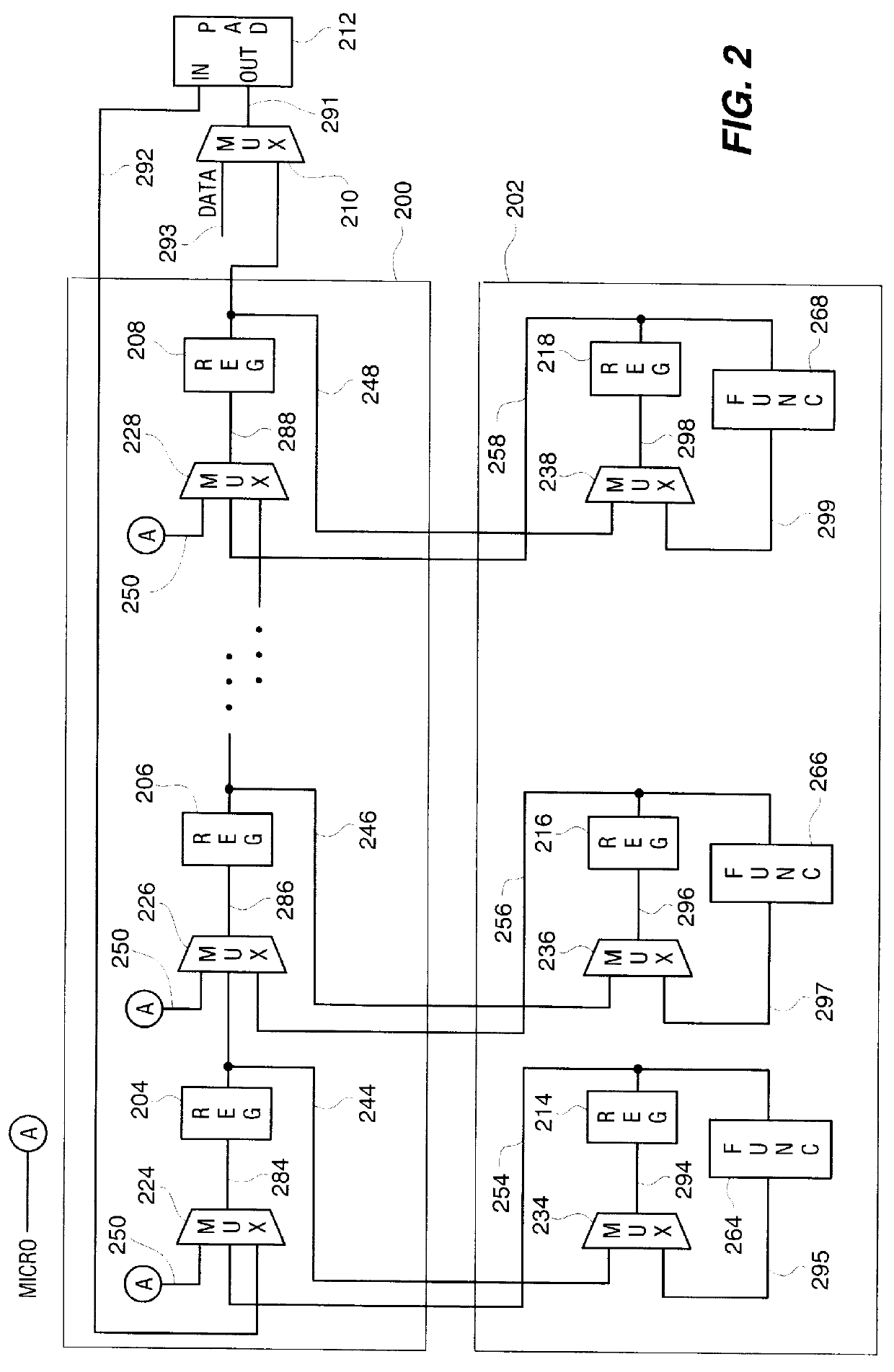

Processes, circuits, devices, and systems for scoreboard and other processor improvements

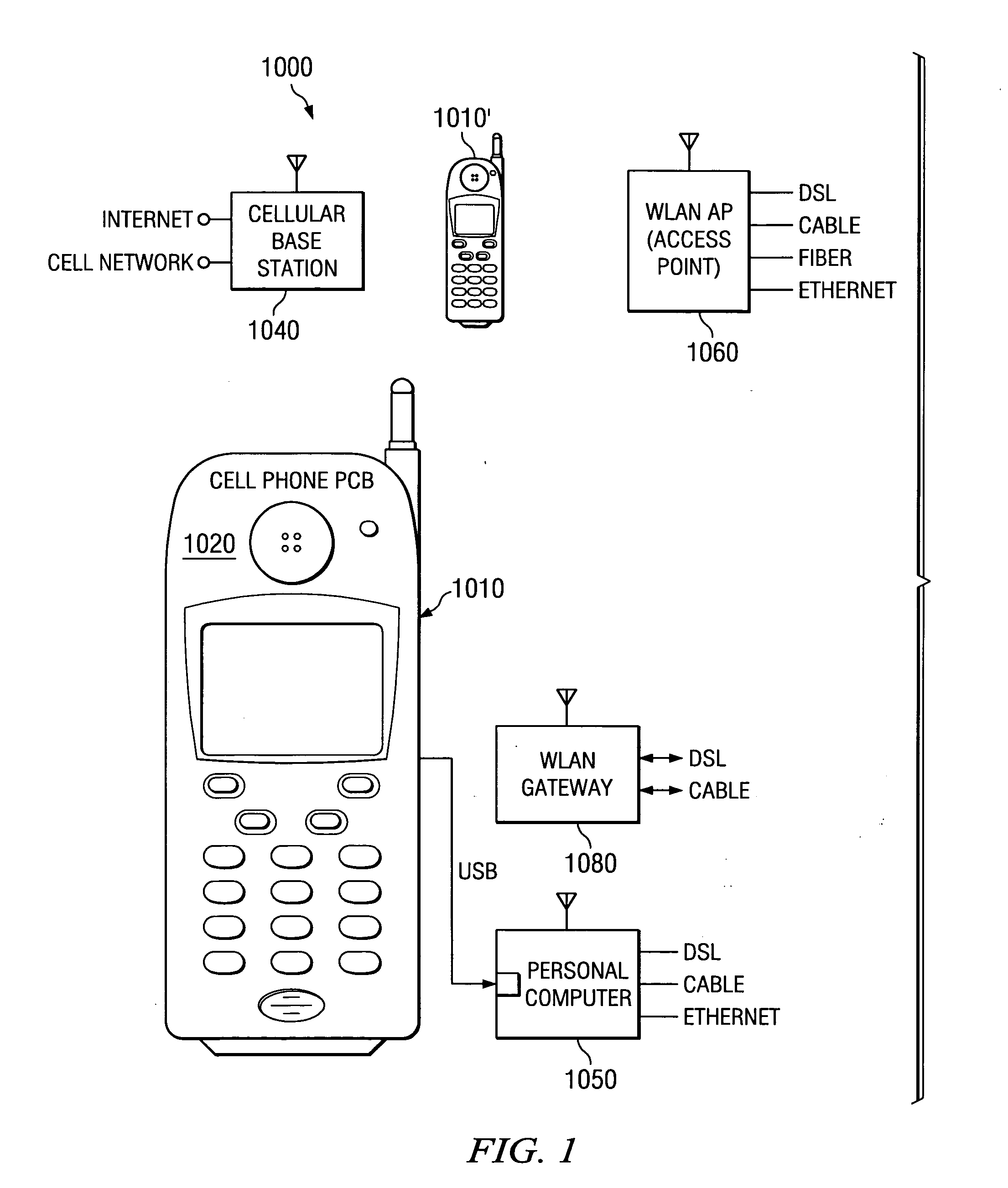

A method of instruction issue (3200) in a microprocessor (1100, 1400, or 1500) with execution pipestages (E1, E2, etc.) and that executes a producer instruction Ip and issues a candidate instruction I0 (3245) having a source operand dependency on a destination operand of instruction Ip. The method includes issuing the candidate instruction I0 as a function (1720, 1950, 1958, 3235) of a pipestage EN(I0) of first need by the candidate instruction for the source operand, a pipestage EA(Ip) of first availability of the destination operand from the producer instruction, and the one execution pipestage E(Ip) currently associated with the producer instruction. A method of data forwarding (3300) in a microprocessor (1100, 1400, or 1500) having a pipeline (1640) having pipestages (E1, E2, etc.), wherein the method includes scoreboarding information E(Ip) (1710, 2220) to represent a changing pipestage position for data from a producer instruction Ip, and selectively forwarding (2310, 3360) the data from the pipestage having the represented pipestage position E(Ip), based on the information (1710), to a receiving pipestage (1682, E1) for a dependent instruction. Wireless communications devices (1010, 1010′, 1040, 1050, 1060, 1080), systems, circuits, devices, scoreboards (1700.N), processes and methods of operation, processes and articles of manufacture (FIGS. 13-16), are also disclosed.

Owner:TEXAS INSTR INC

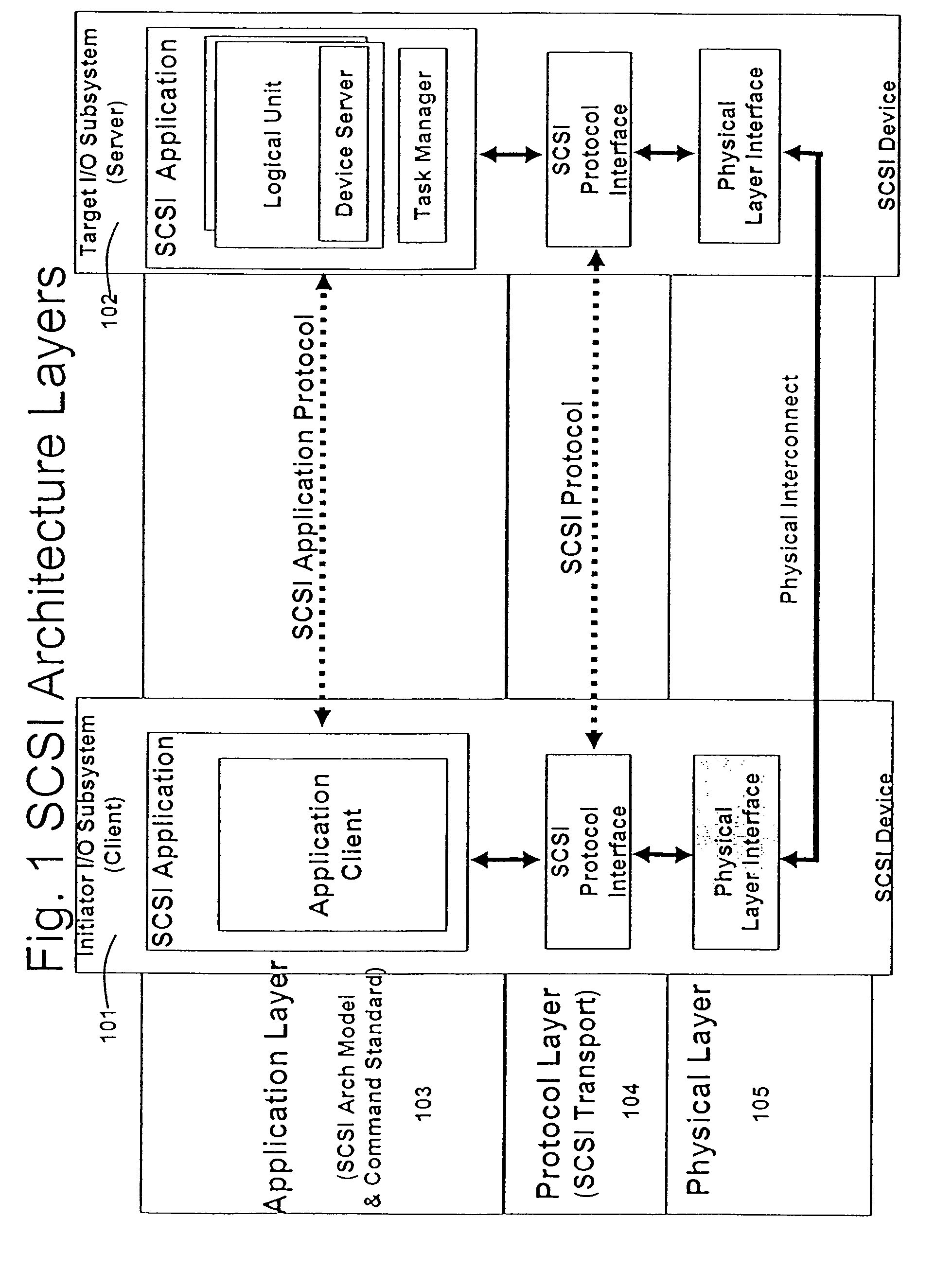

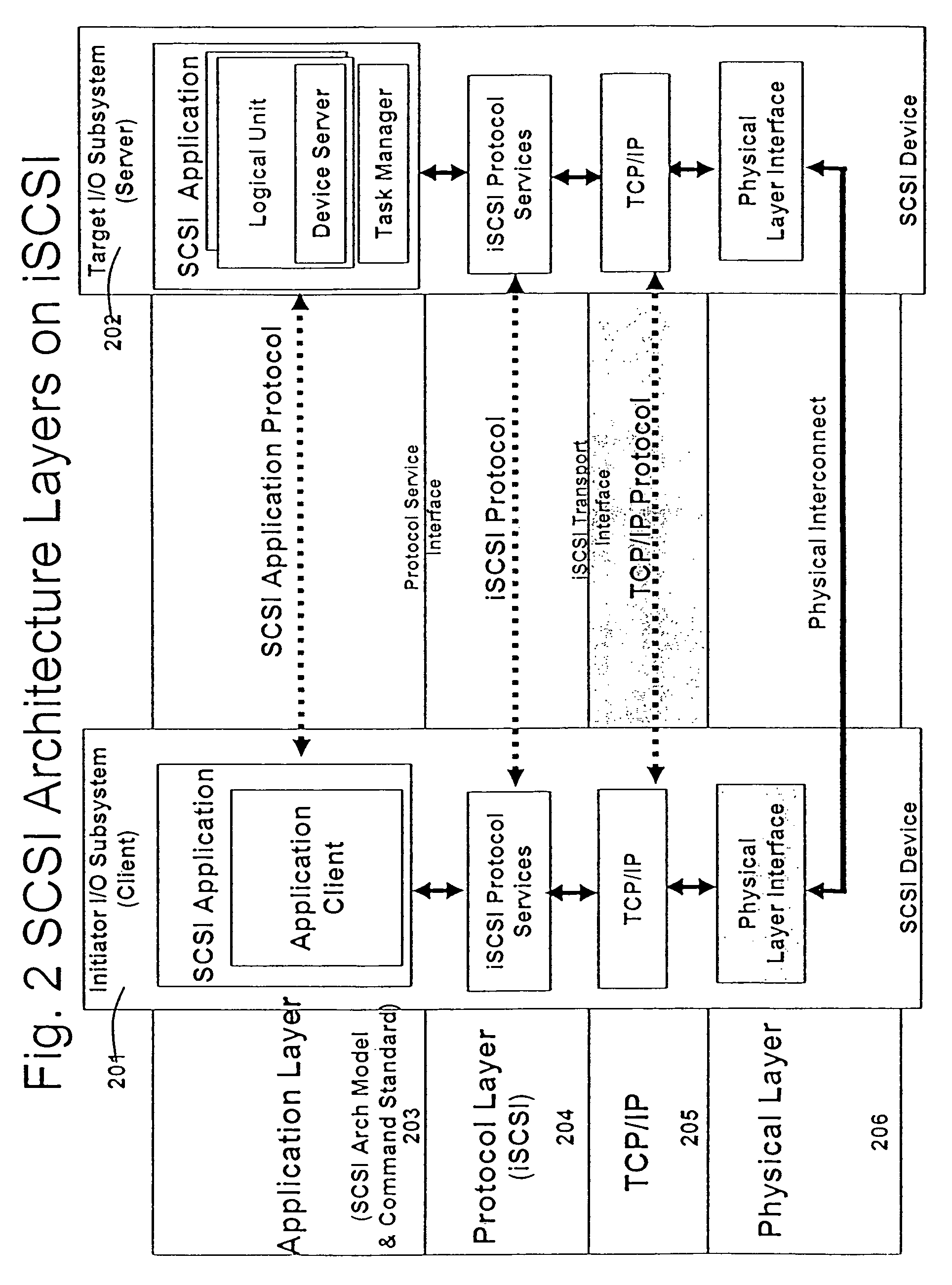

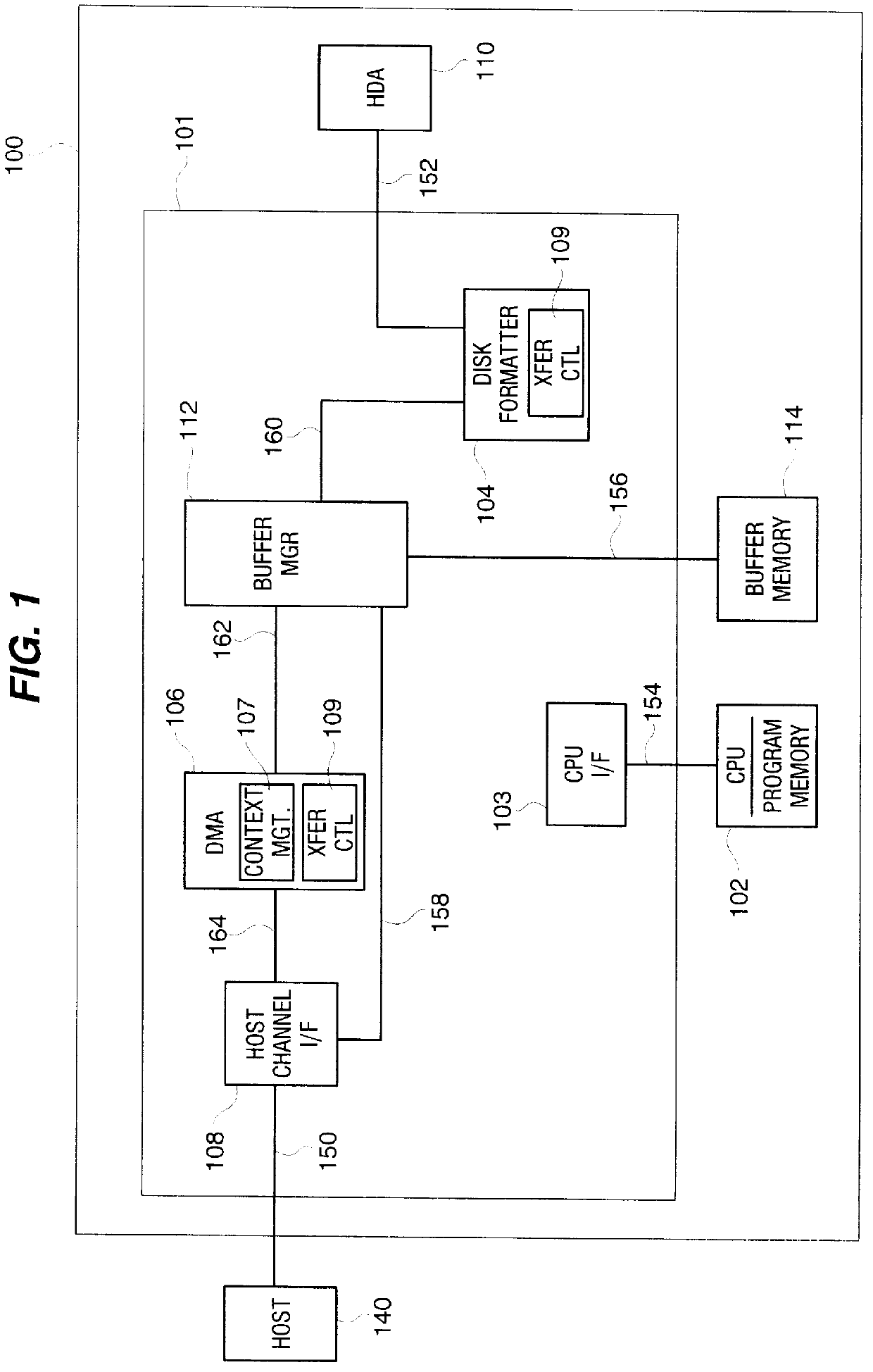

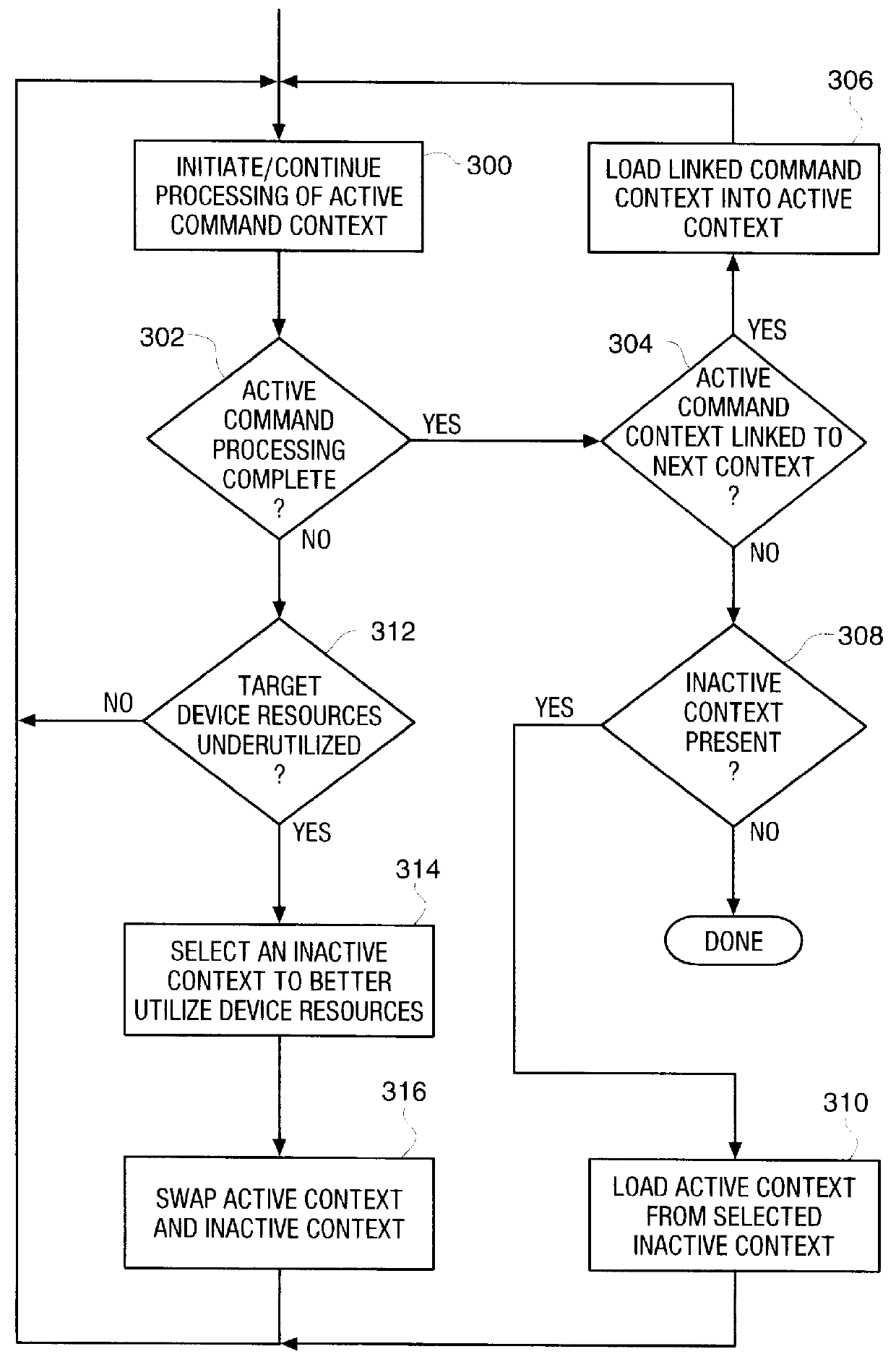

Method and structure for switching multiple contexts in storage subsystem target device

InactiveUS6081849AImprove overall utilizationLower latencyInput/output to record carriersMemory systemsMultiple contextSCSI

A storage target device controller (such as an embedded controller in a SCSI disk drive) processes multiple commands concurrently in accordance with the methods and structures of the present invention. Each command is stored within its own context within the target device controller to retain all unique parameters required for the processing of each command. Processing of multiple commands permits switching of command contexts within the target device to improve utilization of resources associated with the target device. For example, when a first, active, command context is prevented from further processing due to the status of the disk channel, an inactive command context may be swapped with the active command context to better utilize the host channel communication bandwidth. Similarly, a first active command context may be configured to automatically switch to a linked command context upon completion of processing to further ease management of multiple contexts. In a preferred embodiment of the present invention, a set of registers contain the active context while a second set of registers contains an inactive command context. The sets of registers are configured in such a way that the active and inactive context may be rapidly switched with no intervention by the microprocessor. The inactive register set may be read or written directly by the microprocessor, or may be automatically loaded / stored from / to a buffer memory in the target device by shifting a predetermined context structure into the inactive register set through an interface pad with the buffer memory.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

Virtual supercomputer

ActiveUS7774191B2Improve efficiencyFinanceResource allocationInformation processingOperational system

The virtual supercomputer is an apparatus, system and method for generating information processing solutions to complex and / or high-demand / high-performance computing problems, without the need for costly, dedicated hardware supercomputers, and in a manner far more efficient than simple grid or multiprocessor network approaches. The virtual supercomputer consists of a reconfigurable virtual hardware processor, an associated operating system, and a set of operations and procedures that allow the architecture of the system to be easily tailored and adapted to specific problems or classes of problems in a way that such tailored solutions will perform on a variety of hardware architectures, while retaining the benefits of a tailored solution that is designed to exploit the specific and often changing information processing features and demands of the problem at hand.

Owner:VERISCAPE

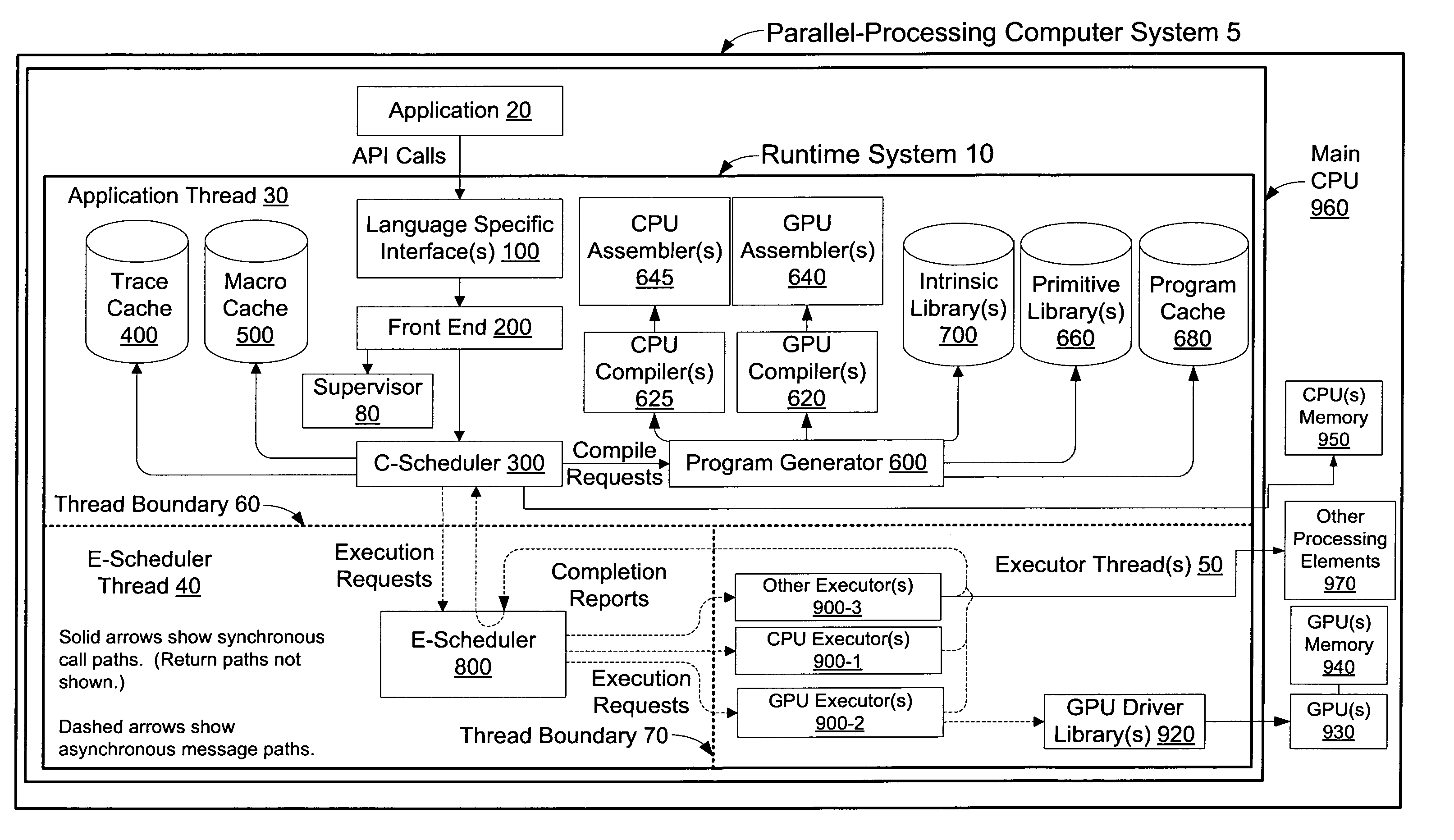

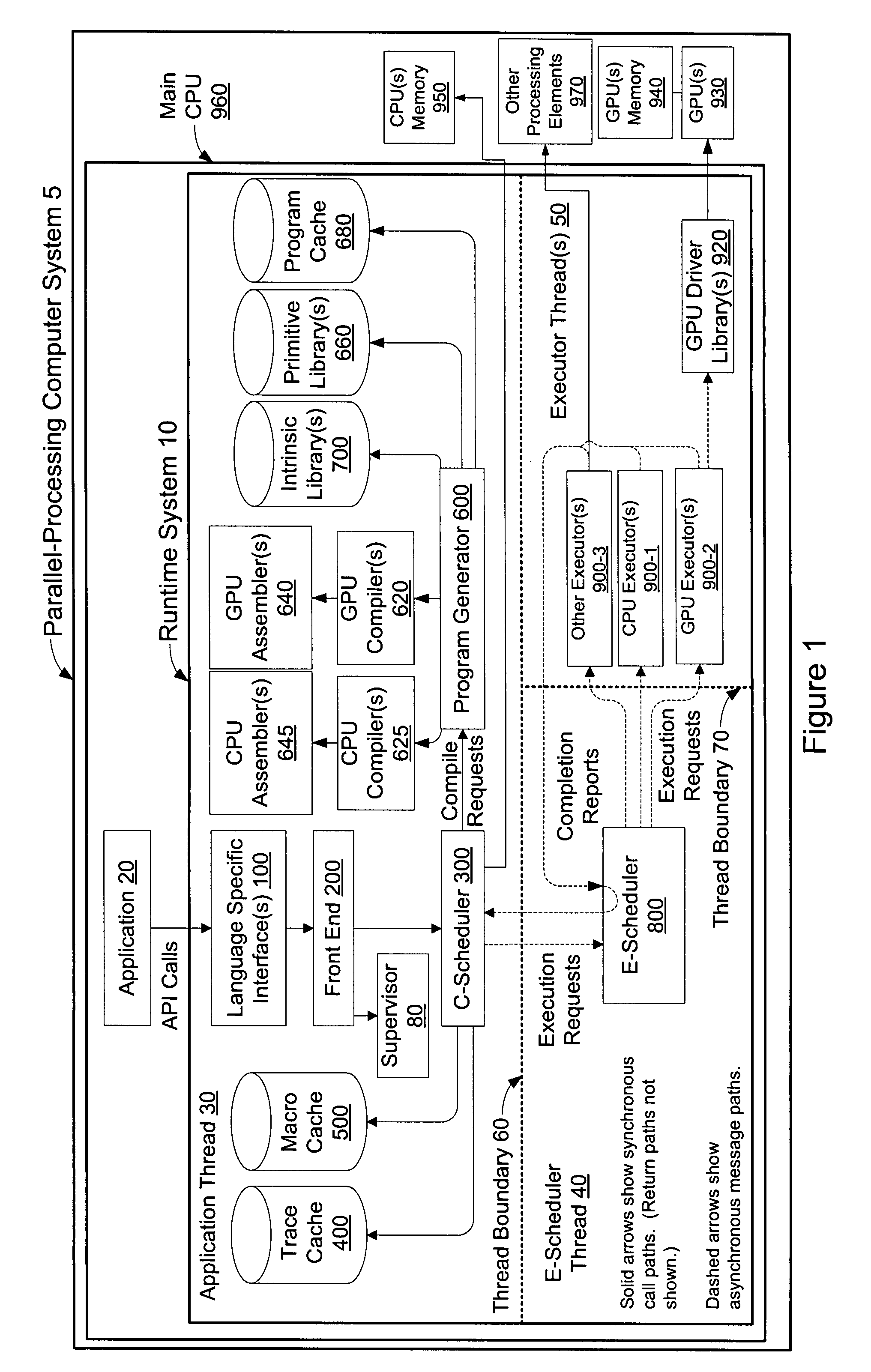

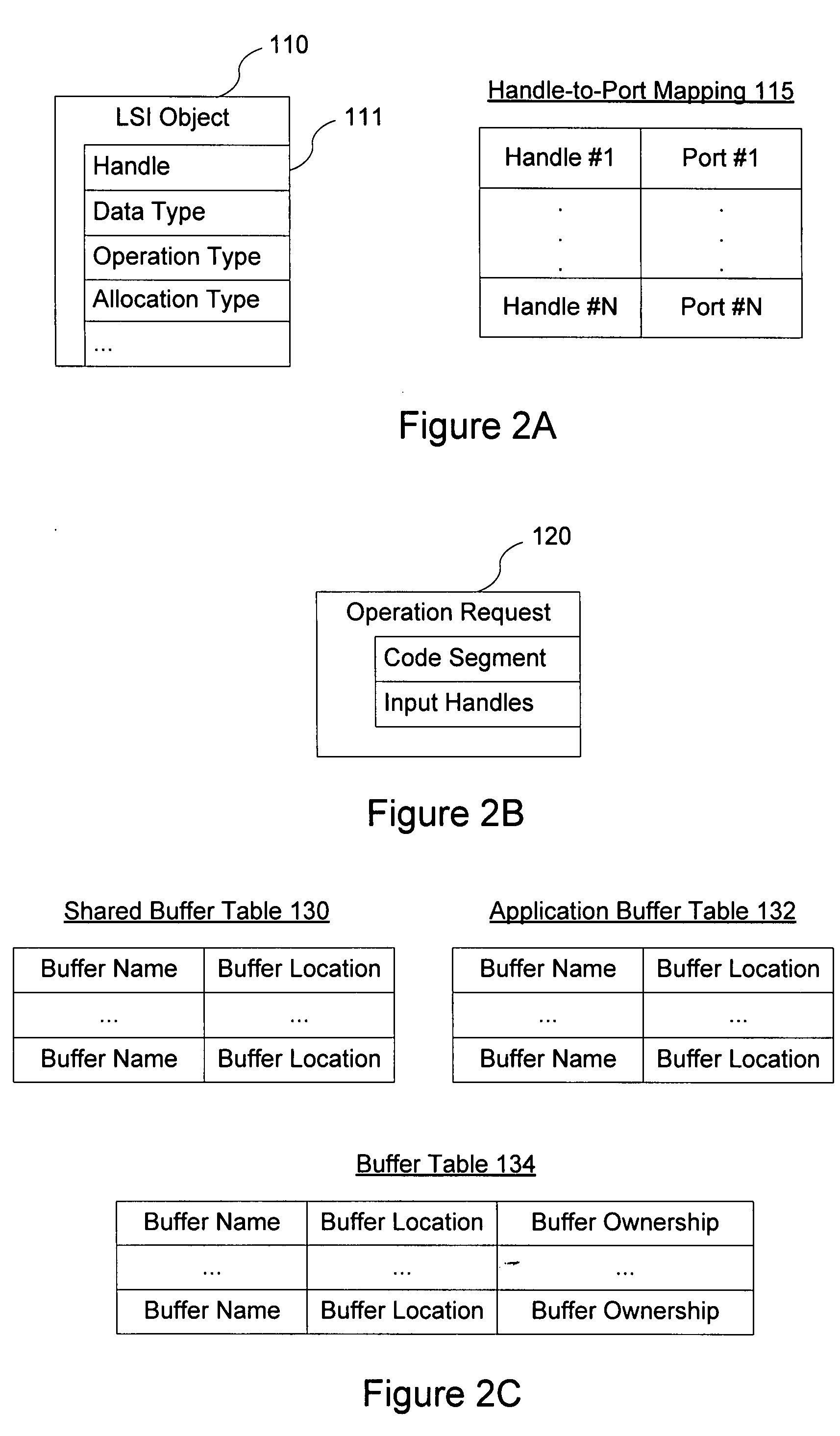

Systems and methods for dynamically choosing a processing element for a compute kernel

ActiveUS20070294512A1Error detection/correctionSoftware engineeringPerformance computingProcessing element

A runtime system implemented in accordance with the present invention provides an application platform for parallel-processing computer systems. Such a runtime system enables users to leverage the computational power of parallel-processing computer systems to accelerate / optimize numeric and array-intensive computations in their application programs. This enables greatly increased performance of high-performance computing (HPC) applications.

Owner:GOOGLE LLC

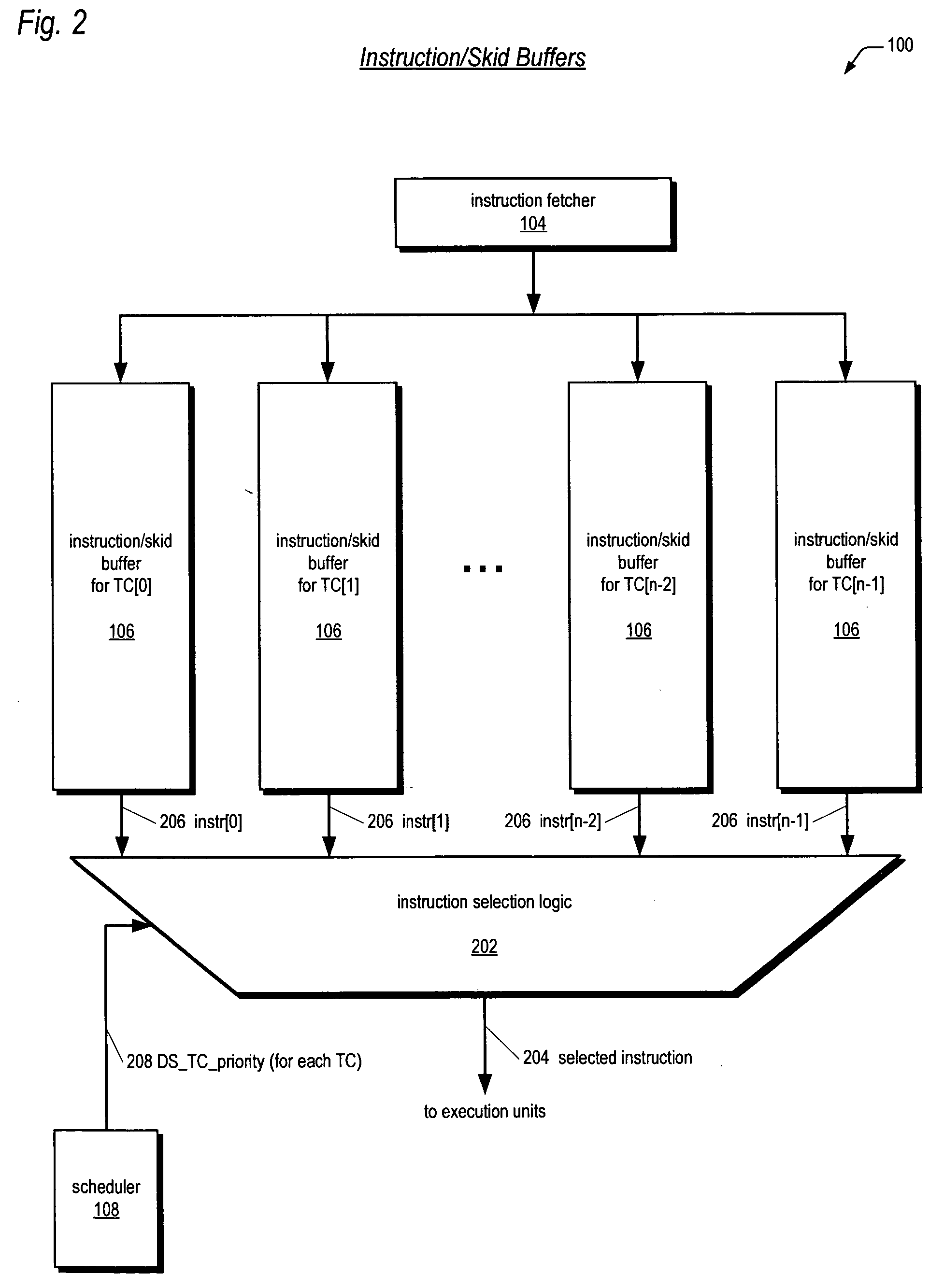

Multithreading instruction scheduler employing thread group priorities

A multithreading processor with an efficient and fair thread scheduler is disclosed. The scheduler enables threads to be grouped and a priority assigned to each group of threads. Round-robin order is maintained for each group. Consequently, the group priorities may be changed relatively frequently in order to obtain the benefits of not starving threads that require relatively low bandwidth and of interleaving instruction dispatch of multiple independent threads to enjoy pipeline efficiencies, and as long as the group populations are changed relatively infrequently, round-robin order fairness is provided within the groups in case multiple threads in a group have issuable instructions.

Owner:ARM FINANCE OVERSEAS LTD

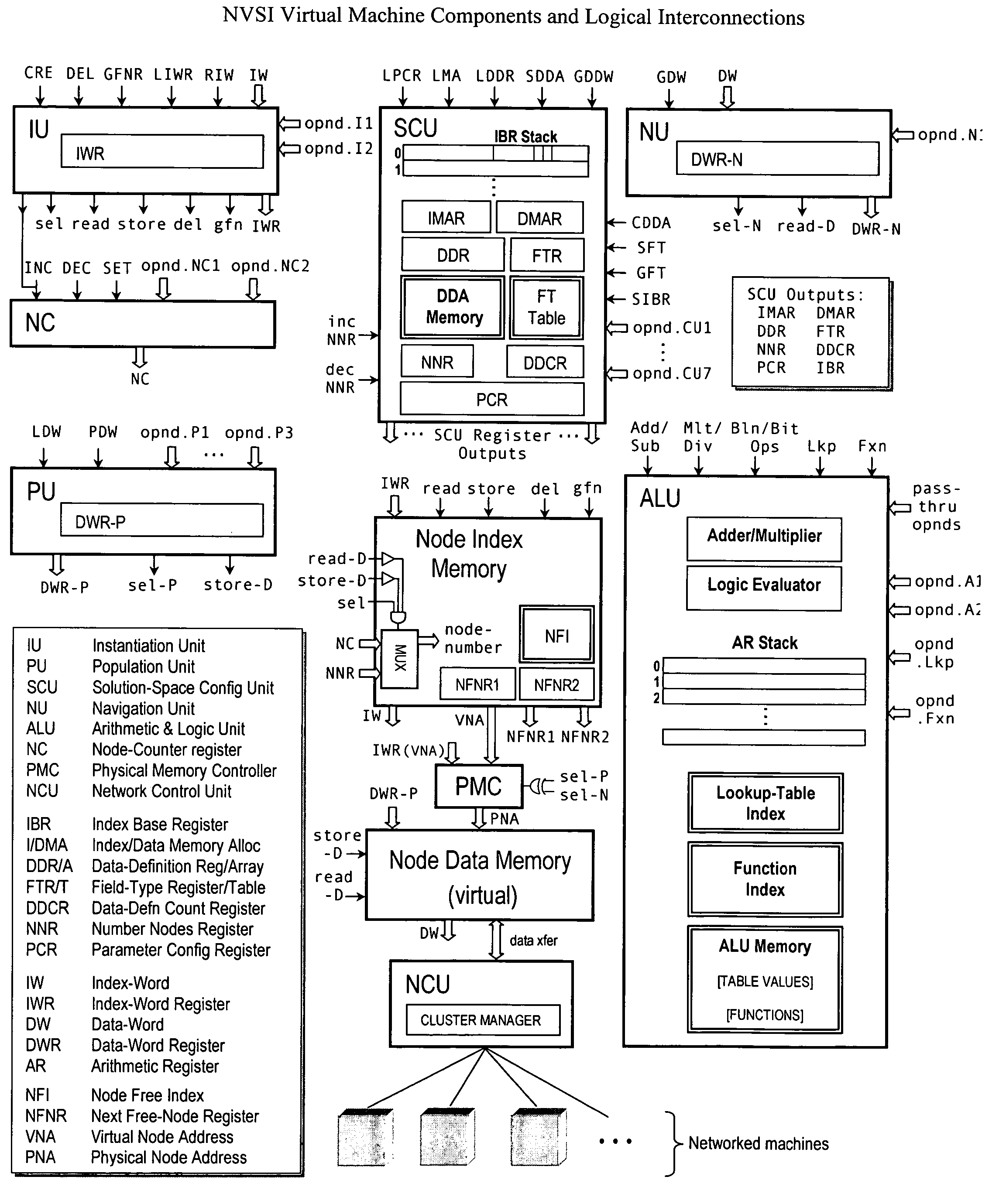

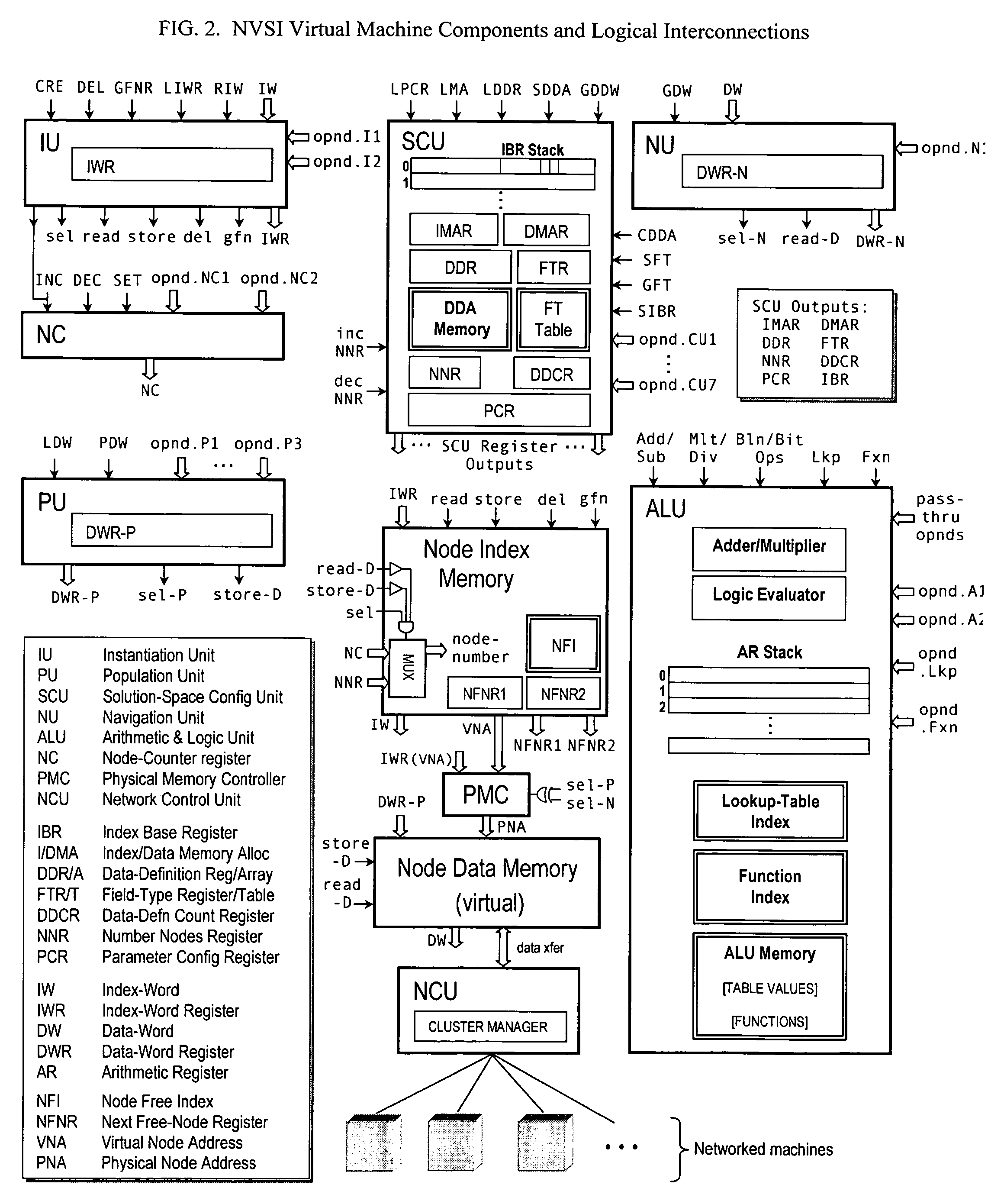

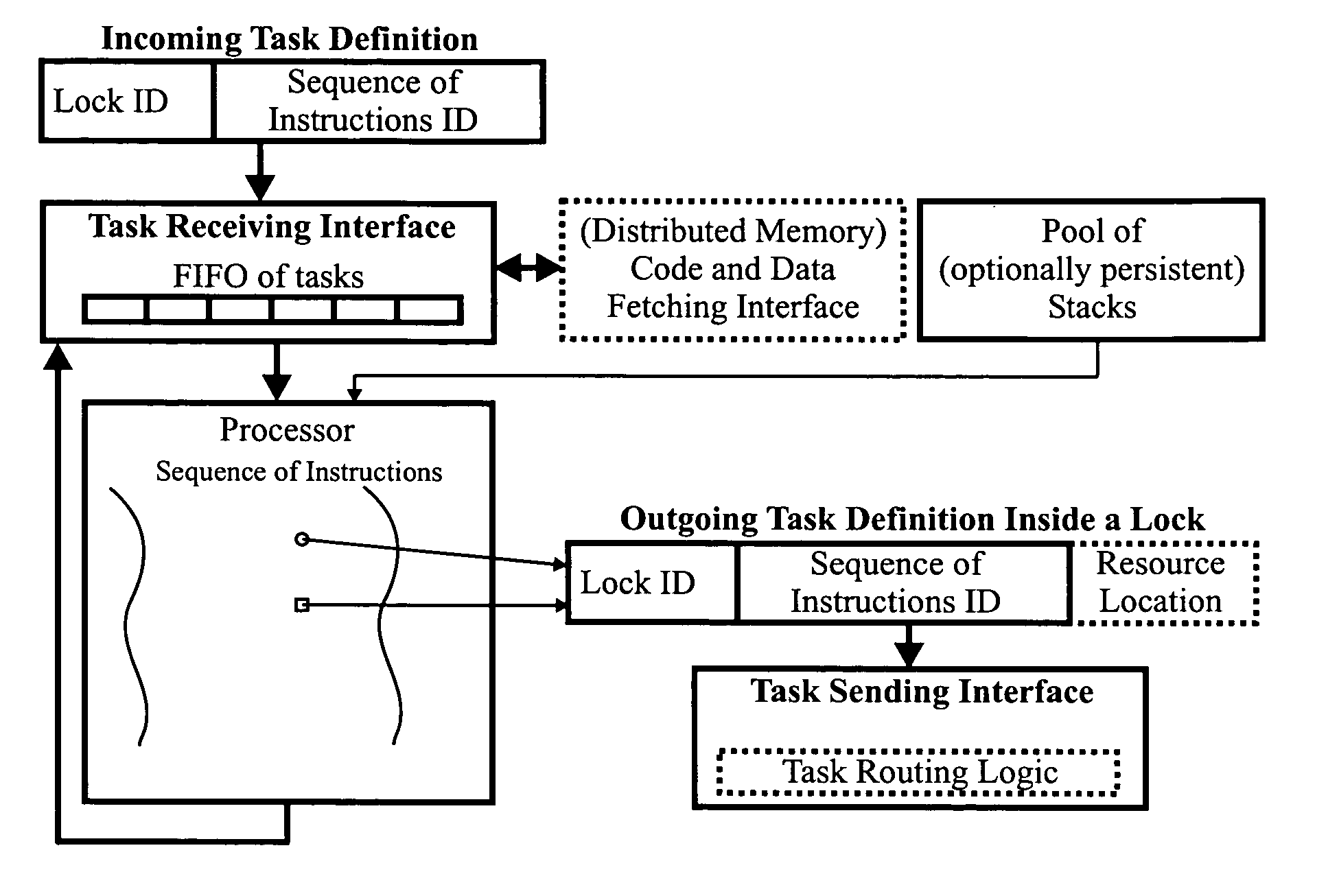

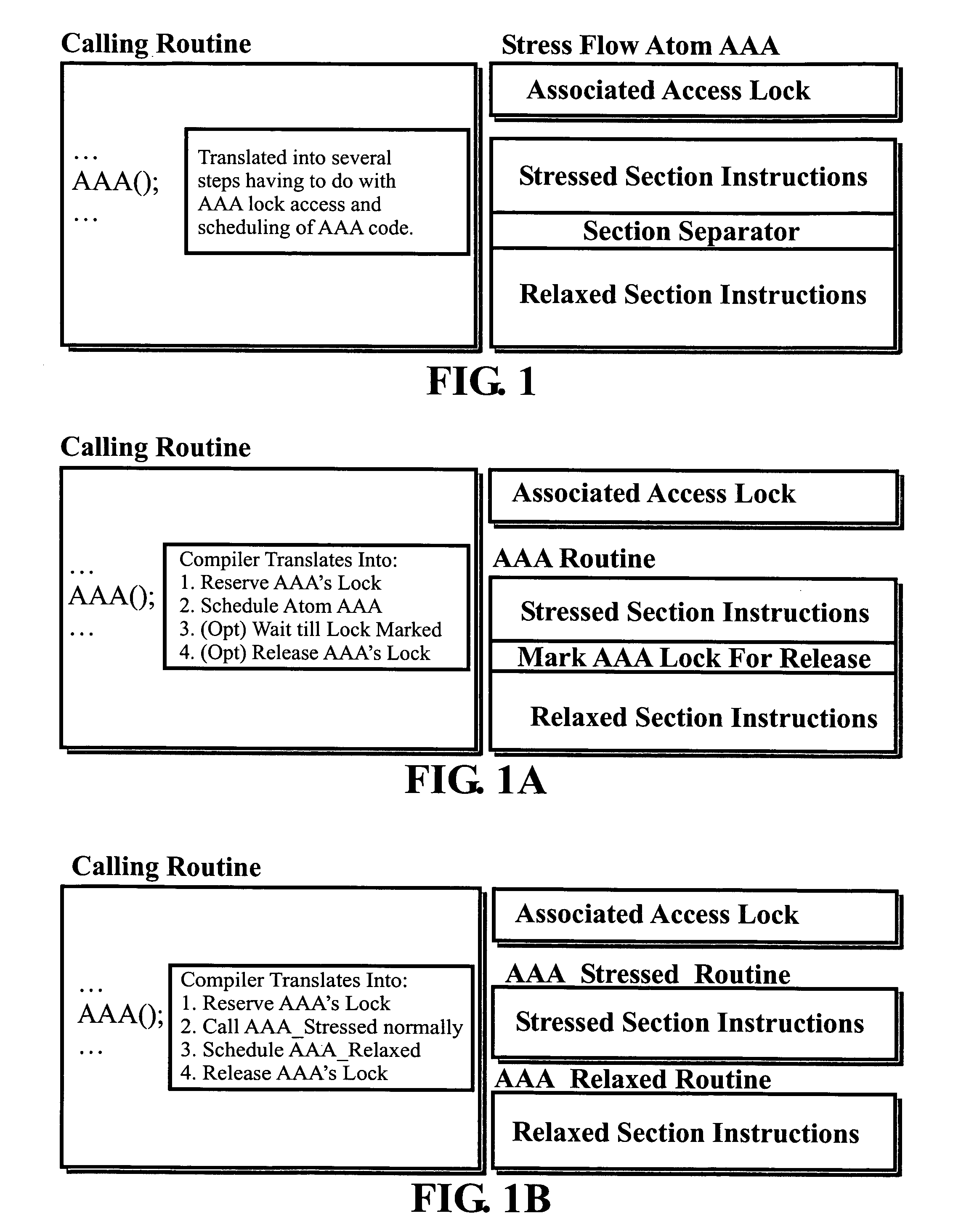

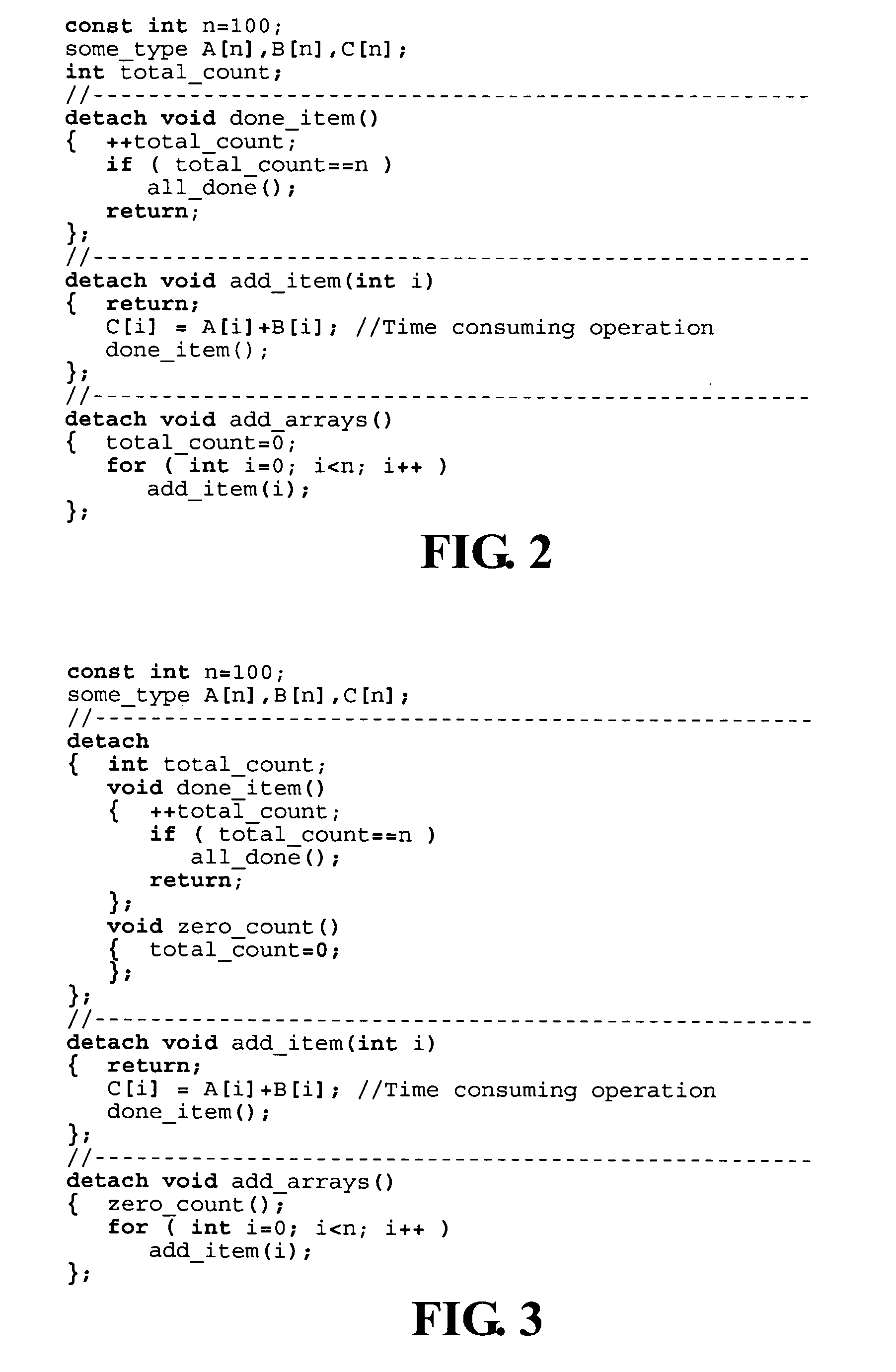

Object-oriented, parallel language, method of programming and multi-processor computer

This invention relates to architecture and synchronization of multi-processor computing hardware. It establishes a new method of programming, process synchronization, and of computer construction, named stress-flow by the inventor, allowing benefits of both opposing legacy concepts of programming (namely of both data-flow and control flow) within one cohesive, powerful, object-oriented scheme. This invention also relates to construction of object-oriented, parallel computer languages, script and visual, together with compiler construction and method to write programs to be executed in fully parallel (or multi-processor) architectures, virtually parallel, and single-processor multitasking computer systems.

Owner:JANCZEWSKA NATALIA URSZULA +2

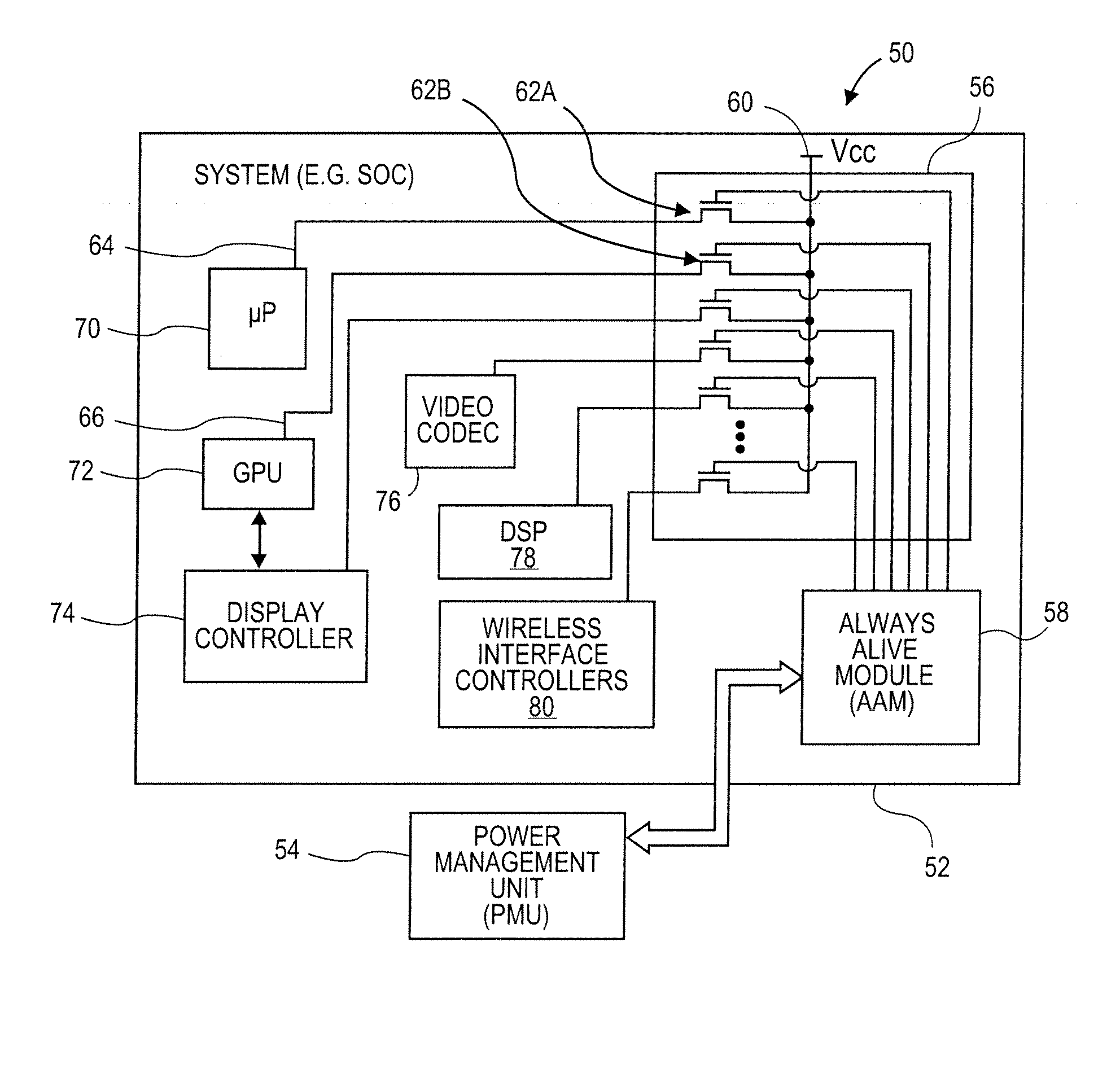

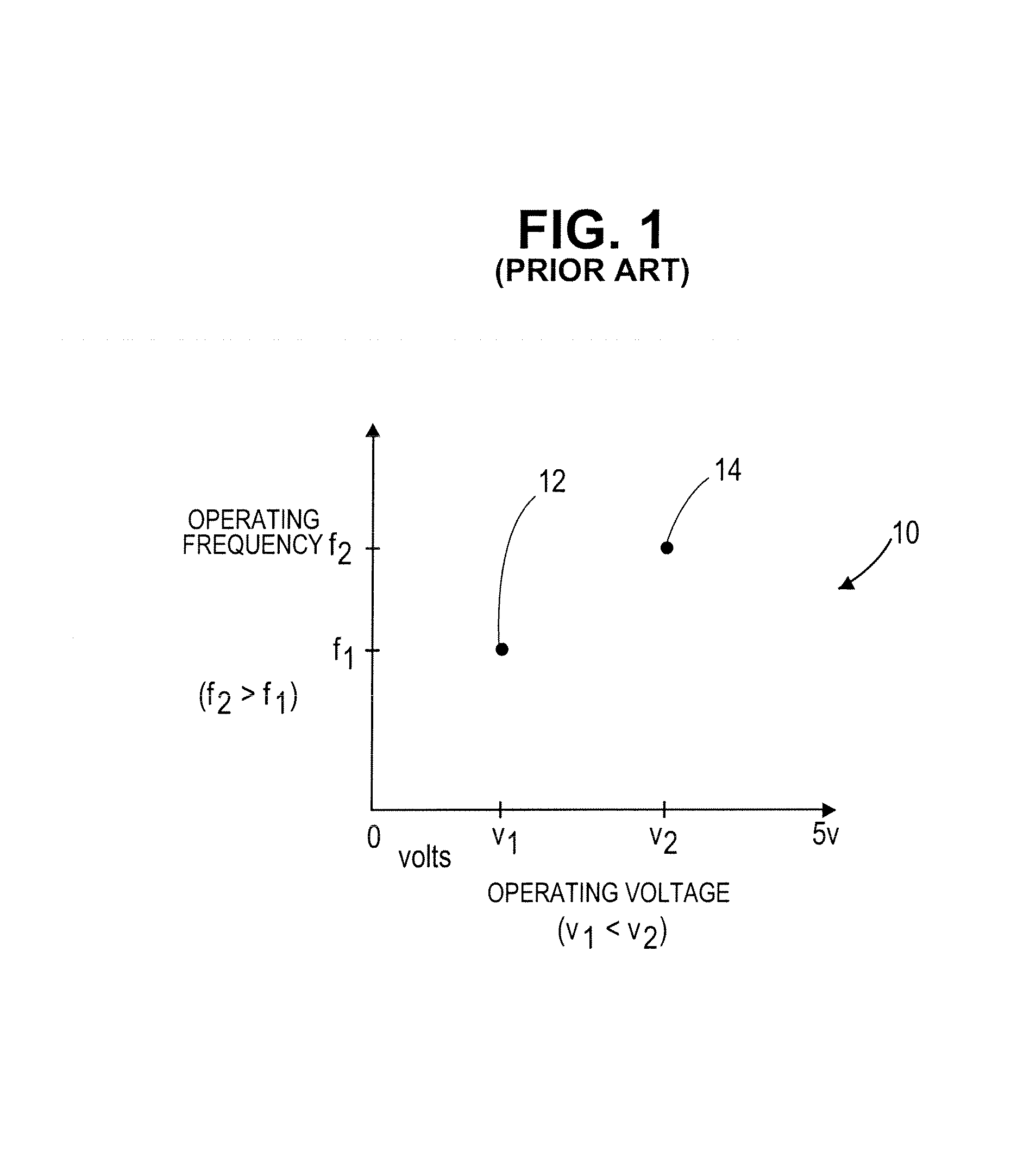

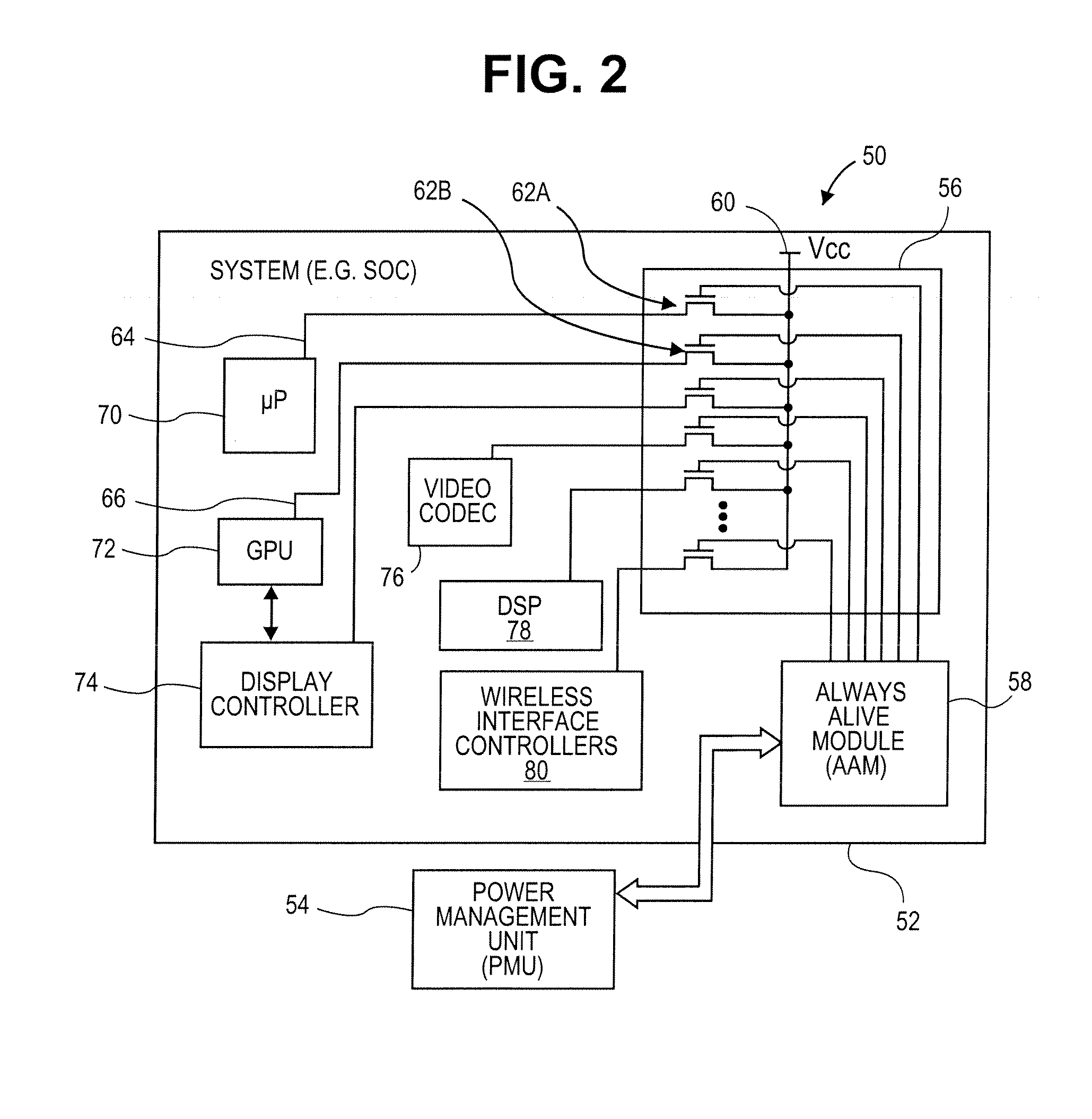

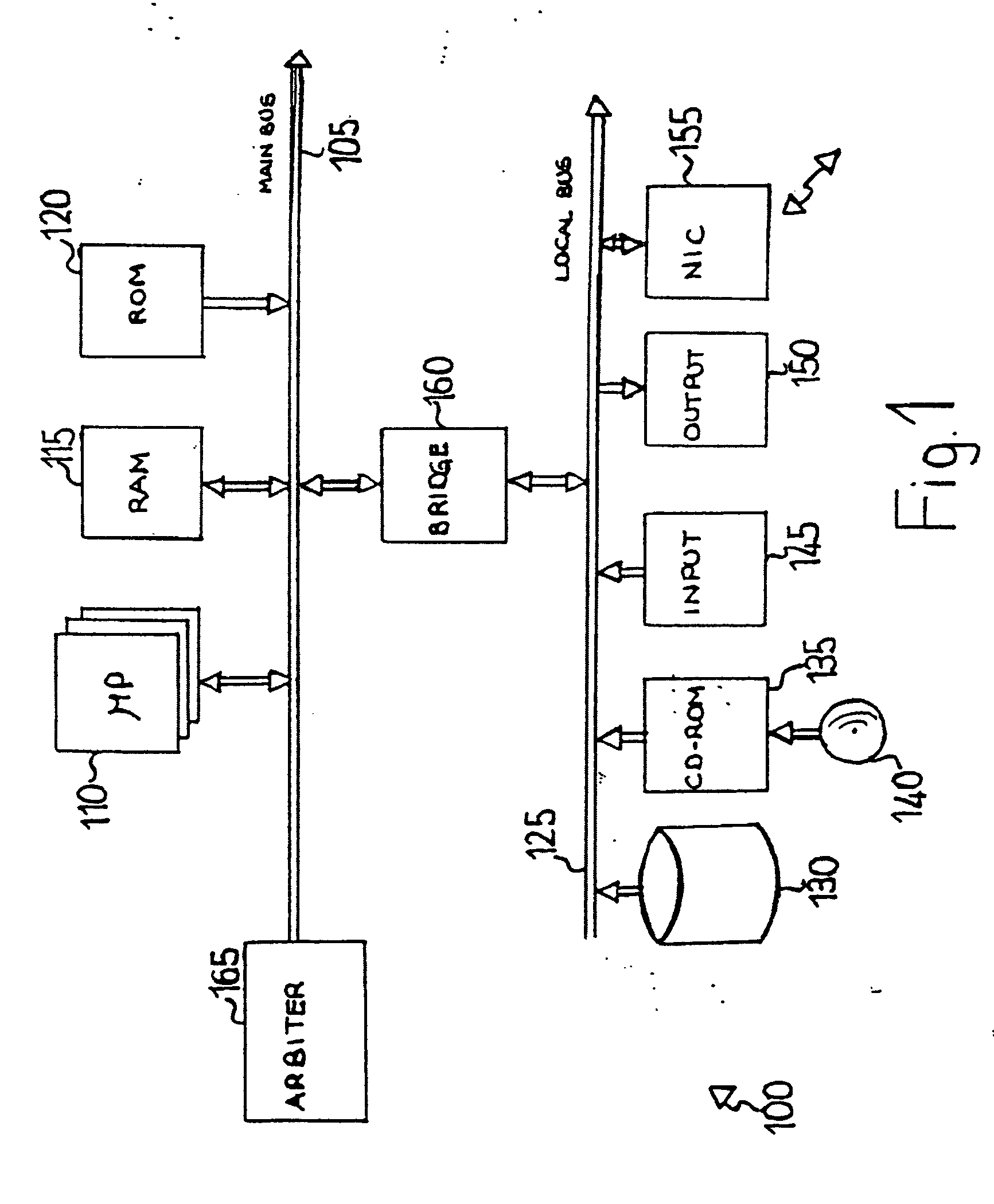

Methods and Systems for Power Management in a Data Processing System

ActiveUS20080168285A1Lower latencyReduce total powerEnergy efficient ICTVolume/mass flow measurementData processing systemGeneral purpose

Methods and systems for managing power consumption in data processing systems are described. In one embodiment, a data processing system includes a general purpose processing unit, a graphics processing unit (GPU), at least one peripheral interface controller, at least one bus coupled to the general purpose processing unit, and a power controller coupled to at least the general purpose processing unit and the GPU. The power controller is configured to turn power off for the general purpose processing unit in response to a first state of an instruction queue of the general purpose processing unit and is configured to turn power off for the GPU in response to a second state of an instruction queue of the GPU. The first state and the second state represent an instruction queue having either no instructions or instructions for only future events or actions.

Owner:APPLE INC

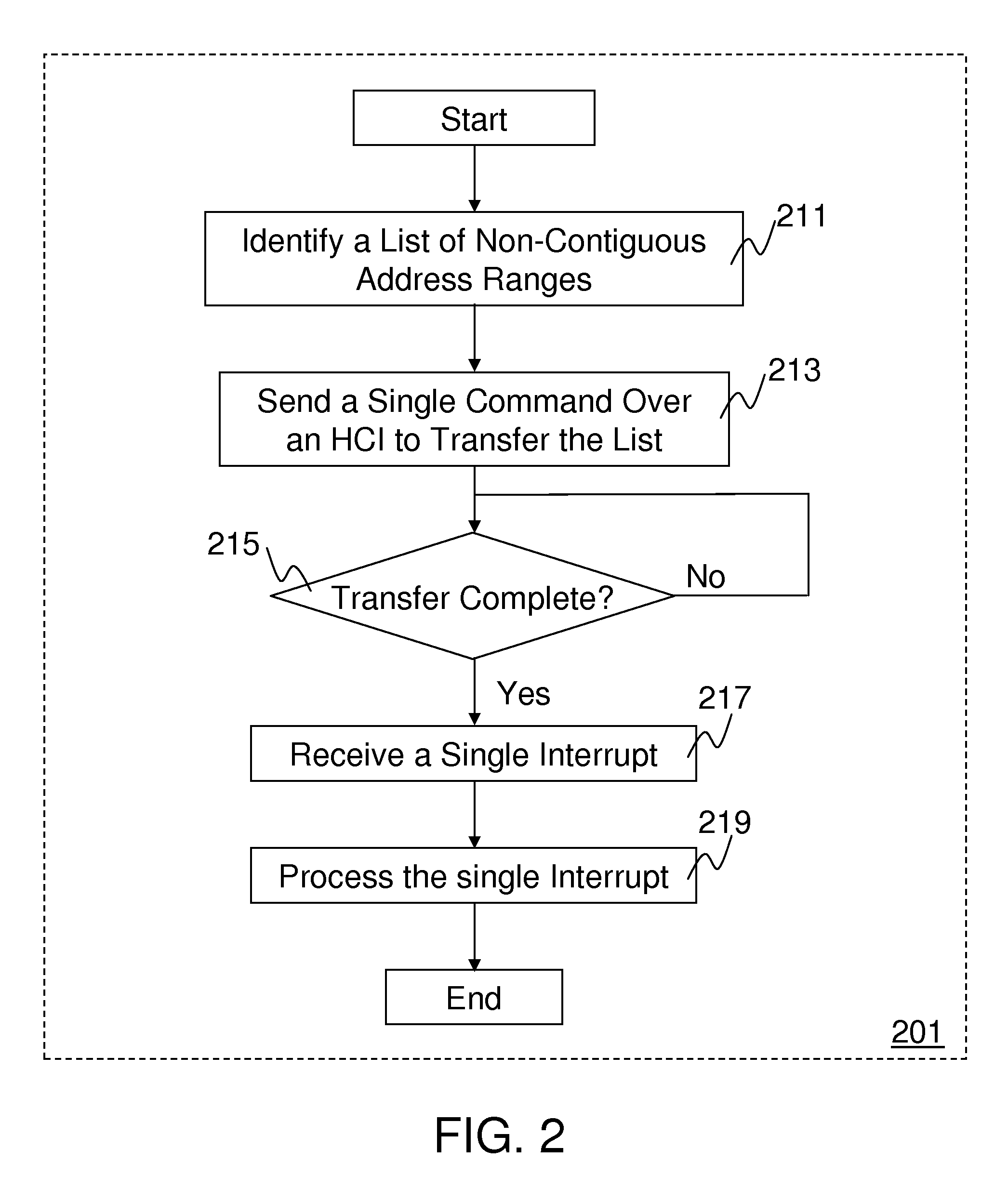

Method and system for queuing transfers of multiple non-contiguous address ranges with a single command

ActiveUS20100161936A1Input/output to record carriersProgram control using stored programsHost controller interfaceSoftware

Methods and systems for queuing transfers of multiple non-contiguous address ranges within a single command are disclosed. Embodiments of systems include system processors, memory to store data and executable software, and storage devices to receive transfer commands stored in system memory. A host controller interface driver is executed by one or more system processors and collects multiple non-continuous address ranges from storage-device transfer requests and records starting addresses and quantities of data to transfer for each non-continuous range in a tagged command list. It records the number of address ranges in the tagged command list, and a tagged-transfer opcode in a command, and stores the command and the tagged command list in a command table for the storage device. It records a base address for the command table in memory and an offset for the tagged command list into a command header, which is stored in a command queue.

Owner:INTEL CORP

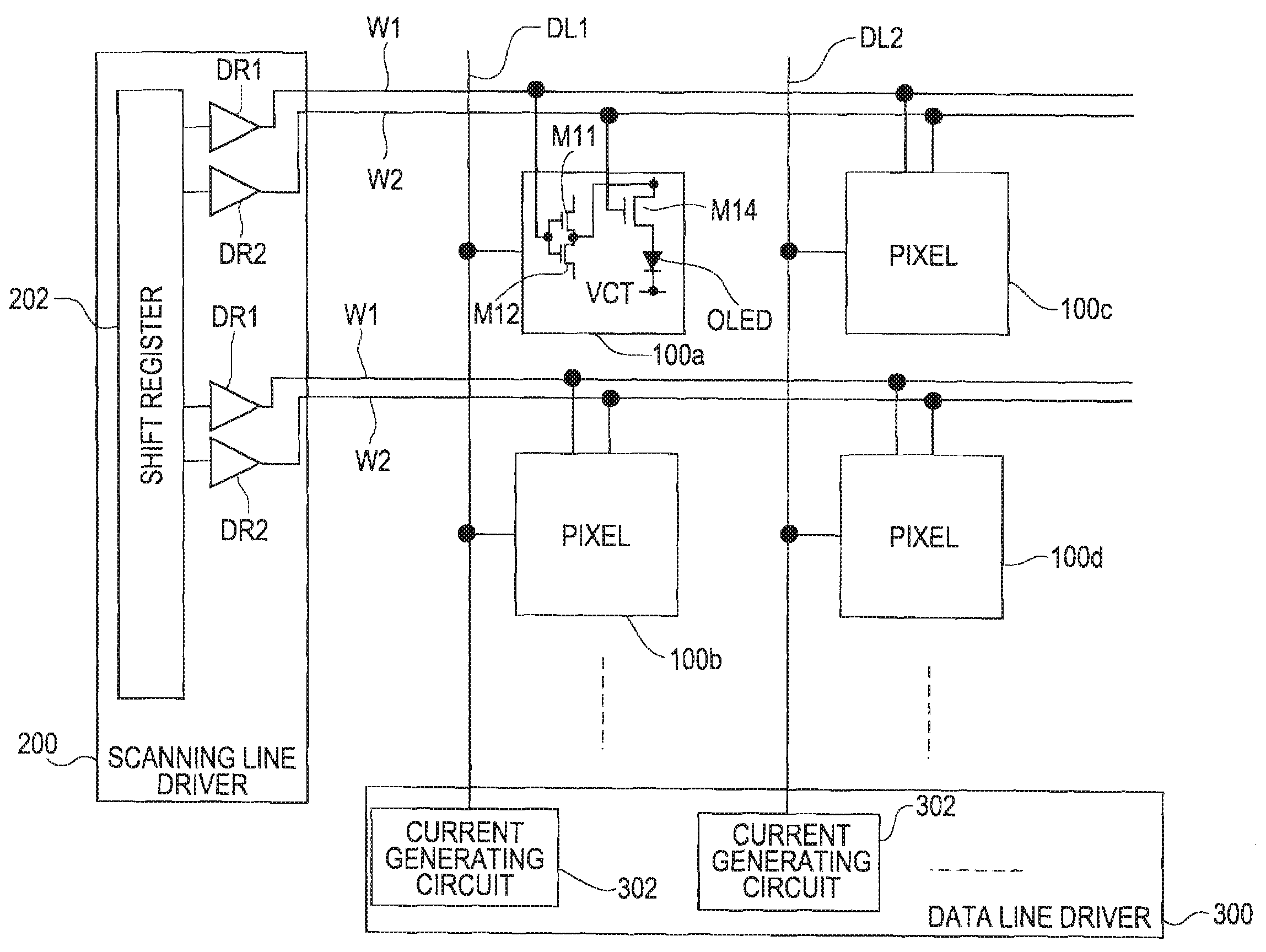

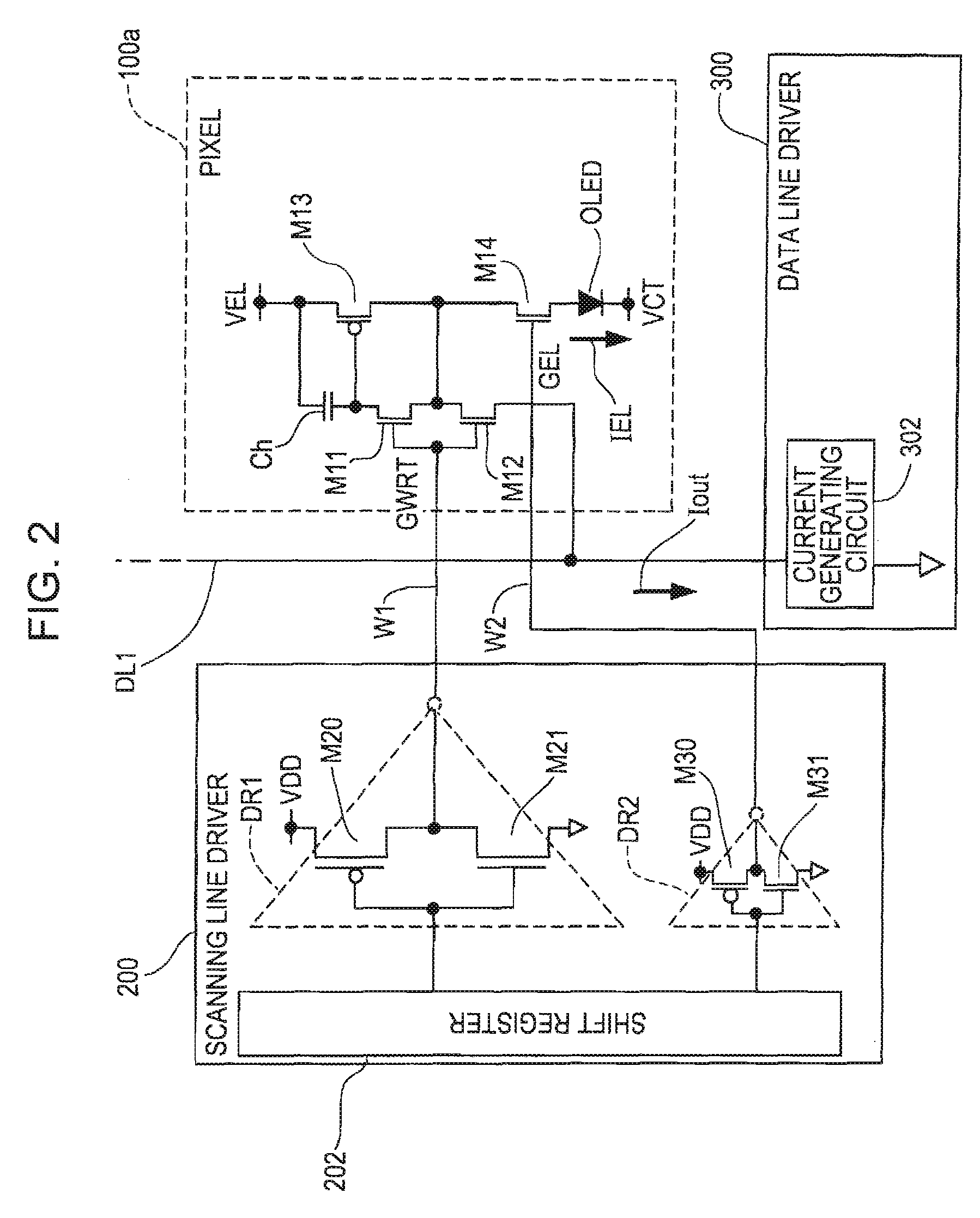

Active-matrix-type light-emitting device, electronic apparatus, and pixel driving method for active-matrix-type light-emitting device

ActiveUS20080036706A1Suppress increase in black levelTotal current dropElectrical apparatusStatic indicating devicesCapacitanceActive matrix

An active-matrix-type light-emitting device includes: a pixel circuit including a light-emitting element, a driving transistor that drives the light-emitting element, a holding capacitor whose one end is connected to the driving transistor and which stores electric charges corresponding to written data, at least a control transistor that controls an operation associated with writing of data into the holding capacitor, and an emission control transistor; a first scanning line for controlling ON / OFF of the control transistor and a second scanning line for controlling ON / OFF of the emission control transistor; a data line through which the written data is transmitted to the pixel circuit; and a scanning line driving circuit which drives the first and second scanning lines and in which a current drive capability associated with the second scanning line is set to be lower than a current drive capability associated with the first scanning line.

Owner:ELEMENT CAPITAL COMMERCIAL CO PTE LTD

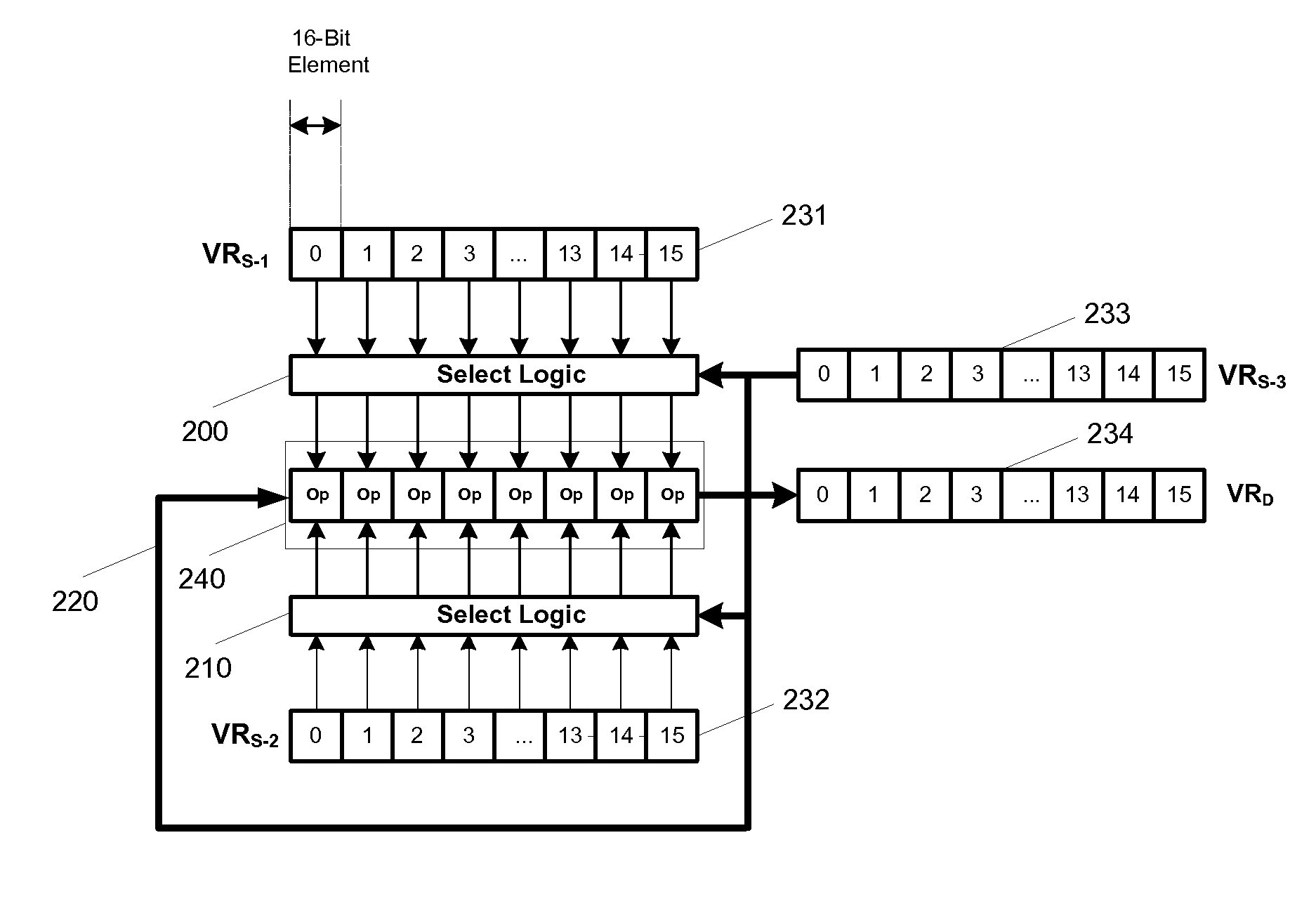

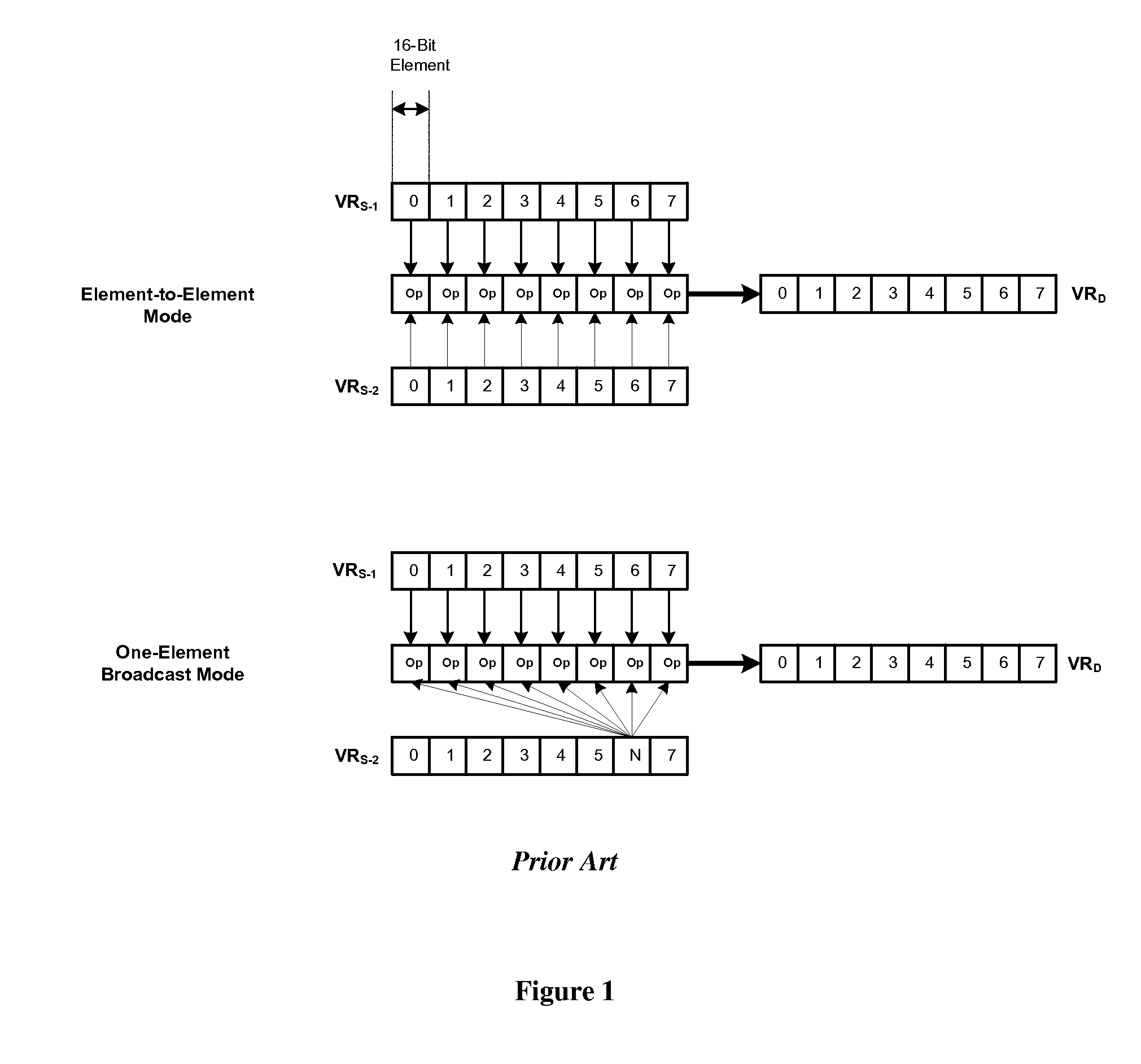

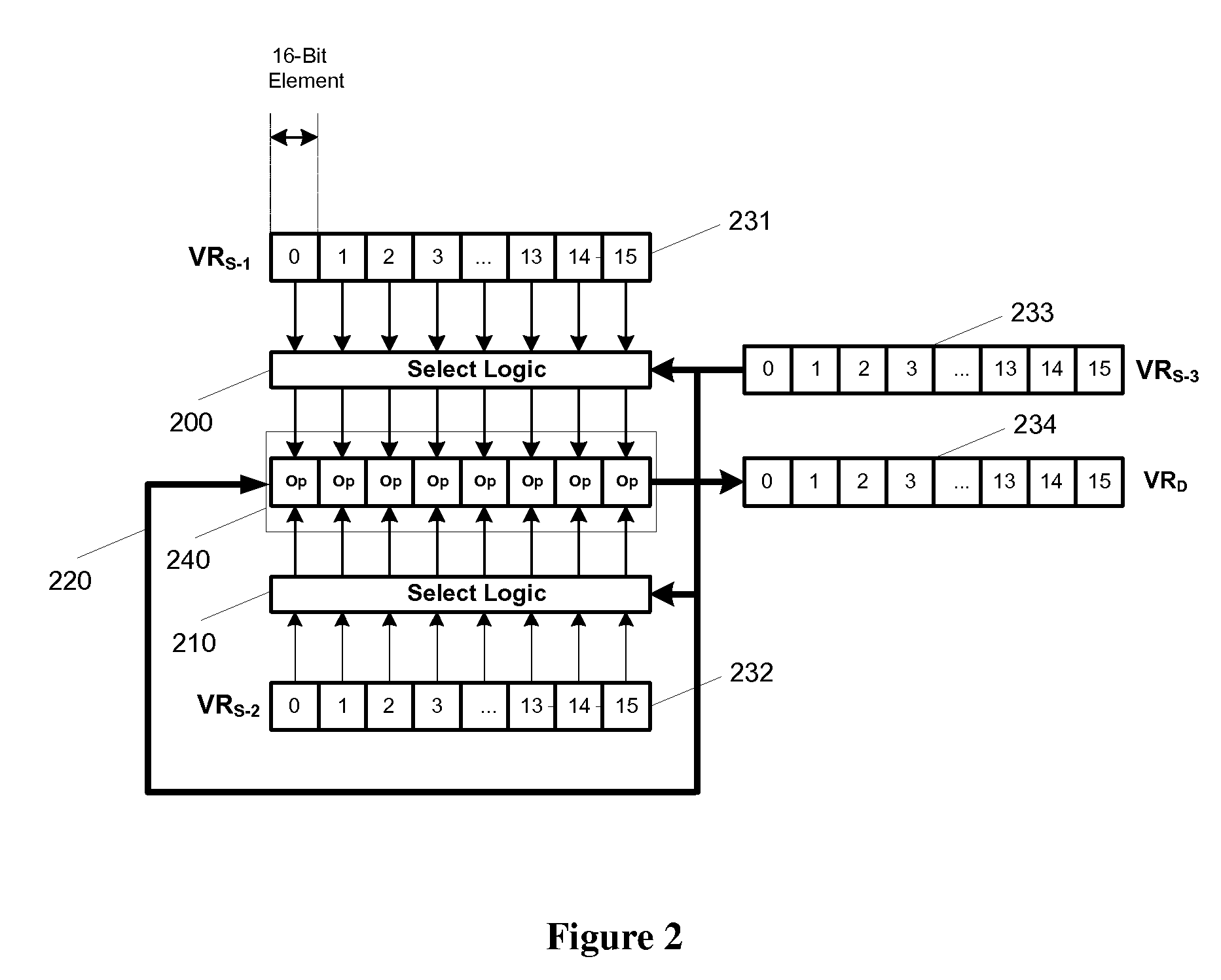

Flexible vector modes of operation for SIMD processor

InactiveUS20100274988A1General purpose stored program computerMachine execution arrangementsComputer architectureVector element

In addition to the usual modes of SIMD processor operation, where corresponding elements of two source vector registers are used as input pairs to be operated upon by the execution unit, or where one element of a source vector register is broadcast for use across the elements of another source vector register, the new system provides several other modes of operation for the elements of one or two source vector registers. Improving upon the time-costly moving of elements for an operation such as DCT, the present invention defines a more general set of modes of vector operations. In one embodiment, these new modes of operation use a third vector register to define how each element of one or both source vector registers are mapped, in order to pair these mapped elements as inputs to a vector execution unit. Furthermore, the decision to write an individual vector element result to a destination vector register, for each individual element produced by the vector execution unit, may be selectively disabled, enabled, or made to depend upon a selectable condition flag or a mask bit.

Owner:MIMAR TIBET

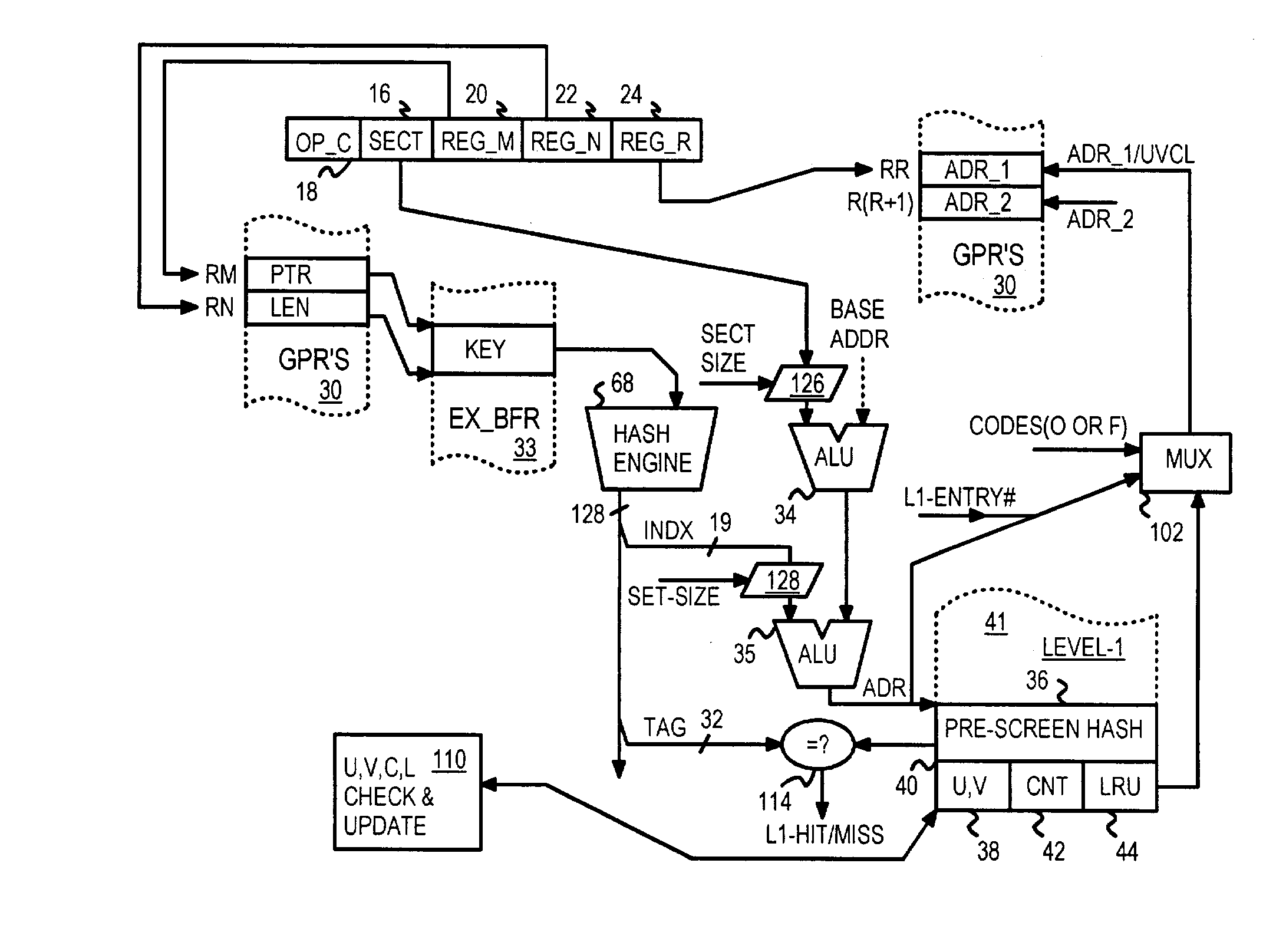

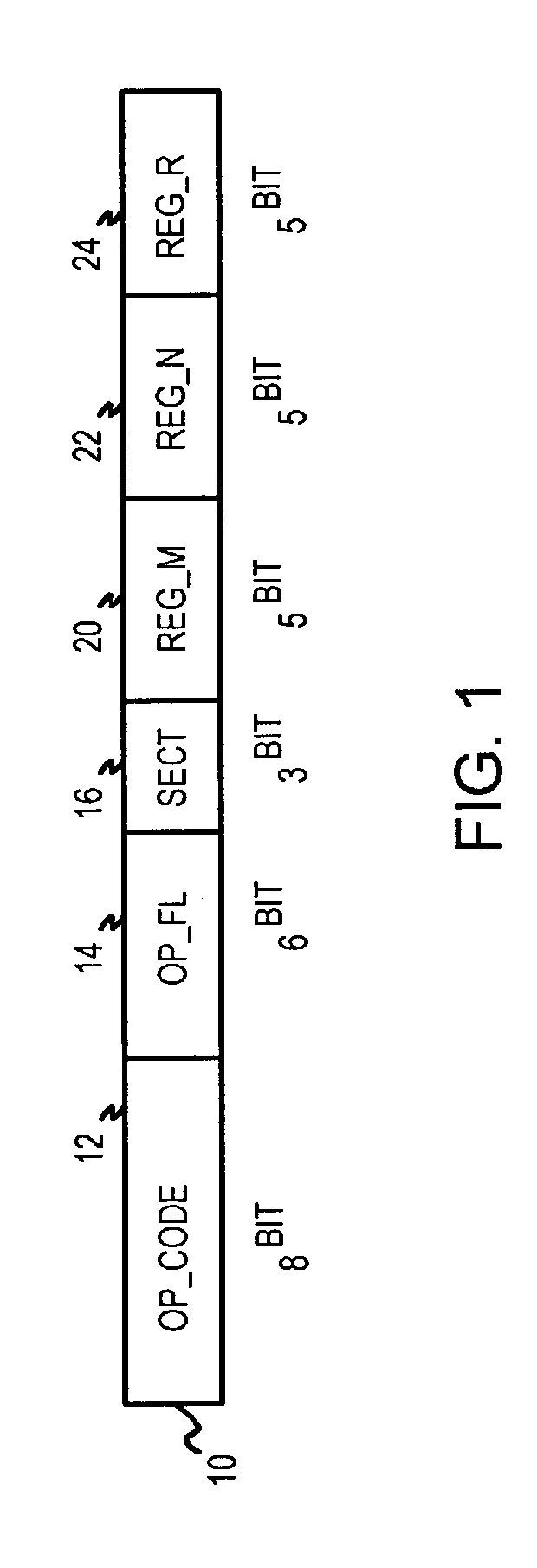

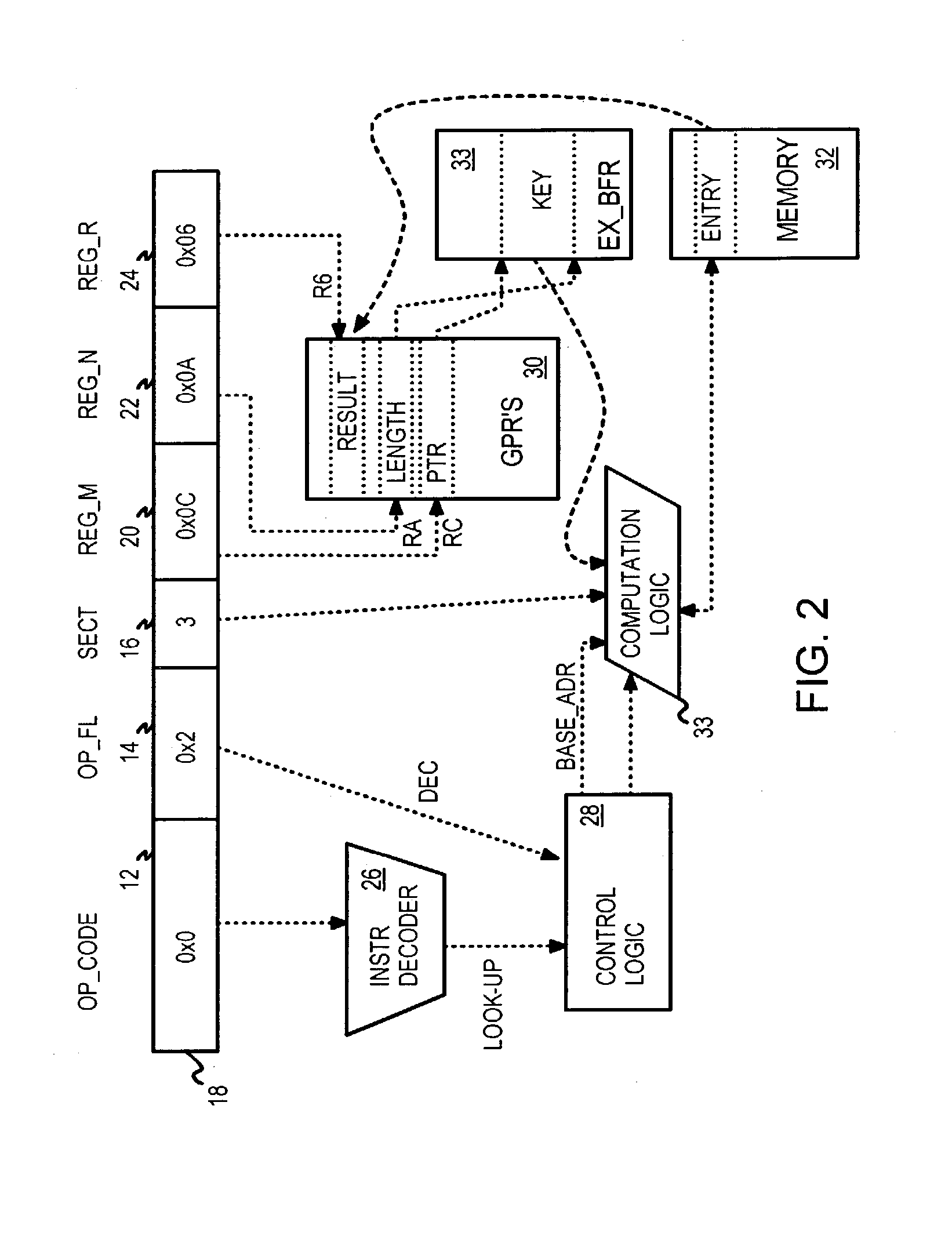

Native lookup instruction for file-access processor searching a three-level lookup cache for variable-length keys

InactiveUS7093099B2Memory adressing/allocation/relocationDigital computer detailsThree levelGeneral purpose

A processor natively executes lookup instructions. The lookup instruction is decoded to determine which general-purpose register (GPR) contains a pointer to a lookup key in a buffer. A variable-length key is read from the buffer and hashed to generate an index into a first-level cache and a hashed tag. An address of a bucket of entries for the index is generated and tags from these entries are read and compared to the hashed tag. When an entry matches the hashed tag, a second-level entry is read. A stored key from the second-level entry is compared to the input key to determine a match. The addresses of the matching second-level and first-level entries are written to GPR's specified by operands decoded from the lookup instruction. When the key or entry data is long, the second-level entry also contains a pointer to a key extension or data extension in a third-level cache.

Owner:RPX CORP

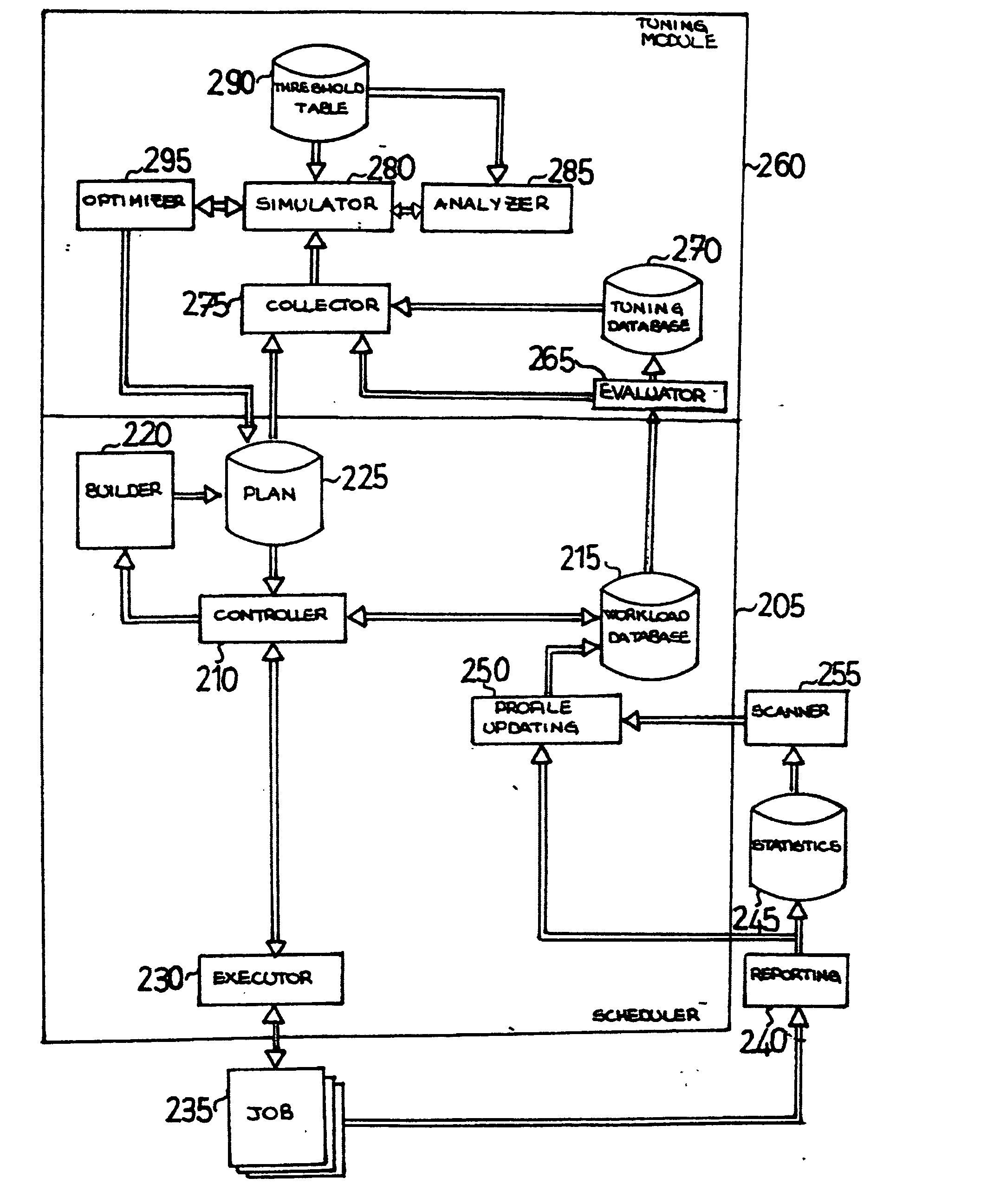

Workload scheduler with cumulative weighting indexes

InactiveUS20050132167A1Efficient of definitionReduce consumptionDigital computer detailsForecastingWorkloadData mining

A workload scheduler supporting the definition of a cumulative weighting index is proposed. The scheduler maintains (384-386) a profile for each job; the profile (built using statistics of previous executions of the job) defines an estimated usage of different resources of the system by the job. A tuning module imports (304) the attributes of the jobs from the profile. The attributes of each job are rated (306-307) according to an estimated duration of the job. The rated attributes so obtained are then combined (308-320), in order to define a single cumulative index for each job and a single cumulative index for each application (for example, weighting the rated attributes according to corresponding correction factors). In this way, the cumulative indexes allow an immediate comparison (324-356) of the impact of the different jobs / applications of a plan on the whole performance of the system.

Owner:IBM CORP

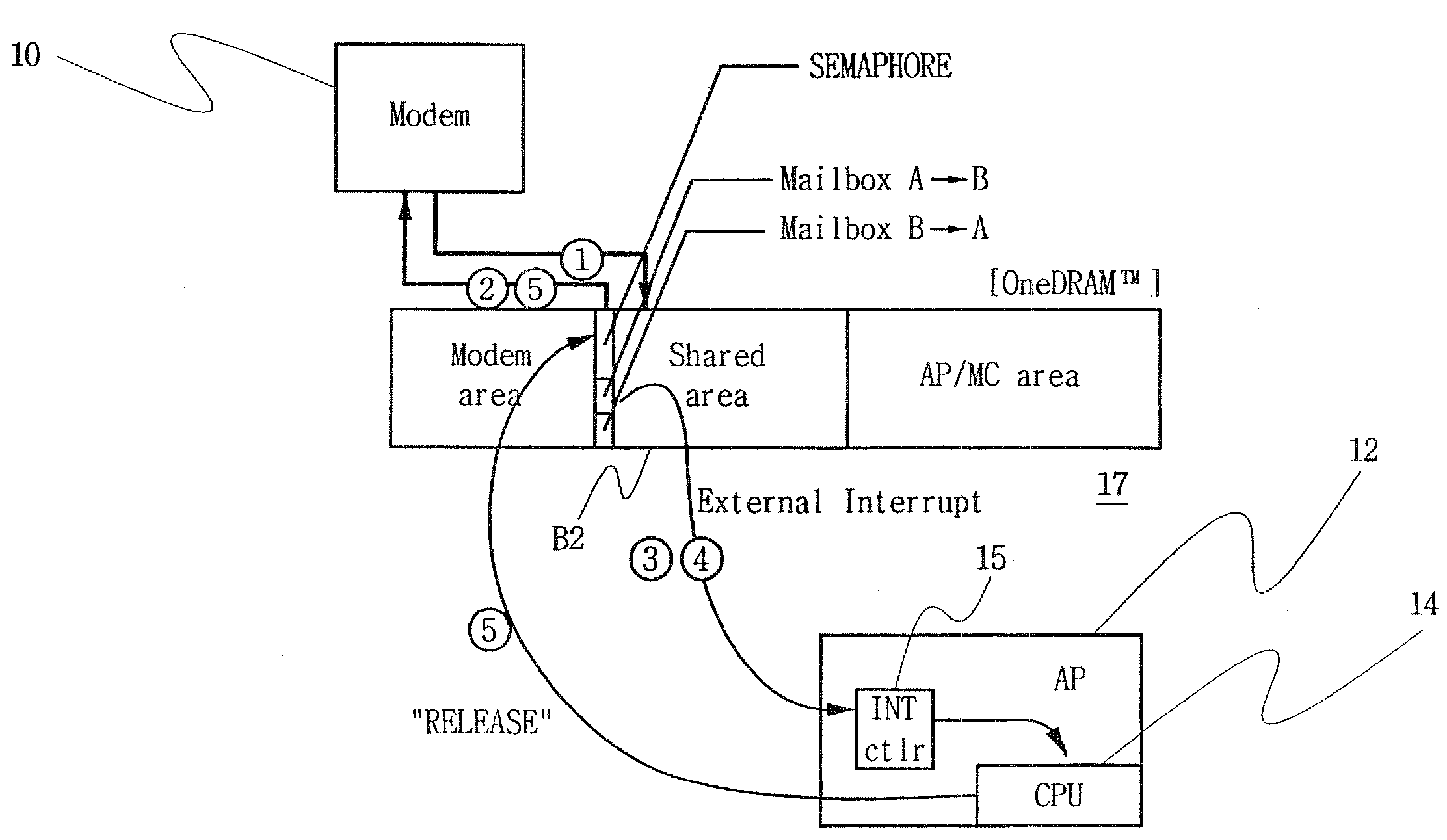

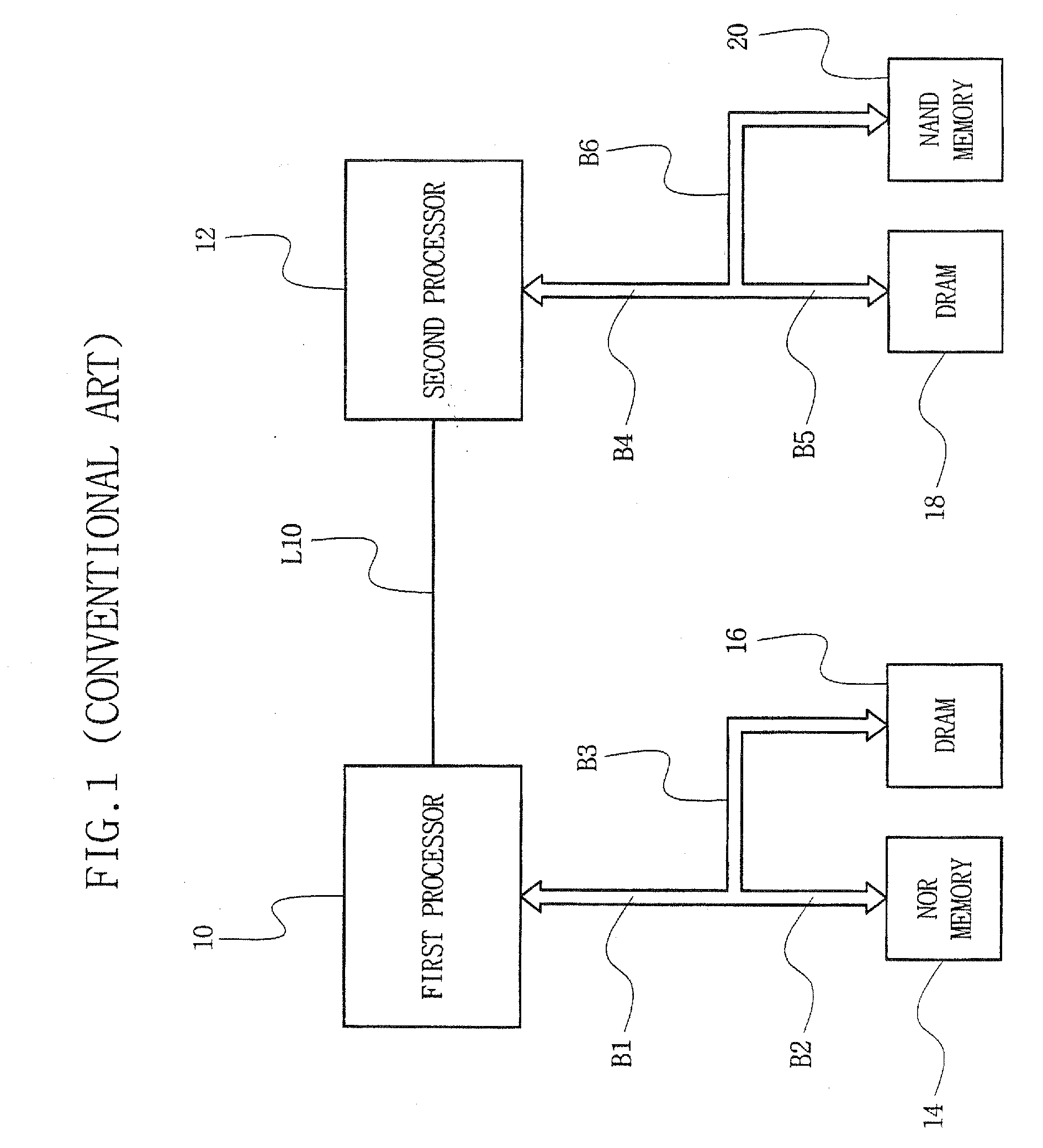

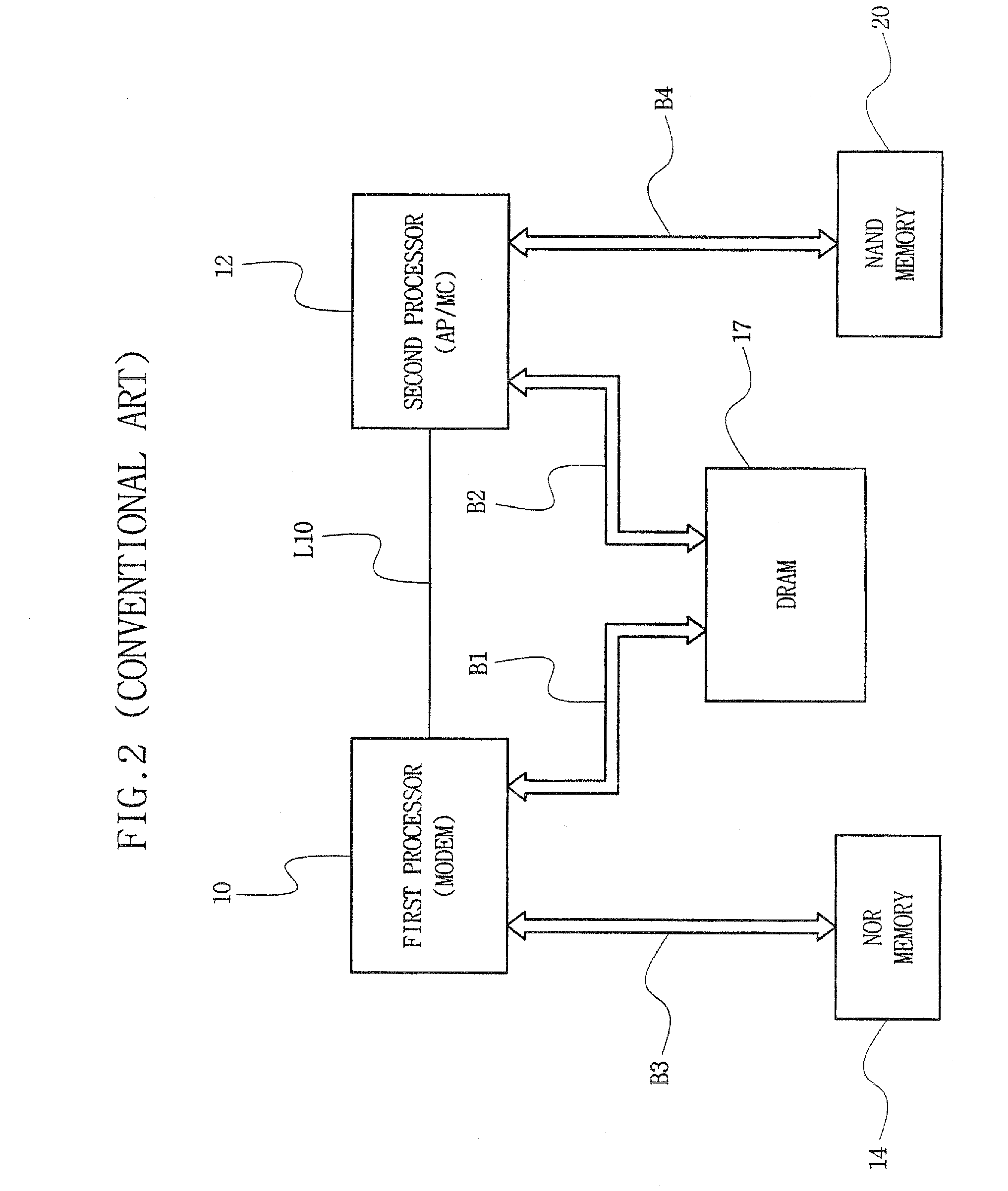

Multipath accessible semiconductor memory device with host interface between processors

ActiveUS20080077937A1Low costIncrease speedGeneral purpose stored program computerDigital storageMulti processorData access

A multipath accessible semiconductor memory device provides an interface function between processors. The memory device may include a memory cell array having a shared memory area operationally coupled to two or more ports that are independently accessible by two or more processors, an access path forming unit to form a data access path between one of the ports and the shared memory area in response to external signals applied by the processors, and an interface unit having a semaphore area and mailbox areas accessible in the shared memory area by the two or more processors to provide an interface function for communication between the two or more processors.

Owner:SAMSUNG ELECTRONICS CO LTD

Popular searches

Data switching by path configuration Multiple digital computer combinations Digital data authentication Special data processing applications Micro-instruction address formation Special television systems Two-way working systems Coding/ciphering apparatus Selective content distribution Electrical appliances

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com