Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

4037results about "Architecture with single central processing unit" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

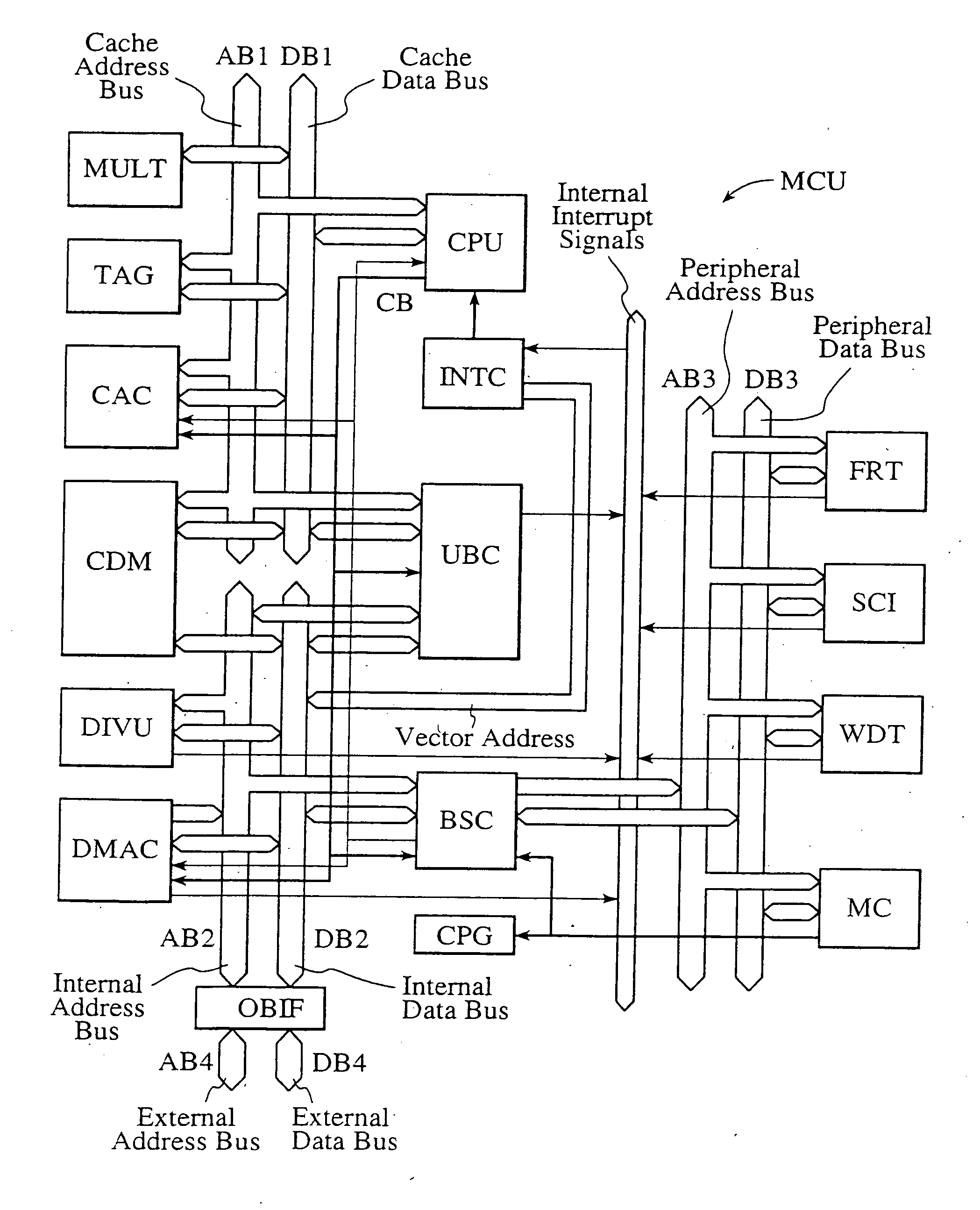

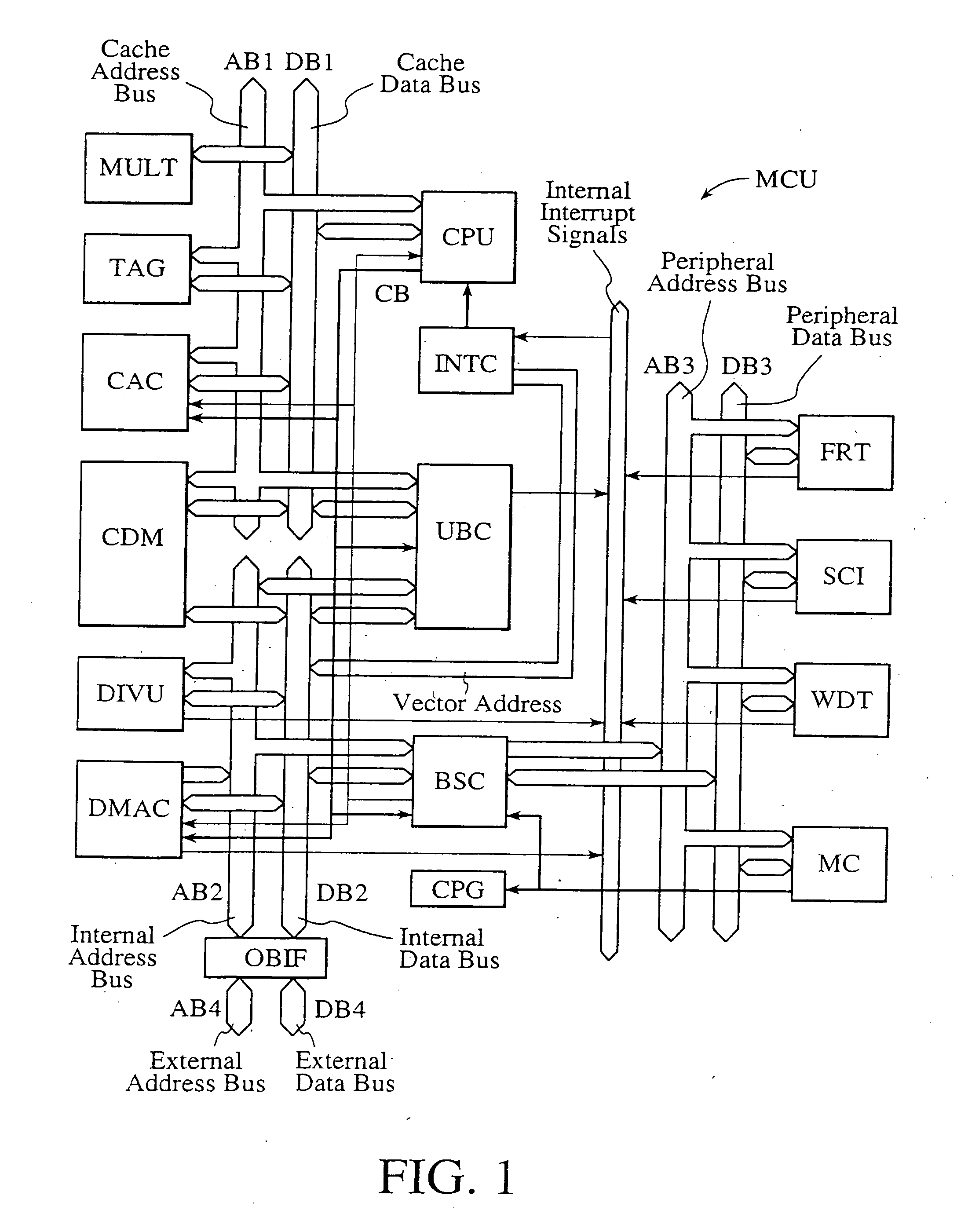

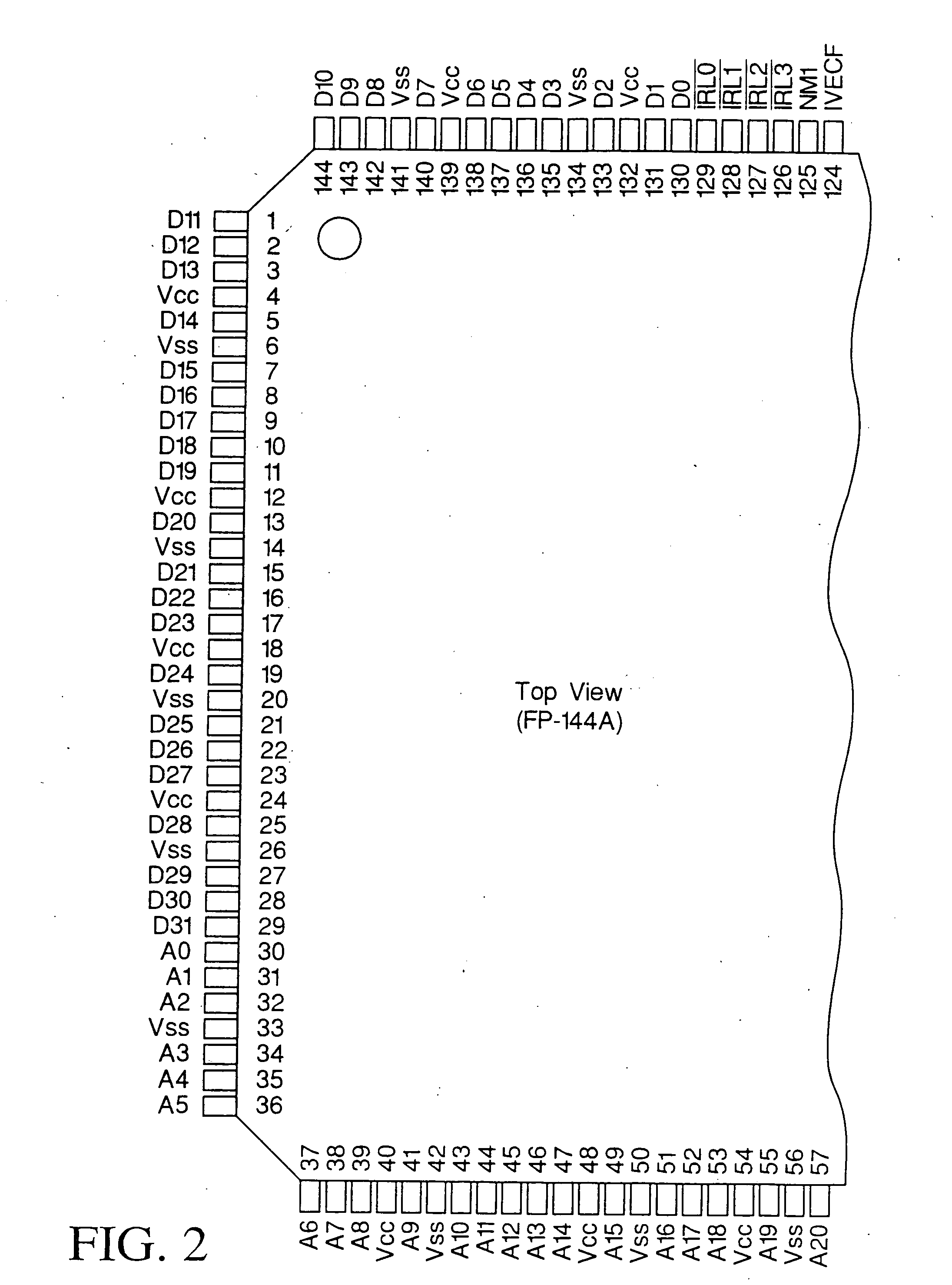

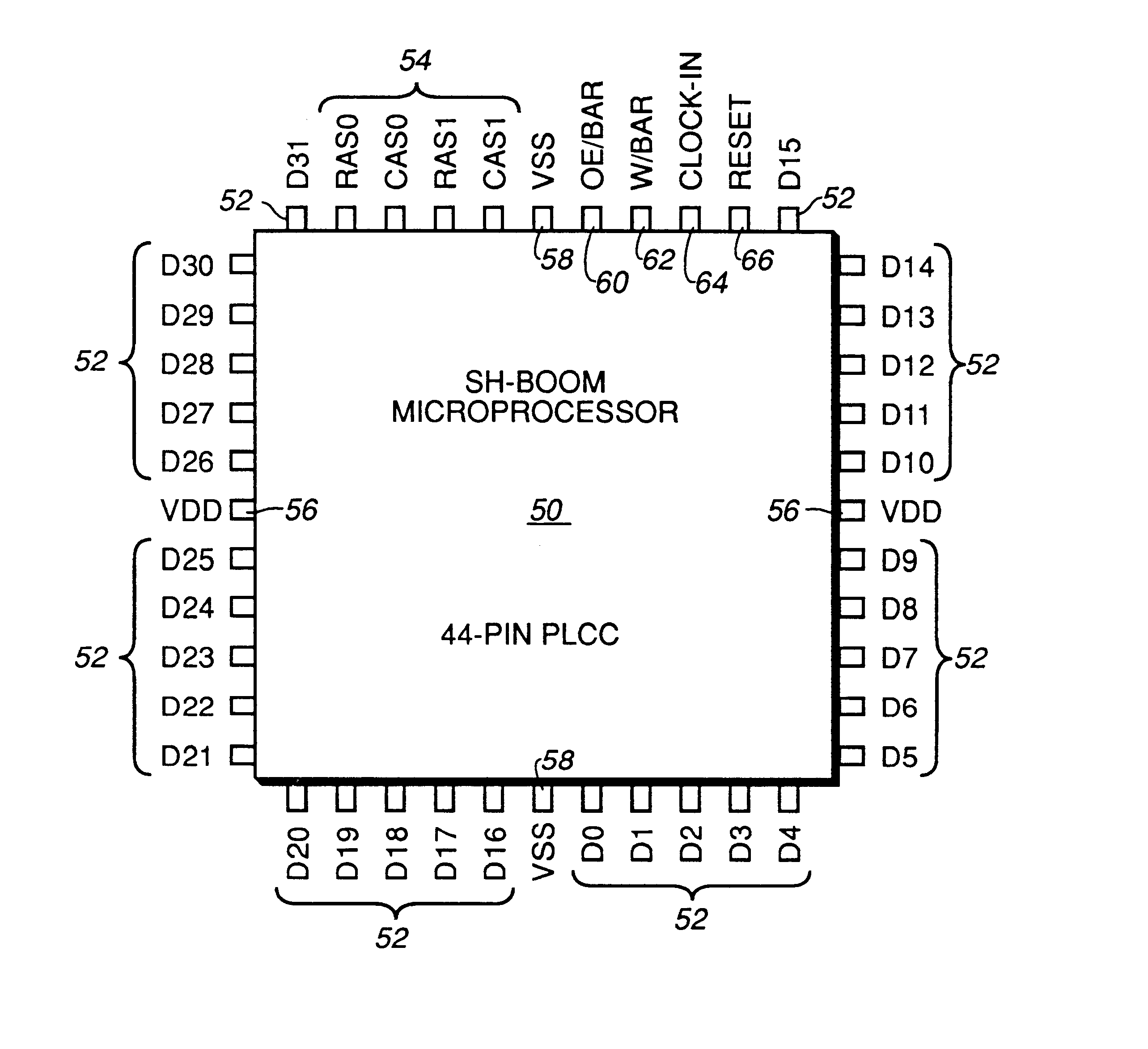

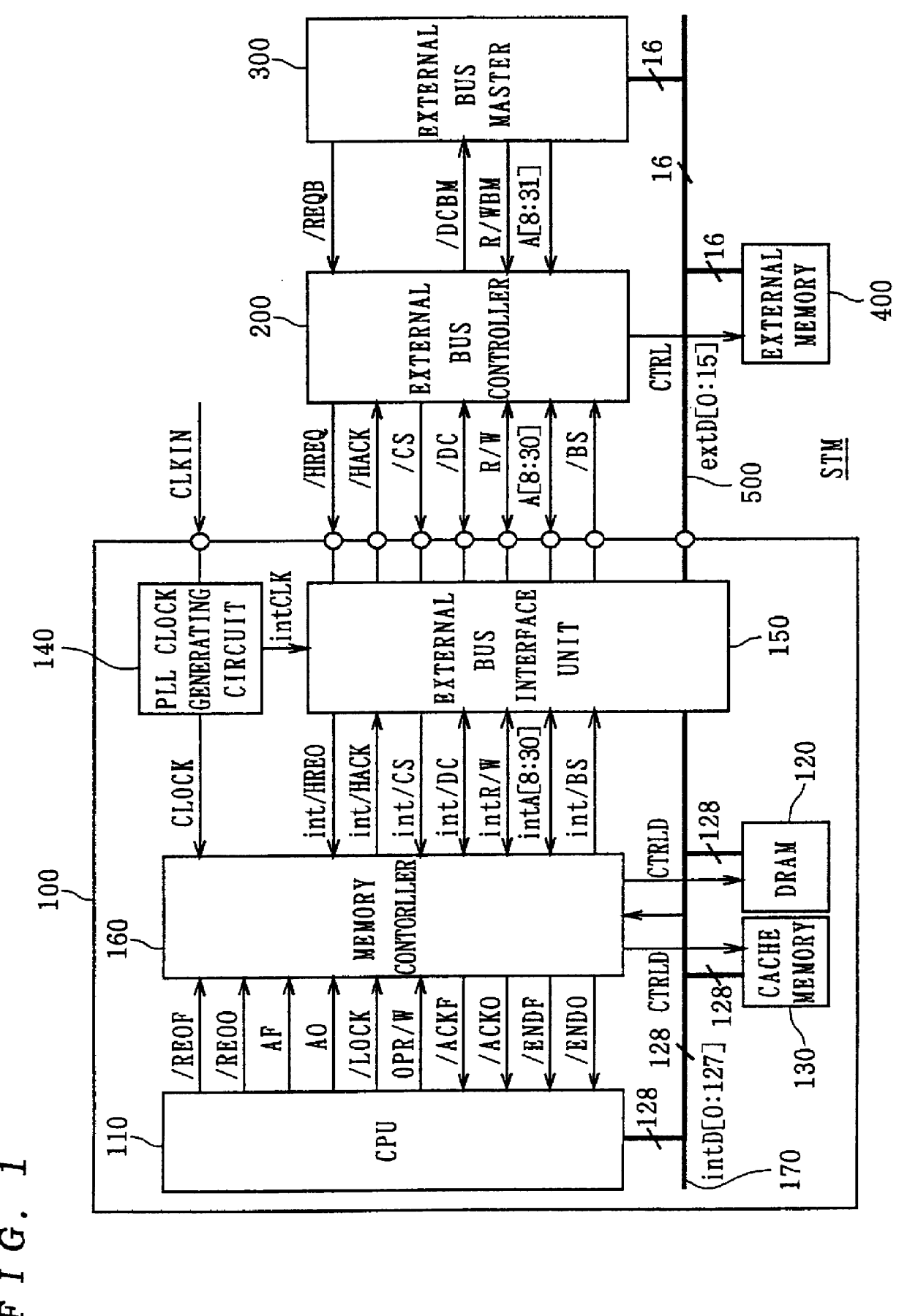

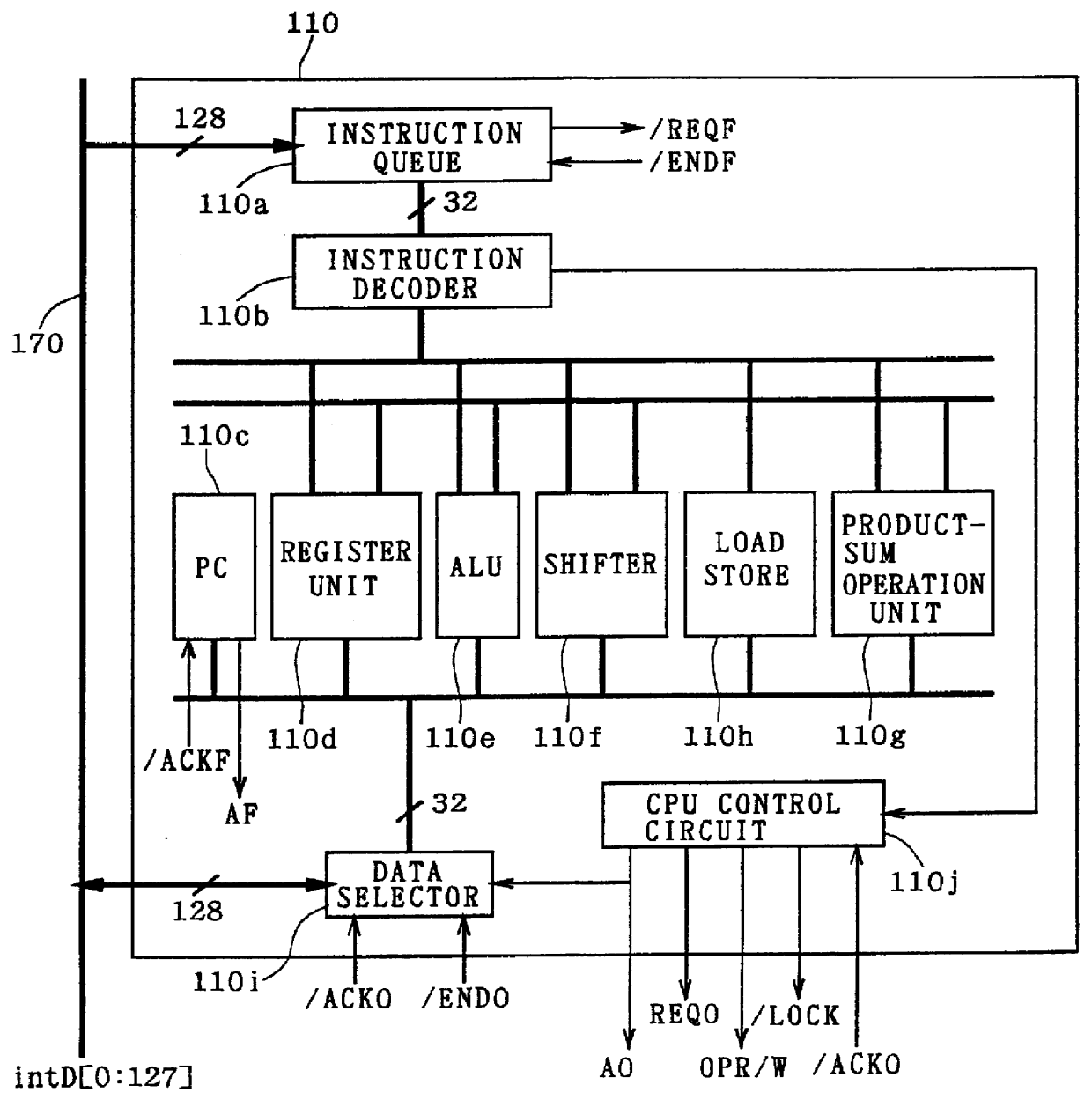

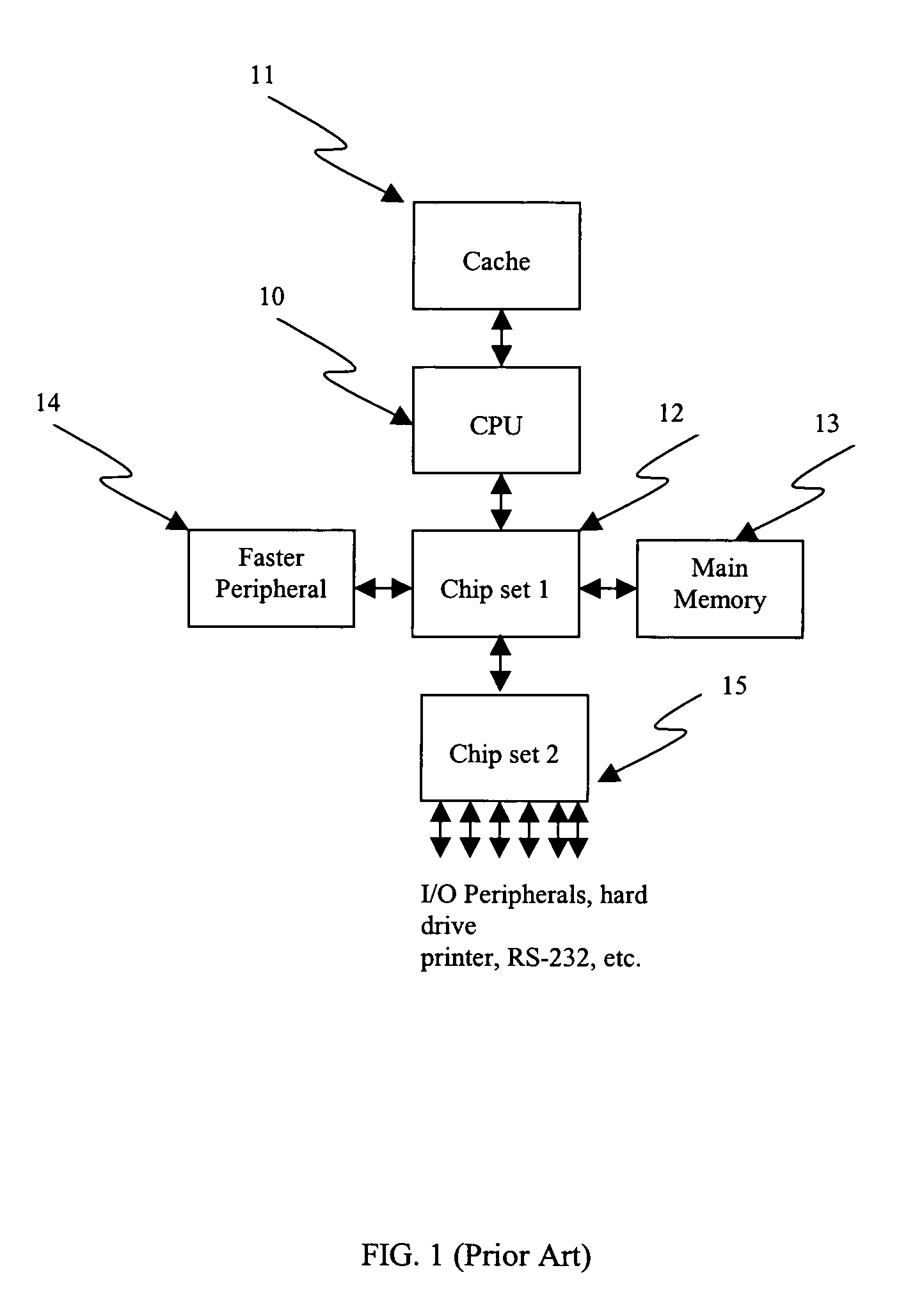

Single-chip microcomputer

InactiveUS20120023281A1Multiple functionsPerformance multiplePower managementEnergy efficient ICTMicrocontrollerMicrocomputer

A single-chip microcomputer comprising: a first bus having a central processing unit and a cache memory connected therewith; a second bus having a dynamic memory access control circuit and an external bus interface connected therewith; a break controller for connecting the first bus and the second bus selectively; a third bus having a peripheral module connected therewith and having a lower-speed bus cycle than the bus cycles of the first and second buses; and a bus state controller for effecting a data transfer and a synchronization between the second bus and the third bus. The single-chip microcomputer has the three divided internal buses to reduce the load capacity upon the signal transmission paths so that the signal transmission can be accomplished at a high speed. Moreover, the peripheral module required to have no operation speed is isolated so that the power dissipation can be reduced.

Owner:KAWASAKI SHUMPEI +8

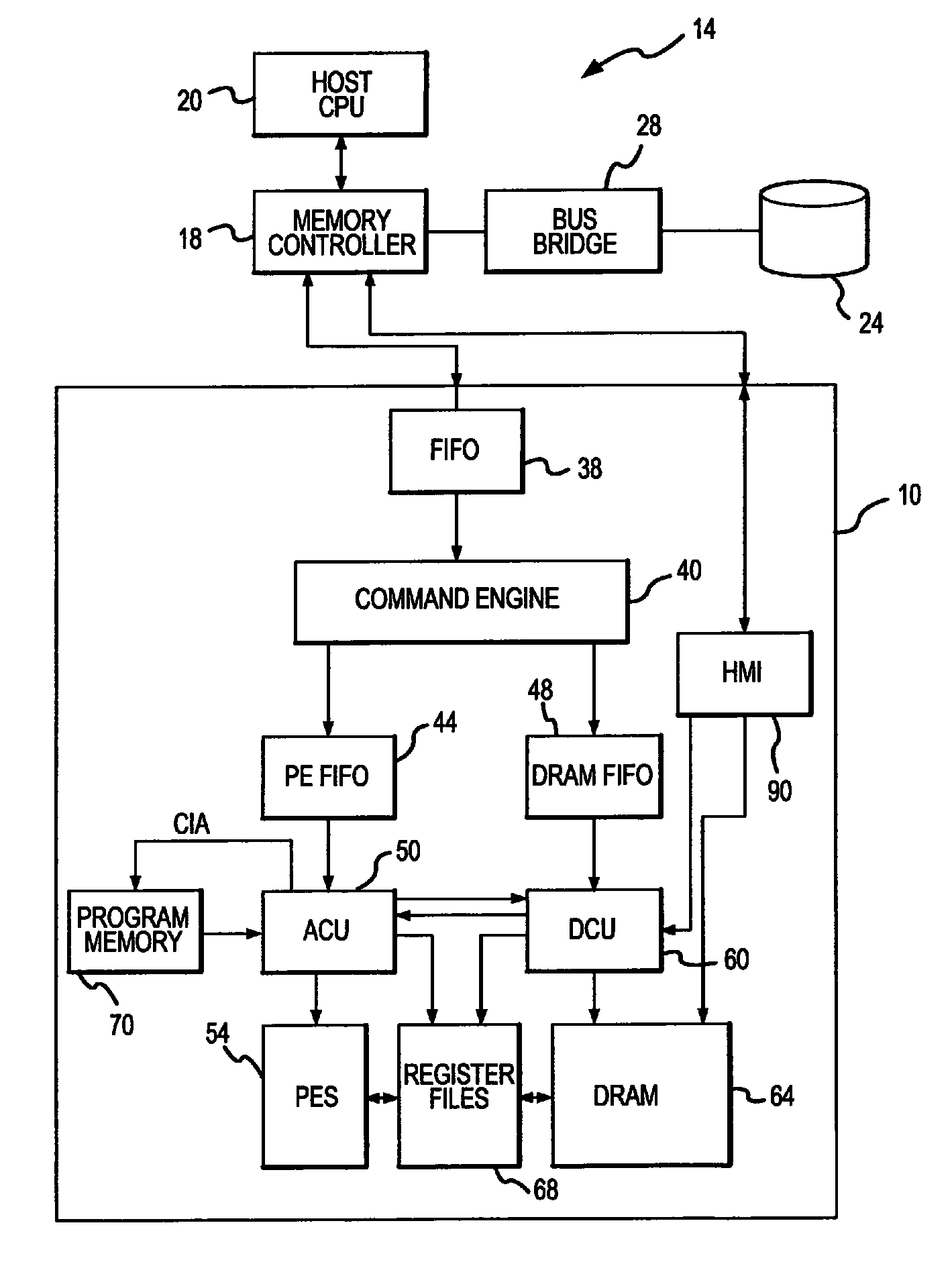

Active memory data compression system and method

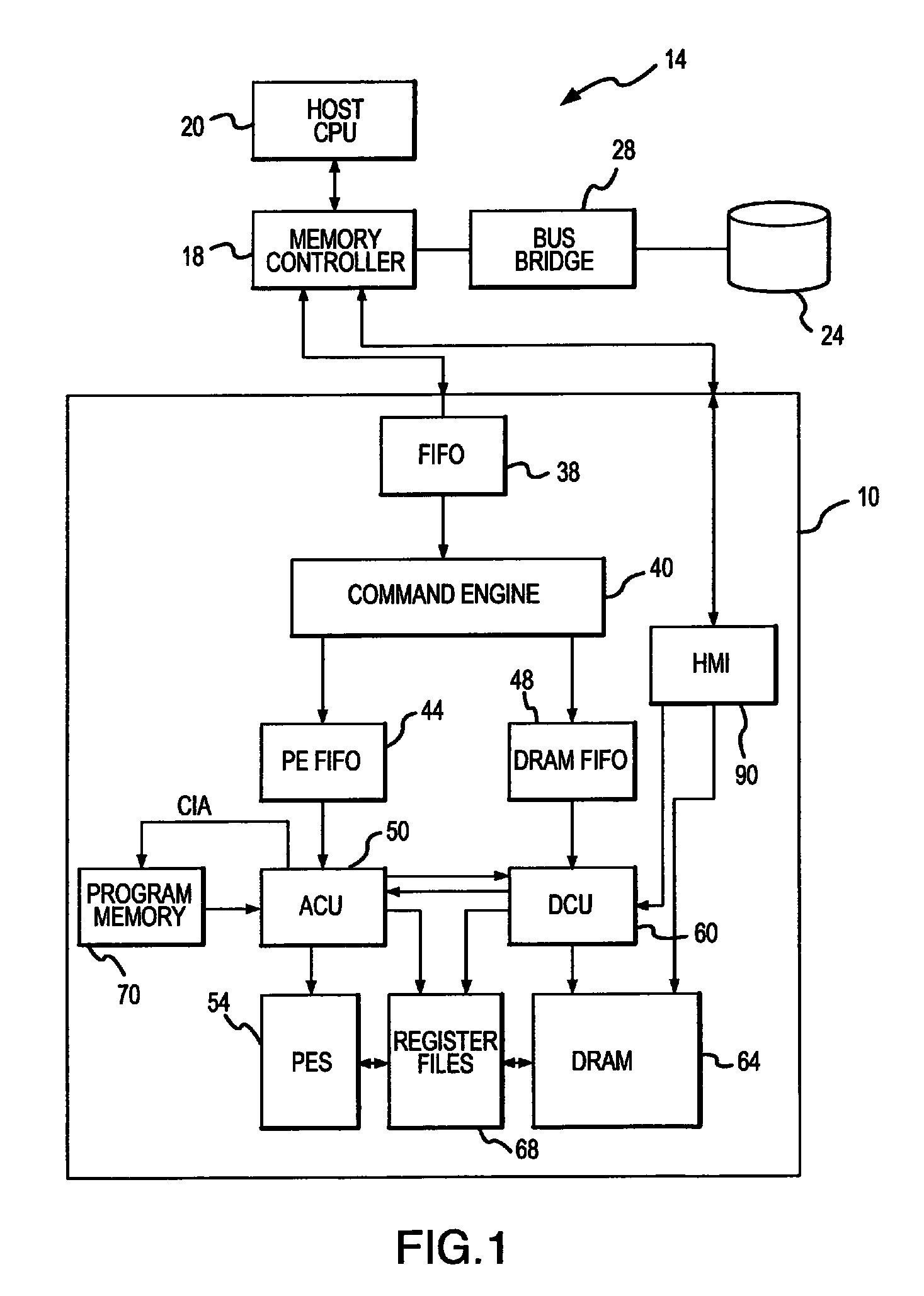

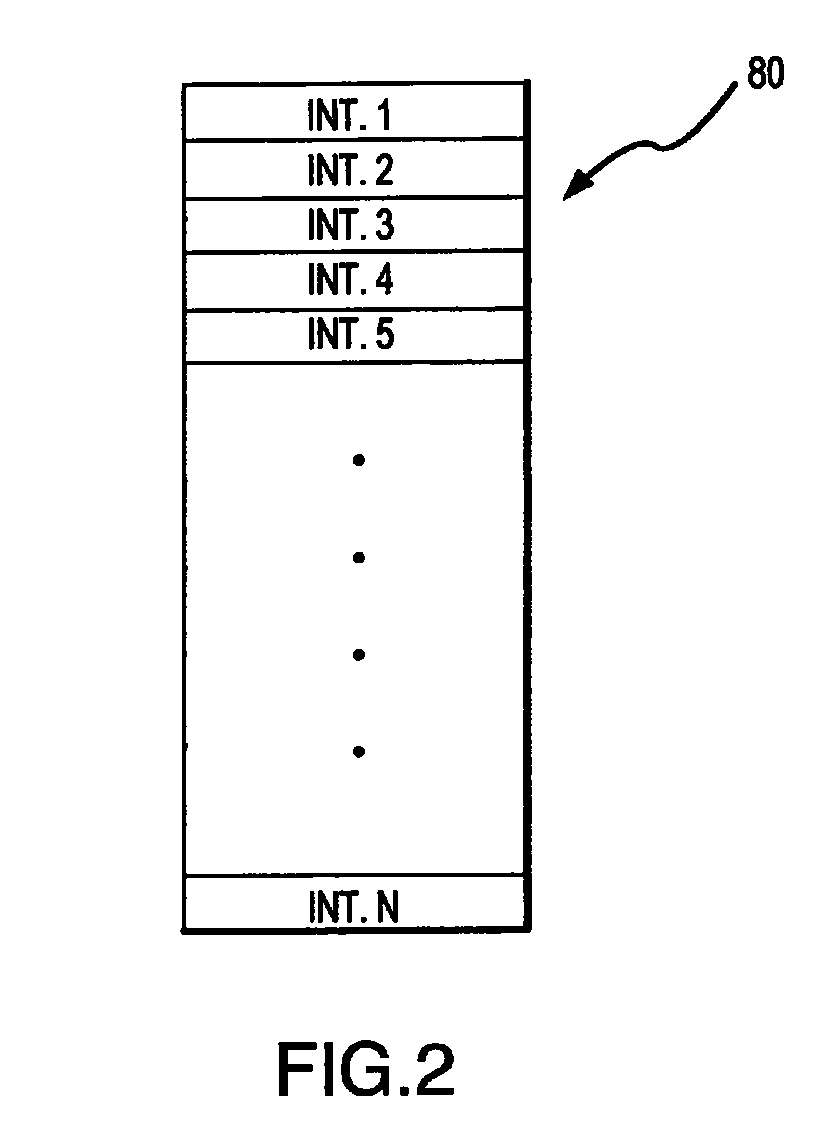

ActiveUS9015390B2Concurrent instruction executionArchitecture with single central processing unitMass storageData compression

An integrated circuit active memory device receives task commands from a component in a host computer system that may include the active memory device. The host system includes a memory controller coupling the active memory device to a host CPU and a mass storage device. The active memory device includes a command engine issuing instructions responsive to the task commands to either an array control unit or a DRAM control unit. The instructions provided to the DRAM control unit cause data to be written to or read from a DRAM and coupled to or from either the processing elements or a host / memory interface. The processing elements execute instructions provided by the array control unit to decompress data written to the DRAM through the host / memory interface and compress data read from the DRAM through the host / memory interface.

Owner:MICRON TECH INC

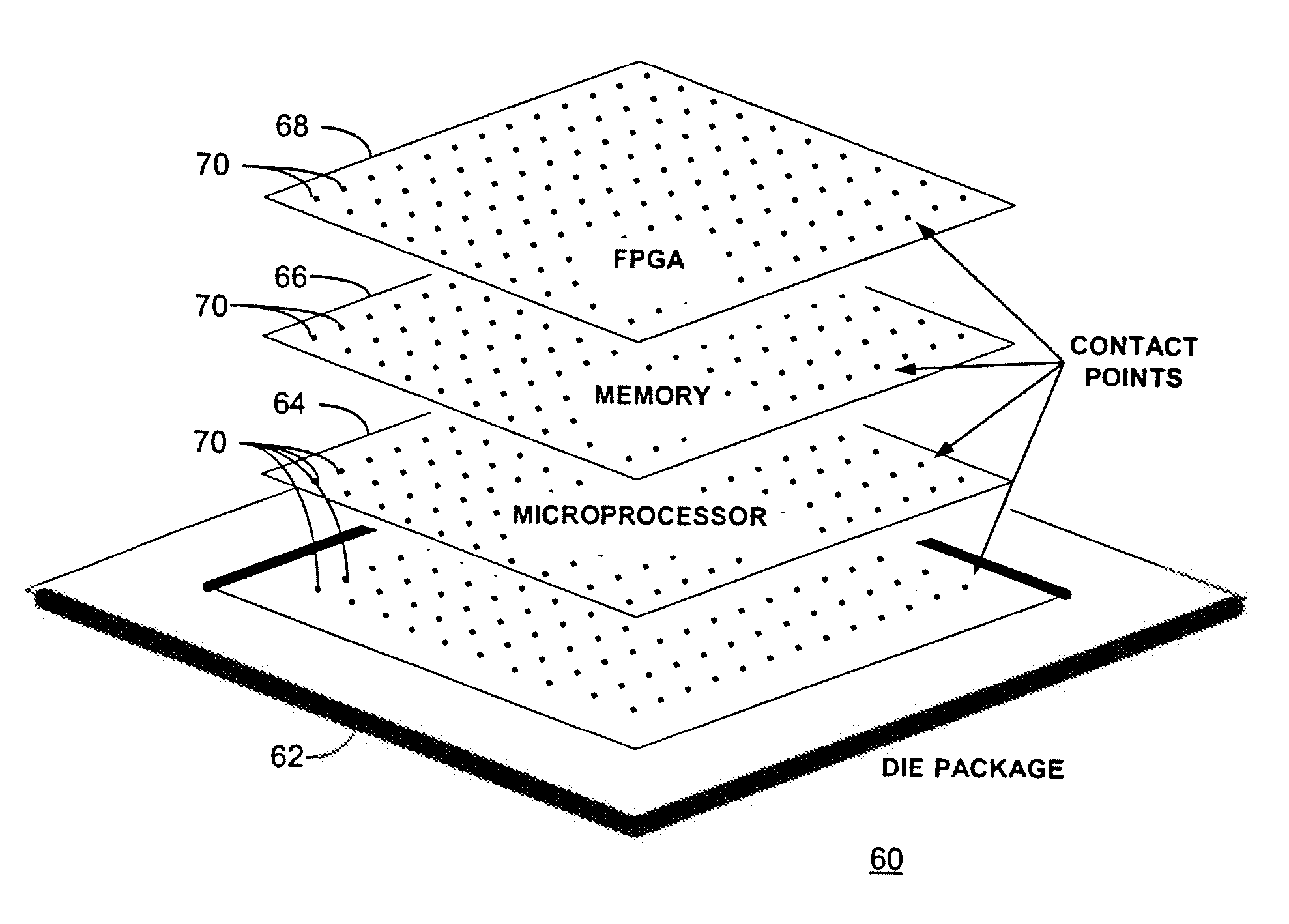

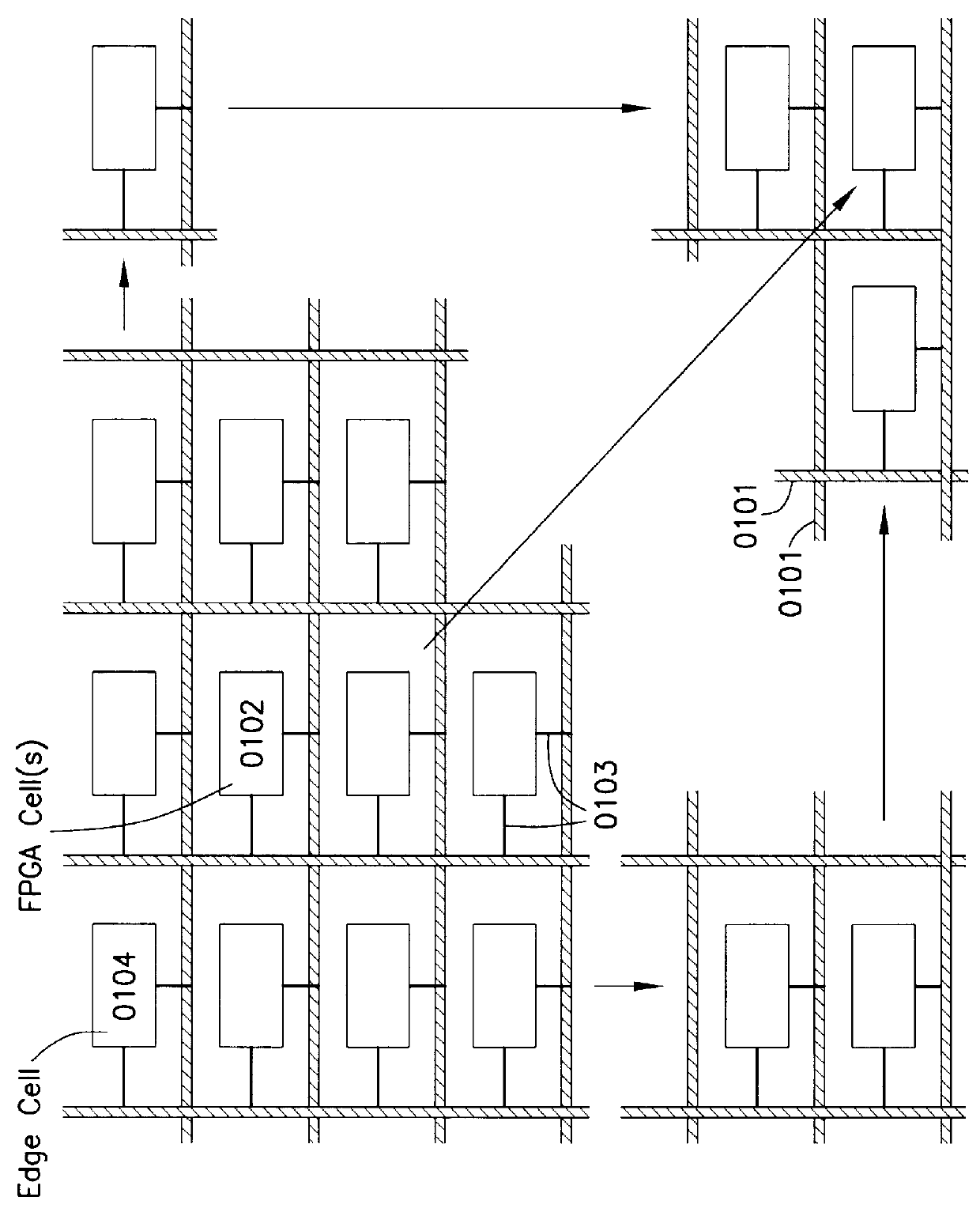

Reconfigurable processor module comprising hybrid stacked integrated circuit die elements

InactiveUS20060195729A1Energy efficient ICTError detection/correctionEngineeringField-programmable gate array

A reconfigurable processor module comprising hybrid stacked integrated circuit (“IC”) die elements. In a particular embodiment disclosed herein, a processor module with reconfigurable capability may be constructed by stacking one or more thinned microprocessor, memory and / or field programmable gate array (“FPGA”) die elements and interconnecting the same utilizing contacts that traverse the thickness of the die. The processor module disclosed allows for a significant acceleration in the sharing of data between the microprocessor and the FPGA element while advantageously increasing final assembly yield and concomitantly reducing final assembly cost.

Owner:ARBOR GLOBAL STRATEGIES LLC

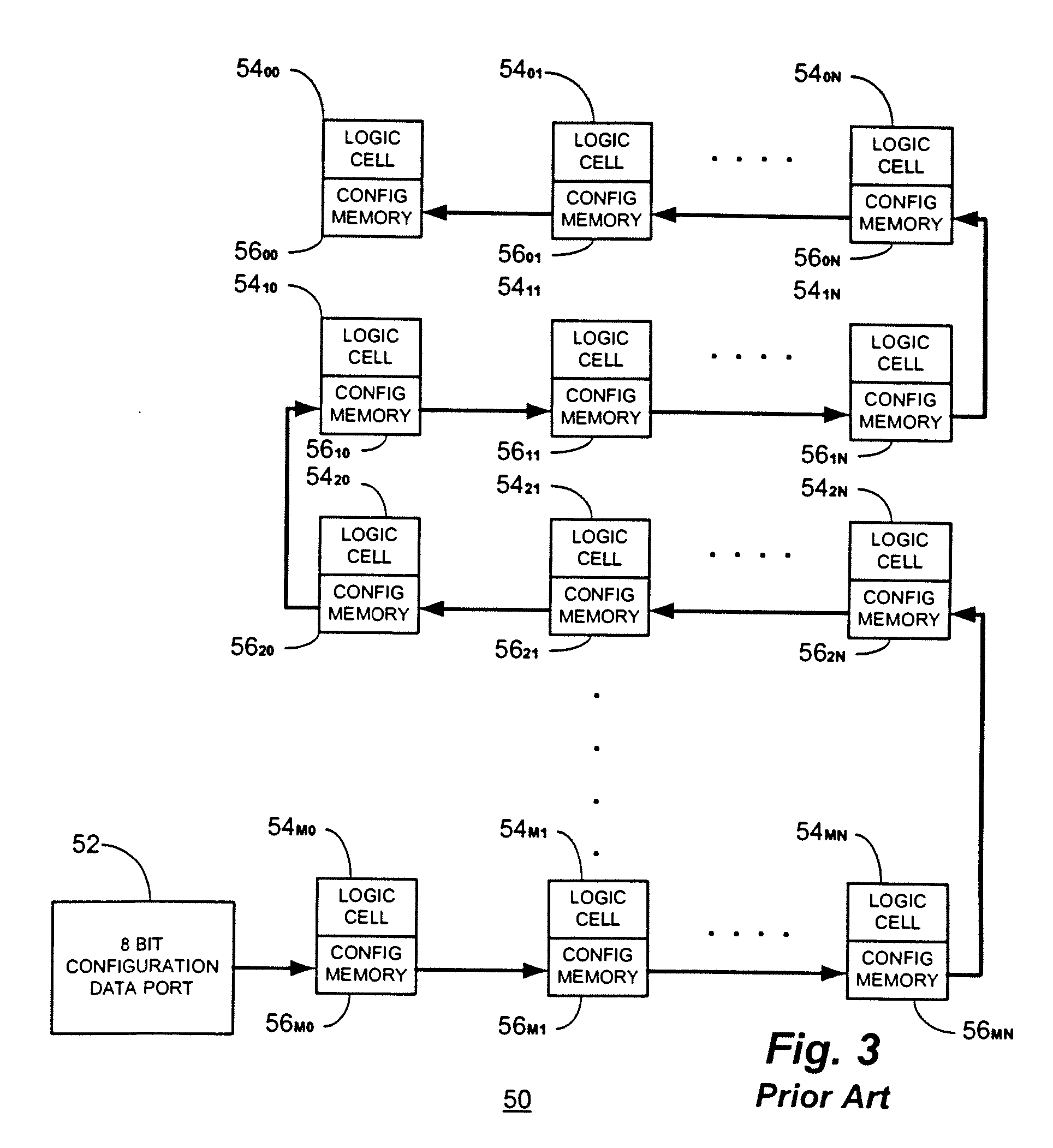

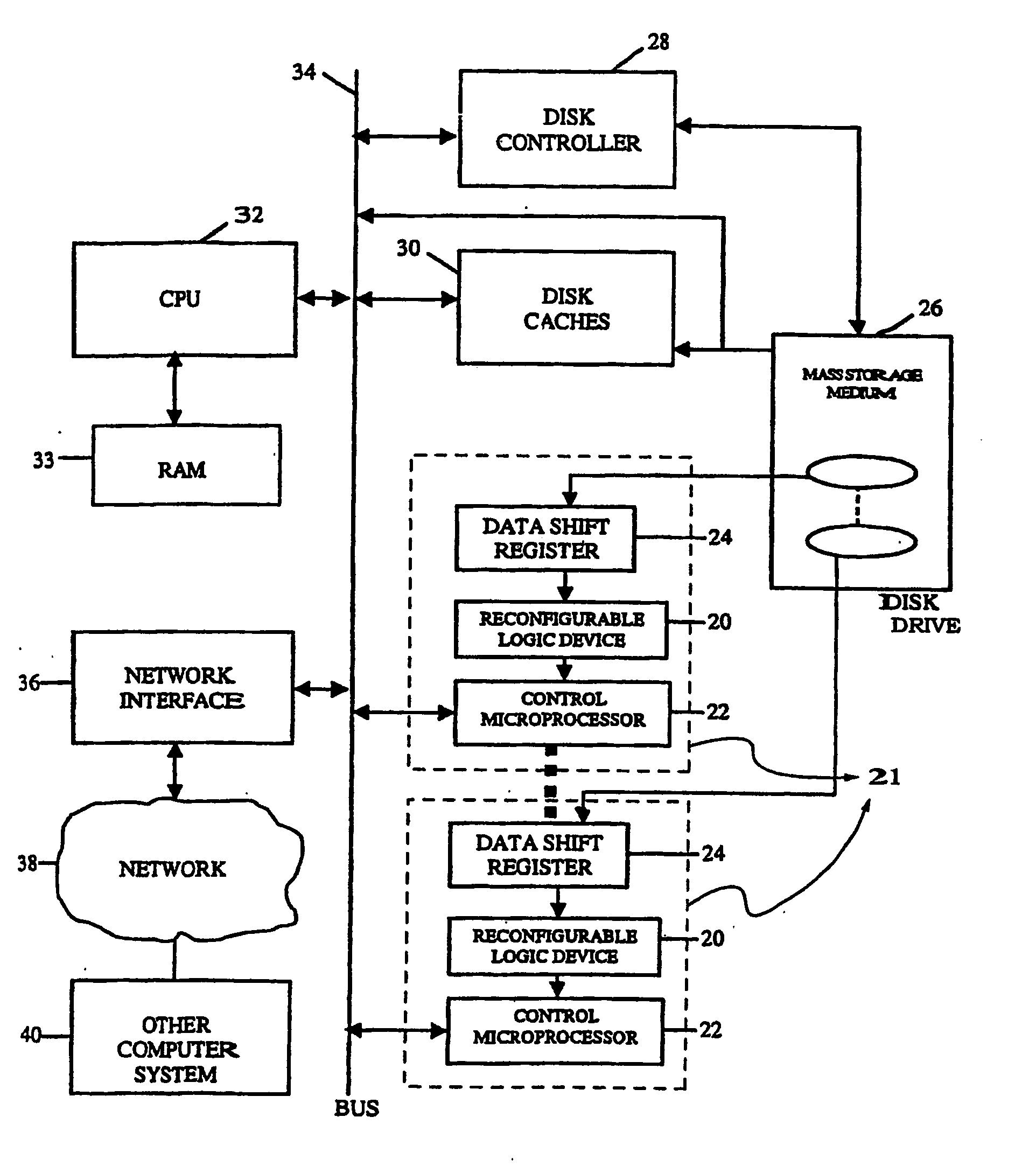

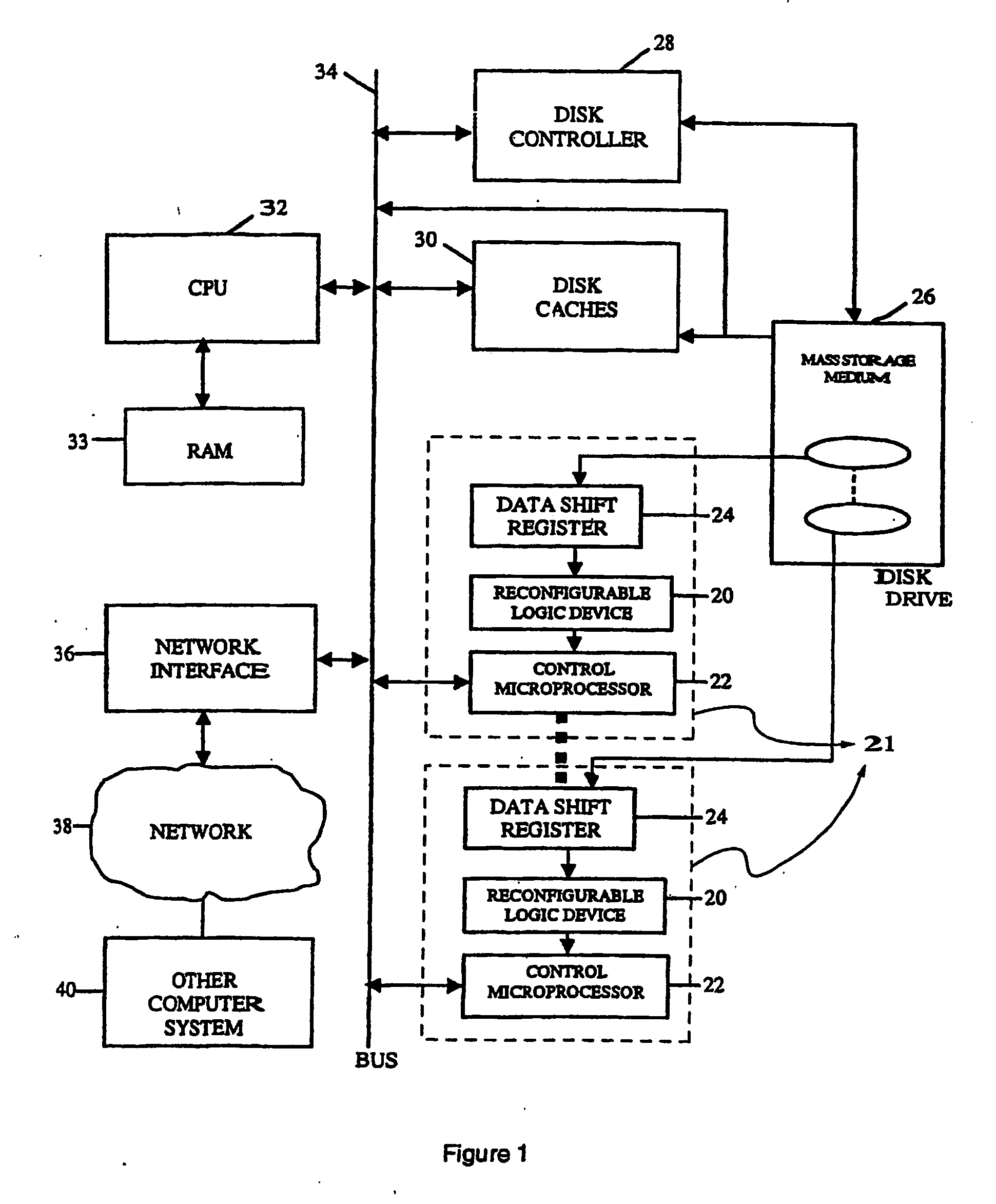

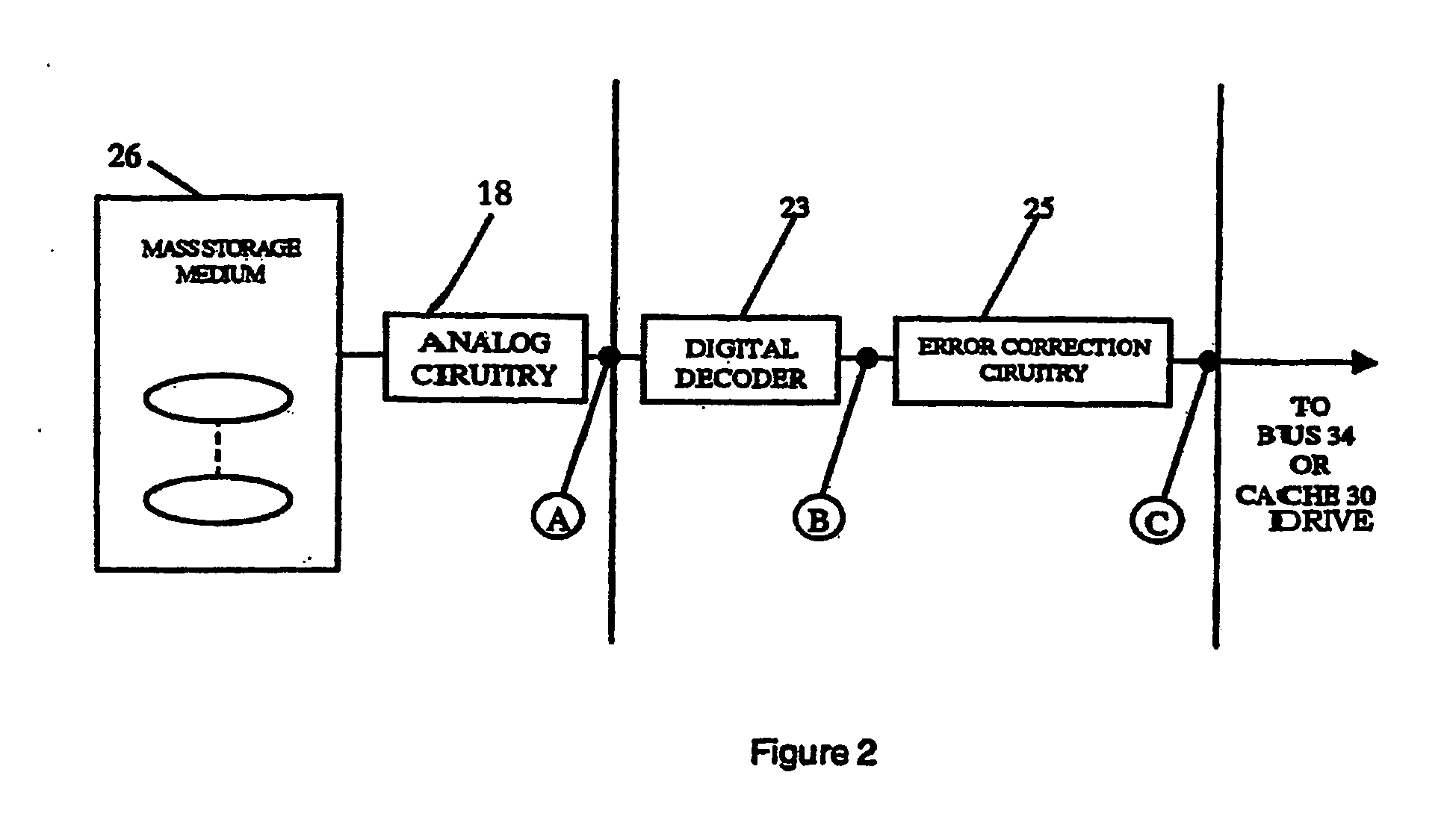

Intelligent data storage and processing using fpga devices

InactiveUS20070277036A1Improve data securityIncrease speedInput/output to record carriersDigital data information retrievalMagnetic storageComputerized system

A data storage and retrieval device and method is disclosed. The device includes at least one magnetic storage medium configured to store target data and at least one re-configurable logic device comprising an FPGA coupled to the at least one magnetic storage medium and configured to read a continuous stream of target data therefrom, having been configured with a template or as otherwise desired to fit the type of search and data being searched. The re-configurable logic device is configured to receive at least one search inquiry in the form of a data key and to determine a match between the data key and the target data as it is being read from the at least one magnetic storage medium. This device and method can perform a variety of searches on the target data including without limitation exact and approximate match searches, sequence match searches, image match searches and data reduction searches. This device and method may be provided as part of a stand-alone computer system, embodied in a network attached storage device, or can otherwise be provided as part of a computer LAN or WAN. In addition to performing search and data reduction operations, this device may also be used to perform a variety of other processing operations including encryption, decryption, compression, decompression, and combinations thereof.

Owner:IP RESERVOIR

Reconfigurable integrated circuit

InactiveUS20070143577A1Energy efficient ICTArchitecture with single central processing unitNon real timeResource block

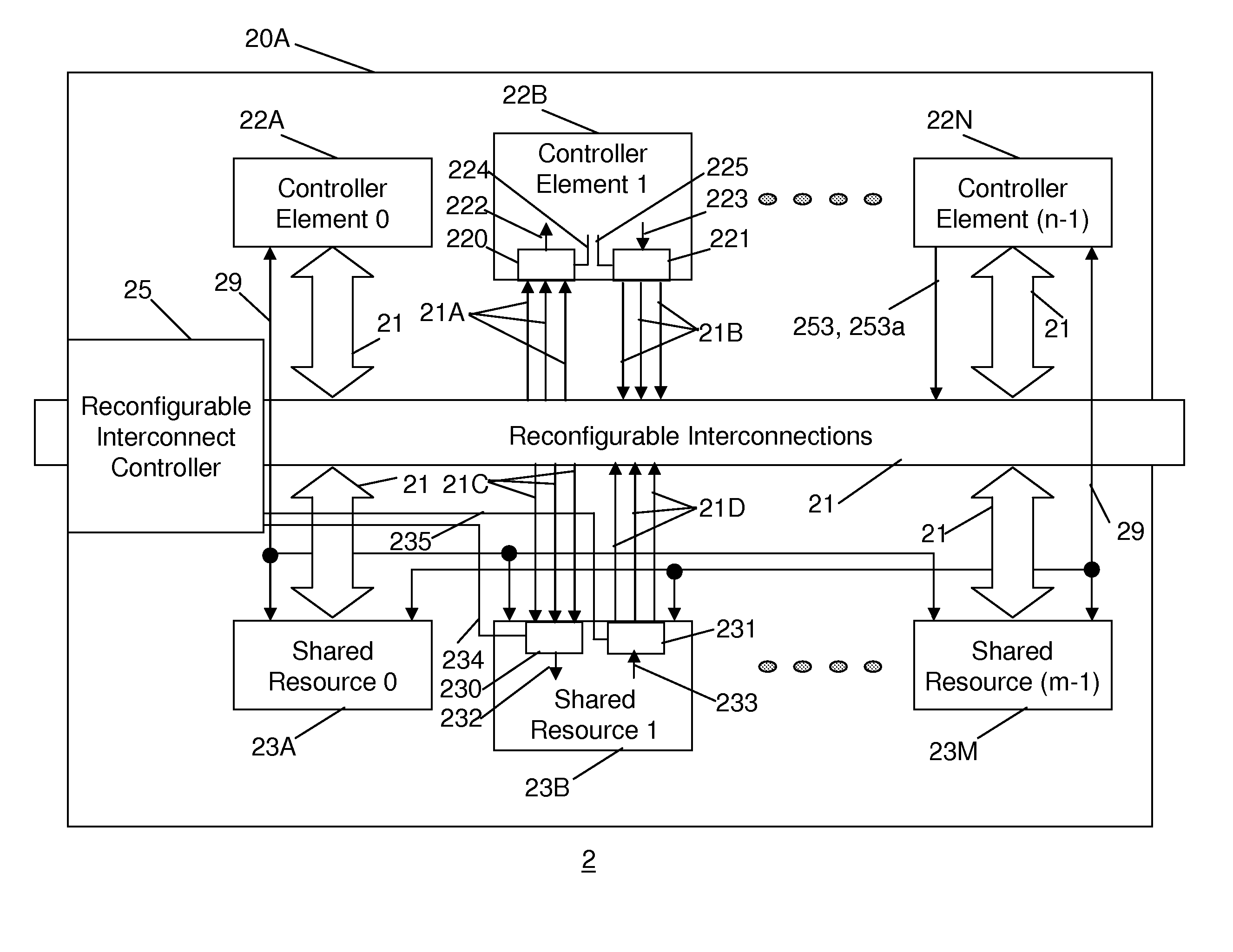

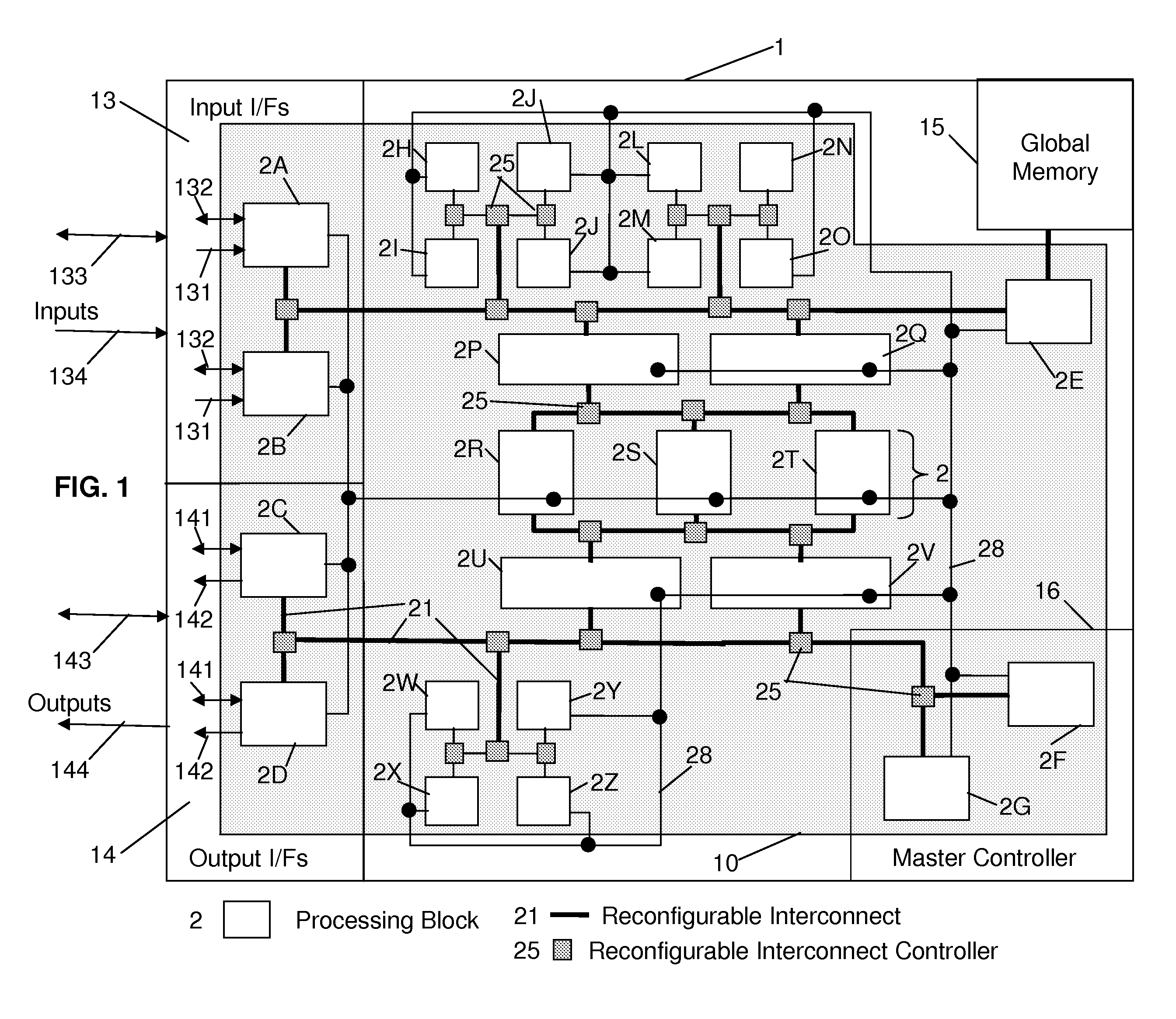

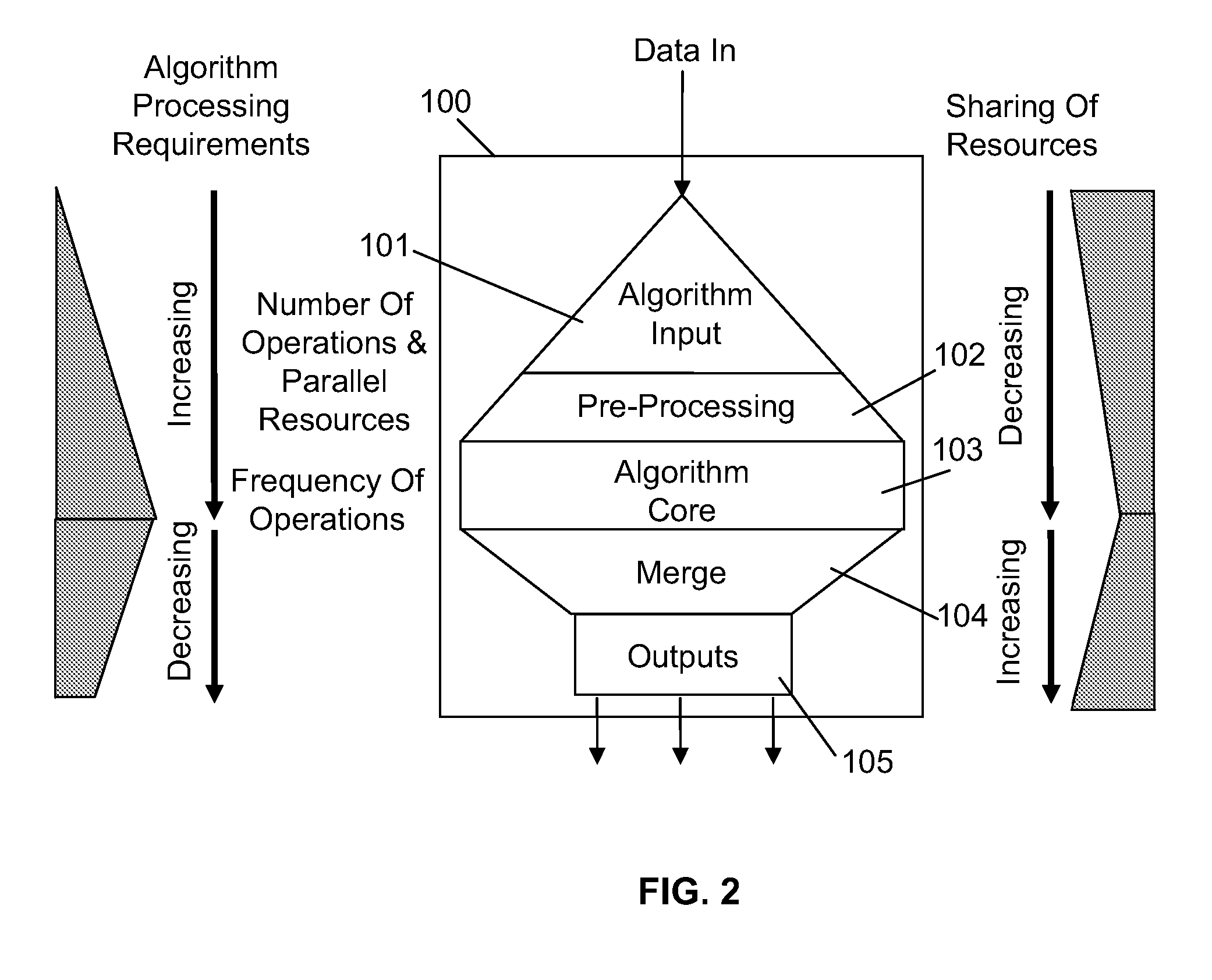

A reconfigurable integrated circuit is provided wherein the available hardware resources can be optimised for a particular application. Dynamically reconfiguring (in both real-time and non real-time) the available resources and sharing a plurality of processing elements with a plurality of controller elements achieve this. In a preferred embodiment the integrated circuit includes a plurality of processing blocks, which interface to a reconfigurable interconnection means. A processing block has two forms, namely a shared resource block and a dedicated resource block. Each processing block consists of one or a plurality of controller elements and a plurality of processing elements. The controller element and processing element generally comprise diverse rigid coarse and fine grained circuits and are interconnected through dedicated and reconfigurable interconnect. The processing blocks can be configured as a hierarchy of blocks and or fractal architecture.

Owner:AKYA HLDG

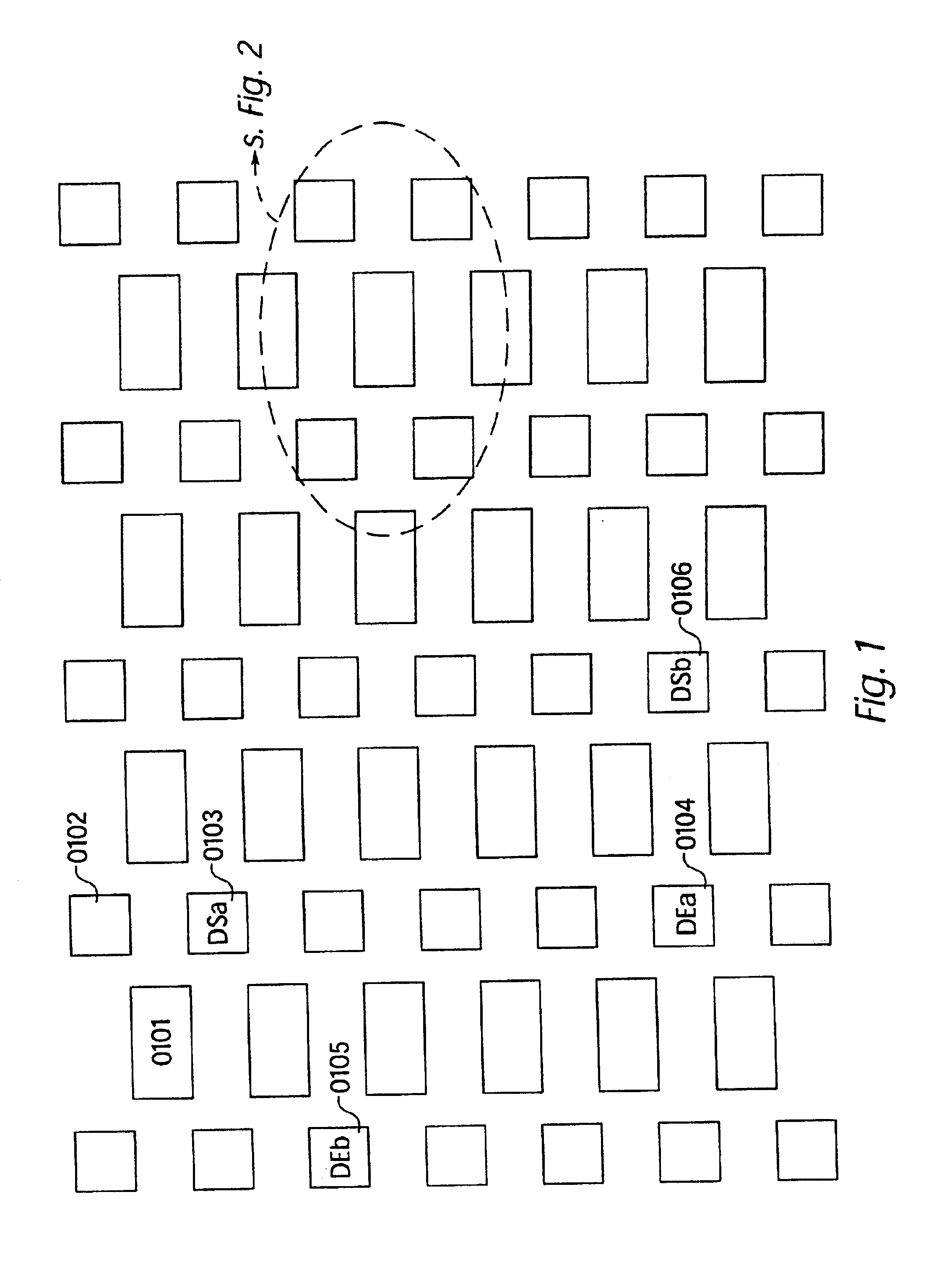

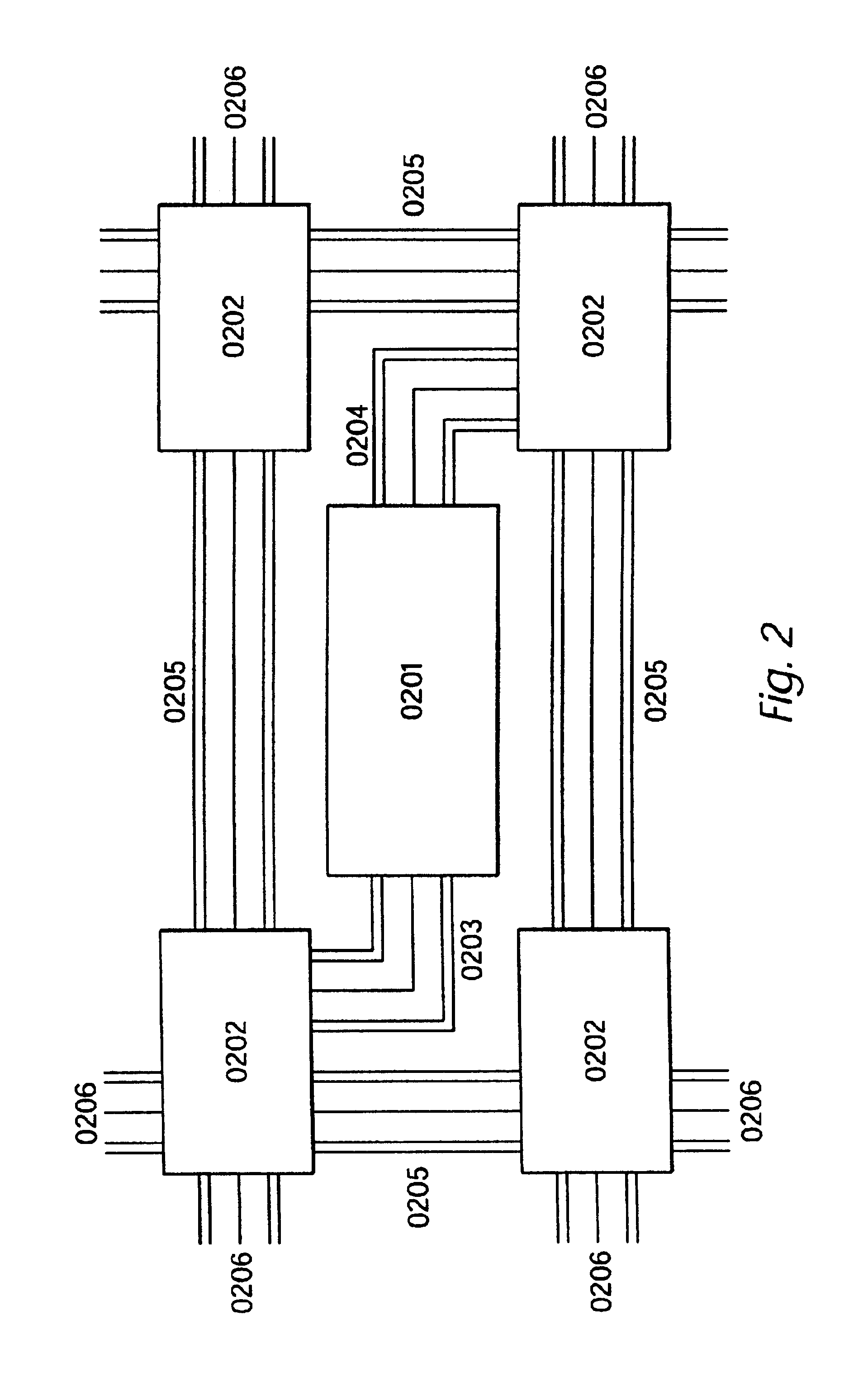

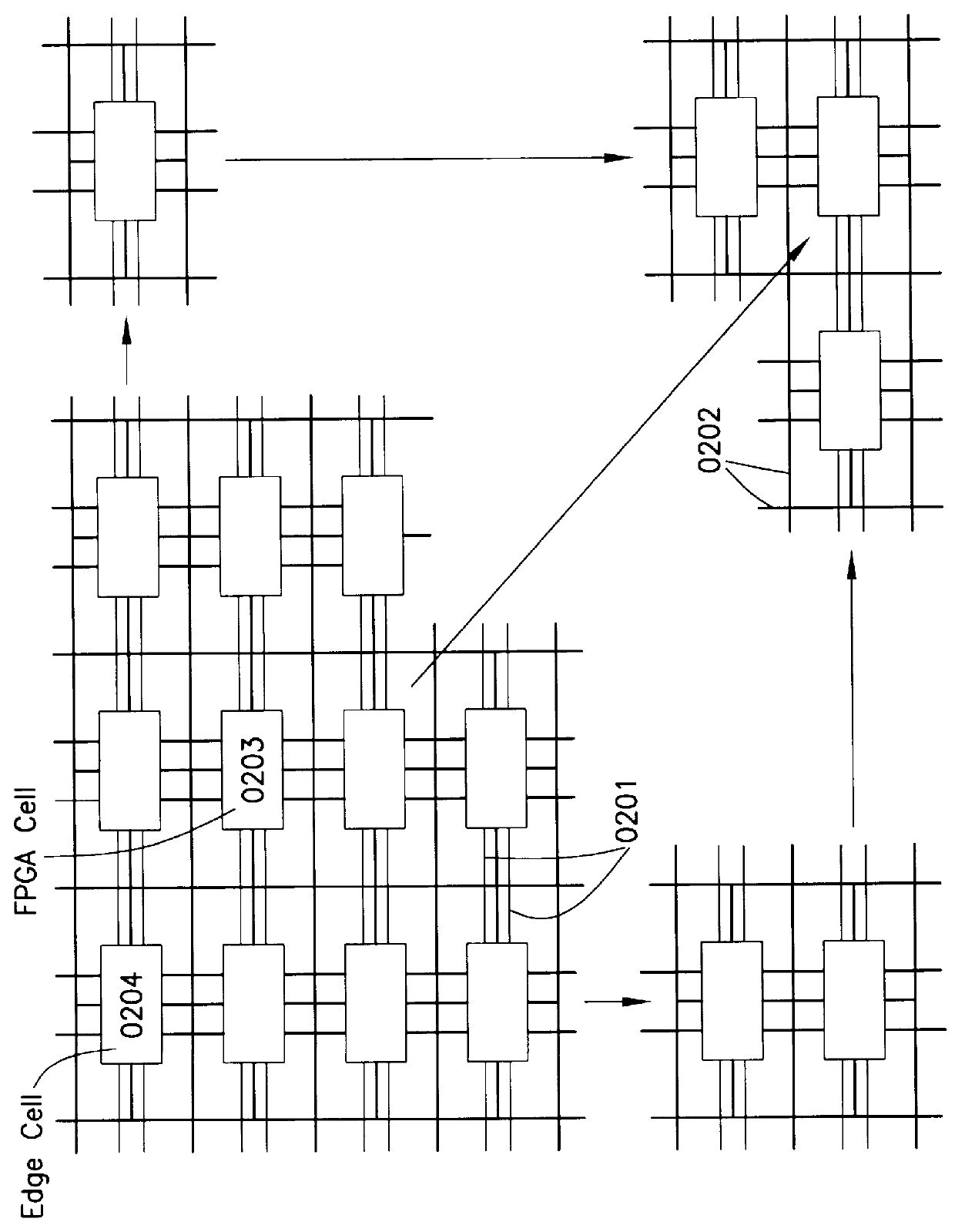

Internal bus system for DFPS and units with two- or multi-dimensional programmable cell architectures, for managing large volumes of data with a high interconnection complexity

InactiveUS7010667B2Multiple digital computer combinationsArchitecture with single central processing unitNetwork packetInterconnection

An internal bus system for DFPs and units with two- or multi-dimensional programmable cell architectures, for managing large volumes of data with a high interconnection complexity. The bus system can transmit data between a plurality of function blocks, where multiple data packets can be on the bus at the same time. The bus system automatically recognizes the correct connection for various types of data or data transmitters and sets it up.

Owner:PACT XPP TECH

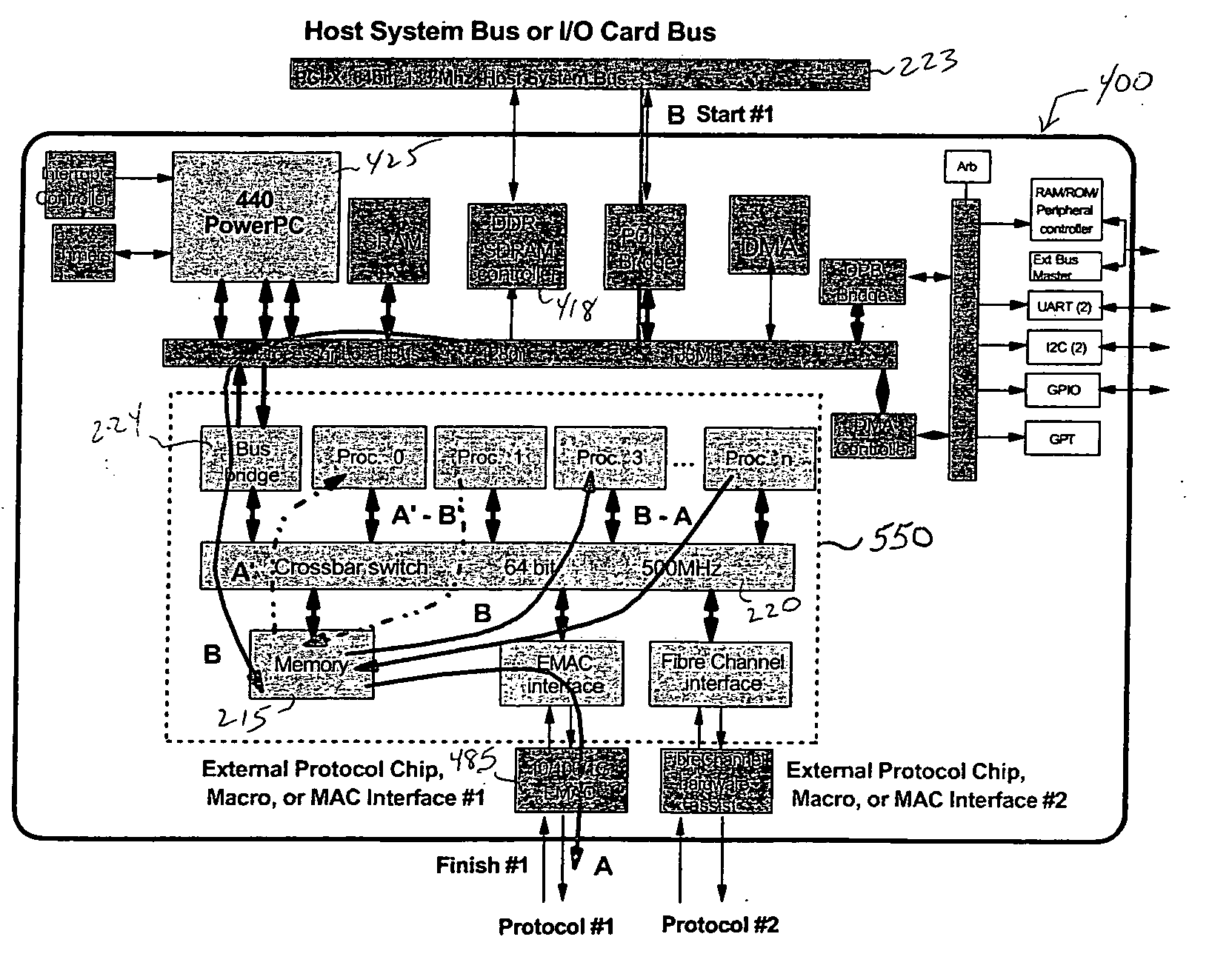

Single chip protocol converter

ActiveUS20050021874A1Increase the number ofHigh bandwidthConcurrent instruction executionMultiple digital computer combinationsSingle chipMultiprocessing

A single chip protocol converter integrated circuit (IC) capable of receiving packets generating according to a first protocol type and processing said packets to implement protocol conversion and generating converted packets of a second protocol type for output thereof, the process of protocol conversion being performed entirely within the single integrated circuit chip. The single chip protocol converter can be further implemented as a macro core in a system-on-chip (SoC) implementation, wherein the process of protocol conversion is contained within a SoC protocol conversion macro core without requiring the processing resources of a host system. Packet conversion may additionally entail converting packets generated according to a first protocol version level and processing the said packets to implement protocol conversion for generating converted packets according to a second protocol version level, but within the same protocol family type. The single chip protocol converter integrated circuit and SoC protocol conversion macro implementation include multiprocessing capability including processor devices that are configurable to adapt and modify the operating functionality of the chip.

Owner:MICROSOFT TECH LICENSING LLC

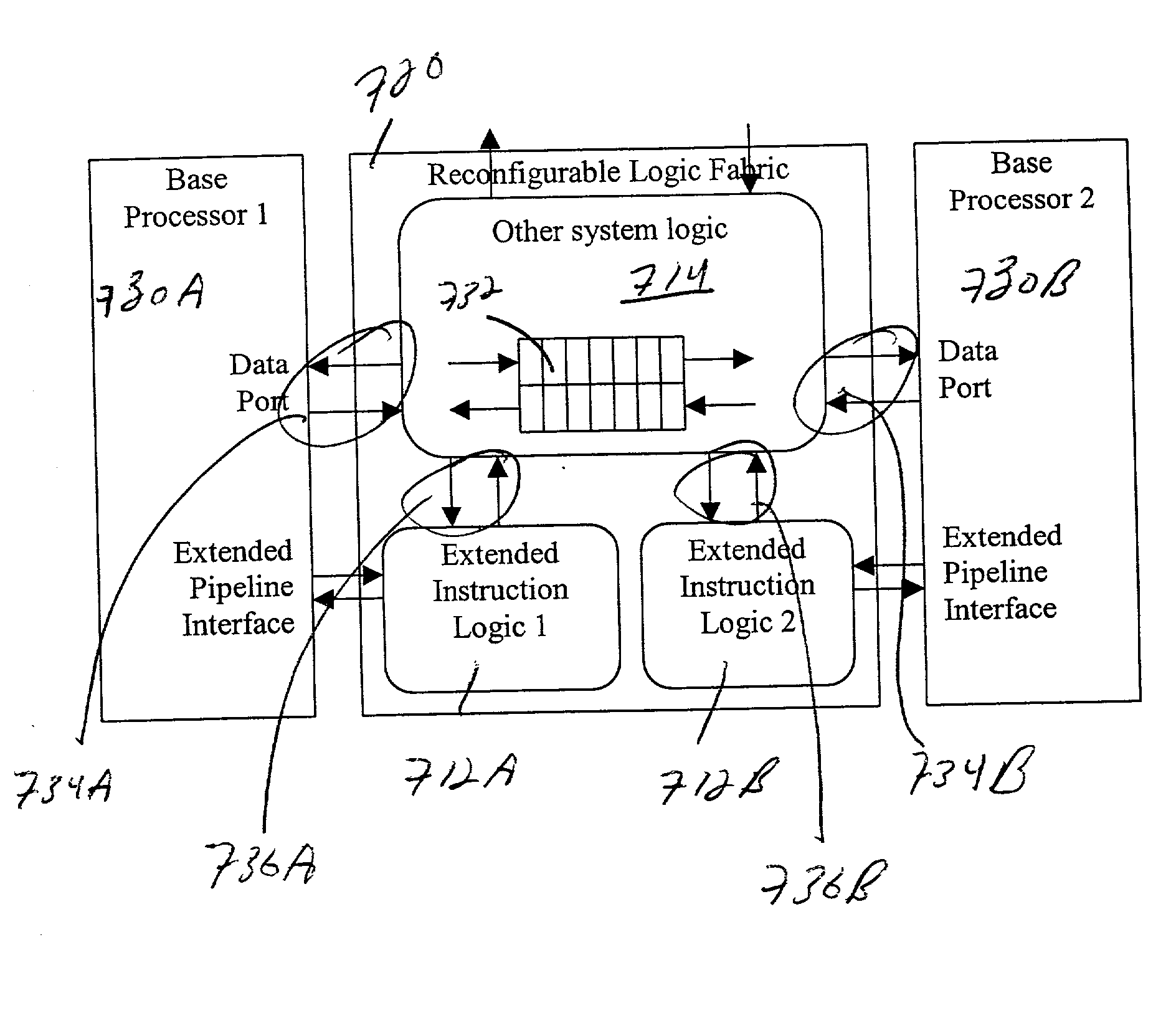

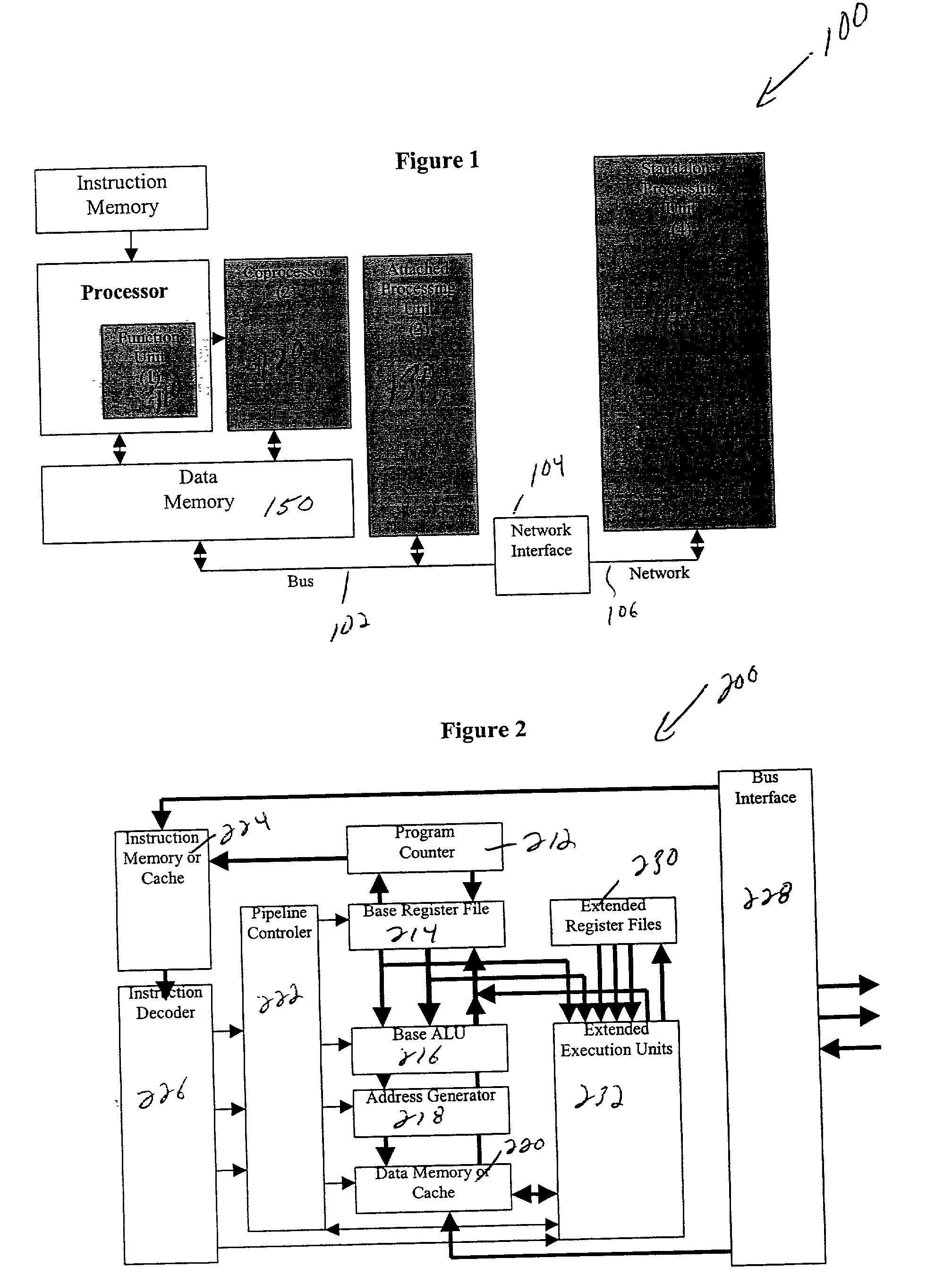

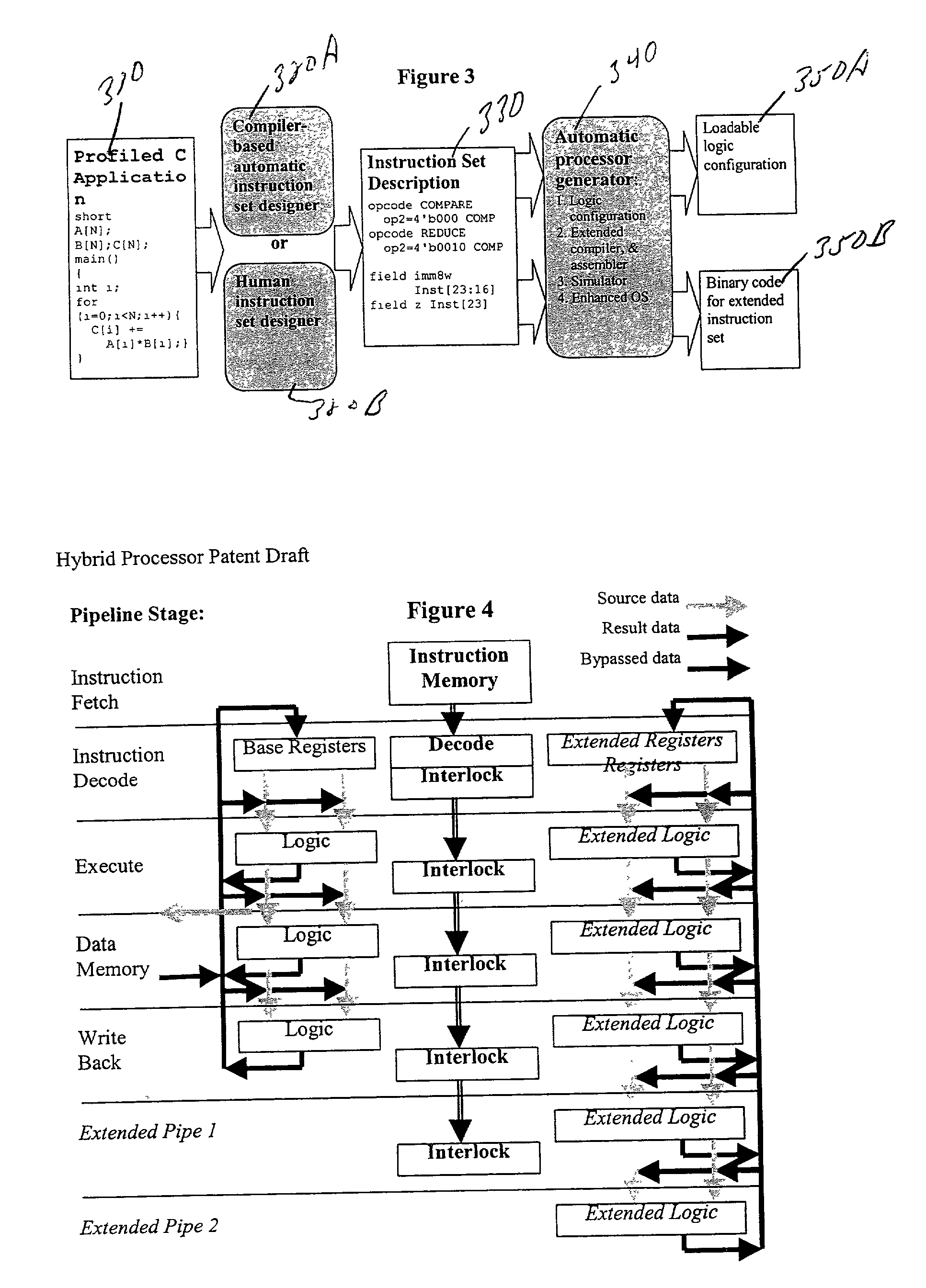

High-performance hybrid processor with configurable execution units

InactiveUS20050166038A1High bandwidthFlexibilityInstruction analysisConcurrent instruction executionHigh bandwidthLatency (engineering)

A new general method for building hybrid processors achieves higher performance in applications by allowing more powerful, tightly-coupled instruction set extensions to be implemented in reconfigurable logic. New instructions set configurations can be discovered and designed by automatic and semi-automatic methods. Improved reconfigurable execution units support deep pipelining, addition of additional registers and register files, compound instructions with many source and destination registers and wide data paths. New interface methods allow lower latency, higher bandwidth connections between hybrid processors and other logic.

Owner:TENSILICA

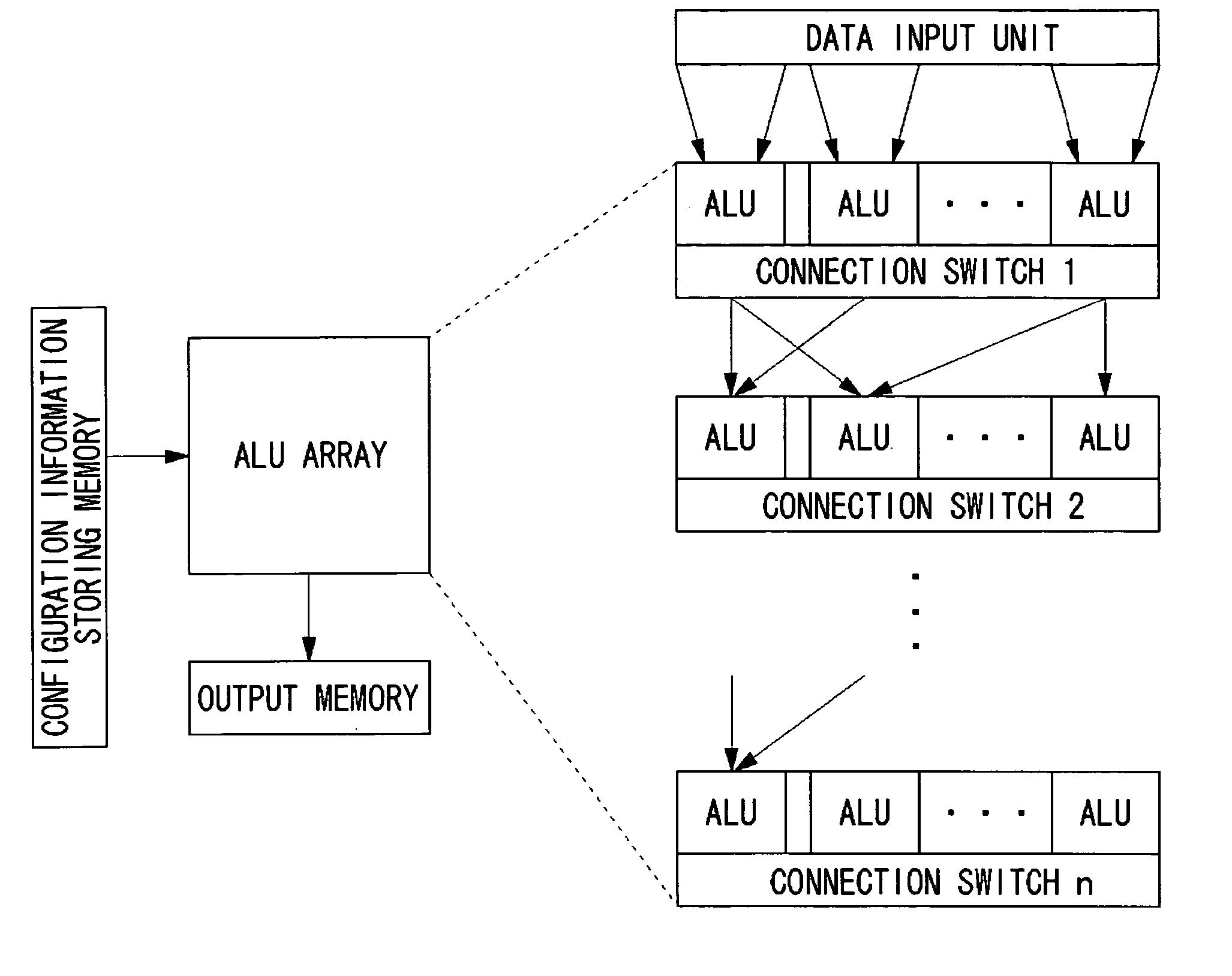

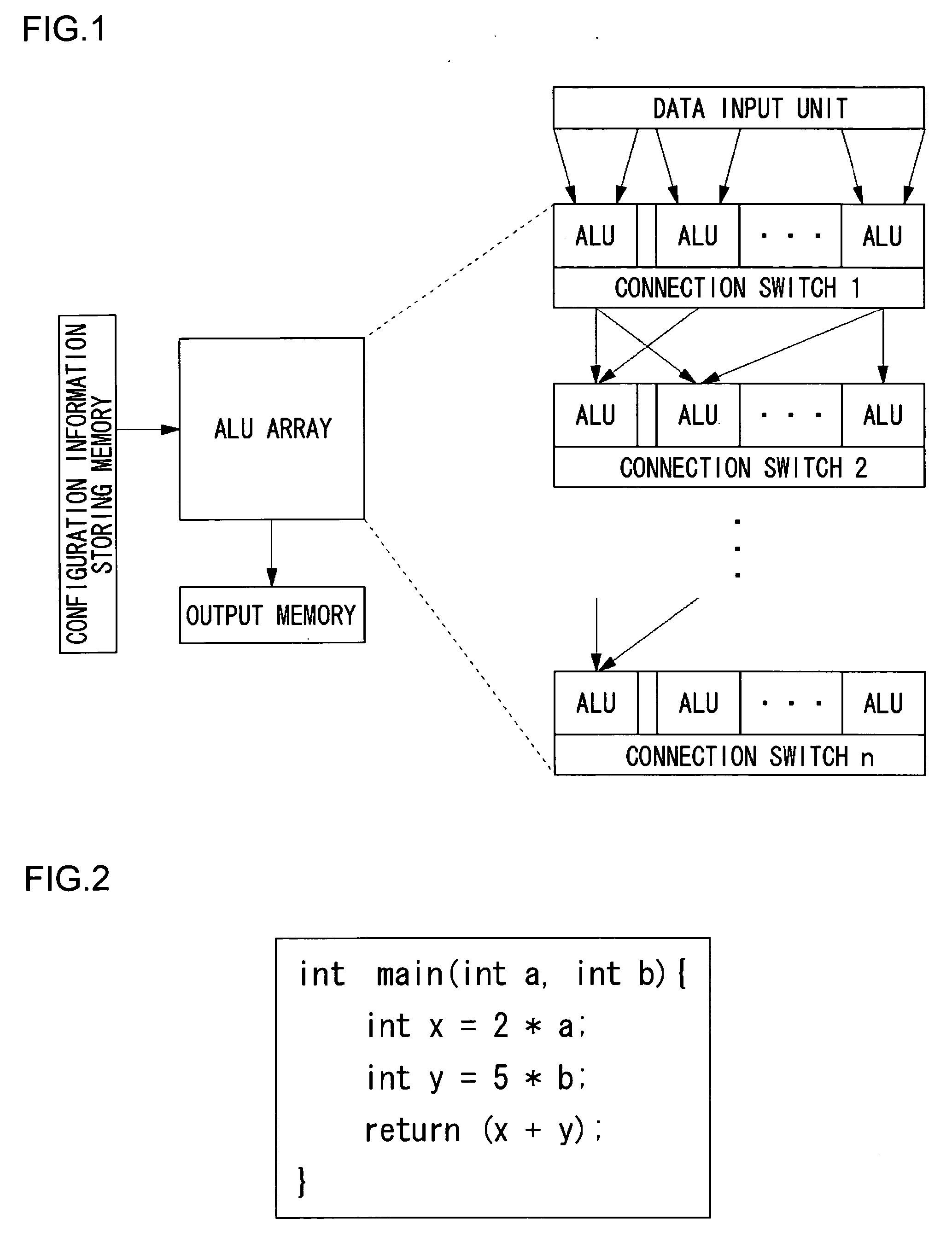

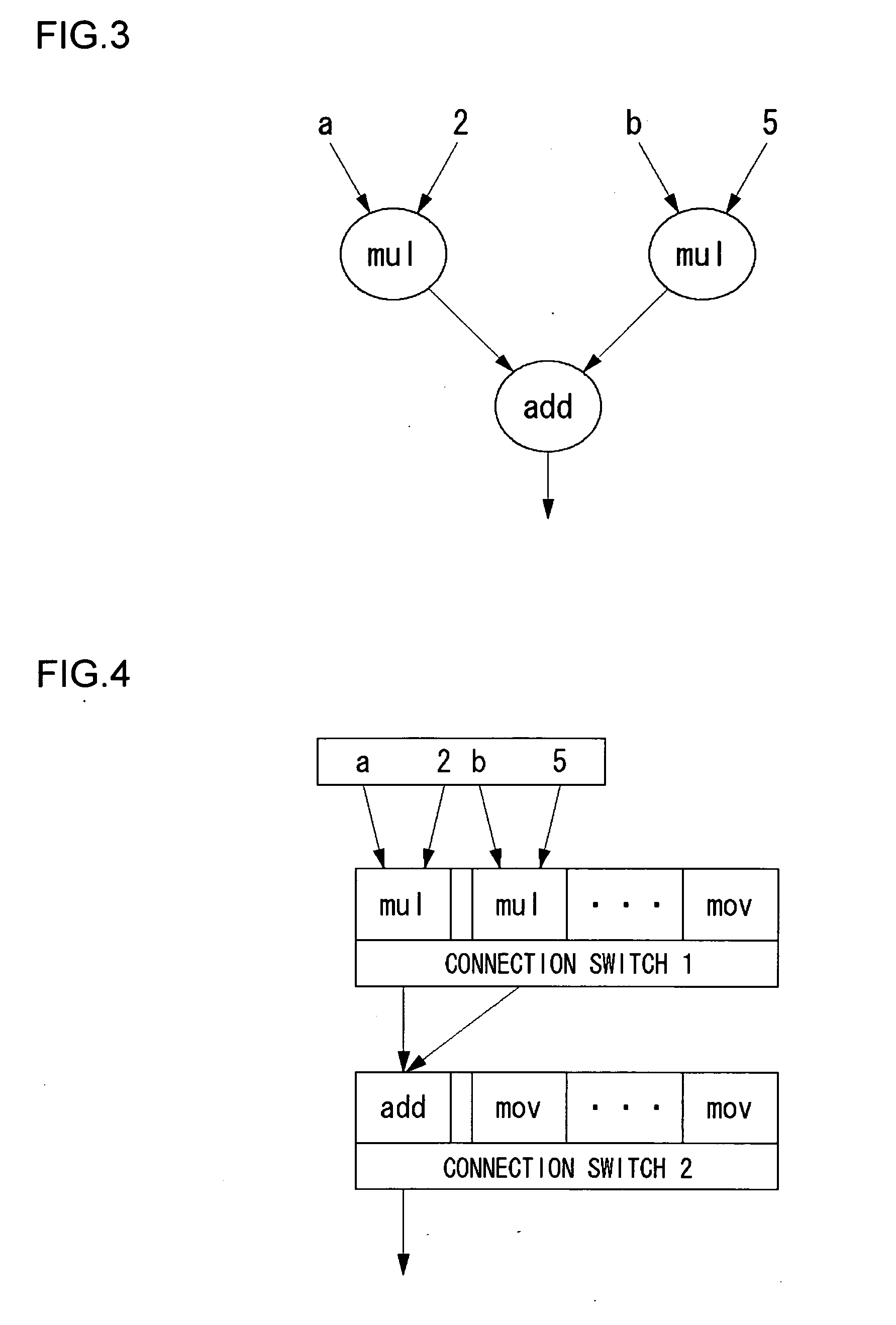

Reconfigurable circuit, processor having reconfigurable circuit, method of determining functions of logic circuits in reconfigurable circuit, method of generating circuit, and circuit

InactiveUS20050134308A1Reduce in quantityReduce switchingEnergy efficient ICTSemiconductor/solid-state device manufacturingLogical circuit

Owner:SANYO ELECTRIC CO LTD

Data processing method and device

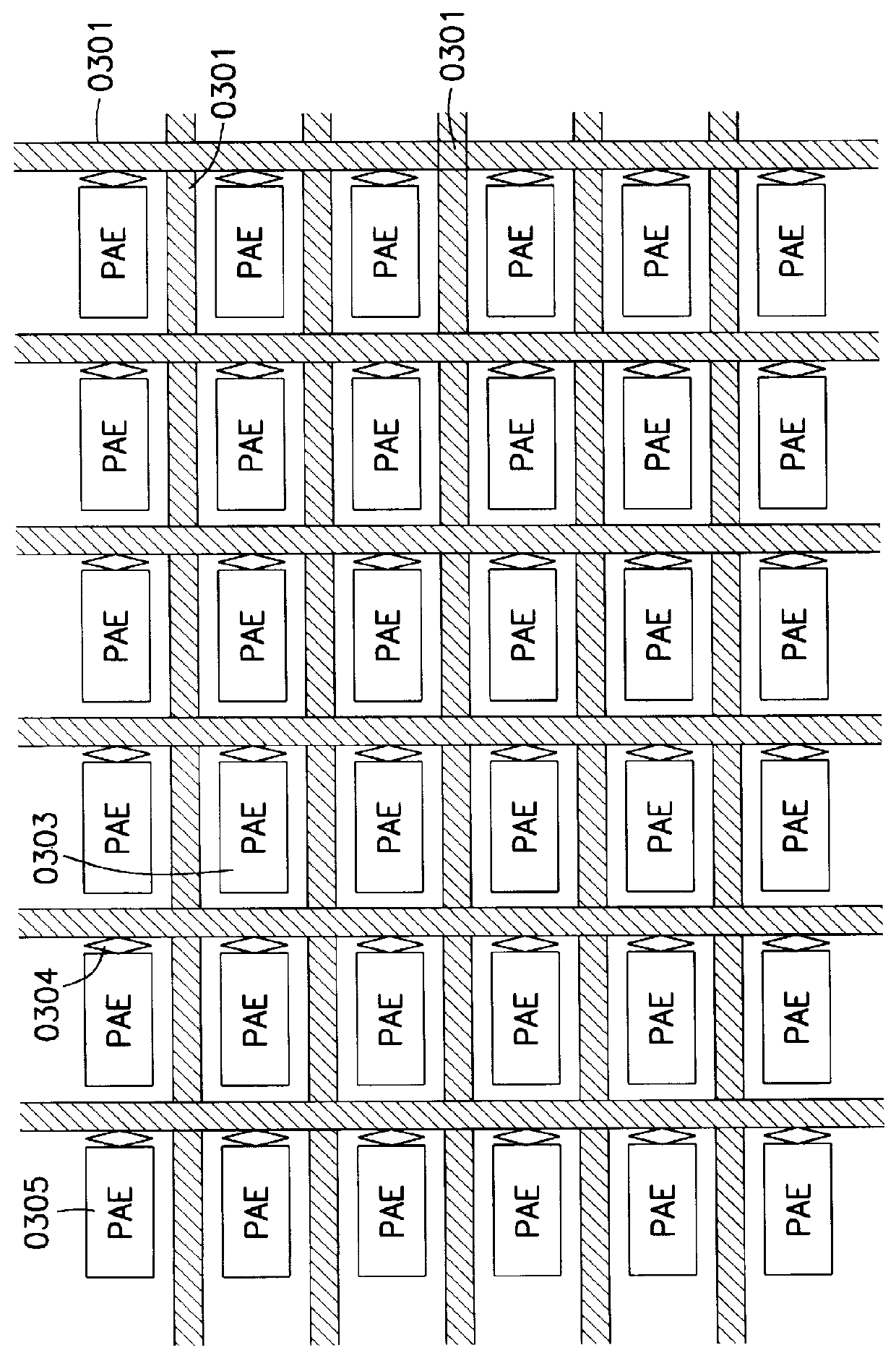

ActiveUS20070050603A1Improve data throughputDelay problemMemory adressing/allocation/relocationConcurrent instruction executionParallel computingData processing

In a data-processing method, first result data may be obtained using a plurality of configurable coarse-granular elements, the first result data may be written into a memory that includes spatially separate first and second memory areas and that is connected via a bus to the plurality of configurable coarse-granular elements, the first result data may be subsequently read out from the memory, and the first result data may be subsequently processed using the plurality of configurable coarse-granular elements. In a first configuration, the first memory area may be configured as a write memory, and the second memory area may be configured as a read memory. Subsequent to writing to and reading from the memory in accordance with the first configuration, the first memory area may be configured as a read memory, and the second memory area may be configured as a write memory.

Owner:SCIENTIA SOL MENTIS AG

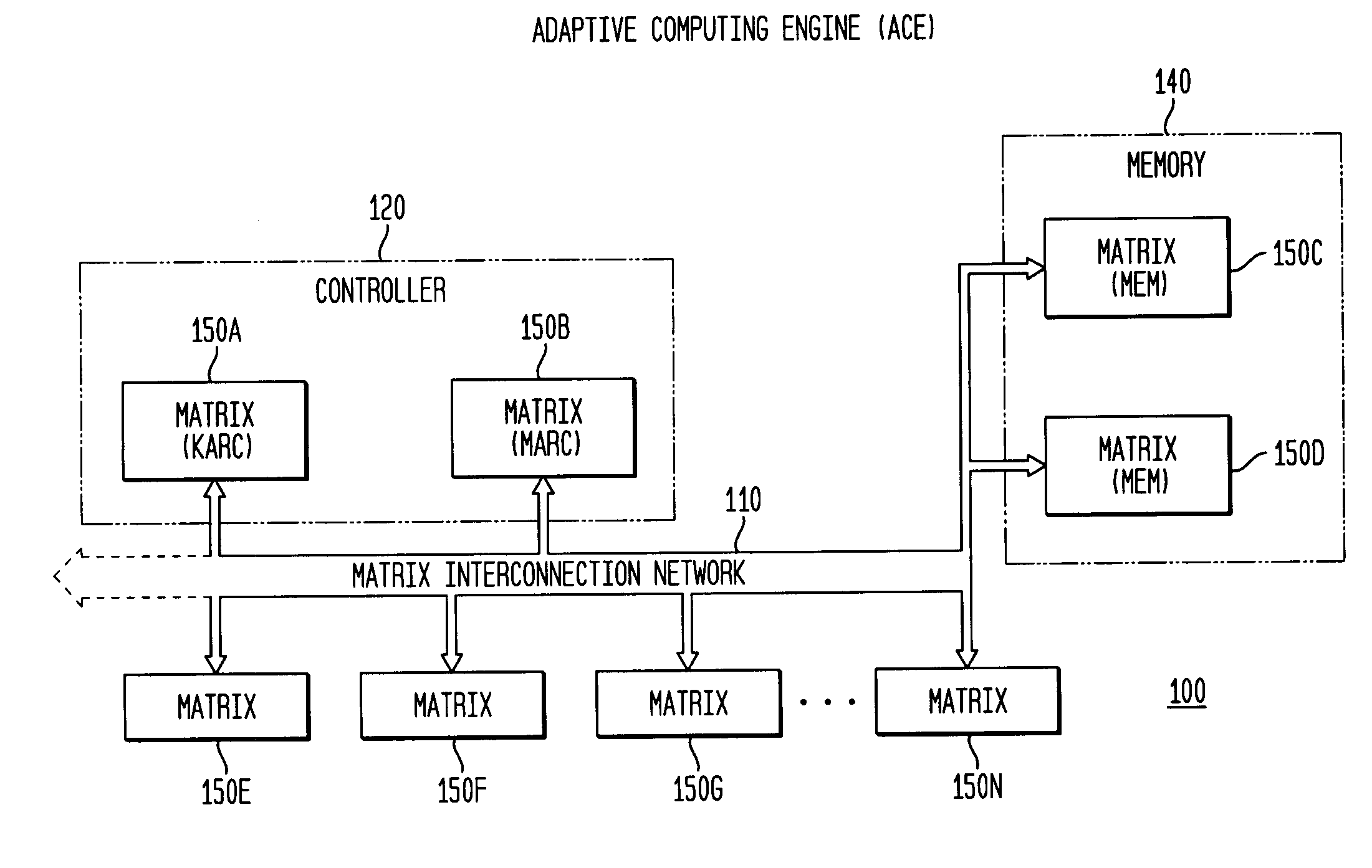

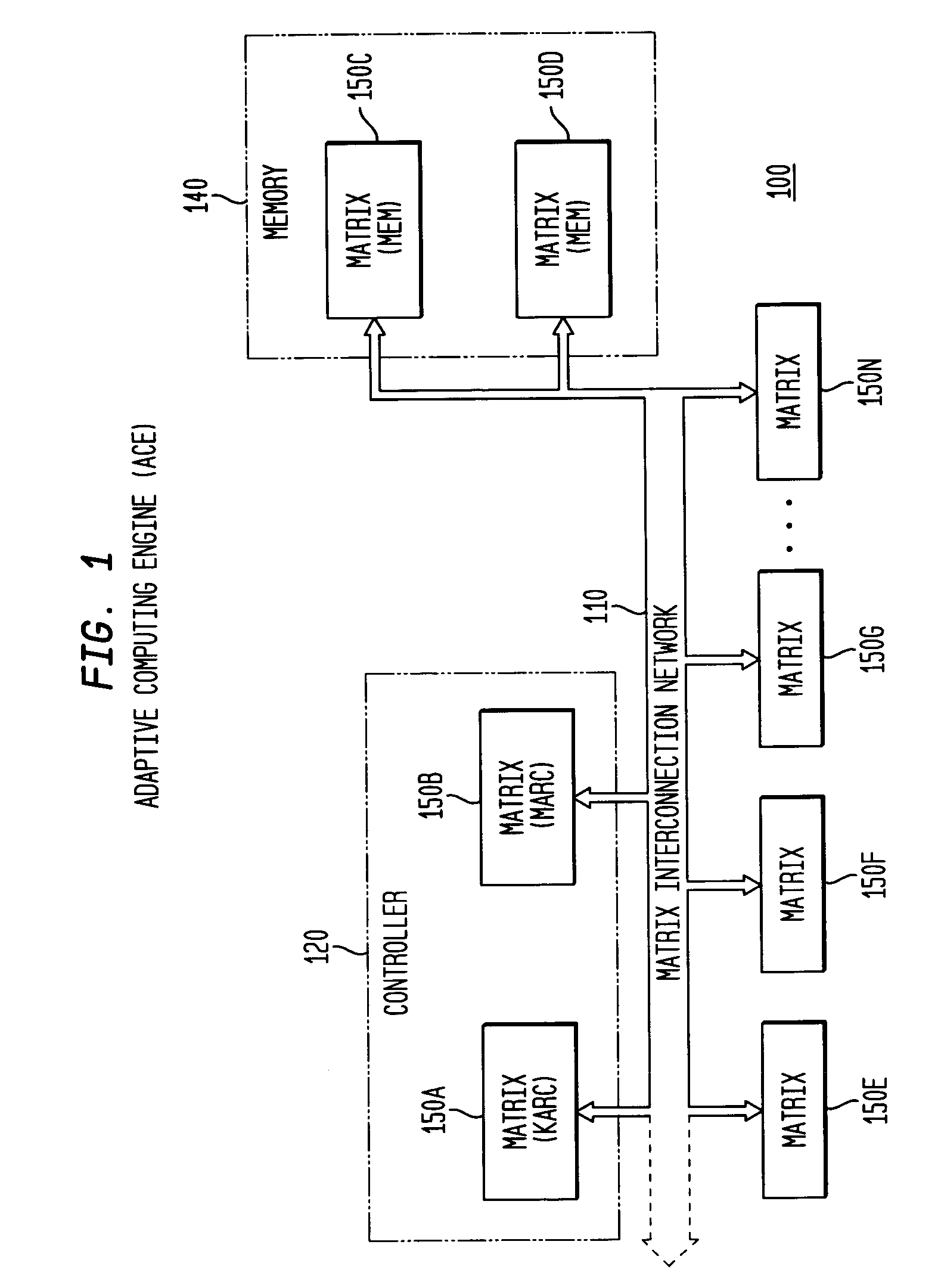

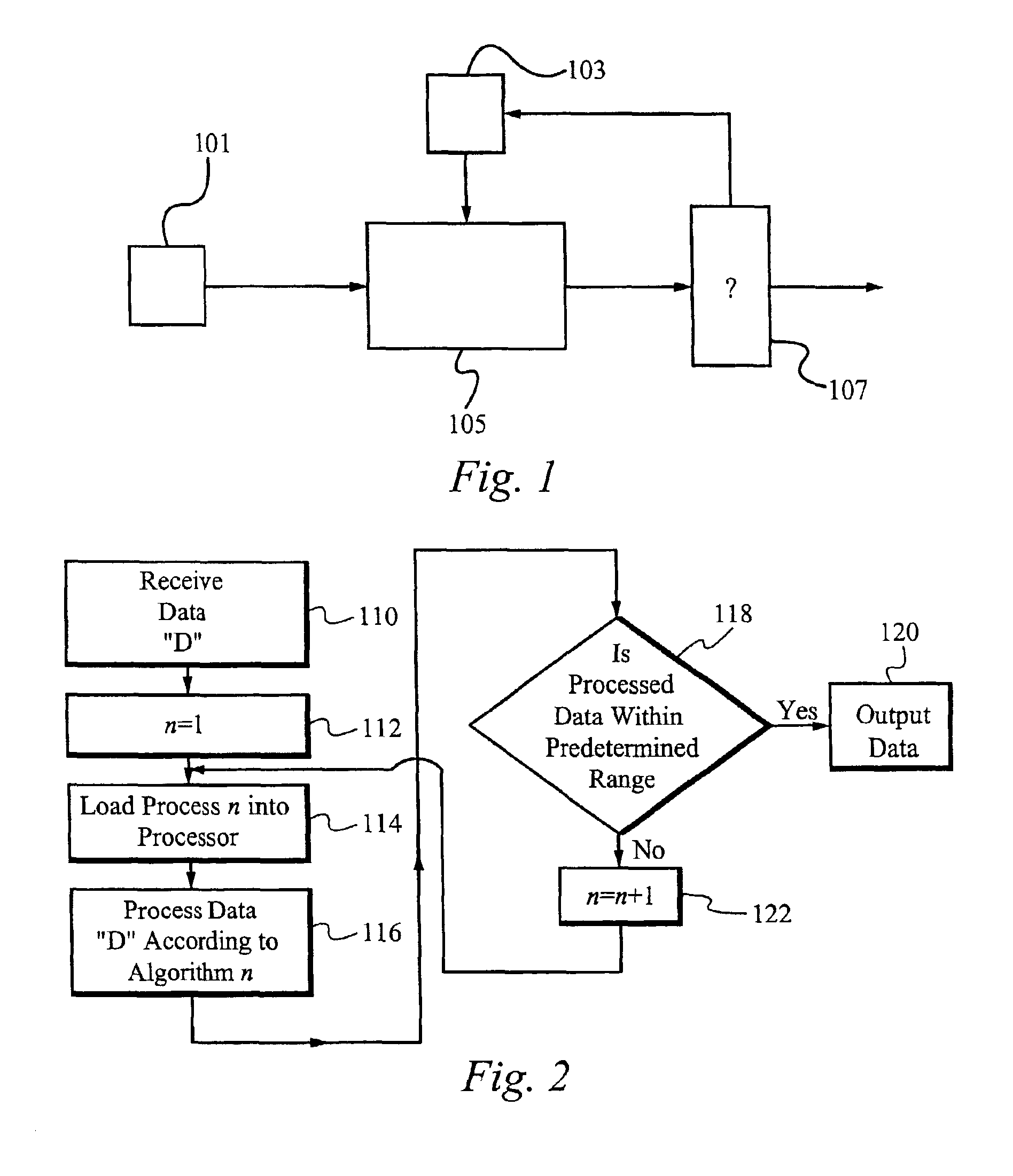

System, method and software for static and dynamic programming and configuration of an adaptive computing architecture

ActiveUS20050044344A1Architecture with single central processing unitSpecial data processing applicationsInterconnectionSystems approaches

The present invention provides a system, method and software for programming and configuring an adaptive computing architecture or device. The invention utilizes program constructs which correspond to and map directly to the adaptive hardware having a plurality of reconfigurable nodes coupled through a reconfigurable matrix interconnection network. A first program construct corresponds to a selected node. A second program construct corresponds to an executable task of the selected node and includes one or more firing conditions capable of determining the commencement of the executable task of the selected node. A third program construct corresponds to at least one input port coupling the selected node to the matrix interconnect network for input data to be consumed by the executable task. A fourth program construct corresponds to at least one output port coupling the selected node to the matrix interconnect network for output data to be produced by the executable task;

Owner:CORNAMI INC

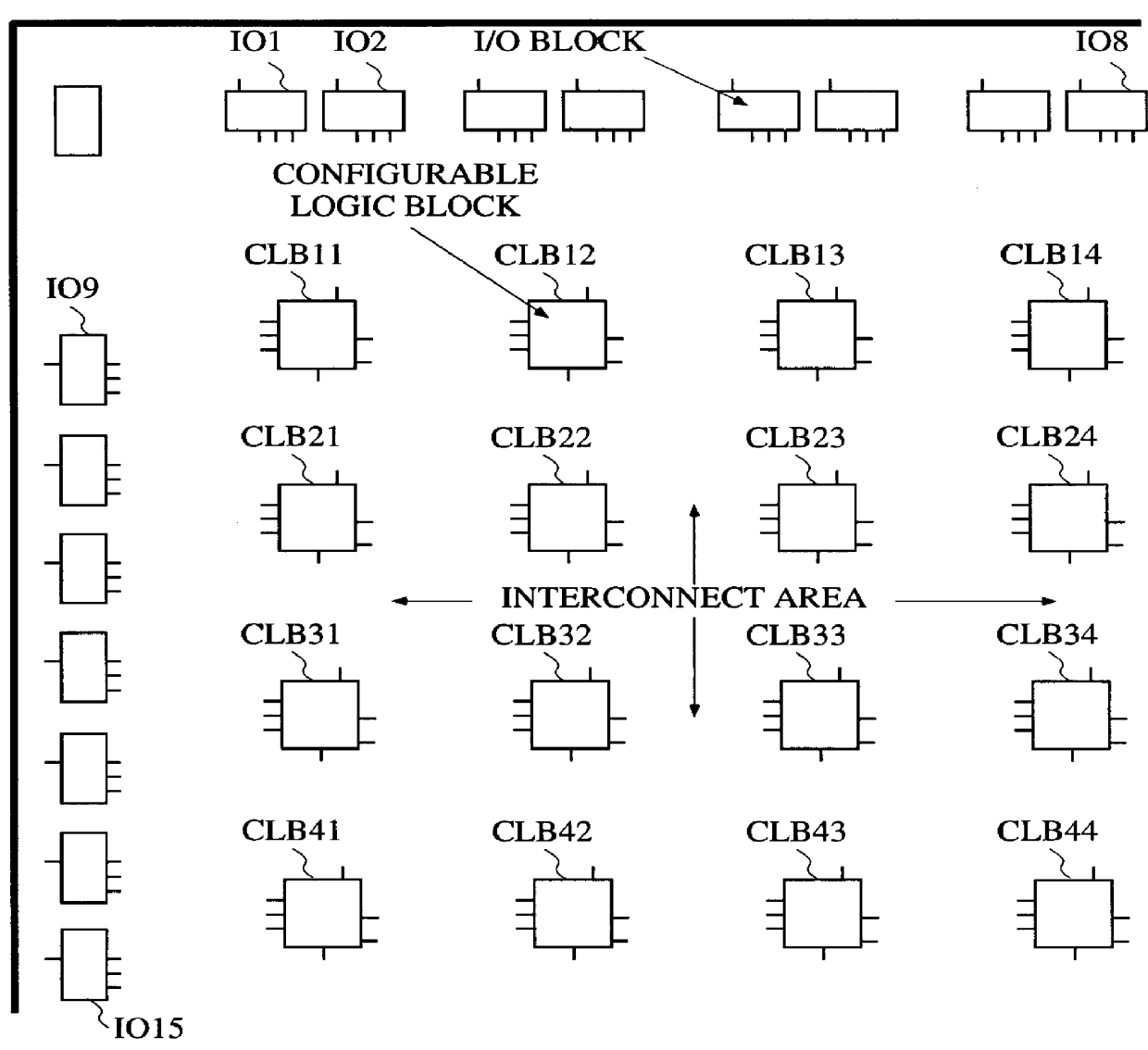

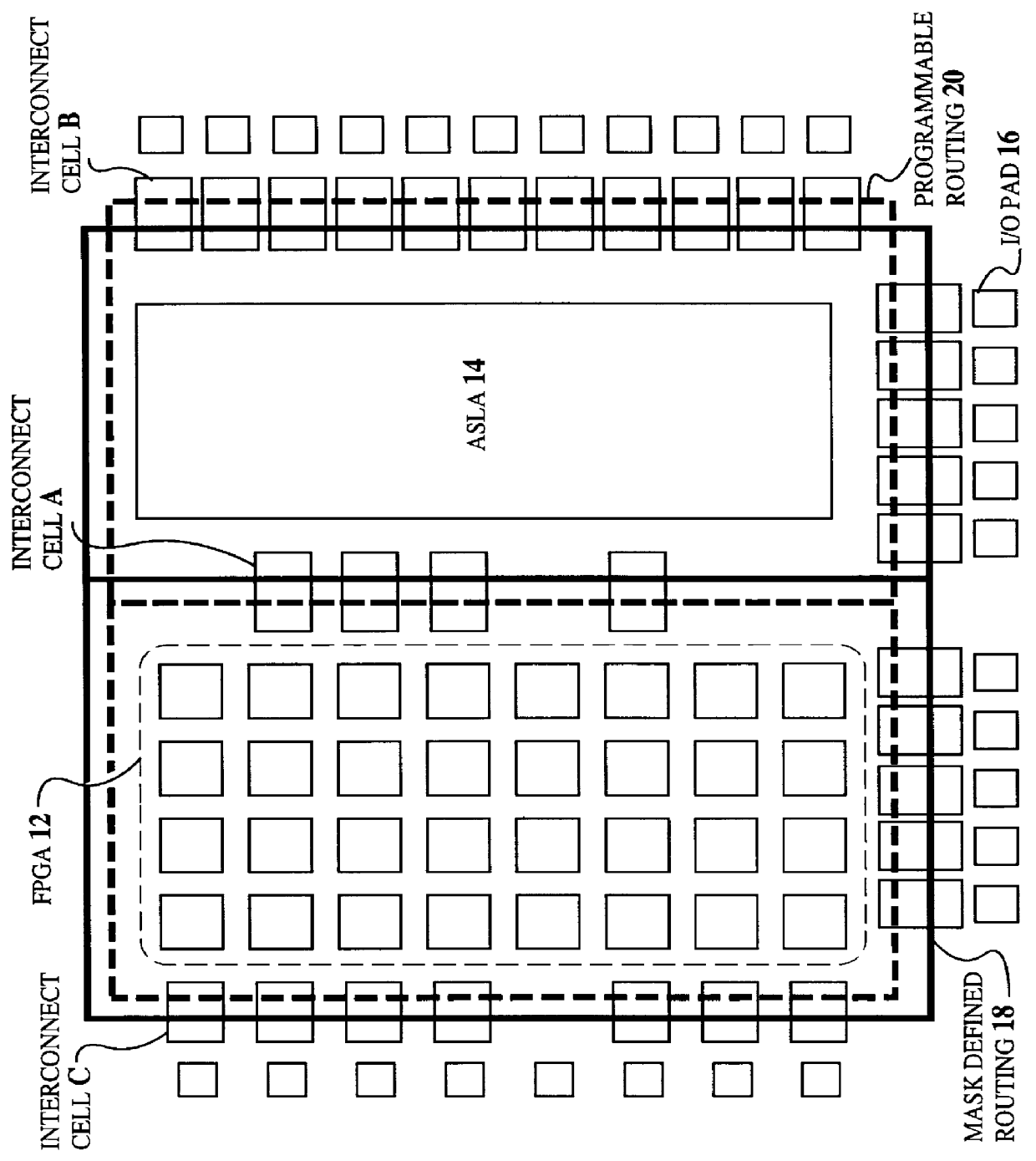

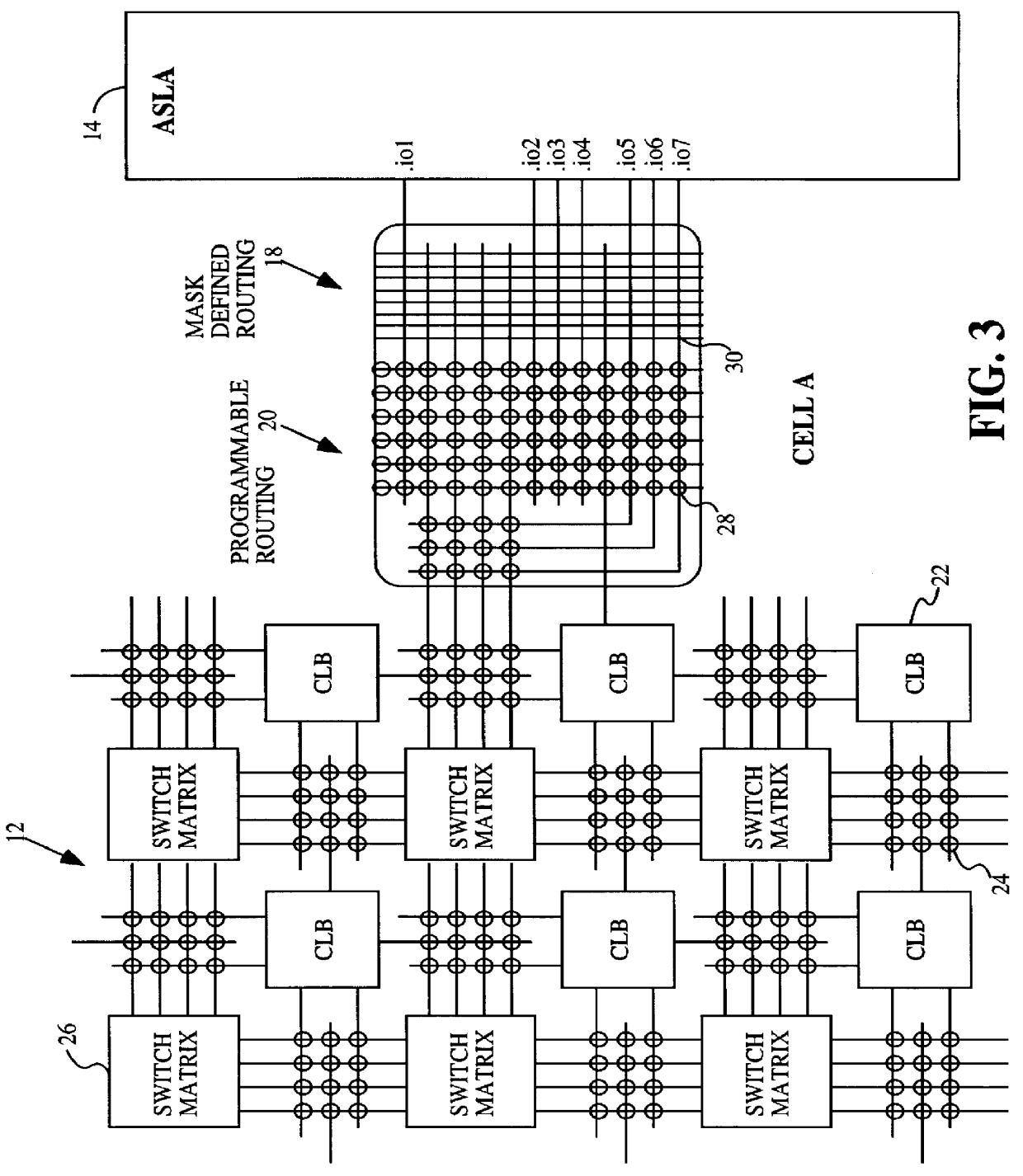

Integrated circuit with field programmable and application specific logic areas

InactiveUS6094065AReconfigurabilityLow costSolid-state devicesArchitecture with single central processing unitIntegrated circuitInput/output

A heterogeneous integrated circuit device comprising a field programmable gate array (FPGA) programmably connected to a mask-defined application specific logic area (ASLA) on an integrated circuit thus providing a flexible low cost alternative to a homogeneous device of one type or the other. By integrating both on a single monolithic IC, the user benefits from both low cost and flexibility. Routing of signals between gate arrays and between the gate arrays and input / output (I / O) circuits is also implemented as a combination of mask-defined and programmably-configured interconnections.

Owner:XILINX INC

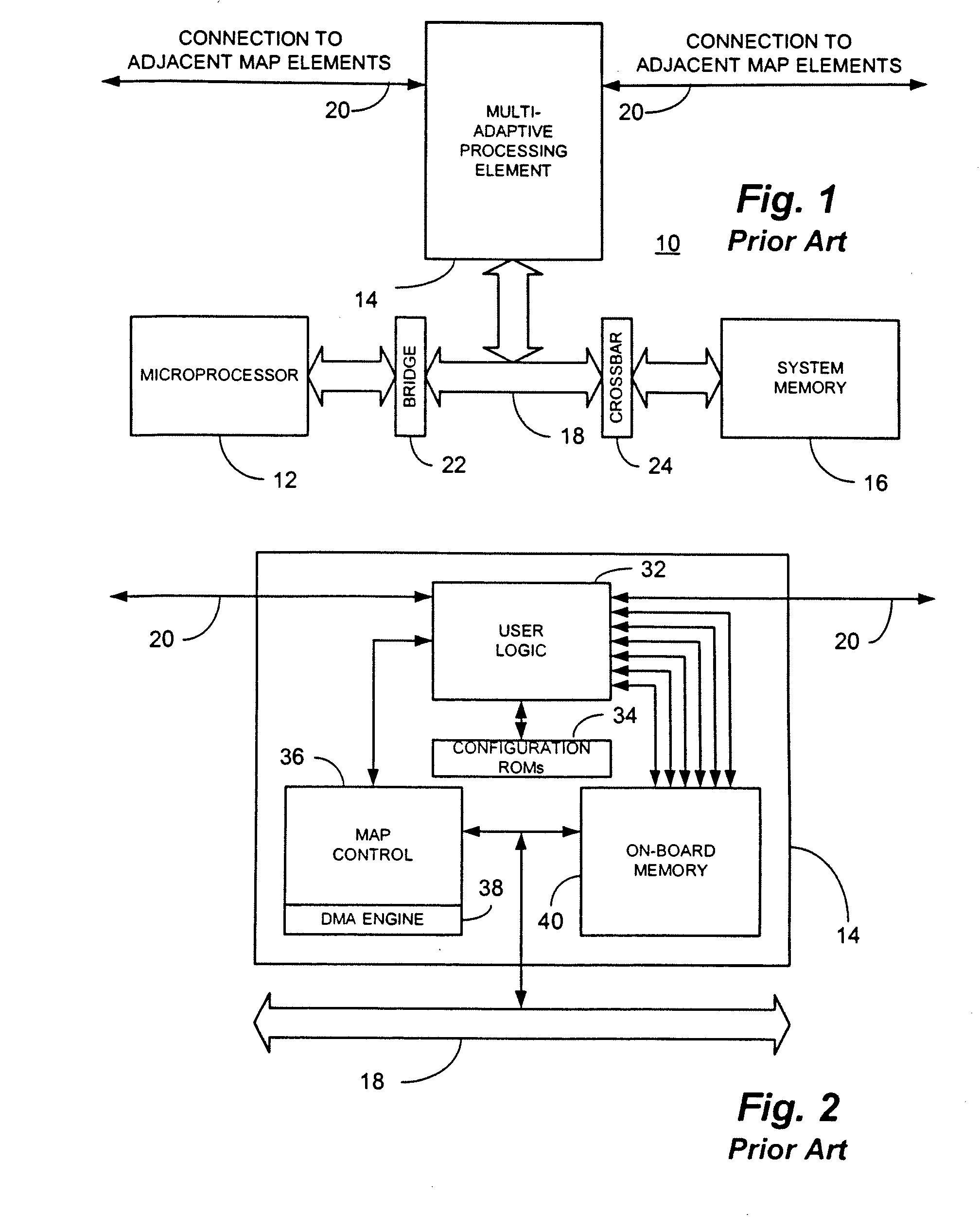

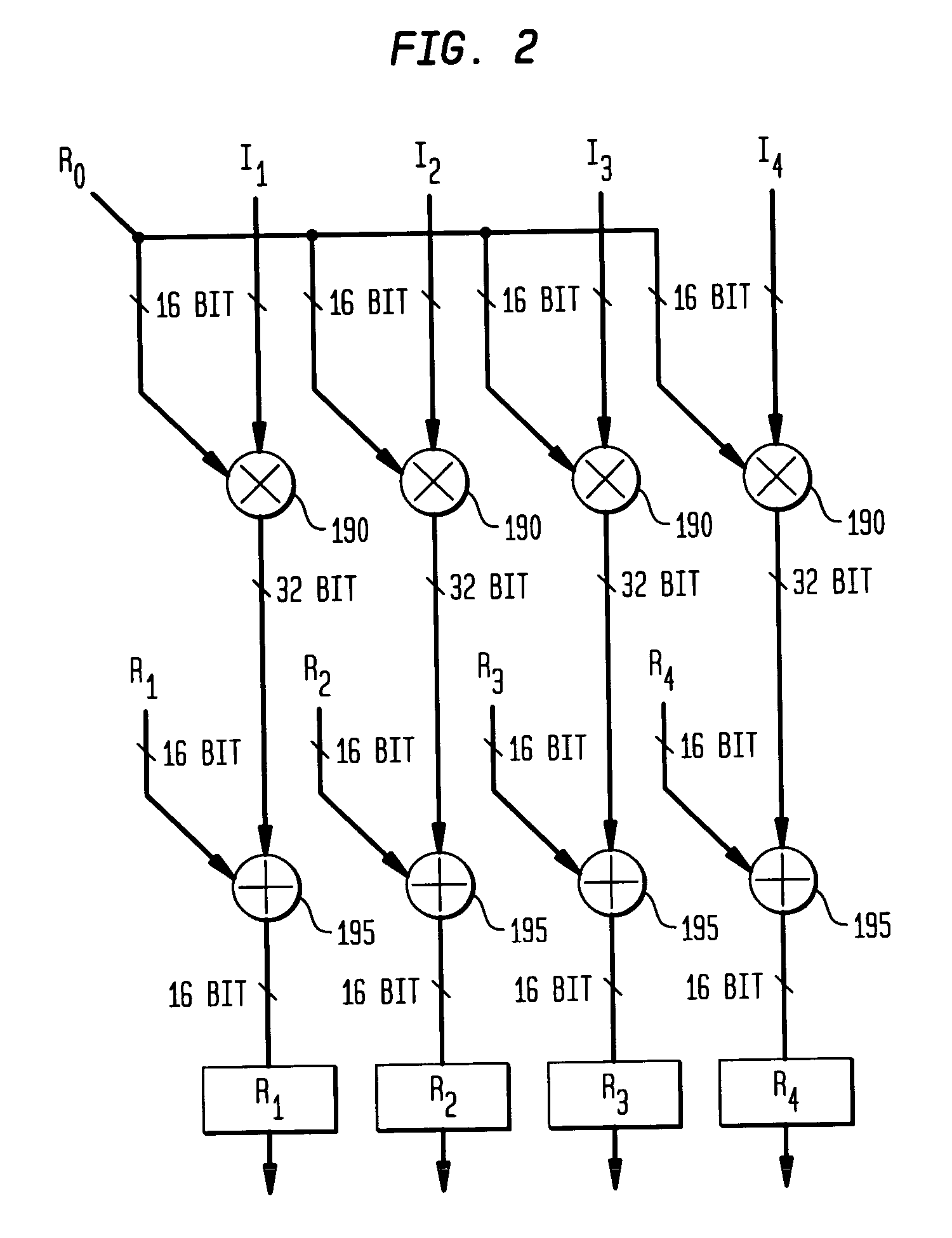

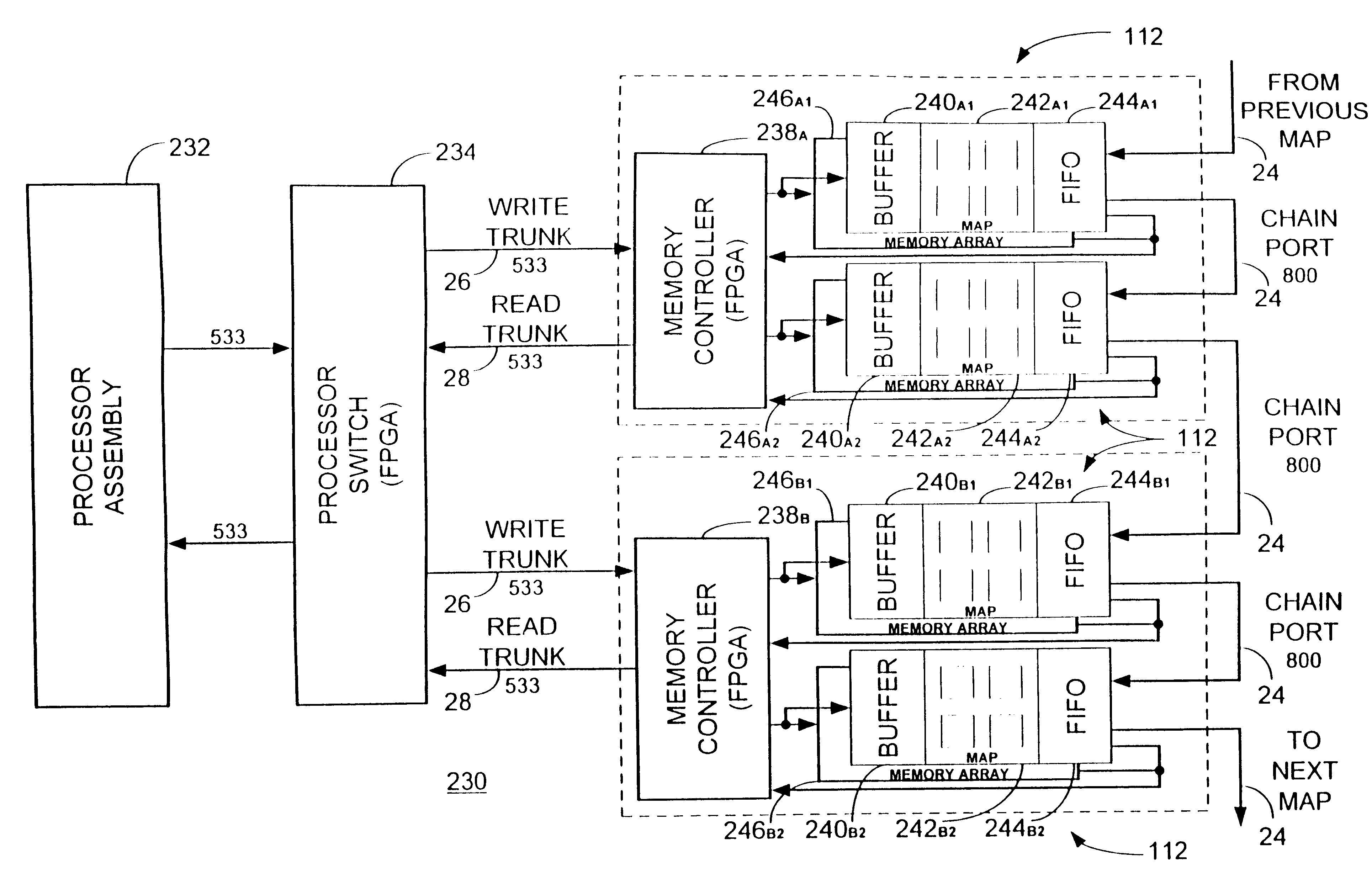

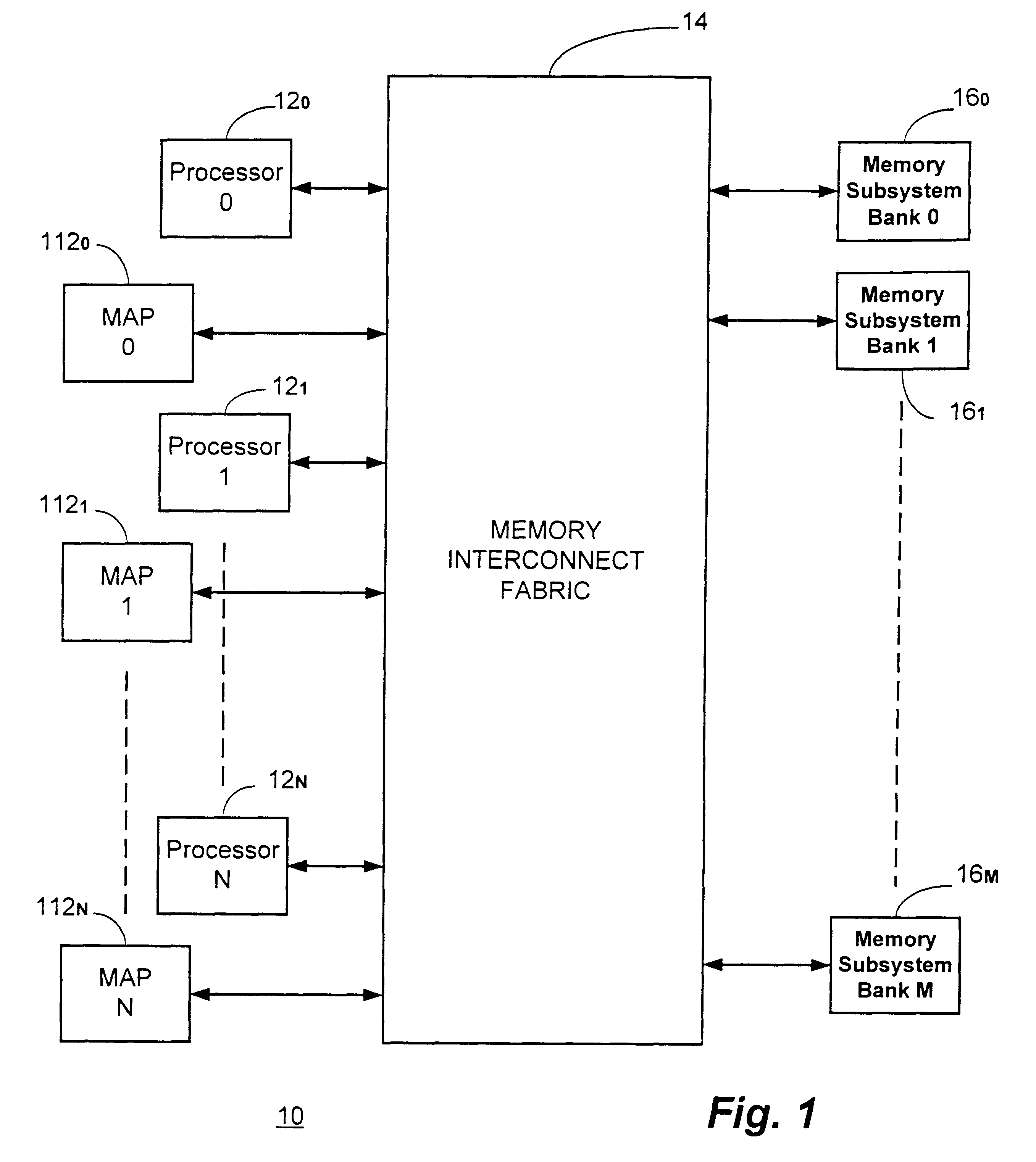

Multiprocessor with each processor element accessing operands in loaded input buffer and forwarding results to FIFO output buffer

InactiveUS6339819B1Perform operationPotential utilitySingle instruction multiple data multiprocessorsMemory adressing/allocation/relocationProcessor elementMulti processor

An enhanced memory algorithmic processor ("MAP") architecture for multiprocessor computer systems comprises an assembly that may comprise, for example, field programmable gate arrays ("FPGAs") functioning as the memory algorithmic processors. The MAP elements may further include an operand storage, intelligent address generation, on board function libraries, result storage and multiple input / output ("I / O") ports. The MAP elements are intended to augment, not necessarily replace, the high performance microprocessors in the system and, in a particular embodiment of the present invention, they may be connected through the memory subsystem of the computer system resulting in it being very tightly coupled to the system as well as being globally accessible from any processor in a multiprocessor computer system.

Owner:SRC COMP

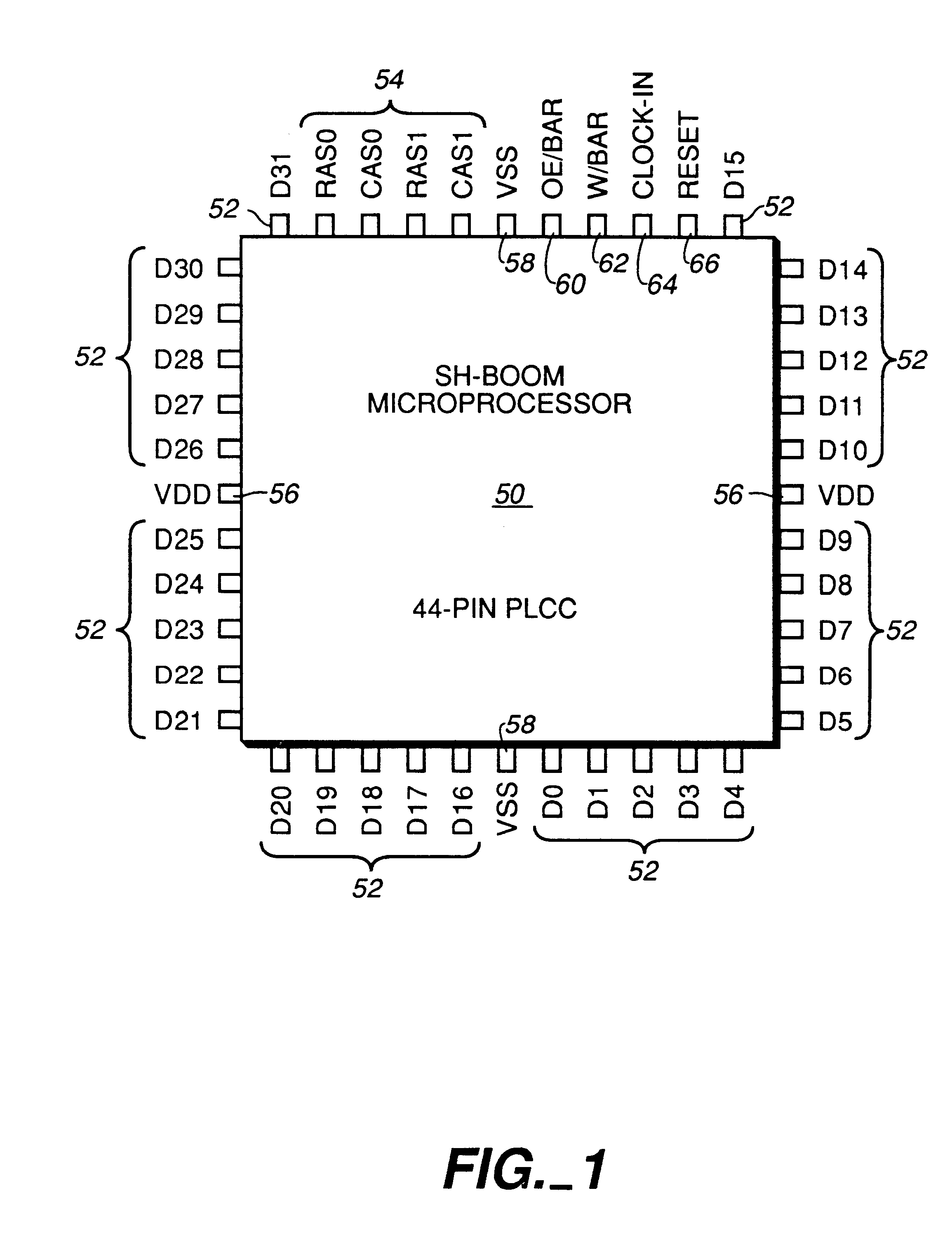

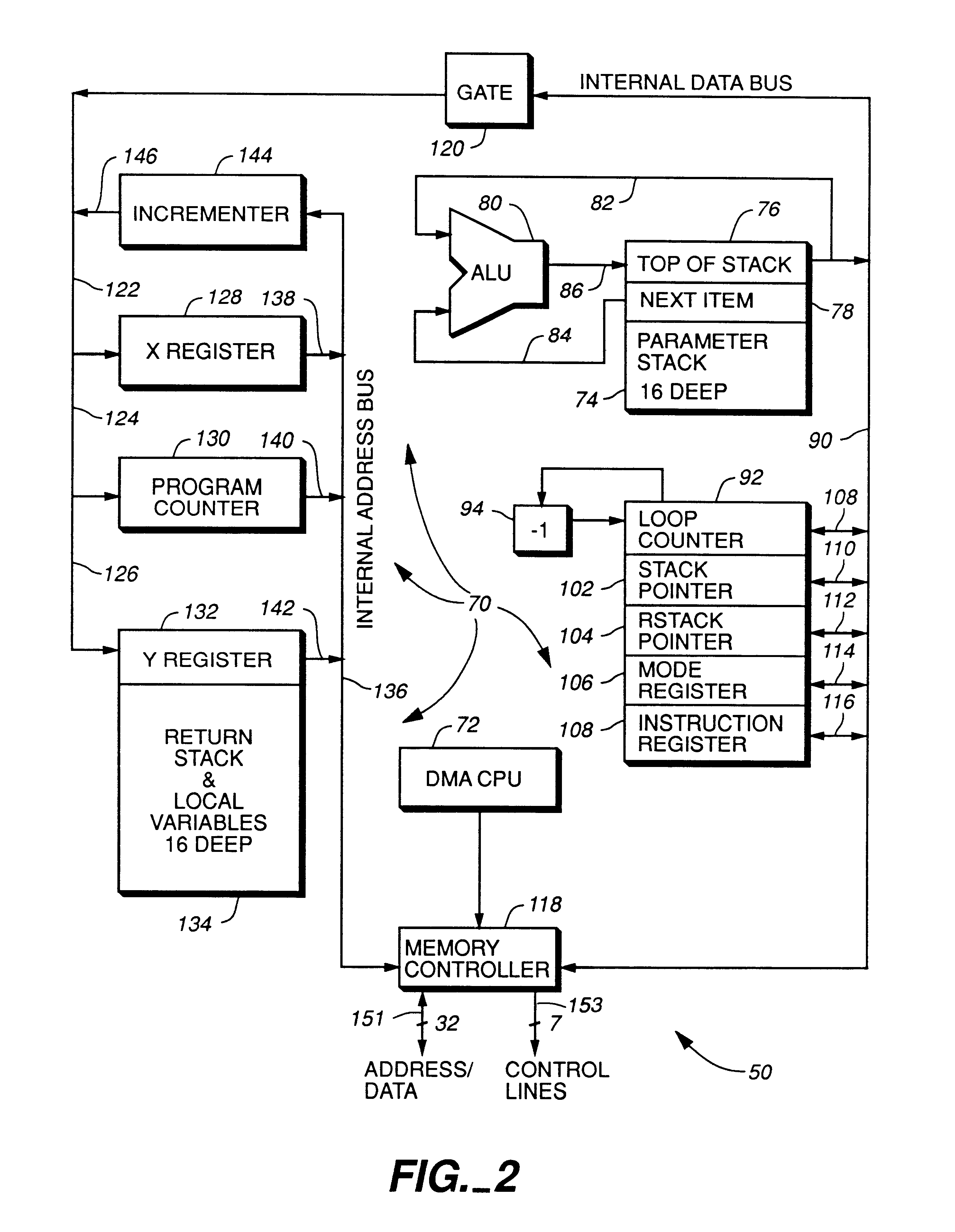

High performance microprocessor having variable speed system clock

InactiveUS6598148B1Improve performanceWithout sacrificing microprocessor speedRandom number generatorsInstruction analysisComputer architectureStatic random-access memory

A microprocessor integrated circuit including a processing unit disposed upon an integrated circuit substrate is disclosed herein. The processing unit is designed to operate in accordance with a predefined sequence of program instructions stored within an instruction register. A memory, capable of storing information provided by the processing unit and occupying a larger area of the integrated circuit substrate than the processing unit, is also provided within the microprocessor integrated circuit. The memory may be implemented using, for example dynamic or static random-access memory. A variable output frequency system clock, such as generated by a ring oscillator, is also disposed on the integrated circuit substrate.

Owner:MOORE CHARLES H TTE UTD 03 21 2006 THE EQUINOX TRUST

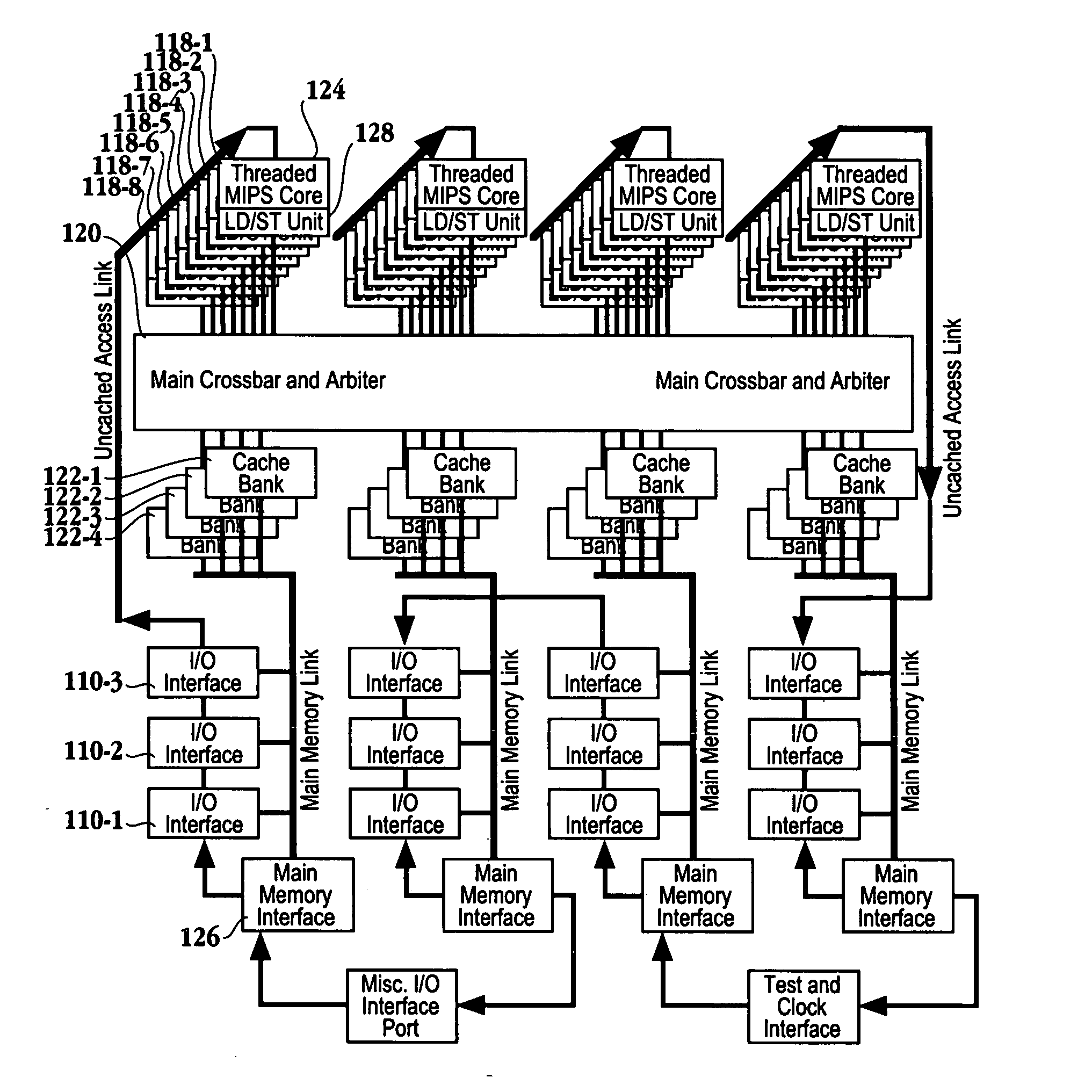

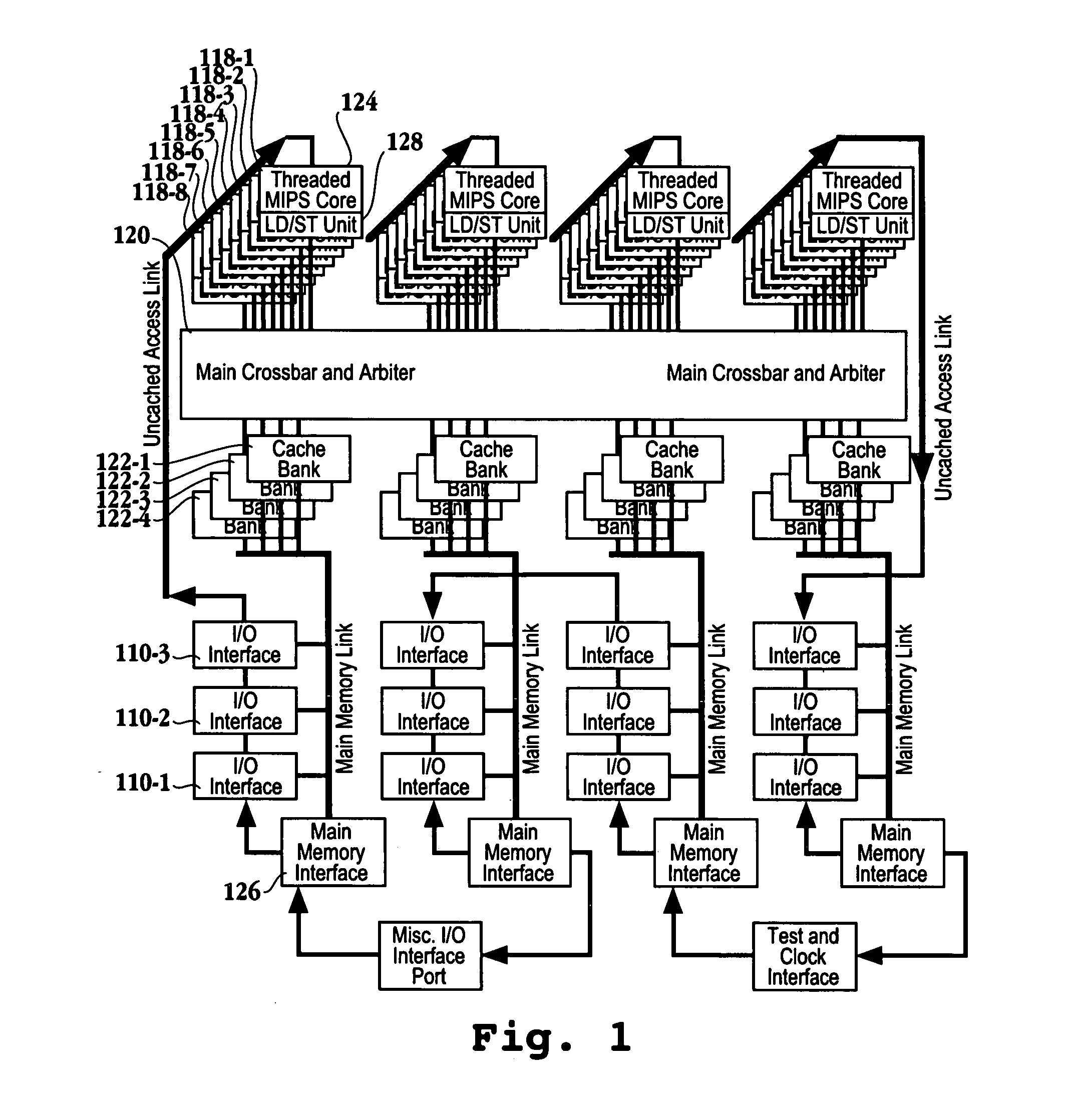

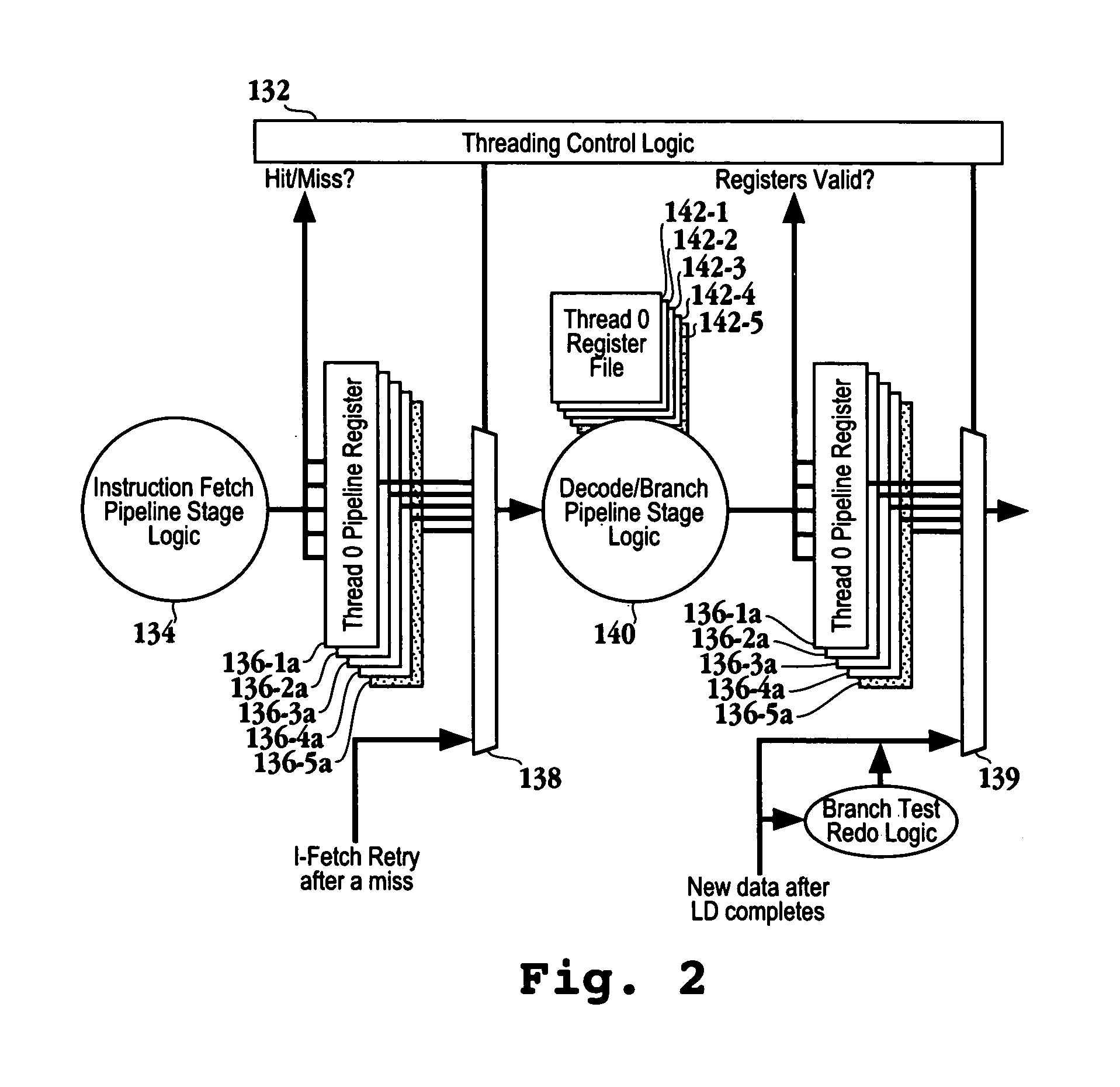

Multi-core multi-thread processor

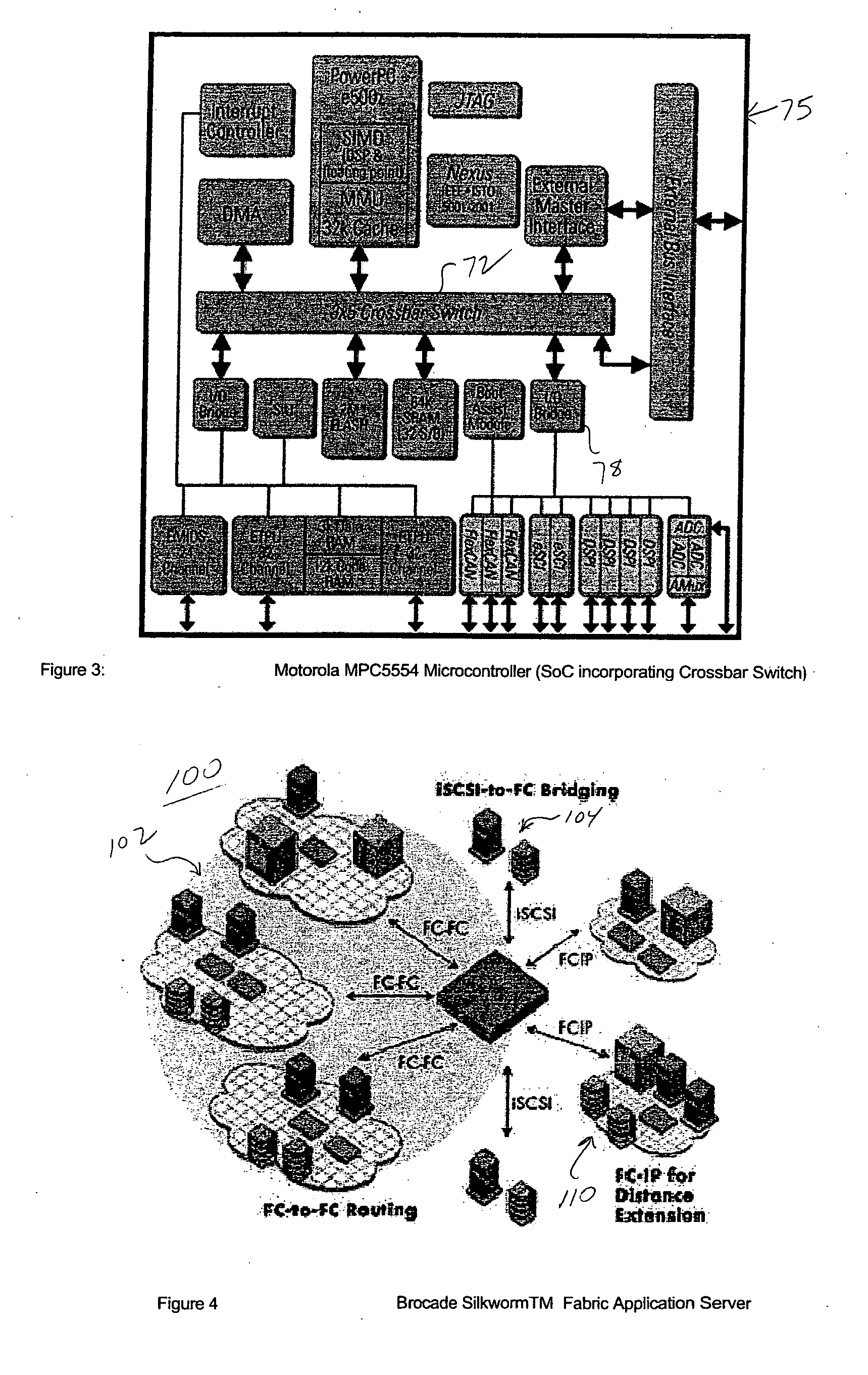

ActiveUS20050044319A1Easy accessRegister arrangementsMemory adressing/allocation/relocationMemory interfaceParallel computing

A processor is provided. The processor includes at least two cores. The at least two cores have a first level cache memory and are multi-threaded. A crossbar is included. A plurality of cache bank memories in communication with the at least two cores through the crossbar is provided. Each of the plurality of cache bank memories communicates with a main memory interface. A plurality of input / output interface modules in communication with the main memory interface and providing a link to the at least two cores are included. The link bypasses the plurality of cache bank memories and the crossbar. Threading hardware configured to enable the at least two cores to switch from a first thread to a second thread in a manner hiding delays caused by cache accesses is included. A server and a method for determining when to switch threads in a multi-core multi-thread environment are included.

Owner:ORACLE INT CORP

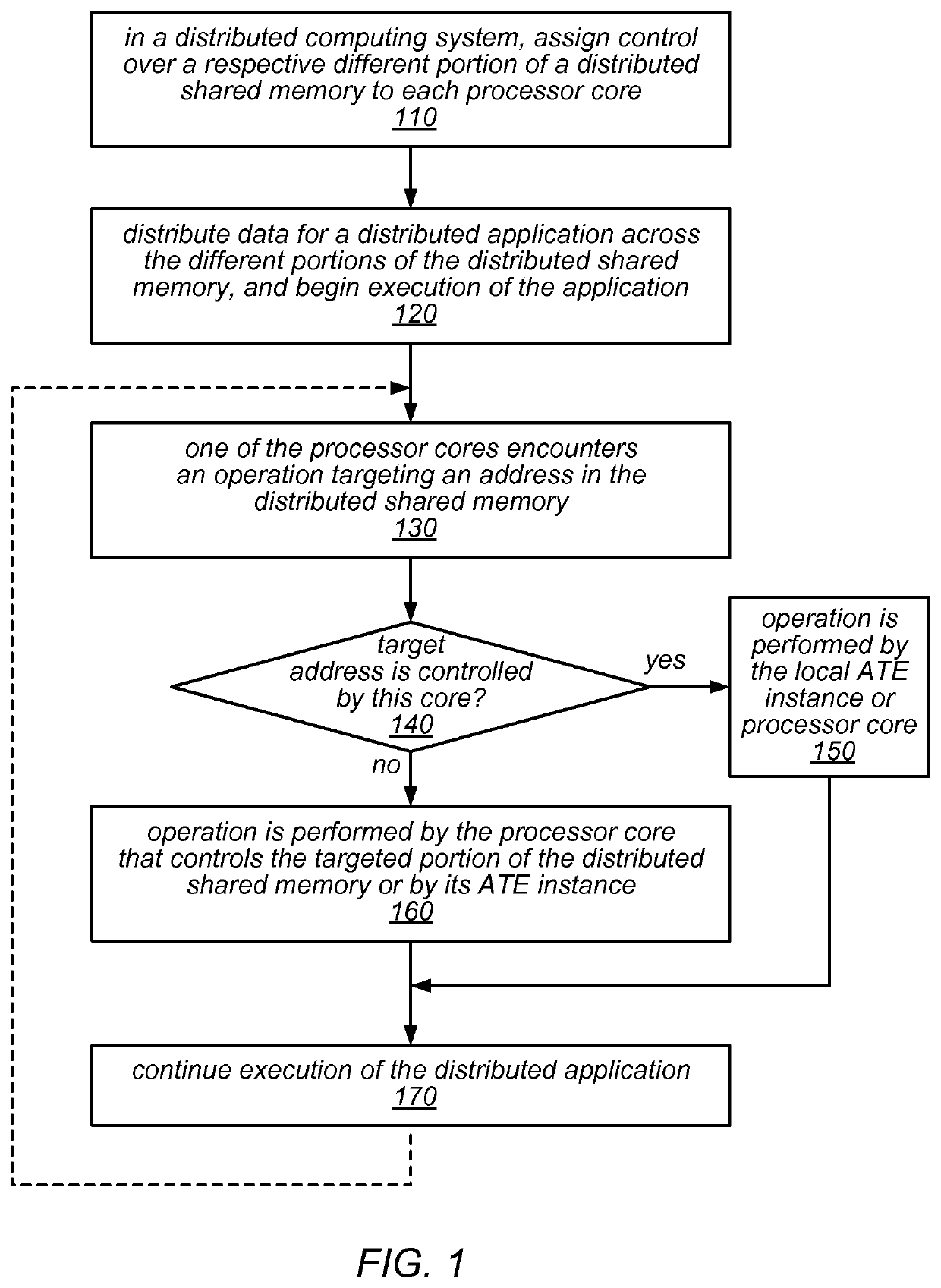

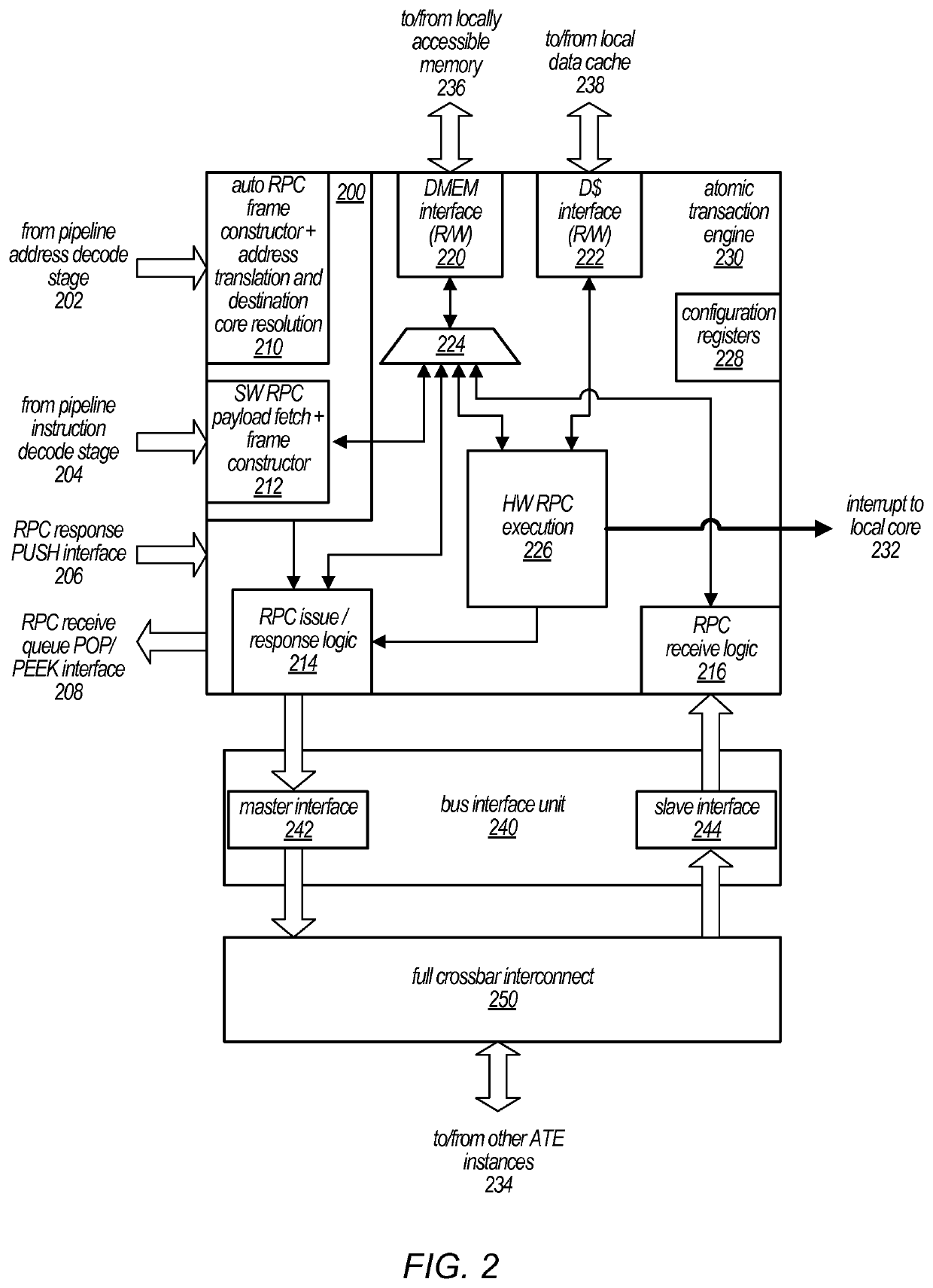

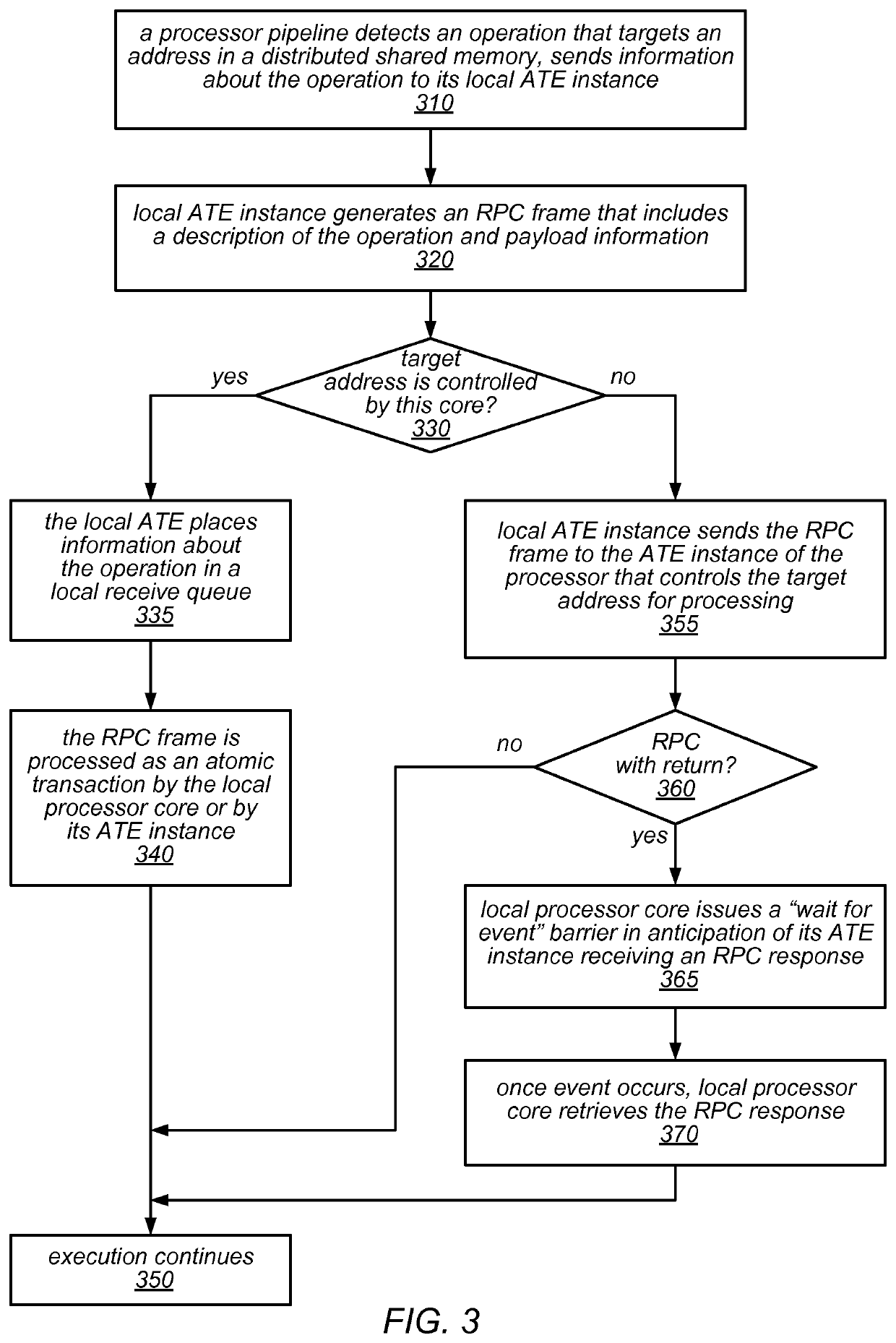

Distributed shared memory using interconnected atomic transaction engines at respective memory interfaces

ActiveUS10732865B2Light weightLess complexInput/output to record carriersProgram synchronisationComputer architectureMemory interface

A hardware-assisted Distributed Memory System may include software configurable shared memory regions in the local memory of each of multiple processor cores. Accesses to these shared memory regions may be made through a network of on-chip atomic transaction engine (ATE) instances, one per core, over a private interconnect matrix that connects them together. For example, each ATE instance may issue Remote Procedure Calls (RPCs), with or without responses, to an ATE instance associated with a remote processor core in order to perform operations that target memory locations controlled by the remote processor core. Each ATE instance may process RPCs (atomically) that are received from other ATE instances or that are generated locally. For some operation types, an ATE instance may execute the operations identified in the RPCs itself using dedicated hardware. For other operation types, the ATE instance may interrupt its local processor core to perform the operations.

Owner:ORACLE INT CORP

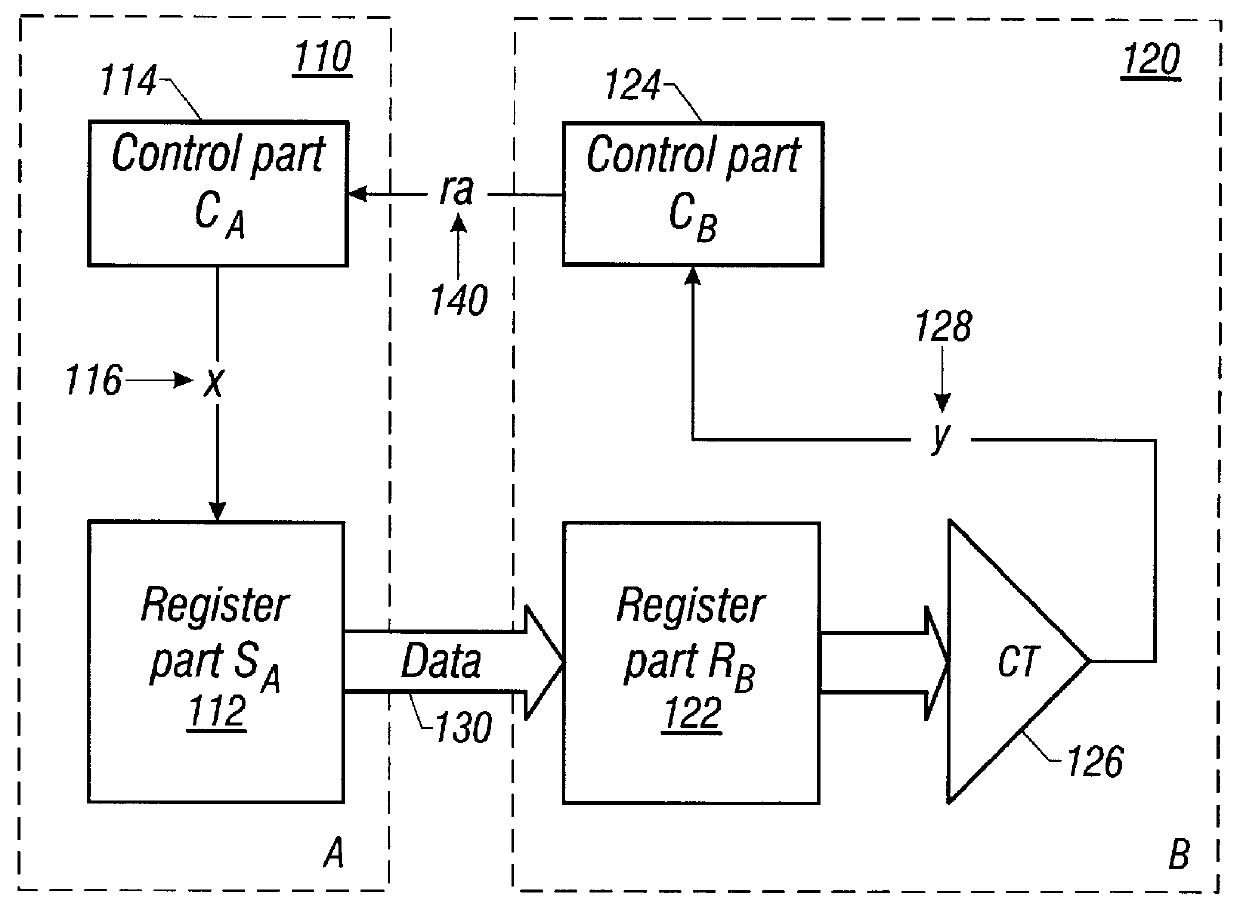

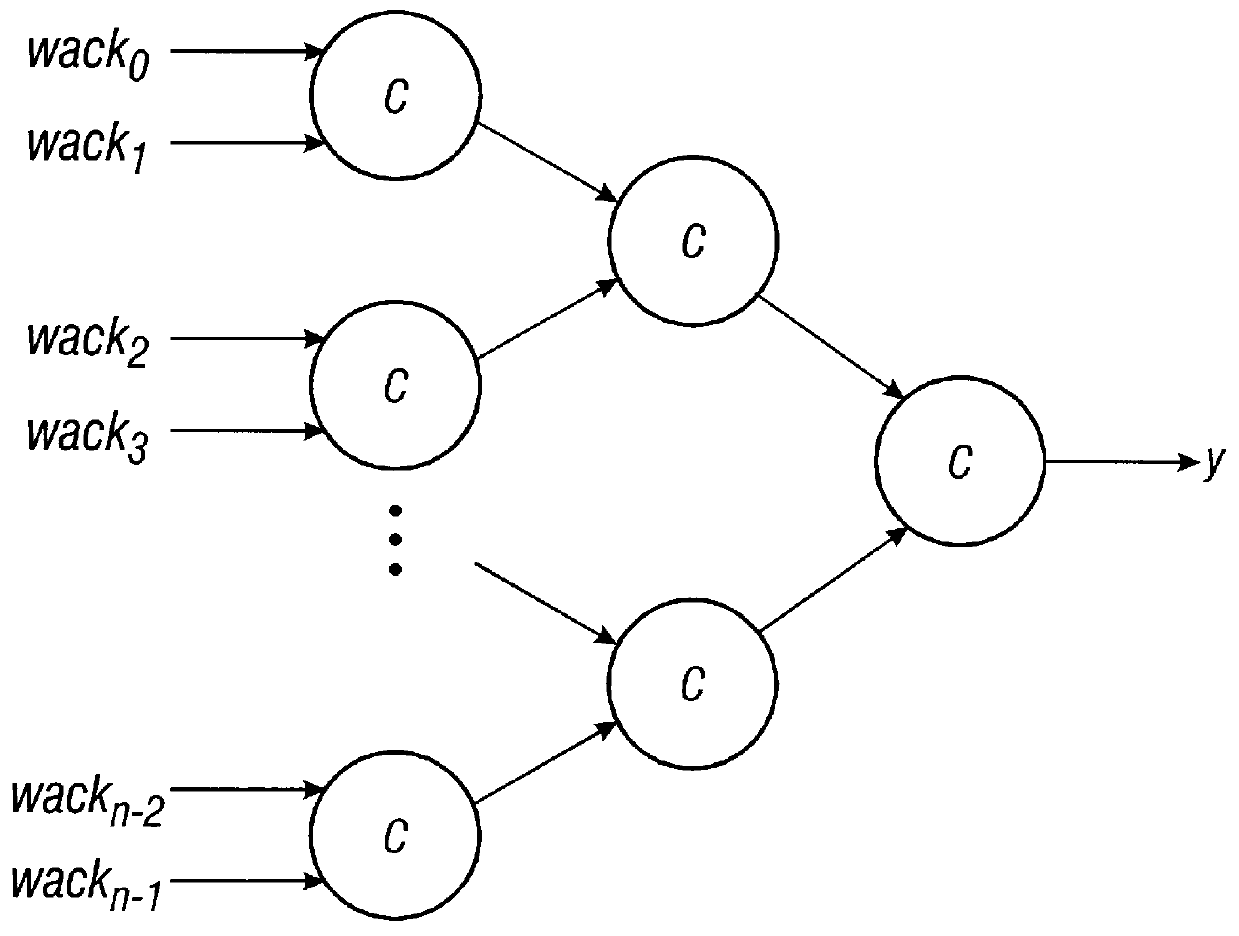

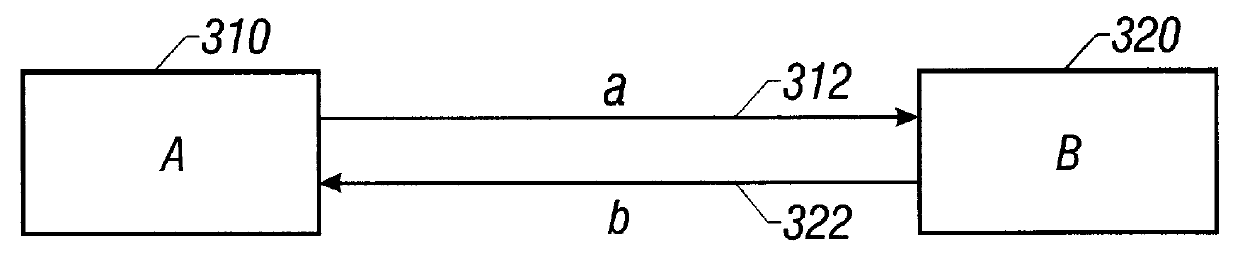

Pipelined completion for asynchronous communication

InactiveUS6038656AImprove throughputLower latencyArchitecture with single central processing unitMemory systemsAsynchronous circuitAsynchronous communication

Owner:CALIFORNIA INST OF TECH

I/O and memory bus system for DFPs and units with two- or multi-dimensional programmable cell architectures

InactiveUS6119181ADigital storageArchitecture with single central processing unitComputer architectureMemory bus

A uniform bus system is provided which operates without any special consideration by a programmer. Memories and peripheral may be connected to this bus system without any special measures. Likewise, units may be cascaded with the help of the bus system. The bus system combines a number of internal lines, and leads them as a bundle to terminals. The bus system control is predefined and does not require any influence by the programmer. Any number of memories, peripherals or other units can be connected to the bus system.

Owner:SCIENTIA SOL MENTIS AG

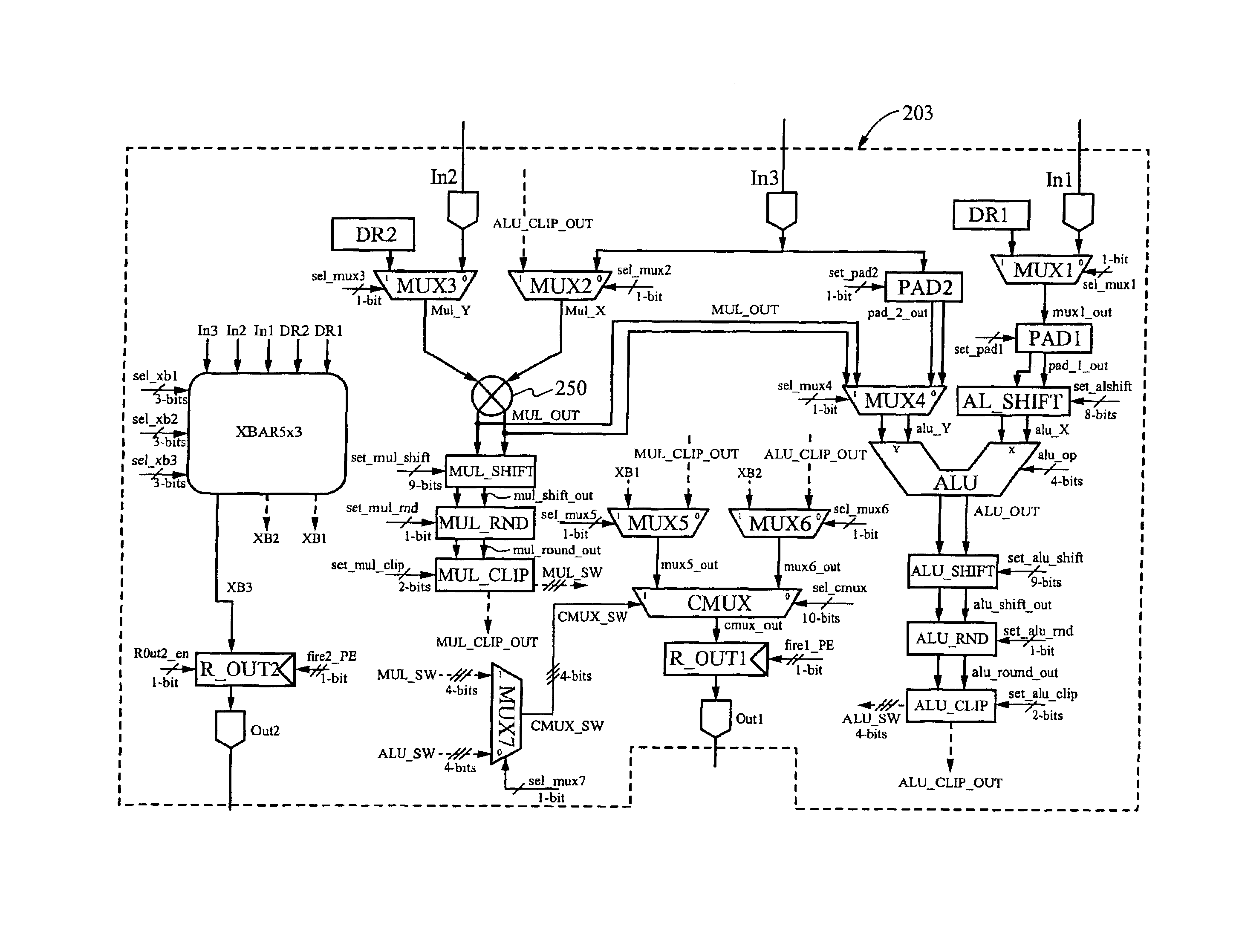

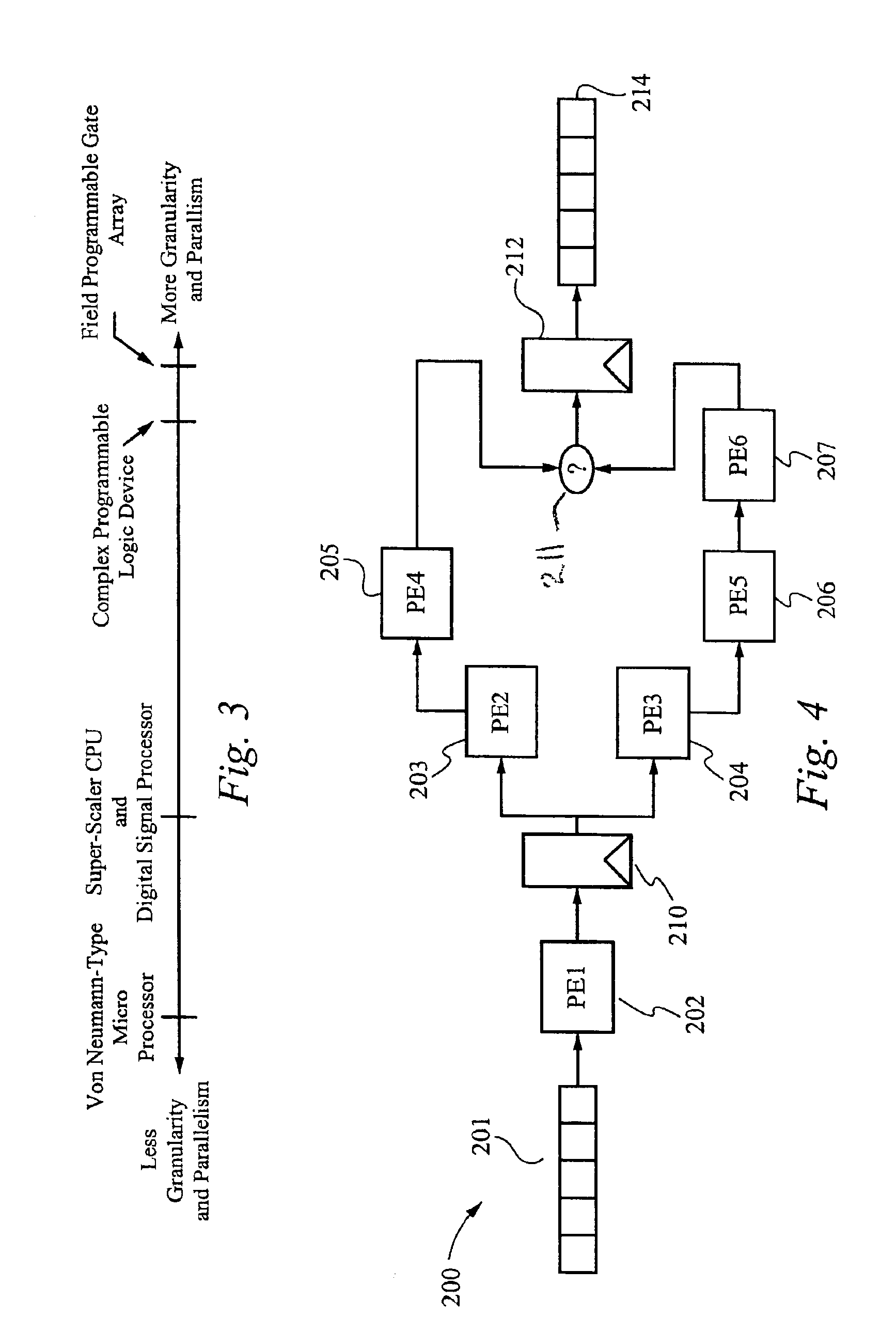

Reconfigurable data path processor

InactiveUS6883084B1Eliminates branchingCycle simpleEnergy efficient ICTConditional code generationMultiplexerProcessing element

A reconfigurable data path processor comprises a plurality of independent processing elements. Each of the processing elements advantageously comprising an identical architecture. Each processing element comprises a plurality of data processing means for generating a potential output. Each processor is also capable of through-putting an input as a potential output with little or no processing. Each processing element comprises a conditional multiplexer having a first conditional multiplexer input, a second conditional multiplexer input and a conditional multiplexer output. A first potential output value is transmitted to the first conditional multiplexer input, and a second potential output value is transmitted to the second conditional multiplexer output. The conditional multiplexer couples either the first conditional multiplexer input or the second conditional multiplexer input to the conditional multiplexer output, according to an output control command. The output control command is generated by processing a set of arithmetic status-bits through a logical mask. The conditional multiplexer output is coupled to a first processing element output. A first set of arithmetic bits are generated according to the processing of the first processable value. A second set of arithmetic bits may be generated from a second processing operation. The selection of the arithmetic status-bits is performed by an arithmetic-status bit multiplexer selects the desired set of arithmetic status bits from among the first and second set of arithmetic status bits. The conditional multiplexer evaluates the select arithmetic status bits according to logical mask defining an algorithm for evaluating the arithmetic status bits.

Owner:STC UNM +1

System and method for parallel execution of data generation tasks

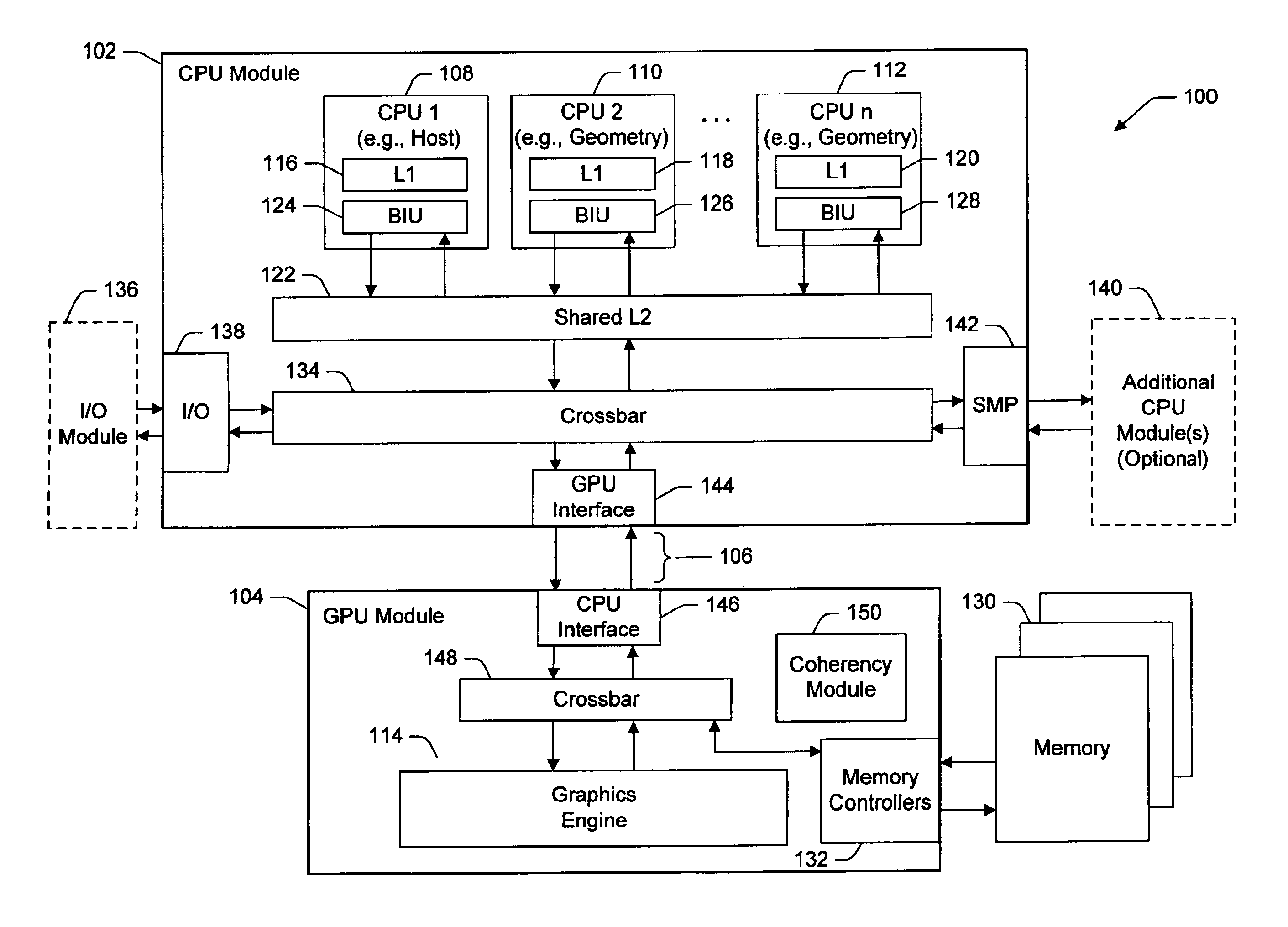

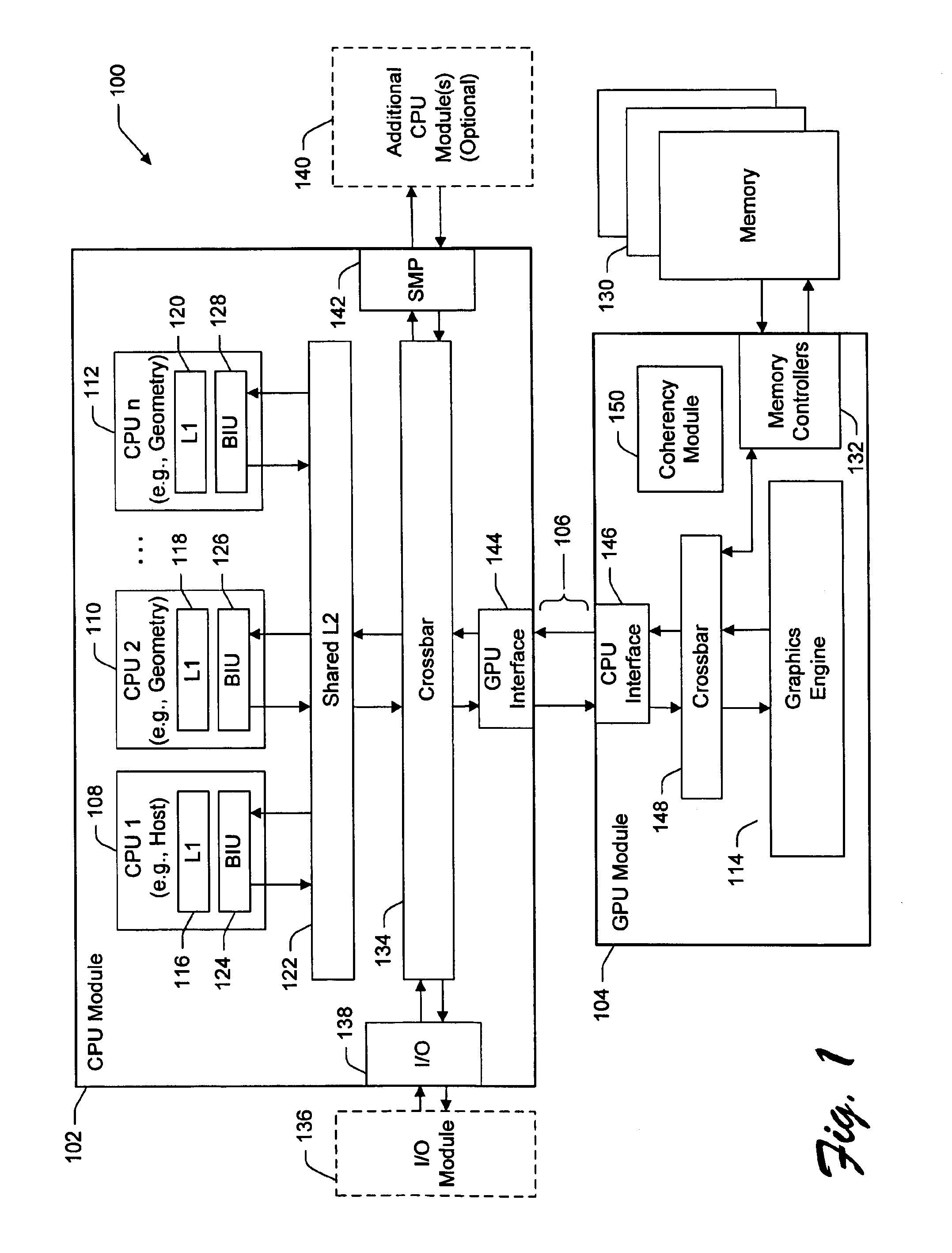

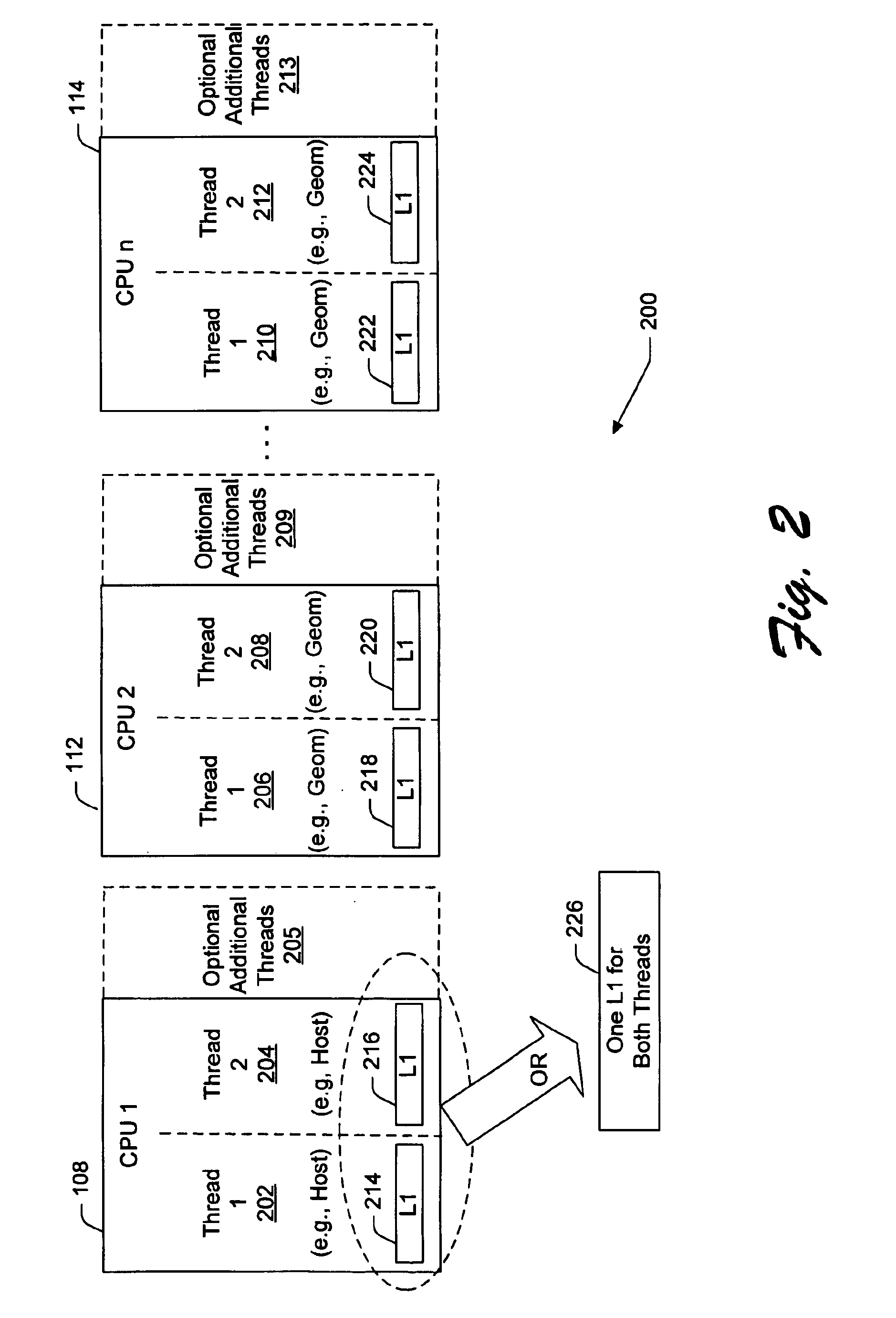

InactiveUS6862027B2Reduce storage requirementsReduces deleterious bandwidth restrictionResource allocationMemory adressing/allocation/relocationGraphicsParallel computing

A CPU module includes a host element configured to perform a high-level host-related task, and one or more data-generating processing elements configured to perform a data-generating task associated with the high-level host-related task. Each data-generating processing element includes logic configured to receive input data, and logic configured to process the input data to produce output data. The amount of output data is greater than an amount of input data, and the ratio of the amount of input data to the amount of output data defines a decompression ratio. In one implementation, the high-level host-related task performed by the host element pertains to a high-level graphics processing task, and the data-generating task pertains to the generation of geometry data (such as triangle vertices) for use within the high-level graphics processing task. The CPU module can transfer the output data to a GPU module via at least one locked set of a cache memory. The GPU retrieves the output data from the locked set, and periodically forwards a tail pointer to a cacheable location within the data-generating elements that informs the data-generating elements of its progress in retrieving the output data.

Owner:MICROSOFT TECH LICENSING LLC

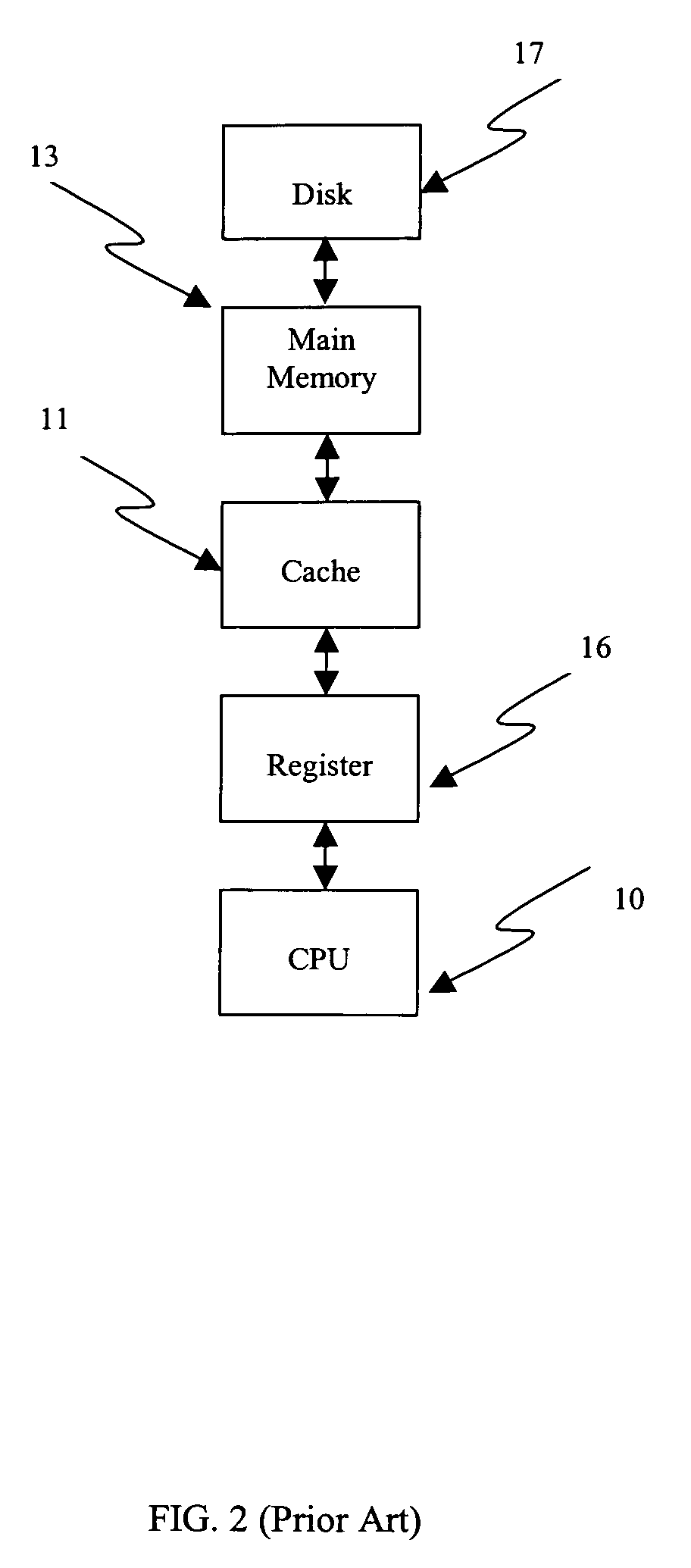

Single-chip microcomputer using adjustable timing to fetch data from an external memory

InactiveUS6125431AIncrease costEasy to operateMemory adressing/allocation/relocationProgram controlMicrocontrollerInternal memory

It is an object of the present invention to provide a one-chip microcomputer which permits the access time for an external memory to be equal to that for an internal memory. The one-chip microcomputer 10 includes an internal ROM 11, control circuit 12, output terminals 13, input terminals 14, control circuit 15, selector 16, instruction register 17, delay circuit 18, and fetch control signal select gate 19. For selection of the external ROM 30, a control arrangement 20 and a delay circuit 18 are employed in one embodiment to adjust the time at which ROM data is fetched by the instruction register 17, based on the delay time for accessing the external ROM 30.

Owner:LAPIS SEMICON CO LTD

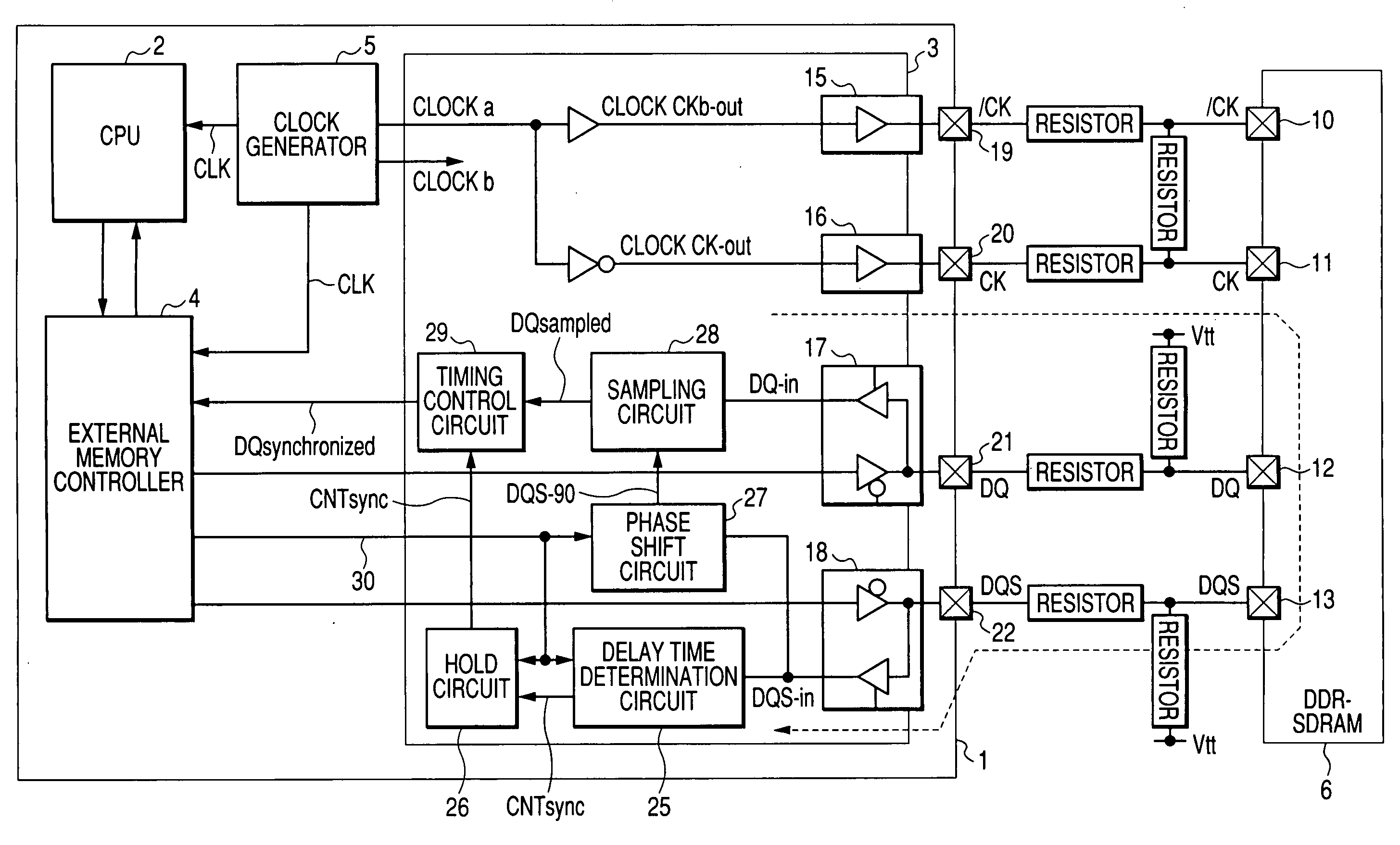

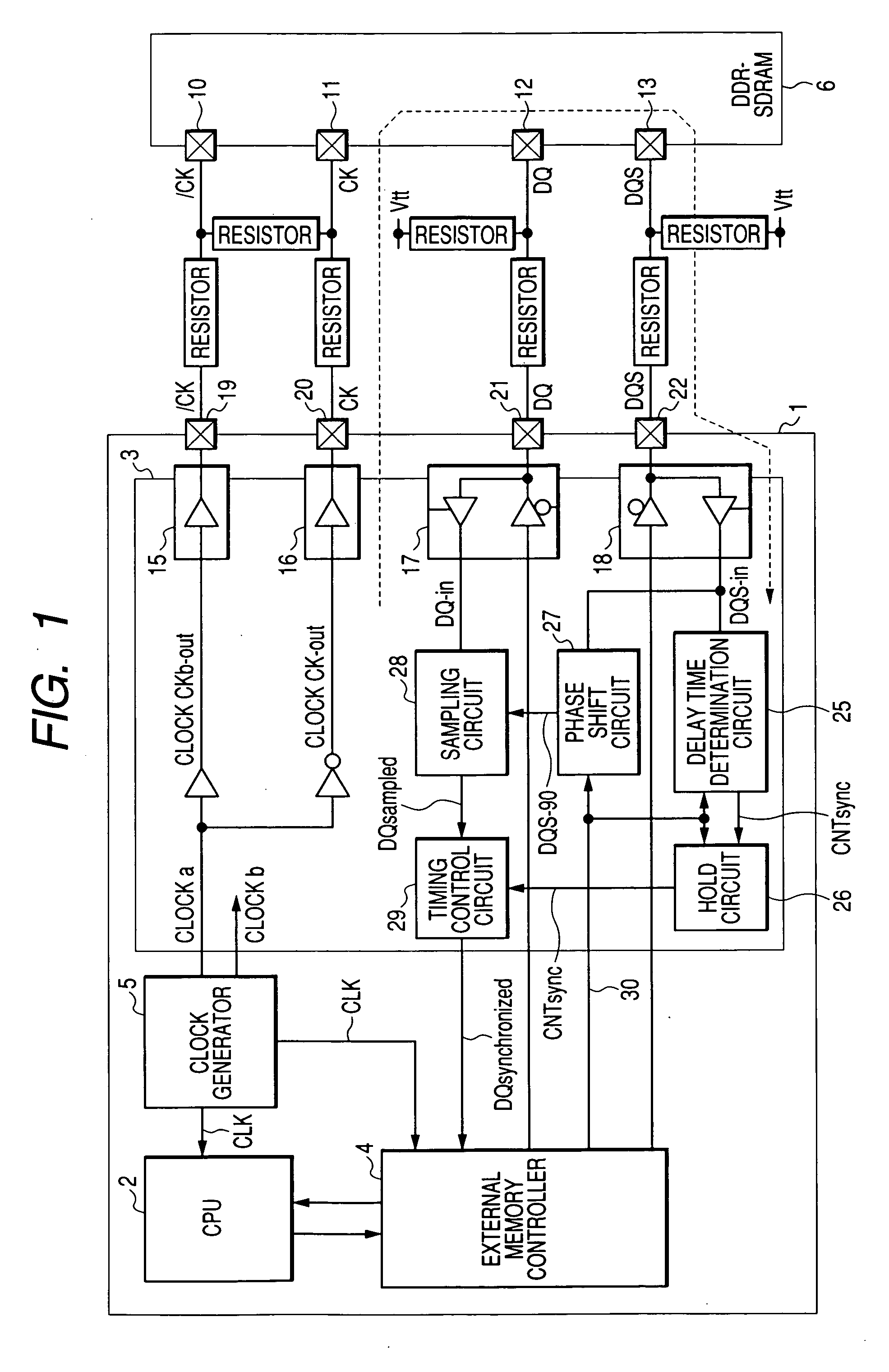

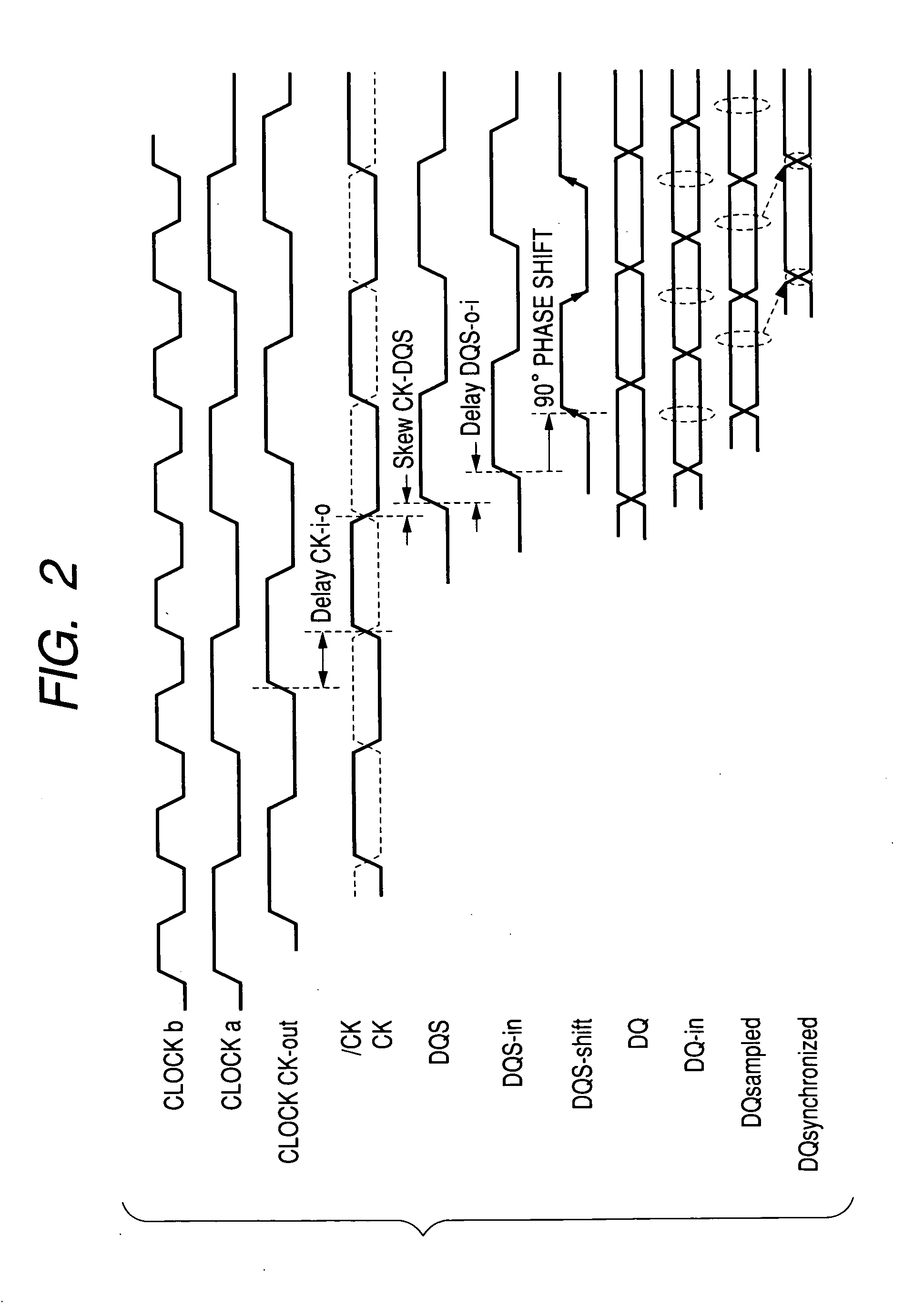

Semiconductor integrated circuit

ActiveUS20050047192A1Improve accuracyGuaranteed uptimeDigital storageGenerating/distributing signalsMemory interfaceComputer science

Owner:RENESAS ELECTRONICS CORP

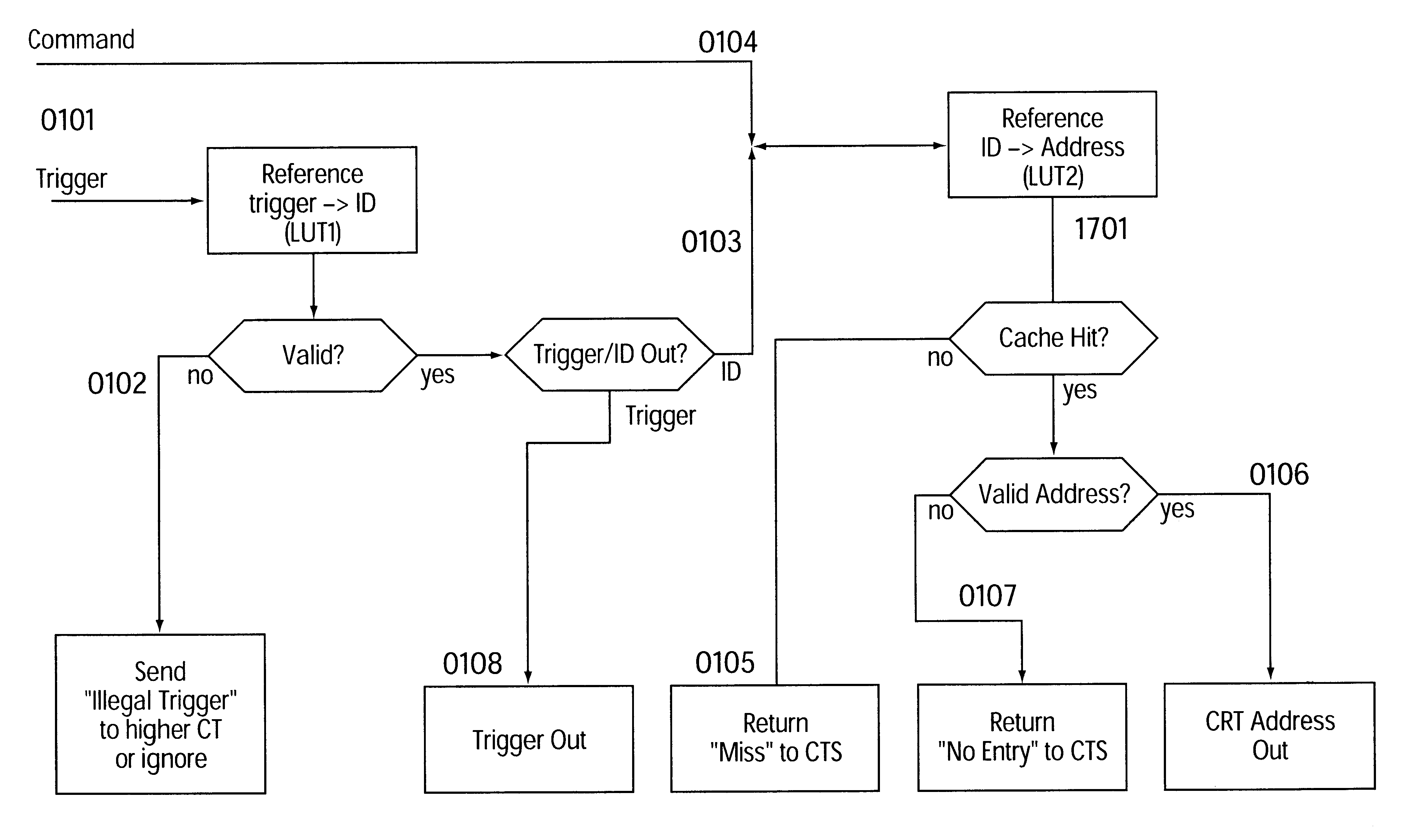

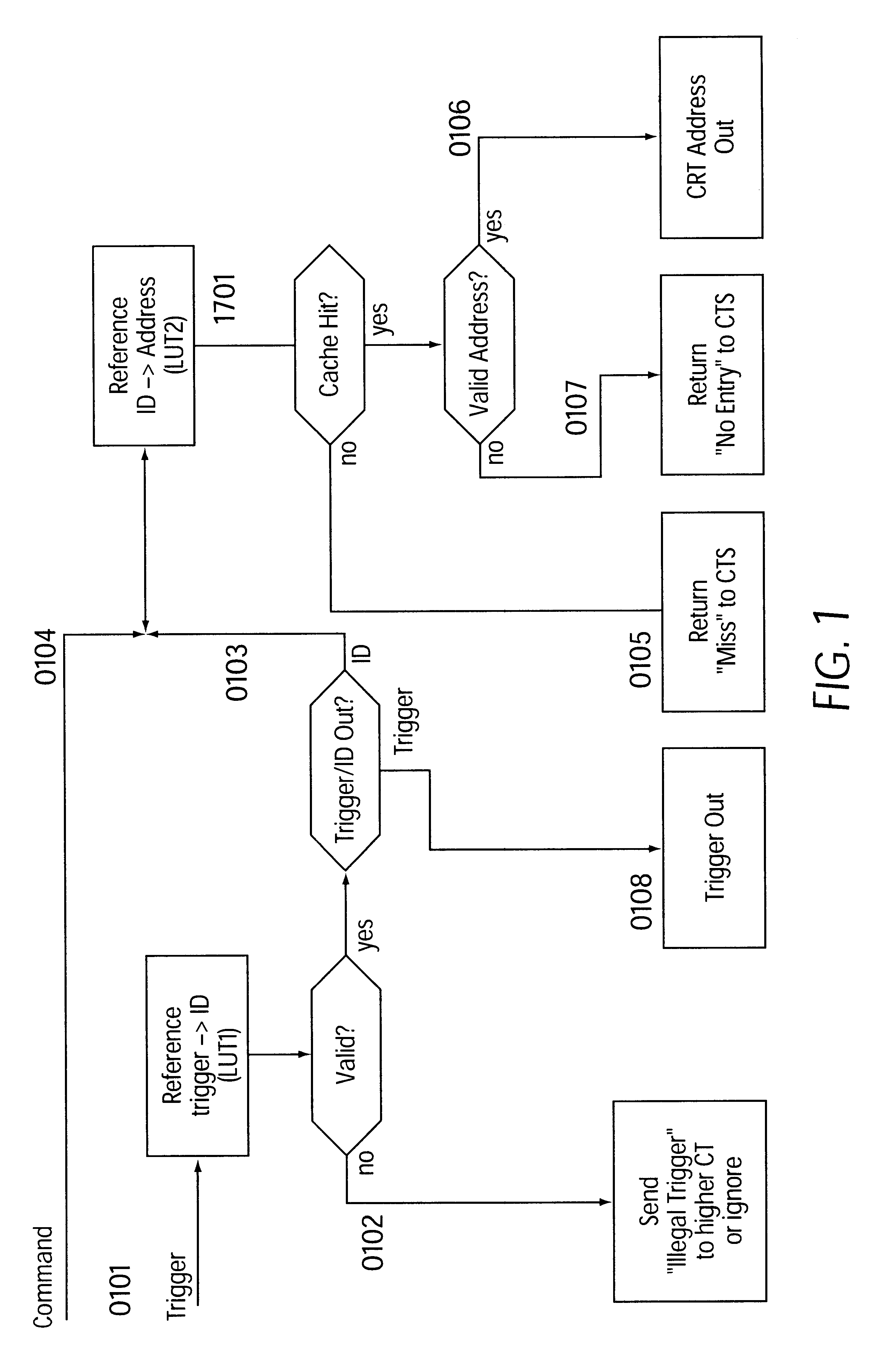

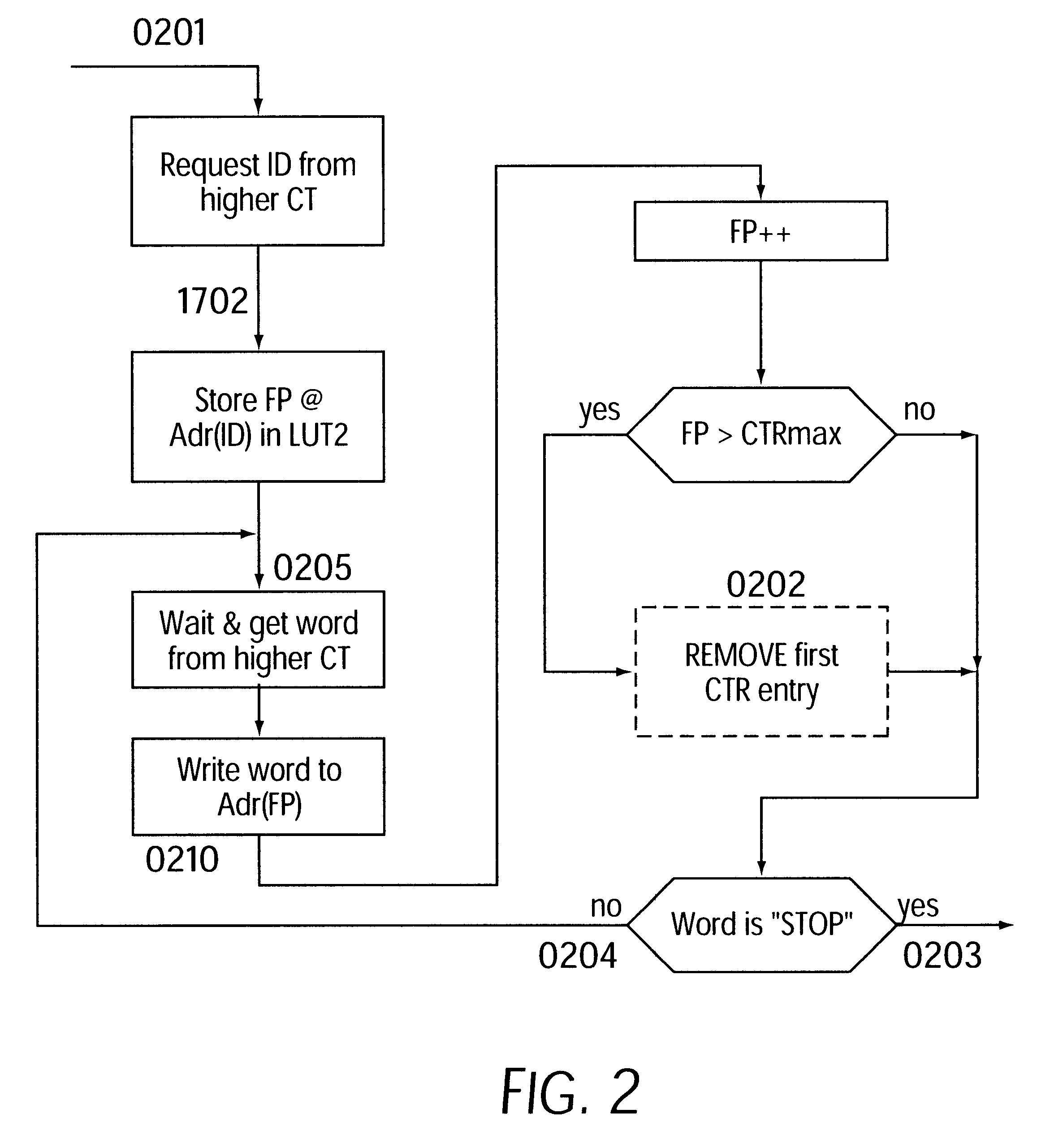

Method for hierarchical caching of configuration data having dataflow processors and modules having two-or multidimensional programmable cell structure (FPGAs, DPGAs, etc.)--

InactiveUS6480937B1Improve performanceEasy transferMemory adressing/allocation/relocationProgram controlData stream processingComputer module

A method of caching commands in microprocessors having a plurality of arithmetic units and in modules having a two- or multidimensional cell arrangement is provided. The method includes combining a plurality of cells and arithmetic units to form a plurality of groups, assigning a cache unit to a group, and connecting the cache unit to a higher level unit via a tree structure. The cache unit may send requests for required commands to the higher level cache unit, which may return a command sequence including the required command, if the higher level cache unit holds the first command sequence including the required command in the higher level cache unit's local memory.

Owner:SCIENTIA SOL MENTIS AG +1

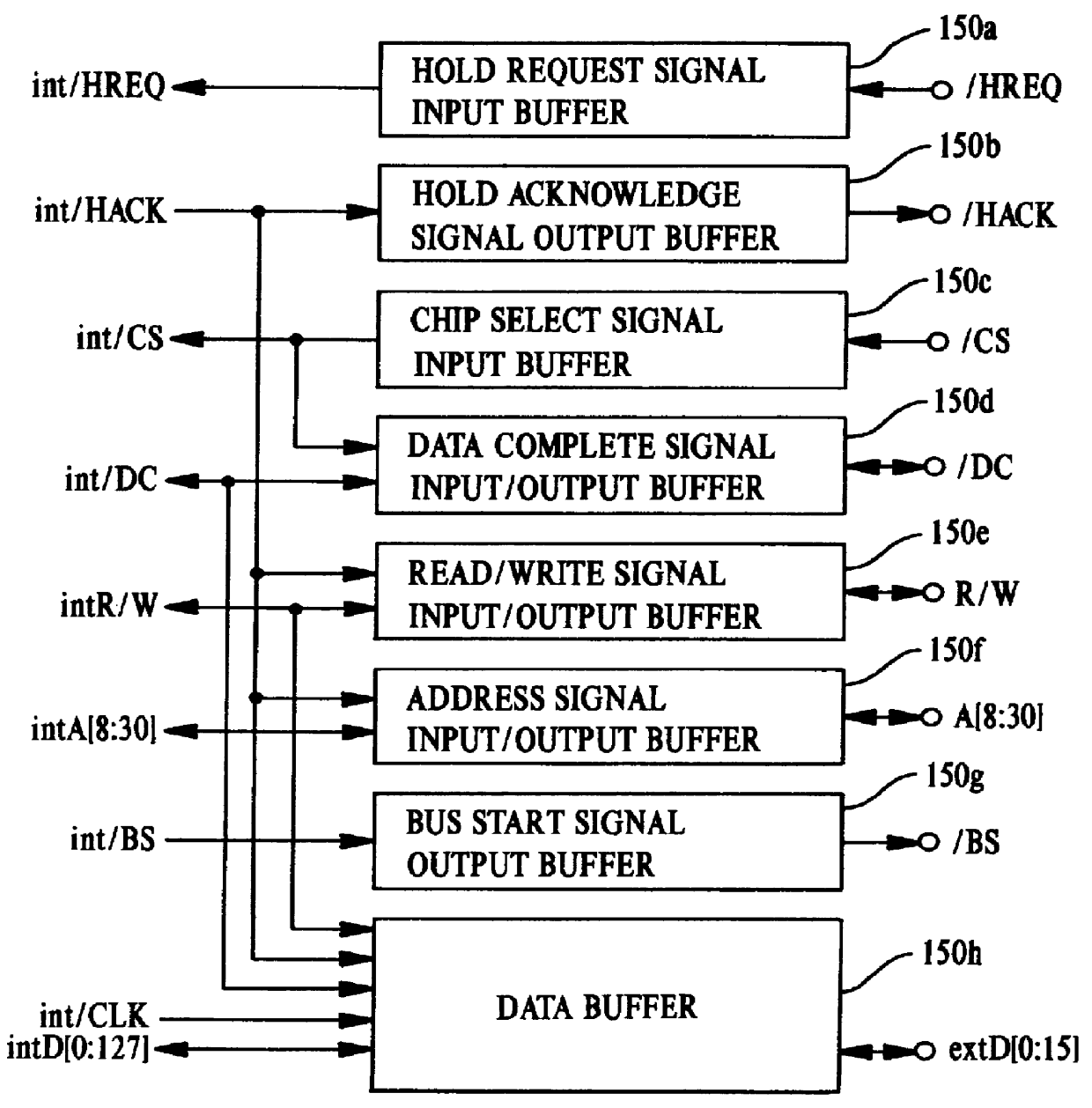

Computer system and semiconductor device on one chip including a memory and central processing unit for making interlock access to the memory

InactiveUS6101584AUnauthorized memory use protectionMultiple digital computer combinationsChip selectComputerized system

A central processing unit (CPU) having a built-in dynamic random-access memory (DRAM) with exclusive access to the DRAM when the CPU performs an interlock access to the DRAM. A memory controller prevents the DRAM from being externally accessed while the CPU is performing the interlock access. When the memory controller receives an external request for accessing the DRAM during a time when the CPU is performing an interlock access to the DRAM, the memory controller outputs a response signal indicating that external access to the DRAM is excluded or inhibited. The request signal can be a hold request signal for requesting a bus right or can be a chip select signal. The response signal can be a hold acknowledge signal or a data complete signal. The memory controller can be switched to and from first and second lock modes, where hold request and hold acknowledge signals are used during the first lock mode and chip select and data complete signals are used in the second lock mode.

Owner:MITSUBISHI ELECTRIC CORP

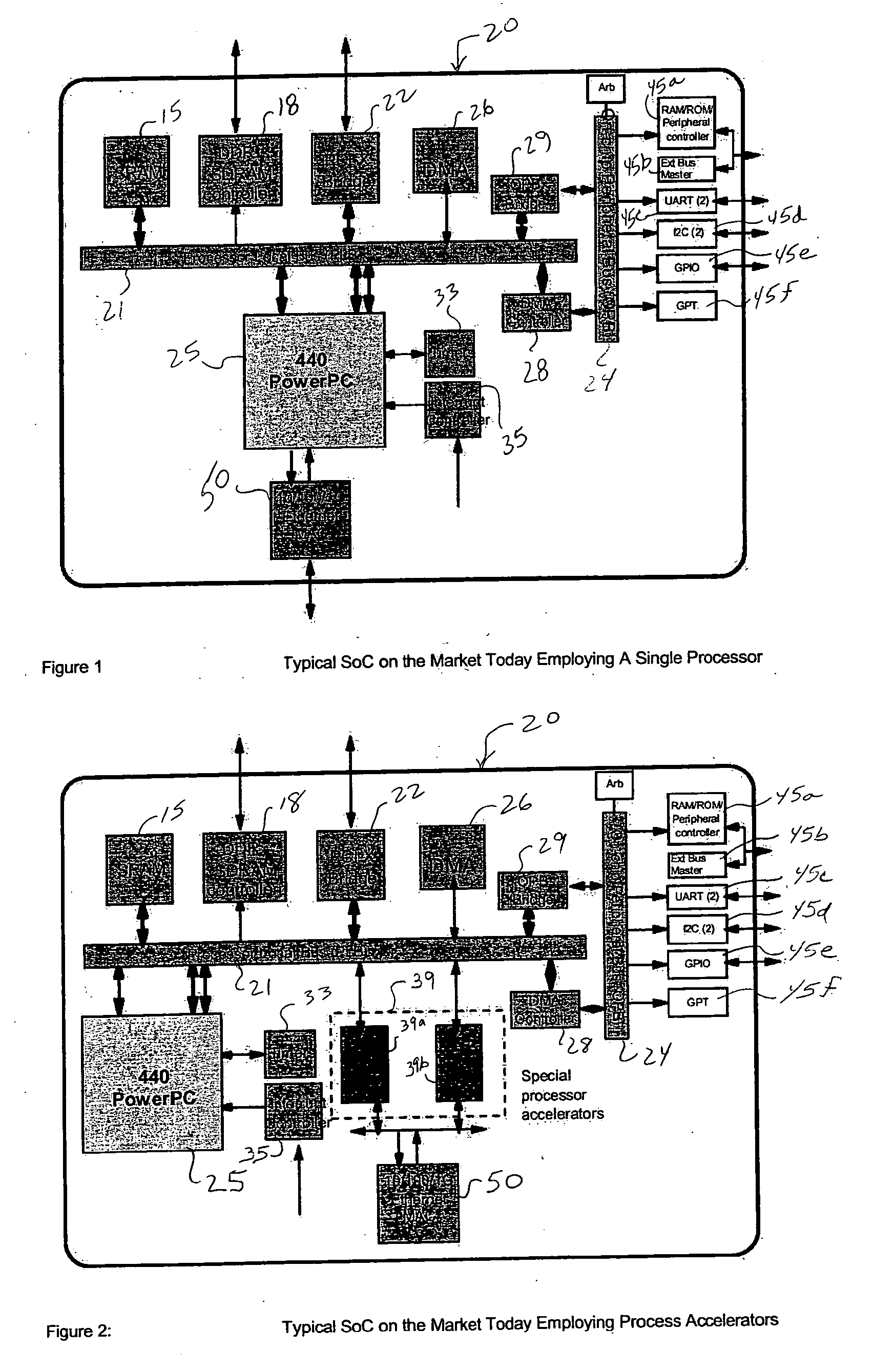

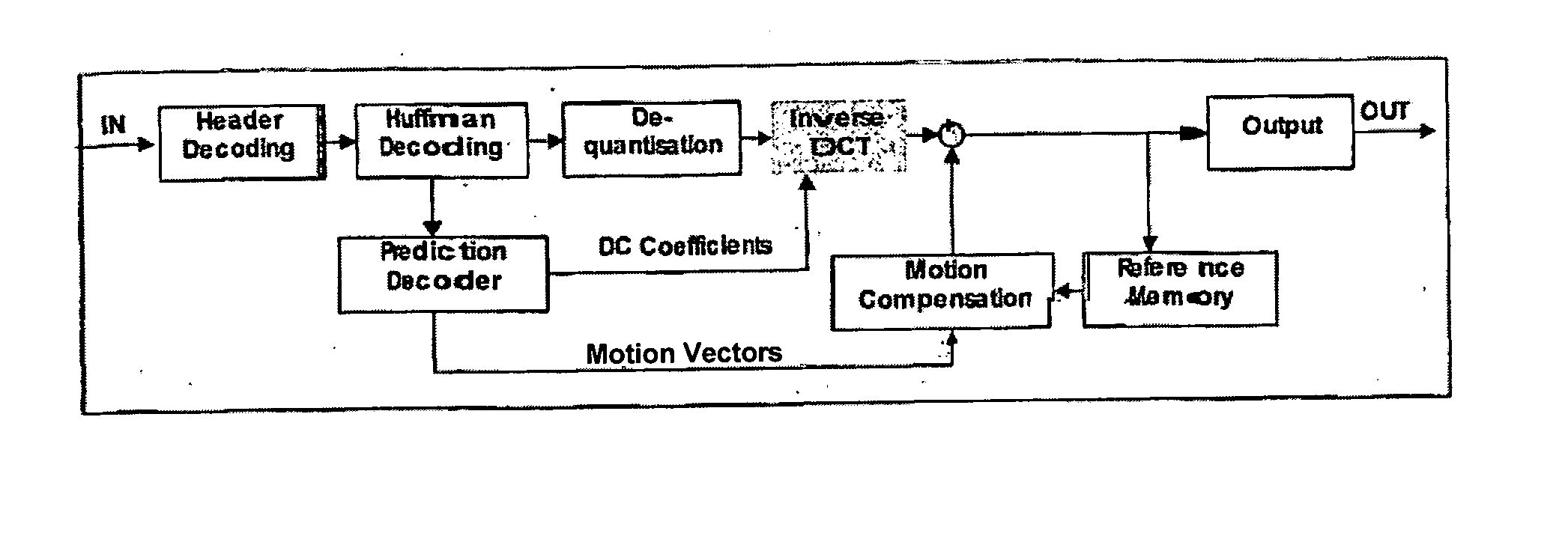

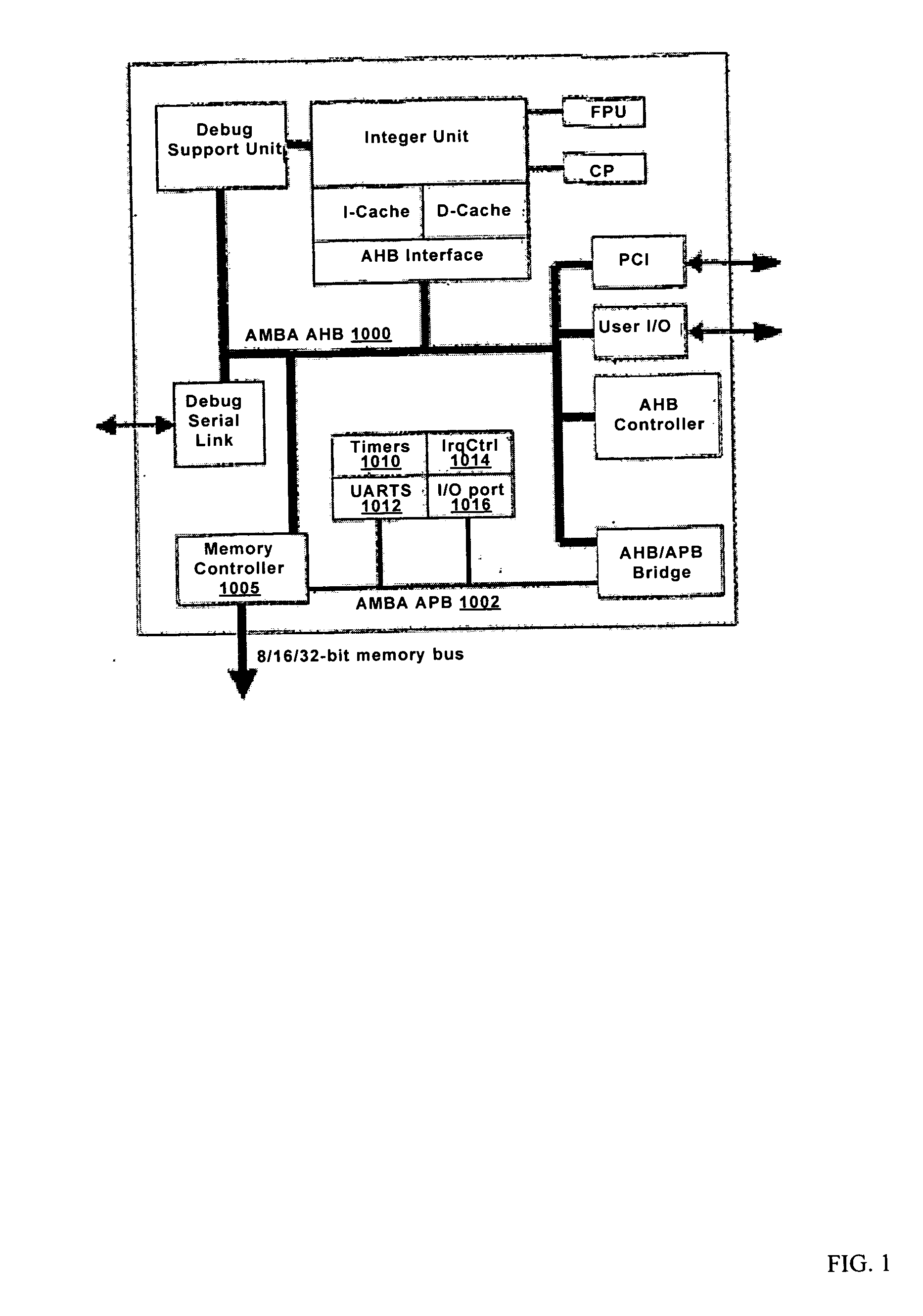

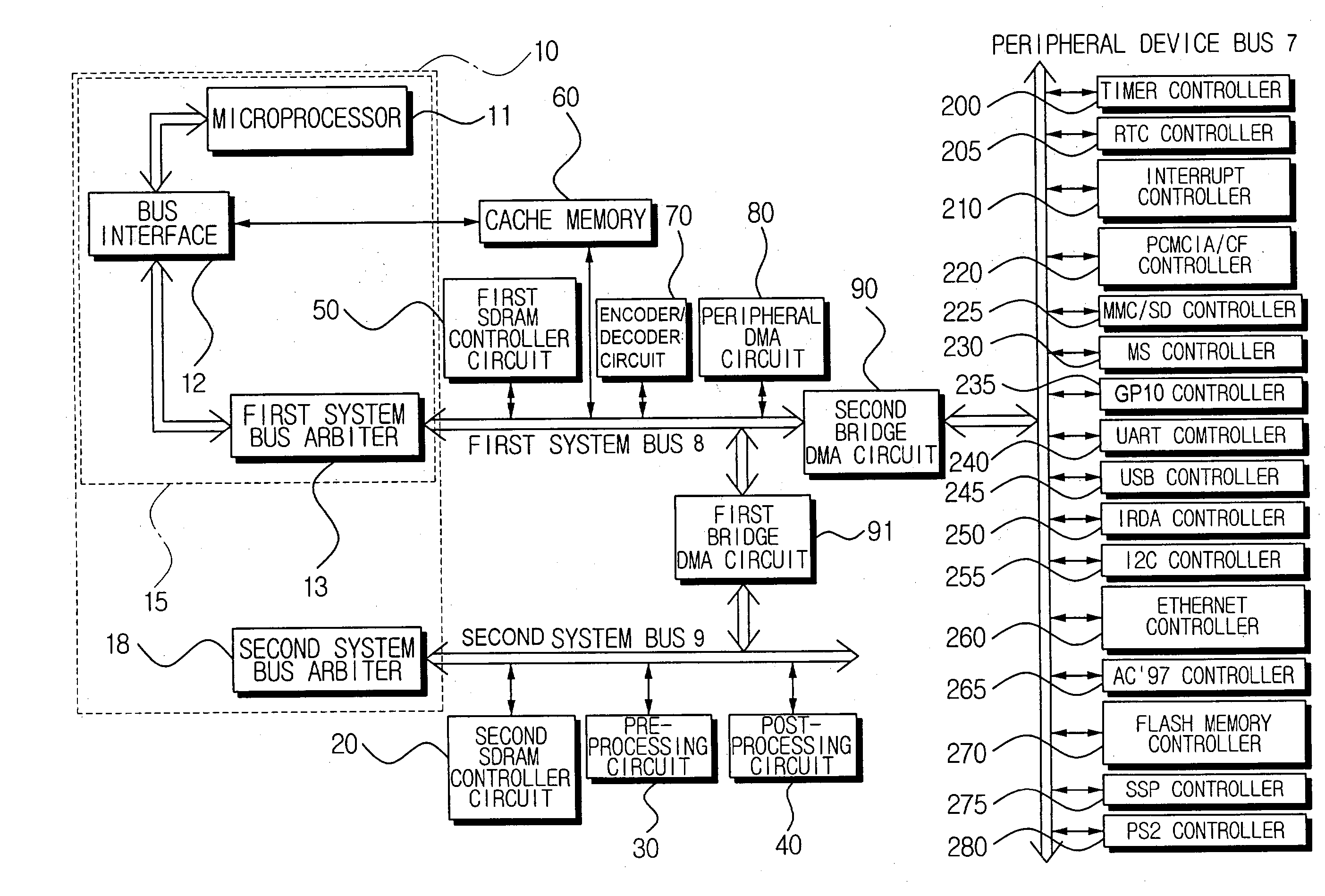

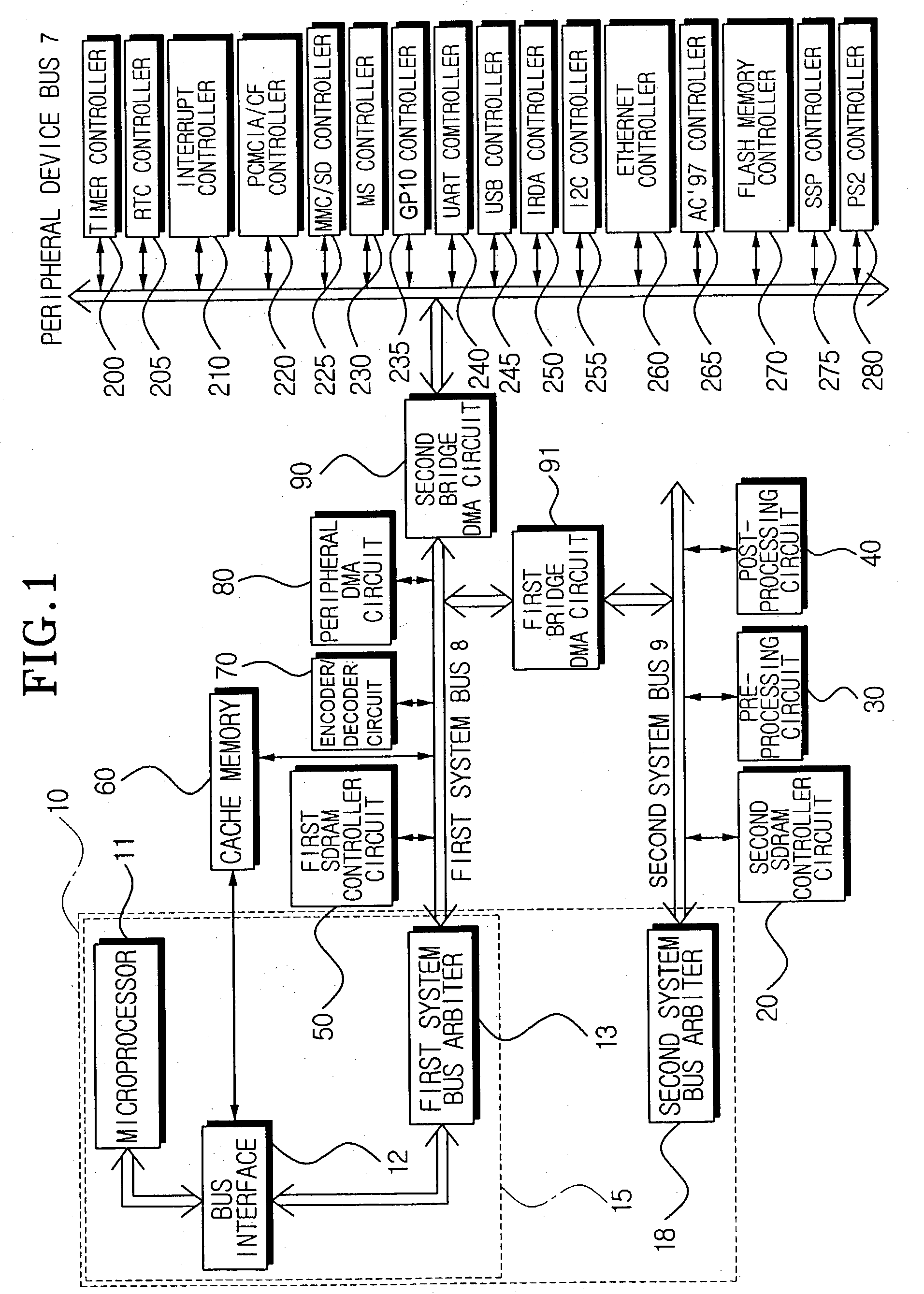

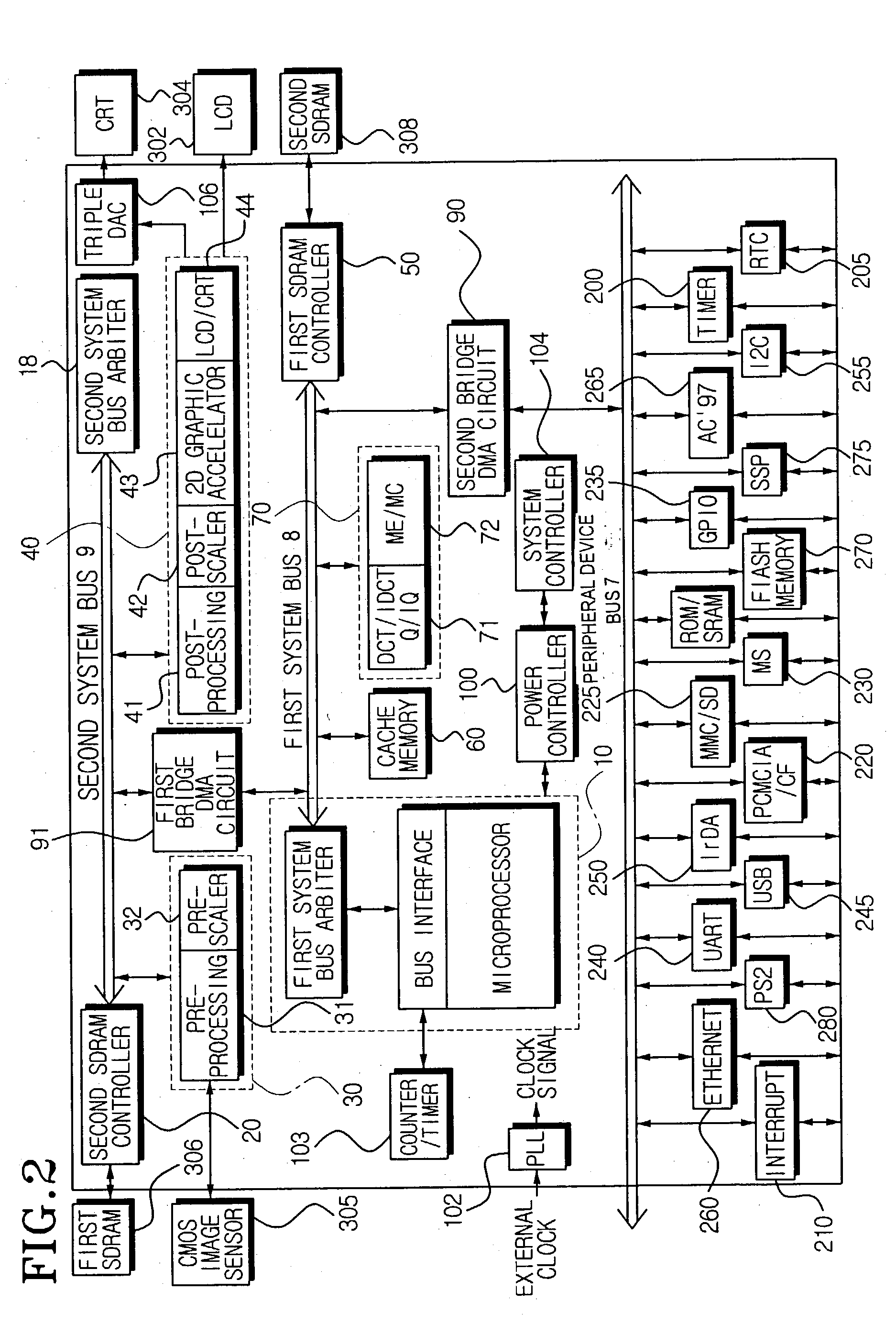

System on chip processor for multimedia devices

InactiveUS7171050B2Advantage in of performance and power consumptionImprove processing speedEnergy efficient ICTCharacter and pattern recognitionEncoder decoderComputer science

A system on chip processor for a multimedia device includes: a pre-processing circuit to convert an external image signal into a compressed input signal for compressing; an encoder / decoder circuit to generate compressed data by compressing the compressed input signal and outputting a coded image signal by decompressing the compressed data; a post-processing circuit to convert the coded image signal into a signal that can be used by an image displaying device; a first system bus connected with pre-processing circuit and post-processing circuit; a second system bus connected with the encoder / decoder circuit; a first bridge DMA circuit to mutually transmit data between first system bus and second system bus; and a controller to control the operation of the circuits.

Owner:SAMSUNG ELECTRONICS CO LTD

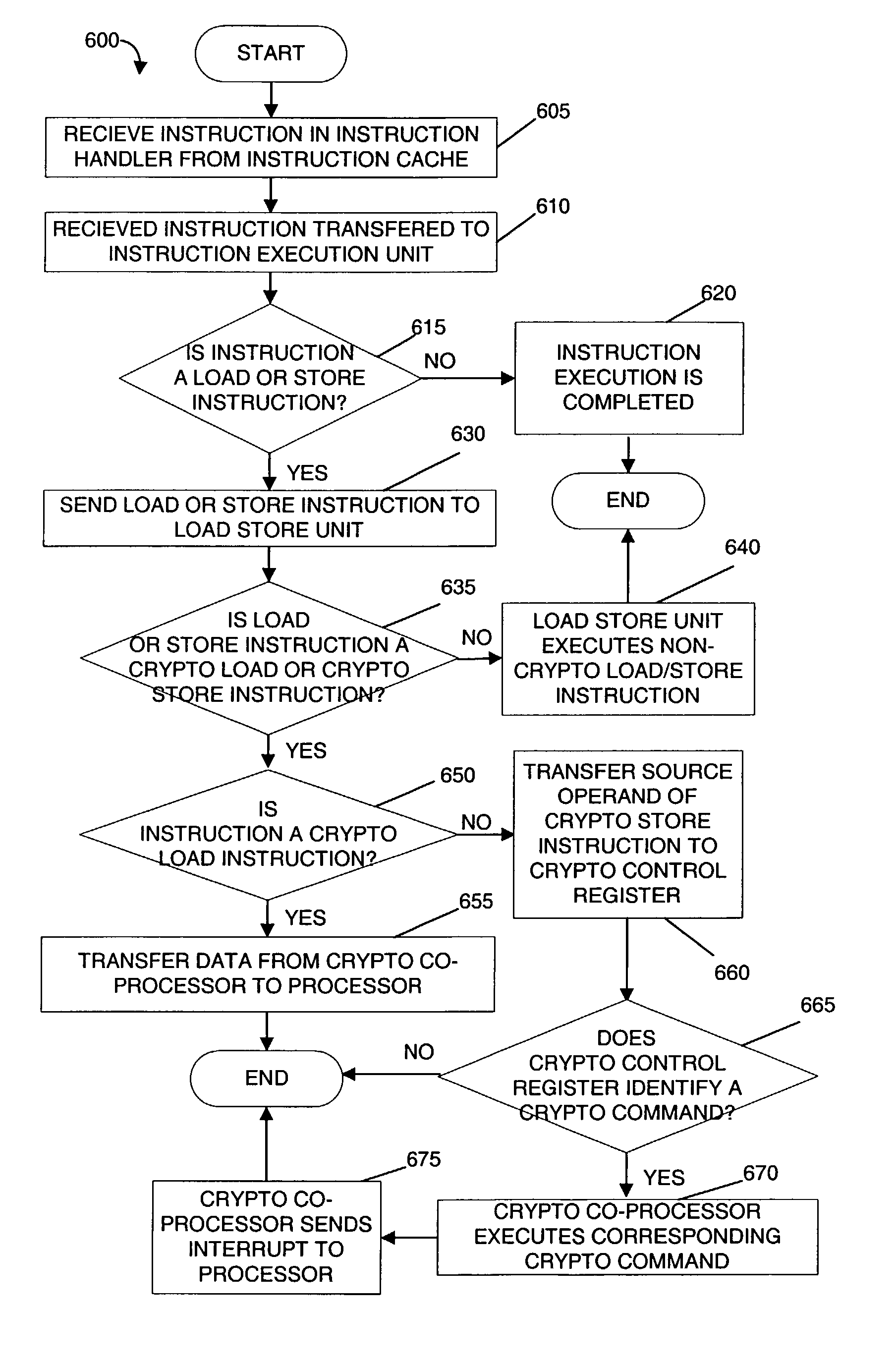

Stream processor with cryptographic co-processor

InactiveUS20030084309A1Energy efficient ICTStatic indicating devicesProcessing coreDirect memory access

A microprocessor includes a first processing core, a first cryptographic coprocessor and an integer multiplier unit that is coupled to the first processing core and the first cryptographic co-processor. The first processing core includes an instruction decode unit, an instruction execution unit, a load / store unit. The first cryptographic coprocessor is located on a first die with the first processing core. The first cryptographic co-processor includes a cryptographic control register, a direct memory access engine that is coupled to the load / store unit in the first processing core and a cryptographic memory.

Owner:SUN MICROSYSTEMS INC

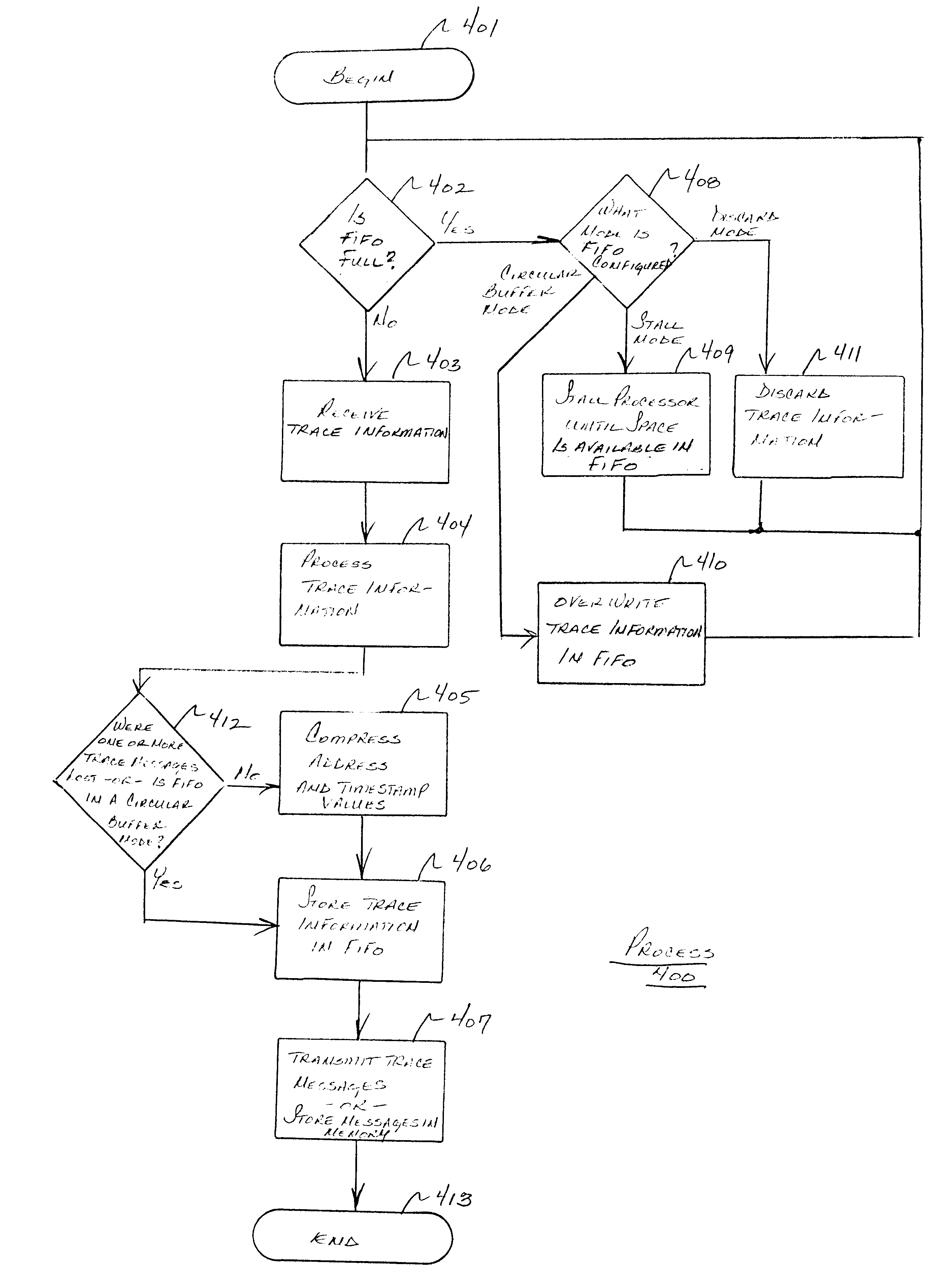

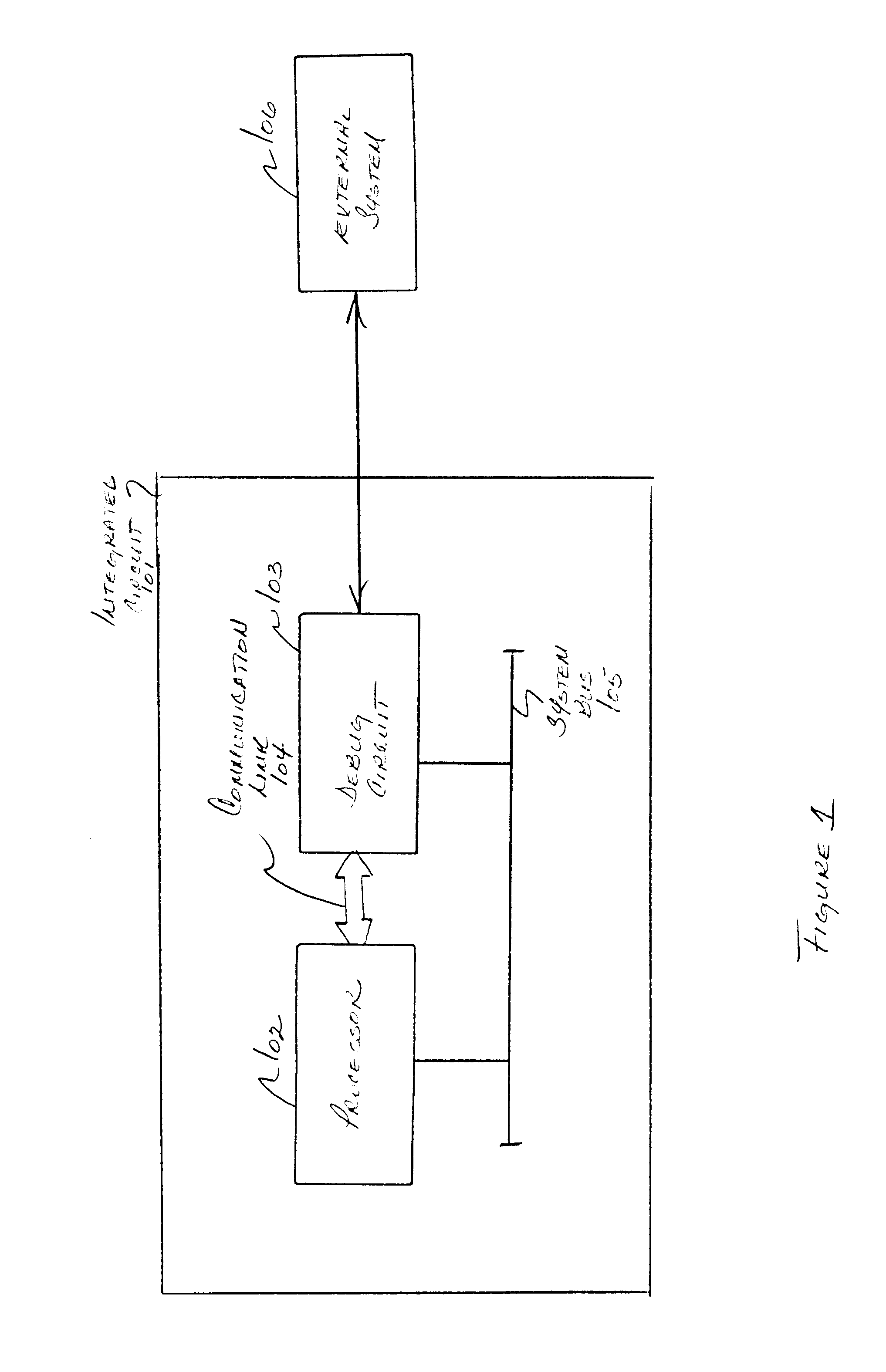

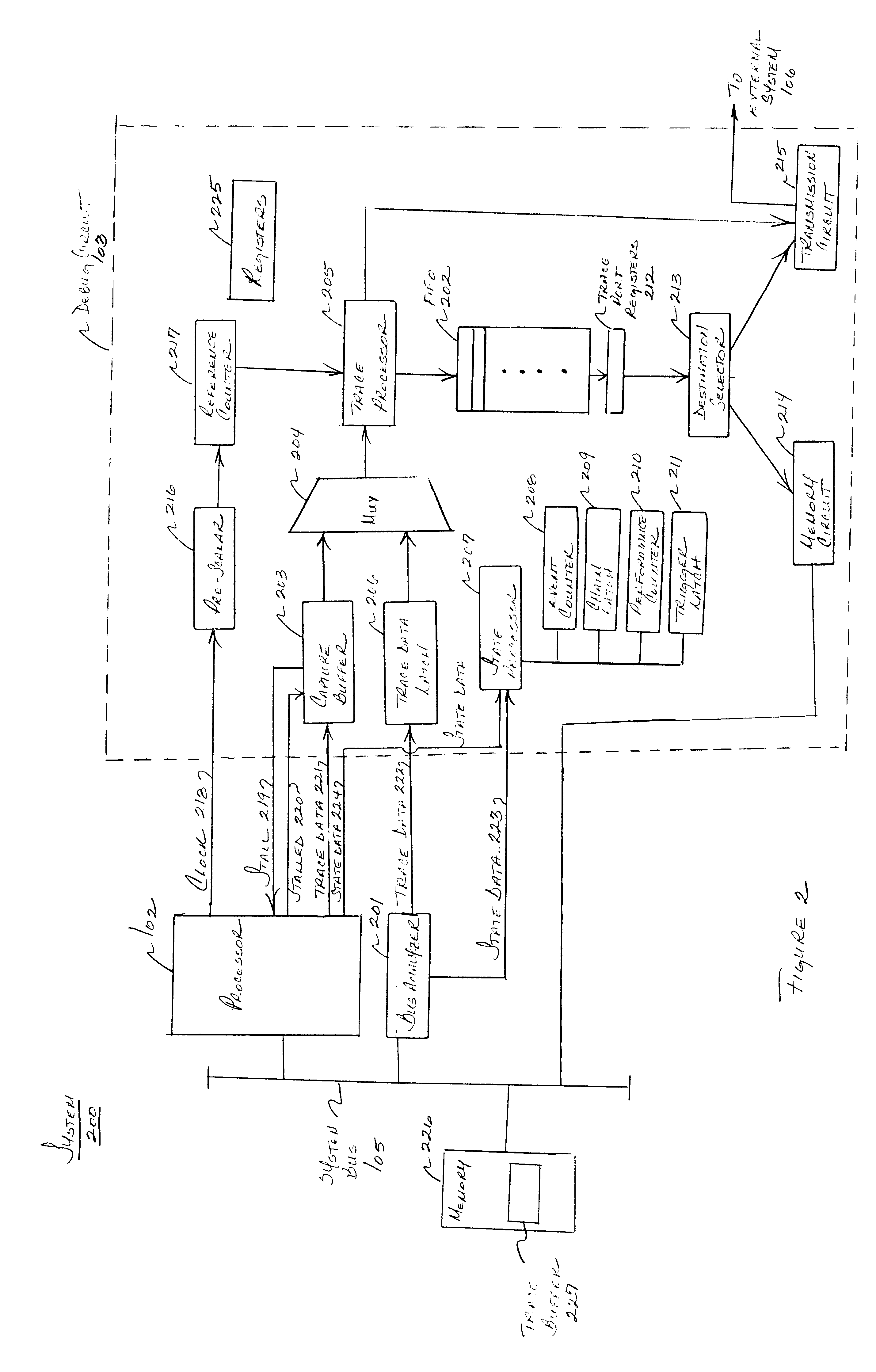

Circuit for processing trace information

InactiveUS6684348B1Maximizes on-chip trace storageEliminating duplicate informationSoftware testing/debuggingArchitecture with single central processing unitMemory mapTrace buffer

A system for performing non-intrusive trace is provided which receives trace information from one or more processors. The trace system may be configured by a user to operate in various modes for flexibly storing or transmitting the trace information. The trace system includes a FIFO which is memory-mapped and is capable of being accessed without affecting processor performance. In one aspect, the trace system includes a trace buffer which receives trace information at an internal clock speed of the processor. In another embodiment, a compression protocol is provided for compressing trace messages on-chip prior to transmitting the messages to an external system or storing the messages in memory.

Owner:RENESAS ELECTRONICS CORP

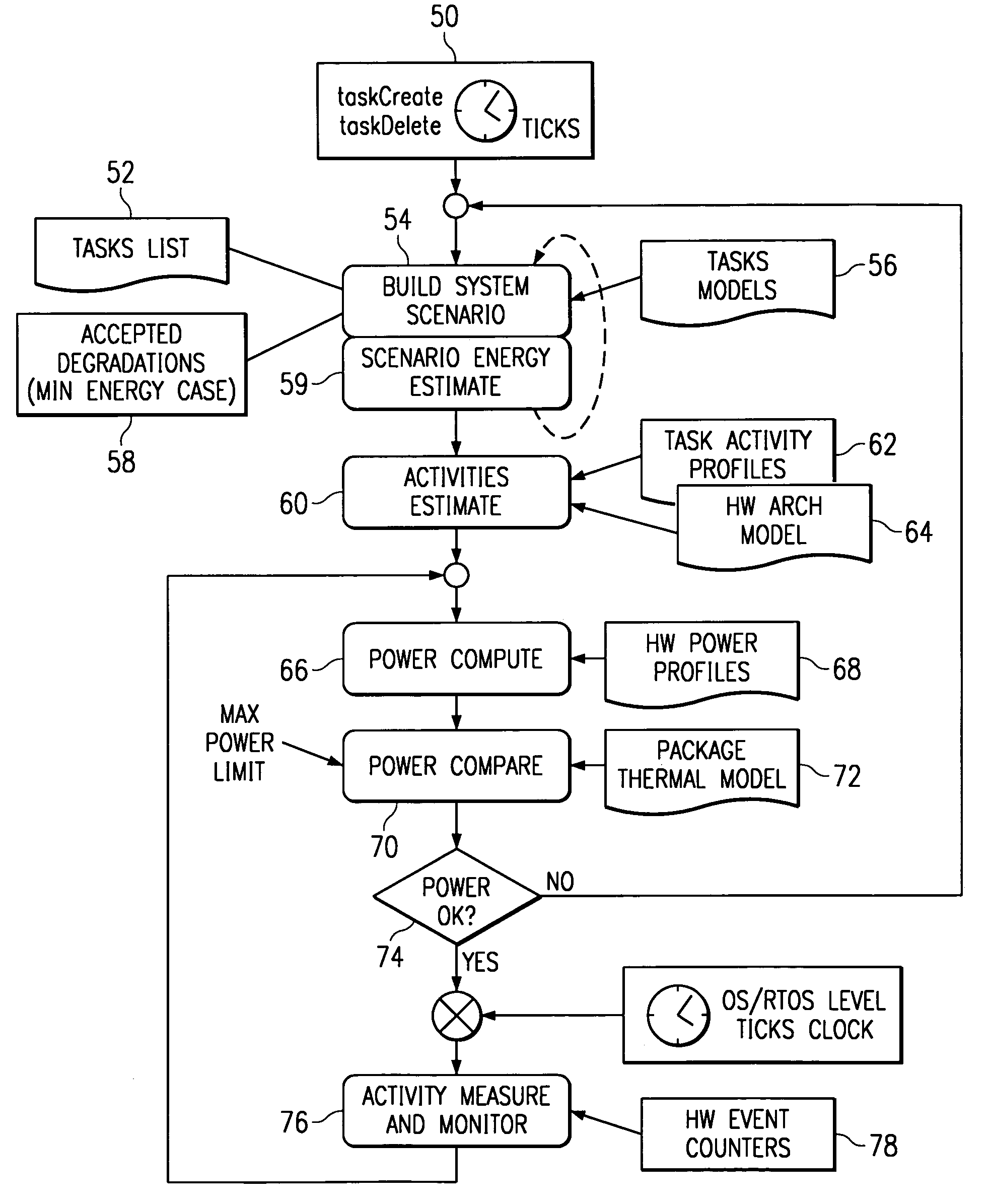

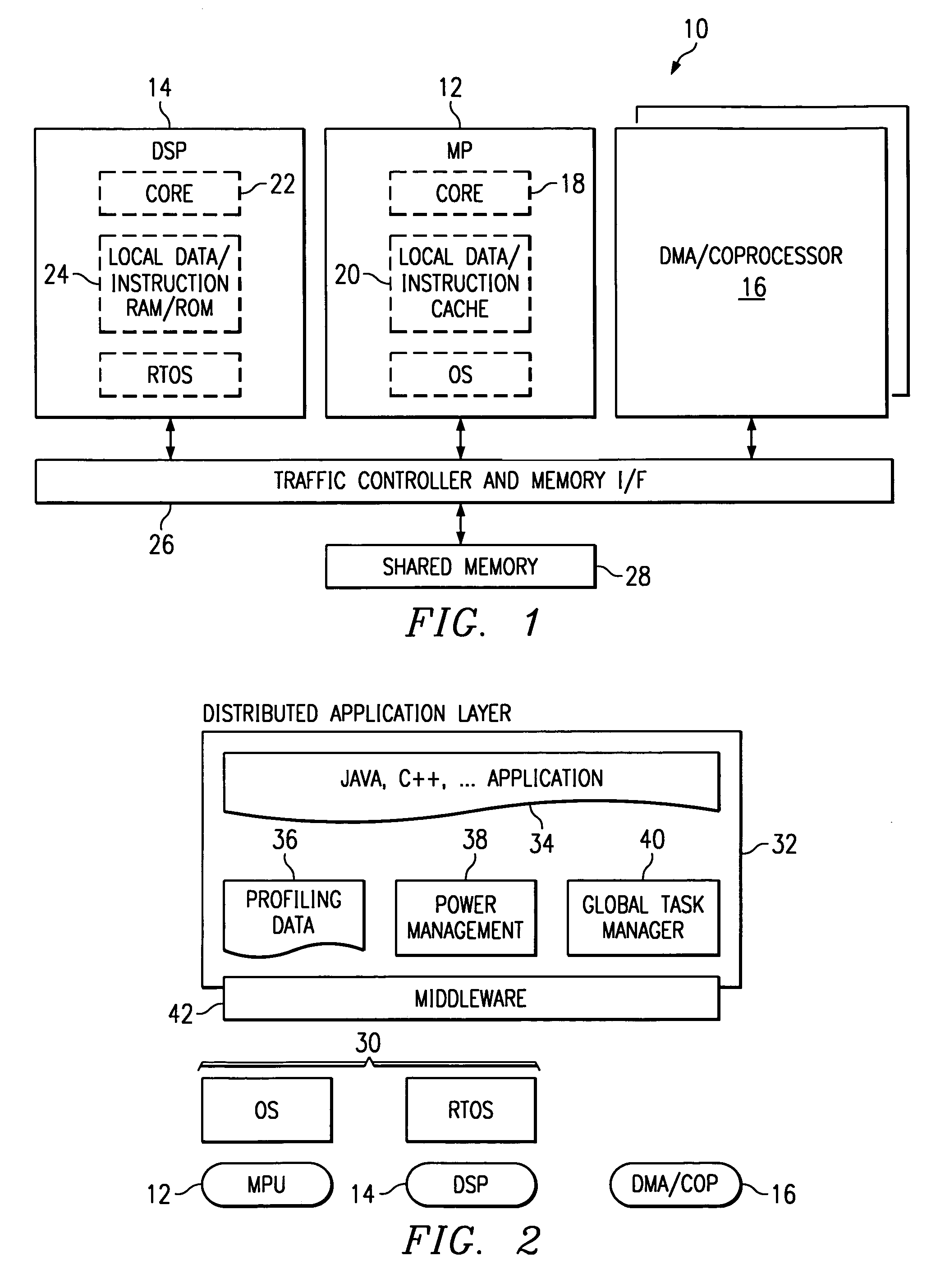

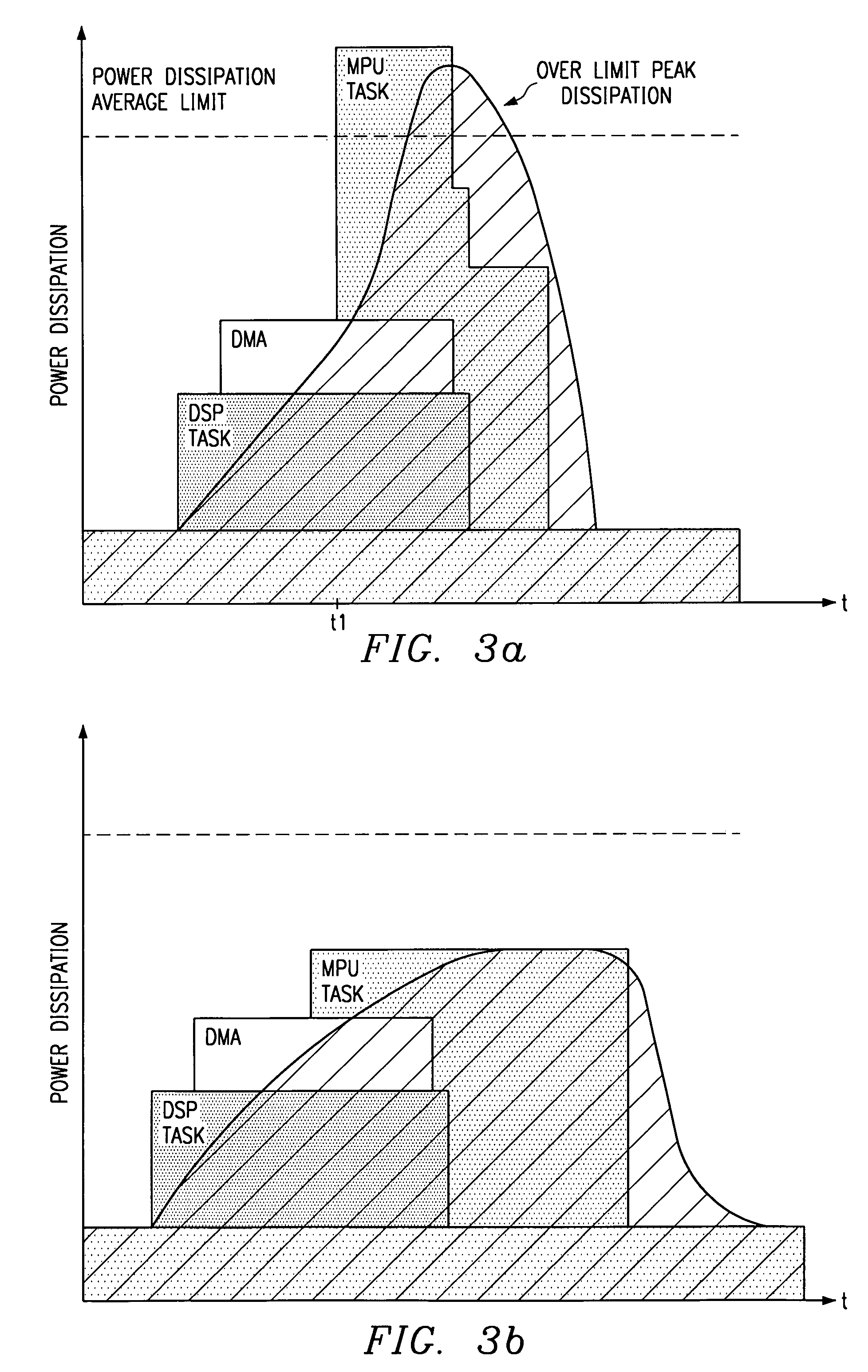

System and method for executing tasks according to a selected scenario in response to probabilistic power consumption information of each scenario

A distributed processing system (10) includes a plurality of processing modules, such as MPUs (12), DSPs (14), and coprocessors / DMA channels (16). Power management software (38) in conjunction with profiles (36) for the various processing modules and the tasks to executed are used to build scenarios which meet predetermined power objectives, such as providing maximum operation within package thermal constraints or using minimum energy. Actual activities associated with the tasks are monitored during operation to ensure compatibility with the objectives. The allocation of tasks may be changed dynamically to accommodate changes in environmental conditions and changes in the task list.

Owner:TEXAS INSTR INC

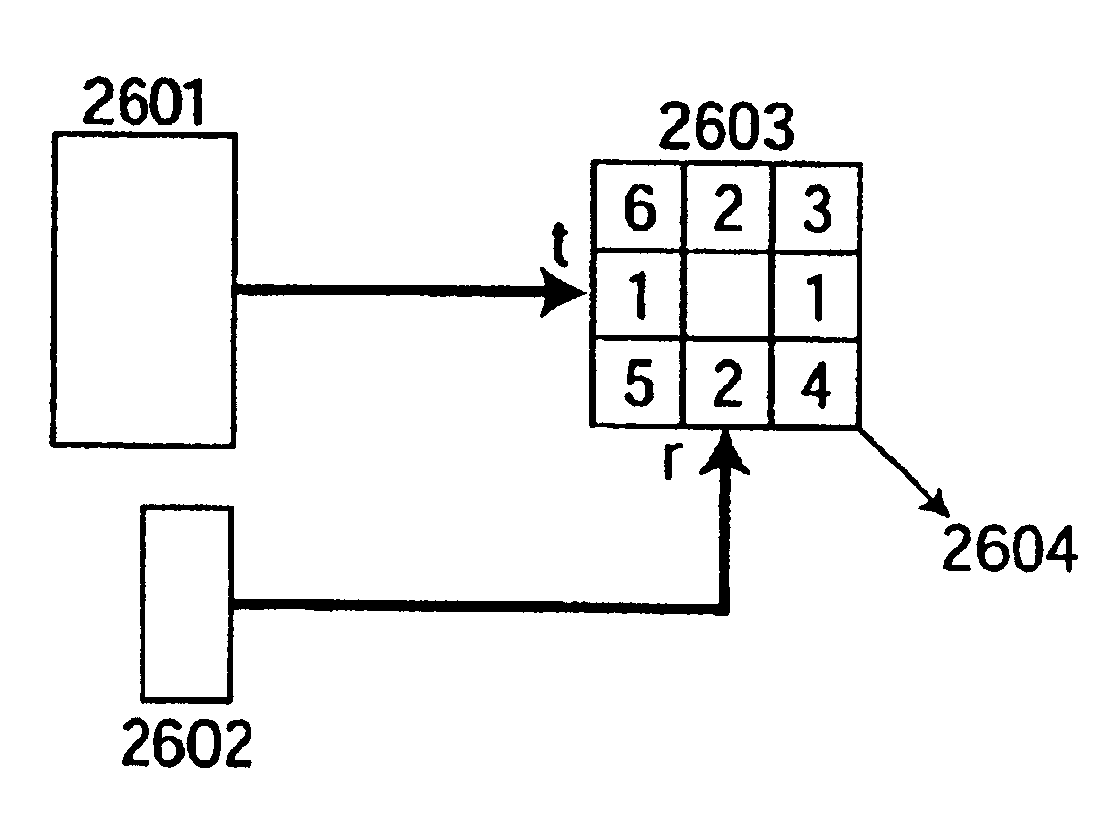

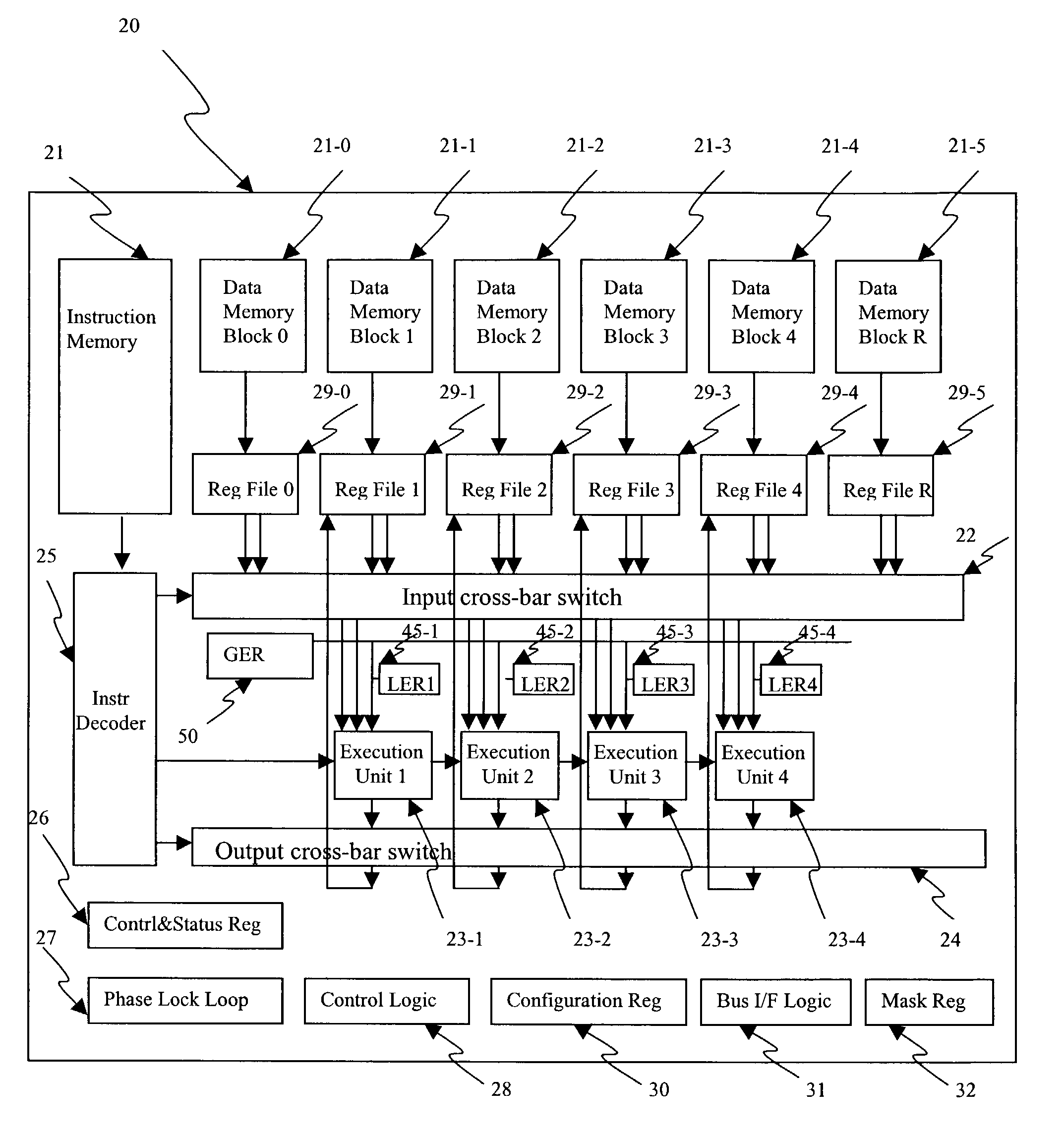

Algorithm mapping, specialized instructions and architecture features for smart memory computing

InactiveUS6970988B1Improve performanceLow costMultiplex system selection arrangementsProgram controlSmart memoryExecution unit

A smart memory computing system that uses smart memory for massive data storage as well as for massive parallel execution is disclosed. The data stored in the smart memory can be accessed just like the conventional main memory, but the smart memory also has many execution units to process data in situ. The smart memory computing system offers improved performance and reduced costs for those programs having massive data-level parallelism. This smart memory computing system is able to take advantage of data-level parallelism to improve execution speed by, for example, use of inventive aspects such as algorithm mapping, compiler techniques, architecture features, and specialized instruction sets.

Owner:STRIPE INC

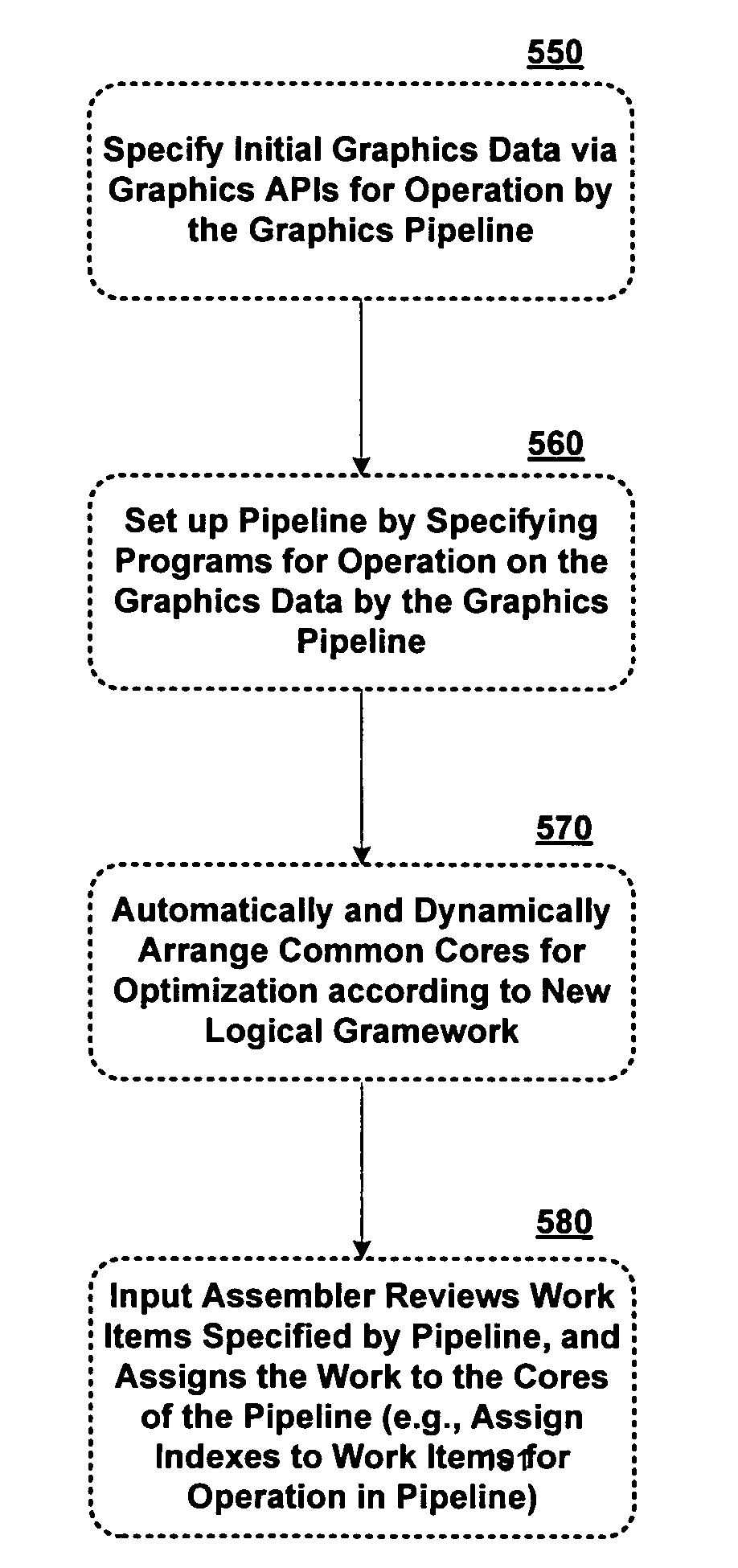

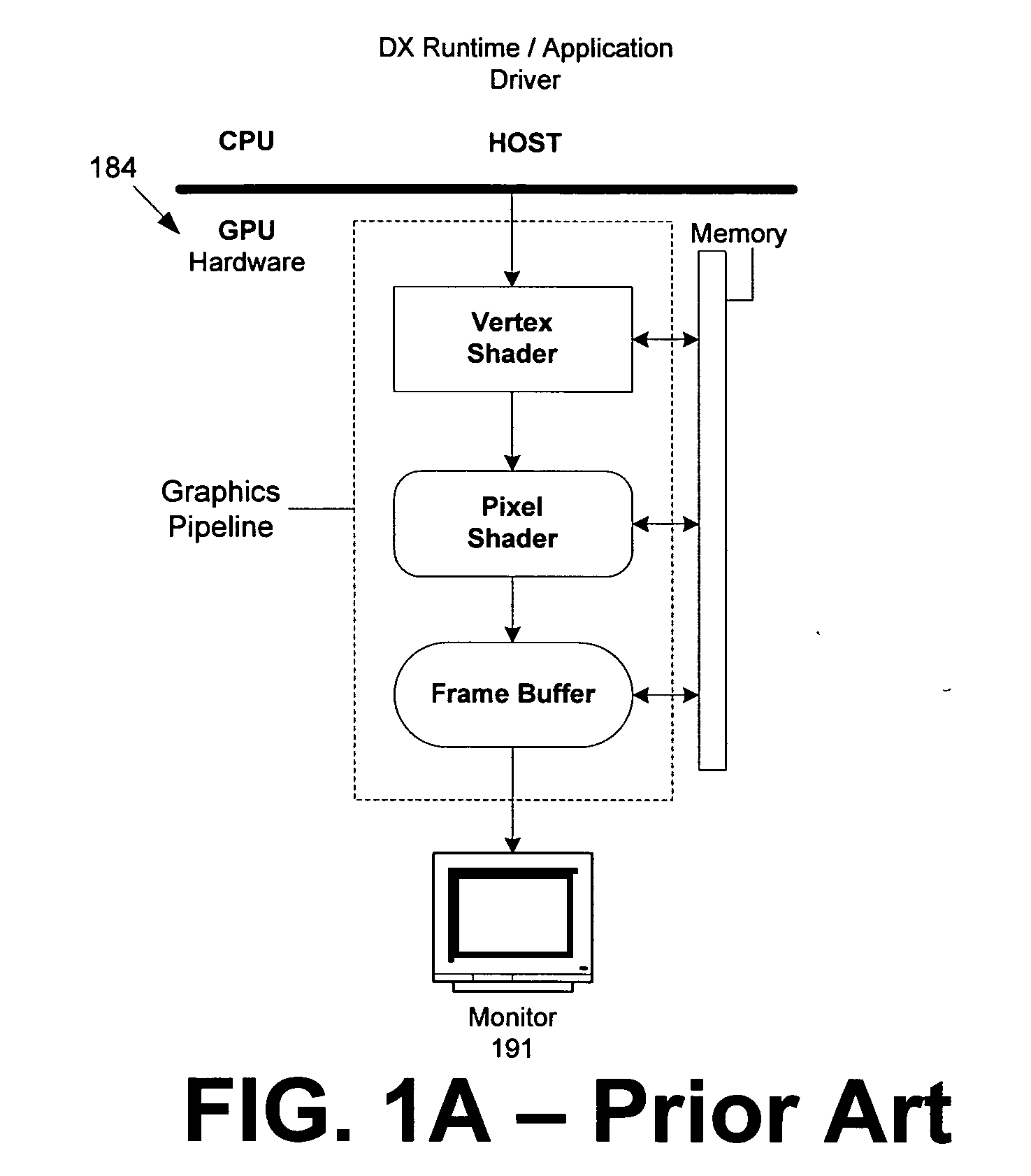

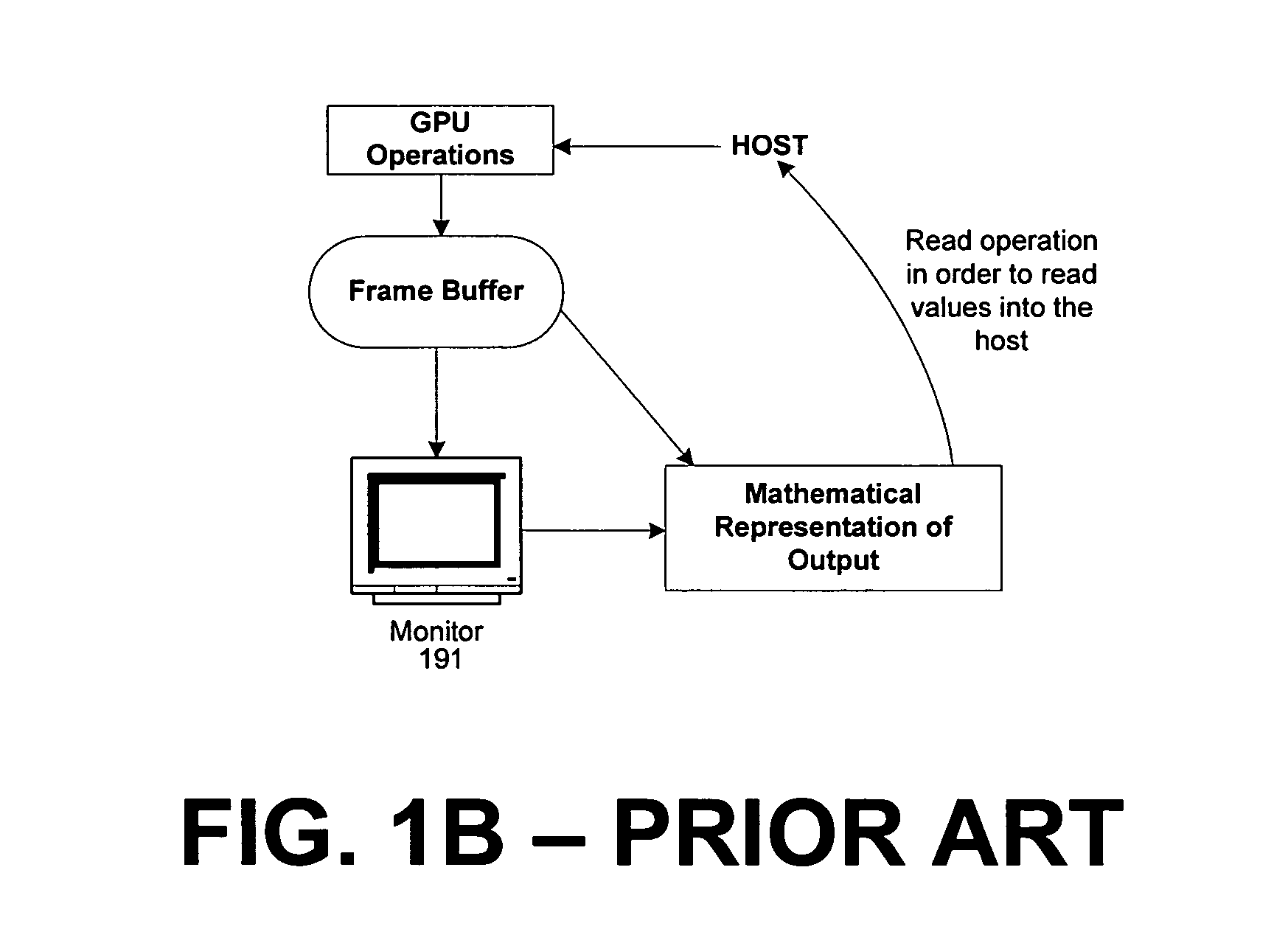

Systems and methods for providing an enhanced graphics pipeline

An enhanced graphics pipeline is provided that enables common core hardware to perform as different components of the graphics pipeline, programmability of primitives including lines and triangles by a component in the pipeline, and a stream output before or simultaneously with the rendering a graphical display with the data in the pipeline. The programmer does not have to optimize the code, as the common core will balance the load of functions necessary and dynamically allocate those instructions on the common core hardware. The programmer may program primitives using algorithms to simplify all vertex calculations by substituting with topology made with lines and triangles. The programmer takes the calculated output data and can read it before or while it is being rendered. Thus, a programmer has greater flexibility in programming. By using the enhanced graphics pipeline, the programmer can optimize the usage of the hardware in the pipeline, program vertex, line or triangle topologies altogether rather than each vertex alone, and read any calculated data from memory where the pipeline can output the calculated information.

Owner:MICROSOFT TECH LICENSING LLC

Popular searches

Network traffic/resource management Data switching by path configuration Energy efficient computing High level techniques Input/output processes for data processing Semiconductor/solid-state device details Logic circuits using elementary logic circuit components Semiconductor devices Computation using denominational number representation Finance

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com