Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

405 results about "Space allocation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Space allocation is the process of experimenting with placements of your organizational units ( business unit, division, department, or functional group) within your company's current or future space.

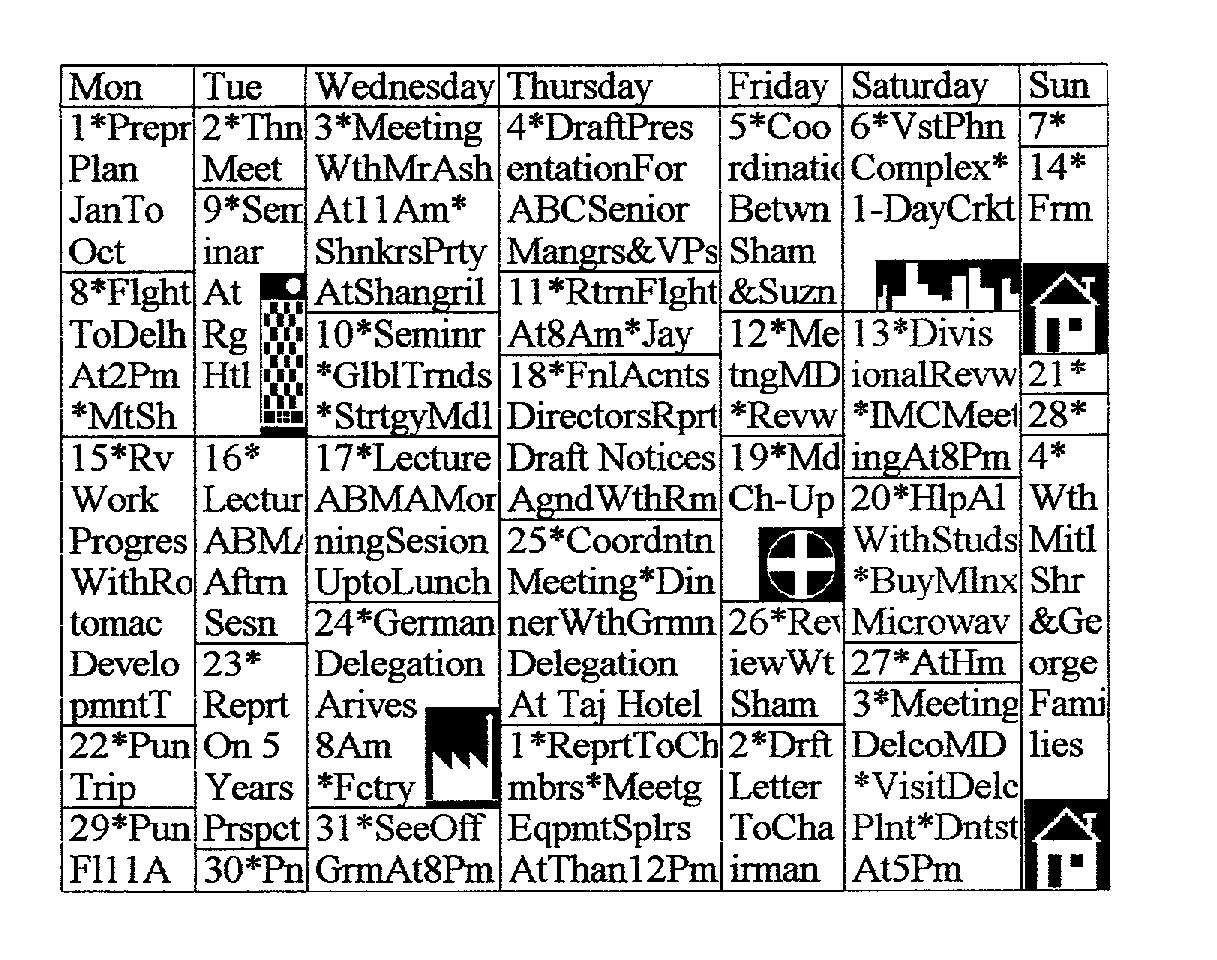

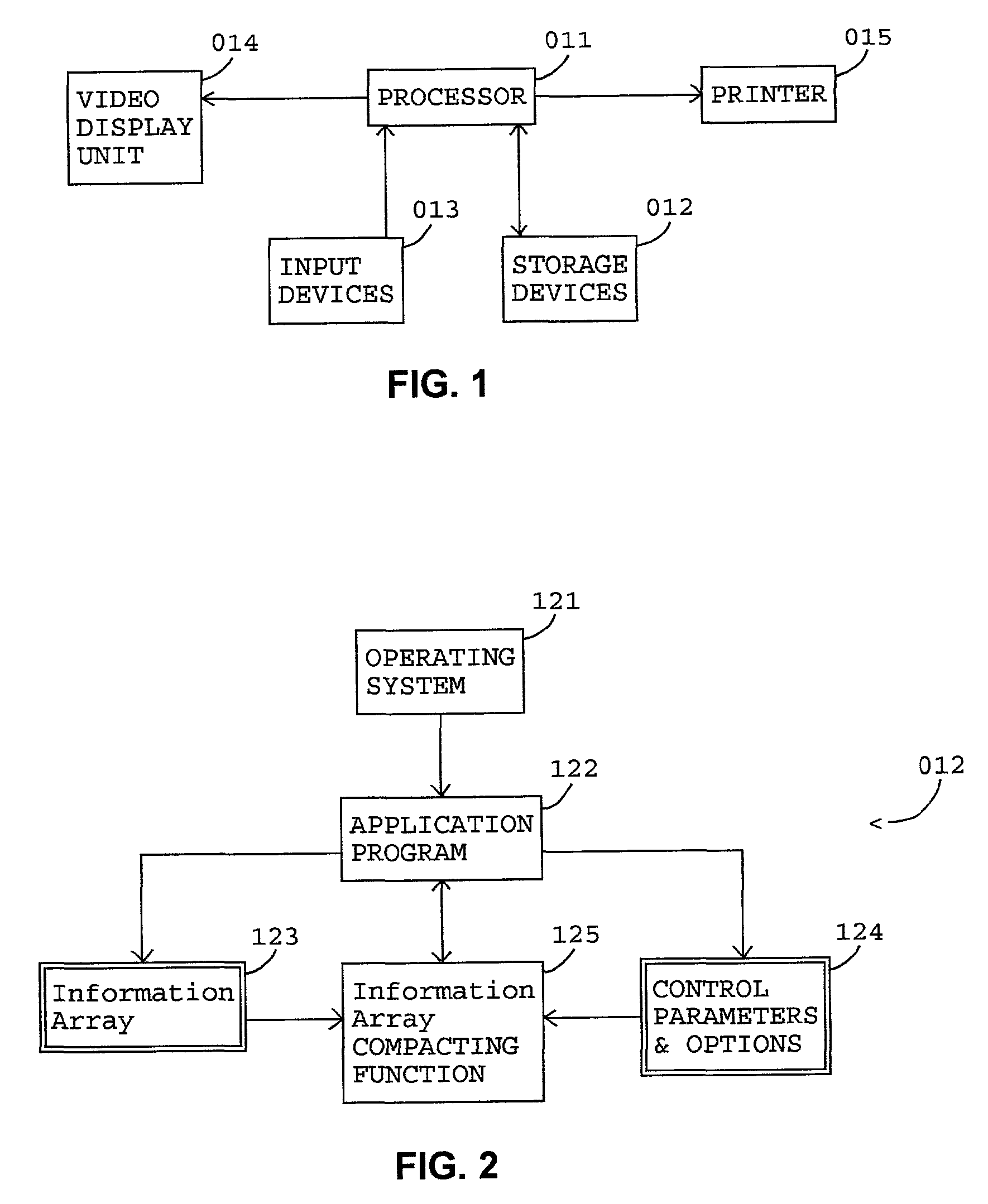

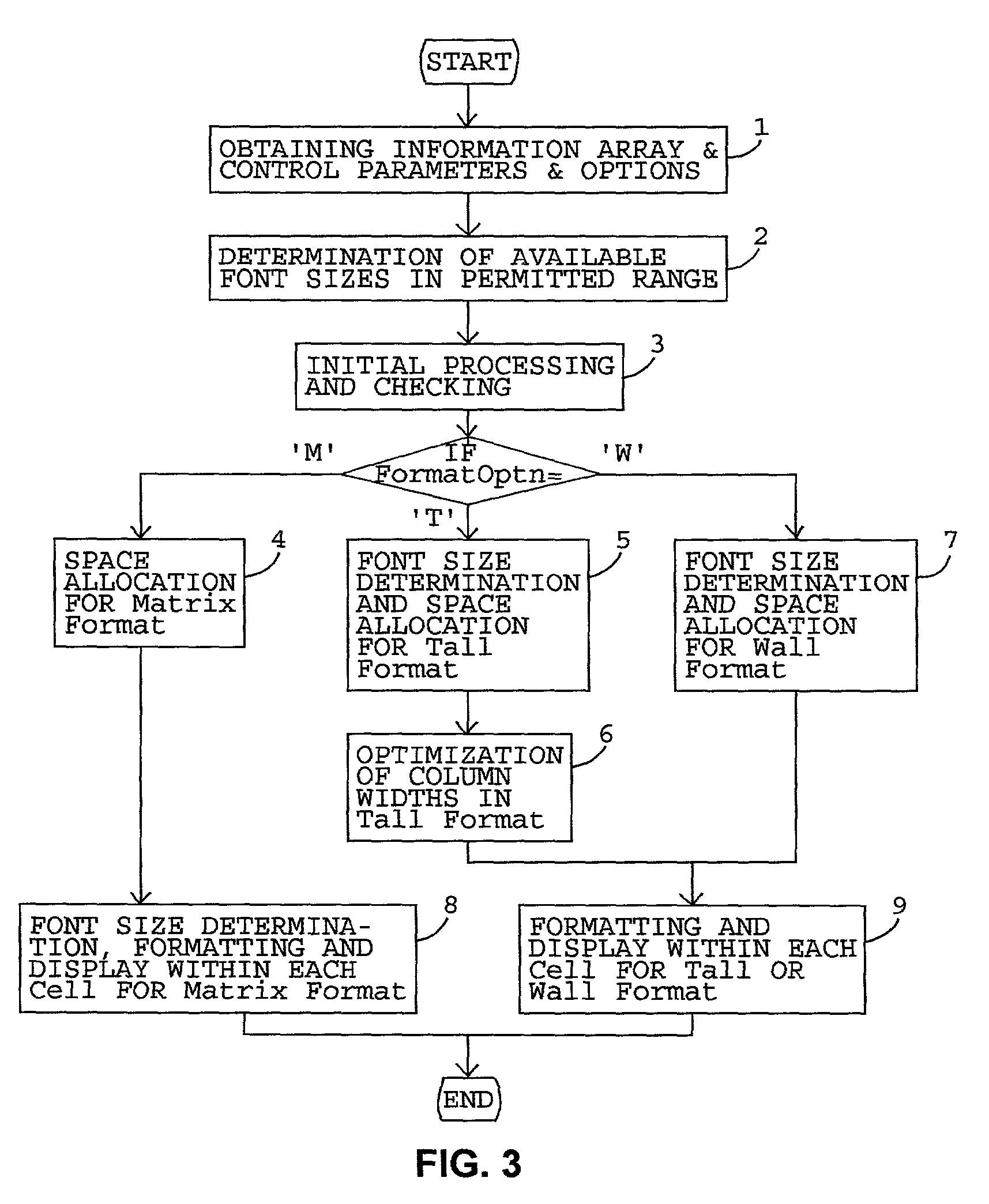

Compacting an information array display to cope with two dimensional display space constraint

ActiveUS8001465B2Maldistribution of minimizedSpace minimizationText processingSpecial data processing applicationsArray data structureCombined use

This invention relates to computer implemented methods for accommodating elements of an information array within the physical constraints of a predetermined two dimensional display space. The maldistribution and wastage of space inherent to matrix format display is sought to be minimized by allocating space based on moderated display space requirement values of larger elements. A measurement of lopsidedness of distribution of larger elements across columns and across rows is used while allocating column widths and row heights. If the display space is inadequate for displaying the array elements in matrix format, then the elements are displayed in Tall / Wall format wherein the row / column alignment of cells, respectively, is not maintained. The information array elements may include text, image or both. Methods such as font size reduction, text abbreviation and image size reduction are used in combination with space allocation methods to fit the array elements into corresponding cells in the display space.

Owner:ARDEN INNOVATIONS LLC

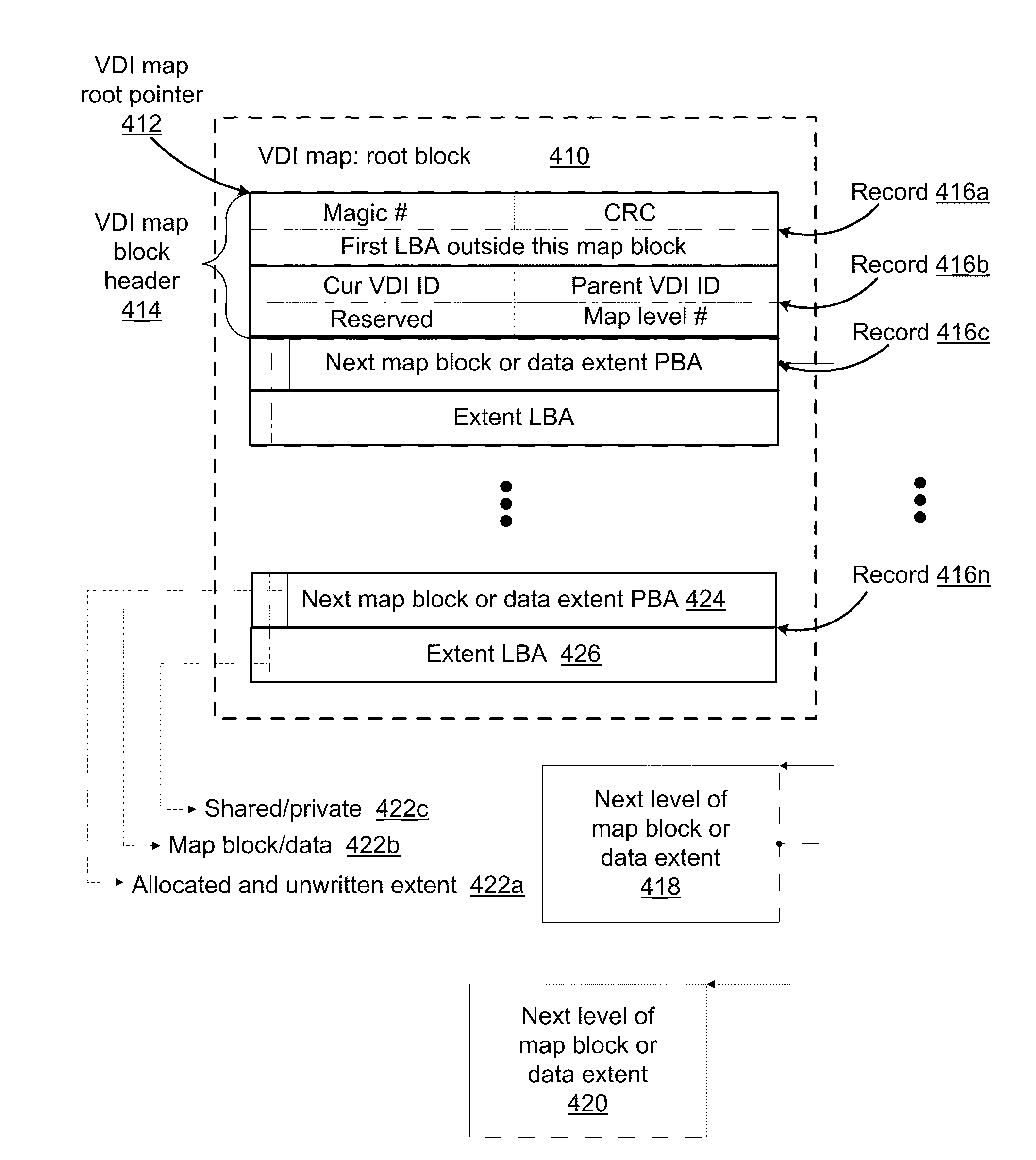

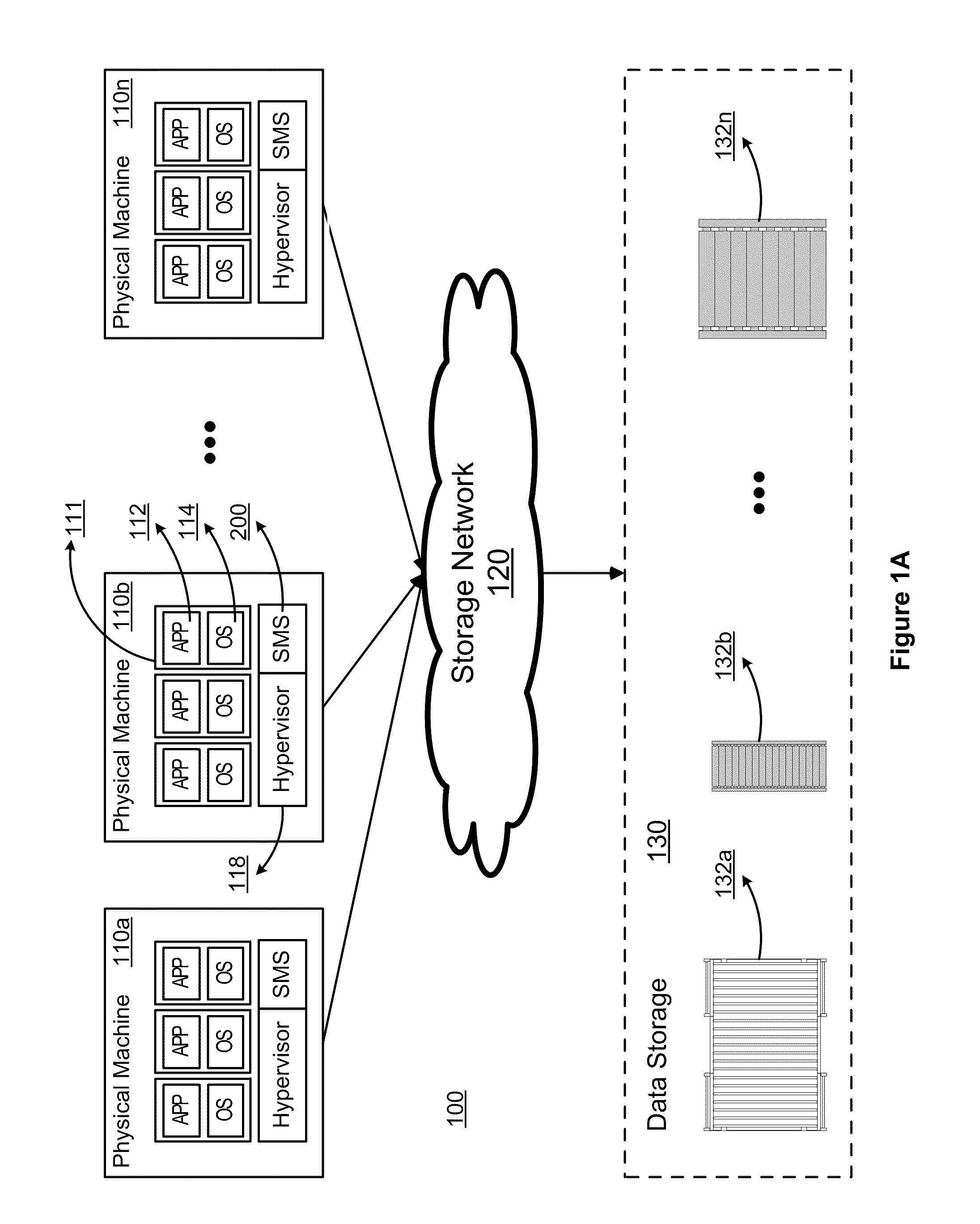

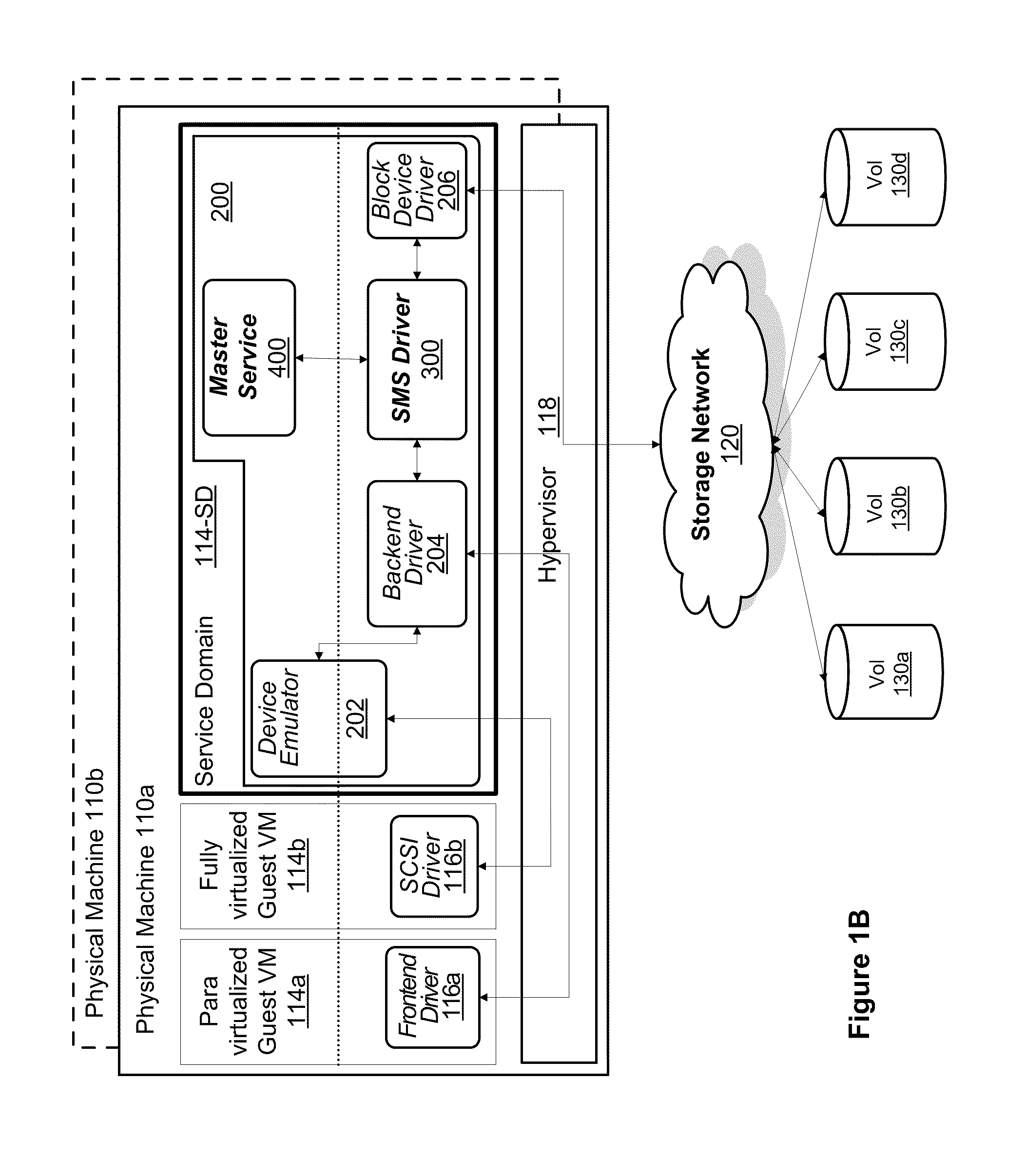

Storage management system for virtual machines

ActiveUS20100153617A1Overcome limitationsMemory adressing/allocation/relocationComputer security arrangementsVirtualizationData center

A computer system (a method) for providing storage management solution that enables server virtualization in data centers is disclosed. The system comprises a plurality of storage devices for storing data and a plurality of storage management drivers configured to provide an abstraction of the plurality of the storage devices to one or more virtual machines of the data center. A storage management driver is configured to represent a live disk or a snapshot of a live disk in a virtual disk image to the virtual machine associated with the driver. The driver is further configured to translate a logical address for a data block to one or more physical addresses of the data block through the virtual disk image. The system further comprises a master server configured to manage the abstraction of the plurality of the storage devices and to allocate storage space to one or more virtual disk images.

Owner:VMWARE INC

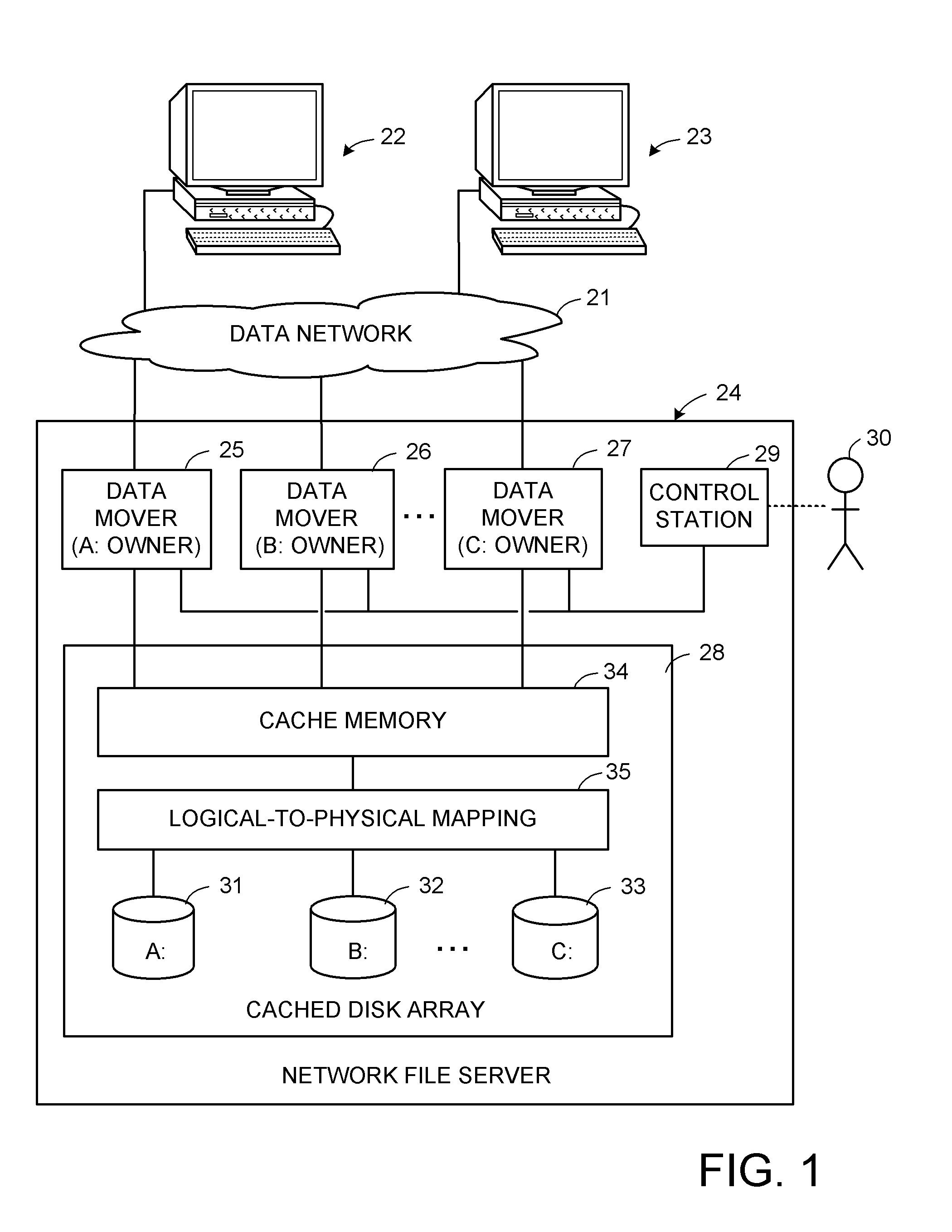

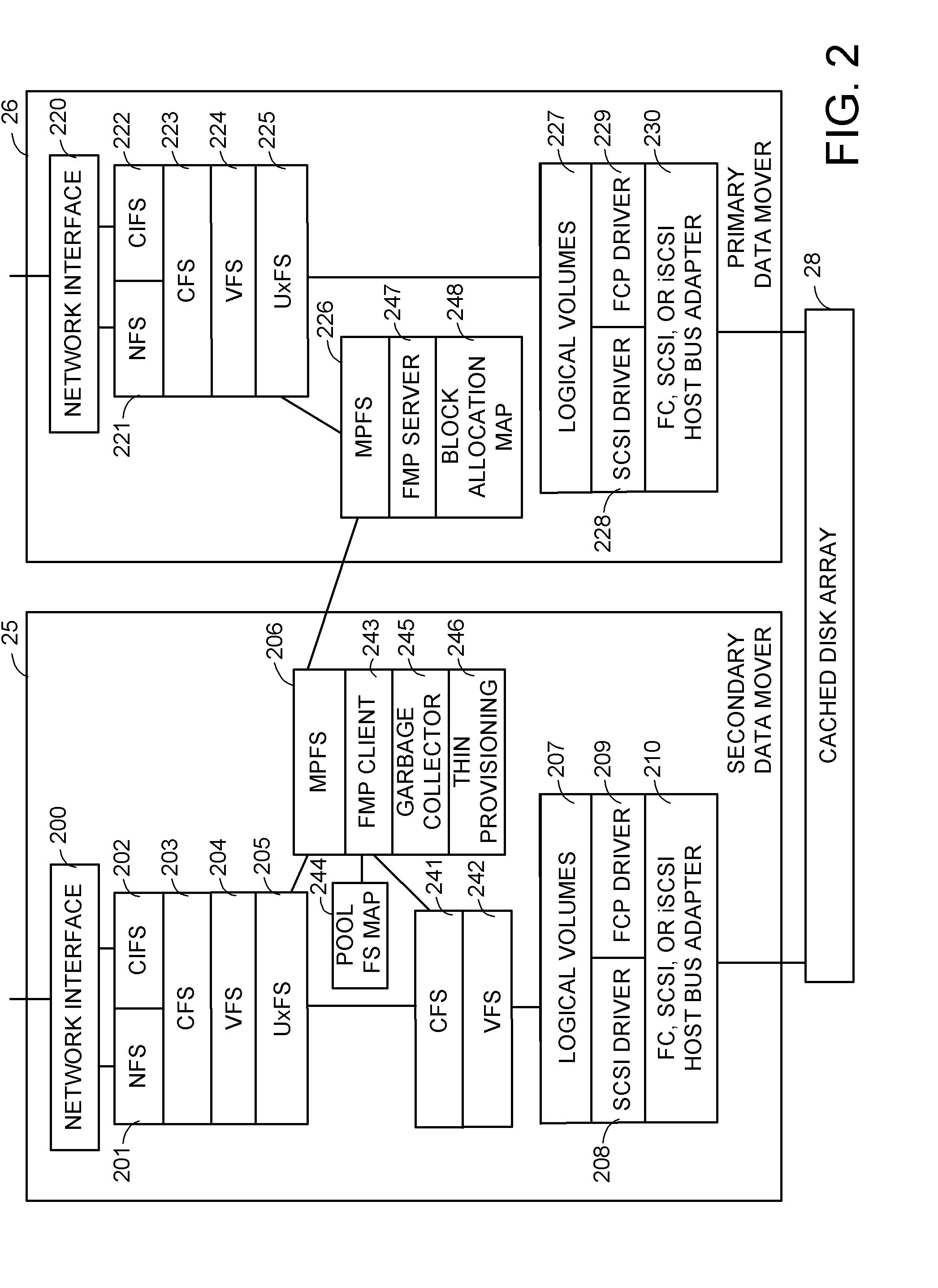

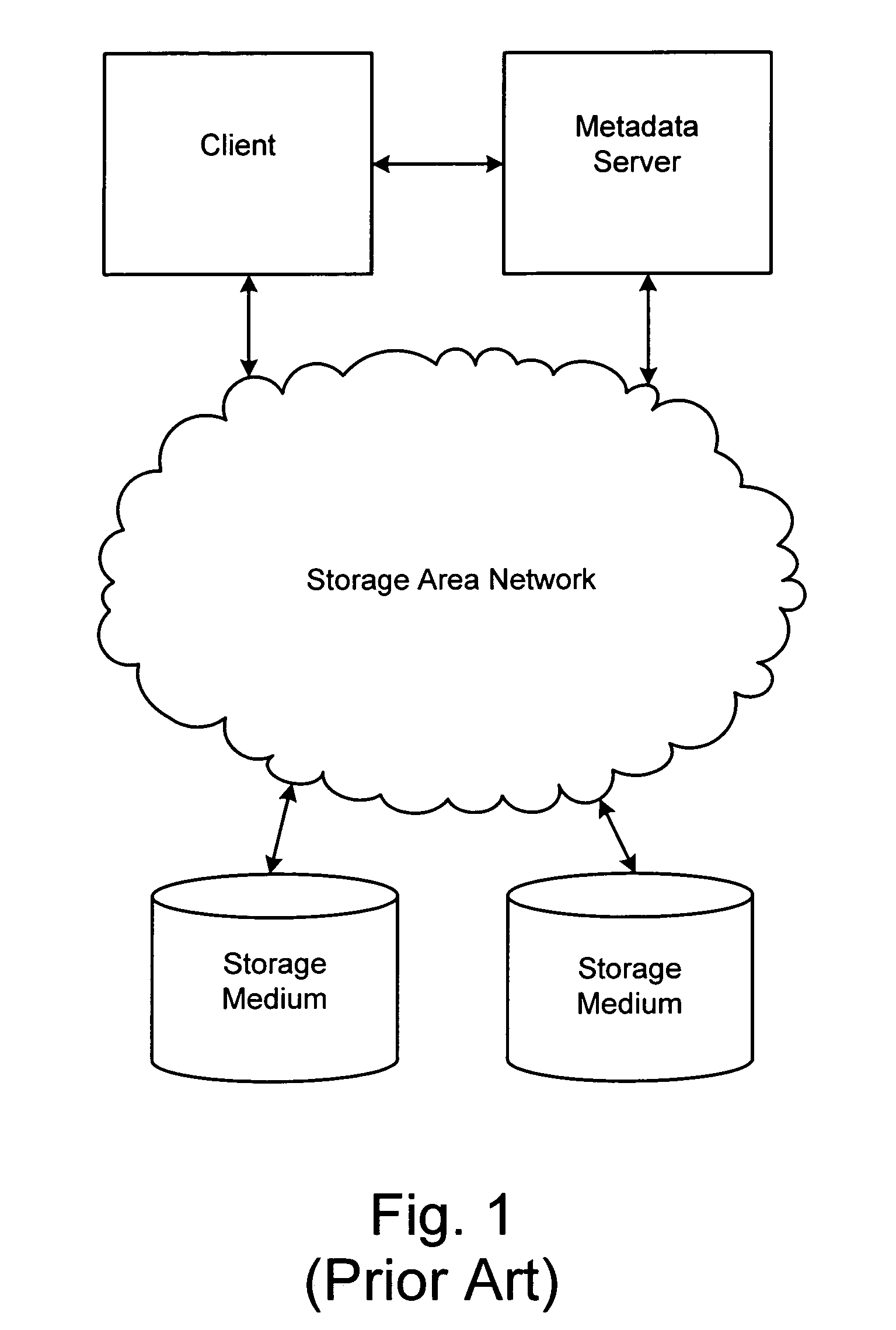

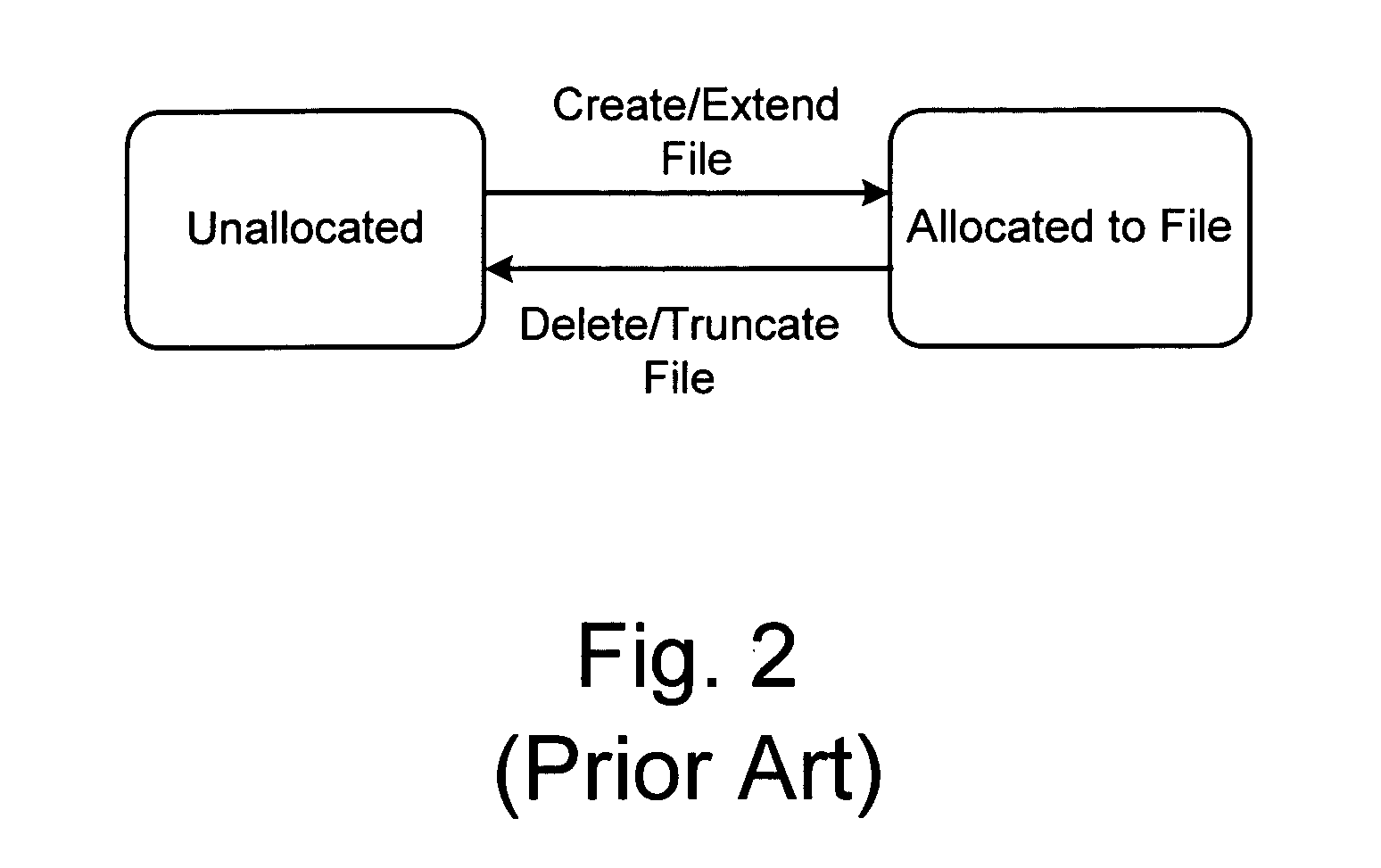

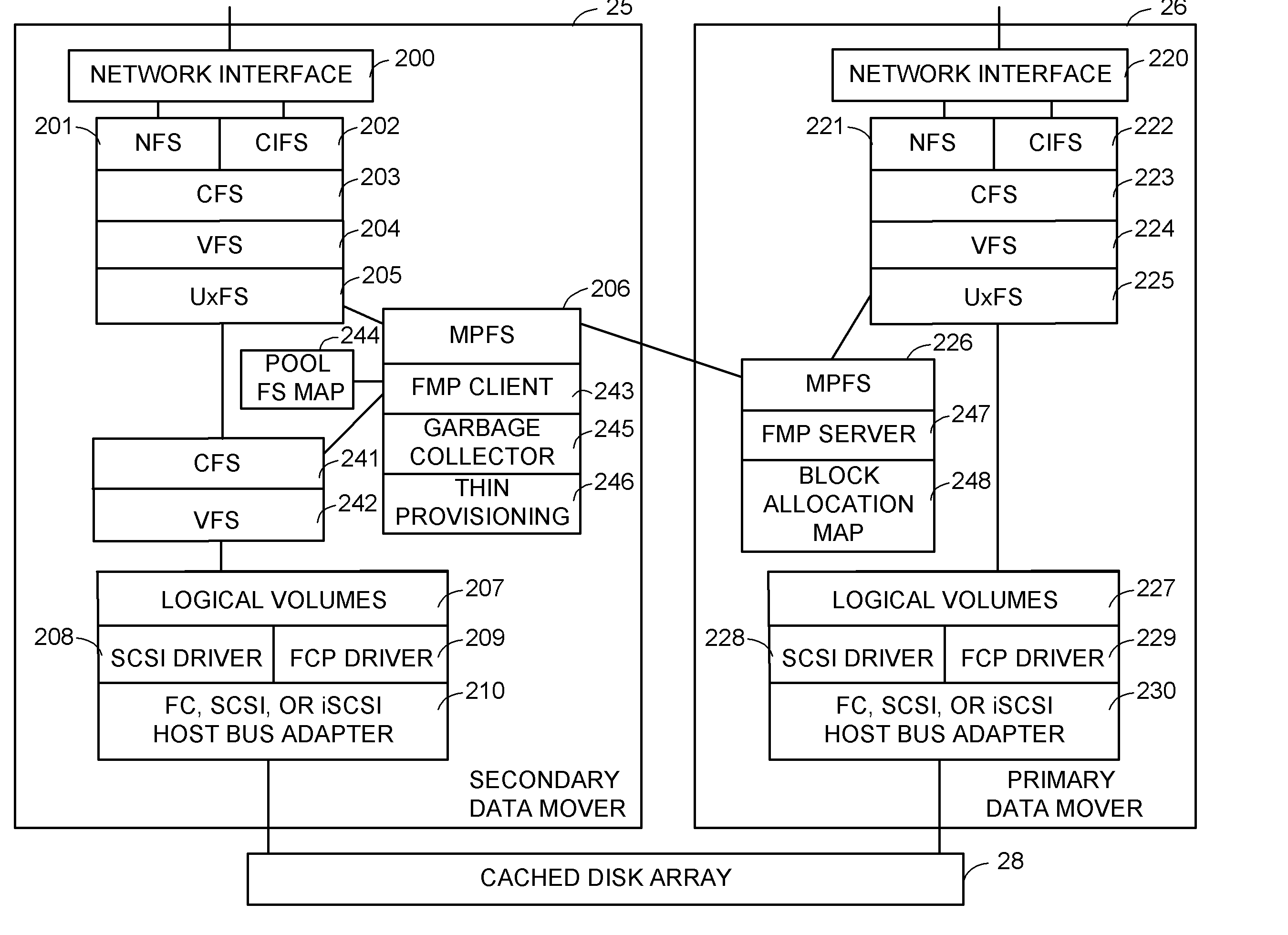

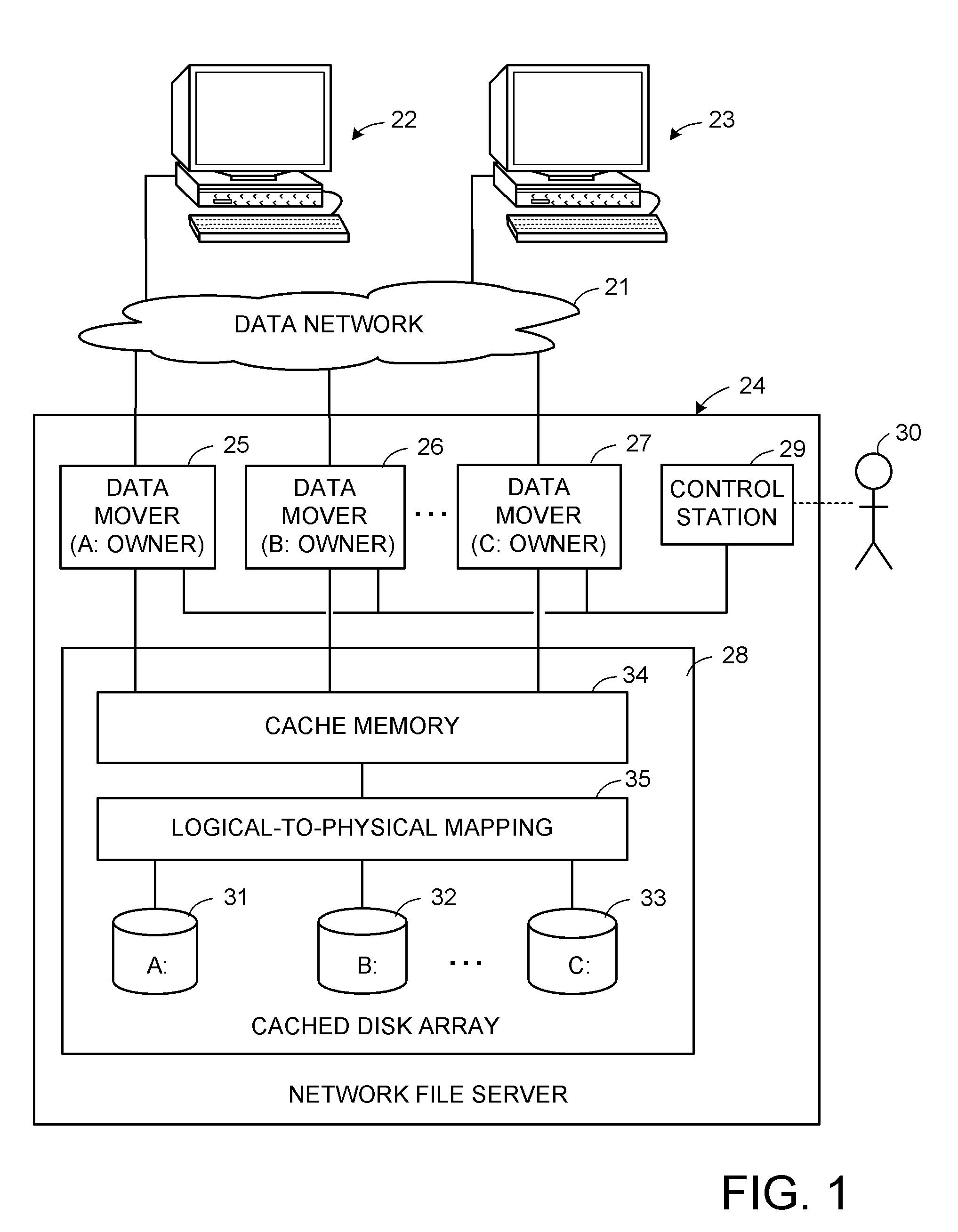

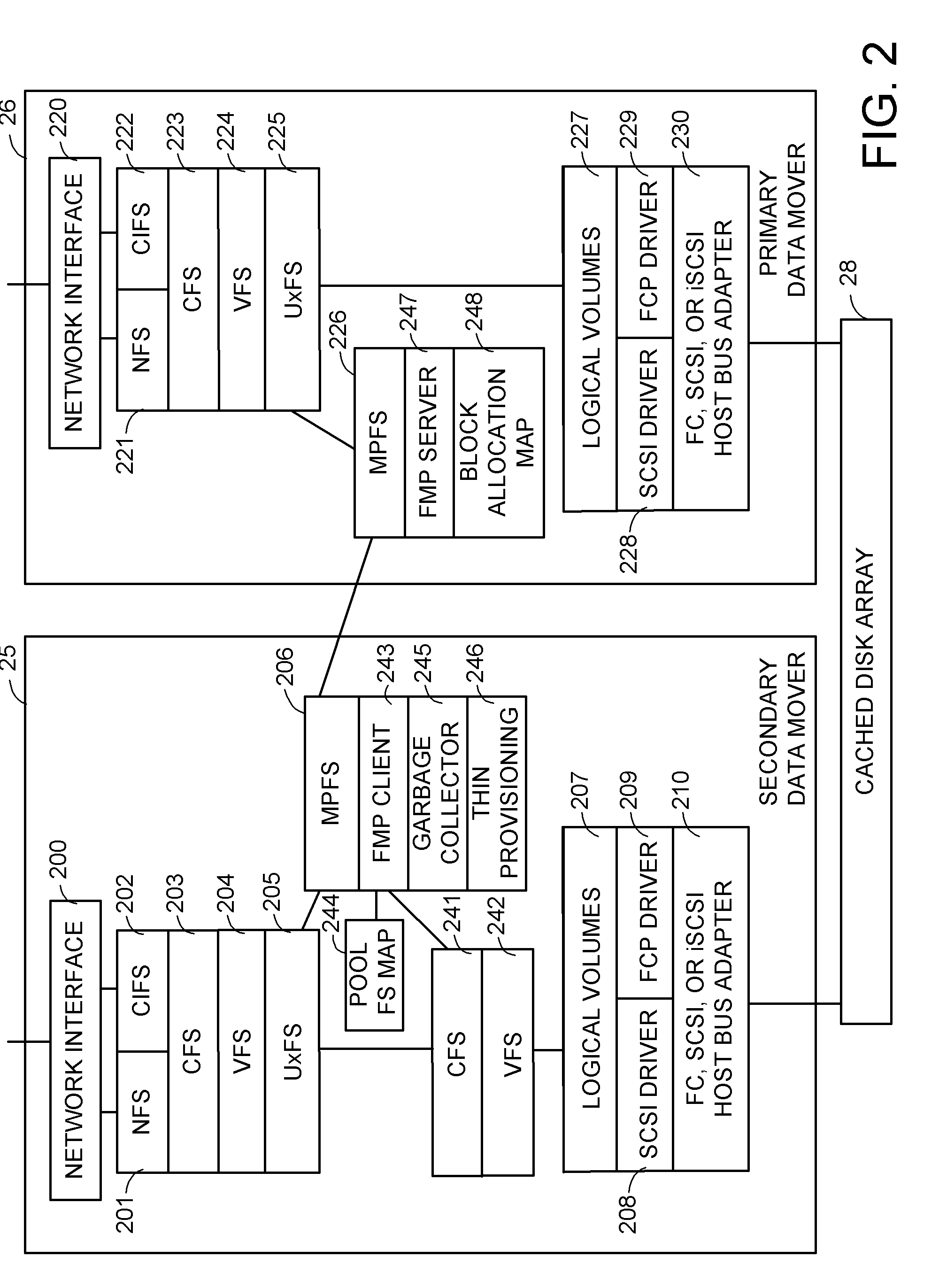

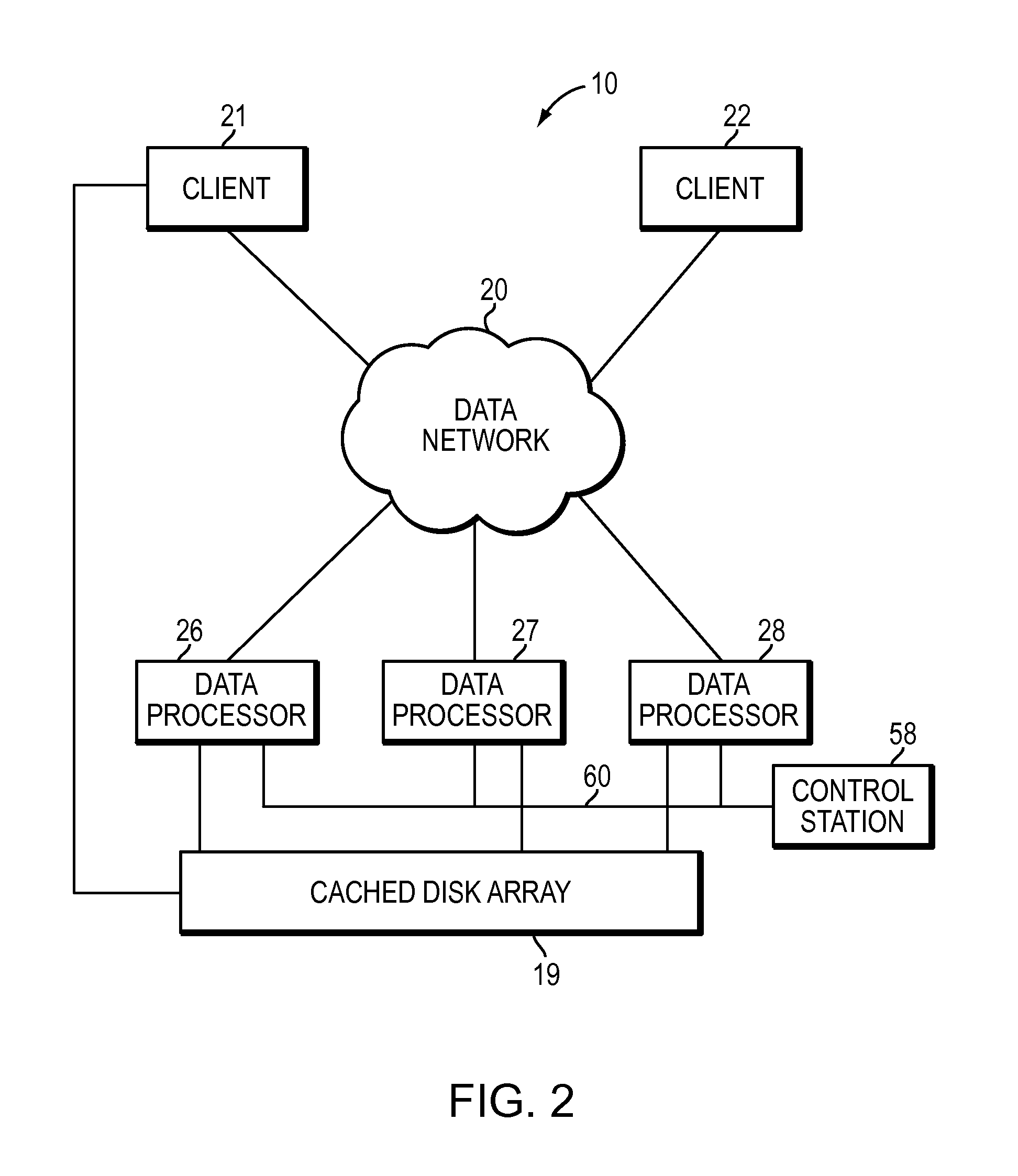

Storage array virtualization using a storage block mapping protocol client and server

A cached disk array includes a disk storage array, a global cache memory, disk directors coupling the cache memory to the disk storage array, and front-end directors for linking host computers to the cache memory. The front-end directors service storage access requests from the host computers, and the disk directors stage requested data from the disk storage array to the cache memory and write new data to the disk storage. At least one of the front-end directors or disk directors is programmed for block resolution of virtual logical units of the disk storage, and for obtaining, from a storage allocation server, space allocation and mapping information for pre-allocated blocks of the disk storage, and for returning to the storage allocation server requests to commit the pre-allocated blocks of storage once data is first written to the pre-allocated blocks of storage.

Owner:EMC IP HLDG CO LLC

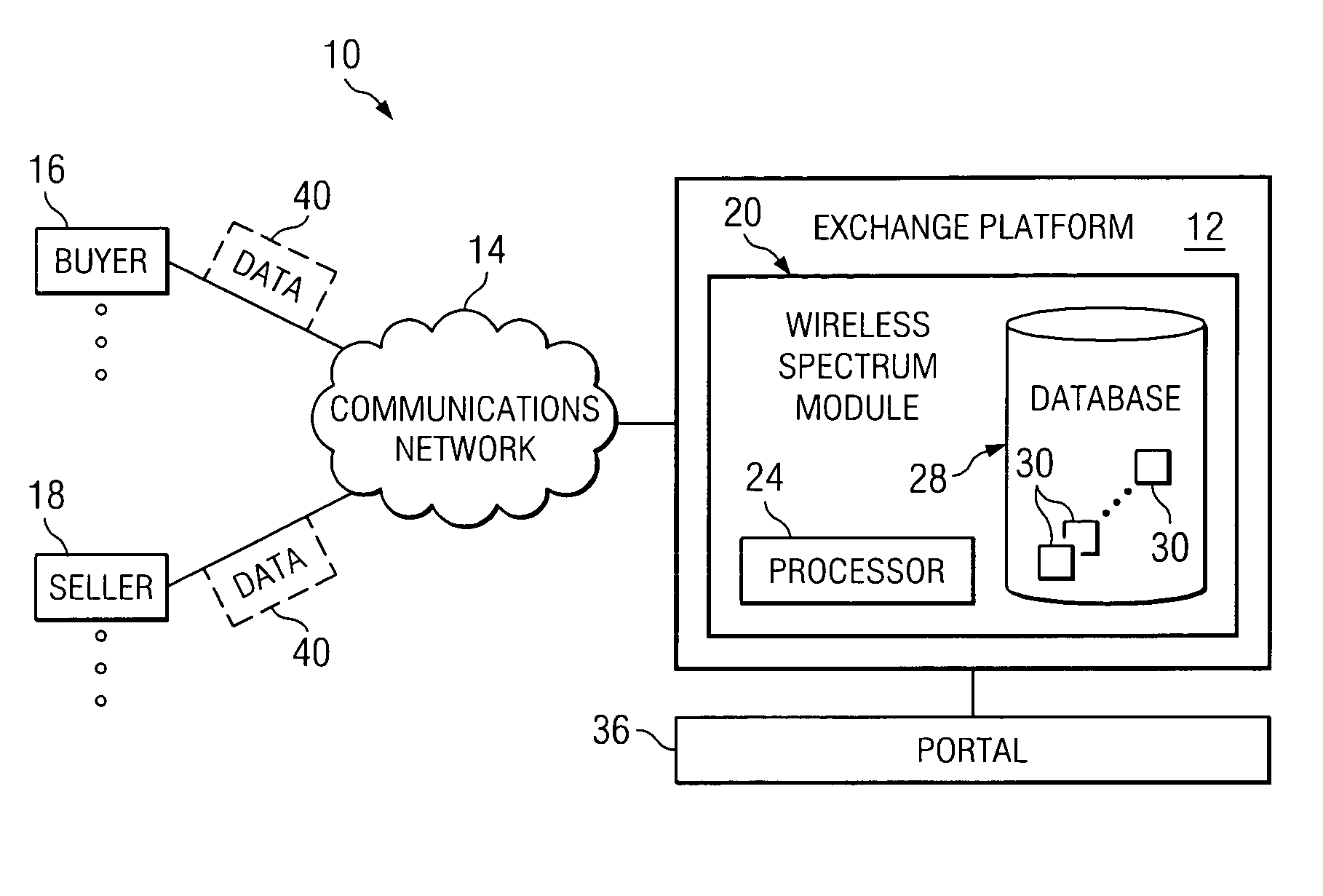

System and method for trading wireless spectrum rights

InactiveUS20060031082A1Improve liquidityEnhanced asset descriptionPayment architectureBuying/selling/leasing transactionsFrequency spectrumTower

A method for facilitating a transaction involving spectrum assets, towers, or tower space allocations is provided that includes storing information associated with at least one wireless spectrum asset in a database and communicating with an end user that provides data. The database can be accessed by the end user in order to identify the wireless spectrum asset.

Owner:CFPH LLC

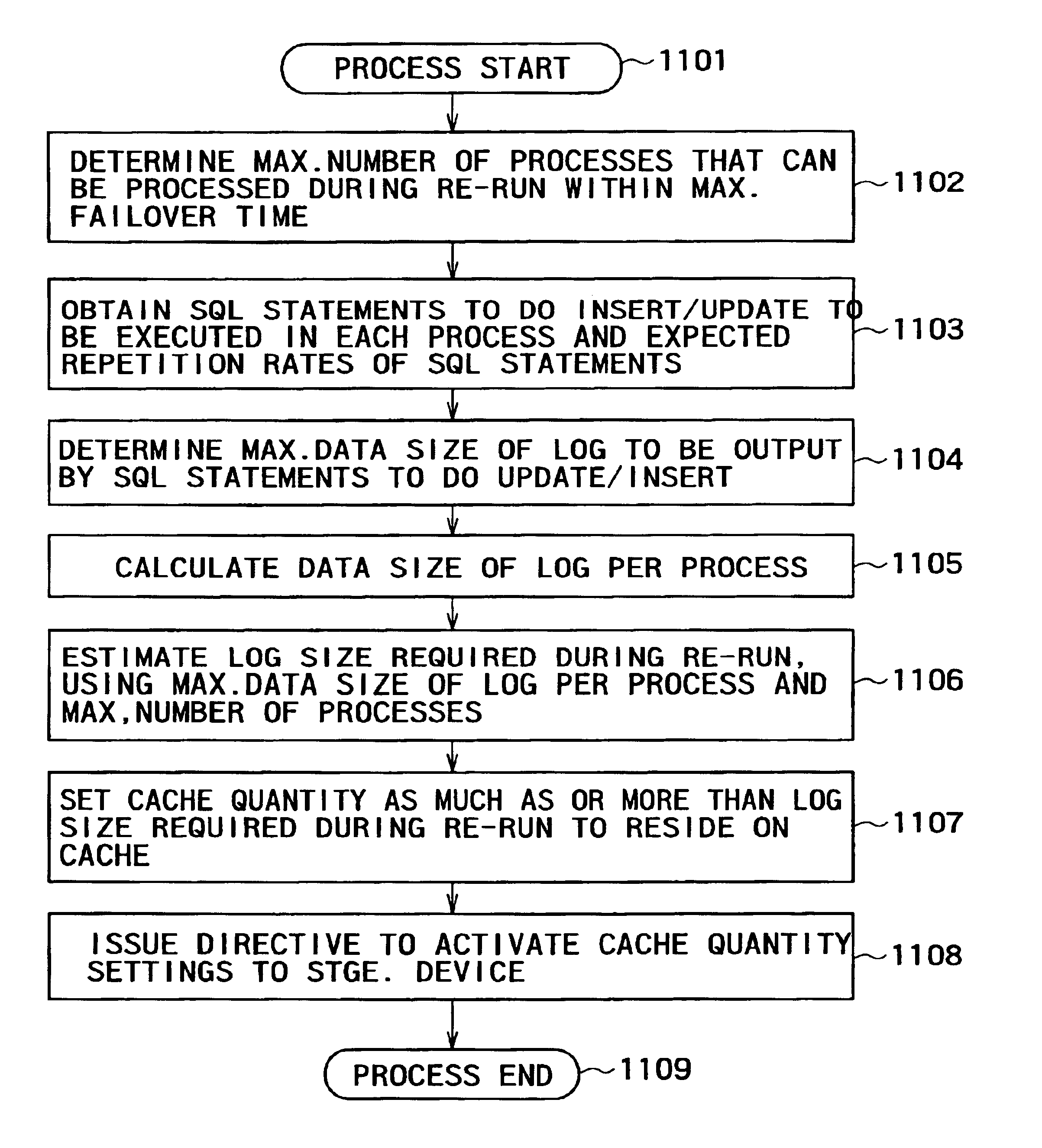

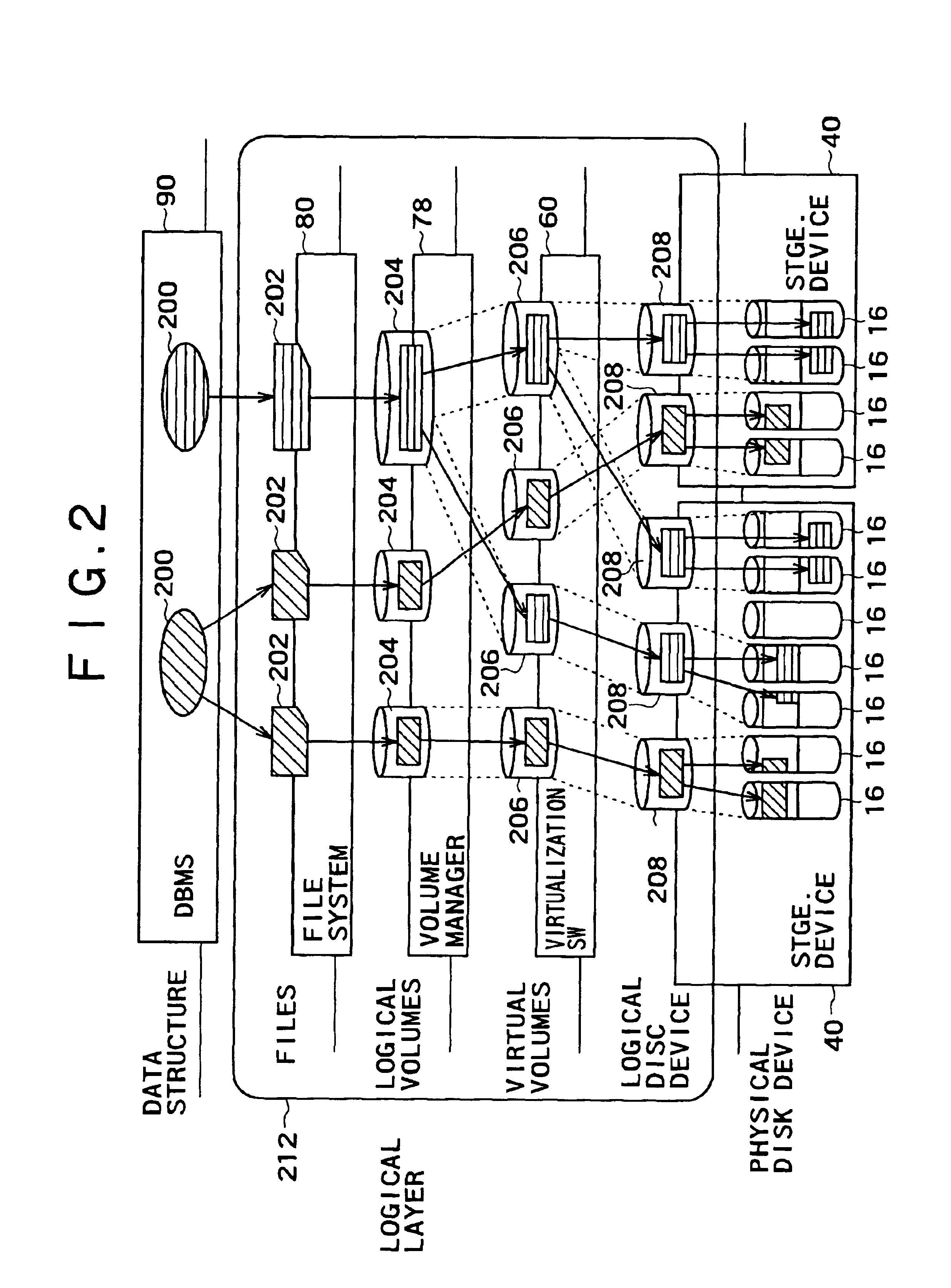

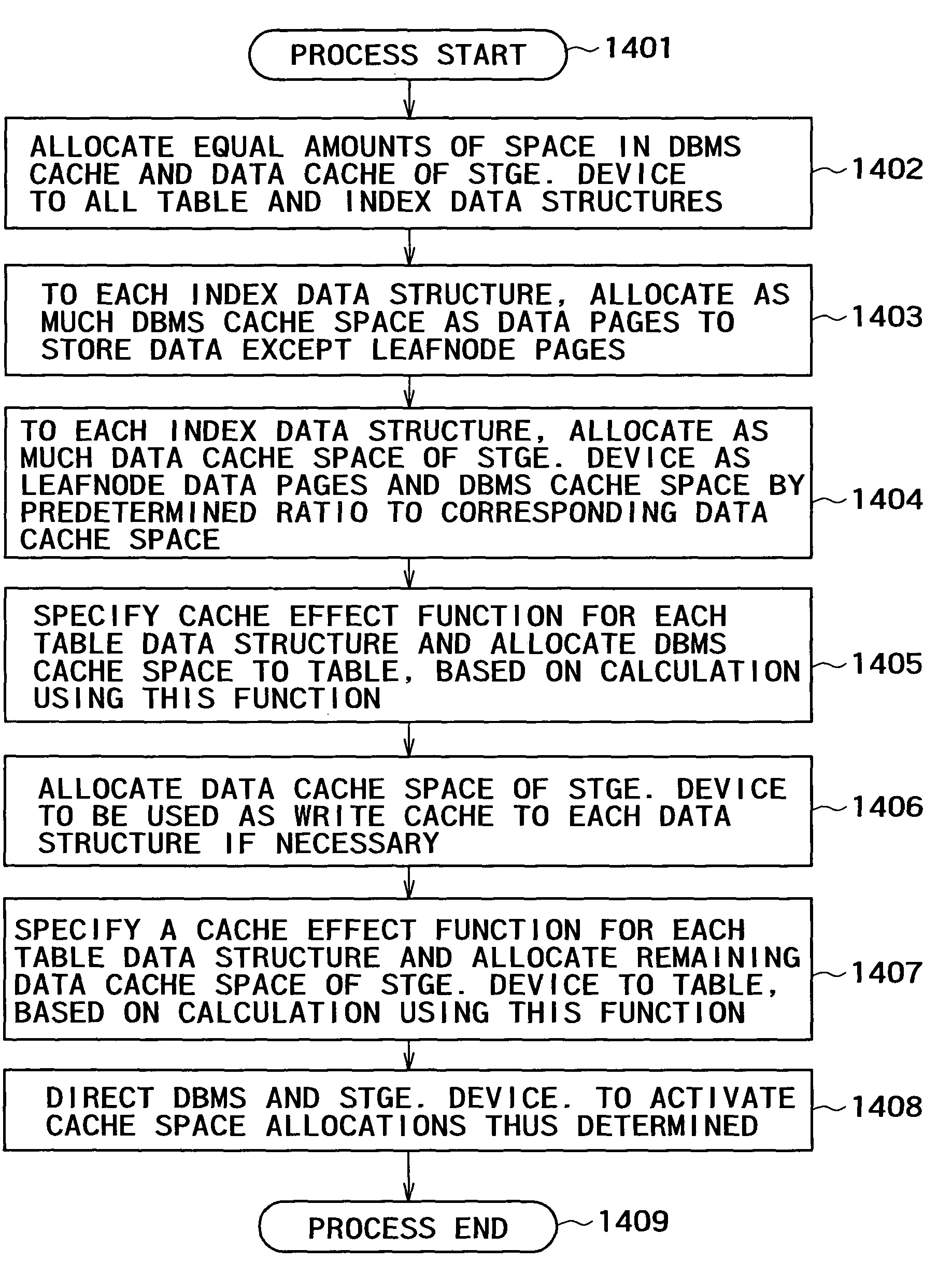

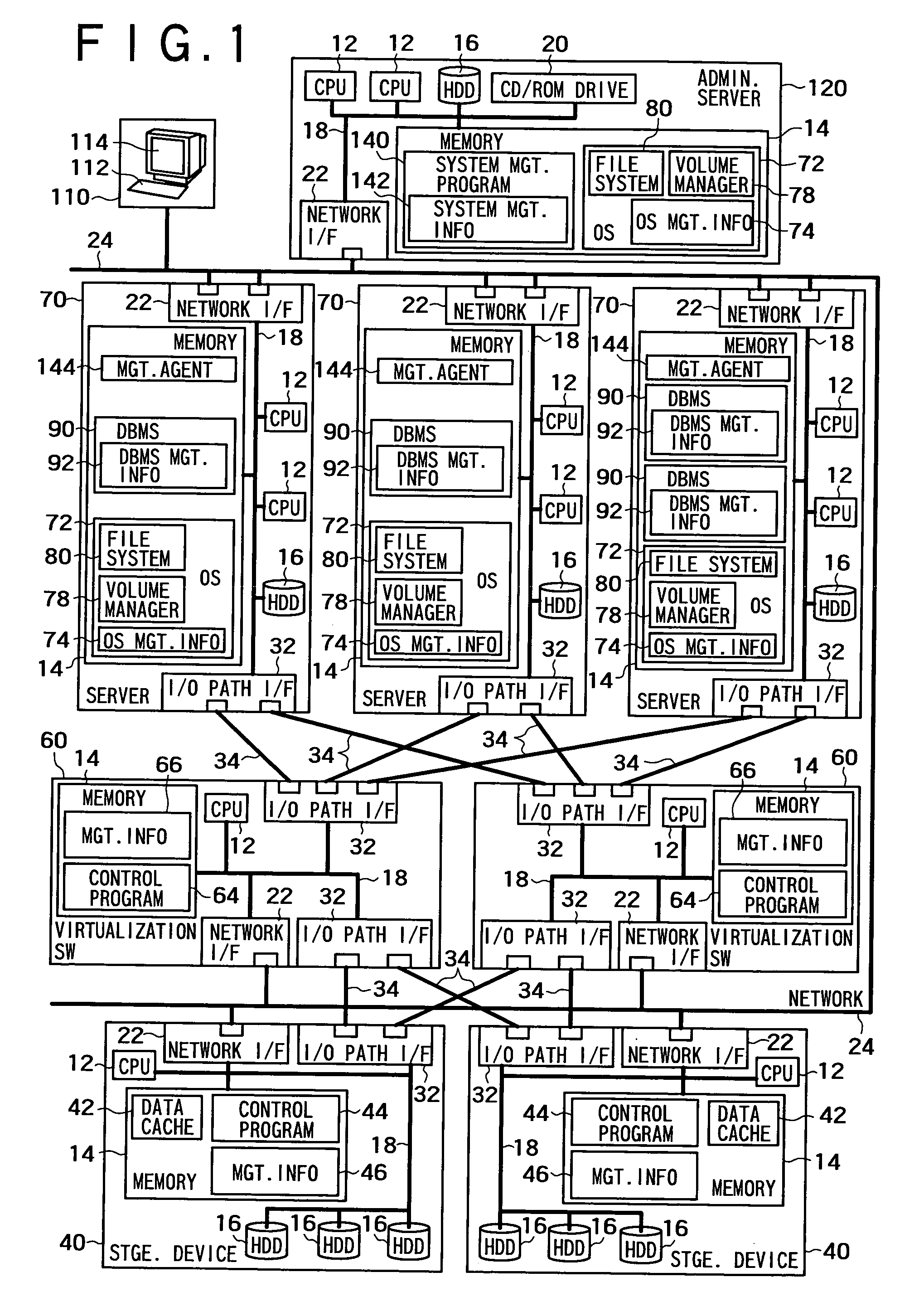

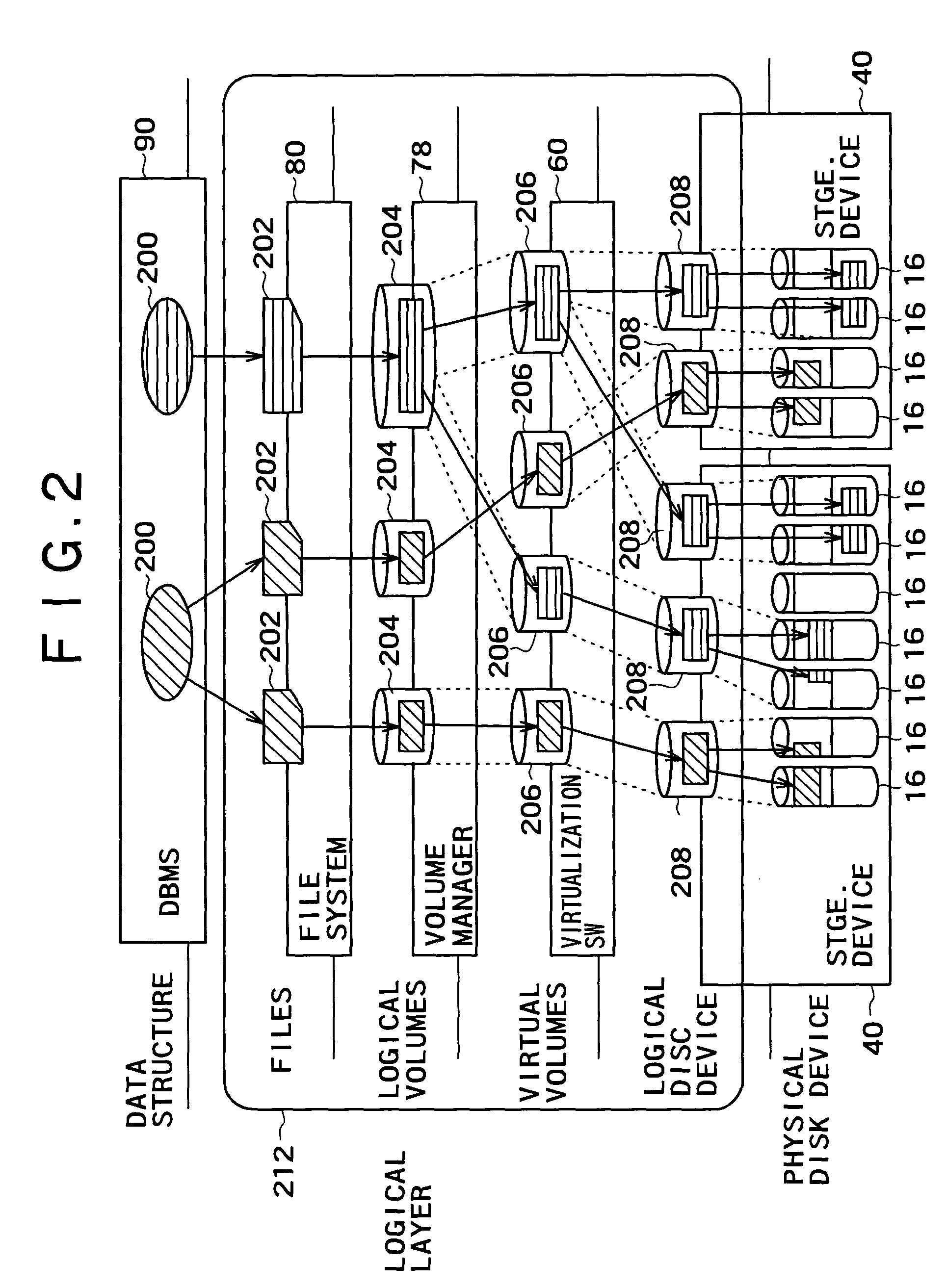

Cache management method for storage device

InactiveUS6944711B2Reduce performance management costGood effectMemory architecture accessing/allocationData processing applicationsData setComputerized system

A cache management method disclosed herein enables optimal cache space settings to be provided on a storage device in a computer system where database management systems (DBMSs) run. Through the disclosed method, cache space partitions to be used per data set are set, based on information about processes to be executed by the DBMSs, which is given as design information. For example, based on estimated rerun time of processes required after DBMS abnormal termination, cache space is adjusted to serve the needs of logs to be output from the DBMS. In another example, initial cache space allocations for table and index data is optimized, based on process types and approximate access characteristics of data. In yet another example, from a combination of results of pre-analysis of processes and cache operating statistics information, a change in process execution time by cache space tuning is estimated and a cache effect is enhanced.

Owner:HITACHI LTD

Cache management method for storage device

InactiveUS20040193803A1Good effectMemory architecture accessing/allocationData processing applicationsData setComputerized system

A cache management method disclosed herein enables optimal cache space settings to be provided on a storage device in a computer system where database management systems (DBMSs) run. Through the disclosed method, cache space partitions to be used per data set are set, based on information about processes to be executed by the DBMSs, which is given as design information. For example, based on estimated rerun time of processes required after DBMS abnormal termination, cache space is adjusted to serve the needs of logs to be output from the DBMS. In another example, initial cache space allocations for table and index data is optimized, based on process types and approximate access characteristics of data. In yet another example, from a combination of results of pre-analysis of processes and cache operating statistics information, a change in process execution time by cache space tuning is estimated and a cache effect is enhanced.

Owner:HITACHI LTD

Data storage structure of Flash memory and data manipulation mode thereof

ActiveCN102081577AExtended service lifePower-down resistantMemory adressing/allocation/relocationRedundant data error correctionElectricityData operations

The invention relates to the field of a Flash memory, particularly a data storage structure of a Flash memory. A storage unit on each page comprises a data storage space for storing data and a spare space, wherein the spare space is defined into the following parts: a file name recording area, a page name recording area, a page storage state recording area, a page state recording area, a block erase counter recording area and a data check code recording area. The data manipulation mode of the data storage structure of a Flash memory specifically comprises a system initialization step and a data manipulation step, wherein the data manipulation step comprises storage space allocation and release operation, anti-power-down protection operation, abrasion balancing operation, bad block processing and data check operation. The invention optimizes the data storage structure of a Flash memory and the data manipulation control mode thereof, balances the Flash memory in the read-write operation, carries out anti-powder-down processing and designs an ECC (Error Correction Code) processing mechanism, thereby avoiding the defects of the existing Flash.

Owner:XIAMEN YAXON NETWORKS CO LTD

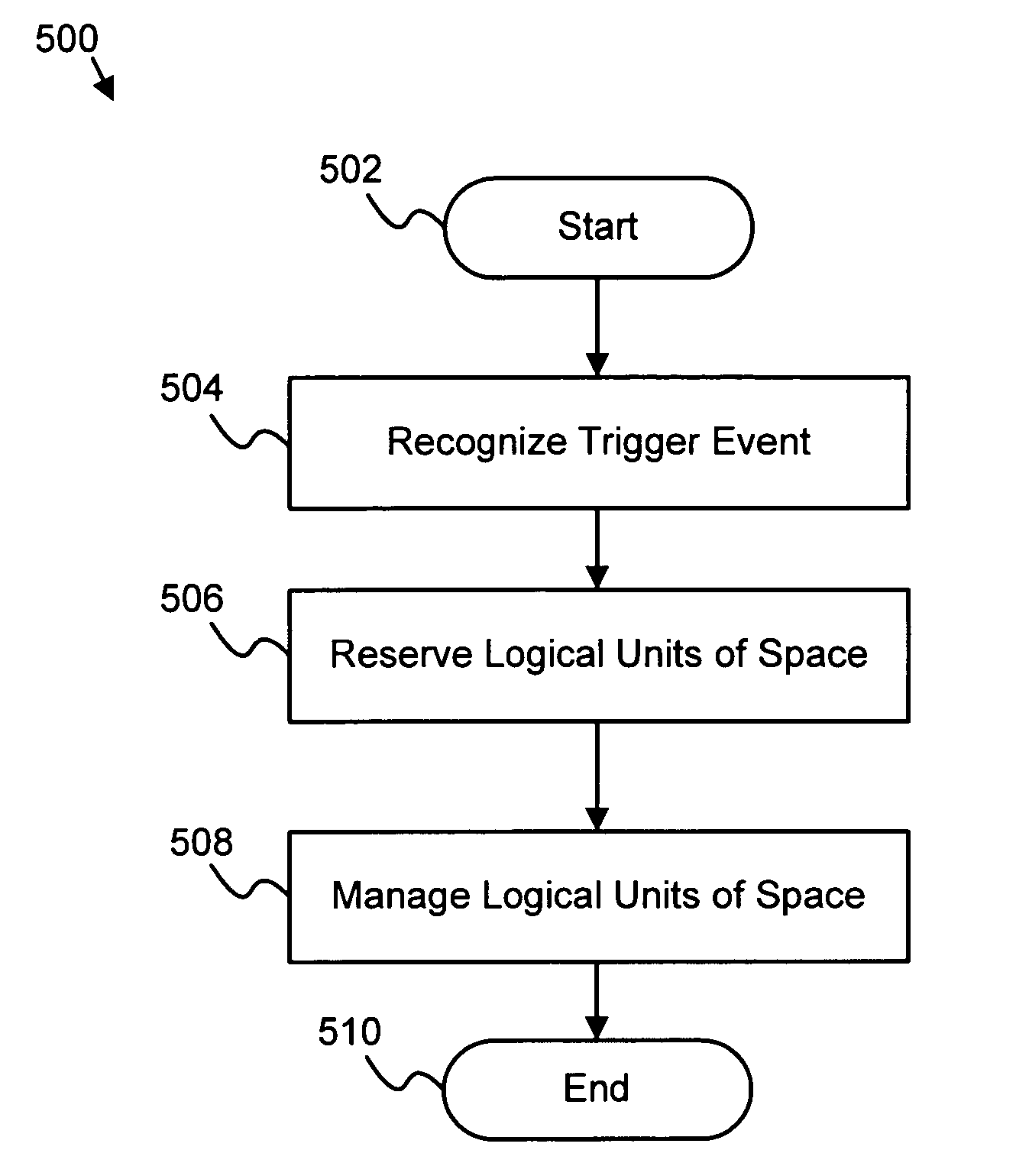

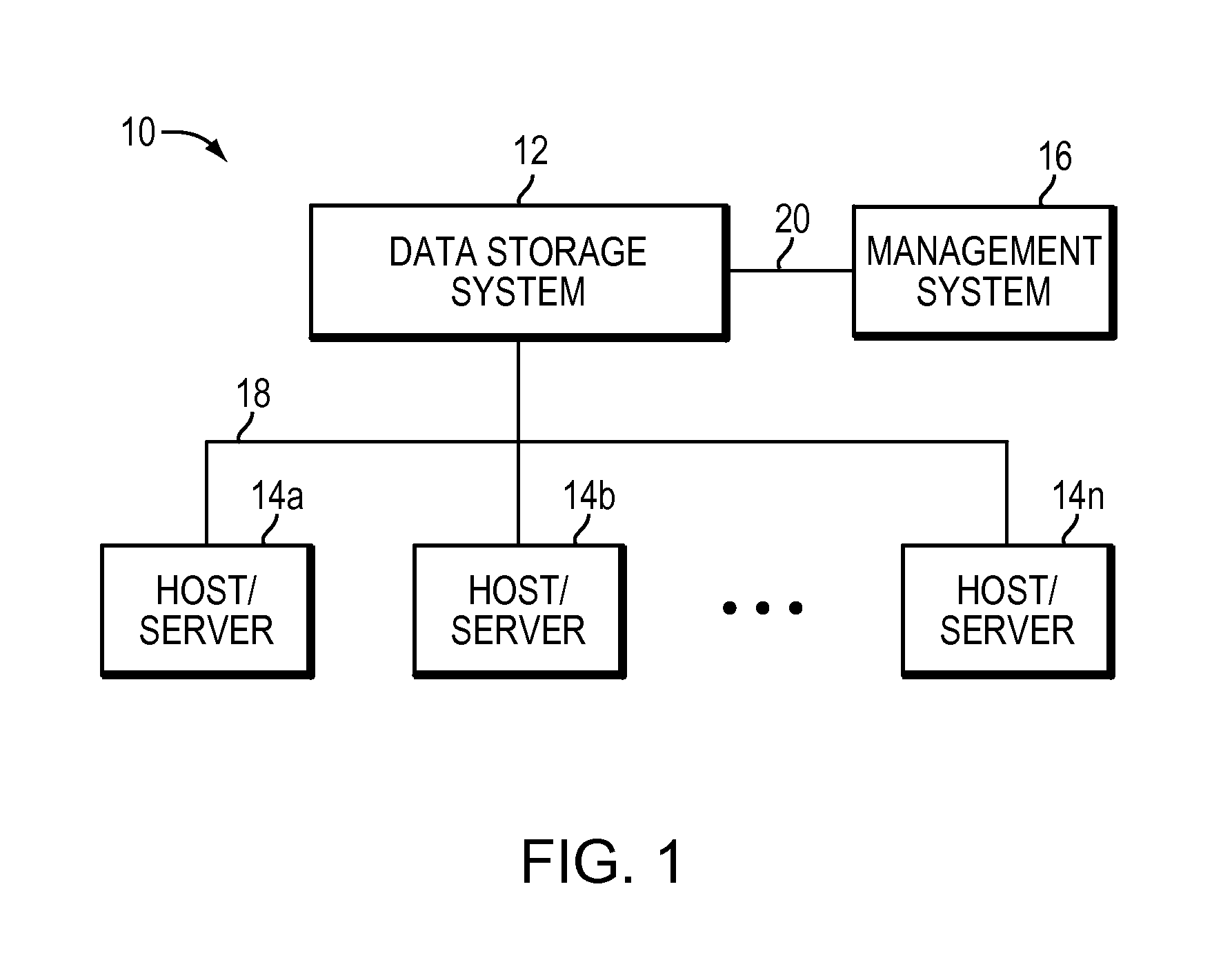

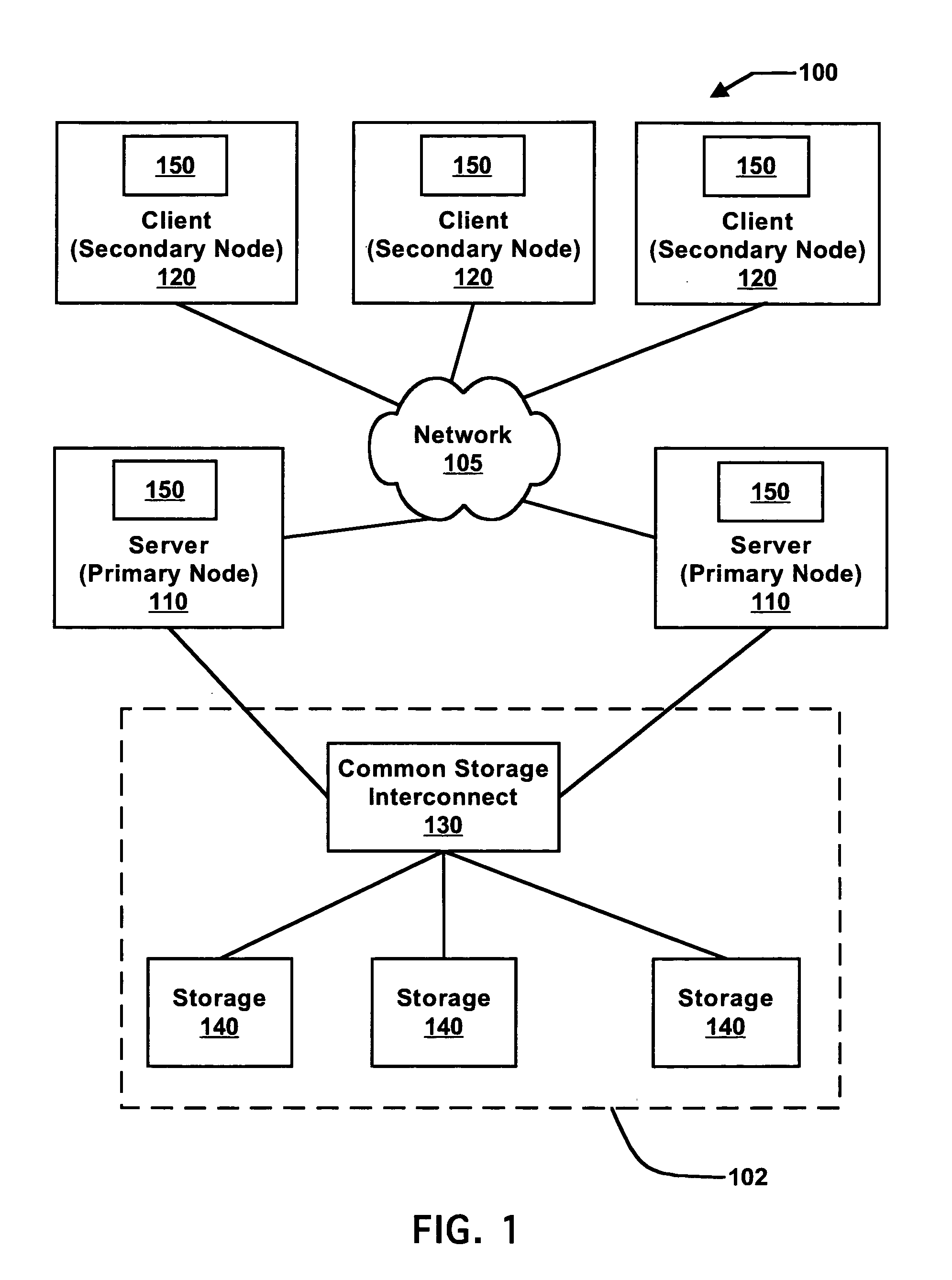

Apparatus, system, and method for managing storage space allocation

InactiveUS20060129778A1Multiple digital computer combinationsTransmissionStorage area networkFile system

An apparatus, system, and method are disclosed for managing storage space allocation. The apparatus includes a recognizing module, a reserving module, and a managing module. The recognizing module recognizes a trigger event at a client of the data storage system. The reserving module reserves logical units of space for data storage. The management module manages the logical units of space at the client. Such an arrangement provides for distributed management of storage space allocation within a storage area network (SAN). Facilitating client management of the logical units of space in this manner may reduce the number of required metadata transactions between the client and a metadata server and may increase performance of the SAN file system. Reducing metadata transactions effectively lowers network overhead, while increasing data throughput.

Owner:IBM CORP

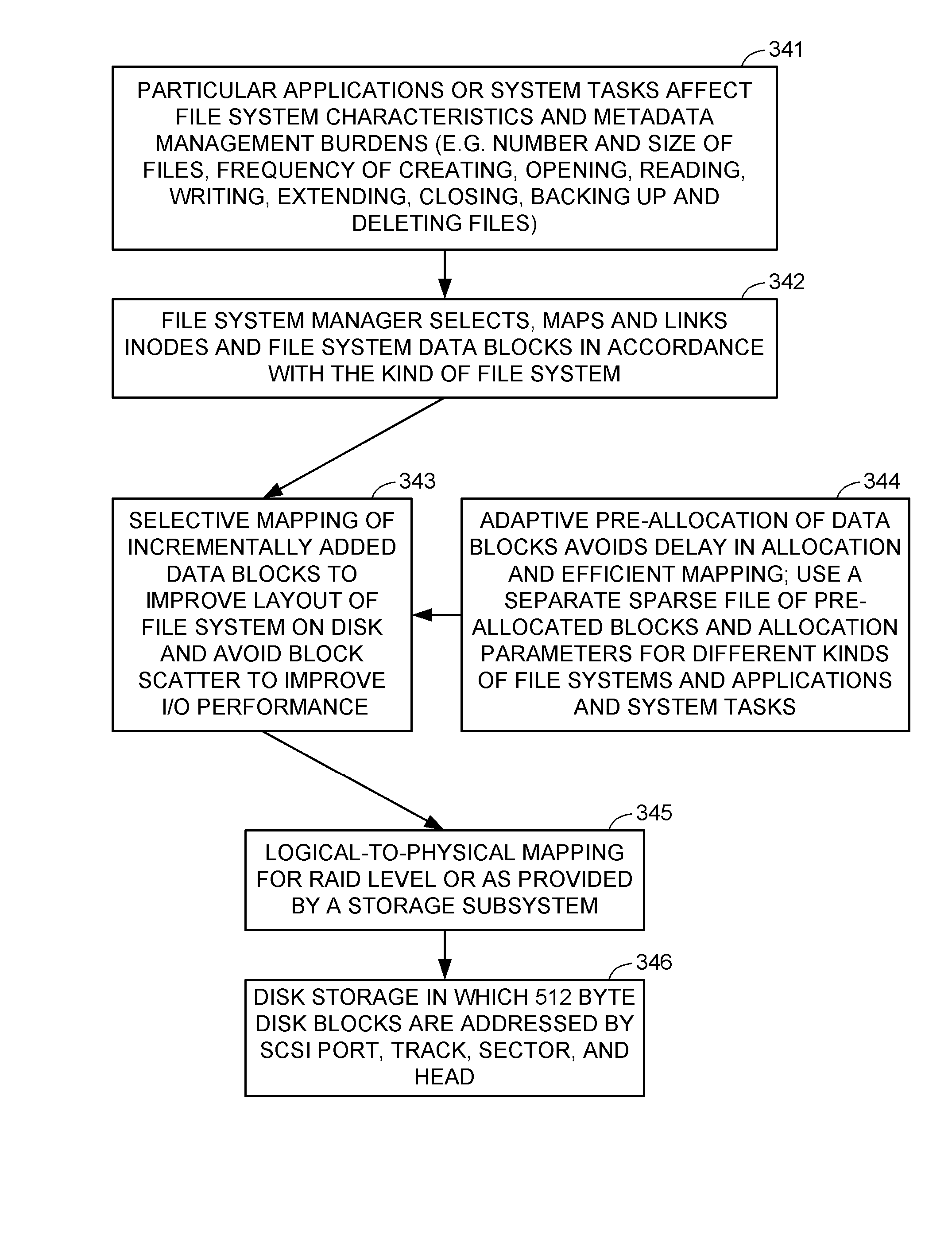

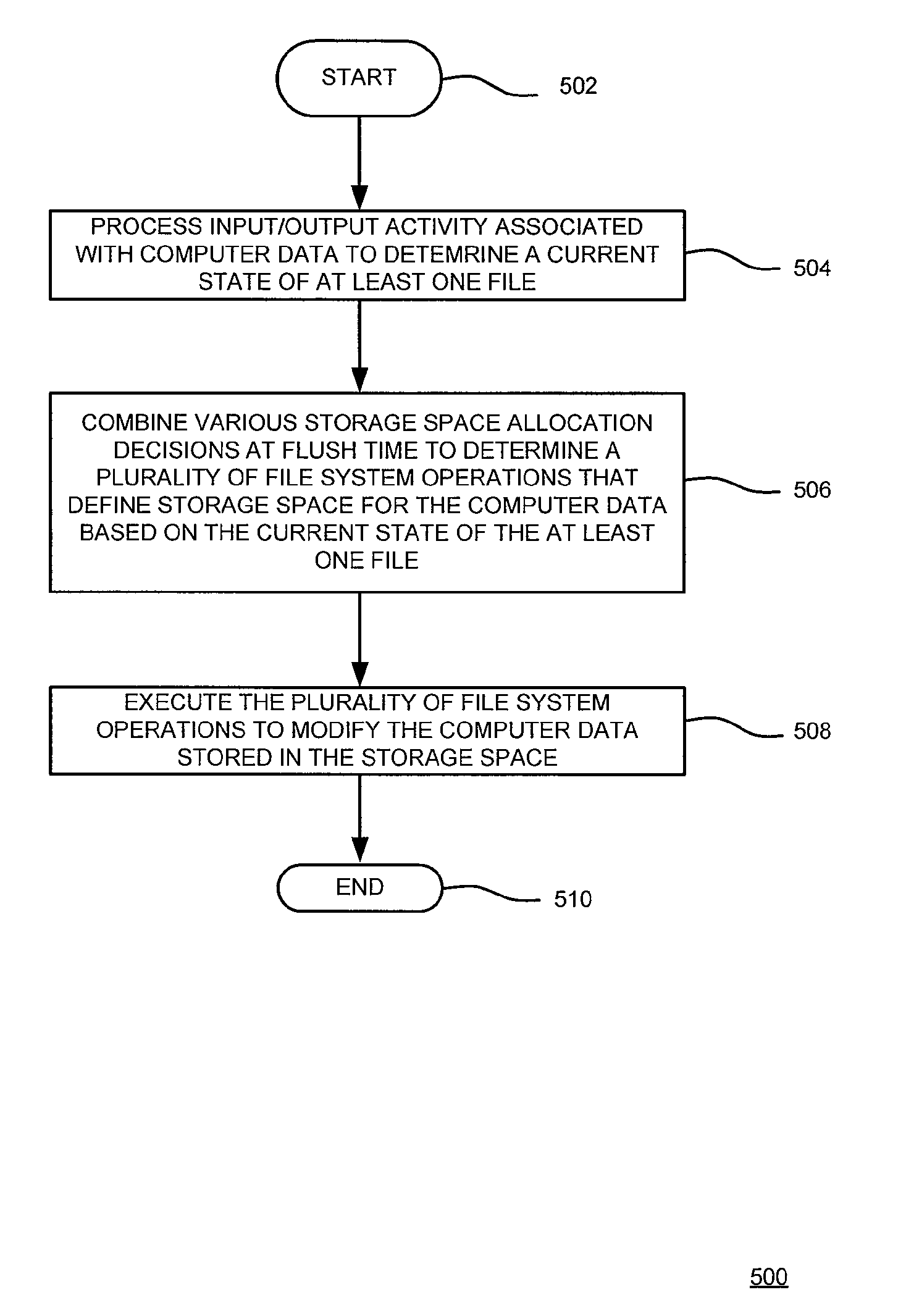

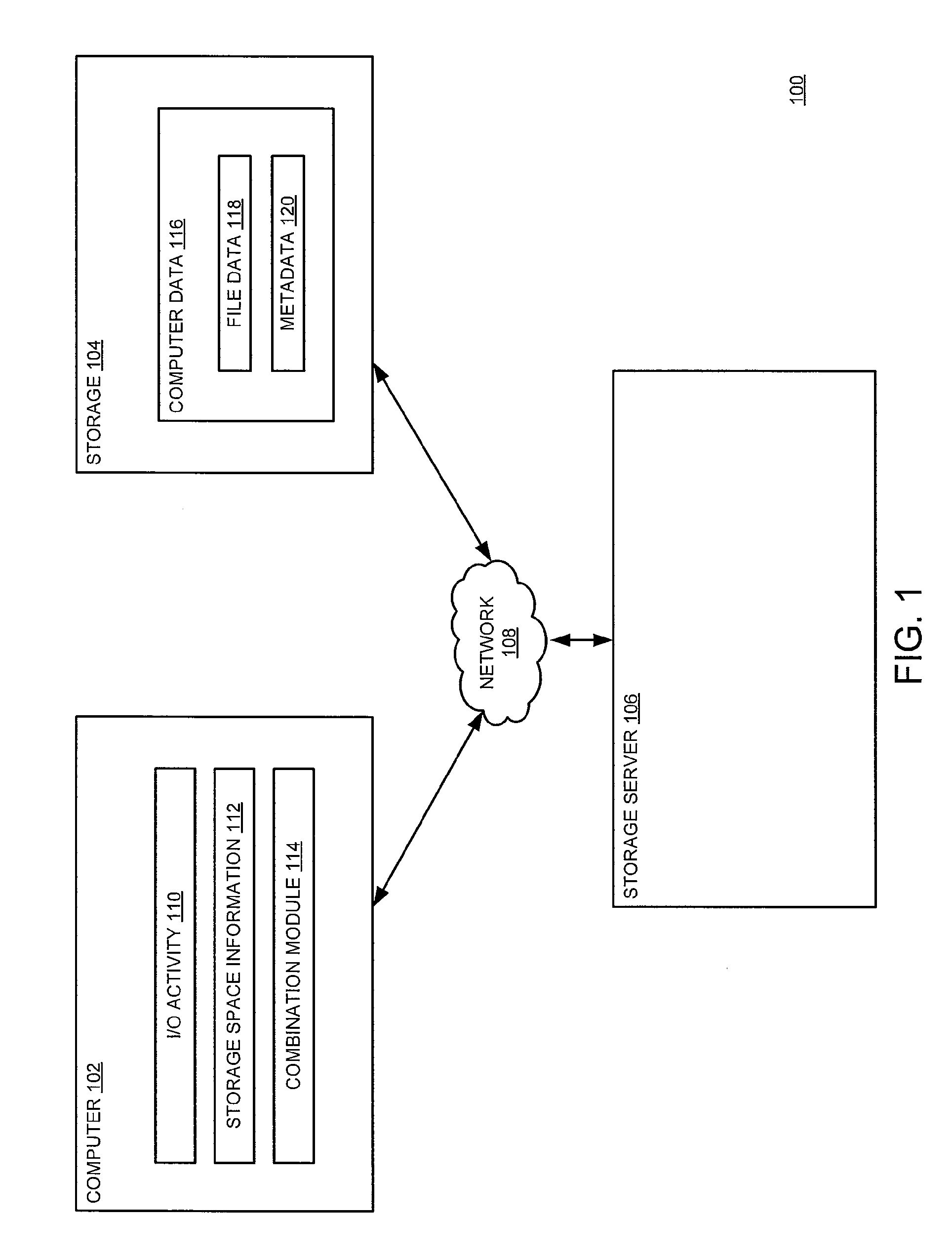

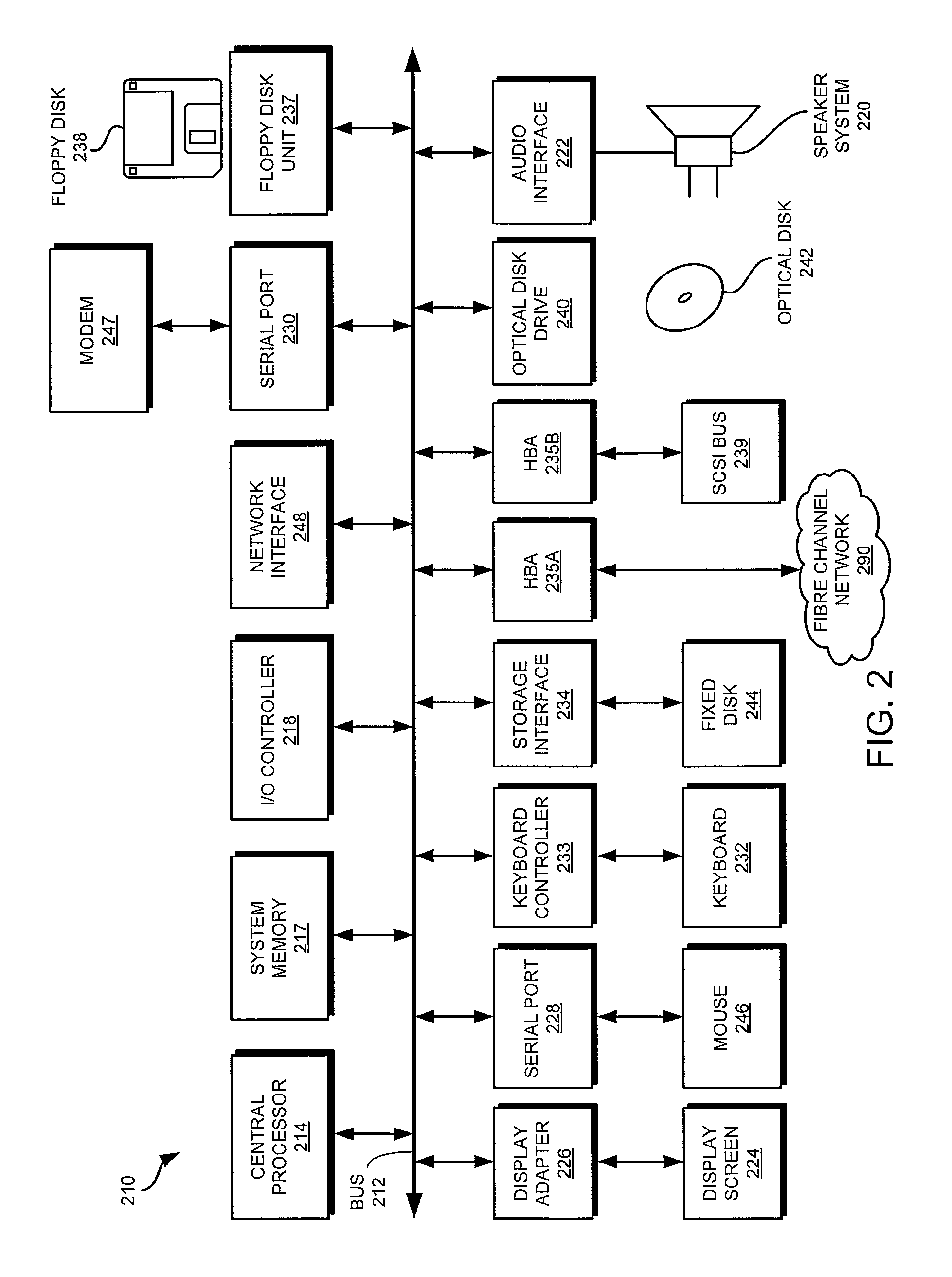

Method and apparatus for optimizing storage space allocation for computer data

ActiveUS8392479B1Optimize layoutFile system administrationSpecial data processing applicationsDistributed File SystemFile system

A method and apparatus for optimizing storage space allocations, using at least one processor, for computer data in distributed file systems is described. In one embodiment, the method includes processing input / output activity that is associated with computer data to determine a current state of at least one file in a distributed file system, at flush time, combining various storage space allocation decisions applied over at least one network protocol to determine a plurality of file system operations that define storage space, based on the current state of the at least one file, for the computer data and executing the plurality of file system operations on the computer data stored in the storage space.

Owner:VERITAS TECH

Rebuilding redundant disk arrays using distributed hot spare space

InactiveUS6976187B2Addressing slow performanceError preventionRedundant data error correctionDisk arrayComputer science

A method and system that allows the distribution of hot spare space across multiple disk drives that also store the data and redundant data in a fully active array of redundant independent disks, so that an automatic rebuilding of the array to an array of the identical level of redundancy can be achieved with fewer disk drives. The method configures the array with D disk drives of B physical blocks each. N user data and redundant data blocks are allocated to each disk drive, and F free blocks are allocated as hot spare space to each disk drive, where N+F<=B, and ((D−M)×F)>=N. Thus, rebuilding of data and redundant blocks of a failed disk drive in the free blocks of the remaining disk drives is enabled after M disk drive failures.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

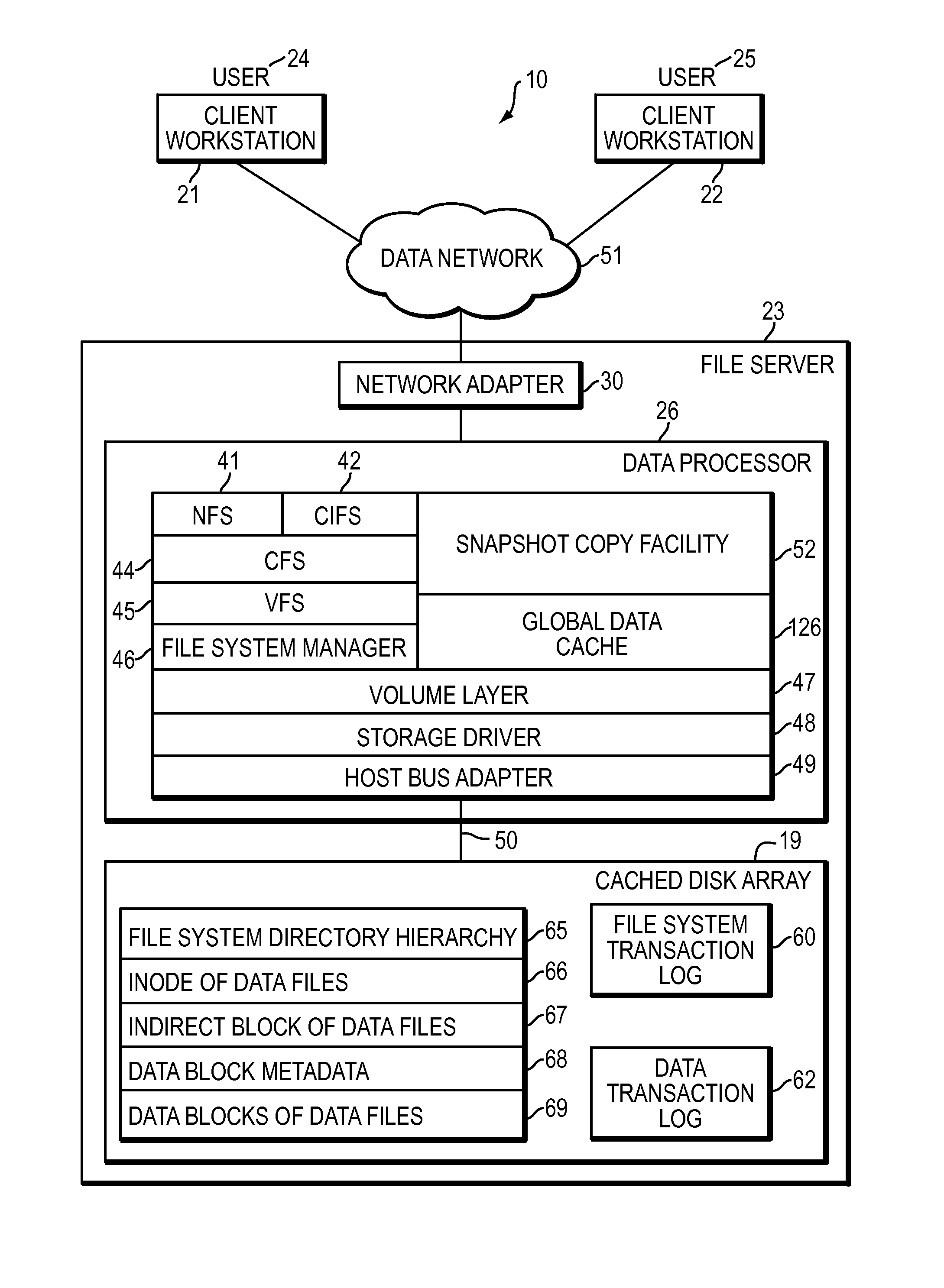

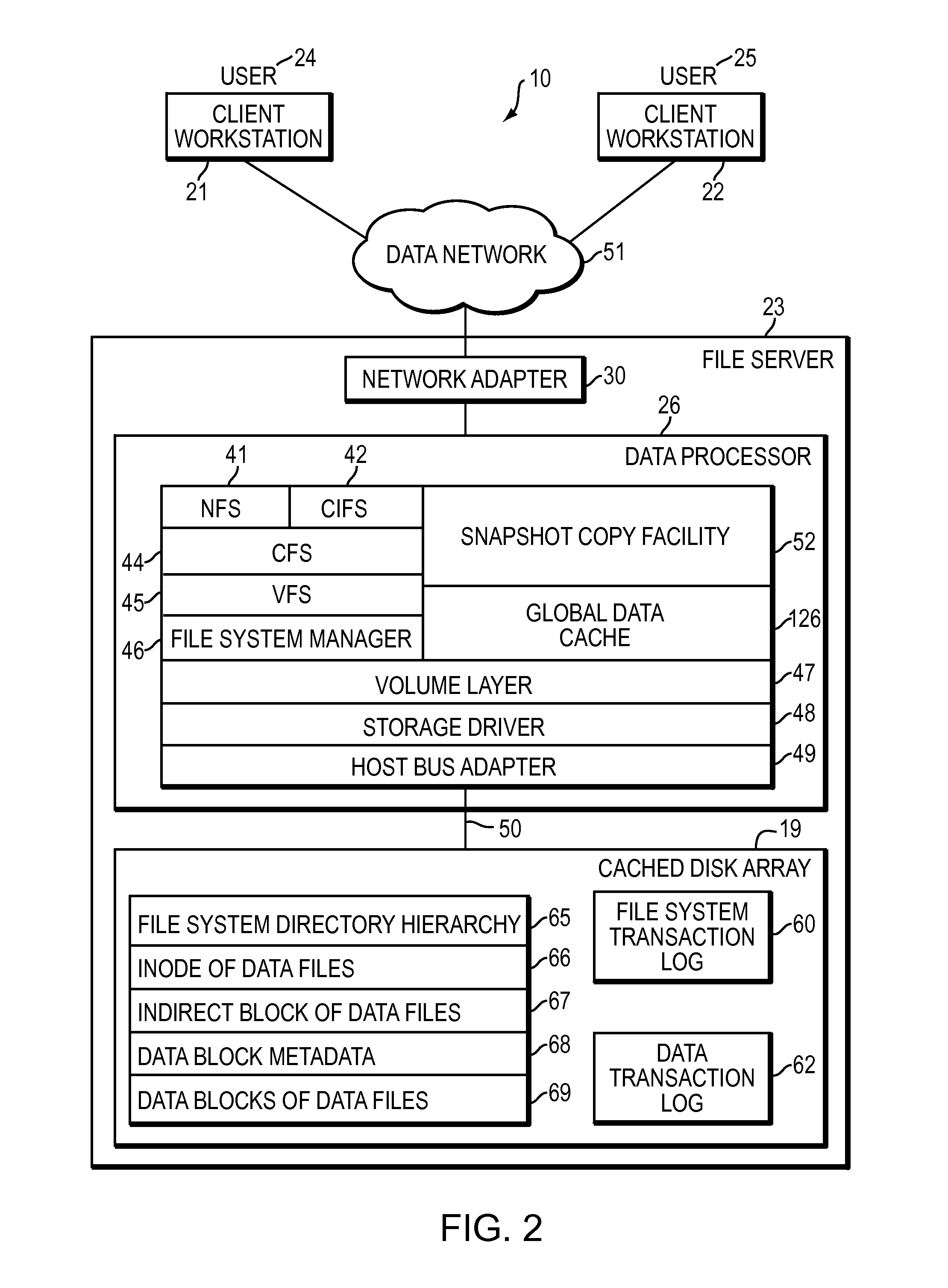

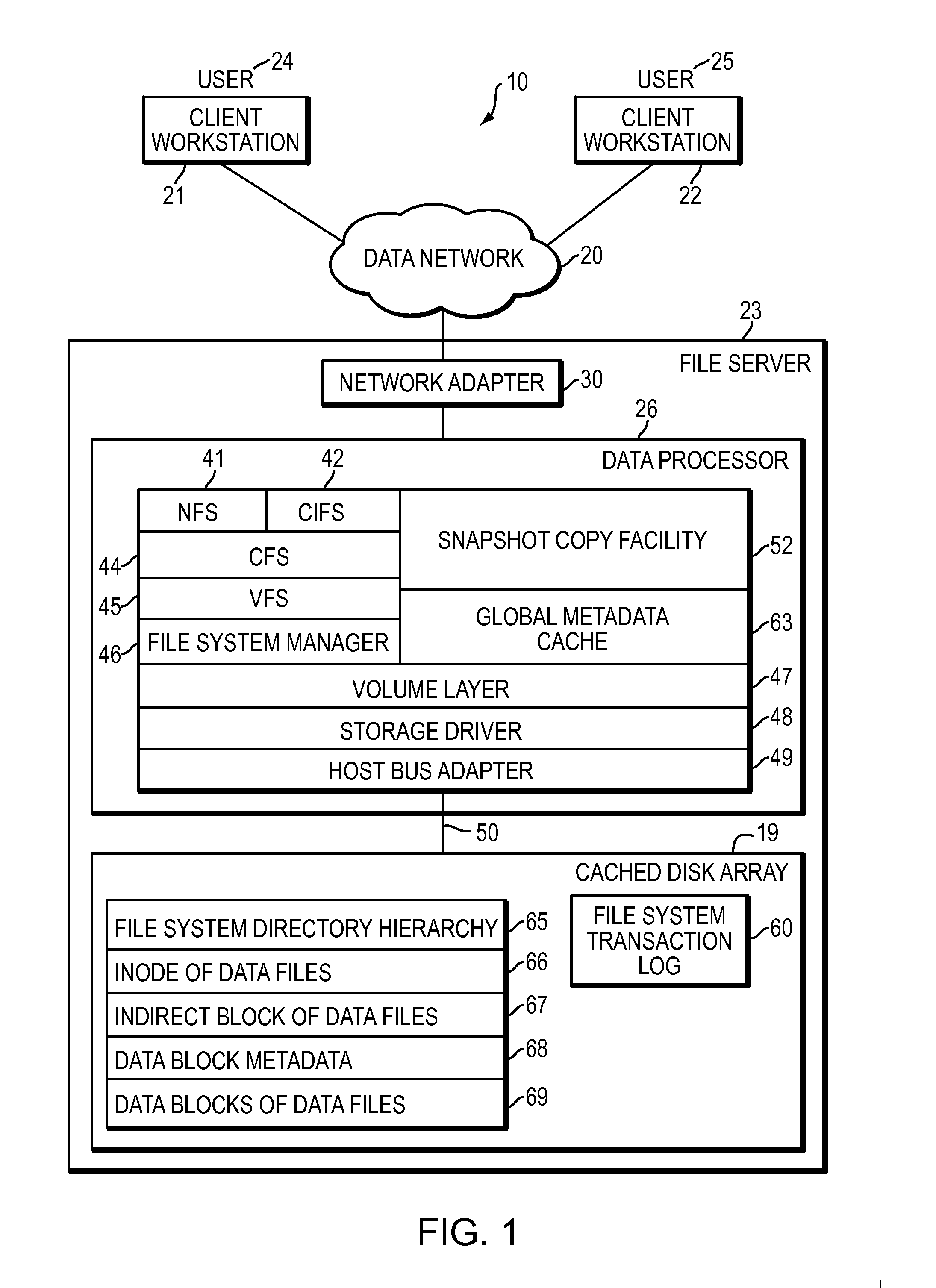

Managing global data caches for file system

ActiveUS9135123B1Digital data information retrievalRedundant operation error correctionFile systemDatabase

A method is used in managing global data caches for file systems. Space is allocated in a volatile memory of a data storage system to a global data cache that is configured to store a set of data objects for a plurality of different file systems. The set of data objects is accessed by the plurality of different file systems. Contents of a file of a file system are stored in a data object in the global data cache upon receiving a write I / O request for the file. A copy of the data object and information for the data object are stored in a persistent journal that is stored in a non-volatile memory of the data storage system. Contents of the file are updated on a storage device based on the data object stored in the global data cache and information stored in the persistent journal.

Owner:EMC IP HLDG CO LLC

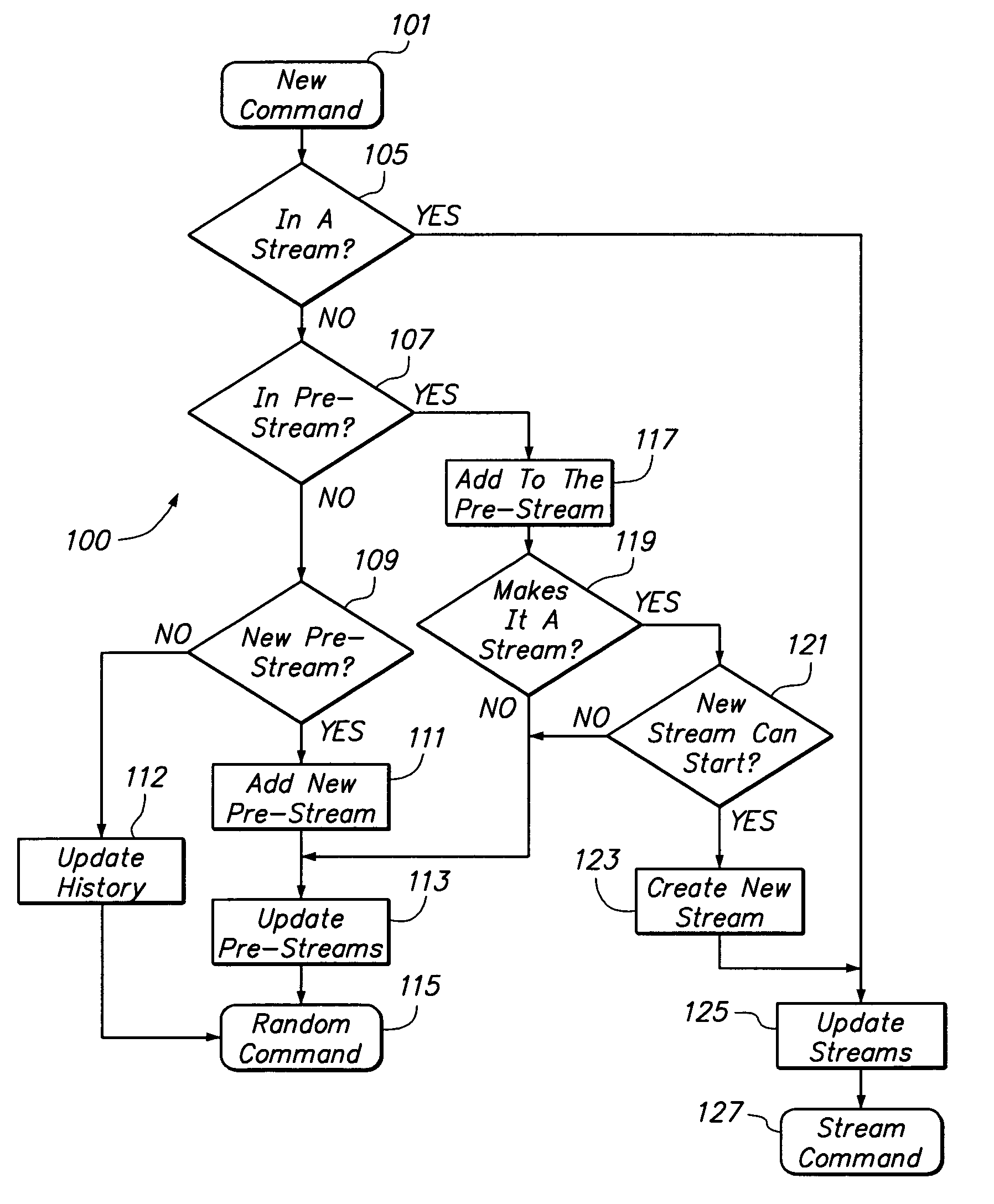

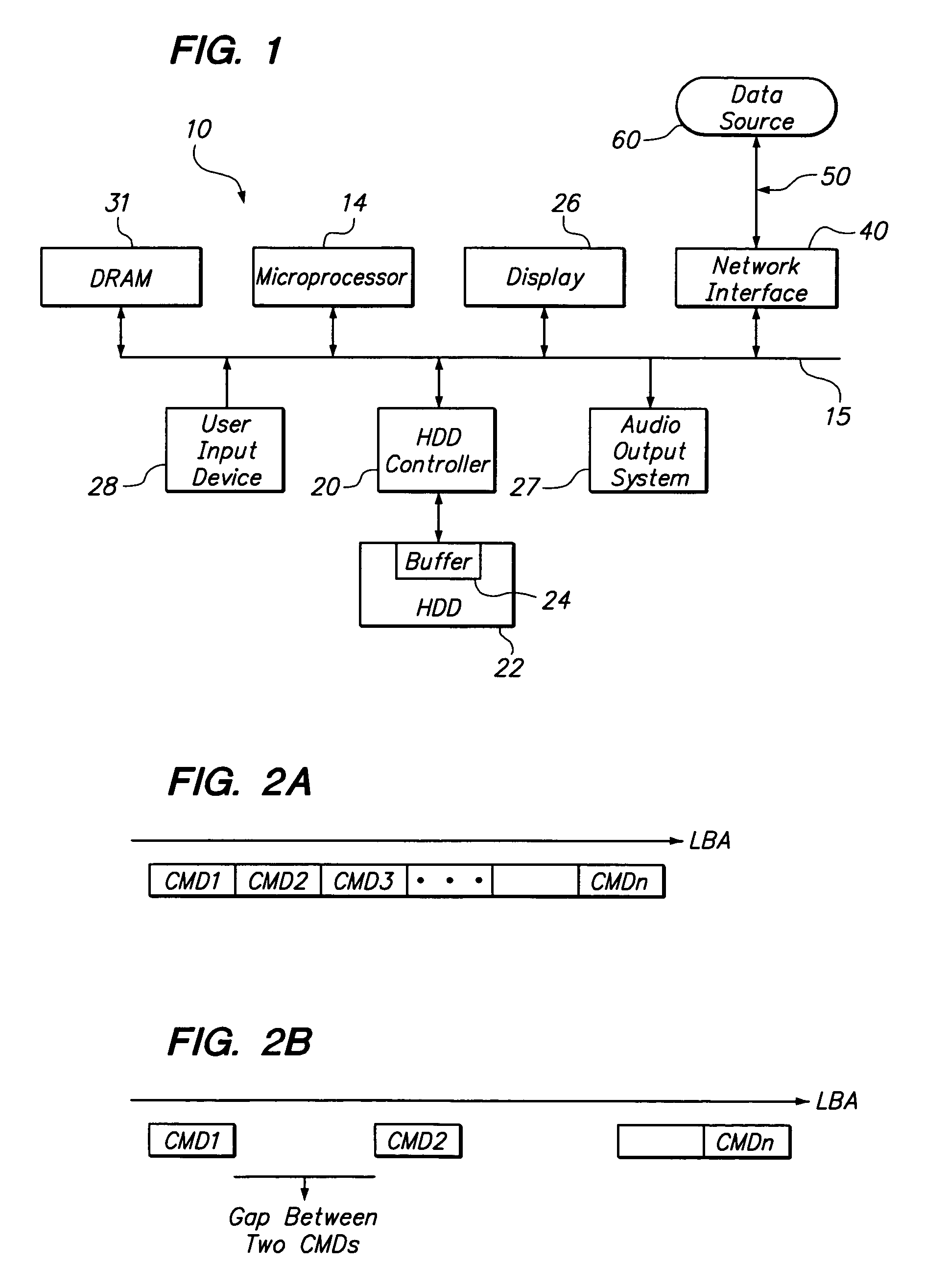

Method and apparatus for detection and management of data streams

ActiveUS6986019B1Minimizes transducer moveDoubling numberMemory adressing/allocation/relocationMicro-instruction address formationData streamResource utilization

A system for the detection and support of data streams is disclosed. The system determines whether new commands comprise a data stream. If a new data stream is detected, the system next determines whether adequate resources are available to launch the new data stream. If the system determines that the data stream can be launched, system resources, particularly cache memory space, are assigned to the data stream to provide the data stream with the necessary amount of data throughput needed to support the data stream efficiently. The data stream's throughput is the amount of data that the stream requires per unit time. The system monitors all supported data streams to determine when a particular data stream has terminated, at which time resources dedicated to the data stream are released and become available to support other data streams. The cache for each supported data stream is maintained at as full a level as possible, with the cache for the “least full” data stream given priority for refresh. Allocating resources by throughput allows for more efficient resource utilization.

Owner:MAXTOR

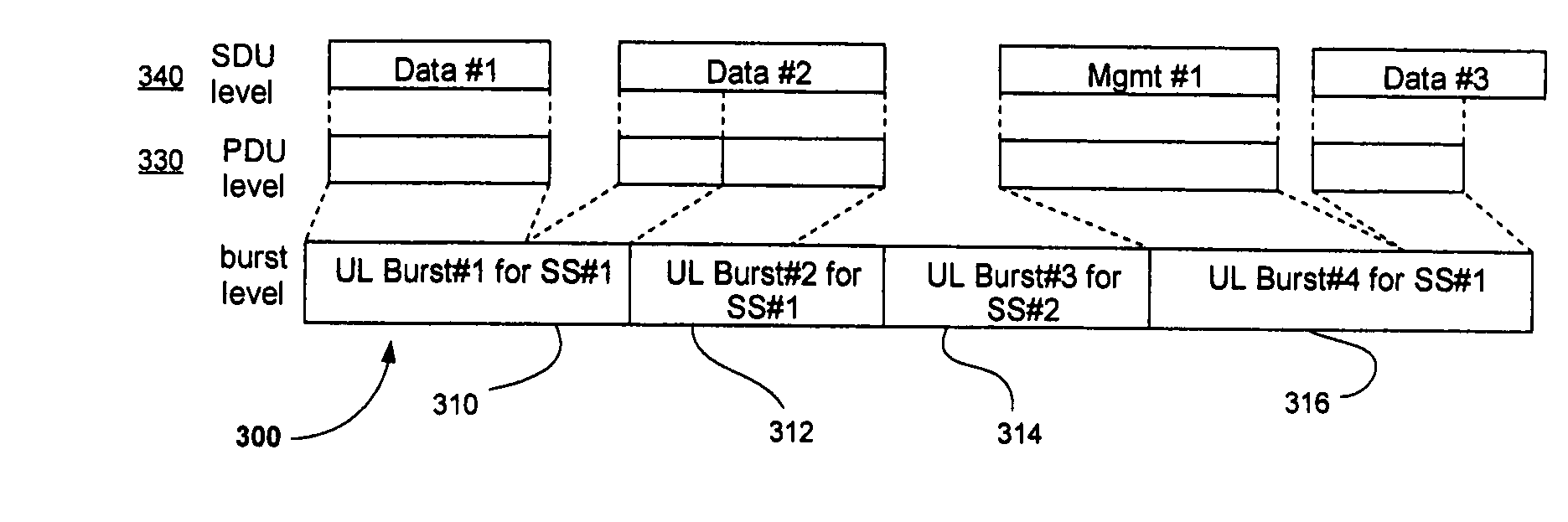

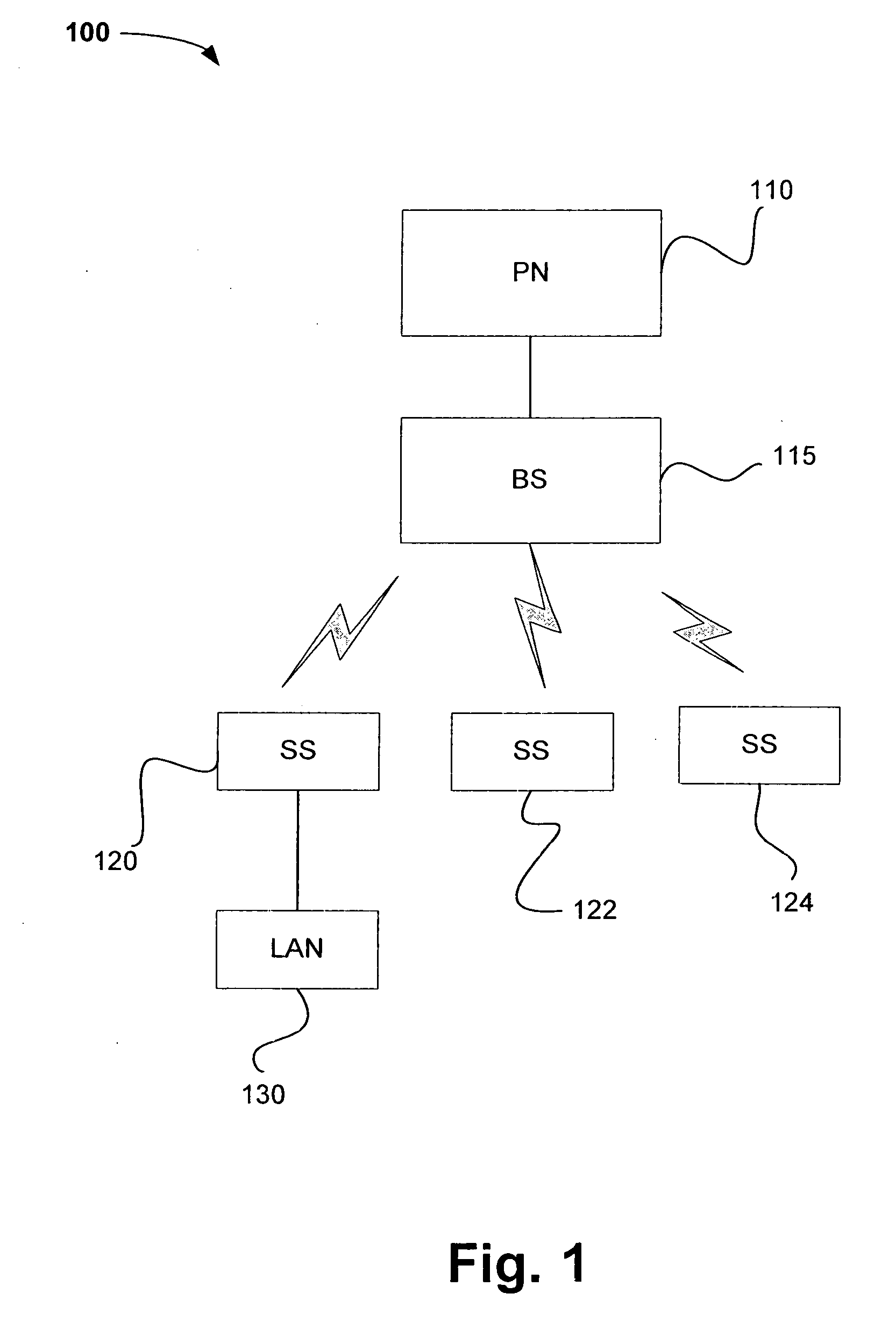

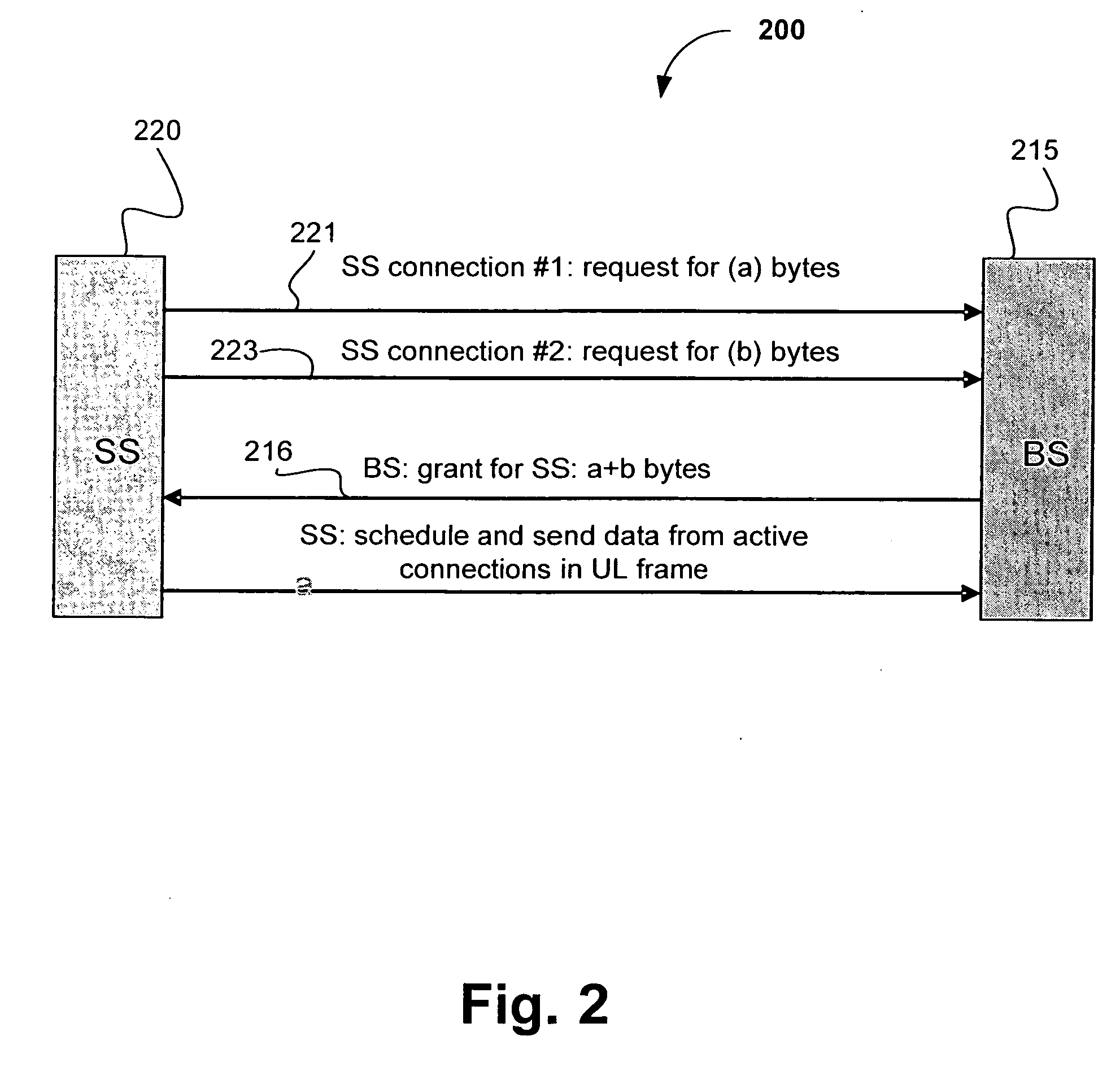

Uplink scheduling in wireless networks

InactiveUS20070047553A1Network traffic/resource managementData switching by path configurationUplink schedulingMedia access control

A medium access control (MAC) scheduler is disclosed for scheduling uplink (UL) traffic by a subscriber station having multiple active service connections. The scheduler may include two types of queue sets, a first type of queue for each unsolicited grant service (UGS) connection and a second type of queue for each and all other non-UGS connections. Upon receipt of an overall bandwidth grant from a base station, data in the first type of queues may be sent first and then data in the second type of queues is sent if there is sufficient remaining burst space in the granted UL frame. The second type of queues may be assigned weight value, and thus scheduled, depending on the type of connection. When serving the second type of queues, initial burst space allocation may be reserved for bandwidth requests to the base station. Additional embodiments and variations are also disclosed.

Owner:INTEL CORP

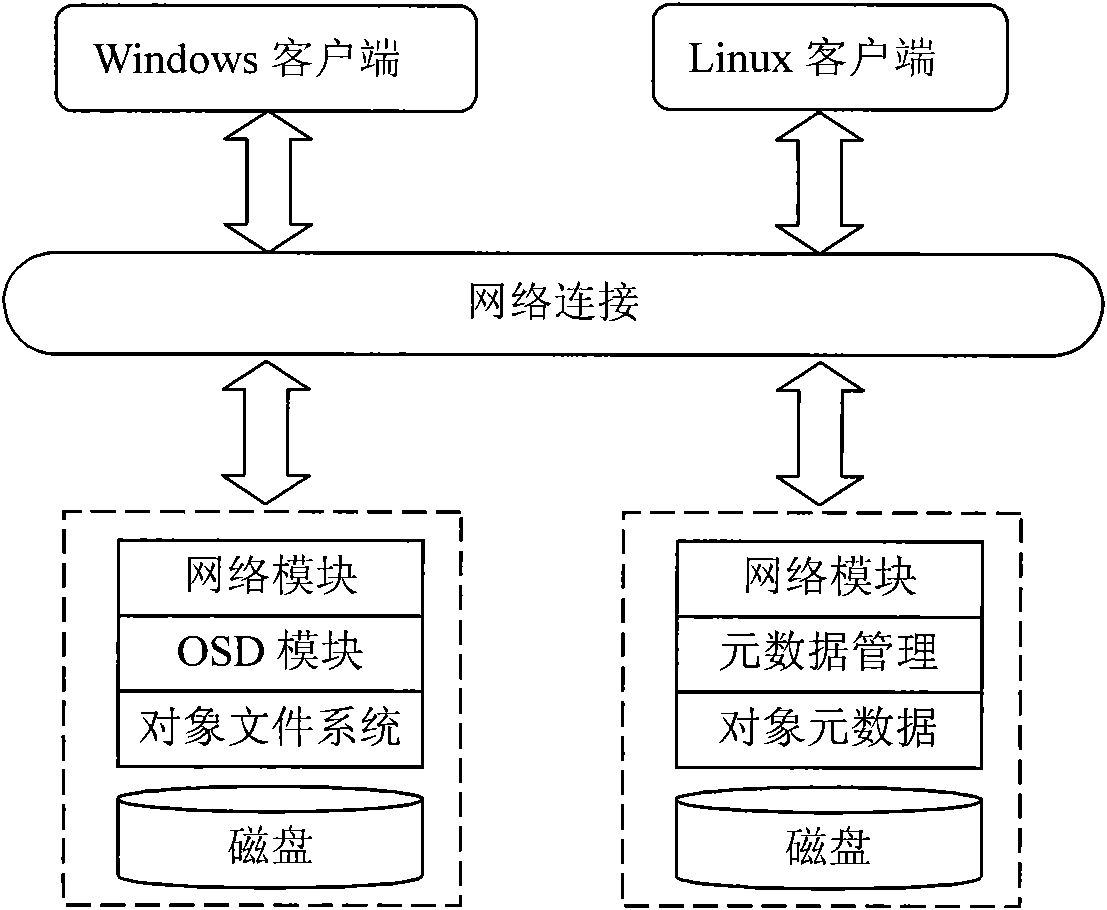

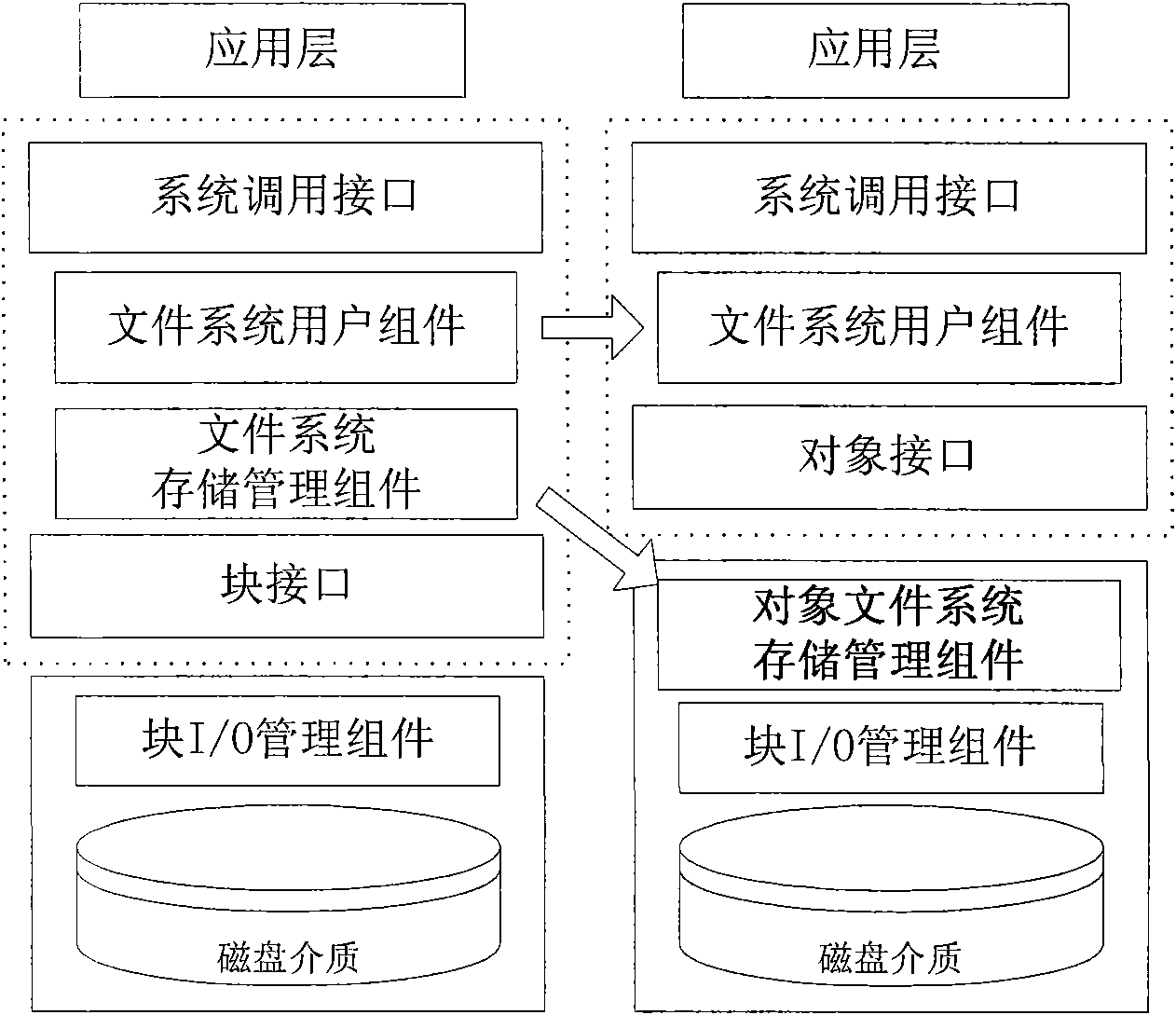

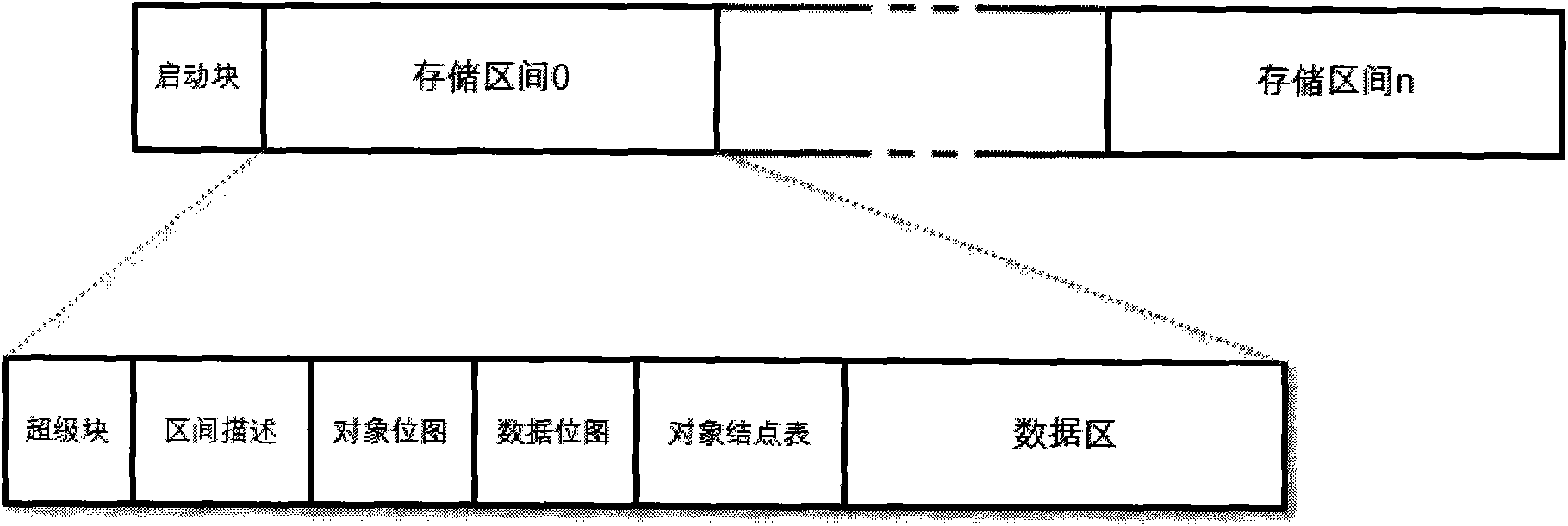

Object file organization method based on object storage device

InactiveCN101556557AEfficiencyImprove reliabilityMemory adressing/allocation/relocationSpecial data processing applicationsObject basedFile allocation

The invention relates to an object file organization method based on an object storage device, comprising the following steps of: establishing the layout of an object file system in a disk, loading information including object description, object bitmap and the like in a memory; detecting the size of the object file by a space allocator before allocating the space; if the size of the object file is acquired, adopting a pre-allocating method to allocate space for the object file on the disk; if the size of the object file can not be acquired, firstly writing part data of the object file into a buffer zone; detecting whether the buffer zone is fully filled or whether the client needs to release the caching data, allocating space for the data in the buffer zone on the disk, and writing the data into the disk; and when the data in the buffer zone is far larger than size of the logic data sub-block, allocating storage space for the data by the space allocator. The invention adopts the method of continuously allocating a plurality of data blocks, thus reducing the time for searching and allocating free blocks, and making up the limitation and disadvantage of object file system disk space allocation in the current distribution-type file system.

Owner:ZHEJIANG UNIV

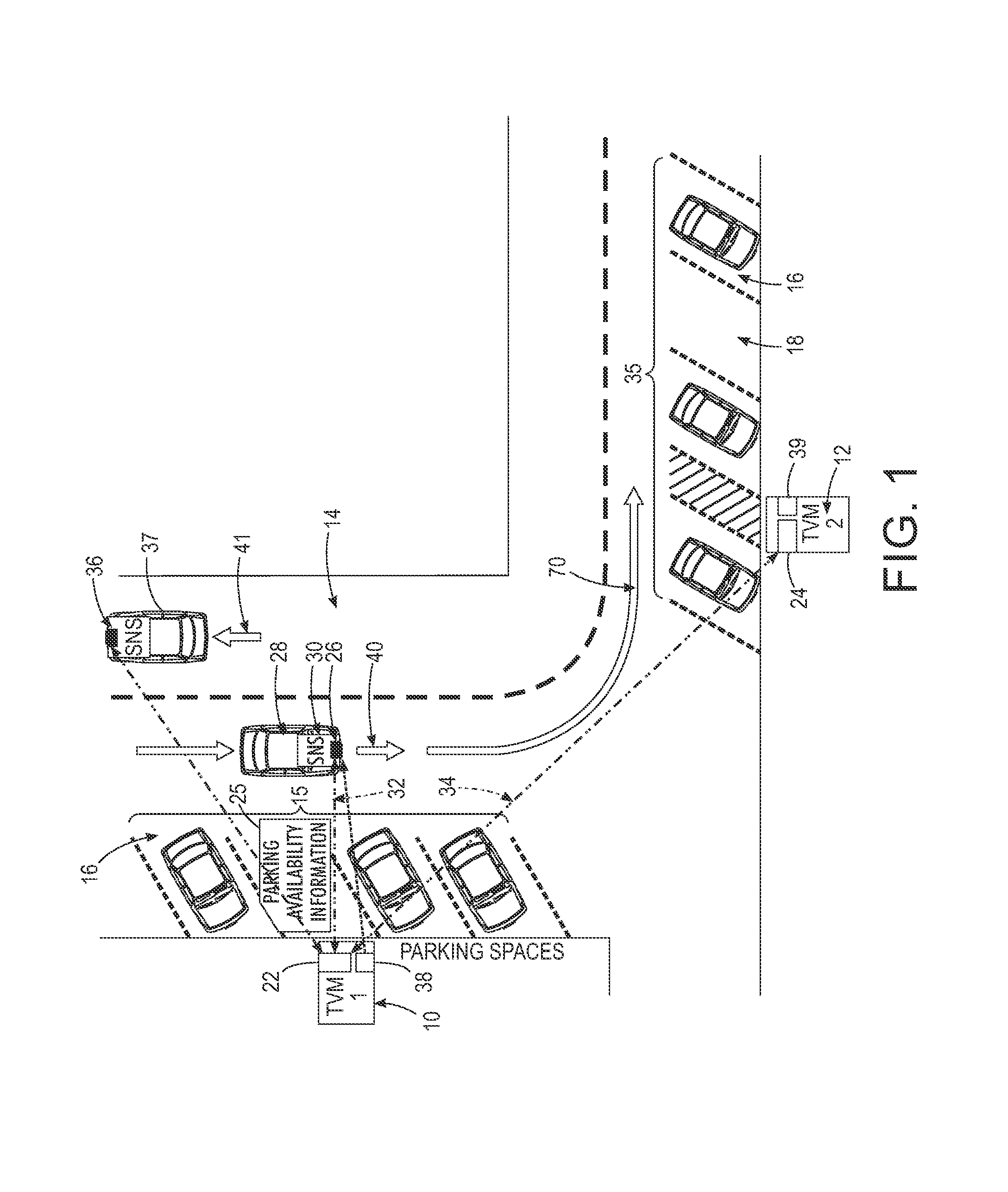

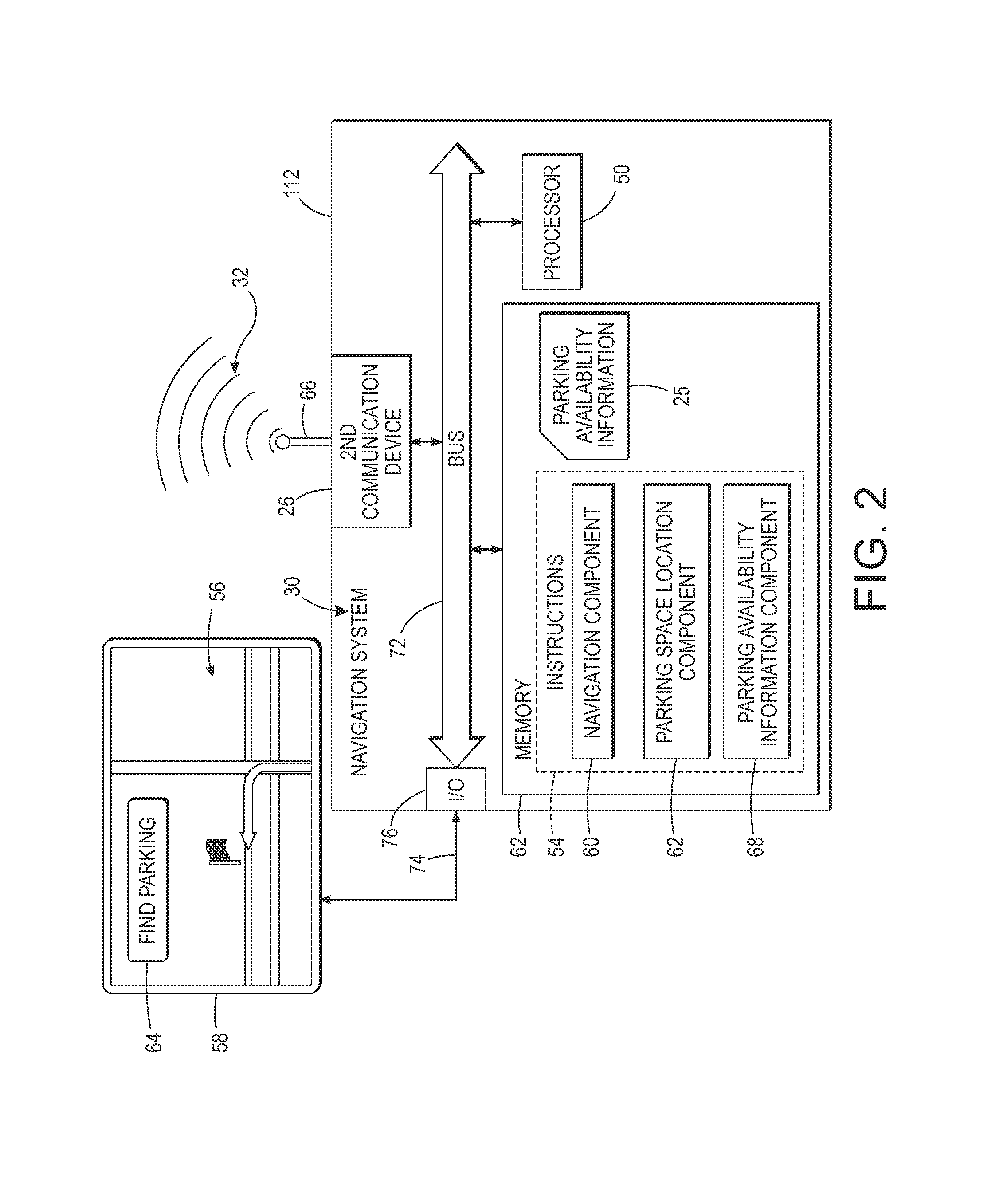

Route computation for navigation system using data exchanged with ticket vending machines

ActiveUS20140350853A1Instruments for road network navigationIndication of parksing free spacesDriver/operatorParking space

A method for assisting a driver to locate a parking space includes identifying a user-selected destination with a navigation system of a first vehicle. While the driver of the first vehicle is being guided by the navigation system towards the selected destination, parking availability information is acquired from a first space allocation device (e.g., ticket vending machine), associated with a first parking zone. The parking availability information includes parking availability information for at least one second parking zone associated with a respective second, space allocation device, remote from the first space allocation device. The second parking availability information for the second parking zone(s) is communicated to the first space allocation device by at a respective second navigation system of at least one second vehicle. A parking zone is selected, based on the user-selected destination and acquired parking availability information transmitted from the first space allocation device.

Owner:CONDUENT BUSINESS SERVICES LLC

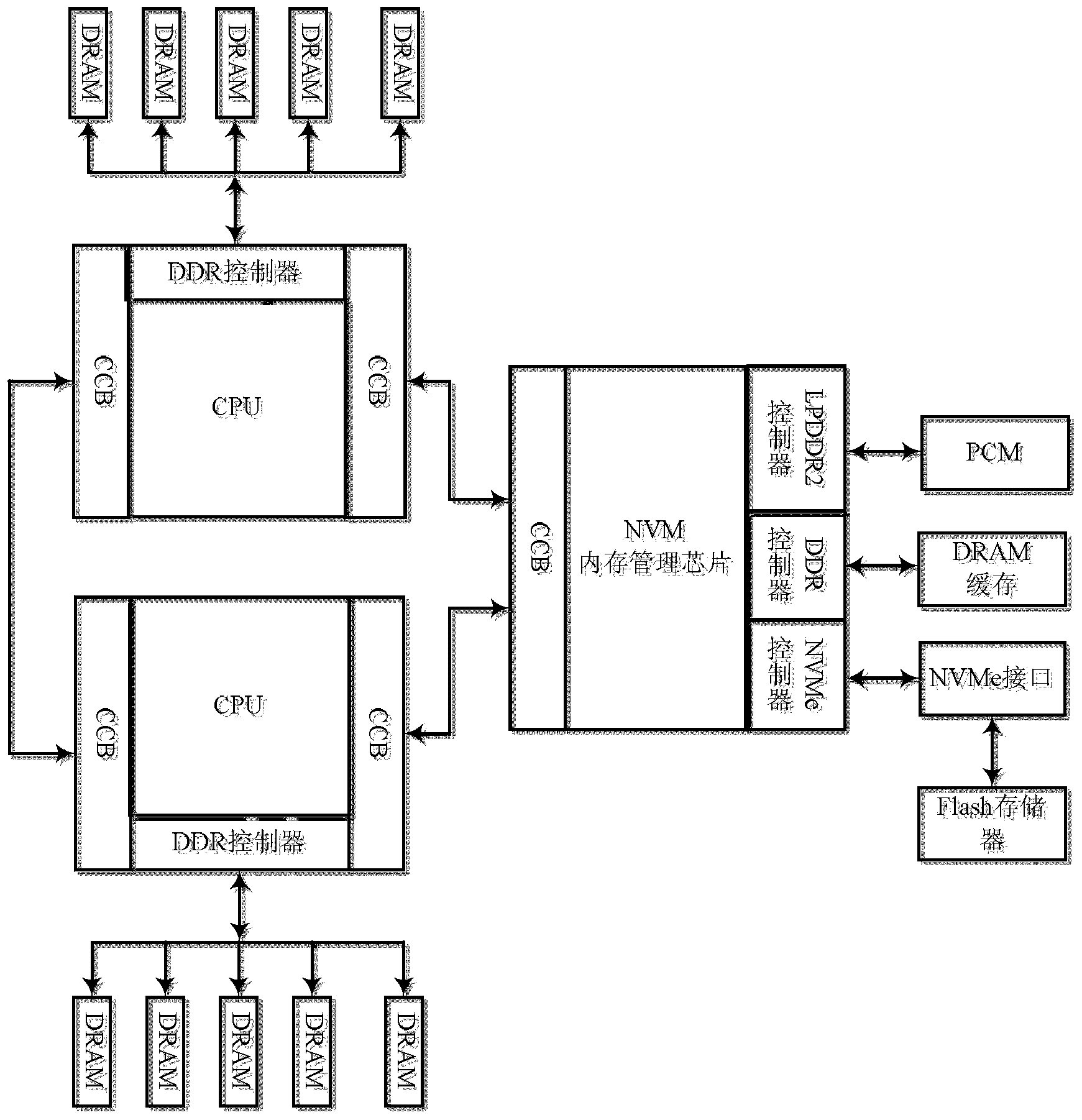

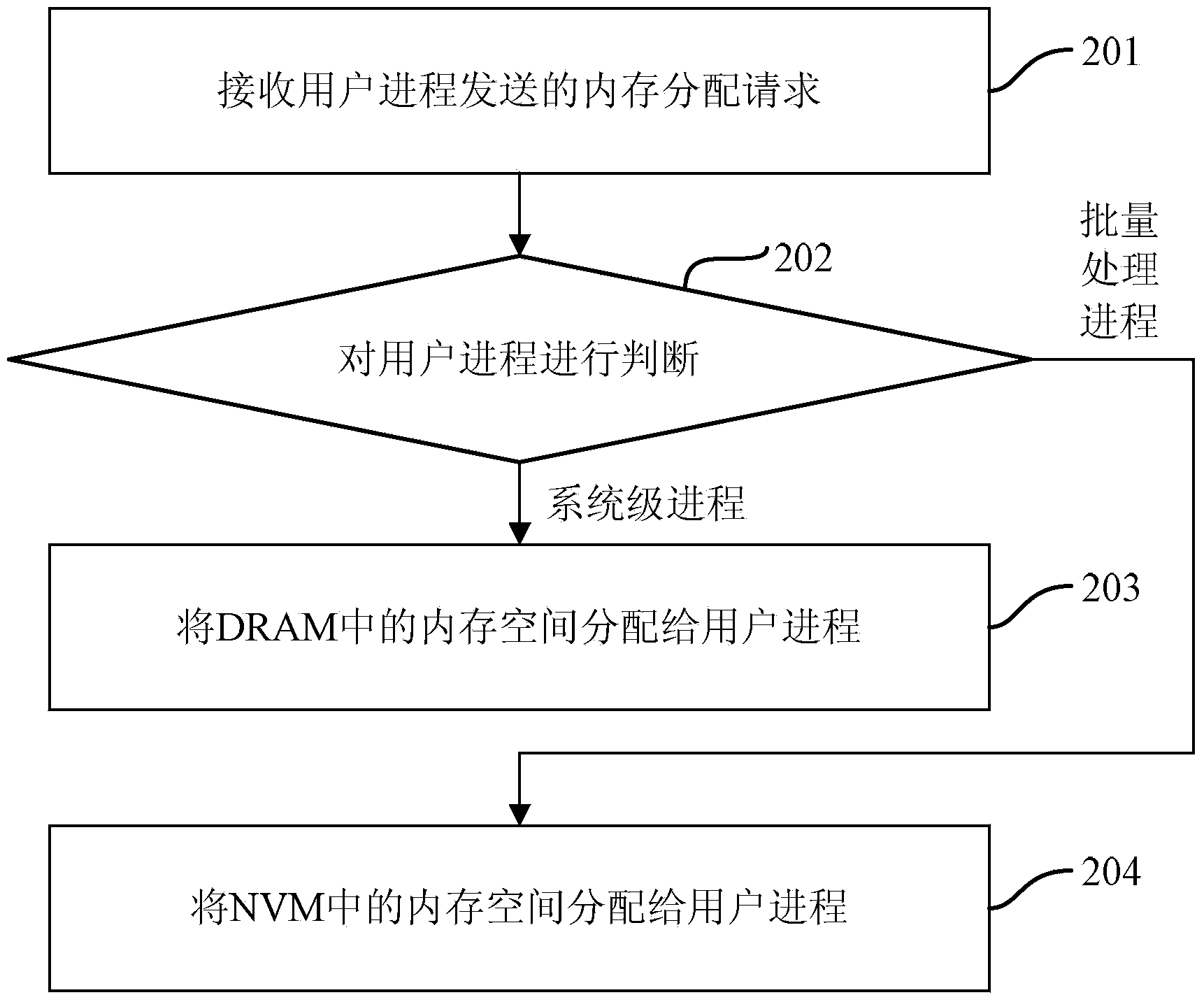

Method and device for managing heterogeneous hybrid memory

ActiveCN104239225AFast data processingImpact of reduced data processing speedMemory adressing/allocation/relocationData processing systemBatch processing

The invention discloses a method and a device for managing a heterogeneous hybrid memory. The heterogeneous hybrid memory comprises an NVM (Non-Volatile Memory) and a DRAM (Dynamic Random Access Memory). The method comprises the following steps: receiving a memory allocation request sent by a user process, and judging the user process; if the user process is a system-level process, allocating the memory space of the DRAM to the user space; if the user process is a batch processing process, allocating the memory space of the NVM to the user process. By virtue of the memory management mechanism, the system-level process is capable of performing data processing in the DRAM with relatively high access rate, the batch processing process is capable of performing data processing in the NVM, so that influence of the access speed difference between the DRAM and the NVM on the data processing speed is reduced, and the data processing speed of the user process and the overall processing performance of the system are enhanced.

Owner:INSPUR BEIJING ELECTRONICS INFORMATION IND

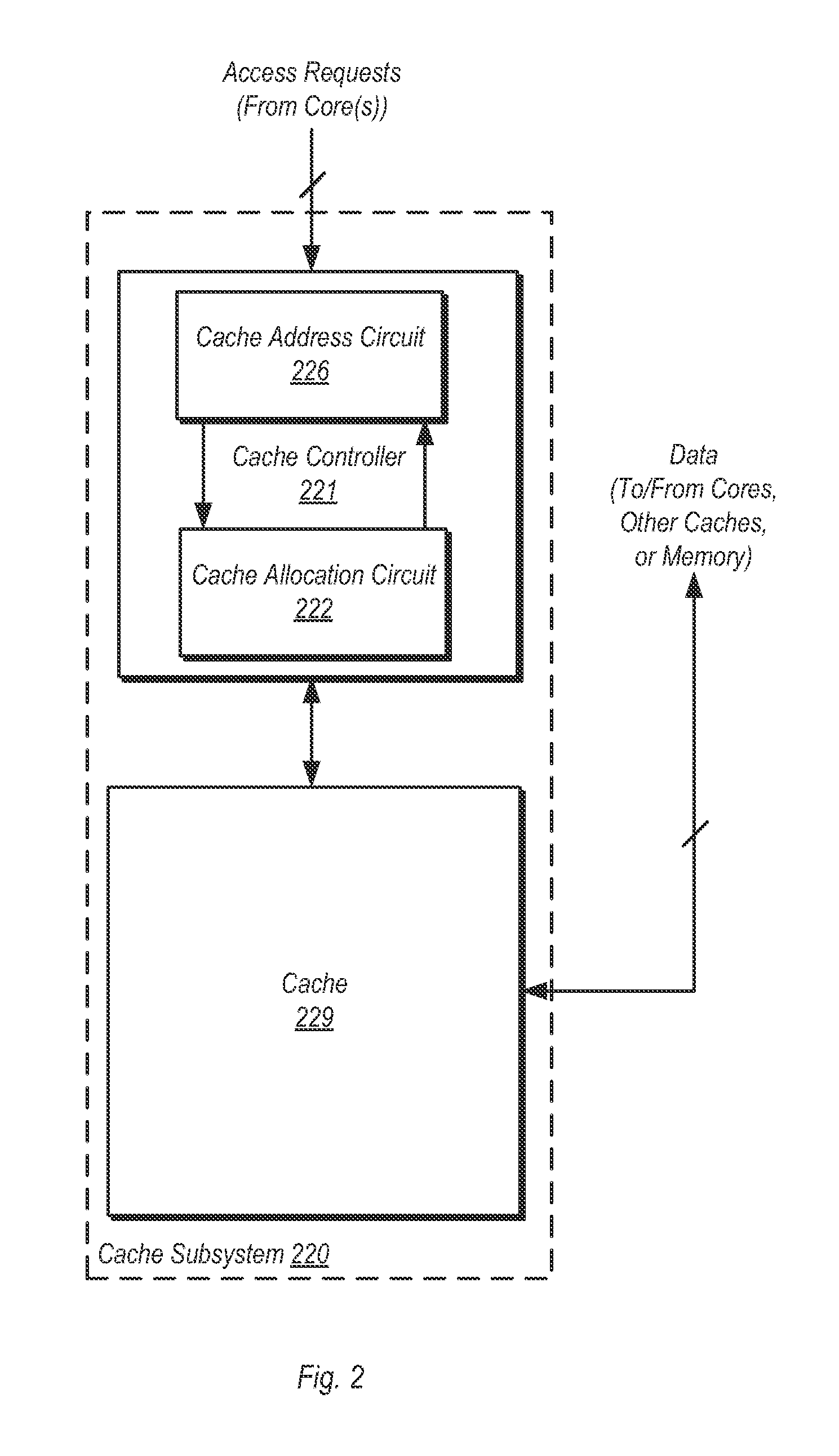

Dynamic Multithreaded Cache Allocation

ActiveUS20140040556A1Memory architecture accessing/allocationMemory adressing/allocation/relocationParallel computingSpace allocation

Owner:ADVANCED MICRO DEVICES INC

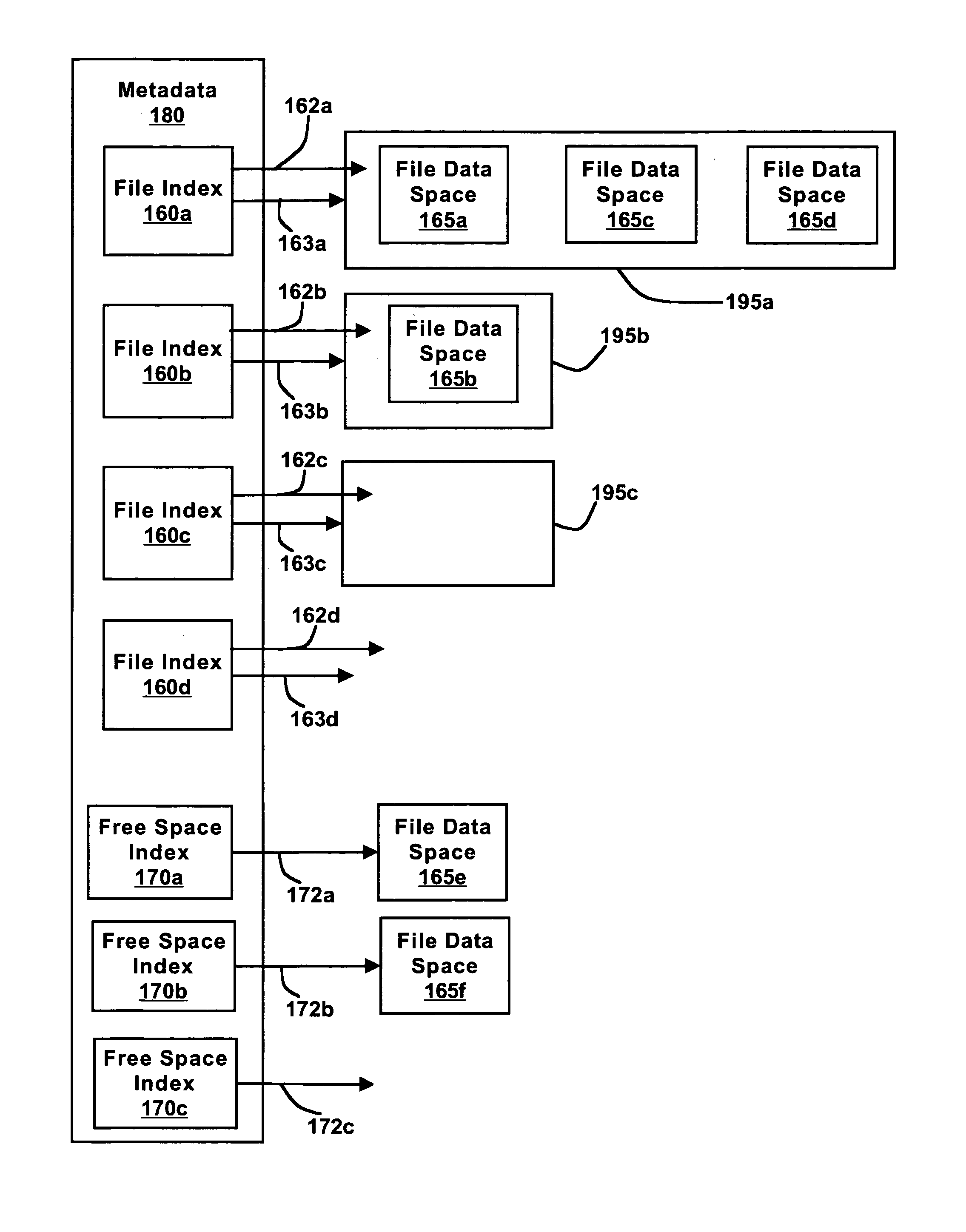

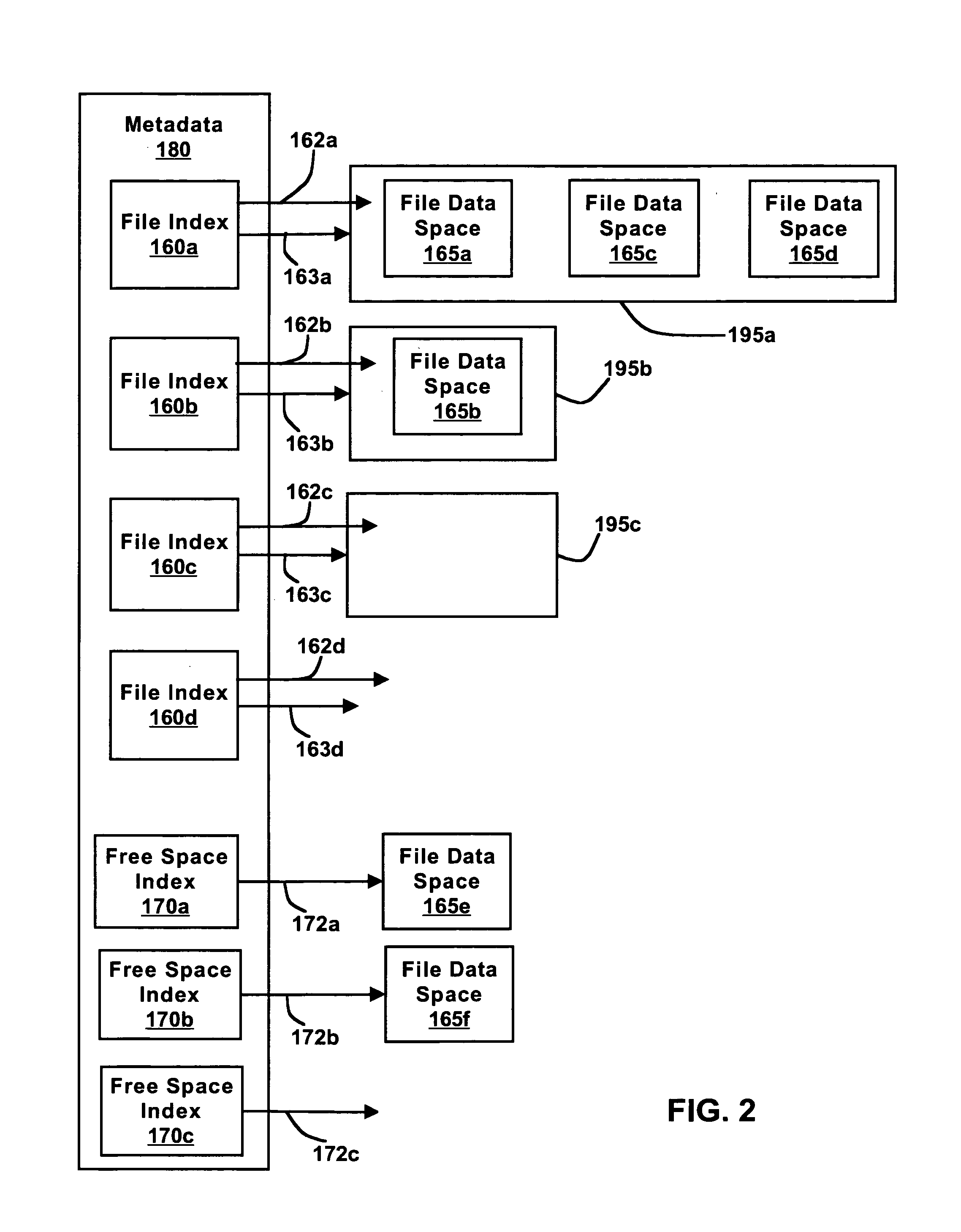

File system and methods for performing file create and open operations with efficient storage allocation

A client computer system of a cluster may send a request to create a file in a cluster file system. A server may create a file in response to the information and allocate space in a storage to the file. If a request to write to the file is received within a predetermined amount of time, the write may complete without requiring that additional operations be performed to allocate space to the file. If a write to the file is not received within the predetermined amount of time, the space allocated to the file when it was created may be de-allocated. The file system may additionally or alternatively perform a method for opening a file while delaying an associated truncation of space allocated to the file. If a request to write to the file is received within a predetermined amount of time, the write may be performed in the space already allocated to the file.

Owner:SYMANTEC OPERATING CORP

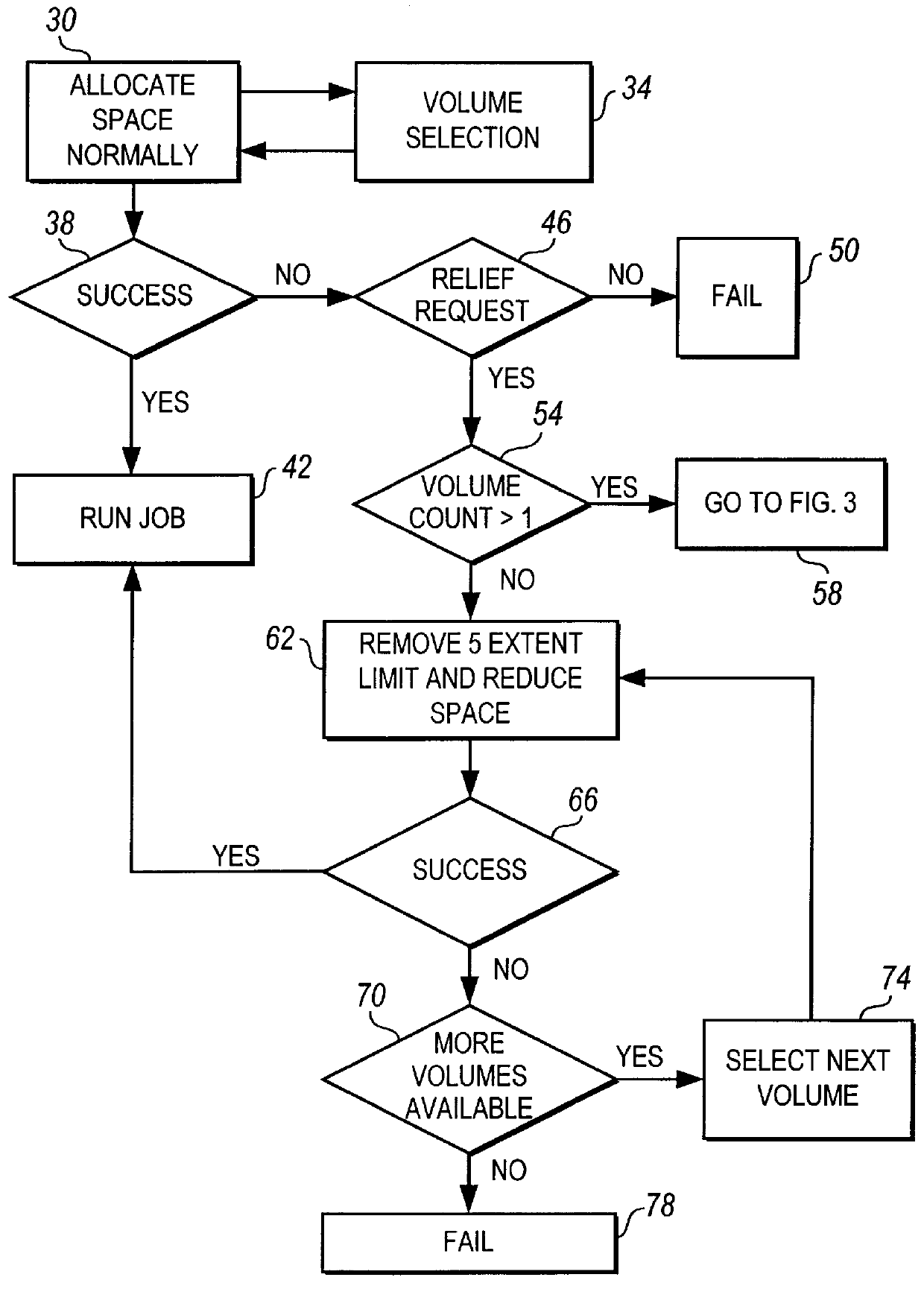

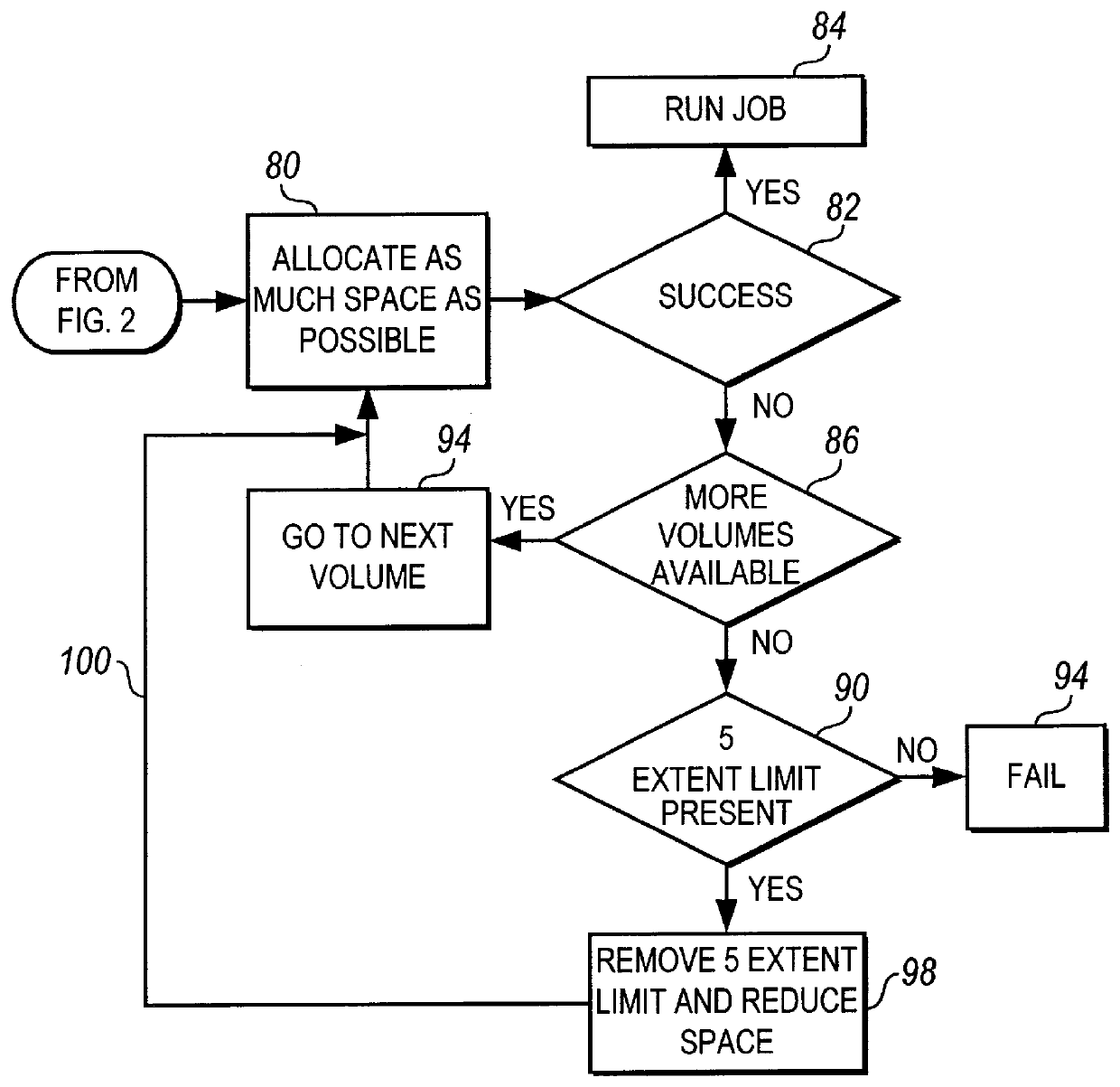

Method and apparatus for reducing space allocation failures in storage management systems

InactiveUS6088764AReducing space allocation errorReduce spacingInput/output to record carriersMemory adressing/allocation/relocationDirect-access storage deviceStorage management

A method and apparatus for reducing space allocation failures in a computer system that utilizes direct access storage devices to store data. The method comprises the steps of determining if authorization has been given to attempt to allocate an initial space request over more than one volume, and, if so, attempting to allocate space on a plurality of volumes. If the initial space request cannot be allocated on a plurality of volumes, the initial space request is reduced by a preset percentage, the five-extent limit is removed and an attempt is made to allocate the reduced space request on the plurality of volumes with the five extent limit removed. Alternatively, if authorization has not been given to attempt to allocate the initial space request over more than one volume, the initial space request is reduced by a preset percentage, the five-extent limit is removed and an attempt is made to allocate the reduced space request on a single volume.

Owner:WESTERN DIGITAL TECH INC

Site-Based Namespace Allocation

Owner:PURE STORAGE

Storage array virtualization using a storage block mapping protocol client and server

A cached disk array includes a disk storage array, a global cache memory, disk directors coupling the cache memory to the disk storage array, and front-end directors for linking host computers to the cache memory. The front-end directors service storage access requests from the host computers, and the disk directors stage requested data from the disk storage array to the cache memory and write new data to the disk storage. At least one of the front-end directors or disk directors is programmed for block resolution of virtual logical units of the disk storage, and for obtaining, from a storage allocation server, space allocation and mapping information for pre-allocated blocks of the disk storage, and for returning to the storage allocation server requests to commit the pre-allocated blocks of storage once data is first written to the pre-allocated blocks of storage.

Owner:EMC IP HLDG CO LLC

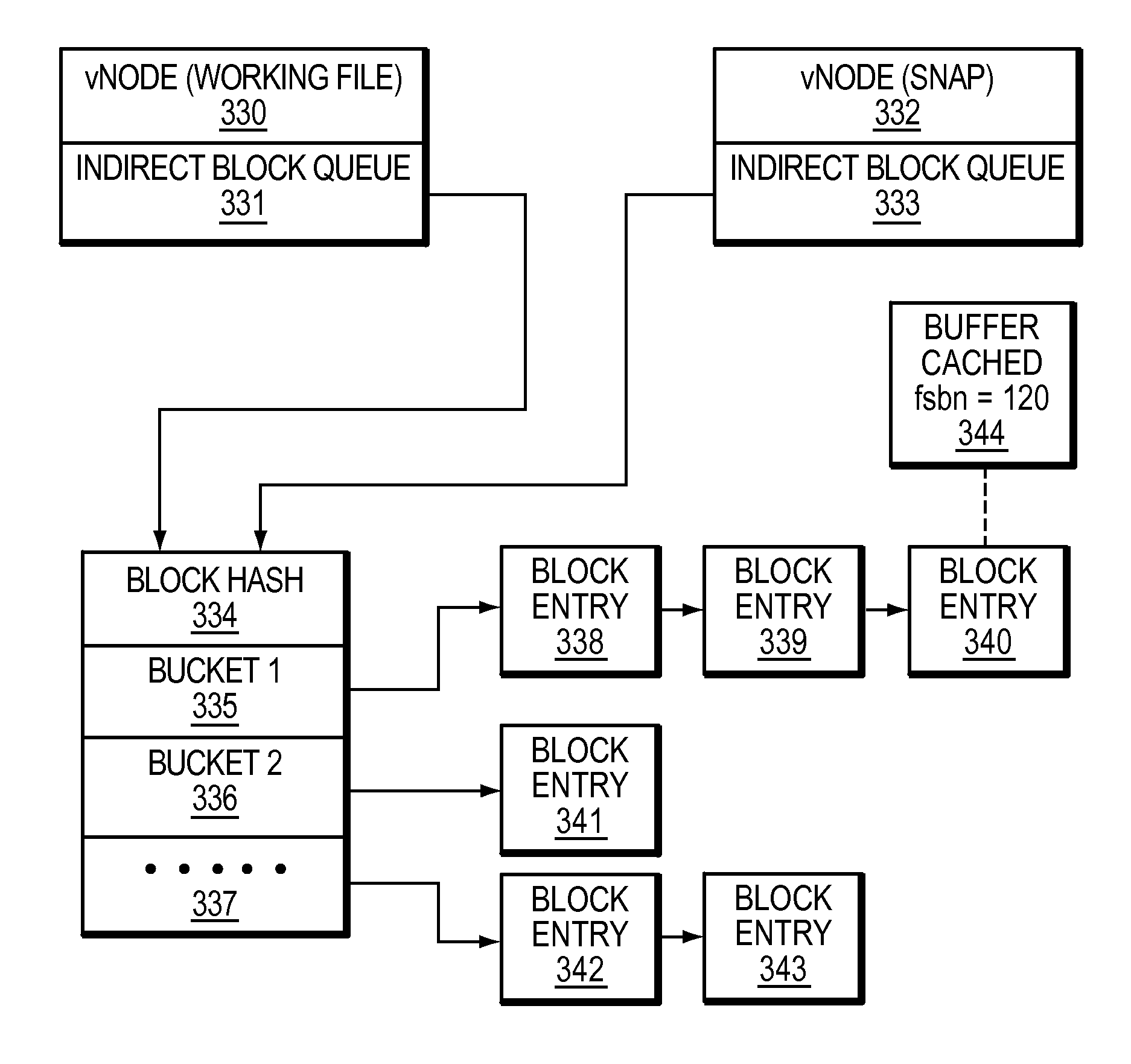

Managing global metadata caches in data storage systems

ActiveUS8661068B1Digital data information retrievalSpecial data processing applicationsFile systemHybrid storage system

A method is used in managing global metadata caches in data storage systems. Space is allocated in a memory of a data storage system to a global metadata cache which is configured to store metadata objects for a plurality of different file systems responsive to file system access requests from the plurality of different file systems. A metadata object associated with a file of a file system of the plurality of different file systems is stored in the global metadata cache. The metadata object is accessed by a plurality of different versions of the file.

Owner:EMC IP HLDG CO LLC

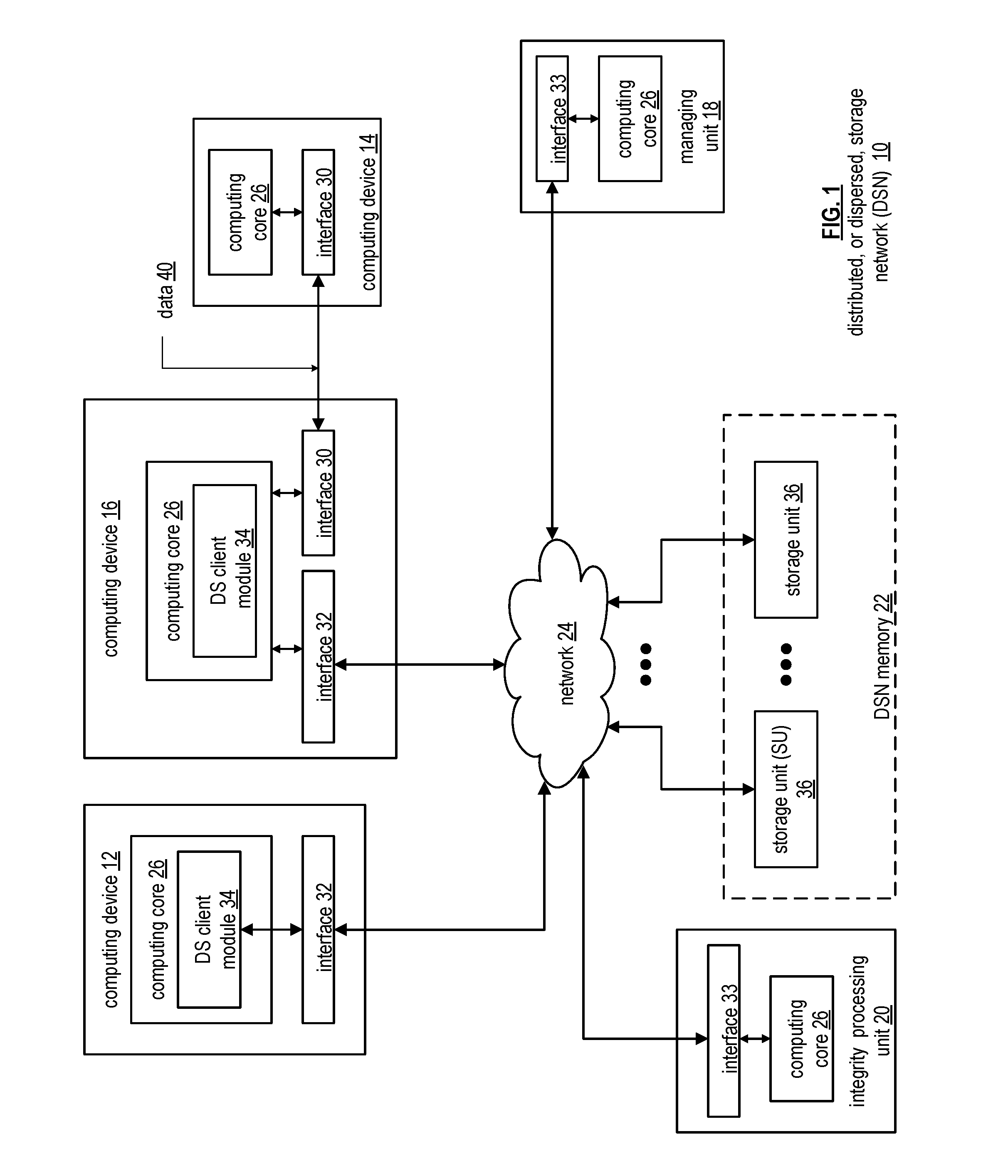

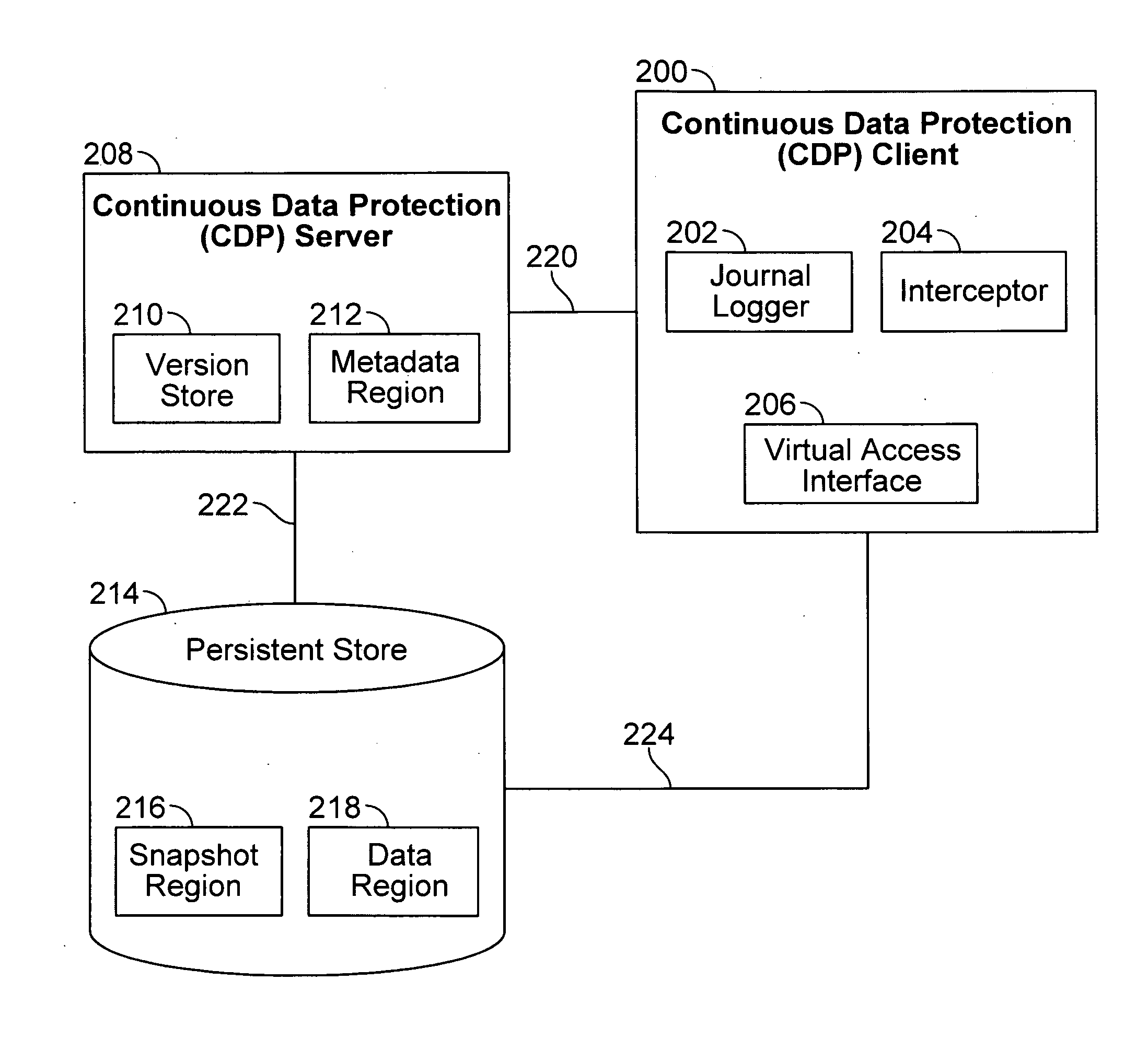

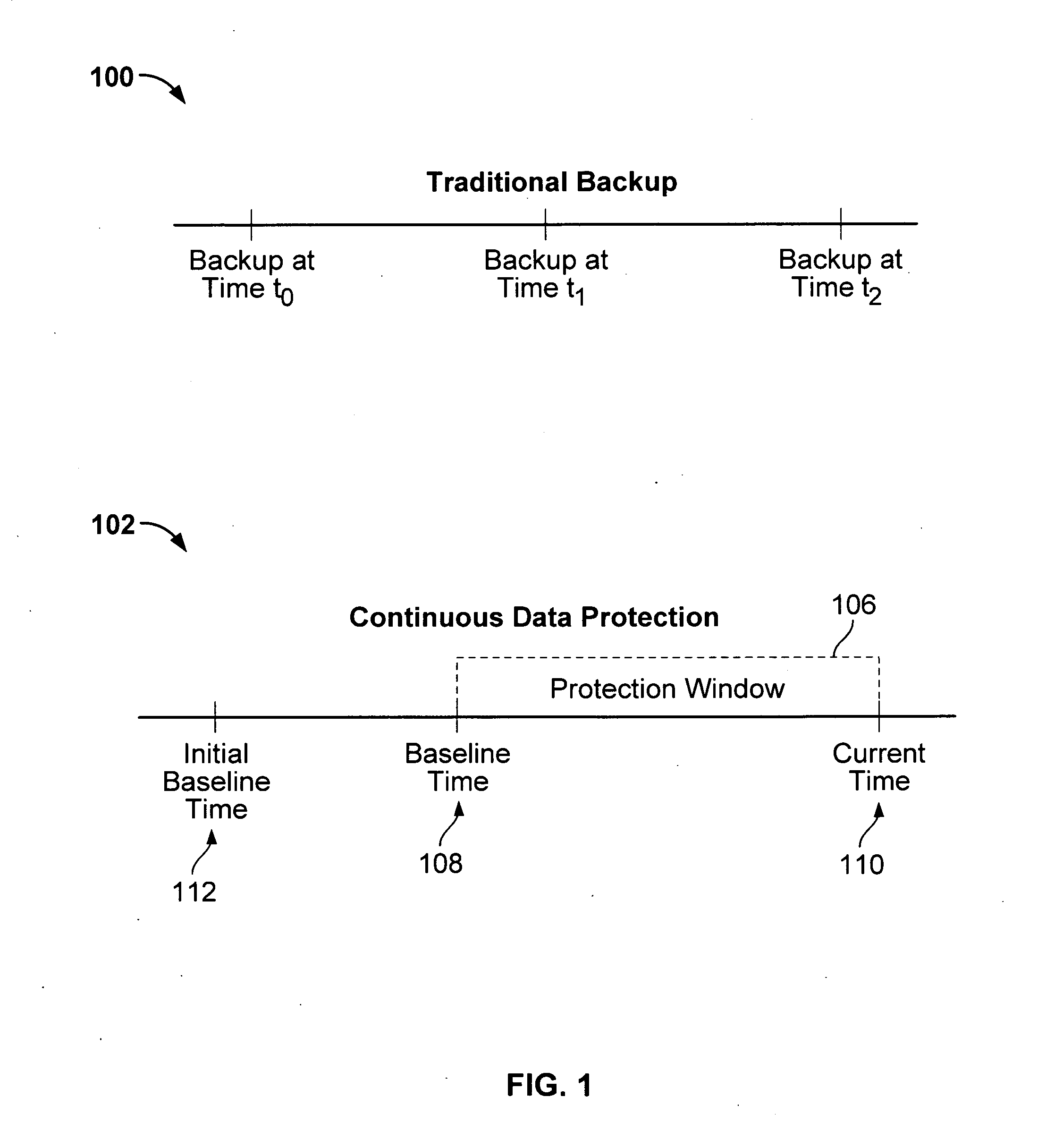

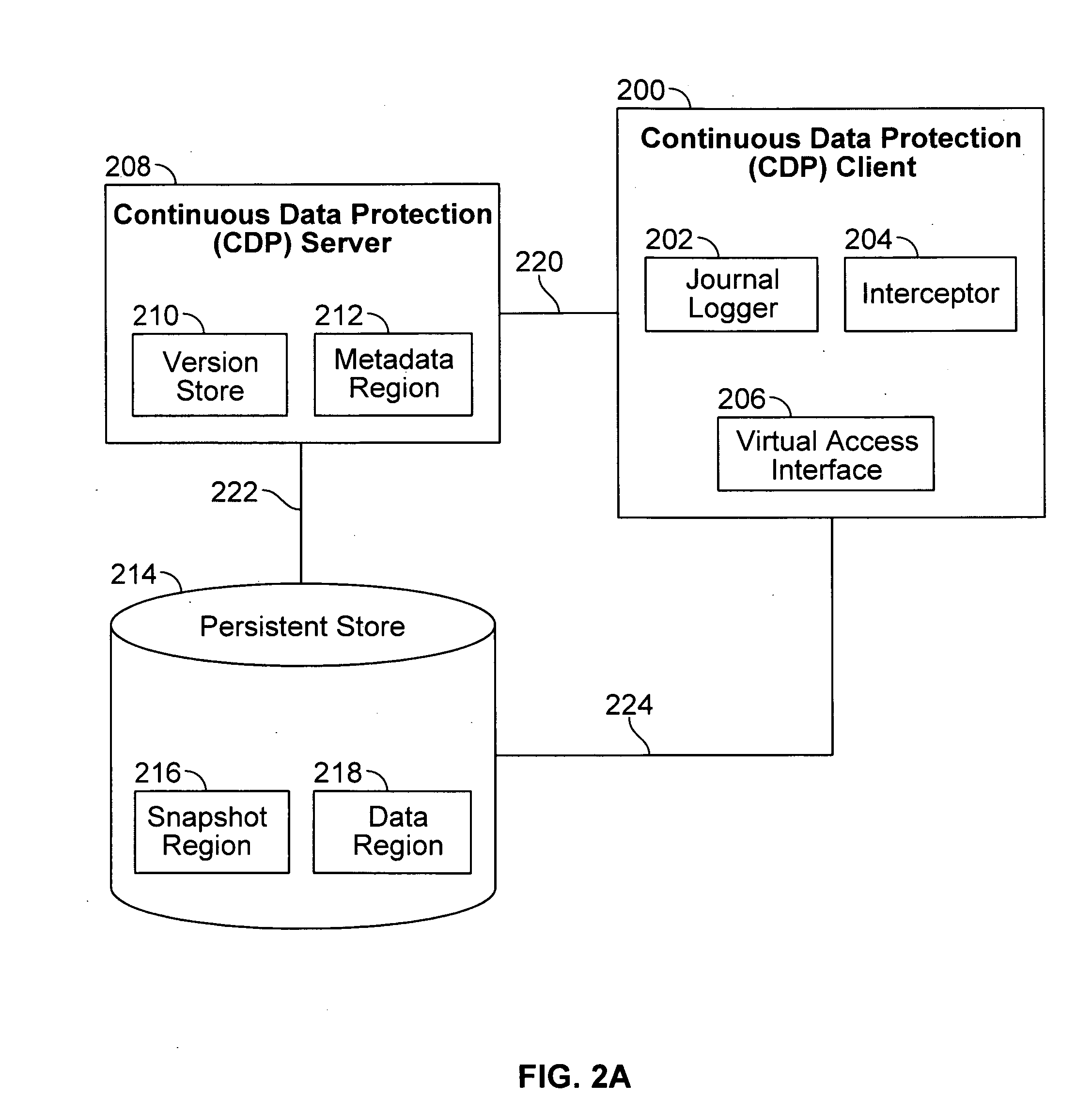

Linear space allocation mechanisms in data space

ActiveUS20100077173A1Error detection/correctionMemory adressing/allocation/relocationData setData space

An indication to allocate storage is received, where the storage is to be used to store previous version data associated with a protected data set. One or more storage groups are allocated of at least a prescribed allocation group size and comprising a set of physically contiguous storage locations.

Owner:EMC IP HLDG CO LLC

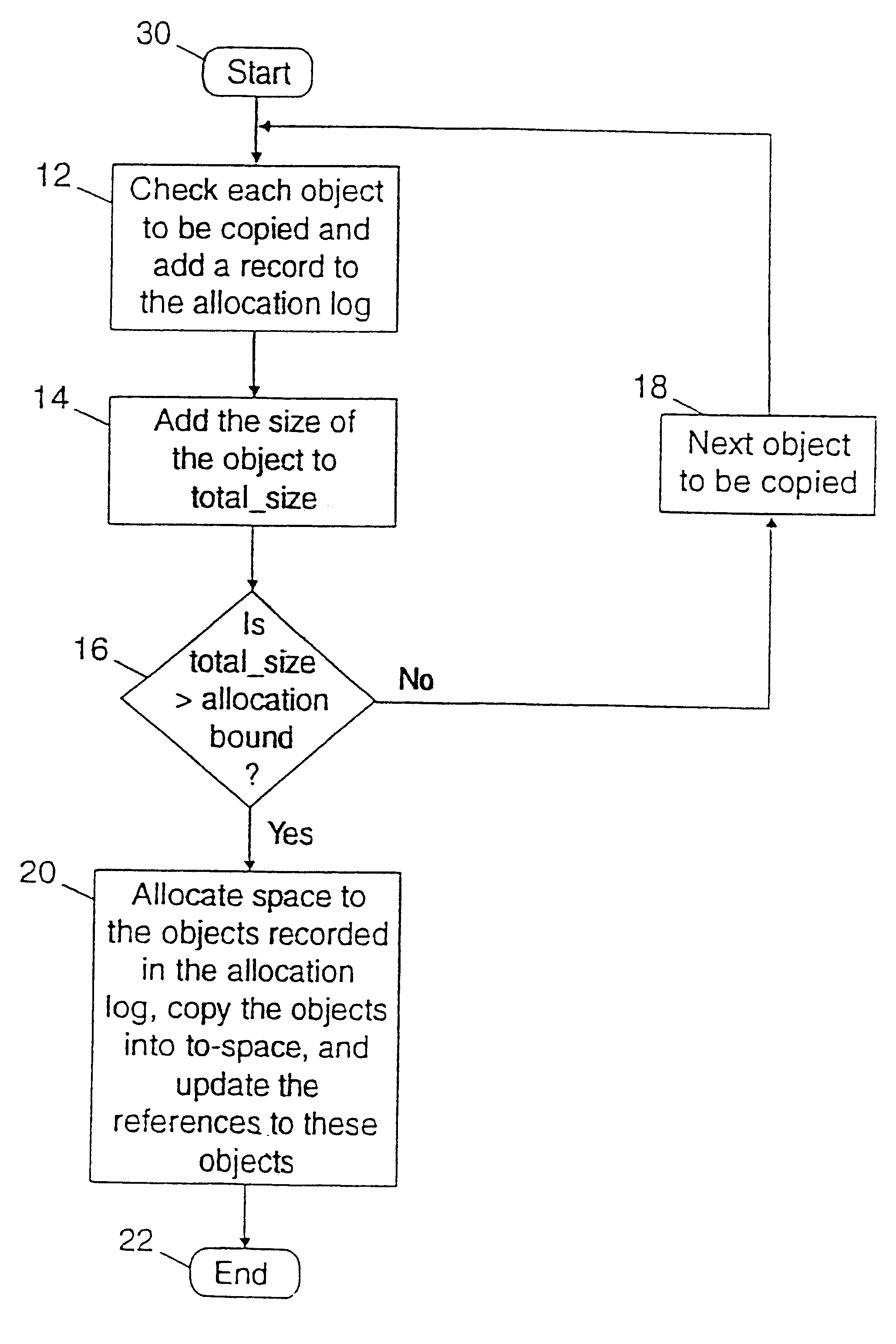

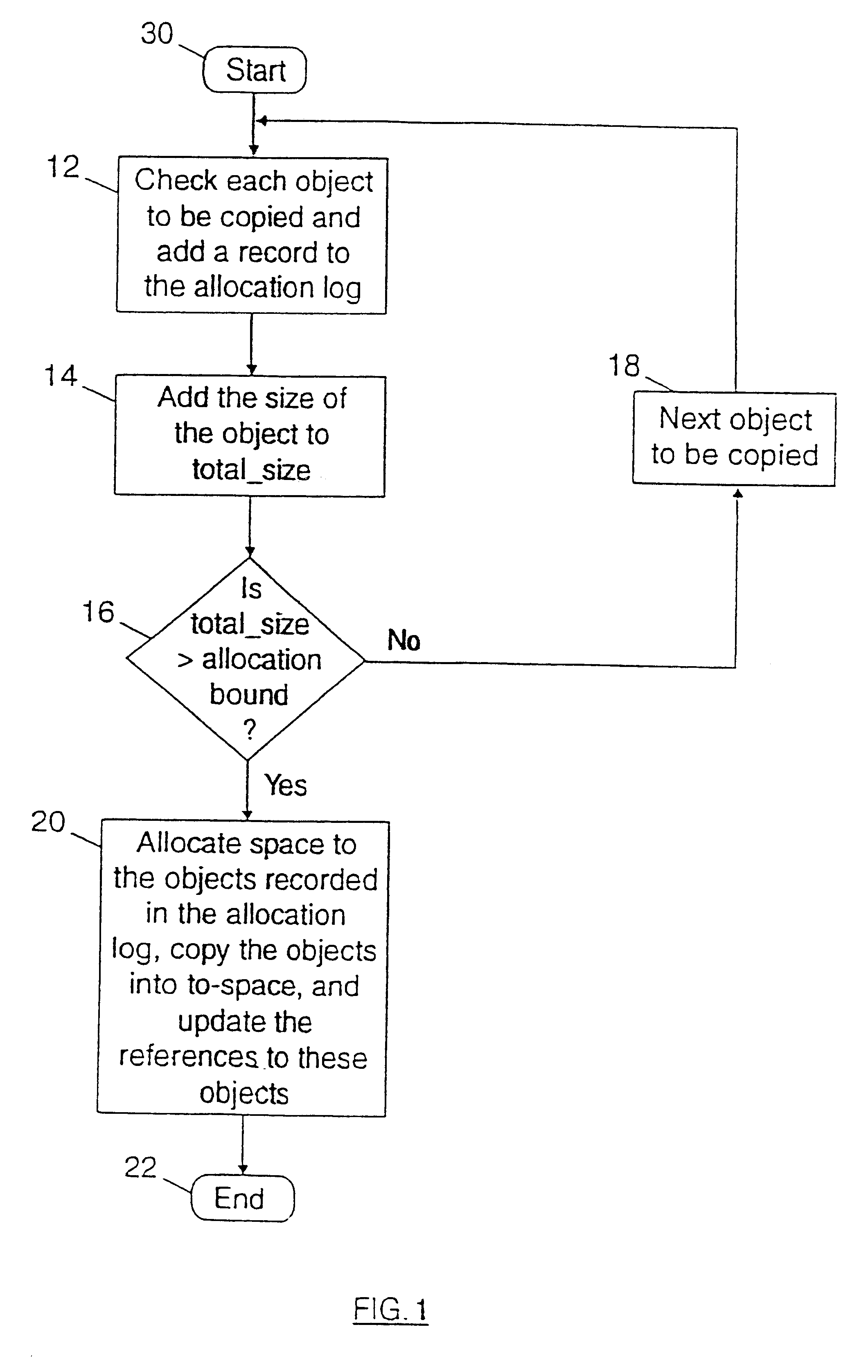

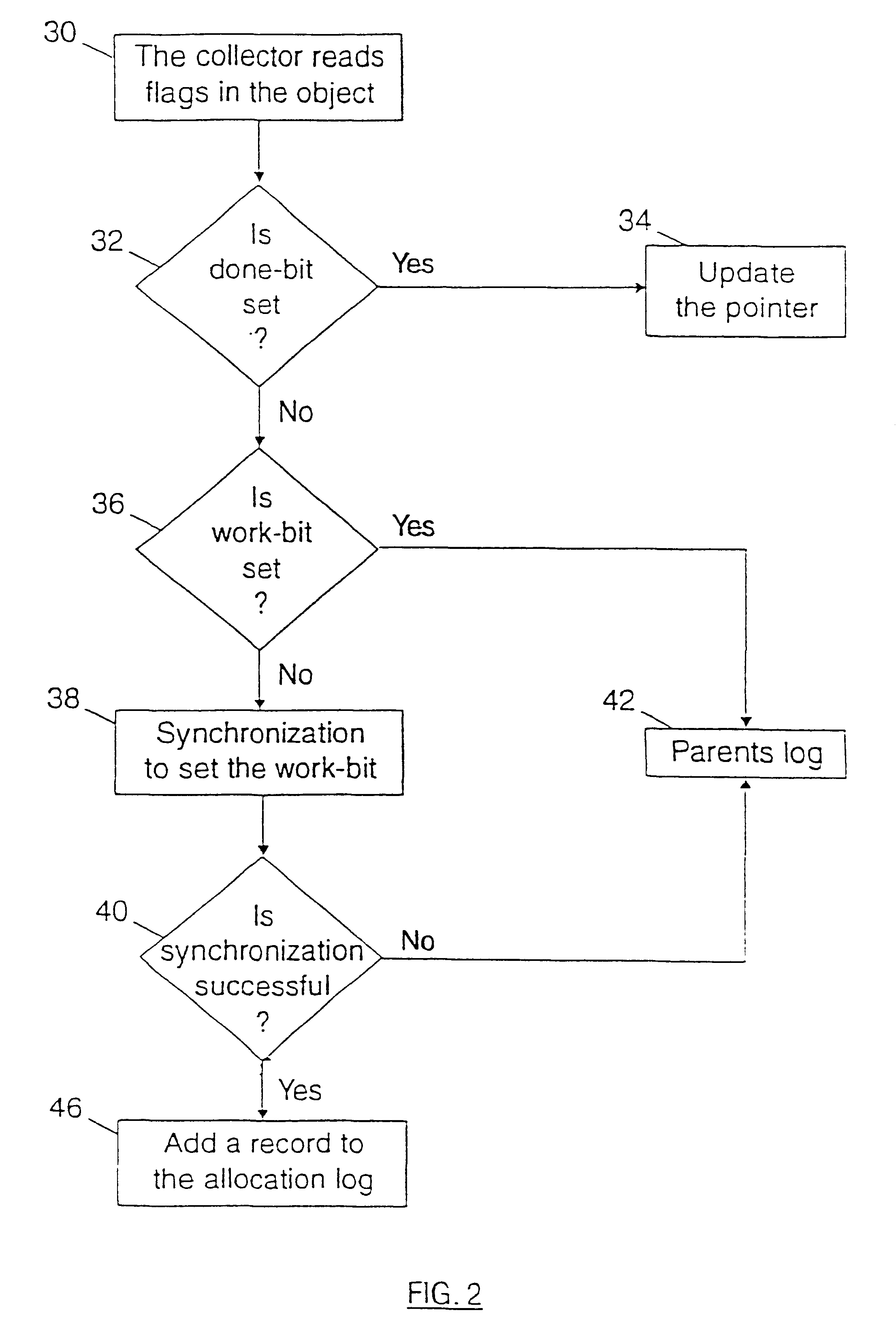

Method of delaying space allocation for parallel copying garbage collection

InactiveUS6427154B1Reduce competitionRemove debrisData processing applicationsDigital data processing detailsData processing systemObject copying

The present invention relates to a method of delaying space allocation for parallel copying garbage collection in a data processing system comprising a memory divided in a current area (from-space) used by at least a program thread during current program execution and reserve area (to-space), and wherein a copying garbage collection is run in parallel by several collector threads, the garbage collection consisting in stopping the program threads and flipping the roles of the current area and reserved area before copying into the reserved area the live objects stored in the current area. Such a method comprises the steps of checking (12) by one collector thread the live objects of the current area to be copied in said reserved area, the live objects being referenced by a list of pointers; storing for each live object, a record into an allocation log, this record including at least the address of the object and its size; adding (14) the object size to a total_size which is the accumulated size of all the checked objects for which a record has been stored in the allocation log; and copying (20) all the checked objects into the reserved area when the value of total_size reaches a predetermined allocation bound.

Owner:LINKEDIN

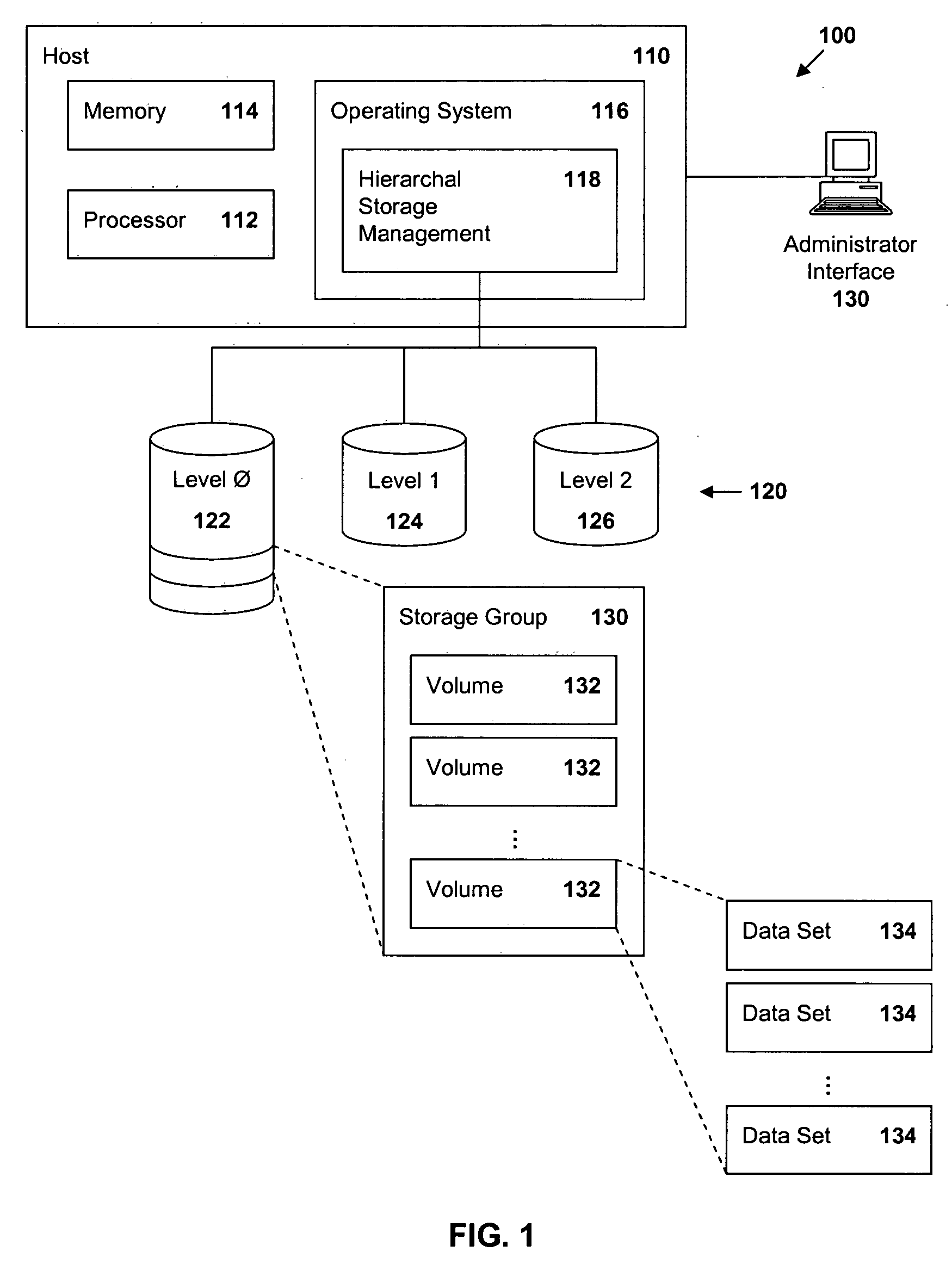

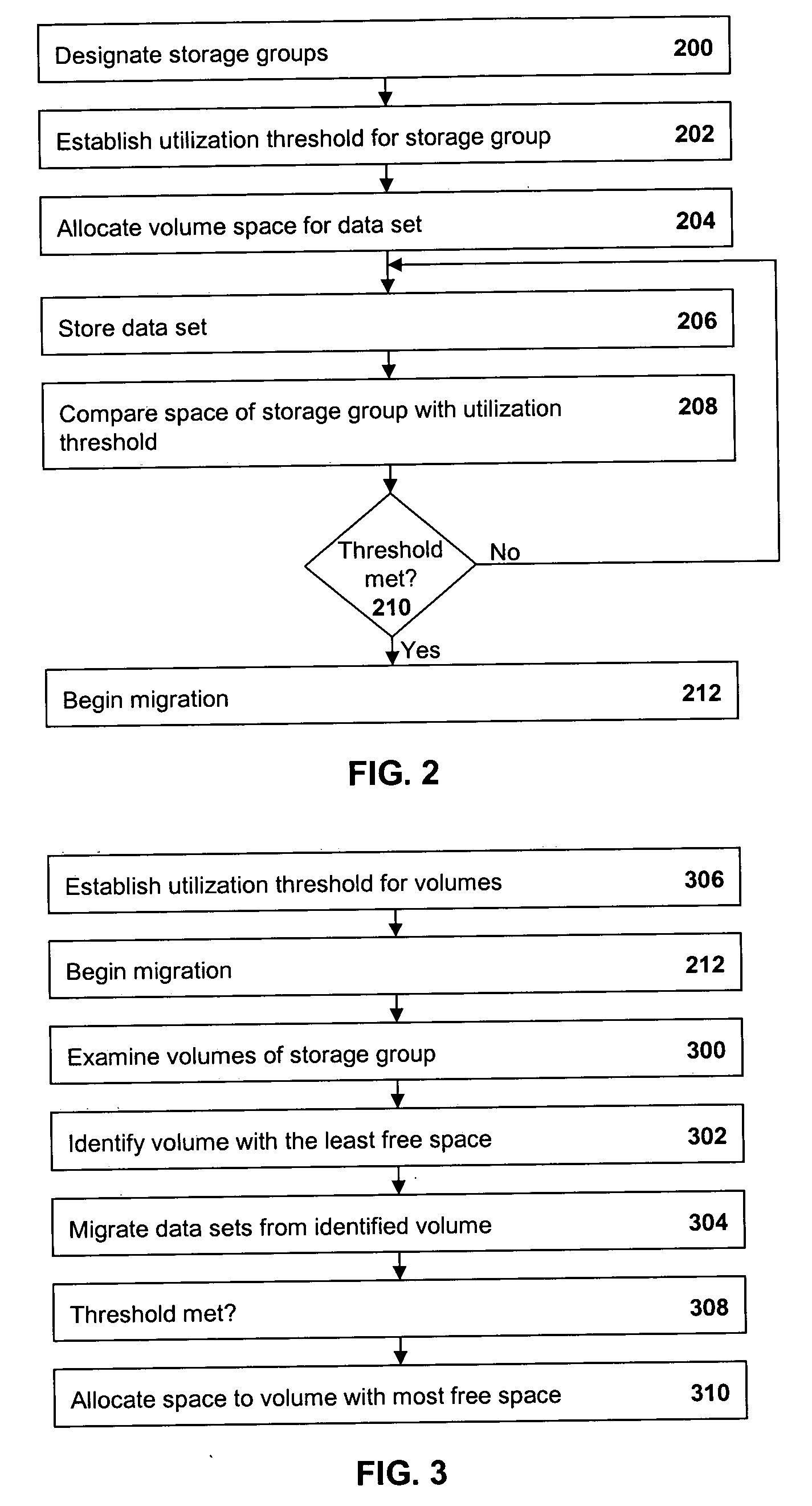

Data migration with reduced contention and increased speed

InactiveUS20060155950A1ContentionReduce contentionMemory systemsInput/output processes for data processingData setSpace allocation

Methods and apparatus are provided for managing data in a hierarchal storage subsystem. A plurality of volumes is designated as a storage group for Level 0 storage; a high threshold is established for the storage group; space is allocated for a data set to a volume of the storage group, storing the data set to the volume; the high threshold is compared with a total amount of space consumed by all data sets stored to volumes in the storage group; and data sets are migrated from the storage group to a Level 1 storage if the high threshold is less than or equal to the total amount of space used by all of the data sets stored to volumes in the storage group. Optionally, high threshold are assigned to each storage group and, when the space used in a storage group reaches or exceeds the high threshold, migration of data will begin from volumes in the storage group, beginning with the volume having the least free space. Thus, contention between migration and space allocation is reduced. Also optionally, when a volume is selected for migration, a flag is set which prevents space in the volume from being allocated to new data sets. Upon completion of the migration, the flag is cleared and allocation is allowed. Thus, contention between migration and space allocation is avoided.

Owner:IBM CORP

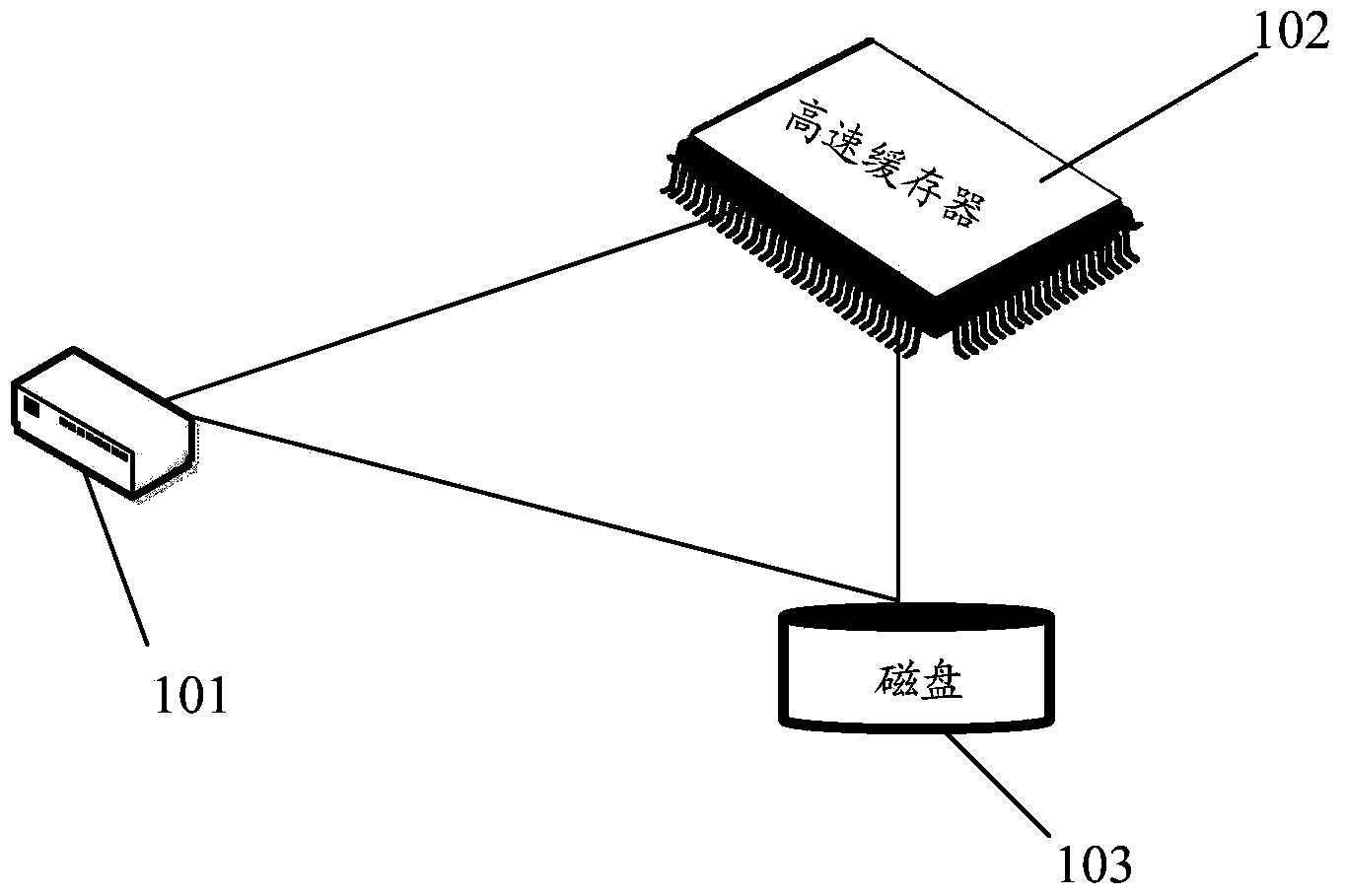

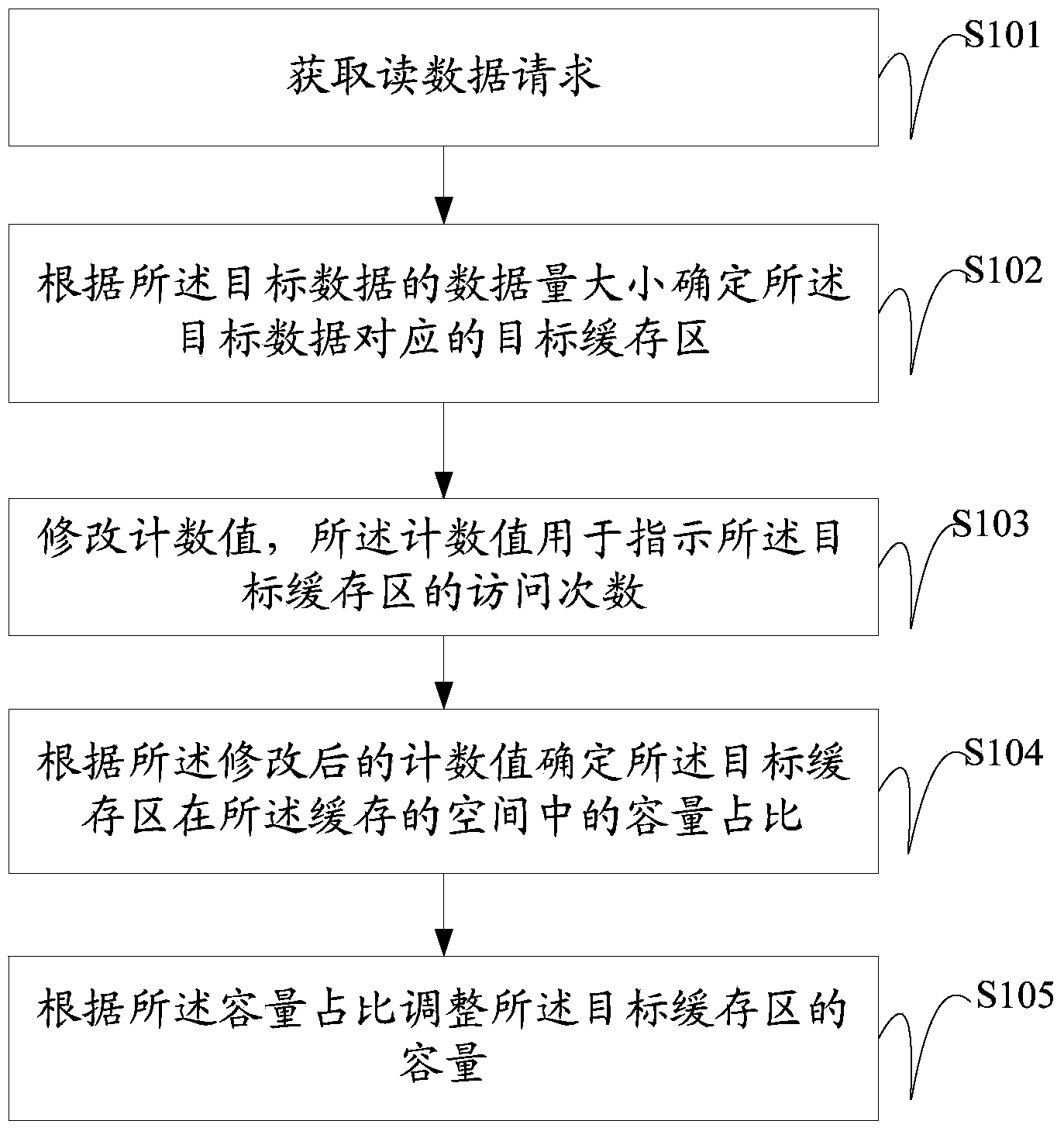

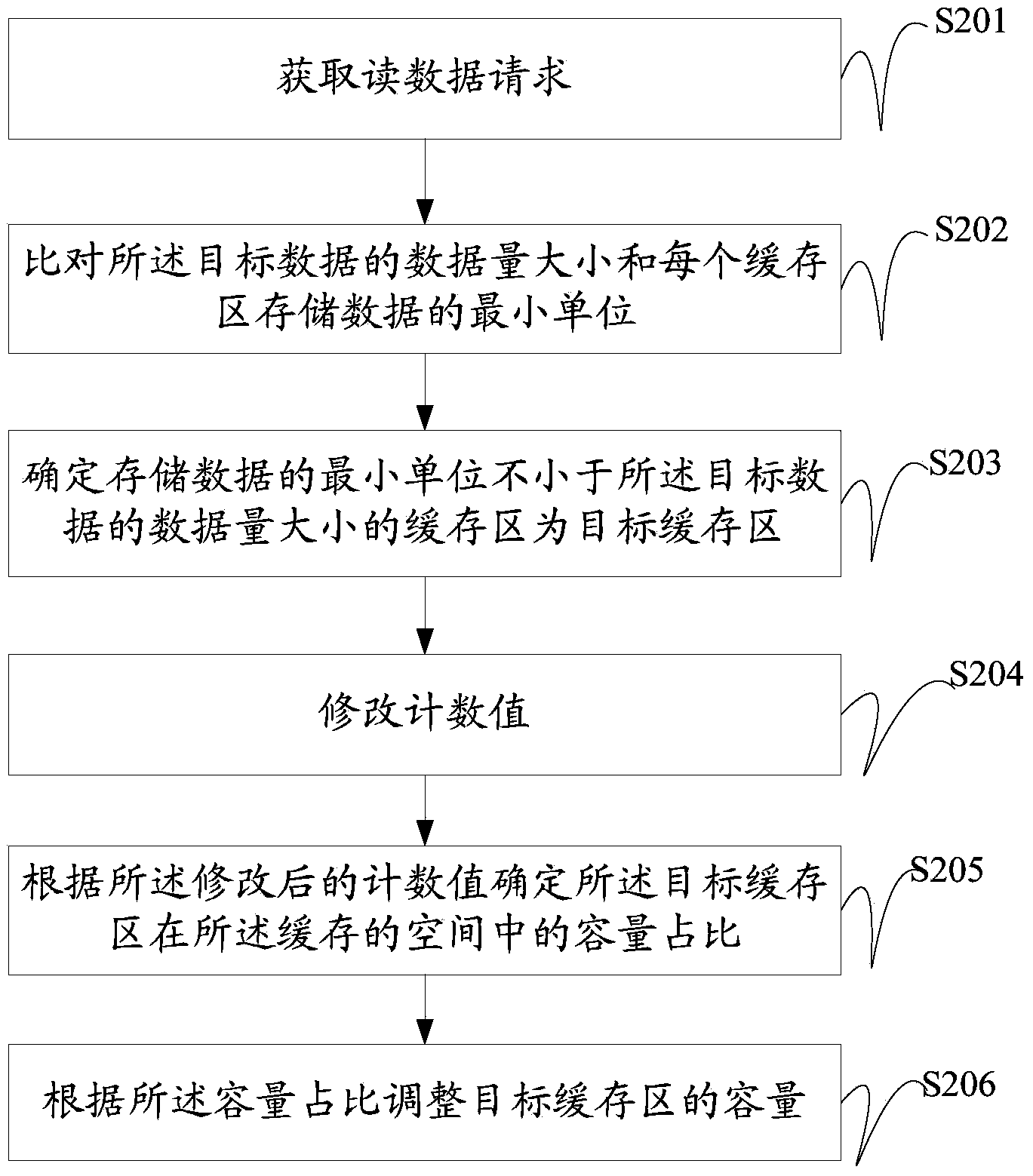

Cache space distribution method and device

InactiveCN103778071AImprove overall utilizationSolve the problem of low space utilizationMemory adressing/allocation/relocationAccess timeDistribution method

The embodiment of the invention provides a cache space distribution method and device. The method is applied to storage equipment comprising a cache; the space of the cache is divided into multiple cache areas, wherein the minimum unit of storage data of each cache area is different; the method comprises the steps of acquiring a data reading request, wherein the data reading request is carried with the data size of target data; determining the target cache area corresponding to the target data according to the data size of the target data, wherein the data size of the target data is smaller than or equal to the minimum unit of the storage data of the target cache area; amending a countering value which is used for indicating the access times of the target cache area; determining the capacity rate of the target cache area in the cache space according to the amended countering value; adjusting the capacity of the target cache area according to the cache rate. By the cache space distribution method, the problem that the utilization rate of the cache space is low as demands on a service cannot be precisely realized by the existing cache space distribution method.

Owner:HUAWEI TECH CO LTD

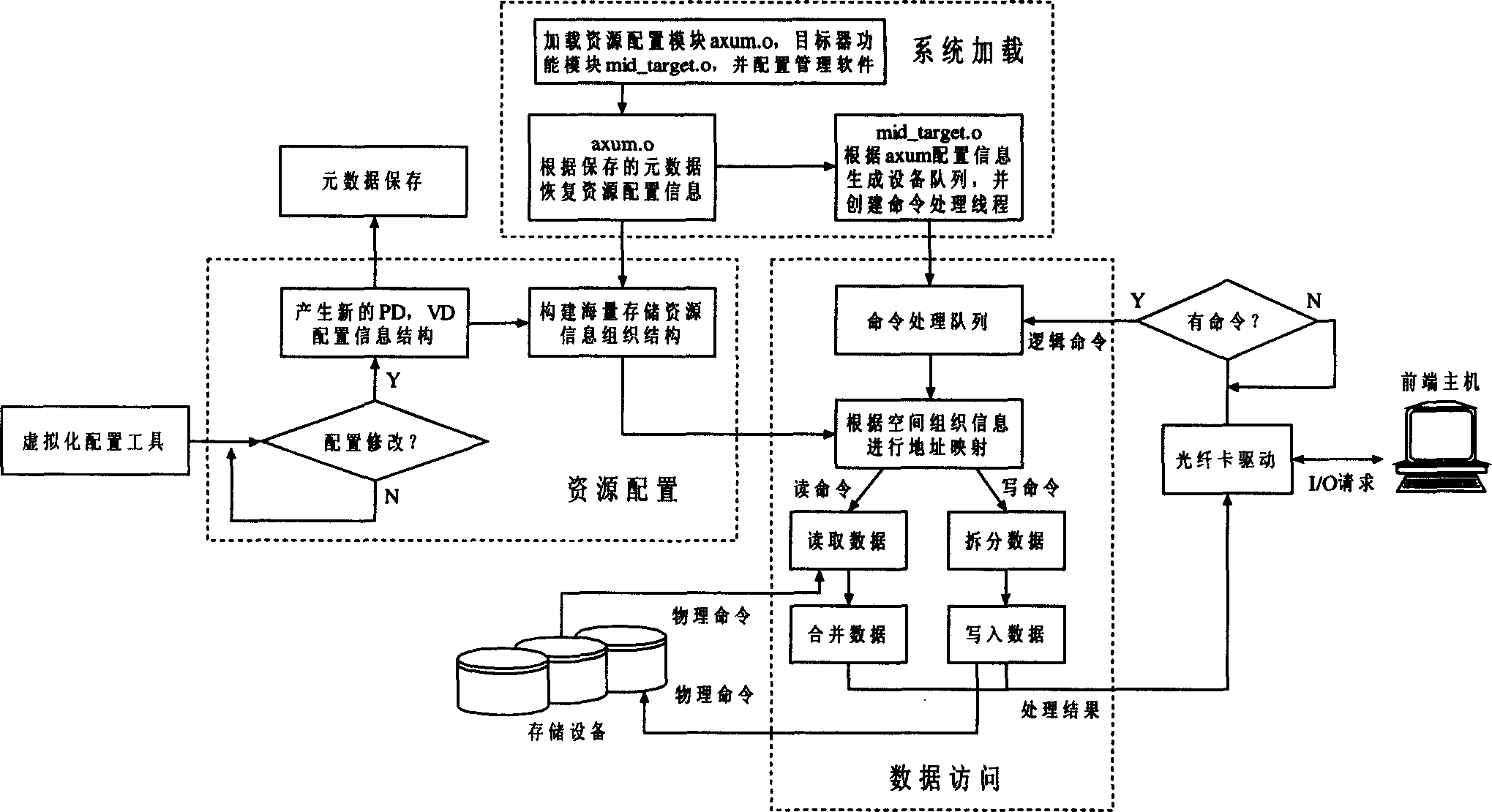

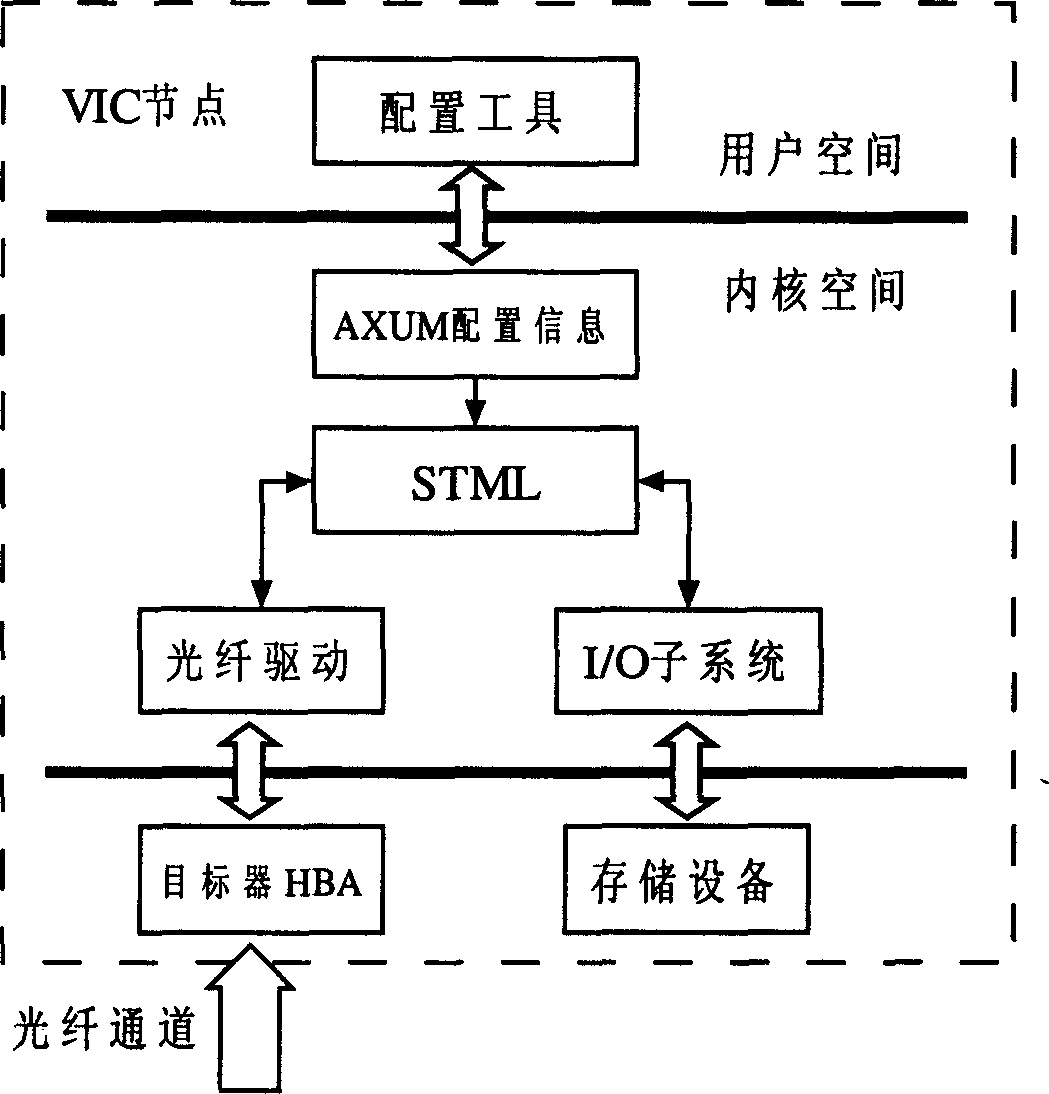

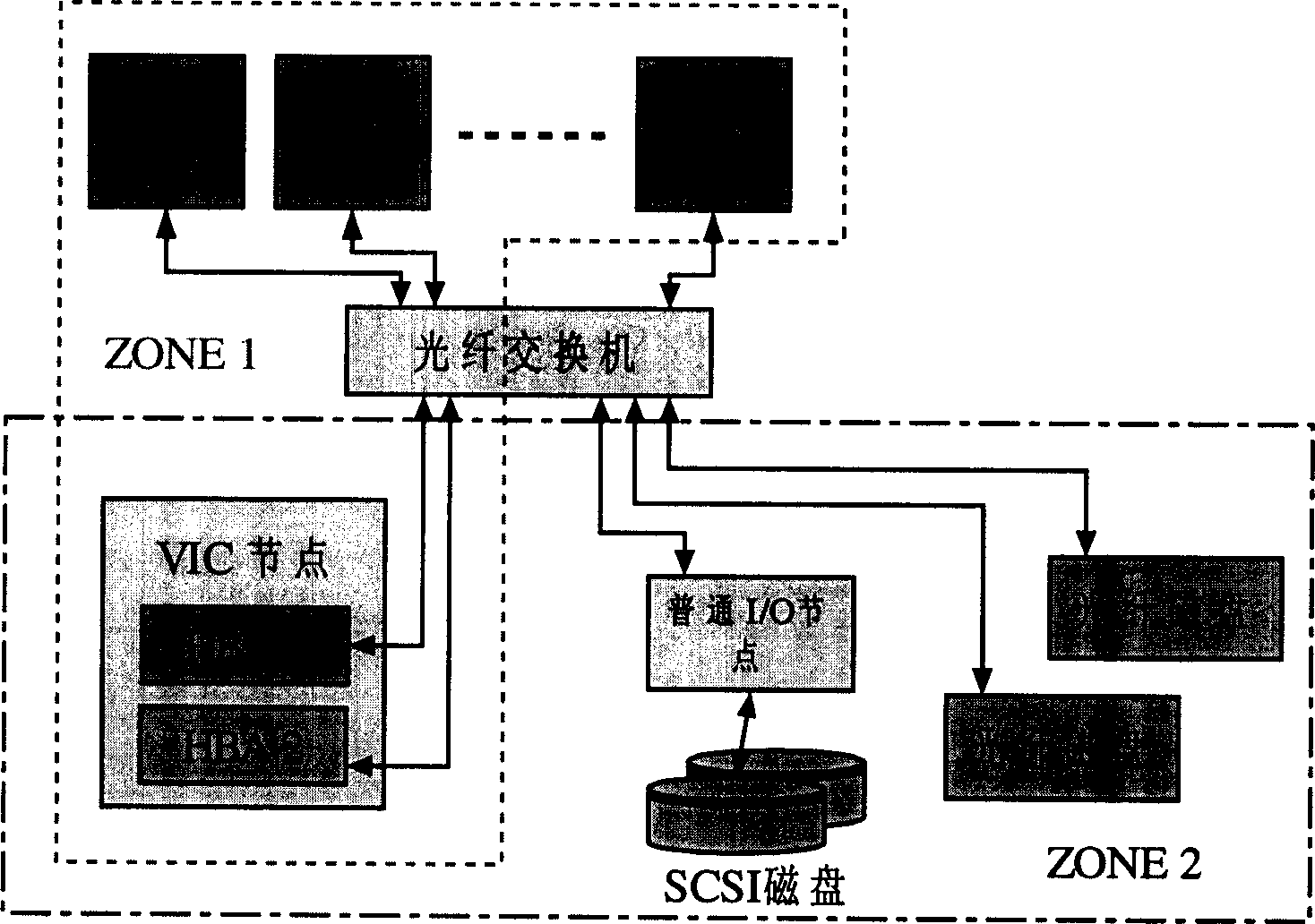

Large scale resource memory managing method based on network under SAN environment

InactiveCN1645342AEasy to manageSmall space requirementMetering/charging/biilling arrangementsMemory adressing/allocation/relocationVirtualizationSCSI

A management method of large scale storage resource includes maintaining a set of storage resouce disposal information by node machine of special processor to manage different storage device resource in storage network so as to provide virtual storage service for front end host by carrying out process of command analysis with interface middle layer of small computer interface. The node machine with information list and software target machine can convert virtual access to be access of physical device.

Owner:TSINGHUA UNIV

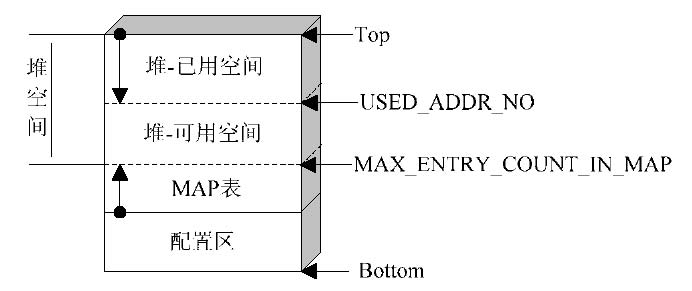

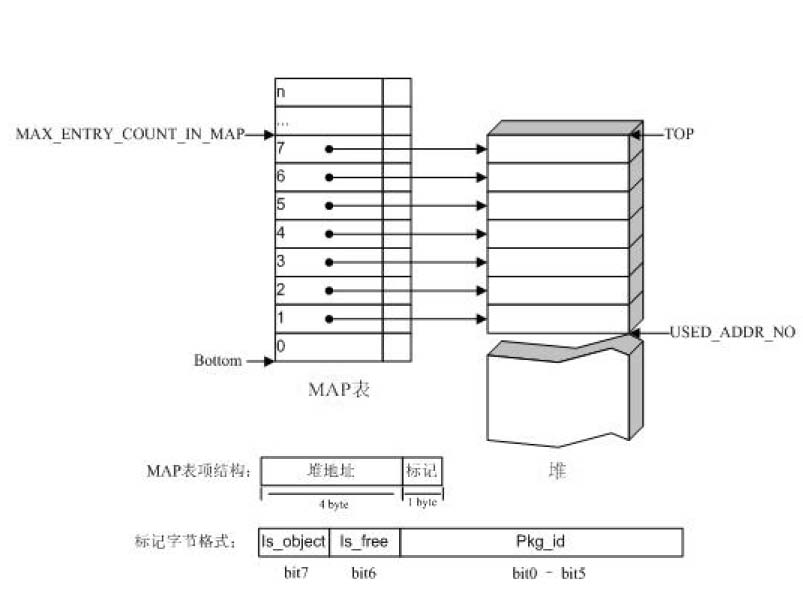

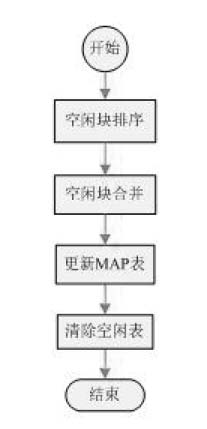

Java card system and space distribution processing method thereof

ActiveCN102521145AImprove access speedRealize distributionMemory adressing/allocation/relocationFragment processingElectricity

The invention provides a Java card system, which comprises an electrically erasable and programmable read-only memory space. The invention further provides a memory space distribution processing method of the Java card system, which includes a space distribution method, a trash recycling method and a stacked fragment processing method. The Java card system and the space distribution processing method thereof can efficiently achieve system space distribution, and lead application programs supplying to providers to be distributed in a continuous electrically erasable and programmable read-only memory (EEPROM) so as to increase access speed of application entities. By means of the trash recycling method, space in the Java card system occupied by trashes is tidied efficiently, a limited memory space of a Java card is led to be reasonably used, and sufficient space is provided for downloading of the application programs.

Owner:EASTCOMPEACE TECH

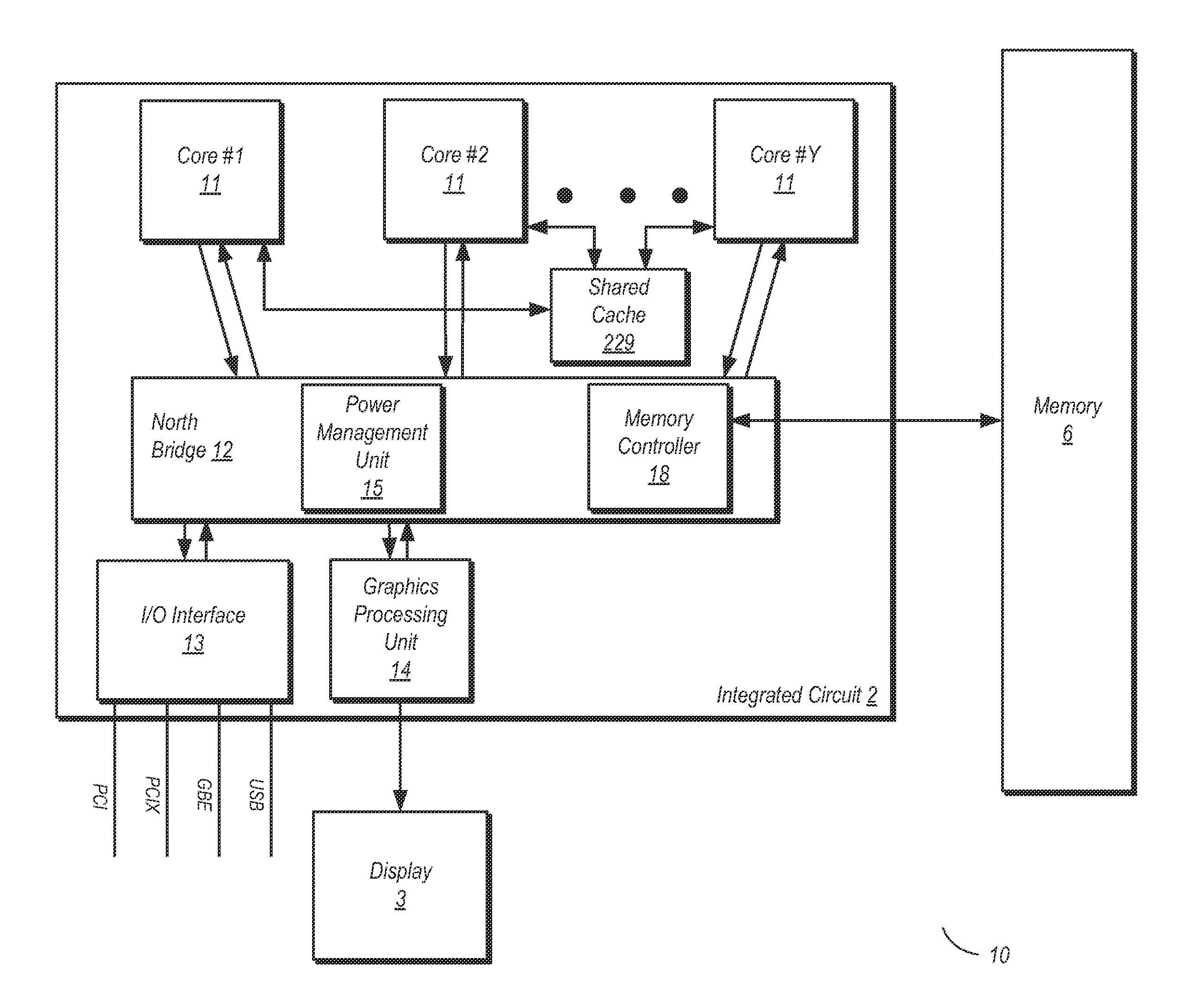

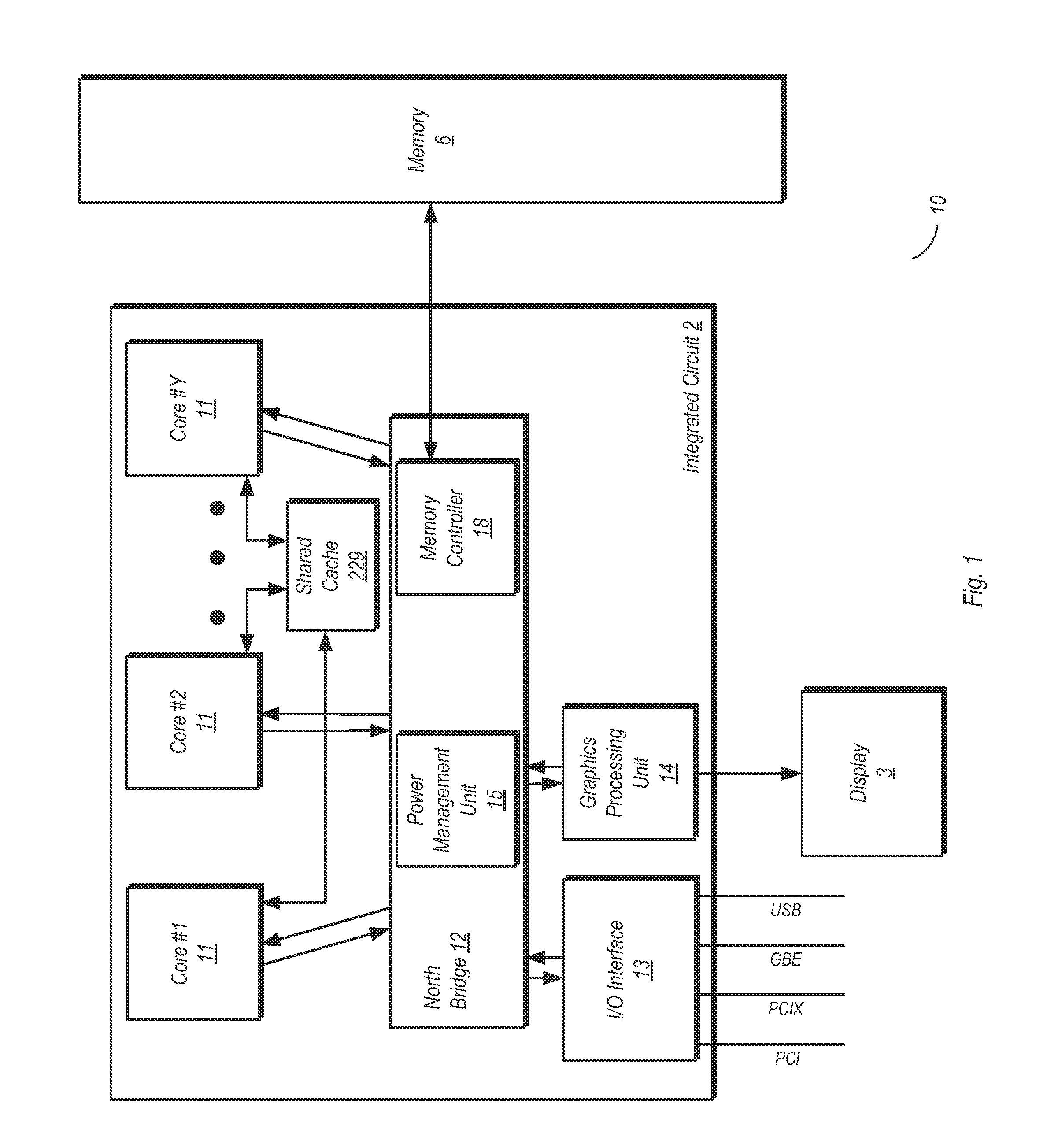

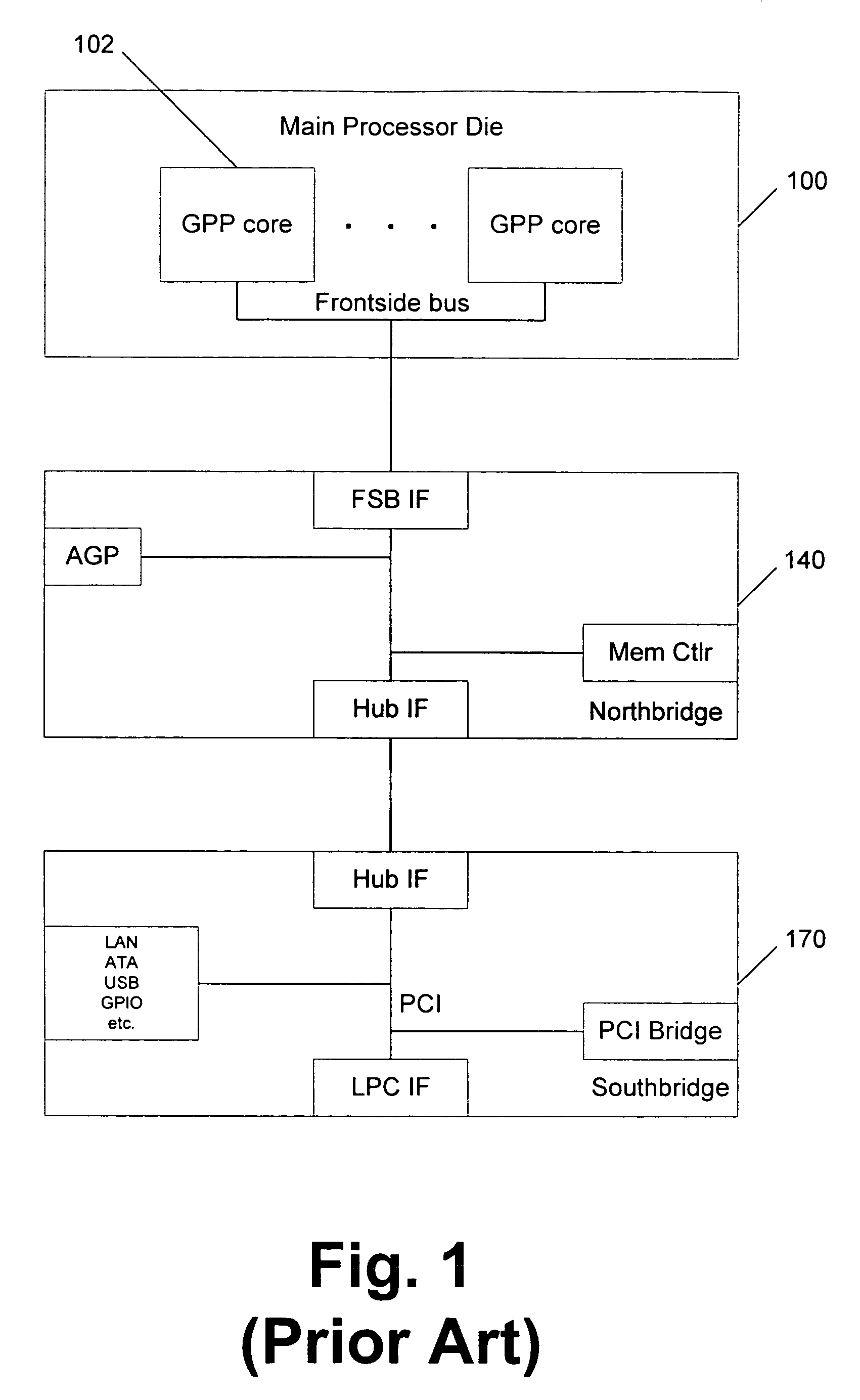

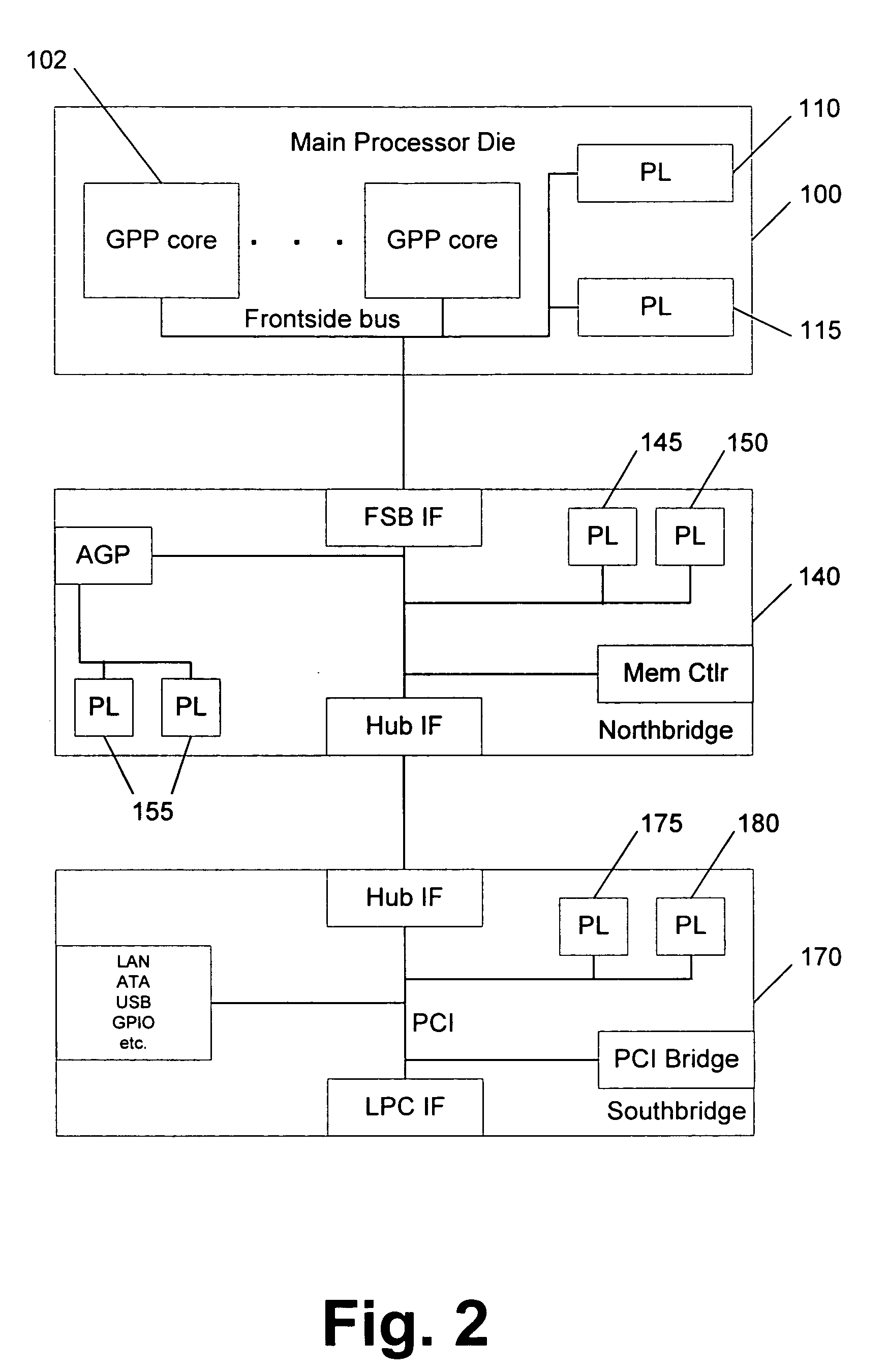

Integrating programmable logic into personal computer (PC) architecture

InactiveUS20060242611A1Improve abilitiesExtends the capability of the processor coreEnergy efficient ICTDigital computer detailsGeneral purposeCoprocessor

A portion of chip die real estate is allocated to blocks of programmable logic (PL) fabric. These blocks can be used to load special purpose processors which operate in concert with the general purpose processors (GPPs). These processors, implemented in PL, may integrate with a PC system architecture. Blocks of PL are integrated with fixed blocks of logic interfaces connecting, for example, to a system's front side bus. This facilitates configuration of the PL as coprocessors or other devices that may operate as peers to GPPs in the system. Moreover, blocks of PL may be integrated with fixed logic interfaces to existing IO buses within a system architecture. This facilitates configuration of the PL as soft devices, which may appear to the system as physical devices connected to the system. These soft devices can be handled like physical devices connected to the same or similar IO buses.

Owner:MICROSOFT TECH LICENSING LLC

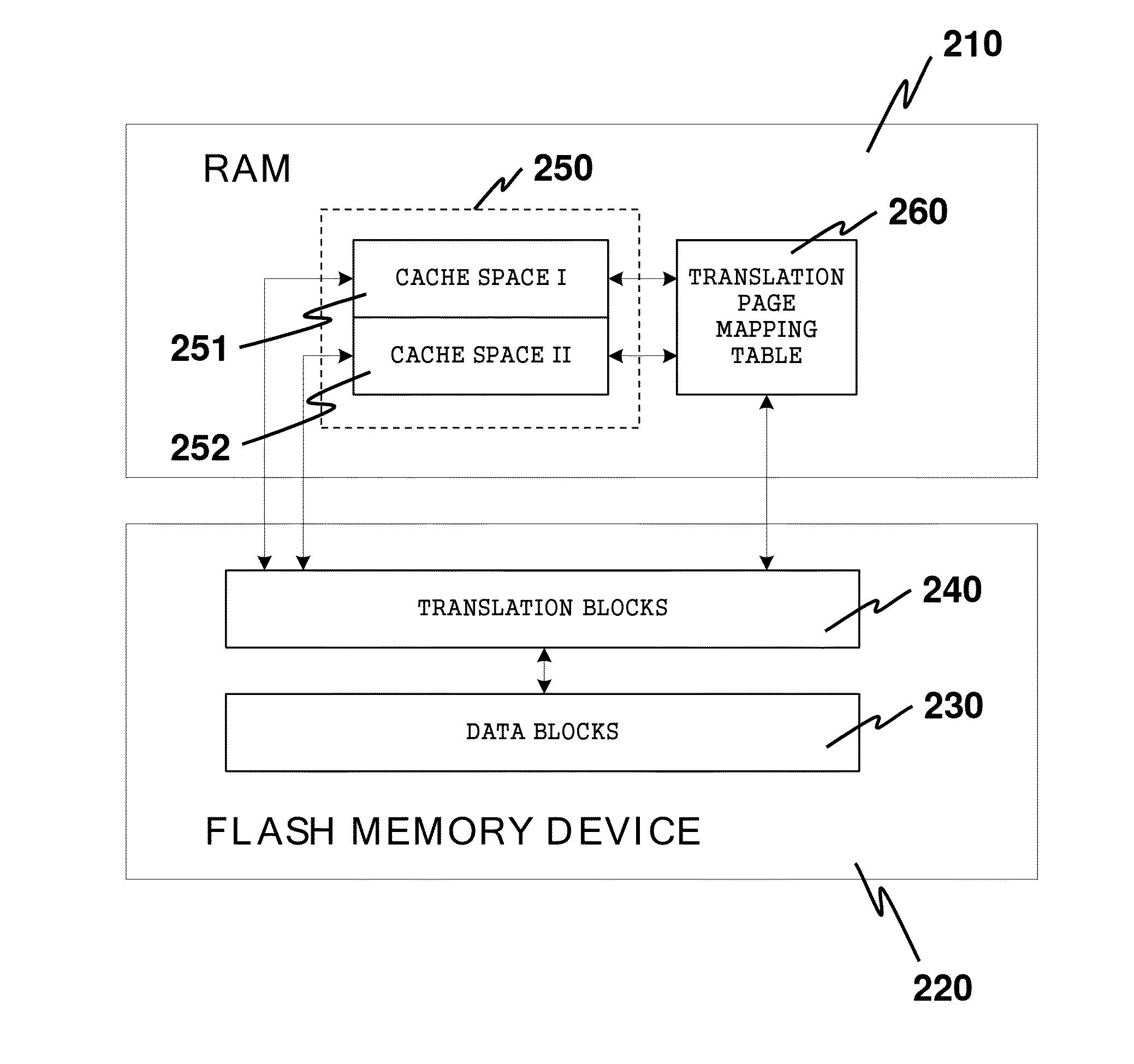

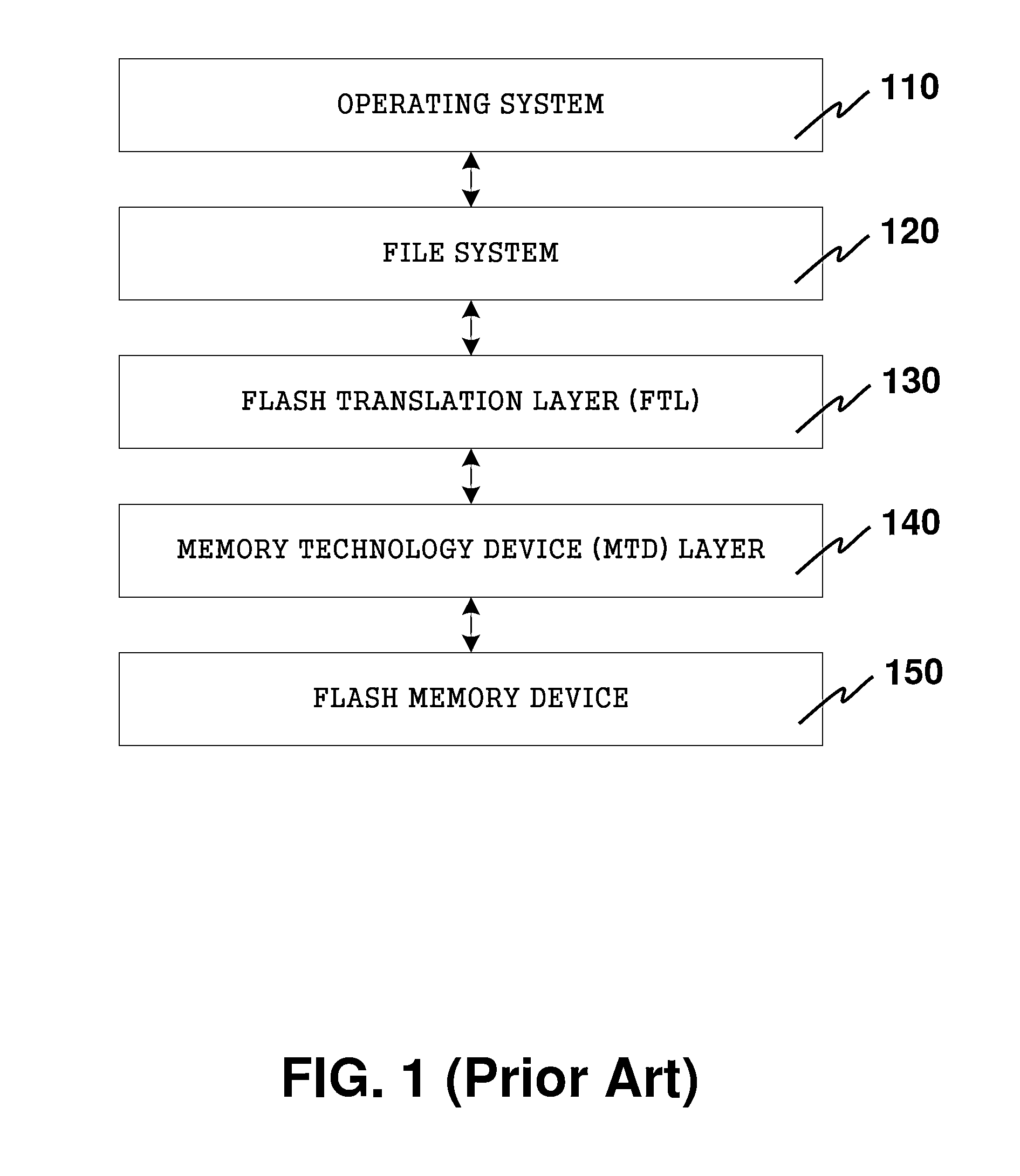

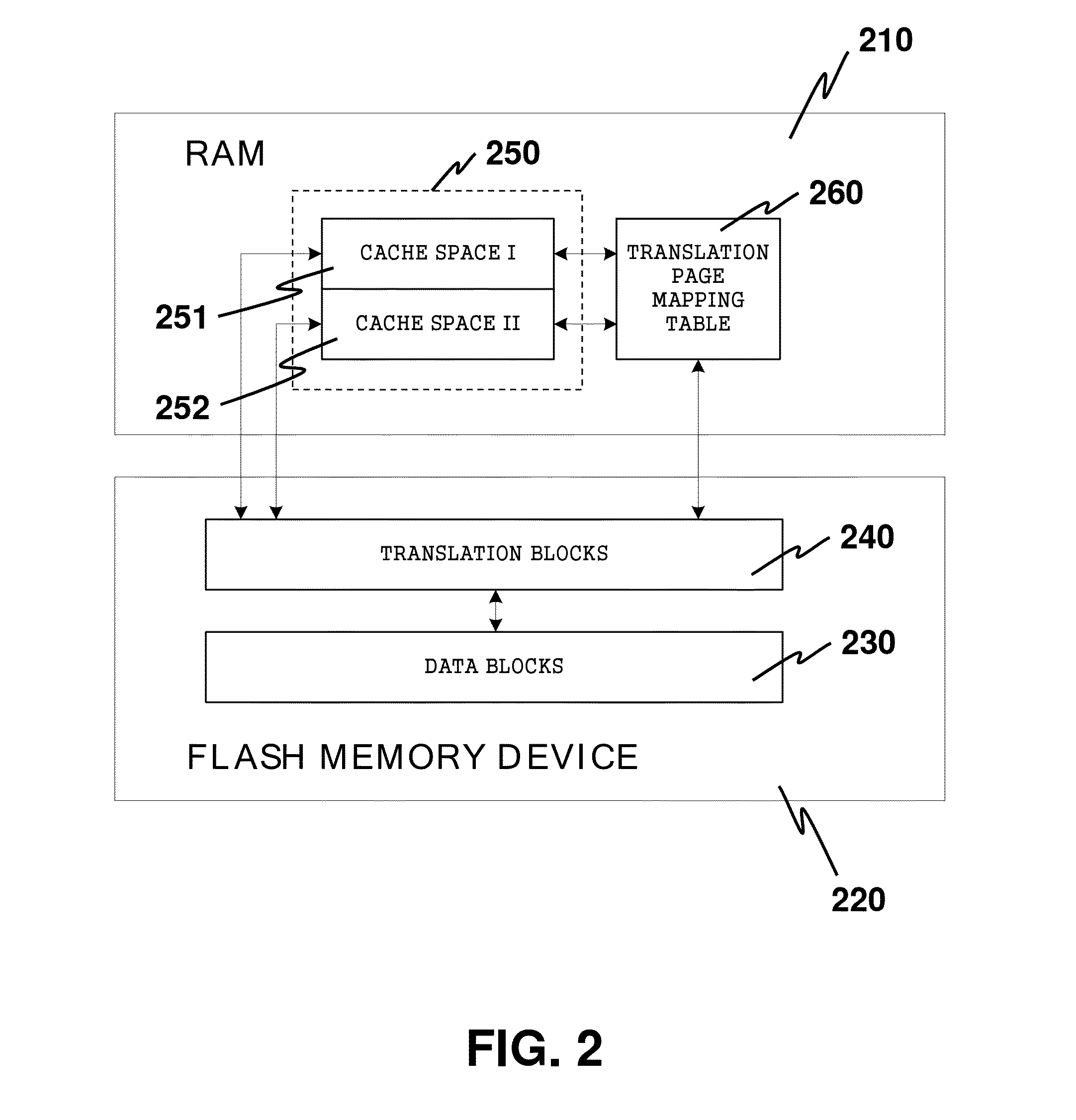

Effective Caching for Demand-based Flash Translation Layers in Large-Scale Flash Memory Storage Systems

InactiveUS20140304453A1Memory architecture accessing/allocationMemory adressing/allocation/relocationRandom access memoryAddress mapping

Owner:THE HONG KONG POLYTECHNIC UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com