Convolution neural network parallel processing method based on large-scale high-performance cluster

A convolutional neural network and parallel processing technology, which is applied in the field of convolutional neural network parallel processing, can solve problems such as network model size limitation and single server configuration, and achieve the effects of optimizing computing efficiency, improving the scope of application, and improving computing efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

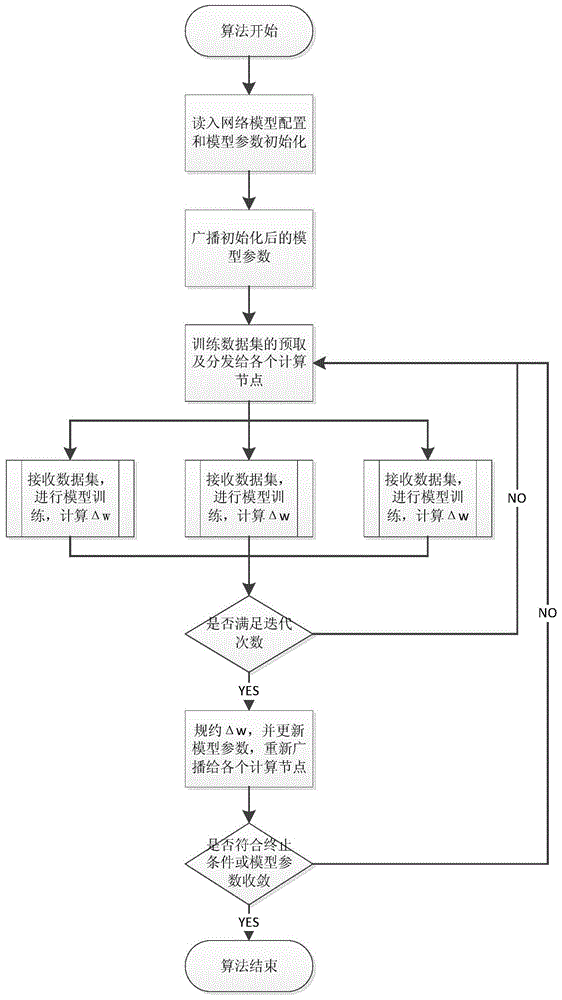

[0033] The present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments.

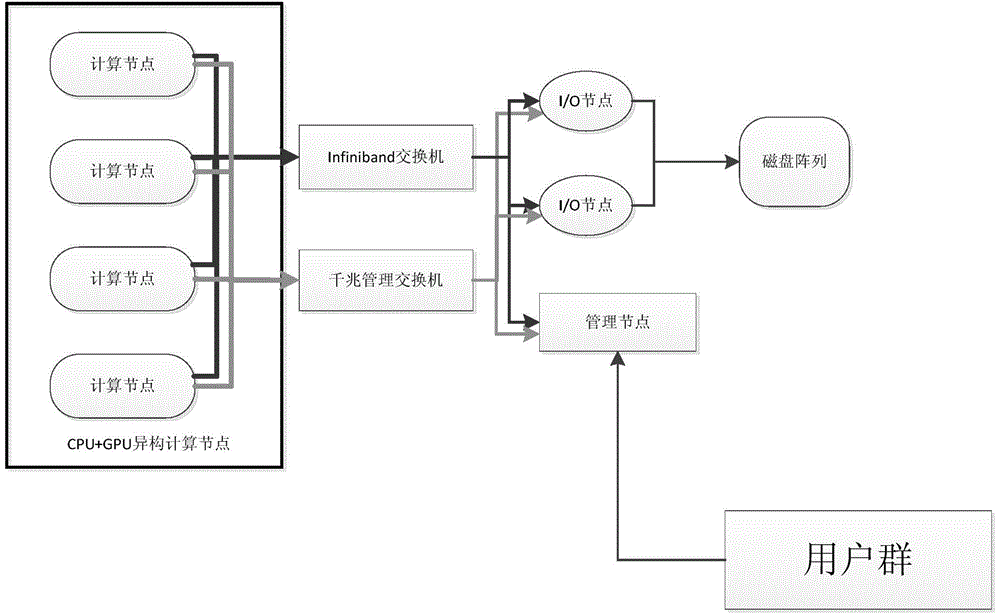

[0034] When the present invention is specifically applied, it is first necessary to construct a large-scale high-performance cluster environment. The high-performance cluster environment is divided into software environment and hardware environment. The hardware is a group of 1 to N independent computing (nodes) with the same configuration. The nodes are connected through a high-performance Internet; each node can be used as a single computing resource for interactive users, and also can work together and appear as a single, centralized computing resource for use by parallel computing tasks.

[0035] The tasks of high-performance clusters are mainly focused on scientific computing, so the requirements for hardware computing capabilities are relatively high. In addition to choosing a CPU with a higher frequency and more cores, the GP...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com