Neutral network processor based on data compression, design method and chip

A technology of data compression and neural network, applied in the direction of biological neural network model, physical realization, etc., can solve problems such as accelerating calculation speed, and achieve the effect of improving calculation speed and operating energy efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

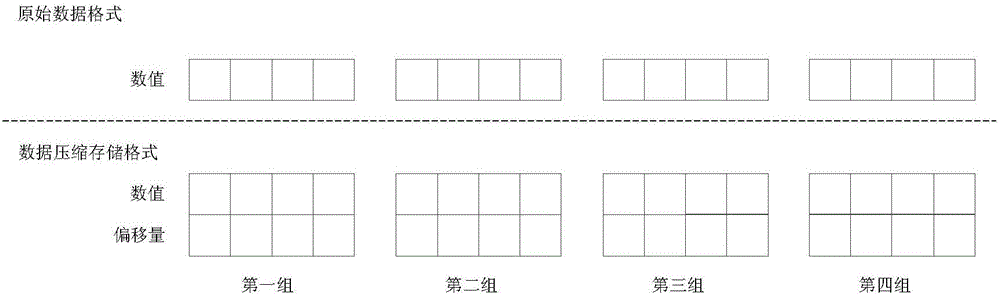

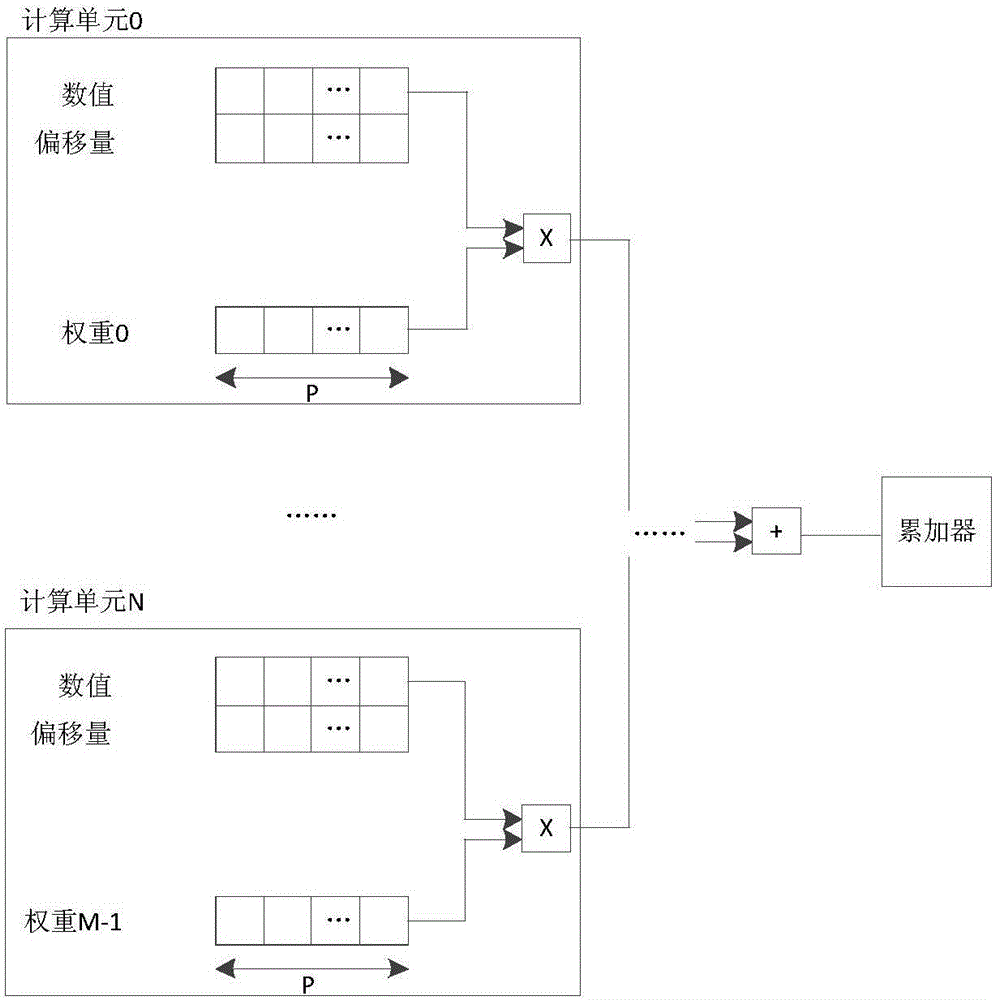

[0036] The inventor found in the research of neural network processors that there are a large number of data elements with a value of 0 in the process of neural network calculations. After data operations such as multiplication and addition, such elements have no numerical impact on the calculation results, but the neural network When the network processor processes these data elements, it will occupy a large amount of on-chip storage space, consume redundant transmission resources and increase the running time, so it is difficult to meet the performance requirements of the neural network processor.

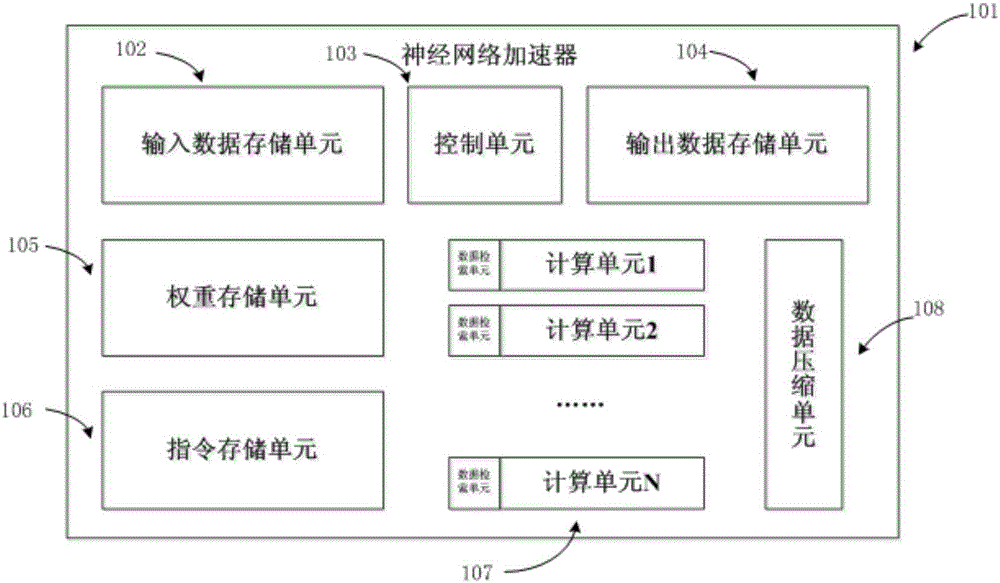

[0037] After analyzing the calculation structure of the existing neural network processor, the inventor finds that the data elements of the neural network can be compressed to achieve the purpose of accelerating the operation speed and reducing energy consumption. The prior art provides the basic framework of a neural network accelerator. The present invention proposes a data comp...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com