Human body gesture identification method based on depth convolution neural network

A neural network and human body posture technology, applied in the field of human body posture estimation system, can solve the problems of artificially designed image features and insufficient accuracy of spatial model posture estimation, so as to save space and time overhead, avoid limitations, and achieve high accuracy Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

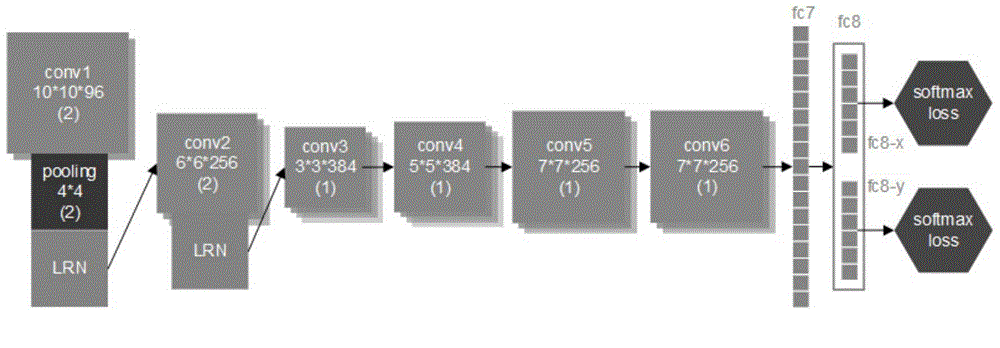

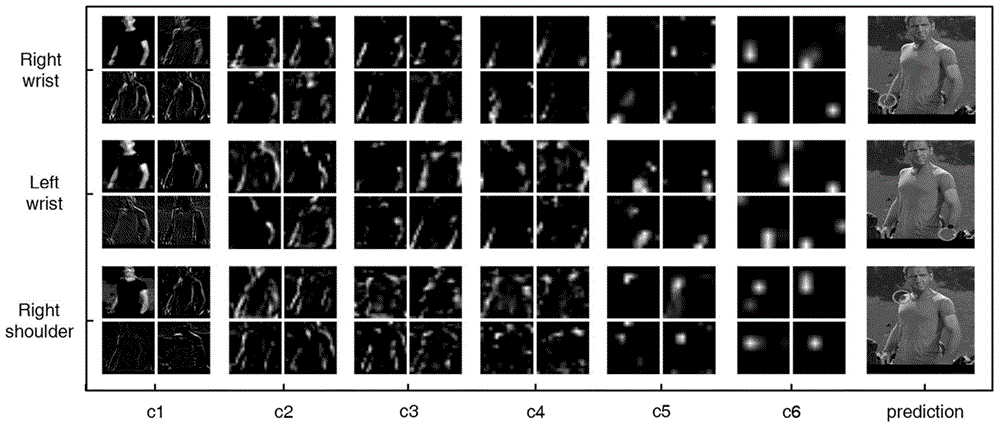

[0033] In order to solve the above problems, the specific implementation steps of the human body posture estimation system based on deep convolutional neural network proposed by the present invention are as follows:

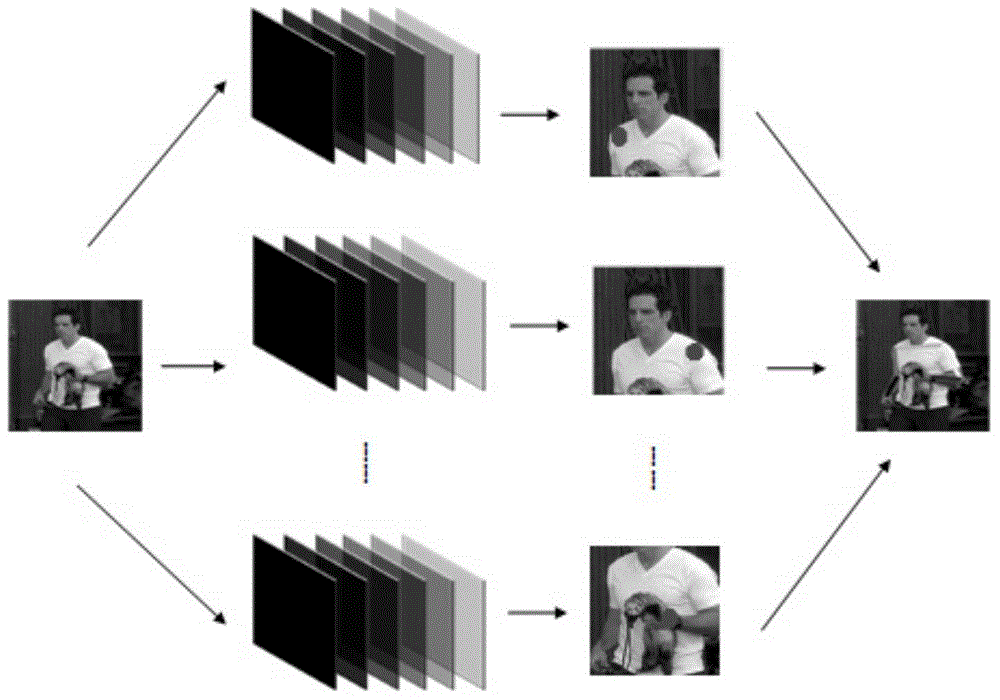

[0034]Step 1: Preprocessing. Data augmentation plays a crucial role in the training of deep convolutional neural networks. In the model training stage, the present invention aims at the problem of pose estimation. The data enhancement method adopted is: through rotation, translation, scale transformation, etc., the training samples are enhanced to force the model to learn the robustness of rotation, translation, and scale transformation. sexual characteristics. At the same time, these operations also provide a large number of fake samples for model training. In the model running stage, it is only necessary to scale the input image to adapt to the input layer size of the deep convolutional neural network, and record the corresponding relationship between the pix...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com