Method for accelerating convolution neutral network hardware and AXI bus IP core thereof

A technology of convolutional neural network and hardware acceleration, which is applied in the field of hardware acceleration of convolutional neural network, can solve the problems of non-modification, large amount of funds and human resources, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0069] Such as figure 1 As shown, the steps of the convolutional neural network hardware acceleration method of this embodiment include:

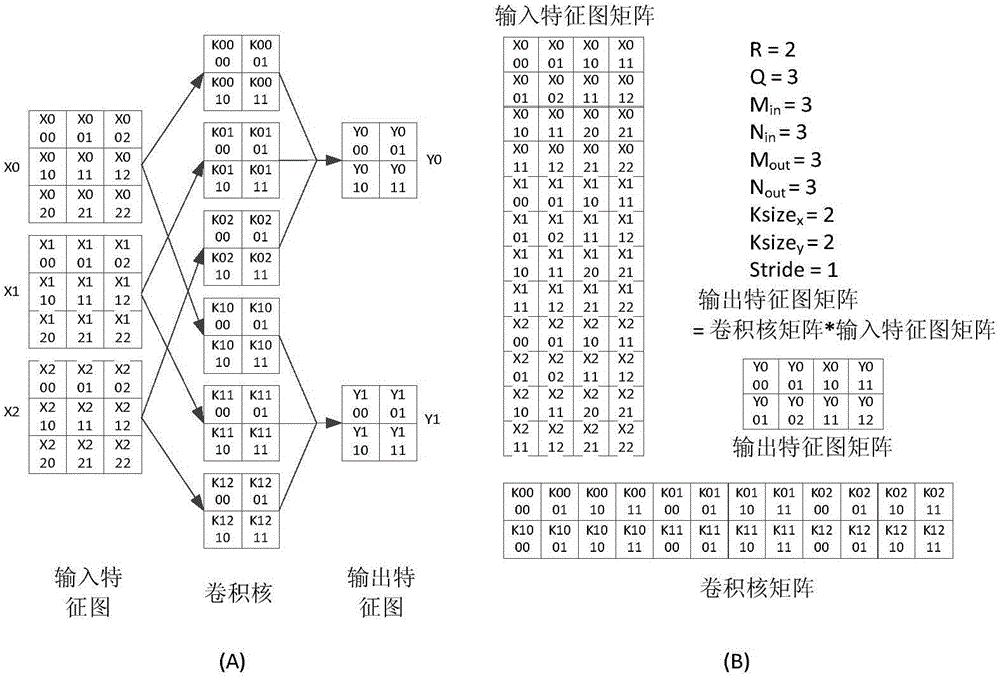

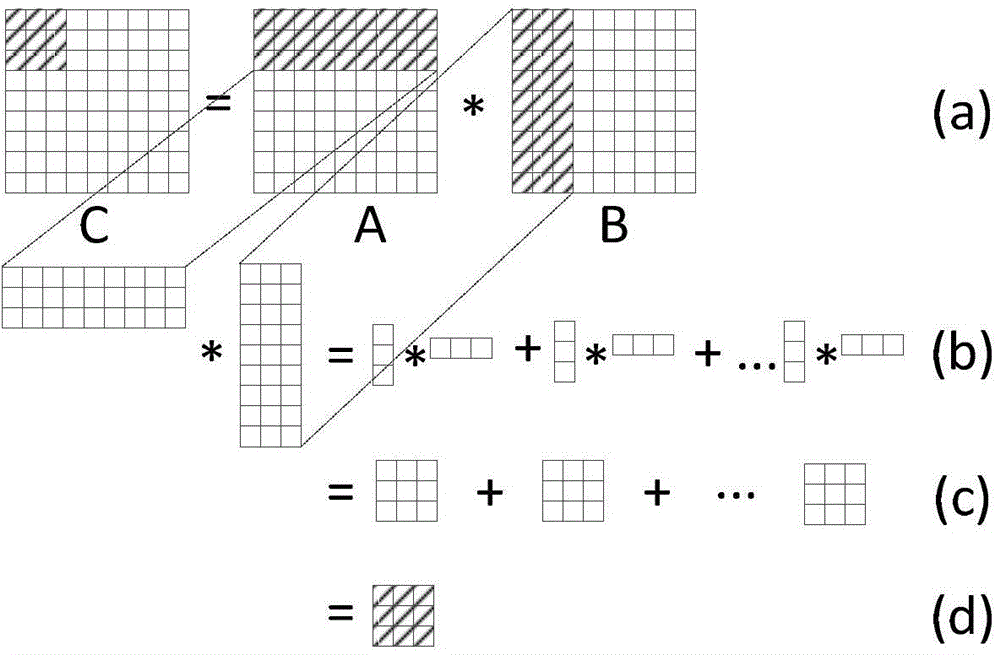

[0070] 1) Arrange the input feature map of the convolution operation in advance to form a matrix A, arrange the convolution kernels corresponding to the output feature map of the convolution operation to form a matrix B, and convert the convolution operation of the convolutional layer of the convolutional neural network into m rows Matrix multiplication of matrix A with K columns and matrix B with K rows and n columns;

[0071] 2) The matrix result C of the matrix multiplication operation is divided into m rows and n columns of matrix sub-blocks;

[0072] 3) Start the matrix multiplier connected to the main processor to calculate all matrix sub-blocks; when calculating the matrix sub-blocks, the matrix multiplier generates data requests in the form of matrix coordinates (Bx, By) in a data-driven manner , the matrix coordinates (Bx, By) ar...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com