Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

2433 results about "Long short term memory" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

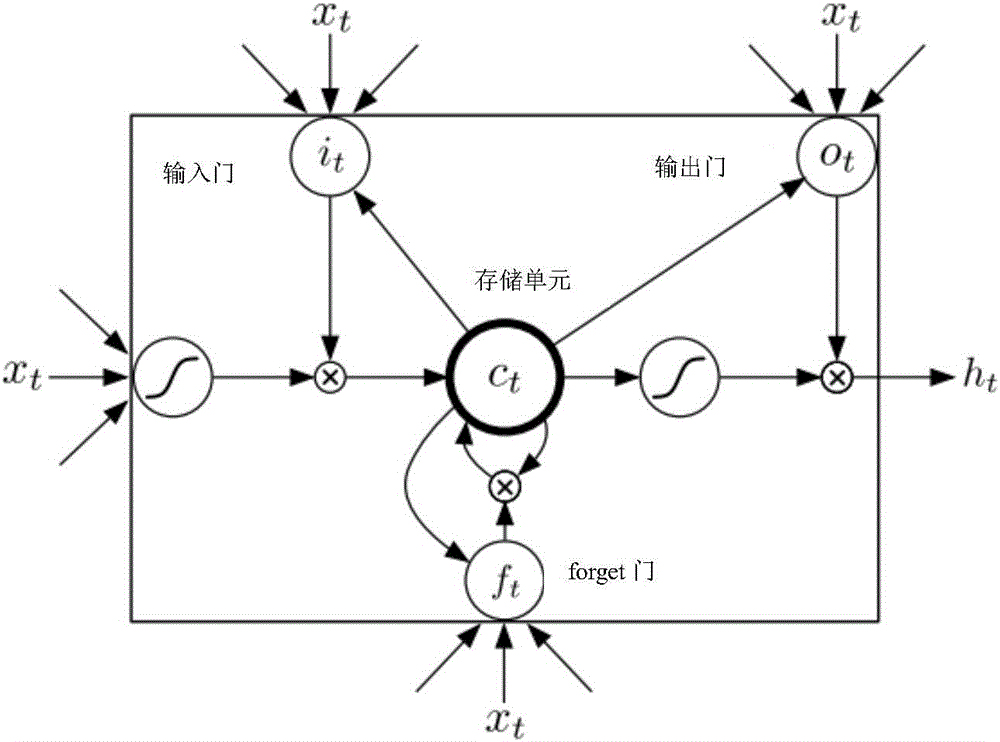

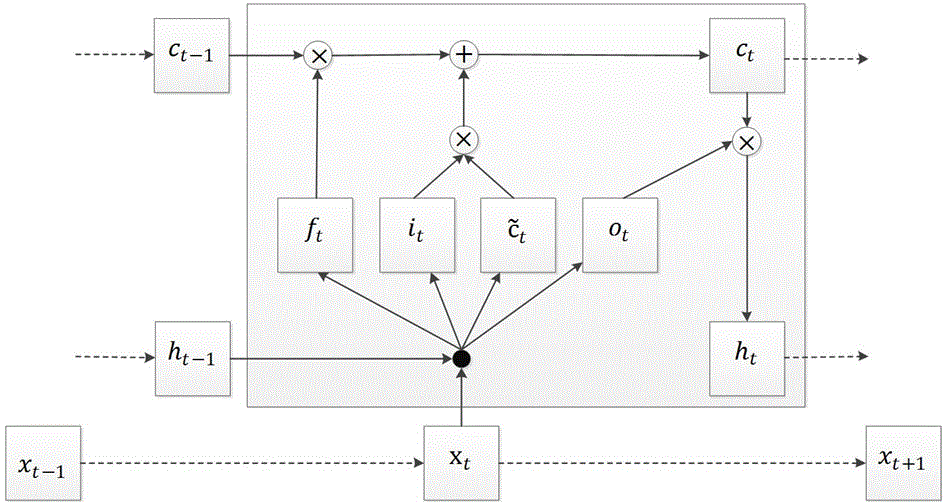

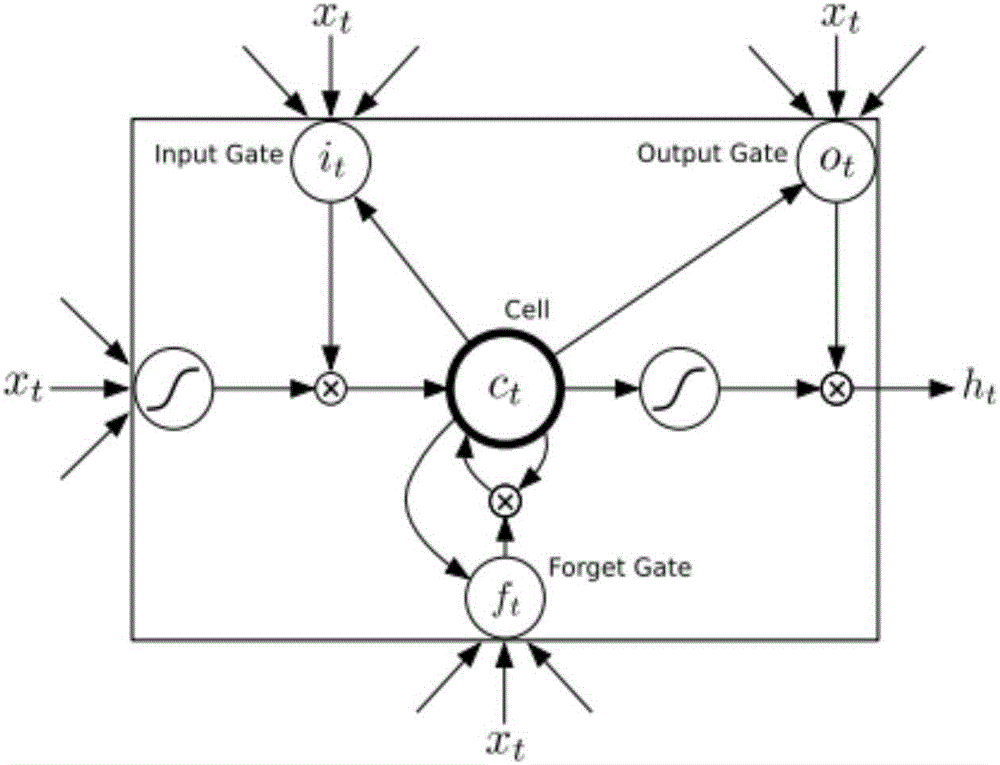

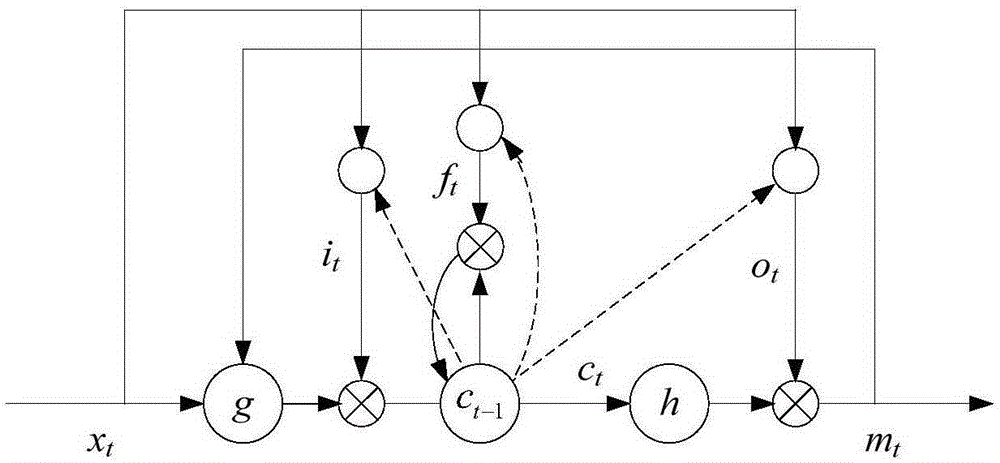

Long short-term memory (LSTM) is an artificial recurrent neural network (RNN) architecture used in the field of deep learning. Unlike standard feedforward neural networks, LSTM has feedback connections. It can not only process single data points (such as images), but also entire sequences of data (such as speech or video). For example, LSTM is applicable to tasks such as unsegmented, connected handwriting recognition or speech recognition. Bloomberg Business Week wrote: "These powers make LSTM arguably the most commercial AI achievement, used for everything from predicting diseases to composing music."

attention CNNs and CCR-based text sentiment analysis method

ActiveCN107092596AHigh precisionImprove classification accuracySemantic analysisNeural architecturesFeature extractionAmbiguity

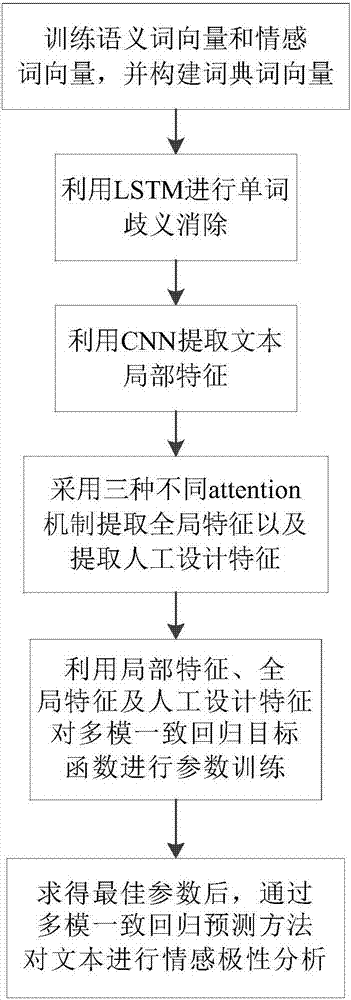

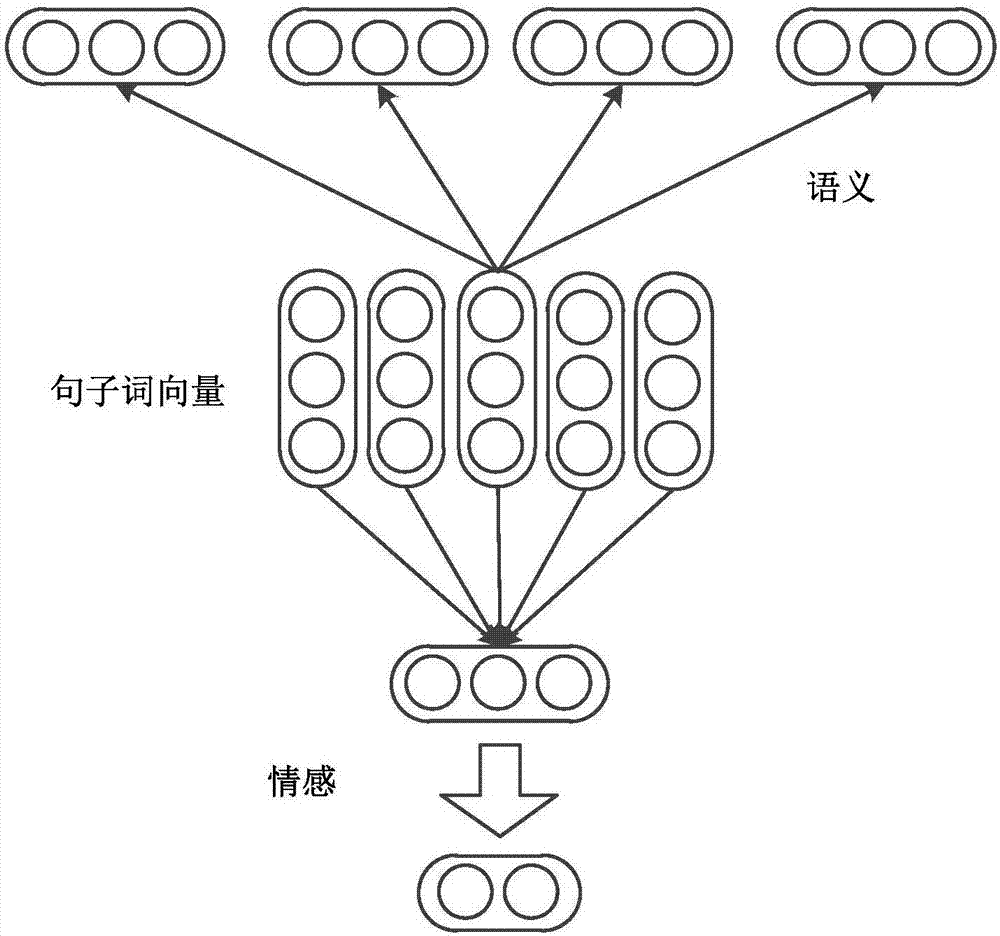

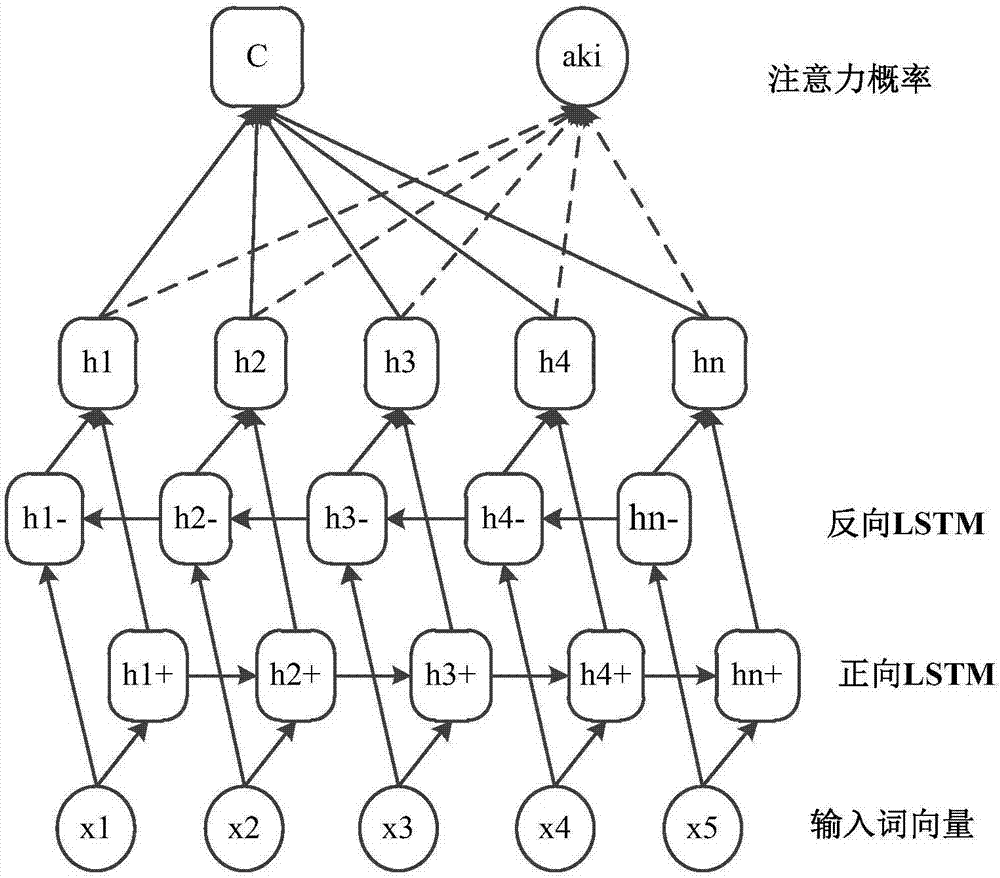

The invention discloses an attention CNNs and CCR-based text sentiment analysis method and belongs to the field of natural language processing. The method comprises the following steps of 1, training a semantic word vector and a sentiment word vector by utilizing original text data and performing dictionary word vector establishment by utilizing a collected sentiment dictionary; 2, capturing context semantics of words by utilizing a long-short-term memory (LSTM) network to eliminate ambiguity; 3, extracting local features of a text in combination with convolution kernels with different filtering lengths by utilizing a convolutional neural network; 4, extracting global features by utilizing three different attention mechanisms; 5, performing artificial feature extraction on the original text data; 6, training a multimodal uniform regression target function by utilizing the local features, the global features and artificial features; and 7, performing sentiment polarity prediction by utilizing a multimodal uniform regression prediction method. Compared with a method adopting a single word vector, a method only extracting the local features of the text, or the like, the text sentiment analysis method can further improve the sentiment classification precision.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

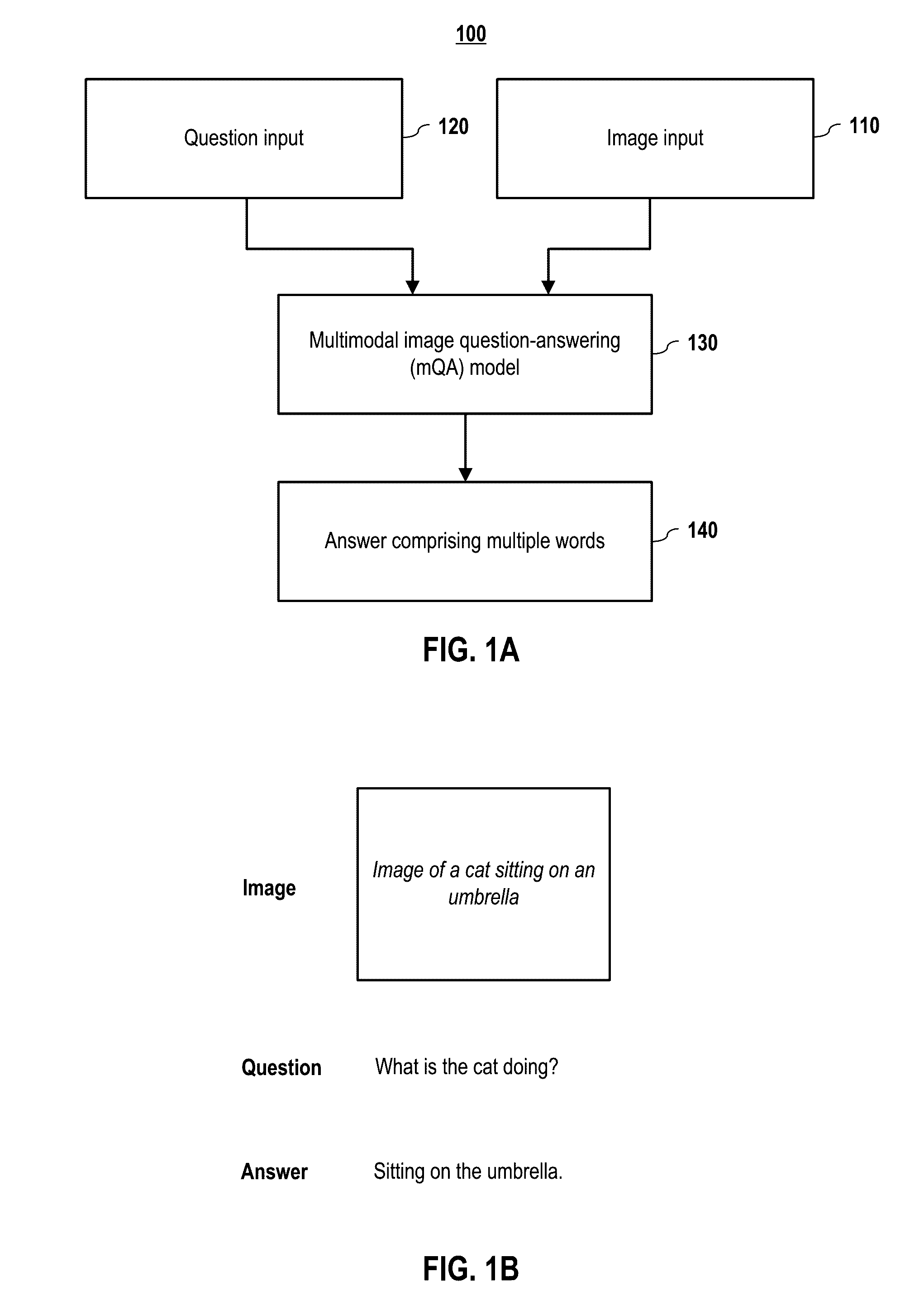

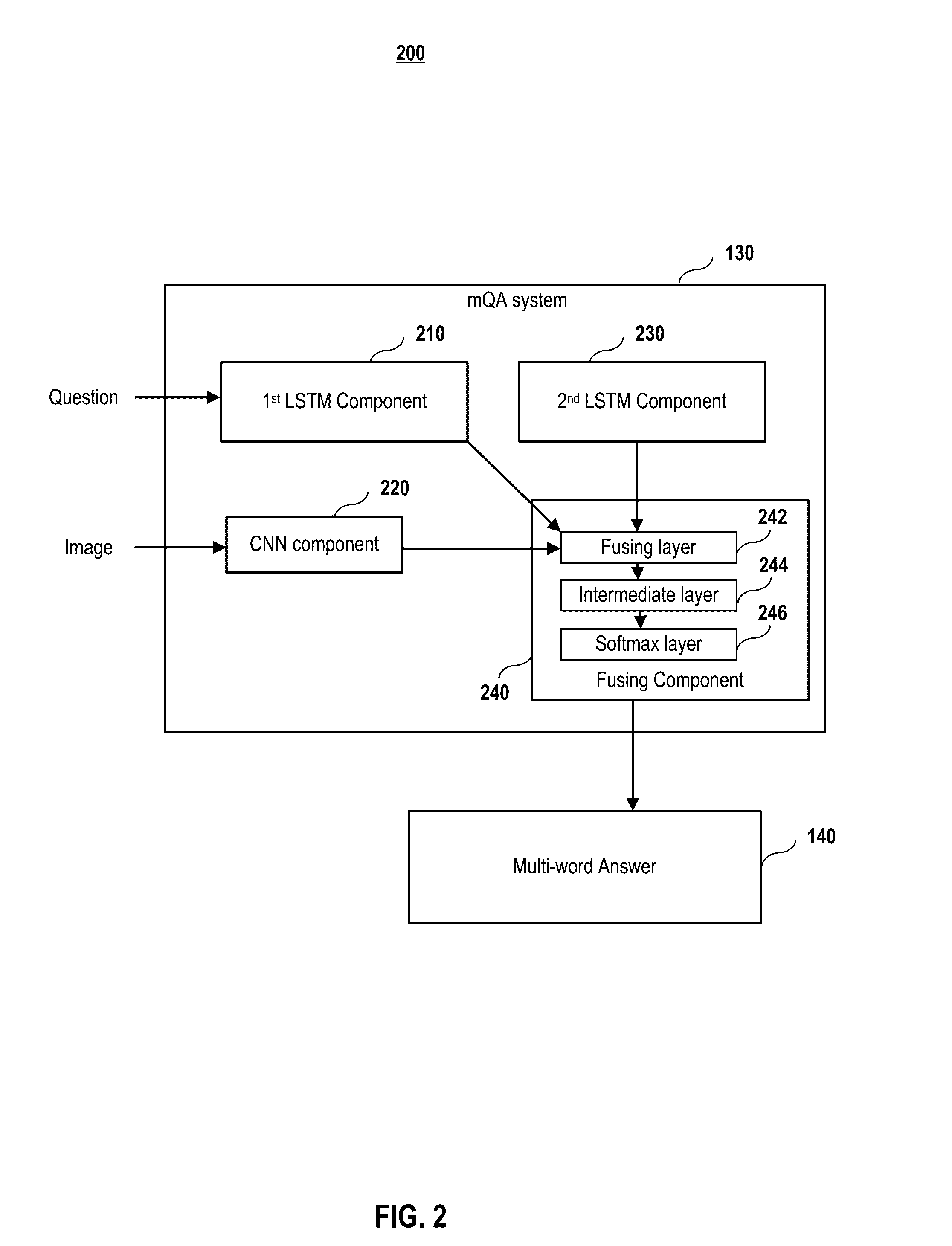

Multilingual image question answering

ActiveUS20160342895A1Natural language translationKnowledge representationData miningLong short term memory

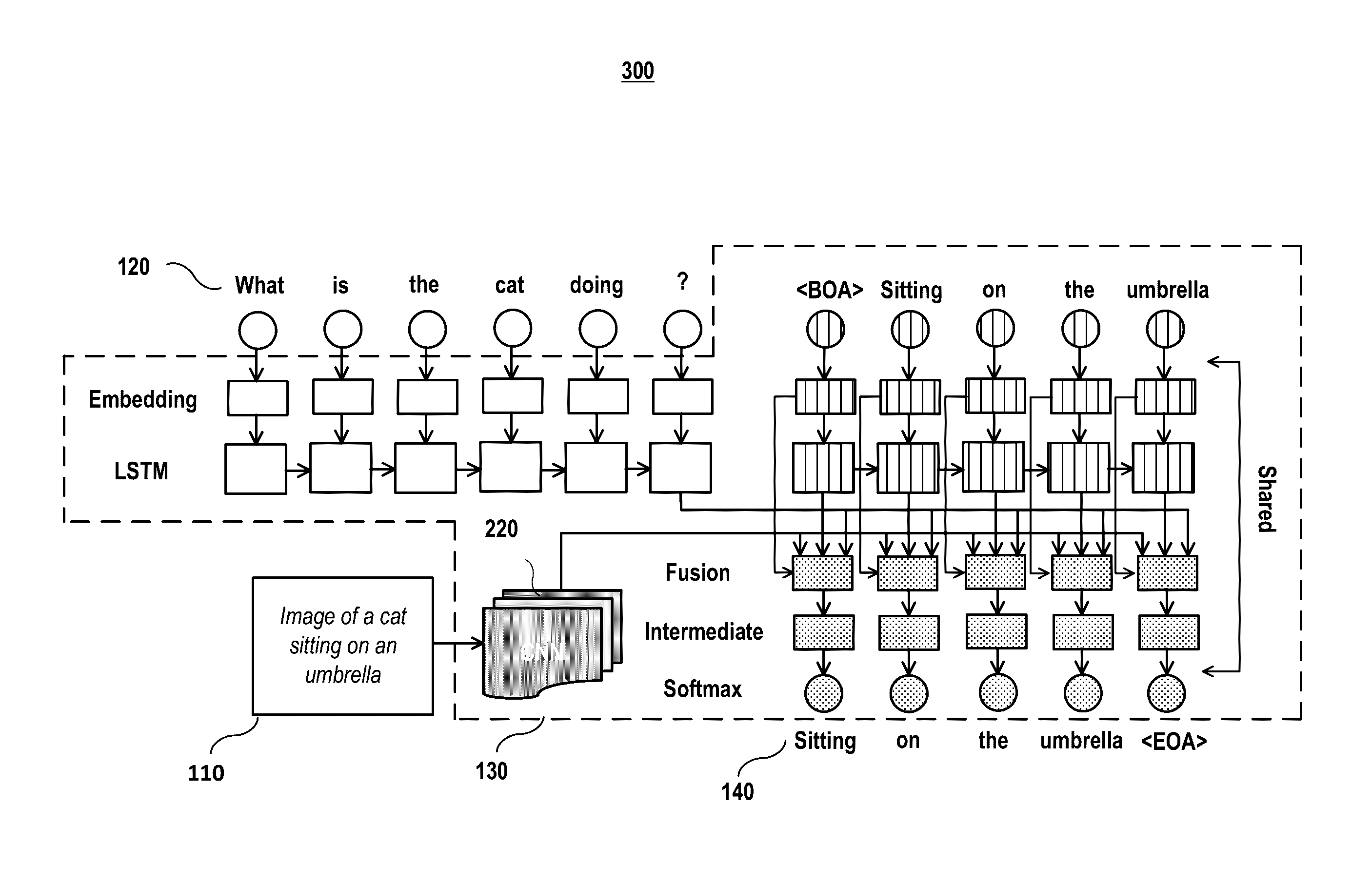

Embodiments of a multimodal question answering (mQA) system are presented to answer a question about the content of an image. In embodiments, the model comprises four components: a Long Short-Term Memory (LSTM) component to extract the question representation; a Convolutional Neural Network (CNN) component to extract the visual representation; an LSTM component for storing the linguistic context in an answer, and a fusing component to combine the information from the first three components and generate the answer. A Freestyle Multilingual Image Question Answering (FM-IQA) dataset was constructed to train and evaluate embodiments of the mQA model. The quality of the generated answers of the mQA model on this dataset is evaluated by human judges through a Turing Test.

Owner:BAIDU USA LLC

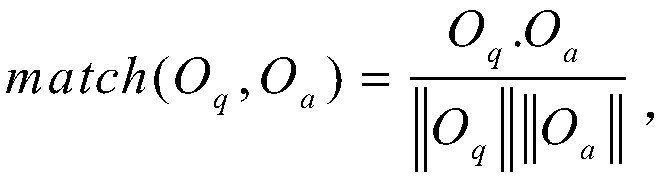

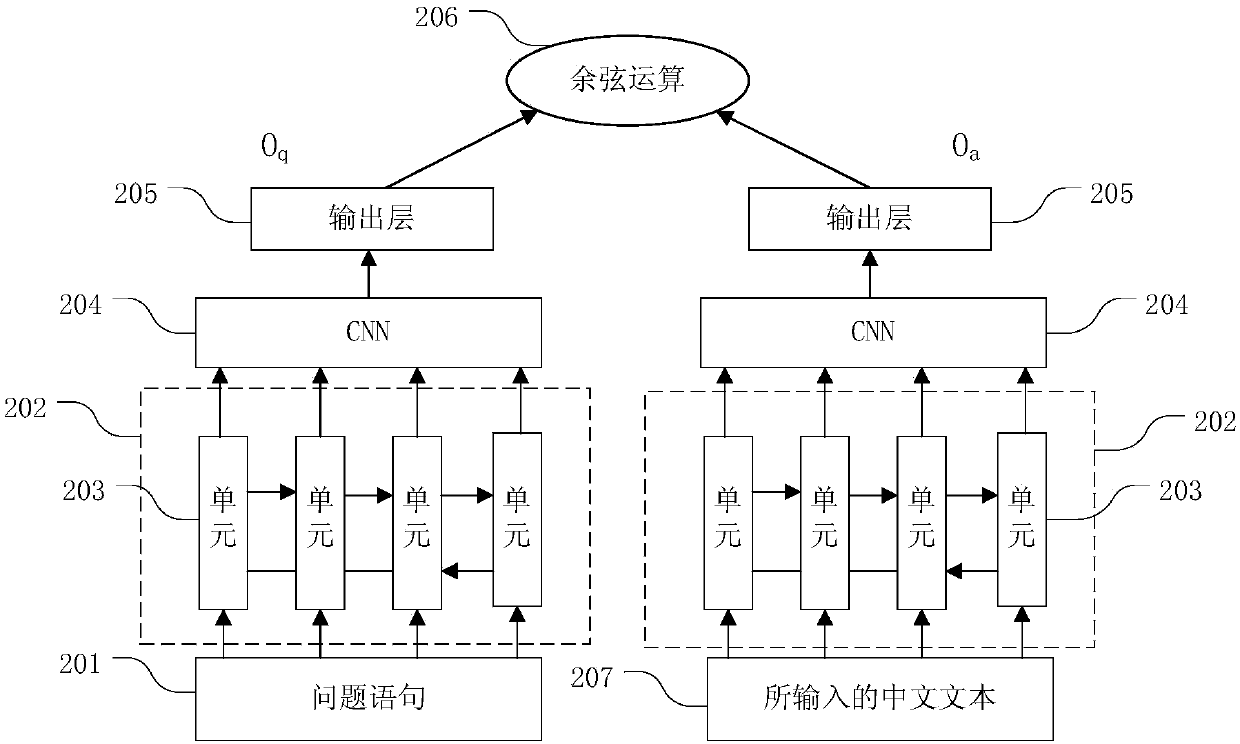

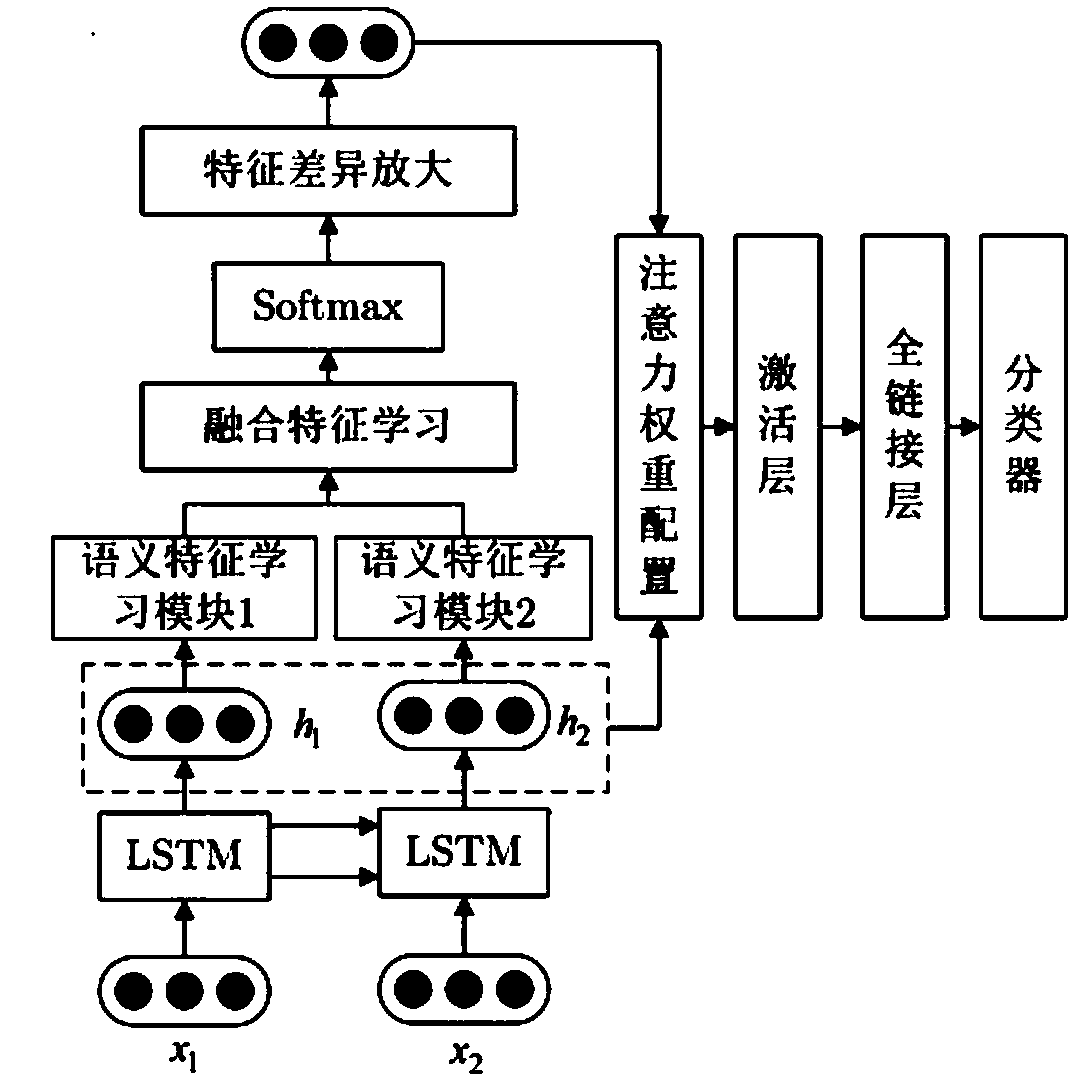

Deep learning-based question and answer matching method

ActiveCN107562792AImprove efficiencyIncrease flexibilityNeural architecturesSpecial data processing applicationsThe InternetOnline forum

The invention relates to a deep learning-based question and answer matching method. The method comprises the following steps of: 1) sufficiently learning word orders and sentence local features of a question text and an answer text by utilizing two underlying deep neural networks: a long short-term memory network LSTM and a convolutional neural network CNN; and 2) selecting a keyword with best semantic matching on the basis of a pooling manner of an attention mechanism AM. Compared with existing methods, the method has the advantages of being in low in feature engineering workload, strong in cross-field performance and relatively high in correctness, and can be effectively applied to the fields of commercial intelligent customer service robots, automatic driving, internet medical treatment, online forum and community question answering.

Owner:TONGJI UNIV

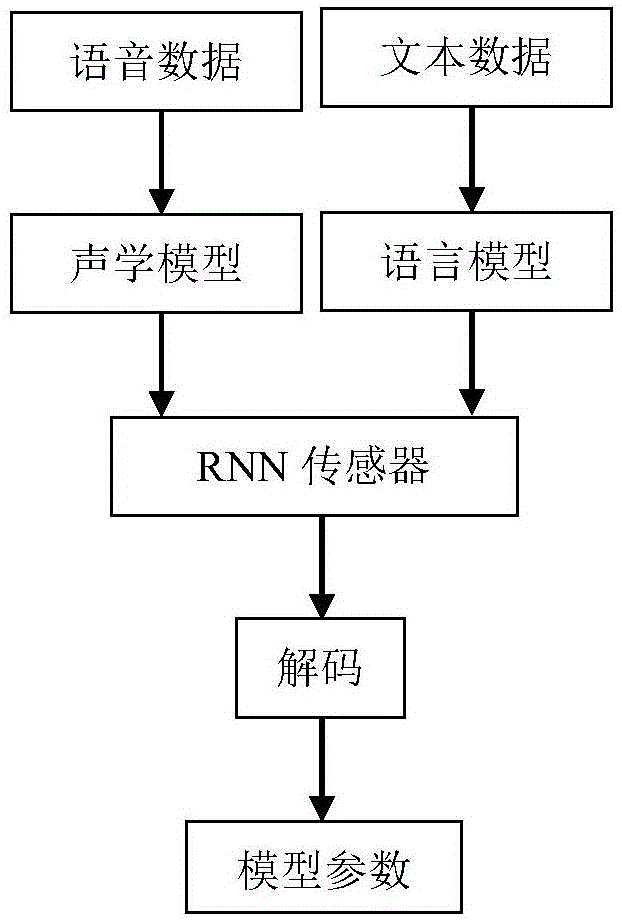

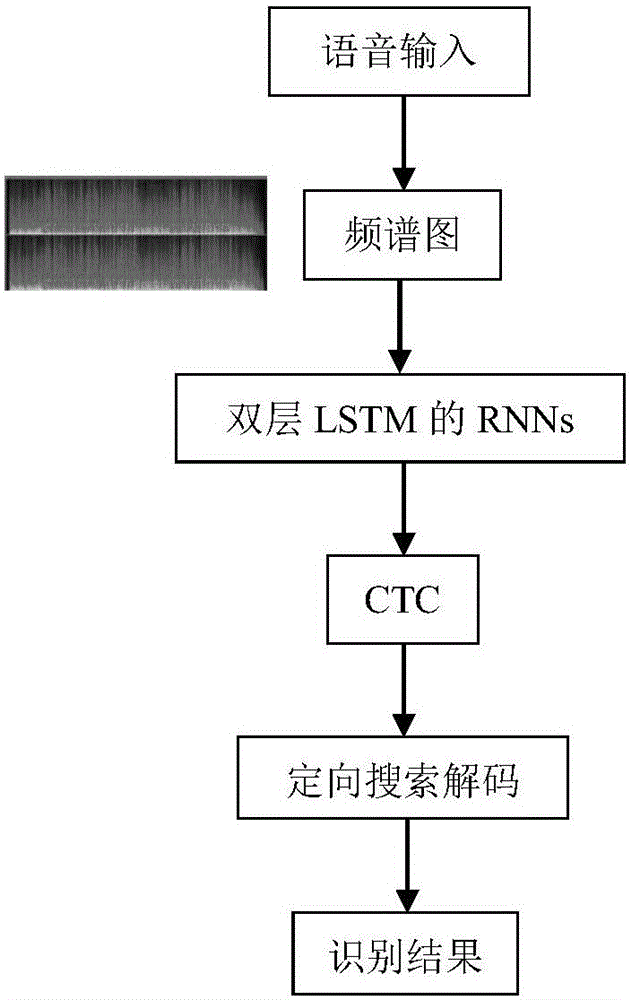

Voice identification method using long-short term memory model recurrent neural network

The invention discloses a voice identification method using a long-short term memory model recurrent neural network. The voice identification method comprises training and identification. The training process comprises steps of introducing voice data and text data to generate a commonly-trained acoustic and language mode, and using an RNN sensor to perform decoding to form a model parameter. The identification process comprises steps of converting voice input to a frequency spectrum graph through Fourier conversion, using the recursion neural network of the long-short term memory model to perform orientational searching decoding and finally generating an identification result. The voice identification method adopts the recursion neural network (RNNs) and adopts connection time classification (CTC) to train RNNs through an end-to-end training method. These LSTM units combining with the long-short term memory have good effects and combines with multi-level expression to prove effective in a deep network; only one neural network model (end-to-end model) exits from a voice characteristic (an input end) to a character string (an output end) and the neural network can be directly trained by a target function which is a some kind of a proxy of WER, which avoids to cost useless work to optimize an individual target function.

Owner:SHENZHEN WEITESHI TECH

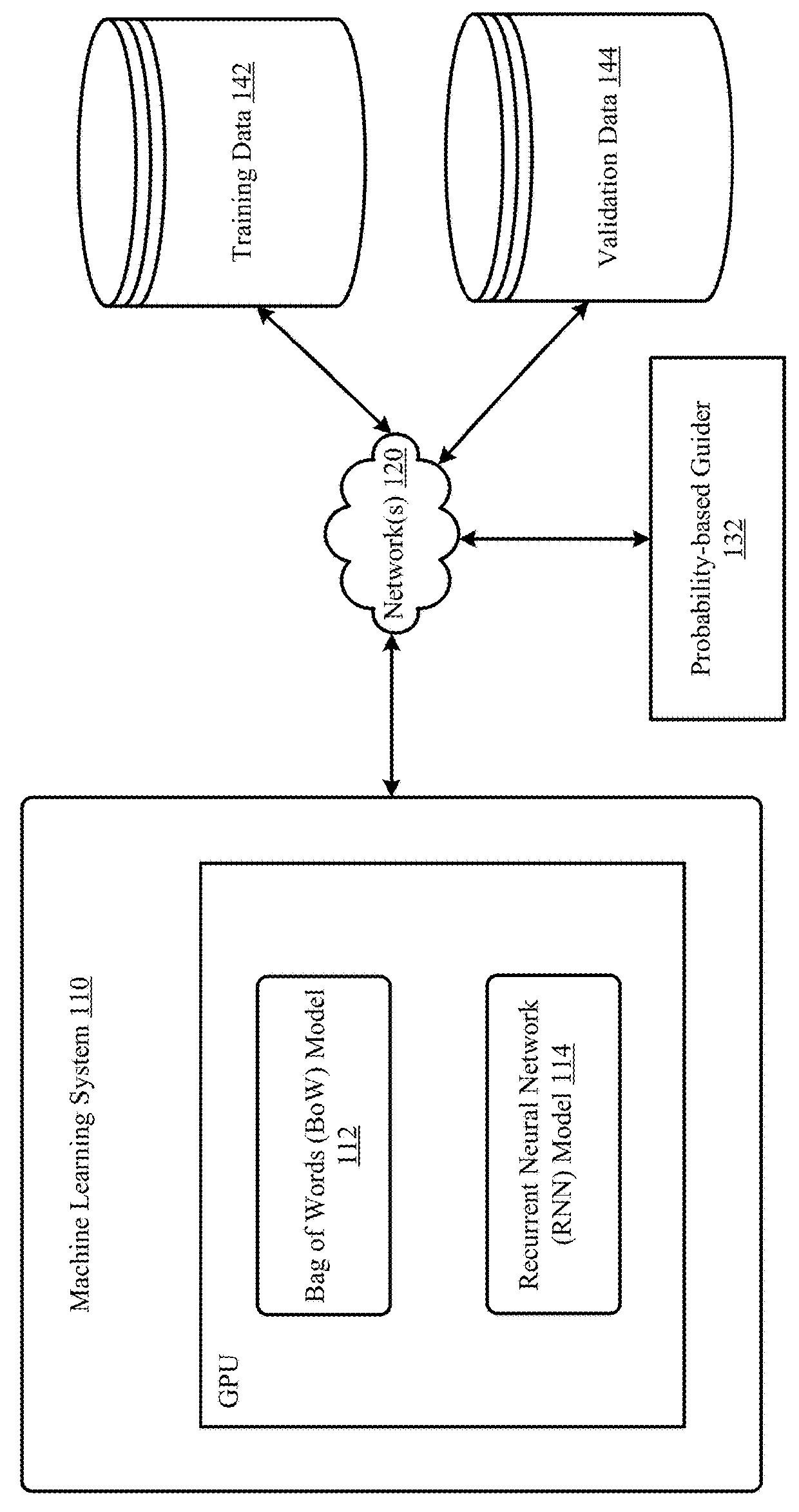

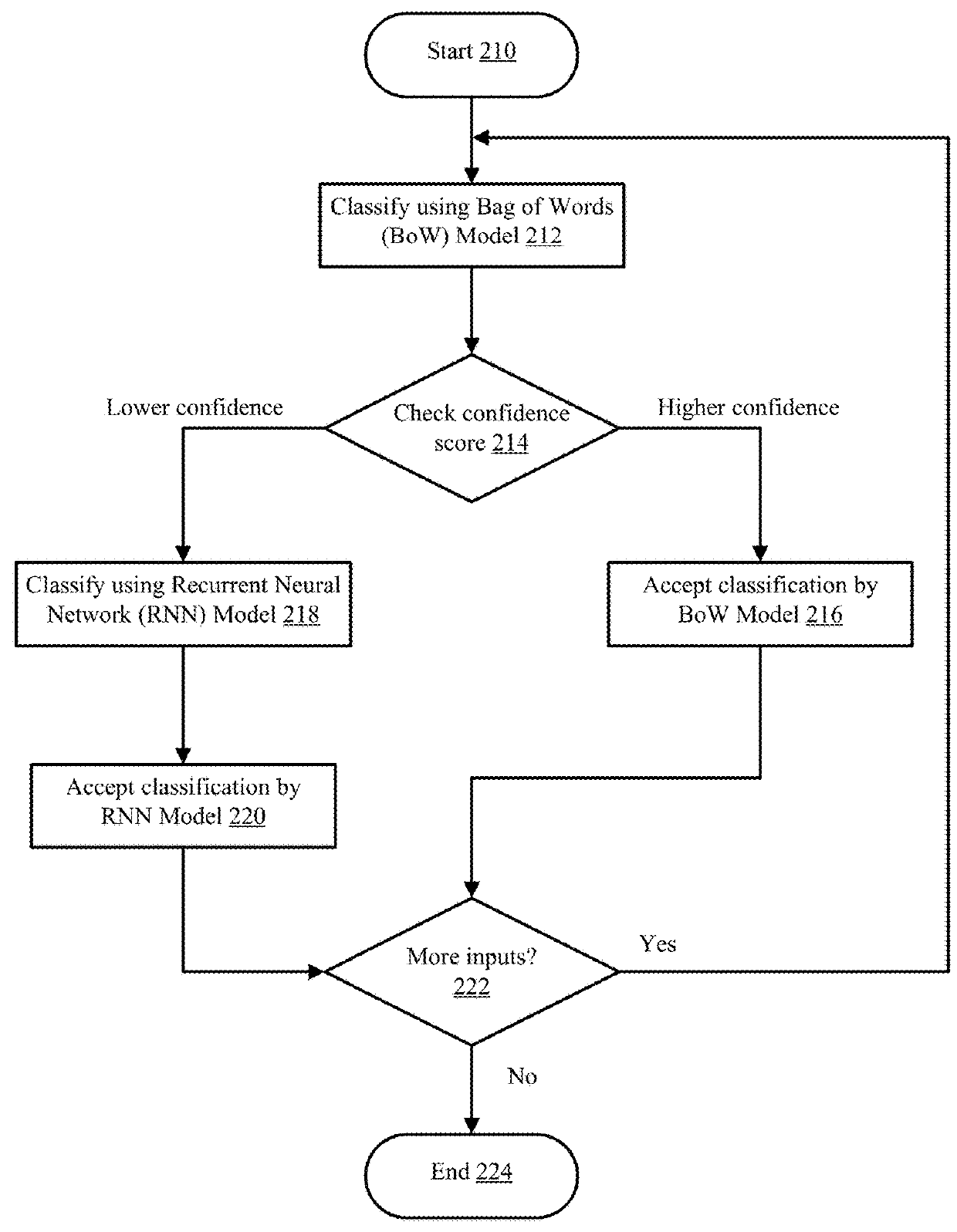

Probability-Based Guider

ActiveUS20180268287A1Semantic analysisGeneral purpose stored program computerShort-term memoryDecision networks

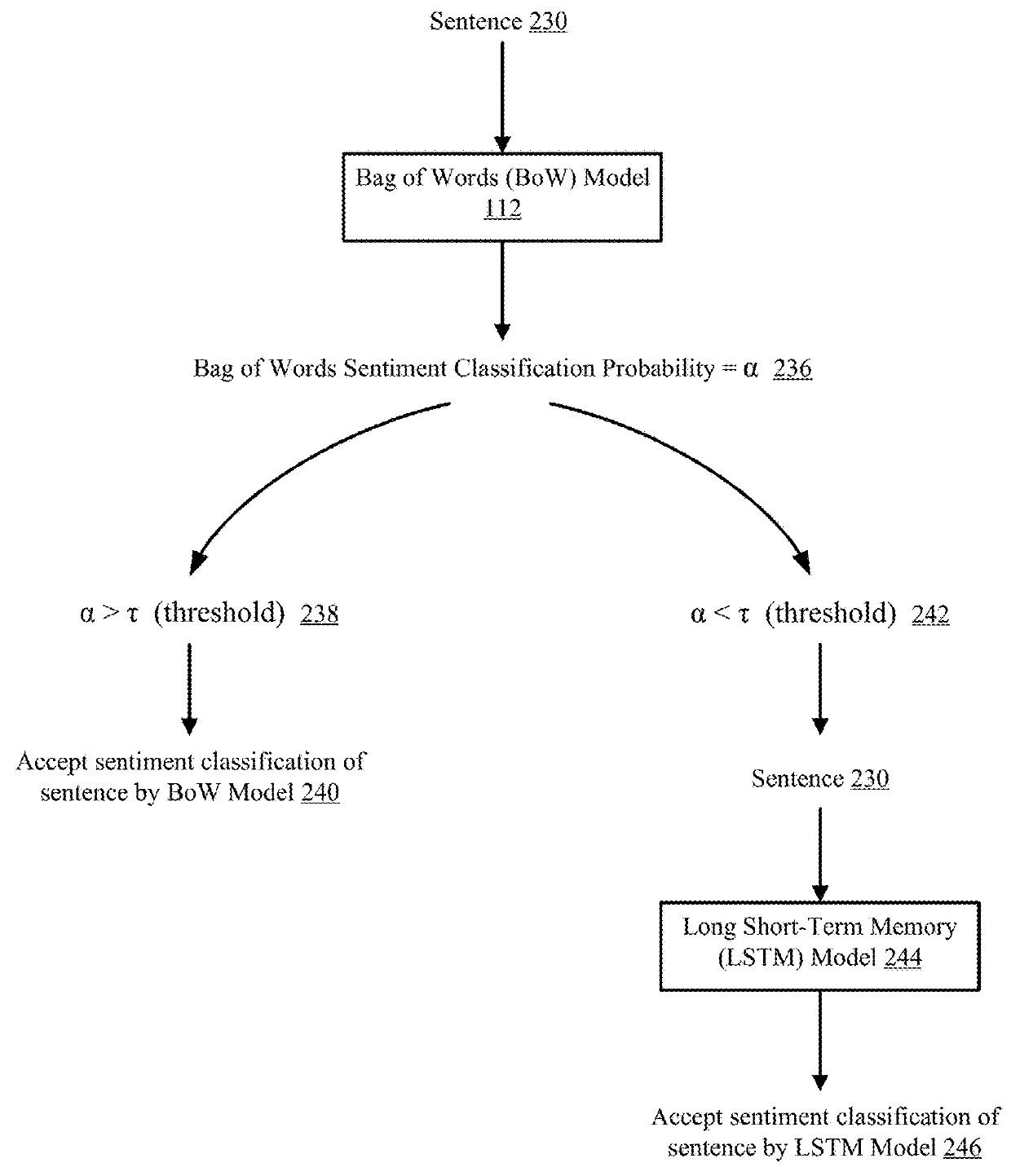

The technology disclosed proposes using a combination of computationally cheap, less-accurate bag of words (BoW) model and computationally expensive, more-accurate long short-term memory (LSTM) model to perform natural processing tasks such as sentiment analysis. The use of cheap, less-accurate BoW model is referred to herein as “skimming”. The use of expensive, more-accurate LSTM model is referred to herein as “reading”. The technology disclosed presents a probability-based guider (PBG). PBG combines the use of BoW model and the LSTM model. PBG uses a probability thresholding strategy to determine, based on the results of the BoW model, whether to invoke the LSTM model for reliably classifying a sentence as positive or negative. The technology disclosed also presents a deep neural network-based decision network (DDN) that is trained to learn the relationship between the BoW model and the LSTM model and to invoke only one of the two models.

Owner:SALESFORCE COM INC

Bidirectional long short-term memory unit-based behavior identification method for video

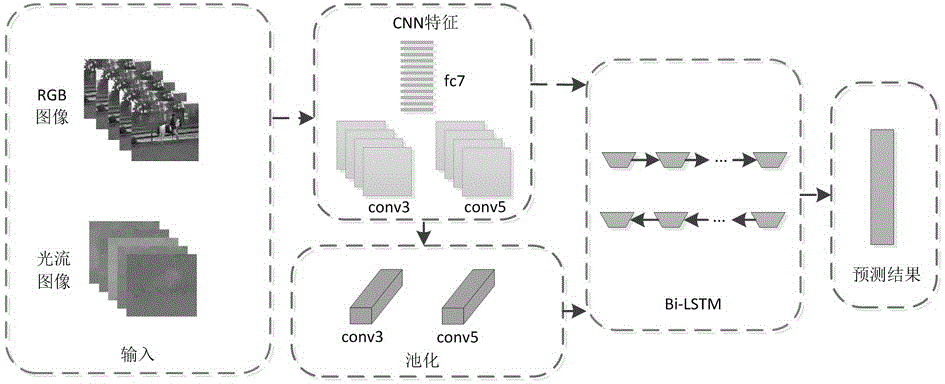

InactiveCN106845351AImprove accuracyGuaranteed accuracyCharacter and pattern recognitionNeural architecturesTime domainTemporal information

Owner:SUZHOU UNIV

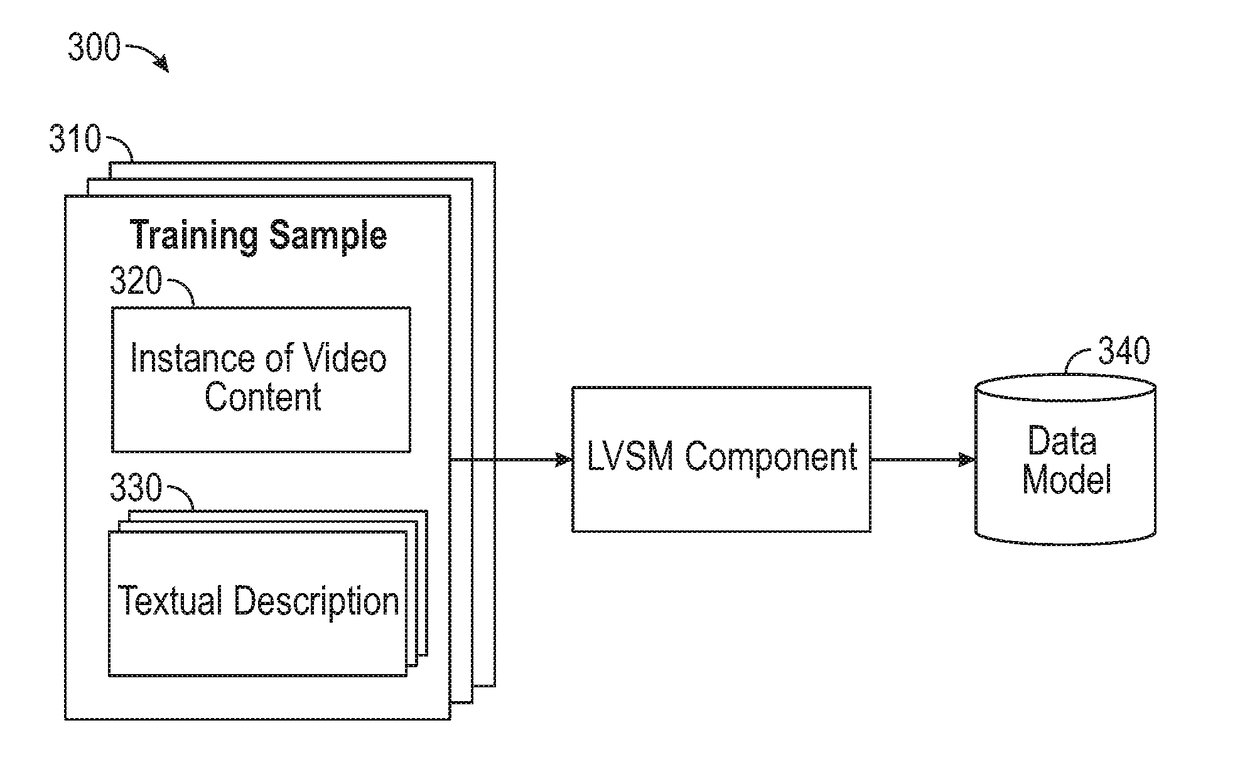

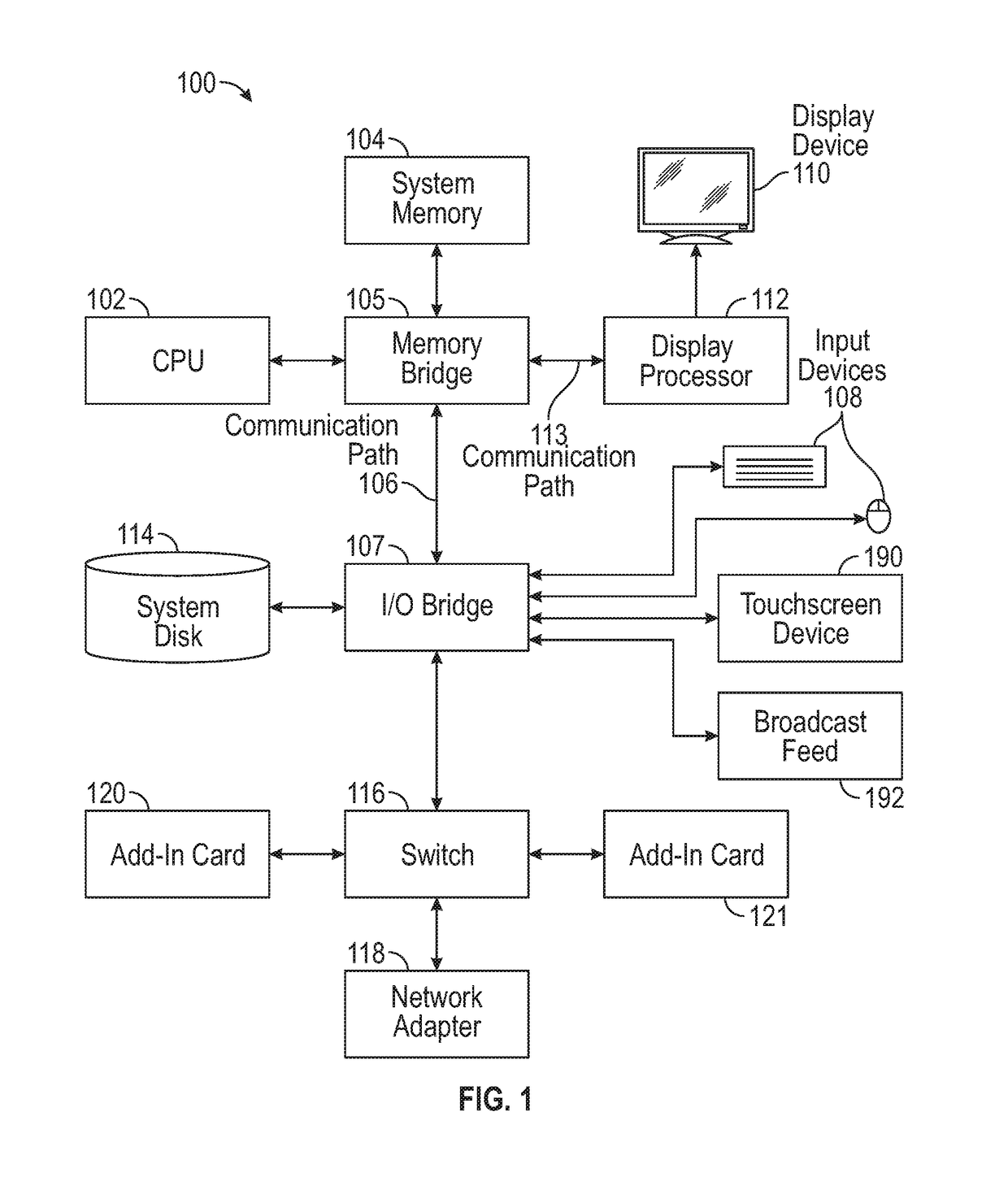

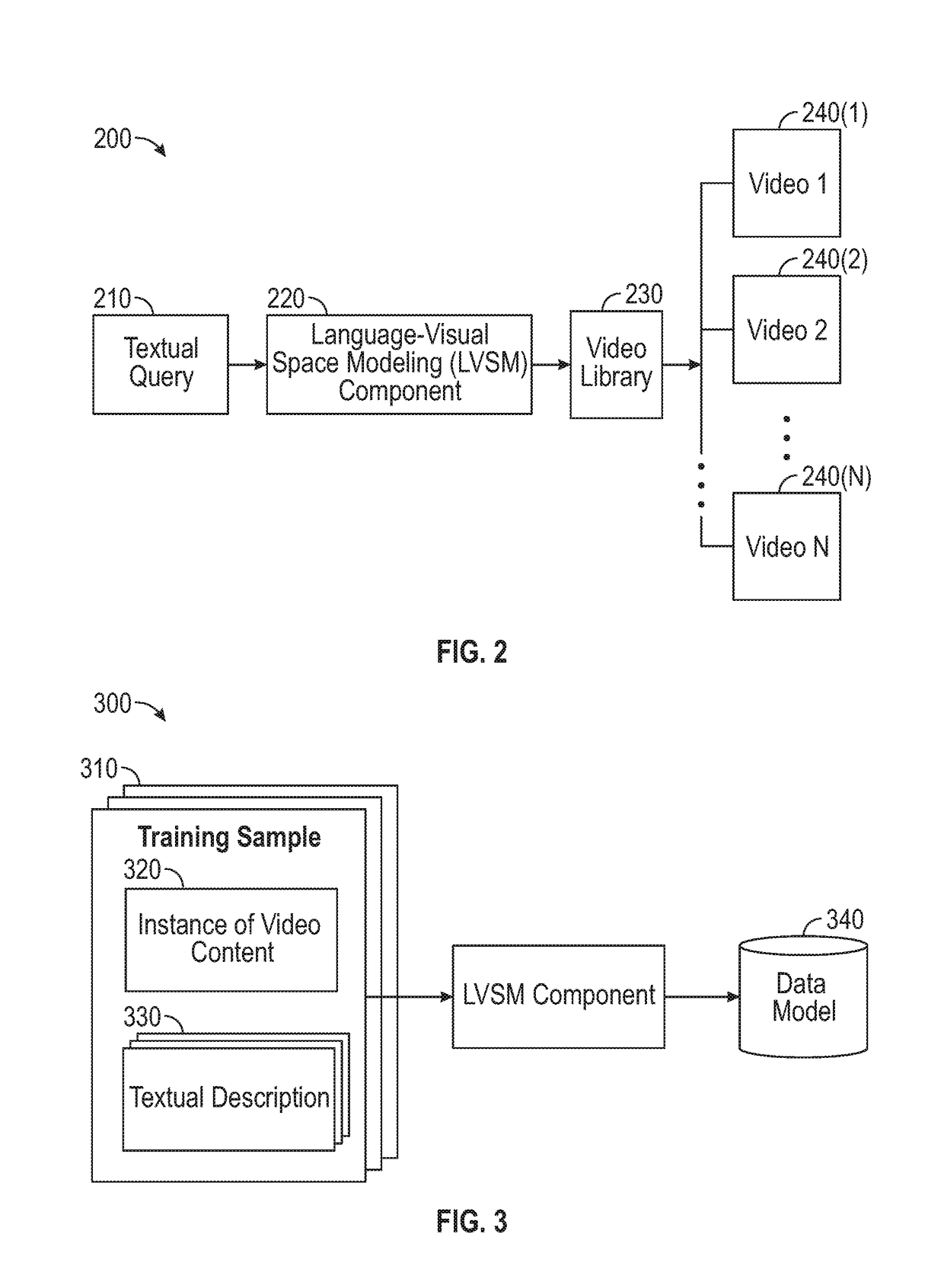

Joint heterogeneous language-vision embeddings for video tagging and search

ActiveUS20170357720A1Digital data information retrievalCharacter and pattern recognitionVisual spacePattern recognition

Systems, methods and articles of manufacture for modeling a joint language-visual space. A textual query to be evaluated relative to a video library is received from a requesting entity. The video library contains a plurality of instances of video content. One or more instances of video content from the video library that correspond to the textual query are determined, by analyzing the textual query using a data model that includes a soft-attention neural network module that is jointly trained with a language Long Short-term Memory (LSTM) neural network module and a video LSTM neural network module. At least an indication of the one or more instances of video content is returned to the requesting entity.

Owner:DISNEY ENTERPRISES INC

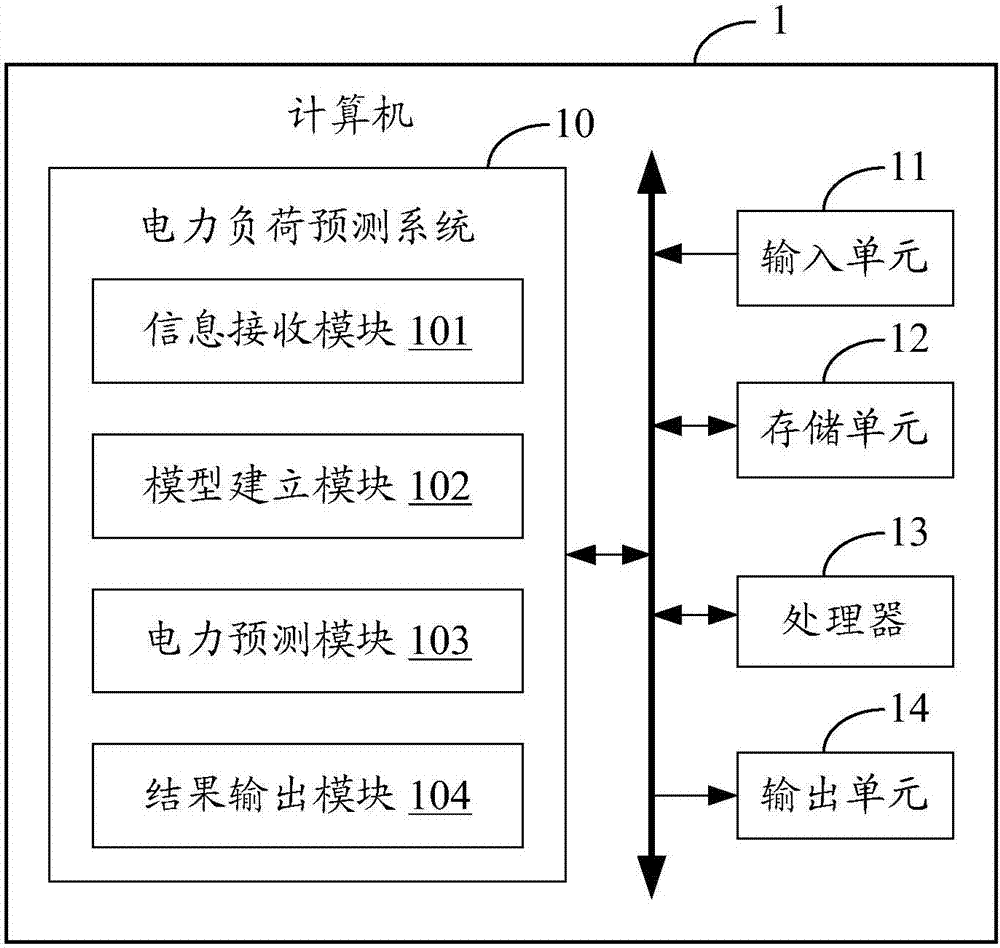

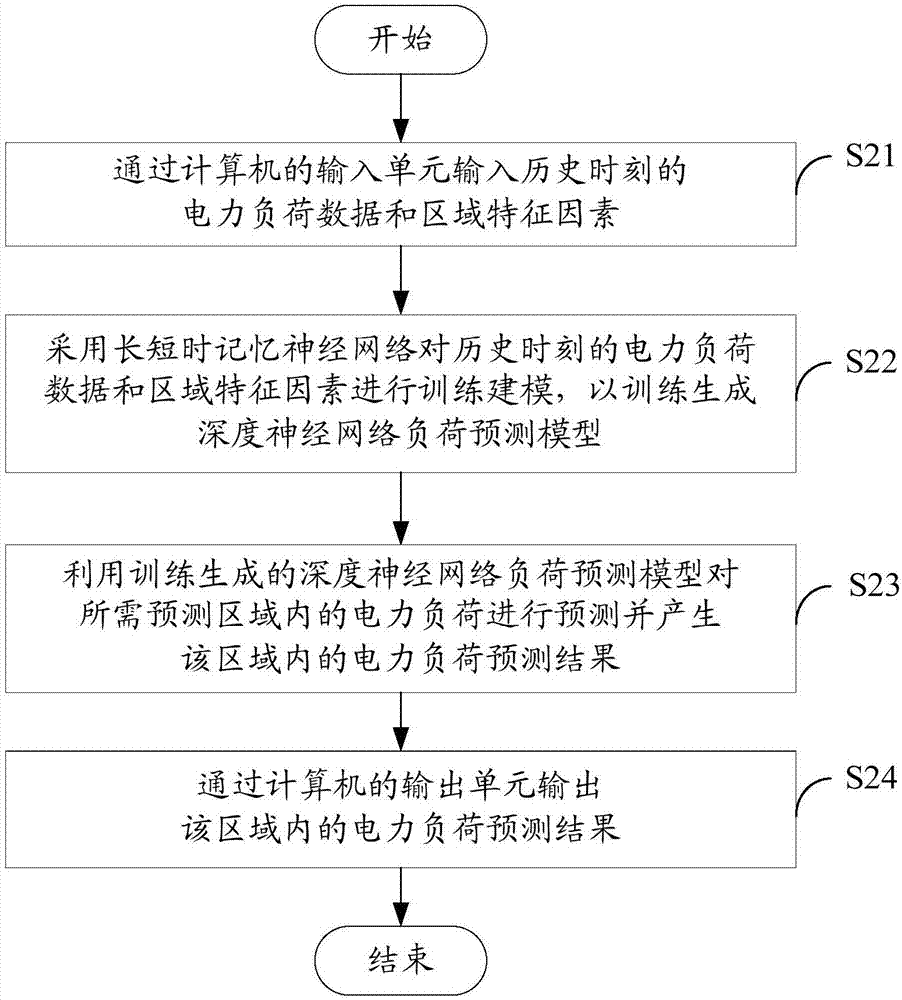

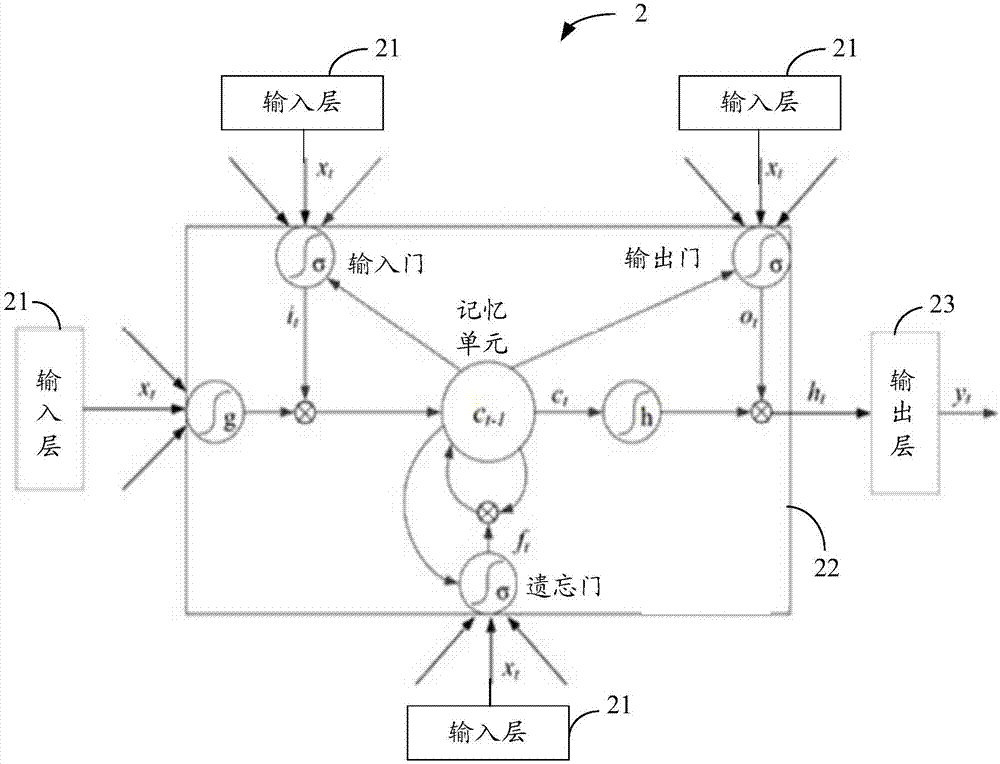

Power load forecasting method based on long short term memory neuron network

InactiveCN106960252AImprove predictive performancePrediction is accurateForecastingNeuron networkLoad forecasting

The invention discloses a power load forecasting method based on a long short term memory (LSTM) neuron network. The power load forecasting method comprises inputting power load data and a region feature factor at a historic moment through an input unit; carrying out training and modeling on the power load data and the region feature factor at the historic moment by means of an LSTM network in order to generate a deep neural network load forecasting model which is a single-layer multi-task deep neural network model or a double-layer multi-task deep neural network model used for power supply load forecasting; forecasting the power load in an area needing to be forecasted by means of the deep neural network load forecasting model, and generating a forecasting result of the power load in the area; and outputting the forecasting result of the power load in the area through an output unit. According to the invention, a multi-task learning power load forecasting model is constructed based on the LSTM network in the deep learning field, power consumption loads in multiple areas can be forecasted accurately, and the forecasting effect is improved.

Owner:X TRIP INFORMATION TECH CO LTD

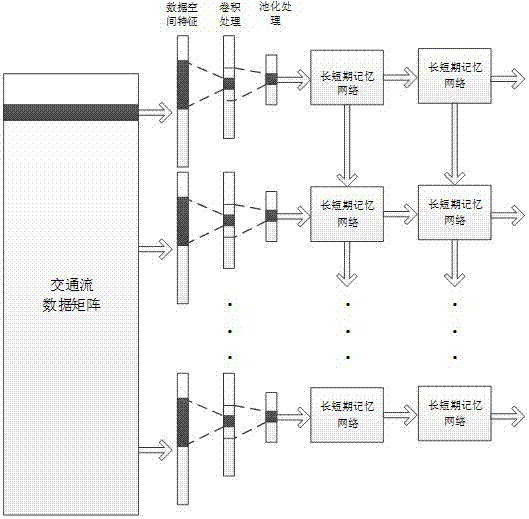

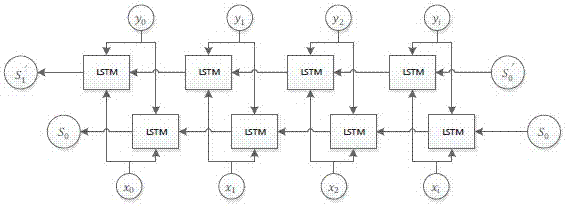

Deep learning-based short-term traffic flow prediction method

ActiveCN107230351AImprove accuracyOvercoming featureDetection of traffic movementForecastingShort-term memoryStudy methods

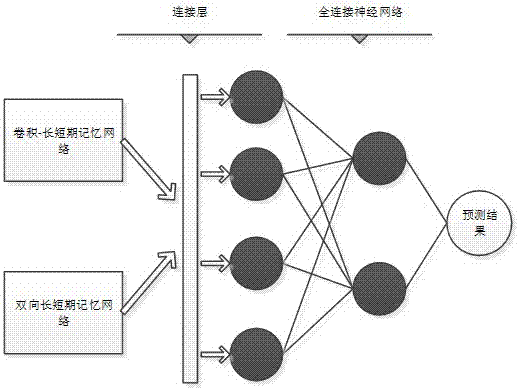

The present invention discloses a deep learning method-based short-term traffic flow prediction method. The influence of the traffic flow rate change of the neighbor points of a prediction point, the time characteristic of the prediction point and the influence of the periodic characteristic of the prediction point on the traffic flow rate of the prediction point are considered simultaneously. According to the deep learning method-based short-term traffic flow prediction method of the invention, a convolutional neural network and a long and short-term memory (LSTM) recurrent neural network are combined to construct a Conv-LSTM deep neural network model; a two-way LSTM model is used to analyze the traffic flow historical data of the point and extract the periodic characteristic of the point; and a traffic flow trend and a periodic characteristic which are obtained through analysis are fused, so that the prediction of traffic flow can be realized. With the method of the invention adopted, the defect of the incapability of an existing method to make full use of time and space characteristics can be eliminated, the time and space characteristics of the traffic flow are fully extracted, and the periodic characteristic of the data of the traffic flow is fused with the time and space characteristics, and therefore, the accuracy of short-term traffic flow prediction results can be improved.

Owner:FUZHOU UNIV

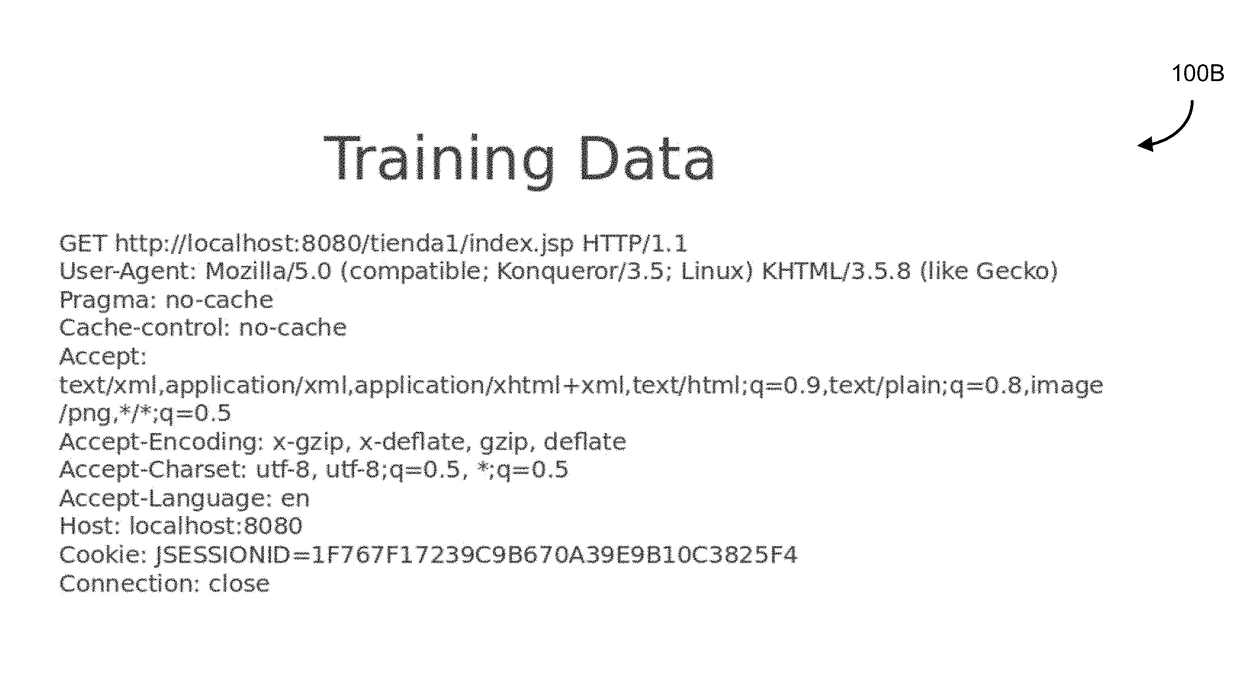

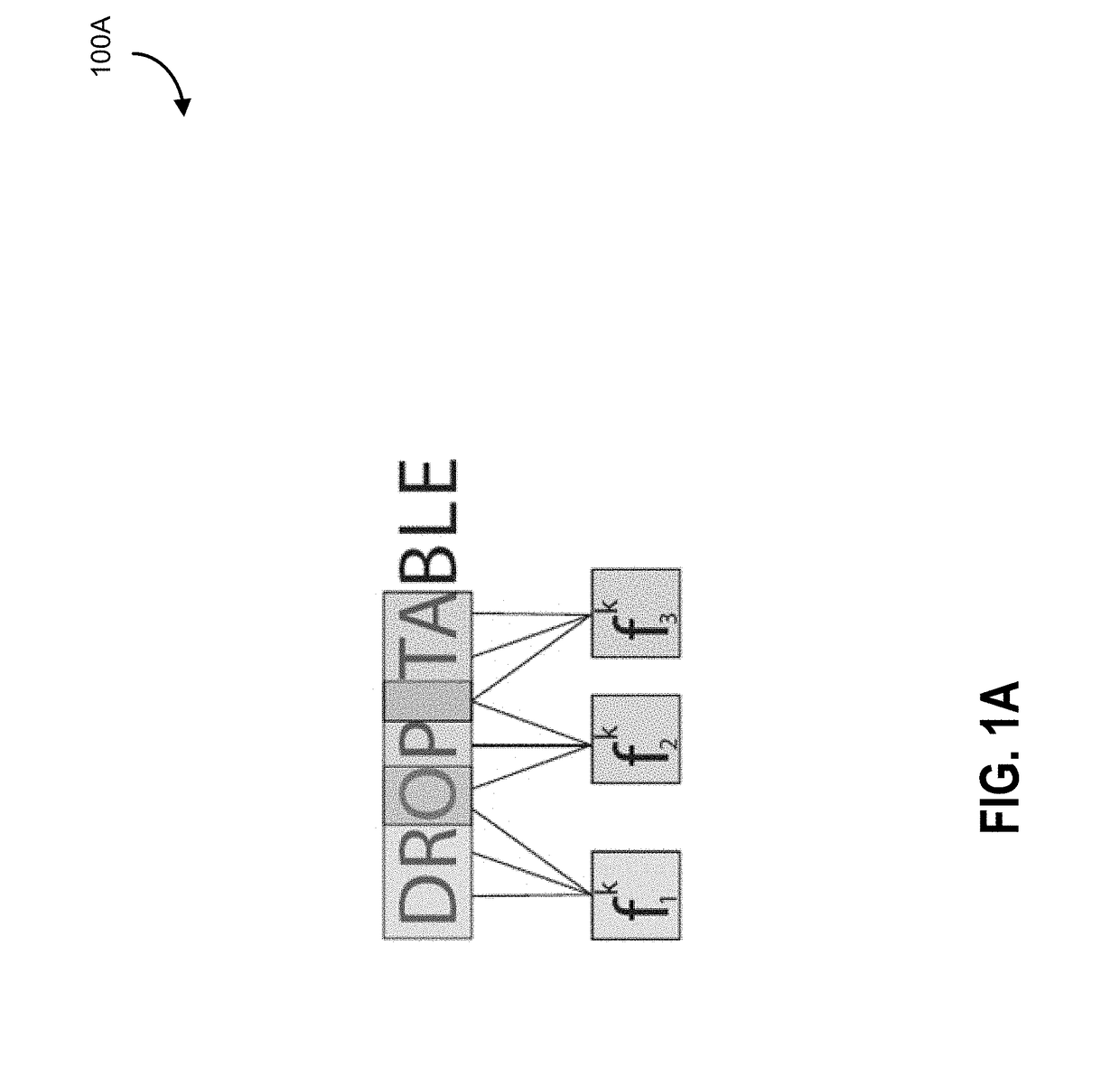

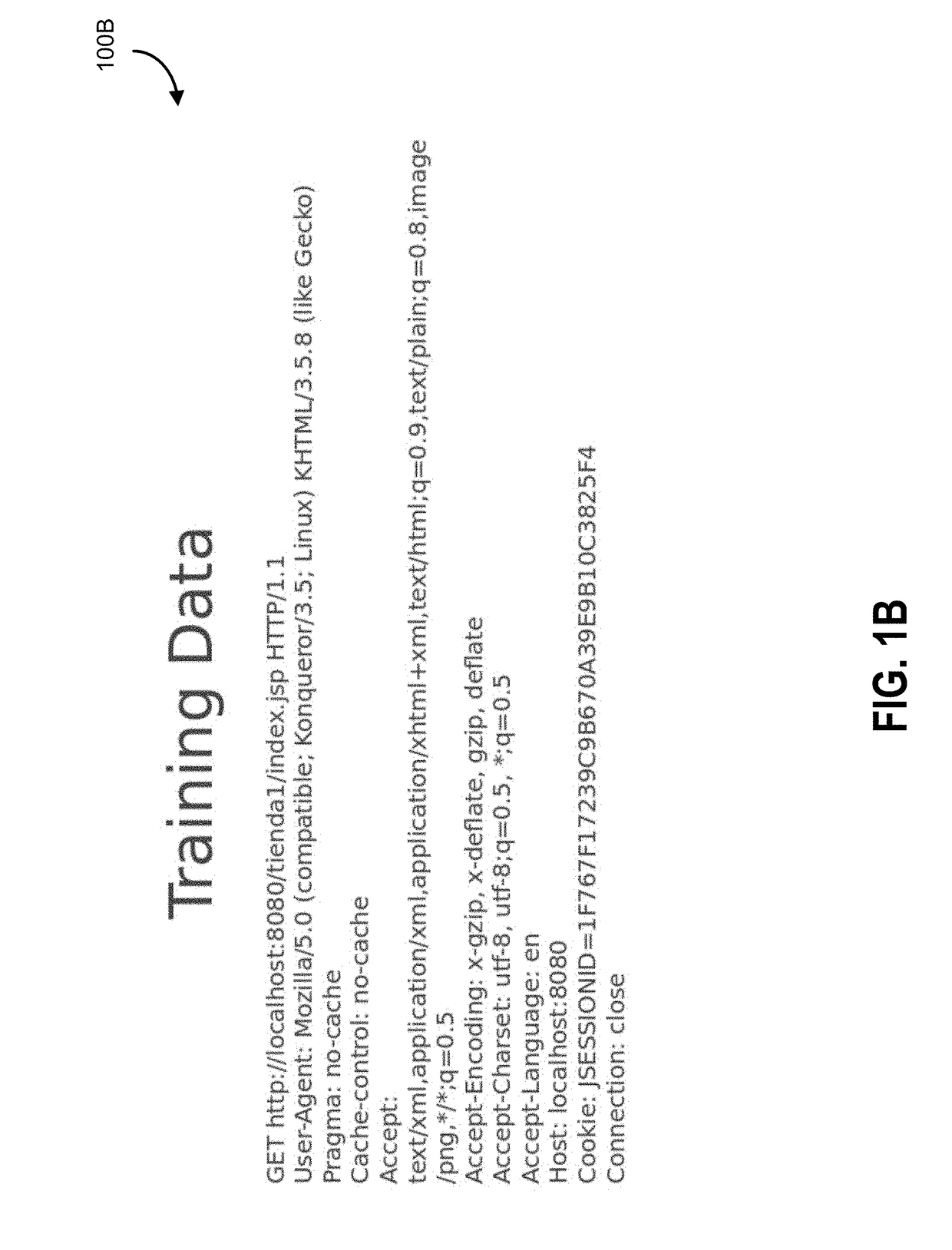

Systems and methods for malicious code detection

ActiveUS20180285740A1Reduce data sizePlatform integrity maintainanceNeural architecturesNeural network systemData mining

There is provided a neural network system for detection of malicious code, the neural network system comprising: an input receiver configured for receiving input text from one or more code input sources; a convolutional neural network unit including one or more convolutional layers, the convolutional unit configured for receiving the input text and processing the input text through the one or more convolutional layers; a recurrent neural network unit including one or more long short term memory layers, the recurrent neural network unit configured to process the output from the convolutional neural network unit to perform pattern recognition; and a classification unit including one or more classification layers, the classification unit configured to receive output data from the recurrent neural network unit to perform a determination of whether the input text or portions of the input text are malicious code or benign code.

Owner:ROYAL BANK OF CANADA

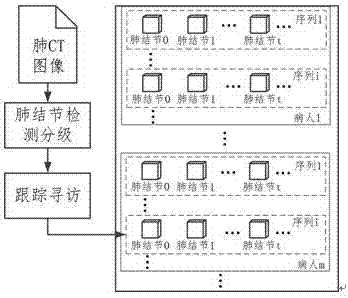

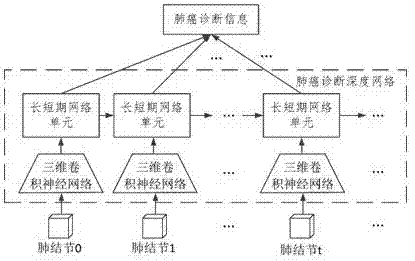

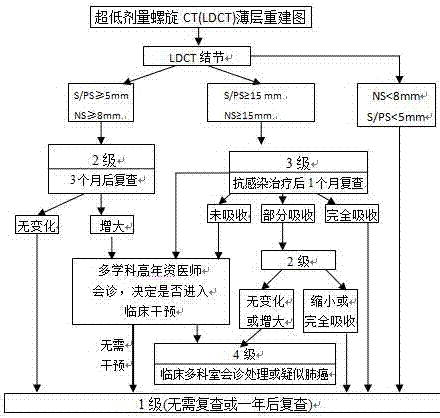

Method and system for grading and managing detection of pulmonary nodes based on in-depth learning

ActiveCN107103187AAutomatic nodule grading managementAutomatic diagnosisCharacter and pattern recognitionMedical automated diagnosisMedicineLow-Dose Spiral CT

The invention discloses a method for grading and managing detection of pulmonary nodes based on in-depth learning. The method for grading and managing detection of the pulmonary nodes based on in-depth learning is characterized by comprising the steps of S100, collecting a chest ultralow-dose-spiral CT thin slice image, sketching a lung area in the CT image, and labeling all pulmonary nodes in the lung area; S200, training a lung area segmentation network, a suspected pulmonary node detection network and a pulmonary node sifting grading network; S300, obtaining pulmonary node temporal sequences of all patients in an image set and grading information marks corresponding to the pulmonary node sequences to construct a pulmonary node management database; S400, training a lung cancer diagnosis network based on a three-dimensional convolutional neural network and a long-short-term memory network. According to the method for grading and managing detection of the pulmonary nodes based on in-depth learning, the lung area segmentation network, the suspected pulmonary node detection network, the pulmonary node sifting grading network and the lung cancer diagnosis network are trained based on in-depth learning, the pulmonary nodes are accurately detected, and through the combination of subsequent tracking and visiting, more accurate diagnosis information and clinic strategies are obtained.

Owner:SICHUAN CANCER HOSPITAL +1

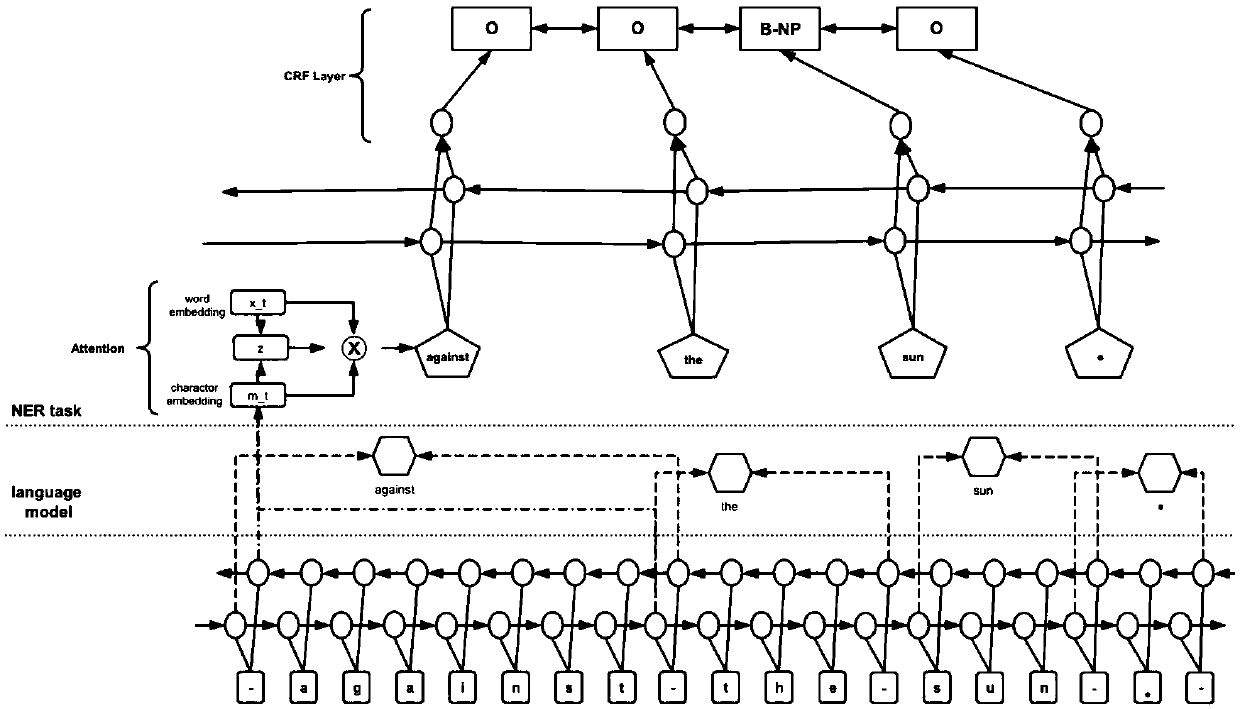

Named entity identification method capable of combining attention mechanism and multi-target cooperative training

ActiveCN108628823AImprove accuracyEffective trainingNatural language data processingNeural architecturesConditional random fieldNamed-entity recognition

The invention provides a named entity identification method capable of combining an attention mechanism and multi-target cooperative training. The method comprises the following steps that: (1) carrying out a preprocessing operation on training data, and through character hierarchy mapping, obtaining the character vector representation of a sentence; (2) inputting the character vector representation obtained in (1) into a bidirectional LSTM (Long Short Term Memory) network, and obtaining the character vector representation of each word; (3) through word hierarchy mapping, obtaining the word vector representation of each sentence; (4) through the attention mechanism, splitting the word vector representation obtained in (3) with the character vector representation obtained in (1), and transmitting into the bidirectional LSTM network to obtain the semantic characteristic vector of the sentence; and (5) aiming at the semantic characteristic vector obtained in (4), carrying out entity annotation on each word by a conditional random field, and decoding to obtain an entity tag.

Owner:SUN YAT SEN UNIV

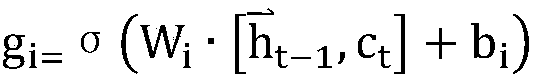

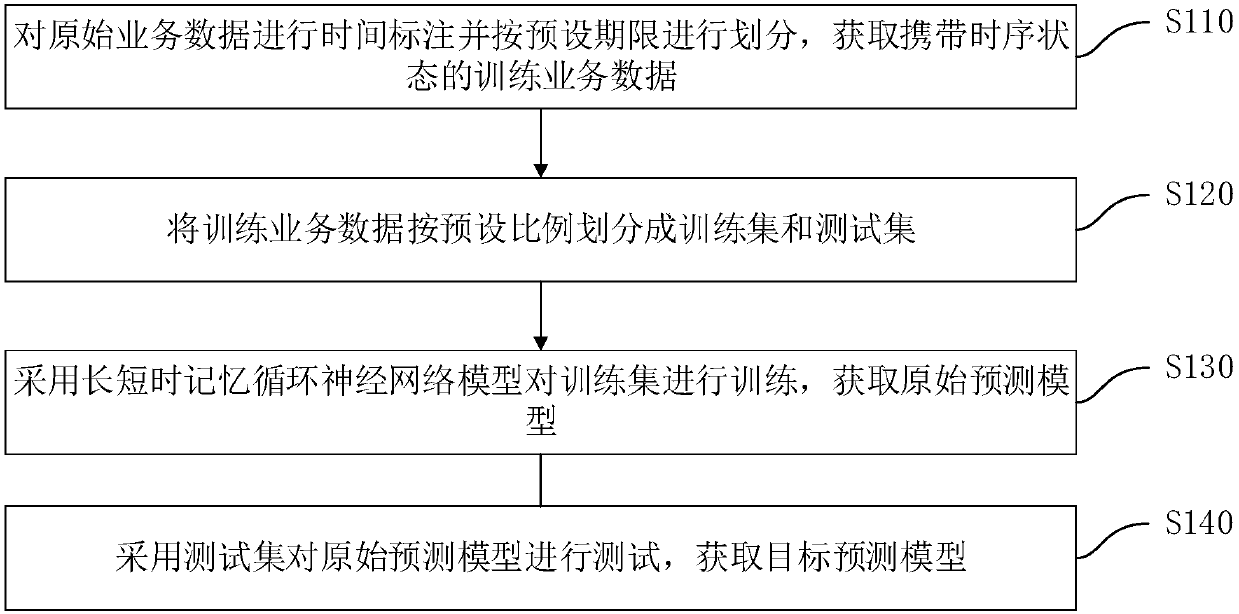

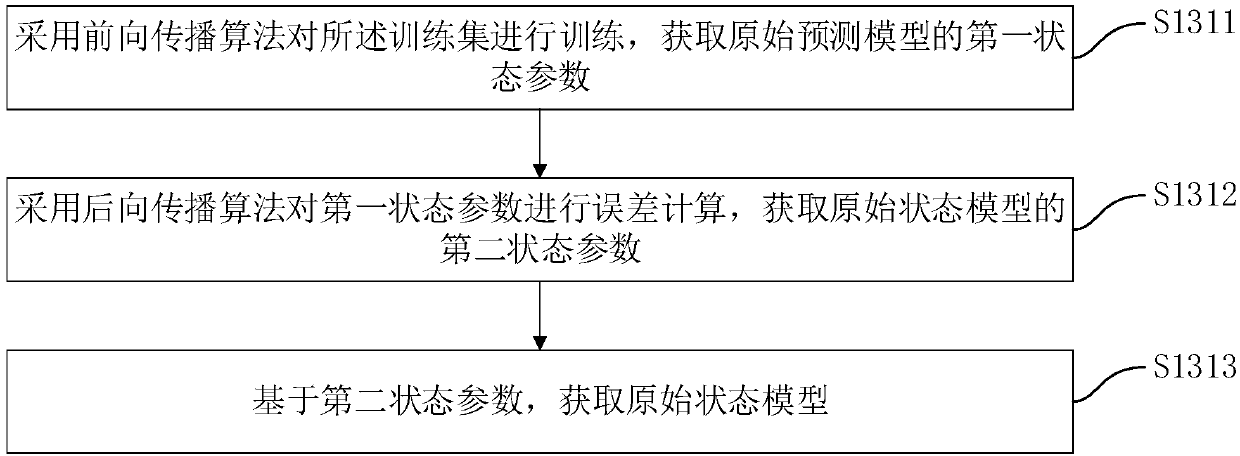

Prediction model training method, data monitoring method and device, equipment and medium

InactiveCN107730087AImprove accuracyImprove efficiencyFinanceCharacter and pattern recognitionNetwork modelTest set

The invention discloses a prediction model training method, a data monitoring method and device, equipment and a medium. The prediction model training method comprises the steps that time-stamping iscarried out on original service data, and the data are divided according to a preset deadline to acquire training service data carrying a time sequence state; the training service data are divided into a training set and a test set according to a preset ratio; a training set is used to train a long short term memory cycle neural network model to acquire an original prediction model; and the testset is used to test the original prediction model to acquire a target prediction model. The prediction model training method has the advantages of high time sequence and high accuracy in predicting.

Owner:PING AN TECH (SHENZHEN) CO LTD

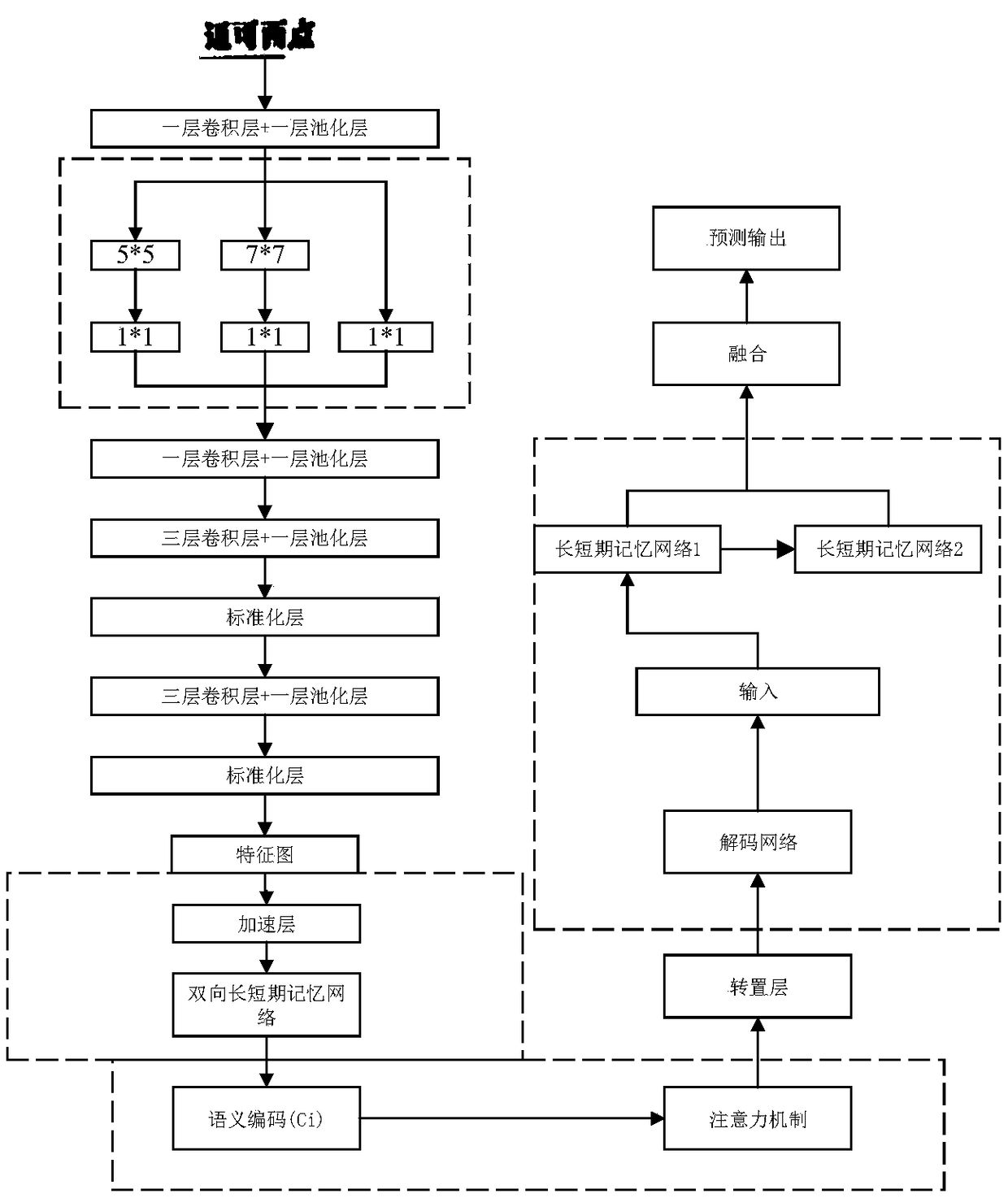

Character recognition system and method based on combination of neural network and attention mechanism

ActiveCN109389091ASolve long-term dependenceImprove adaptabilityCharacter and pattern recognitionNeural architecturesNerve networkShort-term memory

The invention claims to protect a character recognition system and method based on the combination of a neural network and an attention mechanism, the system comprising: a convolution neural network feature extraction module, which is used for spatial feature of character image; The spatial features extracted by the convolution neural network are input to the bi-directional long-short memory network module, and the bi-directional long-short memory network can extract the sequence features of characters. The extracted feature vectors are semantically encoded, and then the attention weights of feature vectors are assigned through the attention mechanism, so that the attention is focused on the feature vectors with higher weights. In the decoding part of the model, the features extracted fromattention and the prediction information of the previous time are used as the inputs of the nested long-short memory network. The purpose of using the long-short memory network is to keep the temporal characteristics of the eigenvectors and make the attention points of the model constantly change with time. In the decoding part, the features extracted from attention and the prediction informationof the previous time are used as the inputs of the nested long-short memory network. The invention can more accurately detect the text area in the natural scene, and has good detection effect on thesmall target text and the text with small tilt angle.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

Multi-task named entity recognition and confrontation training method for medical field

InactiveCN108229582AEntity Recognition FacilitationImprove accuracyCharacter and pattern recognitionNeural architecturesConditional random fieldData set

The invention discloses a multi-task named entity recognition and confrontation training method for medical field. The method includes the following steps of (1) collecting and processing data sets, so that each row is composed of a word and a label; (2) using a convolutional neural network to encode the information at the word character level, obtaining character vectors, and then stitching withword vectors to form input feature vectors; (3) constructing a sharing layer, and using a bidirection long-short-term memory nerve network to conduct modeling on input feature vectors of each word ina sentence to learn the common features of each task; (4) constructing a task layer, and conducting model on the input feature vectors and the output information in (3) through a bidirection long-short-term network to learn private features of each task; (5) using conditional random fields to decode labels of the outputs of (3) and (4); (6) using the information of the sharing layer to train a confrontation network to reduce the private features mixed into the sharing layer. According to the method, multi-task learning is performed on the data sets of multiple disease domains, confrontation training is introduced to make the features of the sharing layer and task layer more independent, and the task of training multiple named entity recognition simultaneously in a specific domain is accomplished quickly and efficiently.

Owner:ZHEJIANG UNIV

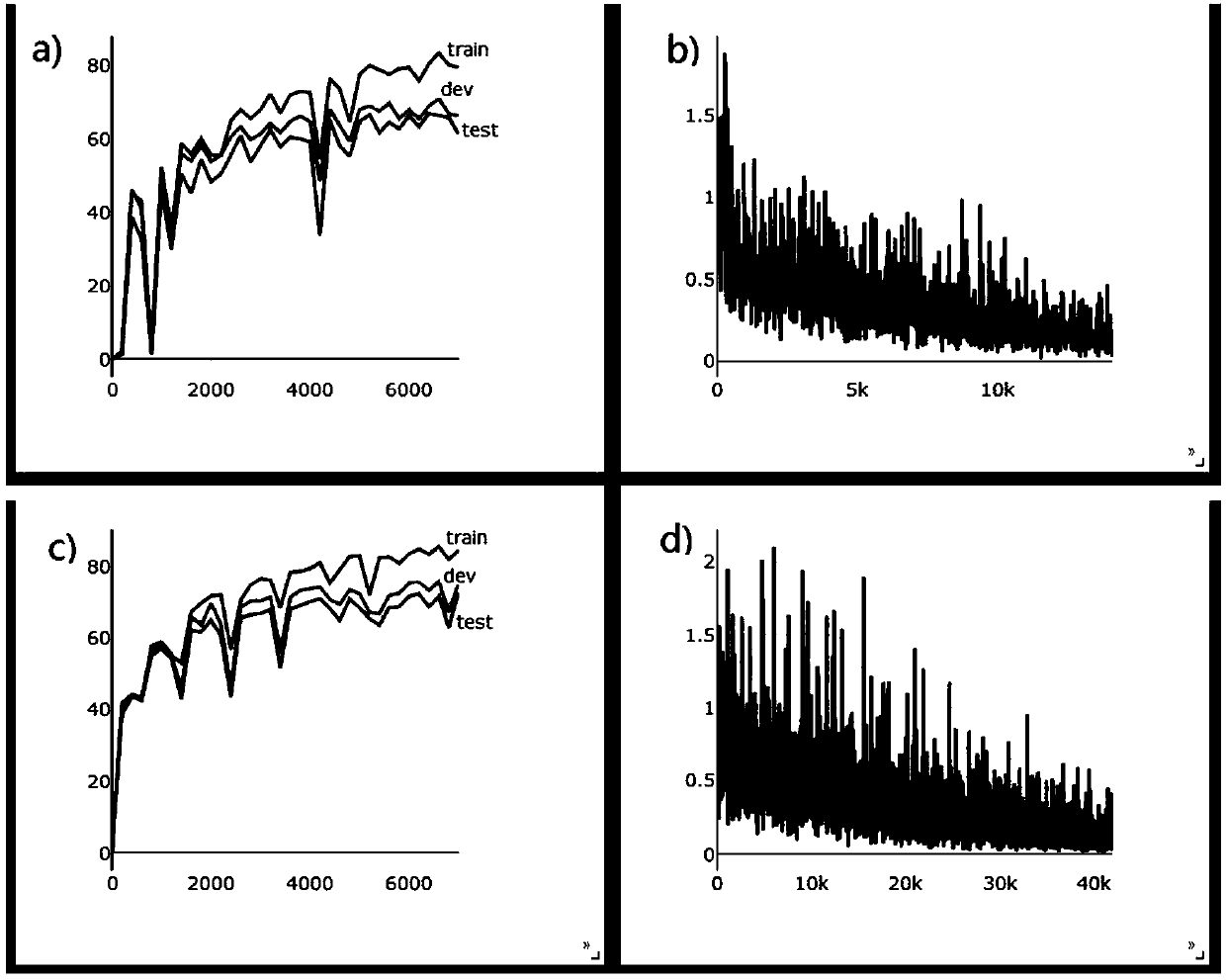

LSTM-RNN model-based air pollutant concentration forecast method

ActiveCN106599520AImprove perceptionTake advantage ofForecastingDesign optimisation/simulationTest sampleModel parameters

The invention relates to an LSTM (Long Short-Term Memory)-RNN model-based air pollutant concentration forecast method. The method comprises the steps of monitoring and collecting air pollutant concentration data in a relatively long time; preprocessing historical data to construct training, verification and test sample data of a to-be-trained LSTM-RNN model; obtaining a pre-trained LSTM-RNN model through the training sample data, then performing fine adjustment and training through the constructed verification and test sample data to obtain LSTM-RNN model parameters, improving model precision by further correcting model parameters, and taking a corrected LSTM-RNN model as an air pollutant concentration forecast model; and finally taking the preprocessed air pollutant concentration data of a target city in the relatively long time as input data of the LSTM-RNN model, and performing model output to obtain a forecast result of air pollutant concentration at a current moment or at a moment in the future.

Owner:UNIV OF SCI & TECH OF CHINA

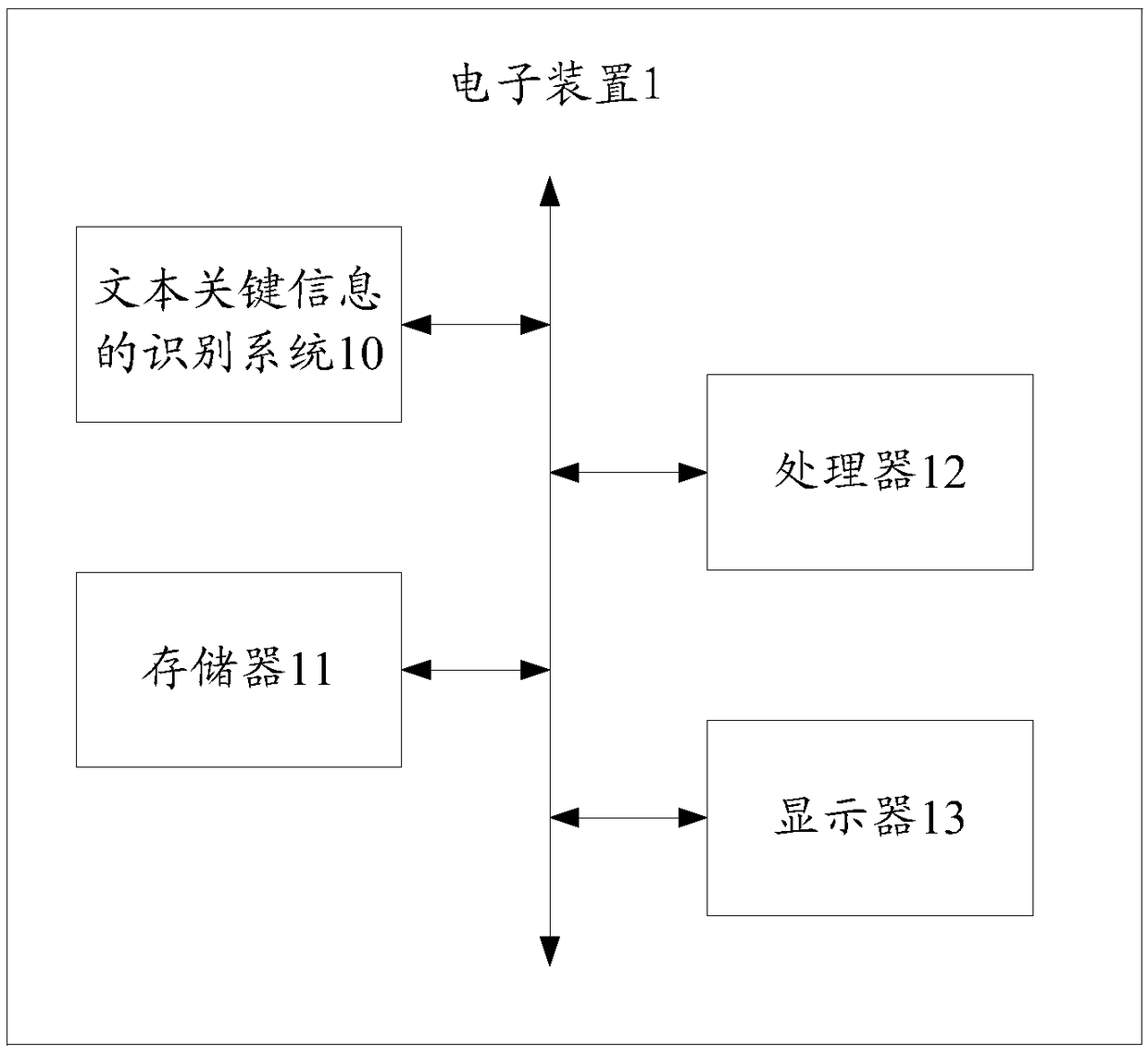

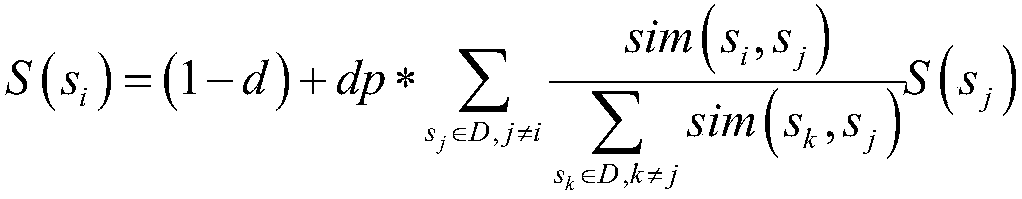

Text key information identification method, electronic apparatus and readable storage medium

InactiveCN108664473AAccurate acquisitionCharacter and pattern recognitionNatural language data processingShort-term memoryNetwork model

The invention relates to a text key information identification method, an electronic apparatus and a readable storage medium. The method comprises the steps of, after a to-be-identified text is received, performing segmentation processing on the received to-be-identified text by using a predetermined word segmentation model to obtain segmented words of the to-be-identified text, wherein the predetermined word segmentation model is a long and short term memory recurrent neural network model obtained by training a preset quantity of sample statements labeled by adopting a sequence labeling method; and based on word frequencies, positions and word spans of the segmented words in the to-be-identified text, and according to a preset scoring formula, performing calculation to obtain scores of the segmented words, sorting the segmented words in the to-be-identified text according to a sequence of the scores from high to low, extracting the segmented words sorted in front to serve as keywords,and according to the extracted keywords, obtaining key information of the to-be-identified text. A user can be enabled to quickly and accurately obtain the key information in the to-be-identified text.

Owner:PING AN TECH (SHENZHEN) CO LTD

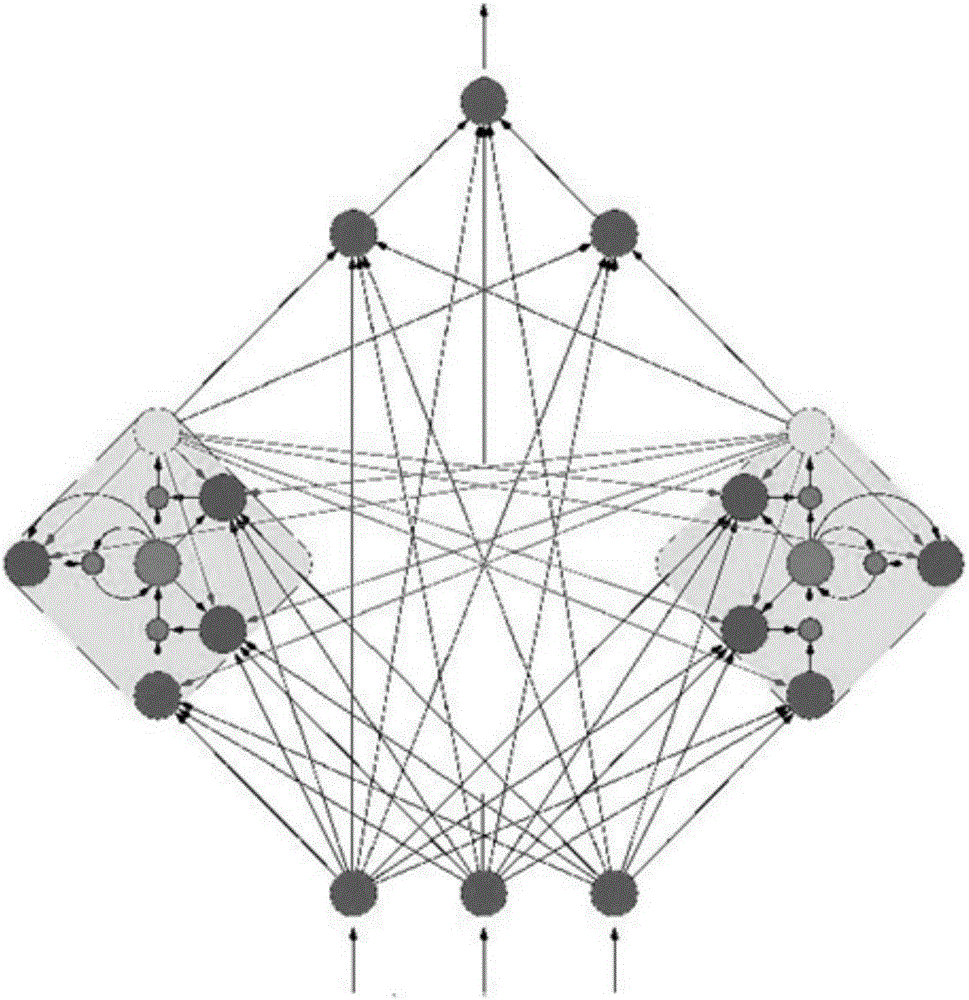

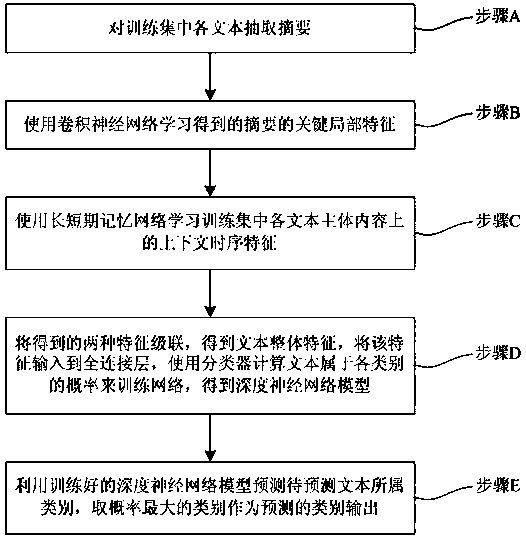

Hybrid neural network text classification method capable of blending abstract with main characteristics

ActiveCN108595632AImprove understandingImprove classification accuracyCharacter and pattern recognitionNeural architecturesText categorizationHigh probability

The invention relates to a hybrid neural network text classification method capable of blending an abstract with main characteristics. The method comprises the following steps that: step A: extractingan abstract from each text in a training set; step B: using a convolutional neural network to learn the key local features of the abstract obtained in the step A; step C: using a long short-term memory network to learn context time sequence characteristics on the main content of each text in the training set; step D: carrying out cascade connection on two types of characteristics obtained in thestep B and the step C to obtain the integral characteristics of the text, inputting the integral characteristics of each text in the training set into a full connection layer, using a classifier to calculate a probability that each text belongs to each category to train a network, and obtaining a deep neural network model; and step E: utilizing the trained deep neural network model to predict thecategory of a text to be predicted, and outputting the category with a highest probability as a prediction category. The method is favorable for improving text classification accuracy based on the deep neural network.

Owner:FUZHOU UNIV

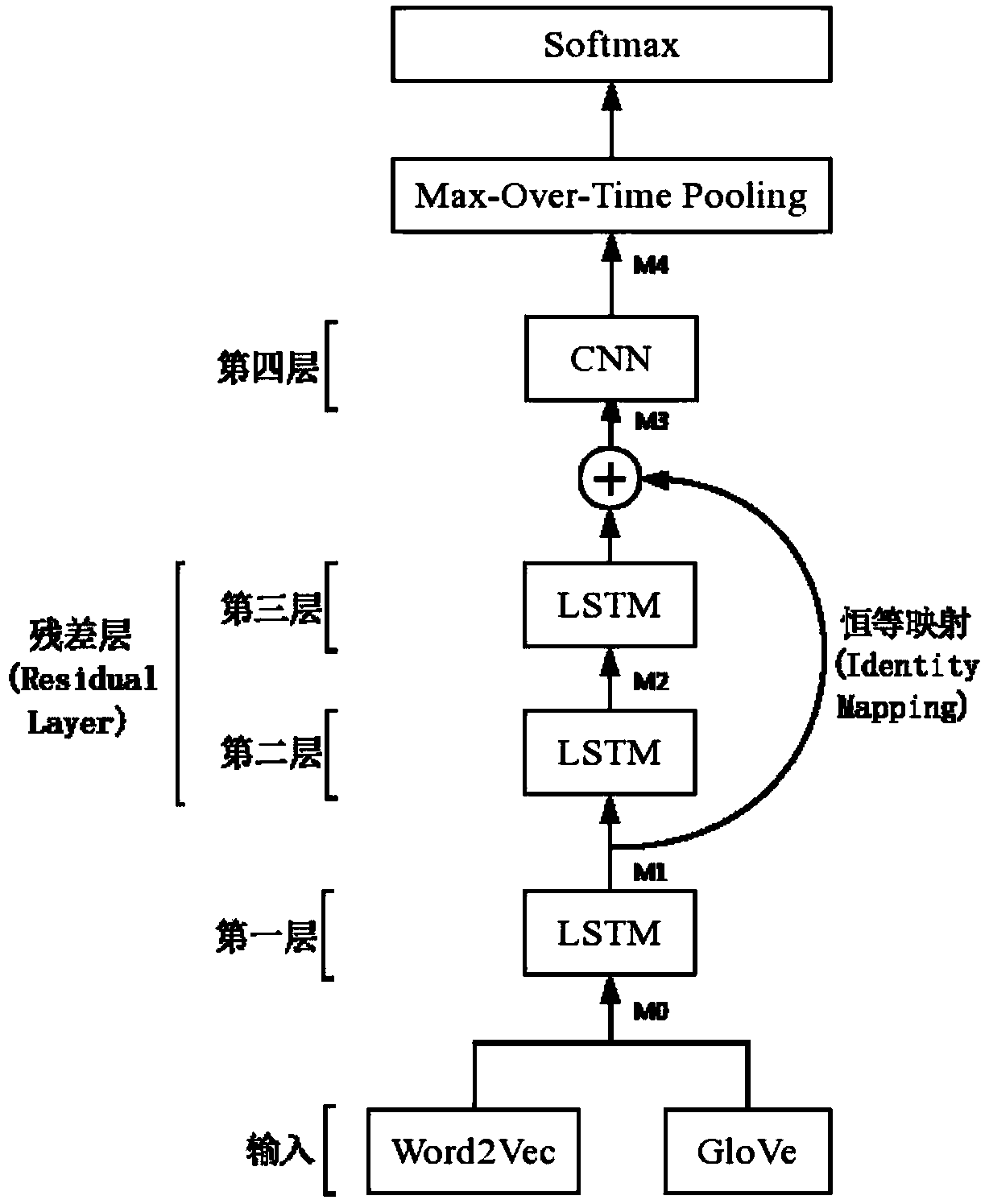

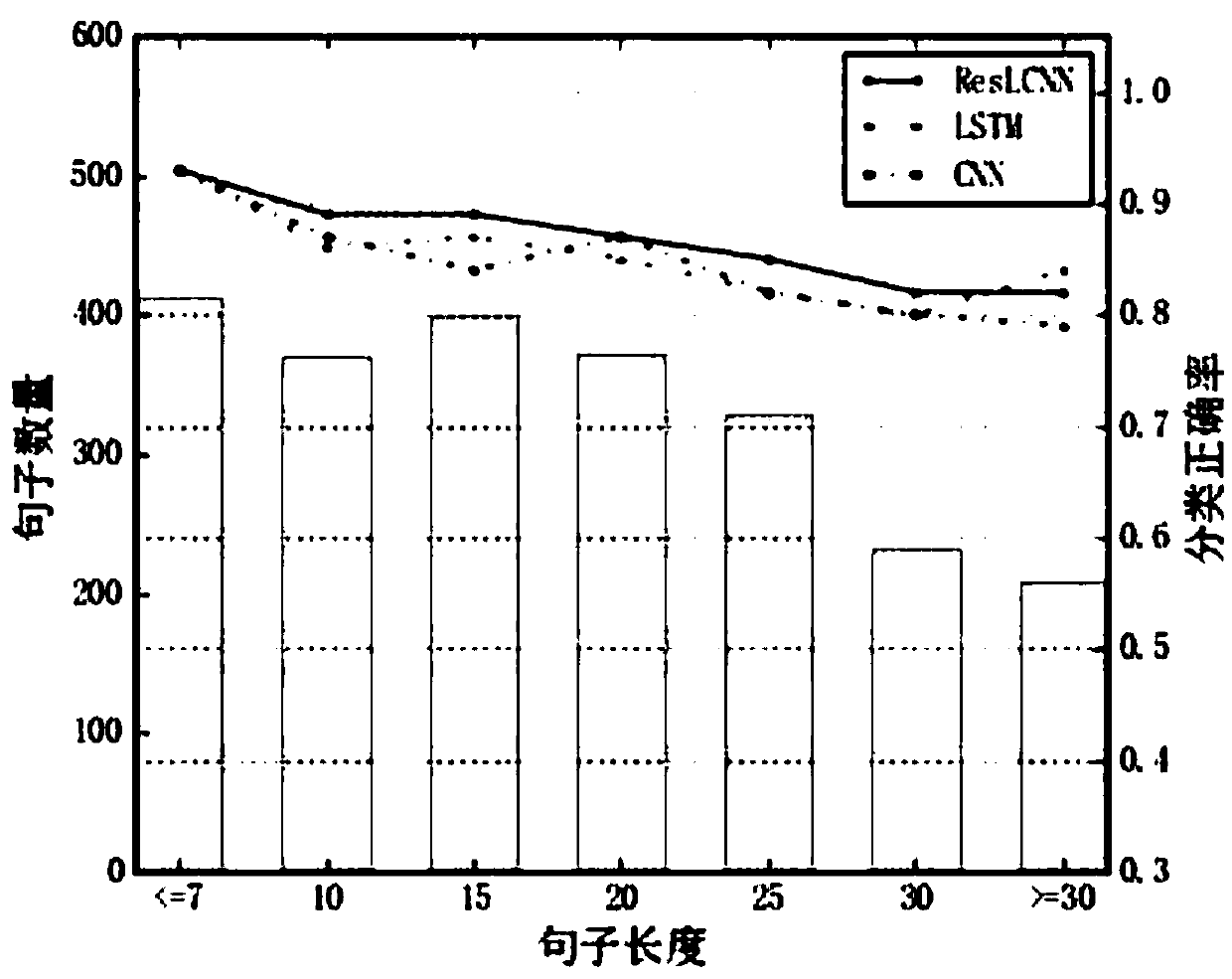

ResLCNN model-based short text classification method

InactiveCN107562784AImprove classification effectNeural architecturesSpecial data processing applicationsText miningText categorization

The invention discloses a ResLCNN model-based short text classification method, relates to the technical field of text mining and deep learning, and in particular to a deep learning model for short text classification. According to the method, characteristics of a long-short term memory network and a convolutional neural network are combined to build a ResLCNN deep text classification model for short text classification. The model comprises three long-short term memory network layer and one convolutional neural network layer; and through using a residual model theory for reference, identity mapping is added between the first long-short term memory network layer and the convolutional neural network layer to construct a residual layer, so that the problem of deep model gradient missing is relieved. According to the model, the advantage, of obtaining long-distance dependency characteristics of text sequence data, of the long-short term memory network and the advantage, of obtaining localfeatures of sentences through convolution, of the convolutional neural network are effectively combined, so that the short text classification effect is improved.

Owner:TONGJI UNIV

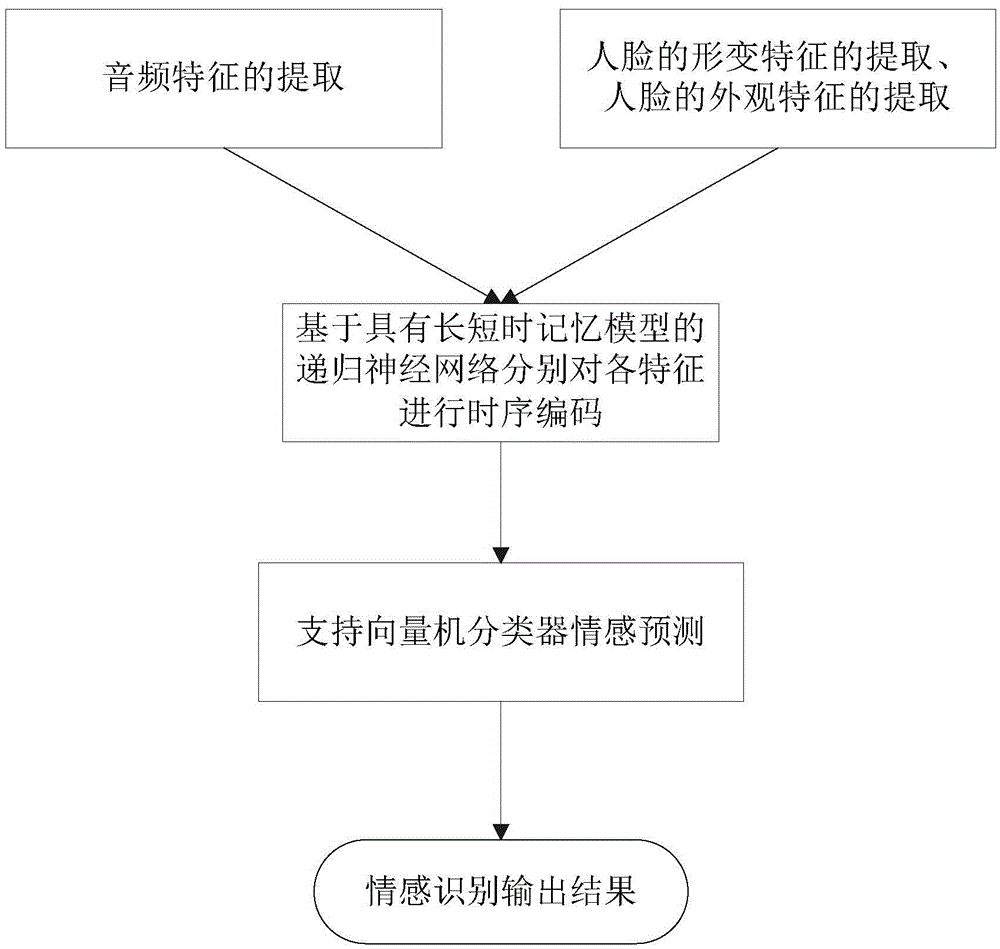

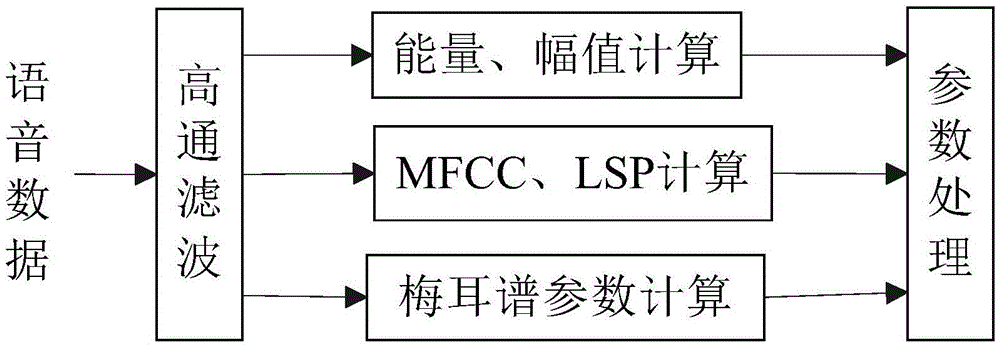

Recurrent neural network-based discrete emotion recognition method

ActiveCN105469065AModeling implementationRealize precise identificationCharacter and pattern recognitionDiscrete emotionsSupport vector machine classifier

The invention provides a recurrent neural network-based discrete emotion recognition method. The method comprises the following steps: 1, carrying out face detecting and tracking on image signals in a video, extracting key points of faces to serve as deformation features of the faces after obtaining the face regions, clipping the face regions and normalizing to a uniform size and extracting the appearance features of the faces; 2, windowing audio signals in the video, segmenting audio sequence units out and extracting audio features; 3, respectively carrying out sequential coding on the three features obtained by utilizing a recurrent neural network with long short-term memory models to obtain emotion representation vectors with fixed lengths, connecting the vectors in series and obtaining final emotion expression features; and 4, carrying out emotion category prediction by utilizing the final emotion expression features obtained in the step 3 on the basis of a support vector machine classifier. According to the method, dynamic information in the emotion expressing process can be fully utilized, so that the precise recognition of emotions of participators in the video is realized.

Owner:北京中科欧科科技有限公司

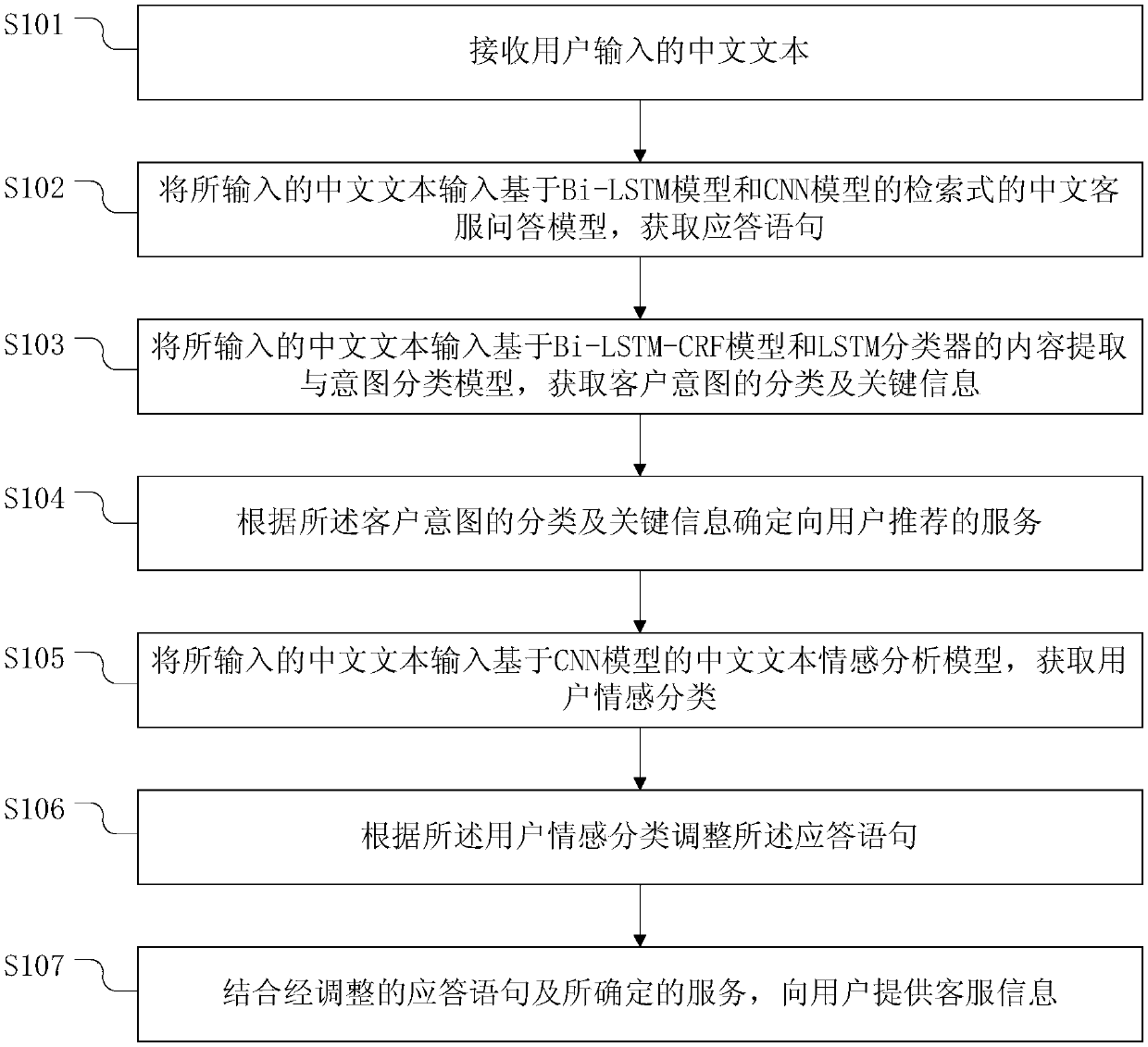

Customer service information providing method and device, electronic equipment and storage medium

ActiveCN107679234ASoothe emotionsImprove accuracyCustomer relationshipSemantic analysisConditional random fieldText entry

The invention provides a customer service information providing method and device, electronic equipment and a storage medium. The method comprises the steps of receiving a Chinese text input by a user; inputting the input Chinese text into a Chinese customer service question-answering model based on a Bi-LSTM (Bidirectional Long Short-Term Memory) model and a CNN (Convolutional Neural Network) model to acquire an answering statement; inputting the input Chinese text into a content extraction and intention classification model based on a Bi-LSTM-CRF (Conditional Random Field) model and an LSTMclassifier to acquire customer intention classification and key information; determining service recommended to a user according to the customer intention classification and the key information; inputting the input Chinese text into a Chinese text emotion analysis model based on the CNN model to acquire a user emotion classification; adjusting the answering statement according to the user emotionclassification; and in combination with the adjusted answering statement and the determined service, providing customer service information to the user. According to the method and device optimizationmodel provided by the invention, the automatic customer service answering is realized.

Owner:上海携程国际旅行社有限公司

Text sentiment analysis method and device, storage medium and computer equipment

ActiveCN107609009AEfficient analysisExtract local featuresNeural architecturesSpecial data processing applicationsFeature vectorSupport vector machine

The invention relates to a text sentiment analysis method and device, a storage medium and computer equipment. A sentence vector in a sentence in a test text is obtained and is formed in a way that the word vectors of words in the sentence are connected, and the sentence vector is independently input into two preset convolutional neural networks and one two-way long short-term memory neural network model to be preprocessed to obtain three sentence feature vectors of the sentence. Three sentence feature vectors are connected, the connected sentence feature vectors are classified through a classifier SVM (Support Vector Machine) to obtain the sentiment classification result of the sentence, and the emotional tendency of a test text can be obtained according to the sentiment classification result of the sentence. By use of the method, the convolutional neural network can be combined to effectively extract local features, the two-way long short-term memory neural network can effectively analyze the advantages of time sequence features, the test text is subjected to the sentiment analysis through the method to obtain robustness with higher emotional tendency and generalization ability,and efficiency is higher.

Owner:PEKING UNIV SHENZHEN GRADUATE SCHOOL +2

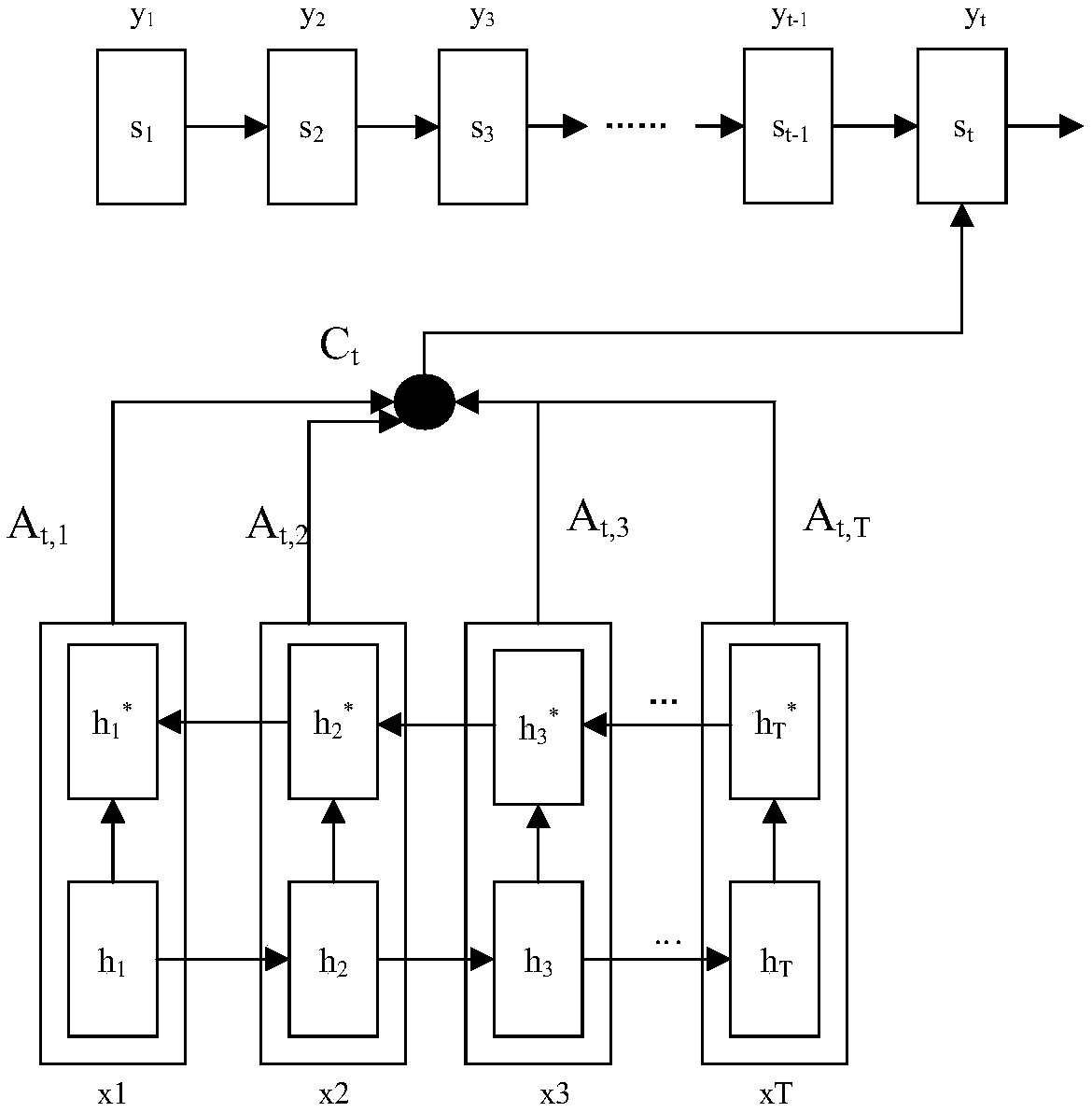

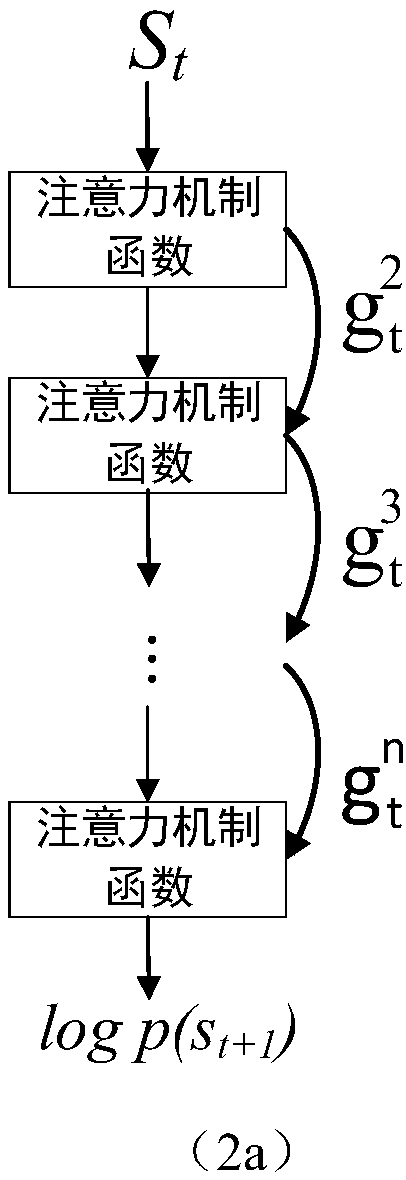

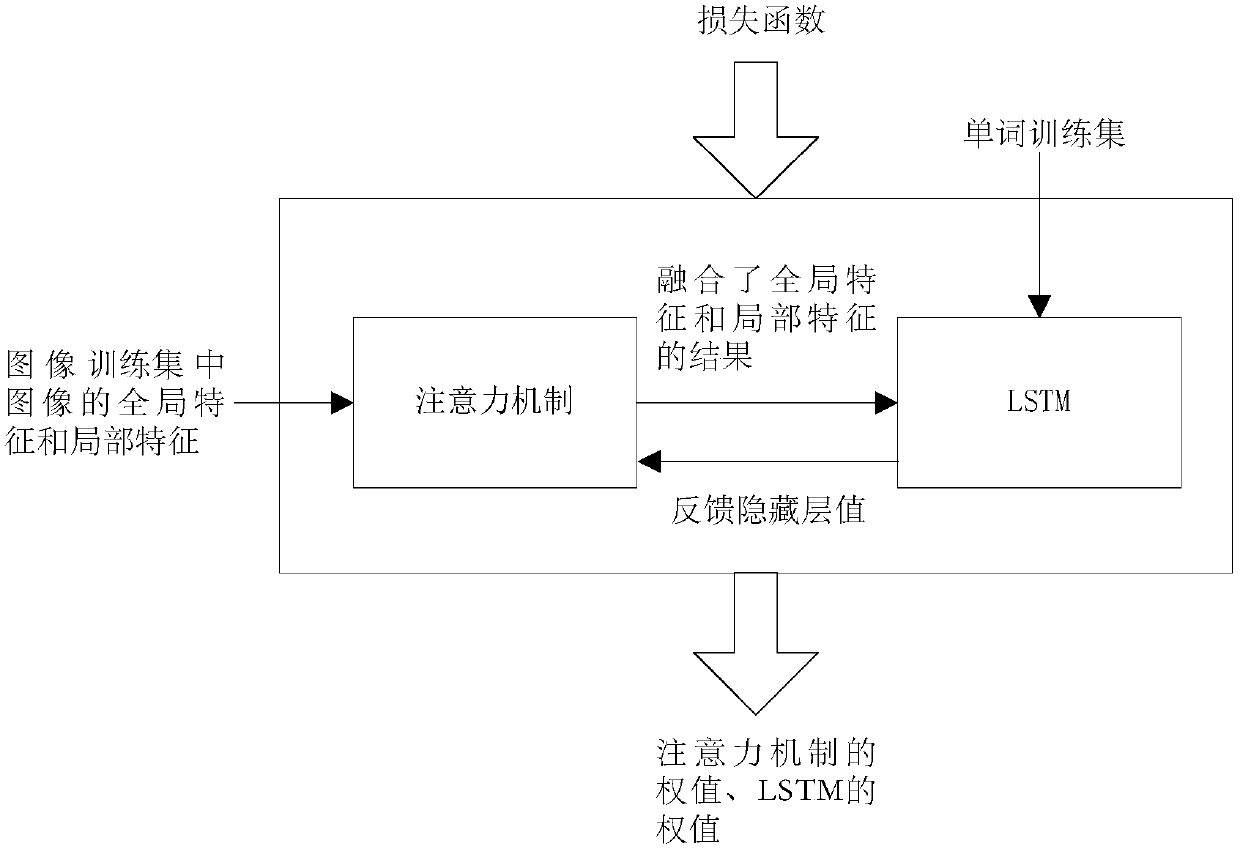

Deep attention mechanism-based image description generation method

ActiveCN108052512AImprove accuracyEfficient extractionNatural language translationCharacter and pattern recognitionAlgorithmLong short term memory

The invention relates to a deep attention mechanism-based image description generation method. The method comprises a deep long-short-term memory network model building step of adding an attention mechanism function between units of a long-short-term memory network model and training a long-short-term memory network added with the attention mechanism function by utilizing features and descriptioninformation of training images extracted by a convolutional neural network to obtain a deep long-short-term memory network model, and an image description generation step of generating descriptions corresponding to the images through a convolutional neural network model and the deep long-short-term memory network model in sequence according to the images to be subjected to description generation.Compared with the prior art, the method has the advantages of high information extraction effectiveness, high deep expression capability, accurate description and the like.

Owner:TONGJI UNIV

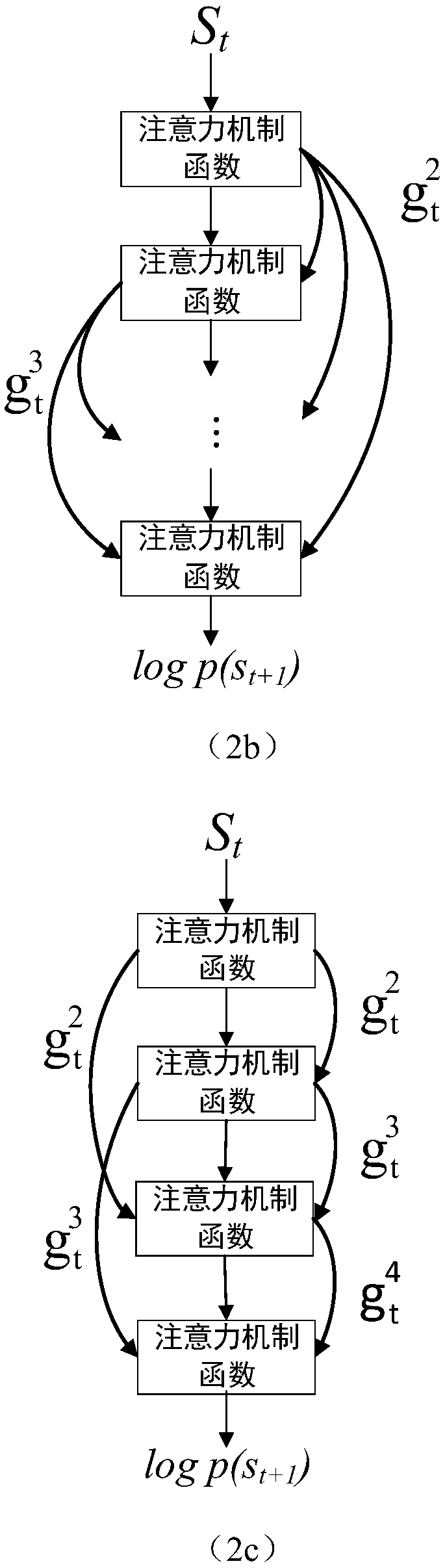

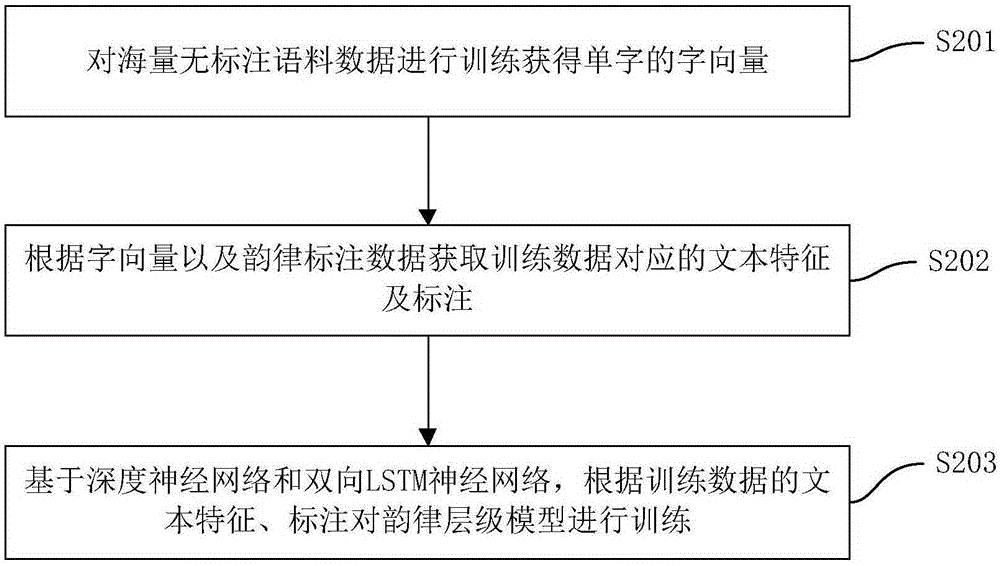

Prosodic hierarchy model training method, text-to-speech method and text-to-speech device

ActiveCN105244020AImprove performanceFew influencing factorsSpeech synthesisNatural language processingGranularity

The invention discloses a prosodic hierarchy model training method for text-to-speech and a text-to-speech method and a text-to-speech device by a prosodic hierarchy model. The training method includes: training massive non-tagged corpus data to obtain character vectors of individual characters; obtaining textual features and tagging corresponding to the trained data according to the character vectors and prosodic tagging data; based on a deep neural network and a bidirectional LSTM (long-short term memory) neural network, training the prosodic hierarchy model according to the textual features and tagging of the trained data. The training method has the advantages that when a character granularity based dictionary in the training method is compared with a traditional word granularity based dictionary, entry scale is decreased effectively, requirements of the model and resource files on computing resources and memory spaces are lowered, and usability of a prosodic prediction model in embedded intelligent devices is guaranteed while performance of the prosodic prediction model is improved.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

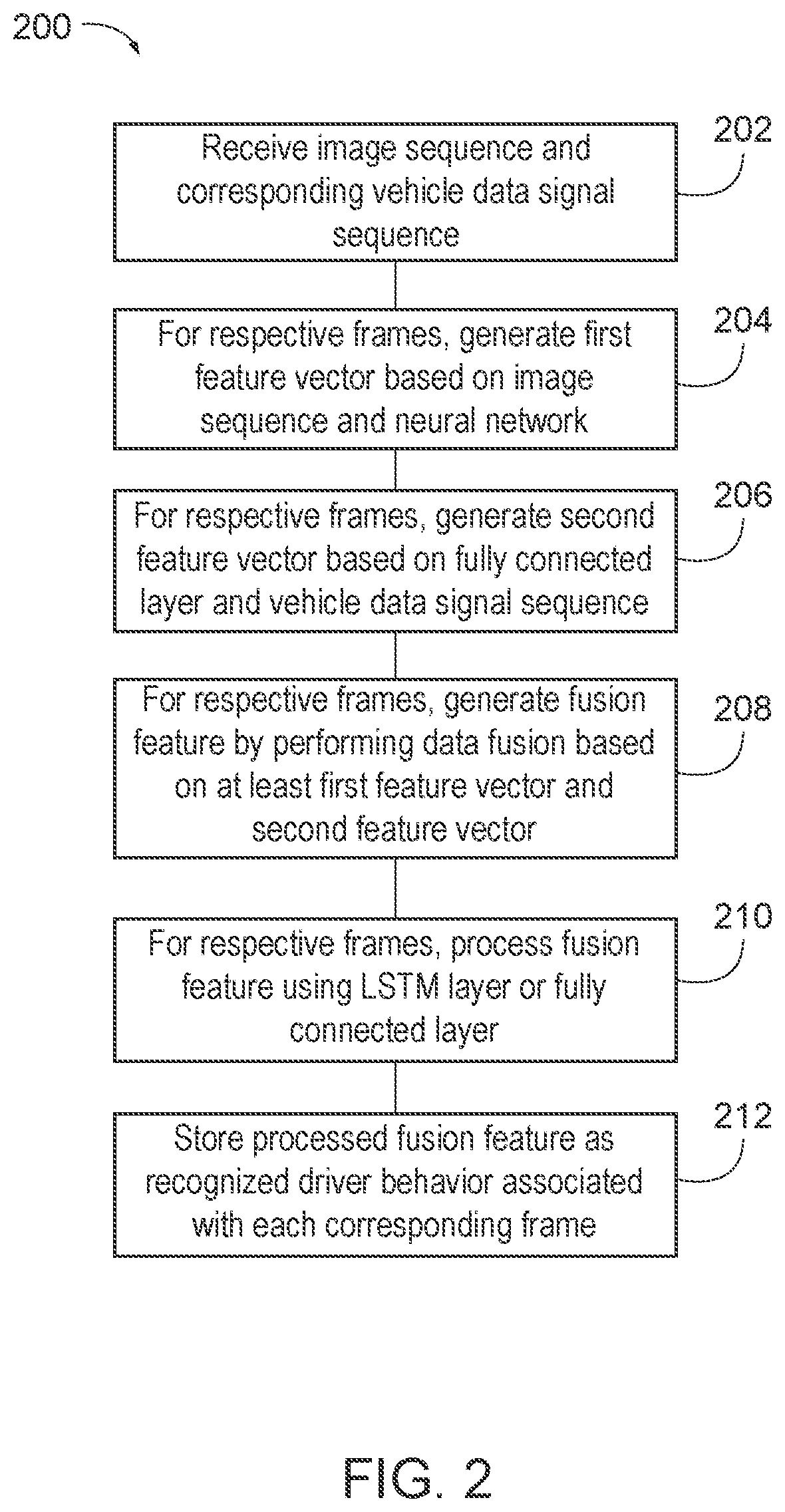

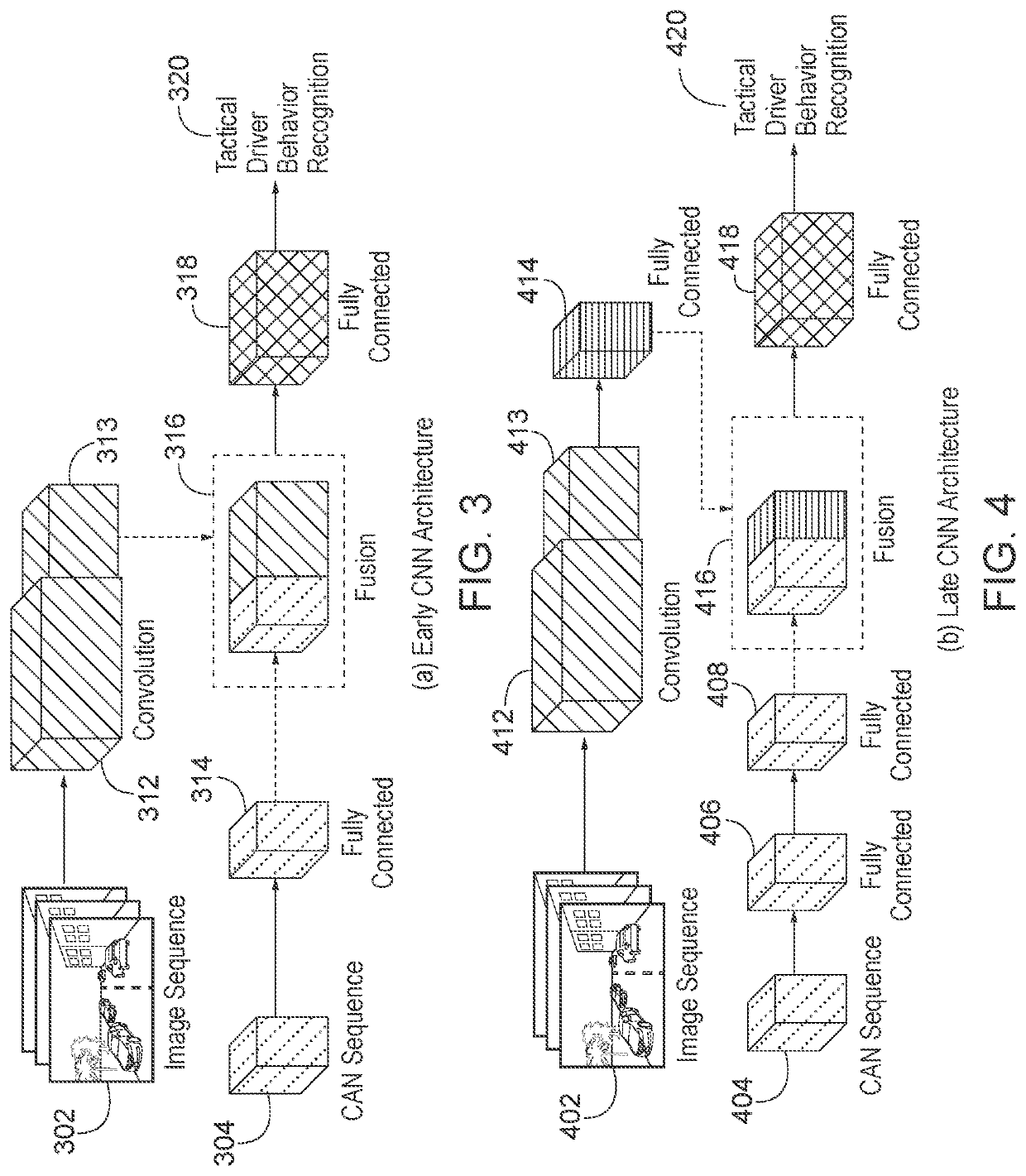

Scene classification prediction

Systems and techniques for scene classification and prediction is provided herein. A first series of image frames of an environment from a moving vehicle may be captured. Traffic participants within the environment may be identified and masked based on a first convolutional neural network (CNN). Temporal classification may be performed to generate a series of image frames associated with temporal predictions based on a scene classification model based on CNNs and a long short-term memory (LSTM) network. Additionally, scene classification may occur based on global average pooling. Feature vectors may be generated based on different series of image frames and a fusion feature vector may be obtained by performing data fusion based on a first feature vector, a second feature vector, a third feature vector, etc. In this way, a behavior predictor may generate a predicted driver behavior based on the fusion feature.

Owner:HONDA MOTOR CO LTD

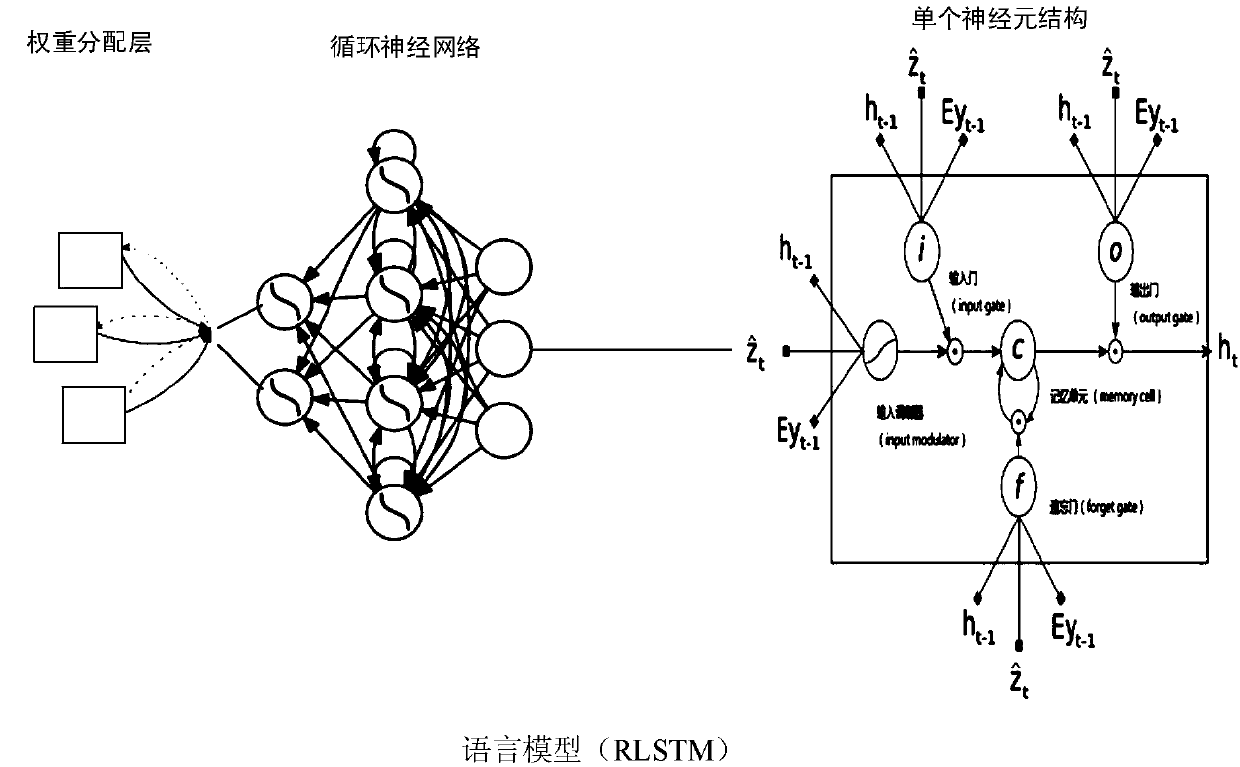

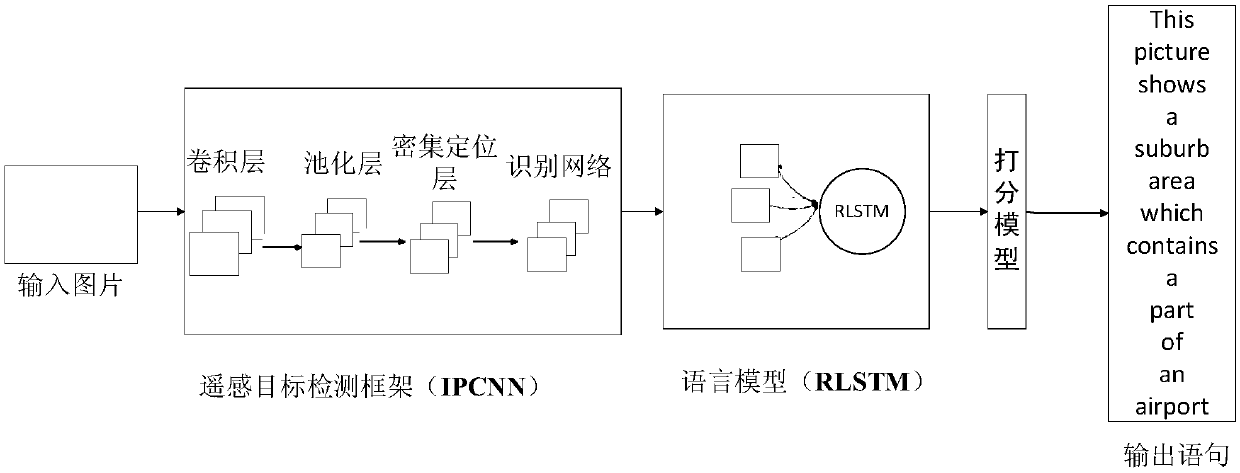

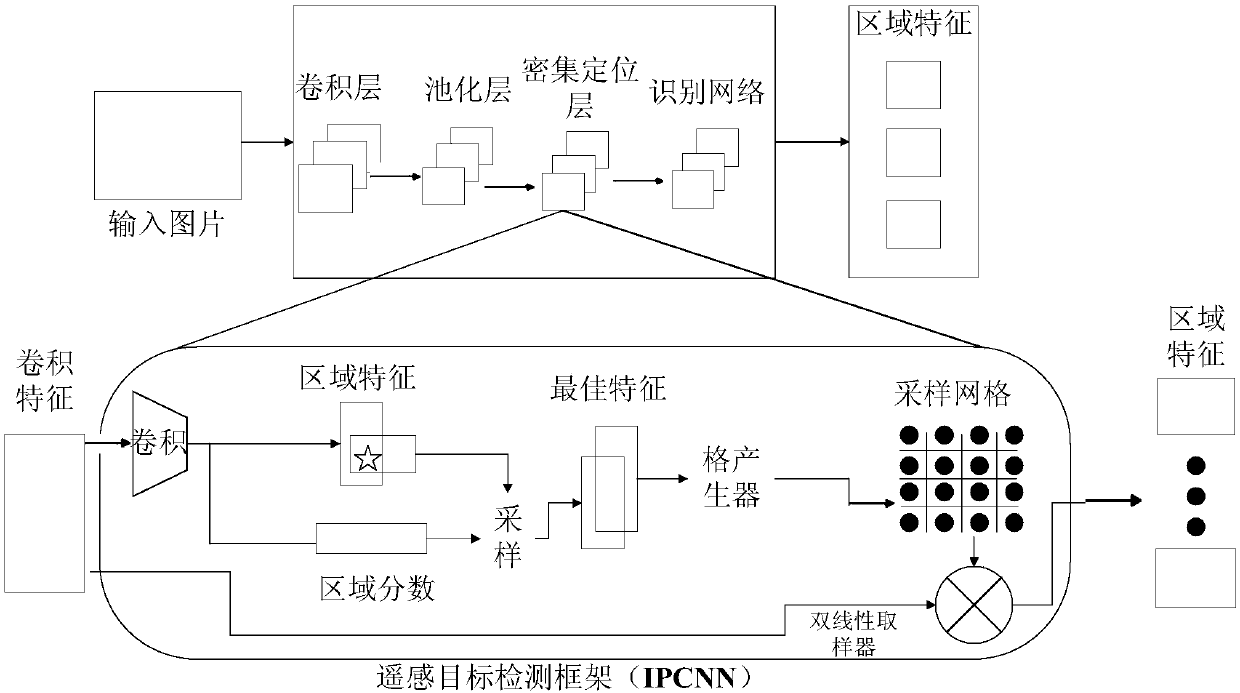

Remote sensing image natural language generation method based on attention mechanism and deep learning

InactiveCN107766894AReduce overfittingHigh outputScene recognitionNeural architecturesShort-term memoryComputer science

The invention relates to a remote sensing image natural language generation method based on an attention mechanism and deep learning. The method comprises the steps of first, preprocessing a remote sensing image and corresponding natural language description; second, inputting the denoised remote sensing image into an intensive positioning convolution neural network (IPCNN); third, inputting region blocks obtained in the second step into a reassignment long-short term memory (RLSTM) network, getting into a weight assignment layer of the RLSTM network, respectively solving the weight of each region by using a multilayer network function, and finally realizing overall output of the natural language description through a deep output layer of the RLSTM network; fourth, inputting the natural language description generated in the third step into a remote sensing image language description scoring model to obtain a score of each sentence; and fifth, inputting a target position, a category label and a natural language description score into a database to wait for calling.

Owner:JILIN UNIV

Method and device for speech recognition by use of LSTM recurrent neural network model

ActiveCN105513591AImprove accuracySolve the "after-tail effect"Speech recognitionPattern recognitionHidden layer

The invention discloses a method and a device for speech recognition by use of a long-short term memory (LSTM) recurrent neural network model. The method comprises the following steps: receiving speech input data at the (t)th time; selecting the LSTM hidden layer states from the (t-1)th time to the (t-n)th time, wherein n is a positive integer; and generating an LSTM result of the (t)th time according to the selected at least one LSTM hidden layer state, the input data at the (t)th time and an LSTM recurrent neural network model. With the method and the device, the 'tail effect' of the deep neural network is well solved, and the accuracy of speech recognition is improved.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

Method and system for generating natural languages for describing image contents

ActiveCN107918782ACharacter and pattern recognitionNatural language data processingConditional probabilityMachine learning

The invention provides a method for training a model for generating natural languages for describing image contents, and a method for generating natural languages for describing image contents by adoption of the model. The training method comprises the following steps of: A1) taking global features and local features of images in an image training set as inputs of an attention mechanism, so as toobtain a fusion result comprising the global features and the local features at the same time; and A2) taking the fusion result and a word training set as inputs of a long short-term memory network, and training the attention mechanism and the long short-term memory network by utilizing a loss function so as to obtain a weight of the attention mechanism and a weight of the long short-term memory network, wherein the loss function is a function of a condition probability of the previous word or the ith word of a plurality of words in the content of a known image and a natural sentence for describing the image content, and i is equal to 1,to imax.

Owner:INST OF COMPUTING TECH CHINESE ACAD OF SCI

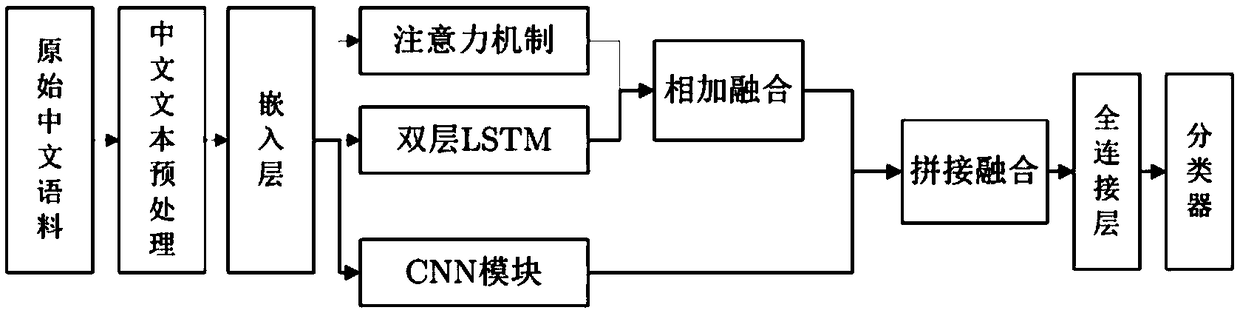

Chinese text classification method based on attention mechanism and feature enhancement fusion

InactiveCN108717439AEasy to identifyIncrease diversityCharacter and pattern recognitionNatural language data processingText categorizationClassification methods

The present invention provides a Chinese text classification method based on an attention mechanism and feature enhancement fusion, and belongs to the technical field of data mining. The Chinese textclassification method based on the attention mechanism and the feature enhancement fusion is characterized in that: a feature enhancement fusion Chinese text classification model based on the attention mechanism, a long short-term memory (LSTM) network and a convolutional neural network (CNN), and a feature difference enhanced attentional algorithm model are proposed; and according to the featureenhancement fusion Chinese text classification model, double-layer LSTM and CNN modules are used to sequentially perform enhancement fusion on the text features extracted by using the attention mechanism, the richness of the extracted text features can be continuously enhanced, and the contained text features can be more comprehensive and detailed, so that the recognition ability of the model to the Chinese text features is improved.

Owner:HARBIN UNIV OF SCI & TECH

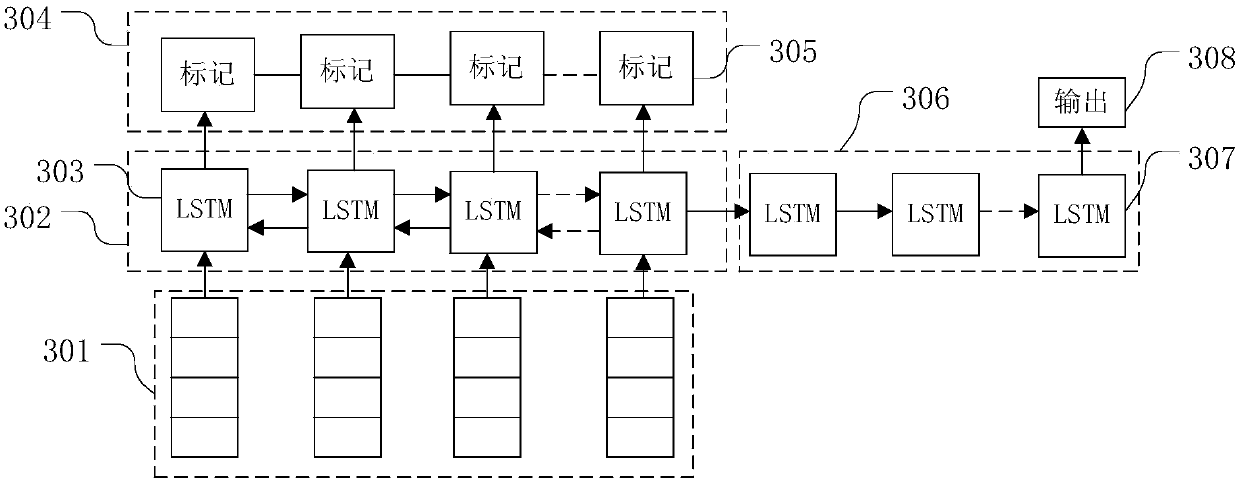

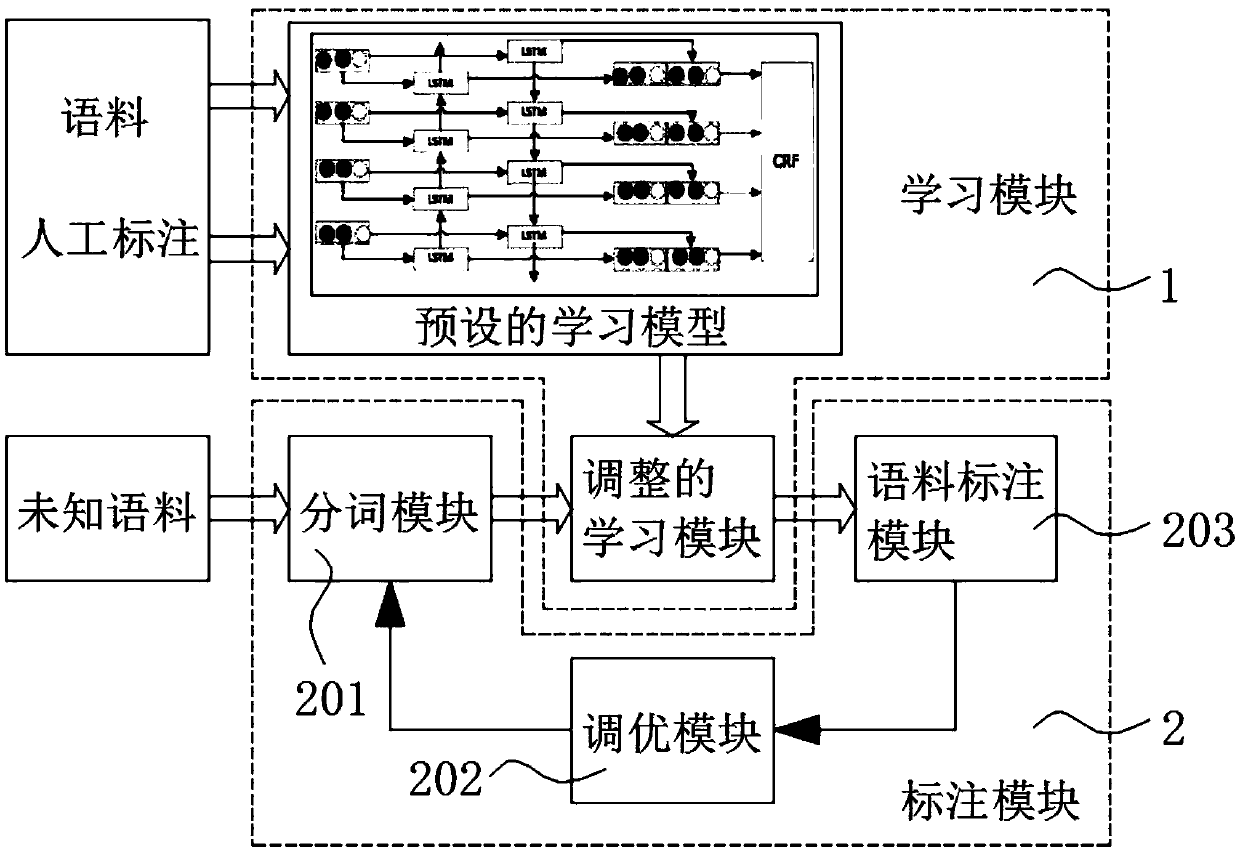

Text sequence annotating system and method based on Bi-LSTM (Bidirectional Long Short-Term Memory) and CRF (Conditional Random Field)

ActiveCN107622050AImprove efficiencyReduce labor labeling costsNeural architecturesSpecial data processing applicationsPart of speechConditional random field

The invention discloses a text sequence annotating system and method based on a Bi-LSTM (Bidirectional Long Short-Term Memory) and a CRF (Conditional Random Field). The system comprises a learning module and an annotating module, wherein the annotating module comprises a word segmenting module, a corpus annotating module and an adjusting and optimizing module; and the corpus annotating module comprises a part-of-speech annotating module and an entity recognizing module. The method comprises the following steps: preprocessing an obtained corpus; inputting the preprocessed corpus into a preset learning model; adjusting and saving parameters of the learning model; adding a corresponding prediction tag for the corpus respectively according to a sequence classifying result output by the learning model; performing word segmentation on an unknown corpus; initially annotating the unknown corpus being subjected to the word segmentation by using the adjusted learning model; adjusting and optimizing the initially-annotated unknow corpus; and finally annotating the adjusted and optimized corpus. Through adoption of the text sequence annotating system and method, a user can adjust a word library as required; a human-computer interactive adjusting function is realized; a process of automatically annotating in the same field and semi-automatically annotating in different fields is realized; the efficiency is increased; and the cost is lowered.

Owner:武汉烽火普天信息技术有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com