Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

152 results about "Word representation" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

A representation term is a word, or a combination of words, that semantically represent the data type of a data element. A representation term is commonly referred to as a class word by those familiar with data dictionaries.

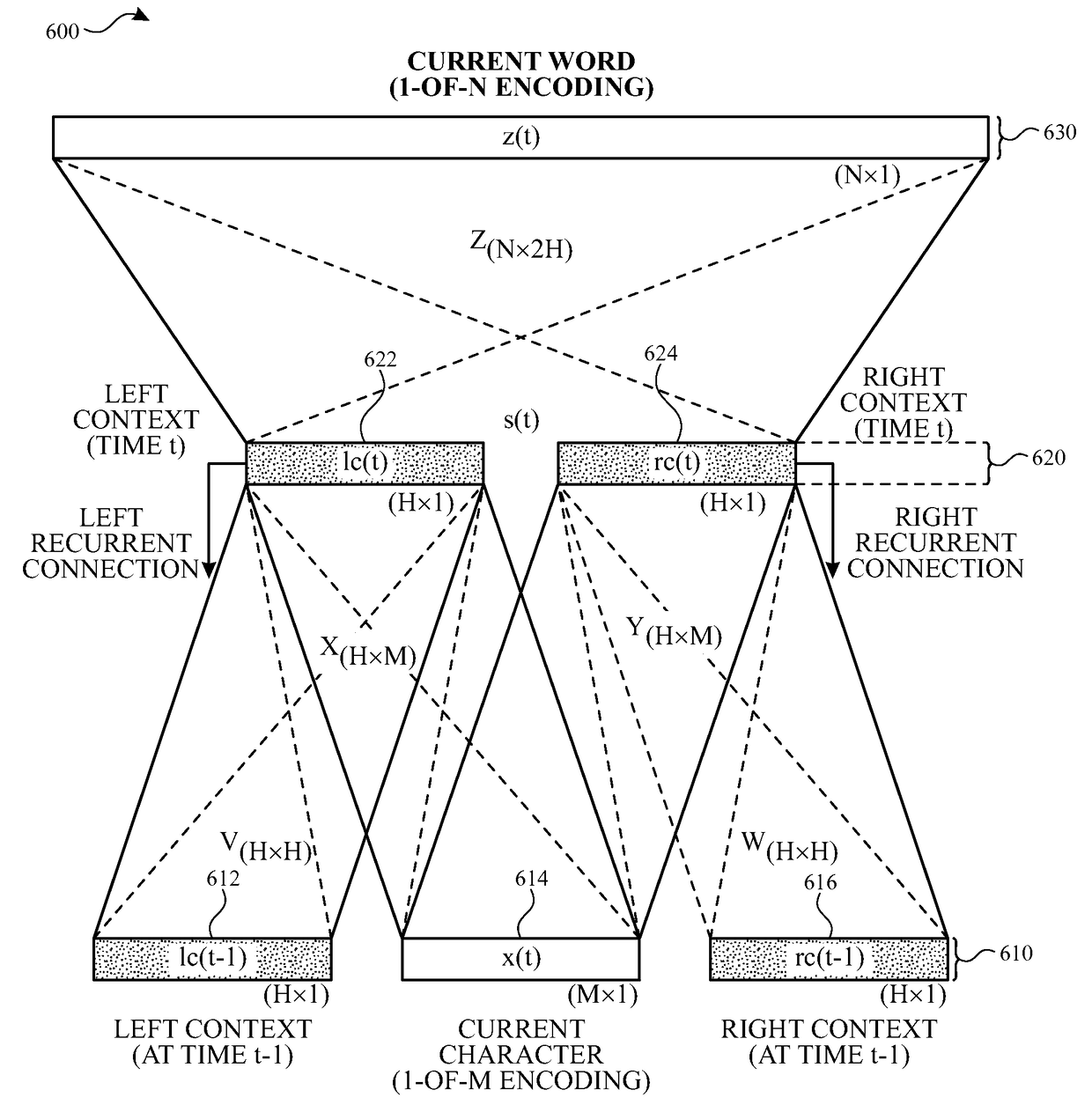

Unified language modeling framework for word prediction, auto-completion and auto-correction

ActiveUS20170091168A1Natural language data processingKnowledge representationProgramming languageAutocorrection

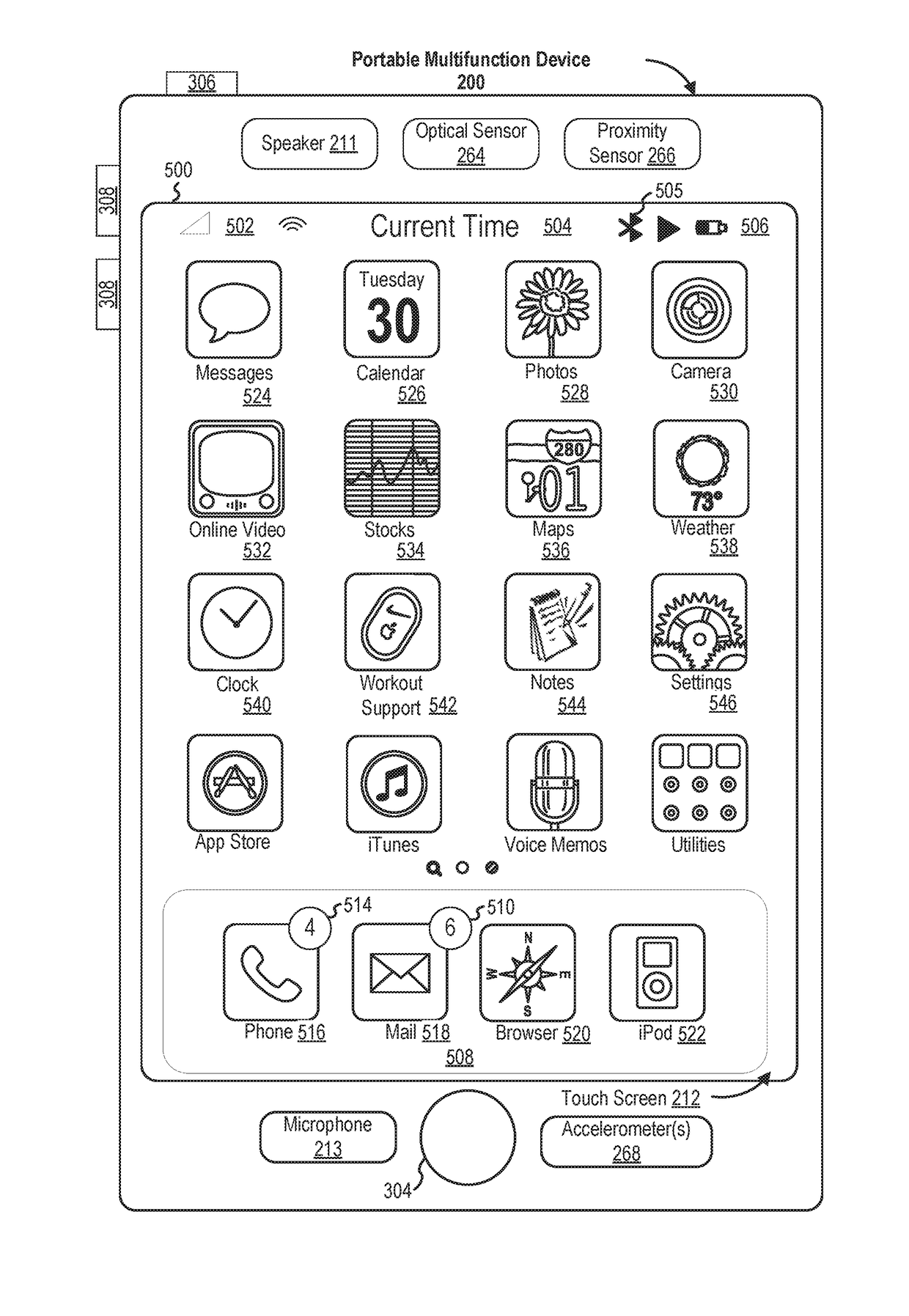

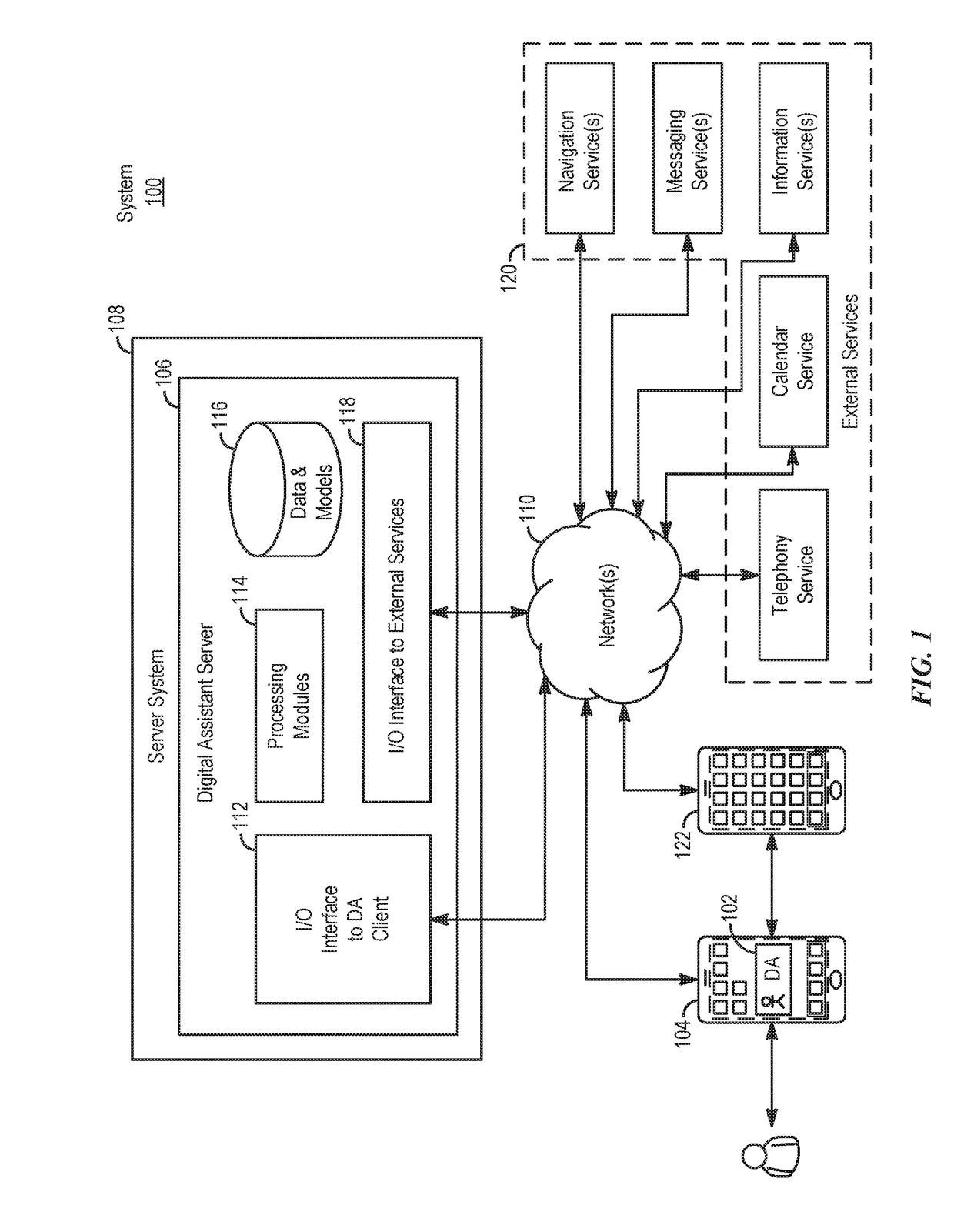

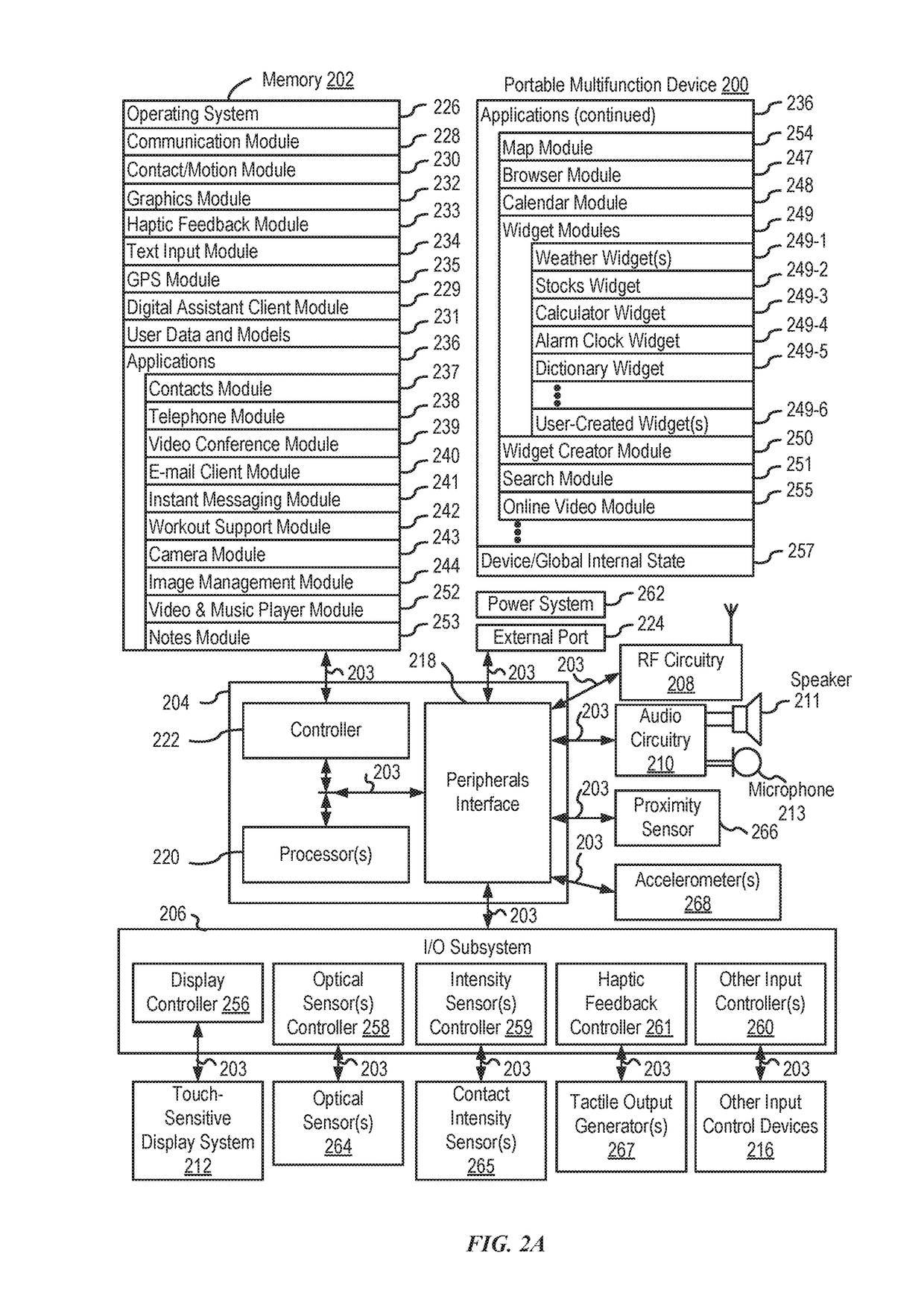

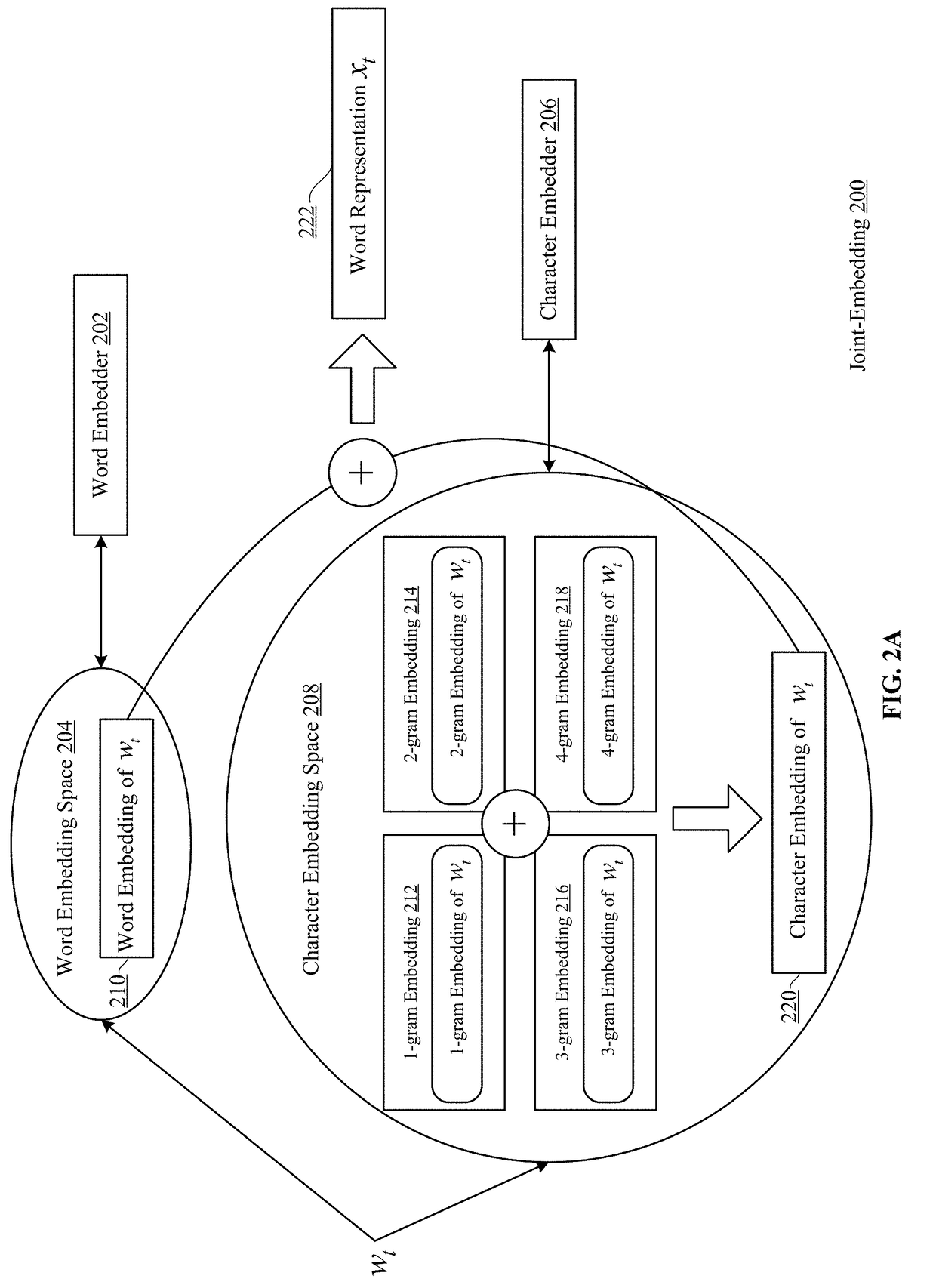

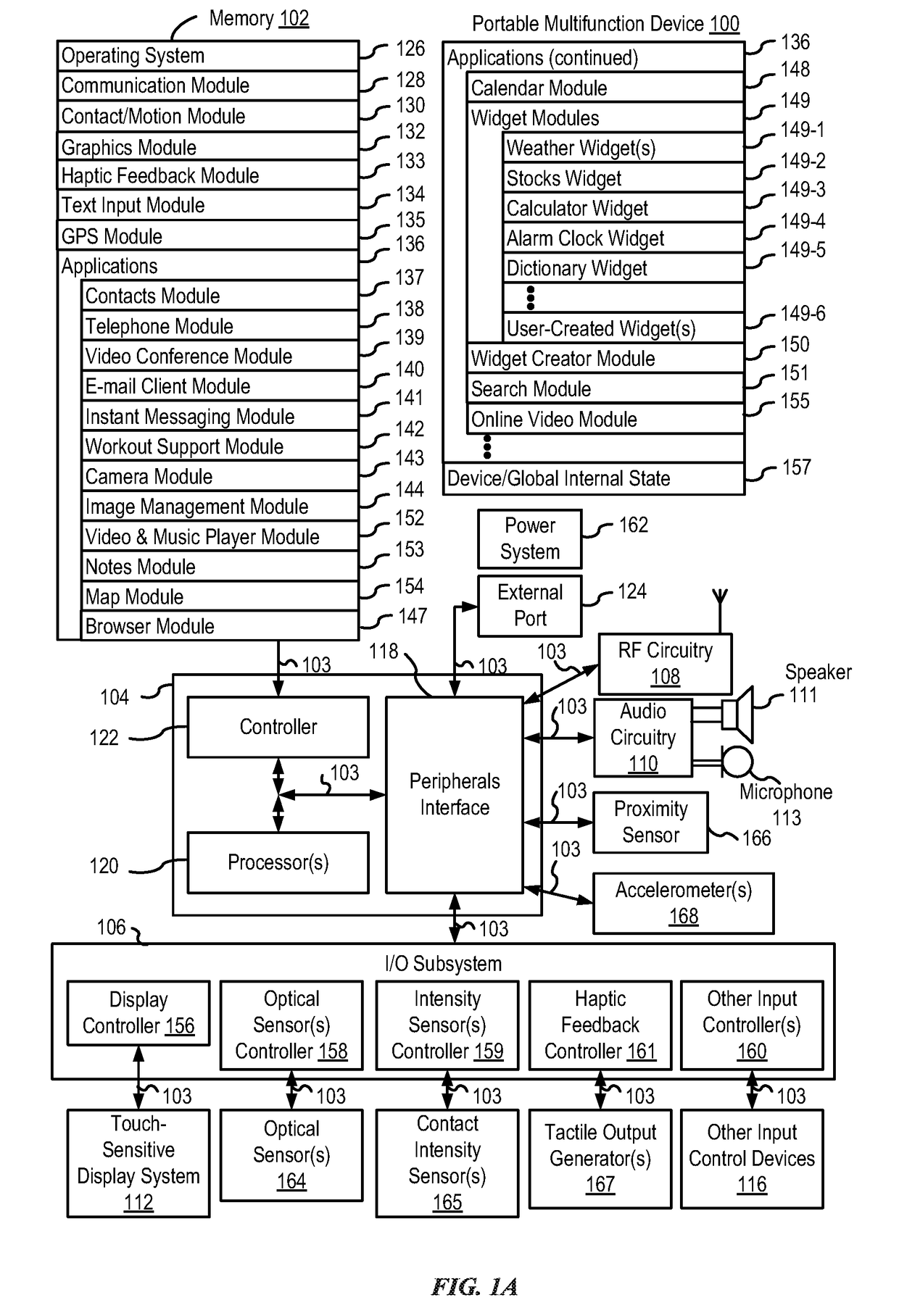

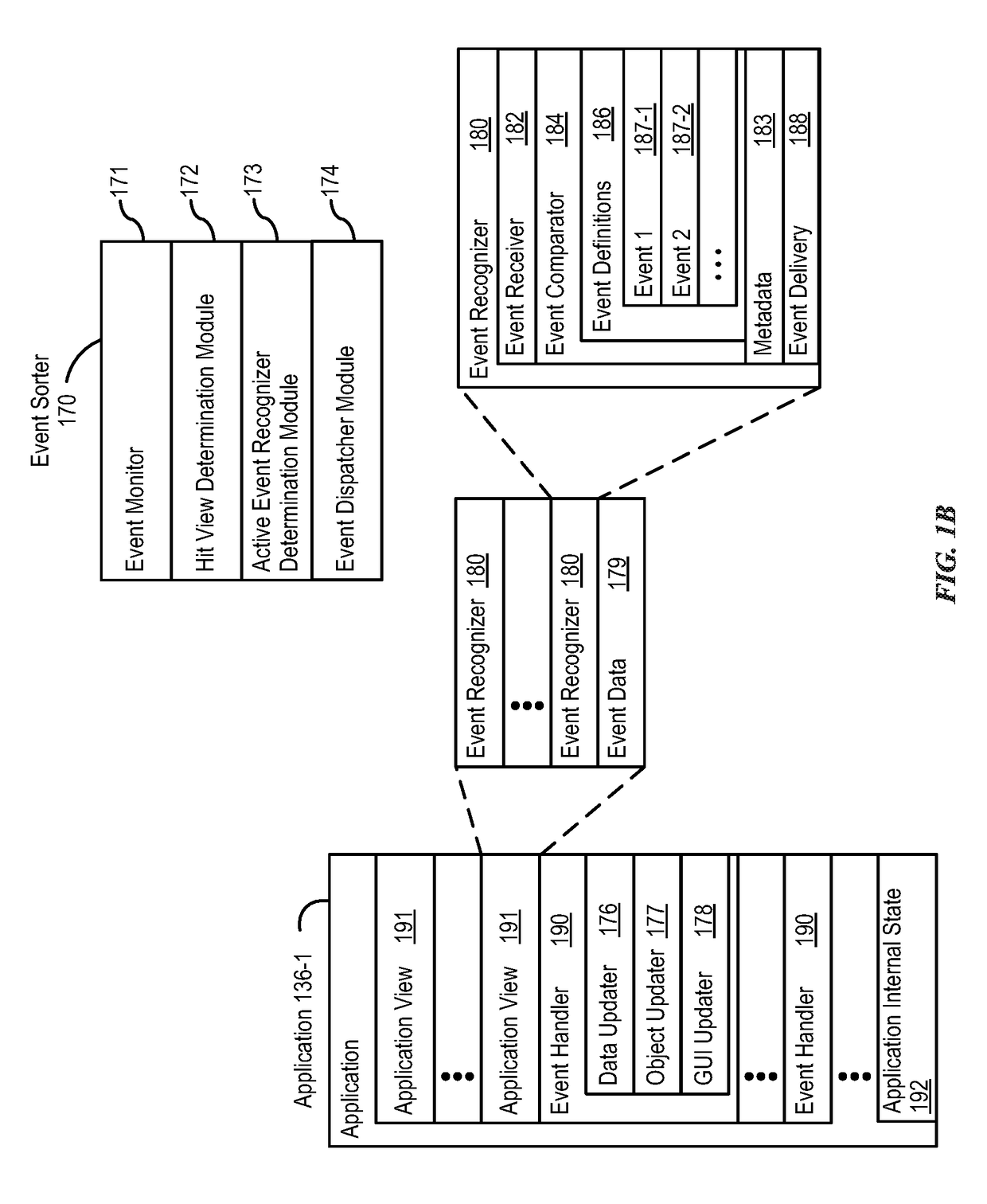

Systems and processes for unified language modeling are provided. In accordance with one example, a method includes, at an electronic device with one or more processors and memory, receiving a character of a sequence of characters and determining a current character context based on the received character of the sequence of characters and a previous character context. The method further includes determining a current word representation based on the current character context and determining a current word context based on the current word representation and a previous word context. The method further includes determining a next word representation based on the current word context and providing the next word representation.

Owner:APPLE INC

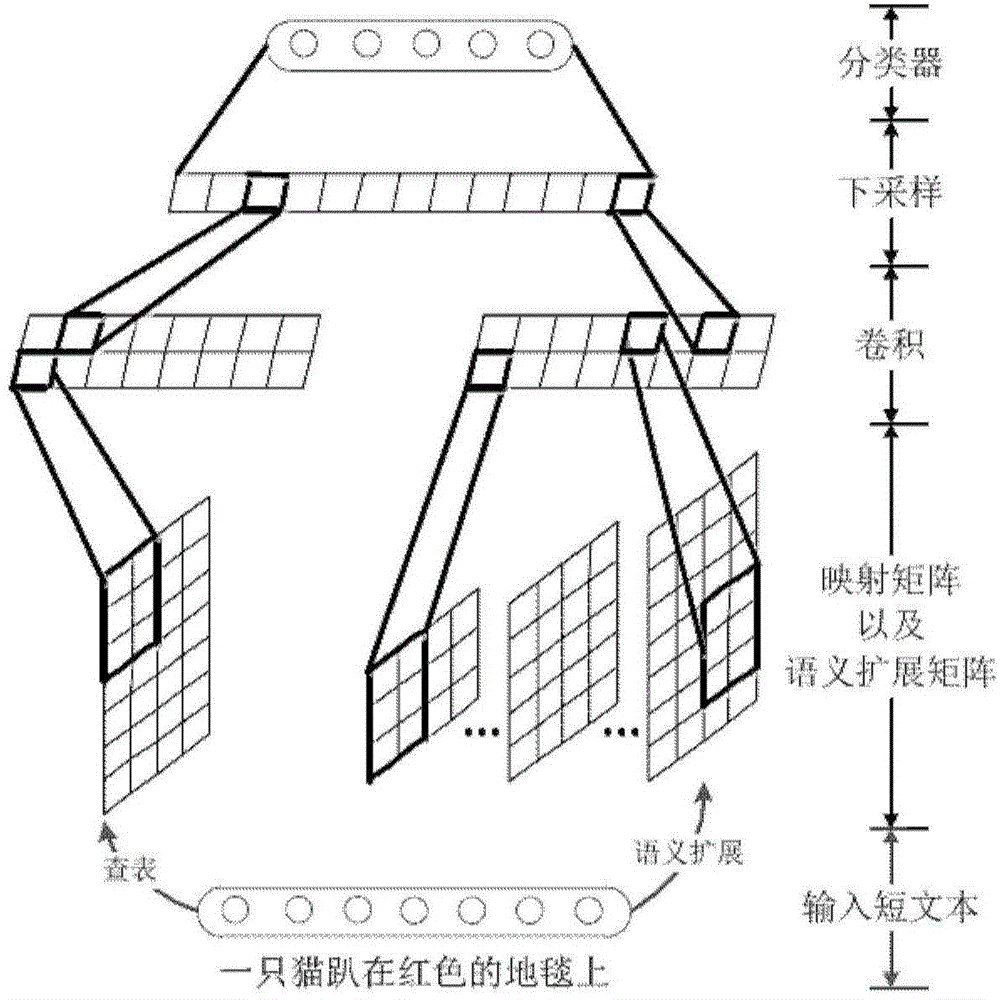

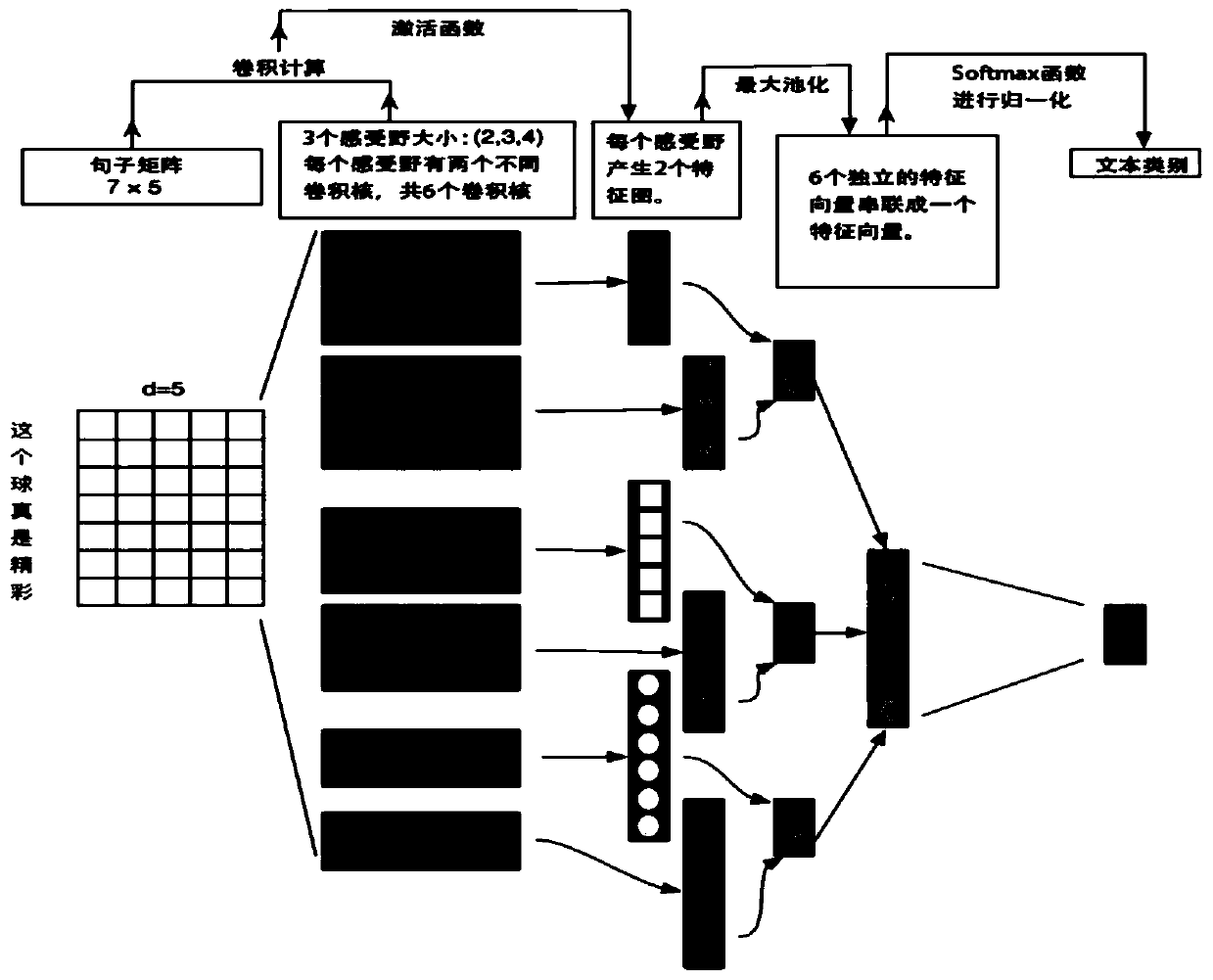

Short text classification method based on convolution neutral network

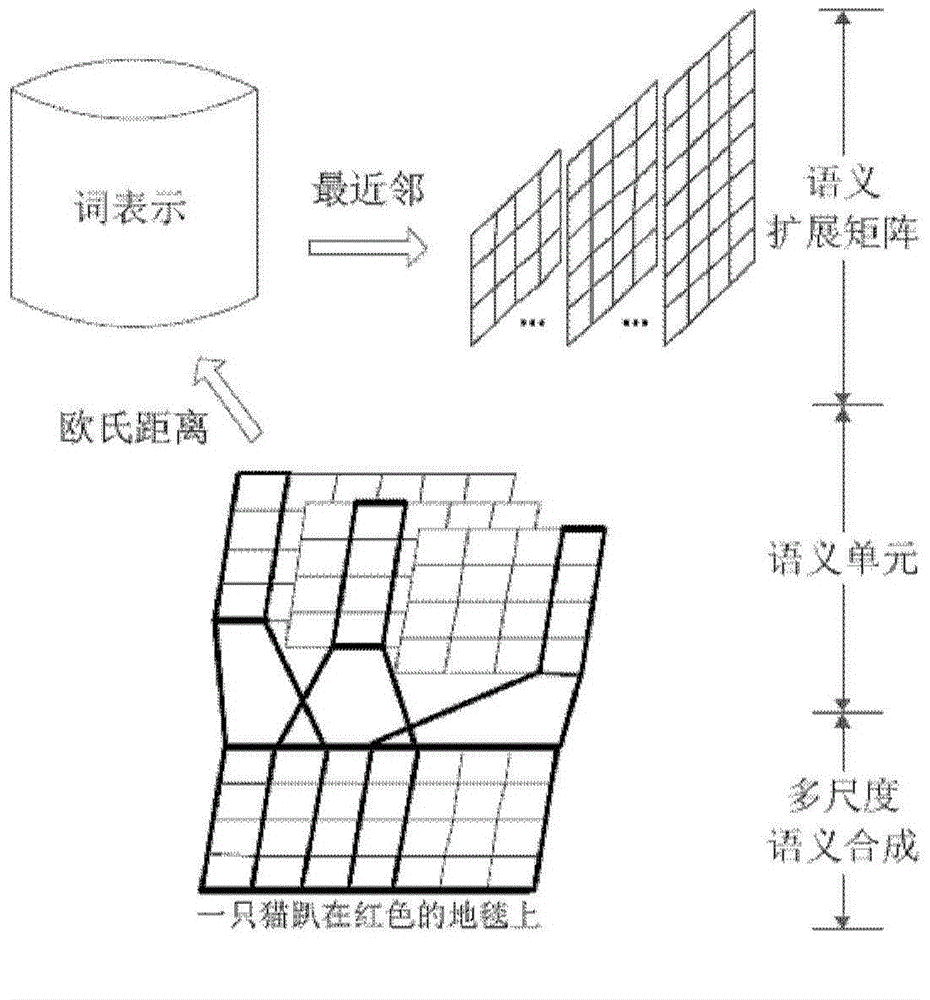

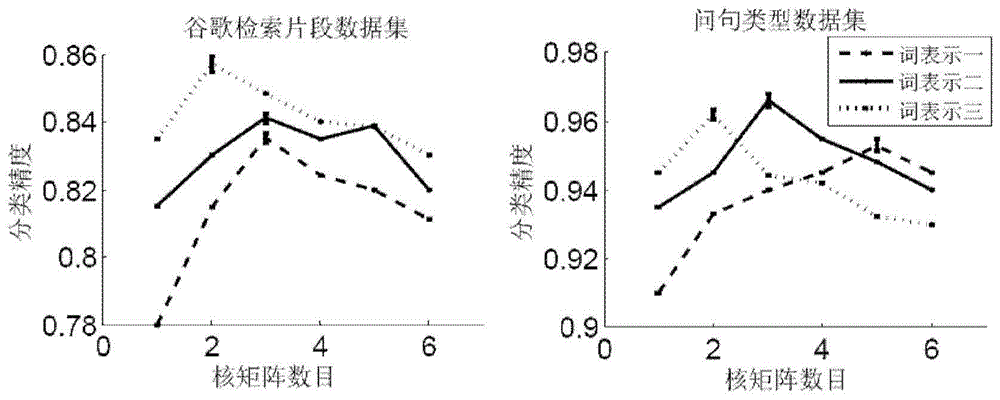

ActiveCN104834747AImprove semantic sensitivity issuesImprove classification performanceInput/output for user-computer interactionBiological neural network modelsNear neighborClassification methods

The invention discloses a short text classification method based on a convolution neutral network. The convolution neutral network comprises a first layer, a second layer, a third layer, a fourth layer and a fifth layer. On the first layer, multi-scale candidate semantic units in a short text are obtained; on the second layer, Euclidean distances between each candidate semantic unit and all word representation vectors in a vector space are calculated, nearest-neighbor word representations are found, and all the nearest-neighbor word representations meeting a preset Euclidean distance threshold value are selected to construct a semantic expanding matrix; on the third layer, multiple kernel matrixes of different widths and different weight values are used for performing two-dimensional convolution calculation on a mapping matrix and the semantic expanding matrix of the short text, extracting local convolution features and generating a multi-layer local convolution feature matrix; on the fourth layer, down-sampling is performed on the multi-layer local convolution feature matrix to obtain a multi-layer global feature matrix, nonlinear tangent conversion is performed on the global feature matrix, and then the converted global feature matrix is converted into a fixed-length semantic feature vector; on the fifth layer, a classifier is endowed with the semantic feature vector to predict the category of the short text.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

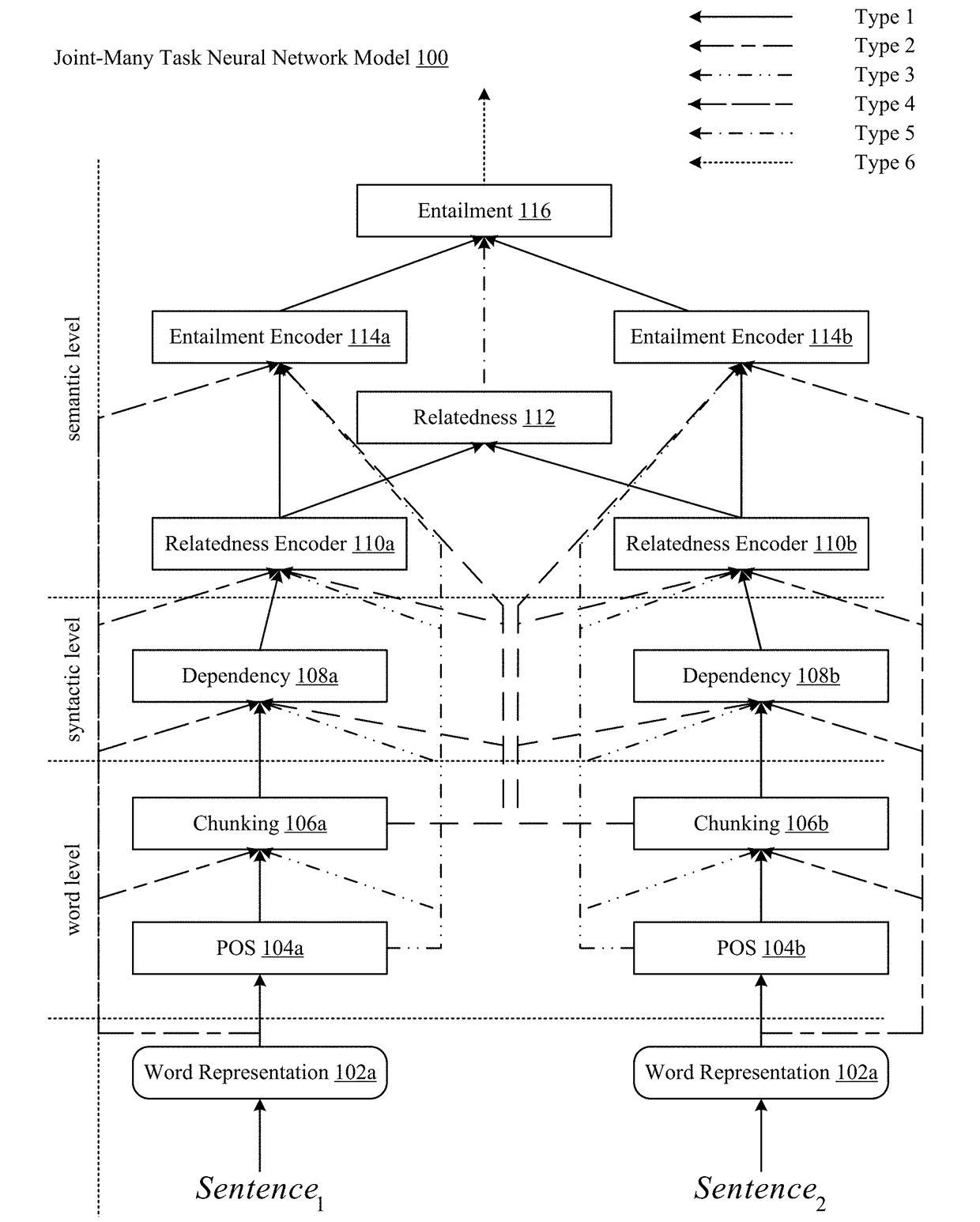

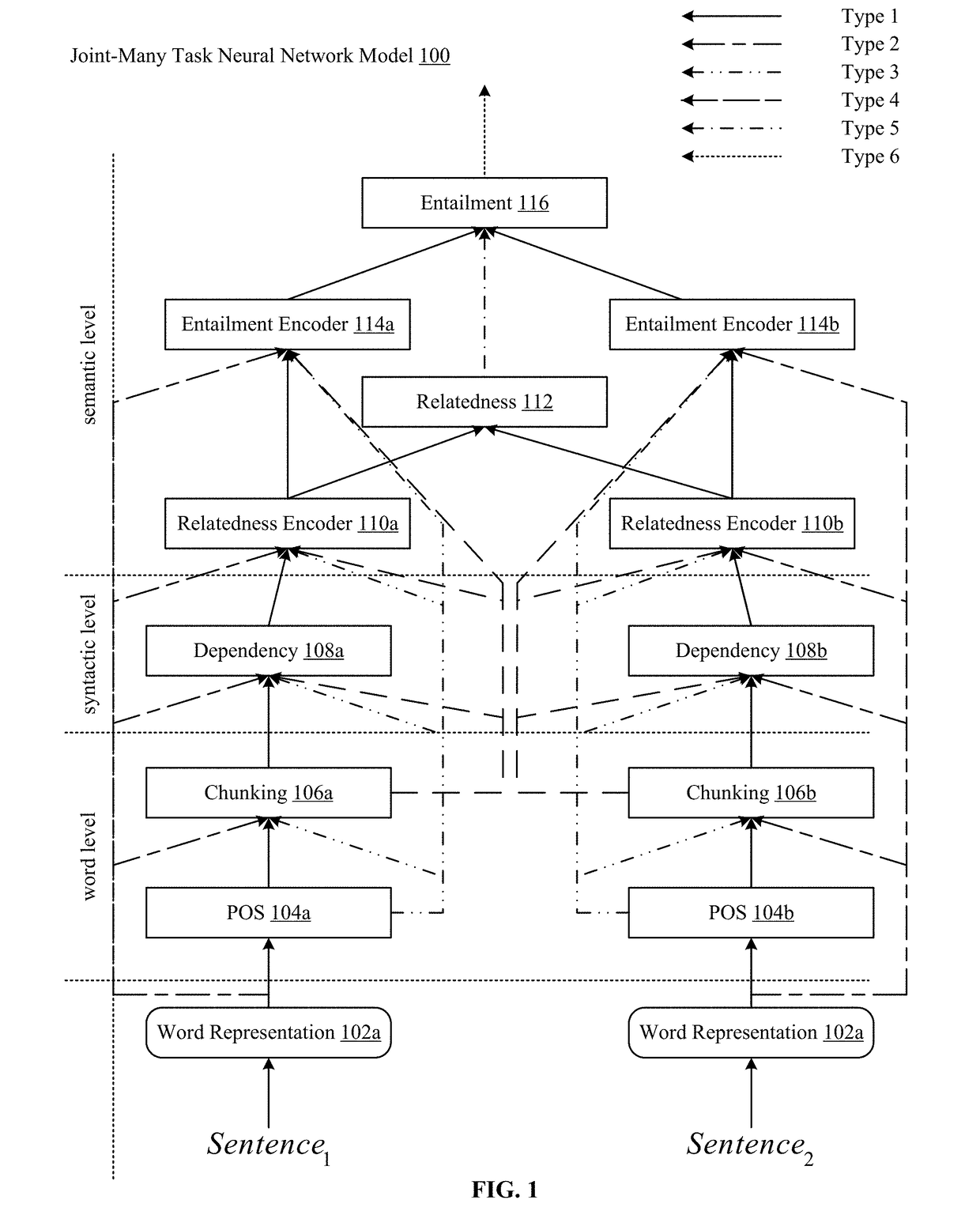

Deep Neural Network Model for Processing Data Through Mutliple Linguistic Task Hiearchies

The technology disclosed provides a so-called “joint many-task neural network model” to solve a variety of increasingly complex natural language processing (NLP) tasks using growing depth of layers in a single end-to-end model. The model is successively trained by considering linguistic hierarchies, directly connecting word representations to all model layers, explicitly using predictions in lower tasks, and applying a so-called “successive regularization” technique to prevent catastrophic forgetting. Three examples of lower level model layers are part-of-speech (POS) tagging layer, chunking layer, and dependency parsing layer. Two examples of higher level model layers are semantic relatedness layer and textual entailment layer. The model achieves the state-of-the-art results on chunking, dependency parsing, semantic relatedness and textual entailment.

Owner:SALESFORCE COM INC

Unified framework for text conversion and prediction

ActiveUS20180322112A1Improve accuracyImprove robustnessNatural language translationSpecial data processing applicationsWord representationSpeech recognition

The present disclosure generally relates to integrated text conversion and prediction. In an example process, a current character input of a first writing system is received. A first current character context in the first writing system is determined based on the current character input and a first previous character context in the first writing system. A second current character context in a second writing system is determined based on the first current character context, a second previous character context in the second writing system, and a character representation in the second writing system. A current word context in the second writing system is determined based on the second current character context, a previous word context in the second writing system, and a word representation in the second writing system. Based on the current word context, a probability distribution over a word inventory in the second writing system is determined.

Owner:APPLE INC

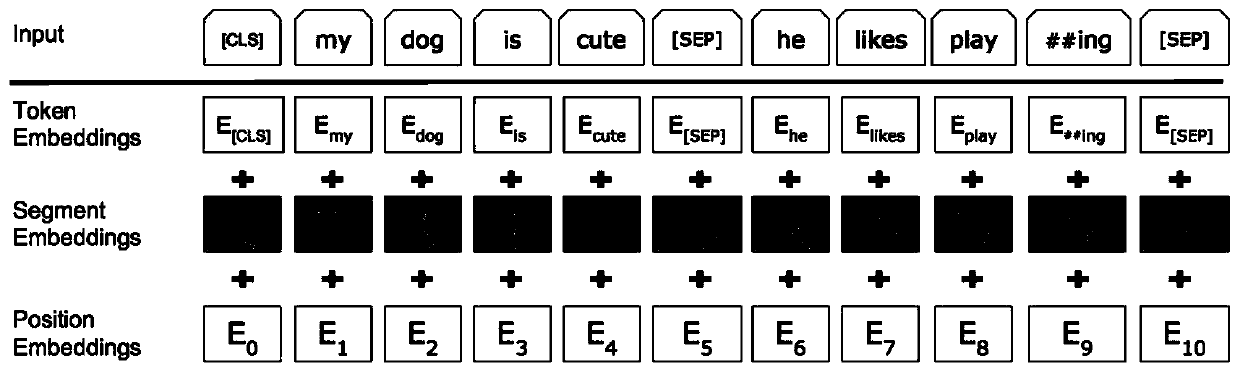

A text classification method and device based on transfer learning

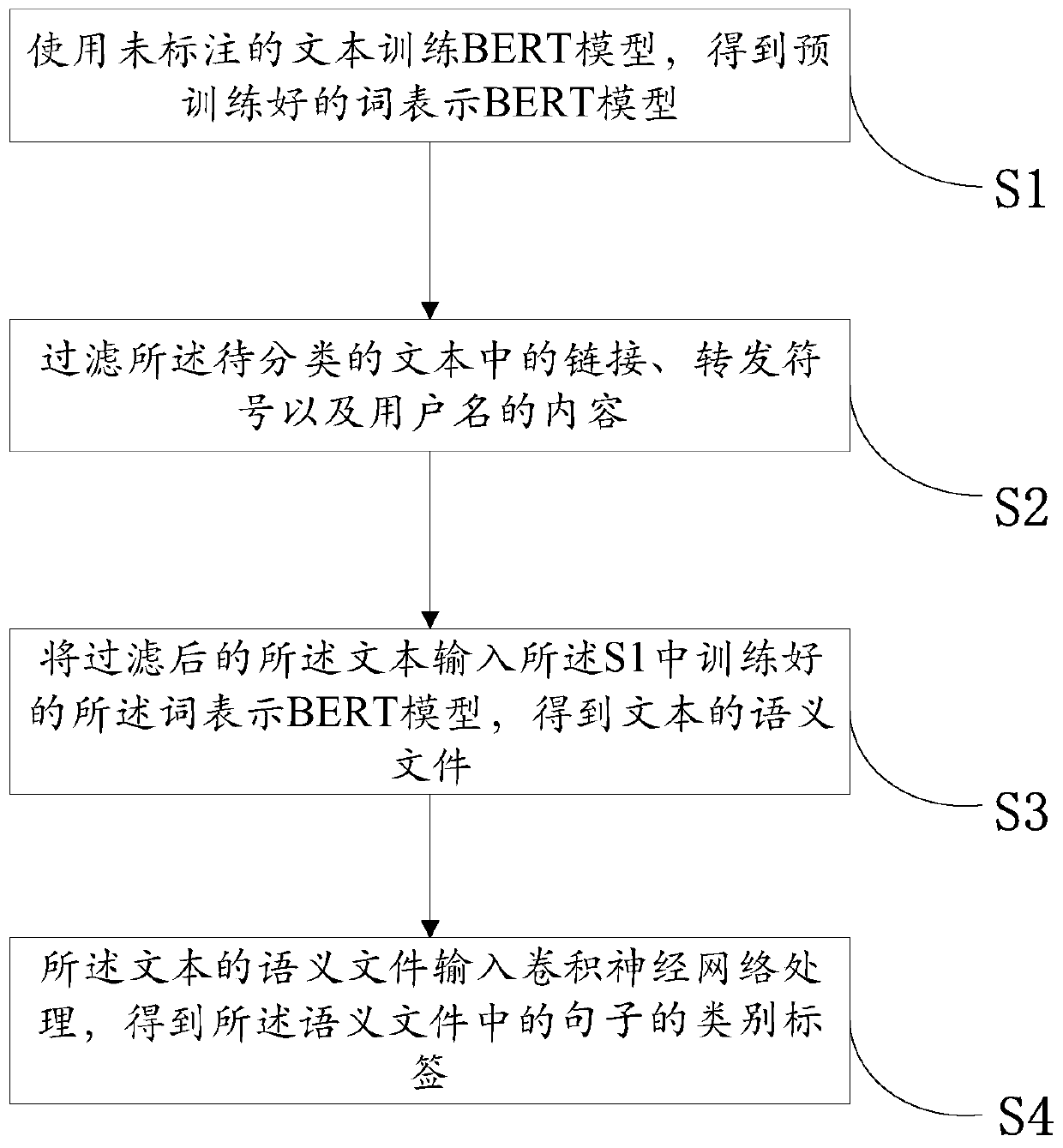

InactiveCN109710770AVersatilityAvoid workloadCharacter and pattern recognitionText database clustering/classificationText categorizationClassification methods

The invention provides a text classification method and device based on transfer learning, and the method comprises the steps: S1, employing an unlabeled text to train a BERT model, and obtaining a pre-trained word representation BERT model; S2, filtering links, forwarding symbols and user name contents in the to-be-classified text; S3, inputting the filtered text into the word representation BERTmodel trained in the step S1 to obtain a semantic file of the text; and S4, inputting the semantic file of the text into a convolutional neural network for processing to obtain a category label of the sentence in the semantic file. According to the method, transfer learning is used for text classification, and a BERT word representation model trained based on large-scale unlabeled corpora is provided. The word representation model has universality, does not depend on the specific text field, and can also be used for other tasks such as entity extraction and sentiment analysis.

Owner:北京牡丹电子集团有限责任公司数字科技中心

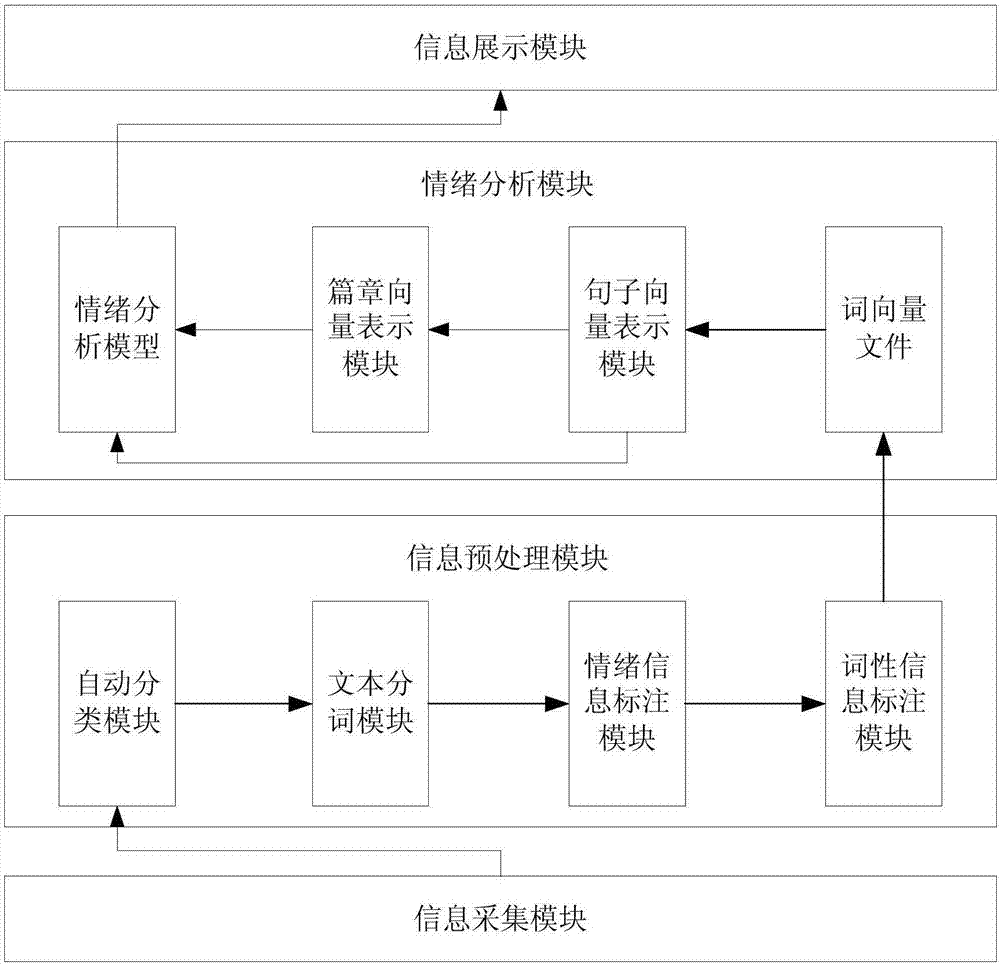

Text emotion analysis system based on deep learning

ActiveCN106919673AImprove attributesImprove accuracySemantic analysisSpecial data processing applicationsOpinion analysisWord representation

The invention discloses a text emotion analysis system based on deep learning. The system comprises an information collection module, an information pre-processing module, an emotion analysis module and an information display module, wherein the information collection module is used to collect comment information in each Internet resource website; the information pre-processing module is used to conduct classification, word segmentation, part-of-speech tagging, emotion information tagging processing and storage of the collected comment information; the emotion analysis module transforms the processed comment information into a phrase vector by a word representation model, a sentence module and a section and chapter model, and also inputs the phrase vector into the emotion classification model for emotion analysis; and the information display module is used to present emotion analysis results in a visualized manner. The system has the advantages that emotion orientation analysis can be conducted on the comment information; the analysis results can be presented to users in a visualized manner; and further public opinion analysis results or early warning can be provided to related departments such as enterprises or governments.

Owner:ZHEJIANG GONGSHANG UNIVERSITY

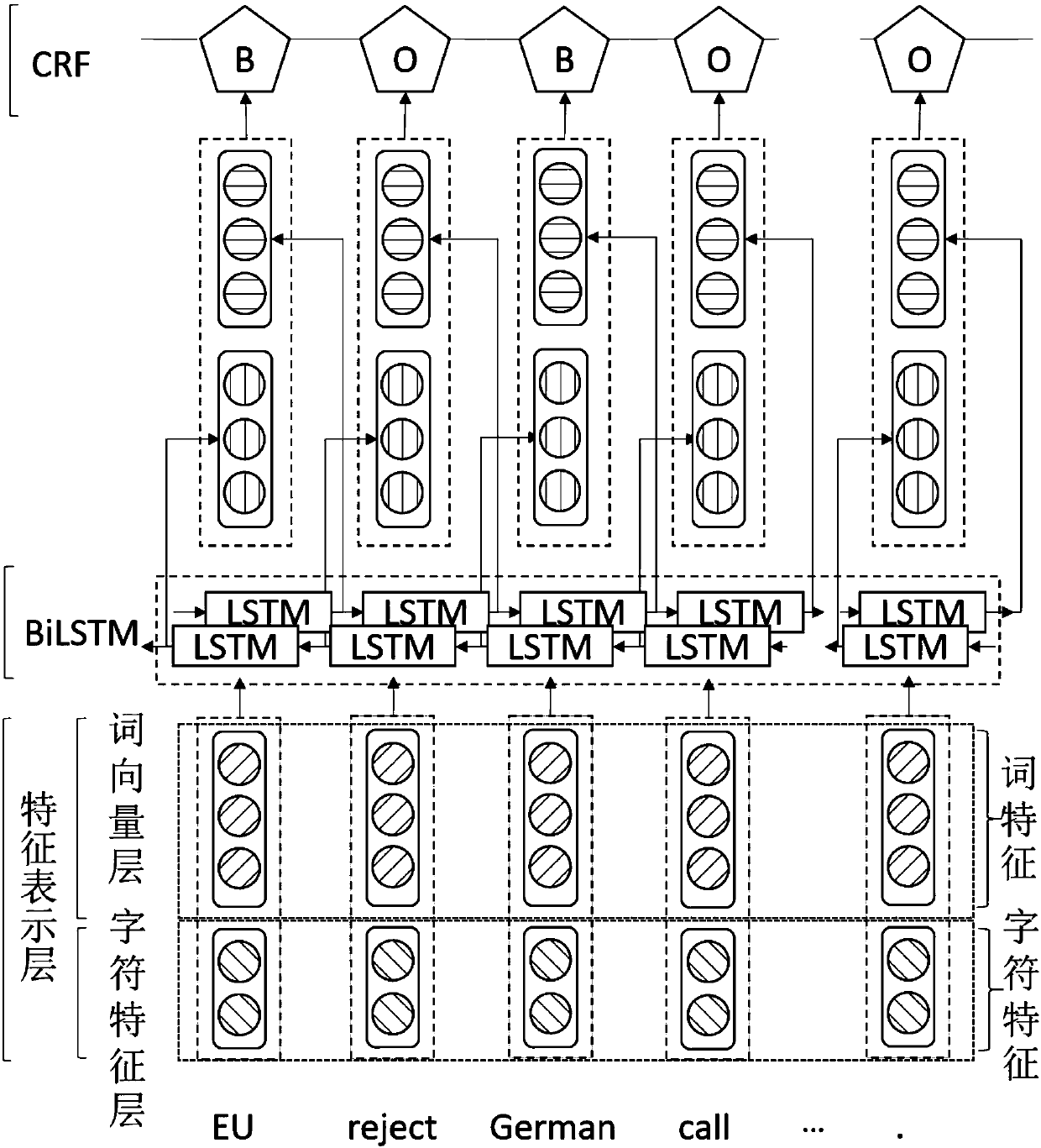

Fine-grained word representation model-based sequence labeling model

ActiveCN108460013ABoundary Judgment ImprovementImprove entity recognitionSemantic analysisCharacter and pattern recognitionData setAlgorithm

The invention provides a fine-grained word representation model-based sequence labeling model, which is used for performing a sequence labeling task, and belongs to the field of computer application and natural language processing. The structure of the model is mainly composed of three parts including a feature representation layer, a BiLSTM layer and a CRF layer. When the sequence labeling task is performed by utilizing the model, firstly an attention mechanism-based character level word representation model Finger is proposed for fusing morphological information and character information ofwords; secondly the Finger and a BiLSTM-CRF model finish the sequence labeling task jointly; and finally a result with F1 of 91.09% is obtained in a CoNLL 2003 data set in end-to-end and no any feature engineering forms by a method. An experiment shows that the designed Finger model remarkably improves the recall rate of a sequence labeling system, so that the model identification capability is remarkably improved.

Owner:DALIAN UNIV OF TECH

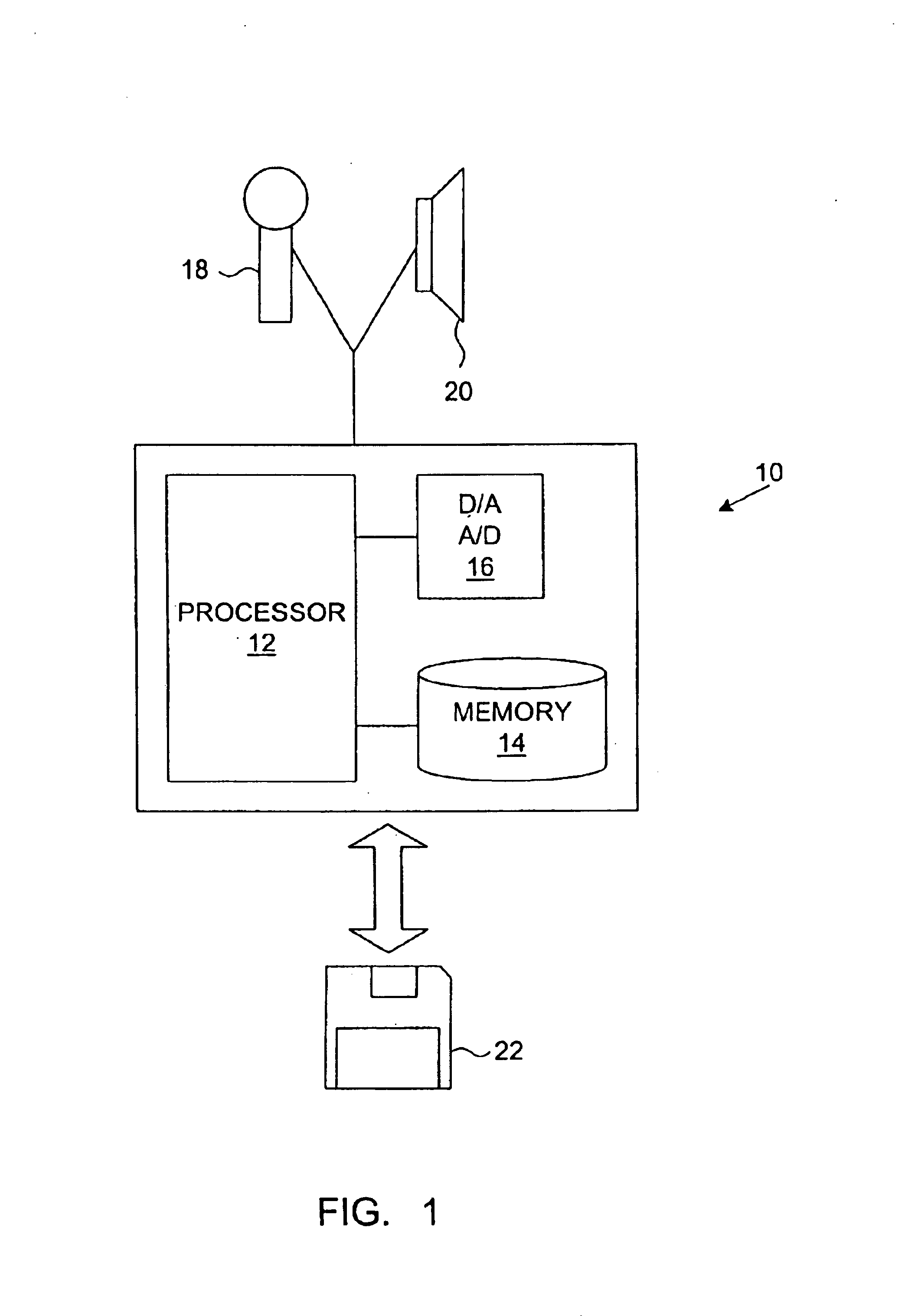

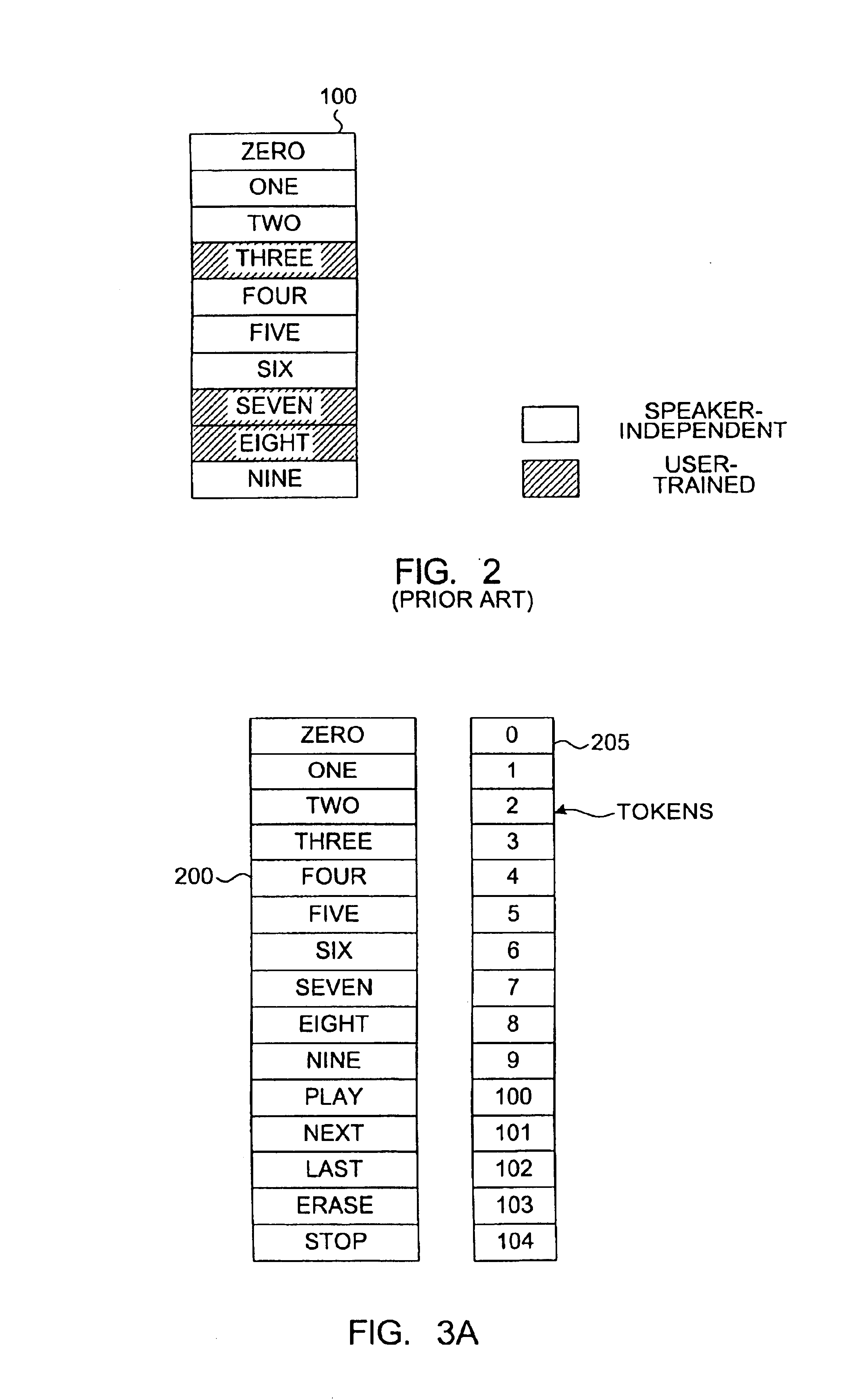

Speech recognition system and method permitting user customization

InactiveUS6873951B1Easy to identifyAutomatic call-answering/message-recording/conversation-recordingSpeech recognitionWord representationSpeech identification

A system and method for speech recognition includes a speaker-independent set of stored word representations derived from speech of many users deemed to be typical speakers and for use by all users, and may further include speaker-dependent sets of stored word representations specific to each user. The speaker-dependent sets may be used to store custom commands, so that a user may replace default commands to customize and simplify use of the system. Utterances from a user which match stored words in either set according to the ordering rules are reported as words.

Owner:RPX CLEARINGHOUSE

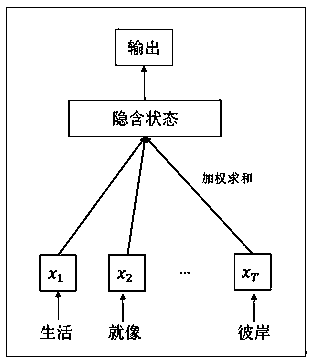

Paper title generation method capable of utilizing distributed semantic information

ActiveCN106383817AThe title is informativeConforms to semantic rulesSemantic analysisSpecial data processing applicationsWord representationData mining

The invention relates to a paper title generation method capable of utilizing distributed semantic information, and belongs to the field of natural language processing. The paper title generation method comprises the following steps of: firstly, utilizing a TextRank algorithm to obtain the first k keywords of a paper abstract, training a GloVe (Global Vector for word representation) model to obtain a word vector, and carrying out vector initialization on the extracted keywords; then, utilizing a recurrent neural network title based on a long short-term memory unit to obtain the title; and finally, carrying out title construction. A deep learning method is used for mining the deep semantic information of the title, and the generated title exhibits high readability and conforms to the semantic rule of the title.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

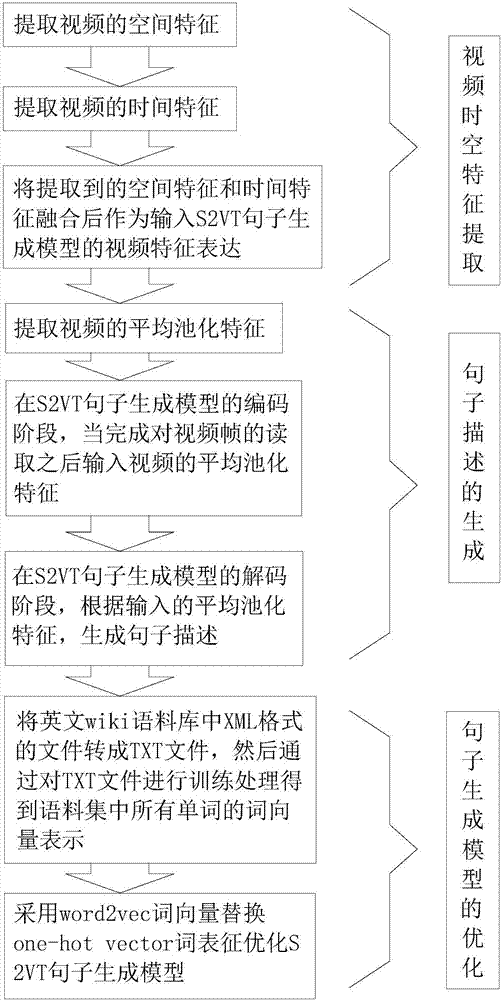

Video description method based on multi-feature fusion

ActiveCN107256221AEasy extractionRobust spatio-temporal featuresText processingCharacter and pattern recognitionFeature extractionOne-hot

The invention discloses a video description method based on multi-feature fusion. The method is characterized in that (1) deep spatial-temporal features of a video are extracted by fusing traditional CNN features and SIFT flow features; (2) an S2VT sentence generation model with added average pooling features being video overall features is adopted to generate corresponding sentence description according to the deep spatial-temporal features extracted in the step (1); and (3) a word2vec word vector method is adopted to replace one-hot vector word representation to optimize the sentence generation model in the step (2). The method has the advantages that more robust spatial-temporal features can be better extracted through multi-feature fusion; meanwhile, the average pooling features are added into the sentence generation model, so that more relations are established between visual information and words; finally, the word2vec word vector method is adopted to replace one-hot vector word representation, so that more relations are established between words, and video description performance is effectively improved.

Owner:SUZHOU UNIV

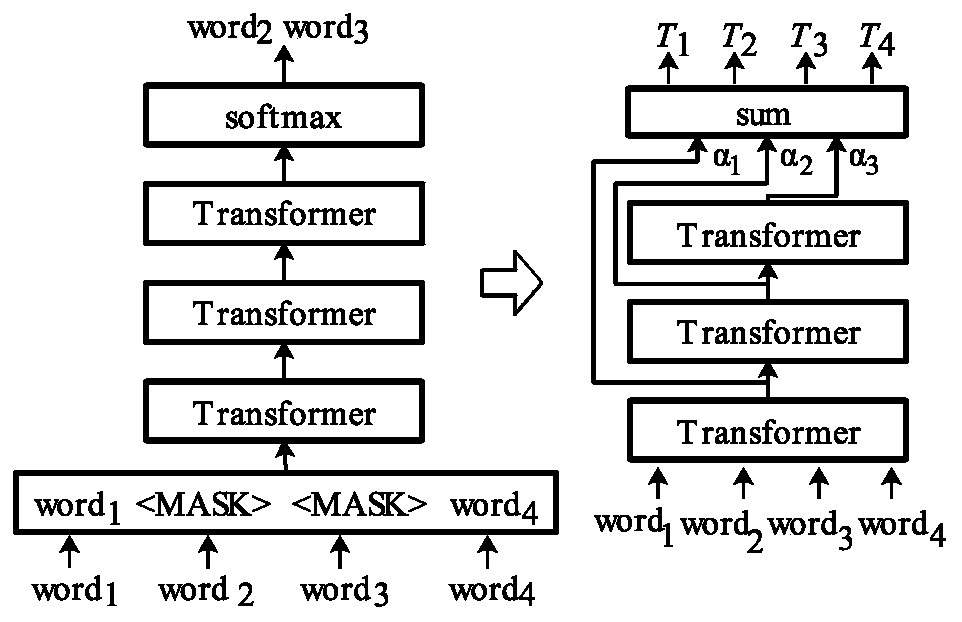

Deep dynamic context word representation model and method and computer

ActiveCN110222349AIncrease conceptSolve the problem of polysemyNatural language translationSpecial data processing applicationsData setTransformer

The invention belongs to the technical field of computer word representation, and discloses a deep dynamic context word representation model and method. The deep dynamic context word representation model is a masking language model formed by stacking multi-layer bidirectional Transformer encoders with layers of attention mechanisms. The network is a multi-layer neural network, and each layer of the network captures context information of each word in the input statement from different angles. Then different weights are given to each layer of the network through a layer attention mechanism. Finally, different word representations are synthesized according to the weights to form context representations of the words. Words generated by using the model represent three tasks of logic reasoning(MultiNLI), named entity recognition (CoNLL2003) and reading understanding (SQuAD) on a public data set, and the tasks are improved by 2.0%, 0.47% and 2.96% respectively compared with an existing model.

Owner:成都集致生活科技有限公司

A method of generating a word vector from a multi-task model

ActiveCN109325231AEnhance the amount of word vector informationGood serviceInternal combustion piston enginesNatural language data processingPart of speechWord representation

The present invention relates to the technical field of natural language processing in the field of computers and, more particularly, to a method of generating a word vector from a multi-task model. The method integrates the information of unsupervised task, classification task, part-of-speech tagging and other task models to enhance the information contained in the generated word vector, at the saem time, uses the efficient and good enough models for multitasking integration, so that the method can be used on large datasets. The method trains unsupervised tasks through the Global Vector forWord representation (Global Vectors for Word representation) model to obtain the information related to the language model. The classification task is trained by the Fasttext model to obtain the category information in the text. The part-of-speech task was trained by logistic regression model to obtain the part-of-speech related information. The method can quickly get high-quality word vectors with rich meanings on large-scale datasets, so that the method can be applied to the natural language processing task scenarios.

Owner:SUN YAT SEN UNIV

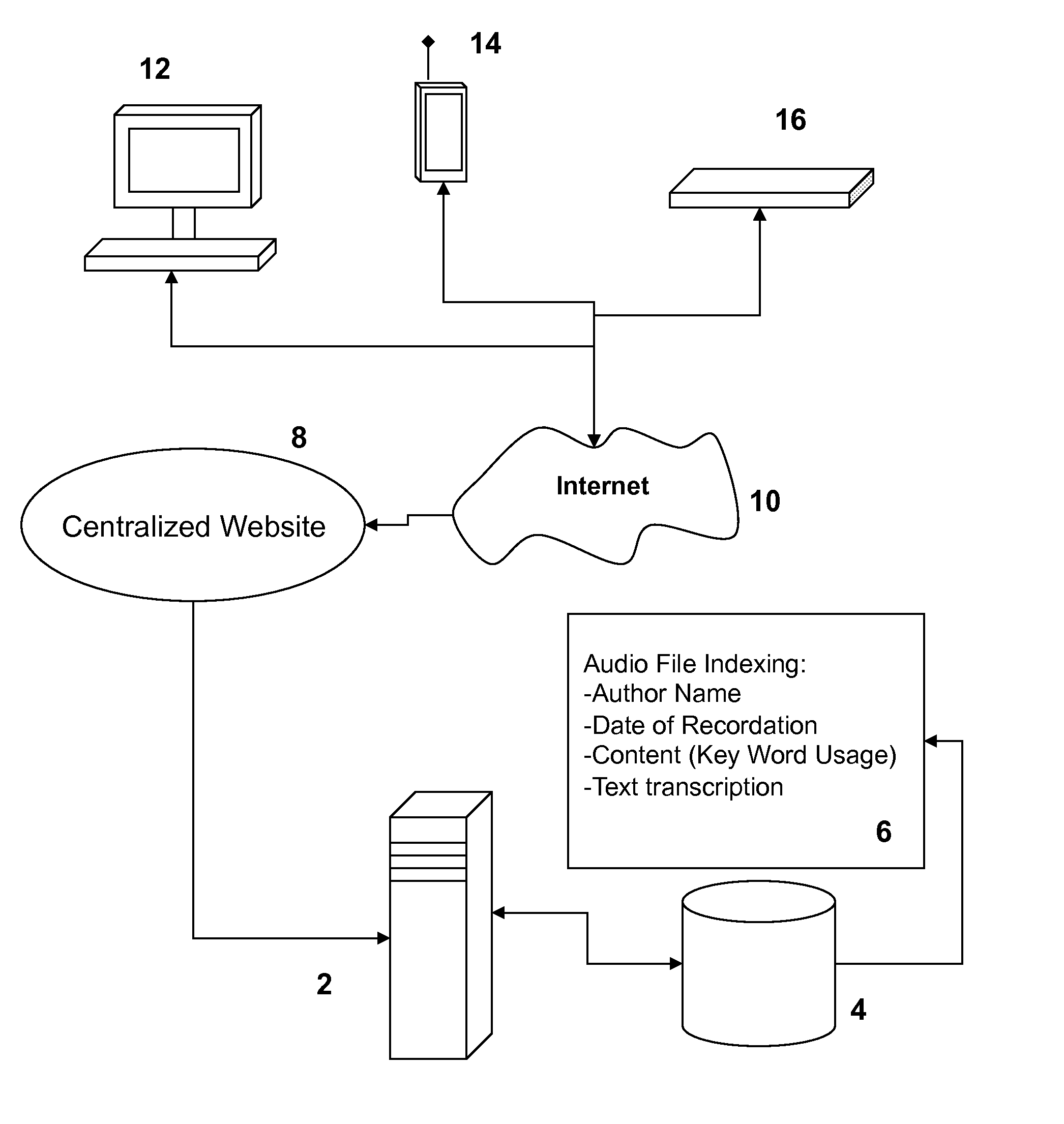

Systems and methods for identifying concepts and keywords from spoken words in text, audio, and video content

InactiveUS20130311181A1High scoreLow scoreSpeech recognitionSpecial data processing applicationsSpoken languageSpeech sound

Systems for identifying, summarizing, and communicating topics and keywords included within an input file are disclosed. The systems include a server that receives one or more input files from an external source; conducts a speech-to-text transcription (when the input file is an audio or video file); and applies an algorithm to the text in order to analyze the content therein. The algorithm calculates a total score for each word included within the text, which is calculated using a variety of metrics that include: a length of each word in relation to a mean length of words, the frequency of letter groups used within each word, the frequency of repetition of each word and word sequences, a part of speech that is represented by each word, and membership of each word within a custom set of words. The systems are further capable of generating a graphical representation of each input file, which depicts those parts of the input file that exhibit a higher total score from those that do not. In addition, the systems allow users to publish commentary—through an email interface—to such graphical representations of the input files.

Owner:VOICEBASE

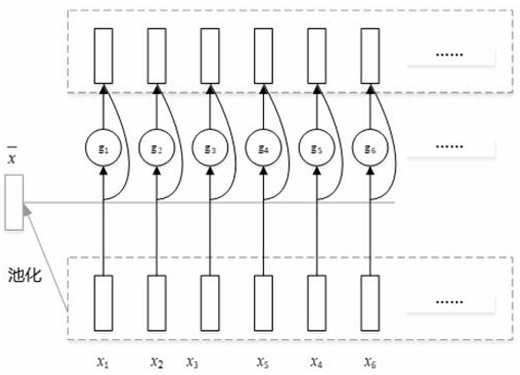

A social network short text recommendation method based on a word meaning topic model

PendingCN109766431AImprove accuracyDigital data information retrievalSpecial data processing applicationsEstimation methodsEnriched text

The invention relates to a social network short text recommendation method based on a word meaning topic model, which comprises the following specific steps of fusing the word representation learningbased on context attention mechanism of word meaning and synonym information into social network short text recommendation so as to enrich word level characteristics of a text; fusing the dirichlet multi-item mixed distribution short text topic modeling based on word meaning representation into social network short text recommendation so as to enrich text level characteristics; modeling the potential interest degree and tendency degree of the user evolved along with time by combining the social network user relationship, the short text topic characteristics of the user-related text based on word meaning representation and the potential relationship characteristics between the user and the text; predicting the potential tendency of the user to the text through a parameter estimation method,and selecting the text with the maximum tendency to recommend to the user to realize short text recommendation. According to the method, the word meaning information is fused into the short text topic modeling and the social network short text recommendation task, so that the accuracy of the social network short text recommendation task is improved.

Owner:TONGJI UNIV

Methods and systems of operating computerized neural networks for modelling csr-customer relationships

In one aspect, a computerized method for operating computerized neural networks for modelling CSR-customer relationships includes the step of receiving a user query. The user query comprises a set of digital text from a customer as input into an online CSR system. The method includes the step of filtering out unnecessary content of the user query. The method includes the step of splitting filtered user query in a sentence wise manner. The method includes the step of feeding the tokenized user query into a contextualized word representation model. The method includes the step of generating a set of context-aware feature vectors from the contextualized word representation model. With the set of context-aware feature vectors, the method implements a decision-making function to generate an identified customer query. The method includes the step of receiving an agent response, wherein the agent response is a response to the user query, and wherein the agent response comprises a set of digital text from an agent. With an LSTM network, the method generates a user query tensor vector. With the LSTM network, generating an agent query tensor vector. The method includes the step of concatenating the user query tensor vector and the agent query tensor vector to produce a single tensor, wherein the single tensor is processable by a neural network.

Owner:MOHANTY PRIYADARSHINI +1

Parsimonious continuous-space phrase representations for natural language processing

Systems and processes for natural language processing are provided. In accordance with one example, a method includes, at a first electronic device with one or more processors and memory, receiving a plurality of words, mapping each of the plurality of words to a word representation, and associating the mapped words to provide a plurality of phrases. In some examples, each of the plurality of phrases has a representation of a first type. The method further includes encoding each of the plurality of phrases to provide a respective plurality of encoded phrases. In some examples, each of the plurality of encoded phrases has a representation of a second type different than the first type. The method further includes determining a value of each of the plurality of encoded phrases and identifying one or more phrases of the plurality of encoded phrases having a value exceeding a threshold.

Owner:APPLE INC

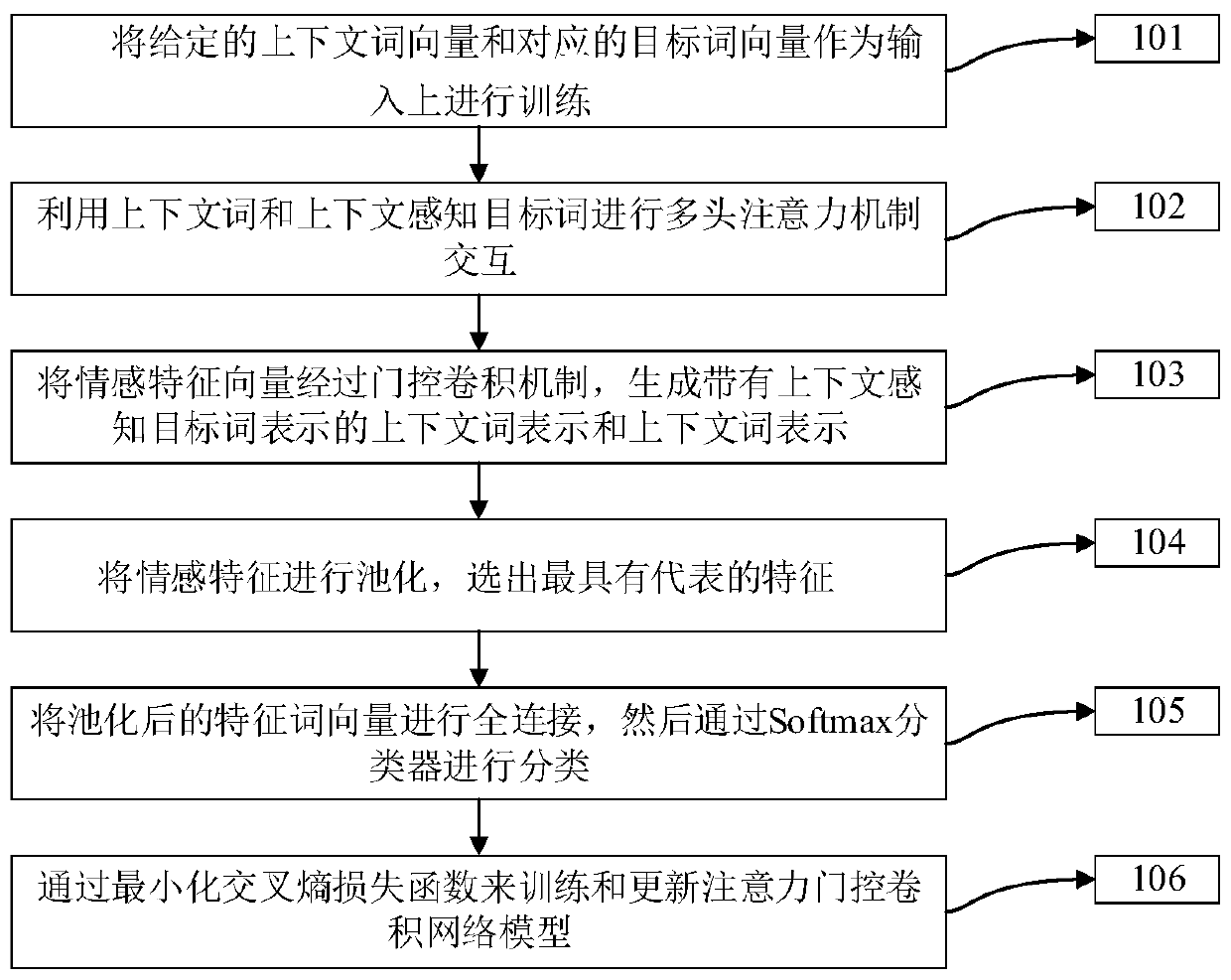

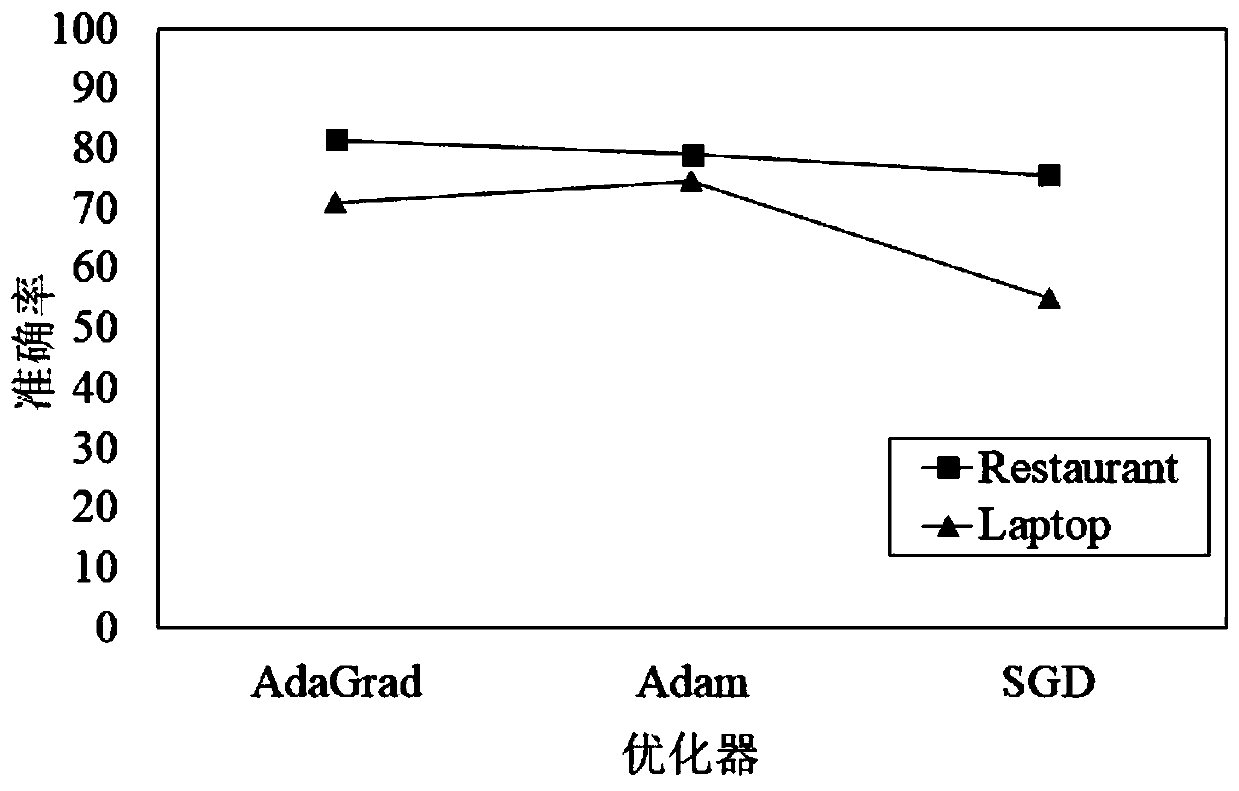

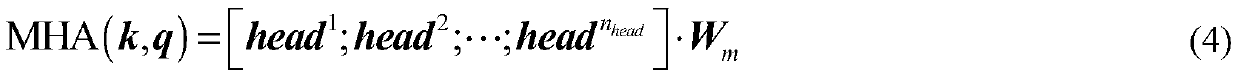

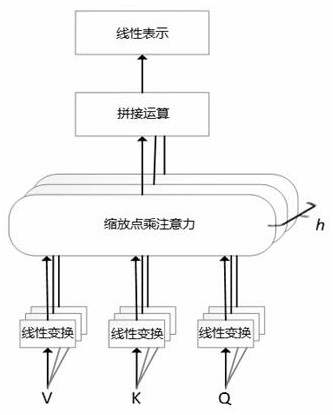

Target emotion analysis method and system based on attention gated convolutional network

ActiveCN110390017AImprove accuracyShorten convergence timeCharacter and pattern recognitionNeural architecturesAlgorithmNetwork model

The invention discloses a target sentiment analysis method and system based on an attention gated convolutional network, and the method comprises the steps: step 1, inputting a given context word vector and a corresponding target word vector, and enabling the given context word vector and the corresponding target word vector to serve as inputs for training; step 2, performing multi-head attentionmechanism interaction by utilizing the context words and the context sensing target words; step 3, enabling the sentiment feature vectors cintra and tinter generated by two channels to respectively pass through a gating convolution mechanism to generate a context word representation ai and a context word representation ui with context perception target word representations; step 4, pooling the emotion feature oi, and selecting the most representative feature; step 5, performing full connection on the pooled feature word vectors, and then performing classification through a Softmax classifier;and step 6, training and updating the attention gated convolutional network model by minimizing the cross entropy loss function. The accuracy can be effectively improved, the convergence time can be shortened, and the practicability is higher.

Owner:CIVIL AVIATION UNIV OF CHINA

Method and device for determining association relationship between entities, equipment and storage medium

PendingCN112507715AEasy to understandSemantic analysisCharacter and pattern recognitionEngineeringWord representation

The invention discloses a method and device for determining an association relationship between entities, and equipment and a storage medium, and is applied to the technical field of natural languageprocessing, knowledge maps and deep learning. According to the specific implementation scheme, the method comprises the steps of obtaining target association information, wherein the target association information comprises a first word representing a first entity, a second word representing a second entity and a third word representing a target association relationship; determining a first semantic feature according to the target association relationship and similar text segments for the target association information in a preset text segment library; determining a description text for the target associated information according to the target associated information and a preset knowledge graph; determining a second semantic feature according to the target associated information and the description text; and according to the first semantic feature and the second semantic feature, determining a confidence coefficient of a target association relationship between the first entity and thesecond entity.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

Entity question answering method and device based on neural network and terminal

InactiveCN108959556AHigh precisionImprove timelinessSemantic analysisNeural architecturesNerve networkSemantic representation

The invention provides an entity question answering method and device based on a neural network and a terminal, wherein the method comprises converting a word contained in a question and a candidate document into a word vector, and generating a corresponding question word vector sequence and a candidate document word vector sequence; inputting the problem word vector sequence and the candidate document word vector sequence into the long-short-term memory network model, and outputting the word coding sequence of the problem and the candidate document; matching the word encoding sequence of theproblem and the word encoding sequence of the candidate document to generate a candidate document representation based on matching information, wherein the candidate document representation comprisesa plurality of word representations; selecting start and end words from all word representations and generating entity answers based on the start and end words. The method reduces explicit computationand cumulative error, effectively utilizes semantic representation between questions and documents, and improves positioning accuracy of entity answers.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

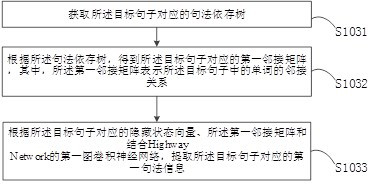

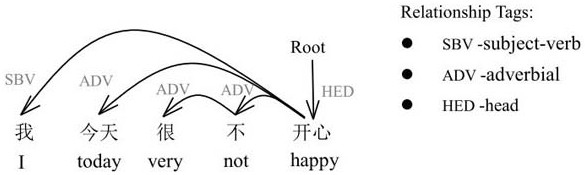

Text attribute word sentiment classification method based on deep learning network

ActiveCN112417157AClose relationshipImprove accuracyNatural language data processingNeural architecturesSentence pairNetwork model

The invention provides a text attribute word sentiment classification method based on a deep learning network. The text attribute word sentiment classification method comprises the steps of obtaininga word vector corresponding to a target sentence in a text; inputting the word vector into a hidden information extraction network model to obtain a hidden state vector; extracting first syntax information in a syntax dependency tree corresponding to the target sentence based on the hidden state vector and the first syntax extraction neural network; based on the hidden state vector and a second syntax extraction neural network, extracting second syntax information in a local syntax dependency tree corresponding to the target sentence; denoising the first syntax information and the second syntax information to obtain context representation and attribute word representation; averaging and pooling the context representation and the attribute word representation, and then splicing to obtain feature representation corresponding to the target sentence; and inputting the feature representation into an emotion classification function to obtain an emotion classification result. Compared with the prior art, the relation between the target sentence and the syntax information and the relation between the attribute words and the syntax information are fully considered, and the accuracy of sentiment classification is improved.

Owner:SOUTH CHINA NORMAL UNIVERSITY

Mian language emotion analysis method and device based on transfer learning

InactiveCN110334187ASentiment analysis worksAddresses lack of performance causing poor performanceSemantic analysisText database queryingPattern recognitionModularity

The invention relates to a Mian language emotion analysis method and a device based on transfer learning, and belongs to the technical field of natural language processing. The method comprises the following steps: firstly, carrying out cross-language words representation on Burmese vocabularies to realize mapping from a Burmese text to an English text semantic space; pre-training an English emotion classification model based on a CNN and attention mechanism network; learning cross-language emotion features by sharing neural network layer parameters of the English emotion classification model,and migrating the cross-language emotion features into the Mian language emotion classification model to realize Mian language emotion classification; and carrying out model optimizing by using marked Mian language data to finally realize Mian language emotion classification. According to the Mian language emotion analysis device based on transfer learning, effective emotion analysis is achievedon Mian language sentences, and the problem of poor performance caused by lack of Mian language emotion mark data, is solved.

Owner:KUNMING UNIV OF SCI & TECH

Visual language task processing system, training method and device, equipment and medium

PendingCN113792112AImprove accuracyVideo data clustering/classificationMetadata video data retrievalPattern recognitionEngineering

The invention provides a visual language task processing system, a visual language task processing method and device, equipment and a storage medium, and relates to the technical field of artificial intelligence. The system comprises a target encoder, a text encoder and a text decoder, the target encoder and the text encoder are respectively connected with the text decoder, and the target encoder is used for inputting a predetermined image; performing coding processing on the predetermined image to obtain a target representation sequence; outputting a target representation sequence; the text encoder is used for inputting text description; encoding the text description to obtain a word representation sequence; outputting a word representation sequence; the text decoder is used for inputting a target representation sequence and a word representation sequence; decoding the target representation sequence and the word representation sequence to obtain a multi-modal representation sequence; and outputting a multi-modal representation sequence, wherein the multi-modal representation sequence is used for processing the visual language task. The system can improve the accuracy of processing the visual language task to a certain extent.

Owner:BEIJING JINGDONG SHANGKE INFORMATION TECH CO LTD +1

Resume data information analysis and matching method and device, electronic equipment and medium

PendingCN111428488AFast and accurate matchImprove analytical abilityCharacter and pattern recognitionNatural language data processingAlgorithmEngineering

The invention provides a resume data information analysis and matching method and device, electronic equipment and a medium. According to the method, the retrieved resume can be preprocessed; obtaining a resume to be parsed, constructing a word segmentation directed acyclic graph according to a pre-constructed word segmentation dictionary so as to segment the resume to be analyzed; the word segmentation result of the to-be-analyzed resume can be quickly obtained; obtaining resume text, further constructing a co-occurrence matrix according to the resume text; determining keywords of the resumetext based on a co-occurrence matrix; obtaining a character sequence in the keyword; performing word representation processing on the character sequence by utilizing a word representation model, wordrepresentation of the character sequence is obtained. According to the method, the word representation is input into the resume label analysis model to obtain the resume label sequence, and the similarity between each label in the resume label sequence and the label of each post is further calculated to determine the resume matched with each post, so that the post and the resume can be quickly, accurately and intelligently matched.

Owner:PING AN TECH (SHENZHEN) CO LTD

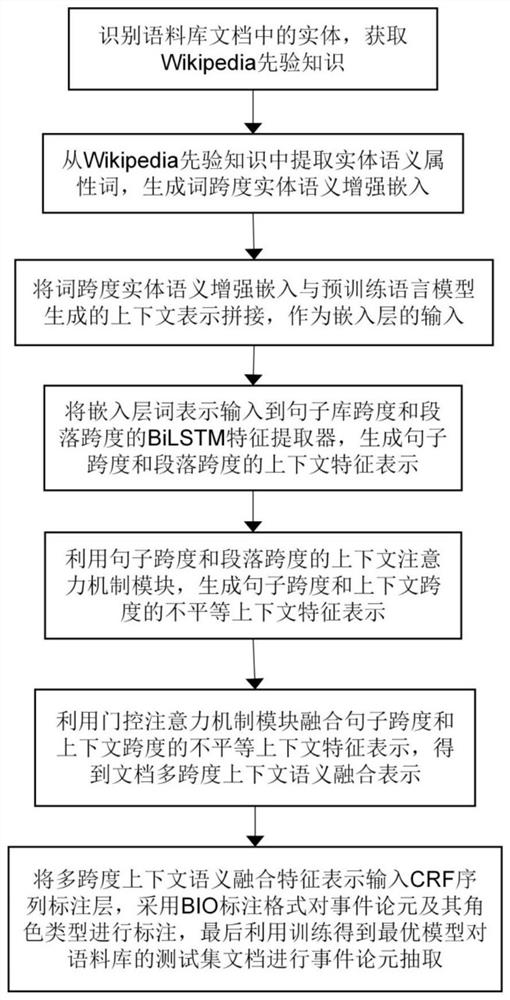

Document-level event argument extraction method based on sequence labeling

PendingCN113591483ASolve the problem of fragmentationGood effectSemantic analysisNeural architecturesWord representationSemantic feature

The invention requests to protect a document-level event argument extraction method based on sequence labeling, which comprises the following steps of obtaining Wikipedia priori knowledge related to a corpus entity, and generating a word span entity semantic enhancement embedding representation; splicing the word span entity semantic enhancement embedded representation with a context representation obtained by a pre-training language model to obtain word vector input of an embedded layer; inputting the word representation into a multi-span bidirectional recurrent neural network to obtain a multi-span context feature representation of the word; inputting the multi-span context feature representation into a context attention mechanism module and a gated attention mechanism module, and obtaining a context semantic fusion feature representation of the word; and finally, carrying out event argument extraction on the output feature representation by adopting sequence labeling, and carrying out event argument extraction on an unknown document by utilizing an optimal model obtained by training. According to the method, the extraction effect of the document-level event argument is effectively improved by integrating priori knowledge and multi-span upper and lower semantic feature representation.

Owner:CHONGQING UNIV OF POSTS & TELECOMM

Text multi-label classification method and system based on attention mechanism and GCN

PendingCN112711953ASolve technical problems of classification accuracyImprove feature extractionSemantic analysisNeural architecturesFeature extractionMulti-label classification

The invention discloses a text multi-label classification method based on an attention mechanism and a GCN, and the method comprises the steps: obtaining a to-be-classified text, carrying out the preprocessing of the to-be-classified text, and converting the preprocessed to-be-classified text into a multi-dimensional vector through a Glove pre-training word vector; and inputting the obtained multi-dimensional vector into a pre-trained classification model to obtain a classification result of the to-be-classified text. According to the method, semantic correlation among texts, words and labels is constructed by utilizing an attention mechanism; on one hand, texts and label information are summarized to form a new text word representation form, and text feature extraction is more sufficiently carried out; on the other hand, texts and word information are summarized to form a new label representation form, and a graph neural network is used to carry out correlation modeling of the labels. And the text multi-label classification effect is improved from two angles.

Owner:HUNAN UNIV

Remote supervision relationship extraction method with entity perception based on PCNN model

ActiveCN111859912ACapture long-term dependenciesEasy to integrateSemantic analysisNeural architecturesRelation classificationSemantic representation

The invention provides a remote supervision relation extraction method with entity perception based on a PCNN model. The method specifically comprises the following steps: combining word embedding with head entity and tail entity embedding and relative position embedding by using a multi-head self-attention mechanism to generate enhanced word semantic representation of a perceptible entity, whichcan capture semantic dependence between each word and an entity pair; introducing a global door, and combining the enhanced word representation perceived by each entity in the input sentence with theaverage value of the enhanced word representations to form a final word representation input by the PCNN, and in addition, in order to determine a key sentence segment in which the most important relationship classification information appears. According to the method, another gate mechanism is introduced, and different weights are allocated to each sentence segment, so the effect of key sentencesegments in the PCNN is highlighted. Experiments show that the remote supervision relationship extraction method provided by the invention can improve the prediction capability of the remote supervision relationship in the sentence.

Owner:海乂知信息科技(南京)有限公司

Method and system for analyzing potential theme phrases of text data

ActiveCN110134951AStrong semantic expression abilityStrong consistencyNatural language data processingSpecial data processing applicationsCollocationData set

Owner:HUAIYIN INSTITUTE OF TECHNOLOGY

Content title generation method, apparatus and device based on neural network, and computer readable storage medium

ActiveCN112104919ALarge amount of playbackHigh playback completionVideo data browsing/visualisationCharacter and pattern recognitionEncoder decoderAlgorithm

The invention discloses a content title generation method based on a neural network comprising an encoder-decoder network, and the method comprises the steps: respectively generating vector representation of each type of information in at least two types of information for the content; respectively processing the vector representation of each type of information by using each encoder; processing the output of each encoder by using a decoder to generate one or more title words of the content, and calculating the copying probability of the words by calculating the attention weight of the words in the text by using the decoder, determining whether to copy from words in the text or generate title words from word representations generated after vector representations of the text are processed by an encoder; and generating the content title based on the one or more title words. The invention further discloses a corresponding device and the like.

Owner:TENCENT TECH (SHENZHEN) CO LTD

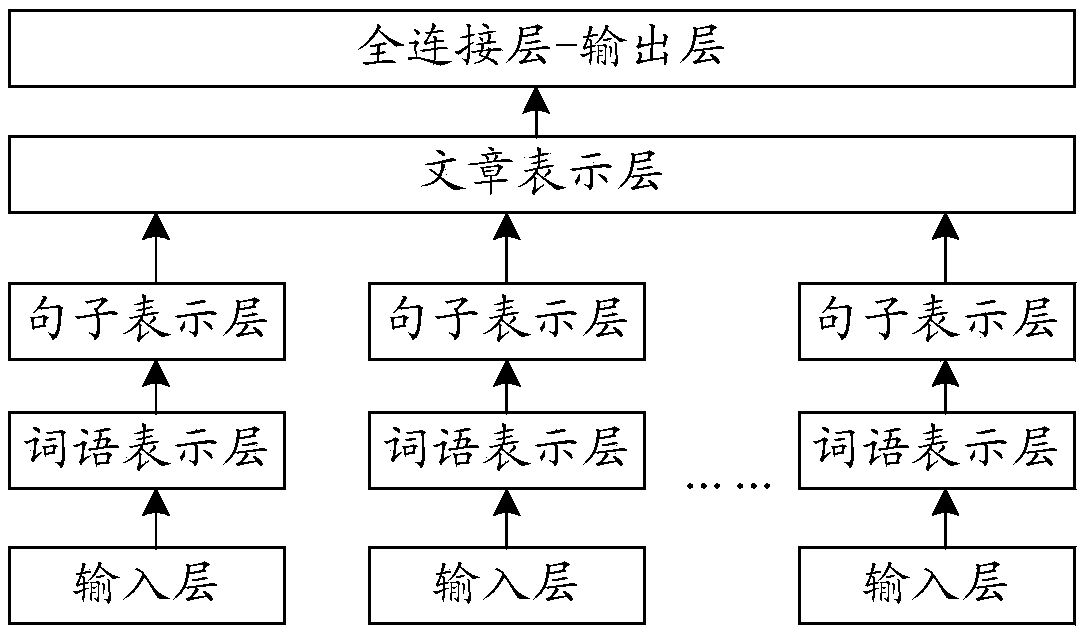

Text classification method and device

PendingCN111125354AImprove classification efficiencyImprove classification accuracySemantic analysisText database clustering/classificationText categorizationClassification methods

The invention discloses a text classification method and device. The method comprises the steps of preprocessing a text to obtain a to-be-classified text; inputting the to-be-classified text into a target depth classification model, the target depth classification model being determined by training and learning a training sample with an identified viewpoint category; performing vectorization representation on the to-be-classified text according to the target depth classification model, determining a word representation vector, a sentence representation vector and an article representation vector of the to-be-classified text; and classifying the to-be-classified text based on the word representation vector, the sentence representation vector and the article representation vector, and determining a classification result of the to-be-classified text, the classification result at least comprising a viewpoint category. The technical problem that the actual viewpoint category of the text cannot be accurately determined due to the fact that a traditional text classification scheme is poor in classification effect is solved.

Owner:BEIJING GRIDSUM TECH CO LTD

Implicit discourse relationship analysis method and system

ActiveCN111651974AAchieve integrationEasy to identifySemantic analysisCharacter and pattern recognitionAlgorithmWord list

The invention provides an implicit discourse relationship analysis method based on a semantic-syntax dual-path graph convolutional network, and belongs to the technical field of natural language processing application. The method comprises the following steps that of firstly, conducting word segmentation is conducted on corpora; constructing a word list and the like for preprocessing, and then initializing word vectors by utilizing GloVe; and respectively inputting the two initialized arguments into Bi-LSTM codes to obtain word representations of the Bi-LSTM codes fused with the context information. T; using the Bi-LSTM output is used to subsequently initialize representations of graph nodes in a binary channel graph convolutional network. , wWherein the dual-path graph convolutional network comprises two GCN networks, extracting corresponding features from the interaction structure graph and the syntax structure graph respectively, and splicing different features obtained by two pathsfor classification. And ; updating parameters in the model to convergence by adopting an Adam algorithm, and completing the analysis of the implicit discourse relationship by using the parameter withthe optimal performance.

Owner:BEIJING INSTITUTE OF TECHNOLOGYGY

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com