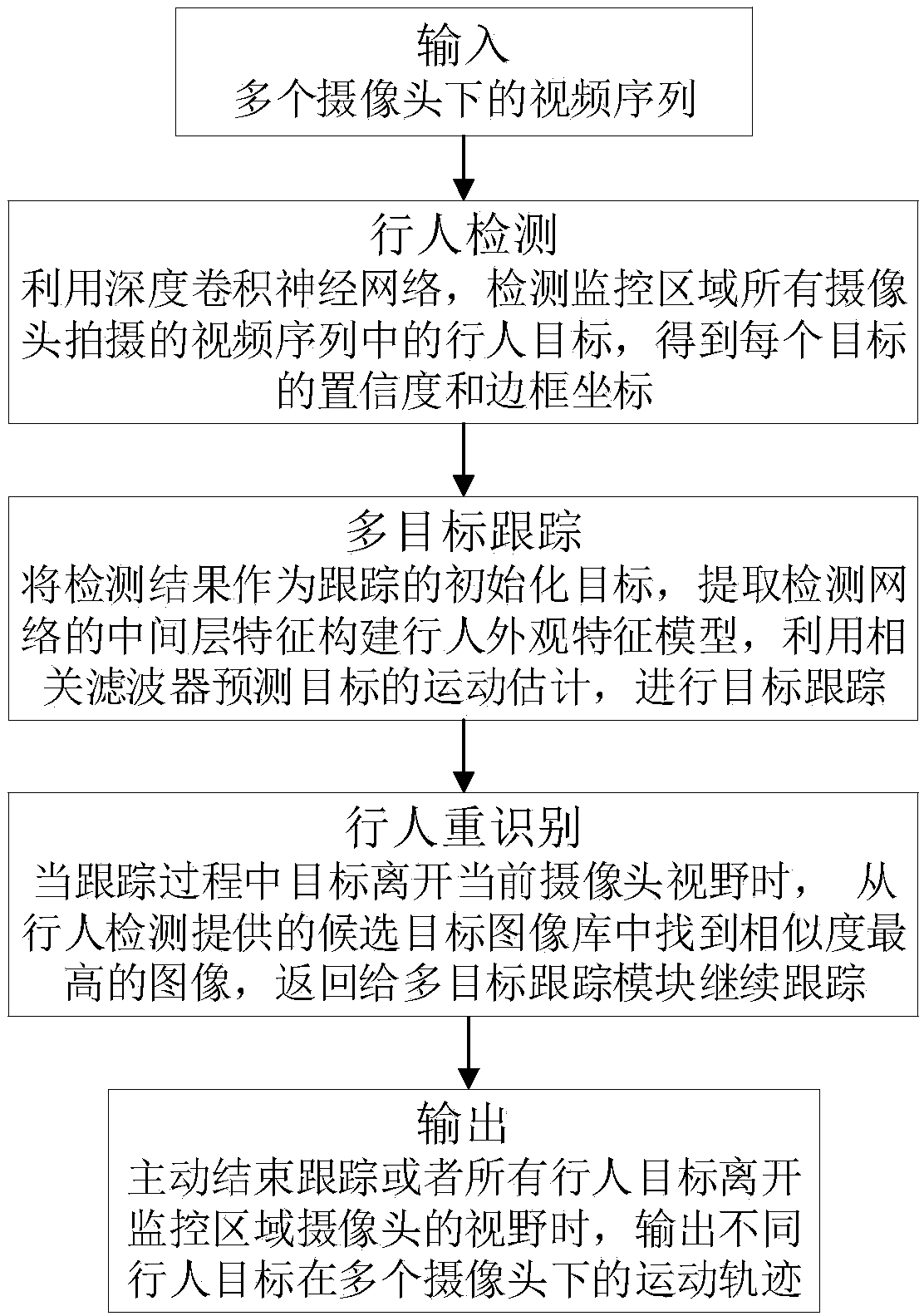

Cross-camera pedestrian detection tracking method based on depth learning

A pedestrian detection and cross-camera technology, which is applied in the fields of computer vision and video analysis, can solve problems such as viewing angle changes, scale changes, and illumination changes, and achieve the effects of increasing speed, satisfying real-time monitoring, and improving tracking accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

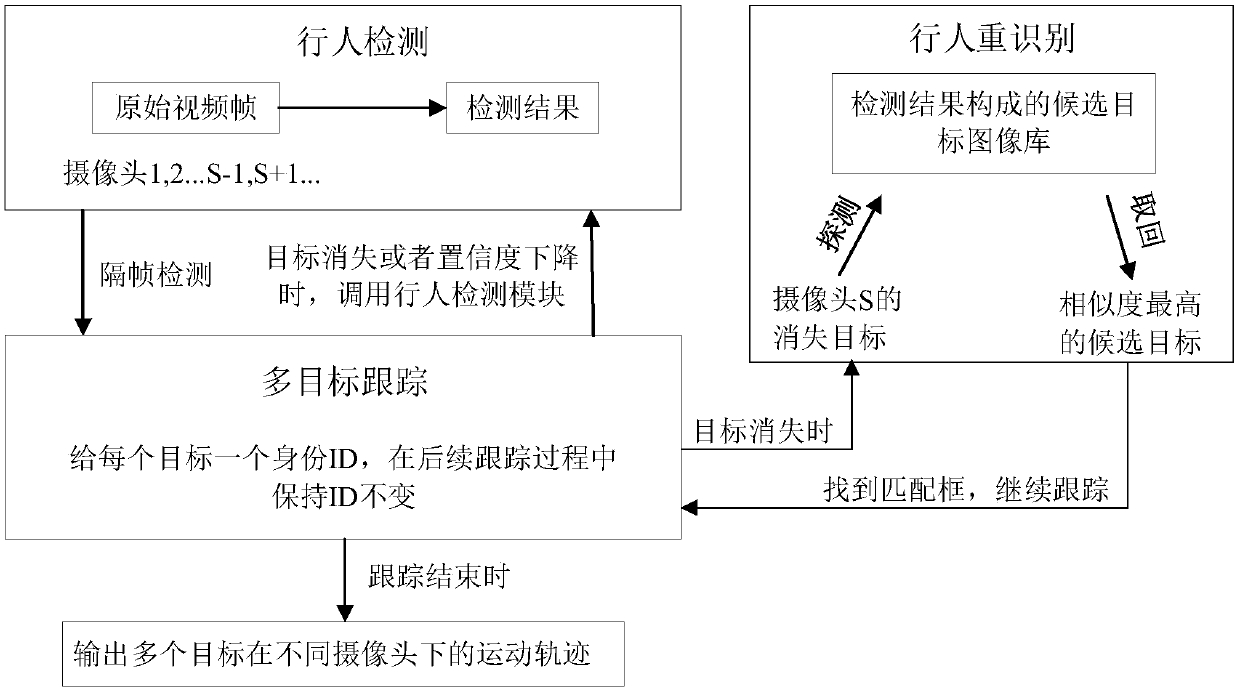

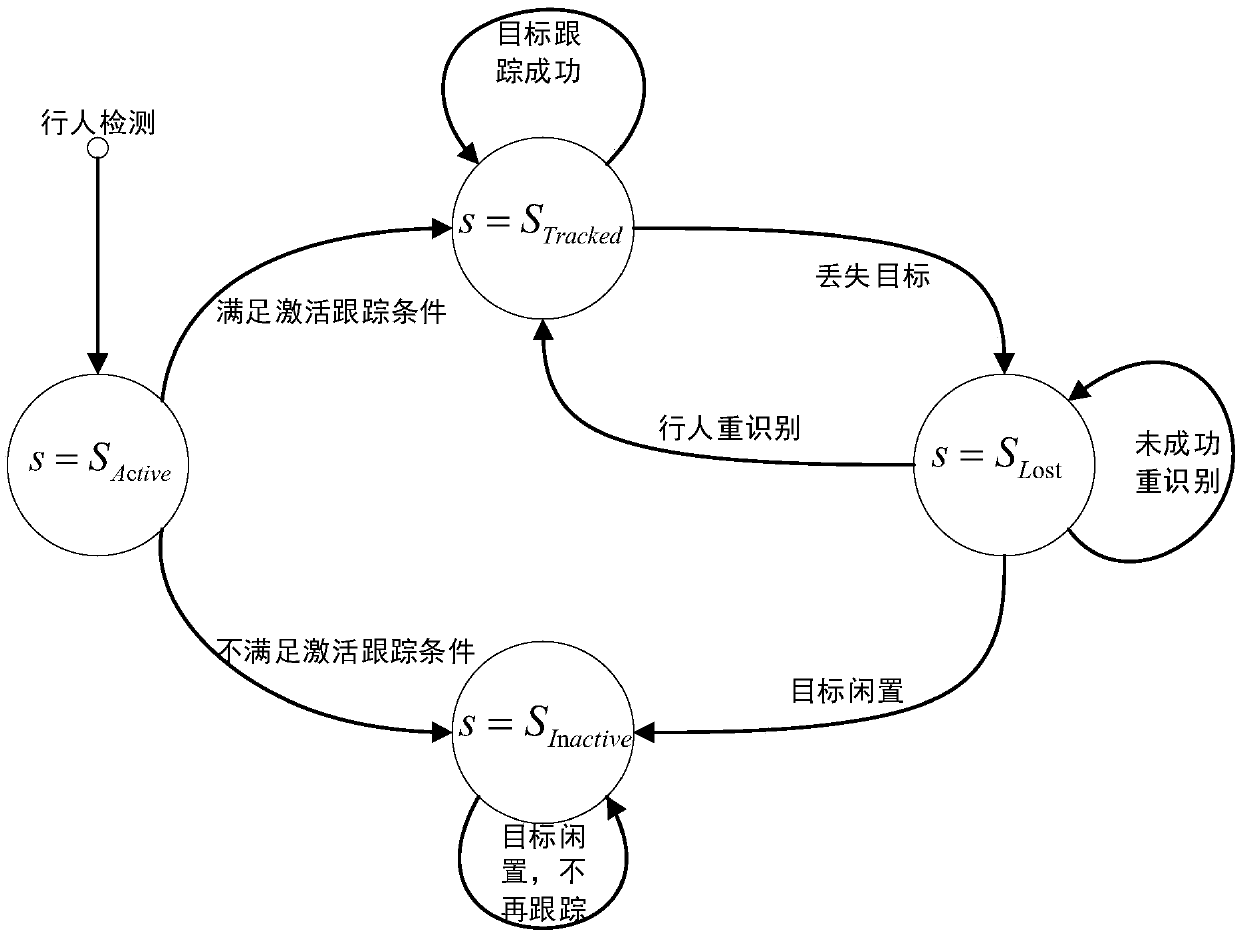

Method used

Image

Examples

Embodiment

[0092] Embodiment specifically comprises the following steps:

[0093] Step S41, assuming that for a disappearing target, N-1 candidate images are obtained through pedestrian detection, and the input of the pedestrian re-identification module is an image of the disappearing target passed in by the target tracking module and N-1 images passed in by the pedestrian detection module Candidate images, for each image, first pass through the first layer (lower layer) of the pedestrian detection network to obtain the shallow feature map, and then use the saliency detection algorithm to extract the saliency of the target to remove the redundant information in the background and send it to the deep volume The product layer, in the fifth layer (high layer) output to get the deep feature map. To fuse the shallow feature map and the deep feature map, the deep feature map can be upsampled to the same size as the shallow feature map, and then connected together, so the number of channels is ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com