Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

39results about How to "Enhanced feature information" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

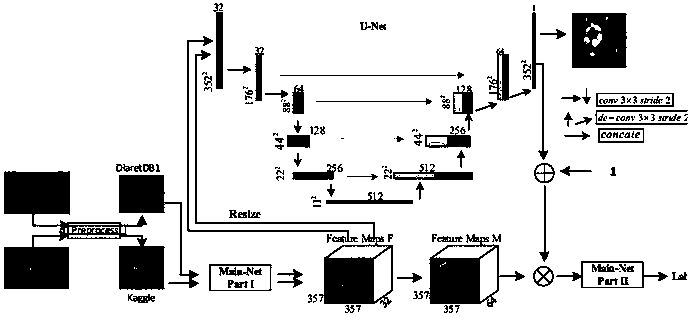

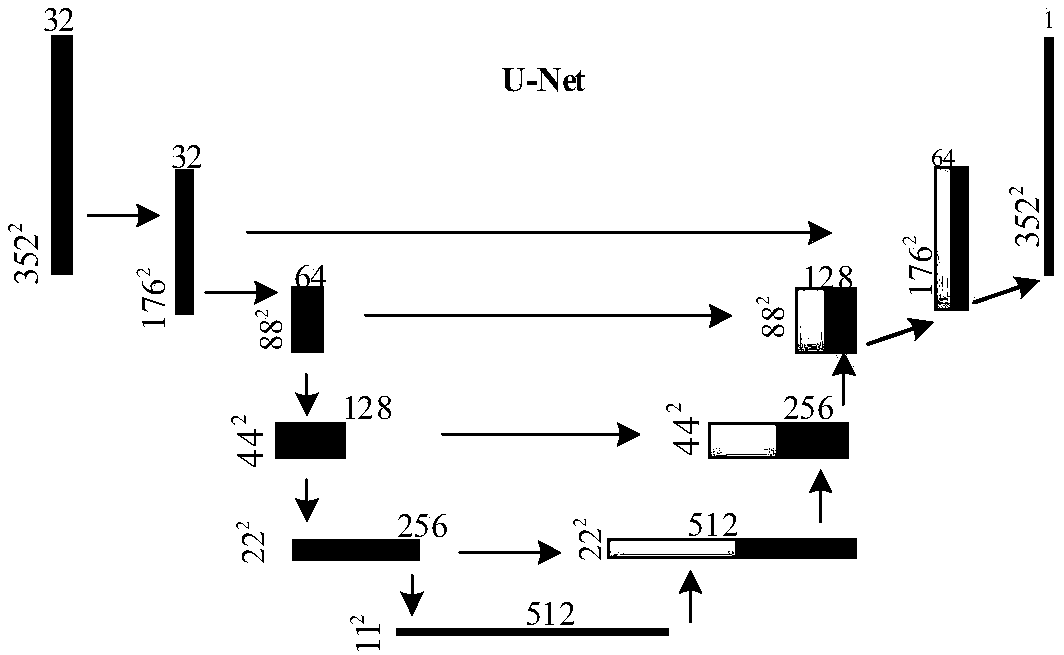

Attention mechanism-based in-depth learning diabetic retinopathy classification method

ActiveCN108021916AEnhanced feature informationImproving Lesion Diagnosis ResultsImage enhancementImage analysisNerve networkDiabetes retinopathy

The invention discloses an attention mechanism-based in-depth learning diabetic retinopathy classification method comprising the following steps: a series of eye ground images are chosen as original data samples which are then subjected to normalization preprocessing operation, the preprocessed original data samples are divided into a training set and a testing set after being cut, a main neutralnetwork is subjected to parameter initializing and fine tuning operation, images of the training set are input into the main neutral network and then are trained, and a characteristic graph is generated; parameters of the main neutral network are fixed, the images of the training set are adopted for training an attention network, pathology candidate zone degree graphs are output and normalized, anattention graph is obtained, an attention mechanism is obtained after the attention graph is multiplied by the characteristic graph, an obtained result of the attention mechanism is input into the main neutral network, the images of the training set are adopted for training operation, and finally a diabetic retinopathy grade classification model is obtained. Via the method disclosed in the invention, the attention mechanism is introduced, a diabetic retinopathy zone data set is used for training the same, and information characteristics of a retinopathy zone is enhanced while original networkcharacteristics are reserved.

Owner:NANJING UNIV OF AERONAUTICS & ASTRONAUTICS

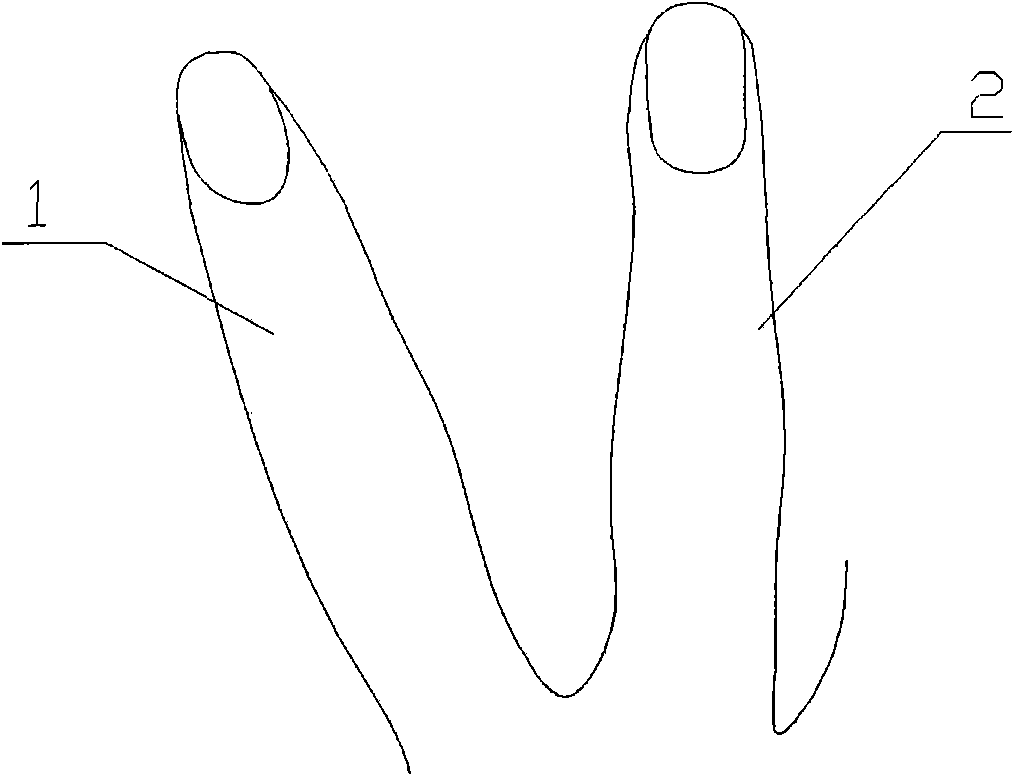

Vein feature extraction method and method for carrying out identity authentication by utilizing double finger veins and finger-shape features

InactiveCN102043961ALow selectivityReduce distortionCharacter and pattern recognitionVeinRectangular coordinates

The invention relates to a method for carrying out identity authentication by utilizing double finger veins and finger-shape features, comprising the following steps of: firstly registering and storing vein features and information of finger-shape features of double fingers of a user; collecting, extracting and authenticating the vine features and the information of the finger-shape features of double fingers of a customer; respectively comparing the extracted double finger vein features and finger-shape features with the stored double finger vein features and information of the finger-shape features, and carrying out decision-level fusion; collecting images of hand shapes and finger veins, establishing a rectangular coordinate system by taking an intersection point between the double fingers as an original point and determining a region of interest (ROI); and finally respectively extracting the vein features and the finger-shape features, comparing with the stored double finger veins and information of the finger-shape features, and carrying out decision-level fusion. In the invention, two kinds of information of veins and finger shapes can be respectively extracted from one image and the two kinds of information are fused; and by introducing the coordinate system with the intersection point of the two fingers as the original point, the method provided by the invention not only improves the positioning precision, but also is convenient for users to use and effectively improves the system performance through double feature fusion.

Owner:BEIJING JIAOTONG UNIV

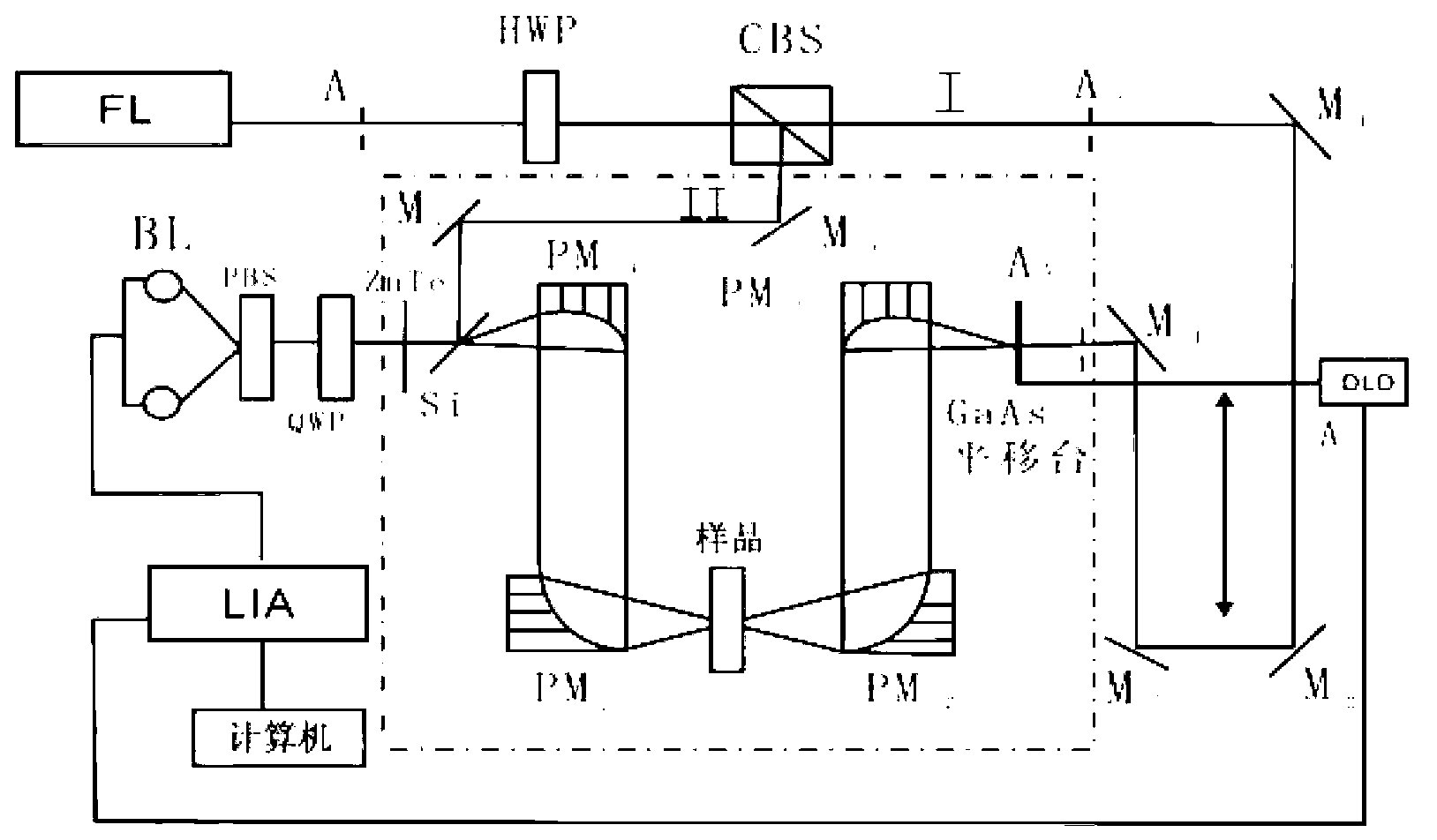

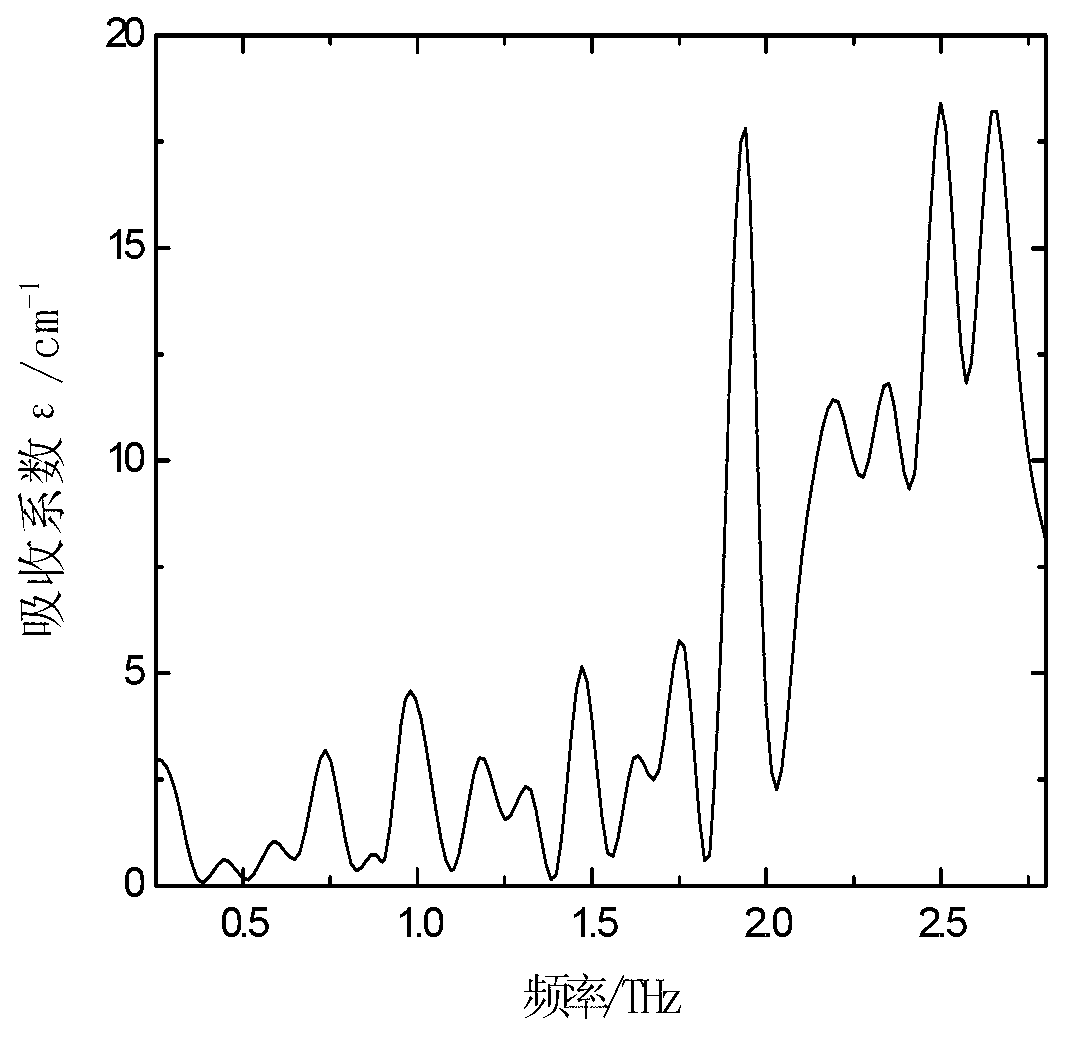

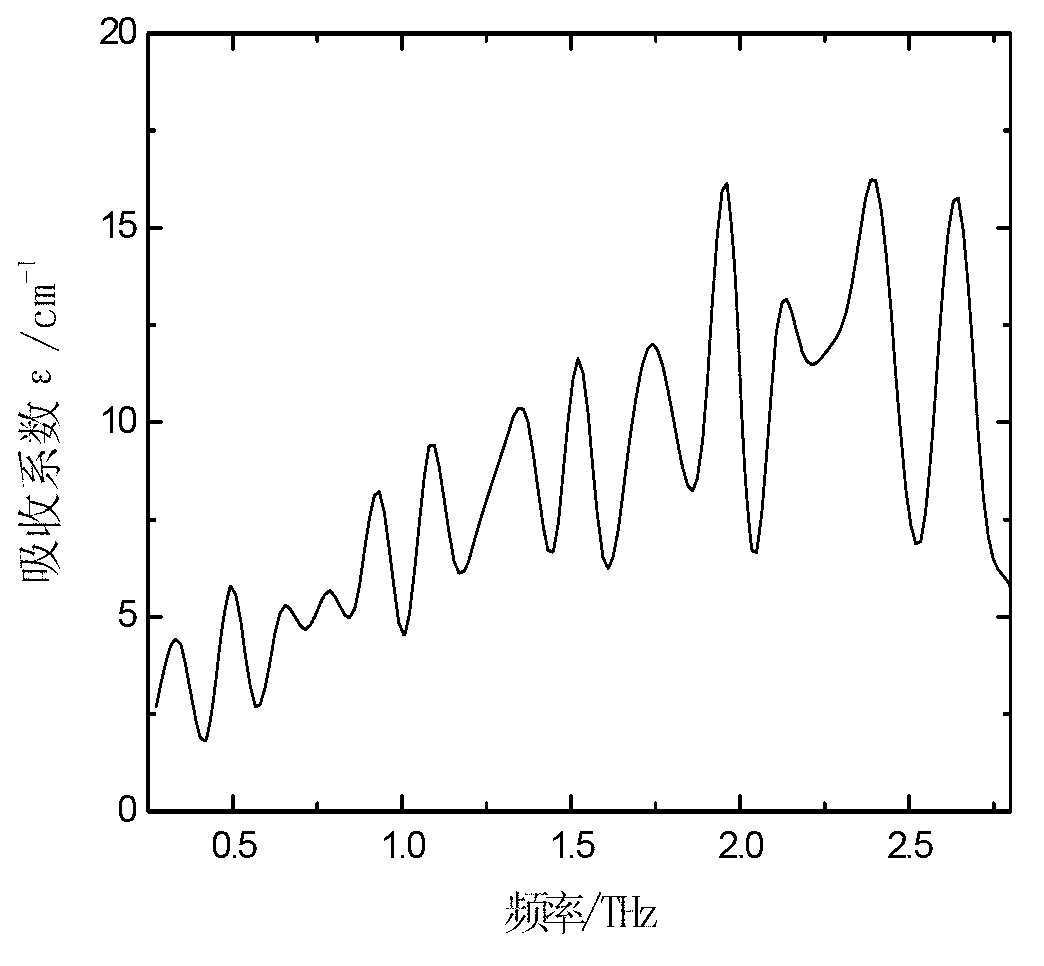

Method for identifying bamboo hemp fiber by using terahertz time-domain spectroscopy technique

InactiveCN103308473AAccurate identificationSmall amount of sampleColor/spectral properties measurementsCircular discRefractive index

The invention relates to the field of textile materials, and aims at providing a method for identifying a bamboo hemp fiber by using a terahertz time-domain spectroscopy technique. The method comprises the following steps of: respectively mixing and pressing a fiber with polyethylene powder to prepare a disc sheet after preparing the powder from the fiber as a guide sample; testing by adopting a transmission-type terahertz time-domain spectroscopy test device, thus respectively obtaining terahertz pulse time-domain waveforms through each guide sample; calculating the absorption coefficient and the refractive index of each guide sample, so as to respectively draw out an absorption spectrum and a refraction spectrum of the fiber; obtaining an absorption coefficient and a refractive index of the fiber to be identified; and confirming the type of the fiber to be identified according to the corresponding relation with the absorption spectrum and the refraction spectrum of each guide sample. According to the method, required sample quantity is simple, samples are simple and convenient to prepare, test time is short, and chemical pollutants are not caused in a test process; the absorption spectrum and the refraction spectrum of the sample at a terahertz waveband can be obtained at the same time when the sample is tested; the feature information of the samples is increased; the reliability of a test result is also improved.

Owner:ZHEJIANG SCI-TECH UNIV

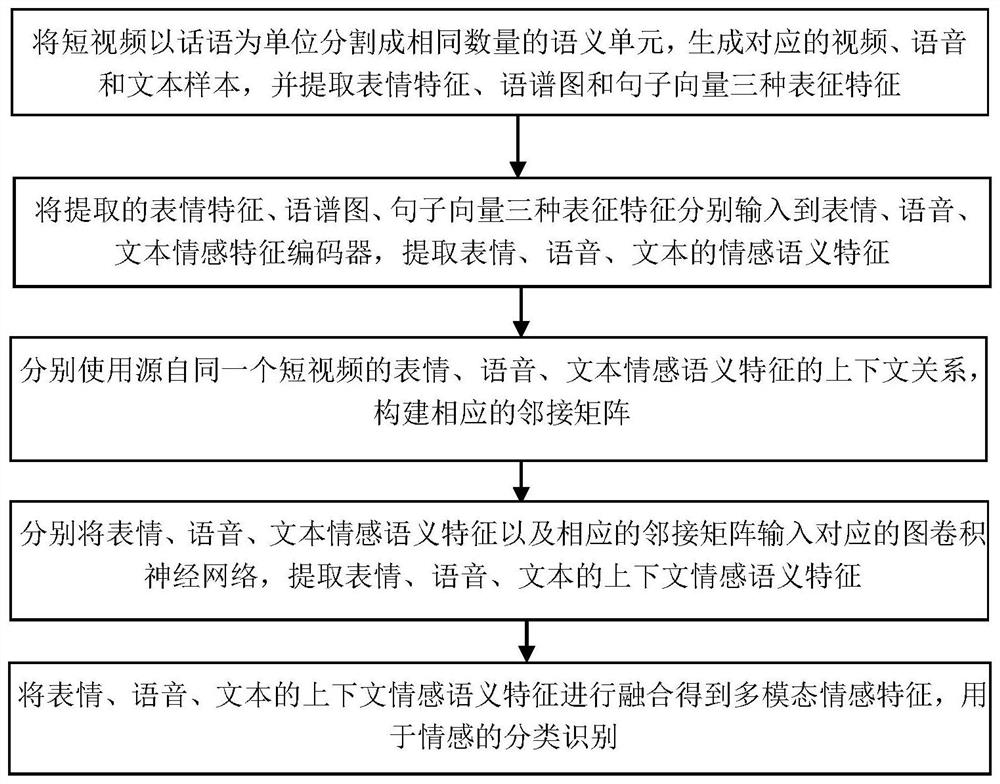

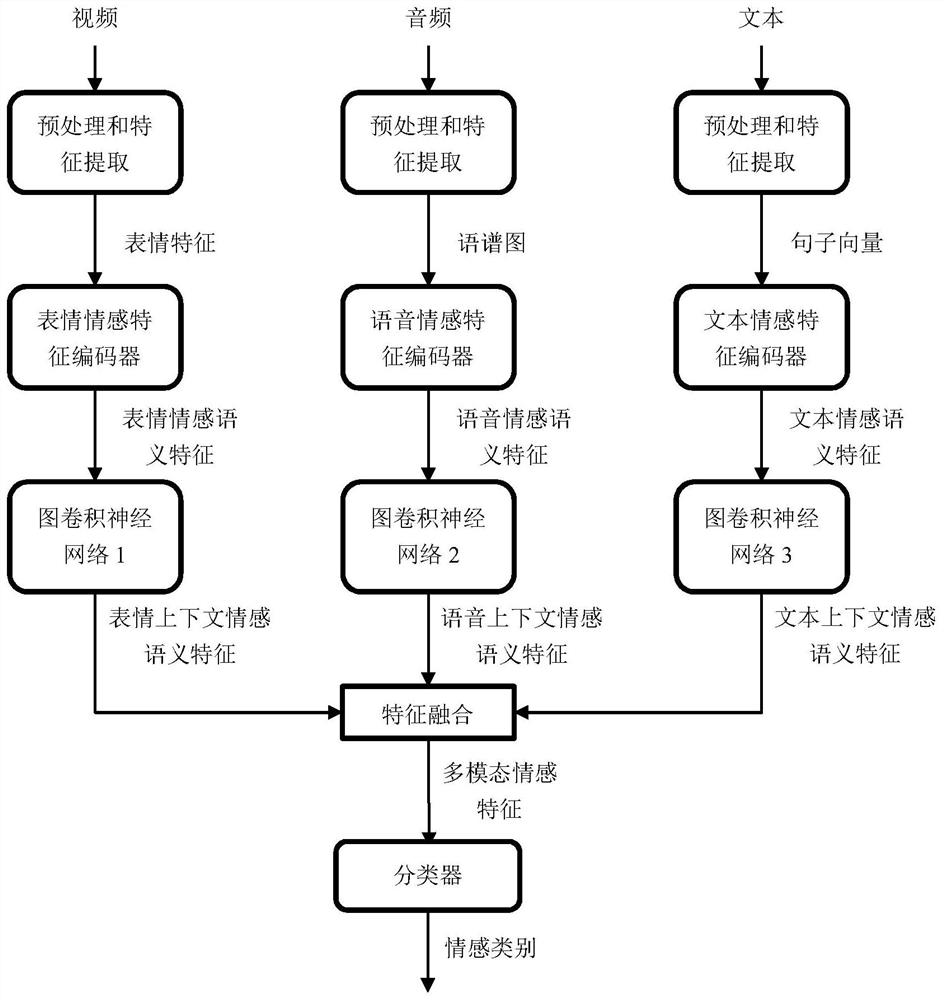

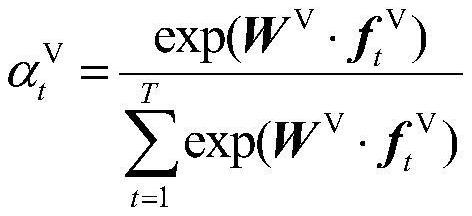

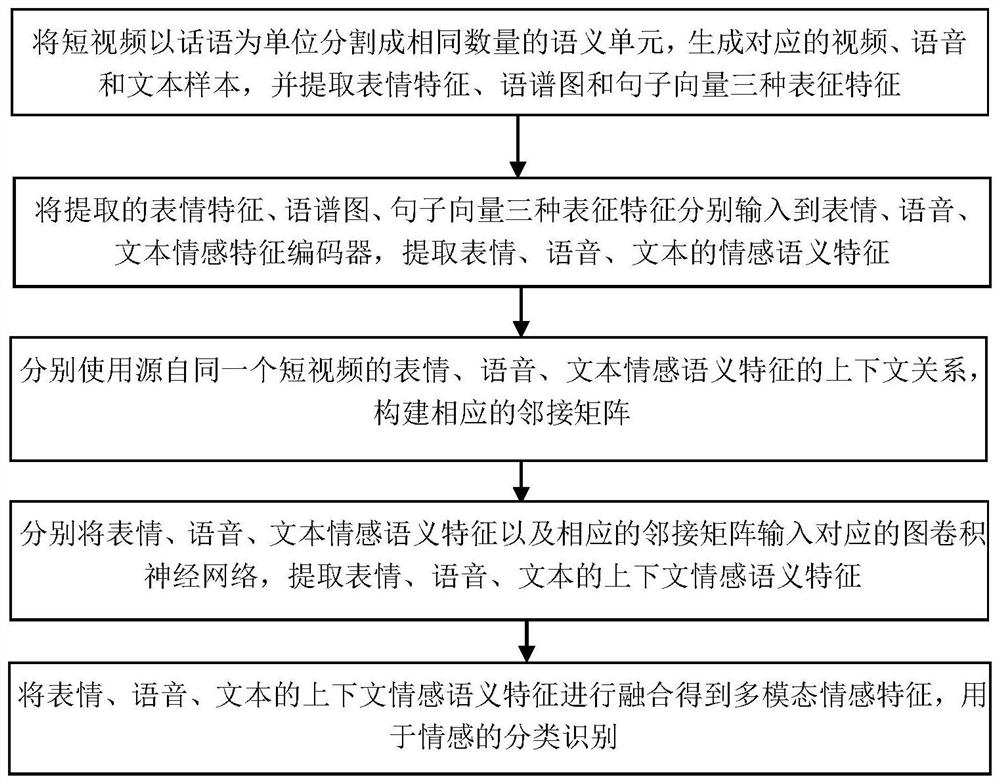

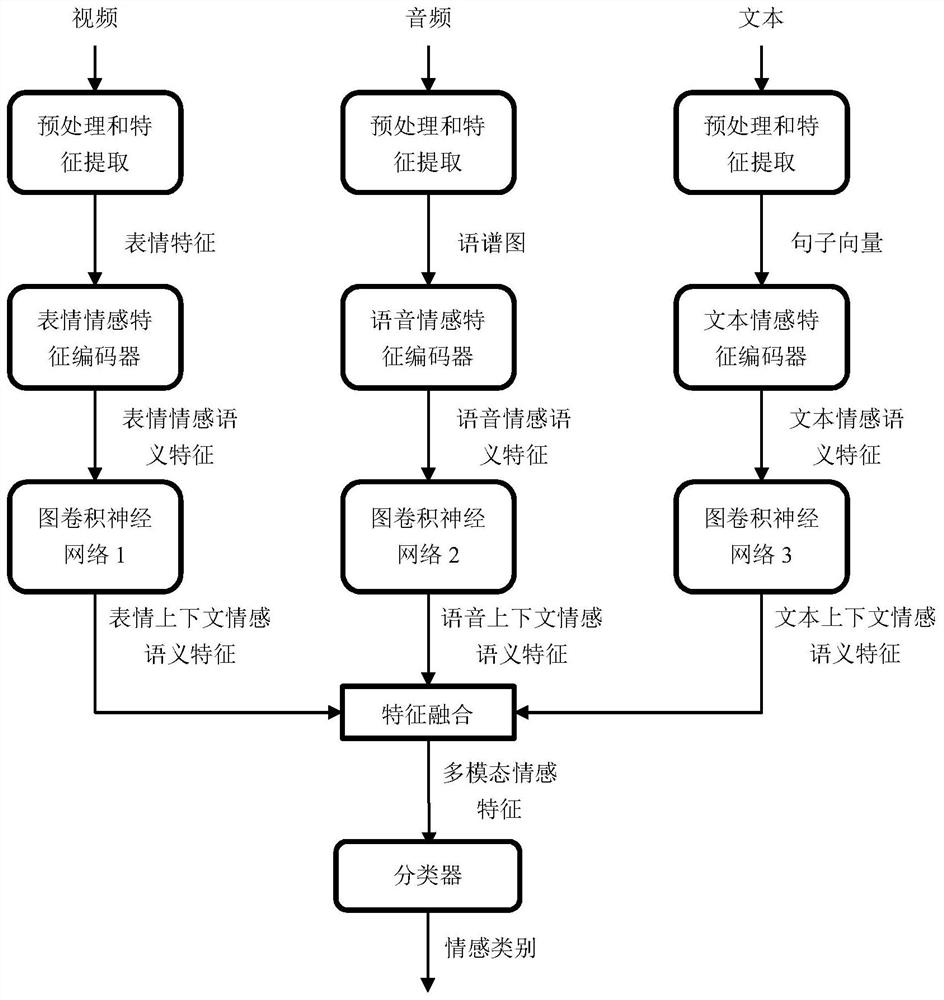

Sentiment classification method and system based on multi-modal context semantic features

ActiveCN112818861ARich Modal InformationFully acquire emotional semantic featuresCharacter and pattern recognitionNeural architecturesEmotion classificationSemantic feature

The invention discloses a sentiment classification method and system based on multi-modal context semantic features. The method comprises the following steps: segmenting a short video into semantic units with the same number by taking an utterance as a unit, generating corresponding video, voice and text samples, and extracting three characterization features, namely an expression feature, a spectrogram and a sentence vector; respectively inputting the three extracted characterization features into expression, voice and text emotion feature encoders, and extracting corresponding emotion semantic features; constructing corresponding adjacent matrixes by using context relationships of emotion semantic features of expressions, voices and texts; and inputting the expression emotion semantic features, the voice emotion semantic features, the text emotion semantic features and the corresponding adjacent matrixes into corresponding graph convolutional neural networks, extracting corresponding context emotion semantic features, and fusing the context emotion semantic features to obtain multi-modal emotion features for emotion classification and recognition. According to the method, the context relationship of the emotion semantic features is better utilized through the graph convolutional neural network, and the accuracy of emotion classification can be effectively improved.

Owner:NANJING UNIV OF POSTS & TELECOMM

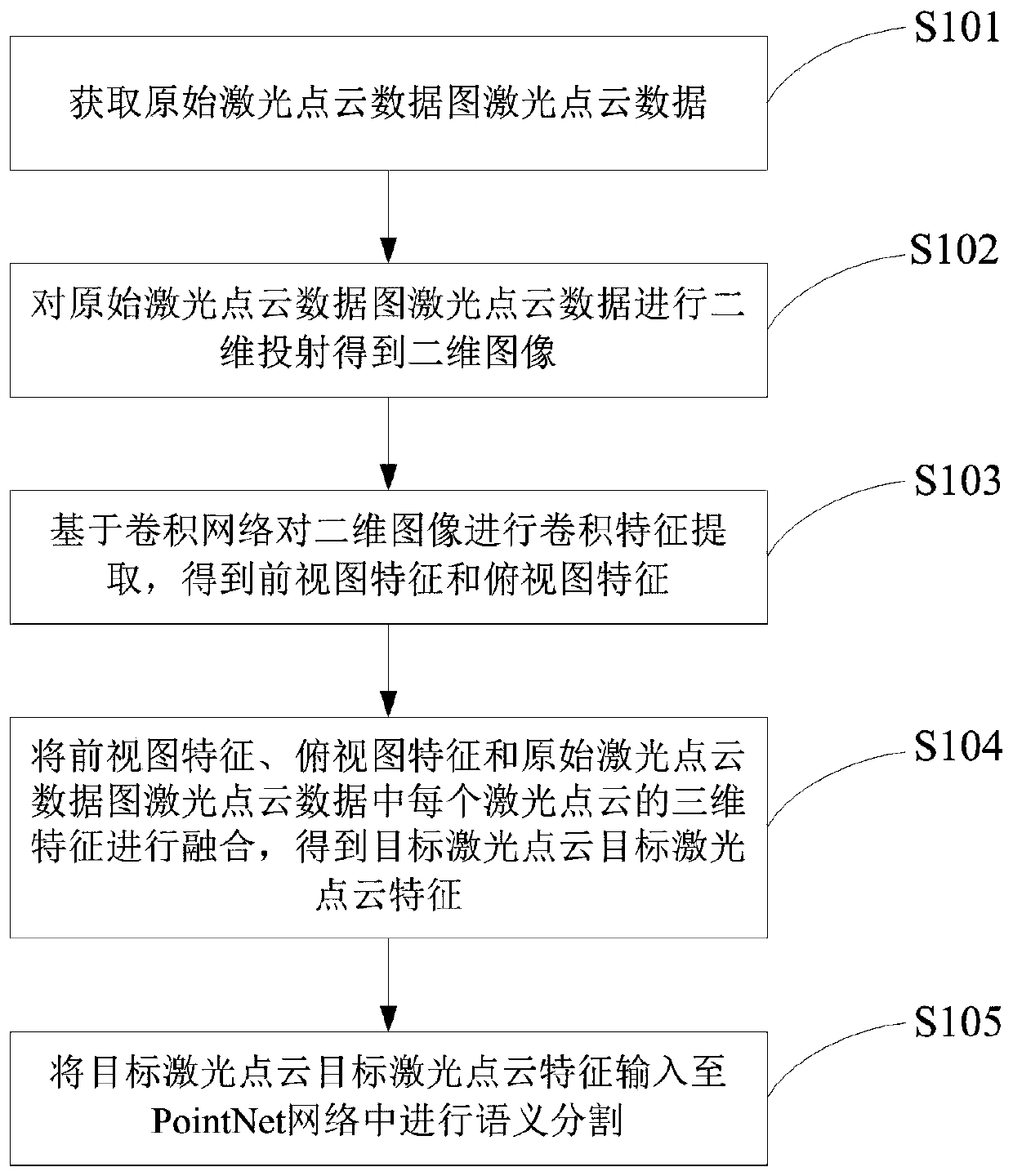

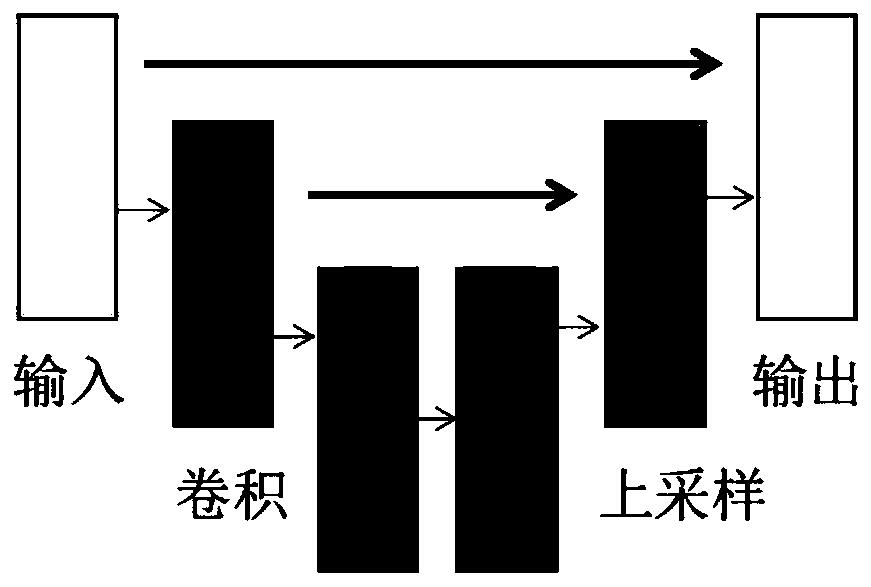

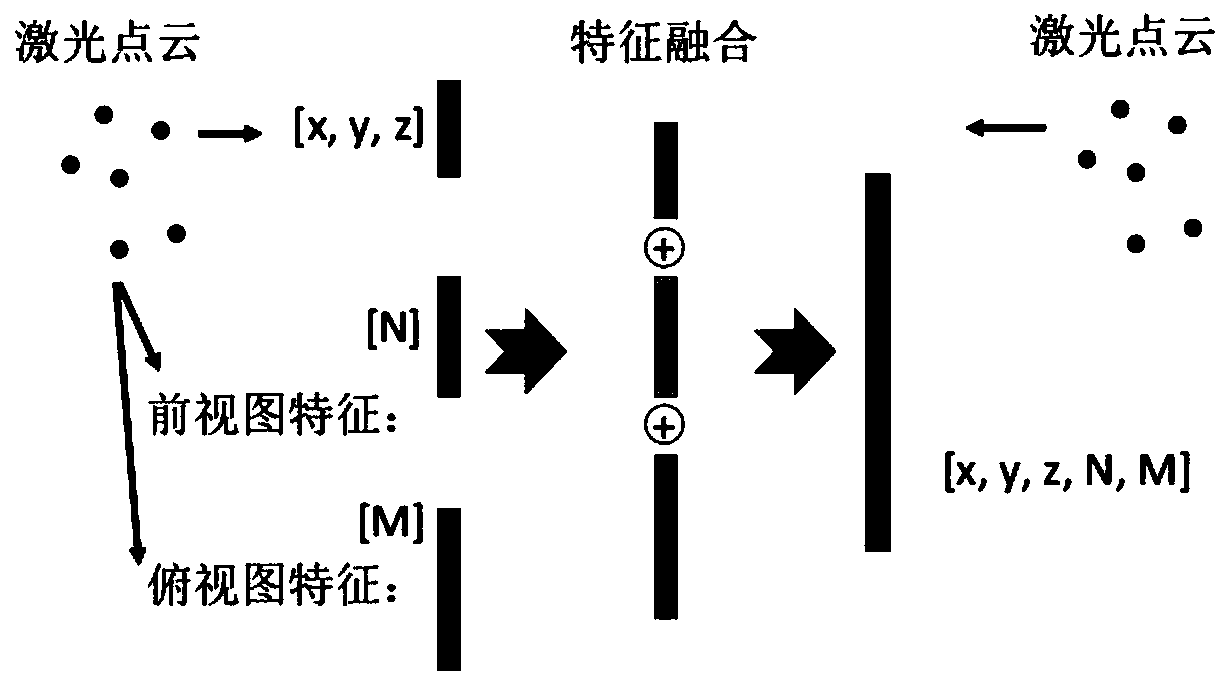

Laser point cloud semantic segmentation method and device

ActiveCN111476242AEnhanced feature informationImprove deep learning capabilitiesImage enhancementImage analysisFeature extractionPoint cloud

The invention discloses a laser point cloud semantic segmentation method and device. The method comprises the steps: performing two-dimensional projection on the obtained original laser point cloud data to obtain a two-dimensional image; performing convolutional feature extraction on the two-dimensional image based on a convolutional network, and obtaining a front view feature and a top view feature; fusing the front view feature, the top view feature and a three-dimensional feature of point cloud in original laser point cloud data to obtain a target laser point cloud feature, and inputting the target laser point cloud feature into a PointNet network for semantic segmentation. According to the method, the visual field range of each pixel point can be expanded through the N-channel featureof the front view after convolution feature extraction and the M-channel feature of the top view after convolution feature extraction, and the feature information of the single laser point cloud can be expanded by fusing the front view feature, the top view feature and the three-dimensional feature of each laser point cloud in the original laser point cloud data.

Owner:BEIJING JINGWEI HIRAIN TECH CO INC

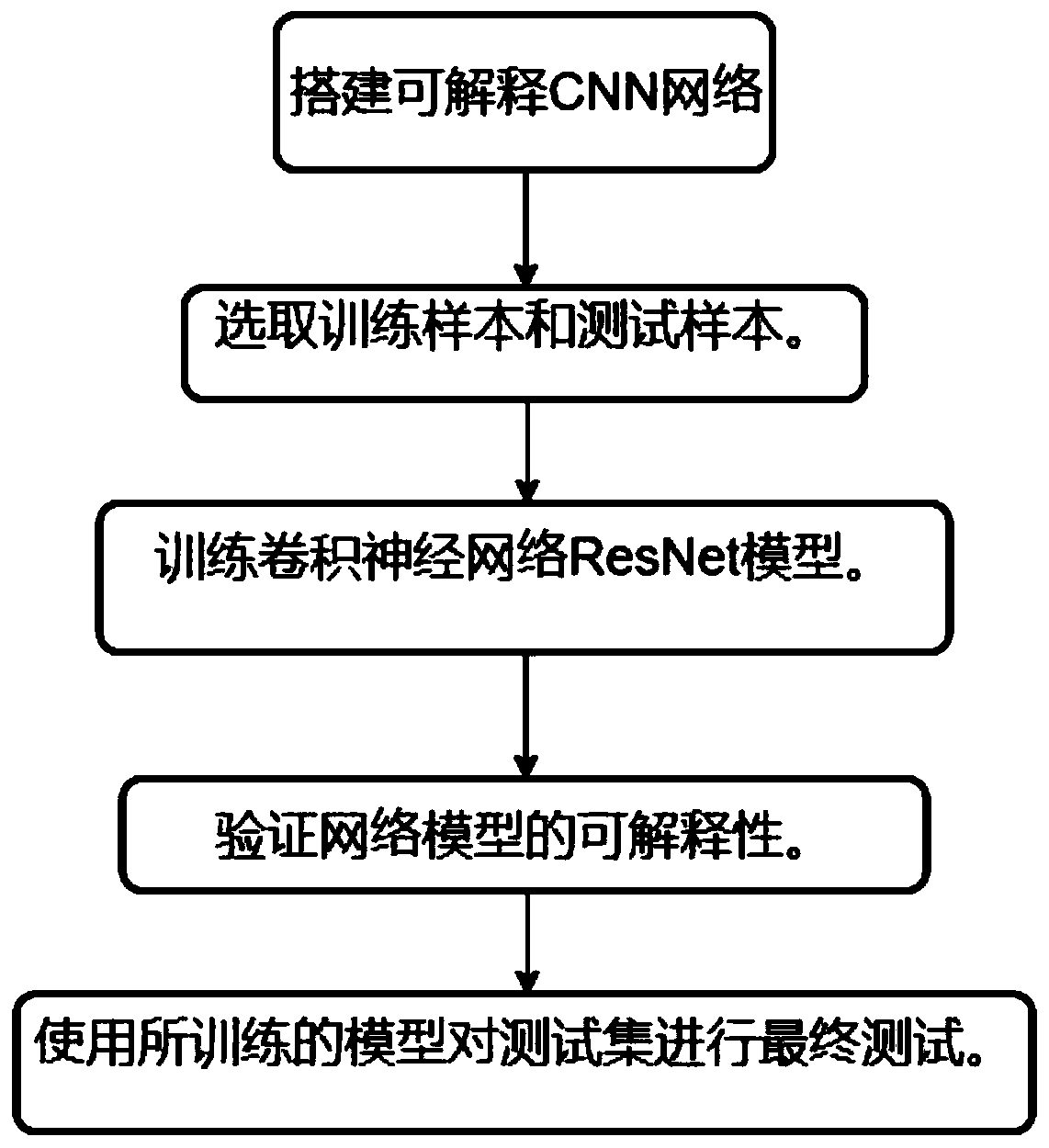

Interpretable CNN image classification model-based optical remote sensing image classification method

PendingCN111339935AShort timeImprove classification accuracyScene recognitionNeural architecturesNetwork modelBioinformatics

The invention discloses an interpretable CNN image classification model-based optical remote sensing image classification method. An explainable CNN ResNet model composed of six groups of 51 basic convolution layers, three full connection layers and one Softmax layer in total is built; the method comprises the following steps of: performing down-sampling by utilizing ResNet; obtaining a feature map containing context information; and then interpretable modification is performed on the ResNet model to obtain a new interpretable CNN network based on the ResNet model, and the ResNet model extracts features through multiple groups of convolution-pooling layers with residual modules and finally inputs the features to a full connection layer to classify the images. The interpretability of an existing deep learning model can be enhanced, and the performance of the model is further improved.

Owner:XIDIAN UNIV

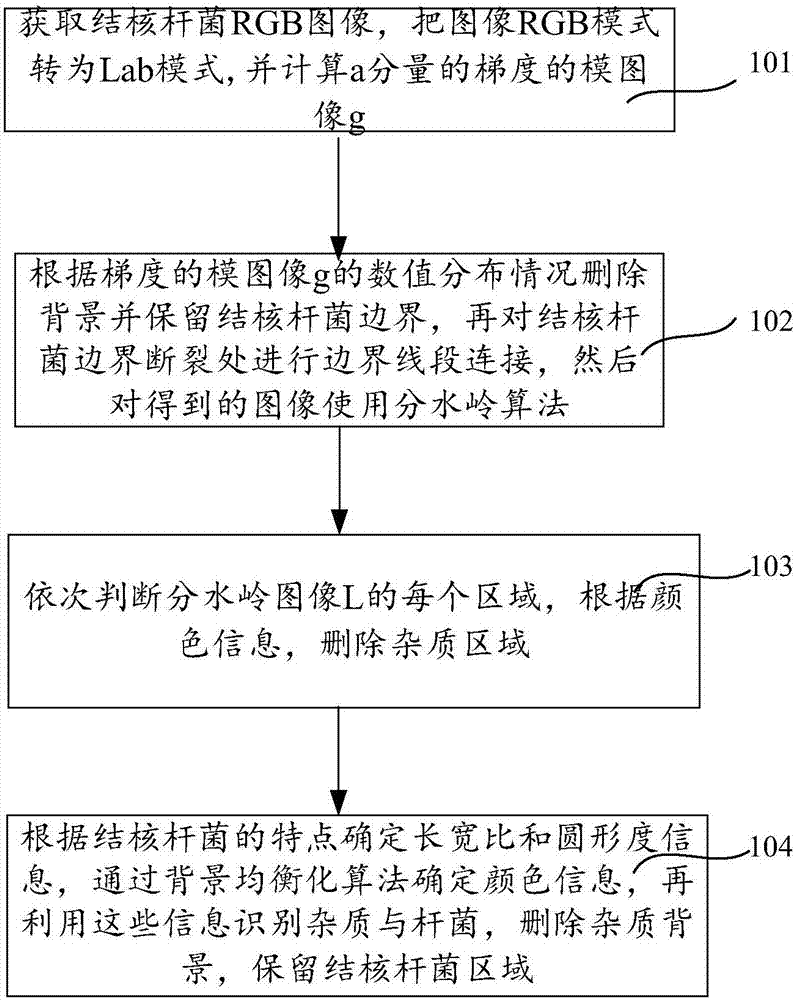

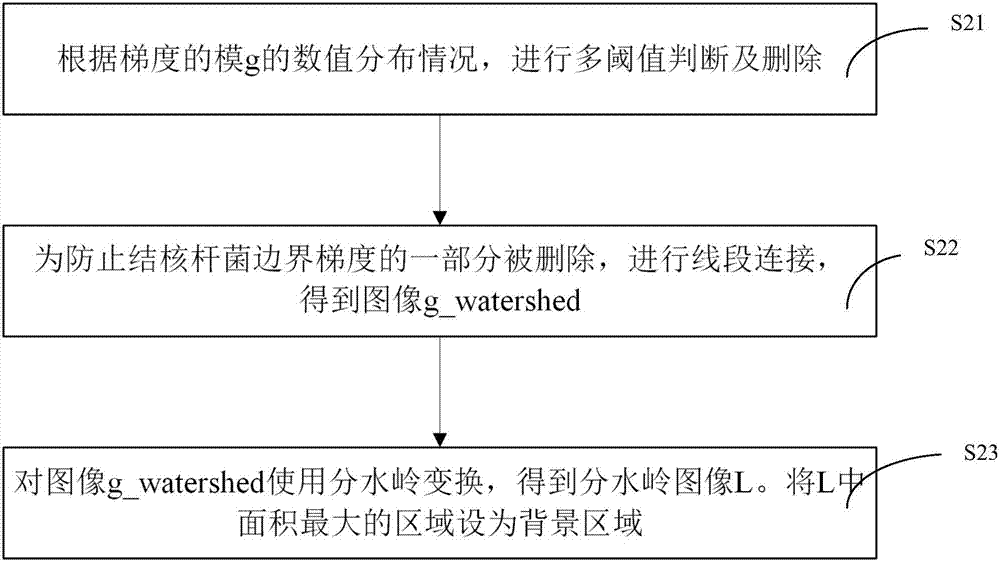

Mycobacterium tuberculosis image segmentation identification method based on optimization watershed and apparatus thereof

ActiveCN106960424AEnhanced feature informationHighlight red featuresImage enhancementImage analysisPattern recognitionRgb image

The invention discloses a mycobacterium tuberculosis image segmentation identification method based on an optimization watershed and an apparatus thereof. The method comprises the following steps of acquiring a mycobacterium tuberculosis RGB image, converting a mycobacterium tuberculosis image RGB mode into a Lab mode, and calculating a die image g of a gradient of an a component; according to a value distribution condition of the die image g of the gradient, deleting a background and reserving a mycobacterium tuberculosis boundary, carrying out boundary line segment connection at a mycobacterium tuberculosis boundary fracture position, carrying out a watershed algorithm on an acquired image so as to acquire a watershed image L; and successively determining each area of the watershed image L, according to color information and mycobacterium tuberculosis shape information, deleting an impurity area and reserving the mycobacterium tuberculosis. In the invention, mycobacterium tuberculosis classification identification precision and a speed under microimaging can be effectively increased.

Owner:上海澜澈生物科技有限公司

Dynamic video tactile feature extraction and rendering method

ActiveCN111291677AReal-time tactile experienceFriendlyImage enhancementImage analysisSaliency mapSpace time domain

The invention relates to a dynamic video tactile feature extraction and rendering method, and belongs to the field of virtual reality and human-computer interaction. The method comprises the steps ofdecompressing a received video, preprocessing the video, segmenting shots based on inter-frame color histogram features, extracting saliency maps fused with time-space domain tactile saliency featuresfrom all the segmented frames in each shot, and performing pixel-level tactile rendering according to the saliency maps of the video frames. According to the method, the video content is divided intothe salient region and the non-salient region by extracting the video frame salient features fused with the space-time characteristics. And pixel-level tactile stimulation is applied to the video frame by adopting a one-to-one mapping relationship between the visual channels and the tactile channels. And real-time tactile feedback is generated through the terminal, so that the realistic experience of watching the video by the user is enriched. The method can be widely applied to video education, multimedia entertainment and human-computer interaction.

Owner:JILIN UNIV

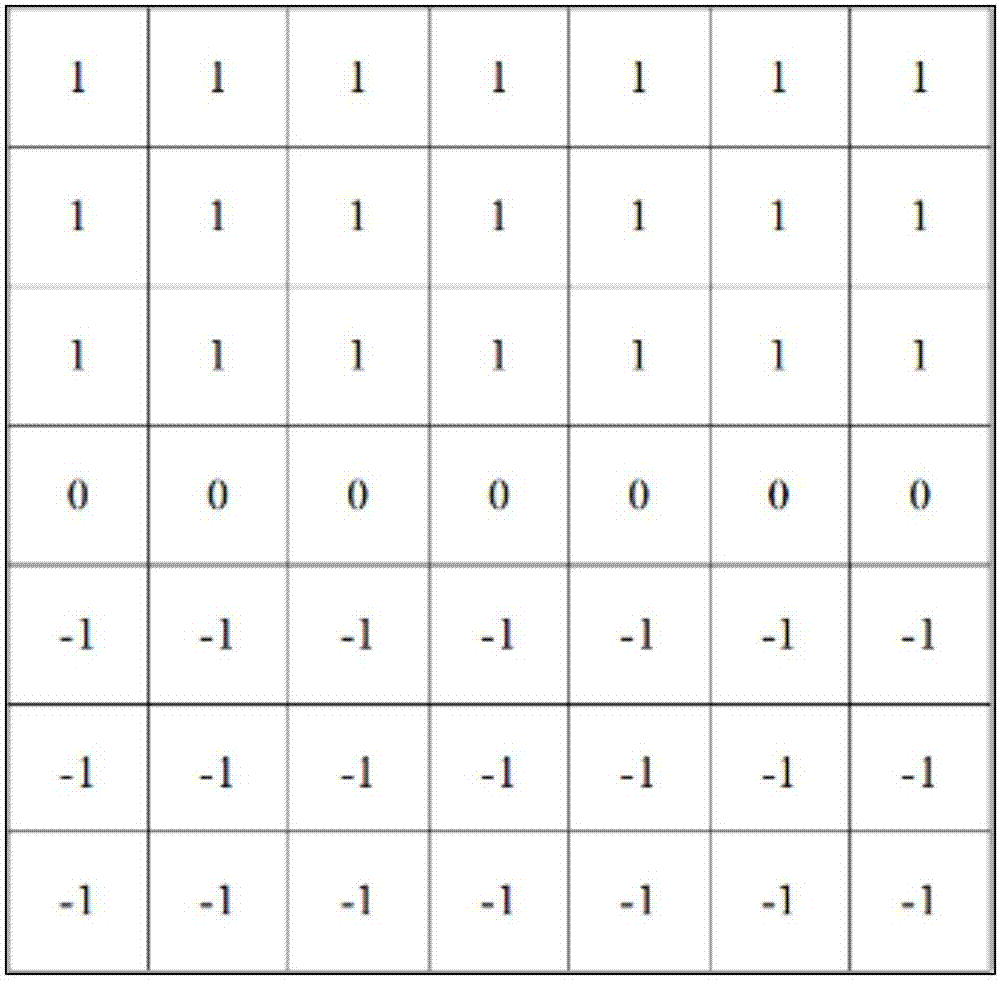

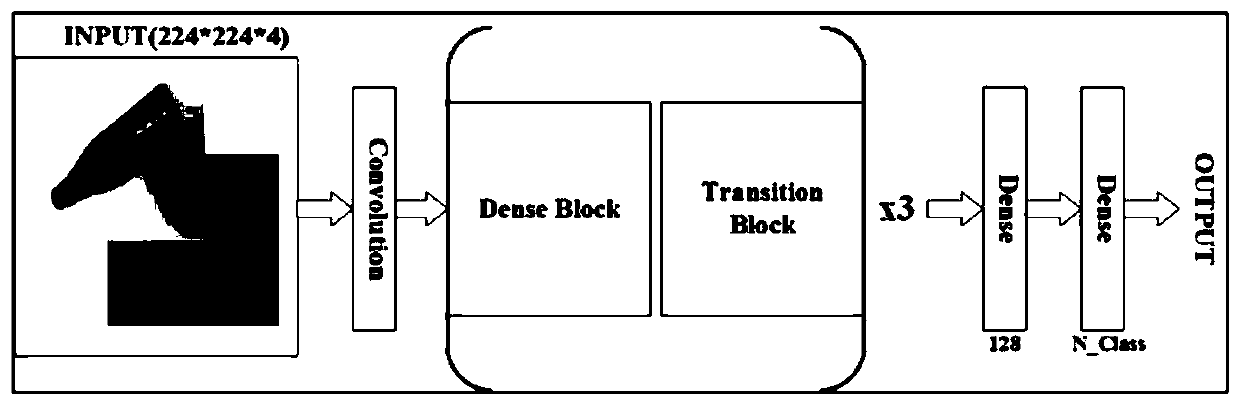

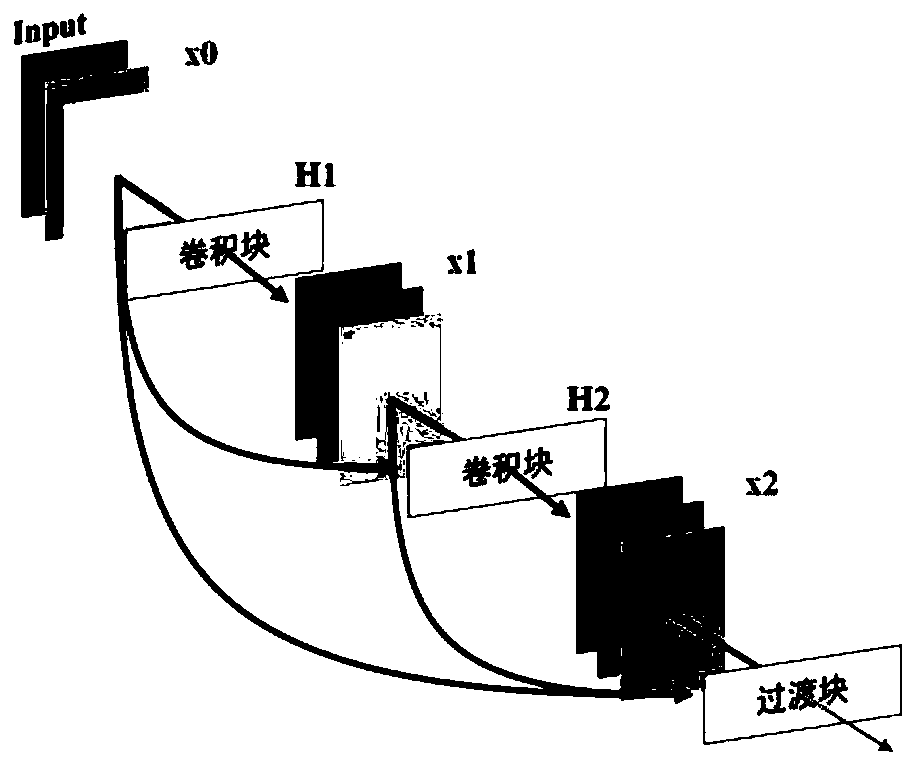

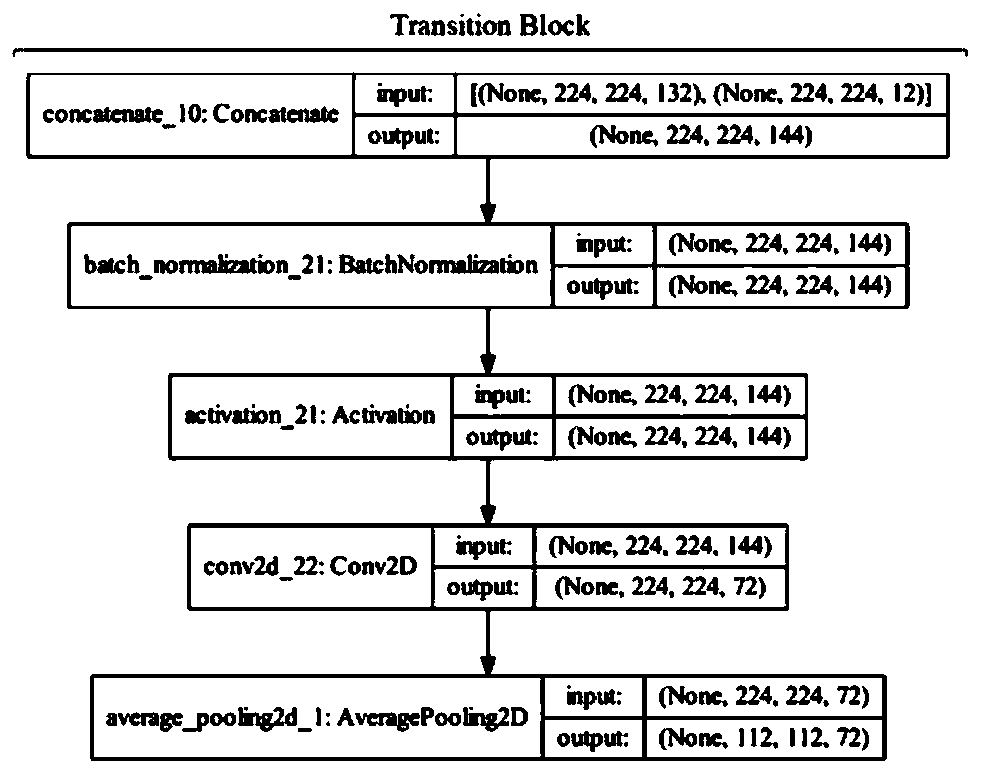

Low-overhead household garbage classification method based on dense convolutional network

PendingCN111553426AGuaranteed volumeMitigate Vanishing GradientsGeometric image transformationCharacter and pattern recognitionColor imageData set

The invention discloses a low-overhead household garbage classification method based on a dense convolutional network, and the method comprises the steps: (1), preprocessing data, (2), building the dense convolutional network, (3), dividing a data set into a training set, a verification set and a test set, and (4), selecting a proper optimizer and a loss function in a training process, setting hyper-parameters, and setting an evaluation index as the accuracy rate. According to the method, optimization is carried out in preprocessing of input data, matrix fusion is carried out on a three-channel color image and an edge detection image to serve as input of a model, and feature information is enhanced; a dense convolutional network structure is constructed; a Dropout layer is additionally arranged; a learning rate self-adjusting and hyper-parameter adjusting method is used.According to the method, the model has enough feature extraction capability; the feature mapping of the model is usedas the input of a subsequent layer; the problem of gradient disappearance caused by a deep network is relieved; good balance is realized on the aspects of low overhead and high precision; and 90.8% of precision and 5.08 M of file size are realized.

Owner:HOHAI UNIV

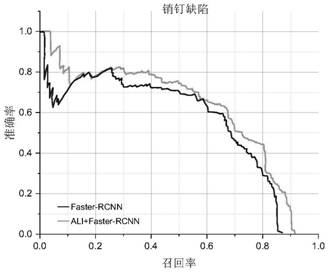

Distribution line pin defect detection method based on improved ALI and Faster-RCNN

PendingCN114049305AAvoid Probability CalculationsEnhance local textureImage enhancementImage analysisMachine learningEngineering

A distribution line pin defect detection method based on improved ALI and Faster-RCNN comprises the following steps: collecting pin defect images of a distribution line, manually marking the pin defect image data, and constructing a training sample set; building network structures, wherein the first network structure is an adversarial learning inference network, the basic structure of the first network structure is composed of an inference network, a generative network and a judger, and the second network structure is a Faster-RCNN network; carrying out detection model training according to the obtained training sample, and completing training after training for a specified number of steps; and inputting an image to be detected into the trained adversarial learning inference network, outputting a high-quality reconstructed pin image, and finally completing defect identification through the trained Faster-RCNN network. According to the method, detail information such as local textures and edges of the distribution line pin image can be enhanced to improve image quality, and accurate features are extracted in combination with a target detection algorithm to realize intelligent detection of pin defects.

Owner:CHINA THREE GORGES UNIV

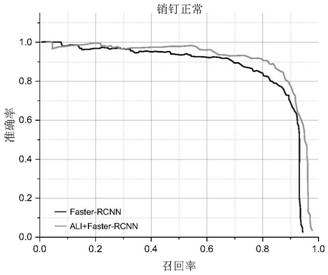

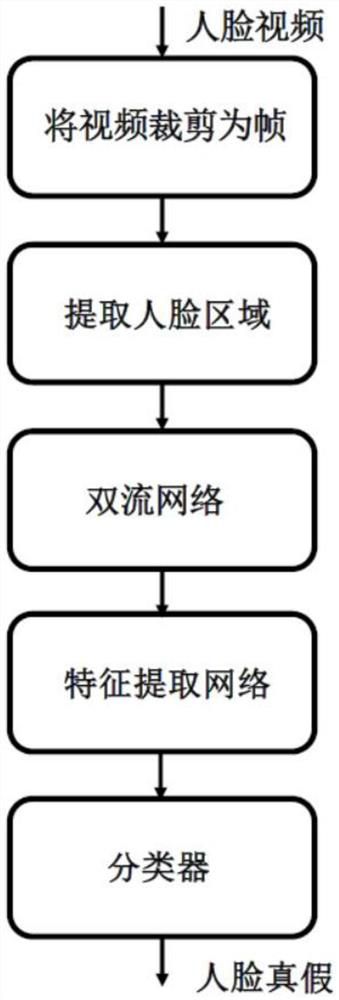

Double-flow face counterfeiting detection method based on Swin Transform

PendingCN114694220AImprove generalization abilityEnhanced feature informationCharacter and pattern recognitionNeural architecturesData setFeature extraction

The invention relates to a double-flow face forgery detection method based on Swin Transformer, which is used for detecting a face forgery image by using deep learning. A deep learning network model is integrally built, and the network model is divided into three parts: a double-flow network, a feature extraction network and a classifier. Because all face counterfeit data sets disclosed at present are videos, the videos need to be clipped into frame pictures by using OpenCV. In addition, the frame picture contains a large amount of background information, so that a human face area needs to be cut out by using a human face positioning algorithm. And inputting the obtained face region image into a double-flow network and a feature extraction network to extract and learn features. And finally, inputting the learned features into a classifier, and identifying whether the face image is true or false. The method is used for solving the problem of partial limitation, namely weak generalization ability, of the existing face counterfeiting detection scheme, and meanwhile, the compression resistance of the model is improved through the double-flow framework, so that the method is more in line with the common face video quality in daily life.

Owner:SHANGHAI UNIV

Handheld three-dimensional scanner-based spatial registration method for surgical navigation

InactiveCN108175500AAvoid registration trapsReduce operational complexitySurgical navigation systemsPoint cloudMedical equipment

The invention belongs to the field of medical equipment, and particularly relates to a handheld three-dimensional scanner-based spatial registration method for surgical navigation. The method adopts facial recognition feature point matching registration to perform preliminary registration on space point clouds of a to-be-tested person obtained by the handheld three-dimensional scanner and image space point clouds obtained by a medical image; a preliminary registration result uses a point cloud iterative registration method to complete the registration of the space of the to-be-tested person ofsurgical navigation and the image space; implementation results show that the method has fast registration speed and stable registration precision, and can meet the requirements of a surgical navigation system and improve the registration accuracy of the surgical navigation system.

Owner:FUDAN UNIV

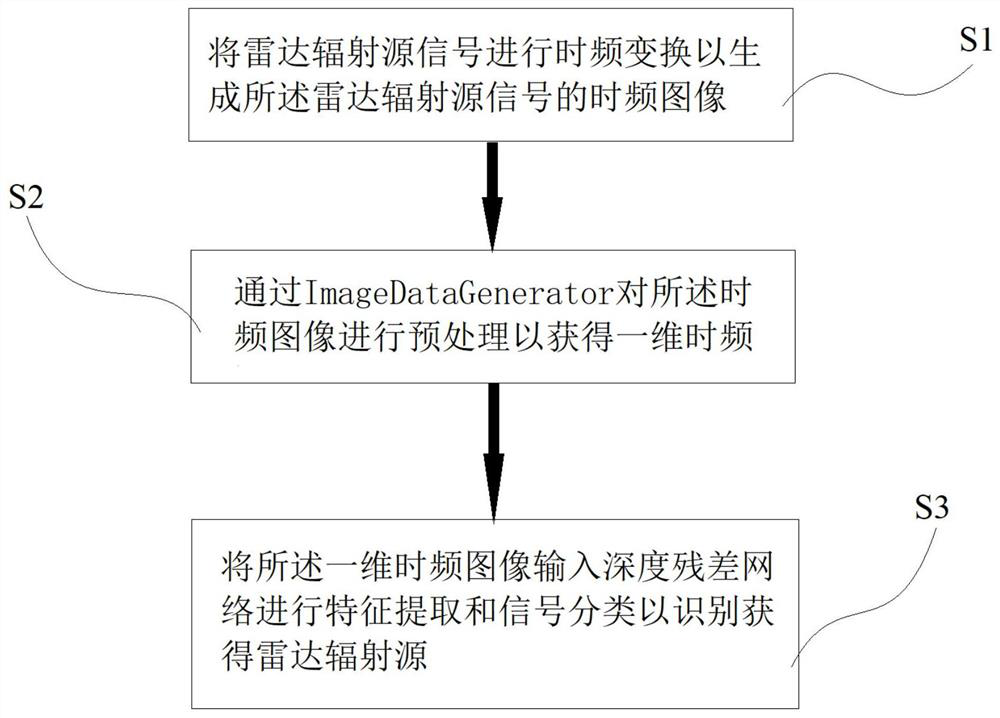

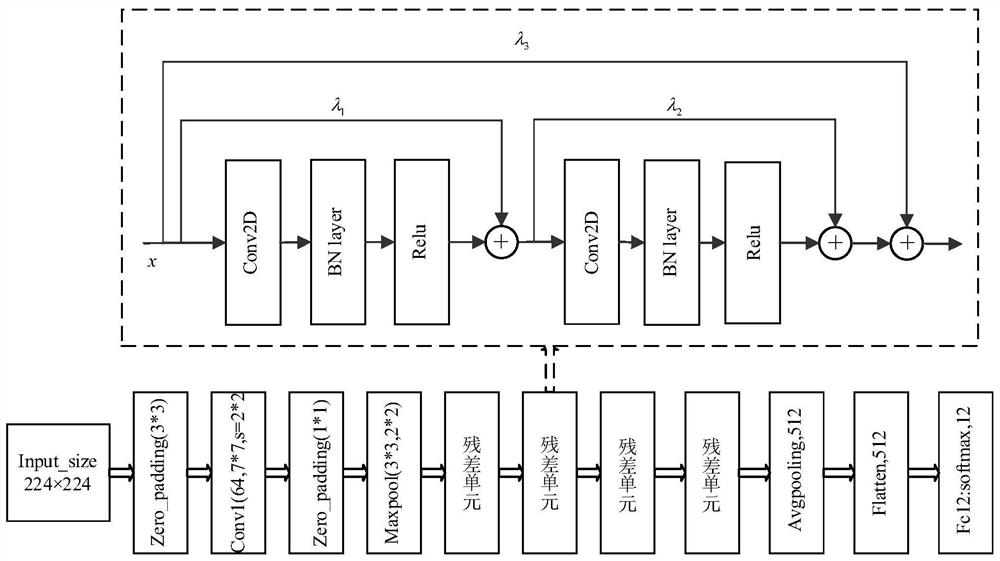

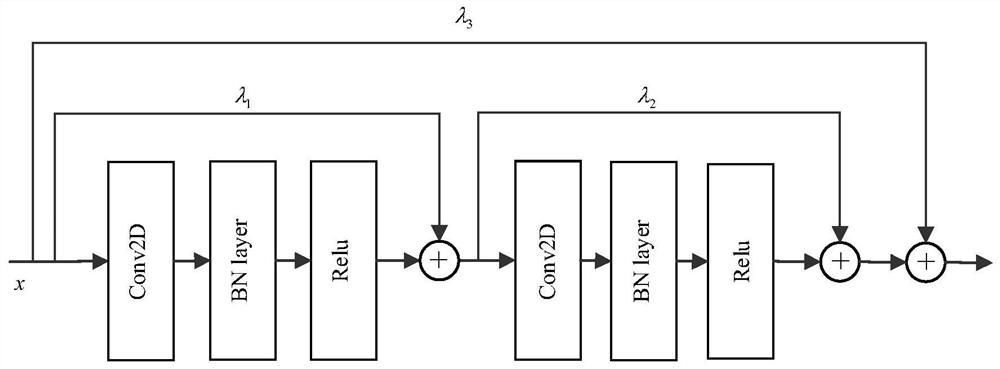

Radar radiation source identification method based on multistage jumper residual network

PendingCN112906591AAvoid vanishing gradientsAvoid problems such as gradient explosionCharacter and pattern recognitionNeural architecturesImaging processingSignal classification

The invention discloses a radar radiation source identification method based on a multistage jumper residual network, and belongs to the field of image processing. The radar radiation source identification method comprises the following steps: performing time-frequency transformation on a radar radiation source signal to generate a time-frequency image of the radar radiation source signal; carrying out the preprocessing of the time frequency image through an ImageDataGenerator, so as to obtain a one-dimensional time frequency image; and inputting the one-dimensional time-frequency image into a deep residual network for feature extraction and signal classification so as to identify and obtain the radar radiation source. According to the radar radiation source identification method based on the multistage jumper residual network provided by the invention, eight sequentially connected residual blocks are arranged in a deep residual network and are connected by using jumpers to form four residual units, so that the constructed deep residual network with the total convolution layer number of 18 can extract deep information of a signal time-frequency image, and meanwhile, the problems of gradient disappearance, gradient explosion and the like of the network are also avoided.

Owner:中国人民解放军海军航空大学航空作战勤务学院

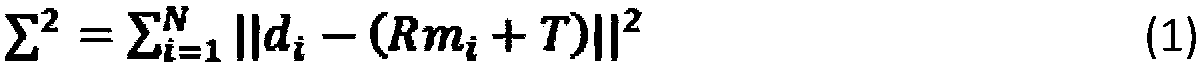

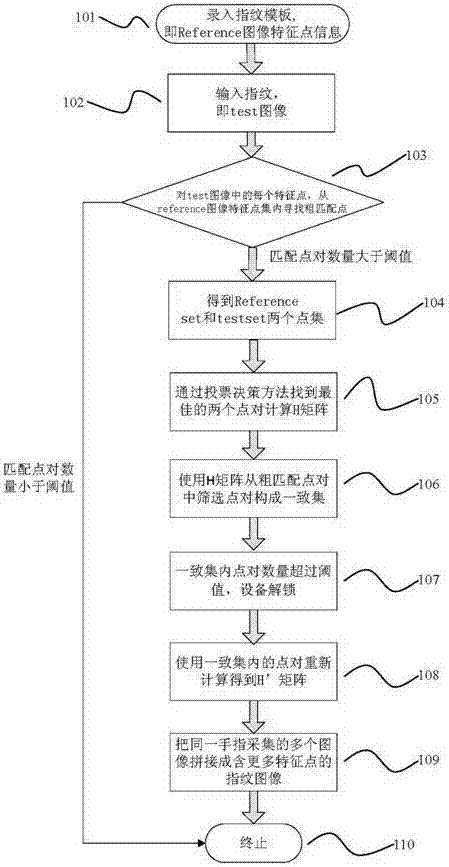

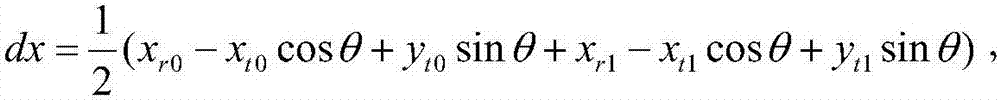

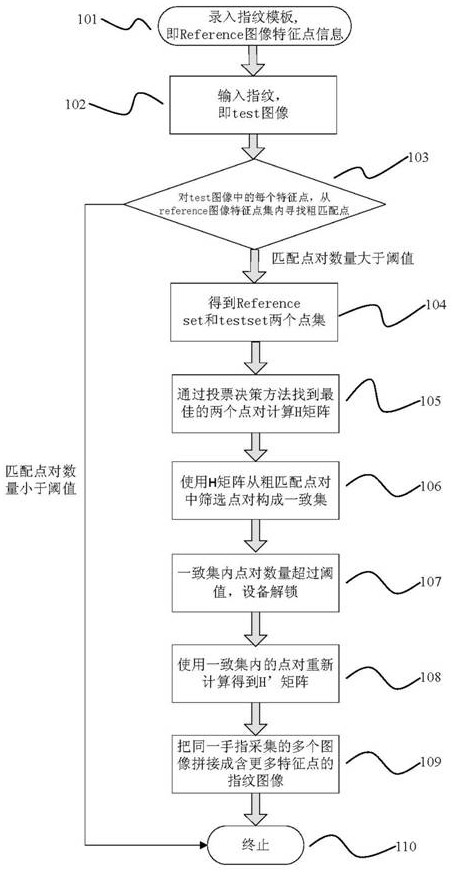

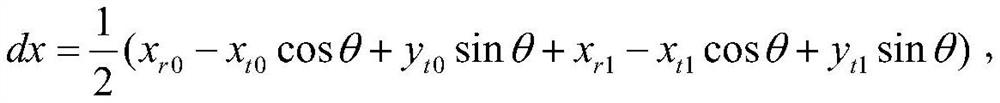

Image-identification random sampling consistency algorithm based on voting decision and least square method

ActiveCN107220580AHigh speedOptimal Alignment MethodMatching and classificationAlgorithmReference image

An image-identification random sampling consistency algorithm based on a voting decision and a least square method is disclosed. A voting decision mode is introduced so as to find out a point pair which is most possible to be right; and a least square method principle is adopted to calculate a rotation translation transformation matrix. The rotation translation transformation matrix calculated in the invention is accurate and stable, and a calculation speed is fast. Each calculation result is not fluctuated randomly. In the invention, an image comparison method is optimized and the algorithm is good for a subsequent learning function of a data processing chip, which means that characteristic information of a reference image is continuously increased; and false rejection rate is effectively improved and the data processing chip is intelligent. Through continuously updating the rotation translation transformation matrix, image splicing can be high-efficiently and accurately realized.

Owner:FOCALTECH ELECTRONICS

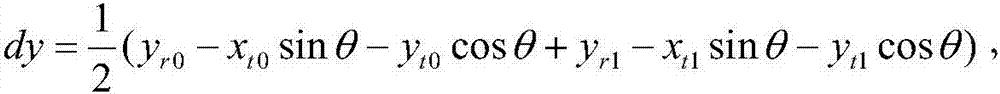

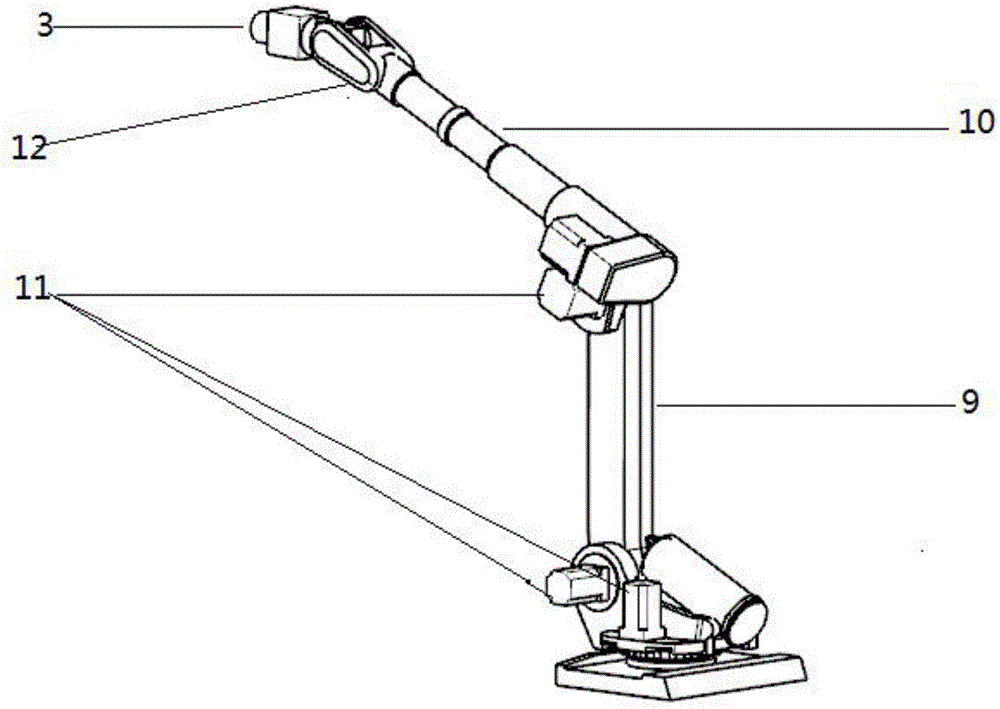

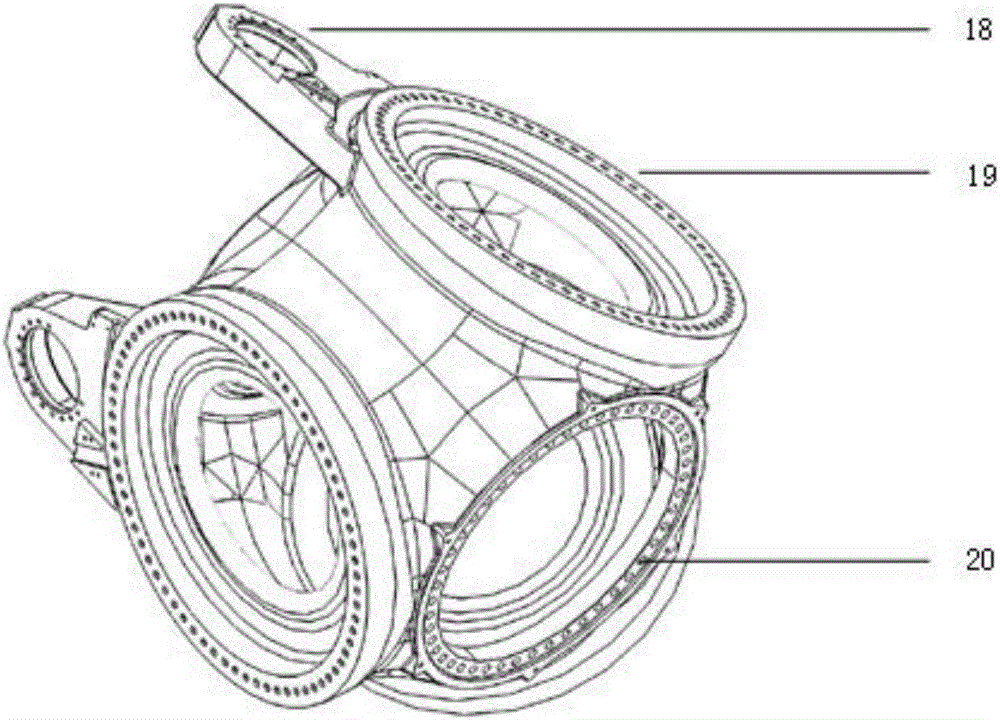

System and method for rapidly measuring characteristic parameter of hub of wind power generation device

InactiveCN106556343AEasy accessHigh degree of automationUsing optical meansThree dimensional measurementMulti degree of freedom

The invention discloses a system and method for rapidly measuring the characteristic parameter of the hub of a wind power generation device. The system is composed of an annular track, a multi-degree-of-freedom mechanical arm, an optical measuring head and a projection light source. The measured hub is arranged in the central area of the annular track. The multi-degree-of-freedom mechanical arm and the projection light source are mounted on the annular track through a base. The optical measuring head is mounted at the end of the multi-degree-of-freedom mechanical arm. The mechanical arm can perform circular motion around the hub on the track through the base. The system is based on the active three-dimensional measurement technology, performs optical measurement by means of the combination of the projection light source and the measuring head, may rapidly acquire the characteristic parameter of hub morphology in combination with the multi-degree-of-freedom motion of the mechanical arm, and has a high degree of automation and high measurement efficiency.

Owner:XIDIAN UNIV

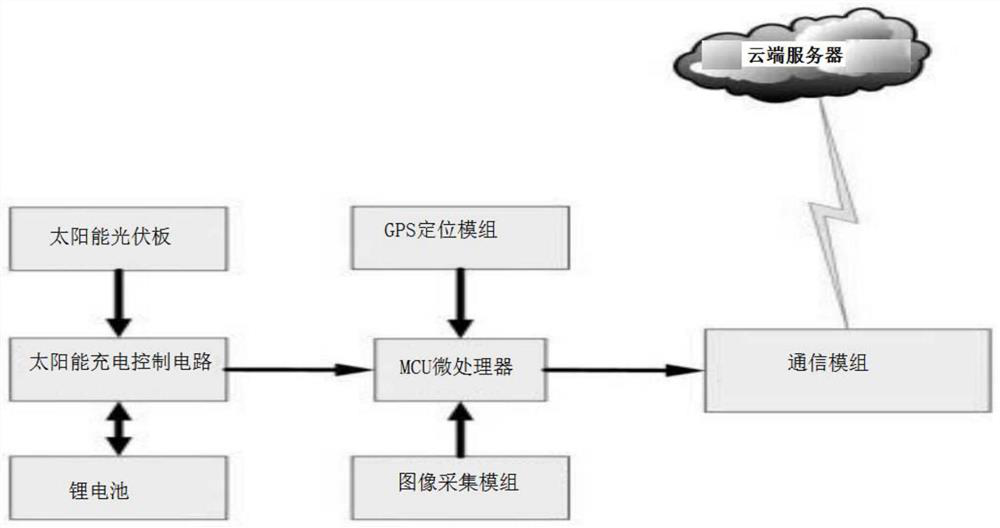

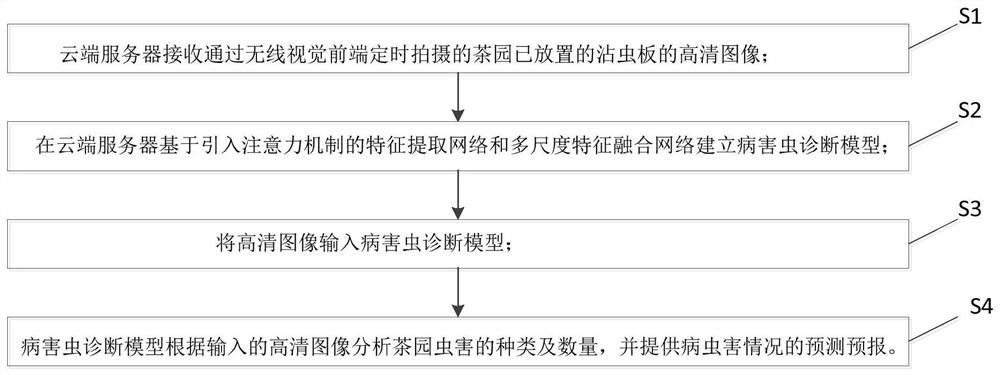

Artificial intelligence-based pest control system

PendingCN112841154AHigh degree of automationStrong real-timeRenewable energy machinesClosed circuit television systemsInsect pestBiology

The invention discloses an artificial intelligence-based pest control system. The system comprises a wireless visual front end, a communication module and a cloud server, wherein the wireless visual front end comprises a solar photovoltaic panel, a solar charging control circuit, a lithium battery, an MCU, a GPS positioning module and an image acquisition module. The the image acquisition module shoots a high-definition image of a pest sticking plate placed in a tea garden at regular time and sends the high-definition image to the MCU; the MCU sends the high-definition image to the cloud server through the communication module; the cloud server receives high-definition image data, analyzes the types and the number of pests in the tea garden based on artificial intelligence, and provides prediction and forecast of the pest condition; and in the process, the MCU controls the solar charging control circuit to realize charging and discharging of the lithium battery by the solar photovoltaic panel. The system has the advantages of high automation degree, strong real-time performance and accurate prevention and control.

Owner:CHANGSHA XIANGFENG TEA MACHINERY MFG +1

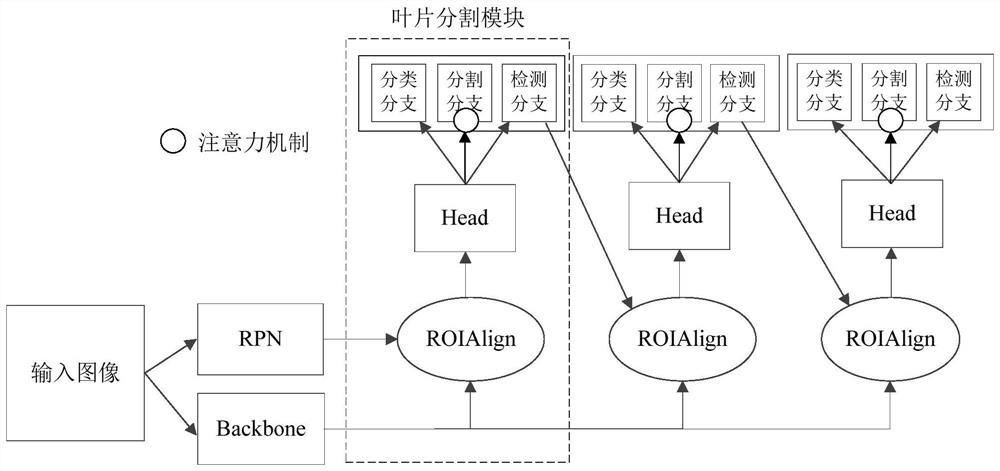

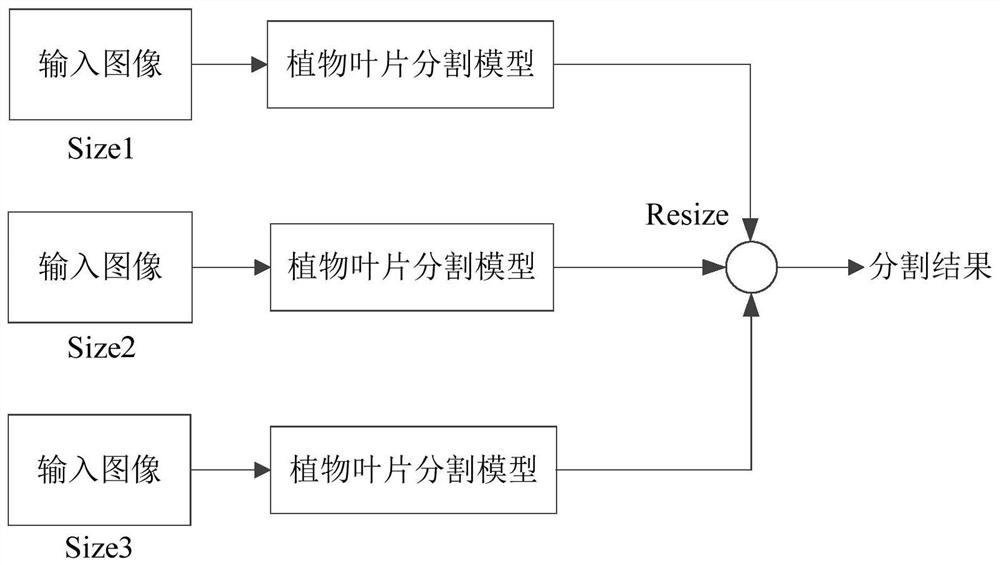

Plant leaf segmentation method

PendingCN113658206AReduce the possibilityPrevent false detectionImage enhancementImage analysisSample graphData set

The invention discloses a plant leaf segmentation method, and relates to the field of image processing. The method comprises the steps that a sample data set is constructed, sample images in the sample data set are inputted into a convolutional neural network, the convolutional neural network comprises a Backbone network, an RPN network and a plurality of cascaded blade segmentation modules, each blade segmentation module comprises a ROIAlign network and a Head network, each Head network comprises a classification branch, a segmentation branch and a detection branch, a plant leaf segmentation model is obtained through training of the sample data set based on a convolutional neural network, a to-be-segmented image is input into the plant leaf segmentation model, a leaf segmentation result of the to-be-segmented image is obtained, and the to-be-segmented image can adopt a multi-scale segmentation strategy. The method can be used for effectively segmenting sheltered leaves, unclear-edge leaves and small-scale leaves, and the application of deep learning in the field of plant leaf segmentation is promoted.

Owner:JIANGNAN UNIV +1

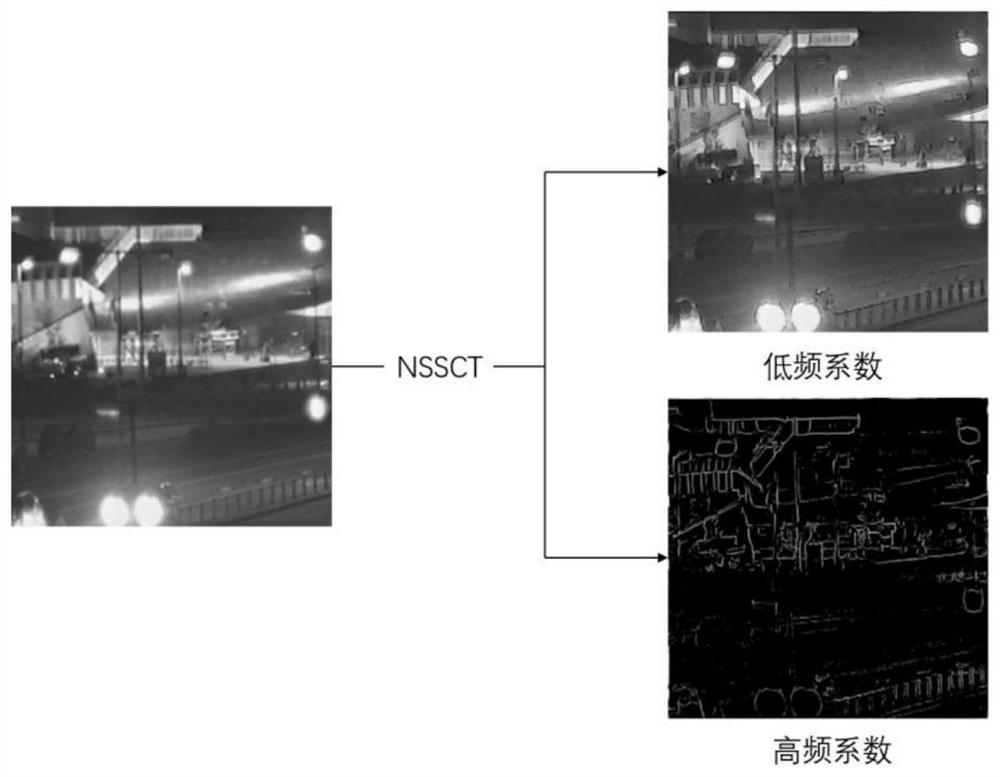

Low-illumination image target detection method based on image fusion and target detection network

PendingCN112487947AImprove detection accuracyEasy extractionCharacter and pattern recognitionNeural architecturesFeature extractionIlluminance

The invention relates to a low-illumination image target detection method based on image fusion and a target detection network, and the method combines a non-subsampled shearlet transform image fusionalgorithm and an improved target detection network YOLOV4, and comprises the steps: 1) employing an infrared and low-illumination visible light image fusion technology, so that the information complementation can be performed on infrared and low-illumination visible light images, and texture information is highlighted while the contour definition is improved; 2) sending the fused image to an improved YOLOV4 target detection network for detection, and outputting target information under a low-illumination condition; and 3) in order to improve the feature extraction capability of the YOLOV4 network, replacing the residual blocks in the YOLOV4 backbone network with dense link blocks, wherein compared with the residual blocks, the dense link blocks enable the network to improve the feature expression capability and improve the feature extraction capability of the network; finally, experiments prove that the method can improve the target detection capability under the condition of low illumination.

Owner:NORTHWESTERN POLYTECHNICAL UNIV

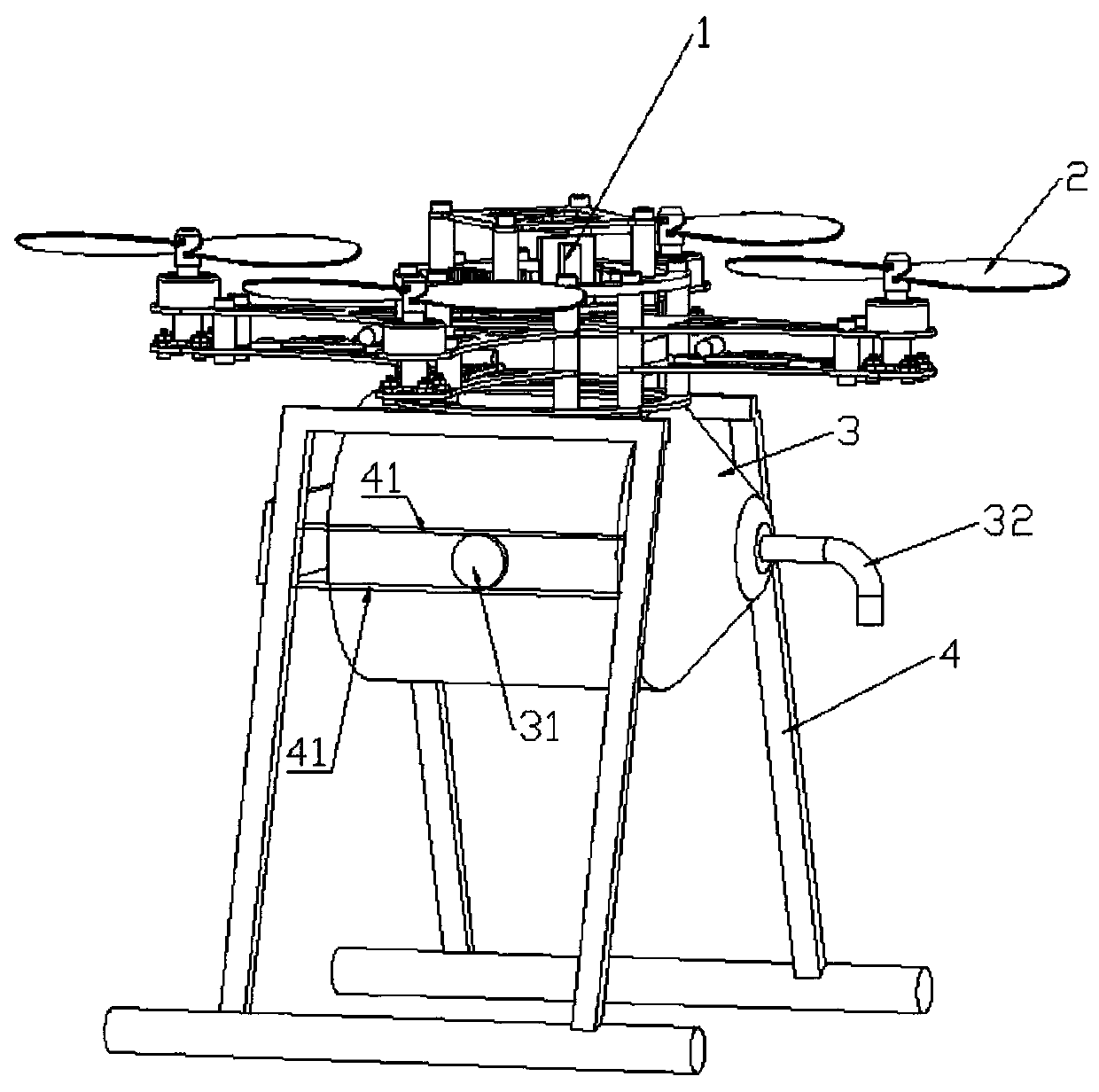

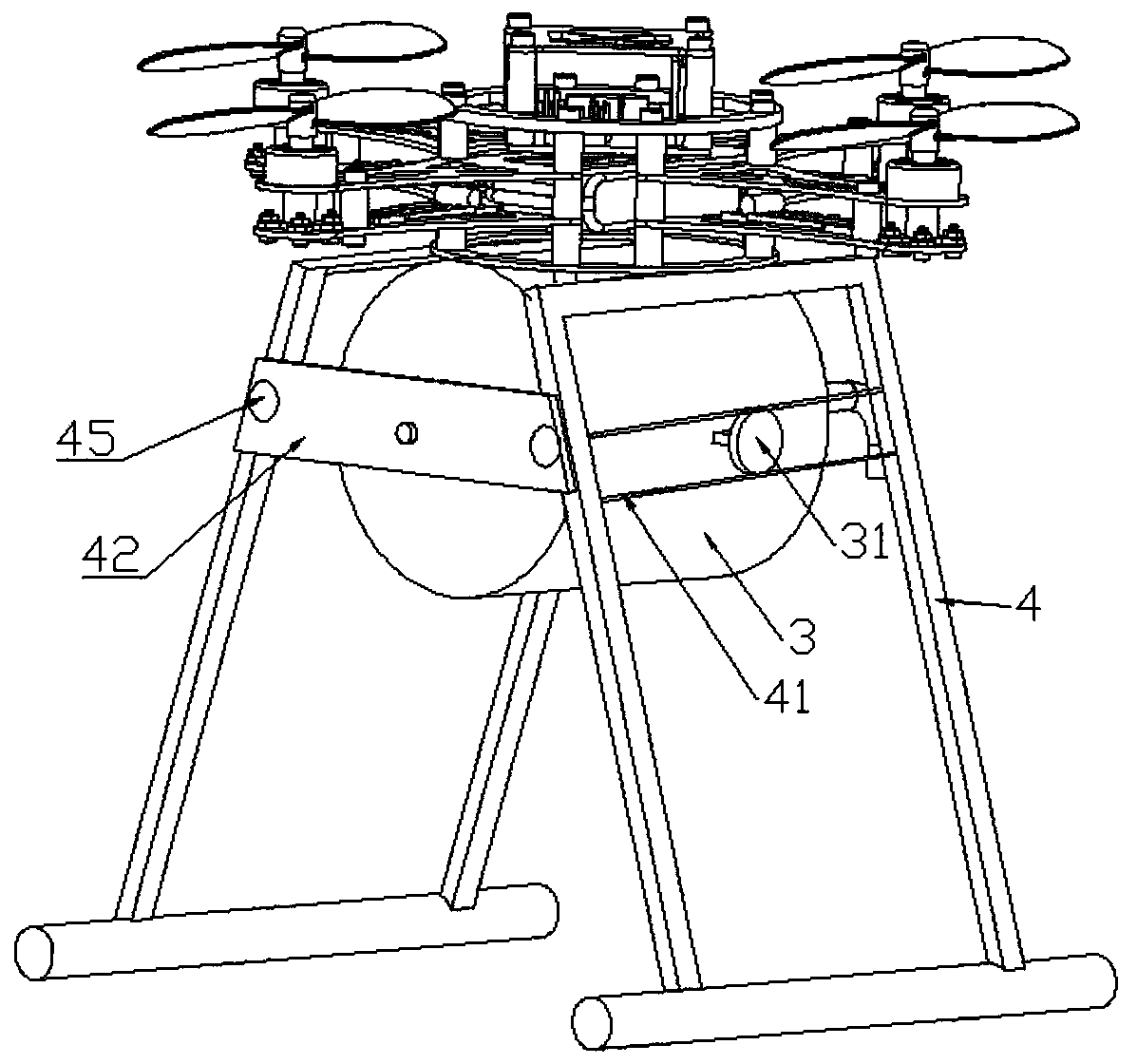

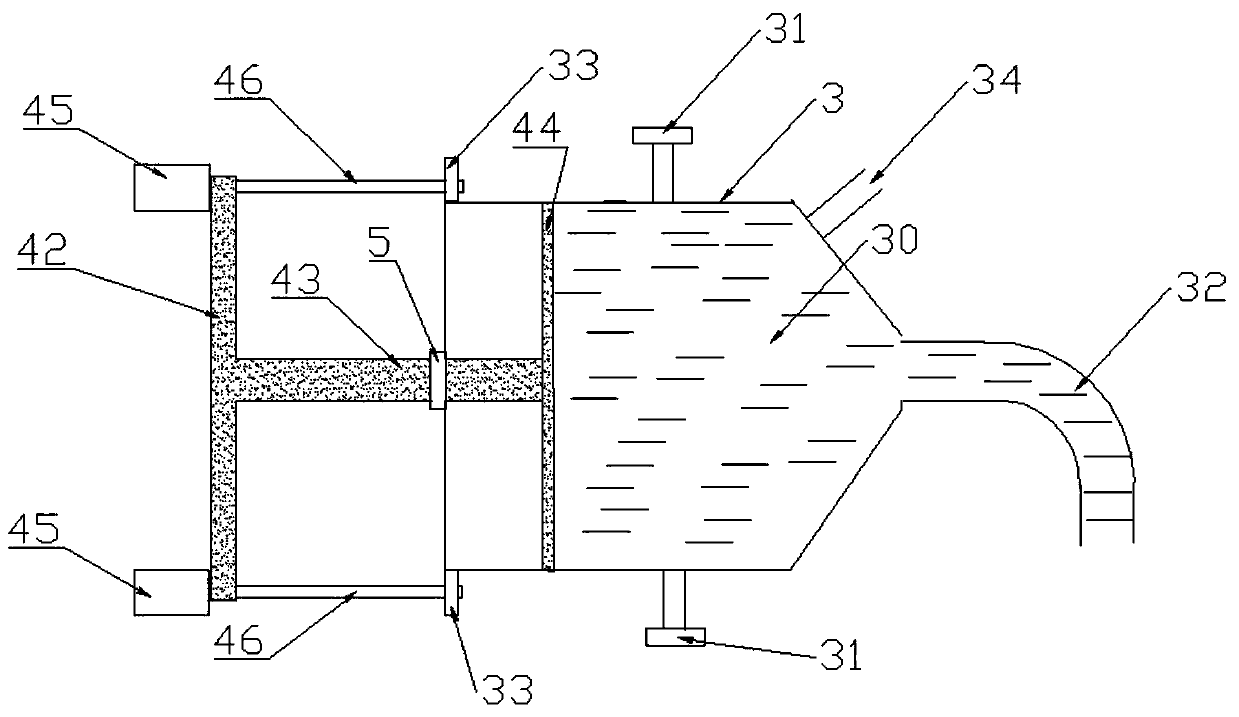

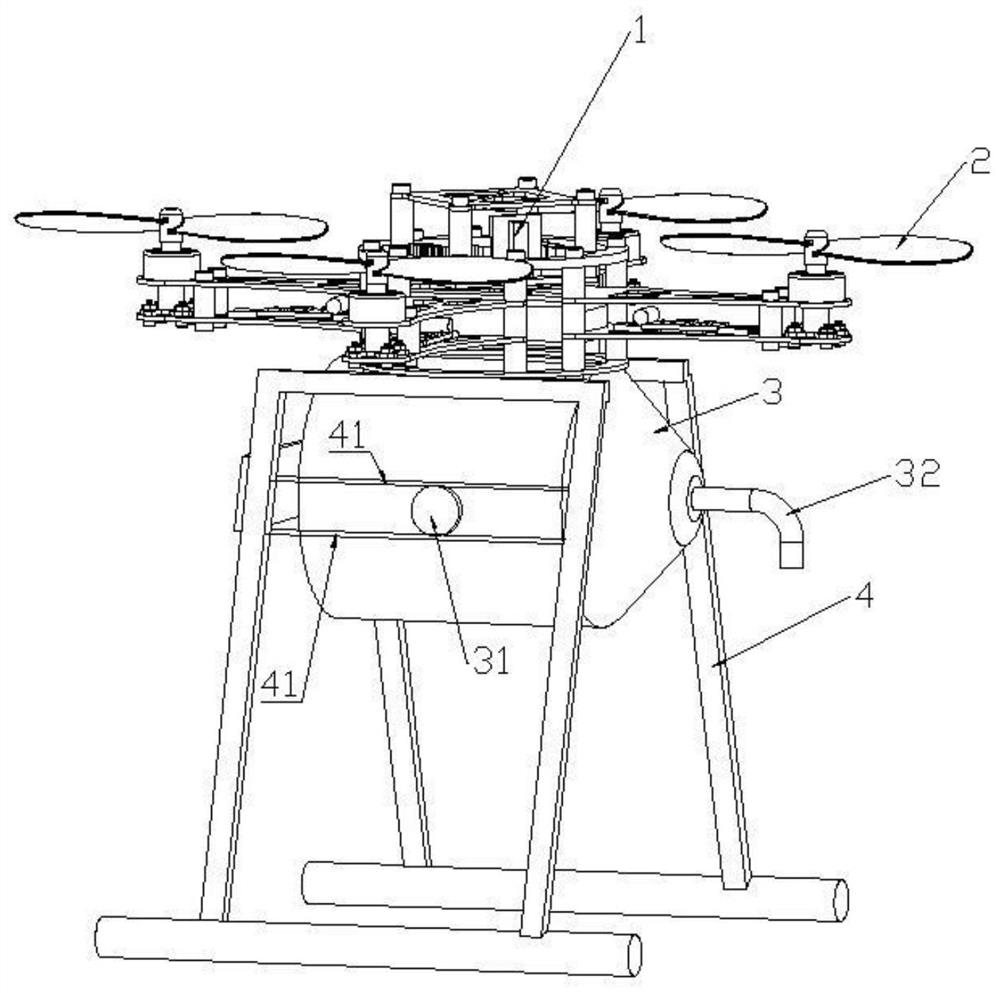

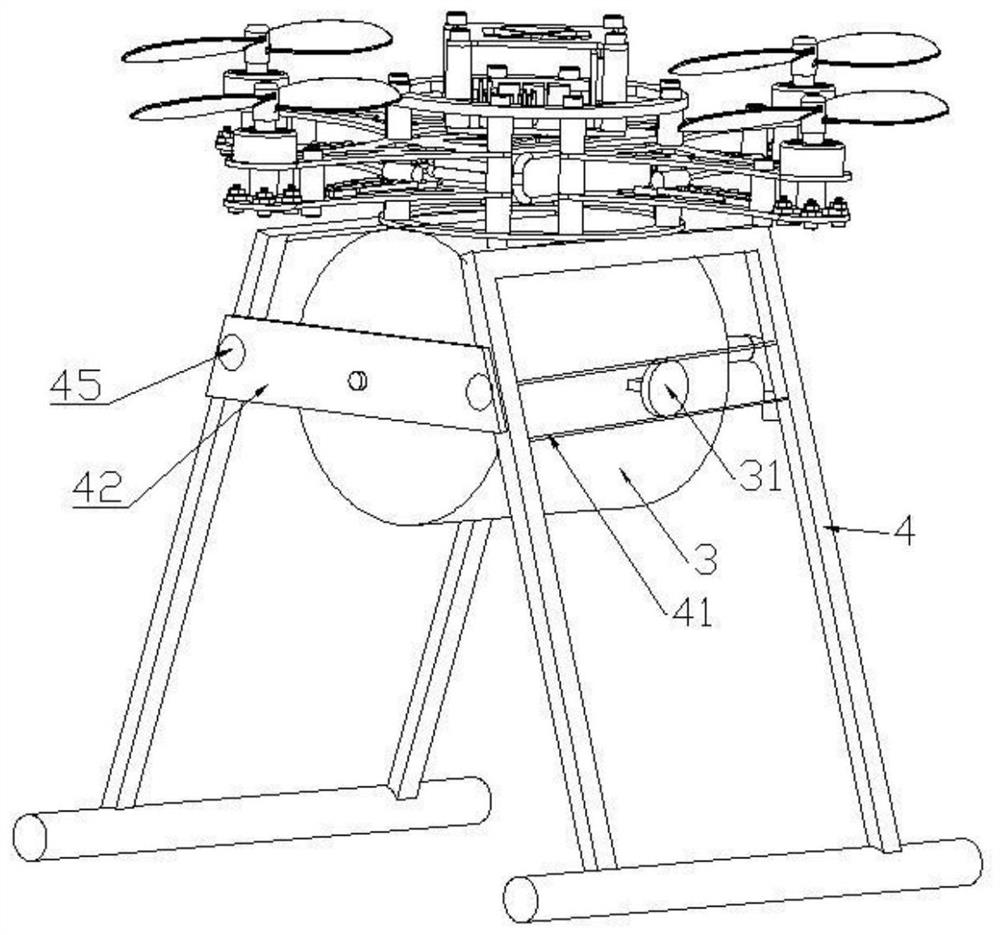

Unmanned aerial vehicle pest detection method

ActiveCN111079546AImprove fidelityImprove accuracyCharacter and pattern recognitionNeural architecturesAgricultural scienceAlgorithm

The invention discloses an unmanned aerial vehicle pest detection method, which belongs to the field of agricultural unmanned aerial vehicles. The method comprises: performing target detection on a current frame image based on a first convolutional neural network to obtain a current frame target area; performing pooling processing on the composite current frame image for at least three times to obtain fifth output data; performing convolution processing on the fifth output data for at least two times to obtain sixth output data; performing pooling processing and convolution processing on the sixth output data to obtain seventh output data; fusing the sixth output data and the seventh output data to obtain eighth output data; performing pooling processing and convolution processing on the eighth output data for at least two times to obtain ninth output data; classifying the ninth output data to obtain the composite target area; and if the distance between the current frame target area and the composite target area is smaller than a set threshold, obtaining an area where the pests are located.

Owner:CHONGQING NORMAL UNIVERSITY

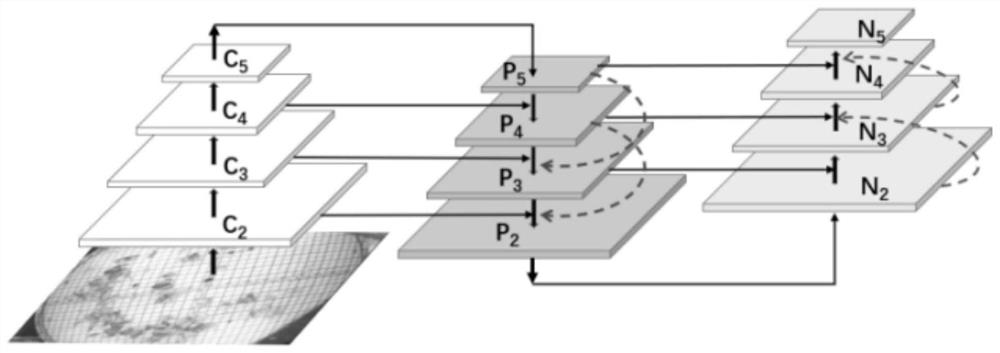

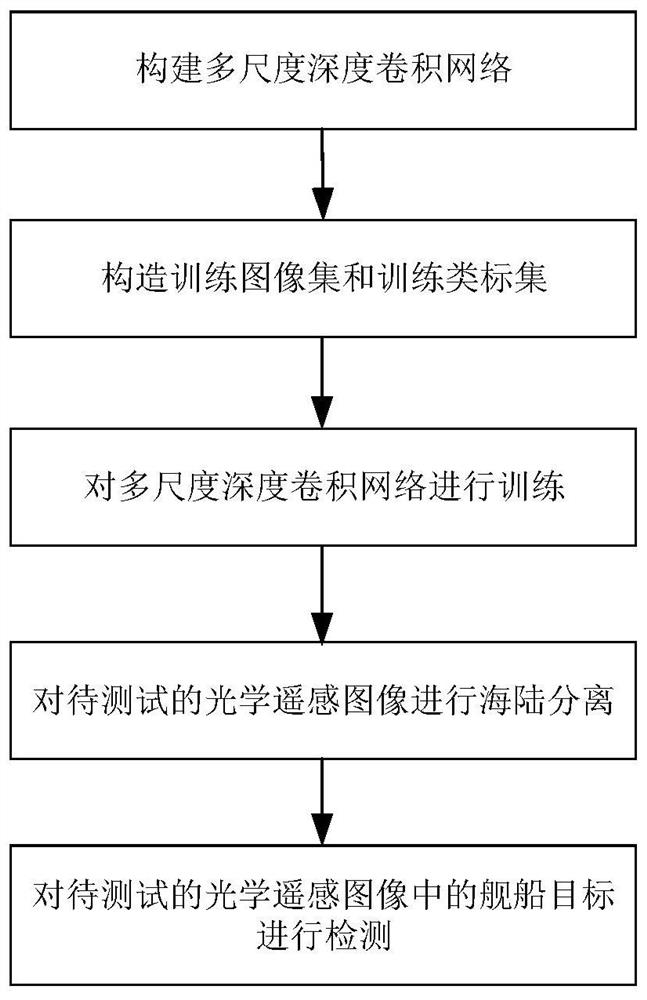

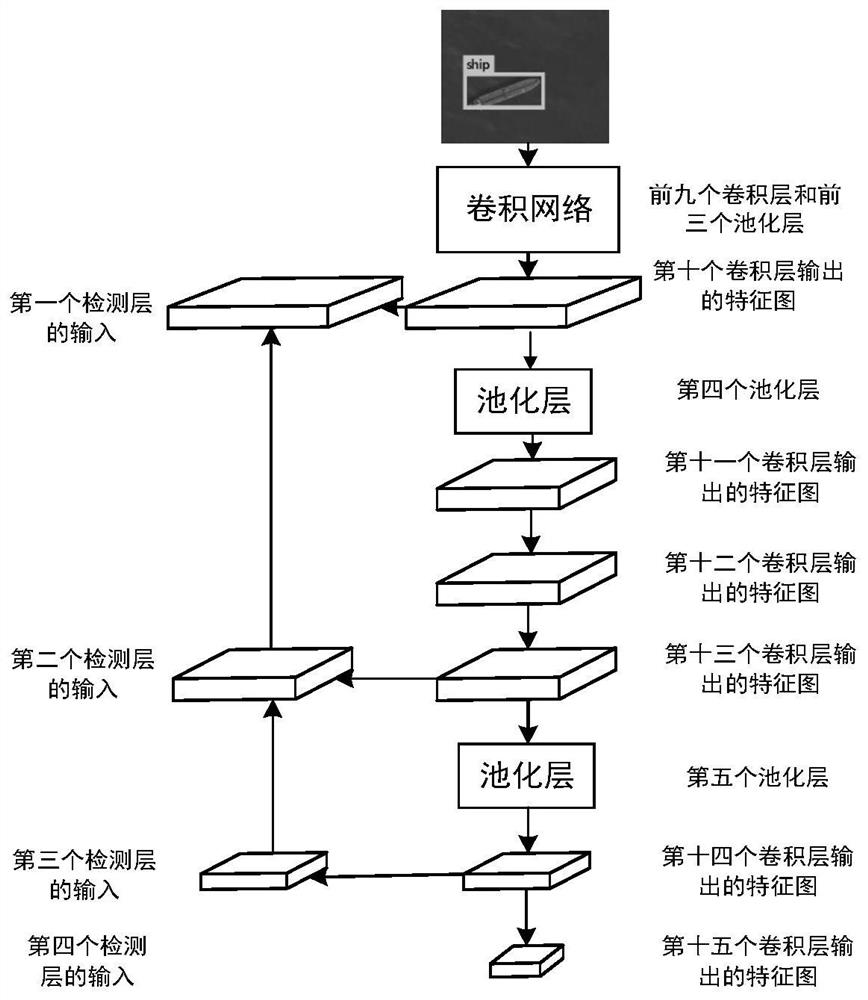

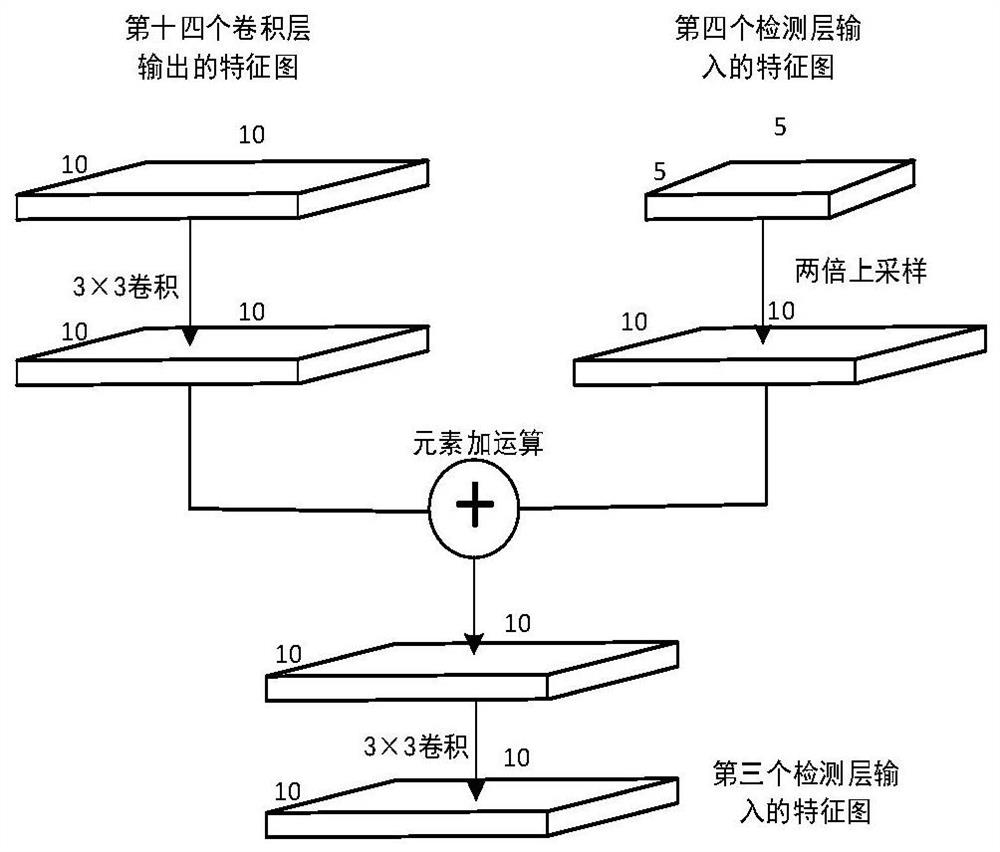

Ship detection method for optical remote sensing images based on feature fusion convolutional network

ActiveCN108921066BEnhanced feature informationImprove detection accuracyScene recognitionImage resolutionEngineering

The invention discloses an optical remote sensing image ship detection method based on a feature fusion convolution network, which mainly solves the problems of low detection accuracy and slow detection speed of small and medium-sized ships in the prior art. Concrete steps of the present invention are as follows: (1) construct feature fusion convolutional network; (2) construct training image set and training class label set; (3) train feature fusion convolutional network; (4) optical remote sensing to be tested The image is separated from land and sea; (5) The ship in the optical remote sensing image to be tested is detected. By fusing feature maps of different resolutions, the feature information of small-sized ships is increased, and ships are detected on multiple feature maps of different resolutions, which improves the detection accuracy of small-sized ships, and combined with optical remote sensing The gray information and gradient information of the image realize the separation of sea and land, which improves the speed of ship detection. This method can be applied to the recognition and detection of ships in optical remote sensing images.

Owner:XIDIAN UNIV

A sentiment classification method and system based on multimodal contextual semantic features

ActiveCN112818861BRich Modal InformationFully acquire emotional semantic featuresCharacter and pattern recognitionNeural architecturesEmotion classificationSemantic feature

Owner:NANJING UNIV OF POSTS & TELECOMM

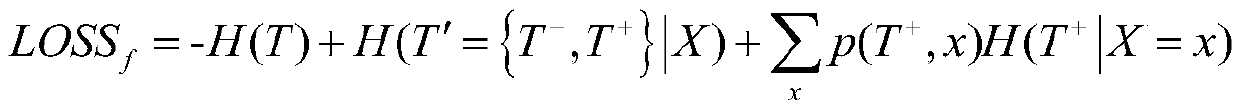

Method and device for determining optimal verbal skill sequence and storage medium

PendingCN114077656ASolve the technical problems of low prediction accuracySolve technical problems with low precisionDigital data information retrievalForecastingTheoretical computer scienceArtificial intelligence

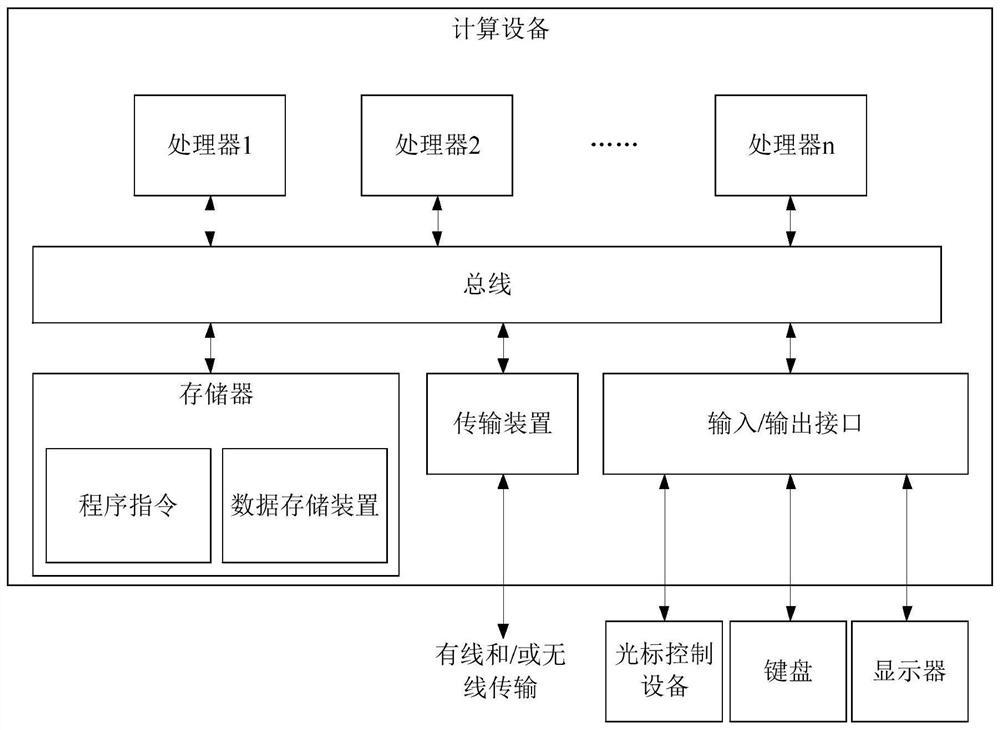

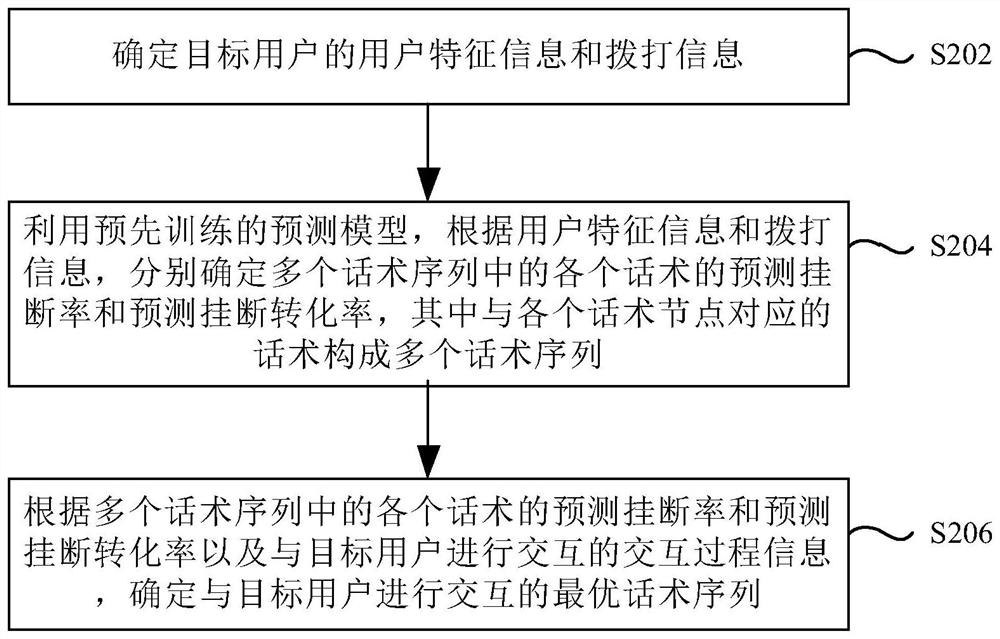

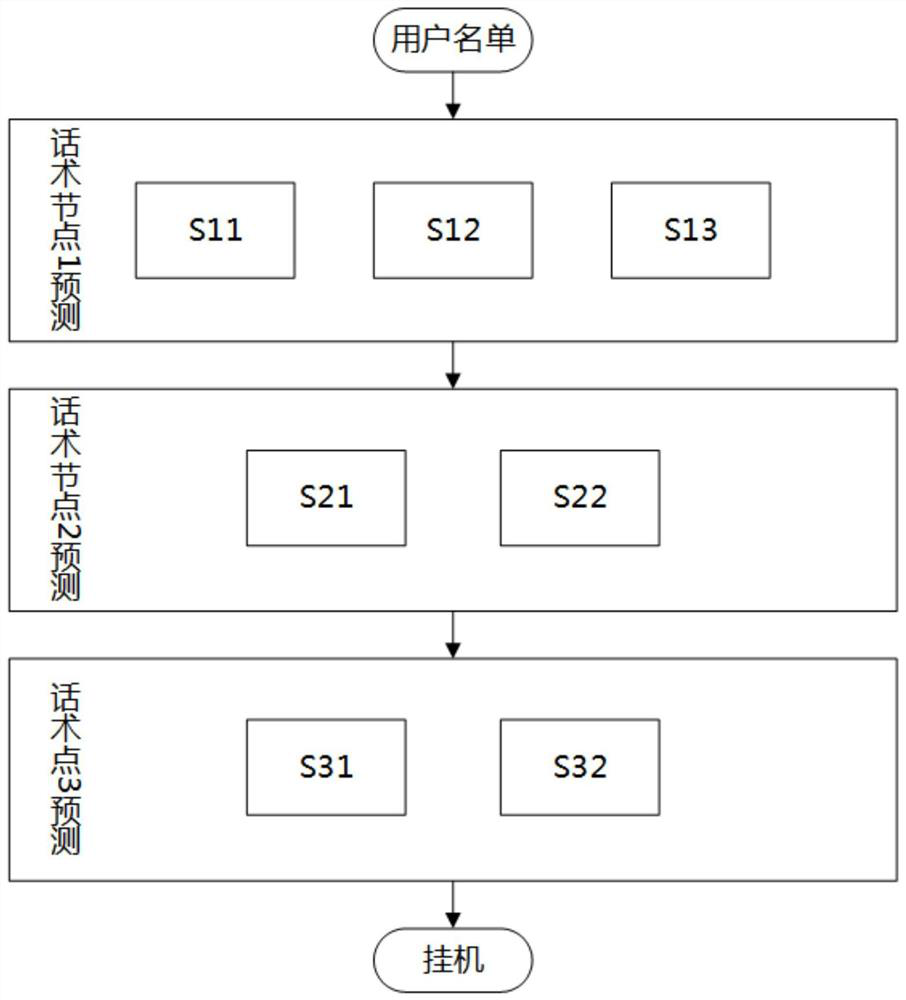

The invention discloses a method and device for determining an optimal verbal skill sequence and a storage medium. The method comprises the following steps: determining user feature information and dialing information of a target user; by utilizing a pre-trained prediction model, according to the user feature information and the dialing information, respectively determining a predicted hang-up rate and a predicted hang-up conversion rate of each verbal skill in the plurality of verbal skill sequences and interaction process information of interaction with the target user, wherein the interaction process information comprises a current verbal skill sequence interacting with the target user, a verbal skill subsequence interacting with the target user and a subsequent verbal skill sequence interacting with the target user; forming a plurality of verbal skill sequences by verbal skill corresponding to each verbal skill node; and determining an optimal verbal skill sequence interacting with the target user according to the predicted hanging-up rate and the predicted hanging-up conversion rate of each verbal skill in the plurality of verbal skill sequences.

Owner:北京有限元科技有限公司

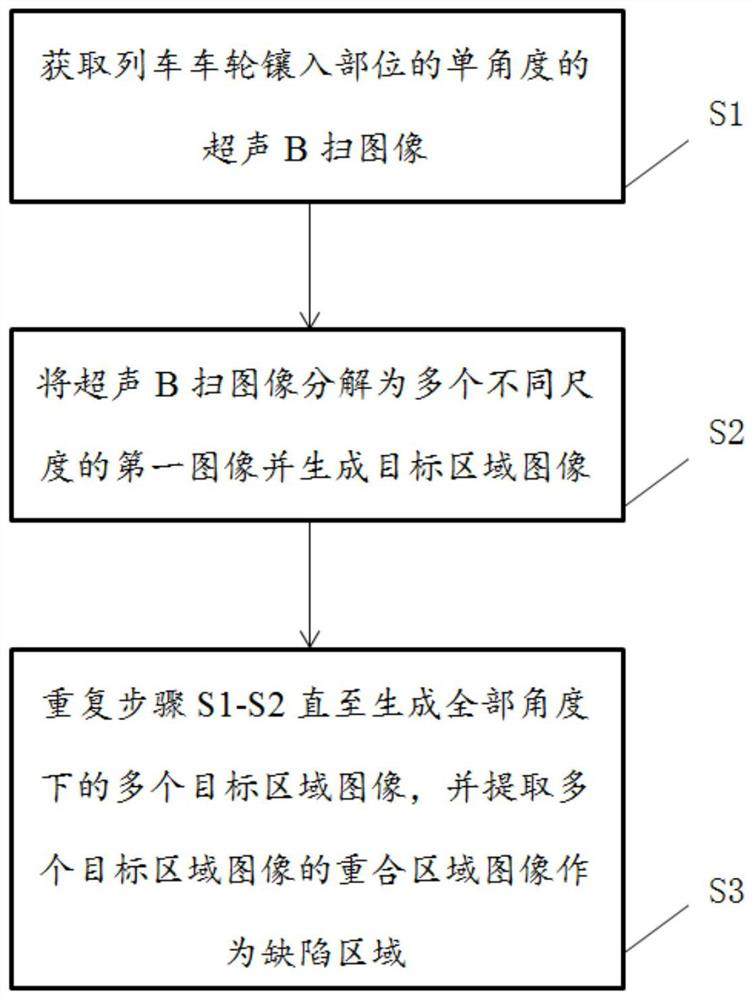

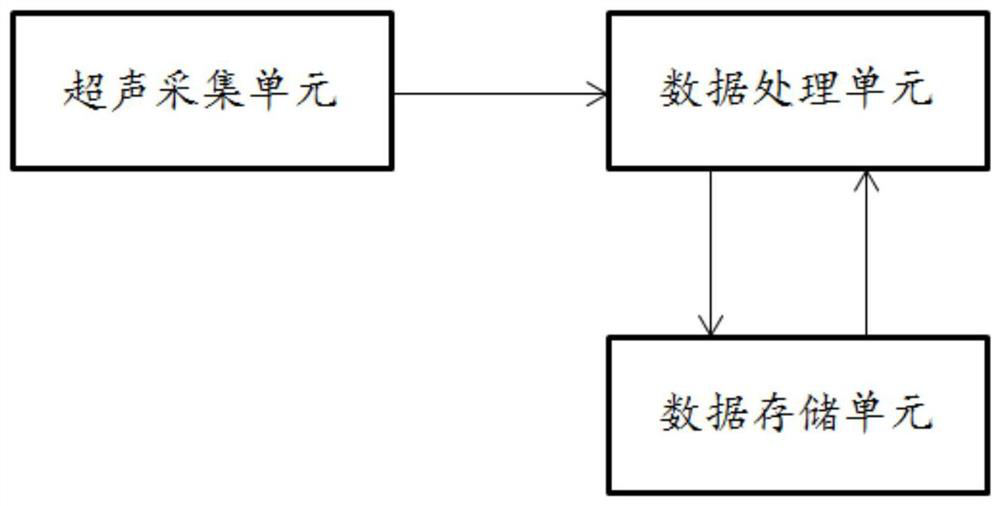

Ultrasonic damage judging method and system for embedded part of train axle

PendingCN112700438AEnhanced feature informationImprove accuracyImage enhancementImage analysisPattern recognitionEngineering

The invention discloses an ultrasonic damage judging method and system for an embedded part of a train axle. The ultrasonic damage judging method comprises the steps: acquiring a single-angle ultrasonic B-scan image of the embedded part of a train wheel; decomposing the ultrasonic B-scan image into a plurality of first images with different scales, calculating the significance value of each pixel block of the plurality of first images, and generating a target area image; and repeating the step S1 and step S2 until a plurality of target area images at all angles are generated, and extracting an overlapping area image of the plurality of target area images as a defect area. According to the method, multiple multi-angle ultrasonic B-scan images of the train wheel embedding part are collected, feature information of wheel set noise and defects is increased, Gaussian pyramid image decomposition is carried out on the ultrasonic B-scan images to carry out significance detection, target defect features are further enhanced, background noise is further weakened, and the detection accuracy is improved. Moreover, an accurate defect area is extracted, a detection result is generated, and the problem that the reliability of the detection result is low in an existing ultrasonic damage judgment method for the axle embedding part is solved.

Owner:CHENGDU TIEAN SCI & TECH

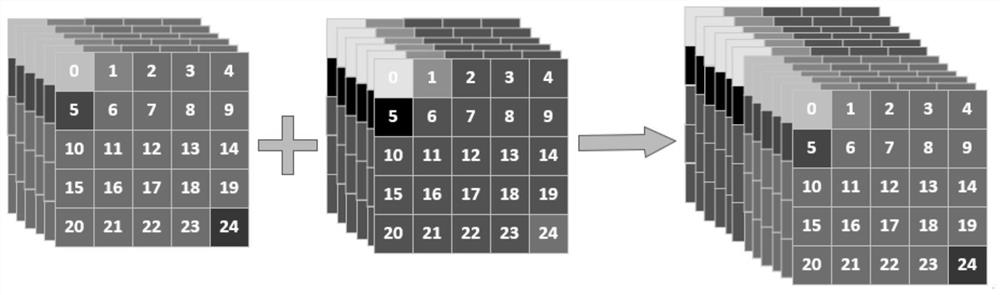

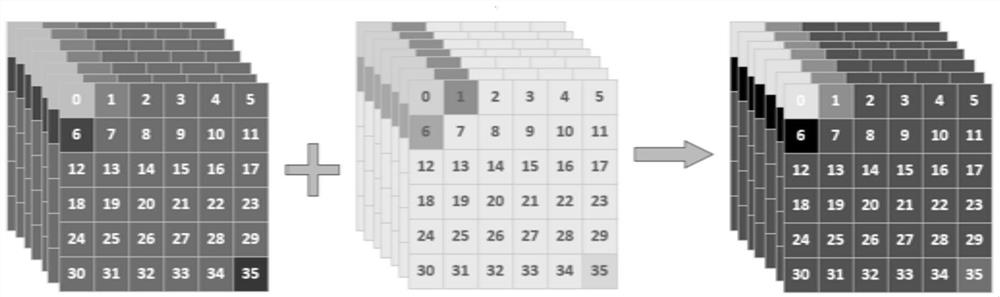

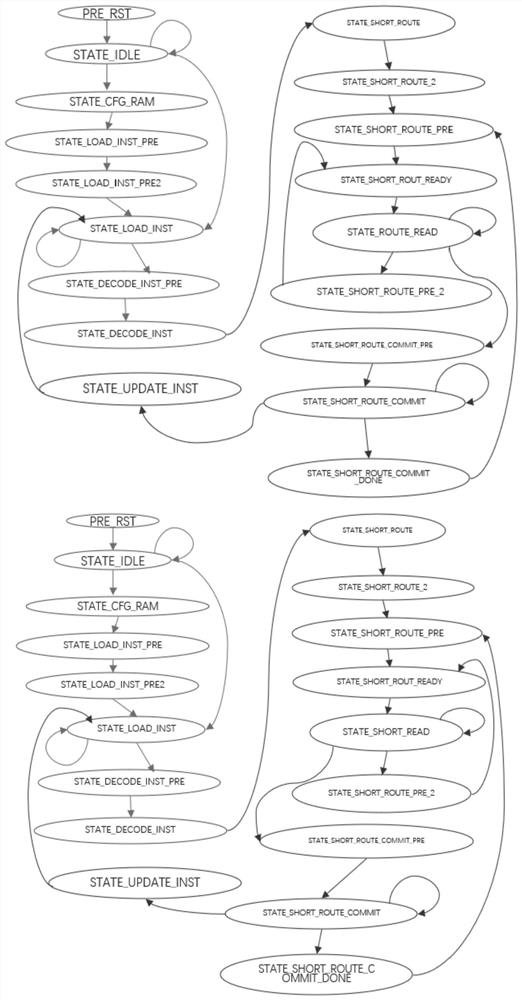

Vector fusion calculation method and device applied to neural network data processing

PendingCN114595805ASubsequent processing accuracy improvementEnhanced feature informationNeural architecturesEnergy efficient computingMachine learningControl mode

Owner:SHENZHEN INST OF ADVANCED TECH CHINESE ACAD OF SCI

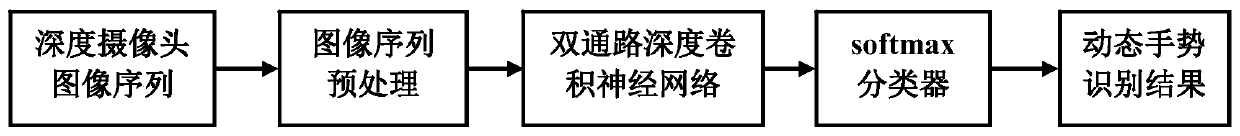

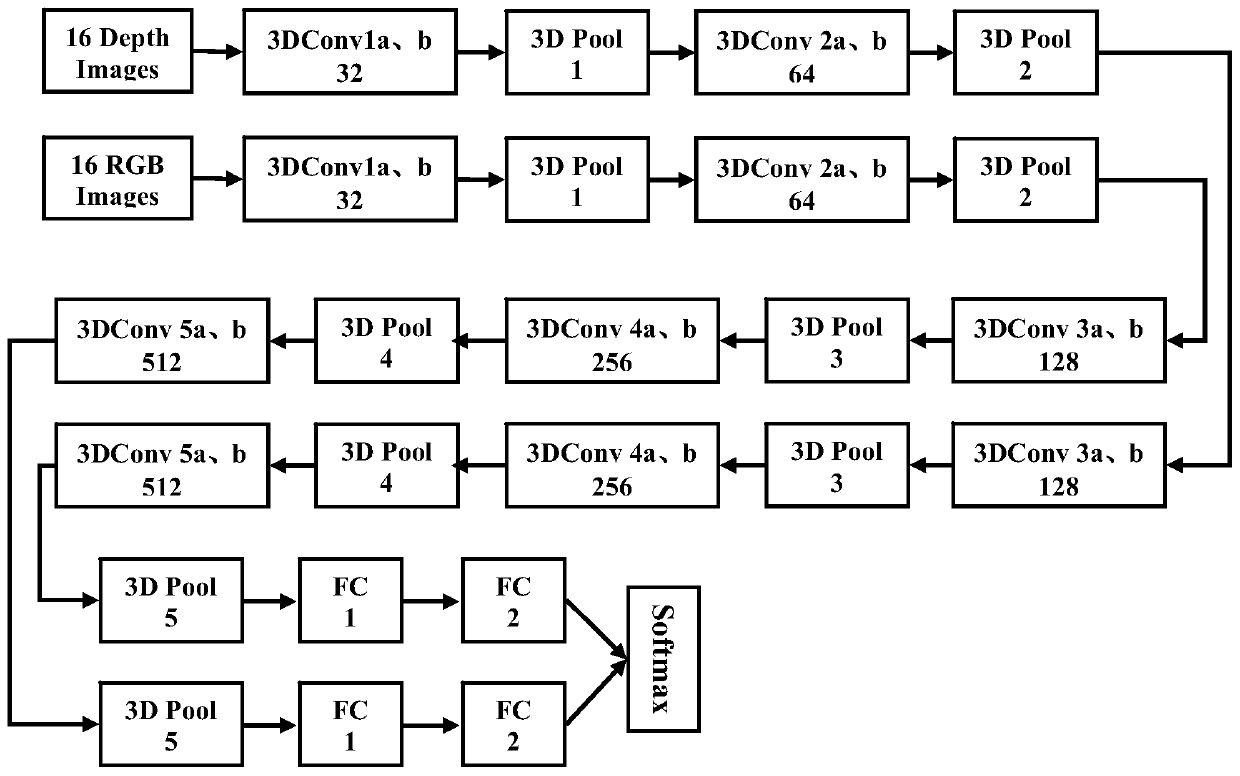

Dynamic gesture recognition method based on two-pass deep convolutional neural network

ActiveCN107808131BMeet the requirements of quantity consistencyEliminates differences in different operating time periodsImage analysisCharacter and pattern recognitionTime domainColor image

The invention discloses a dynamic gesture recognition method based on a dual-channel deep convolutional neural network. First, a depth image sequence and a color image sequence of a dynamic gesture are collected from a depth camera, and a preprocessing operation is performed to obtain a depth foreground image sequence and a color image sequence of the dynamic gesture. Color foreground image sequence; design a two-pass deep convolutional neural network, input the deep foreground image sequence and the color foreground image sequence into the two-pass deep convolutional neural network, and extract the dynamic The temporal and spatial features of gestures in depth space and color space are fused, and then input to the softmax classifier; the final gesture recognition result is obtained according to the output of the softmax classifier. The invention adopts a dual-channel deep convolutional neural network model, extracts and fuses the features on the color and depth space of dynamic gestures, and greatly improves the recognition rate of dynamic gestures.

Owner:SOUTH CHINA UNIV OF TECH

A method of drone pest detection

ActiveCN111079546BImprove fidelityImprove accuracyCharacter and pattern recognitionNeural architecturesDroneAlgorithm

The invention discloses a method for detecting pests of unmanned aerial vehicles, which belongs to the field of agricultural unmanned aerial vehicles. After the current frame image is pooled at least three times, the fifth output data is obtained; after the fifth output data is convolutionally processed at least twice, the sixth output data is obtained; and the sixth output data is pooled. After processing and one convolution processing, the seventh output data is obtained; the sixth output data and the seventh output data are fused to obtain the eighth output data; the eighth output data is pooled at least twice After processing and at least two convolution processes, the ninth output data is obtained; the ninth output data is classified to obtain the composite target area; if the distance between the current frame target area and the composite target area is less than a set threshold , to obtain the area where the pest is located.

Owner:CHONGQING NORMAL UNIVERSITY

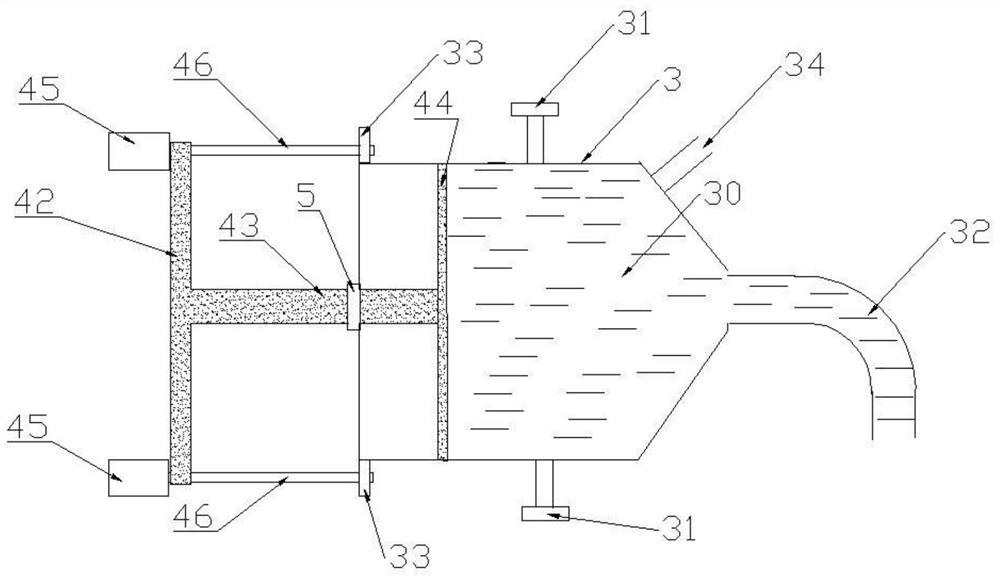

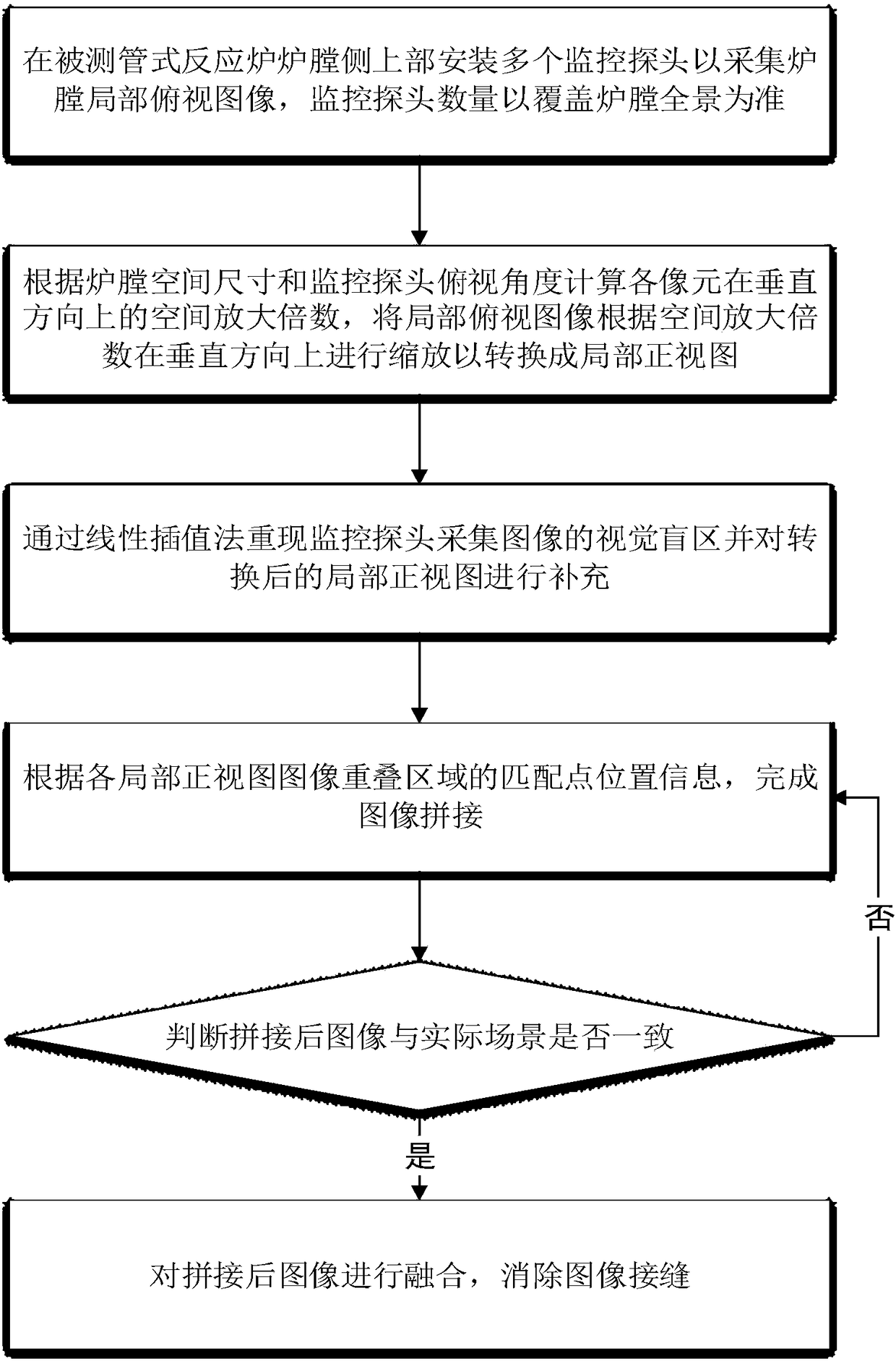

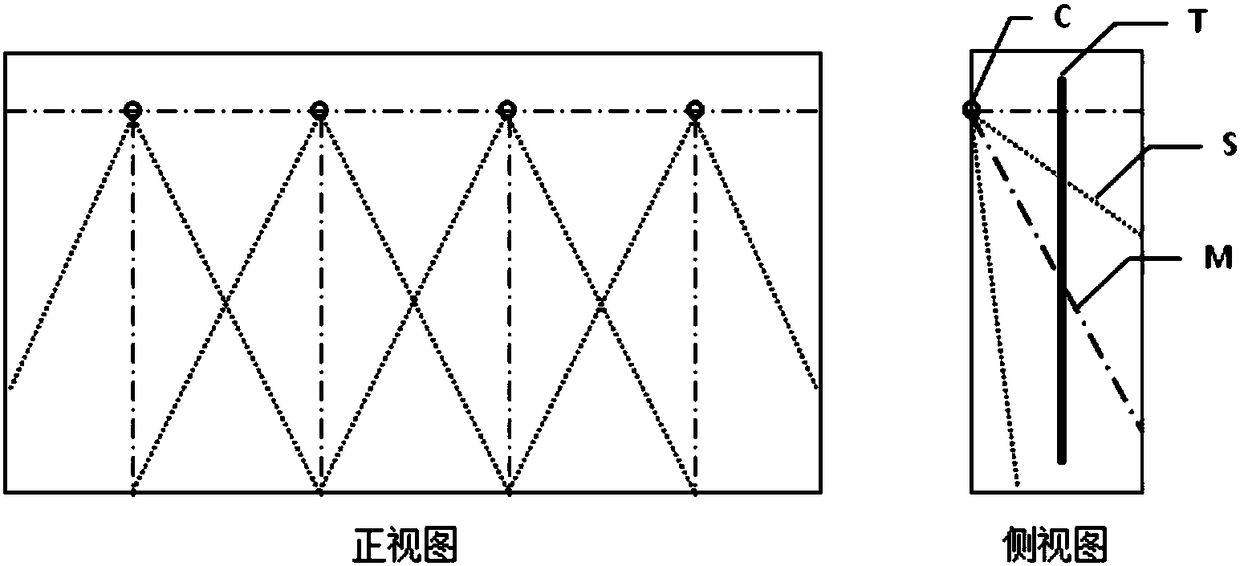

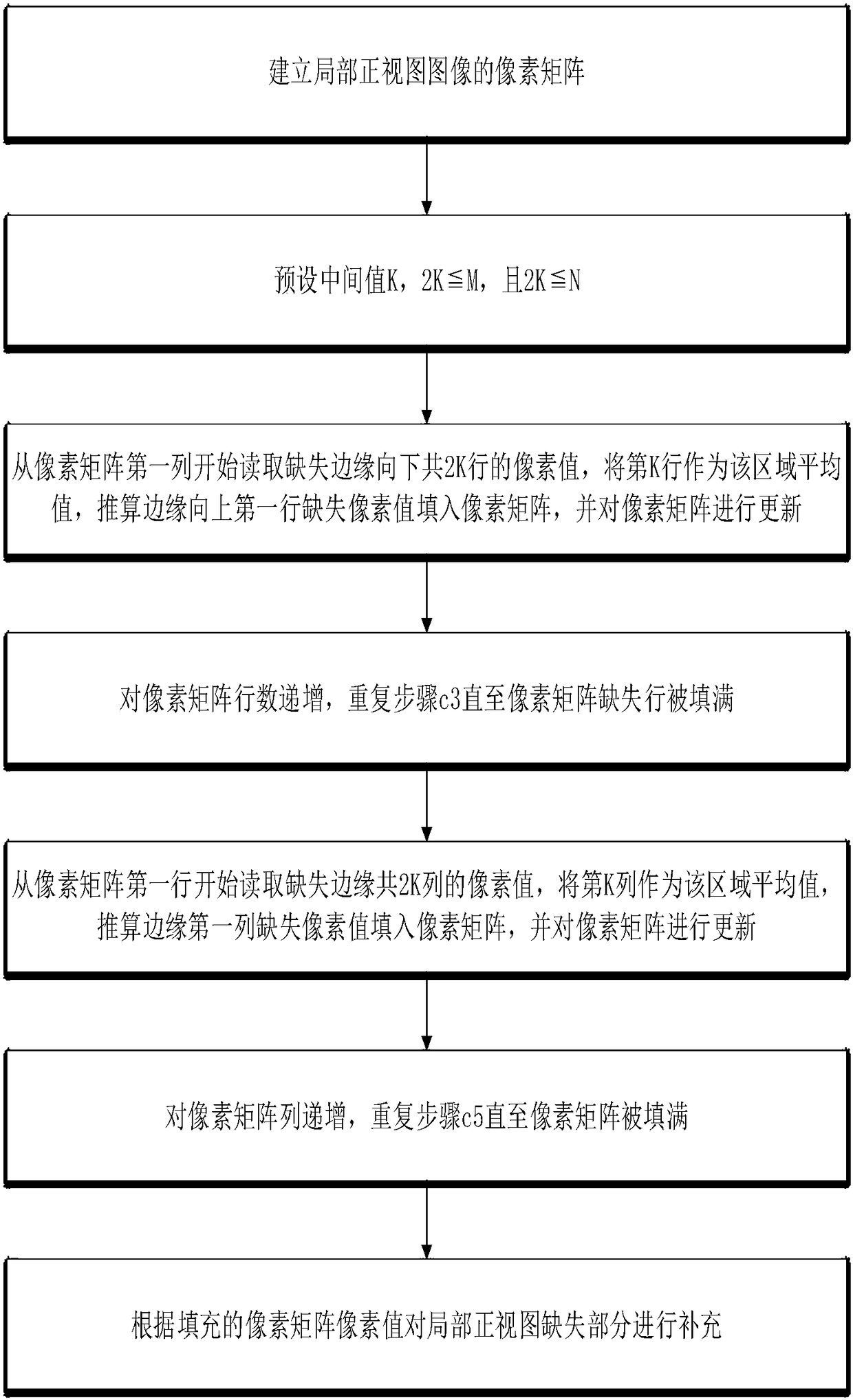

A Method for Imaging Panorama Image of Tube Reactor Furnace

ActiveCN105828034BImprove visual effectsEnhanced feature informationImage enhancementGeometric image transformationTime informationImage fusion

A tubular reacting furnace hearth panoramic image imaging method disclosed by the present invention comprises the steps, such as the image pre-processing, the view angle transformation, the image blind area reproduction, the image splicing, the image fusion, the temperature color coding, etc. According to the tubular reacting furnace hearth panoramic image imaging method provided by the present invention, a hearth panorama top view image is obtained by a plurality of set monitoring probes, and then a hearth local top view image is transformed into a front view image rapidly by combining the hearth spatial size and the spatial solid angles of the pixels of the monitoring probes, thereby visually reflecting the hearth internal real information, and being good in visual effect. According to the method, a tubular reacting furnace hearth panoramic image can be extracted effectively and rapidly, thereby retaining the real-time information of a furnace tube furthest; the infrared radiation information can be extracted by the panoramic image, so that the furnace wall surface temperature of the furnace tube can be calculated accurately, the hearth temperature information and the change variation trend can be displayed panoramically, and the foundation is laid for the tubular reacting furnace panoramic temperature field detection.

Owner:安徽淮光智能科技有限公司

Random sampling consensus algorithm for image recognition based on voting decision and least squares

ActiveCN107220580BHigh speedOptimal Alignment MethodMatching and classificationPattern recognitionAlgorithm

A random sampling consensus algorithm for image recognition based on voting decision and least squares method. The voting decision method is introduced to find the most likely correct point pair, and the rotation and translation transformation matrix is calculated by the principle of least squares method. The rotation and translation transformation matrix calculated by the invention is not only accurate, fast, but also very stable, and the calculation result will not fluctuate randomly each time; the invention not only optimizes the image comparison method, but also helps the subsequent learning of the data processing chip The feature information of the reference image is continuously increased, thereby effectively improving the false rejection rate and making the data processing chip more intelligent; the present invention also realizes image stitching more efficiently and accurately through the continuously updated rotation and translation transformation matrix.

Owner:FOCALTECH ELECTRONICS LTD

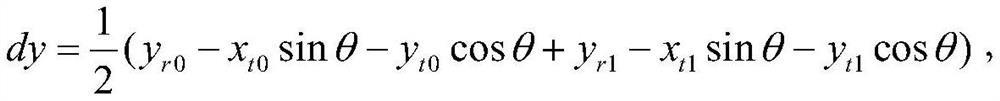

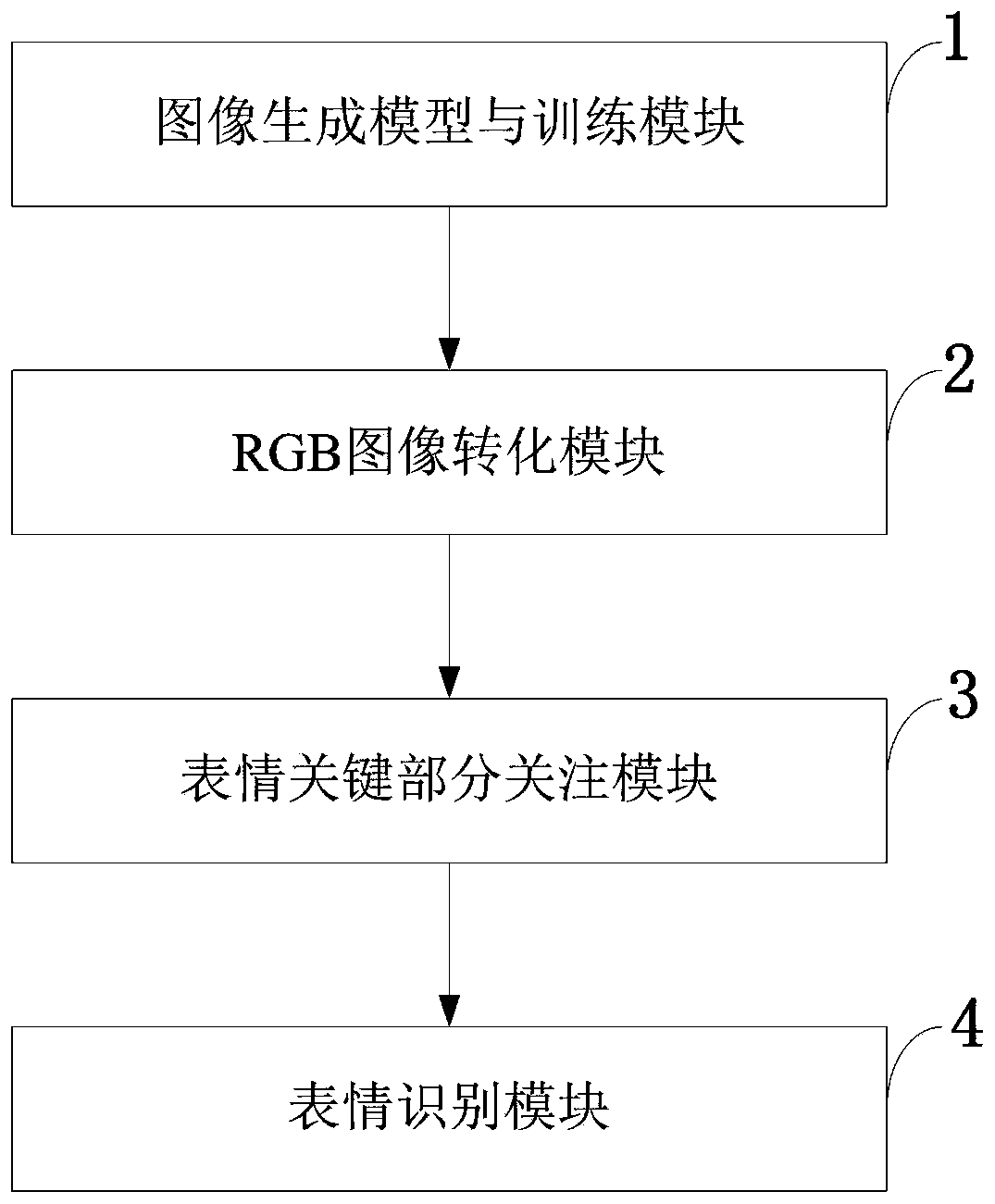

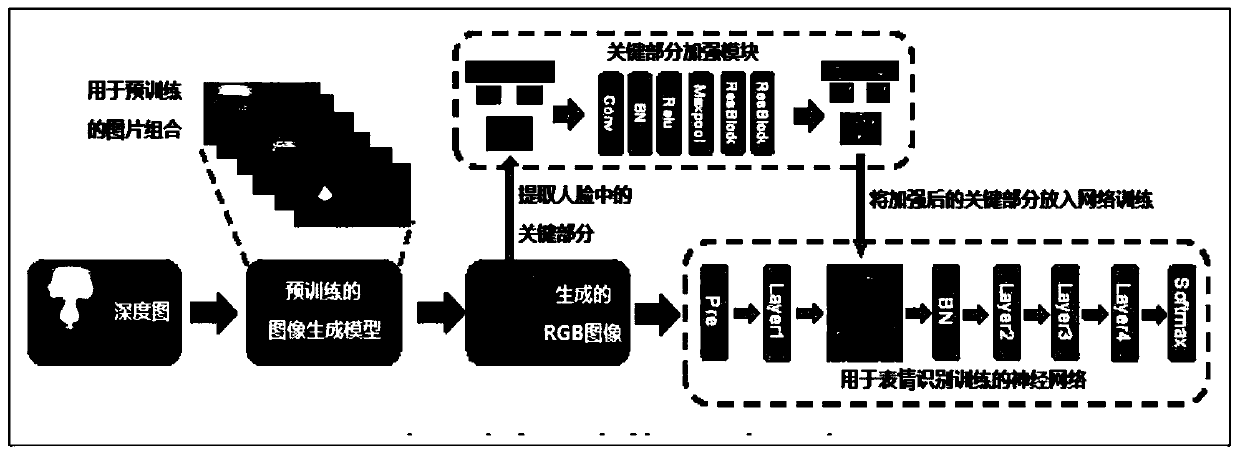

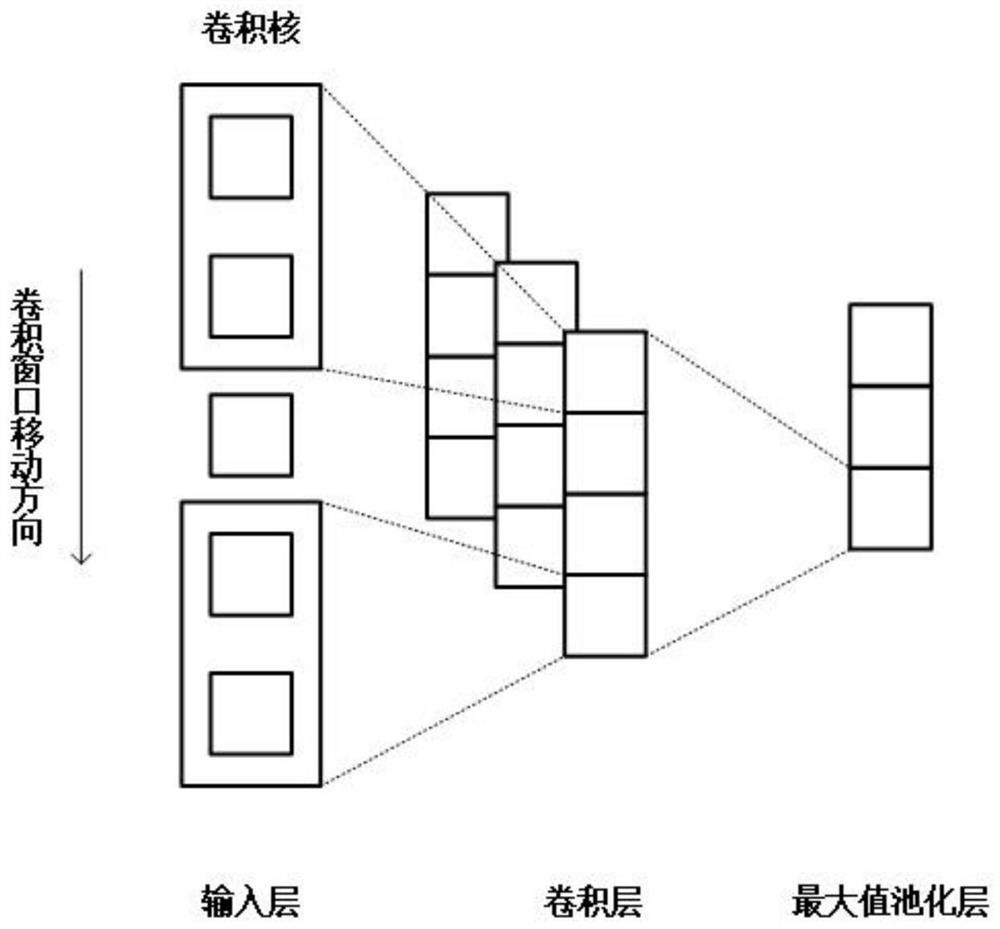

Facial expression recognition method and system, storage medium, computer program and terminal

ActiveCN111582067AEnhanced feature informationImprove recognition accuracyNeural architecturesAcquiring/recognising facial featuresVisual technologyRgb image

The invention belongs to the technical field of computer vision. The invention discloses a facial expression recognition method and system, a storage medium, a computer program and a terminal, and themethod comprises the steps: pre-training an image generation model according to the combination of a given depth image and an RGB image, and enabling the trained image generation model to convert aninput depth image into an RGB image according to an RGB image style used for training; generating eyebrows, eyes and mouths of expressions in the RGB images, training a convolutional neural network considering the eyebrows, the eyes and the mouths, and achieving expression recognition through the convolutional neural network. According to the invention, the feature information of eyes, eyebrows and mouths is enhanced, and the recognition accuracy is higher; the effect of the image generation model is good, important information about expressions is reserved through the image generation model,and the forms of RGB images used for expression recognition are unified; the accuracy of expression recognition is higher; when only one channel of the depth map is used for identification, the effectobtained by the method is better.

Owner:SOUTHWEST UNIVERSITY

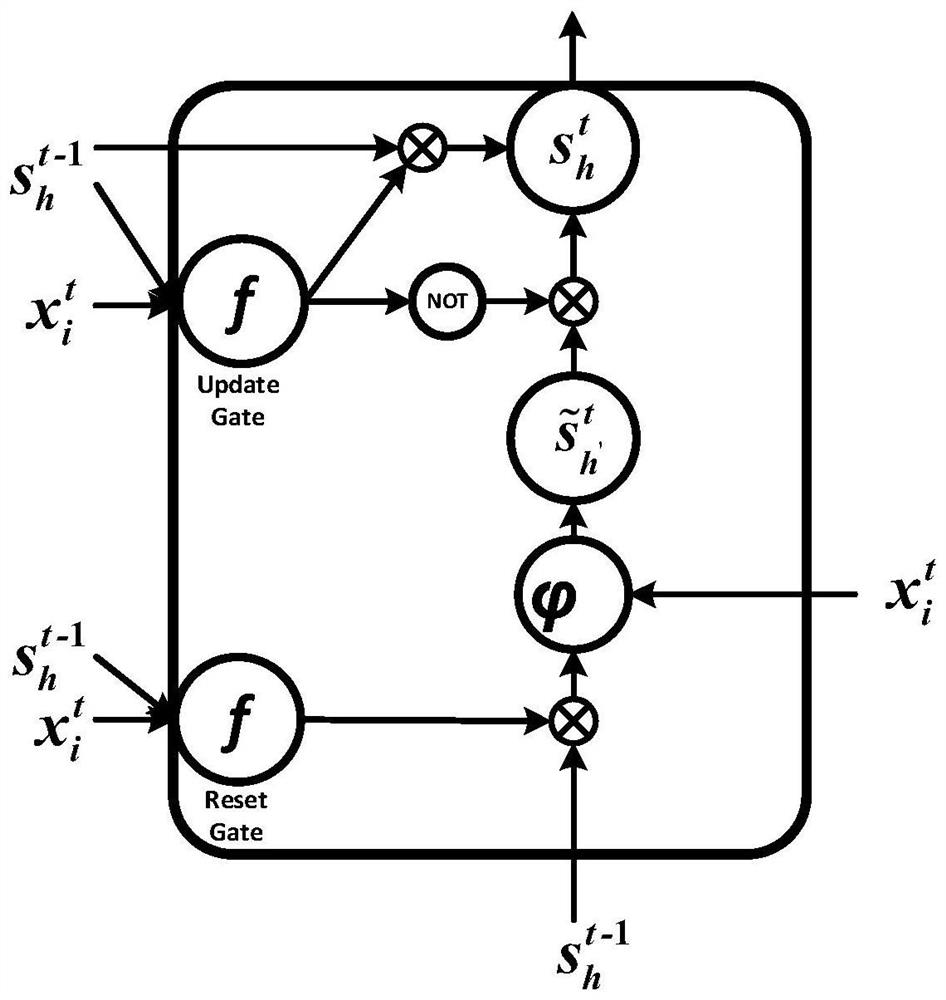

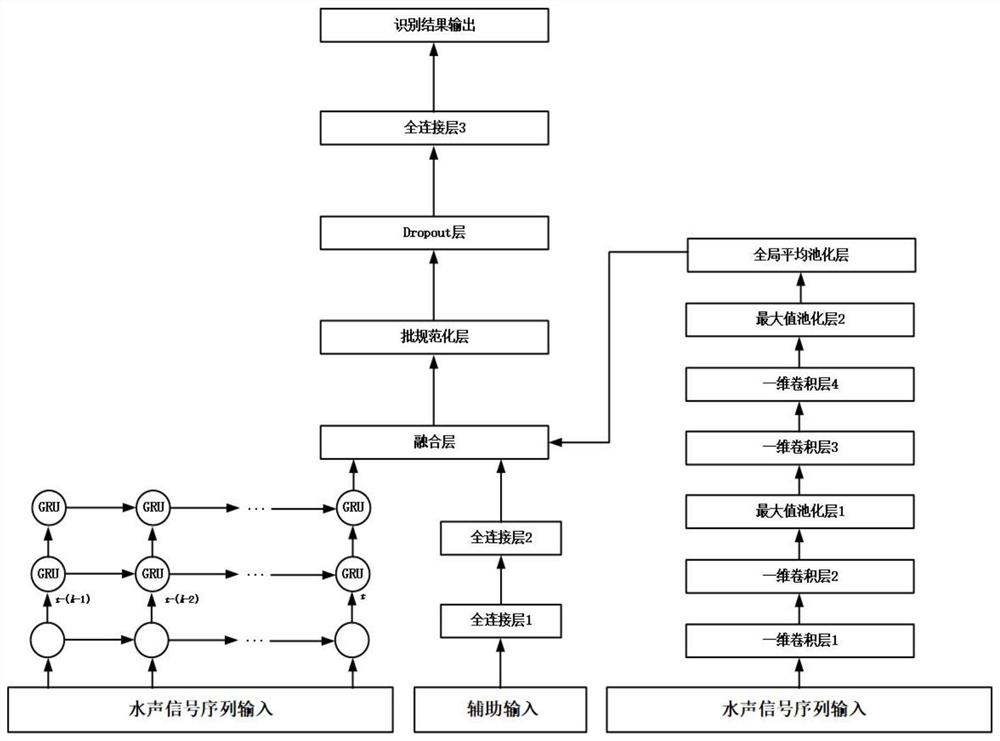

An underwater target recognition method based on the fusion of GRU and one-dimensional CNN neural network

ActiveCN110807365BSolve the problem that the time feature cannot be extractedEnhanced feature informationCharacter and pattern recognitionNeural architecturesHydrophoneFeature vector

The invention discloses an underwater target recognition method based on the fusion of GRU and one-dimensional CNN neural network, belonging to the field of underwater acoustic target recognition. Aiming at the problem of underwater acoustic target recognition, an underwater target recognition method based on the fusion of GRU and one-dimensional CNN neural network is proposed, and the problem that the traditional neural network cannot extract the temporal characteristics of underwater acoustic signals is solved by using the GRU-based cyclic neural network structure. , while using a one-dimensional CNN convolutional neural network structure to extract the time-domain waveform features of the underwater acoustic signal. The eigenvectors extracted by the fusion of GRU and one-dimensional CNN neural network structure enrich the eigenvalue information of the input classifier. The auxiliary information input that affects the recognition accuracy is expanded, including distance, hydrophone depth, channel depth and other information, and the dropout layer and batch normalization layer are added to avoid the overfitting problem and improve the recognition accuracy of underwater acoustic targets.

Owner:ZHEJIANG UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com