Dynamic gesture recognition method based on two-pass deep convolutional neural network

A deep convolution and neural network technology, applied in the field of computer vision and machine learning, can solve problems such as influence and difference, and achieve the effect of increasing feature information, eliminating differences, and high recognition rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

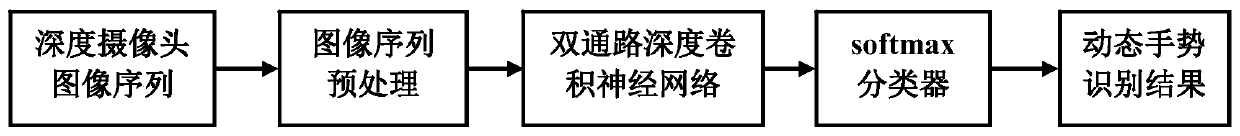

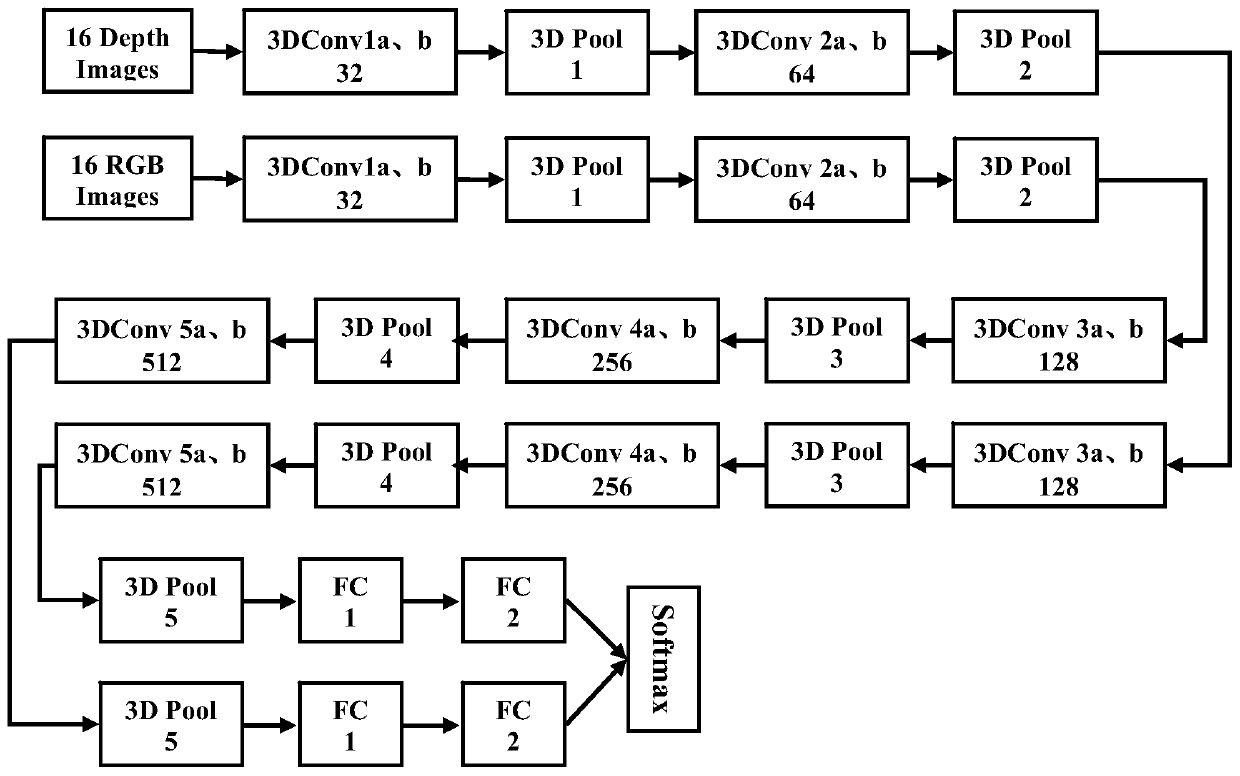

[0022] Such as figure 1 As shown, the present embodiment is based on the dynamic gesture recognition method of the dual-channel deep convolutional neural network, comprising the following steps:

[0023] S1, collect the image sequence of dynamic gesture from depth camera, comprise depth (depth) image sequence and color (RGB) image sequence;

[0024] The data output by the depth camera includes depth (depth) and color (RGB) image sequences, and the resolution can be 640*480 pixels or 320*240 pixels.

[0025] S2. Then perform preprocessing operations on the depth image sequence and the color image sequence to obtain a depth foreground image sequence of 16 frames and a color foreground image sequence of 16 frames for dynamic gestures;

[0026] The preprocessing operation of the image sequence includes: through the method of subtracting the pixels of the front and rear frame images, the foreground image sequence (the calculation formula is as follows (1)) is obtained, which repre...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com