Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

411 results about "Voice analysis" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Voice analysis is the study of speech sounds for purposes other than linguistic content, such as in speech recognition. Such studies include mostly medical analysis of the voice (phoniatrics), but also speaker identification. More controversially, some believe that the truthfulness or emotional state of speakers can be determined using voice stress analysis or layered voice analysis.

Multi-party conversation analyzer & logger

ActiveUS20070071206A1High precisionFacilitate interruptionInterconnection arrangementsSubstation speech amplifiersGraphicsSurvey instrument

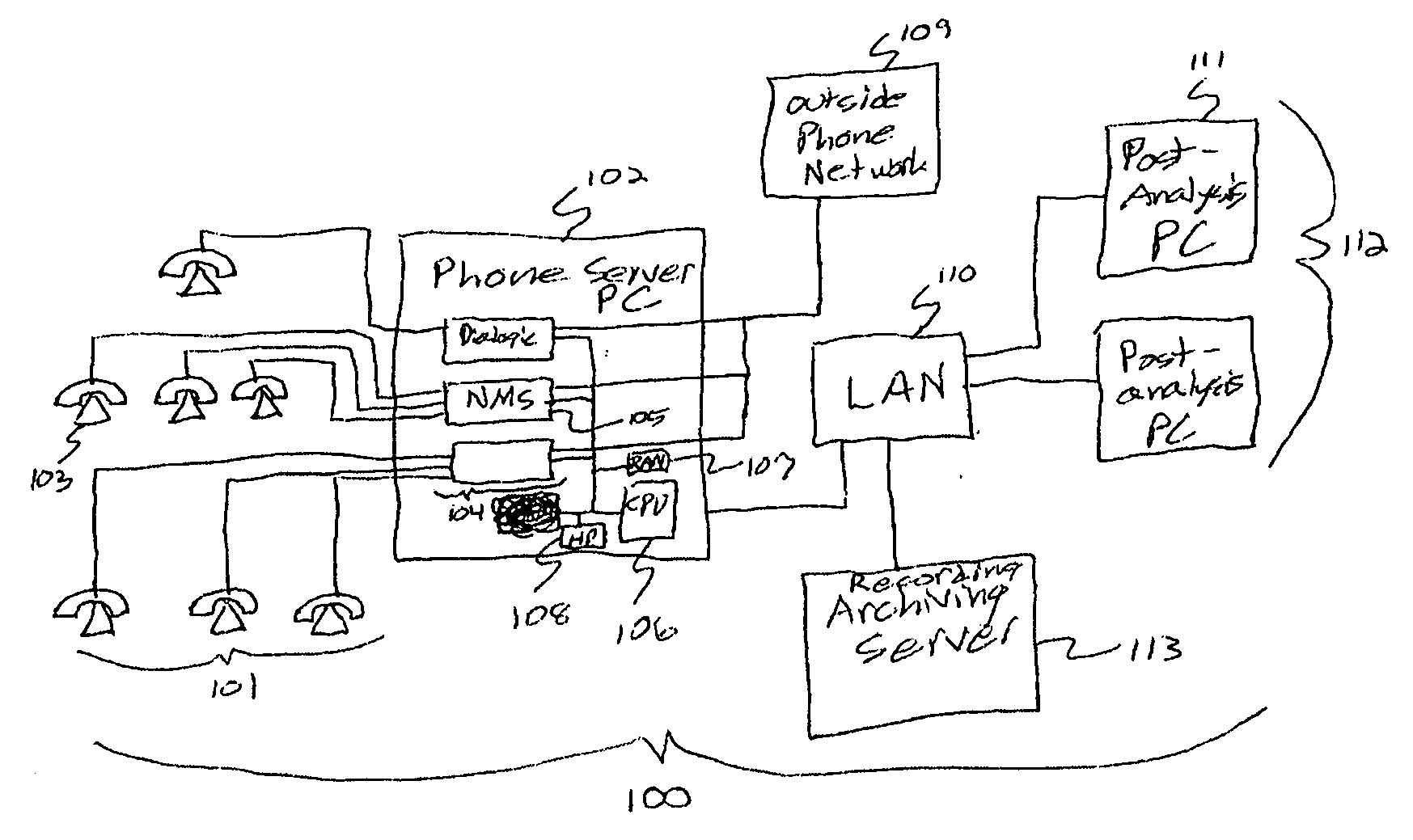

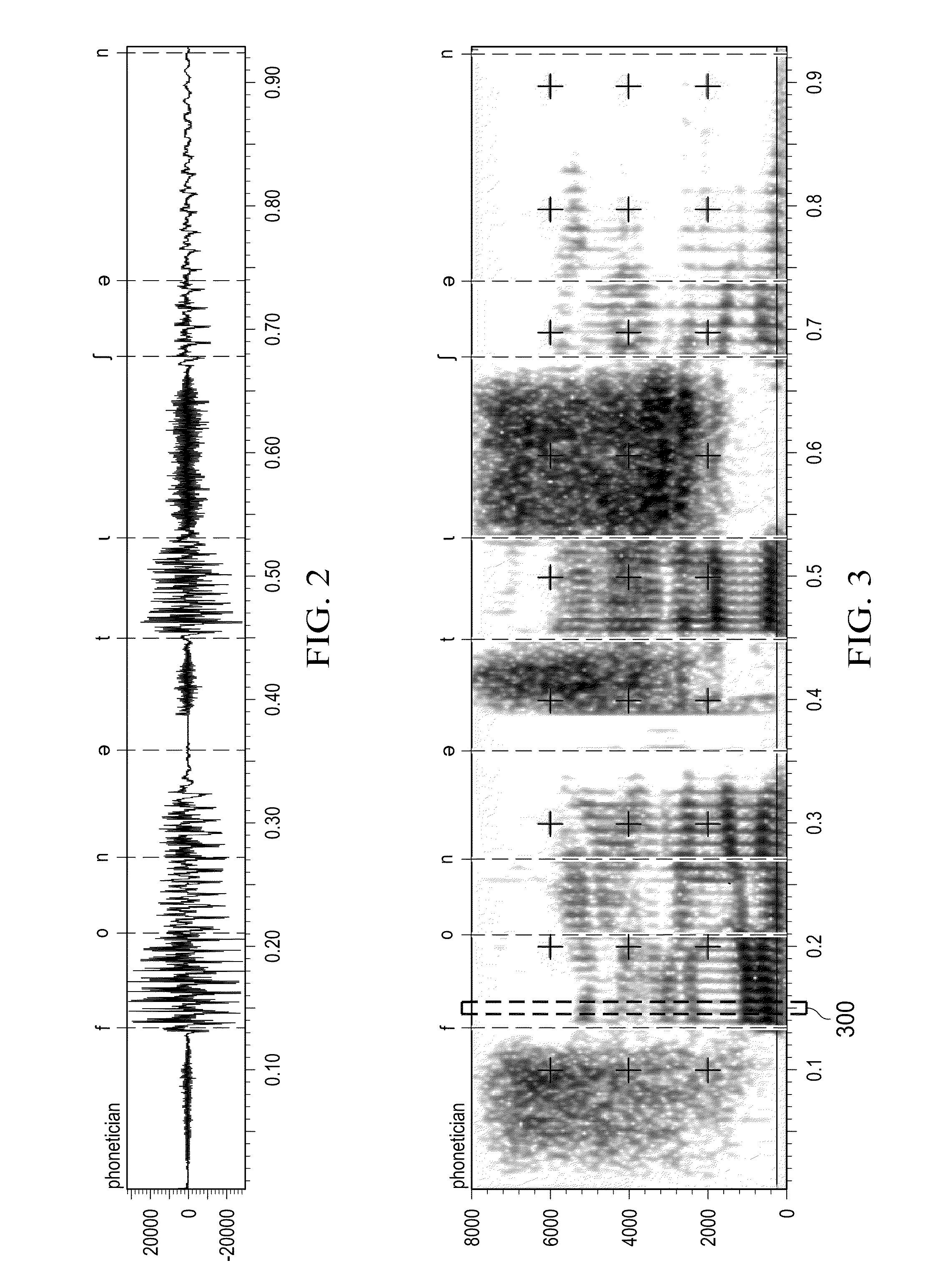

A multi-party conversation analyzer and logger uses a variety of techniques including spectrographic voice analysis, absolute loudness measurements, directional microphones, and telephonic directional separation to determine the number of parties who take part in a conversation, and segment the conversation by speaking party. In one aspect, the invention monitors telephone conversations in real time to detect conditions of interest (for instance, calls to non-allowed parties or calls of a prohibited nature from prison inmates). In another aspect, automated prosody measurement algorithms are used in conjunction with speaker segmentation to extract emotional content of the speech of participants within a particular conversation, and speaker interactions and emotions are displayed in graphical form. A conversation database is generated which contains conversation recordings, and derived data such as transcription text, derived emotions, alert conditions, and correctness probabilities associated with derived data. Investigative tools allow flexible queries of the conversation database.

Owner:SECURUS TECH LLC

Detecting emotions using voice signal analysis

InactiveUS7222075B2Speech analysisAutomatic call-answering/message-recording/conversation-recordingVoice analysisFundamental frequency

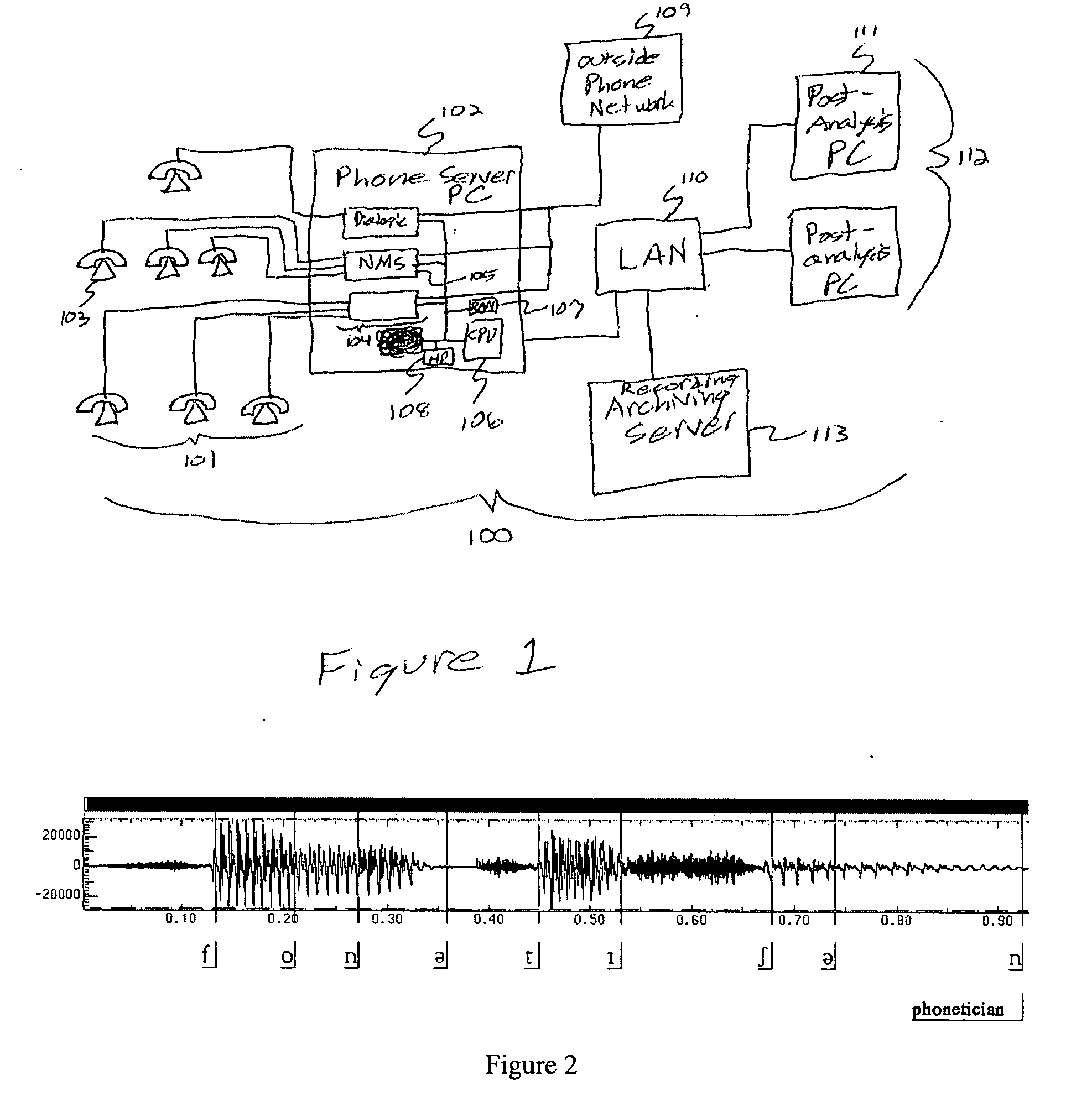

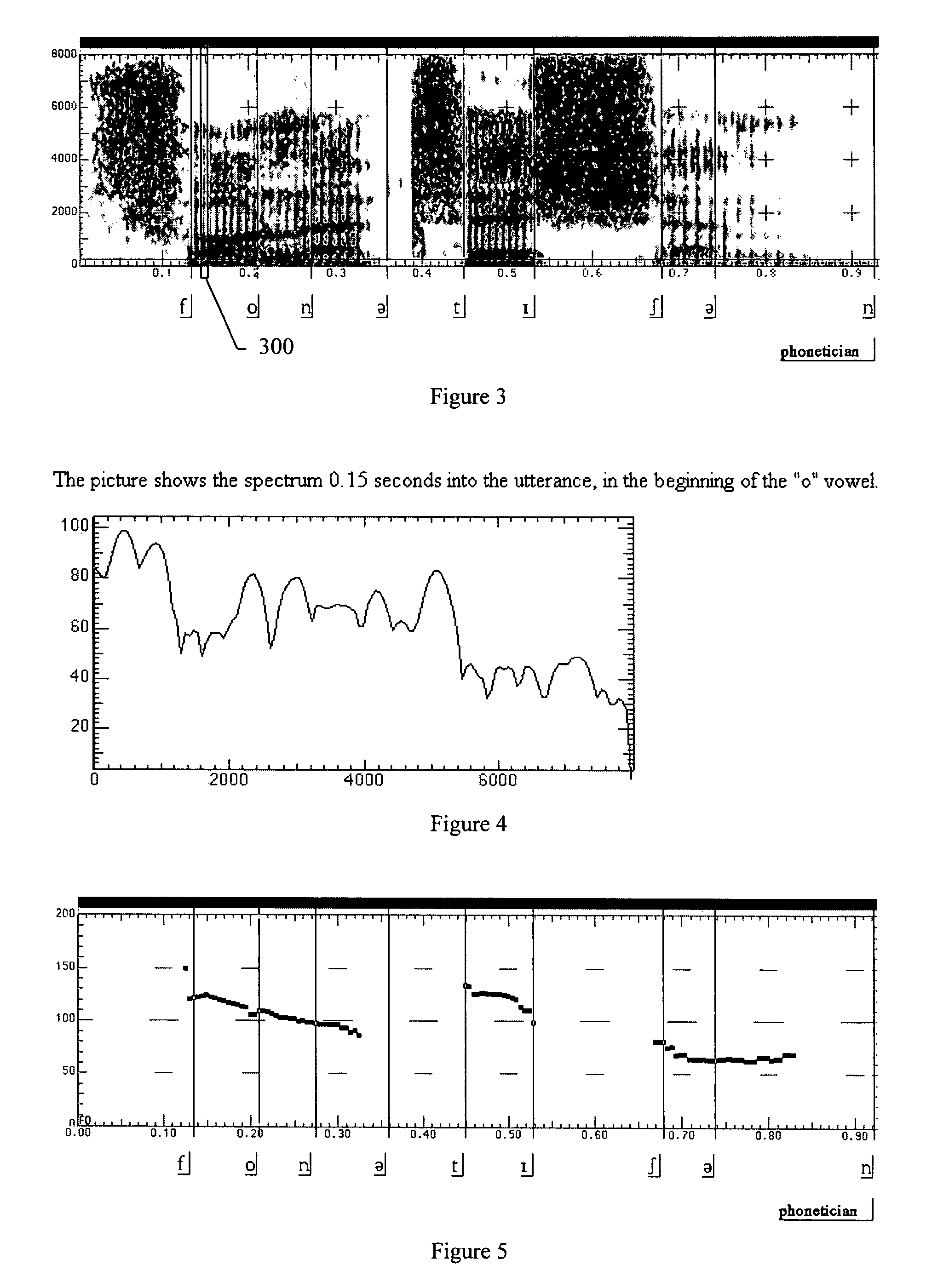

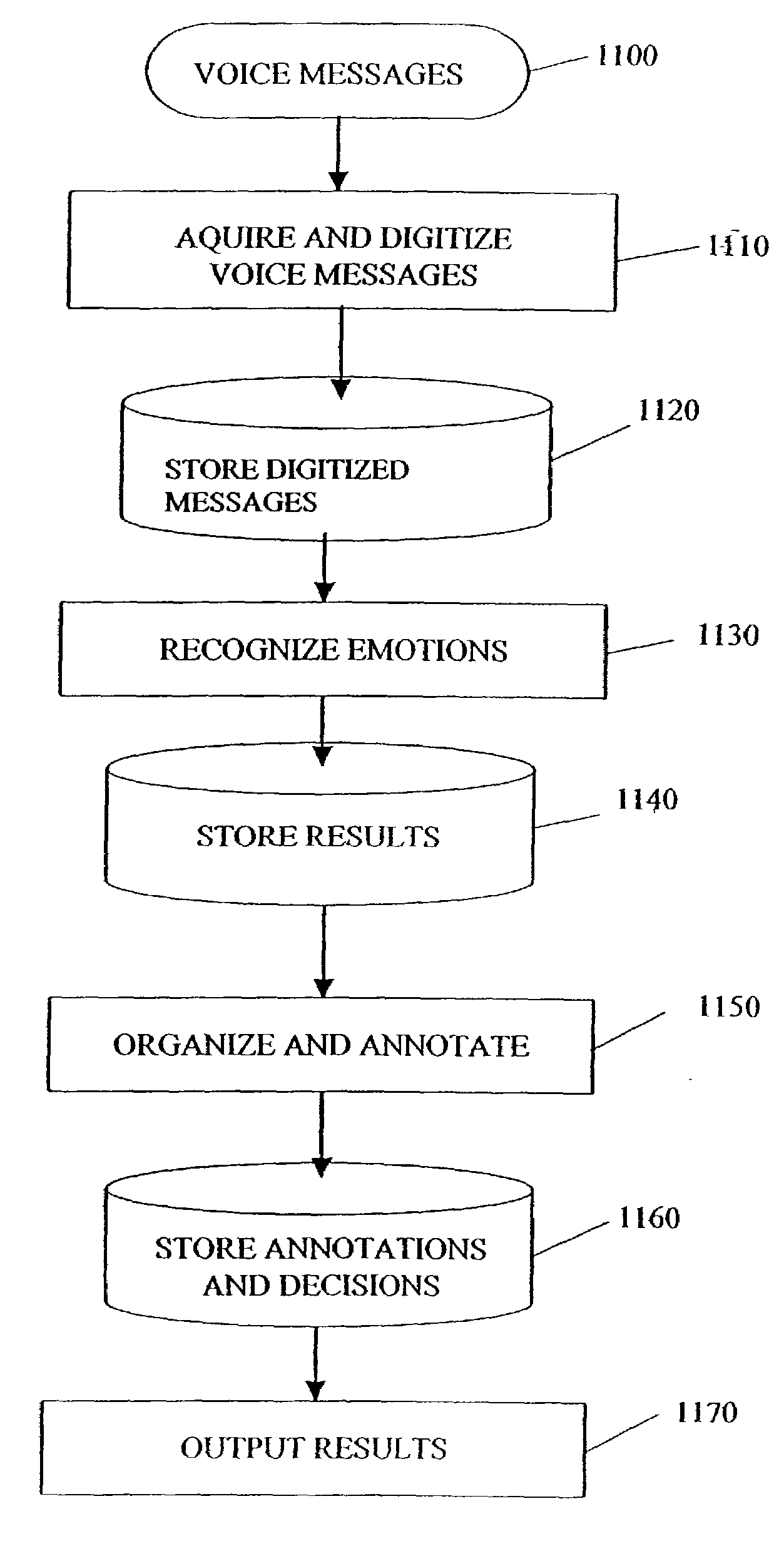

A system and method are provided for detecting emotional states using statistics. First, a speech signal is received. At least one acoustic parameter is extracted from the speech signal. Then statistics or features from samples of the voice are calculated from extracted speech parameters. The features serve as inputs to a classifier, which can be a computer program, a device or both. The classifier assigns at least one emotional state from a finite number of possible emotional states to the speech signal. The classifier also estimates the confidence of its decision. Features that are calculated may include a maximum value of a fundamental frequency, a standard deviation of the fundamental frequency, a range of the fundamental frequency, a mean of the fundamental frequency, and a variety of other statistics.

Owner:ACCENTURE GLOBAL SERVICES LTD

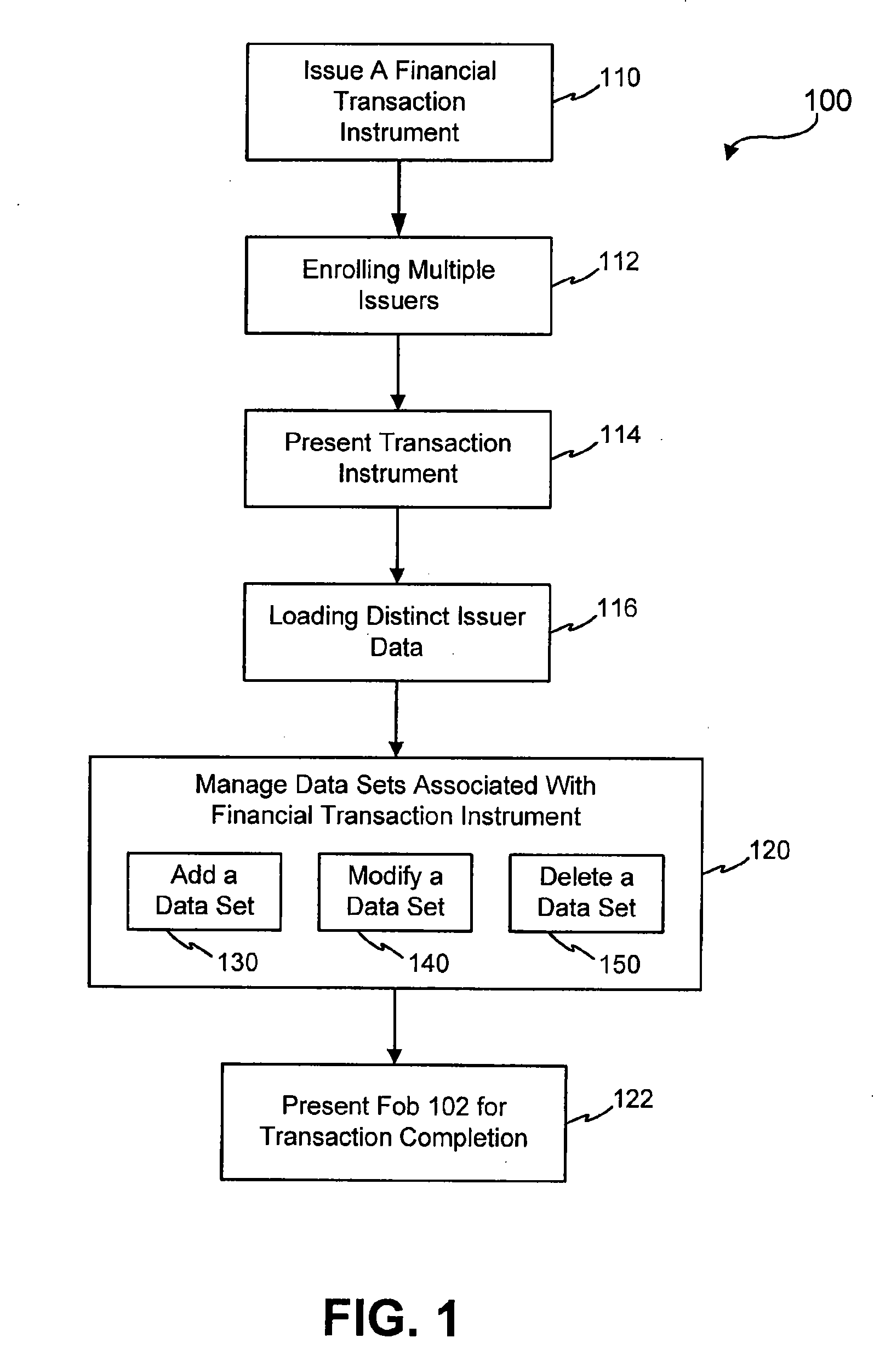

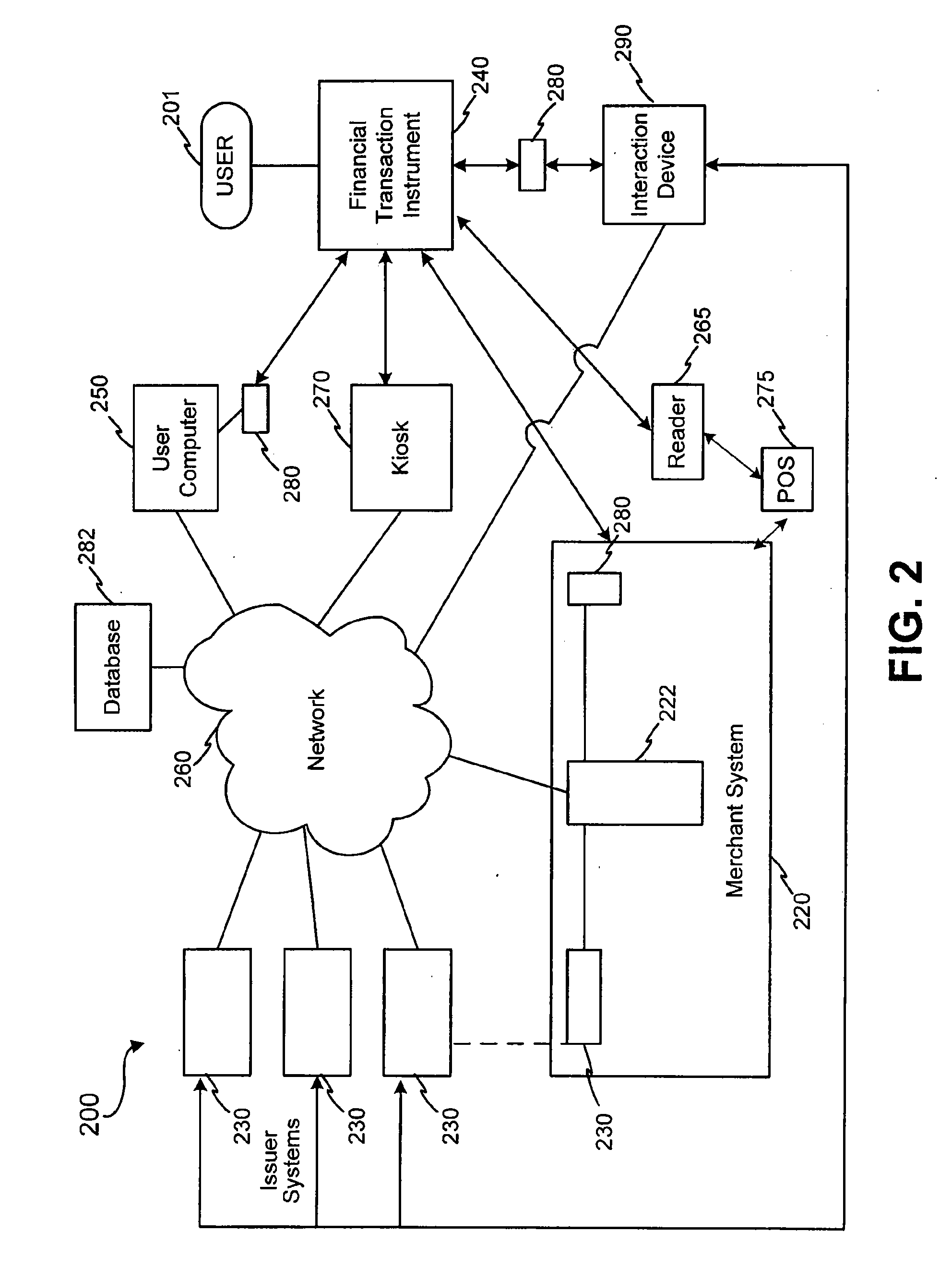

Systems and methods for non-traditional payment using biometric data

InactiveUS20070052517A1Convenient transactionFacilitate payment transactionElectric signal transmission systemsMultiple keys/algorithms usageComputer hardwareBiometric data

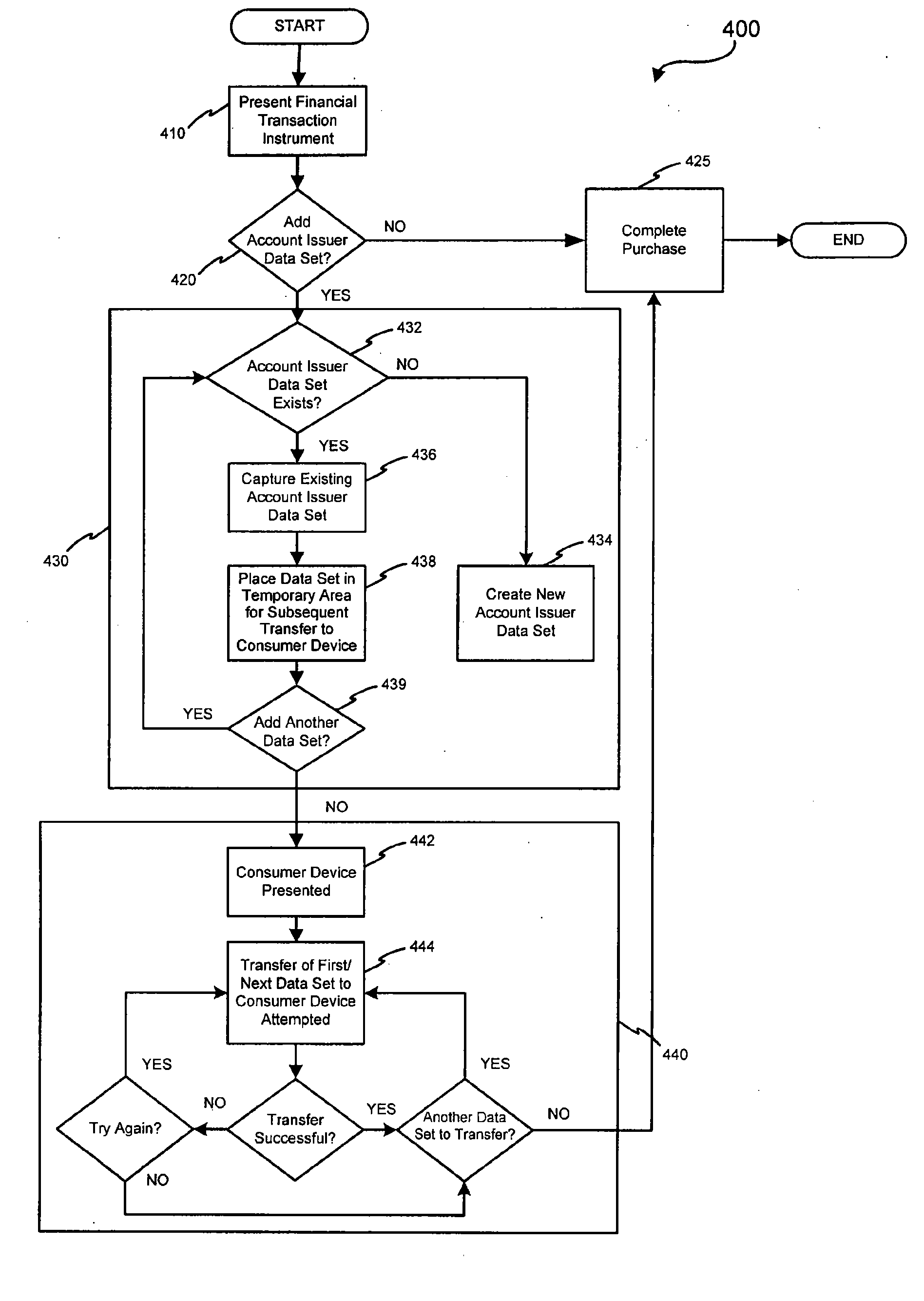

Facilitating transactions using non-traditional devices and biometric data to activate a transaction device is disclosed. A transaction request is formed at a non-traditional device, and communicated to a reader, wherein the non-traditional device may be configured with an RFID device. The RFID device is not operable until a biometric voice analysis has been executed to verify that the carrier of the RFID equipped non-traditional device is the true owner of account information stored thereon. The non-traditional device provides a conduit between a user and a verification system to perform biometric voice analysis of the user. When the verification system has determined that the user is the true owner of one or more accounts stored at the verification system, a purchase transaction is facilitated between the verification system. Transactions may further be carried out through a non-RF device such as a cellular telephone in direct communication with an acquirer / issuer or payment processor

Owner:LIBERTY PEAK VENTURES LLC

Smart phone customer service system based on speech analysis

InactiveCN103811009ARealize objective managementEasy to handleSpeech analysisSpecial service for subscribersOpinion analysisConversational speech

The invention provides a smart phone customer service system based on speech analysis. Firstly, real-time recording and effectiveness detection are performed on conversation speech caused when a client calls human customer service; then related individual information of the client is extracted, voiceprint recognition is performed on client speech in the conversation speech, and the verification is performed and the conversation speech is used as client identity at the time of consulting complaints to be recorded; meanwhile, speech content recognition is performed on the conversation speech to save as written record, and text public opinion analysis is performed on the written record; speech emotion analysis is performed on the conversation speech to record speech emotion data; the effect of the consulting complaints this time is analyzed by combination of the result of the text public opinion analysis and the emotion data; final grade of the customer service is obtained by combination of the analysis results and traditional grading evaluation to perform feed-back assessment. Compared with traditional customer service systems, the smart customer service system is capable of effectively increasing service qualities of the client and achieving objectified management of customer service evaluation.

Owner:EAST CHINA UNIV OF SCI & TECH

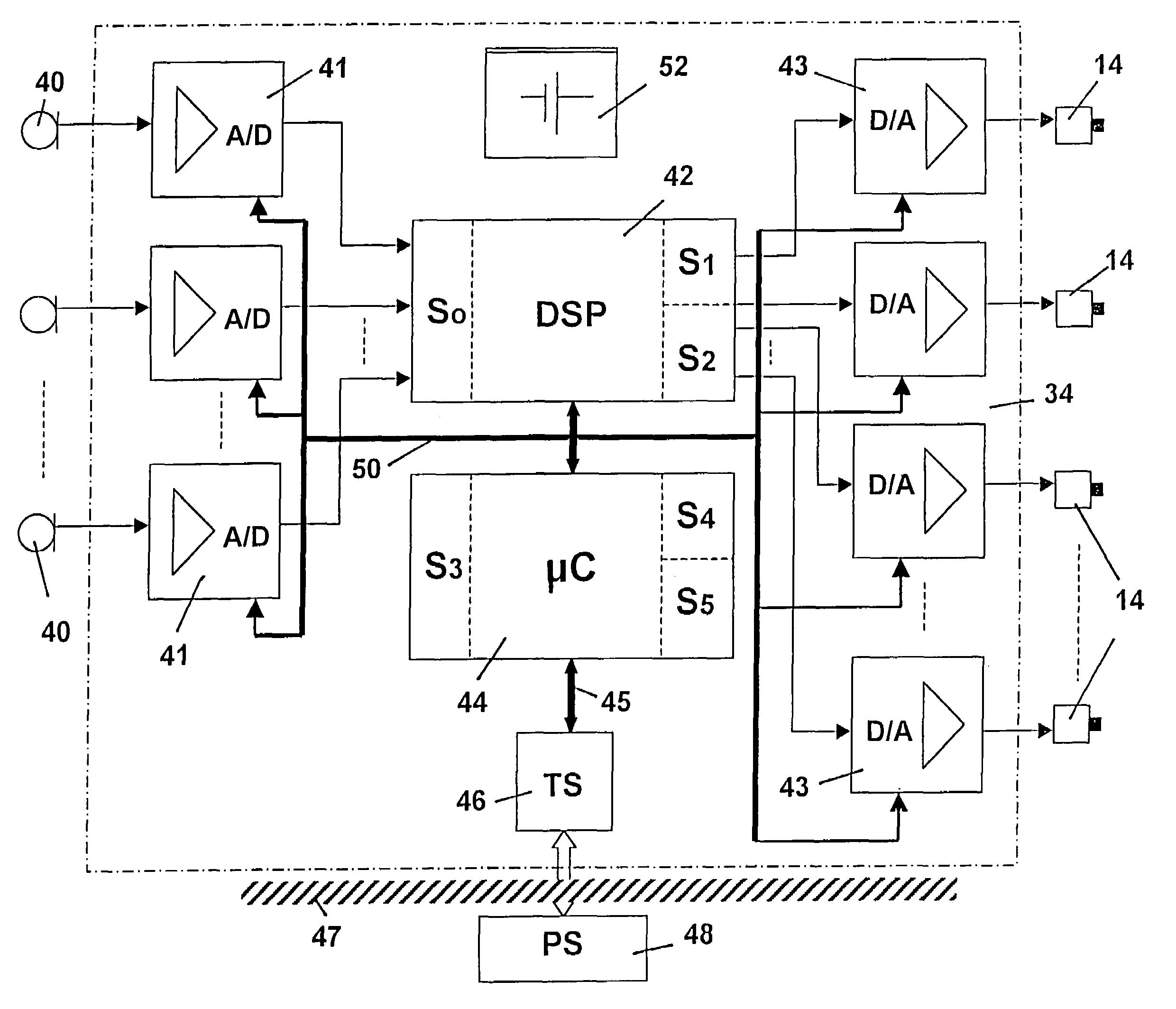

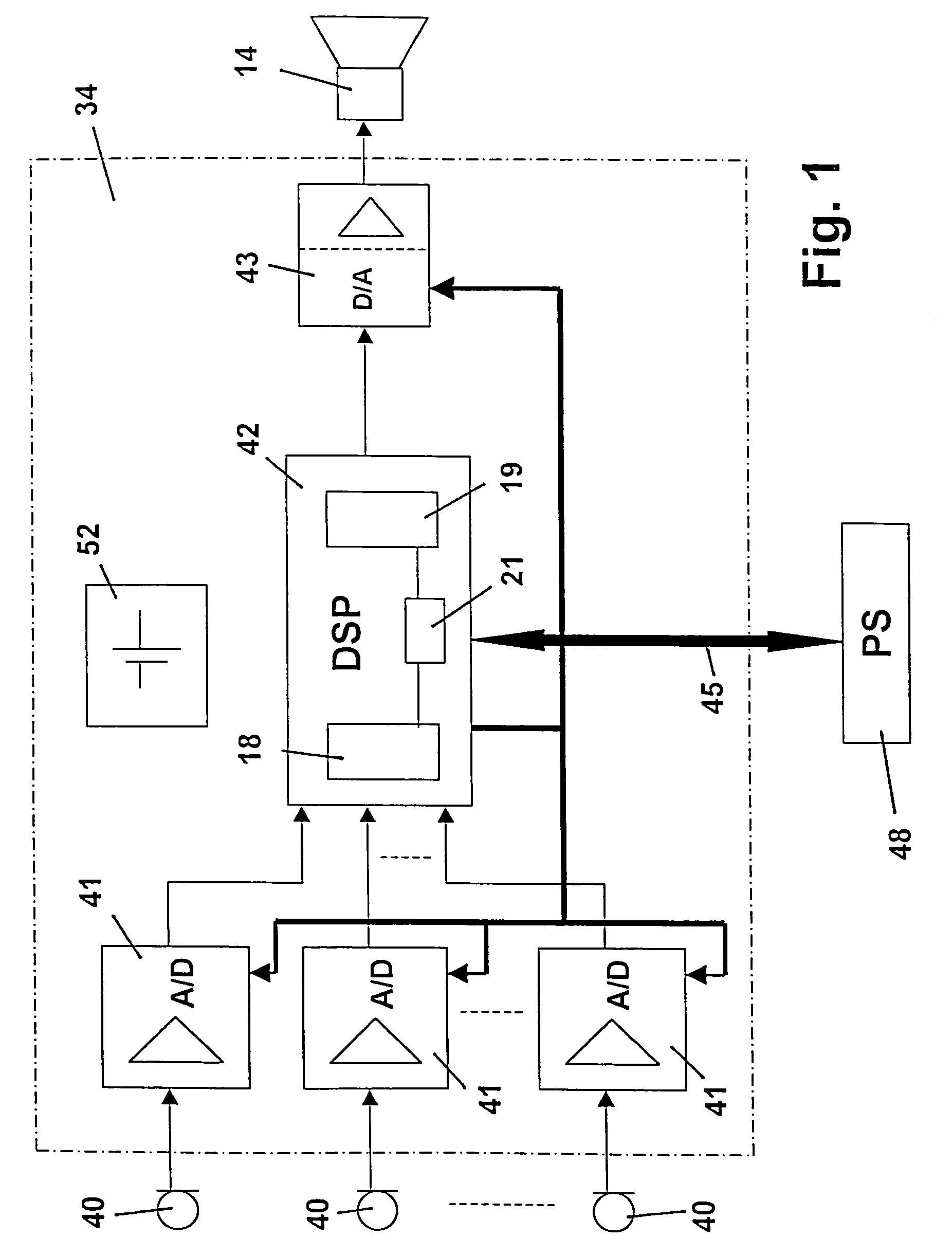

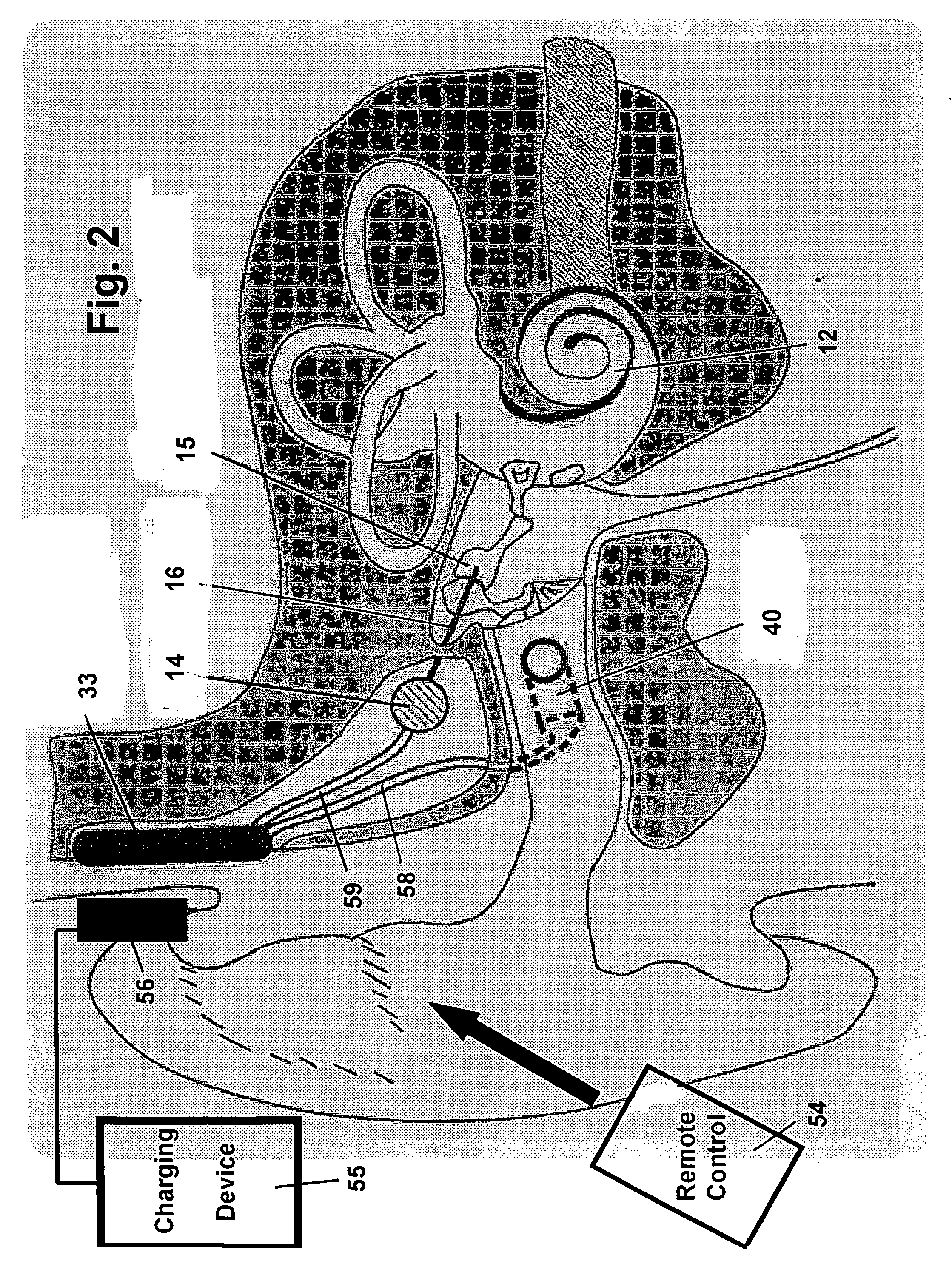

System for rehabilitation of a hearing disorder

InactiveUS7376563B2Improve understandingAdverse effectElectrotherapyElectric tinnitus maskersEngineeringHearing perception

A system for rehabilitation of a hearing disorder which comprises at least one acoustic sensor for picking up an acoustic signal and converting it into an electrical audio signal, an electronic signal processing unit for audio signal processing and amplification, an electrical power supply unit which supplies individual components of the system with current, and an actuator arrangement which is provided with one or more electroacoustic, electromechanical or purely electrical output-side actuators or any combination of these actuators for stimulation of damaged hearing, wherein the signal processing unit has a speech analysis and recognition module and a speech synthesis module.

Owner:COCHLEAR LIMITED

Virtual television phone apparatus

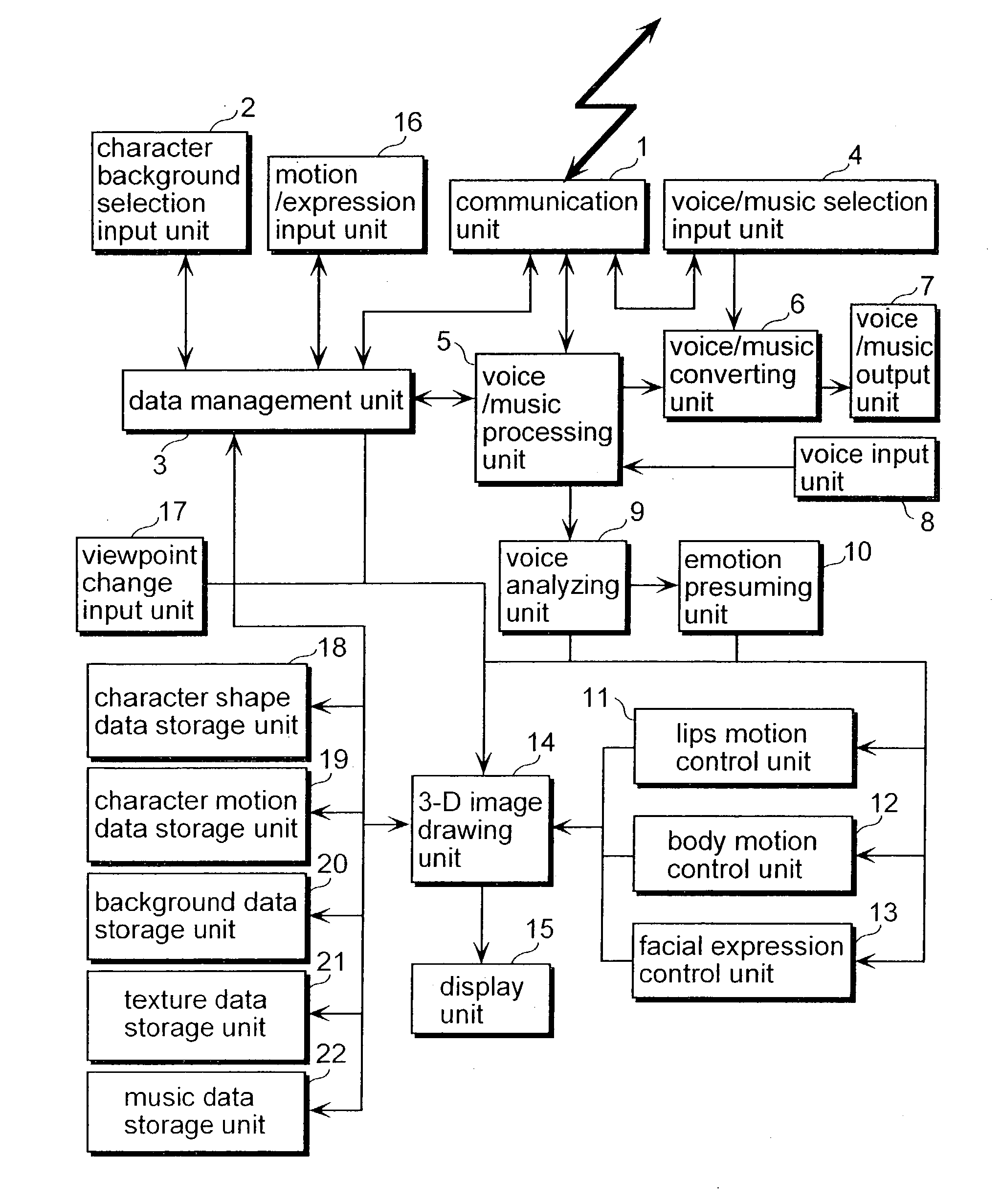

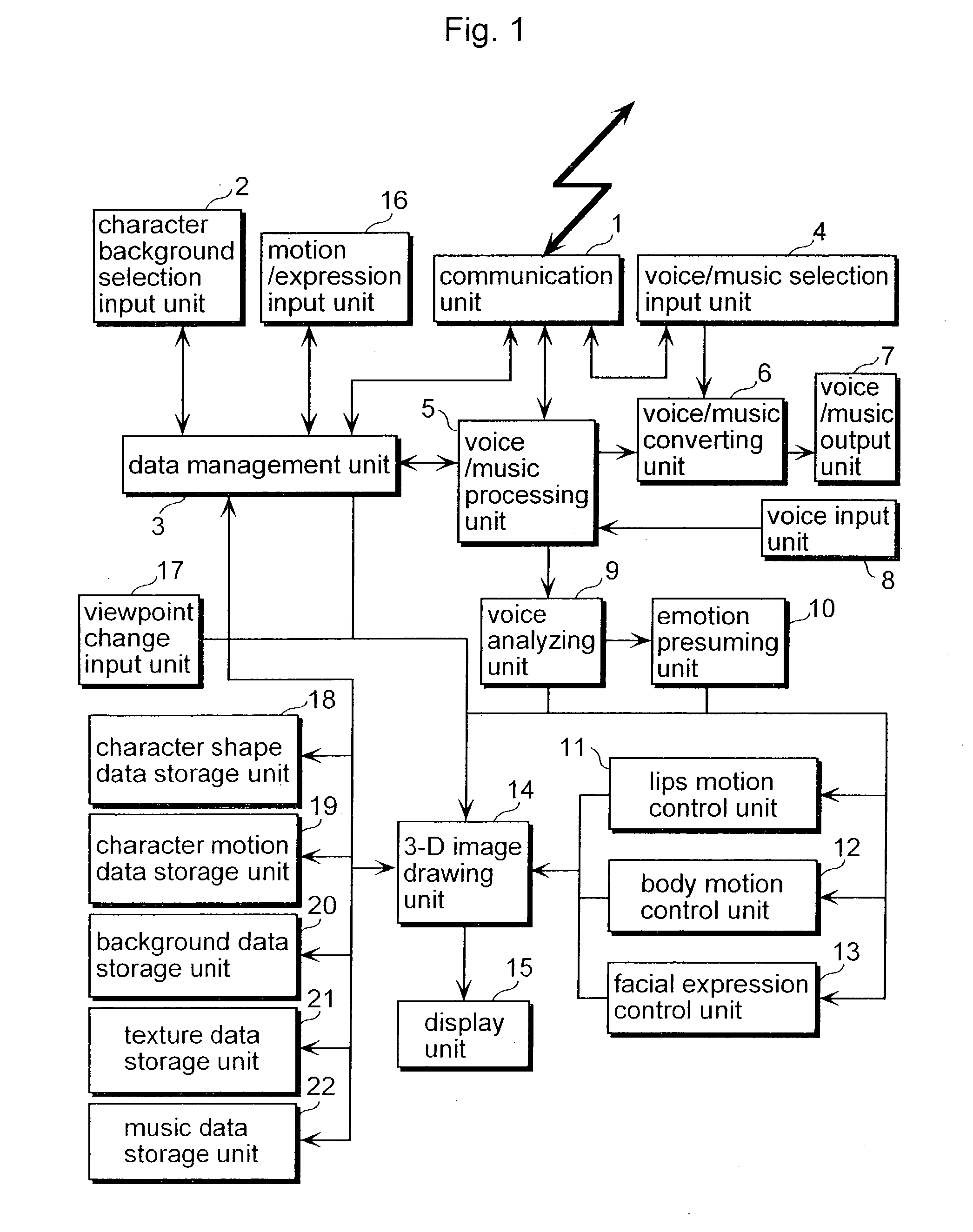

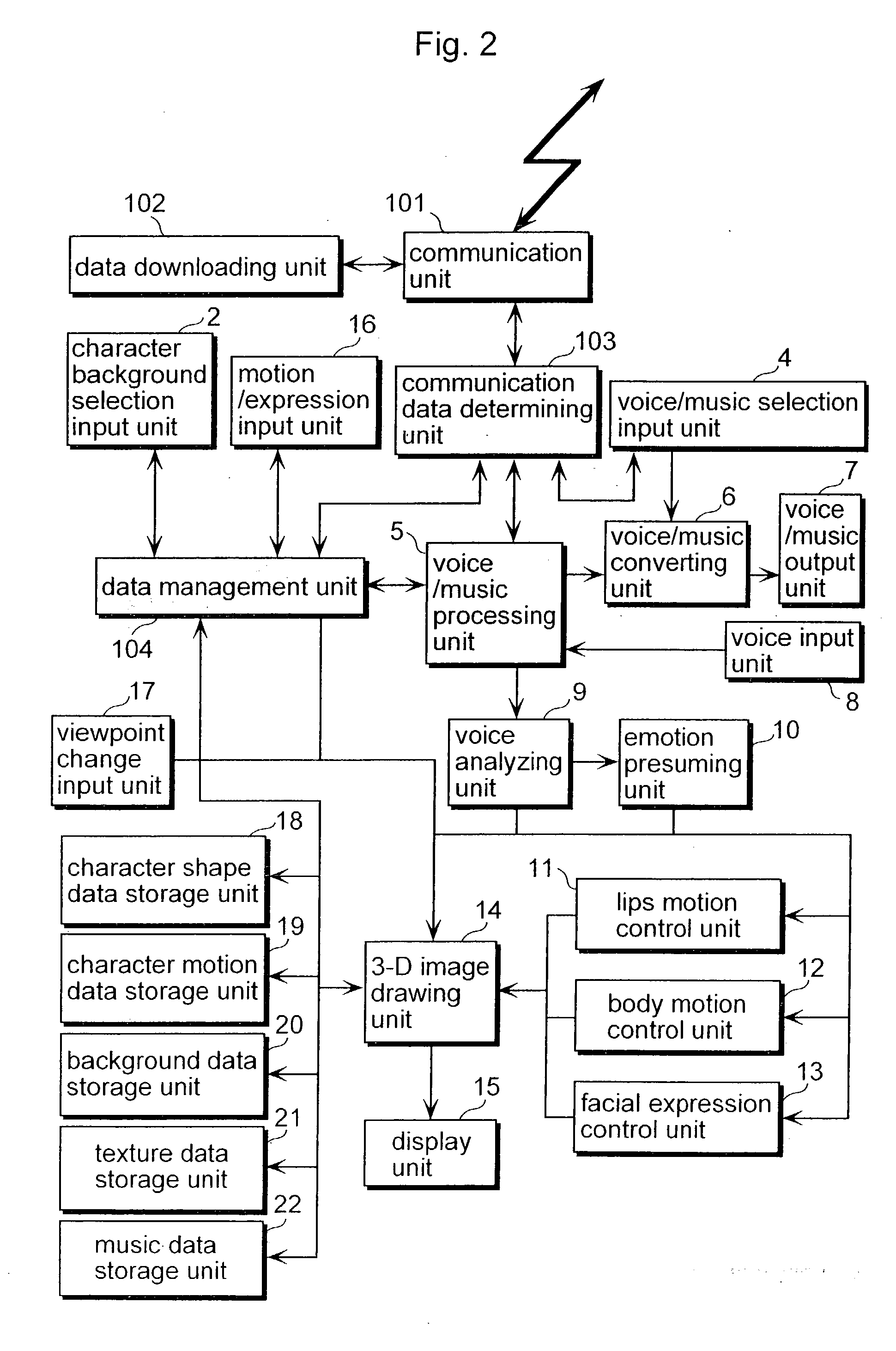

A communication unit 1 carries out voice communication, and a character background selection input unit 2 selects a CG character corresponding to a communication partner. A voice / music processing unit 5 performs voice / music processing required for the communication, a voice / music converting unit 6 converts voice and music, and a voice / music output unit outputs the voice and music. A voice input unit 8 acquires voice. A voice analyzing unit 9 analyzes the voice, and an emotion presuming unit 10 presumes an emotion based on the result of the voice analysis. A lips motion control unit 11, a body motion control unit 12 and an expression control unit 13 send control information to a 3-D image drawing unit 14 to generate an image, and a display unit 15 displays the image.

Owner:PANASONIC CORP

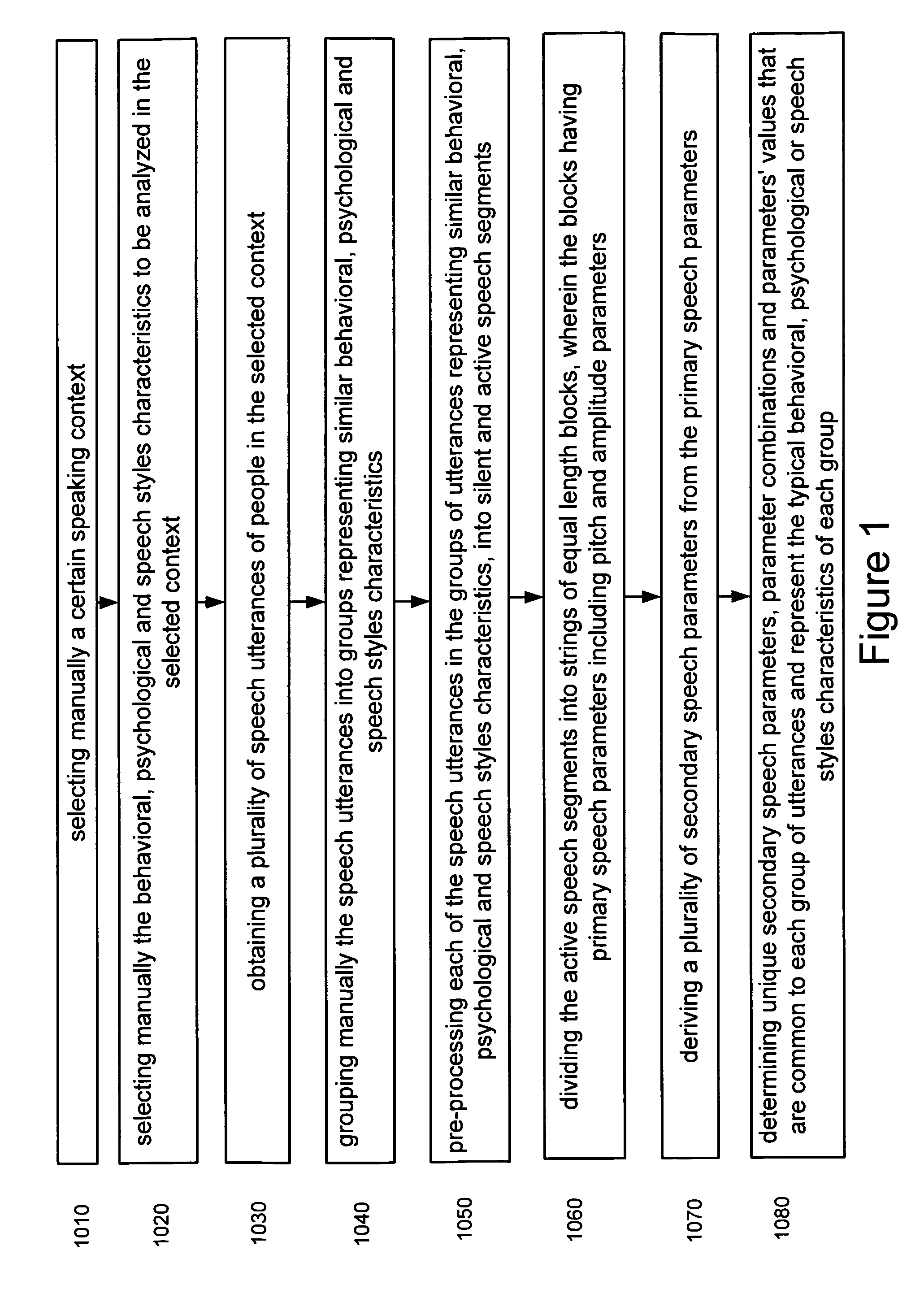

Speaker characterization through speech analysis

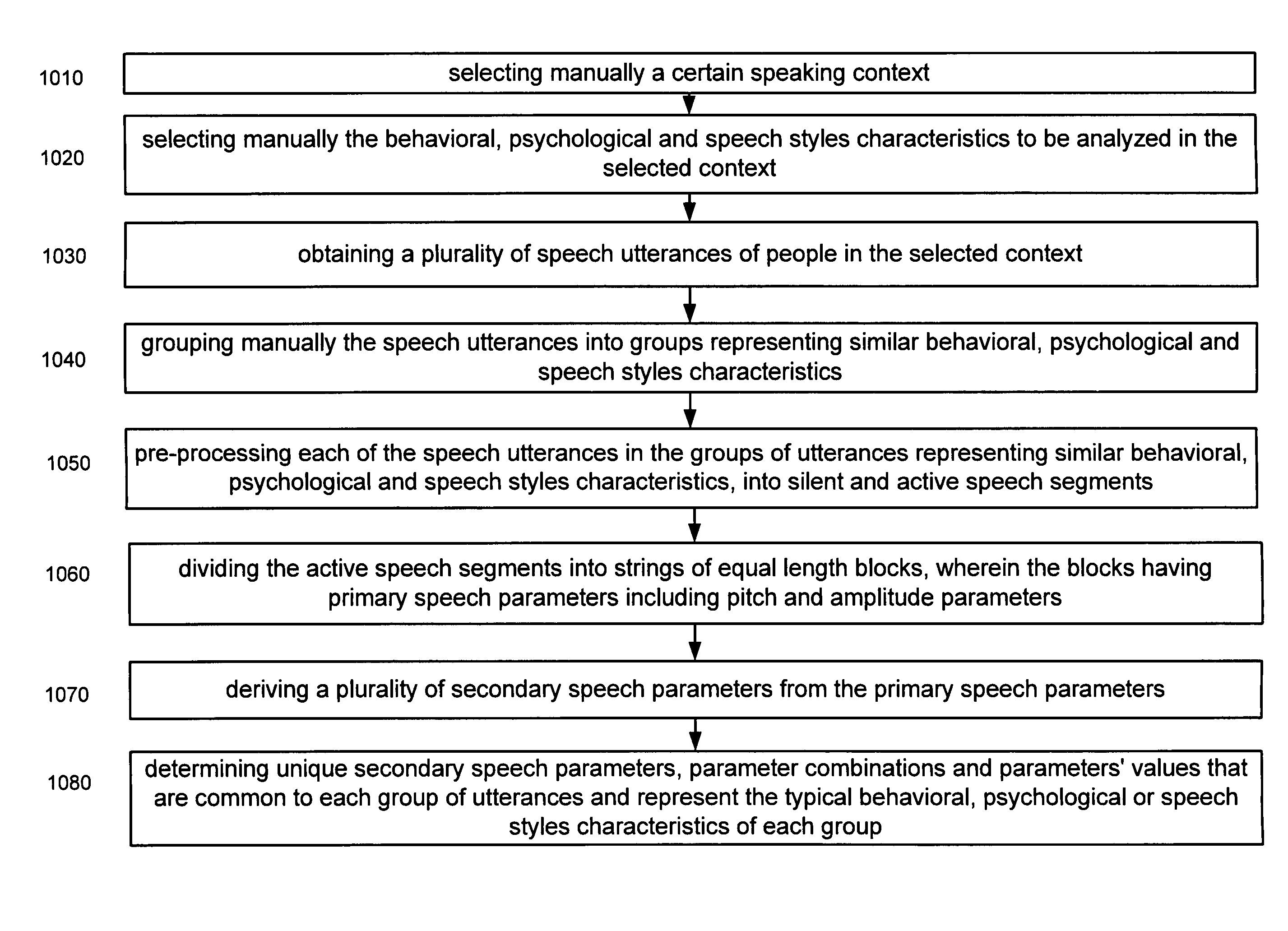

A computer implemented method, data processing system, apparatus and computer program product for determining current behavioral, psychological and speech styles characteristics of a speaker in a given situation and context, through analysis of current speech utterances of the speaker. The analysis calculates different prosodic parameters of the speech utterances, consisting of unique secondary derivatives of the primary pitch and amplitude speech parameters, and compares these parameters with pre-obtained reference speech data, indicative of various behavioral, psychological and speech styles characteristics. The method includes the formation of the classification speech parameters reference database, as well as the analysis of the speaker's speech utterances in order to determine the current behavioral, psychological and speech styles characteristics of the speaker in the given situation.

Owner:VOICESENSE LTD

Systems and methods for non-traditional payment using biometric data

InactiveUS20090140839A1Convenient transactionElectric signal transmission systemsDigital data processing detailsComputer hardwareBiometric data

Facilitating transactions using non-traditional devices and biometric data to activate a transaction device is disclosed. A transaction request is formed at a non-traditional device, and communicated to a reader, wherein the non-traditional device may be configured with an RFID device. The RFID device is not operable until a biometric voice analysis has been executed to verify that the carrier of the RFID equipped non-traditional device is the true owner of account information stored thereon. The non-traditional device provides a conduit between a user and a verification system to perform biometric voice analysis of the user. When the verification system has determined that the user is the true owner of one or more accounts stored at the verification system, a purchase transaction is facilitated between the verification system. Transactions may further be carried out through a non-RF device such as a cellular telephone in direct communication with an acquirer / issuer or payment processor

Owner:LIBERTY PEAK VENTURES LLC

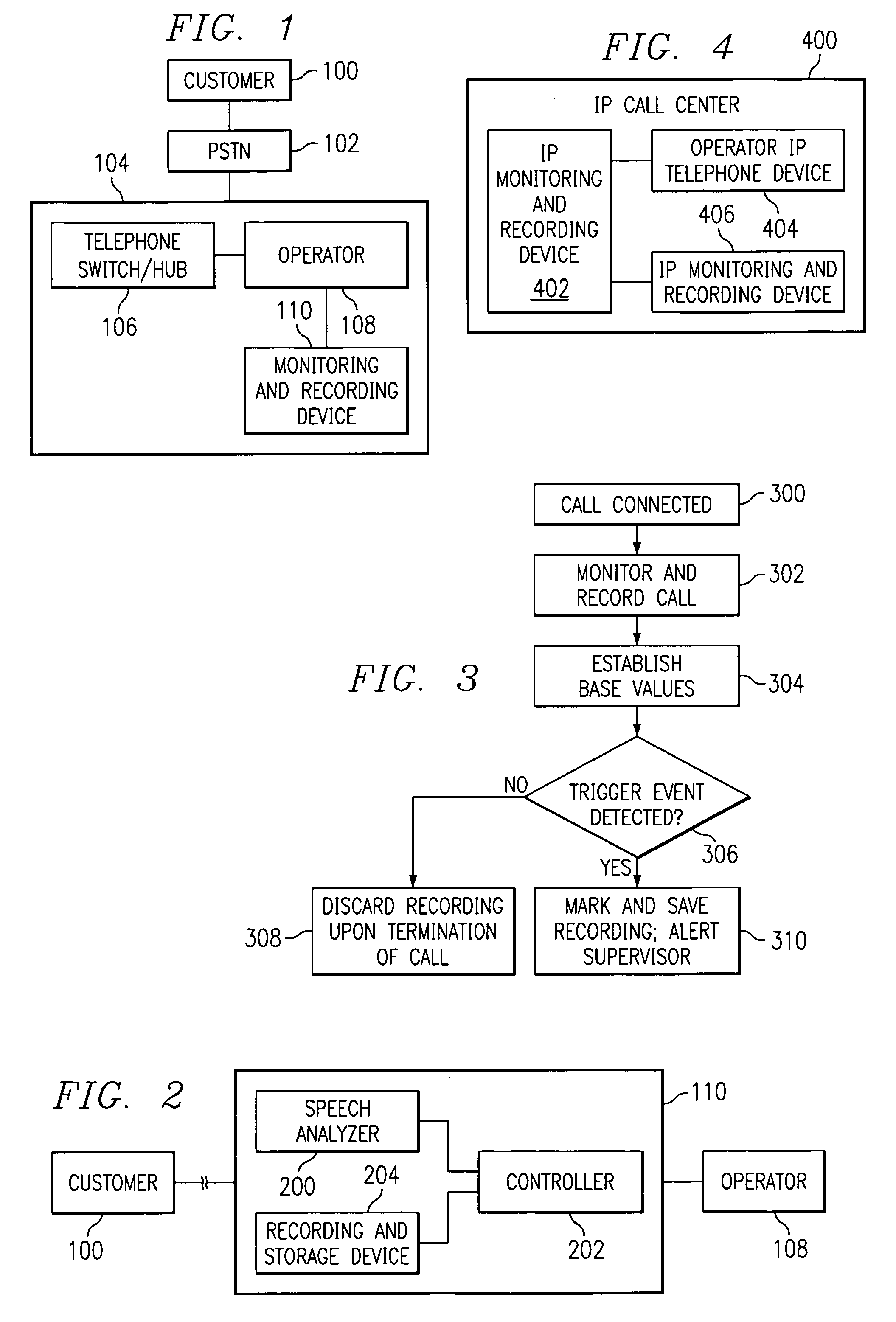

Selective conversation recording using speech heuristics

InactiveUS7043008B1Easy to controlSave storage spaceSpecial service for subscribersManual exchangesHeuristicVoice analysis

A system and method for selectively monitoring, recording, storing, and handling telephone conversations through the use of speech analysis is disclosed. In particular, the present invention utilizes a speech analyzer to analyze a speech signal during a telephone conversation between two parties, and a recording and storage device to record and store the telephone conversation. Based on variations in signal characteristics related to the emotional state of the caller, the system selectively generates a trigger to keep the stored recording of the telephone conversation. The present invention also selectively determines whether to send a notification in response to said trigger.

Owner:CISCO TECH INC

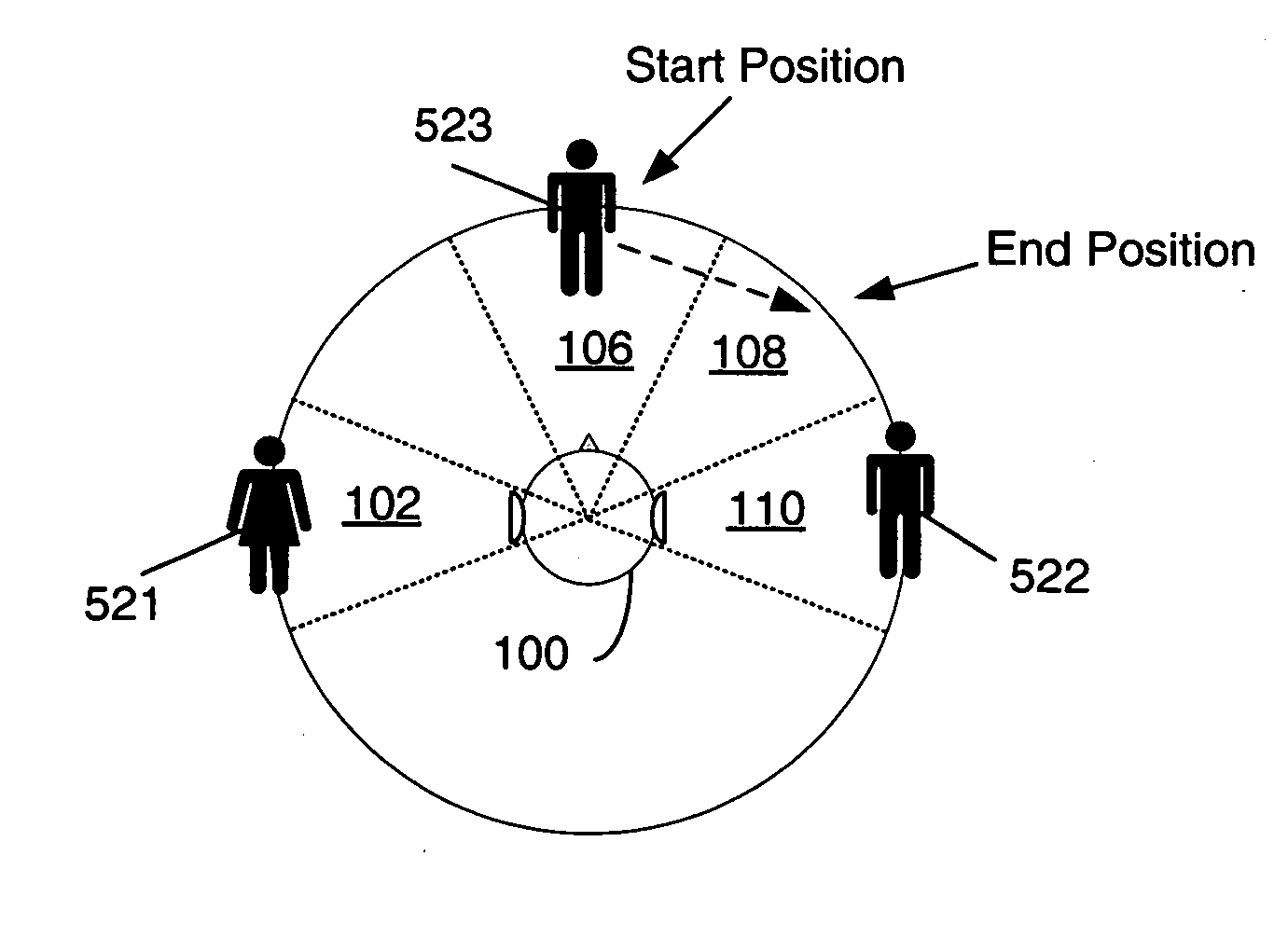

Automatic participant placement in conferencing

InactiveUS20070263823A1Maximizing abilityMaximize intelligibilitySpecial service for subscribersStereophonic systemsFrame basedVoice analysis

Techniques for positioning participants of a conference call in a three dimensional (3D) audio space are described. Aspects of a system for positioning include a client component that extracts speech frames of a currently speaking participant of a conference call from a transmission signal. A speech analysis component determines a voice fingerprint of the currently speaking participant based upon any of a number of factors, such as a pitch value of the participant. A control component determines a category position of the currently speaking participant in a three dimensional audio space based upon the voice fingerprint. An audio engine outputs audio signals of the speech frame based upon the determined category position of the currently speaking participant. The category position of one or more participants may be changed as new participants are added to the conference call.

Owner:NOKIA CORP

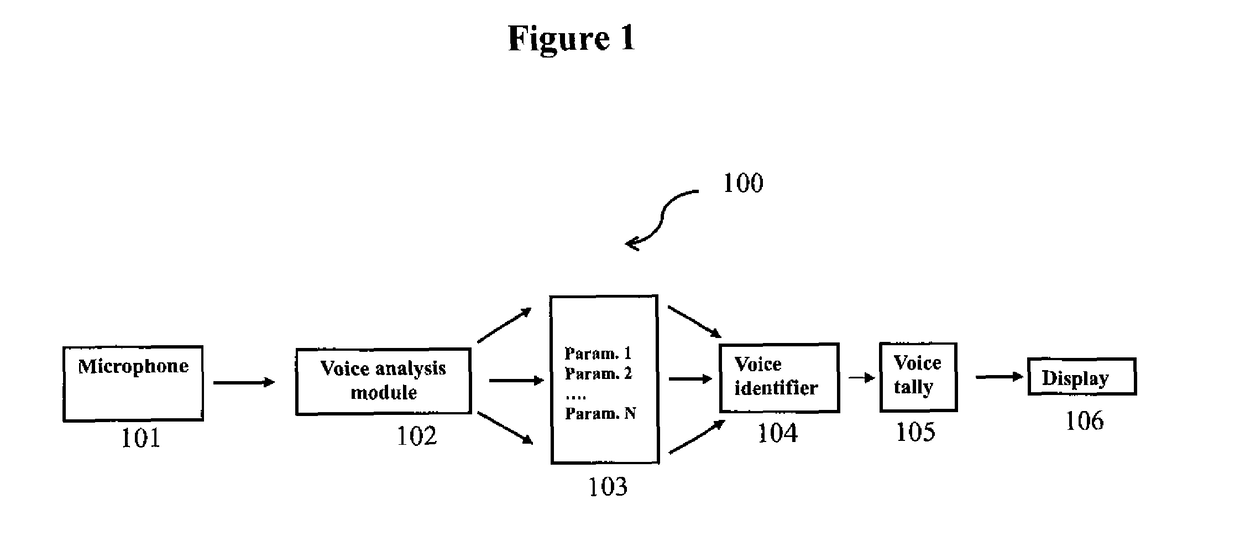

Voice tallying system

InactiveUS20170270930A1Television system detailsTelevision conference systemsVoice analysisSpeech sound

Owner:FLAGLER LLC

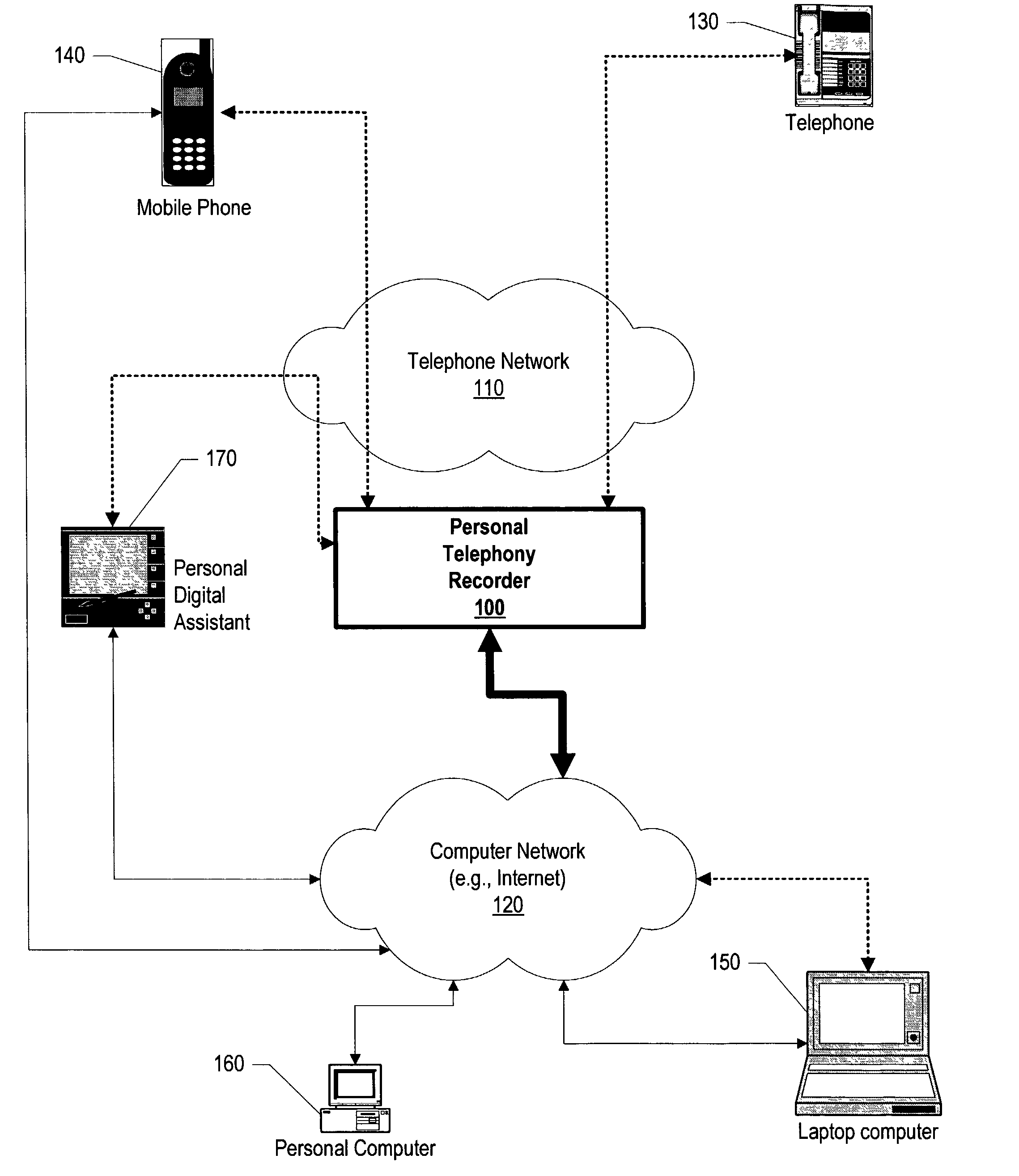

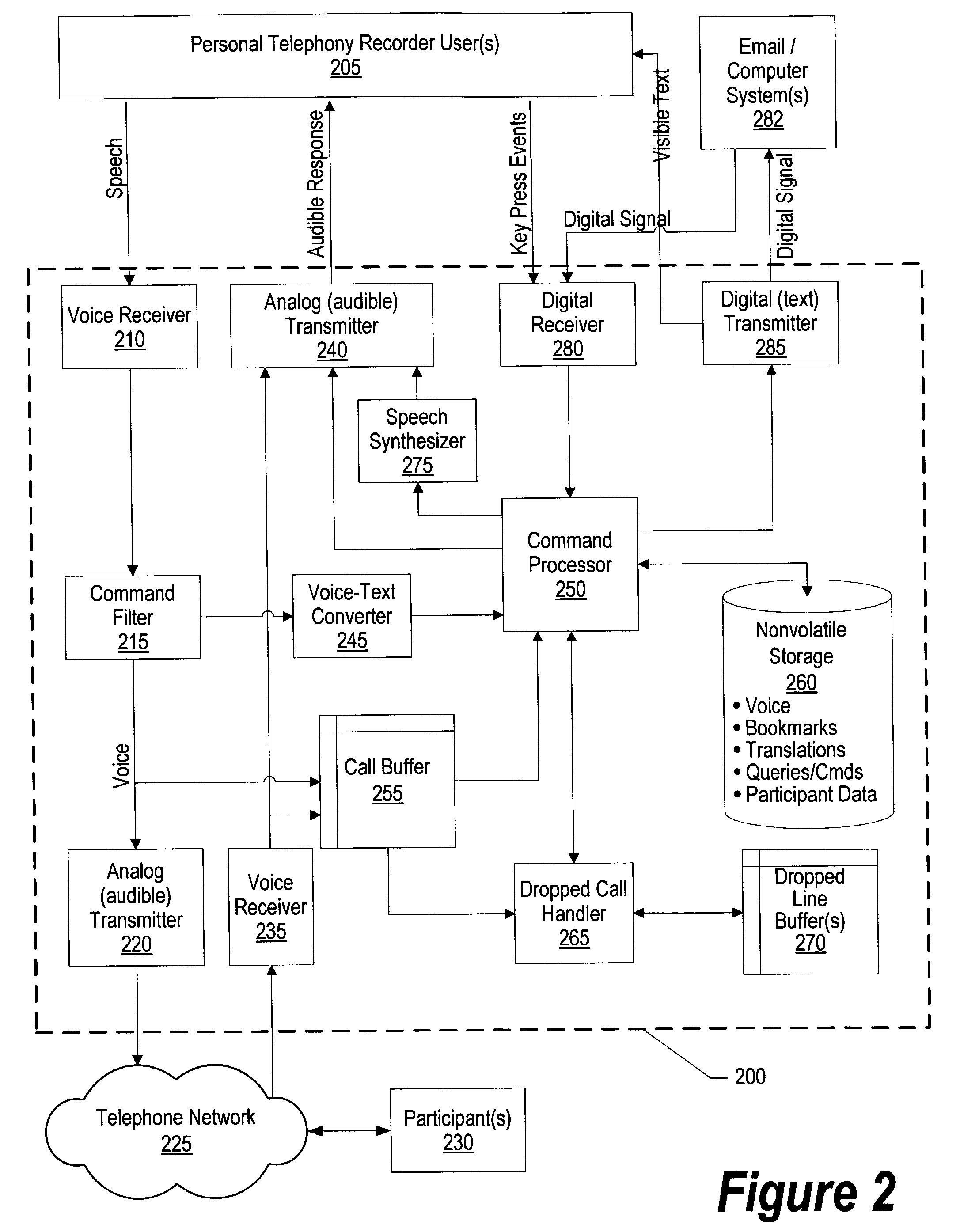

System and method for volume control management in a personal telephony recorder

ActiveUS7065198B2Save storage spaceSpecial service for subscribersAutomatic call-answering/message-recording/conversation-recordingNetwork connectionVoice analysis

A system and method for recording a telephone conference and replaying a portion of the recording during the conference. Users participate by connecting through different types of networks using a device having a communication line connection. The recording can be in audio format, text format, or both. Thus, users can recall and replay textual information in addition to the recorded audio. Other information-such as time and user data-may also be recorded along with the audio and text. Users in the conference are identified to enable the association with them each user's contribution to the conference. The user or the user's device can assist by providing identification information. User identification may also be accomplished by associating each user's contribution with the particular line the user is calling from. Caller ID information may also be used to identify the user. Voice analysis may also performed to accomplish user identification.

Owner:IBM CORP

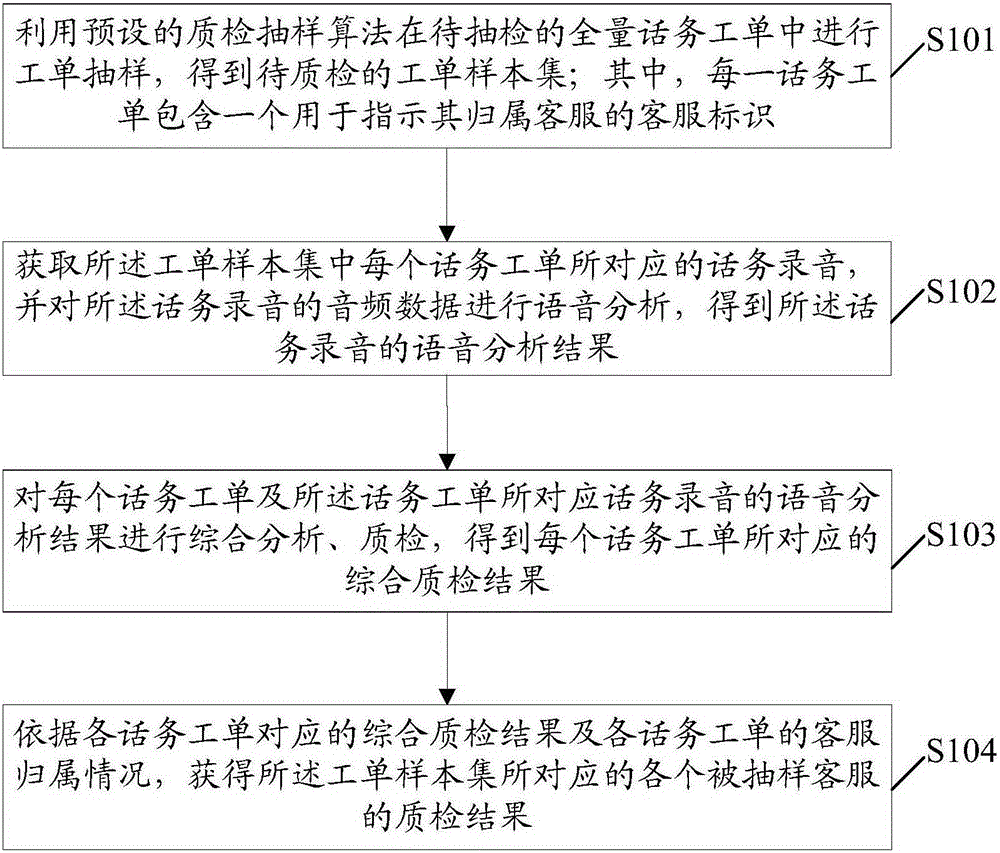

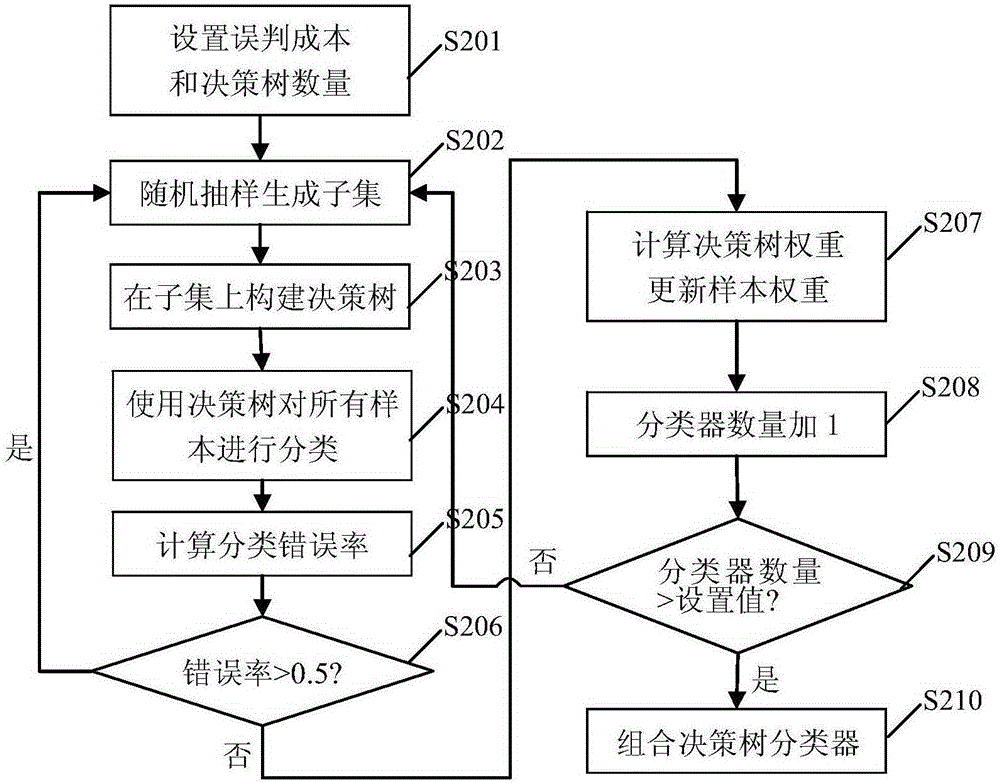

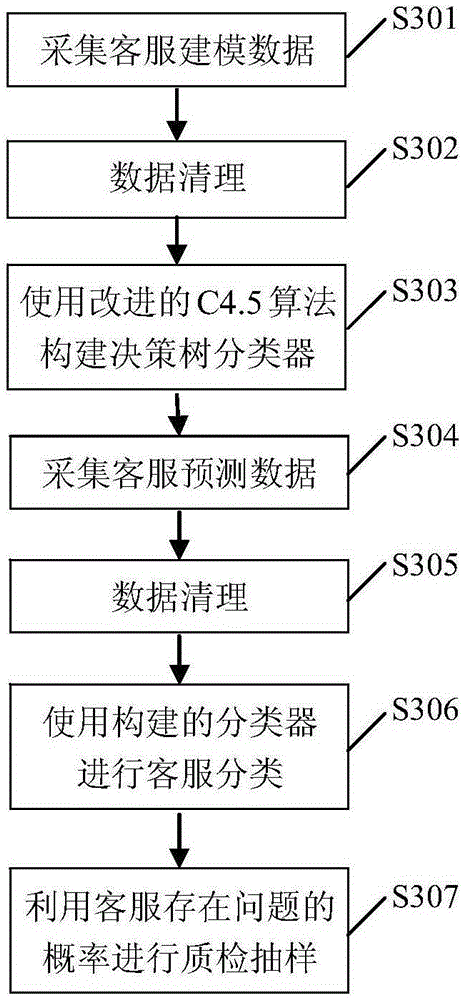

Quality inspection treatment method and system

ActiveCN105184315AFully automatedOvercome resourcesCharacter and pattern recognitionResourcesVisual inspectionVoice analysis

The present application provides a quality inspection treatment method and a system. After the work order sampling process, all traffic orders to be subjected to quality inspection are obtained. After that, the data of a recorded audio file corresponding to each traffic order is subjected to voice analysis. Meanwhile, the comprehensive analysis and the quality inspection for each traffic order and the voice analysis result of the corresponding recorded audio file are conducted, so that a comprehensive quality inspection result is obtained. Finally, according to the comprehensive quality inspection result of each traffic order and the customer service ownership condition of the traffic order, the quality inspection result of each sampled customer service corresponding to the traffic order can be obtained. In this way, the quality inspection process for the customer service is automatically realized. Therefore, the problems that the existing manual quality inspection manner is large in occupancy of human resources, poor in quality inspection efficiency and not objective in quality inspection result in the prior art can be solved.

Owner:BEIJING CHINA POWER INFORMATION TECH +3

Method and Apparatus for Monitoring Contact Center Performance

InactiveUS20100158237A1Massive potential call center efficiencyMassive potential performance optimizationManual exchangesAutomatic exchangesEvent generatorEvent data

A system for monitoring a communication session in a contact center comprises a store of one or more defined speech events which may occur in a communication session for a contact being handled by an agent operating an agent station of the contact center, a speech event comprising at least one occurrence of at least one word in an audio stream of a communication session. A speech analyser is operable, during a communication session involving an agent station of the contact center, to detect the occurrence of one of the speech events. An event generator is responsive to detection of one of the speech events, for issuing an event notification during the communication session identifying the speech event to a reporting component of the contact center which has been configured to receive such event notifications. A display displays event data for contacts being handled by the contact center in real-time at a supervisor station of the contact center, the displayed event data including the speech event identified in the event notification.

Owner:AVAYA INC

Device, system and method for providing targeted advertisements and content

An aspect of the present invention is drawn to an audio data processing device for use by a user to control a system and for use with a microphone, a user demographic profiles database and a content / ad database. The microphone may be operable to detect speech and to generate speech data based on the detected speech. The user demographic profiles database may be capable of having demographic data stored therein. The content / ad database may be capable of having at least one of content data and advertisement data stored therein. The audio data processing device includes a voice recognition portion, a voice analysis portion and a speech to text portion. The voice recognition portion may be operable to process user instructions based on the speech data. The voice analysis portion may be operable to determine characteristics of the user based on the speech data. The speech to text portion may be operable to determine interests of the user.

Owner:GOOGLE TECH HLDG LLC

Computer system for assisting spoken language learning

InactiveCN101551947AFully complete conceptImprove interactivitySpeech recognitionElectrical appliancesSpoken languageComputerized system

The invention discloses a computer system for assisting spoken language learning, comprising the following components of a user interface for prompting a user to complete a certain spoken language learning content and collect voice response data of the user; a database which comprises a group of mode features used for describing the performances on acoustic and linguistic aspects which are related to the spoken language learning of the user; a voice analysis system for analyzing the voice response data and extracting acoustic mode features or linguistic mode features; a mode matching system for correspondingly matching one or more subsets in the mode feature extracted from the voice response data with the mode features in the database and generating feedback data according to the matching result; and a feedback system for feeding data back to the user and assisting the user to master the spoken language learning content. The computer system has higher interactivity and self-adaptability and can particularly heuristicly capture user errors and intelligently feed back rich teaching instruction information, thereby making up for deficiencies of the prior art on the aspect.

Owner:AISPEECH CO LTD

Information Retrieving System

InactiveUS20090210411A1Accurate informationDigital data information retrievalDigital data processing detailsVoice analysisData mining

A user speech analyzing component poses, to a user, question sentences for respective ones of a plurality of attributes, and analyzes an attribute value for each of the attributes from an answer sentence from the user to the sentence question. A user data holding component, as a result of analysis, holds user data that allows the plurality of attributes, and respective user attribute values for the attributes to correspond to one another. A matching component, when an acquisition ratio of the attribute values from the user with respect to all of the attributes is a predetermined value or greater, selects at least one target data candidate that matches each of the attributes and each of the attribute values of the user data, from a plurality of target data. A dialogue control component outputs each of the target data candidates selected, to the user's side.

Owner:OKI ELECTRIC IND CO LTD

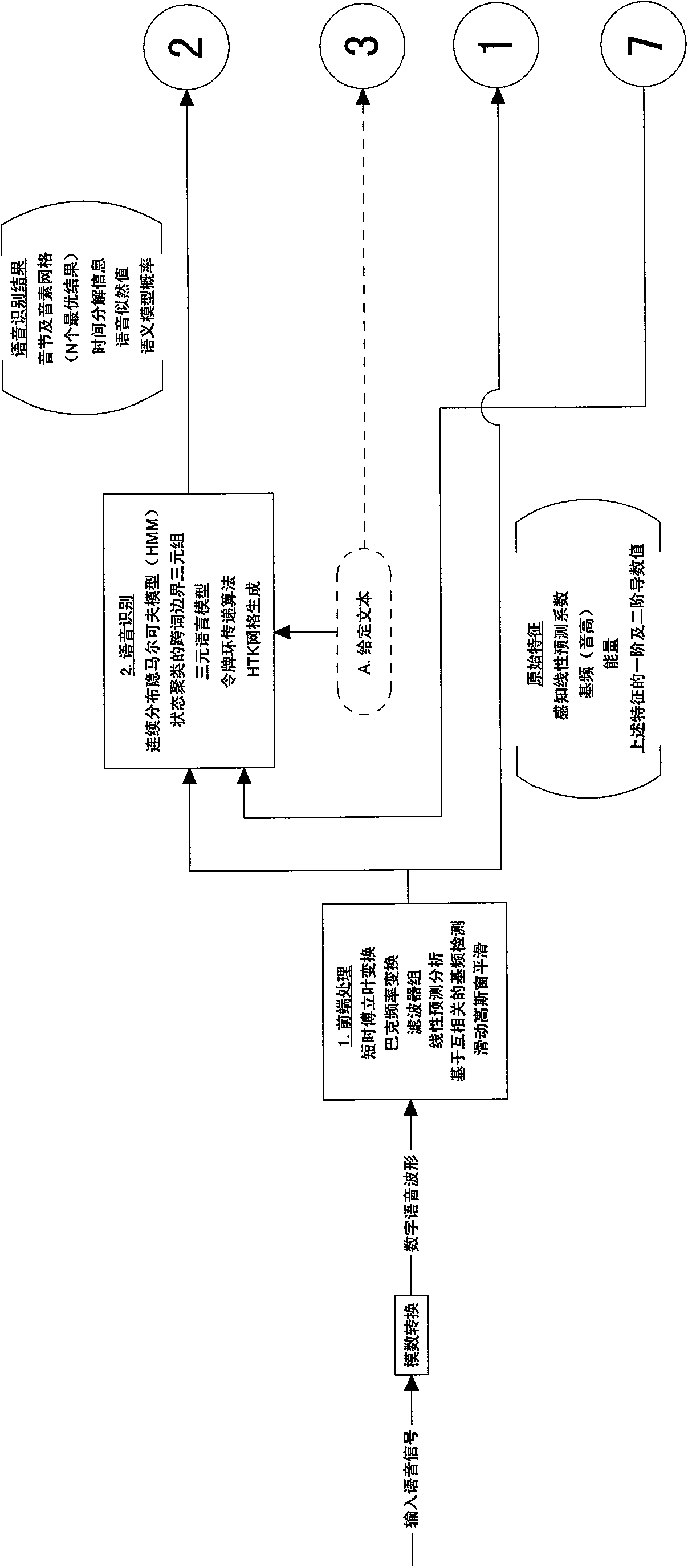

Voice quality analysis technique

ActiveUS20050015253A1Suitable for useDigital computer detailsSupervisory/monitoring/testing arrangementsSpeech soundVoice analysis

One or more methods and systems of analyzing, assessing, and reporting voice quality scores are presented. In one embodiment, voice quality scores are generated by querying one or more computing devices responsible for processing a reference speech sample input into a voice communication system. In one embodiment, voice quality scores are transmitted by a voice analysis platform and subsequently analyzed. In one embodiment, a single voice analysis platform is used to measure voice quality of a voice communication system. In one embodiment, multiple voice analysis platforms are used to measure voice quality at multiple endpoints of one or more voice communication systems. In one embodiment, the method comprises a user determining one or more points along a communication system where transmitted reference speech samples are to be tapped. The tapped reference speech samples are ported to a voice analysis platform where a voice quality score is generated and graphically displayed.

Owner:AVAGO TECH INT SALES PTE LTD

Multi-party conversation analyzer and logger

ActiveUS9300790B2High precisionFacilitate interruptionInterconnection arrangementsSubstation speech amplifiersGraphicsVoice analysis

Owner:SECURUS TECH LLC

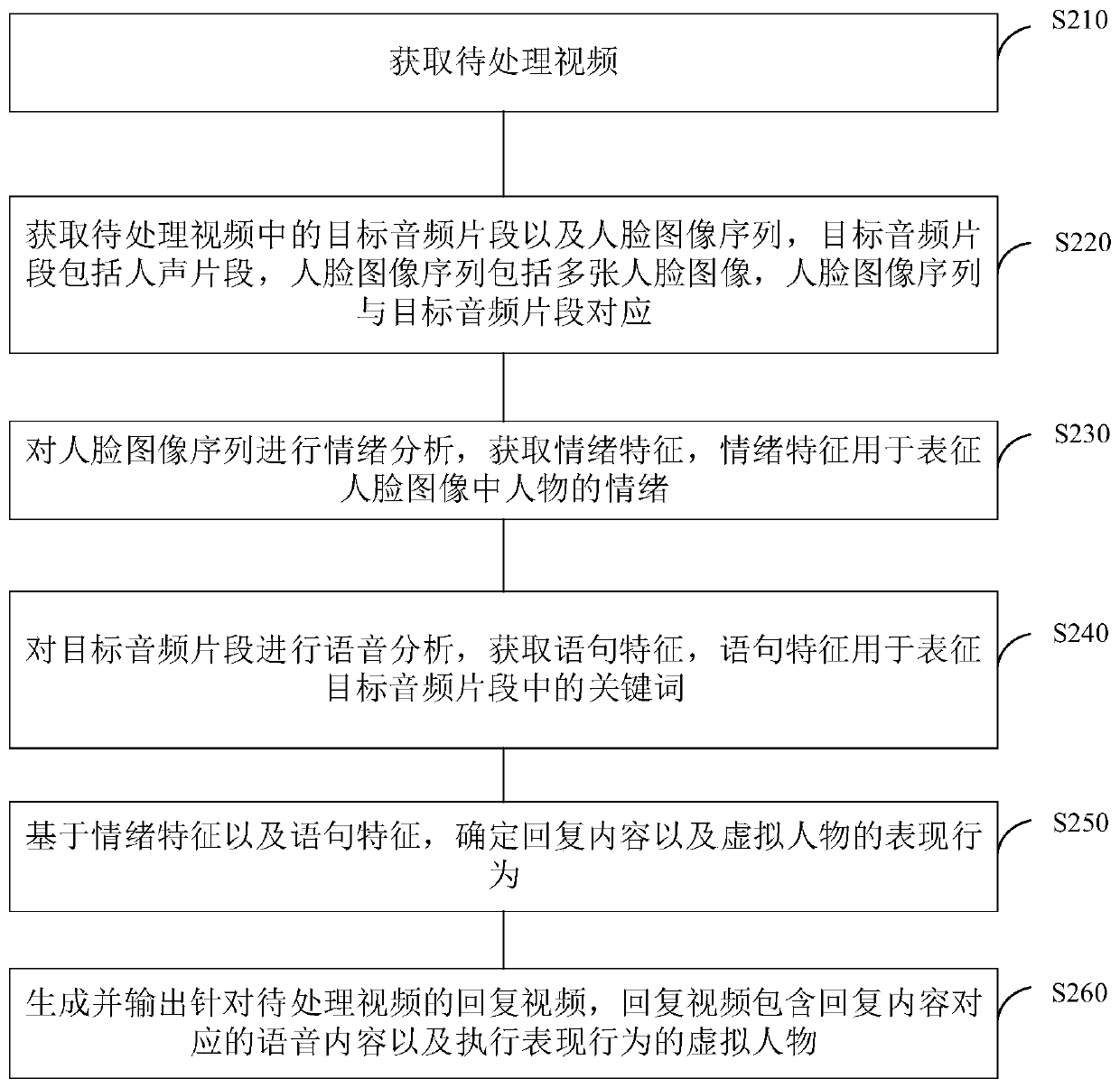

Video processing method, device and system, terminal equipment and storage medium

ActiveCN110688911AImprove the accuracy of semantic understandingImprove realismCharacter and pattern recognitionSpeech recognitionComputer graphics (images)Video processing

The embodiment of the invention discloses a video processing method and device, terminal equipment and a storage medium. The method comprises the steps of obtaining a to-be-processed video; obtaininga target audio clip and a face image sequence in the to-be-processed video; performing emotion analysis on the face image sequence to obtain emotion features; performing voice analysis on the target audio clip to obtain statement features which are used for representing keywords in the target audio clip; based on the emotional characteristics and the statement characteristics, determining reply contents and expression behaviors of the virtual character; and generating and outputting a reply video for the to-be-processed video, wherein the reply video comprises voice content corresponding to the reply content and a virtual character for executing the expression behavior. According to the embodiment of the invention, the emotional characteristics and the statement characteristics of the character can be obtained according to the speaking video of the character, and the virtual character video matched with the emotional characteristics and the statement characteristics is generated as thereply, so that the sense of reality and naturalness of human-computer interaction are improved.

Owner:SHENZHEN ZHUIYI TECH CO LTD

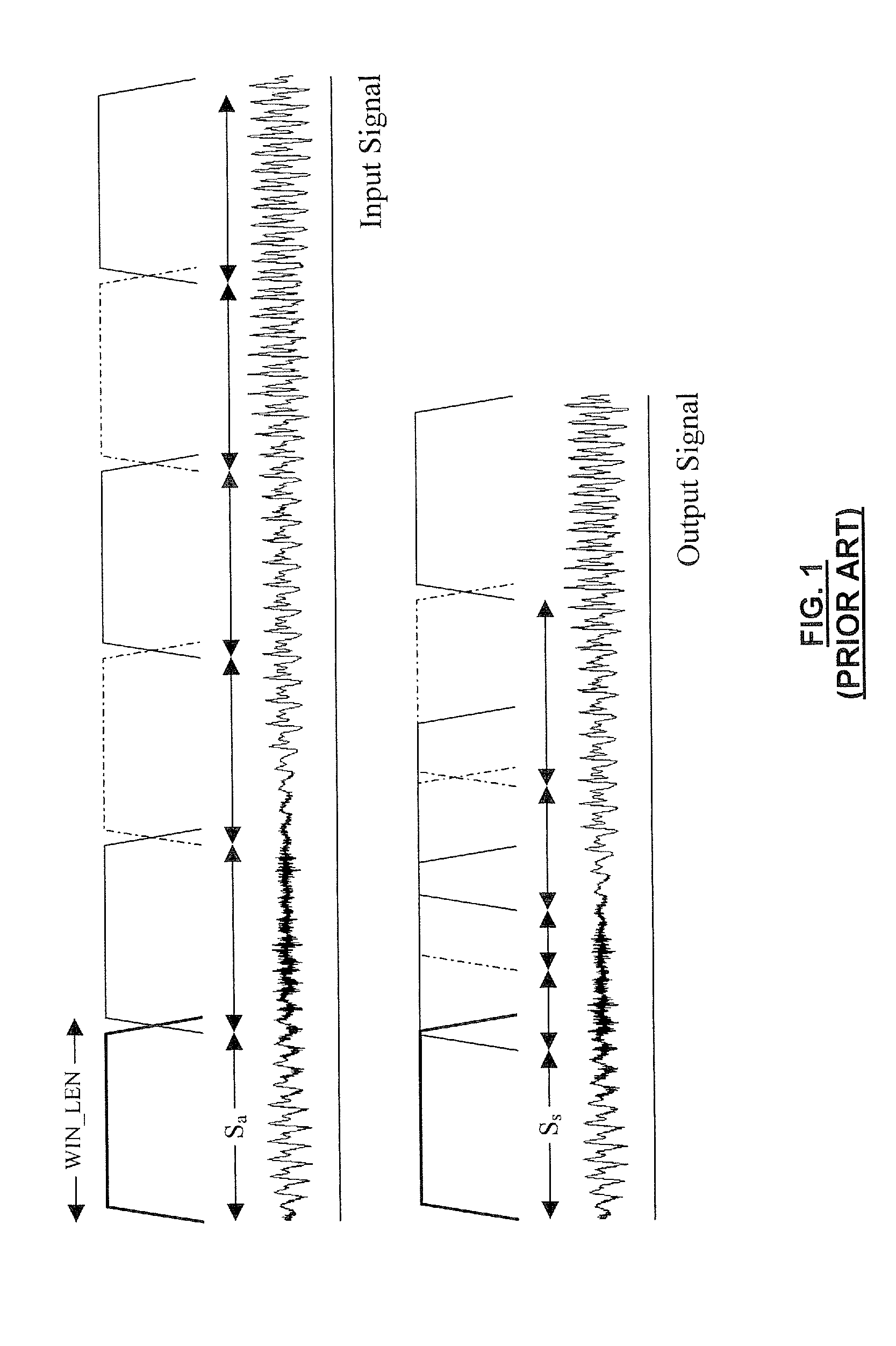

Method for the time scaling of an audio signal

A method for the time scaling of a sampled audio signal is presented. The method includes a first step of performing a pitch and voicing analysis of each frame of the signal in order to determine if a given frame is voiced or unvoiced and to evaluate a pitch profile for voiced frames. The results of this analysis are used to determine the length and position of analysis windows along each frame. Once an analysis window is determined, it is overlap-added to previously synthesized windows of the output signal.

Owner:TECH HUMANWARE

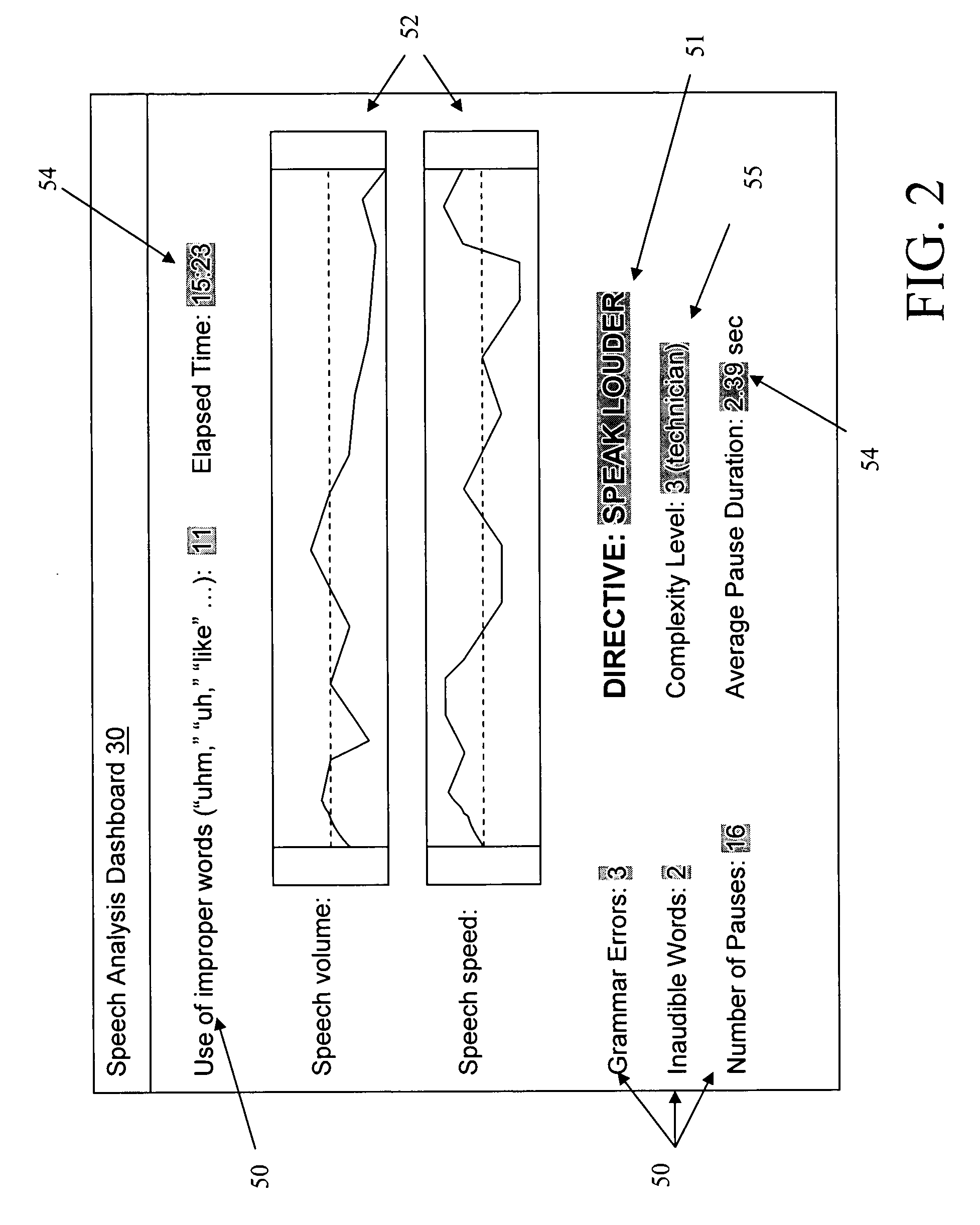

System and method for improving speaking ability

ActiveUS20070100626A1Enhance speechProvide feedbackSpeech recognitionSpeech synthesisVoice analysisAcoustics

A speech analysis system and method for analyzing speech. The system includes: a voice recognition system for converting inputted speech to text; an analytics system for generating feedback information by analyzing the inputted speech and text; and a feedback system for outputting the feedback information.

Owner:NUANCE COMM INC

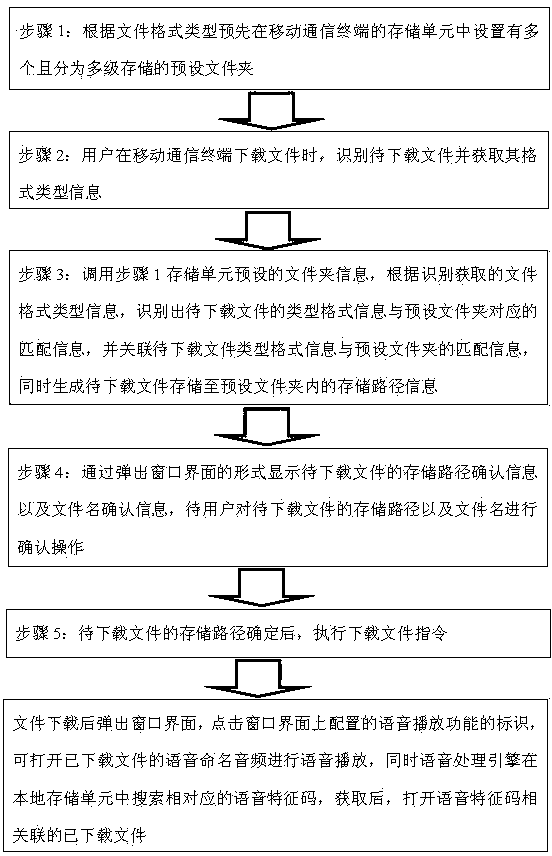

Method and device for identifying and saving file to be downloaded and quickly searching for downloaded file through mobile terminal

ActiveCN104199897AImprove experienceImprove download efficiencyExecution for user interfacesFile system functionsDocument IdentifierDocument handling

The invention discloses a method for identifying and saving a file to be downloaded and quickly searching for the downloaded file through a mobile terminal. The method comprises the steps that a preset folder is set in advance; in the downloading process of the file, the file to be downloaded is identified, and format type information of the file to be downloaded is obtained; the format type information of the file to be downloaded and matching information corresponding to the preset folder are judged and correlated to generate storage path information; storage path confirmation information and file name confirmation information are displayed, and a file downloading instruction is executed after the storage path confirmation information and the file name confirmation information are confirmed by a user; after the file is downloaded, a window interface is popped up, and then voice named audio of the downloaded file and the downloaded file can be opened. A device for identifying and saving the file to be downloaded and quickly searching for the downloaded file through the mobile terminal comprises a storage unit, a file identifying unit, a file judgment unit, a file processing unit, a path display unit, a path providing unit, a voice analysis engine and a voice processing engine. The method and device can facilitate downloading of the file and search for the downloaded file by the user.

Owner:宁波高智创新科技开发有限公司

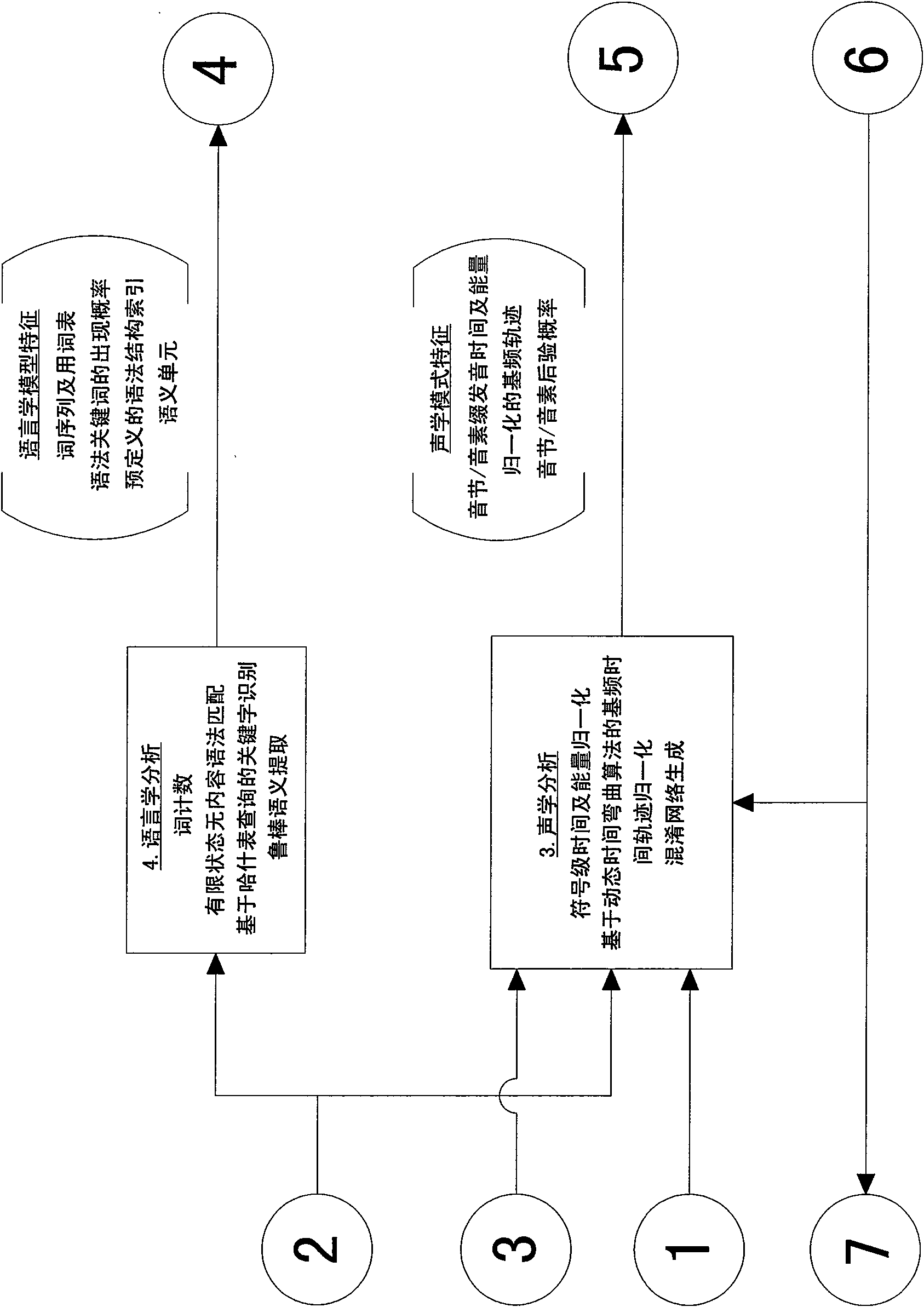

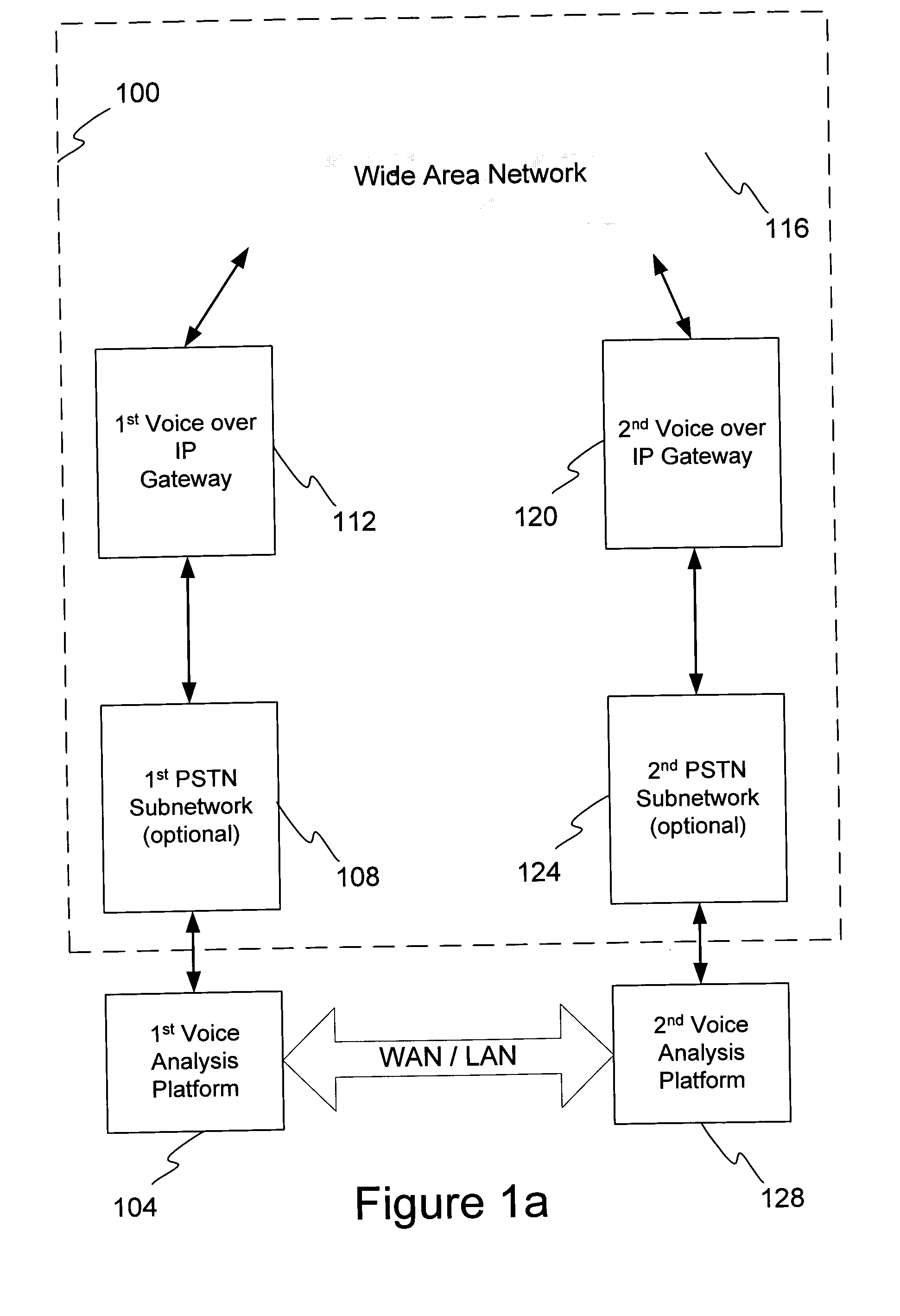

Man-machine interactive system and method for digital television voice recognition

InactiveCN102013254AAchieve interactionSpeech recognition is accurateSpeech recognitionSound input/outputData compressionSpeech identification

The invention discloses a man-machine interactive system and method for digital television voice recognition. The system comprises a target voice acquisition module, a voice analysis module, a semantic computation module and an intelligent control module, wherein the target voice acquisition module comprises a signal amplification module, a forward filtering module, a signal sampling module and a data compression and coding module; and the voice analysis module comprises a noise removal module, a feature extraction module and a decoding device. The method comprises the processes of target voice acquisition, voice noise removal, voice recognition processing, command recognition conversion and intelligent control processing. In the invention, through the cooperative work of the modules, a digital TV man-machine interactive technology for anti-interference voice intelligent identification and voice analysis and interaction under the digital TV reverberation acoustic environment of digital home life is achieved, and an advanced digital TV voice language interaction mode is provided.

Owner:广东中大讯通信息有限公司 +1

Methods and system for distributing information via multiple forms of delivery services

A content distribution facilitation system is described comprising configured servers and a network interface configured to interface with a plurality of terminals in a client server relationship and optionally with a cloud-based storage system. A request from a first source for content comprising content criteria is received, the content criteria comprising content subject matter. At least a portion of the content request content criteria is transmitted to a selected content contributor. If recorded content is received from the first content contributor, the first source is provided with access to the received recorded content. The recorded content may be transmitted via one or more networks to one or more destination devices. Optionally, a voice analysis and / or facial recognition engine are utilized to determine if the recorded content is from the first content contributor.

Owner:GREENFLY

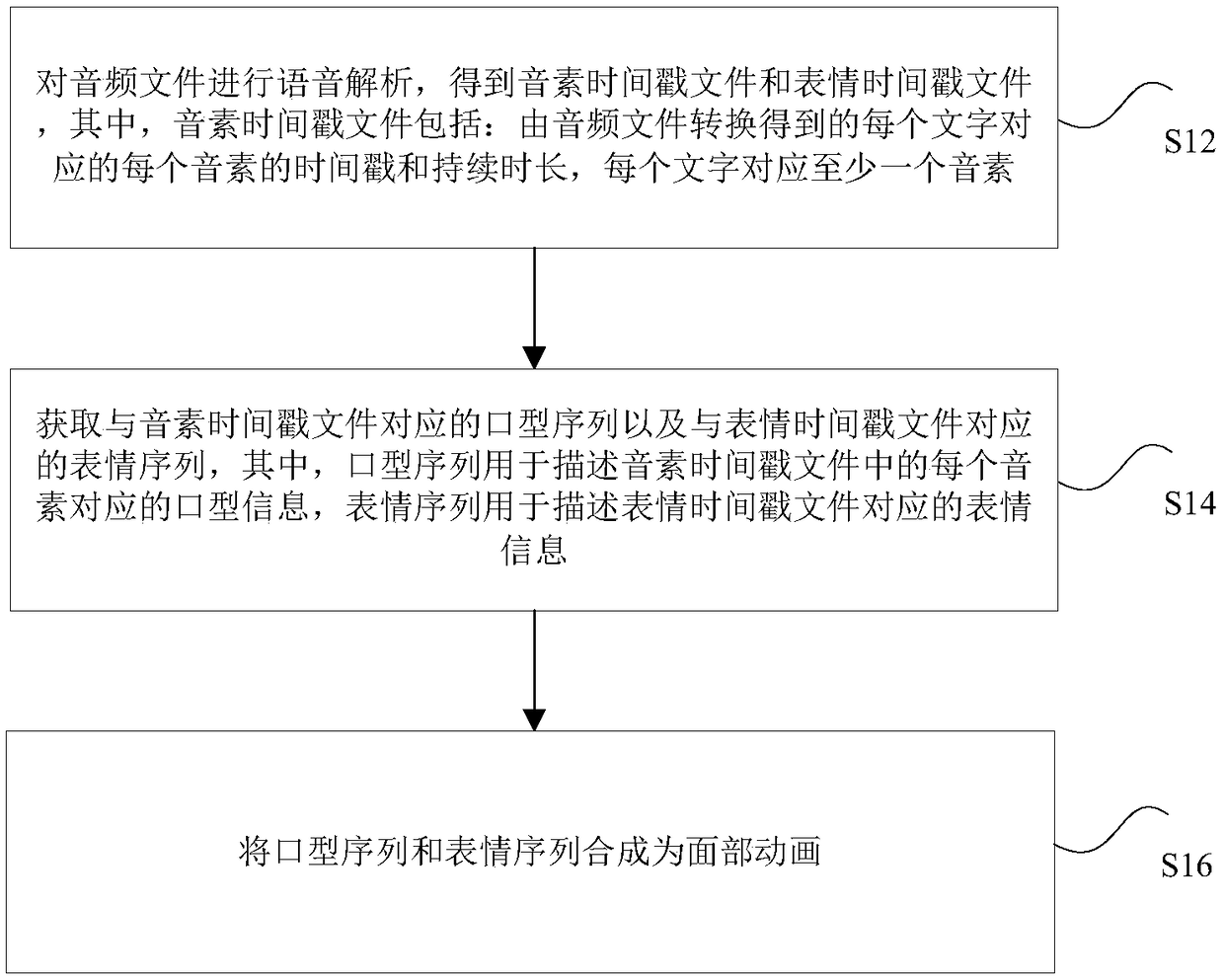

Facial animation synthesis method, a device, a storage medium, a processor and a terminal

PendingCN109377540ATroubleshoot technical issues with the experienceAnimationAnimationSynthesis methods

The invention discloses a facial animation synthesis method, a device, a storage medium, a processor and a terminal. The method comprises: performing voice analysis on the audio file to obtain a phoneme time stamp file and an expression time stamp file, wherein the phoneme time stamp file comprises a timestamp and a duration of each phoneme corresponding to each character converted from the audiofile, and each character corresponds to at least one phoneme; acquiring a mouth shape sequence corresponding to a phoneme timestamp file, wherein the mouth shape sequence is used for describing mouthshape information corresponding to each phoneme in the phoneme timestamp file; acquiring an expression sequence corresponding to the expression timestamp file, wherein, the expression sequence is usedfor describing expression information corresponding to the expression timestamp file; and synthesizing mouth and expression sequences into facial animation. The invention solves the technical problemthat the speech analysis mode provided in the related art is easy to cause the large error of the speech animation synthesized in the follow-up, and affects the user experience.

Owner:NETEASE (HANGZHOU) NETWORK CO LTD

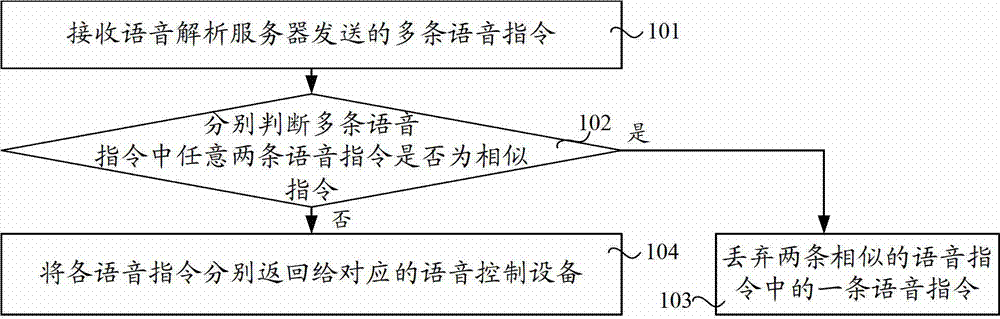

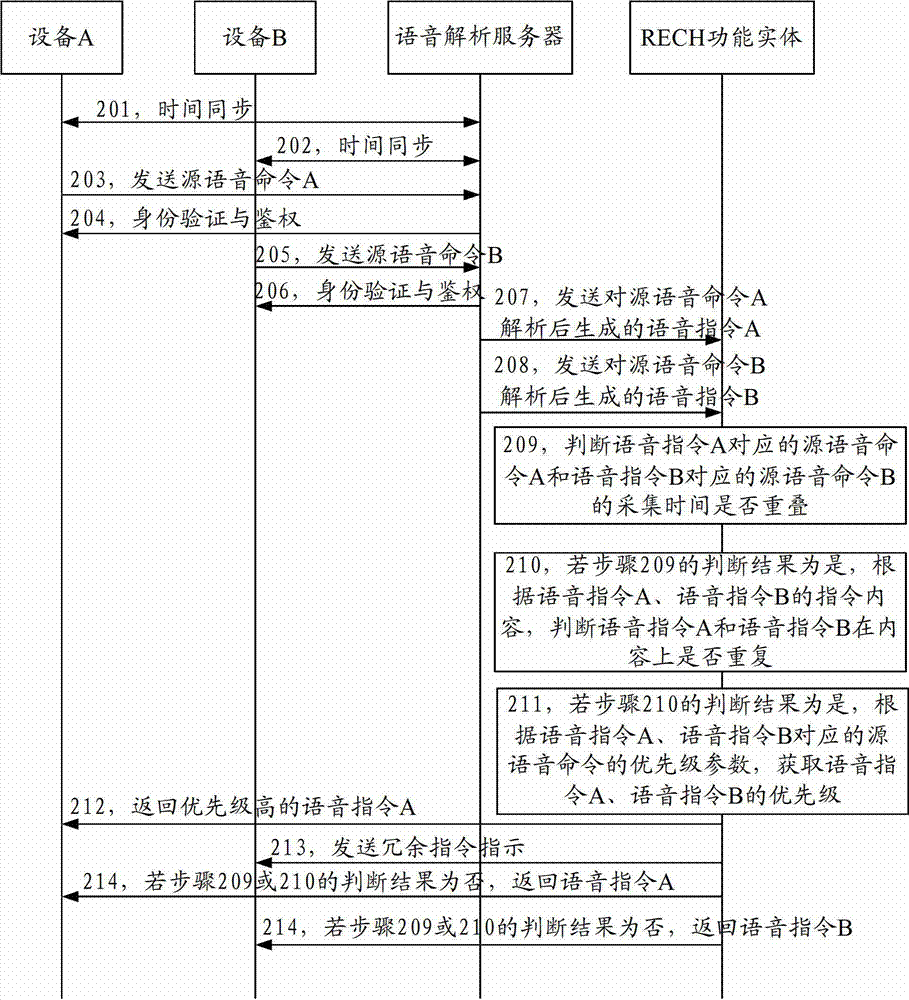

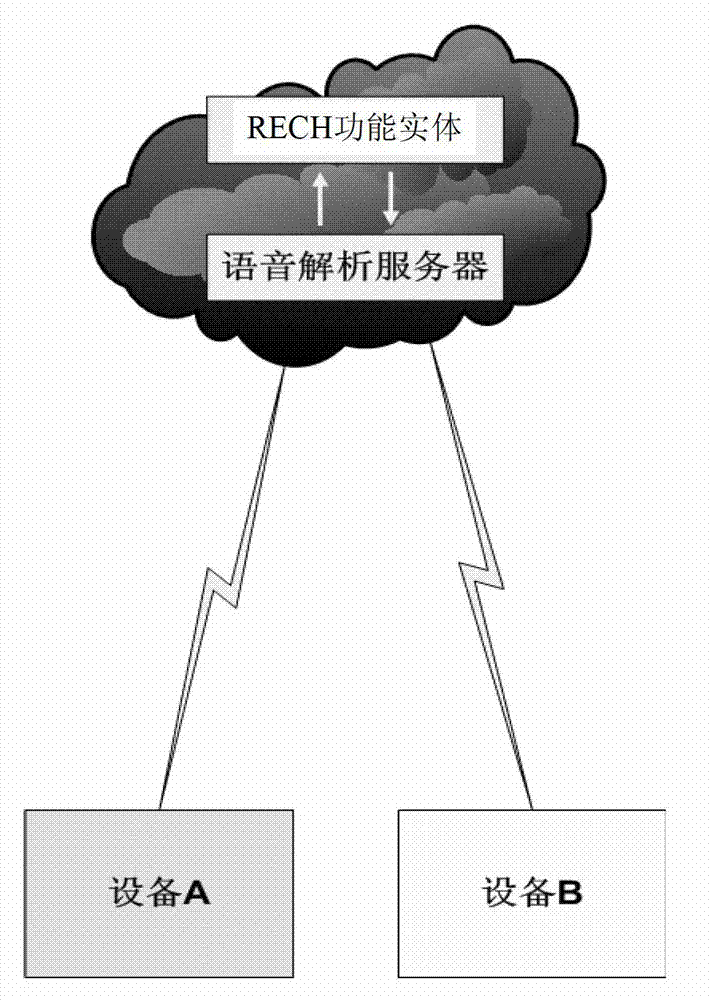

Command processing method, command processing device and command processing system

ActiveCN102831894ATime synchronizationAvoid repeated executionSpeech recognitionVoice analysisSpeech sound

The embodiment of the invention provides a command processing method, a command processing device and a command processing system. The method comprises the steps of: receiving multiple voice commands sent by a voice analysis server, wherein the multiple voice commands are generated after source voice commands coming from different voice control devices are analyzed by the voice analysis server; respectively judging if any two voice commands in the multiple voice commands are similar commands, wherein the similar command is a voice command corresponding to the source voice command obtained by using different voice control devices to capture same voice information; and discarding one voice command in two similar voice commands when two voice commands existing in the multiple voice commands are similar commands. The embodiment of the invention further provides a command processing device and a command processing system. With the adoption of the embodiment, the control mistake brought by the repetitive execution of commands is eliminated.

Owner:HUAWEI DEVICE CO LTD

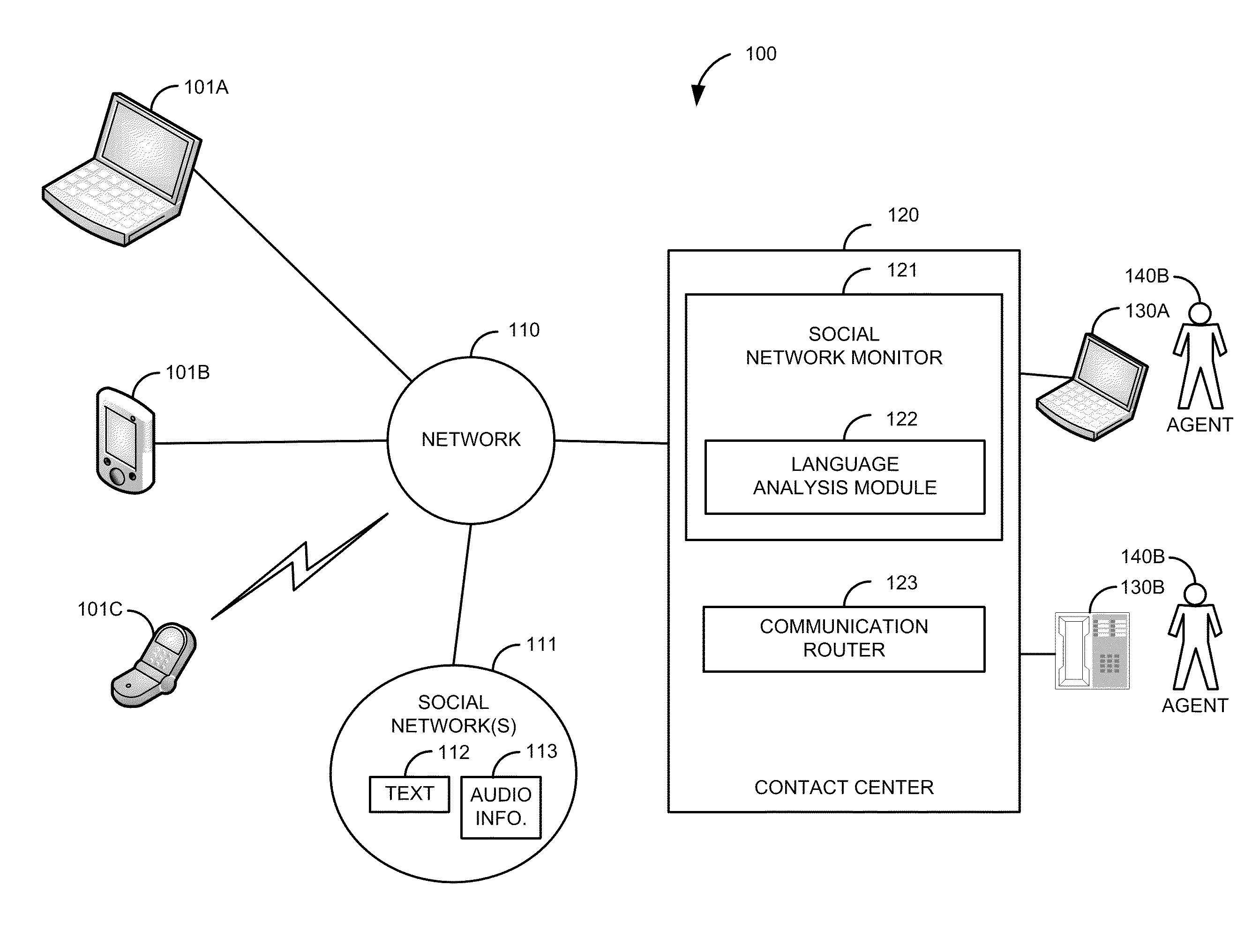

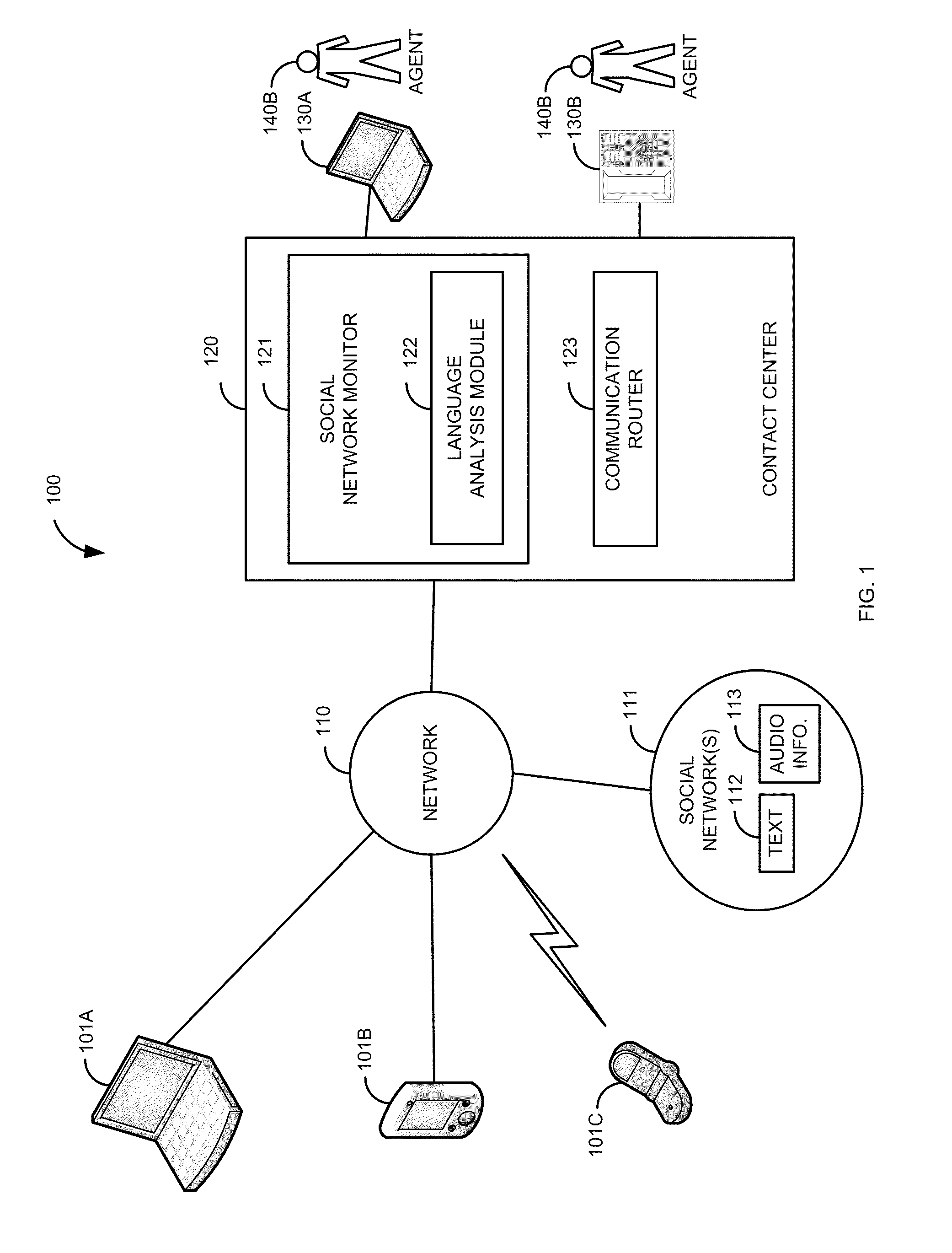

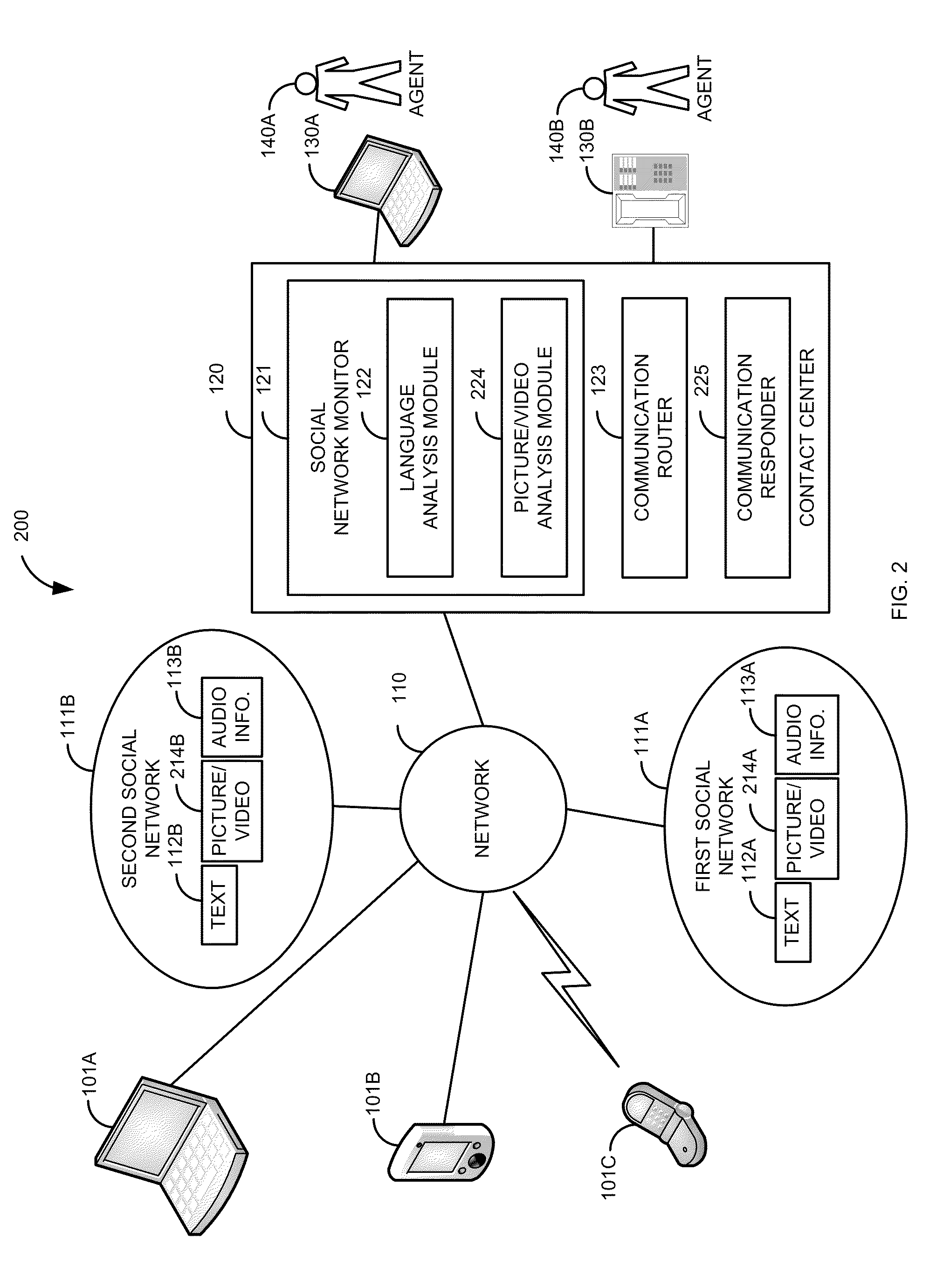

Social media language identification and routing

A communication from a person in a first language is received at a contact center. A social network that the person frequents is searched to determine if the person can converse in a second language. The determination that the person can converse in the second language can be done through text analysis, voice analysis, picture analysis, video analysis, or different combinations of these. Based on the person being able to converse in the second language, the communication is routed differently within the contact center.In a second embodiment, the system and method searches the first social network to determine an issue in a first language. A second social network is searched to determine if the person can converse in a second language. Based on the person being able to converse in the second language, the issue is responded to based on the second language.

Owner:AVAYA INC

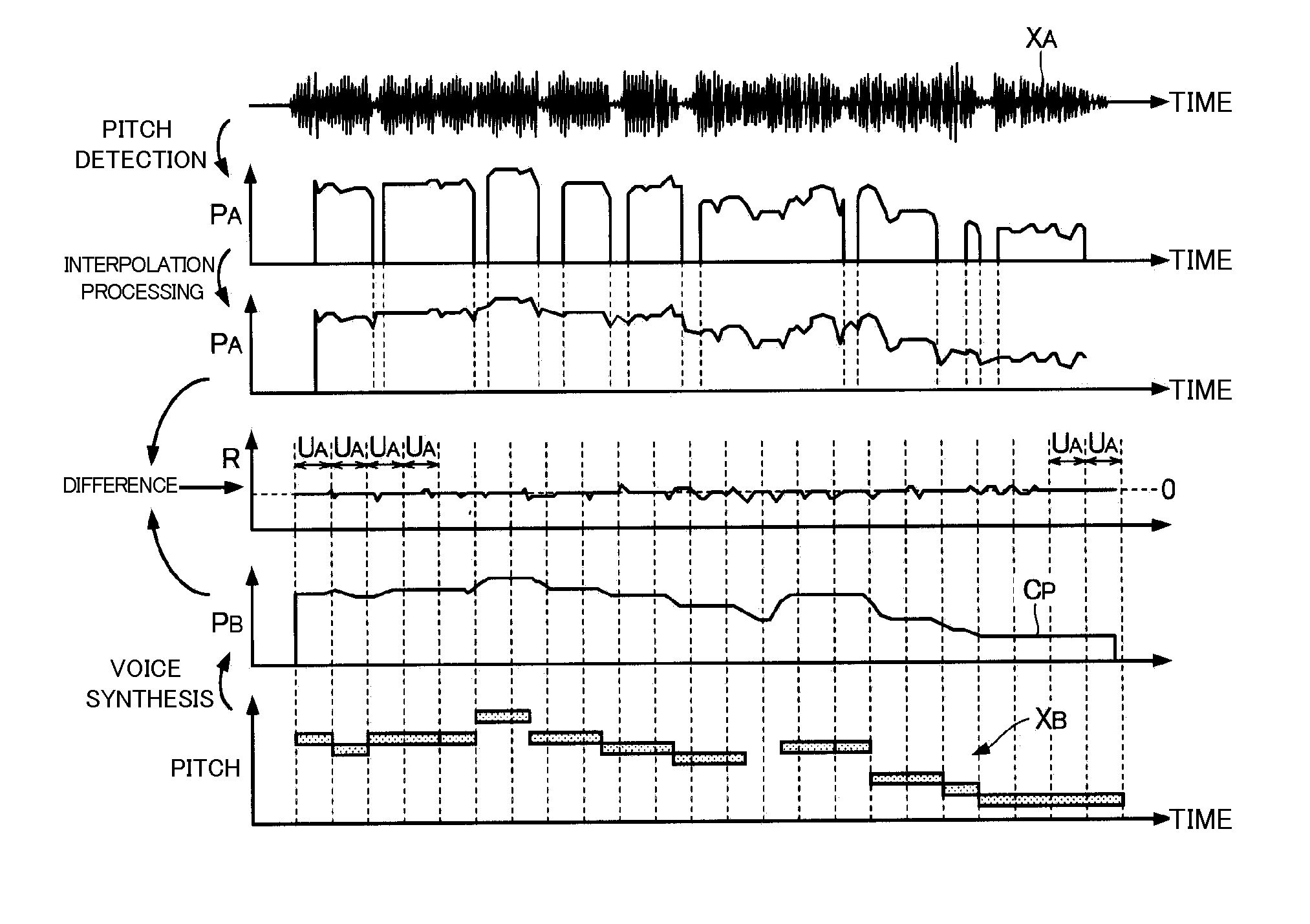

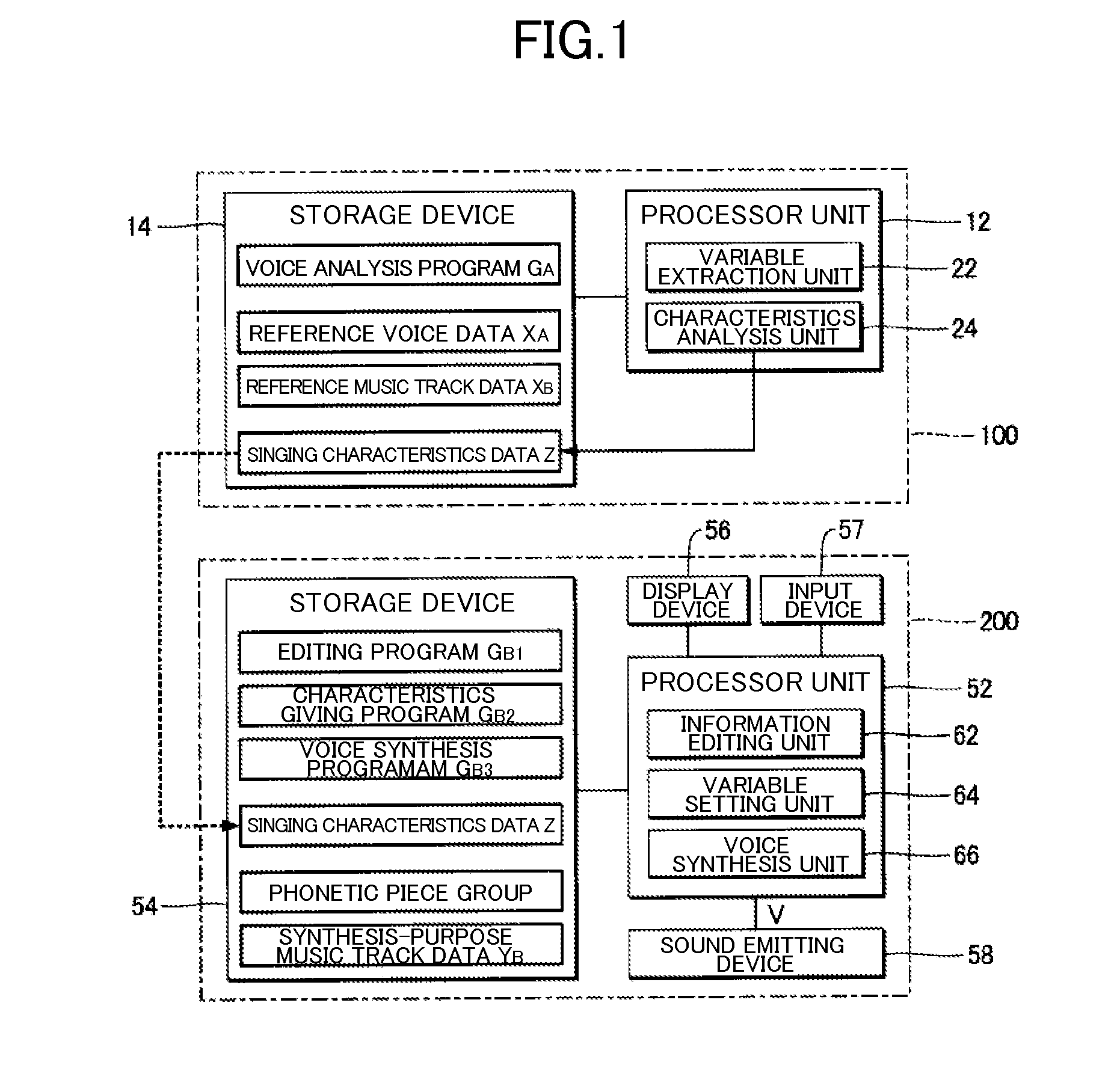

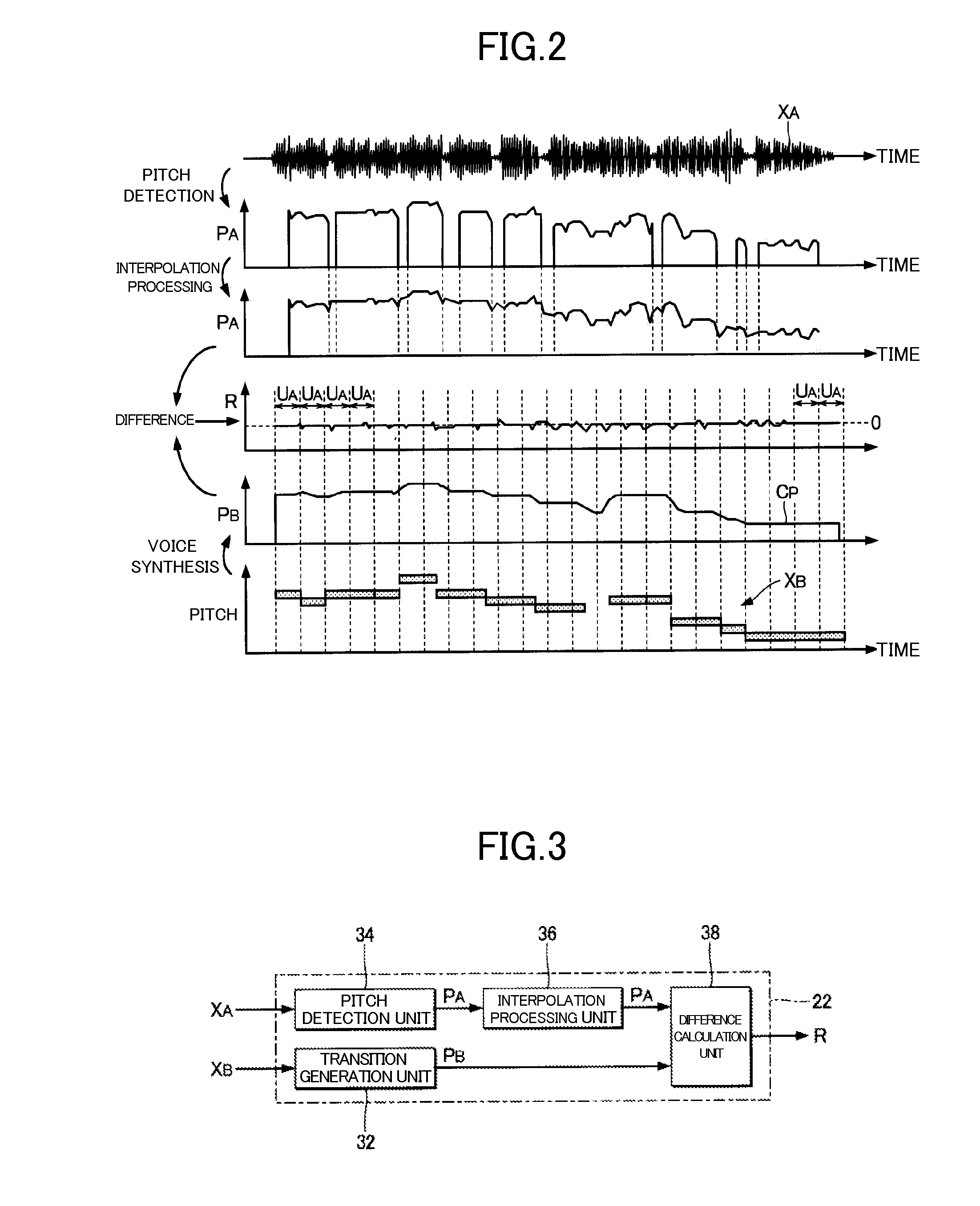

Voice analysis method and device, voice synthesis method and device, and medium storing voice analysis program

ActiveUS20150040743A1Suppressing discontinuous fluctuationLow sectionElectrophonic musical instrumentsSpeech synthesisRelative pitchSynthesis methods

A voice analysis method includes a variable extraction step of generating a time series of a relative pitch. The relative pitch is a difference between a pitch generated from music track data, which continuously fluctuates on a time axis, and a pitch of a reference voice. The music track data designate respective notes of a music track in time series. The reference voice is a voice obtained by singing the music track. The pitch of the reference voice is processed by an interpolation processing for a voiceless section from which no pitch is detected. The voice analysis method also includes a characteristics analysis step of generating singing characteristics data that define a model for expressing the time series of the relative pitch generated in the variable extraction step.

Owner:YAMAHA CORP

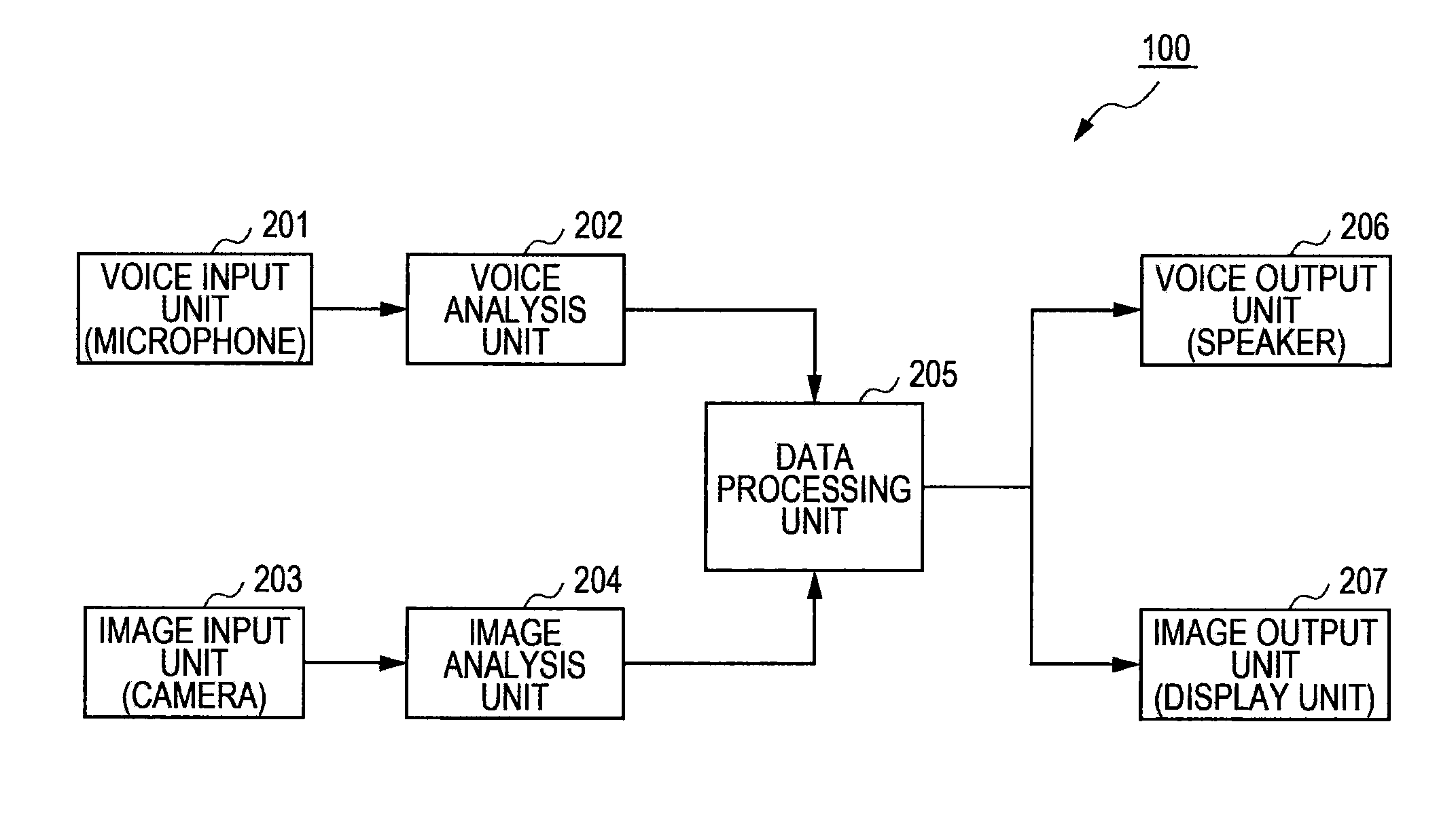

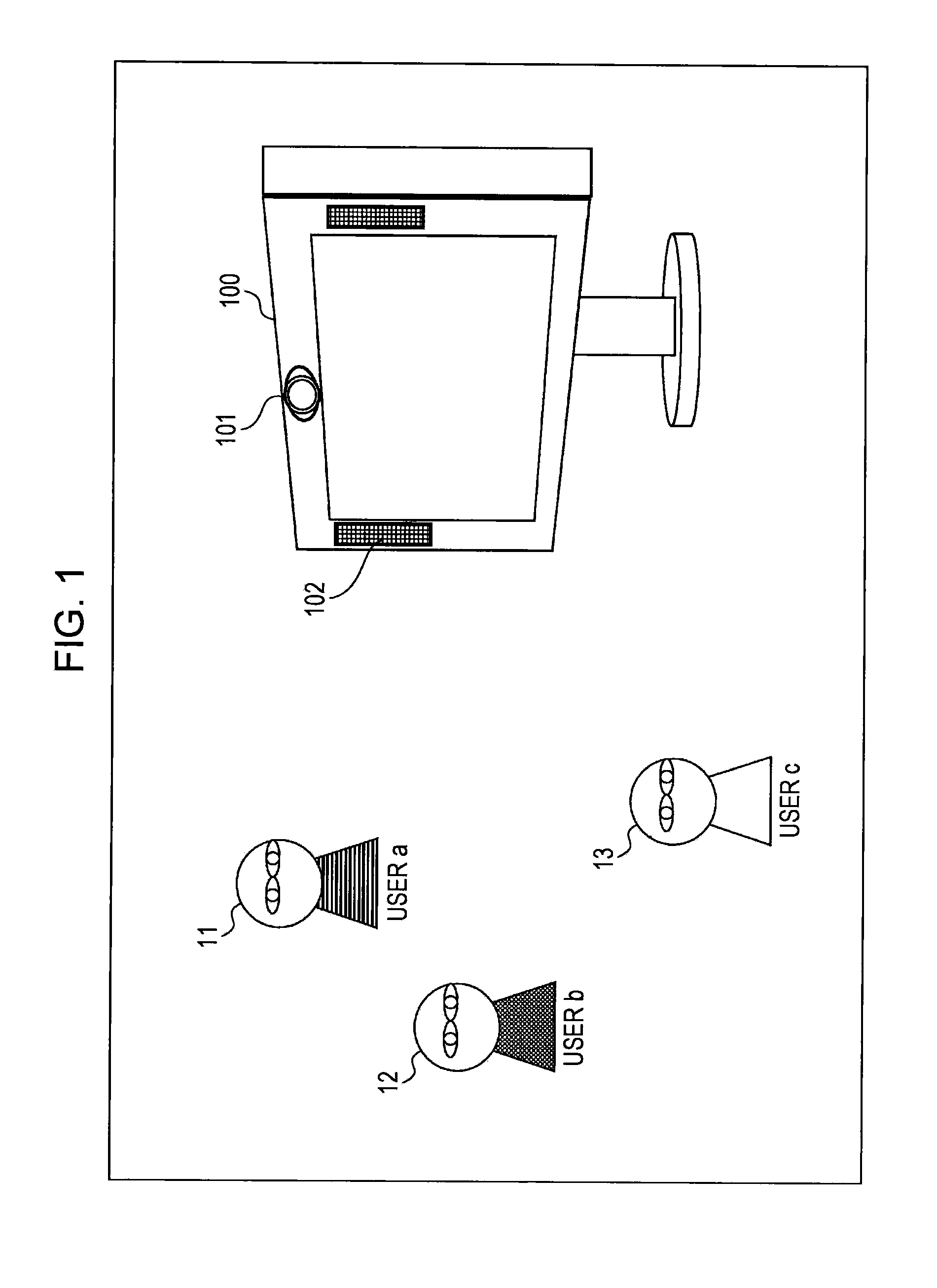

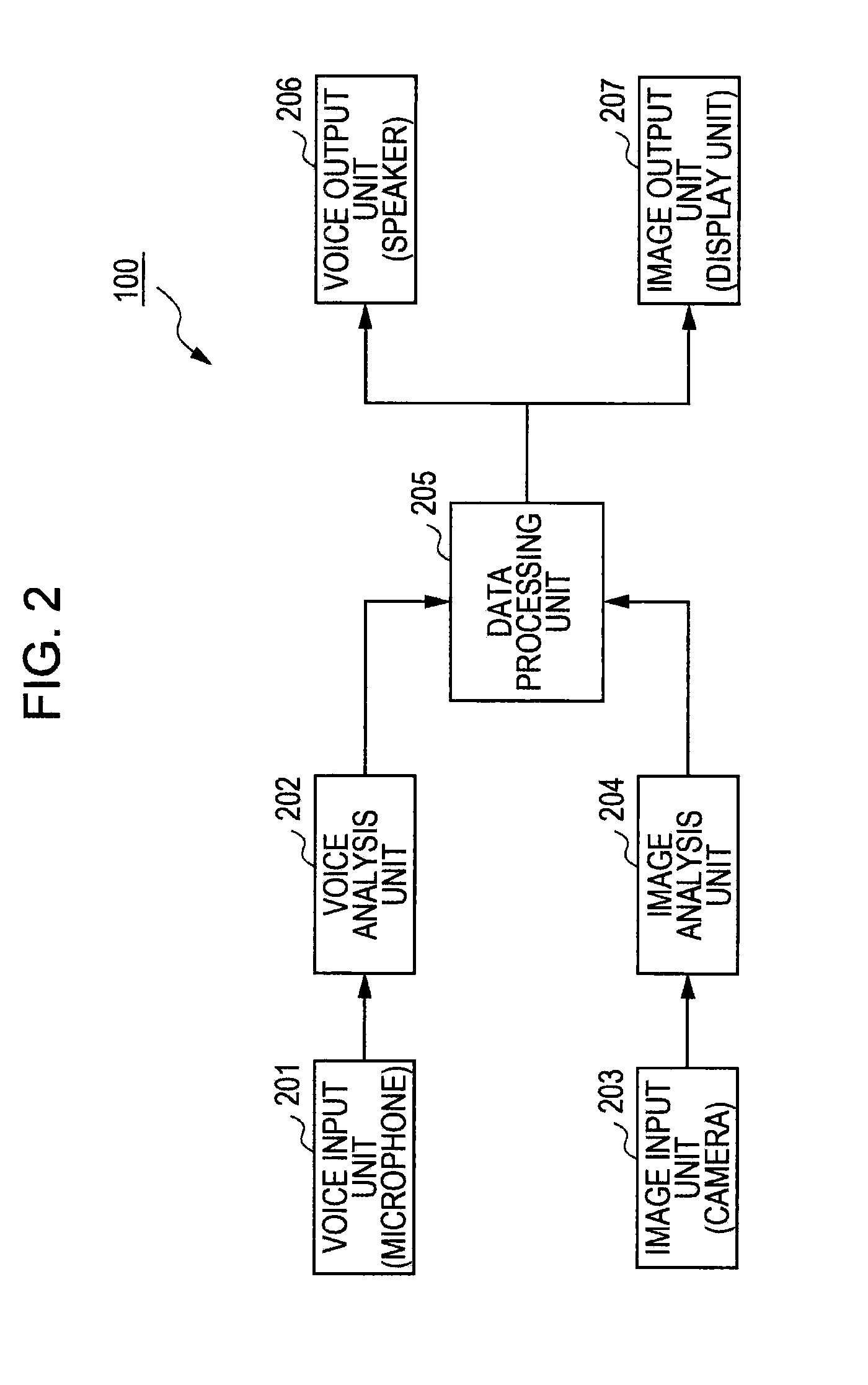

Information processing apparatus, information processing method, and program

InactiveUS20110282673A1Difficult be continuously processHigh degreeTelevision system detailsCathode-ray tube indicatorsInformation processingContinuation

Provided is an information processing apparatus including: a voice analysis unit which performs an analysis process for a user speech; and a data processing unit which is input with analysis results of the voice analysis unit to determine a process which is to be performed by the information processing apparatus, wherein in the case where a factor of inhibiting process continuation occurs in a process based on the user speech, the data processing unit performs a process of generating and outputting feedback information corresponding to a process stage in which the factor of inhibiting occurs.

Owner:SONY CORP

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com