Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

263 results about "Instruction pipeline" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

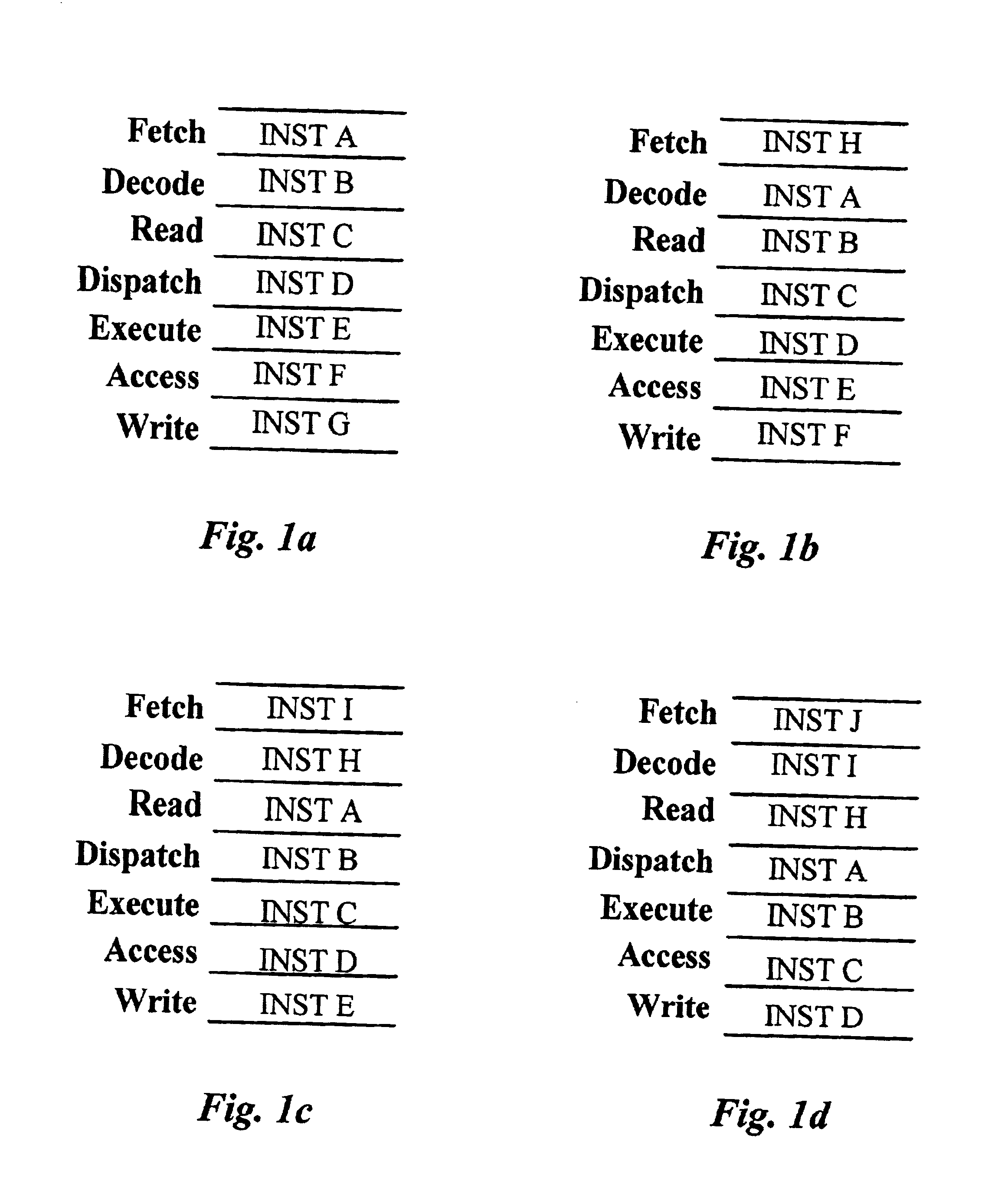

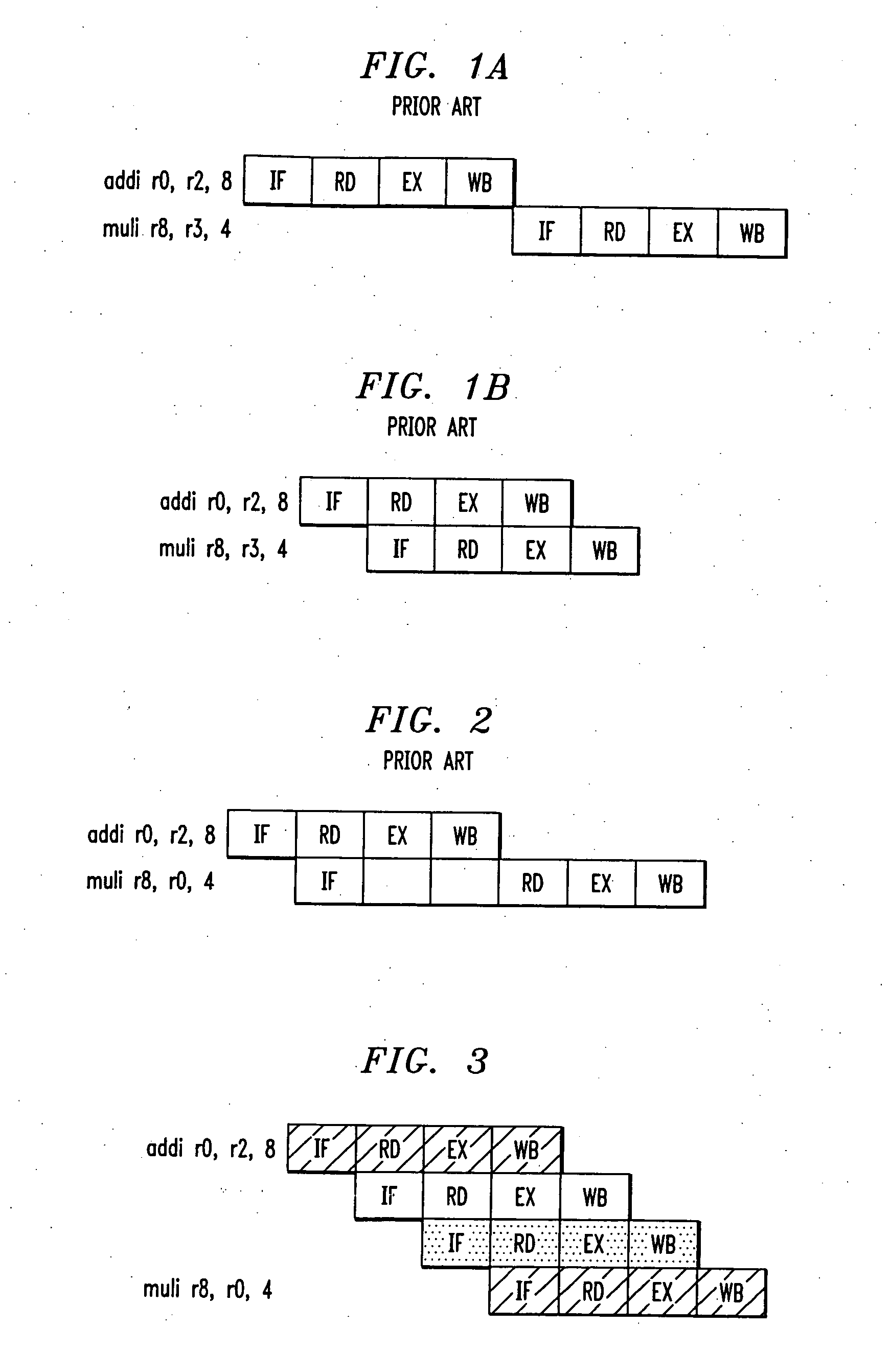

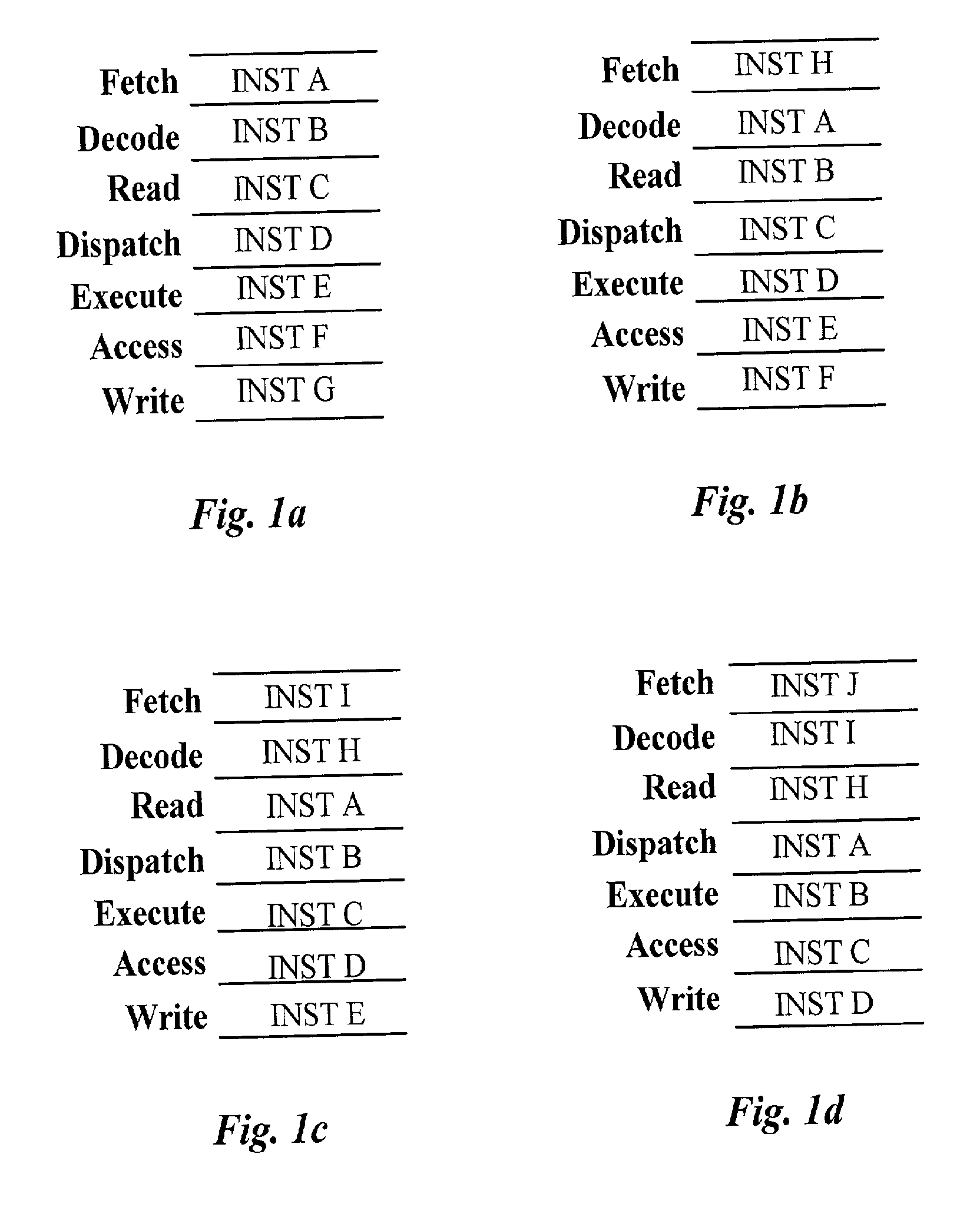

In computer science, instruction pipelining is a technique for implementing instruction-level parallelism within a single processor. Pipelining attempts to keep every part of the processor busy with some instruction by dividing incoming instructions into a series of sequential steps (the eponymous "pipeline") performed by different processor units with different parts of instructions processed in parallel. It allows faster CPU throughput than would otherwise be possible at a given clock rate, but may increase latency due to the added overhead of the pipelining process itself.

Communications system using rings architecture

InactiveUS20030167348A1Raise countMultiple digital computer combinationsLoop networksAuto-configurationStructure of Management Information

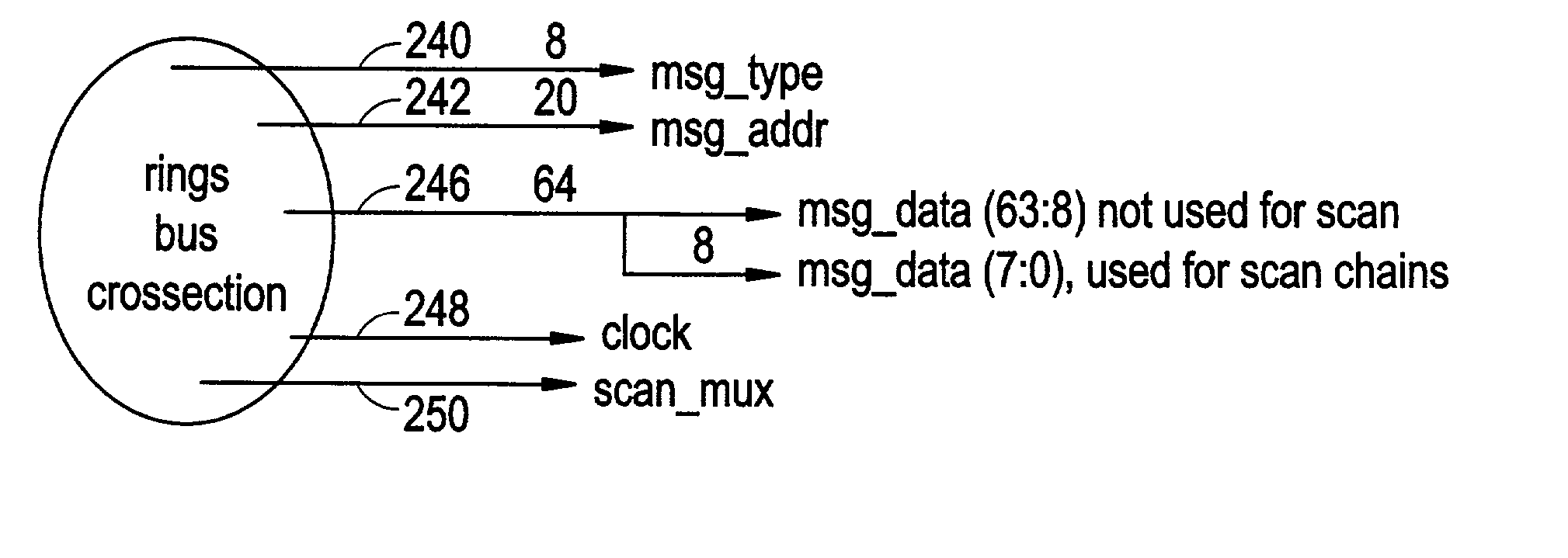

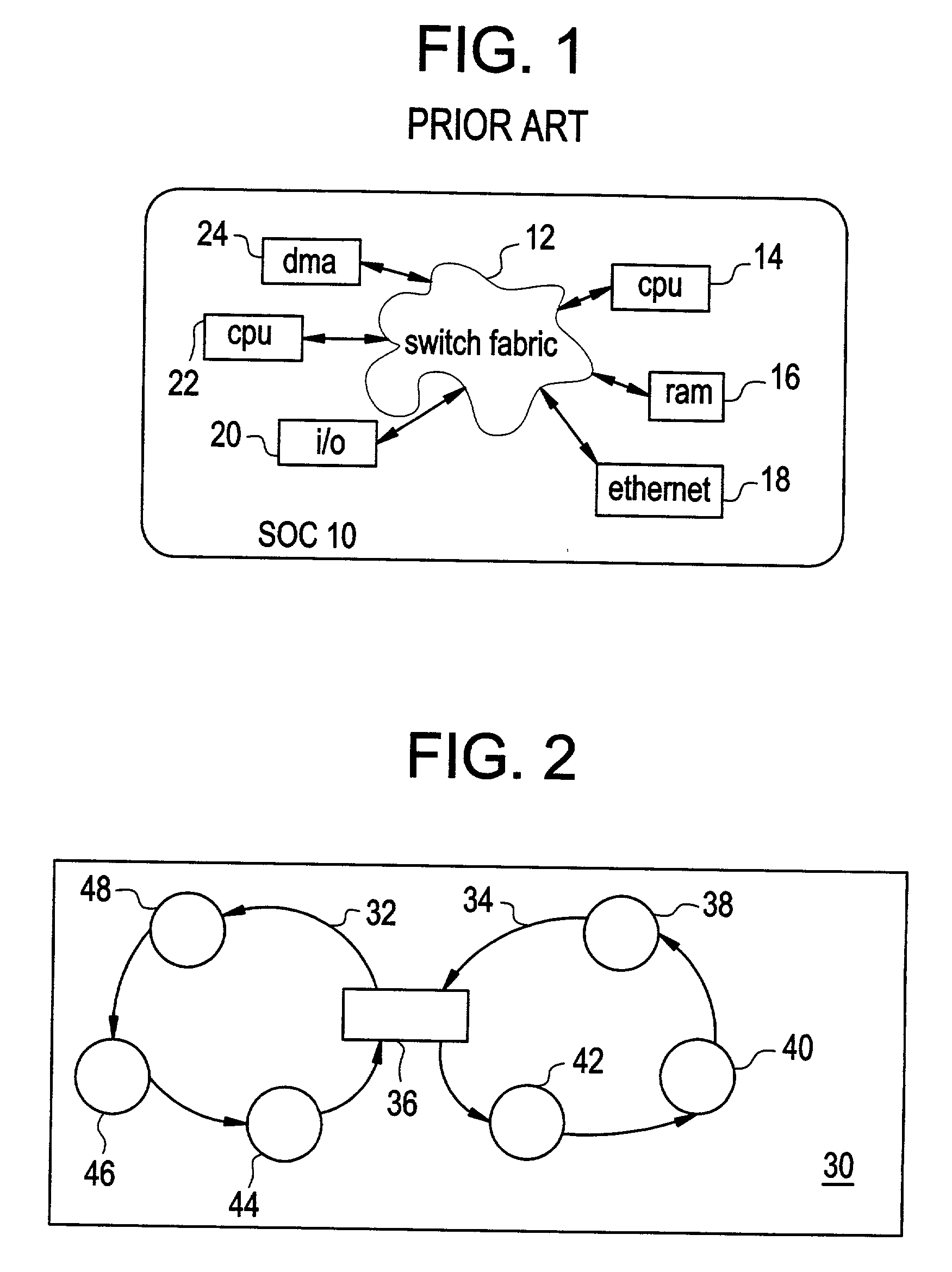

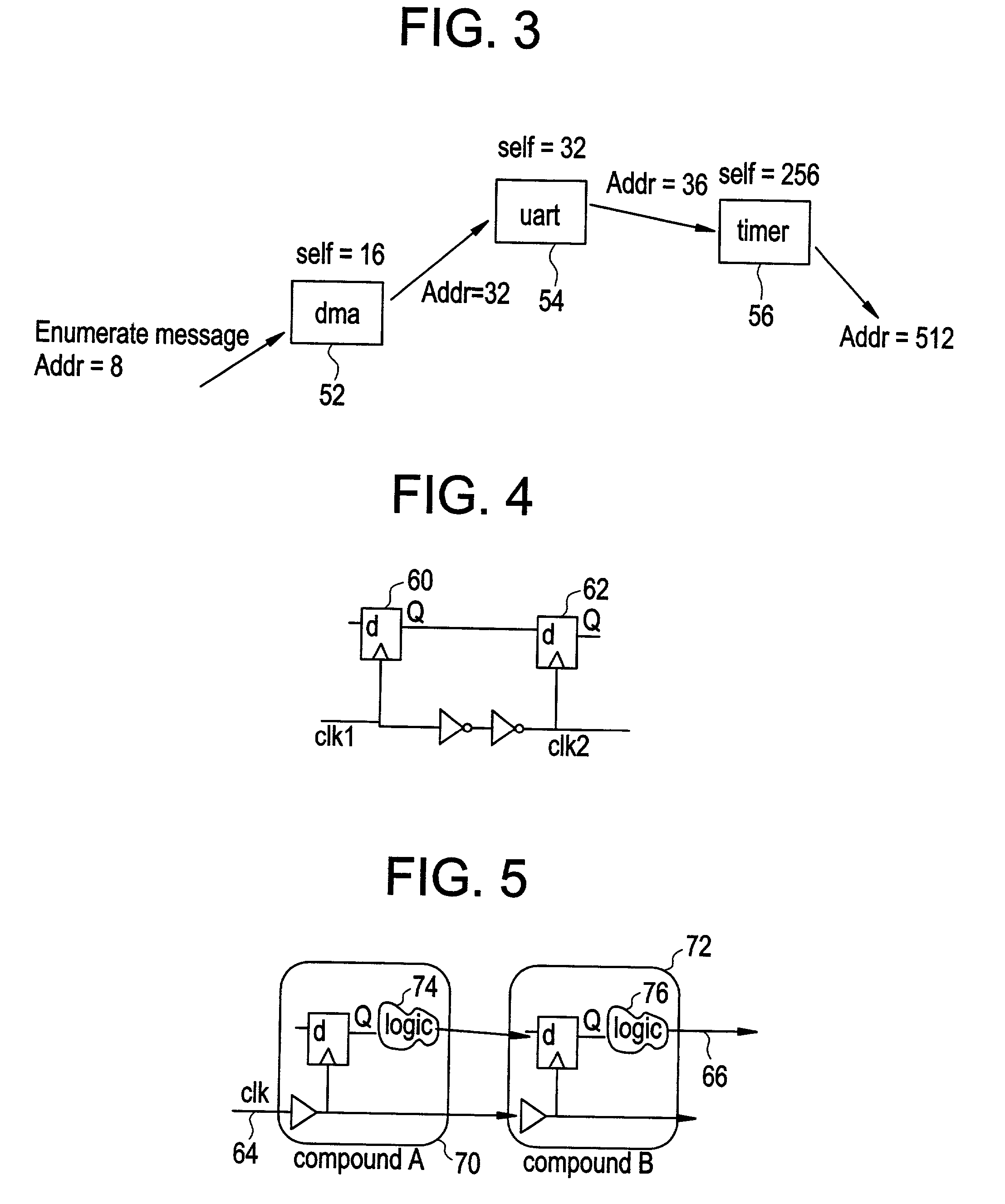

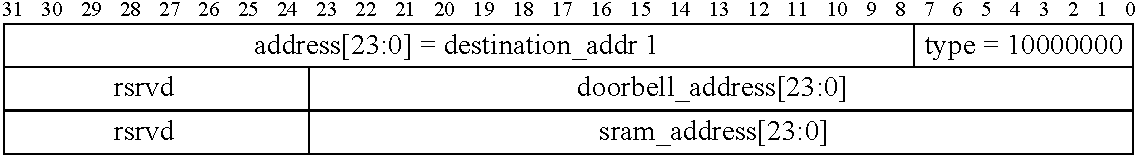

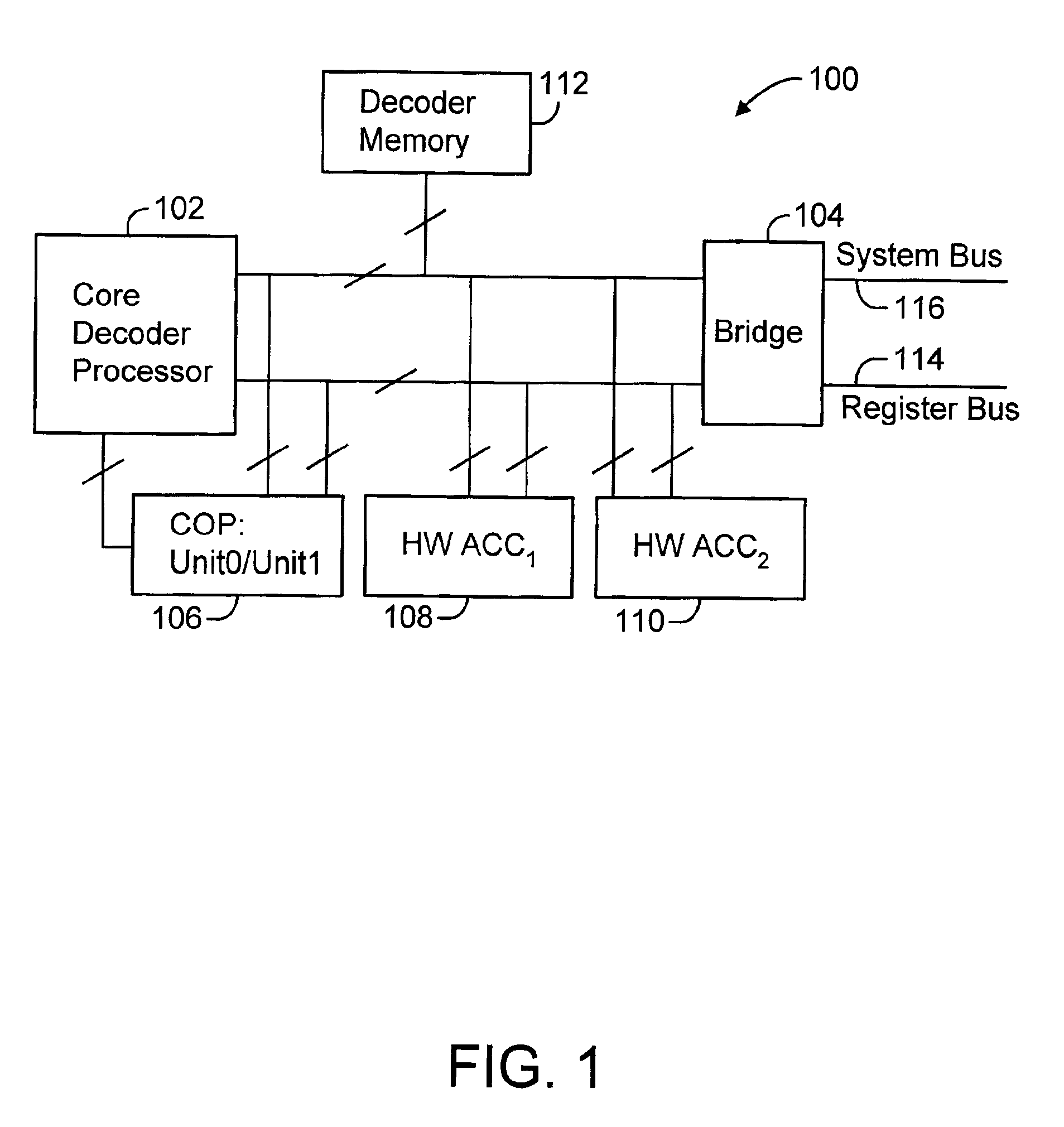

Systems and methods are provided for implementing: a rings architecture for communications and data handling systems; an enumeration process for automatically configuring the ring topology; automatic routing of messages through bridges; extending a ring topology to external devices; write-ahead functionality to promote efficiency; wait-till-reset operation resumption; in-vivo scan through rings topology; staggered clocking arrangement; and stray message detection and eradication. Other inventive elements conveyed include: an architectural overview of a packet processor; a programming model for a packet processor; an instruction pipeline for a packet processor; and use of a packet processor as a module on a rings-based architecture. Additional inventive elements conveyed include: an architectural overview of a communications processor; a data path protocol support model for a communications processor; an exemplary network processor employed as the core packet processor for the communications processor; an exemplary rings-based SOC switch fabric architecture; and a variety of quality of support features.

Owner:GLOBESPANVIRATA

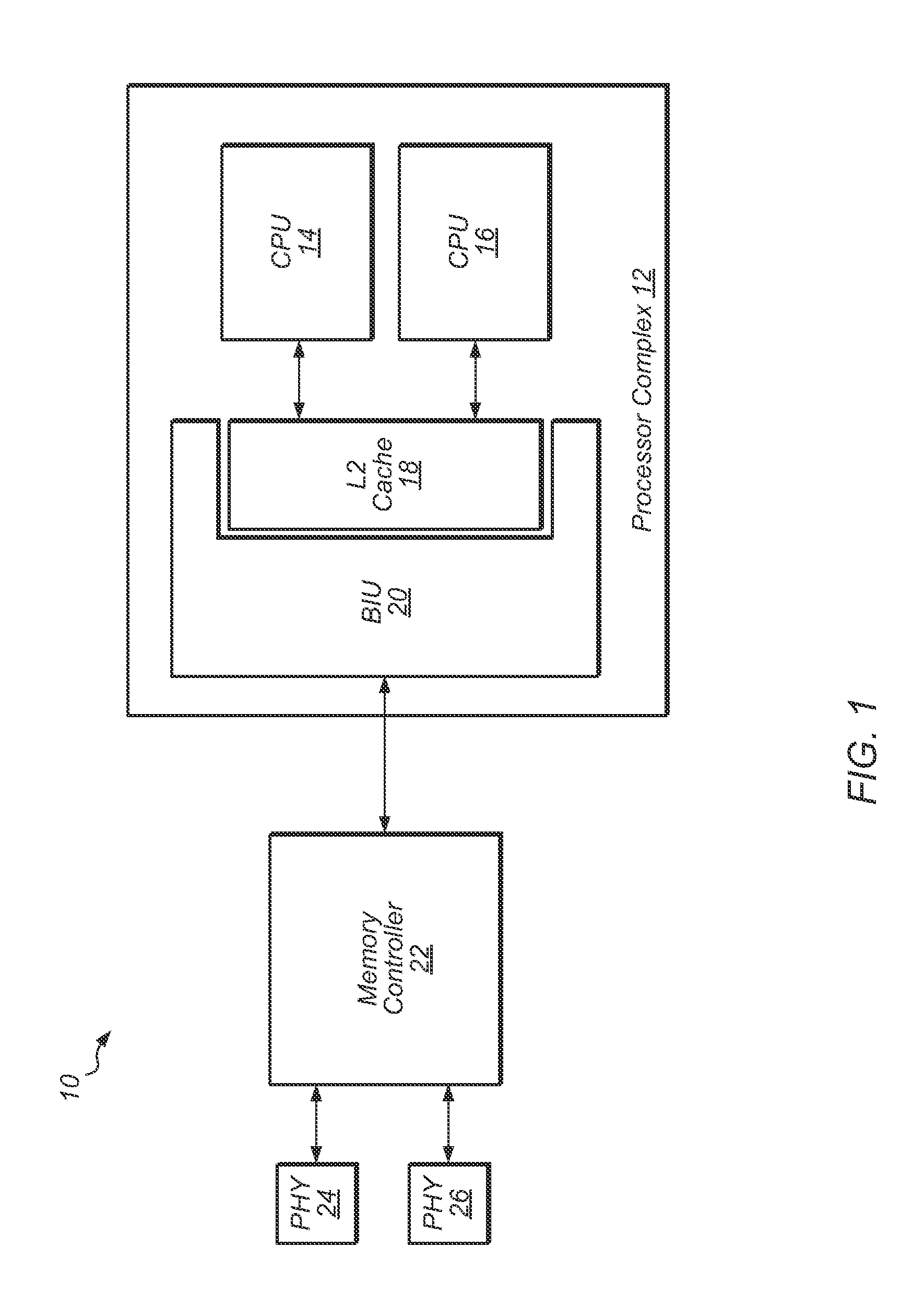

Hardware-enabled instruction tracing

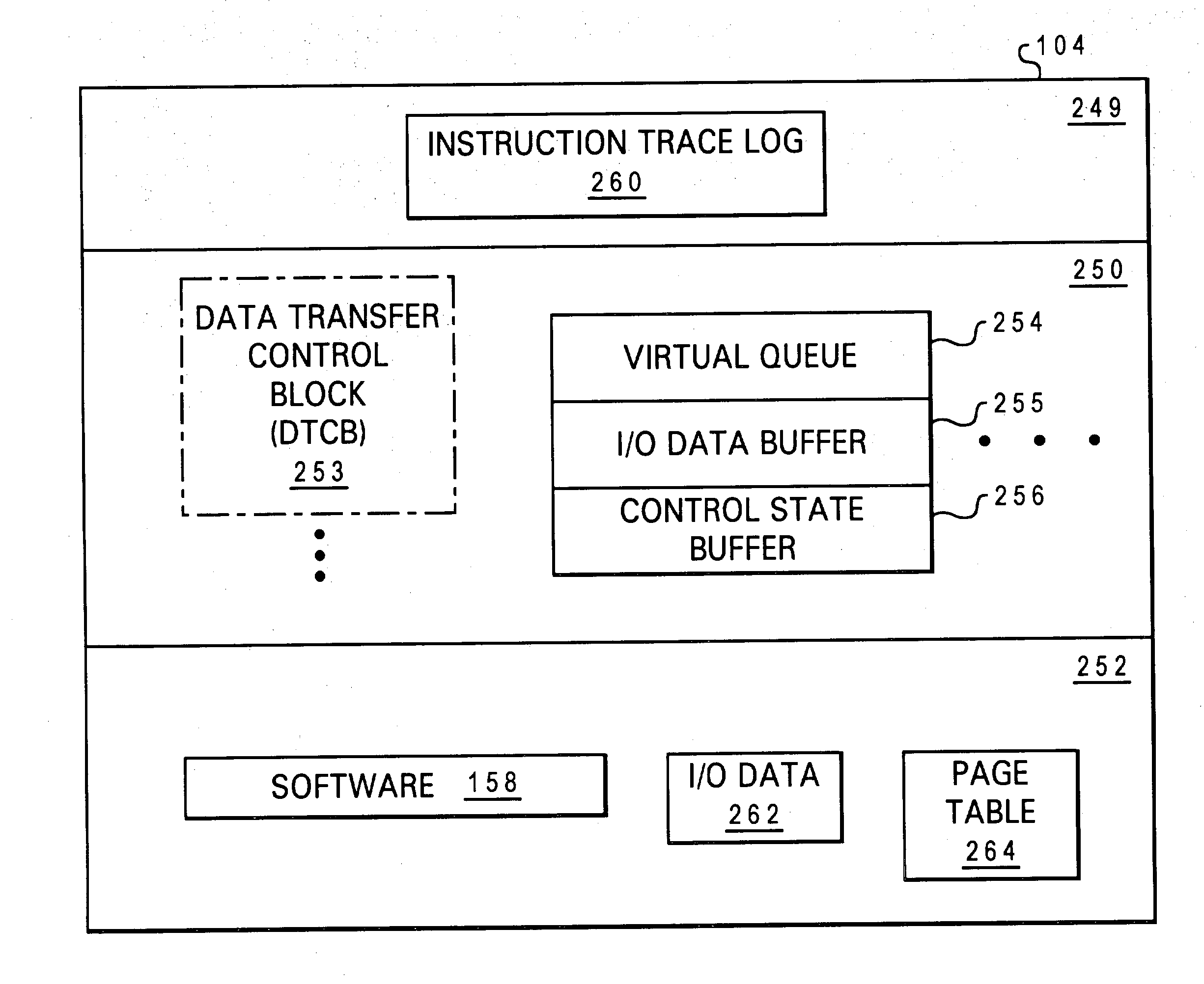

InactiveUS20040139305A1Error detection/correctionDigital computer detailsData processing systemExecution unit

A data processing system includes an instruction pipeline, including one or more execution units that execute instructions and an instruction sequencing unit that dispatches instructions to the execution units for execution. The data processing system further includes a memory controller for a memory containing an instruction trace log and an interconnect coupled to the instruction pipeline and to the memory controller. The interconnect transmits to the memory controller for storage in the instruction trace log instructions processed within the instruction pipeline.

Owner:IBM CORP

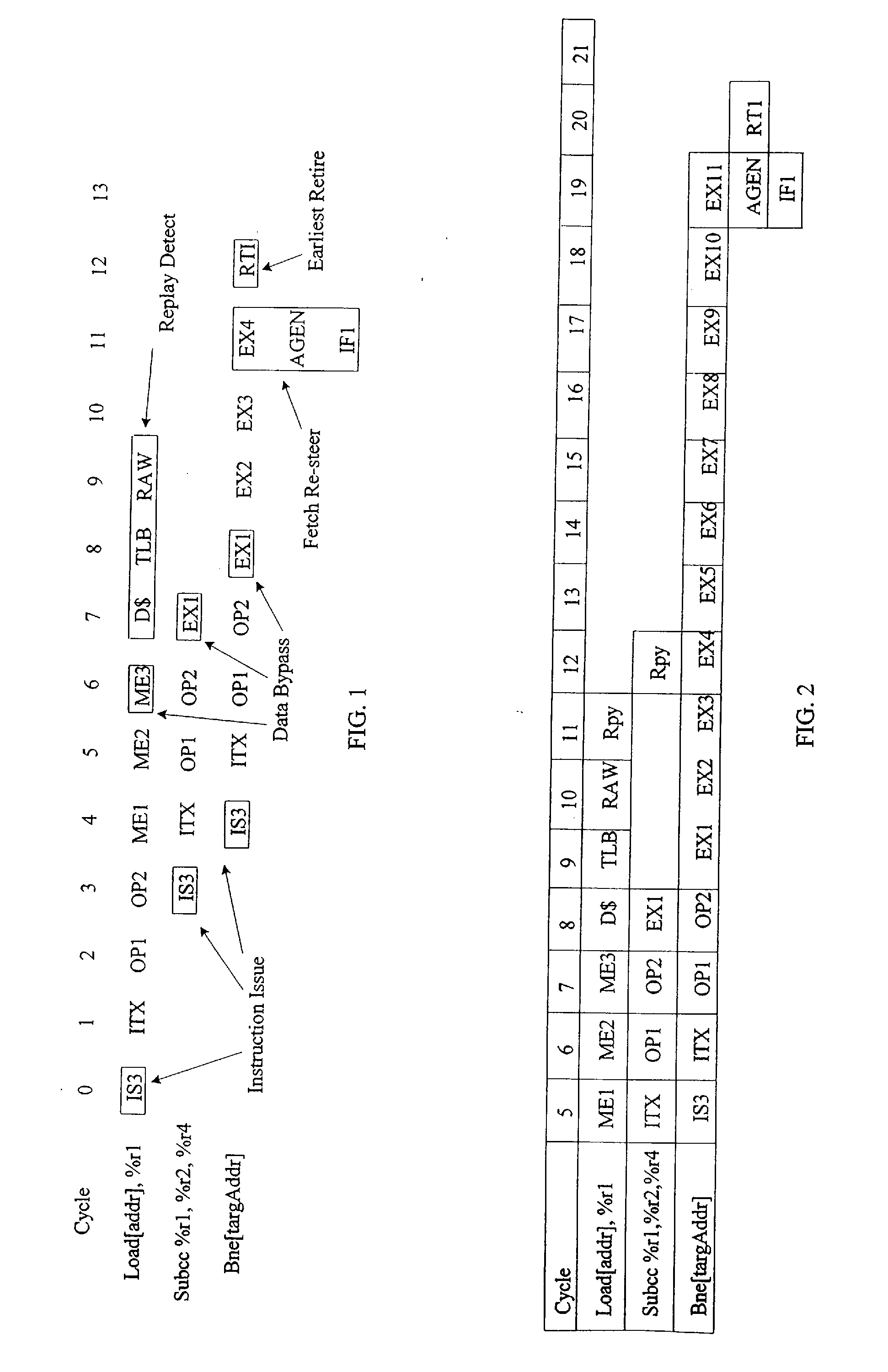

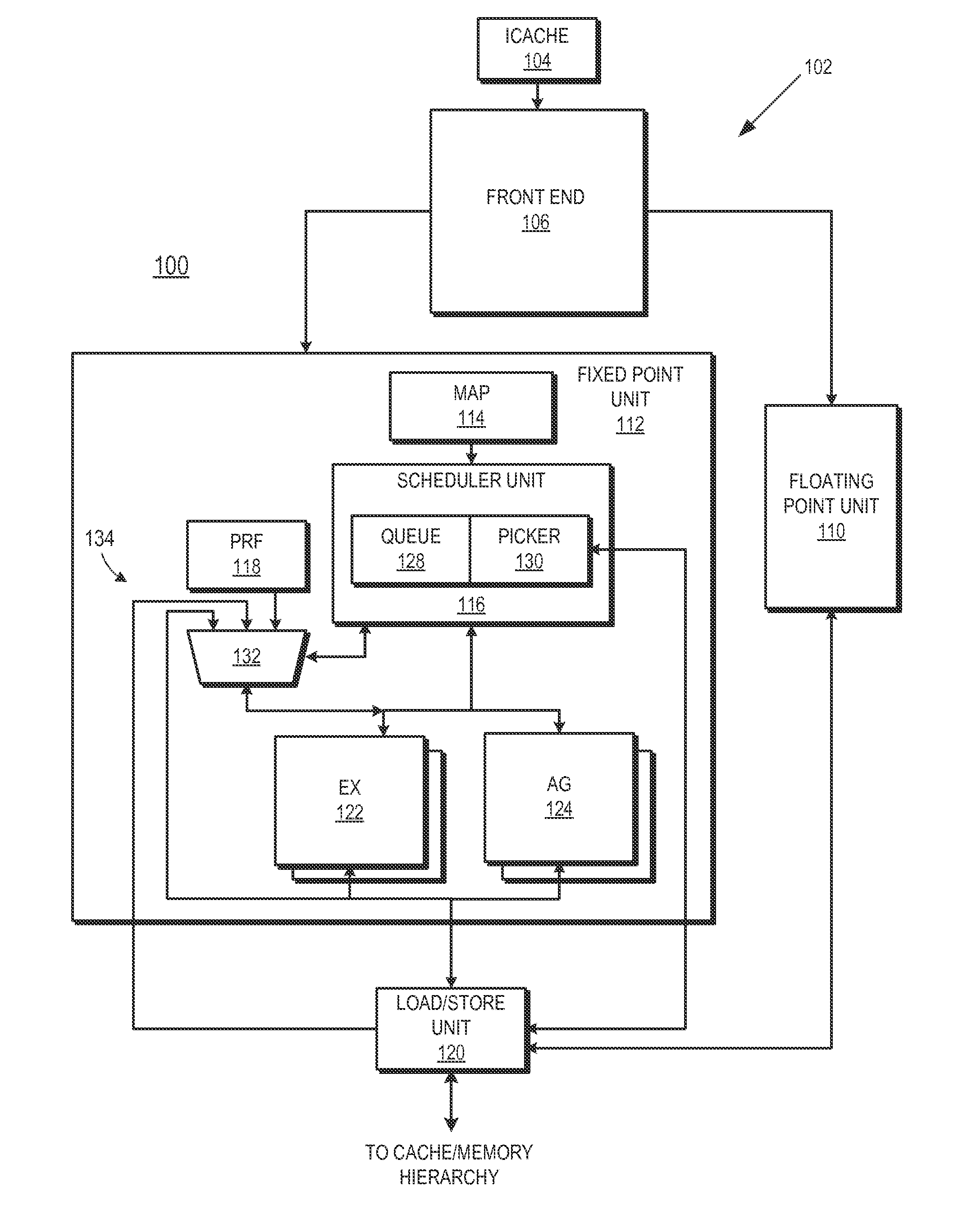

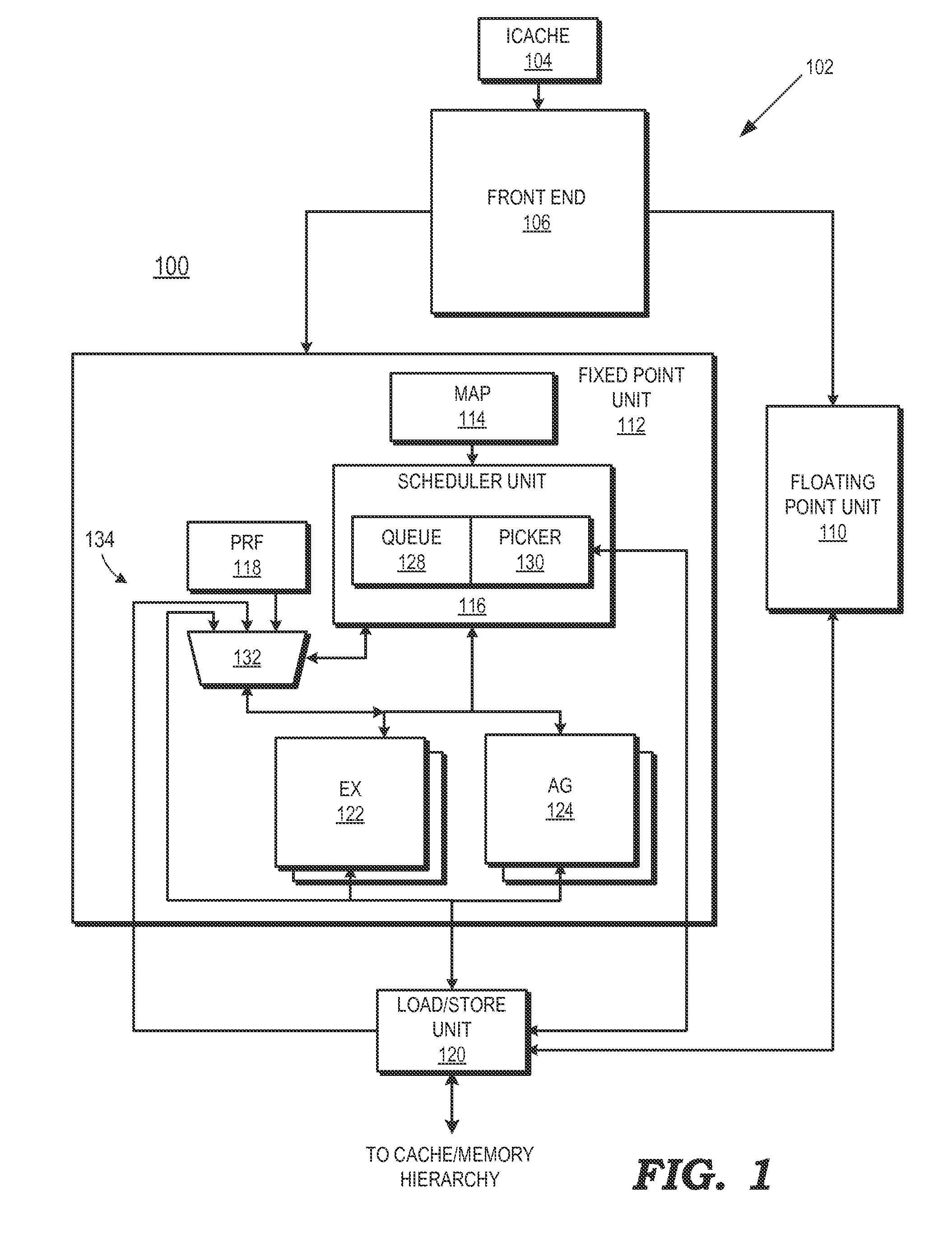

Dependence-based replay suppression

ActiveUS20140380023A1Digital computer detailsConcurrent instruction executionLoad instructionExecution unit

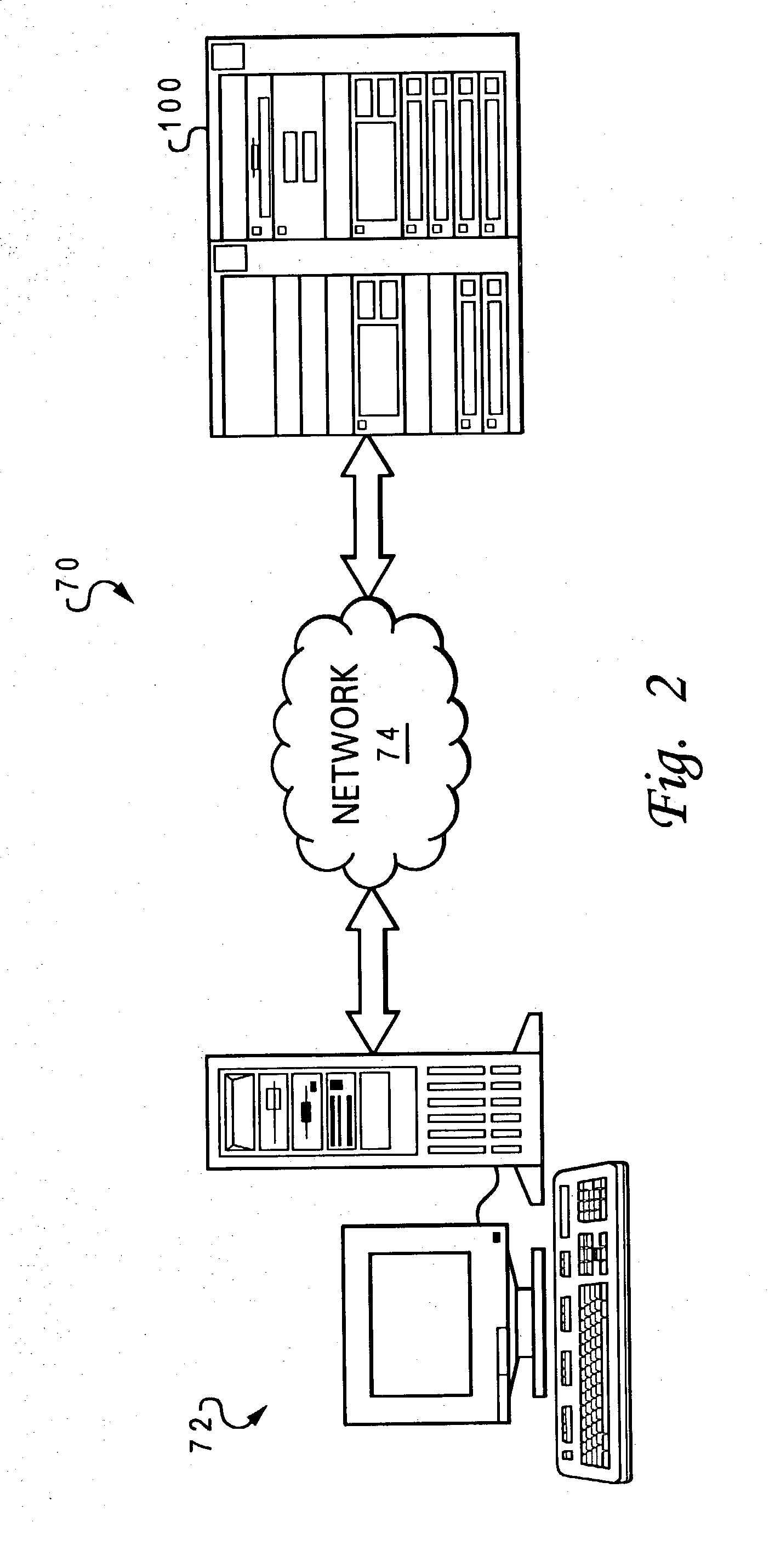

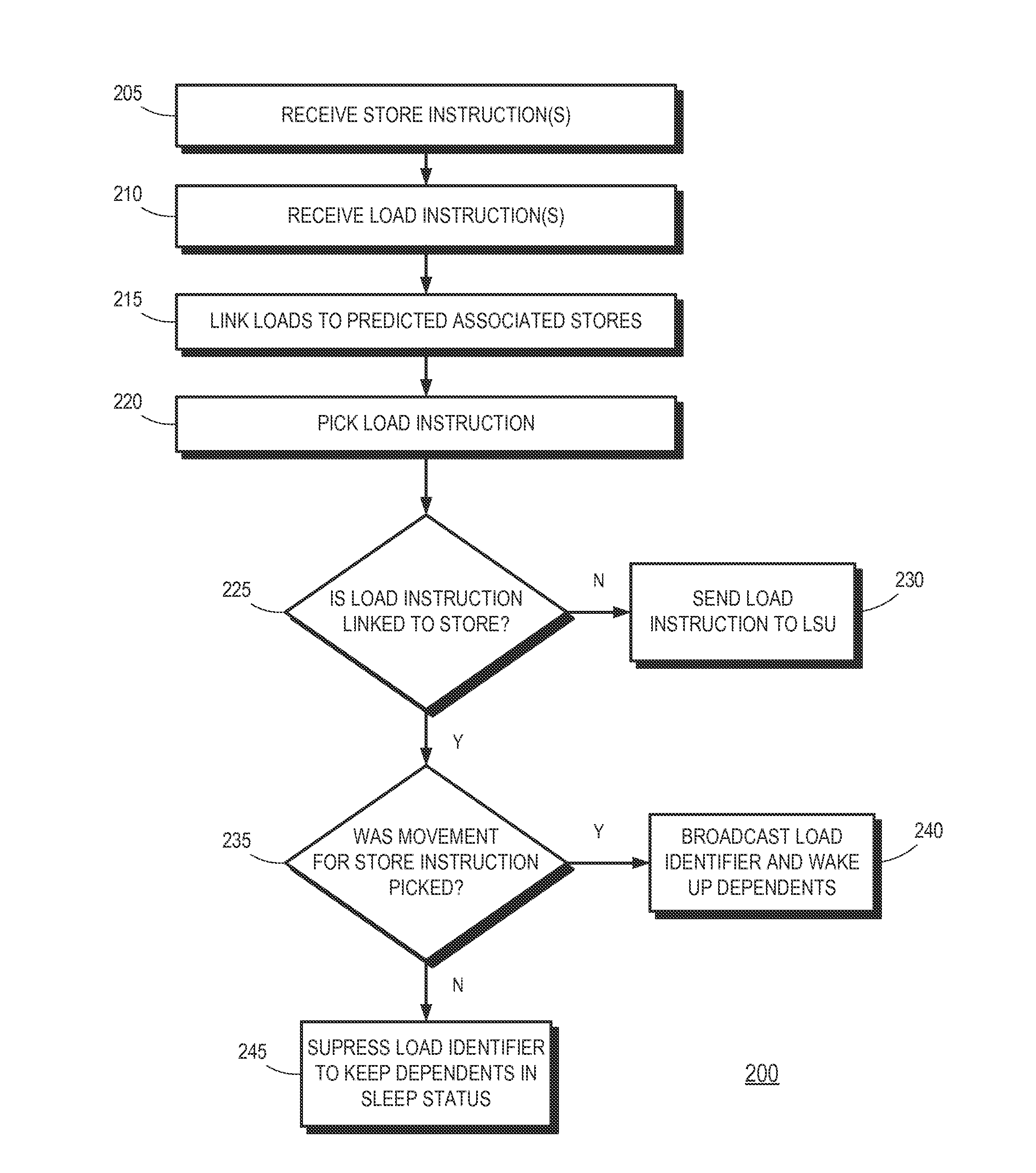

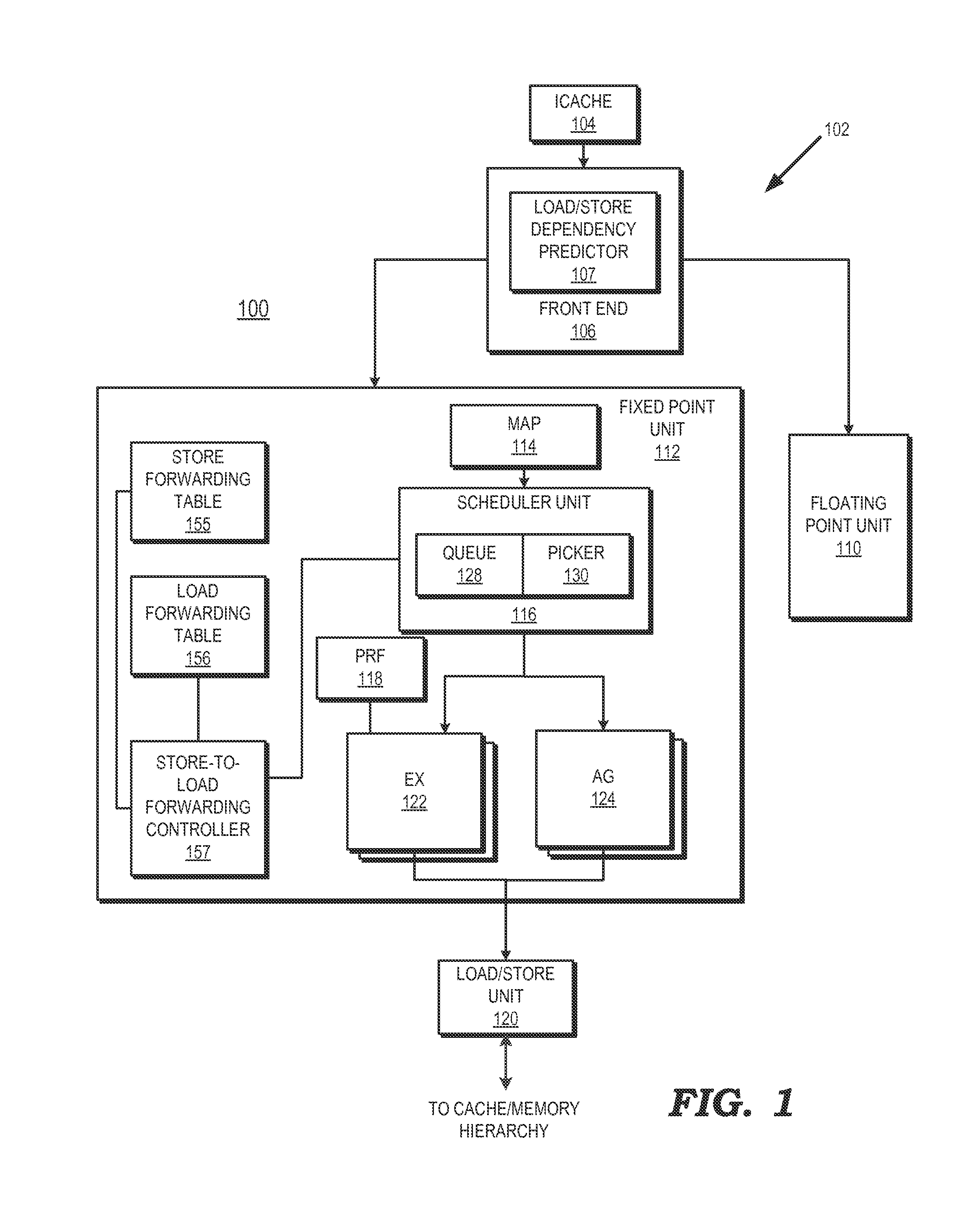

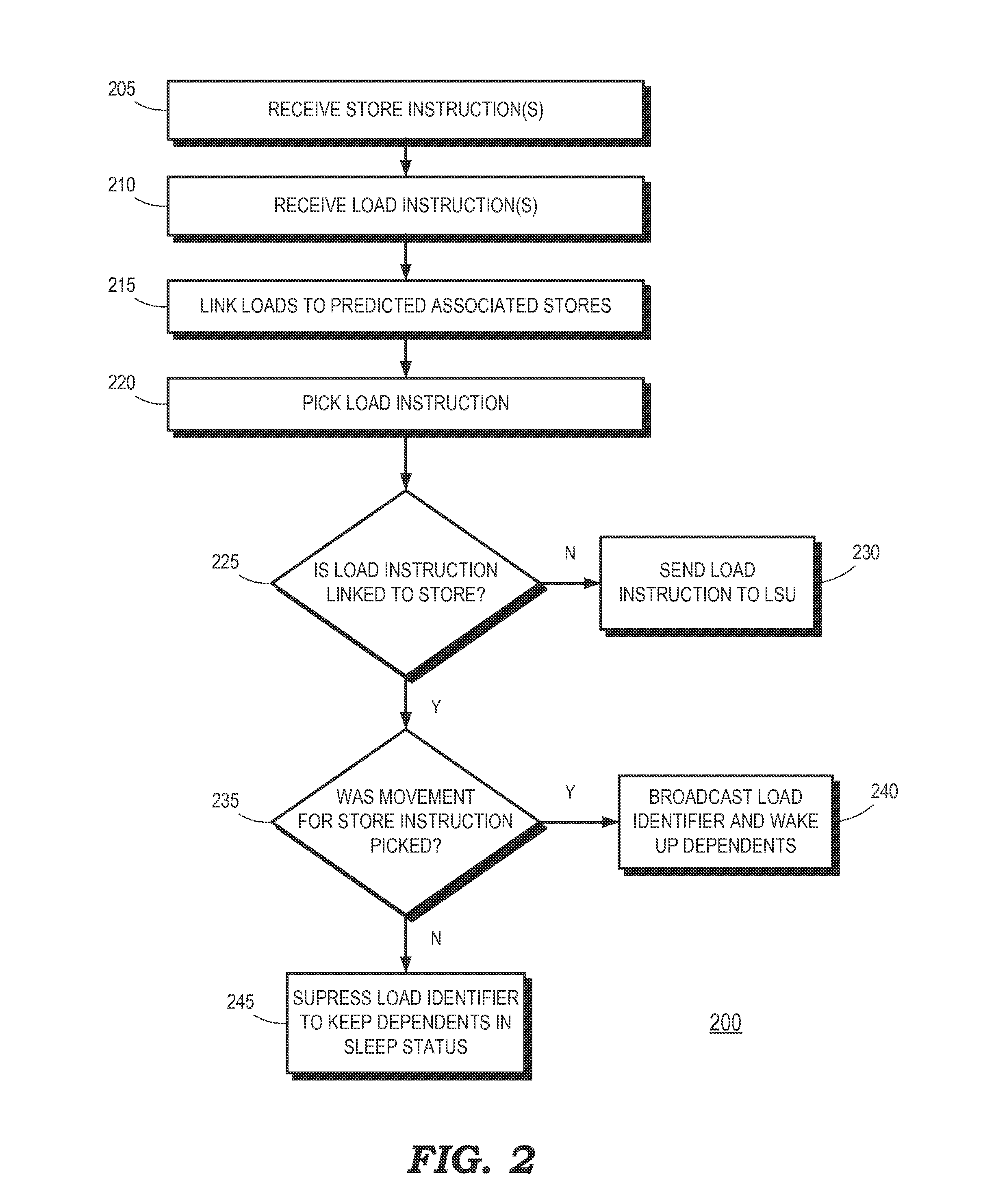

A method includes selecting for execution in a processor a load instruction having at least one dependent instruction. Responsive to selecting the load instruction, the at least one dependent instruction is selectively awakened based on a status of a store instruction associated with the load instruction to indicate that the at least one dependent instruction is eligible for execution. A processor includes an instruction pipeline having an execution unit to execute instructions, a scheduler, and a controller. The scheduler selects for execution in the execution unit a load instruction having at least one dependent instruction. The controller, responsive to the scheduler selecting the load instruction, selectively awakens the at least one dependent instruction based on a status of a store instruction associated with the load instruction to indicate that the at least one dependent instruction is eligible for execution by the execution unit.

Owner:ADVANCED MICRO DEVICES INC

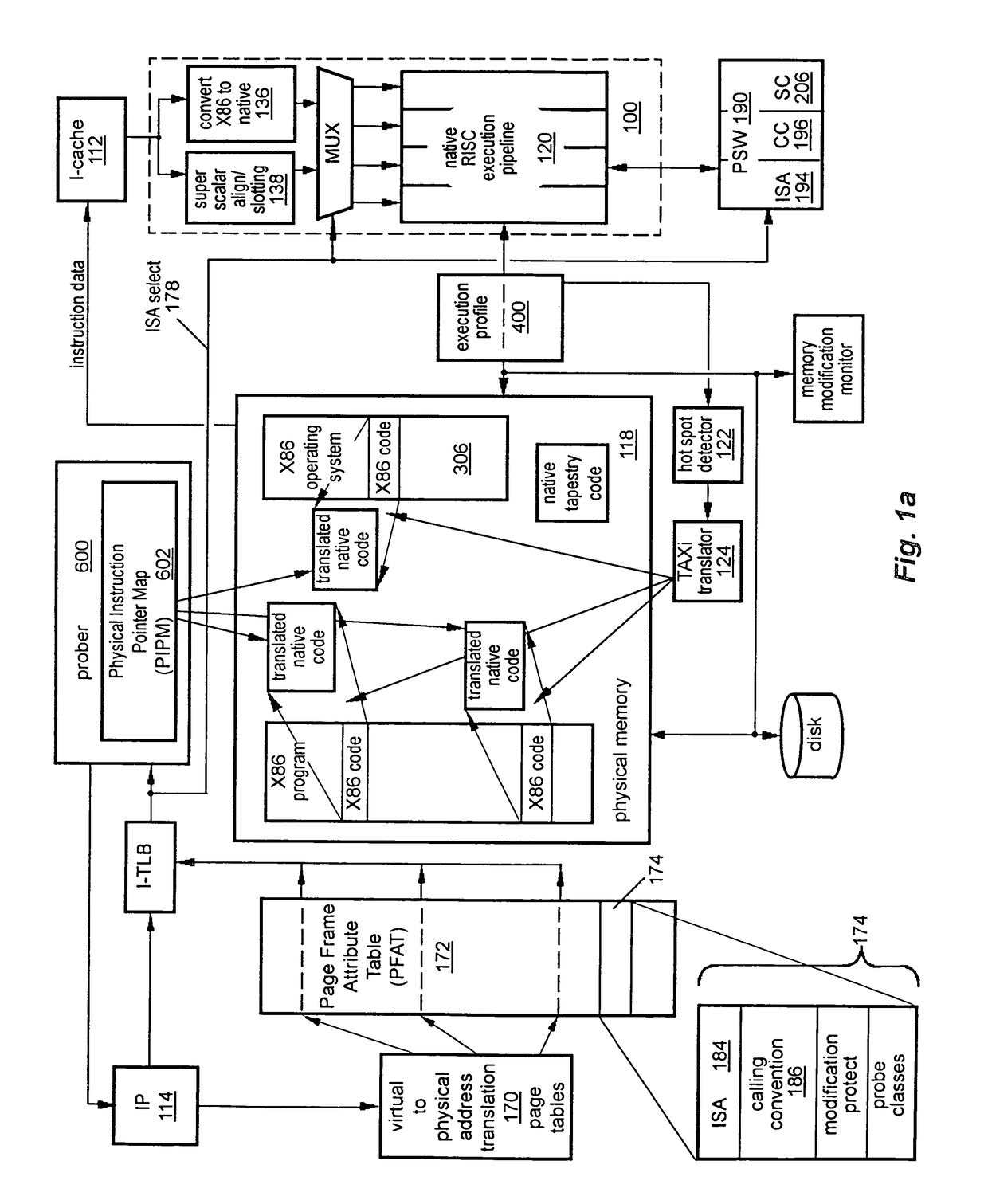

Detecting conditions for transfer of execution from one computer instruction stream to another and executing transfer on satisfaction of the conditions

InactiveUS8121828B2Efficiently tailoredEasy to operateGeneral purpose stored program computerSoftware simulation/interpretation/emulationInstruction pipelineInstruction stream

A computer has instruction pipeline circuitry capable of executing two instruction set architectures (ISA's). A binary translator translates at least a selected portion of a computer program from a lower-performance one of the ISA's to a higher-performance one of the ISA's. Hardware initiates a query when about to execute a program region coded in the lower-performance ISA, to determine whether a higher-performance translation exists. If so, the about-to-be-executed instruction is aborted, and control transfers to the higher-performance translation. After execution of the higher-performance translation, execution of the lower-performance region is reestablished at a point downstream from the aborted instruction, in a context logically equivalent to that which would have prevailed had the code of the lower-performance region been allowed to proceed.

Owner:ADVANCED SILICON TECH

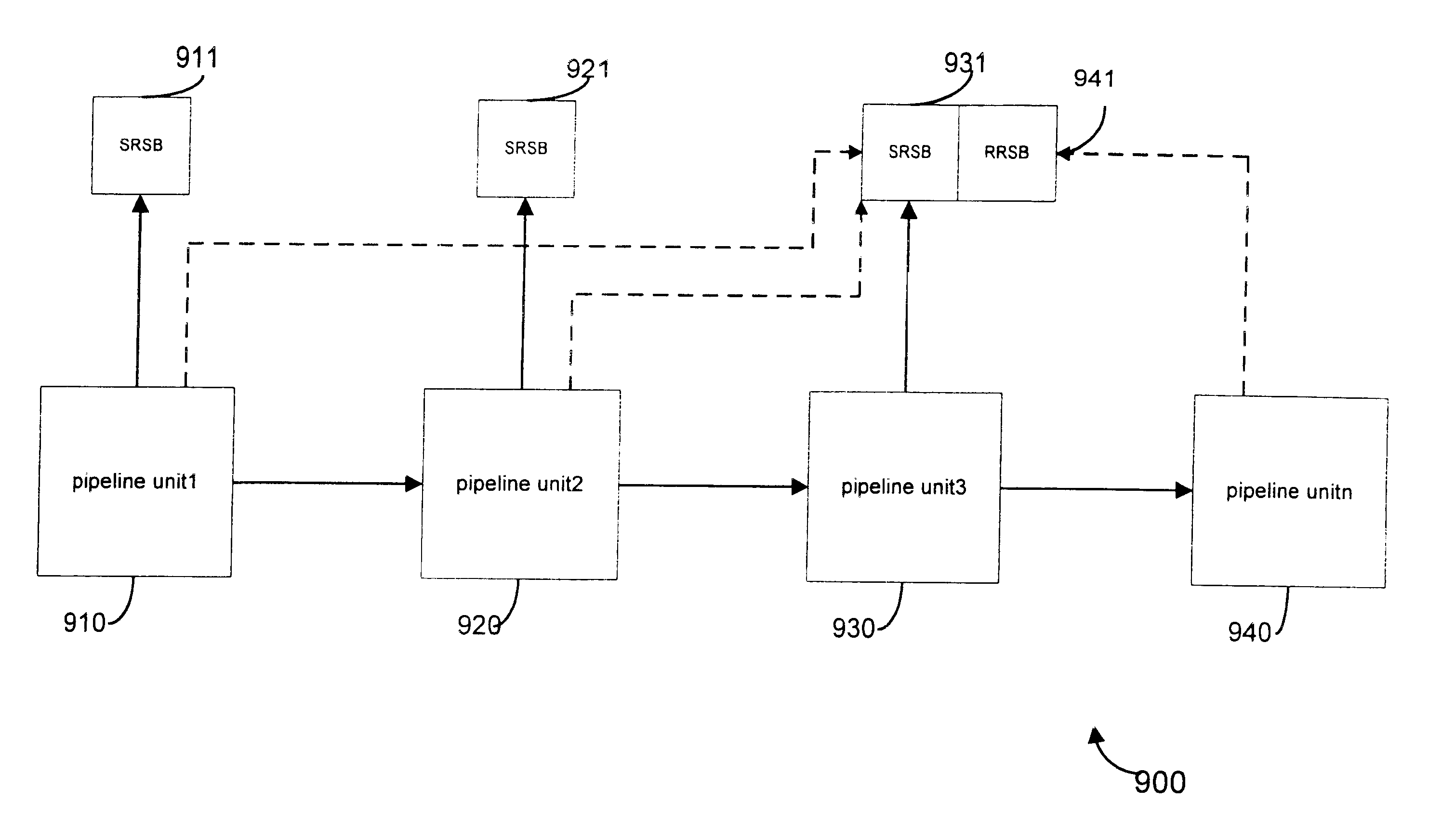

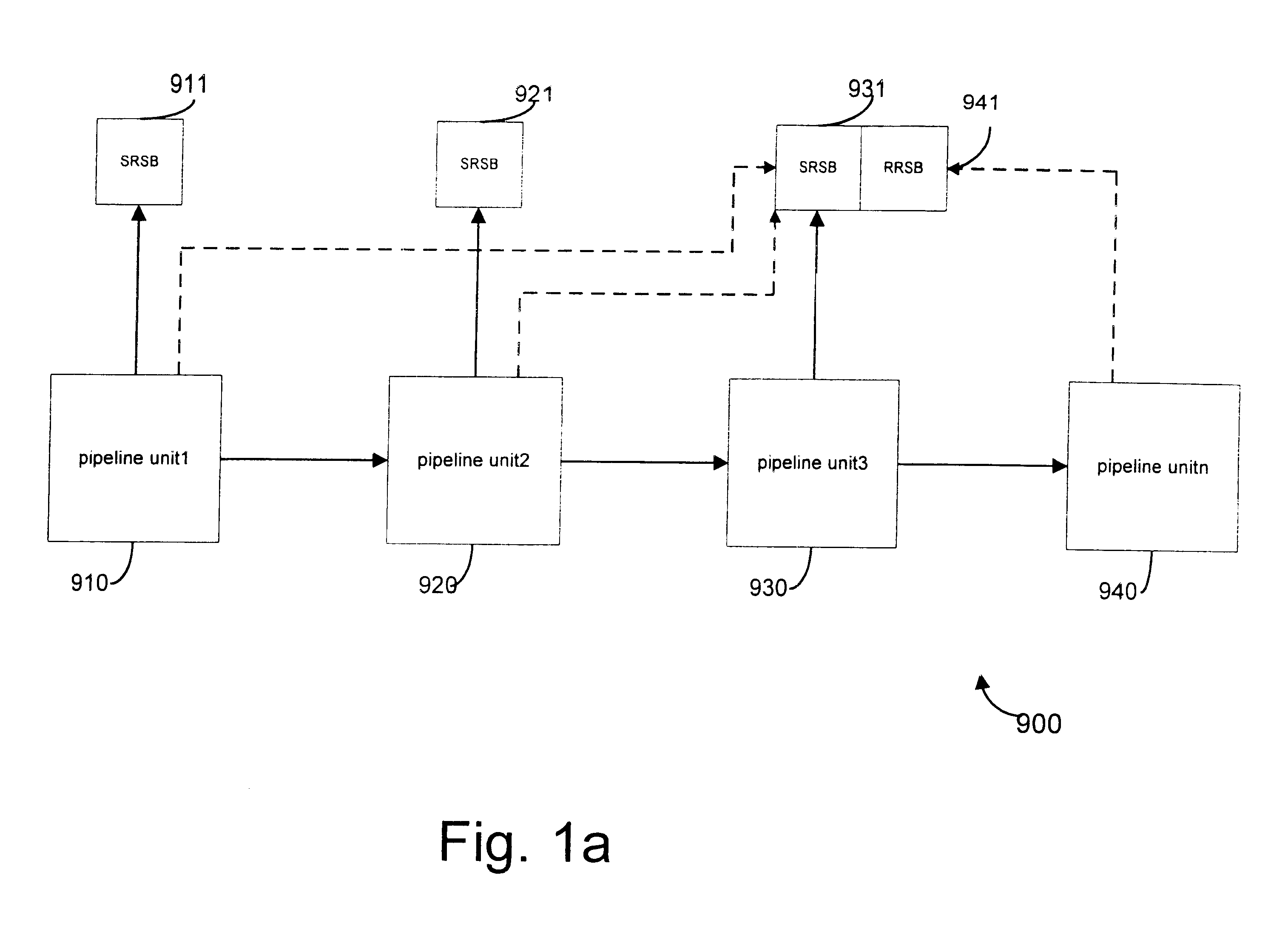

System and method of maintaining and utilizing multiple return stack buffers

InactiveUS6374350B1Digital computer detailsConcurrent instruction executionReturn address stackInstruction pipeline

An instruction pipeline in a microprocessor is provided. The instruction pipeline includes a plurality of pipeline units, each of the plurality of pipeline units processing a plurality of instructions. At least two of the plurality of pipeline units are a source of at least some of the instructions for the pipeline. The pipeline further includes at least two speculative return address stacks, each of the speculative return address stacks coupled is coupled to at least one of the instruction source units. Each of the speculative return return address stacks are capable of storing at least two speculative return addresses.

Owner:INTEL CORP

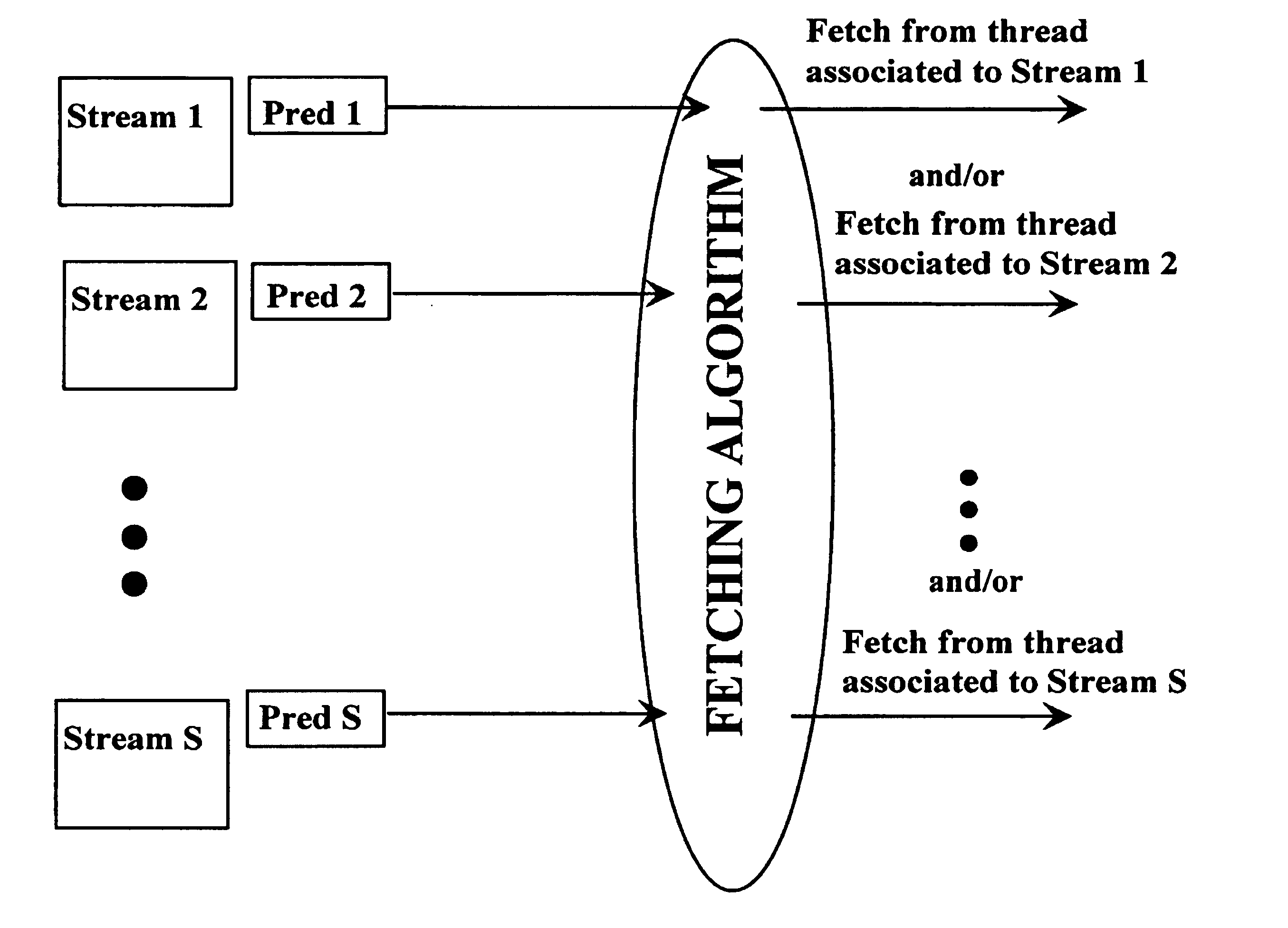

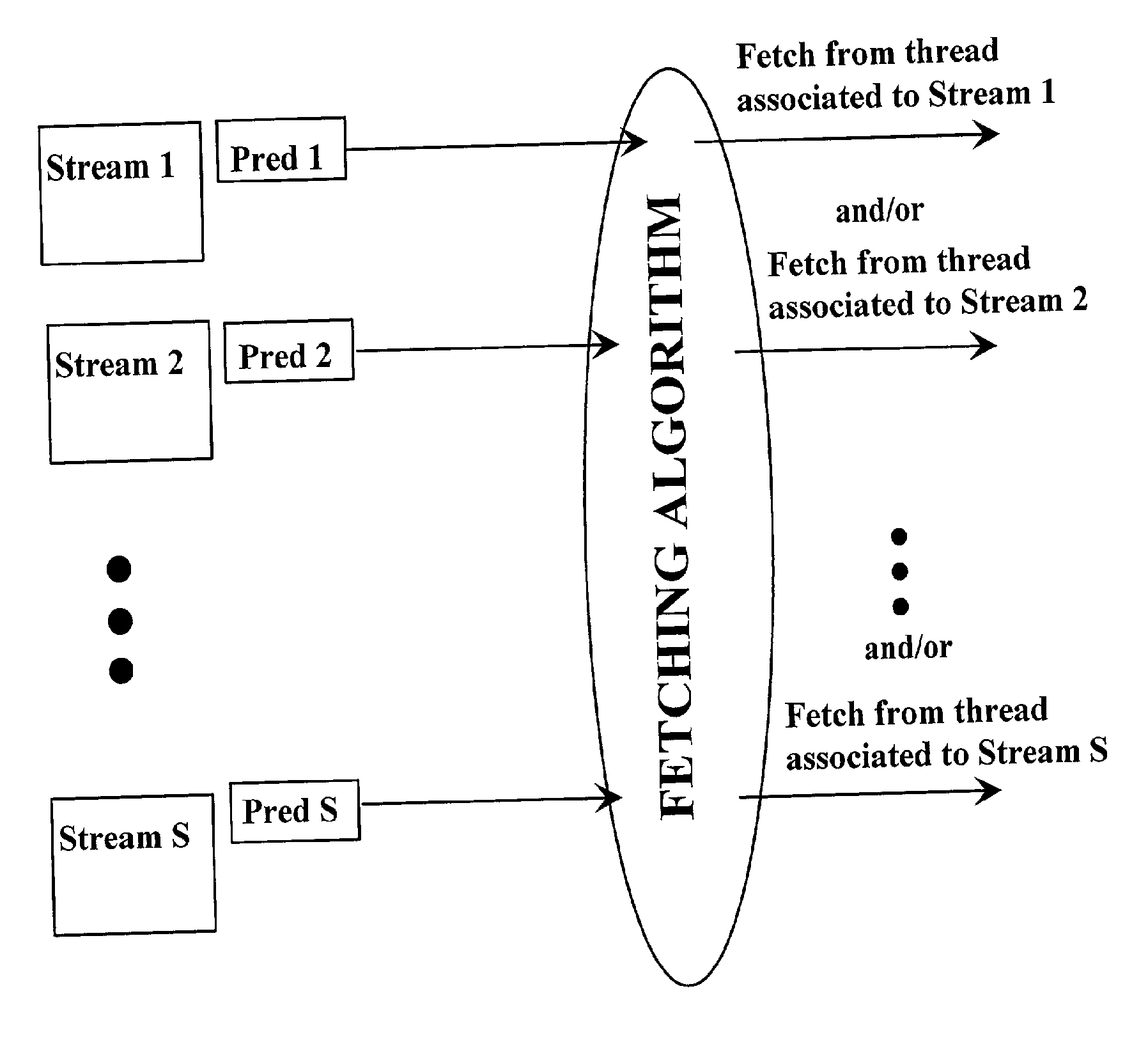

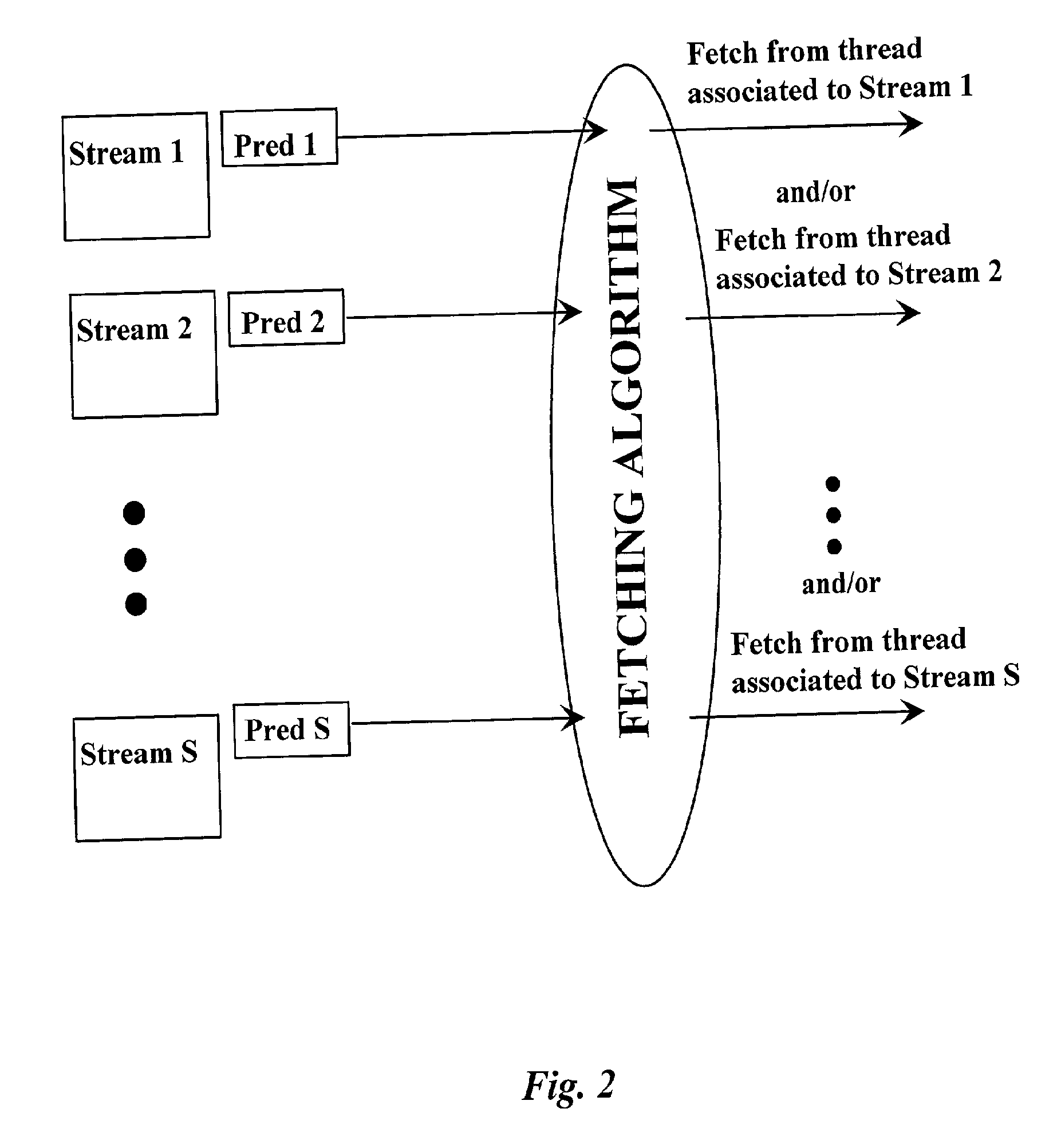

Methods and apparatus for improving fetching and dispatch of instructions in multithreaded processors

InactiveUS7035997B1Improve performanceDigital computer detailsMemory systemsLoad instructionInstruction pipeline

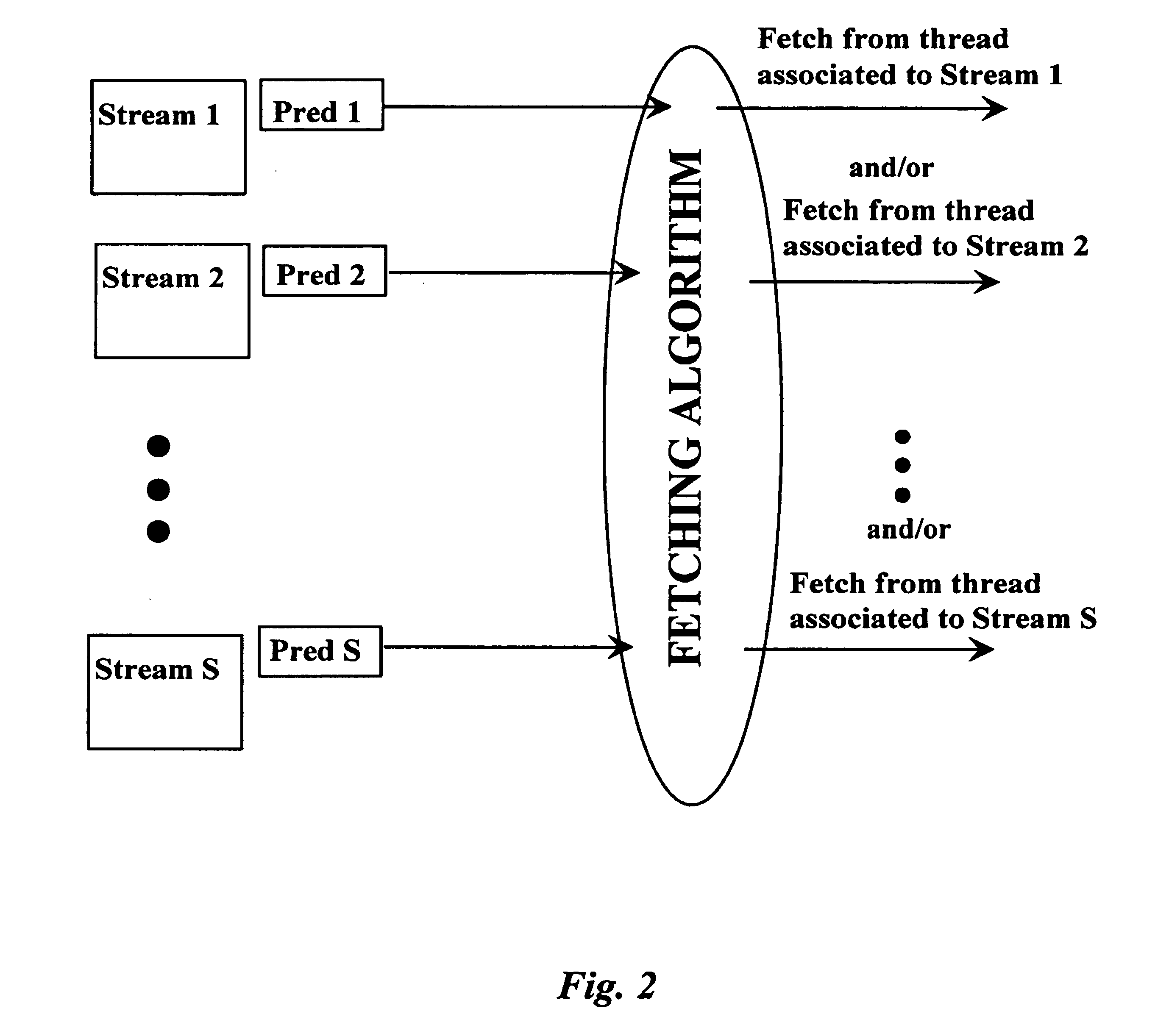

In a multi-streaming processor, a system for fetching instructions from individual ones of multiple streams to an instruction pipeline is provided, comprising a fetch algorithm for selecting from which stream to fetch an instruction, and one or more predictors for forecasting whether a load instruction will hit or miss the cache or a branch will be taken. The prediction or predictions are used by the fetch algorithm in determining from which stream to fetch. In some cases probabilities are determined and also used in decisions, and predictors may be used at either or both of fetch and dispatch stages.

Owner:ARM FINANCE OVERSEAS LTD

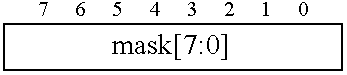

Method and apparatus for register file port reduction in a multithreaded processor

InactiveUS6904511B2Reduce in quantityDecreasing processor power consumptionMemory adressing/allocation/relocationInterprogram communicationProcessor registerLeast significant bit

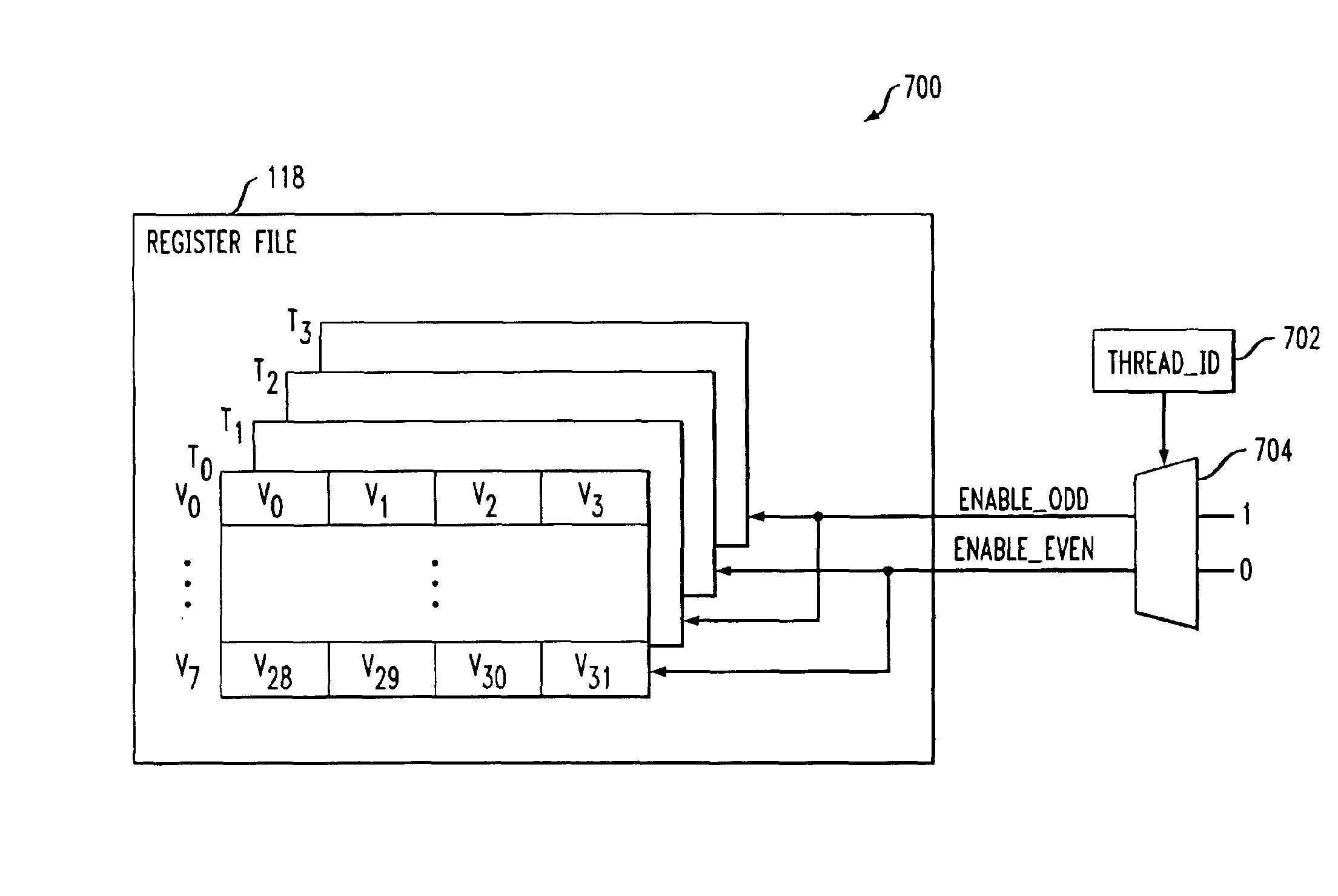

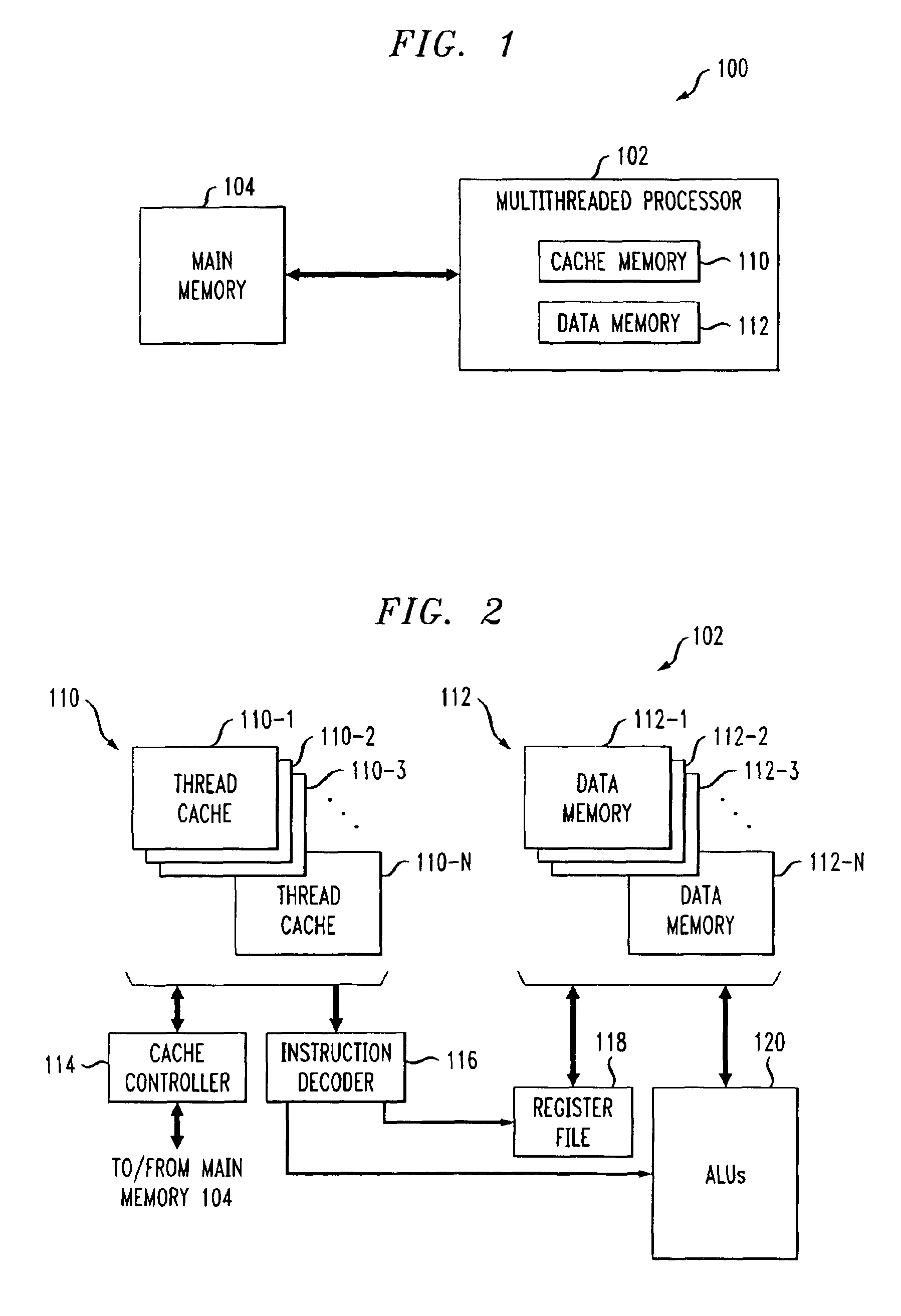

Techniques for thread-based register file access by a multithreaded processor are disclosed. The multithreaded processor determines a thread identifier associated with a particular processor thread, and utilizes at least a portion of the thread identifier to select a particular portion of an associated register file to be accessed by the corresponding processor thread. In an illustrative embodiment, the register file is divided into even and odd portions, with a least significant bit or other portion of the thread identifier being used to select either the even or the odd portion for use by a given processor thread. The thread-based register file selection may be utilized in conjunction with token triggered threading and instruction pipelining. Advantageously, the invention reduces register file port requirements and thus processor power consumption, while maintaining desired levels of concurrency.

Owner:QUALCOMM INC

Communications system using rings architecture

ActiveUS20030172257A1Energy efficient ICTNetwork traffic/resource managementAuto-configurationAutomatic routing

Systems and methods are provided for implementing: a rings architecture for communications and data handling systems; an enumeration process for automatically configuring the ring topology; automatic routing of messages through bridges; extending a ring topology to external devices; write-ahead functionality to promote efficiency; wait-till-reset operation resumption; in-vivo scan through rings topology; staggered clocking arrangement; and stray message detection and eradication. Other inventive elements conveyed include: an architectural overview of a packet processor; a programming model for a packet processor; an instruction pipeline for a packet processor; and use of a packet processor as a module on a rings-based architecture. Additional inventive elements conveyed include: an architectural overview of a communications processor; a data path protocol support model for a communications processor; an exemplary network processor employed as the core packet processor for the communications processor; an exemplary rings-based SOC switch fabric architecture; and a variety of quality of support features.

Owner:CONEXANT +1

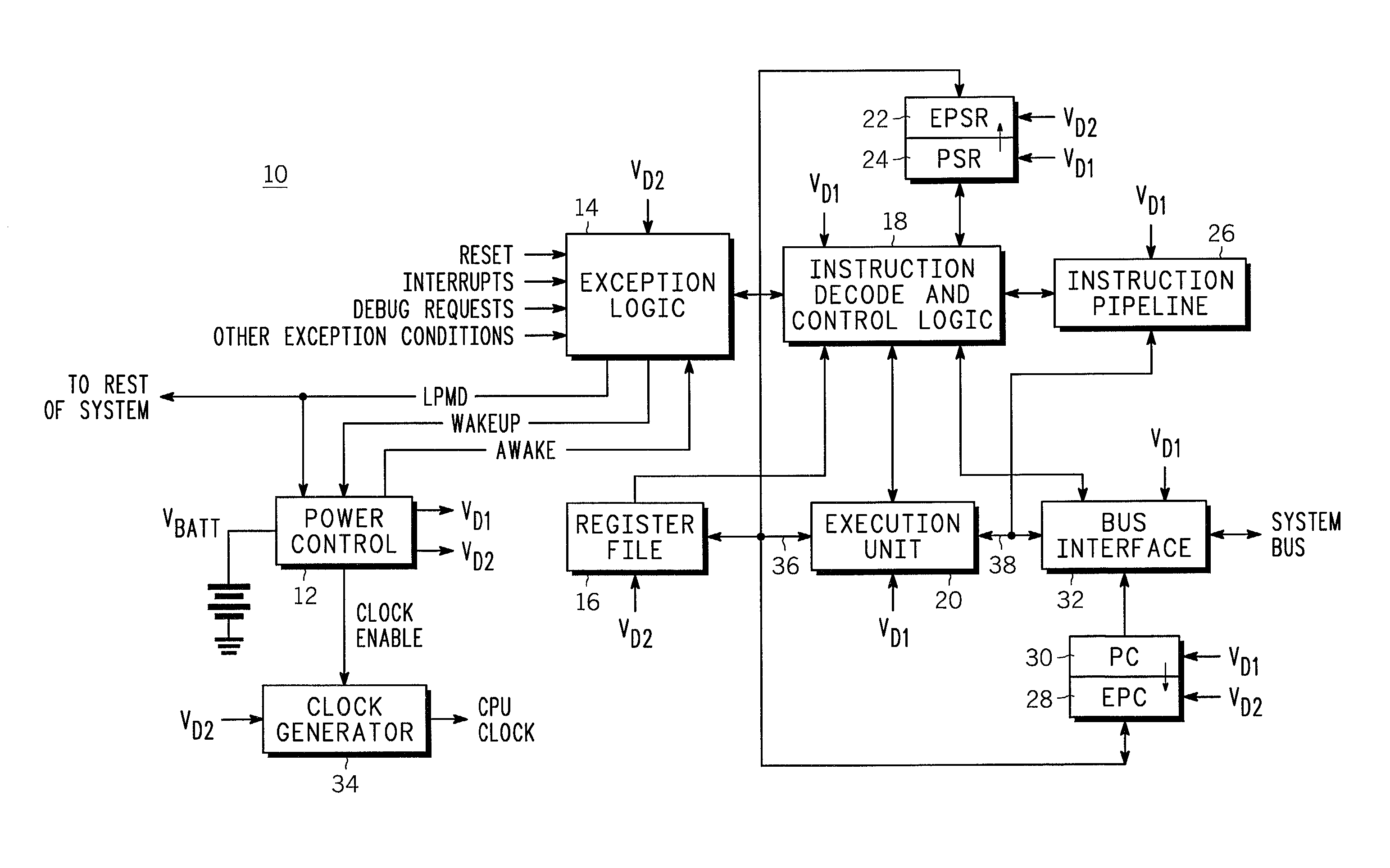

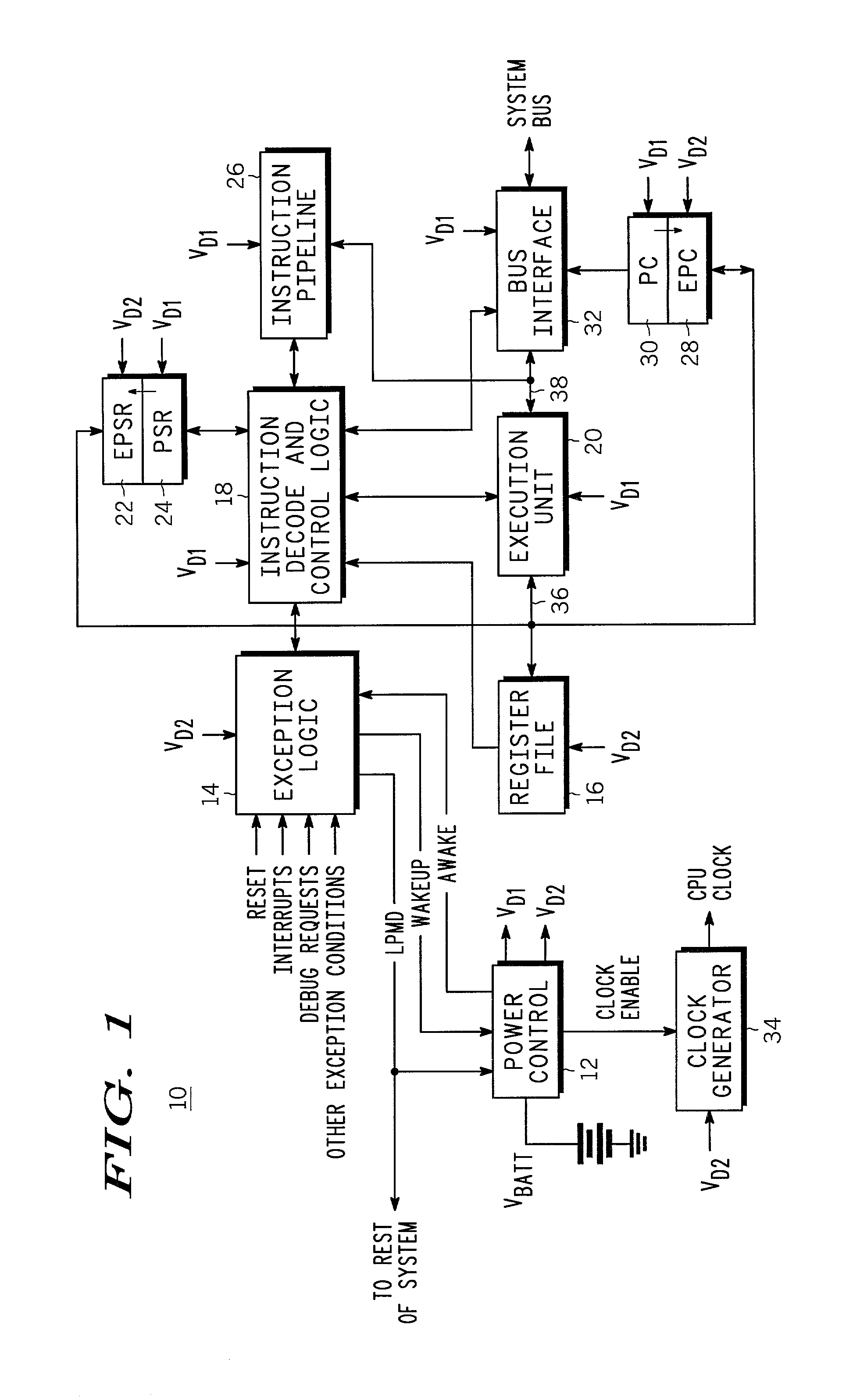

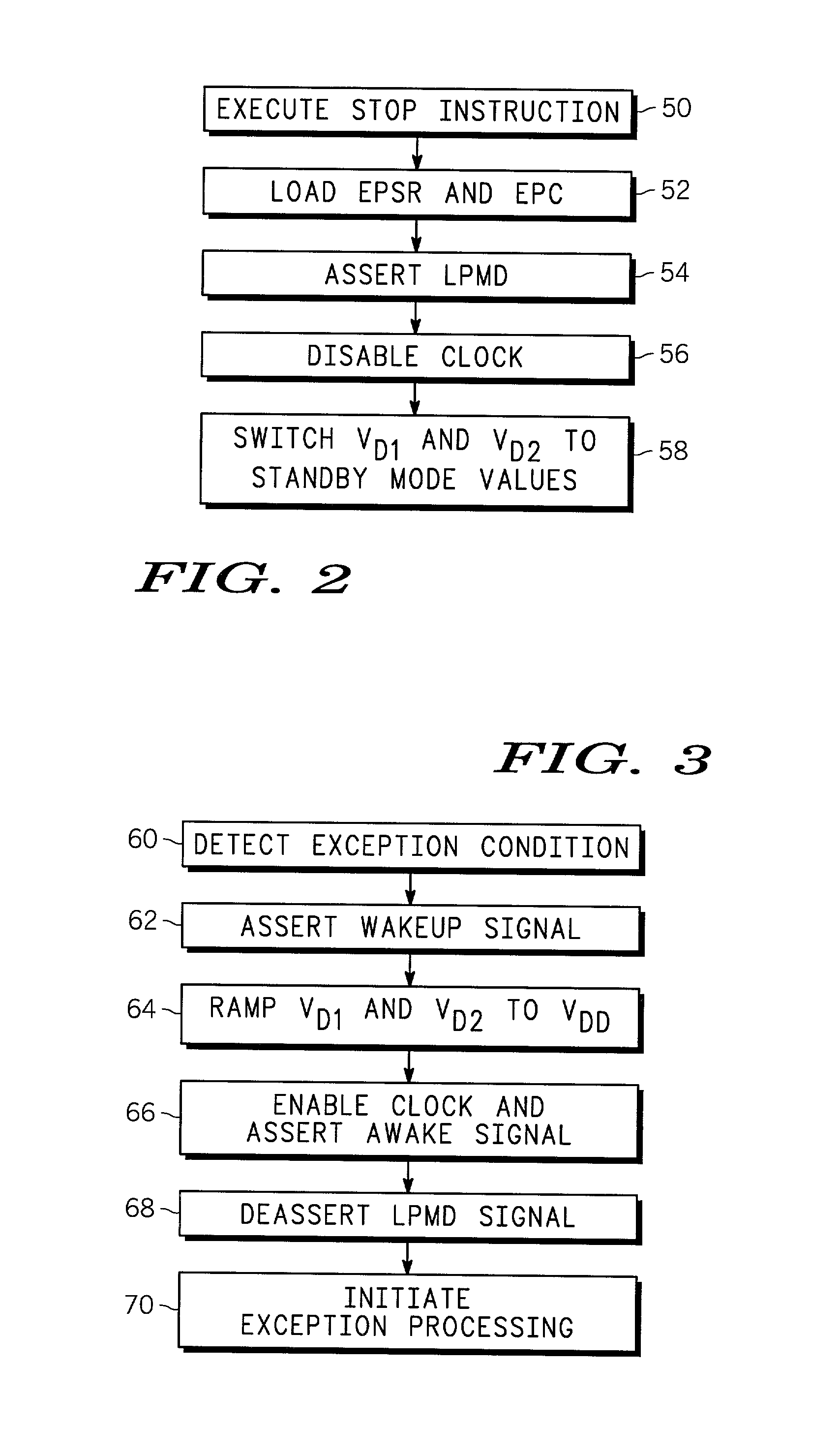

CPU powerdown method and apparatus therefor

A CPU has a powerdown mode in which most of the circuitry does not receive power. Power-up, coming out of powerdown, is achieved in response to receiving an exception. Because most of the state information that is present in the CPU is not needed in response to an exception, there is no problem in removing power to most of the CPU during powerdown. The programmer's model register file and a few other circuits in the CPU are maintained in powerdown, but the vast majority of the circuits that make up the CPU: the execution unit, the instruction decode and control logic, instruction pipeline and bus interface, do not need to receive power. Removing power from these non-critical circuits results in significant power savings during powerdown. The powered circuits are provided with a reduced power supply voltage to provide additional power savings.

Owner:APPLE INC

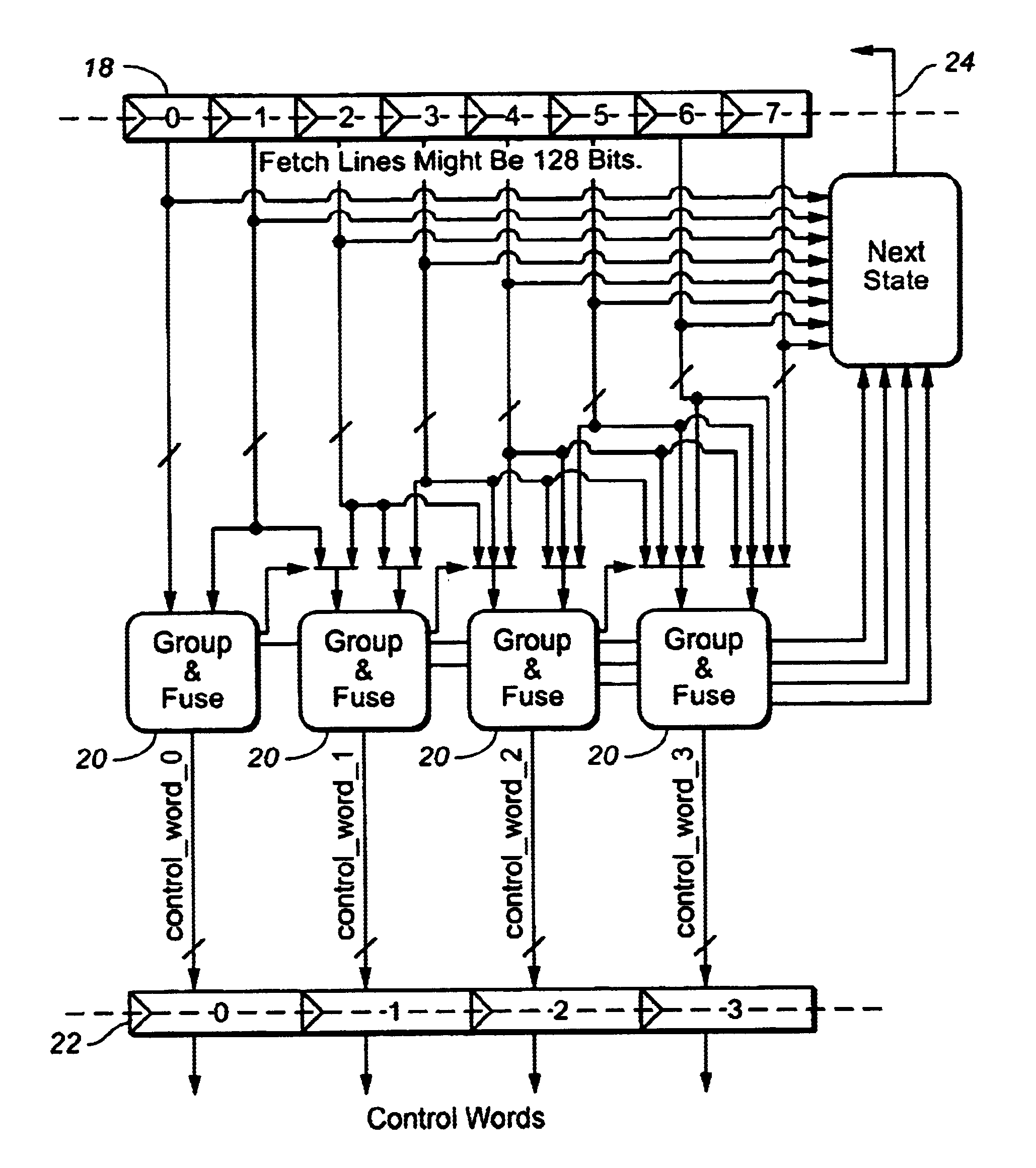

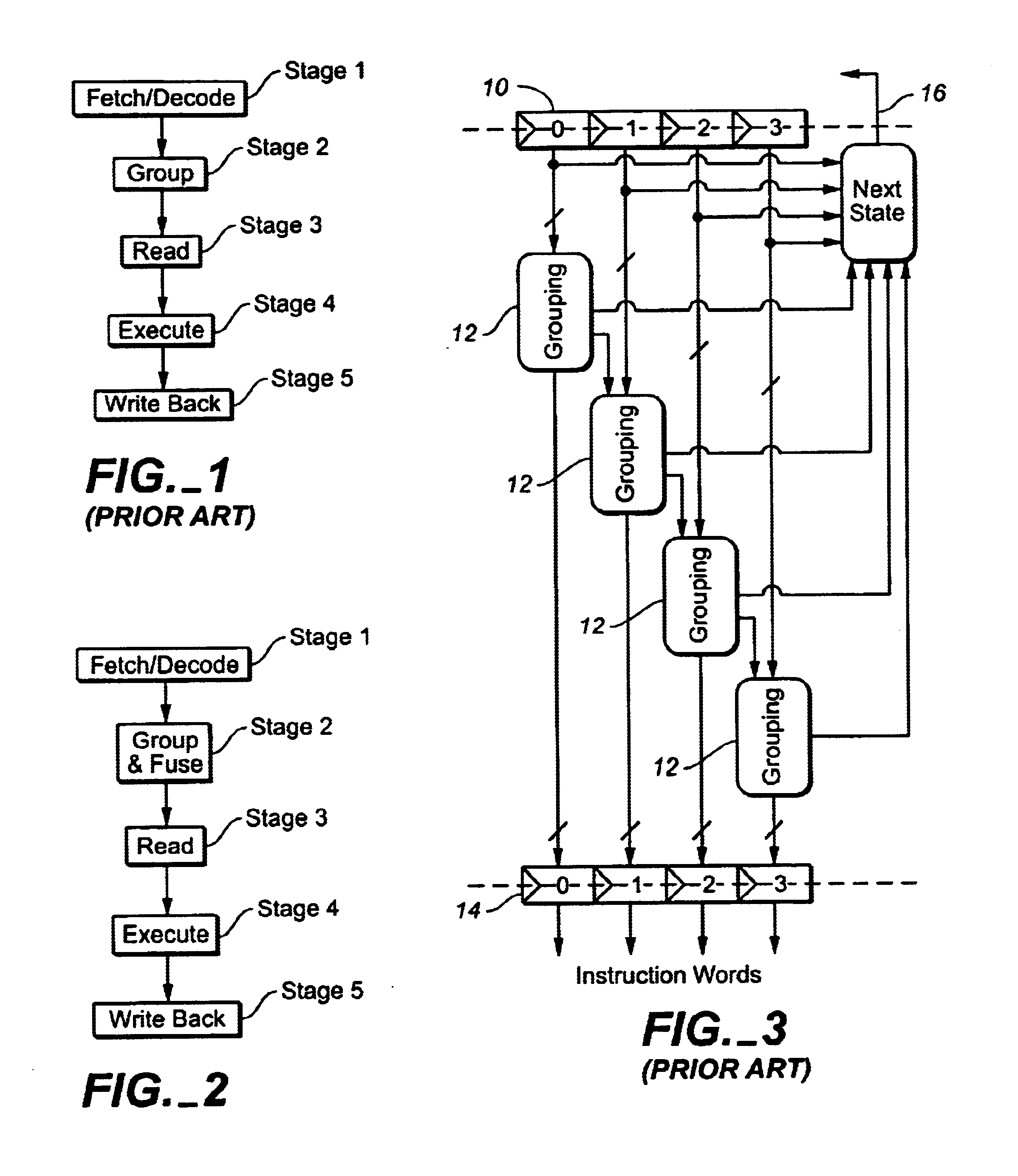

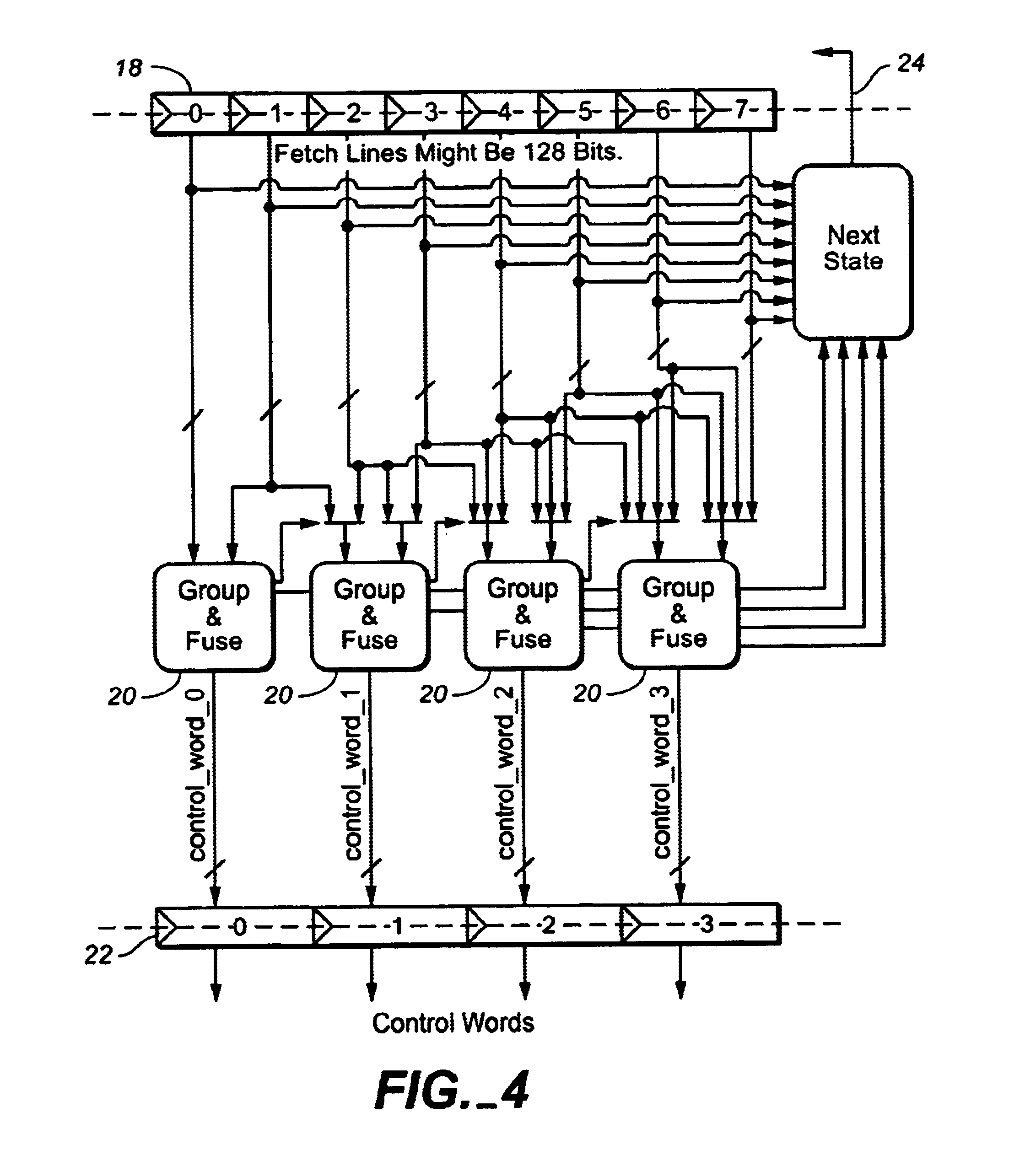

Instruction fusion for digital signal processor

InactiveUS6889318B1Digital computer detailsConcurrent instruction executionDigital signal processingInstruction unit

An instruction pipeline for a DSP with fusing logic for combining multiple instructions into a single control word which can be executed by one execution unit. The pipeline fetches a greater number of instructions than the number of execution units to which it can issue instructions. It applies grouping rules to the instructions and also identifies pairs, or larger groups, of instructions which can be combined, or fused, into a single control word which can be executed by one execution unit. Issuance of a fused control word to a single execution unit effectively allows two or more instructions to be executed simultaneously in one execution unit.

Owner:VERISILICON HLDGCO LTD

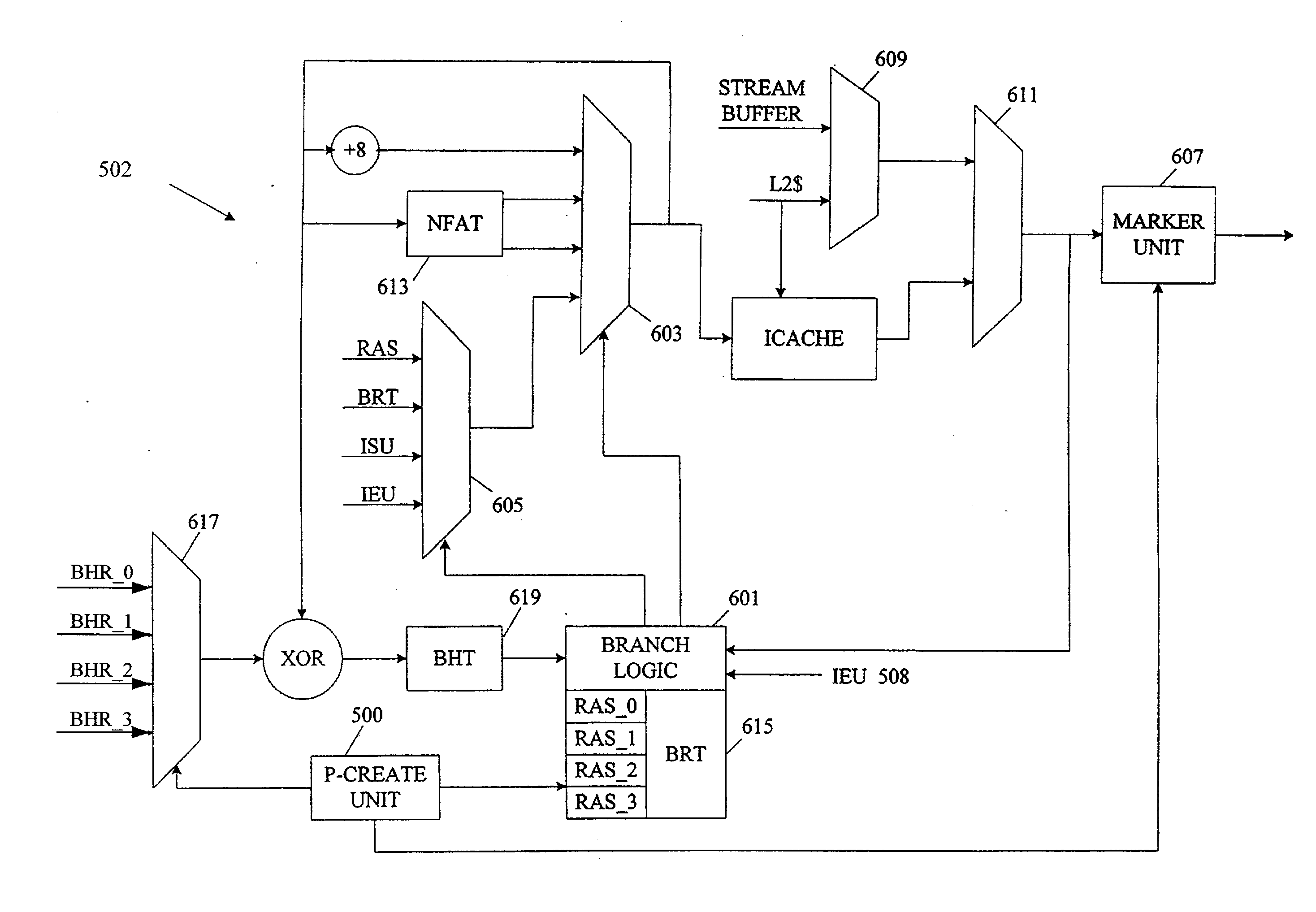

Validating branch resolution to avoid mis-steering instruction fetch

InactiveUS20060248319A1Avoids and eliminates repetitive replay conditionResolve delayDigital computer detailsSpecific program execution arrangementsLoad instructionImage resolution

A processor avoids or eliminates repetitive replay conditions and frequent instruction resteering through various techniques including resteering the fetch after the branch instruction retires, and delaying branch resolution. A processor resolves conditional branches and avoids repetitive resteering by delaying branch resolution. The processor has an instruction pipeline with inserted delay in branch condition and replay control pathways. For example, an instruction sequence that includes a load instruction followed by a subtract instruction then a conditional branch, delays branch resolution to allow time for analysis to determine whether the condition branch has resolved correctly. Eliminating incorrect branch resolutions prevents flushing of correctly predicted branches.

Owner:SUN MICROSYSTEMS INC

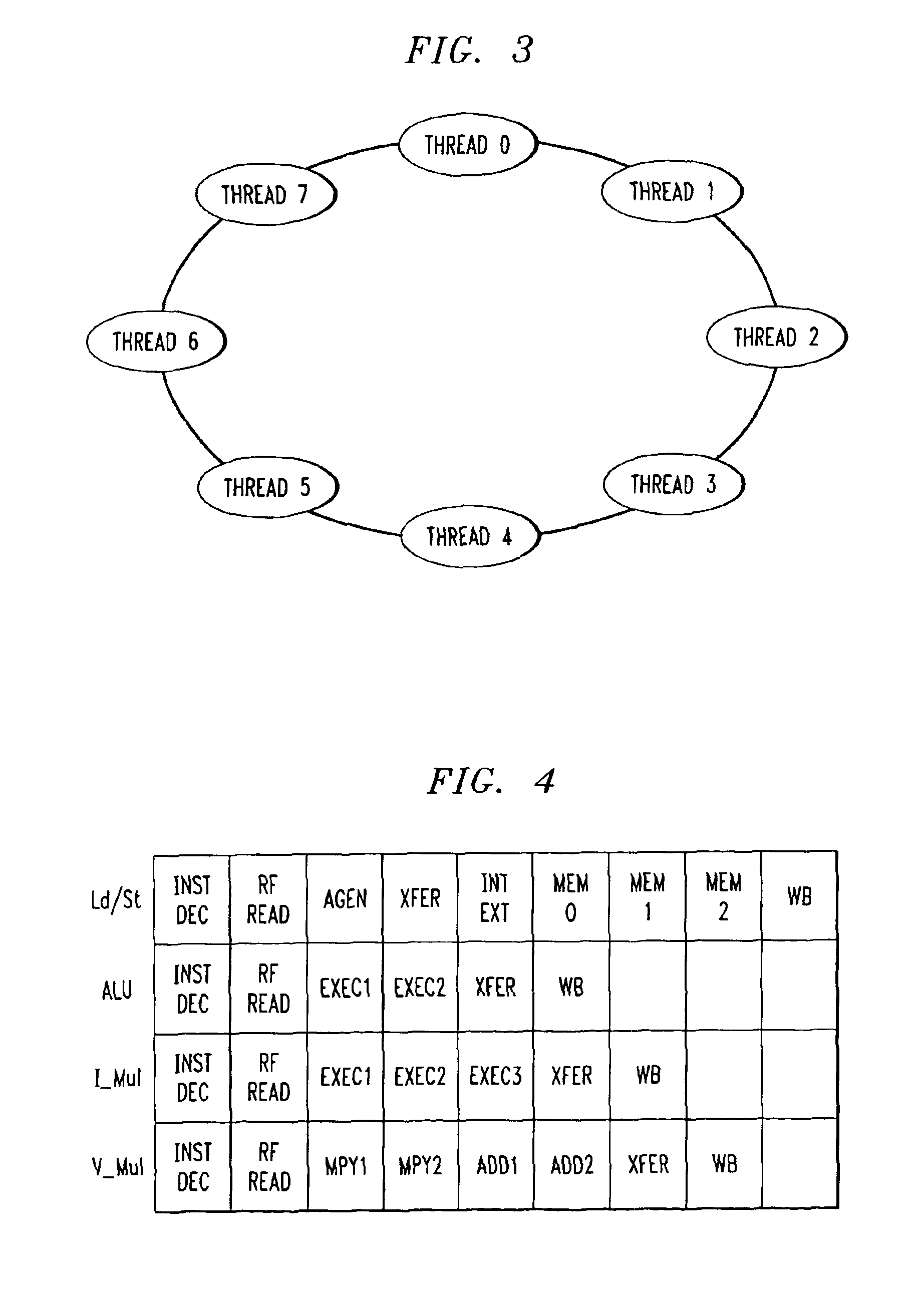

Multithreaded processor architecture with operational latency hiding

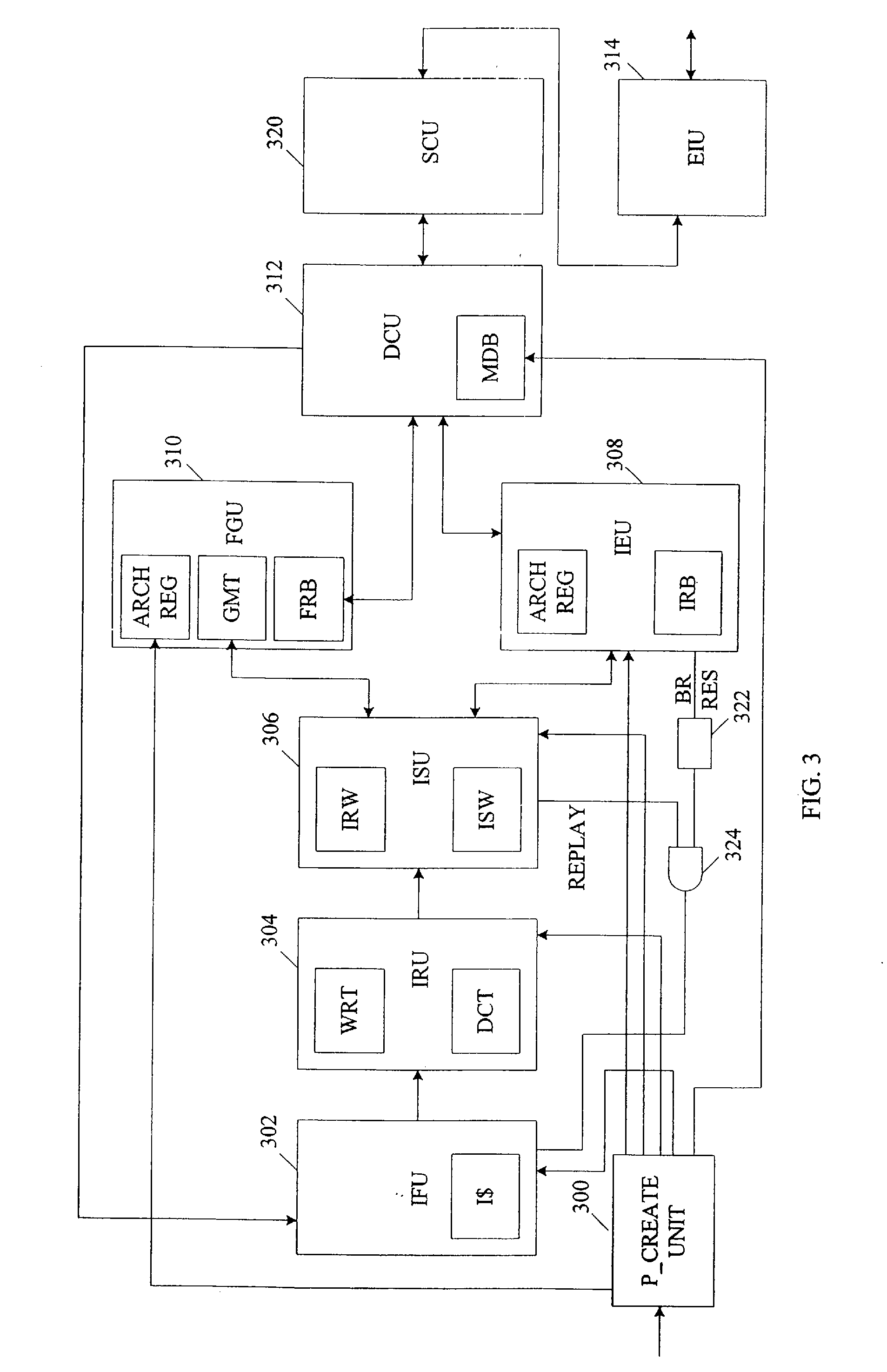

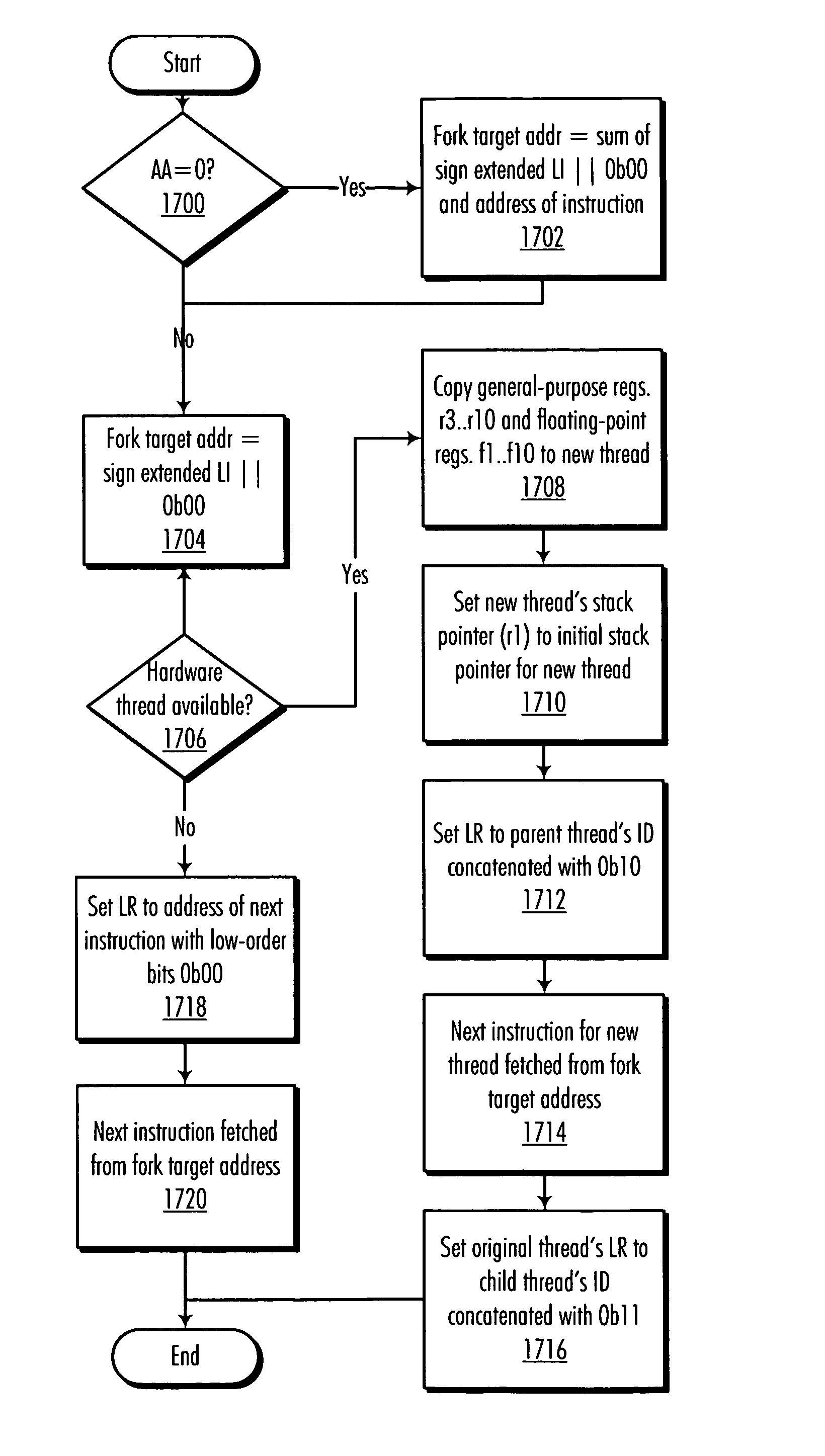

ActiveUS8230423B2High of latencyHigh levelError detection/correctionRuntime instruction translationHardware threadLogical operations

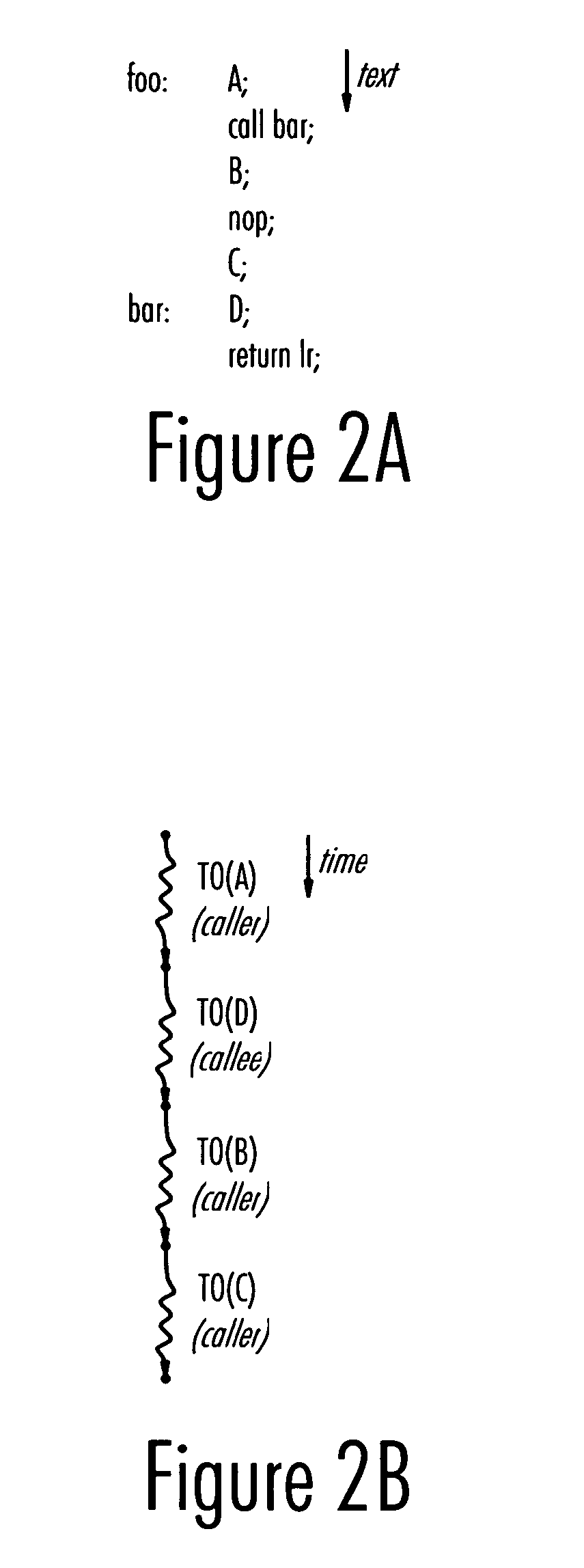

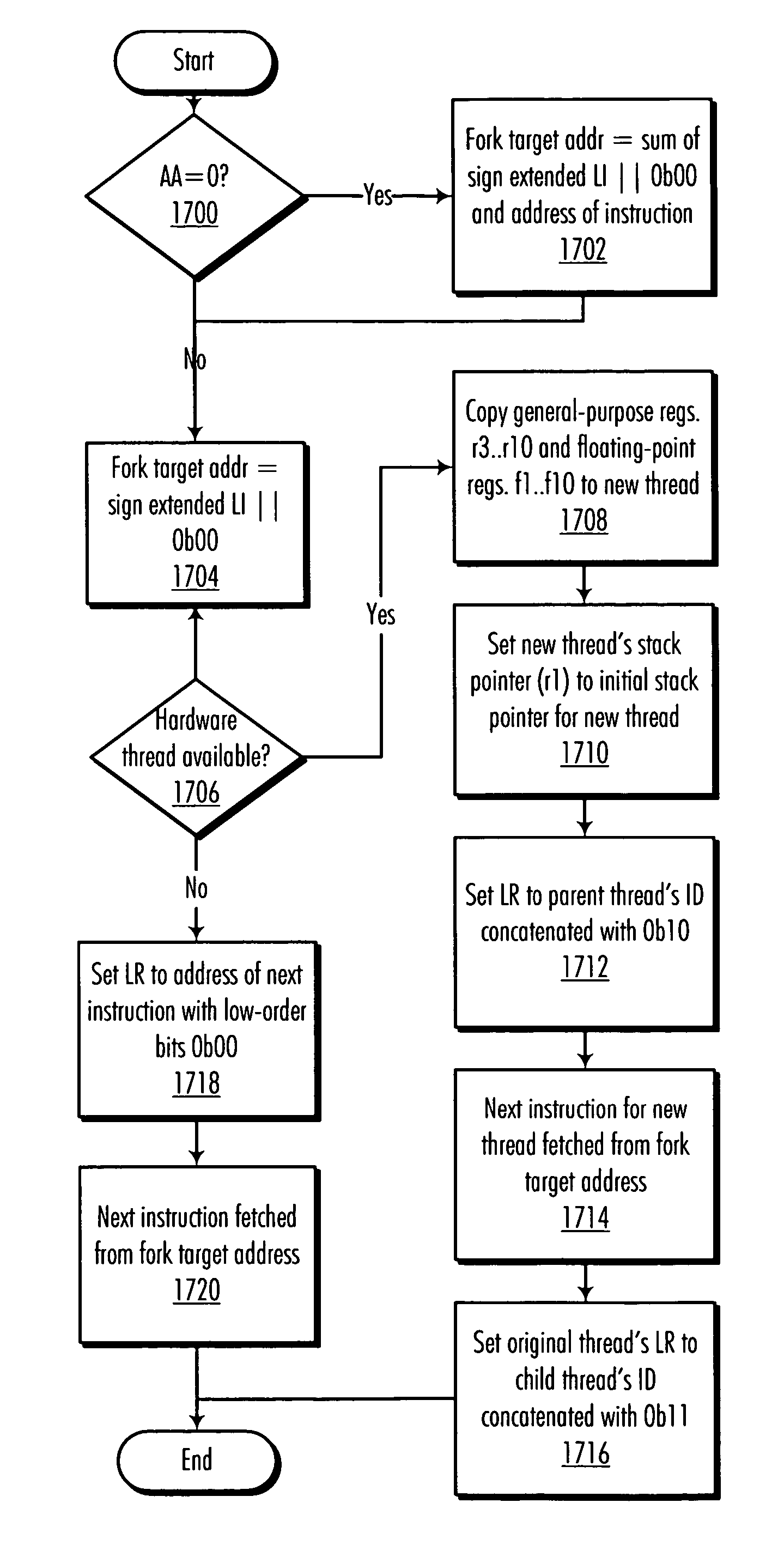

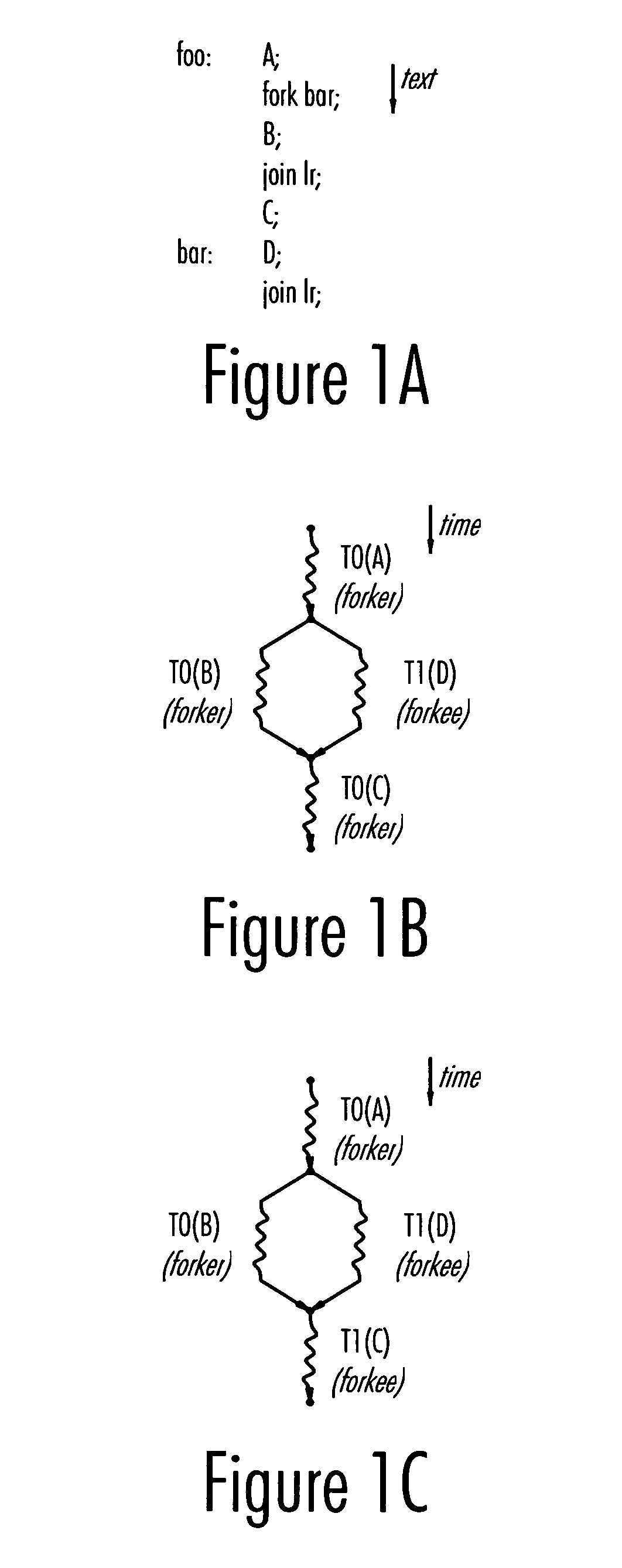

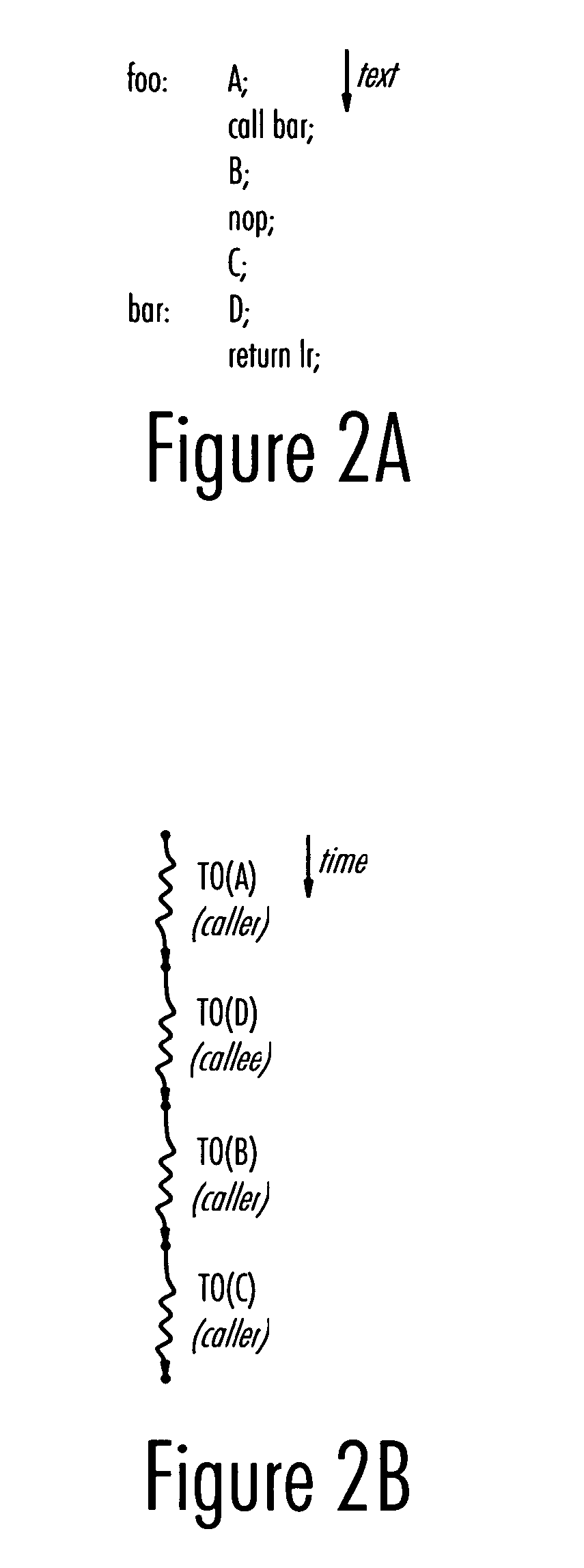

A method and processor architecture for achieving a high level of concurrency and latency hiding in an “infinite-thread processor architecture” with a limited number of hardware threads is disclosed. A preferred embodiment defines “fork” and “join” instructions for spawning new context-switched threads. Context switching is used to hide the latency of both memory-access operations (i.e., loads and stores) and arithmetic / logical operations. When an operation executing in a thread incurs a latency having the potential to delay the instruction pipeline, the latency is hidden by performing a context switch to a different thread. When the result of the operation becomes available, a context switch back to that thread is performed to allow the thread to continue.

Owner:IBM CORP

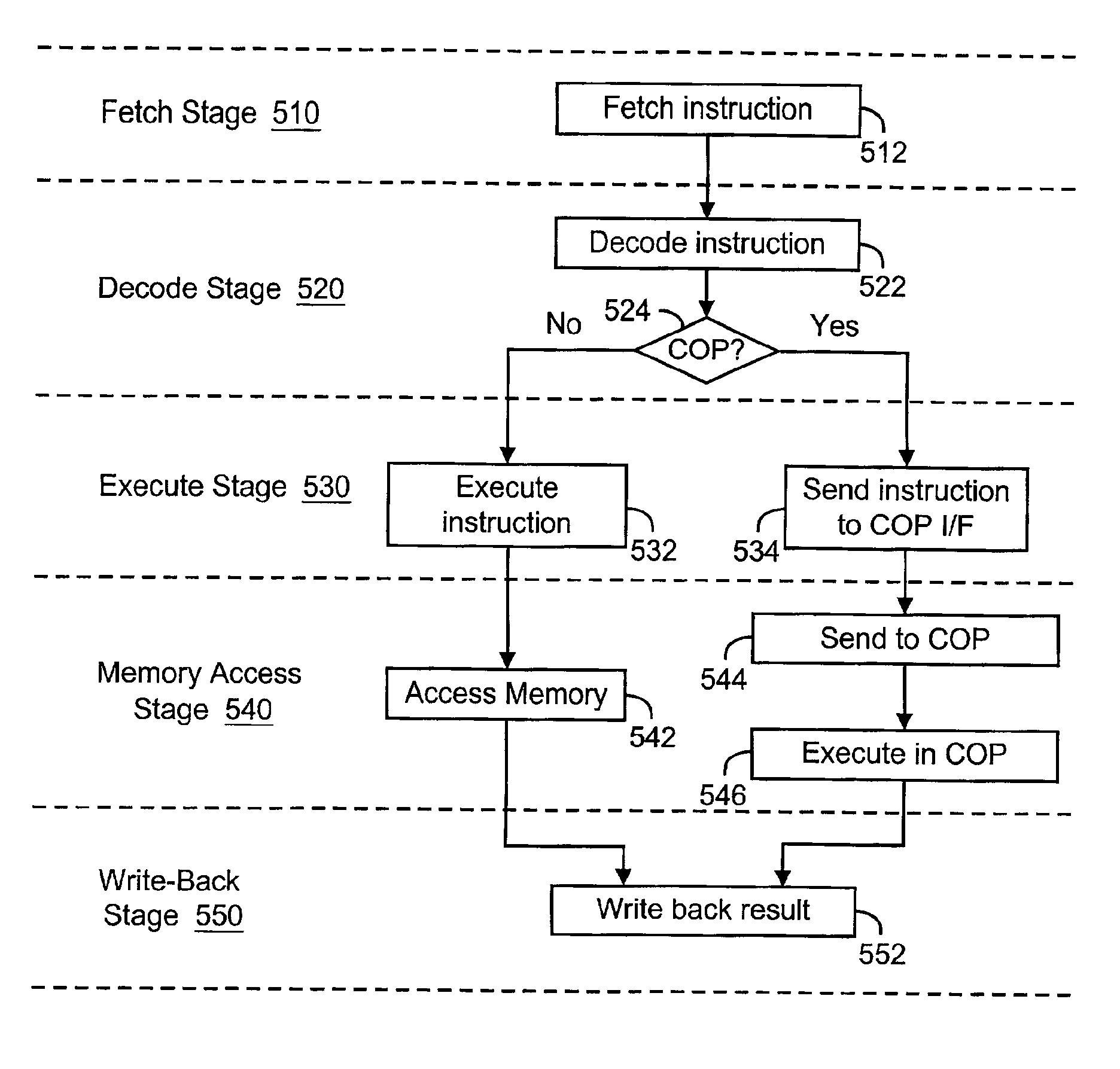

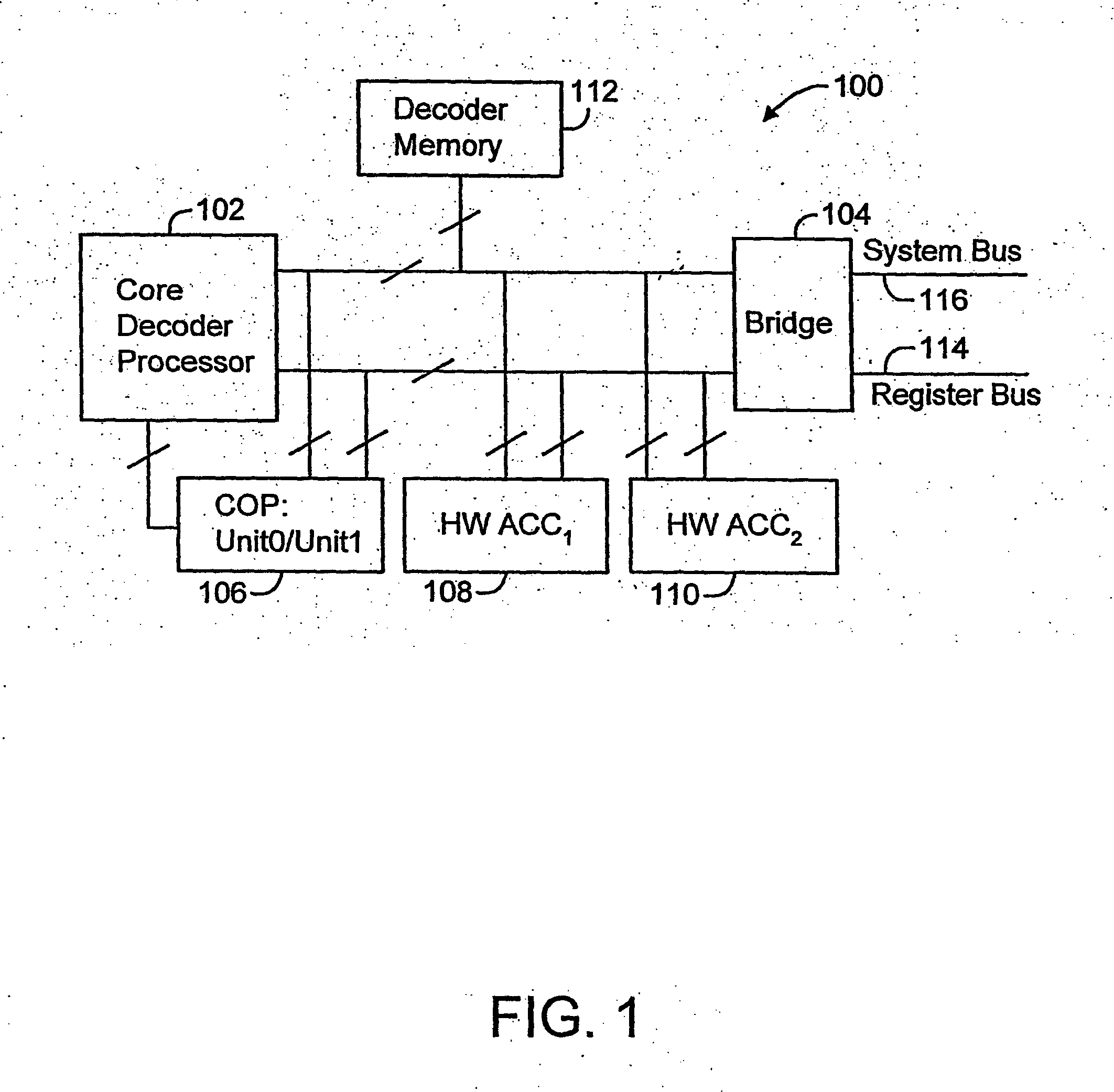

RISC processor supporting one or more uninterruptible co-processors

ActiveUS6944746B2General purpose stored program computerImage codingProcessing InstructionComputer architecture

A system and method for processing instructions in a computer system comprising a processor and a co-processor communicatively coupled to the processor. Instructions are processed in the processor in an instruction pipeline. In the instruction pipeline, instructions are processed sequentially by an instruction fetch stage, an instruction decode stage, an instruction execute stage, a memory access stage and a result write-back stage. If a co-processor instruction is received by the processor, the co-processor instruction is held in the core processor until the co-processor instruction reaches the memory access stage, at which time the co-processor instruction is transmitted to the co-processor.

Owner:QUALCOMM INC

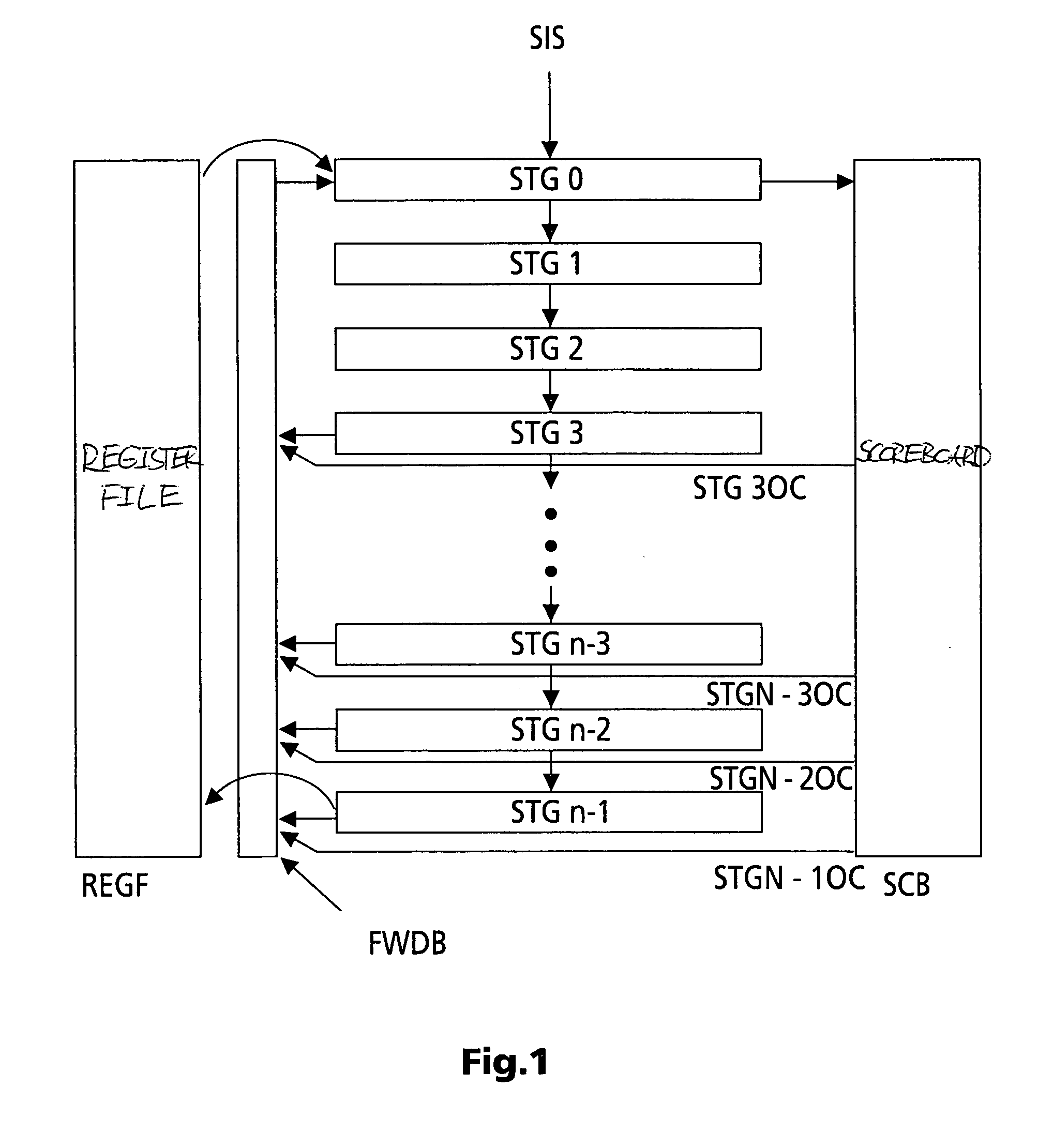

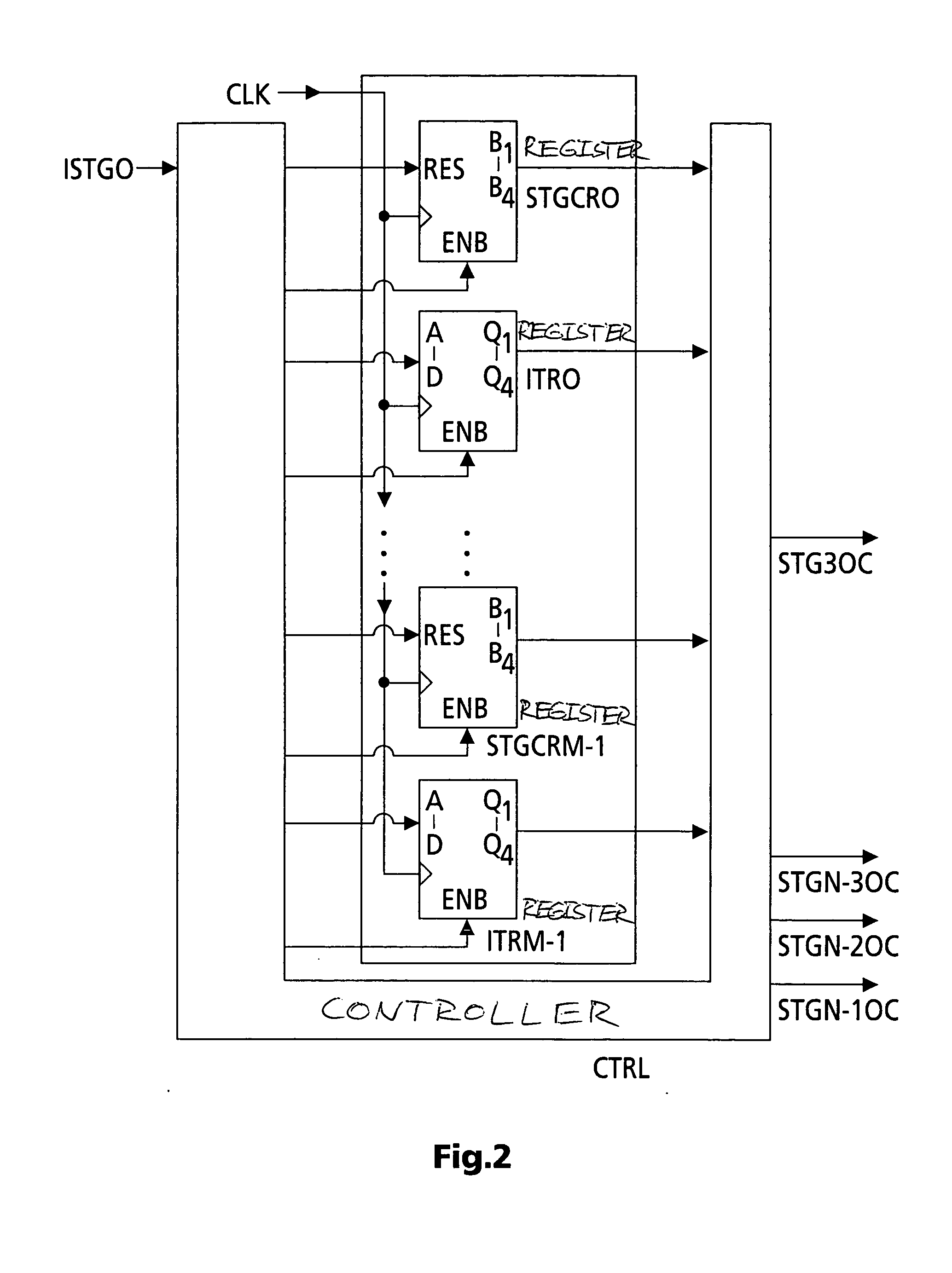

Method and apparatus for pipeline processing a chain of processing instructions

InactiveUS20050076189A1Improve reading efficiencyImprove efficiencyDigital computer detailsConcurrent instruction executionProcessing InstructionOperand

Processor instruction pipelines, which split the processing of individual instructions into several sub-stages and thus reduce the complexity of each stage while simultaneously increasing the clock speed, are typical features of RISC architectures. Operands required by the processing are read from a register file. Read-after-write access problems in the pipeline processing can be avoided by using a scoreboard that has an individual entry per address of the register file. Once an instruction enters the pipeline, a flag is set at the address of the destination address of this particular instruction. This flag signals that an instruction inside the pipeline wants to write its result to the respective register address. Hence the result is unavailable as long as the flag is set. It is cleared after the instruction process has successfully written the result into the register file. According to the invention, not only a single flag but the number of the pipeline stage, which currently carries the instruction that wants to write its result to a particular register file address, and the type of the respective instruction is stored in the corresponding scoreboard address for the particular instruction.

Owner:THOMSON LICENSING SA

Multithreaded processor architecture with operational latency hiding

ActiveUS20060230408A1High of latencyHide latencyError detection/correctionRuntime instruction translationHardware threadLogical operations

A method and processor architecture for achieving a high level of concurrency and latency hiding in an “infinite-thread processor architecture” with a limited number of hardware threads is disclosed. A preferred embodiment defines “fork” and “join” instructions for spawning new context-switched threads. Context switching is used to hide the latency of both memory-access operations (i.e., loads and stores) and arithmetic / logical operations. When an operation executing in a thread incurs a latency having the potential to delay the instruction pipeline, the latency is hidden by performing a context switch to a different thread. When the result of the operation becomes available, a context switch back to that thread is performed to allow the thread to continue.

Owner:IBM CORP

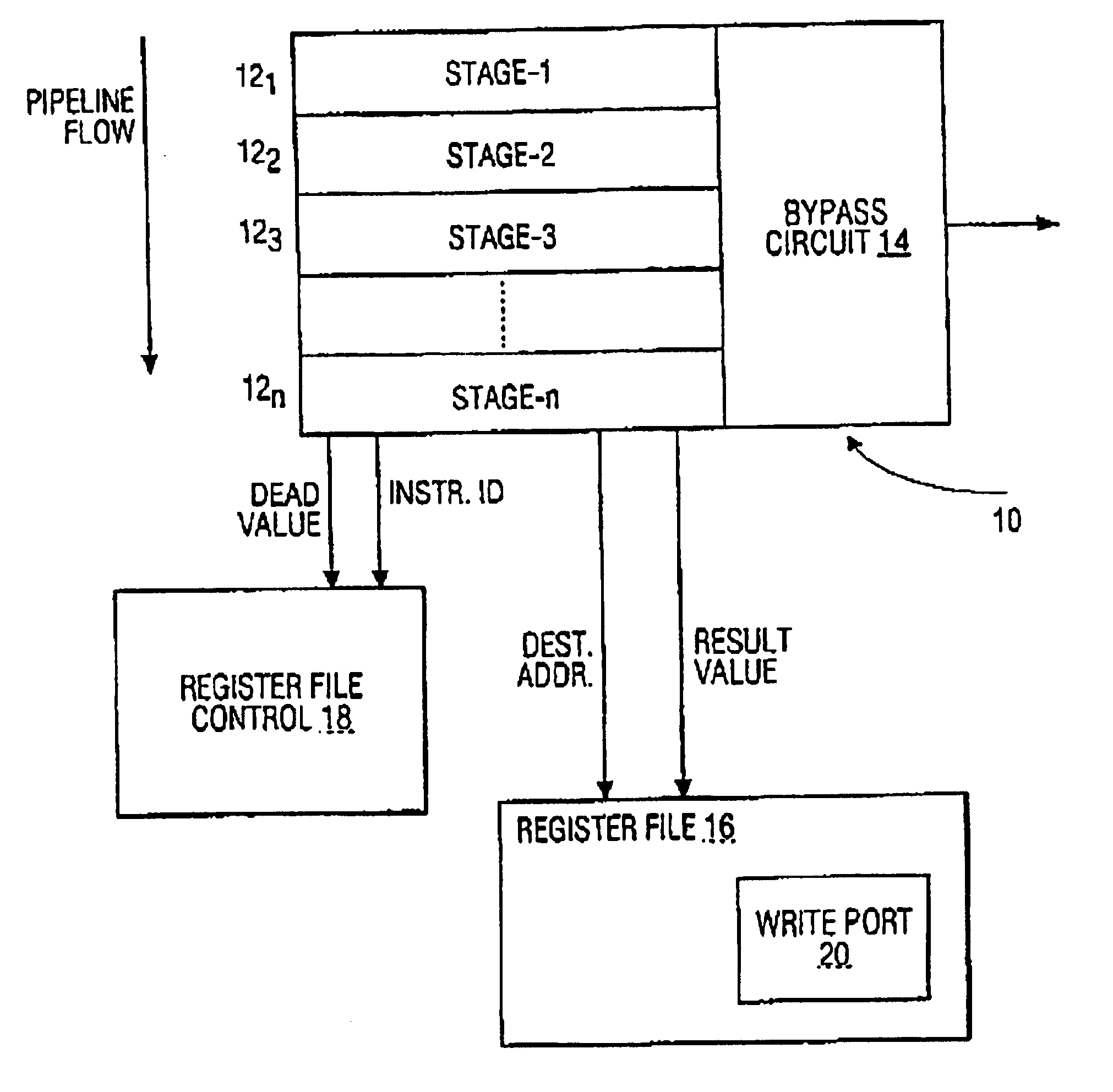

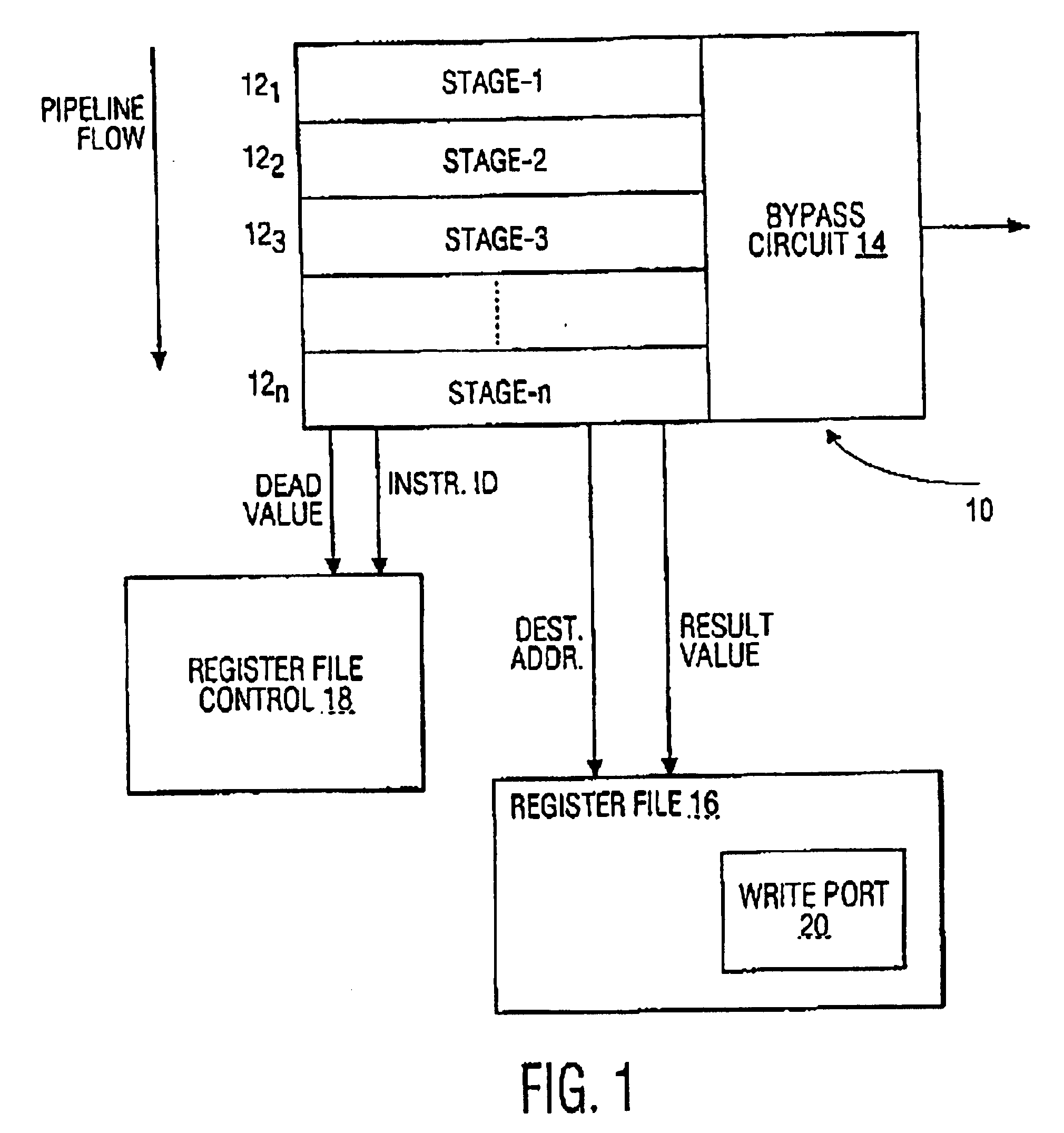

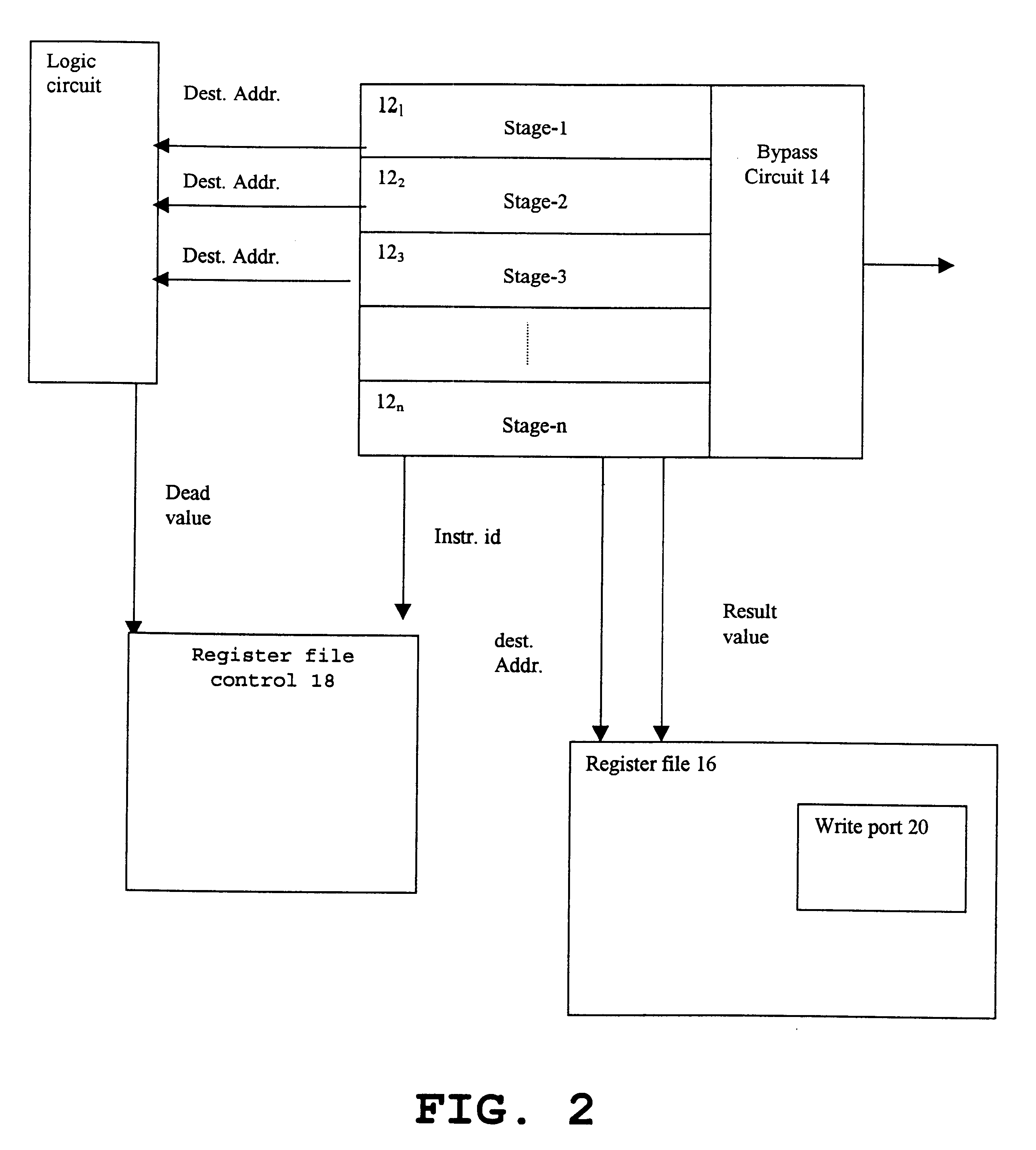

System and method for eliminating write back to register using dead field indicator

InactiveUS6862677B1Reduce in quantityReduce power consumptionDigital computer detailsConcurrent instruction executionProcessor registerInstruction pipeline

An instruction execution device and method are disclosed for reducing register write traffic within a processor. The instruction execution device includes an instruction pipeline for producing a result for an instruction, a register file that includes at least one write port for storing the result, a bypass circuit for allowing access to the result, a means for indicating whether the result is used by only one other instruction, and a register file control for preventing the result from being stored in the write port when the result has been accessed via the bypass circuit and is used by only one other instruction.

Owner:INVENSAS CORP

Multithreaded processor with multiple concurrent pipelines per thread

ActiveUS20060095729A1Improve concurrencyControl consumptionGeneral purpose stored program computerConcurrent instruction executionHardware threadExecution unit

A multithreaded processor comprises a plurality of hardware thread units, an instruction decoder coupled to the thread units for decoding instructions received therefrom, and a plurality of execution units for executing the decoded instructions. The multithreaded processor is configured for controlling an instruction issuance sequence for threads associated with respective ones of the hardware thread units. On a given processor clock cycle, only a designated one of the threads is permitted to issue one or more instructions, but the designated thread that is permitted to issue instructions varies over a plurality of clock cycles in accordance with the instruction issuance sequence. The instructions are pipelined in a manner which permits at least a given one of the threads to support multiple concurrent instruction pipelines.

Owner:QUALCOMM INC

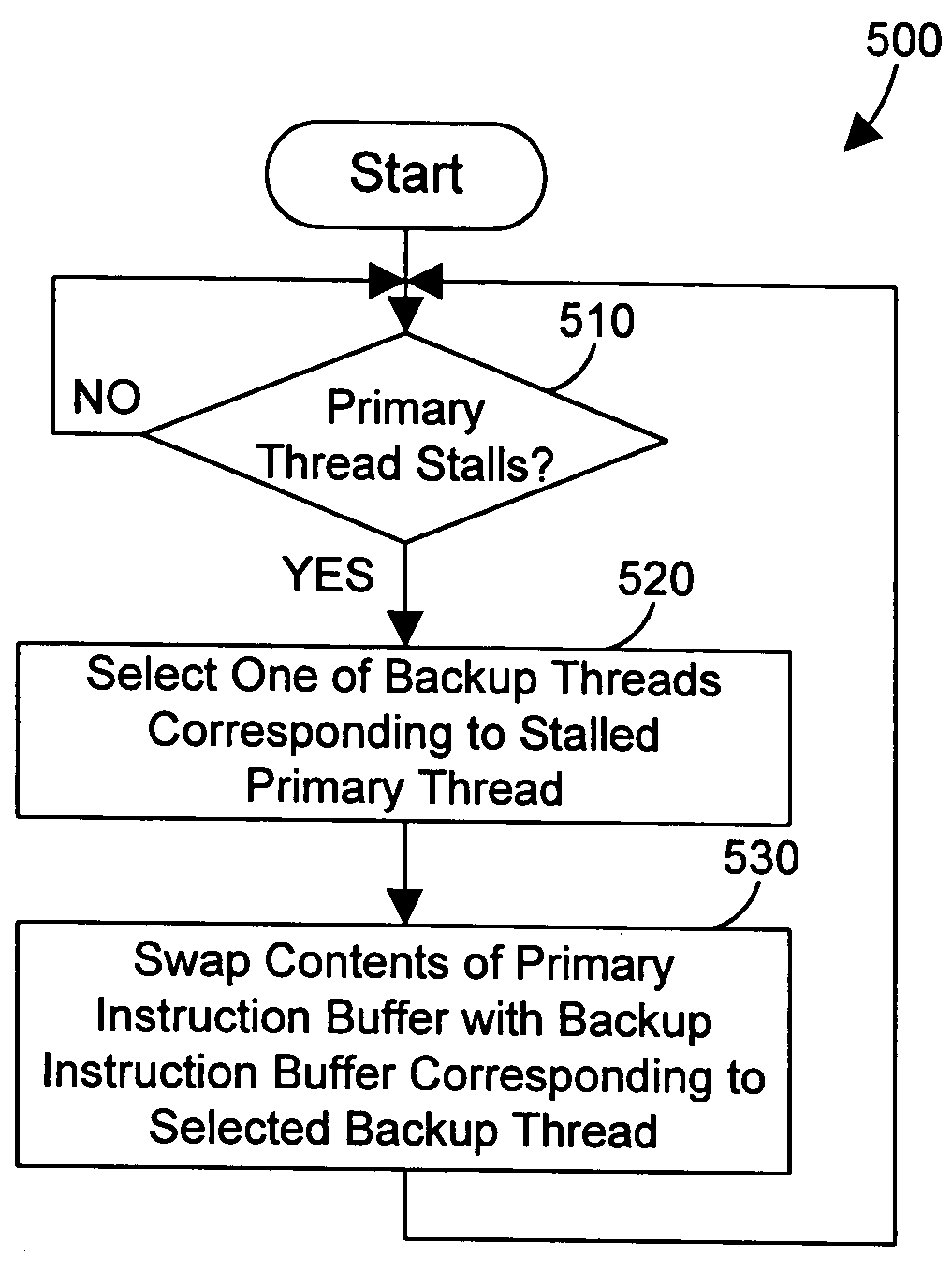

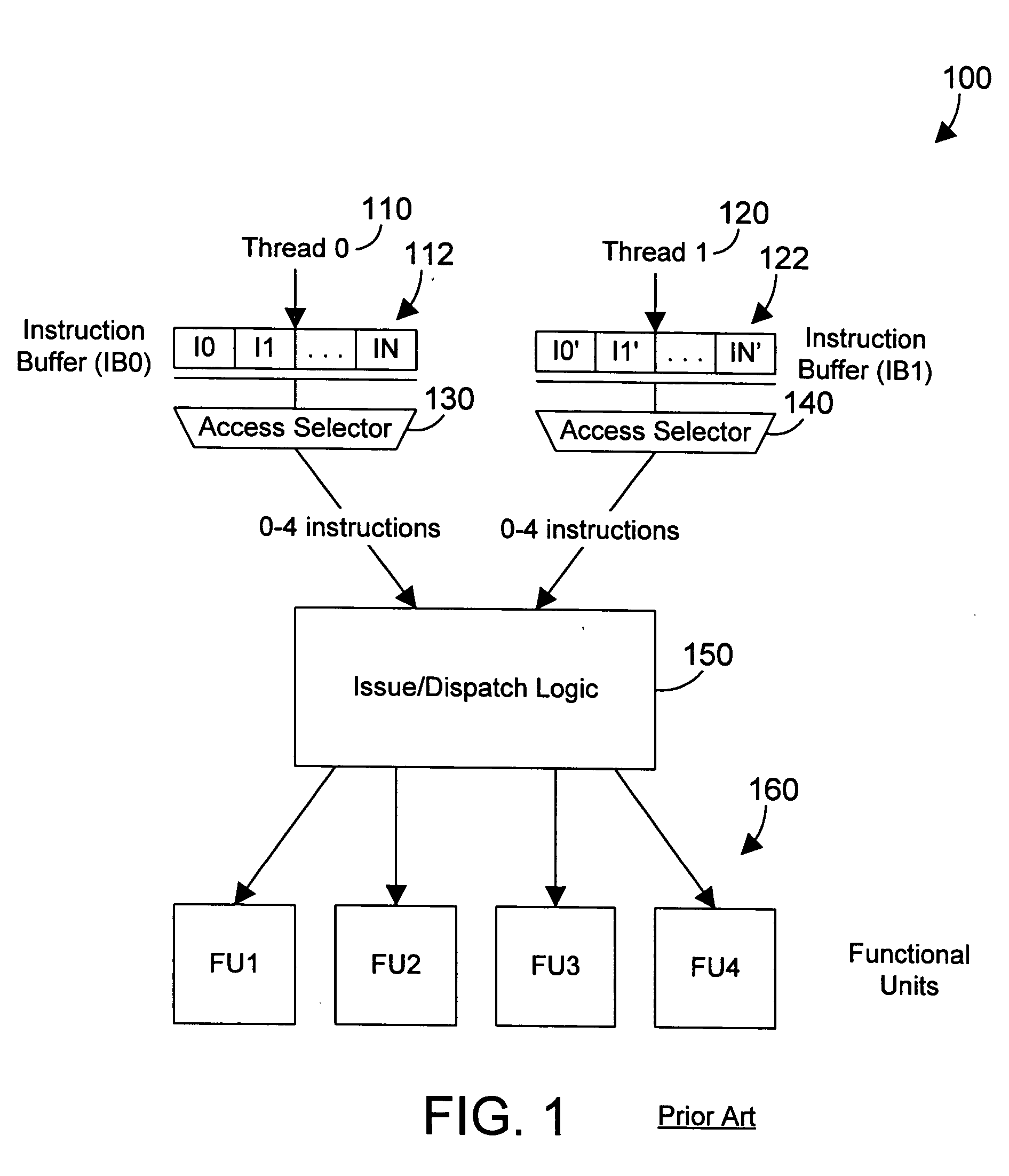

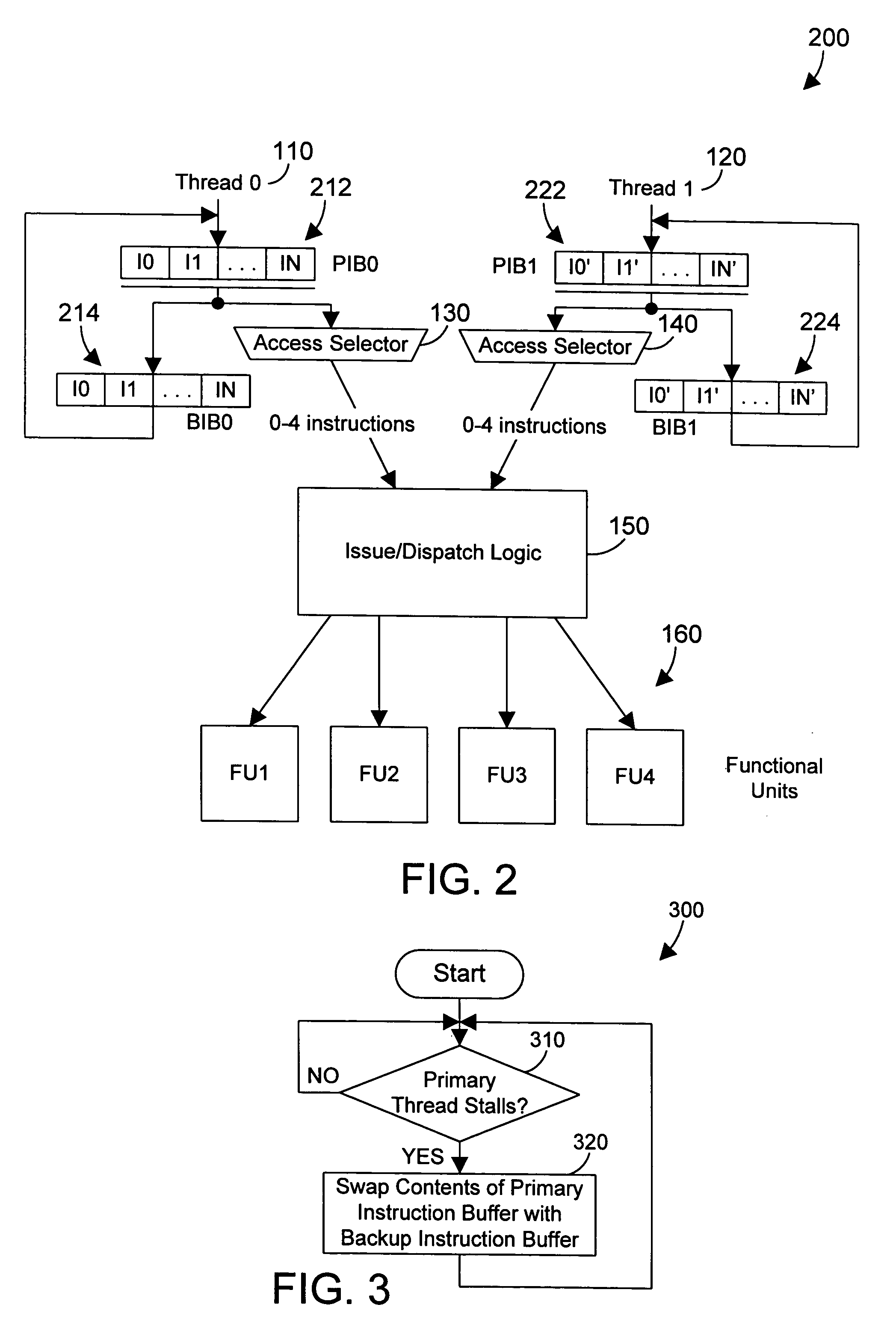

Multithreaded processor and method for switching threads

InactiveUS20050114856A1Improve throughputQuick switchProgram initiation/switchingDigital computer detailsInstruction pipelineInstruction buffer

A processor includes primary threads of execution that may simultaneously issue instructions, and one or more backup threads. When a primary thread stalls, the contents of its instruction buffer may be switched with the instruction buffer for a backup thread, thereby allowing the backup thread to begin execution. This design allows two primary threads to issue simultaneously, which allows for overlap of instruction pipeline latencies. This design further allows a fast switch to a backup thread when a primary thread stalls, thereby providing significantly improved throughput in executing instructions by the processor.

Owner:IBM CORP

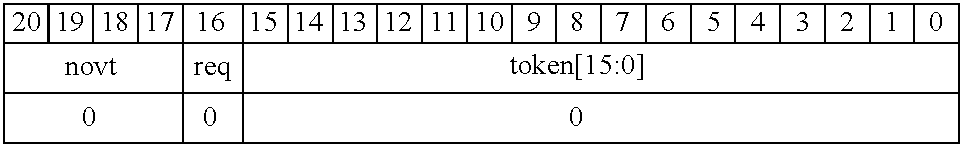

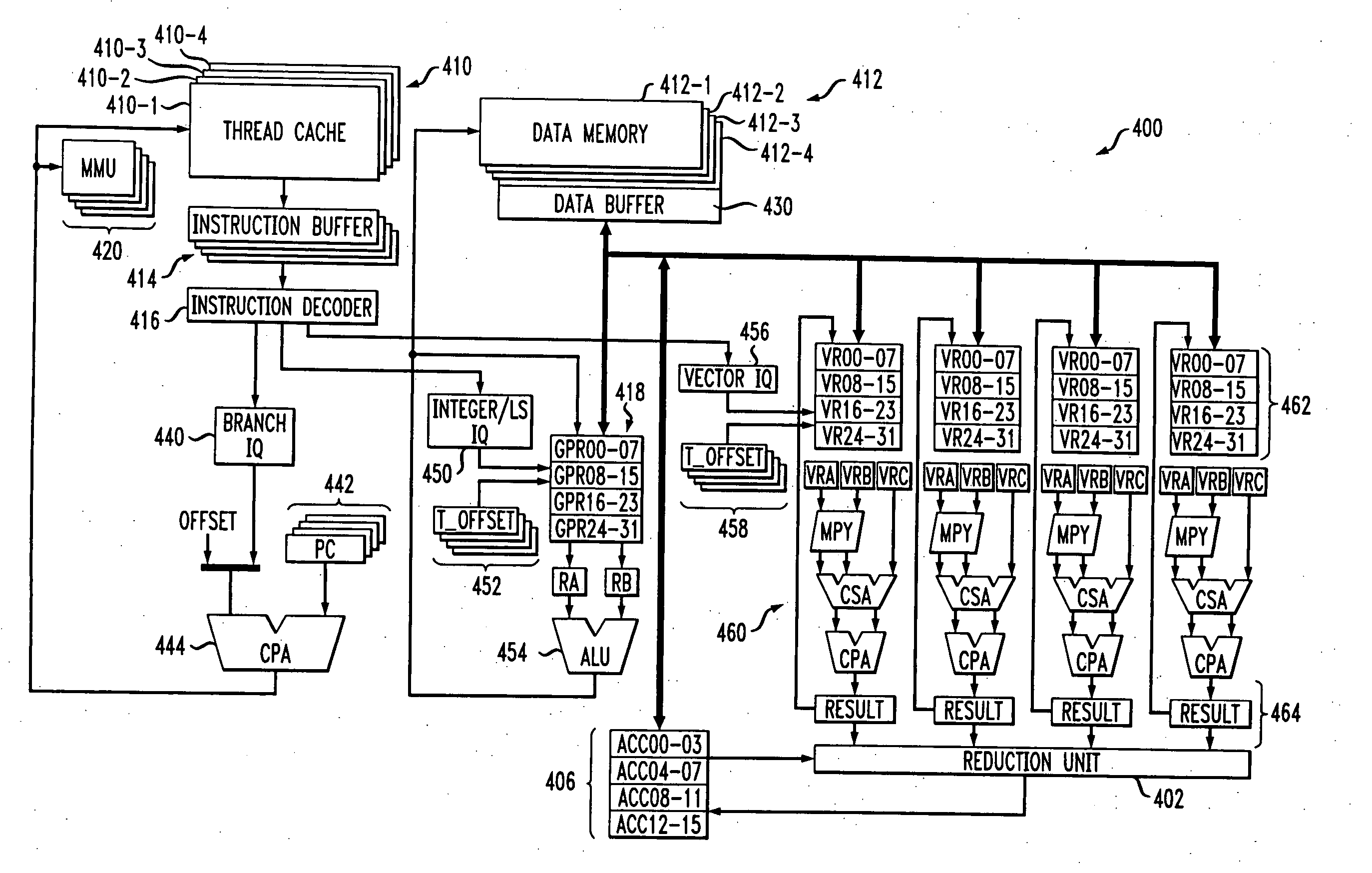

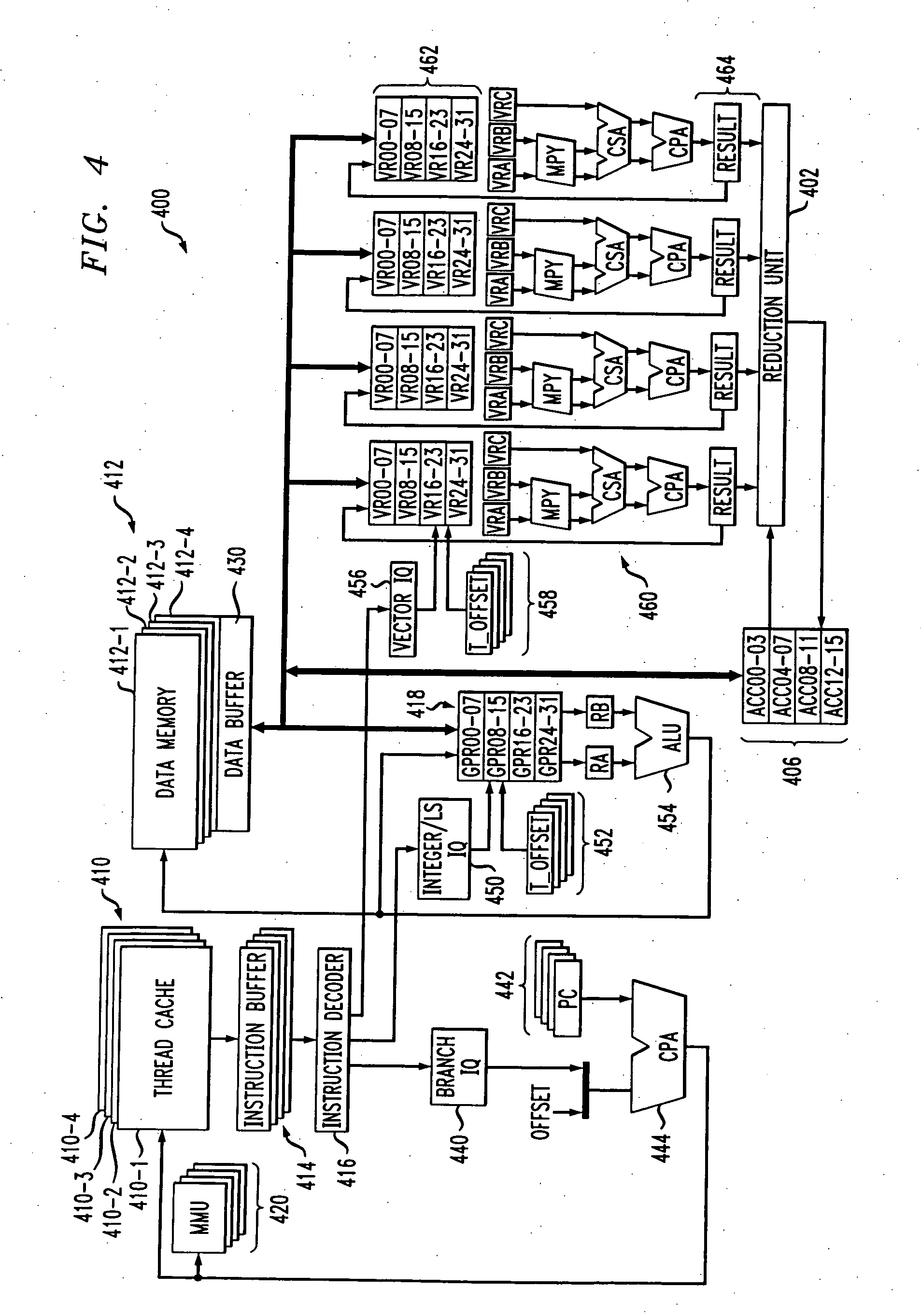

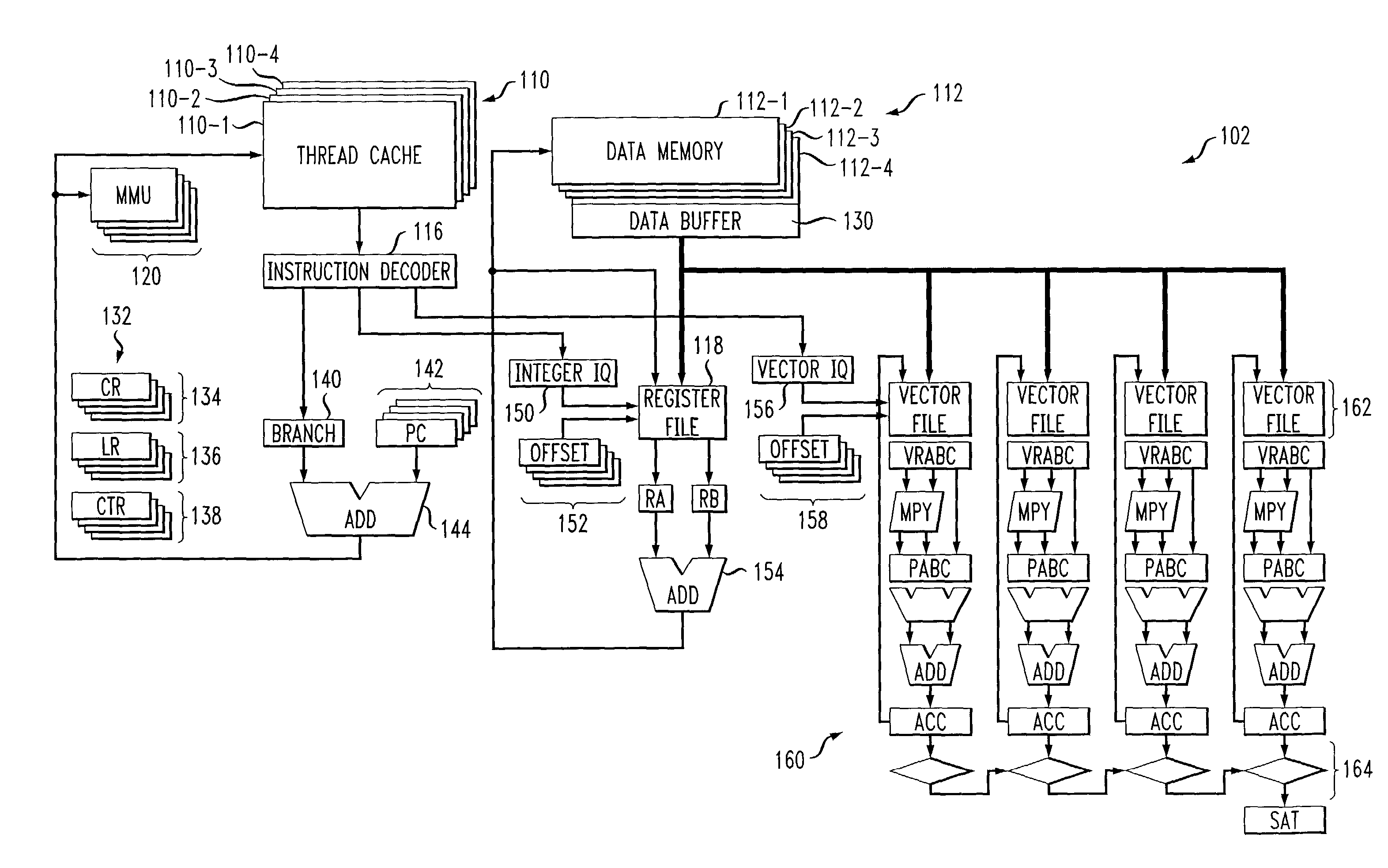

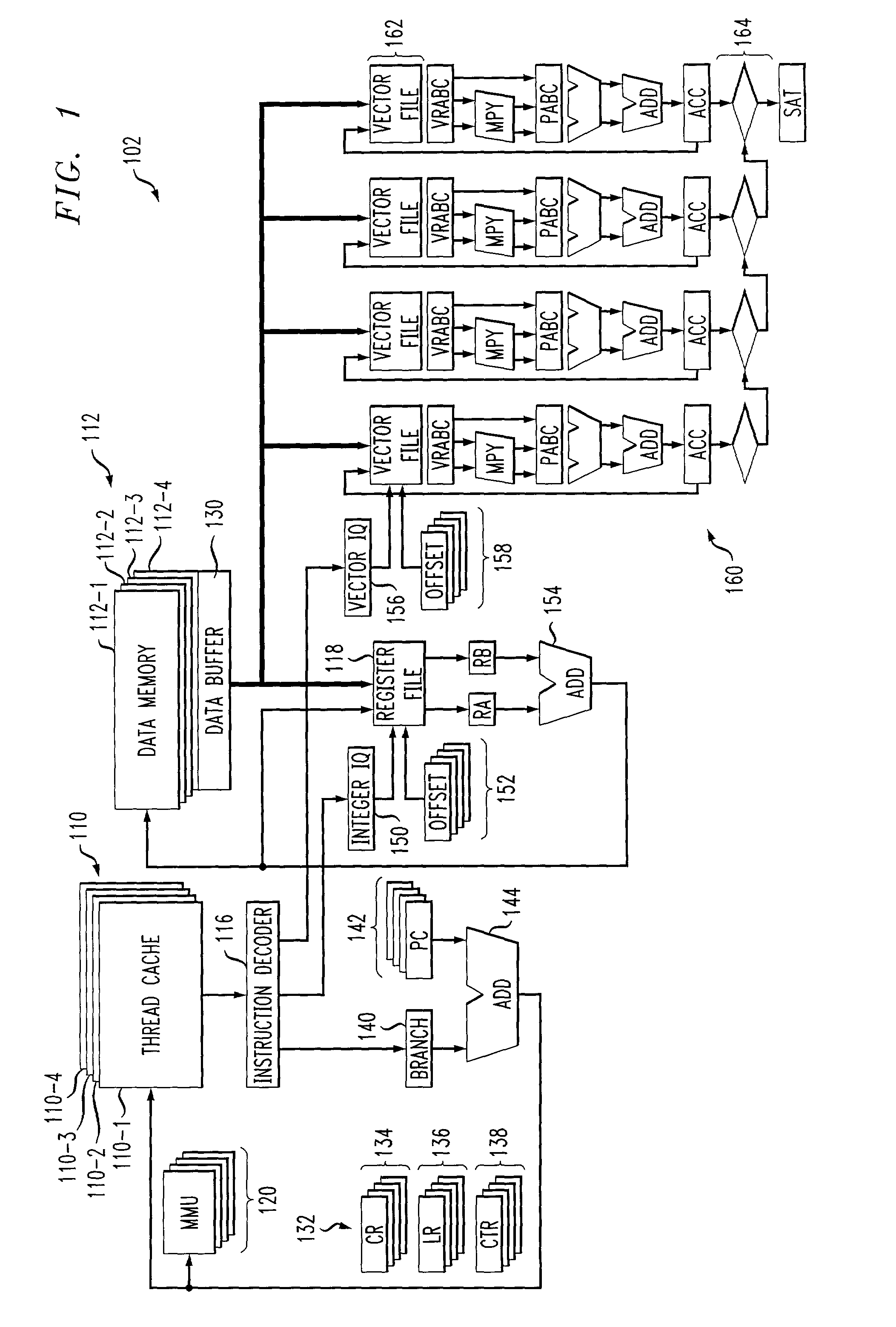

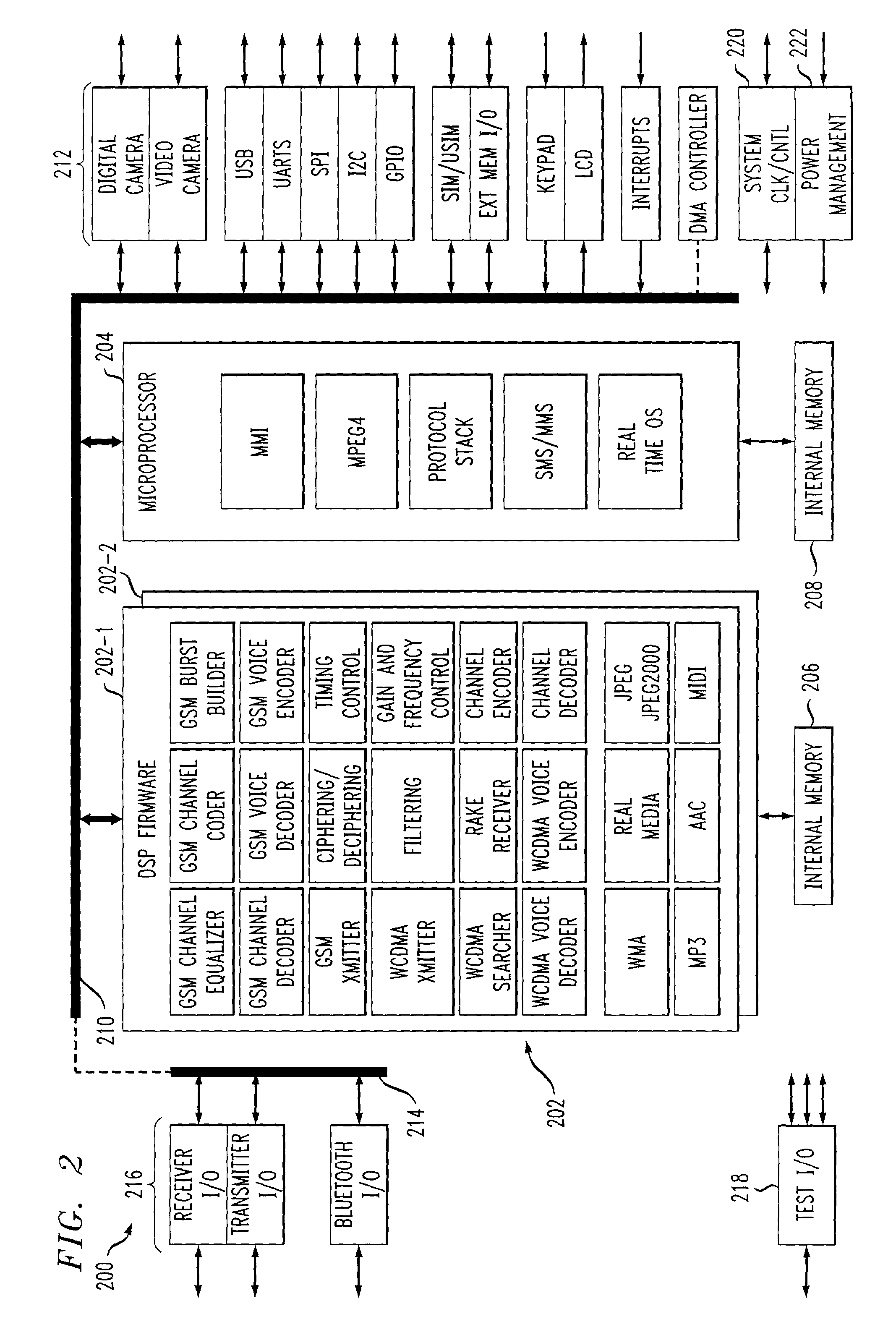

Multithreaded processor with efficient processing for convergence device applications

ActiveUS6968445B2Executing controlEfficient executionMultiprogramming arrangementsConcurrent instruction executionInstruction unitExecution control

A multithreaded processor includes an instruction decoder for decoding retrieved instructions to determine an instruction type for each of the retrieved instructions, an integer unit coupled to the instruction decoder for processing integer type instructions, and a vector unit coupled to the instruction decoder for processing vector type instructions. A reduction unit is preferably associated with the vector unit and receives parallel data elements processed in the vector unit. The reduction unit generates a serial output from the parallel data elements. The processor may be configured to execute at least control code, digital signal processor (DSP) code, Java code and network processing code, and is therefore well-suited for use in a convergence device. The processor is preferably configured to utilize token triggered threading in conjunction with instruction pipelining.

Owner:QUALCOMM INC

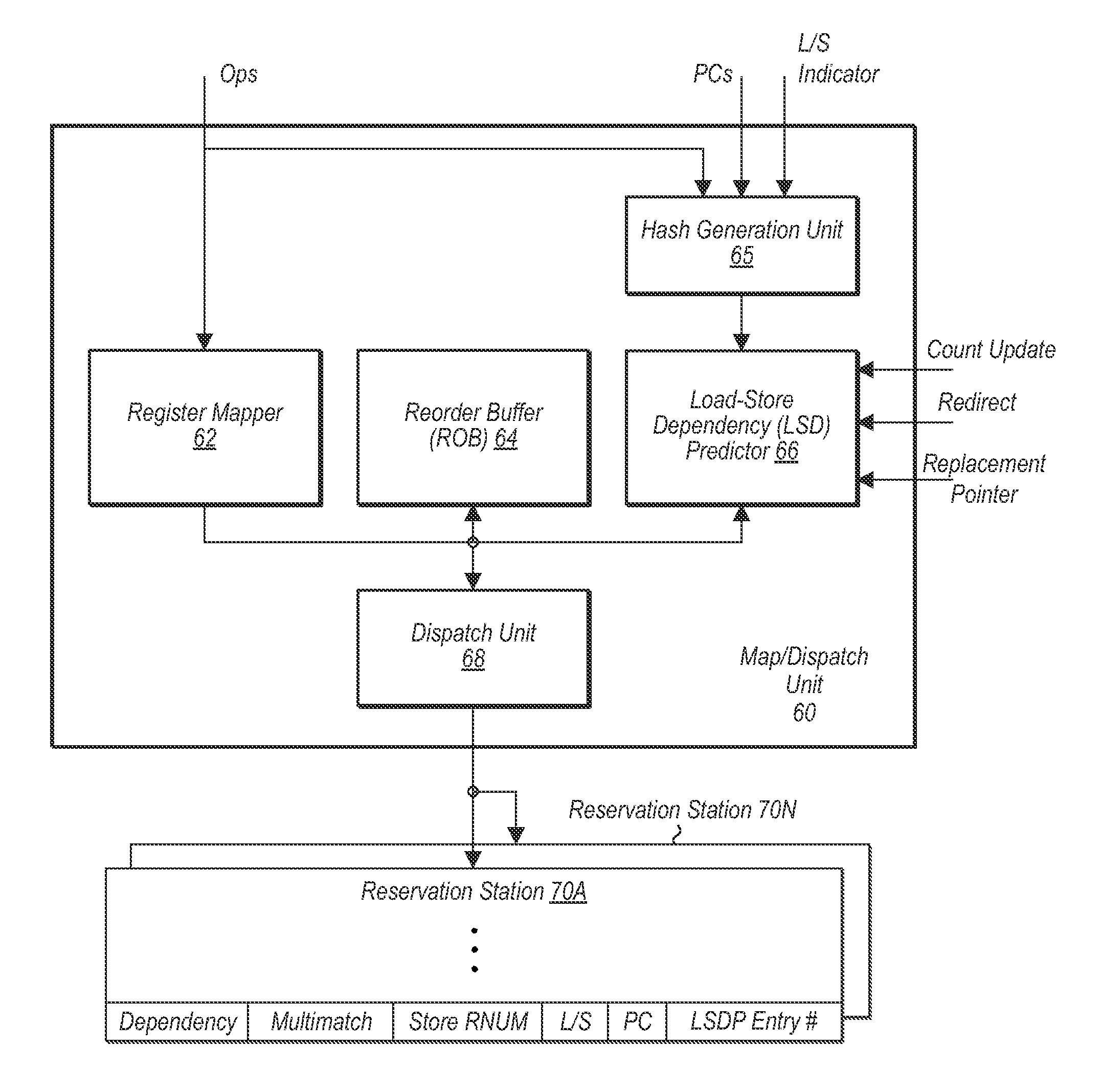

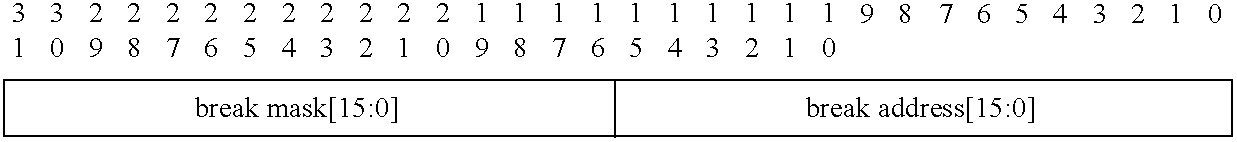

Load-store dependency predictor PC hashing

Methods and processors for managing load-store dependencies in an out-of-order instruction pipeline. A load store dependency predictor includes a table for storing entries for load-store pairs that have been found to be dependent and execute out of order. Each entry in the table includes hashed values to identify load and store operations. When a load or store operation is detected, the PC and an architectural register number are used to create a hashed value that can be used to uniquely identify the operation. Then, the load store dependency predictor table is searched for any matching entries with the same hashed value.

Owner:APPLE INC

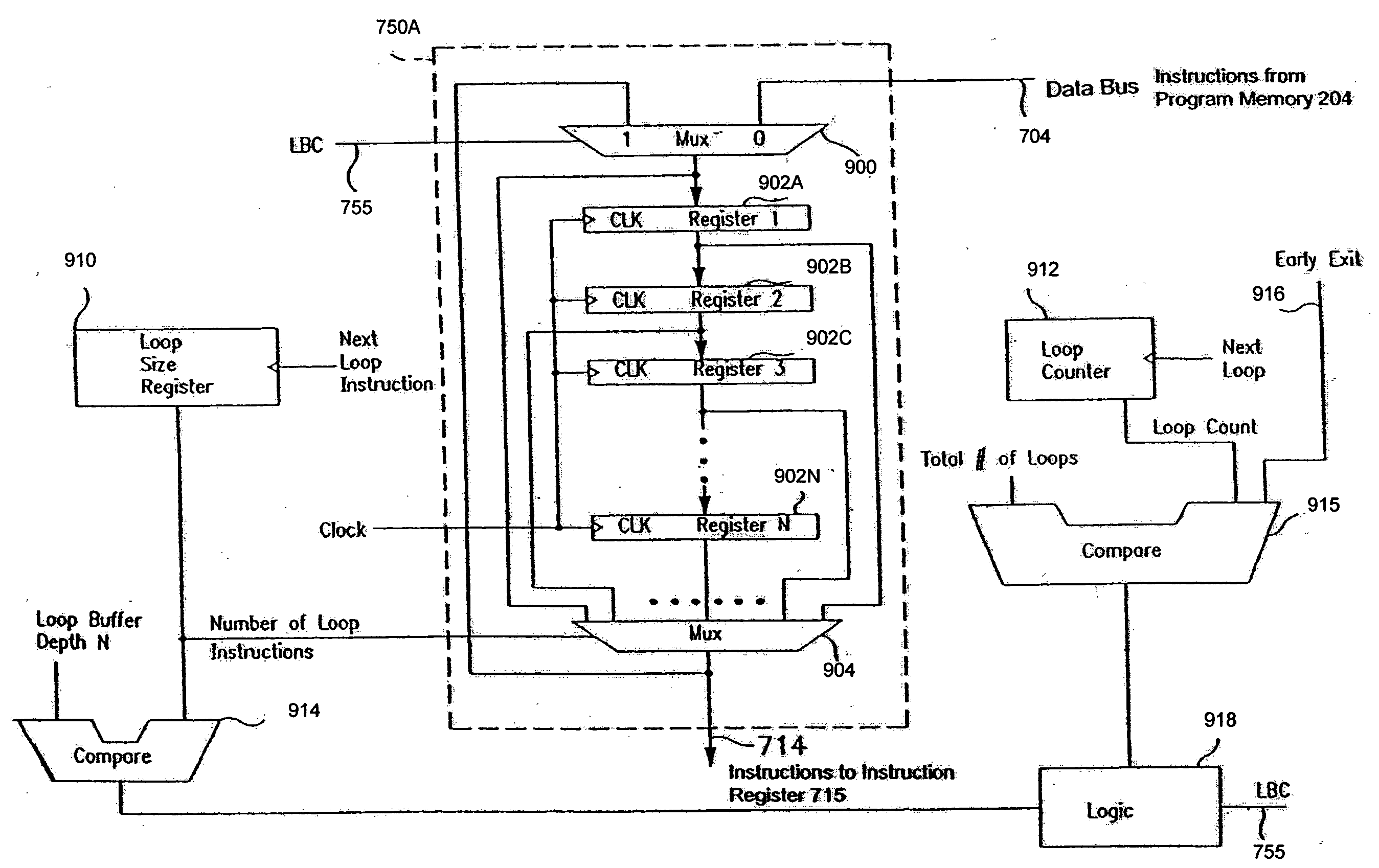

Unified instruction pipeline for power reduction in a digital signal processor integrated circuit

Method and apparatus for reducing power consumption in a digital specific signal processor integrated circuit. Data buses are routed through multiplexers to reduce the number of busses routed across an integrated circuit and maintain their prior state. Global memory is clustered into memory clusters. The memory cluster having a memory block to be accessed is activated without activating other memory clusters in the global memory. Inactive data buses retain their state by use of bus state keepers. A loop buffer stores instructions within program loops to avoid memory accesses. Functional blocks can have their clocks gated instruction by instruction to lower power consumption. RISC and DSP units swap circuit activity to reduce power consumption. Local data memory is includes self-timed memory access activation and provides for off boundary access to further lower power consumption.

Owner:INTEL CORP

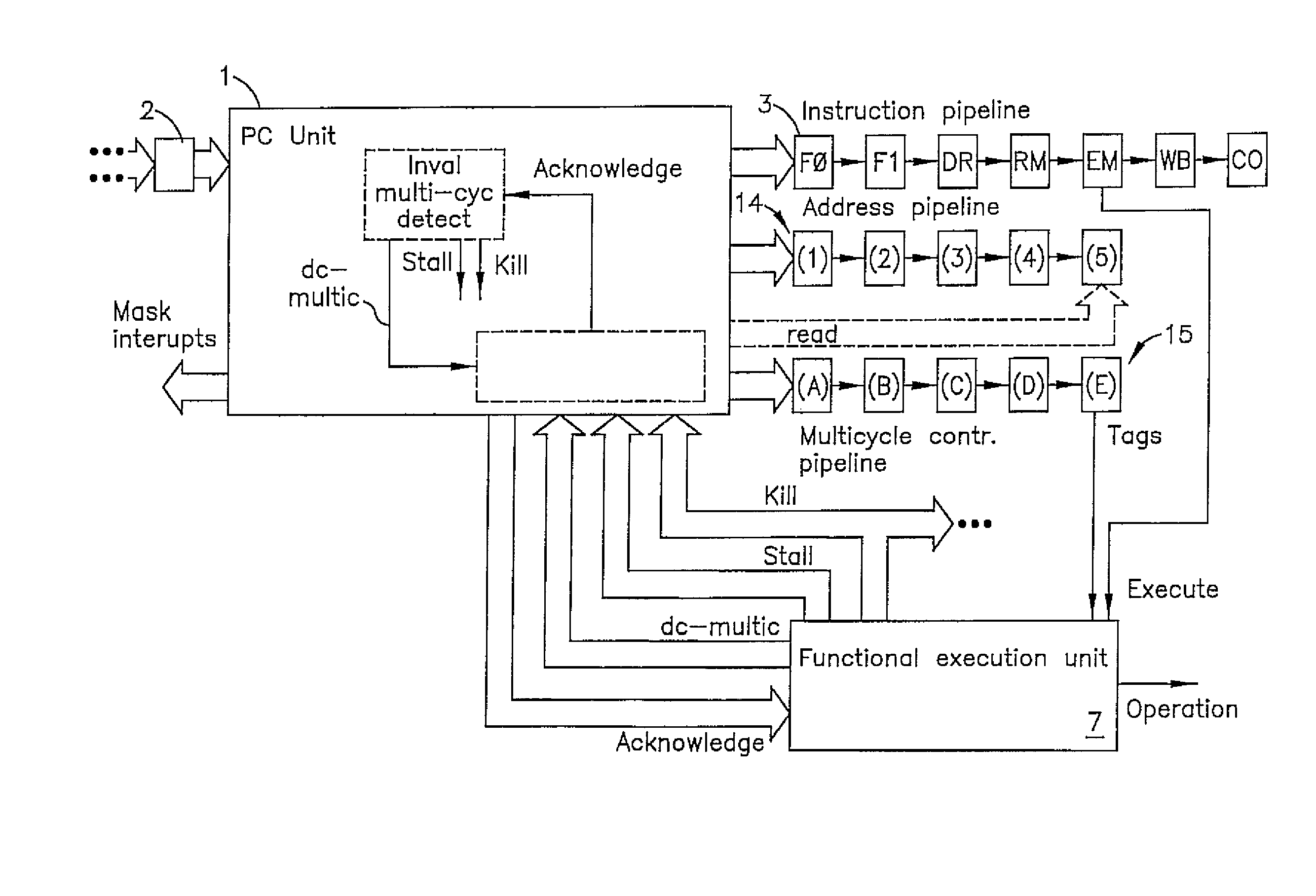

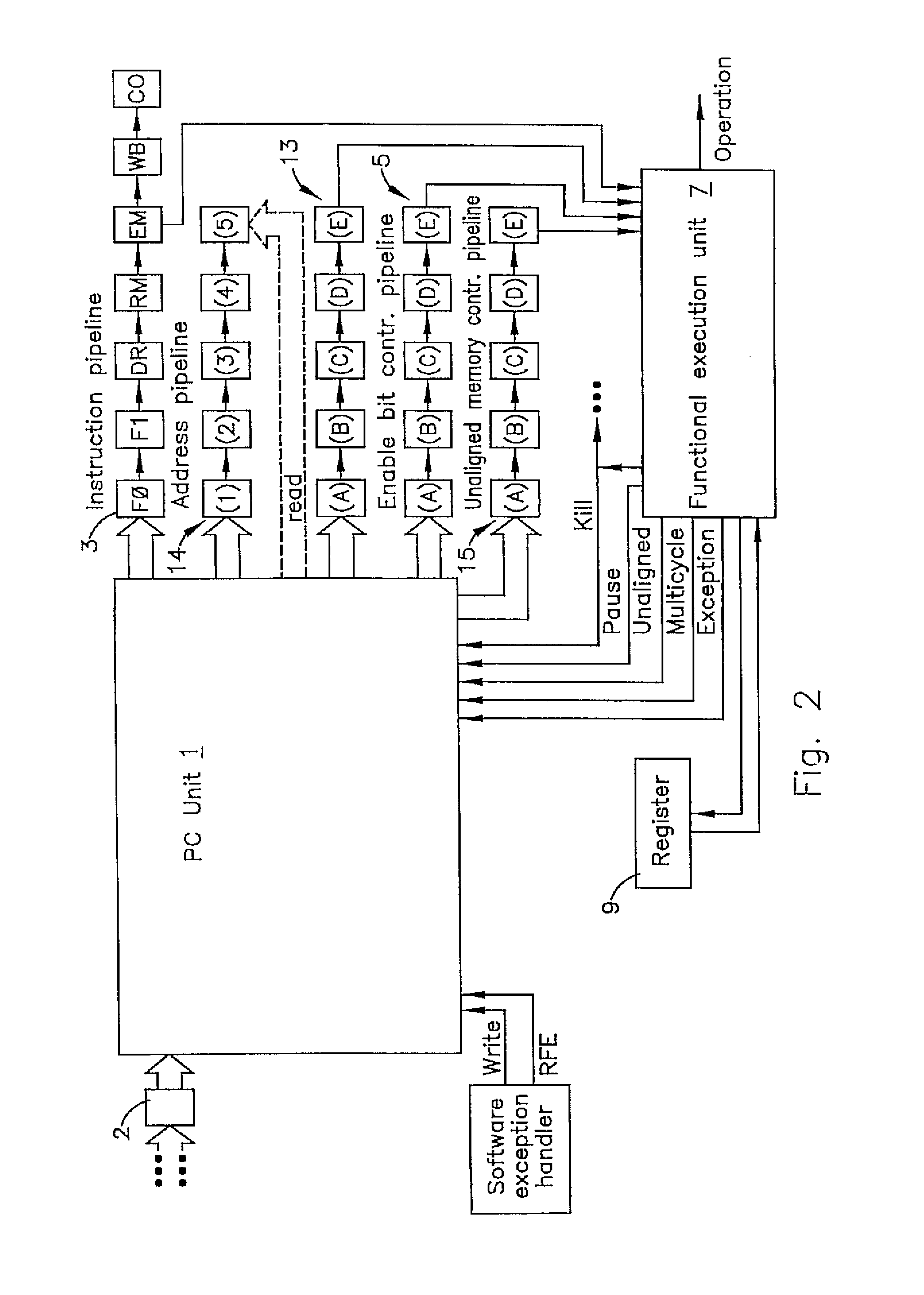

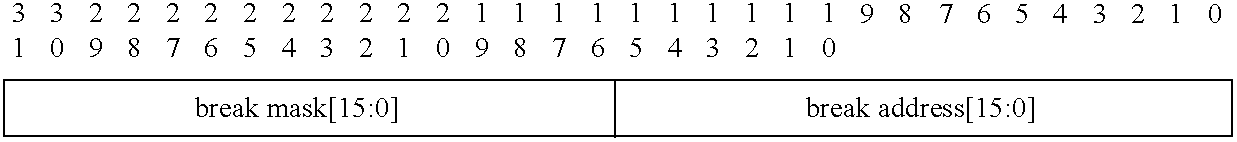

Pipeline replay support for multi-cycle operations

InactiveUS8117423B1General purpose stored program computerNext instruction address formationExecution unitInstruction pipeline

Instructions asserted in the instruction pipeline (3) of the microprocessor are accompanied by control information, comprising a group of bits, asserted within a control information pipeline (15) of the processor. The control information pipeline is synchronized to the instruction pipeline so that the control information for an instruction progresses in synchronism with the instruction. The control information may identify, directly or indirectly, the type of operation called for by the instruction and, if the operation is to be performed in parts, indicate the part to be performed. Means are included in the processor, such as a number of functional execution units (7), to interpret that control information and take appropriate action. Applied in a VLIW processor to an atom operation that requires multiple cycles to complete, in which the first part of the operation is permitted to complete and the atom then reasserted, the control information identifies the second assertion of the atom as the second part of a multi-cycle operation.

Owner:INTELLECTUAL VENTURES HOLDING 81 LLC

Communications system using rings architecture

InactiveUS20030189940A1Energy efficient ICTNetwork traffic/resource managementAuto-configurationAutomatic routing

Systems and methods are provided for implementing: a rings architecture for communications and data handling systems; an enumeration process for automatically configuring the ring topology; automatic routing of messages through bridges; extending a ring topology to external devices; write-ahead functionality to promote efficiency; wait-till-reset operation resumption; in-vivo scan through rings topology; staggered clocking arrangement; and stray message detection and eradication. Other inventive elements conveyed include: an architectural overview of a packet processor; a programming model for a packet processor; an instruction pipeline for a packet processor; and use of a packet processor as a module on a rings-based architecture. Additional inventive elements conveyed include: an architectural overview of a communications processor; a data path protocol support model for a communications processor; an exemplary network processor employed as the core packet processor for the communications processor; an exemplary rings-based SOC switch fabric architecture; and a variety of quality of support features.

Owner:GLOBESPANVIRATA

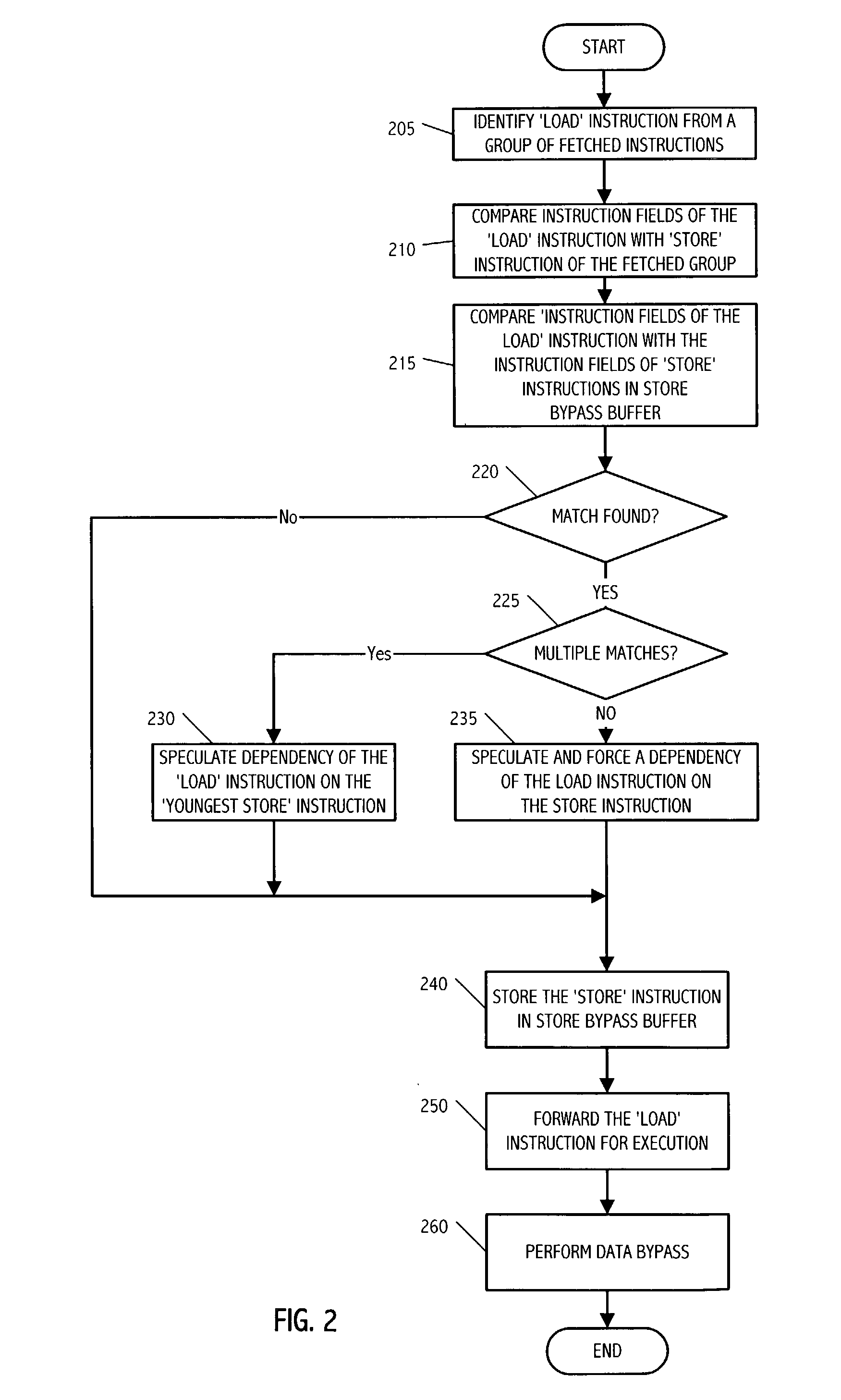

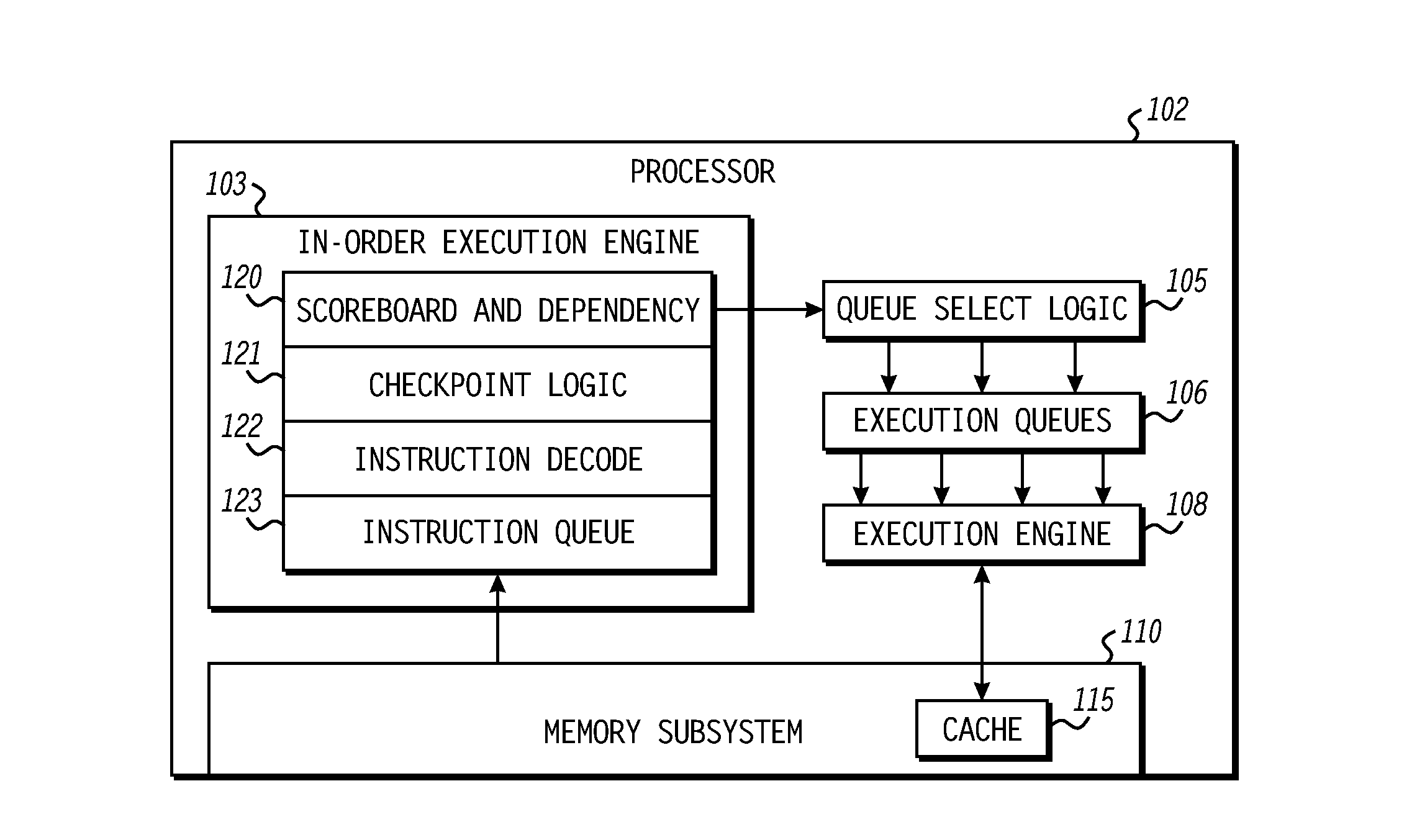

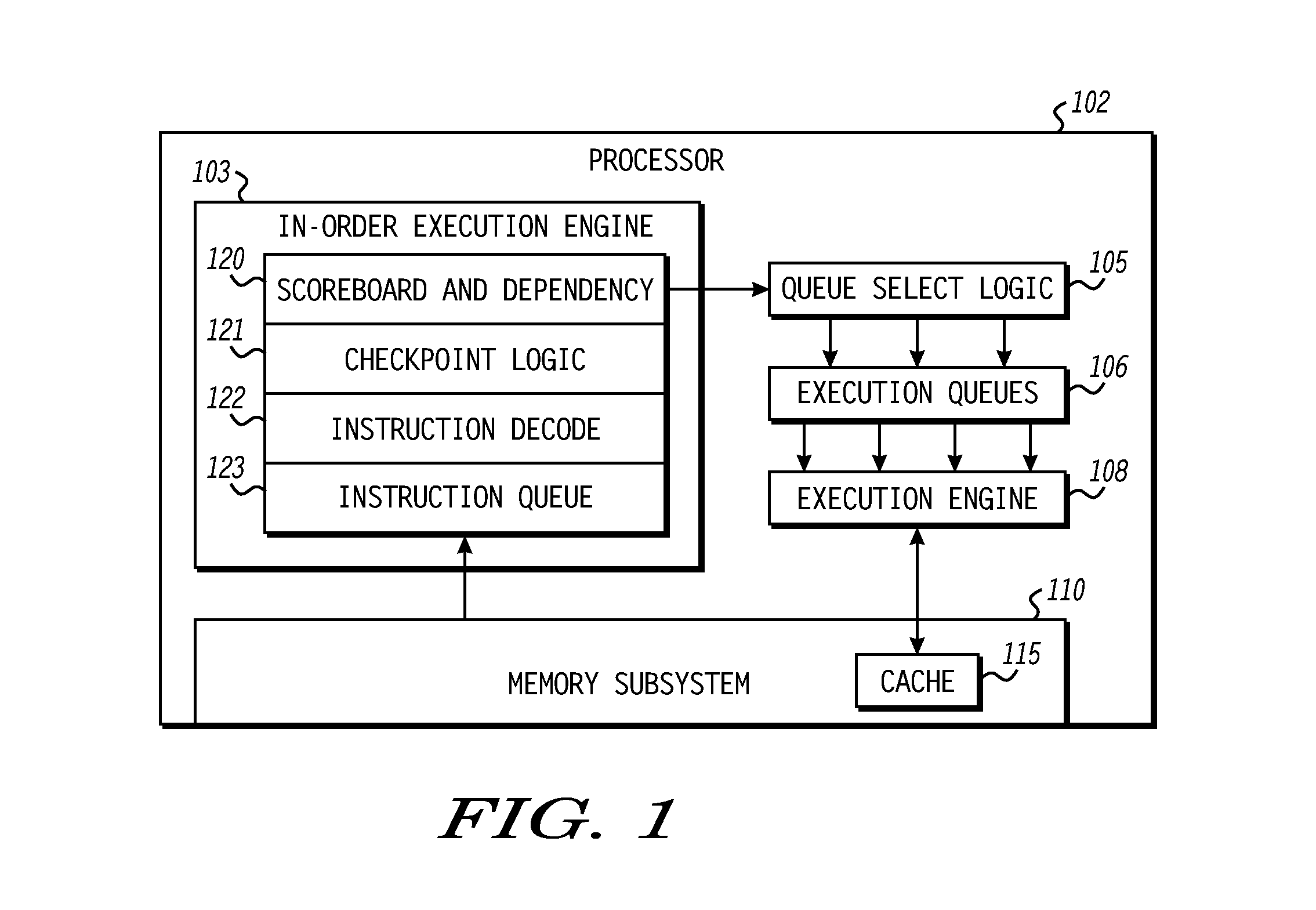

Method and system for early speculative store-load bypass

InactiveUS20040044881A1Digital computer detailsConcurrent instruction executionLoad instructionInstruction pipeline

In an embodiment, the present invention describes a method and apparatus for detecting RAW condition earlier in an instruction pipeline. The store instructions are stored in a special store bypass buffer (SBB) within an instruction decode unit (IDU). The IDU compares the instruction fields that are used for address generation of all 'load' instructions against 'store' instructions within a group of fetched instructions and 'store' instructions previously stored in the SBB. If a match of instruction fields is found, the IDU 'speculates' that the load instruction has dependency on the 'store' instruction. A data cache unit (DCU) validates the dependency of the load instruction 'speculated' by the IDU. If a false dependency is 'speculated' by the IDU, the DCU forces a re-fetch of the load instruction.

Owner:SUN MICROSYSTEMS INC

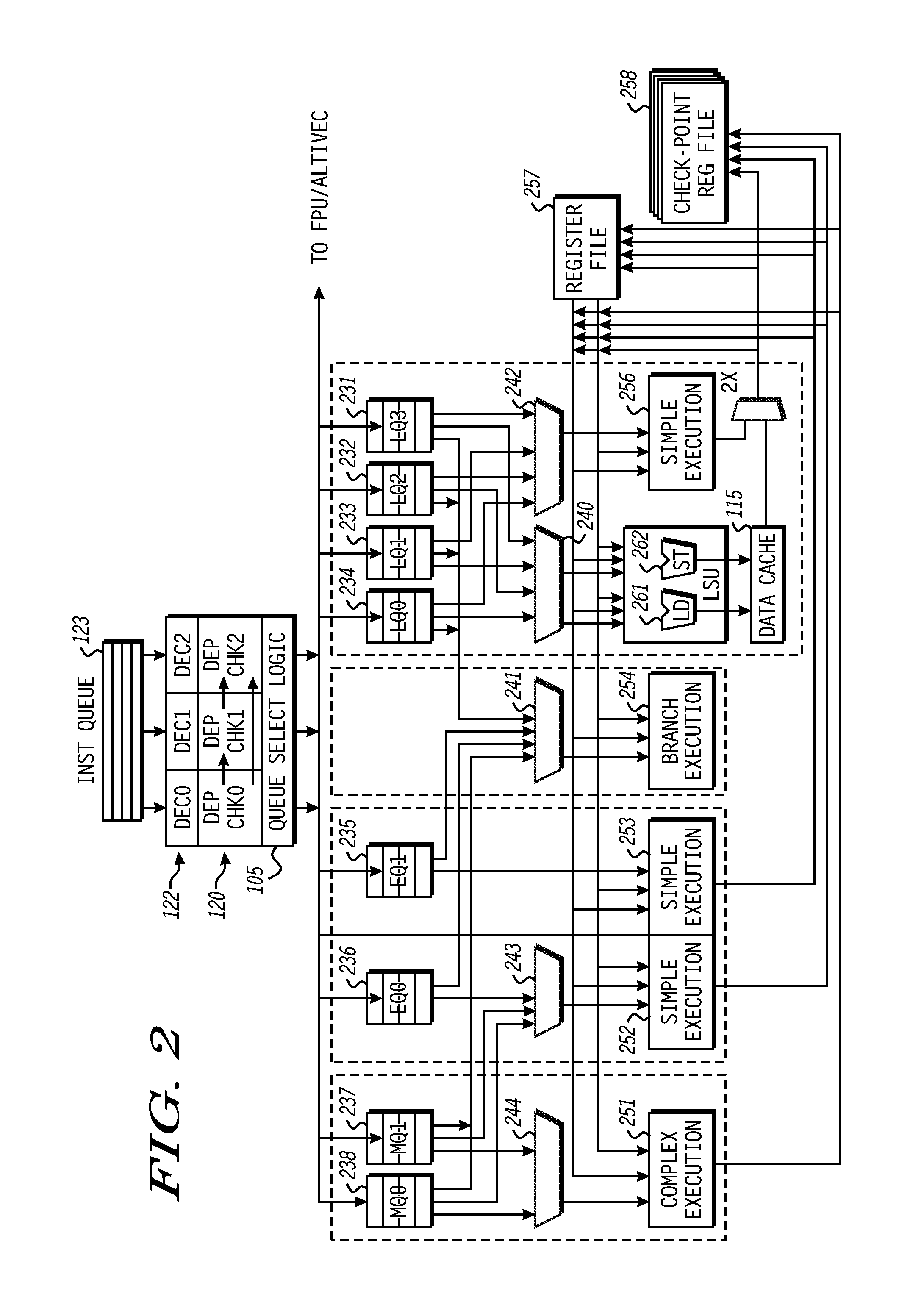

Apparatus and method for dynamic allocation of execution queues

A processor reduces the likelihood of stalls at an instruction pipeline by dynamically extending the size of a full execution queue. To extend the full execution queue, the processor temporarily repurposes another execution queue to store instructions on behalf of the full execution queue. The execution queue to be repurposed can be selected based on a number of factors, including the type of instructions it is generally designated to store, whether it is empty of other instruction types, and the rate of cache hits at the processor. By selecting the repurposed queue based on dynamic factors such as the cache hit rate, the likelihood of stalls at the dispatch stage is reduced for different types of program flows, improving overall efficiency of the processor.

Owner:FREESCALE SEMICON INC

Dependent instruction suppression in a load-operation instruction

ActiveUS20150026686A1Program initiation/switchingDigital computer detailsCell schedulingExecution unit

A method includes suppressing execution of an operation portion of a load-operation instruction in a processor responsive to an invalid status of a load portion of load-operation instruction. A processor includes an instruction pipeline including an execution unit operable to execute instructions and a scheduler unit. The scheduler unit includes a scheduler queue and is operable to store a load-operation in the scheduler queue. The load-operation instruction includes a load portion and an operation portion. The scheduler unit schedules the load portion for execution in the execution unit, marks the operation portion in the scheduler queue as eligible for execution responsive to scheduling the load portion, receives an indication of an invalid status of the load portion, and suppresses execution of the operation portion responsive to the indication of the invalid status.

Owner:ADVANCED MICRO DEVICES INC

Interrupt verification support mechanism

InactiveUS20050060577A1Instruction analysisRuntime instruction translationInstruction pipelineEmbedded system

The present invention relates to a device for an interrupt verification support mechanism and the method for operating said device comprising a processor and an input for external interrupt requests or interrupt pseudo-instructions communicatively coupled to the processor. The method comprises the steps of processing at least one actual instruction in the processor in an instruction pipeline, and if an external interrupt request is received by the processor, the actual instruction is replaced with the pseudo-instruction. Pursuant to the method, instructions are concurrently processed in the processor in an instruction pipeline with several stages. In the instruction pipeline, instructions are processed by an instruction fetch stage, an instruction decode stage, an instruction issue stage, an execute stage and a result write-back stage. Thereby, interrupt requests are only processed at the fetch stage of the instruction pipeline. The device of an interrupt support mechanism and the method for operating said device provides the advantage a simplification of interrupt verification.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

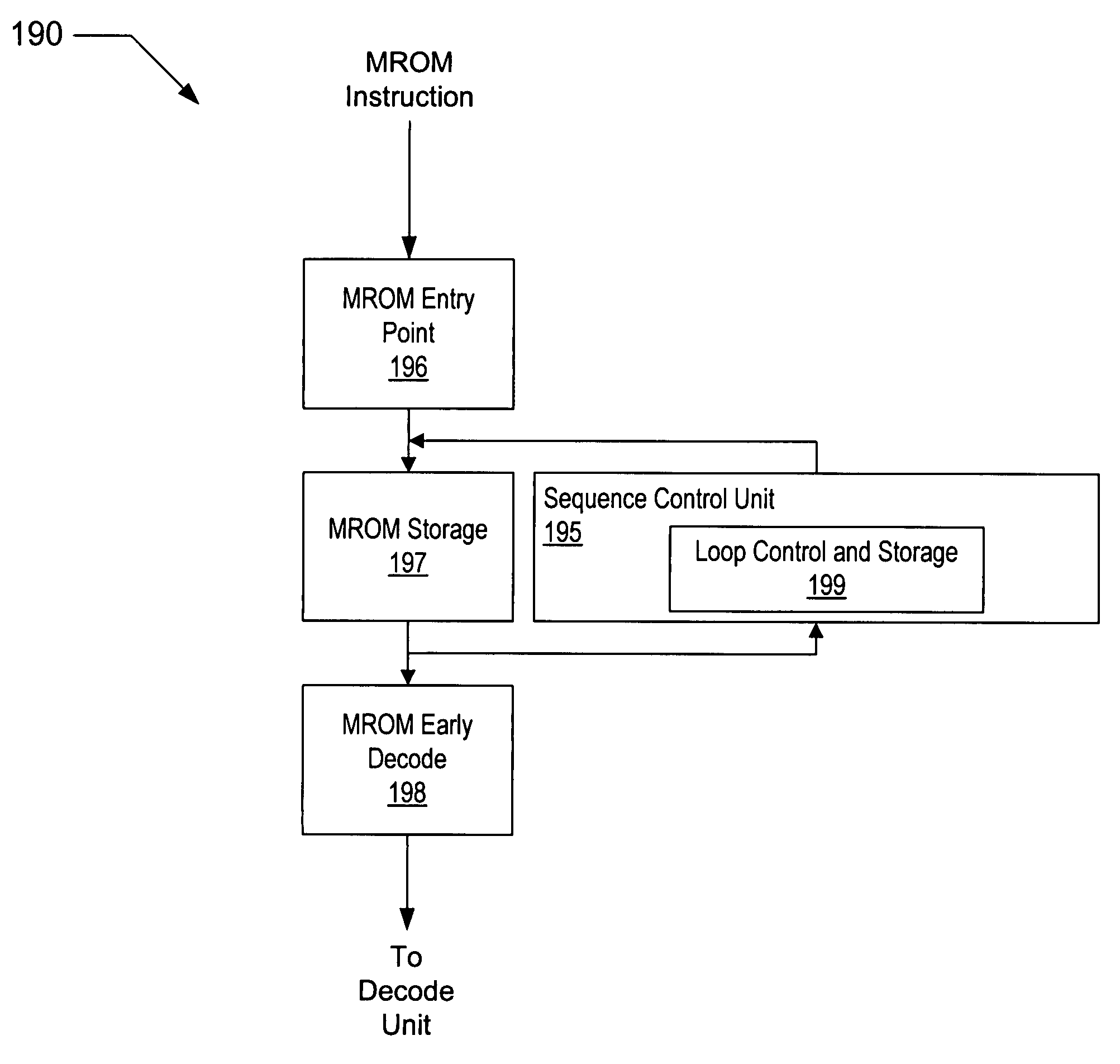

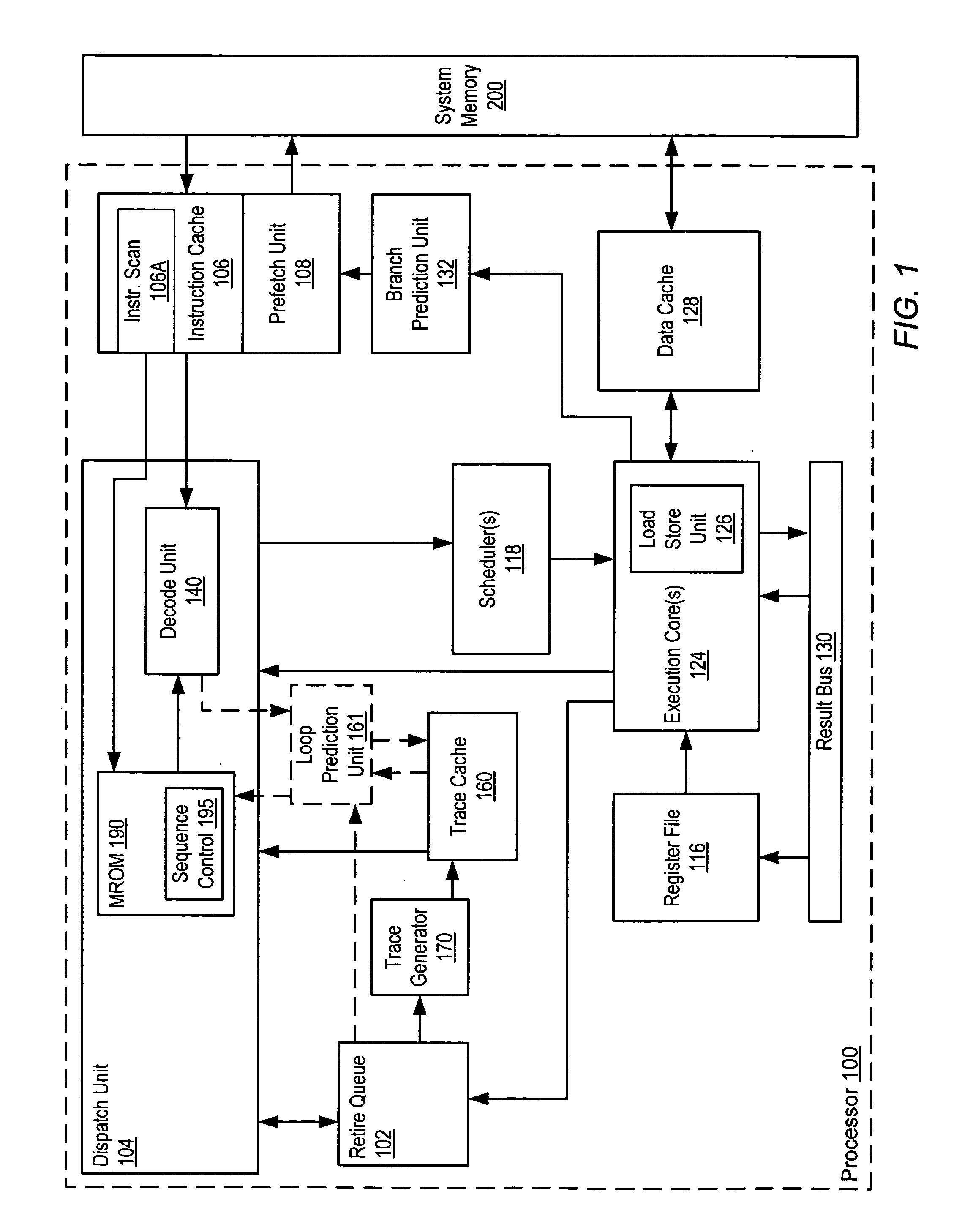

Method for optimizing loop control of microcoded instructions

Owner:MEDIATEK INC

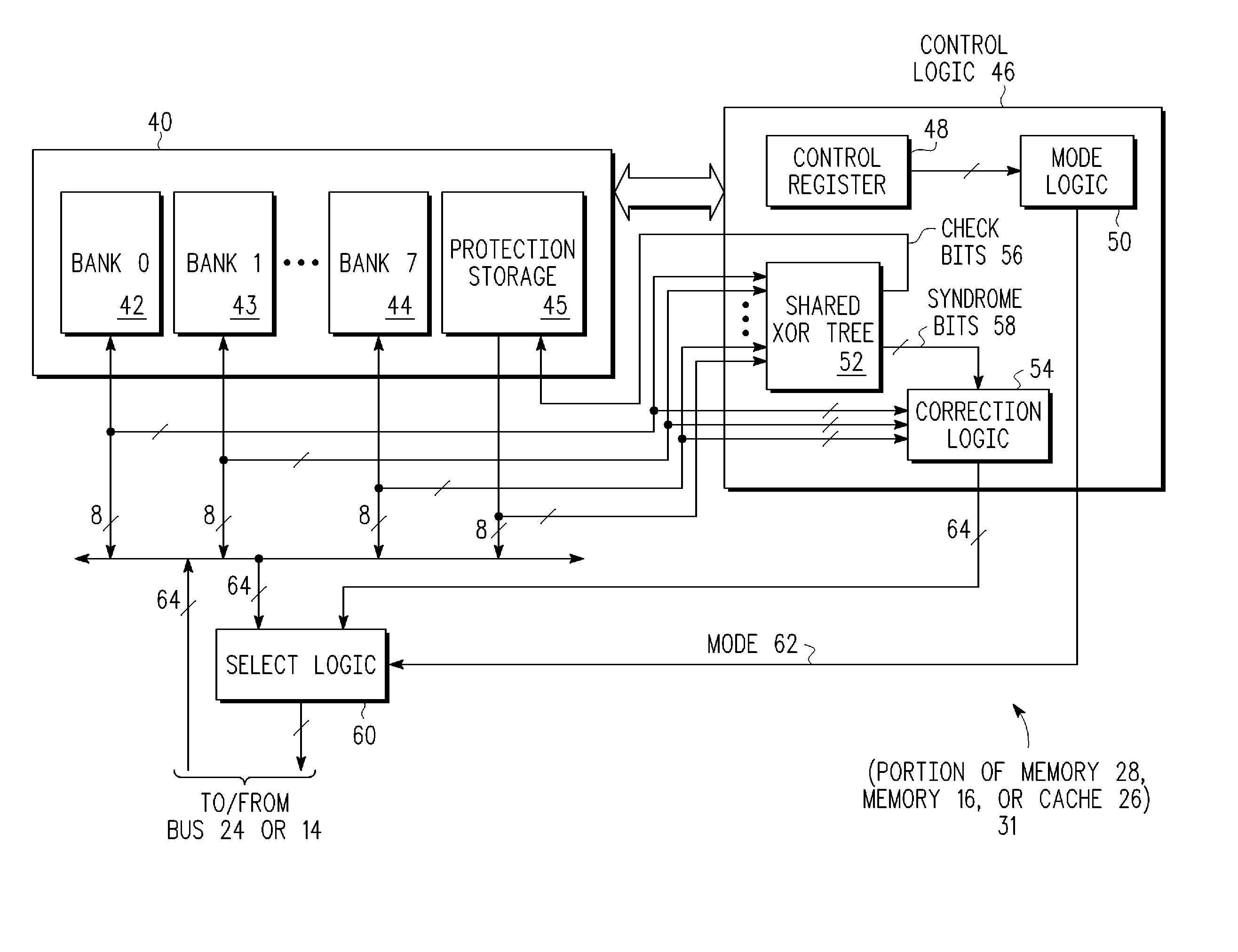

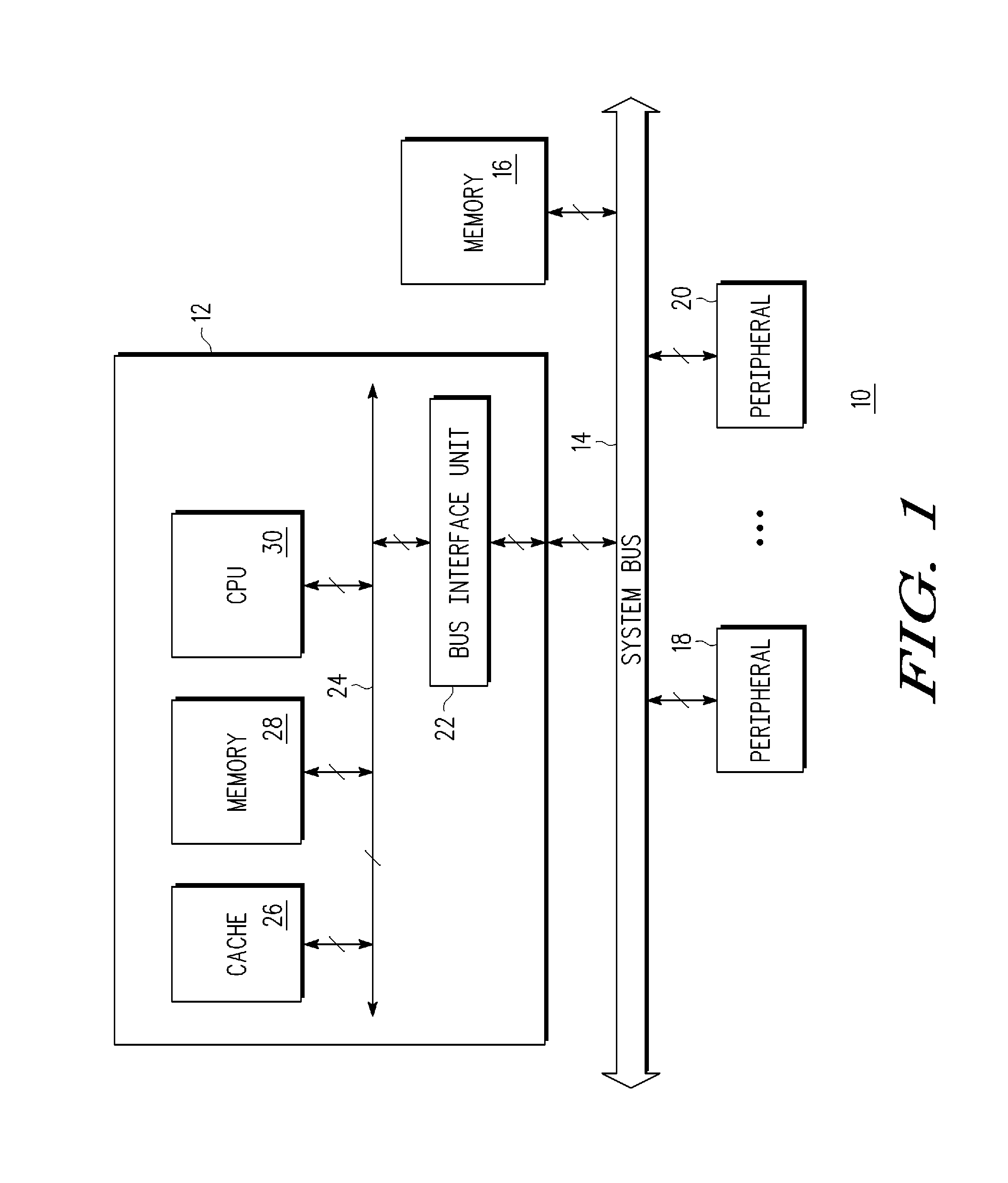

Configurable pipeline based on error detection mode in a data processing system

InactiveUS20090276609A1Error detection/correctionDigital computer detailsData processing systemInstruction pipeline

A method includes providing a data processor having an instruction pipeline, where the instruction pipeline has a plurality of instruction pipeline stages, and where the plurality of instruction pipeline stages includes a first instruction pipeline stage and a second instruction pipeline stage. The method further includes providing a data processor instruction that causes the data processor to perform a first set of computational operations during execution of the data processor instruction, performing the first set of computational operations in the first instruction pipeline stage if the data processor instruction is being executed and a first mode has been selected, and performing the first set of computational operations in the second instruction pipeline stage if the data processor instruction is being executed and a second mode has been selected.

Owner:RAMBUS INC

Methods and apparatus for improving fetching and dispatch of instructions in multithreaded processors

InactiveUS20070143580A1Improve performanceDigital computer detailsMultiprogramming arrangementsLoad instructionInstruction pipeline

In a multi-streaming processor, a system for fetching instructions from individual ones of multiple streams to an instruction pipeline is provided, comprising a fetch algorithm for selecting from which stream to fetch an instruction, and one or more predictors for forecasting whether a load instruction will hit or miss the cache or a branch will be taken. The prediction or predictions are used by the fetch algorithm in determining from which stream to fetch. In some cases probabilities are determined and also used in decisions, and predictors may be used at either or both of fetch and dispatch stages.

Owner:ARM FINANCE OVERSEAS LTD

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com