Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

210 results about "Address generation unit" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

The address generation unit (AGU), sometimes also called address computation unit (ACU), is an execution unit inside central processing units (CPUs) that calculates addresses used by the CPU to access main memory. By having address calculations handled by separate circuitry that operates in parallel with the rest of the CPU, the number of CPU cycles required for executing various machine instructions can be reduced, bringing performance improvements.

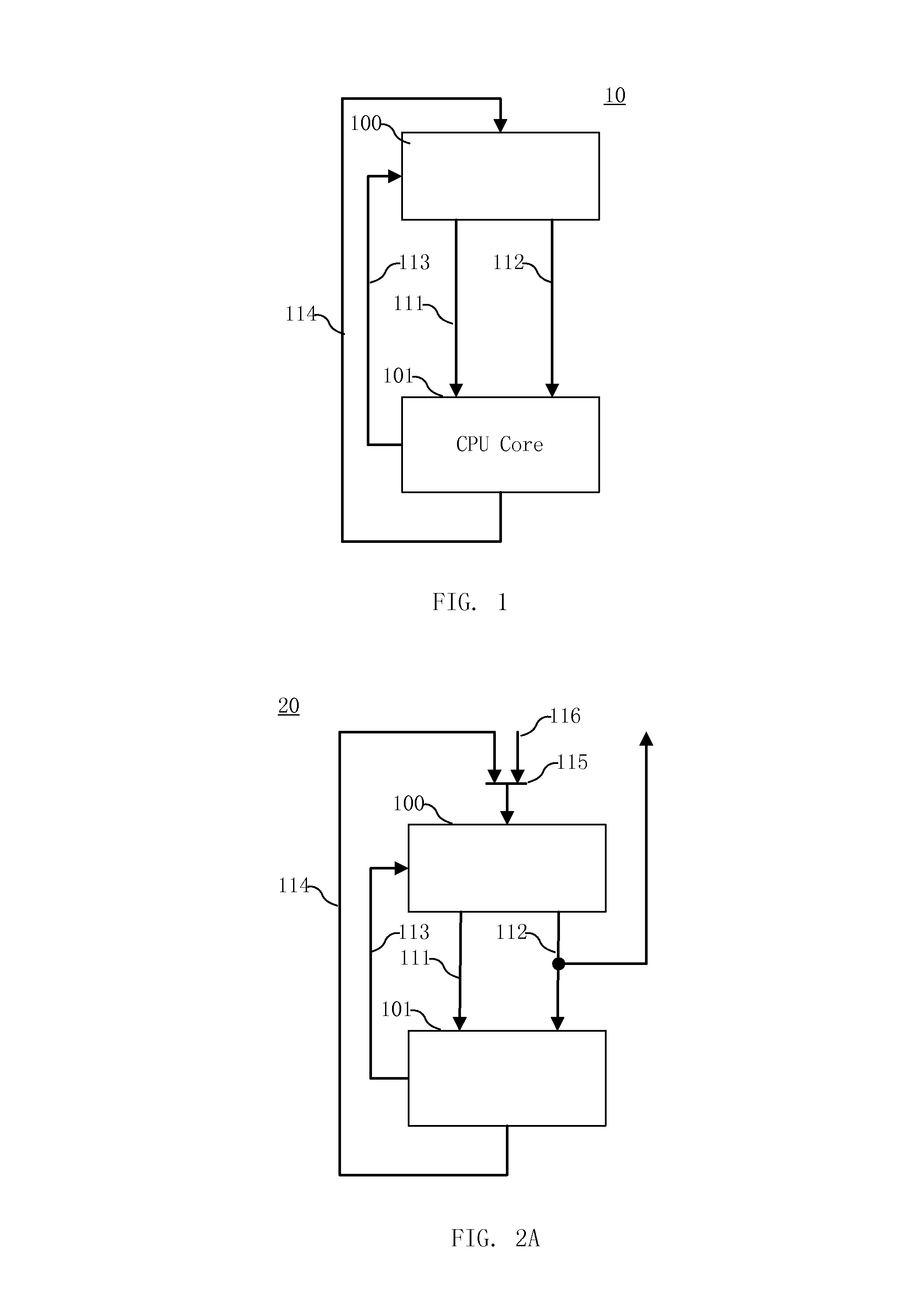

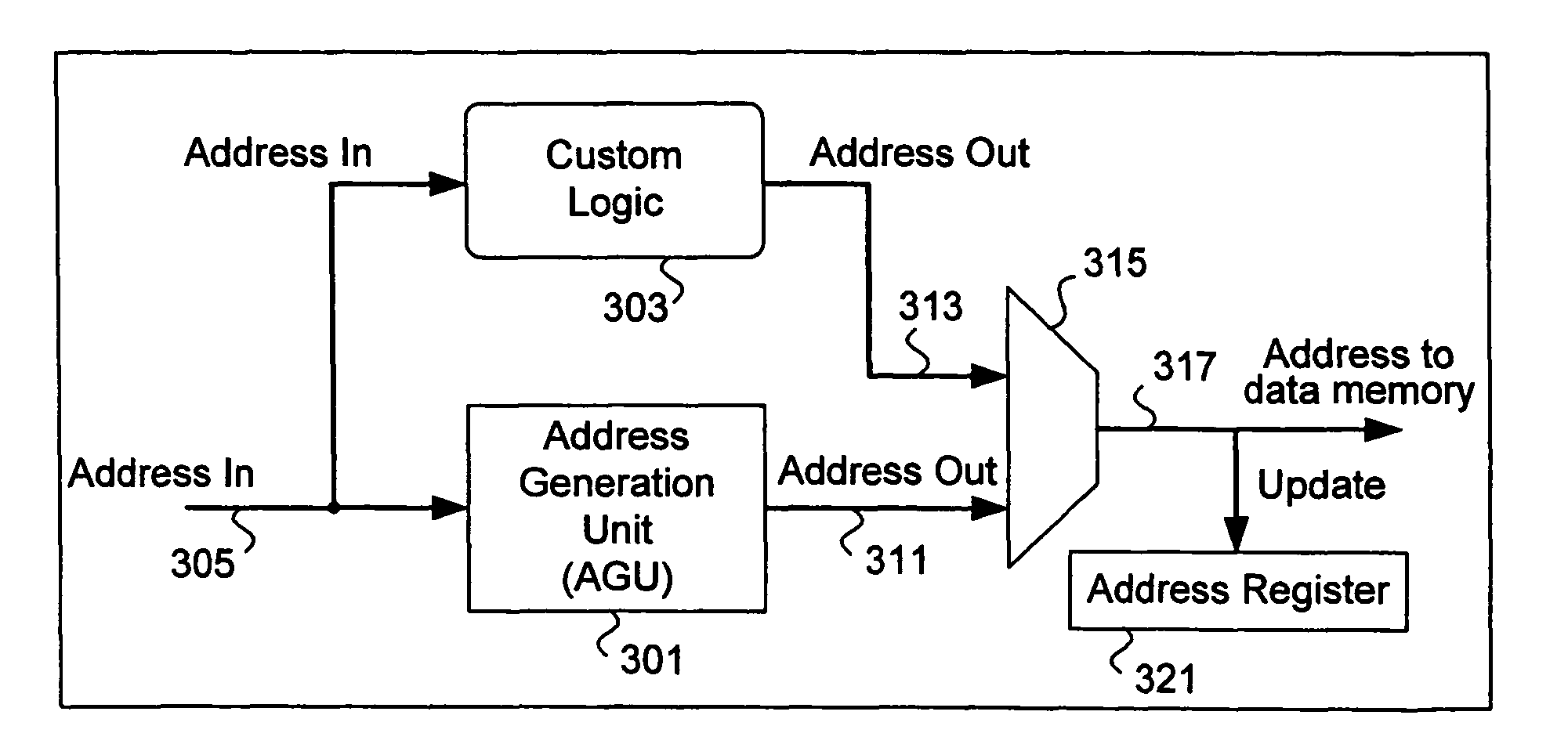

Processor-cache system and method

ActiveUS9047193B2Shorten the counting processEfficient and uniform structureEnergy efficient ICTRegister arrangementsAddress generation unitProcessor register

A digital system is provided. The digital system includes an execution unit, a level-zero (L0) memory, and an address generation unit. The execution unit is coupled to a data memory containing data to be used in operations of the execution unit. The L0 memory is coupled between the execution unit and the data memory and configured to receive a part of the data in the data memory. The address generation unit is configured to generate address information for addressing the L0 memory. Further, the L0 memory provides at least two operands of a single instruction from the part of the data to the execution unit directly, without loading the at least two operands into one or more registers, using the address information from the address generation unit.

Owner:SHANGHAI XINHAO MICROELECTRONICS

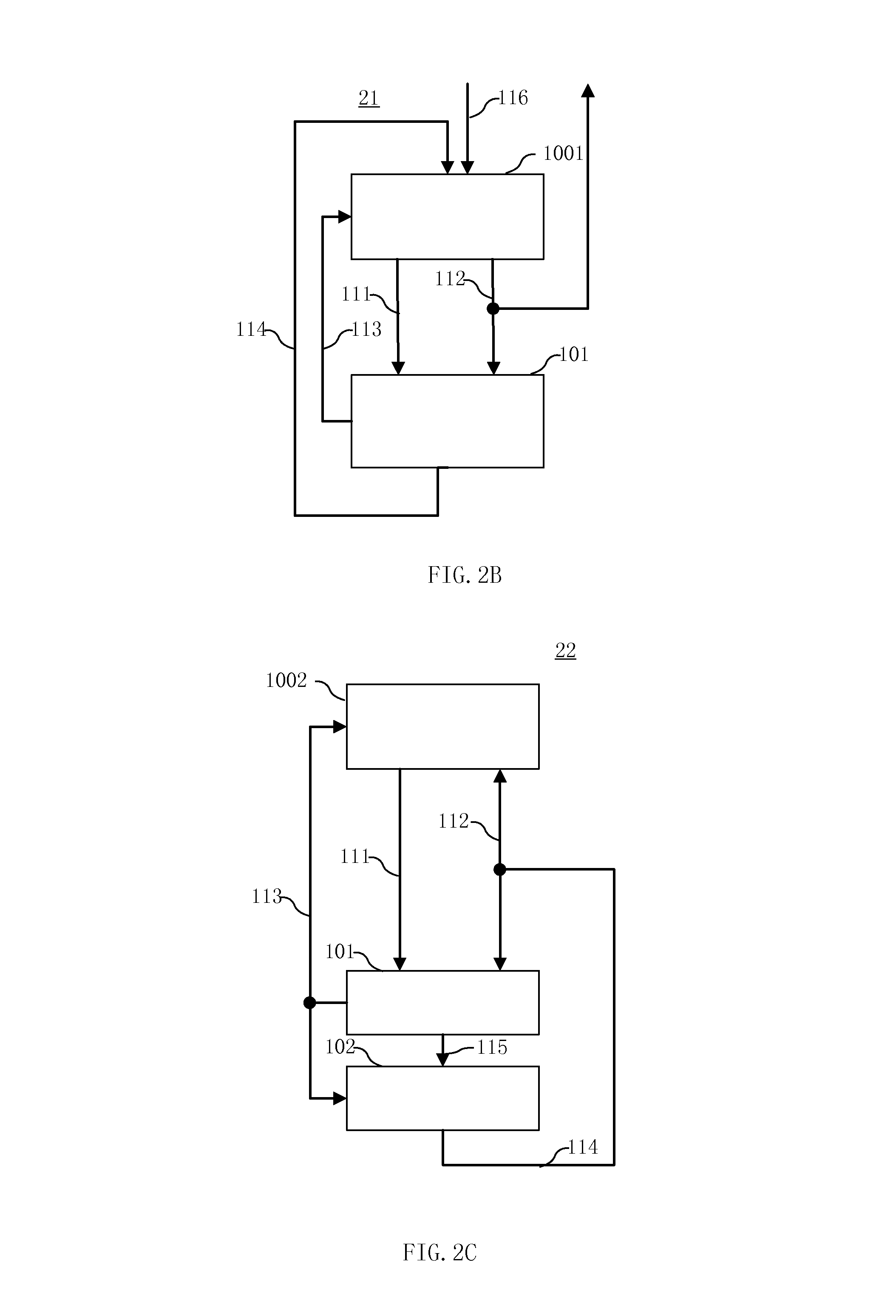

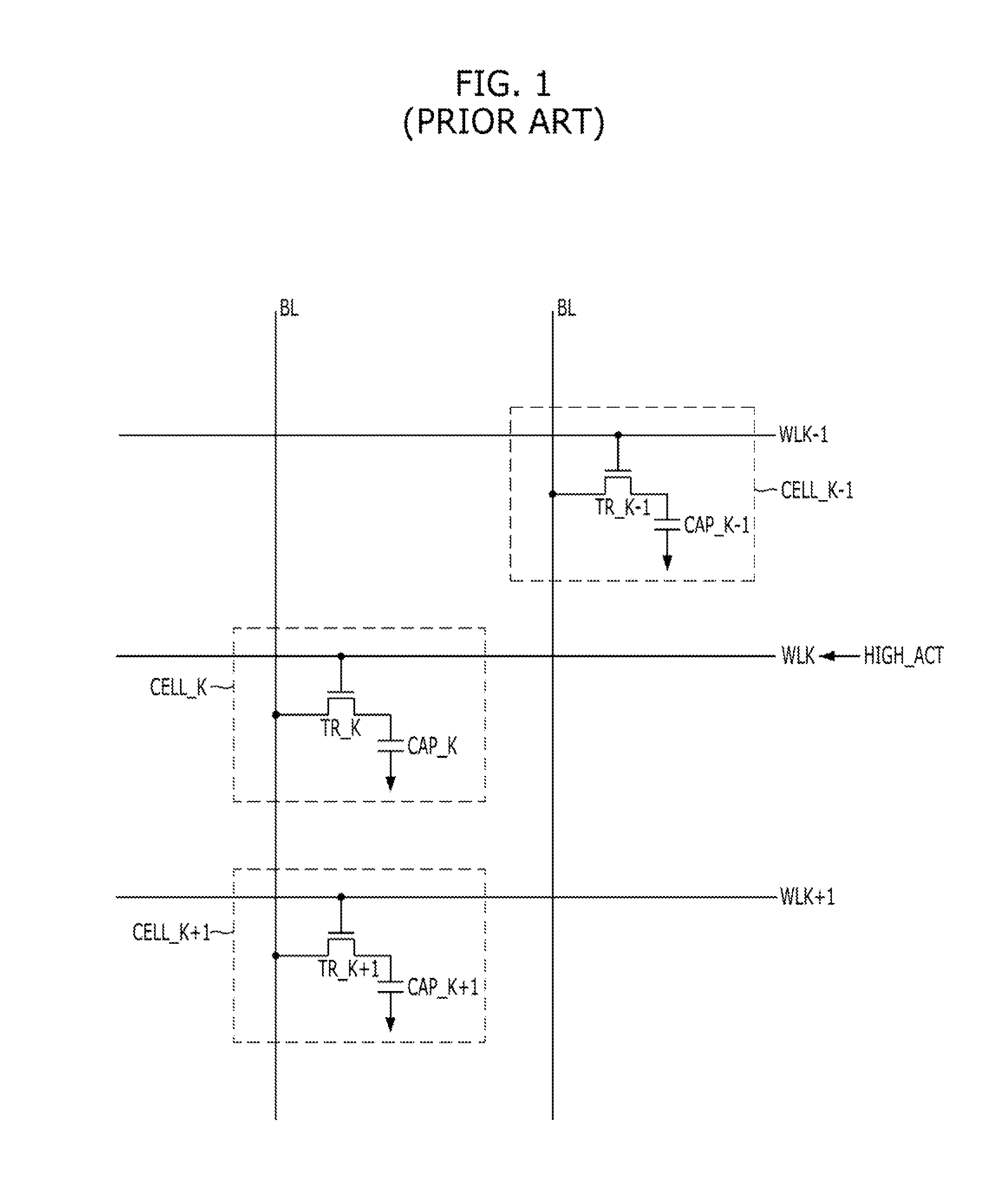

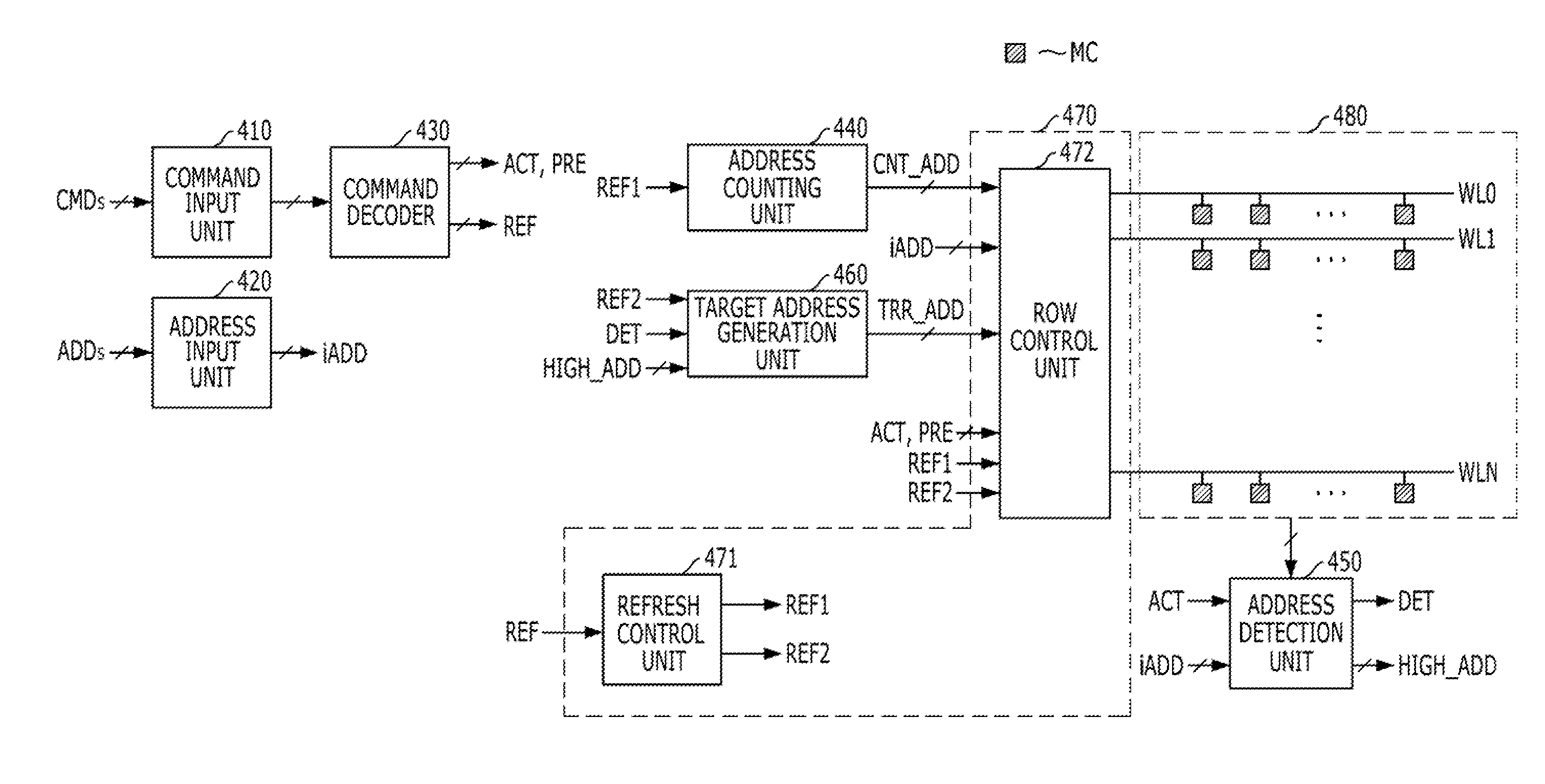

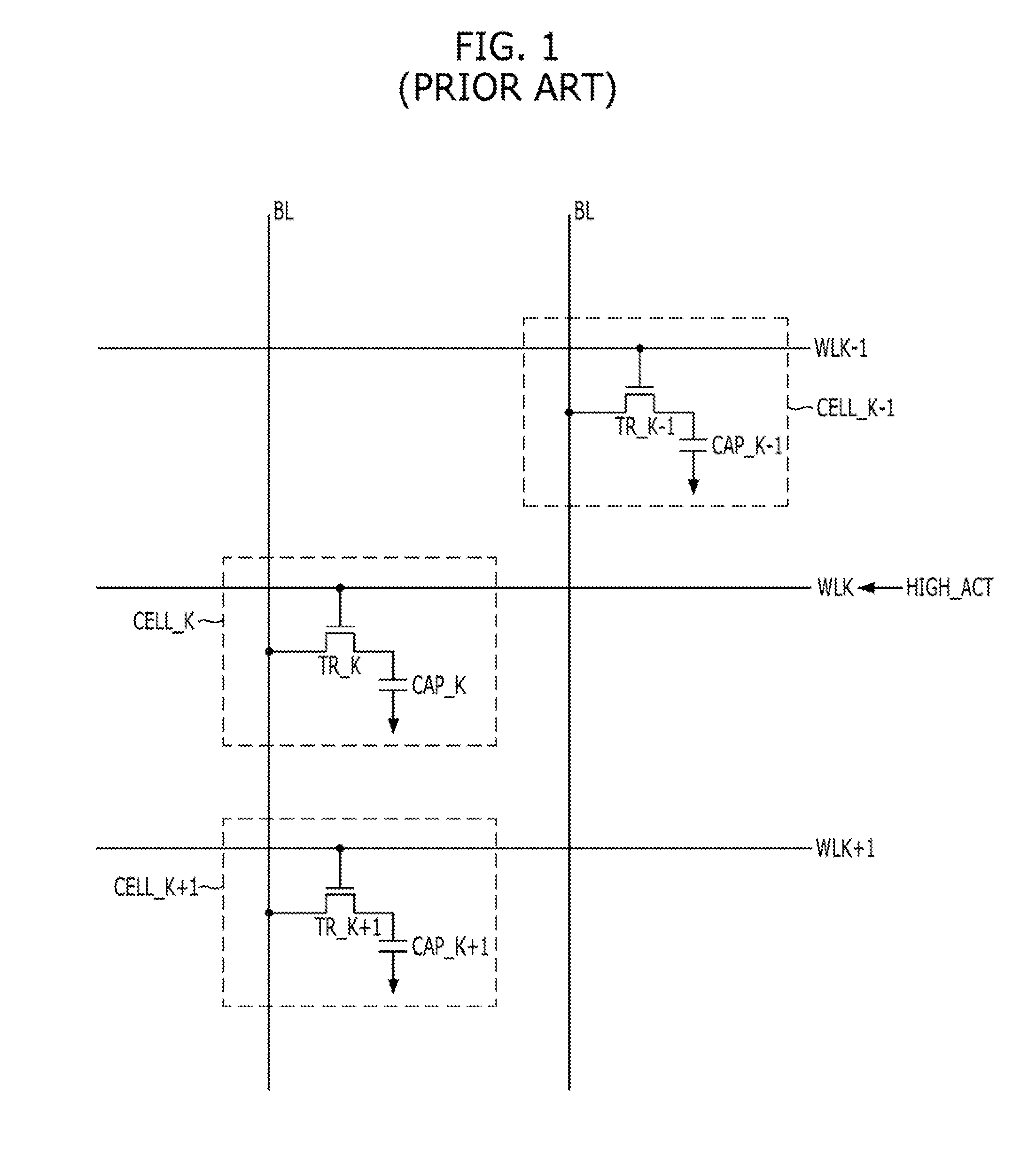

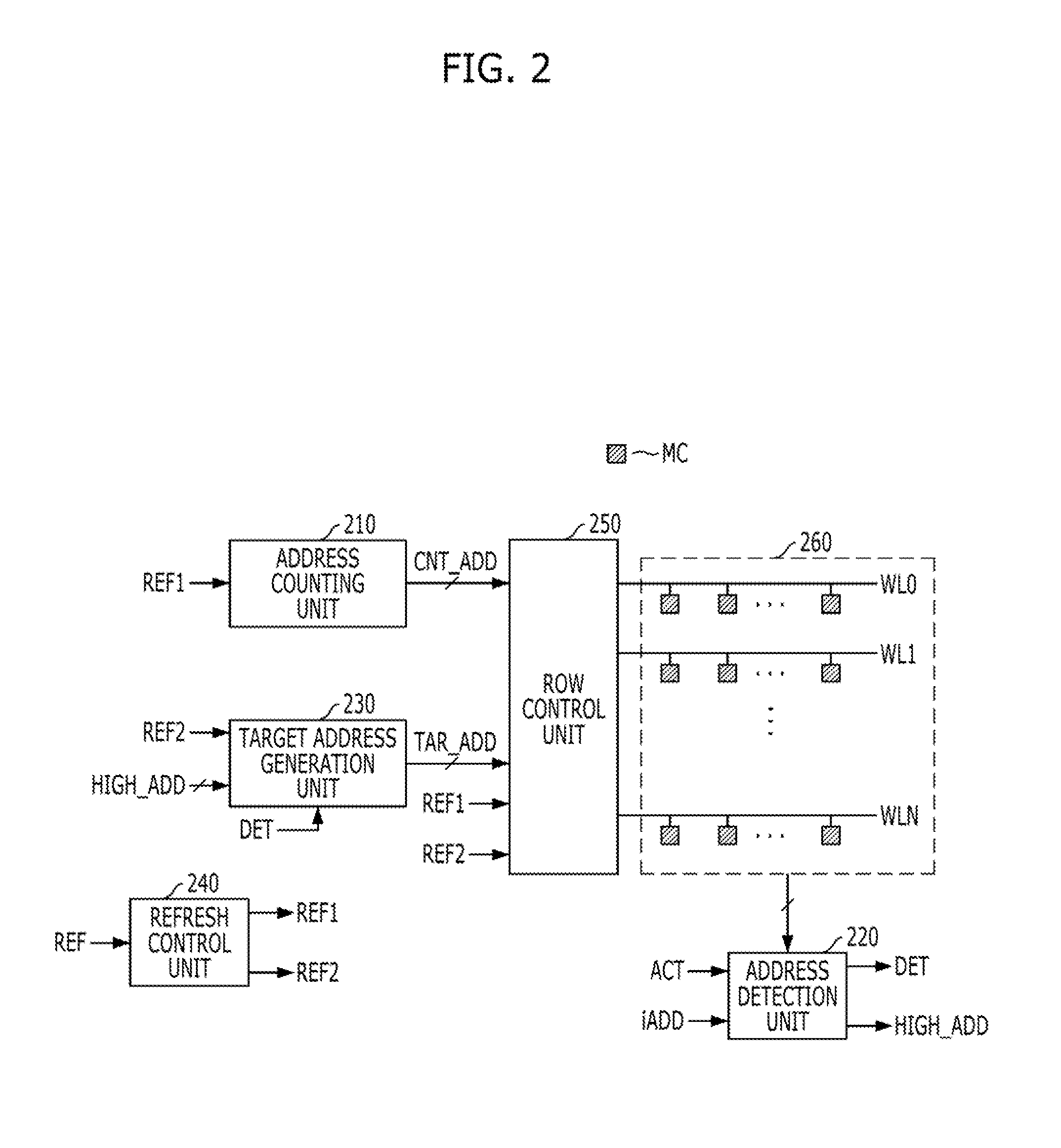

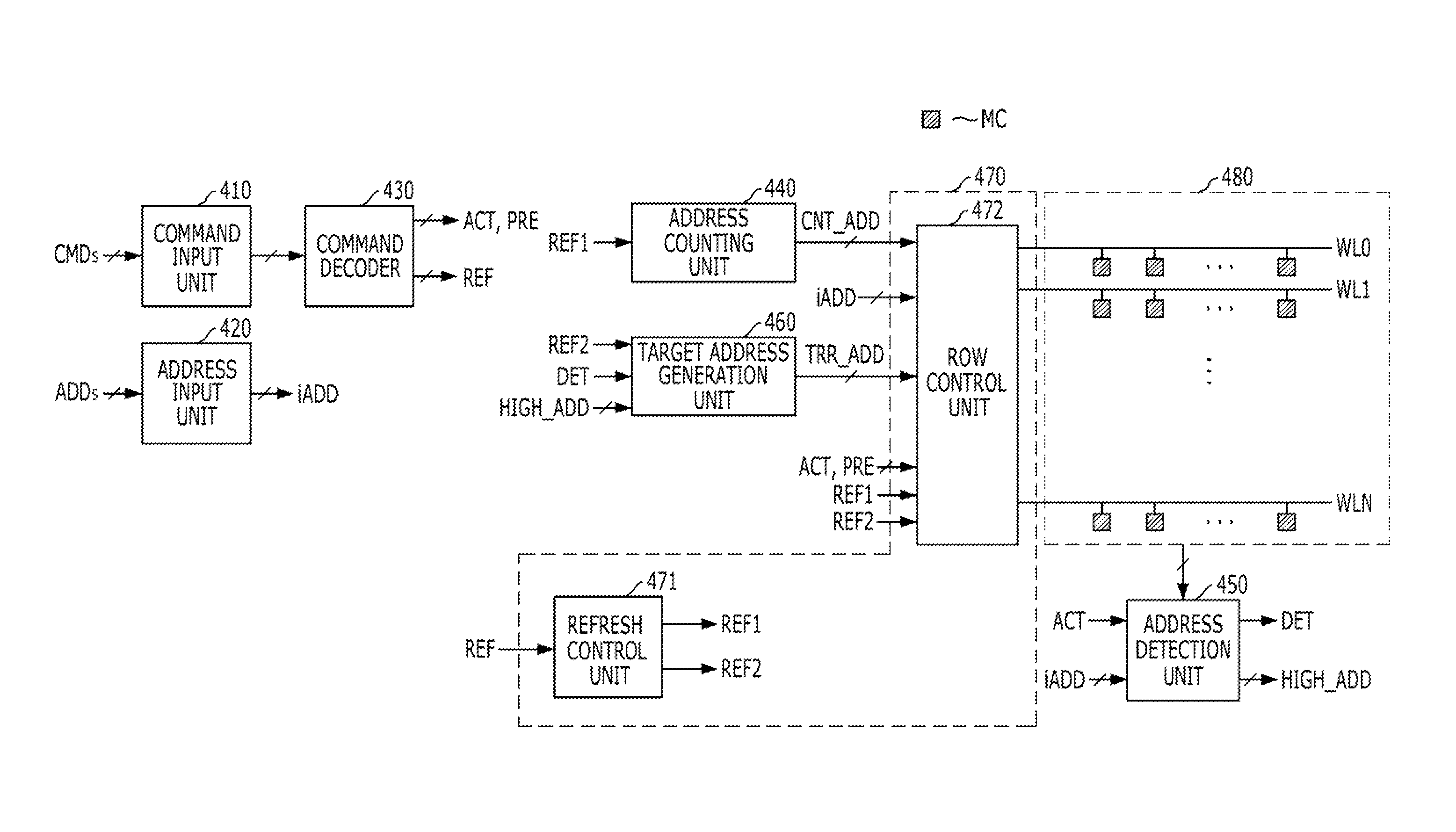

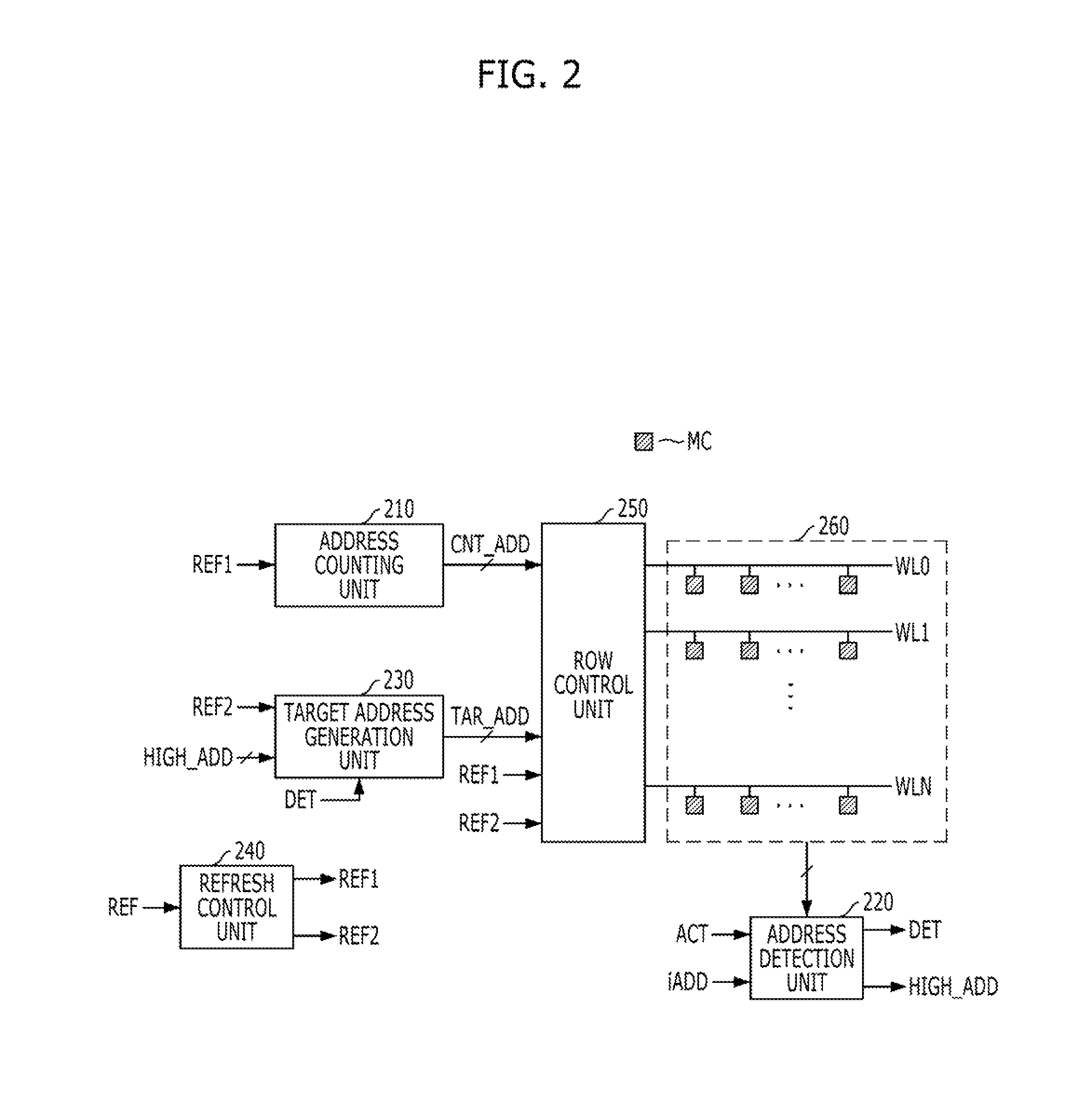

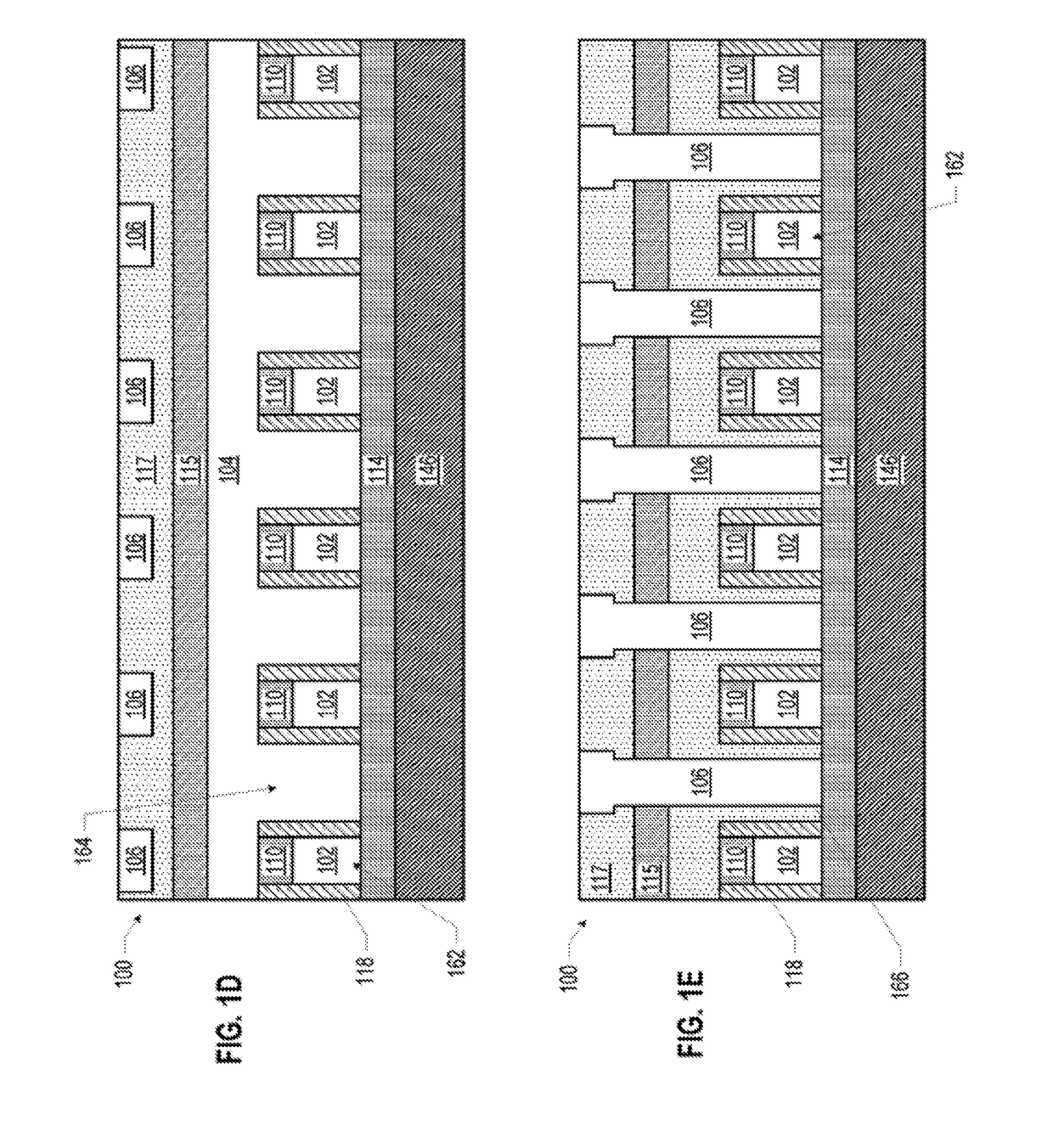

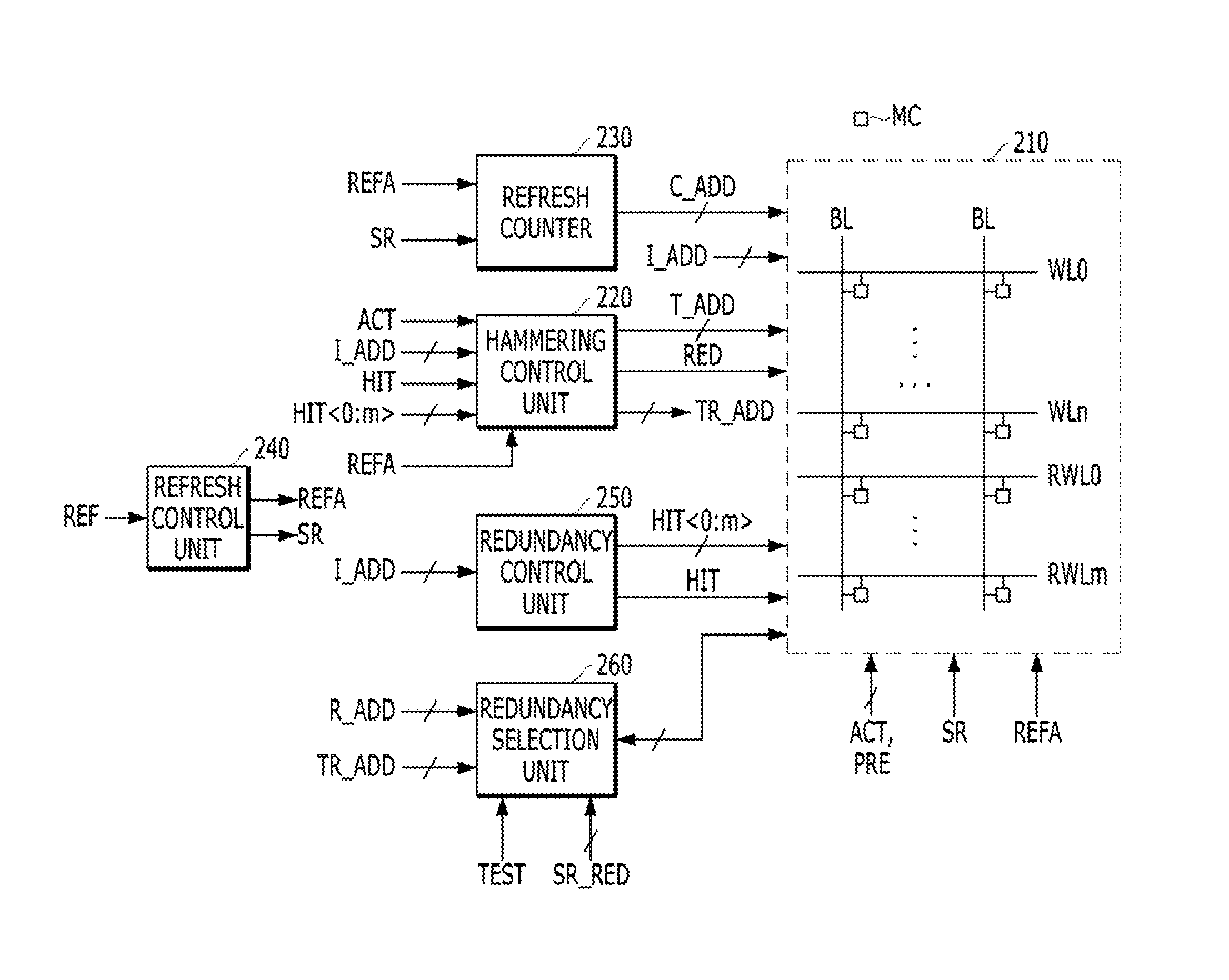

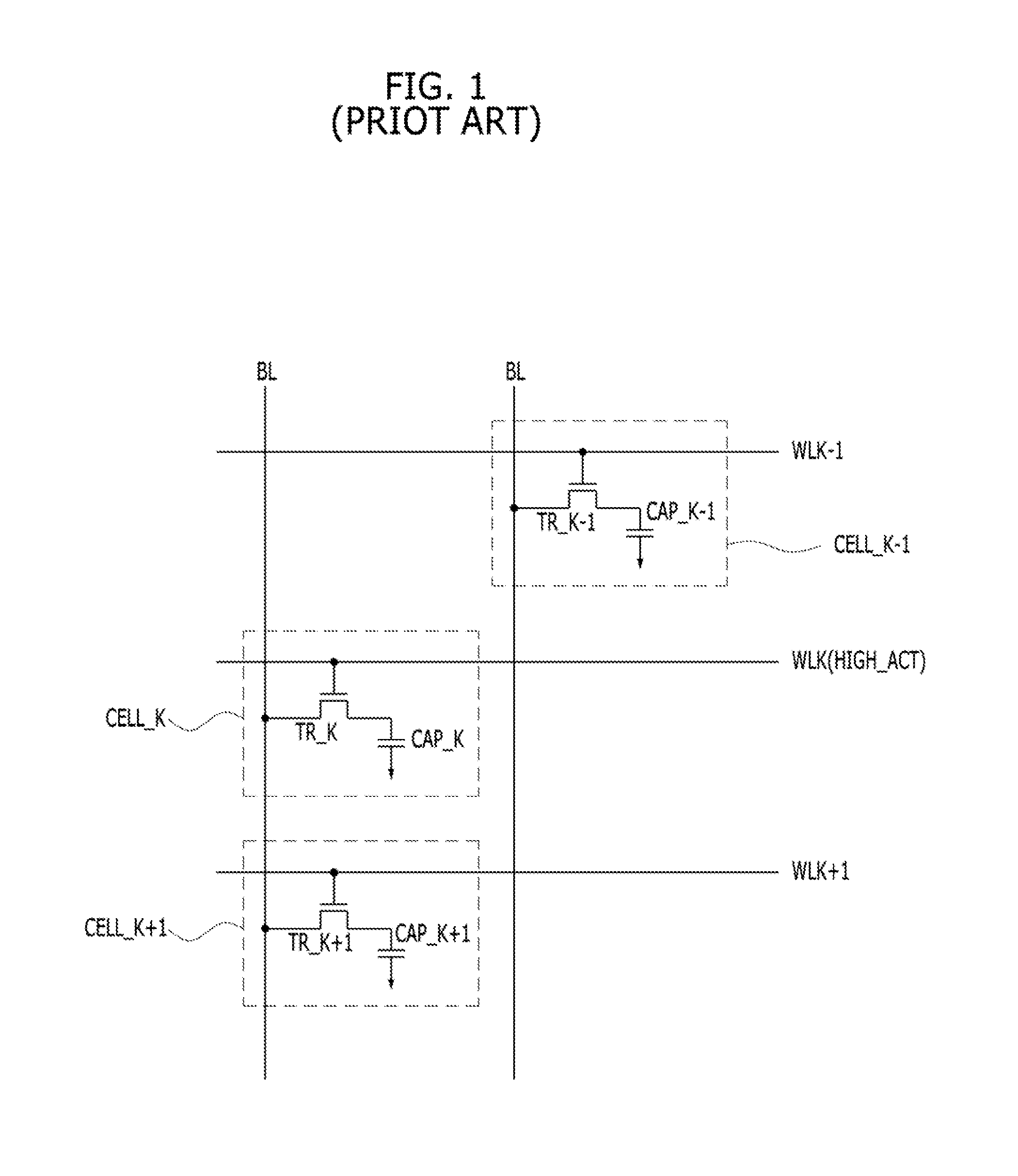

Memory and memory system including the same

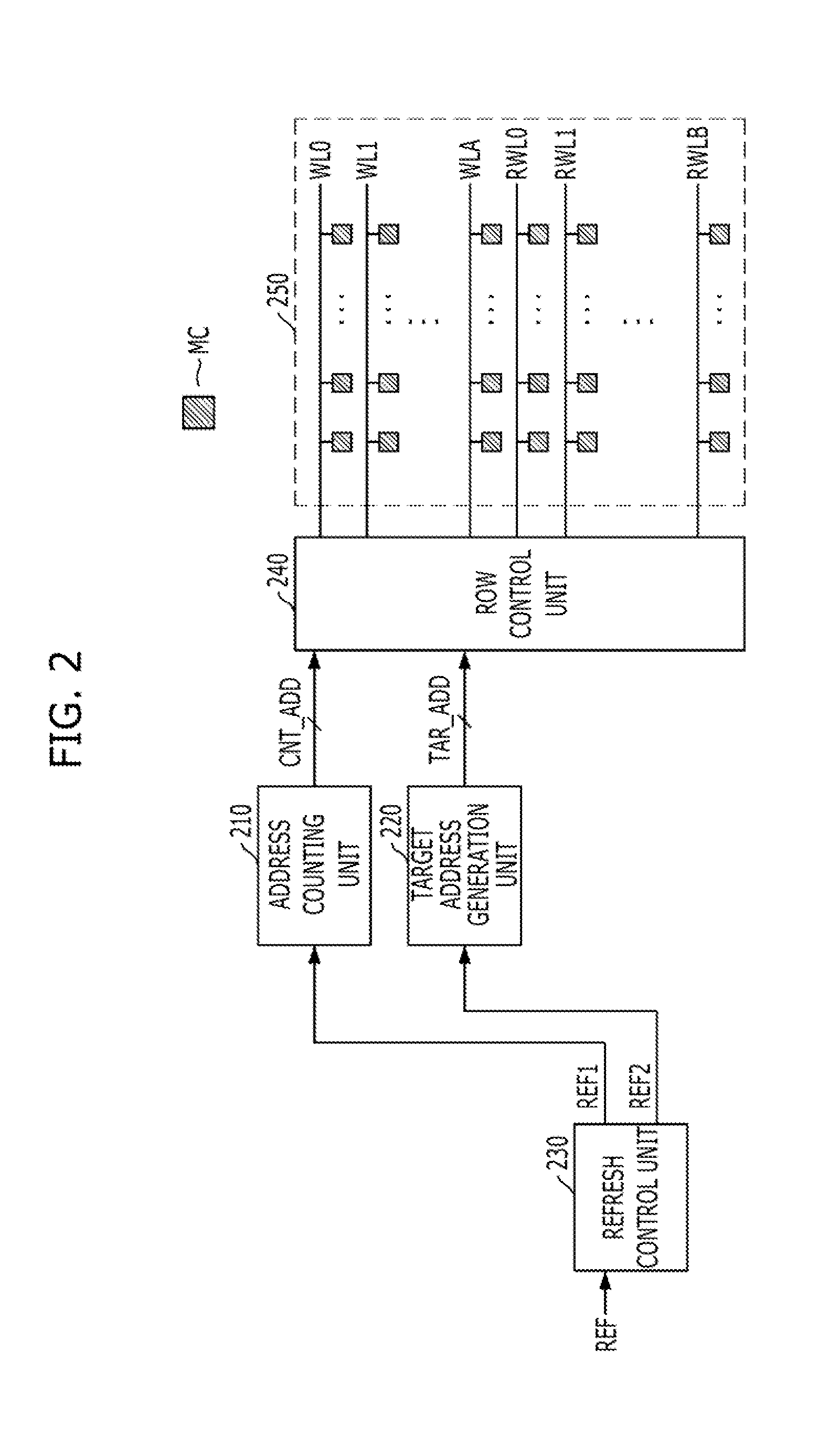

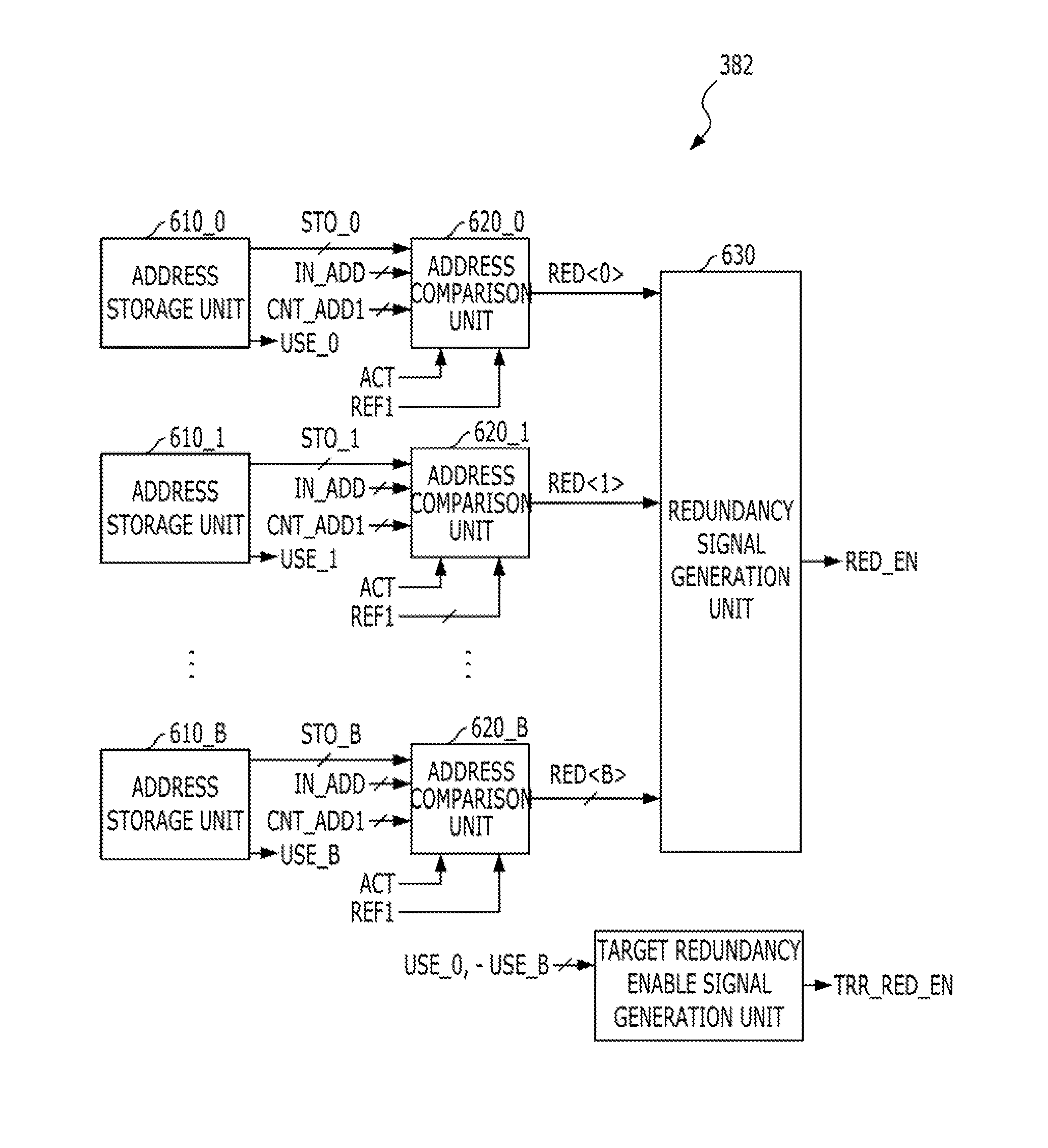

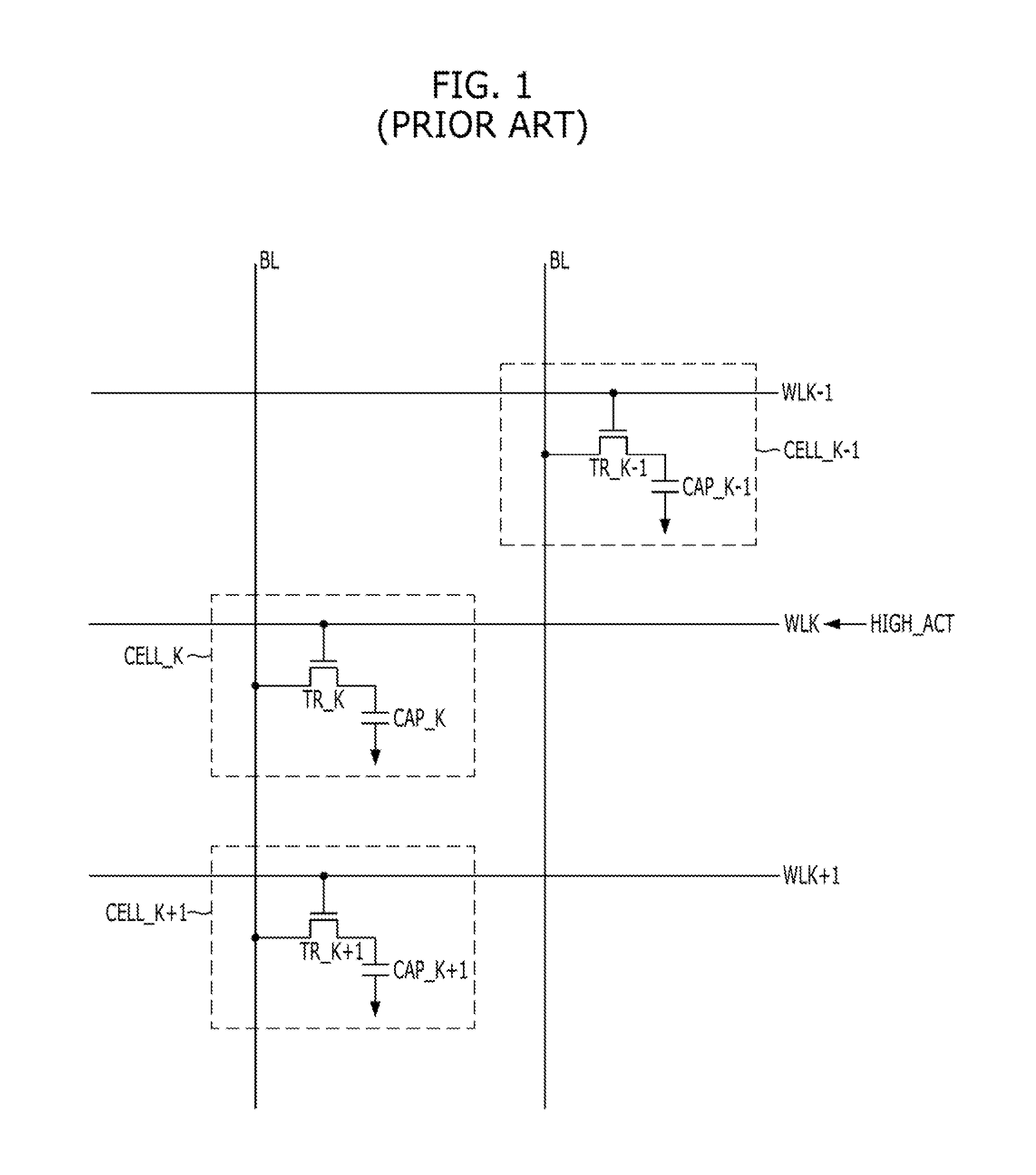

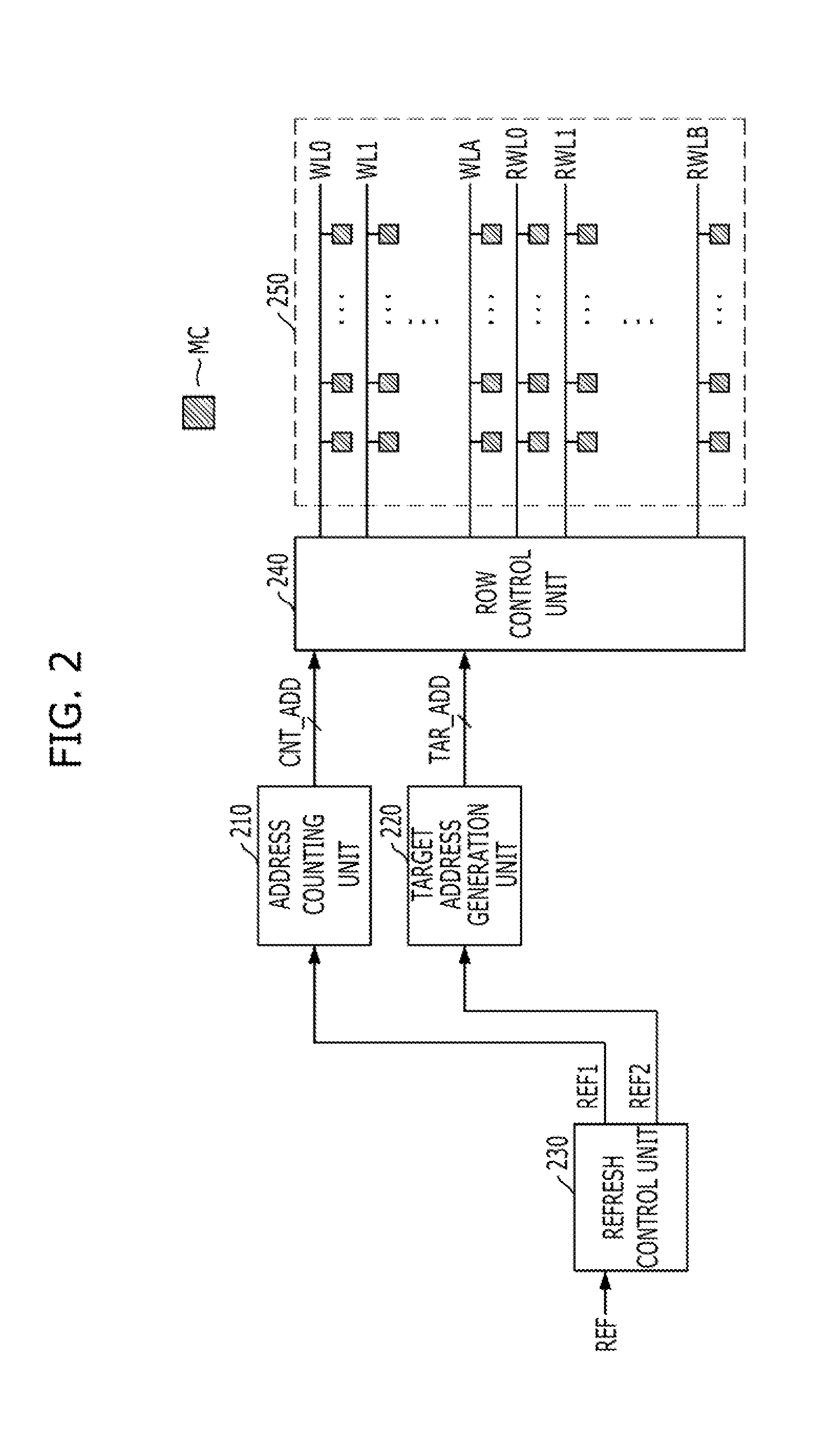

A memory may include a plurality of word lines, one or more redundancy word lines for replacing one or more word lines among the plurality of word lines, a target address generation unit suitable for generating one or more target addresses using a stored address, and a control unit suitable for sequentially refreshing the plurality of word lines in response to a refresh command which is periodically inputted, refreshing a word line selected based on the target address when the refresh command is inputted M times, and refreshing the one or more redundancy word lines whenever the refresh command is inputted N times, wherein the M and N are natural numbers.

Owner:SK HYNIX INC

Memory and memory system including the same

A memory may include a plurality of word lines, one or more redundancy word lines for replacing one or more word lines among the plurality of word lines, a target address generation unit suitable for generating one or more target addresses using a stored address, and a control unit suitable for sequentially refreshing the plurality of word lines in response to a refresh command which is periodically inputted, refreshing a word line selected based on the target address when the refresh command is inputted M times, and refreshing the one or more redundancy word lines whenever the refresh command is inputted N times, wherein the M and N are natural numbers.

Owner:SK HYNIX INC

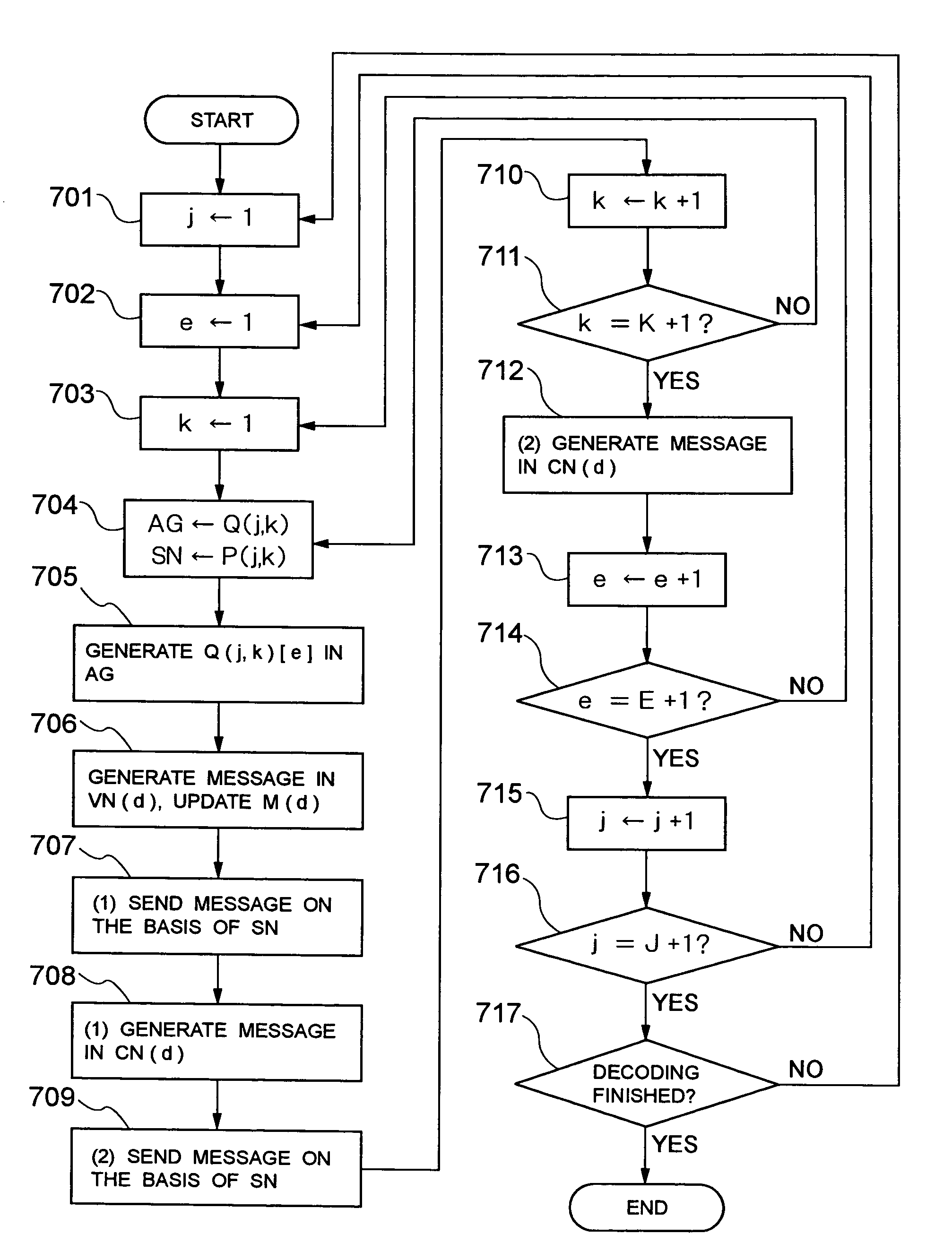

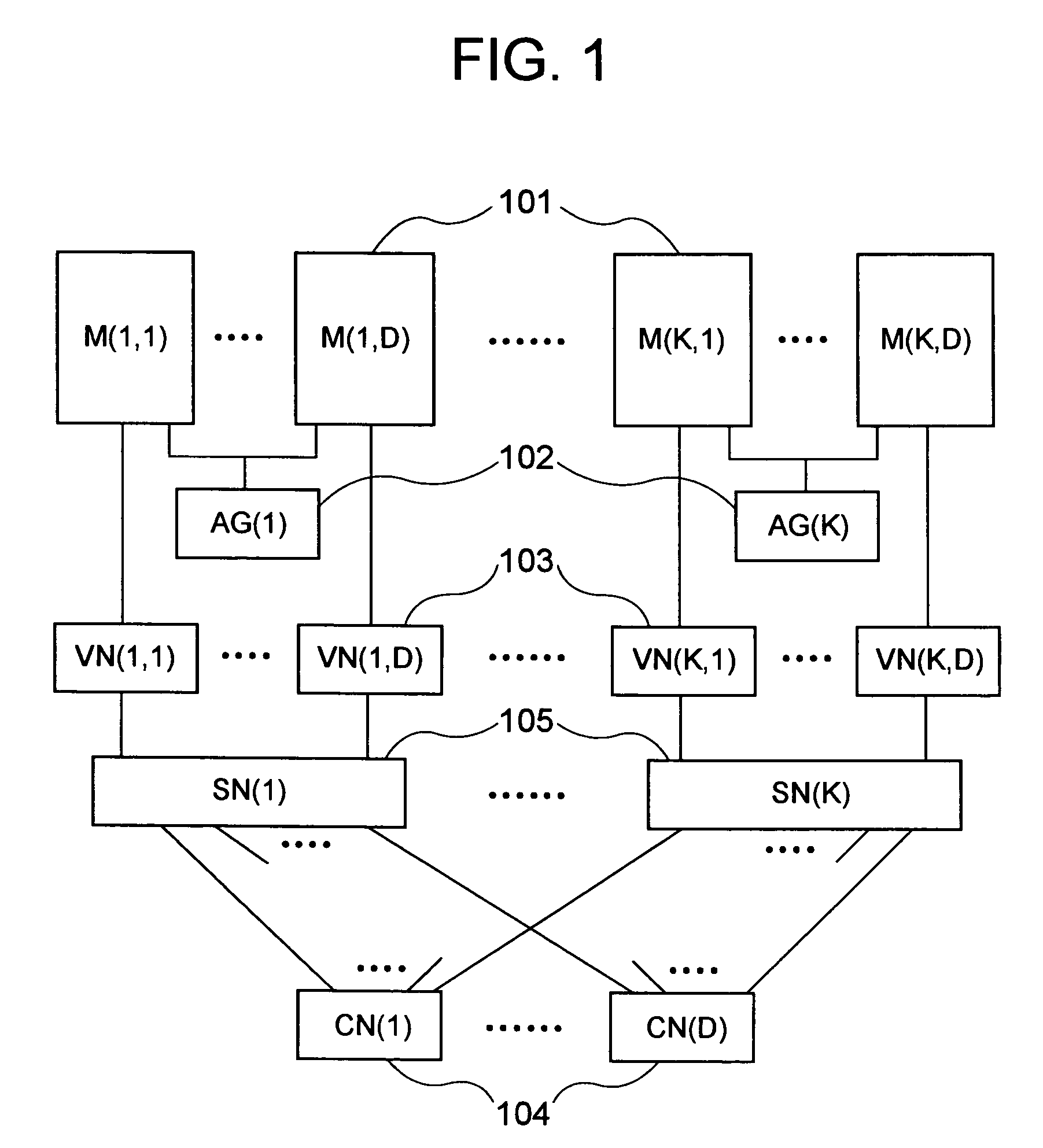

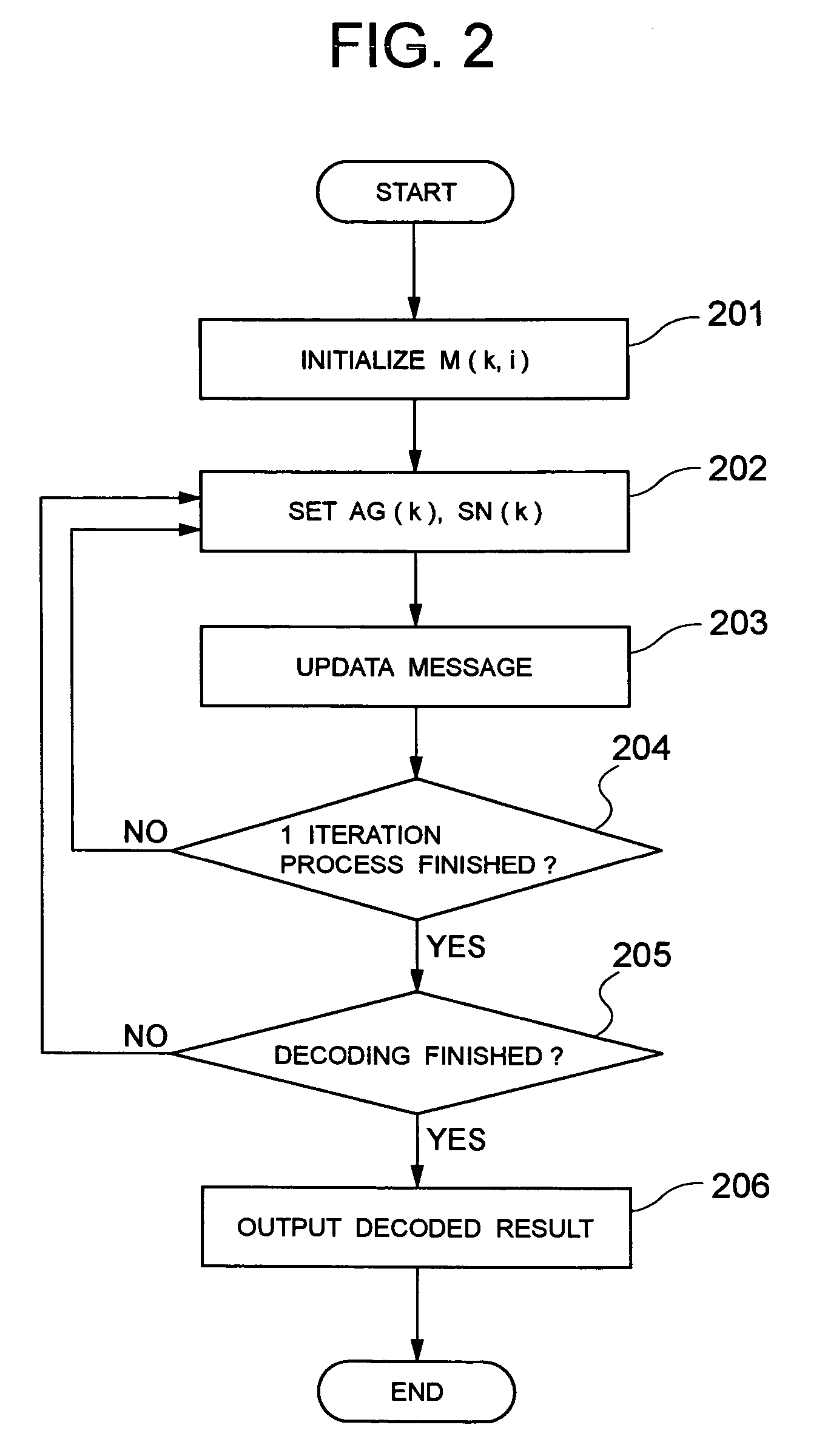

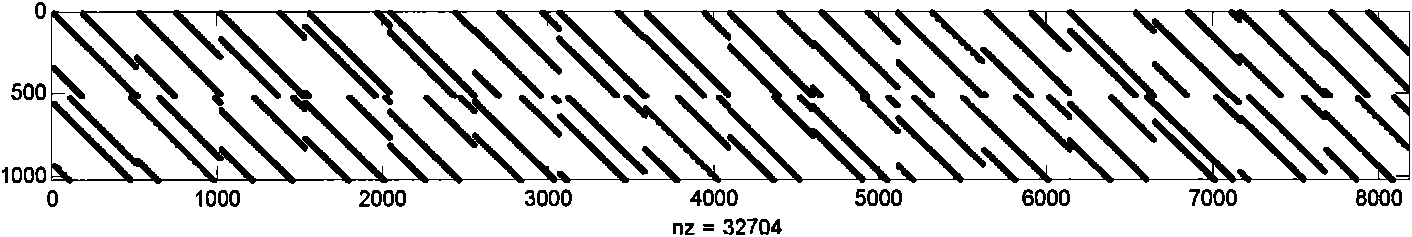

Device, program, and method for decoding LDPC codes

ActiveUS7373581B2Simpler and flexibleError preventionTransmission systemsAddress generation unitMessage passing decoding

A parallel decoder, which is simpler and more flexible than conventional devices, is provided in decoding device for a LDPC code. The present invention includes a plurality of memory units for storing a received value and a message generated during a Message-Passing decoding, a plurality of variable node function units, a plurality of check node function units, a plurality of address generation units for generating an address of each of memory units, and a plurality of shuffle network units for determining a connection between variable node function units and check node function units. An address generation unit generates an address on the basis of a plurality of permutations. Each shuffle network unit is connected to some of the variable node function units. This connection is determined on the basis of a plurality of permutations. A change of the permutations in the address generation units and a change of the permutations in the shuffle network units are performed in the same cycle in a decoding process.

Owner:NEC CORP

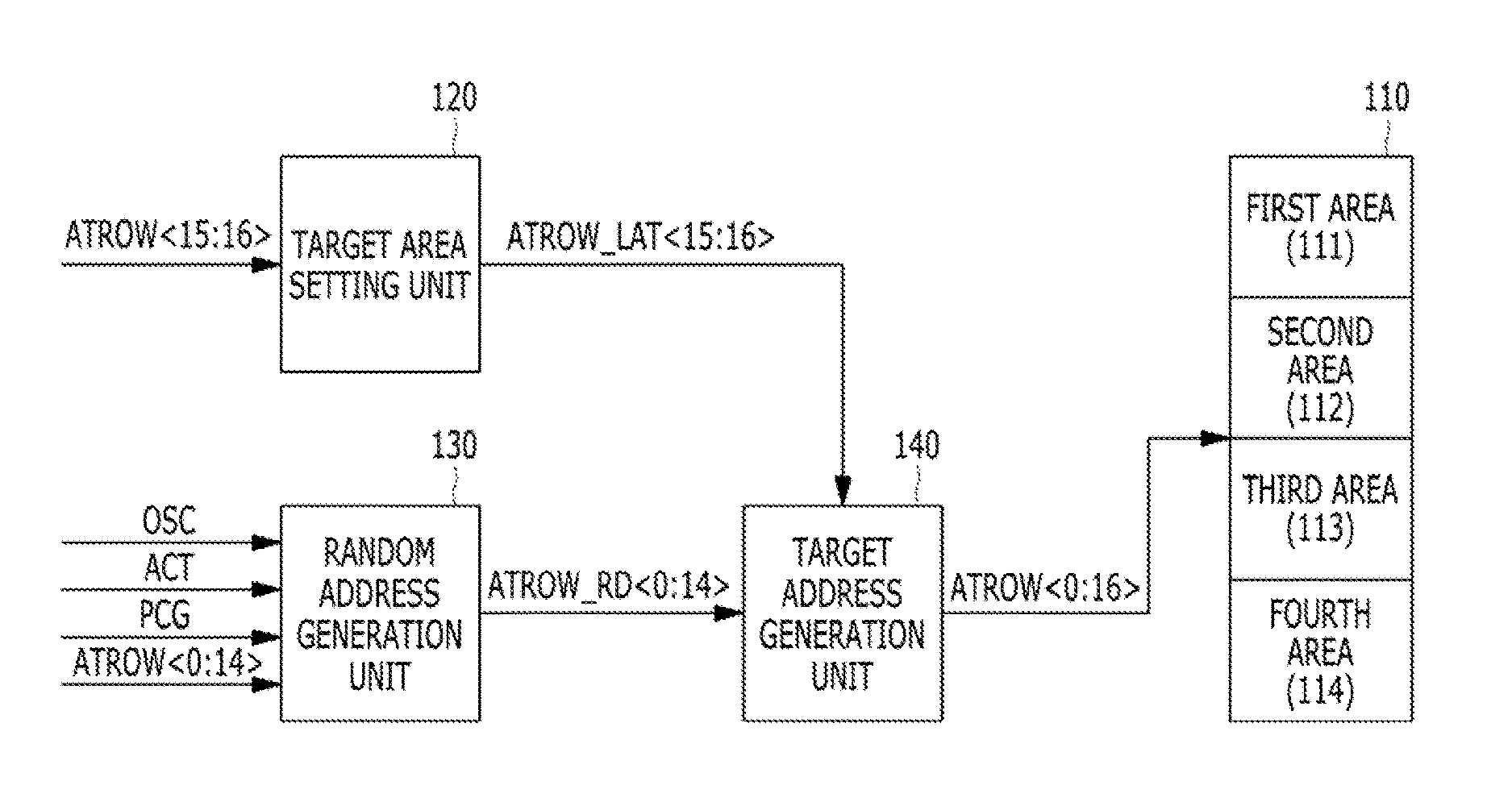

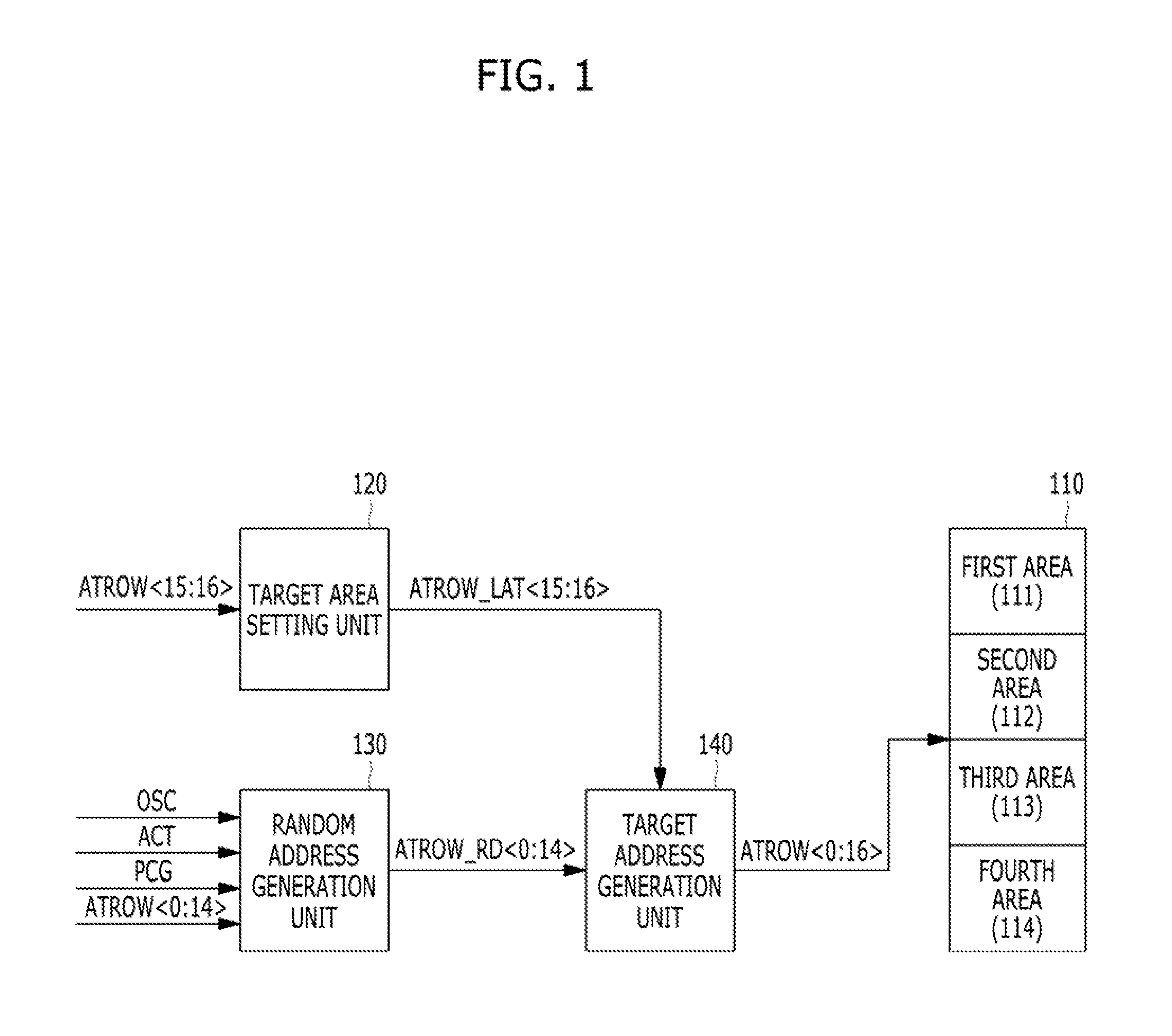

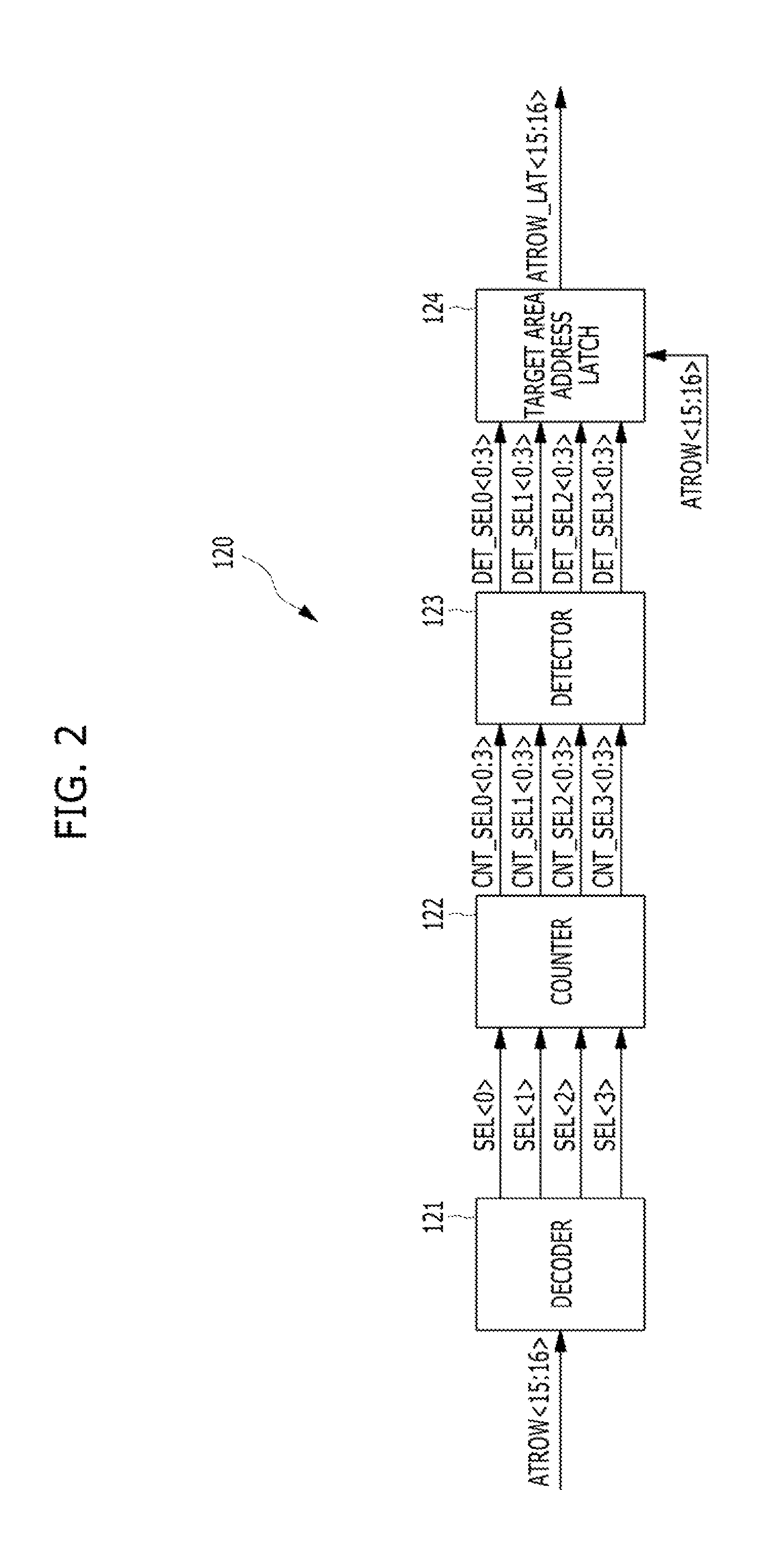

Semiconductor memory device for performing refresh operation

A semiconductor memory device includes: a plurality of memory areas; a target area setting unit suitable for designating a target area according to a number of accesses to the respective memory areas; a random address generation unit suitable for generating a random address within the respective memory areas in a random manner; a target address generation unit suitable for generating a target address based on the target area and the random address; and a driving unit suitable for performing a smart refresh operation according to the target address.

Owner:SK HYNIX INC

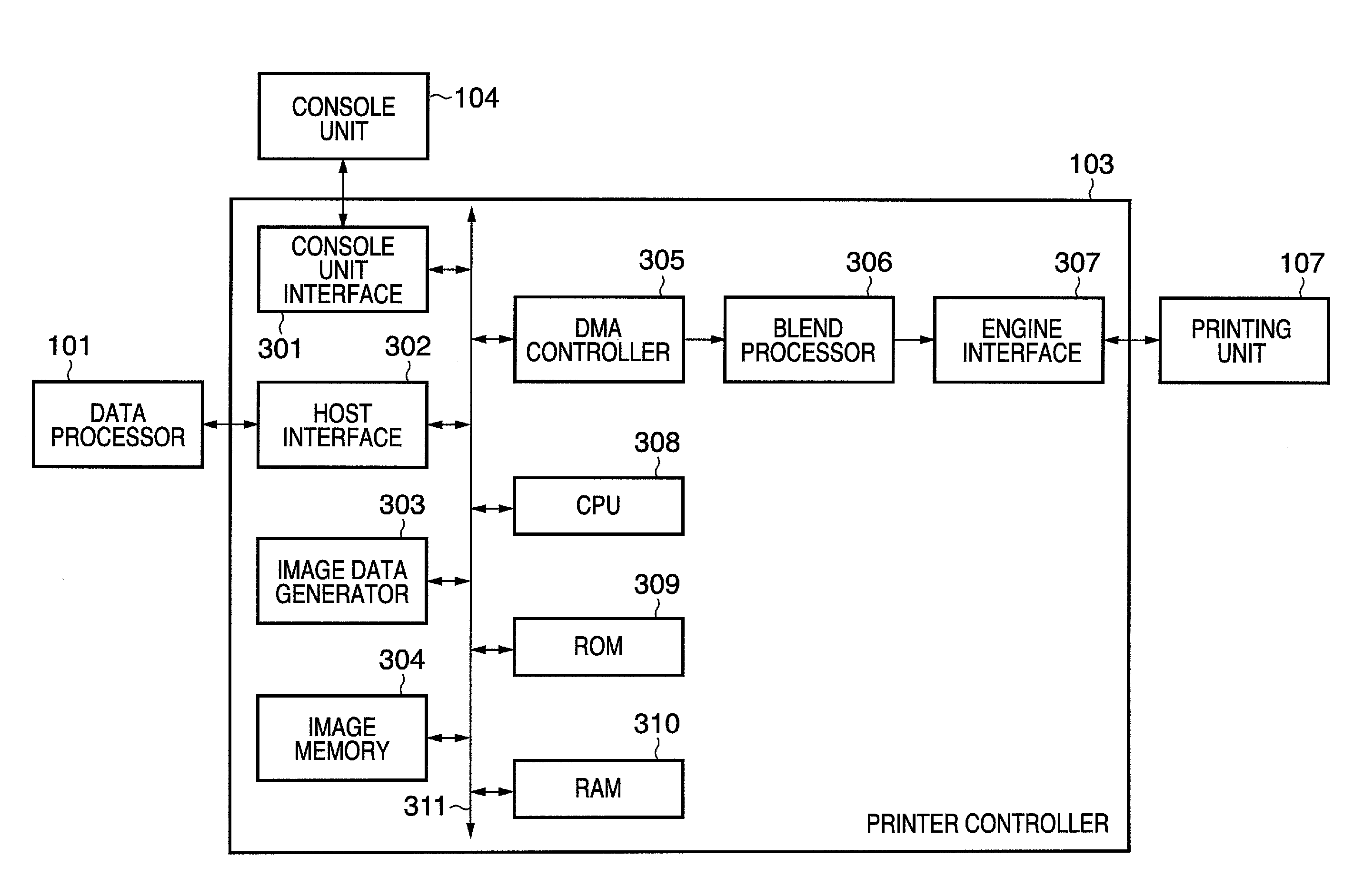

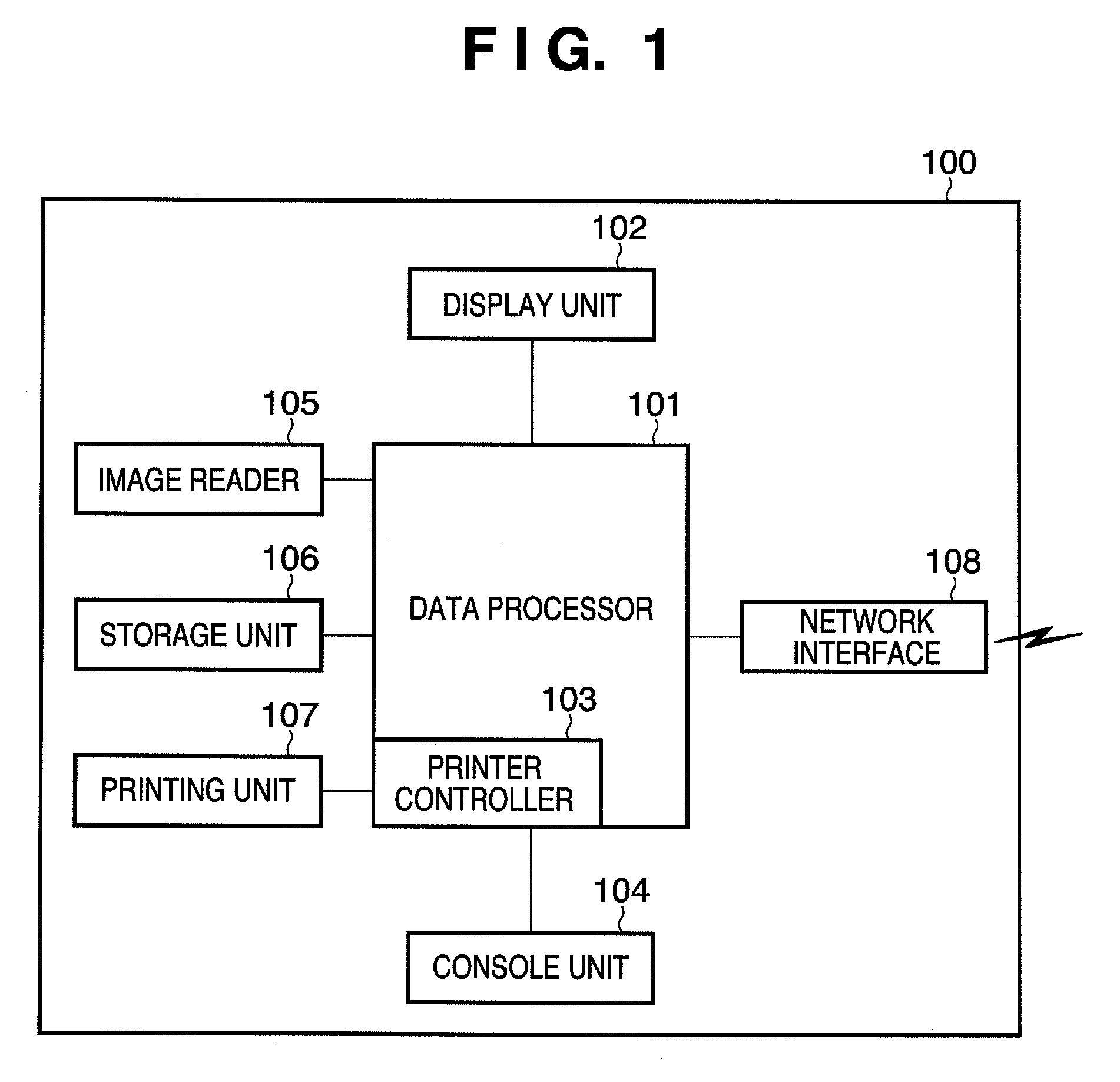

Image forming apparatus and method thereof

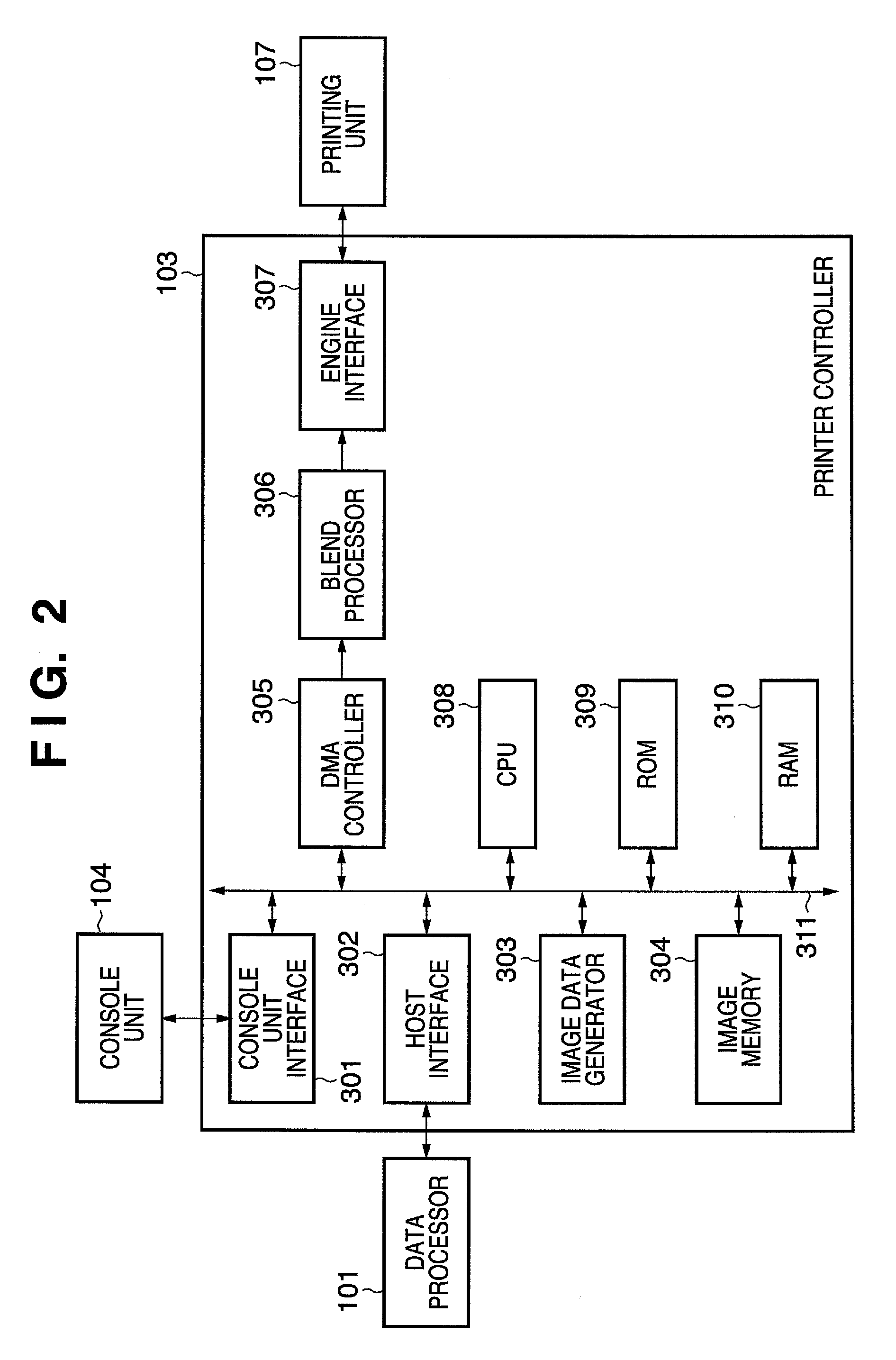

ActiveUS20080112012A1Simple and cost-effective configurationDigitally marking record carriersVisual presentation using printersAddress generation unitComputer architecture

In a line by line image forming apparatus, line switching information which depends on scan line curve and an overlap data length to be read that overlaps across a plurality of lines before and after a switching position instructed by line switching information are set in a register. If switching to the line above or below is instructed by the line switching information, an address generating unit, when reading image data from an image memory, generates the read address and read data length of the image memory in accordance with the line switching information and the overlap data length, and reads image data corresponding to a current line and the line above or below the current line in accordance with the generated data.

Owner:CANON KK

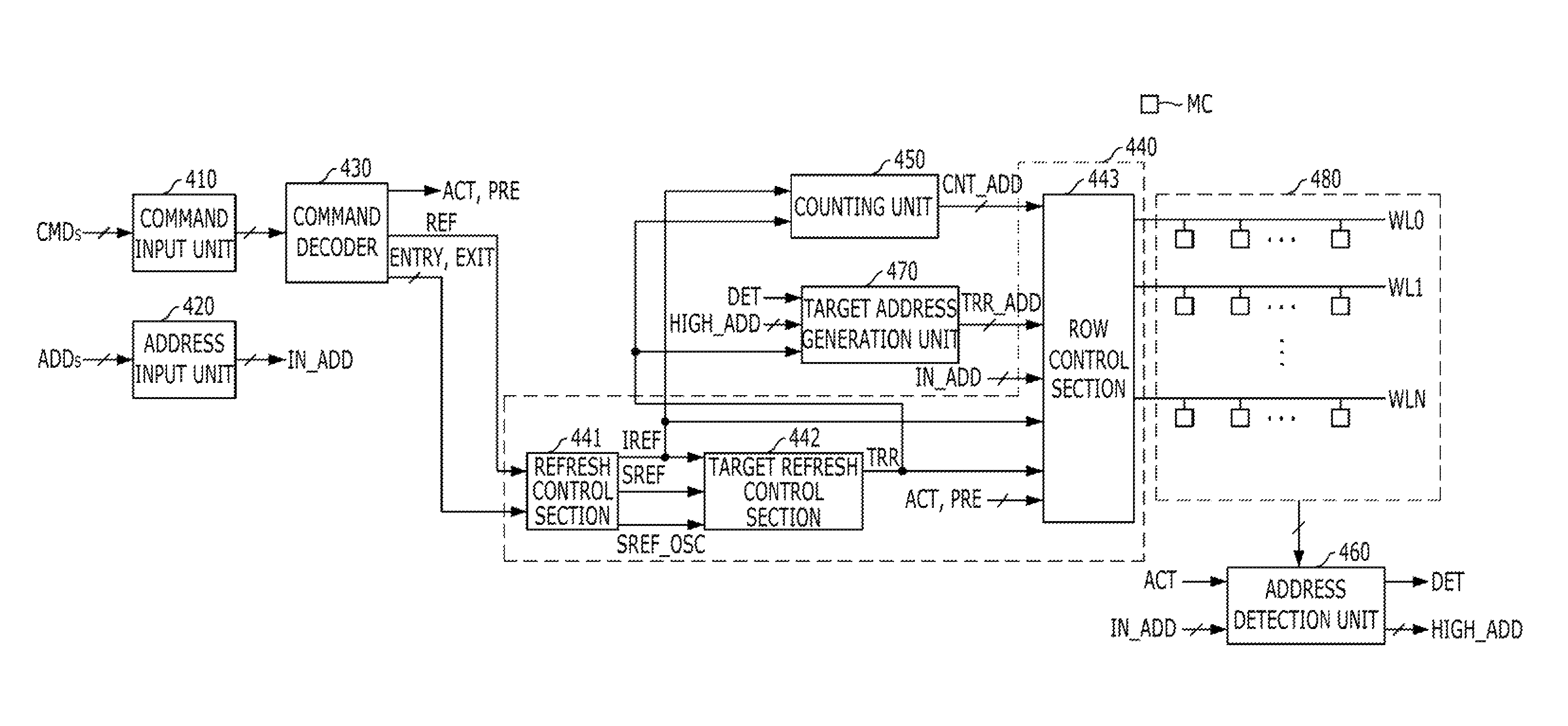

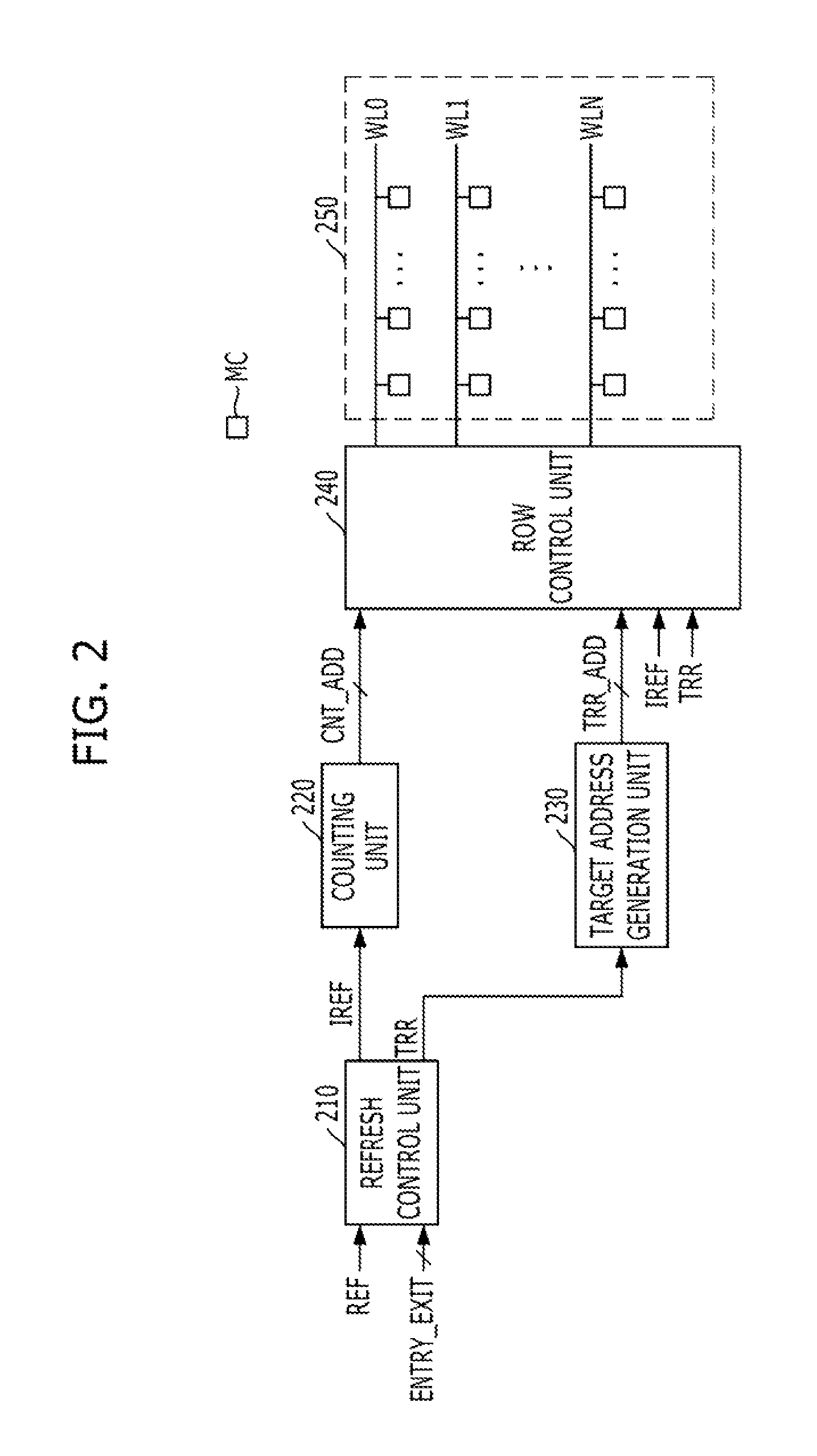

Memory and memory system for periodic targeted refresh

ActiveUS9311985B2Avoid data lossReduce the number of timesDigital storageAddress generation unitMemory systems

A memory includes a plurality of word lines, a target address generation unit generating one or more target addresses by using a stored address, a refresh control section activating a refresh signal in response to a refresh command that is periodically inputted and periodically activating the refresh signal in a self-refresh mode, a target refresh control section activating a target refresh signal when the refresh signal is activated M times, wherein the M is a natural number, and deactivating the target refresh signal in the self-refresh mode, and a row control section sequentially refresh a plurality of first word lines in response to the refresh signal and refreshing a second word line corresponding to the target address in response to the refresh signal when the target refresh signal is activated.

Owner:SK HYNIX INC

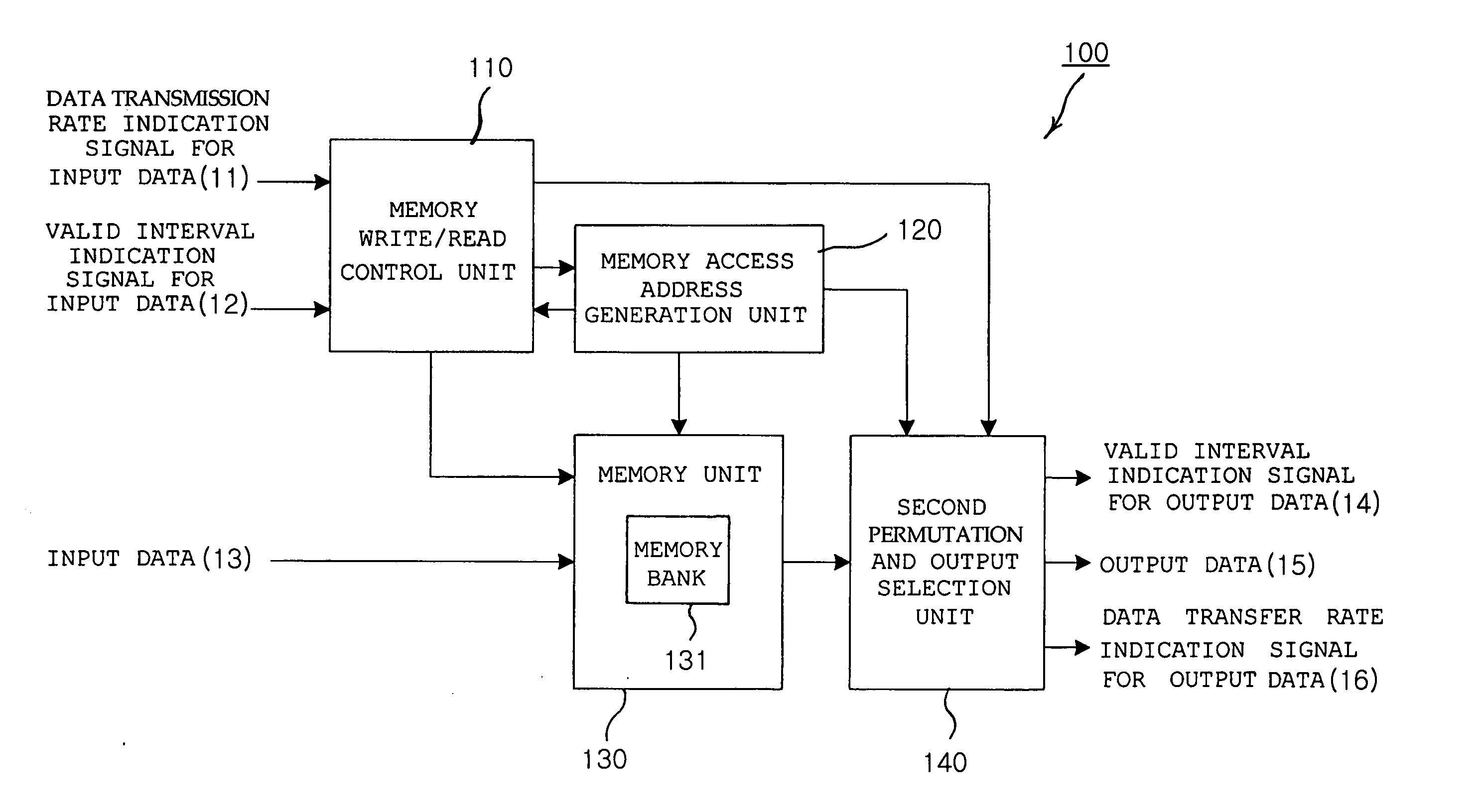

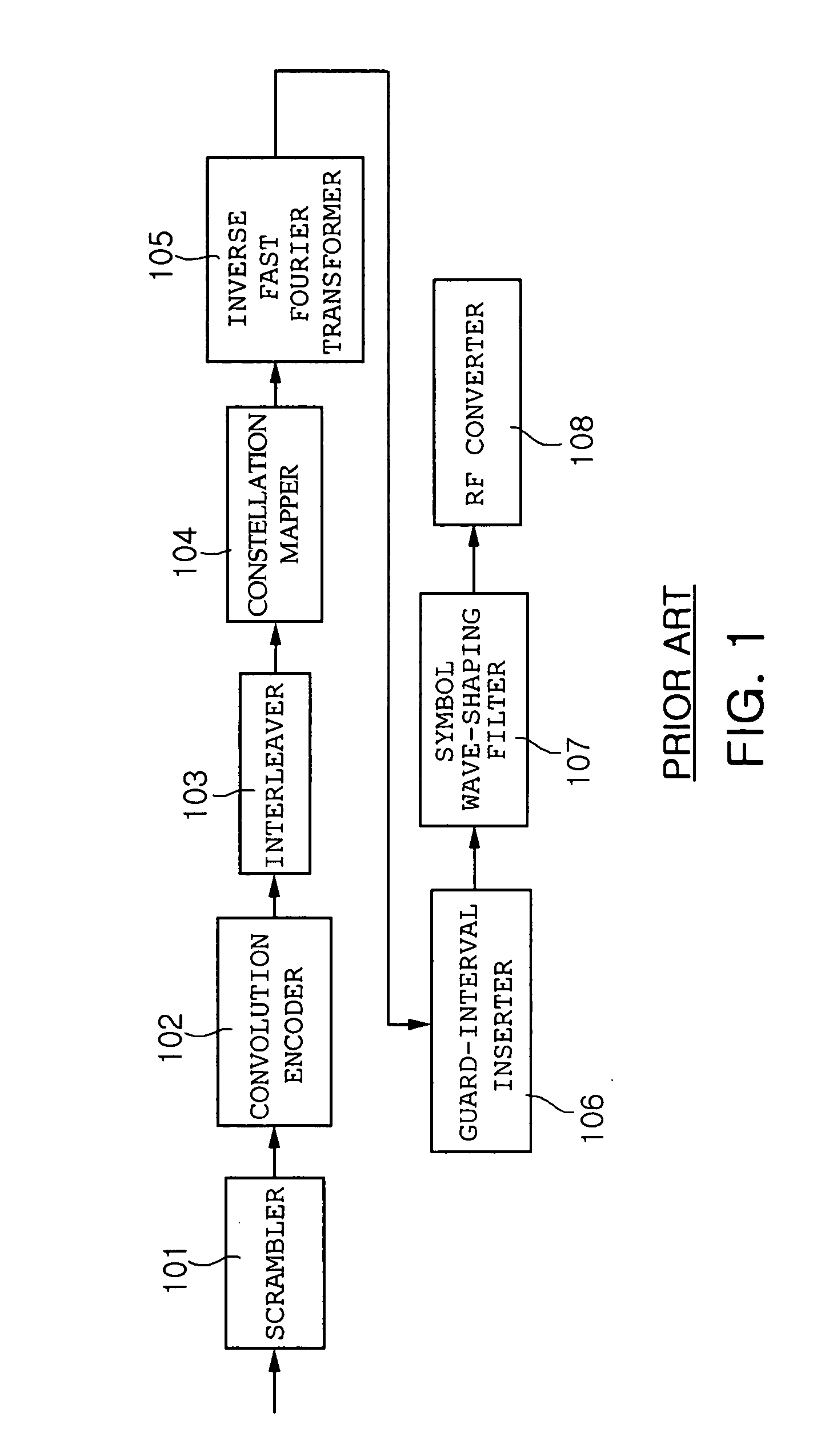

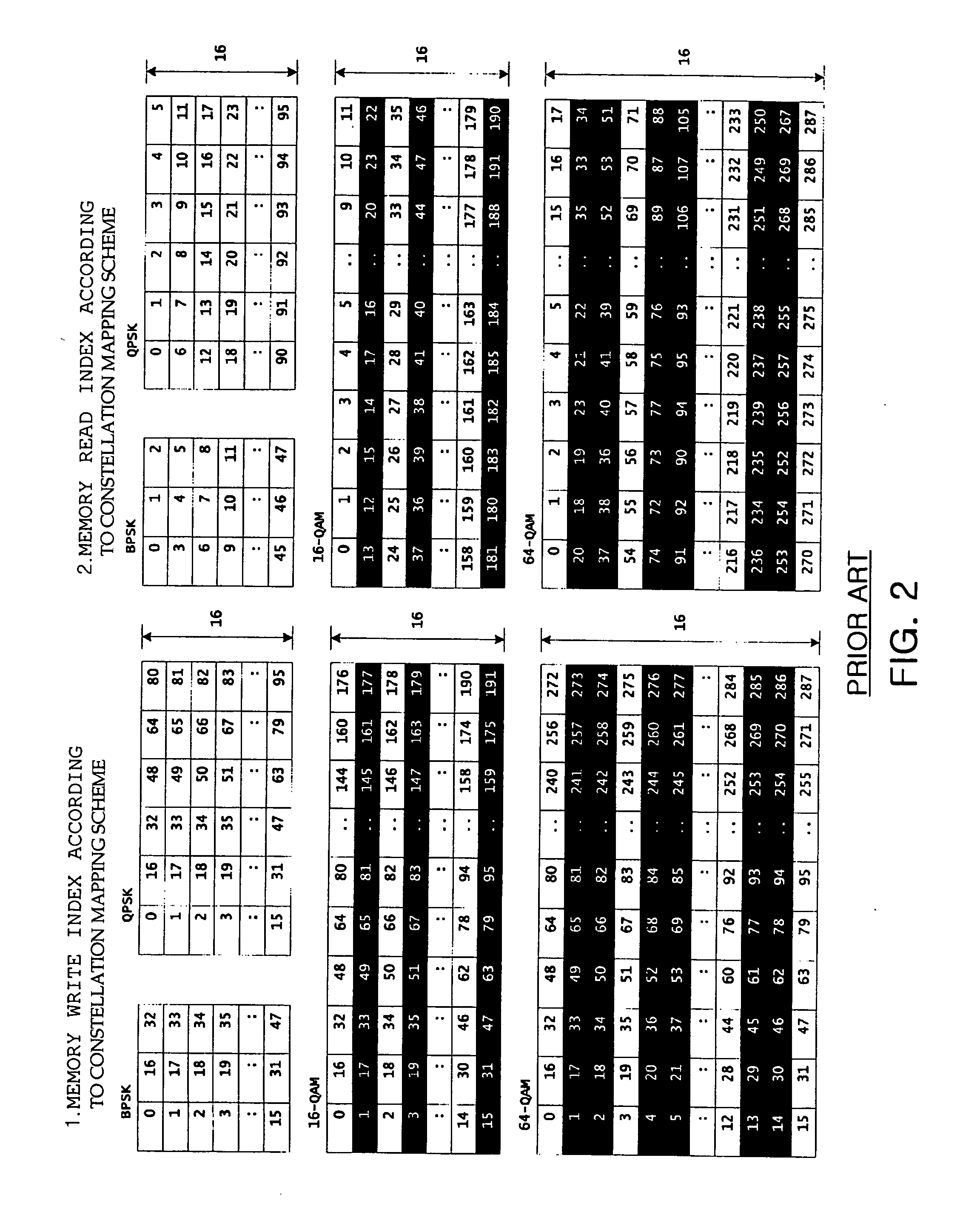

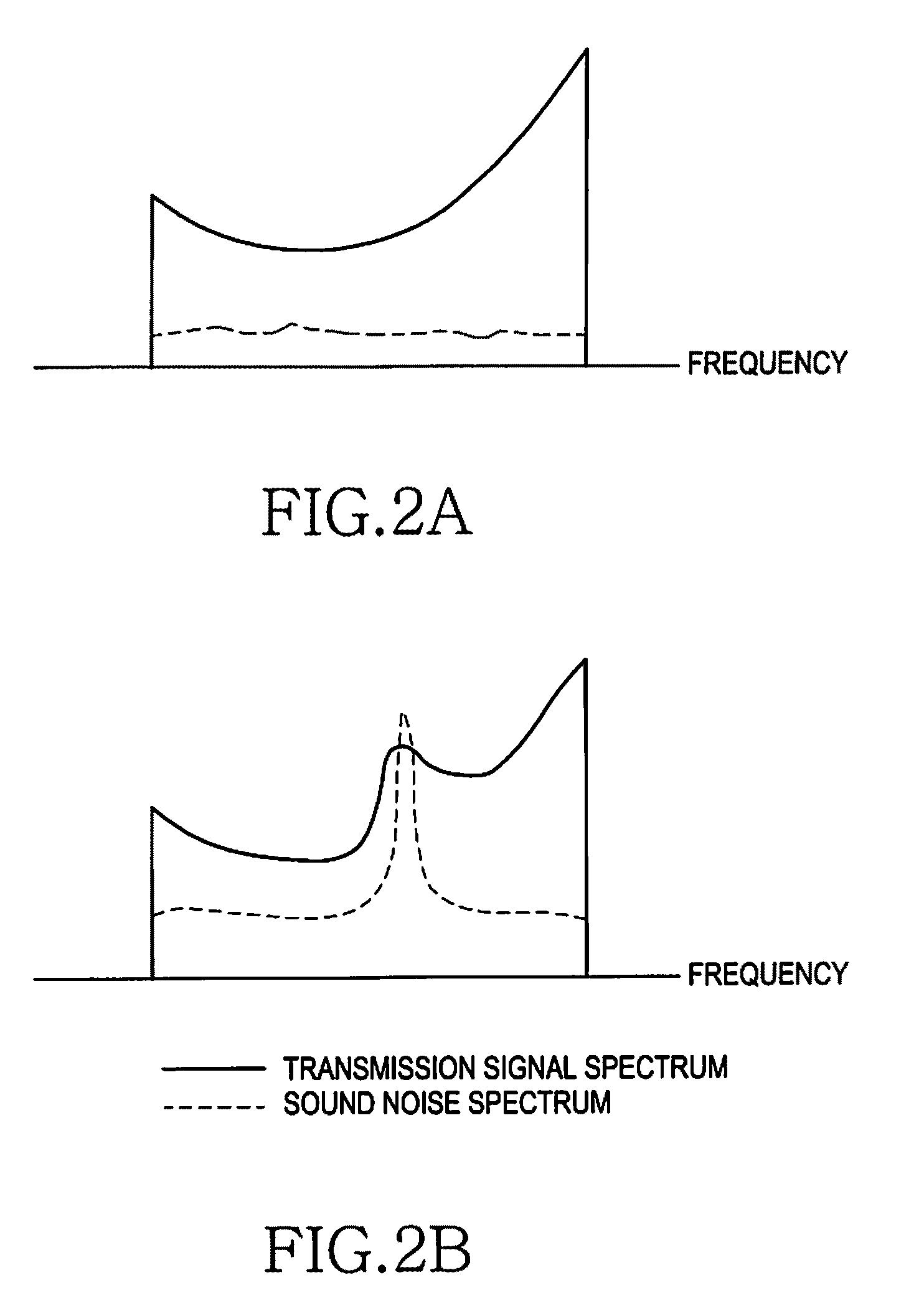

Interleaving apparatus and method for orthogonal frequency division multiplexing transmitter

ActiveUS20060123323A1Digital storageMulti-frequency code systemsAddress generation unitControl signal

An interleaving apparatus and method for an OFDM transmitter are provided. The interleaving apparatus comprises a memory unit, a memory write / read control unit, a memory access address generation unit, and a second permutation and output selection unit. The memory unit includes a plurality of memory banks, which are capable of being independently controlled so that data can be written or read in / from the memory banks, each having memory cells arranged in an NxM matrix structure. The memory write / read control unit generates control signals to write / read data in / from the memory unit. The memory access address generation unit generates a memory access address used to write / read data in / from the memory unit in response to the memory write / read control signals. The second permutation and output selection unit rearranges the positions of data bits output from the memory unit and outputs the position-rearranged data bits.

Owner:ELECTRONICS & TELECOMM RES INST

Memory and memory system including the same

ActiveUS20150162067A1Prevents word line disturbanceDigital storageAddress generation unitEmbedded system

A memory may include a plurality of word lines coupled to one or more memory cells; a target address generation unit suitable for generating one or more target addresses using a stored address; and a control unit suitable for sequentially refreshing the plurality of word lines in response to a refresh command which is periodically inputted, and refreshing a word line corresponding to the target address in response to the refresh command at a random time.

Owner:SK HYNIX INC

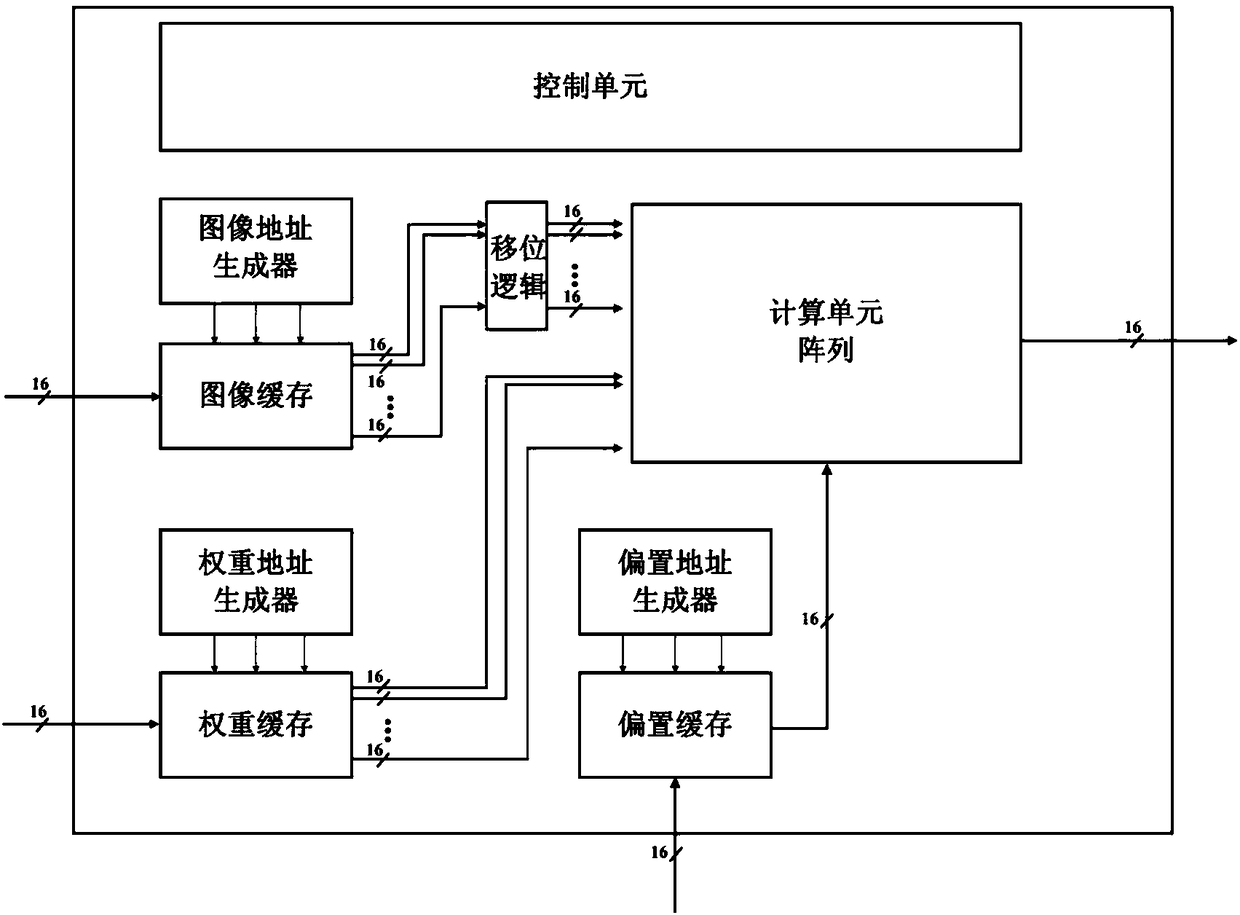

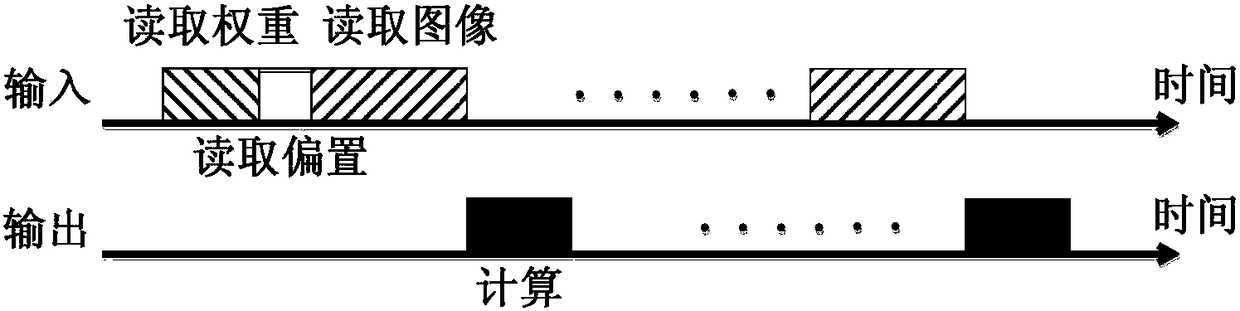

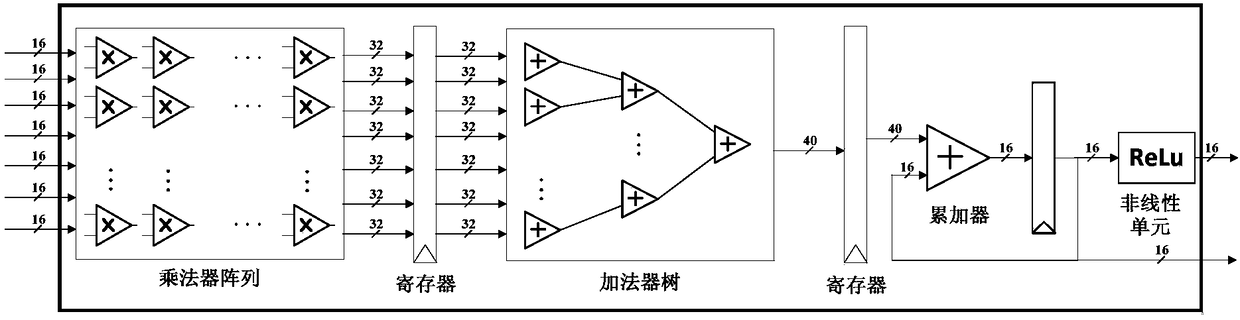

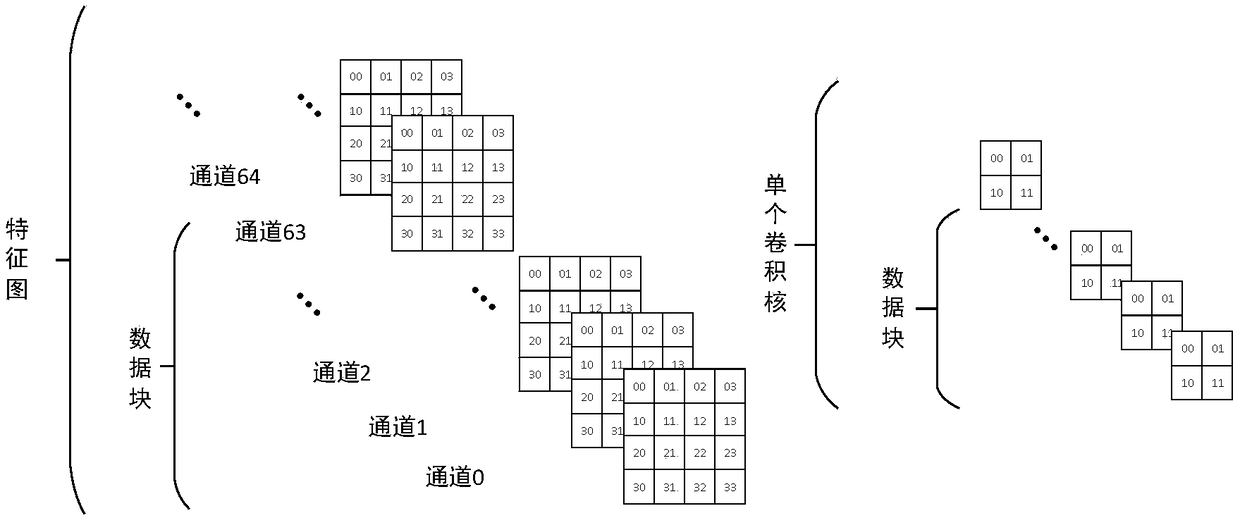

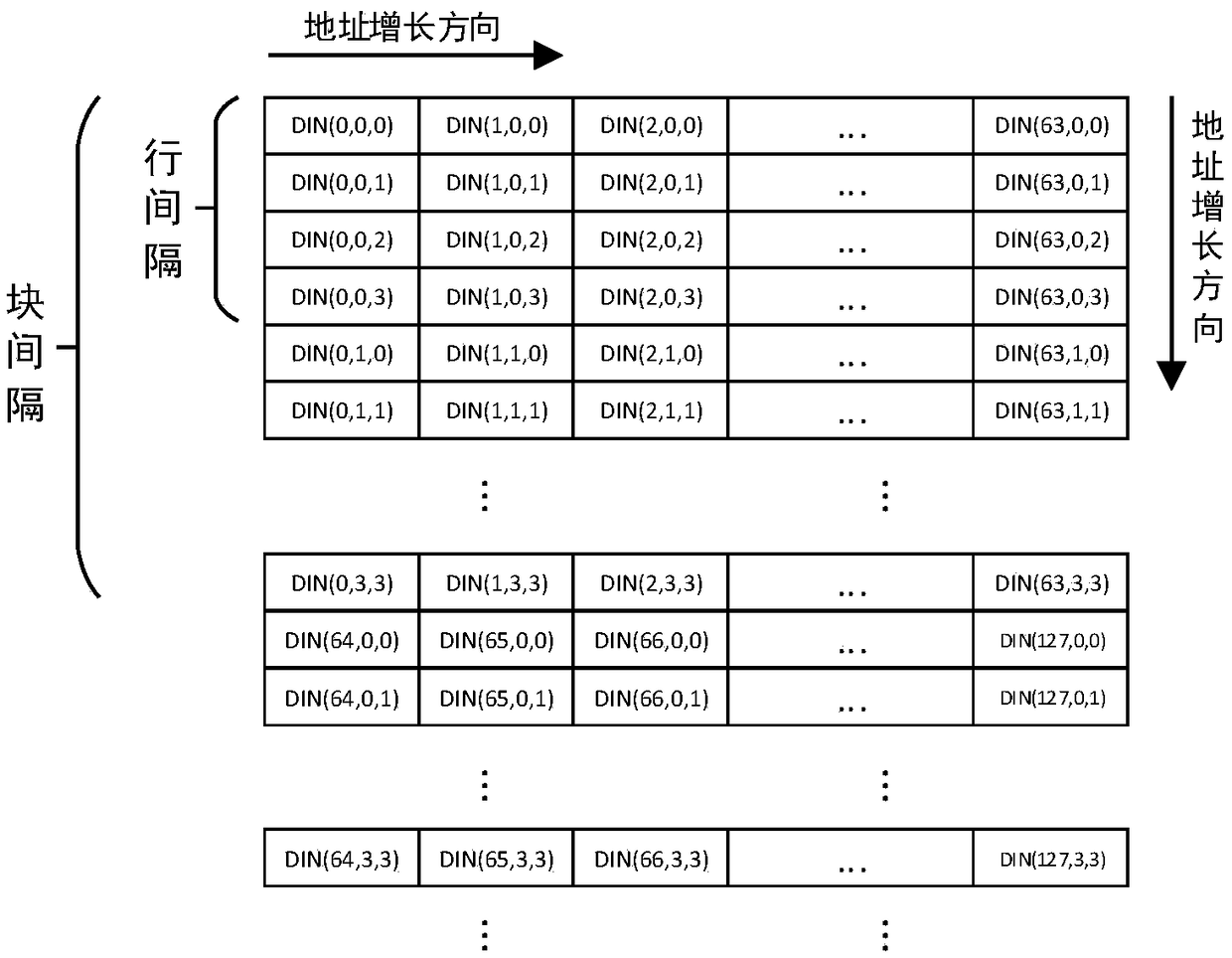

SOC-based data reuse convolutional neural network accelerator

ActiveCN108171317AImprove computing efficiencyReduce latencyImage memory managementNeural architecturesAddress generation unitRead through

The invention provides an SOC-based data reuse convolutional neural network accelerator. Input data of image input, weight parameters, offset parameters and the like of a convolutional neural networkis grouped; a large amount of the input data is classified into reusable block data; and reusable data blocks are read through a control state machine. The convolutional neural network has large parameter quantity and requires strong calculation capability, so that the convolutional neural network accelerator needs to provide very large data bandwidth and calculation capability. A large load is subjected to reusable segmentation, and data reuse is realized through a control unit and an address generation unit, so that the calculation delay and the required bandwidth of the convolutional neuralnetwork are shortened and reduced, and the calculation efficiency is improved.

Owner:BEIJING MXTRONICS CORP +1

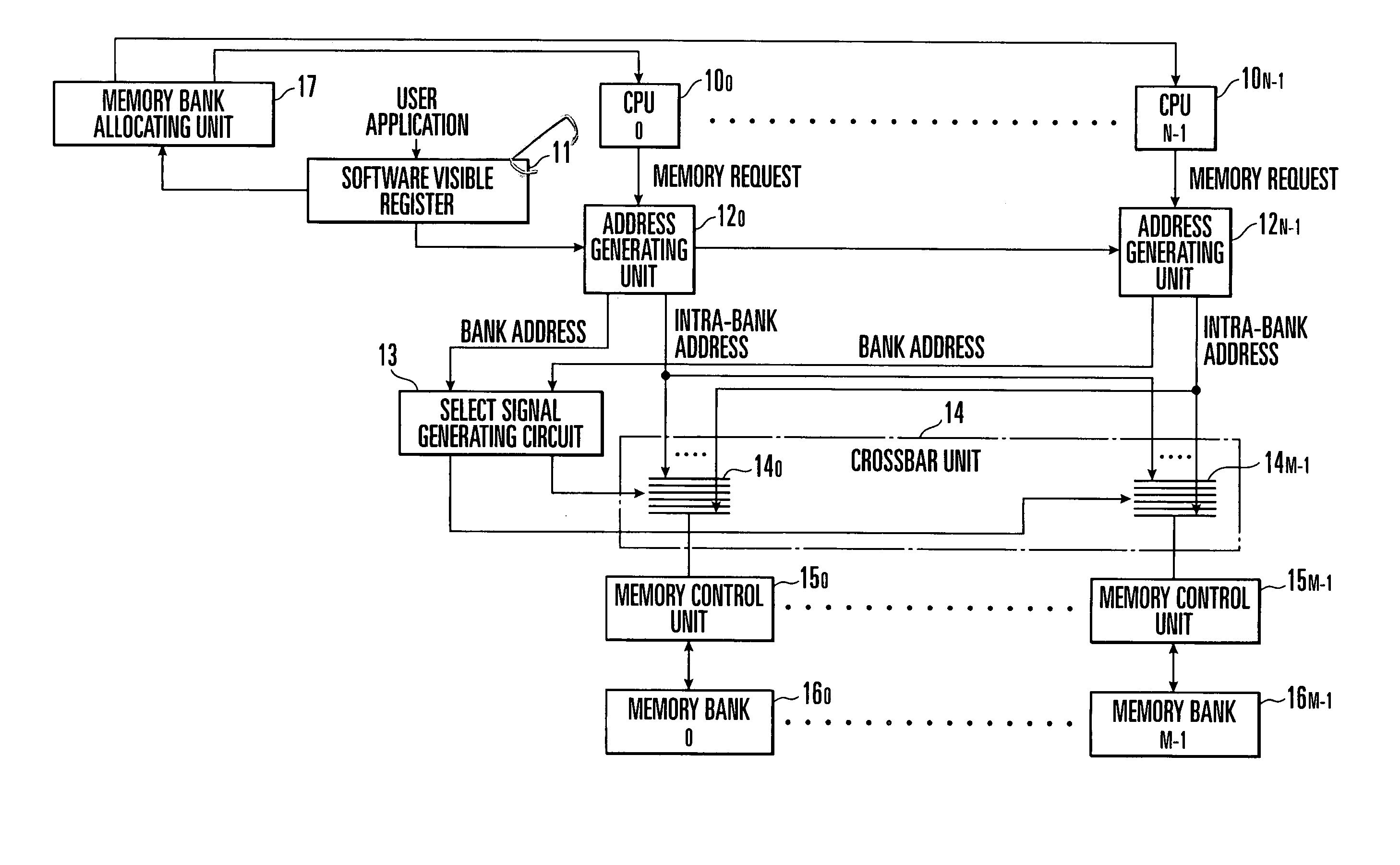

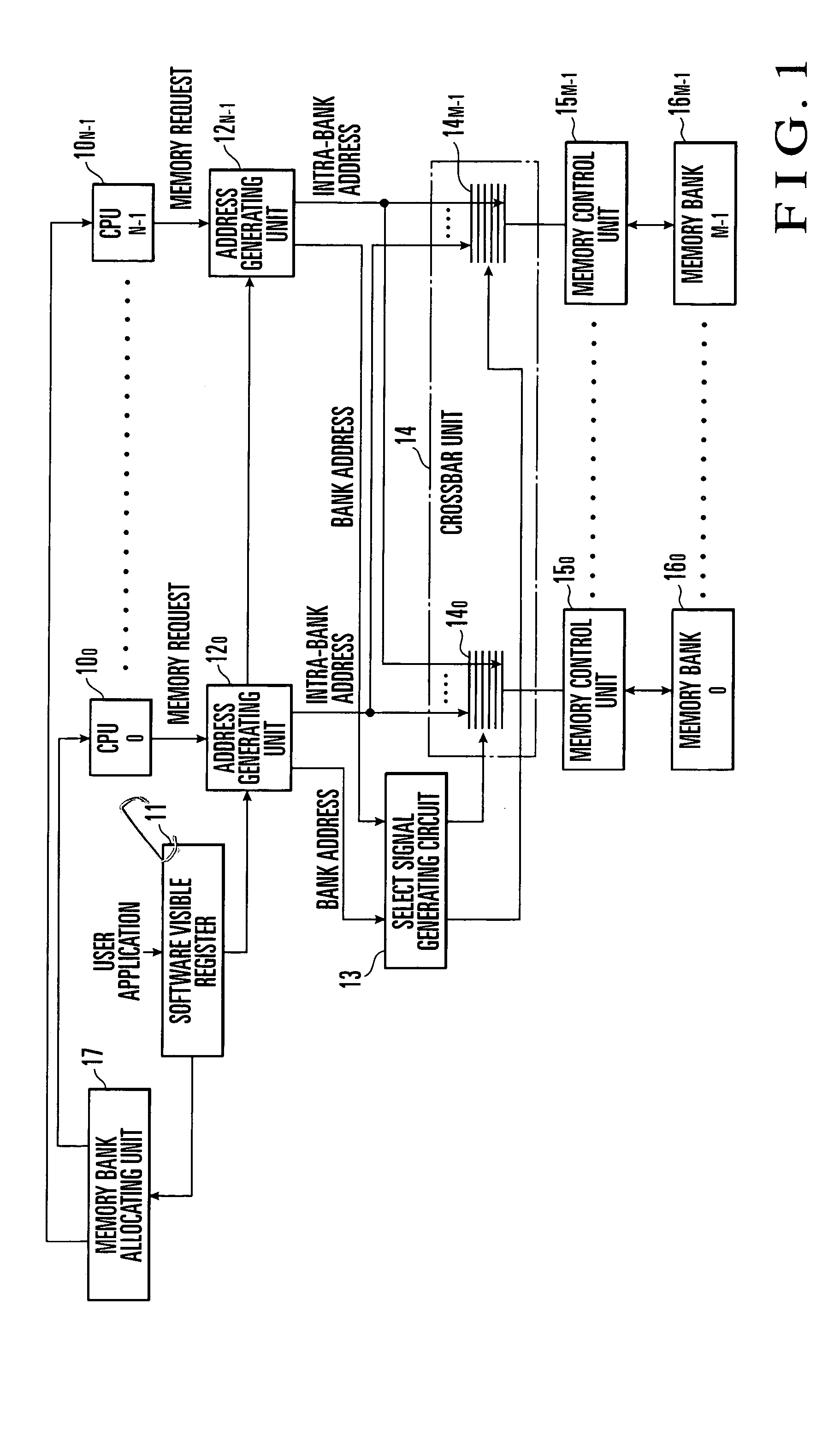

Memory interleave system

InactiveUS7346750B2Avoid contentionImprove throughputMemory adressing/allocation/relocationDigital storageAddress generation unitMemory address

A memory interleave system includes M (M=2p, where p is a natural number) memory banks, M memory control units (MCU) corresponding respectively to the M memory banks, N (a natural number) CPUs, and N address generating units (AGU) corresponding respectively to the N CPUs. Each memory bank includes a plurality of memories. The CPUs output memory requests, each containing a first bank address (address of the memory bank) and a first intra-bank address (address of a memory in the memory bank). Each AGU receives a memory request from a corresponding CPU, and generates and outputs a second intra-bank address and a second bank address by using the first intra bank address and the first bank address. Each memory control MCU performs memory bank access control on the basis of the second intra-bank address. An MCU performing access control is selected on the basis of the second bank address.

Owner:NEC CORP

Memory performing target refresh operation and memory system including the same

A memory may include a plurality of word lines coupled to one or more memory cells; a target address generation unit suitable for generating one or more target addresses using a stored address; and a control unit suitable for sequentially refreshing the plurality of word lines in response to a refresh command which is periodically inputted, and refreshing a word line corresponding to the target address in response to the refresh command at a random time.

Owner:SK HYNIX INC

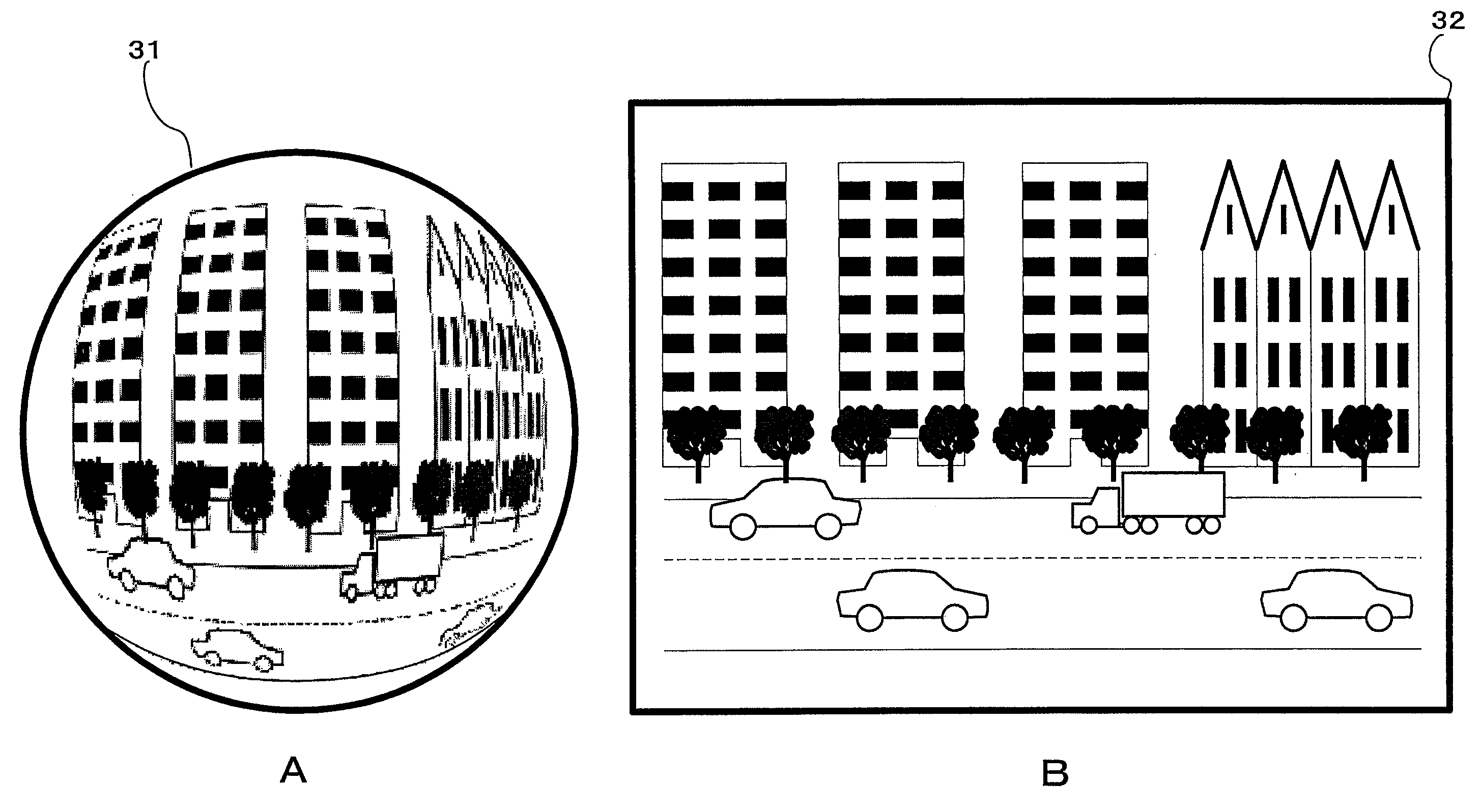

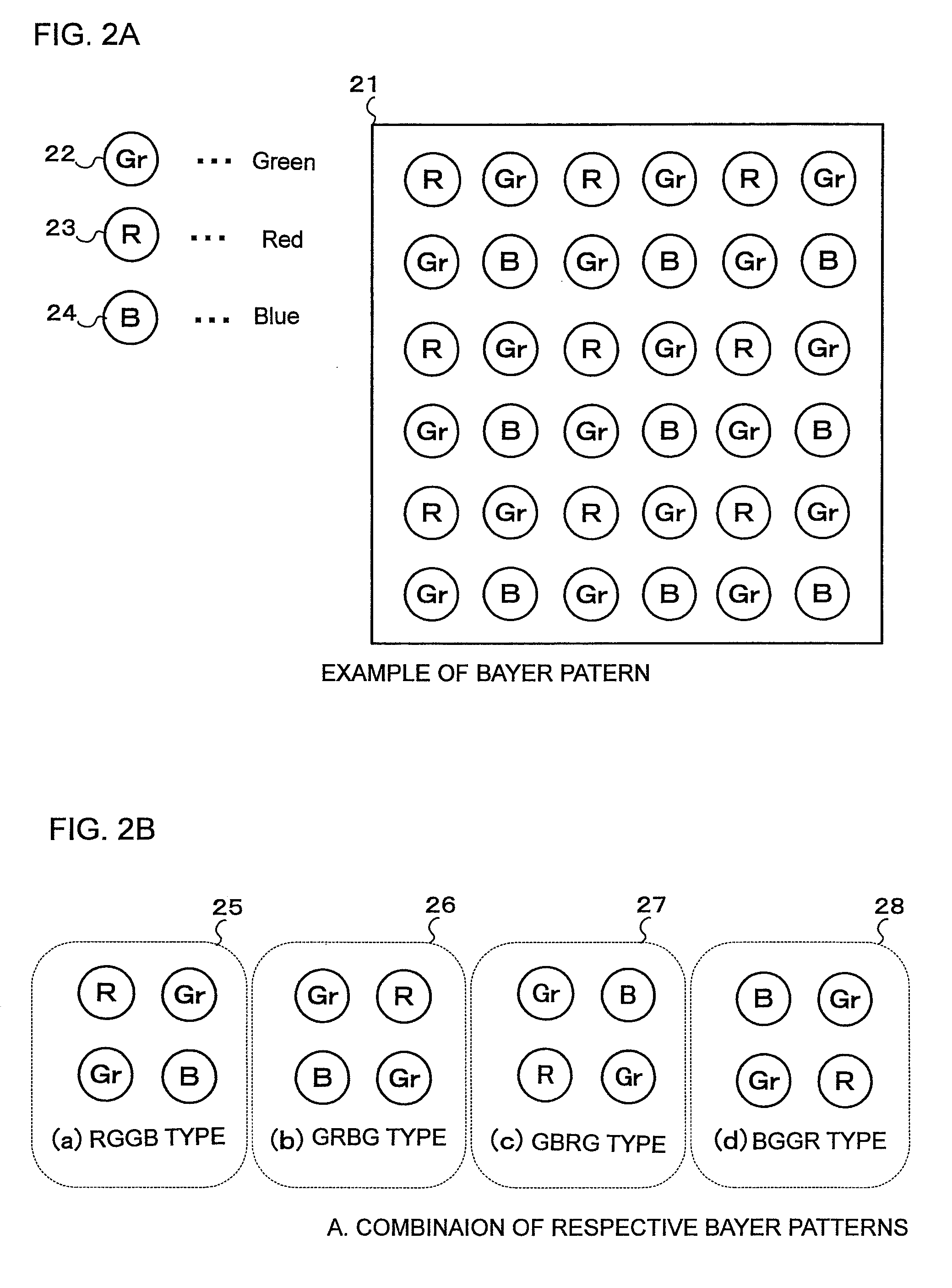

Image processing apparatus and method and a computer-readable recording medium on which an image processing program is stored

InactiveUS20100254602A1Improve image qualitySimple structureTelevision system detailsGeometric image transformationPattern recognitionAddress generation unit

An image processing apparatus converts an input data indicative of a fish-eye image into an output data indicative of a plane image. The apparatus includes an input data conversion unit that converts the fish-eye image into the input data including an input data pixel information and an input data color information; a fish-eye image corrected address generating unit that generates, based on the input data pixel information, an output data pixel information; an input data color information acquiring unit that acquires the input data color information based on a pattern of a color filter array; an output data color information calculating unit that calculates, based on the input data color information, an output data color information; an output data generating unit that generates the output data including the output data pixel information and the output data color information; and a storage unit that stores the input and output data.

Owner:DAI NIPPON PRINTING CO LTD

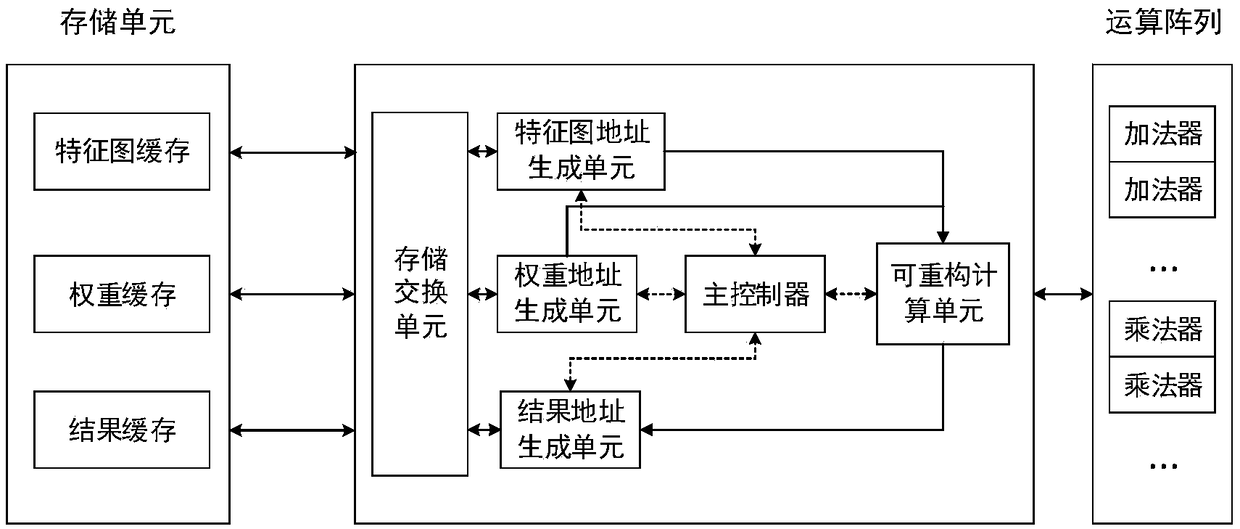

Reconfigurable CNN (Convolutional Neural Network) high concurrency convolution accelerator

ActiveCN108805266AIncrease profitImprove parallelismNeural architecturesAddress generation unitReconfigurable computing

The invention provides a reconfigurable CNN (Convolutional Neural Network) high concurrency convolution accelerator, which comprises a weight address generation unit, a result address generation unit,a reconfigurable calculation unit, a characteristic pattern address generation unit, a master controller and a memory exchange unit, wherein the weight address generation unit generates the address of convolution kernel data in a cache; the result address generation unit generates the address of result data in the cache; the reconfigurable calculation unit can reconfigure a calculation array intotwo multiply-accumulation tree circuits with different particle sizes; the characteristic pattern address generation unit generates the address of characteristic pattern data in the cache; the mastercontroller generates an accumulator resetting signal synchronous with the address, carries out gating on a corresponding circuit in the reconfigurable calculation unit, and generates an interrupt signal for the end of the whole operation; and the memory exchange unit converts an effective characteristic pattern read address and a weight read address into the read operation of a memory unit, and converts an effective result write address and data into a write operation for the memory unit. The accelerator has the beneficial effects that a control part is simplified, the degree of parallelism of a multi-channel convolution operation and memory access efficiency can be greatly improved, and occupied resources are reduced.

Owner:NANJING UNIV

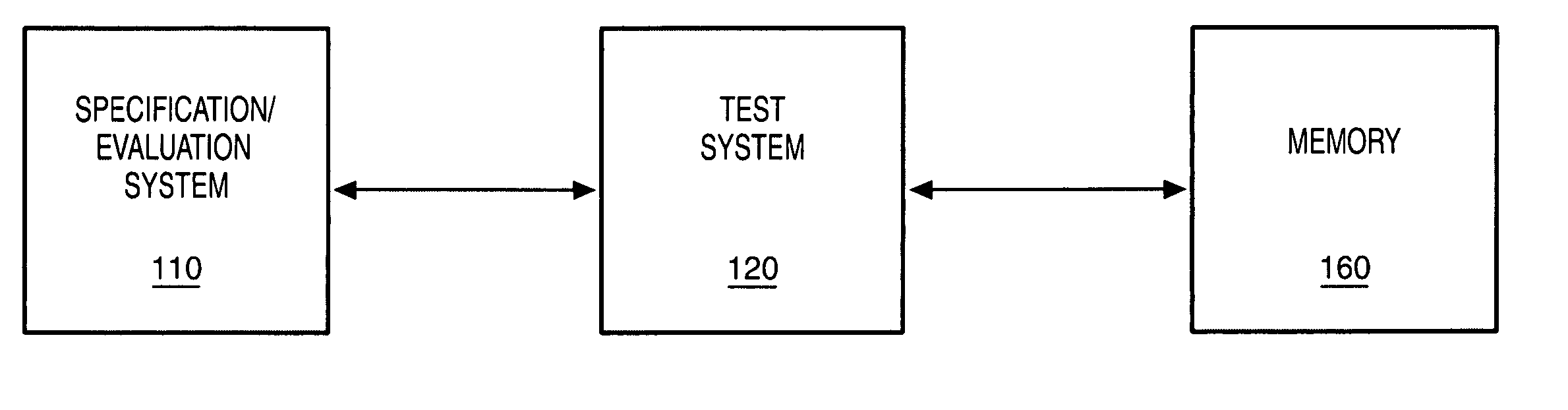

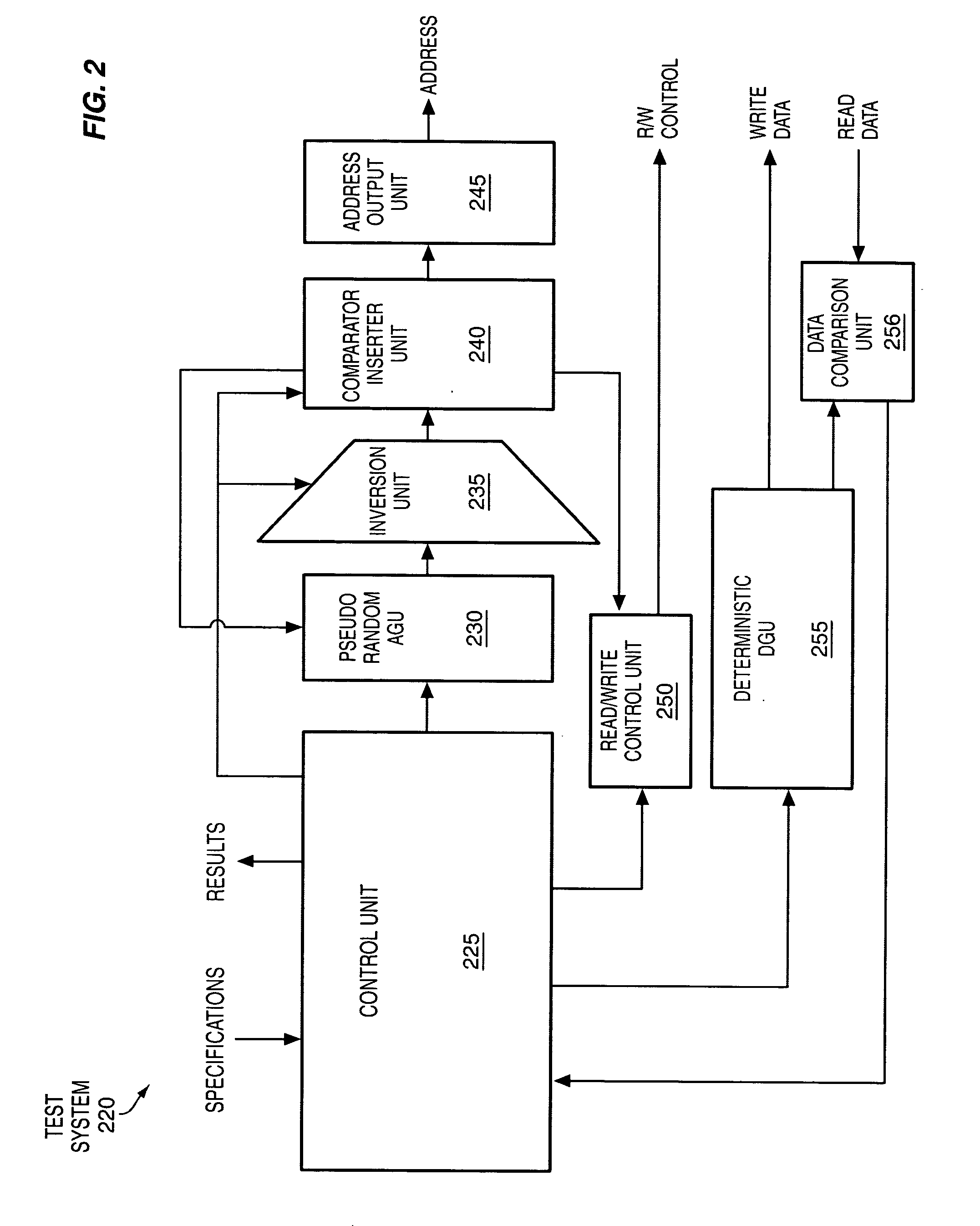

Testing memories

InactiveUS20060085710A1Electronic circuit testingStatic storageMemory addressAddress generation unit

Methods and apparatus to test memories, such as, for example, caches of processors, are disclosed. In one aspect, an apparatus may include a pseudo random address generation unit, such as, for example, including a linear feedback shift register, to generate pseudo random memory addresses, and a deterministic data generation unit, such as, for example, including a state machine, to generate deterministic data to be written to the pseudo random memory addresses. Computer systems and other electronic systems including such apparatus are also disclosed.

Owner:INTEL CORP

Image encoding apparatus and image decoding apparatus

InactiveUS20070237412A1Efficient executionLarge storage capacityGeometric image transformationCode conversionCoding blockAddress generation unit

A conversion control unit divides a transform coefficient into code blocks. A storing device has a storage capacity corresponding to data size of the code blocks. To the conversion control unit, rotation / inversion control information including information of the rotation angle and the inverting direction is input. The conversion control unit generates a control signal on the basis of the rotation / inversion control information and sub-band information of the transform coefficient. An address generating unit generates a write address on the basis of a control signal. By accessing the storing device in predetermined order on the basis of the write address at the time of writing the code block to the storing device, an image rotating / inverting process is performed.

Owner:MEGACHIPS

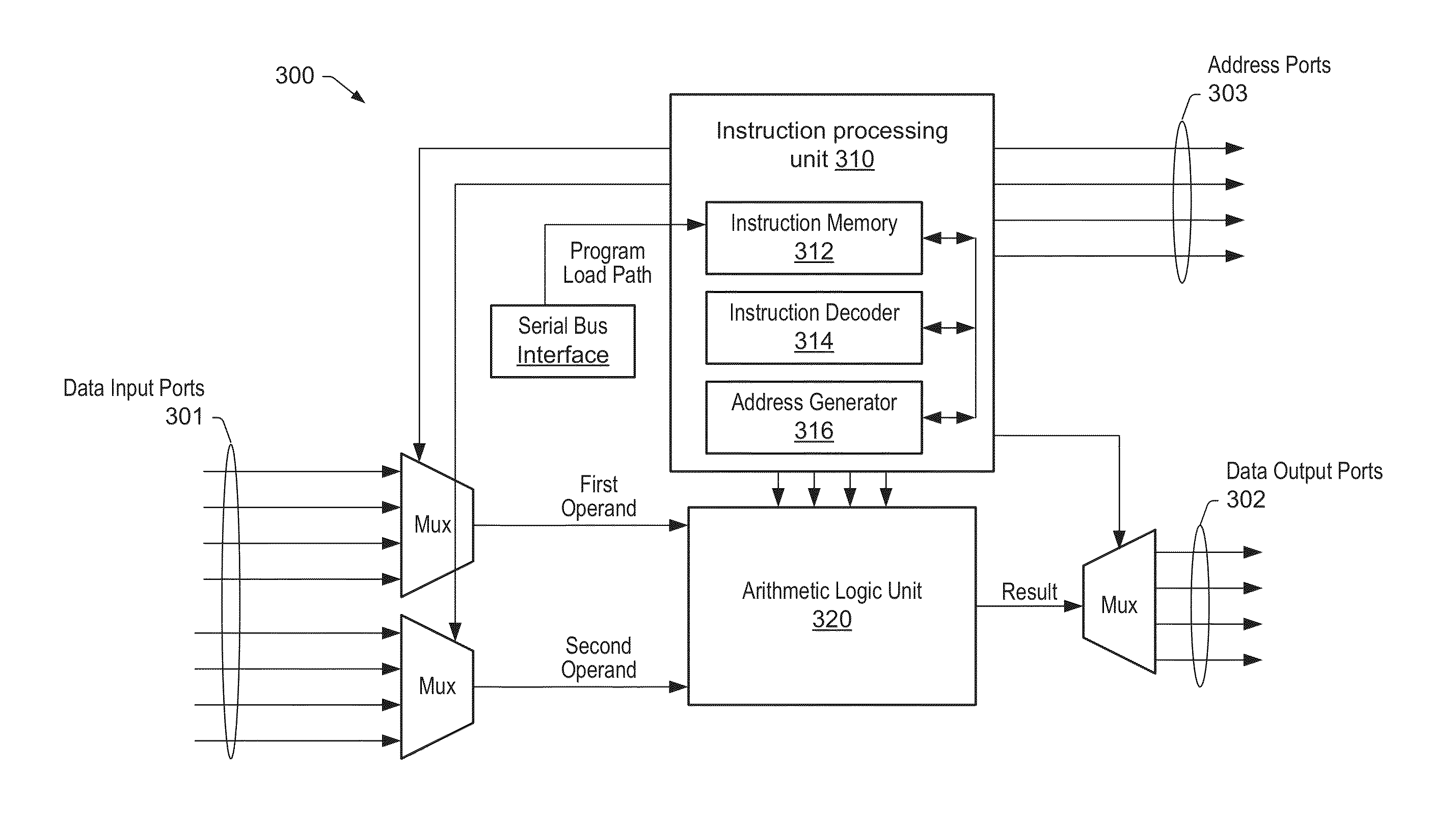

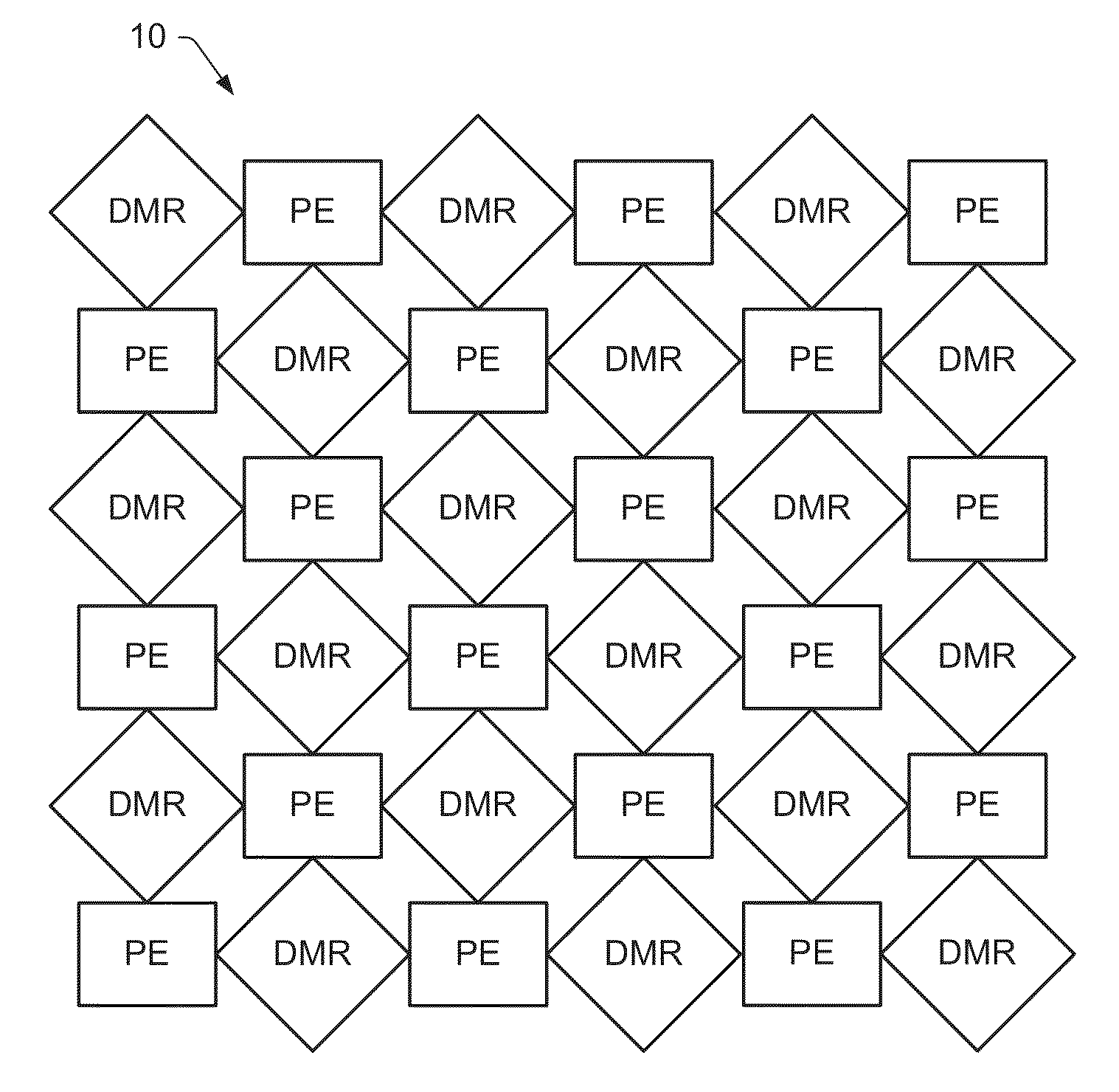

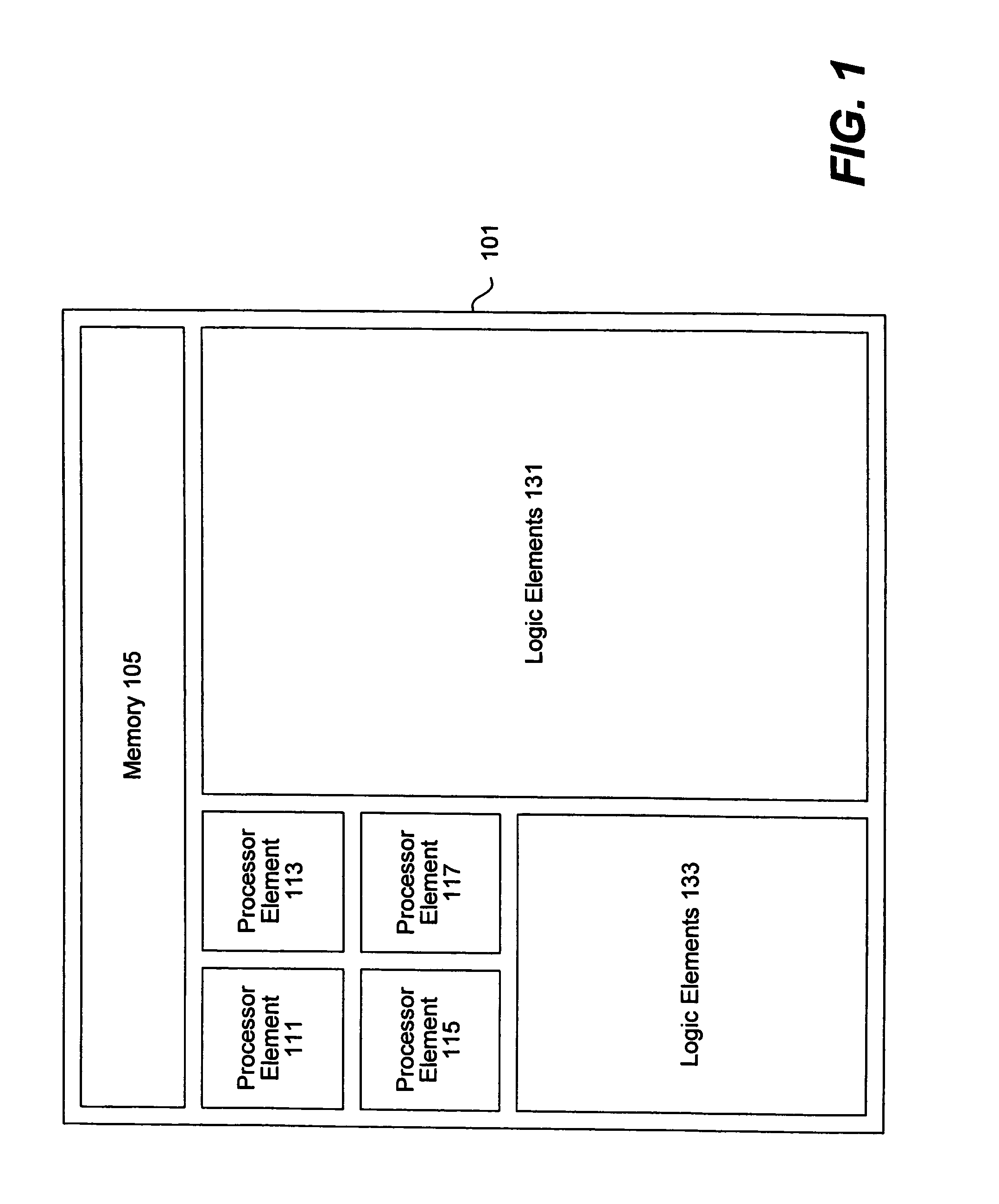

Memory-network processor with programmable optimizations

ActiveUS20140351551A1Efficient executionInstruction analysisMemory adressing/allocation/relocationAddress generation unitMulti processor

Various embodiments are disclosed of a multiprocessor system with processing elements optimized for high performance and low power dissipation and an associated method of programming the processing elements. Each processing element may comprise a fetch unit and a plurality of address generator units and a plurality of pipelined datapaths. The fetch unit may be configured to receive a multi-part instruction, wherein the multi-part instruction includes a plurality of fields. A first address generator unit may be configured to perform an arithmetic operation dependent upon a first field of the plurality of fields. A second address generator unit may be configured to generate at least one address of a plurality of addresses, wherein each address is dependent upon a respective field of the plurality of fields. A parallel assembly language may be used to control the plurality of address generator units and the plurality of pipelined datapaths.

Owner:COHERENT LOGIX

Memory-network processor with programmable optimizations

ActiveUS9430369B2Efficient executionInstruction analysisMemory adressing/allocation/relocationAddress generation unitMulti processor

Various embodiments are disclosed of a multiprocessor system with processing elements optimized for high performance and low power dissipation and an associated method of programming the processing elements. Each processing element may comprise a fetch unit and a plurality of address generator units and a plurality of pipelined datapaths. The fetch unit may be configured to receive a multi-part instruction, wherein the multi-part instruction includes a plurality of fields. A first address generator unit may be configured to perform an arithmetic operation dependent upon a first field of the plurality of fields. A second address generator unit may be configured to generate at least one address of a plurality of addresses, wherein each address is dependent upon a respective field of the plurality of fields. A parallel assembly language may be used to control the plurality of address generator units and the plurality of pipelined datapaths.

Owner:COHERENT LOGIX

Apparatus and method for scalable qubit addressing

ActiveUS20190042971A1Quantum computersMultiple digital computer combinationsMemory addressAddress generation unit

An apparatus and method for scalable qubit addressing. For example, one embodiment of a processor comprises: a decoder comprising quantum instruction decode circuitry to decode quantum instructions to generate quantum microoperations (uops) and non-quantum decode circuitry to decode non-quantum instructions to generate non-quantum uops; execution circuitry comprising: an address generation unit (AGU) to generate a system memory address responsive to execution of one or more of the non-quantum uops; and quantum index generation circuitry to generate quantum index values responsive to execution of one or more of the quantum uops, each quantum index value uniquely identifying a quantum bit (qubit) in a quantum processor; wherein to generate a first quantum index value for a first quantum uop, the quantum index generation circuitry is to read the first quantum index value from a first architectural register identified by the first quantum uop.

Owner:INTEL CORP

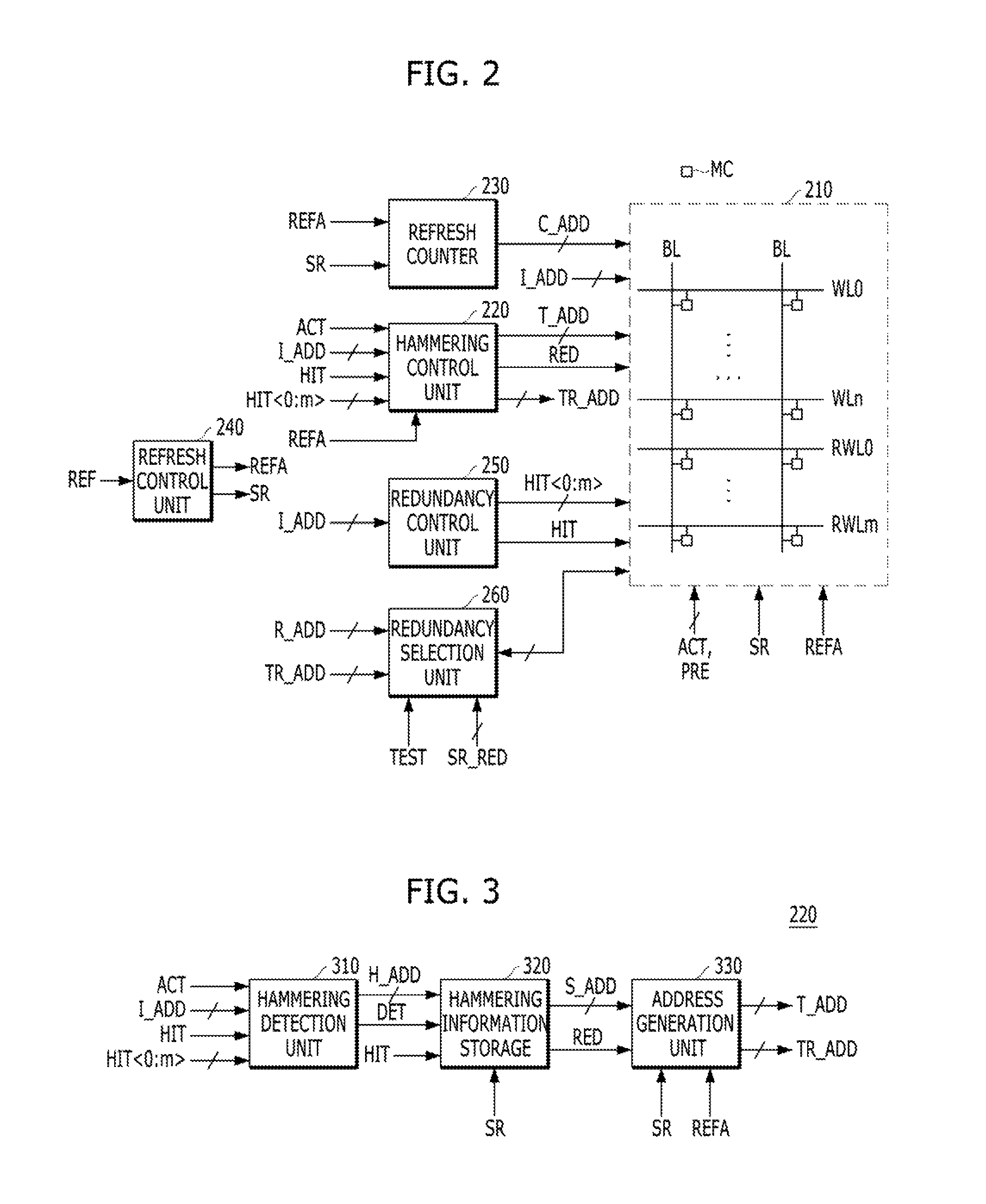

Memory device

A memory device may include a plurality of normal word lines; a plurality of redundancy word lines capable of replacing the word lines; a hammering information storage unit capable of storing an address of a row hammering word line of the normal word lines and the redundancy word lines; an address generation unit capable of generating an address of a normal word line or redundancy word line adjacent to a normal word line or redundancy word line corresponding to the address stored in the hammering information storage unit; and a refresh control unit capable of selecting the normal word line or redundancy word line corresponding to the address generated by the address generation unit for performing an additional refresh operation.

Owner:SK HYNIX INC

Address generation unit for a processor

ActiveUS20060010255A1Good suitImprove processor performanceMemory adressing/allocation/relocationImage memory managementAddress generation unitAddress control

A processor includes a memory port for accessing a physical memory under control of an address. A processing unit executing instructions stored in the memory and / or operates on data stored in the memory. An address generation unit (“AGU”) generates address for controlling access to the memory; the AGU being associated with a plurality of N registers enabling the AGU to generate the address under control of an address generation mechanism. A memory unit is operative to save / load k of the N registers, where 2<=k<=N, triggered by one operation. To this end, the memory unit includes a concatenator for concatenating the k registers to one memory word to be written to the memory through the memory port and a splitter for separating a word read from the memory through the memory port into the k registers.

Owner:TELEFON AB LM ERICSSON (PUBL)

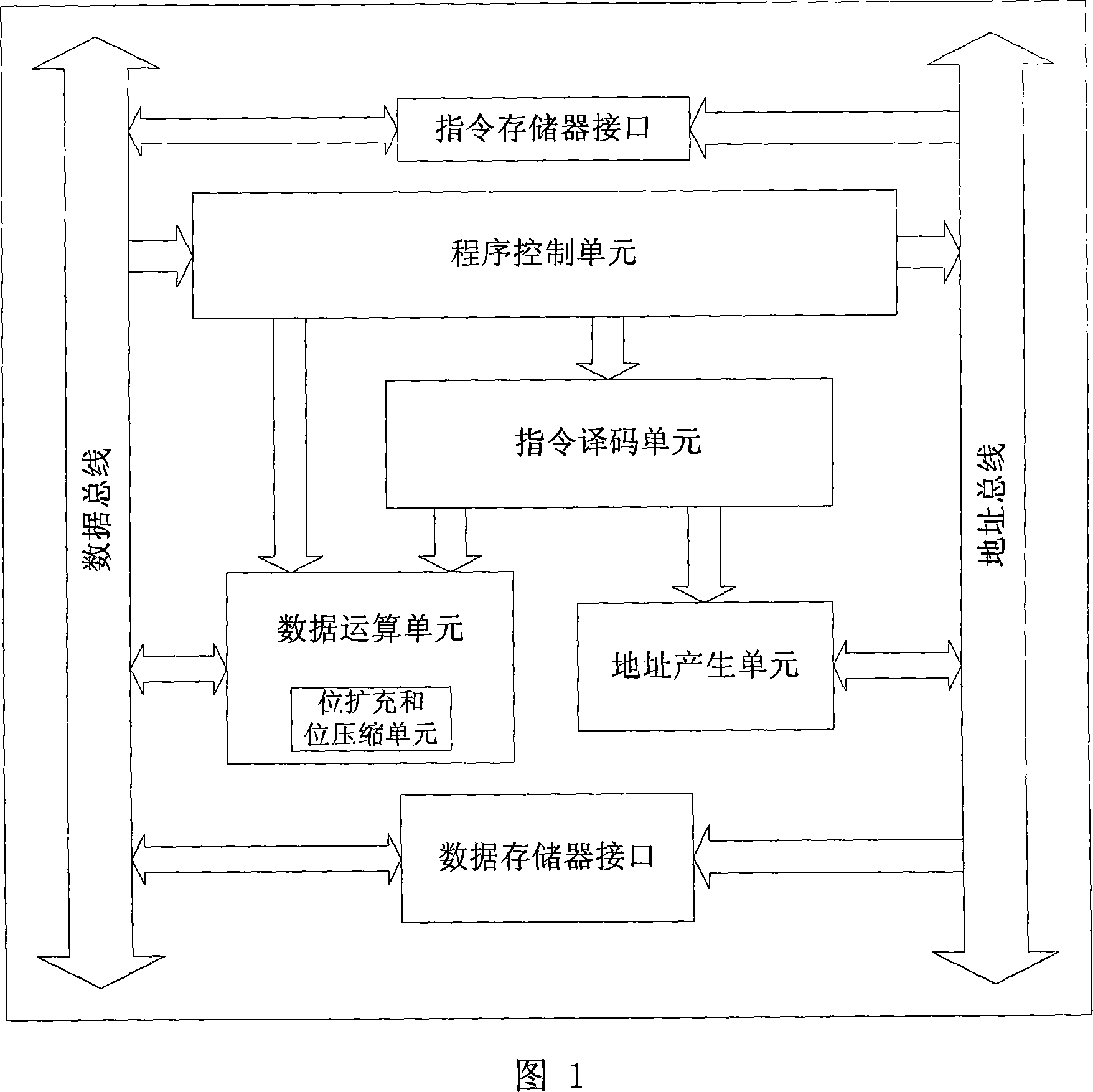

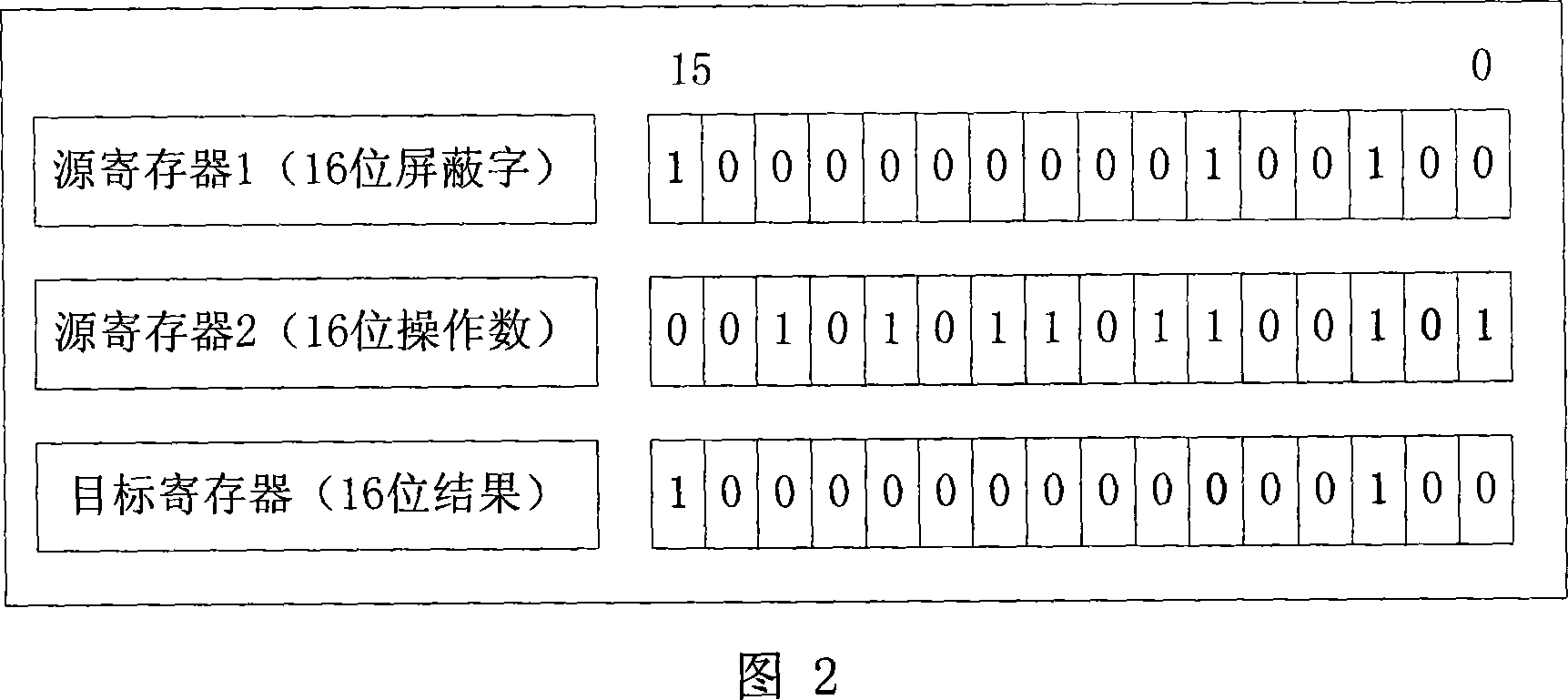

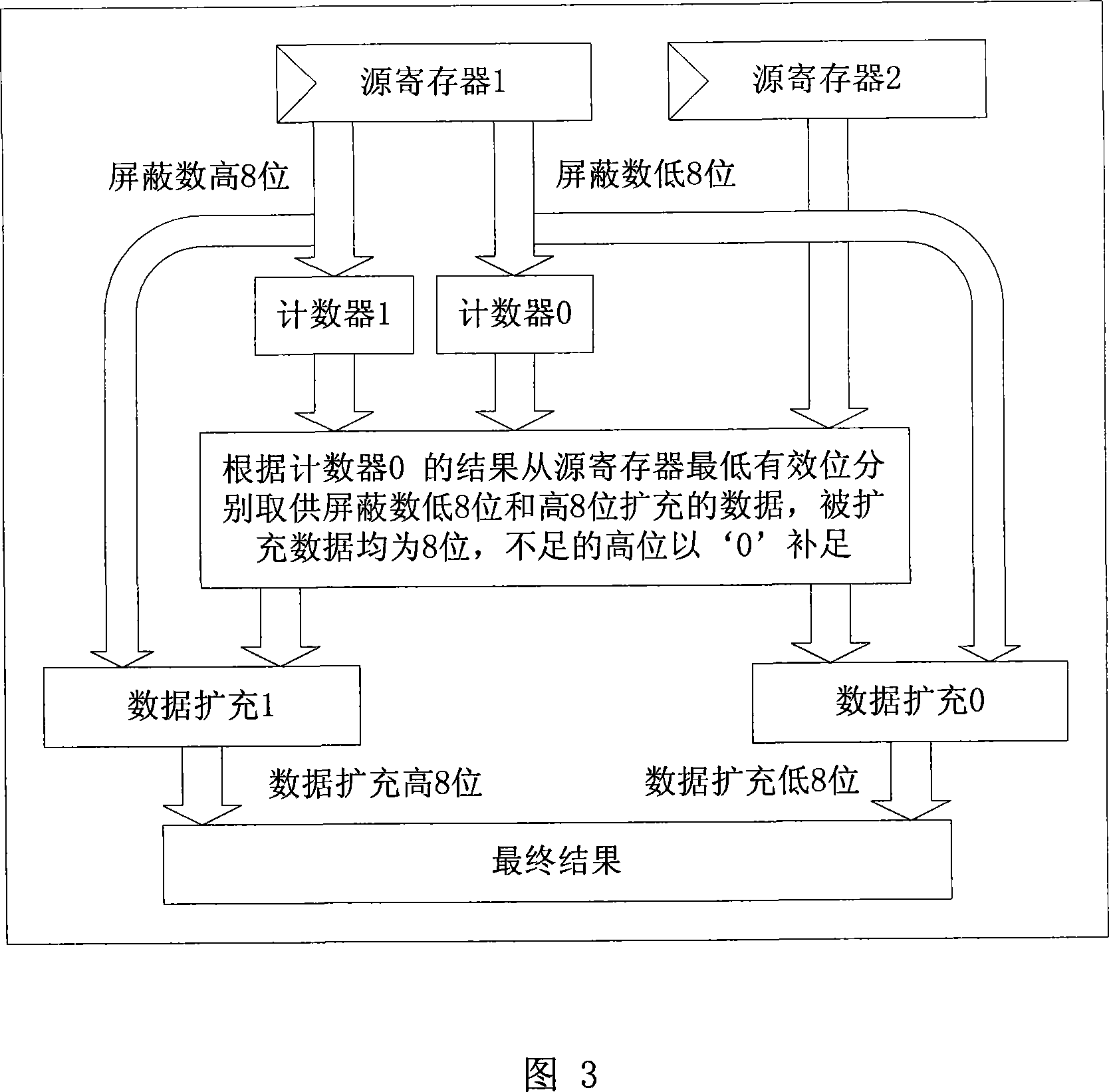

Digital signal processor with bit expansion and bit compressing compressing cell

InactiveCN101042640AImprove scalabilityFacilitation of mathematics applicationProgram controlMemory systemsDigital signal processingInstruction memory

This invention discloses one digital signal processor with bit expend and bit compression unit, which comprises digital signal processor nuclear, order memory and data memory, wherein, the nuclear comprises program control unit, order coding unit, address generation unit and data computation unit. This invention is characterized by the following: the computation unit is integrated with one bit expend and bit compression unit; the unit is composed of timer, displacement device and two source operation number and one aim register device.

Owner:SHANGHAI HUALONG INFORMATION TECH DEV CENT

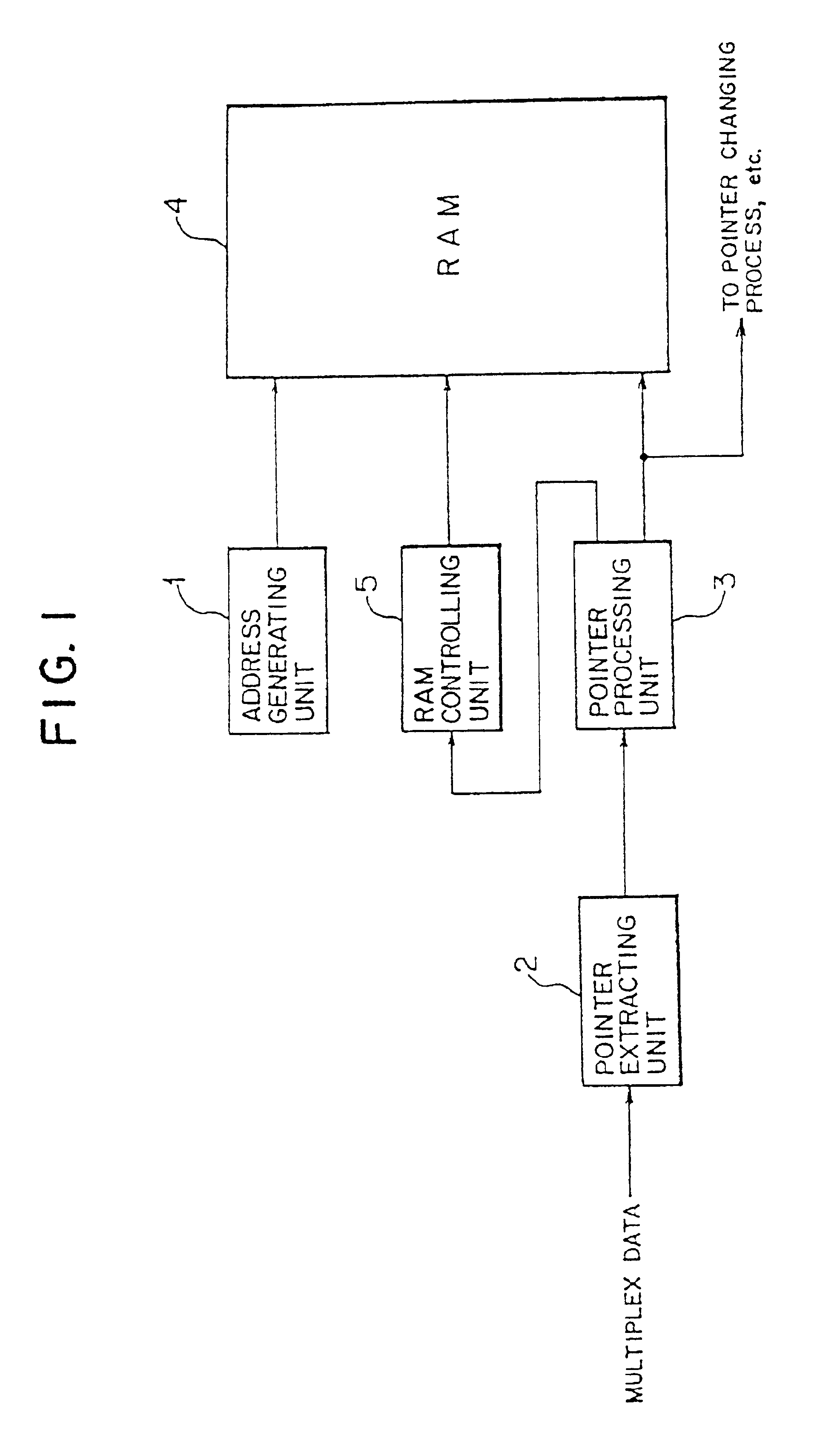

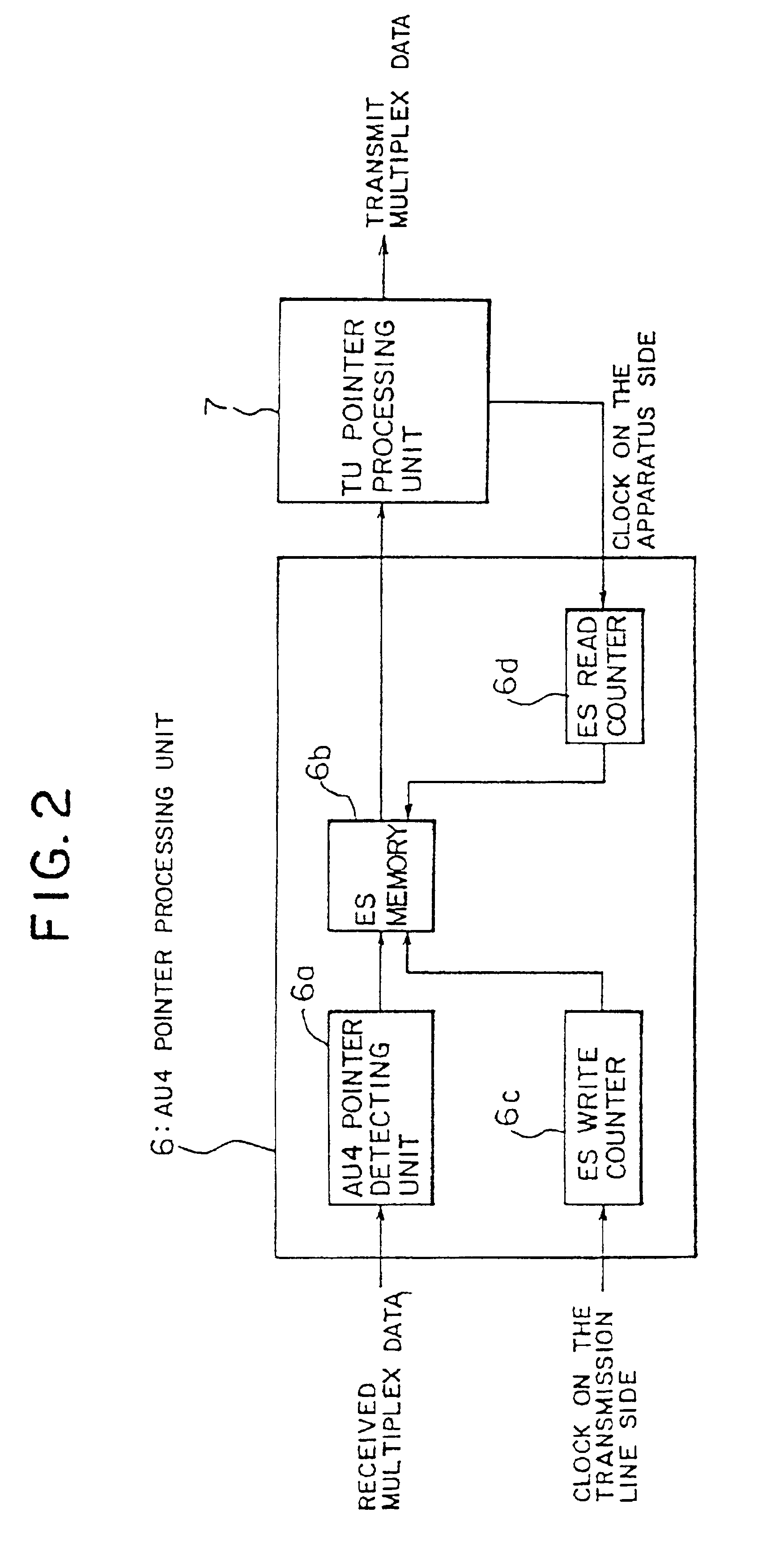

Pointer processing apparatus, POH terminating process apparatus, method of POH terminating process and pointer/POH terminating process apparatus in SDH transmission system

InactiveUS6912201B1Minimum scaleMinimum power consumptionError preventionFrequency-division multiplex detailsMultiplexingAddress generation unit

A pointer processing apparatus in an SDH transmission system used to serially conducting a pointer process on inputted multiplex data has an address generating unit for allocating an address to each channel of the multiplex data, a RAM for holding an information group obtained by a pointer extracting process and a pointer process, and RAM controlling unit for controlling a sequence of an operation to write-in / read-out the RAM to serially conduct the pointer process on the received multiplex data, thereby largely decreasing the circuit scale, the power consumption, the number of distributions and the like. A POH terminating operation process is conducted in a POH terminating operation process unit, and an obtained result of the POH terminating operation is stored in a storage area for a corresponding channel of a storage unit, whereby the POH terminating operation process can be conducted without separating a multiplex signal into channels. By conducting the POH terminating operation process on the multiplex signal without separating the multiplex signal into channels, a scale and a power consumption of an apparatus can be largely decreased.

Owner:FUJITSU LTD

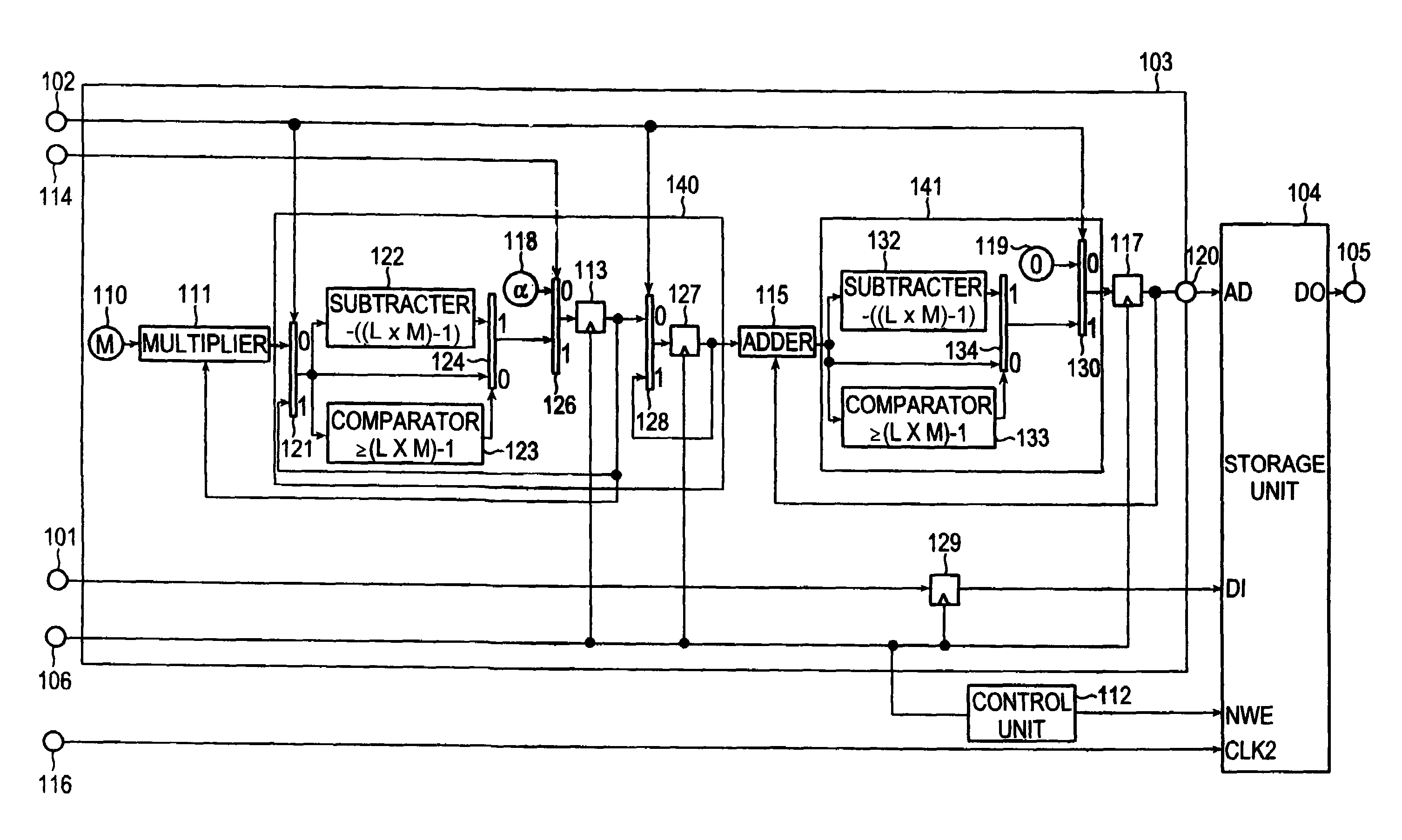

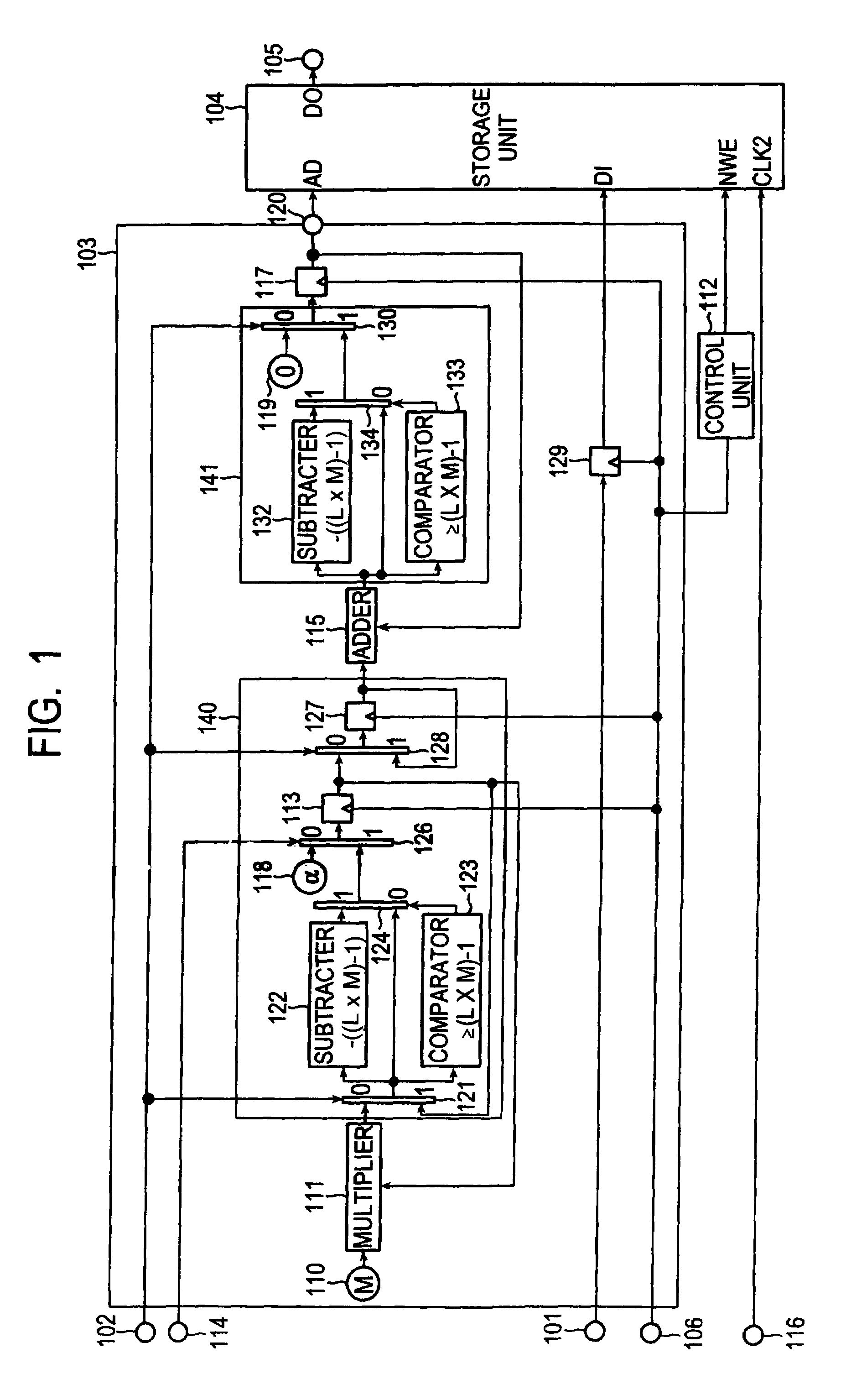

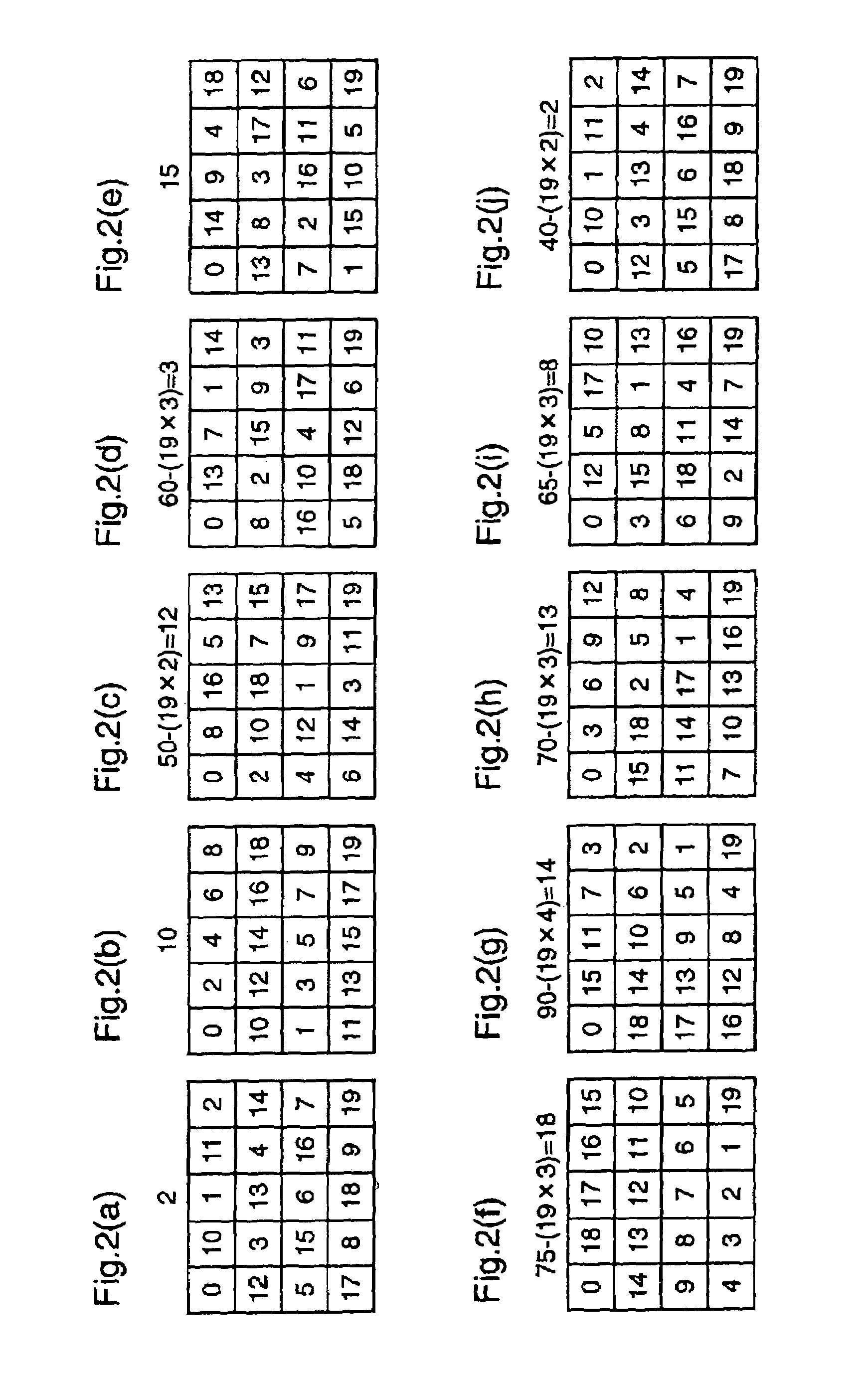

Block interleaving apparatus, block deinterleaving apparatus, block interleaving method and block deinterleaving method

InactiveUS6986081B1Reduce circuit sizeNumberError detection/correctionMemory adressing/allocation/relocationAddress generation unitComparator

In a block interleaving apparatus, a block deinterleaving apparatus, a block interleaving method, and a block deinterleaving method for performing block interleaving and block deinterleaving by using a single plane of a storage unit having a storage area of one block, in order to realized further reductions in circuit scale and power consumption, a comparison reference value of a comparator 123 included in an address generation unit 103 for generating addresses of a storage unit 104 is set at a minimum value which appears in the output of a multiplier 111 and is larger than L×M−1, thereby reducing the scale of the comparator.

Owner:SOCIONEXT INC

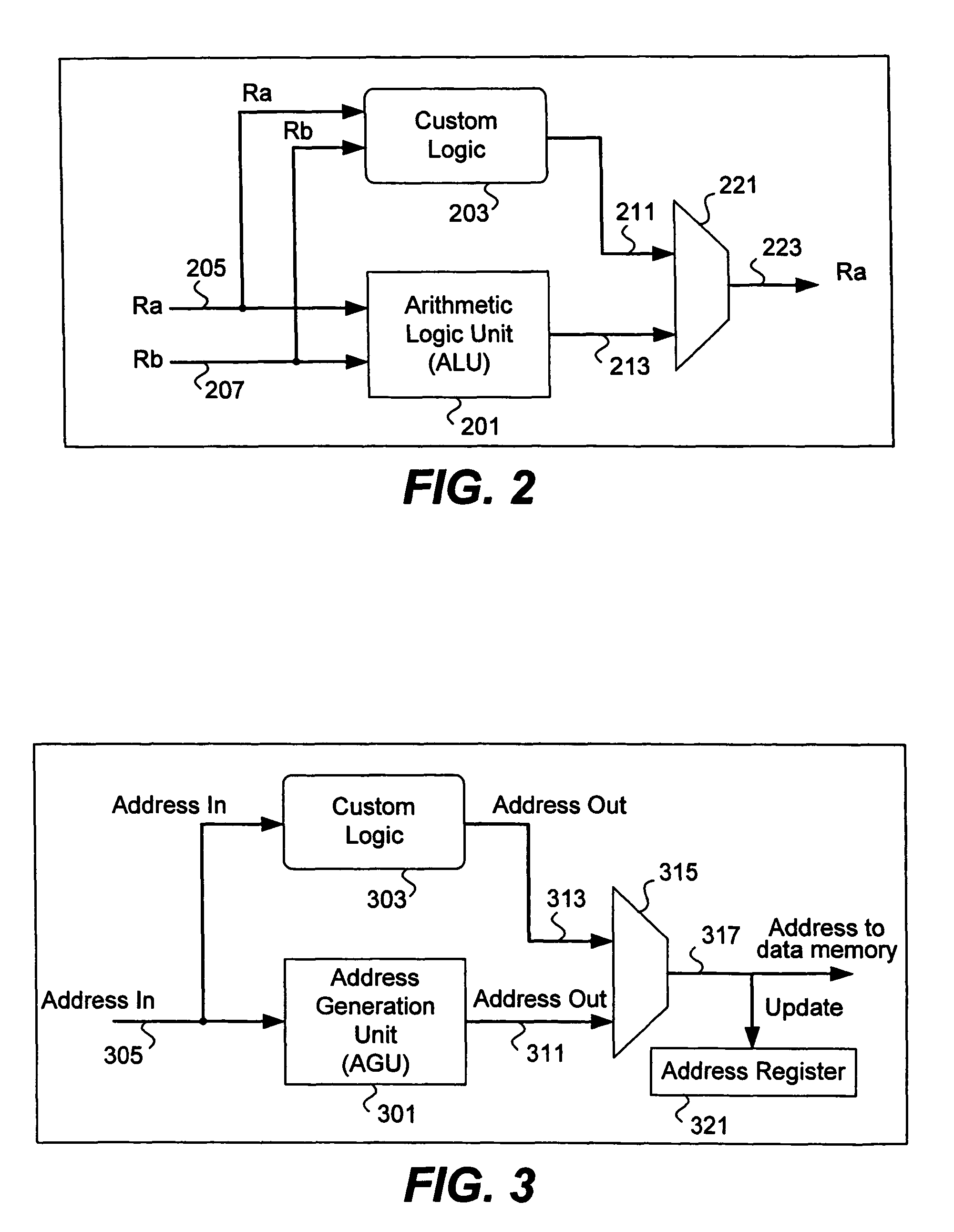

Methods and apparatus for executing extended custom instructions

ActiveUS8006074B1Efficient executionGeneral purpose stored program computerSpecific program execution arrangementsAddress generation unitArithmetic logic unit

Methods and apparatus are provided for efficiently executing extended custom instructions on a programmable chip. Components of a processor core such as arithmetic logic units, program sequencer units, and address generation units are integrated with customizable logic blocks. Various customizable logic blocks can be invoked in a pipelined manner using an available customized instruction set while allowing a processor to continue simultaneous operation. Program counter snooping is also provided to add custom instruction functionality to a processor with no additional provisions for adding custom instructions.

Owner:ALTERA CORP

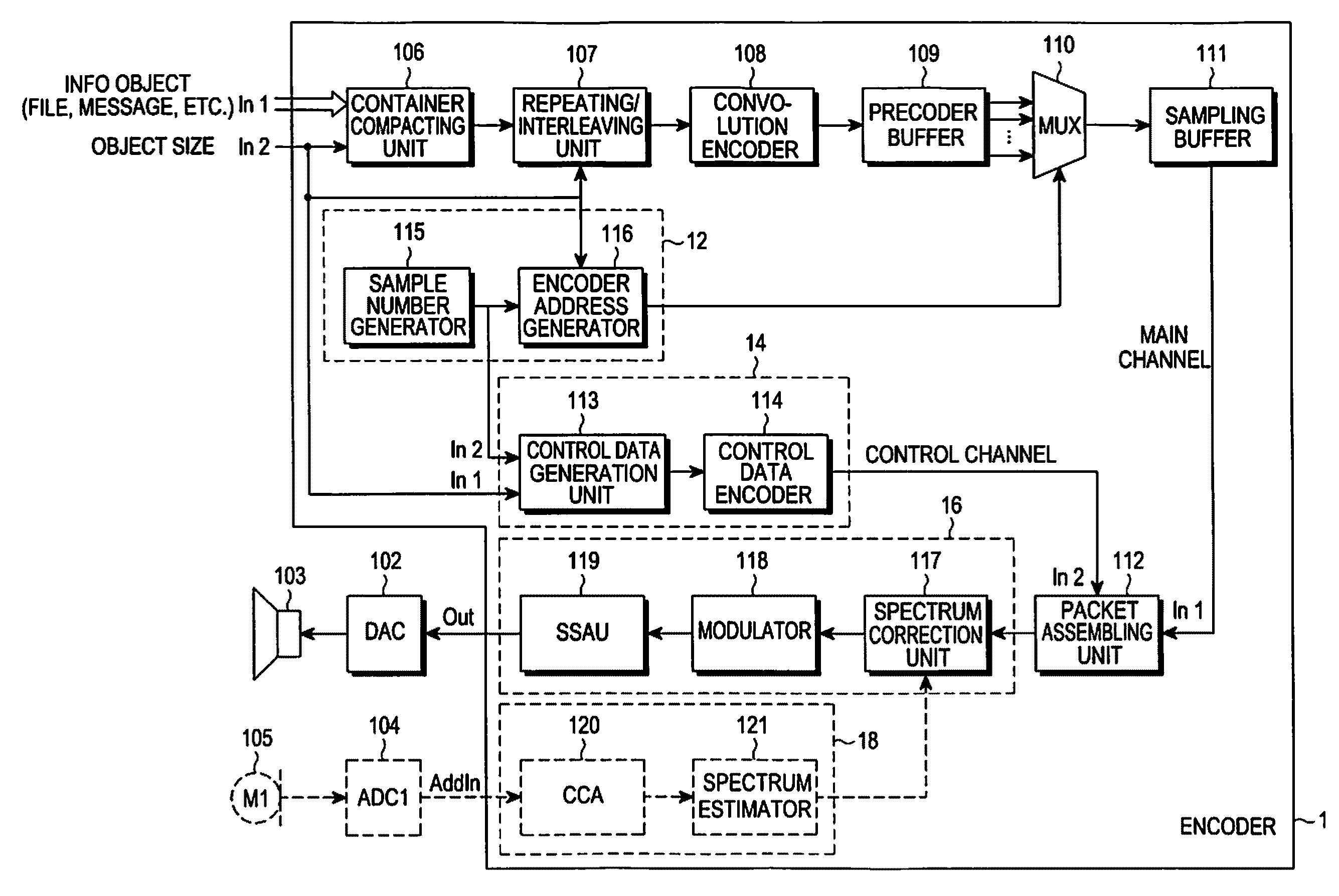

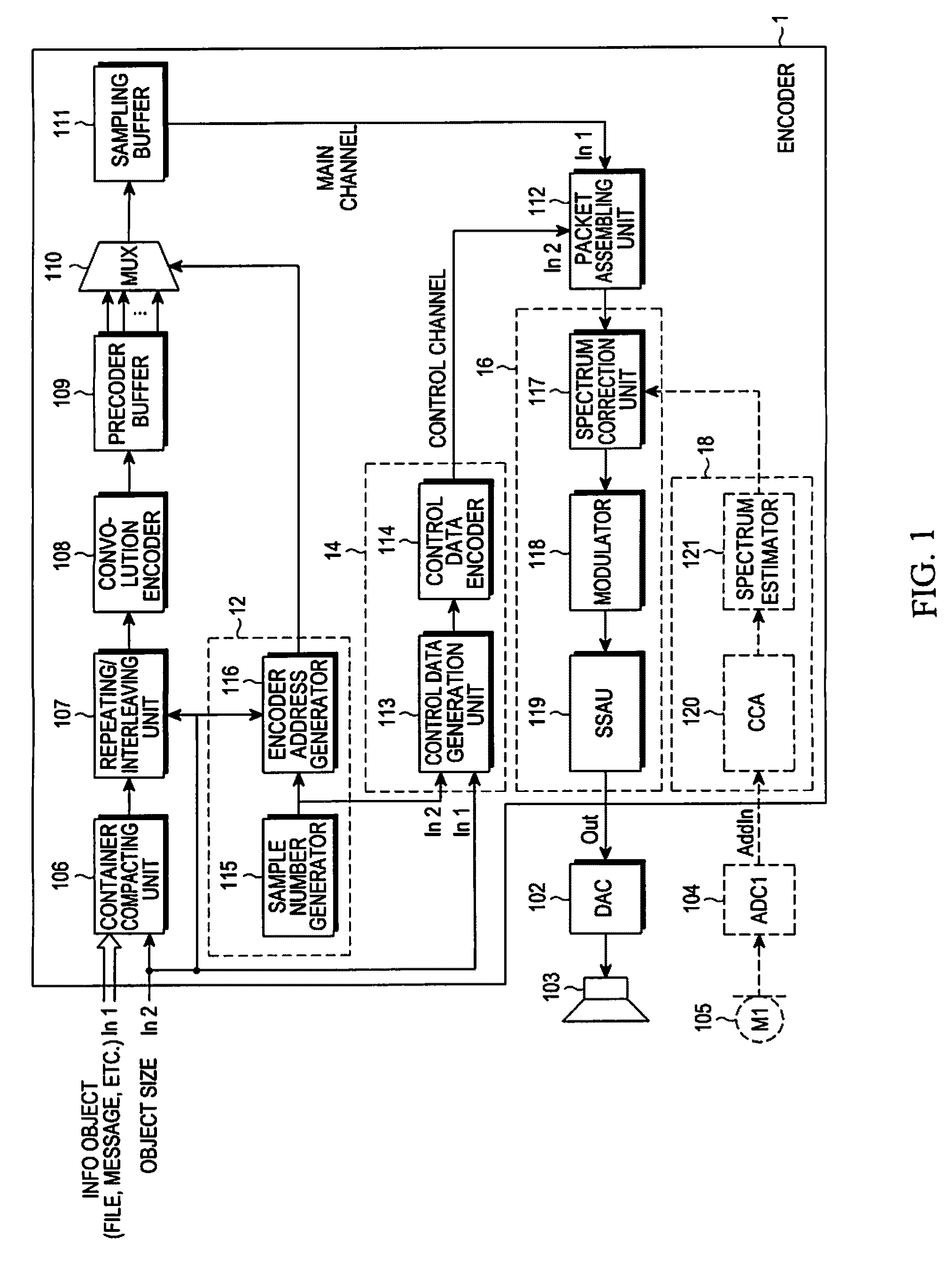

Encoder, decoder, encoding method, and decoding method

InactiveUS20100290484A1Optimal volume of transmittedIncrease transfer speedError preventionCode conversionAddress generation unitEncoder decoder

An encoder includes a precoder for encoding an input information object according to a preset encoding scheme and storing the encoded information object in a precoder buffer, a sample number / address generation unit for generating a sample number of each sample and an address, which corresponds to each bit of each sample and the address of the precoder buffer, a multiplexer for selecting a bit of the precoder buffer corresponding to the address generated by the sample number / address generation module, a sampling buffer for storing a bit of each sample output from the multiplexer, a control packet generation module for generating a control packet including information on the sample number generated by the sample number / address generation module, a packet assembling unit for assembling the sample stored in the sampling buffer with the control packet generated by the control data generation module, and a modulation module for modulating the packet output from the packet assembling unit into a sound signal according to a preset scheme.

Owner:SAMSUNG ELECTRONICS CO LTD

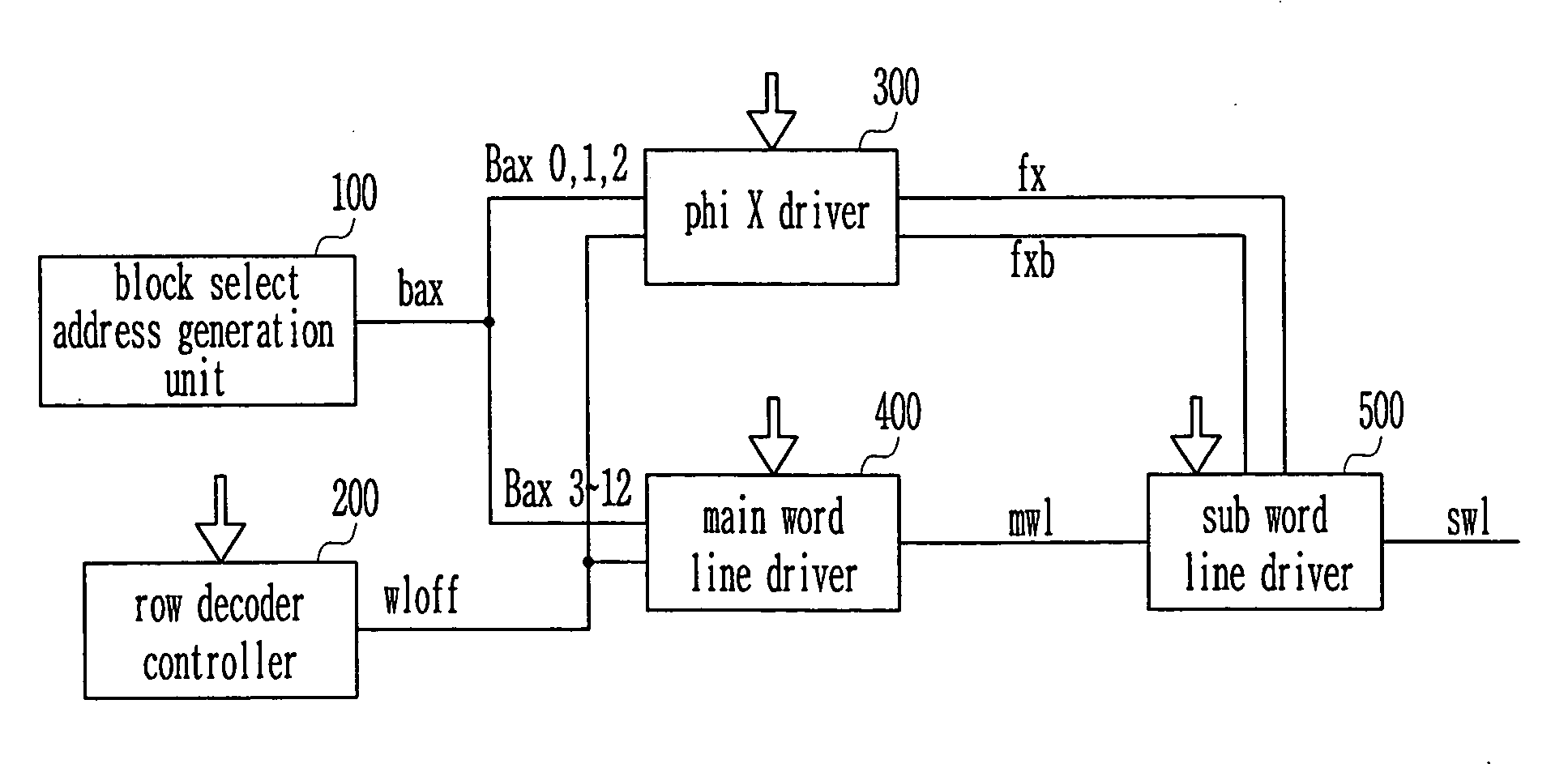

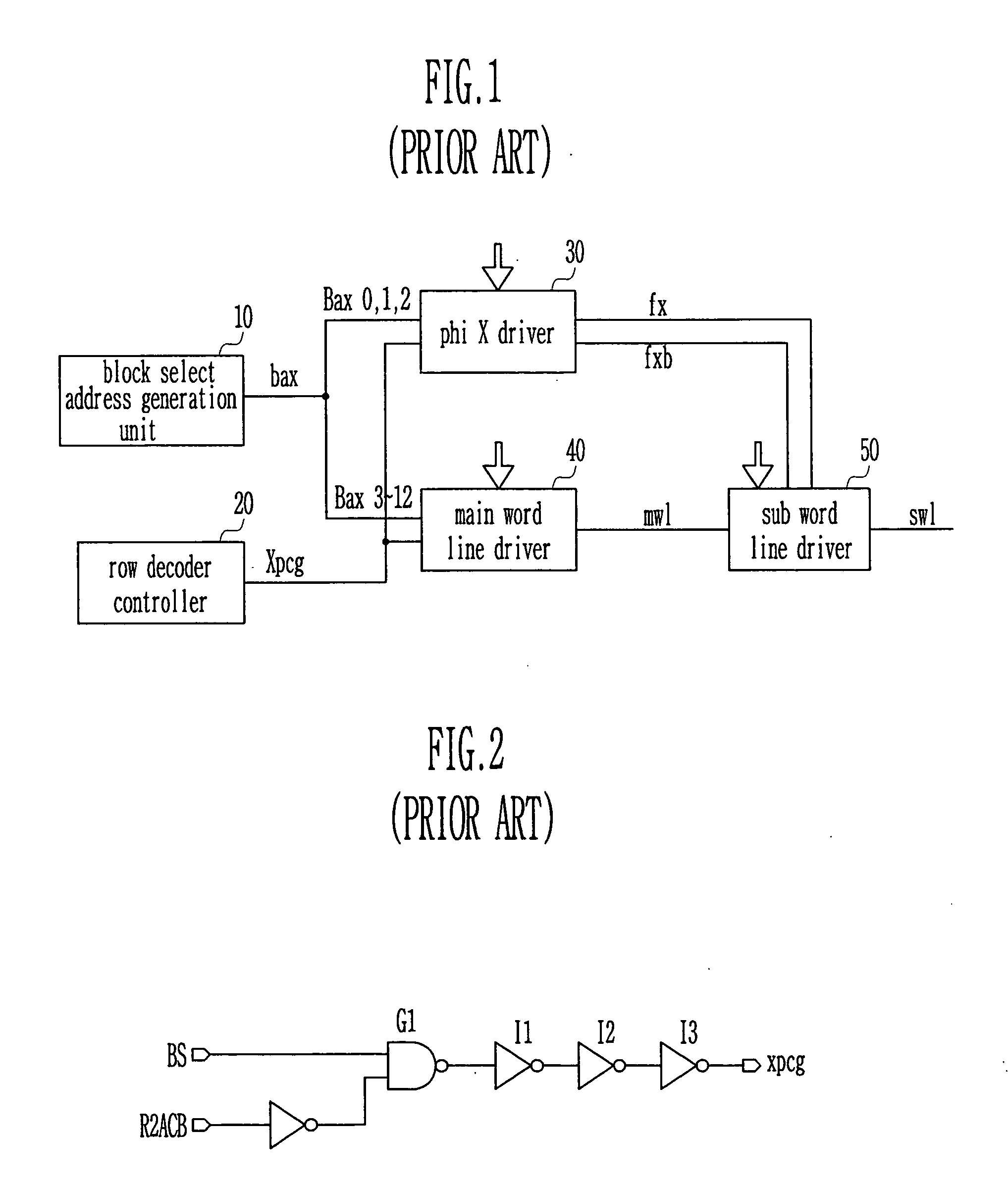

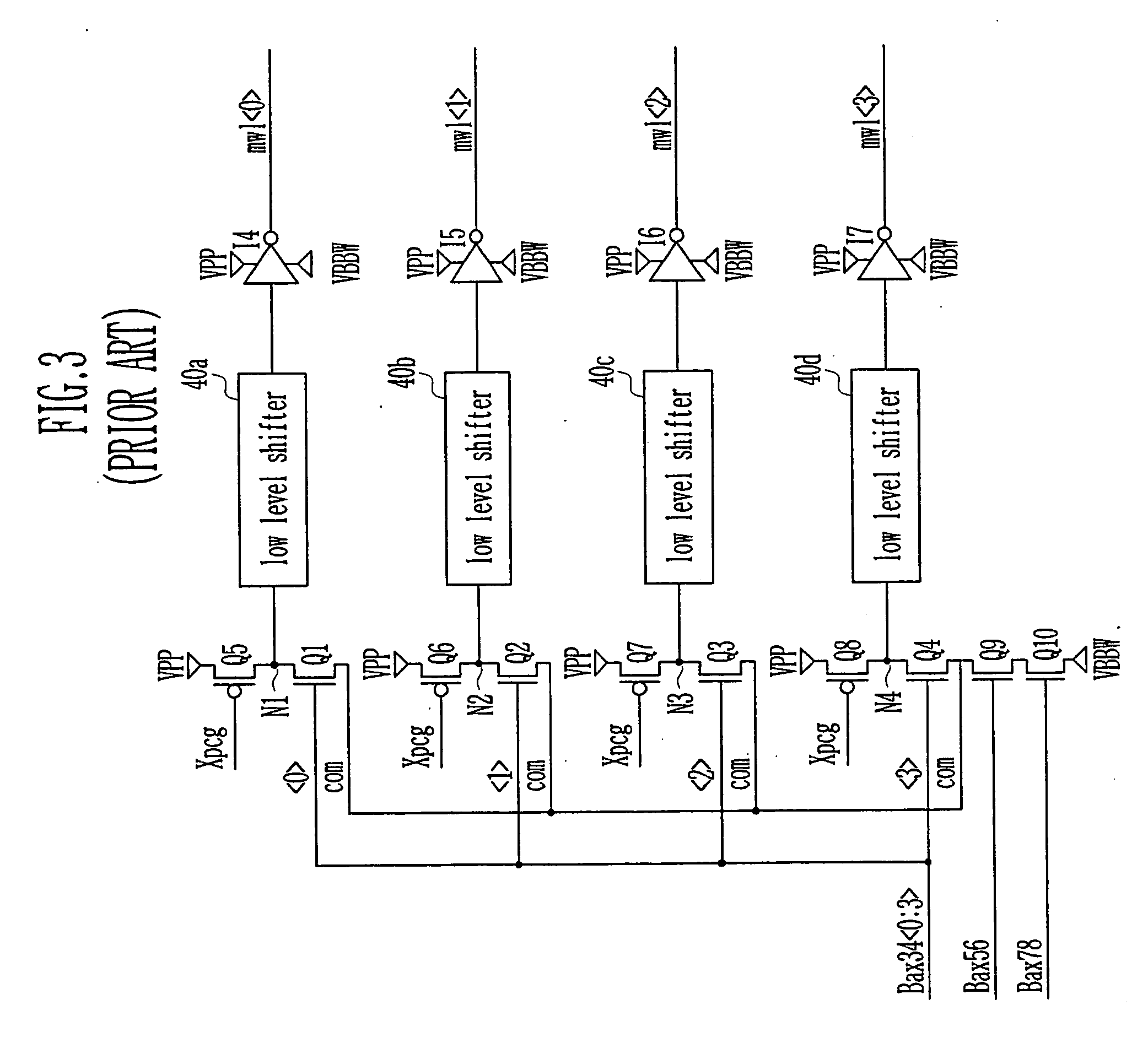

Negative word line driver

Provided is directed to a negative word line driver, including: a block select address generation unit for generating first and second block select addresses having a block information according to an active signal; a row decoder controller for generating a control signal to disable a word line; a main word line driver for accessing a main word line by being driven in response to a signal coding the first block select address and the control signal; and a phi X driver for accessing a sub word line by being driven in response to a signal coding the second block select address and the control signal wloff.

Owner:SK HYNIX INC

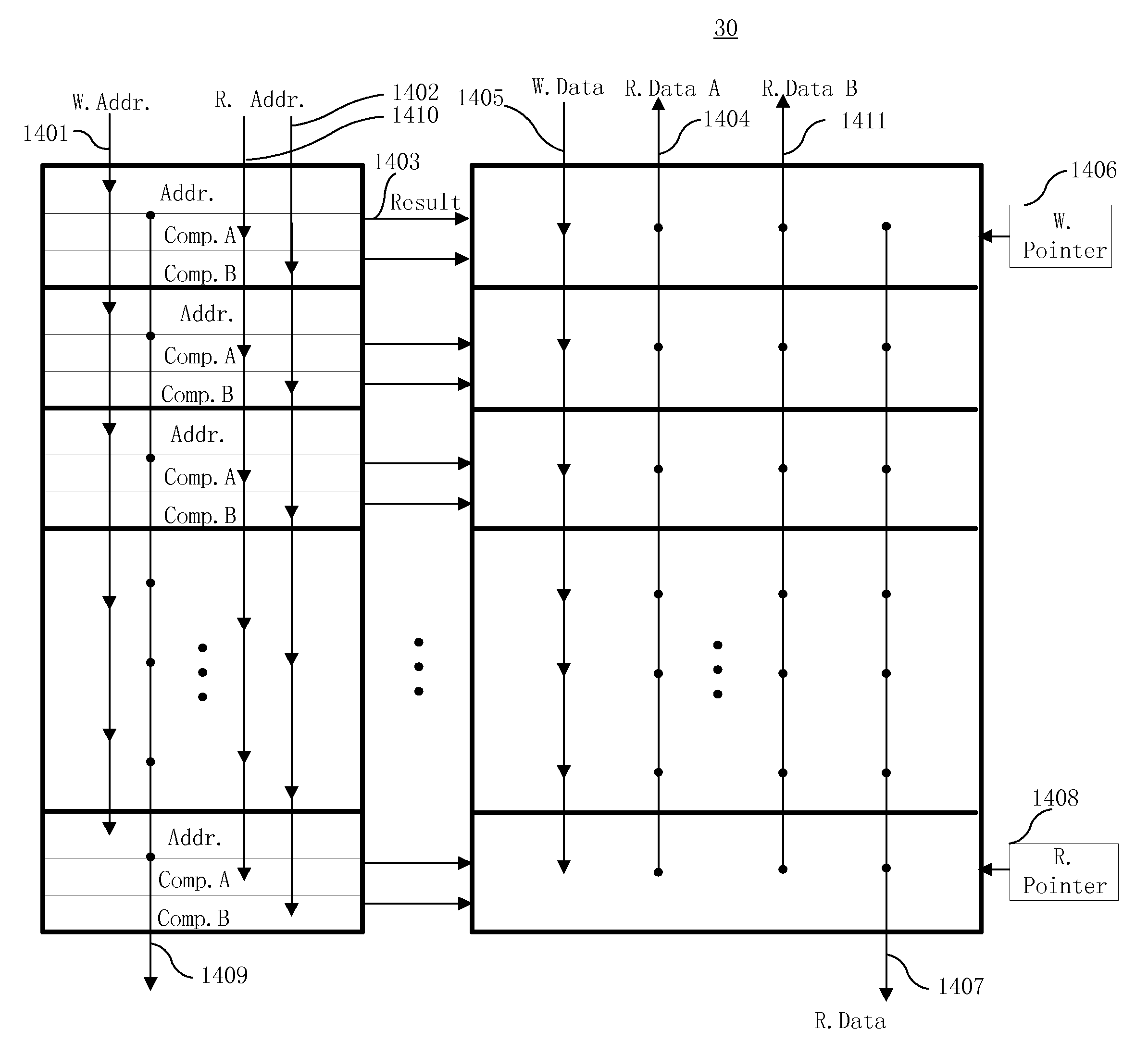

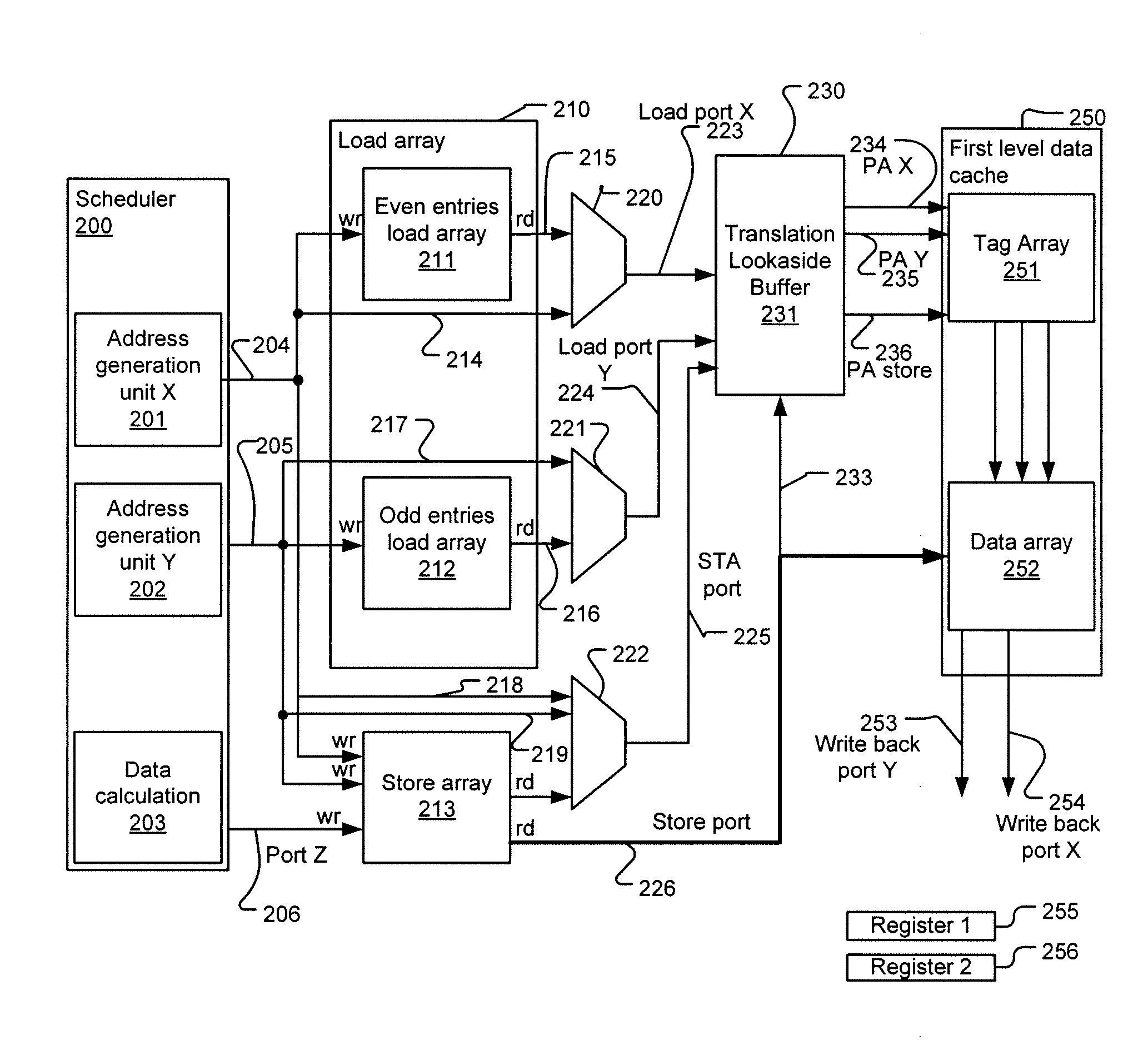

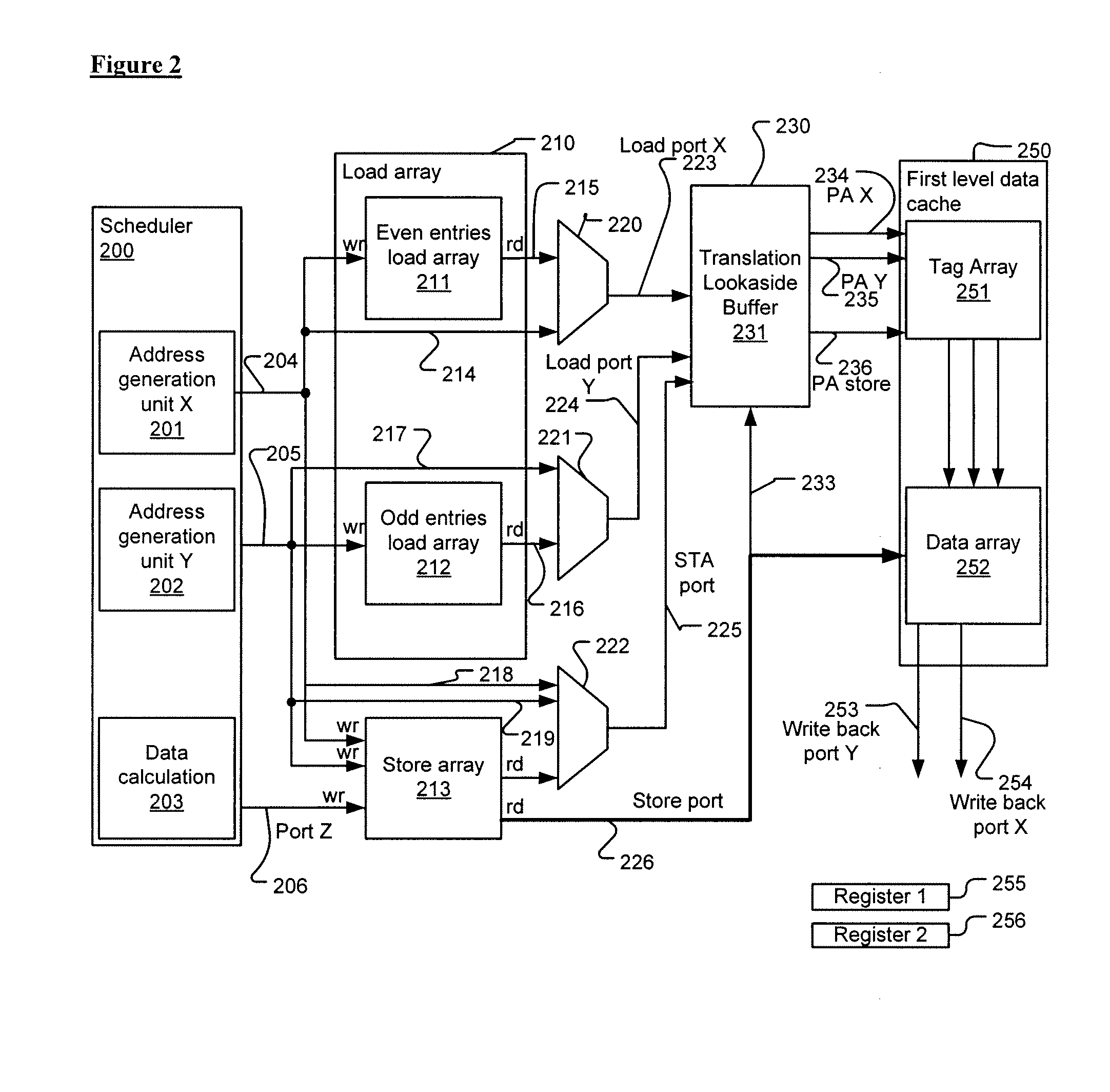

Apparatus and method for memory structure to handle two load operations

InactiveUS20090300319A1Memory adressing/allocation/relocationMicro-instruction address formationAddress generation unitMemory bandwidth

An apparatus and method to increase memory bandwidth is presented. In one embodiment, the apparatus comprises a load array having: a first array to store a plurality of load operation entries and a second array to store a second plurality of load operation entries. The apparatus further comprises: a store array having a plurality of store operation entries; a first address generation unit coupled to send a linear address of a first load operation to the first array and to send a linear address of a first store operation to the store array; and a second address generation unit coupled to send a linear address of a second load operation to the second array and to send a linear address of a second store operation to the store array.

Owner:INTEL CORP

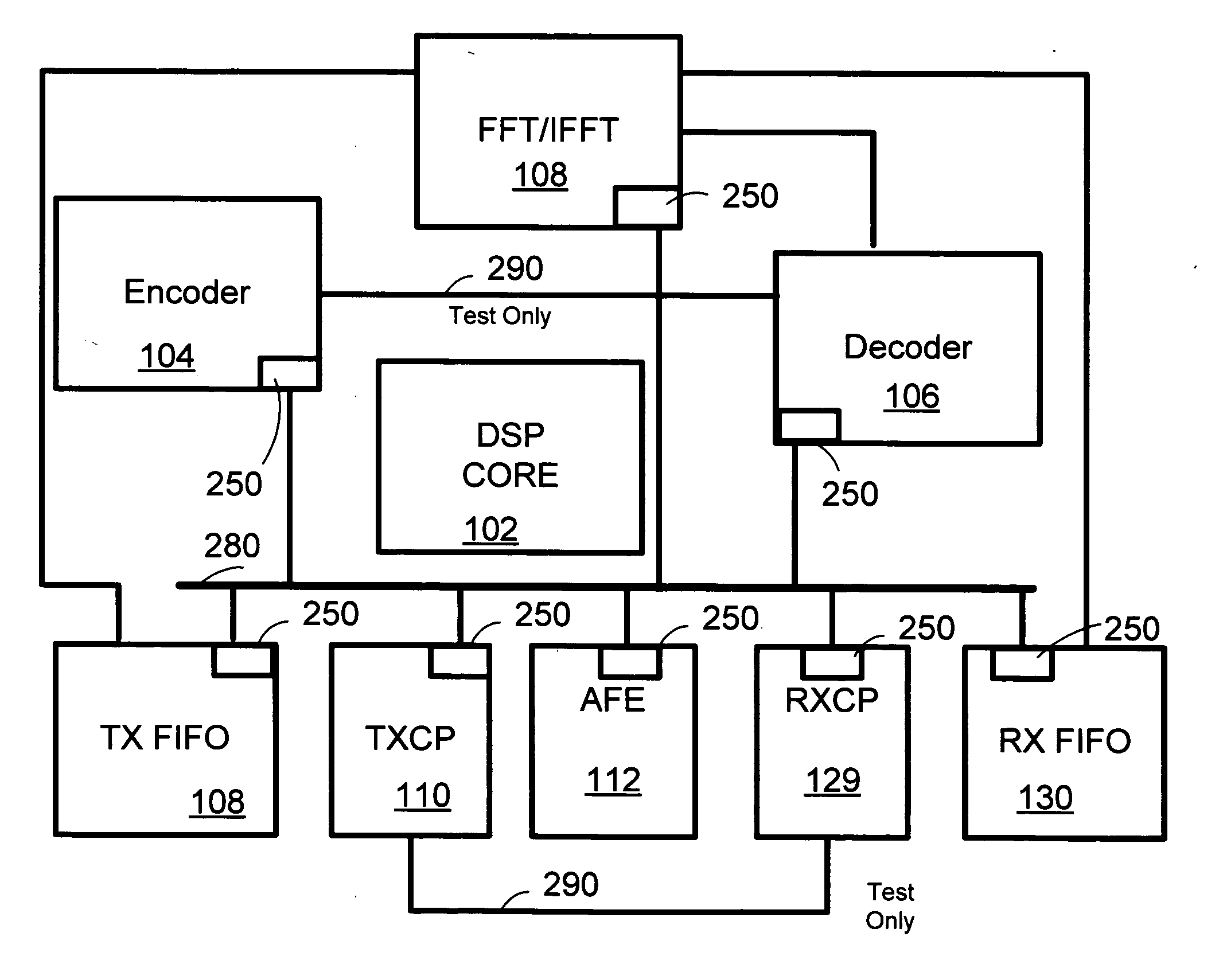

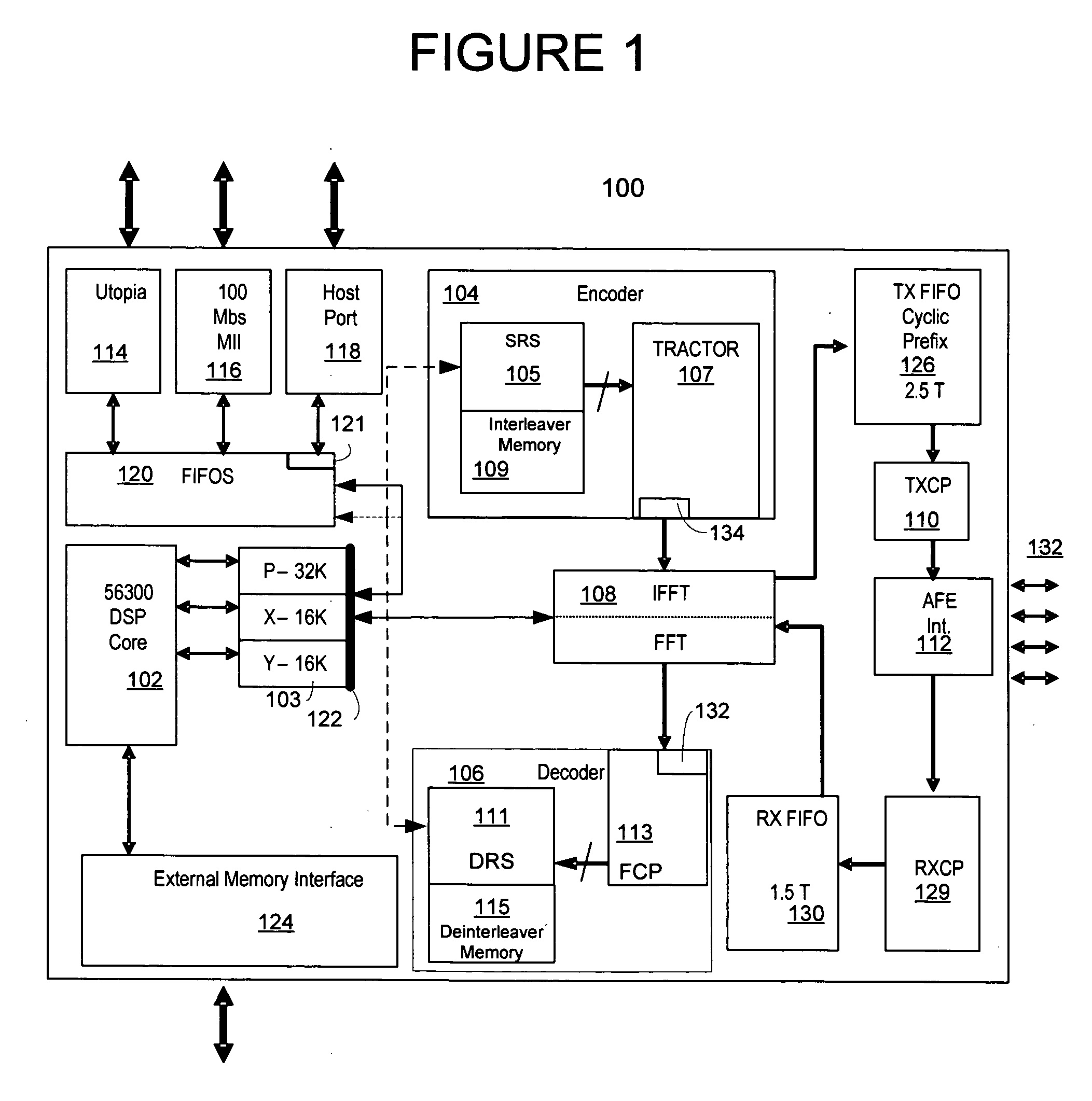

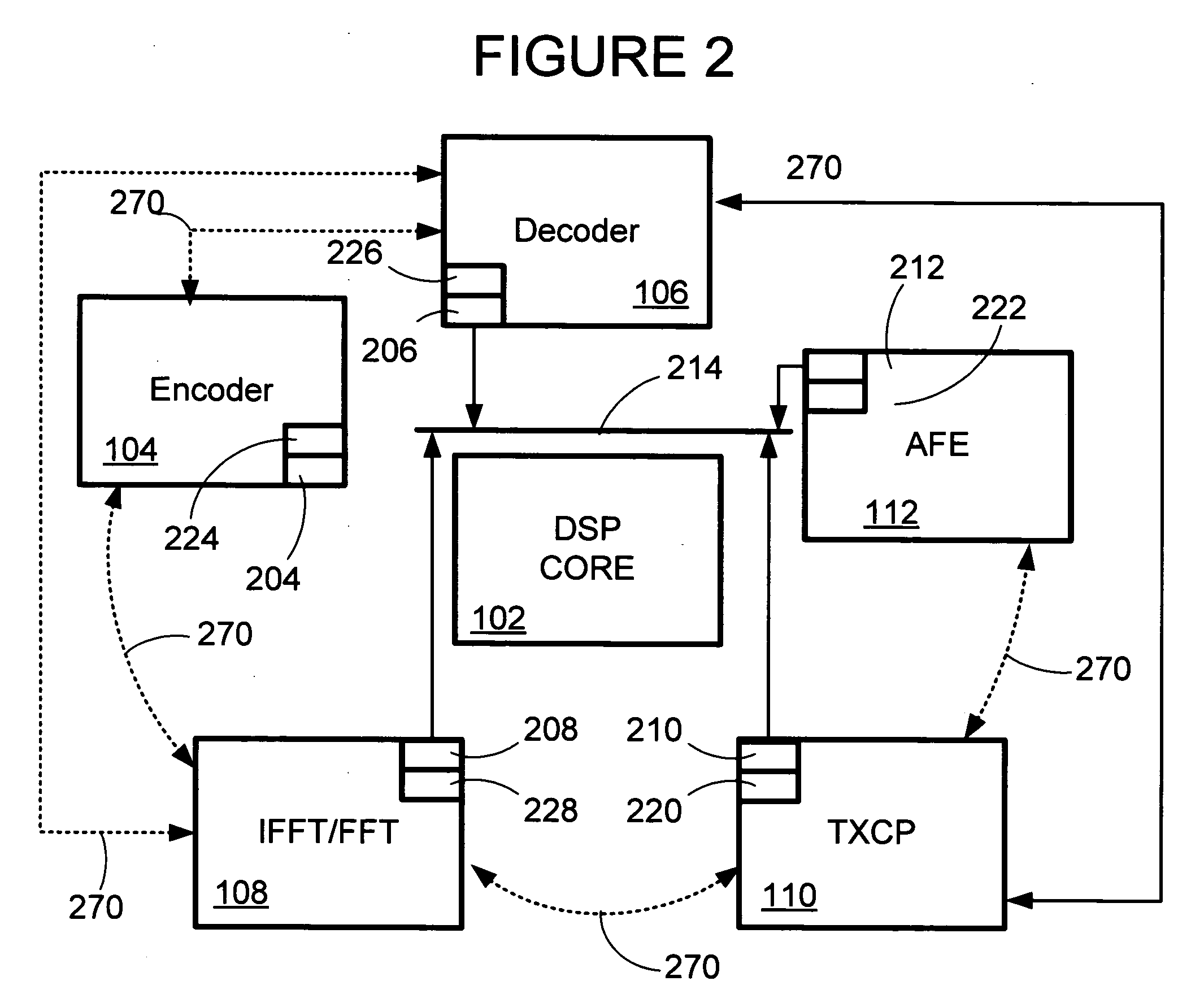

System and method for a fast fourier transform architecture in a multicarrier transceiver

InactiveUS20060095492A1Avoid collisionAvoiding latencyModulated-carrier systemsDigital computer detailsFast Fourier transformAddress generation unit

A Fourier transform architecture and system for FFT and IFFT processing within multicarrier transceiver is disclosed that includes a programmable butterfly component, a memory and a programmable address generation unit. The architecture includes a butterfly component configured to perform a plurality of radix butterfly calculations, and a four bank memory configured to operate on sample data. The architecture further includes a programmable address generation unit coupled to the pipeline to enable the architecture to perform calculations independent of Fourier-based algorithms. A method for addressing memory banks for an FFT pipeline includes expressing an index in radix notation, computing a bank address for a bank memory, converting the bank address to a reduced size by ignoring one or more bits, and calculating the bank address within the reduced memory bank.

Owner:METANOIA TECH

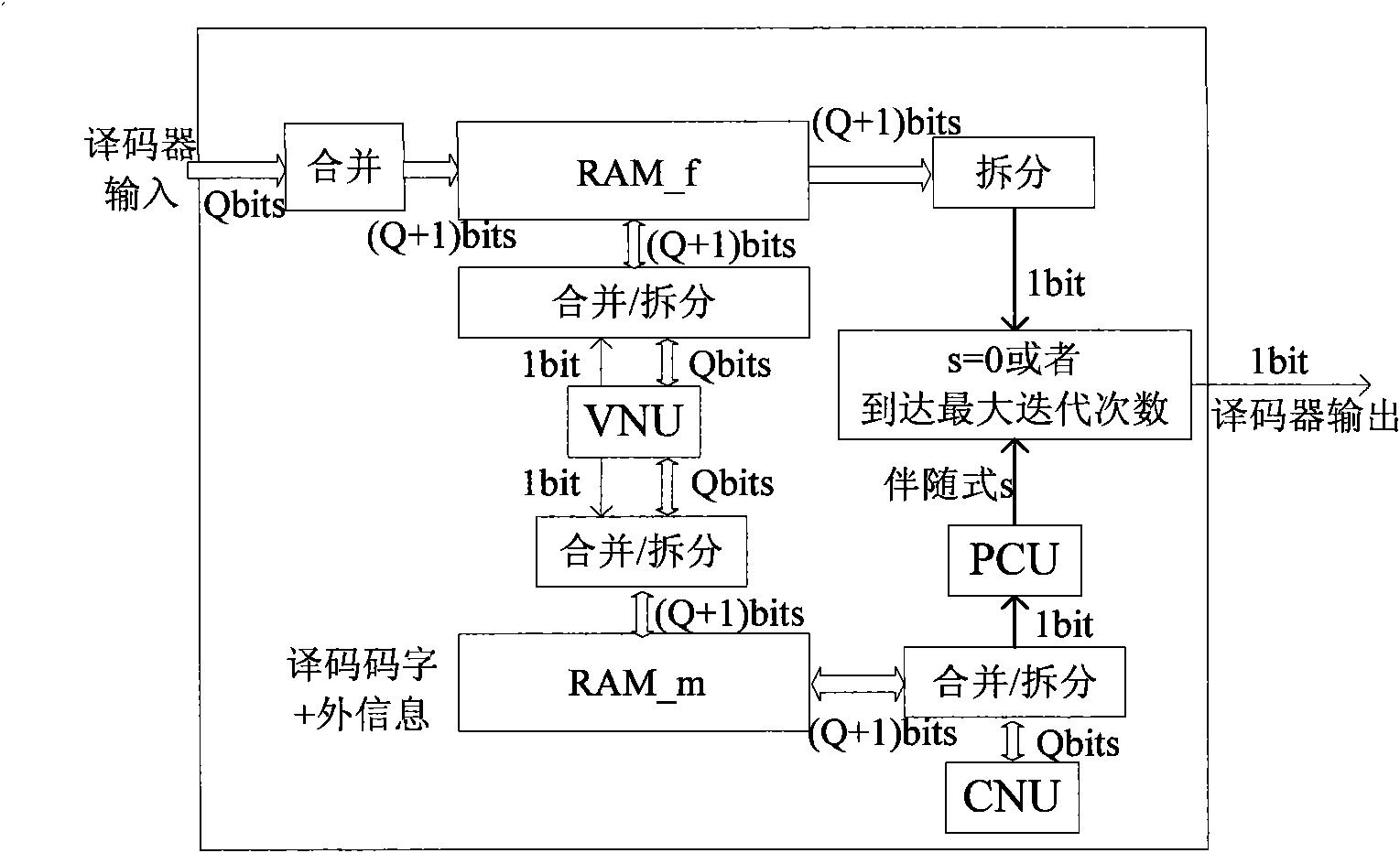

High efficiency storing method for coding digit of LDPC coder based on FPGA

ActiveCN101599302ASave logic resourcesReduce the quantity requiredData representation error detection/correctionRead-only memoriesProduction lineAddress generation unit

The invention discloses a high efficiency storing method for the coding digit of an LDPC coder based on an FPGA. In the method, the coding digit and external information (or channel information) share one storage block, thereby effectively reducing the requirement of the coder to the quantity of the storage resources; and the coding digit and the external information can be withdrawn when reading the storage block. Therefore, a check equation computational unit PCU and a check node update unit CNU can share one group of address information, and the PCU does not need additional address generation units; and finally, the design method of a step thinning production line is adopted to realize process units VNU, CNU and PCU, thereby effectively reducing the key path delay of the coder and providing a necessary safeguard to improve the throughput of the LDPC coder. When realizing the method with the FPGA, the invention not only can save a large number of the resources for respectively storing the digit and the logic resources for generating addresses which are needed by the PCU, but also can improve the throughput of the coders.

Owner:XIAN INSTITUE OF SPACE RADIO TECH

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com