Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

156results about How to "Avoid contention" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

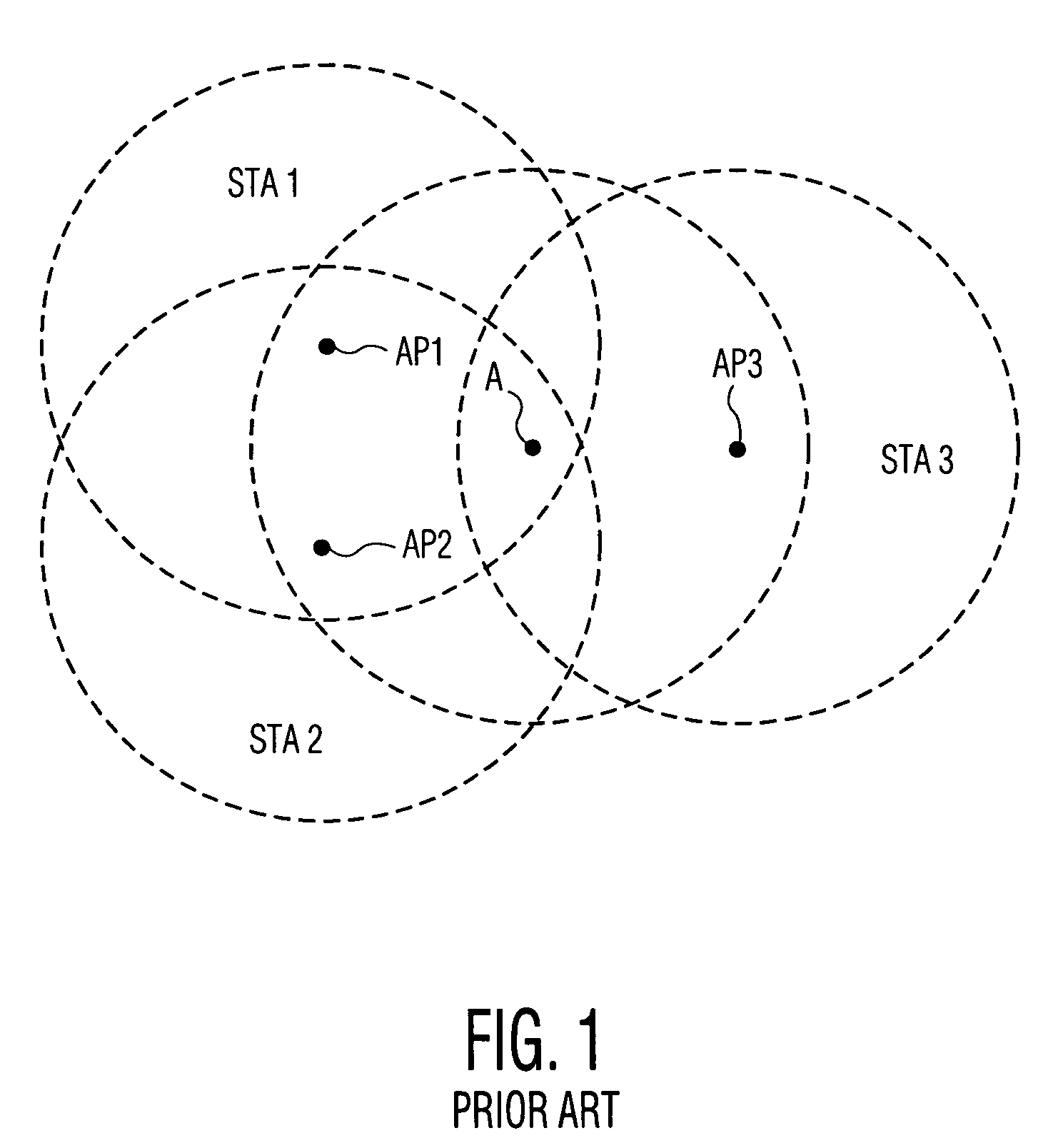

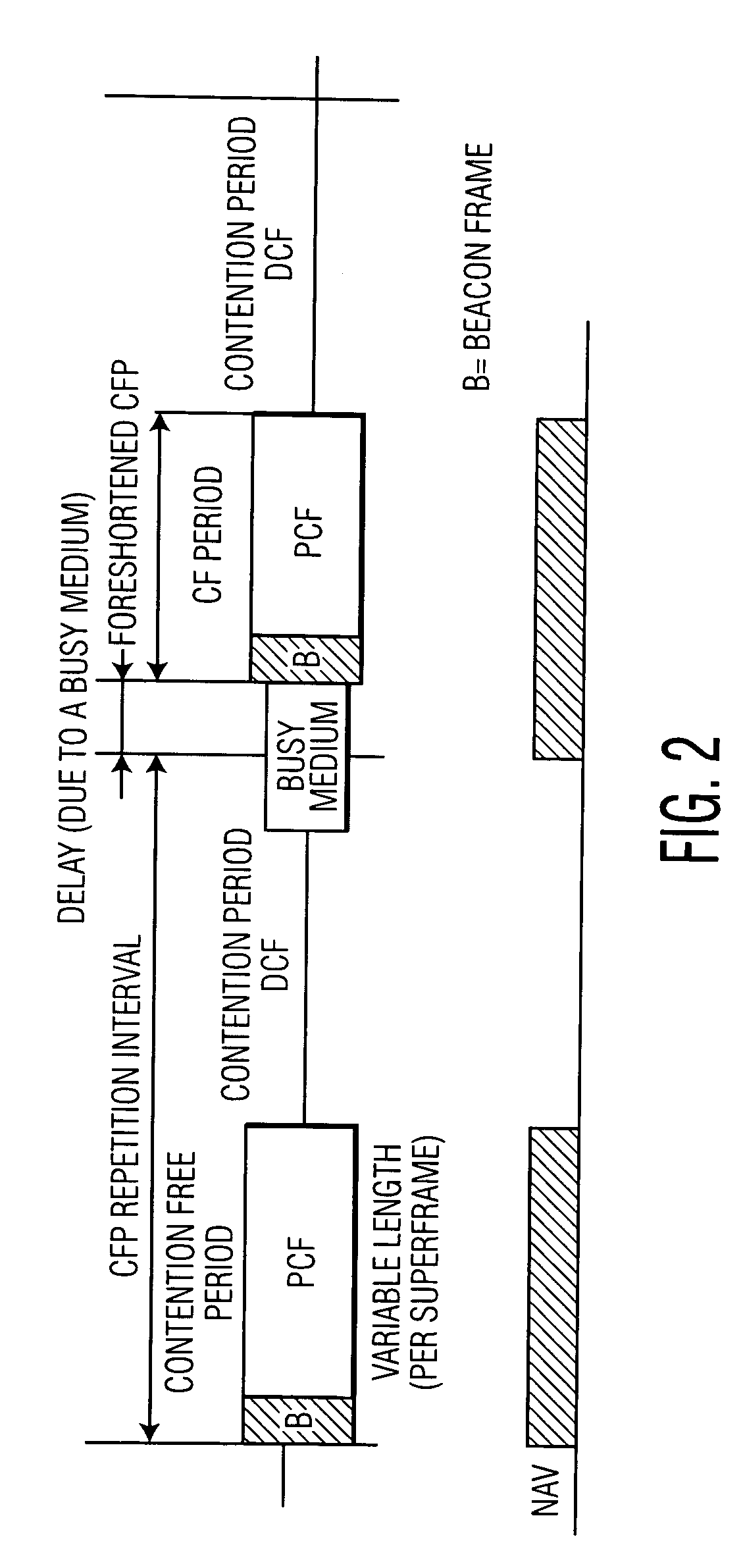

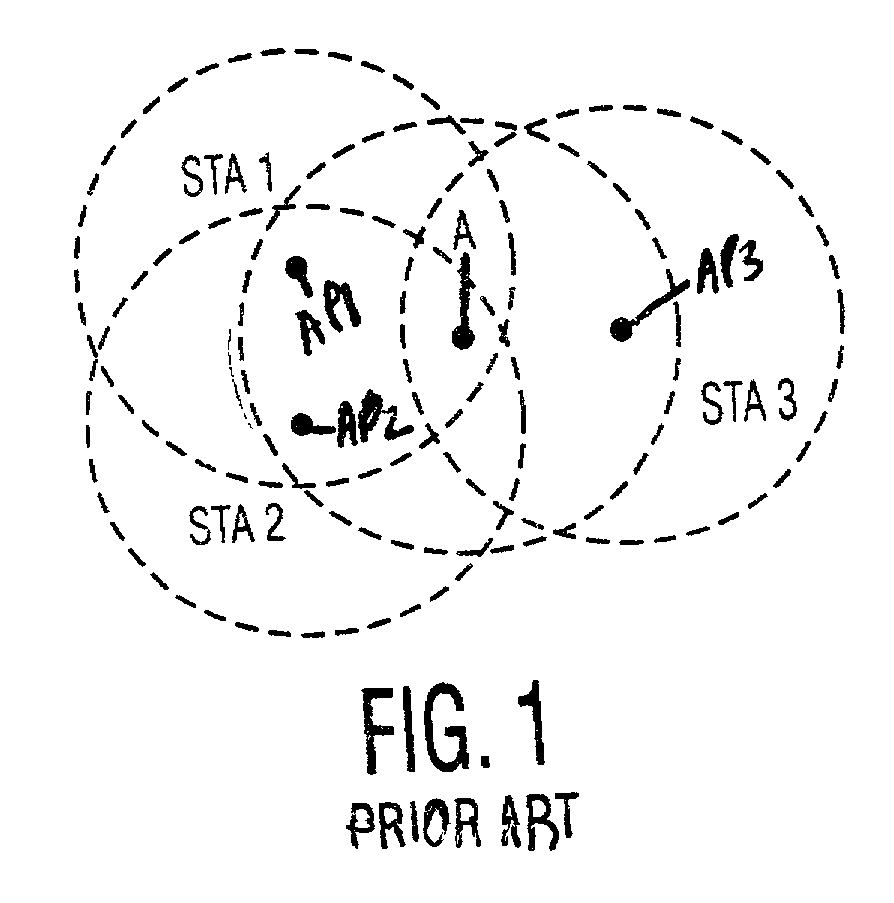

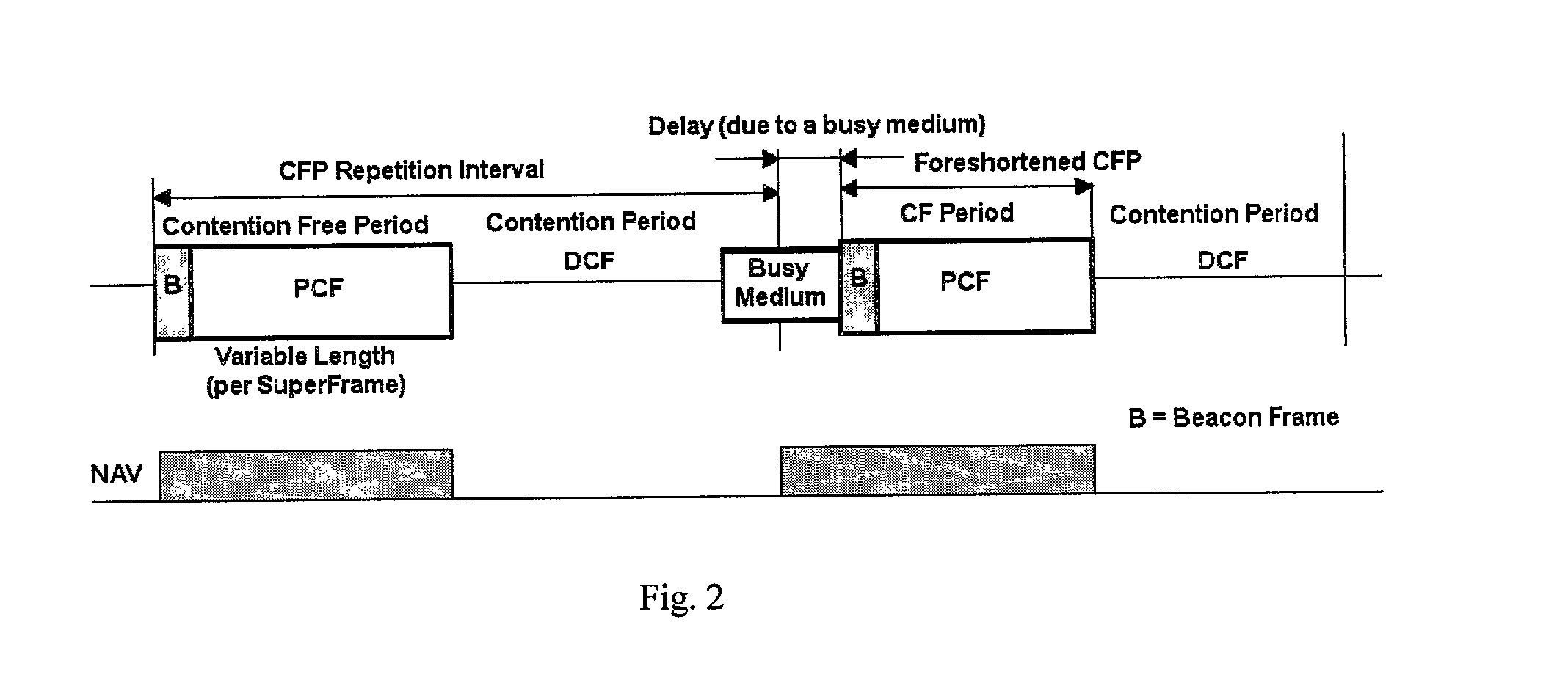

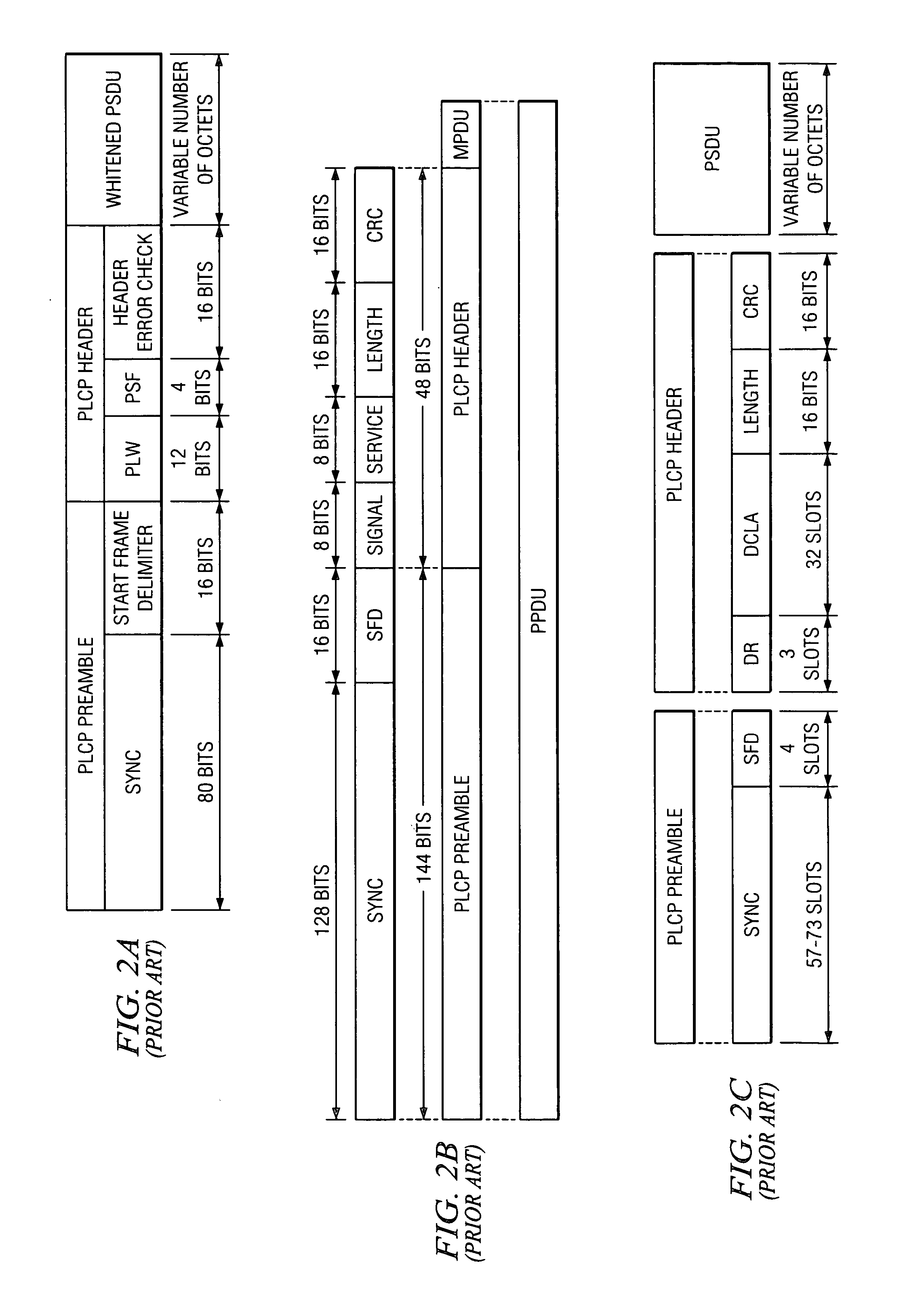

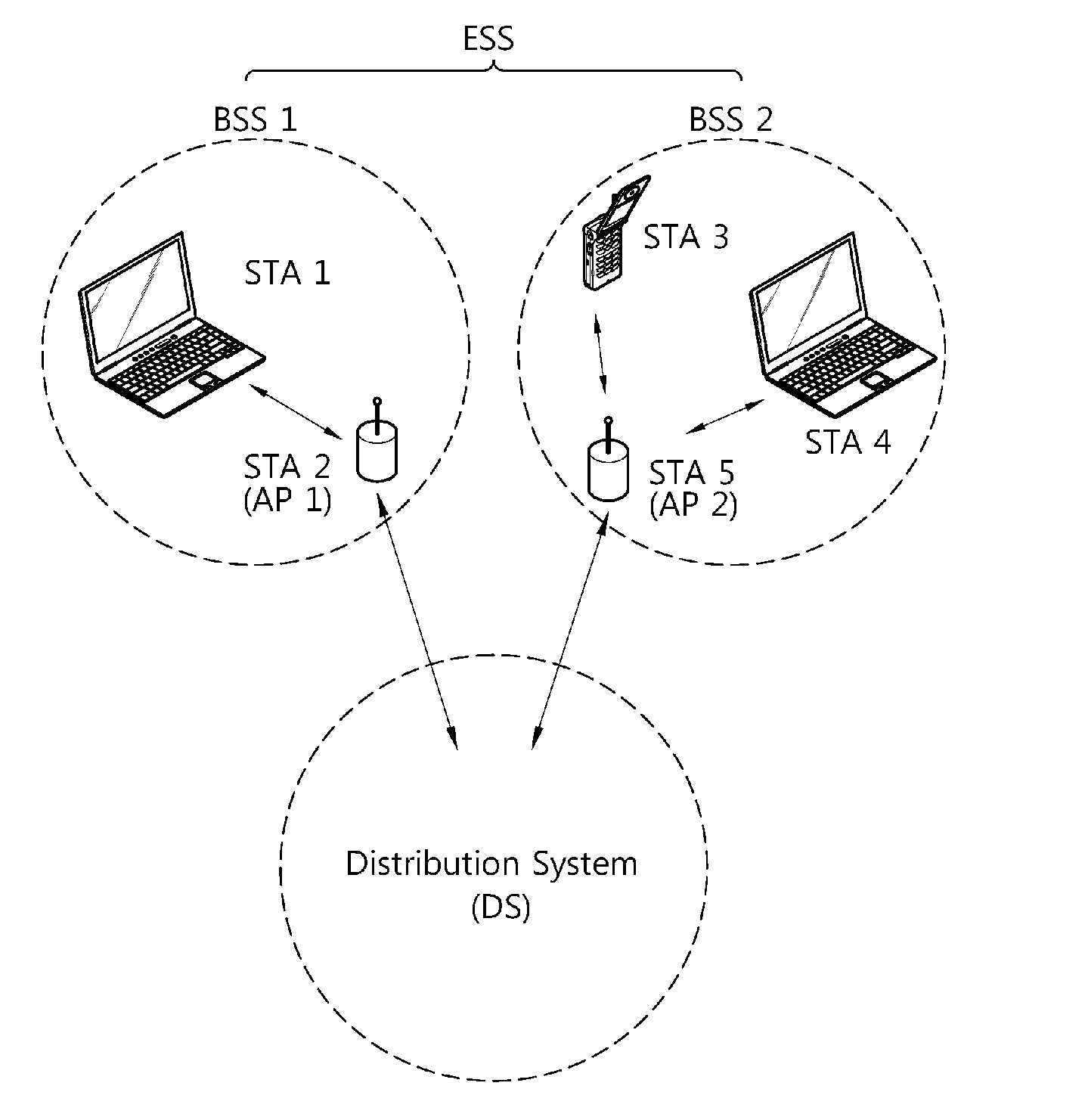

Collision avoidance in IEEE 802.11 contention free period (CFP) with overlapping basic service sets (BSSs)

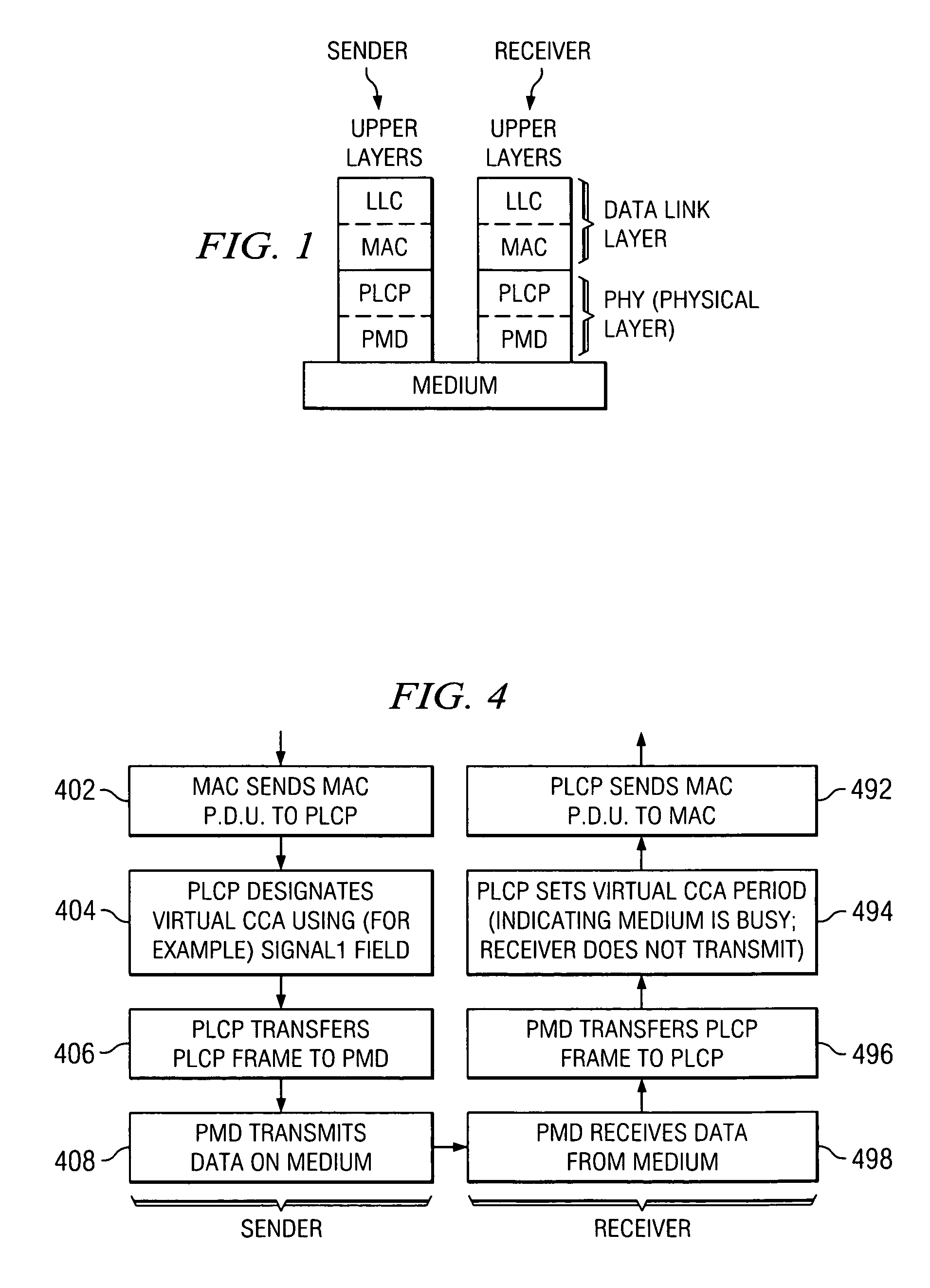

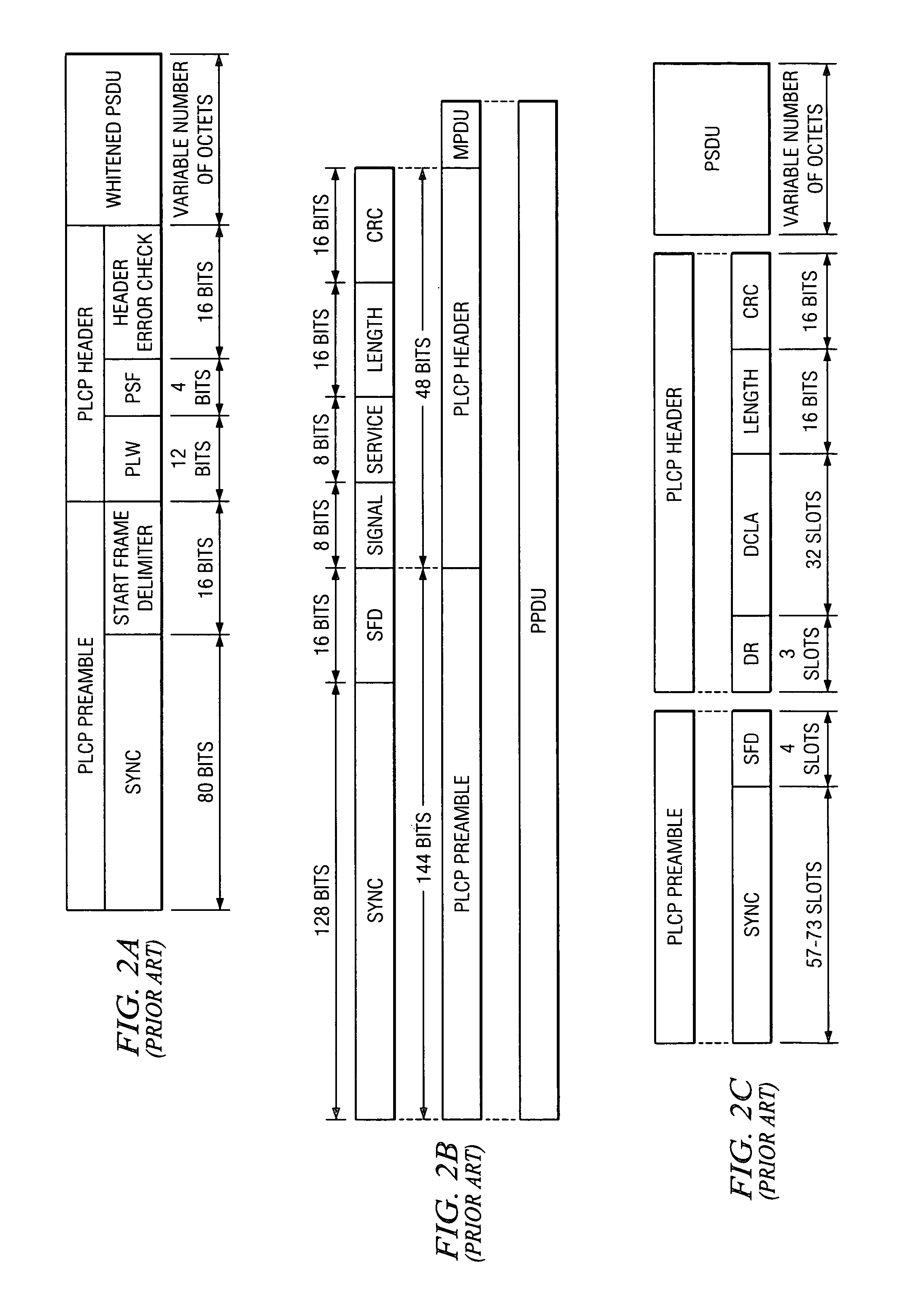

InactiveUS20060029073A1Improve efficiencyAvoid contentionNetwork topologiesData switching by path configurationClear to sendBasic service

A medium access control (MAC) protocol is provided for avoiding collisions from stations (STAs) comprising two or more IEEE 802.11 basic service sets (BSSs) collocated and operating in the same channel during contention free periods (CFPs). The MAC protocol includes hardware / software for utilizing ready-to-send (RTS) / clear-to-send(CTS) exchange during CFPs to avoid potential collision from STAs in overlapping BSSs and hardware / software for providing overlapping network allocation vectors (ONAV) in addition to a network allocation vector (NAV), the ONAV included to facilitate the effectiveness of the RTS / CTS during CFPs.

Owner:CERVELLO GERARD +1

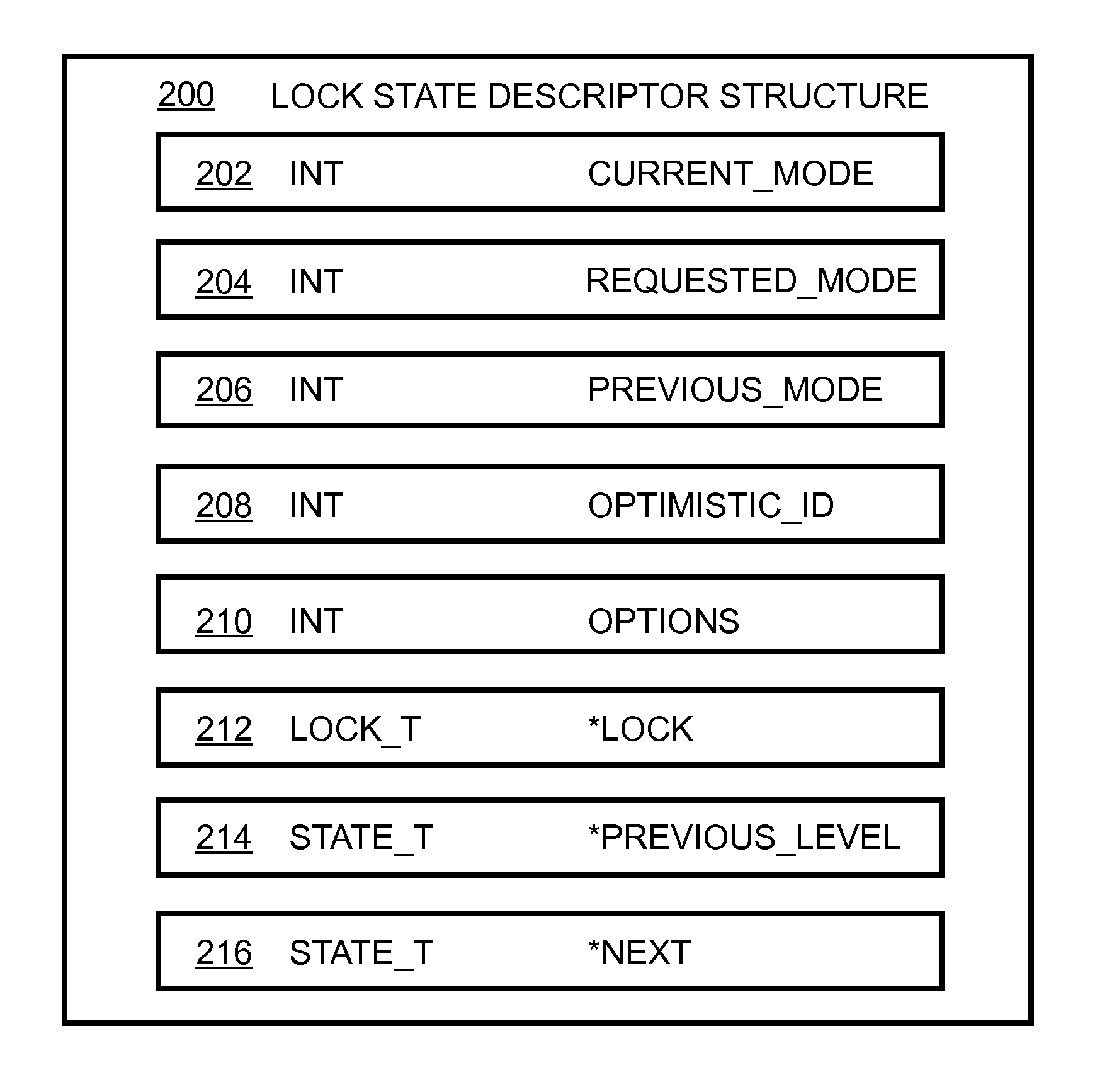

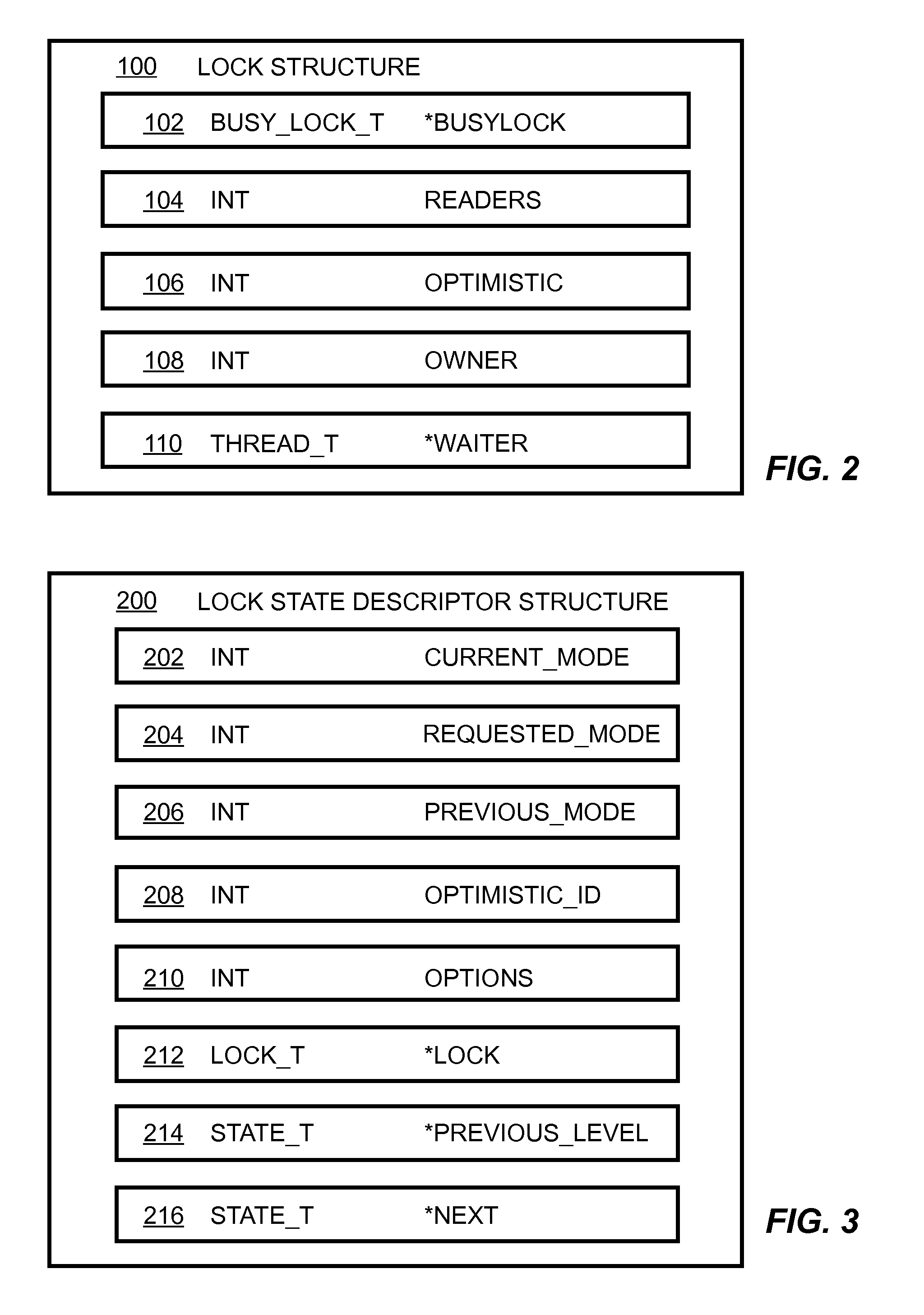

Reentrant read-write lock algorithm

InactiveUS9471400B1Avoiding further contentionSignificant bottleneckResource allocationProgram synchronisationReal-time computingCurrent mode

Access to a shareable resource between threads is controlled by a lock having shared, optimistic and exclusive modes and maintaining a list of threads requesting ownership of said lock. A shared optimistic mode is provided. A lock state descriptor is provided for each desired change of mode comprising a current mode in which a thread has already acquired the lock. When a thread acquires the lock in shared optimistic mode, other threads are allowed to acquire the lock in shared or optimistic mode. When a thread which acquired the lock in shared optimistic mode wants to acquire the lock in exclusive mode, other threads which have acquired the lock in shared or optimistic mode are prevented from acquiring the lock in exclusive mode until the thread which acquired the lock in shared optimistic mode and requested to acquire the lock in exclusive mode releases the lock.

Owner:IBM CORP

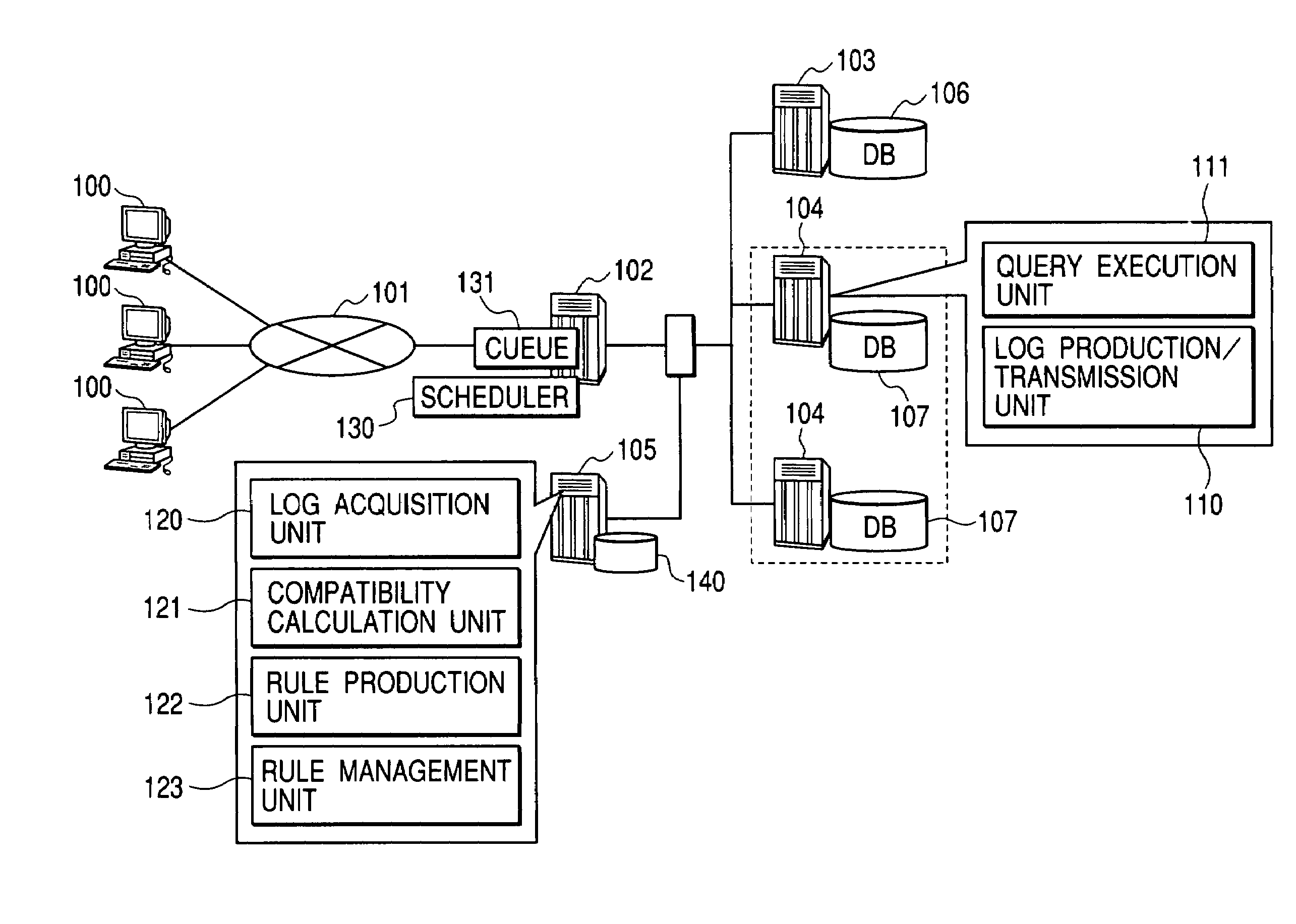

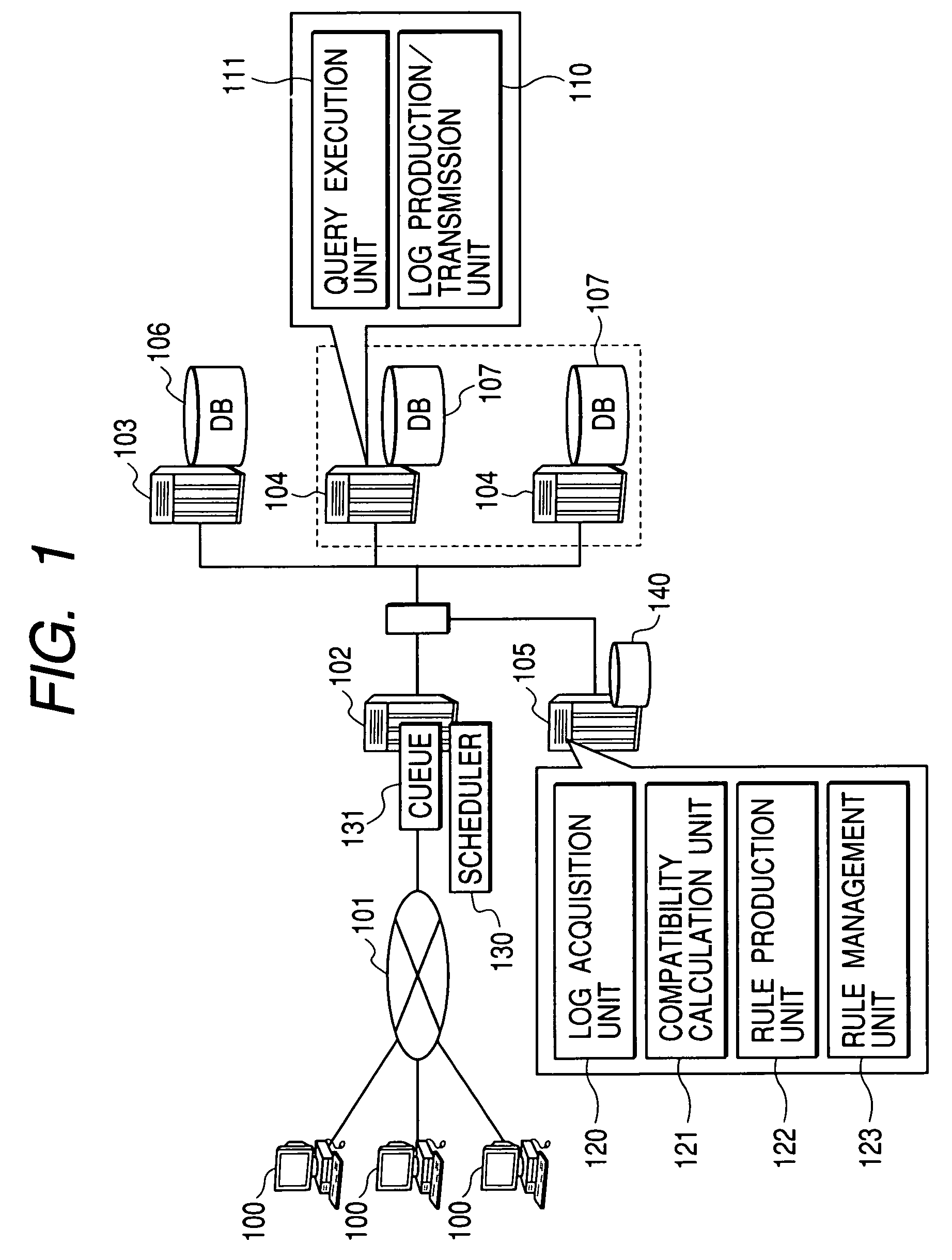

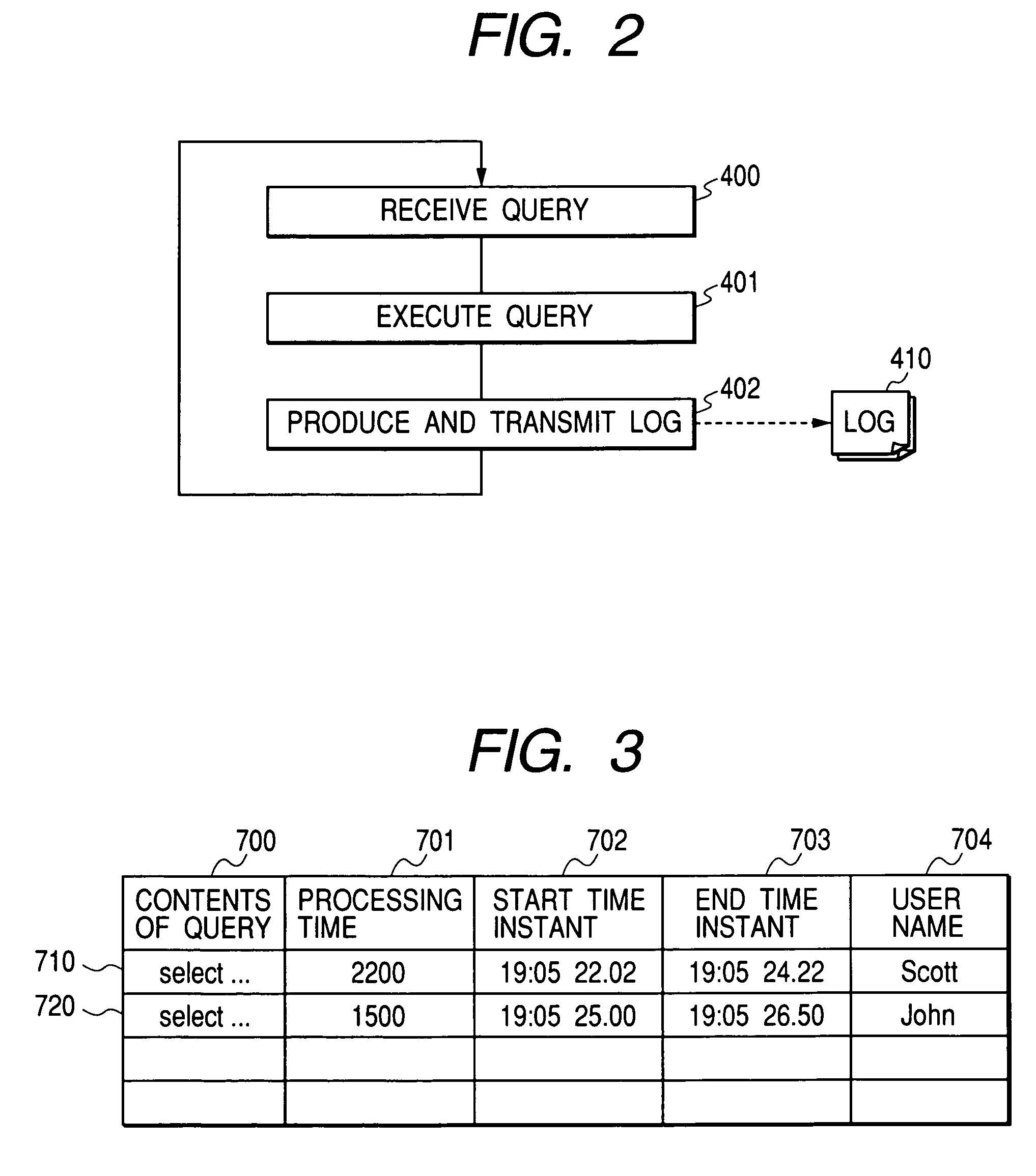

Database system, server, query posing method, and data updating method

InactiveUS7146357B2Improve throughputLow costDigital data information retrievalData processing applicationsDatabase serverQuery log

An object of the present invention is to avoid contention for resources in a parallel / decentralized database system so as to improve the performance of the system. A query posing method is implemented in a database system comprising a plurality of database servers each of which includes a database from which the same content can be retrieved and searches the database in response to a query request a front-end server that poses a query according to a predetermined rule and a management server that manages rules to be used by the front-end server. The management server acquires a processed query log relevant to a database server, and produces a rule according to the compatibility value of a query calculated using the acquired processed query log. Moreover, the front-end server poses the query according to the rule produced by the management server.

Owner:HITACHI LTD

Collision avoidance in IEEE 802.11 contention free period (CFP) with overlapping basic service sets (BSSs)

InactiveUS7054329B2Improve efficiencyAvoid contentionNetwork topologiesTime-division multiplexClear to sendTelecommunications

Owner:KONINK PHILIPS ELECTRONICS NV

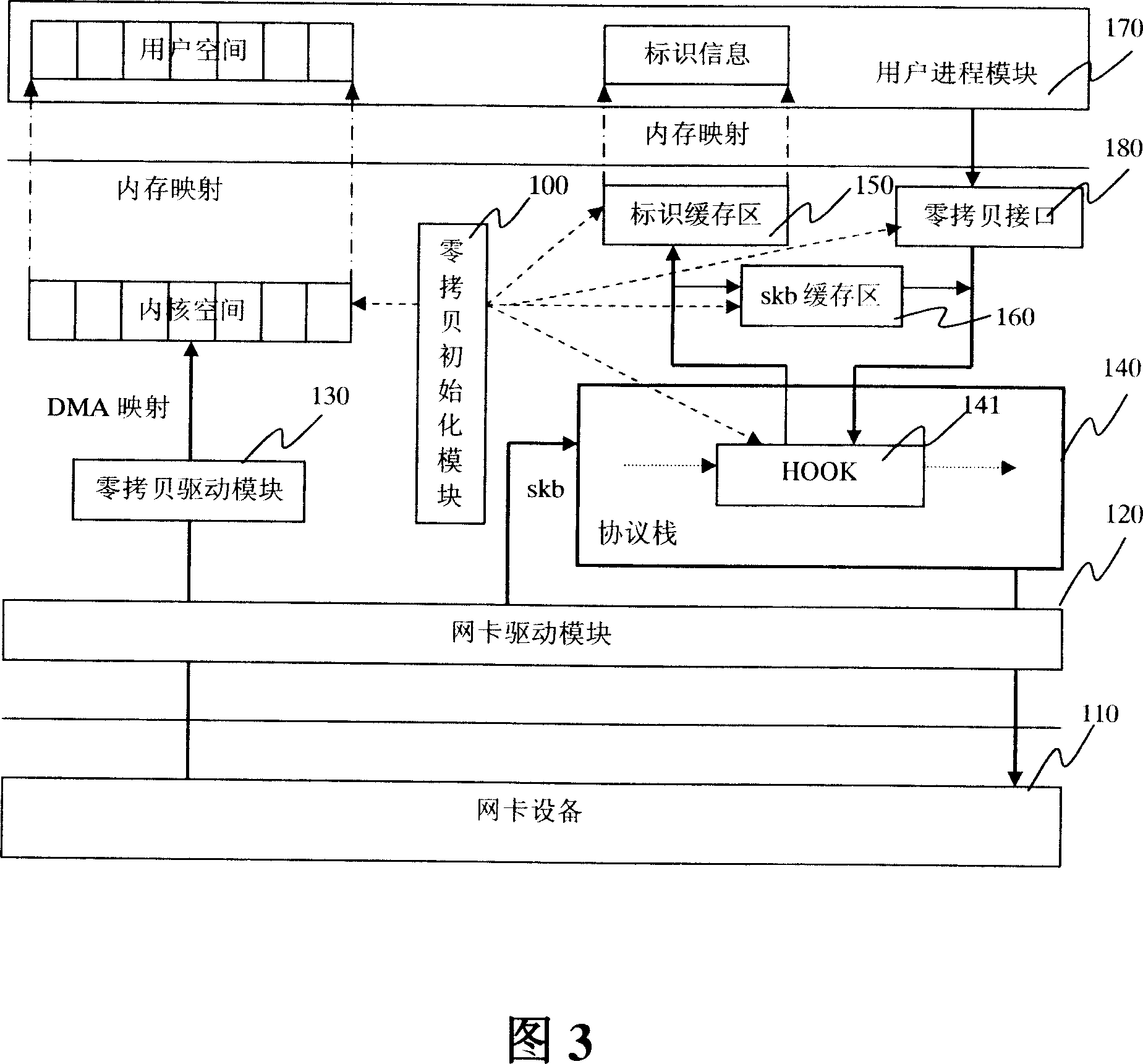

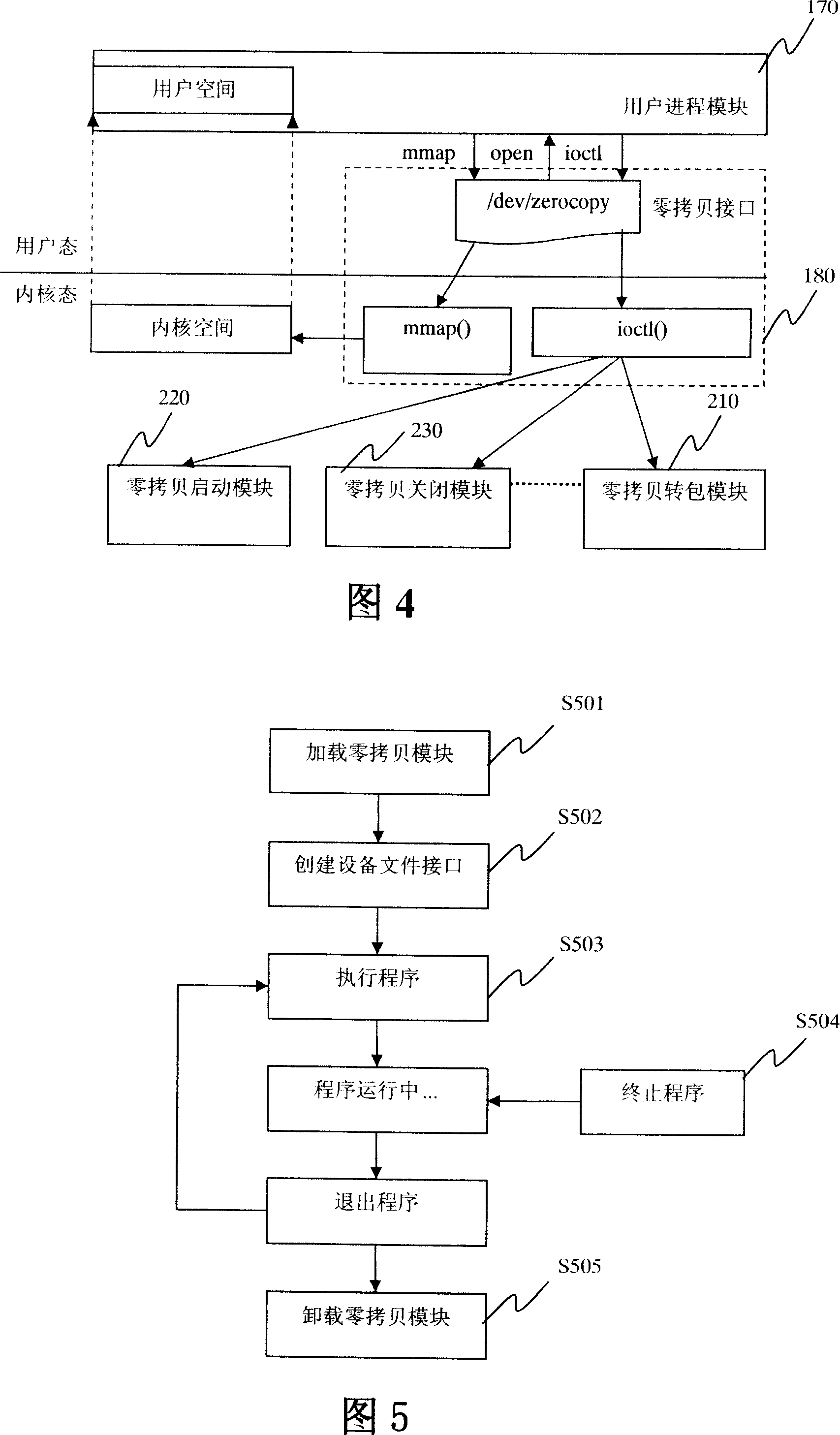

Apparatus and method for realizing zero copy based on Linux operating system

ActiveCN101135980ARealize zero copy of dataRegistration is flexibleMultiprogramming arrangementsTransmissionAnalysis dataZero-copy

The apparatus comprises: a zero-copy initializing module used for allocating a segment of inner core space in the inner core, and dividing the inner cored space into multi data blocks, and identifying each block; a network card driving module used for sending the received data packet to the data block in the inner core to save; recording the identifier of the data block in skb of the data packet, and sending the skb to the protocol stack; a protocol stack used for receiving and analyze the skb of the data packet, and getting the identifier of the data block; a identifier buffer connected to the protocol stack and is directly mapped to the user progress module, and is used for saving the identifier of data block obtained by the protocol stack; a user progress module used for getting the identifier from the identifier buffer, and getting the data packet from the data block in the inner core space.

Owner:FORTINET

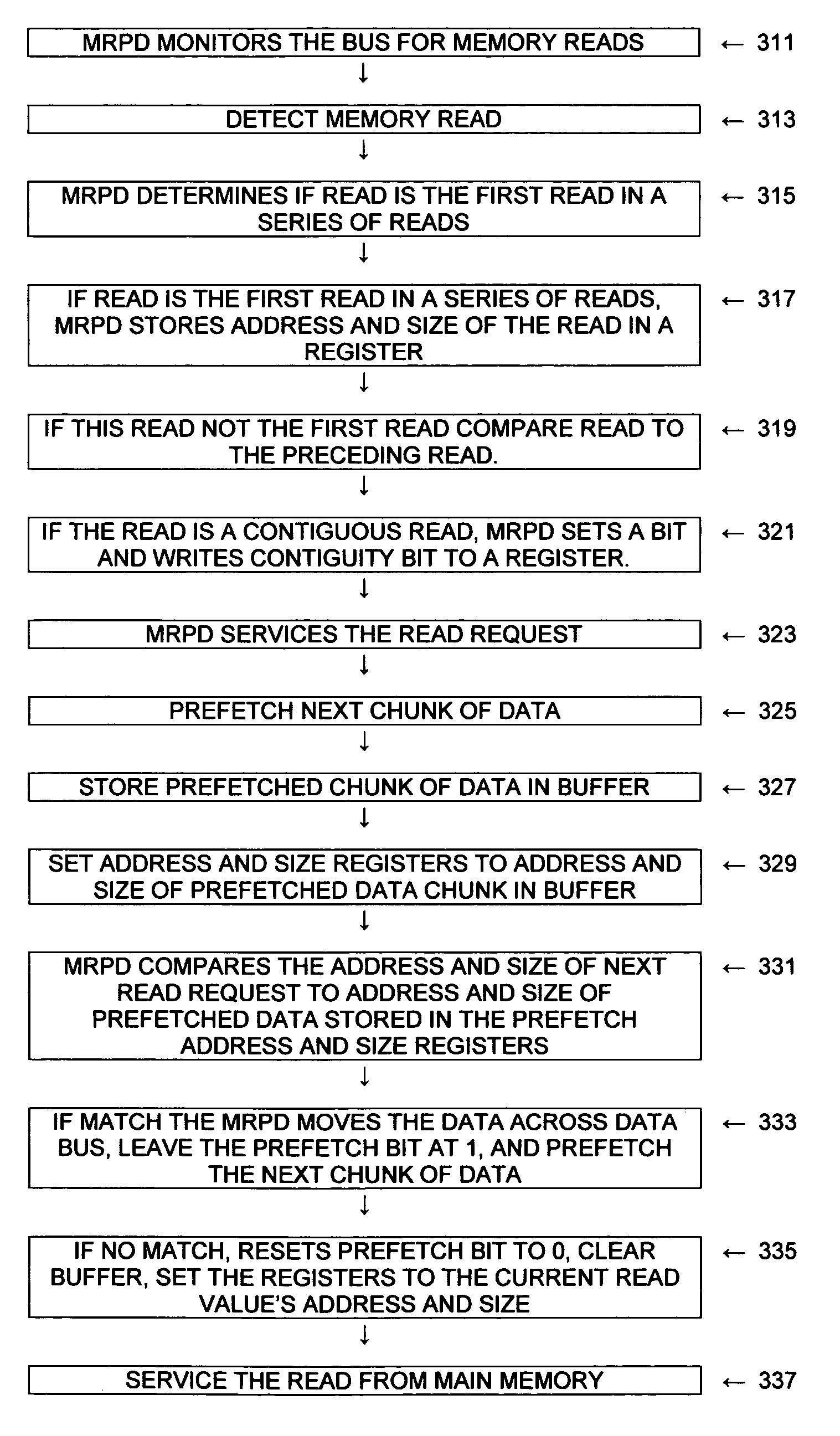

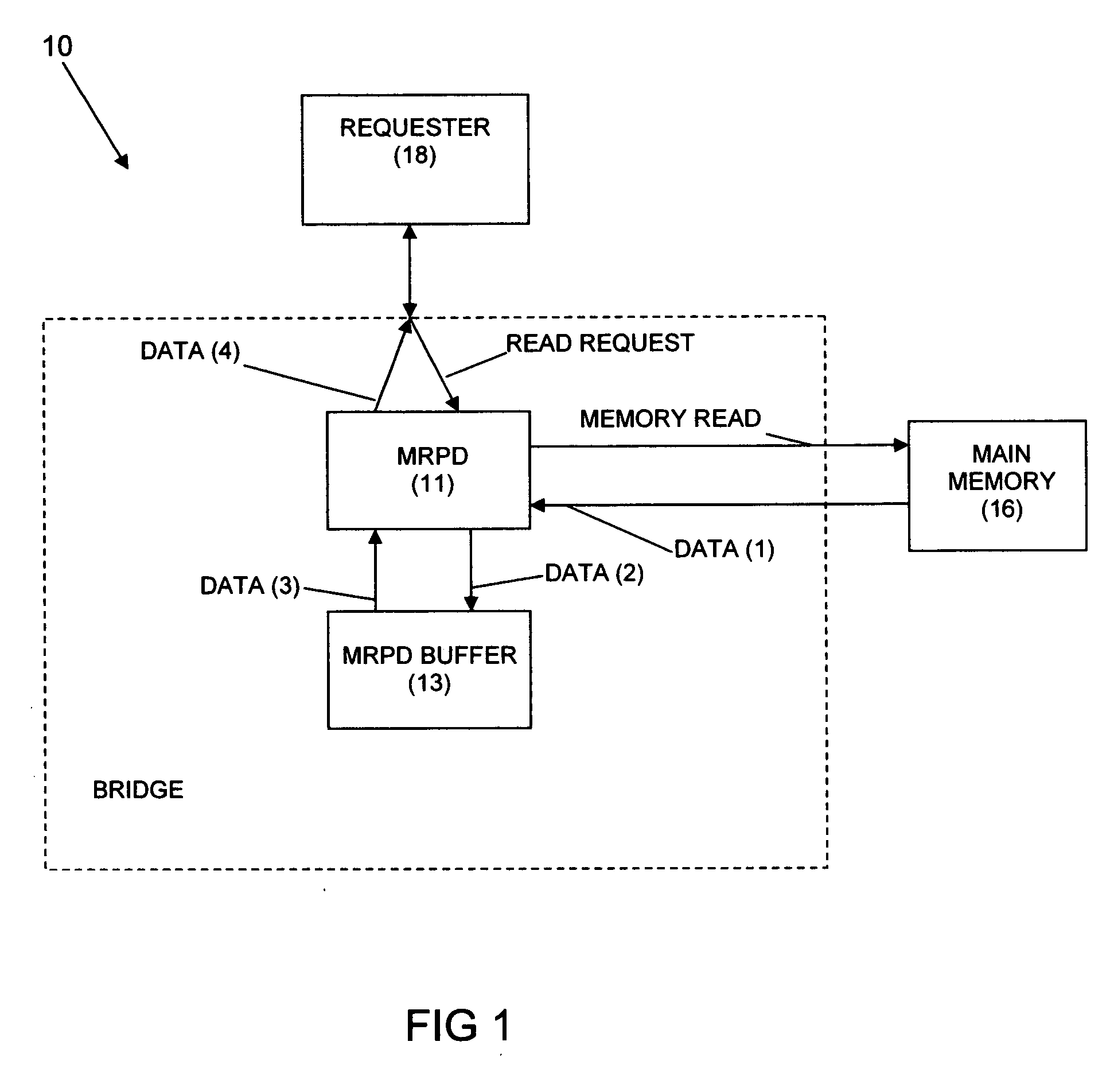

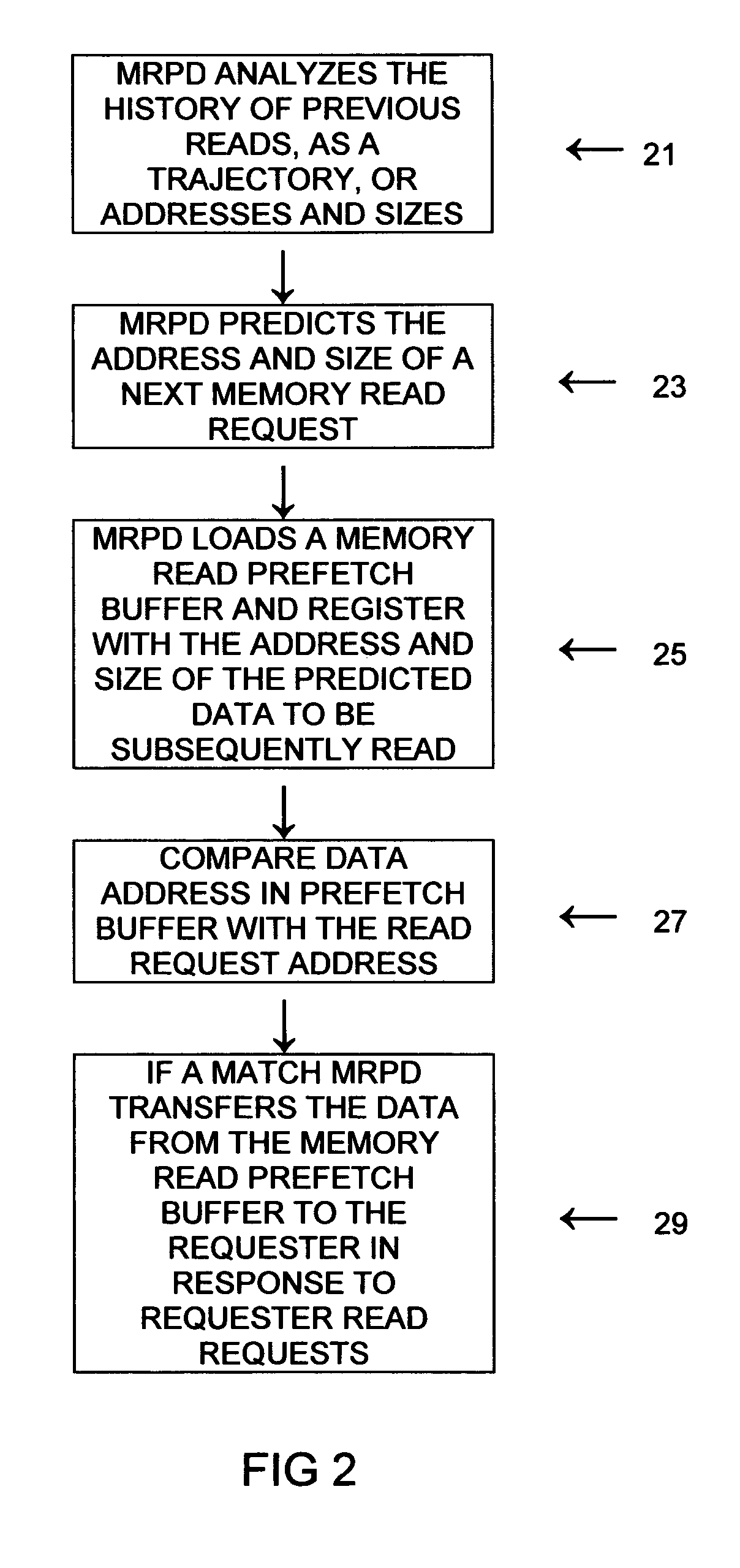

Memory prefetch method and system

InactiveUS20050223175A1Reduce data latencyReducing cache missMemory architecture accessing/allocationMemory adressing/allocation/relocationParallel computing

Owner:IBM CORP

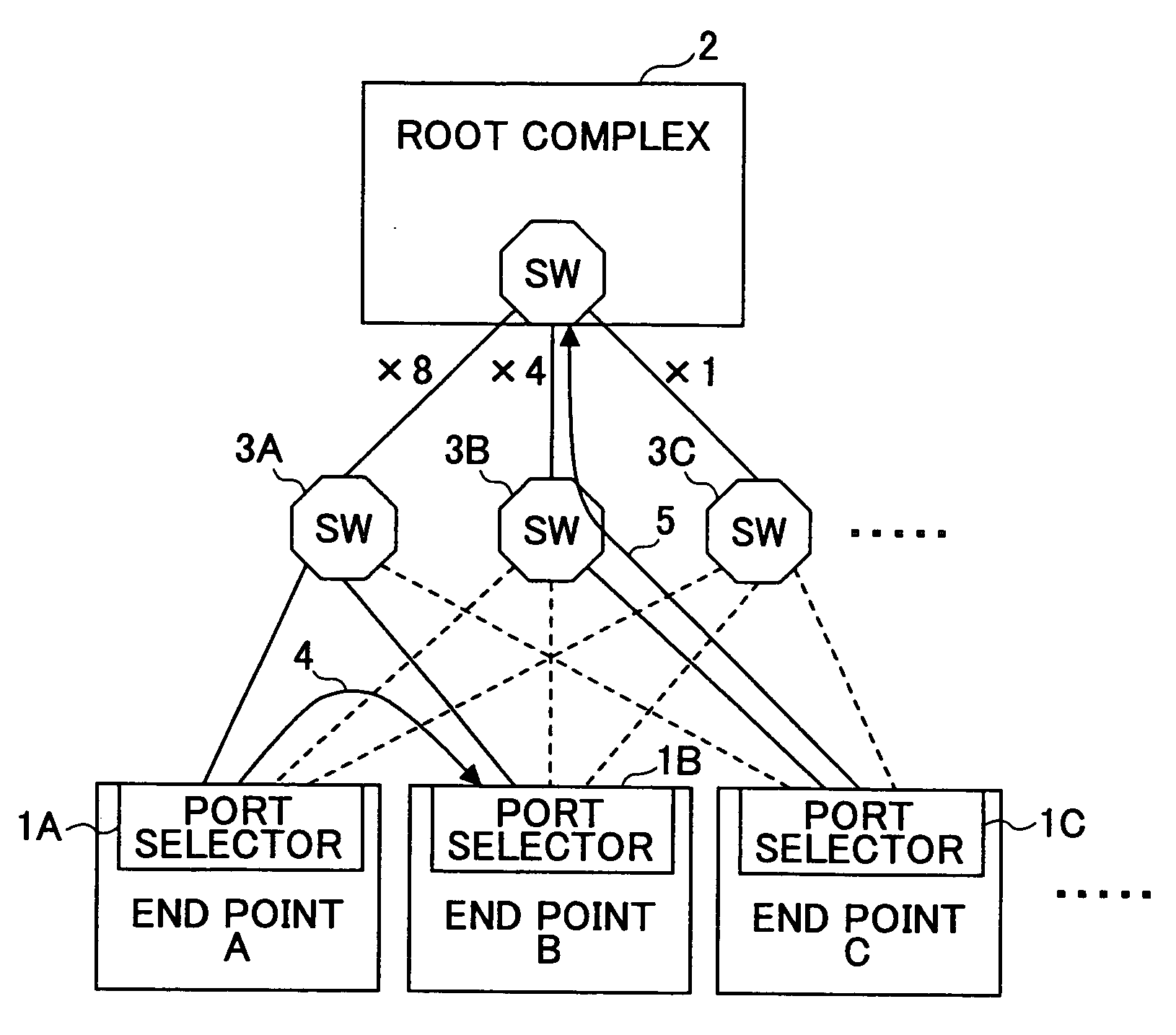

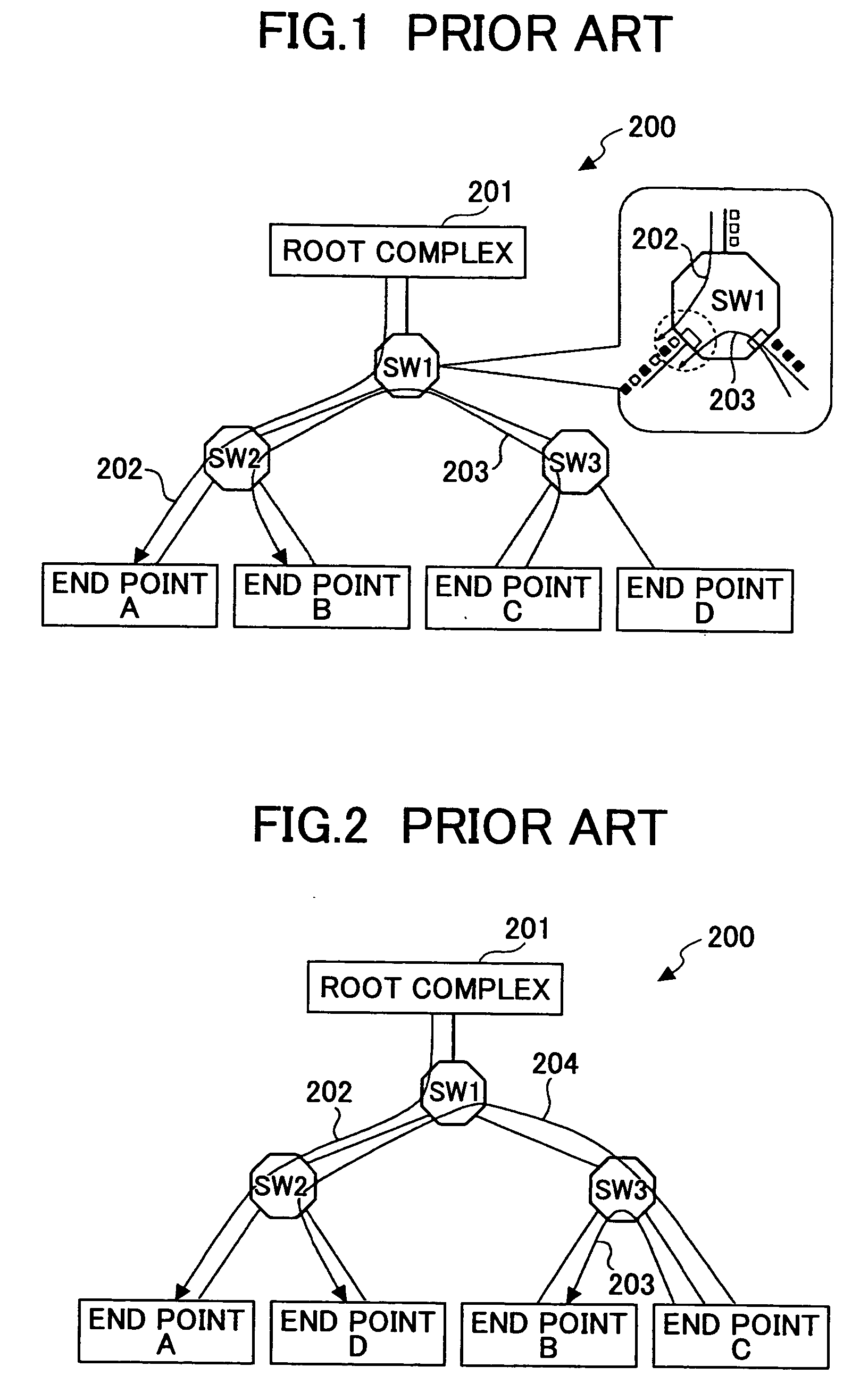

Data transfer system, data transfer method, and image apparatus system

InactiveUS20060114918A1Improve data transfer efficiencyAvoid contentionData switching by path configurationTransfer systemOperation mode

A data transfer system using a high-speed serial interface system that forms a tree structure in which point-to-point communication channels are established for data sending and data receiving independently is provided. The data transfer system includes plural end points each having plural upper ports each of which is connected to a switch of an upper side, wherein each end point includes a port selecting part for selecting a port to be used according to an operation mode of the data transfer system so as to dynamically change the tree structure.

Owner:RICOH KK

Recording medium, reporduction apparatus, recording method, integrated circuit, program and reporduction method

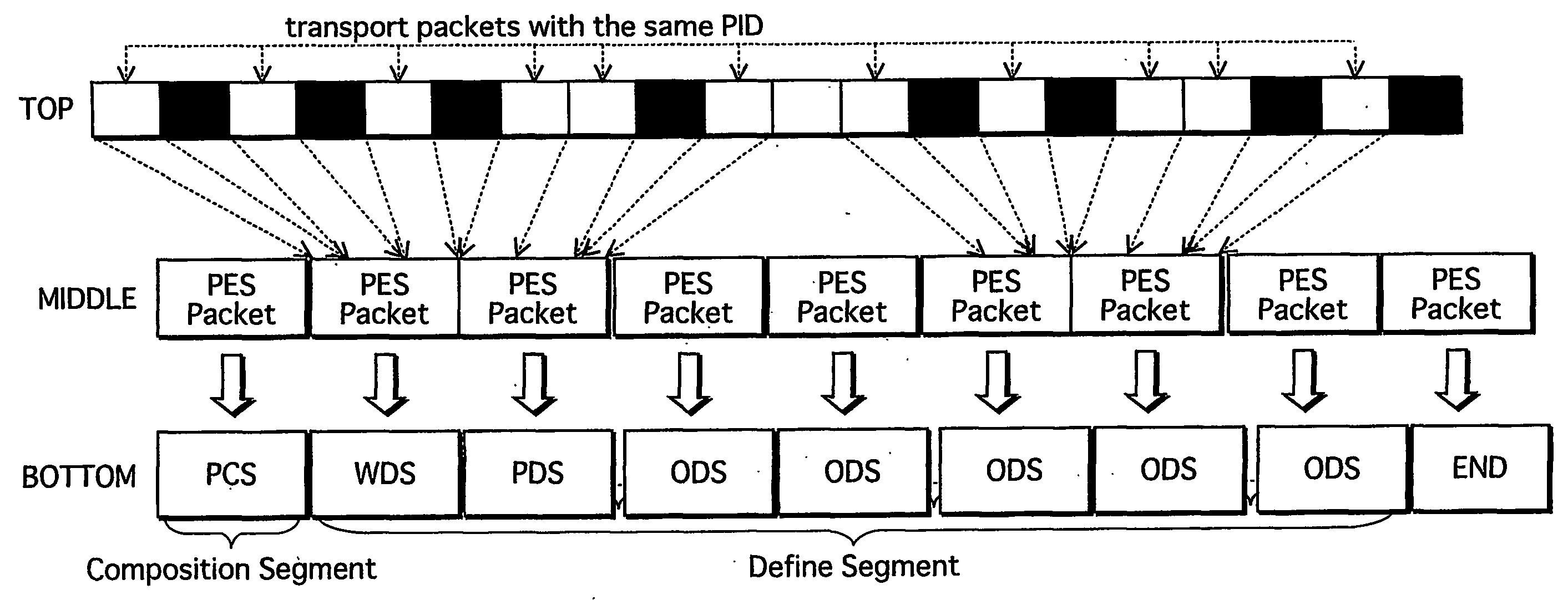

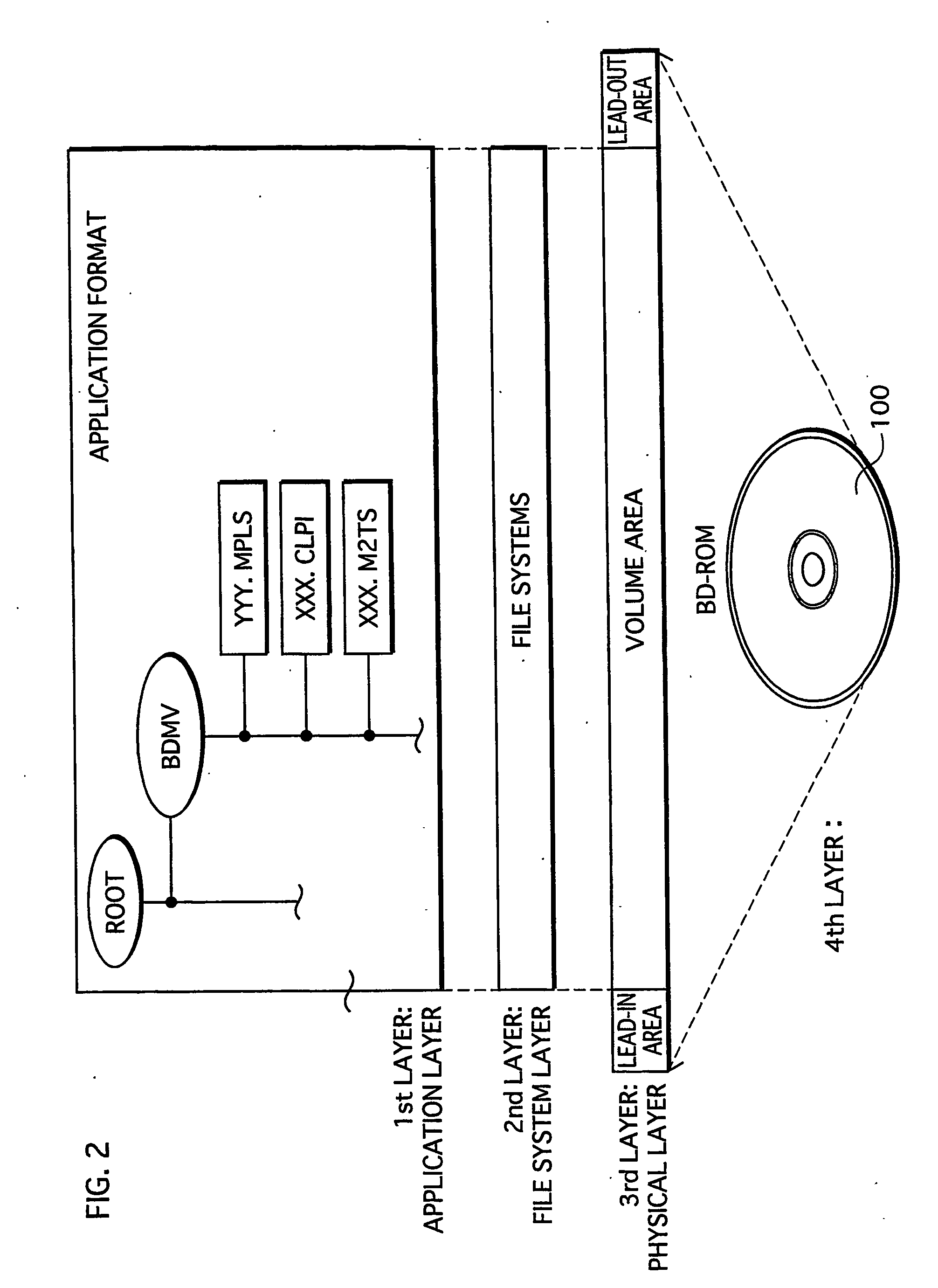

ActiveUS20060153532A1High definition levelAvoid rising production costsTelevision system detailsColor television signals processingMultiplexingGraphics

AVClip recorded in BD-ROM is obtained by multiplexing a graphics stream and a video stream. The graphics stream is a PES packet sequence that includes 1) PES packets storing graphics data (ODS) and 2) PES packets storing control information (PCS). In each ODS, values of DTS and PTS indicate a timing of decoding start for corresponding graphics data, and a timing of decoding end for corresponding graphics data, respectively. In each PCS, a value of PTS indicates a display timing of corresponding decoded graphics data combined with the video stream.

Owner:PANASONIC CORP

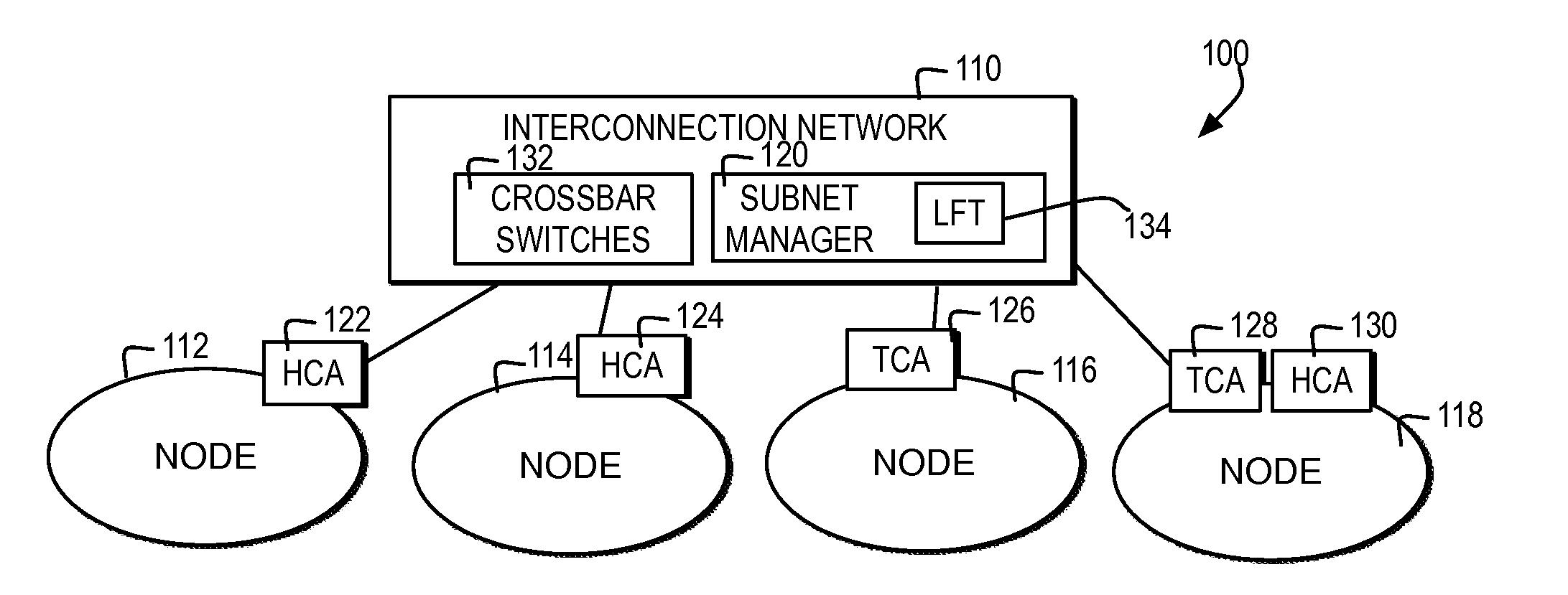

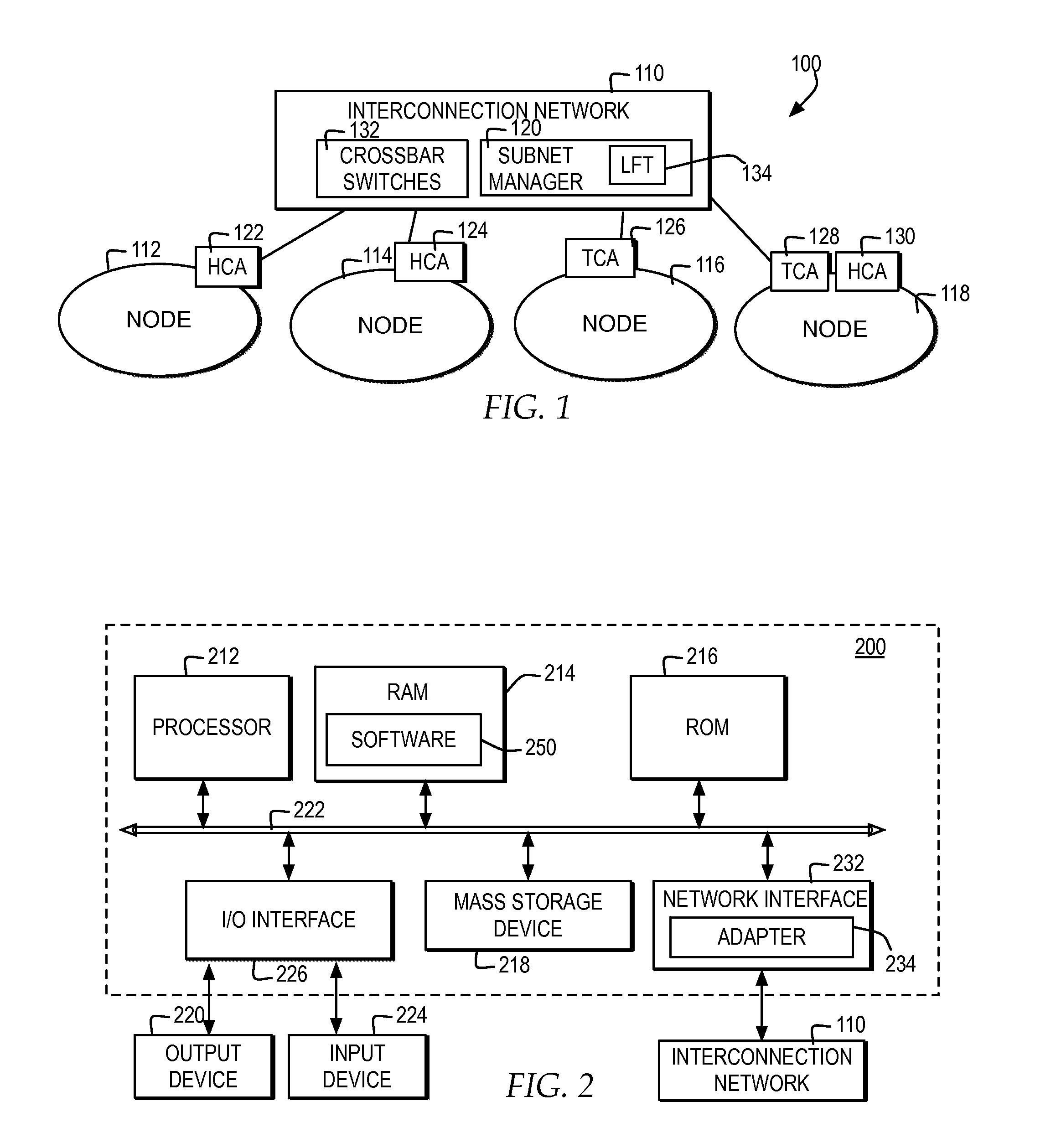

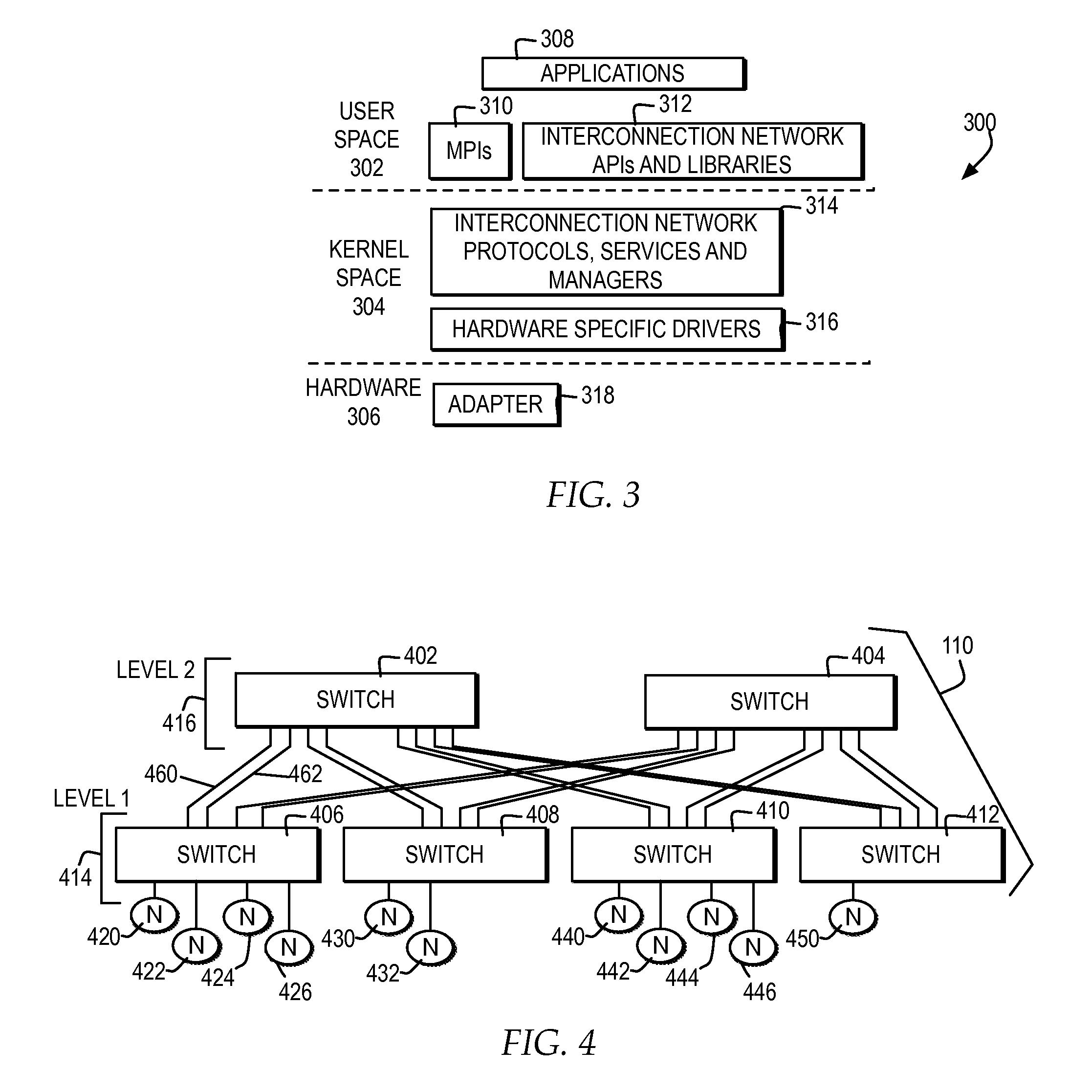

Contention free pipelined broadcasting within a constant bisection bandwidth network topology

InactiveUS20110228789A1Avoiding contention latencyEasy to useData switching by path configurationBroadcastingComputer science

In an interconnection network, multiple nodes are connected to one of a first layer of switches. The first layer of switches is connected to one another through a second layer of switches, wherein each of the nodes is connected through one of multiple shared links connecting the first layer switches and the second layer of switches. A pipelined broadcast manager schedules a hierarchical pipelined broadcast through at least one switch of the first layer switches comprising a multiple non-root nodes by selecting two nodes of multiple non-root nodes connected to the at least one switch and scheduling each of multiple broadcast steps for the pipelined broadcast with at least one of an inter-switch broadcast phase and an intra-switch broadcast phase. The inter-switch broadcast phase is scheduled with a first node of the two nodes receiving a first data packet from another switch from among the first layer of switches and a second node of the two nodes sending a second data packet previously received from another switch to one other switch from among the first layer switches. The intra-switch broadcast phase is scheduled with the first node acting as a source for sending a previously received data packet to at least one other non-root node and the second node acting as a sink for receiving the previously received data packet, wherein each of the non-root nodes sends and receives the previously received data packet once throughout the broadcast steps and the sink receives the previously received data packet last. In scheduling the hierarchical pipelined broadcast, the first node and the second node alternate roles each broadcast step.

Owner:IBM CORP

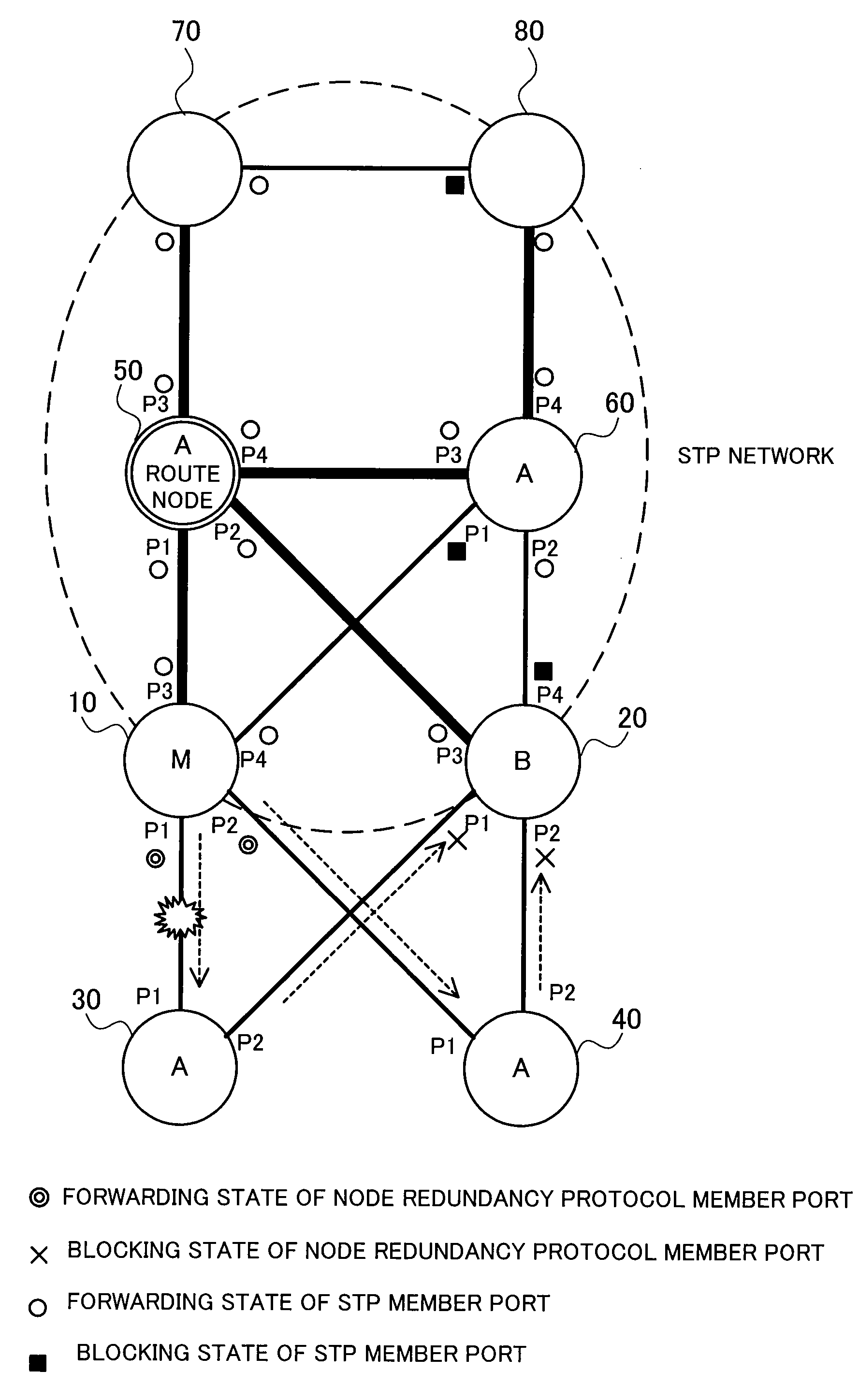

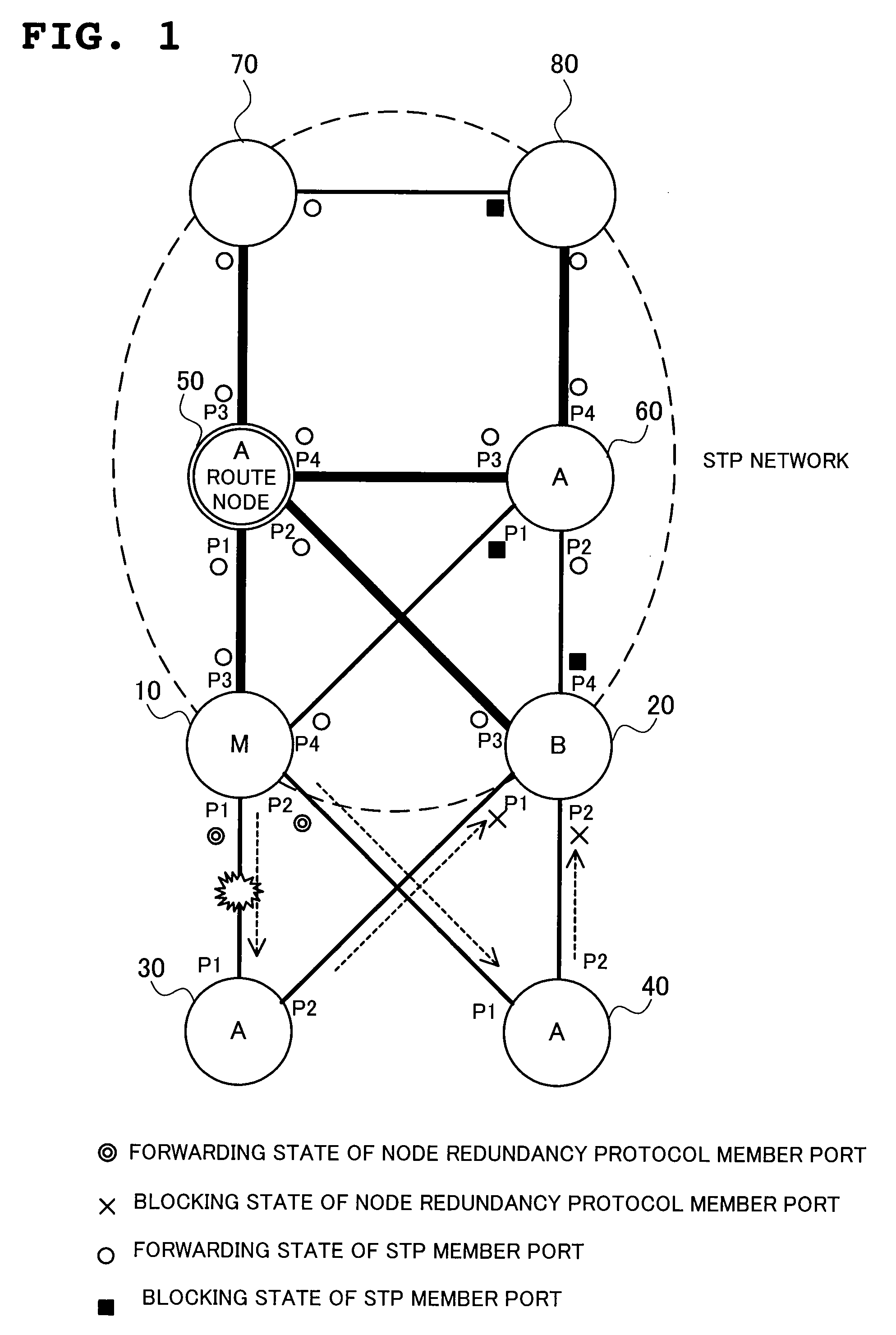

Network system, node, node control program, and network control method

InactiveUS20070258359A1Avoid contentionError preventionFrequency-division multiplex detailsNetwork controlNetworked system

A network system in which a network adopting a node redundancy protocol and a network adopting an STP protocol as other protocol for managing a state of a port coexist, which is configured to manage, by the STP protocol as other protocol, a state of a port which belongs to a master node 10 and a backup node 20 forming the network adopting the STP protocol and is under the management of the node redundancy protocol and also under the management of the STP protocol, and in which the master node 10 or the backup node 20 transmits a Hello message as a control frame for monitoring a node and a link to all or a part of nodes connected to the port under the management of the node redundancy protocol, as well as transmitting a Flush message as a control frame for rewriting a forwarding database to all or a part of nodes connected to the port under the management of the node redundancy protocol at the time of switching to a master mode.

Owner:NEC CORP

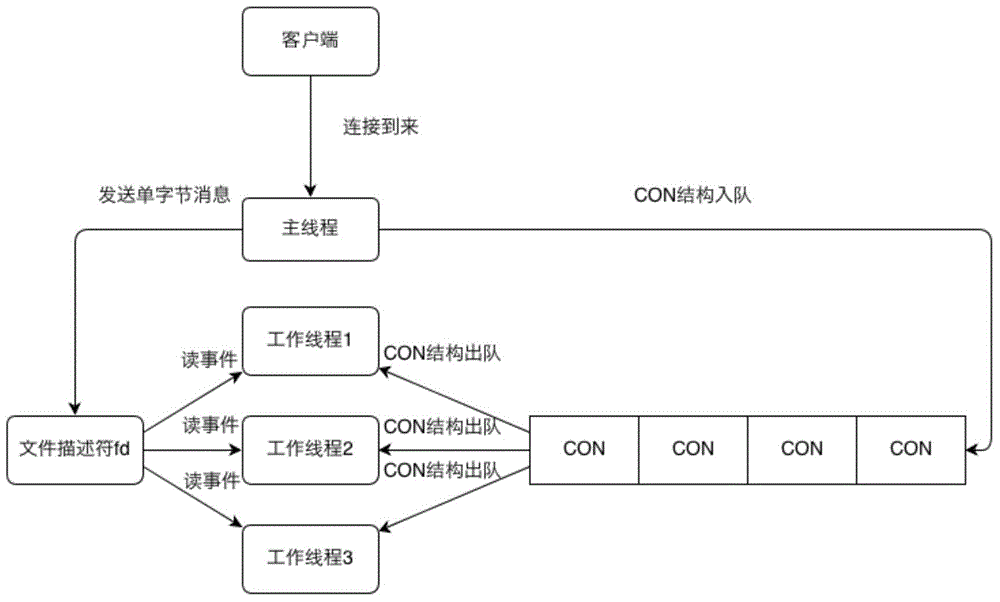

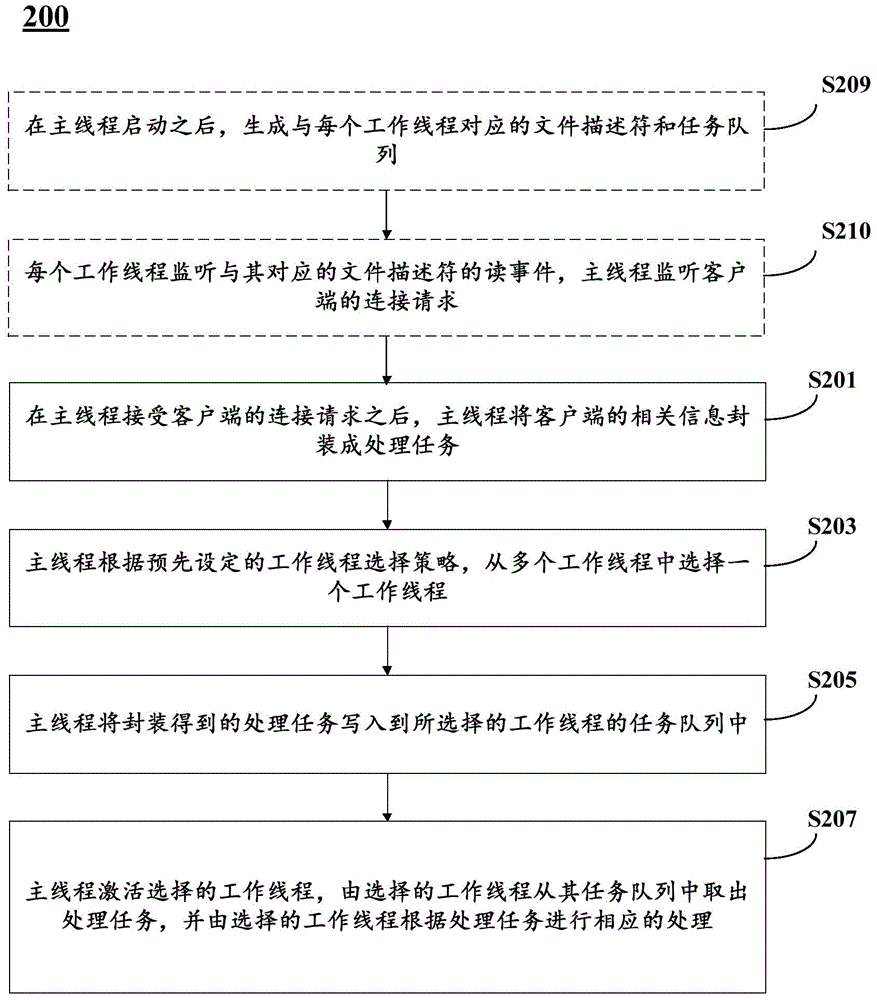

Multithread management method and device

ActiveCN103605568AAvoid wastingAvoid contentionProgram initiation/switchingResource allocationRelevant informationOperating system

The invention discloses a multithread management method and device applicable to a framework where an operation system controls a plurality of threads. The plurality of threads comprise a main thread and a plurality of working threads. The method includes that relevant information of a client is packaged into a processing task after the main thread receives a connection request from the client; the main thread selects a working thread from the plurality of working threads according to a preset working thread selection strategy; the main thread writes the processing task obtained through packaging into a task queue of the selected working thread; the main thread activates the selected working thread, the selected working thread takes the processing task from the task queue and the selected working thread conducts corresponding processing according to the processing task. By means of the method and device, system resource waste caused in the process that the main thread distributes tasks for the working threads is avoided.

Owner:BEIJING QIHOO TECH CO LTD

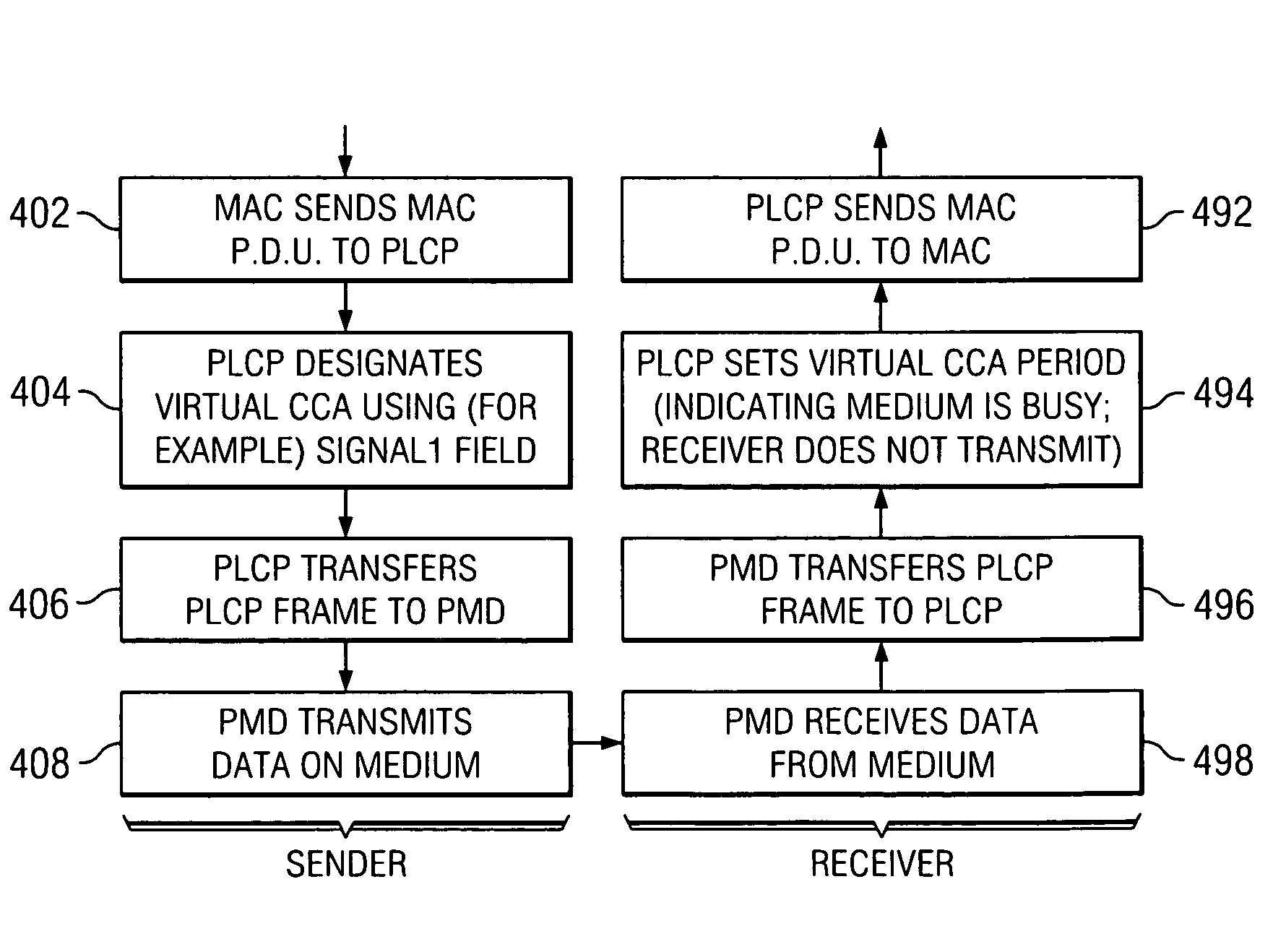

Virtual clear channel avoidance (CCA) mechanism for wireless communications

ActiveUS20050226270A1Avoid contentionAssess restrictionTime-division multiplexCommunications mediaTransmitter

An arrangement avoids contention on a communication medium among devices including at least a transmitter and a receiver. The arrangement involves a first portion configured to instruct a receiver to indicate that the communication medium is busy for a time period substantially longer than an actual frame transmission period being sent from the transmitter to the receiver, and a second portion configured to prohibit the receiver from transmitting on the communication medium during the time period.

Owner:TEXAS INSTR INC

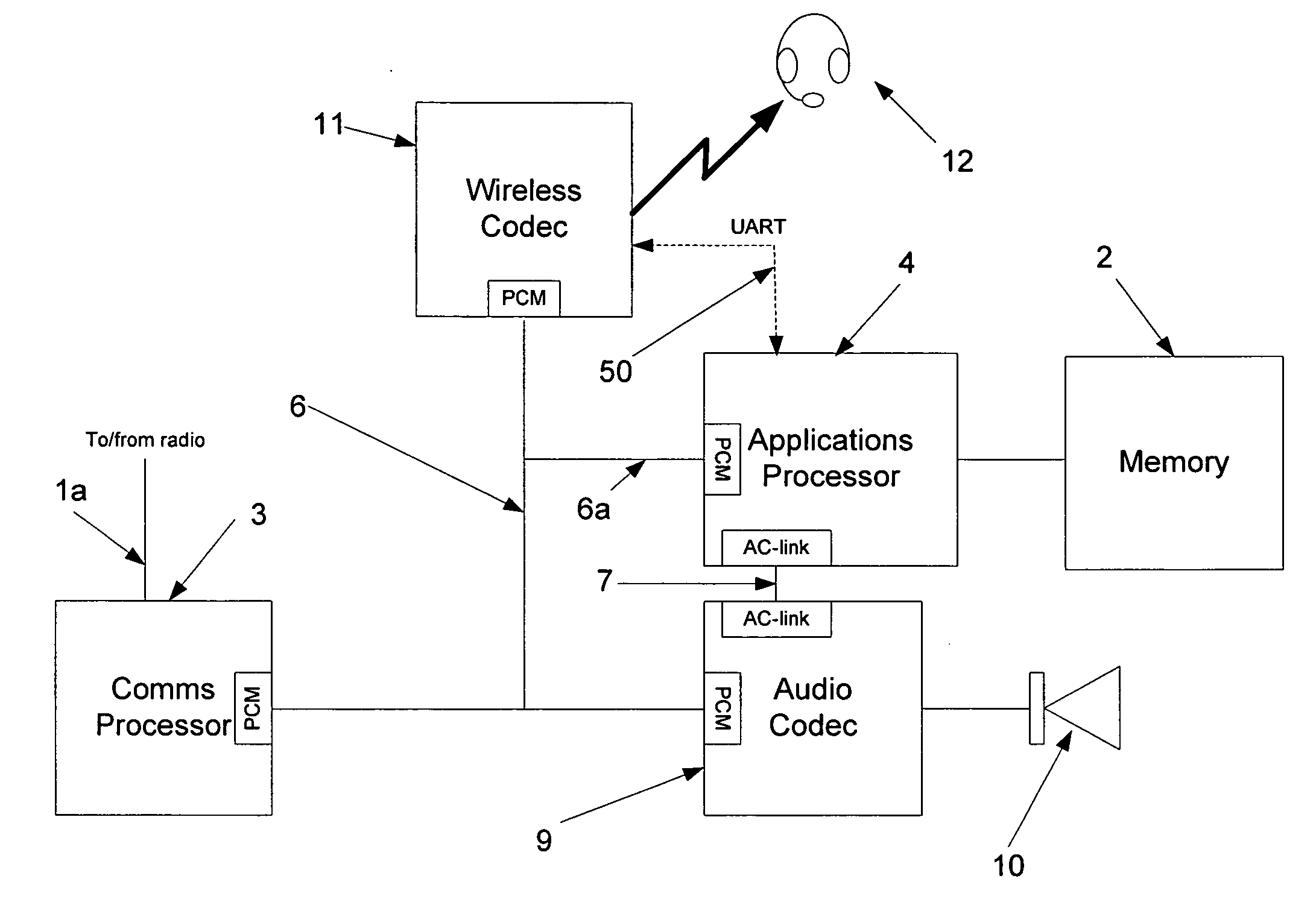

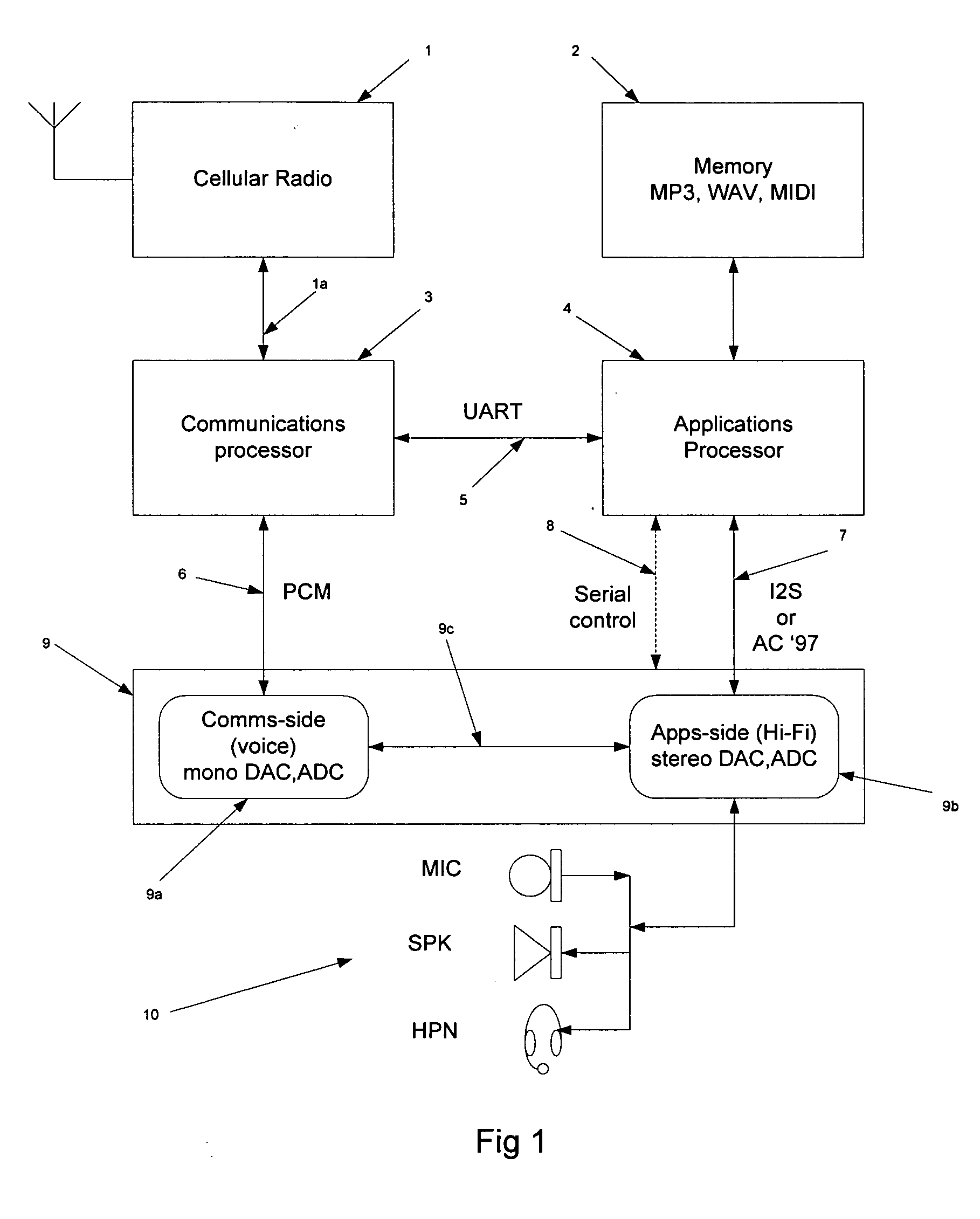

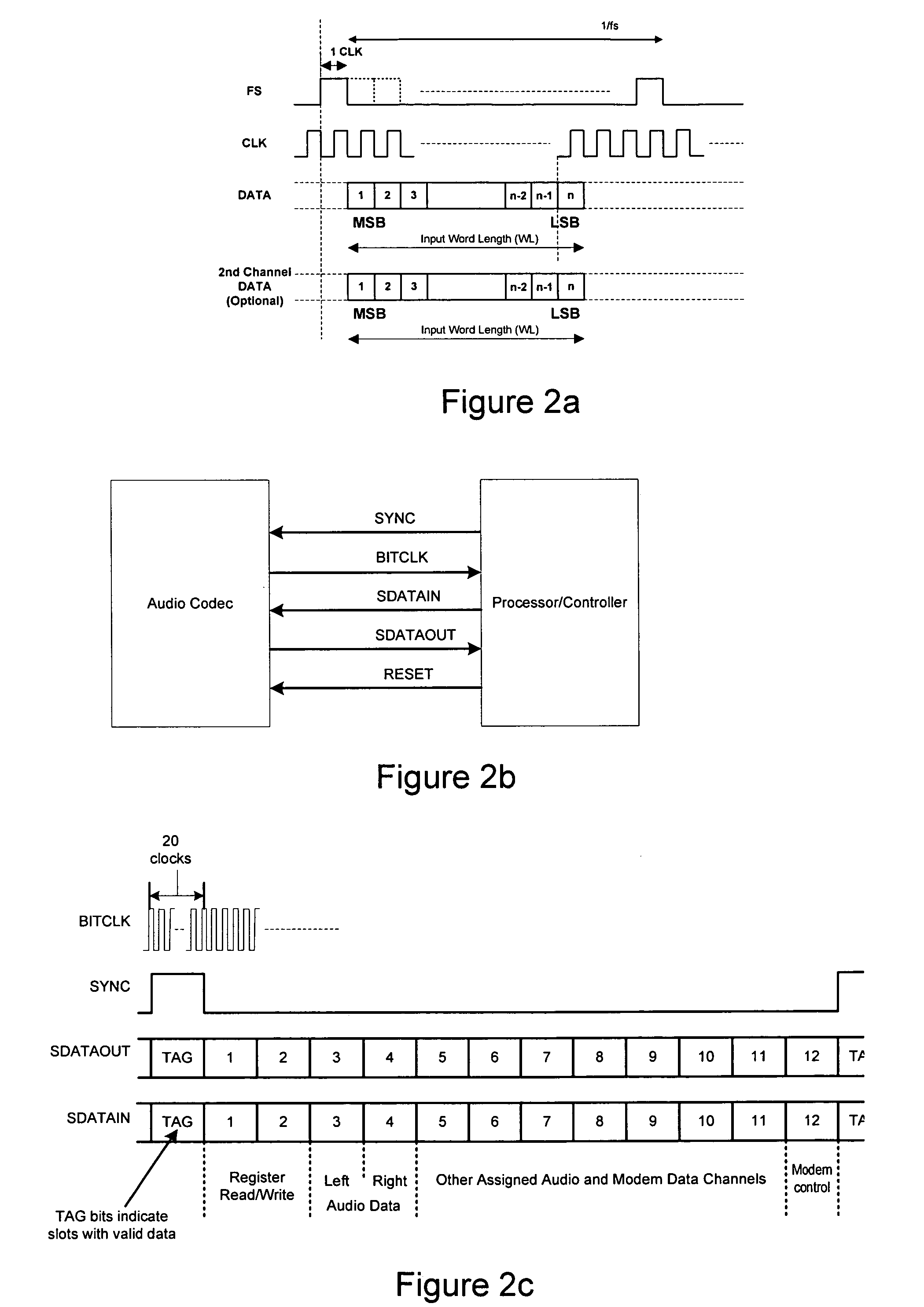

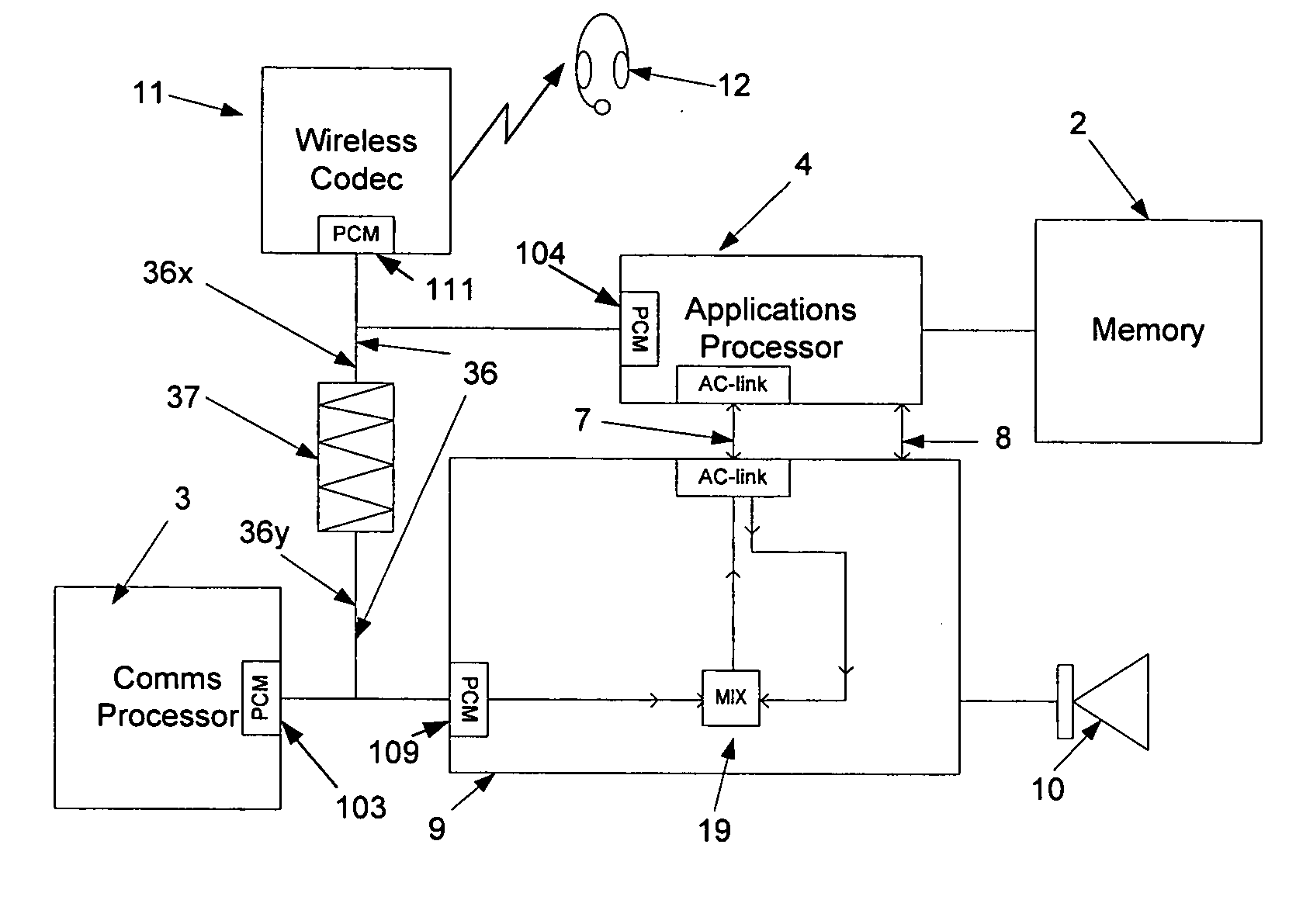

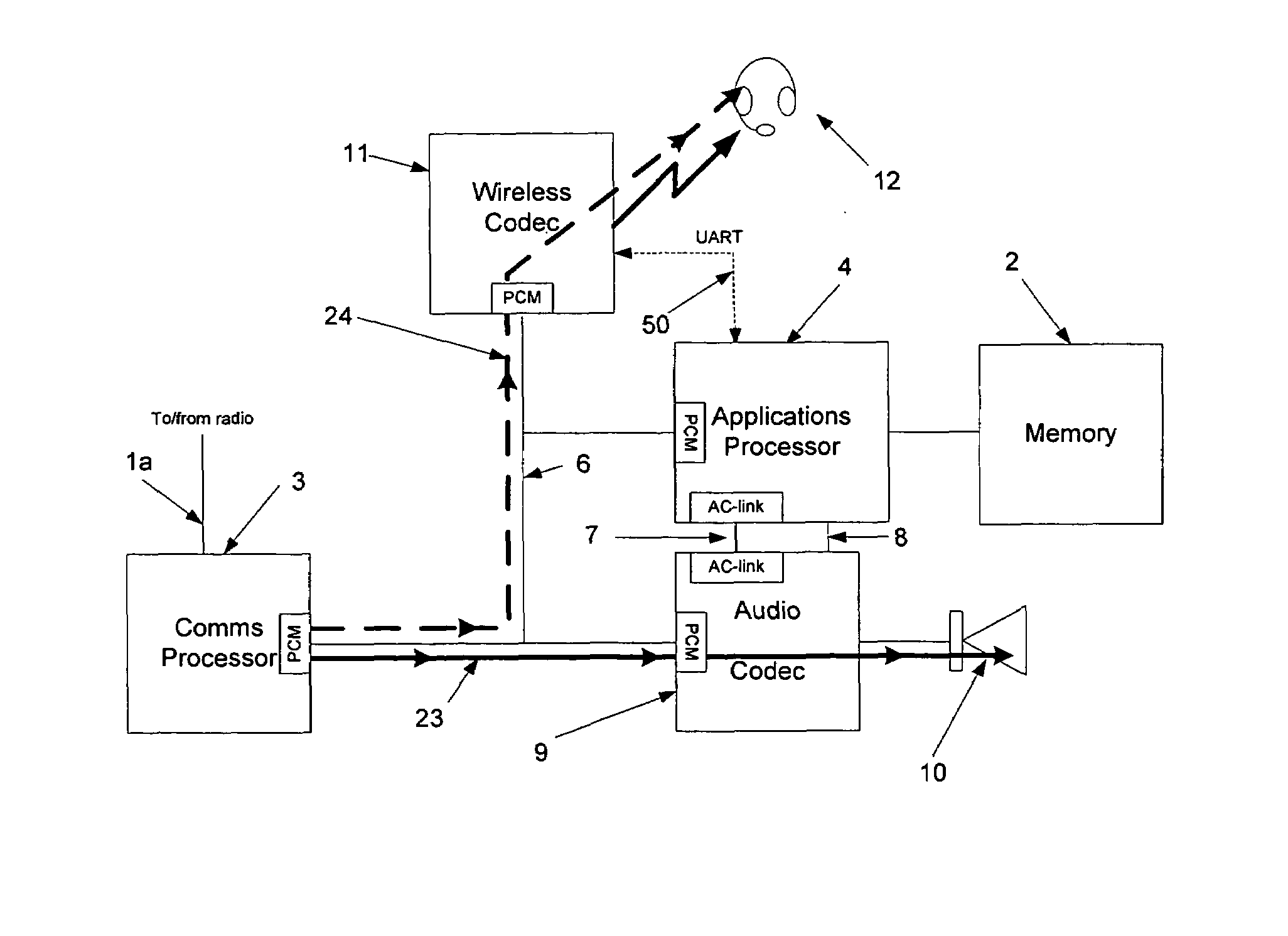

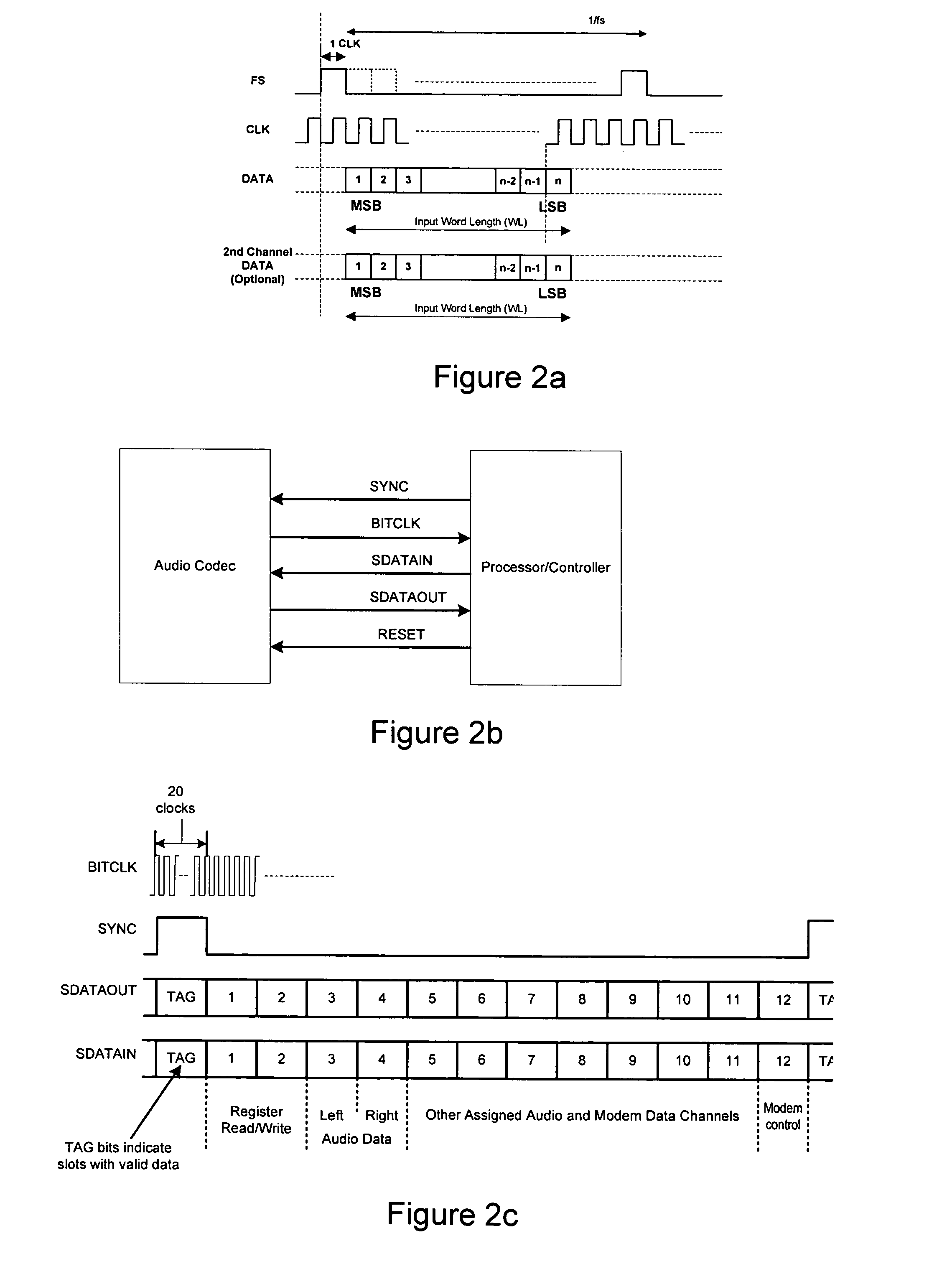

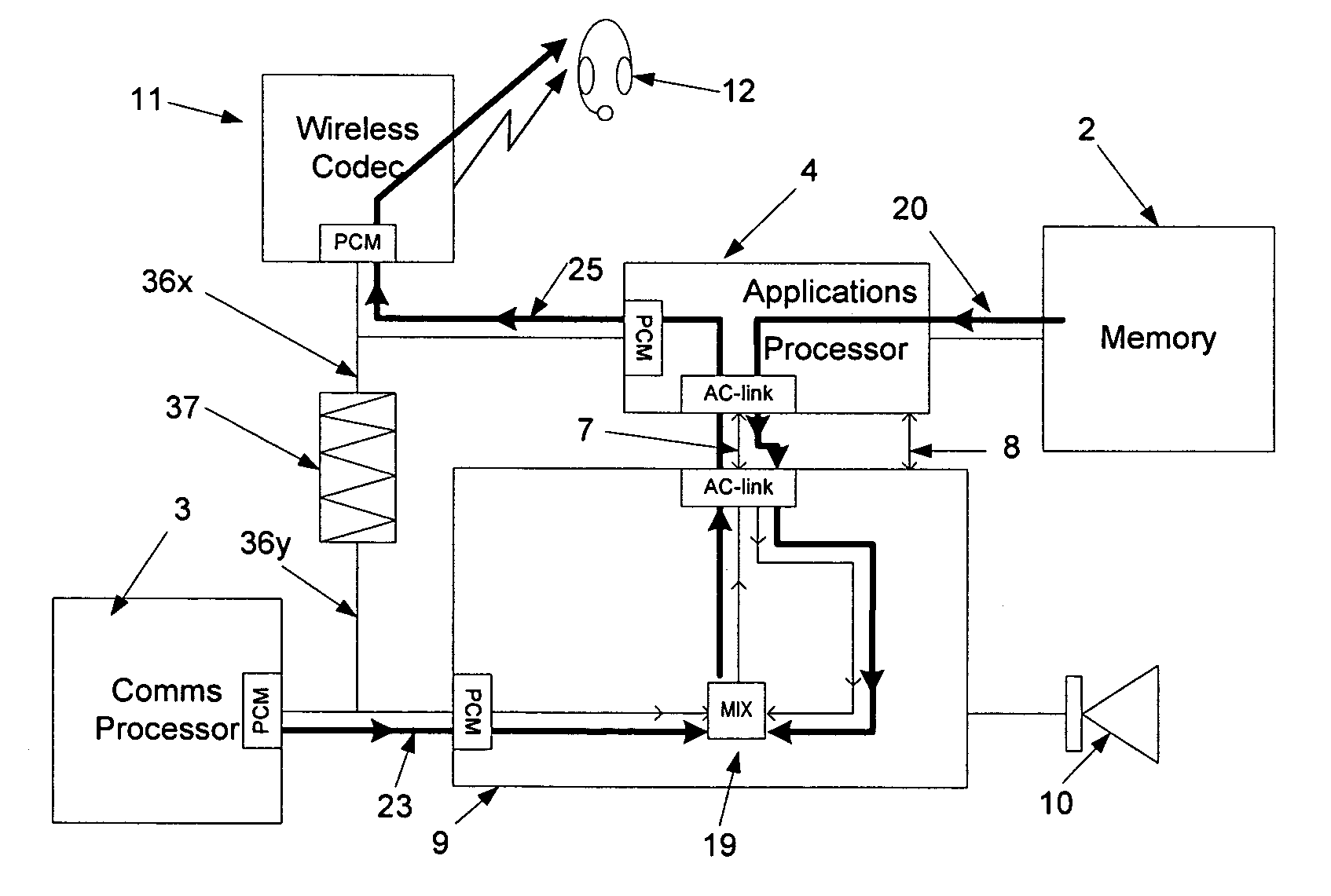

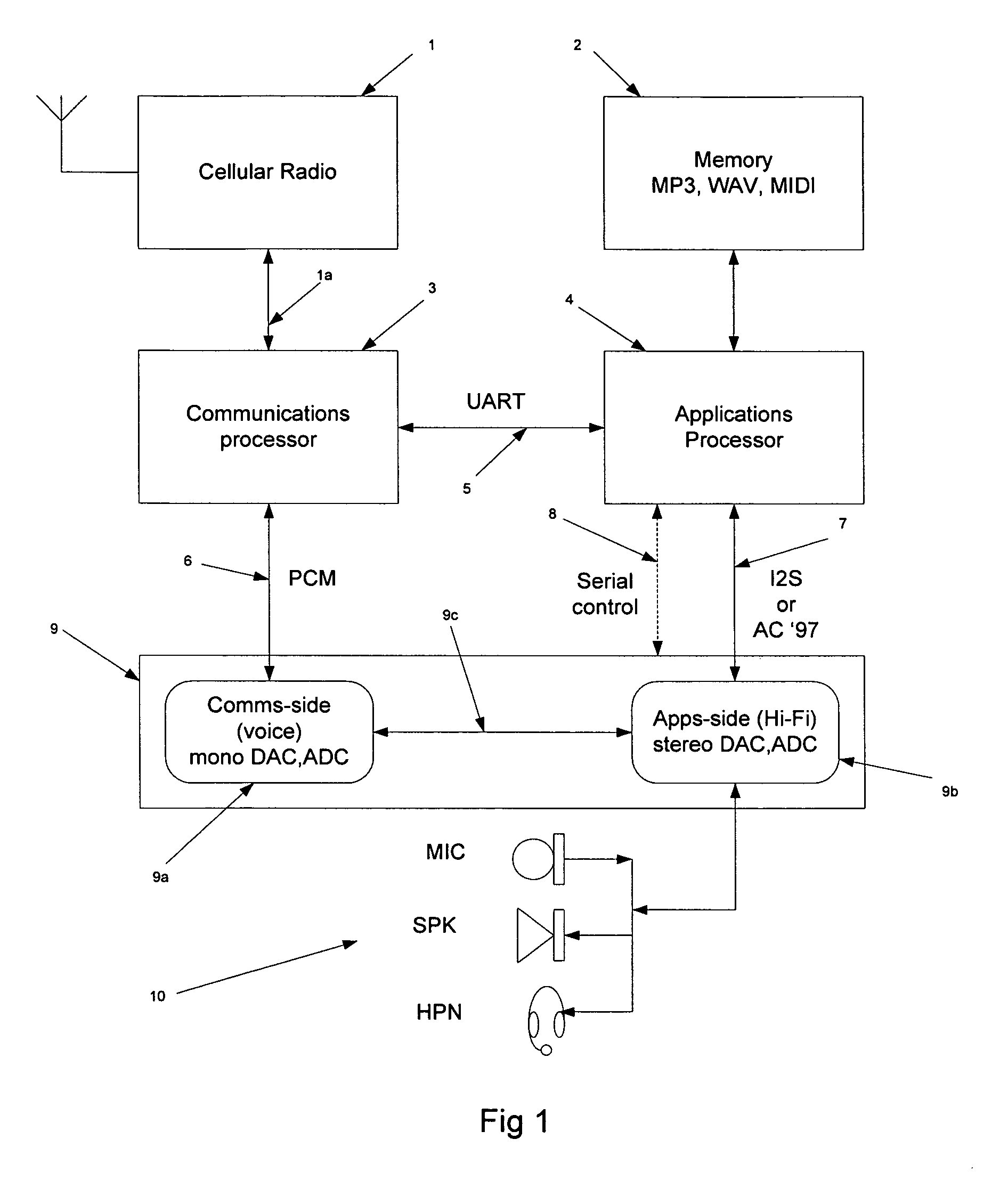

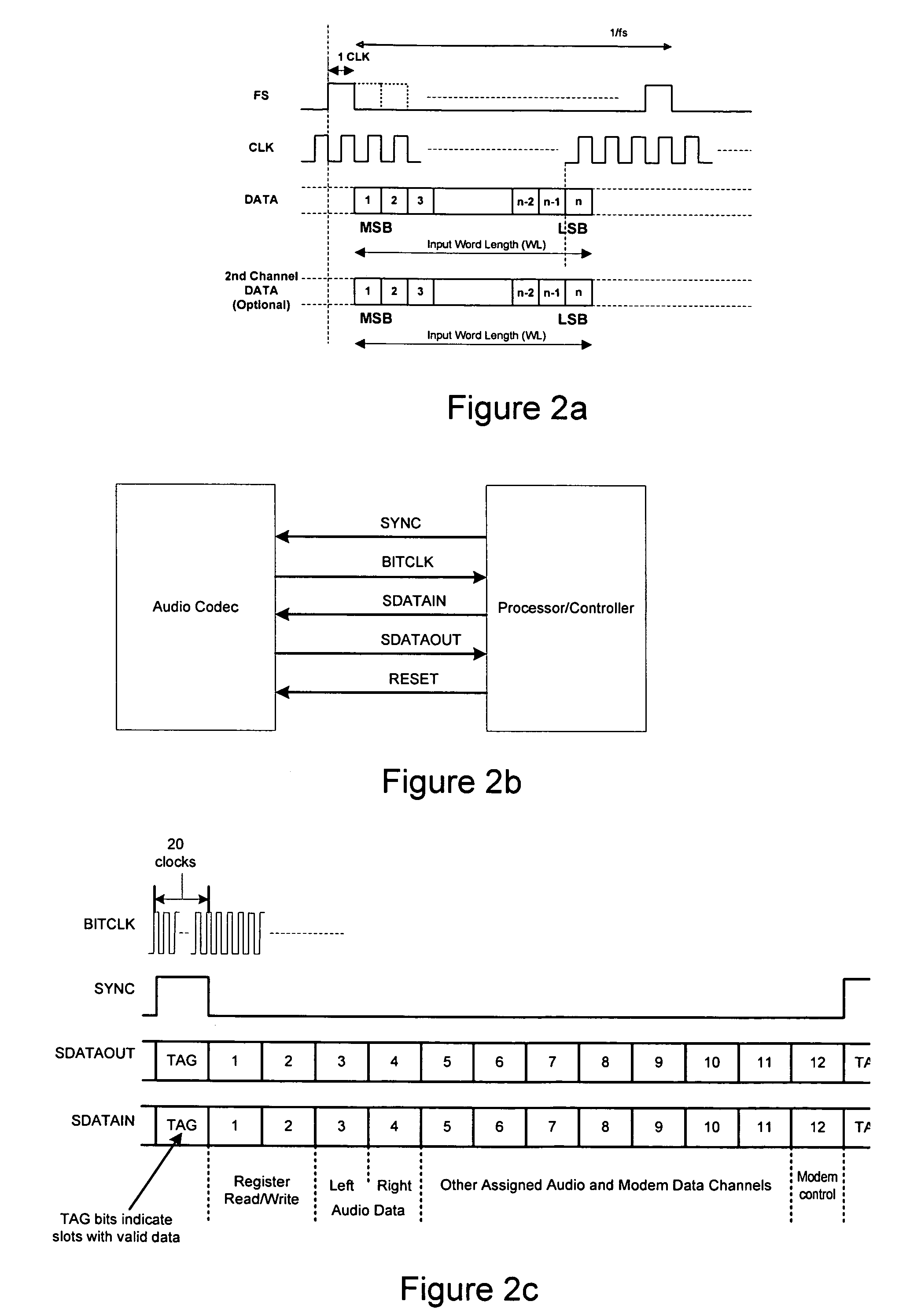

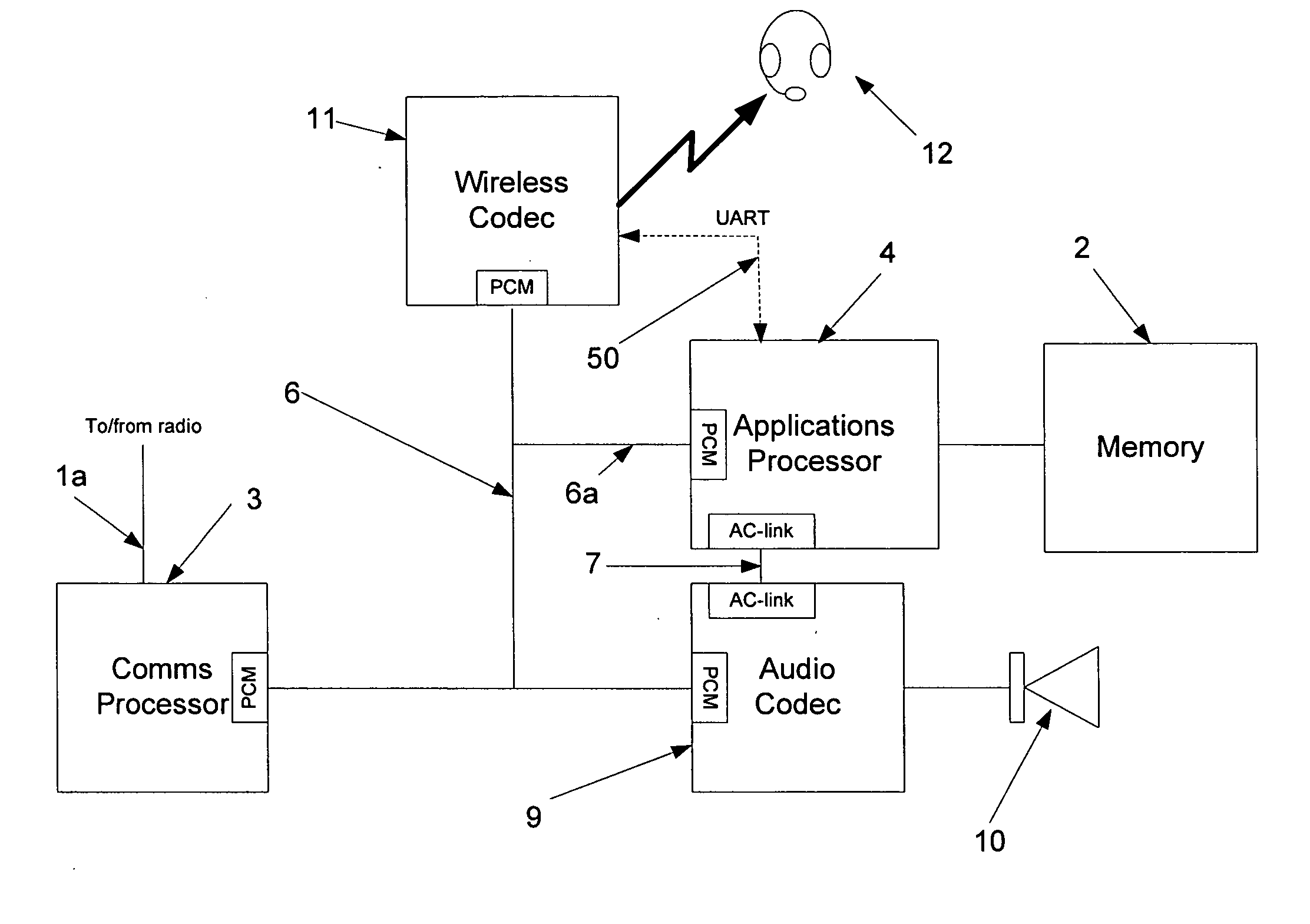

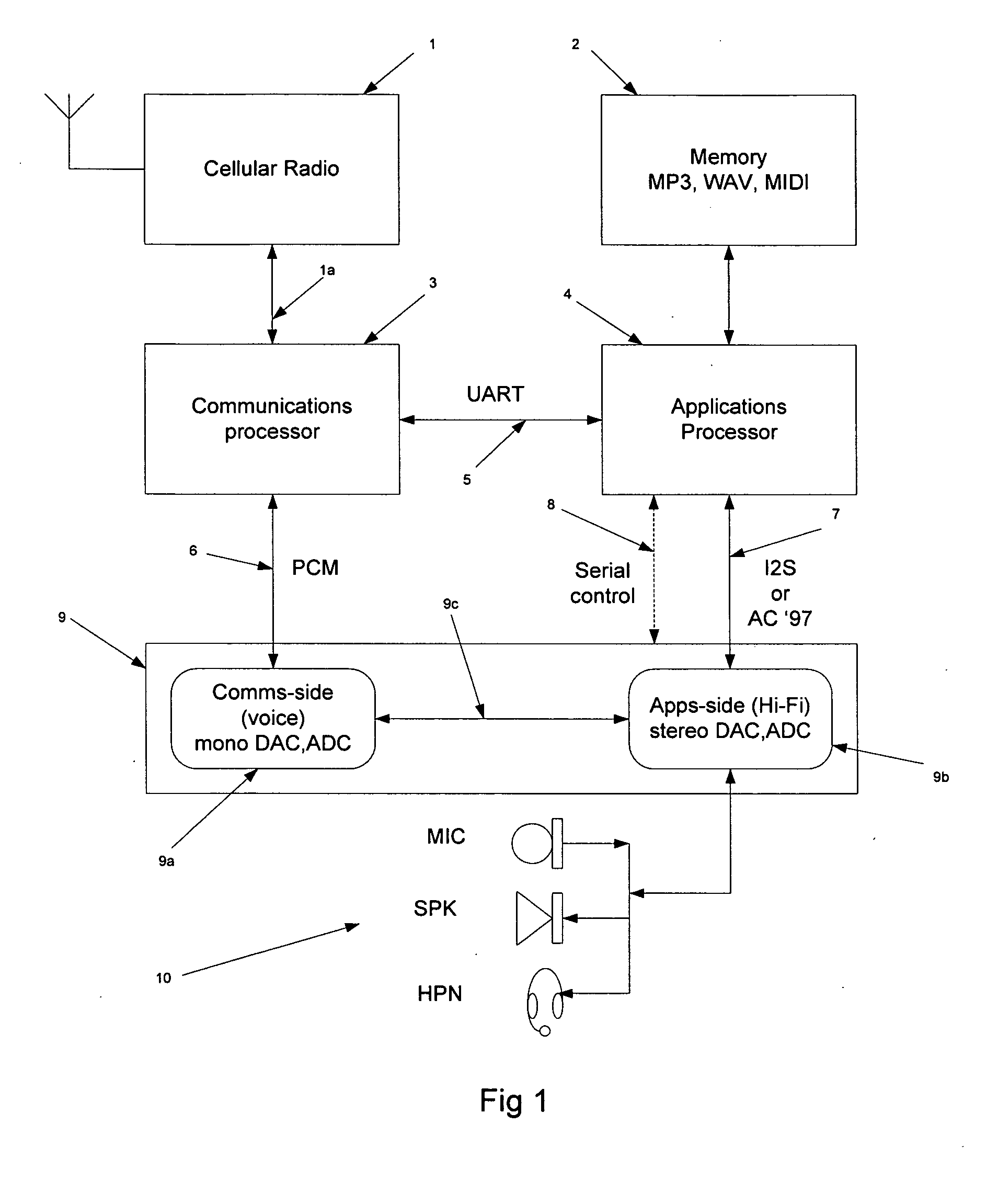

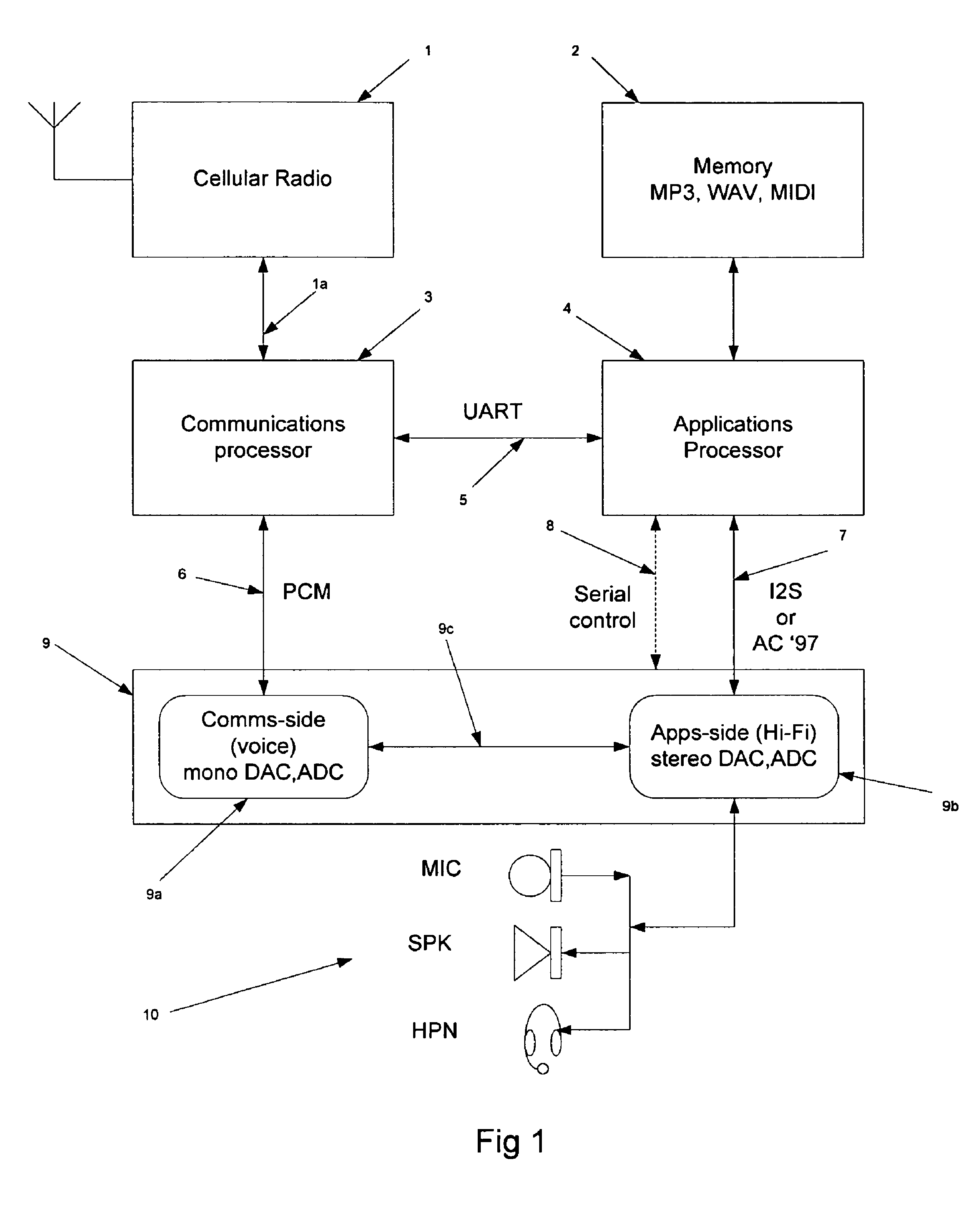

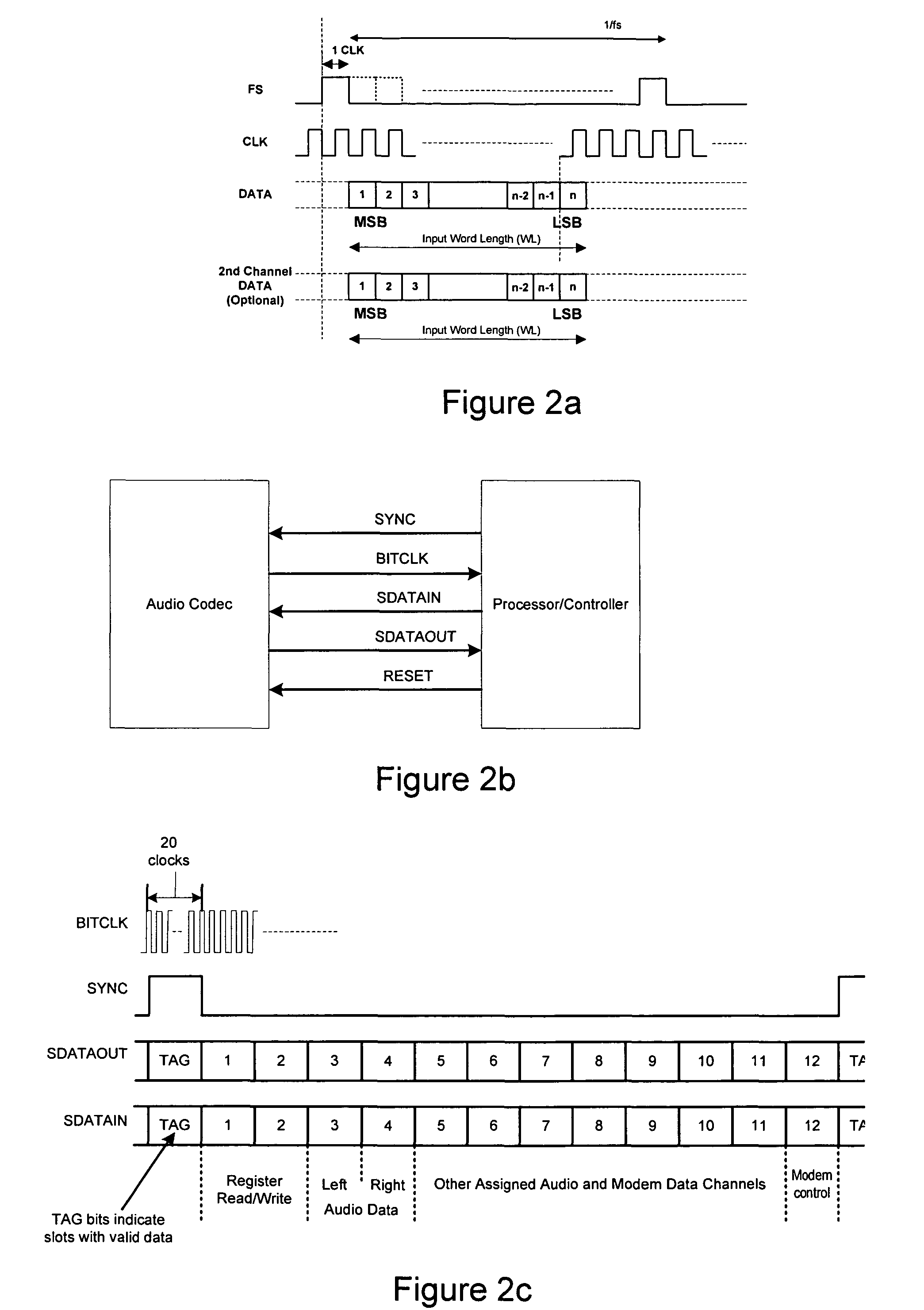

Audio device

ActiveUS20070124150A1Reduce in quantityIncrease flexibilitySpeech analysisDigital computer detailsBus interfaceDigital audio signals

The present invention provides an audio codec for converting digital audio signals to analogue audio signals, the audio codec comprising: two digital audio bus interfaces for coupling to respective digital audio buses; a digital-only signal path between the two digital audio bus interfaces, such that no analogue processing of the audio signals occurs in the digital-only signal path.

Owner:CIRRUS LOGIC INC

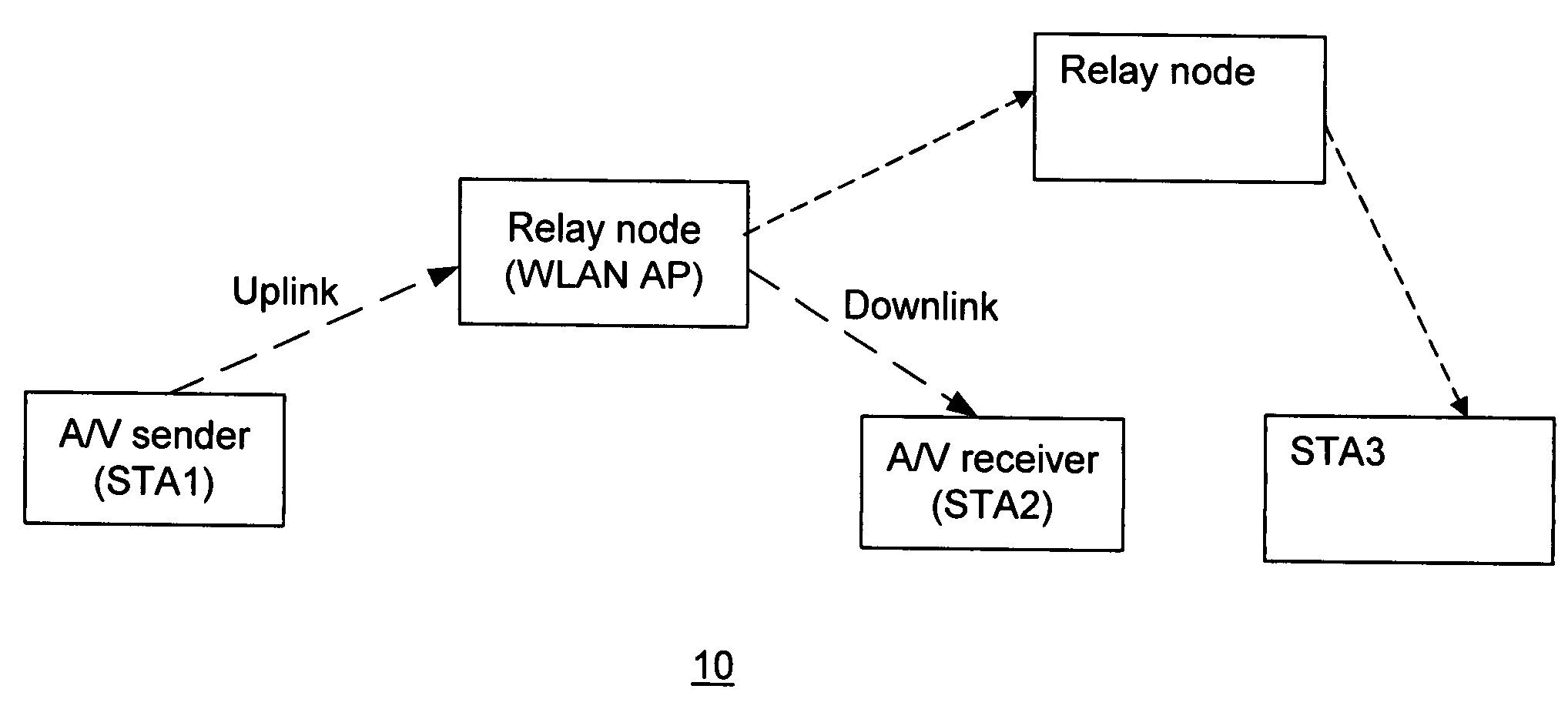

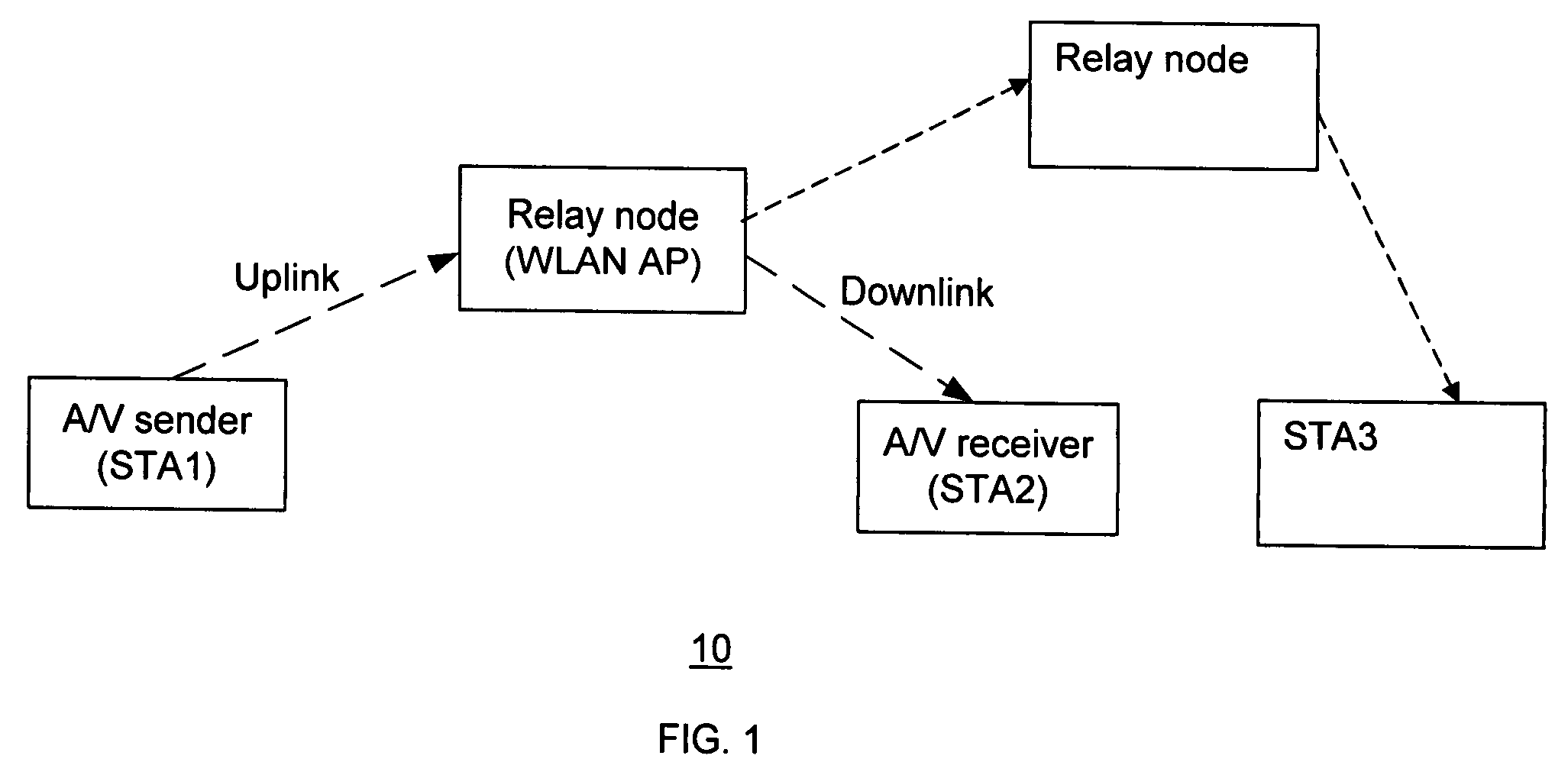

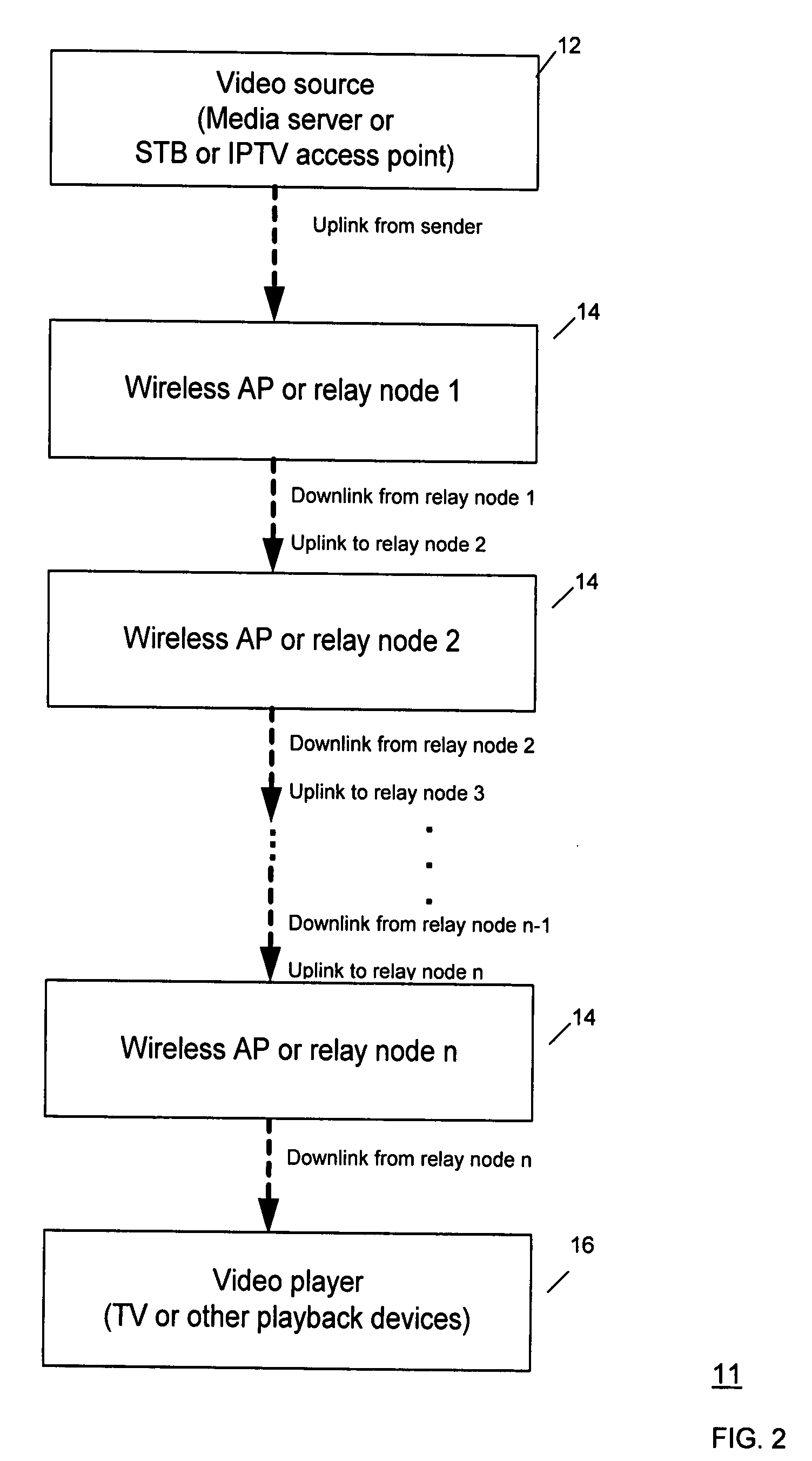

Method and system for alternate wireless channel selection for uplink and downlink data communication

InactiveUS20090080366A1Avoid contentionFrequency-division multiplex detailsTime-division multiplexTelecommunicationsUplink transmission

A method and system for alternate wireless channel selection for uplink and downlink data communication, is provided. In a wireless communication network including a wireless relay node, a communication path is established via the relay node for transmission of the data. A wireless channel is selected as an uplink channel for uplink transmission of the data to the relay node, and an alternate wireless channel is selected as a downlink channel for downlink transmission of the data from the relay node.

Owner:SAMSUNG ELECTRONICS CO LTD

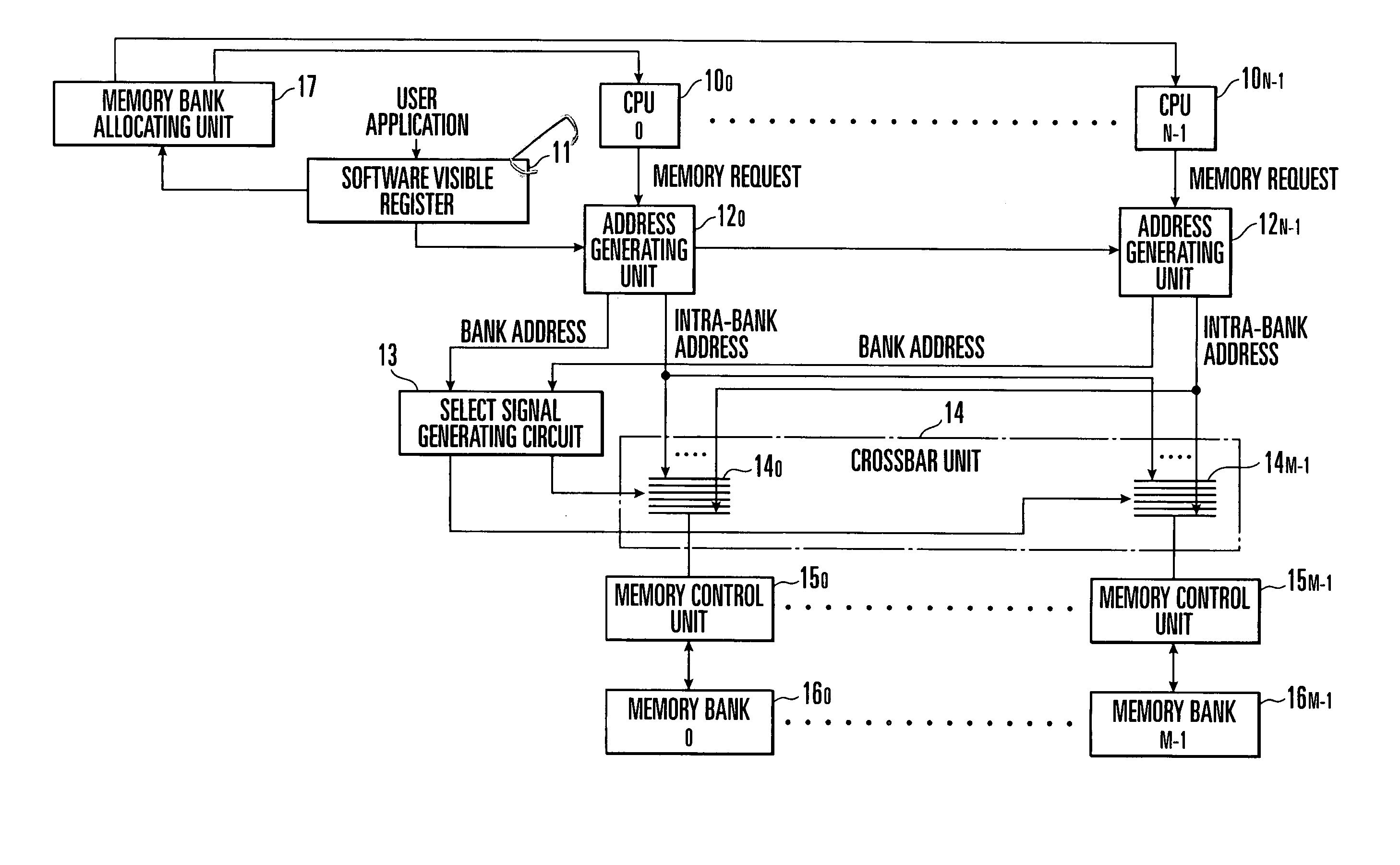

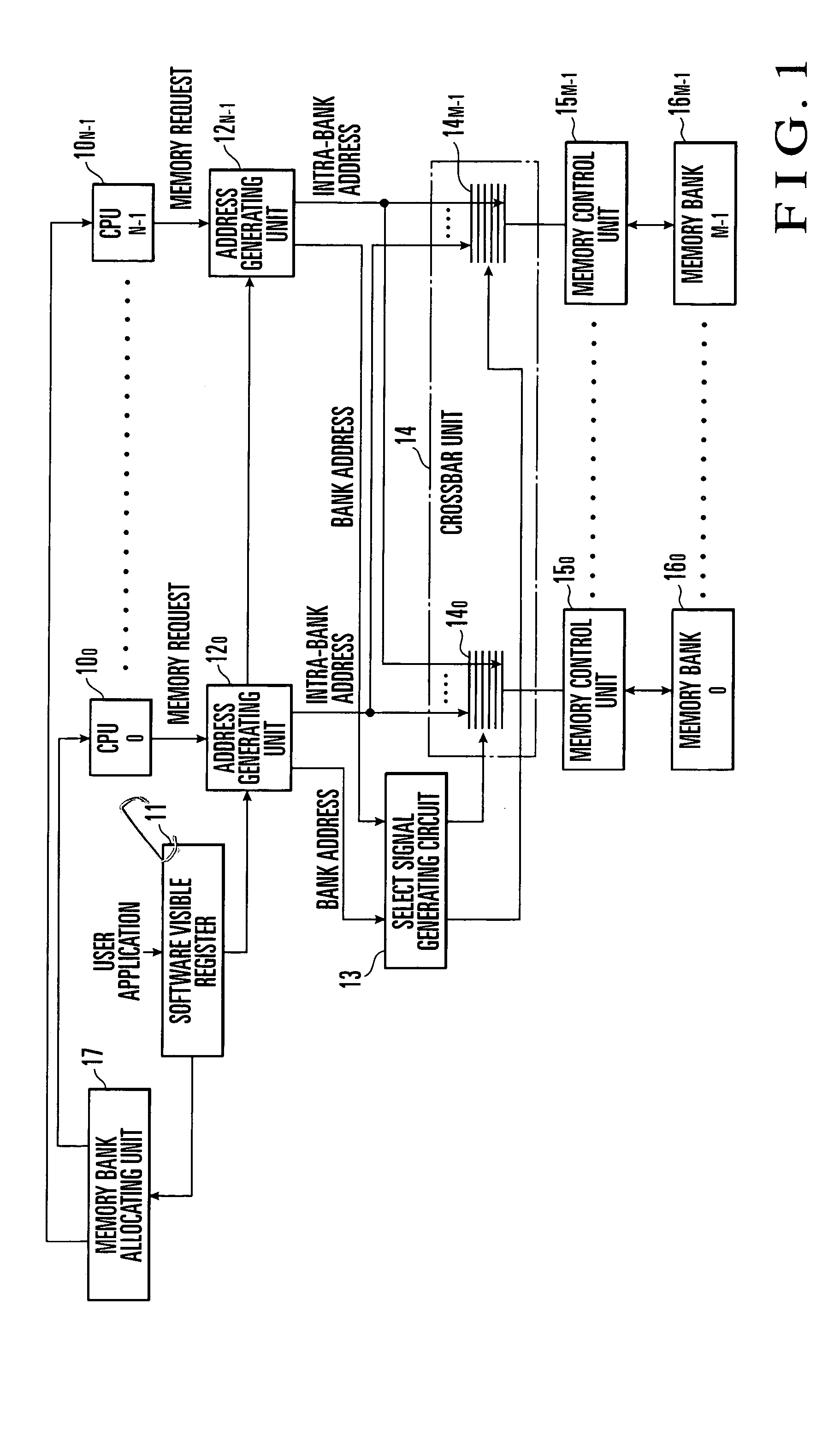

Memory interleave system

InactiveUS7346750B2Avoid contentionImprove throughputMemory adressing/allocation/relocationDigital storageAddress generation unitMemory address

A memory interleave system includes M (M=2p, where p is a natural number) memory banks, M memory control units (MCU) corresponding respectively to the M memory banks, N (a natural number) CPUs, and N address generating units (AGU) corresponding respectively to the N CPUs. Each memory bank includes a plurality of memories. The CPUs output memory requests, each containing a first bank address (address of the memory bank) and a first intra-bank address (address of a memory in the memory bank). Each AGU receives a memory request from a corresponding CPU, and generates and outputs a second intra-bank address and a second bank address by using the first intra bank address and the first bank address. Each memory control MCU performs memory bank access control on the basis of the second intra-bank address. An MCU performing access control is selected on the basis of the second bank address.

Owner:NEC CORP

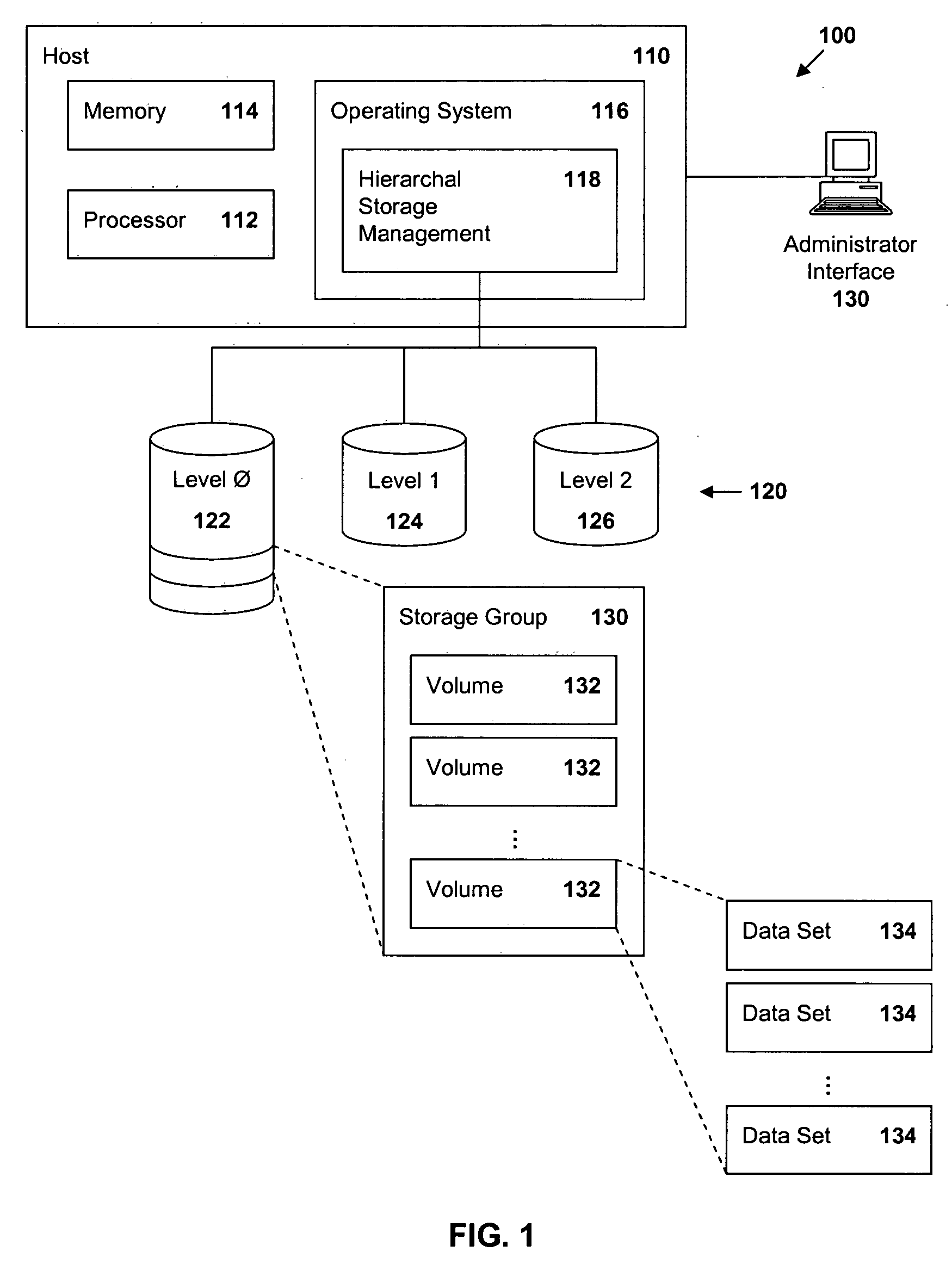

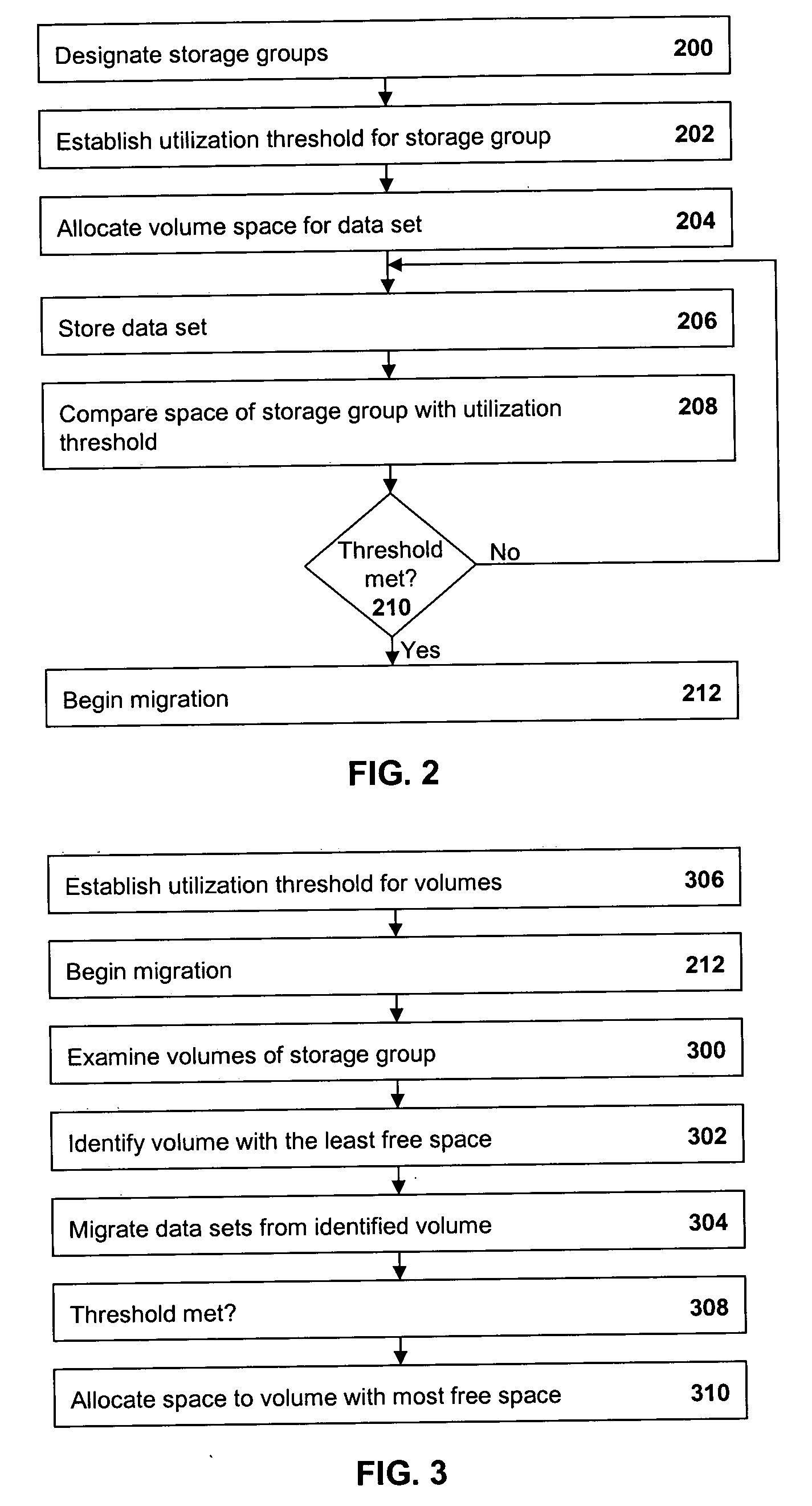

Data migration with reduced contention and increased speed

InactiveUS20060155950A1ContentionReduce contentionMemory systemsInput/output processes for data processingData setSpace allocation

Methods and apparatus are provided for managing data in a hierarchal storage subsystem. A plurality of volumes is designated as a storage group for Level 0 storage; a high threshold is established for the storage group; space is allocated for a data set to a volume of the storage group, storing the data set to the volume; the high threshold is compared with a total amount of space consumed by all data sets stored to volumes in the storage group; and data sets are migrated from the storage group to a Level 1 storage if the high threshold is less than or equal to the total amount of space used by all of the data sets stored to volumes in the storage group. Optionally, high threshold are assigned to each storage group and, when the space used in a storage group reaches or exceeds the high threshold, migration of data will begin from volumes in the storage group, beginning with the volume having the least free space. Thus, contention between migration and space allocation is reduced. Also optionally, when a volume is selected for migration, a flag is set which prevents space in the volume from being allocated to new data sets. Upon completion of the migration, the flag is cleared and allocation is allowed. Thus, contention between migration and space allocation is avoided.

Owner:IBM CORP

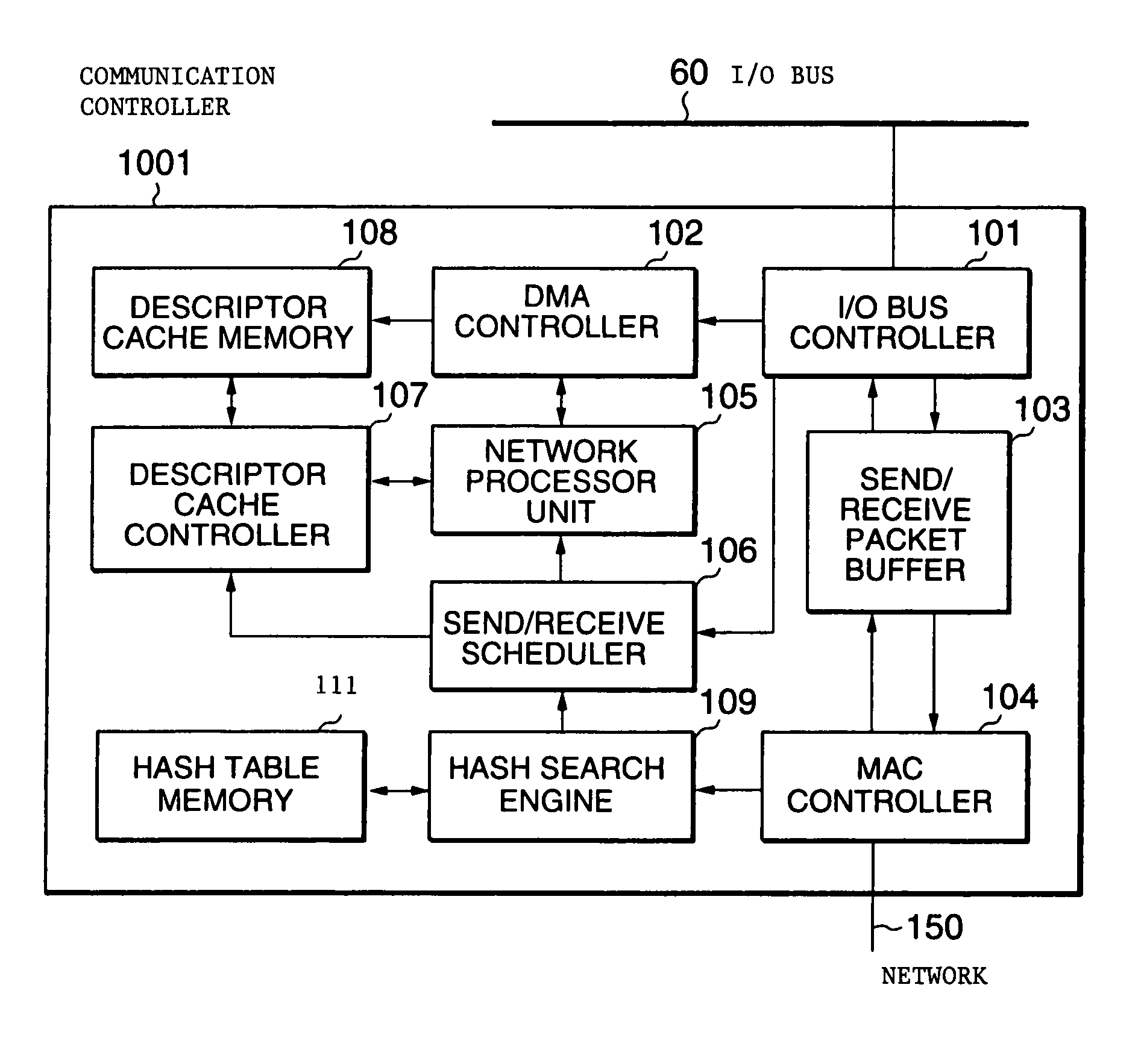

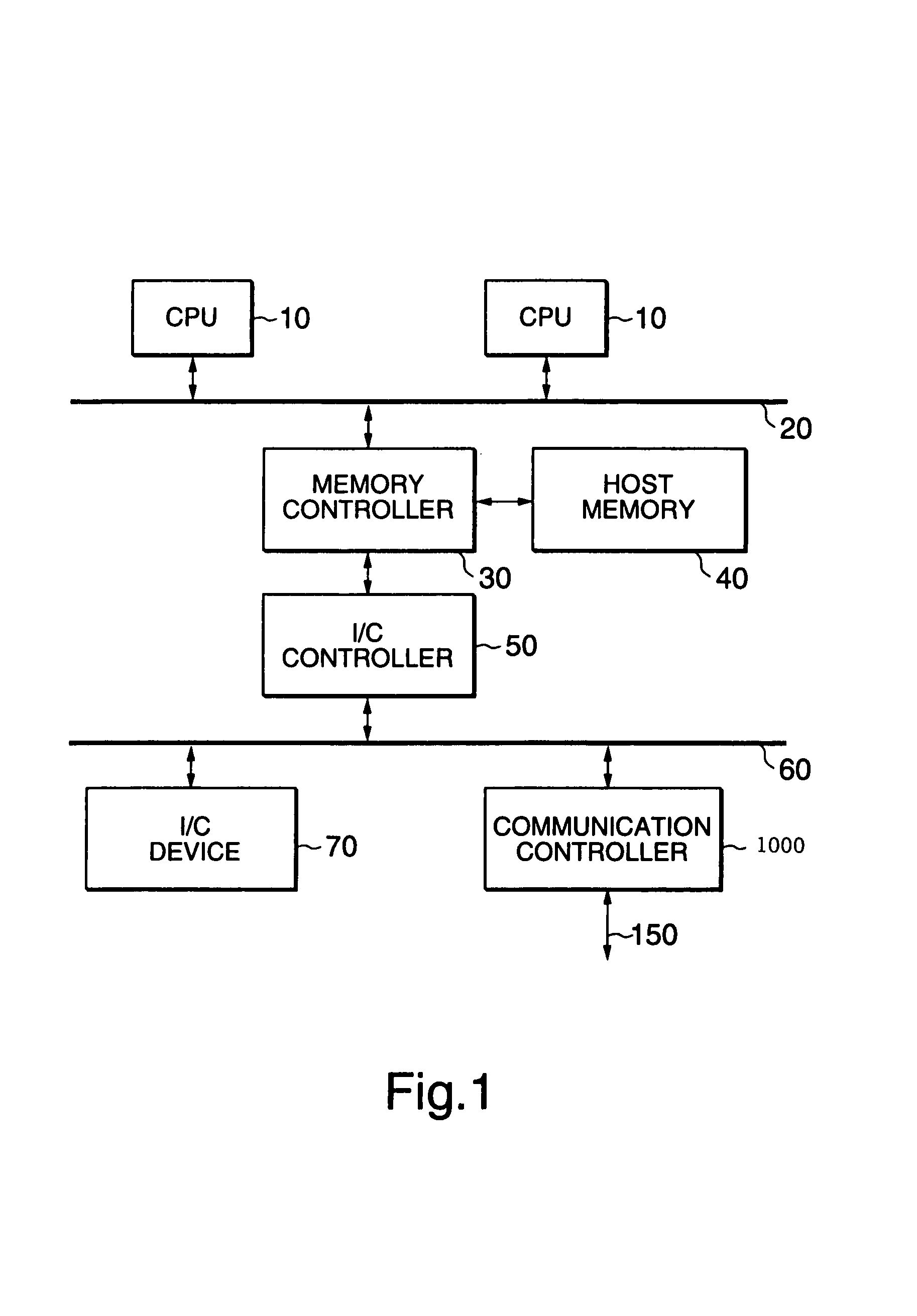

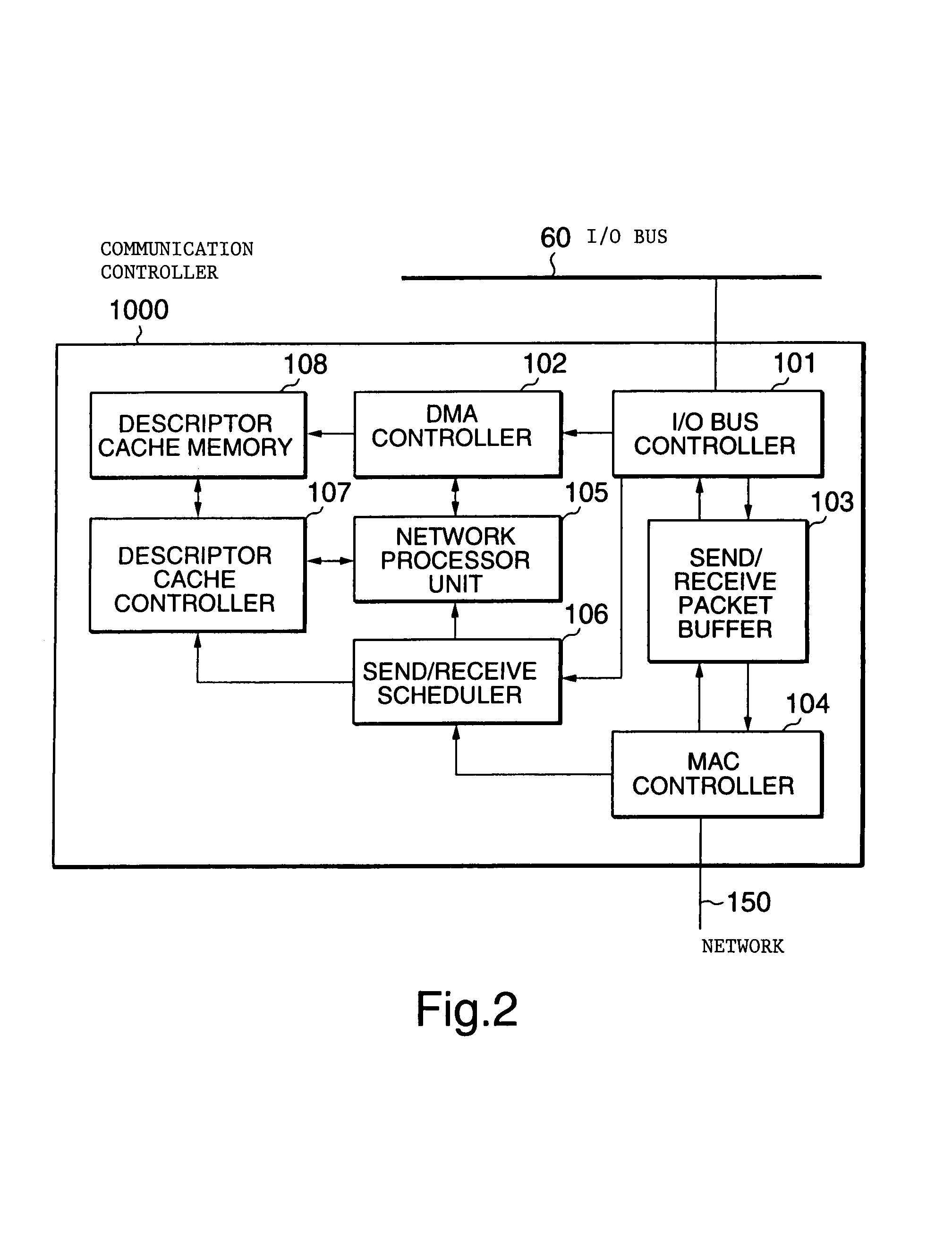

Communication control apparatus which has descriptor cache controller that builds list of descriptors

InactiveUS7472205B2Reduce descriptor control overheadReduce access latencyCharacter and pattern recognitionMultiple digital computer combinationsCommunication unitParallel computing

A communication controller of the present invention includes a descriptor cache mechanism which makes a virtual descriptor gather list from the descriptor indicted from a host, and which allows a processor to refer to a portion of the virtual descriptor gather list in a descriptor cache window. Another communication controller of the present invention includes a second processor which allocates any communication process related with a first communication unit of the communication processes to the first one of a first processors and any communication process related with a second communication unit of the communication processes to the second one of the first processors. Another communication controller includes a first memory which stores control information. The first memory includes a first area accessed by the associated one of processors to refer to the control information and a second area which stores the control information during the access.

Owner:NEC CORP

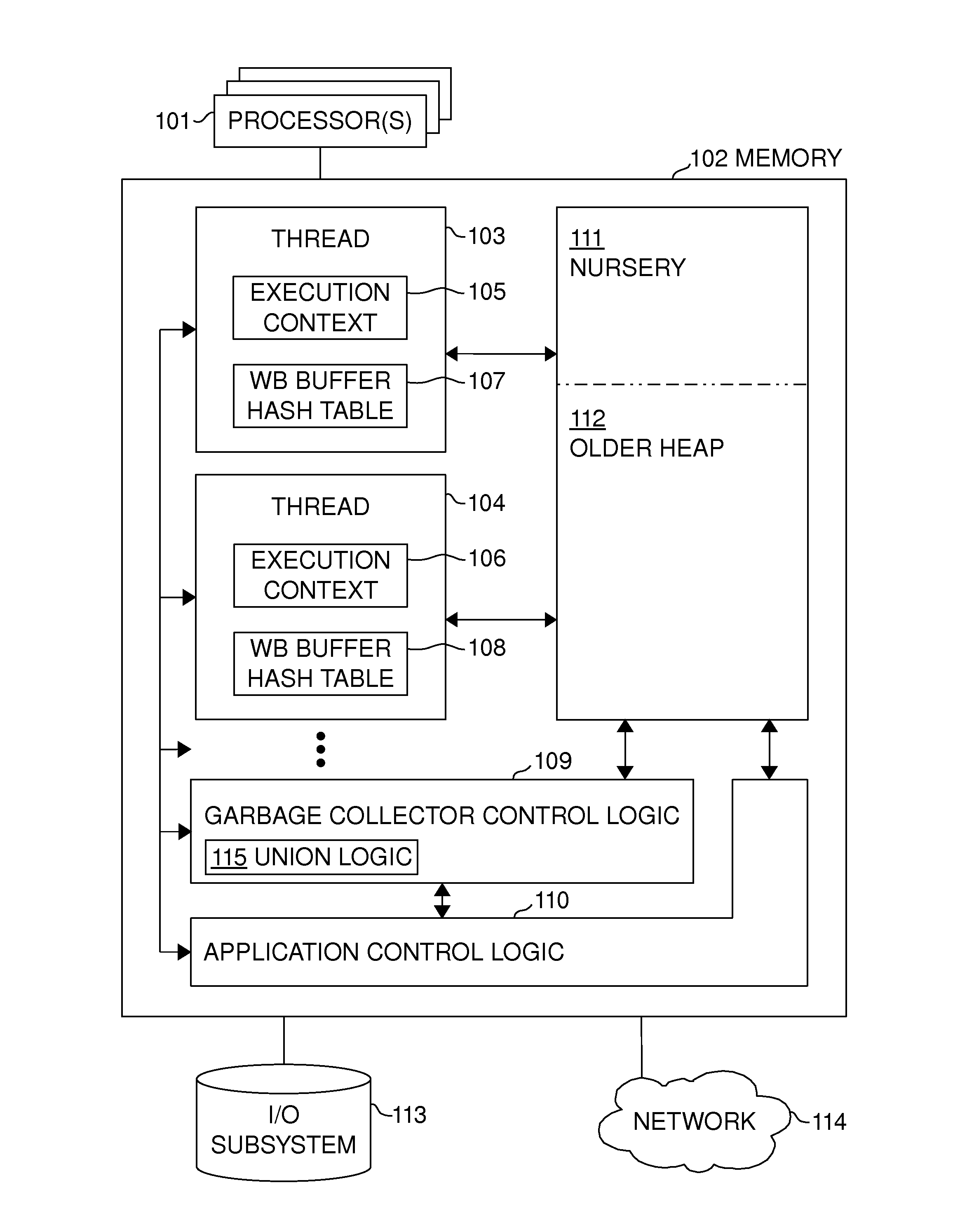

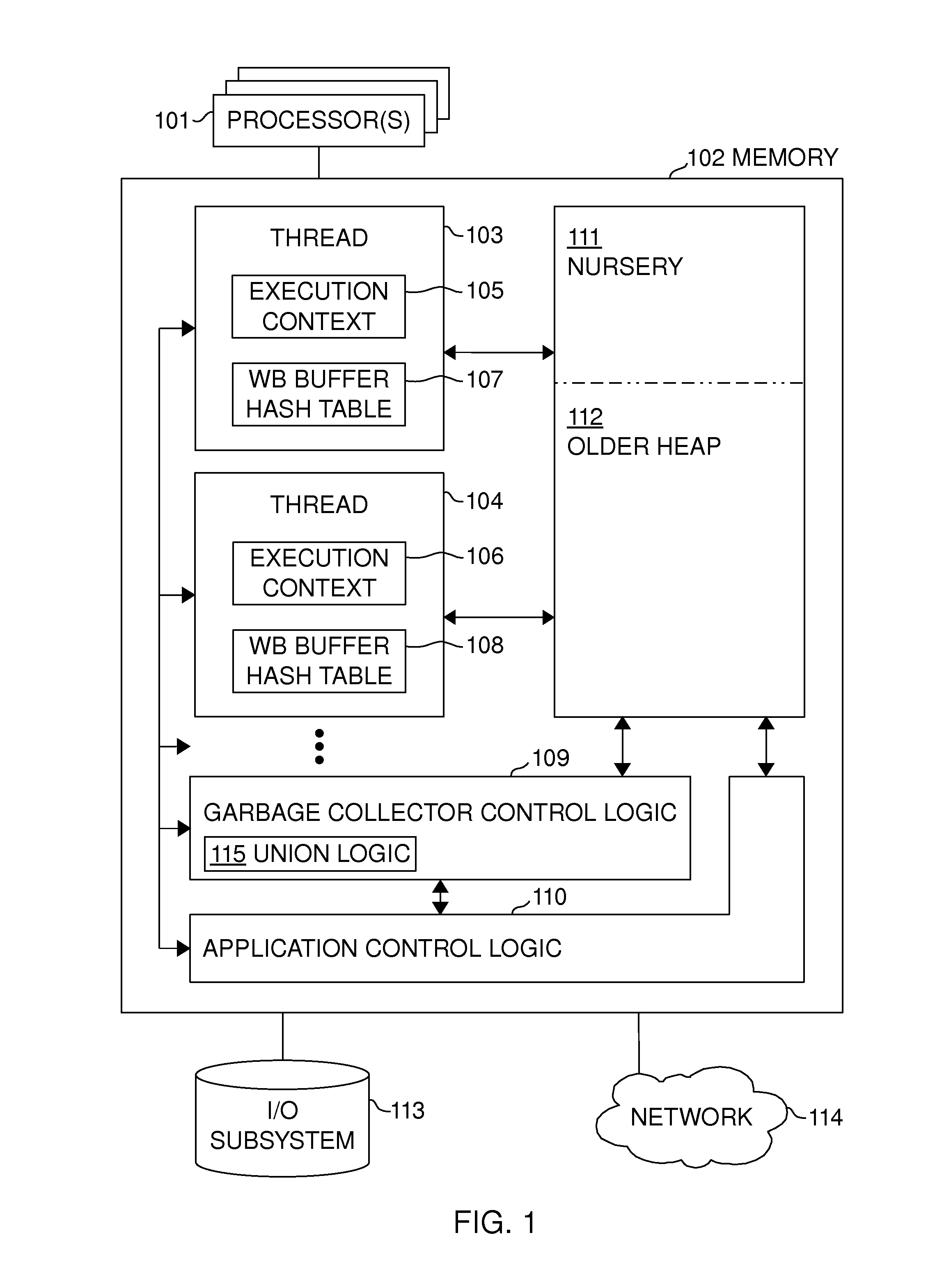

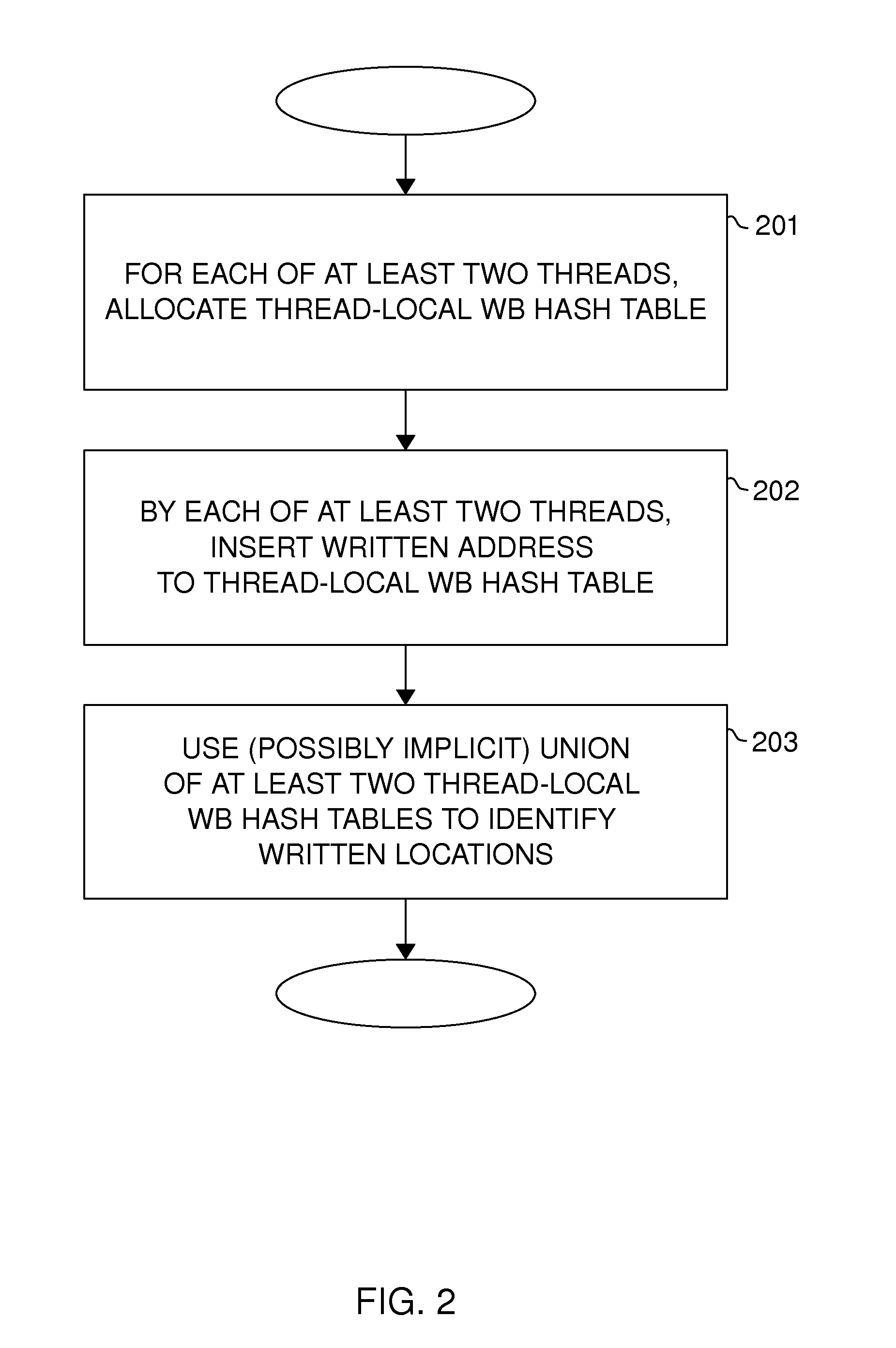

Thread-local hash table based write barrier buffers

InactiveUS20110252216A1Quick insertAddress calculationsProgram synchronisationMemory adressing/allocation/relocationComputer hardwareHash table

A write barrier is implemented using thread-local hash table based write barrier buffers. The write barrier, executed by mutator threads, stores addresses of written memory locations or objects in the thread-local hash tables, and during garbage collection, an explicit or implicit union of the addresses in each hash table is used in a manner that is tolerant to an address appearing in more than one hash table.

Owner:CLAUSAL COMPUTING

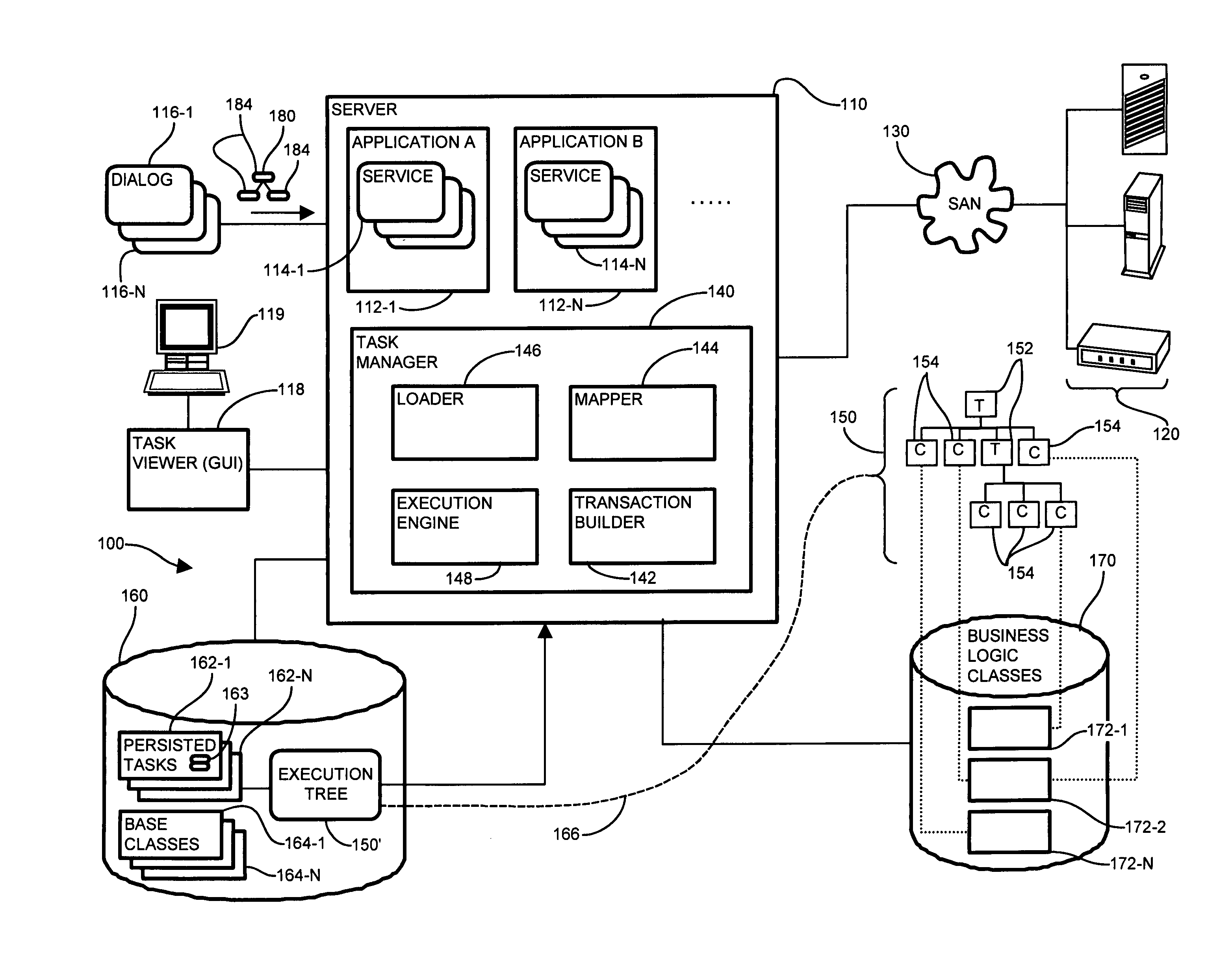

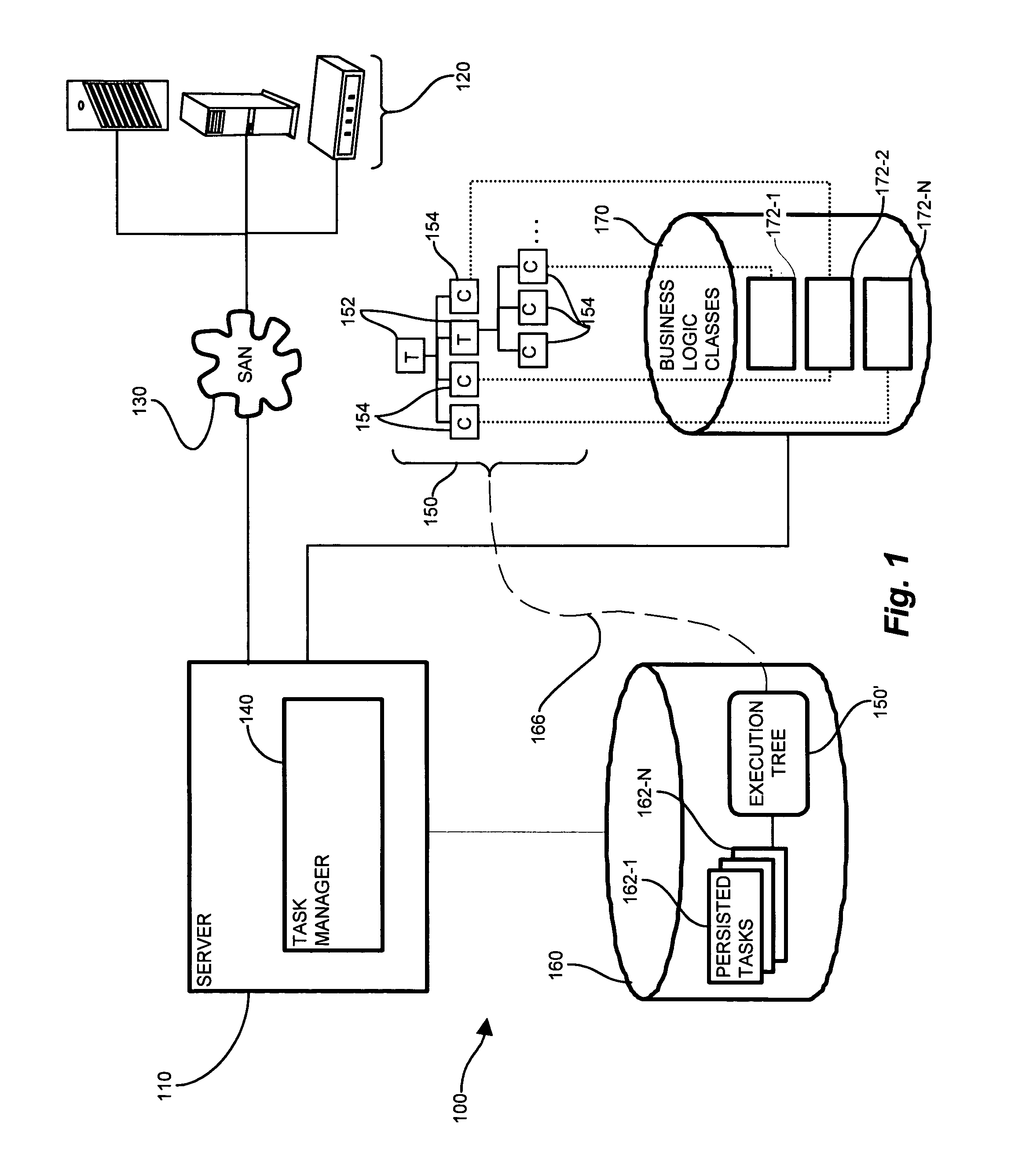

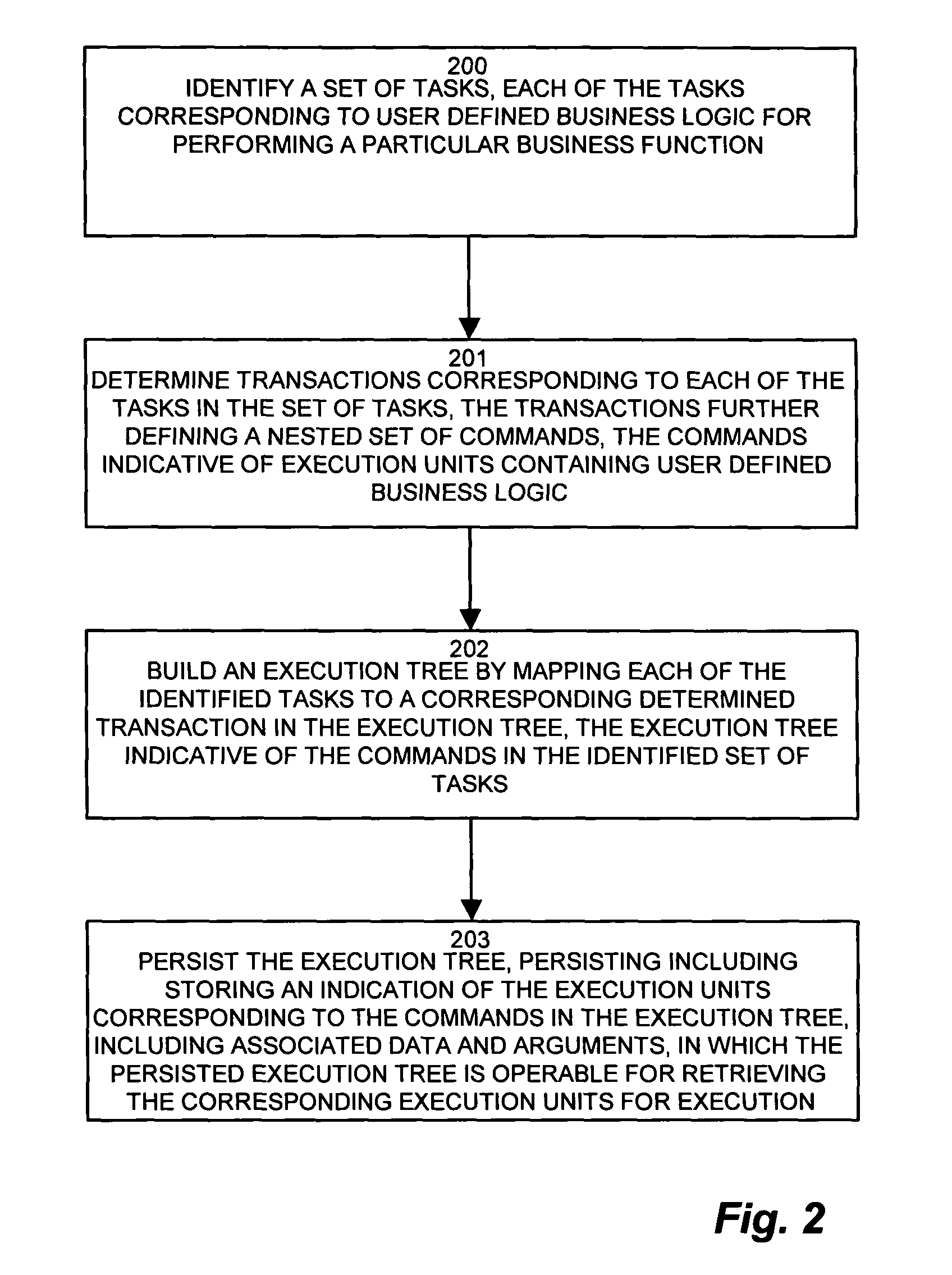

System and methods for task management

ActiveUS7836448B1Reduce redundancyAvoid collisionDigital computer detailsMultiprogramming arrangementsStorage area networkLogfile

In a storage area network (SAN), different tasks may expect different types of commands for commencing execution, such as interactive or offline, and may have different formats for reporting status and completion, such as log files or message based. A framework for defining the business logic enveloped in a particular task, and providing a common manner of deploying, or enabling invocation, of the task provides consistent operator control for scheduling, monitoring, ensuring completion, and tracking errors and other events. Business logic modules are identified as commands corresponding to a task. Transactions including a set of the commands define an ordered sequence for completing the task. The operator requests a particular set of tasks, using a selection tree, and the task manager builds a corresponding execution tree to identify and map the transactions and commands of the task to the execution tree to optimize execution and mitigate redundancies.

Owner:EMC IP HLDG CO LLC

Virtual clear channel avoidance (CCA) mechanism for wireless communications

ActiveUS7680150B2Avoid contentionAssess restrictionTime-division multiplexBusy timeCommunications media

Owner:TEXAS INSTR INC

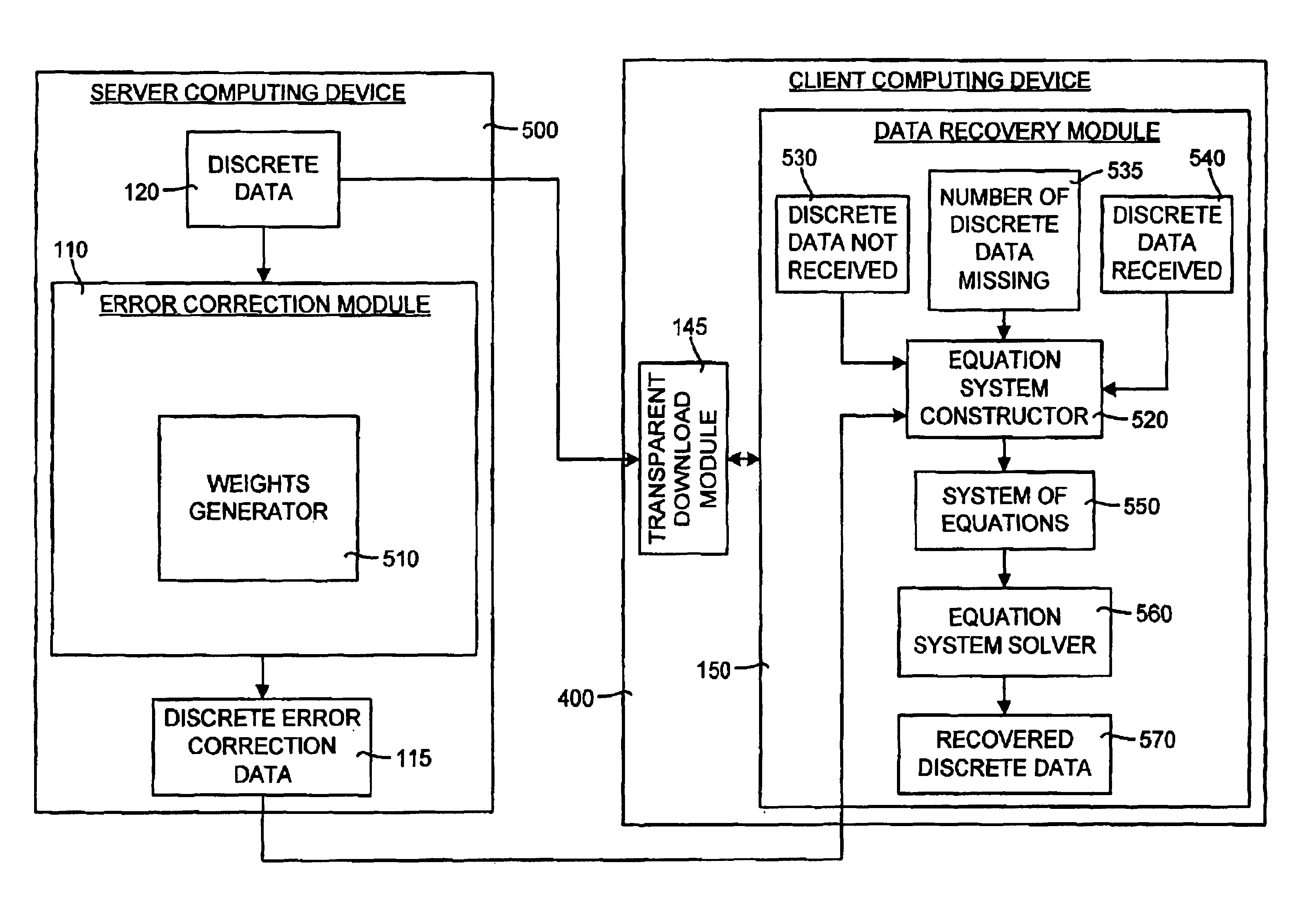

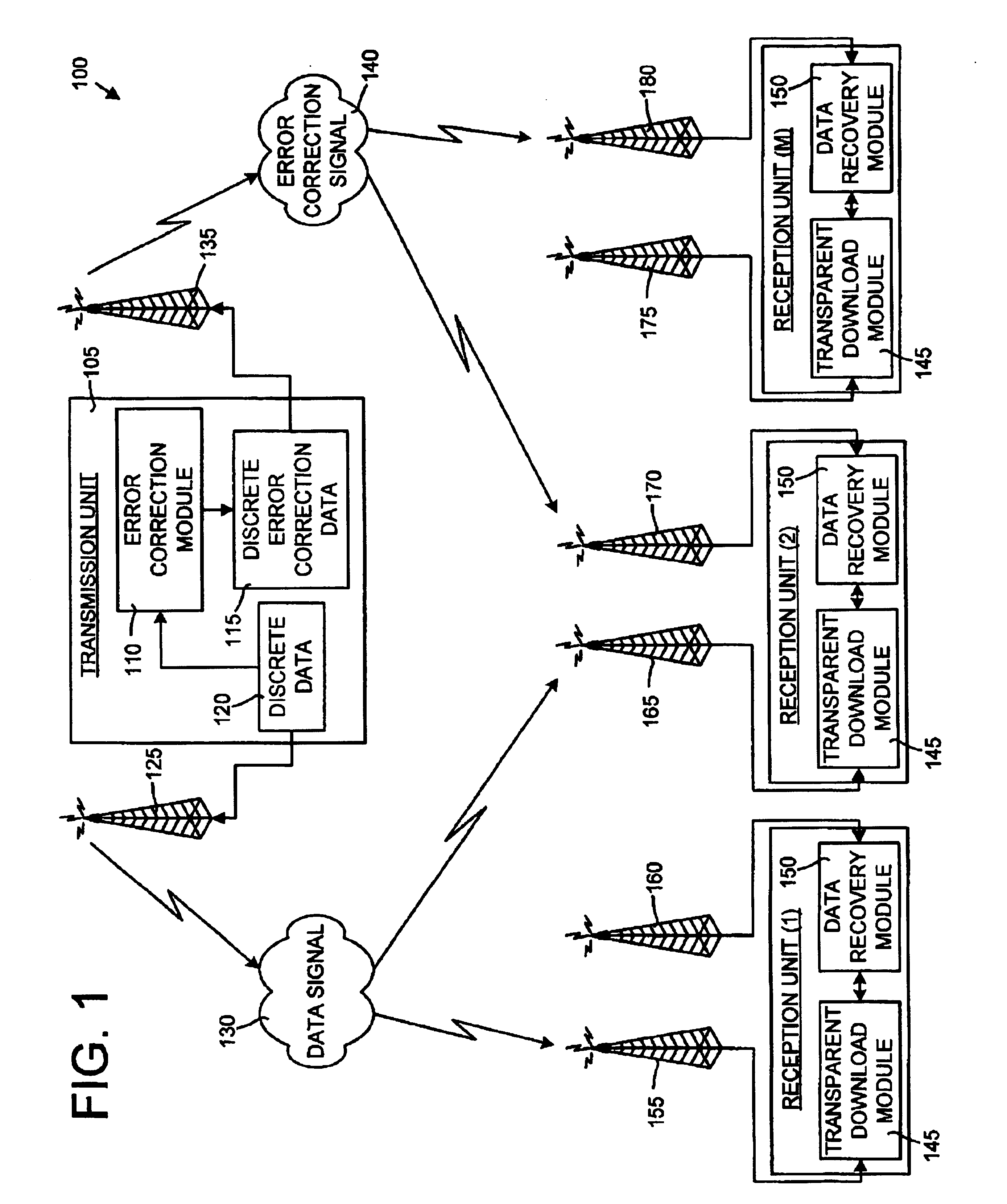

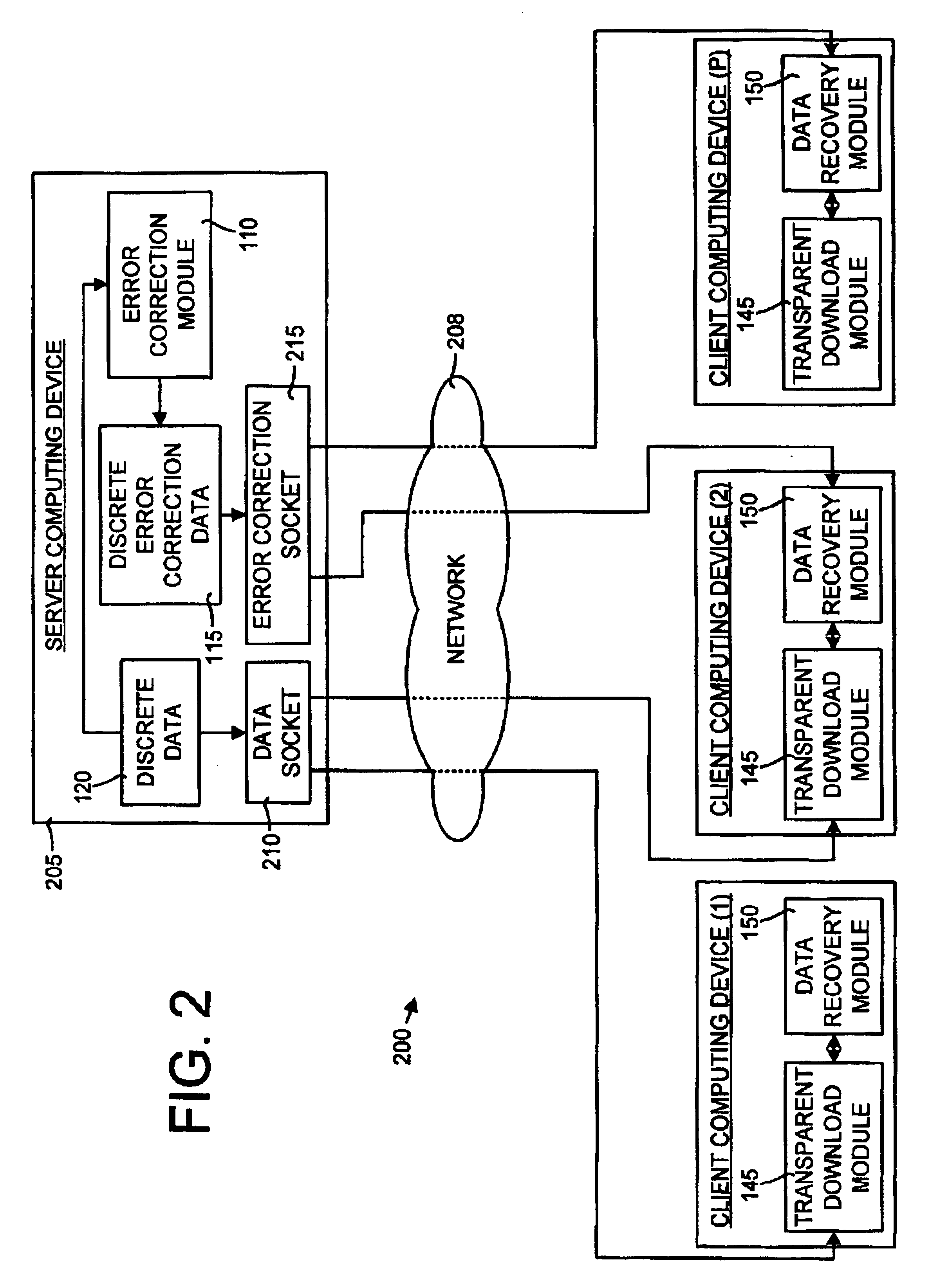

System and method for transparent electronic data transfer using error correction to facilitate bandwidth-efficient data recovery

InactiveUS6948104B2Promote recoveryAvoid contentionError prevention/detection by using return channelTransmission systemsTraffic capacityMissing data

The invention disclosed herein includes a system and method for electronically transferring data through a communications connection in a transparent manner such that the data transfer does not interfere with other traffic sharing the connection. The invention transfers data using bandwidth of the connection that other traffic are not using. If other traffic desires to use the bandwidth currently being used by the invention, the invention relinquishes the bandwidth to the other traffic and retreats to avoid bandwidth contention. Although a retreat may cause gaps in the data transferred, a key aspect of the invention is that any missing data due to these gaps is recovered easily and in a bandwidth-efficient way using novel error correction and recovery.

Owner:MICROSOFT TECH LICENSING LLC

Method of allocating radio resource

InactiveUS20120002634A1Avoid overheadWaste of resource be preventedNetwork topologiesOptical multiplexData streamWireless resource allocation

A method of allocating a radio resource is provided. The method includes: receiving space division multiple access (SDMA) information for downlink transmission; transmitting a result of channel estimation performed on channels corresponding to data streams transmitted in downlink according to the SDMA information; and receiving the data streams through the respective channels according to the result of channel estimation. Accordingly, radio resource request states of stations can be collectively considered.

Owner:LG ELECTRONICS INC

Audio device

ActiveUS20070124526A1Reduce in quantityIncrease flexibilityDigital computer detailsSubstation equipmentElectrical conductorDual bus

The present invention provides a digital bus circuit comprising: a bus conductor having two sections each connected to a pass circuit, each bus section being connected to two bus interfaces for respective circuits; at least three of the bus interfaces comprising a tri-state output buffer having a tri-state mode and one or more logic output modes; wherein in a unitary bus mode the tri-state output buffers are arranged such that only one of said output buffers is not in a tri-state mode, and the pass circuit is arranged to substantially couple said bus sections; and wherein in a dual bus mode the tri-state output buffers are arranged such that only one of the output buffers connected to each bus section is not in a tri-state mode, and wherein the pass circuit is arranged to substantially isolate said bus sections.

Owner:CIRRUS LOGIC INC

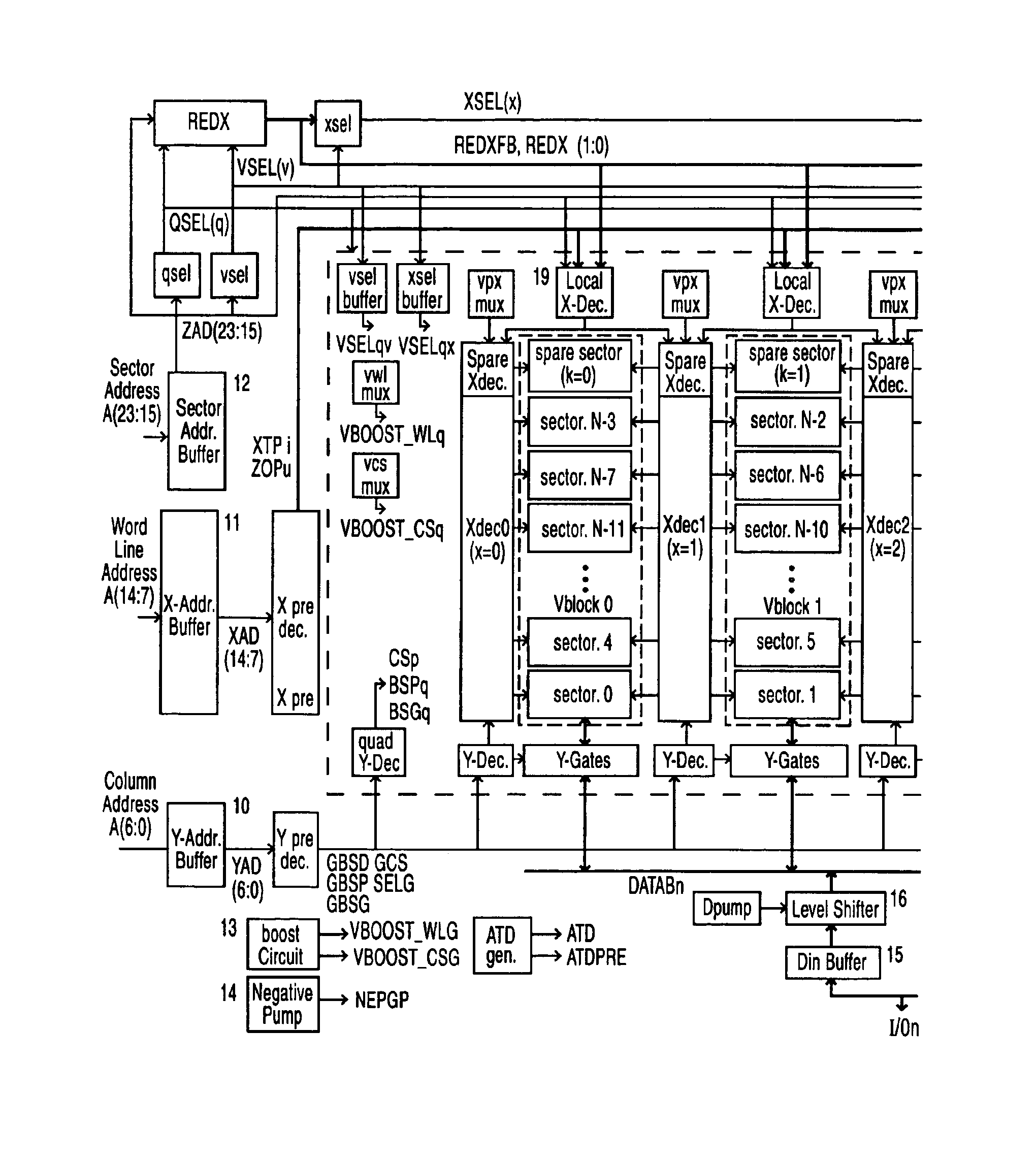

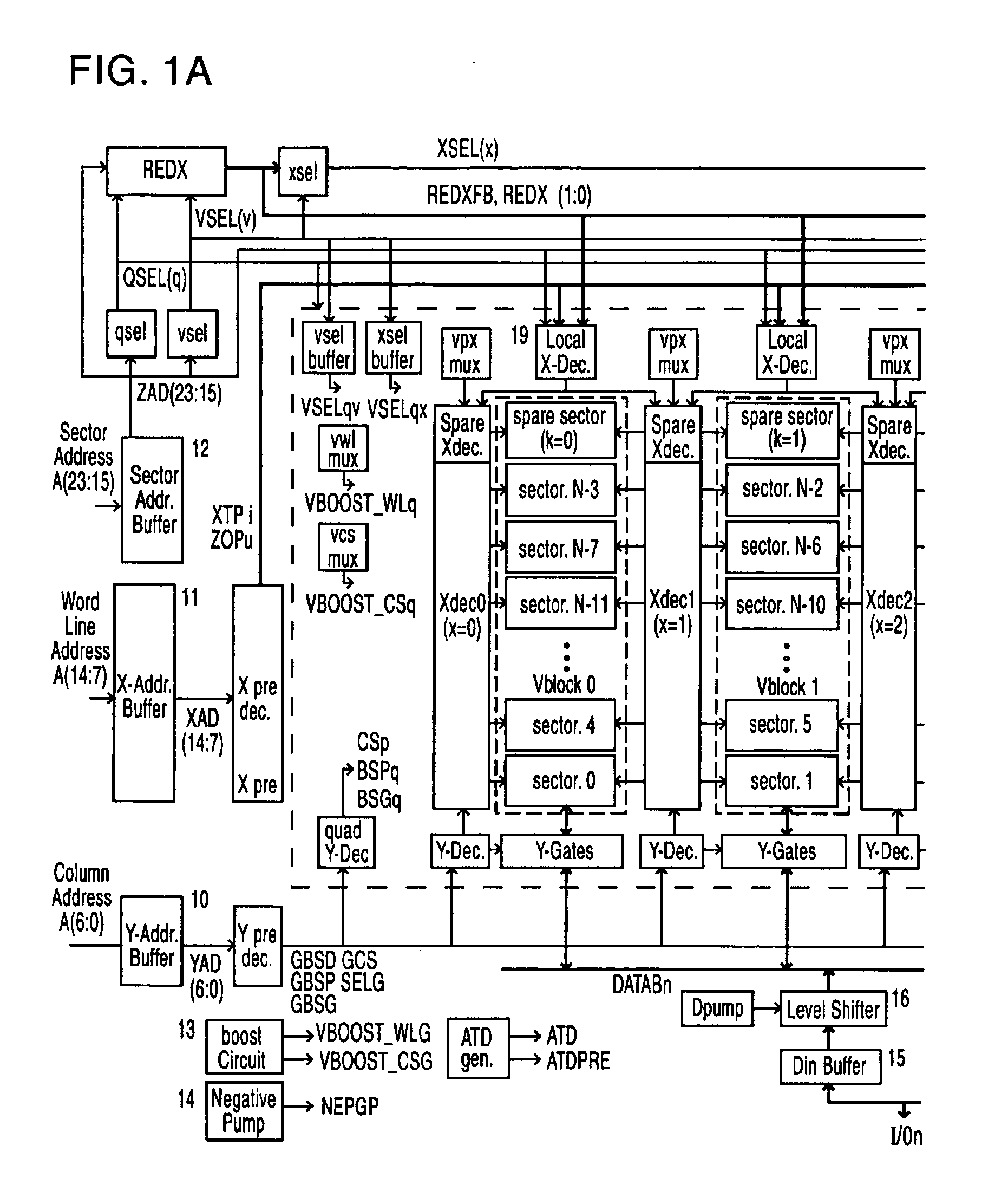

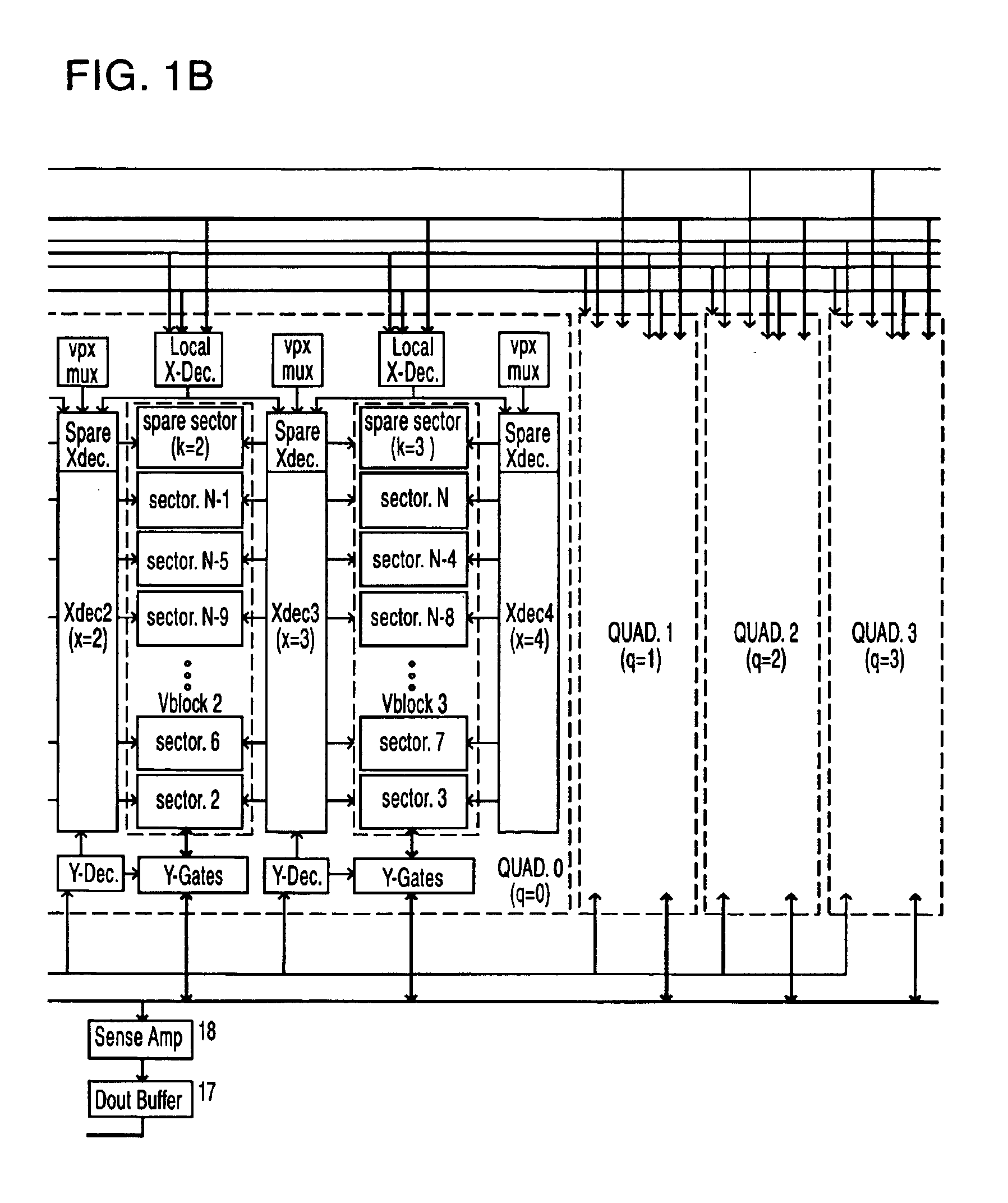

Memory circuit with redundant configuration

ActiveUS6865133B2Prevent fallingDrop in access speed due to redundancy judgment is preventedRead-only memoriesDigital storageMemory circuitsReliability engineering

A memory circuit has a plurality of blocks which further comprises a plurality of regular sectors and a spare sector, wherein each sector further comprises a plurality of memory cells, and when a regular sector in a first block is defective, this defective regular sector is replaced with a spare sector in a second block. And responding to an address to be supplied, the regular sector corresponding to the supplied address in the first block and the spare selector in the second block are selected simultaneously during a first period, and after the first period, selection of one of the regular sector and the spare sector is maintained according to the result of redundancy judgment on whether the supply address matches with the redundant address. Regardless the result of redundancy judgment on whether the supplied address matches the redundant address indicating the defective sector, a regular sector in the first block and the spare sector in the second block, to be a pair thereof, are set to selected status simultaneously during the first period when access operation stars, so a drop in access speed due to a redundancy judgment operation can be suppressed.

Owner:INFINEON TECH LLC

Audio device

ActiveUS7376778B2Reduce in quantityIncrease flexibilityDigital computer detailsSubstation equipmentDual busBus interface

The present invention provides a digital bus circuit comprising: a bus conductor having two sections each connected to a pass circuit, each bus section being connected to two bus interfaces for respective circuits; at least three of the bus interfaces comprising a tri-state output buffer having a tri-state mode and one or more logic output modes; wherein in a unitary bus mode the tri-state output buffers are arranged such that only one of said output buffers is not in a tri-state mode, and the pass circuit is arranged to substantially couple said bus sections; and wherein in a dual bus mode the tri-state output buffers are arranged such that only one of the output buffers connected to each bus section is not in a tri-state mode, and wherein the pass circuit is arranged to substantially isolate said bus sections.

Owner:CIRRUS LOGIC INC

Audio device and method

ActiveUS7885422B2Reduce in quantityIncrease flexibilityDevices with voice recognitionAutomatic call-answering/message-recording/conversation-recordingDigital audio signalsSpeech sound

The present invention provides a method of operating a digital audio device, the method comprising: receiving a voice call; receiving another digital audio signal which is not a voice call; mixing the two received signals; transmitting the mixed signal wirelessly to another device.

Owner:CIRRUS LOGIC INC

Biologically-safe quality control material for blood tester and preparation method thereof

ActiveCN103454410ASimple processGuaranteed stabilityBiological testingHuman plateletWhite blood cell

The invention discloses a biologically-safe quality control material for a blood tester and a preparation method thereof. The preparation method comprises that animal red blood cells having the sizes similar to sizes of human red blood cells are used for simulation of human red blood cells; animal red blood cells having the sizes similar to sizes of large cells in human white blood cells and animal red blood cells have the sizes similar to sizes of small cells in human white blood cells are used for simulation of human white blood cells; animal red blood cells have the sizes similar to sizes of human blood platelets are used for simulation of human blood platelets; the animal red blood cells for simulation of human white blood cells and human blood platelets are cured by formaldehyde having the content of 2-8%; the cured animal red blood cells are cleaned and then are added with bovine serum albumin so that the cure agent is removed; and according to requirements, through blending, the single-component or whole blood quality control material is obtained. The biologically-safe quality control material has good stability, a low cost and high safety, and is suitable for a blood cell analyzer, a urinary sediment analyzer and a hemoglobin analyzer.

Owner:URIT MEDICAL ELECTRONICS CO LTD

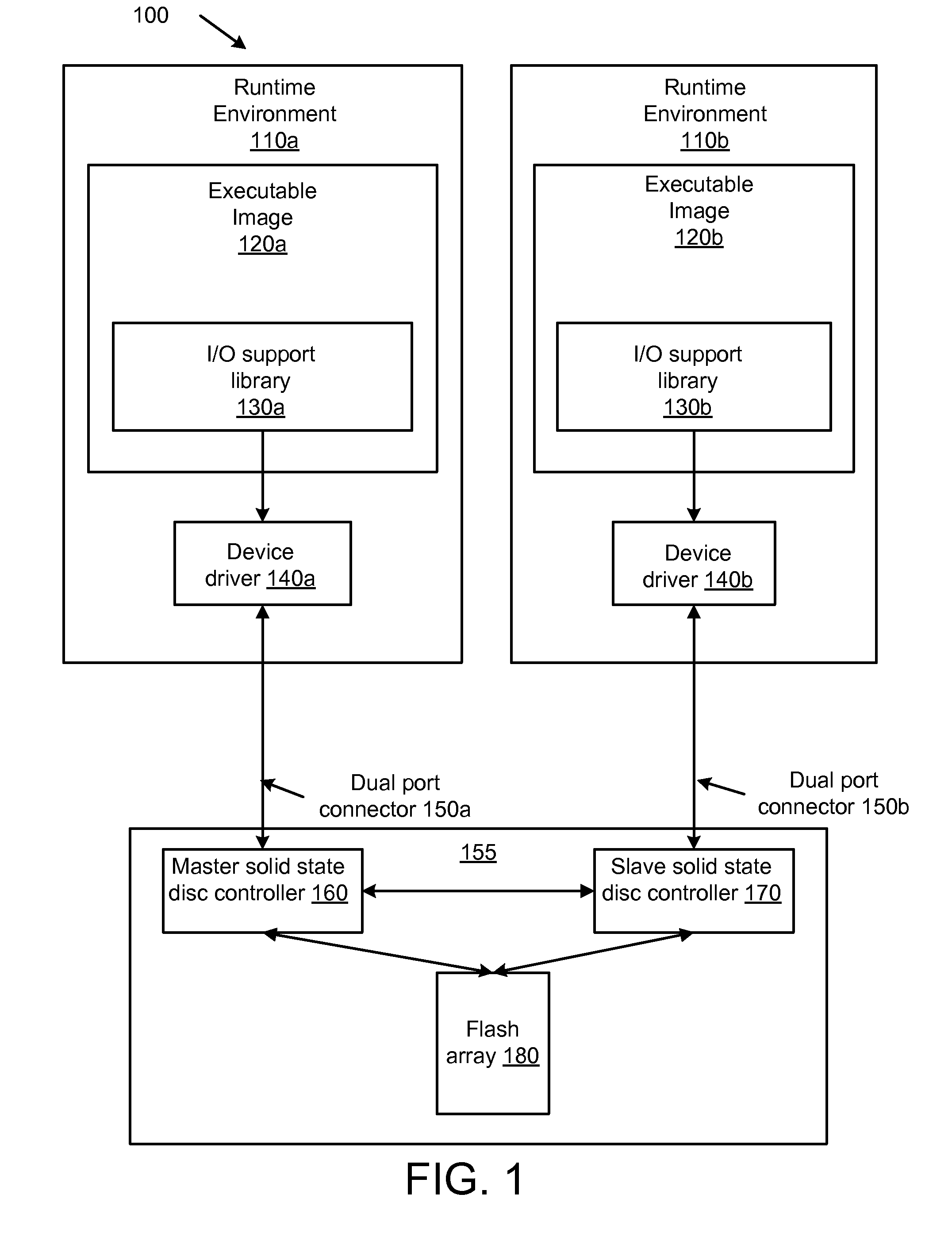

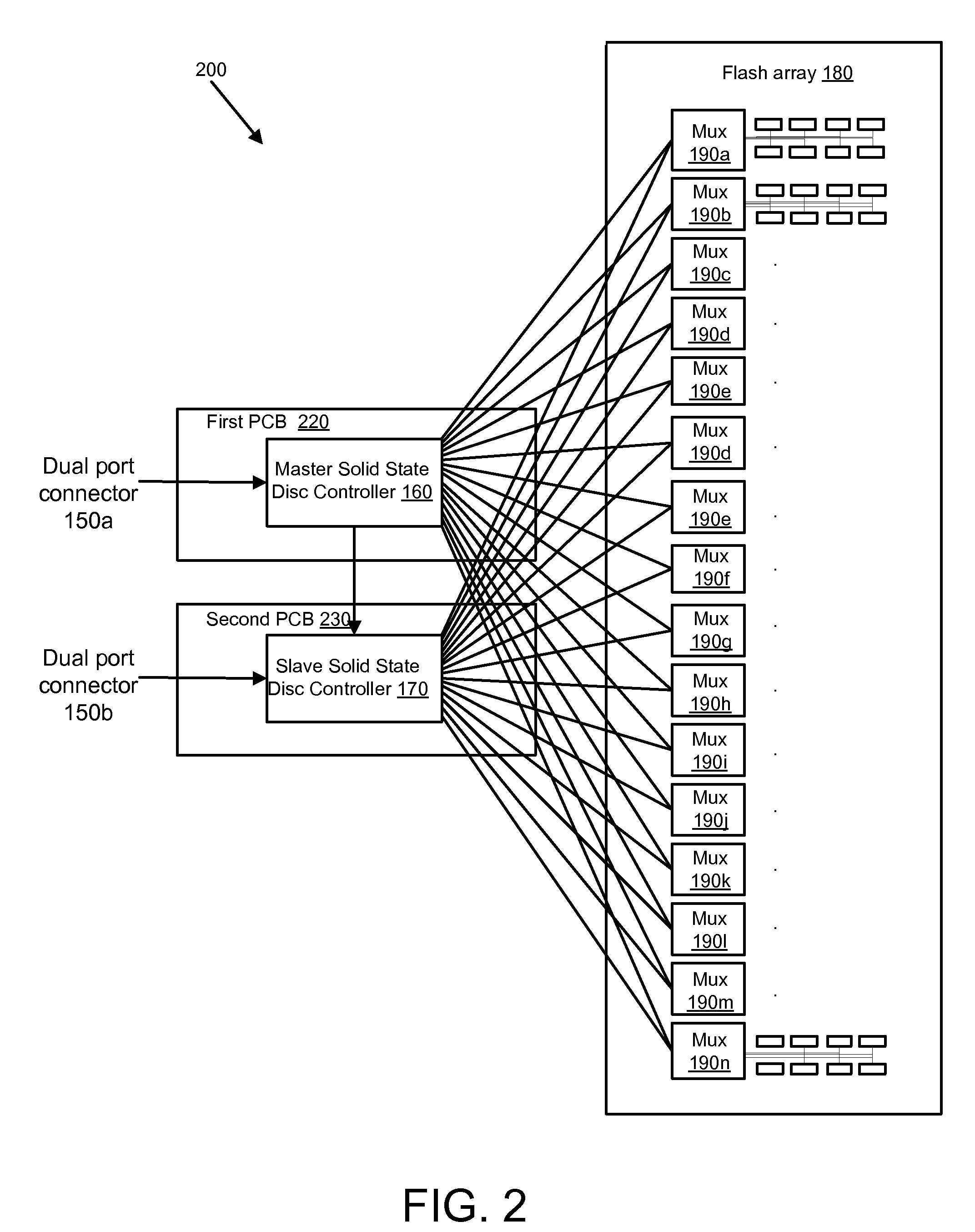

Method Apparatus and System for a Redundant and Fault Tolerant Solid State Disk

ActiveUS20110113279A1Avoid contentionMemory adressing/allocation/relocationRedundant hardware error correctionFailoverComputer science

A redundant and fault tolerant solid state disk (SSDC) includes a determination module configured to identify a first SSDC configured to connect to a flash array and a second SSDC configured to connect to the flash array. A capture module is configured to capture a copy of an I / O request received by the first SSDC from a port of a dual port connector, and / or capture a copy of an I / O request received by the second SSDC from a port of the dual port connector, and identify a write I / O request from the I / O request. A detection module is configured to detect a failure in the first SSDC. A management module is configured to manage access to a flash array by the first SSDC and the second SSDC. An error recovery and failover module is configured to automatically reassign work from the first SSDC to the second SSDC.

Owner:IBM CORP

Audio device and method

ActiveUS20070123192A1Reduce in quantityIncrease flexibilityDevices with voice recognitionSubscriber signalling identity devicesDigital audio signalsSpeech sound

The present invention provides a method of operating a digital audio device, the method comprising: receiving a voice call; receiving another digital audio signal which is not a voice call; mixing the two received signals; transmitting the mixed signal wirelessly to another device.

Owner:CIRRUS LOGIC INC

Portable wireless telephony device

ActiveUS7765019B2Reduce in quantityIncrease flexibilityInterconnection arrangementsCalling susbscriber number recording/indicationBus interfaceAudio signal flow

The present invention provides an audio codec for converting digital audio signals to analogue audio signals, the audio codec comprising: two digital audio bus interfaces for coupling to respective digital audio buses; a digital-only signal path between the two digital audio bus interfaces, such that no analogue processing of the audio signals occurs in the digital-only signal path.

Owner:CIRRUS LOGIC INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com