Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

214 results about "Zero-copy" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

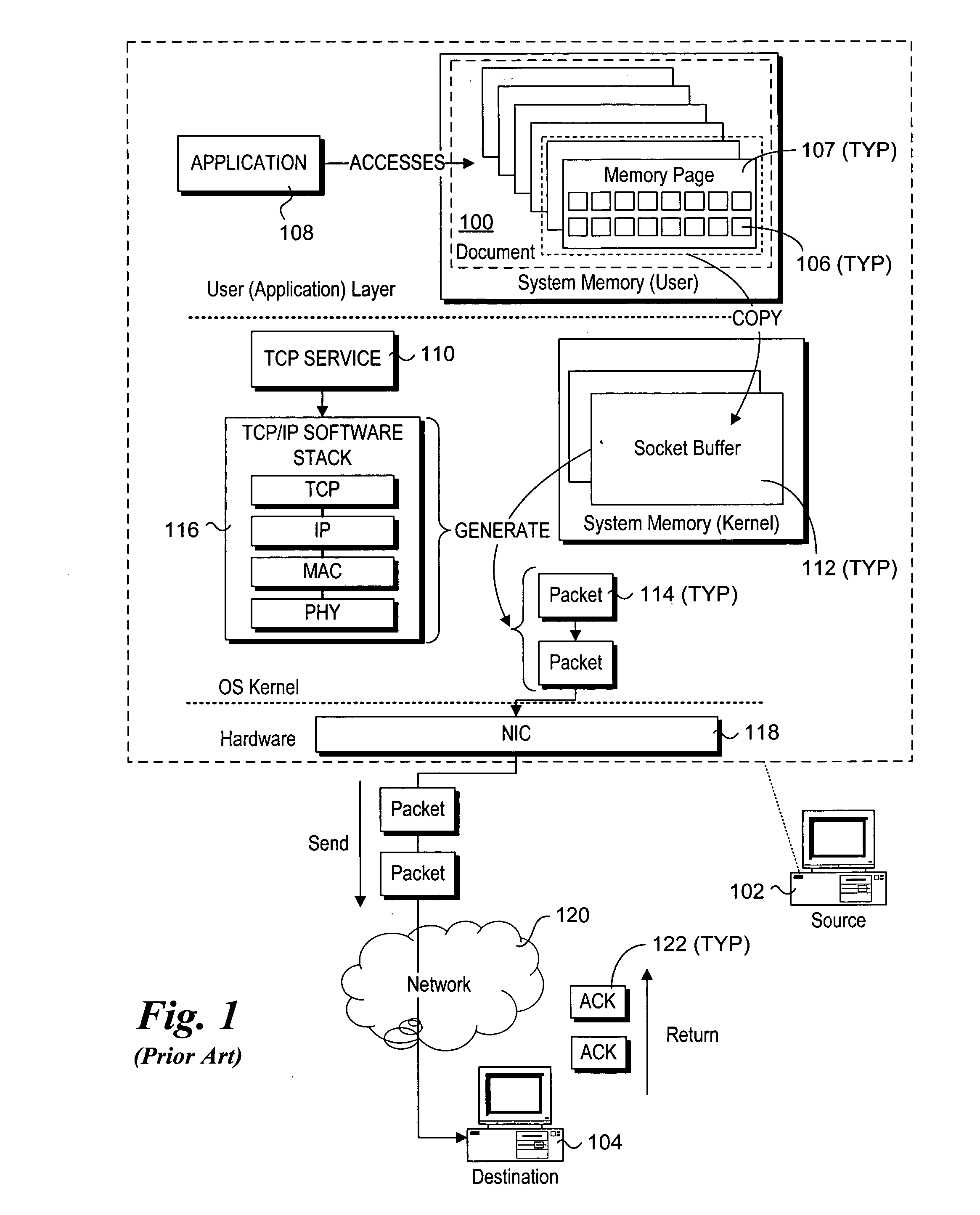

"Zero-copy" describes computer operations in which the CPU does not perform the task of copying data from one memory area to another. This is frequently used to save CPU cycles and memory bandwidth when transmitting a file over a network.

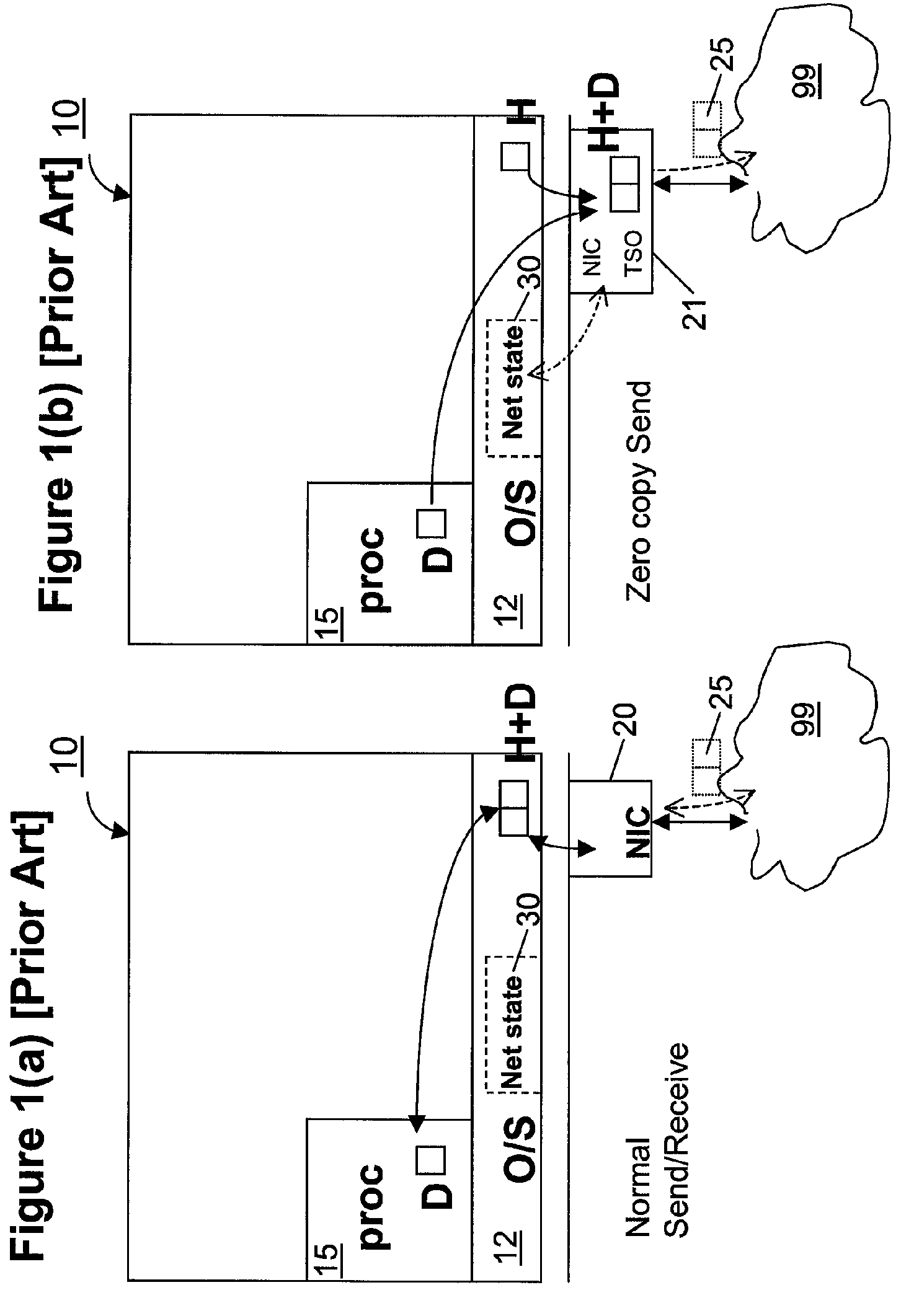

Zero-copy network I/O for virtual hosts

ActiveUS20070061492A1Eliminate the problemSolve excessive overheadDigital computer detailsTransmissionData packVirtualization

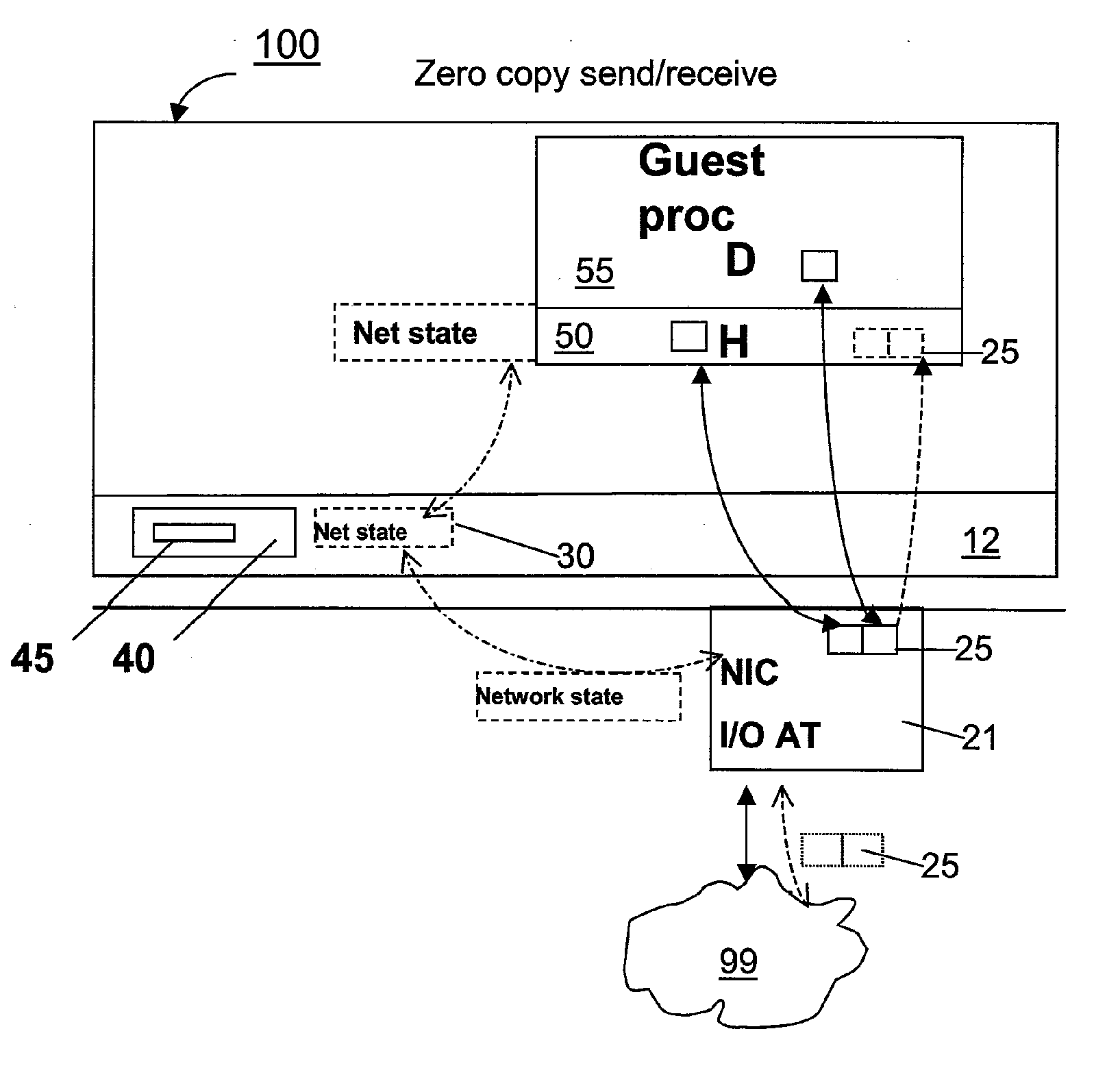

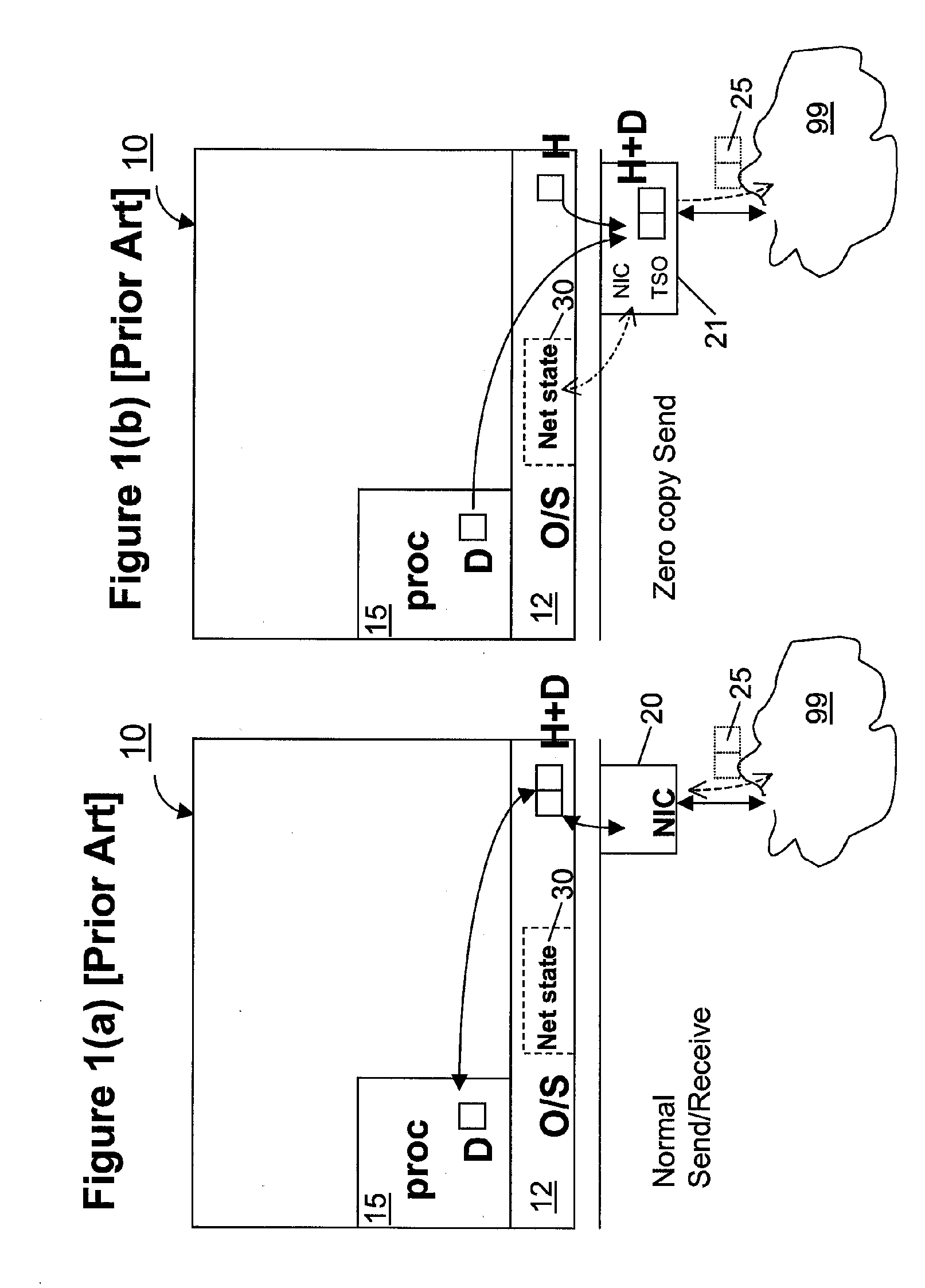

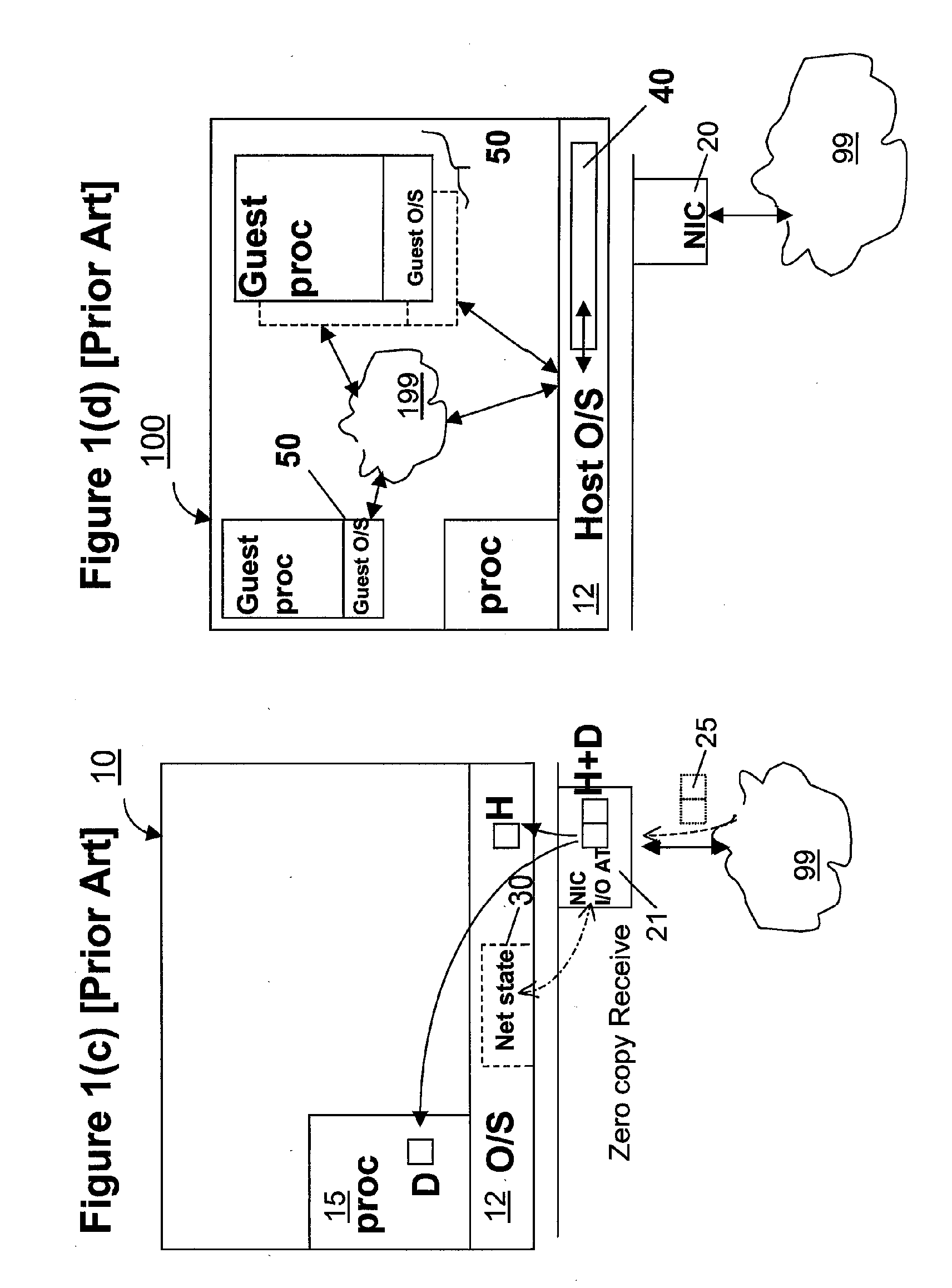

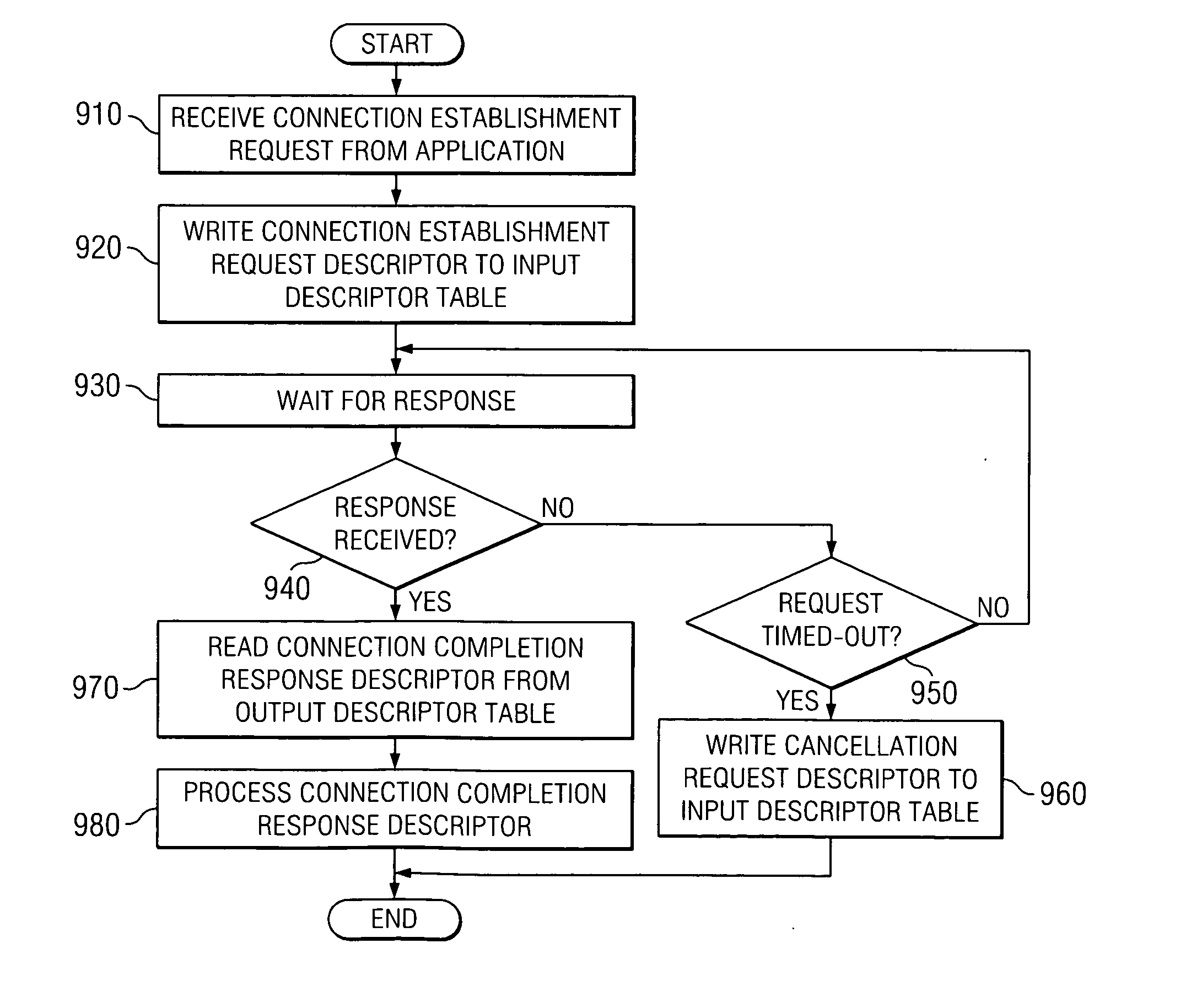

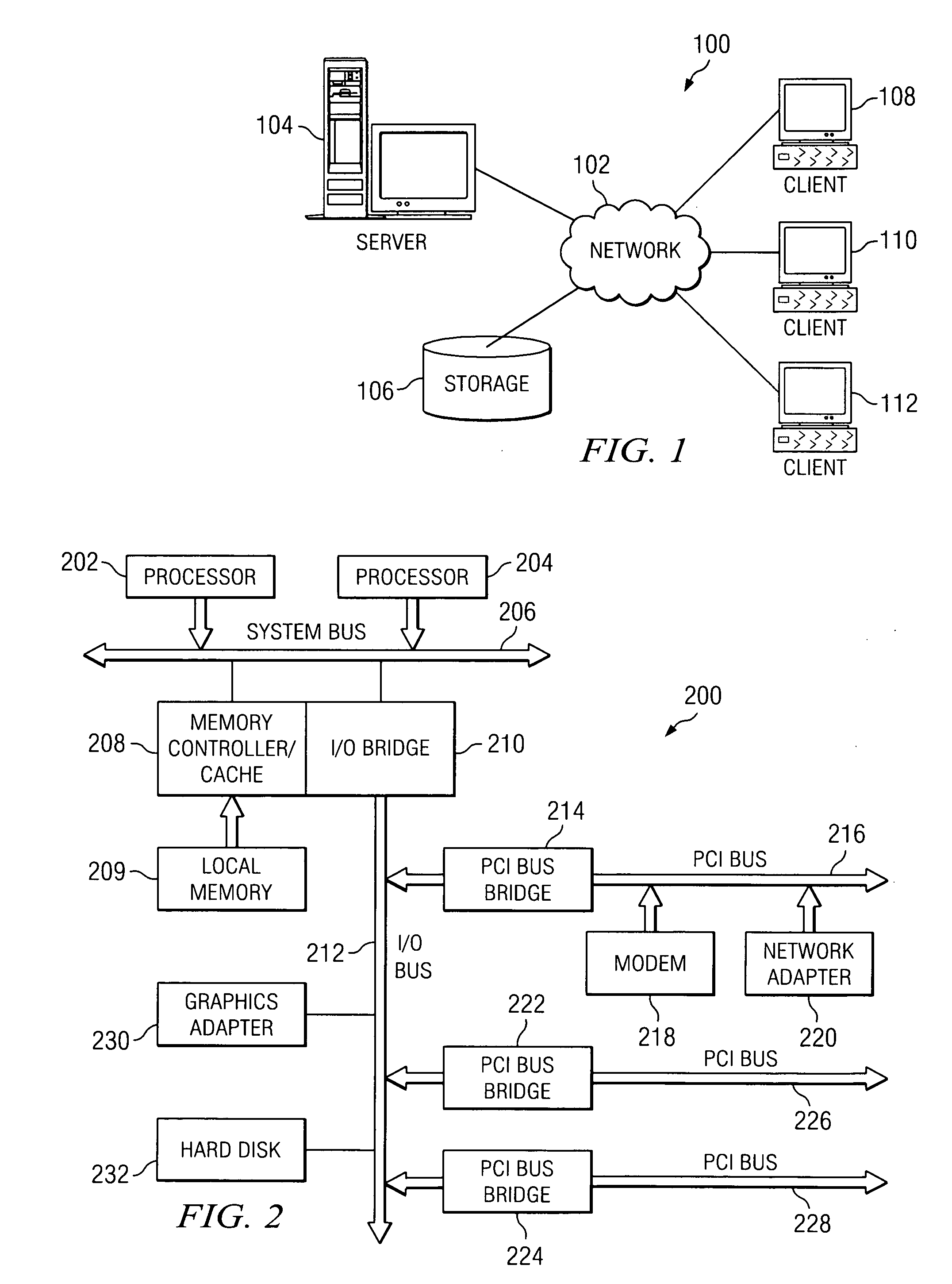

Techniques for virtualized computer system environments running one or more virtual machines that obviate the extra host operating system (O / S) copying steps required for sending and receiving packets of data over a network connection, thus eliminating major performance problems in virtualized environment. Such techniques include methods for emulating network I / O hardware device acceleration-assist technology providing zero-copy I / O sending and receiving optimizations. Implementation of these techniques require a host O / S to perform actions including, but not limited to: checking of the address translations (ensuring availability and data residency in physical memory), checking whether the destination of a network packet is local (to another virtual machine within the computing system), or across an external network; and, if local, checking whether either the sending destination VM, receiving VM process, or both, supports emulated hardware accelerated-assist on the same physical system. This optimization, in particular, provides a further optimization in that the packet data checksumming operations may be omitted when sending packets between virtual machines in the same physical system.

Owner:RED HAT

Apparatus and method for supporting memory management in an offload of network protocol processing

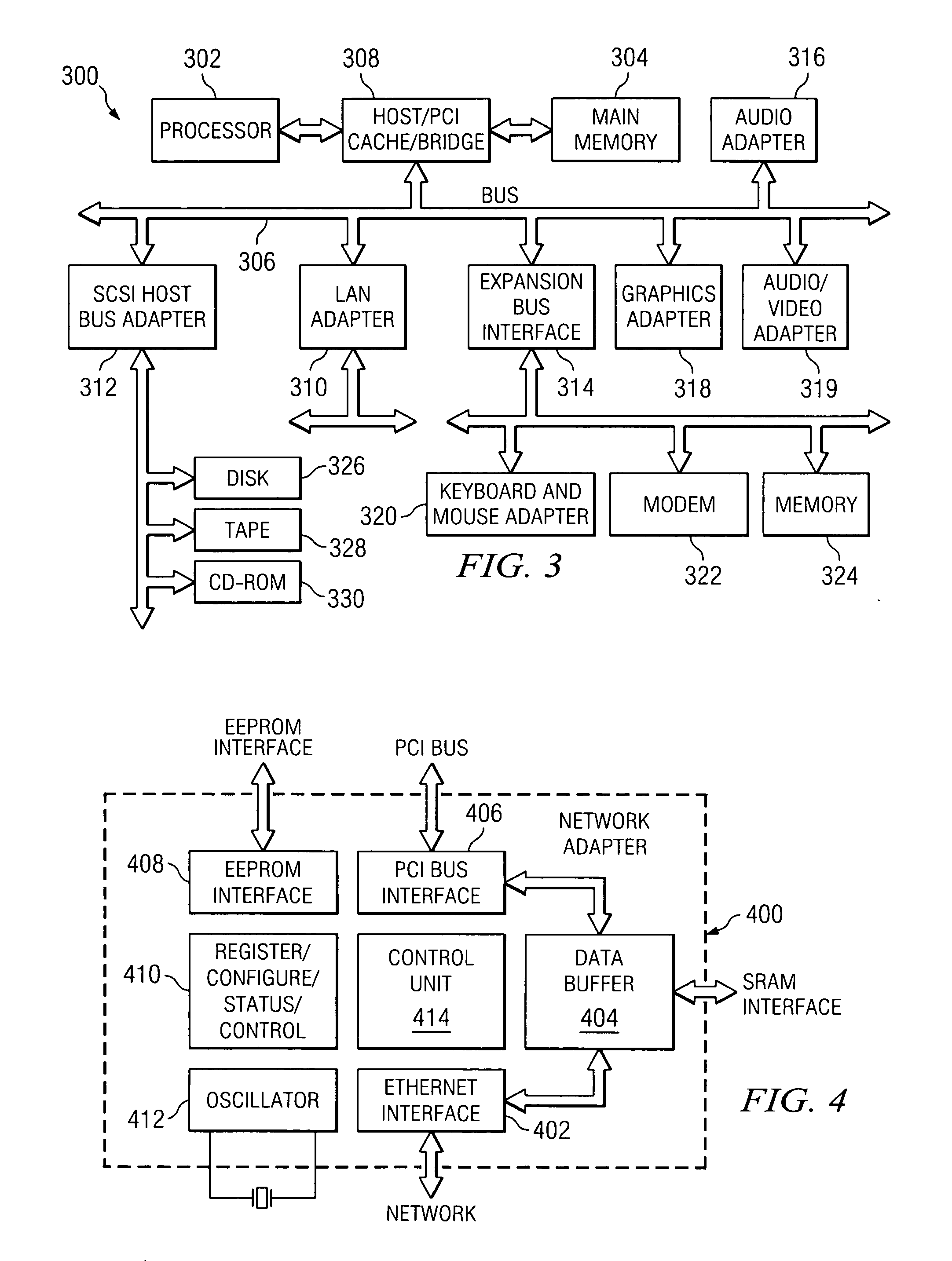

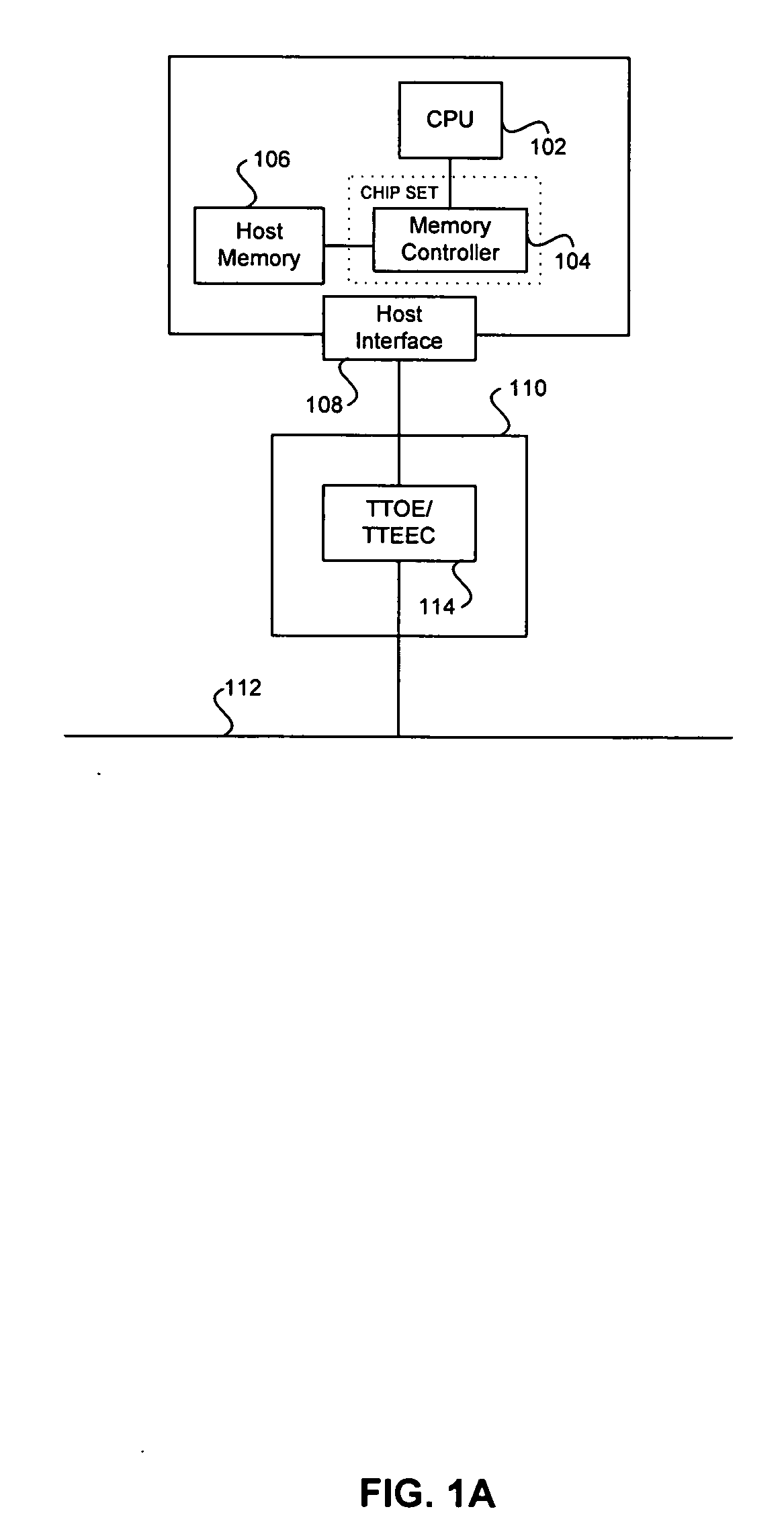

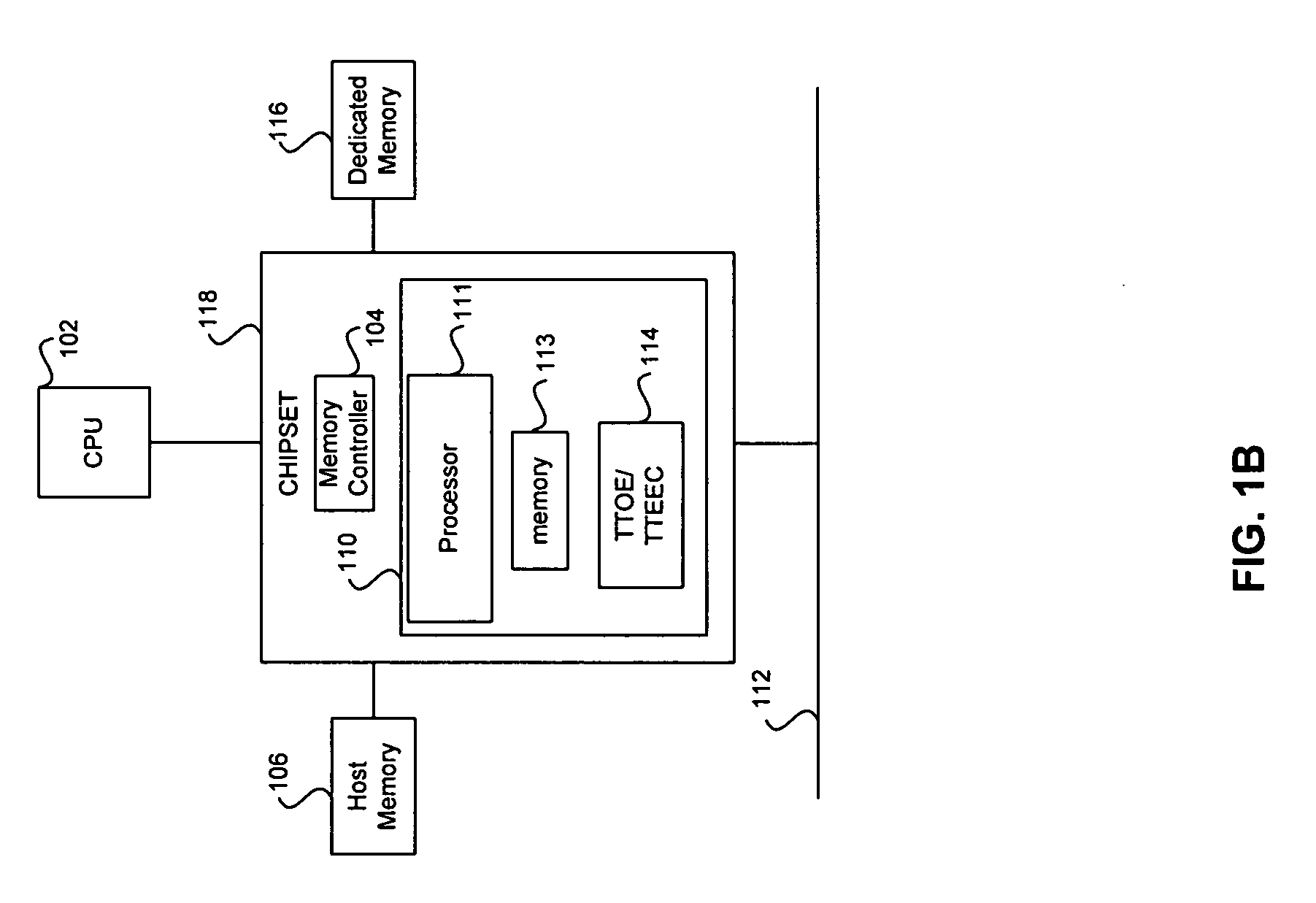

ActiveUS20060015651A1Firmly connectedNumber of neededMultiple digital computer combinationsMemory systemsNetworking protocolZero-copy

A number of improvements in network adapters that offload protocol processing from the host processor are provided. Specifically, mechanisms for handling memory management and optimization within a system utilizing an offload network adapter are provided. The memory management mechanism permits both buffered sending and receiving of data as well as zero-copy sending and receiving of data. In addition, the memory management mechanism permits grouping of DMA buffers that can be shared among specified connections based on any number of attributes. The memory management mechanism further permits partial send and receive buffer operation, delaying of DMA requests so that they may be communicated to the host system in bulk, and expedited transfer of data to the host system.

Owner:IBM CORP

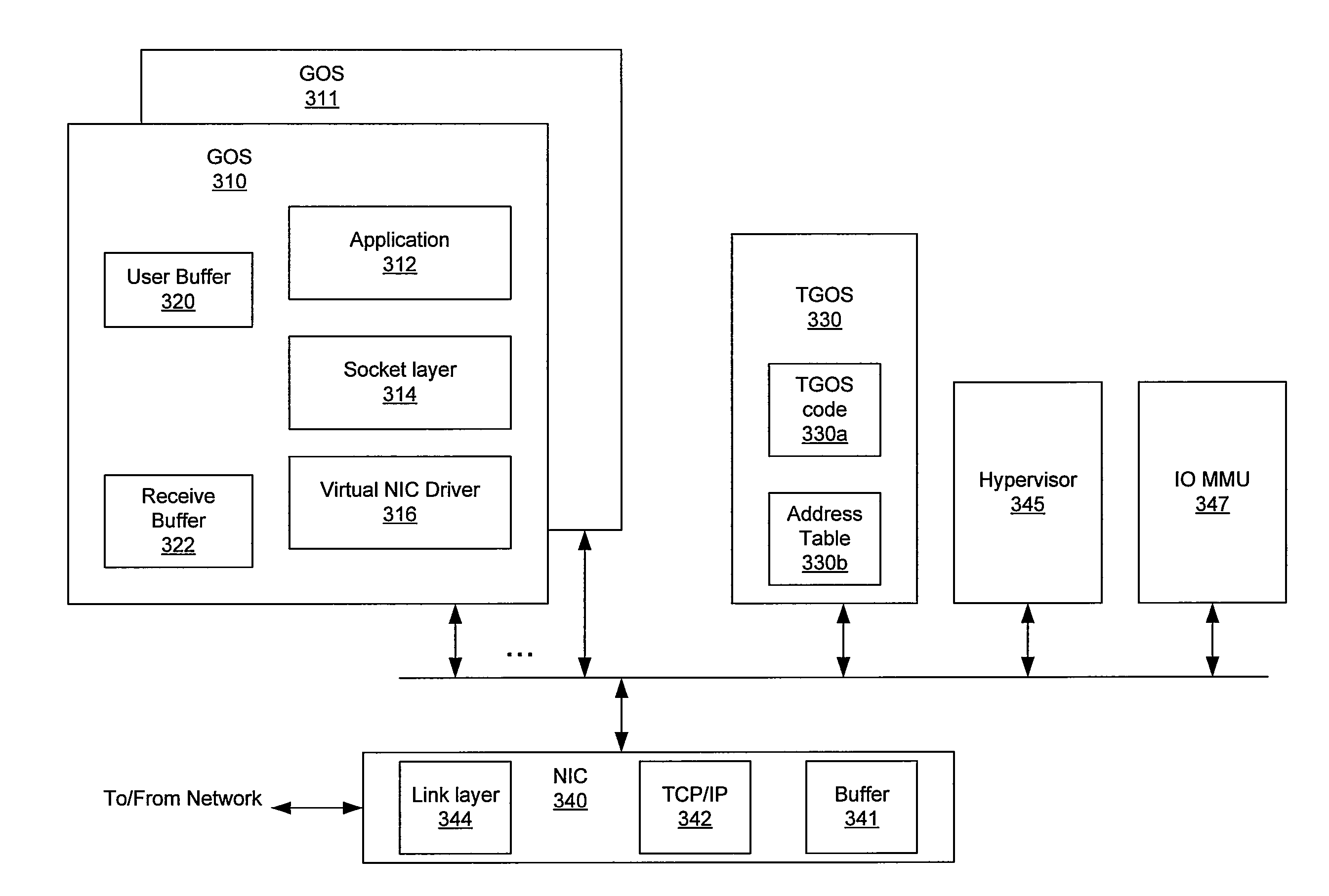

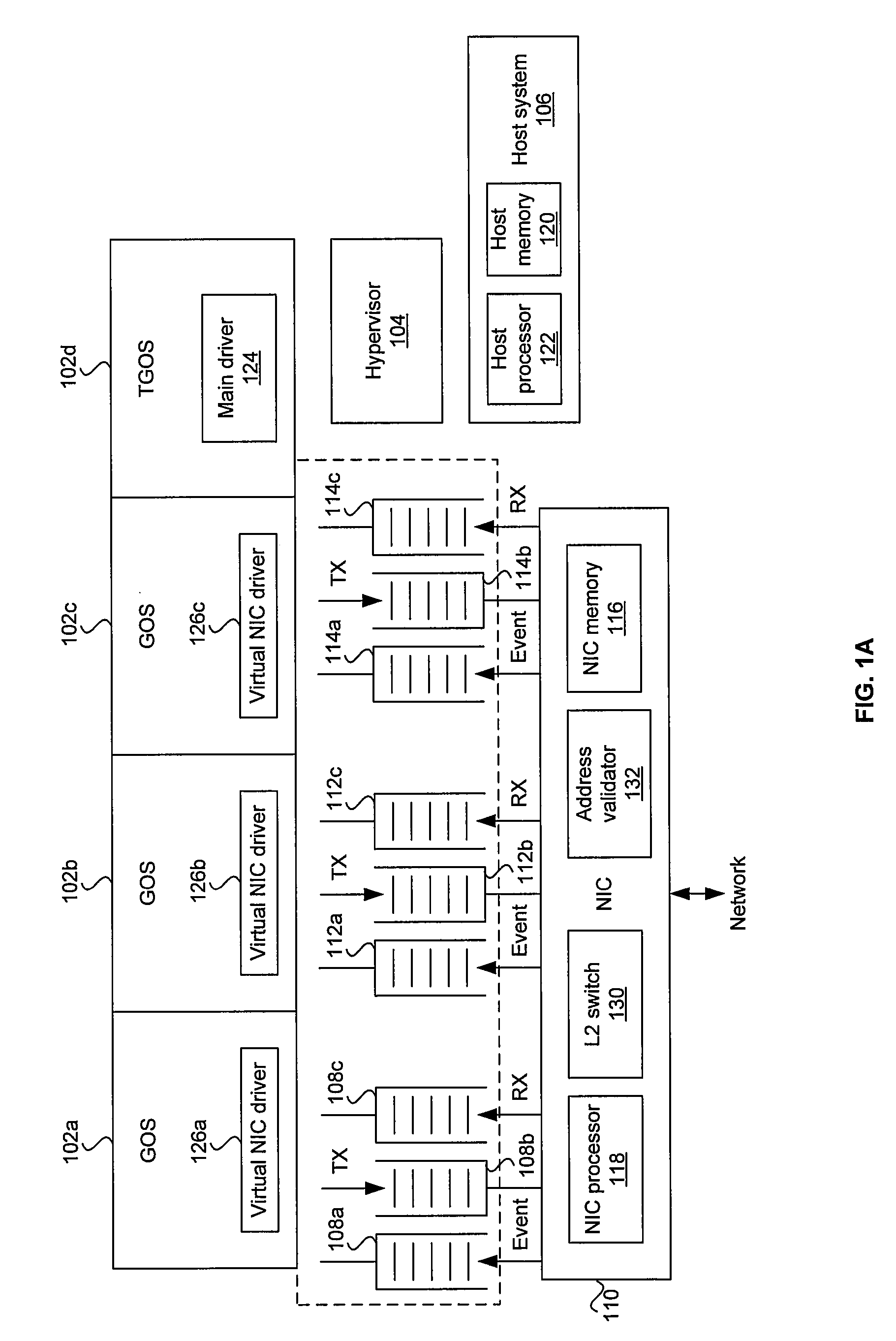

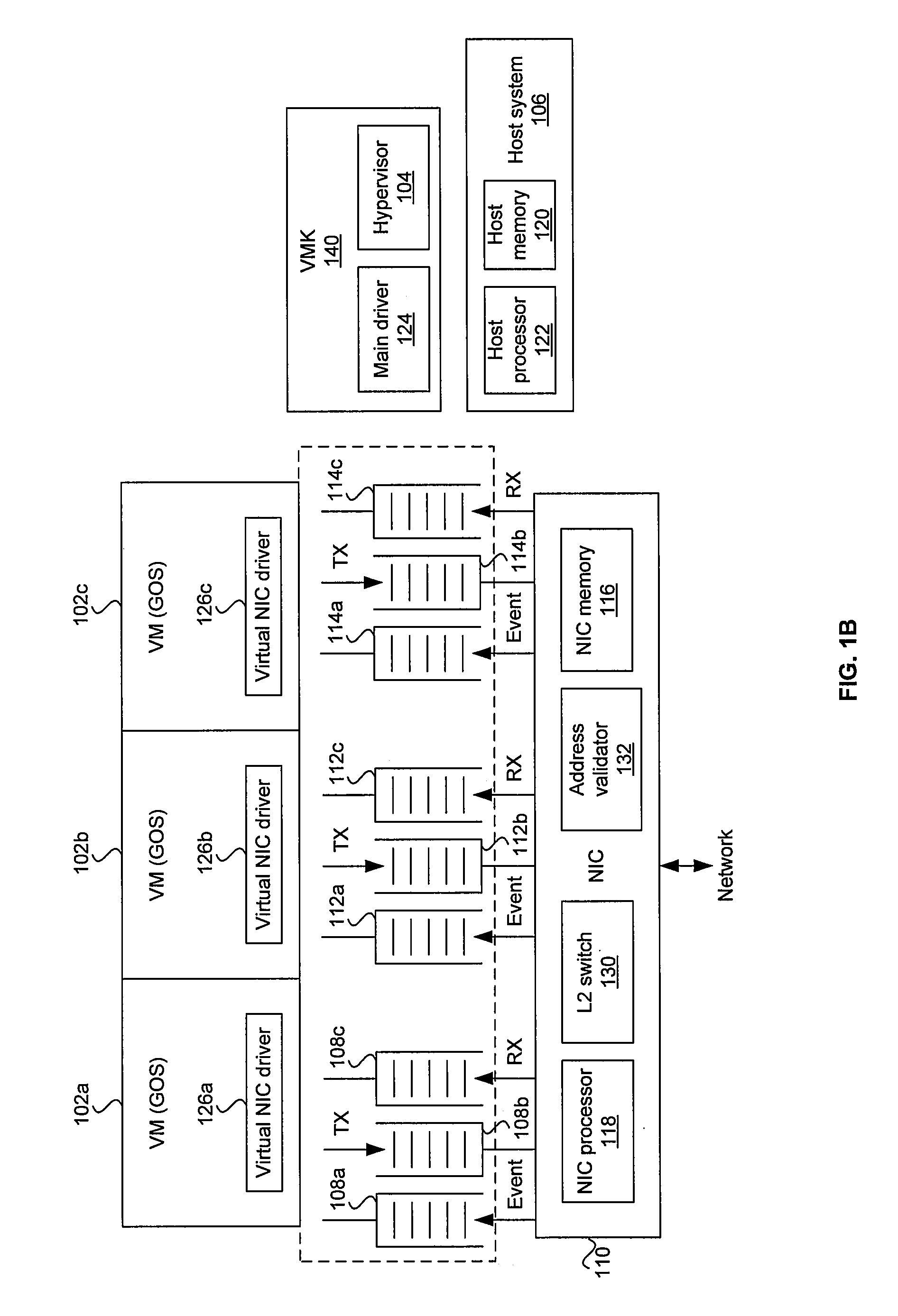

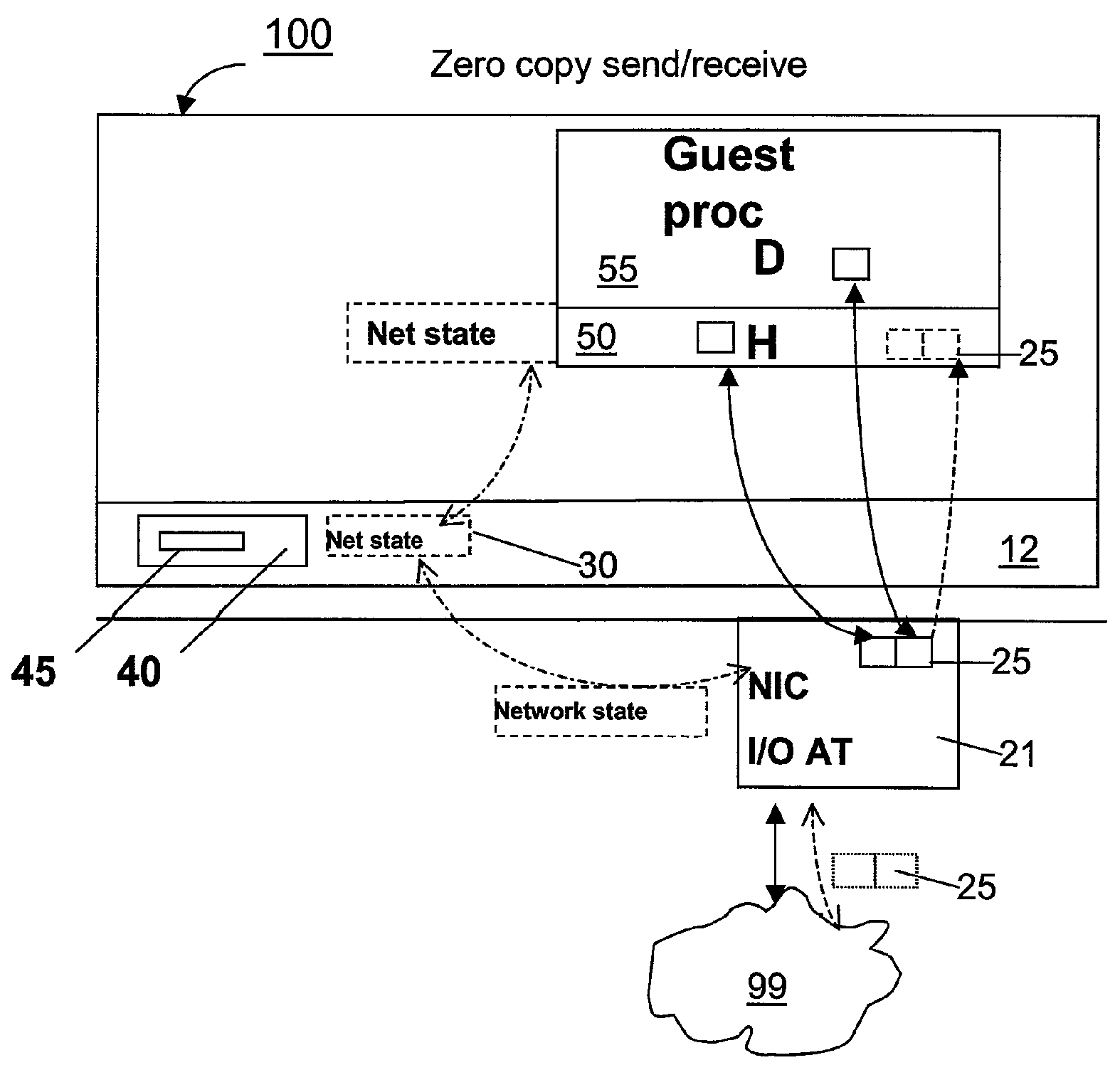

Method and System for Zero Copy in a Virtualized Network Environment

Methods and systems for zero copy in a virtualized network environment are disclosed. Aspects of one method may include a plurality of GOSs that share a single NIC. The NIC may switch communication to a GOS to allow that GOS access to a network via the NIC. The NIC may offload, for example, OSI layer 3, 4, and / or 5 protocol operations from a host system and / or the GOSs. The data received from, or to be transmitted to, the network by the NIC may be copied directly between the NIC's buffer and a corresponding application buffer for one of the GOSs without copying the data to a TGOS. The NIC may access the GOS buffer via a virtual address, a buffer offset, or a physical address. The virtual address and the buffer offset may be translated to a physical address.

Owner:BROADCOM ISRAEL R&D

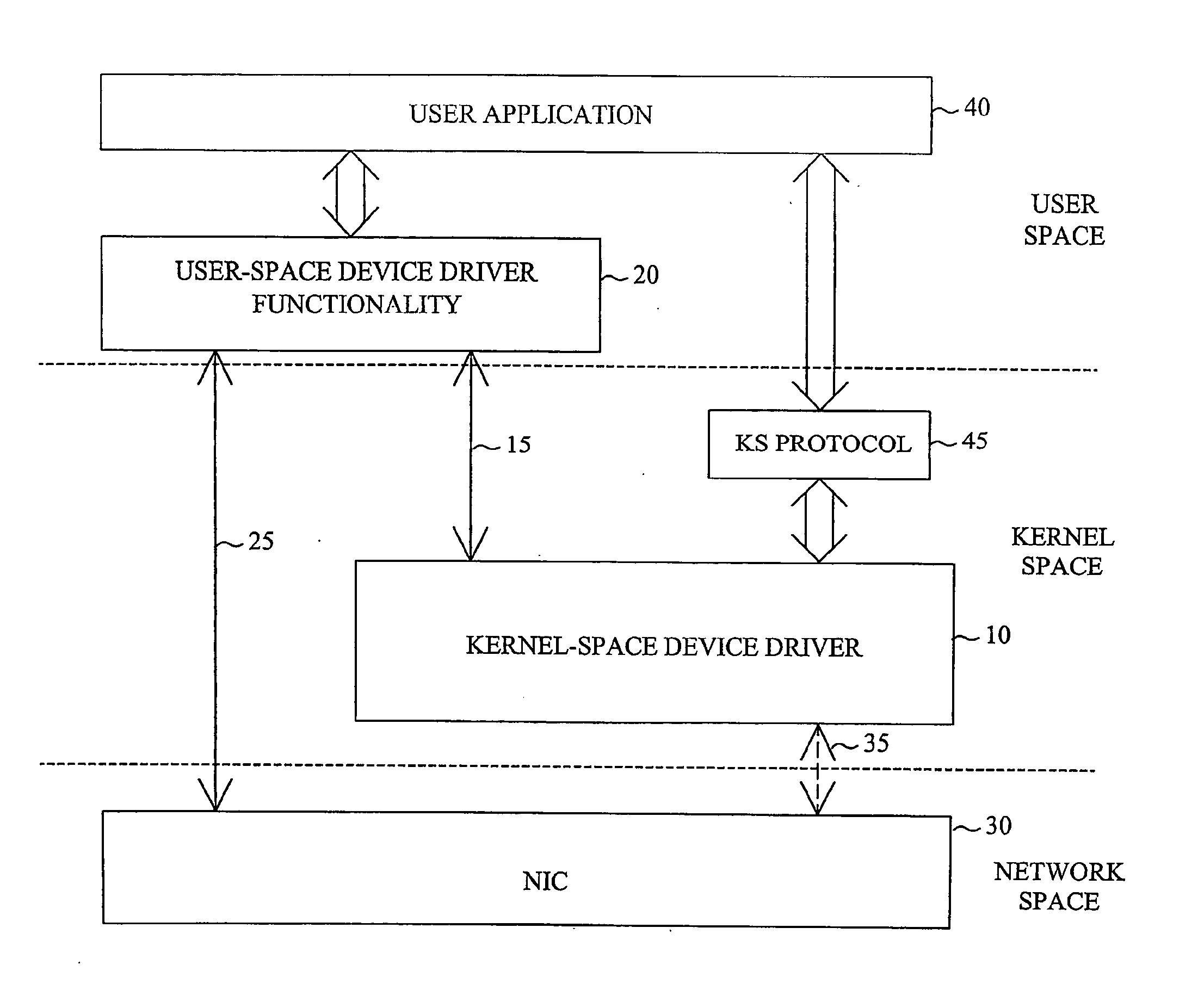

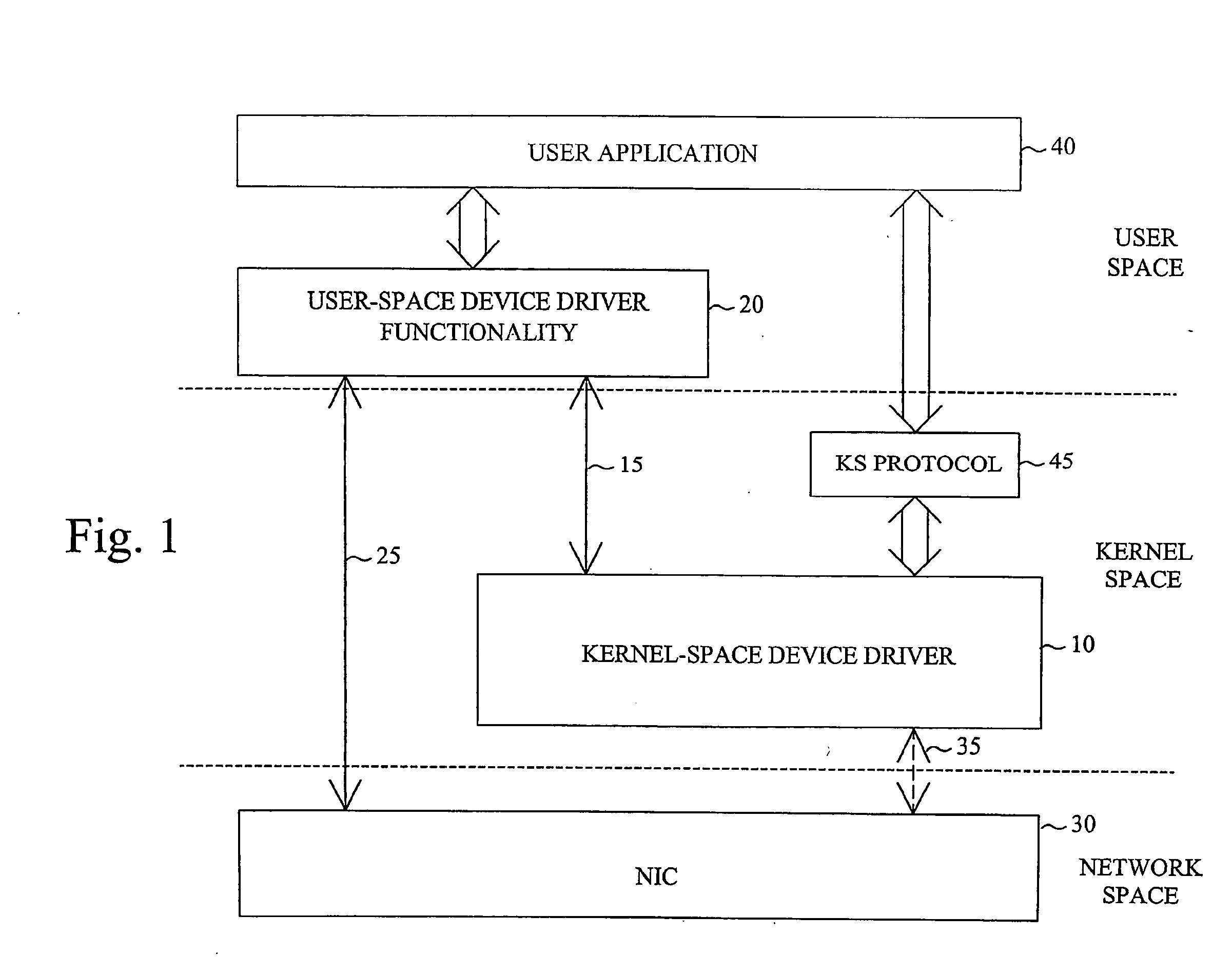

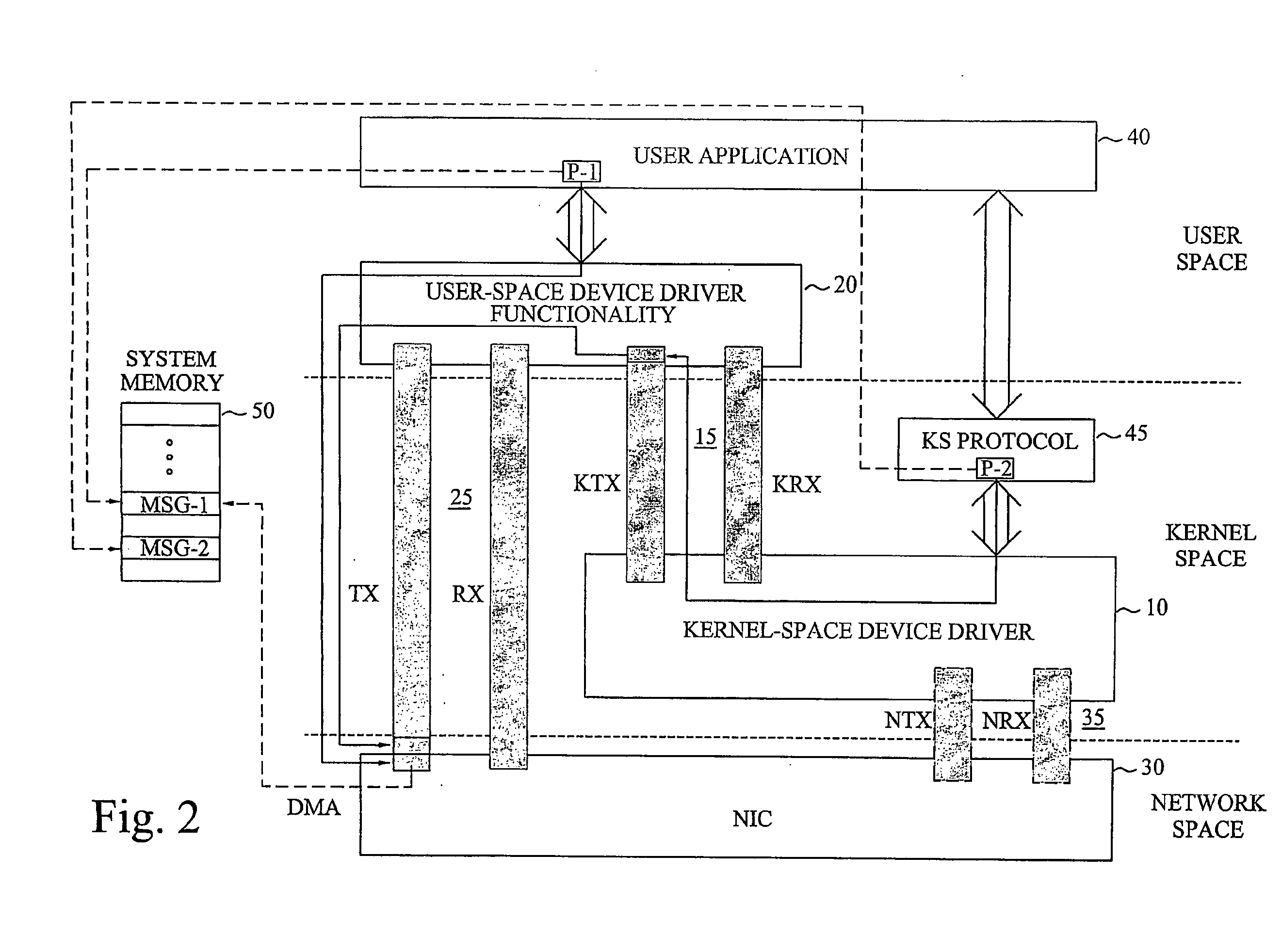

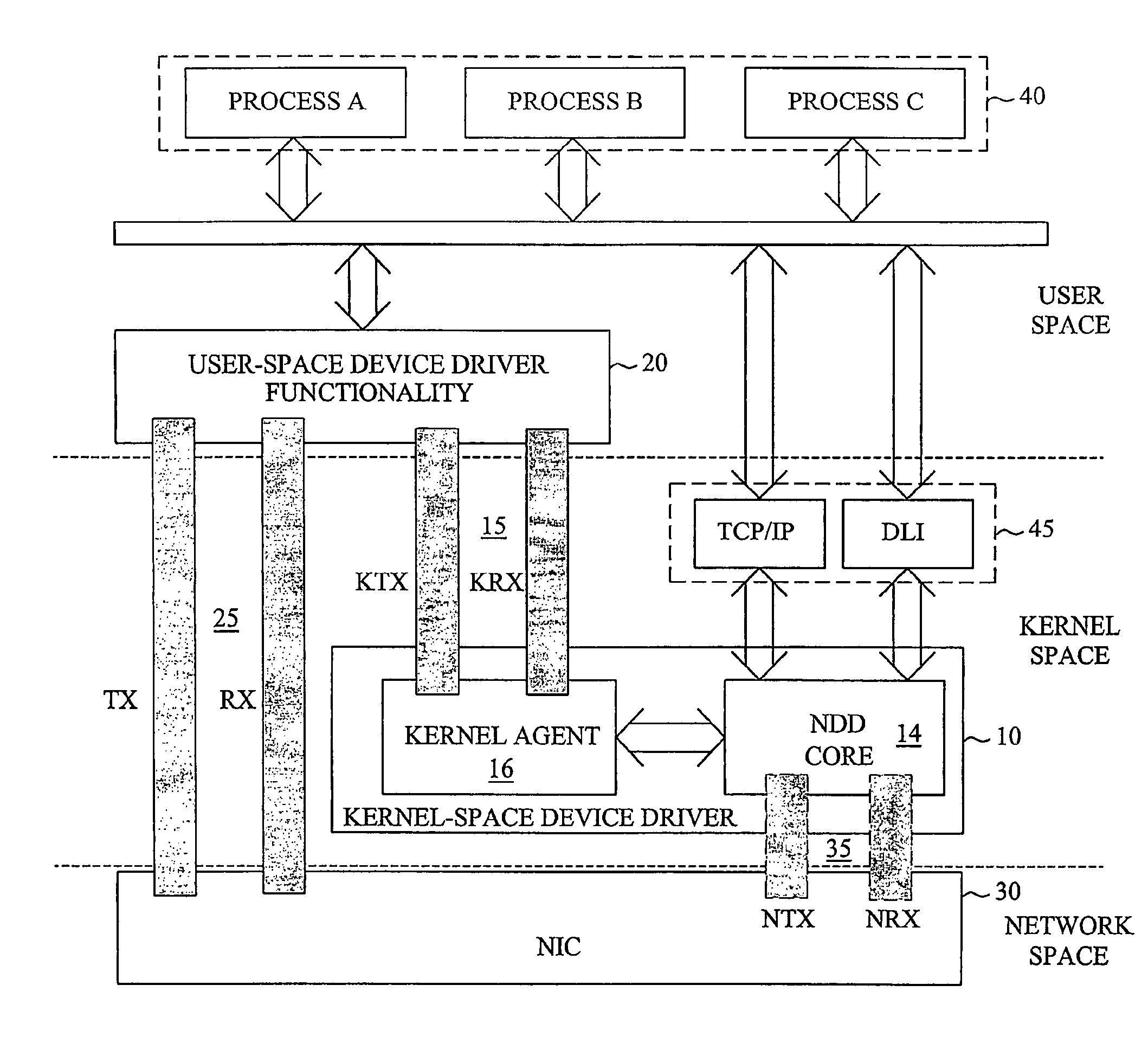

Network device driver architecture

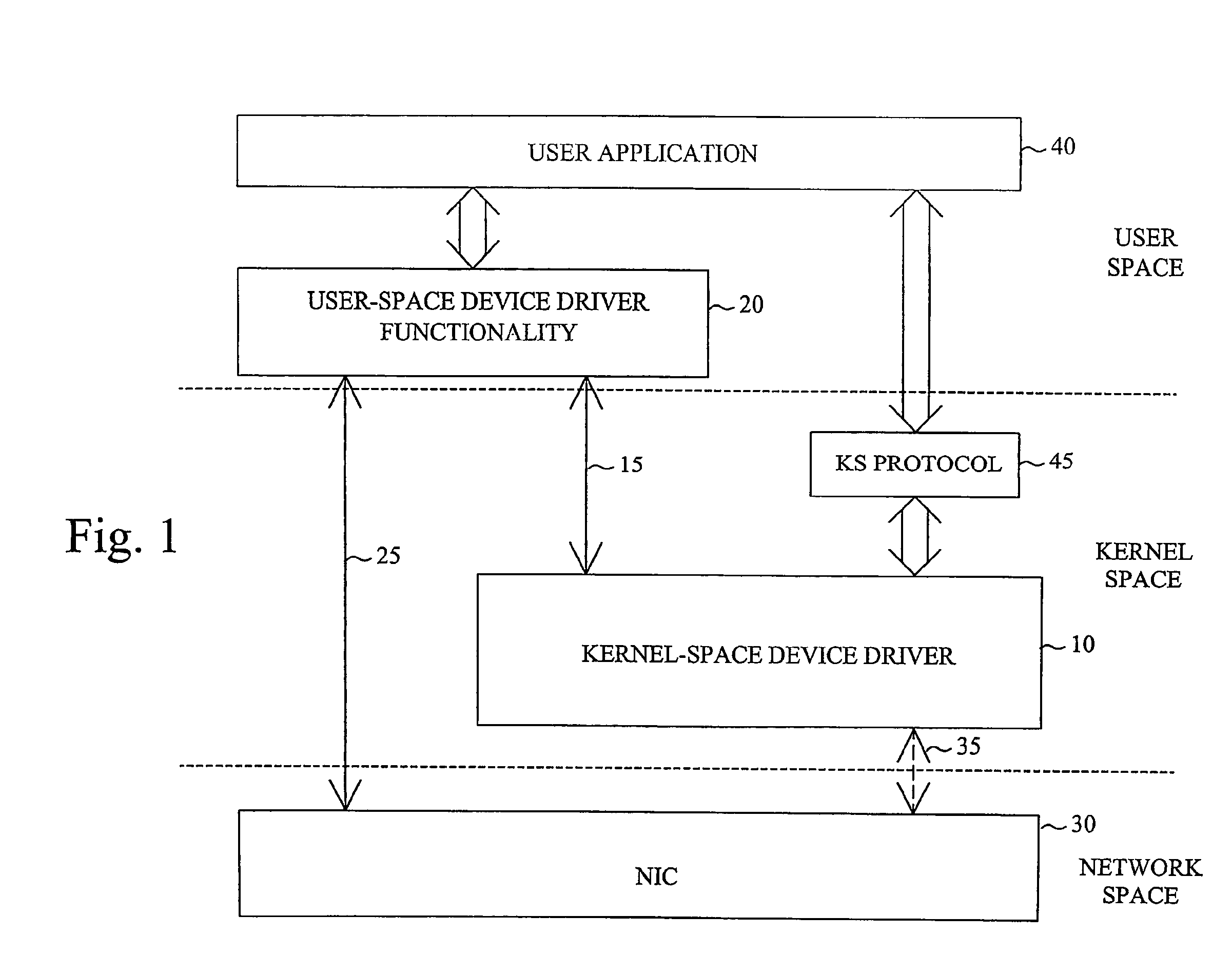

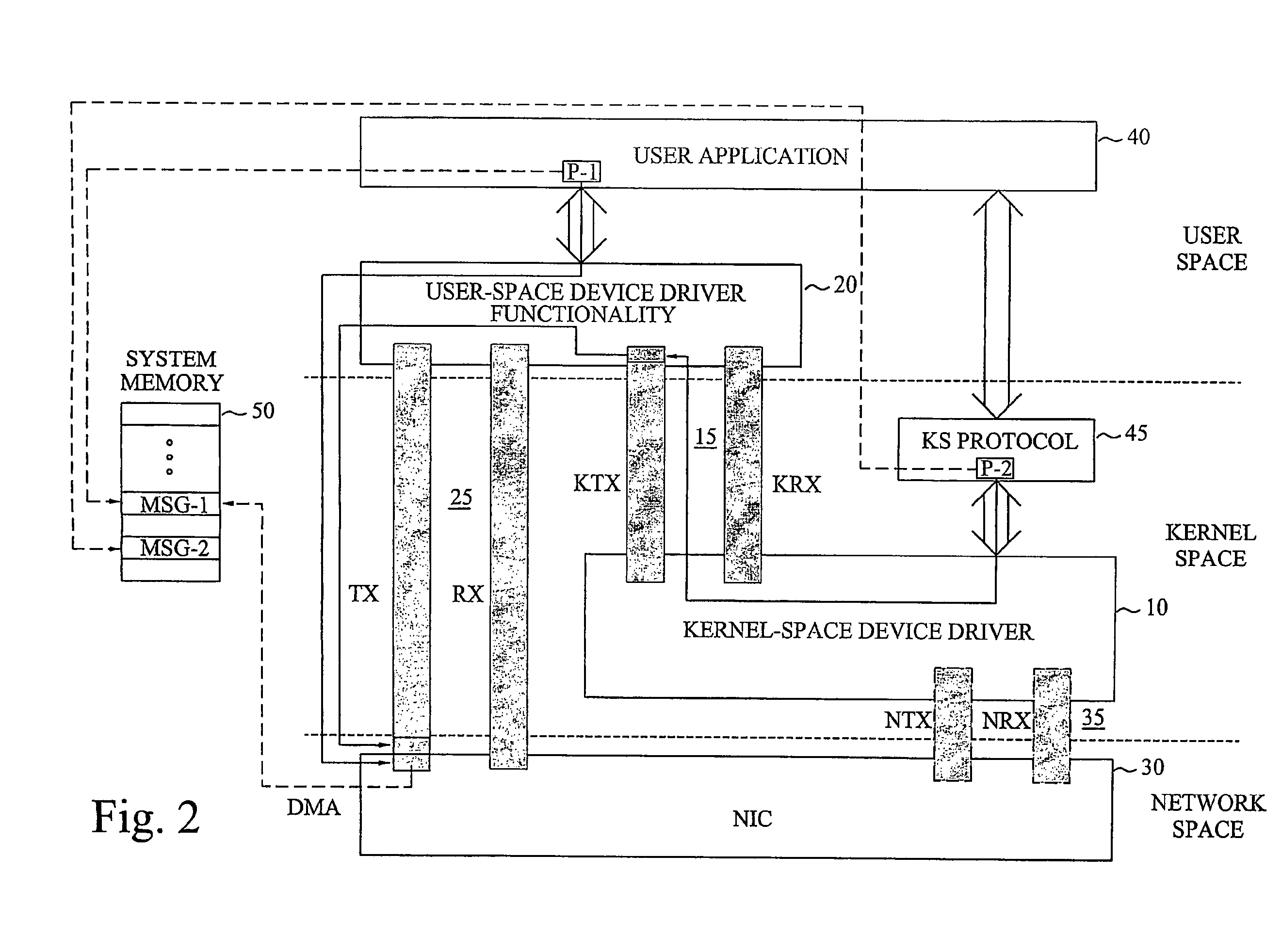

InactiveUS20050210479A1Easy to adaptReduce in quantityMultiprogramming arrangementsTransmissionZero-copyCombined use

The invention proposes a network device driver architecture with functionality distributed between kernel space and user space. The overall network device driver comprises a kernel-space device driver (10) and user-space device driver functionality (20). The kernel-space device driver (10) is adapted for enabling access to the user-space device driver functionality (20) via a kernel-space-user-space interface (15). The user-space device driver functionality (20) is adapted for enabling direct access between user space and the NIC (30) via a user-space-NIC interface (25), and also adapted for interconnecting the kernel-space-user-space interface (15) and the user-space-NIC interface (25) to provide integrated kernel-space access and user-space access to the NIC (30). The user-space device driver functionality (20) provides direct, zero-copy user-space access to the NIC, whereas information to be transferred between kernel space and the NIC will be “tunneled” through user space by combined use of the kernel-space device driver (10), the user-space device driver functionality (20) and the two associated interfaces (15,25).

Owner:TELEFON AB LM ERICSSON (PUBL)

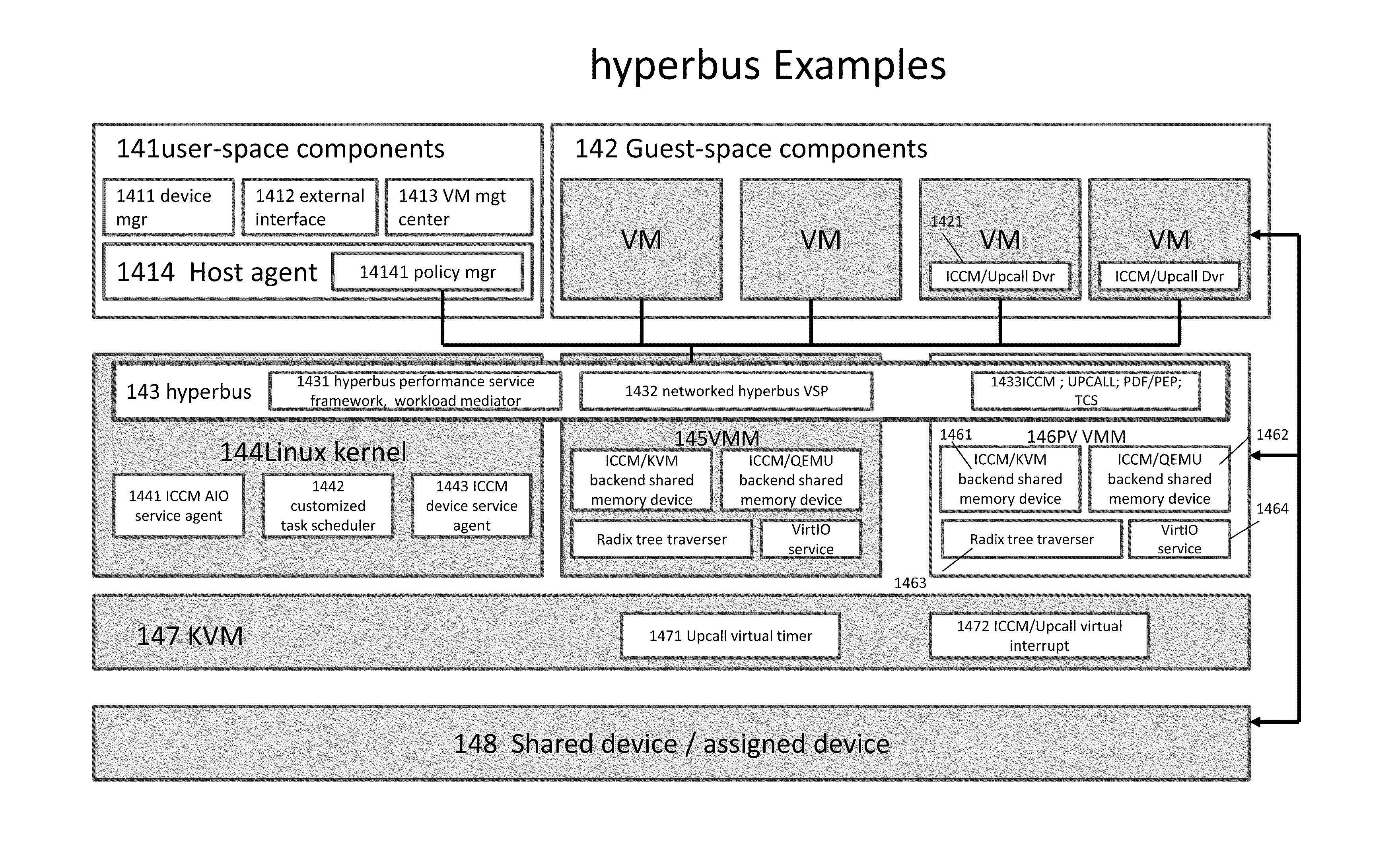

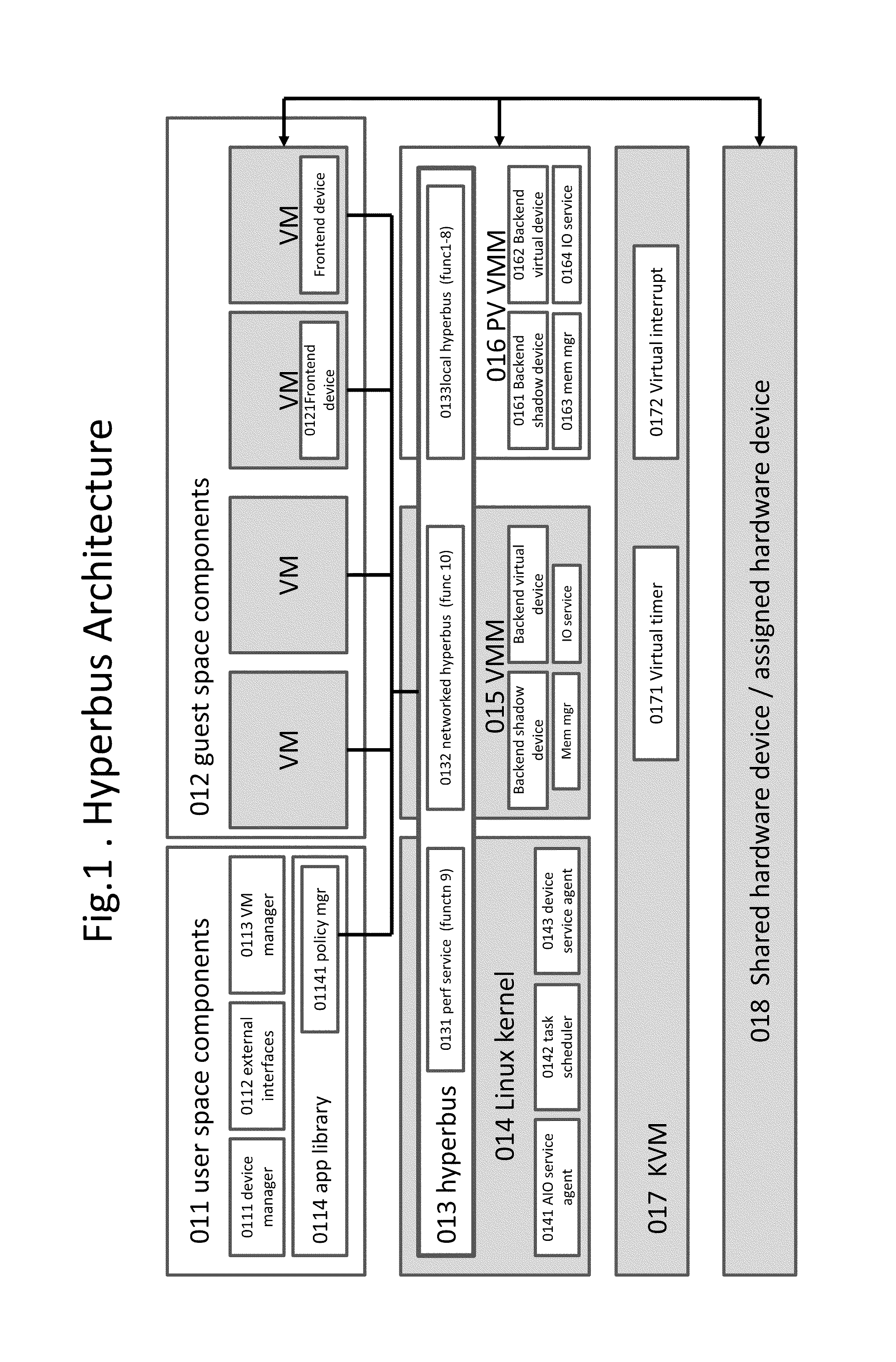

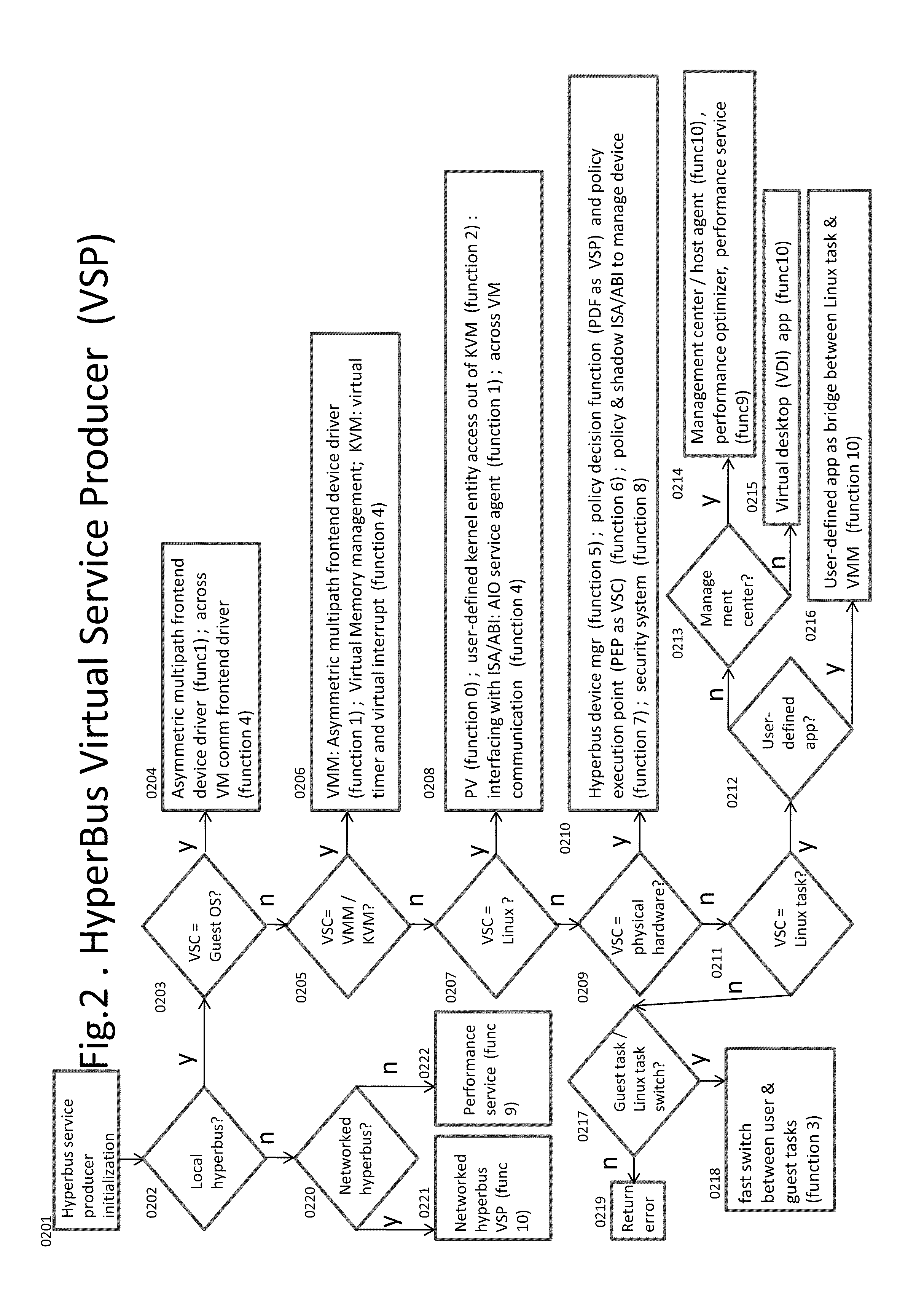

Kernel bus system with a hyberbus and method therefor

InactiveUS8832688B2Fast deliveryImprove performanceMultiprogramming arrangementsSoftware simulation/interpretation/emulationVirtualizationZero-copy

Some embodiments concern a kernel bus system for building at least one virtual machine monitor. The kernel bus system can include: (a) a hyperbus; (b) user space components; (c) guest space components configured to interact with the user space components via the hyperbus; (d) VMM components having frontend devices configure to perform I / O operations with the hardware devices of the host computer using a zero-copy method or non-pass-thru method; (e) para-virtualization components having (1) a virtual interrupt module configured to use processor instructions to swap the processors of the host computer between a kernel space and a guest space; and (2) a virtual I / O driver configured to enable synchronous I / O signaling, asynchronous I / O signaling and payload delivery, and pass-through delivery independent an QEMU emulation; and (f) KVM components. Other embodiments are disclosed.

Owner:TRANSOFT

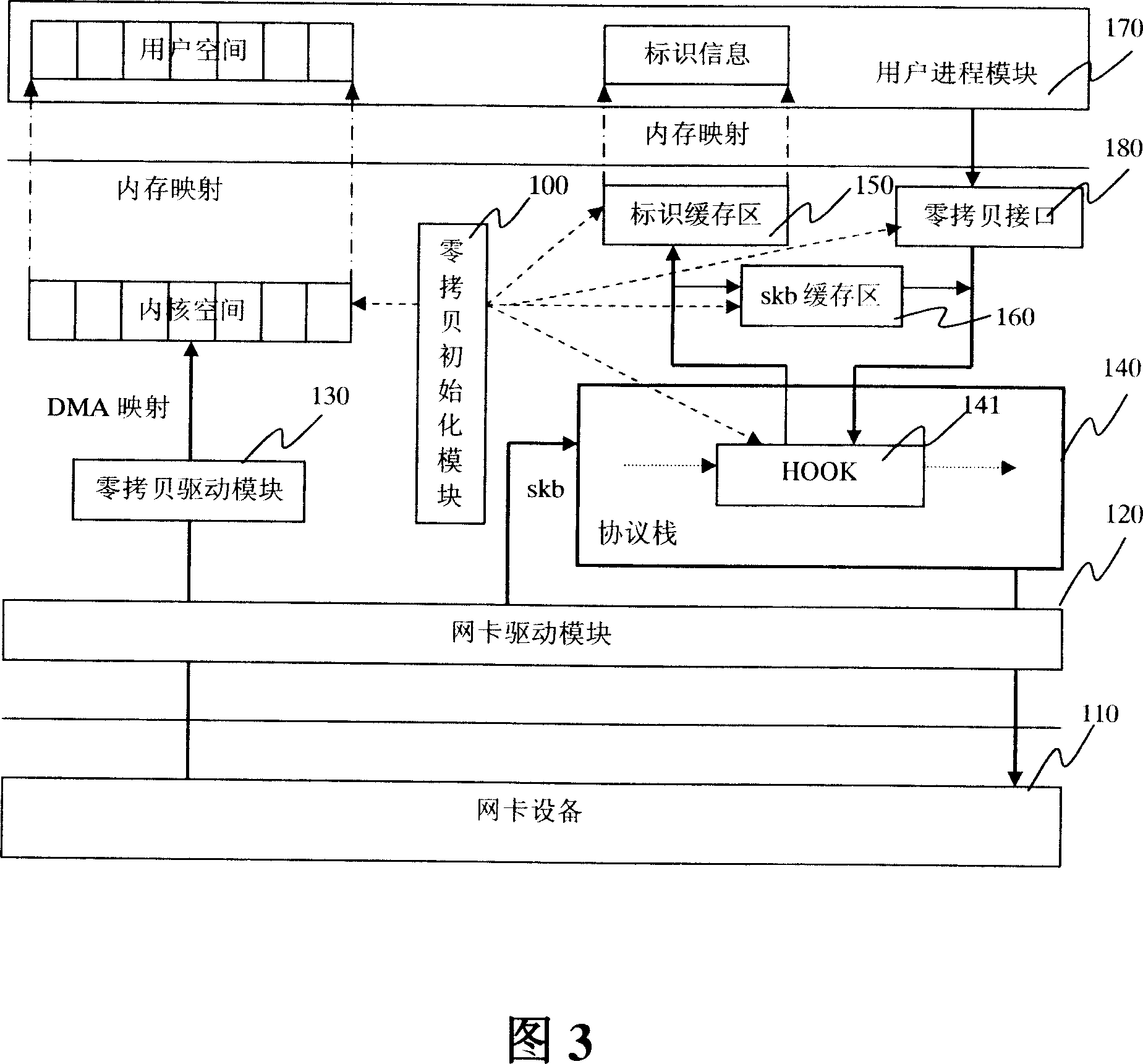

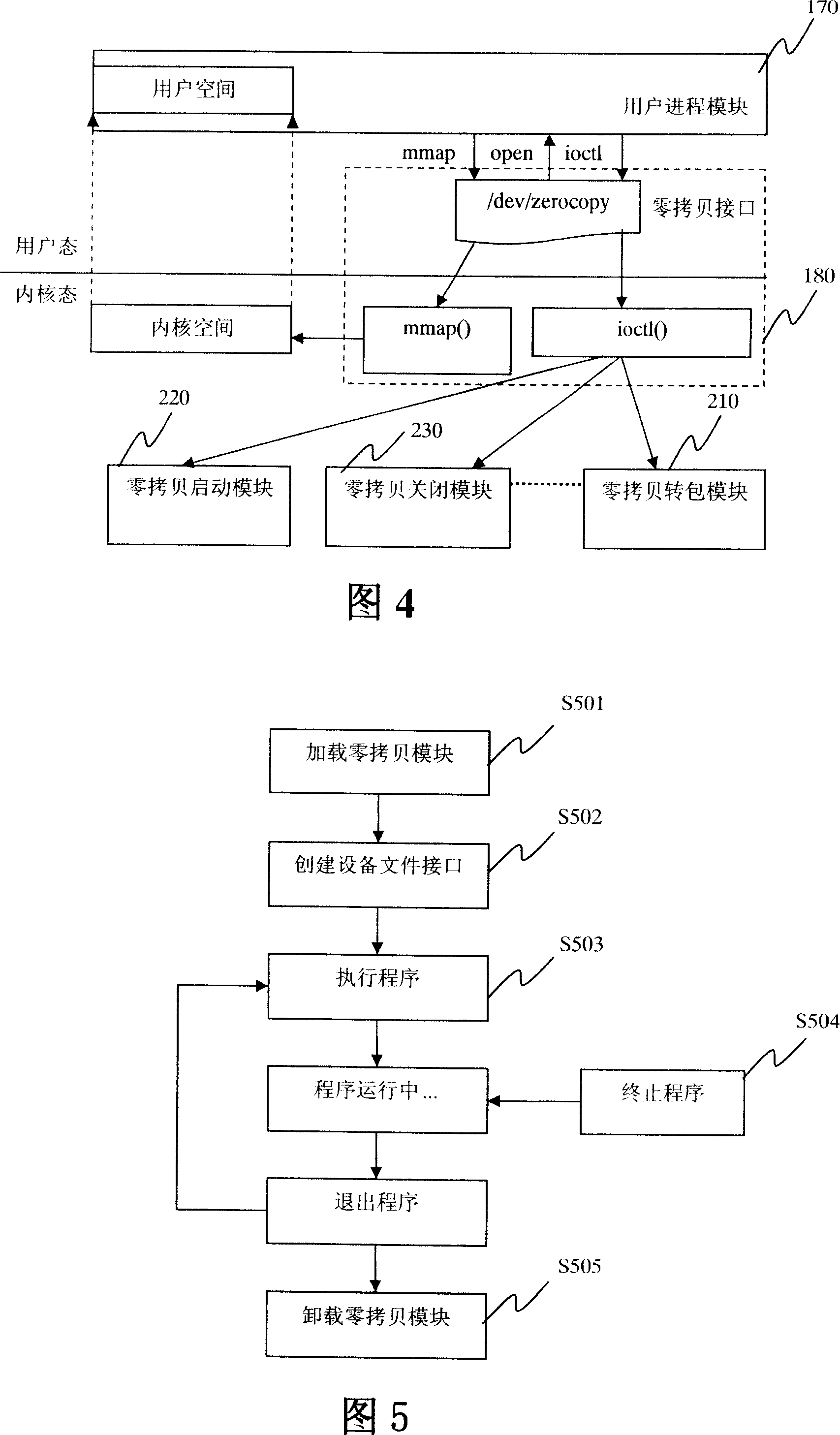

Apparatus and method for realizing zero copy based on Linux operating system

ActiveCN101135980ARealize zero copy of dataRegistration is flexibleMultiprogramming arrangementsTransmissionAnalysis dataZero-copy

The apparatus comprises: a zero-copy initializing module used for allocating a segment of inner core space in the inner core, and dividing the inner cored space into multi data blocks, and identifying each block; a network card driving module used for sending the received data packet to the data block in the inner core to save; recording the identifier of the data block in skb of the data packet, and sending the skb to the protocol stack; a protocol stack used for receiving and analyze the skb of the data packet, and getting the identifier of the data block; a identifier buffer connected to the protocol stack and is directly mapped to the user progress module, and is used for saving the identifier of data block obtained by the protocol stack; a user progress module used for getting the identifier from the identifier buffer, and getting the data packet from the data block in the inner core space.

Owner:FORTINET

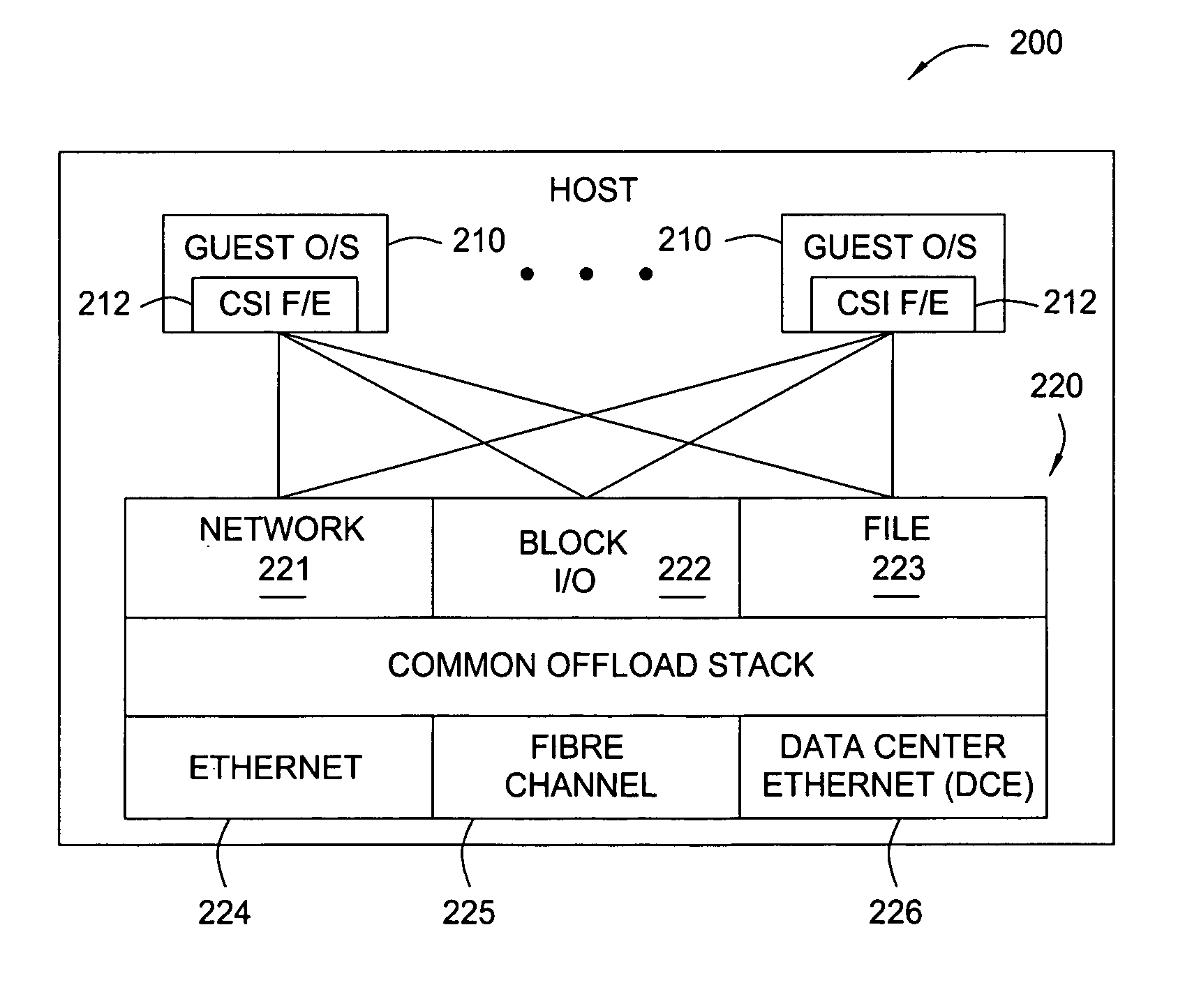

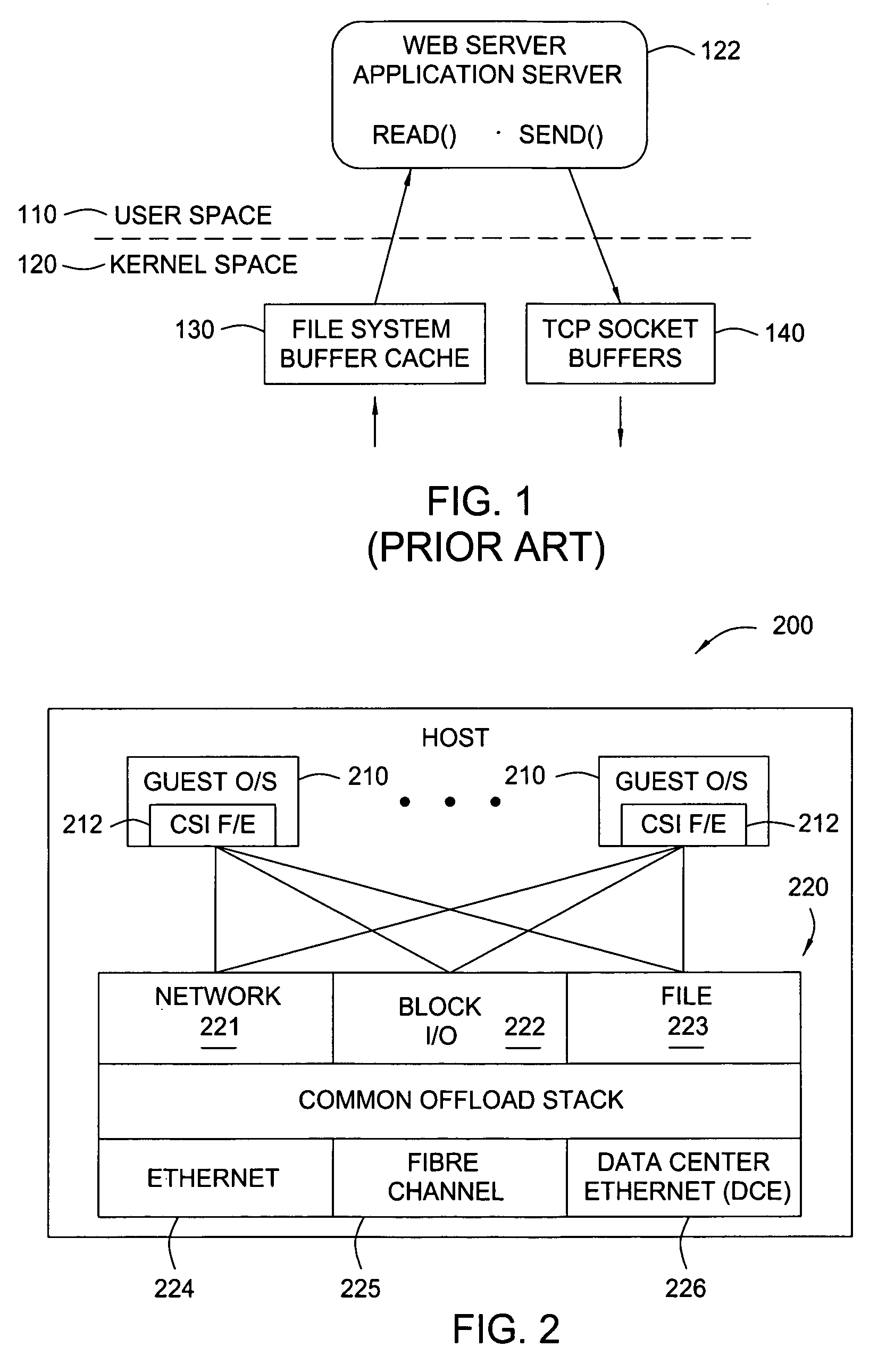

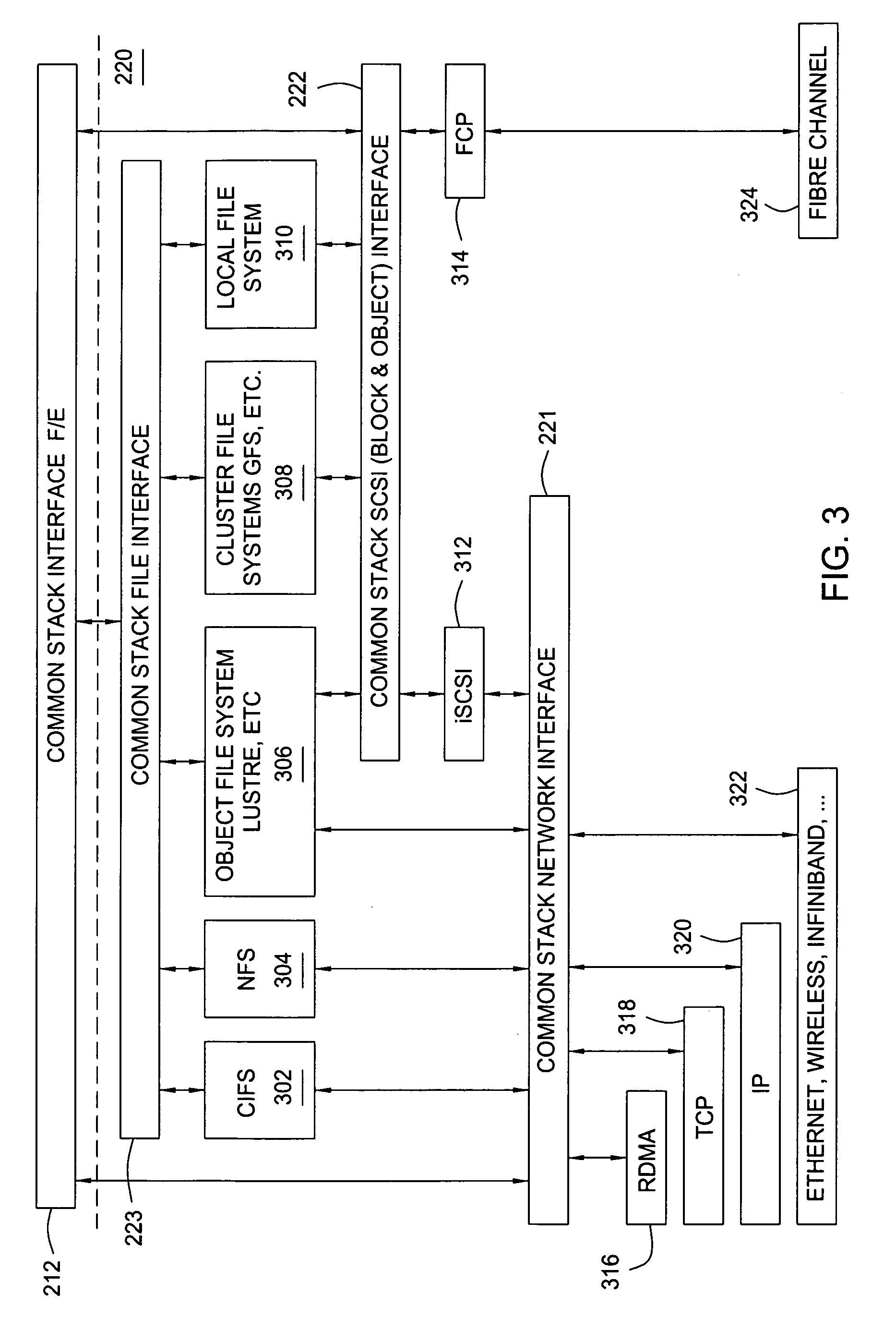

Zero-copy network and file offload for web and application servers

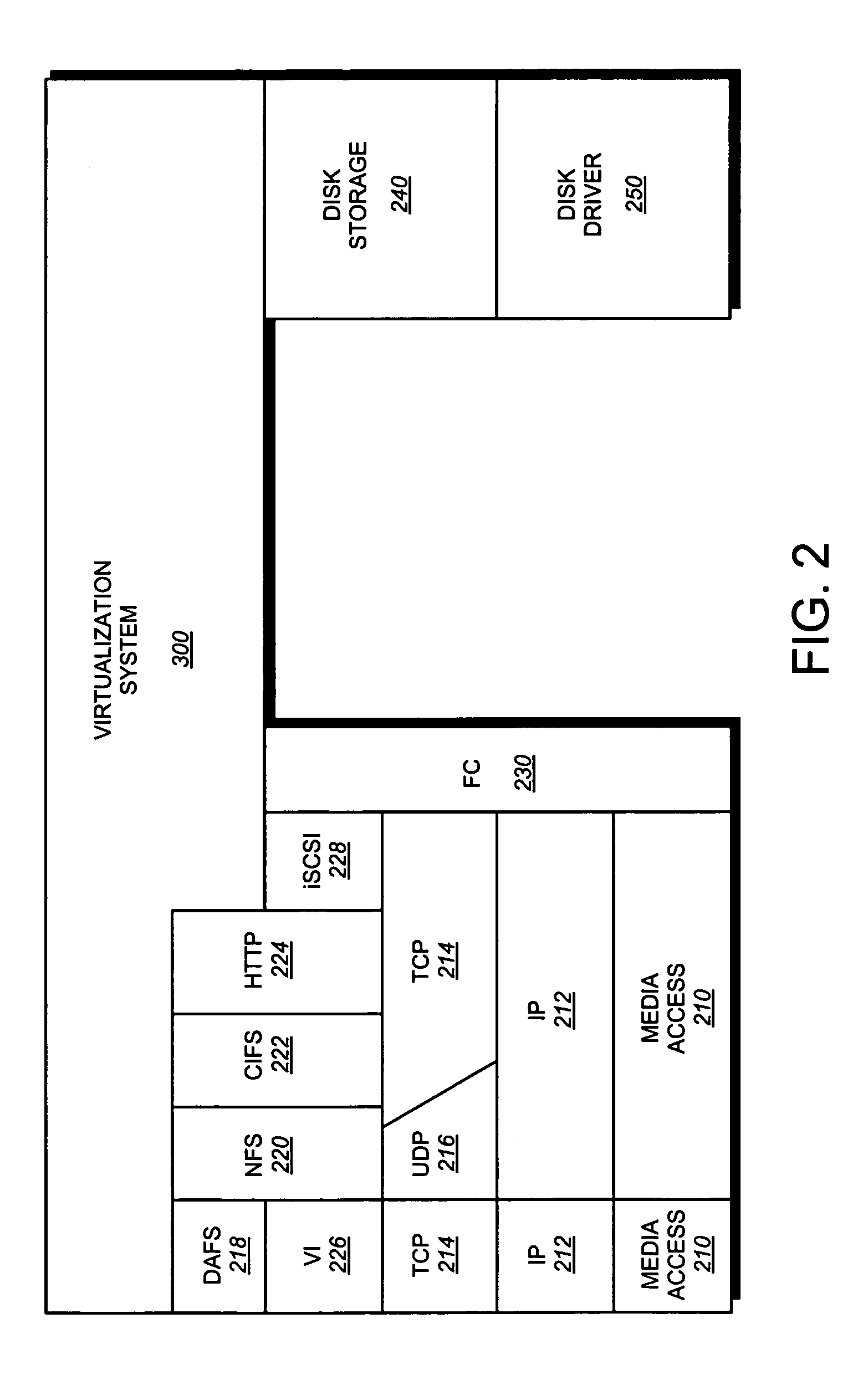

ActiveUS20060294234A1Provide supportDigital computer detailsTransmissionApplication serverOperational system

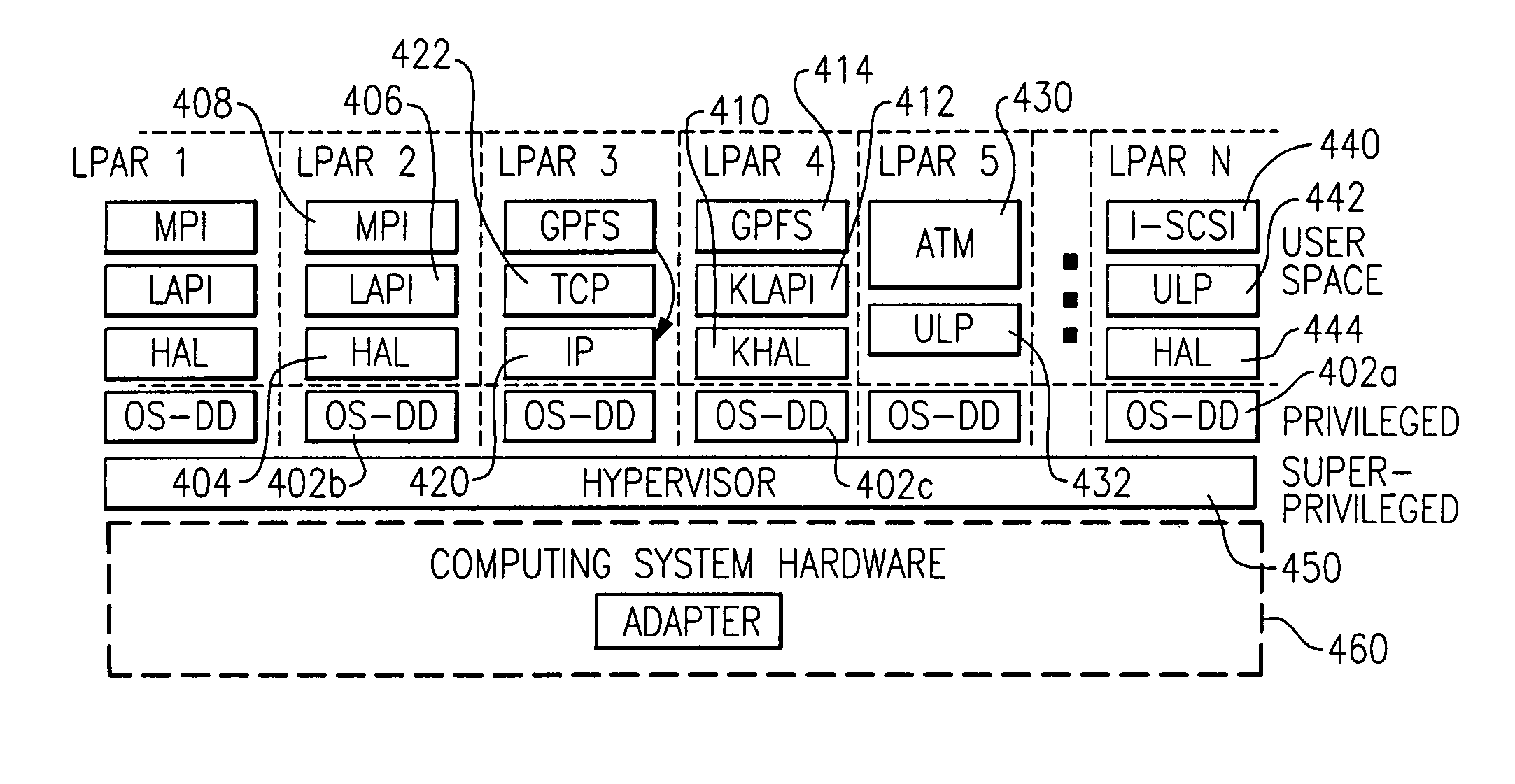

Methods and apparatus for transferring data from an application server are provided. By offloading network and file system stacks to a common stack accessible by multiple operating systems in a virtual computing system, embodiments of the present invention may achieve data transfer support for web and application servers without the data needing to be copied to or reside in the address space of the server operating systems.

Owner:CISCO TECH INC

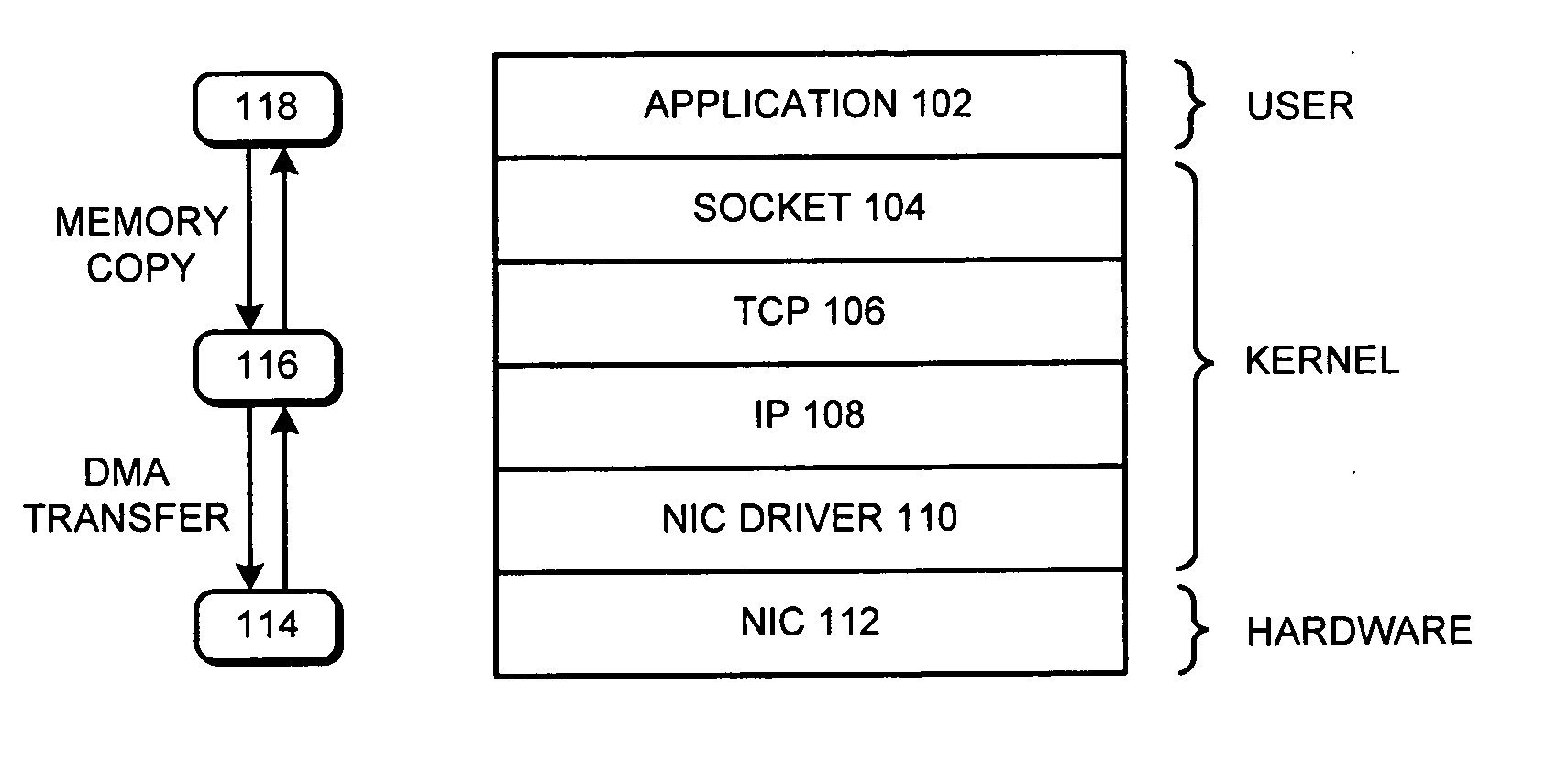

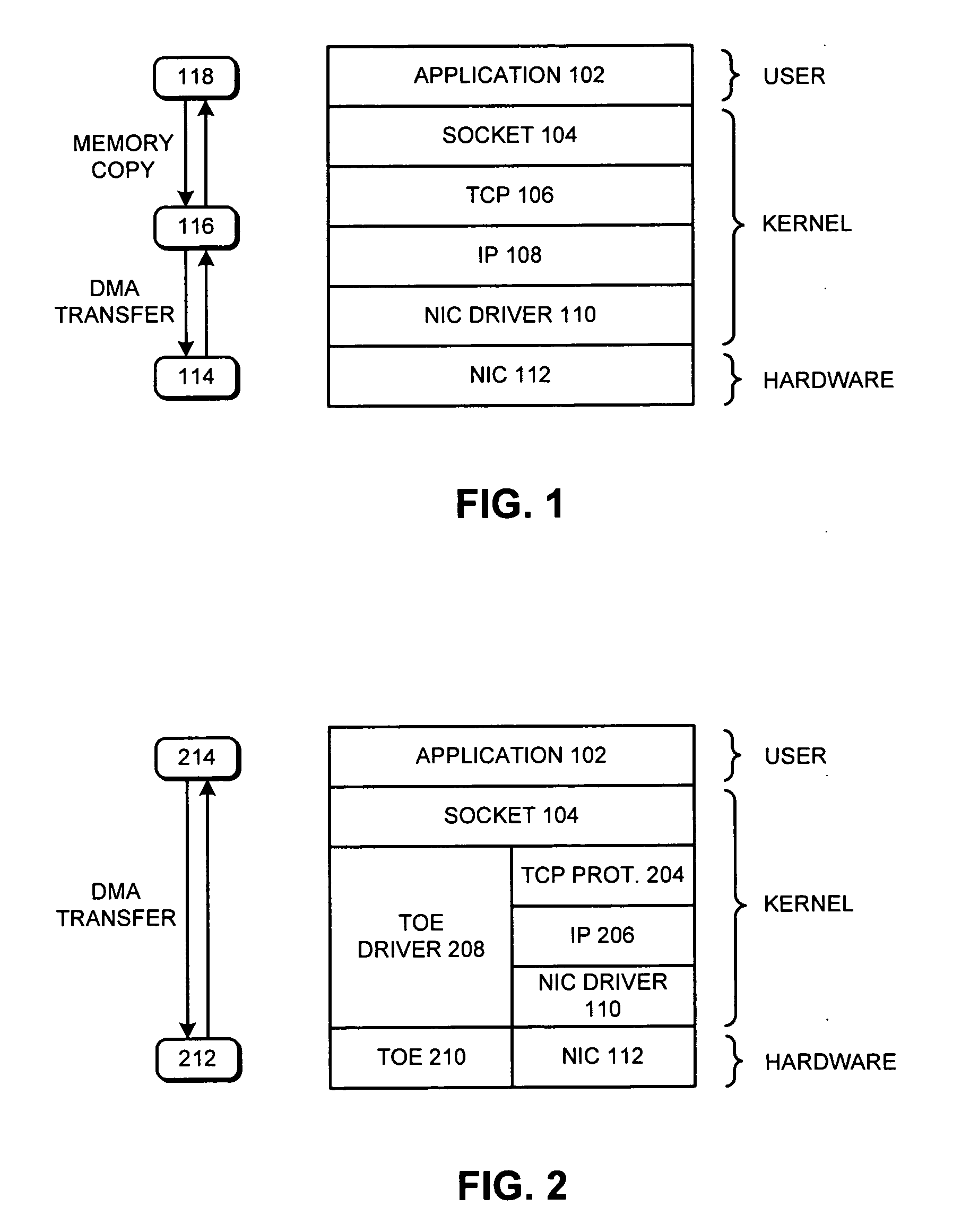

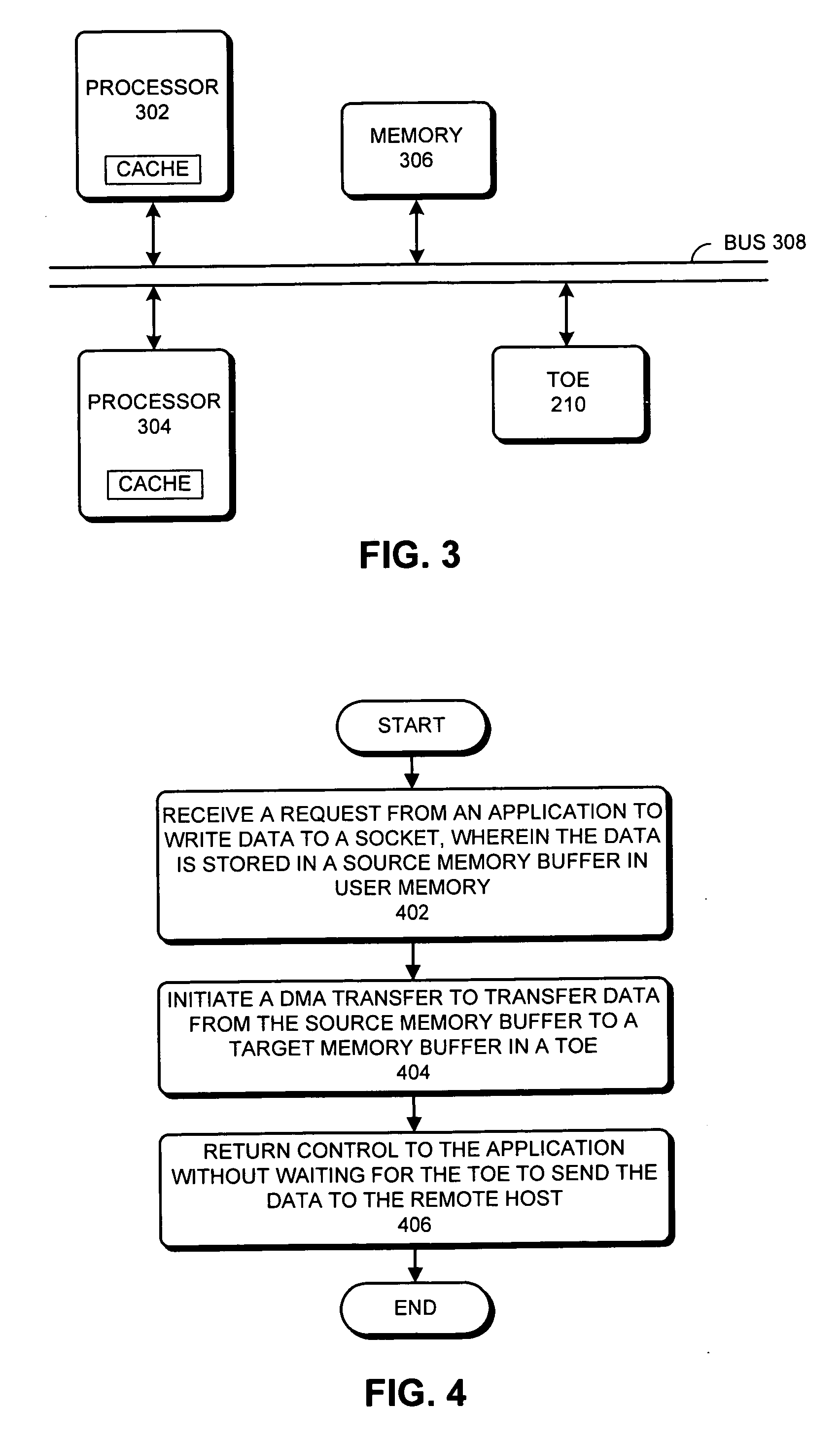

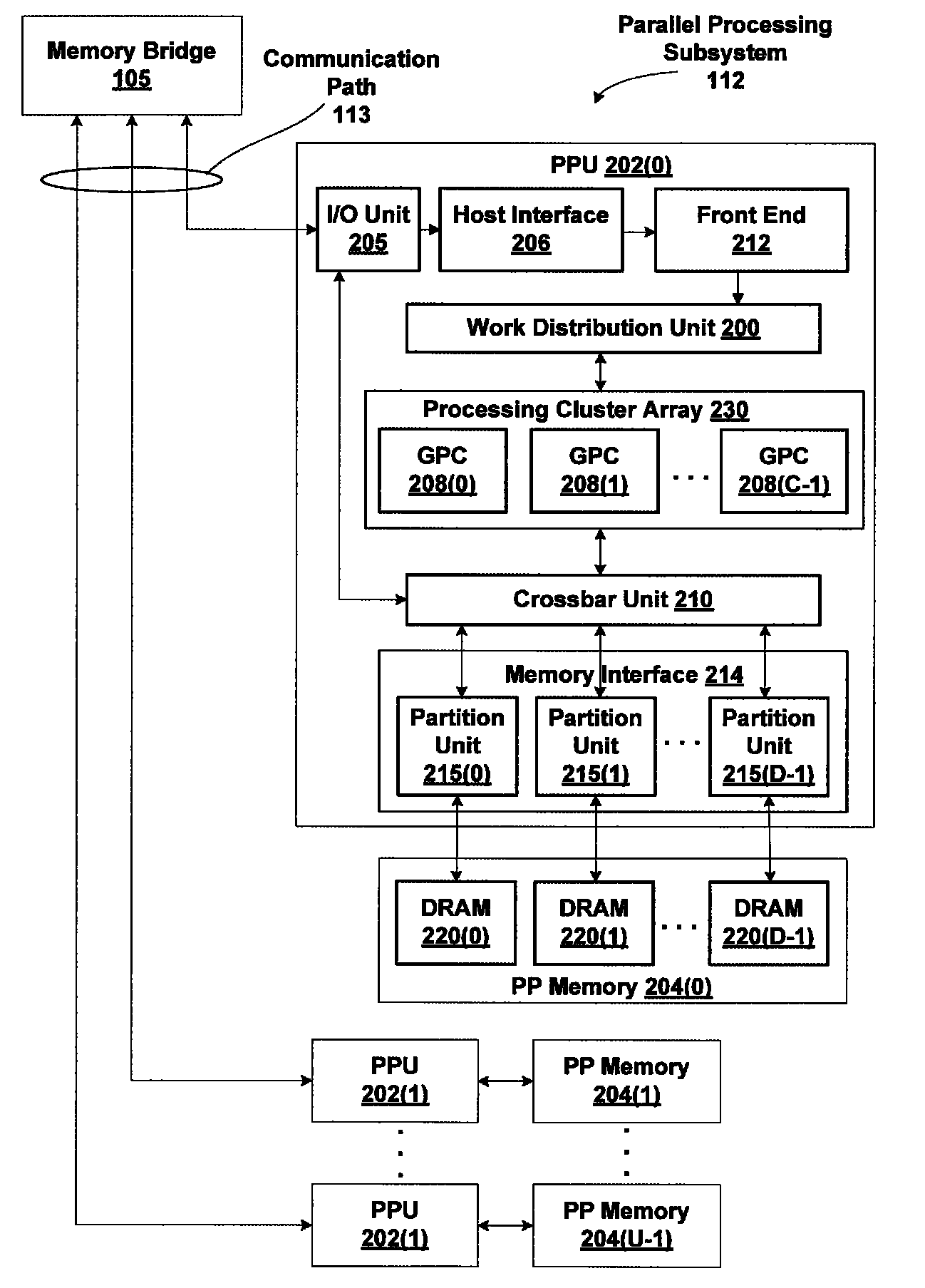

TCP-offload-engine based zero-copy sockets

One embodiment of the present invention provides a system for sending data to a remote host using a socket. During operation the system receives a request from an application to write data to the socket, wherein the data is stored in a source memory buffer in user memory. Next, the system initiates a DMA (Direct Memory Access) transfer to transfer the data from the source memory buffer to a target memory buffer in a TCP (Transmission Control Protocol) Offload Engine. The system then returns control to the application without waiting for the TCP Offload Engine to send the data to the remote host.

Owner:SUN MICROSYSTEMS INC

Zero-copy data sharing by cooperating asymmetric coprocessors

ActiveUS8645634B1Reduce data duplicationGeneral purpose stored program computerMemory systemsCoprocessorZero-copy

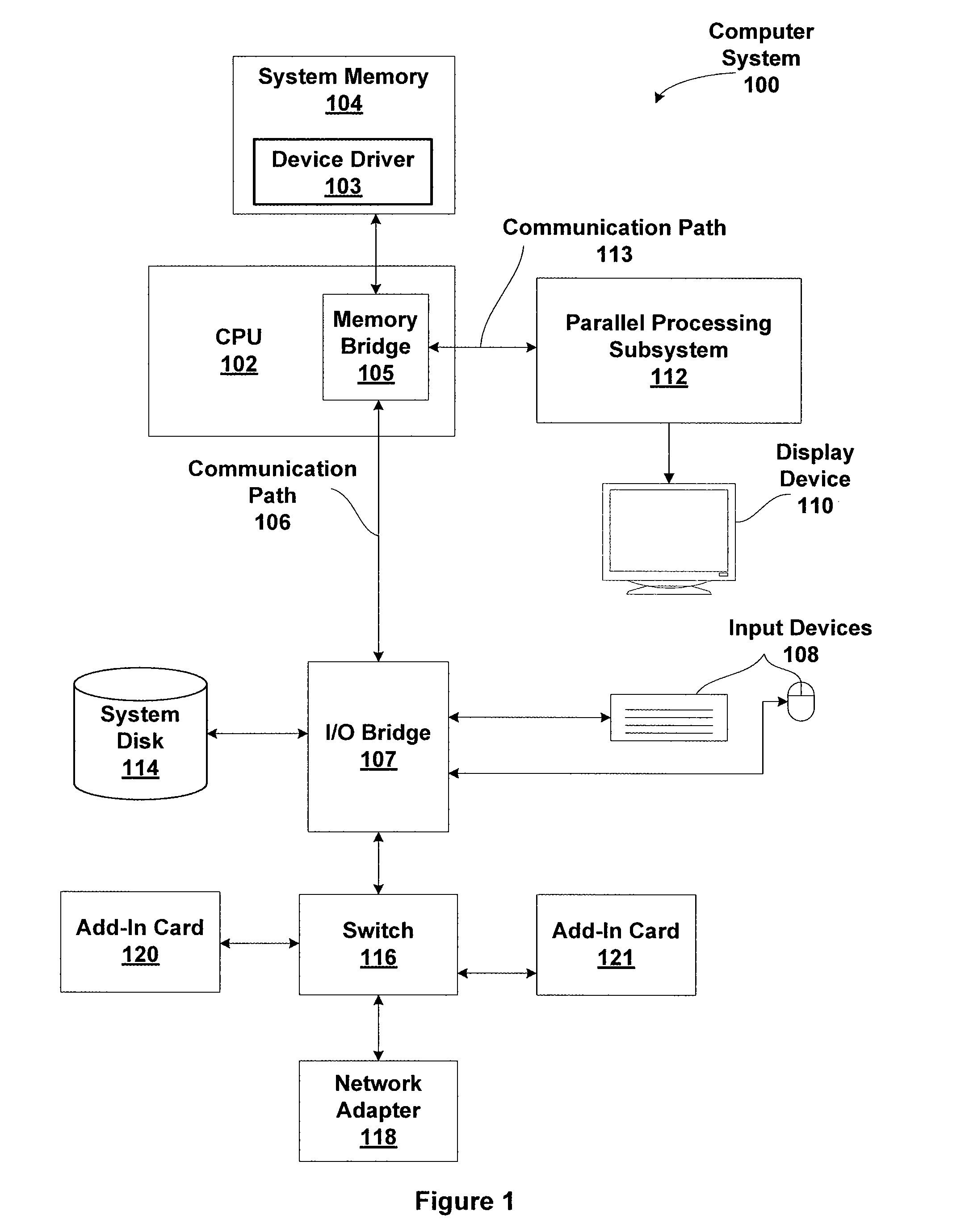

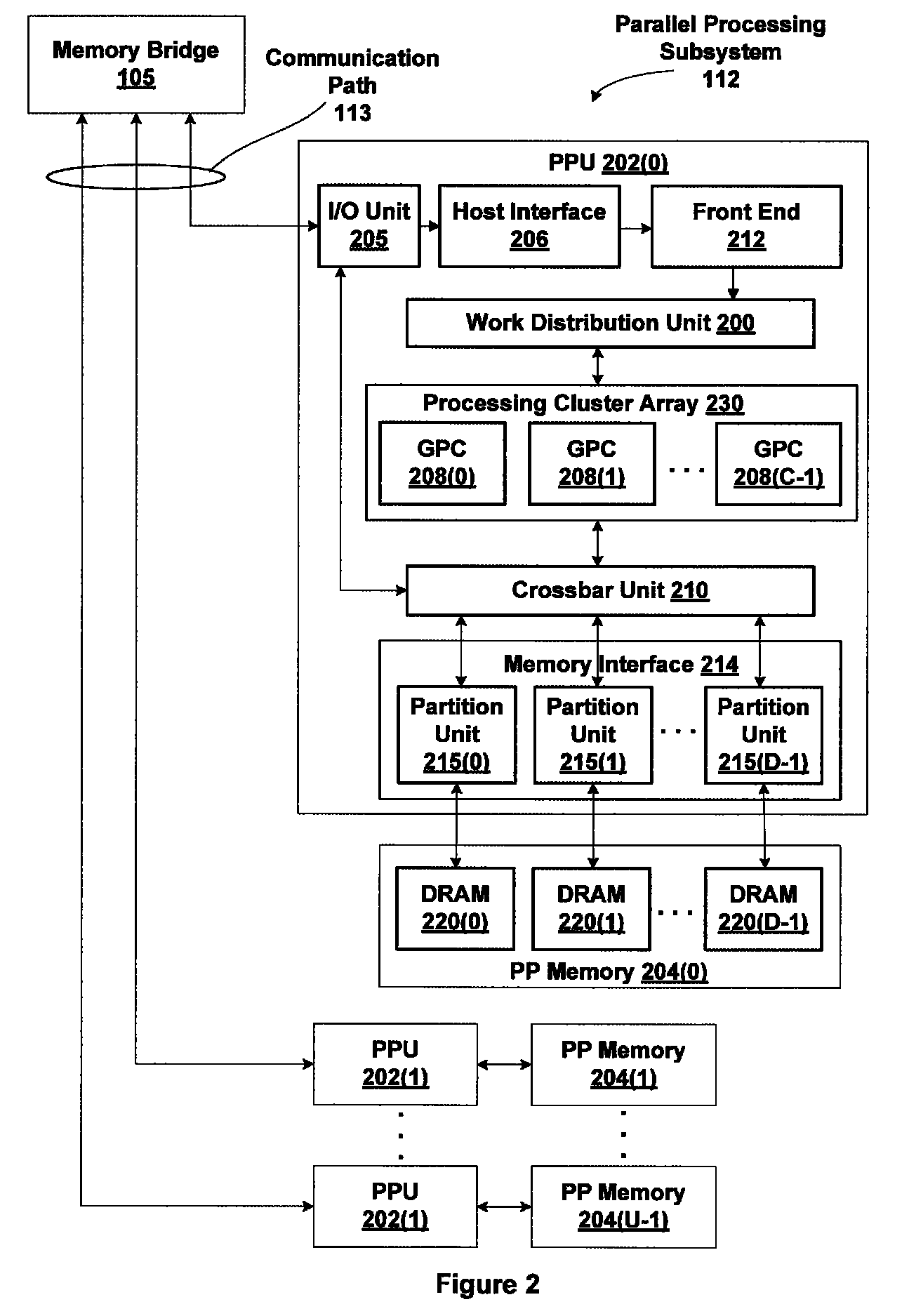

One embodiment of the present invention sets forth a technique for reducing the copying of data between memory allocated to a primary processor and a coprocessor is disclosed. The system memory is aliased as device memory to allow the coprocessor and the primary processor to share the same portion of memory. Either device may write and / or read the shared portion of memory to transfer data between the devices rather than copying data from a portion of memory that is only accessible by one device to a different portion of memory that is only accessible by the other device. Removal of the need for explicit primary processor memory to coprocessor memory and coprocessor memory to primary processor memory copies improves the performance of the application and reduces physical memory requirements for the application since one portion of memory is shared rather than allocating separate private portions of memory.

Owner:NVIDIA CORP

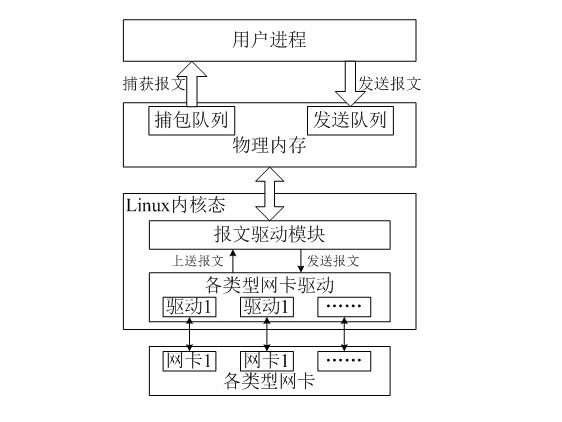

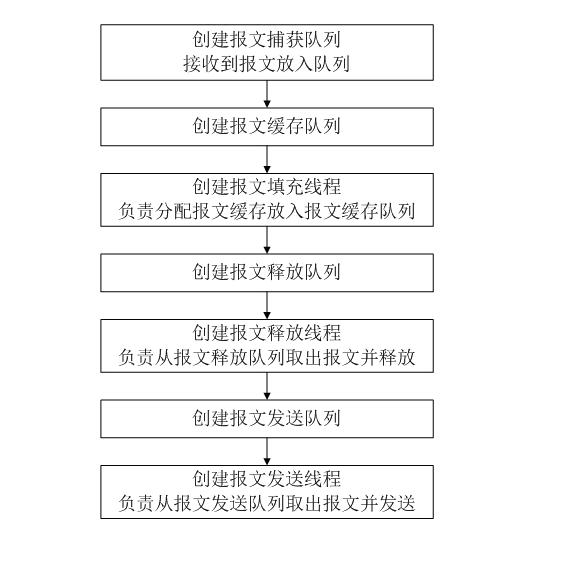

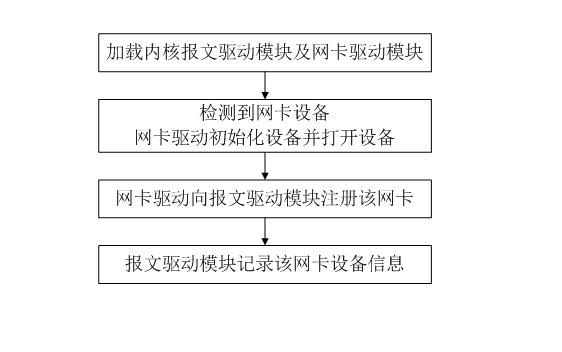

Network card drive-based zero copy Ethernet message capturing and transmitting implementation method under Linux

ActiveCN101917350AEasy to captureIncrease sending rateData switching networksTraffic capacityZero-copy

The invention discloses a network card drive-based zero copy Ethernet message capturing and transmitting implementation method under Linux. The method comprises the following steps of: 1, mapping the whole physical memory to a user process space by using a user process; 2, managing message received by a message driving module and message to be transmitted by the message driving module in a queue mode; 3, isolating the influence of discrimination of various network card drives on the user process by using the message driving module; and 4, after the various network card drives are loaded successfully, registering equipment and related operating methods to the message driving module. The method is implemented layer by layer so that the method can simultaneously support multiple types of network cards and is easy to add support for new network cards. The method can realize high-performance message capture and transmission without one-time copy in the message capture and transmission process, and is suitable for the situations with high requirement for transceiving of the message such as network traffic monitoring and analysis and the like.

Owner:南京中新赛克科技有限责任公司

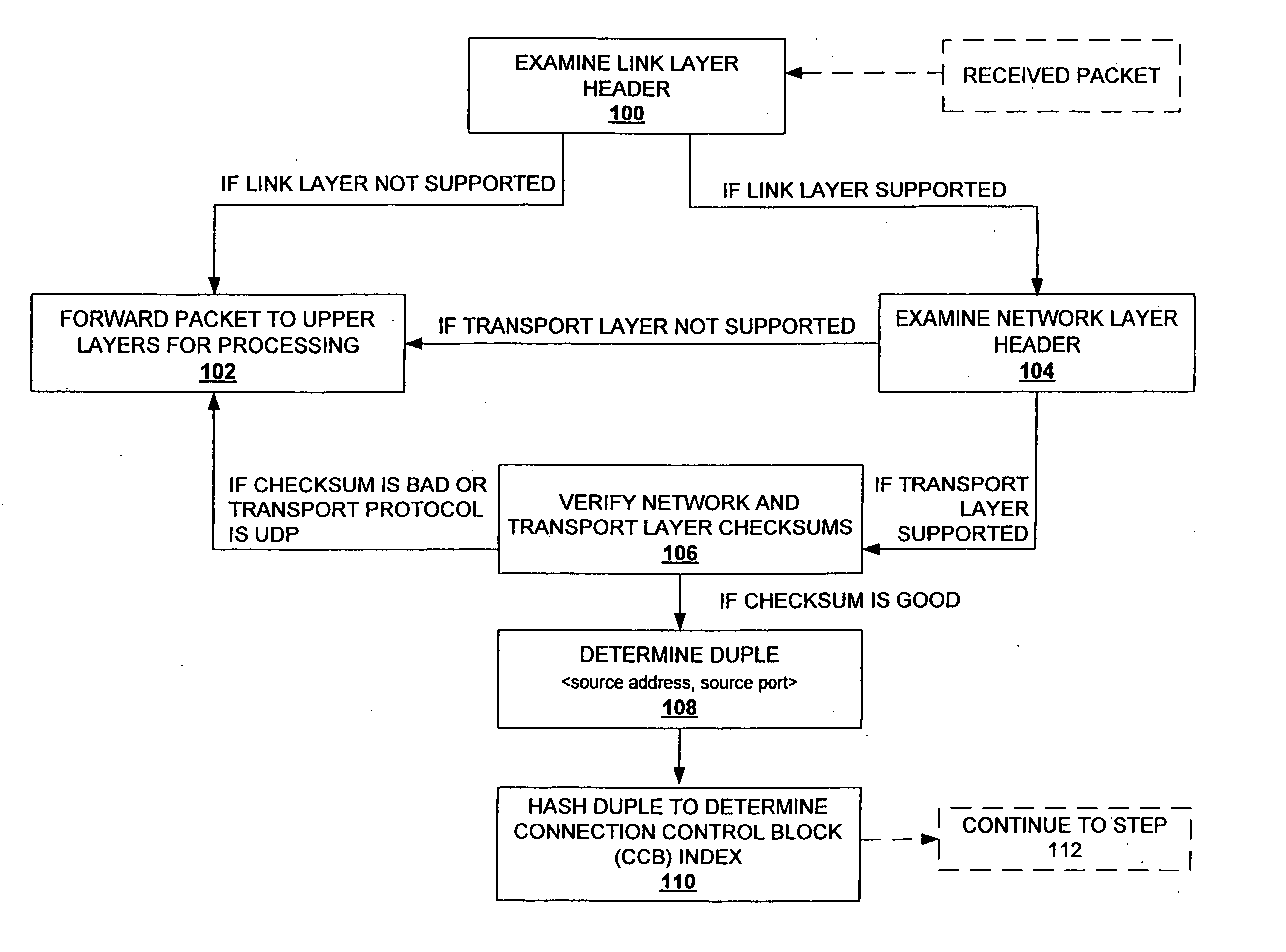

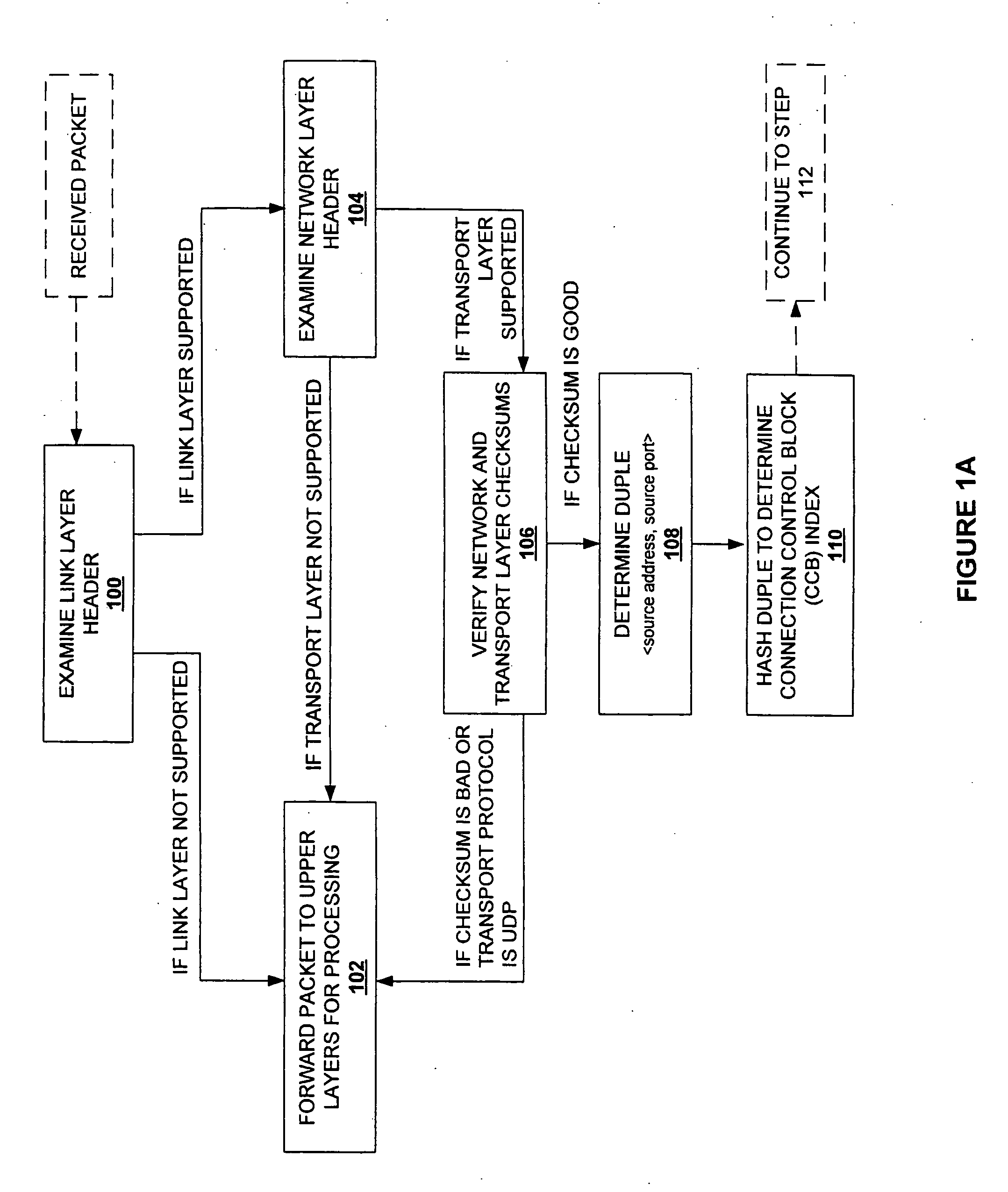

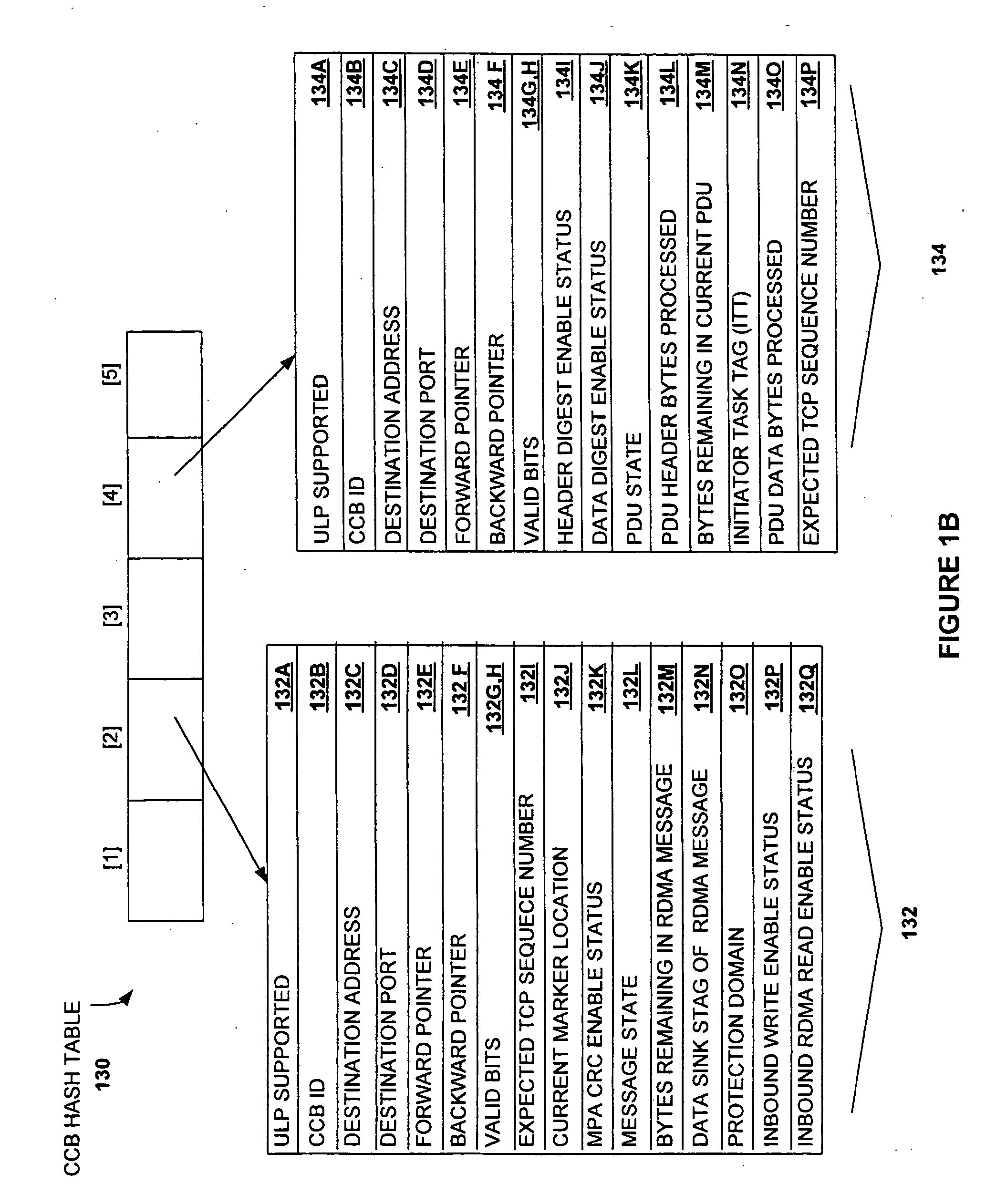

Method and system for providing direct data placement support

InactiveUS20060034283A1Reduction of CPU processing overheadReduced space requirementsTime-division multiplexData switching by path configurationWire speedZero-copy

A system and method for reducing the overhead associated with direct data placement is provided. Processing time overhead is reduced by implementing packet-processing logic in hardware. Storage space overhead is reduced by combining results of hardware-based packet-processing logic with ULP software support; parameters relevant to direct data placement are extracted during packet-processing and provided to a control structure instantiation. Subsequently, payload data received at a network adapter is directly placed in memory in accordance with parameters previously stored in a control structure. Additionally, packet-processing in hardware reduces interrupt overhead by issuing system interrupts in conjunction with packet boundaries. In this manner, wire-speed direct data placement is approached, zero copy is achieved, and per byte overhead is reduced with respect to the amount of data transferred over an individual network connection. Movement of ULP data between application-layer program memories is thereby accelerated without a fully offloaded TCP protocol stack implementation.

Owner:IBM CORP

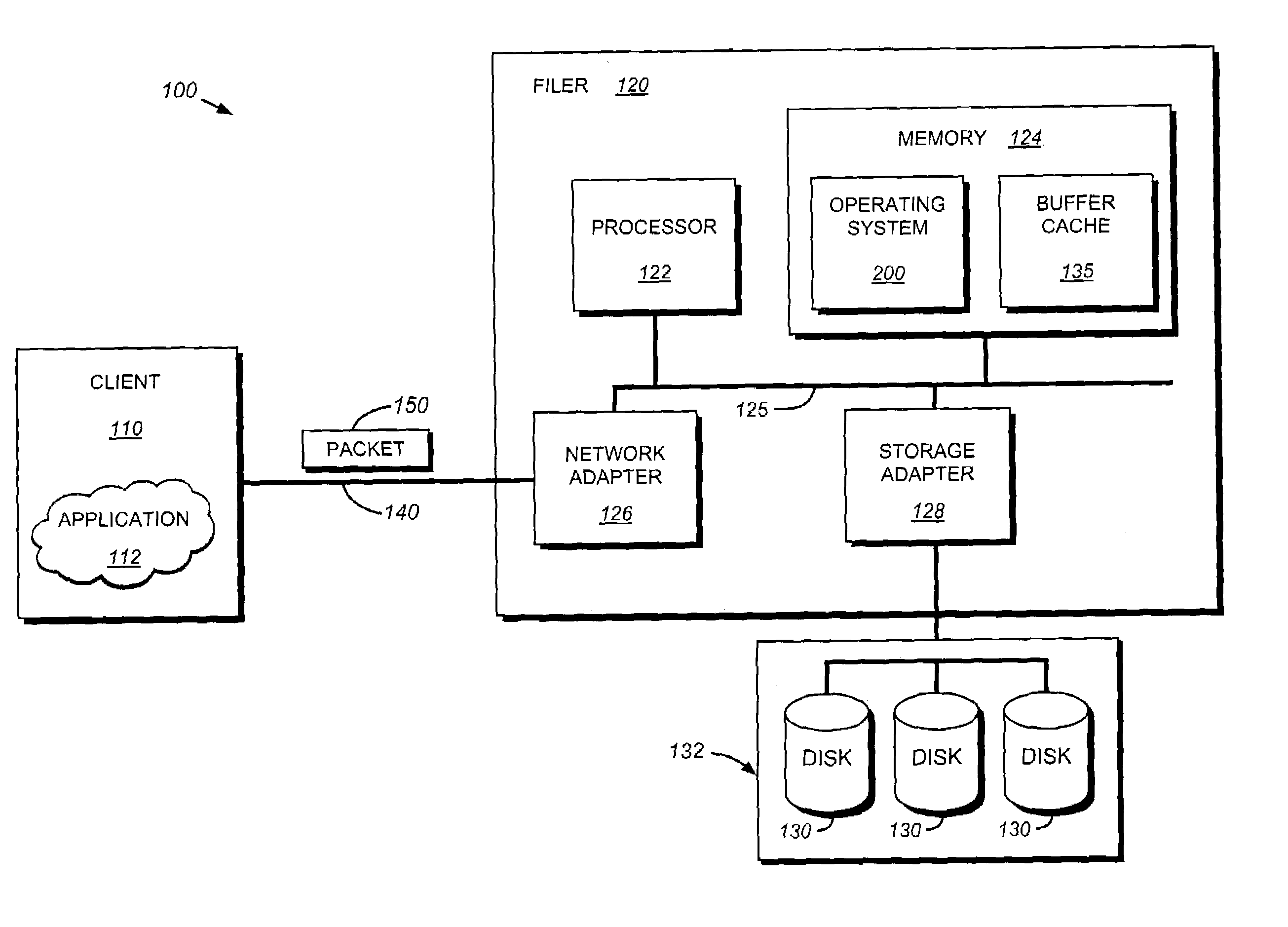

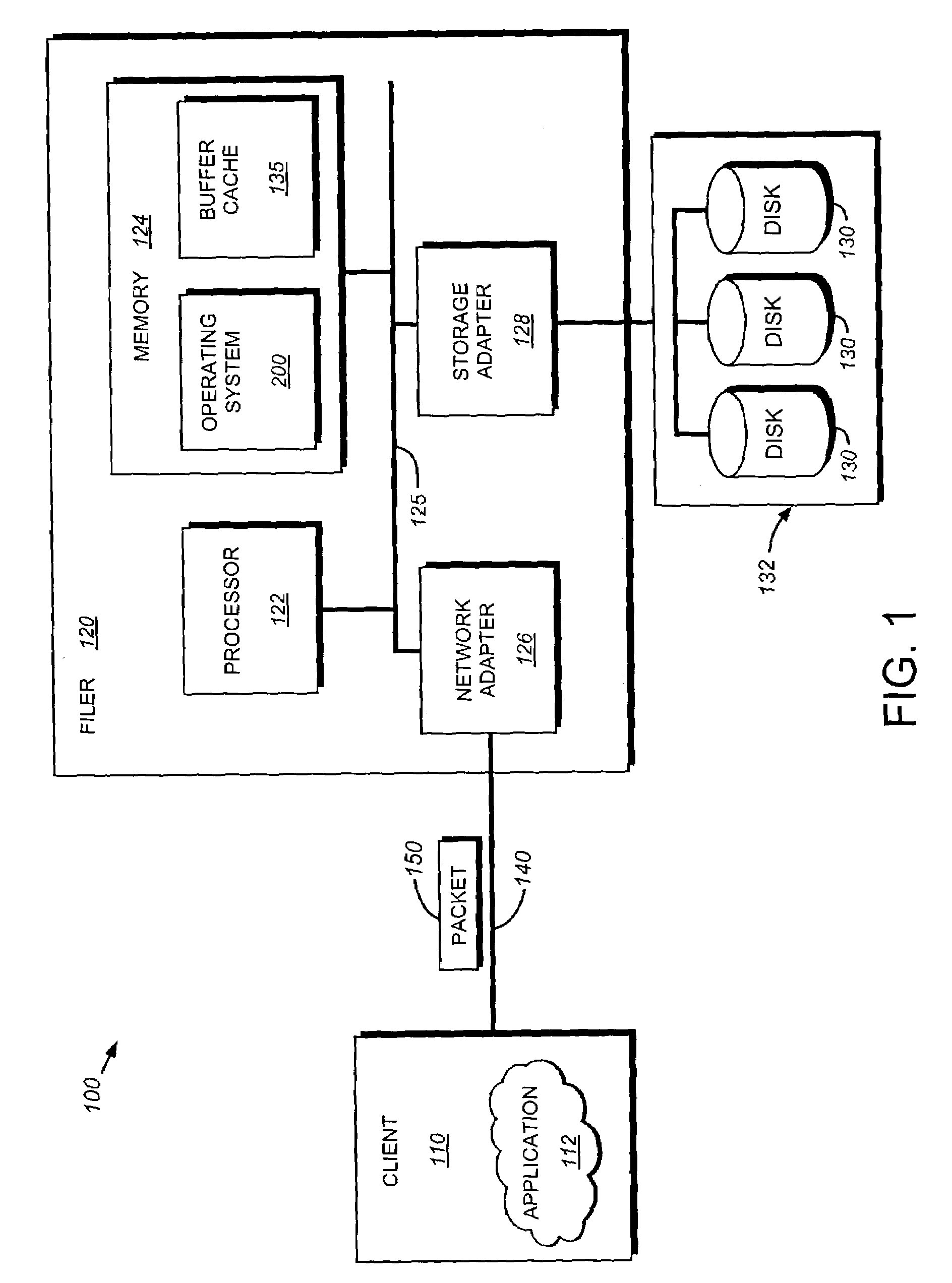

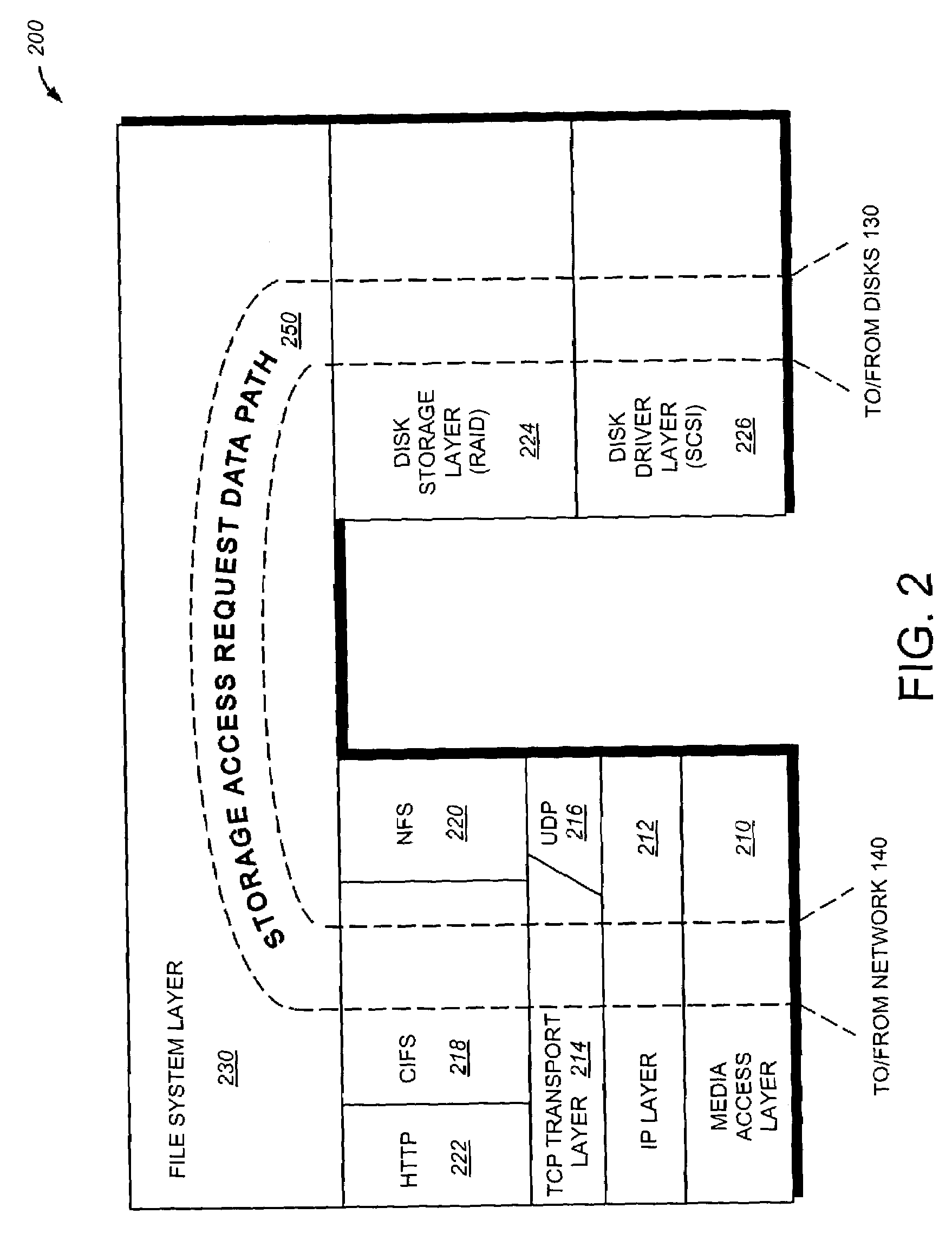

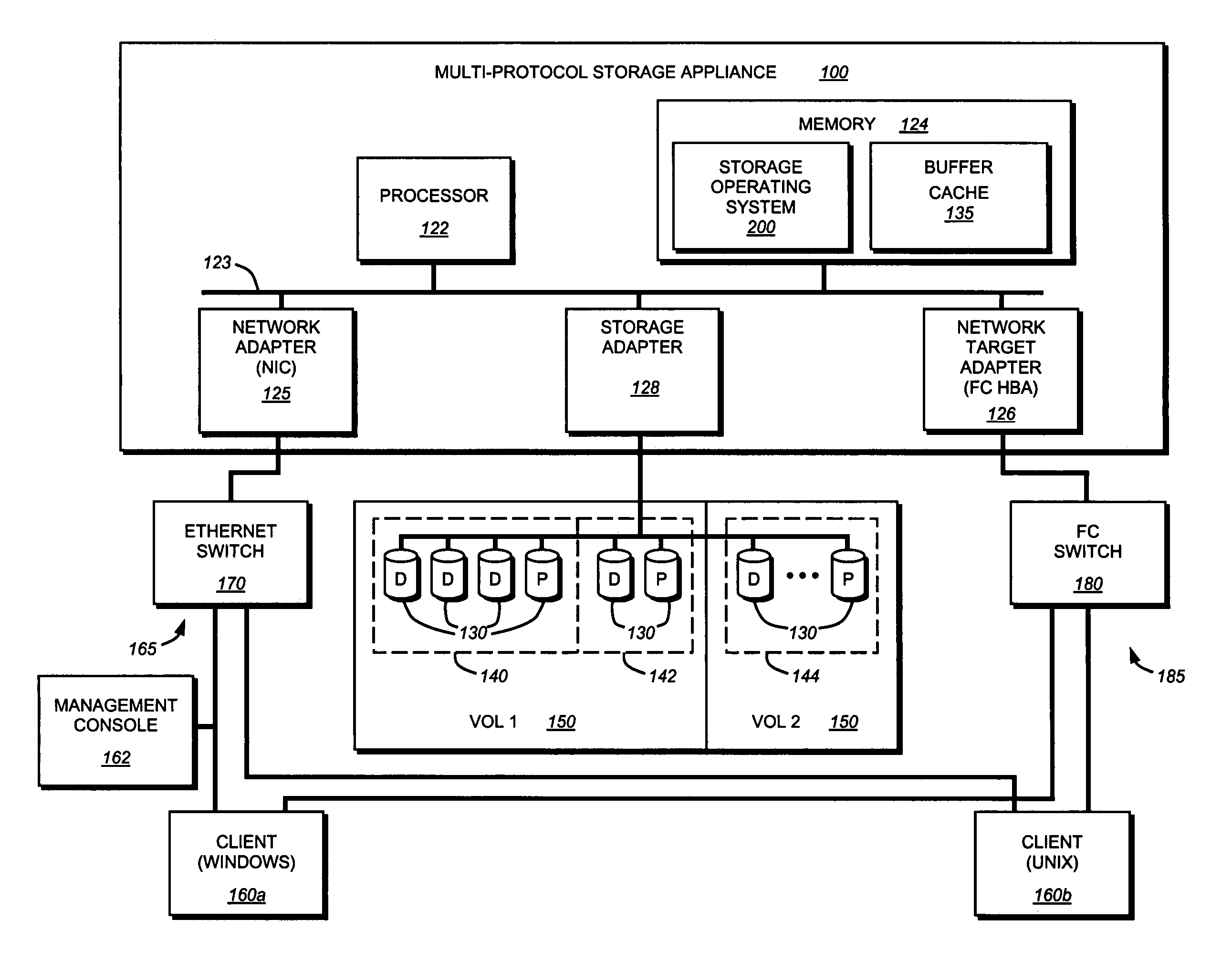

Zero copy writes through use of mbufs

ActiveUS7152069B1Minimize system memory usageConsume resourceSpecial data processing applicationsFile systemsOperational systemZero-copy

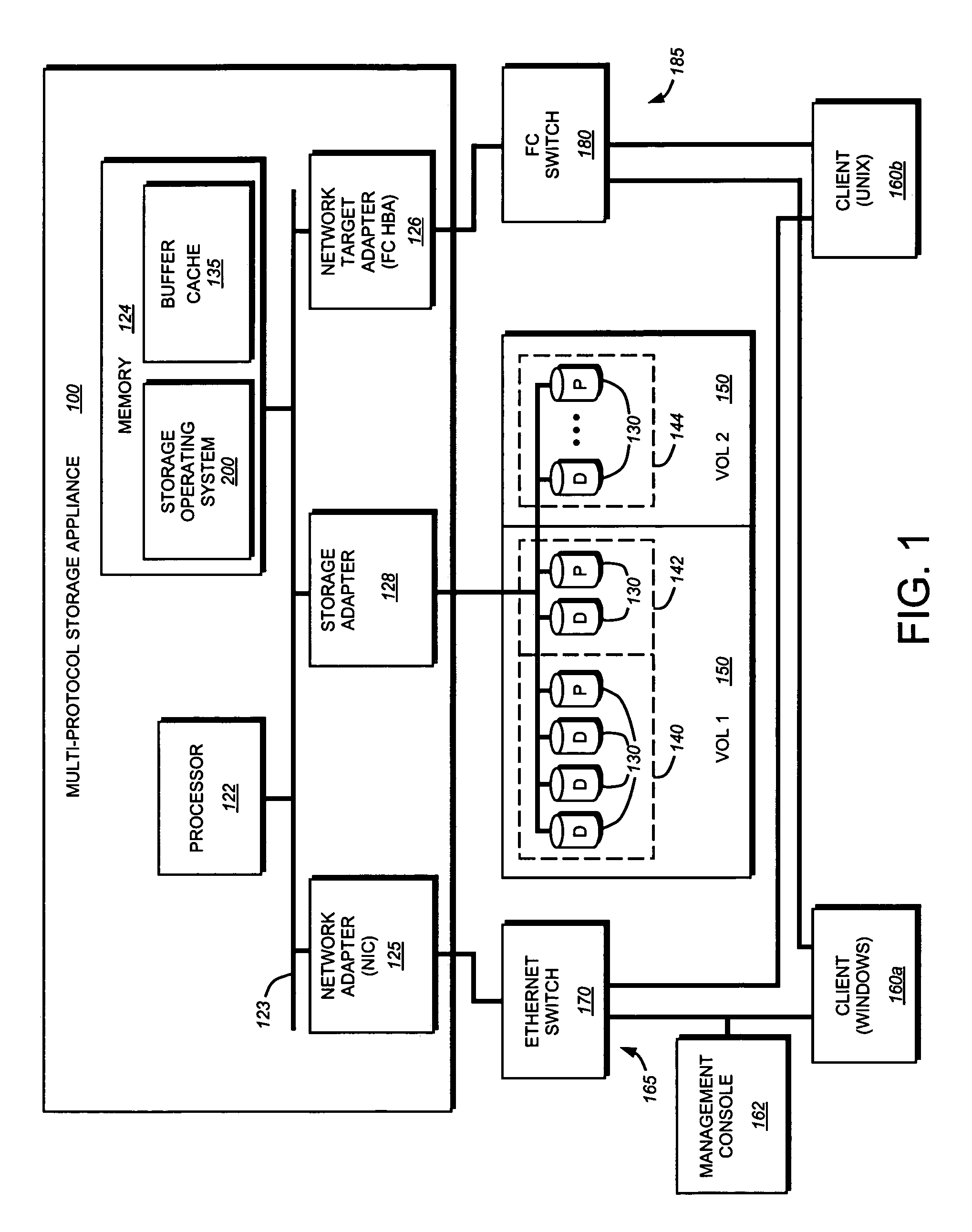

A system and method enable a storage operating system to partition data into fixed sized data blocks that can be written to disk without having to copy the contents of memory buffers (mbufs). The storage operating system receives data from a network and stores the data in chains of mbufs having various lengths. However, the operating system implements a file system that manipulates data in fixed sized data blocks. Therefore, a set of buffer pointers is generated by the file system to define a fixed sized block of data stored in the mbufs. The set of buffer pointers address various portions of data stored in one or more mbufs, and the union of the data portions form a single fixed sized data block. A buffer header stores the set of pointers associated with a given data block, and the buffer header is passed among different layers in the storage operating system. Thus, received data is partitioned into one or more fixed sized data blocks each defined by a set of buffer pointers stored in a corresponding buffer header. Because the buffer pointers address data directly in one or more mbufs, the file system does not need to copy data out of the mbufs when partitioning the data into fixed sized data blocks.

Owner:NETWORK APPLIANCE INC

Network device driver architecture

InactiveUS7451456B2Efficient and flexible accessEliminate bottlenecksDigital computer detailsMultiprogramming arrangementsZero-copyCombined use

The invention proposes a network device driver architecture with functionality distributed between kernel space and user space. The overall network device driver comprises a kernel-space device driver (10) and user-space device driver functionality (20). The kernel-space device driver (10) is adapted for enabling access to the user -space device driver functionality (20) via a kernel-space-user-space interface (15). The user-space device driver functionality (20) is adapted for enabling direct access between user space and the NIC (30) via a user-space-NIC interface (25), and also adapted for interconnecting the kernel-space-user-space interface (15) and the user-space-NIC interface (25) to provide integrated kernel -space access and user-space access to the NIC (30). The user-space device driver functionality (20) provides direct, zero-copy user-space access to the NIC, whereas information to be transferred between kernel space and the NIC will be “tunneled” through user space by combined use of the kernel-space device driver (10), the user-space device driver functionality (20) and the two associated interfaces (15,25).

Owner:TELEFON AB LM ERICSSON (PUBL)

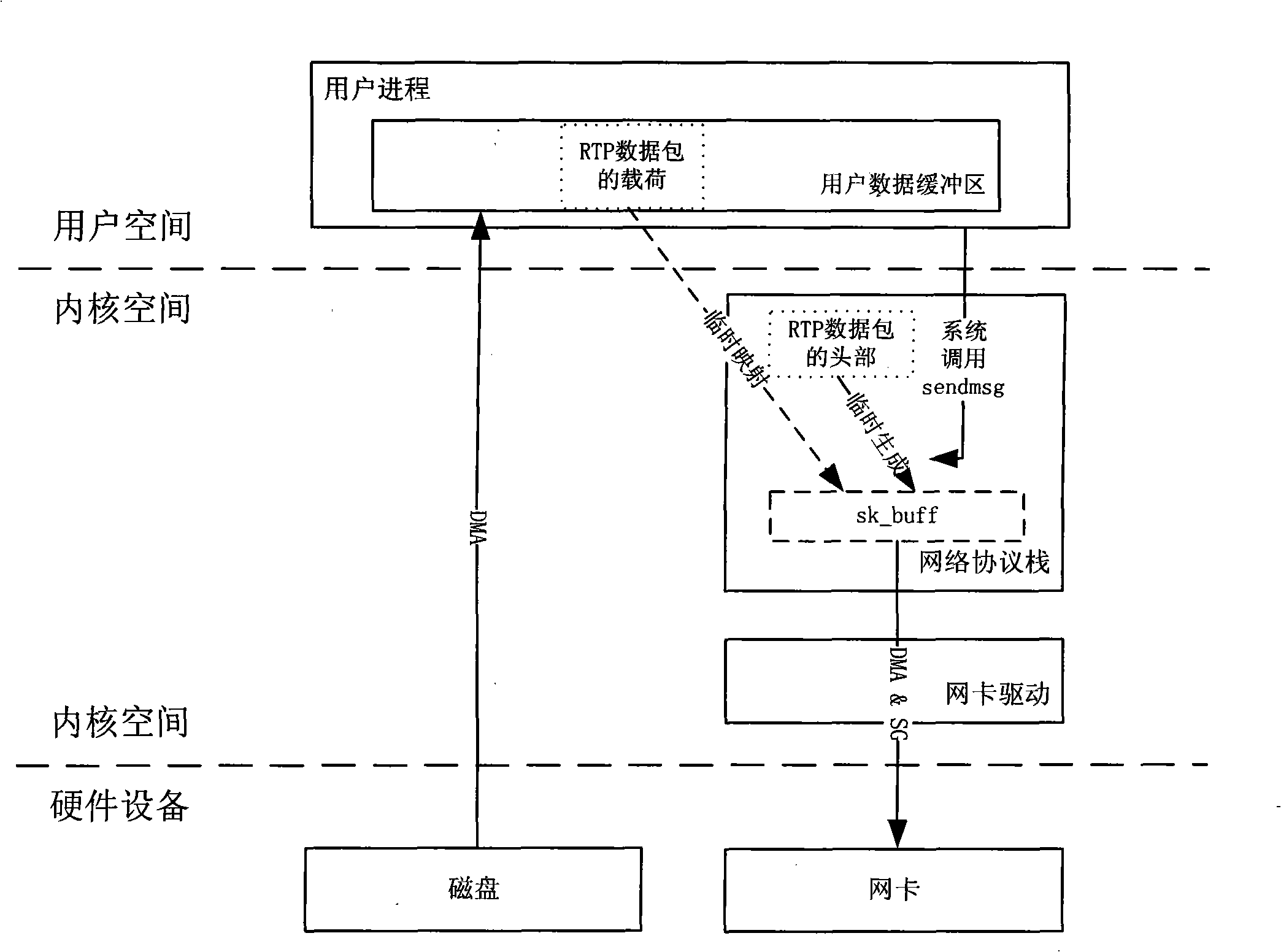

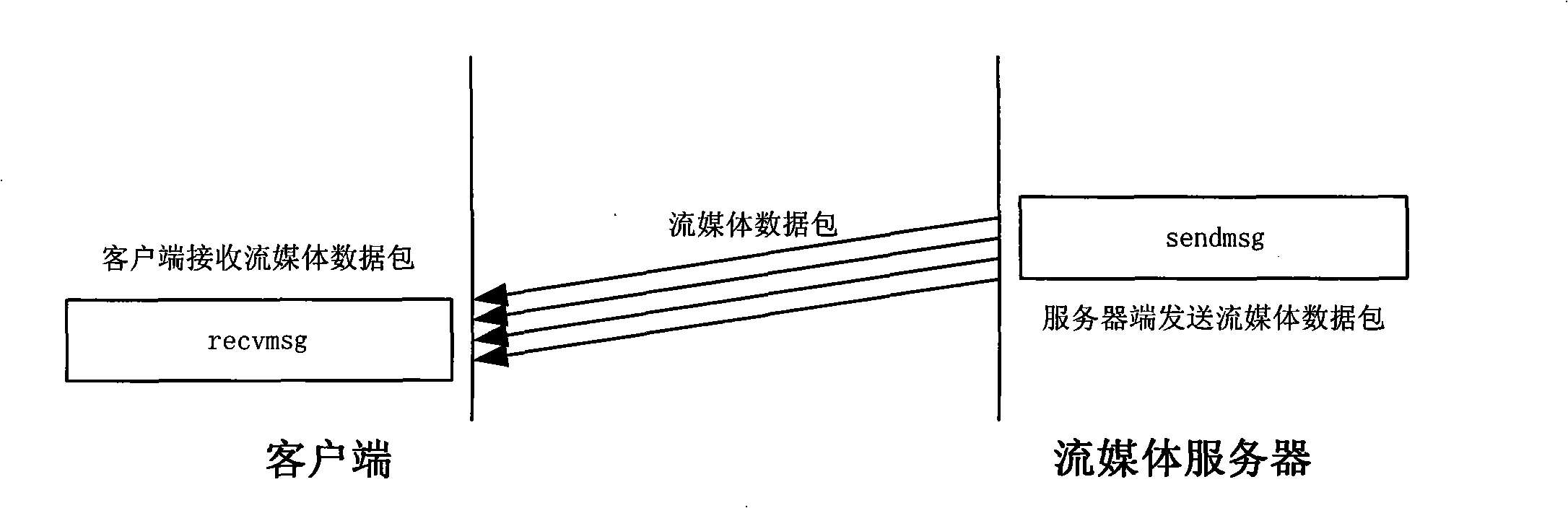

Method and system realizing zero-copy transmission of stream media data

InactiveCN101340574ARealize sendingPulse modulation television signal transmissionTwo-way working systemsZero-copySystem call

The invention discloses a method and a system which can send stream media data with zero copy, which is based on the network protocol stack of Linux. The method of the invention comprises the steps as follows: when a stream media server receives the data request of user equipment, the system calling of data to be transmitted is carried out; the stream media data is read into a user data cache from the hard disk space; the stream media data memorized in the user data cache is packaged as real-time transmission protocol data packages; the transmission of stream media data package with separation of head and load is adopted for the real-time transmission protocol data. The method and the system of the invention sufficiently use the DMA function and SG (Scatter / Gather) function of a network card and realizes a type of stream media data transmission with zero-copy; compared with the existing network protocol stack of Linux kernel, the method and the system of the invention realize the transmission type of stream media data packages with separated head and load, and reduces the once data copy operation required during the RTP packaging process of the stream media data.

Owner:ZTE CORP

Communication resource reservation system for improved messaging performance

InactiveUS20060034167A1Facilitating zero-copy communicationMeet the blocking requirementsError preventionFrequency-division multiplex detailsResource poolZero-copy

A system and method are provided for facilitating zero-copy communications between computing systems of a group of computing systems. The method includes allocating, in a first computing system of the group of computing systems, a pool of privileged communication resources from a privileged resource controller to a communications controller. The communications controller designates the privileged communication resources from the pool for use in handling individual ones of the zero-copy communications, thereby avoiding a requirement to obtain individual ones of the privileged resources from the owner of the privileged resources at setup time for each zero-copy communication.

Owner:IBM CORP

Method and system for transparent TCP offload with dynamic zero copy sending

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE +1

Apparatus and method for supporting memory management in an offload of network protocol processing

ActiveUS7930422B2Firmly connectedNumber of neededMultiple digital computer combinationsMemory systemsProtocol processingZero-copy

A number of improvements in network adapters that offload protocol processing from the host processor are provided. Specifically, mechanisms for handling memory management and optimization within a system utilizing an offload network adapter are provided. The memory management mechanism permits both buffered sending and receiving of data as well as zero-copy sending and receiving of data. In addition, the memory management mechanism permits grouping of DMA buffers that can be shared among specified connections based on any number of attributes. The memory management mechanism further permits partial send and receive buffer operation, delaying of DMA requests so that they may be communicated to the host system in bulk, and expedited transfer of data to the host system.

Owner:INT BUSINESS MASCH CORP

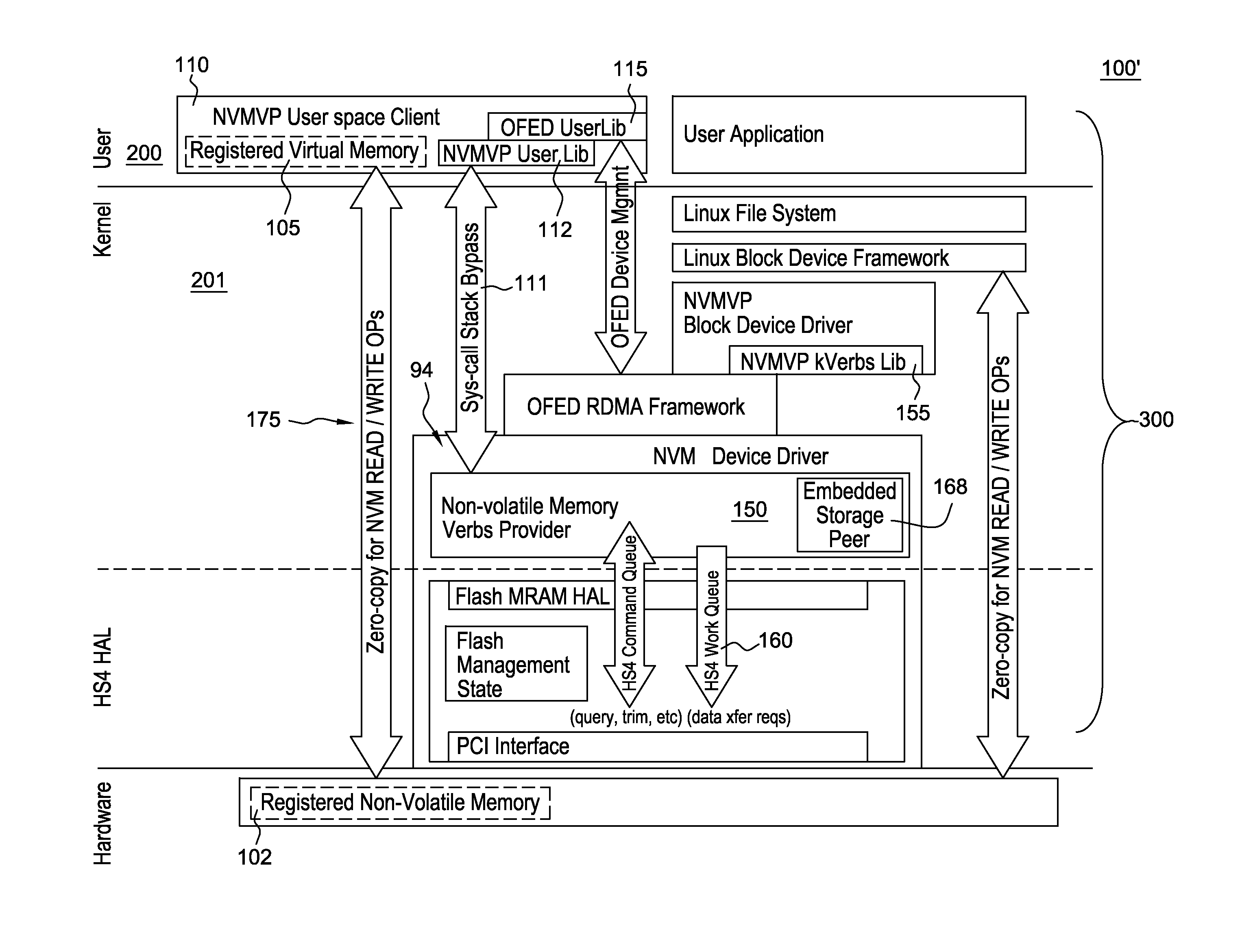

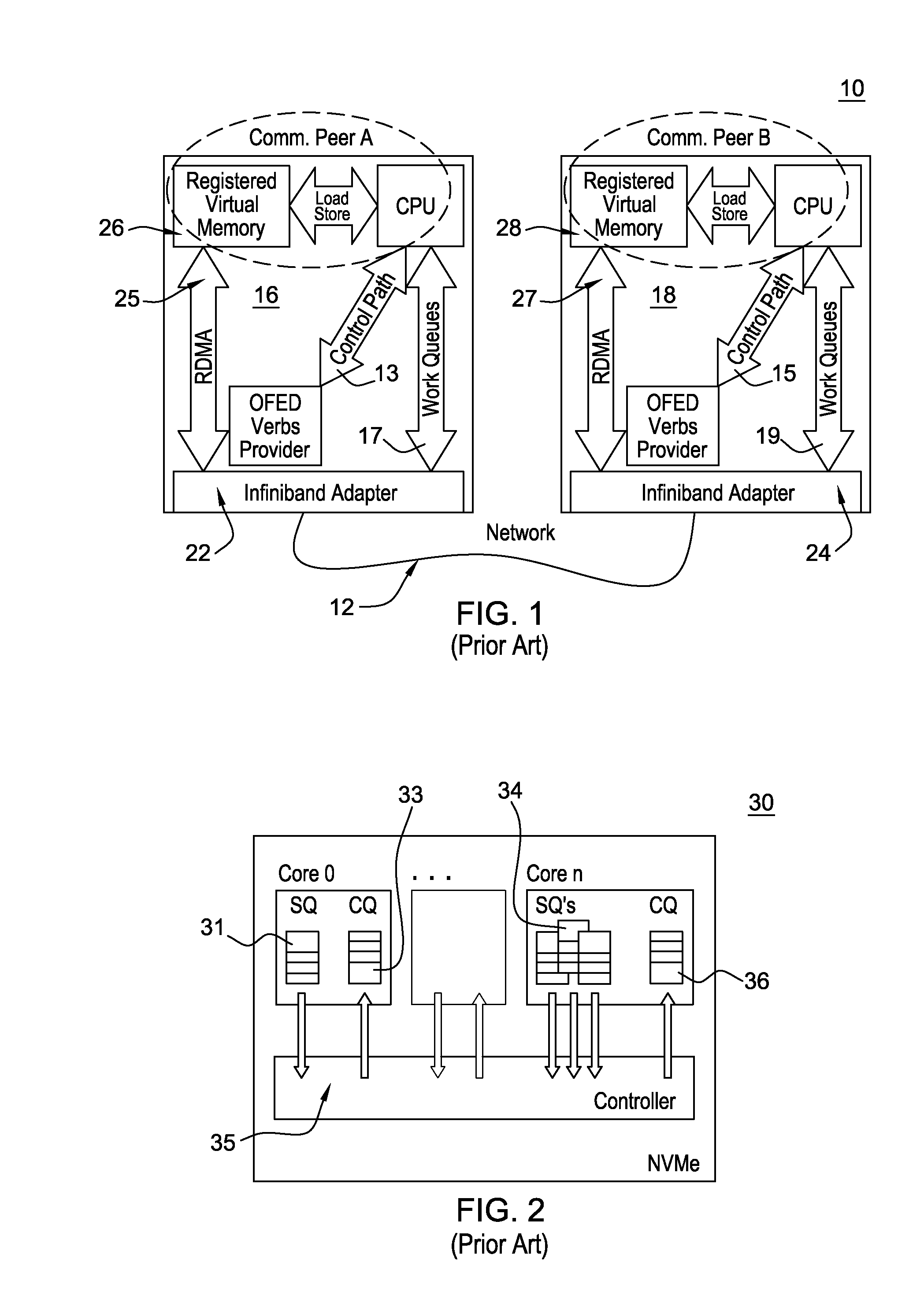

Local direct storage class memory access

ActiveUS20140317336A1Reduce the burden onEasy accessMemory adressing/allocation/relocationTransmissionZero-copyGranularity

A queued, byte addressed system and method for accessing flash memory and other non-volatile storage class memory, and potentially other types of non-volatile memory (NVM) storage systems. In a host device, e.g., a standalone or networked computer, having attached NVM device storage integrated into a switching fabric wherein the NVM device appears as an industry standard OFED™ RDMA verbs provider. The verbs provider enables communicating with a ‘local storage peer’ using the existing OpenFabrics RDMA host functionality. User applications issue RDMA Read / Write directives to the ‘local peer (seen as a persistent storage) in NVM enabling NVM memory access at byte granularity. The queued, byte addressed system and method provides for Zero copy NVM access. The methods enables operations that establish application private Queue Pairs to provide asynchronous NVM memory access operations at byte level granularity.

Owner:GLOBALFOUNDRIES US INC

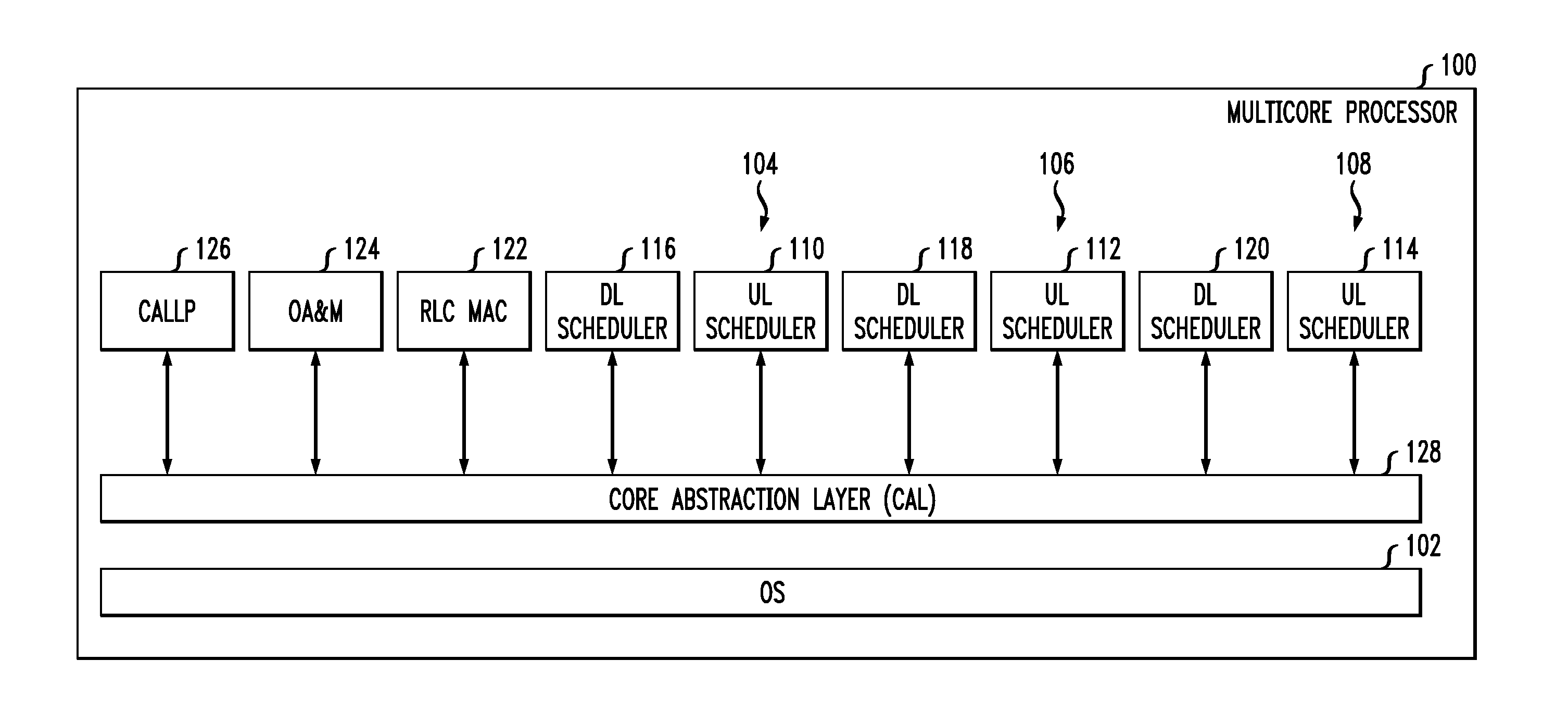

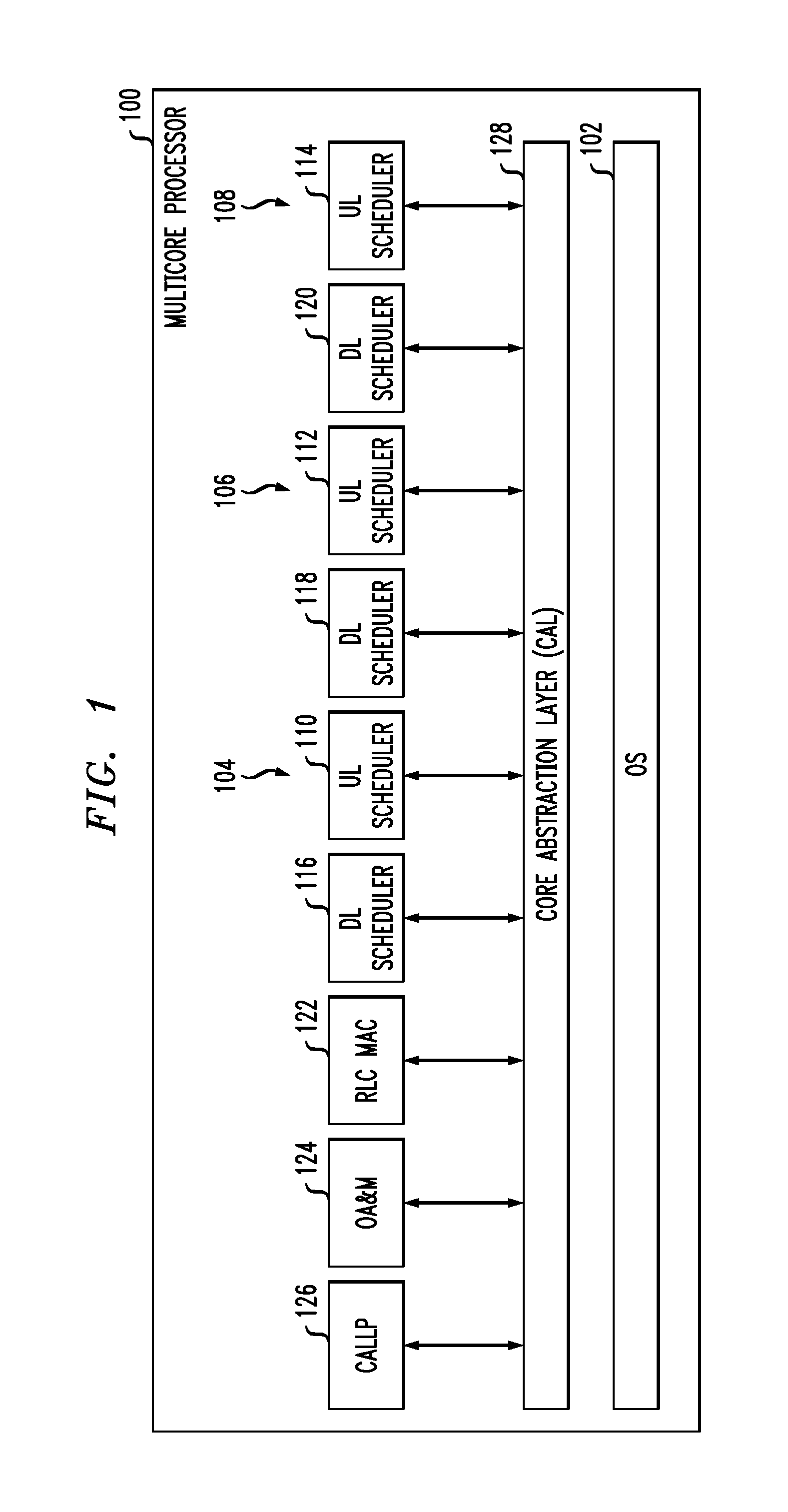

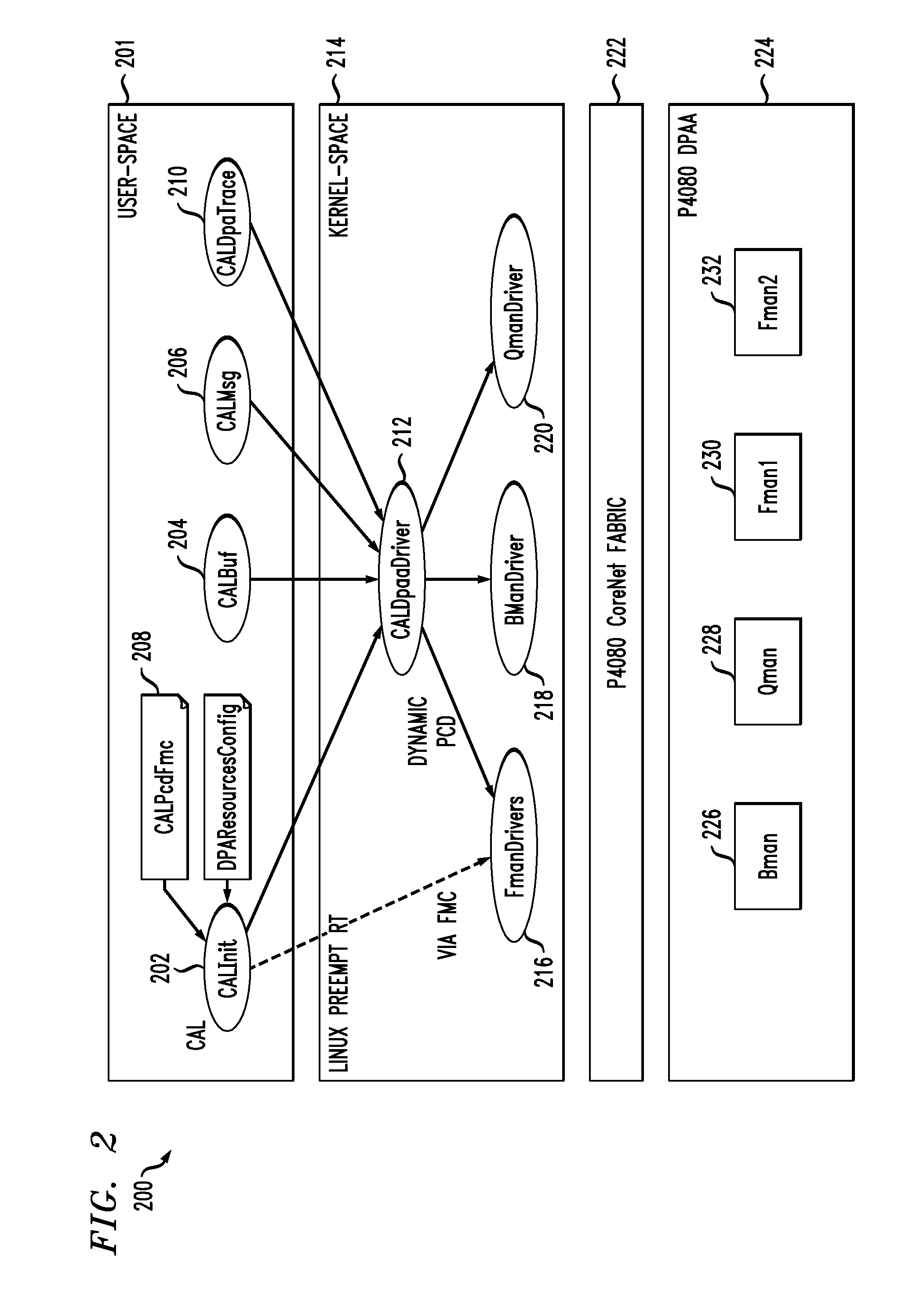

Lock-less and zero copy messaging scheme for telecommunication network applications

A computer-implemented system and method for a lock-less, zero data copy messaging mechanism in a multi-core processor for use on a modem in a telecommunications network are described herein. The method includes, for each of a plurality of processing cores, acquiring a kernel to user-space (K-U) mapped buffer and corresponding buffer descriptor, inserting a data packet into the buffer; and inserting the buffer descriptor into a circular buffer. The method further includes creating a frame descriptor containing the K-U mapped buffer pointer, inserting the frame descriptor onto a frame queue specified by a dynamic PCD rule mapping IP addresses to frame queues, and creating a buffer descriptor from the frame descriptor.

Owner:ALCATEL LUCENT SAS

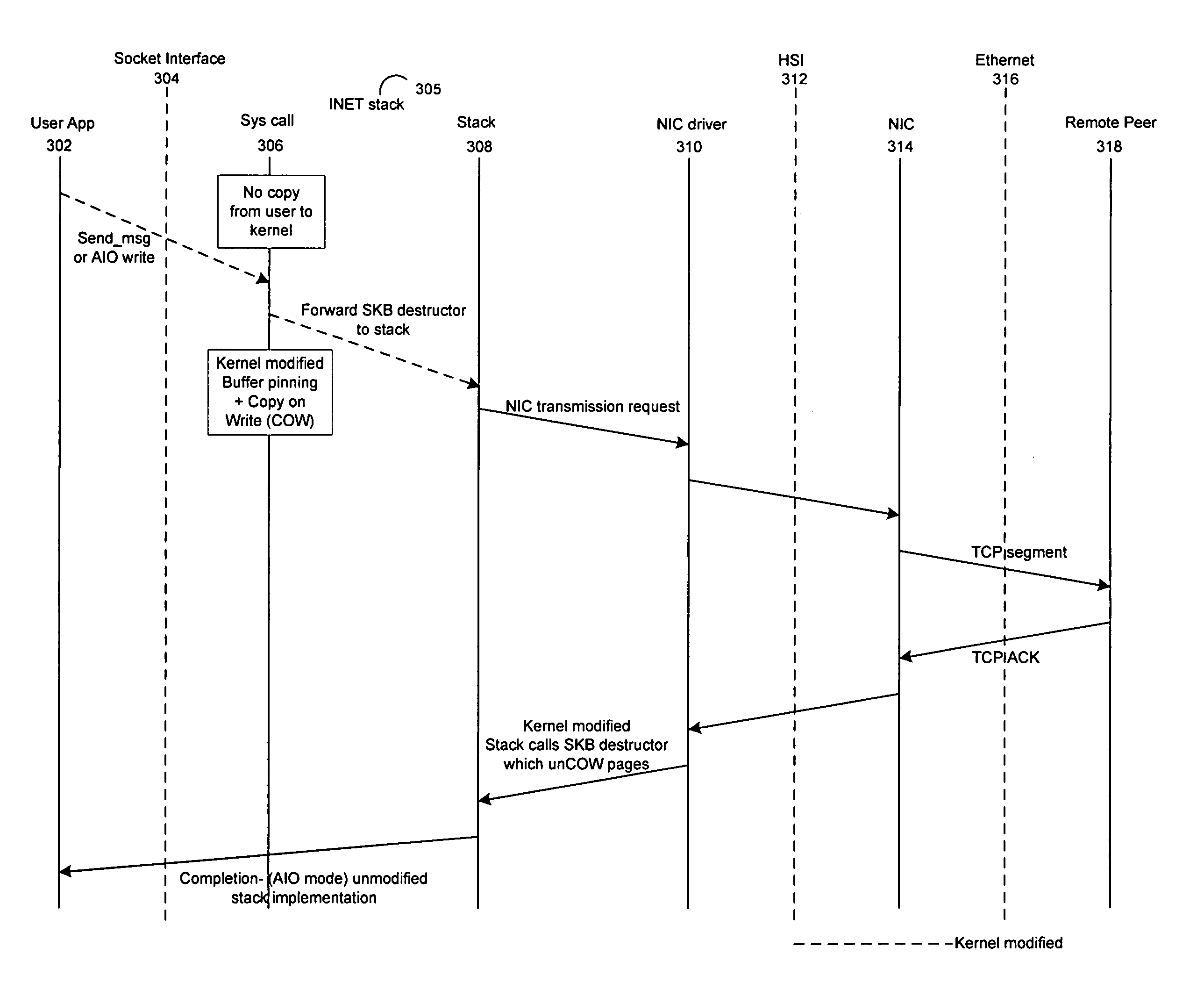

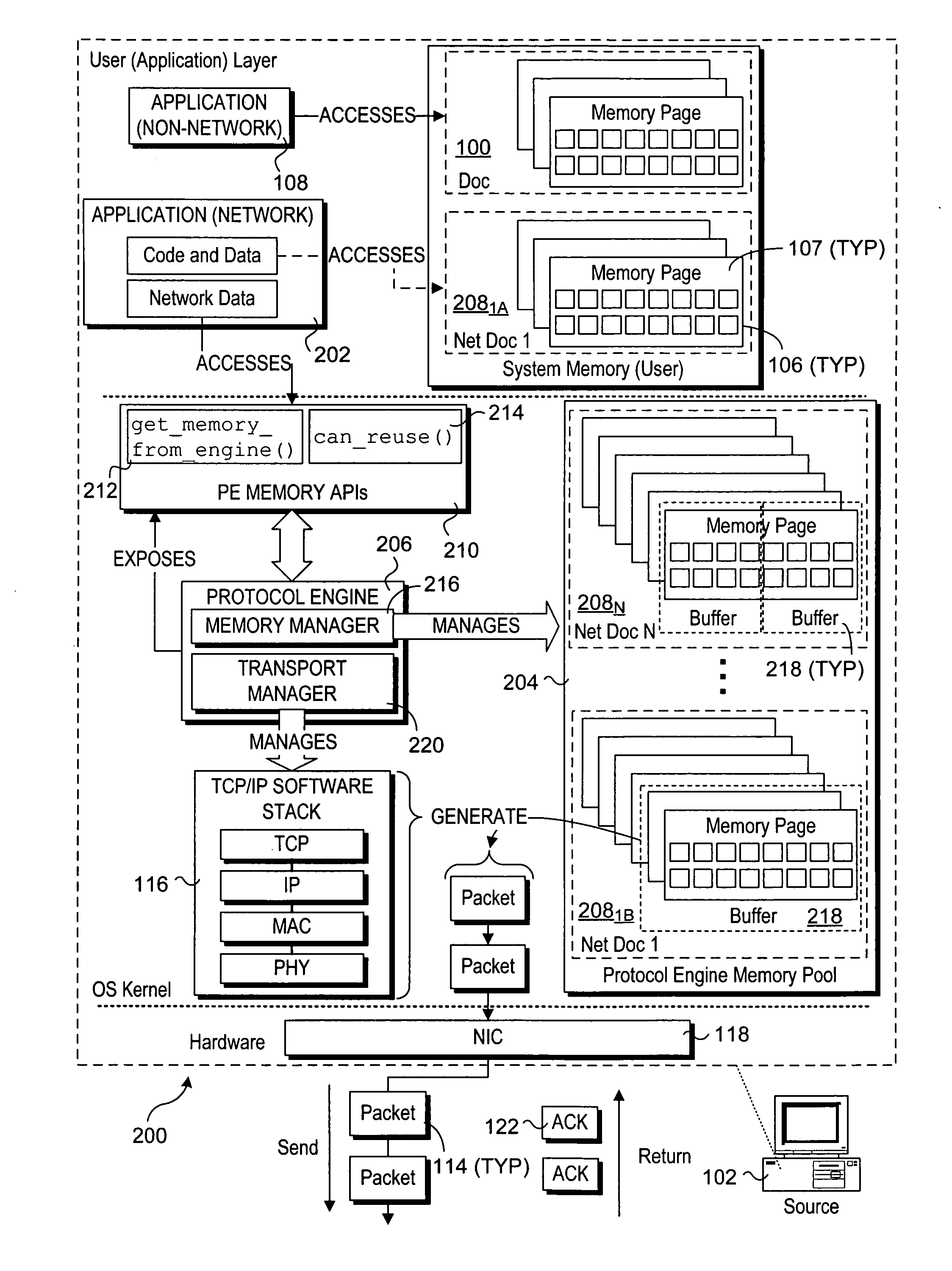

Mechanisms to implement memory management to enable protocol-aware asynchronous, zero-copy transmits

InactiveUS20070011358A1Multiple digital computer combinationsTransmissionZero-copyOperational system

Mechanisms to implement memory management to enable protocol-aware asynchronous, zero-copy transmits. A transport protocol engine exposes interfaces via which memory buffers from a memory pool in operating system (OS) kernel space may be allocated to applications running in an OS user layer. The memory buffers may be used to store data that is to be transferred to a network destination using a zero-copy transmit mechanism, wherein the data is directly transmitted from the memory buffers to the network via a network interface controller. The transport protocol engine also exposes a buffer reuse API to the user layer to enable applications to obtain buffer availability information maintained by the protocol engine. In view of the buffer availability information, the application may adjust its data transfer rate.

Owner:INTEL CORP

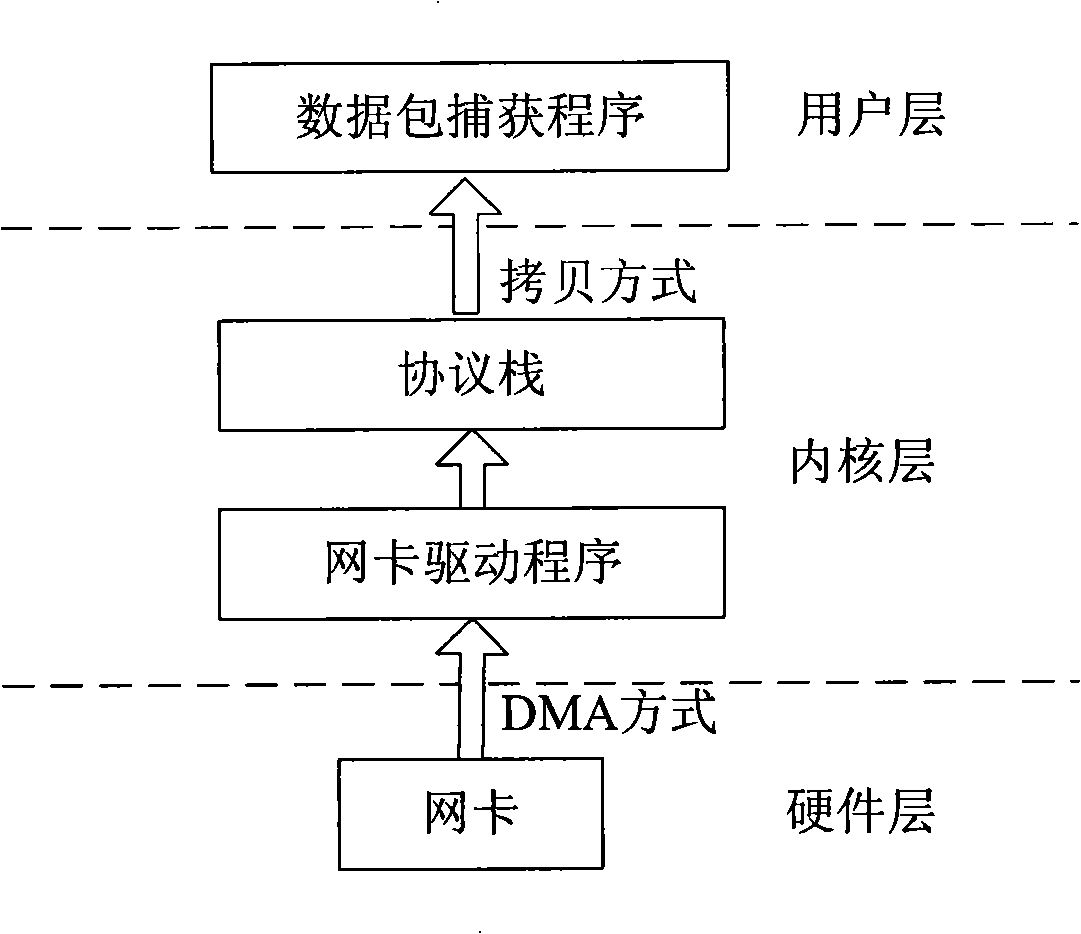

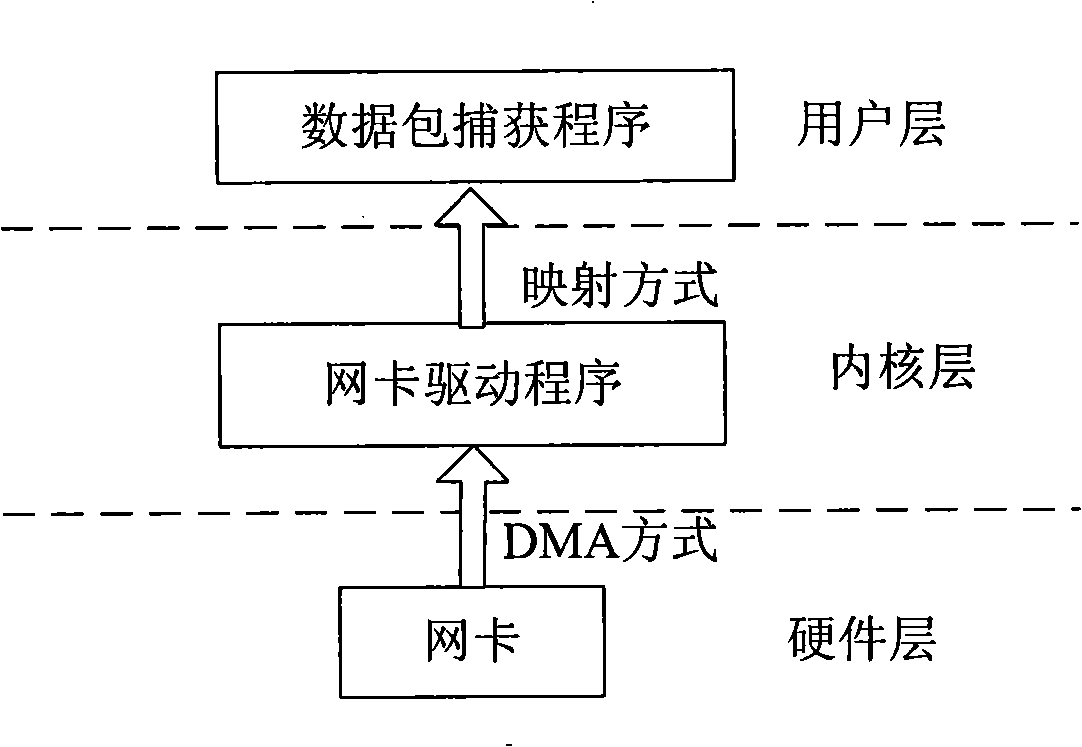

A high-speed network data packet capturing method based on zero duplication technology

InactiveCN101267361AImprove capture efficiencyReduce overheadData switching networksTraffic capacityOperational system

A capturing method for high speed network data packet based on the zero-copy technology, relating to field of network flow capacity monitoring and analysis. The method comprises a computer at least with one network card; the computer is arranged with operation system and data packet capturing program; the data packet capturing program captures the data packet via the network card. The method comprises following steps: 1) initialization of the network card, 2) initialization of the data packet capturing program, 3) starting data capture, being characterized in that: steps of the starting data capture are: a, the network card of a hardware layer passes the received data packet to the network card driven program of the core layer via DMA way; b, the data packet capturing program of a user layer obtains the data packet received by the network card driven program via mapping way. The capturing method for high speed network data packet based on zero-copy technology of this invention implements high speed network data packet capture based on the hardware platform and zero-copy technology. The invention has advantages of low system expense, high efficiency of capture and low reclaiming cost.

Owner:武汉飞思科技有限公司

Intel e1000 zero copy method

The invention relates to a transmitting method of the data communication in a computer and is a method of direct communication through the user applying procedure and network card hardware driving procedure. The kernel and the applying layer procedure commonly share one ring-shaped memory buffering district, the memory buffering district is divided into a number of slots (can also be regarded as array which can buffer store a number of messages), and each slot buffer stores one message. The network card driving adopts a DMA method to write message into a buffering district; and the applying layer space reads data from the buffering district. When a socket connector is used for controlling the connector using zero copying mechanism, e1000 receiving message driving starts to write date into the ring-shaped buffering district, the up layer applying can directly visit the data in the ring-shaped buffering district after the socket mechanism binding the connector and getting the mapped memory.

Owner:莱克斯科技(北京)有限公司

Zero-copy network I/O for virtual hosts

ActiveUS7721299B2Eliminate the problemSolve excessive overheadDigital computer detailsTransmissionComputer hardwareVirtualization

Techniques for virtualized computer system environments running one or more virtual machines that obviate the extra host operating system (O / S) copying steps required for sending and receiving packets of data over a network connection, thus eliminating major performance problems in virtualized environment. Such techniques include methods for emulating network I / O hardware device acceleration-assist technology providing zero-copy I / O sending and receiving optimizations. Implementation of these techniques require a host O / S to perform actions including, but not limited to: checking of the address translations (ensuring availability and data residency in physical memory), checking whether the destination of a network packet is local (to another virtual machine within the computing system), or across an external network; and, if local, checking whether either the sending destination VM, receiving VM process, or both, supports emulated hardware accelerated-assist on the same physical system. This optimization, in particular, provides a further optimization in that the packet data checksumming operations may be omitted when sending packets between virtual machines in the same physical system.

Owner:RED HAT

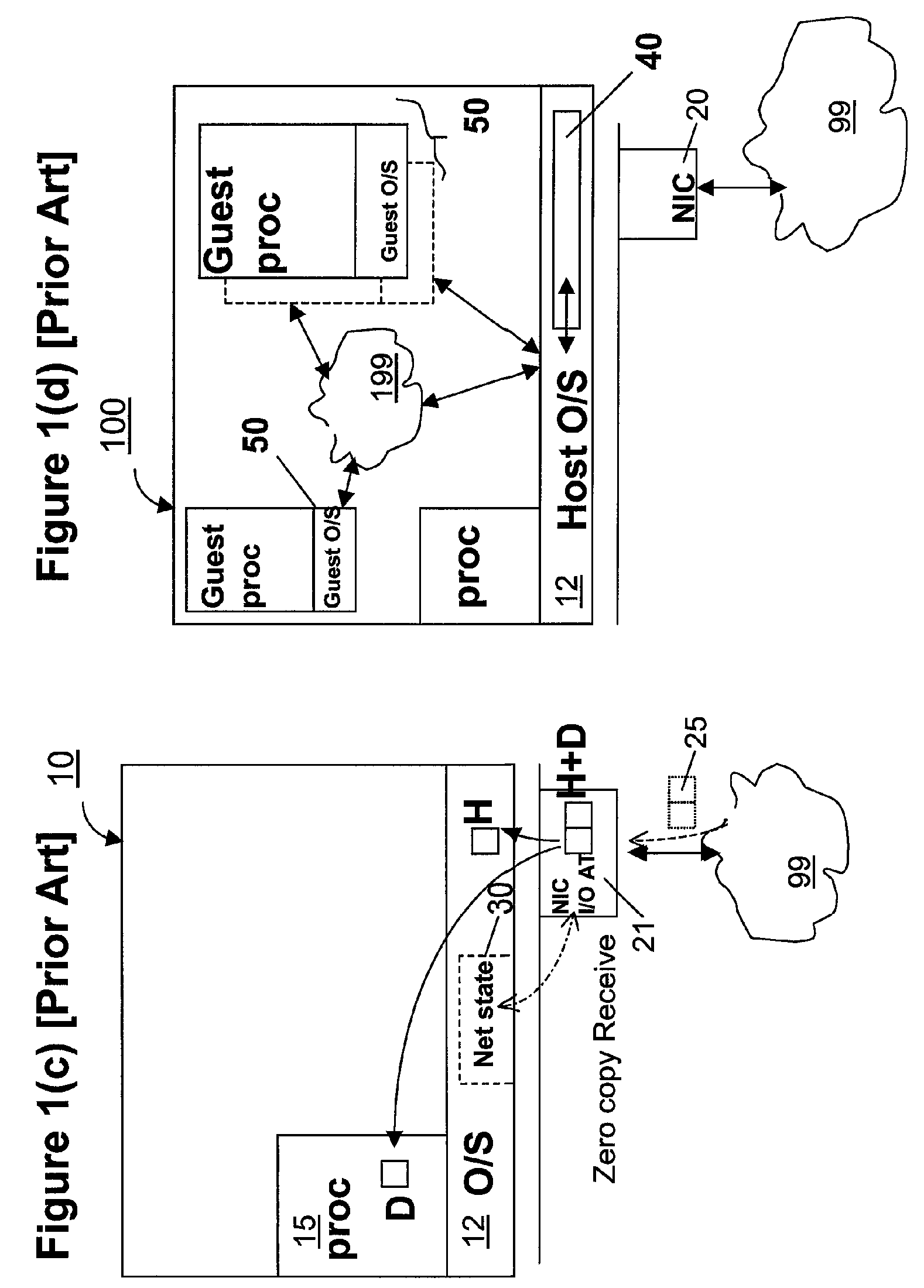

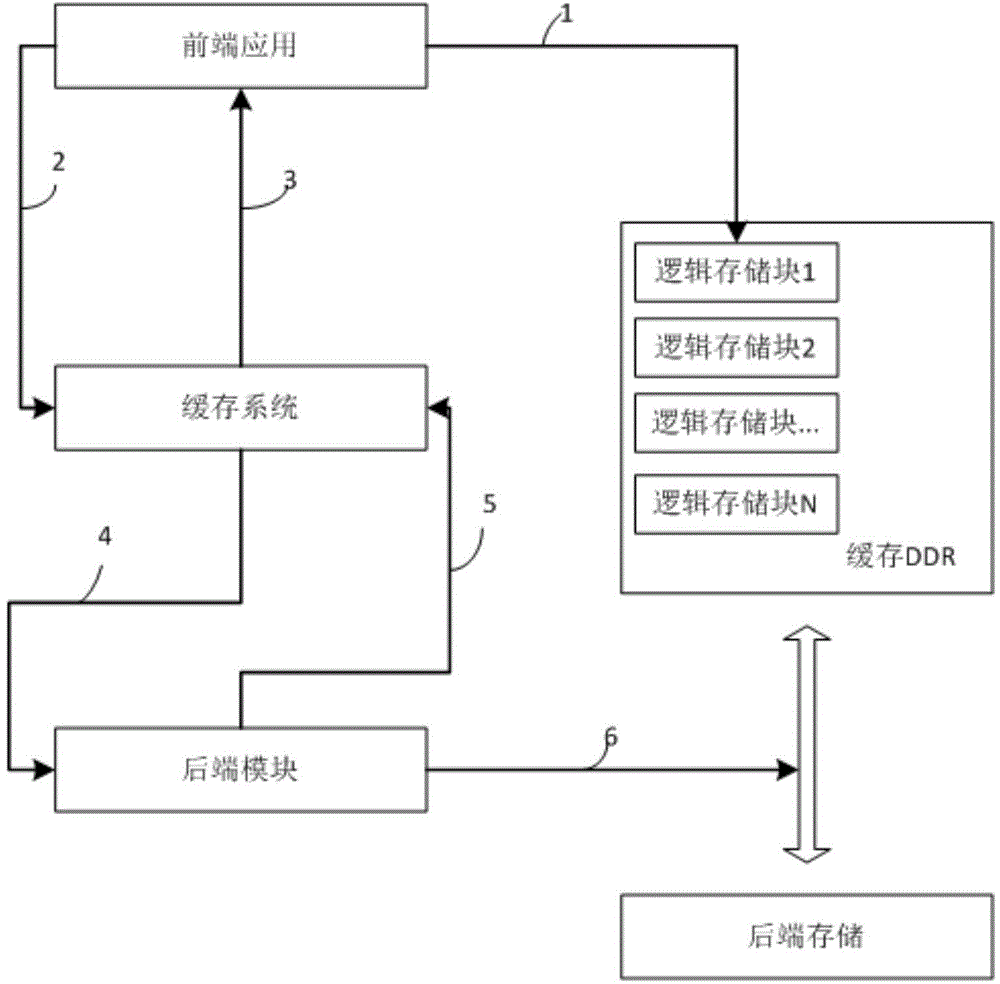

Flash memory storage system and reading, writing and deleting method thereof

ActiveCN104636285AAchieve zero copyWrite lessMemory adressing/allocation/relocationZero-copyMetadata record

The invention discloses a flash memory storage system and a reading, writing and deleting method thereof. The flash memory storage system comprises a cache, a main control module, a cache metadata record sheet, a read mapping table and a write mapping table. The write mapping table is used for being stored in the cache and writing in the correspondence of a logical storage block and a physical storage block. The read mapping table is used for being stored in the cache and reading out the correspondence of the logical storage block and the physical storage block. The cache metadata record sheet is used for storing correspondences of metadata sheet addresses, the physical storage blocks and rear end flash memory addresses. By means of the flash memory storage system, the unnecessary write-in or read-out of a rear end flash memory can be reduced, the zero copy on a read-write data channel is achieved, the unnecessary intermediate copy process is omitted, and therefore the read-write efficiency is improved; the size of the read-write access of a front end application can be matched with the size of the rear end flash memory.

Owner:BEIJING ZETTASTONE TECH CO LTD

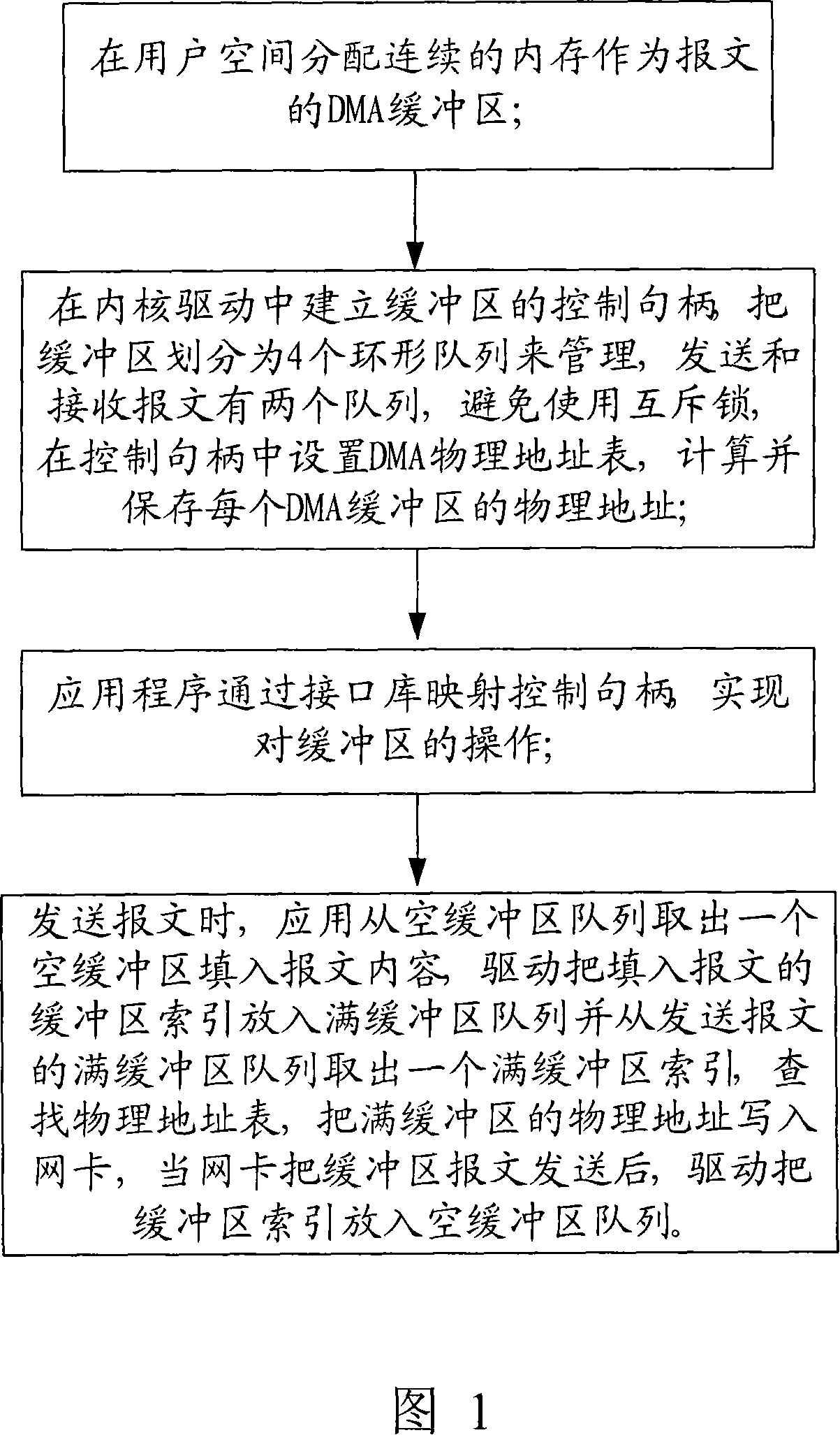

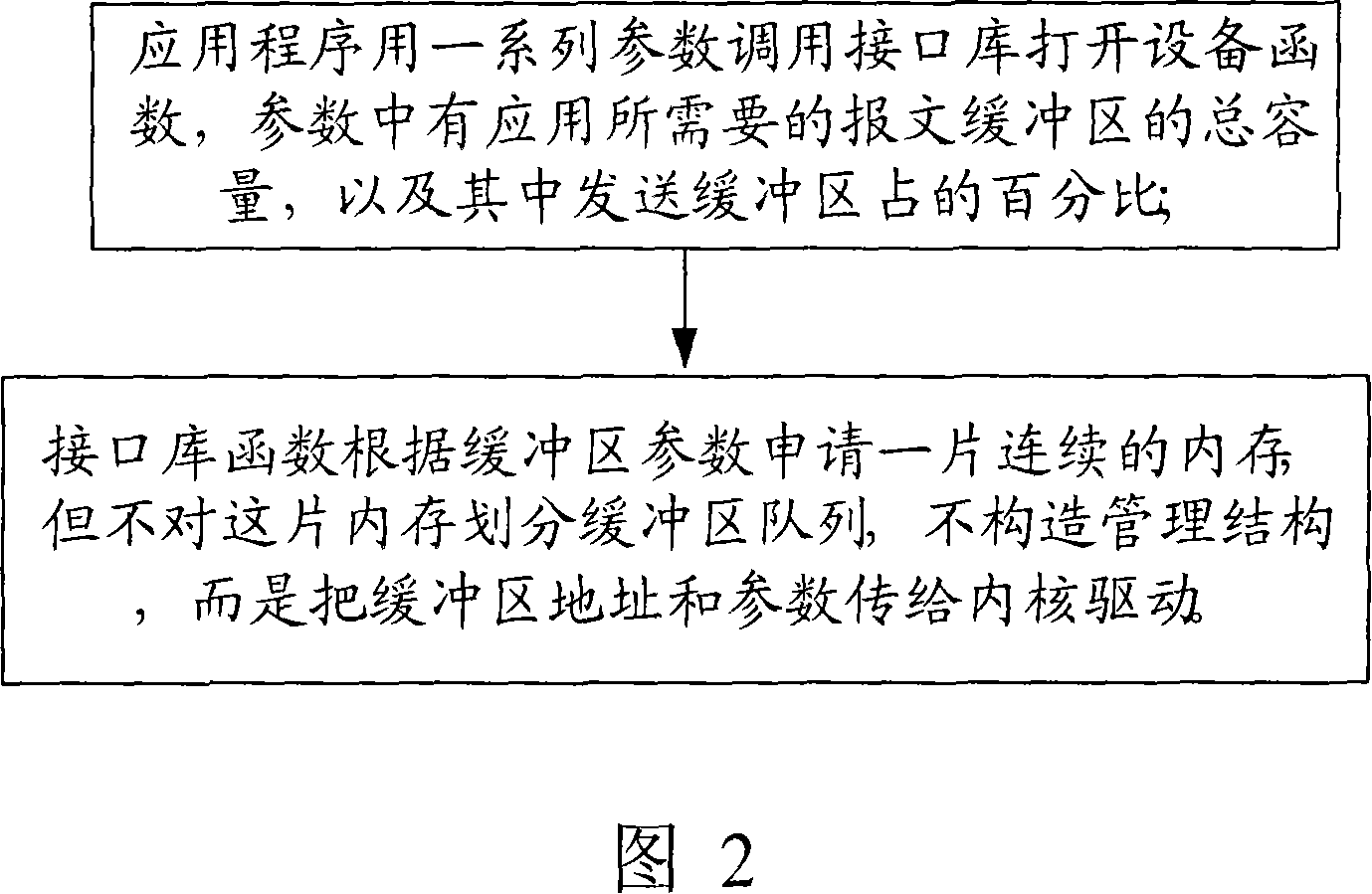

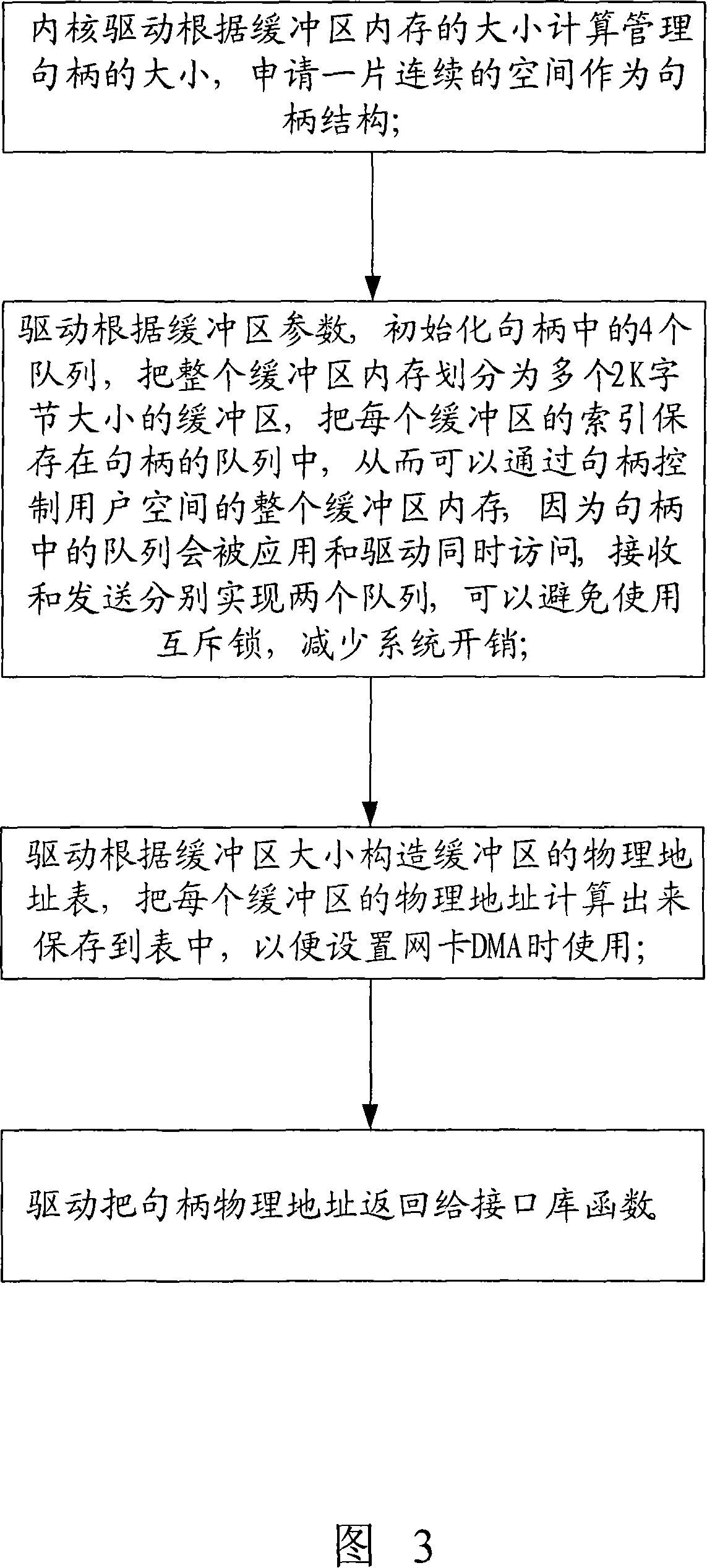

A management method for network data transmission of zero copy buffer queue

InactiveCN101150485AImprove timelinessIncrease flexibilityStore-and-forward switching systemsElectric digital data processingZero-copyPhysical address

The invention discloses a management method for network data transmission of zero copy buffer queue, which belongs to the field of management method of data buffer queue of network data zero copy. The technical proposal of the invention includes the following operating steps: A. a continuous-memory allocated in a user space is taken as a DMA buffer for messages; B. a control handle of the buffer is established inside a kernel-mode driver; C. the control handle is mapped by an application program through an interface repository so as to realize operation of the buffer; and D. when messages are transmitted, the physical address of a full buffer is written into a network card by the driver, a buffer filled with messages taken out from the full buffer is applied, then the buffer is positioned inside an empty buffer queue after the messages are transmitted. The invention is applicable to the flexible management of a message buffer by a zero copy driver.

Owner:DAWNING INFORMATION IND BEIJING

System and method for zero copy block protocol write operations

ActiveUS7249227B1Overcome disadvantagesImprove system performanceTransmissionMemory systemsSCSIZero-copy

A system and method for zero copy block protocol write operations obviates the need to copy the contents of memory buffers (mbufs) at a storage system. A storage operating system of the storage system receives data from a network and stores the data in chains of mbufs having various lengths. An iSCSI driver processes (interprets) the received mbufs and passes the appropriate data and interpreted command to a SCSI target module of the storage operating system. The SCSI target module utilizes the mbufs and the data contained therein to perform appropriate write operations in response to write requests received from clients.

Owner:NETWORK APPLIANCE INC

Packet capture engine for commodity network interface cards in high-speed networks

ActiveUS20160127276A1Better able to addressDigital computer detailsData switching networksZero-copyMulticore architecture

A method, systems for a packet capture engine for commodity network interface cards (NICs) in high-speed networks that provides lossless zero-copy packet capture and delivery services by exploiting multi-queue NICs and multicore architectures. The methods, systems include a ring-buffer-pool mechanism and a buddy-group based offloading mechanism.

Owner:FERMI RESEARCH ALLIANCE LLC

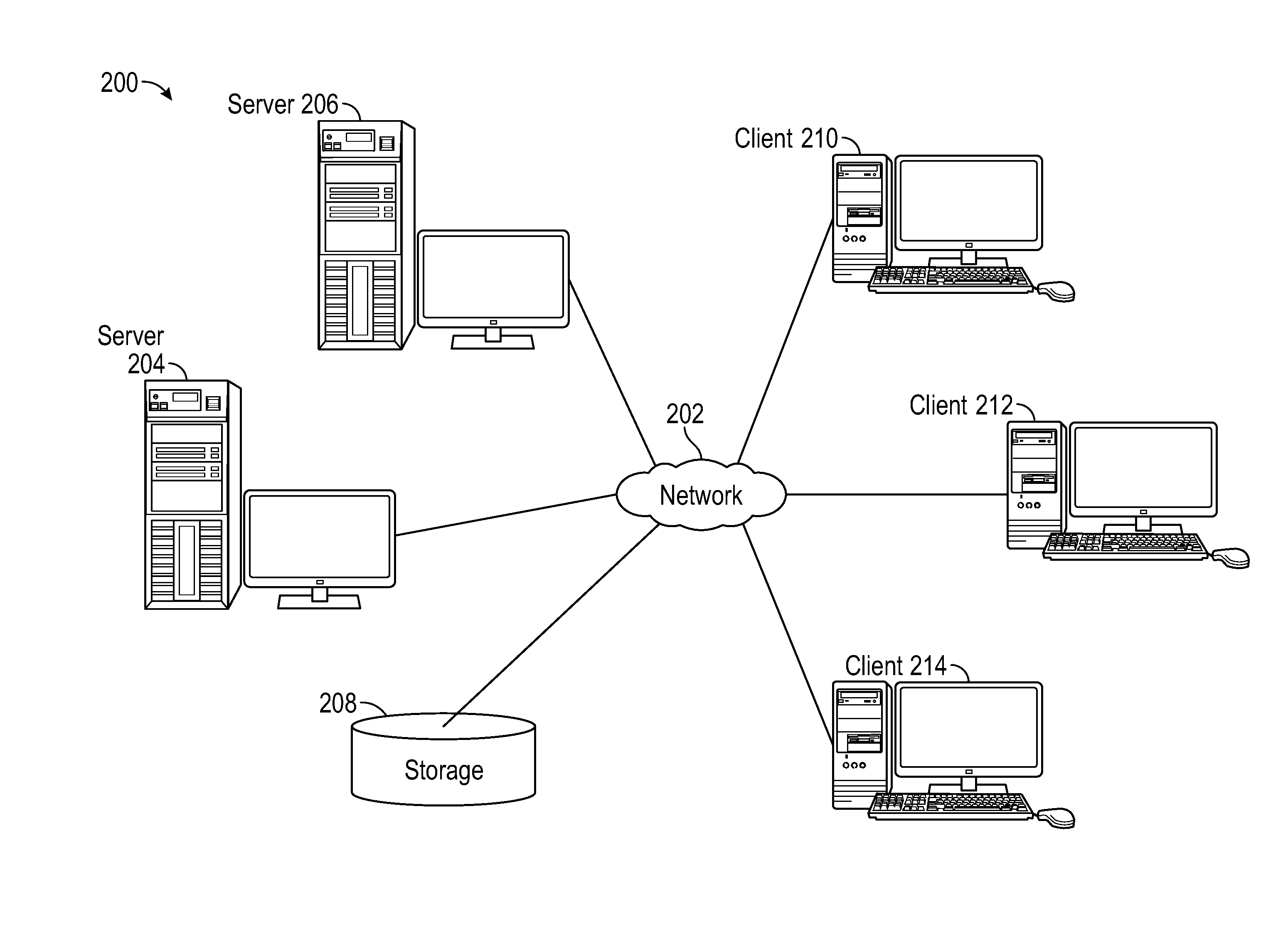

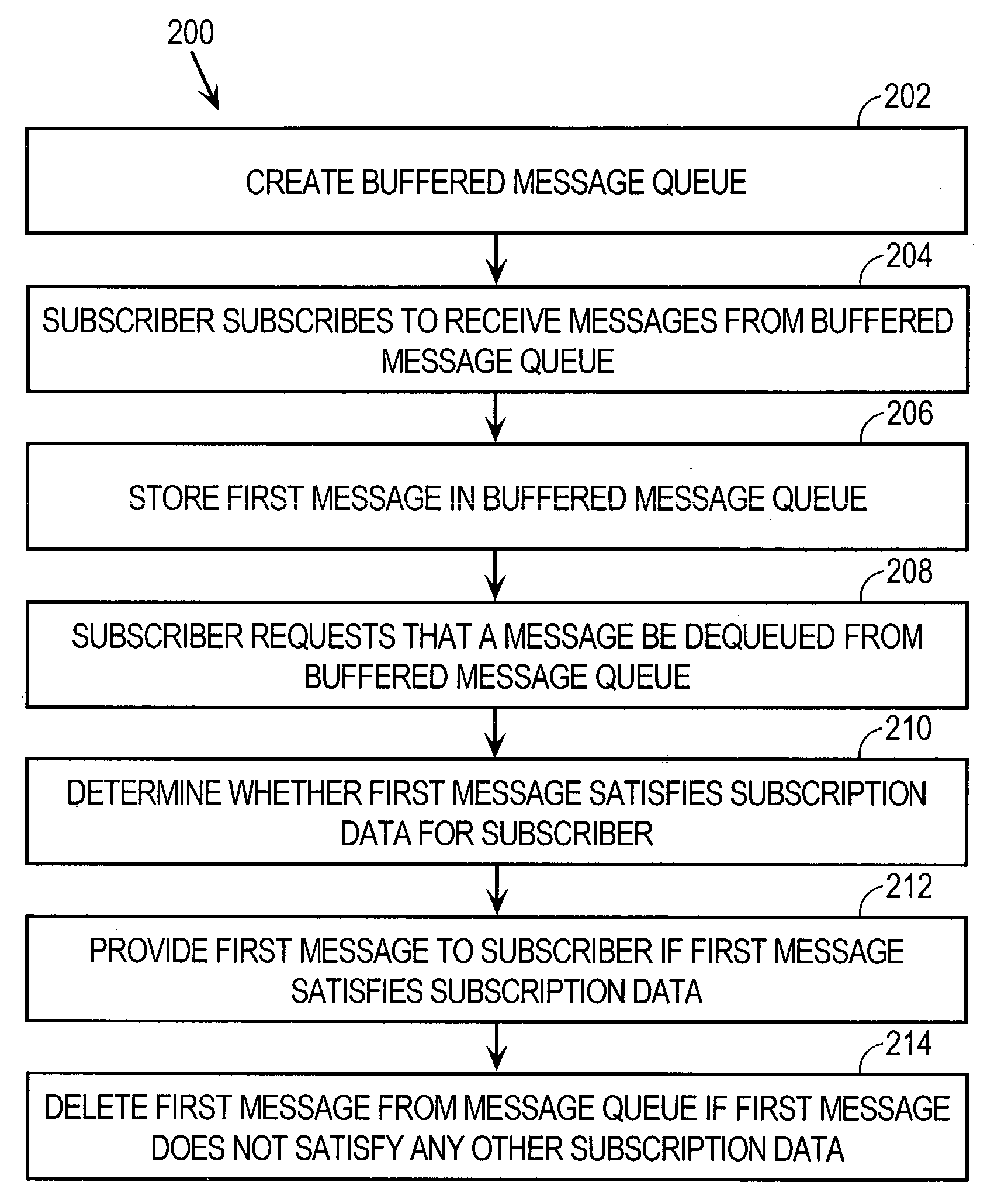

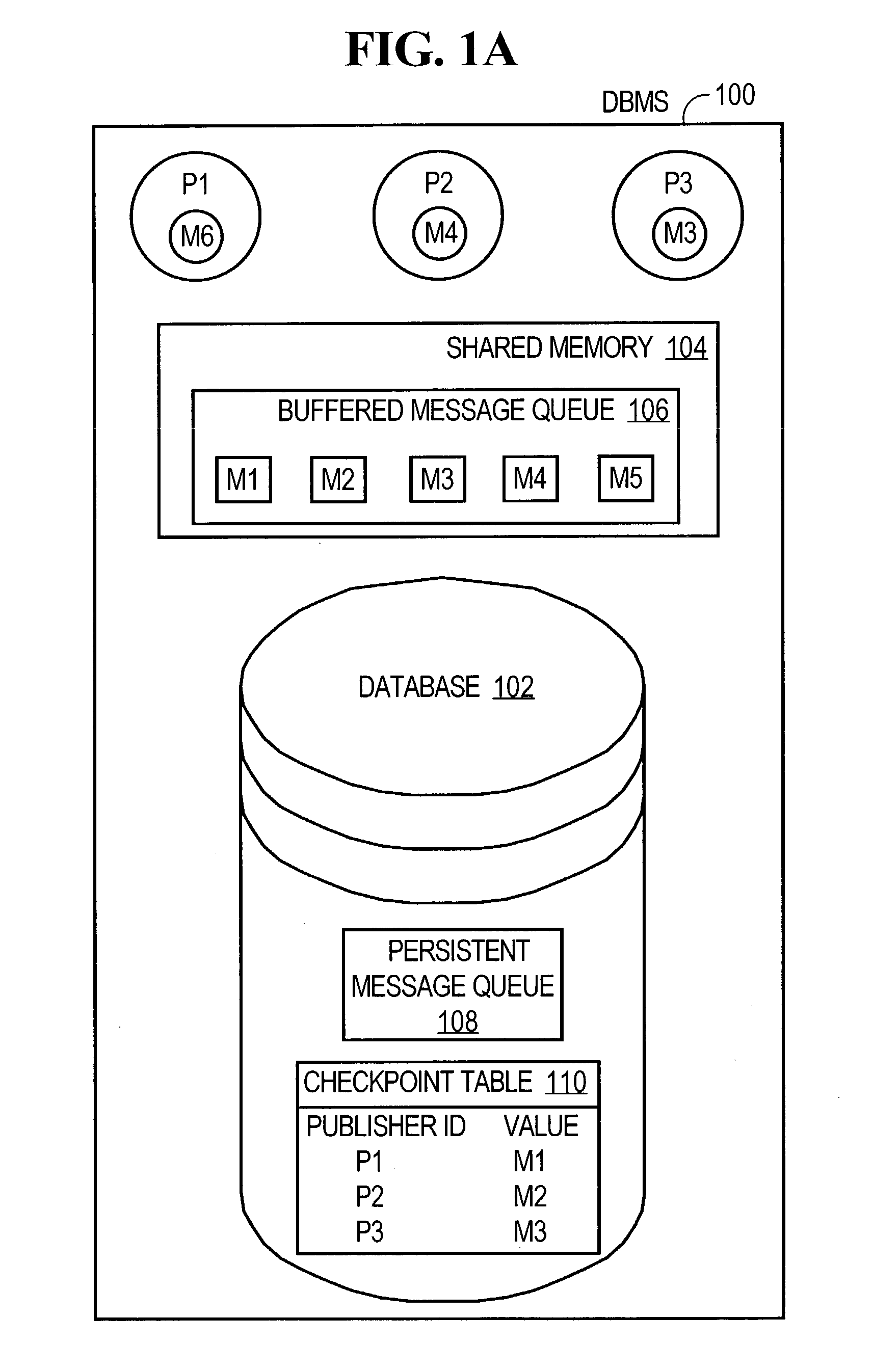

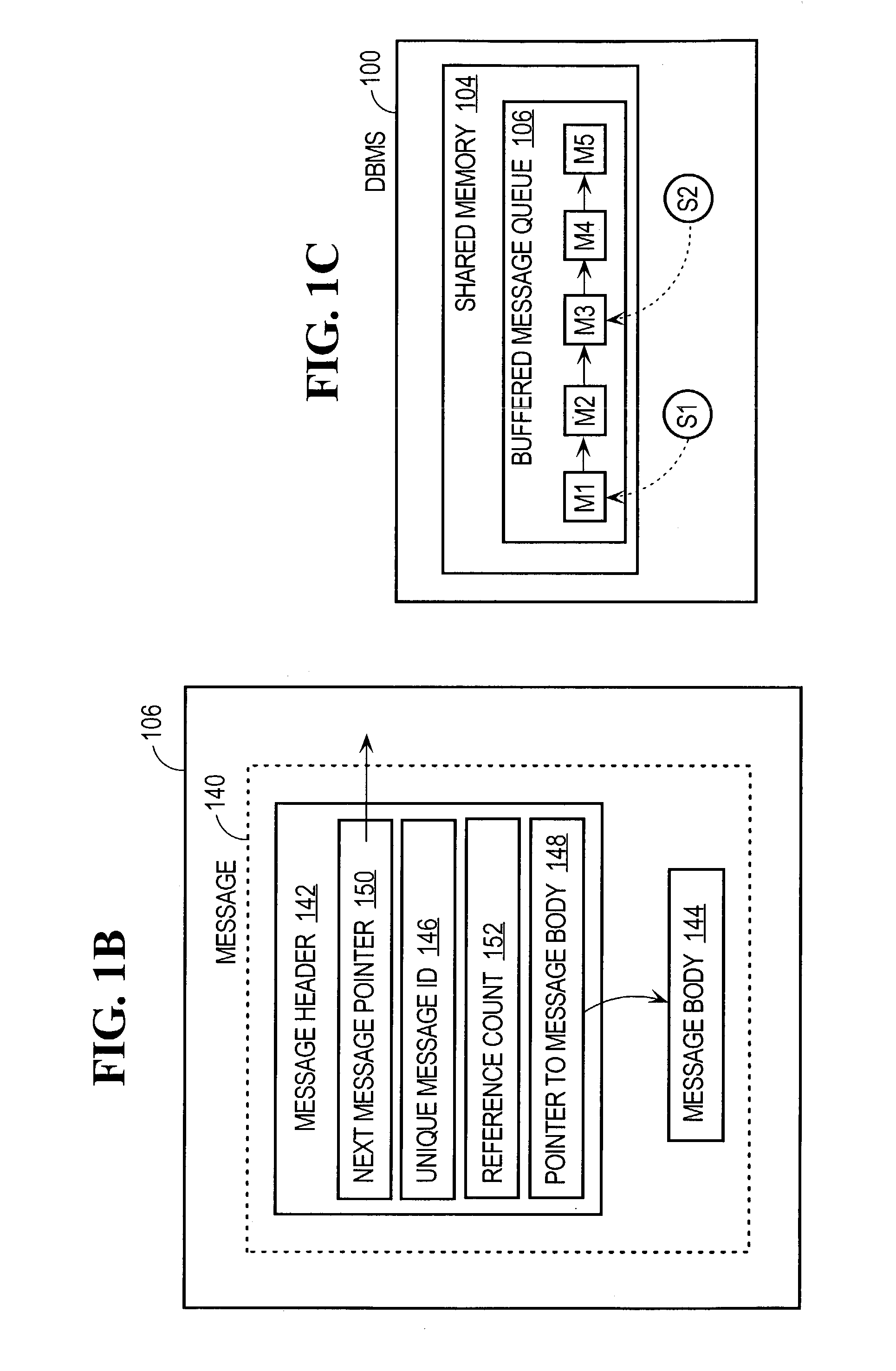

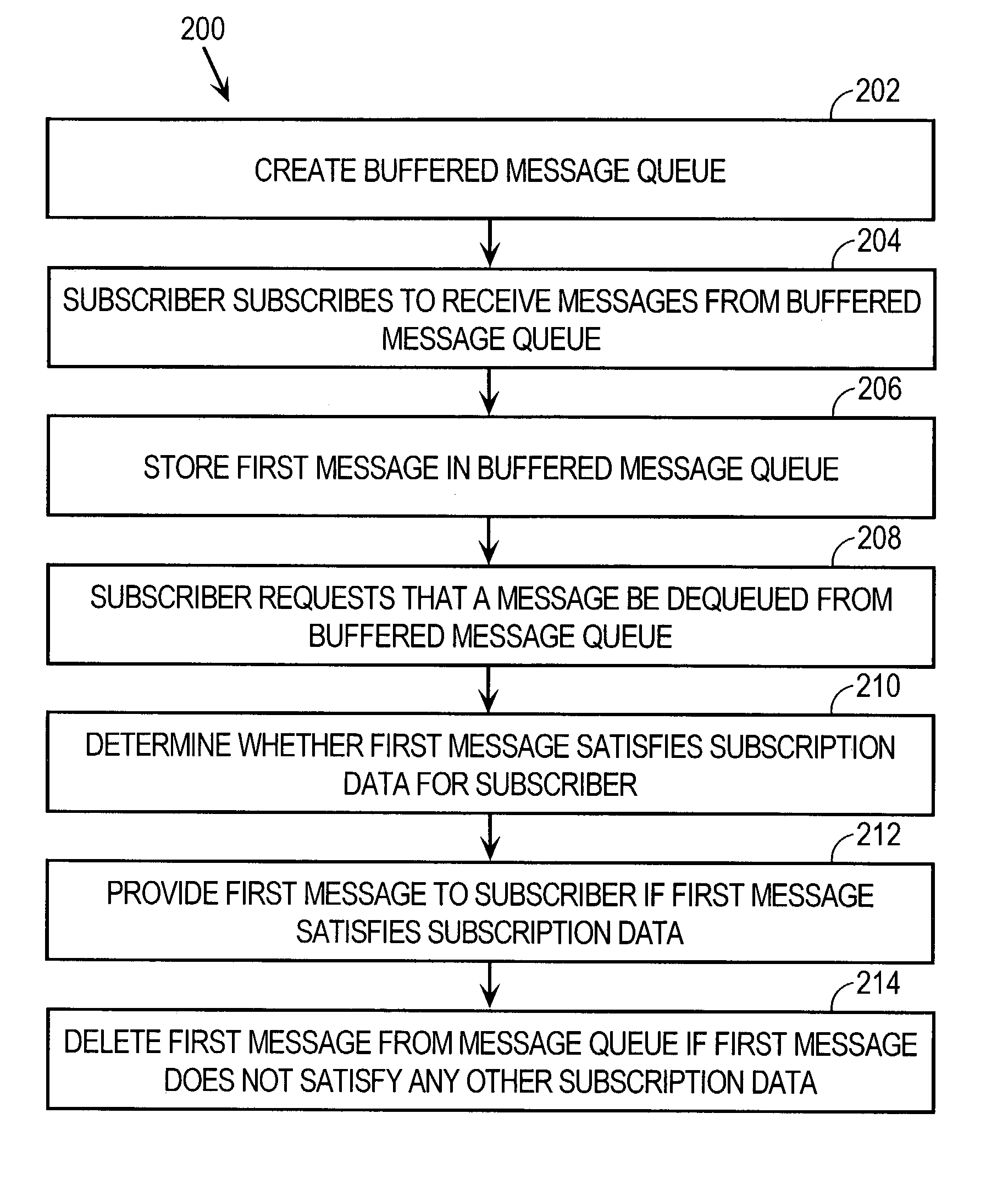

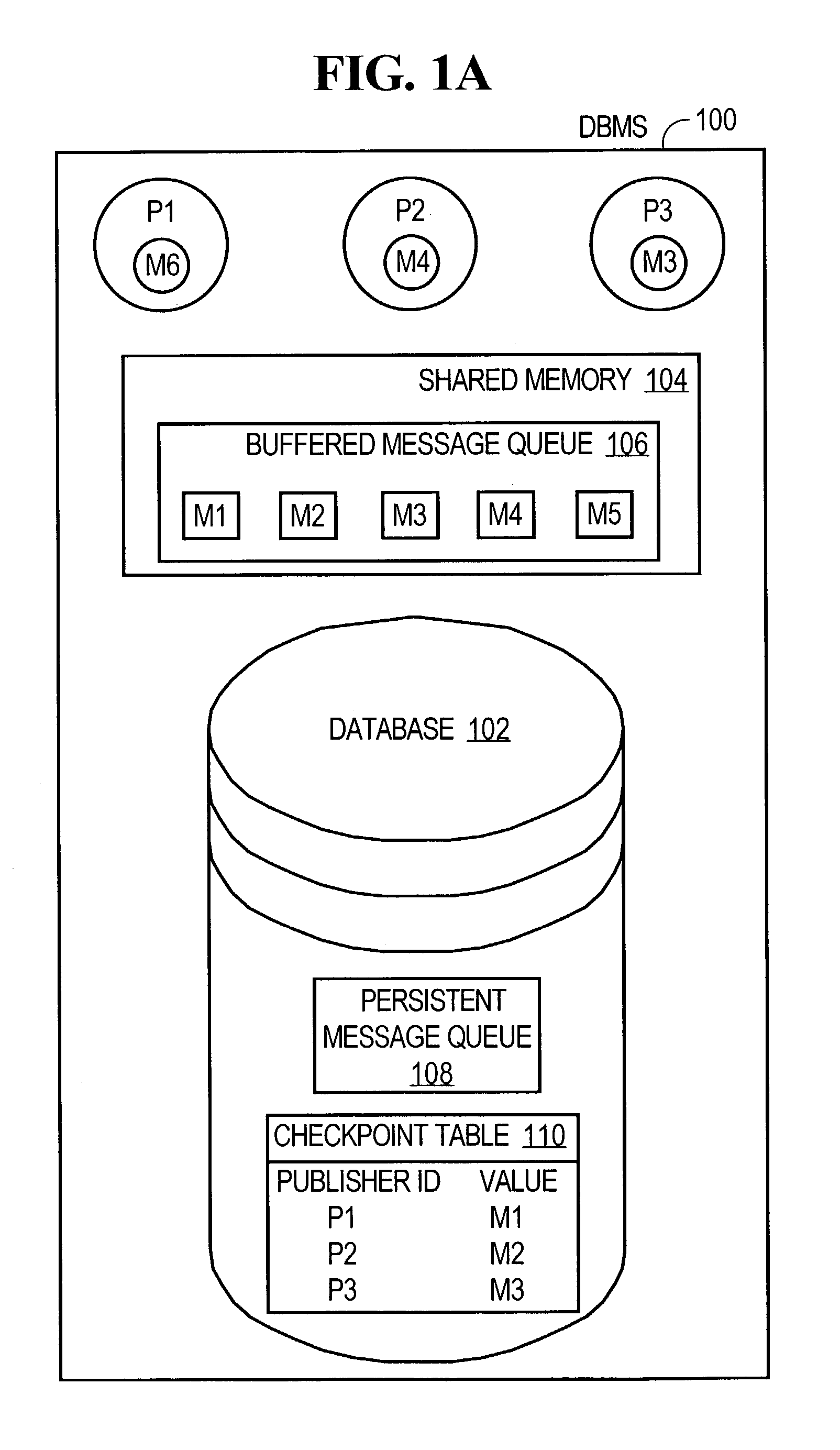

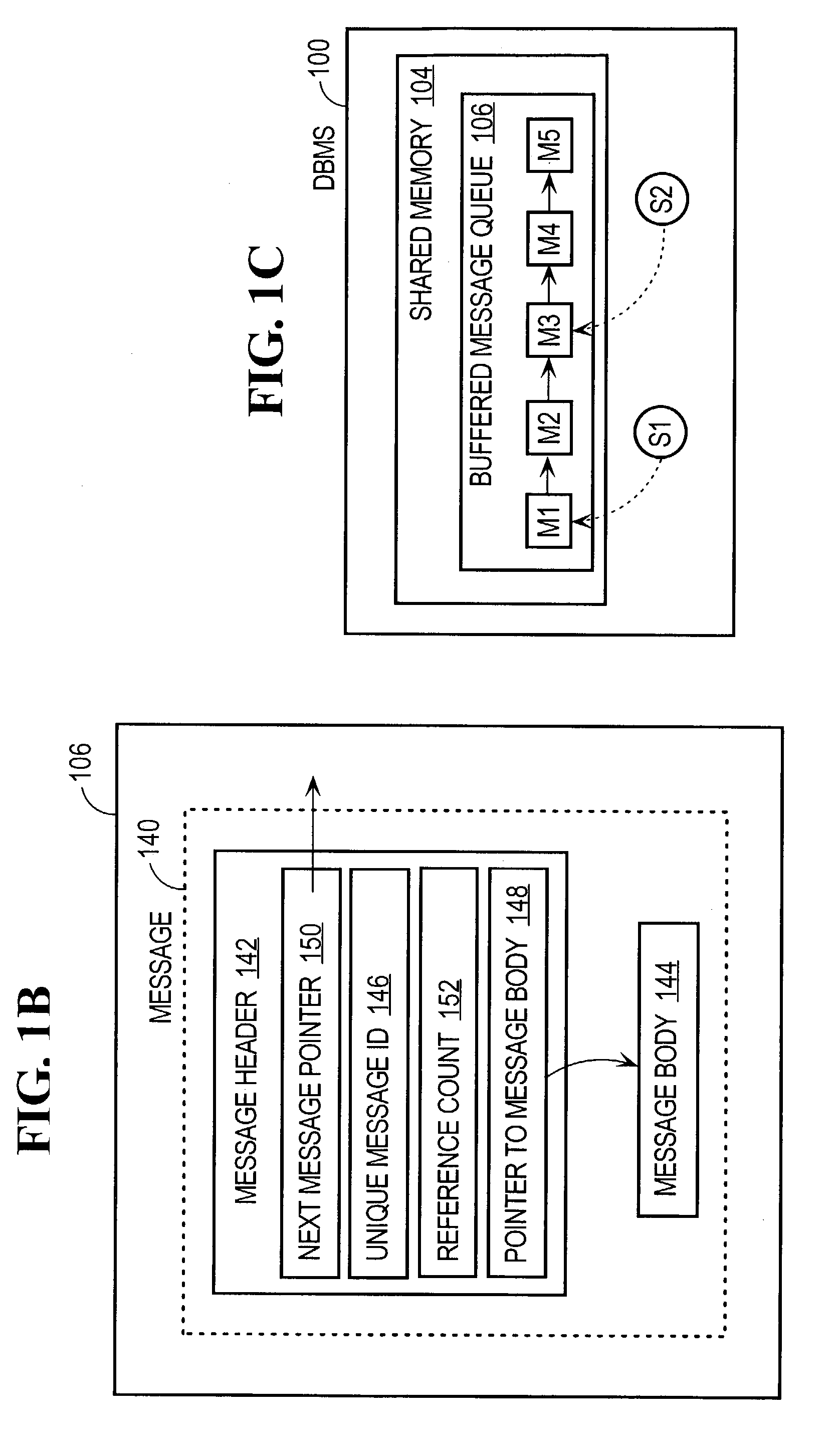

Buffered message queue architecture for database management systems with unlimited buffered message queue with limited shared memory

ActiveUS7185033B2Data processing applicationsError detection/correctionZero-copyApplication software

A buffered message queue architecture for managing messages in a database management system is disclosed. A “buffered message queue” refers to a message queue implemented in a volatile memory, such as a RAM. The volatile memory may be a shared volatile memory that is accessible by a plurality of processes. The buffered message queue architecture supports a publish and subscribe communication mechanism, where the message producers and message consumers may be decoupled from and independent of each other. The buffered message queue architecture provides all the functionality of a persistent publish-subscriber messaging system, without ever having to store the messages in persistent storage. The buffered message queue architecture provides better performance and scalability since no persistent operations are needed and no UNDO / REDO logs need to be maintained. Messages published to the buffered message queue are delivered to all eligible subscribers at least once, even in the event of failures, as long as the application is “repeatable.” The buffered message queue architecture also includes management mechanisms for performing buffered message queue cleanup and also for providing unlimited size buffered message queues when limited amounts of shared memory are available. The architecture also includes “zero copy” buffered message queues and provides for transaction-based enqueue of messages.

Owner:ORACLE INT CORP

Buffered message queue architecture for database management systems with guaranteed at least once delivery

ActiveUS7185034B2Data processing applicationsSpecial data processing applicationsZero-copyApplication software

A buffered message queue architecture for managing messages in a database management system is disclosed. A “buffered message queue” refers to a message queue implemented in a volatile memory, such as a RAM. The volatile memory may be a shared volatile memory that is accessible by a plurality of processes. The buffered message queue architecture supports a publish and subscribe communication mechanism, where the message producers and message consumers may be decoupled from and independent of each other. The buffered message queue architecture provides all the functionality of a persistent publish-subscriber messaging system, without ever having to store the messages in persistent storage. The buffered message queue architecture provides better performance and scalability since no persistent operations are needed and no UNDO / REDO logs need to be maintained. Messages published to the buffered message queue are delivered to all eligible subscribers at least once, even in the event of failures, as long as the application is “repeatable.” The buffered message queue architecture also includes management mechanisms for performing buffered message queue cleanup and also for providing unlimited size buffered message queues when limited amounts of shared memory are available. The architecture also includes “zero copy” buffered message queues and provides for transaction-based enqueue of messages.

Owner:ORACLE INT CORP

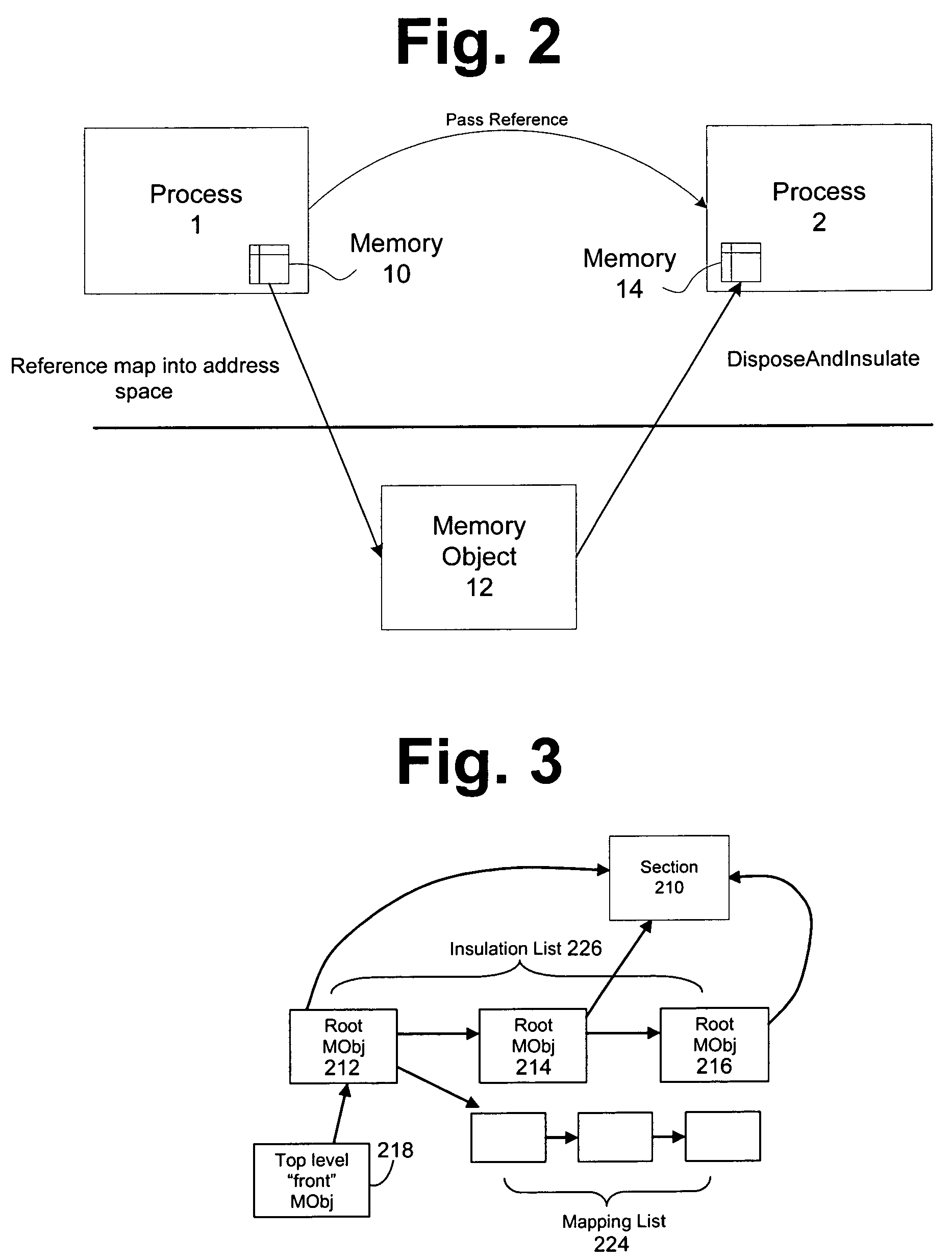

Zero-copy transfer of memory between address spaces

Methods for performing zero-copy memory transfers between processes or services using shared memory without the overhead of current schemes. An IPC move semantic may be used that allows a sender to combine passing a reference and releasing it within the same IPC call. An insulate method removes all references to the original object and creates a new object pointing to the original memory if a receiver requires exclusive access. Alternatively, if a receiving process or service seeks read-only access, the sender unmaps its access to the buffer before sending to the receiver. When the insulate operation is initiated, the kernel detects an object with multiple active references but no active mappings and provides a mapping to the memory without taking a copy or copy-on-write.

Owner:MICROSOFT TECH LICENSING LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com