Patents

Literature

Hiro is an intelligent assistant for R&D personnel, combined with Patent DNA, to facilitate innovative research.

471 results about "Wire speed" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

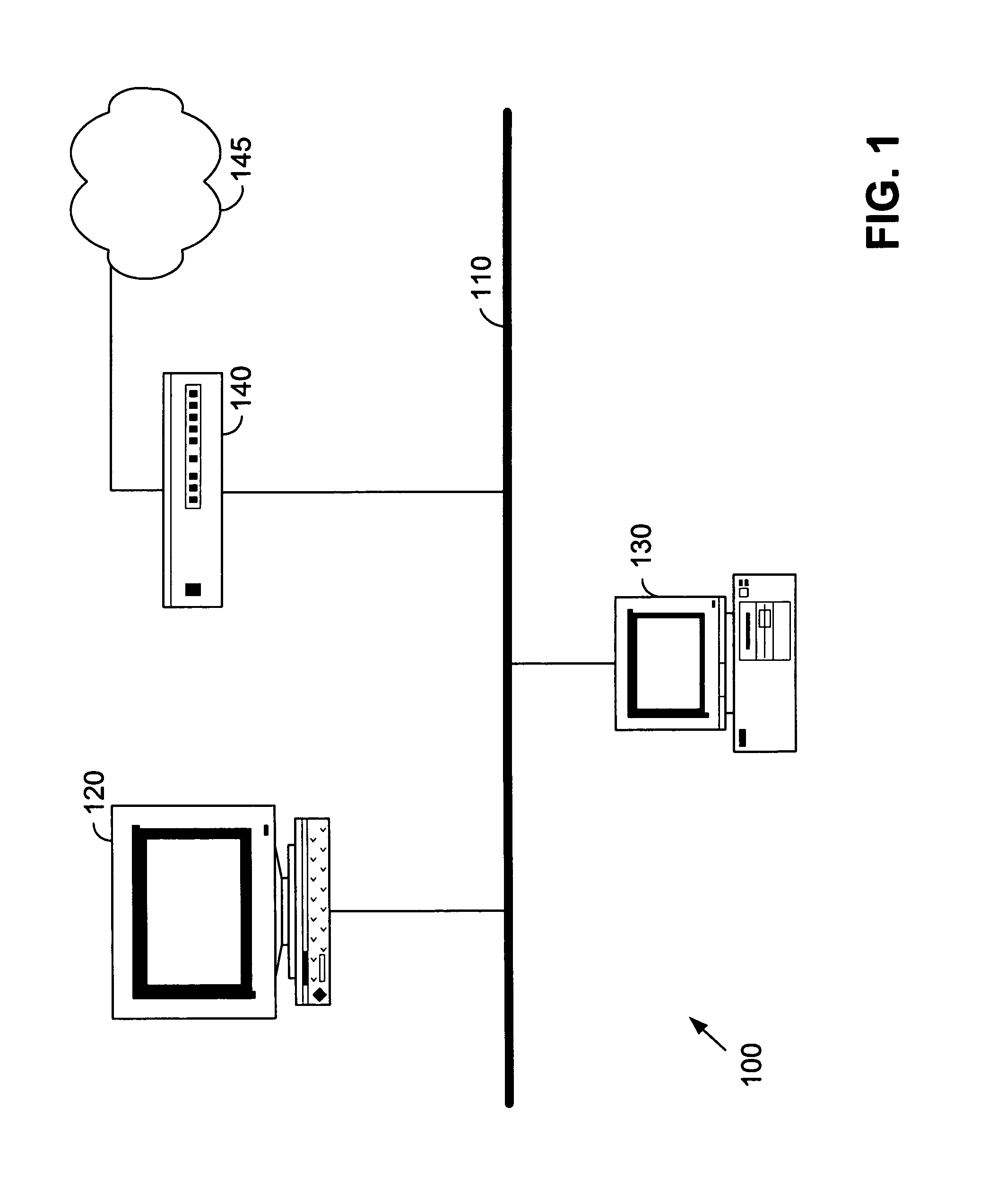

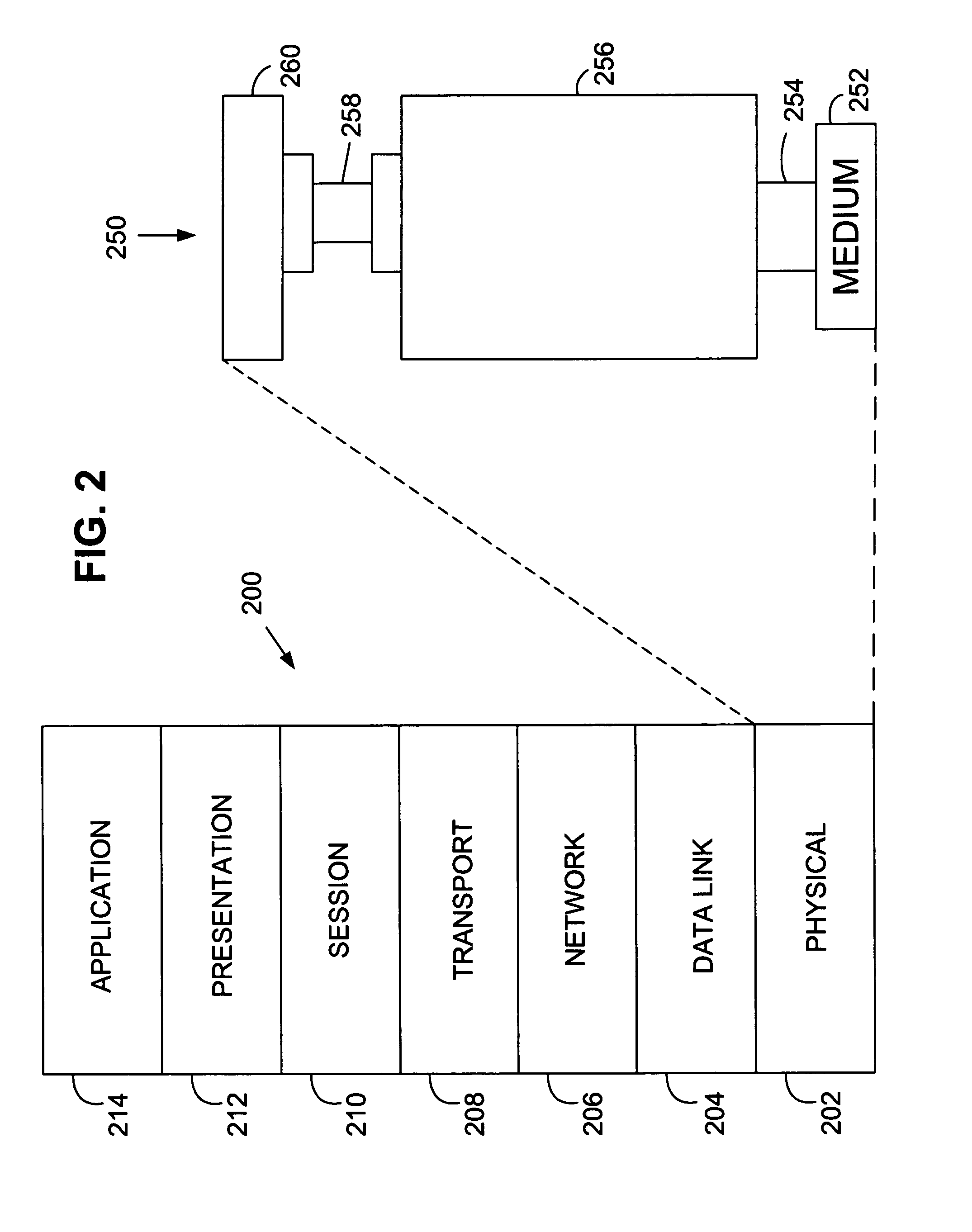

In computer networking, wire speed or wirespeed refers to the hypothetical peak physical layer net bitrate (useful information rate) of a cable (consisting of fiber-optical wires or copper wires) combined with a certain digital communication device, interface, or port. For example, the wire speed of Fast Ethernet is 100 Mbit/s also known as the peak bitrate, connection speed, useful bit rate, information rate, or digital bandwidth capacity. The wire speed is the data transfer rate that a telecommunications standard provides at a reference point between the physical layer and the datalink layer.

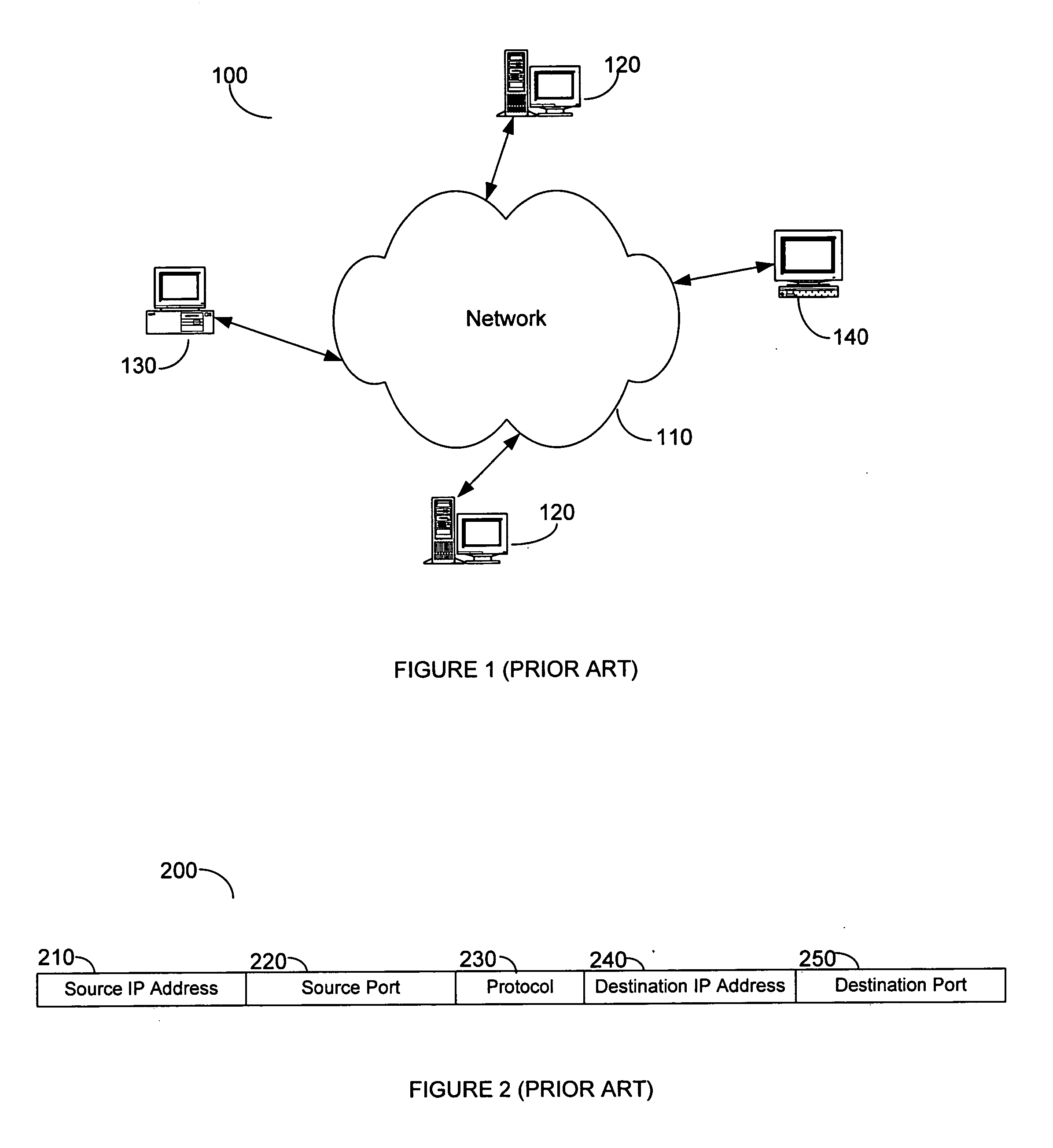

Statistical classification of high-speed network data through content inspection

InactiveUS20050060295A1Good marginEasy to separateData switching networksSpecial data processing applicationsFeature extractionStatistical classification

A network data classifier statistically classifies received data at wire-speed by examining, in part, the payloads of packets in which such data are disposed and without having a priori knowledge of the classification of the data. The network data classifier includes a feature extractor that extract features from the packets it receives. Such features include, for example, textual or binary patterns within the data or profiling of the network traffic. The network data classifier further includes a statistical classifier that classifies the received data into one or more pre-defined categories using the numerical values representing the features extracted by the feature extractor. The statistical classifier may generate a probability distribution function for each of a multitude of classes for the received data. The data so classified are subsequently be processed by a policy engine. Depending on the policies, different categories may be treated differently.

Owner:INTEL CORP +1

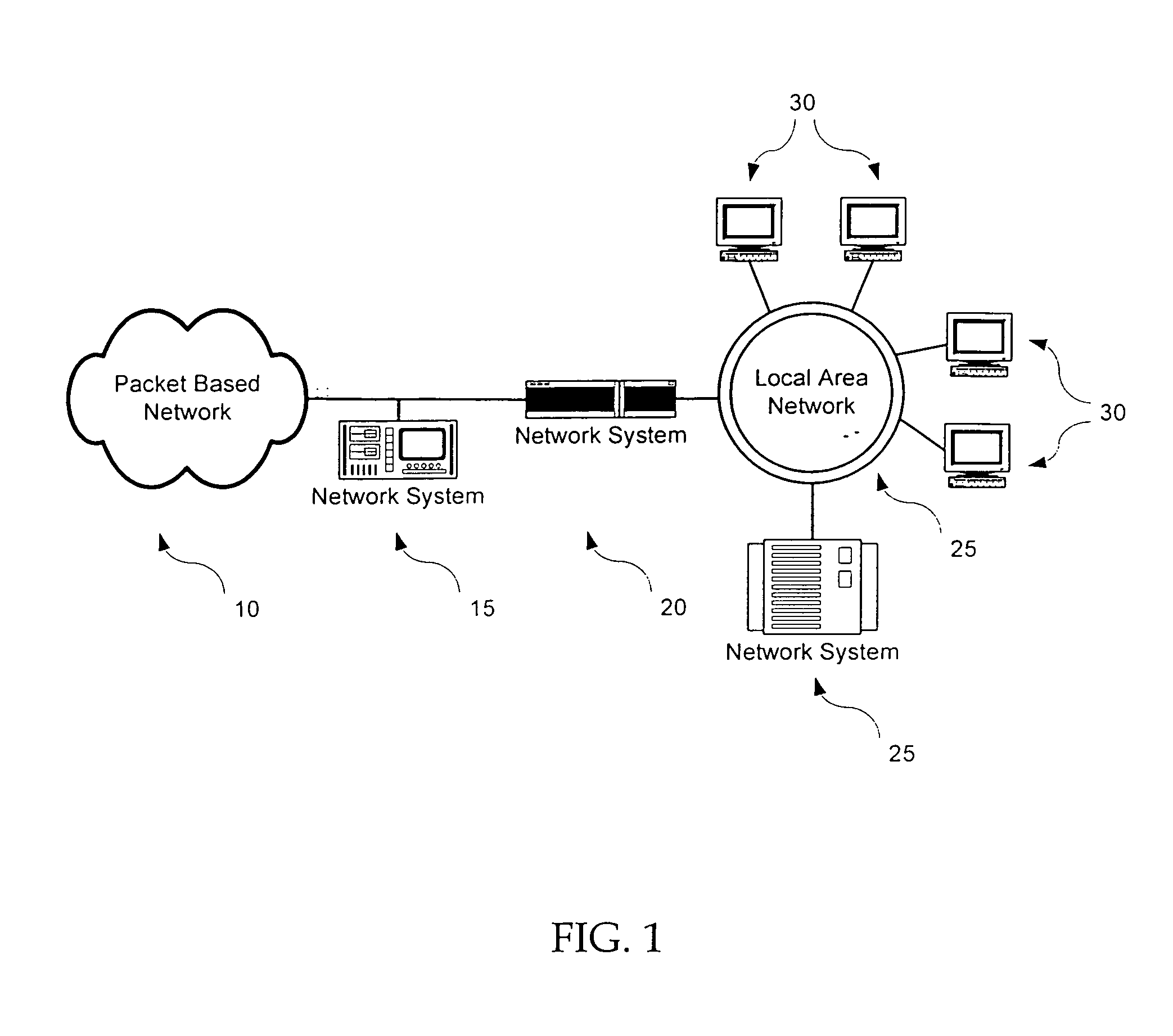

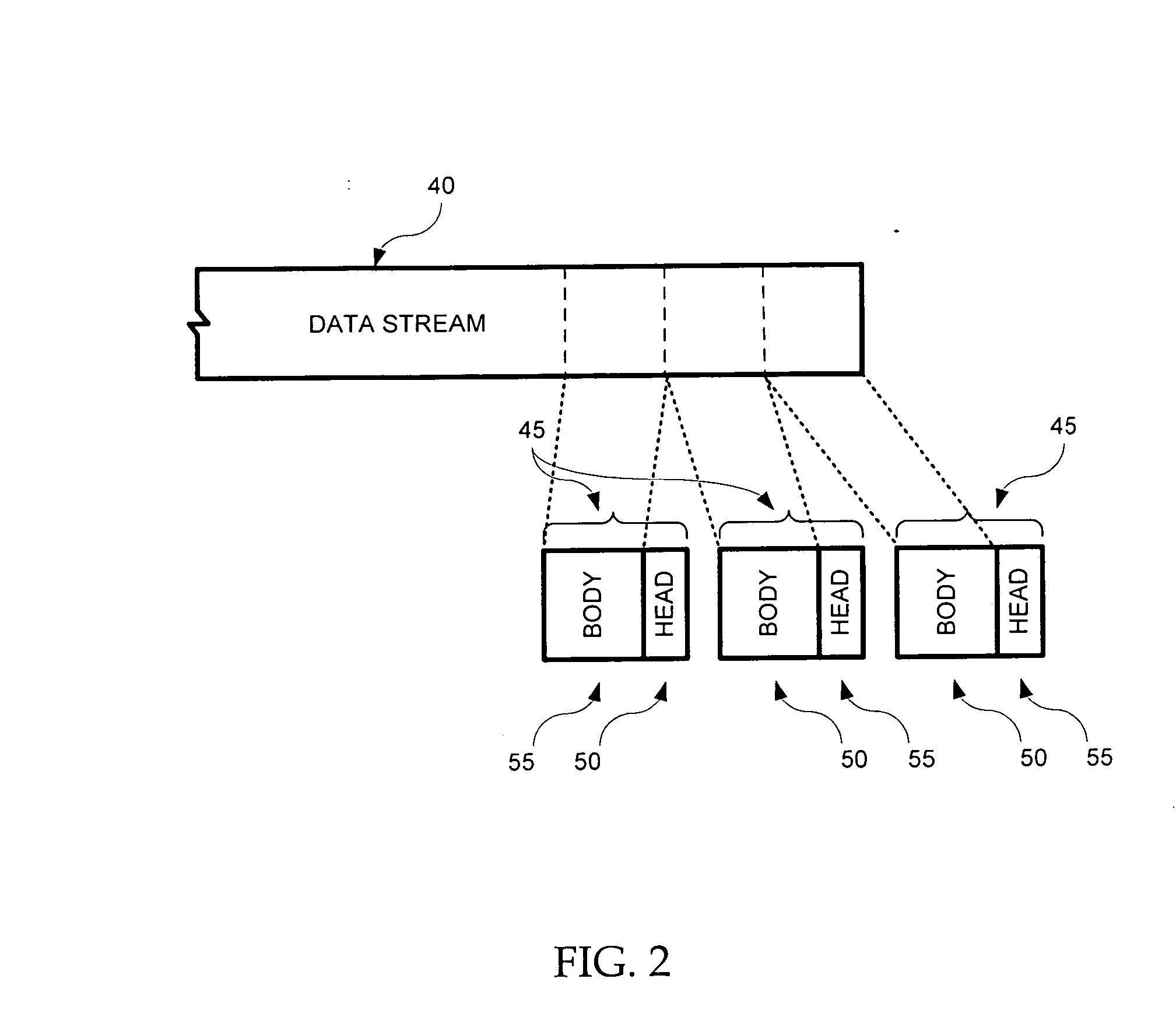

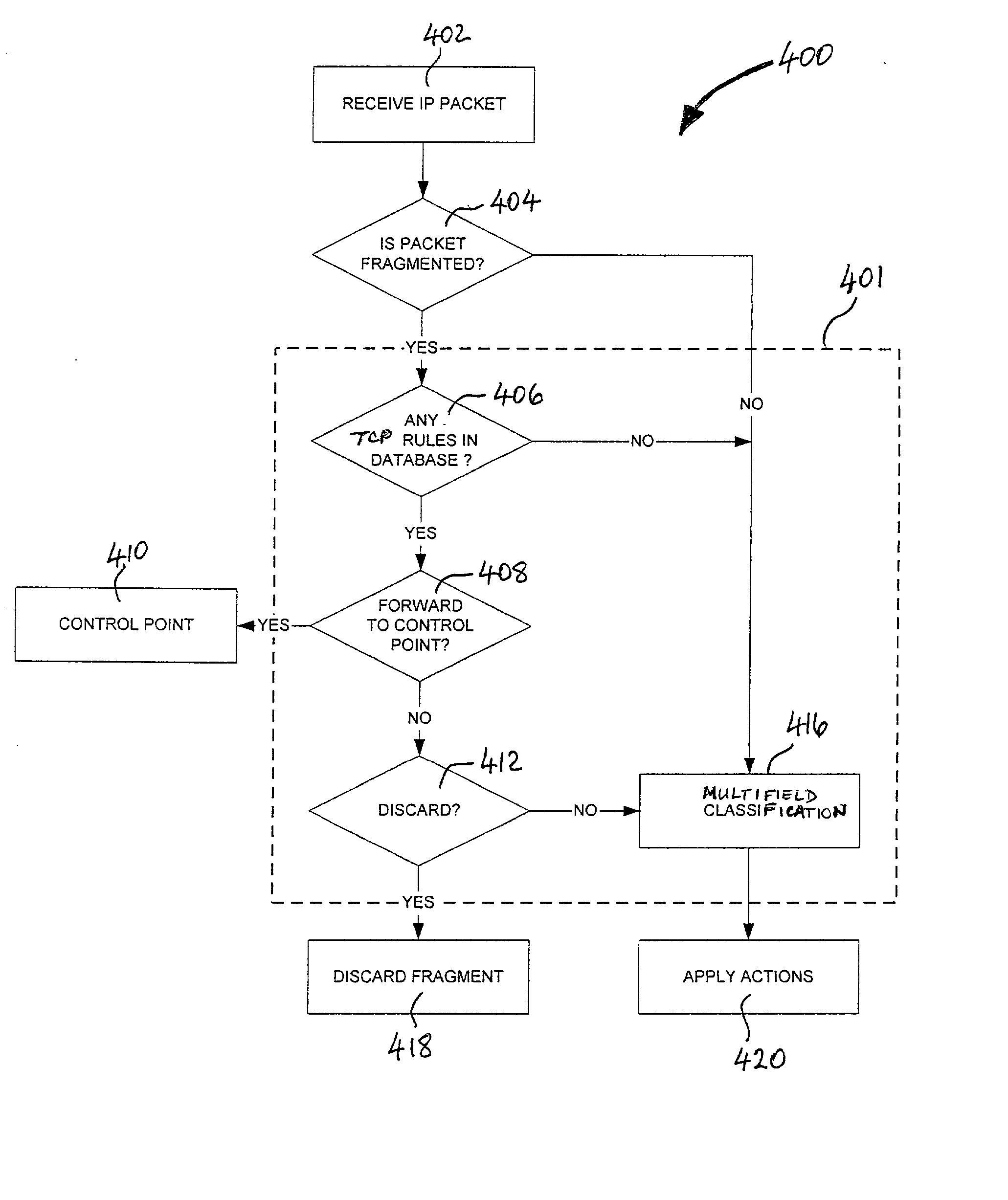

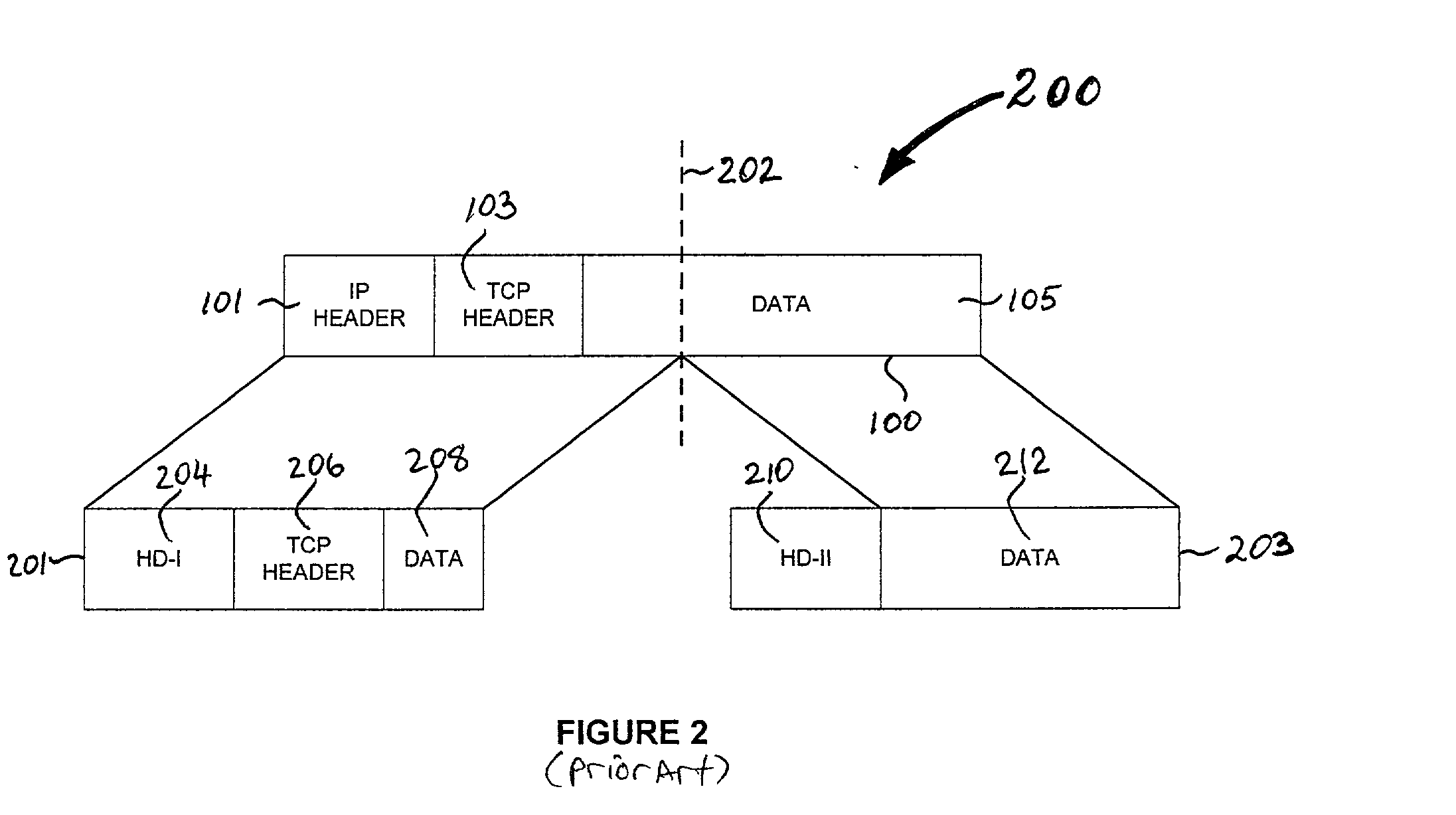

Classification support system and method for fragmented IP packets

InactiveUS20030126272A1Data processing applicationsMultiple digital computer combinationsSupporting systemWire speed

Owner:IBM CORP

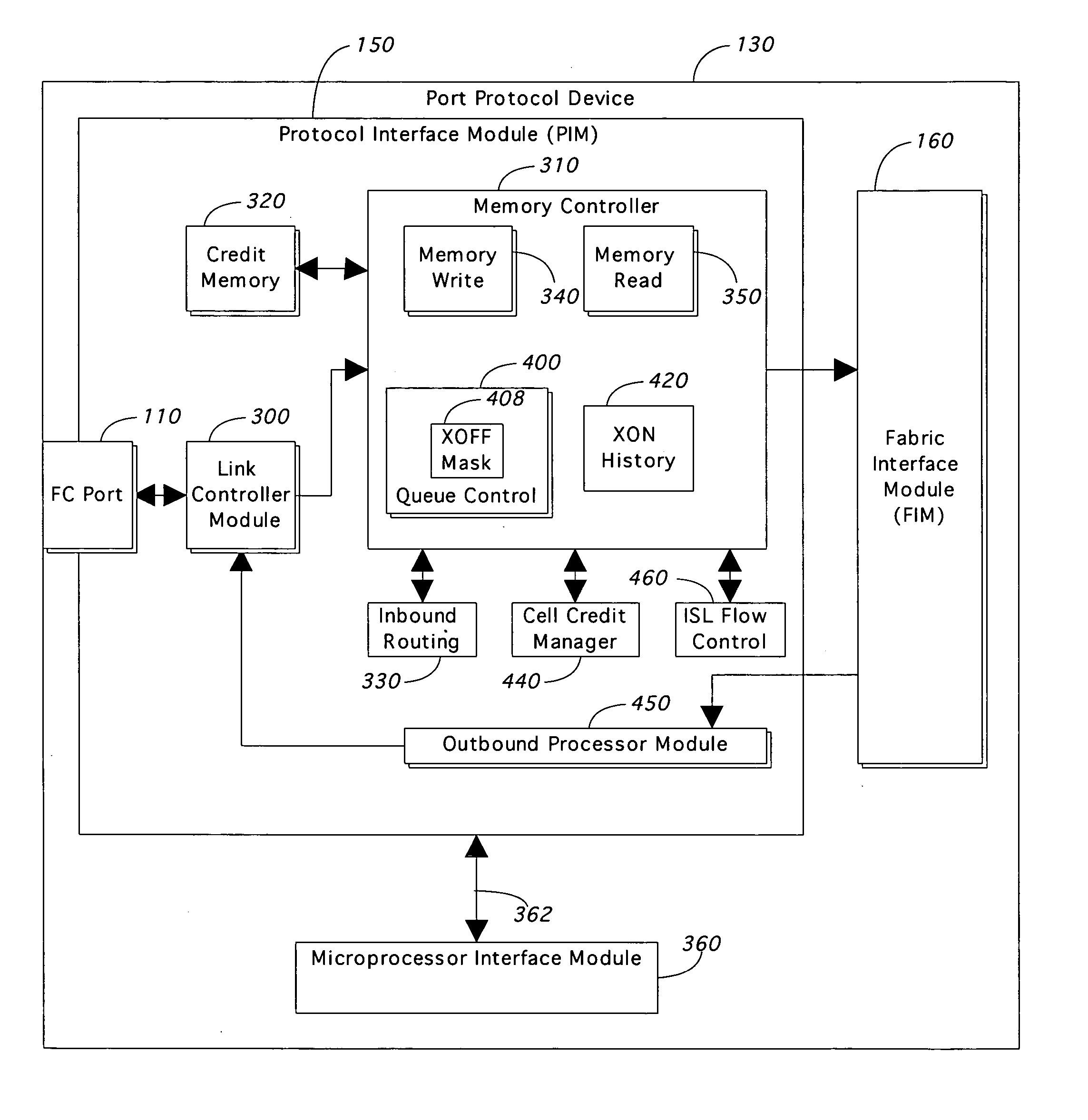

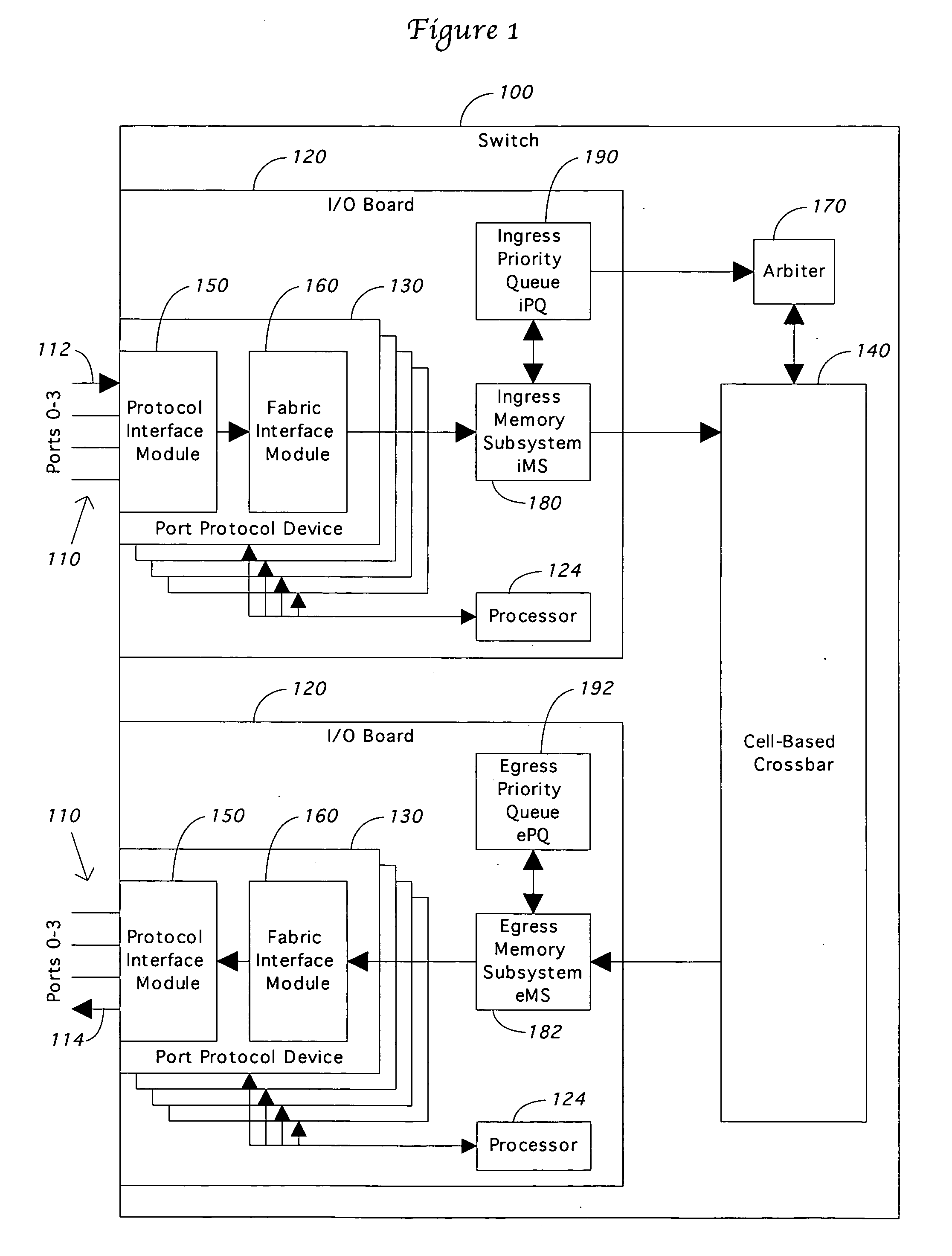

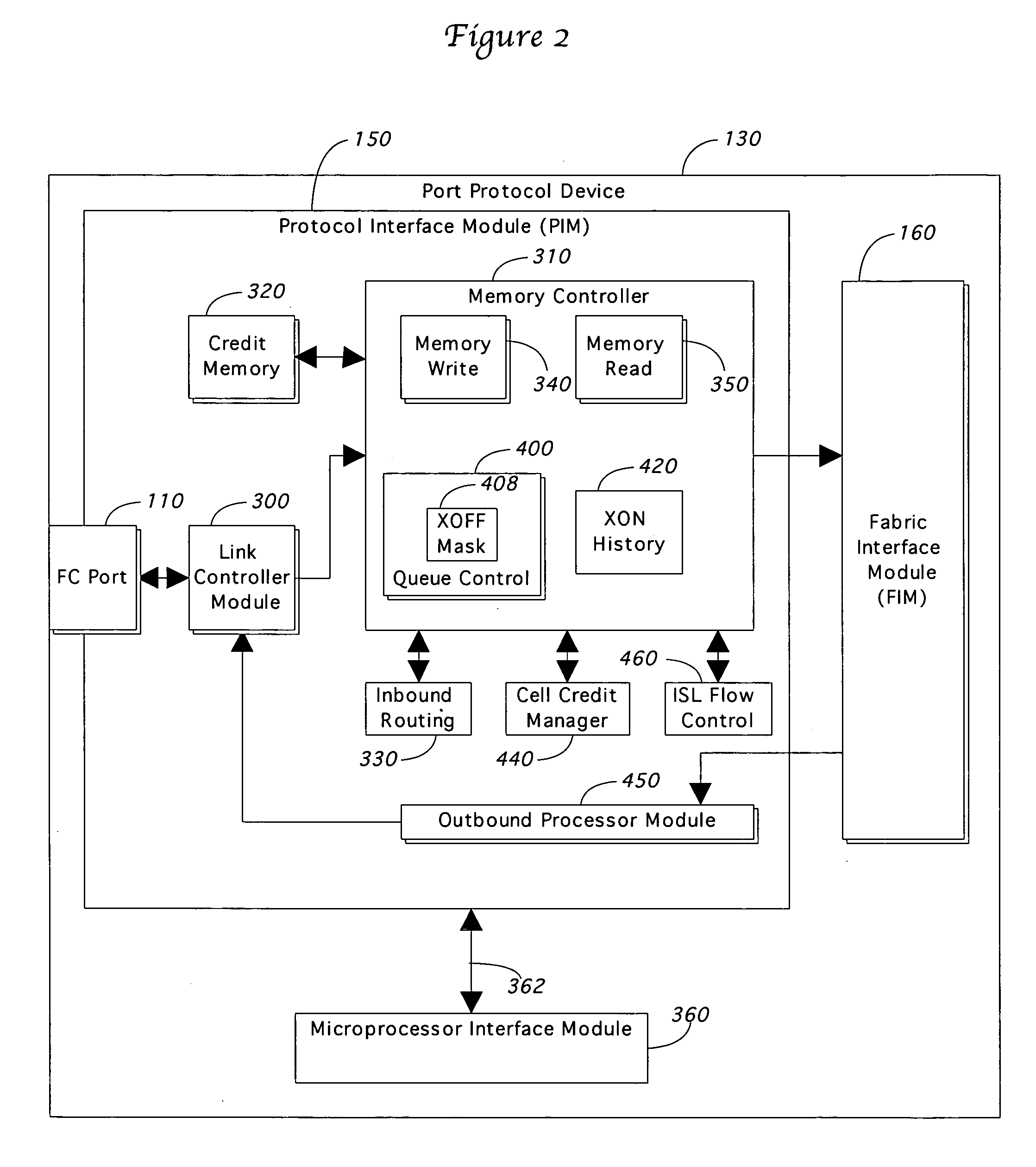

Fibre channel switch

InactiveUS20050047334A1Error preventionFrequency-division multiplex detailsCrossbar switchWire speed

A Fibre Channel switch is presented that tracks the congestion status of destination ports in an XOFF mask at each input. A mapping is maintained between virtual channels on an ISL and the destination ports to allow changes in the XOFF mask to trigger a primitive to an upstream port that provides virtual channel flow control. The XOFF mask is also used to avoid sending frames to a congested port. Instead, these frames are stored on a single deferred queue and later processed in a manner designed to maintain frame ordering. A routing system is provided that applies multiple routing rules in parallel to perform line speed routing. The preferred switch fabric is cell based, with techniques used to manage path maintenance for variable length frames and to adapt to varying transmission rates in the system. Finally, the switch allows data and microprocessor communication to share the same crossbar network.

Owner:MCDATA SERVICES CORP +1

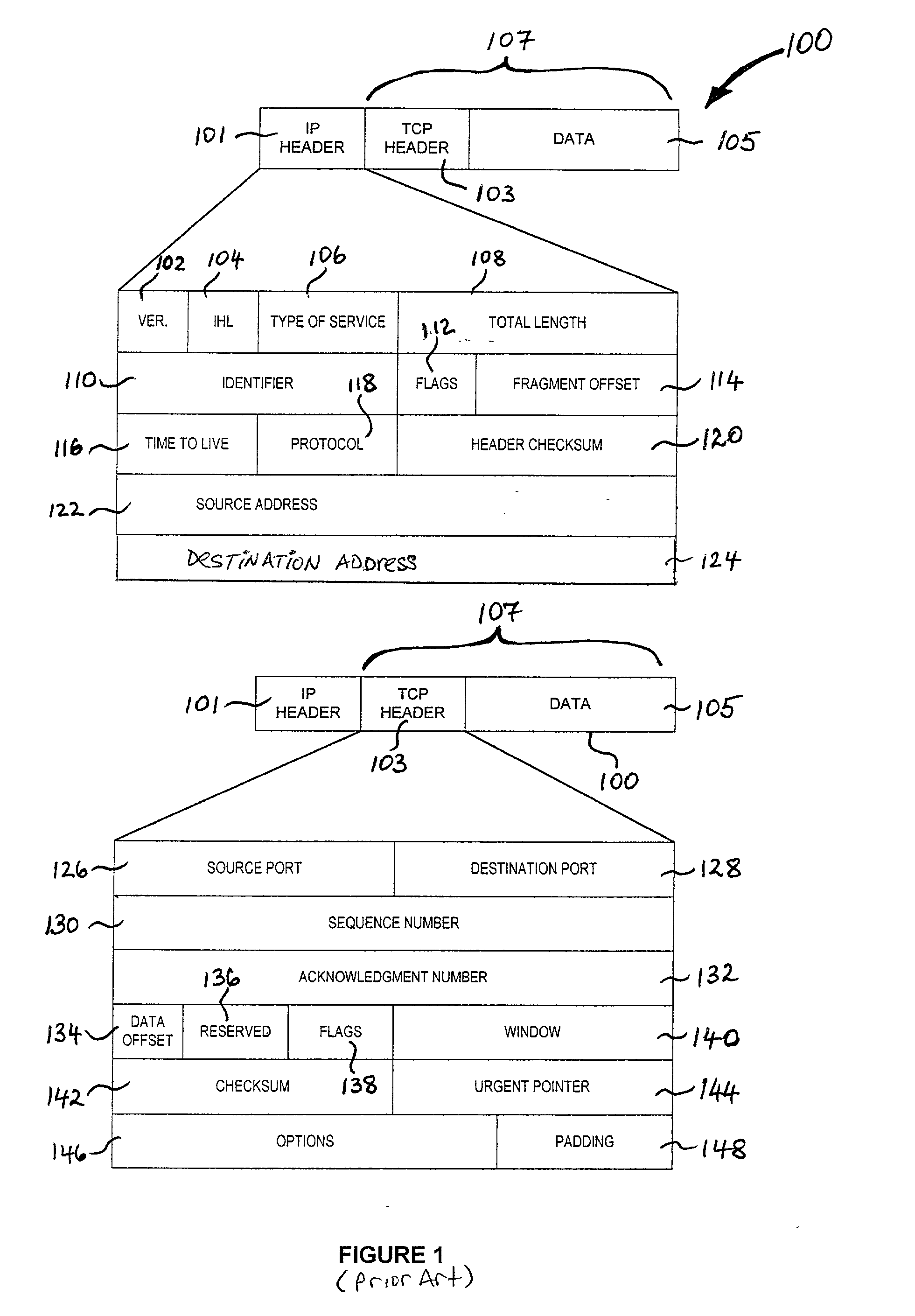

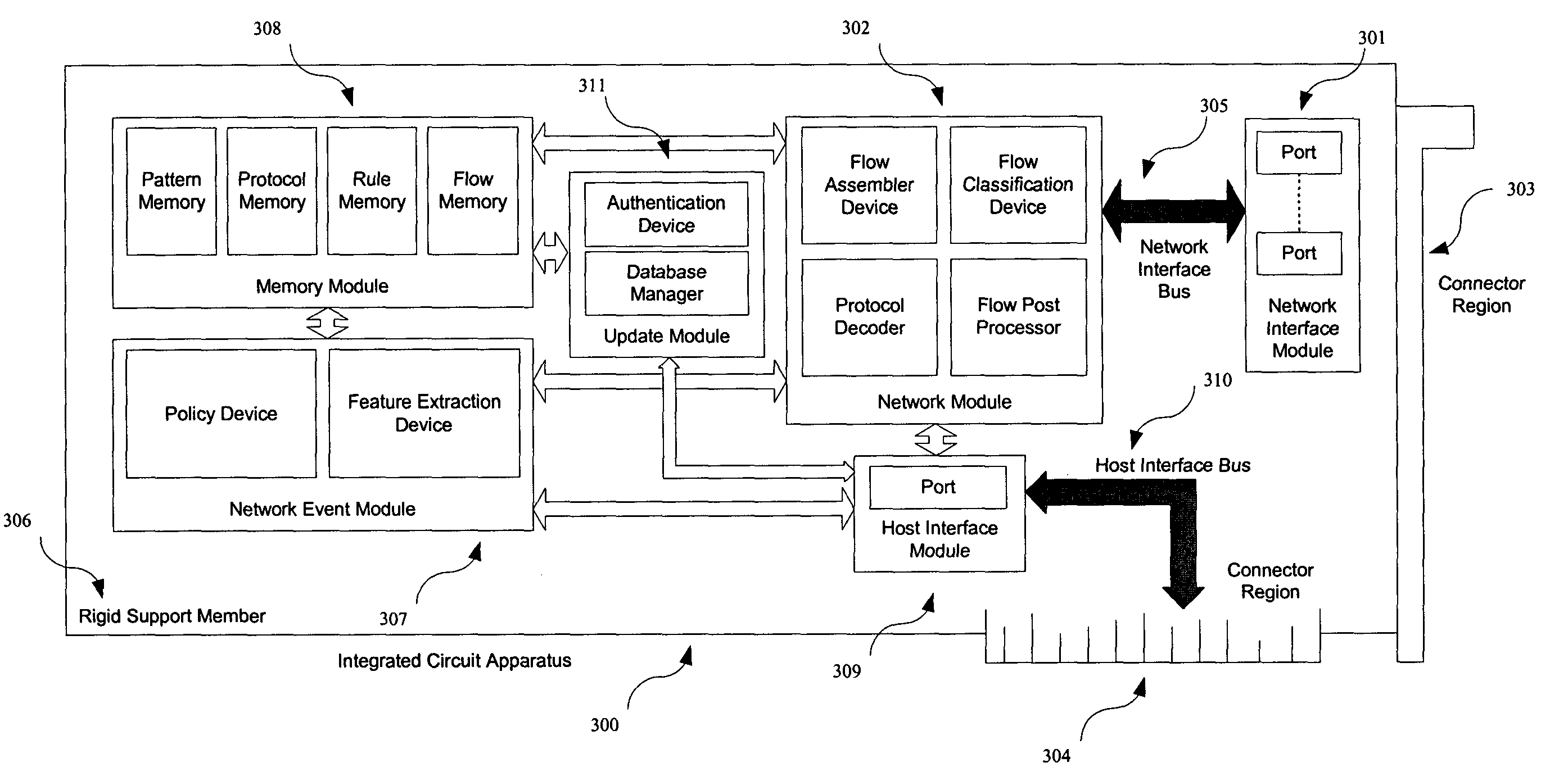

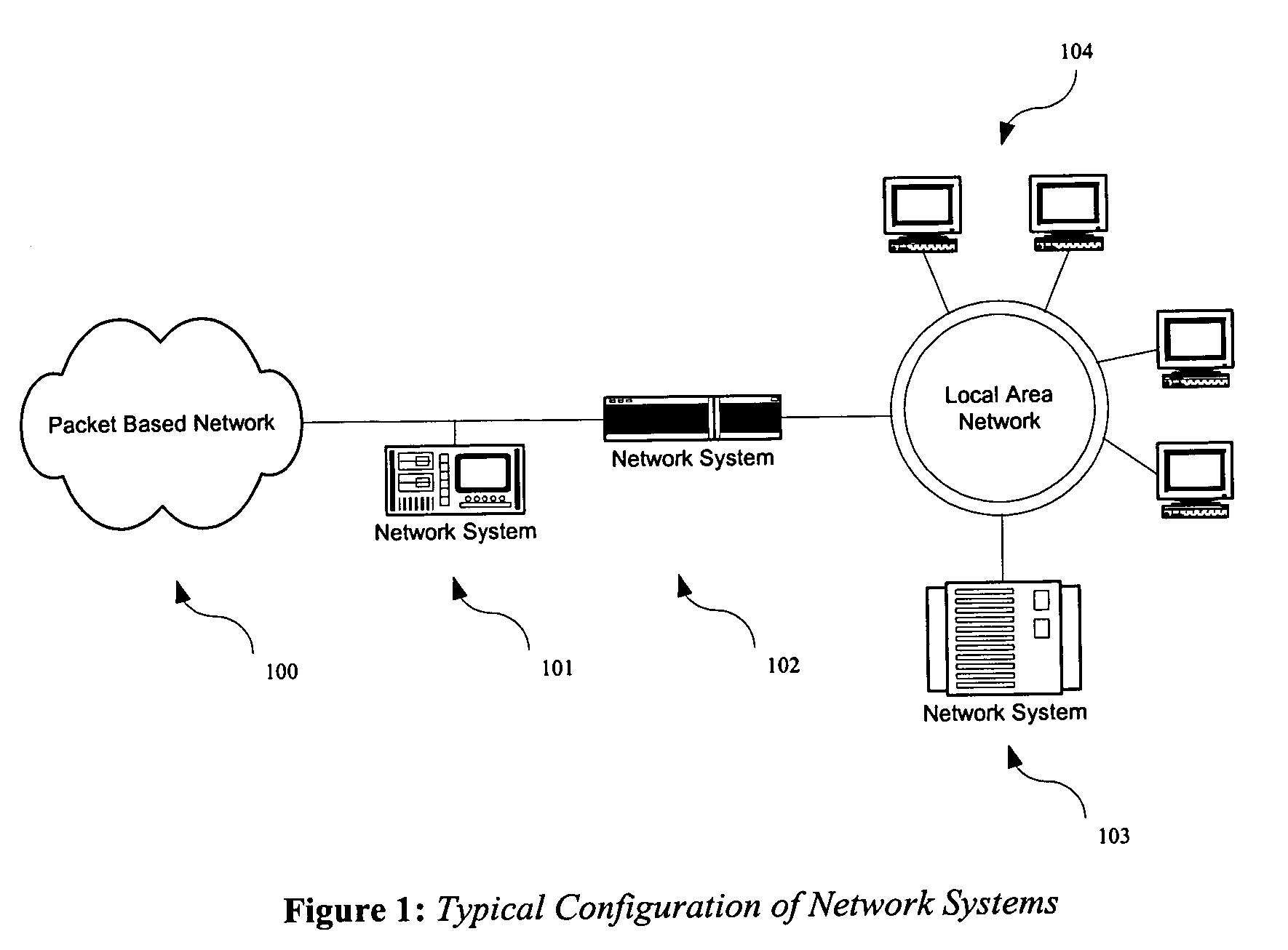

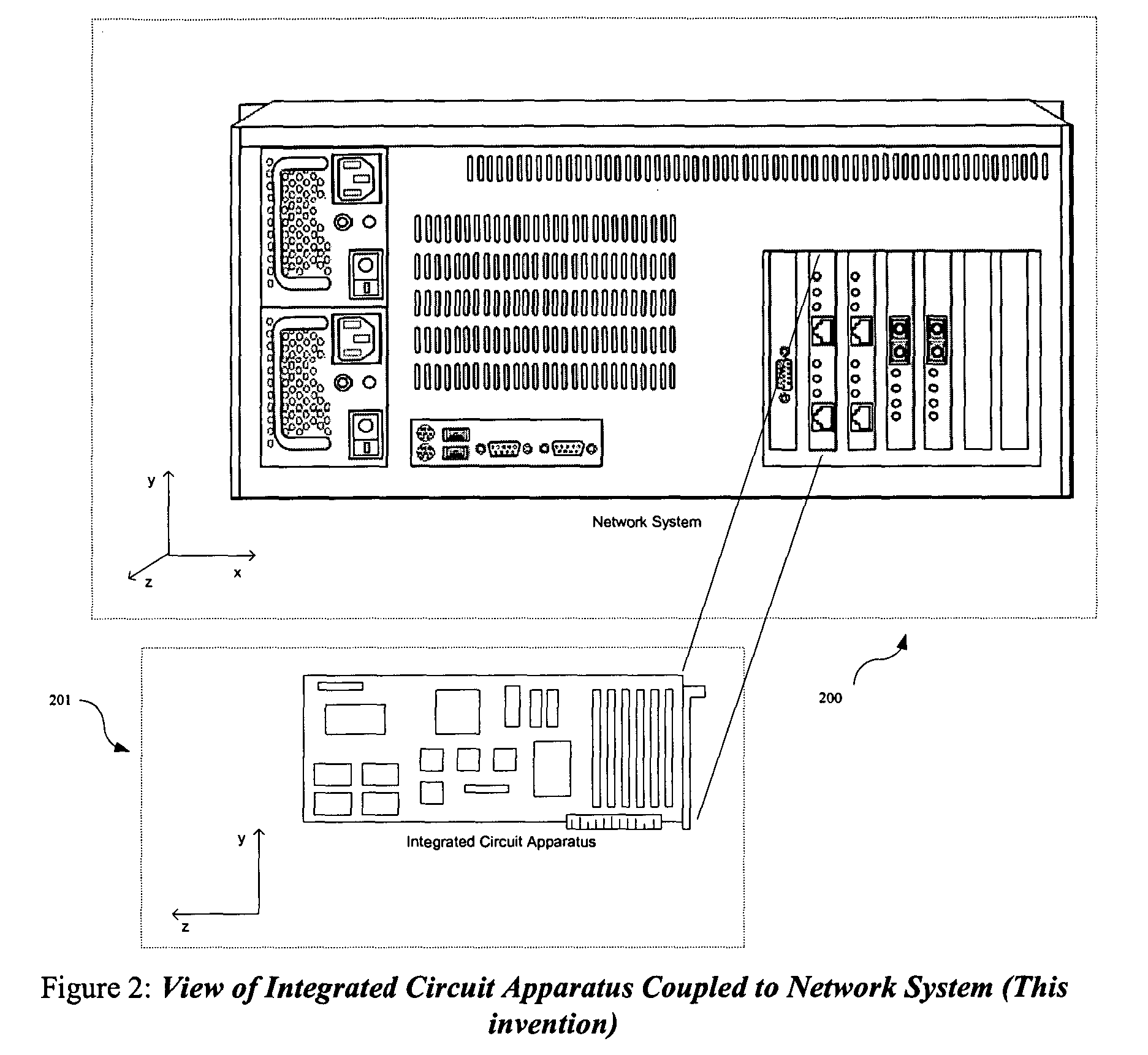

Integrated circuit apparatus and method for high throughput signature based network applications

InactiveUS20050114700A1Overcome quality of service problemOvercome deficienciesDigital data processing detailsCharacter and pattern recognitionWire speedPattern matching

An architecture for an integrated circuit apparatus and method that allows significant performance improvements for signature based network applications. In various embodiments the architecture allows high throughput classification of packets into network streams, packet reassembly of such streams, filtering and pre-processing of such streams, pattern matching on header and payload content of such streams, and action execution based upon rule-based policy for multiple network applications, simultaneously at wire speed. The present invention is improved over the prior art designs, in performance, flexibility and pattern database size.

Owner:INTEL CORP

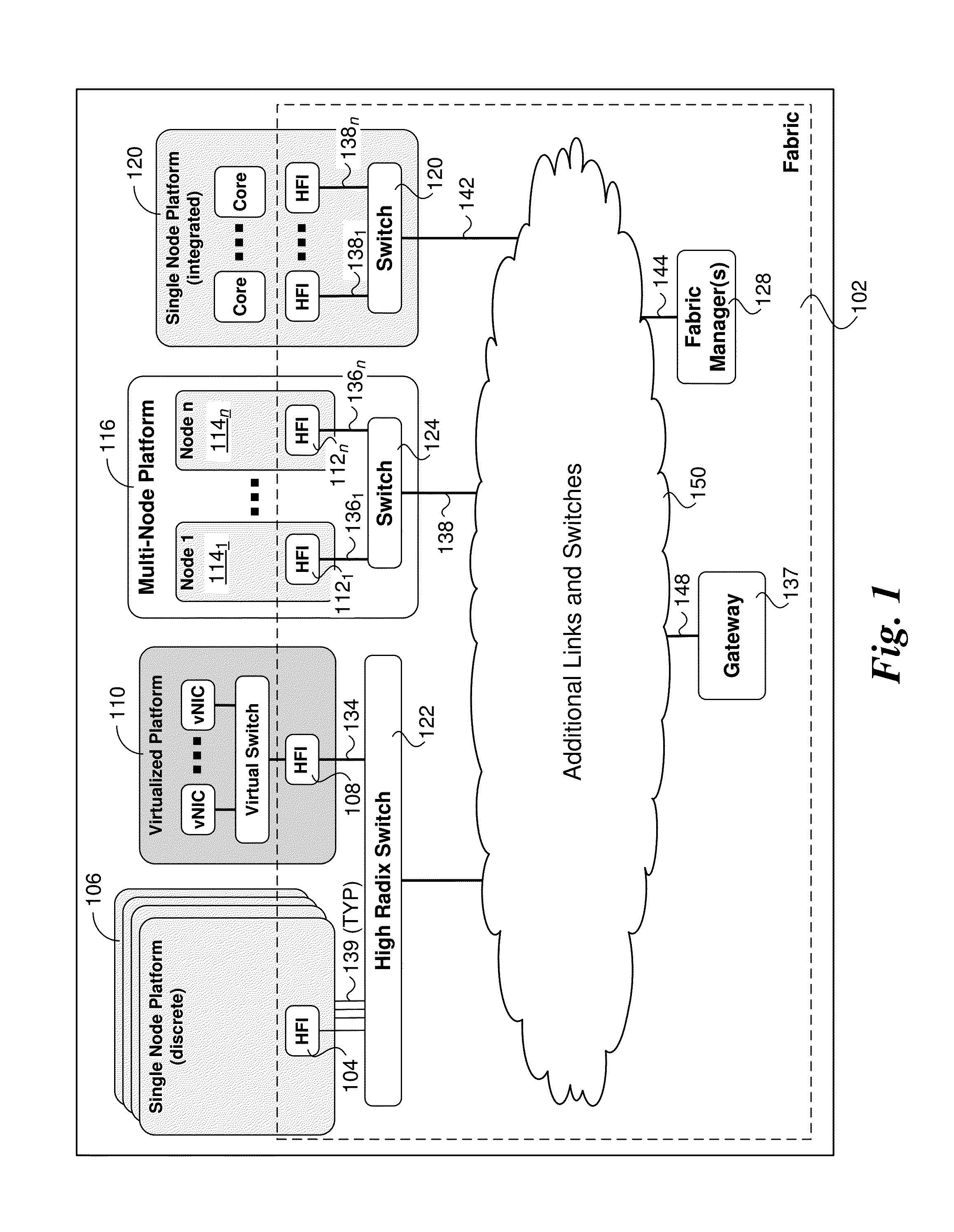

Transport of ethernet packet data with wire-speed and packet data rate match

ActiveUS20150222533A1Multiplex system selection arrangementsSignal allocationReliable transmissionWire speed

Method, apparatus, and systems for reliably transferring Ethernet packet data over a link layer and facilitating fabric-to-Ethernet and Ethernet-to-fabric gateway operations at matching wire speed and packet data rate. Ethernet header and payload data is extracted from Ethernet frames received at the gateway and encapsulated in fabric packets to be forwarded to a fabric endpoint hosting an entity to which the Ethernet packet is addressed. The fabric packets are divided into flits, which are bundled in groups to form link packets that are transferred over the fabric at the Link layer using a reliable transmission scheme employing implicit ACKnowledgements. At the endpoint, the fabric packet is regenerated, and the Ethernet packet data is de-encapsulated. The Ethernet frames received from and transmitted to an Ethernet network are encoded using 64b / 66b encoding, having an overhead-to-data bit ratio of 1:32. Meanwhile, the link packets have the same ratio, including one overhead bit per flit and a 14-bit CRC plus a 2-bit credit return field or sideband used for credit-based flow control.

Owner:INTEL CORP

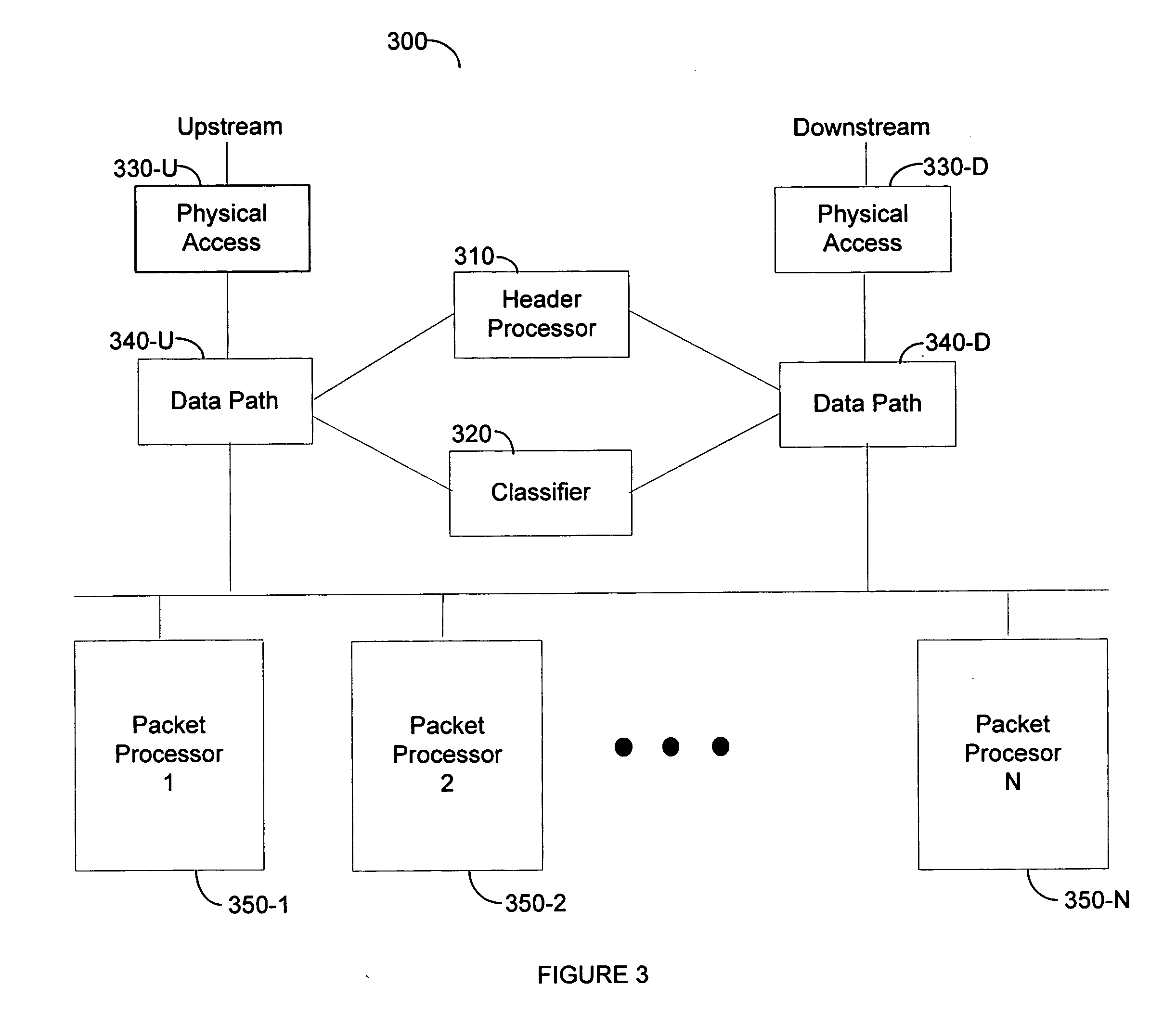

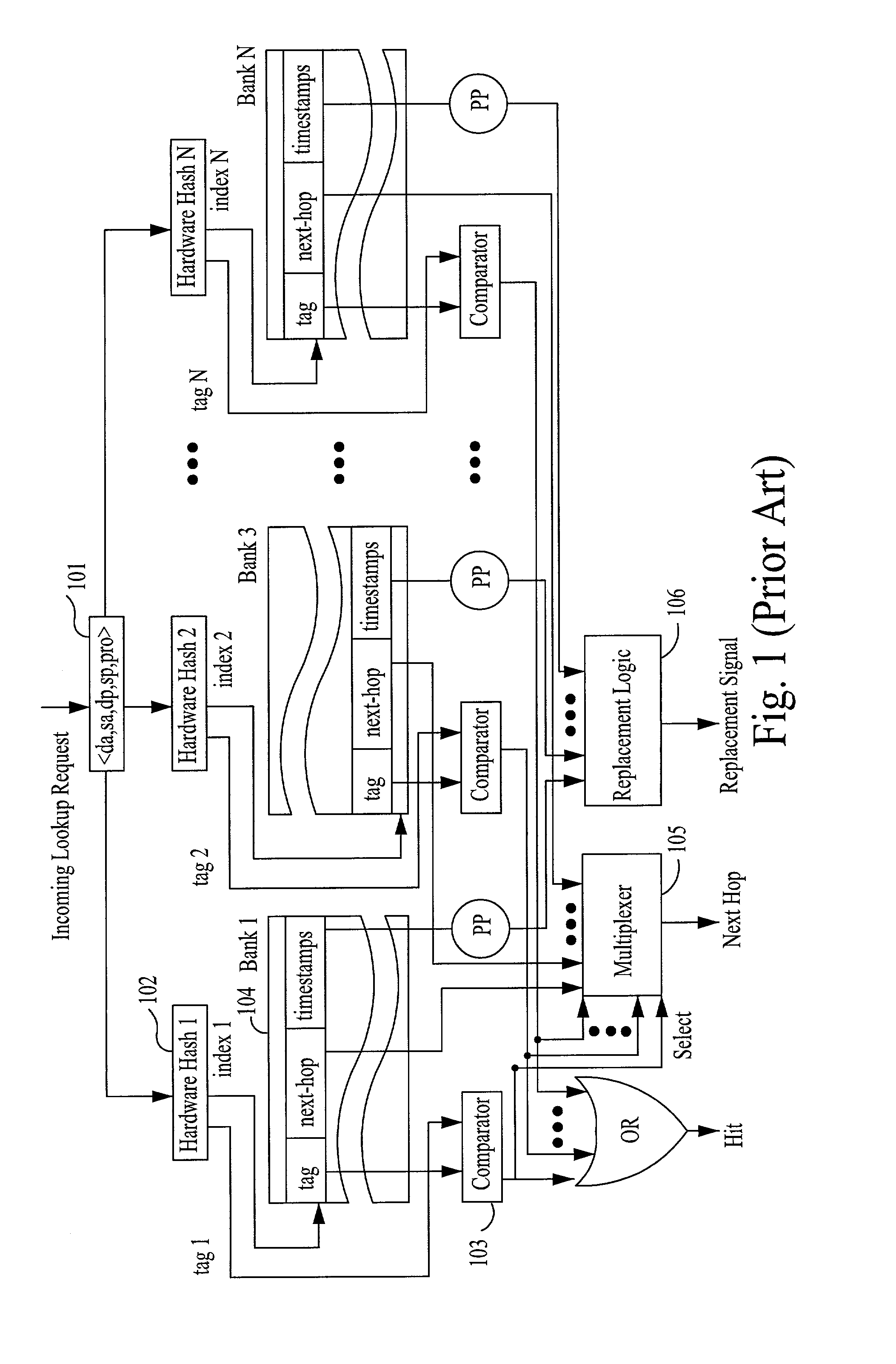

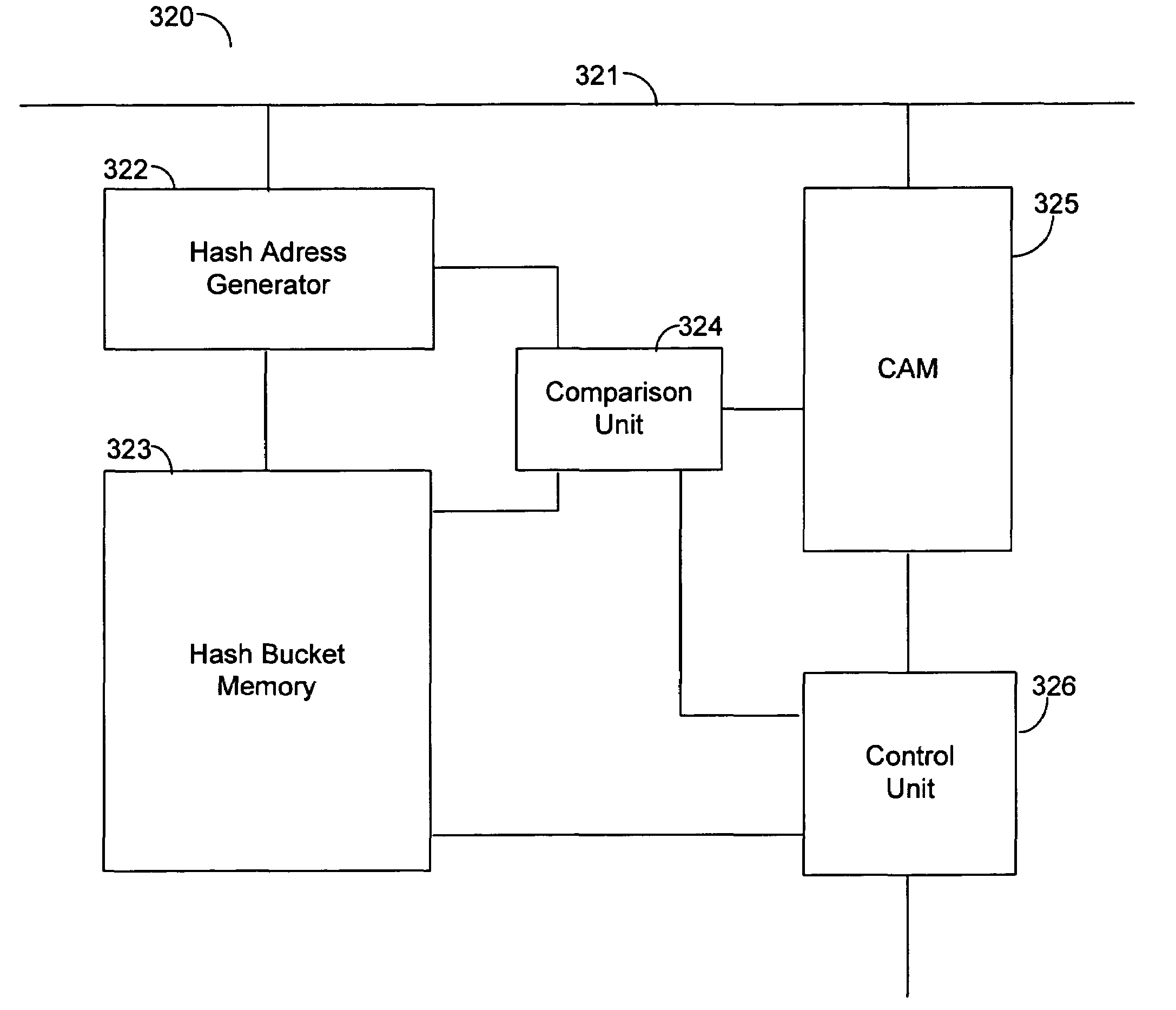

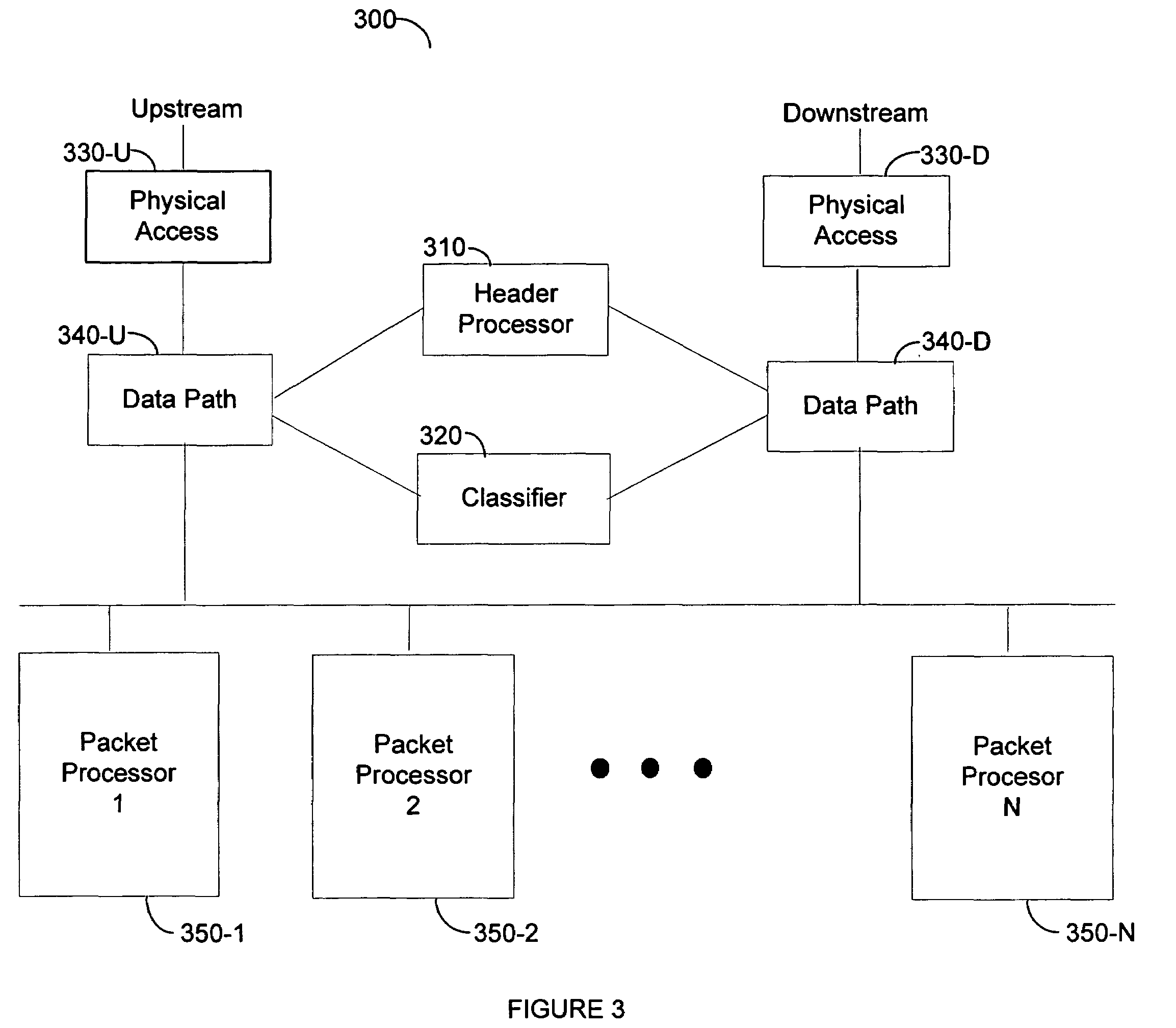

Method and apparatus for wire-speed application layer classification of upstream and downstream data packets

InactiveUS20050190694A1Efficient data processingError preventionFrequency-division multiplex detailsWire speedAddress generator

A data packet classifier to classify a plurality of N-bit input tuples, said classifier comprising a hash address, a memory and a comparison unit. The hash address generator generate a plurality of M-bit hash addresses from said plurality of N-bit input tuples, wherein M is significantly smaller than N. The memory has a plurality of memory entries and is addressable by said plurality of M-bit hash addresses, each such address corresponding to a plurality of memory entries, each of said plurality of memory entries capable of storing one of said plurality of N-bit tuples and an associated process flow information. The comparison unit determines if an incoming N-bit tuple can be matched with a stored N-bit tuple. The associated process flow information is output if a match is found and wherein a new entry is created in the memory for the incoming N-bit tuple if a match is not found.

Owner:CISCO SYST ISRAEL

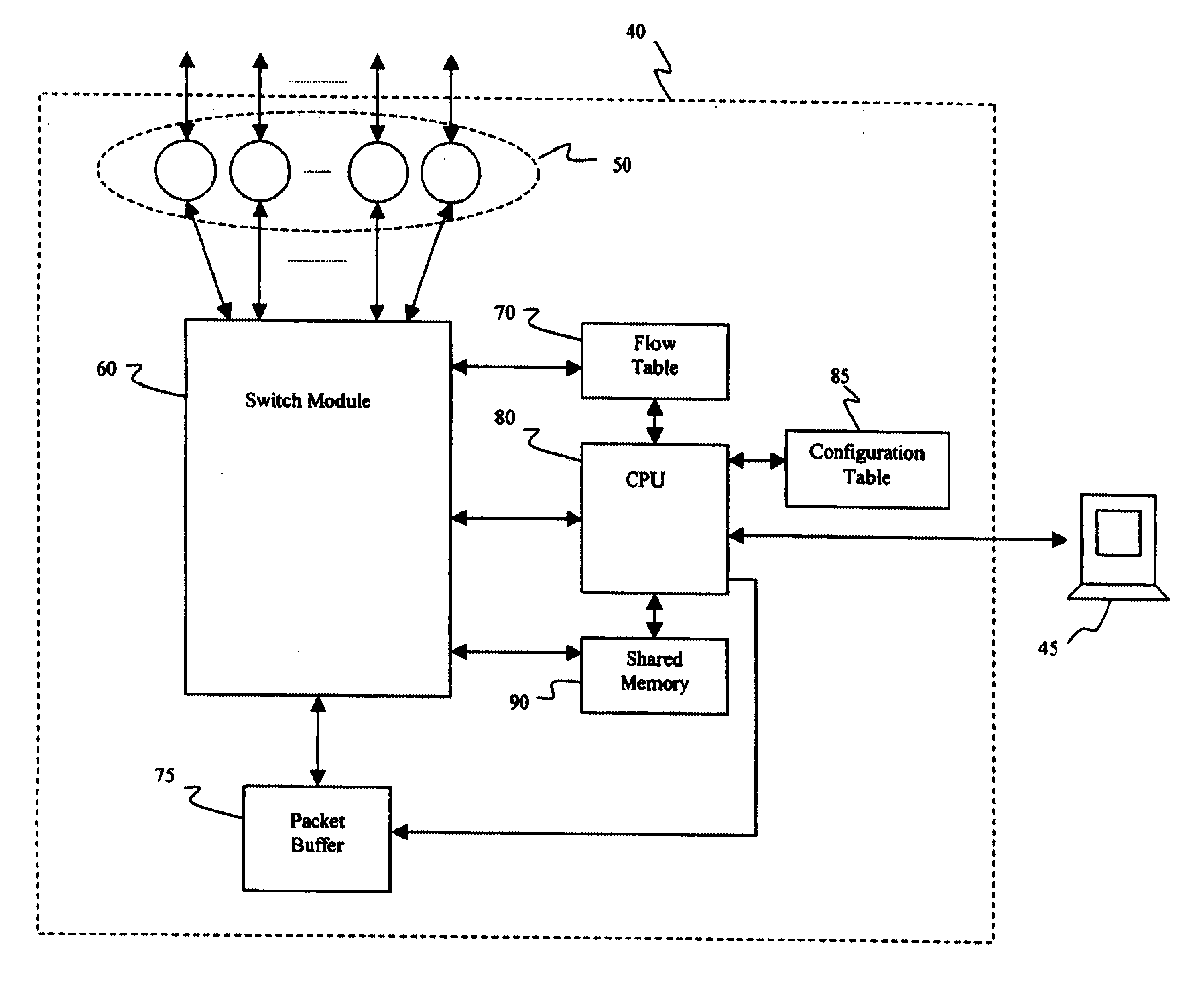

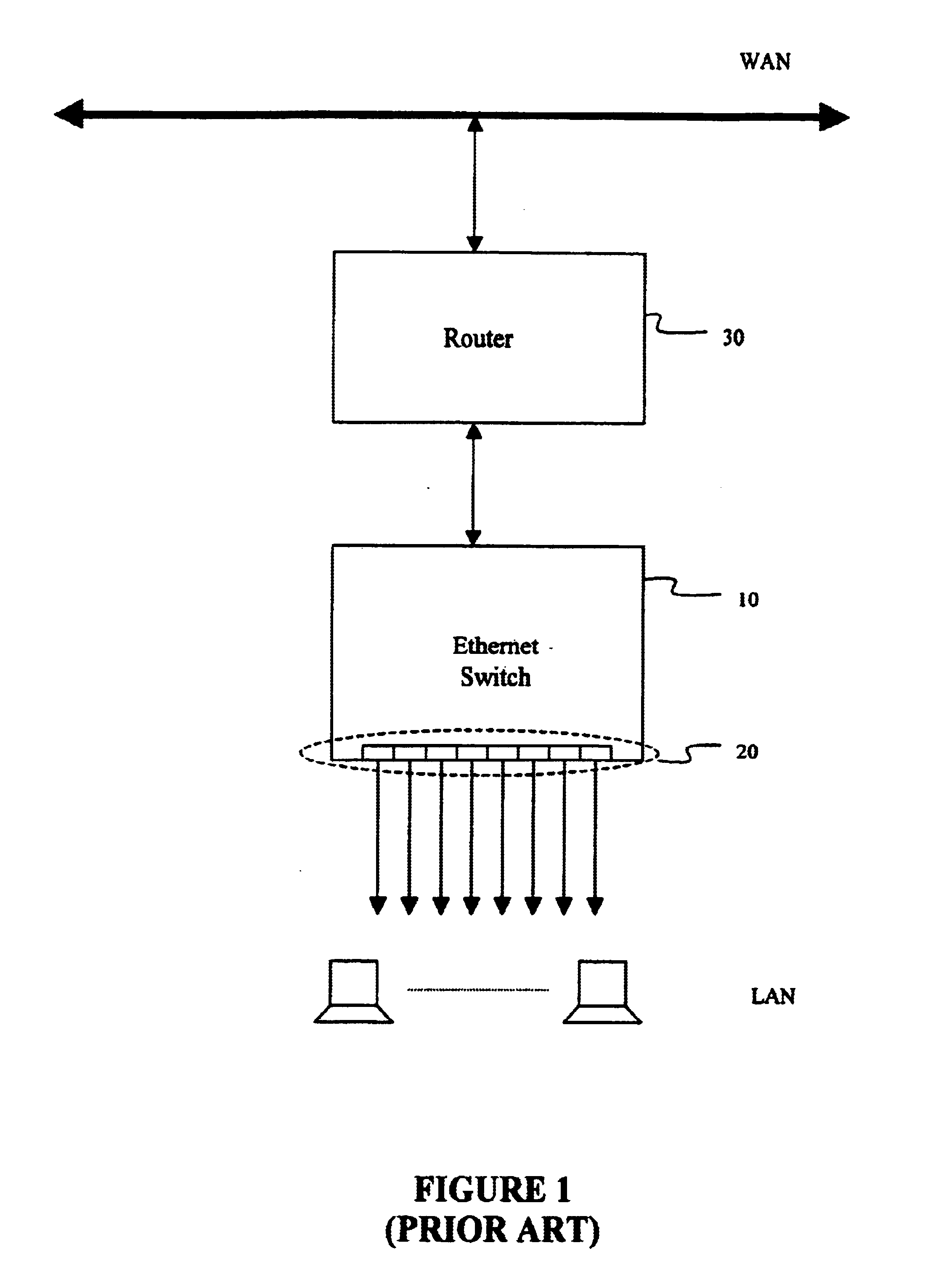

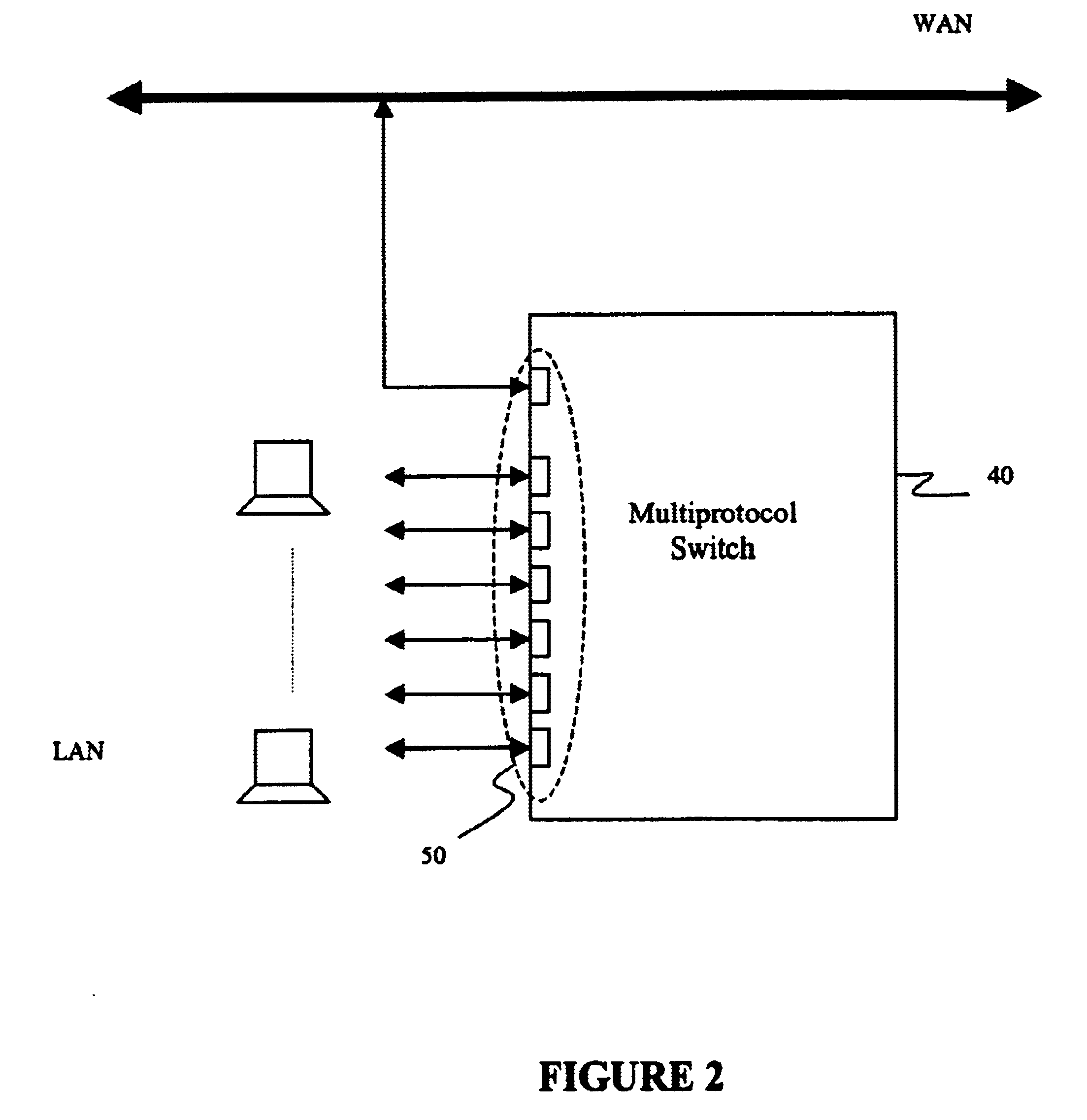

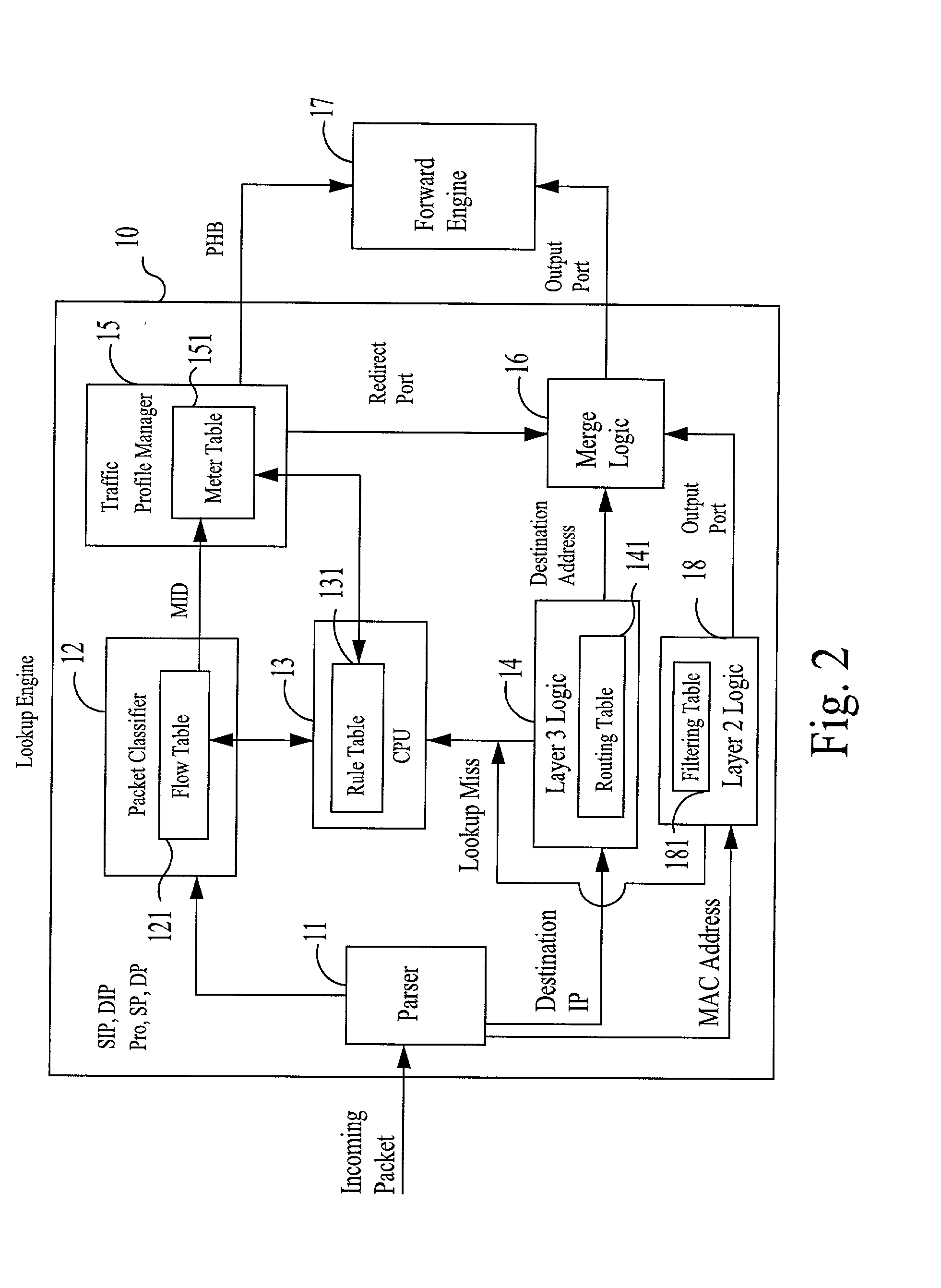

Method and apparatus for multiprotocol switching and routing

InactiveUS6876654B1Low implementation costPartly effectiveTime-division multiplexNetworks interconnectionMultiprotocol Label SwitchingWire speed

A packet forwarding method and apparatus performs multiprotocol routing (for IP and IPX protocols) and switching. Incoming data packets are examined and the flow (i.e., source and destination addresses and source and destination socket numbers) with which they are associated is determined. A flow table contains forwarding information that can be applied to the flow. If an entry is not present in the table for the particular flow, the packet is forwarded to the CPU to be processed. The CPU can then update the table with new forwarding information to be applied to all future packets belonging to the same flow. When the forwarding information is already present in the table, packets can thus be forwarded at wire speed. A dedicated ASIC is preferably employed to contain the table, as well as the engine for examining the packets and forwarding them according to the stored information. Decision-making tasks are thus more efficiently partitioned between the switch and the CPU so as to minimize processing overhead.

Owner:INTEL CORP

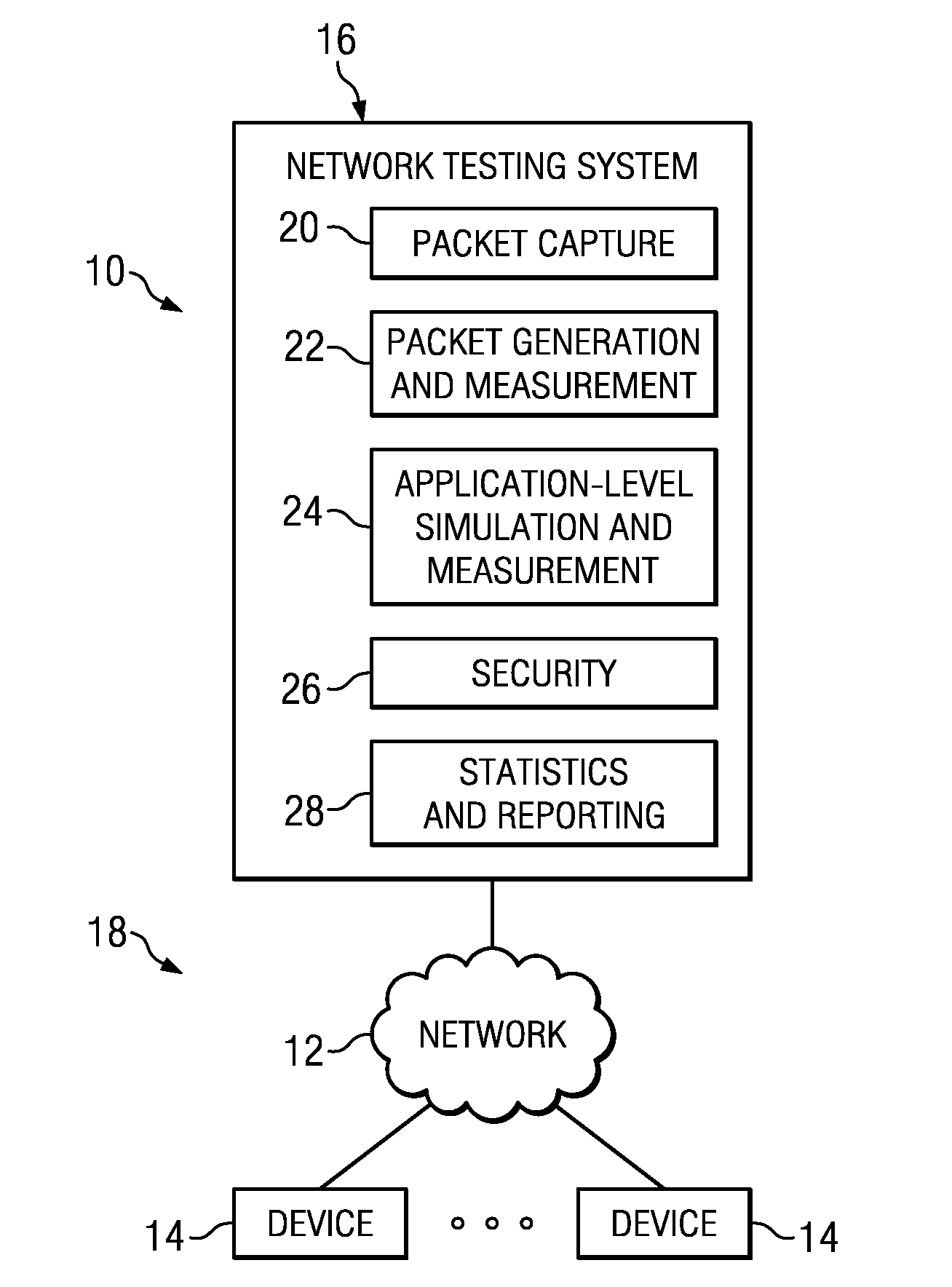

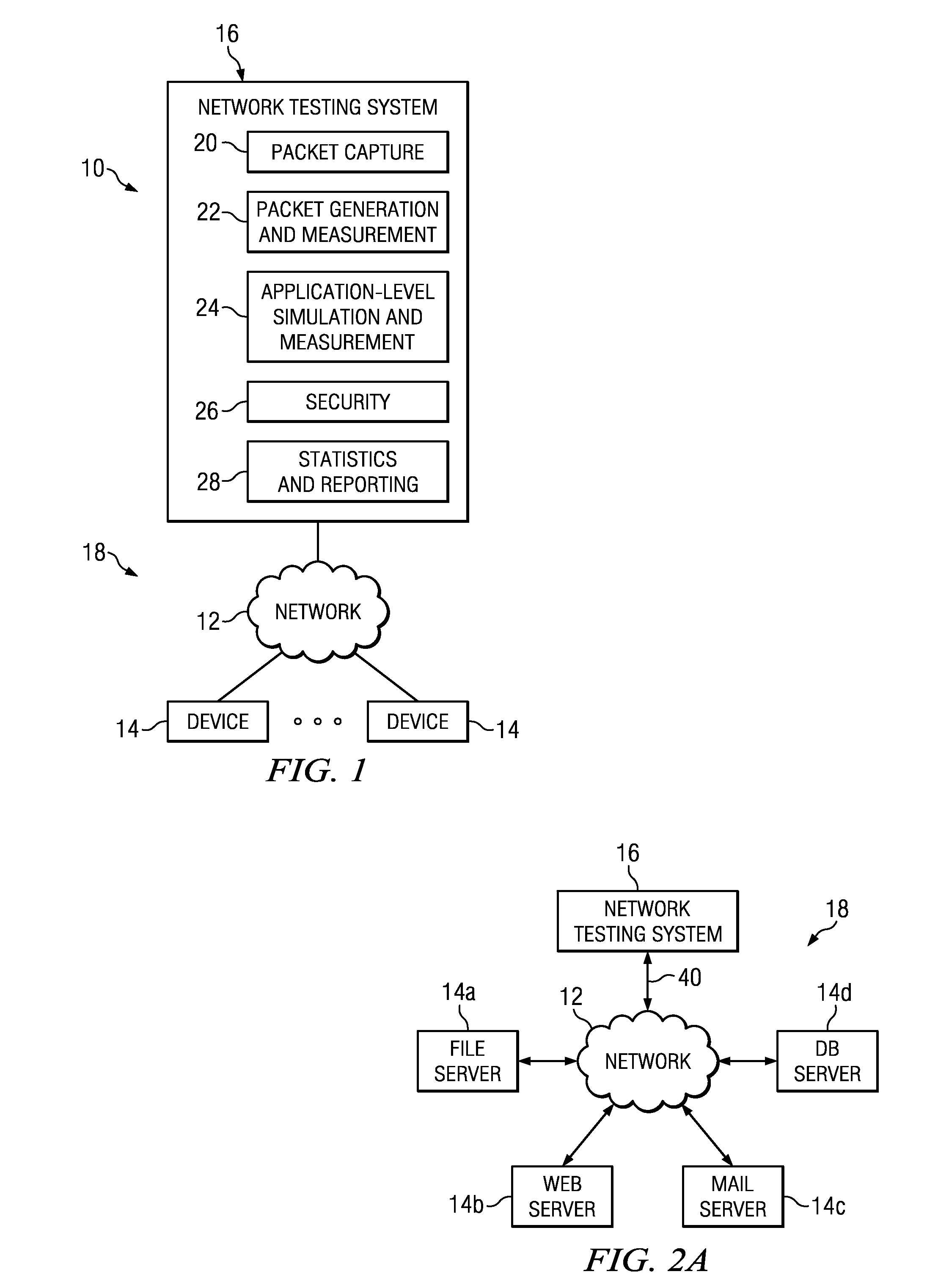

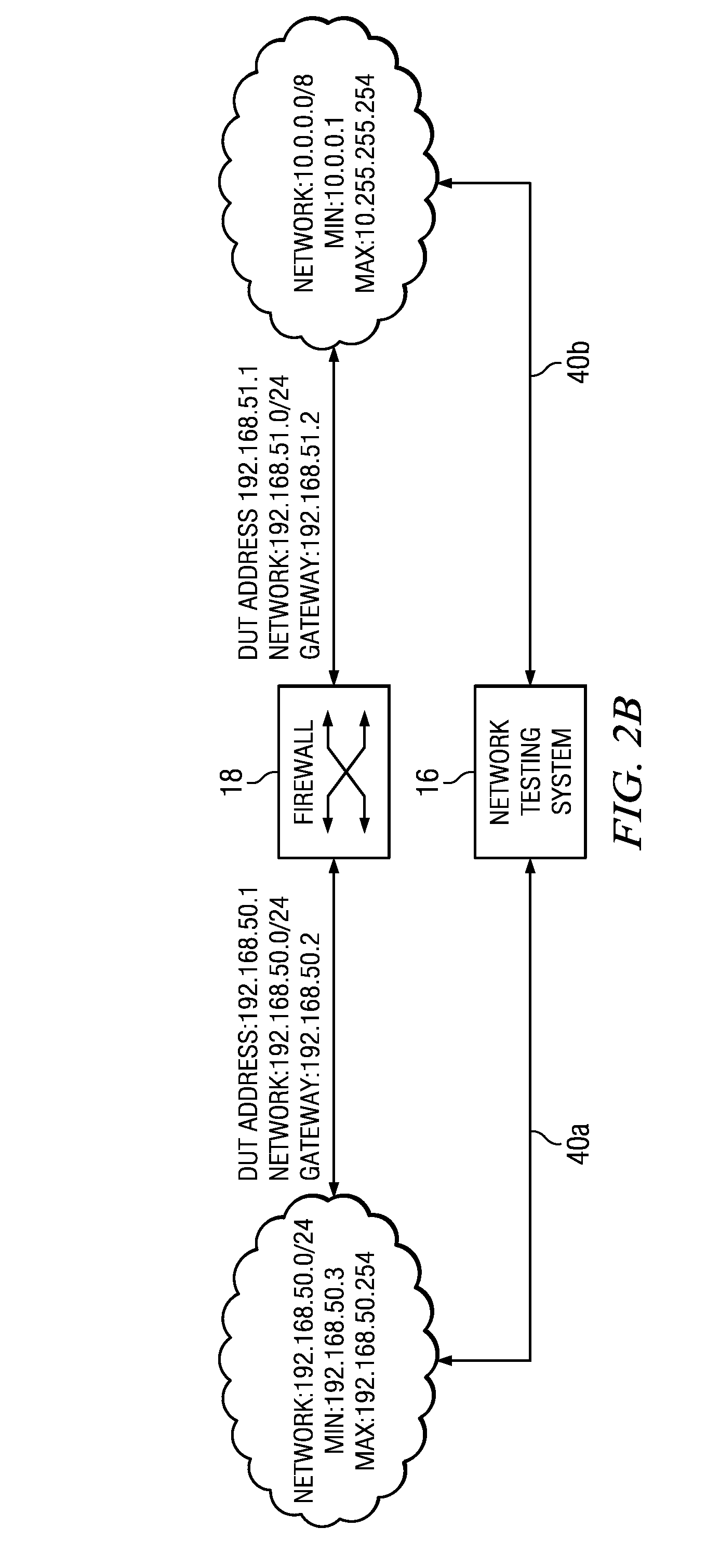

Systems and methods of data processing using an fpga-implemented hash function

InactiveUS20130343181A1Error preventionFrequency-division multiplex detailsComputer hardwareHash function

A method for processing data packets in a computer system may include receiving a data packet at a configurable logic device (e.g., an FPGA), each packet including header information regarding the data packet, the configurable logic device automatically identifying particular information elements in the header information of the data packet, the configurable logic device automatically executing a hash function programmed on the configurable logic device to calculate a hash value for the data packet based on the particular information elements, and processing the data packet based on the calculated hash value for the data packet. The calculate hash value may be used for various purposes, e.g., routing and / or load balancing of traffic across multiple interfaces. The configurable logic device may be able to execute the hash function at line rate, thus freeing up processor cycles in one or more related processors.

Owner:BREAKINGPOINT SYST

Real-time network monitoring and security

ActiveUS20050108573A1Easy programmingEasy maintenanceWeb data indexingMemory loss protectionHigh bandwidthWire speed

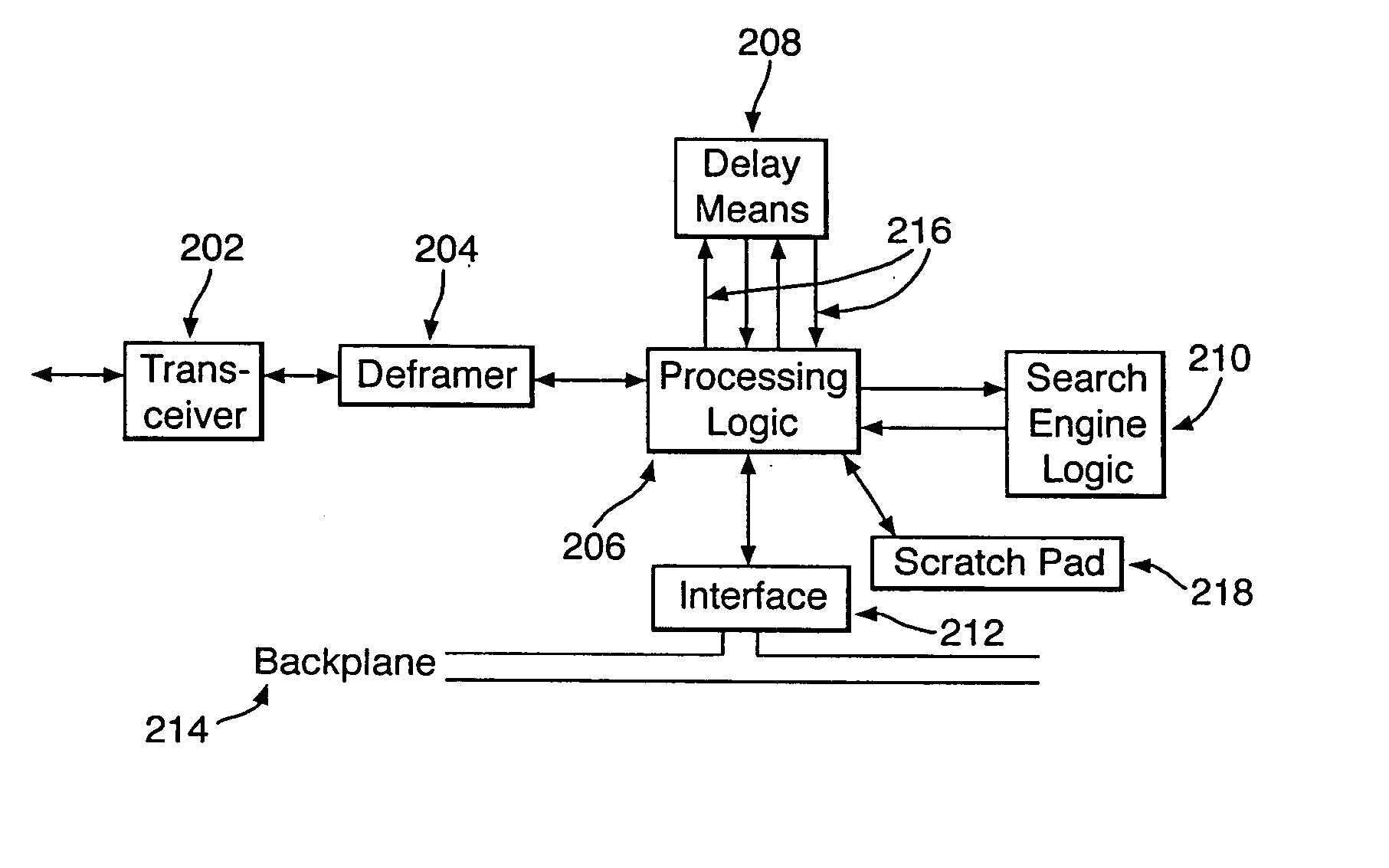

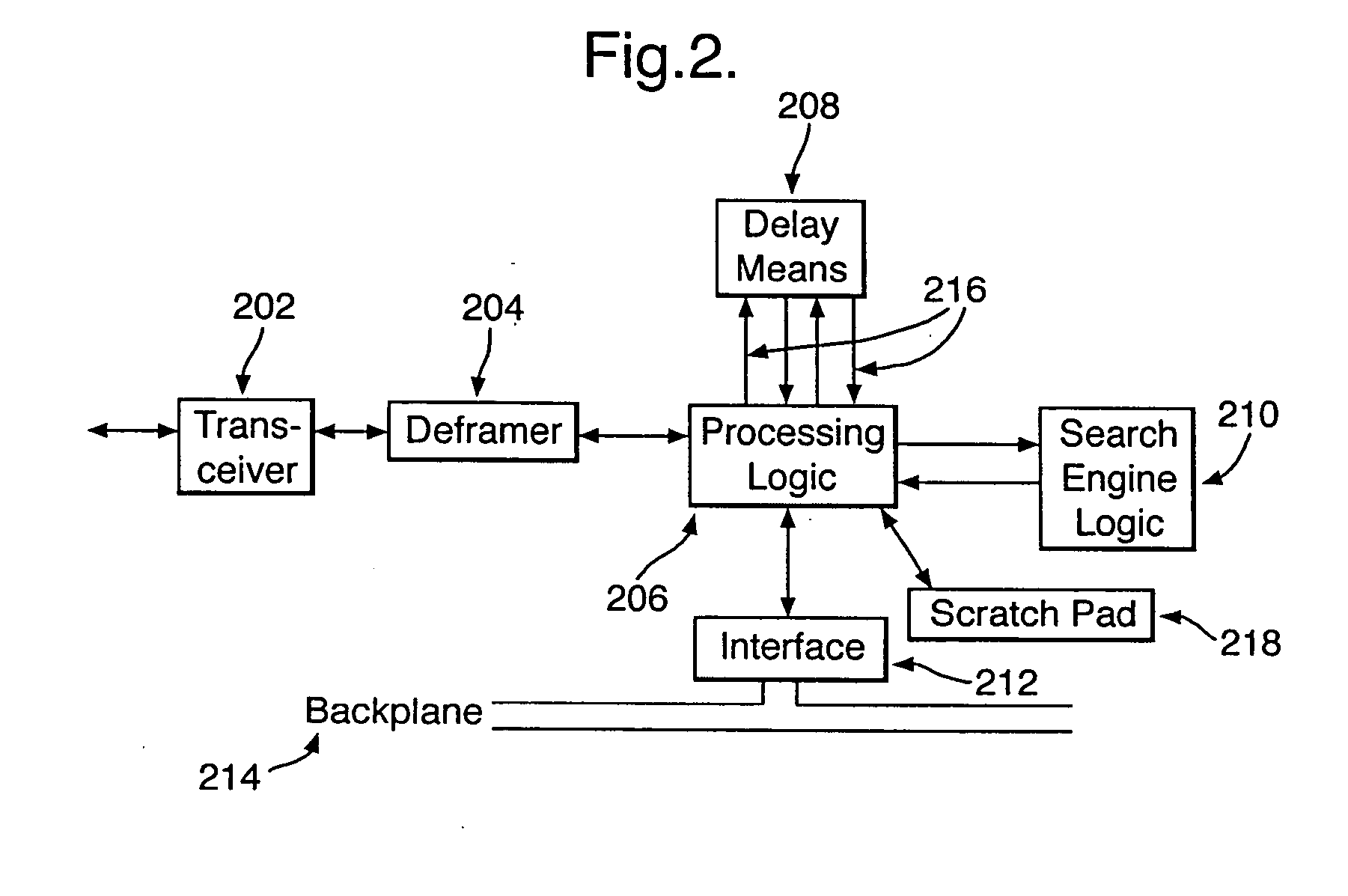

There is provided a hardware device for monitoring and intercepting data packetized data traffic at full line rate. In preferred high bandwidth embodiments, full line rate corresponds to rates that exceed 100 Mbytes / s and in some cases 1000 Mbytes / s. Monitoring and intercepting software, alone, is not able to operate on such volumes of data in real-time. A preferred embodiment comprises: a data delay buffer (208) with multiple delay outputs (216); a search engine logic (210) for implementing a set of basic search tools that operate in real-time on the data traffic; a programmable gate array (206); an interface (212) for passing data quickly to software sub-systems; and control means for implementing software control of the operation of the search tools. The programmable gate array (206) inserts the data packets into the delay buffer (208), extracts them for searching at the delay outputs and formats and schedules the operation of the search engine logic (210). One preferred embodiment uses an IP co-processor as the search engine logic.

Owner:BAE SYSTEMS PLC

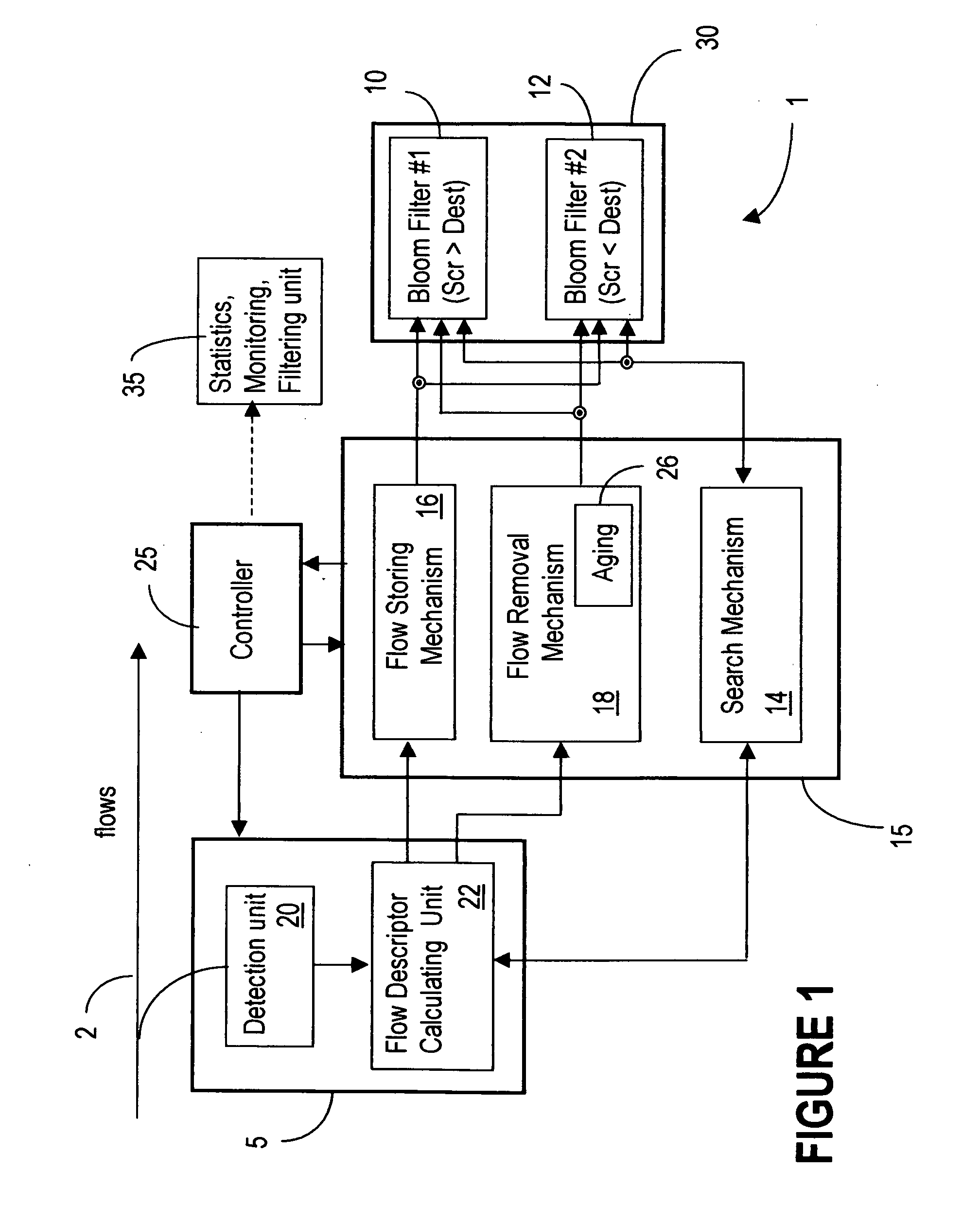

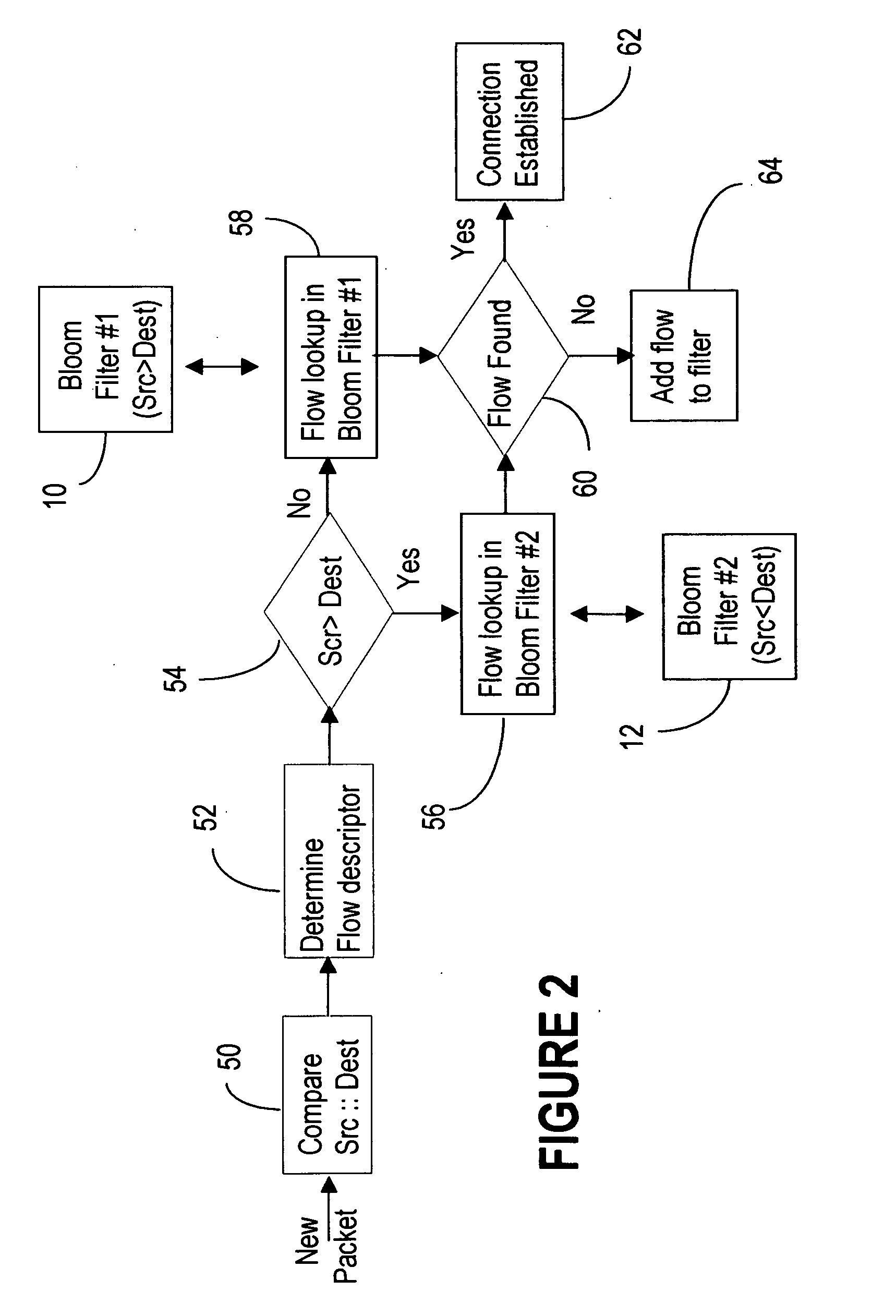

Symmetric connection detection

InactiveUS20070248084A1Mitigate such drawbackAlleviates totallyError preventionTransmission systemsComputer hardwareWire speed

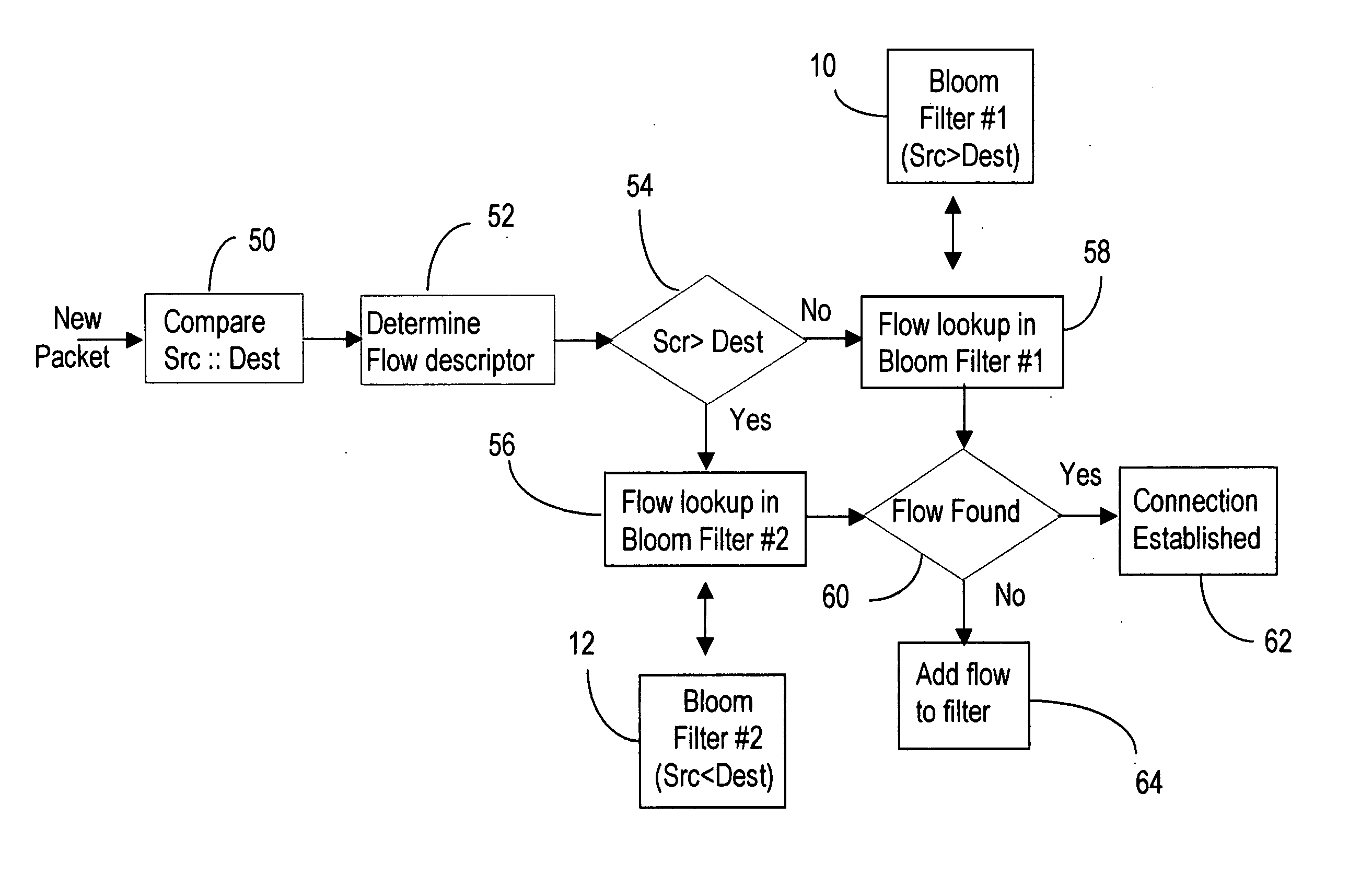

Symmetric Connection Detection (SCD) is a method of detecting when a connection has been fully established in a resource-constrained environment, and works in high-speed routers, at line speed. Many network monitoring applications are only interested in connections that become fully established, so other connection attempts, such as port scanning attempts, simply waste resources if not filtered. SCD filters out unsuccessful connection attempts using a simple combination of Bloom filters to track the state of connection establishment for every flow in the network. Unsuccessful flows can be filtered out to a very high degree of accuracy, depending on the size of the bloom filter and traffic rate. The SCD methodology can also easily be adapted to accomplish port scan detection, and to detect or filter other types of invalid TCP traffic.

Owner:ALCATEL LUCENT SAS

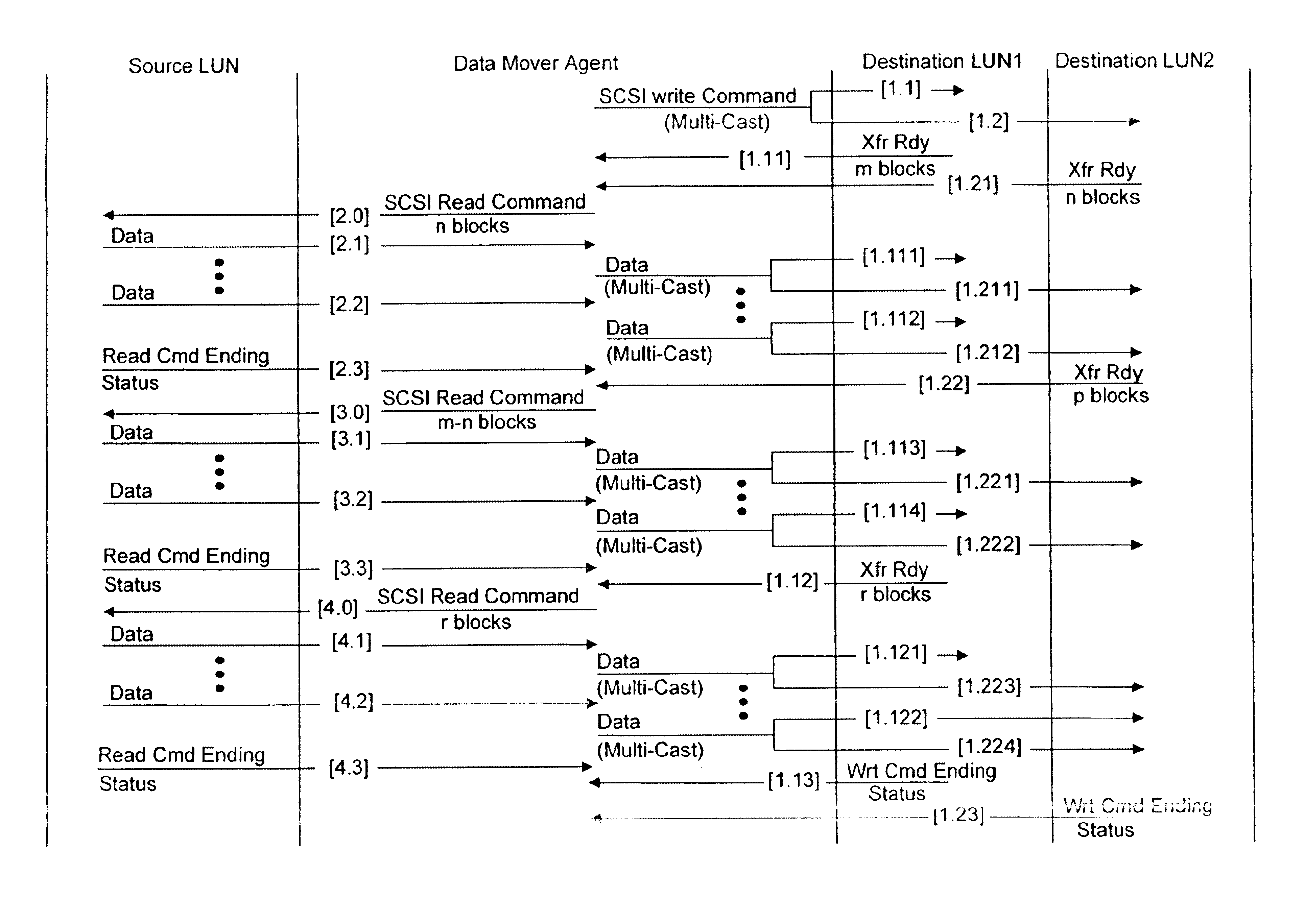

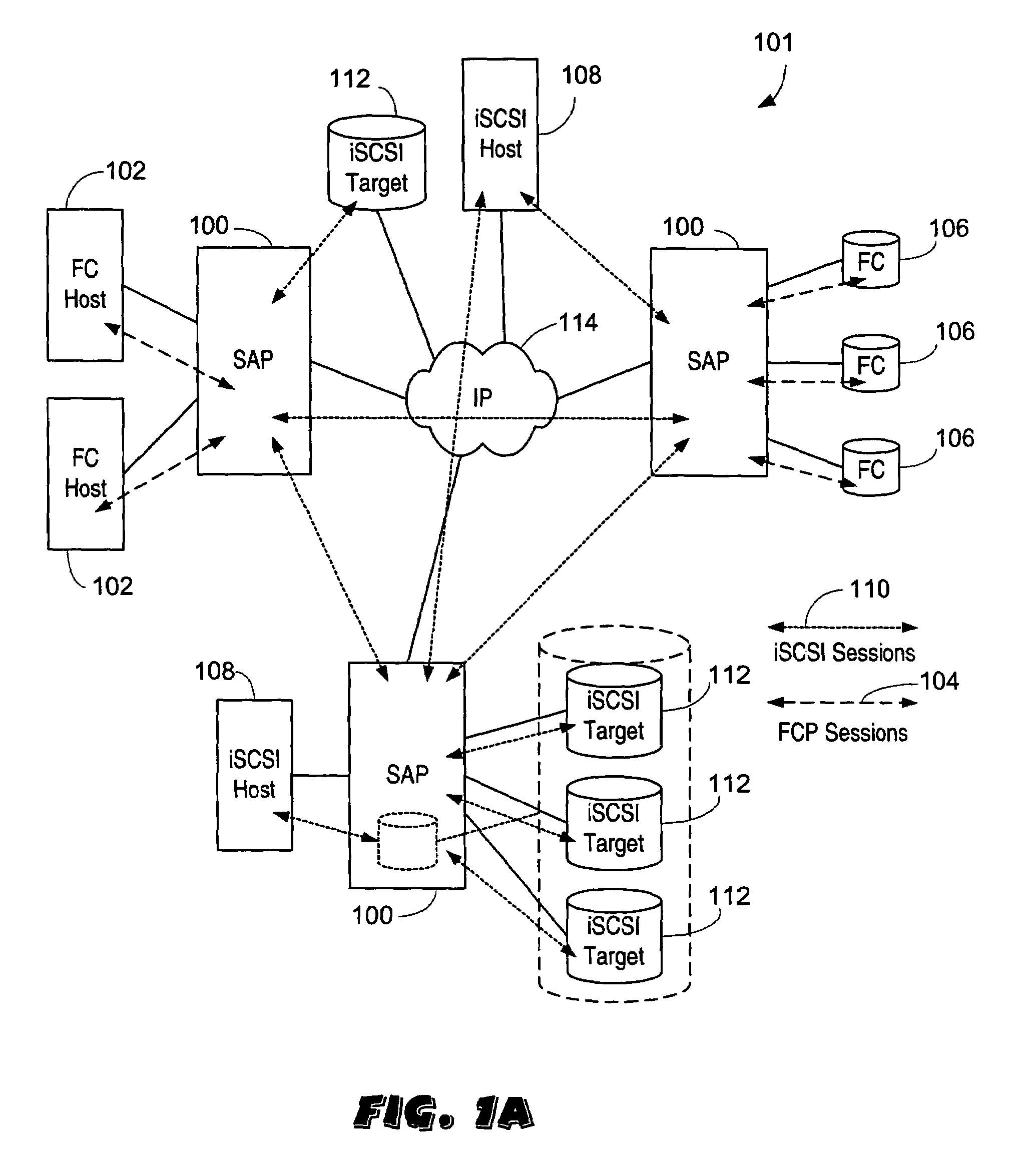

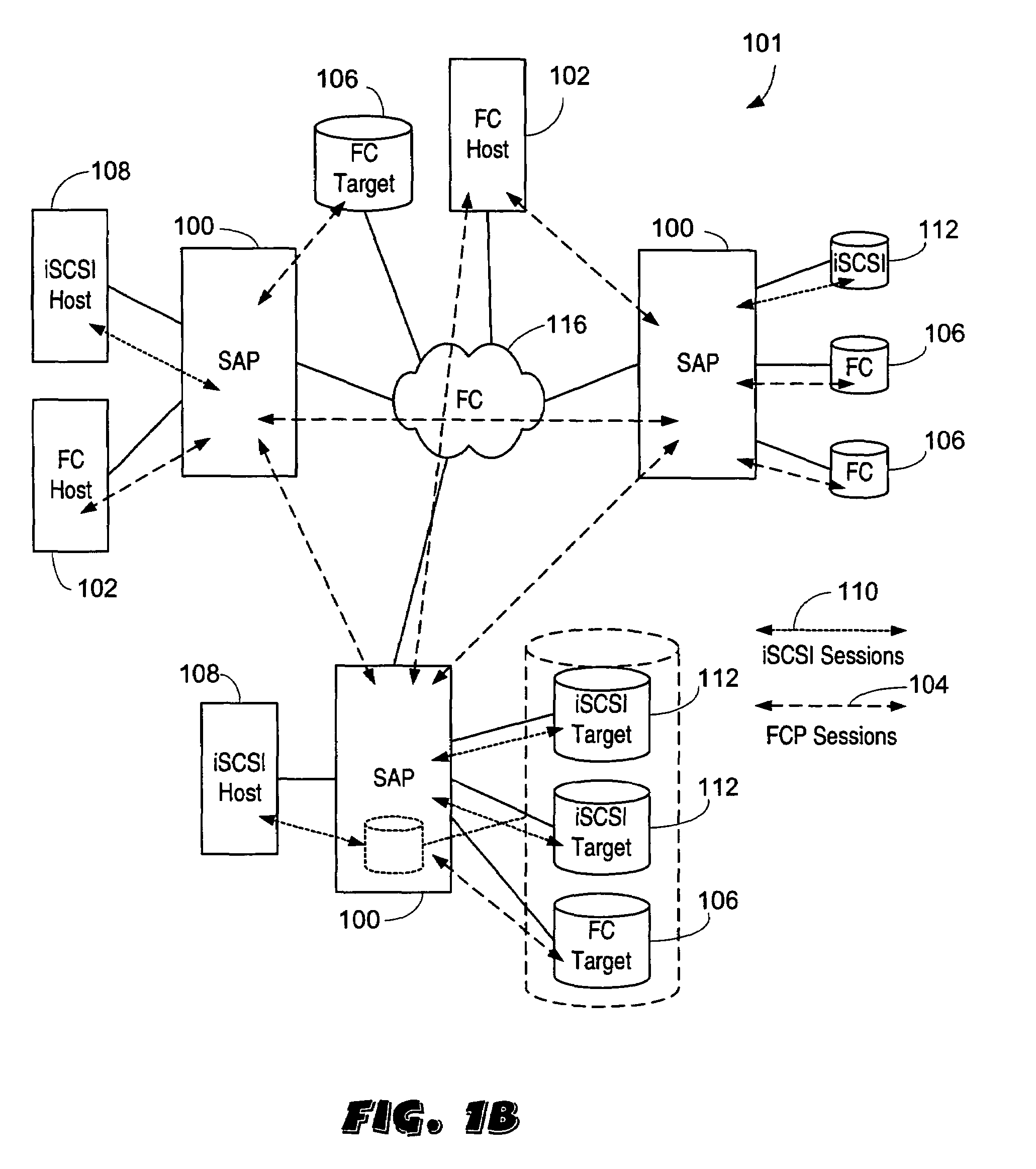

Data mover mechanism to achieve SAN RAID at wire speed

InactiveUS6880062B1Avoid dataAvoid errorsMultiple digital computer combinationsTransmissionRAIDWire speed

A Virtual Storage Server is provided for transferring data between a source storage device and one or more destination storage devices. A write command is issued to the one or more destinations for an amount of data. In response, one or more Transfer Ready Responses are returned indicating the amount of data the destinations are prepared to receive. The Virtual Storage Server then sends a read command to the source for an amount of data based on the amounts of data in the Transfer Ready Responses. The data is then transferred from the source storage device through the Virtual Storage Server to the one or more destination storage devices. Because data is transferred only in amounts that the destination is ready to receive, the Virtual Storage Server does not need a large buffer, and can basically send data received at wire speed. This process continues until the amount of data in the write command is transferred to the one or more destination storage devices.

Owner:NETWORK APPLIANCE INC

Lookup engine for network devices

InactiveUS20020116527A1Multiple digital computer combinationsData switching networksWire speedControl logic

A lookup engine for a network device is provided to lookup an address table. The lookup engine includes a parser for getting address information of an incoming packet. A predetermined number of shift control logic is provided for generating an I.I.D hash index for the incoming packet in response to the address information of the incoming packet. The output of each shift control logic is selected by a selector for looking up an address table, thereby to generate a forwarding information. With the implementation of the shift control logic, the lookup engine can construct each flow entry and classify the incoming packets belonging to this flow in wire-speed.

Owner:ACUTE COMM CORP

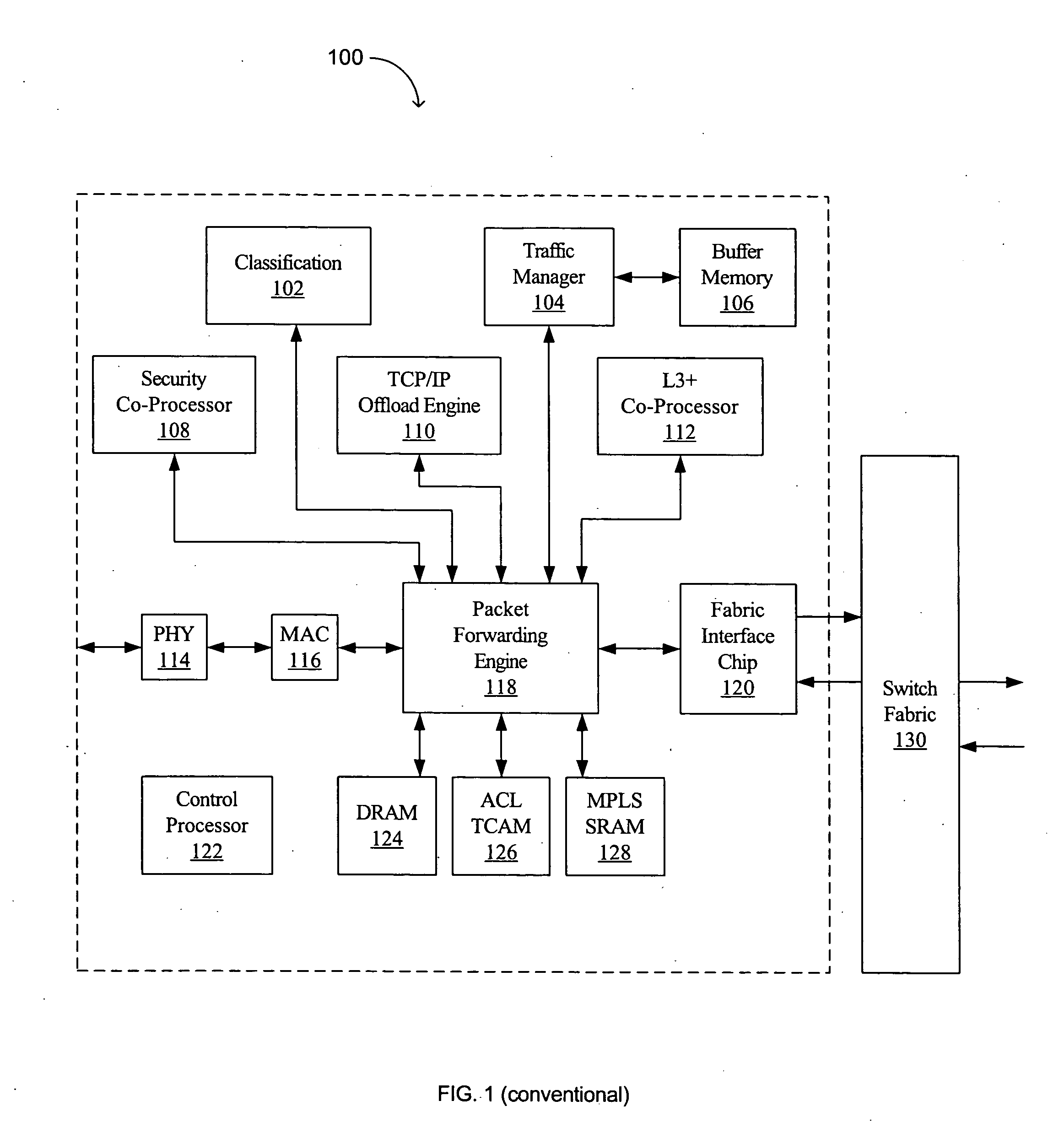

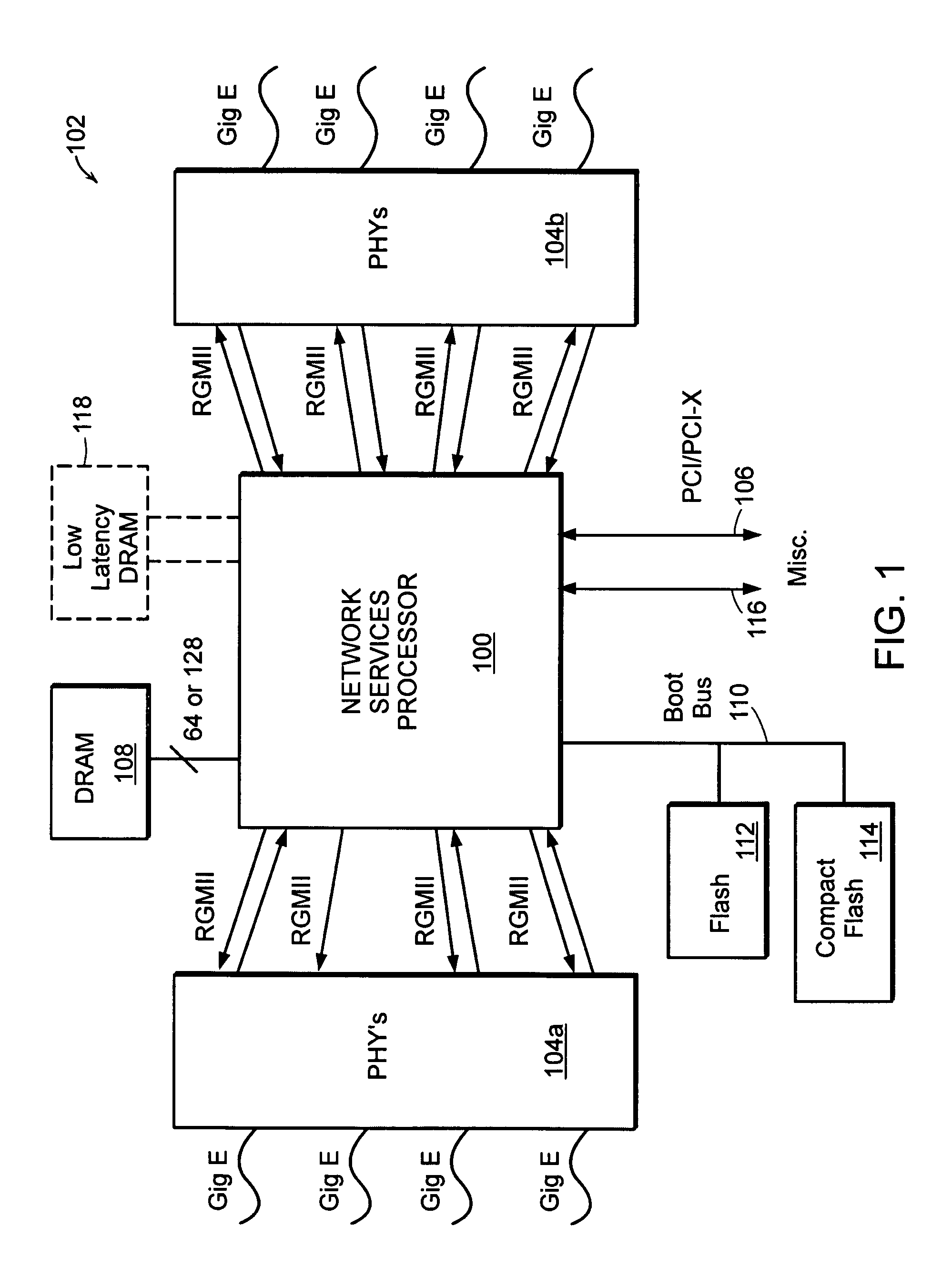

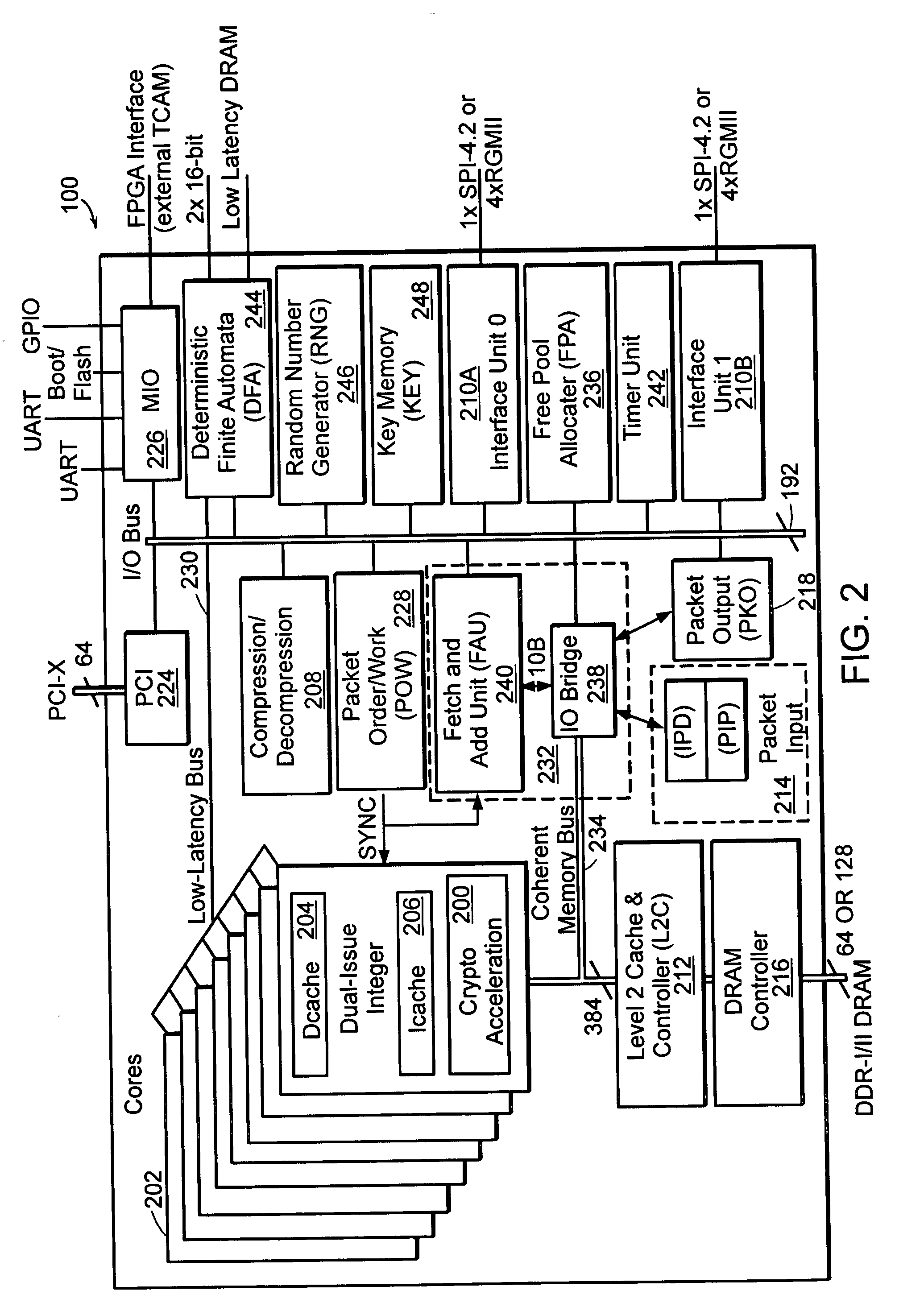

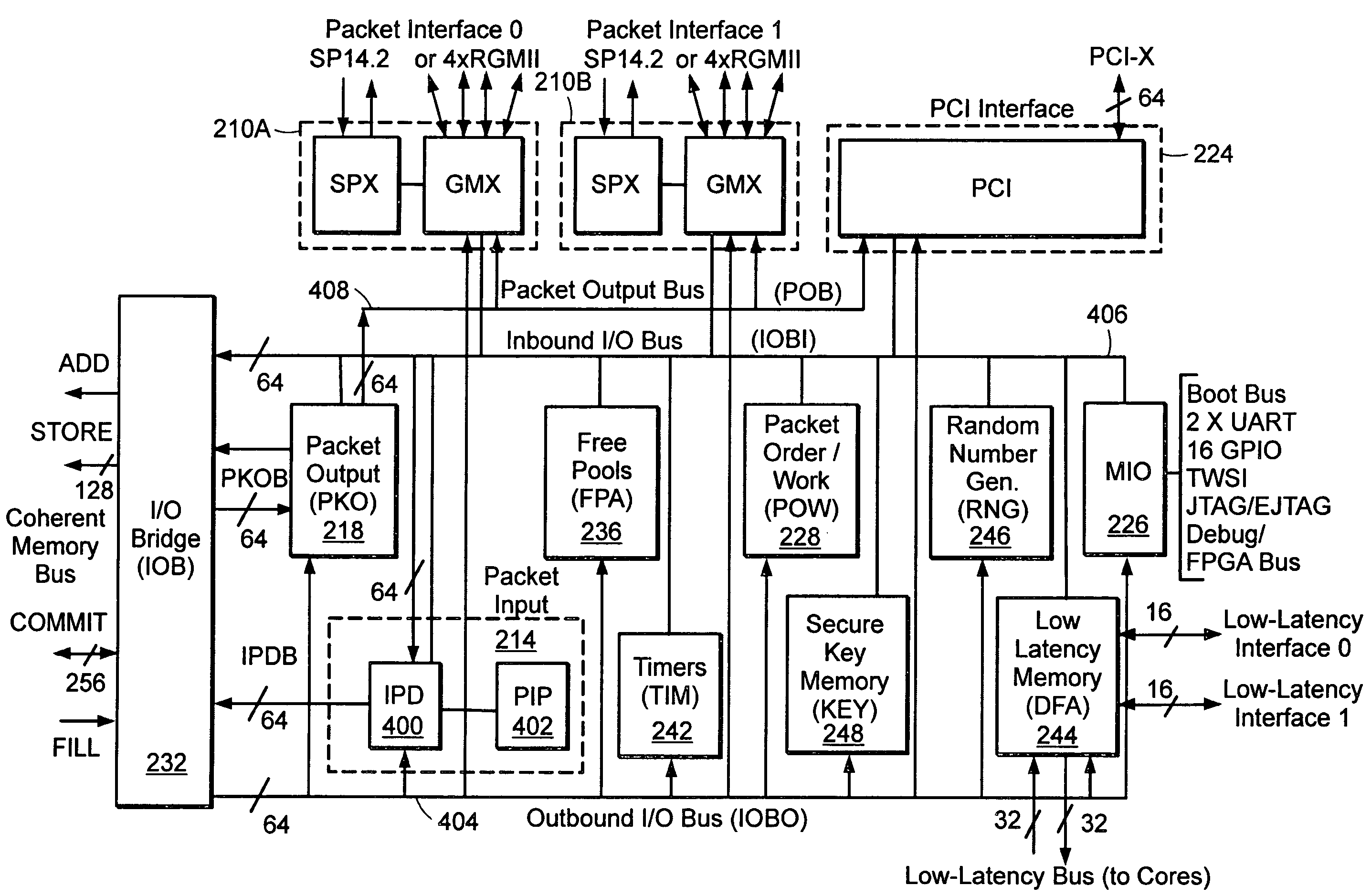

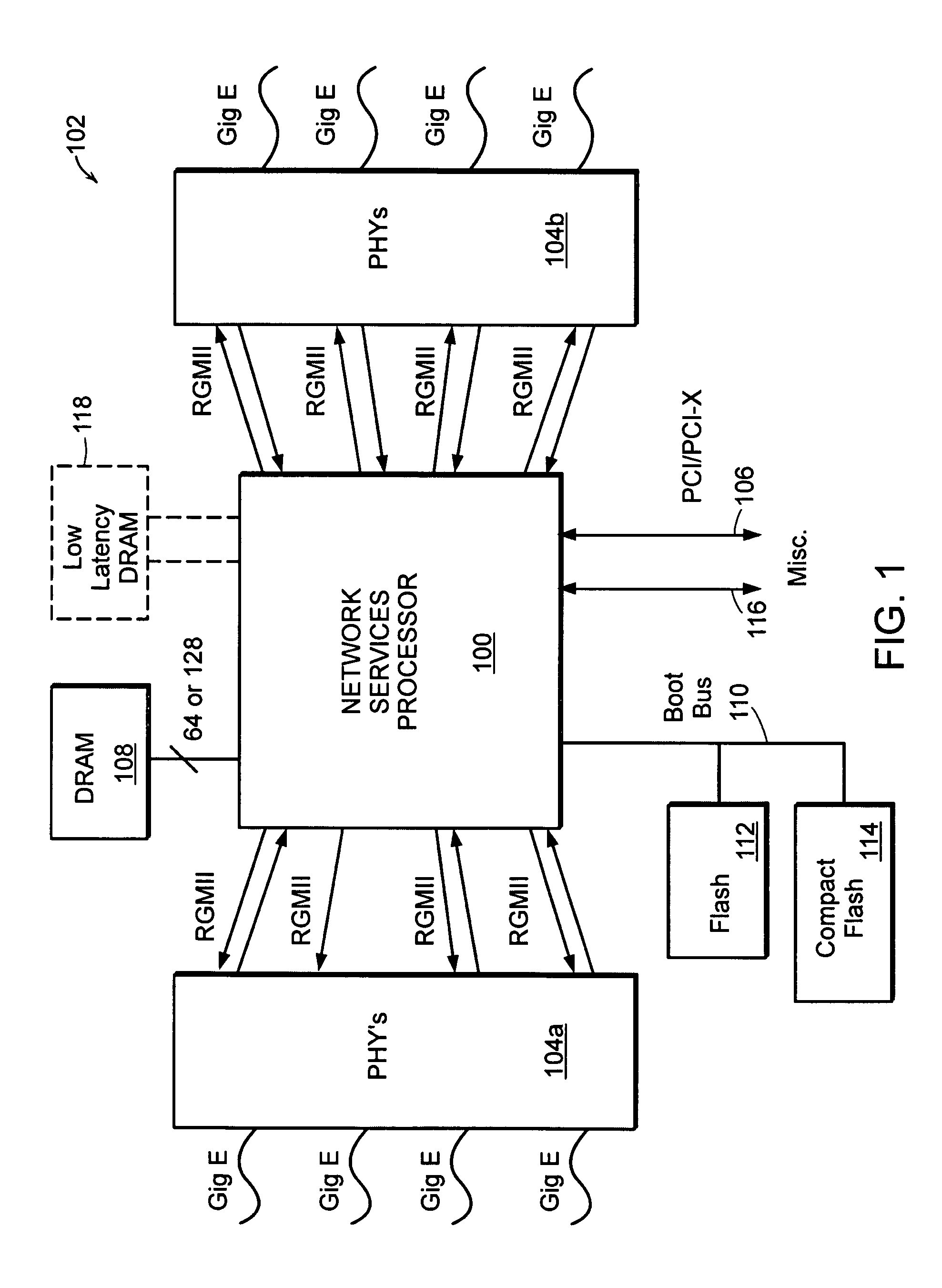

Advanced processor with mechanism for packet distribution at high line rate

InactiveUS20050027793A1High bandwidth communicationEfficient and cost-effectiveMemory adressing/allocation/relocationGeneral purpose stored program computerHigh bandwidthWire speed

An advanced processor comprises a plurality of multithreaded processor cores each having a data cache and instruction cache. A data switch interconnect is coupled to each of the processor cores and configured to pass information among the processor cores. A messaging network is coupled to each of the processor cores and a plurality of communication ports. In one aspect of an embodiment of the invention, the data switch interconnect is coupled to each of the processor cores by its respective data cache, and the messaging network is coupled to each of the processor cores by its respective message station. Advantages of the invention include the ability to provide high bandwidth communications between computer systems and memory in an efficient and cost-effective manner.

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

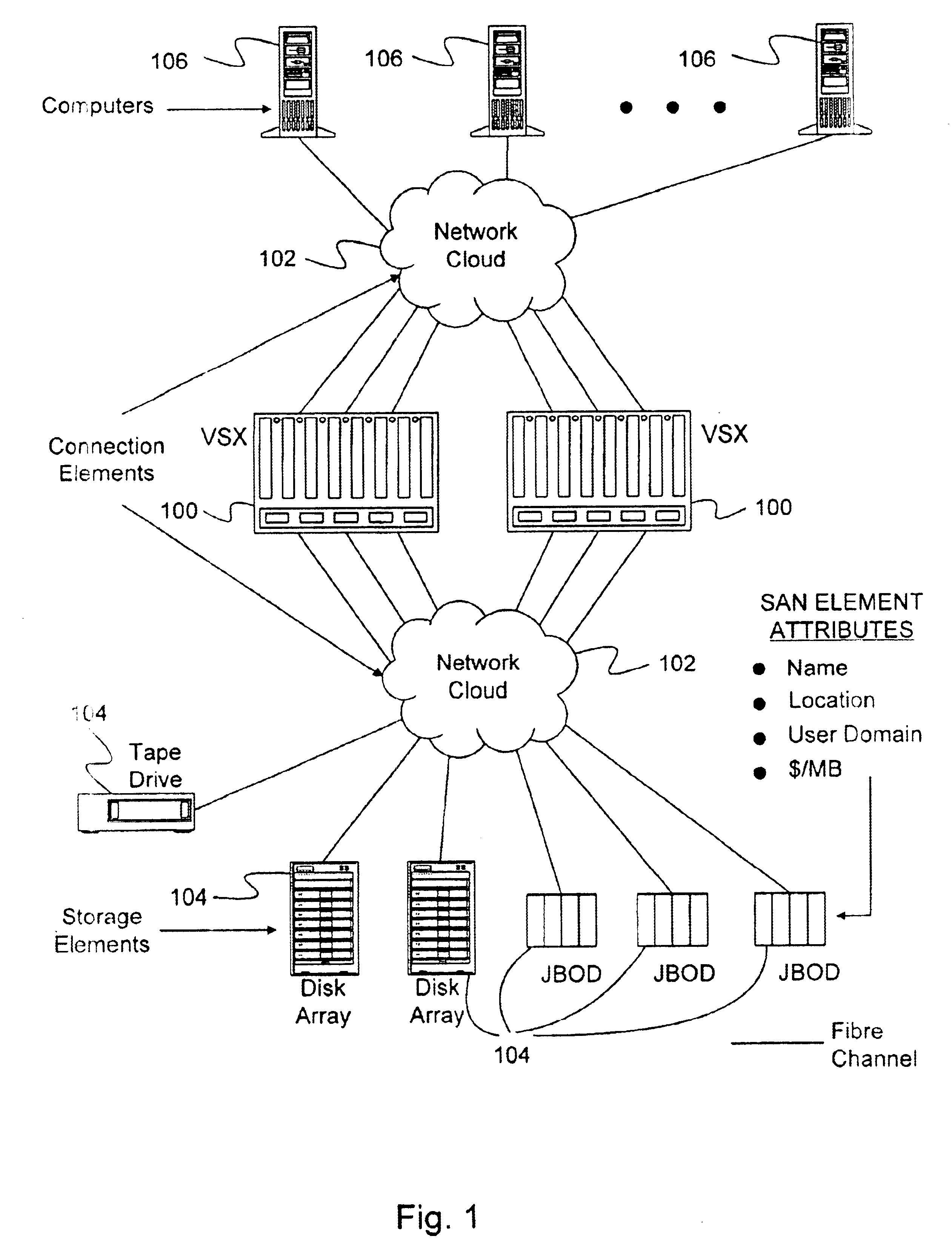

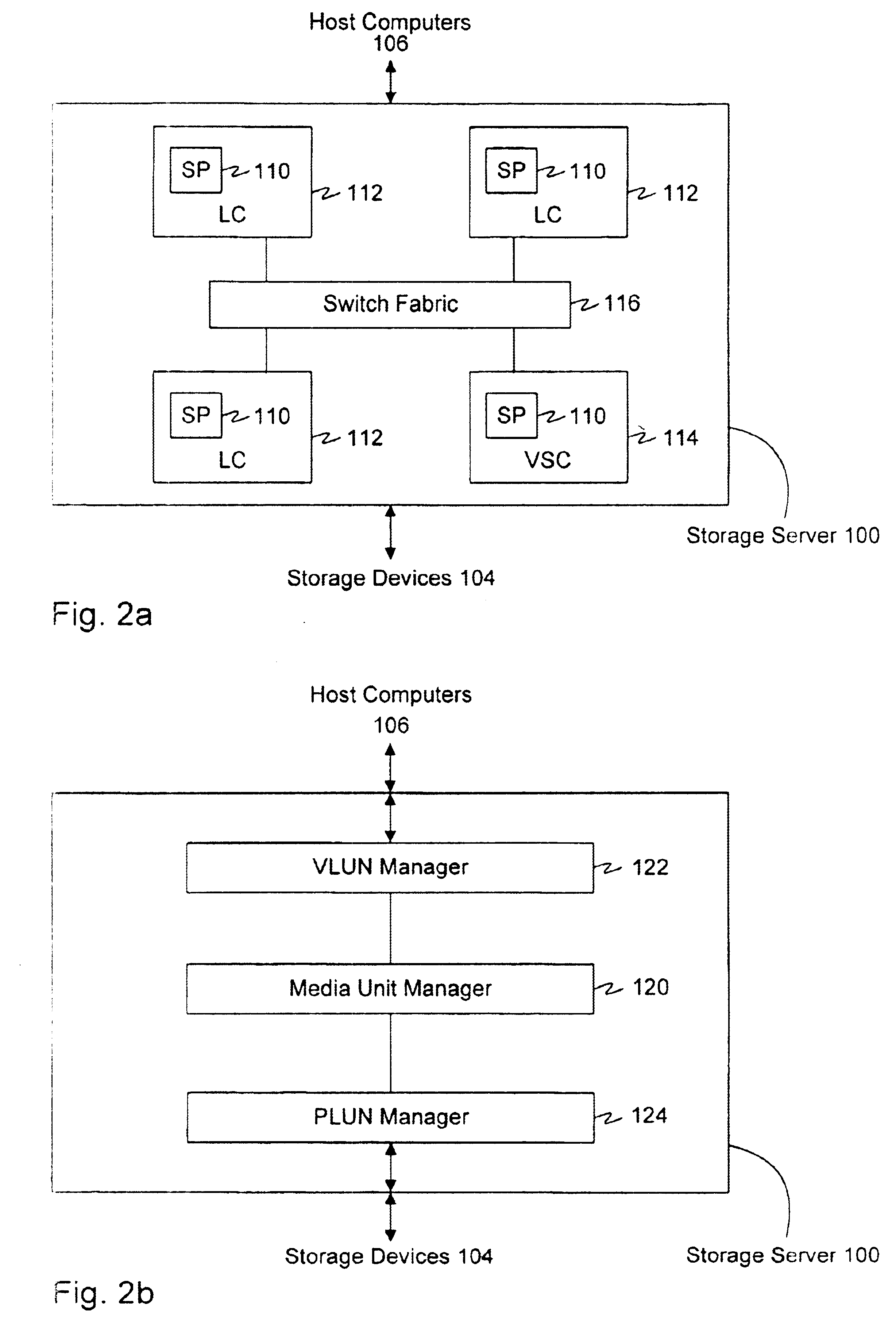

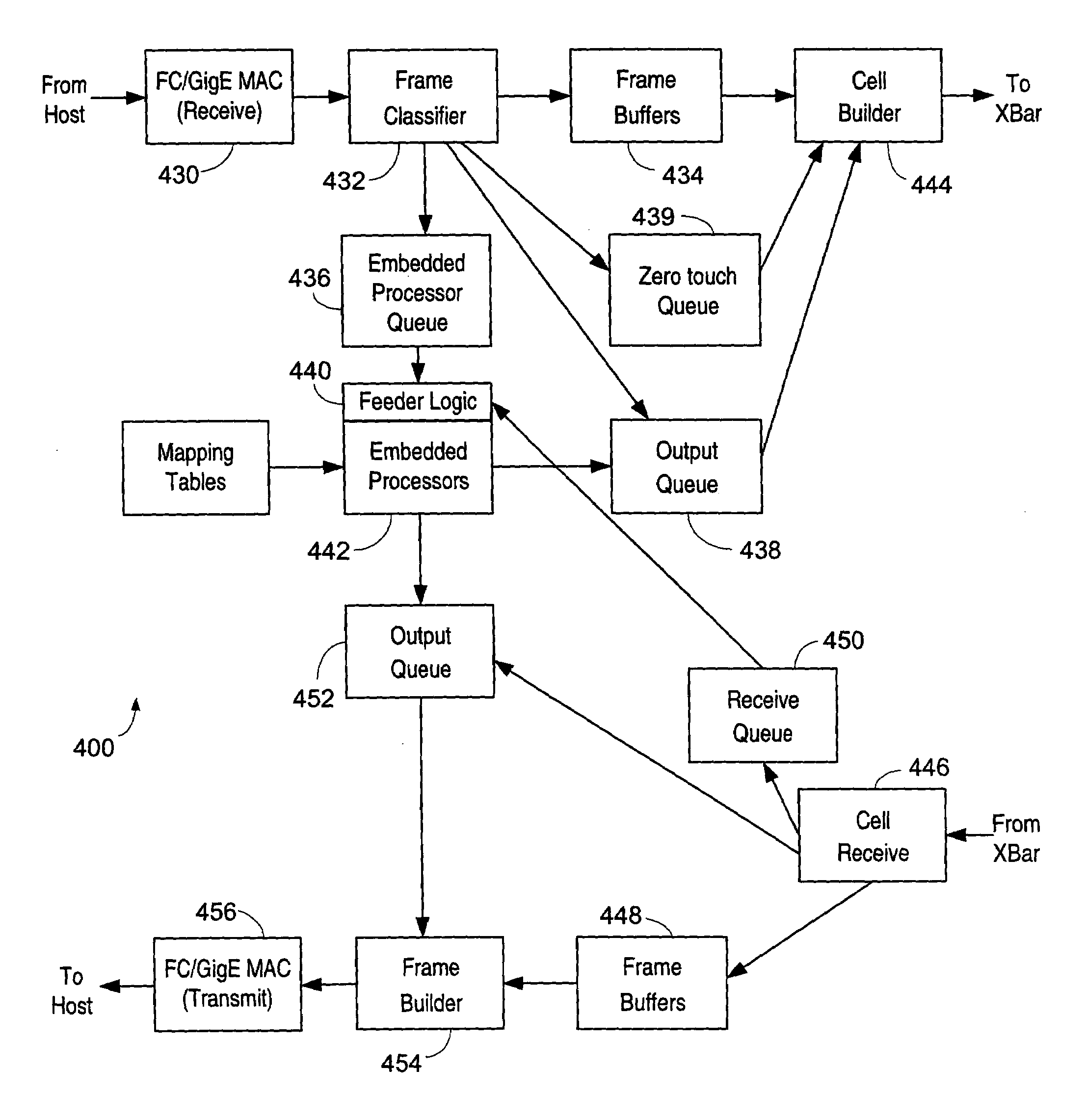

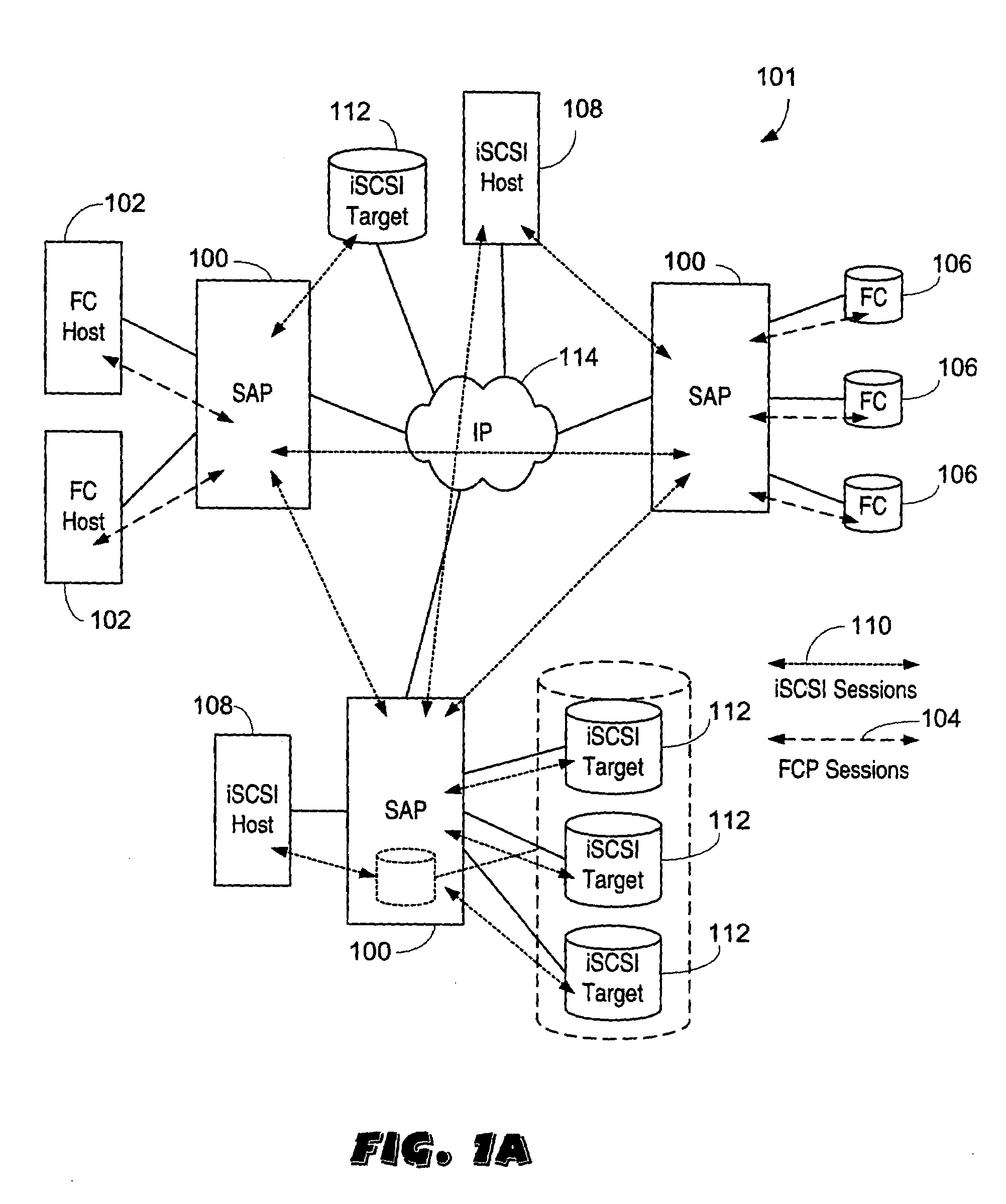

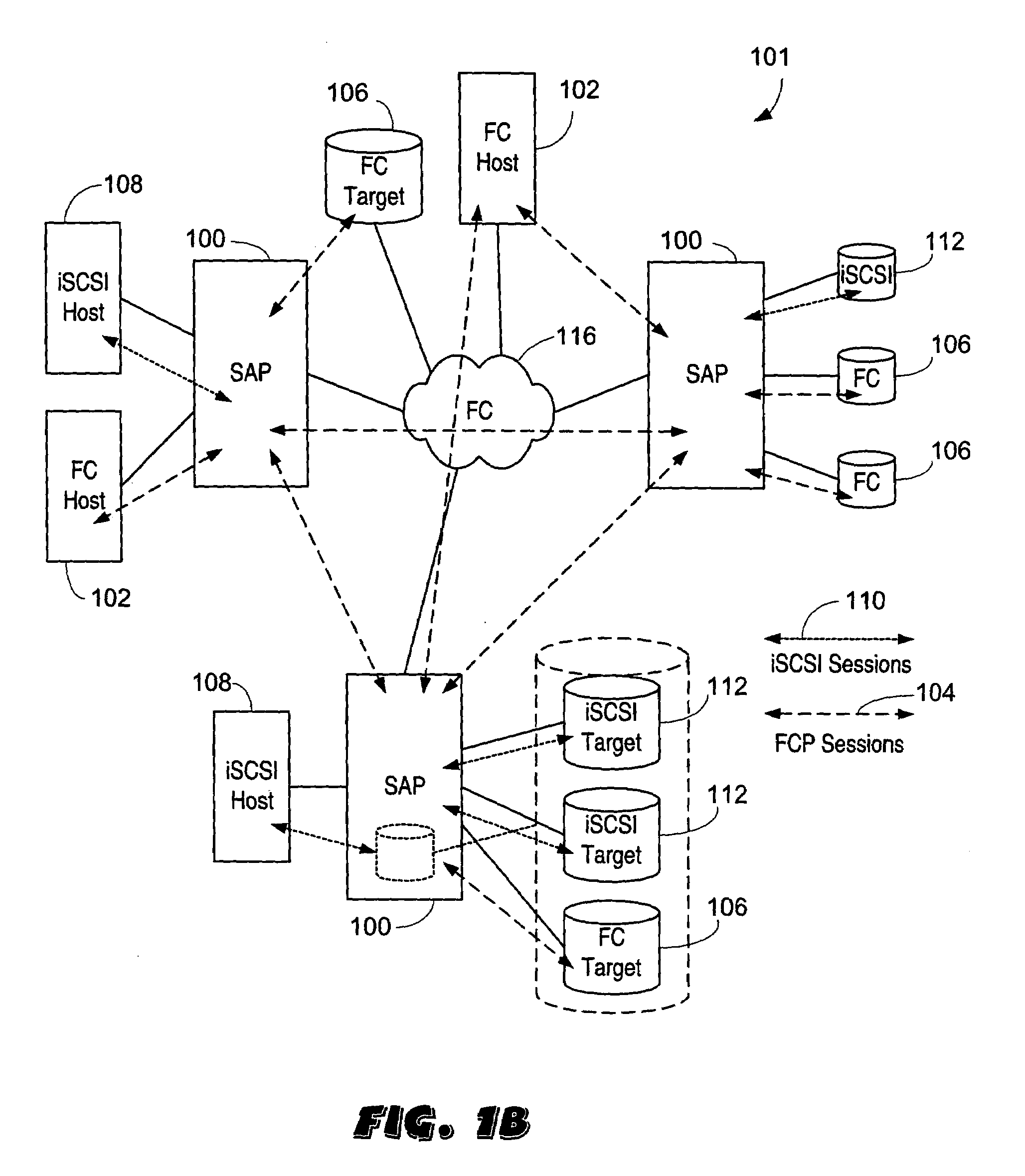

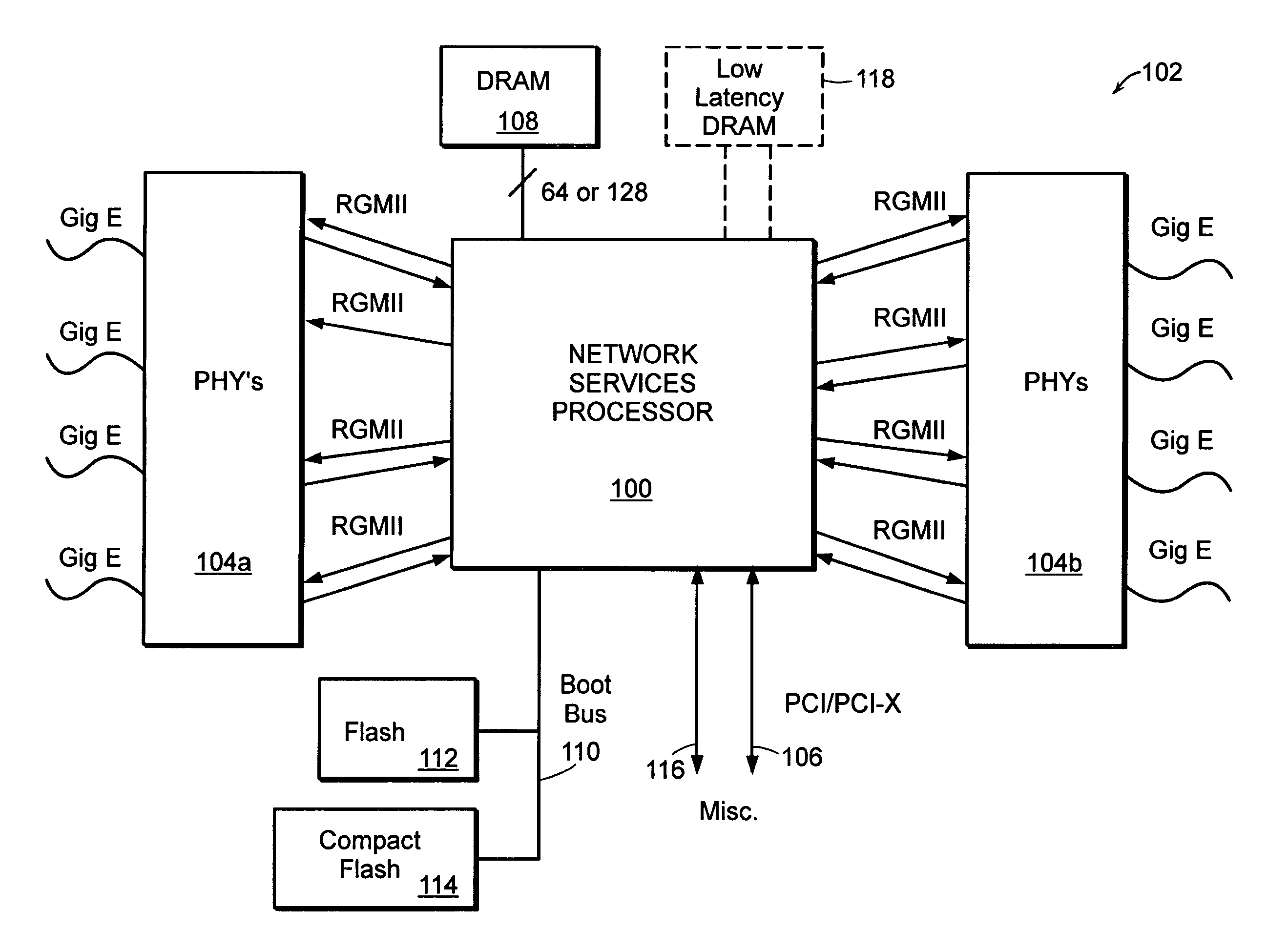

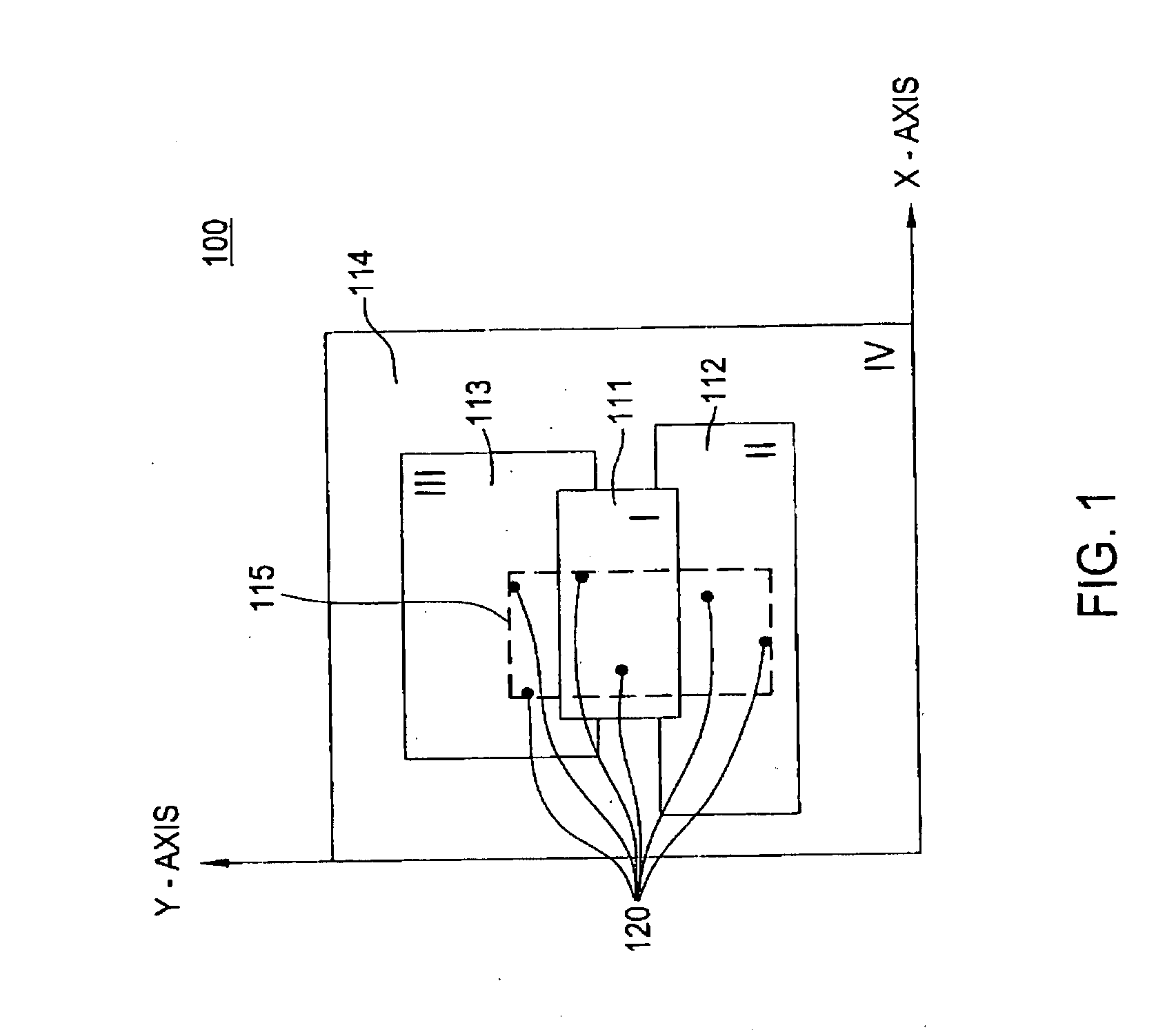

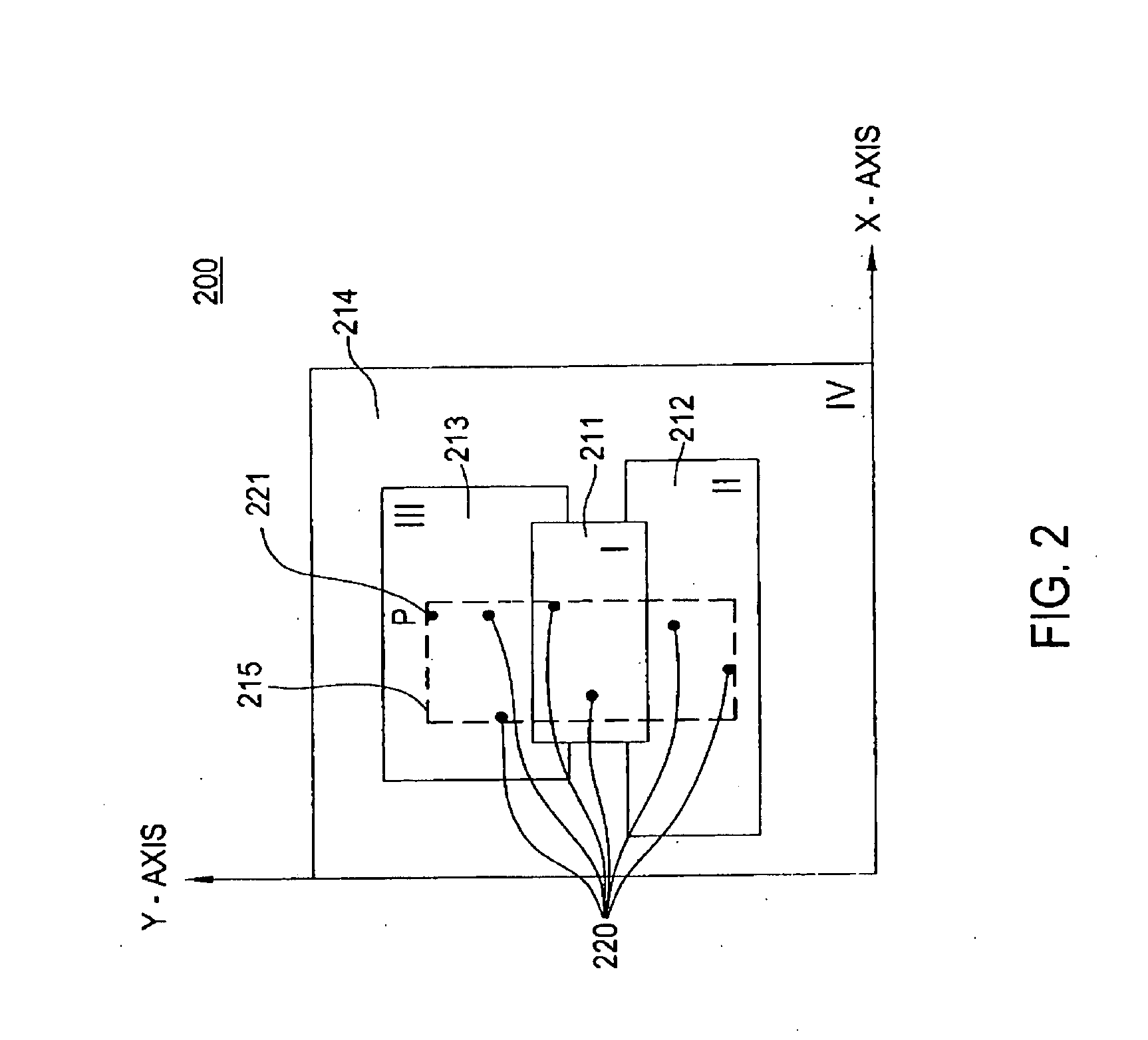

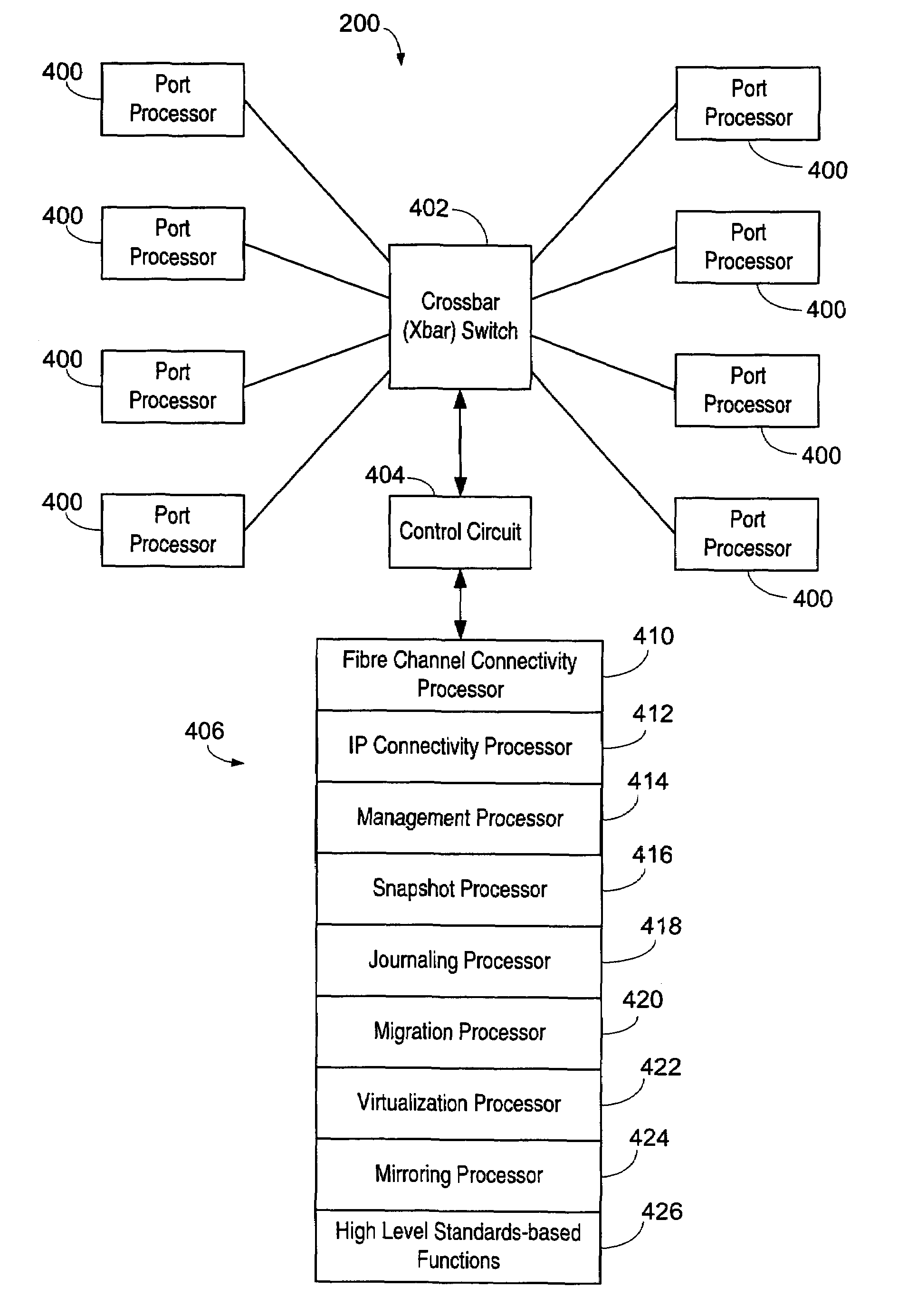

Apparatus and method for storage processing through scalable port processors

InactiveUS7237045B2Achieve scaleProvide flexibilityMultiplex system selection arrangementsInput/output to record carriersWire speedMulti protocol

A system including a storage processing device with an input / output module. The input / output module has port processors to receive and transmit network traffic. The input / output module also has a switch connecting the port processors. Each port processor categorizes the network traffic as fast path network traffic or control path network traffic. The switch routes fast path network traffic from an ingress port processor to a specified egress port processor. The storage processing device also includes a control module to process the control path network traffic received from the ingress port processor. The control module routes processed control path network traffic to the switch for routing to a defined egress port processor. The control module is connected to the input / output module. The input / output module and the control module are configured to interactively support data virtualization, data migration, data journaling, and snapshotting. The distributed control and fast path processors achieve scaling of storage network software. The storage processors provide line-speed processing of storage data using a rich set of storage-optimized hardware acceleration engines. The multi-protocol switching fabric provides a low-latency, protocol-neutral interconnect that integrally links all components with any-to-any non-blocking throughput.

Owner:AVAGO TECH INT SALES PTE LTD

TCP engine

ActiveUS20060227811A1Speed up the processTime-division multiplexTransmissionTransport layerWire speed

A network transport layer accelerator accelerates processing of packets so that packets can be forwarded at wire-speed. To accelerate processing of packets, the accelerator performs pre-processing on a network transport layer header encapsulated in a packet for a connection and performs in-line network transport layer checksum insertion prior to transmitting a packet. A timer unit in the accelerator schedules processing of the received packets. The accelerator also includes a free pool allocator which manages buffers for storing the received packets and a packet order unit which synchronizes processing of received packets for a same connection.

Owner:MARVELL ASIA PTE LTD

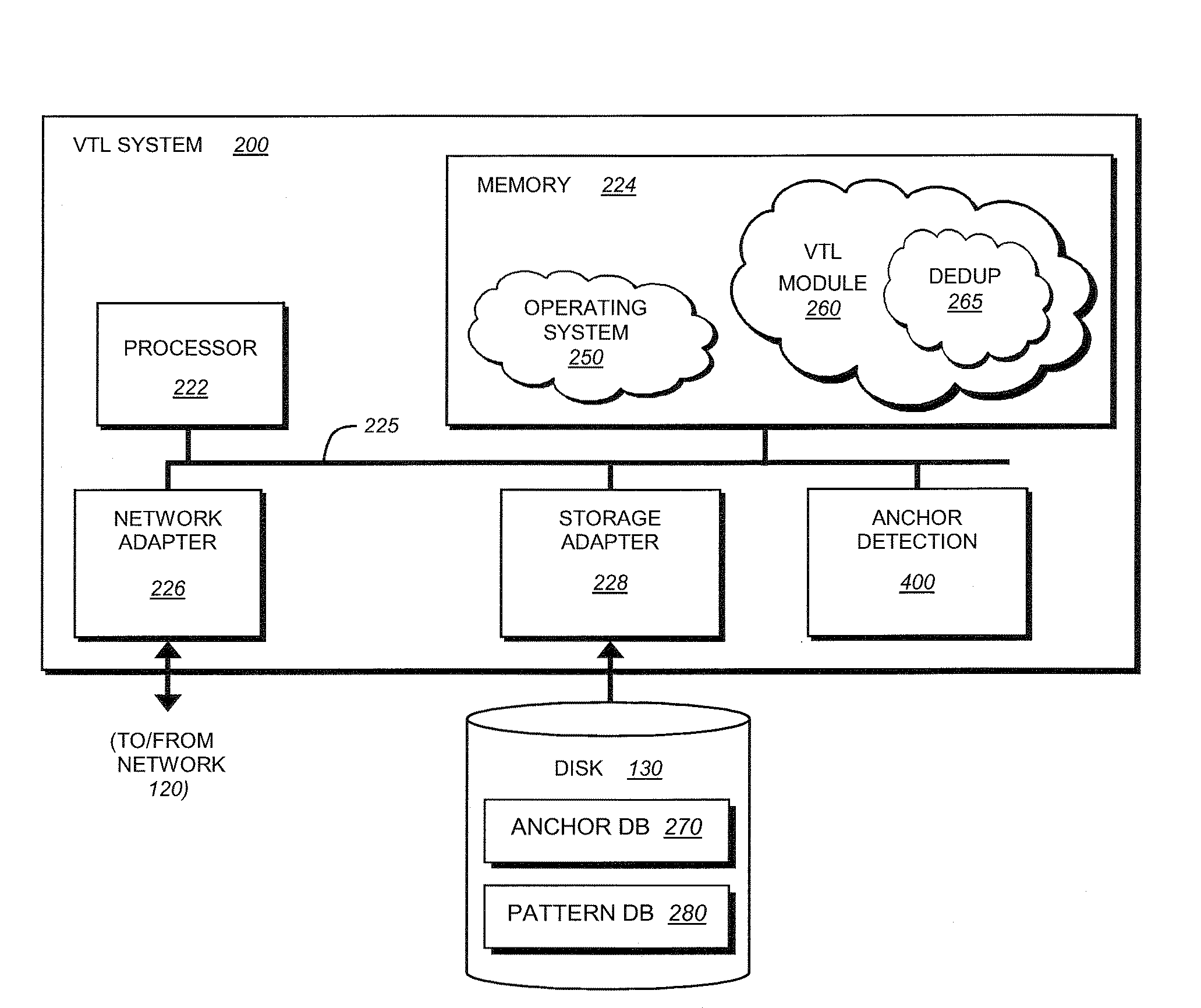

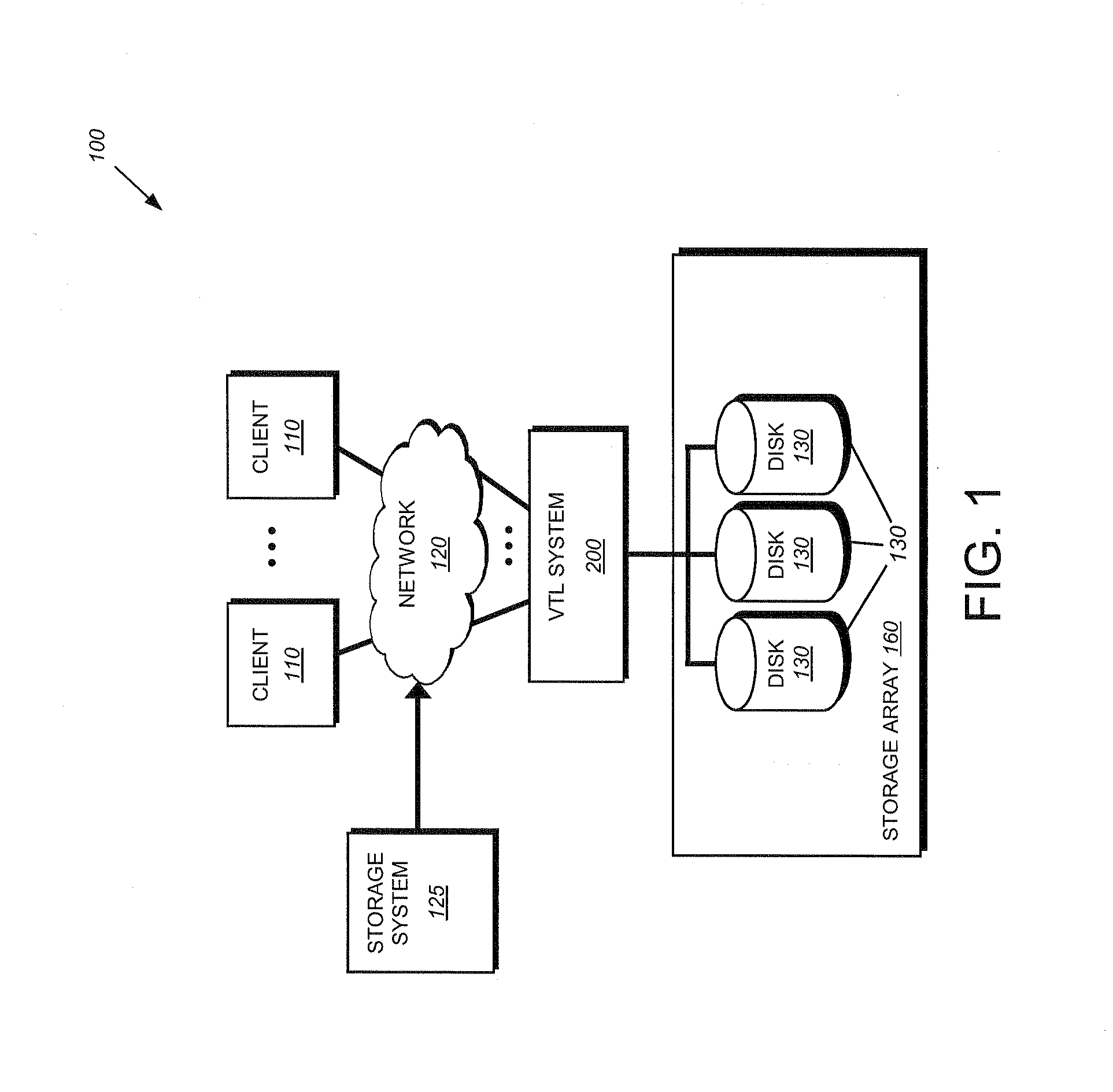

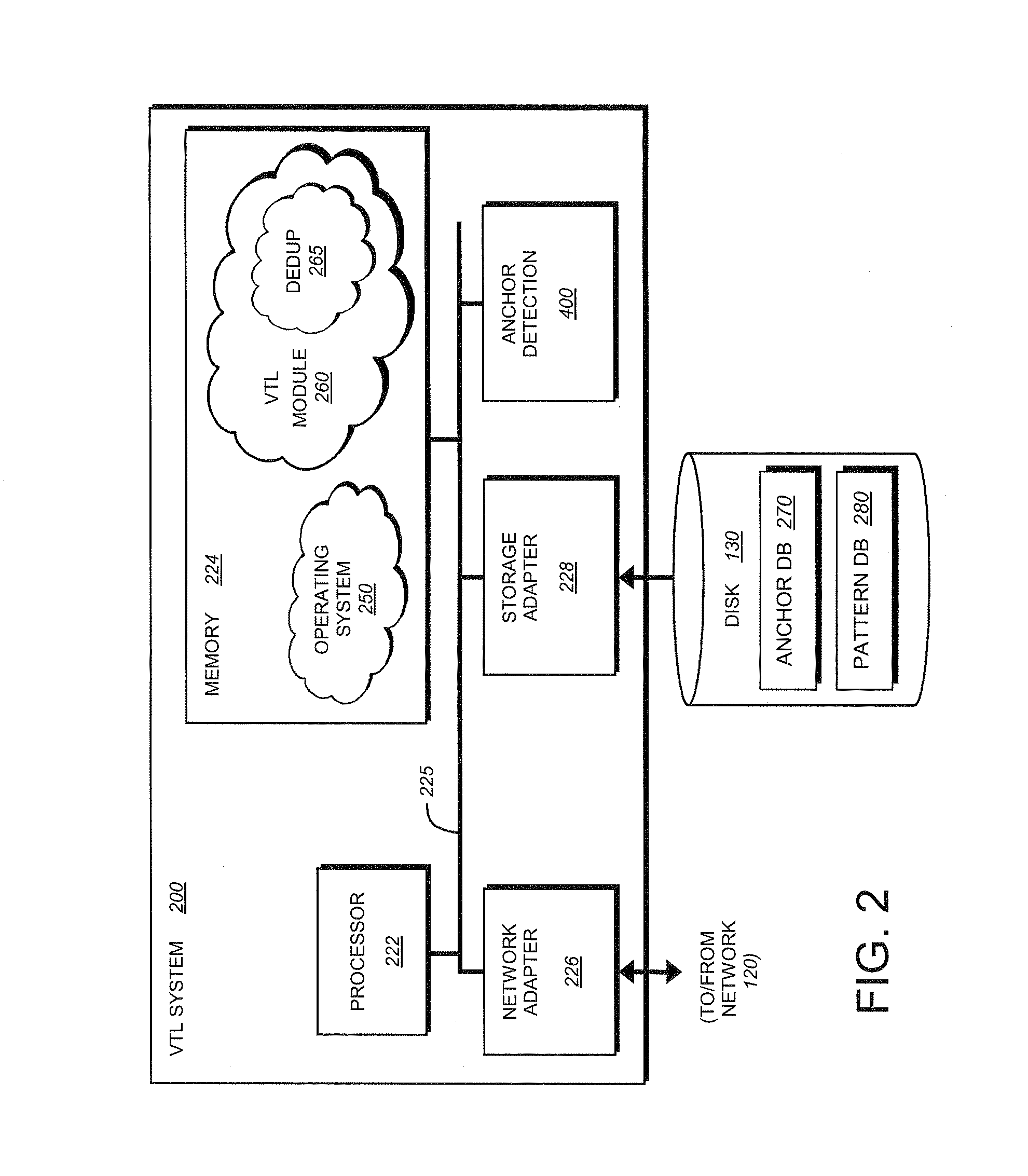

System and method for accelerating anchor point detection

ActiveUS20080301134A1Reduce loadQuick identificationDigital data information retrievalCode conversionComputer hardwareData stream

A sampling based technique for eliminating duplicate data (de-duplication) stored on storage resources, is provided. According to the invention, when a new data set, e.g., a backup data stream, is received by a server, e.g., a storage system or virtual tape library (VTL) system implementing the invention, one or more anchors are identified within the new data set. The anchors are identified using a novel anchor detection circuitry in accordance with an illustrative embodiment of the present invention. Upon receipt of the new data set by, for example, a network adapter of a VTL system, the data set is transferred using direct memory access (DMA) operations to a memory associated with an anchor detection hardware card that is operatively interconnected with the storage system. The anchor detection hardware card may be implemented as, for example, a FPGA is to quickly identify anchors within the data set. As the anchor detection process is performed using a hardware assist, the load on a main processor of the system is reduced, thereby enabling line speed de-duplication.

Owner:NETWORK APPLIANCE INC

TCP engine

ActiveUS7535907B2Speed up the processSynchronizationTime-division multiplexData switching by path configurationTransport layerWire speed

A network transport layer accelerator accelerates processing of packets so that packets can be forwarded at wire-speed. To accelerate processing of packets, the accelerator performs pre-processing on a network transport layer header encapsulated in a packet for a connection and performs in-line network transport layer checksum insertion prior to transmitting a packet. A timer unit in the accelerator schedules processing of the received packets. The accelerator also includes a free pool allocator which manages buffers for storing the received packets and a packet order unit which synchronizes processing of received packets for a same connection.

Owner:MARVELL ASIA PTE LTD

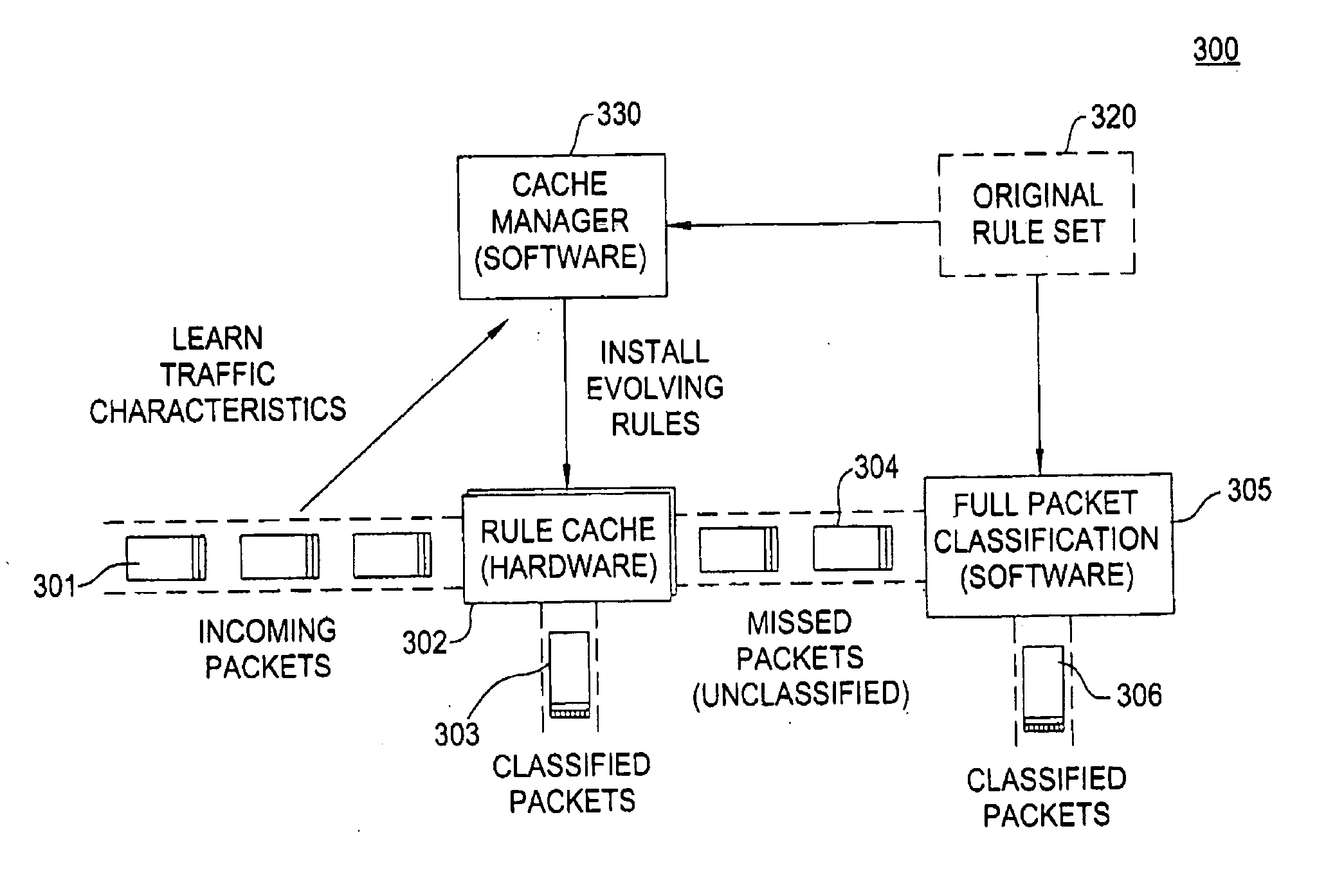

Method and apparatus for classifying packets

A method and apparatus for classifying packets, e.g., at wire speed are disclosed. The method receives a packet and processes the packet through a hardware-based packet classifier having at least one evolving rule. The method then processes the packet through a software-based packet classifier if the hardware-based packet classifier is unable to classify the packet. In one embodiment, the at least one evolving rule is continuously modified in accordance with learned traffic characteristics of the received packets

Owner:WISCONSIN ALUMNI RES FOUND +1

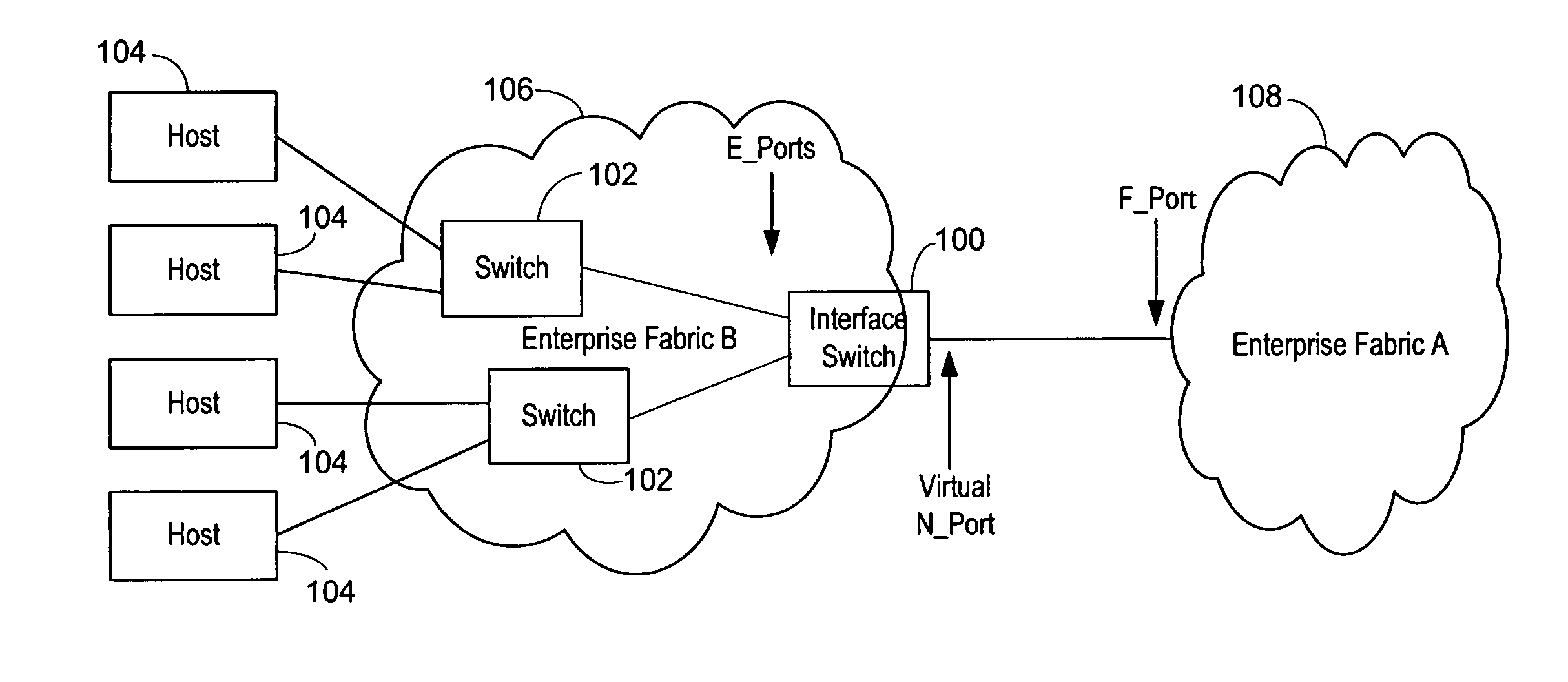

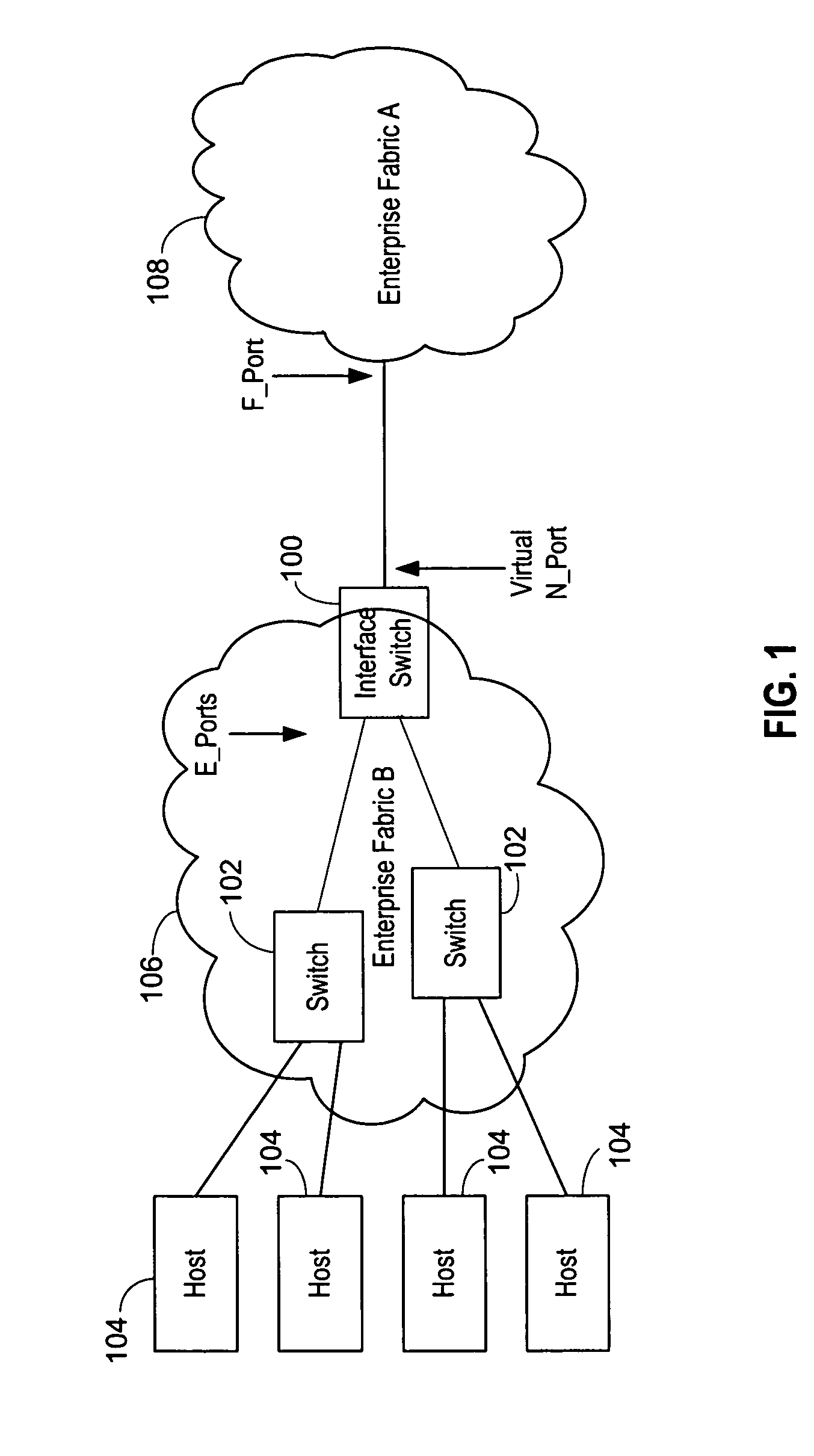

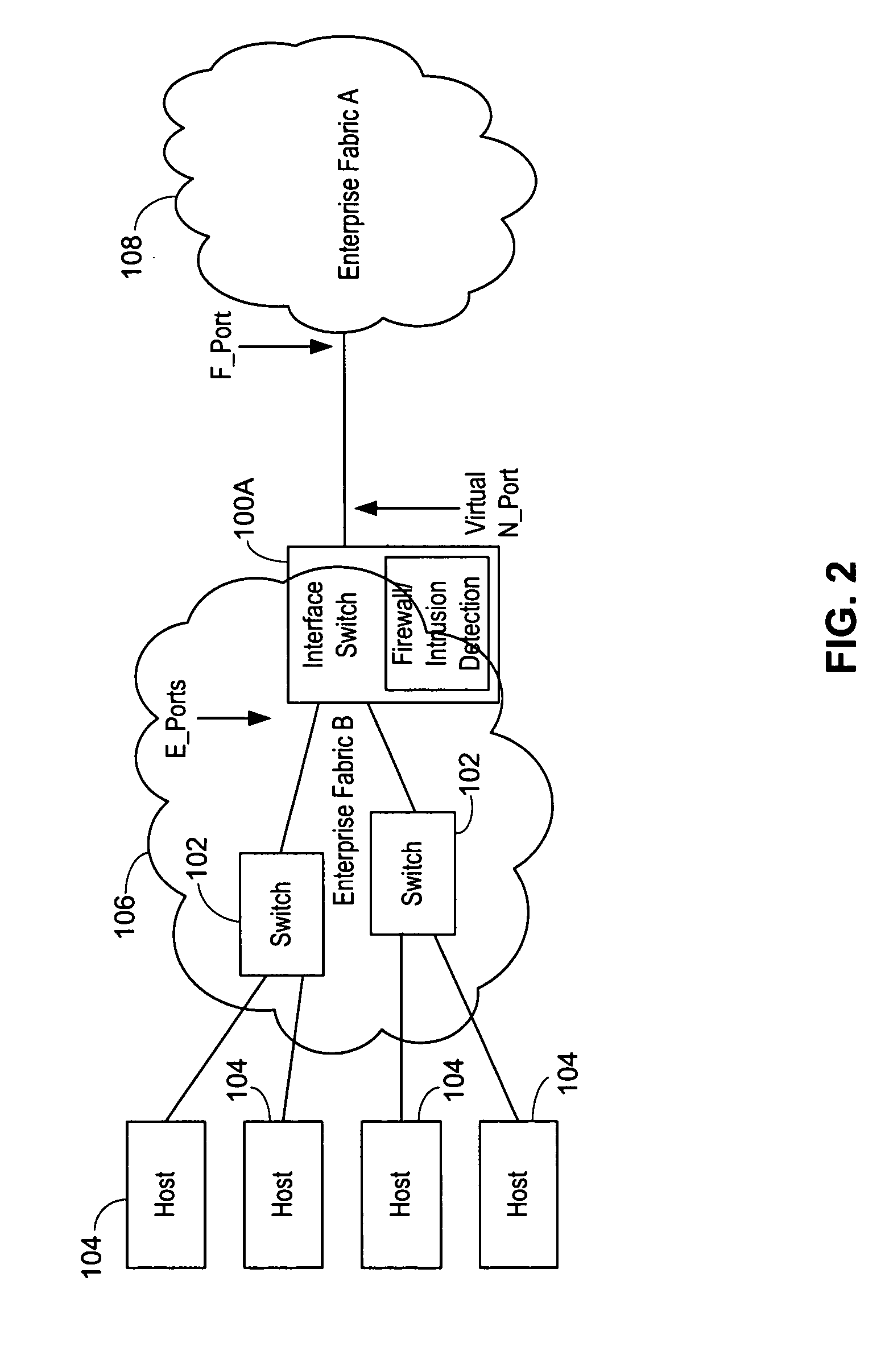

Interface switch for use with fibre channel fabrics in storage area networks

InactiveUS20070091903A1Improve scalabilityAvoid mergingMultiplex system selection arrangementsTime-division multiplexMultiplexingVirtualization

An interface switch which presents itself as switch to an enterprise fabric formed of the devices from the same manufacturer as the interface switch and that of a host or node to an enterprise fabric from a different manufacturer. This allows each enterprise fabric to remain in a higher performance operating mode. The multiplexing of multiple streams of traffic between the N_ports on the first enterprise fabric and the second enterprise fabric is accomplished by N_port Virtualization. The interface switch can be connected to multiple enterprise fabrics. All control traffic address mappings between virtual and physical addresses may be mediated and translated by the CPU of the interface switch and address mappings for data traffic performed at wire speed. Since the interface switch may preferably be a single conduit between the enterprise fabrics, it is also a good point to enforce perimeter defenses against attacks.

Owner:AVAGO TECH INT SALES PTE LTD

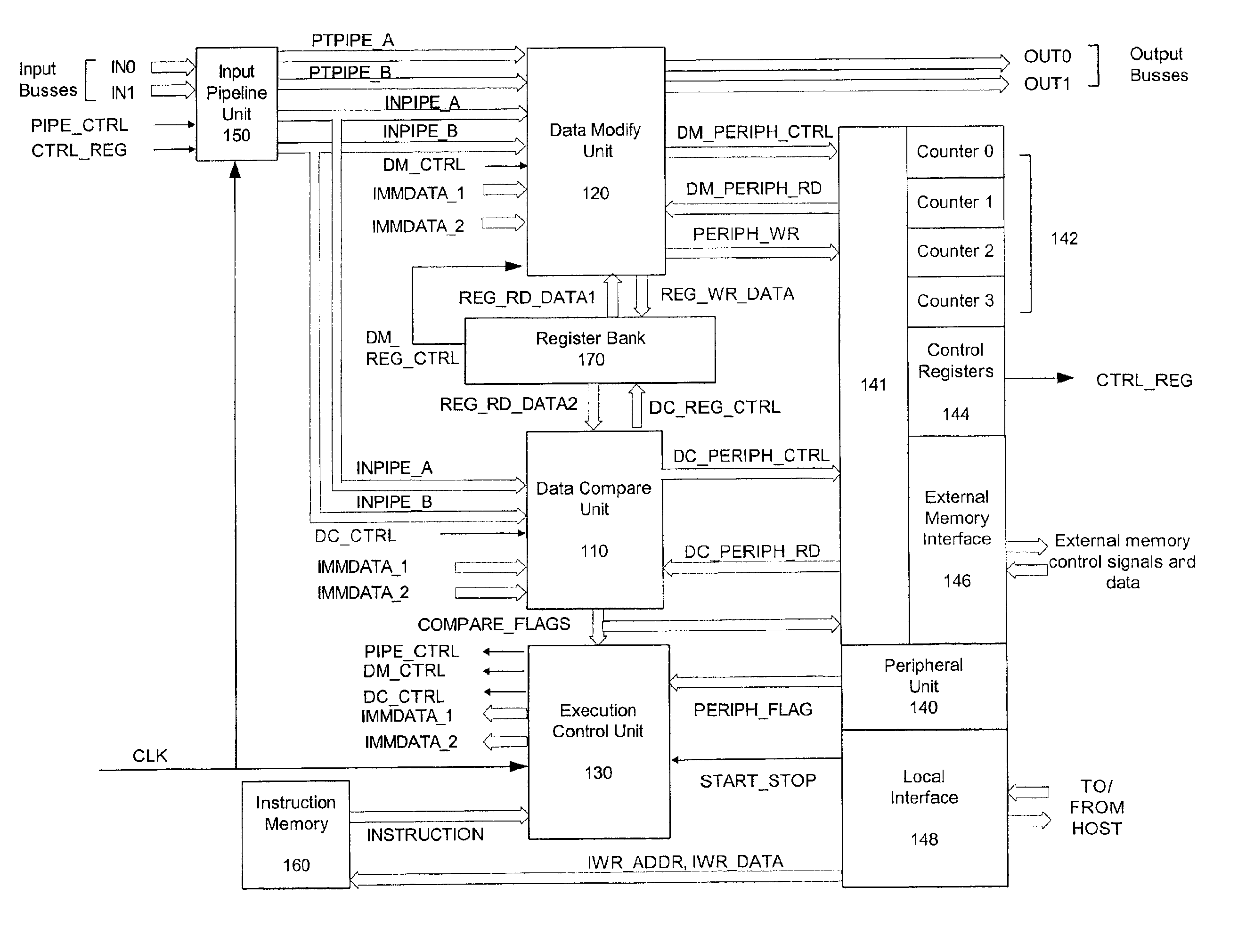

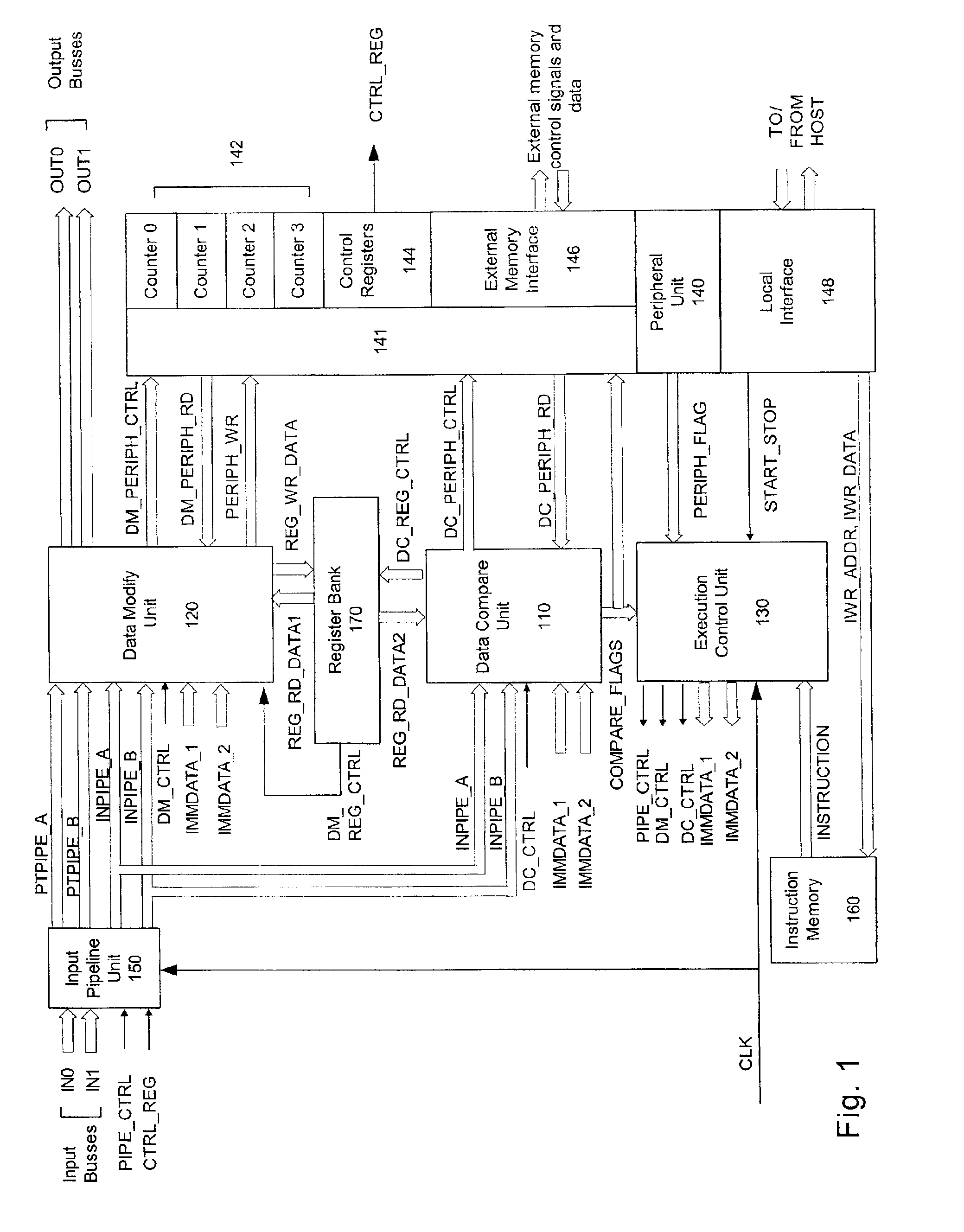

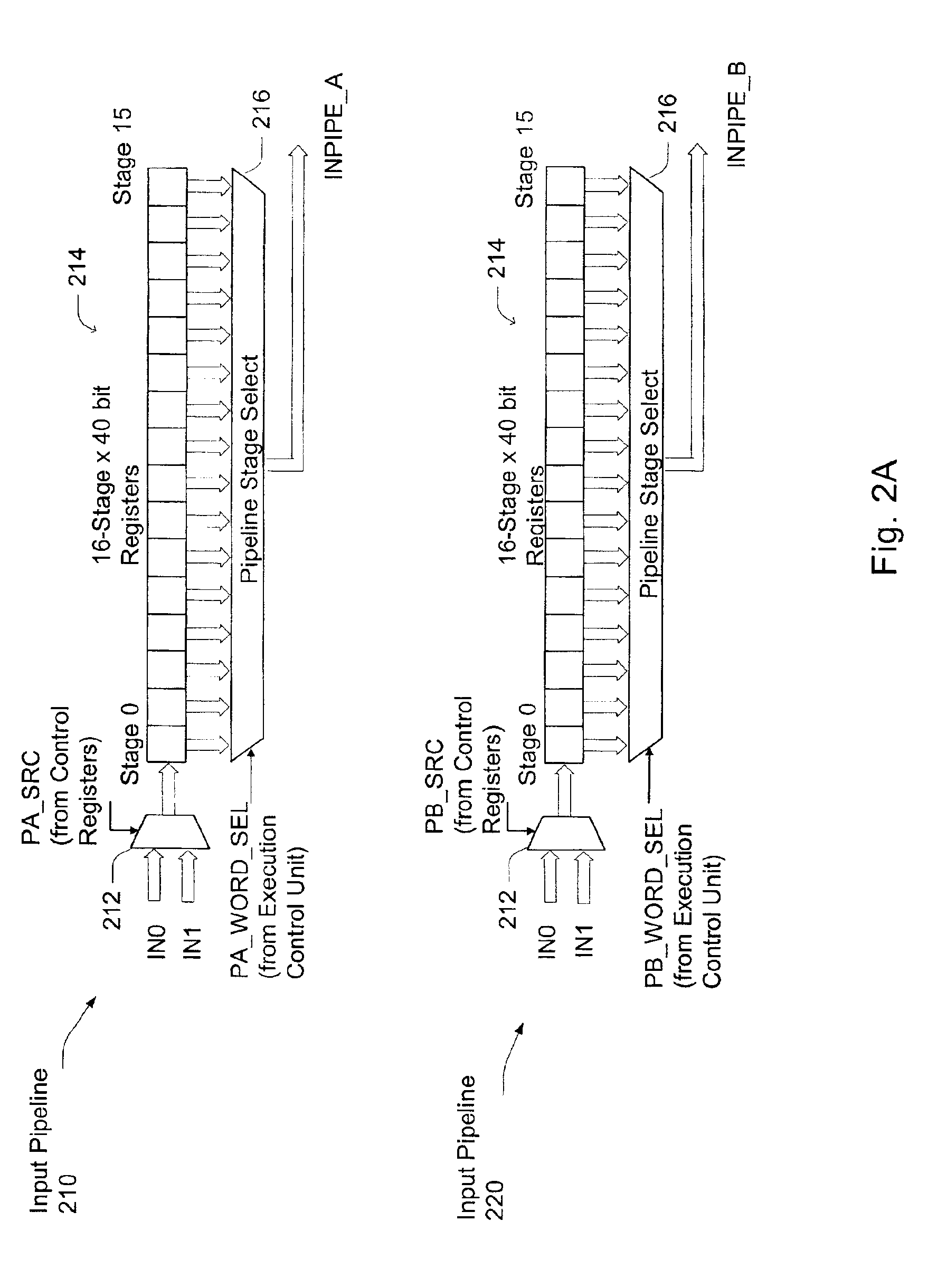

Synchronous network traffic processor

InactiveUS6880070B2Low costReduce developmentDigital computer detailsNext instruction address formationTraffic capacityWire speed

A synchronous network traffic processor that synchronously processes, analyzes and generates data for high-speed network protocols, on a wire-speed, word-by-word basis. The synchronous network processor is protocol independent and may be programmed to convert protocols on the fly. An embodiment of the synchronous network processor described has a low gate count and can be easily implemented using programmable logic. An appropriately programmed synchronous network traffic processor may replace modules traditionally implemented with hard-wired logic or ASIC.

Owner:VIAVI SOLUTIONS INC

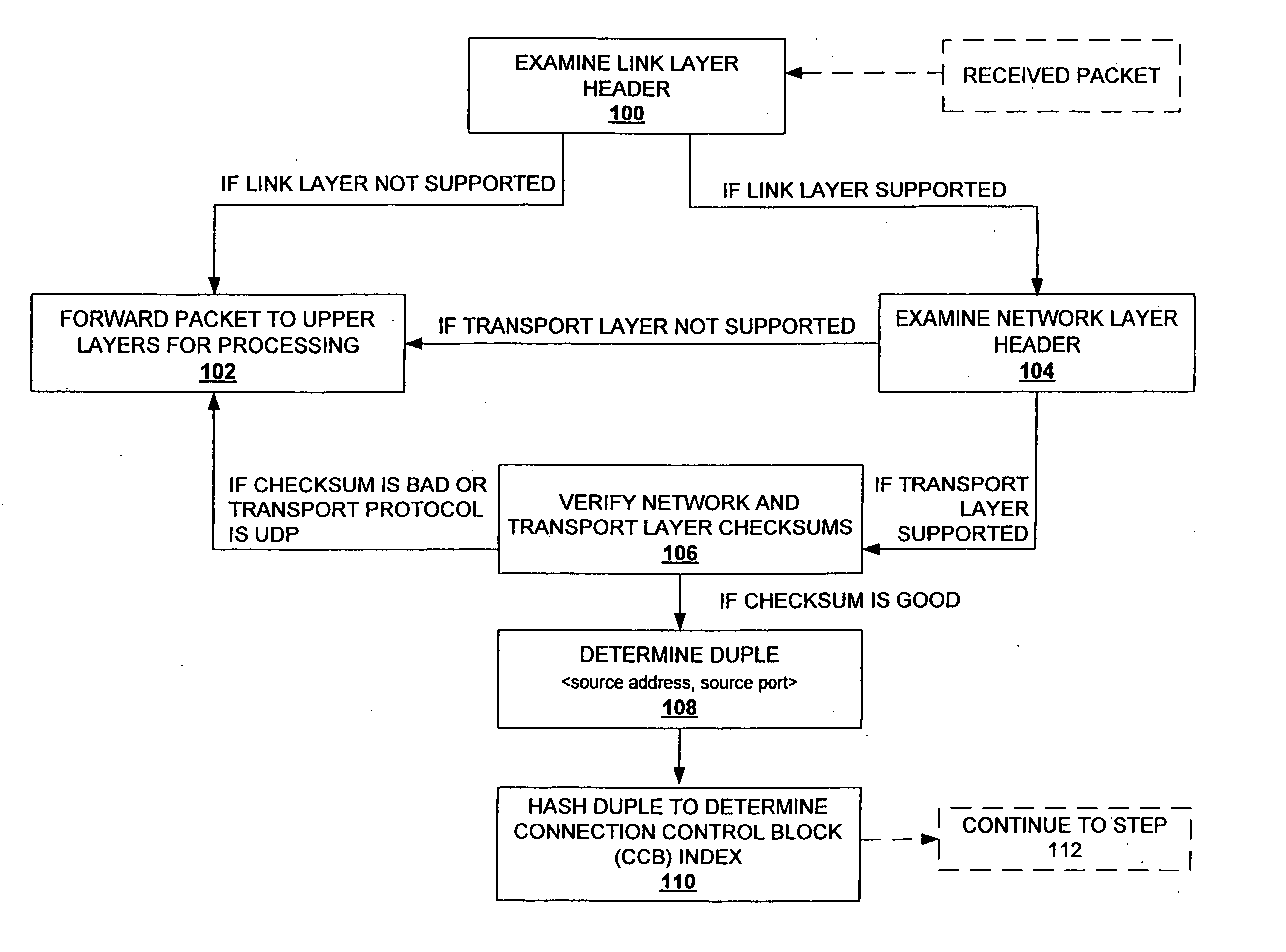

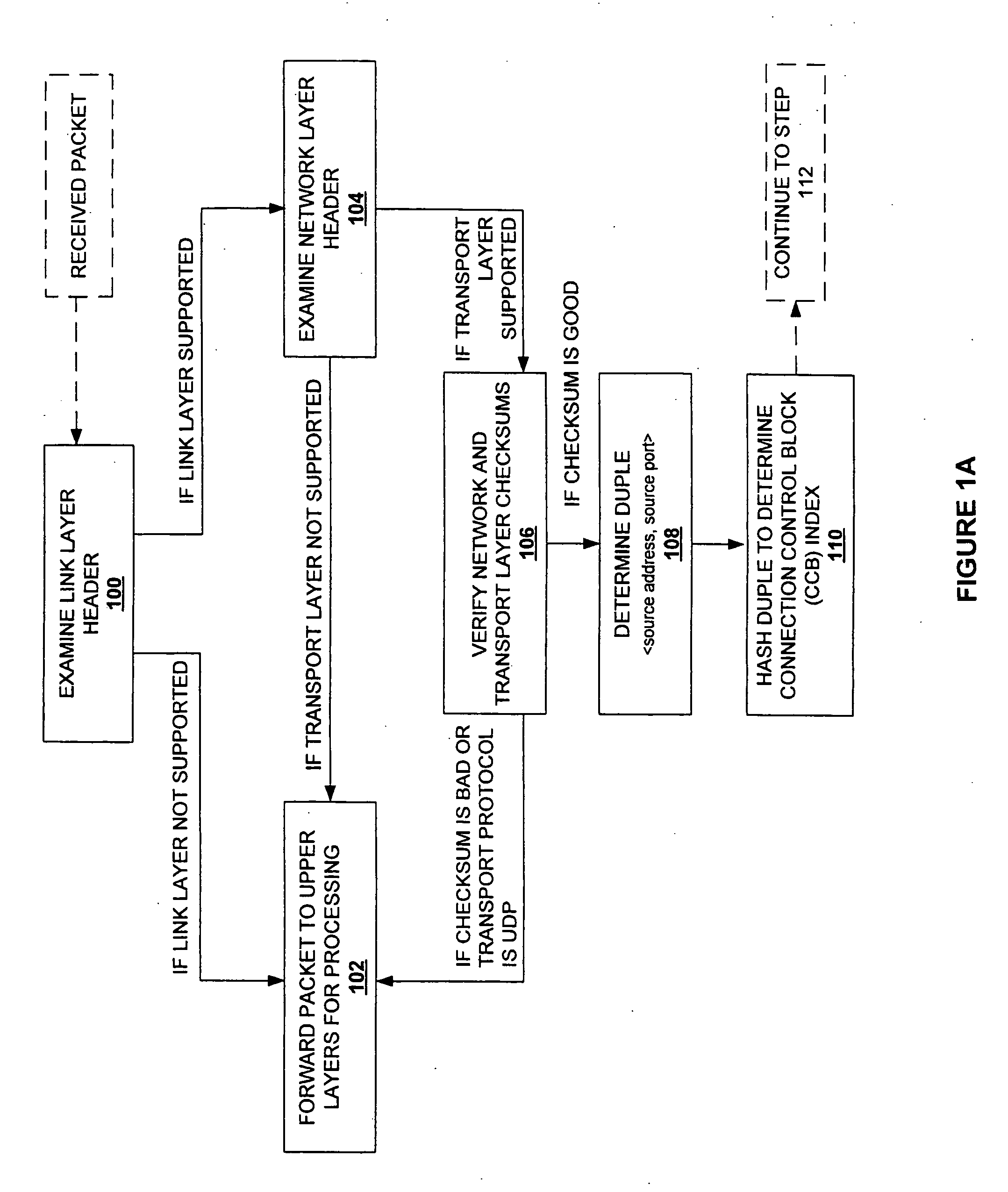

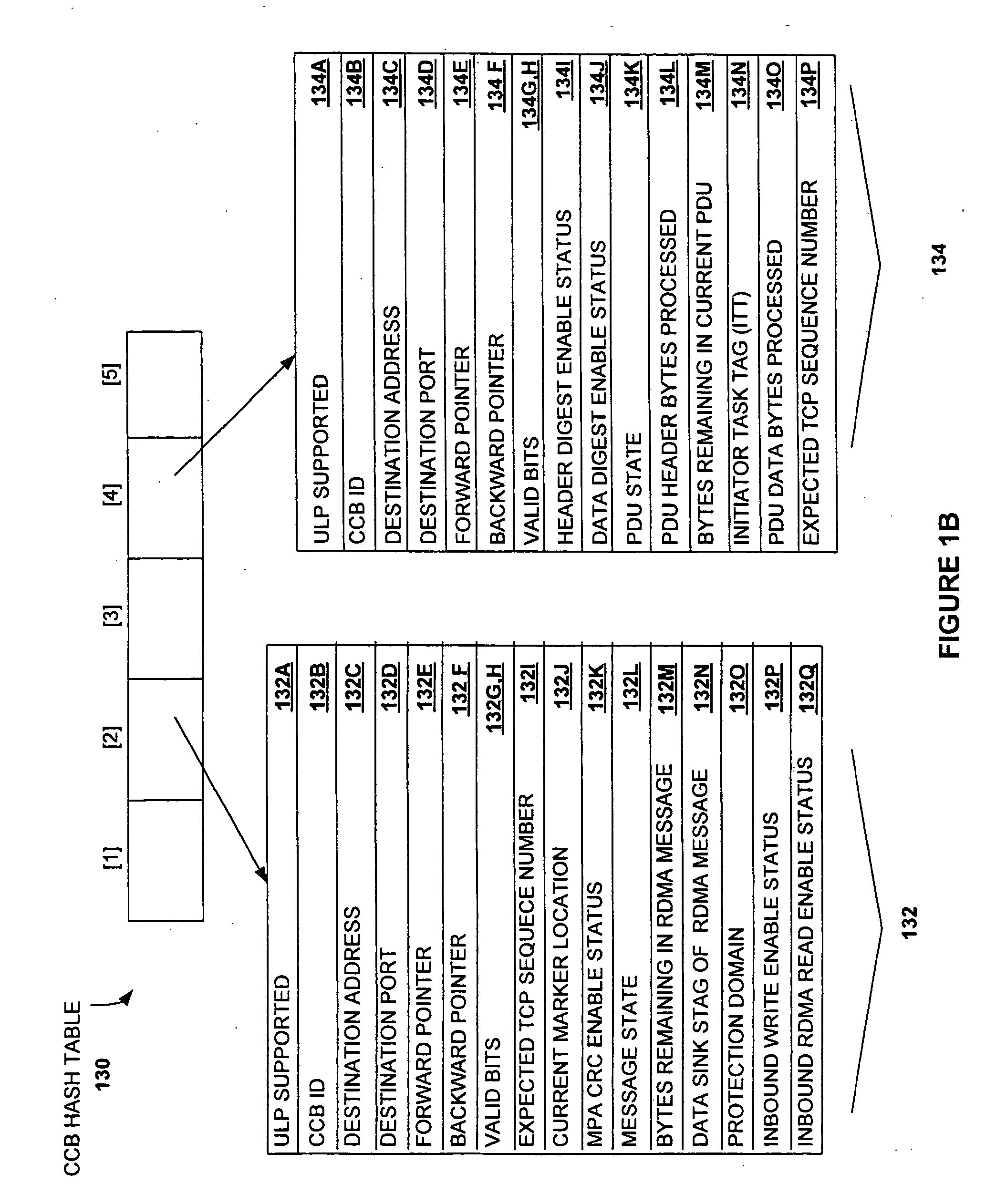

Method and system for providing direct data placement support

InactiveUS20060034283A1Reduction of CPU processing overheadReduced space requirementsTime-division multiplexData switching by path configurationWire speedZero-copy

A system and method for reducing the overhead associated with direct data placement is provided. Processing time overhead is reduced by implementing packet-processing logic in hardware. Storage space overhead is reduced by combining results of hardware-based packet-processing logic with ULP software support; parameters relevant to direct data placement are extracted during packet-processing and provided to a control structure instantiation. Subsequently, payload data received at a network adapter is directly placed in memory in accordance with parameters previously stored in a control structure. Additionally, packet-processing in hardware reduces interrupt overhead by issuing system interrupts in conjunction with packet boundaries. In this manner, wire-speed direct data placement is approached, zero copy is achieved, and per byte overhead is reduced with respect to the amount of data transferred over an individual network connection. Movement of ULP data between application-layer program memories is thereby accelerated without a fully offloaded TCP protocol stack implementation.

Owner:IBM CORP

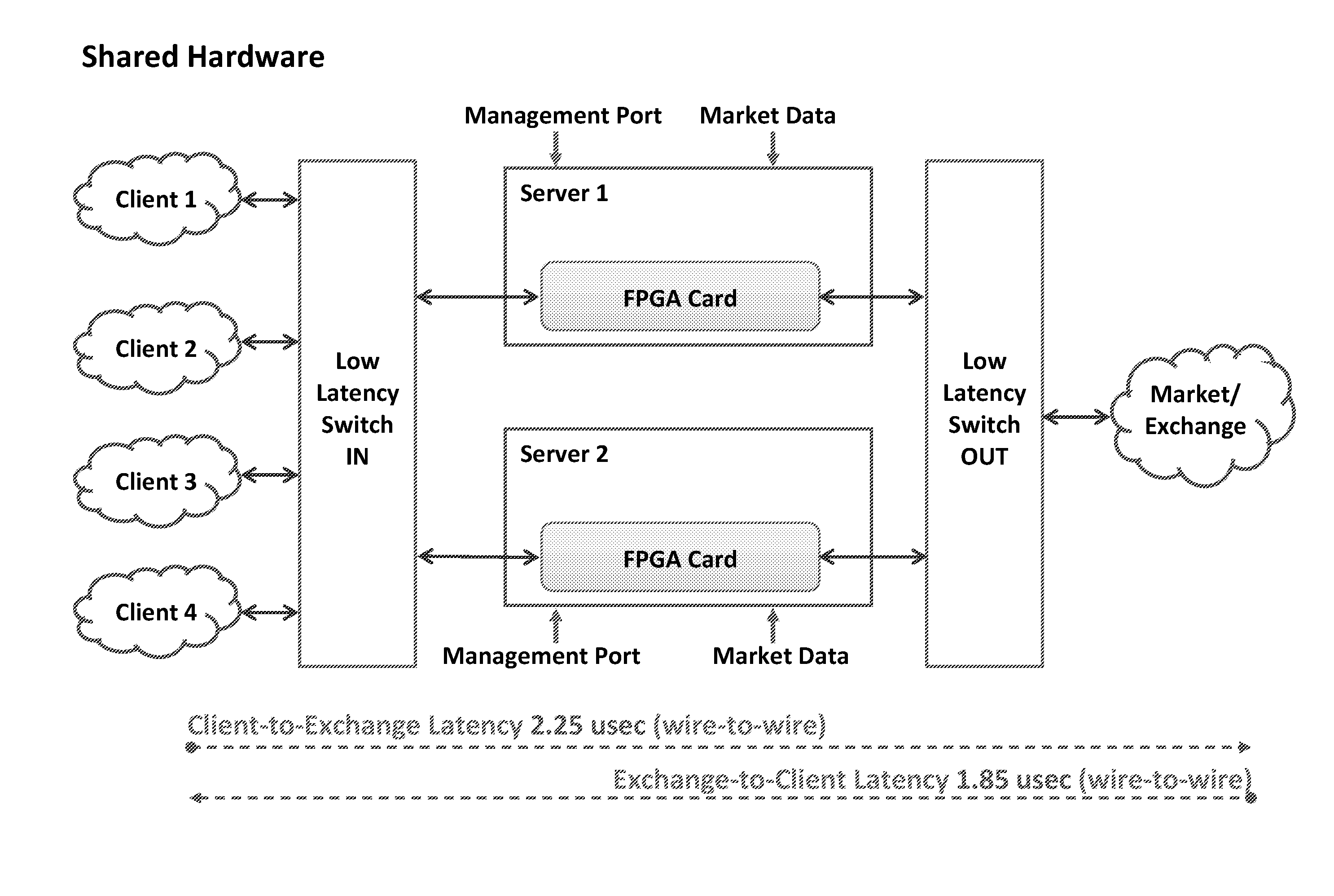

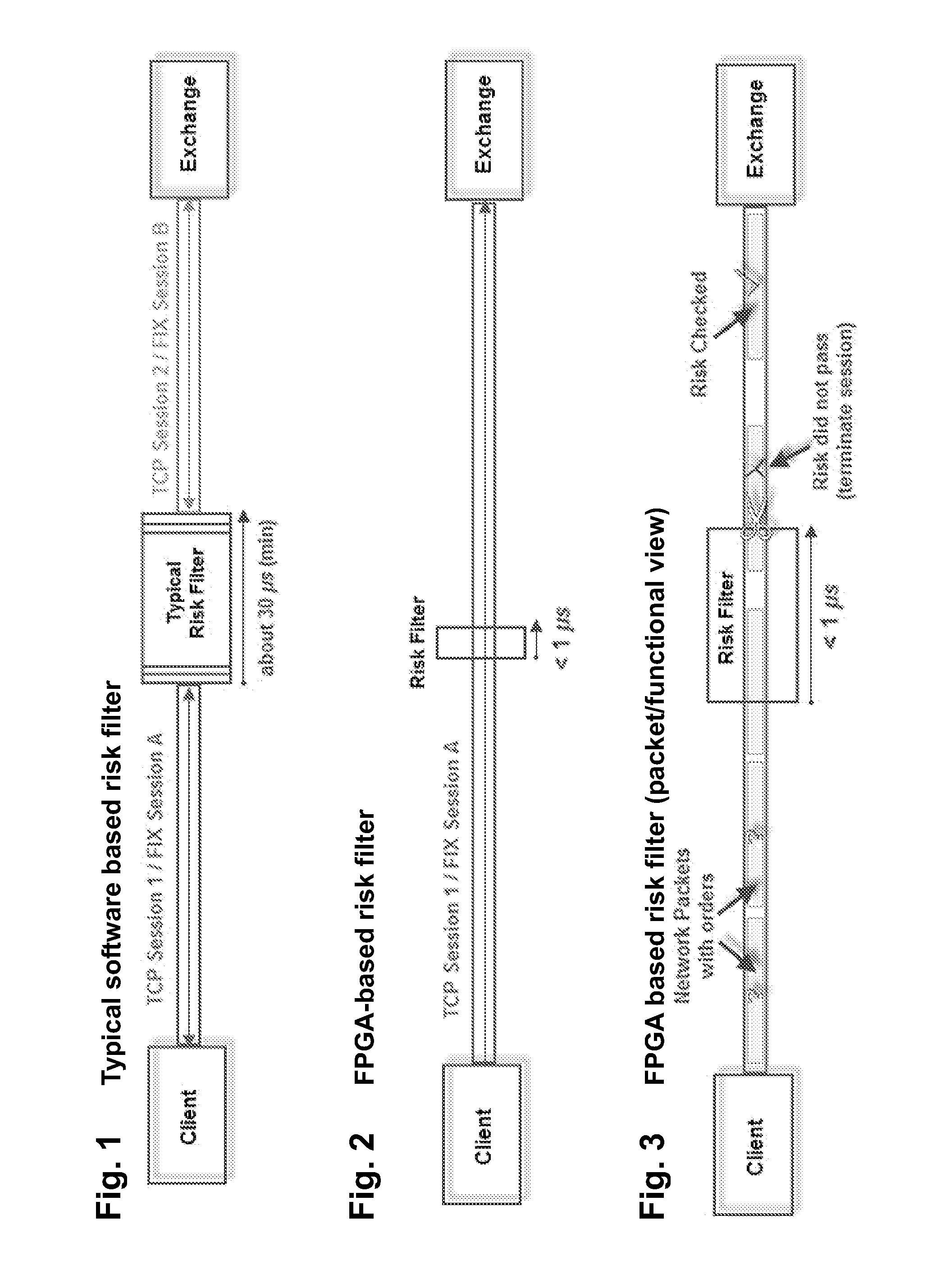

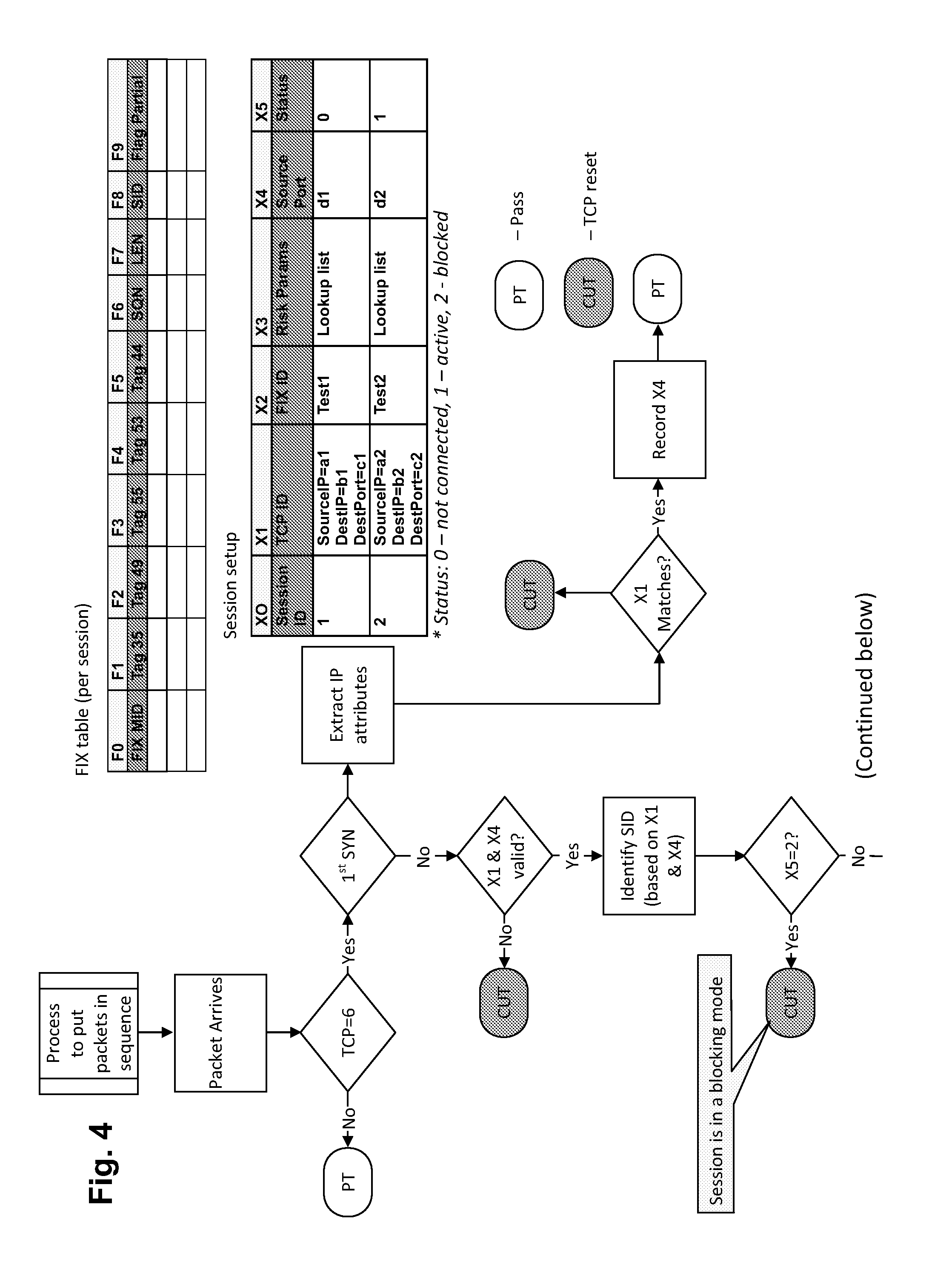

Wire Speed Monitoring and Control of Electronic Financial Transactions

InactiveUS20140289094A1Faster message processingSpeed maximizationFinanceComputer hardwareTraffic capacity

An in-line hardware message filter device inspects incoming securities transactions. The invention is implemented as an integrated circuit (IC) device which contains computer code in the form of on-chip hardware instructions. Data messages comprising orders enter the device in exchange-specific formats. Messages that satisfy pre-determined risk assessment filters are allowed to pass through the device to the appropriate securities exchange for execution. The system functions as a passive device for all legitimate network traffic passing directly or indirectly between a customer's computer and a securities exchange's order-acceptance computer. Advantageously, the invention allows the broker-dealer to check and pass messages or orders as they come through the system without having to store the full message before making a risk assessment decision. The hardware-only nature of the invention serves to maximize the speed of order validation and to perform pre-trade checks in a cut-through or store-and-forward mode.

Owner:DEUTSCHE BANK

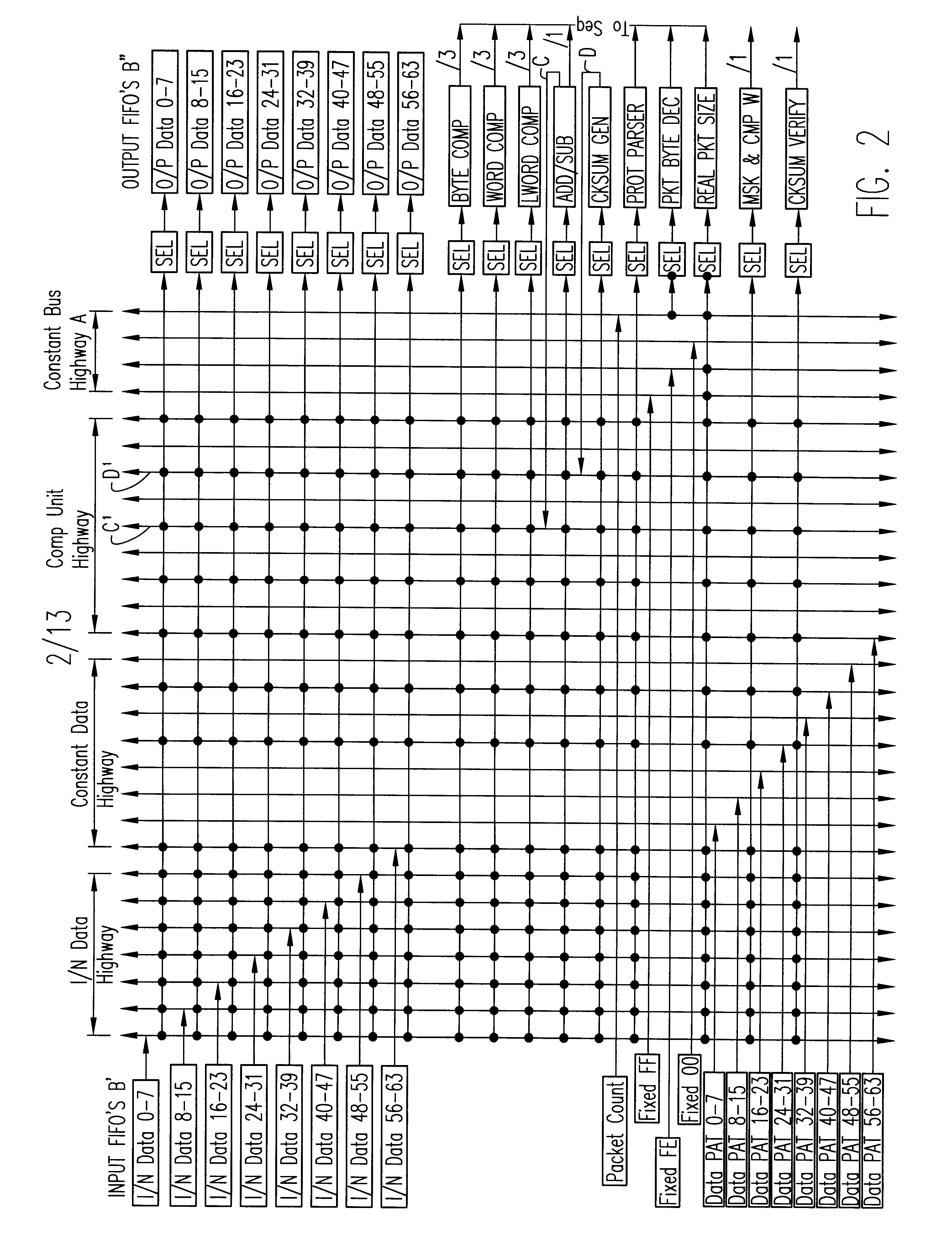

Method of and system for processing datagram headers for high speed computer network interfaces at low clock speeds, utilizing scalable algorithms for performing such network header adaptation (SAPNA)

A technique and system for manipulating, converting or adapting datagram headers as required during traverse from one interface of a networking device to another, by novel clocked micro-sequencing and selection amongst input and output FIFO data streams, through dividing input serial data streams into small groups of FIFO input data streams and feeding the groups parallely into a matrix of a multilane highway of header unit input data, constant data pattern and computational unit busses controlled by such micro-sequencing so as to enable such processing of packet datagram headers and the like at very high wire speed, but using low clock speeds, and in a scalable manner.

Owner:NEXABIT NETWORKS

Apparatus and method for data virtualization in a storage processing device

ActiveUS7353305B2Provide flexibilityProvides managementMultiplex system selection arrangementsInput/output to record carriersWire speedMulti protocol

A system including a storage processing device with an input / output module. The input / output module has port processors to receive and transmit network traffic. The input / output module also has a switch connecting the port processors. Each port processor categorizes the network traffic as fast path network traffic or control path network traffic. The switch routes fast path network traffic from an ingress port processor to a specified egress port processor. The storage processing device also includes a control module to process the control path network traffic received from the ingress port processor. The control module routes processed control path network traffic to the switch for routing to a defined egress port processor. The control module is connected to the input / output module. The input / output module and the control module are configured to interactively support data virtualization, data migration, data journaling, and snapshotting. The distributed control and fast path processors achieve scaling of storage network software. The storage processors provide line-speed processing of storage data using a rich set of storage-optimized hardware acceleration engines. The multi-protocol switching fabric provides a low-latency, protocol-neutral interconnect that integrally links all components with any-to-any non-blocking throughput.

Owner:AVAGO TECH INT SALES PTE LTD

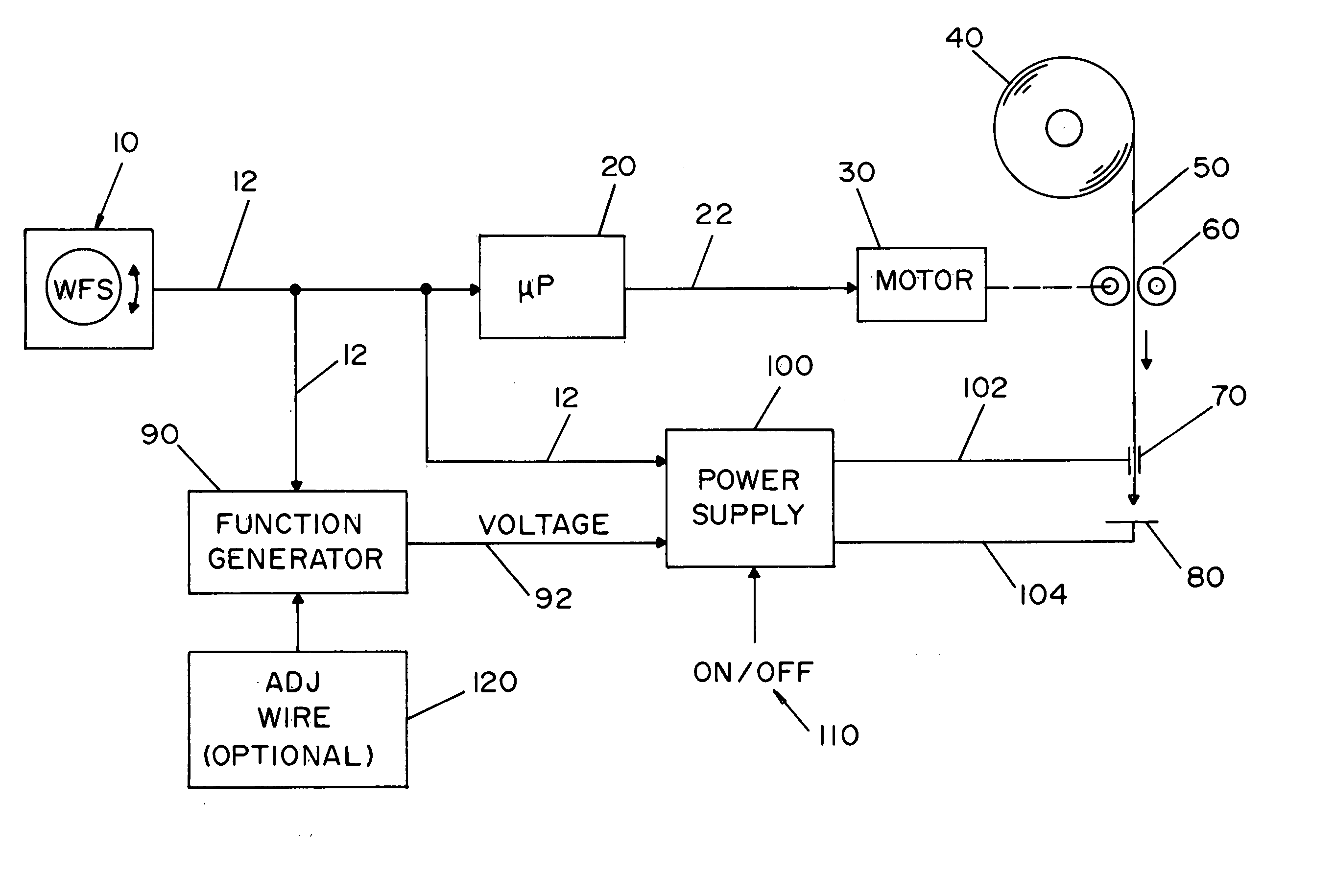

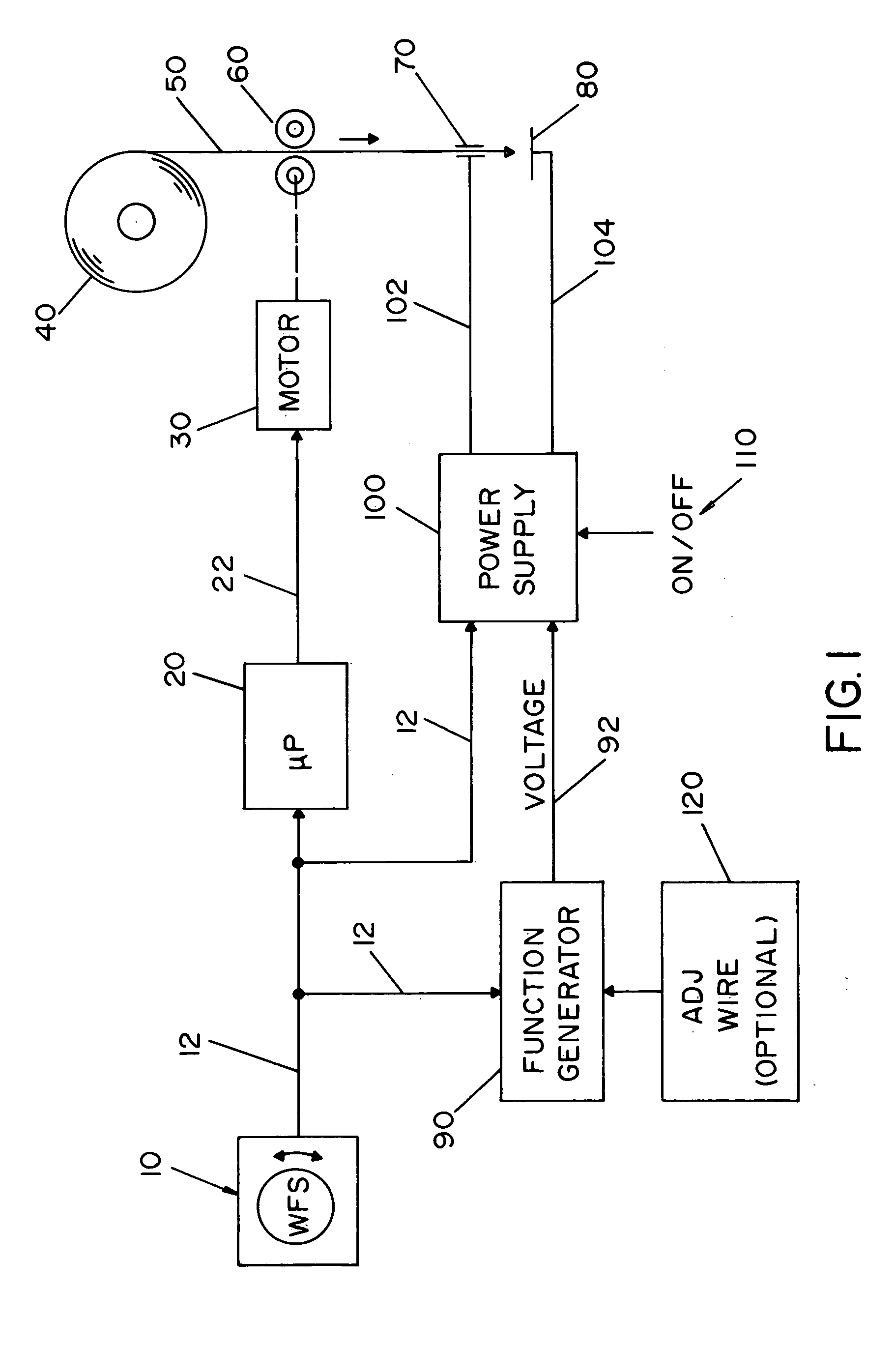

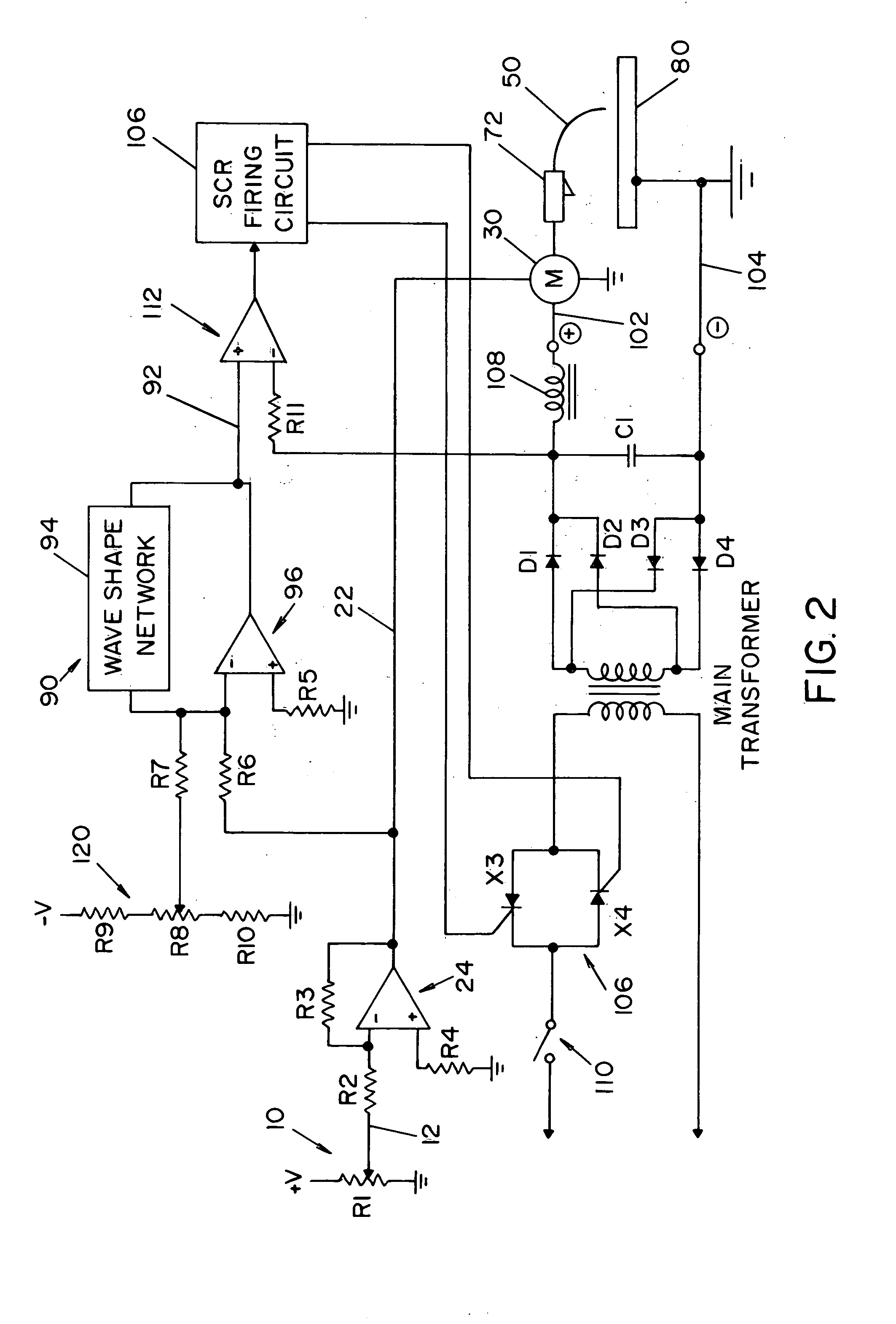

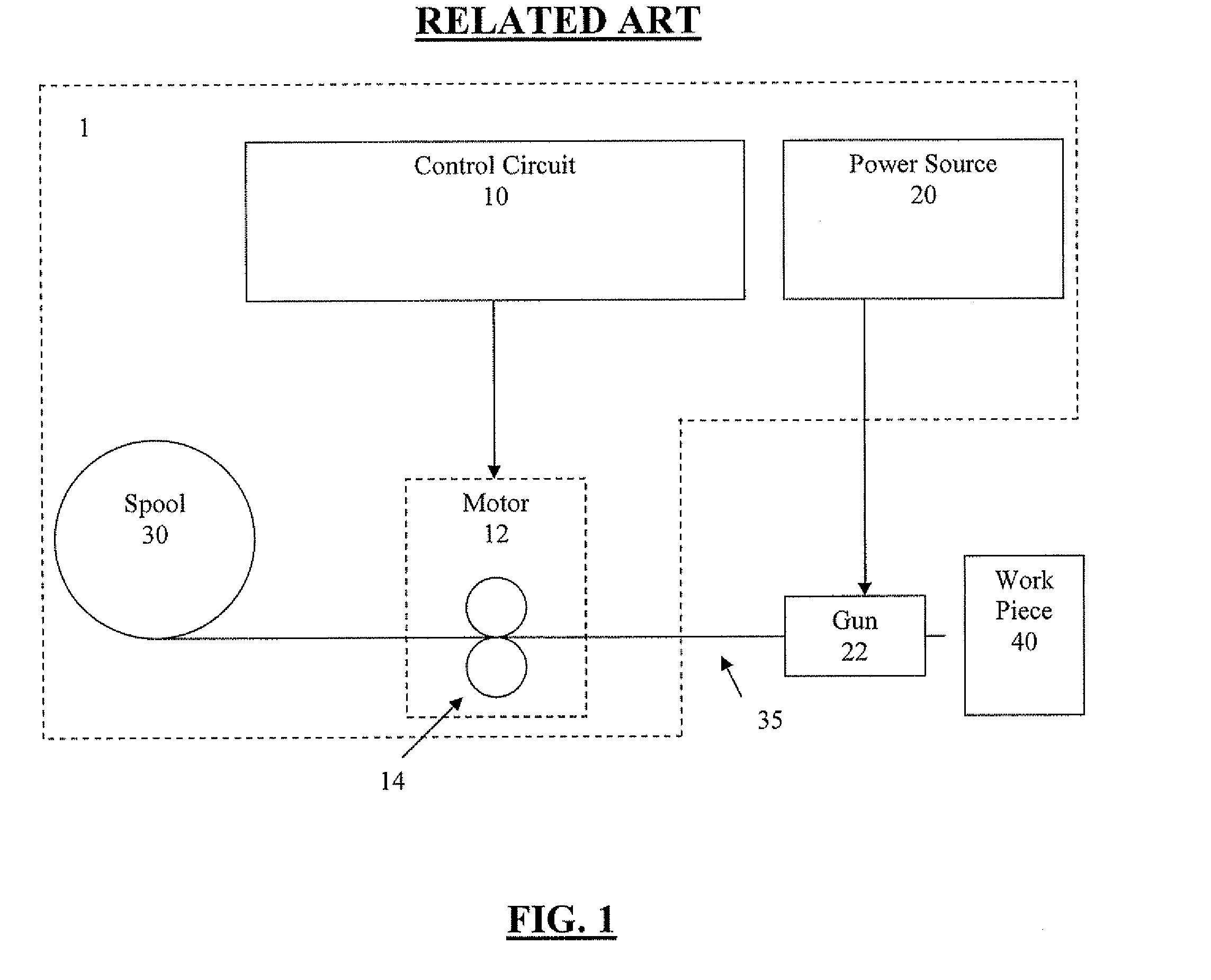

Dedicated wire feed speed control

InactiveUS20050161448A1Simpler to useSimplify welding procedureArc welding apparatusWire speedControl signal

A MIG welder and method of controlling a MIG welder which includes a wire speed selector that is used to generate a control signal which controls both the wire feed speed of a consumable electrode and the output current level of the power supply.

Owner:LINCOLN GLOBAL INC

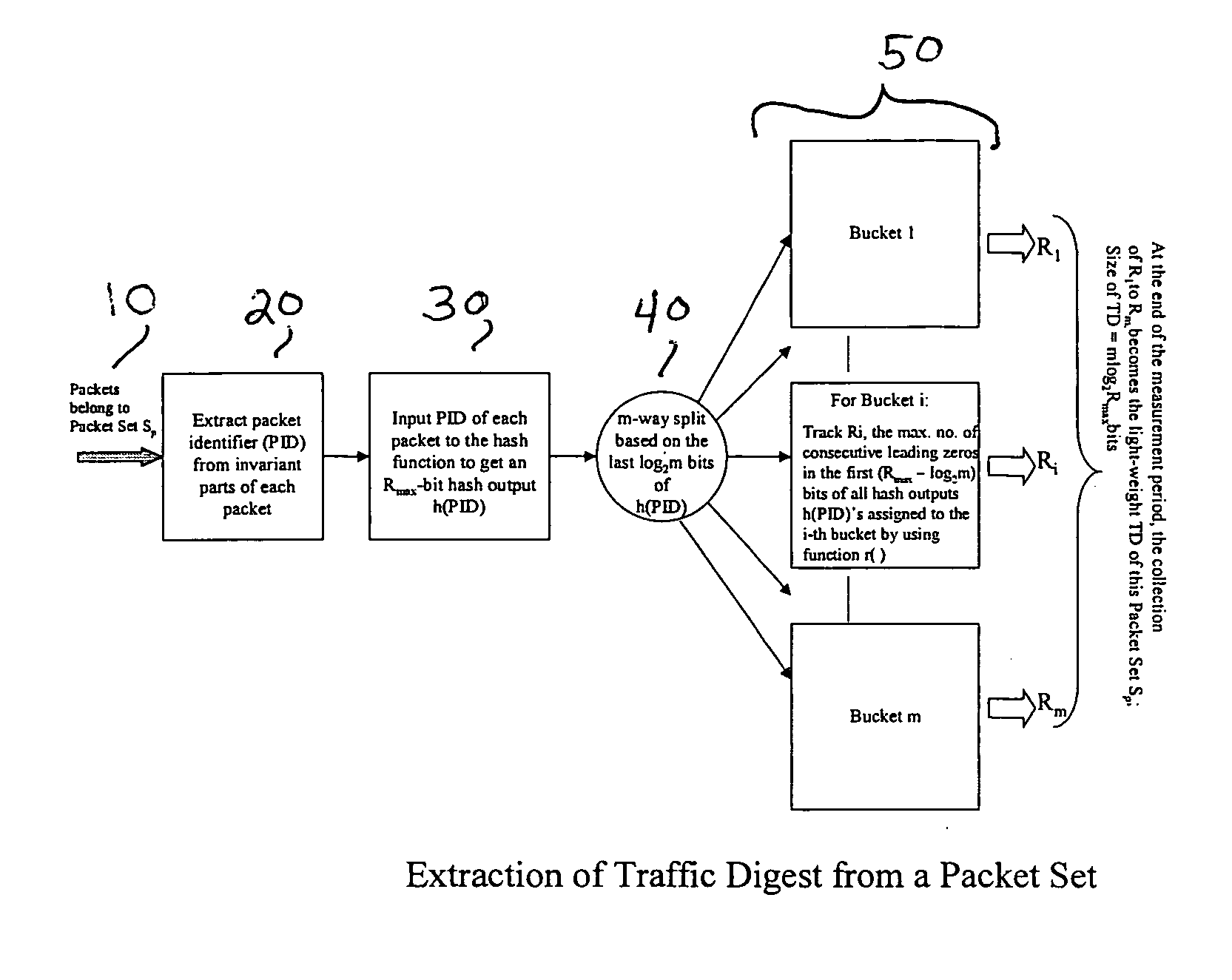

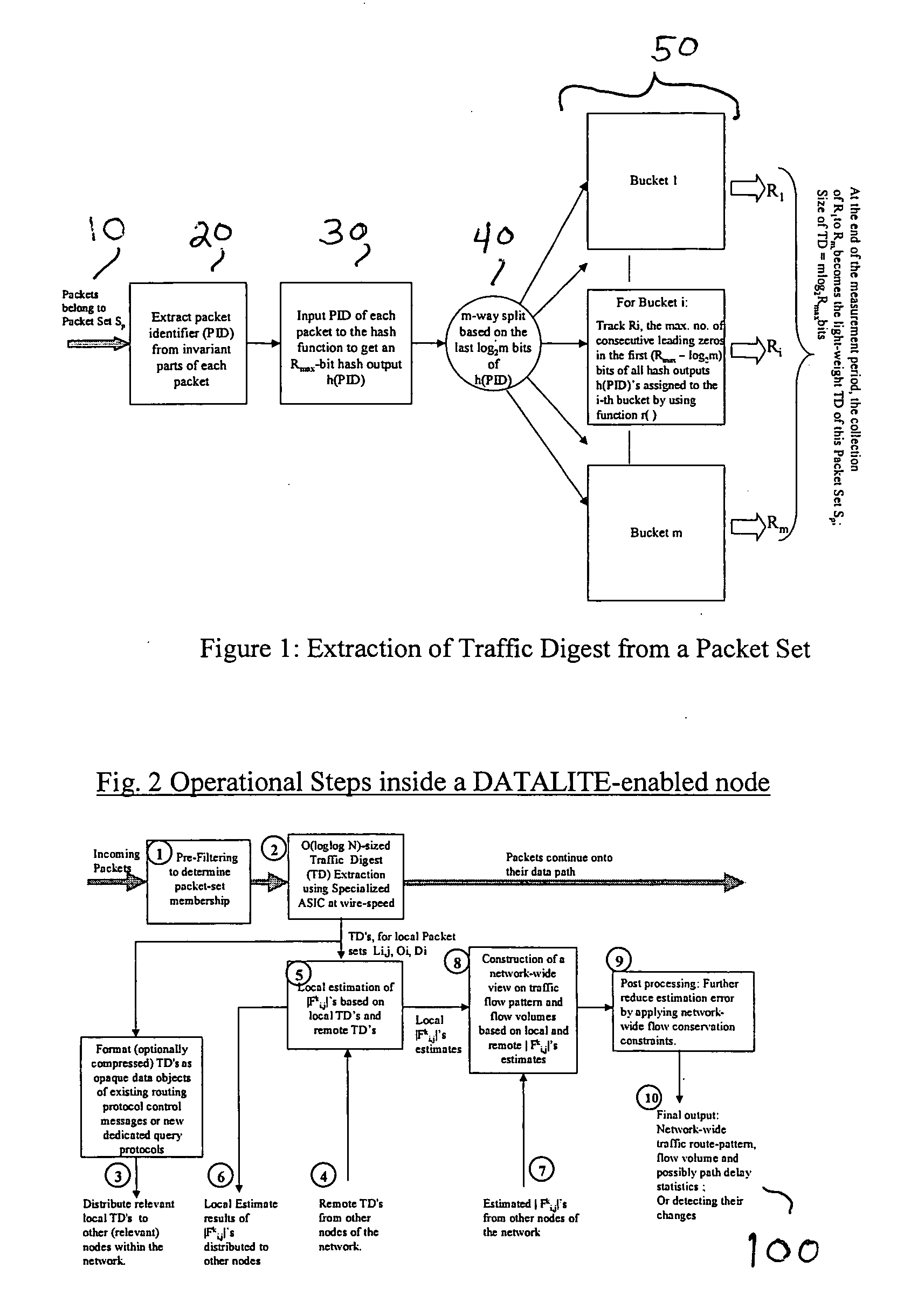

High-speed traffic measurement and analysis methodologies and protocols

InactiveUS20050220023A1Minimal communication overheadMore bandwidth is requiredError preventionFrequency-division multiplex detailsNODALWire speed

We formulate the network-wide traffic measurement / analysis problem as a series of set-cardinality-determination (SCD) problems. By leveraging recent advances in probabilistic distinct sample counting techniques, the set-cardinalities, and thus, the network-wide traffic measurements of interest can be computed in a distributed manner via the exchange of extremely light-weight traffic digests (TD's) amongst the network nodes, i.e. the routers. A TD for N packets only requires O(loglog N) bits of memory storage. The computation of such O(loglog N)-sized TD is also amenable for efficient hardware implementation at wire-speed of 10 Gbps and beyond. Given the small size of the TD's, it is possible to distribute nodal TD's to all routers within a domain by piggybacking them as opaque data objects inside existing control messages, such as OSPF link-state packets (LSPs) or I-BGP control messages. Once the required TD's are received, a router can estimate the traffic measurements of interest for each of its local link by solving a series of set-cardinality-determination problems. The traffic measurements of interest are typically in form of per-link, per-traffic-aggregate packet counts (or flow counts) where an aggregate is defined by the group of packets sharing the same originating and / or destination nodes (or links) and / or some intermediate nodes (or links). The local measurement results are then distributed within the domain so that each router can construct a network-wide view of routes / flow patterns of different traffic commodities where a commodity is defined as a group of packets sharing the same origination and / or termination nodes or links. After the initial network-wide traffic measurements are received, each router can further reduce the associated measurement / estimation errors by locally conducting a minimum square error (MSE) optimization based on network-wide commodity-flow conservation constraints.

Owner:LUCENT TECH INC

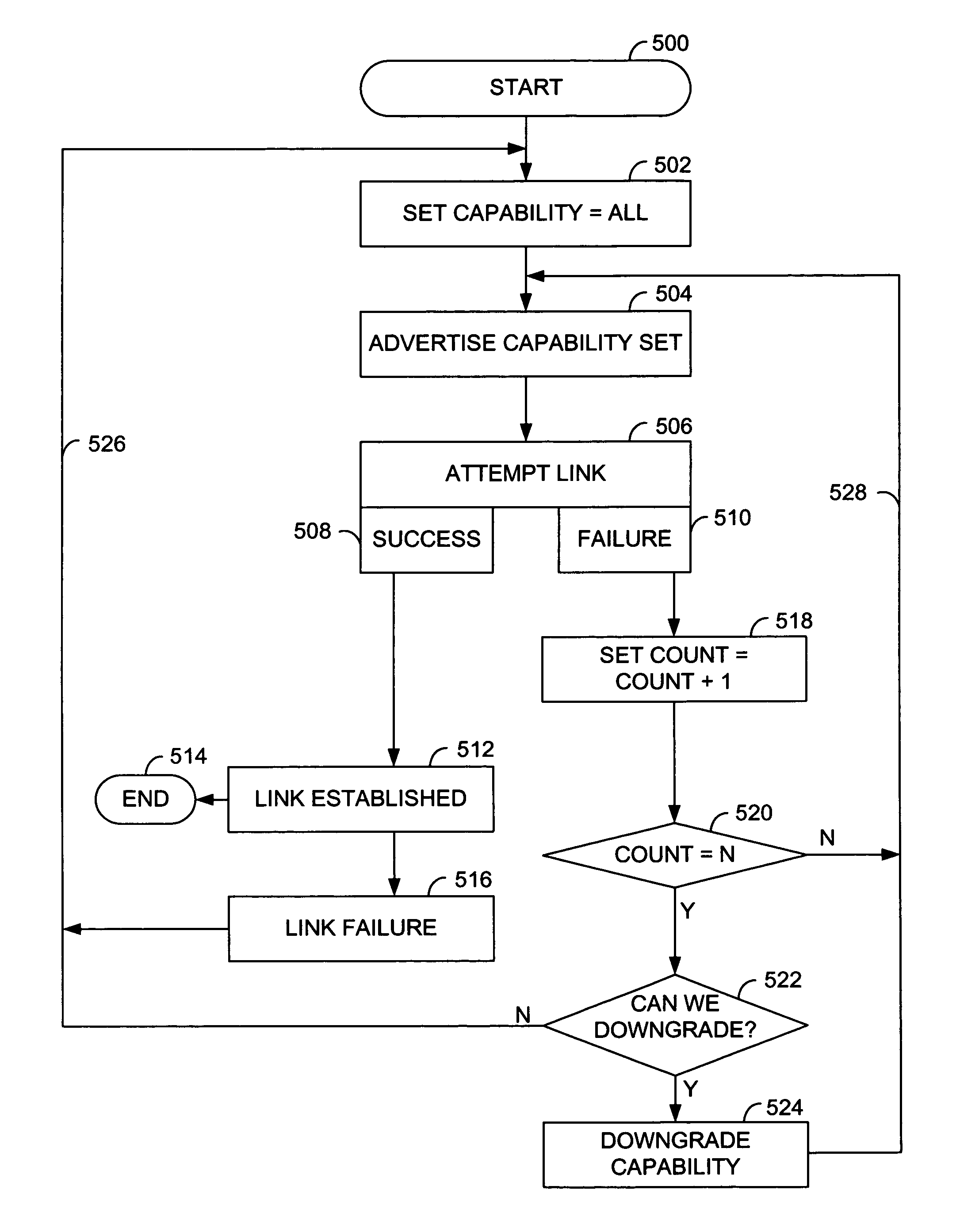

Method and apparatus for performing wire speed auto-negotiation

Owner:AVAGO TECH INT SALES PTE LTD

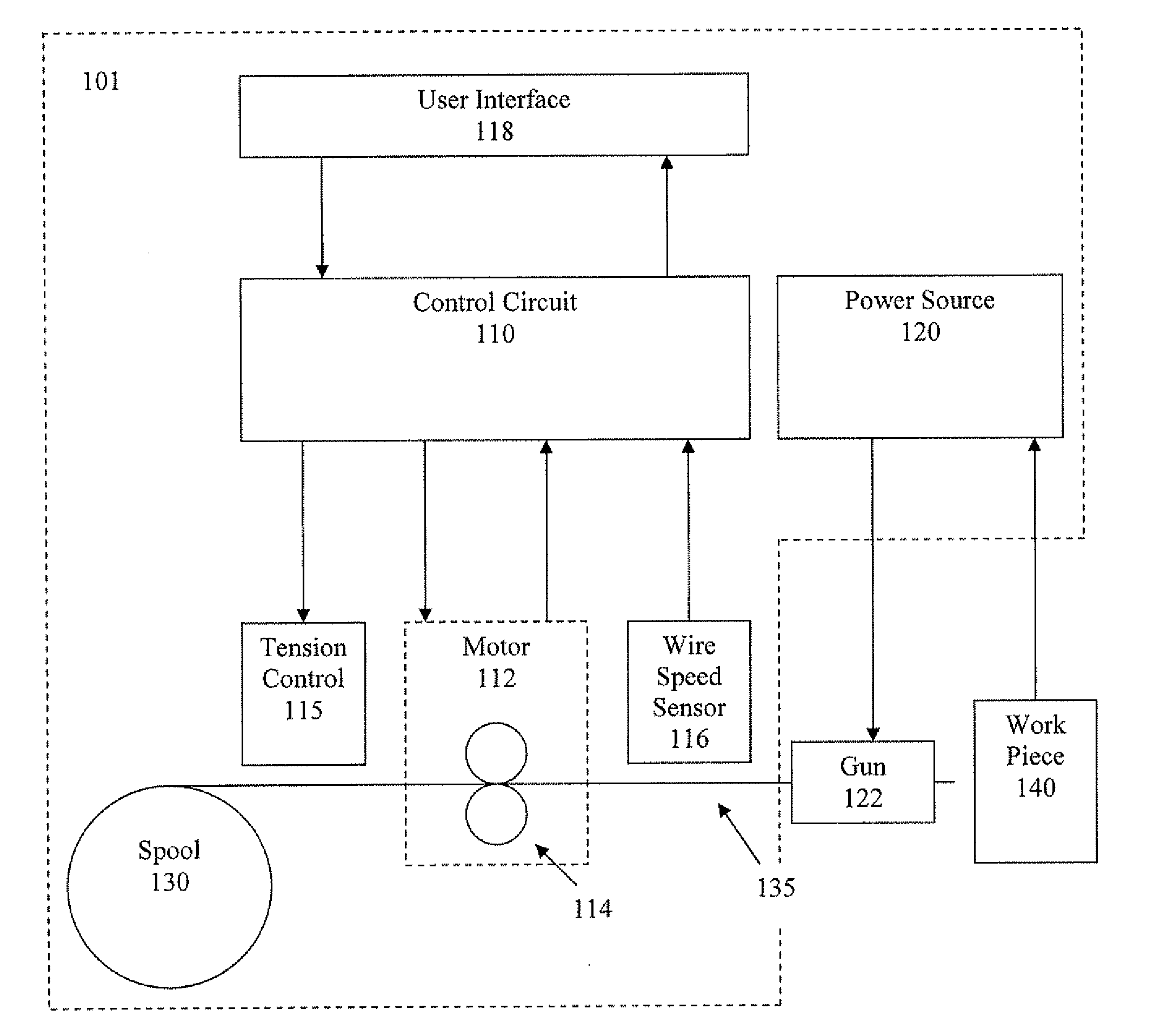

Automatic welding wire feed adjuster

An automatic wire feed adjuster, including a feeding mechanism including a pair of rollers that feeds a wire therebetween, a tension controller that adjusts a tension between the pair of rollers, a wire speed sensor that measures a fed speed of the wire after exiting the feeding mechanism, and a control circuit that compares a driven speed of the wire with the fed speed of the wire, and that decides whether to instruct the tension controller to adjust the tension between the pair of rollers.

Owner:LINCOLN GLOBAL INC

Method and apparatus for wire-speed application layer classification of upstream and downstream data packets

InactiveUS7436830B2Efficient data processingError preventionTransmission systemsWire speedAddress generator

A data packet classifier to classify a plurality of N-bit input tuples, said classifier comprising a hash address, a memory and a comparison unit. The hash address generator generate a plurality of M-bit hash addresses from said plurality of N-bit input tuples, wherein M is significantly smaller than N. The memory has a plurality of memory entries and is addressable by said plurality of M-bit hash addresses, each such address corresponding to a plurality of memory entries, each of said plurality of memory entries capable of storing one of said plurality of N-bit tuples and an associated process flow information. The comparison unit determines if an incoming N-bit tuple can be matched with a stored N-bit tuple. The associated process flow information is output if a match is found and wherein a new entry is created in the memory for the incoming N-bit tuple if a match is not found.

Owner:CISCO SYST ISRAEL

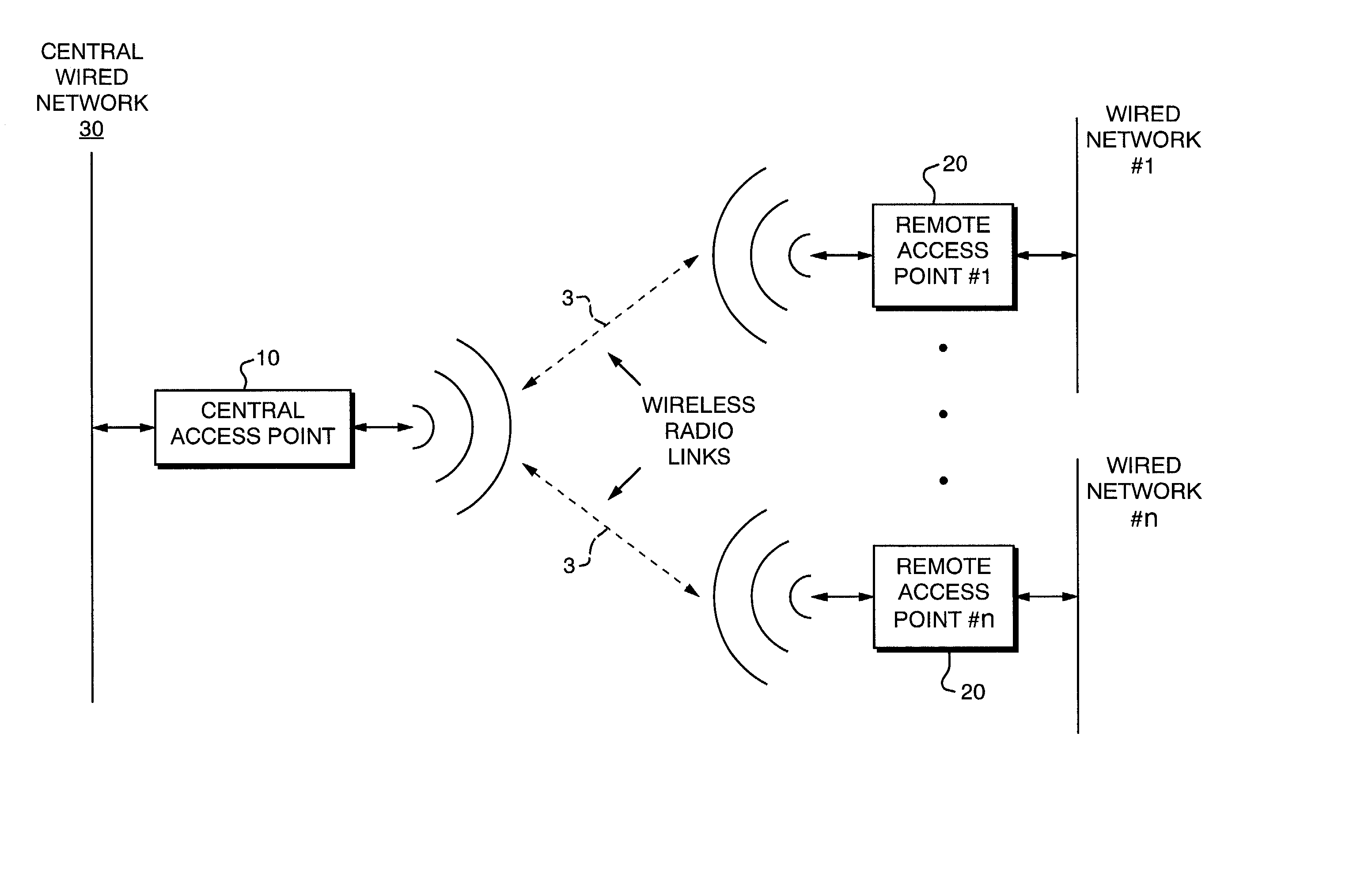

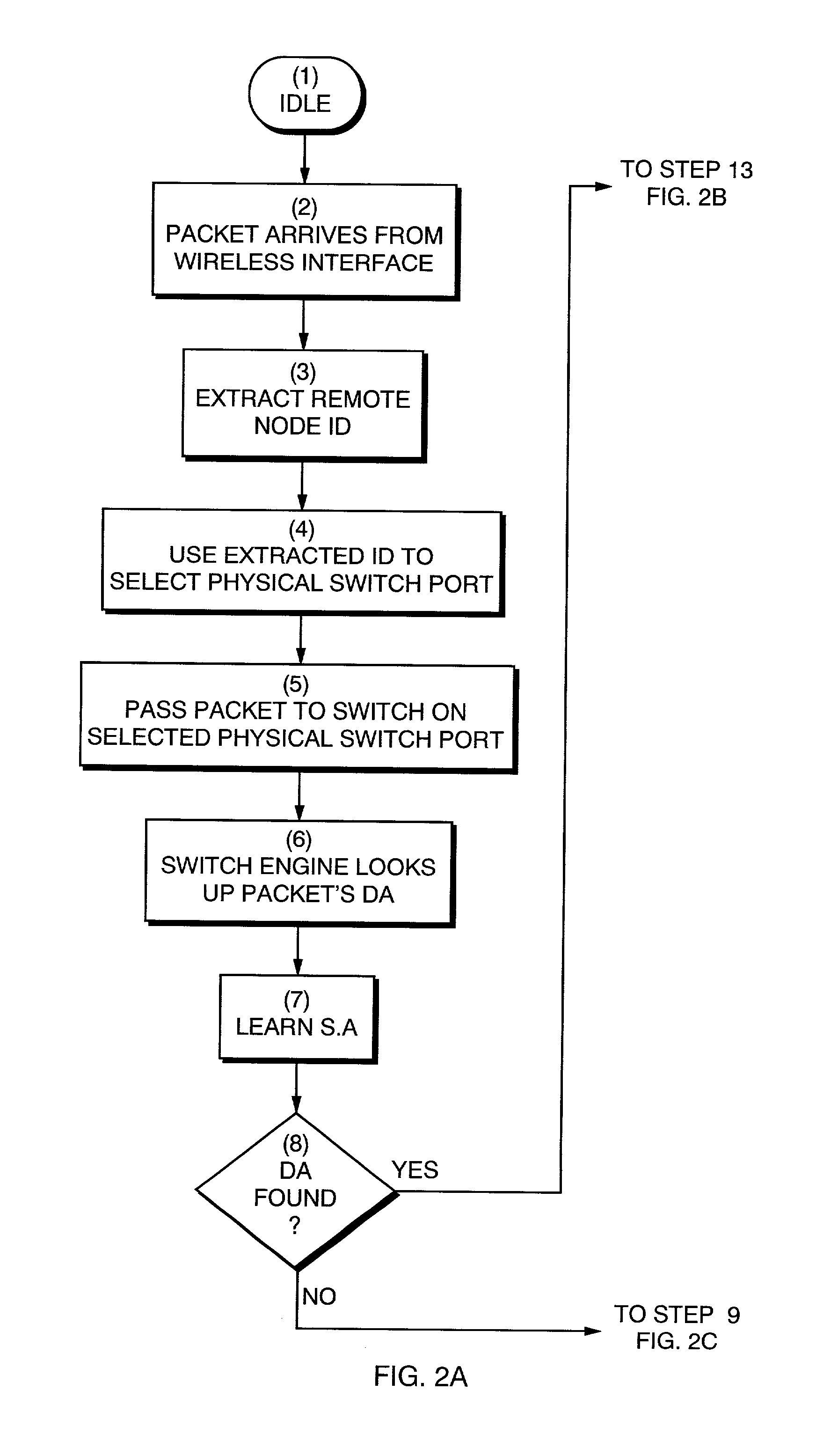

Method and apparatus for scalable, line-rate protocol-independent switching between multiple remote access points in a wireless local area network

The invention is a system and method to uniquely associate remote wireless Access Points using a switch engine. This is necessary in a multi-point wireless network where a central wireless Access Point must be able to receive packets from remote Access Points and then retransmit them, with a modified identifier, back to the wireless port from which they were received. By using available switch engine hardware this invention takes advantage of lower cost, higher performance and increased features in commodity parts. The invention resolves differences in common Ethernet switch engines that make them unsuitable for direct interface to wireless networks such as that defined by IEEE Standard 802.11 by using an aggregated set of physical ports on the switch engine. The method allows the switch engine to operate in its normal manner while satisfying the requirements for switching data packets between the Access Points in a multi-point configuration.

Owner:ENTERASYS NETWORKS

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com