Efficient network IO processing method based on NUMA and hardware assisted technology

A hardware-assisted, processing method technology, applied in the field of network virtualization, can solve problems such as unknowable running status, delayed processing of interrupt information, and time-consuming interrupts, so as to reduce VM-Exit operations, optimize interrupt processing efficiency, and reduce interrupt delivery Delay effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

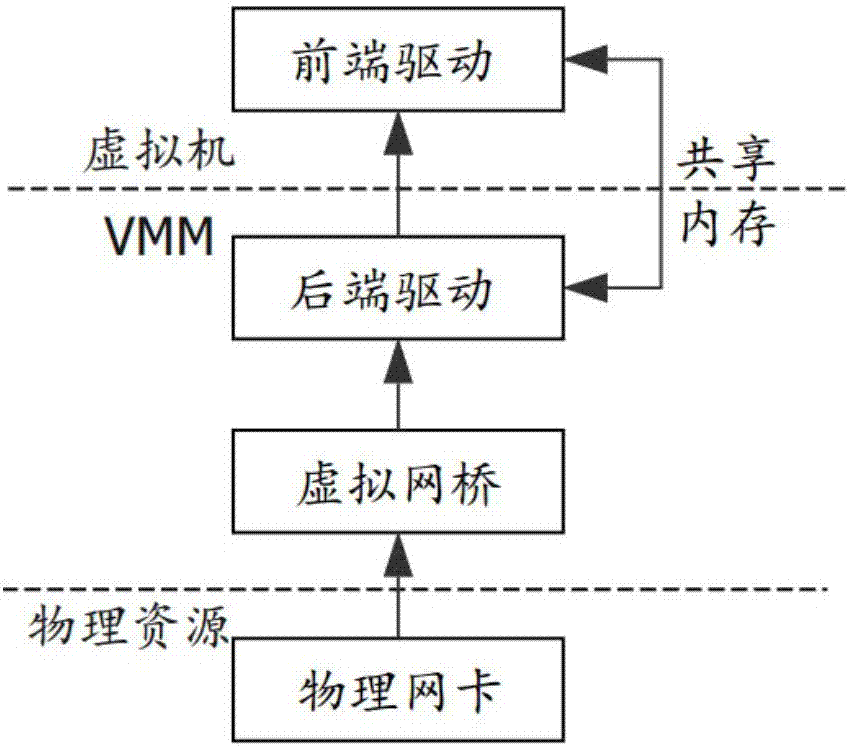

[0029] Such as figure 1 As shown, the virtual machine software maintains a series of simulated devices for each virtual machine, wherein the front end of the device runs inside the virtual machine, and the back end of the device runs in the virtual machine management software. The backend of the device communicates with the real physical device through the virtual network bridge. The direction of the arrow in the figure is the process of receiving data packets from the virtual machine. The virtual machine management software receives new data packets from the physical network card, and the virtual network bridge processes the data packets. Division, so as to identify the virtual machine to which the data packet belongs, and then forward it to the shared memory of the back-end device of the virtual machine and the front-end device, and then trigger an interrupt to notify the front-end device to process the data packet. On different CPUs, this notification interrupt is often an ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com